An image coloring method based on a self-attention generative adversarial network

An image coloring and attention technology, applied in biological neural network model, image analysis, image data processing and other directions, can solve the problems of color blur, color overflow, etc., and achieve the effect of good coloring effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

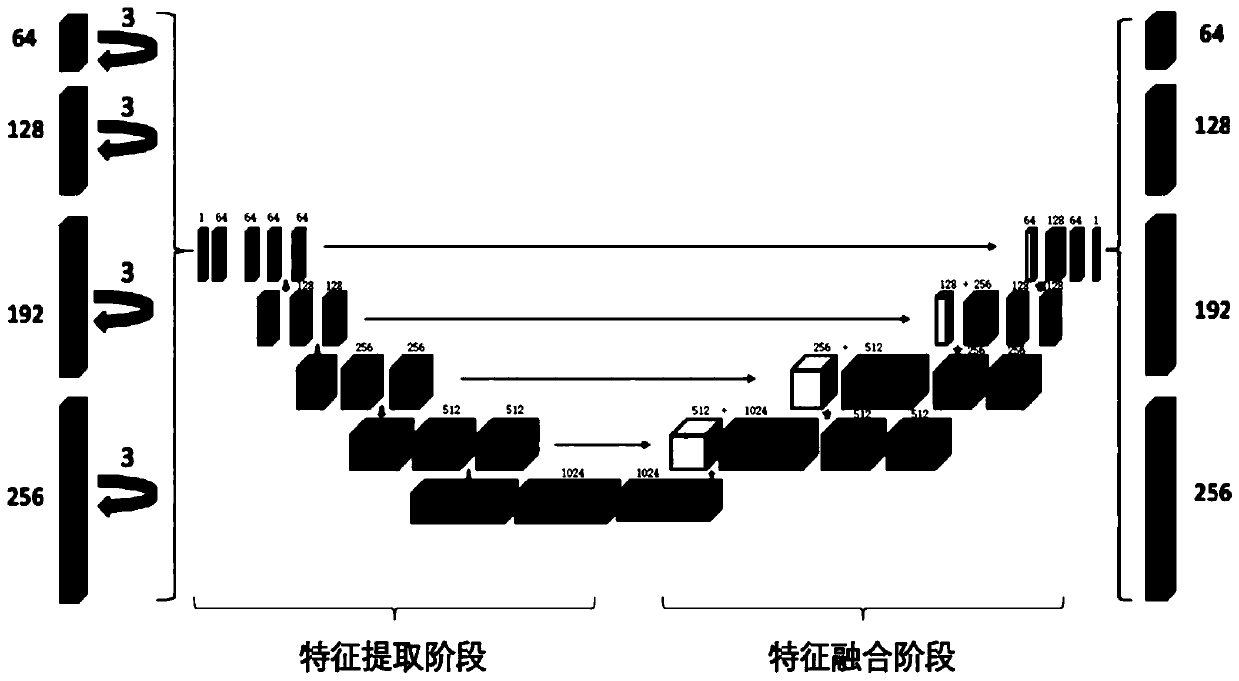

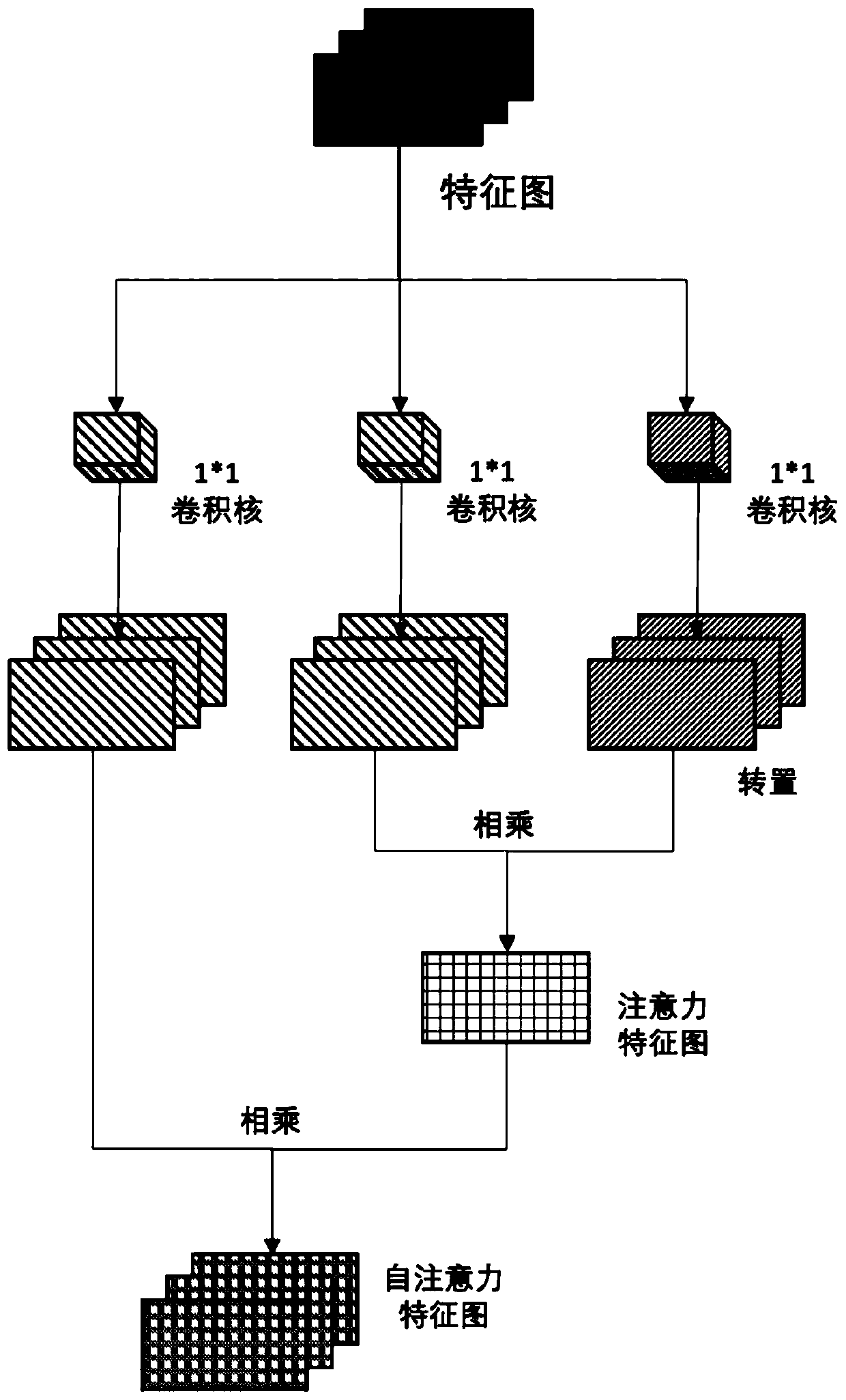

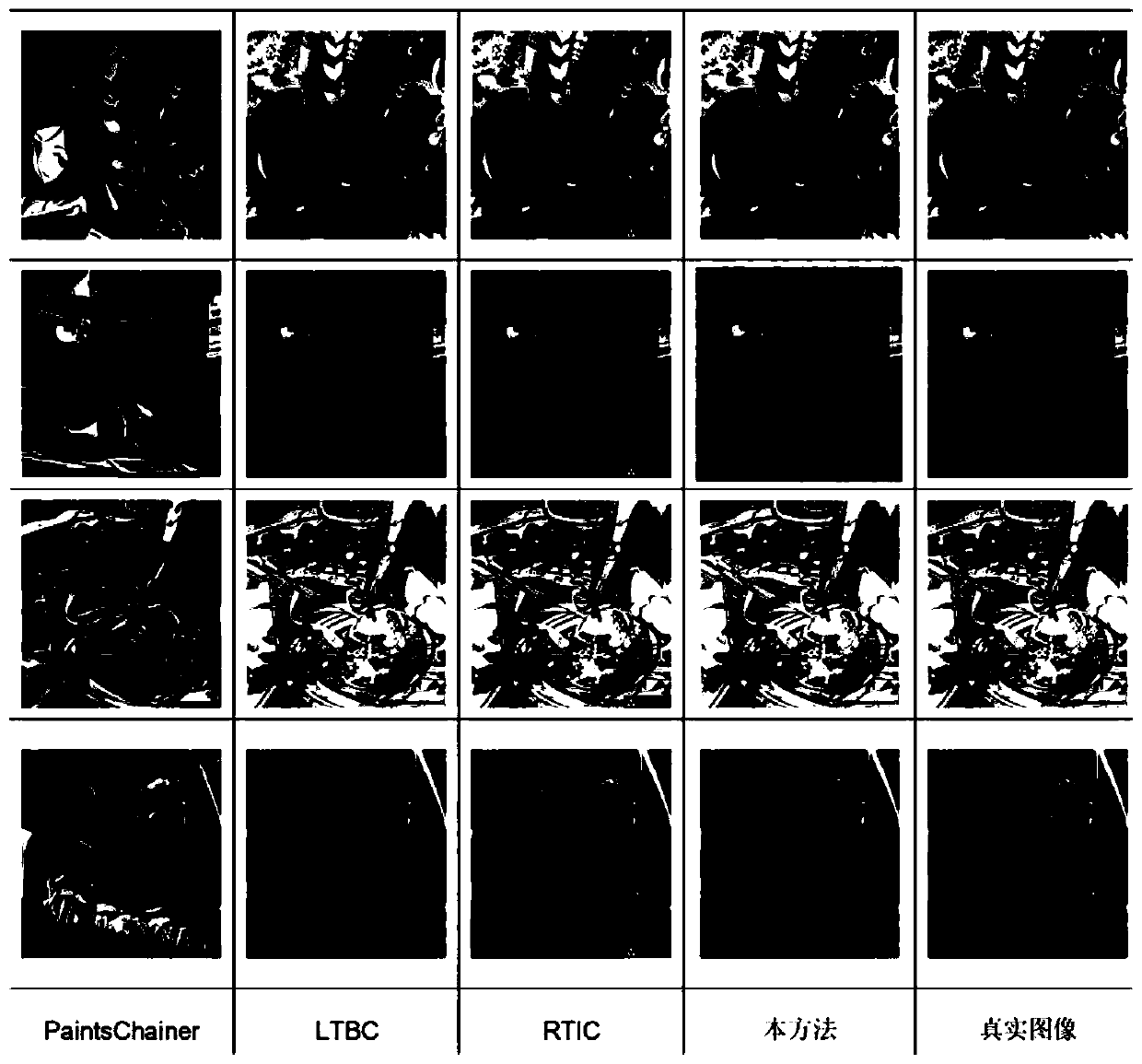

[0044] Such as Figure 1-5 As shown in one of them, the present invention discloses an image coloring method based on self-attention generation confrontation network, which includes the following steps:

[0045] Step 1: In order to train the grayscale image generation model, select the Konachan high-definition animation image data set, randomly intercept the original 2K or 4K resolution image data to become a color original image, and then rotate each color original image, after the mirroring operation , and the corresponding grayscale image is obtained through RGB conversion to grayscale image operation. Then the grayscale image I C and color original image I C Cut into sub-images of 1×512×512 and 3×512×512 respectively, and perform normalization processing to map the image pixel values to the [-1,1 interval to obtain the training data set.

[0046] Step 2: Expand the grayscale image in the training data set to three dimensions, which is consistent with the expected colo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com