Patents

Literature

317 results about "Two-vector" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A two-vector is a tensor of type (2,0) and it is the dual of a two-form, meaning that it is a linear functional which maps two-forms to the real numbers (or more generally, to scalars). The tensor product of a pair of vectors is a two-vector. Then, any two-form can be expressed as a linear combination of tensor products of pairs of vectors, especially a linear combination of tensor products of pairs of basis vectors.

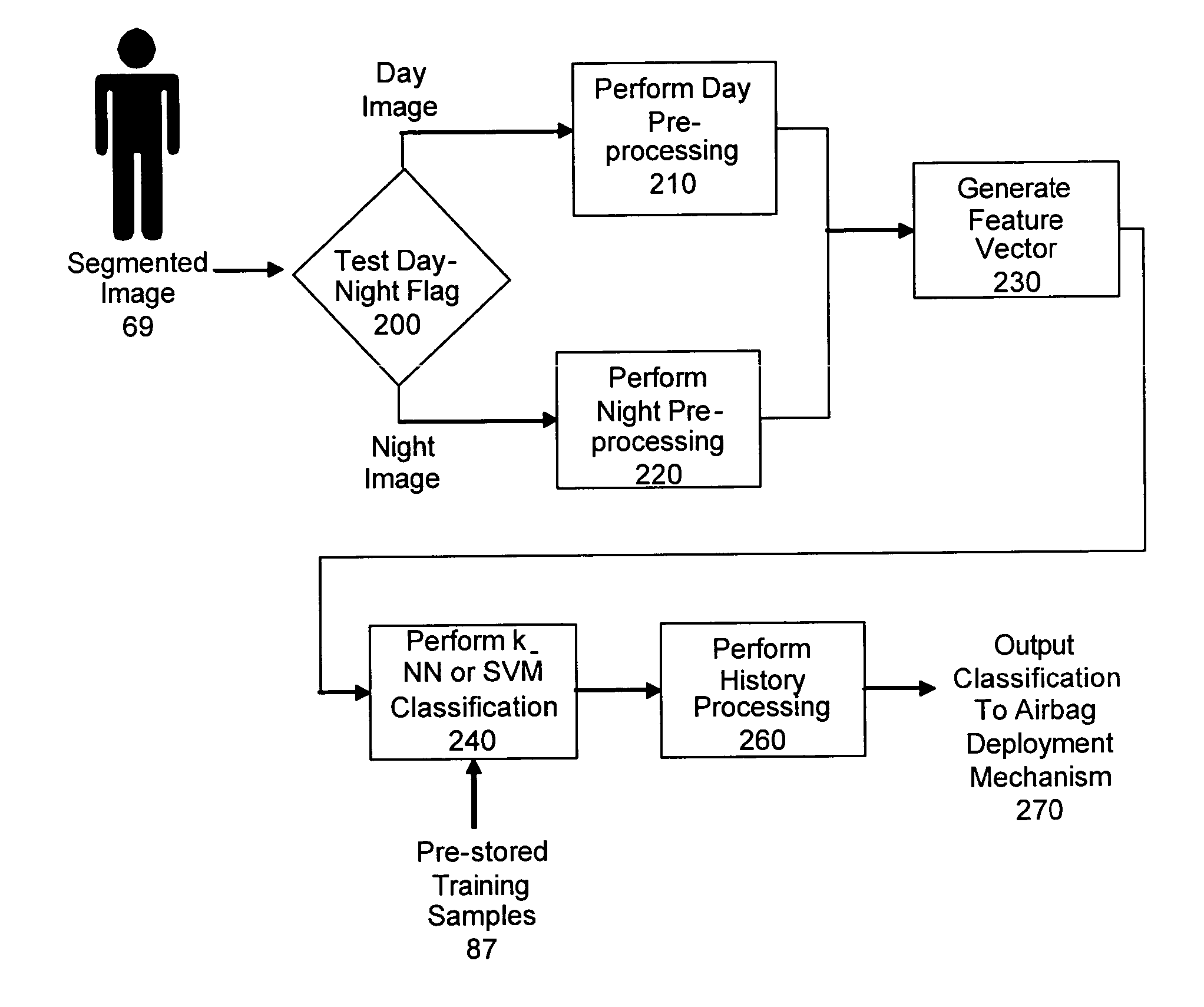

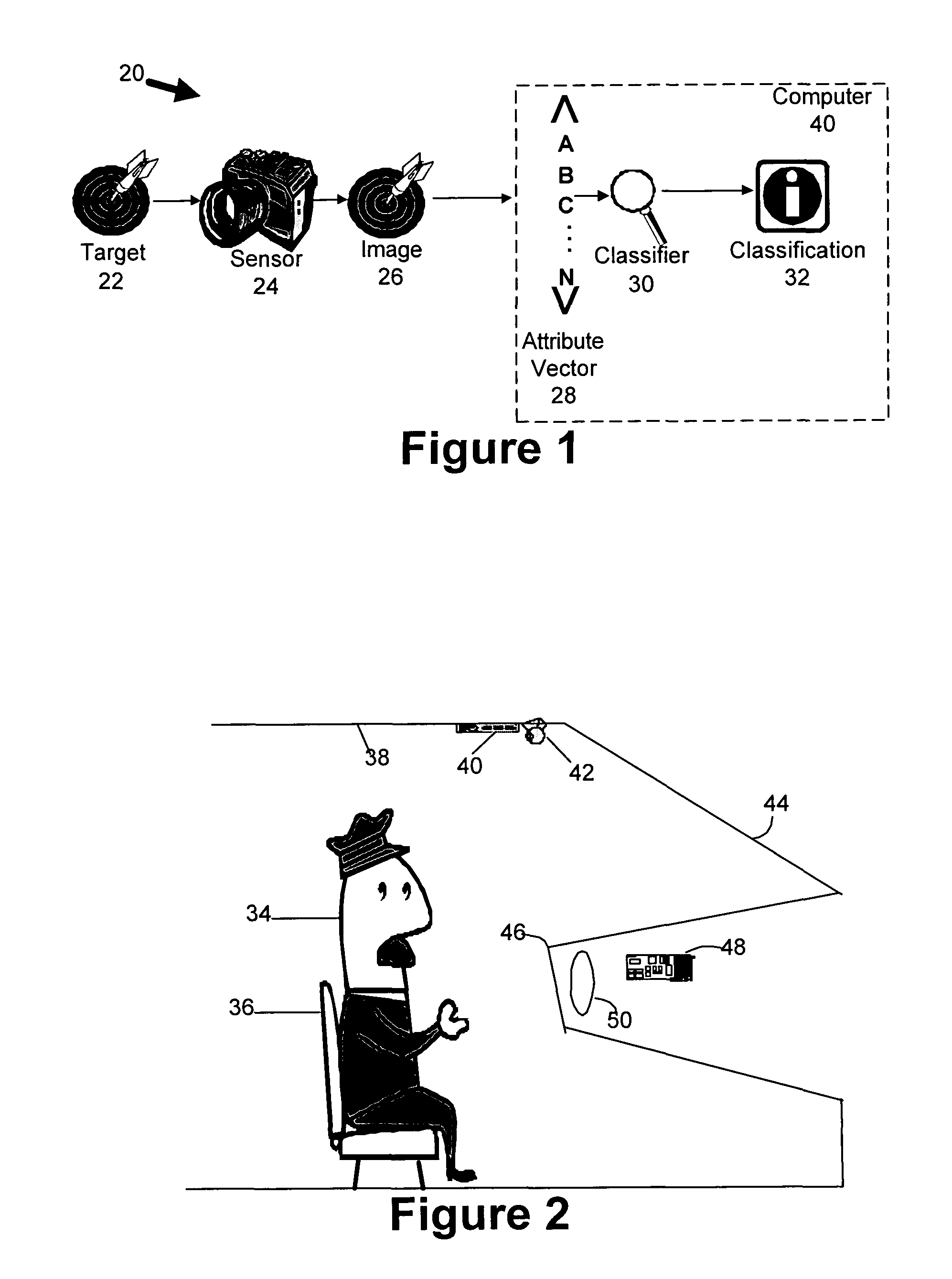

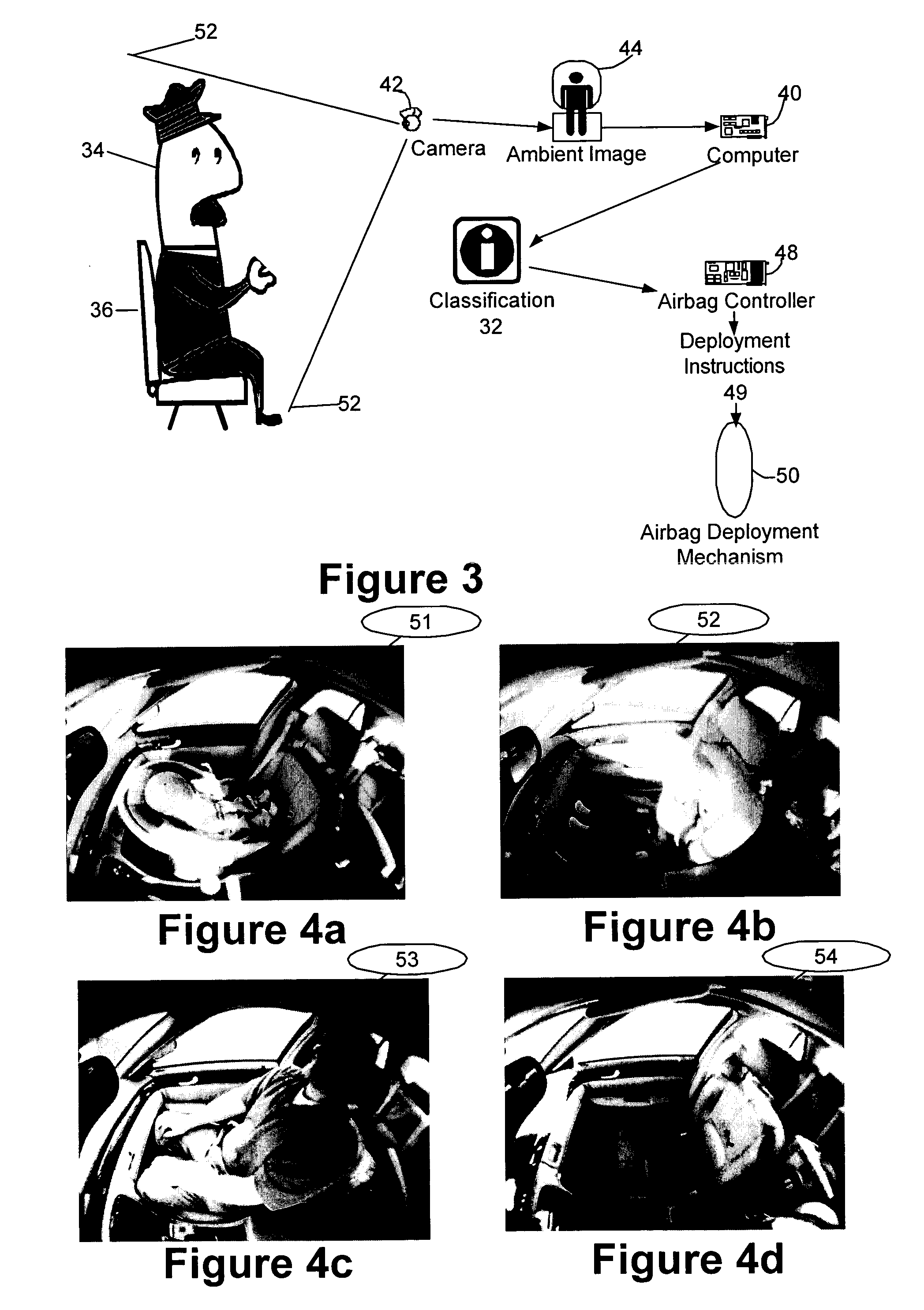

System or method for classifying images

InactiveUS20050271280A1Pedestrian/occupant safety arrangementBiometric pattern recognitionTwo-vectorHeuristic

A system or method (collectively “classification system”) is disclosed for classifying sensor images into one of several pre-defined classifications. Mathematical moments relating to various features or attributes in the sensor image are used to populated a vector of attributes, which are then compared to a corresponding template vector of attribute values. The template vector contains values for known classifications which are preferably predefined. By comparing the two vectors, various votes and confidence metrics are used to ultimately select the appropriate classification. In some embodiments, preparation processing is performed before loading the attribute vector with values. Image segmentation is often desirable. The performance of heuristics to adjust for environmental factors such as lighting can also be desirable. One embodiment of the system is to prevent the deployment of an airbag when the occupant in the seat is a child, a rear-facing infant seat, or when the seat is empty.

Owner:EATON CORP

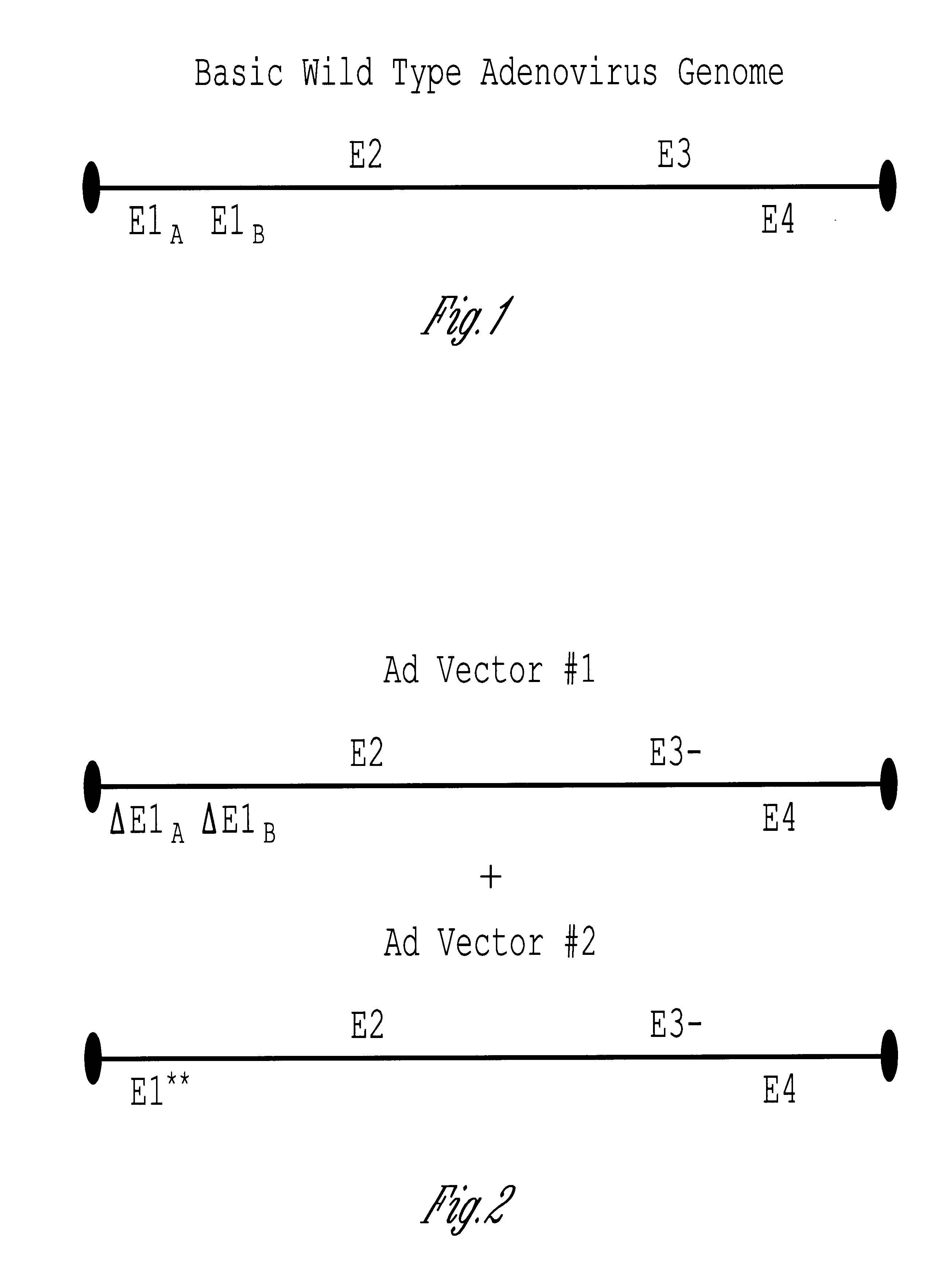

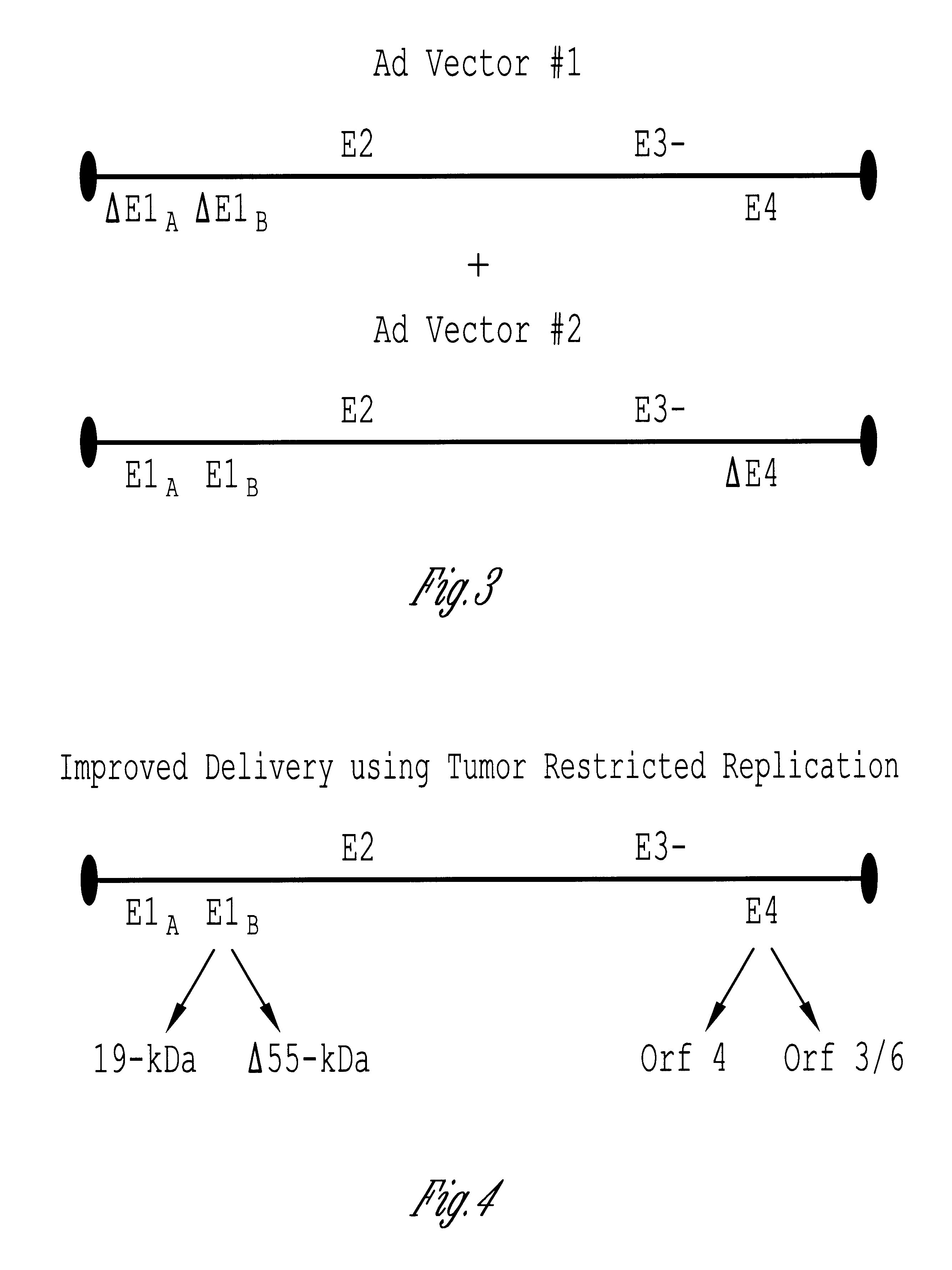

Methods and compositions for efficient gene transfer using transcomplementary vectors

The invention includes a viral vector method and composition comprising transcomplementary replication incompetent viral vectors, preferably adenoviral vectors, which are cotransformed to a recipient cell. The two vectors complement each other and thus allow viral replication, in a synergistic combination which enhances both gene delivery and gene expression of genetic sequences contained within the vector.

Owner:HUMAN GENE THERAPY RES INST

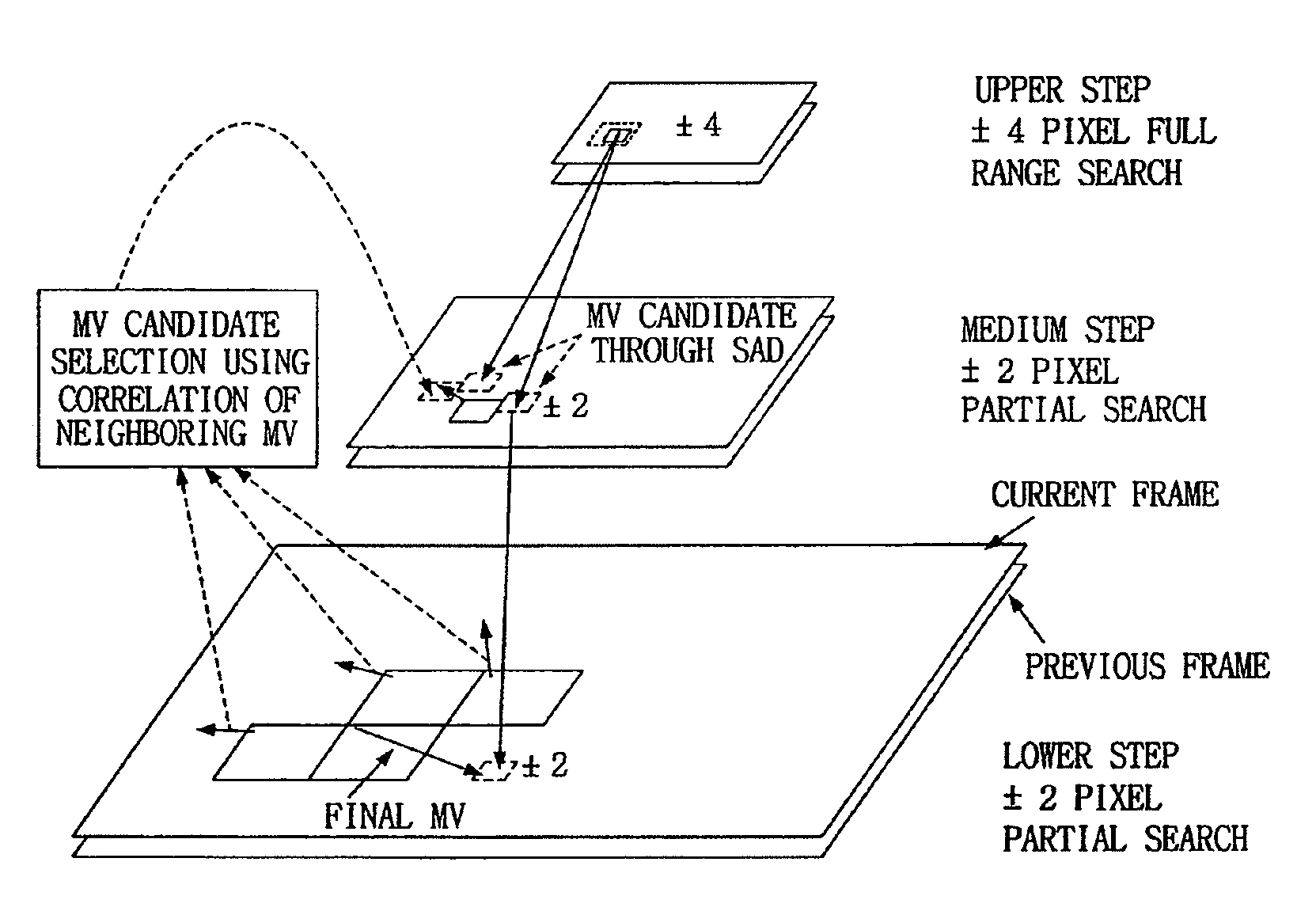

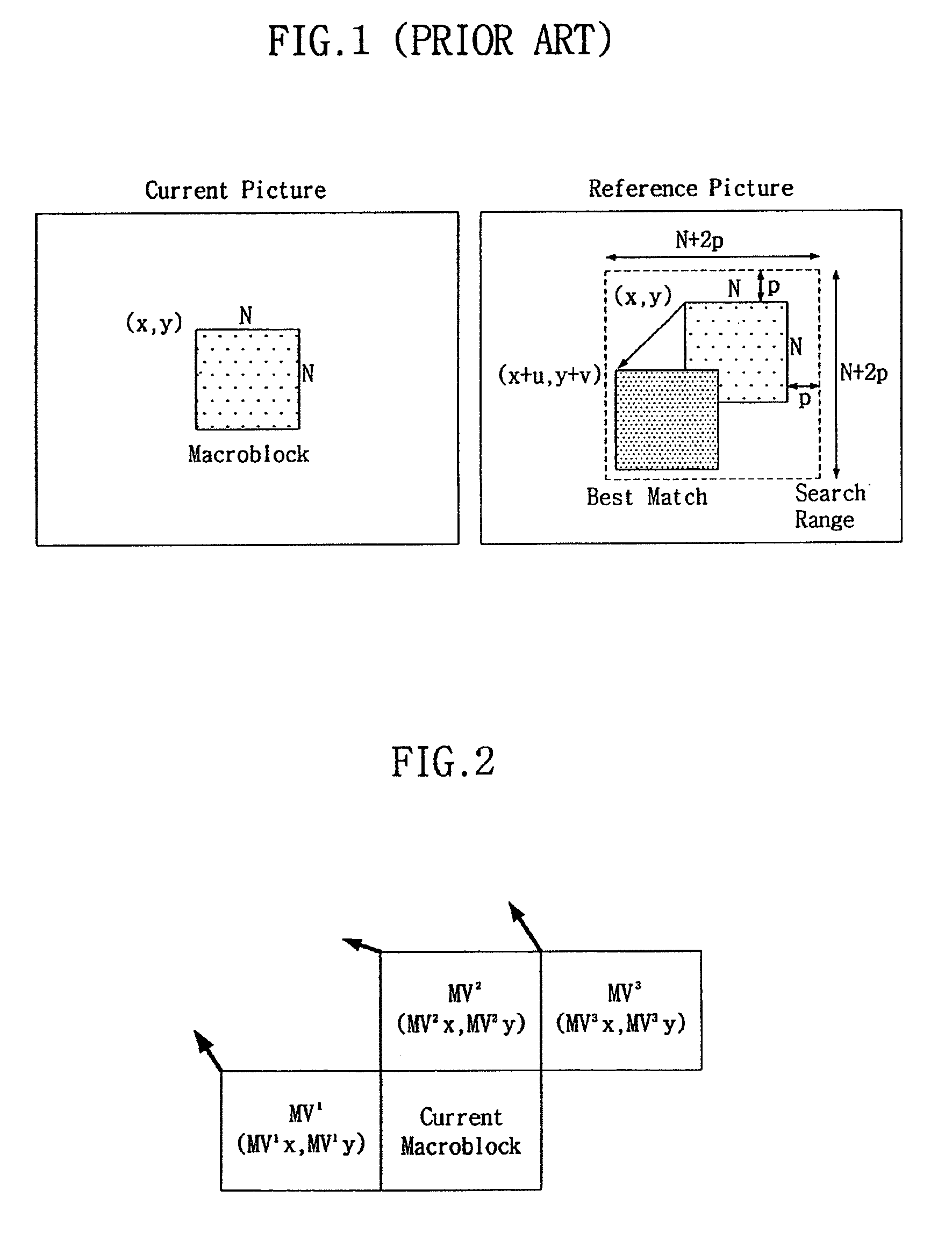

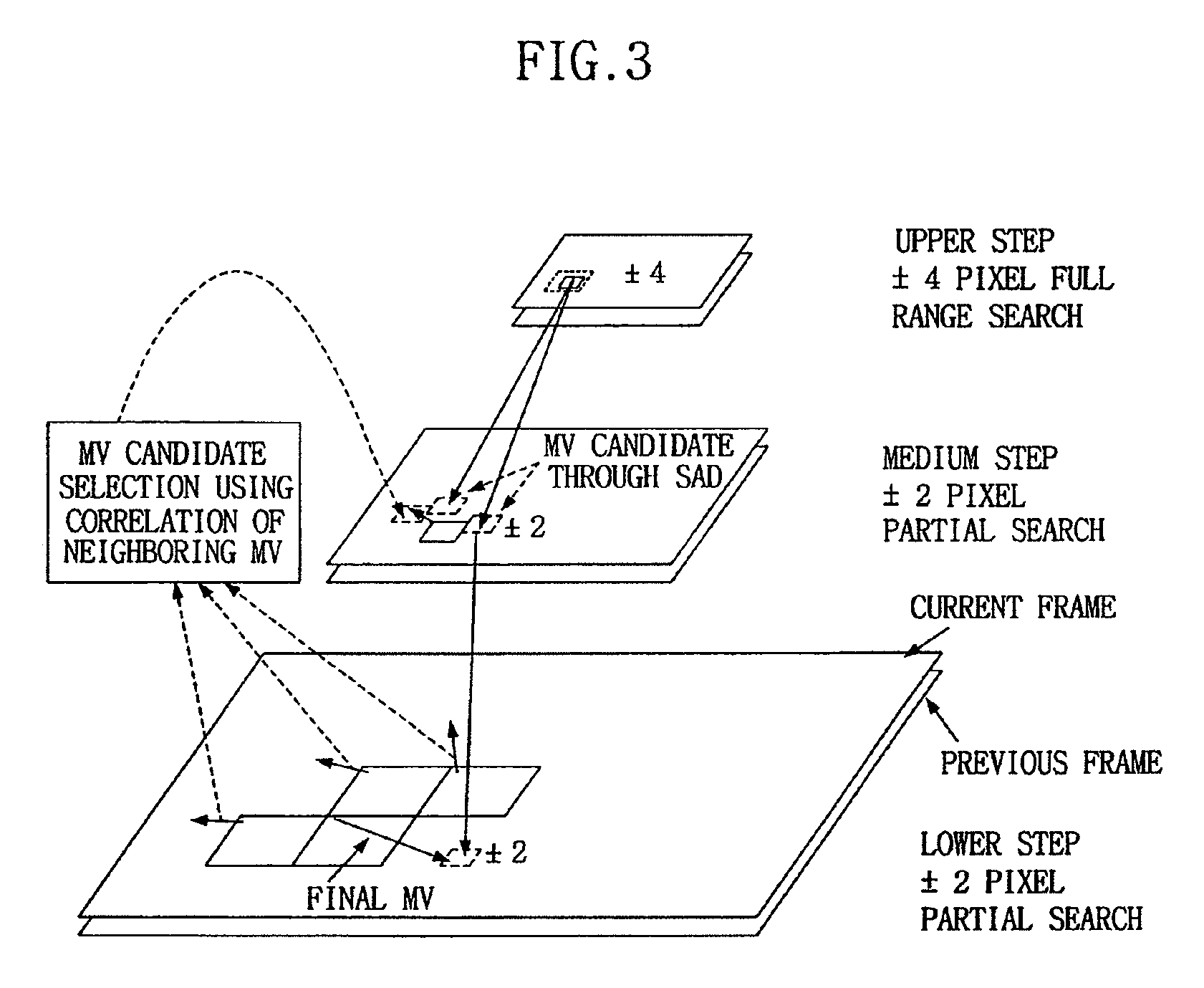

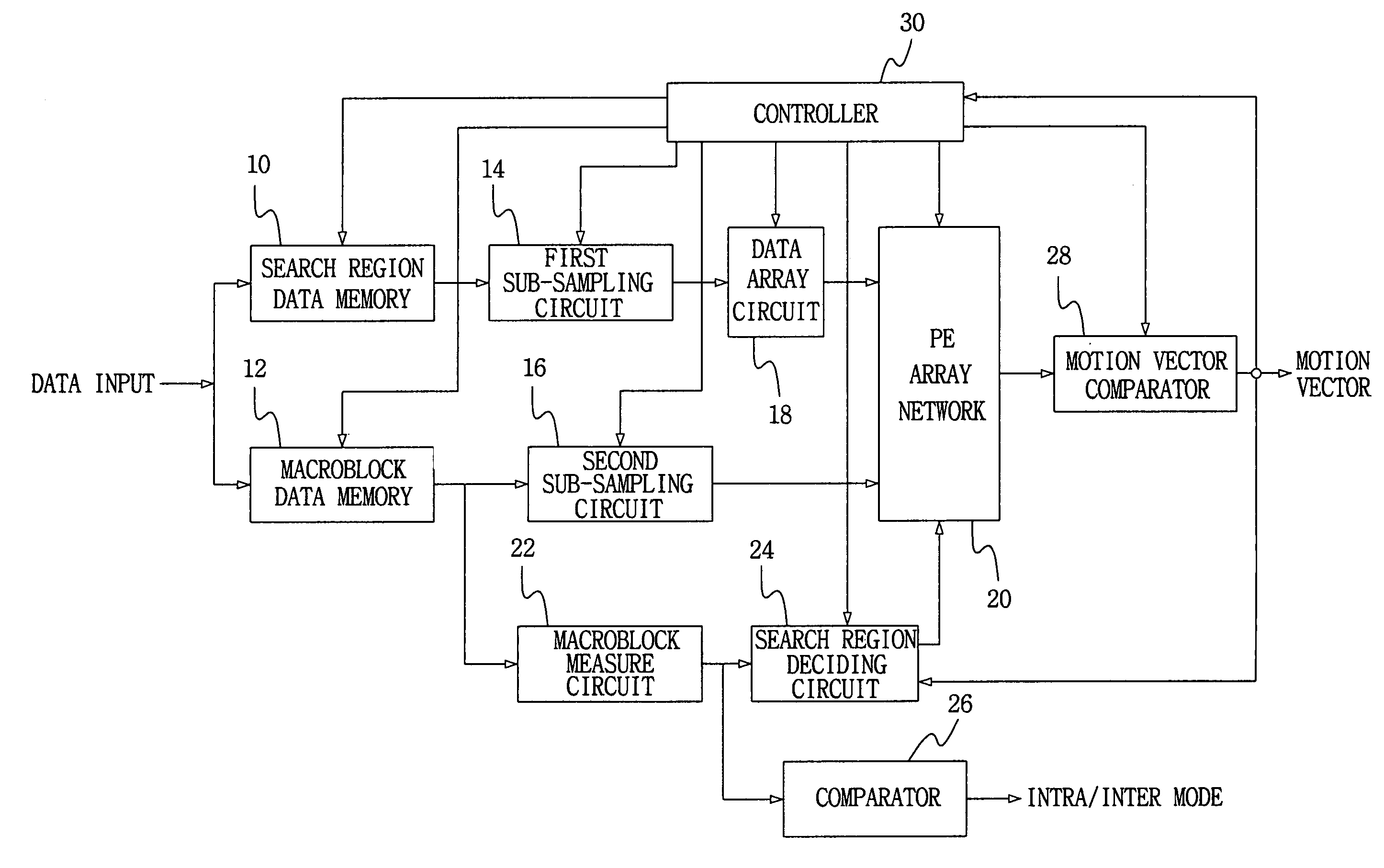

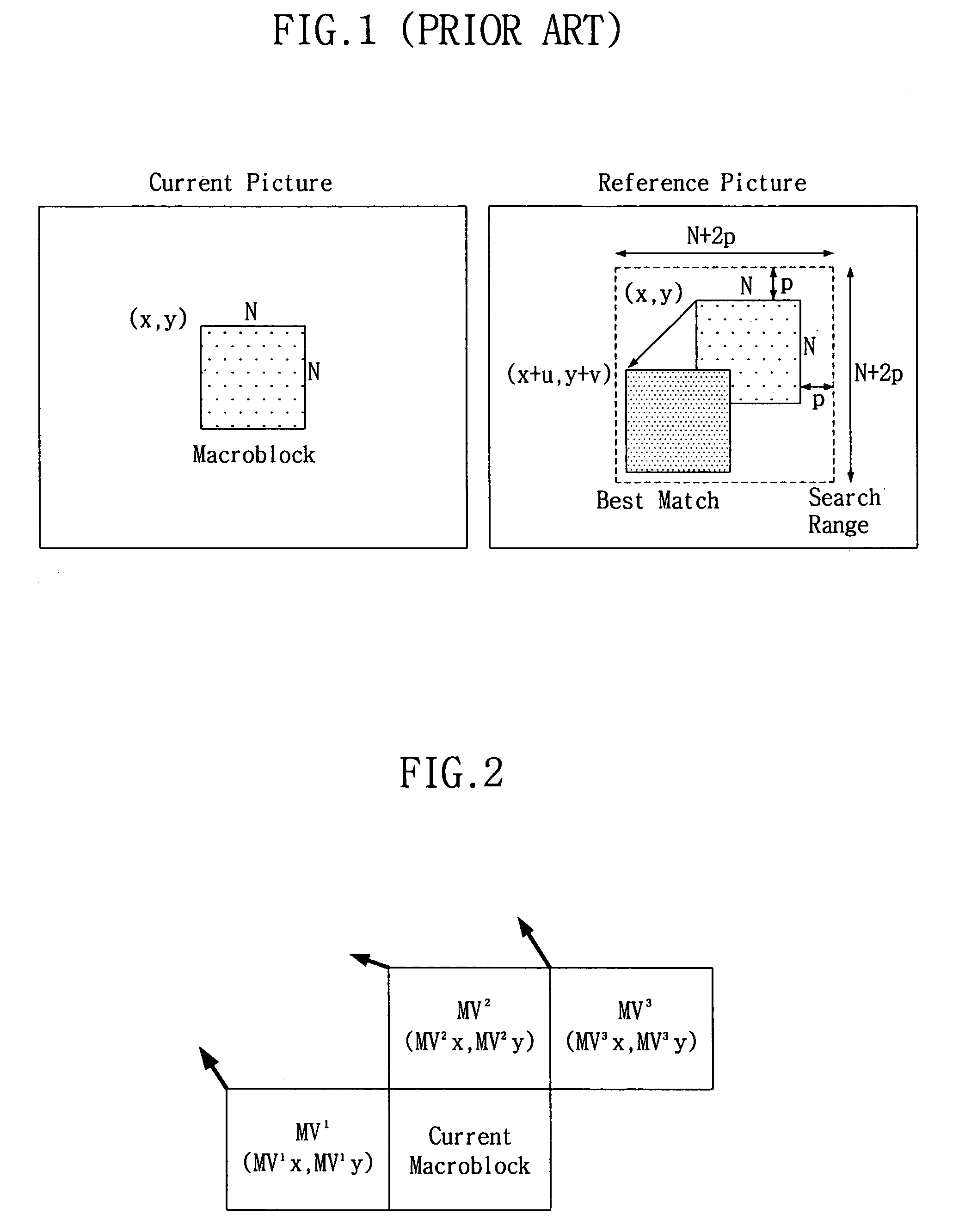

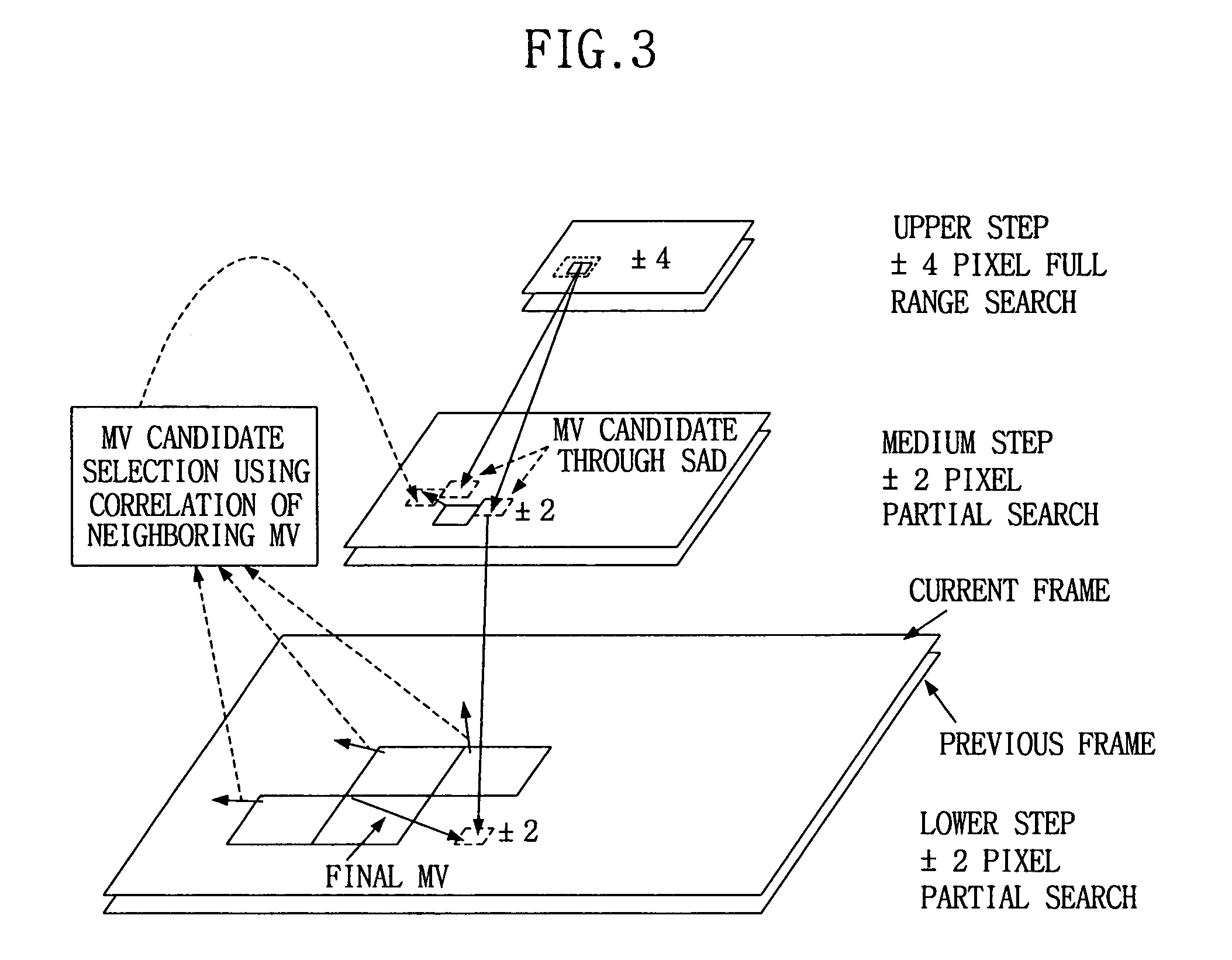

Device for and method of estimating motion in video encoder

InactiveUS7590180B2Reduce consumptionShorten the timeColor television with pulse code modulationColor television with bandwidth reductionSpatial correlationComputation complexity

A motion estimator and an estimation method for a video encoder to reduce power consumption by reducing the computational complexity of the motion estimator. In an upper step, a full search for a ±4 pixel search region for a 4×4 pixel block is performed at ¼ video resolution, to detect two motion vector candidates. In a medium step, a partial search for two vector candidates selected in the upper step and one vector candidate using a spatial correlation is performed for a 8×8 block within a ±1 or ±2 search region, to decide one motion vector candidate. In a lower step, a partial search for the ±1 or ±2 search region on 16×16 block is performed at full resolution, and a half pixel search for a motion vector candidate obtained in the lower step is performed to estimate a final motion vector. A ±4 pixel search region is operatively divided into four search regions, and the estimator sequentially searches the four ±2 pixel search regions to sequentially output SAD values.

Owner:SAMSUNG ELECTRONICS CO LTD

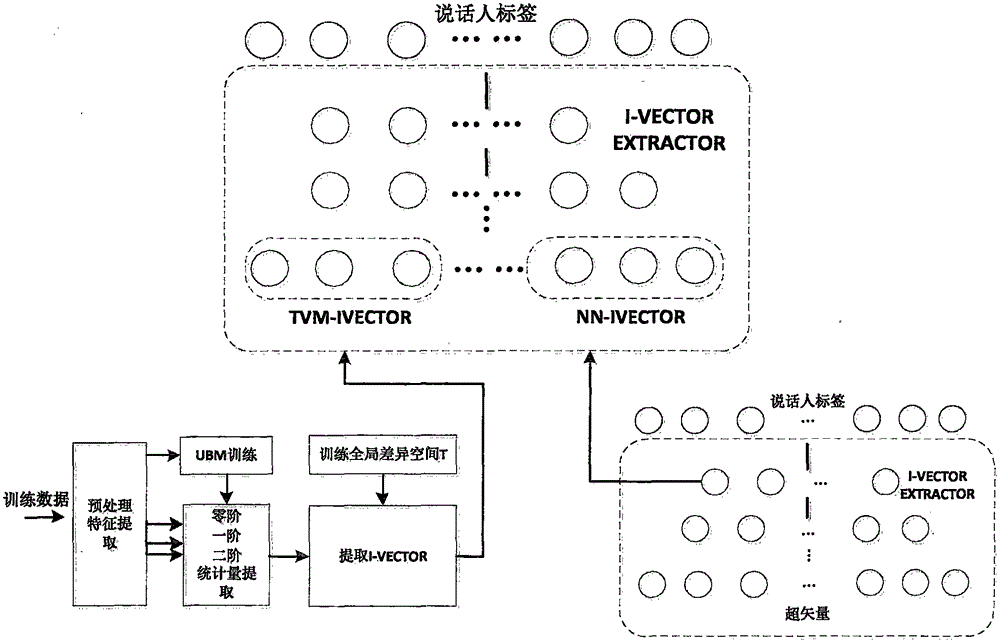

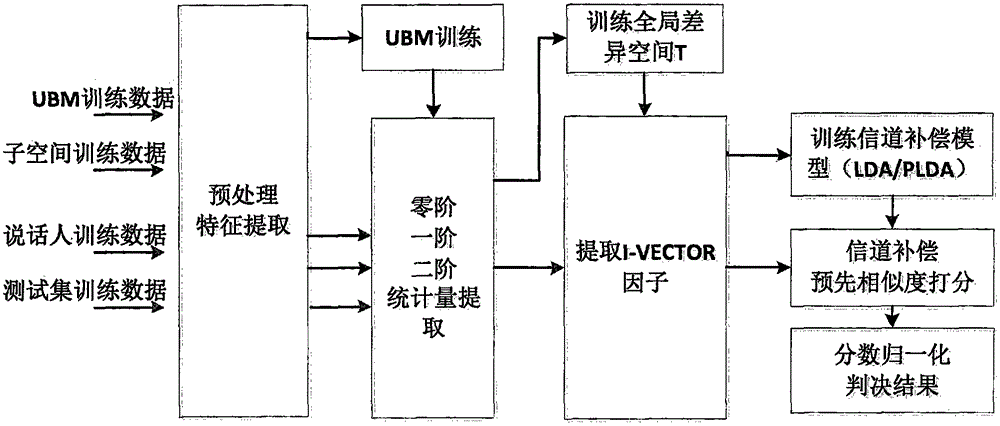

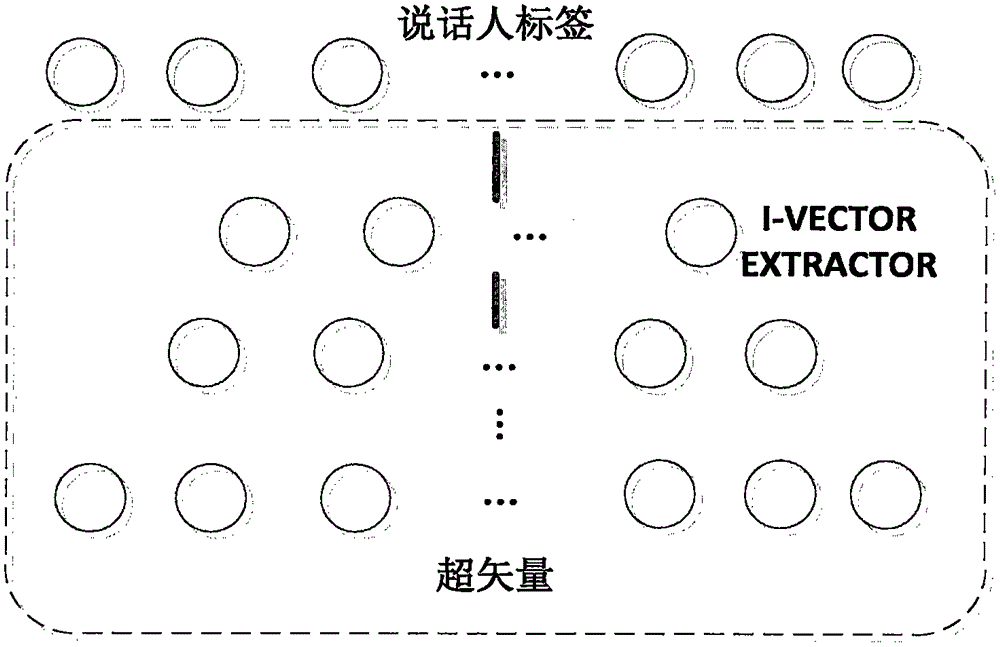

Voiceprint identification method based on global change space and deep learning hybrid modeling

InactiveCN105575394AImprove performanceImprove robustnessSpeech analysisPattern recognitionTwo-vector

The invention discloses a voiceprint identification method based on global change space and deep learning hybrid modeling, comprising the steps of: obtaining voice segment training data, employing a global change space modeling method to perform an identity authentication vector to obtain a TVM-IVECTOR; employing a deep neural network method to perform training to obtain an NN-IVECTOR; fusing two vectors of a same audio frequency file to obtain a new I-IVECTOR characteristic extractor; for the audio frequency to be tested, fusing the TVM-IVECTOR and the NN-IVECTOR, and then extracting a final I-IVECTOR; and after channel compensation, performing rating identification on the speaker model in a model base to obtain an identification result. The voiceprint identification method possesses greater robustness to environmental factor interference such as environment mismatching, multiple channel change and noise, and can improve voiceprint identification method performance.

Owner:中科极限元(杭州)智能科技股份有限公司

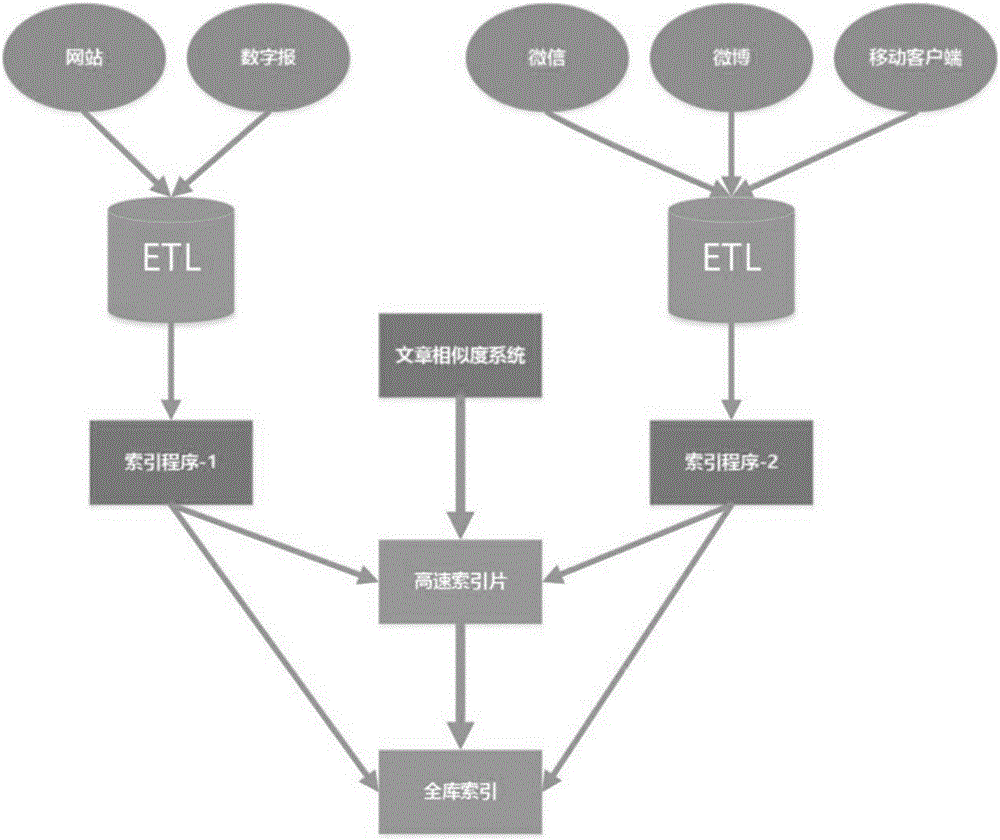

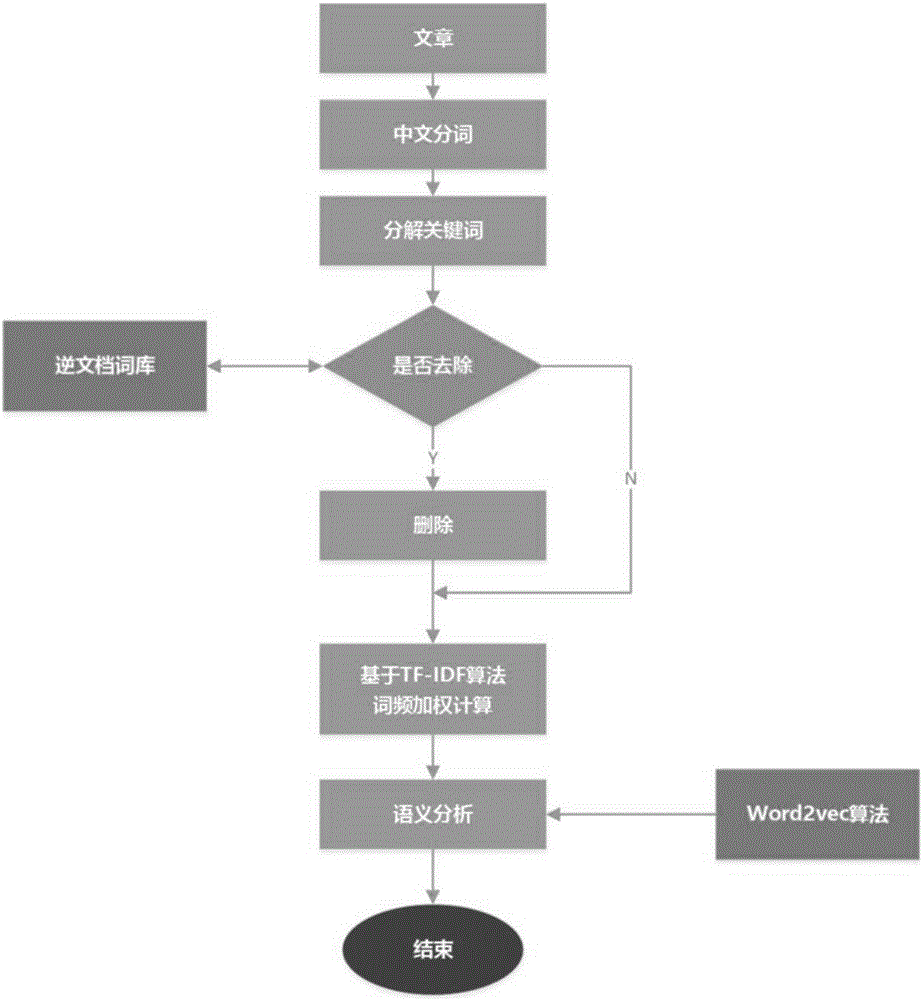

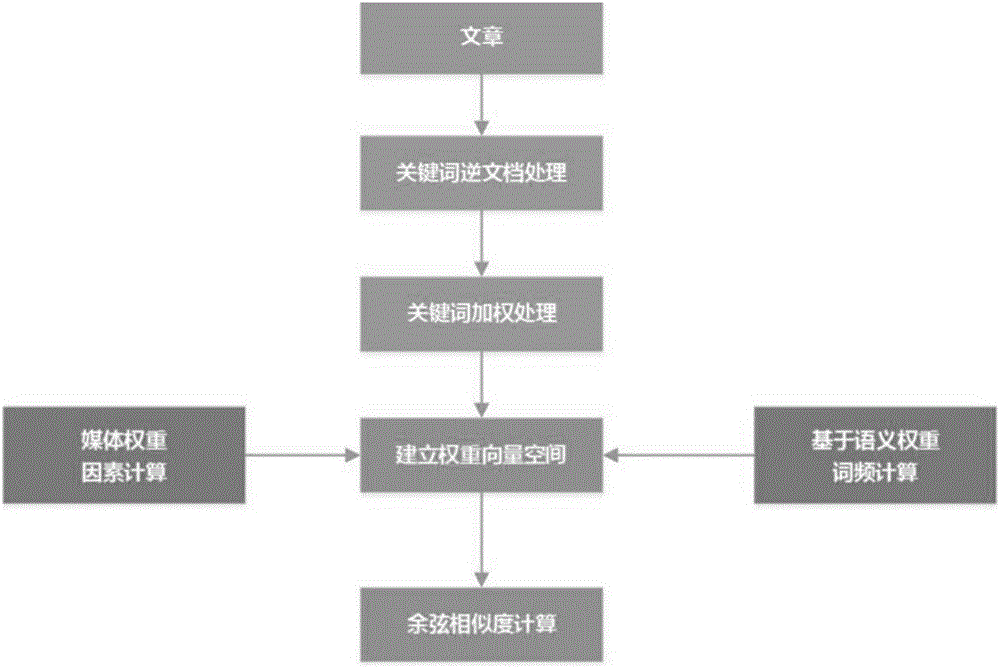

Document similarity calculating method and similar document whole-network retrieval tracking method

InactiveCN106095737AAccurate processing of similarityAccurate analysis and statisticsSemantic analysisText database indexingCosine similarityTwo-vector

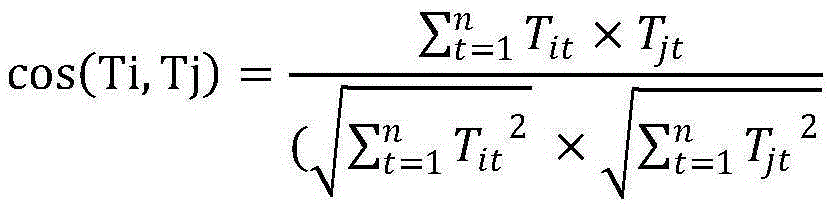

The invention relates to a document similarity calculating method and a similar document whole-network retrieval tracking method. The technical scheme is characterized in that the document similarity calculating method includes: S01, performing word segmentation on an original document and a target document to acquire respective word segmentation sets; S02, performing preprocessing and feature weighting: utilizing TF-IDF technology to calculate weight of each word segmentation, extracting core key words, utilizing Word2vec to dig correlation degree among different word segmentation in the documents, and performing semantic analysis on each document; S03, adopting a vector space model and a cosine similarity algorithm: utilizing a cosine value of an included angle of two vectors in vector space to evaluate similarity of the documents, wherein the cosine value is between 0 and 1, and the greater the cosine value is, the higher the similarity of the documents is. The document similarity calculating method and the similar document whole-network retrieval tracking method are suitable for news information redistribution tracking and transmissibility statistics.

Owner:HANGZHOU FANEWS TECH

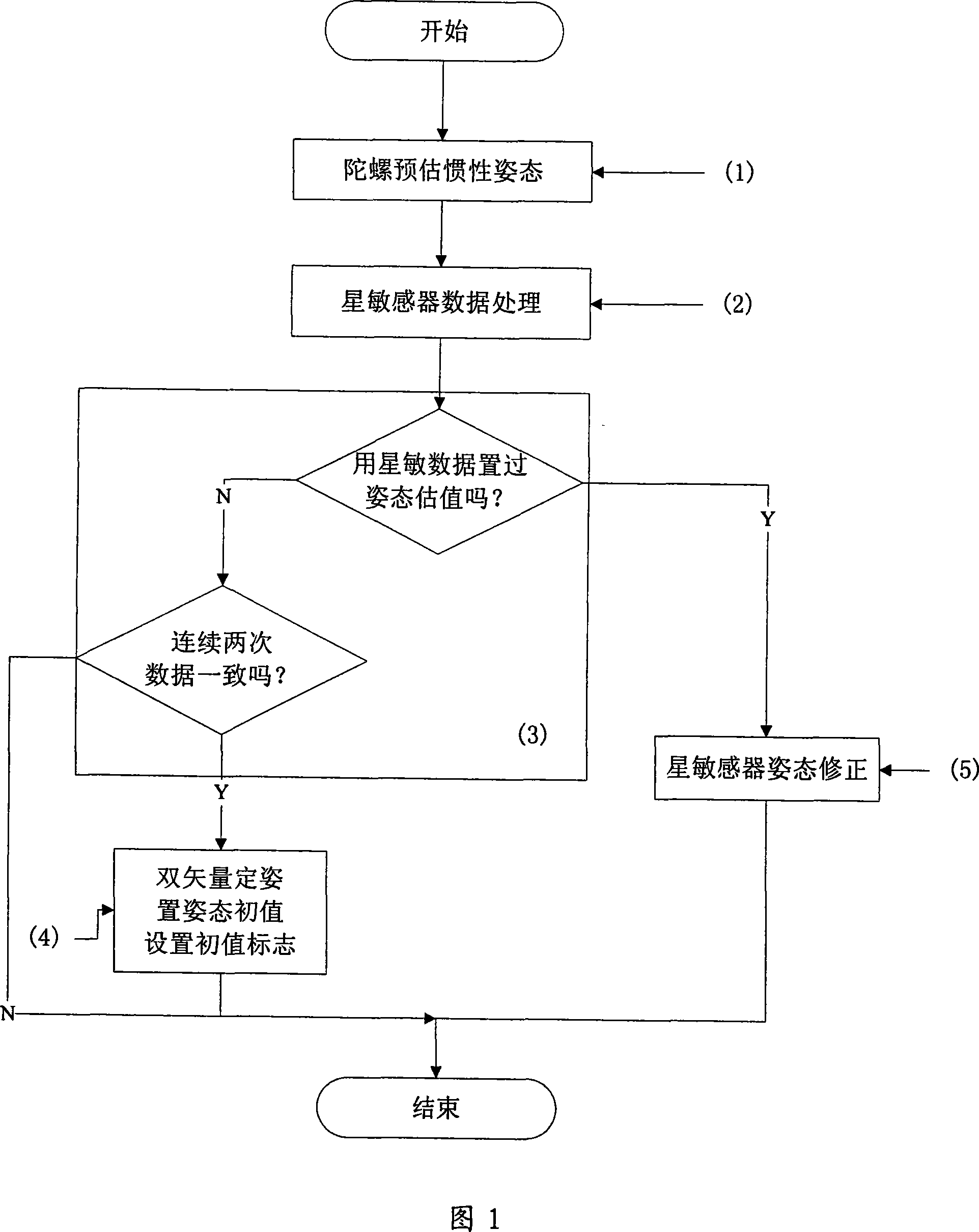

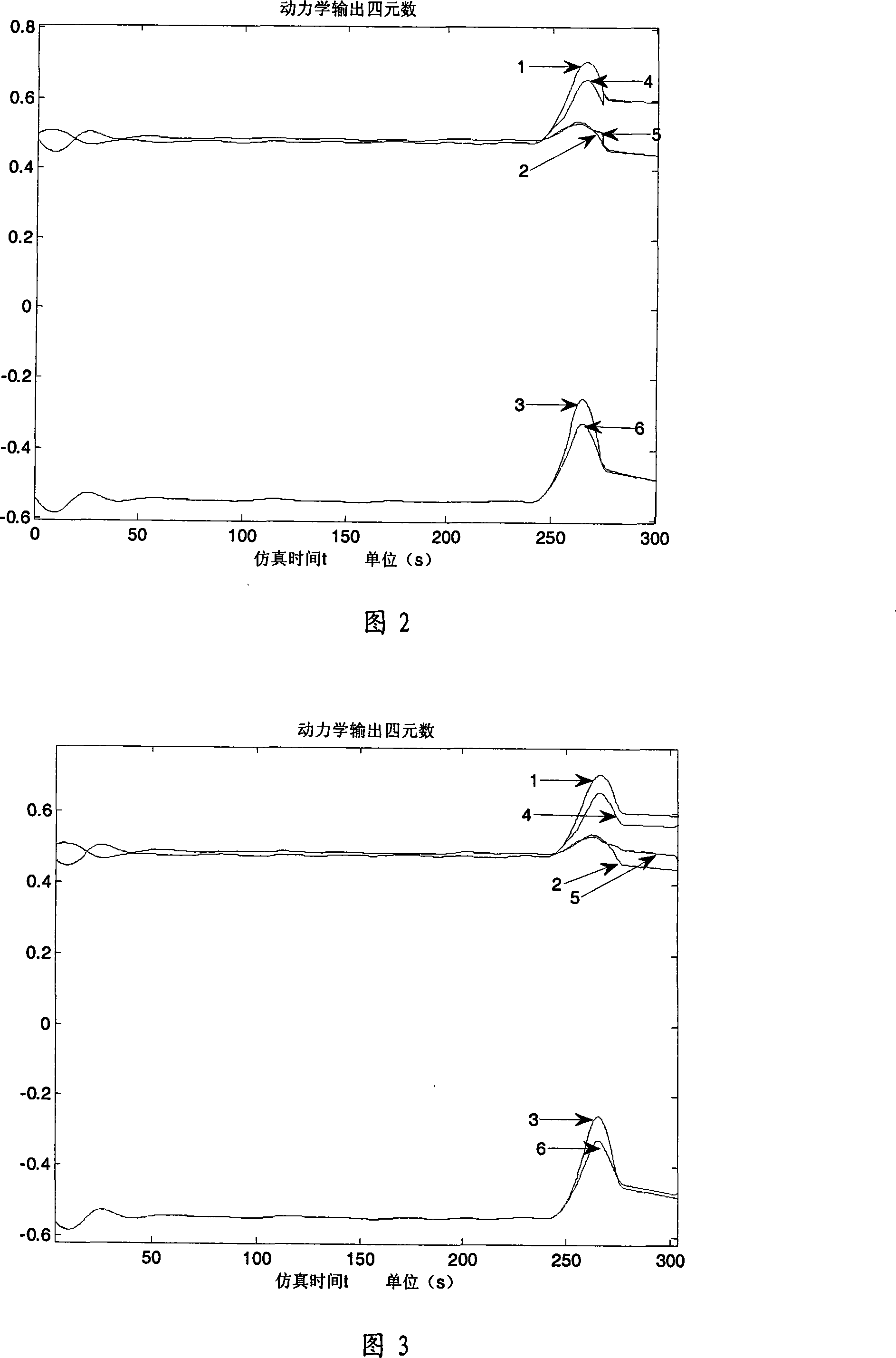

Star sensor attitude determination method at self-determination retrieve rail controlling fault

InactiveCN101214861AAccurate estimateShorten the process of failure recoverySpacecraft guiding apparatusTwo-vectorOptical axis

A star sensor attitude-determination method during the autonomous orbital control fault recovery includes: (1) predicting the satellite inertia attitude according to data measured by a gyro; (2) calculating the filtering modified innovation amount according to the inertia attitude of the satellite and the optical axis vector and the lateral axis vector under an inertial coordinate system which are measured and output by the star sensor, and calculating the error of the innovation amount between the front and the back periodicals, which is used for judging the consistency of star sensor data; (3) judging the consistency of the star sensor data; (4) fixing the attitude of the star sensor according to the two vectors; (5) introducing the star sensor which is combined with the gyro for the correction of the satellite attitude under the condition that the star sensor data arranges the initial value of the attitude estimation. The method can improves the reliability of the orbital control fault recovery, saves the time for the fault recovery and ensures that the orbital control is accurately recovered in time.

Owner:BEIJING INST OF CONTROL ENG

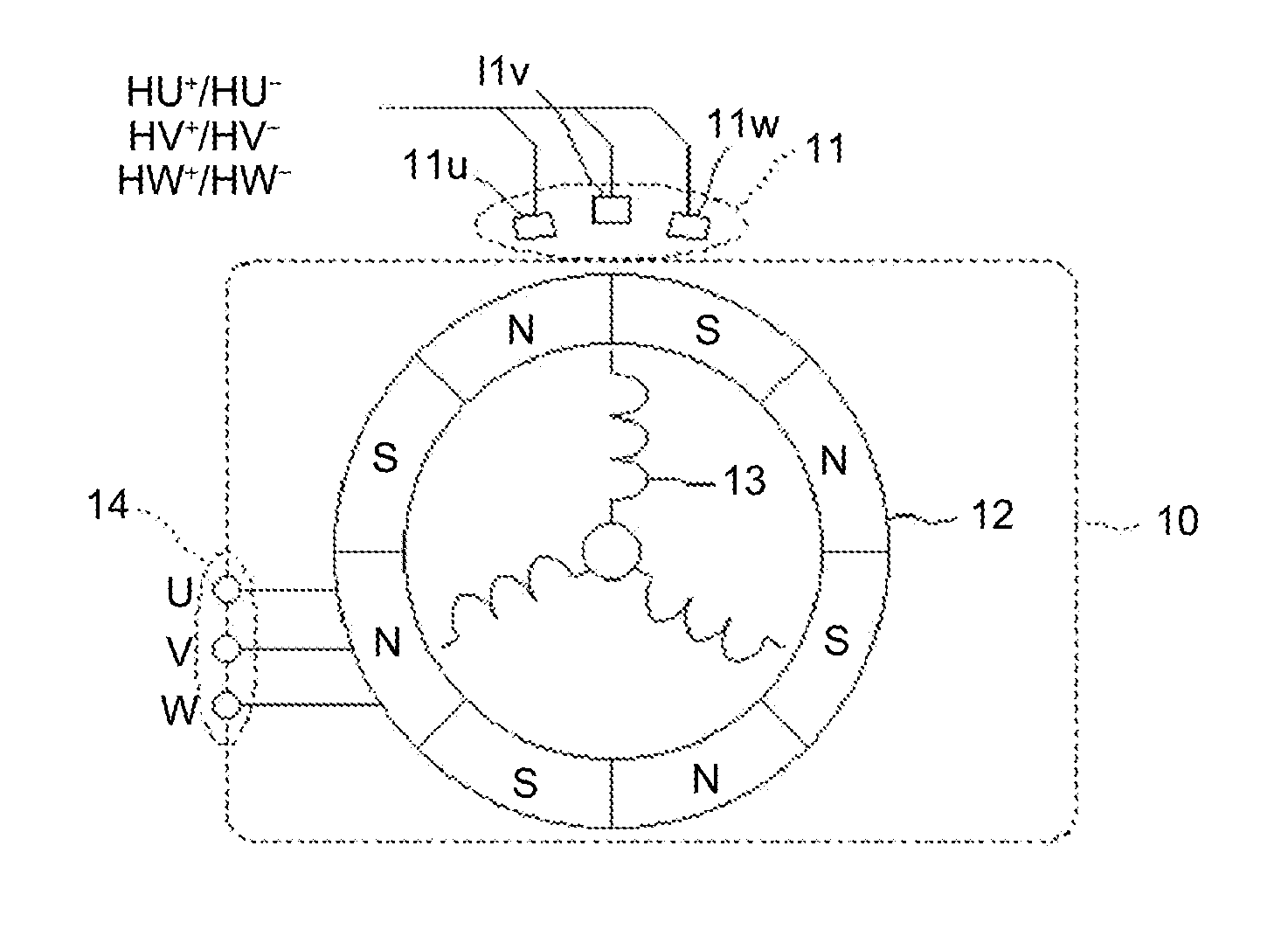

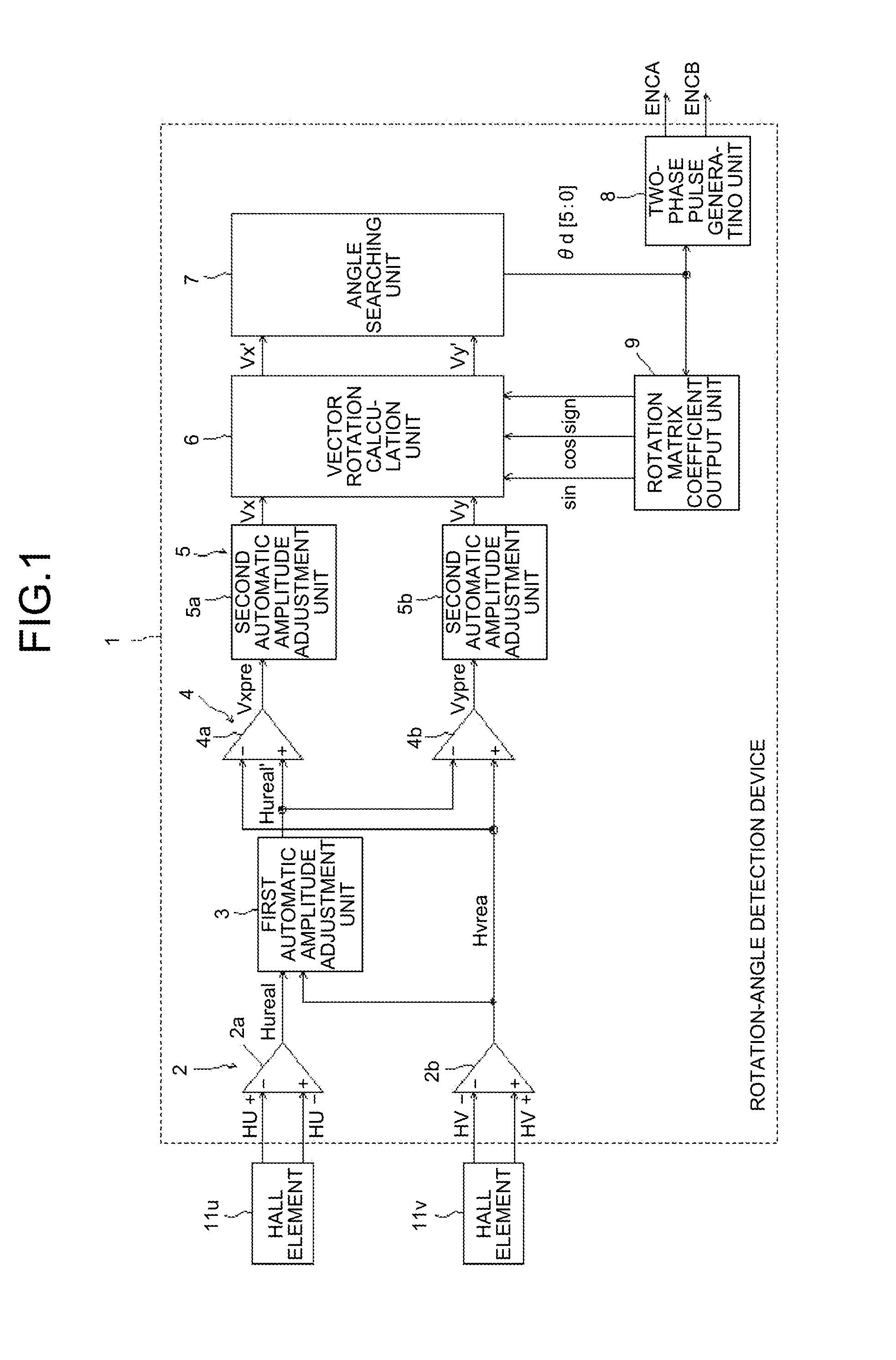

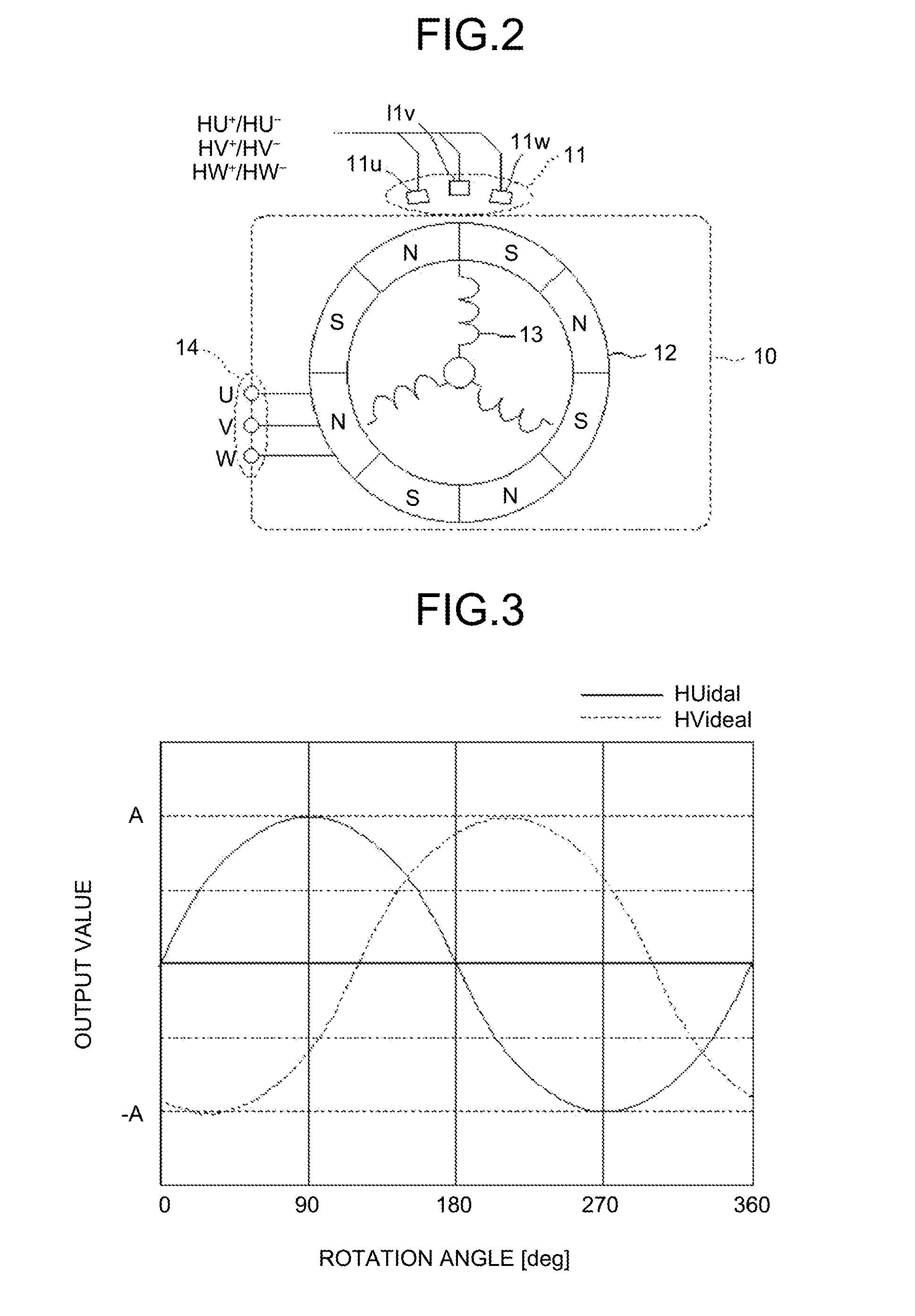

Rotation-angle detection device, image processing apparatus, and rotation-angle detection method

A rotation-angle detection device includes: an amplitude adjustment unit that performs correction to match amplitude values of multiple detection signals output from multiple rotation detection units to output corrected signals, the rotation detection units changing outputs in accordance with a rotation angle of a rotor and being provided such that the rotation detection units output the detection signals having different phases; a vector generation unit that performs addition and subtraction on two signals out of the corrected signals to generate two vector component signals that are perpendicular to each other; an amplitude correction unit that performs correction to match amplitudes of the two vector component signals to output corrected vector component signals; and a rotation-angle searching unit that searches for the rotation angle of the rotor on basis of a vector that is represented by the two corrected vector component signals to output a detection angle.

Owner:RICOH KK

Vector difference measures for data classifiers

InactiveUS20020147754A1Computation using non-contact making devicesCharacter and pattern recognitionCorrelation coefficientTwo-vector

A method and apparatus are provided for forming a measure of difference between two data vectors, in particular for use in a trainable data classifier system. An association coefficient determined for the two vectors is used to form the measure of difference. A geometric difference between the two vectors may advantageously be combined with the association coefficient in forming the measure of difference. A particular application is the determination of conflicts between items of training data proposed for use in training a neural network to detect telecommunications account fraud or network intrusion.

Owner:CEREBRUS SOLUTIONS LTD

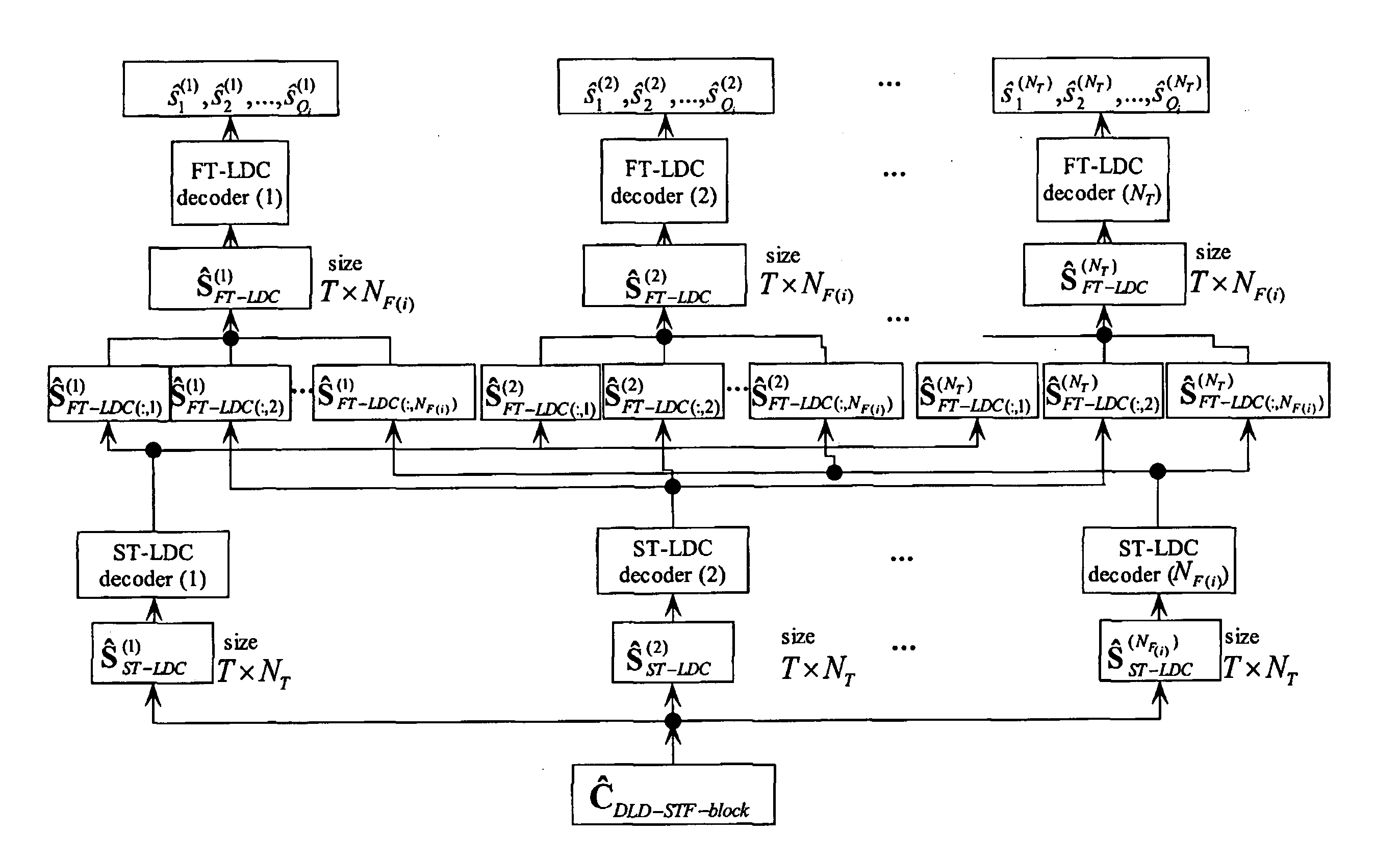

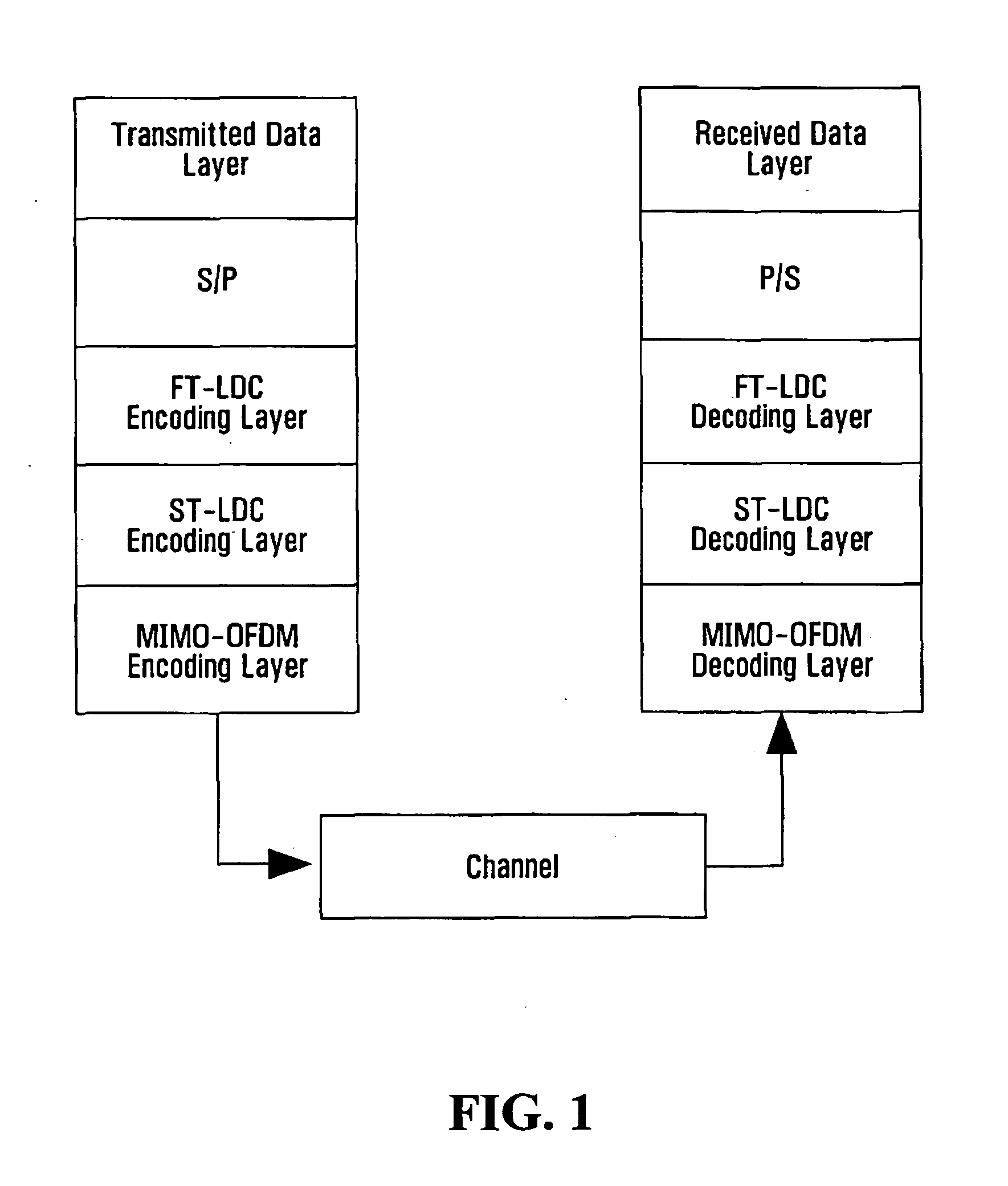

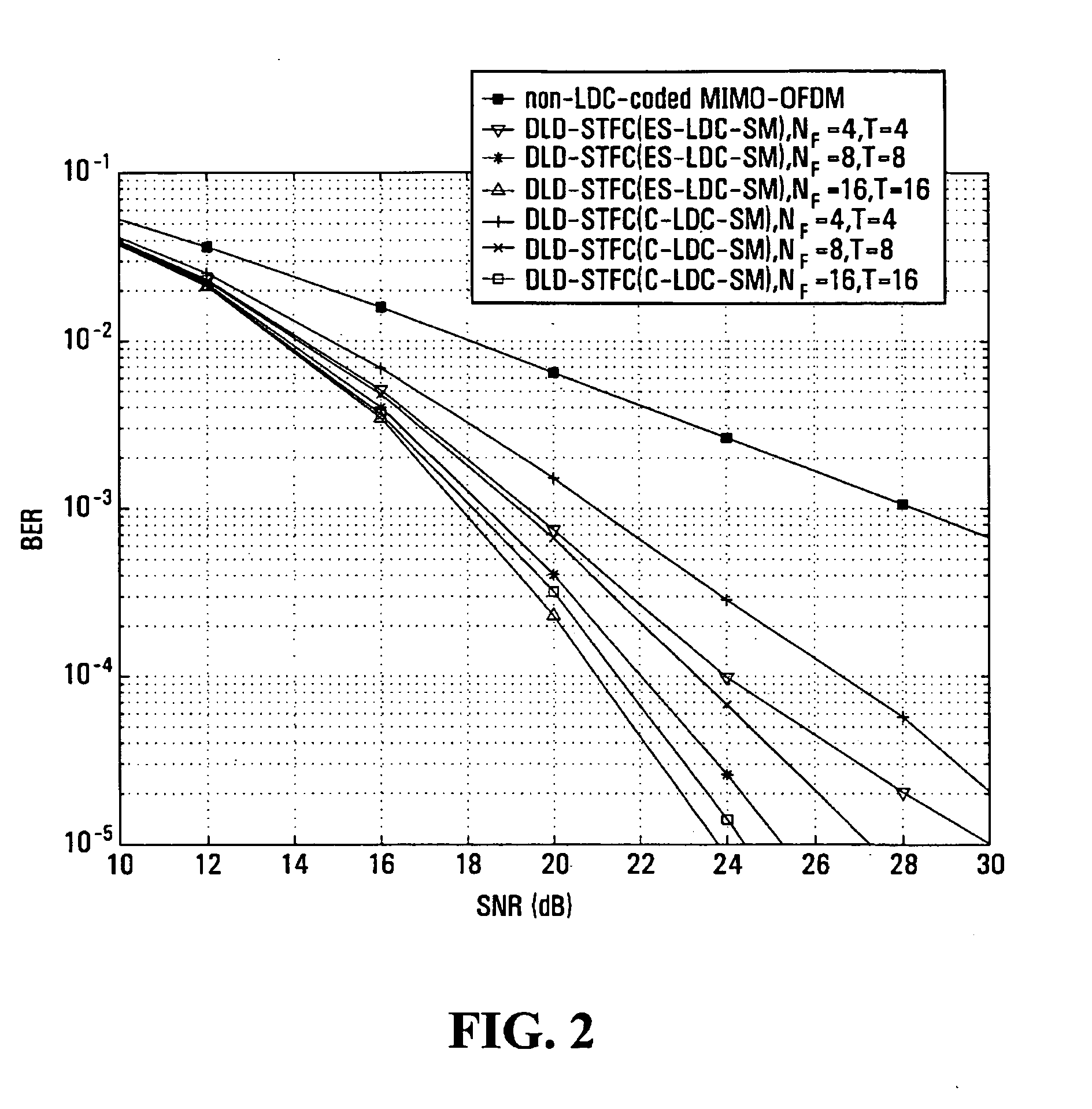

System and method employing linear dispersion over space, time and frequency

InactiveUS20070177688A1Diversity/multi-antenna systemsForward error control useLinear dispersionTwo-vector

Systems and methods for performing space time coding are provided. Two vector→matrix encoding operations are performed in sequence to produce a three dimensional result containing a respective symbol for each of a plurality of frequencies, for each of a plurality of transmit durations, and for each of a plurality of transmitter outputs. The two vector→matrix encoding operations may be for encoding in a) time-space dimensions and b) time-frequency dimensions sequentially or vice versa.

Owner:QUEENS UNIV OF KINGSTON

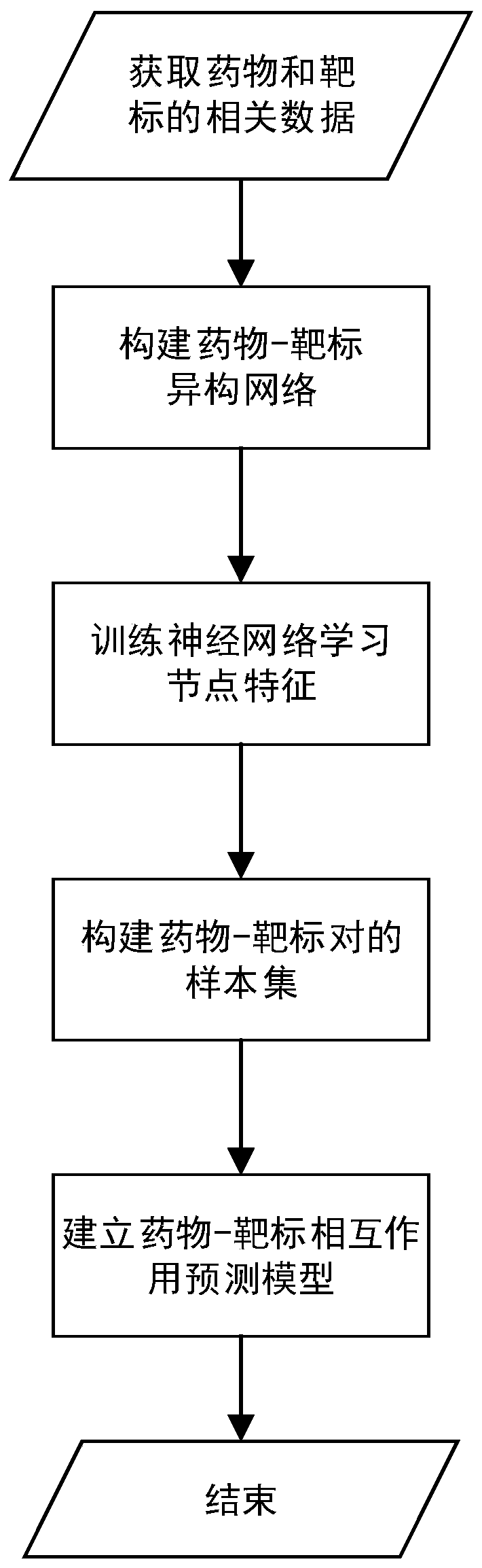

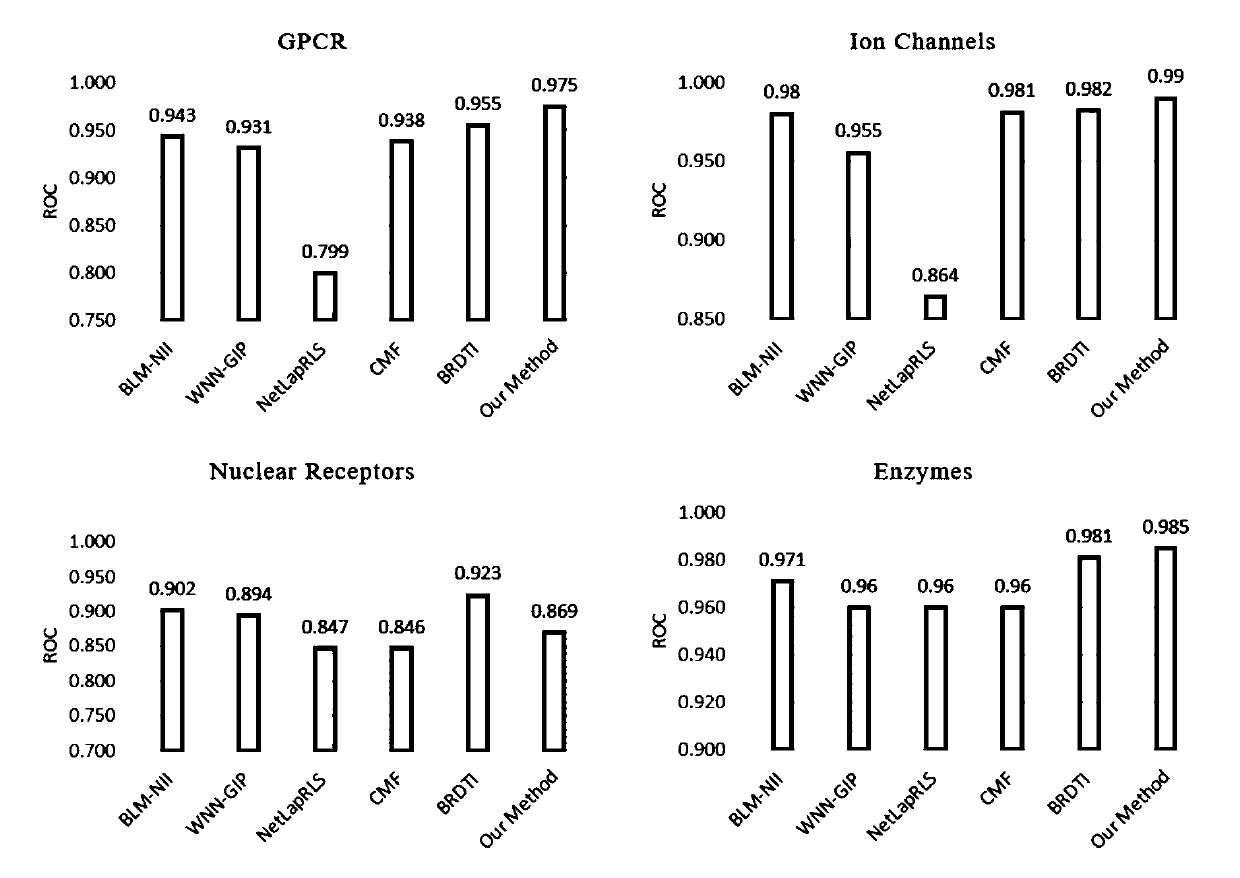

Built-in drug target interaction prediction method based on heterogeneous network

The invention discloses a built-in drug target interaction prediction method based on a heterogeneous network. The built-in drug target interaction prediction method includes the steps: based on the assumption that a chemically similar drug can often interact with a similar target, combining a drug-drug similarity network, a target-target similarity network, and a drug-target interaction network into a drug-target heterogeneous network; using a starting-node-based migrating sequence, constructing a neural network classification model, taking the migrating sequence as input of the neural network classification model, training the classification model and learning to obtain vector representation of all nodes; and for prediction of drug-target interaction, giving a pair of drug-target pairs,extracting vector representation of the corresponding drug and target from the node vectors obtained from learning, performing Hadamard product operation on the two vectors, and taking the obtained result as the input of a random forest classifier to obtain the final prediction result. According to the experimental verification, the built-in drug target interaction prediction method based on a heterogeneous network has preferable prediction effect and applicability.

Owner:CENT SOUTH UNIV

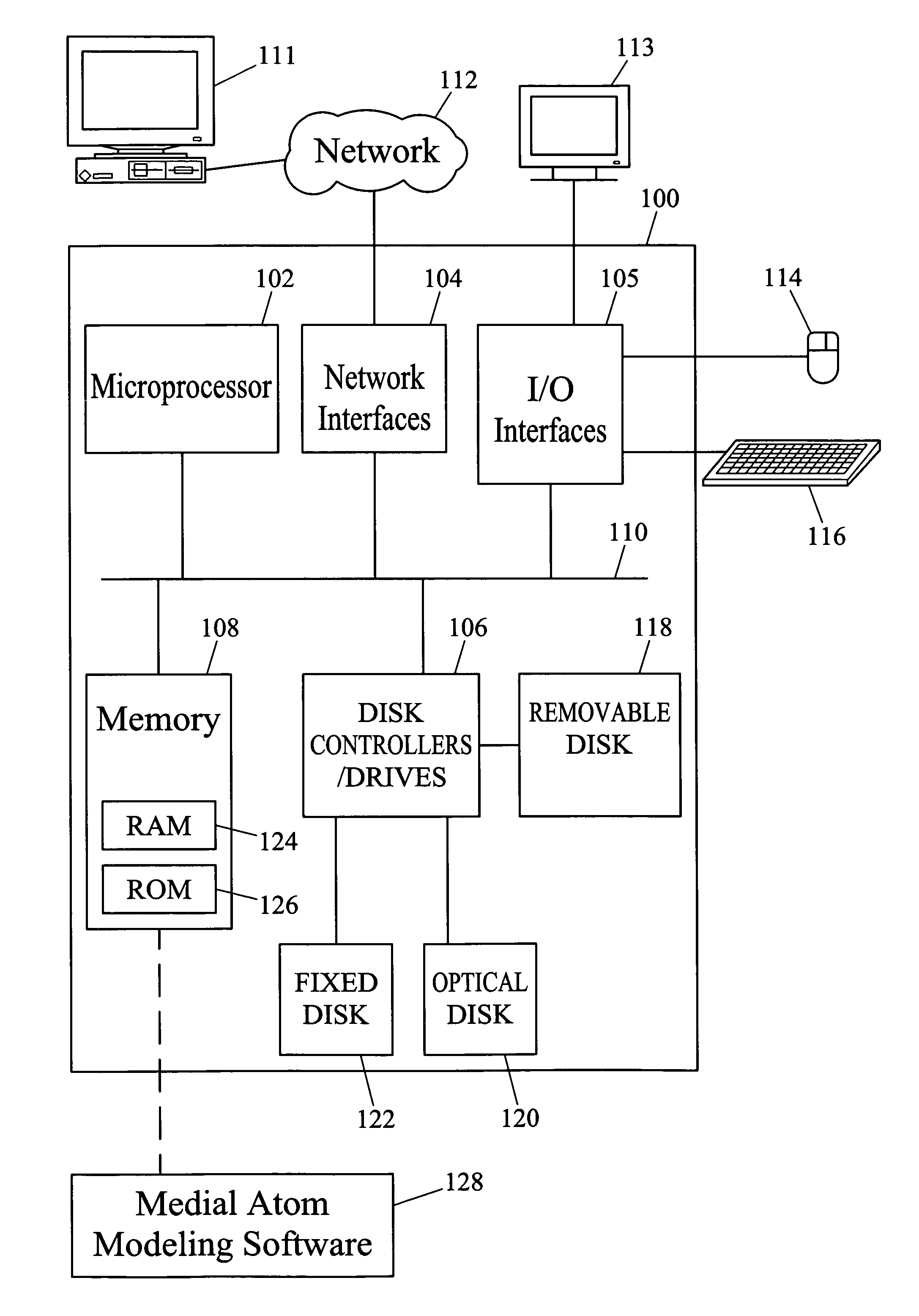

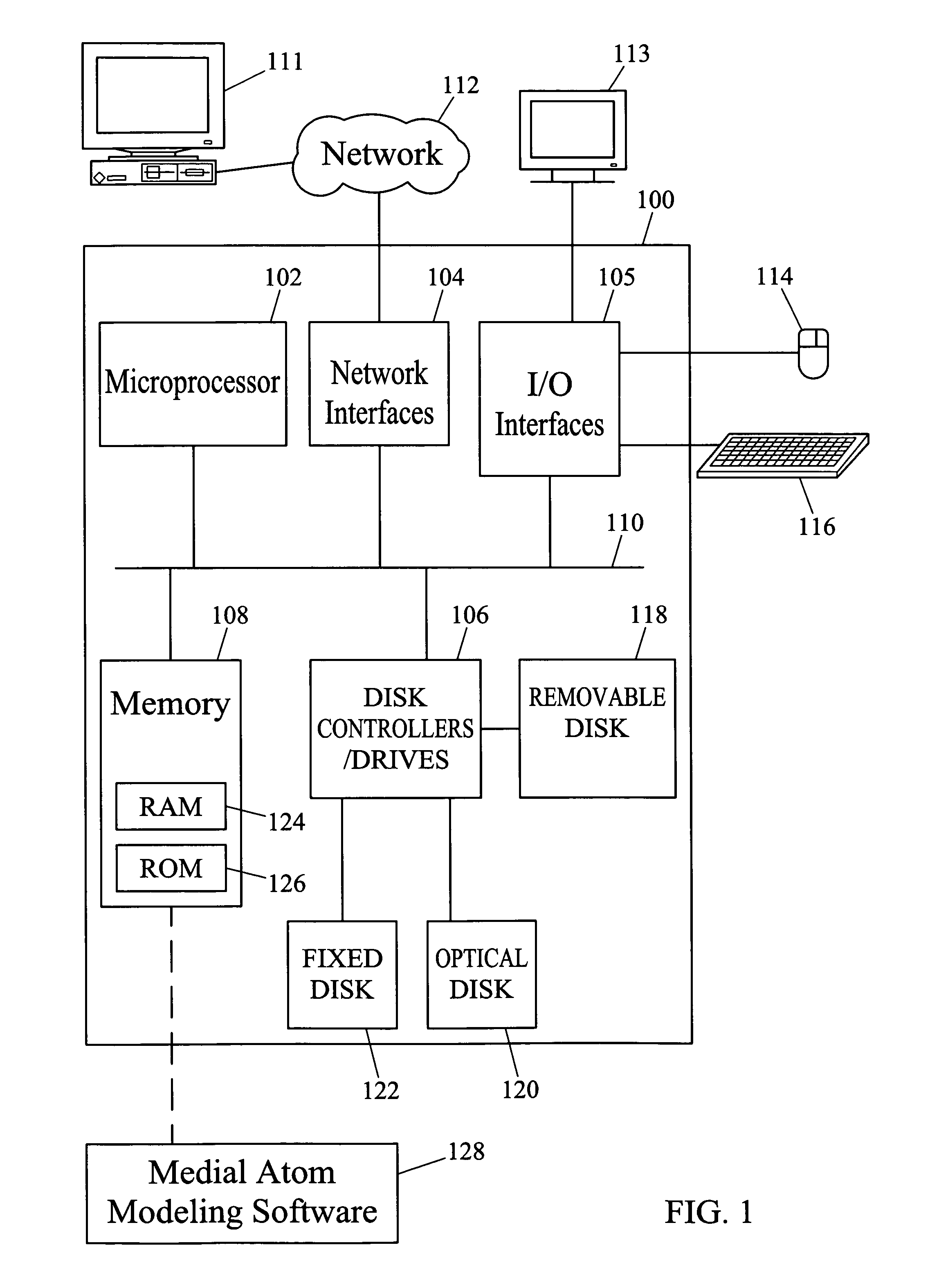

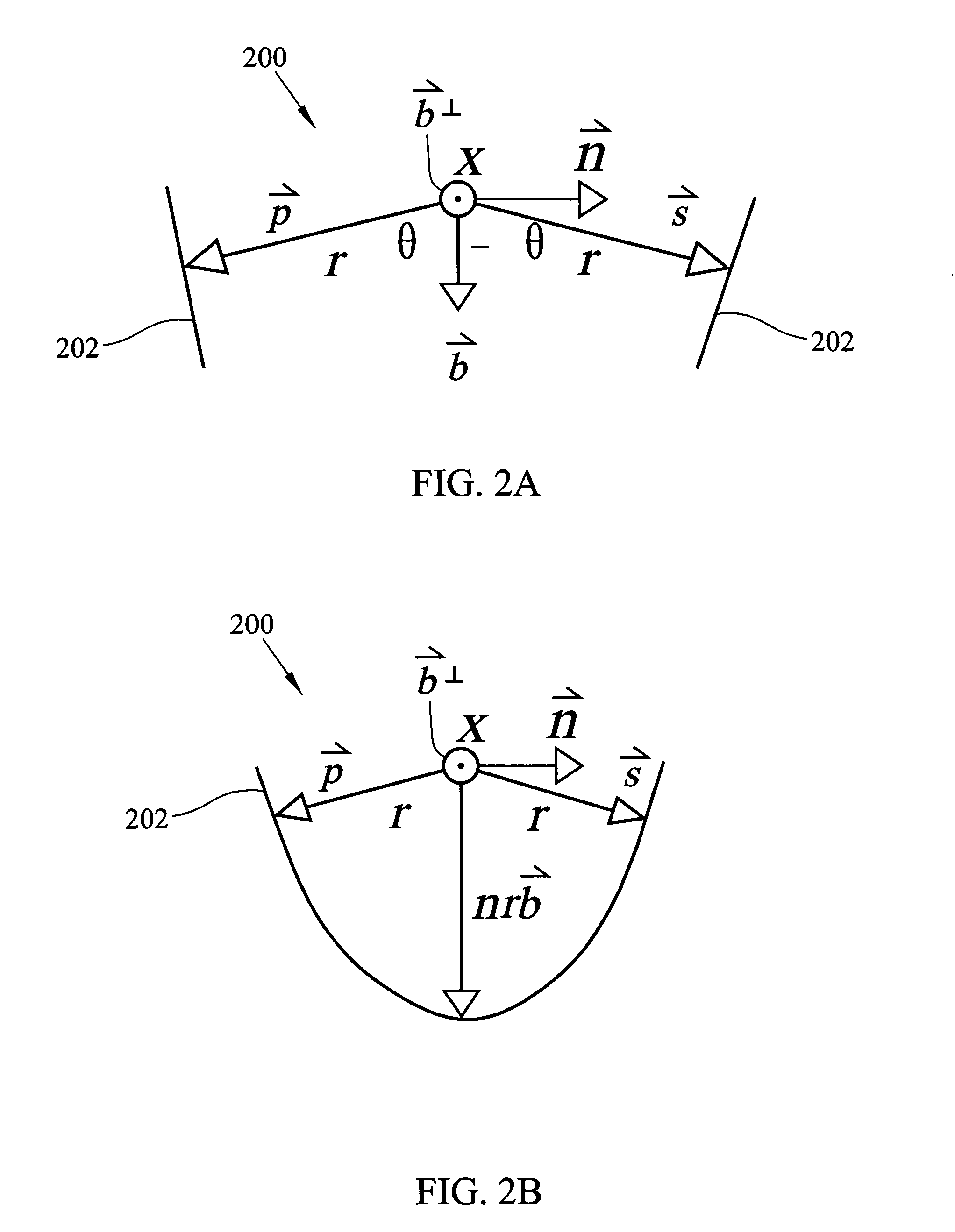

Methods and systems for modeling objects and object image data using medial atoms

ActiveUS7200251B2Shorten the timeReduce the amount requiredAnalogue computers for chemical processesCharacter and pattern recognitionGraphicsTwo-vector

Methods and systems for modeling objects and object image data using medial atoms are disclosed. Objects and object image data can be modeled using medial atoms. Each medial atom includes at least two vectors having a common tail and extending towards an implied boundary of a model. The medial atoms may be aligned along one or more medial axes in the model. The model may include multiple sub-components, referred to as figures. The model and each of its figures may be represented in model-based and figure-based coordinates. The model may be automatically deformed into target image data using a hierarchy of geometric or probabilistic transformations.

Owner:NORTH CAROLINA UNIV OF THE

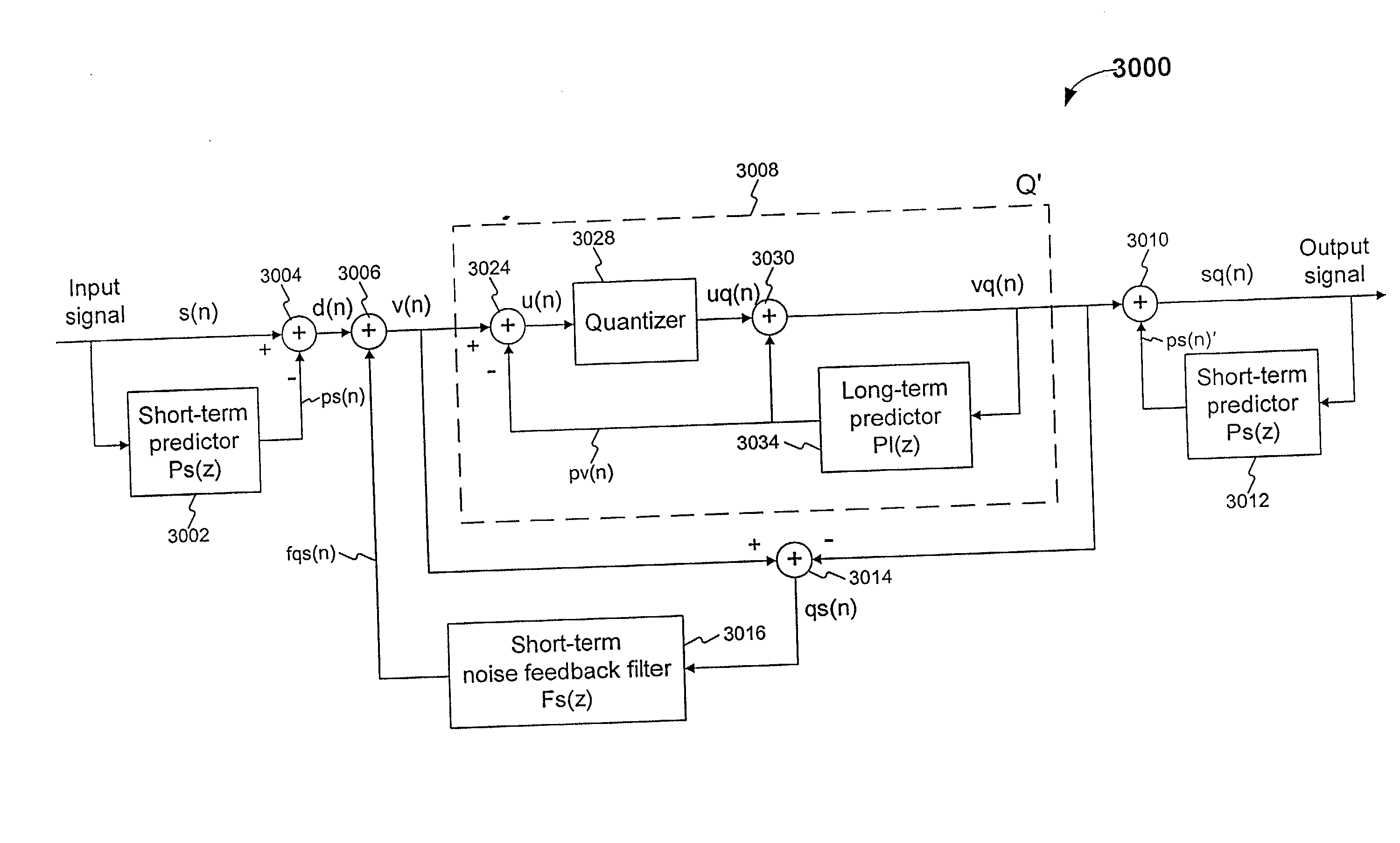

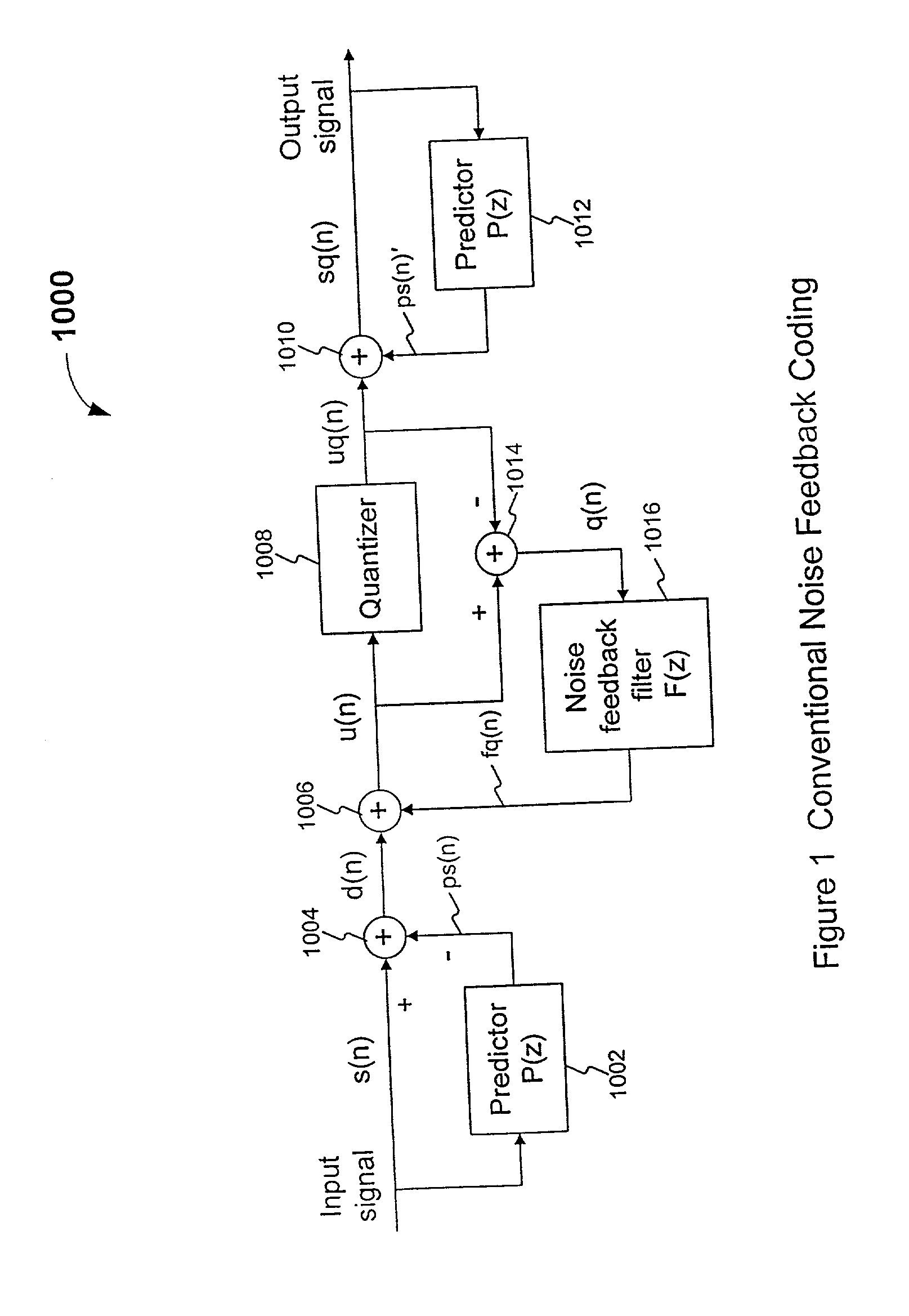

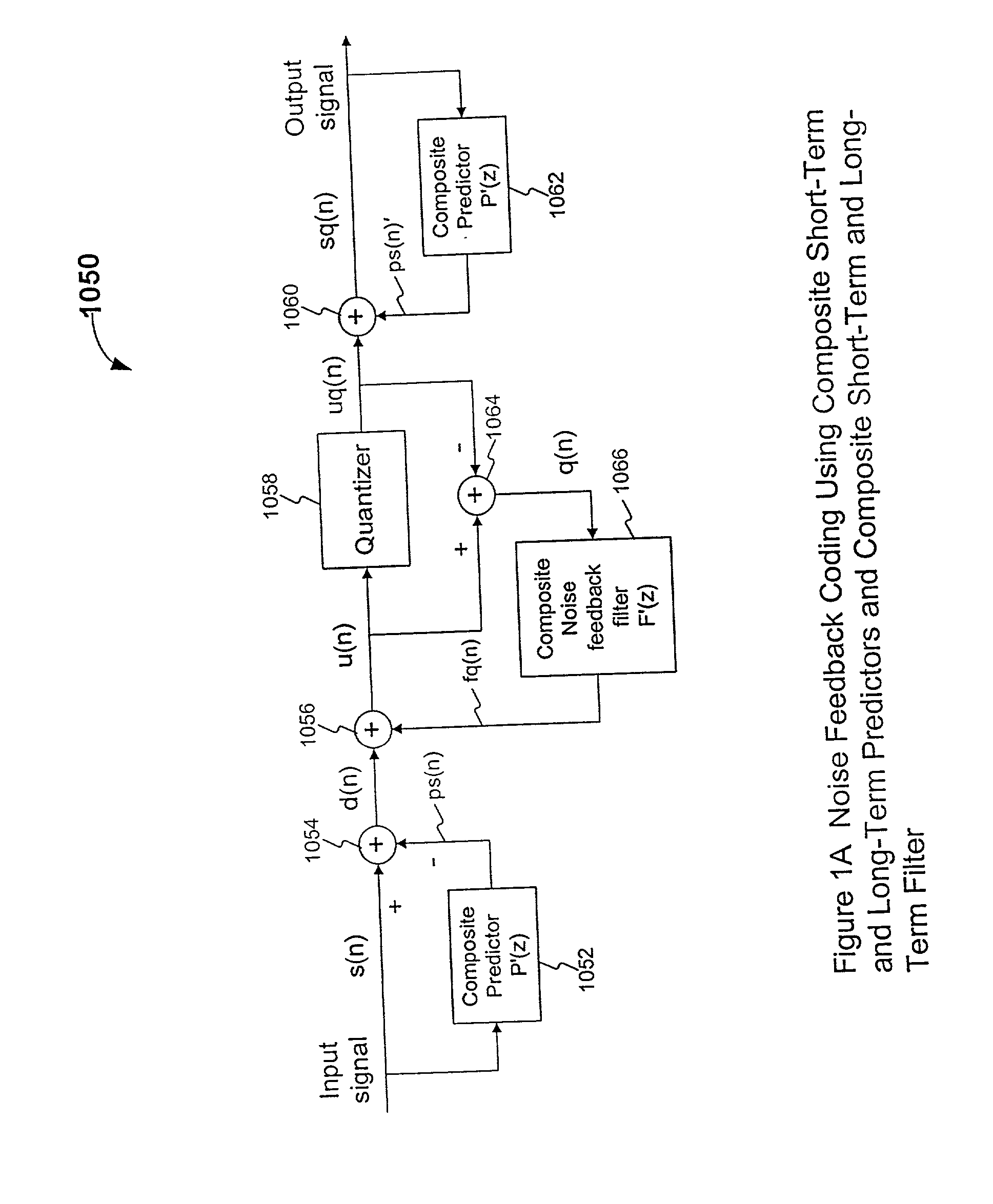

Noise feedback coding method and system for performing general searching of vector quantization codevectors used for coding a speech signal

InactiveUS20020069052A1Eliminate redundancyImprove perceived qualitySpeech analysisTwo-vectorAlgorithm

A method of searching a plurality of Vector Quantization (VQ) codevectors for a preferred one of the VQ codevectors to be used as an output of a vector quantizer for encoding a speech signal, includes determining a quantized prediction residual vector, and calculating a corresponding unquantized prediction residual vector and the energy of the difference between these two vectors (that is, a VQ error vector). After trying each of the plurality of VQ codevectors, the codevector that minimizes the energy of the VQ error vector is selected as the output of the vector quantizer

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Device for and method of estimating motion in video encoder

InactiveUS7362808B2Reduce power consumptionShorten the timeColor television with pulse code modulationColor television with bandwidth reductionSpatial correlationComputation complexity

A motion estimator and an estimation method for a video encoder to reduce power consumption by reducing the computational complexity of the motion estimator. In an upper step, a full search for a ±4 pixel search region for a 4×4 pixel block is performed at ¼ video resolution, to detect two motion vector candidates. In a medium step, a partial search for two vector candidates selected in the upper step and one vector candidate using a spatial correlation is performed for a 8×8 block within a ±1 or ±2 search region, to decide one motion vector candidate. In a lower step, a partial search for the ±1 or ±2 search region on 16×16 block is performed at full resolution, and a half pixel search for a motion vector candidate obtained in the lower step is performed to estimate a final motion vector. A ±4 pixel search region is operatively divided into four search regions, and the estimator sequentially searches the four ±2 pixel search regions to sequentially output SAD values.

Owner:SAMSUNG ELECTRONICS CO LTD

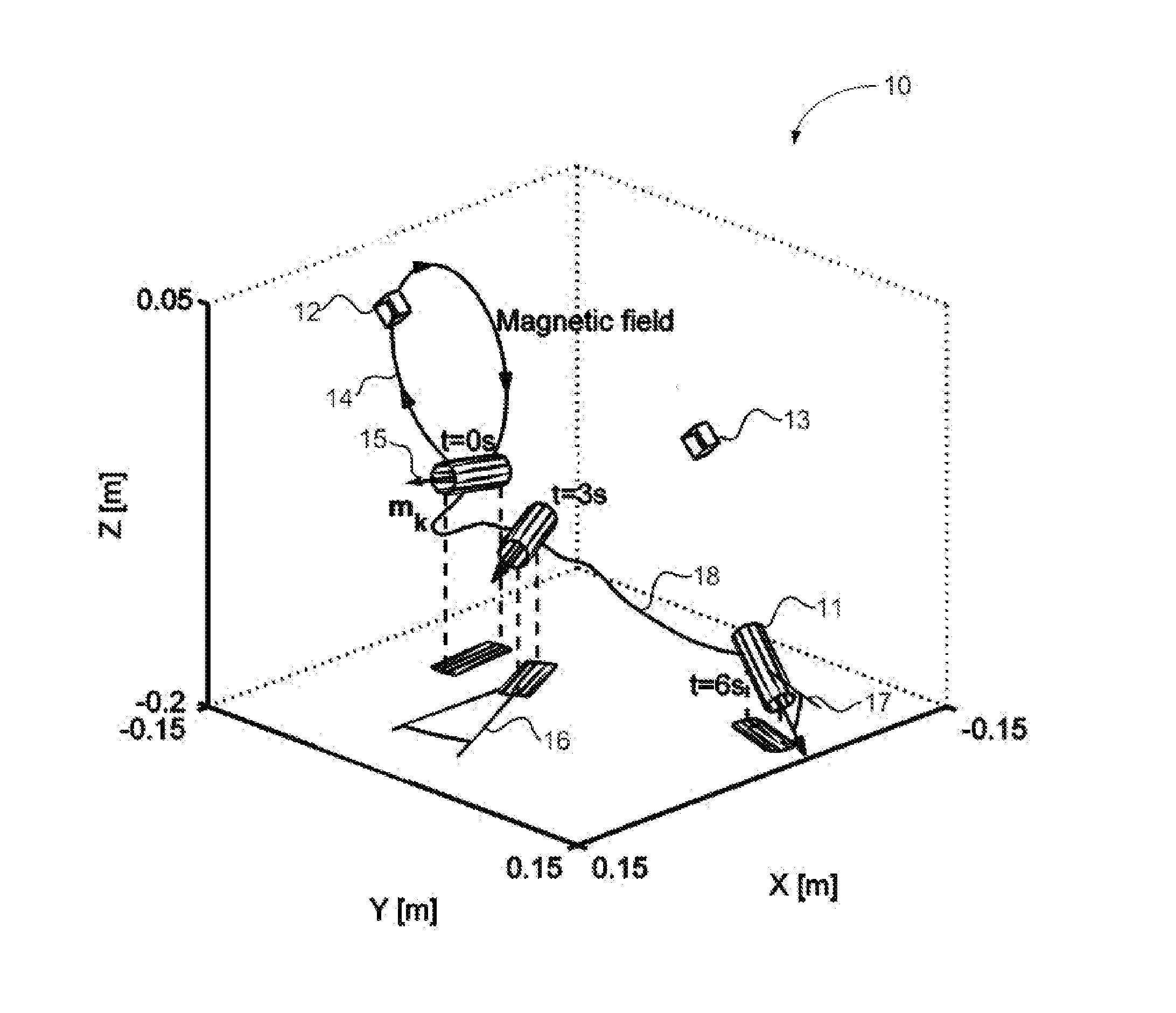

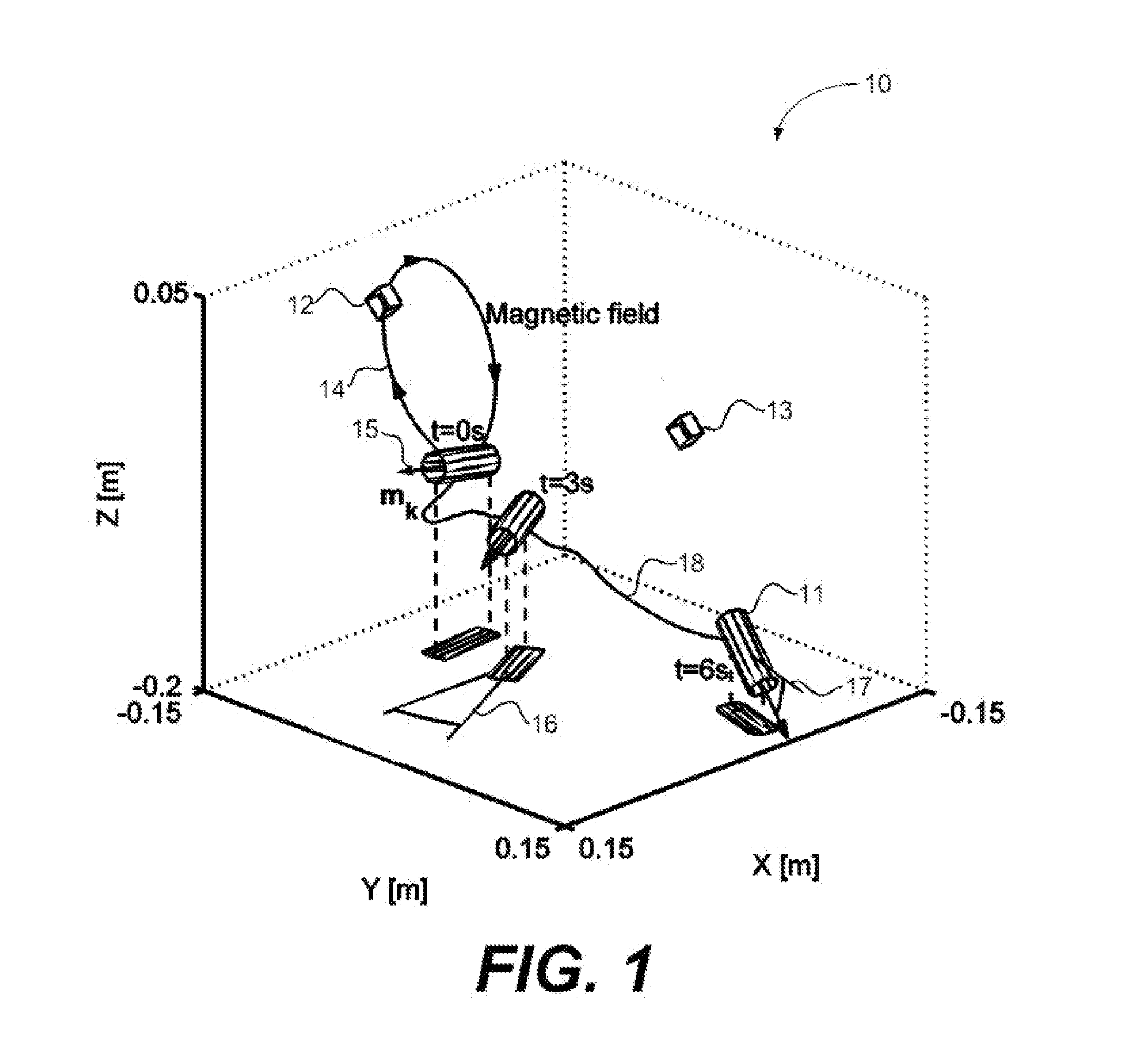

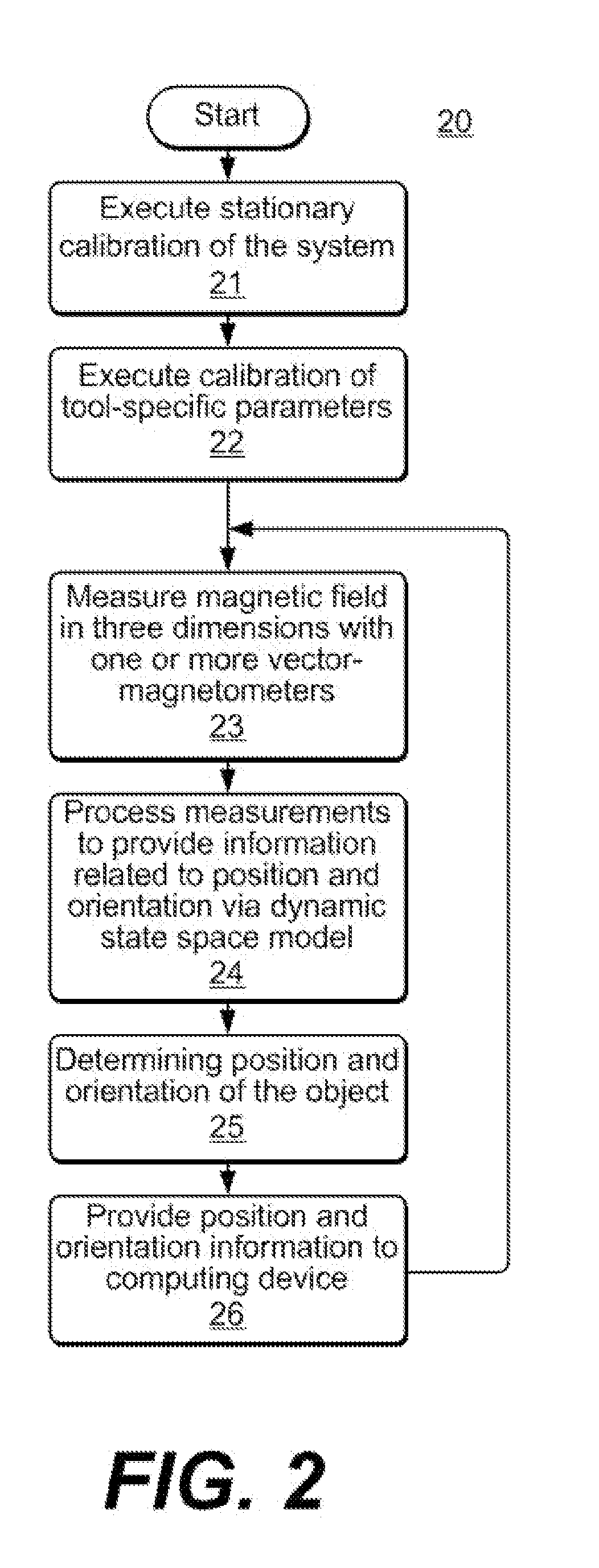

Method and Device for Pose Tracking Using Vector Magnetometers

ActiveUS20130249784A1Made very small and inexpensivelyBattery chargingMagnetic measurementsCathode-ray tube indicatorsMagnetic tension forceTwo-vector

In accordance with various embodiments of the invention, a user-borne computer input device is disclosed including a 5D or greater “mouse” or other tool or object containing or consisting of one or more permanent magnetic presenting permanent magnetic dipoles. The position and orientation of the device are determined by magnetic field strength measurements derived from at least two vector-magnetometers fixed in a reference frame connected to the computing device. The system allows a user to interact with the computing device in at least 5 dimensions by manipulating the position and orientation of the device within a measurement volume, which may be a few cubic meters.

Owner:ADVANCED MAGNETIC INTERACTION

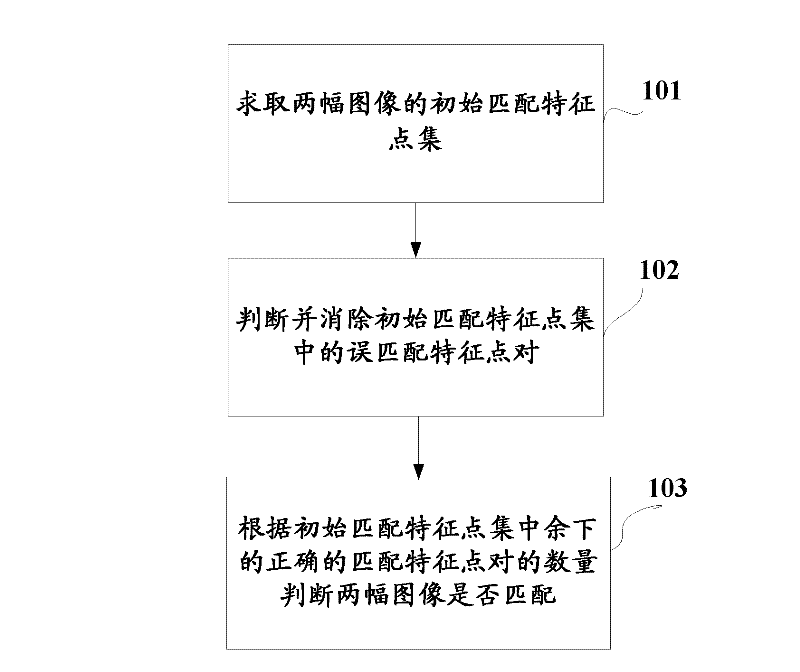

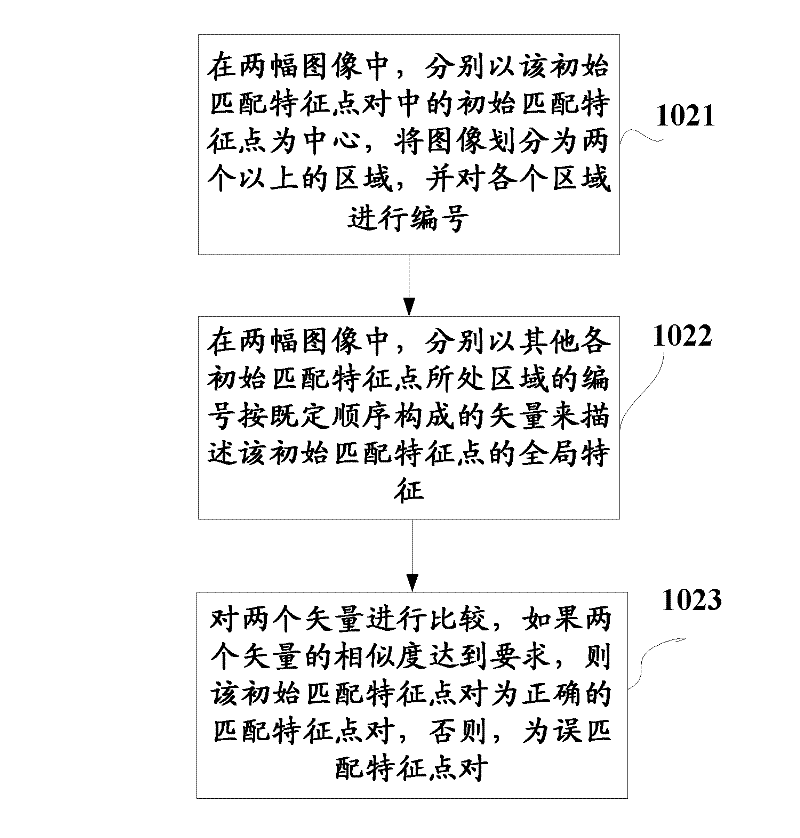

Image searching/matching method and system for the same

InactiveCN102521838AEliminate mismatchesImprove accuracyImage analysisSpecial data processing applicationsPattern recognitionTwo-vector

The invention discloses an image searching / matching method. The method comprises the following steps of: firstly, resolving an initial matching feature point set of two images, then, judging and eliminating error matching feature point pairs, and judging whether the two images are matched according to the number of the correct matching feature point pairs; wherein the step of judging whether the initial matching feature points are the error matching feature point pairs comprises the following procedures of: in the two images, respectively taking the initial matching feature point as a center, dividing each image into more than two regions, and numbering each region; in the two images, respectively describing the global feature of the initial matching feature point by a vector composed of the number of the region where each of the other initial matching feature points is located; comparing the two vectors, if the similarity of the two vectors meets the requirement, the initial matching feature points are the correct matching feature point pairs. The method of the invention not only is adaptive to the image searching in complex conditions, such as image size variation, image embedding, visual angle variation and the like, but also has higher searching efficiency and recognition accuracy. The invention further discloses an image searching / matching system.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT

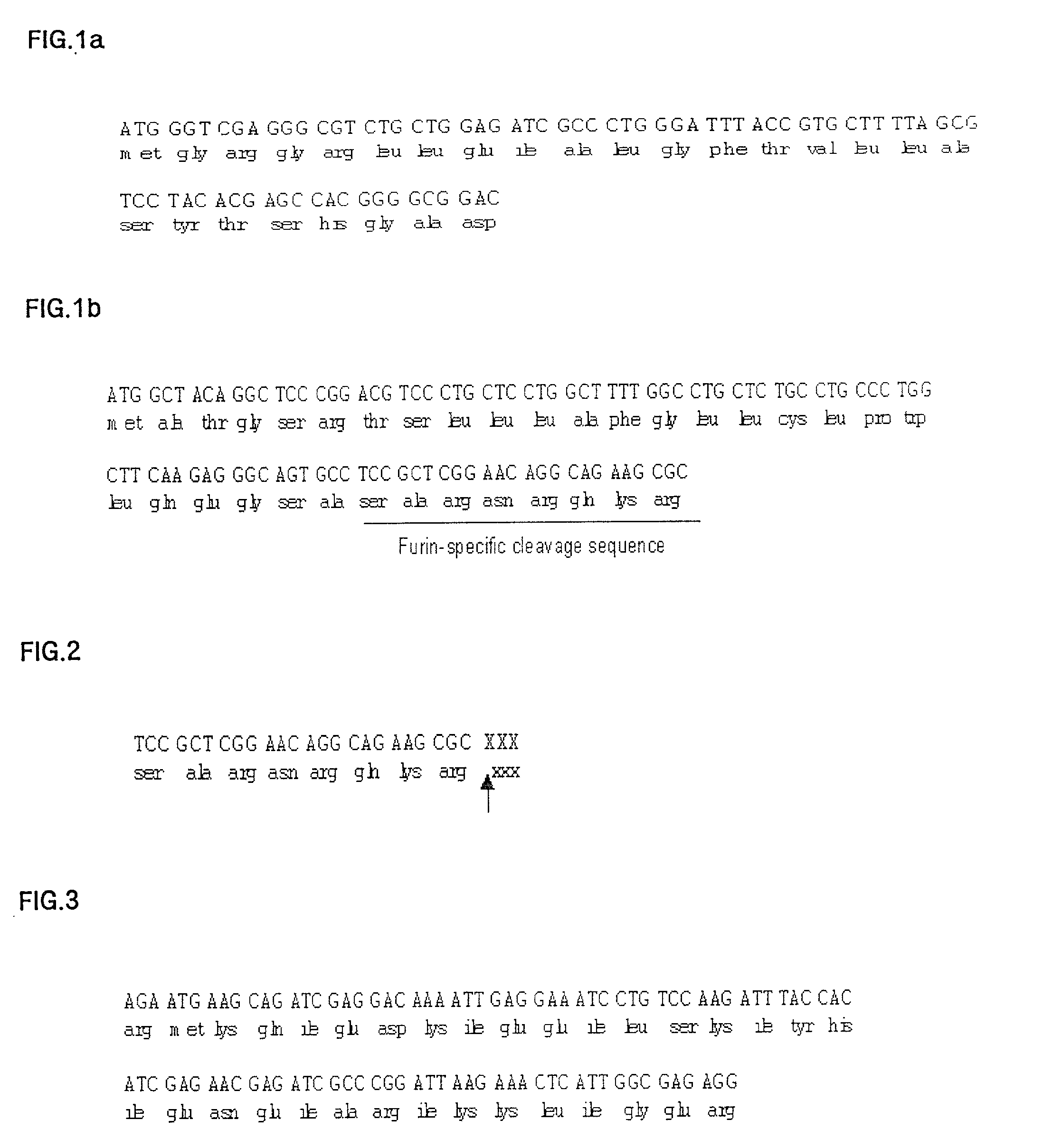

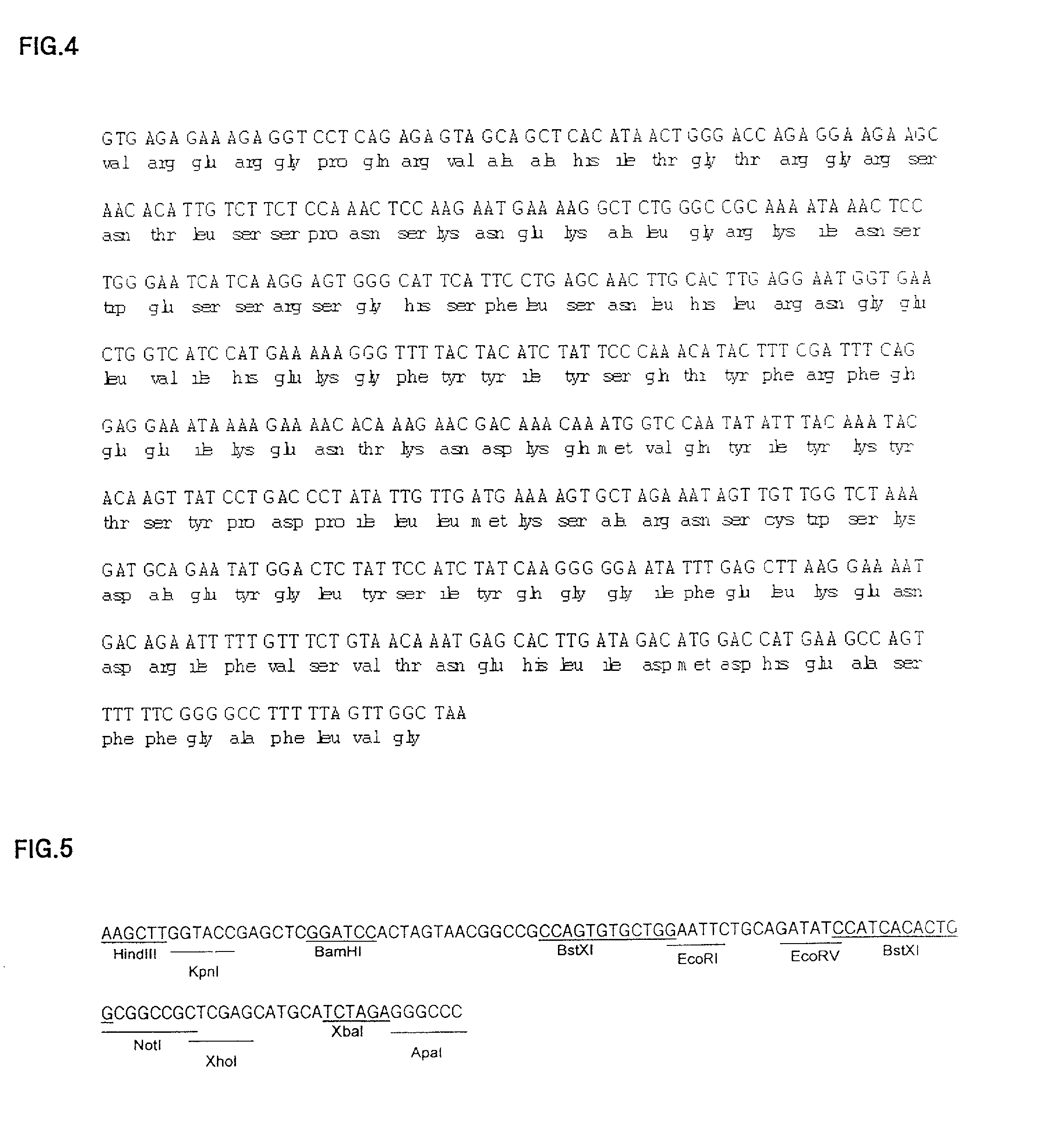

DNA cassette for the production of secretable recombinant trimeric TRAIL proteins, tetracycline/ doxycycline-inducible adeno-associated virus vector, their combination and use in gene therapy

InactiveUS20020128438A1Enhanced homotrimer-forming functionFunction increasePeptide/protein ingredientsAntibody mimetics/scaffoldsDiseaseTwo-vector

The present invention relates to the construction of a TRAIL DNA cassette for the production of a secretable trimeric rTRAIL, the development of pCMVdw vectors and pAAVdw vectors harboring a feed-forward amplification loop type Tet-On system that can be packaged into AAV particles, the preparation of a recombinant vectors by the combination of the TRAIL DNA cassette and the two vectors, and the treatment of diseases including cancer using such vectors. The present invention provides a TRAIL DNA cassette comprising a secretion signal (SS) sequence, a trimer-forming domain (TFD) and a TRAIL(114-281) coding cDNA. The TRAIL cassette thus constructed can be cloned into an appropriate expression vector, and subsequently used in the mass production of a secretable recombinant trimeric TRAIL protein or administered to a patient for a gene therapy.

Owner:SEOL DAI WU

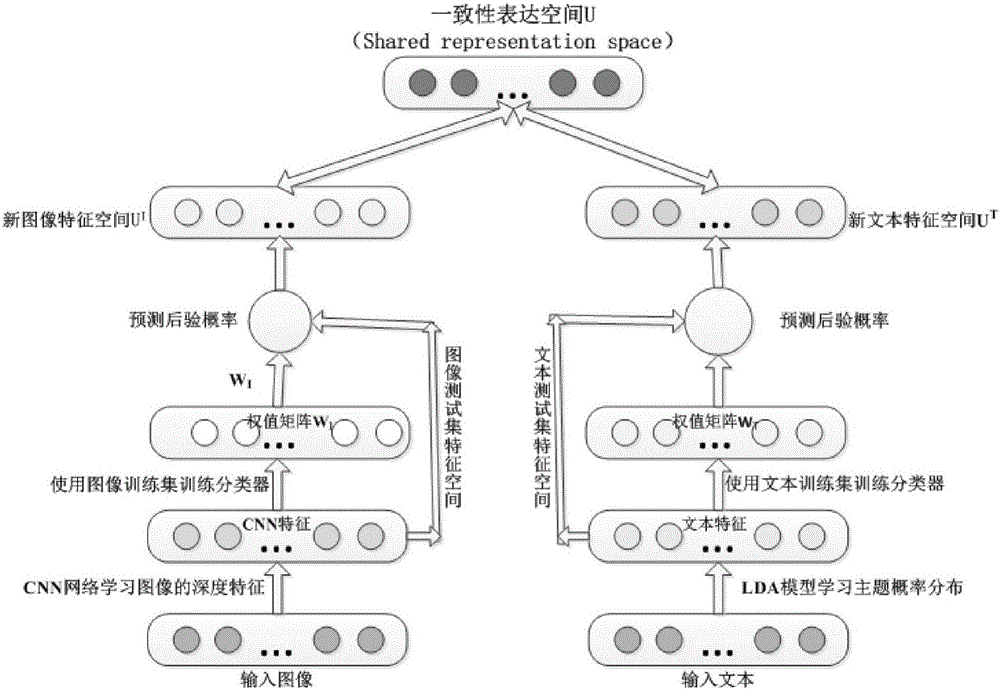

Cross-media retrieval method based on deep learning and consistent expression spatial learning

ActiveCN106095829AExpress abstract conceptsAutomatically learns wellMultimedia data queryingSpecial data processing applicationsFeature vectorTwo-vector

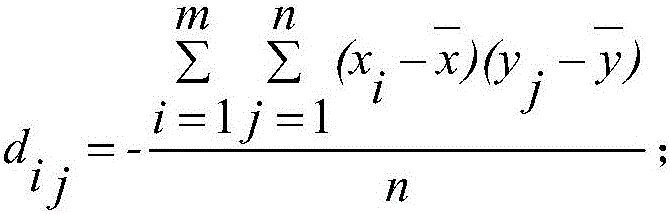

The invention relates to a cross-media retrieval method based on deep learning and consistent expression spatial learning. By starting with two methods including feature selection and the similarity estimation of two highly-isomerous feature spaces, the invention puts forward the cross-media retrieval method capable of improving multimedia retrieval accuracy to a large extent by aiming at the cross-media information of two modalities including an images and a text. The method disclosed by the invention is a multimedia information mutual retrieval method which aims at two modalities including the image and the text, and cross-media retrieval accuracy is improved to a large extent. In a model which is put forward by the invention, a regulated vector inner product is adopted as a similarity metric algorithm, the directions of the feature vectors of two different modalities are considered, the influence of an index dimension is eliminated after centralization is carried out, an average value of elements is subtracted from each element in the vectors, the correlation of the two vectors subjected to average value removal is calculated, and accurate similarity can be obtained through calculation.

Owner:HUAQIAO UNIVERSITY

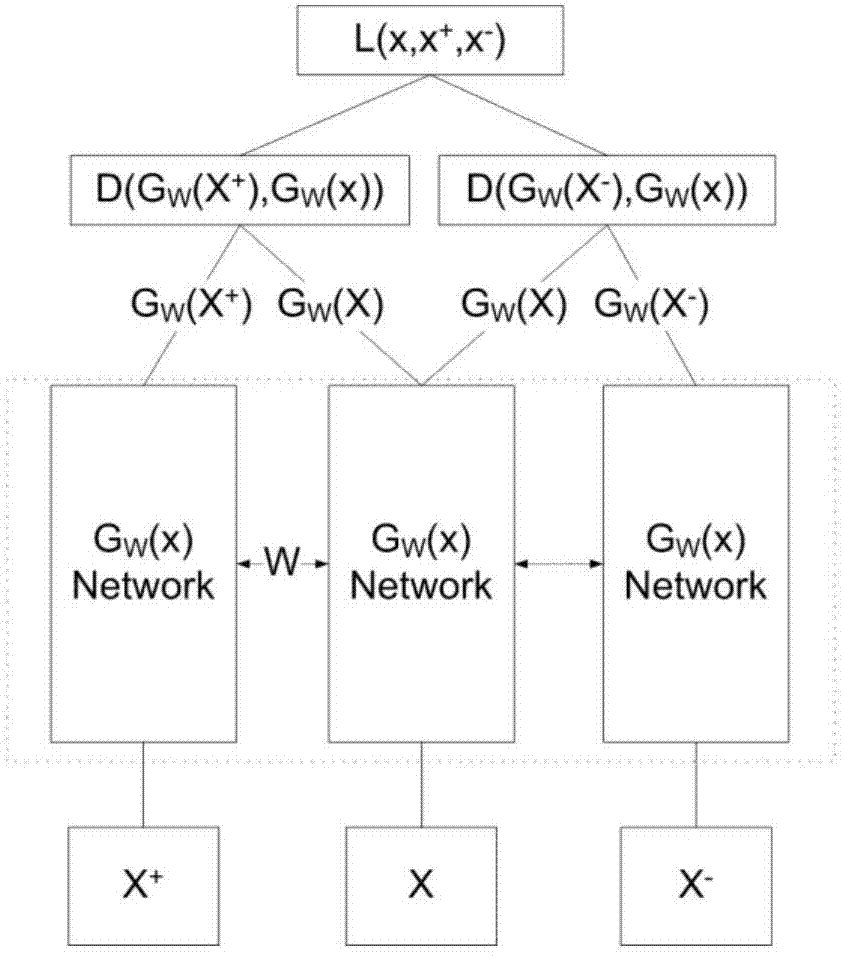

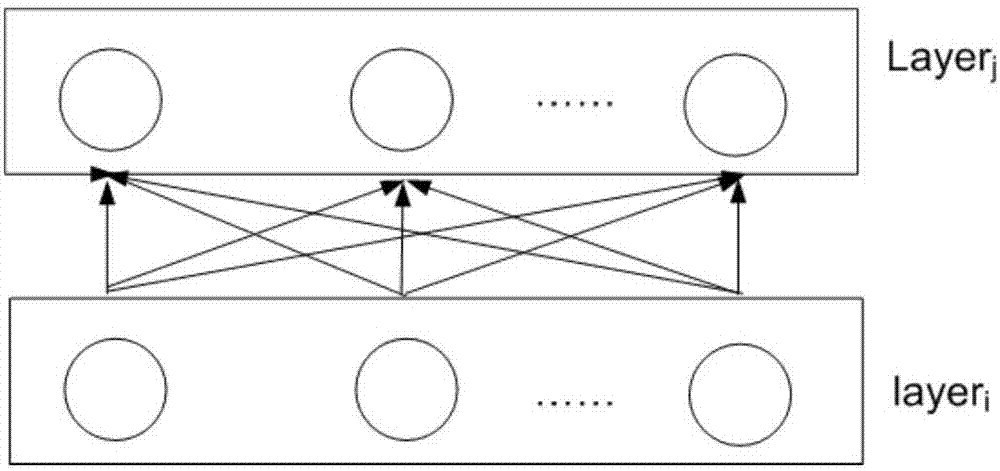

Image-text retrieval system and method based on multi-angle self-attention mechanism

PendingCN109992686AConvenience to followOptimization parametersStill image data queryingNeural architecturesPattern recognitionTwo-vector

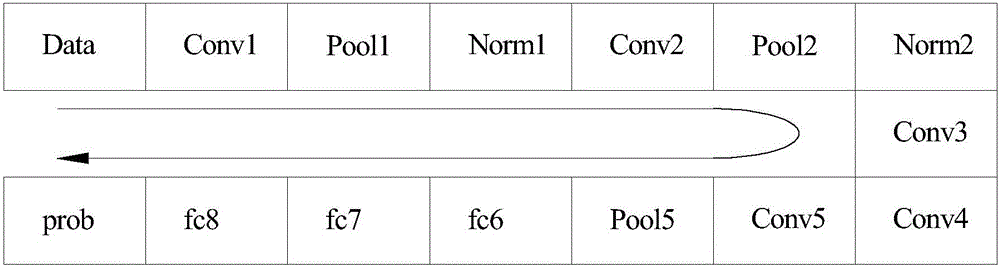

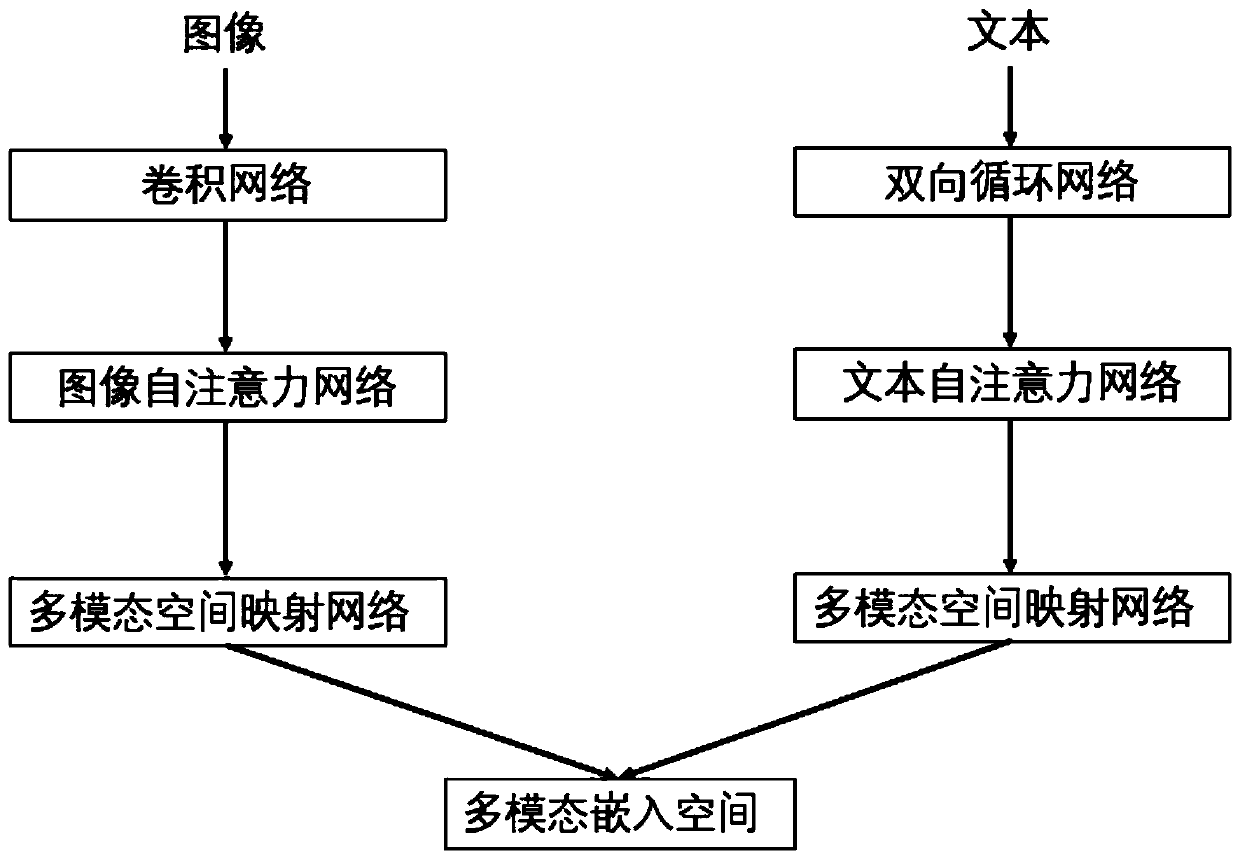

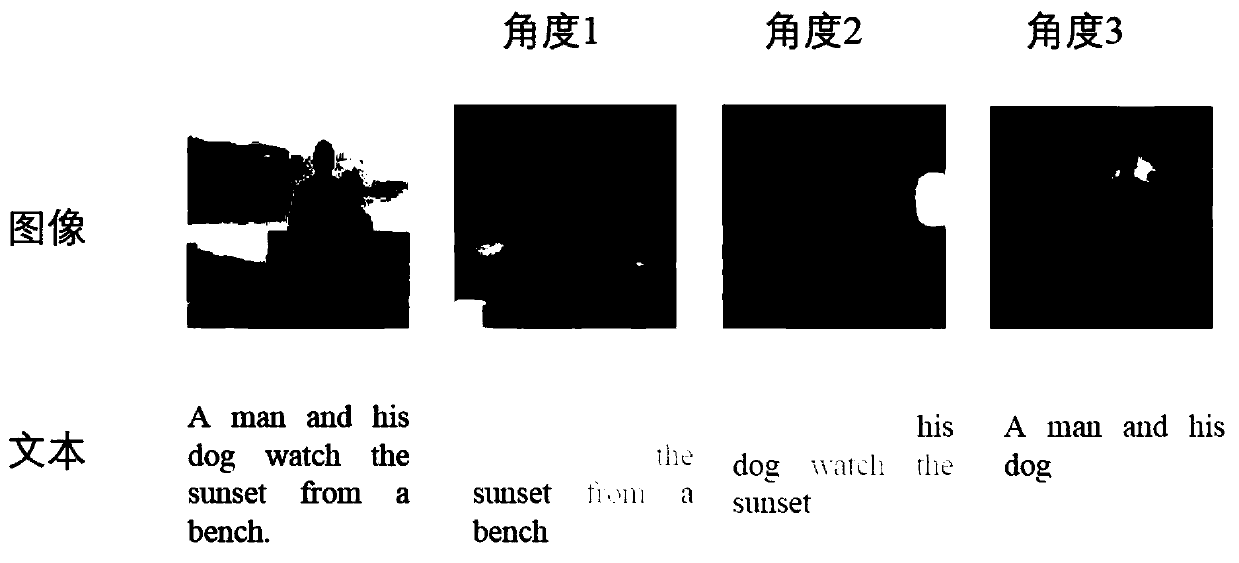

The invention belongs to the technical field of cross-modal retrieval, and particularly relates to an image-text retrieval system and method based on a multi-angle self-attention mechanism. The systemcomprises a deep convolutional network, a bidirectional recurrent neural network, an image, a text self-attention network, a multi-modal space mapping network and a multi-stage training module. The deep convolutional network is used for acquiring an embedding vector of an image region feature in an image embedding space. The bidirectional recurrent neural network is used for acquiring an embedding vector of a word feature in a text space, and the two vectors are respectively input to the image and the text self-attention network. The image and text self-attention network is used for acquiringan embedded representation of an image key area and an embedded representation of key words in sentences. The multi-modal space mapping network is used for acquiring the embedded representation of the image text in the multi-modal space. The multi-stage training module is used for learning parameters in the network. A good result is obtained on a common data set Flickr30k and an MSCOCO, and the performance is greatly improved.

Owner:FUDAN UNIV

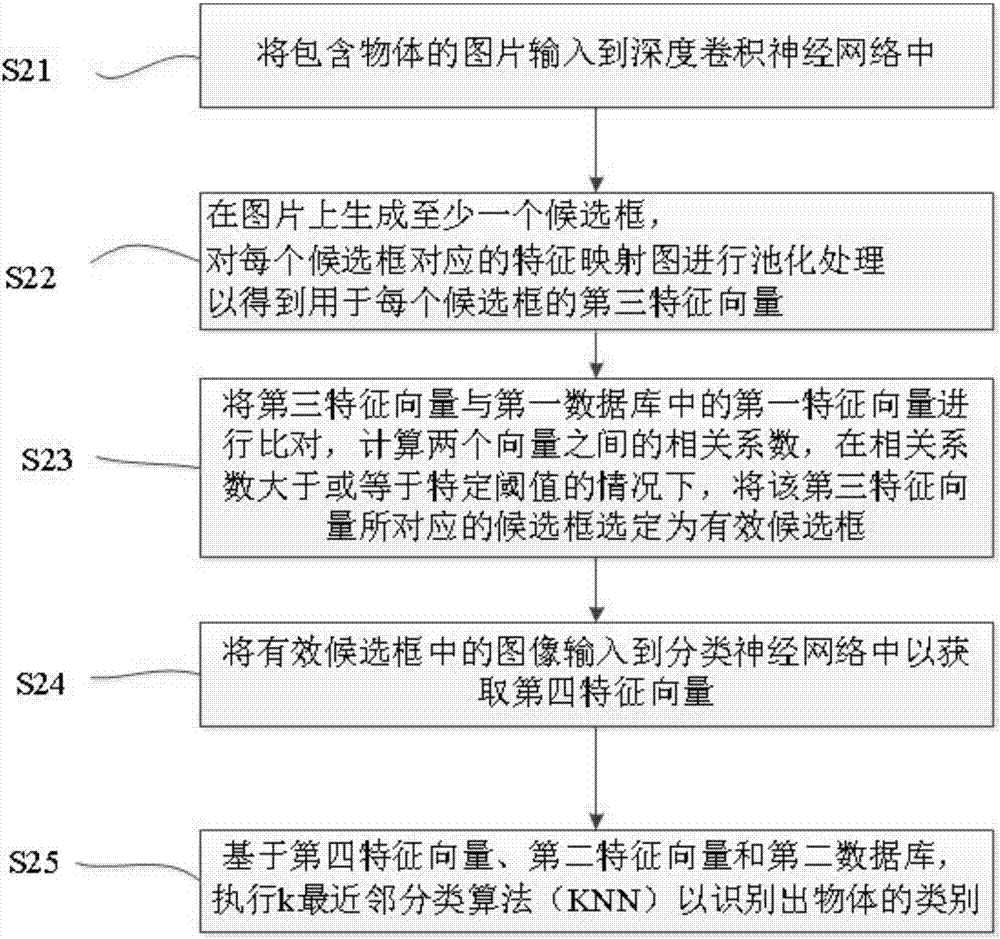

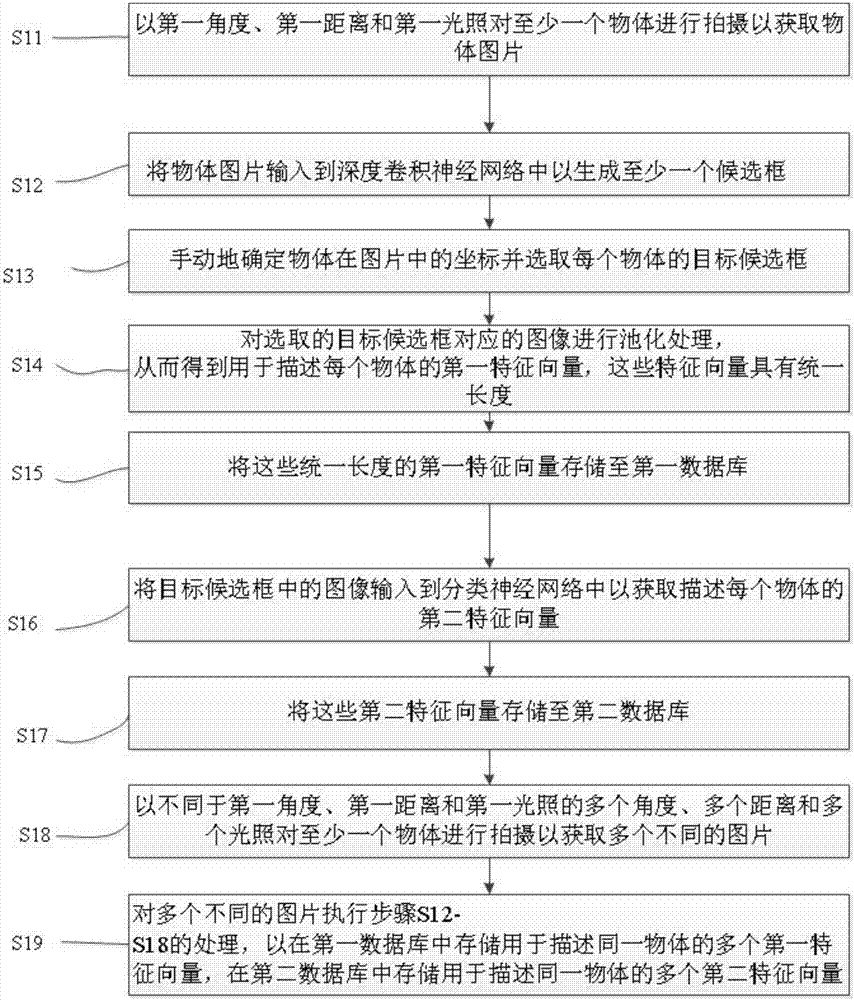

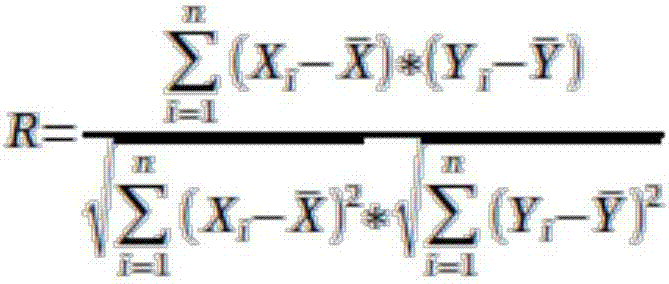

Image based object recognizing method

ActiveCN106960214AImprove classification accuracyImprove recognition accuracyCharacter and pattern recognitionFeature vectorSorting algorithm

The invention provides an image based object recognizing method. The method includes a training process used for establishing a first database including first characteristic vectors used for describing object shapes and a second database including second characteristic vectors used for describing object types; a recognition process. The recognition process includes inputting pictures into a depth convolutional neural network; generating at least one candidate box in each picture, performing pooling treatment on a characteristic map corresponding to each candidate box so as to obtaining third characteristic vectors; comparing the third characteristic vectors with the first characteristic vectors in the first database, calculating coefficient of association between the two vectors, and selecting the candidate boxes corresponding to the third characteristic vectors to be bounding boxess when the coefficient of association is greater than or equal to a specific threshold value; inputting the images in the bounding boxes to a classification neural network so as to obtain fourth characteristic vectors; and based on the fourth characteristic vectors, the second characteristic vectors and the second database, performing a kNN (k-Nearest Neighbor) sorting algorithm for recognizing object types.

Owner:北京一维弦教育科技有限公司

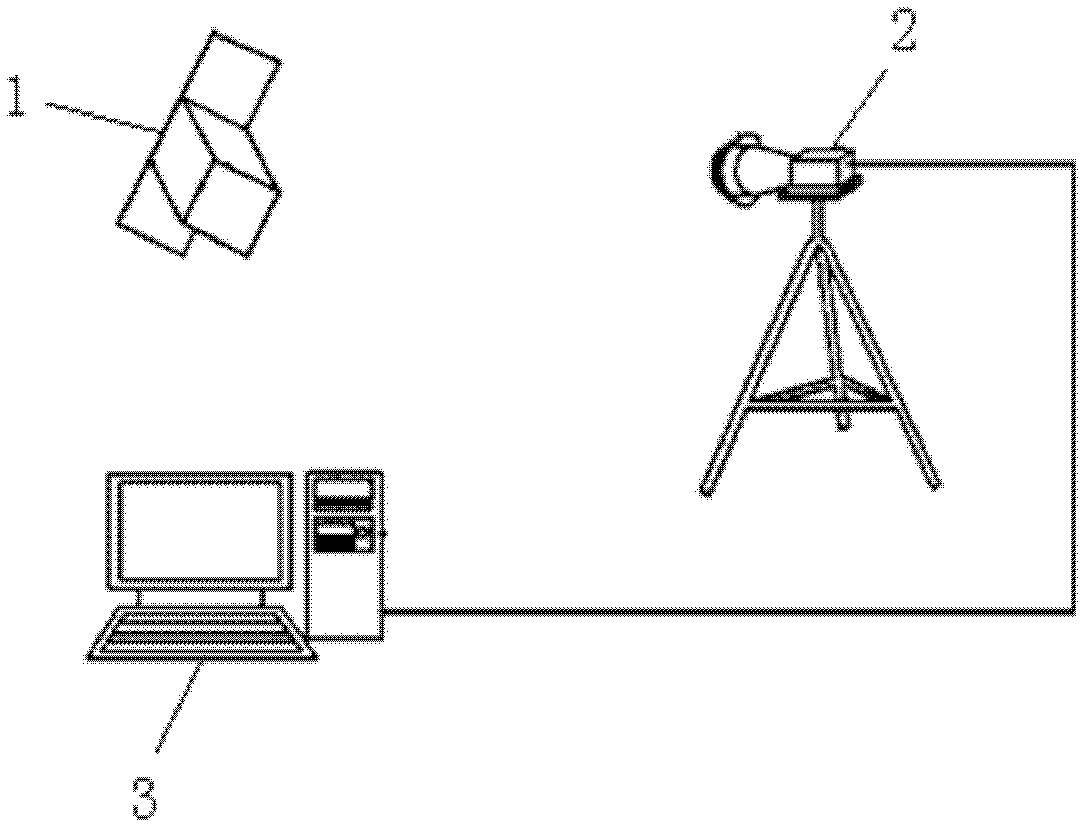

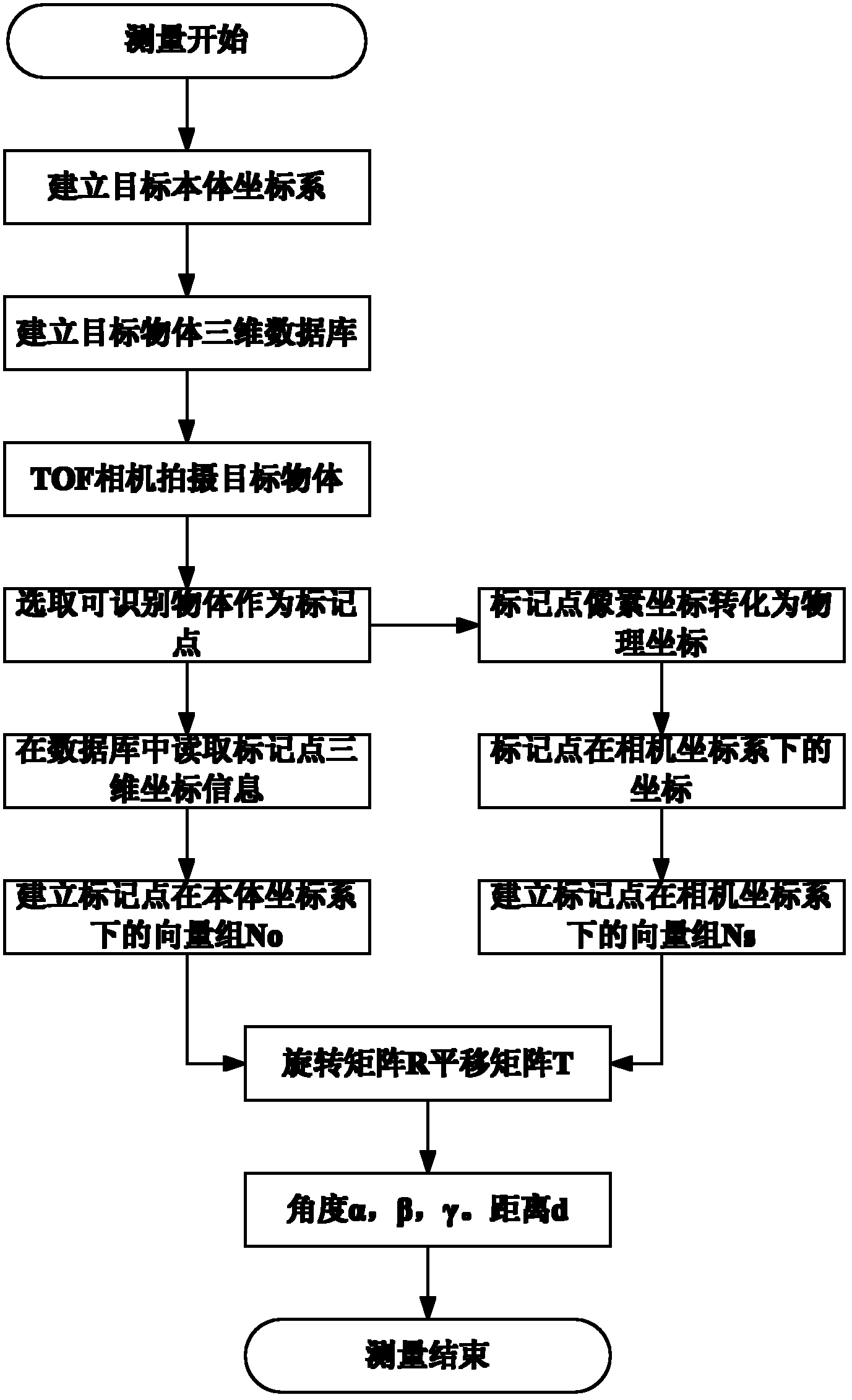

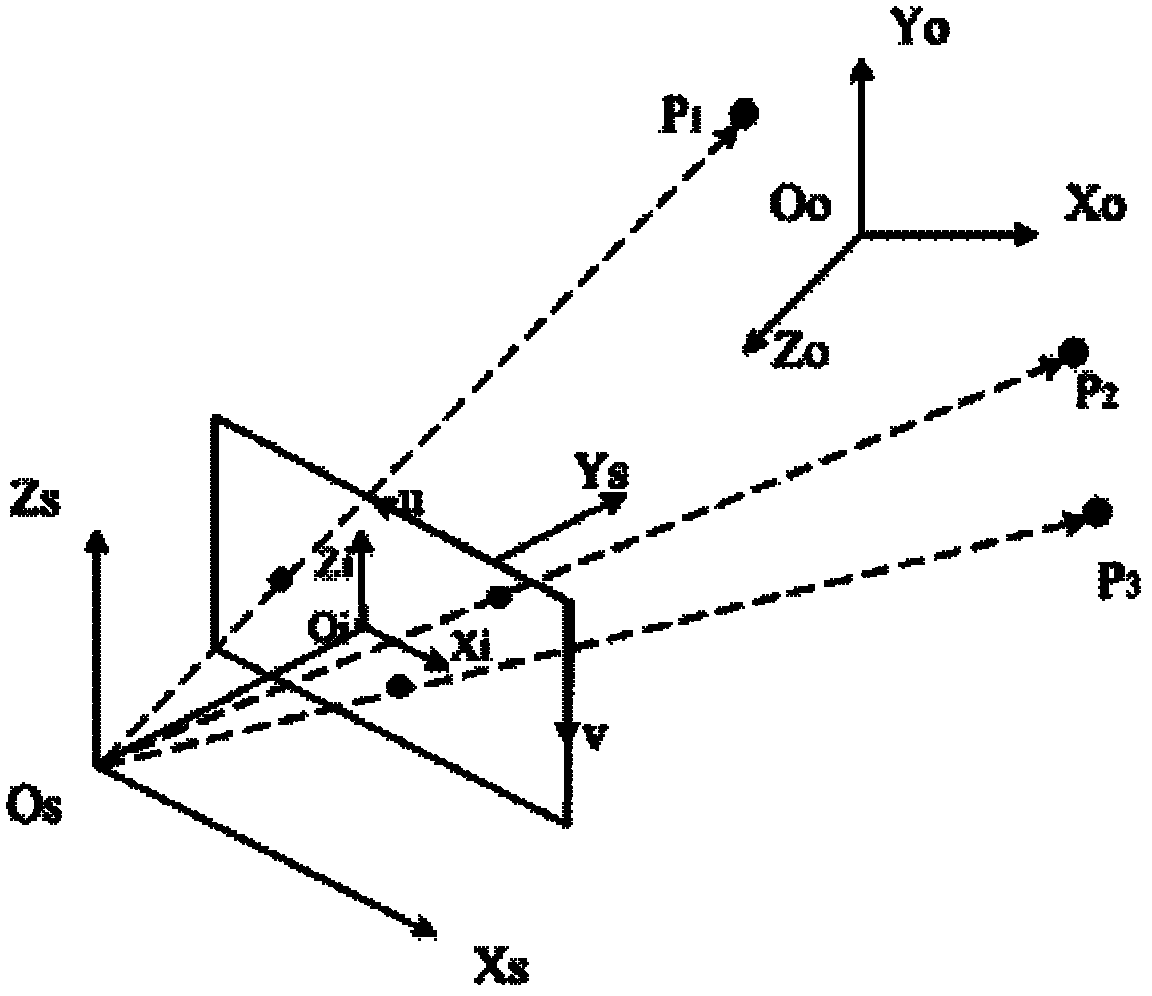

Position and attitude measurement method based on time of flight (TOF) scanning-free three-dimensional imaging

InactiveCN102252653AHigh speedSmall amount of calculationAngle measurementOptical rangefindersTwo-vectorData information

The invention discloses a position and attitude measurement method based on time of flight (TOF) scanning-free three-dimensional imaging, which is characterized by comprising the following steps of: establishing a three-dimensional coordinate information database for a target object, taking a TOF camera as an imaging and distance data acquisition device, selecting three identifiable objects in a photographed picture as mark points, acquiring coordinate information of the mark points in the database under a target body coordinate system, and establishing a vector group; obtaining a distance from an optical center to the mark points by a method for calculating a distance between two points in a non-iterative three-dimensional space through the data information acquired by the TOF camera, and calculating coordinates of the mark points under a camera coordinate system; and establishing another vector group, and calculating a rotation matrix and a translation matrix through a relationship between the two vector groups so as to acquire an attitude angle and translation quantity, namely a relative attitude of the target object. By the method, a large number of iterative algorithms are avoided, position and attitude can be quickly solved, and the requirement of position and attitude parameter determination accuracy can be met.

Owner:HEFEI UNIV OF TECH

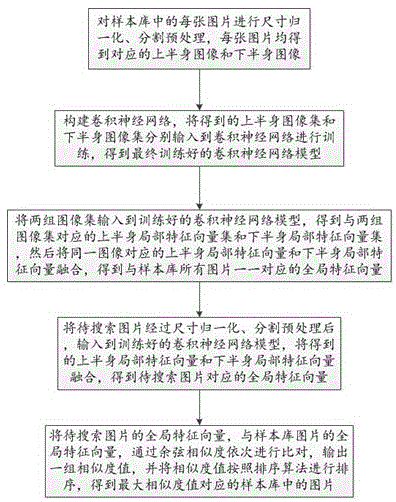

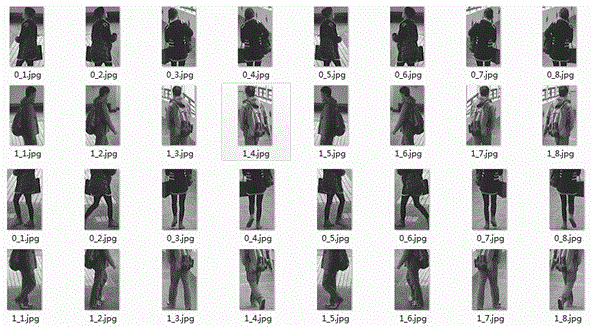

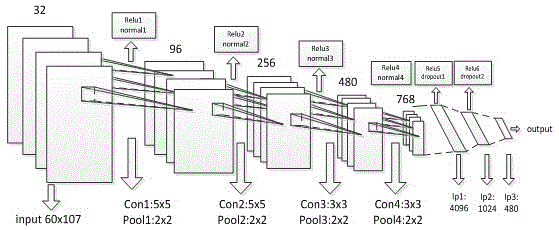

Cross-scene pedestrian searching method based on depth learning

InactiveCN105631413AStrong feature robustnessImprove feature performanceCharacter and pattern recognitionCosine similarityFeature vector

The present invention discloses a cross-scene pedestrian searching method based on depth learning. The method comprises a step of carrying out preprocessing on each image in a sample library, a step of constructing and training a convolutional neural network, a step of extracting an upper half body local feature vector set and a lower half body local feature vector set from two groups of preprocessed image sets, and then the two local feature vector sets are fused to obtain a global feature vector, a step of carrying out preprocessing on an image to be searched, extracting an upper half body local feature vector and a lower half body local feature vector and fusing the two vectors to obtain a global feature vector, a step of orderly comparing the global feature vector corresponding to the image to be searched and the global feature vectors corresponding to the sample library images through a cosine similarity, outputting a group of similarity values, and sorting the similarity values according to a sorting algorithm. The method has the advantages that with the pedestrian images obtained in a monitoring video as the sample library, the design of features is not needed, the robustness is high, and the accuracy rate of actual searching is high.

Owner:CHINACCS INFORMATION IND

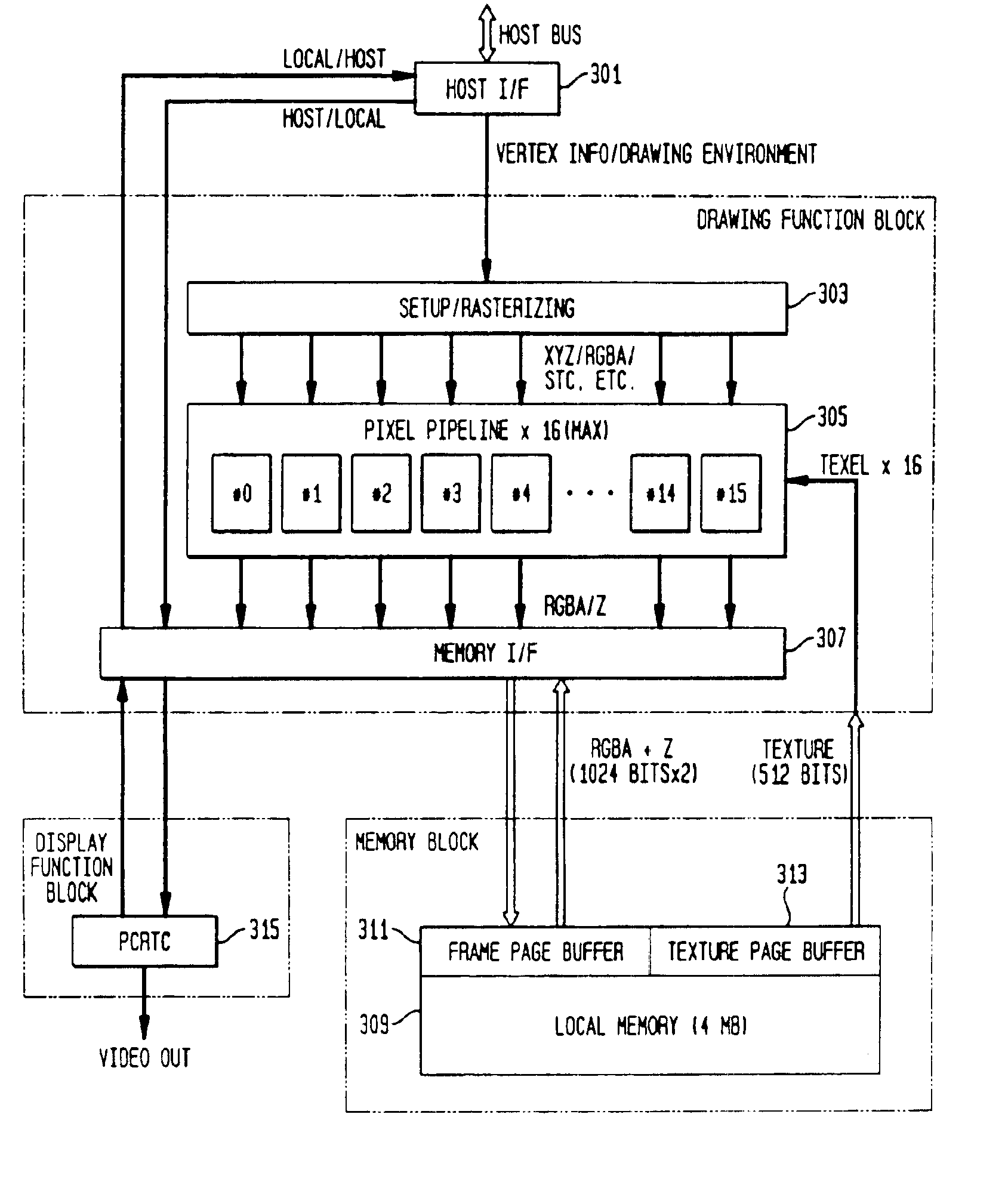

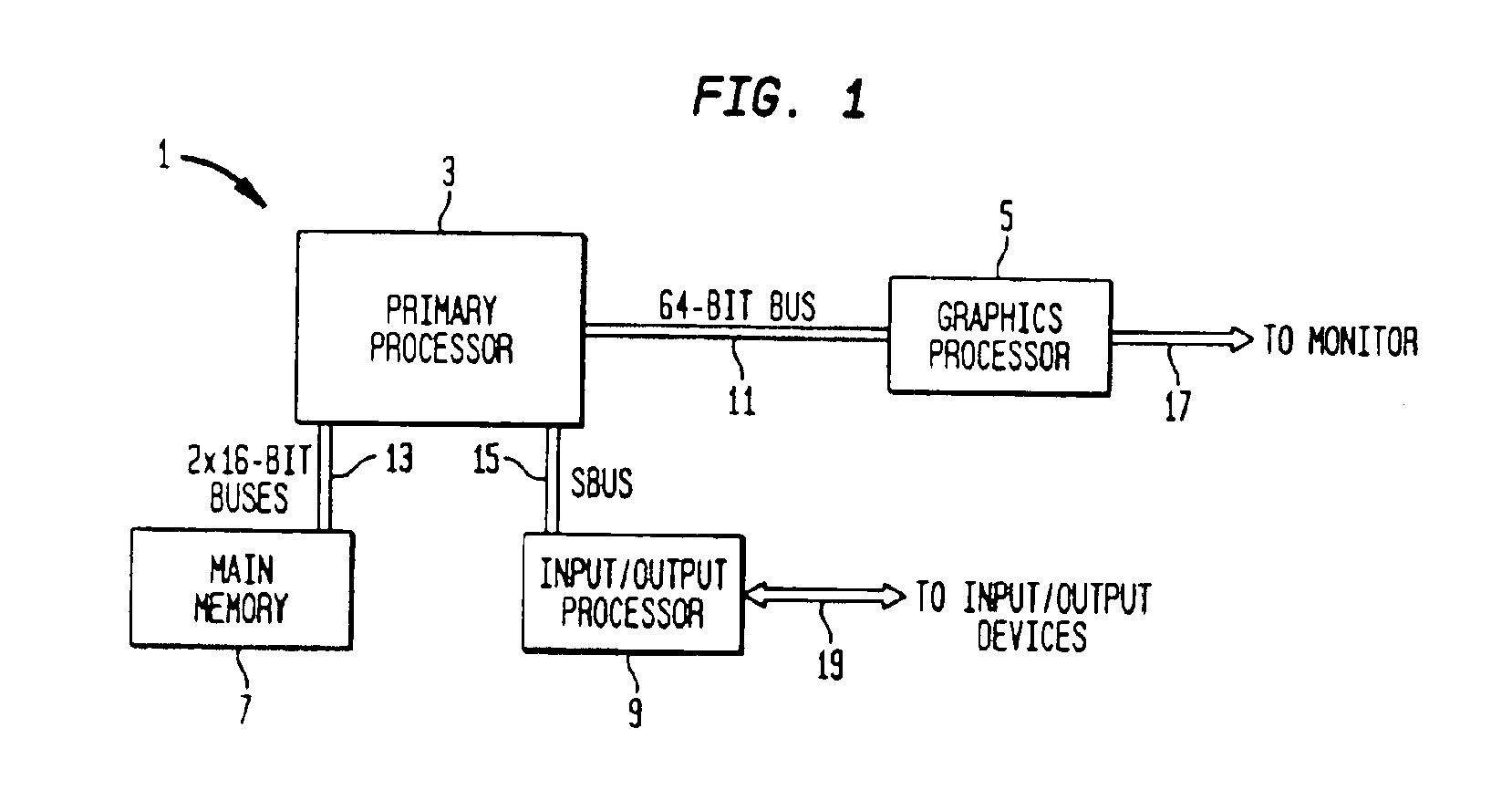

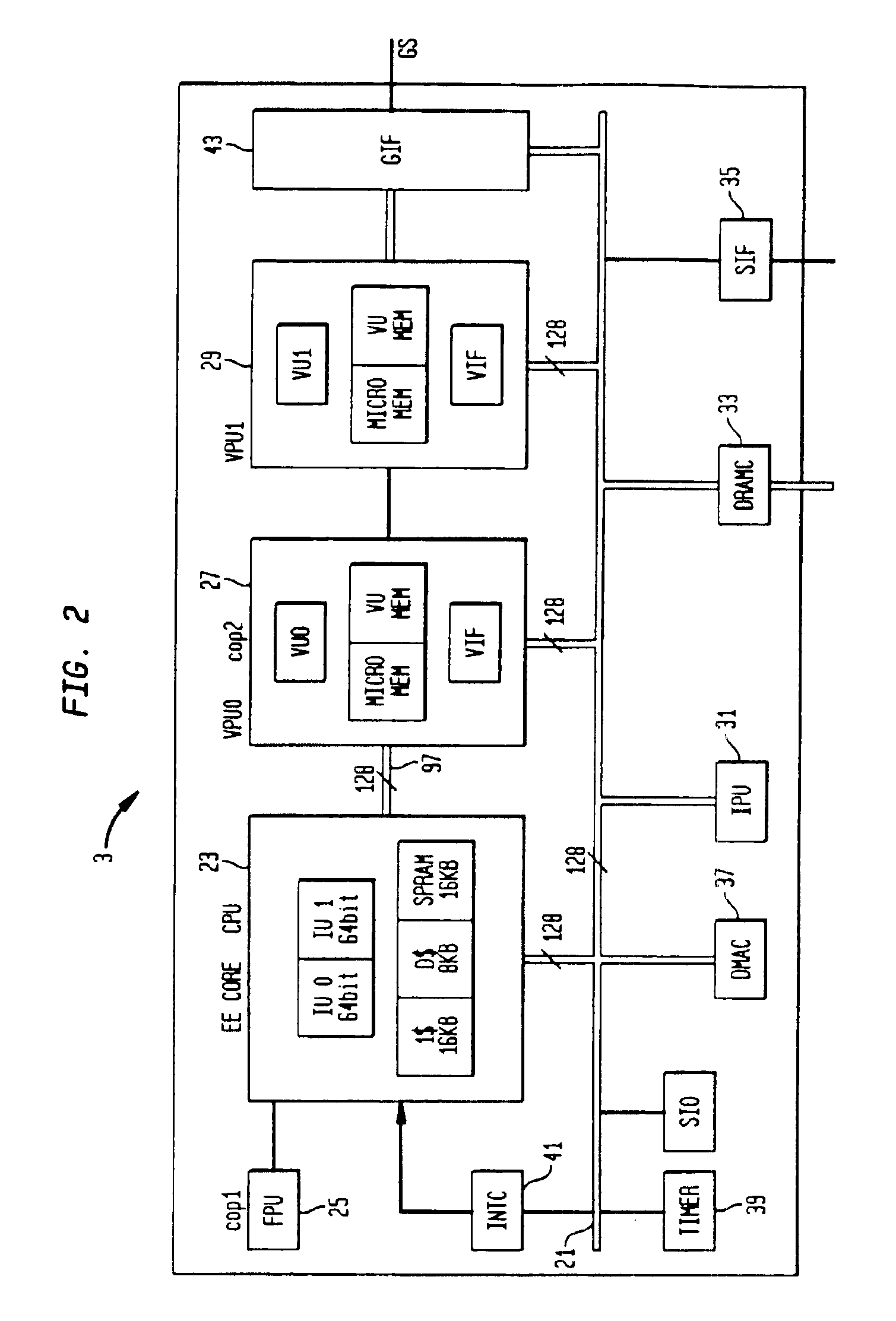

Game system with graphics processor

InactiveUS6891544B2Reduce data volumeHide main memory latencyIndoor gamesDigital computer detailsFill rateData stream

The present invention relates to the architecture and use of a computer system optimized for the efficient modeling of graphics. The computer system has a primary processor and a graphics processor. The primary processor has two vector processor units within it, one which is closely connected to central processor unit. Simultaneously performing complex modeling calculations on the first vector processor and CPU, and geometry transformation calculations on the second vector processor, allows for efficient modeling of graphics. Furthermore, the graphics processor is optimized to rapidly switch between data flows from the two vector processors. In addition, the graphics processor is able to render many pixels simultaneously, and has a local memory on the graphics processor chip that acts as a frame buffer, texture buffer, and z buffer. This allows a high fill rate to the frame buffer.

Owner:SONY COMPUTER ENTERTAINMENT INC

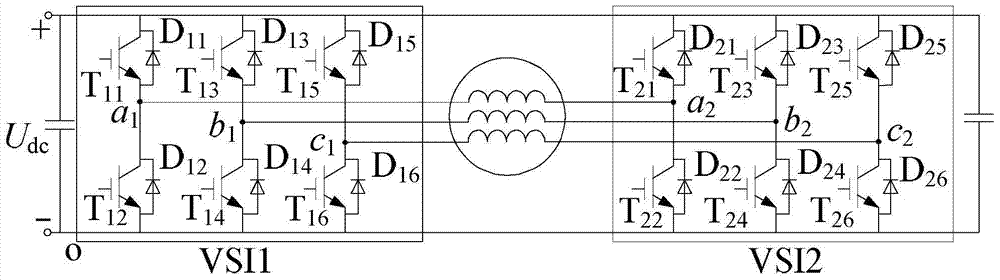

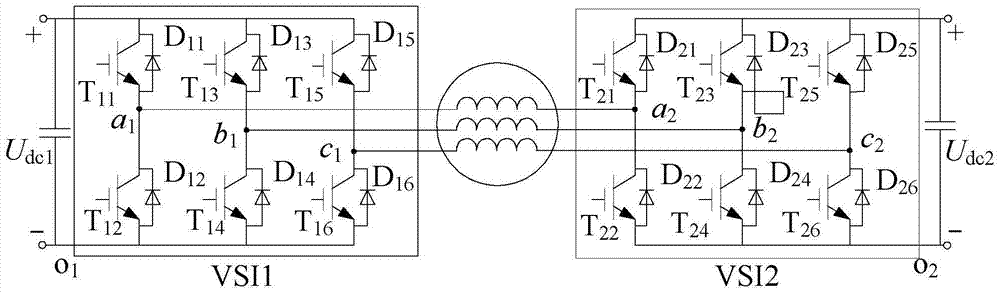

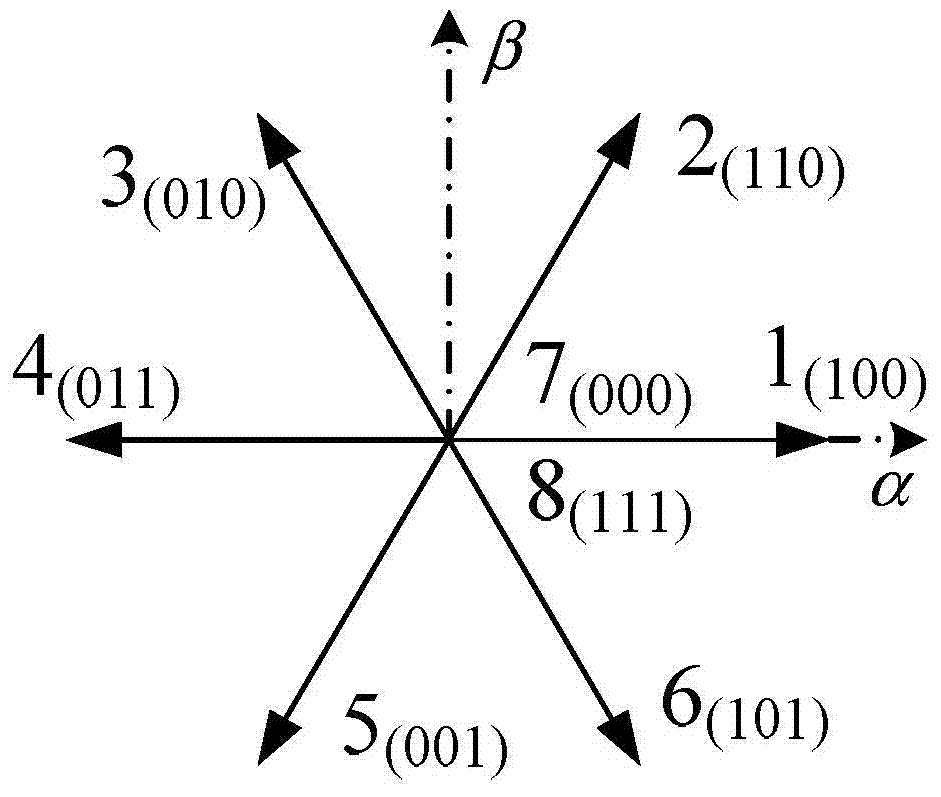

Method for modulating space vectors of double inverters

InactiveCN104506070AReject common mode voltageIncrease profitElectronic commutation motor controlAC motor controlVoltage vectorTwo-vector

The invention discloses a method for modulating space vectors of double inverters, and belongs to the field of inverter control. The method is used for solving the problem that in the prior art, when basic space voltage vectors which do not generate common-mode voltage are selected to modulate voltage, the utilization of direct-current bus voltage is low. The method comprises the steps that a space voltage vector plane is divided into six sectors, a reference voltage vector is divided into two vectors, one vector is equivalent to the operation that one of the double inverters is clamped in a specific switching state, and the other vector is modulated by the other inverter in six sub-sectors of the inverter to be achieved; modulation is conducted within the maximum voltage range, an equivalent zero vector distribution factor is introduced when the vectors are synthesized, the common-mode voltage generated in the process of synthesis of the basic voltage vectors is counteracted with common-mode voltage generated by a zero vector, and common-mode voltage of the double inverters is restrained.

Owner:HARBIN INST OF TECH

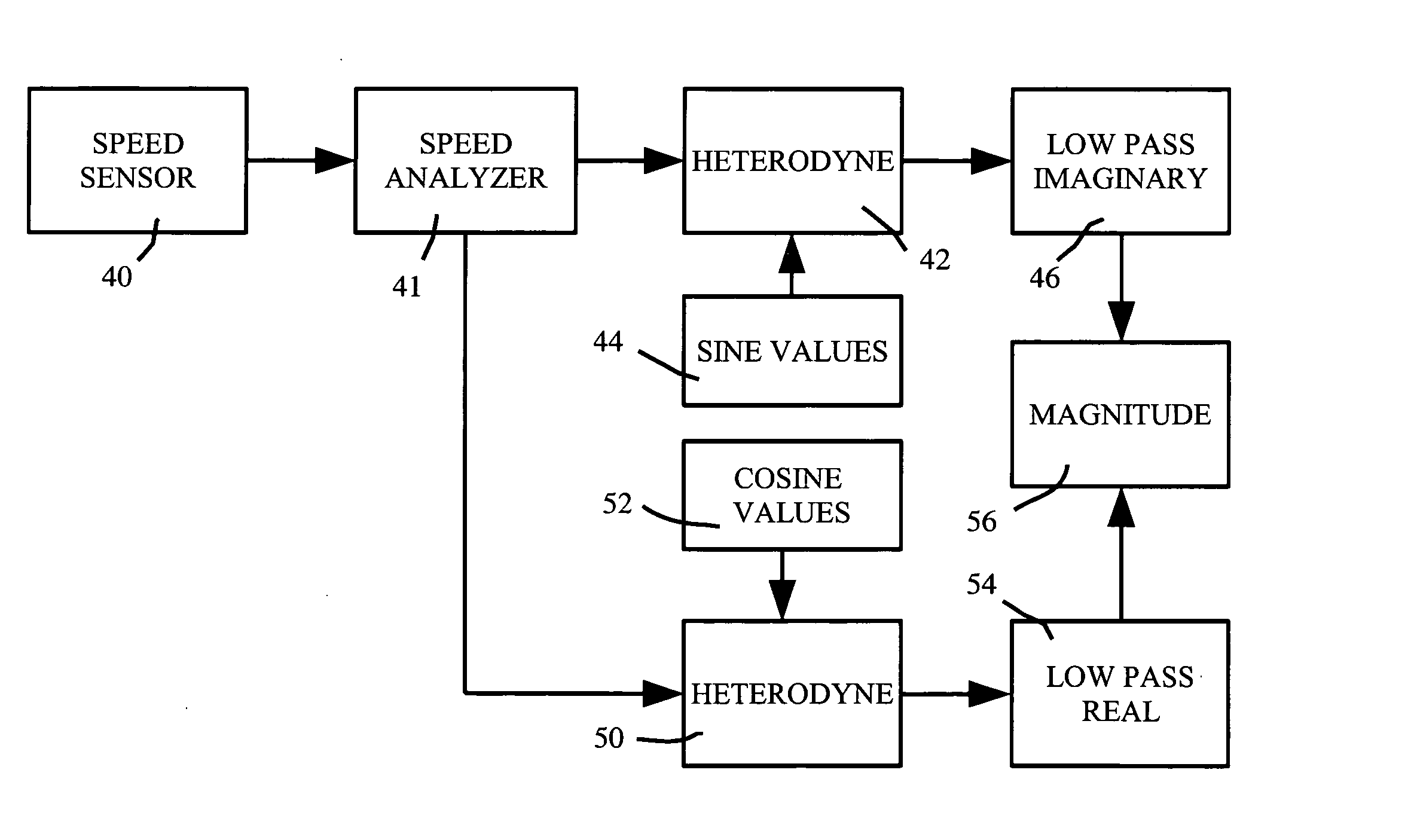

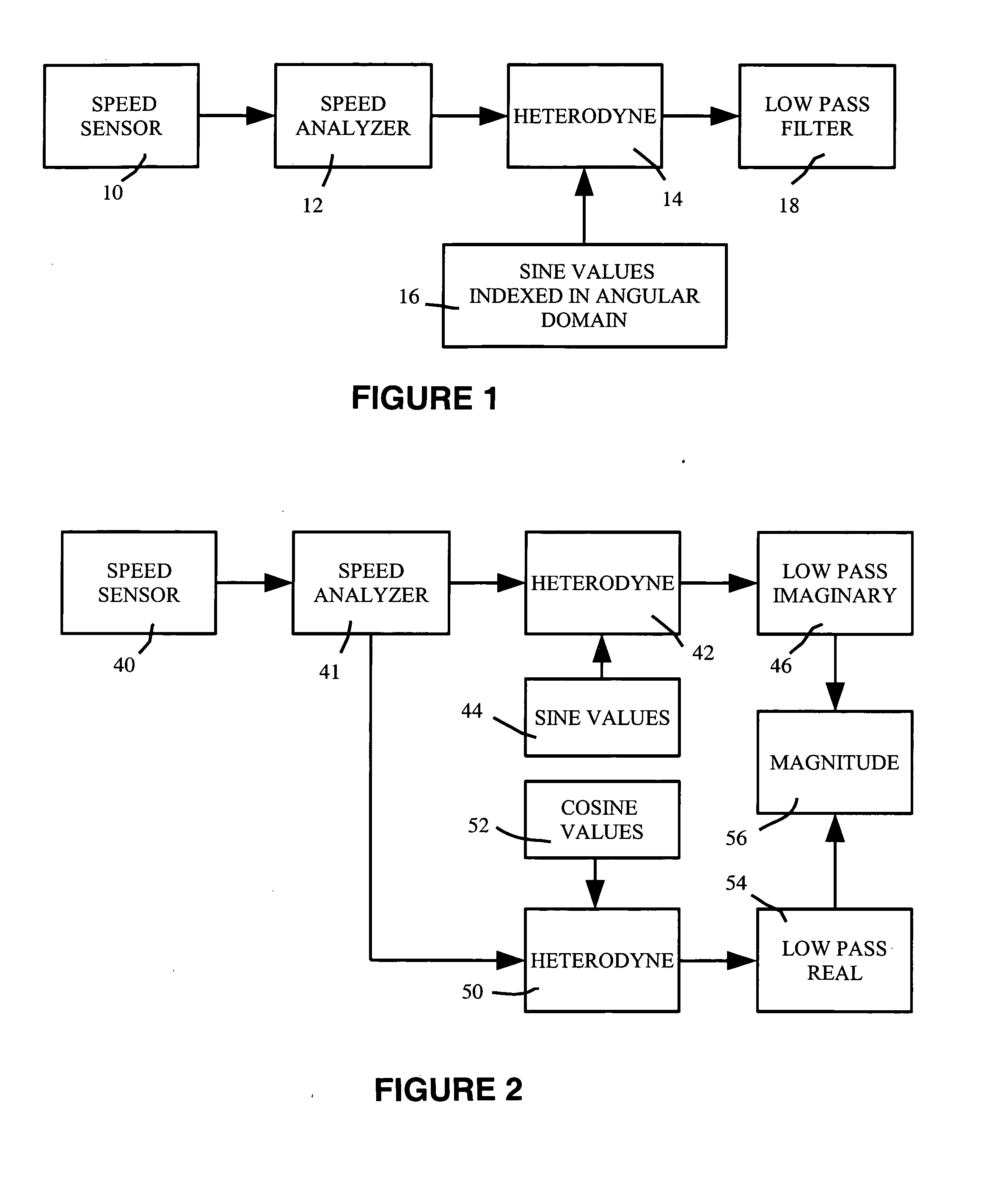

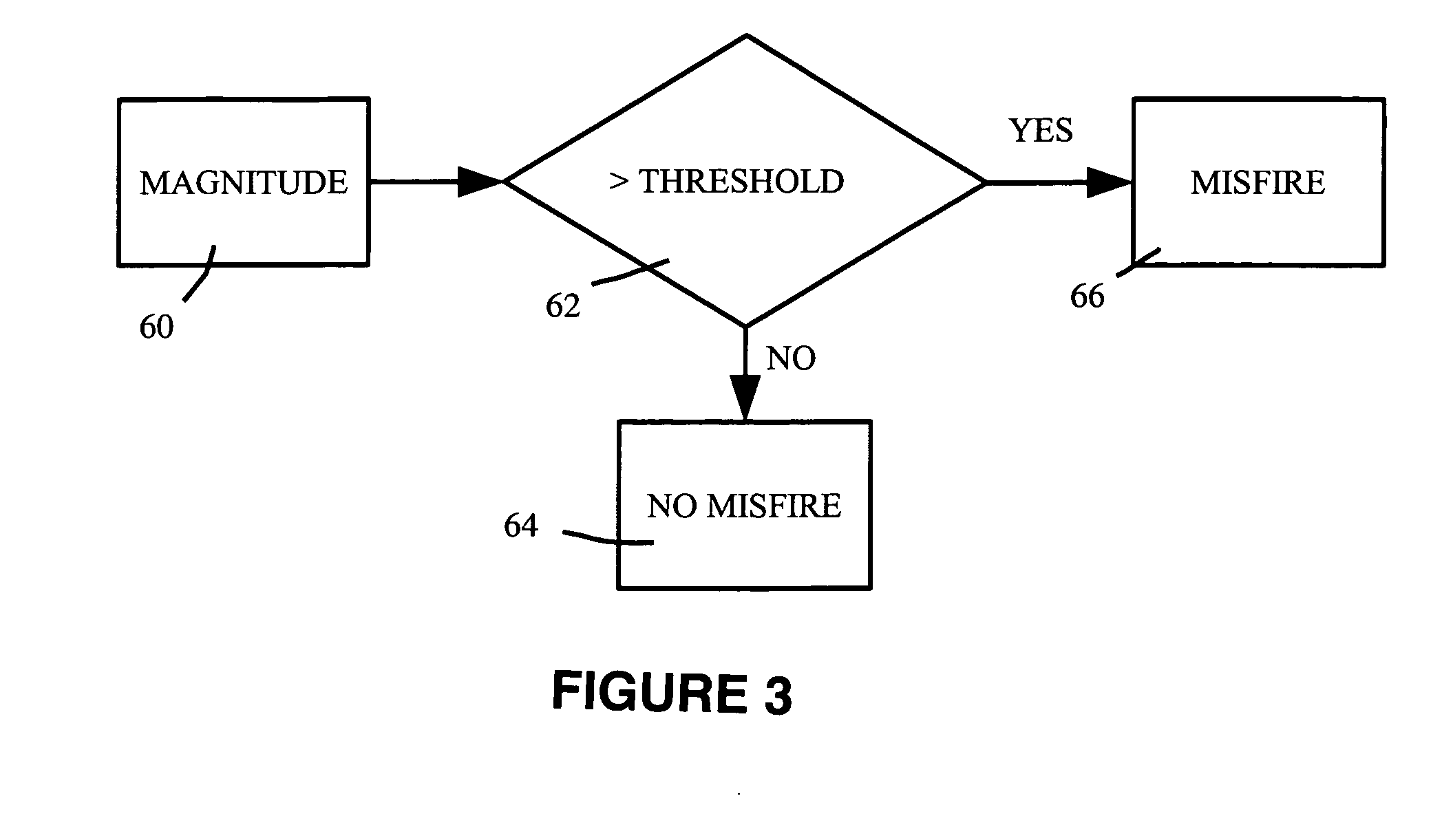

Engine misfire detection

InactiveUS20060101902A1Internal-combustion engine testingAnalogue computers for vehiclesTwo-vectorLow-pass filter

A method of detecting an engine malfunction such as a misfire includes determining engine speed values at each of a plurality of measurement angular positions, heterodyning the engine speed values with sine and cosine functions indexed in the angular domain, passing the heterodyned results through a low pass filter, and computing the resulting magnitude from the resulting two vectors. An apparatus for detecting an engine malfunction, such as a misfire, includes an engine speed analyzer, a multiplier, and a low pass filter.

Owner:LOTUS ENG

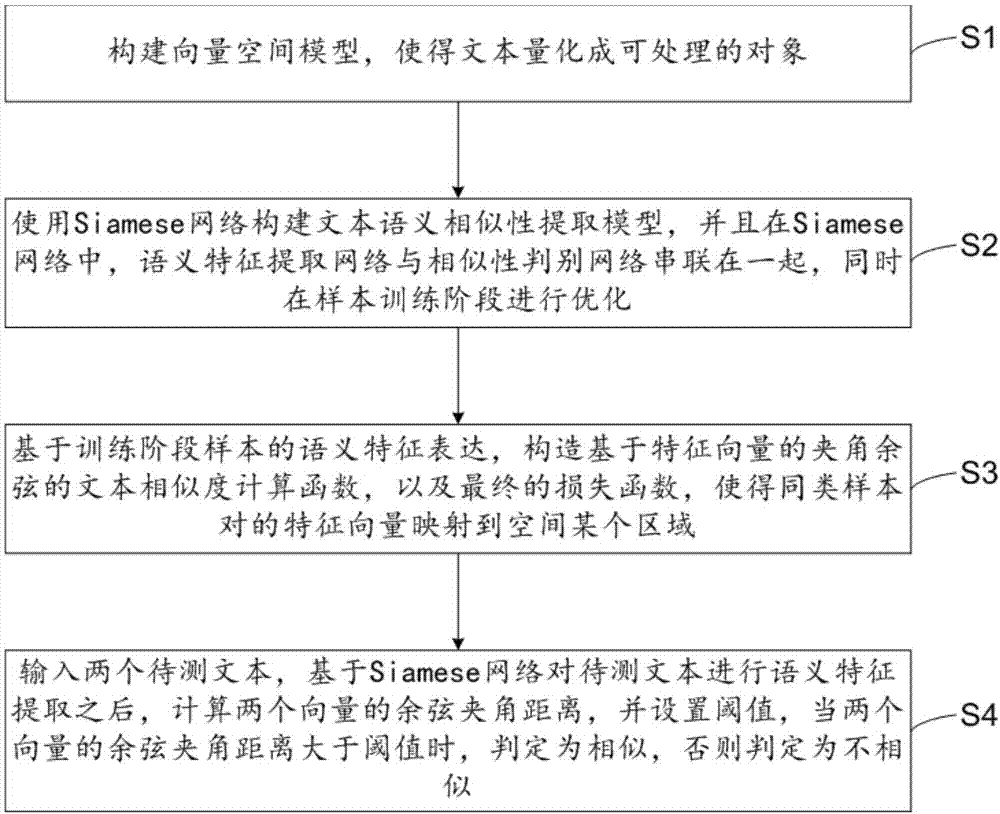

Method and system for judging text similarity

InactiveCN107967255ANo computational difficultiesNo difficultySemantic analysisSpecial data processing applicationsTwo-vectorText categorization

The invention belongs to the technical field of text classification and provides a method and system for judging the text similarity. The method and system for judging the text similarity are used forovercoming the defects which exist in three kinds of text similarity judgement algorithms in the prior art separately. The method comprises the steps 1, a vector space model is established and subjected to text quantization to form a processible object; 2, a Siamese network is used for establishing a text semantic similarity extraction model, in the Siamese network, a text semantic extraction network and a similarity judgement network are in series connection, and meanwhile optimization is conducted at the sample training stage; 3, based on semantic characteristic expression of samples at thetraining stage, a text similarity calculation function of the included angle cosine based on characteristic vectors and a final loss function are established; 4, two pieces of text to be tested are input, after the text to be tested is subjected to semantic characteristic extraction based on the Siamese network, the cosine included angle distance of the two vectors is calculated, a threshold value is set, and when the cosine included angle distance of the two vectors is larger than the threshold value, the similarity is judged, otherwise, the dissimilarity is judged.

Owner:CHINA TECHENERGY +1

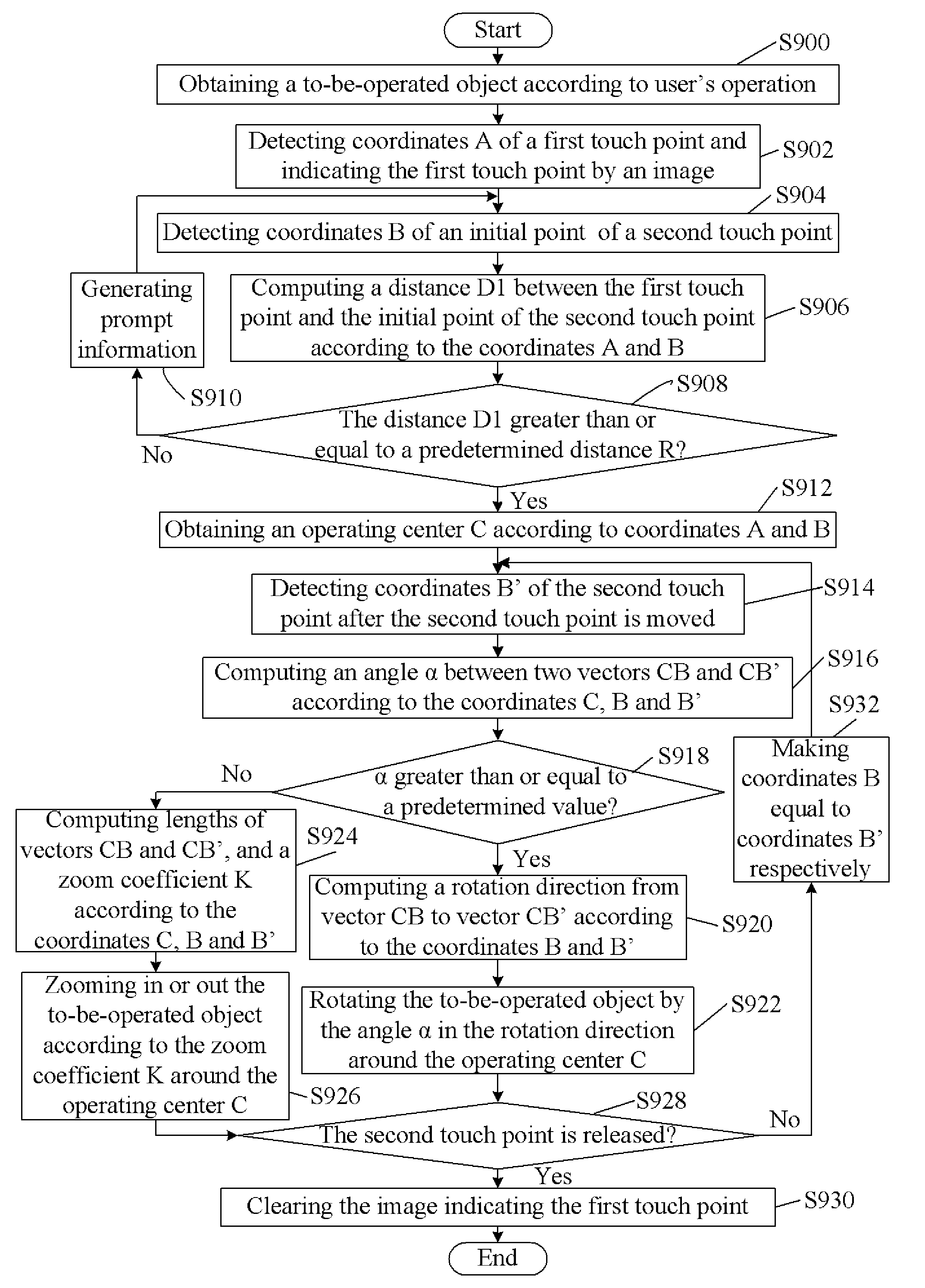

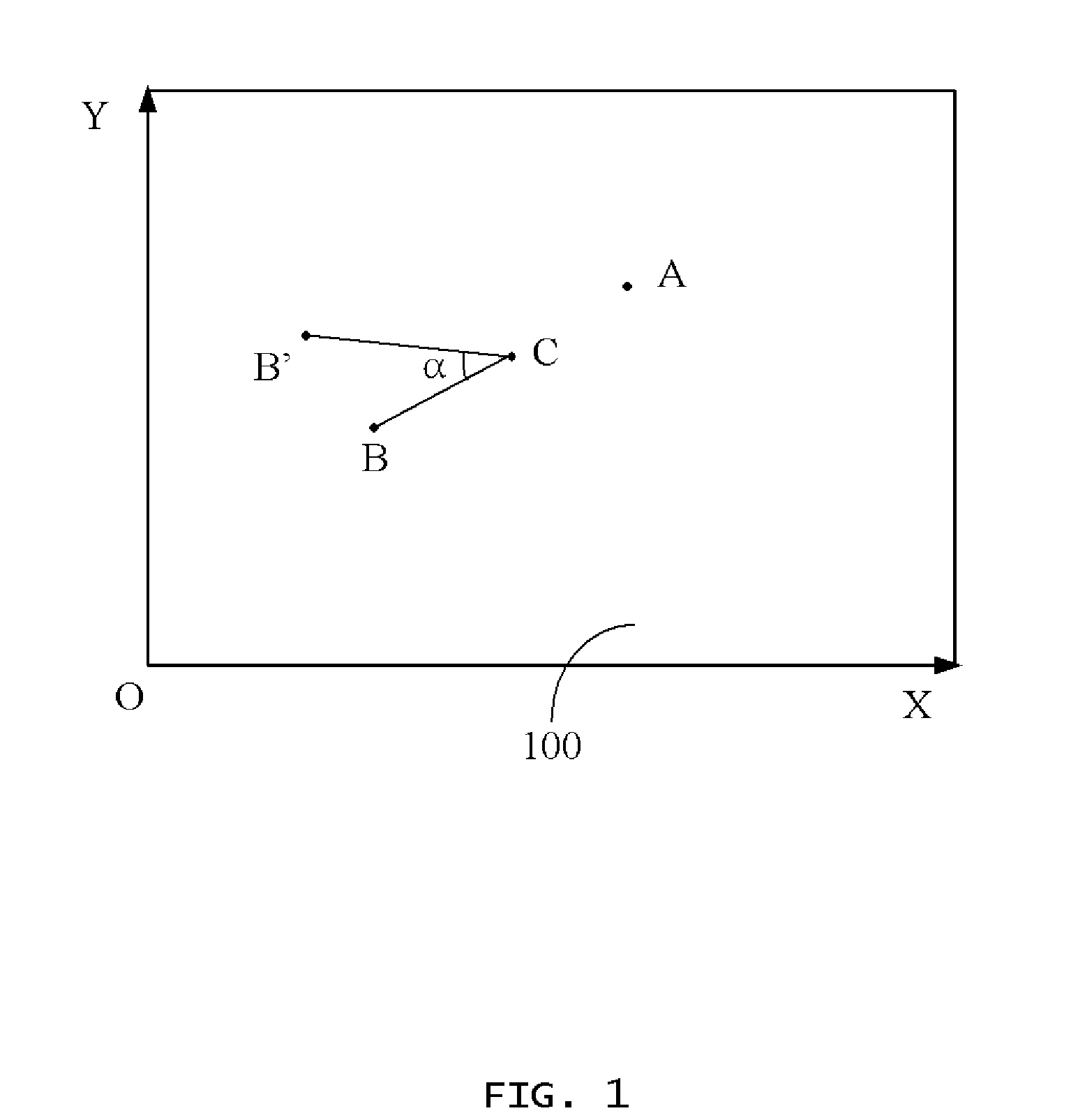

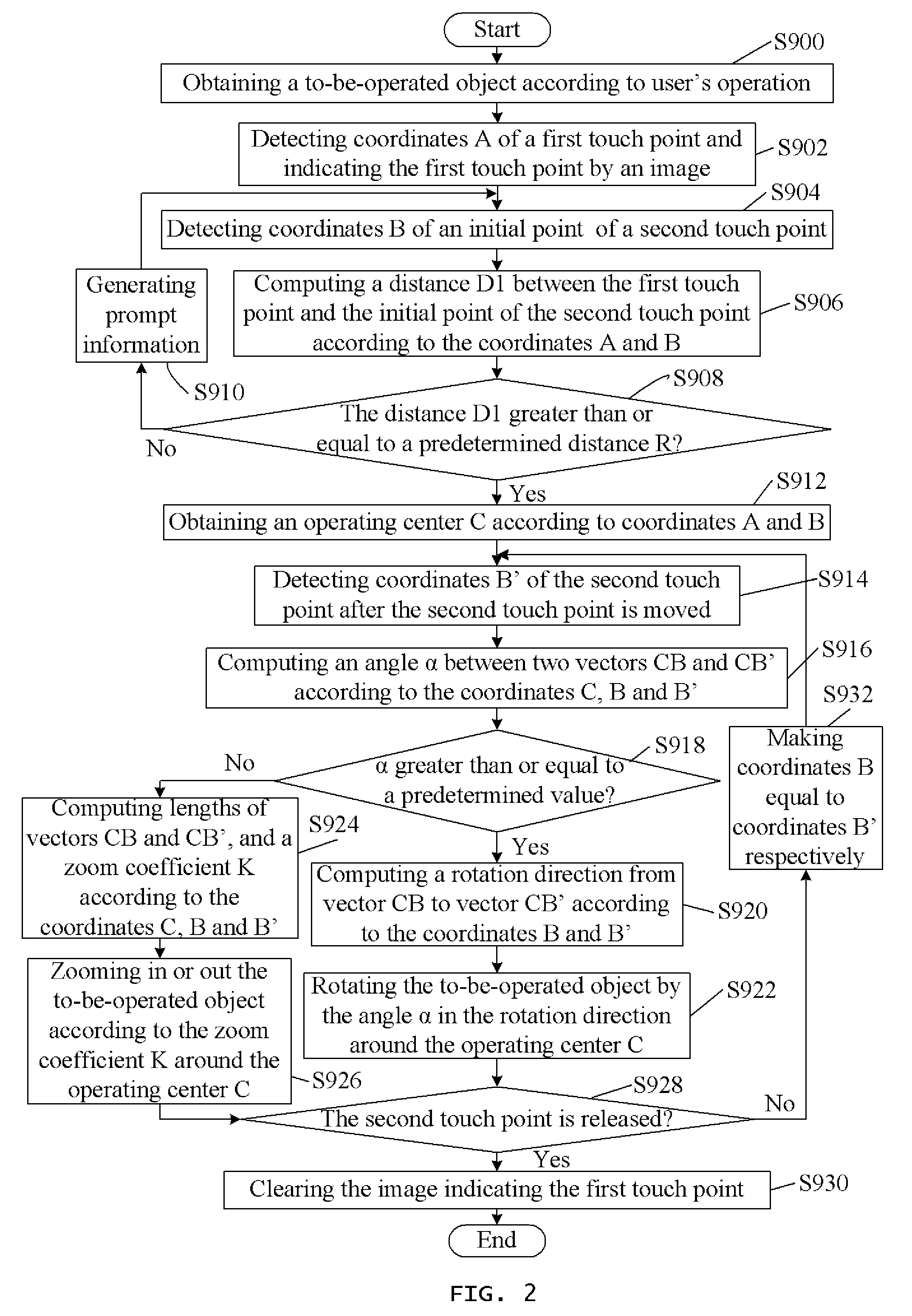

Touch control method

InactiveUS20110012927A1Cathode-ray tube indicatorsInput/output processes for data processingTwo-vectorHuman–computer interaction

A touch control method for operating a touch screen includes: obtaining a to-be-operated object according to user's operations; detecting coordinates A(XA, YA) of a first touch point with respect to the to-be-operated object on the touch screen; detecting coordinates B(XB, YB) of an initial point of a second touch point; obtaining an operating center C(XC, YC) according to the coordinates A(XA, YA) and B(XB, YB); detecting coordinates B′(XB′, YB′) of the second touch point after the second touch point is moved; computing lengths of the two vectors CB and CB′ according to the coordinates C(Xc, YC), B(XB, YB), and B′(XB′, YB′), and computing a zoom coefficient K according to the lengths of the two vectors CB and CB′; and zooming in or out the to-be-operated object according to the zoom coefficient K around the operating center C(XC, YC).

Owner:HON HAI PRECISION IND CO LTD

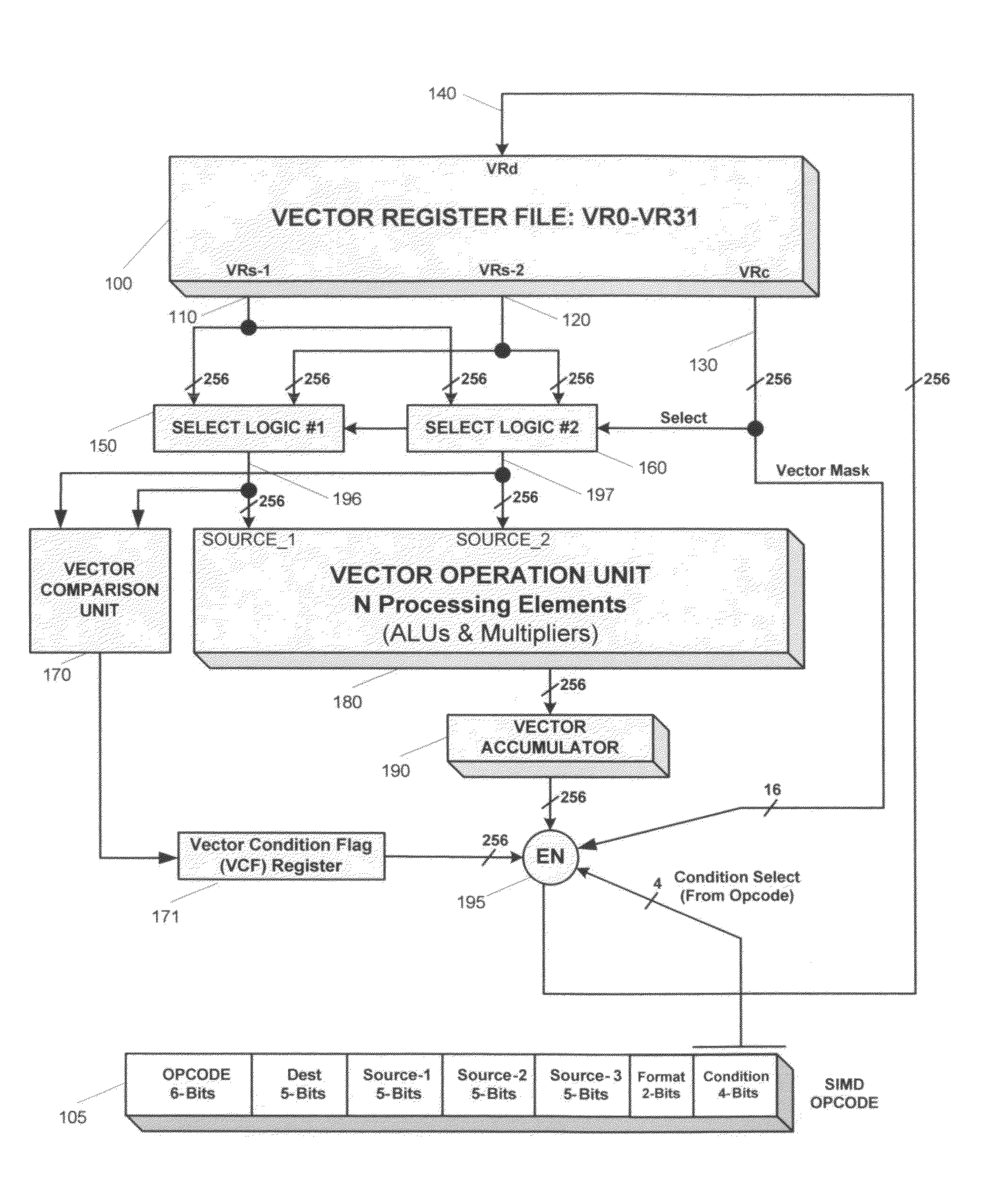

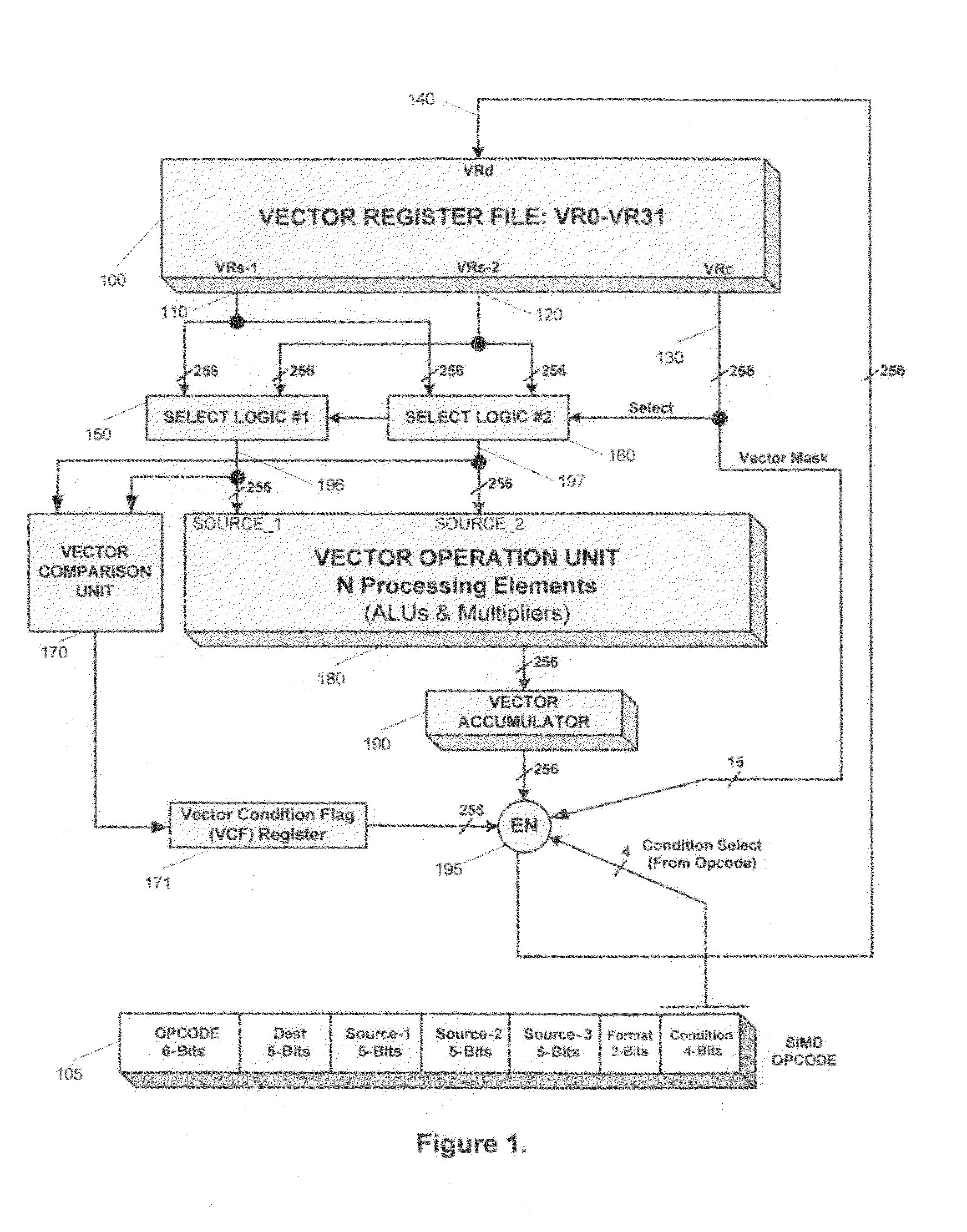

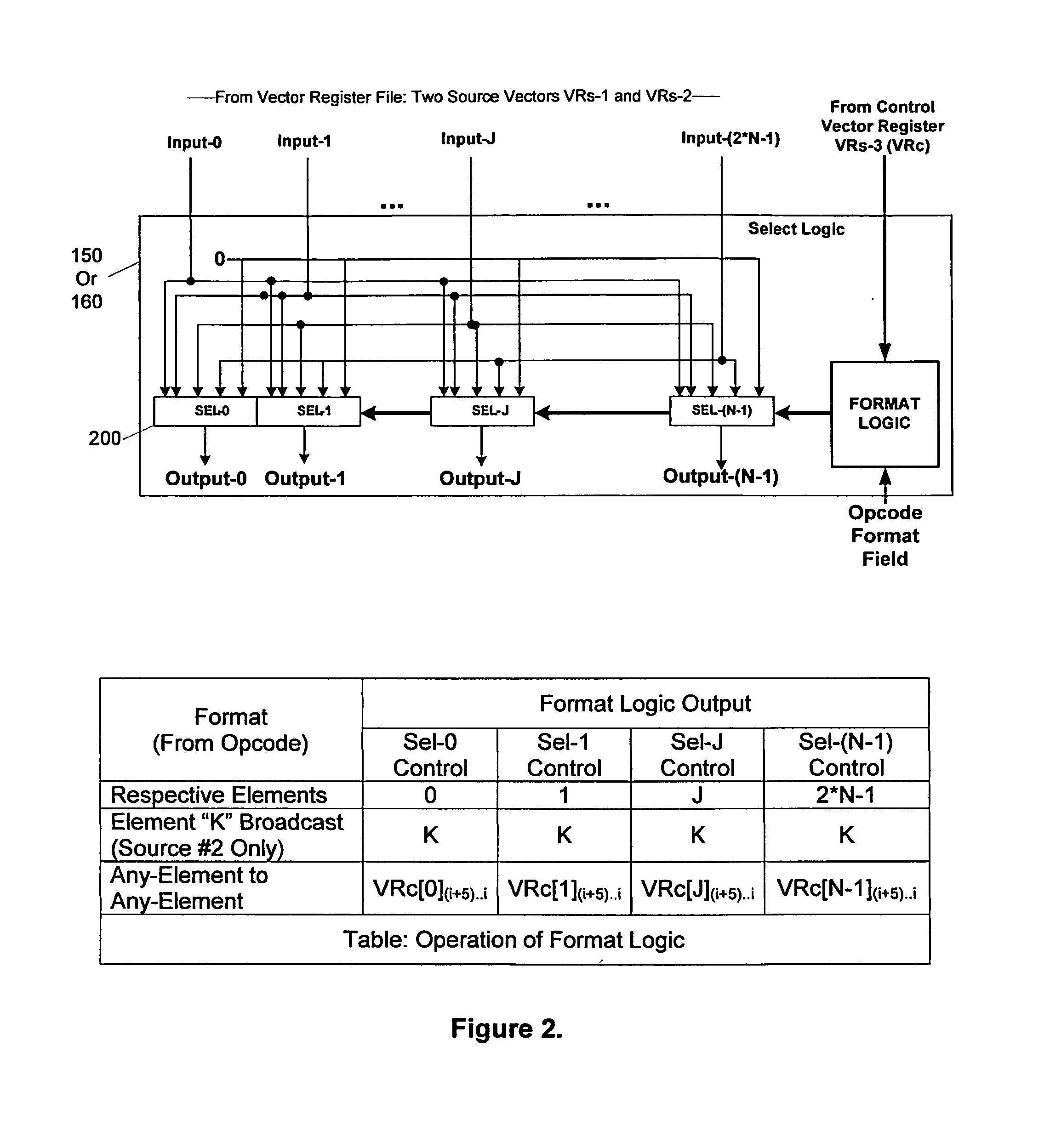

Method for efficient data array sorting in a programmable processor

InactiveUS20130212354A1Avoid changeEfficient sortingGeneral purpose stored program computerData sortingTwo-vectorSorting algorithm

The present invention provides a method for performing data array sorting of vector elements in a N-wide SIMD that is accelerated by a factor of about N / 2 over scalar implementation excluding scalar load / store instructions. A vector compare instruction with ability to compare any two vector elements in accordance to optimized data array sorting algorithms, followed by a vector-multiplex instruction which performs exchanges of vector elements in accordance with condition flags generated by the vector compare instruction provides an efficient but programmable method of performing data sorting with a factor of about N / 2 acceleration. A mask bit prevents changes to elements which is not involved in a certain stage of sorting.

Owner:MIMAR TIBET

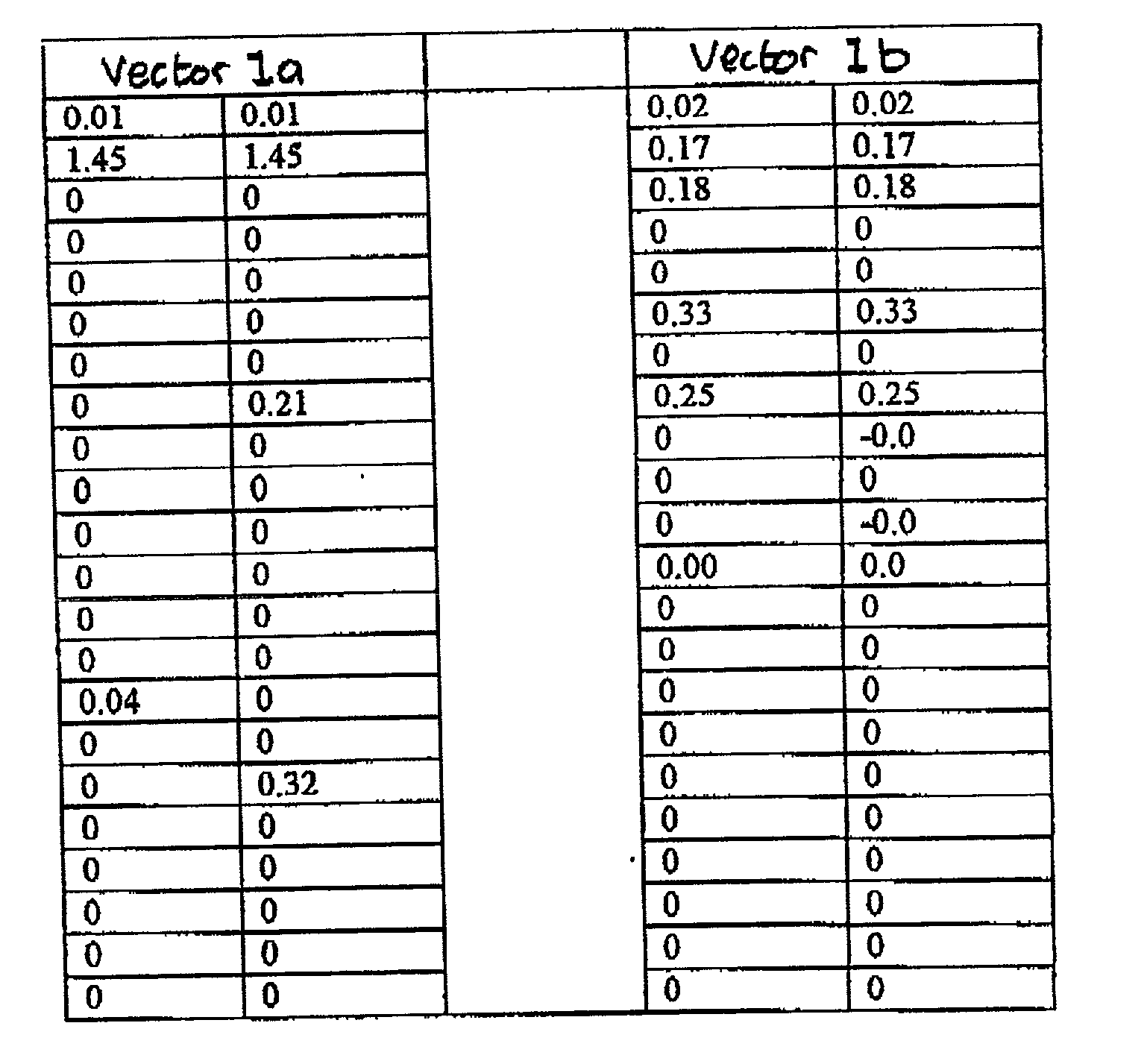

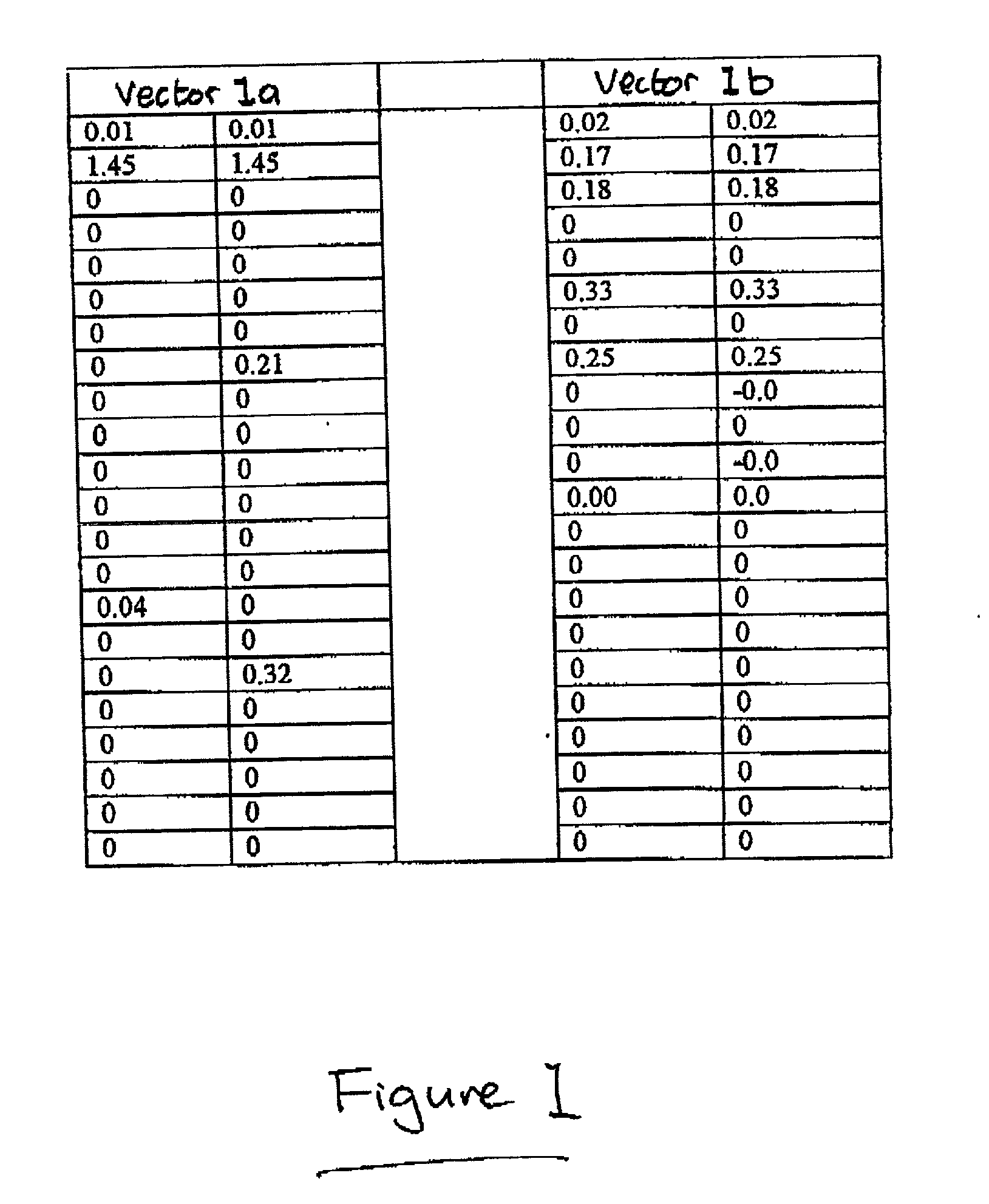

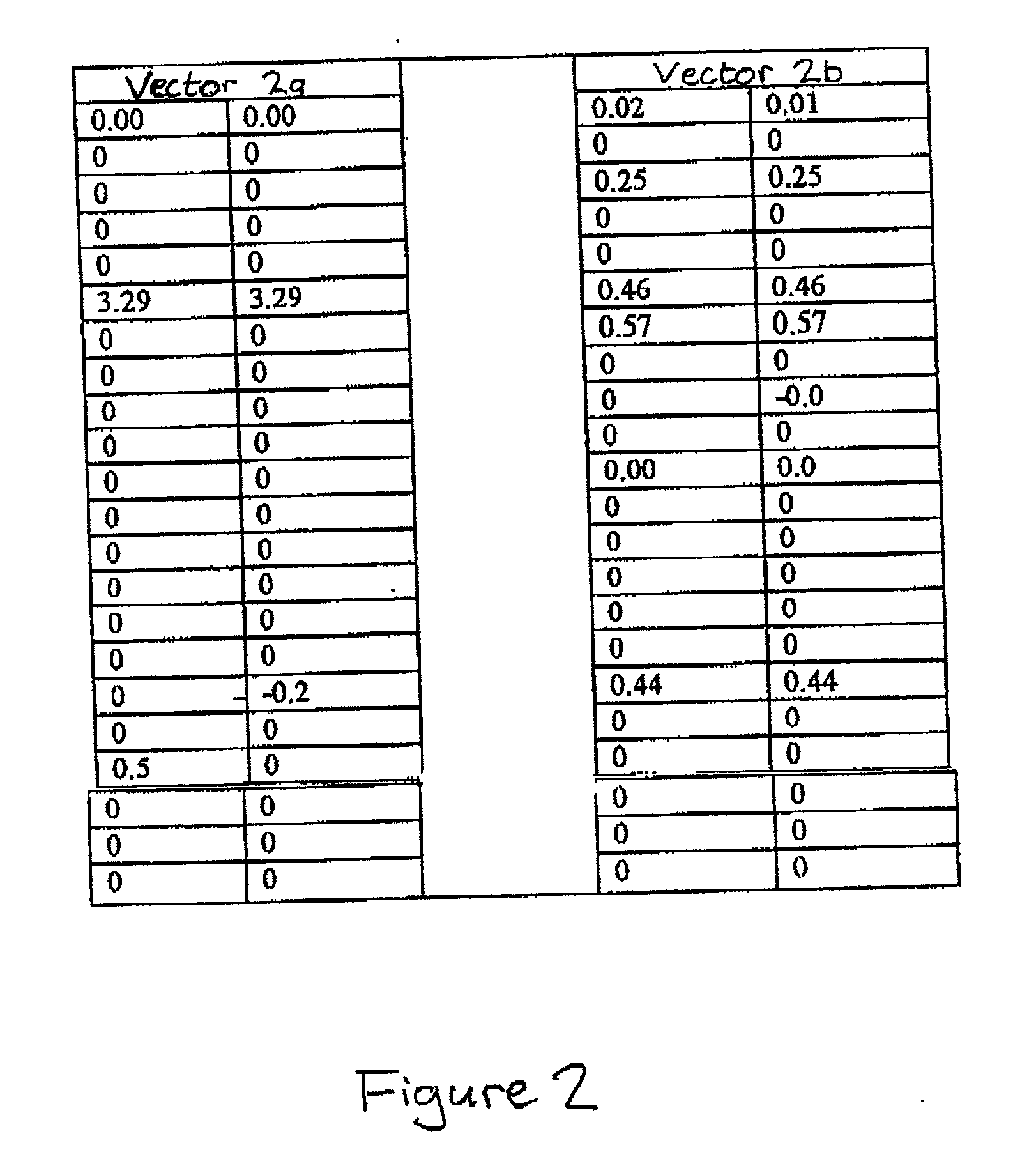

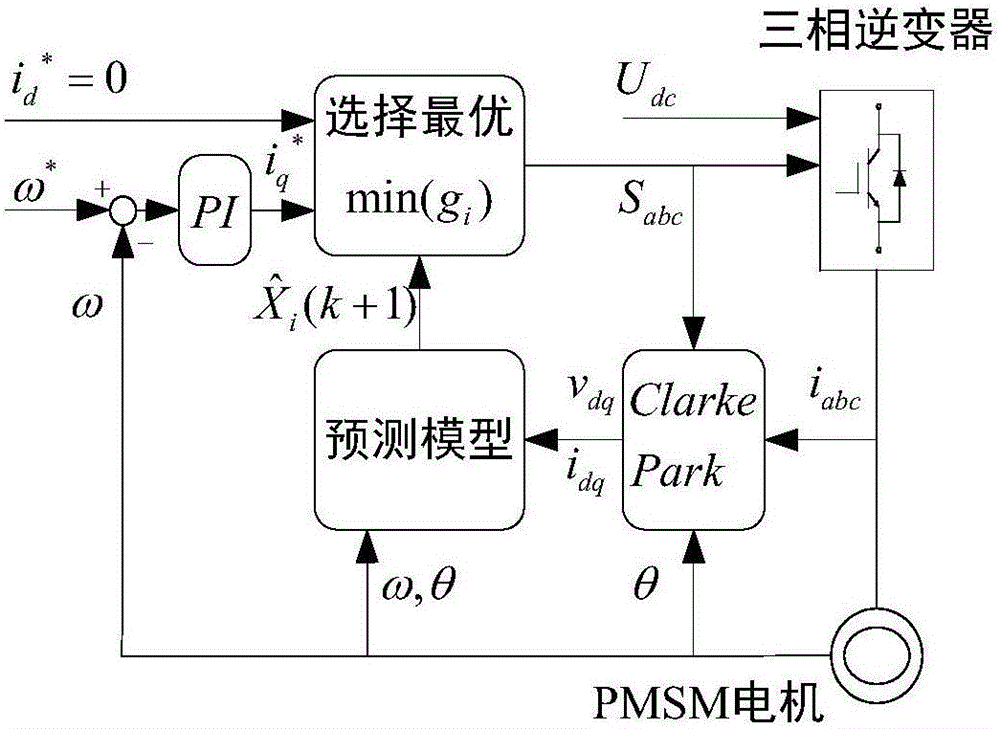

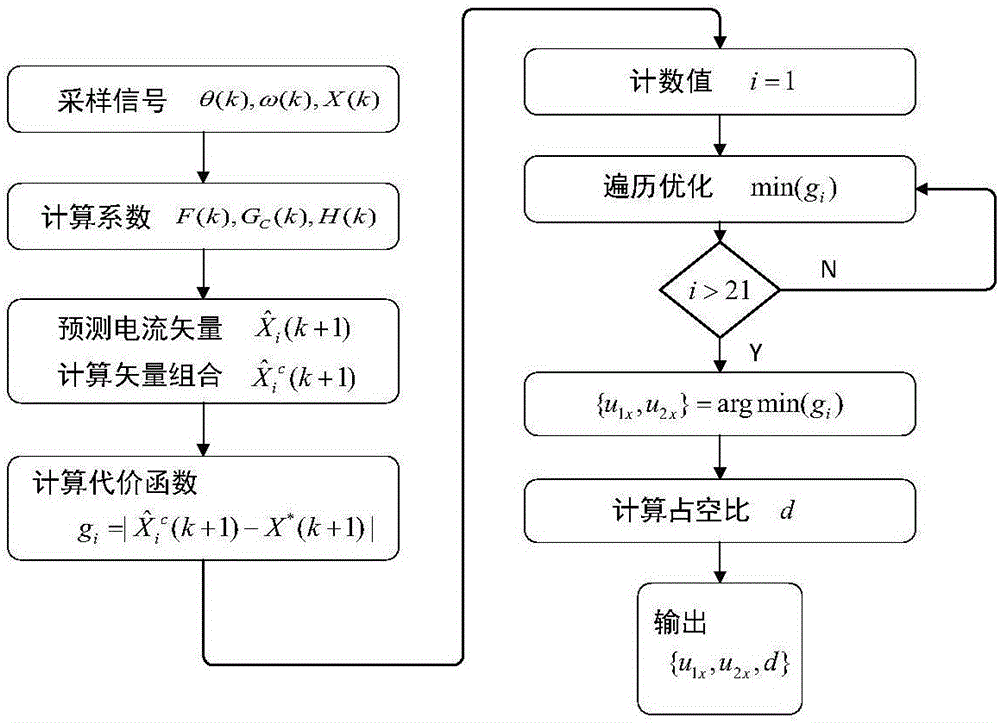

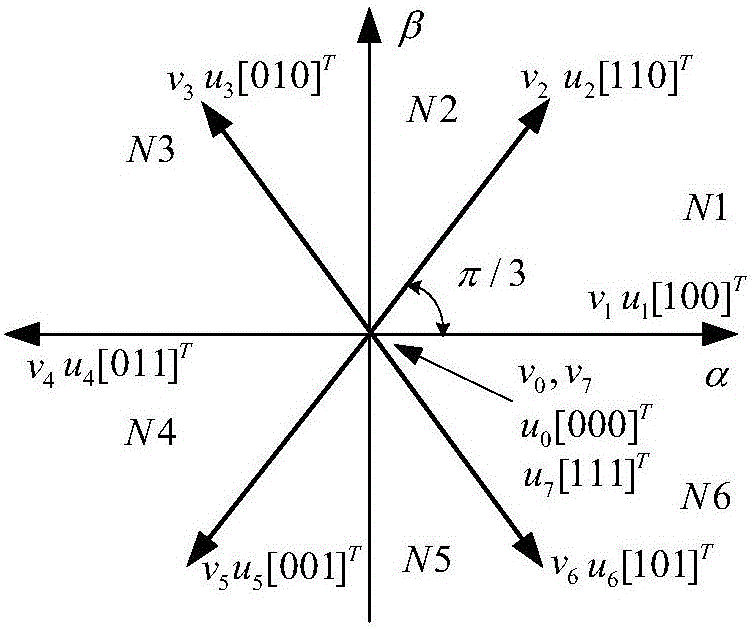

Optimal two-vector combination-based model predictive control method and system

ActiveCN106788027ASmall amount of calculationIncreased current capabilityElectronic commutation motor controlAC motor controlTwo-vectorControl system

The invention discloses an optimal two-vector combination-based model predictive control method and system. The method is applied to a permanent-magnet synchronous motor control system driven by a three-phase two-level inverter. A model predictive current control strategy is adopted, all two-vector combinations and resultant vector sets obtained under action time are considered at the same time, and a cost function is inspected and an optimal resultant vector is selected from all to-be-selected sets. In order to simplify the optimization process, an equivalent voltage equation is given, a sector transformation method is provided and to-be-selected two-vector combination sets are transformed into multiple fixed line segments; and a fast algorithm is given and partial complicated calculation is transformed to offline execution, so that the real-time calculated amount of the novel method is effectively reduced. The model predictive control method disclosed by the invention is simple in structure, small in real-time calculated amount and easy to implement; and the response speed of a motor is high, current ripples and distortion are small, the switching frequency is low and the dynamic and static performance of the system is excellent.

Owner:HUAZHONG UNIV OF SCI & TECH

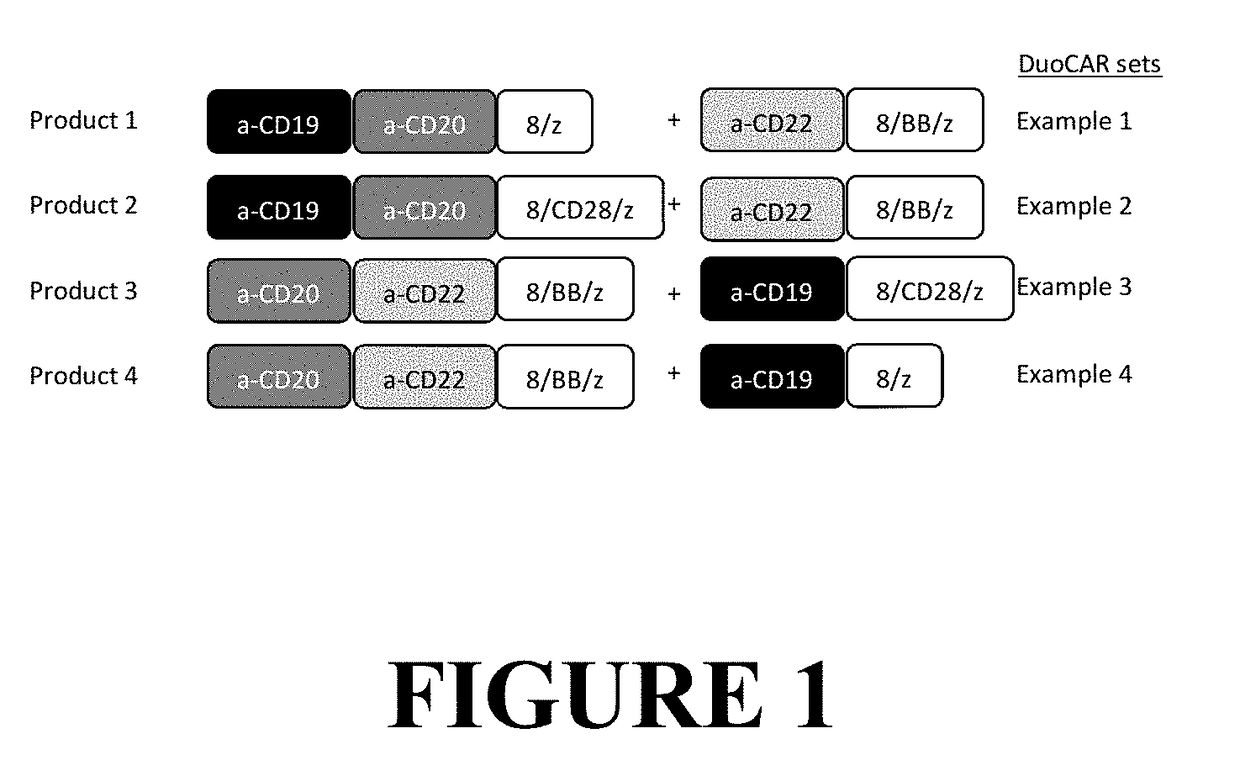

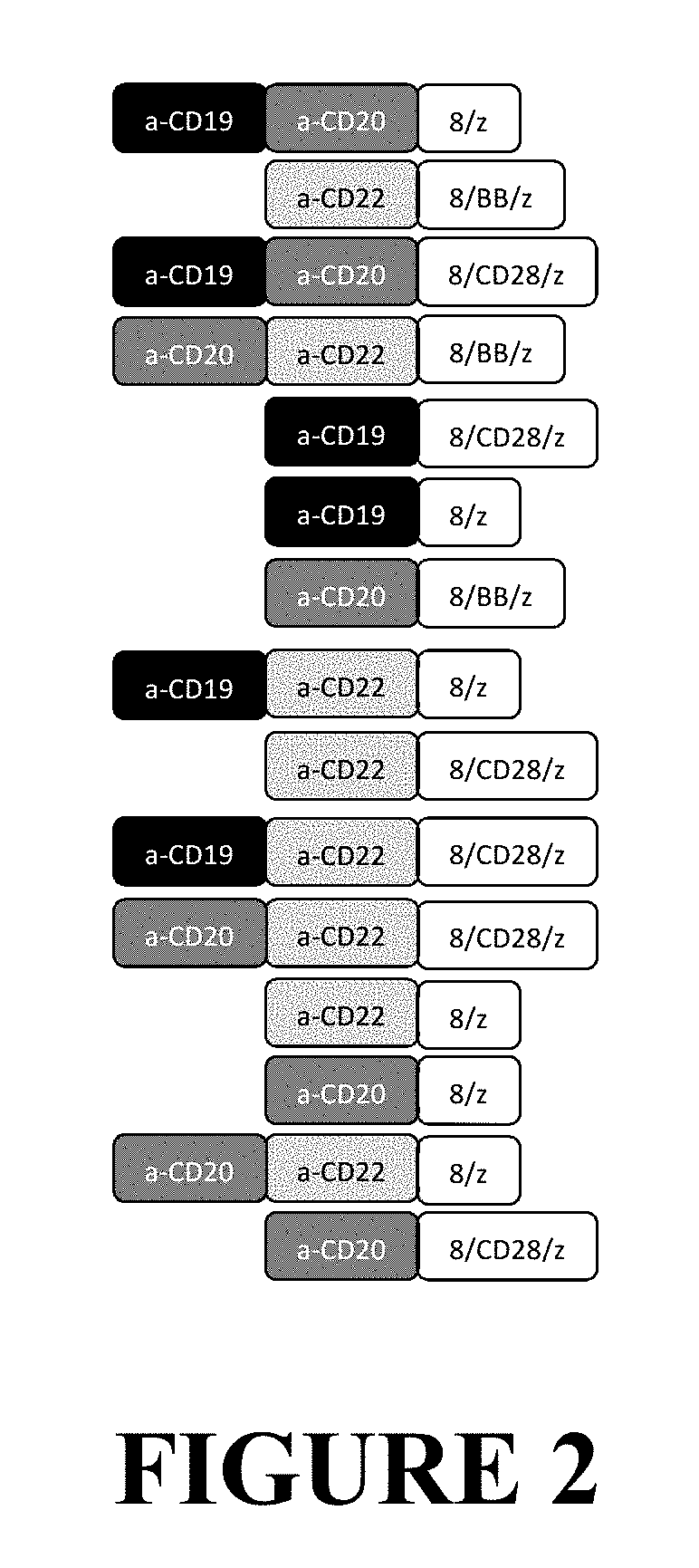

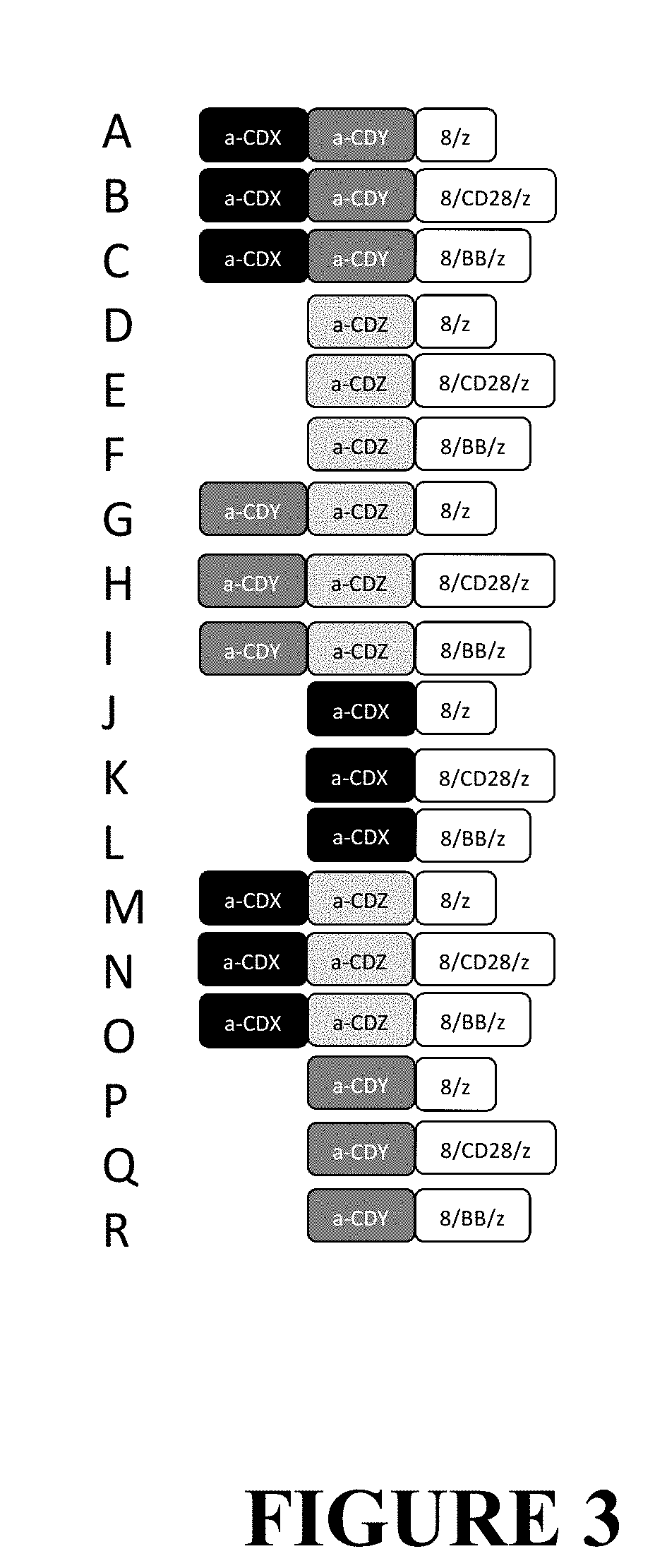

Compositions and Methods for Treating Cancer with DuoCARs

ActiveUS20190083596A1Prevent and ameliorate relapseAntibody mimetics/scaffoldsImmunoglobulins against cell receptors/antigens/surface-determinantsIntracellular signallingDisease

Novel therapeutic immunotherapy compositions comprising at least two vectors, each vector encoding a functional CAR, whereby the combination of vectors results in the expression of two or more non-identical binding domains, wherein each vector encoded binding domain(s) are covalently linked to a transmembrane domain and one or more non-identical intracellular signaling motifs are provided herein as well as are methods of use of same in a patient-specific immunotherapy that can be used to treat cancers and other diseases and conditions.

Owner:LENTIGEN TECH INC

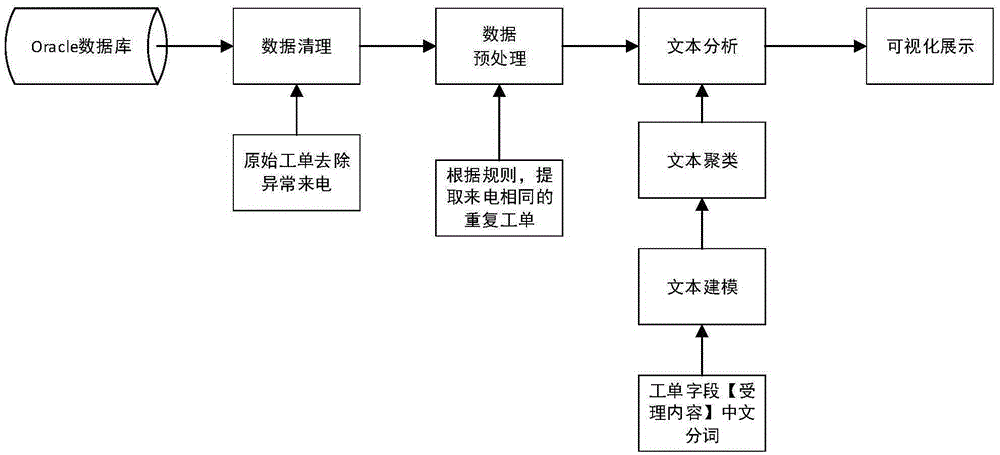

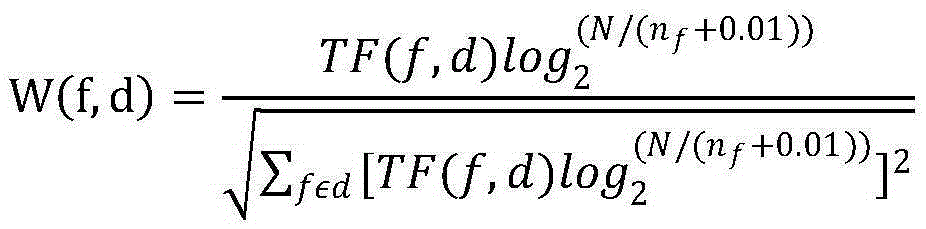

Customer service repeated call treatment method based on cosine similarity text mining algorithm

ActiveCN105335496AIncrease work rateImprove work efficiencySpecial data processing applicationsText miningTwo-vector

The invention discloses a customer service repeated call treatment method based on a cosine similarity text mining algorithm. The customer service repeated call treatment method comprises the following steps of extracting a work order text of 95598, performing data cleaning and removing abnormal call IDs; performing pretreatment on the data, extracting work orders of the same call and constructing a repeated call work order text set; converting the text into the language which can be recognized by a computer, establishing a vector space model, and representing the text by utilizing a characteristic vector; using a vector taking weight of a characteristic item as a component to express the whole text, measuring the similarity among documents on the basis of included angle cosine of two vectors in a use space of the characteristic vector, extracting a repeated call work order of the similar content of the same user by utilizing hierarchical clustering, and performing an analysis on semantic features; timely recording the problem reflected by the repeated call work order, sending an order and tracking a record. The customer service repeated call treatment method disclosed by the invention replaces manual search of the repeated call work order, and can improve the operating rate.

Owner:国网山东省电力公司营销服务中心(计量中心) +2

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com