A method and system for realizing a visual SLAM semantic mapping function based on a cavity convolutional deep neural network

A deep neural network and semantic mapping technology, which is applied in the field of visual SLAM semantic mapping based on the deep convolutional neural network, can solve the problems of difficult application of embedded systems, poor real-time calculation, false detection, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0092] In order to describe the technical content of the present invention more clearly, further description will be given below in conjunction with specific embodiments.

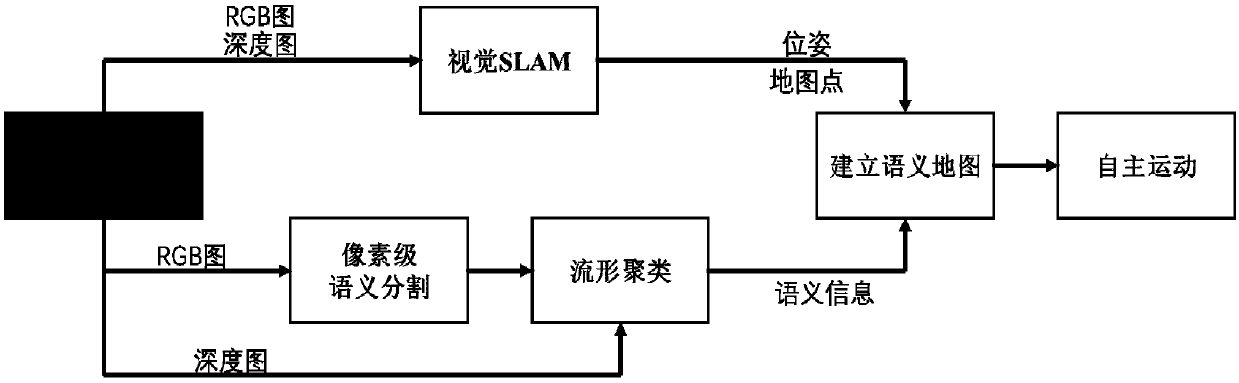

[0093] The method for realizing the visual SLAM semantic mapping function based on the hole convolution deep neural network, wherein the method comprises the following steps:

[0094] (1) The embedded development processor obtains the color information and depth information of the current environment through the RGB-D camera;

[0095] (2) Obtain feature point matching pairs through the collected images, perform pose estimation, and obtain scene space point cloud data;

[0096] (2.1) Extract image feature points through visual SLAM technology, and perform feature matching to obtain feature point matching pairs;

[0097] (2.2) Solve the current pose of the camera through 3D point pairs;

[0098] (2.3) Perform more accurate pose estimation through the method of graph optimization Bundle Adjustment;

[0099]...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com