Pose estimation method based on RGB-D and IMU information fusion

A pose estimation and residual technology, applied in the field of pose estimation based on RGB-D and IMU information fusion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

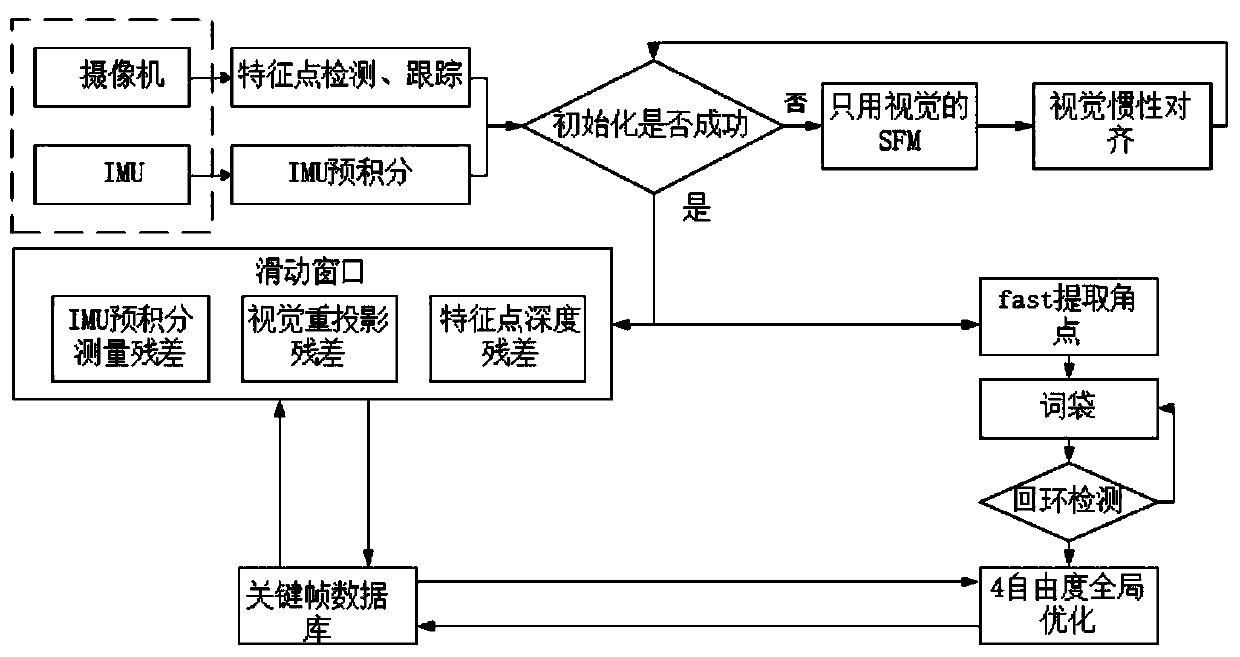

[0111] The overall framework of a method for pose estimation based on RGB-D and IMU information fusion is as follows: figure 1 shown.

[0112] The pose estimation algorithm of the RGB-D and IMU information fusion pose estimation system (hereinafter referred to as the system) can be divided into four parts: data preprocessing, visual inertial navigation initialization, back-end optimization and loop detection. The four parts are taken as independent modules, which can be improved according to requirements.

[0113] Data preprocessing part: It is used to process the grayscale image and depth image collected by the RGB-D camera, as well as the acceleration and angular velocity information collected by the IMU. The input of this part contains grayscale image, depth image, IMU acceleration and angular velocity information, and the output contains adjacent matching feature points and IMU state increment.

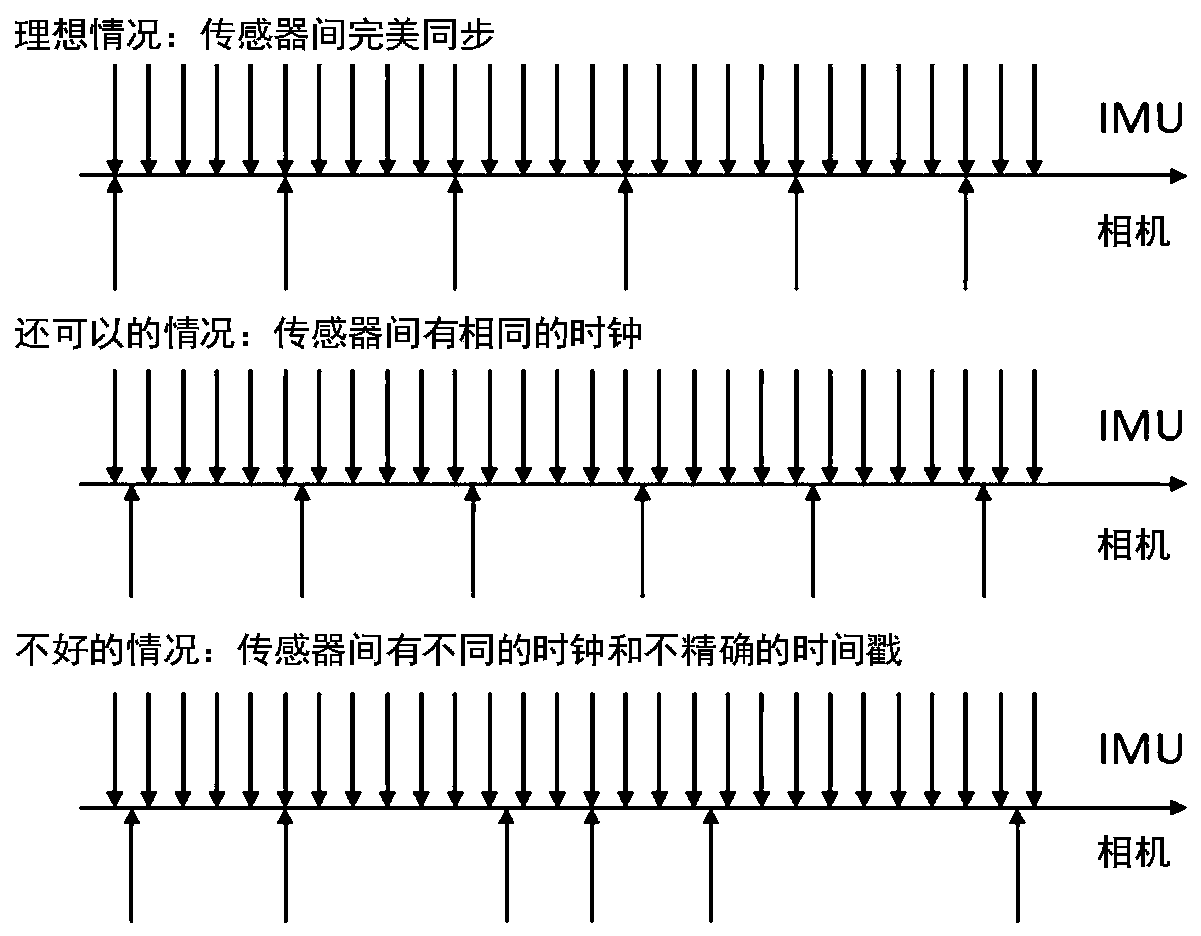

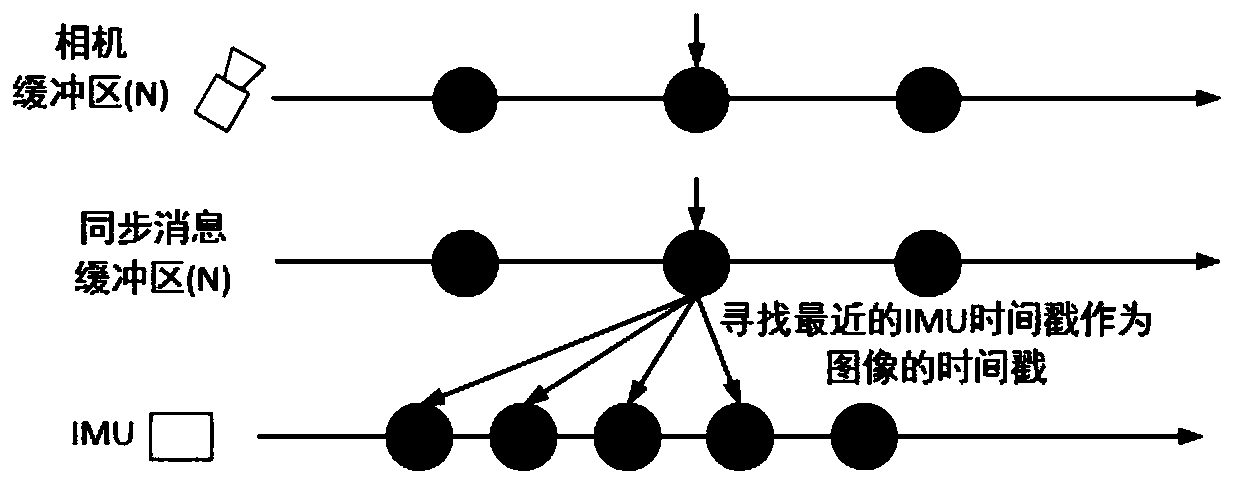

[0114] Specifically: since the camera and the IMU have two clock sources,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com