Patents

Literature

2051results about How to "Improve parallelism" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

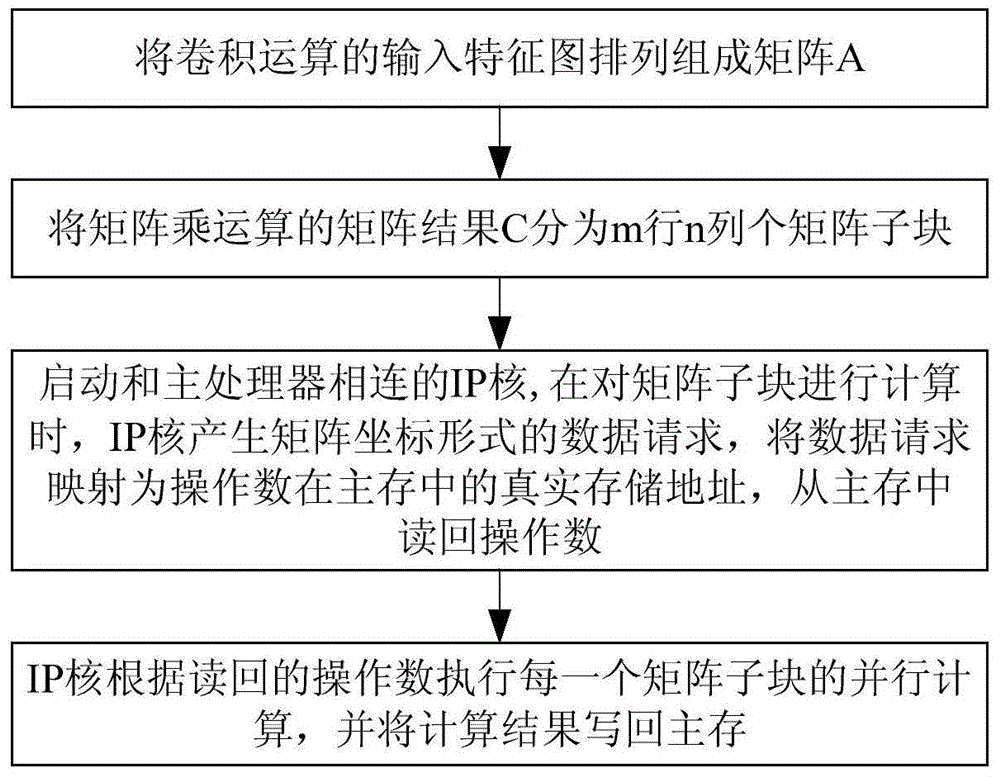

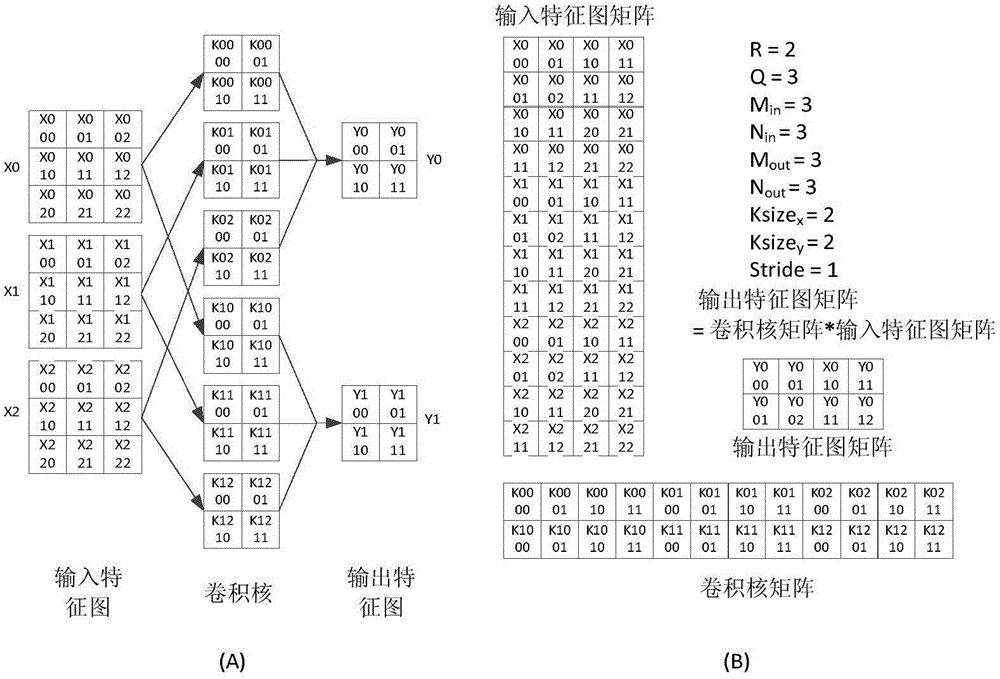

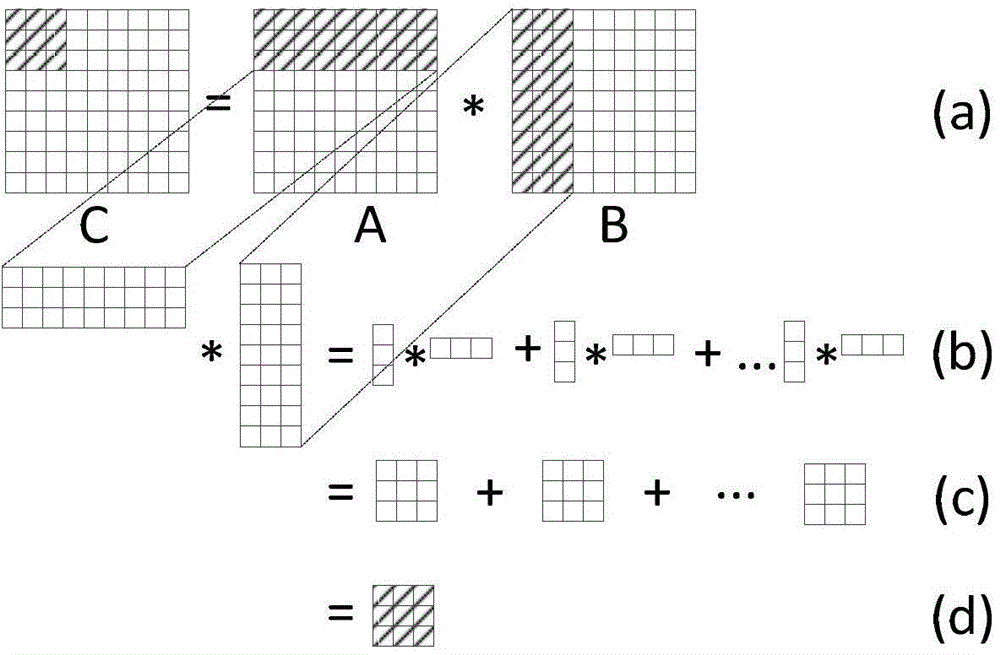

Method for accelerating convolution neutral network hardware and AXI bus IP core thereof

ActiveCN104915322AImprove adaptabilityIncrease flexibilityResource allocationMultiple digital computer combinationsChain structureMatrix multiplier

The invention discloses a method for accelerating convolution neutral network hardware and an AXI bus IP core thereof. The method comprises the first step of performing operation and converting a convolution layer into matrix multiplication of a matrix A with m lines and K columns and a matrix B with K lines and n columns; the second step of dividing the matrix result into matrix subblocks with m lines and n columns; the third step of starting a matrix multiplier to prefetch the operation number of the matrix subblocks; and the fourth step of causing the matrix multiplier to execute the calculation of the matrix subblocks and writing the result back to a main memory. The IP core comprises an AXI bus interface module, a prefetching unit, a flow mapper and a matrix multiplier. The matrix multiplier comprises a chain type DMA and a processing unit array, the processing unit array is composed of a plurality of processing units through chain structure arrangement, and the processing unit of a chain head is connected with the chain type DMA. The method can support various convolution neutral network structures and has the advantages of high calculation efficiency and performance, less requirements for on-chip storage resources and off-chip storage bandwidth, small in communication overhead, convenience in unit component upgrading and improvement and good universality.

Owner:NAT UNIV OF DEFENSE TECH

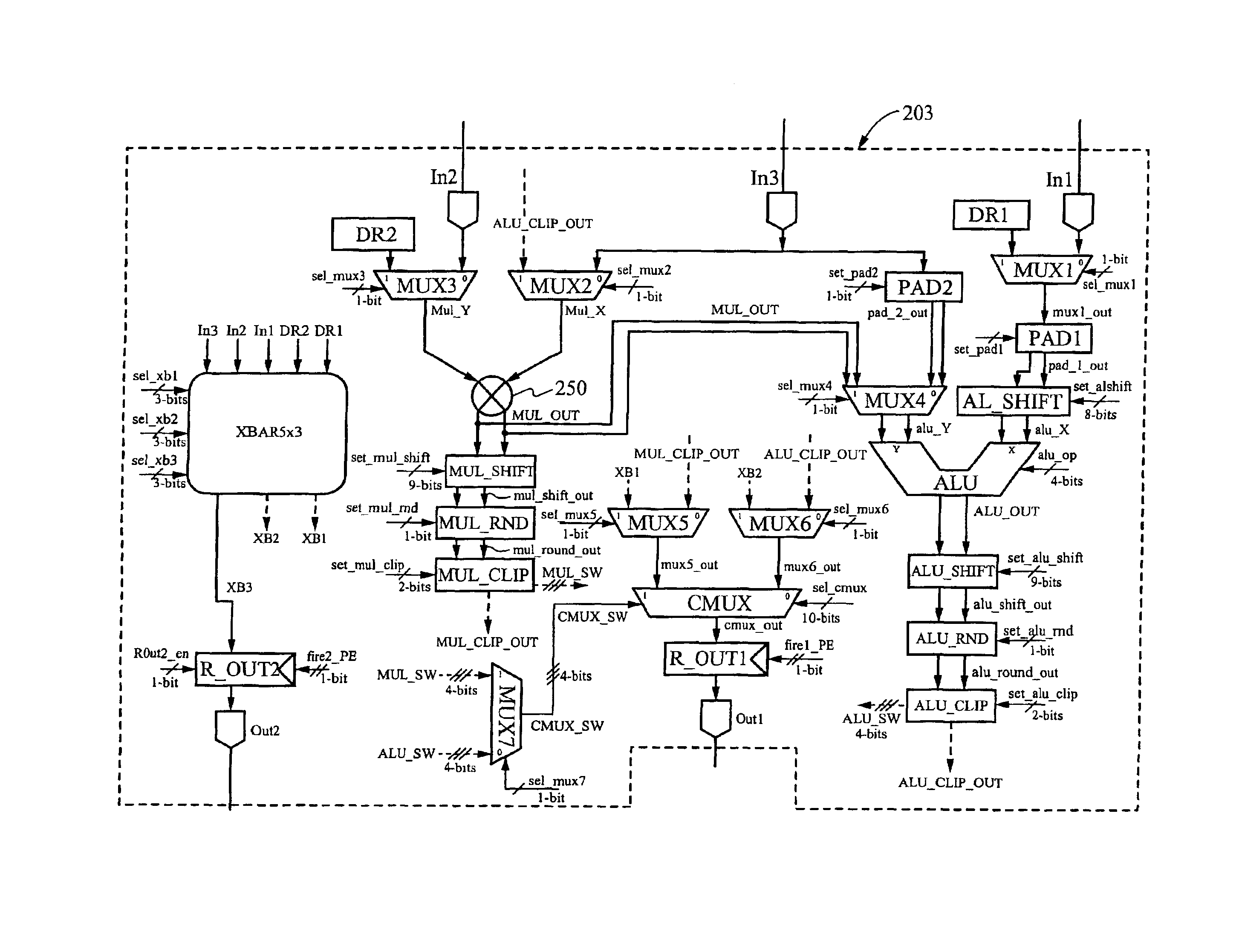

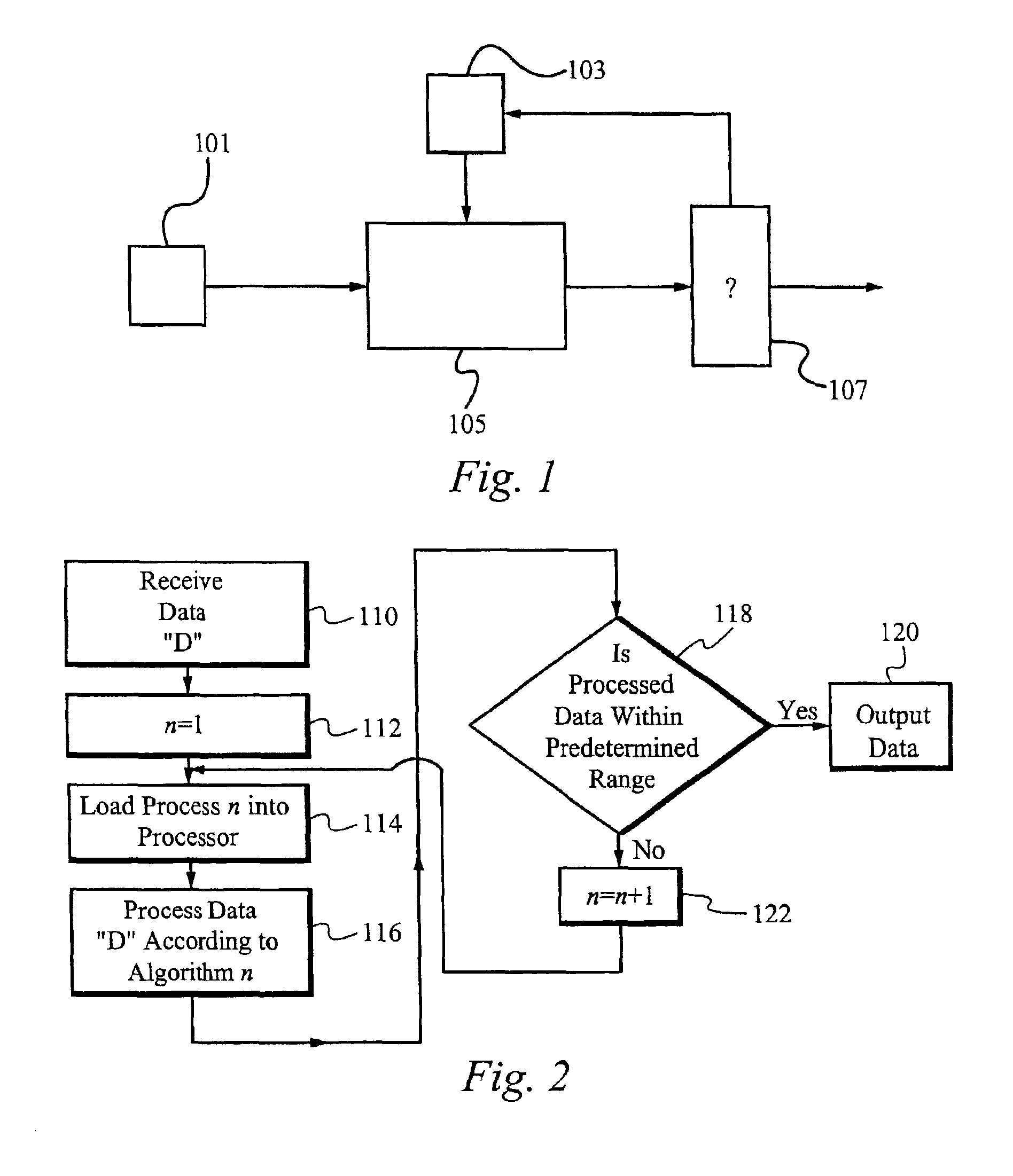

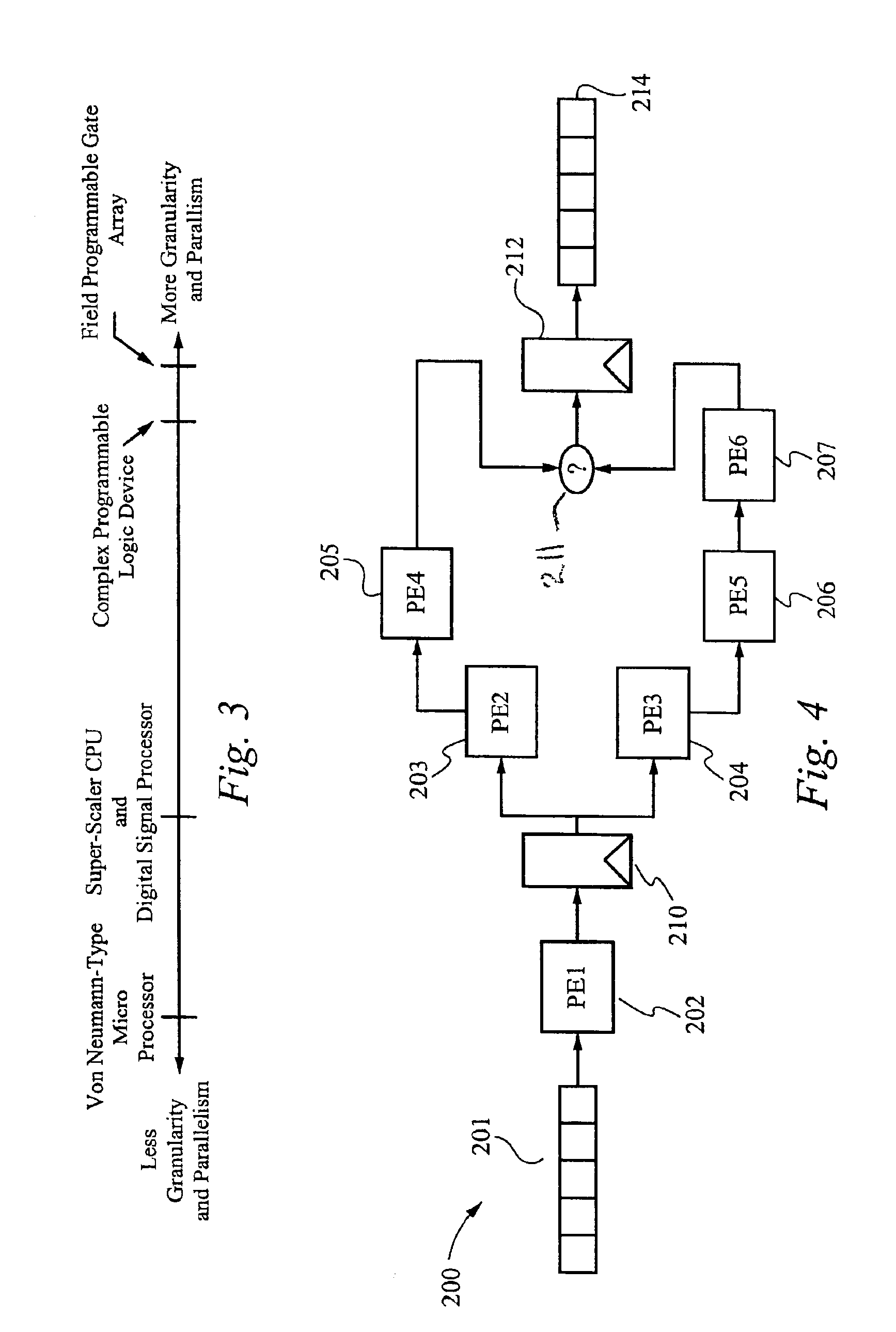

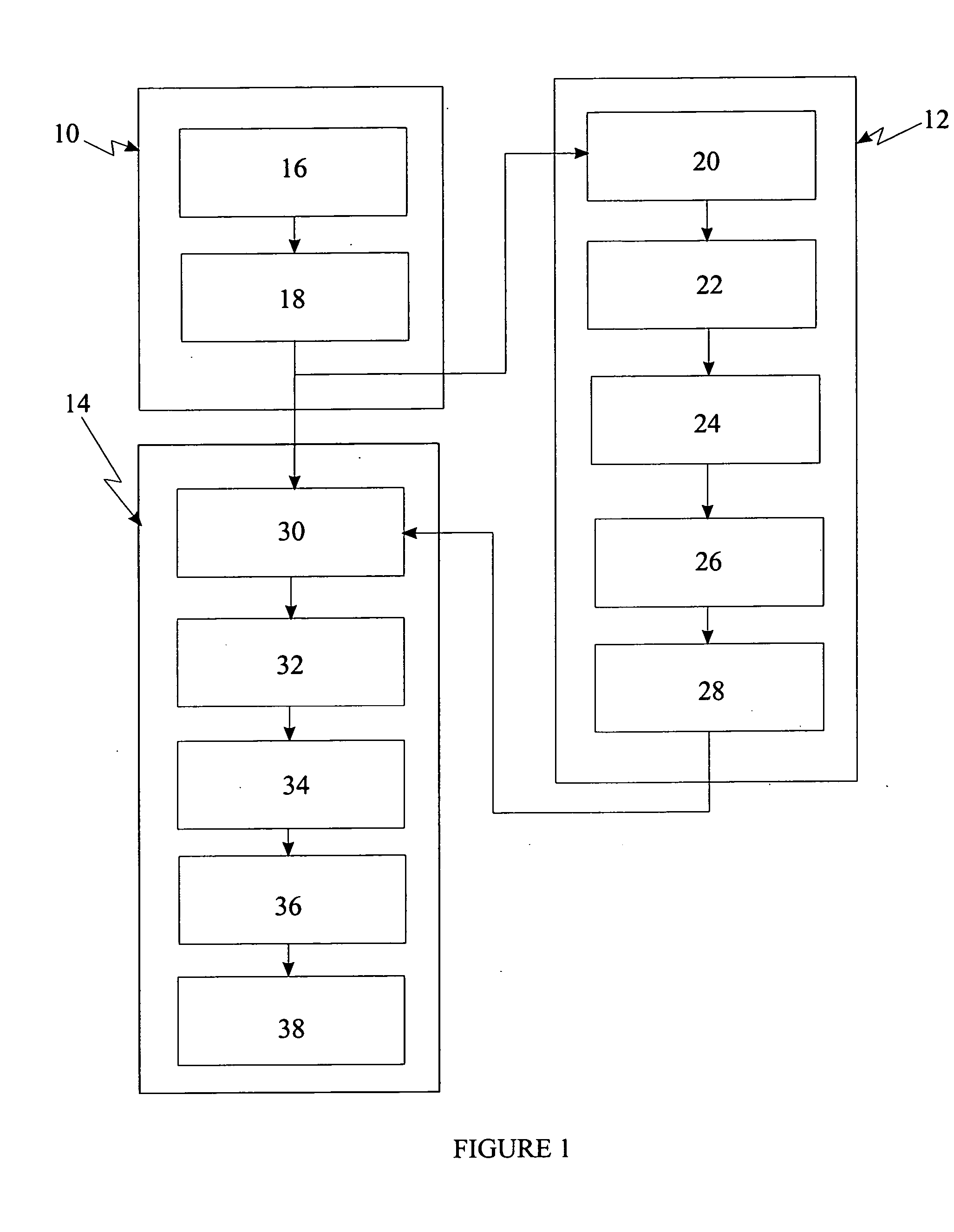

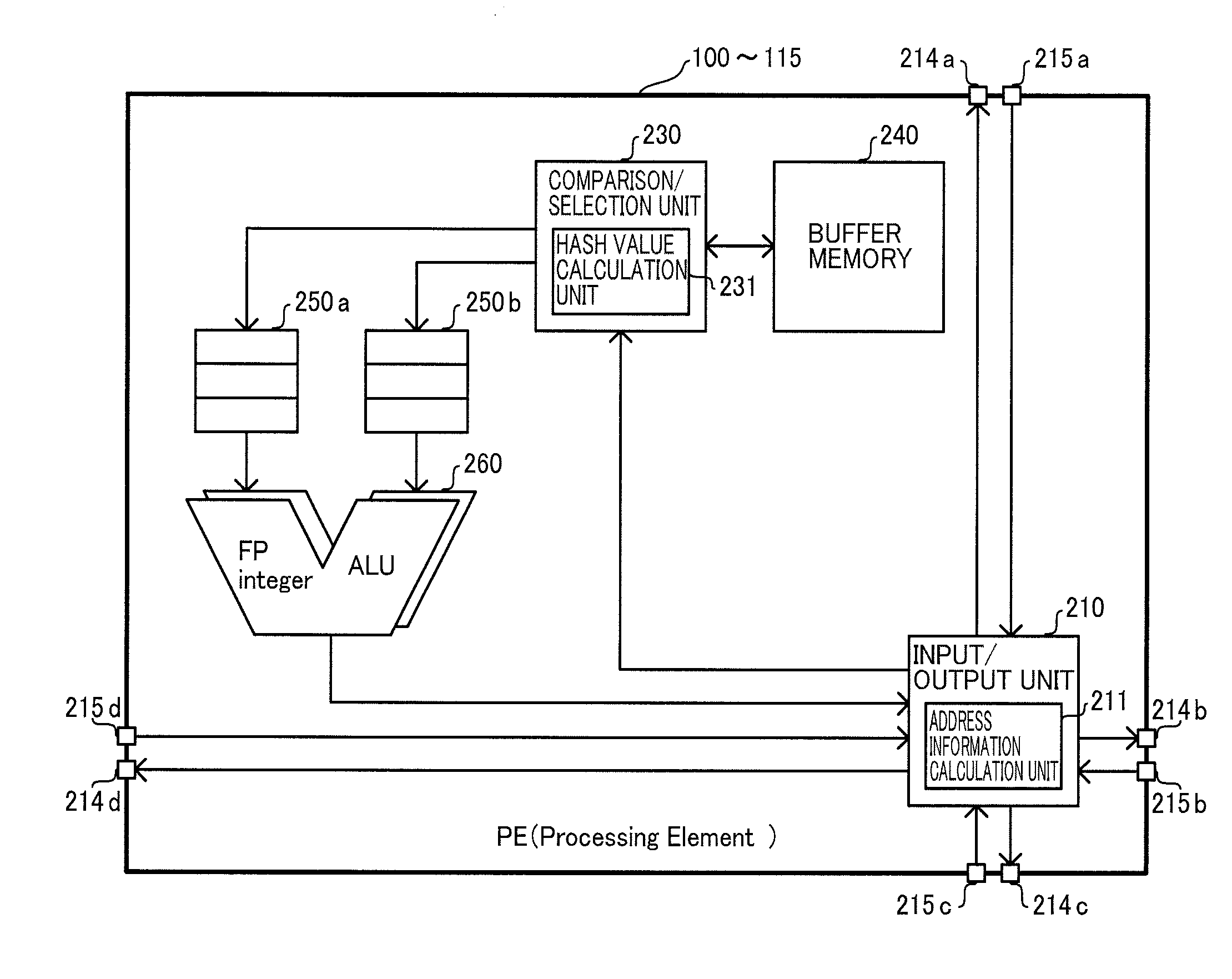

Reconfigurable data path processor

InactiveUS6883084B1Eliminates branchingCycle simpleEnergy efficient ICTConditional code generationMultiplexerProcessing element

A reconfigurable data path processor comprises a plurality of independent processing elements. Each of the processing elements advantageously comprising an identical architecture. Each processing element comprises a plurality of data processing means for generating a potential output. Each processor is also capable of through-putting an input as a potential output with little or no processing. Each processing element comprises a conditional multiplexer having a first conditional multiplexer input, a second conditional multiplexer input and a conditional multiplexer output. A first potential output value is transmitted to the first conditional multiplexer input, and a second potential output value is transmitted to the second conditional multiplexer output. The conditional multiplexer couples either the first conditional multiplexer input or the second conditional multiplexer input to the conditional multiplexer output, according to an output control command. The output control command is generated by processing a set of arithmetic status-bits through a logical mask. The conditional multiplexer output is coupled to a first processing element output. A first set of arithmetic bits are generated according to the processing of the first processable value. A second set of arithmetic bits may be generated from a second processing operation. The selection of the arithmetic status-bits is performed by an arithmetic-status bit multiplexer selects the desired set of arithmetic status bits from among the first and second set of arithmetic status bits. The conditional multiplexer evaluates the select arithmetic status bits according to logical mask defining an algorithm for evaluating the arithmetic status bits.

Owner:STC UNM +1

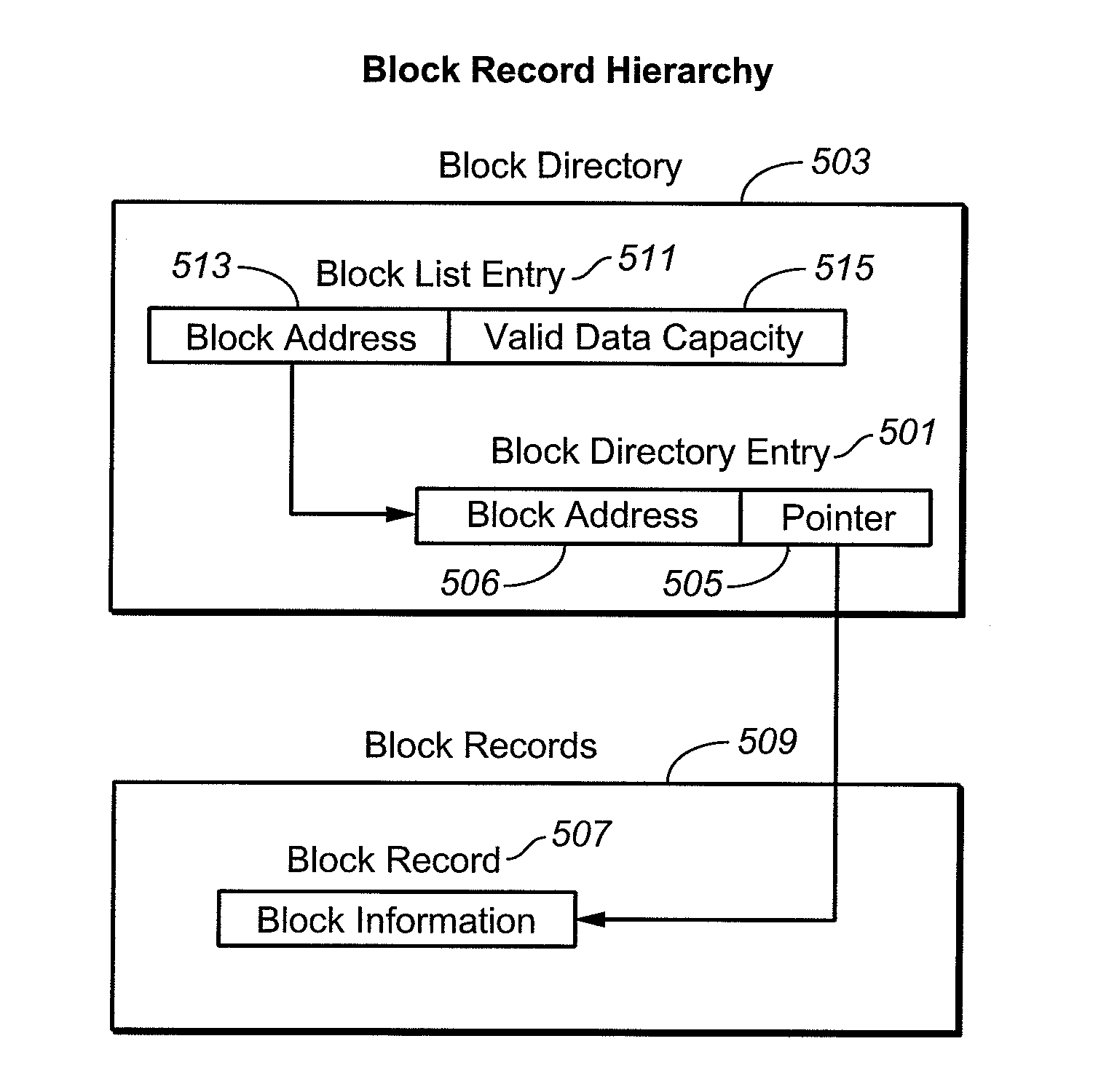

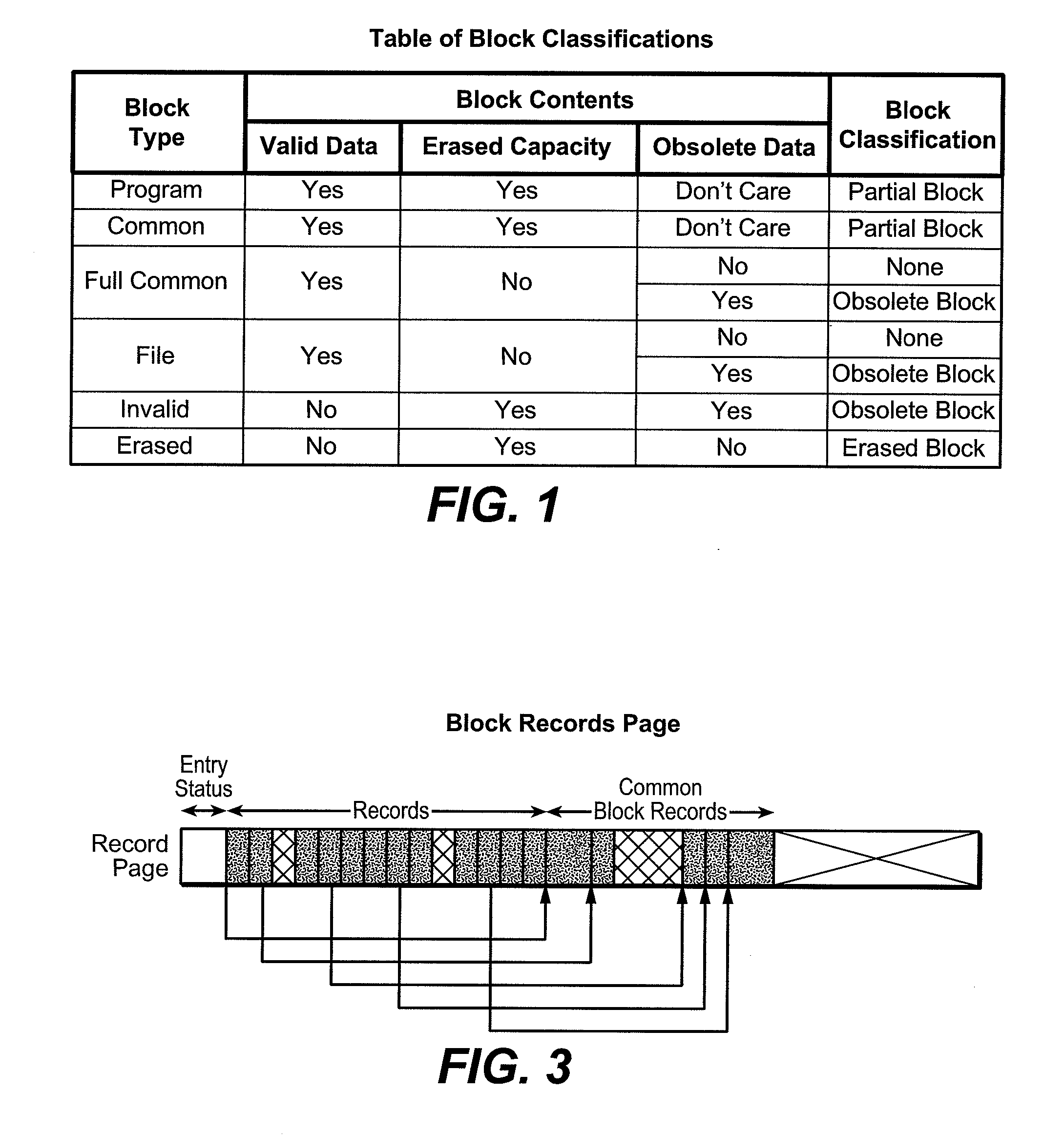

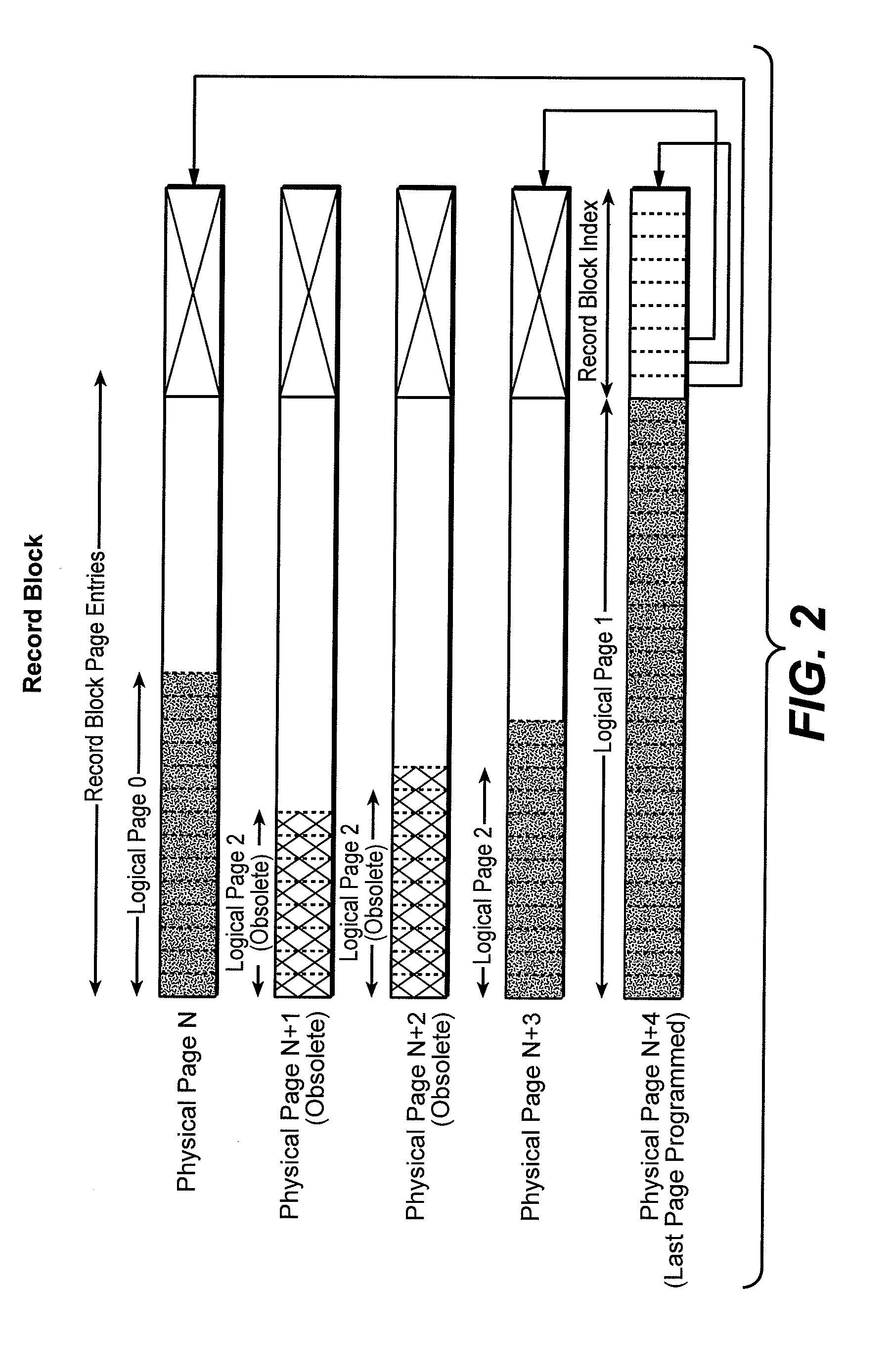

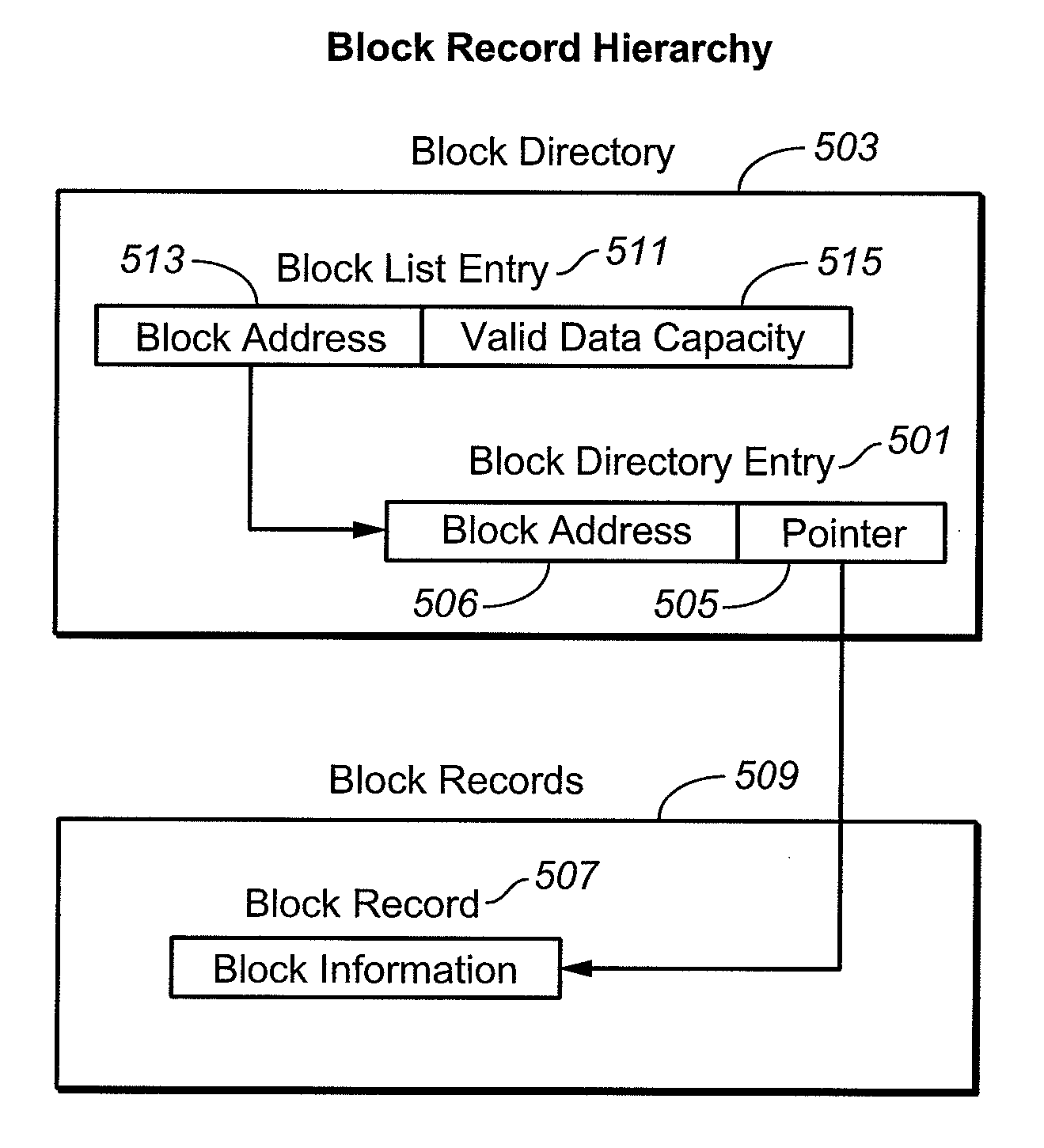

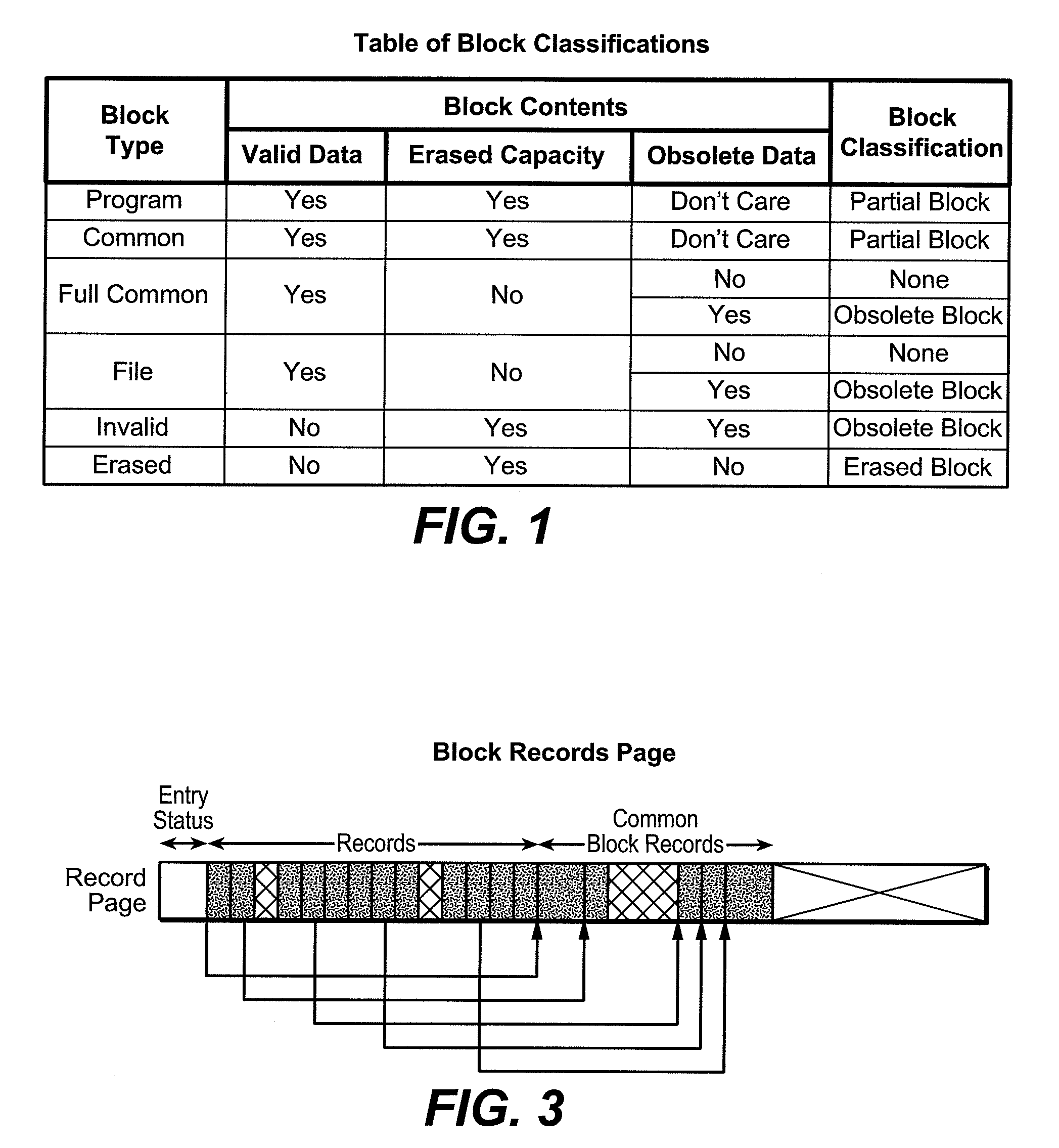

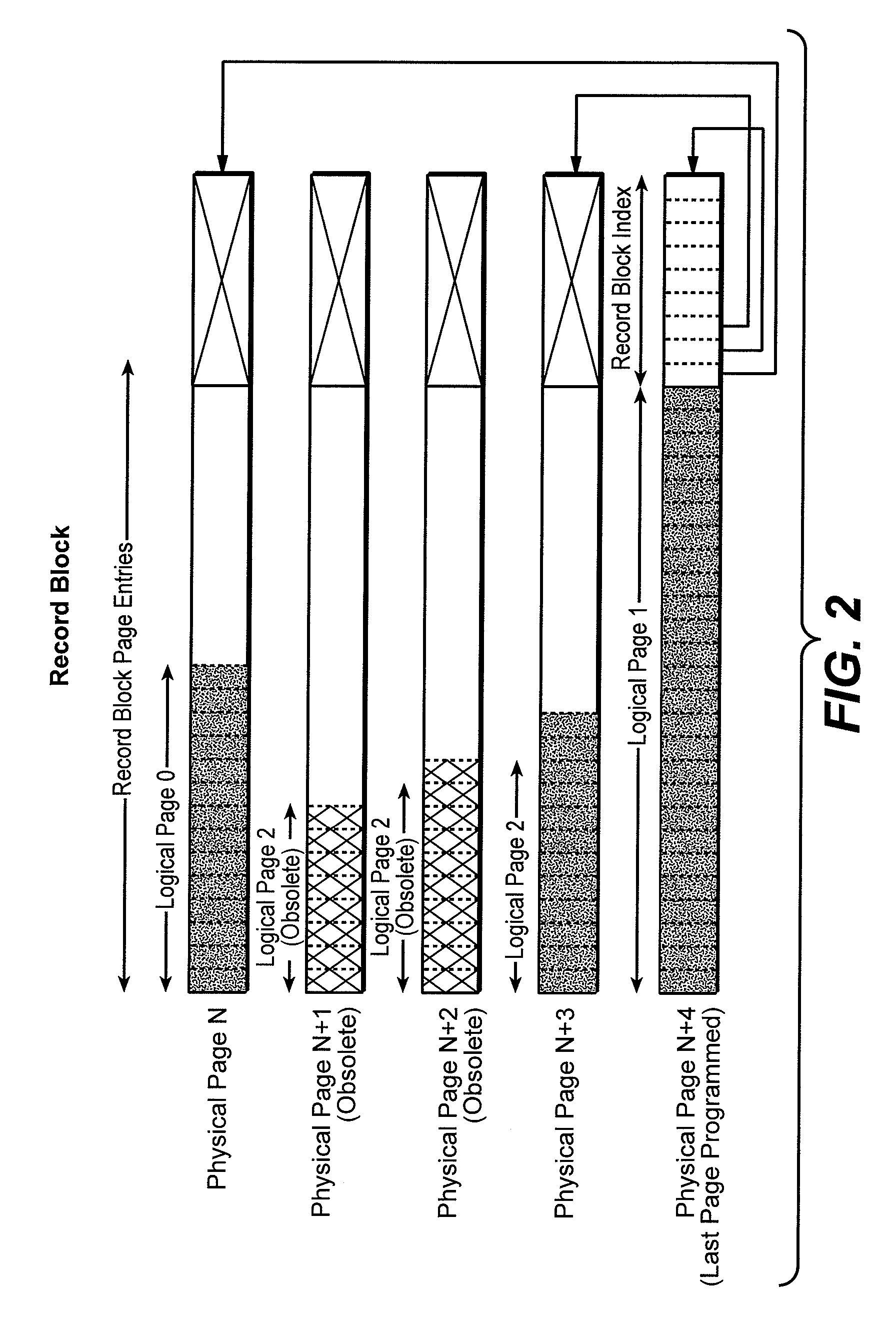

Methods of Managing Blocks in NonVolatile Memory

ActiveUS20070033332A1Memory can be managed effectivelyReduce copyMemory systemsParallel computingRapid identification

In a nonvolatile memory system that includes a block-erasable memory array, records are individually maintained for certain classifications of blocks. One or more lists may be maintained for the blocks, an individual list ordered according to a descriptor value. Such ordered lists allow rapid identification of a block by descriptor value.

Owner:SANDISK TECH LLC

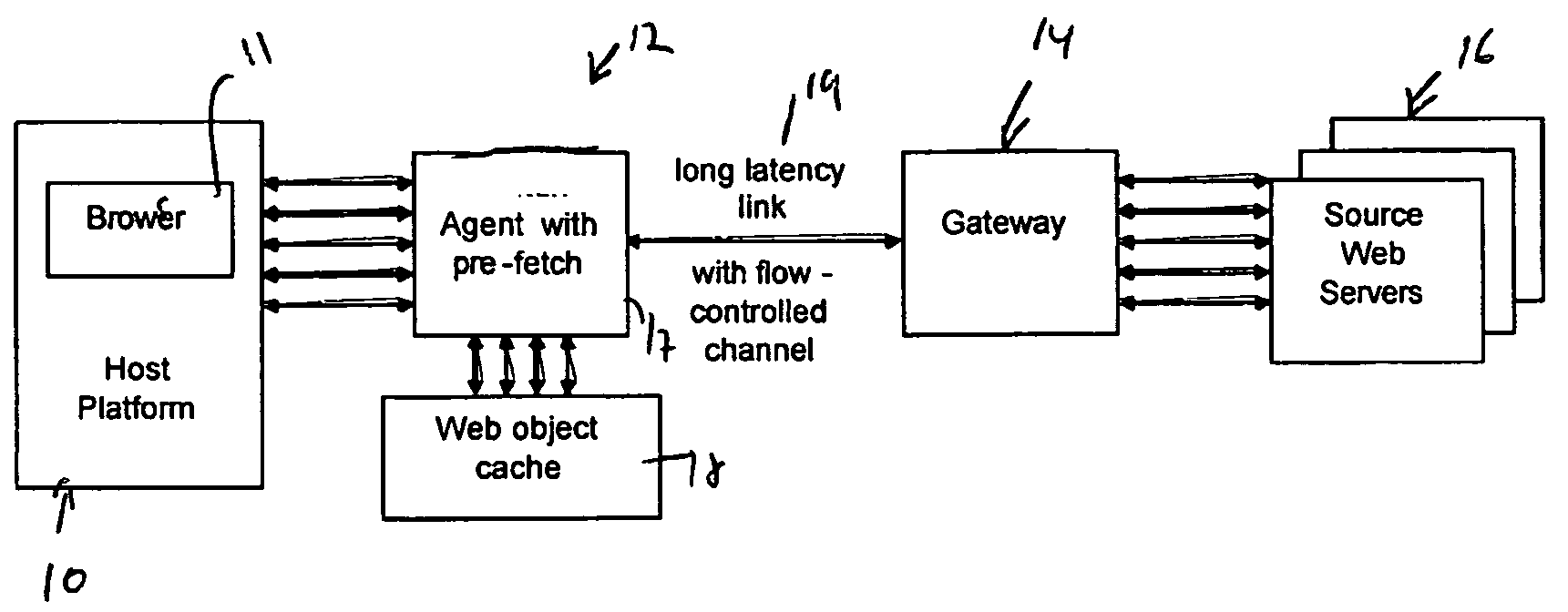

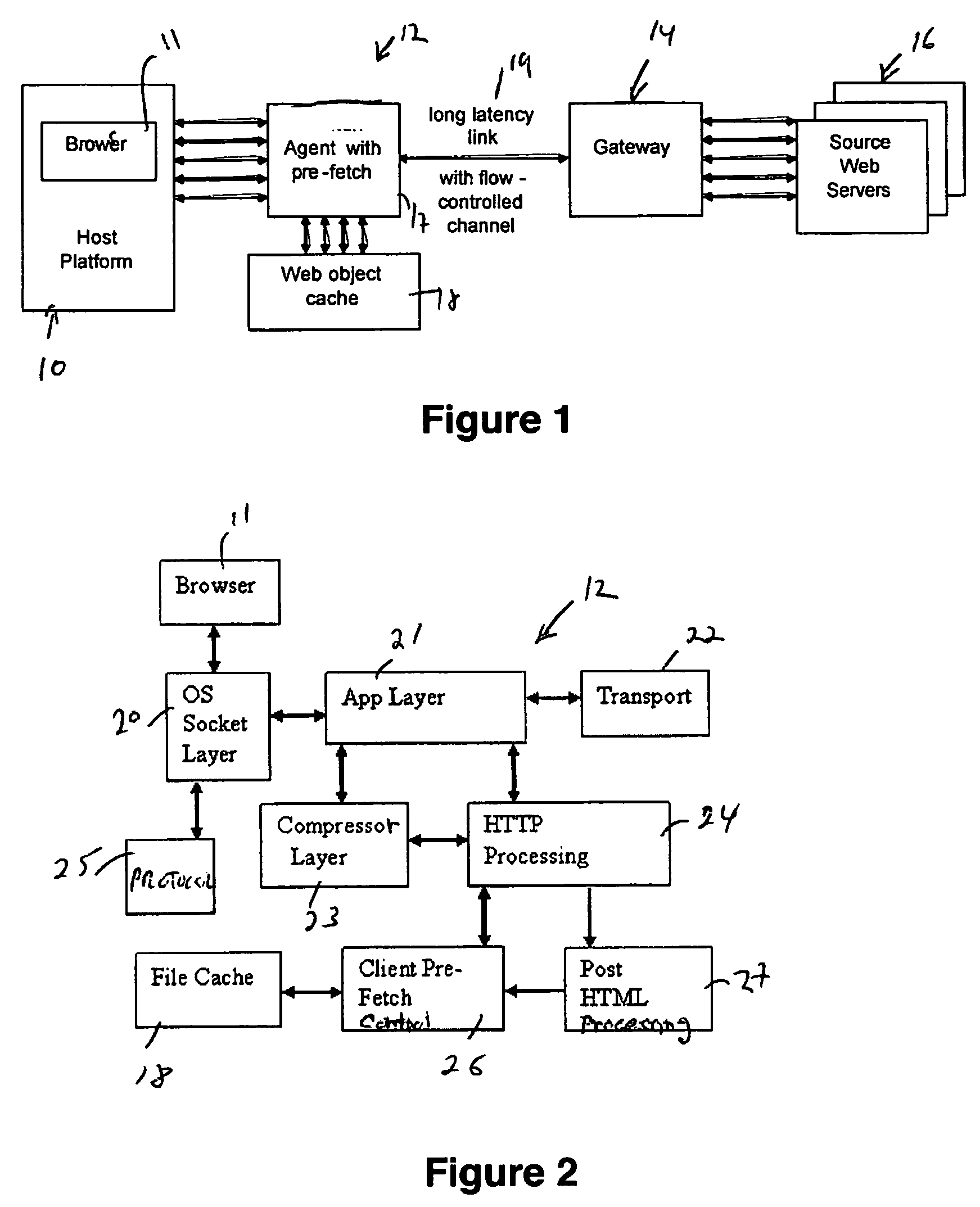

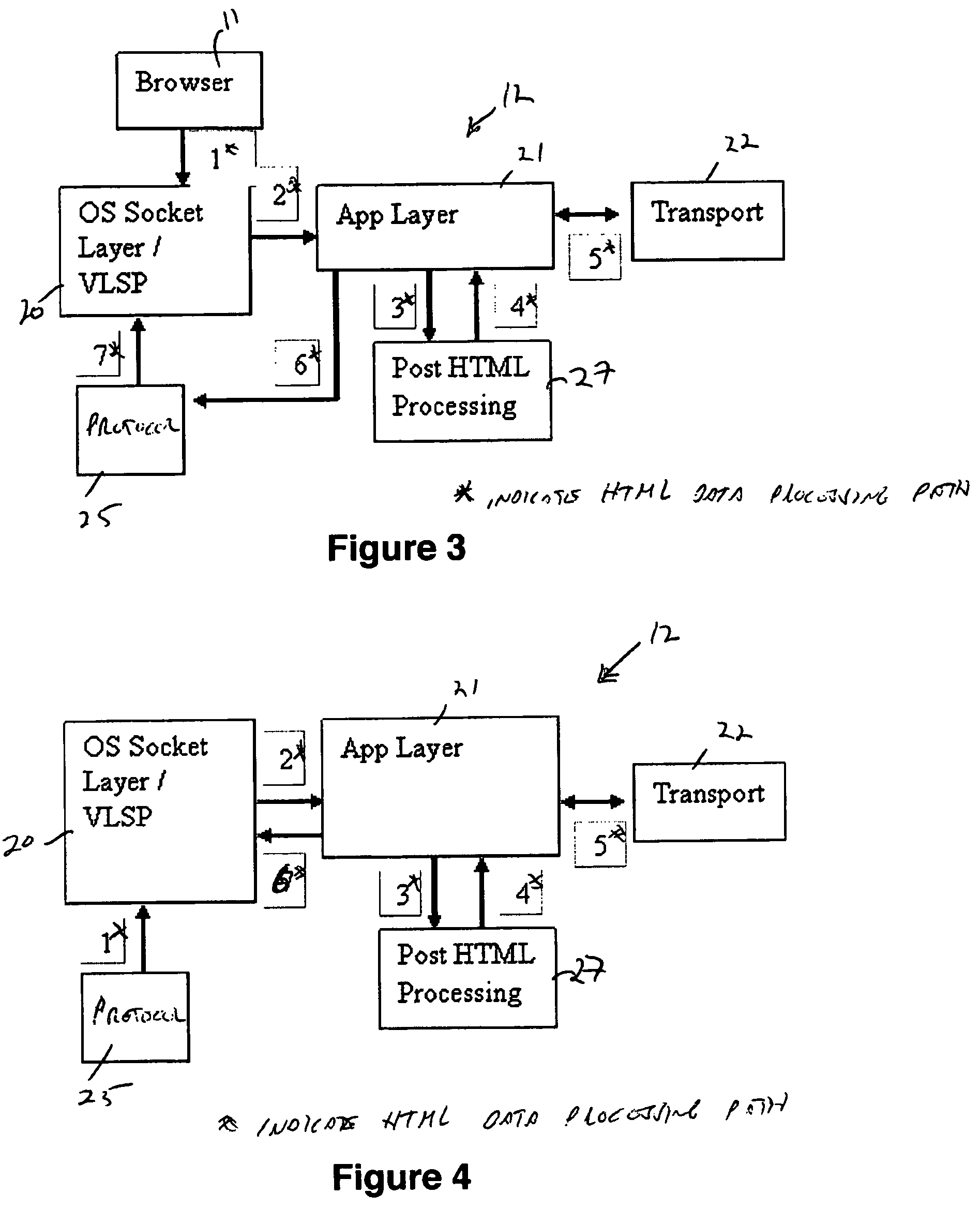

Method and apparatus for increasing performance of HTTP over long-latency links

ActiveUS7694008B2Reduce negative impactNo latencyDigital data information retrievalMultiprogramming arrangementsWeb siteWeb browser

The invention increases performance of HTTP over long-latency links by pre-fetching objects concurrently via aggregated and flow-controlled channels. An agent and gateway together assist a Web browser in fetching HTTP contents faster from Internet Web sites over long-latency data links. The gateway and the agent coordinate the fetching of selective embedded objects in such a way that an object is ready and available on a host platform before the resident browser requires it. The seemingly instantaneous availability of objects to a browser enables it to complete processing the object to request the next object without much wait. Without this instantaneous availability of an embedded object, a browser waits for its request and the corresponding response to traverse a long delay link.

Owner:VENTURI WIRELESS

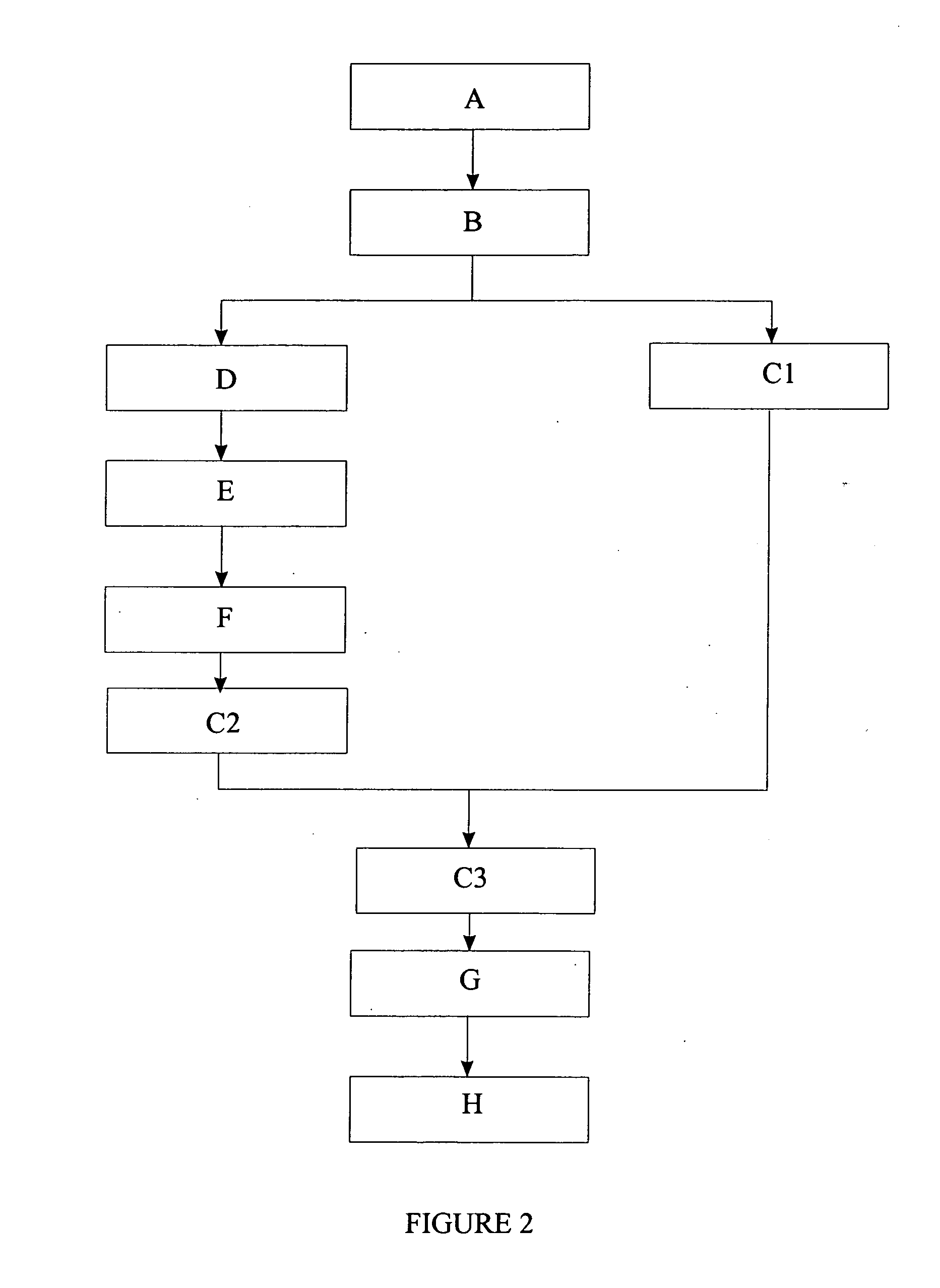

Enabling intra-partition parallelism for partition-based operations

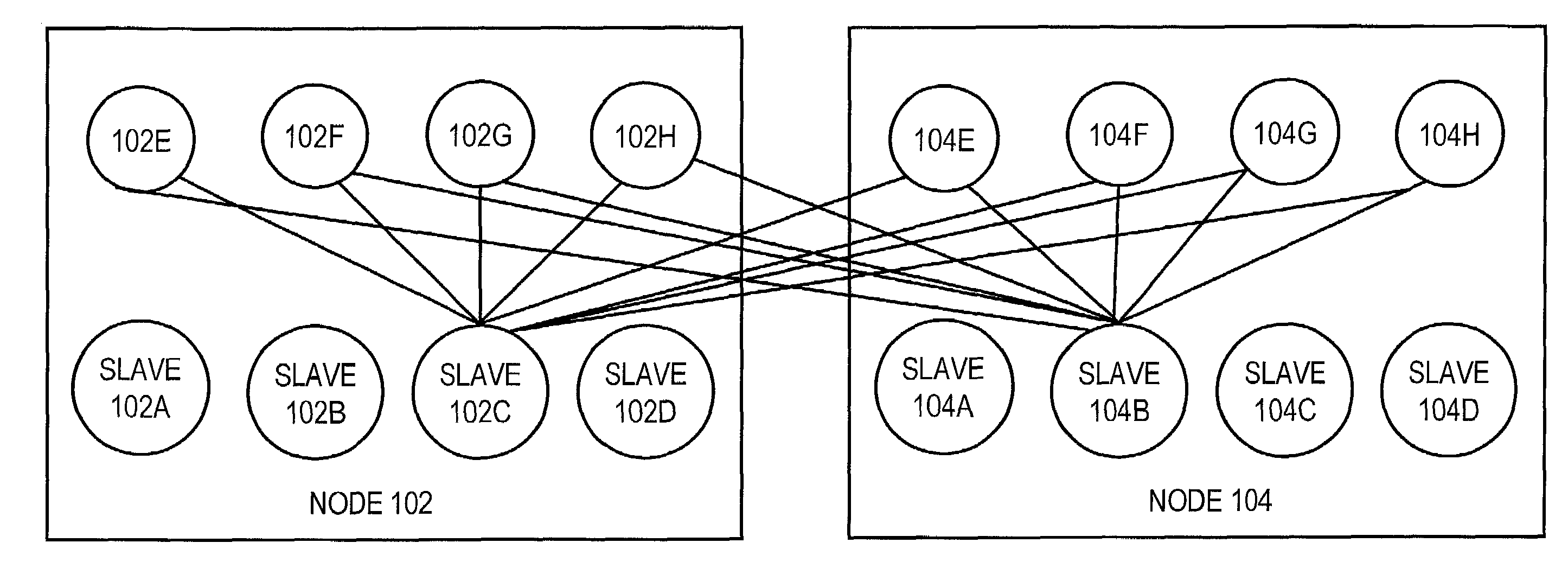

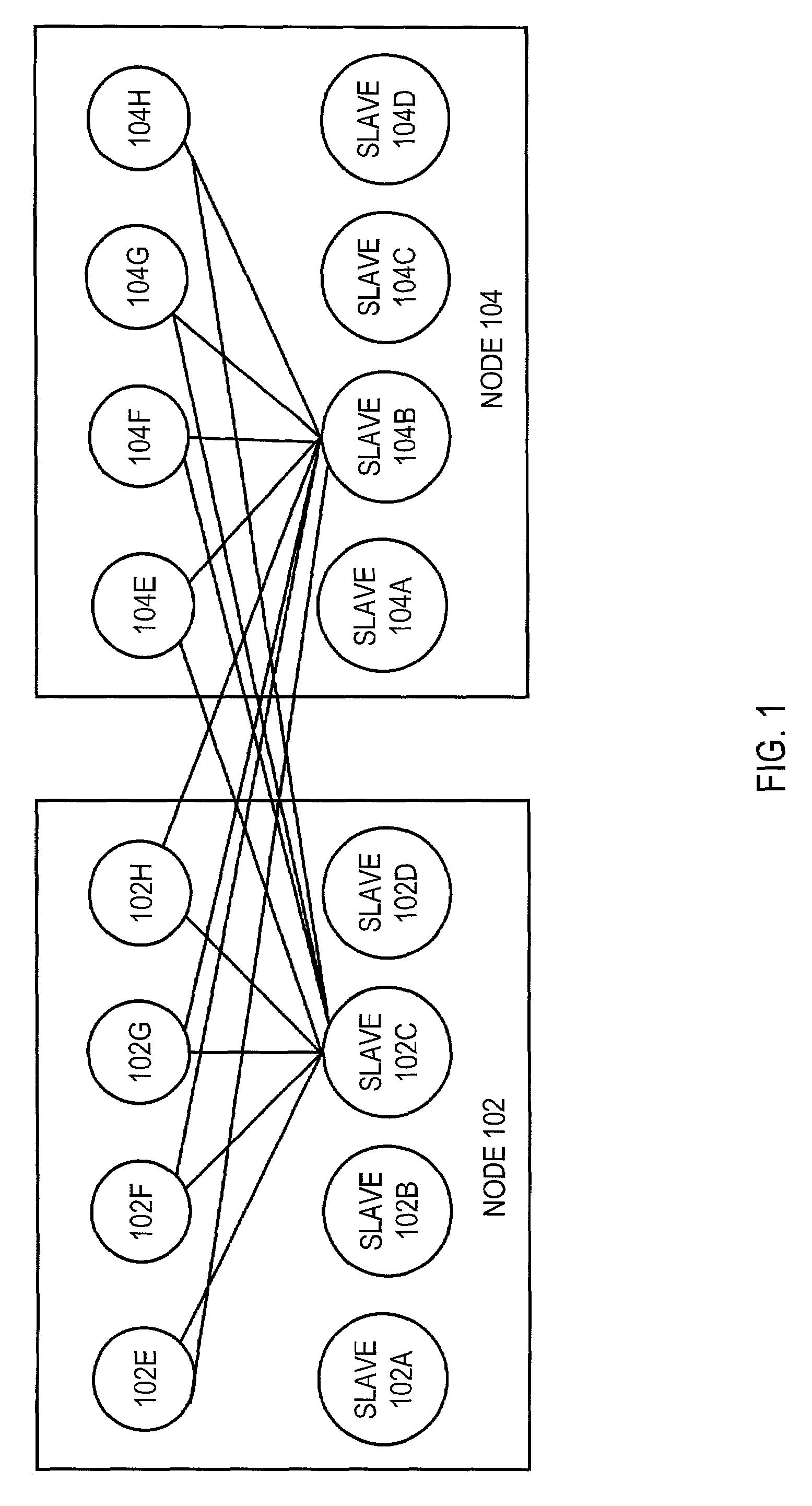

InactiveUS6954776B1Without incurring overhead costImprove parallelismDigital data information retrievalResource allocationHash functionTheoretical computer science

Techniques are provided for increasing the degree of parallelism without incurring overhead costs associated with inter-nodal communication for performing parallel operations. One aspect of the invention is to distribute-phase partition-pairs of a parallel partition-wise operation on a pair of objects among the nodes of a database system. The -phase partition-pairs that are distributed to each node are further partitioned to form a new set of-phase partition-pairs. One -phase partition-pair from the set of new-phase partition-pairs is assigned to each slave process that is on a given node. In addition, a target object may be partitioned by applying an appropriate hash function to the tuples of the target object. The parallel operation is performed by broadcasting each tuple from a source table only to the group of slave processes that is working on the static partition to which the tuple is mapped.

Owner:ORACLE INT CORP

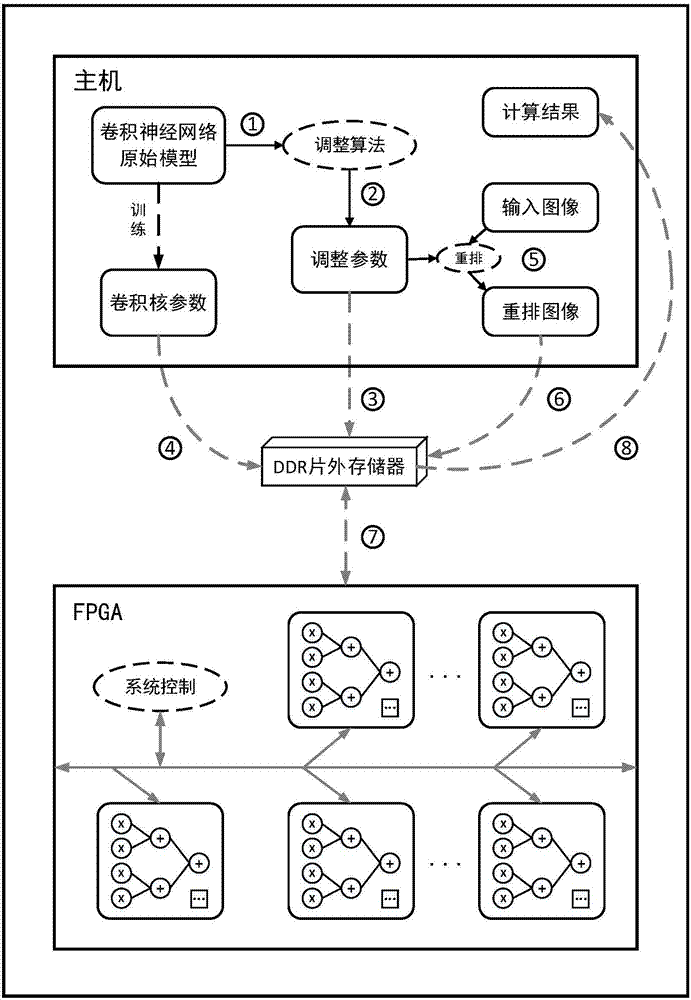

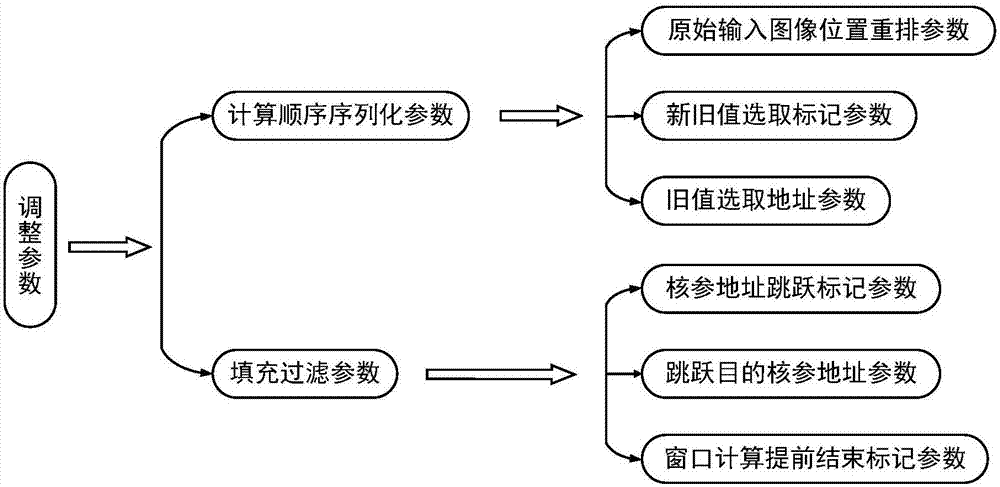

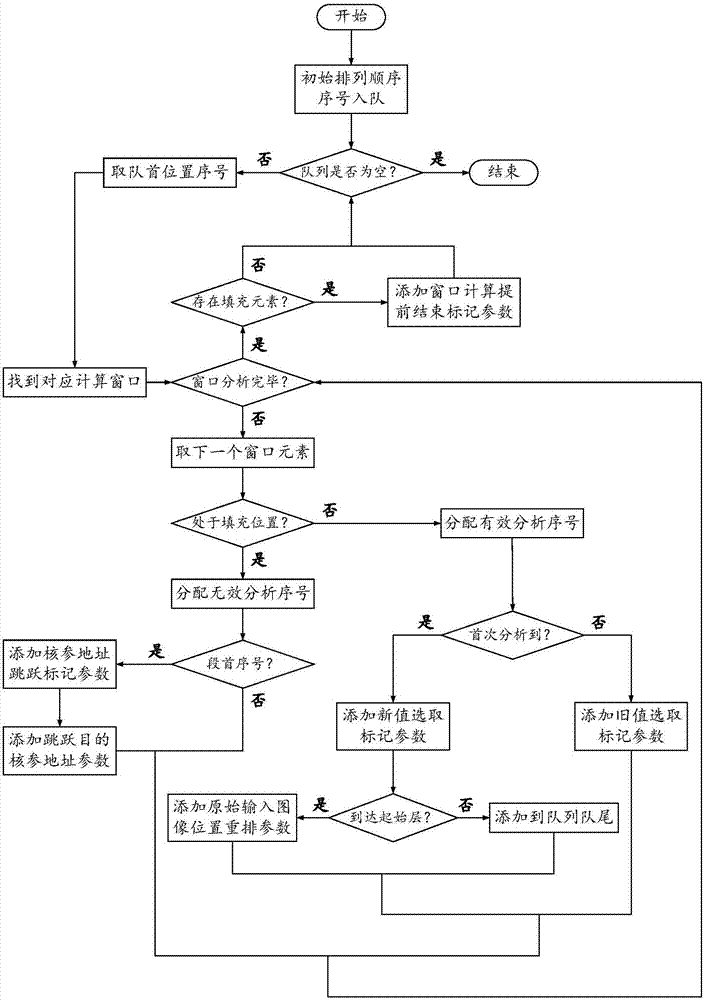

Streamlined acceleration system of FPGA-based depth convolution neural network

ActiveCN106875012AProcessing speedHighly integratedPhysical realisationNeural learning methodsComputer science

The invention brings forward a streamlined acceleration system of an FPGA-based depth convolution neural network. The streamlined acceleration system is mainly formed by an input data distribution control module, an output data distribution control module, a convolution calculating sequence serialization realizing module, a convolution calculating module, a pooling calculating sequence serialization realizing module, a pooling calculating module, and a convolution calculating result distribution control module. Moreover, the streamlined acceleration system comprises an internal system cascade interface. Through the streamlined acceleration system designed by the invention, highly efficient parallel streamlined realization can be conducted on an FPGA, problems of resource waste and effective calculation delays caused by filling operations during calculation are effectively solved, the power consumption of the system is effectively reduced, and the operation processing speed is greatly increased.

Owner:武汉魅瞳科技有限公司

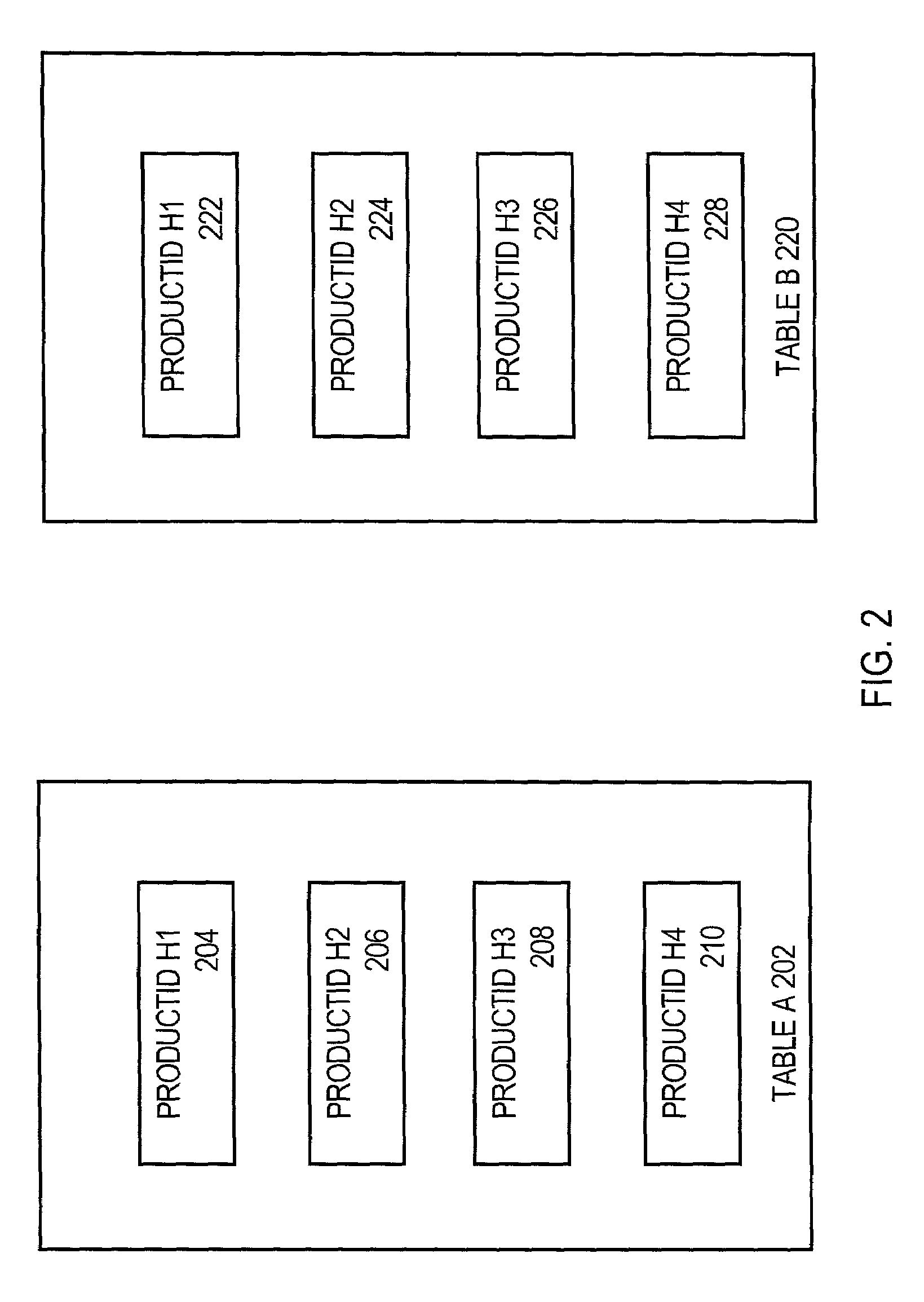

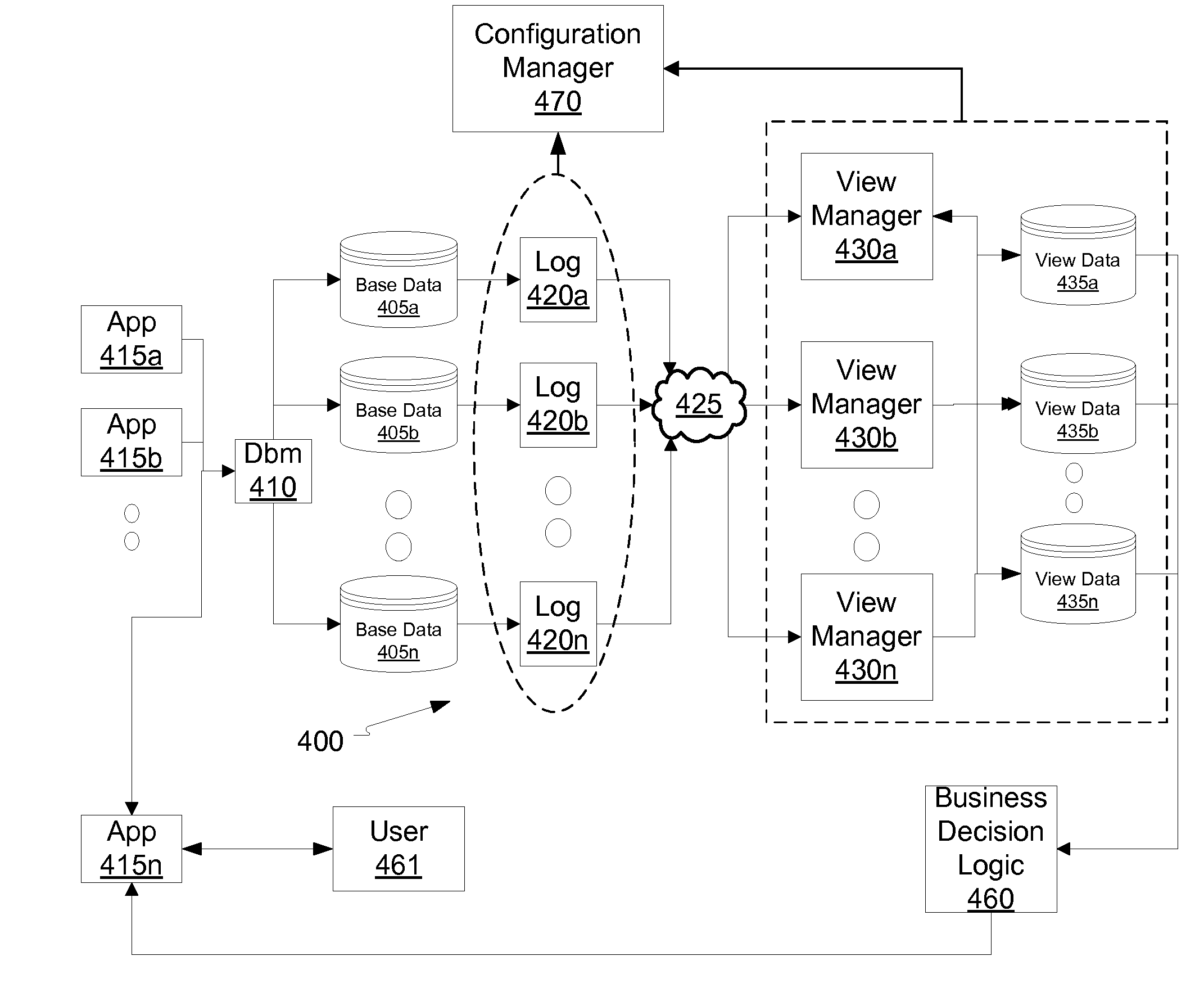

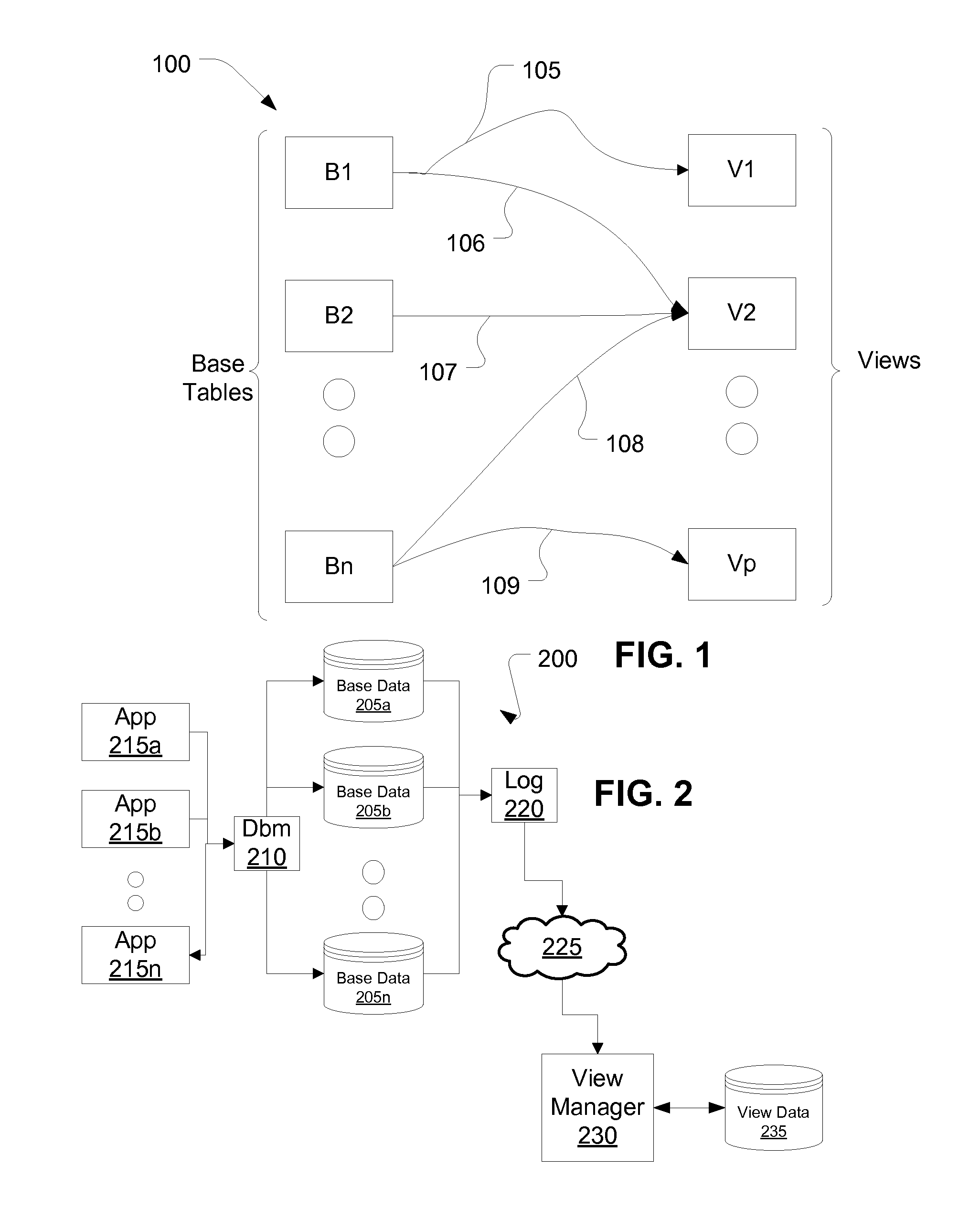

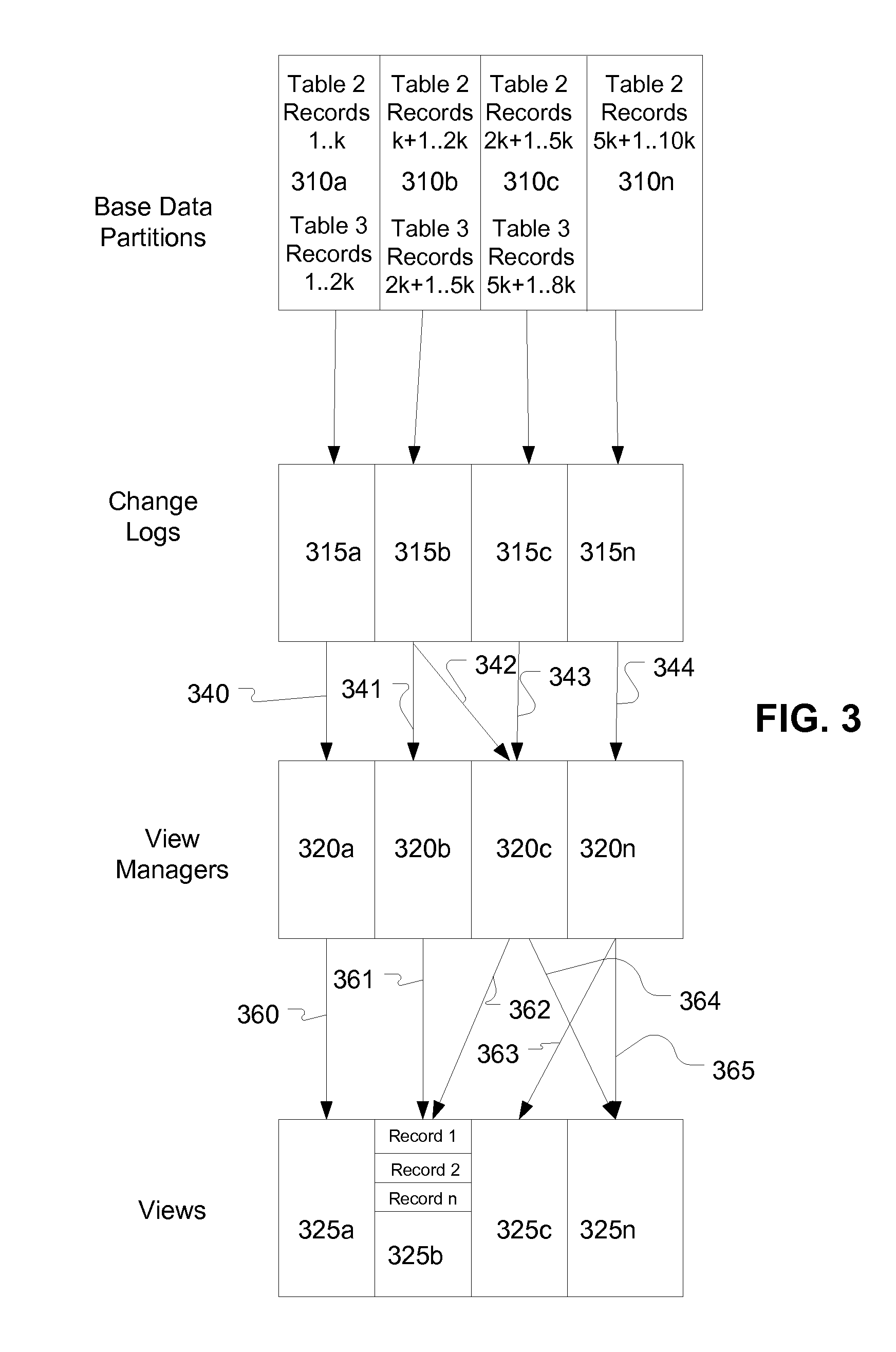

Controlled parallel propagation of view table updates in distributed database systems

InactiveUS20100049715A1Improve parallelismReduce usageDigital data processing detailsDatabase distribution/replicationAnalysis dataDistributed database

Aspects include mechanisms for design and analysis of flows of information in a database system from updates to base table records, through one or more log segments, to a plurality of view managers that respectively execute operations to update view table records. Mechanisms allow any base table record to be used by any view manager, so long as the view managers are using that base table record to update different view table records. Mechanisms also allow any number of view table records to be updated by any number of view managers, based on respective base table records. Mechanisms prevent the same view record from being used as a basis for updating the same base table record by more than one view manager, thereby preventing a conflict where updated information from one base table record is used more than once for updating a single view table record.

Owner:OATH INC

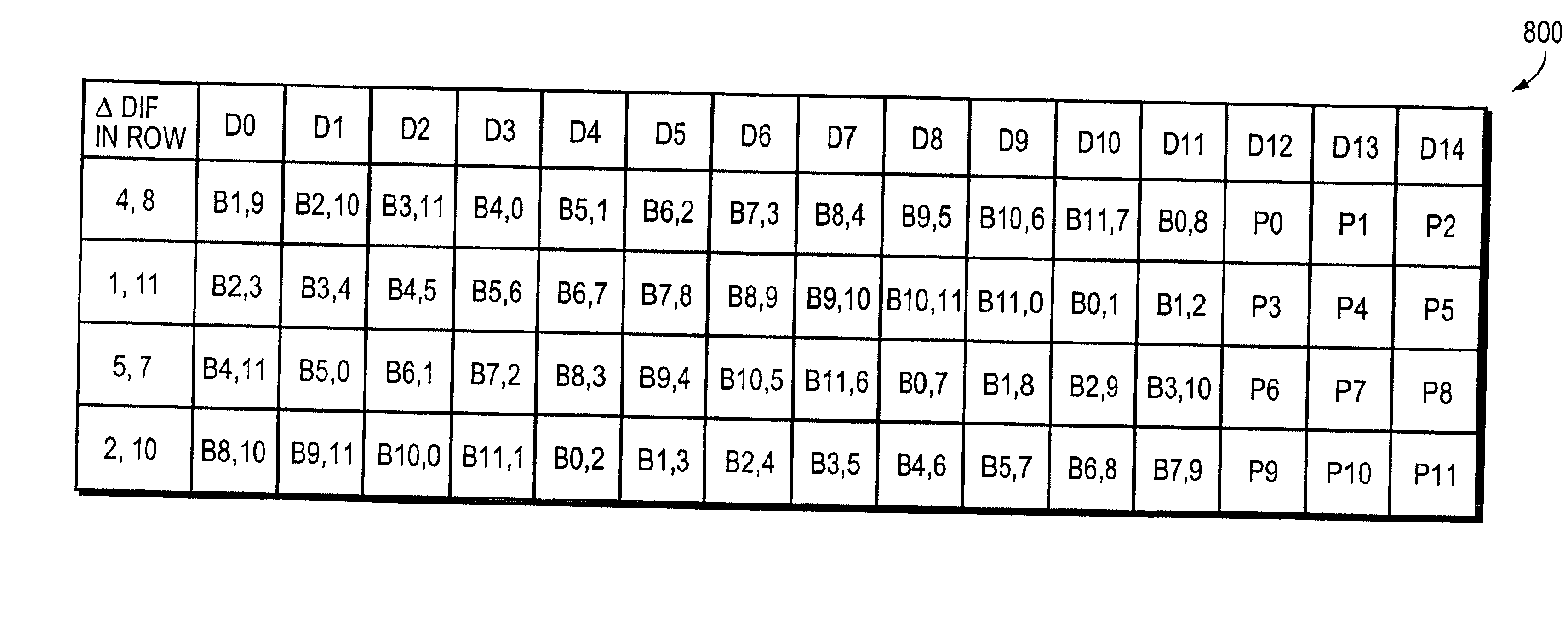

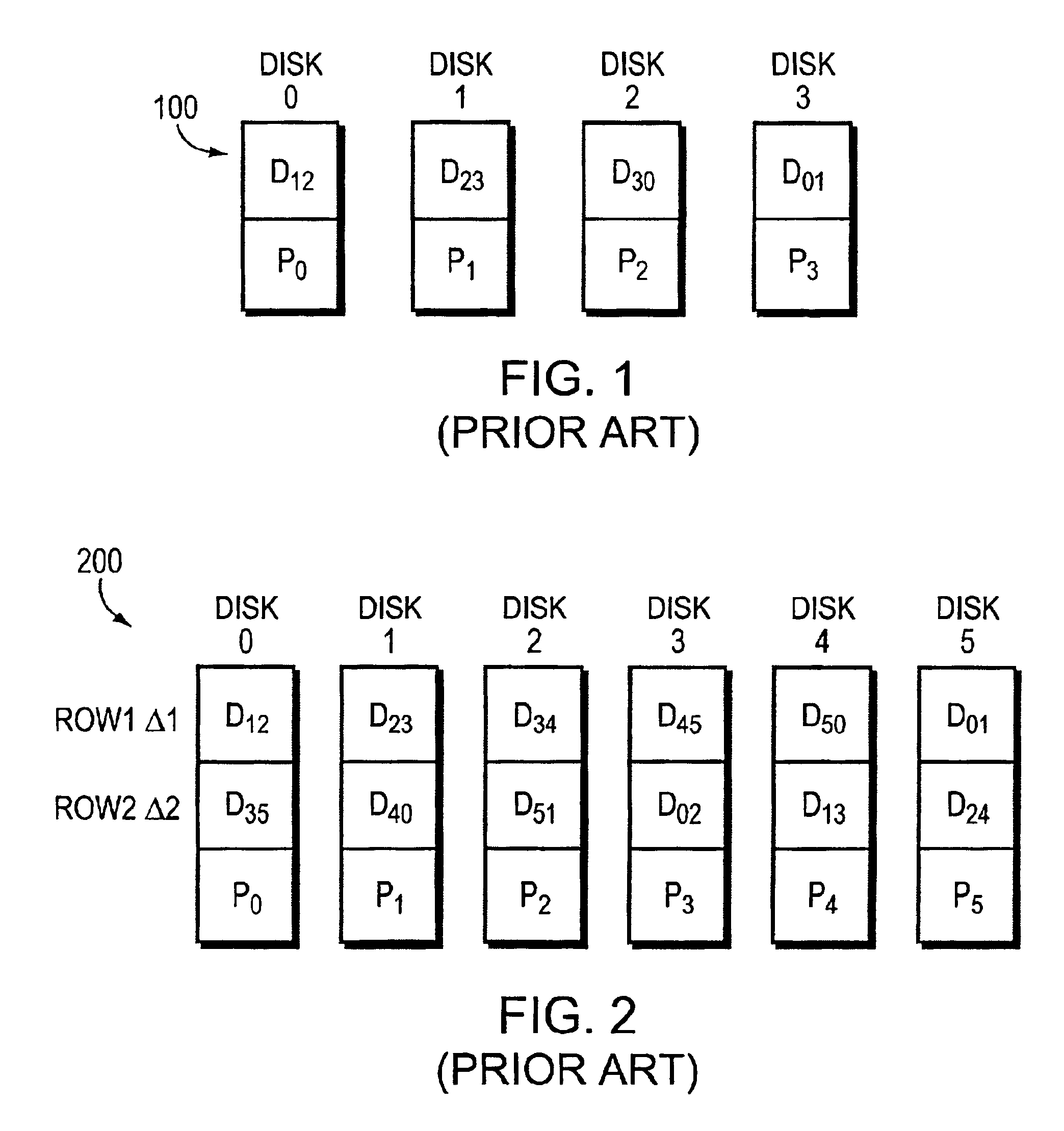

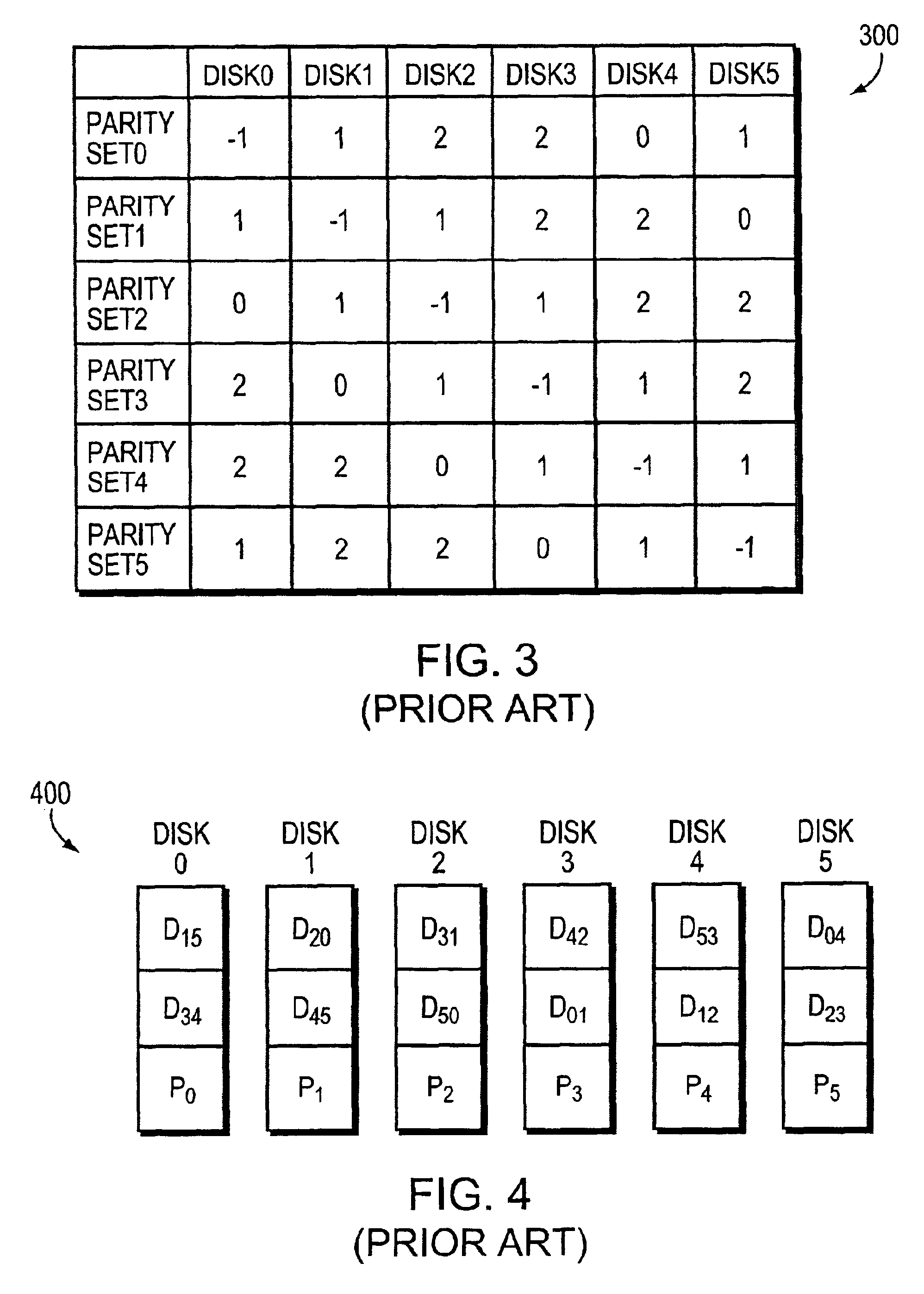

Concentrated parity technique for handling double failures and enabling storage of more than one parity block per stripe on a storage device of a storage array

ActiveUS6851082B1Increase redundancyImprove parallelismCode conversionCoding detailsDevice failureDouble fault

Owner:NETWORK APPLIANCE INC

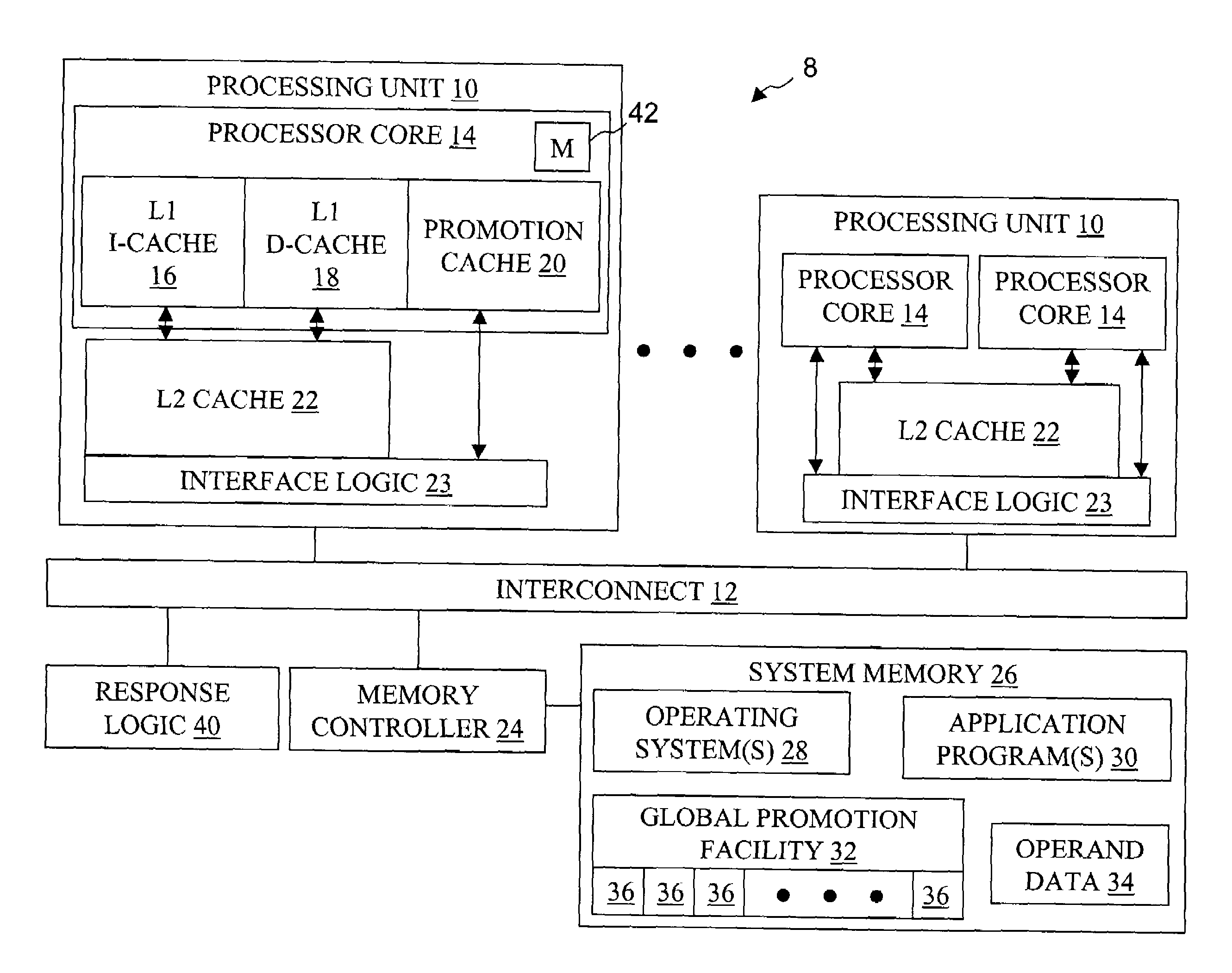

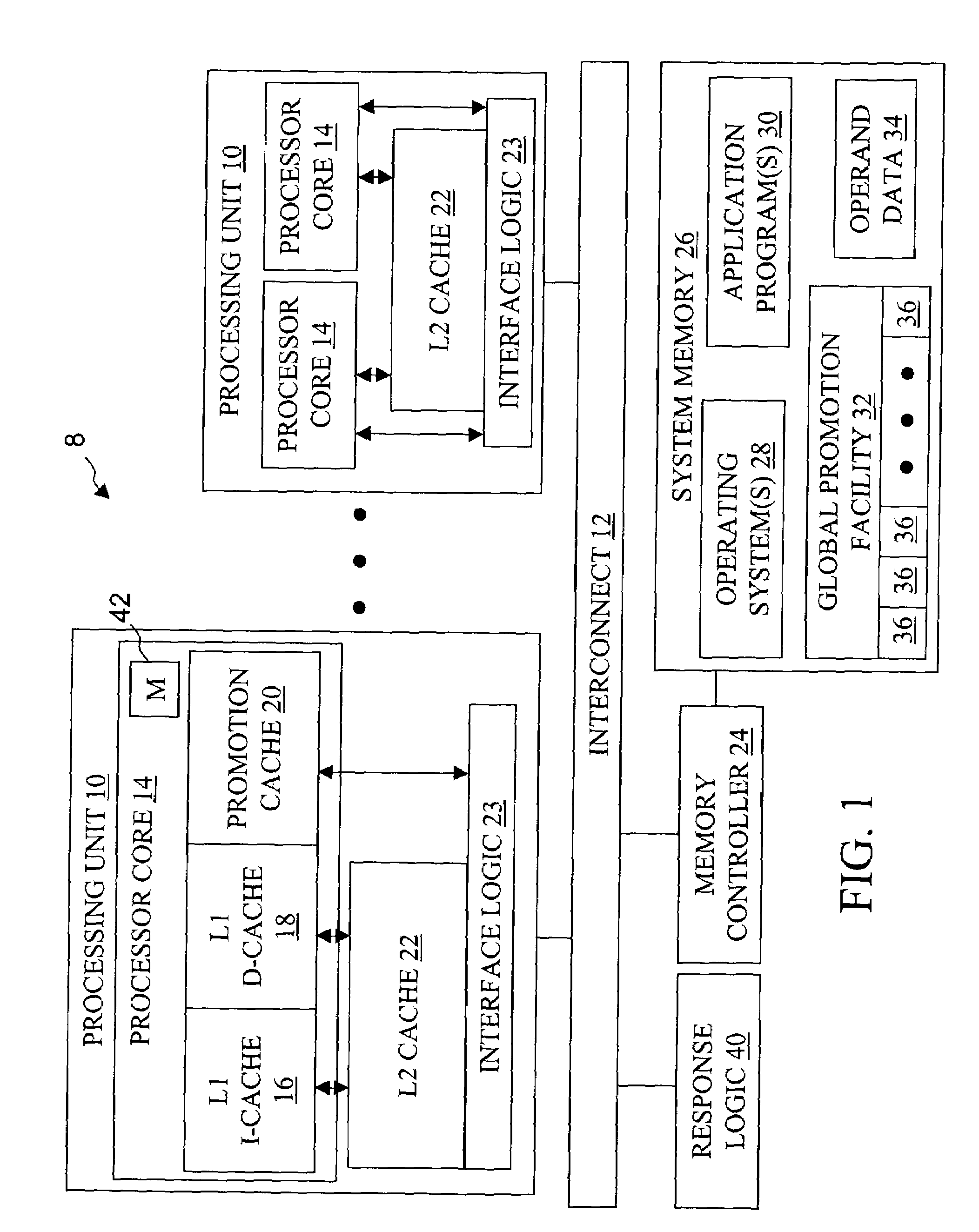

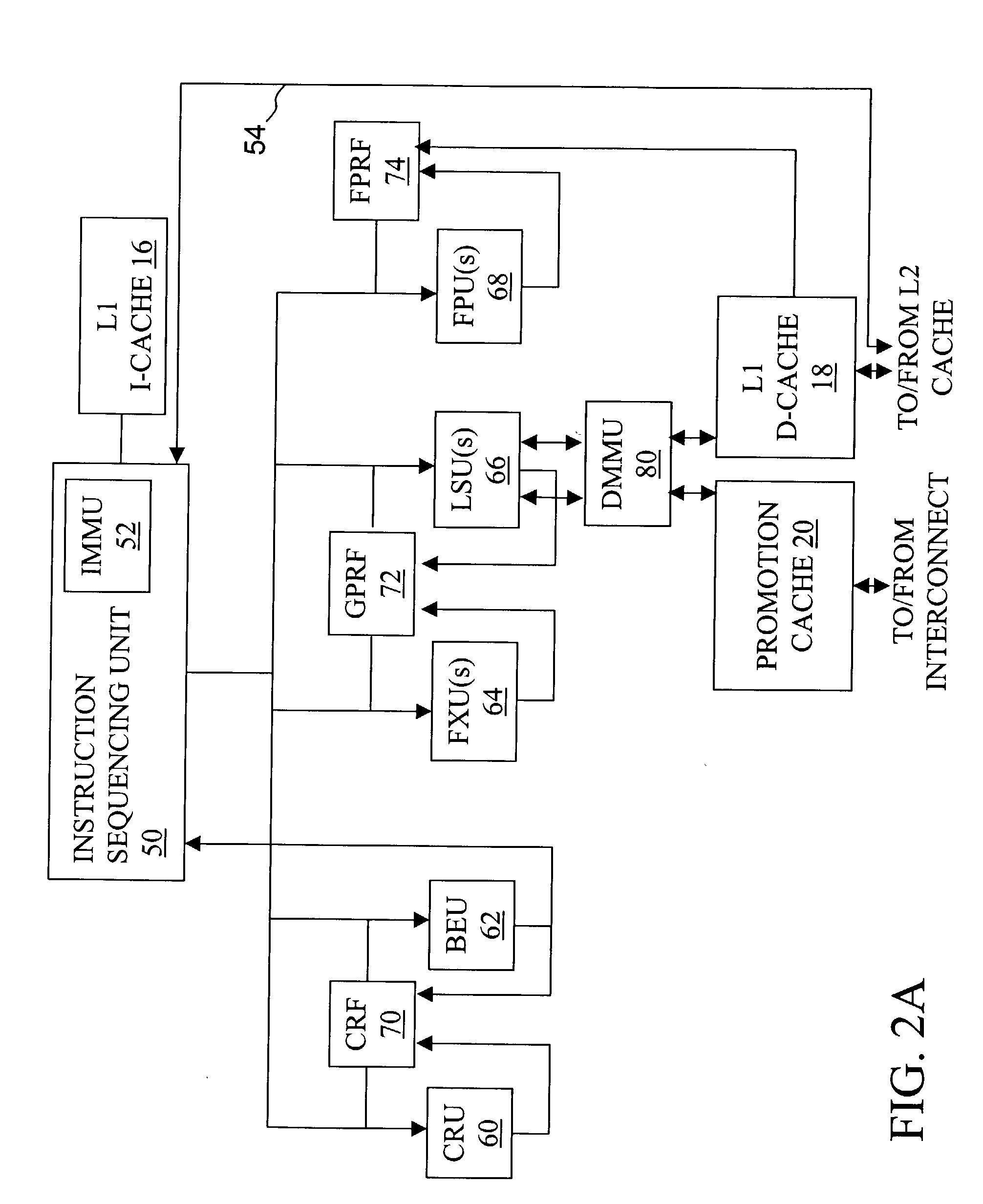

High speed promotion mechanism suitable for lock acquisition in a multiprocessor data processing system

InactiveUS7213248B2Reduce percentageReduce the amount requiredProgram synchronisationUnauthorized memory use protectionData processing systemBit field

A multiprocessor data processing system includes a plurality of processors coupled to an interconnect and to a global promotion facility containing at least one promotion bit field. A first processor executes a high speed instruction sequence including a load-type instruction to acquire a promotion bit field within the global promotion facility exclusive of at least a second processor. The request may be made visible to all processors coupled to the interconnect. In response to execution of the load-type instruction, a register of the first processor receives a register bit field indicating whether or not the promotion bit field was acquired by execution of the load-type instruction. While the first processor holds the promotion bit field exclusive of the second processor, the second processor is permitted to initiate a request on the interconnect. Advantageously, promotion bit fields are handled separately from data, and the communication of promotion bit fields does not entail the movement of data cache lines.

Owner:INT BUSINESS MASCH CORP

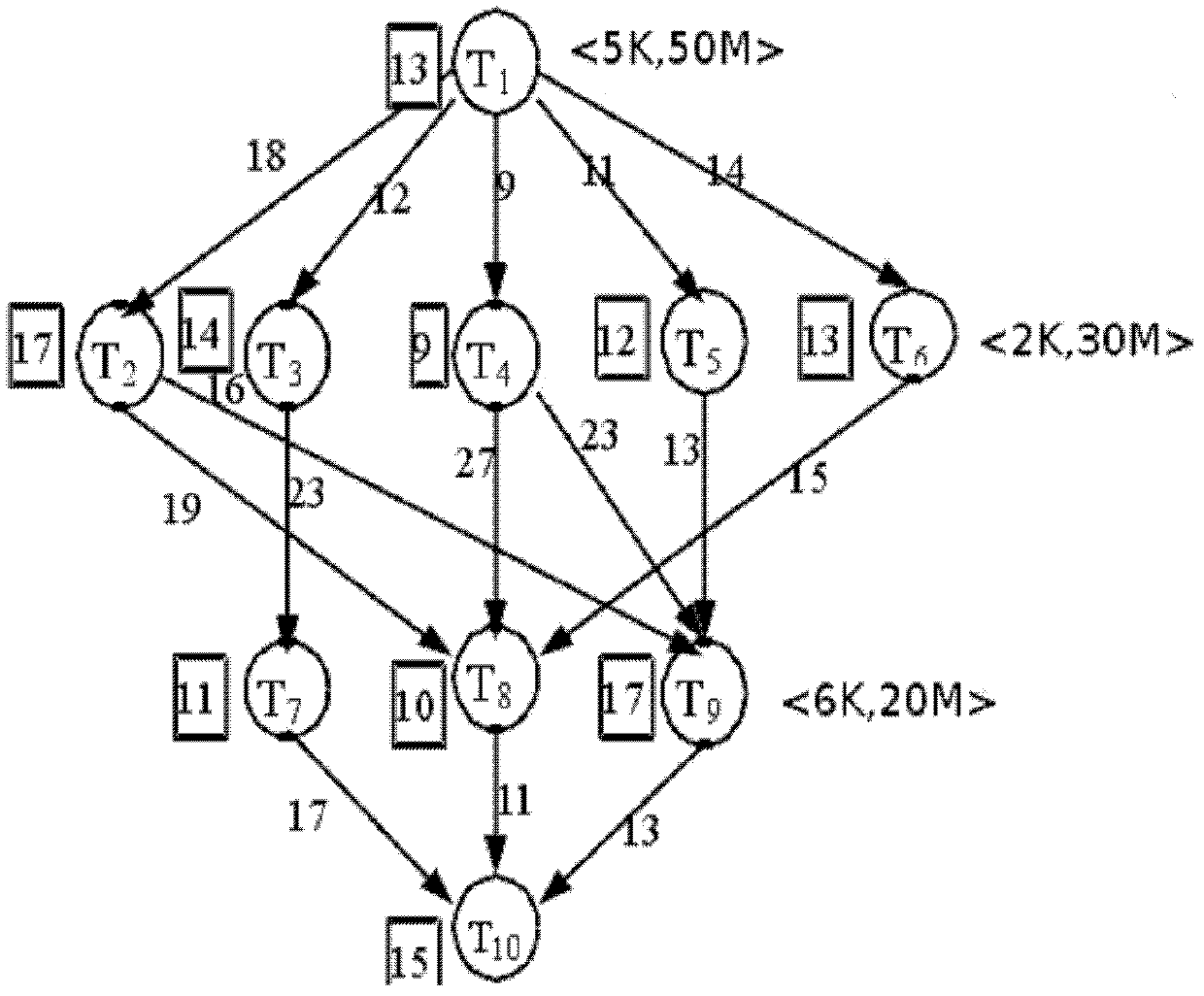

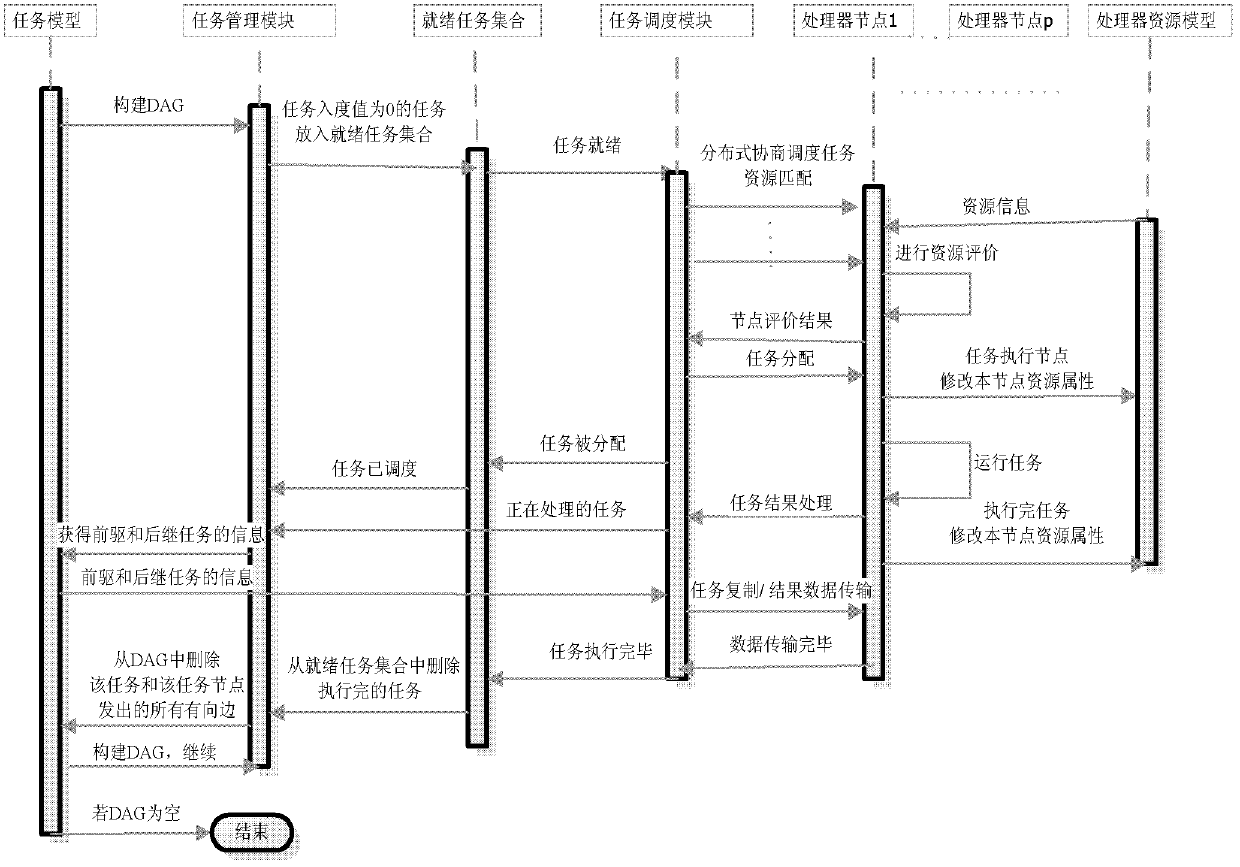

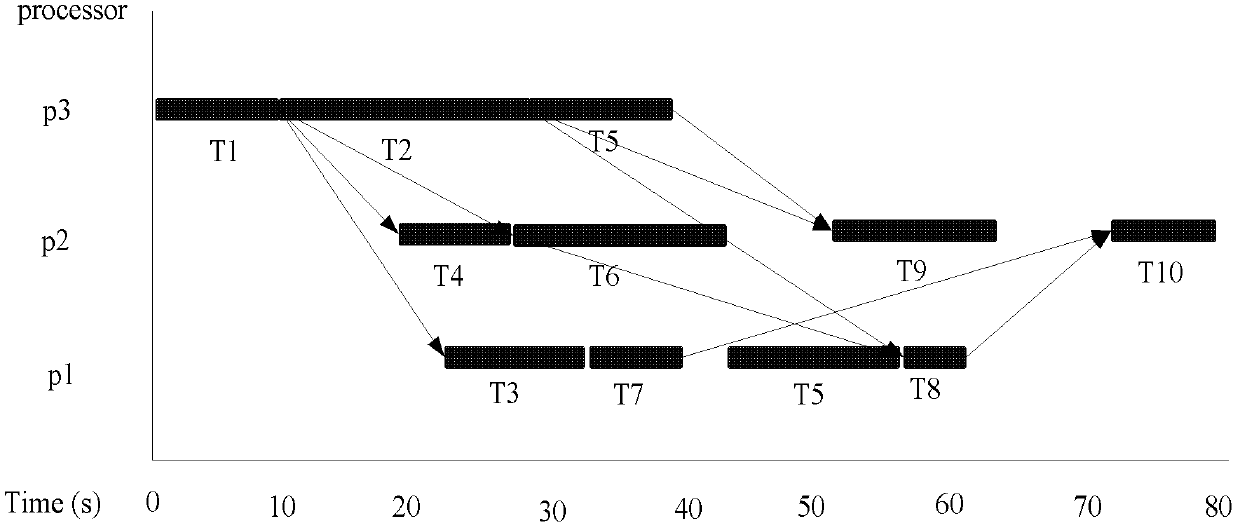

Decoupling parallel scheduling method for rely tasks in cloud computing

InactiveCN102591712AStrong parallelismLoad balancingMultiprogramming arrangementsTransmissionParallel computingPerformance index

The invention belongs to the field of cloud computing application, and relates to method for task rely relation description, decoupling, parallel scheduling and the like in cloud service. Rely task relations are provided, and a decoupling parallel scheduling method of rely tasks are constructed. The method comprises first decoupling the task rely relations with incoming degree being zero to construct a set of ready tasks and dynamically describing tasks capable of being scheduled parallelly at a moment; then scheduling the set of the ready tasks in distribution type and multi-target mode according to real time resource access so as to effectively improve schedule parallelism; and during the distribution of the tasks, further considering task execution and expenditure of communication (E / C) between the tasks to determine whether task copy is used to replace rely data transmission so as to reduce the expenditure of communication. The whole scheduling method can schedule a plurality of tasks in the set of the ready tasks in dynamic parallel mode, well considers performance indexes including real time performance, parallelism, expenditure of communication, loading balance performance and the like, and effectively improves integral performance of the system through the dynamic scheduling strategy.

Owner:DALIAN UNIV OF TECH

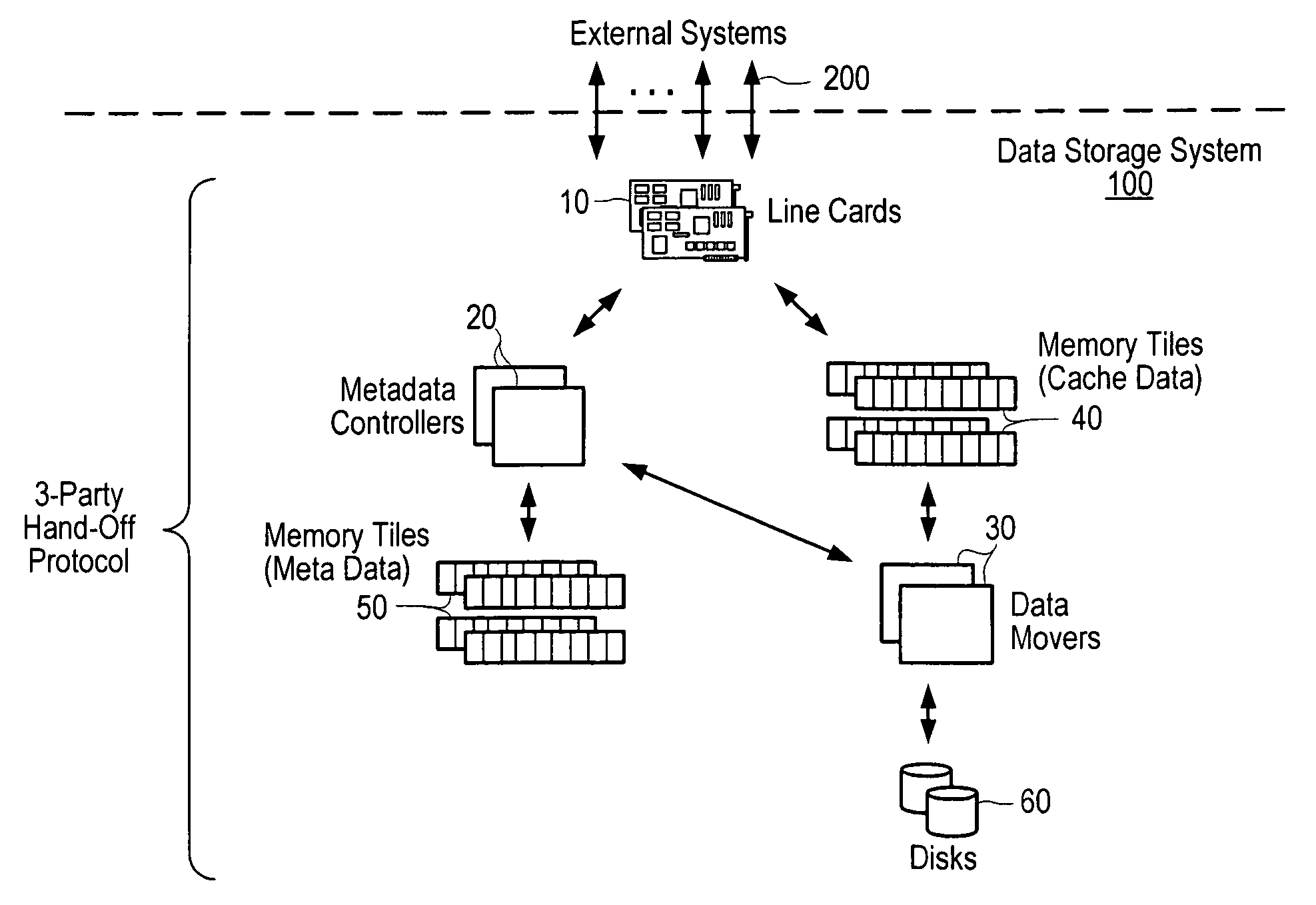

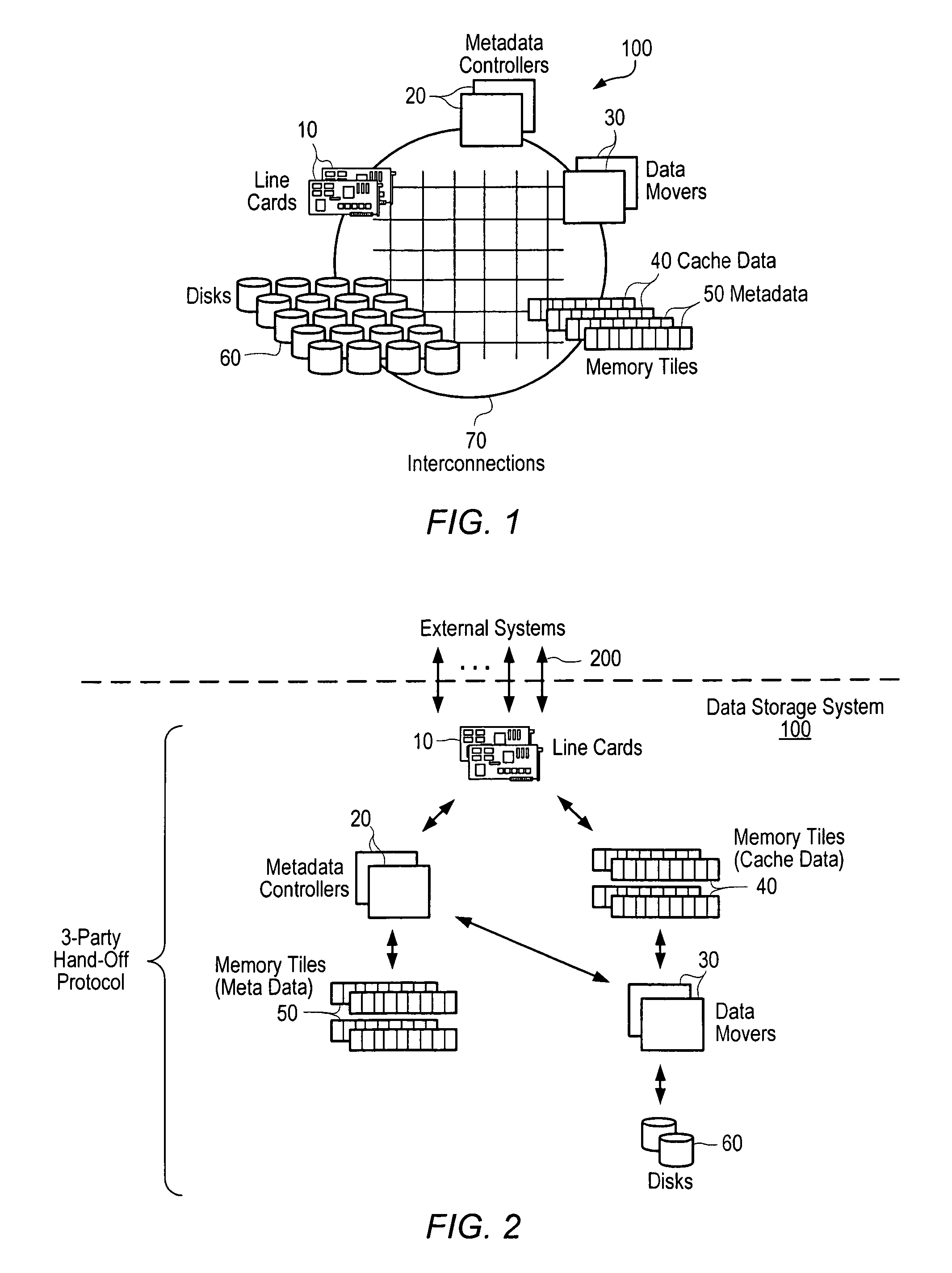

Data storage system using 3-party hand-off protocol to facilitate failure recovery

ActiveUS7007196B2Enhancing scalability and performance and robustnessPromote recoveryError detection/correctionTransmissionComputer moduleData store

A data storage system is disclosed in which a 3-party hand-off protocol is utilized to maintain a single coherent logical image. In particular, the functionality of the data storage system is separated into distinct processing modules. Each processing module is implemented in a distinct central processing unit (CPU). Alternatively, the first type processing module and the third type processing module can be implemented in a common CPU. Isolating the different functions of the data storage system into distinct CPUs facilitates failure recovery. A characteristic of the 3-party hand-off protocol is that, if an abnormal state occurs, a surviving module has sufficient information to proceed to recover from said abnormal state after detecting the abnormal state, without depending on a failing module, by retrying the data storage operation with another processing module or the failing module or cleaning up after the failed data storage operation, resulting in improved failure recovery.

Owner:ORACLE INT CORP

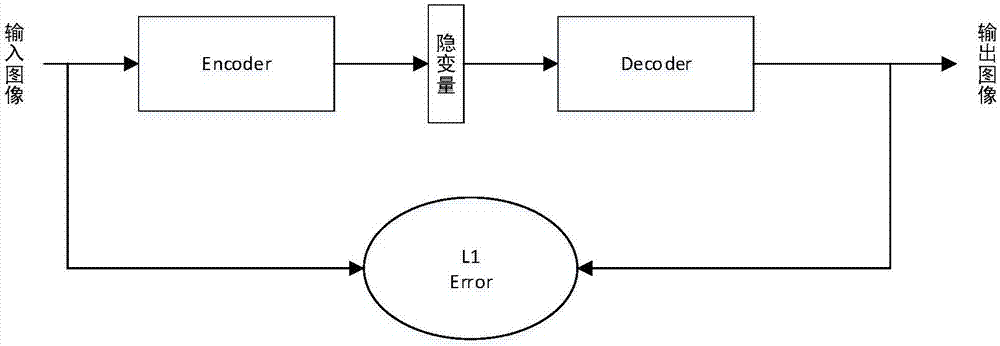

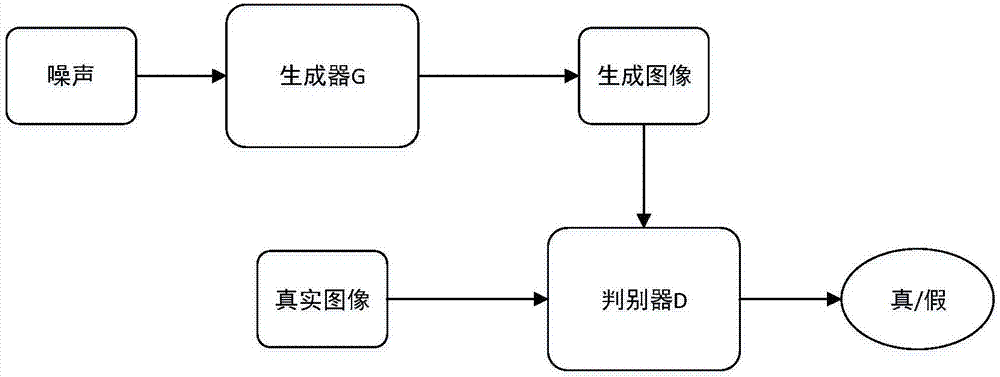

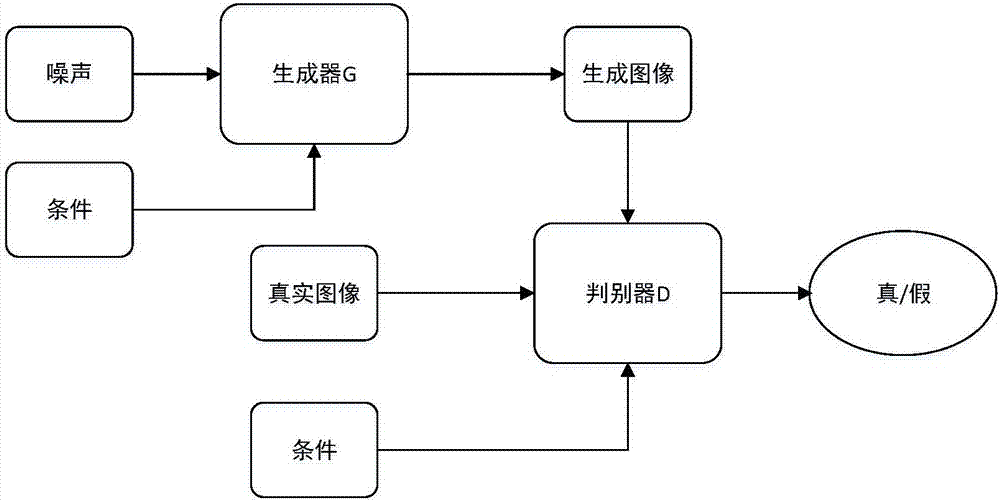

Face image aging synthesis method based on feature separation adversarial network

InactiveCN107977629AStrong supervisionSimple calculationCharacter and pattern recognitionNeural architecturesSynthesis methodsGenerative adversarial network

The invention discloses a face image aging synthesis method based on a feature separation adversarial network, belongs to the technical field of computer vision, and relates to a face image aging synthesis method. The method comprises the steps: supposing that the age features of a face are distributed in a Manifold, extracting the hidden features of an original image through an Auto-encoder, adding a corresponding age condition, finally generating an adversarial network through the condition, synthesizing a face image of a specific age, and maintaining the identity corresponding to an original face image. The innovativeness of the invention lies in that the method achieves the constraint of the hidden features outputted by an Encoder through the intra-class distance measure, enables the hidden features to be exclusively correlated with the identity, and removes the information correlated with the age, so as to guarantee that the age of the generated image is matched with a target agein a better way after the age is added. The method can be used for the face image aging synthesis, the cross-age face recognition and beatifying software.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

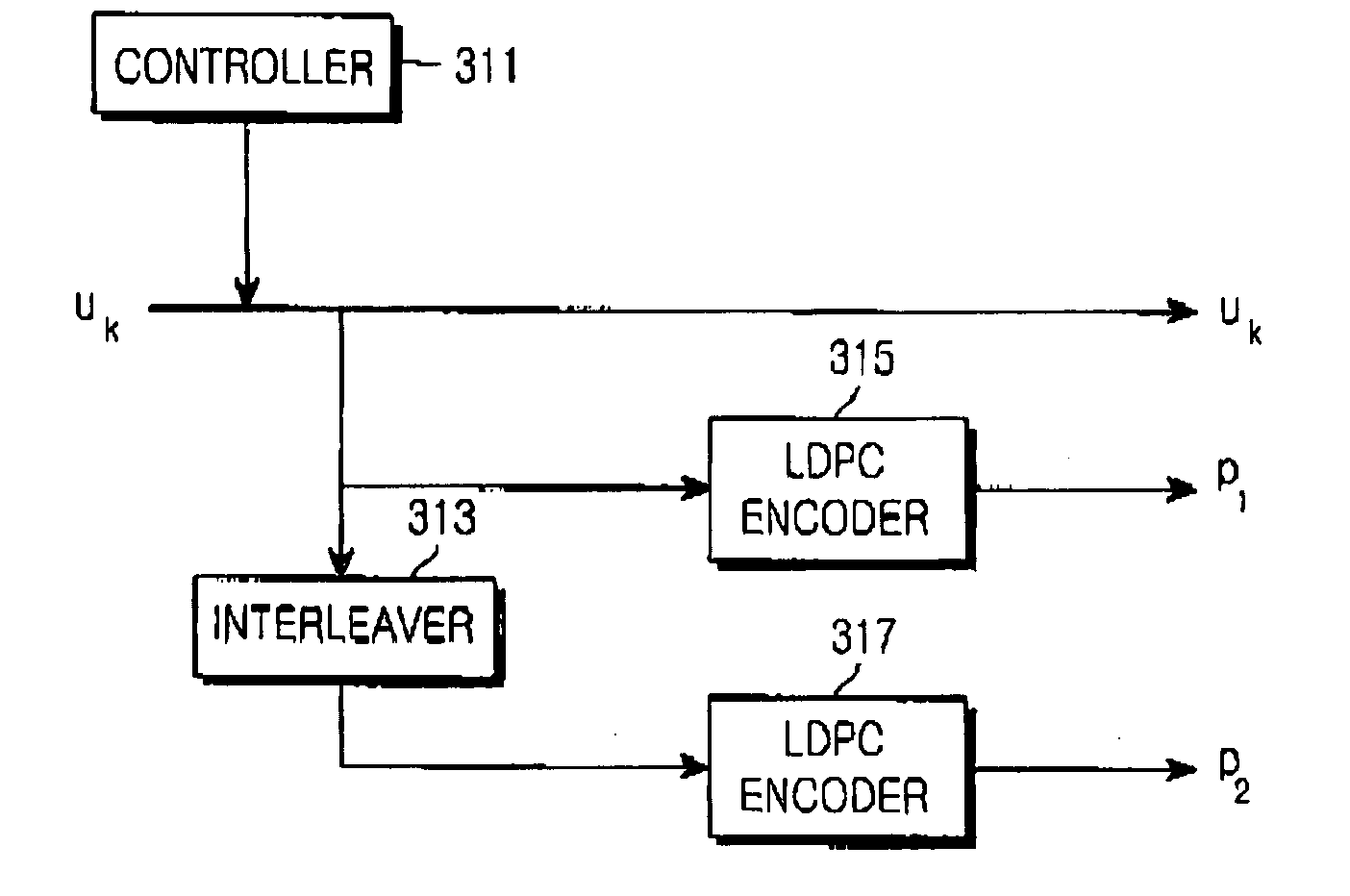

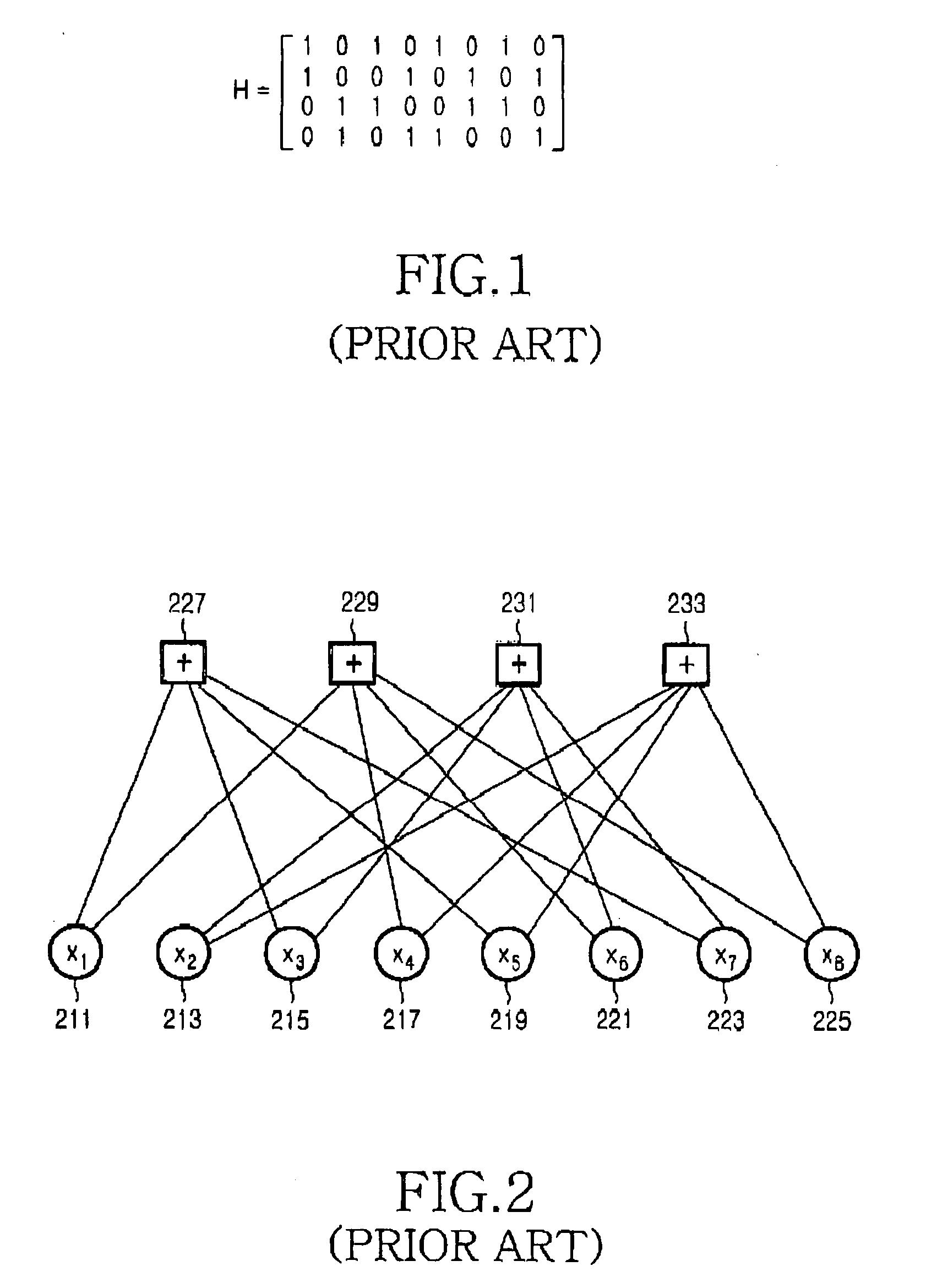

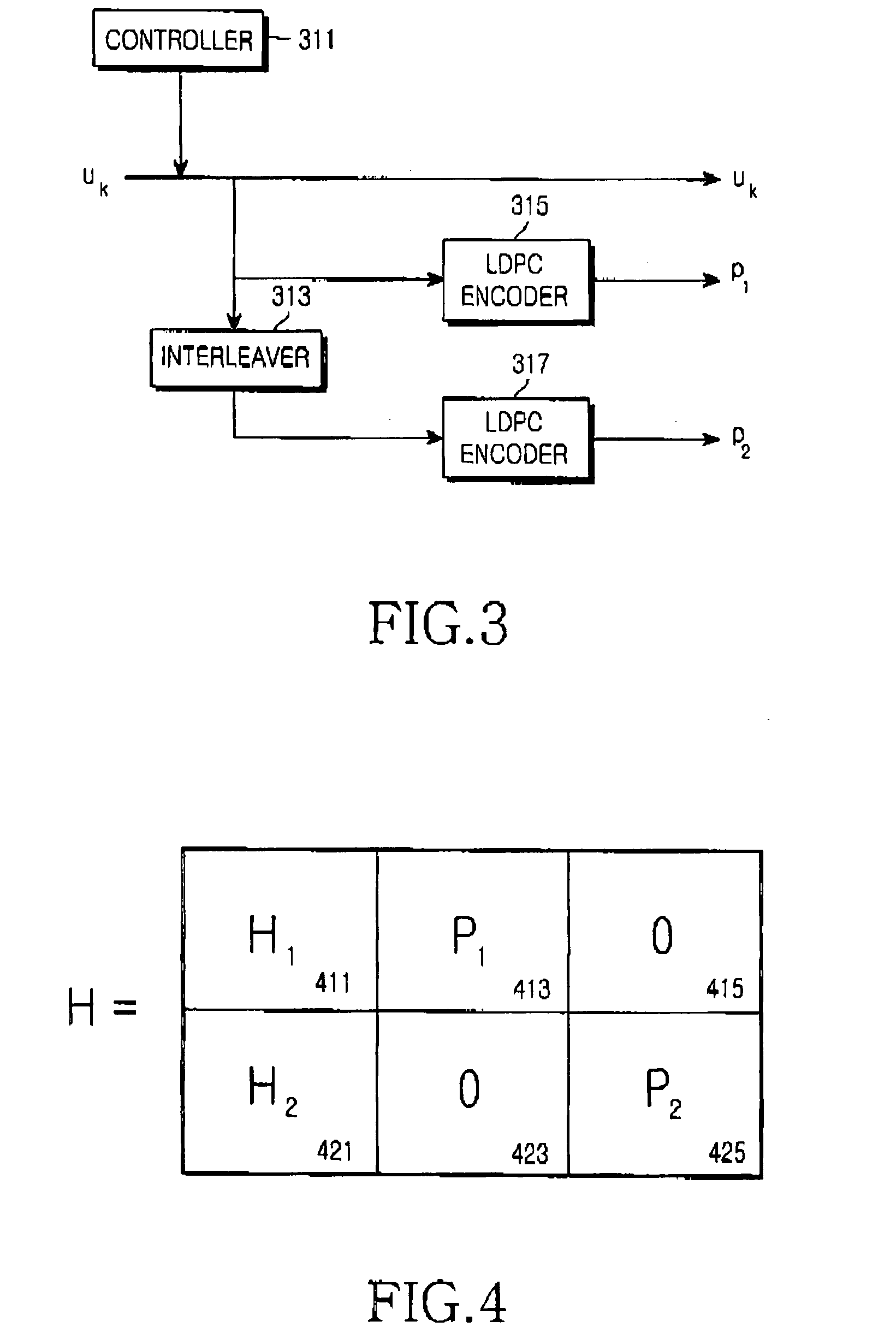

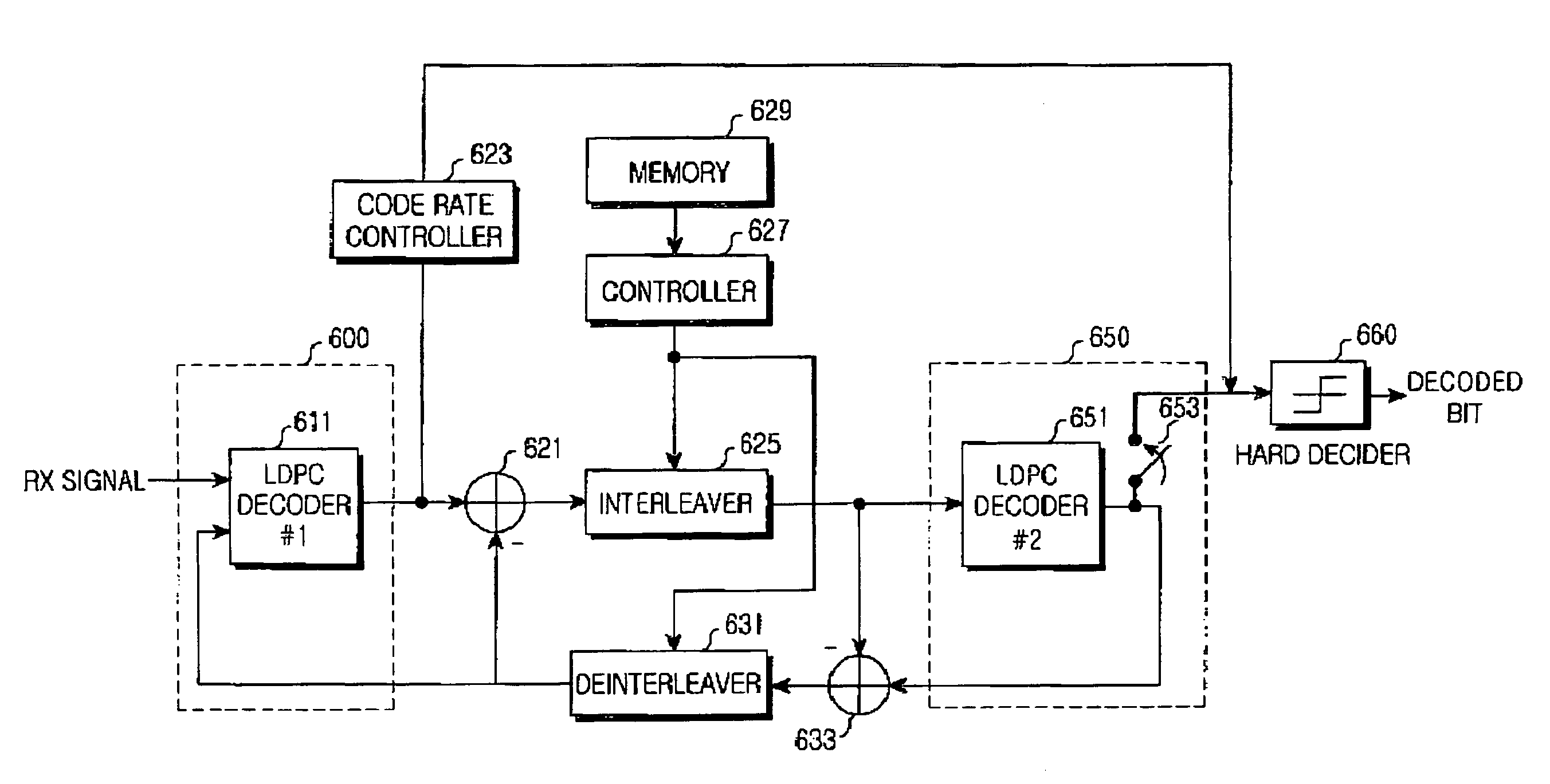

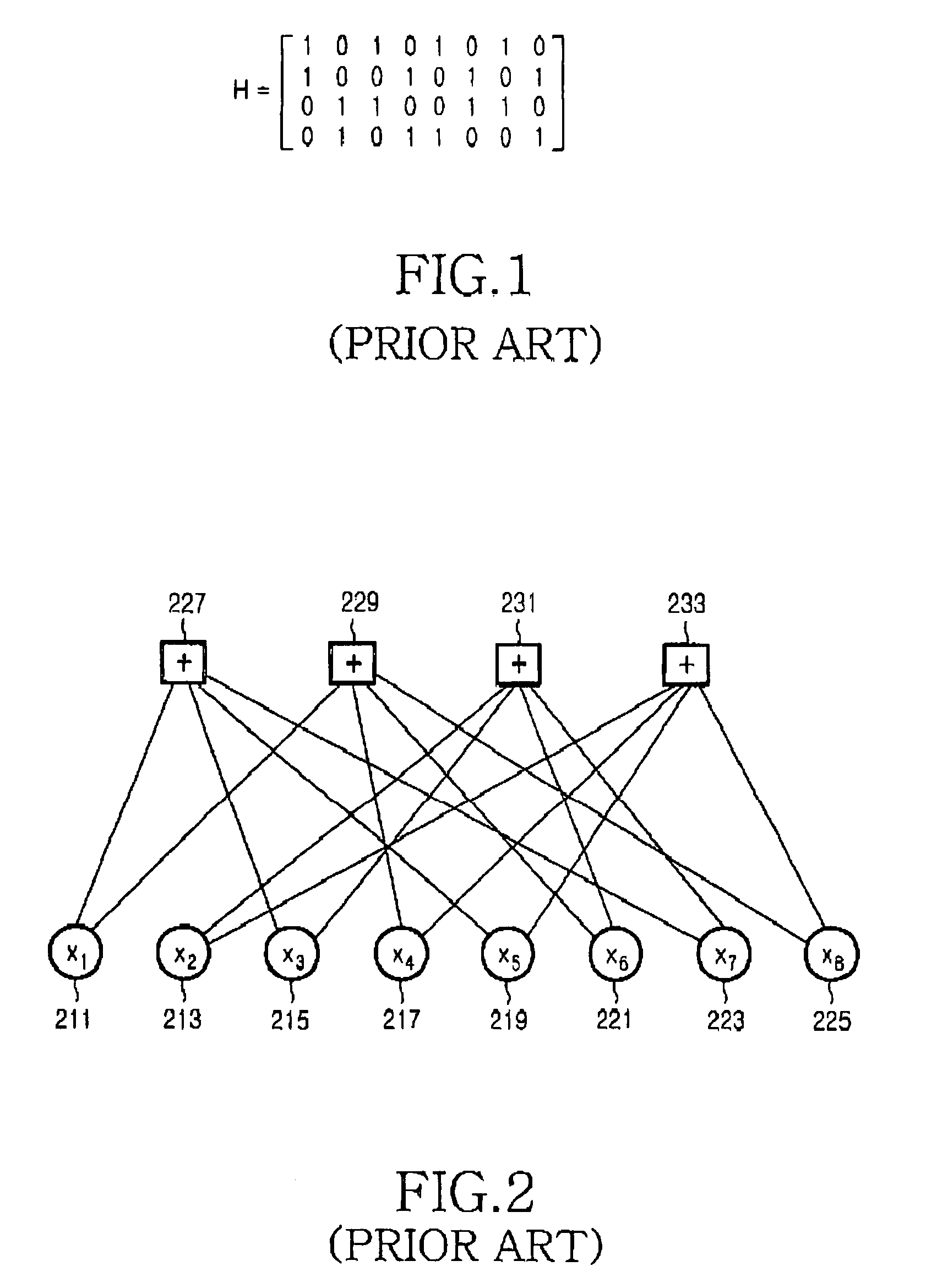

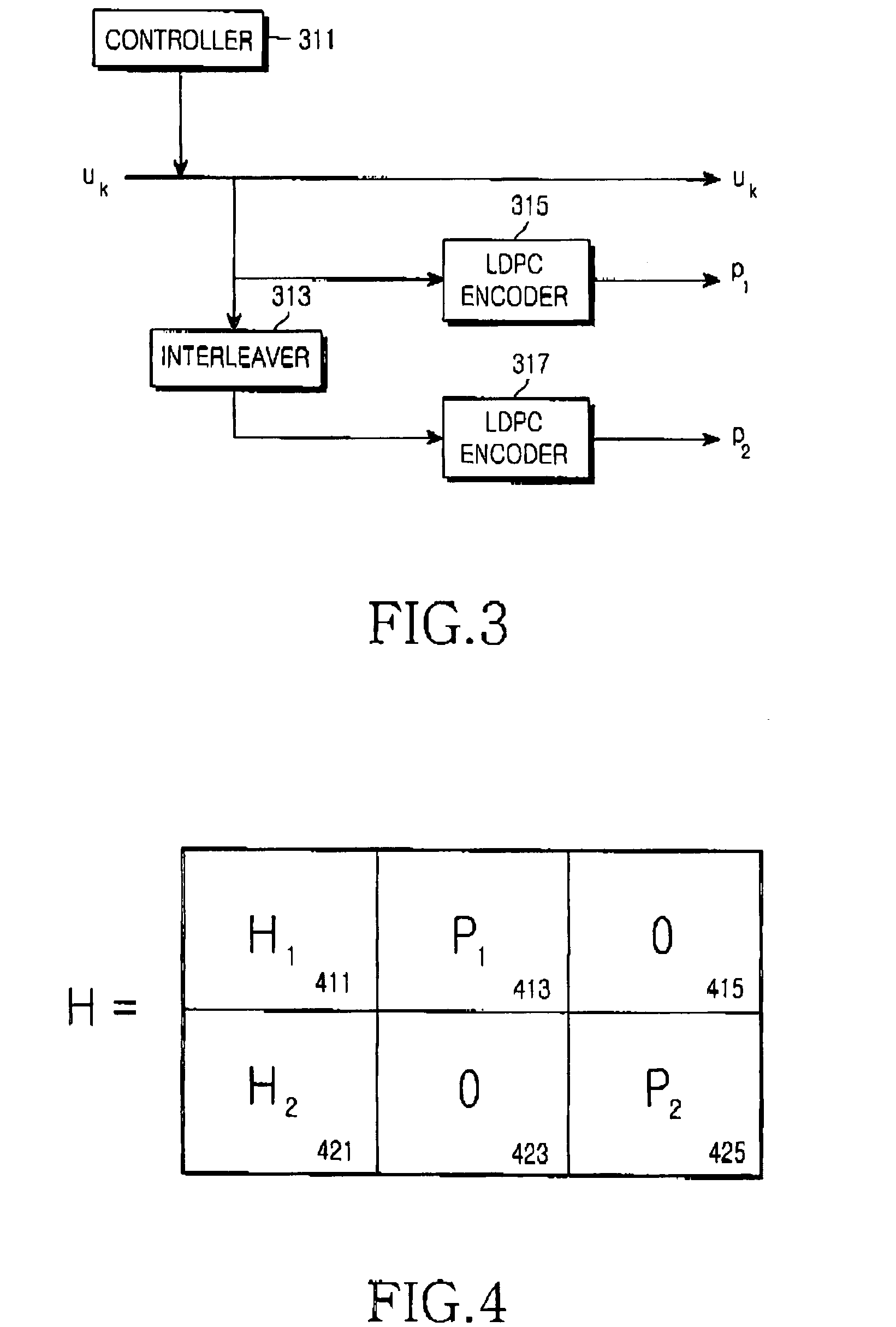

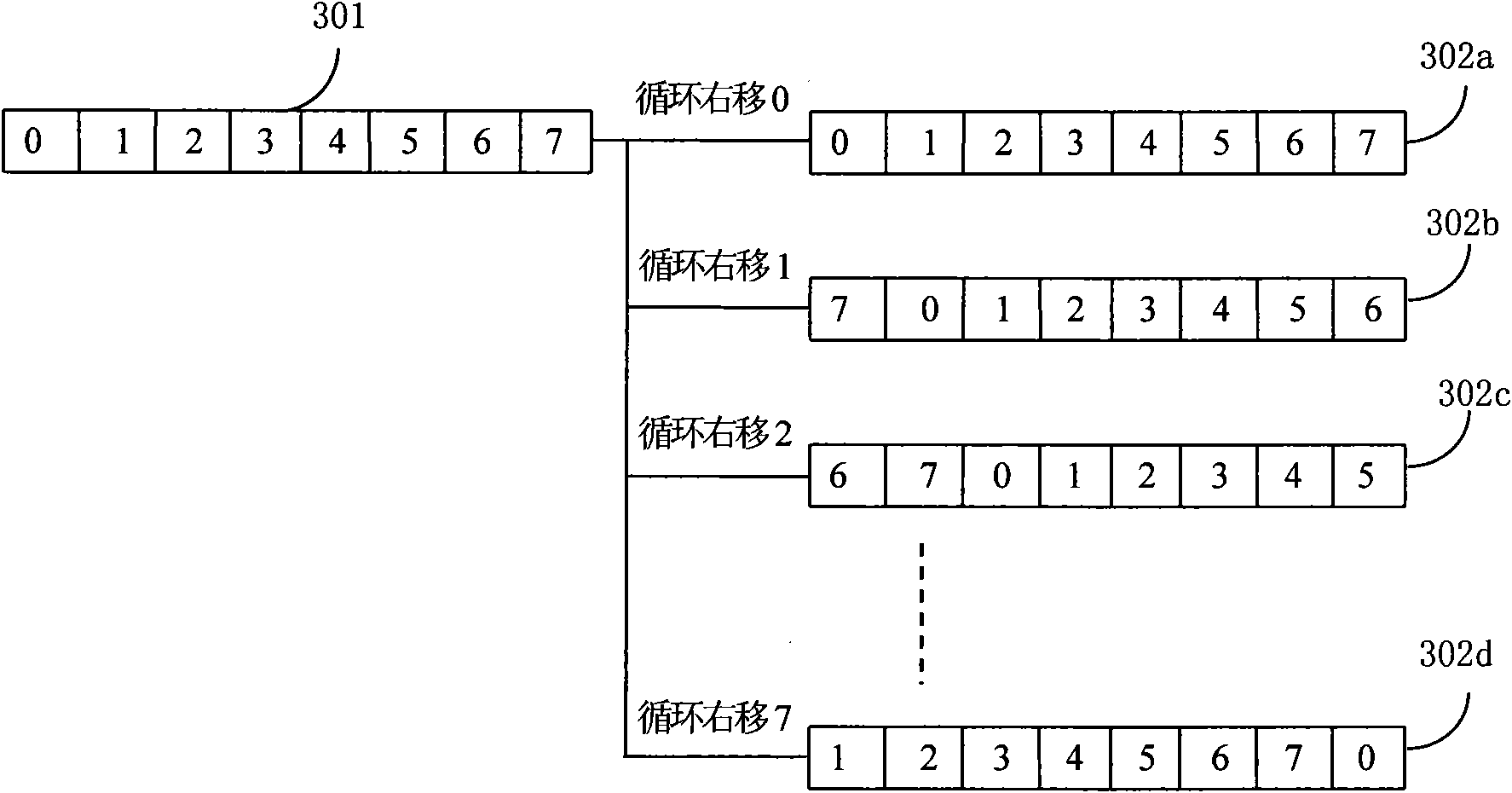

Channel encoding/decoding apparatus and method using a parallel concatenated low density parity check code

ActiveUS20050149842A1Improve parallelismOther decoding techniquesError detection/correctionLow-density parity-check codeLow density

A channel encoding apparatus using a parallel concatenated low density parity check (LDPC) code. A first LDPC encoder generates a first component LDPC code according to information bits received. An interleaver interleaves the information bits according to a predetermined interleaving rule. A second LDPC encoder generates a second component LDPC code according to the interleaved information bits. A controller performs a control operation such that the information bits, the first component LDPC code which is first parity bits corresponding to the information bits, and the second component LDPC code which is second parity bits corresponding to the information bits are combined according to a predetermined code rate.

Owner:SAMSUNG ELECTRONICS CO LTD

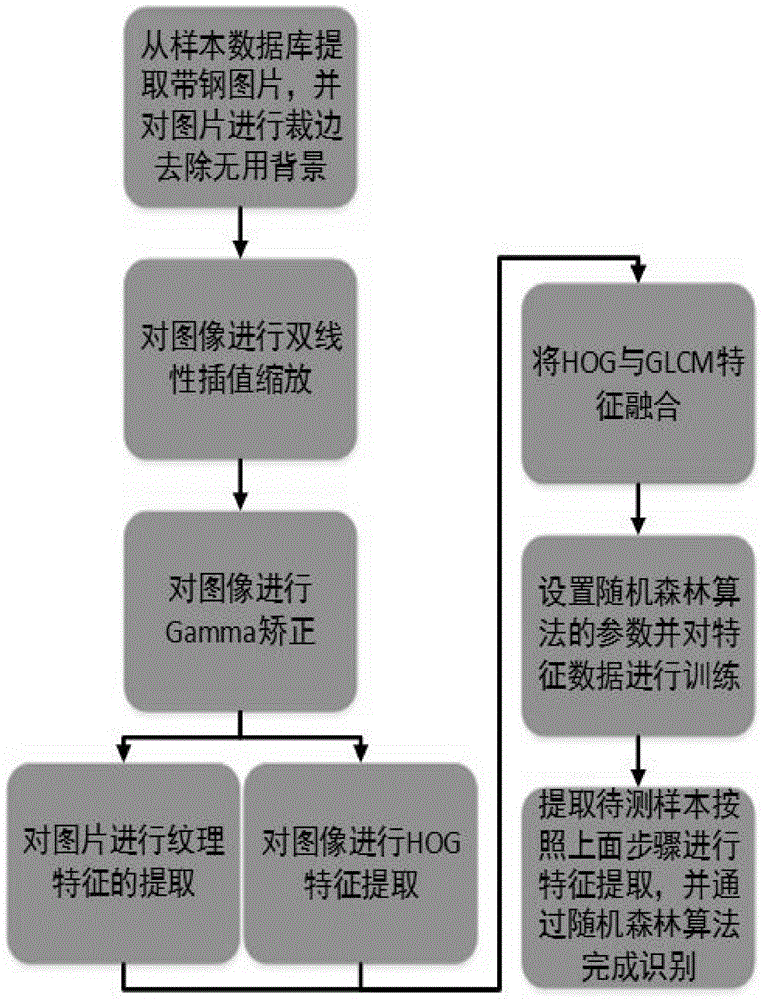

Strip steel surface area type defect identification and classification method

ActiveCN104866862AEasy to handleAccurate identificationCharacter and pattern recognitionFeature setHistogram of oriented gradients

The invention discloses a strip steel surface area type defect identification and classification method which comprises the following steps: extracting strip steel surface pictures in a training sample database, removing useless backgrounds and keeping the category of the pictures to a corresponding label matrix; carrying out bilinear interpolation algorithm zooming on the pictures; carrying out color space normalization on images of the zoomed pictures by adopting a Gamma correction method; carrying out direction gradient histogram feature extraction on the corrected pictures; carrying out textural feature extraction on the corrected pictures by using a gray-level co-occurrence matrix; combining direction gradient histogram features and textural features to form a feature set, which comprises two main kinds of features, as a training database; training the feature data with an improved random forest classification algorithm; carrying out bilinear interpolation algorithm zooming, Gamma correction, direction gradient histogram feature extraction and textural feature extraction on the strip steel defect pictures to be identified in sequence; and then, inputting the feature data into an improved random forest classifier to finish identification.

Owner:CENT SOUTH UNIV

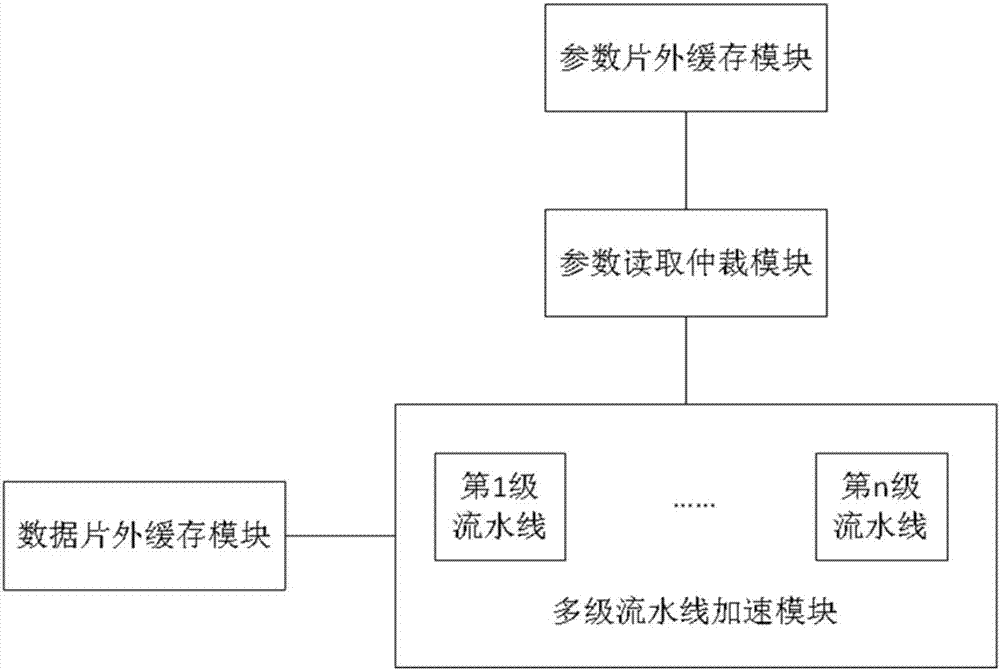

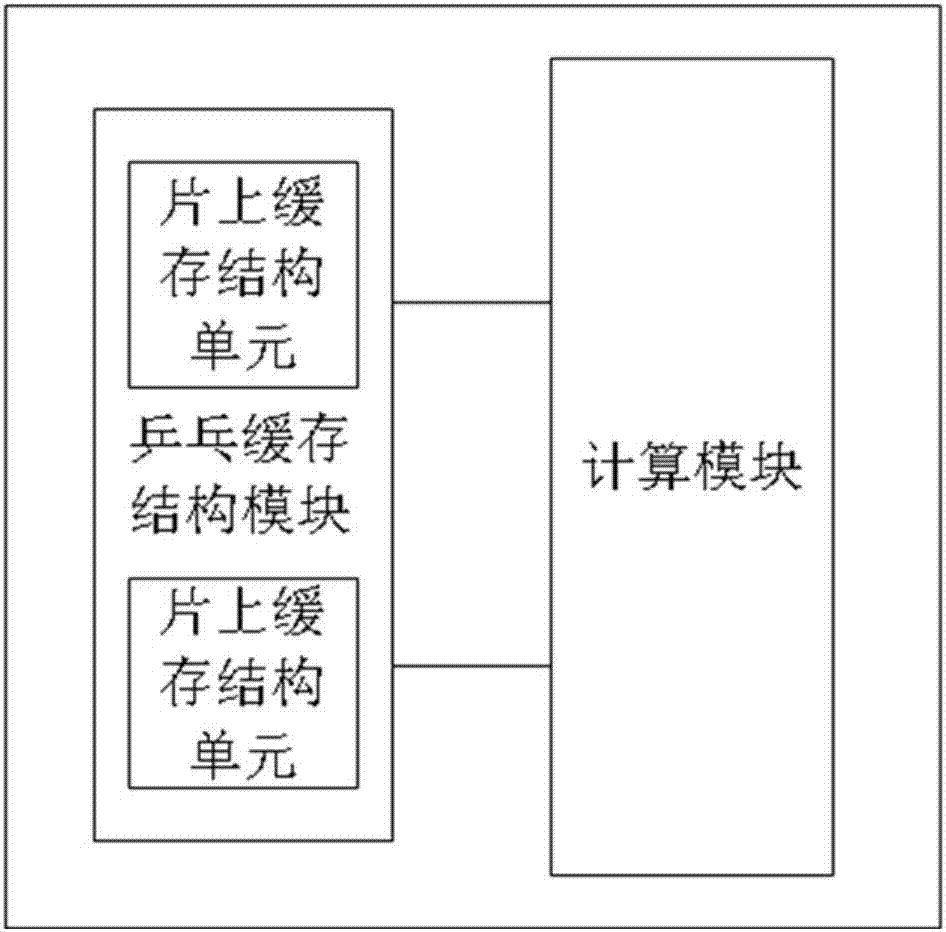

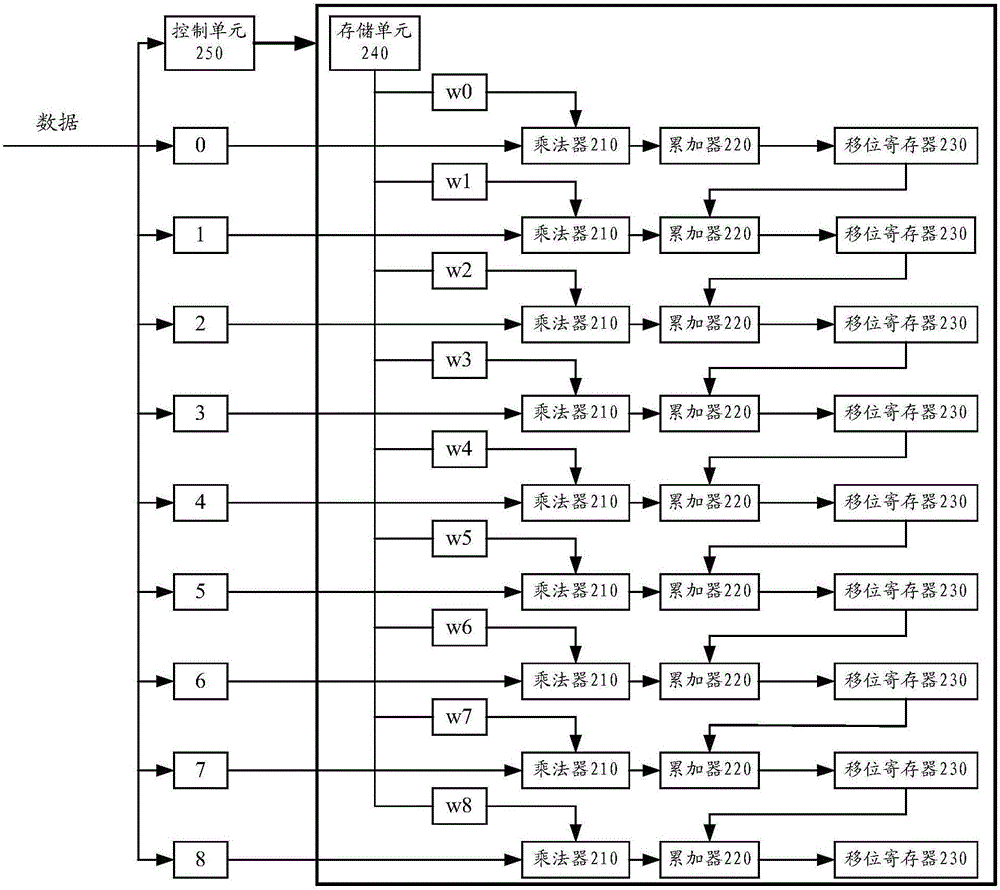

Hardware structure for realizing forward calculation of convolutional neural network

InactiveCN107066239AReduce cache requirementsImprove parallelismConcurrent instruction executionPhysical realisationHardware structureHardware architecture

The present application discloses a hardware structure for realizing forward calculation of a convolutional neural network. The hardware structure comprises: a data off-chip caching module, used for caching parameter data in each to-be-processed picture that is input externally into the module, wherein the parameter data waits for being read by a multi-level pipeline acceleration module; the multi-level pipeline acceleration module, connected to the data off-chip caching module and used for reading a parameter from the data off-chip caching module, so as to realize core calculation of a convolutional neural network; a parameter reading arbitration module, connected to the multi-level pipeline acceleration module and used for processing multiple parameter reading requests in the multi-level pipeline acceleration module, so as for the multi-level pipeline acceleration module to obtain a required parameter; and a parameter off-chip caching module, connected to the parameter reading arbitration module and used for storing a parameter required for forward calculation of the convolutional neural network. The present application realizes algorithms by adopting a hardware architecture in a parallel pipeline manner, so that higher resource utilization and higher performance are achieved.

Owner:智擎信息系统(上海)有限公司

NonVolatile Memory With Block Management

ActiveUS20070033331A1Memory can be managed effectivelyReduce copyMemory systemsParallel computingRapid identification

In a nonvolatile memory system that includes a block-erasable memory array, records are individually maintained for certain classifications of blocks. One or more lists may be maintained for the blocks, an individual list ordered according to a descriptor value. Such ordered lists allow rapid identification of a block by descriptor value.

Owner:SANDISK TECH LLC

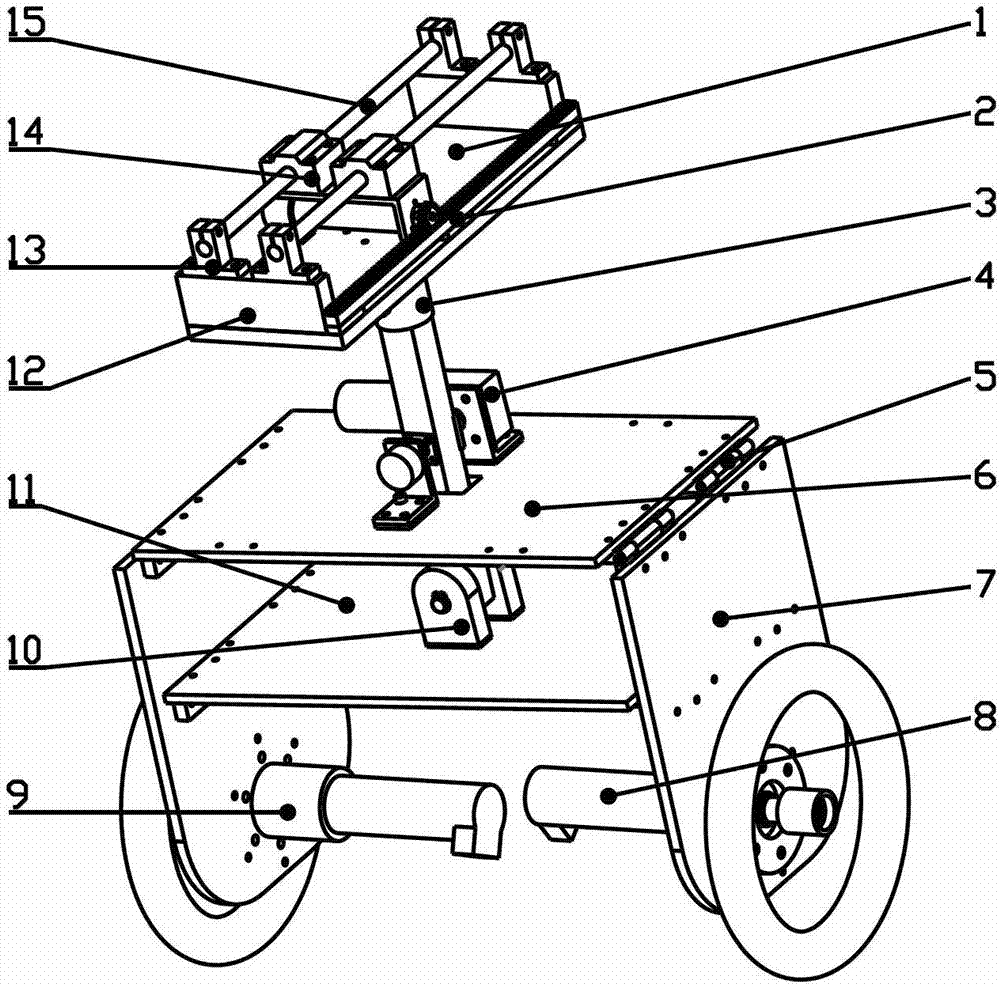

Multi-degree-of-freedom two-wheeled robot with variable gravity center

InactiveCN102923204ASmooth Turning ActionClimbing action is easy to controlVehiclesEngineeringGravity center

The invention discloses a multi-degree-of-freedom two-wheeled robot with a variable gravity center. The robot is remodeled on the basis of a common two-wheeled robot; two degrees of freedom are increased to change the gravity center, so that the balance of a two-wheeled vehicle is preferably maintained in the motion. One degree of freedom is that the two-wheeled robot is integrally inclined to balance the centrifugal force of the two-wheeled robot during turning; during rapid turning, the two-wheeled robot can overcome the centrifugal force through adjusting the inclination angle of the robot, so that the robot more smoothly finishes the turning action; the other degree of freedom is that a slide block is born on the two-wheeled robot; and through adjusting the position of the slide block on an article carrying plate of the robot, the position of the gravity center is changed, so that the climbing action of the robot is controlled more easily and greater stability of the robot during climbing is obtained.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Superabrasive electrodeposited cutting edge and method of manufacturing the same

InactiveUS6098609AGood precisionImprove working precisionRevolution surface grinding machinesBonded abrasive wheelsBiomedical engineeringThin walled

PCT No. PCT / JP96 / 00206 Sec. 371 Date Jul. 30, 1997 Sec. 102(e) Date Jul. 30, 1997 PCT Filed Feb. 1, 1996 PCT Pub. No. WO96 / 23630 PCT Pub. Date Aug. 8, 1996A cutting edge comprising a mass of superabrasive particles (2) electrodeposited on a thin-walled metallic base member (1) along a border (6) of said base member, wherein said mass (2) forms one or more layers at said border of said base member and fixed thereto, and each layer contains parts comprising at least five superabrasive particles (3) in a row in an extending direction of said base member from said border, so as to improve free-cut performance, decrease kerf width and prolong the life of cutting tool.

Owner:ISHIZUKA HIROSHI

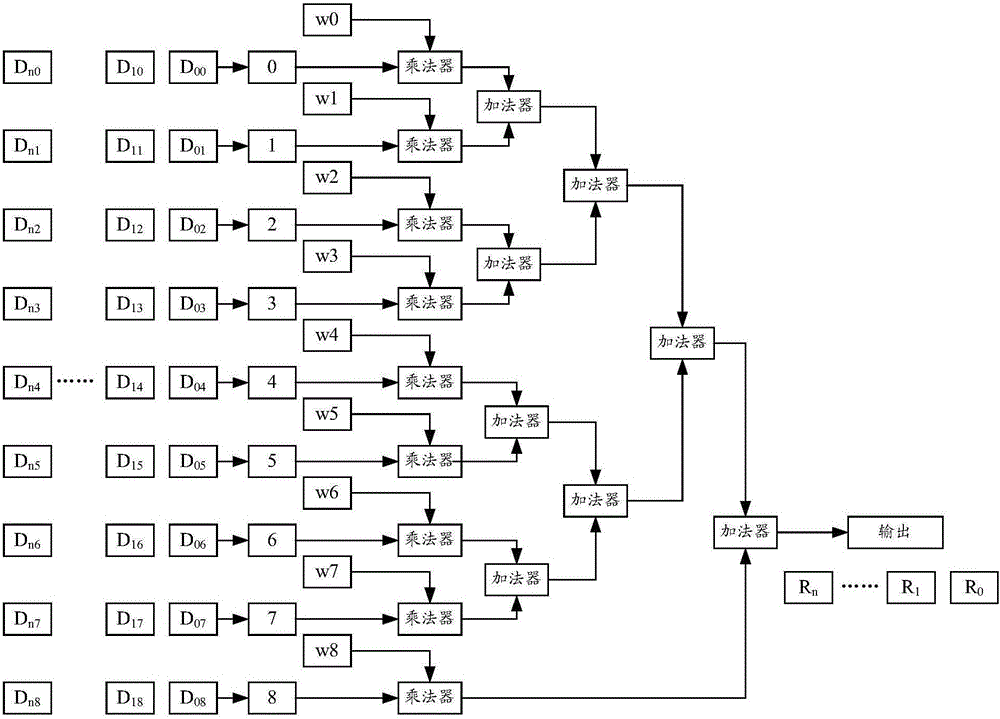

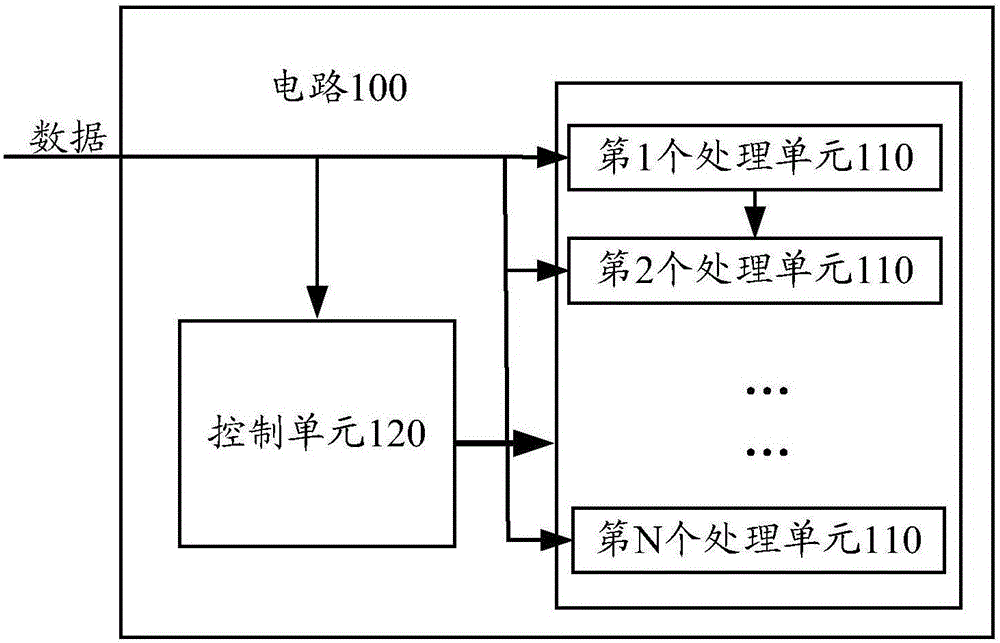

Circuit for processing data, image processing system, and method and apparatus for processing data

InactiveCN106127302AReduce power consumptionAchieve sharingDigital data processing detailsConcurrent instruction executionMain processing unitImaging processing

The embodiments of the invention disclose a circuit for processing data, an image processing system, and a method and apparatus for processing data. The circuit comprises a control unit and N processing units. The control unit and each processing unit among the N processing units are connected with a data transmission unit, each processing unit is used for processing the same data output successively by the data transmission unit, and the output end of an i-th processing unit among the N processing units is connected with the input end of an (i+1)th processing unit, wherein N is an integer greater than 1, and the value scope of i is an integer ranging from 1 to N-1. The control unit is used for, when it is determined that data to be processed output by the data transmission unit is 0, controlling the N processing units to be all at a closed state. The circuit, the system, the method and the apparatus provided by the embodiments of the invention can reduce circuit power consumption while realizing convolution operation.

Owner:HANGZHOU HUAWEI DIGITAL TECH

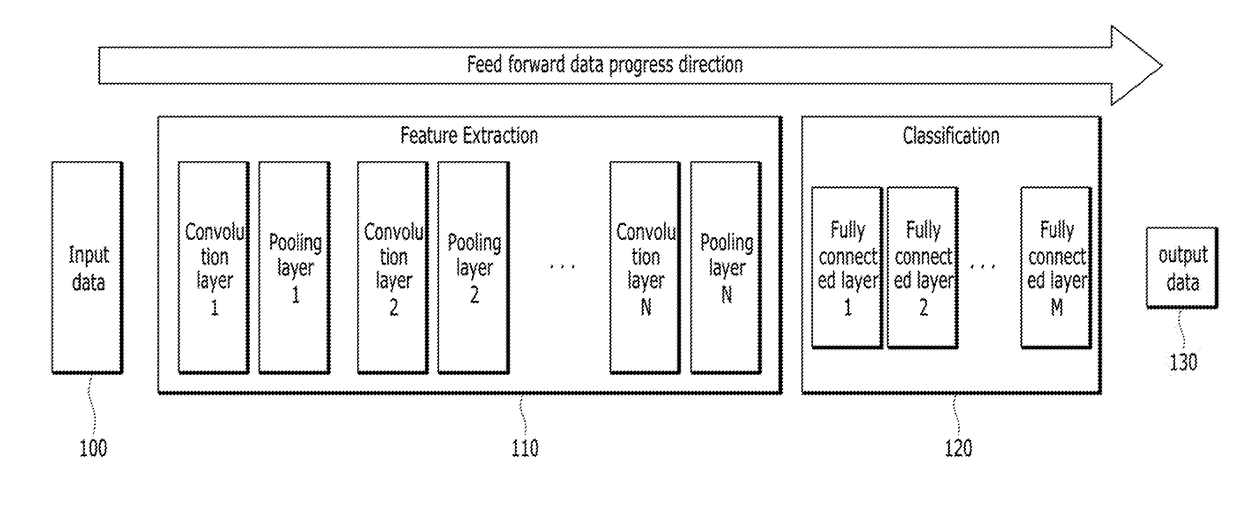

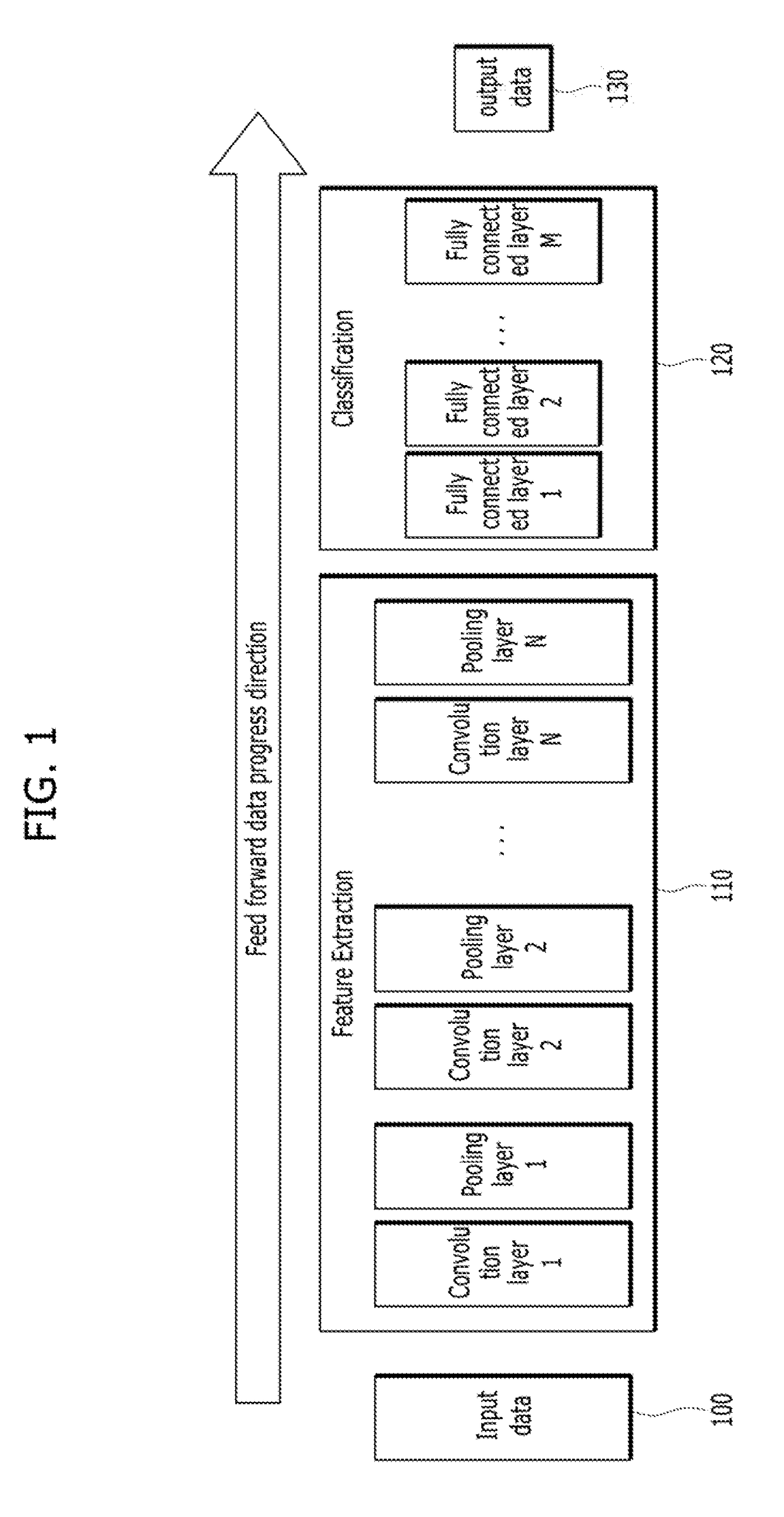

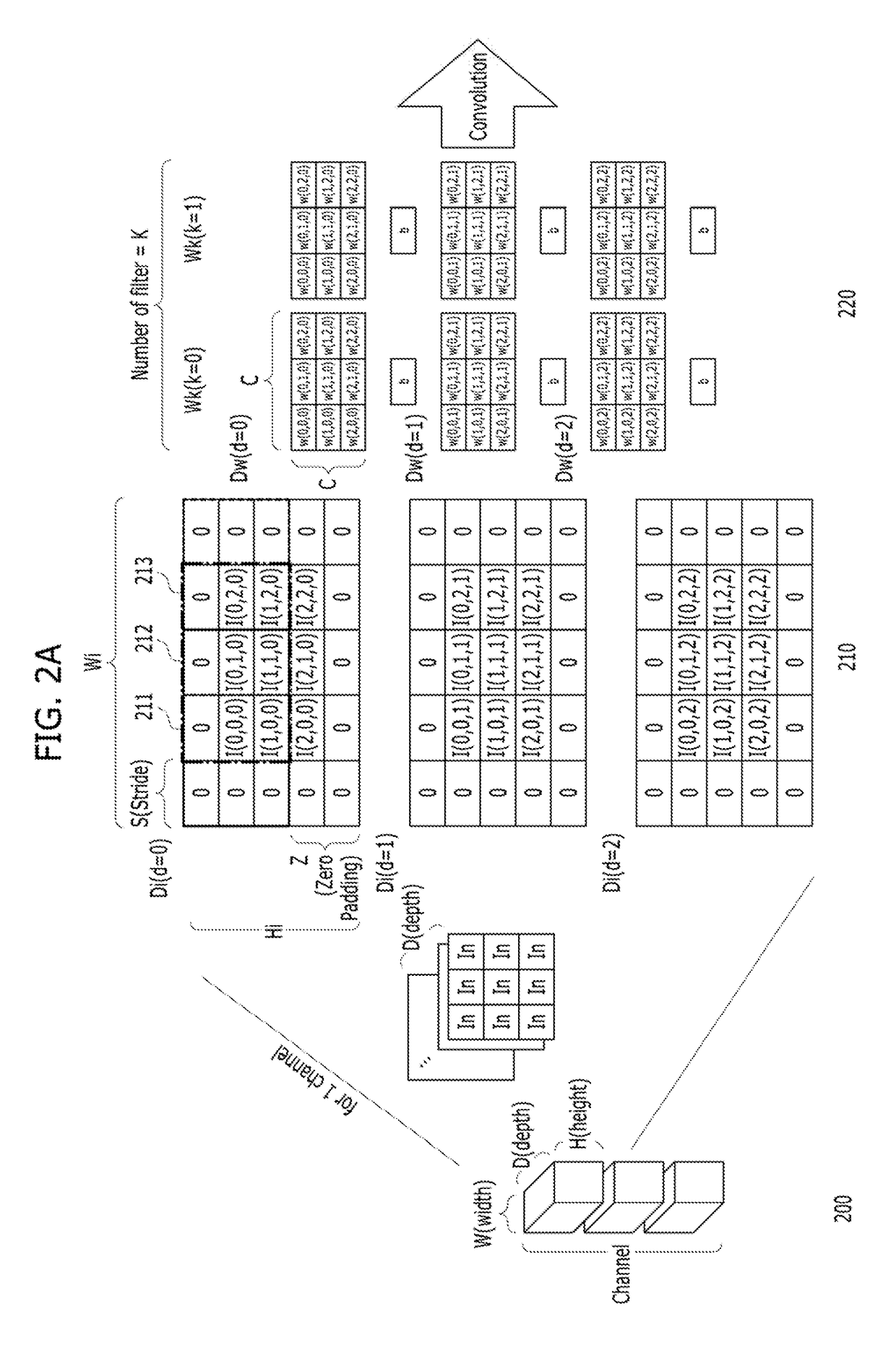

Operation apparatus and method for convolutional neural network

ActiveUS20180089562A1Improve parallelismReducing operation latencyDigital data processing detailsNeural architecturesControl channelFeature extraction

Disclosed herein is a convolutional neural network (CNN) operation apparatus, including at least one channel hardware set suitable for performing a feature extraction layer operation and a classification layer operation based on input data and weight data, and a controller coupled to the channel hardware set. The controller may control the channel hardware set to perform the feature extraction layer operation and perform a classification layer operation when the feature extraction layer operation is completed.

Owner:SK HYNIX INC

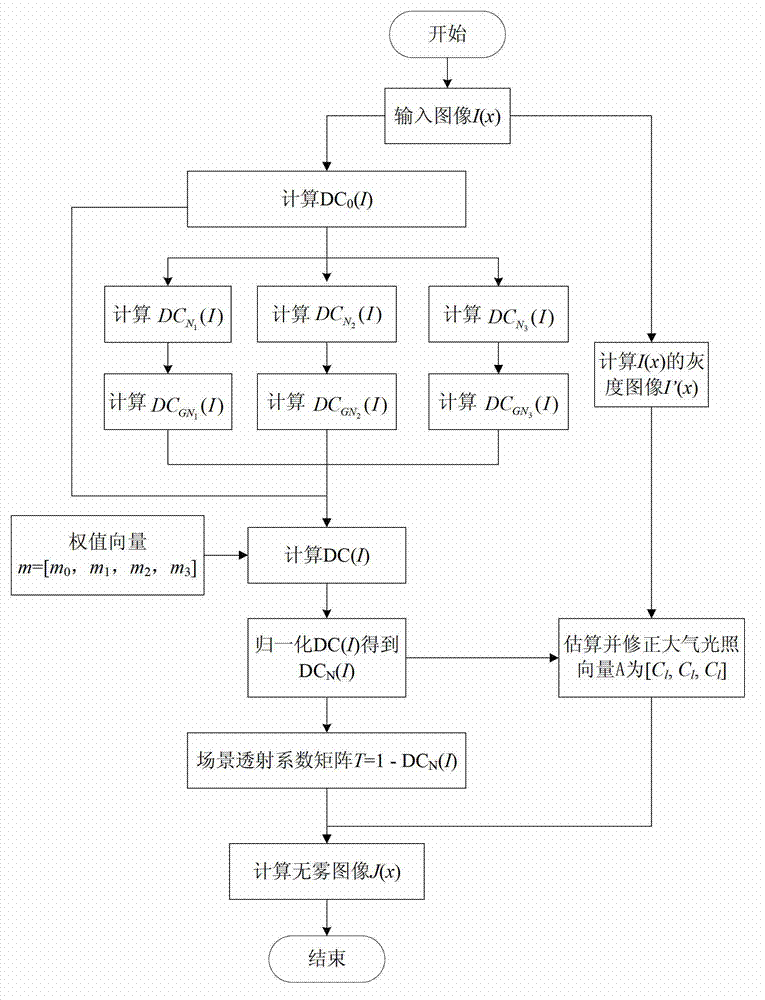

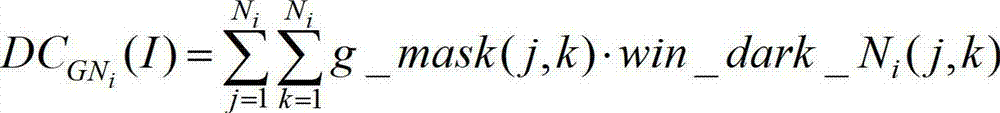

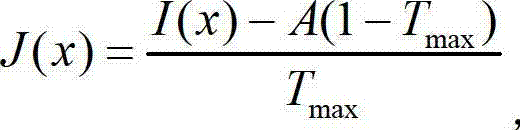

Image defogging method based on dark channel information

ActiveCN102968772AReduce computational complexityAvoid handlingImage enhancementPattern recognitionTransmission coefficient

The invention discloses an image defogging method based on dark channel information, belonging to the technical field of image treatment and computer vision. The method disclosed by the invention comprises the following steps of: calculating the minimum value of each pixel of each color channel of an input image via a minimum value filter; then calculating dark channel counting values of the image under different scale parameters and carrying out Gaussian smoothing filter on the dark channel counting value corresponding to each scale parameter; distributing different weight values to the filtered dark channel counting values according to different scale parameters; and carrying out weighted optimization on the dark channel counting values and calculating a transmission coefficient of the scene so that the defogging of the image is realized. The application of the invention can avoid complex soft matting optimization steps and computing complexity of the defogging; the image with high quality can be obtained after the defogging; and the requirement on real-time treatment application can be met.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

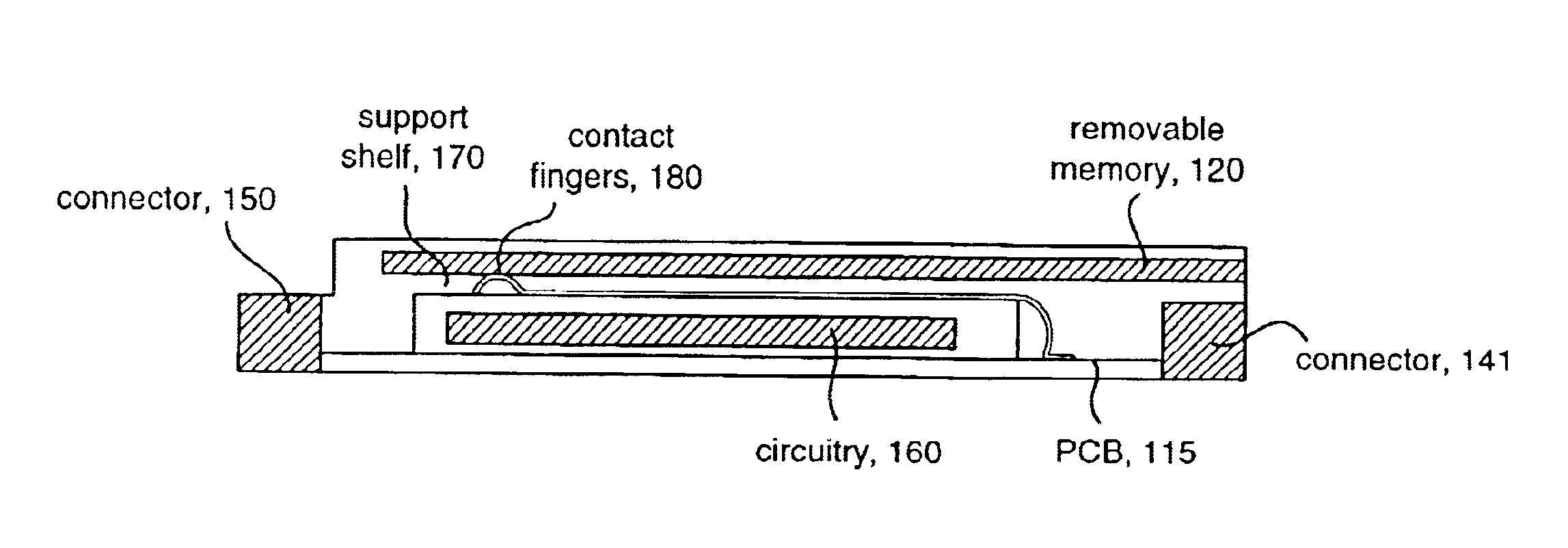

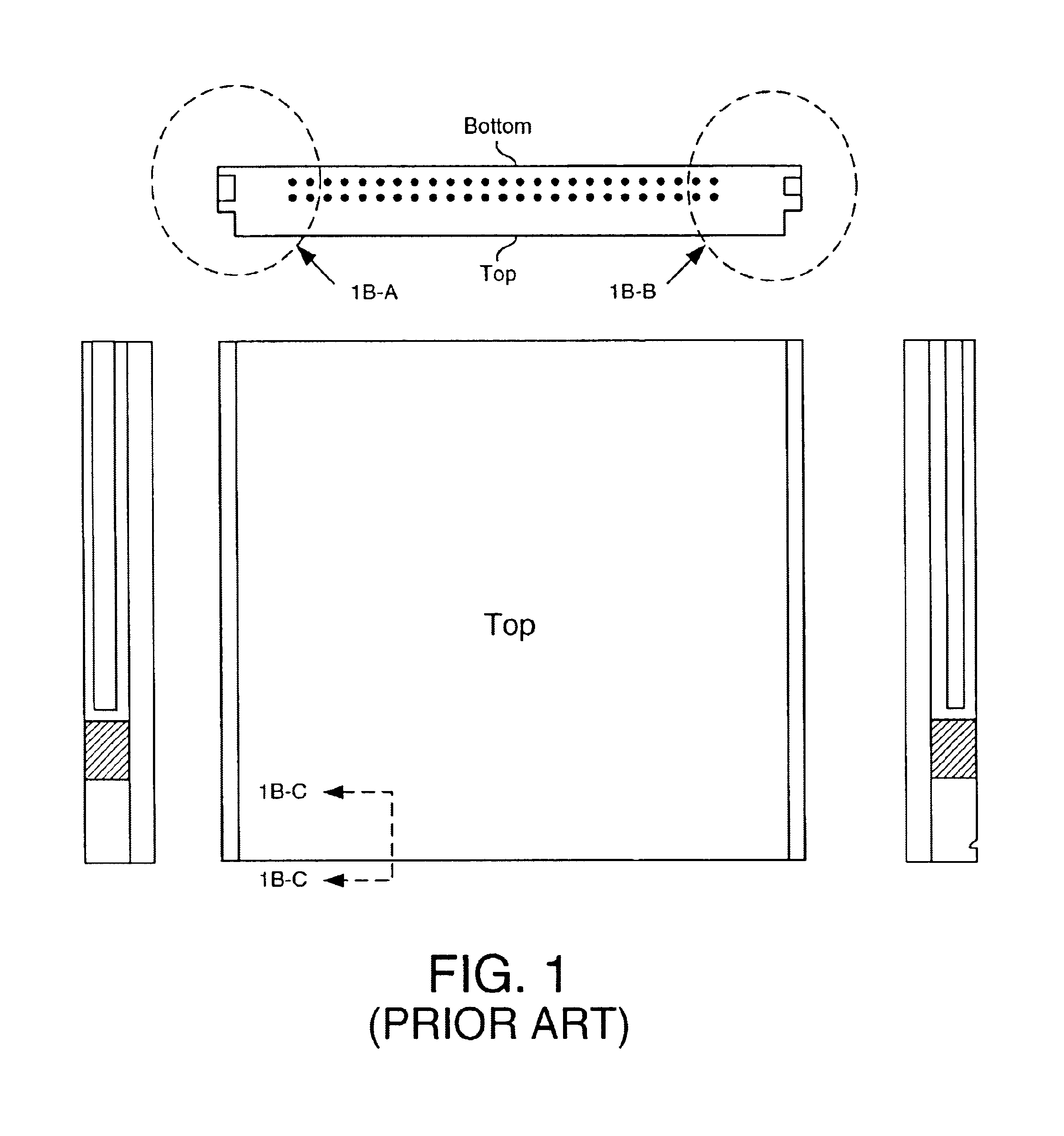

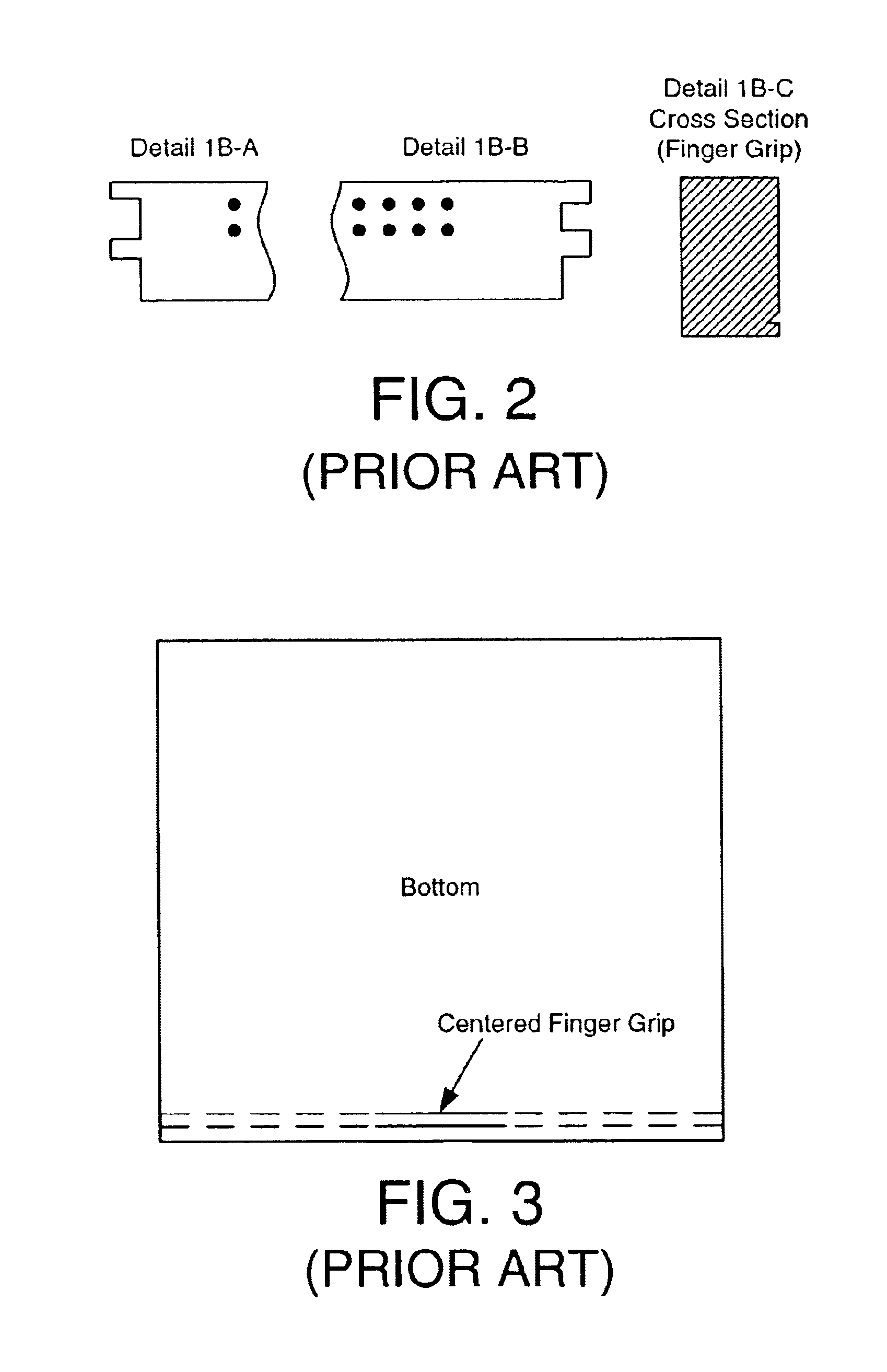

High-density removable expansion module having I/O and second-level-removable expansion memory

InactiveUS6863557B2Easy user field selection and upgradeImprove overall utilizationNavigational calculation instrumentsIncorrect coupling preventionExpansion cardComputer architecture

The utility of portable computer hosts, such as PDAs (or hand-helds), is enhanced by methods and apparatus for removable expansion cards having application specific circuitry, a second-level-removable memory, and optional I / O, in a number of illustrative embodiments. In addition to providing greater expansion utility in a compact and low profile industrial design, the present invention permits memory configuration versatility for application specific expansion cards, permitting easy user field selection and upgrades of the memory used in conjunction with the expansion card. Finally, from a system perspective, the present invention enables increased parallelism and functionality previously not available to portable computer devices.

Owner:SOCKET MOBILE

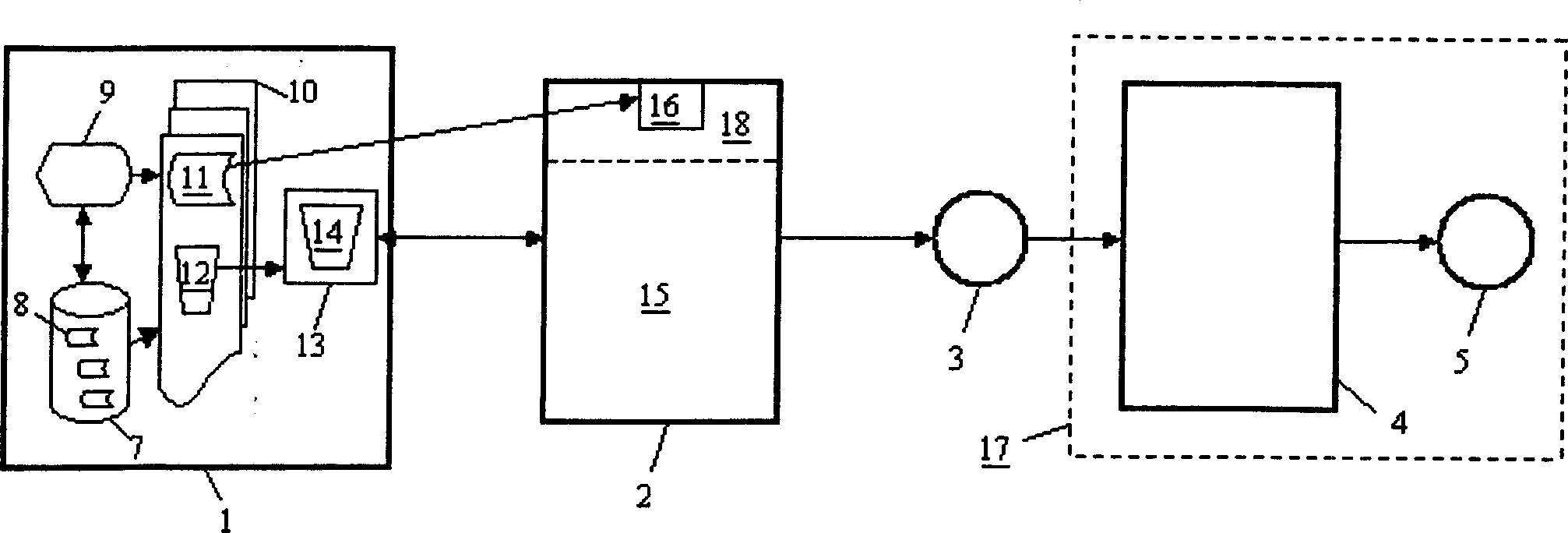

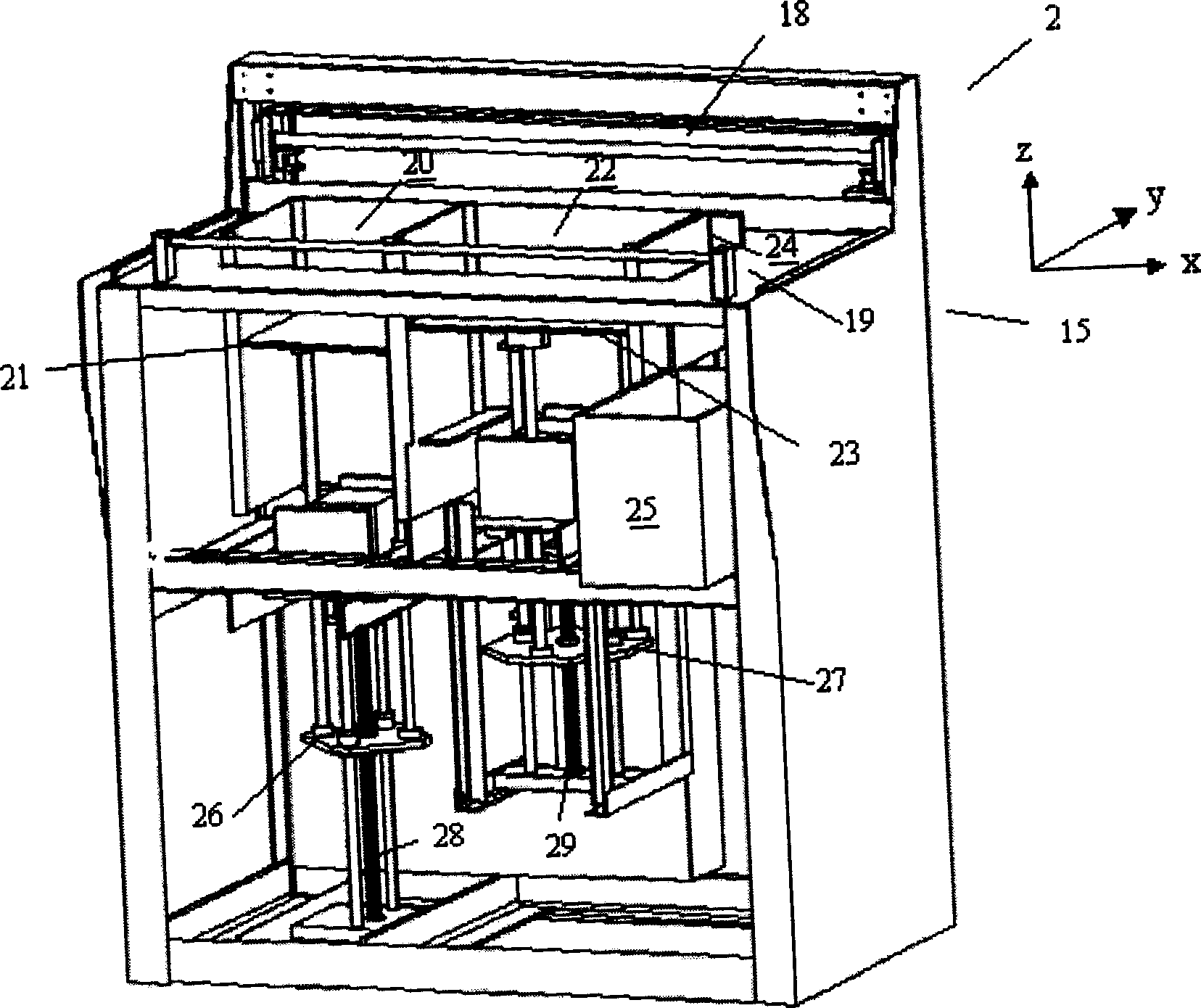

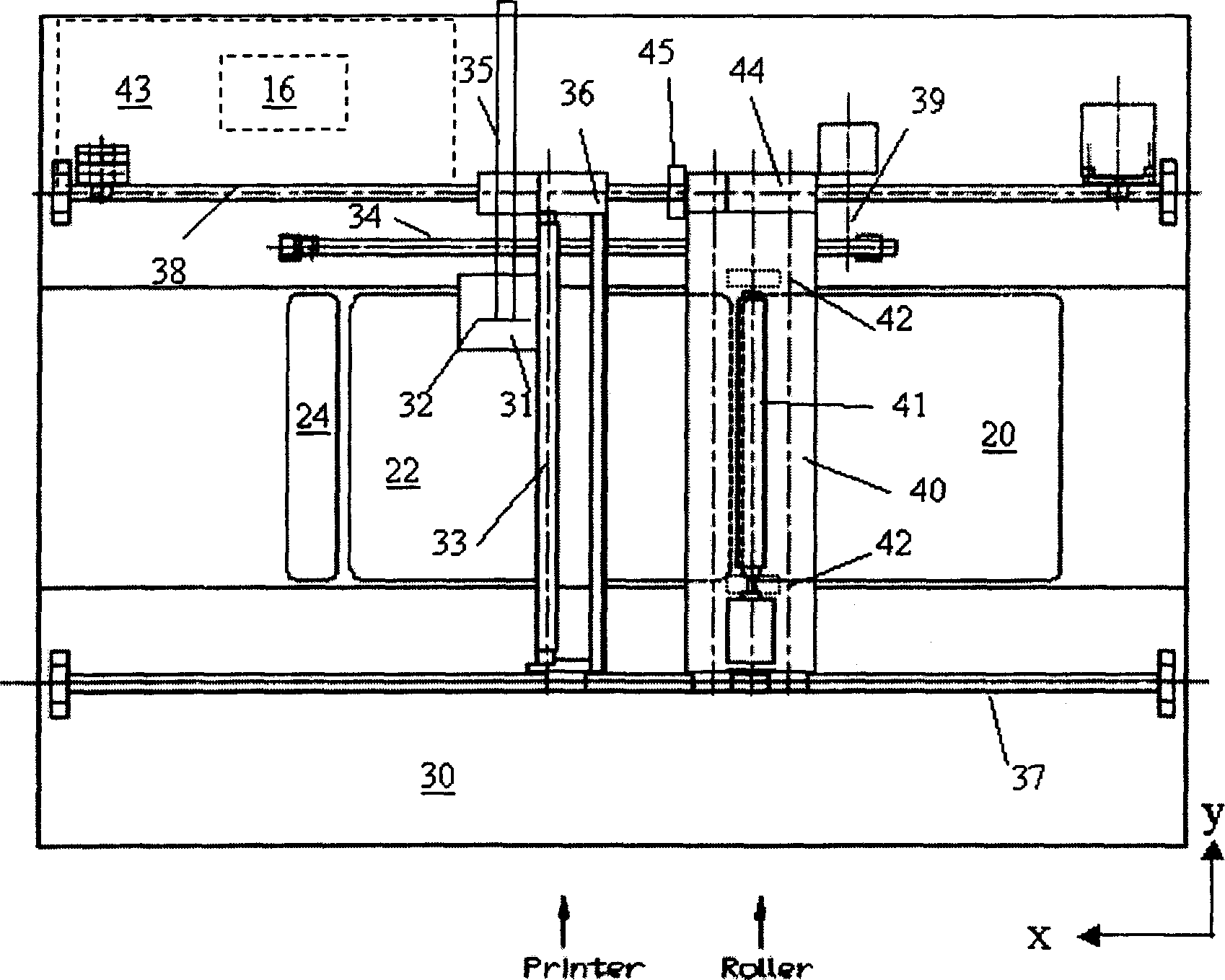

Fast shaping device for making body from image of computer and with printing machine

InactiveCN1911635AImprove stabilityImprove printing accuracyCoatingsPrintingEngineeringRapid prototyping

The present invention is one fast forming apparatus, in which the virtual image stored in the memory of computer is tomographically treated and the tomographical treatment obtained contours are jet printed one layer by one layer with fluid onto the powder in the constituting platform for combining with powder so as to form stereo real object. The fast forming apparatus includes the jet printing mechanism and firmware interfaces of a printer or a plotter, one work platform with tomographically operating software and jet printing control firmware, and one computer with memory with stored virtual image.

Owner:赖维祥 +1

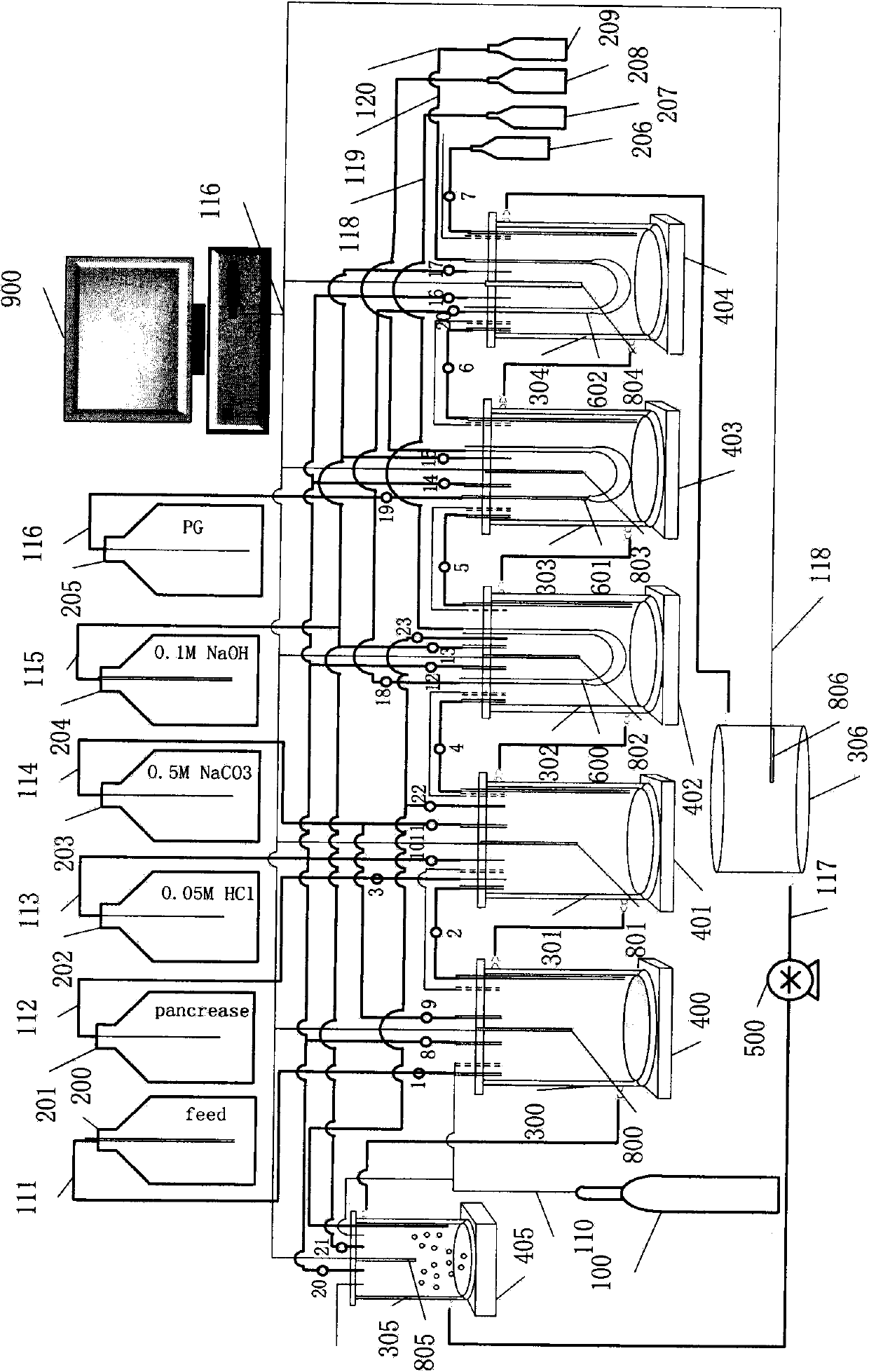

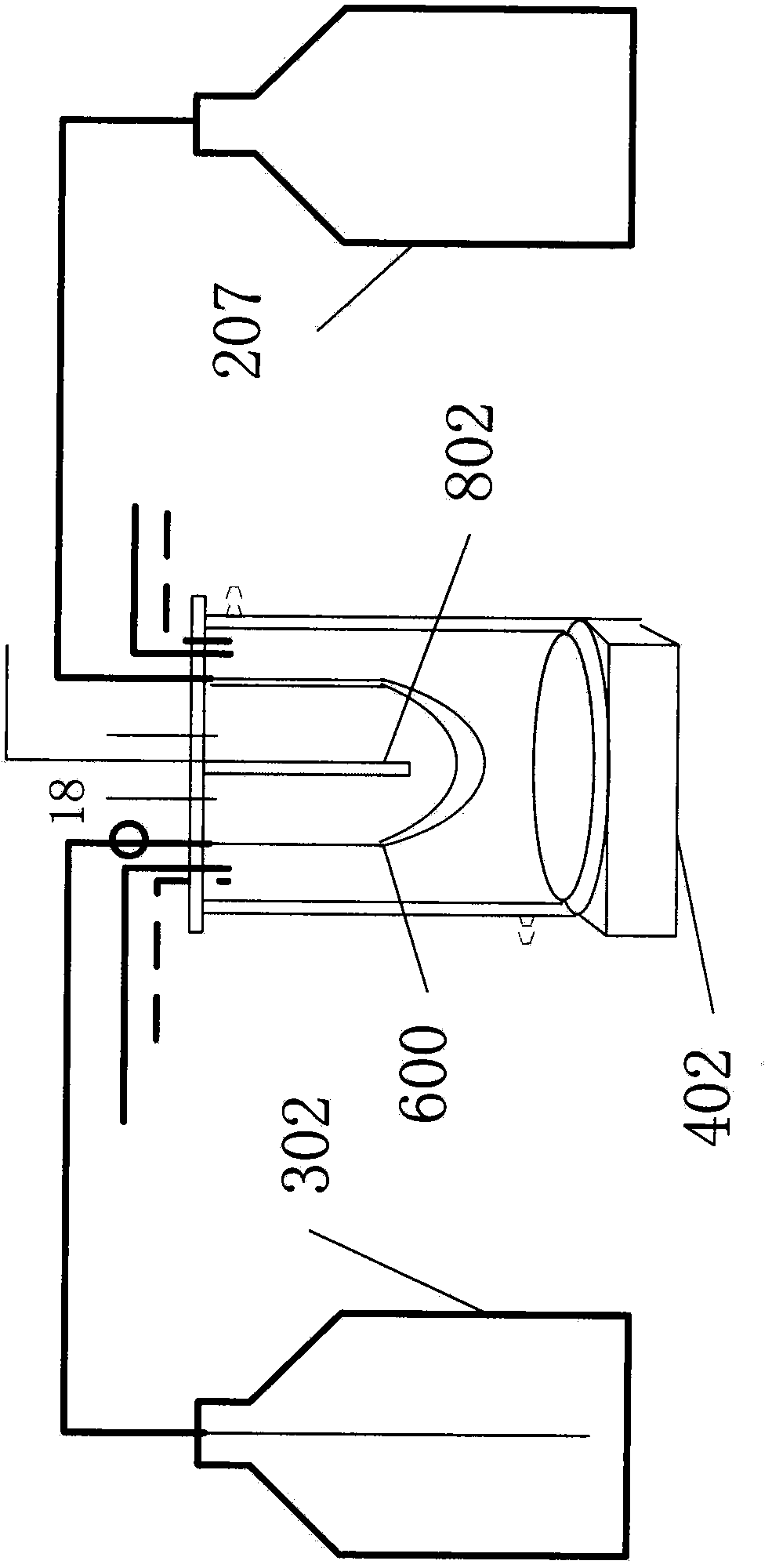

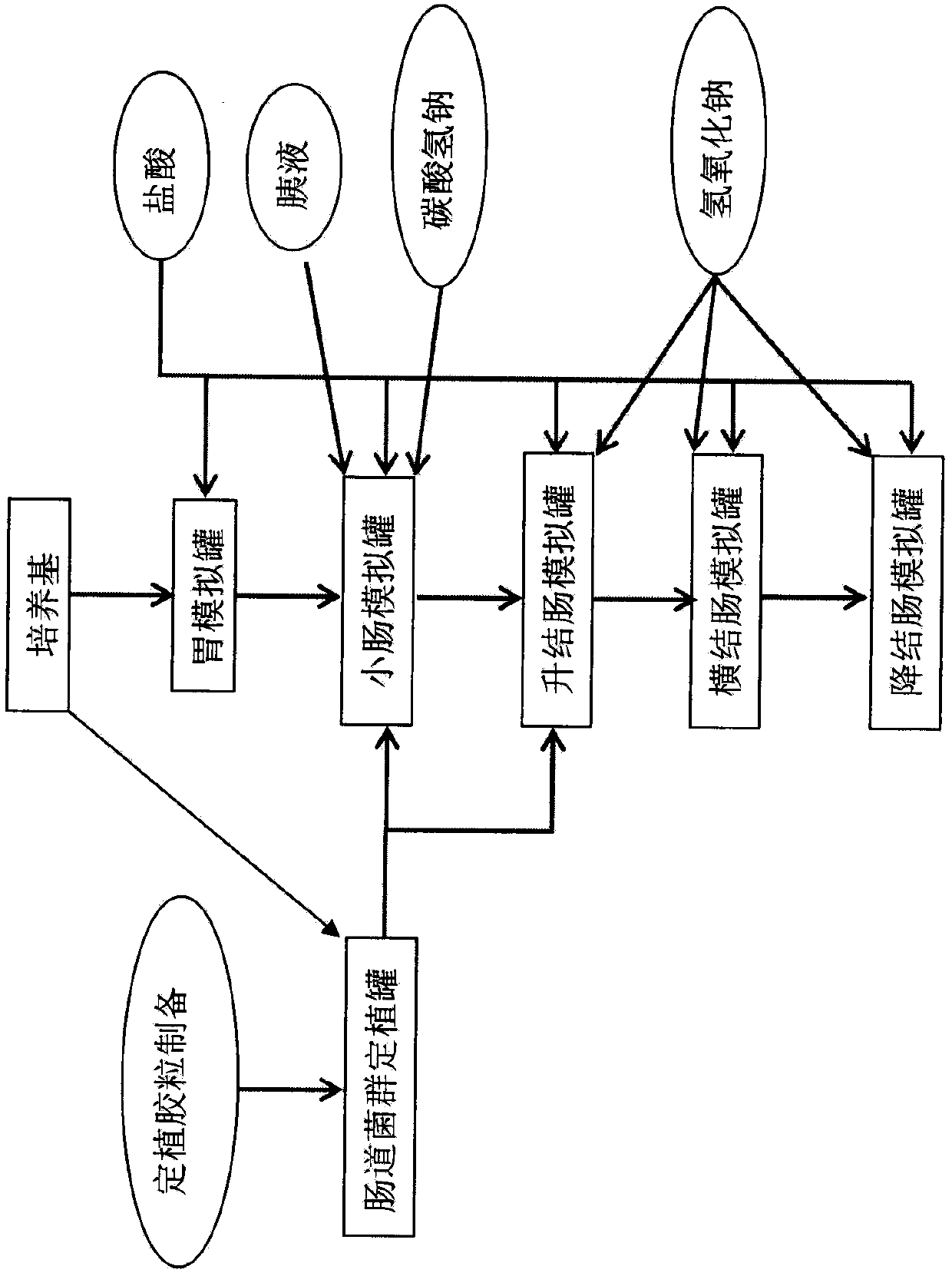

Human body gastrointestinal tract bionic system and simulation experiment method based on system

ActiveCN103740589AEliminate distractionsSimulate the realBioreactor/fermenter combinationsBiological substance pretreatmentsHuman bodyHuman gastrointestinal tract

The invention relates to a human body gastrointestinal tract bionic system and a simulation experiment method based on the system. The system comprises a system controller, an intestinal flora planting system, a simulation digestion system, a simulation absorption system, a stirring system, a rate control system, a gas path system, a temperature adjusting system, a pH adjusting system, a digestion liquid reagent bottle, an absorbing liquid reagent bottle and a collector. The method comprises the following steps: setting parameters of the control system, preparing planting colloidal particles, planting the intestinal flora, simulating digestion and the like. The system simulates gastrointestinal tract digestion, absorption and intestinal microecology of human bodies under in-vitro conditions, is real in simulation and good in reproducibility, and us applicable to study on the fields of nutriology, toxicology, physiology, microbiology and the like as intelligent control is adopted.

Owner:马爱国 +1

Channel encoding/decoding apparatus and method using a parallel concatenated low density parity check code

ActiveUS7519895B2Improve parallelismOther decoding techniquesError detection/correctionLow-density parity-check codeLow density

A channel encoding apparatus using a parallel concatenated low density parity check (LDPC) code. A first LDPC encoder generates a first component LDPC code according to information bits received. An interleaver interleaves the information bits according to a predetermined interleaving rule. A second LDPC encoder generates a second component LDPC code according to the interleaved information bits. A controller performs a control operation such that the information bits, the first component LDPC code which is first parity bits corresponding to the information bits, and the second component LDPC code which is second parity bits corresponding to the information bits are combined according to a predetermined code rate.

Owner:SAMSUNG ELECTRONICS CO LTD

Authentication system executing an elliptic curve digital signature cryptographic process

ActiveUS20060285682A1Improve parallelismImprove performanceDigital data processing detailsDigital computer detailsAuthentication systemPower analysis

An authentication system and a method for signing data are disclosed. The system uses a hardware software partitioned approach. In its implementation the system of the invention compares favourably with performance and other parameters with a complete hardware or full software implementation. Particularly, advantageously there is a reduced gate count. Also as disclosed in the invention the system makes it difficult for hackers to attack the system using simple power analysis.

Owner:TATA CONSULTANCY SERVICES LTD

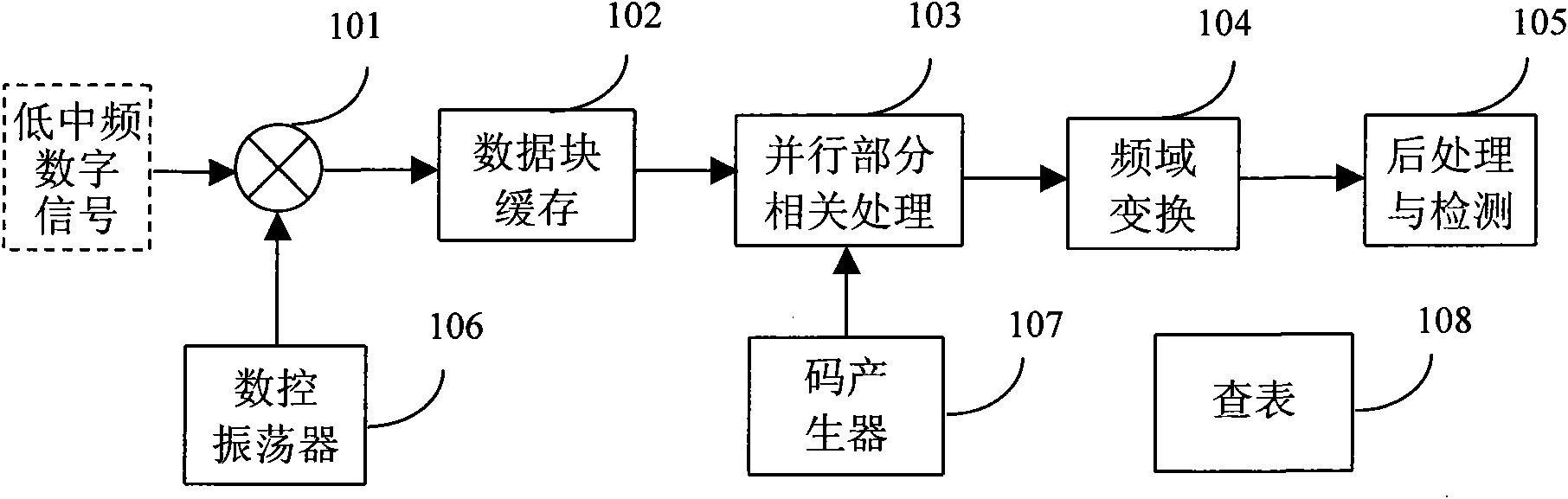

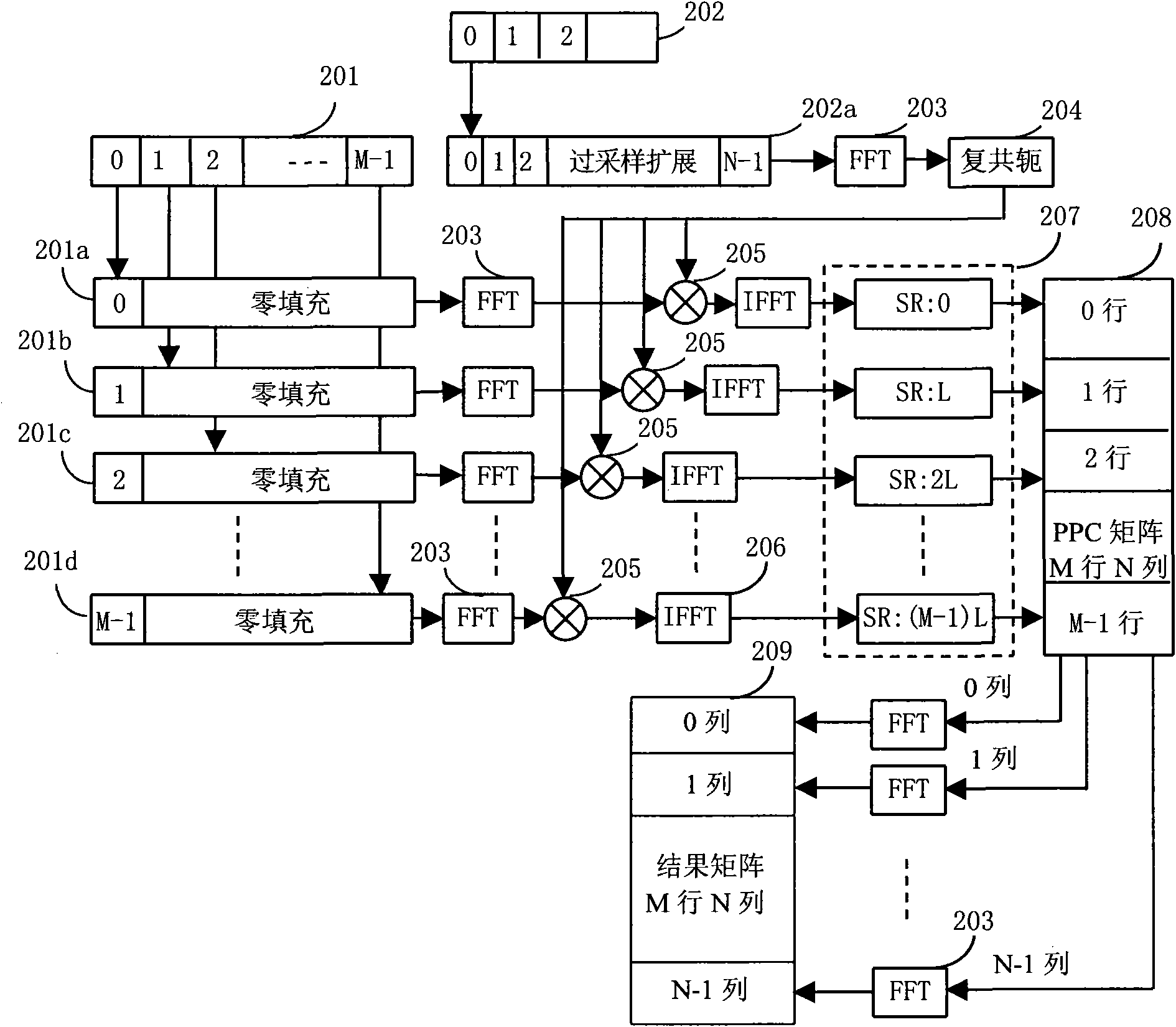

GPS signal large-scale parallel quick capturing method and module thereof

ActiveCN101625404ALow costCapture flexibleBeacon systemsTransmissionData segmentIntermediate frequency

The invention discloses a GPS signal large-scale parallel quick capturing method, which comprises the following steps: configuring a large-scale parallel quick capturing module firmware comprising submodules of multiplier, data block cache, parallel part correlative processing, frequency domain transformation, postprocessing and digital controlled oscillator, code generator and the like in a system CPU; through the calling computation, converting low-medium frequency digital signals into baseband signals in a processing procedure to combine a data block; performing zeroing extension of the length and the data block on each equational data section in the data block; then based on FFT transformation computation, performing parallel part correlative PPC processing on each extended data section and local spreading codes, and performing FFT transformation on each line of a formed PPC matrix to obtain a result matrix; and performing coherent or incoherent integration on a plurality of result matrixes formed by processing a plurality of data blocks to increase the processing gain, improve the capturing sensitivity, roughly determine the code phase and the Doppler frequency of GPS signals, and achieve two-dimensional parallel quick capturing of the GPS signals. The method has high processing efficiency and high capturing speed, and can be applied to various GPS positioning navigation aids.

Owner:杭州中科微电子有限公司

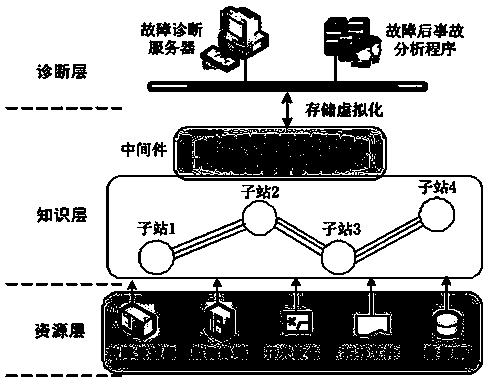

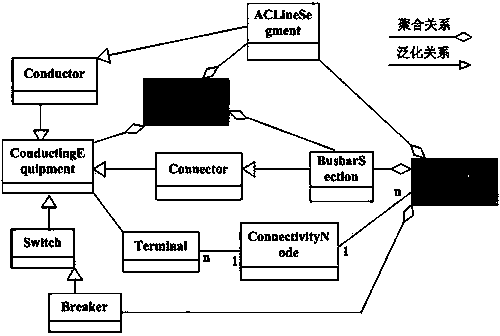

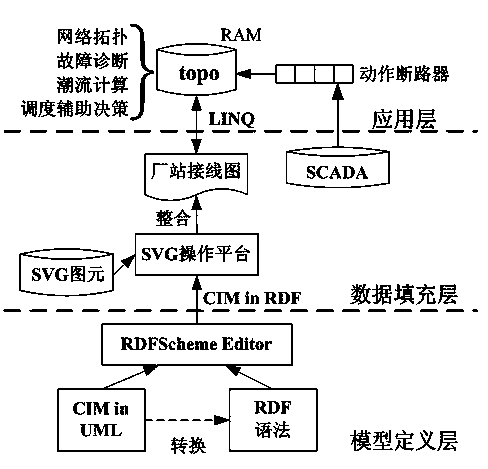

A power distribution network fault diagnosis system based on topology knowledge and a method

ActiveCN103675600ASave human and financial timeIncrease topology speedFault locationInformation technology support systemWiring diagramElectric network

The invention relates to a power distribution network fault diagnosis system based on topology knowledge and a method. The system comprises a resource layer, a knowledge layer and a diagnosis layer. The resource layer comprises a fault oscilloscope, a monitoring terminal, a distribution equipment machine account, a topology file and a database. The monitoring terminal is used for collecting switch state information after a fault. The fault oscilloscope is used for obtaining waveforms after the fault. The topology file is used for providing an electrical wiring diagram of a whole electrical network. The distribution equipment machine account is used for obtaining operation conditions in the fault of power distribution equipment. The knowledge level comprises a knowledge base providing information for the diagnosis layer. The diagnosis layer comprises a fault diagnosis server. The fault diagnosis server is provided with a fault diagnosis program module. According to the method, topology knowledge formation and fault diagnosis are realized through the above system. The system and the method can carry out fault analysis after the fault and provide references for operation personnel, so that manpower, financial resources and time are saved. In addition, the system is highly efficient and rapid in operation, and the usage effect is good.

Owner:STATE GRID CORP OF CHINA +3

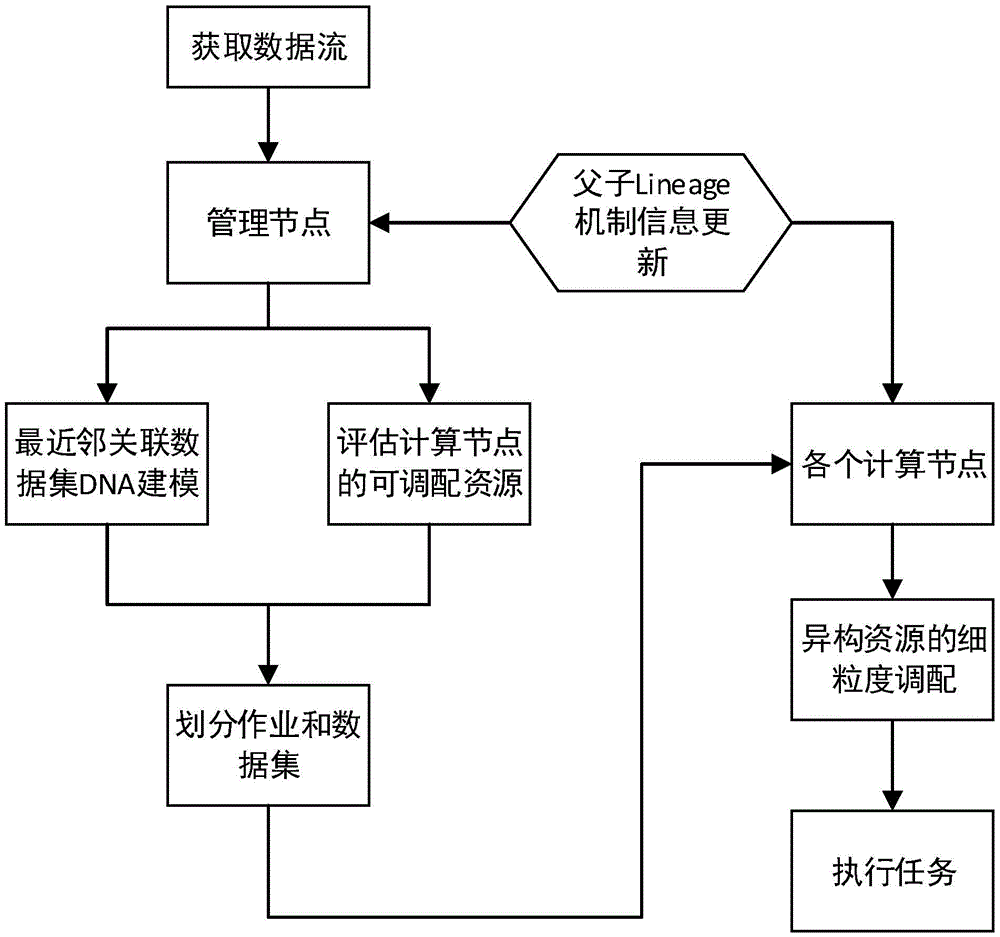

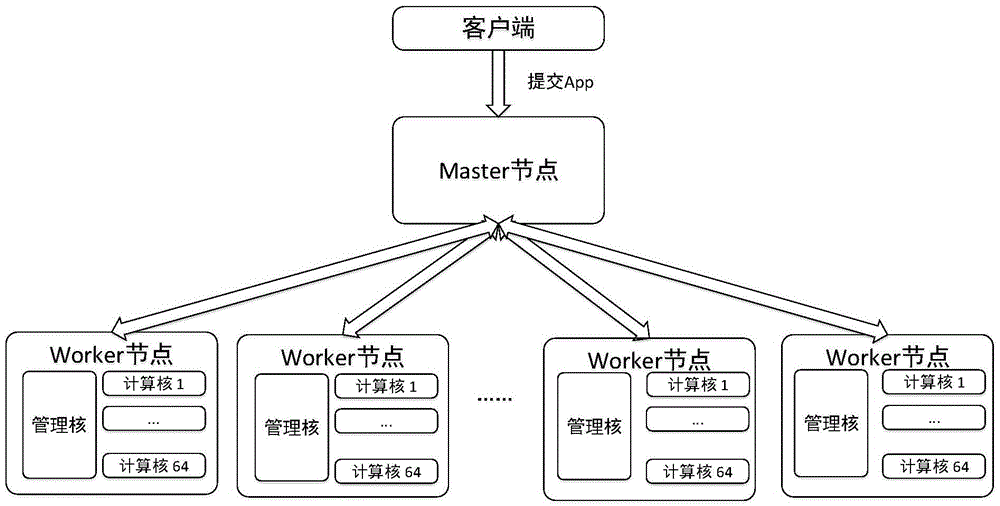

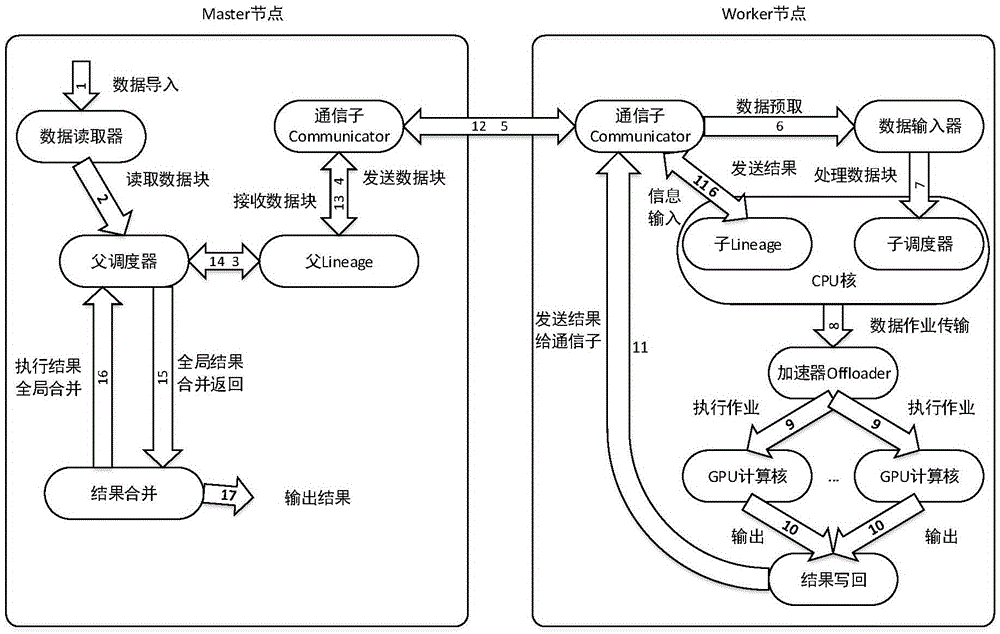

Data parallel processing method and system

ActiveCN105677486AImprove execution efficiencyImprove parallelismResource allocationData streamGranularity

The invention provides a data parallel processing method. The data parallel processing method comprises the following steps that 1, a main management node receives data and acquires the incidence relation of the data; 2, the main management node calculates allocatable GPUs and GPU work loads of work computing nodes; 3, the main management node partitions the data and distributes the partitioned data to all the work computing nodes; 4, the work computing nodes perform parallel processing on the received data and transmit processing results back to the main management node; 5, the main management node merges the results and then outputs the results. The data parallel processing method has the following advantages that a master-slave architectural pattern is adopted to be used for high-performance large-scale data parallel processing, operation stage partition is performed on specific operations converted by application programs according to DNA feature modeling, node granularity grade operation deployment is performed according to a partition result, and the execution efficiency of a parallel task of data flow in a single node is improved by adopting a thread parallel optimization mechanism and fully utilizing multiple computing kernels.

Owner:SHANGHAI JIAO TONG UNIV +1

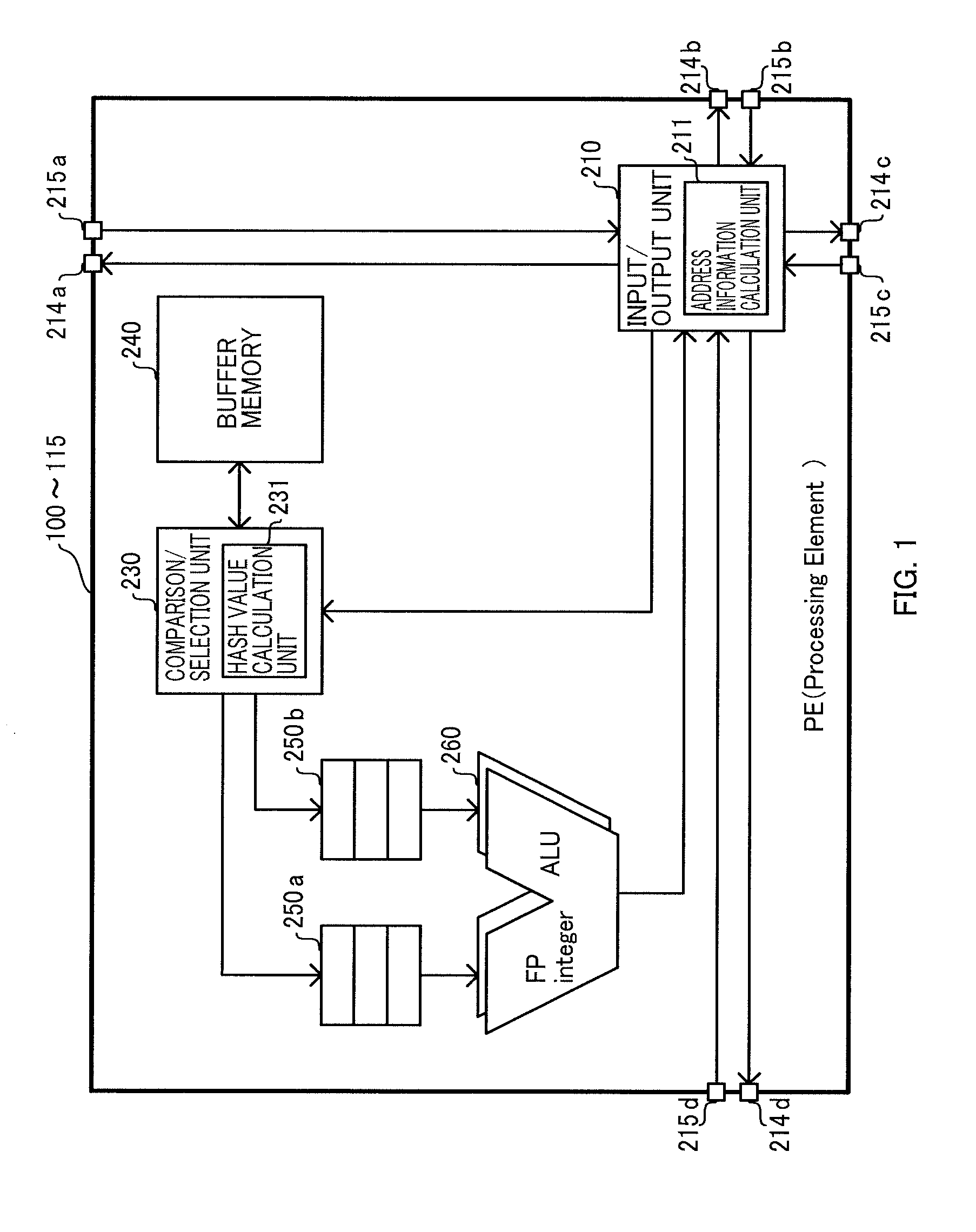

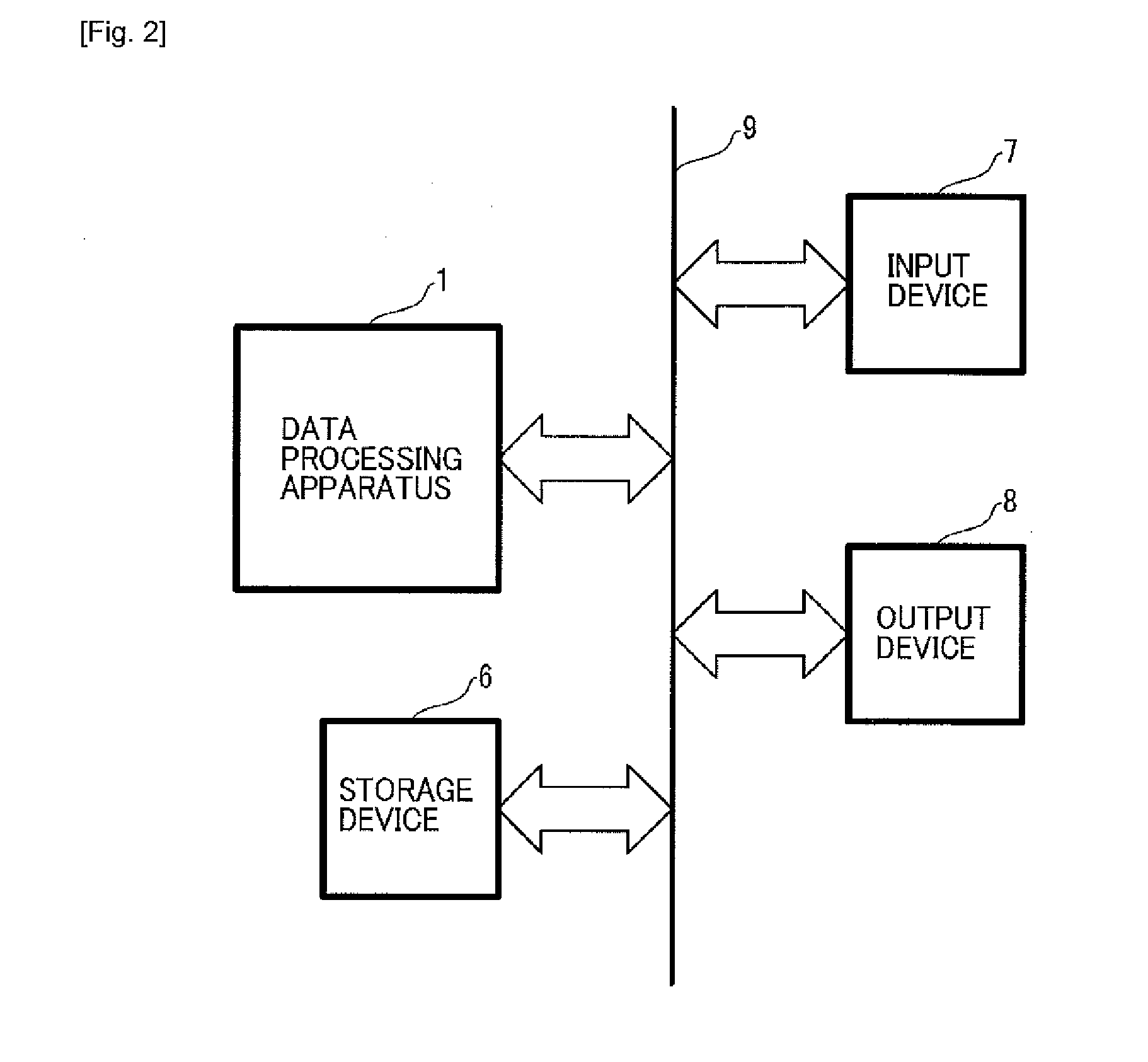

Data processing apparatus, data processing system, packet, recording medium, storage device, and data processing method

ActiveUS20120311306A1Speed up the processImprove parallelismSingle instruction multiple data multiprocessorsSpecific program execution arrangementsProcessing InstructionData processing system

Parallelism of processing can be improved while existing software resources are utilized substantially as they are.A data processing apparatus includes a plurality of processing units configured to process packets each including data and extended identification information added to the data, the extended identification information including identification information for identifying the data and instruction information indicating one or more processing instructions to the data, each processing unit in the plurality of processing units including: an input / output unit configured to obtain, in the packets, only a packet whose address information indicates said each processing unit in the plurality of processing units, the address information determined in accordance with the extended identification information; and an operation unit configured to execute the processing instruction in the packet obtained by the input / output unit.

Owner:MUSH A

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com