Patents

Literature

36results about How to "Reduce cache requirements" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

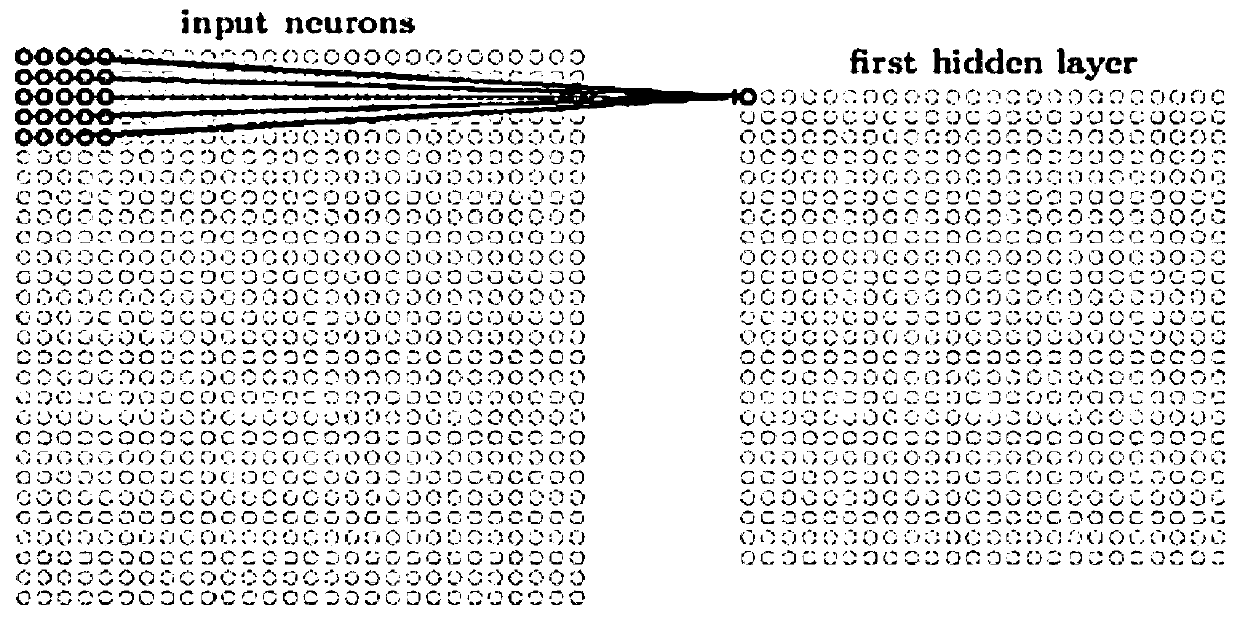

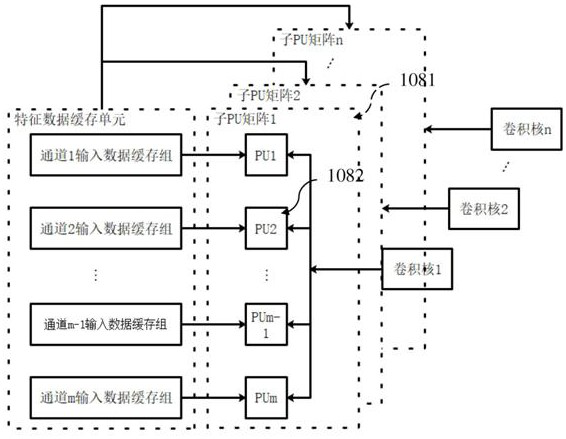

Hardware structure for realizing forward calculation of convolutional neural network

InactiveCN107066239AReduce cache requirementsImprove parallelismConcurrent instruction executionPhysical realisationHardware structureHardware architecture

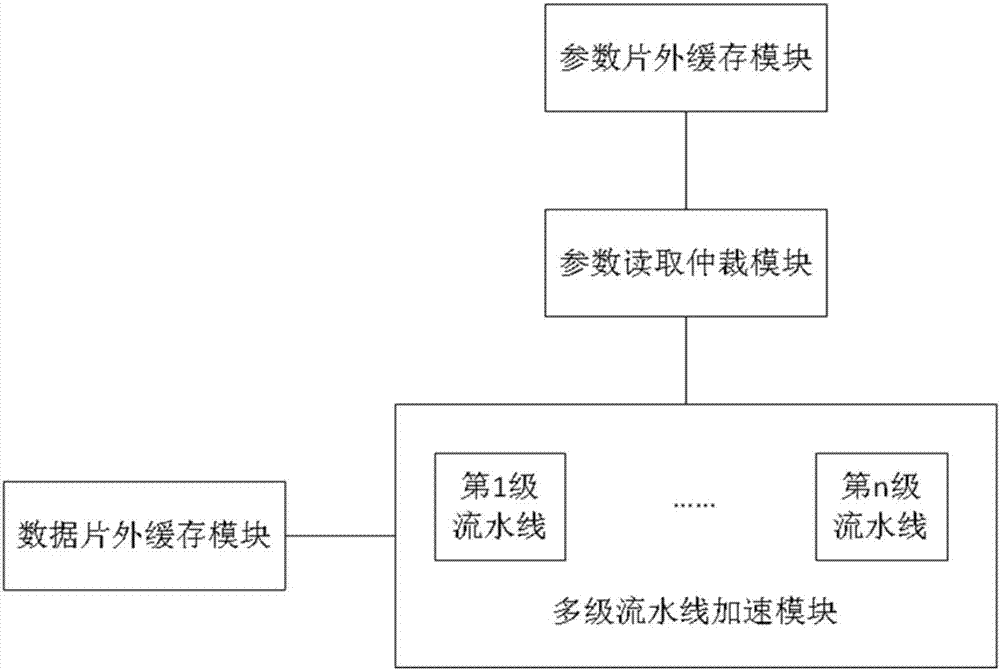

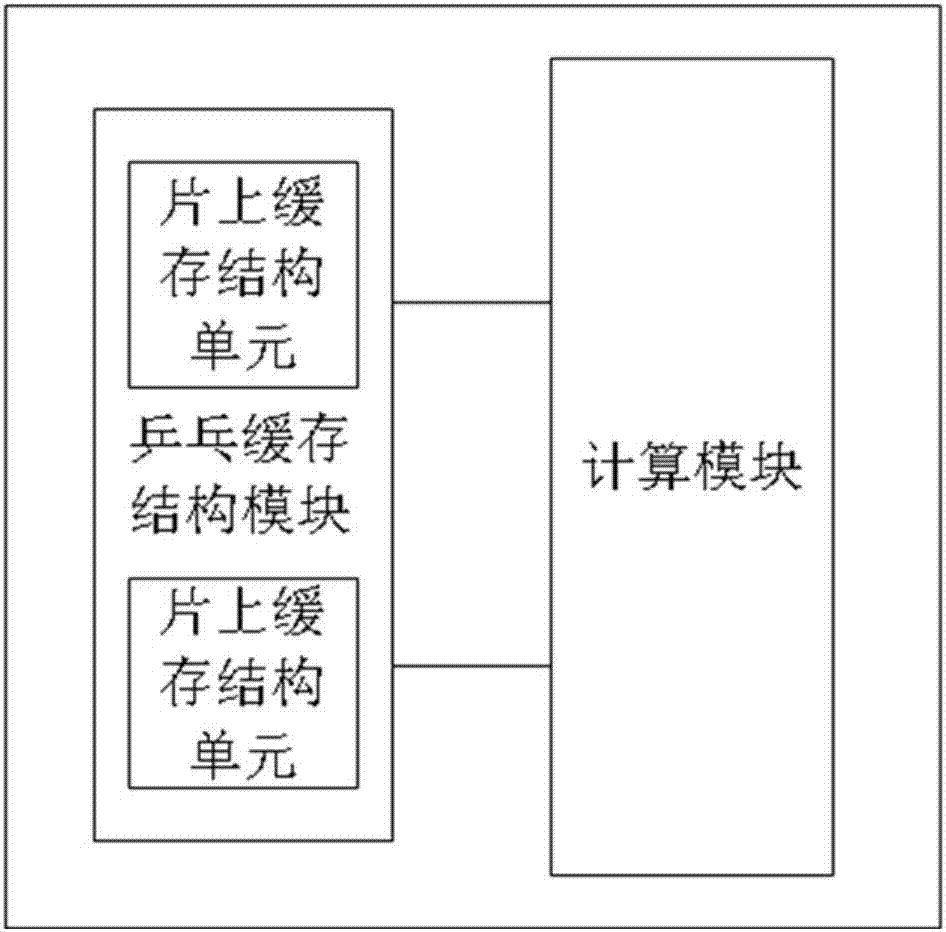

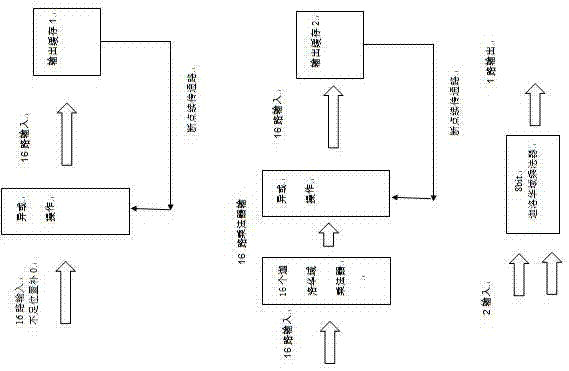

The present application discloses a hardware structure for realizing forward calculation of a convolutional neural network. The hardware structure comprises: a data off-chip caching module, used for caching parameter data in each to-be-processed picture that is input externally into the module, wherein the parameter data waits for being read by a multi-level pipeline acceleration module; the multi-level pipeline acceleration module, connected to the data off-chip caching module and used for reading a parameter from the data off-chip caching module, so as to realize core calculation of a convolutional neural network; a parameter reading arbitration module, connected to the multi-level pipeline acceleration module and used for processing multiple parameter reading requests in the multi-level pipeline acceleration module, so as for the multi-level pipeline acceleration module to obtain a required parameter; and a parameter off-chip caching module, connected to the parameter reading arbitration module and used for storing a parameter required for forward calculation of the convolutional neural network. The present application realizes algorithms by adopting a hardware architecture in a parallel pipeline manner, so that higher resource utilization and higher performance are achieved.

Owner:智擎信息系统(上海)有限公司

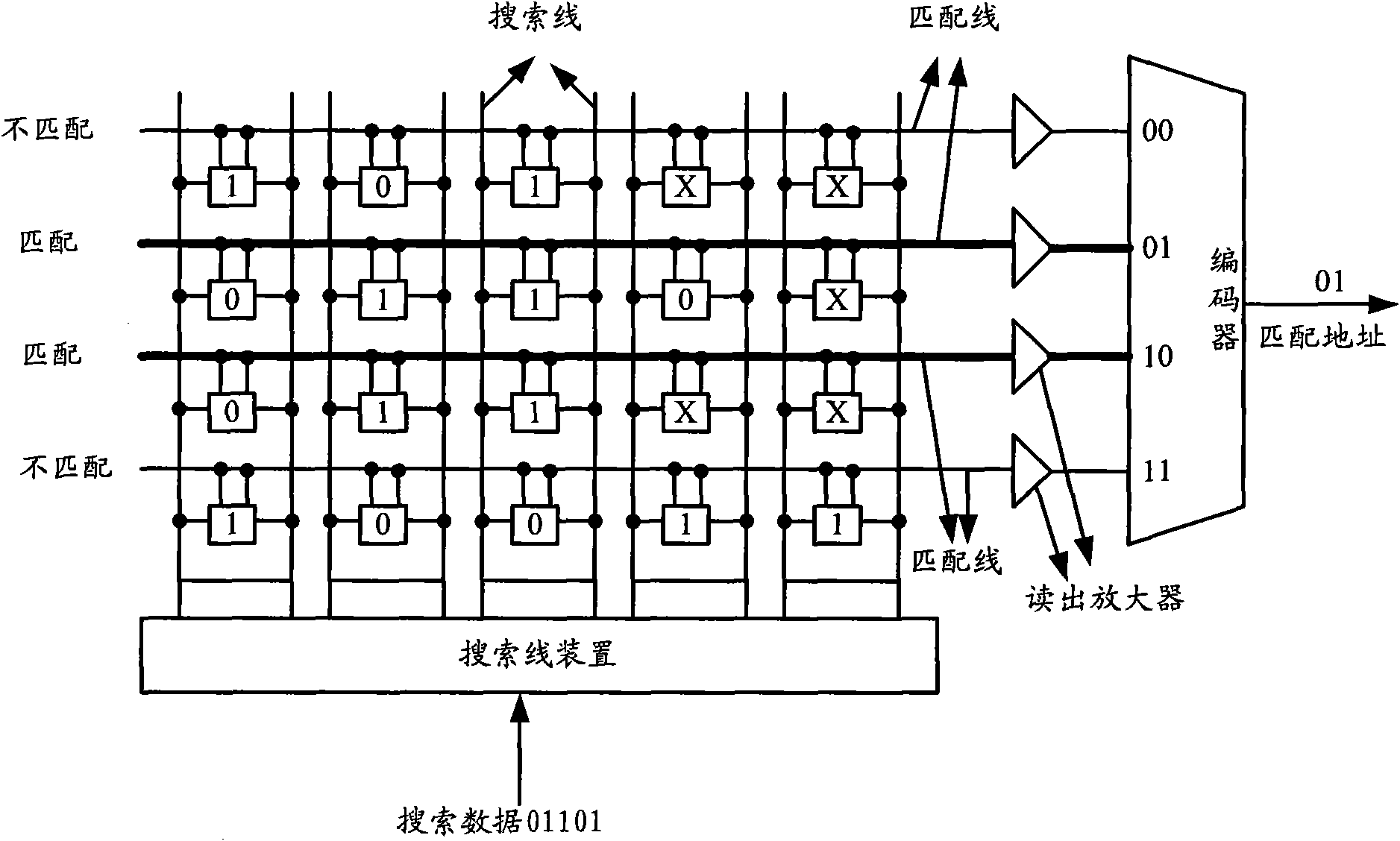

Method and device for managing list item of content addressable memory CAM

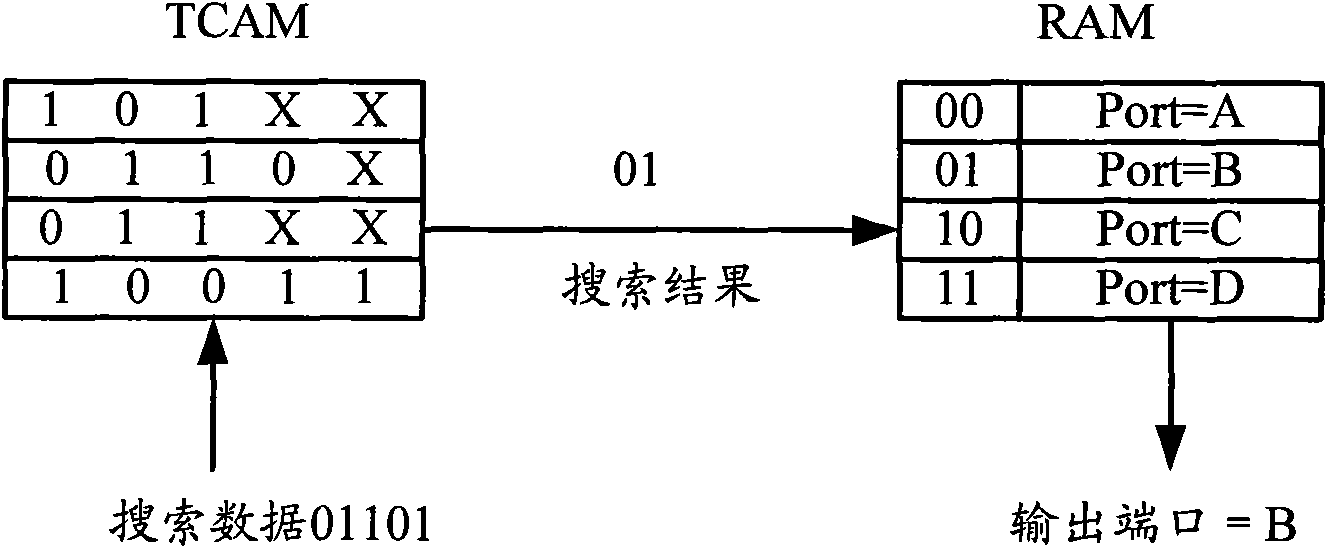

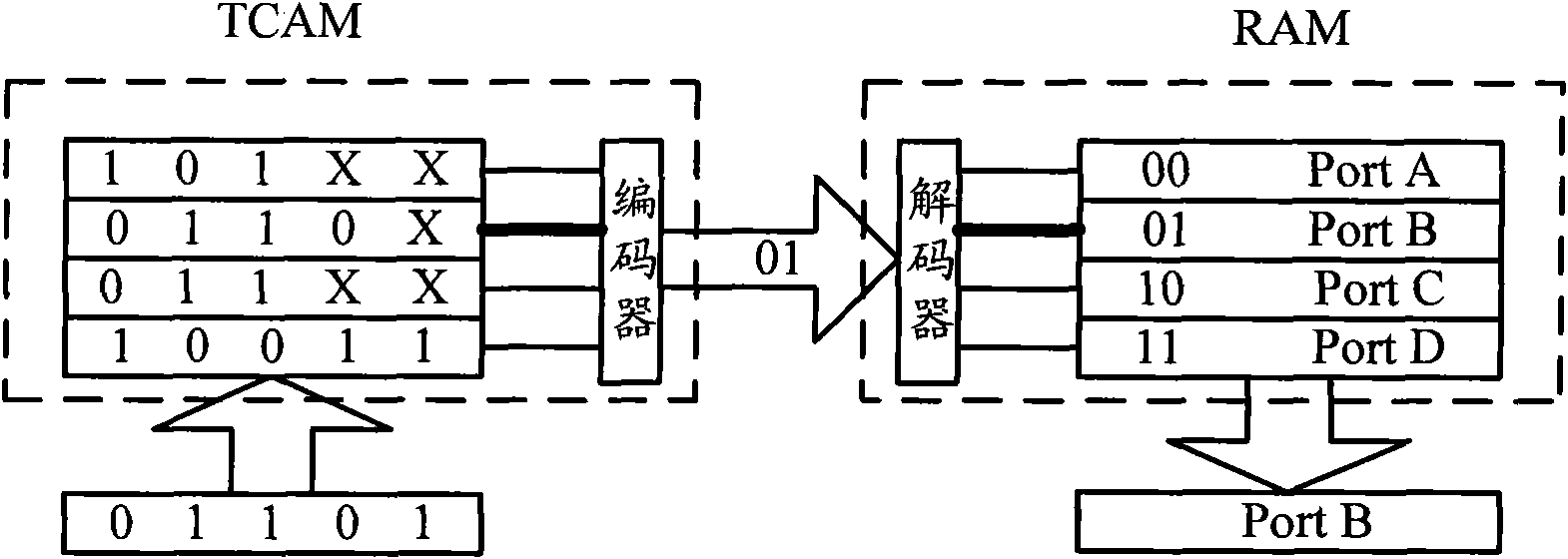

InactiveCN101620623AReduce time spentReduce cache requirementsData switching networksSpecial data processing applicationsContent-addressable storageTernary content addressable memory

The invention discloses a method and a device for managing list items of a content addressable memory CAM. The method comprises the following steps: storing a priority level message which corresponds to each list item of the content addressable memory CAM; and when a plurality of matched list items are obtained by searching data to match the list items of the content addressable memory CAM, selecting one matched list item according to the priority level message which corresponds to each matched list item or the priority level message or a matched address message which corresponds to each matched list item. The invention is easy to realize and has high efficiency.

Owner:NEW H3C TECH CO LTD

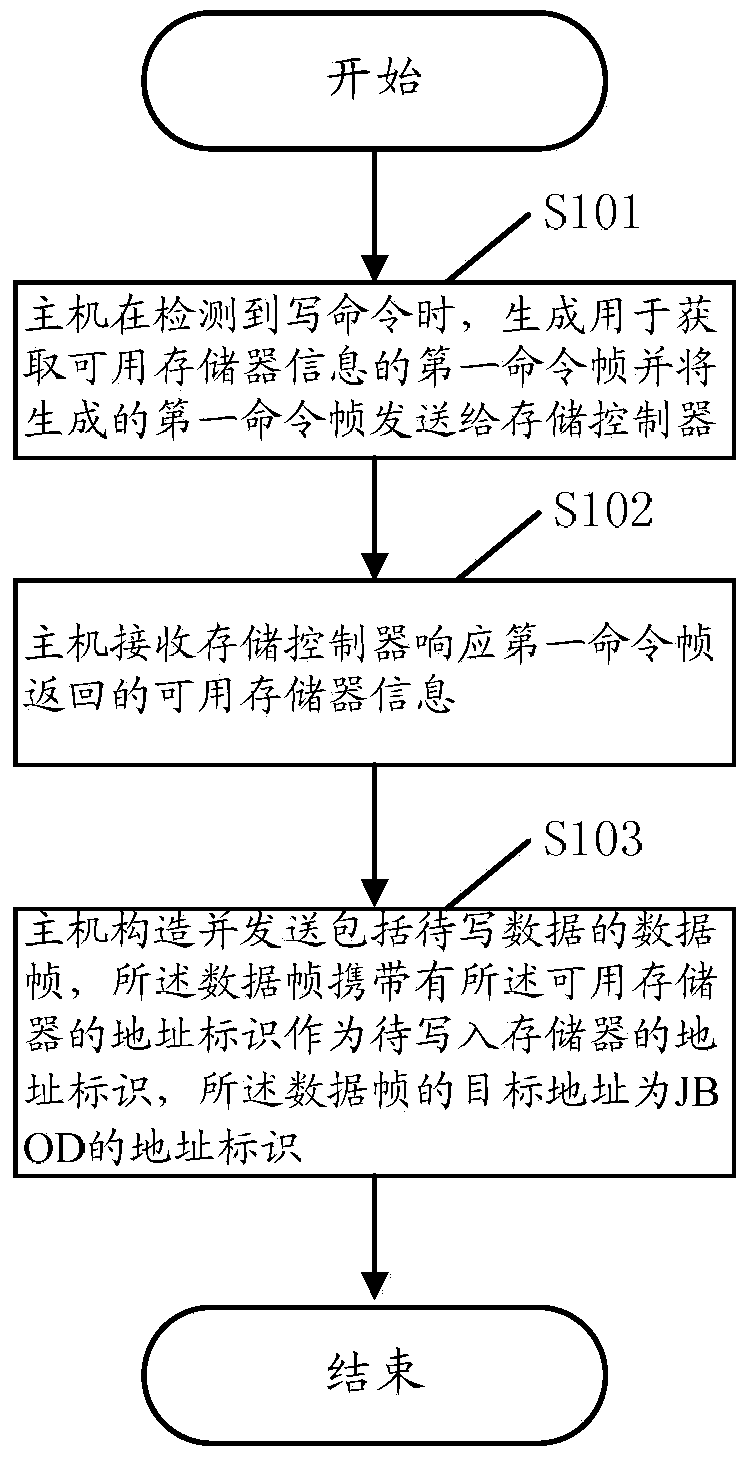

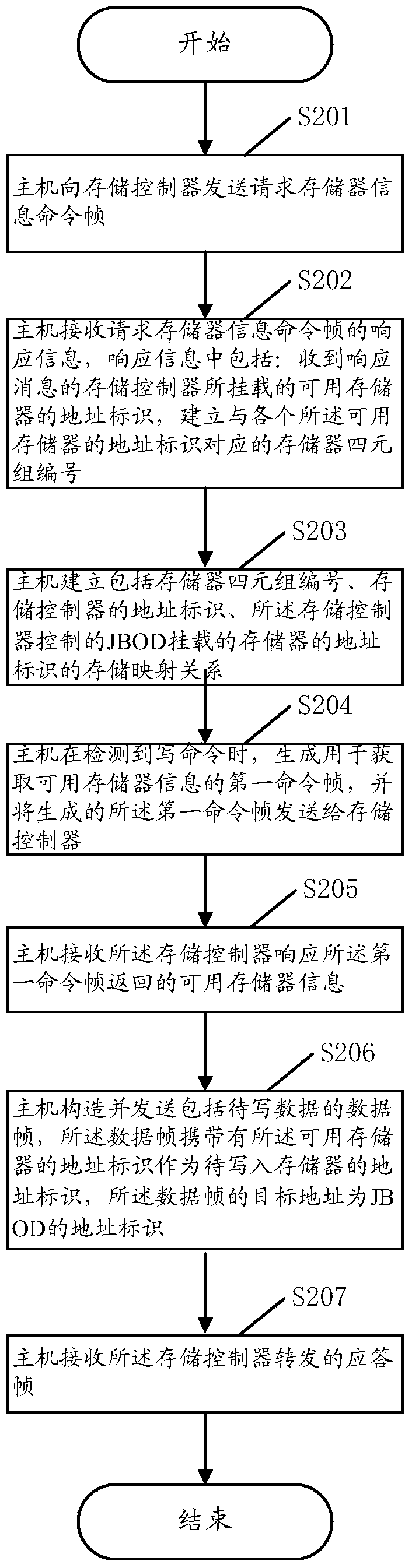

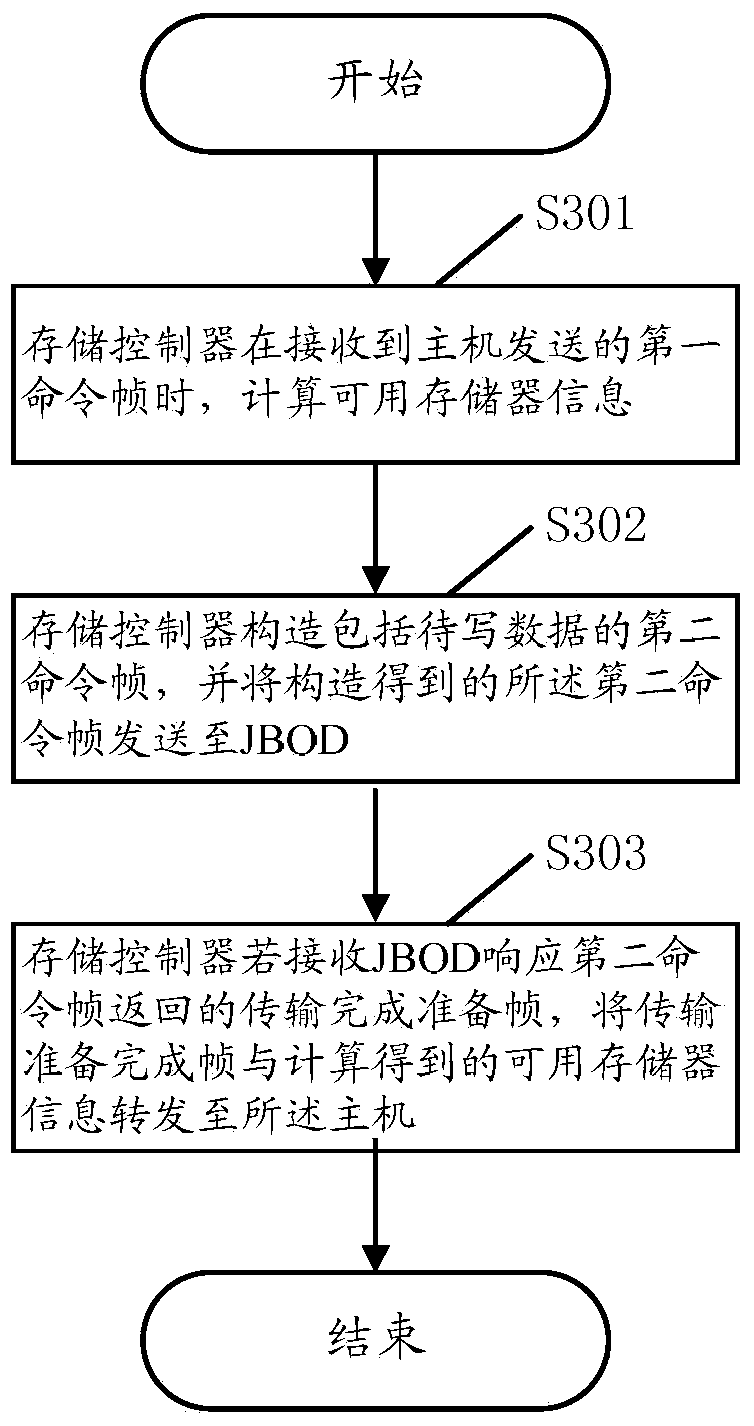

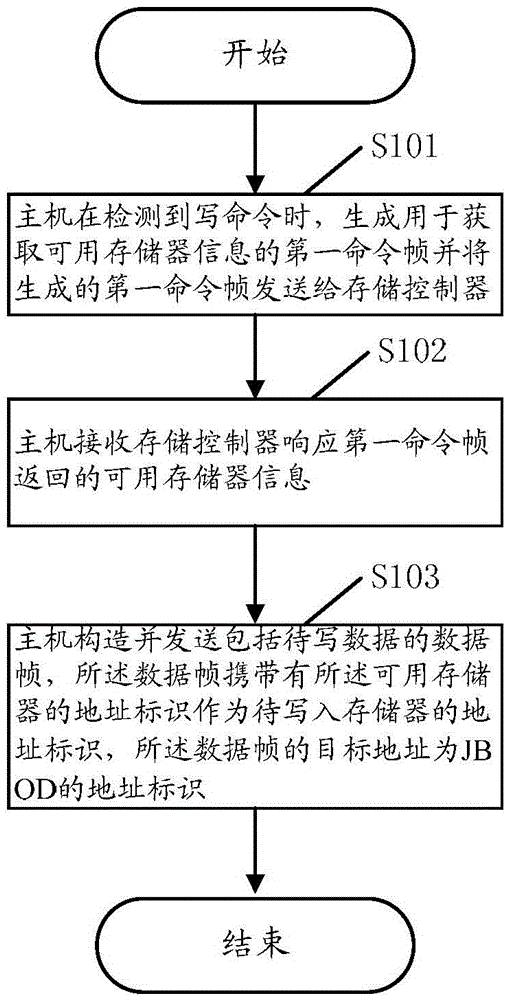

Data storage method, device and system

ActiveCN103530066AImprove storage efficiencyReduce cache requirementsInput/output to record carriersNon-RAID drive architecturesData store

Owner:HUAWEI TECH CO LTD

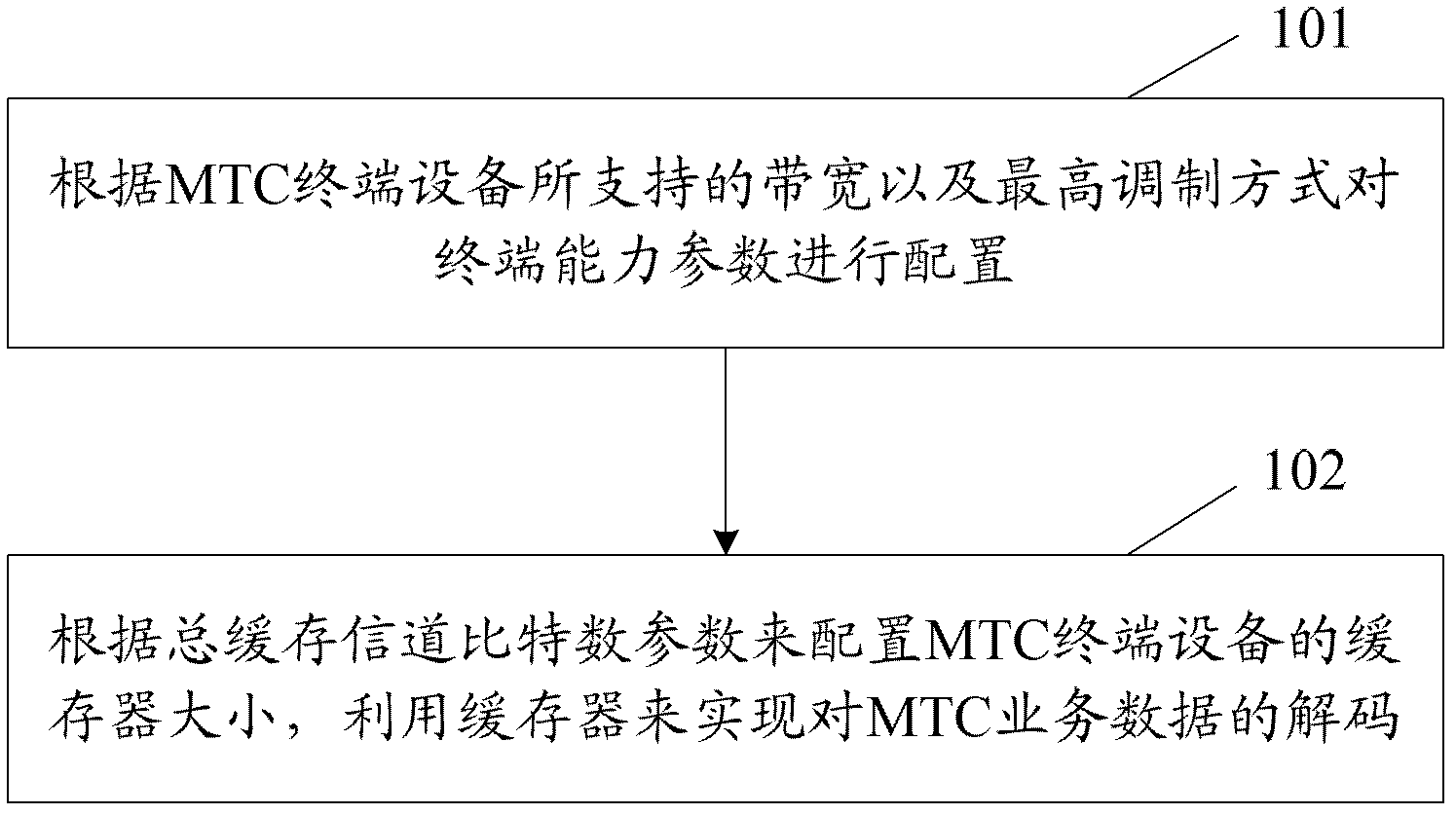

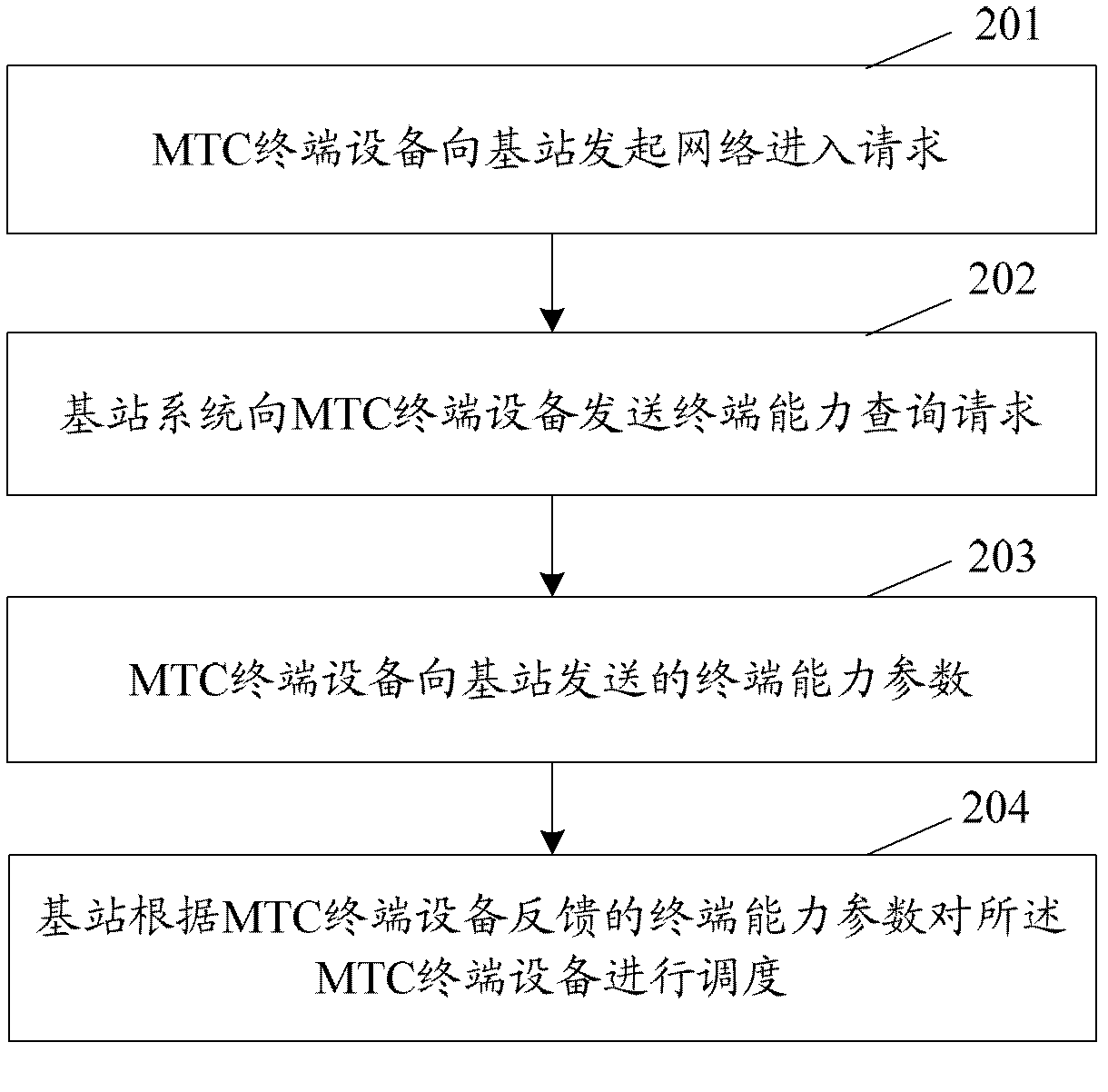

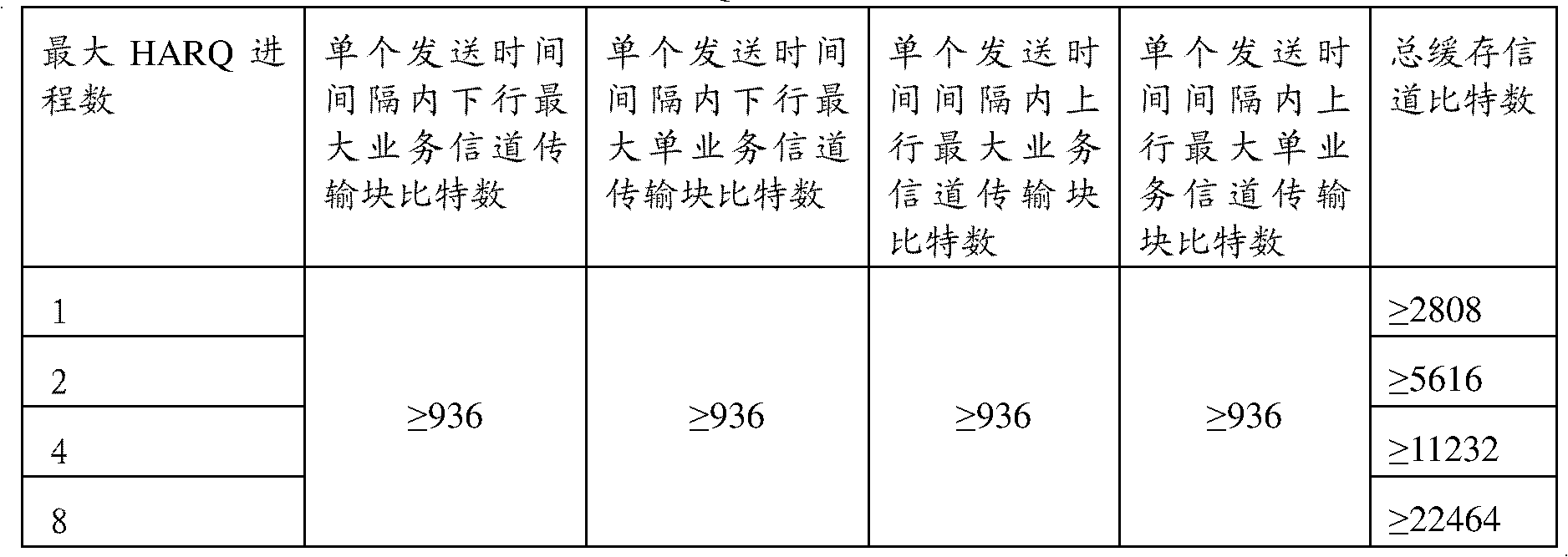

Method and device for configuring machine class communication terminal capacity

ActiveCN103220660AReduce the total number of buffered channel bitsReduce cache requirementsData switching networksLink quality based transmission modificationBit numberingReal-time computing

The invention discloses a method and a device for configuring machine class communication terminal capacity. The method and the device for configuring machine class communication terminal capacity are used for promoting a (machine to machine) M2M service to progress from a global system for mobile communication (GSM) to a long term evolution (LTE) system. Capacity parameters of machine type communication (MTC) terminal equipment are configured according to a bandwidth and a highest modulation system supported by the MTC terminal equipment, and the capacity of a buffer of the MTC terminal equipment is configured according to a total buffering channel bit number parameter. By limiting the receiving bandwidth and sending bandwidth of the MTC terminal equipment, the largest modulation order and the supported largest hybrid automatic repeat request (HARQ) process number of the MTC terminal equipment are limited, a total buffering channel bit number of the MTC terminal equipment is largely lowered, the buffering requirement of the MTC terminal equipment is lowered, and therefore the cost of the MTC terminal equipment is largely lowered, and the MTC service is promoted to progress from the GSM to the LTE system.

Owner:ZTE CORP

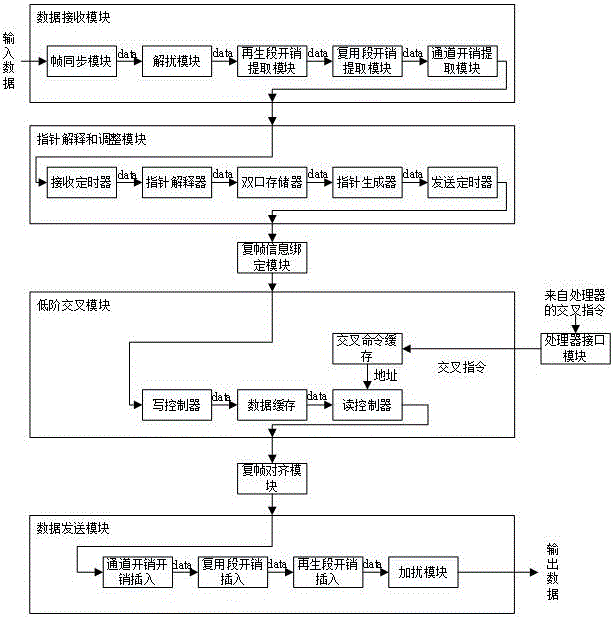

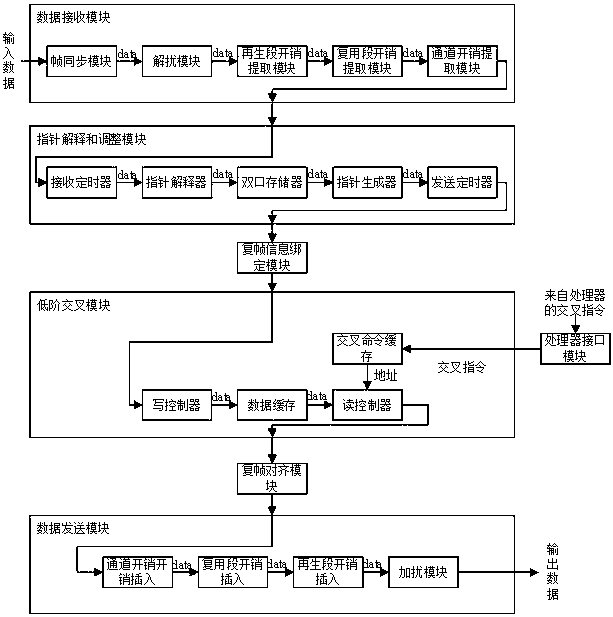

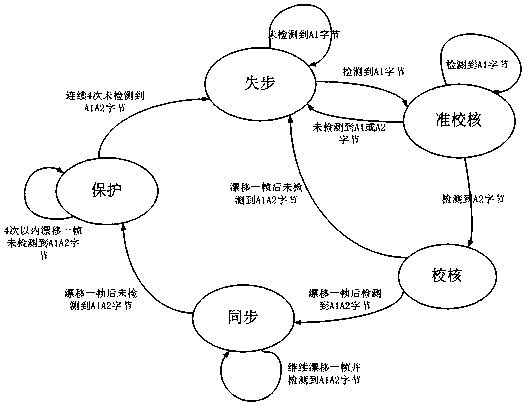

Method for adopting FPGA to carry out low order cross connect on large volume data

ActiveCN106487471AReduce cache requirementsHigh bandwidthTime-division multiplexComputer architectureMultiple frame

The invention discloses a method for adopting an FPGA to carry out low order cross connect on large volume data. The method changes a conventional way that multi-frame alignment and cross are needed when the low order cross connect is carried in a synchronous digital system, aims at application characteristics of large input bandwidth and small output bandwidth of an information screening field, and adopts a way of cross, convergence and multi-frame alignment to operate after the accessed large volume data is processed through data reception, pointer interpreter and adjustment and multi-frame information binding modules. According to the method, the requirements of design for internal cache of a chip can be greatly reduced, the data access with the larger bandwidth can be realized, and the stronger access, cross and data handling capacity can be provided. The conventional method only completes the low order capacity of 20G*20G, the method can complete the low order capacity of 80G*20G without any additional external cache, and the method has remarkable advantages in the aspects of hardware cost, access capacity and operability.

Owner:TOEC TECH

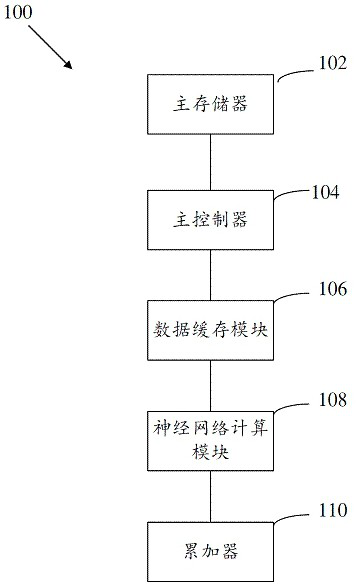

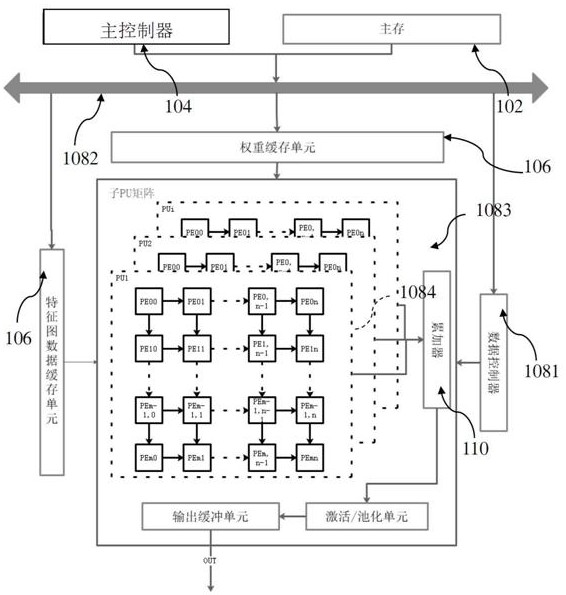

Neural network acceleration device and method and communication equipment

PendingCN113807509AImprove energy efficiency of accessReduce cache requirementsNeural architecturesPhysical realisationData streamEngineering

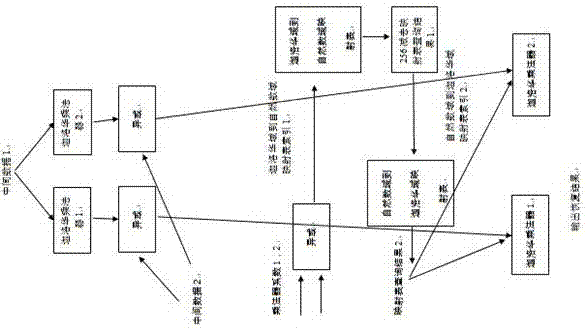

The invention provides a neural network acceleration device and method and communication equipment, and belongs to the field of data processing, and the method specifically comprises the steps that a main memory receives and stores feature map data and weight data of a to-be-processed image; a main controller generates configuration information and an operation instruction according to the structure parameters of the neural network; a data caching module comprises a feature data caching unit for caching feature line data extracted from the feature map data and a convolution kernel caching unit for caching convolution kernel data extracted from the weight data; a data controller adjusts a data path according to the configuration information and the instruction information and controls the data flow extracted by a data extractor to be loaded to a corresponding neural network calculation unit, the neural network calculation unit at least completes convolution operation of one convolution kernel and feature map data and completes accumulation of multiple convolution results in at least one period, and therefore, circuit reconstruction and data multiplexing are realized; and an accumulator accumulates the convolution results and outputs output feature map data corresponding to a convolution core.

Owner:绍兴埃瓦科技有限公司 +1

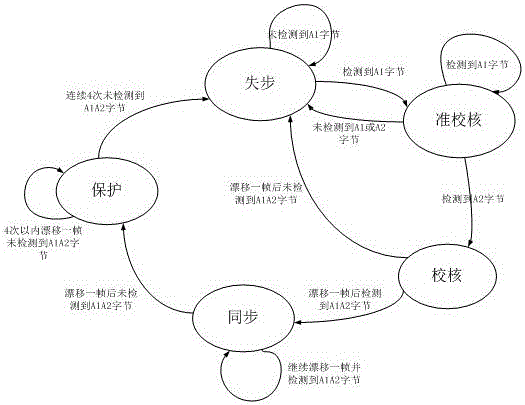

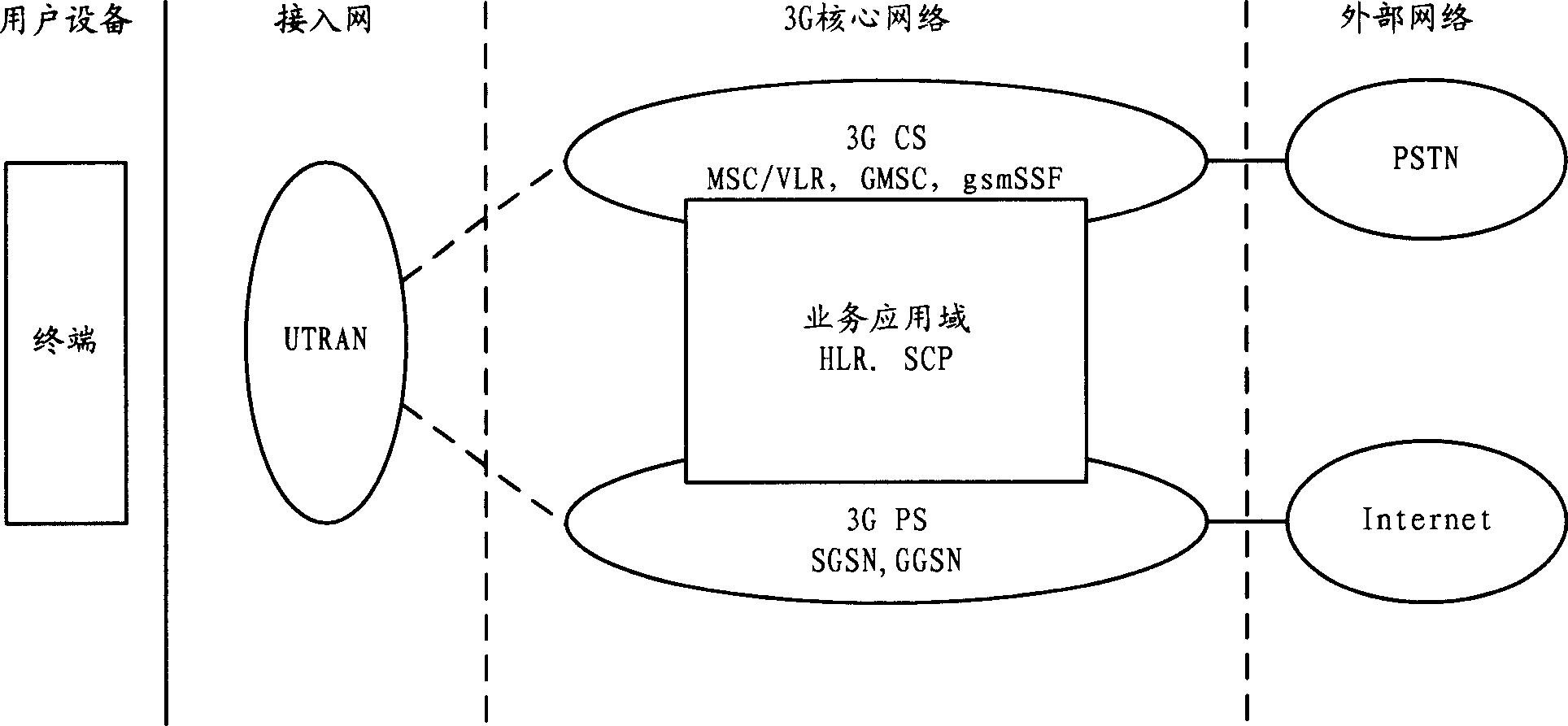

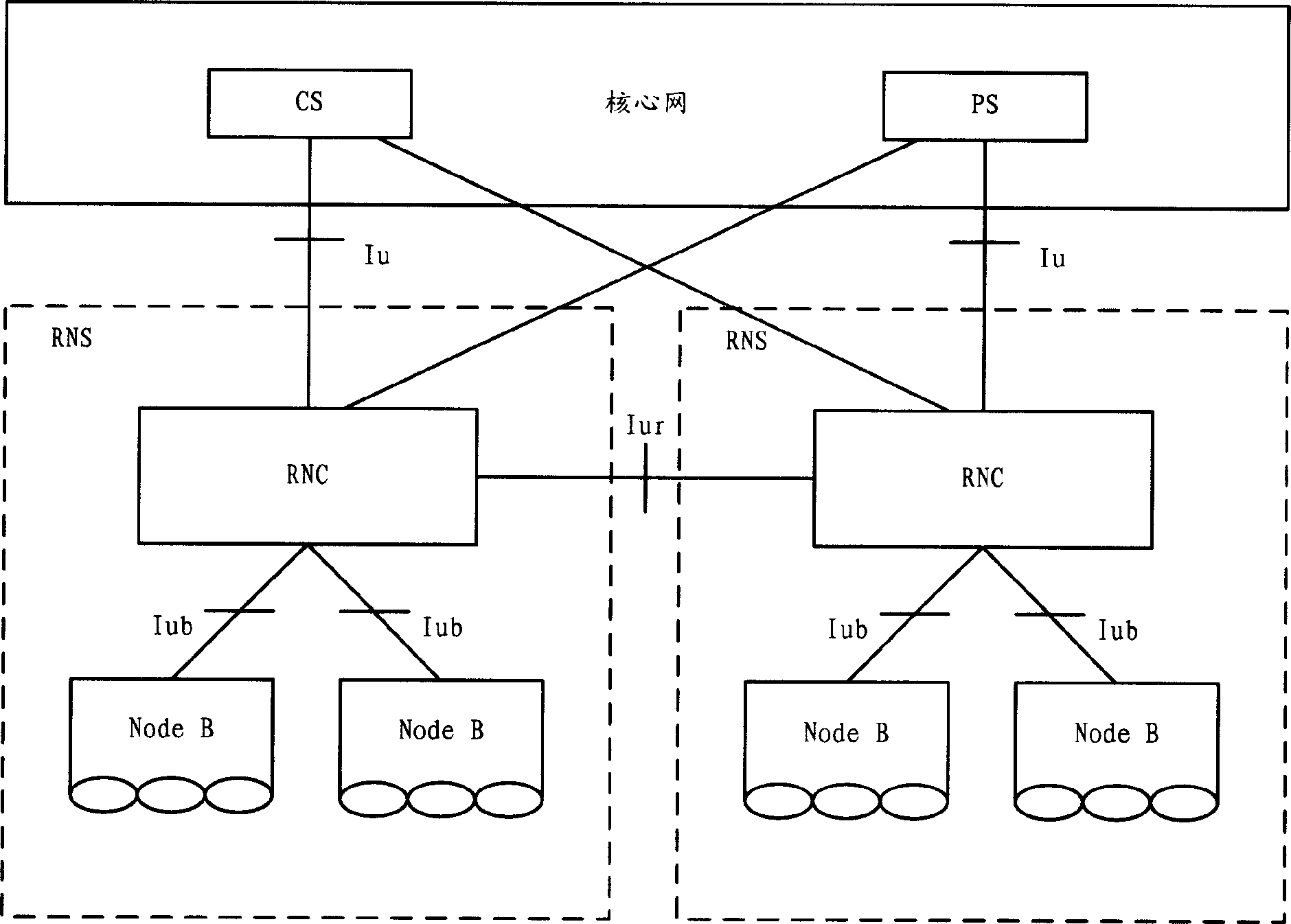

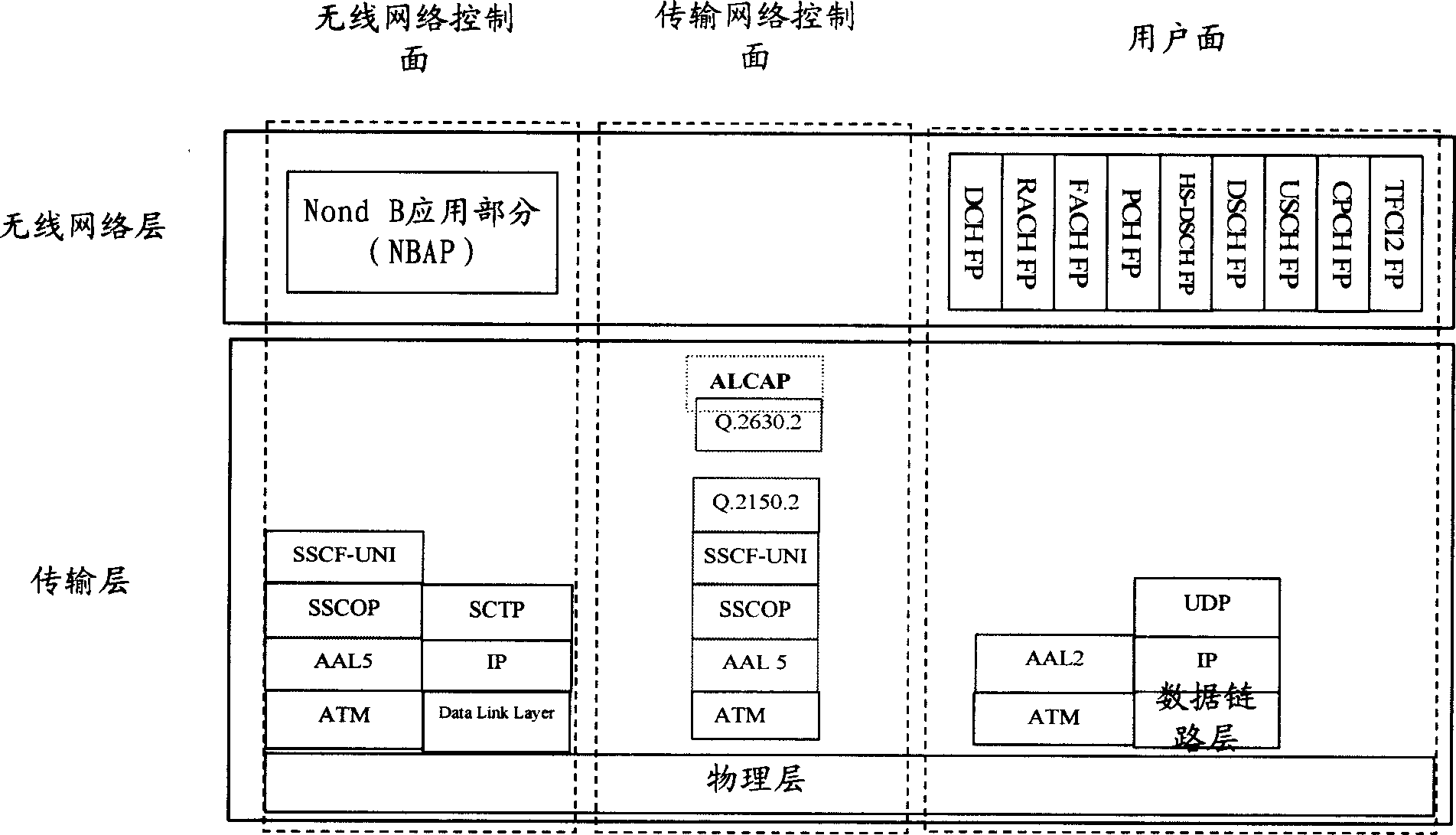

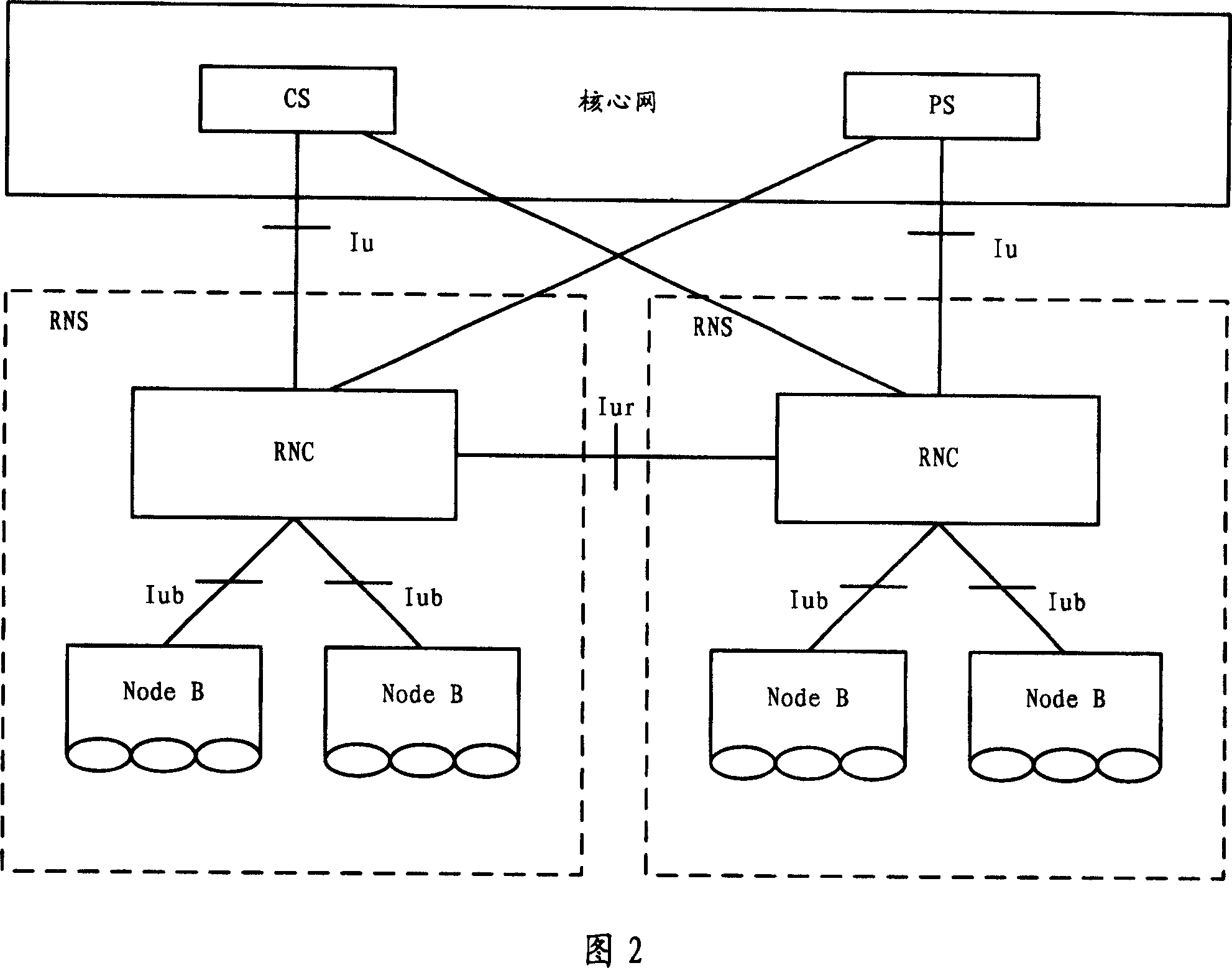

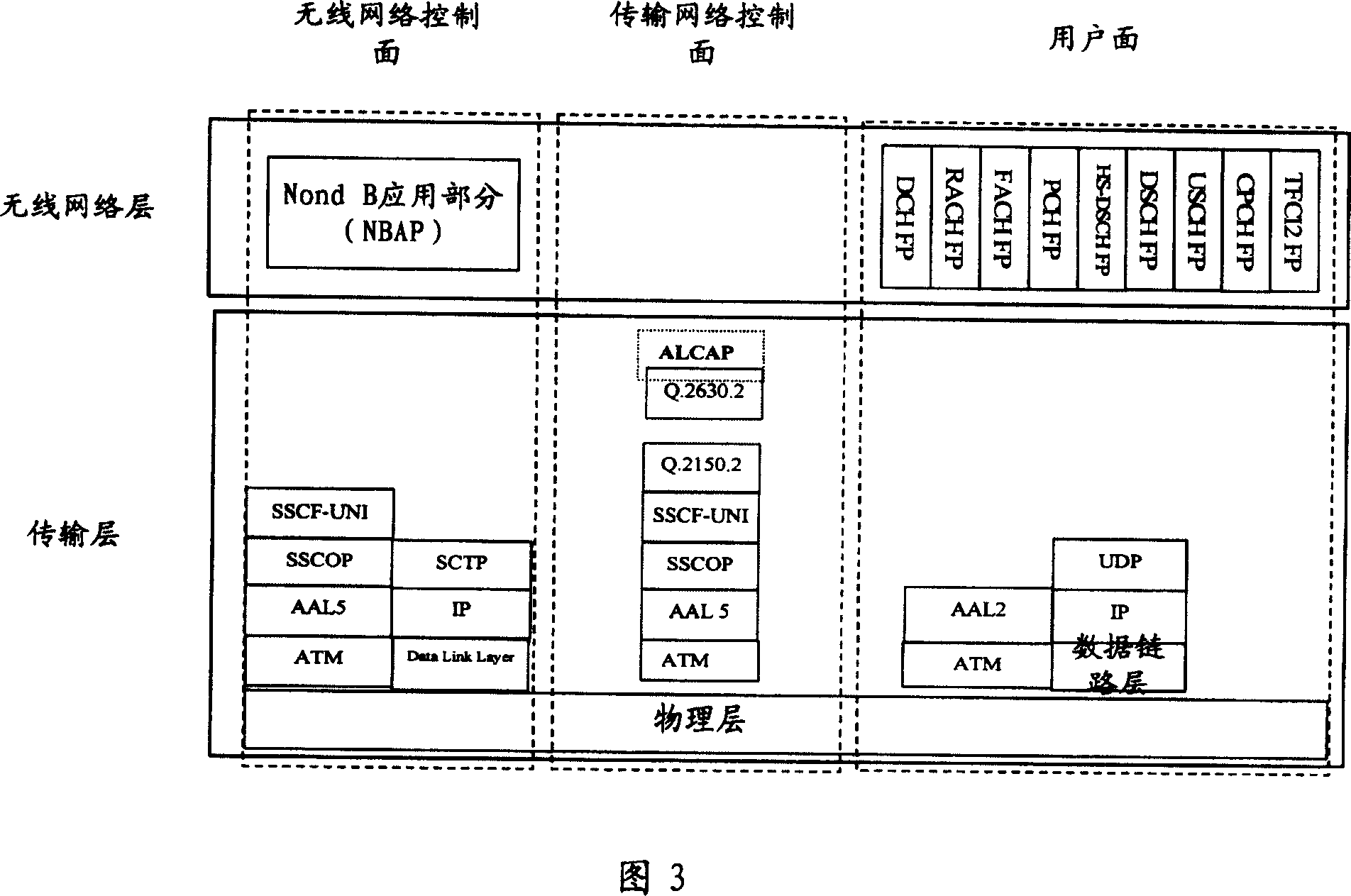

Transmitting system and method between base station and wireless network controller

ActiveCN1819675AReduce transmission costsLow costRadio/inductive link selection arrangementsWireless communicationHigh bandwidthTransport system

A transmitting system and method between the wireless network controllers and the base station belongs to mobile communication technology, which get high bandwidth Iub interface transmitting capability with low cost. There are two different type of transmitting line between the base station and the RNC, one is for the transmitting special line that transmits the audio operating frame, and the other is for the IP net that transmit the data operating frame. Setting different CFN for the voice operating frame and the data operating frame that according to transmitting delay of the pre-mensuration. Setting the bigger incept window for the transmitting line of the data operating frame. Setting the different IP address to realize separate-way transmit of the two operating frame if the transmitting special line use IP transmission; Configuring AAL2VC and IP address to realize separate-way transmission of the two operating frame if the transmitting special line use asynchronism transfer mode ATM.

Owner:SHANGHAI HUAWEI TECH CO LTD

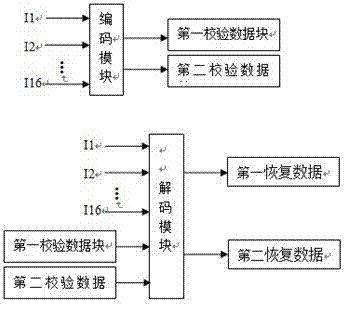

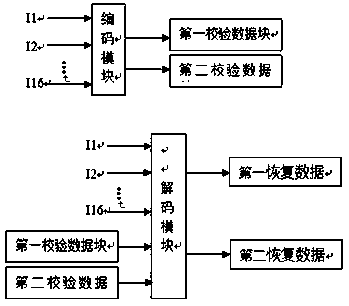

Erasure correcting method and apparatus based on flash memory storage device

ActiveCN107193685AImprove protectionTake up less resourcesRedundant data error correctionRAIDMissing data

The invention discloses an erasure correcting method and apparatus based on a flash memory storage device. The apparatus comprises an encoding module and a decoding module, the encoding module processes 16 input data blocks to obtain two verification data blocks, and the decoding module performs erasure correcting on received partially missing data by using the received two verification data blocks. The erasure correcting ability of the erasure correcting apparatus disclosed by the invention is M + 2, the protection ability of the erasure correcting apparatus is higher than that of the traditional RAID, few resources are occupied, meanwhile the erasure correcting delay is less than that of the traditional RS erasure code, the encoding only requires 2 clock cycles, and the decoding only requires 4 clock cycles. At the same time, breakpoint resume is supported, calculation can be performed without waiting for the preparation of all data blocks, and the data are calculated once arrival, therefore the requirements for the system cache are greatly reduced.

Owner:武汉中航通用科技有限公司

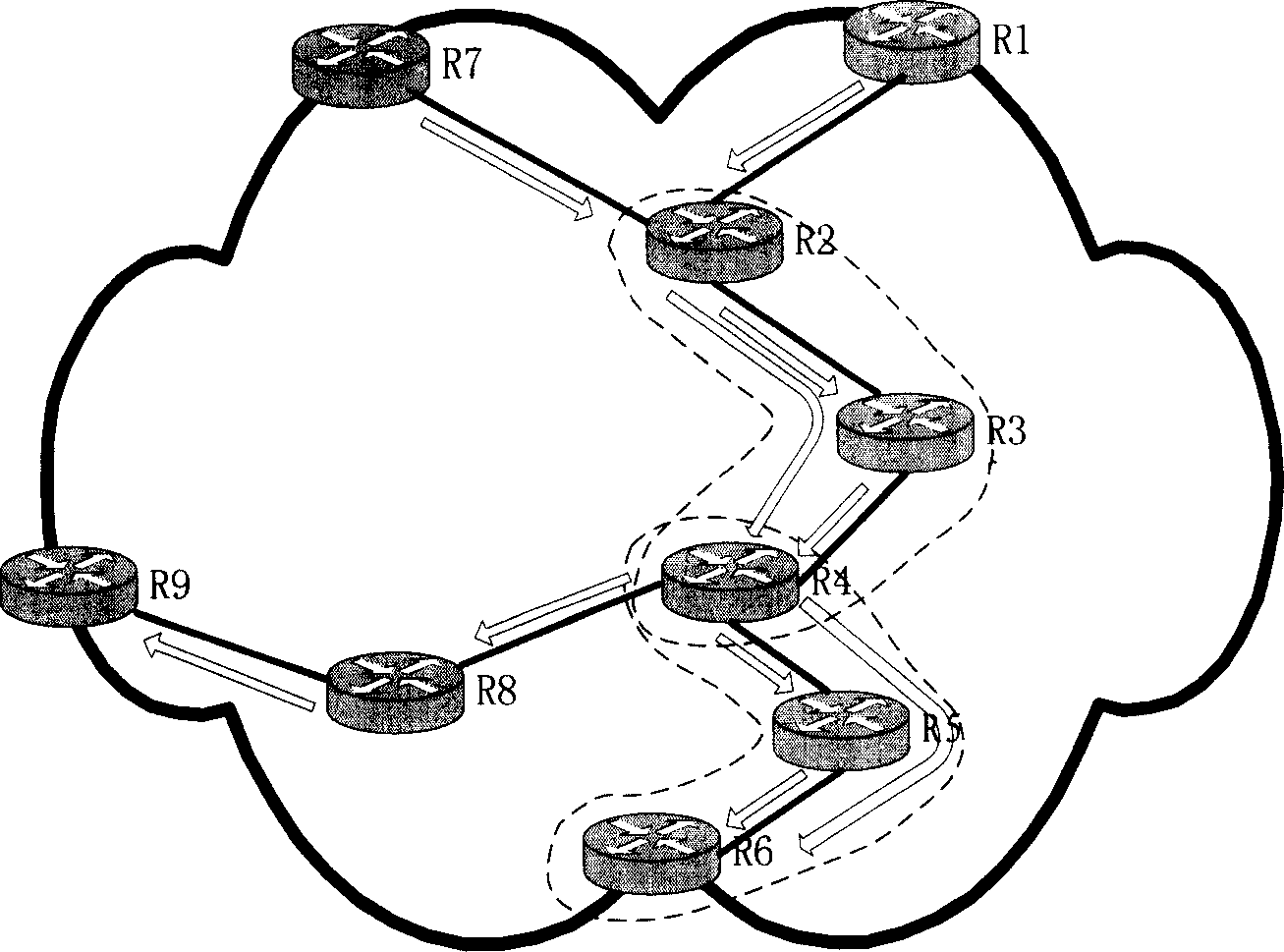

OBS/OPS network performance optimizing method based on virtual node

InactiveCN1741498AImprove latencyImprove packet loss rateMultiplex system selection arrangementsElectromagnetic transmissionQuality of serviceTime delays

A method for optimizing OBS / OPS network performance based on virtual node includes combining a numbers of physical nodes on service shortest route to be one virtual node according to network service flow rate of last statistic time section , setting special wavelength to connect head and tail physical node in virtual node to let data packet jump over at least two physical nodes with only one time of processing when data packet is via this virtual node for lowering end to end time delay and packet lost rate in network .

Owner:SHANGHAI JIAO TONG UNIV +1

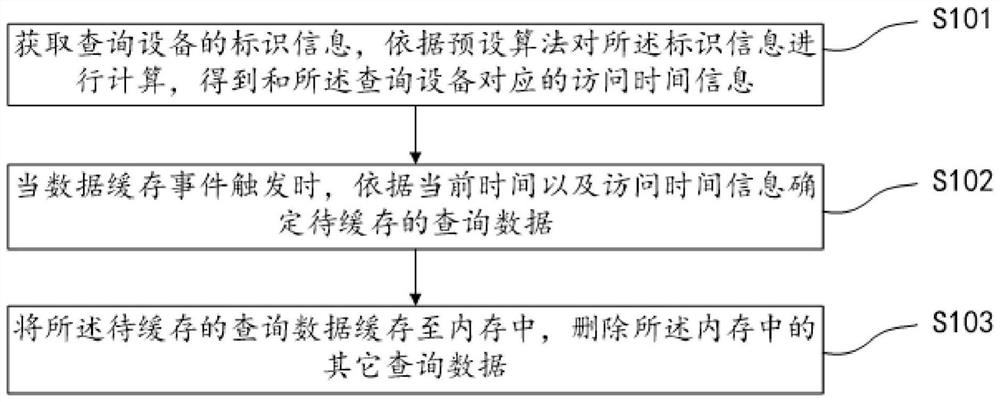

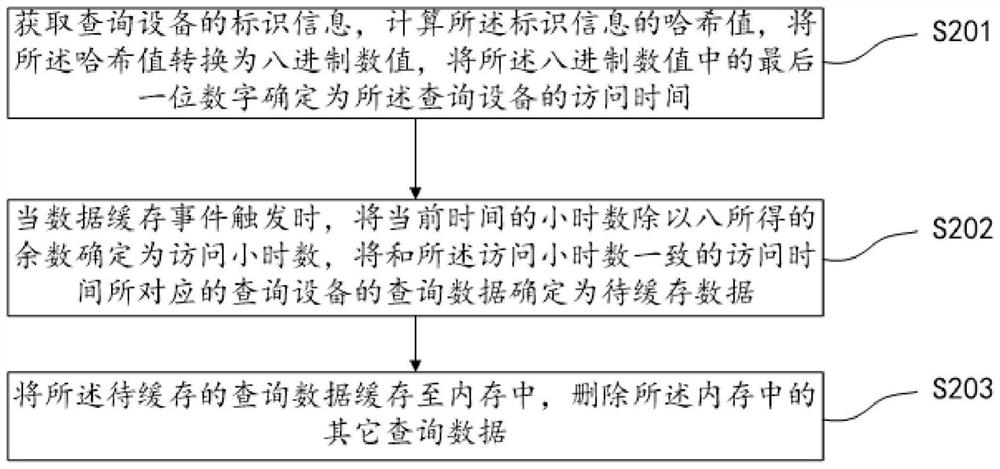

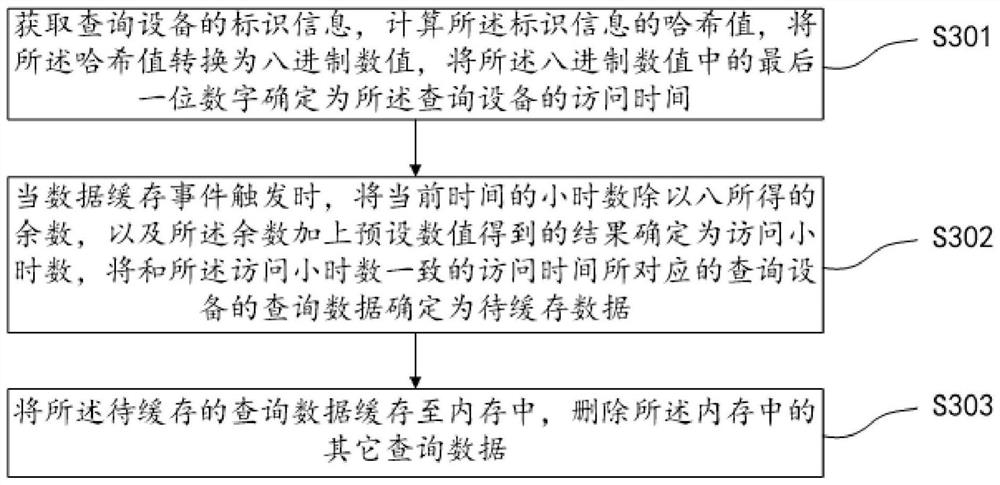

Data processing method and device, terminal and storage medium

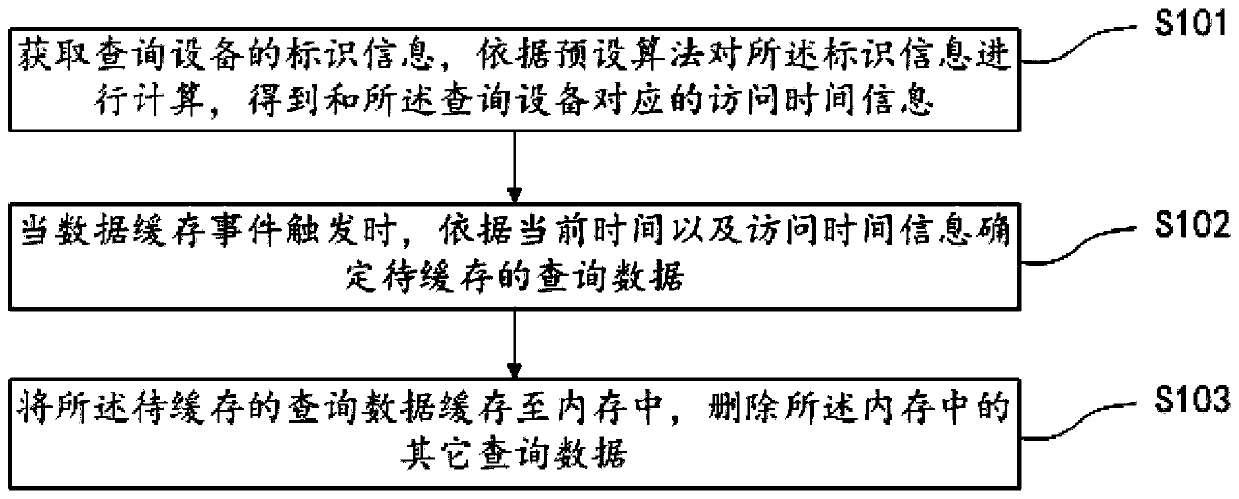

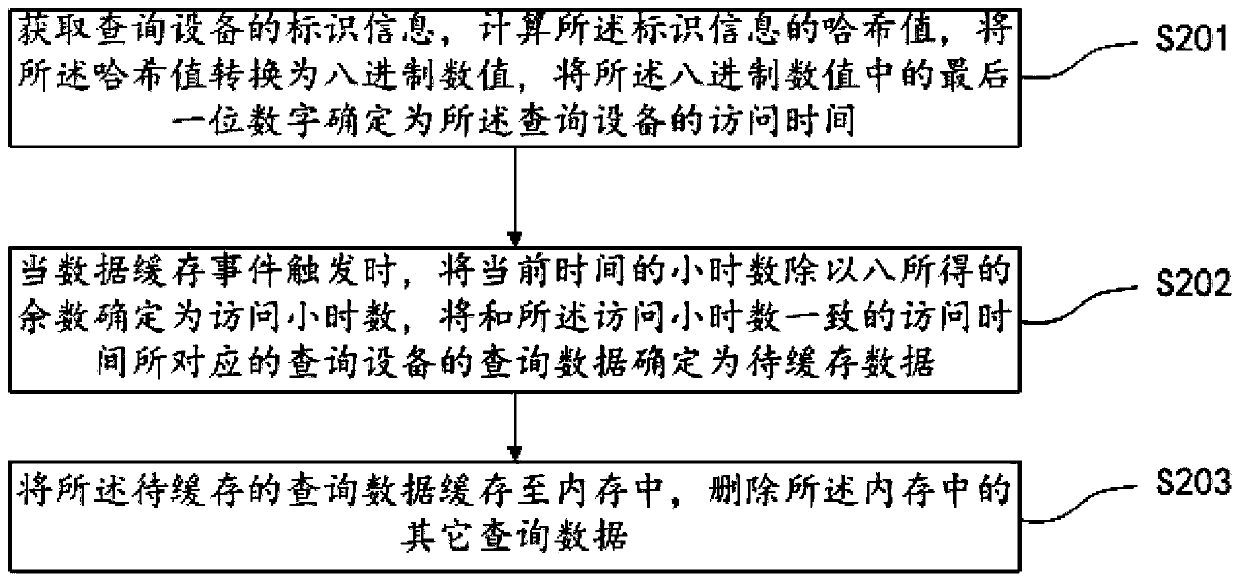

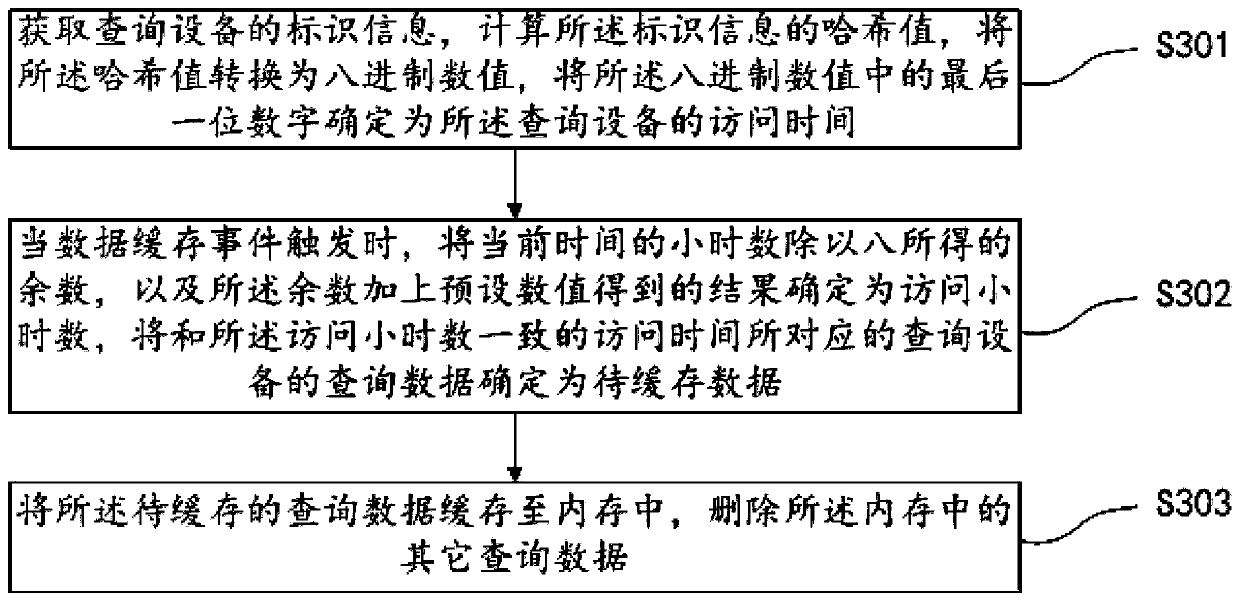

ActiveCN109918382AReduce cache requirementsIncrease flexibilitySpecial data processing applicationsDatabase indexingAccess timeComputer terminal

The embodiment of the invention discloses a data processing method and device, a terminal and a storage medium, and belongs to the technical field of computers. The method comprises the steps that identification information of a query device is acquired, the identification information is calculated according to a preset algorithm, access time information corresponding to the query device is obtained, and the identification information and the query device are in one-to-one correspondence; when the data caching event is triggered, to-be-cached query data is determined according to current timeand access time information, and the query data is associated with the query equipment; and the to-be-cached query data is cached into a memory, and other query data is eliminated in the memory. In the embodiment of the invention, the reasonable access time information is obtained by calculating the identifier of the query equipment, the query data is cached according to the reasonable access timeinformation, and other query data is deleted, so that the flexibility of data storage is improved, the data caching requirement is reduced, and the cost is reduced.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

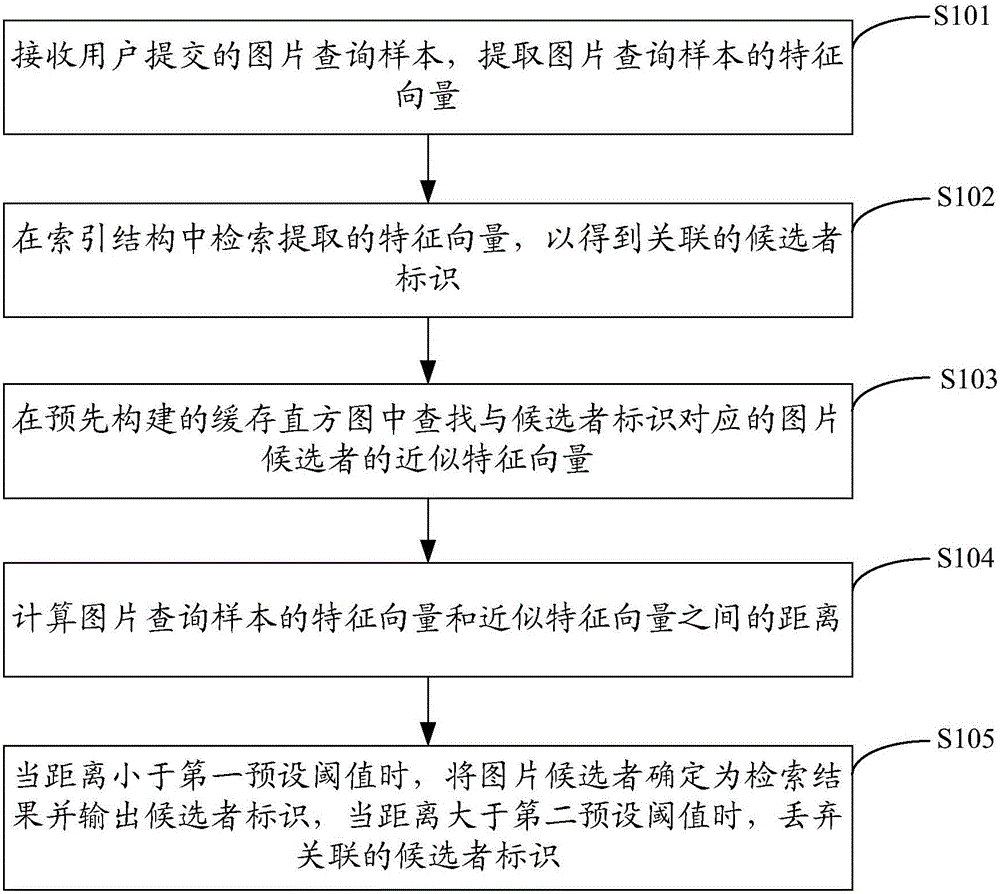

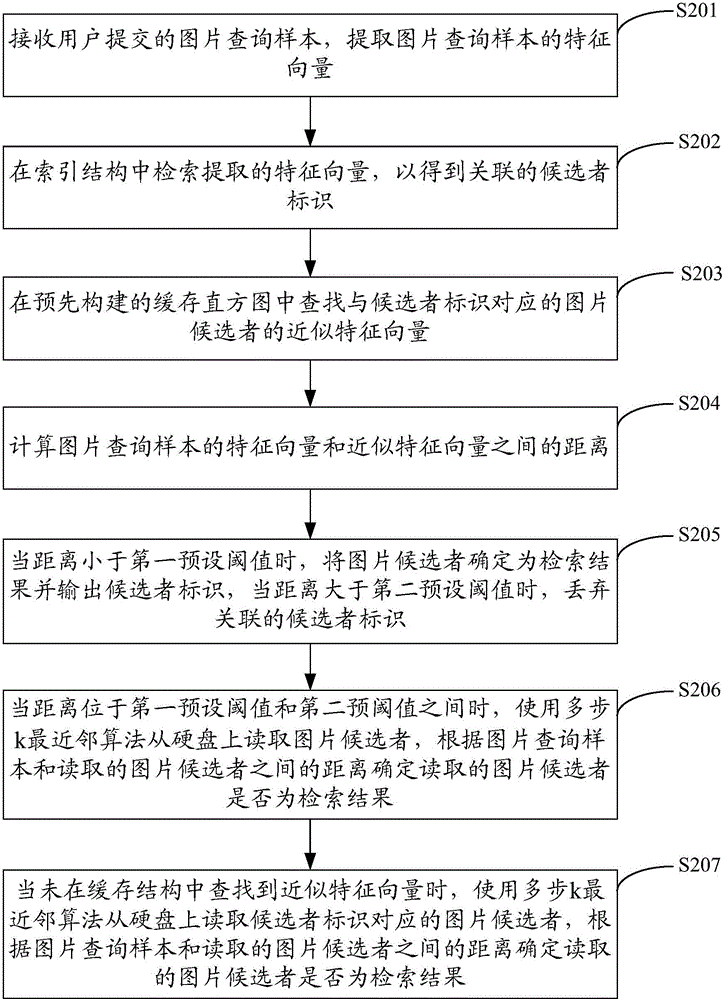

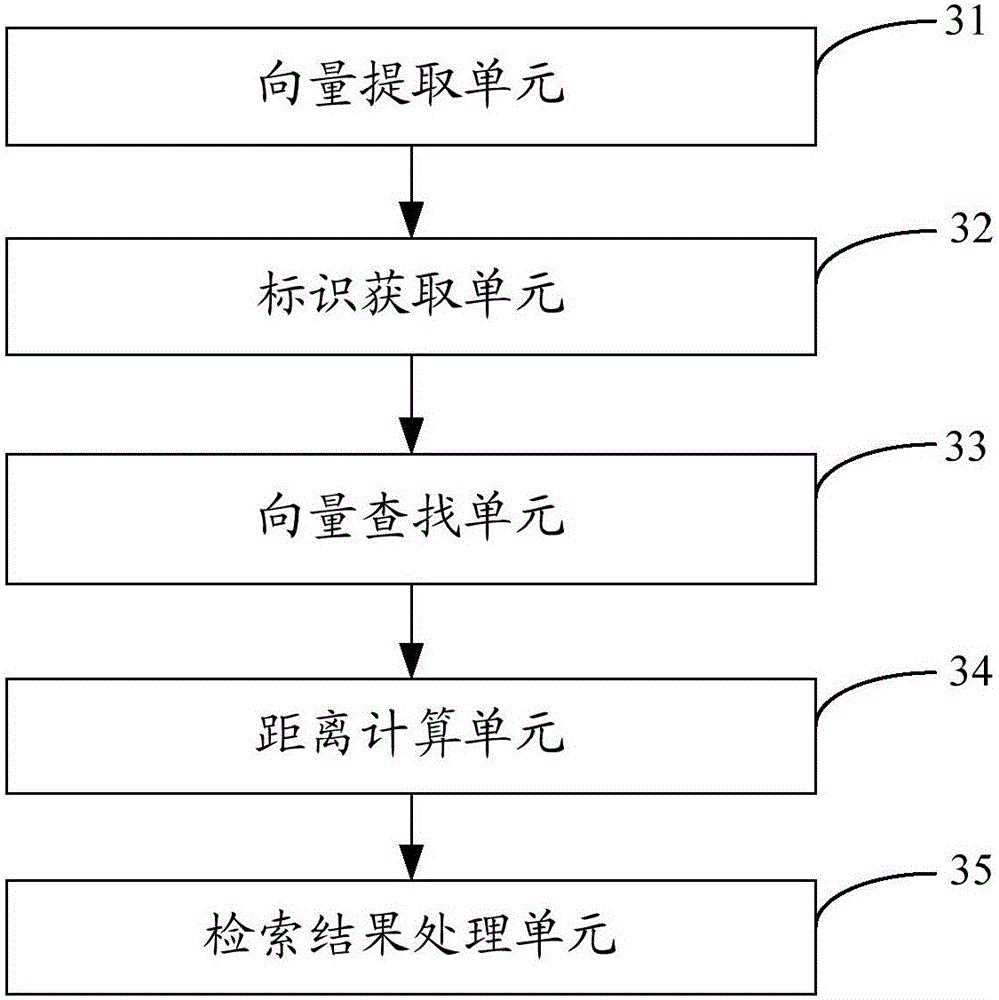

Image searching method and system

ActiveCN105930499AImprove retrieval efficiencyReduce cache requirementsSpecial data processing applicationsFeature vectorImage retrieval

The invention is applied to the technical field of computers, and provides an image searching method and system. The method comprises the following steps of: receiving an image inquiry sample submitted by a user, extracting a characteristic vector of the image inquiry sample, retrieving the characteristic vector in an index structure so as to obtain a related candidate identifier, searching an approximate characteristic vector of an image candidate corresponding to the candidate identifier from a pre-constructed cache histogram, calculating the distance between the characteristic vector of the image inquiry sample and the approximate characteristic vector, if the distance is less than a first pre-set threshold value, determining the image candidate as a retrieved result and outputting the candidate identifier, and, if the distance is greater than a second pre-set threshold value, abandoning the associated candidate identifier. The image retrieving efficiency is greatly increased; and simultaneously, cache demands for a retrieving system are reduced.

Owner:SOUTH UNIVERSITY OF SCIENCE AND TECHNOLOGY OF CHINA

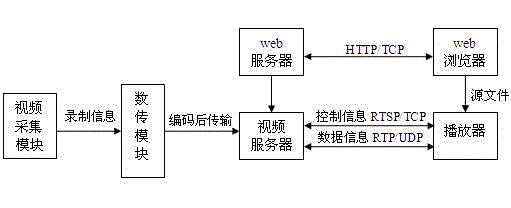

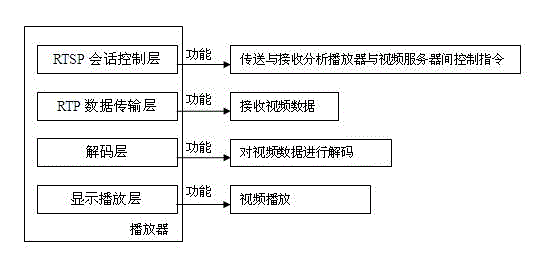

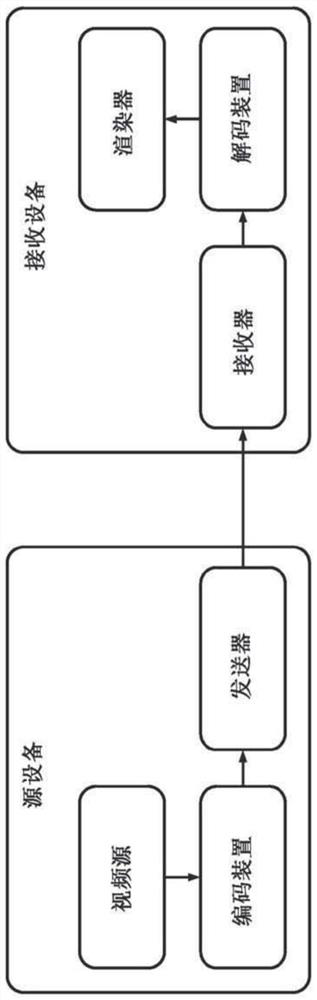

Environmental sanitation operation stream media network video monitoring system

InactiveCN103945174AFast transferReduce the burden onClosed circuit television systemsSelective content distributionVideo monitoringData transmission

The invention provides an environmental sanitation operation stream media network video monitoring system. Through a video acquisition device, acquired video information, after being coded by a data transmission module, is streamed to a video server through a network for storage and is streamed to a monitoring end for playing or real-time live broadcasting. The environmental sanitation operation stream media network video monitoring system performs accompanying monitoring on collecting vehicles in sanitation work so as to deal with problems timely and avoid expansion of harmful effects when the collecting vehicles have problems. The environmental sanitation operation stream media network video monitoring system employs stream transmission so as to alleviate the burden of network transmission, reduce caching requirement for a monitoring end, can realize "playing while downloading, and implementing monitoring", and reduces the waiting time of monitoring people. Since the volume of a stream media file is quite small, the network transmission is quite fast, the obtaining is convenient and time-saving, and the network cost is also saved.

Owner:SUZHOU VORTEX INFORMATION TECH

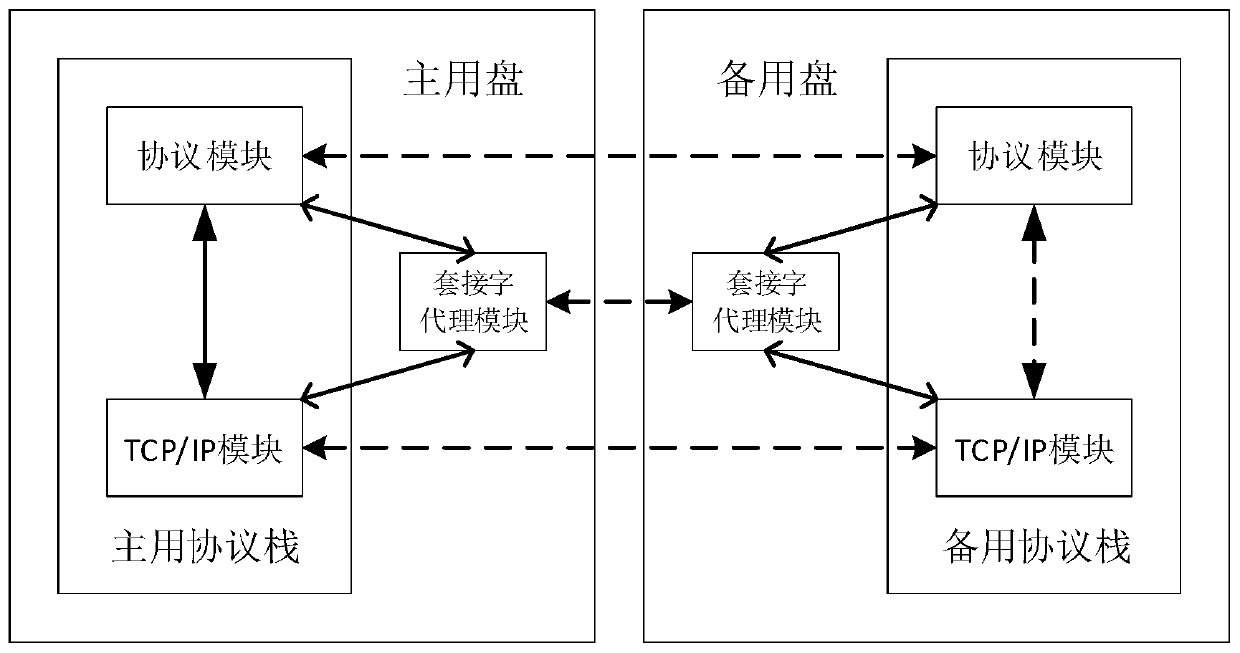

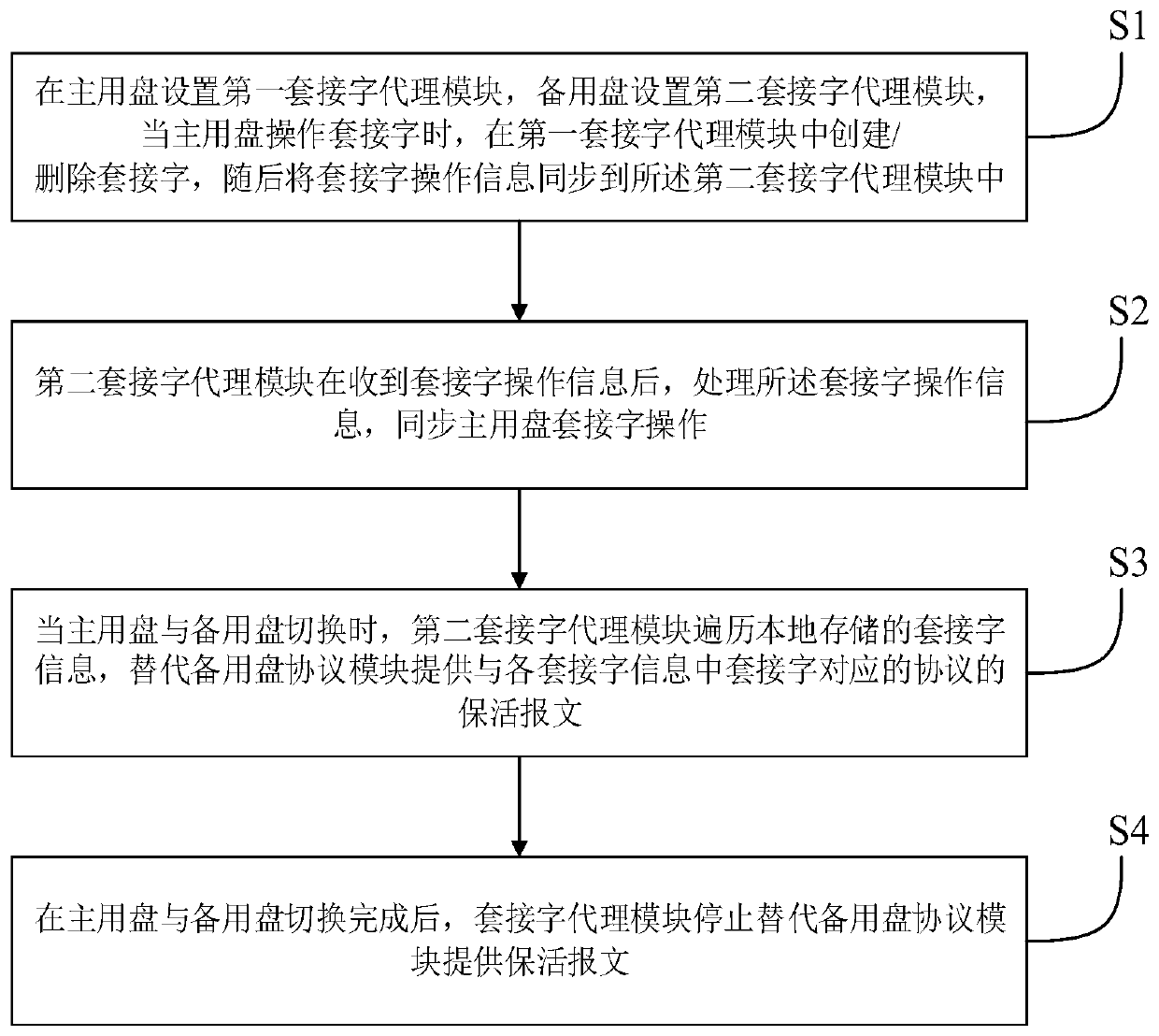

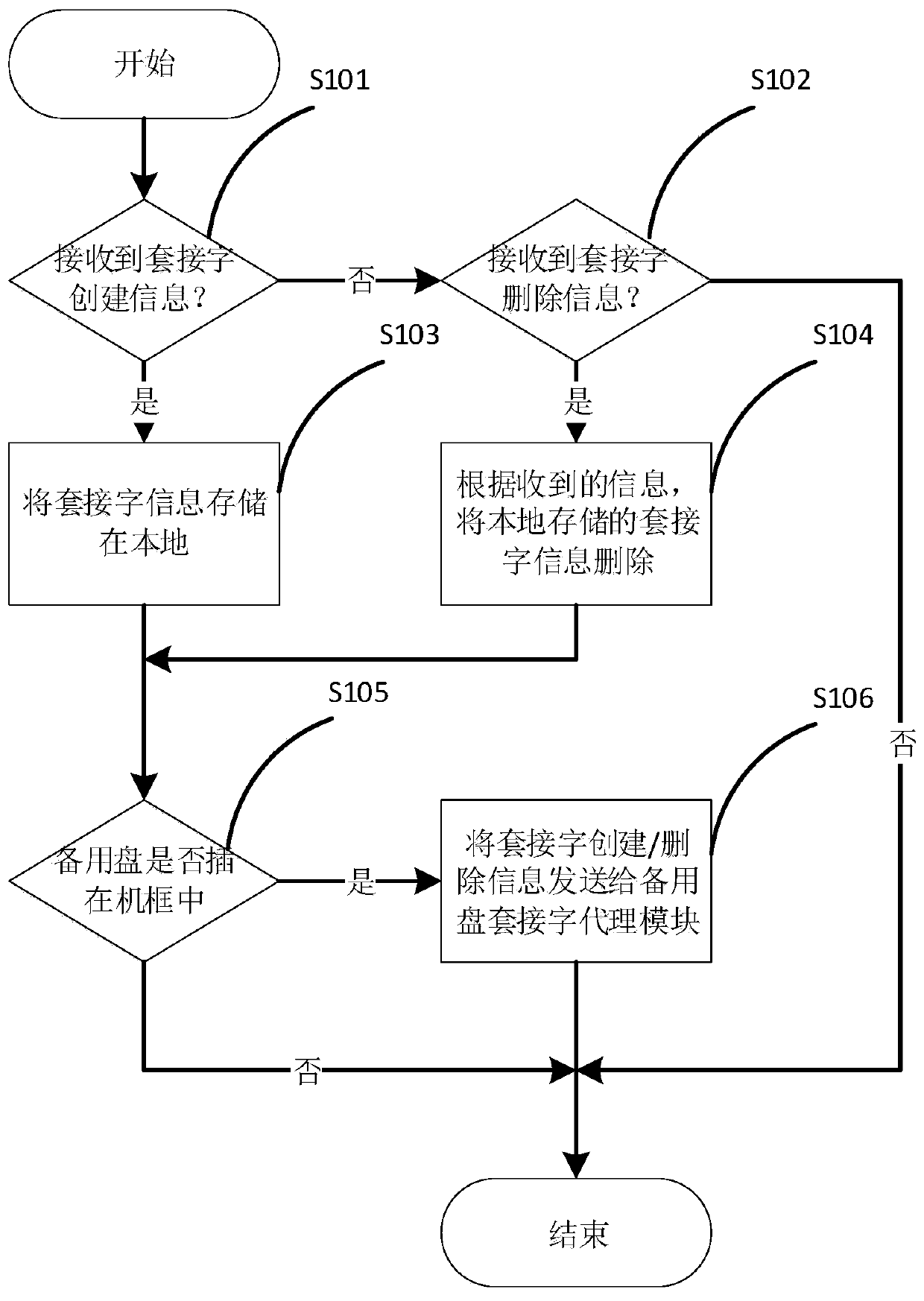

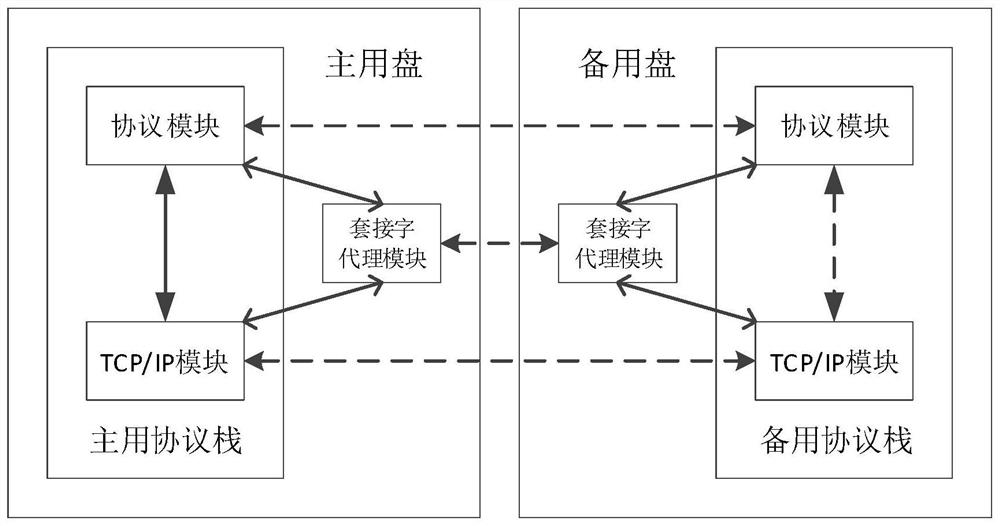

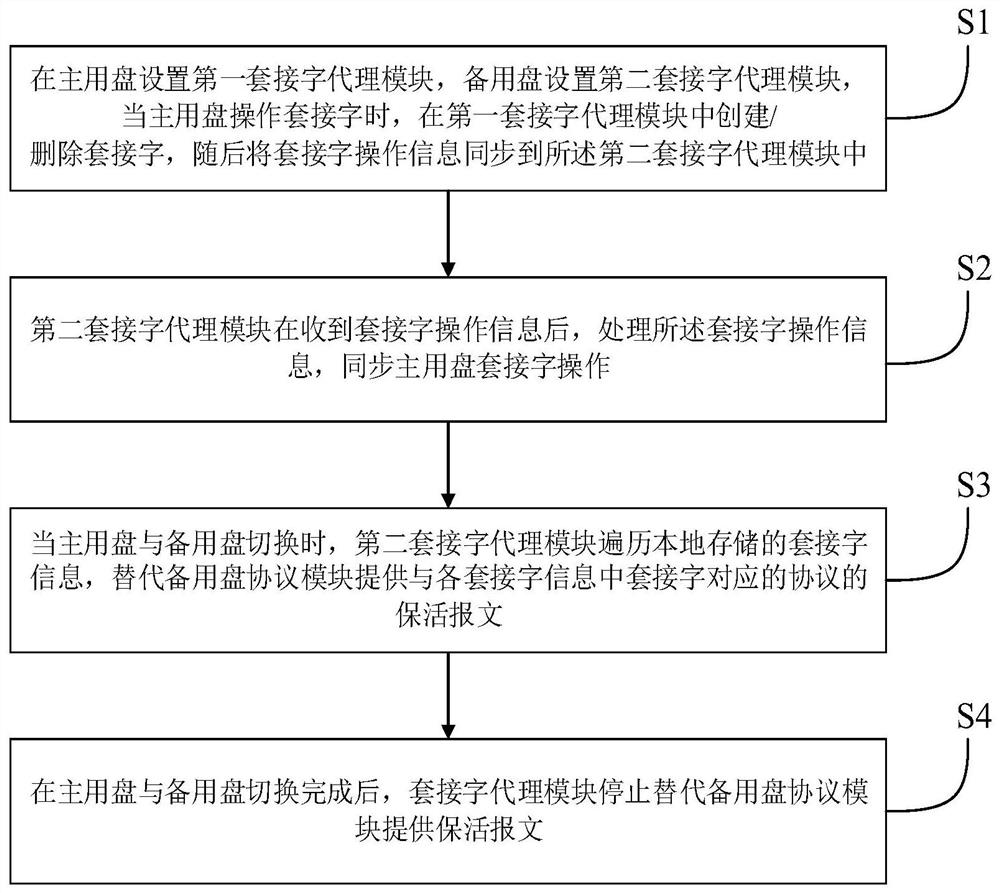

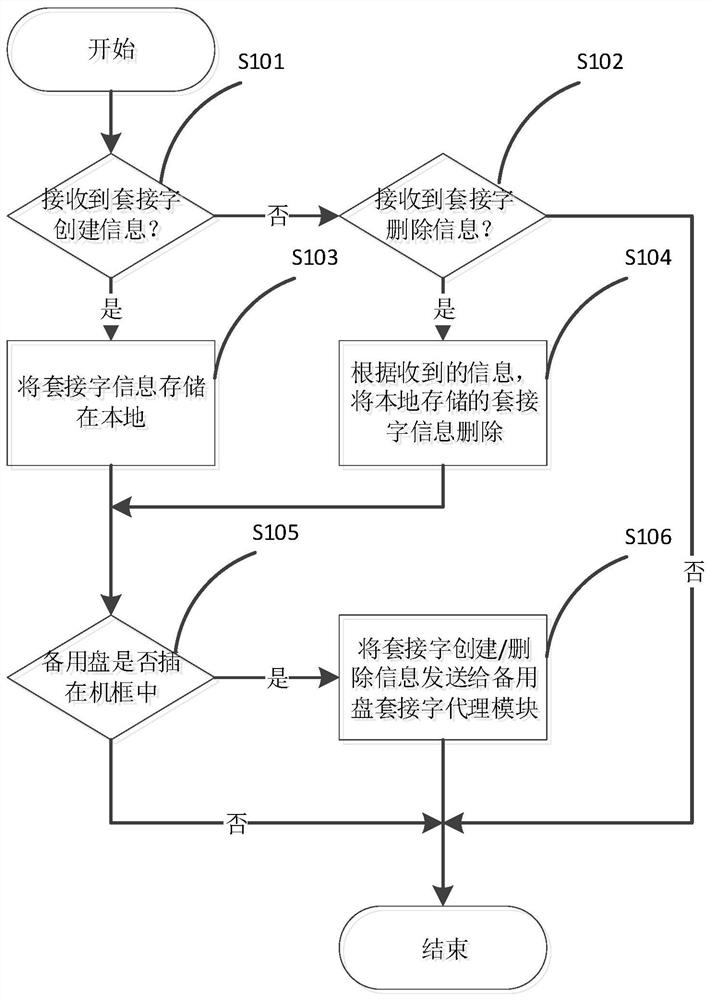

System and method for non-stop routing during switching of main and standby disks of control plane

ActiveCN110958176ANonstop routing implementationImplement non-stop routing schemeData switching networksComputer hardwareEngineering

The invention discloses a system and method for non-stop routing during the switching of the main and standby disks of a control plane, and relates to the technical field of communication, and the method comprises the stepsthat the main disk stores the related information of a socket in a first socket proxy module when operating the socket, and then synchronizes the stored socket information to asecond socket proxy module; after receiving the socket information, the second socket proxy module stores the socket information and calls a TCP / IP module interface of the standby disk to obtain a corresponding socket; when the main disk and the standby disk are switched, the second socket proxy module traverses locally stored socket information to replace a standby disk protocol module to providekeep-alive messages of protocols corresponding to sockets; and after the switching between the main disk and the standby disk is completed, the socket proxy module stops replacing the standby disk protocol module to provide the keep-alive message. According to the method, the connection state between the standby disk and the neighbor equipment node protocol module can be kept uninterruptible in the lifting process of the standby disk, and non-stop routing is realized.

Owner:FENGHUO COMM SCI & TECH CO LTD

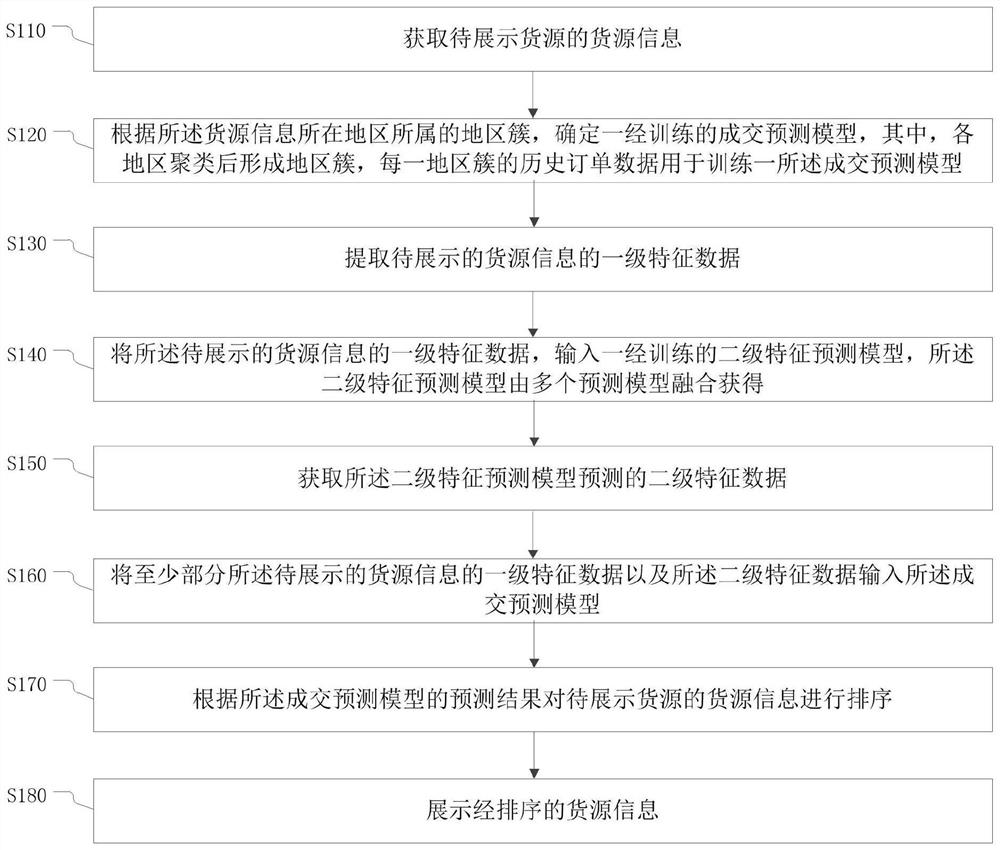

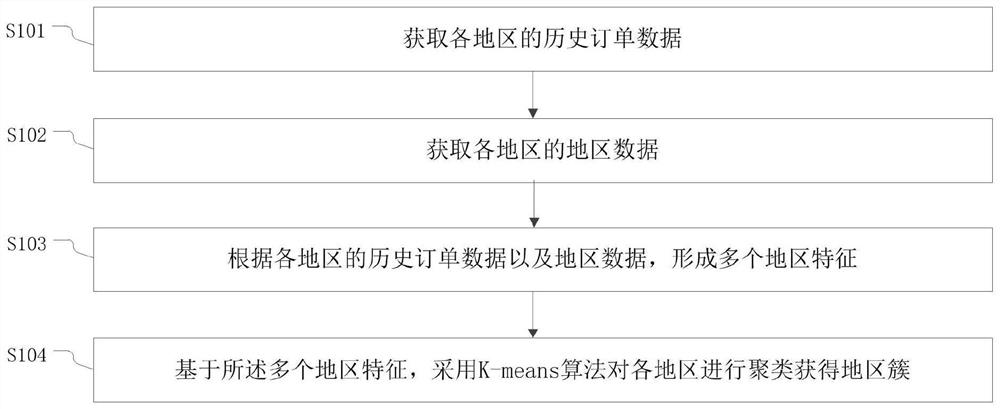

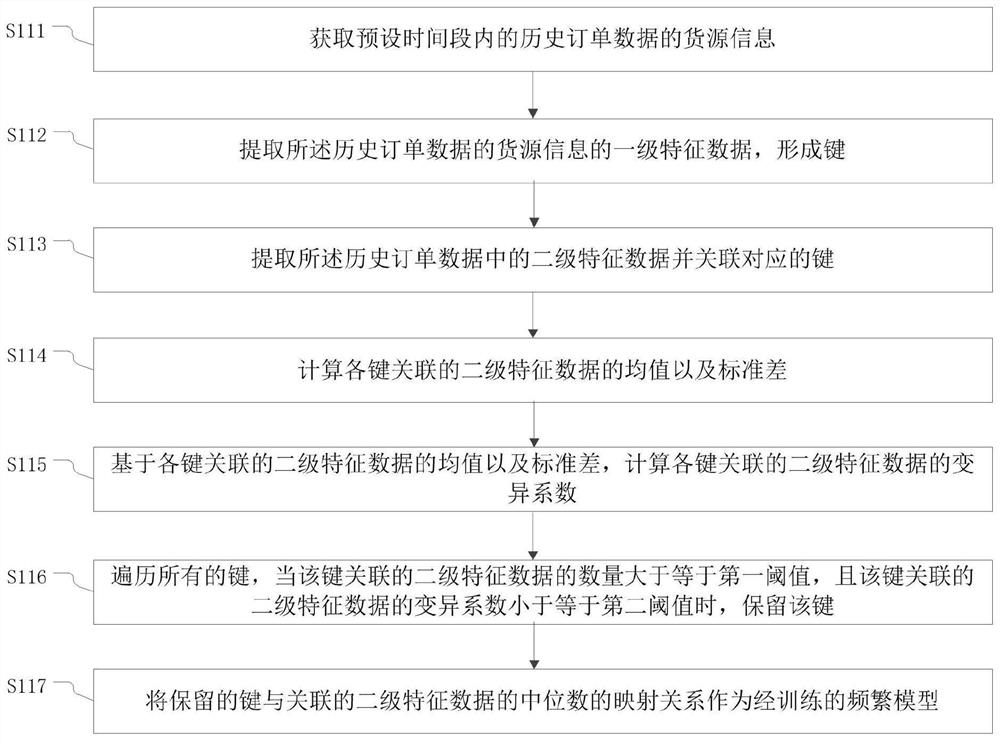

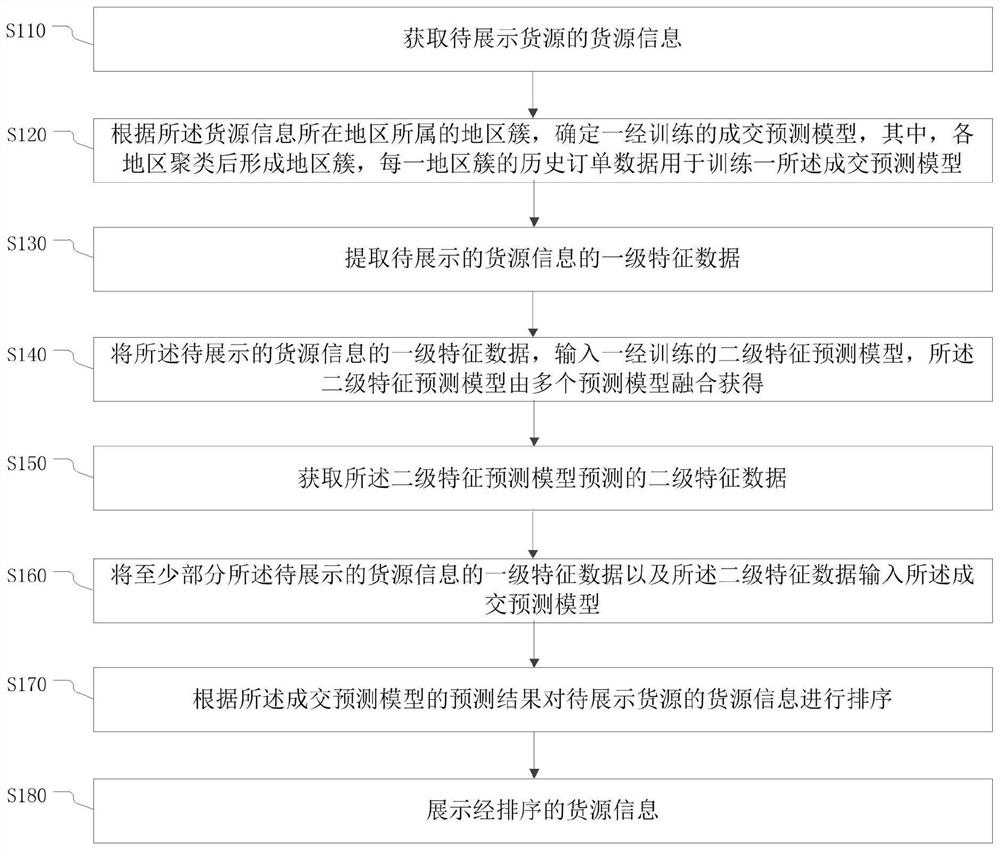

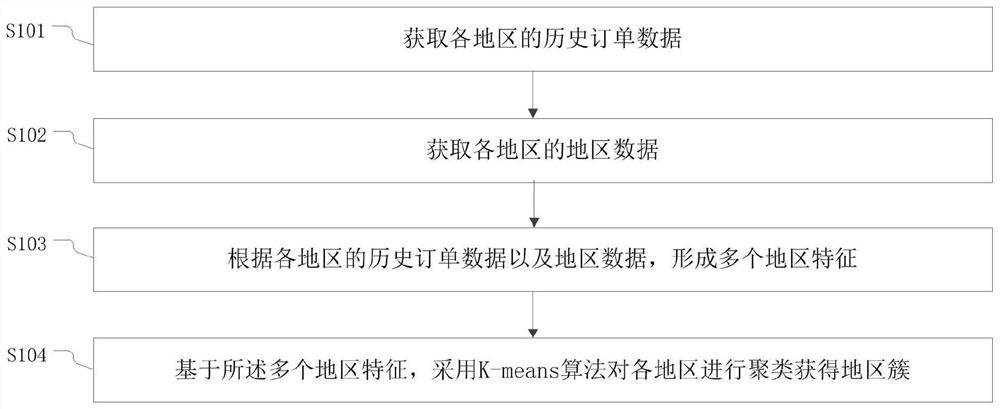

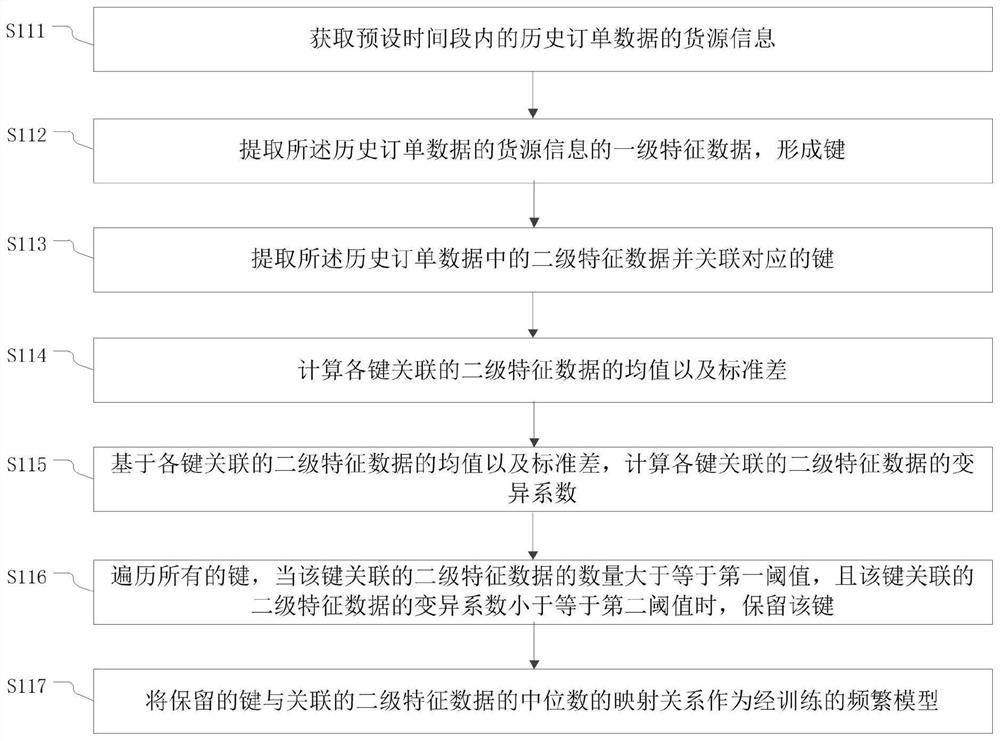

Goods source display method and device, electronic equipment and storage medium

ActiveCN112561433ASave browsing timeLow costForecastingCharacter and pattern recognitionEngineeringFeature data

The invention provides a goods source display method and device, electronic equipment and a storage medium. The method comprises the steps of obtaining goods source information of a to-be-displayed goods source; determining a trained transaction prediction model according to a region cluster to which the region where the goods source information is located belongs; extracting first-level feature data of the to-be-displayed goods source information; inputting the first-level feature data of the to-be-displayed goods source information into a trained second-level feature prediction model, the second-level feature prediction model being obtained by fusing a plurality of prediction models; obtaining second-level feature data predicted by the second-level feature prediction model; inputting thefirst-level feature data and the second-level feature data of at least part of the to-be-displayed goods source information into the transaction prediction model; sorting the goods source informationof the to-be-displayed goods source according to the prediction result of the transaction prediction model; and displaying the sorted goods source information. The goods source browsing time of the user is shortened, the order receiving efficiency is improved, and then the overall freight transport efficiency of the platform is improved.

Owner:江苏运满满信息科技有限公司

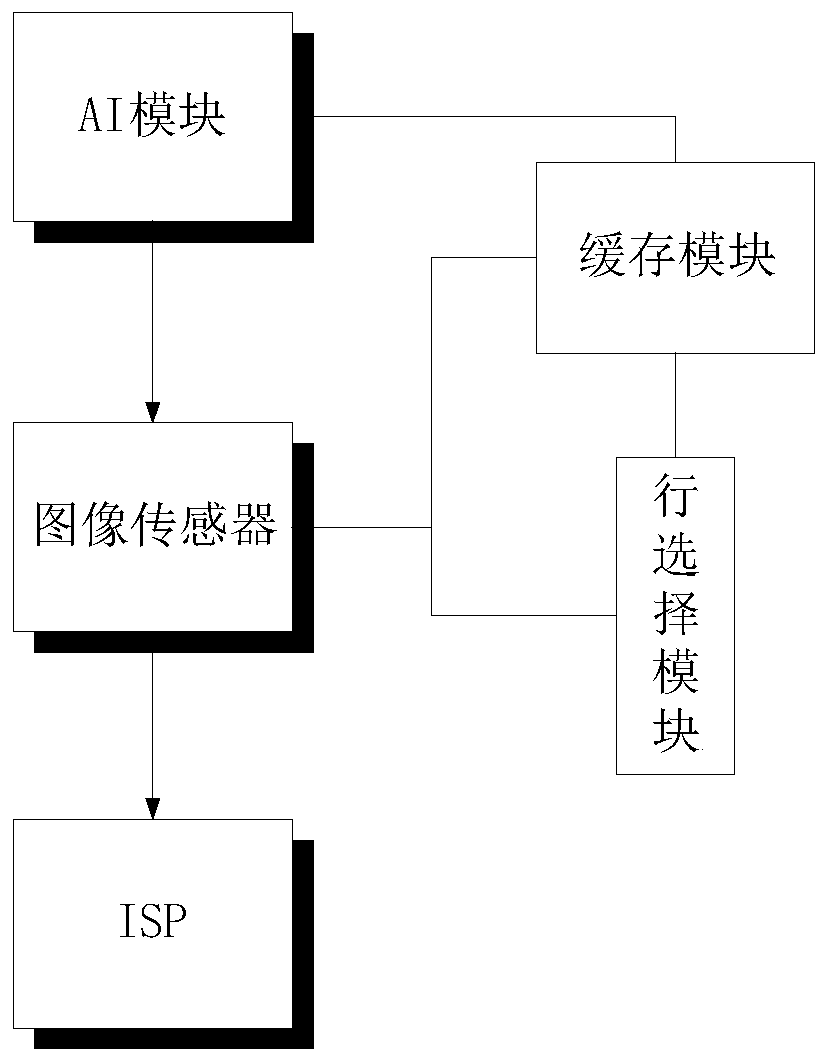

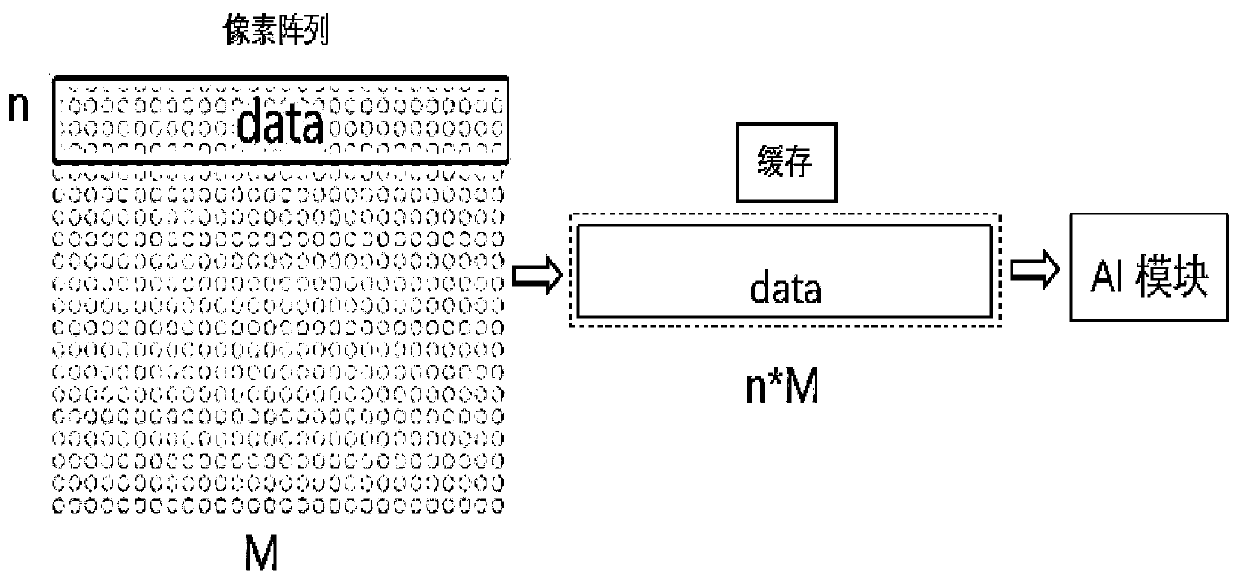

AI-based intelligent image preprocessing method and system

PendingCN110400250AReduce cache requirementsLow costImage enhancementImage analysisImage sensorImaging Signal

The invention discloses an AI-based intelligent image preprocessing method and system. The AI-based intelligent image preprocessing method comprises the steps: obtaining a sensing signal from an imagesensor in batches, carrying out the artificial intelligence processing, outputting a processing result to the image sensor for selective optimization, and enabling the image sensor to output the optimized sensing signal to an image signal processor for generating a digital image. According to the invention, the AI processing mode and the final imaging strategy can be flexibly adjusted according to different applications and requirements; in addition, the requirement for cache of the image sensor is low, and the whole picture can be processed only through a small amount of cache, and hardwarecost and power consumption are remarkably reduced. According to the invention, when AI processing is carried out on the input image, more pixel information in the vertical direction can be contained according to an adaptive occasion, and the algorithm performance of the intelligent camera in the application field sensitive to the context information in the vertical direction can be improved on thepremise that the hardware cost is not increased. The AI-based intelligent image preprocessing method can be used for but is not limited to face detection, pedestrian detection, vehicle identificationand the like.

Owner:淄博凝眸智能科技有限公司

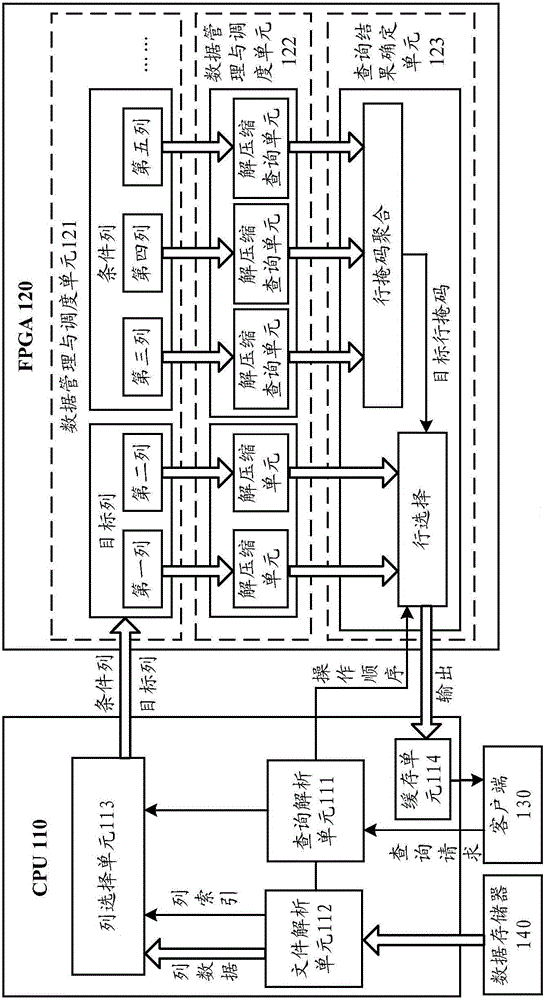

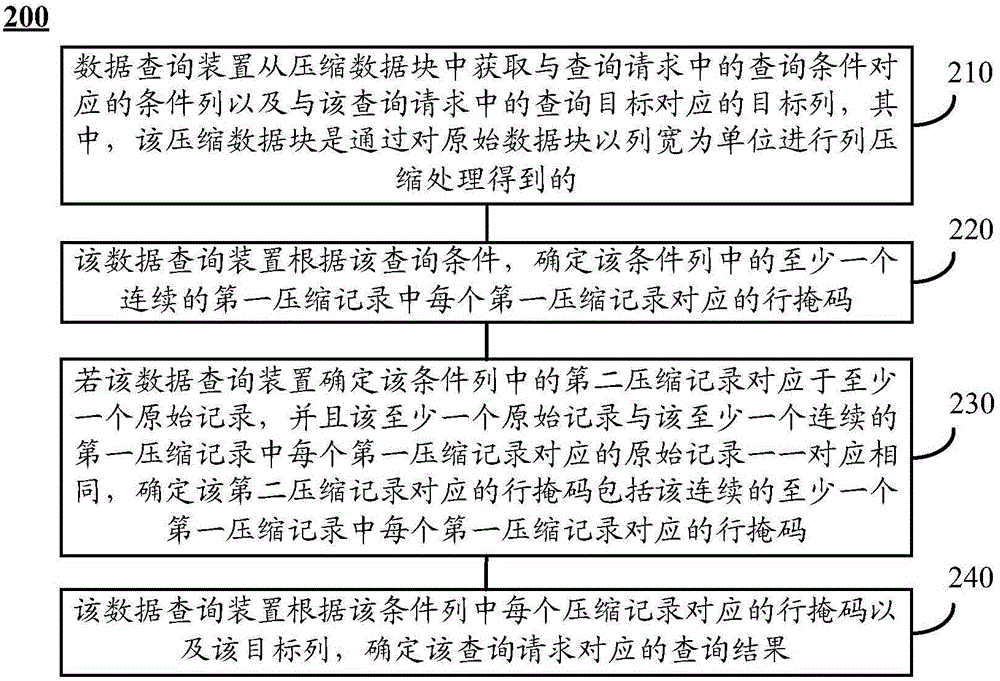

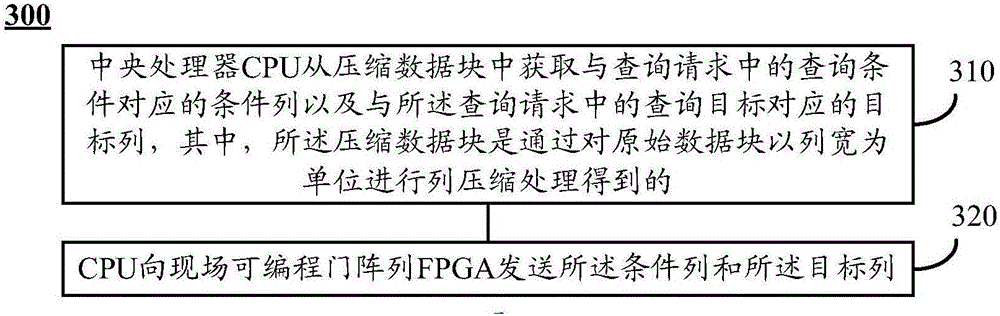

Data query method, device and system

InactiveCN105975498AReduce cache requirementsImprove query performanceSpecial data processing applicationsData queryOriginal data

The invention discloses a data query method, device and system. The data query method comprises the following steps: acquiring a condition column corresponding to a query condition in a query request and a target column corresponding to a query target in the query request from a compressed data block through the data query device, wherein the compressed data block is obtained through compressing an original data block by taking column width as a unit; determining a line mask corresponding to at least one continuous first compression record in the condition column according to the query condition; if determining that a second compression record in the condition column corresponds to at least one original record and the at least one original record is correspondingly same as an original record corresponding to the at least one continuous first compression record one by one, determining that a line mask corresponding to the second compression record comprises a line mask corresponding to the at least one continuous first compression record; and determining a query result corresponding to the query request according to the line mask corresponding to each compression record in the condition column and the target column, so as to improve the data query speed.

Owner:HUAWEI TECH CO LTD +1

A system and method for uninterrupted routing during master-slave disk switching of control plane

ActiveCN110958176BNonstop routing implementationImplement non-stop routing schemeTransmissionComputer hardwareEngineering

Owner:FENGHUO COMM SCI & TECH CO LTD

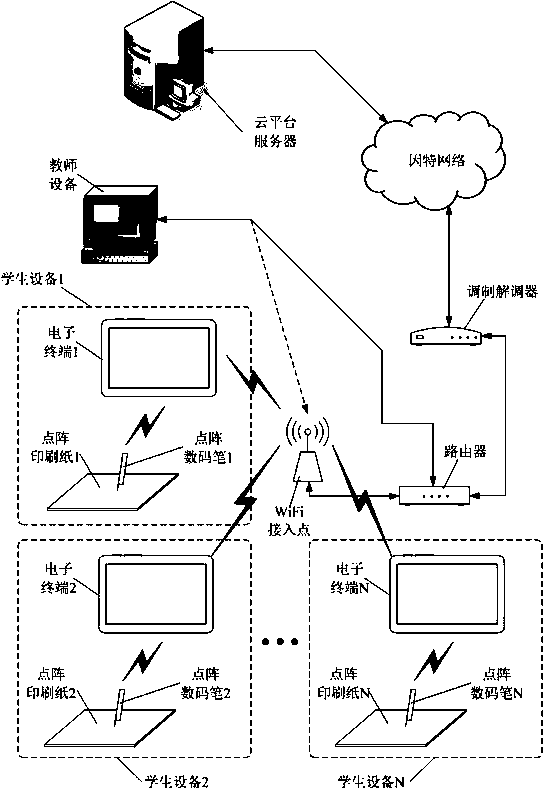

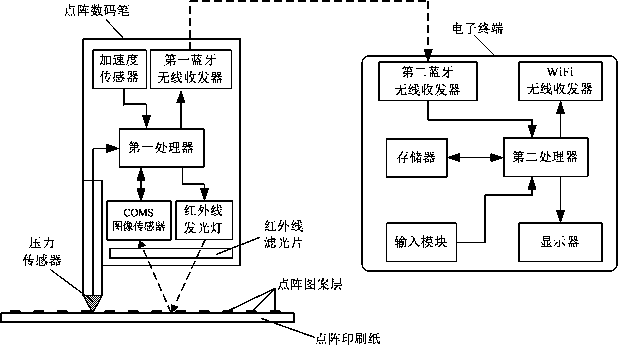

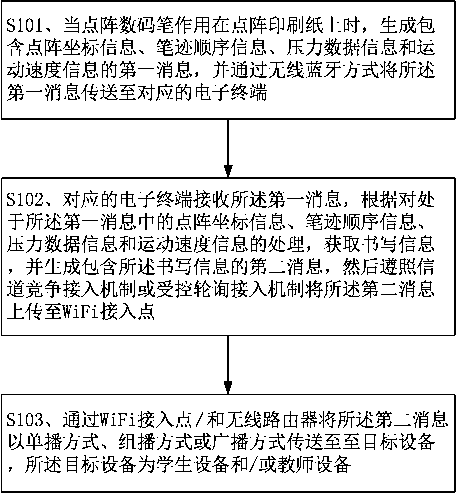

A group teaching system and its working method based on dot-matrix digital pen

ActiveCN106057000BSimple structureEnhanced interactionNetwork topologiesTransmissionDot matrixWorking set

The invention relates to the field of electronic teaching technology, and discloses a group teaching system based on a dot matrix pen and a working method thereof. The group teaching system comprises a router, a WiFi accessing point, teacher equipment and a plurality of sets of student equipment, wherein each set of student equipment comprises dot matrix printing paper, the dot matrix digital pen and an electronic terminal. A dot matrix pattern layer is printed on the upper surface of the dot matrix printing paper. The dot matrix digital pen is in communication connection with the electronic terminal in a wireless Bluetooth manner. Through combination of the dot matrix digital pen and a wireless local area network, the dot matrix digital pen can be applied in group teaching, and furthermore data transmission in a manner of one-to-one, one-to-many or many-to-one can be realized, thereby facilitating writing information interaction in group teaching, facilitating teaching and electronic teaching development, and realizing convenient popularization and convenient application in reality.

Owner:成都东方闻道科技发展有限公司

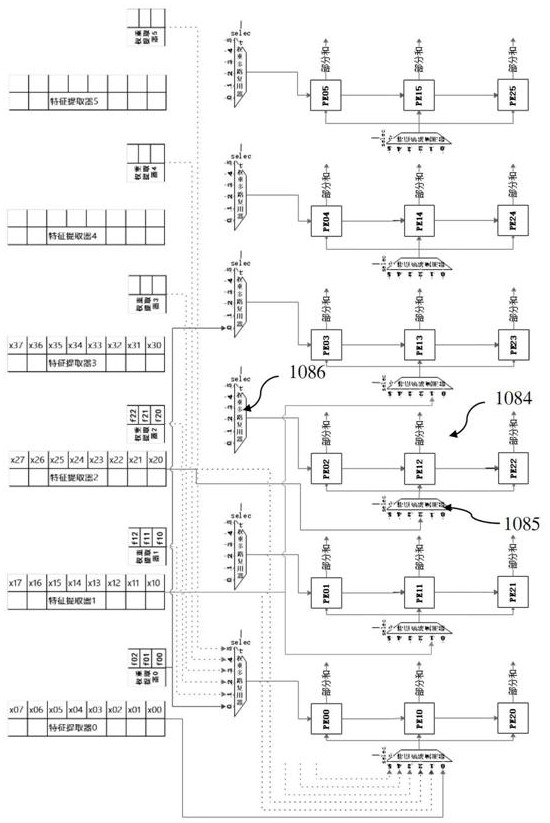

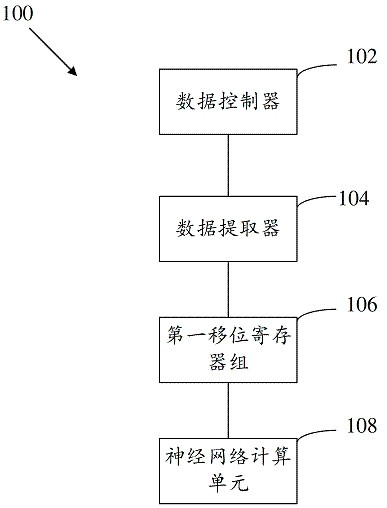

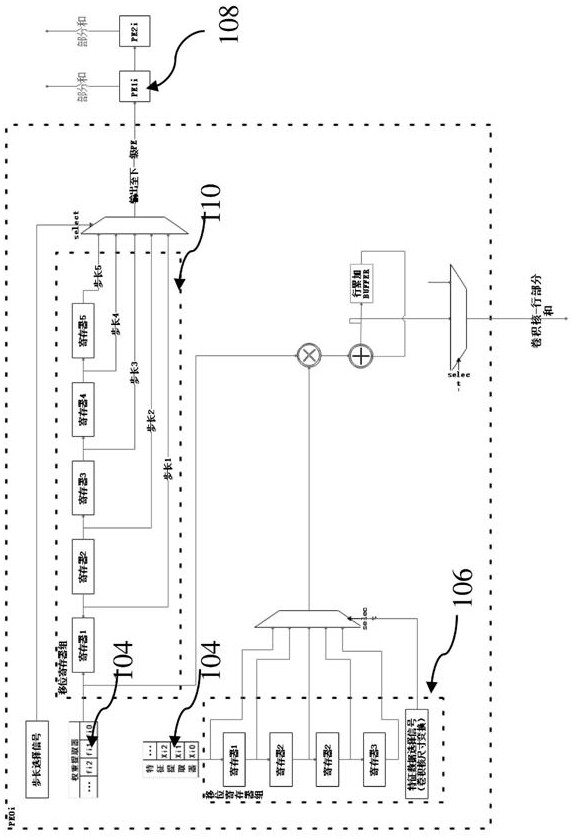

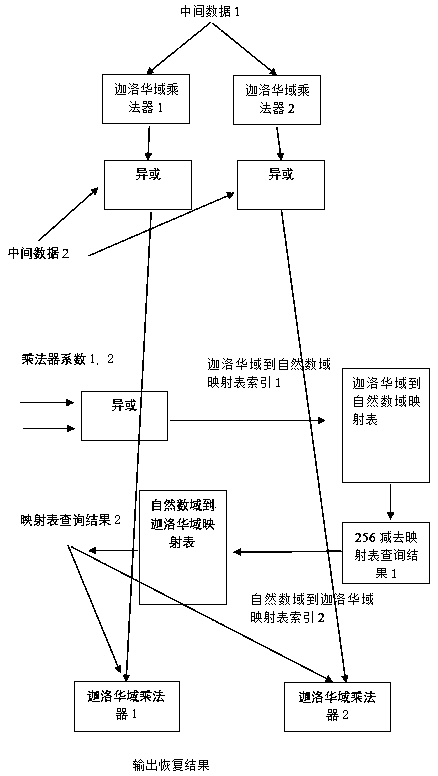

Neural network computing module, method and communication device

ActiveCN113537482BReduce cache requirementsNo delay operationNeural architecturesPhysical realisationShift registerEngineering

The present invention provides a neural network calculation module, method and communication equipment, belonging to the field of data processing, specifically including a data controller, a data extractor, a first shift register group and a neural network calculation unit, the data controller according to the configuration information and instruction information to adjust the data path, and control the data extractor to extract feature row data and convolution kernel row data from the feature map data of the image to be processed by row; the first shift register group adopts serial input and parallel output output the feature row data to the neural network computing unit; the neural network computing unit performs multiplication and accumulation operations on the input feature row data and the convolution kernel row data to complete a convolution The convolution operation of the kernel and the feature map data, and the accumulation of multiple convolution results are completed in at least one cycle, so as to realize circuit reconstruction and data multiplexing.

Owner:绍兴埃瓦科技有限公司

A Method of Low-Order Interleaving for Large-capacity Data Using FPGA

ActiveCN106487471BReduce cache requirementsHigh bandwidthTime-division multiplexComputer architectureMultiple frame

The invention discloses a method for adopting an FPGA to carry out low order cross connect on large volume data. The method changes a conventional way that multi-frame alignment and cross are needed when the low order cross connect is carried in a synchronous digital system, aims at application characteristics of large input bandwidth and small output bandwidth of an information screening field, and adopts a way of cross, convergence and multi-frame alignment to operate after the accessed large volume data is processed through data reception, pointer interpreter and adjustment and multi-frame information binding modules. According to the method, the requirements of design for internal cache of a chip can be greatly reduced, the data access with the larger bandwidth can be realized, and the stronger access, cross and data handling capacity can be provided. The conventional method only completes the low order capacity of 20G*20G, the method can complete the low order capacity of 80G*20G without any additional external cache, and the method has remarkable advantages in the aspects of hardware cost, access capacity and operability.

Owner:TOEC TECH

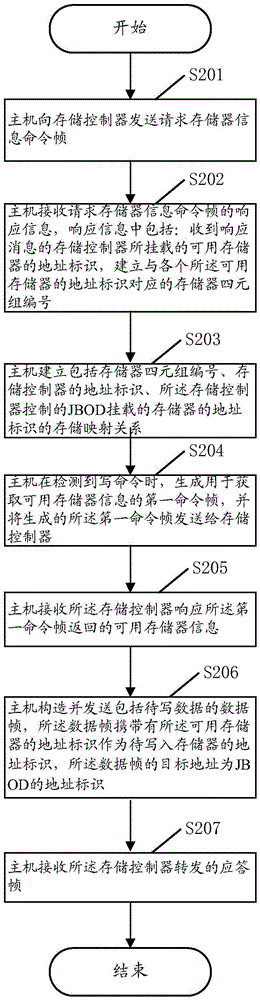

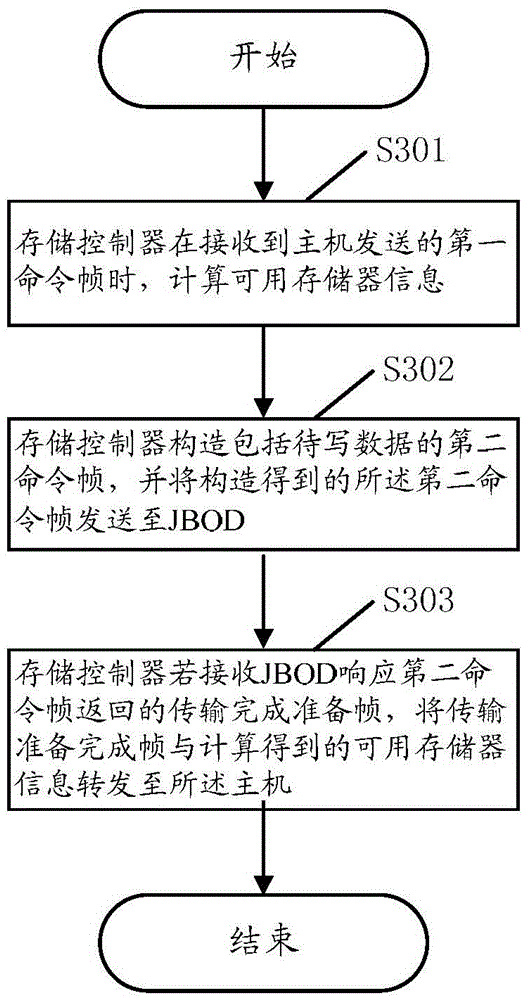

A data storage method, device and system

ActiveCN103530066BImprove storage efficiencyReduce cache requirementsInput/output to record carriersControl storeData store

A data storage method comprises: when a write command is detected, generating a first command frame for acquiring available memory information, and forwarding the generated first command frame to a storage controller; a host receiving the available memory information returned by the storage controller in response to the first command frame, the available memory information comprising an address identifier of Just a Bundle Of Disks (JBOD) and an address identifier of an available memory; constructing and sending a data frame comprising to-be-written data, the data frame carrying the address identifier of the available memory that serves as an address identifier to be written into the available memory, and a destination address of the data frame being the address identifier of the JBOD. The data storage method has a low requirement on a cache, and saves the storage cost; and in addition, a host directly sends a data frame comprising to-be-written data to a to-be-written memory, thereby improving the data storage efficiency.

Owner:HUAWEI TECH CO LTD

Data processing method, device, terminal and storage medium

ActiveCN109918382BReduce cache requirementsIncrease flexibilitySpecial data processing applicationsDatabase indexingTime informationAccess time

The embodiment of the application discloses a data processing method, device, terminal and storage medium, which belong to the field of computer technology. The method includes obtaining the identification information of the querying device, calculating the identification information according to a preset algorithm, and obtaining access time information corresponding to the querying device, and the identification information is in one-to-one correspondence with the querying device; when the data is cached When the event is triggered, determine the query data to be cached according to the current time and access time information, and the query data is associated with the query device; cache the query data to be cached in the memory, and delete other queries in the memory data. In the embodiment of the present application, reasonable access time information is obtained by calculating the identifier of the query device, and the query data is cached accordingly, and other query data are deleted, which improves the flexibility of data storage and reduces data Cache requirements, reducing costs.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

Method and device for erasure correction based on flash memory storage device

ActiveCN107193685BImprove protectionTake up less resourcesRedundant data error correctionRAIDComputer architecture

The invention discloses an erasure correcting method and apparatus based on a flash memory storage device. The apparatus comprises an encoding module and a decoding module, the encoding module processes 16 input data blocks to obtain two verification data blocks, and the decoding module performs erasure correcting on received partially missing data by using the received two verification data blocks. The erasure correcting ability of the erasure correcting apparatus disclosed by the invention is M + 2, the protection ability of the erasure correcting apparatus is higher than that of the traditional RAID, few resources are occupied, meanwhile the erasure correcting delay is less than that of the traditional RS erasure code, the encoding only requires 2 clock cycles, and the decoding only requires 4 clock cycles. At the same time, breakpoint resume is supported, calculation can be performed without waiting for the preparation of all data blocks, and the data are calculated once arrival, therefore the requirements for the system cache are greatly reduced.

Owner:武汉中航通用科技有限公司

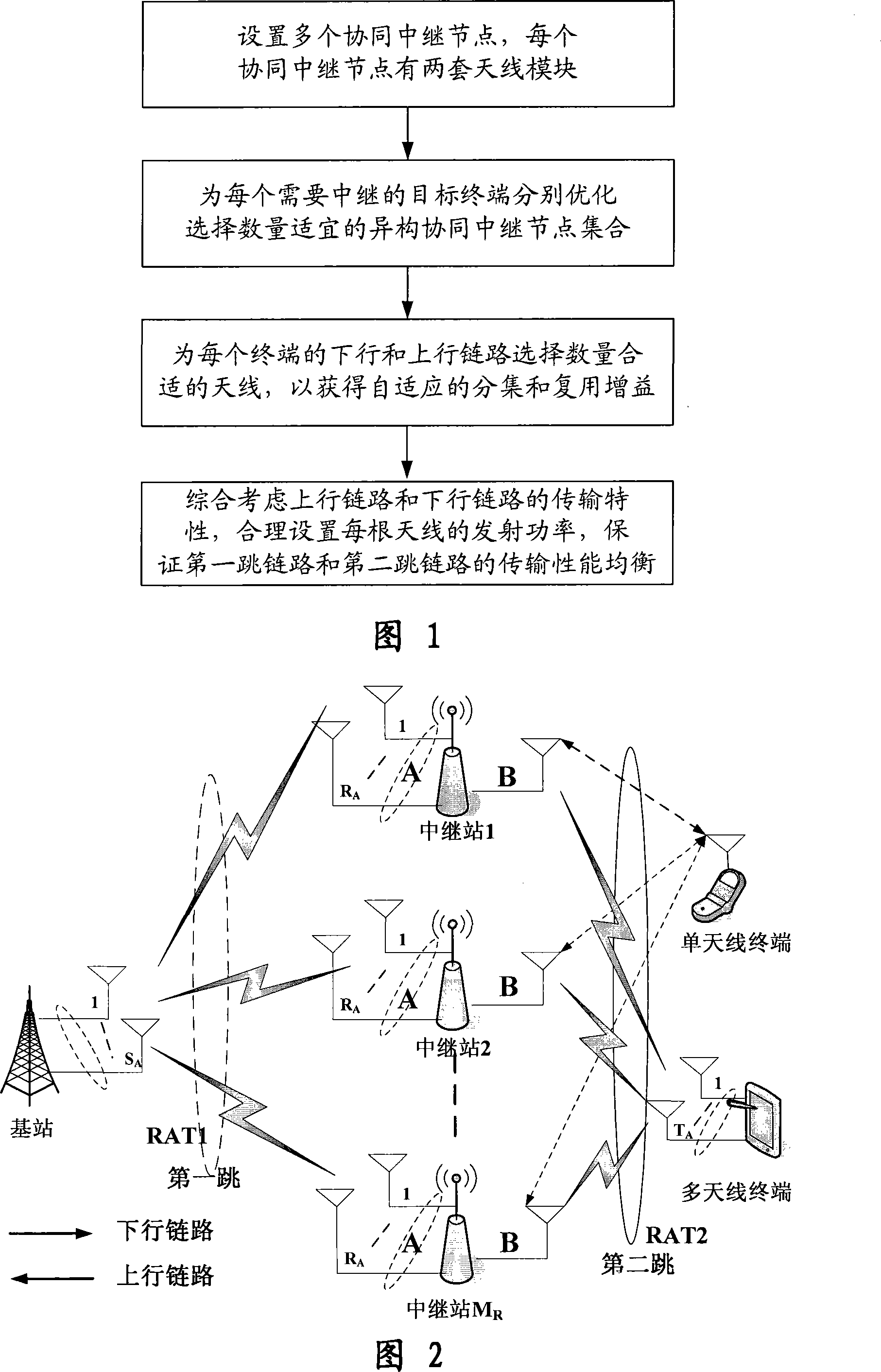

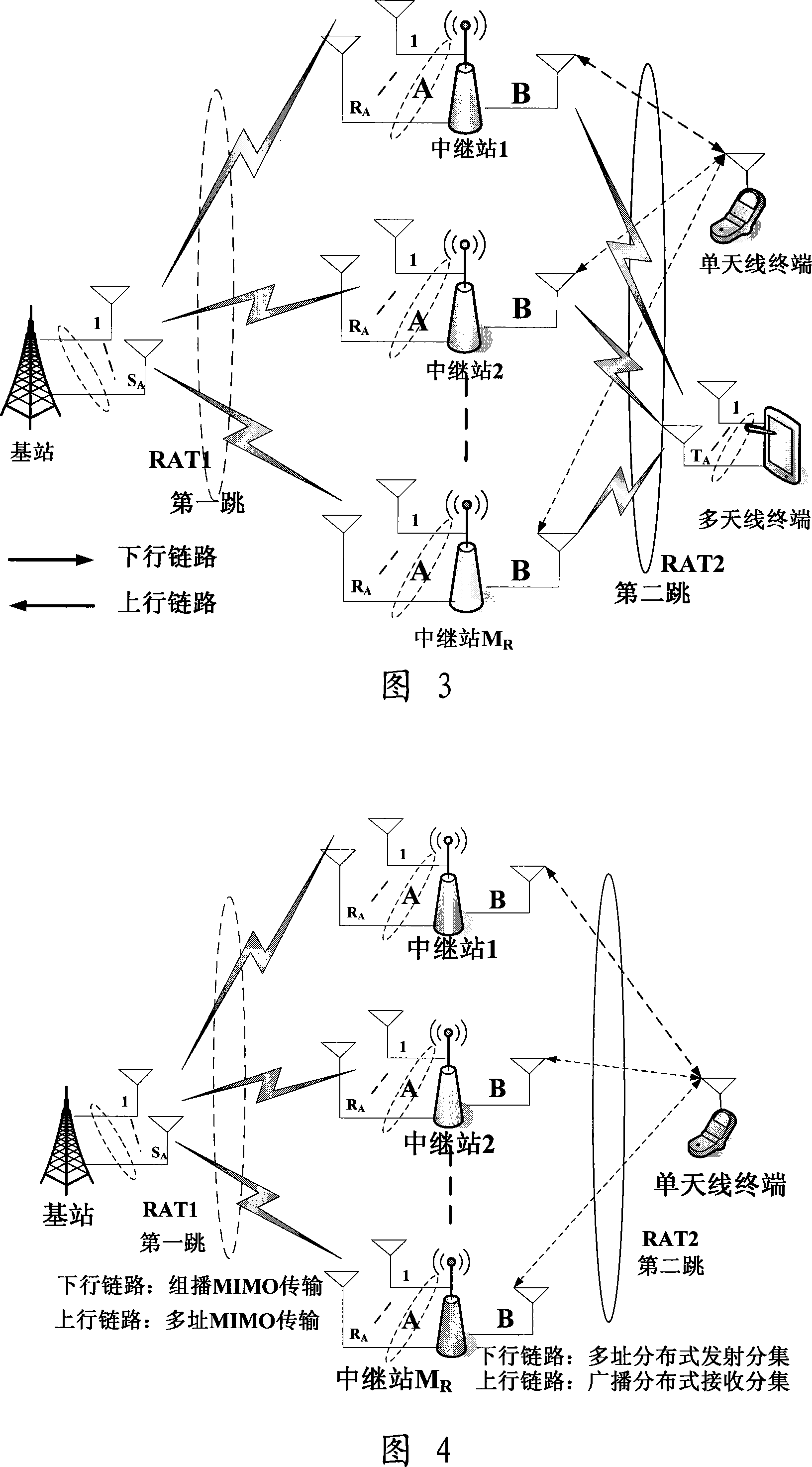

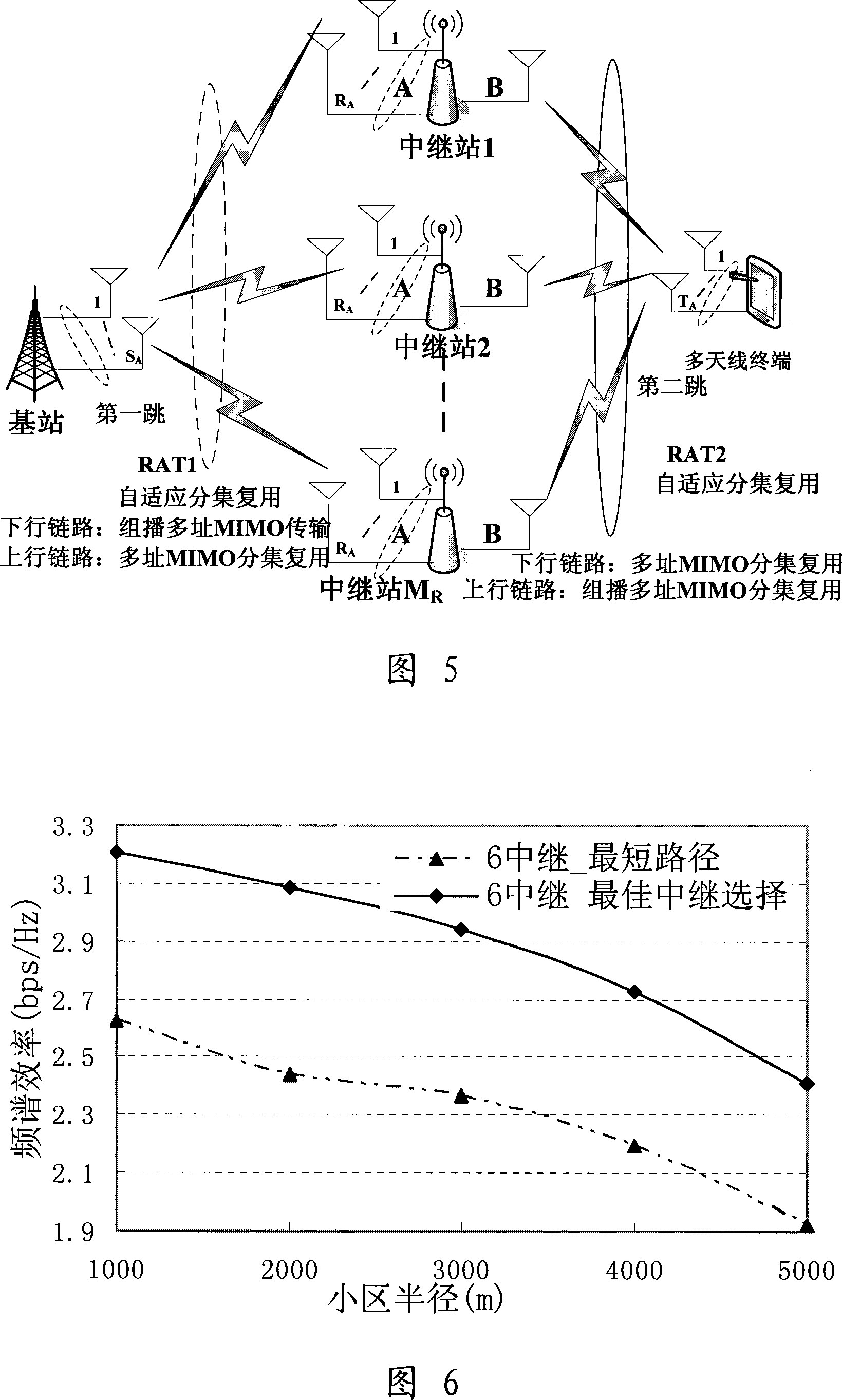

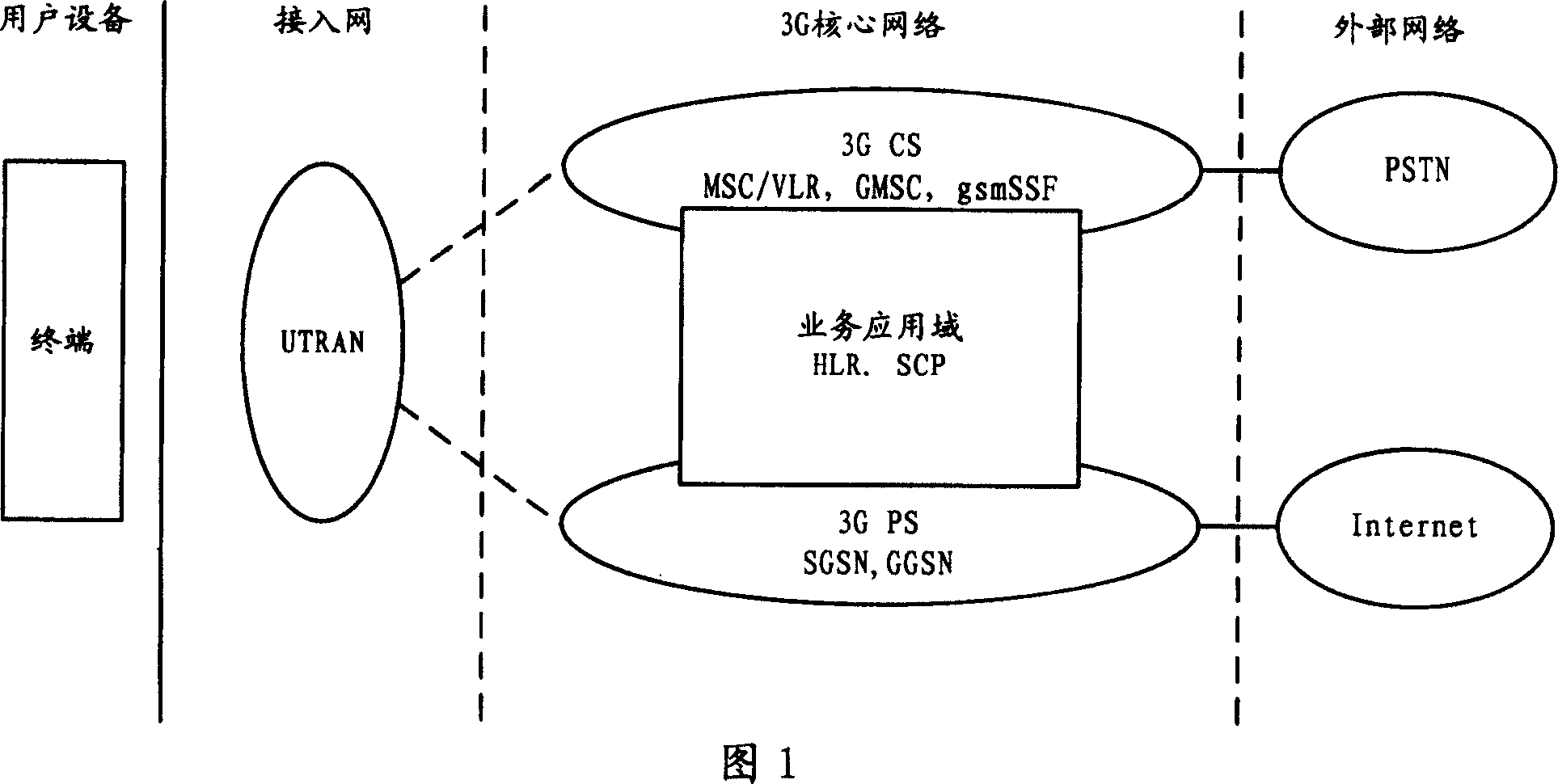

A collaborative transmission method realized in isomerization wireless network with cooperating relay nodes

InactiveCN101217301BRealize interconnectionReduce cache requirementsSpatial transmit diversityError prevention/detection by diversity receptionComputer moduleComputer terminal

The invention discloses a method for implementing heterogeneous and collaborative transmission in a heterogeneous wireless network by using collaborative trunk nodes; one or more collaborative trunk nodes are arranged, and each of which is provided with two set of antenna modules; a base station implements an optimized selection of proper collections of the heterogeneous and collaborative trunk nodes respectively for each destination user terminal that needs trunking, selects and determines antennas with proper number for the uplink and the downlink of each terminal, thus being convenient foracquiring self-adaptive diversity and reuse gain; the emission power of each antenna is reasonably set by comprehensively considering the transmission characteristics of the uplink and the downlink, thus ensuring the balance between the transmission performance of the first-hop link and the second-hop link and optimizing the integrative performance of the system. Based on the sufficient consideration of the existing constructions of wireless network frameworks, the invention discloses two set of antennas creatively, which are respectively used as heterogeneous trunk nodes of two-hips link transmission for implementing heterogeneous transmission, thus having the advantages of simple and convenient operation, flexibility, practicability, less investment, good effectiveness and great application generalization prospect.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Transmitting system and method between base station and wireless network controller

InactiveCN1332578CRealize branch transmissionReduce transmission costsRadio/inductive link selection arrangementsWireless communicationHigh bandwidthTransport system

A transmitting system and method between the wireless network controllers and the base station belongs to mobile communication technology, which get high bandwidth Iub interface transmitting capability with low cost. There are two different type of transmitting line between the base station and the RNC, one is for the transmitting special line that transmits the audio operating frame, and the other is for the IP net that transmit the data operating frame. Setting different CFN for the voice operating frame and the data operating frame that according to transmitting delay of the pre-mensuration. Setting the bigger incept window for the transmitting line of the data operating frame. Setting the different IP address to realize separate-way transmit of the two operating frame if the transmitting special line use IP transmission; Configuring AAL2VC and IP address to realize separate-way transmission of the two operating frame if the transmitting special line use asynchronism transfer mode ATM.

Owner:SHANGHAI HUAWEI TECH CO LTD

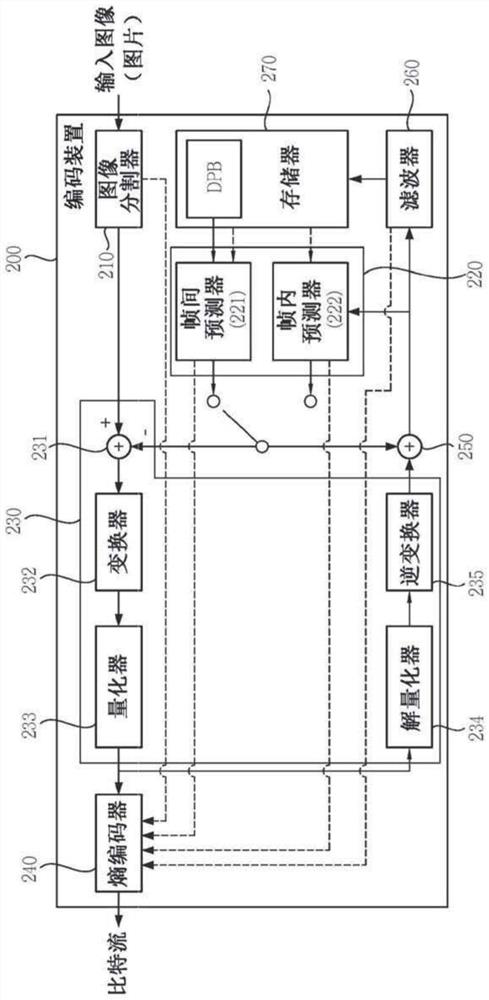

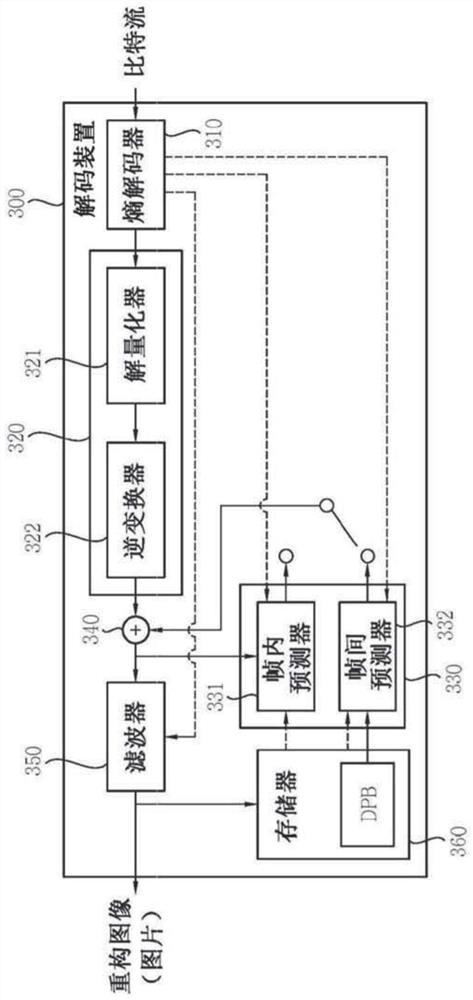

Image decoding method and apparatus therefor

PendingCN114258684ALow costReduce cache requirementsDigital video signal modificationRadiologyComputer vision

Owner:LG ELECTRONICS INC

A Block Matrix Storage Method in Circuit Simulation

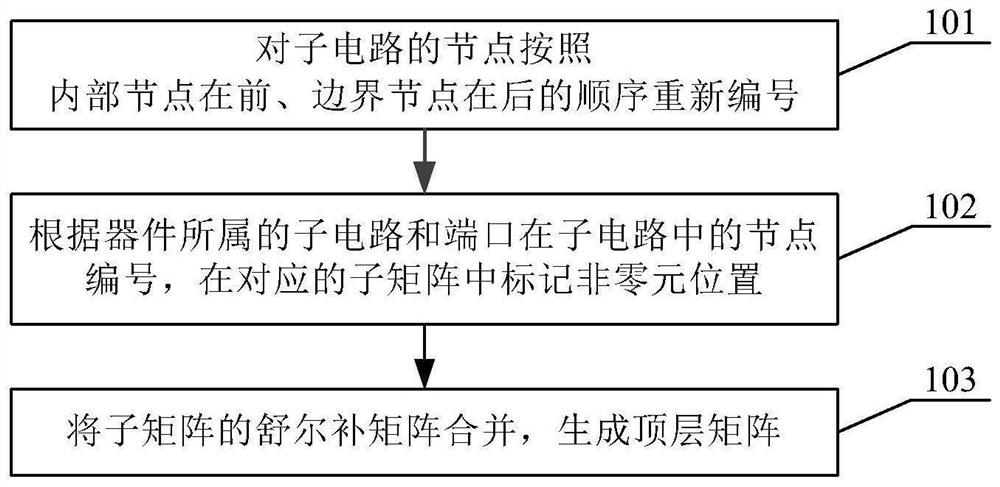

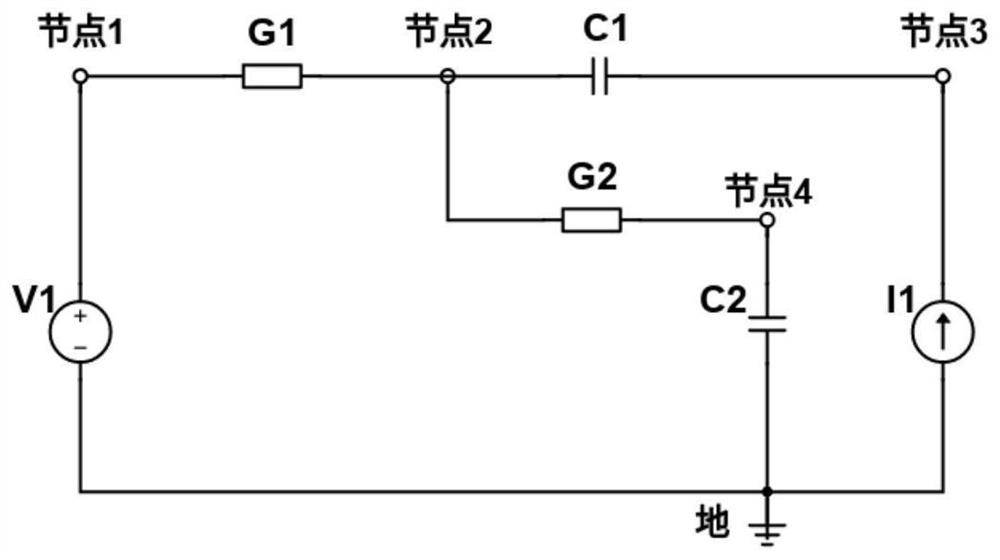

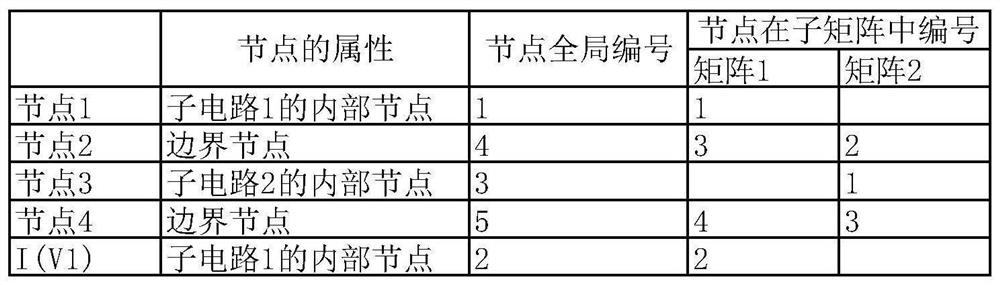

ActiveCN113486616BReduce data volumeReduced CPU cache requirementsComputer aided designComplex mathematical operationsComputational scienceMatrix decomposition

A block matrix storage method in circuit simulation, comprising the following steps: renumbering and sorting the nodes of each sub-circuit to establish a sub-matrix of nodes; The non-zero element position is marked in the corresponding sub-matrix; the Schur complement matrix of each sub-matrix is calculated and merged to generate the top-level matrix. The block matrix storage method in the circuit simulation of the present invention divides the coefficient matrix of the circuit equation system into blocks, so that the subsequent matrix calculation, including matrix decomposition and back-substitution solution, can be performed in parallel, and the calculation efficiency is improved.

Owner:成都华大九天科技有限公司

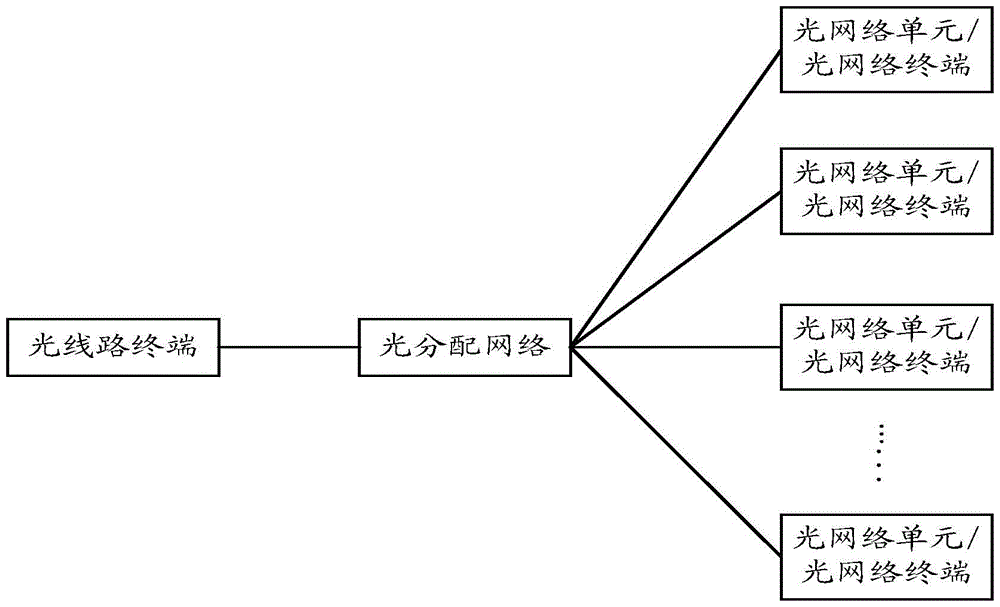

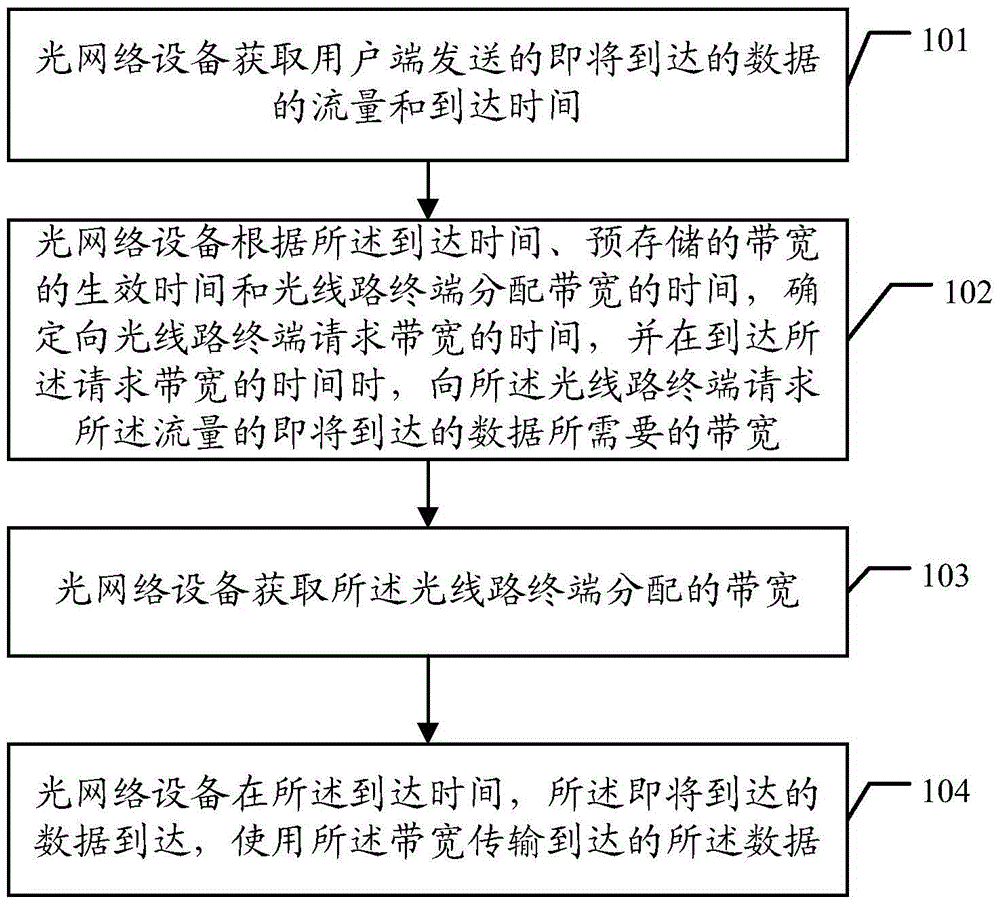

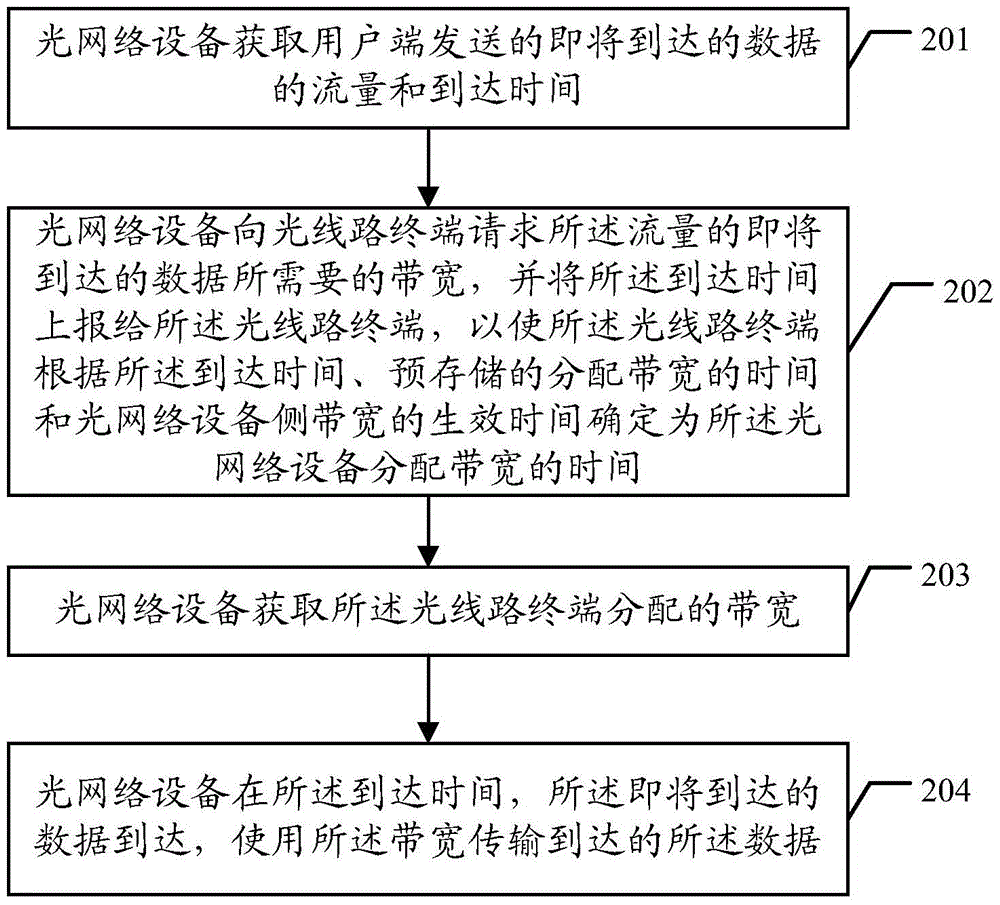

Method, device and system for bandwidth allocation

InactiveCN103518355BSolve the problem of allocation lagReduce latencyMultiplex system selection arrangementsData switching networksTraffic capacityDynamic bandwidth allocation

The invention discloses a bandwidth allocation method, which includes: acquiring the flow and arrival time of data about to arrive sent by a user terminal; The time for requesting the bandwidth from the optical line terminal, and when the time for requesting the bandwidth is reached, the bandwidth required for the incoming data of the traffic is requested from the optical line terminal; the bandwidth allocated by the optical line terminal is obtained; At the arrival time, the incoming data arrives, and the incoming data is transmitted using the bandwidth. The embodiments of the present invention also provide corresponding devices and systems. The technical solution of the present invention is that the optical network equipment can request bandwidth from the network line terminal in advance according to the arrival time of the upcoming data, thereby solving the problem of bandwidth allocation lag in the non-fiber-to-user scenario, thereby reducing the cache of the ONU. demand, reducing the line delay.

Owner:王丽东

Display method, device, electronic device, and storage medium of source of goods

ActiveCN112561433BSave browsing timeLow costForecastingCharacter and pattern recognitionEngineeringFeature data

The present invention provides a method, device, electronic device and storage medium for displaying a source of goods. The method includes: acquiring source information of the source of goods to be displayed; determining a trained transaction prediction model according to a region cluster to which the region where the source of goods information is located belongs; The first-level feature data of the source of supply information to be displayed; the first-level feature data of the source of supply information to be displayed is input into a trained second-level feature prediction model, and the second-level feature prediction model is obtained by fusing multiple prediction models; Obtain the secondary feature data predicted by the secondary feature prediction model; input at least part of the primary feature data of the source information to be displayed and the secondary feature data into the transaction prediction model; according to the transaction prediction model The forecast result of , sort the source information of the source to be displayed; display the sorted source information. The present invention reduces the browsing time of the user for the source of goods, improves the efficiency of receiving orders, and further improves the overall freight efficiency of the platform.

Owner:江苏运满满信息科技有限公司

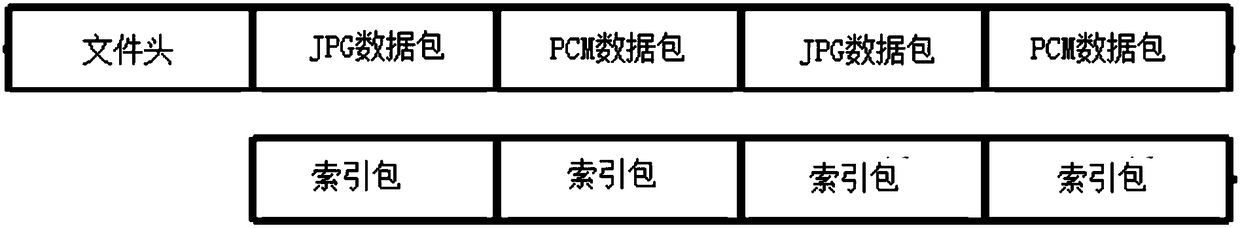

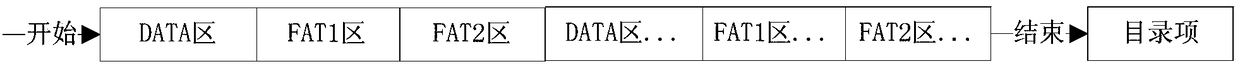

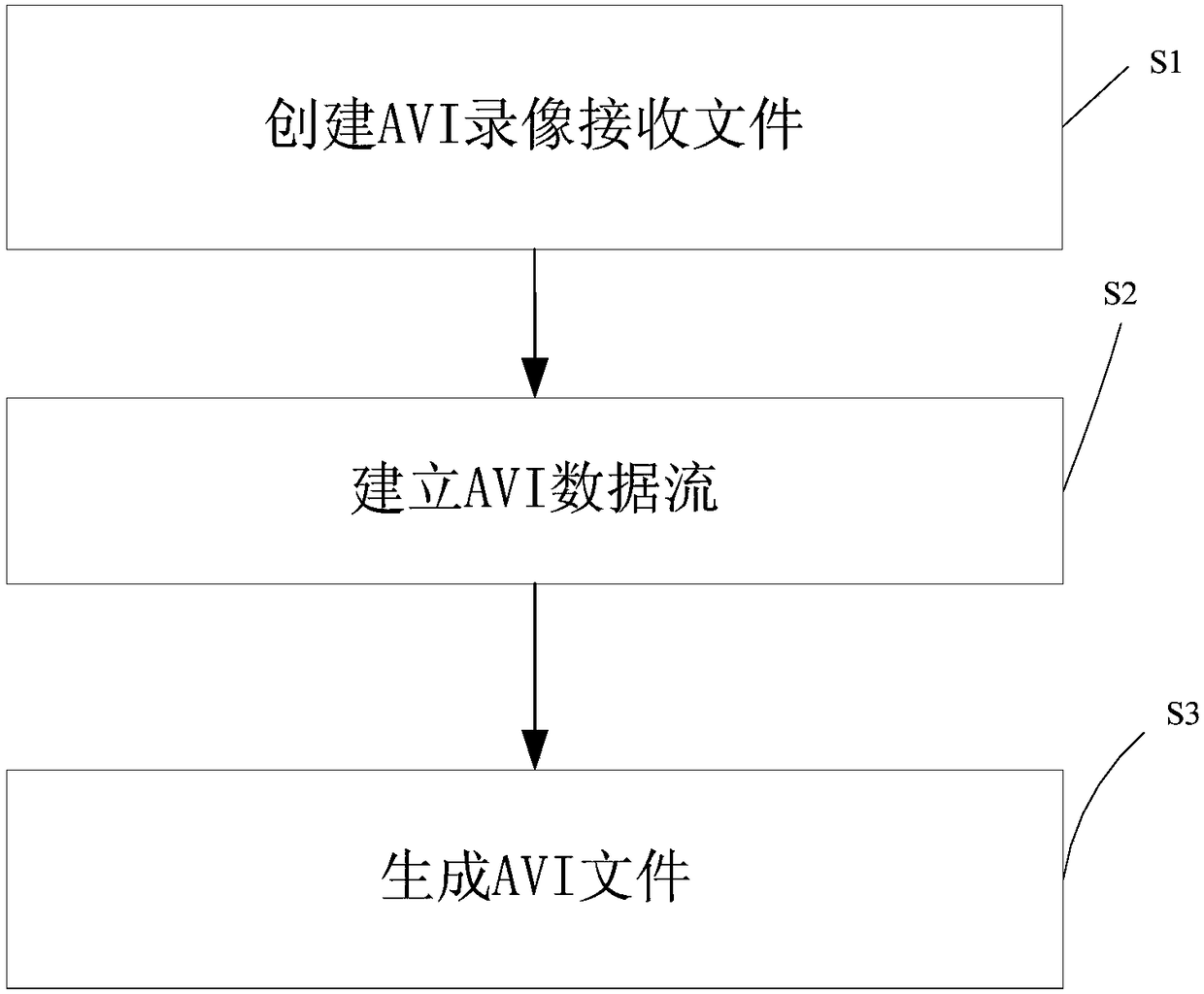

Video record processing method, system and chip and storage device

ActiveCN109120986AImprove storage efficiencyReduce cache requirementsTelevision system detailsColor television detailsData streamNetwork packet

The invention provides a video record processing method, system and chip and a storage device. The video record processing method comprises an AVI record receiving file establishing step, namely, an AVI video receiving file is established in the storage device at the beginning of the video recording; an AVI data stream establishing step, namely, an AVI data stream is established according to a video stream provided by a video recording device in real time, and the AVI data stream is written into the AVI record receiving file, wherein the videos stream is composed of continuous streaming mediadata packets, and the AVI data stream is composed of a file header, streaming media data packets and an index packet corresponding to the streaming media data packets; and an AVI file generating step,namely, the streaming media data packets and the index packet in the AVI record receiving file are parsed out, and a file in the AVI format is generated. The cache occupation in the video recording process can be reduced by adopting the technical scheme of the invention.

Owner:APPOTECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com