Patents

Literature

38 results about "Locality of reference" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, locality of reference, also known as the principle of locality, is the tendency of a processor to access the same set of memory locations repetitively over a short period of time. There are two basic types of reference locality – temporal and spatial locality. Temporal locality refers to the reuse of specific data, and/or resources, within a relatively small time duration. Spatial locality refers to the use of data elements within relatively close storage locations. Sequential locality, a special case of spatial locality, occurs when data elements are arranged and accessed linearly, such as, traversing the elements in a one-dimensional array.

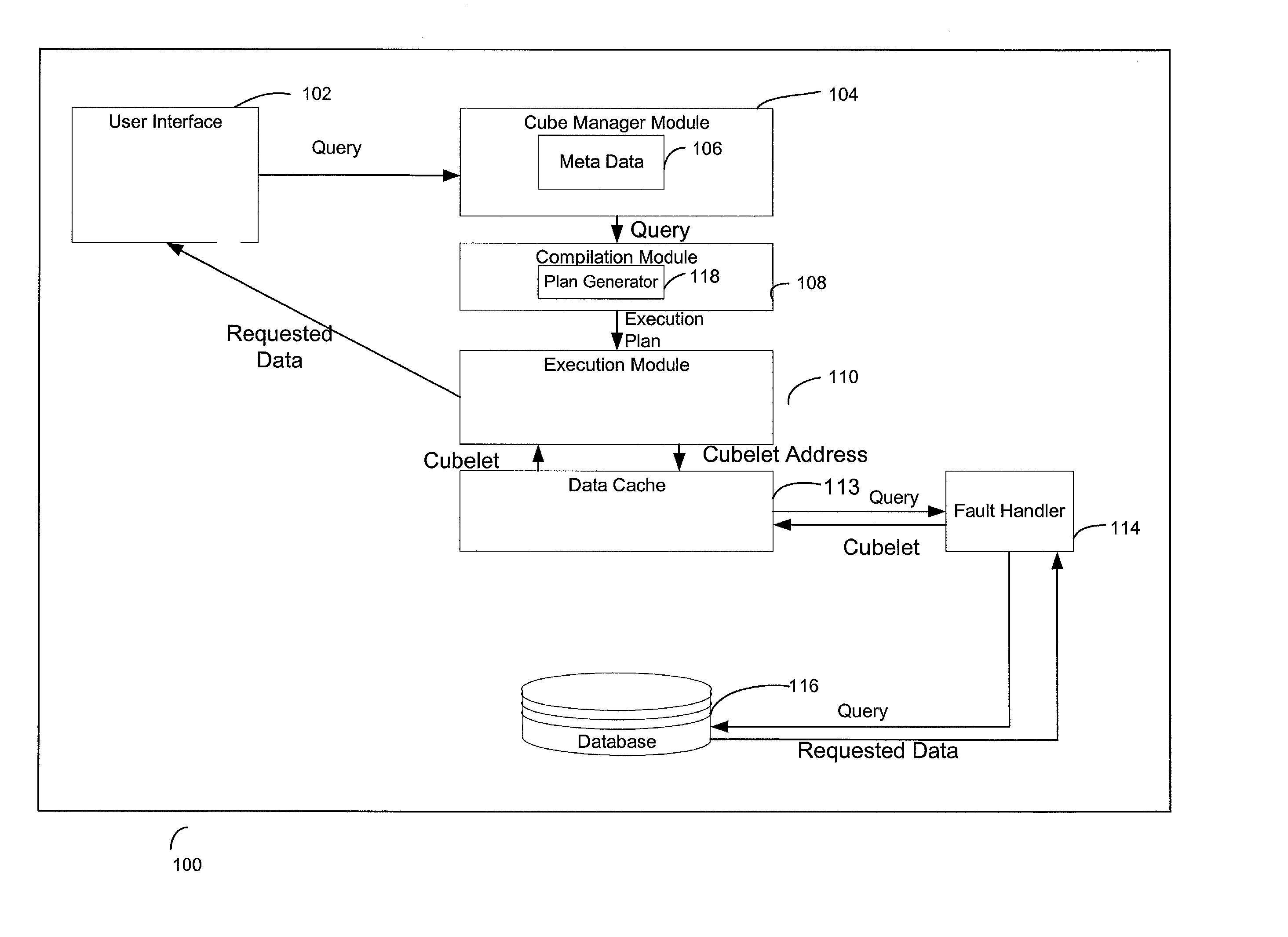

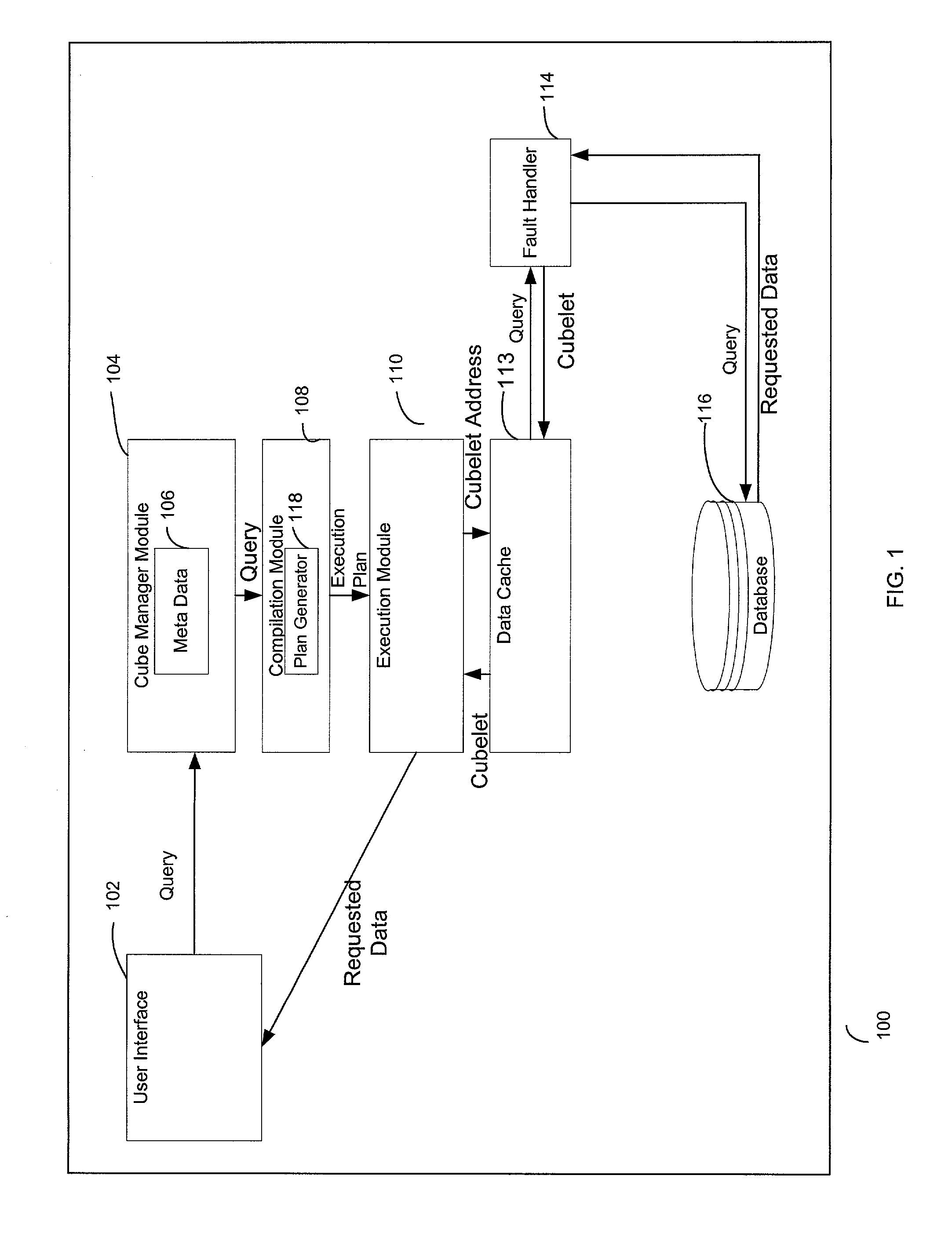

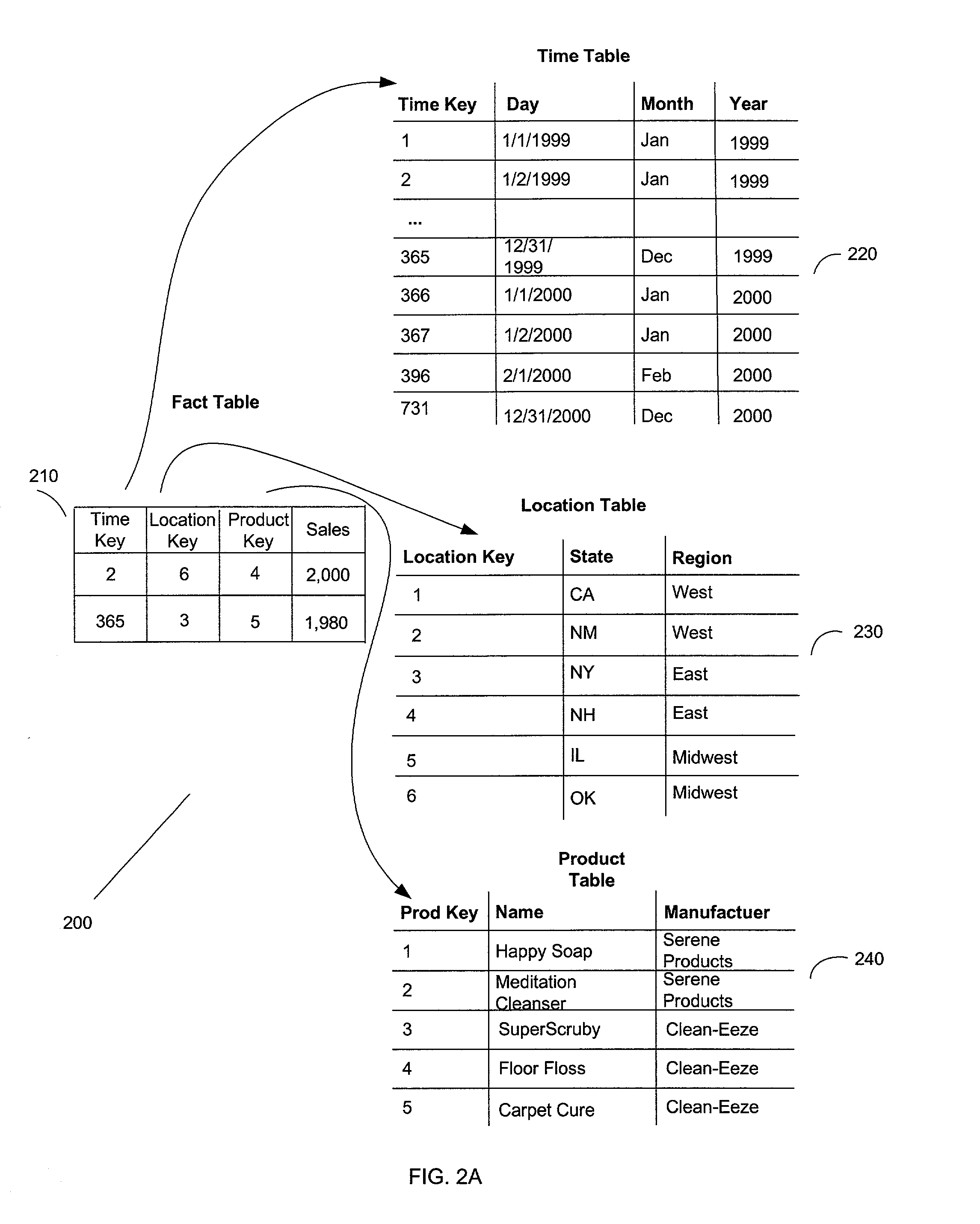

Caching scheme for multi-dimensional data

InactiveUS20020126545A1Data processing applicationsDigital storageLocality of referenceError processing

A system, method, and a computer program product for caching multi-dimensional data based on an assumption of locality of reference. A user sends a query for data. A described compilation module converts the query into a set of cubelet addresses and canonical addresses. In the described embodiment, if the data corresponding to the cubelet address is found in a data cache, the data cache returns the cubelet, which may contain the requested data and data for "nearby" cells. The data corresponding to the canonical addresses is extracted from the returned cubelet. If the data is not found in a data cache, a fault handler queries a back-end database for the cubelet identified by the cubelet address. This cubelet includes the requested data and data for "nearby" cells. The requested data and the data for "nearby cells" are in the form of values of measure attributes and associated canonical addresses. The returned cubelet is then cached and the data corresponding to the canonical addresses is extracted.

Owner:IBM CORP

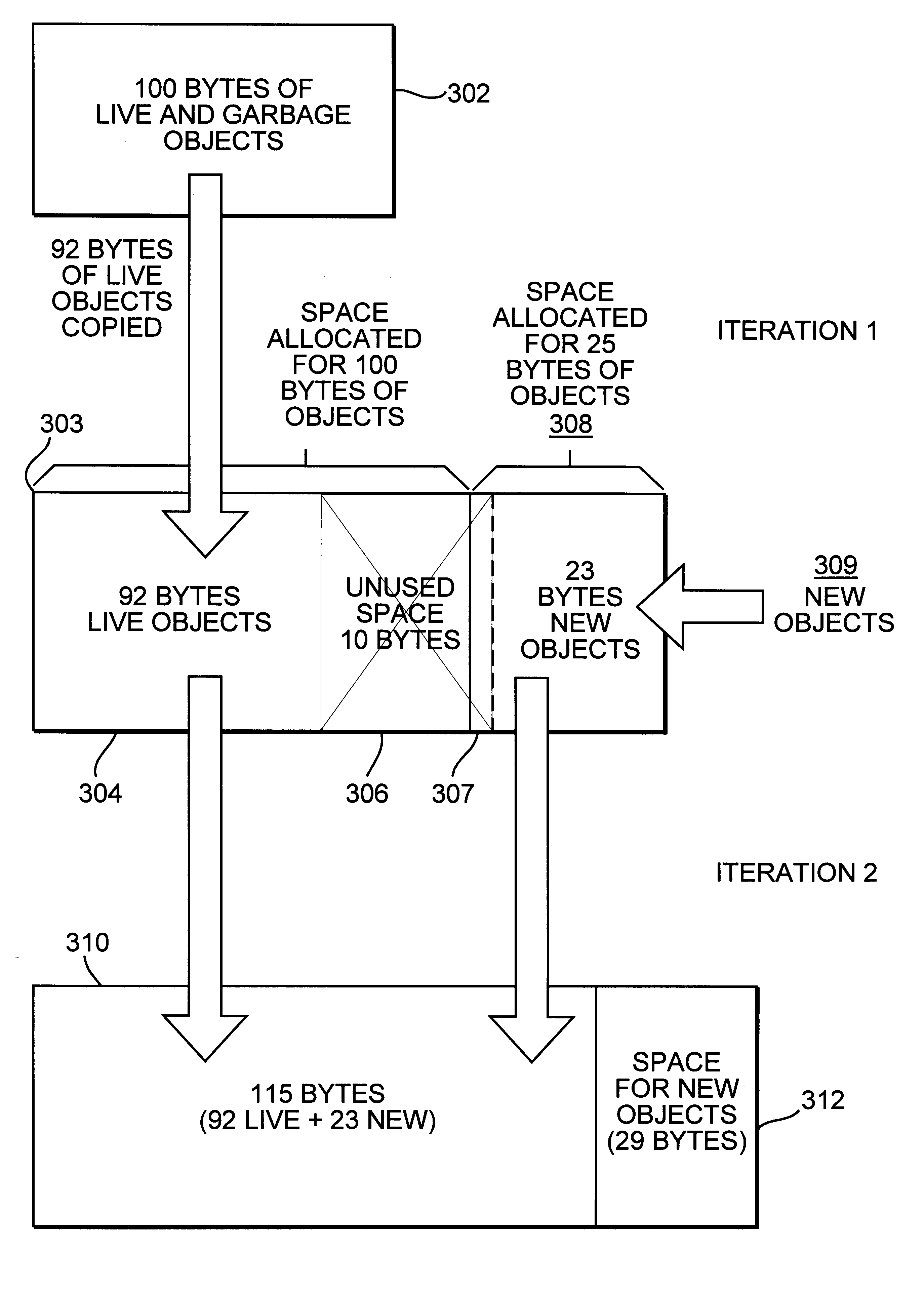

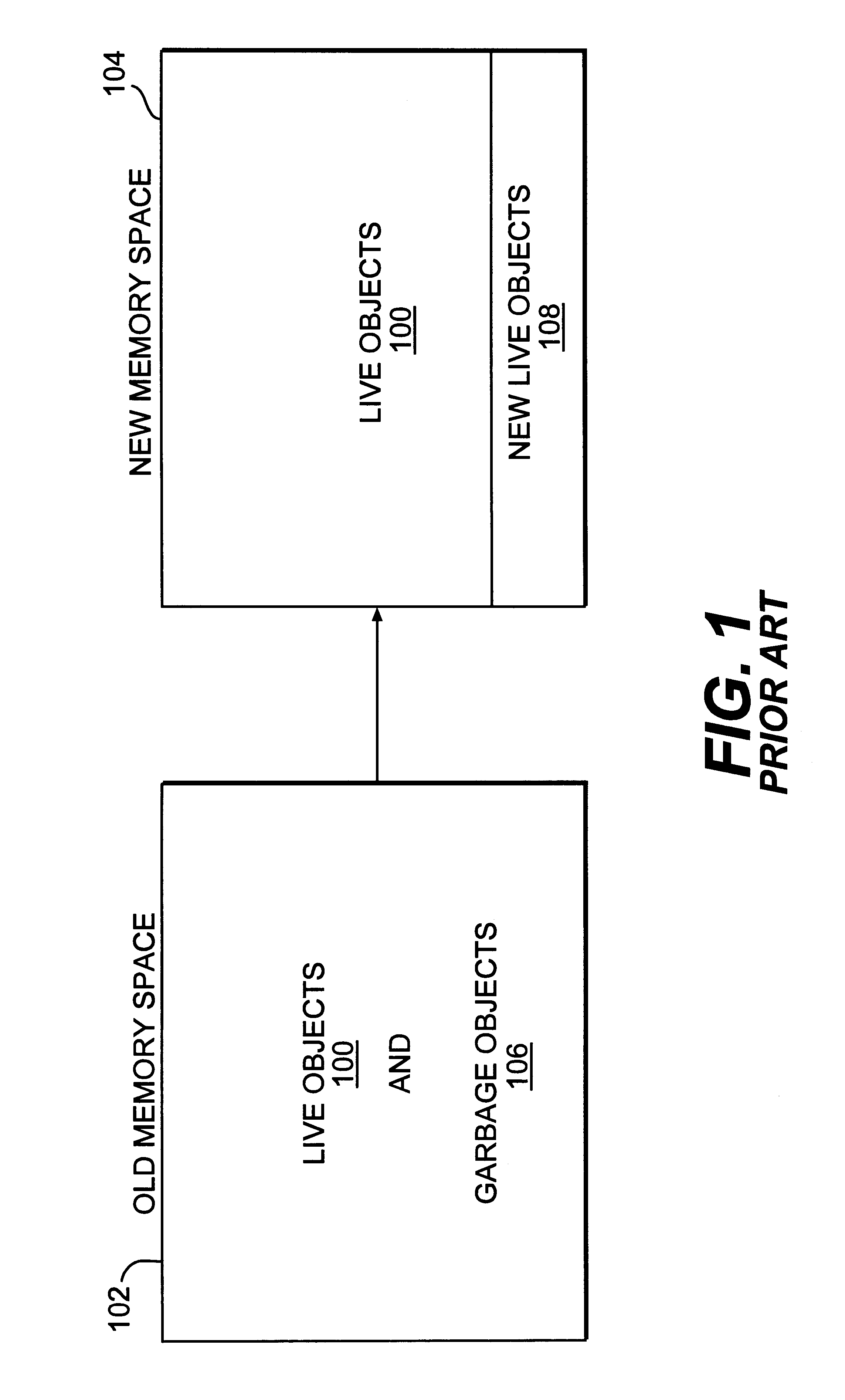

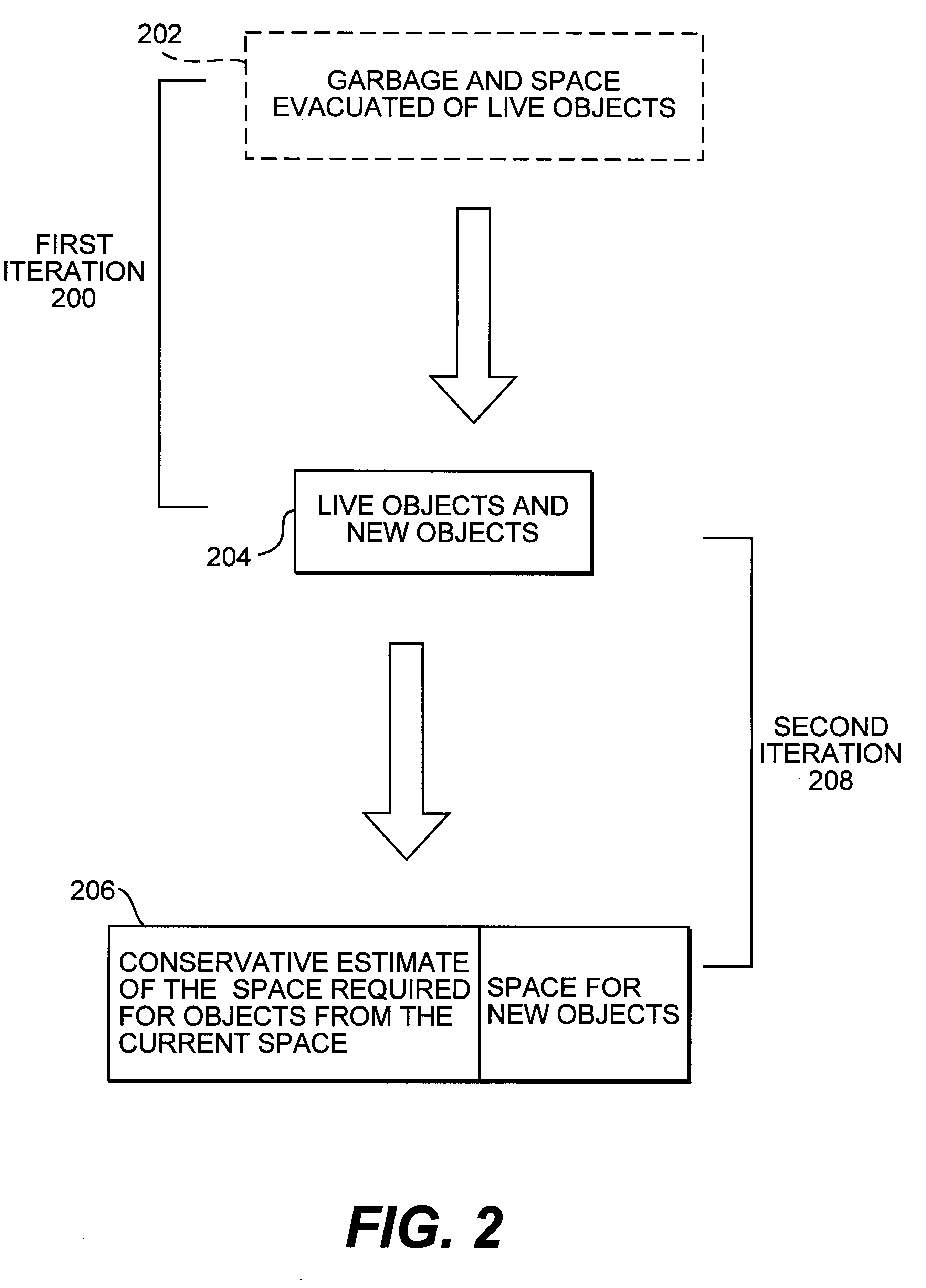

Incremental heap expansion in a real-time garbage collector

InactiveUS6286016B1Data processing applicationsMemory adressing/allocation/relocationLocality of referenceOut of memory

A system that performs real-time garbage collection by dynamically expanding and contracting the heap is provided. This system performs real-time garbage collection in that the system guarantees garbage collection will not take more time than expected. The system dynamically expands and contracts the heap to correspond to the actual memory space used by live objects. This dynamic resizing of the heap has the advantages of expanding when the amount of objects increases and contracting to free memory space for use by other procedures when the amount of objects decreases. Keeping the heap as small as possible frees resources for other processes and increases the locality of reference for the application. This dynamic resizing also ensures that the new memory space will not run out of memory before all of the live objects from the old memory space are copied, even if all of the live objects in the old memory space survive.

Owner:ORACLE INT CORP

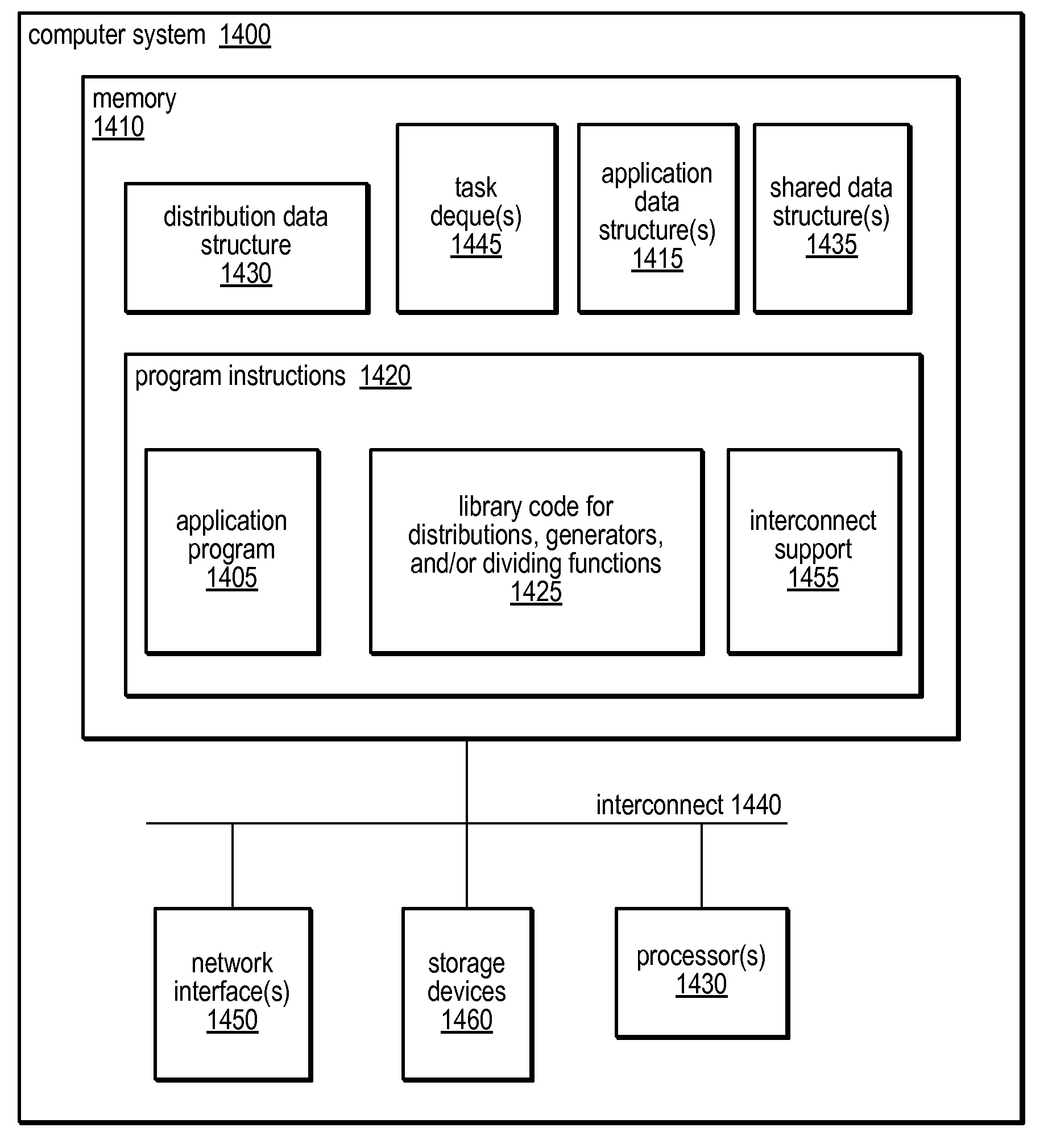

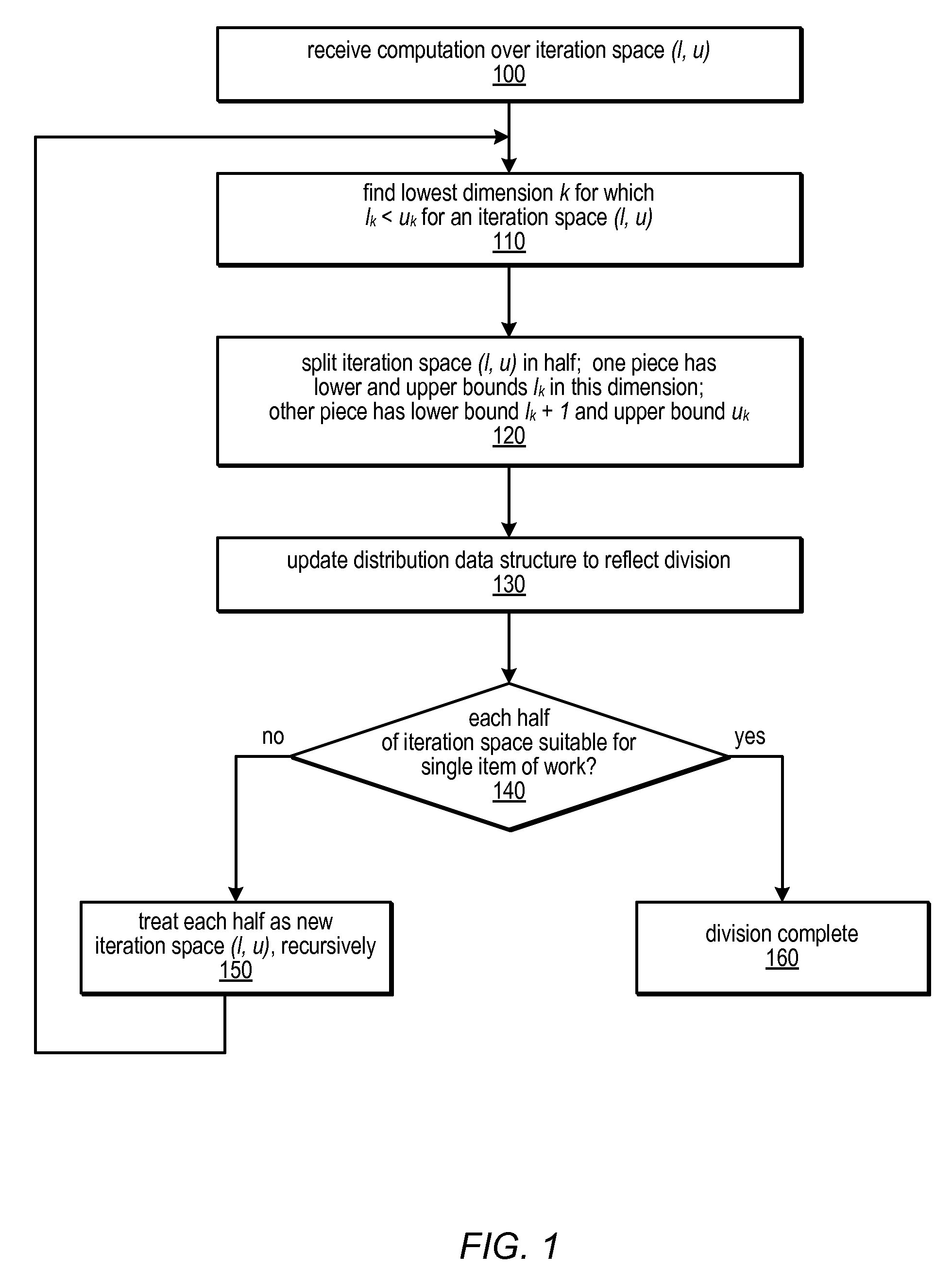

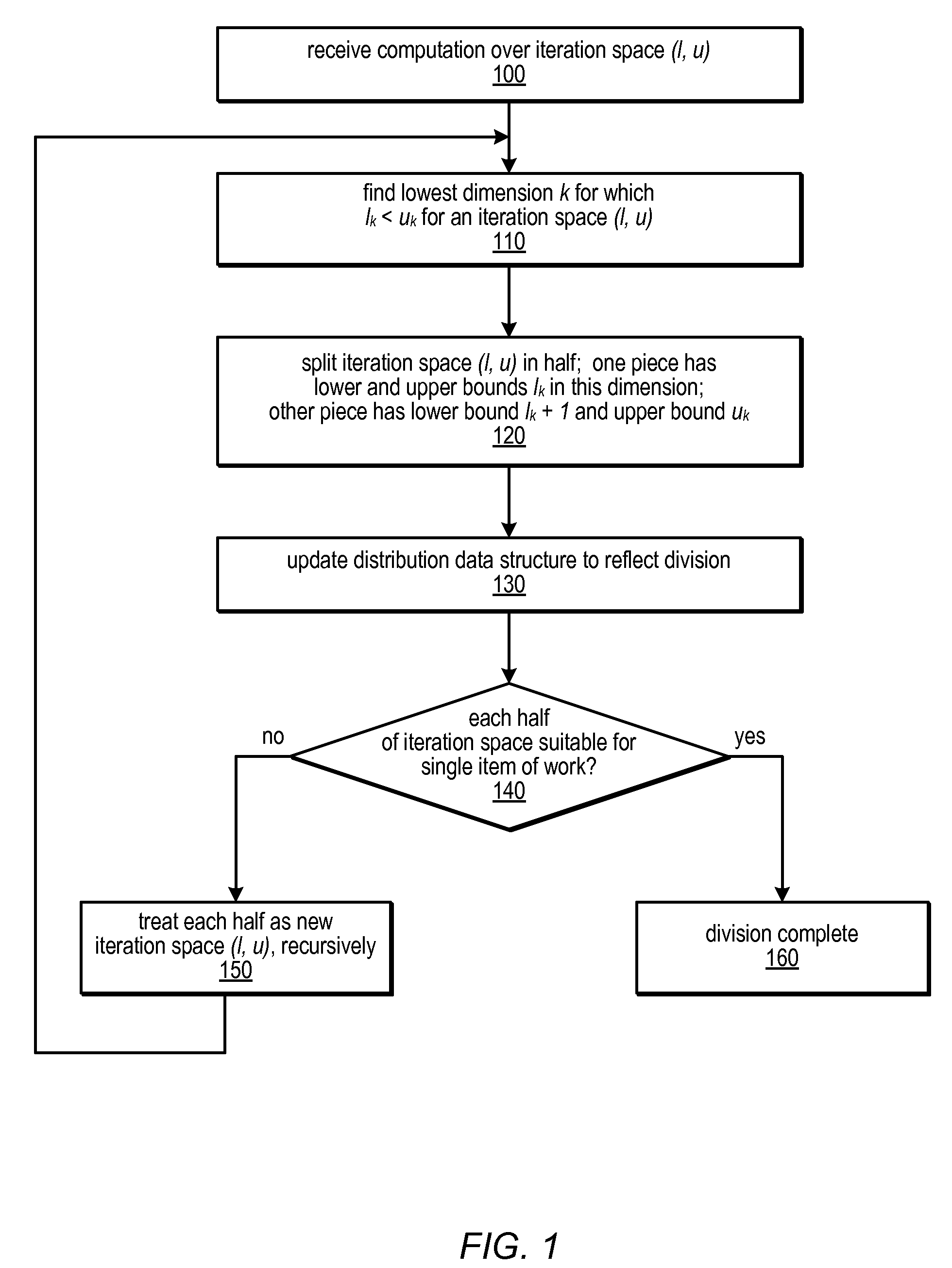

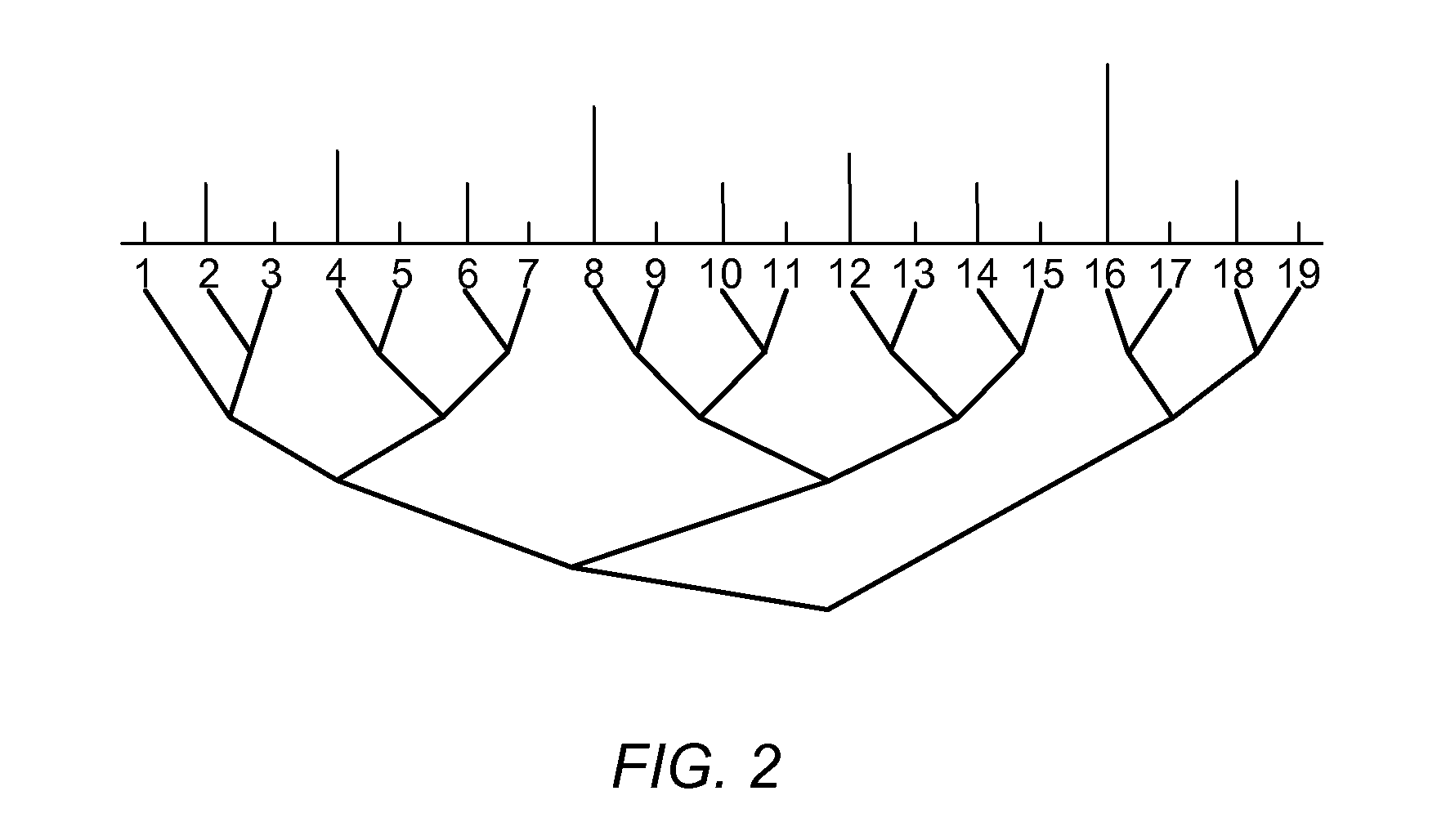

Distribution Data Structures for Locality-Guided Work Stealing

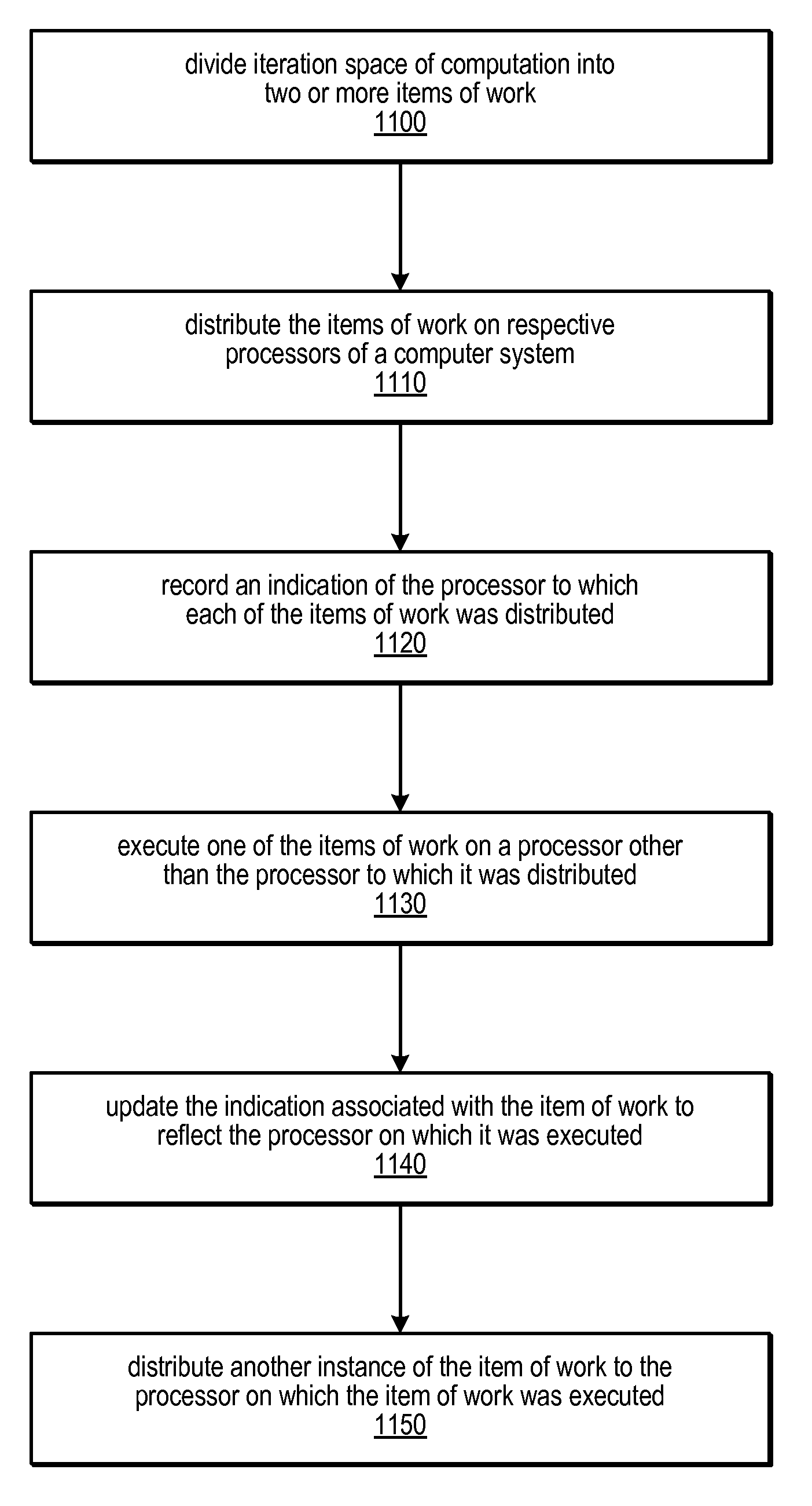

ActiveUS20100031267A1Keep localProvide load balancingResource allocationMemory systemsLocality of referenceLanguage construct

A data structure, the distribution, may be provided to track the desired and / or actual location of computations and data that range over a multidimensional rectangular index space in a parallel computing system. Examples of such iteration spaces include multidimensional arrays and counted loop nests. These distribution data structures may be used in conjunction with locality-guided work stealing and may provide a structured way to track load balancing decisions so they can be reproduced in related computations, thus maintaining locality of reference. They may allow computations to be tied to array layout, and may allow iteration over subspaces of an index space in a manner consistent with the layout of the space itself. Distributions may provide a mechanism to describe computations in a manner that is oblivious to precise machine size or structure. Programming language constructs and / or library functions may support the implementation and use of these distribution data structures.

Owner:SUN MICROSYSTEMS INC

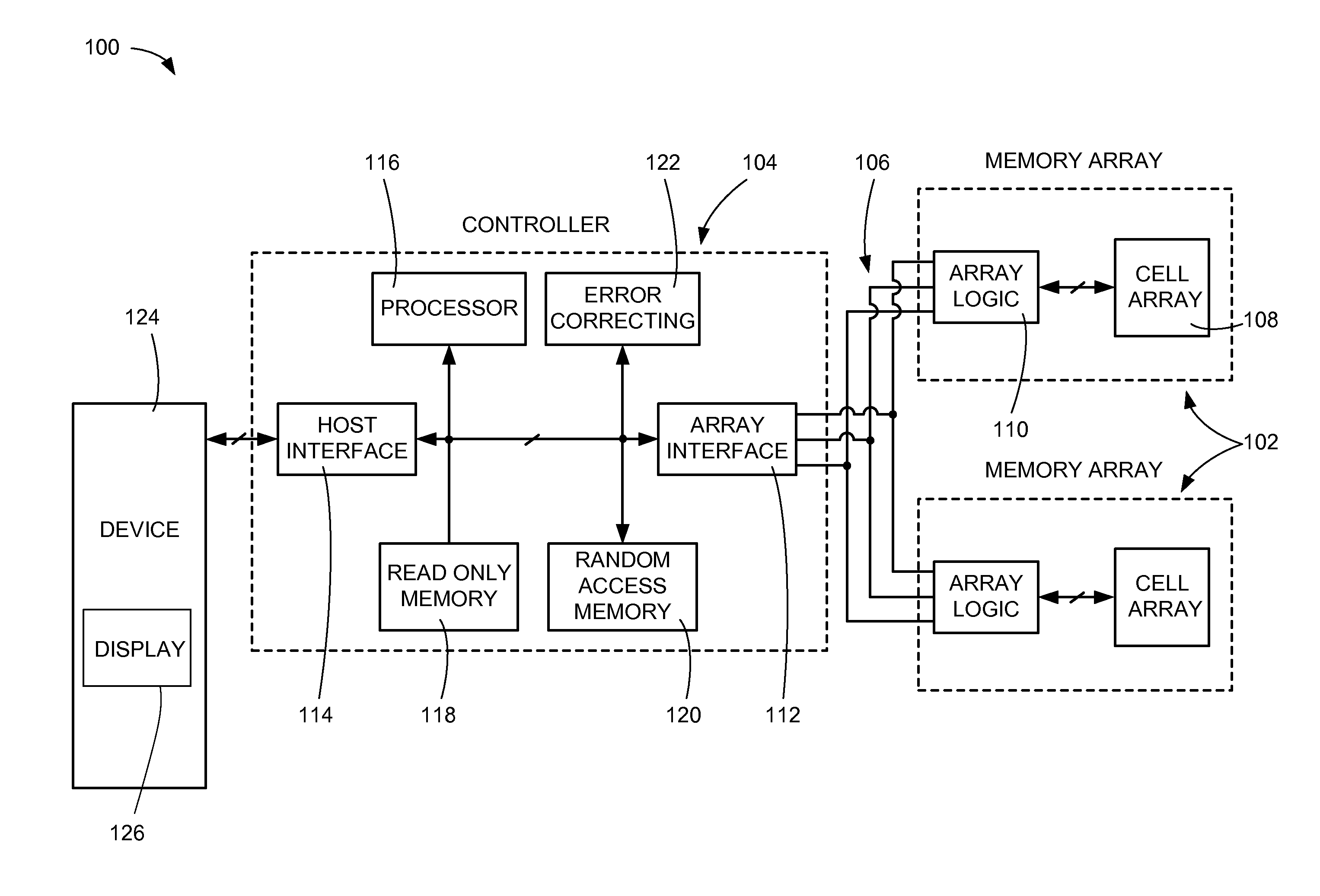

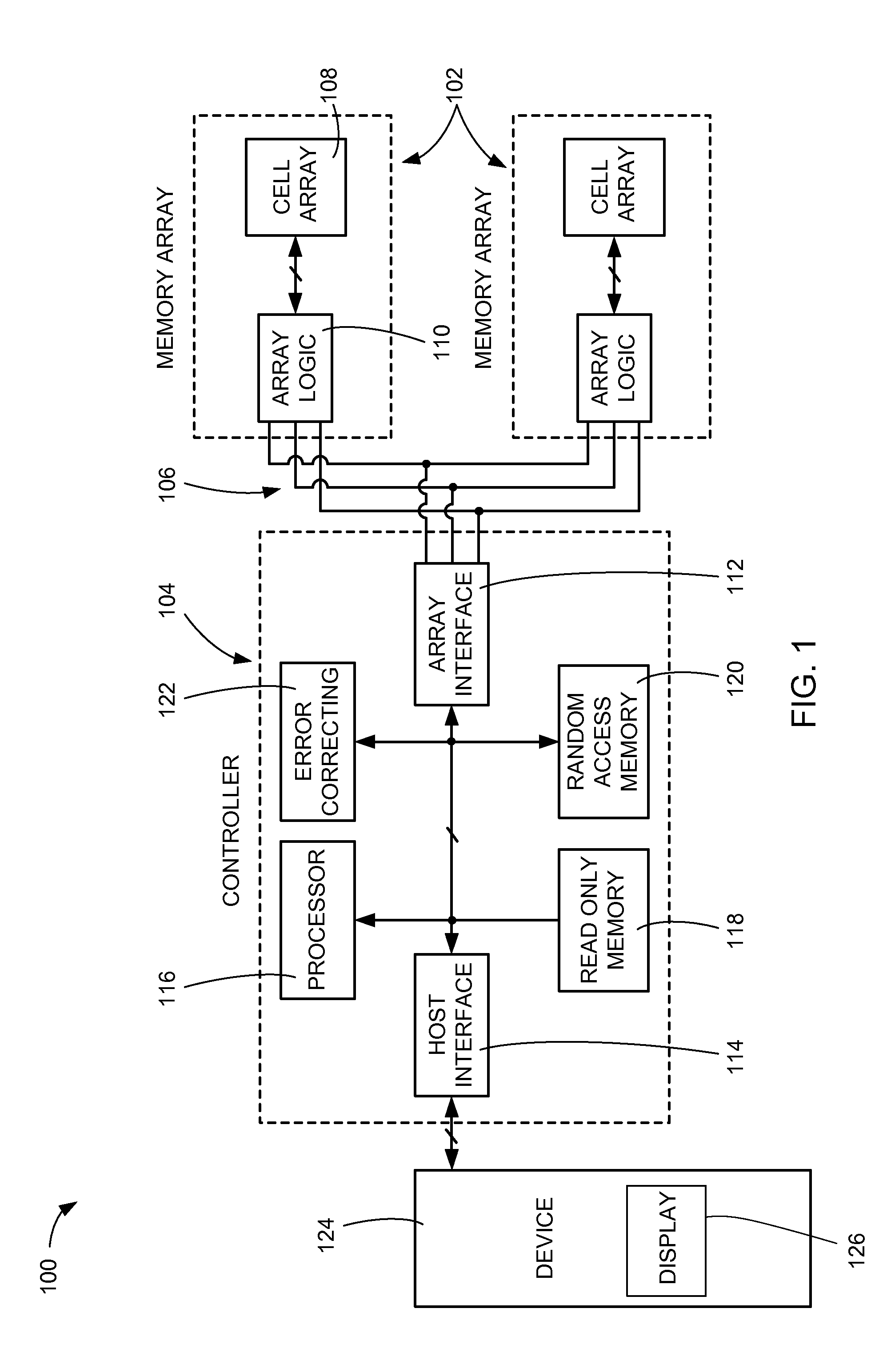

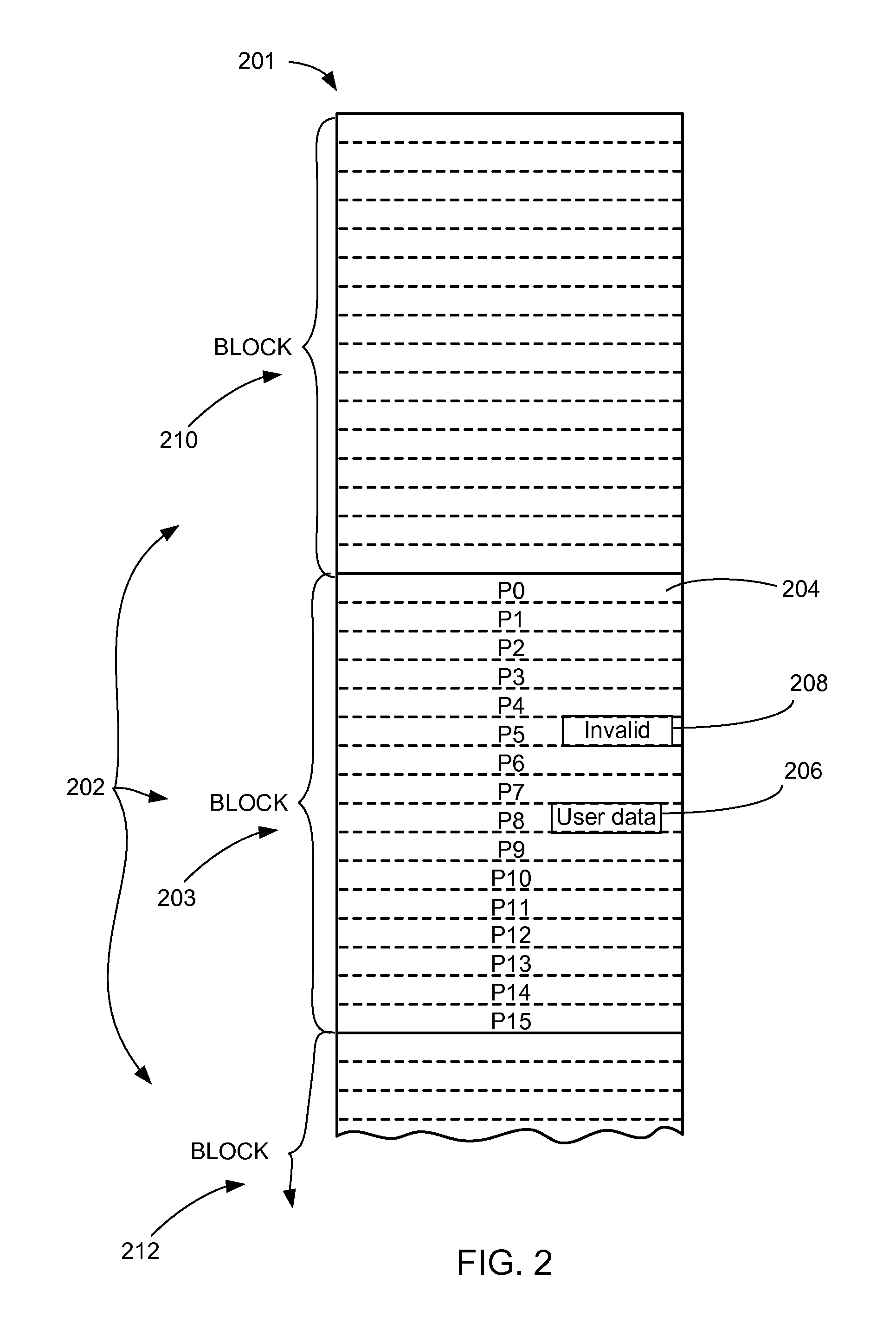

Memory system with tiered queuing and method of operation thereof

InactiveUS20120203993A1Memory architecture accessing/allocationMemory adressing/allocation/relocationLocality of referenceParallel computing

A method of operation of a memory system includes: providing a memory array having a dynamic queue and a static queue; and grouping user data by a temporal locality of reference having more frequently handled data in the dynamic queue and less frequently handled data in the static queue.

Owner:SANDISK TECH LLC

Efficient hardware A-buffer using three-dimensional allocation of fragment memory

InactiveUS7336283B2Efficient accessPromotes effective prefetchingMemory adressing/allocation/relocationImage memory managementLocality of referenceGraphics

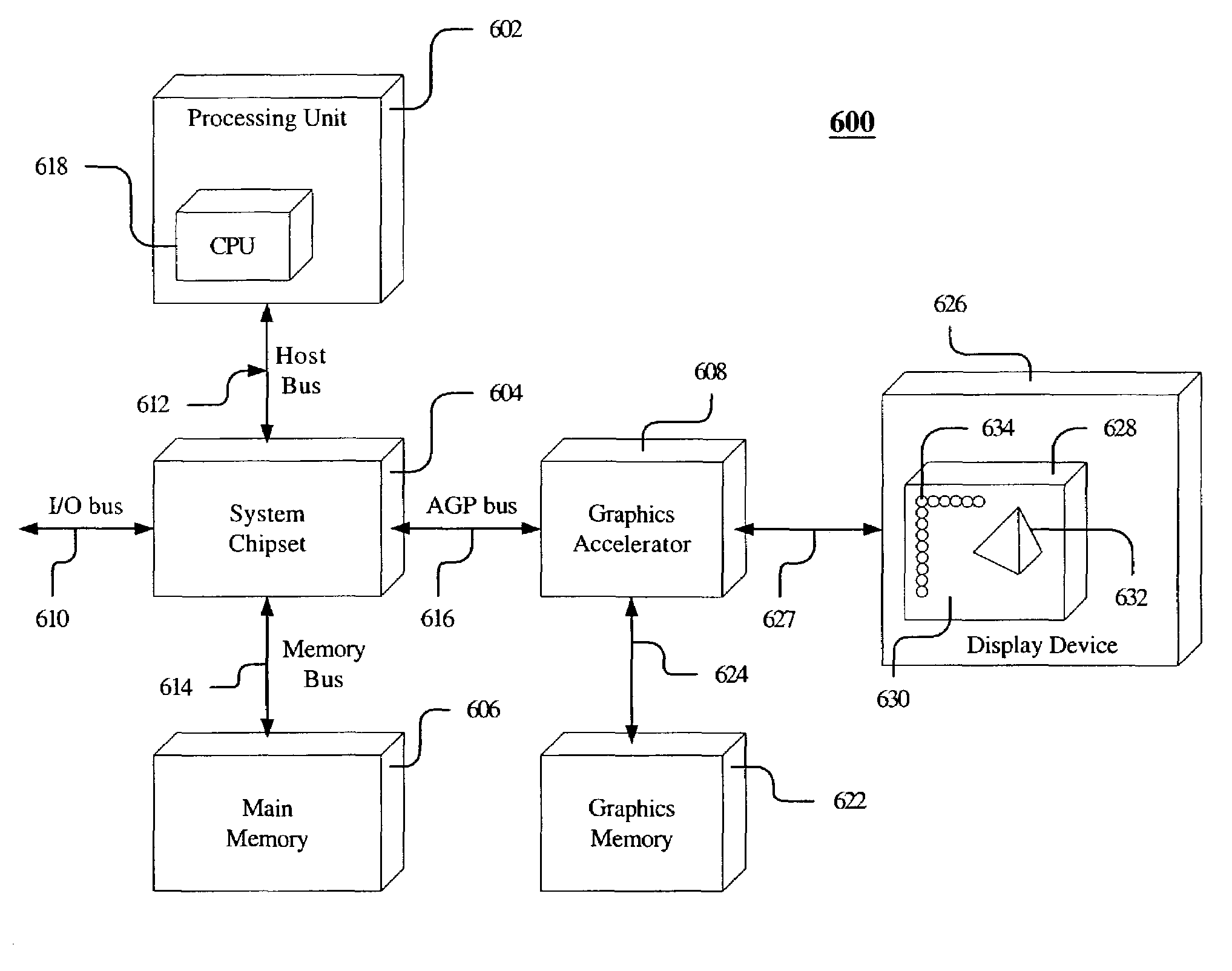

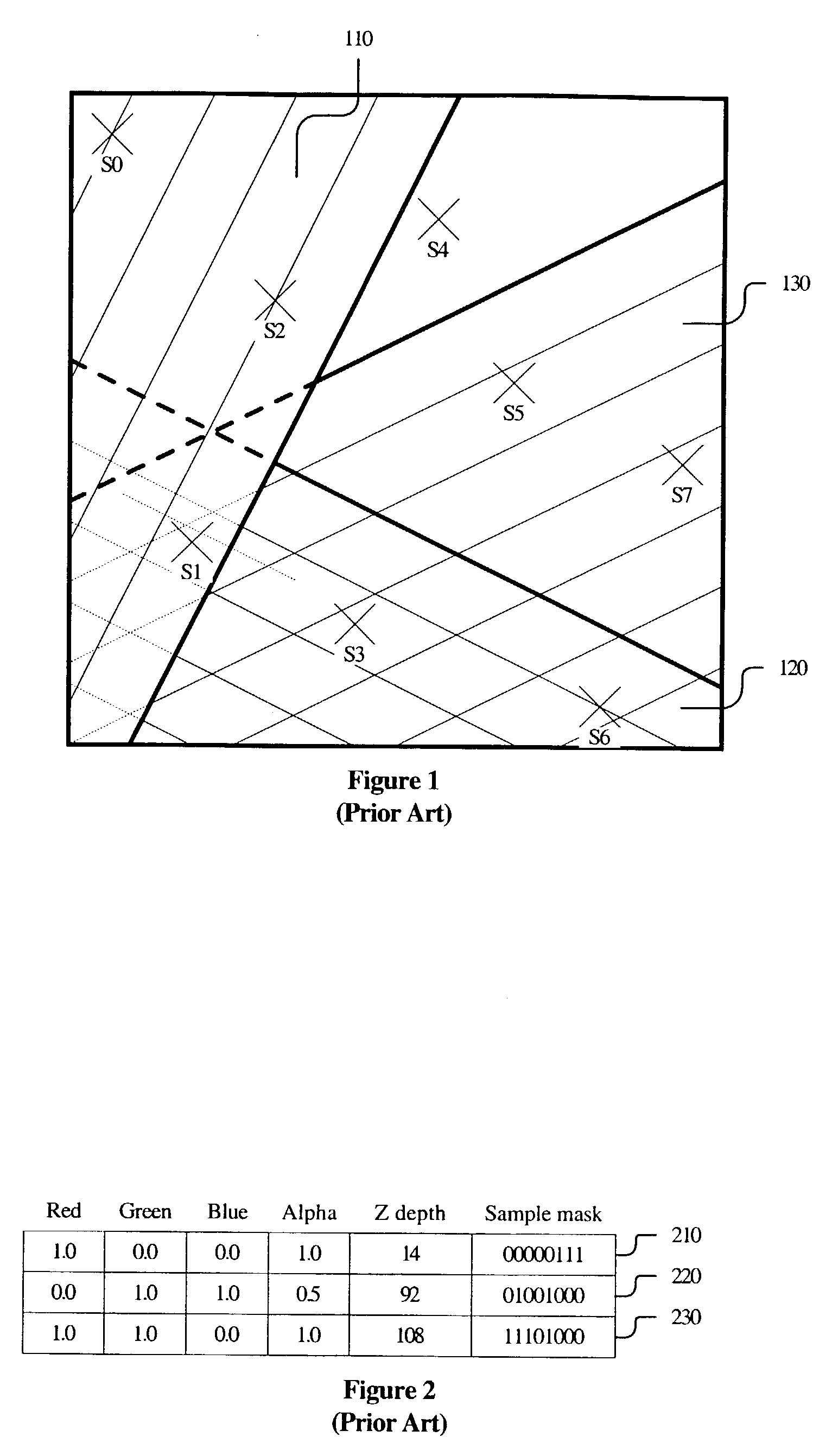

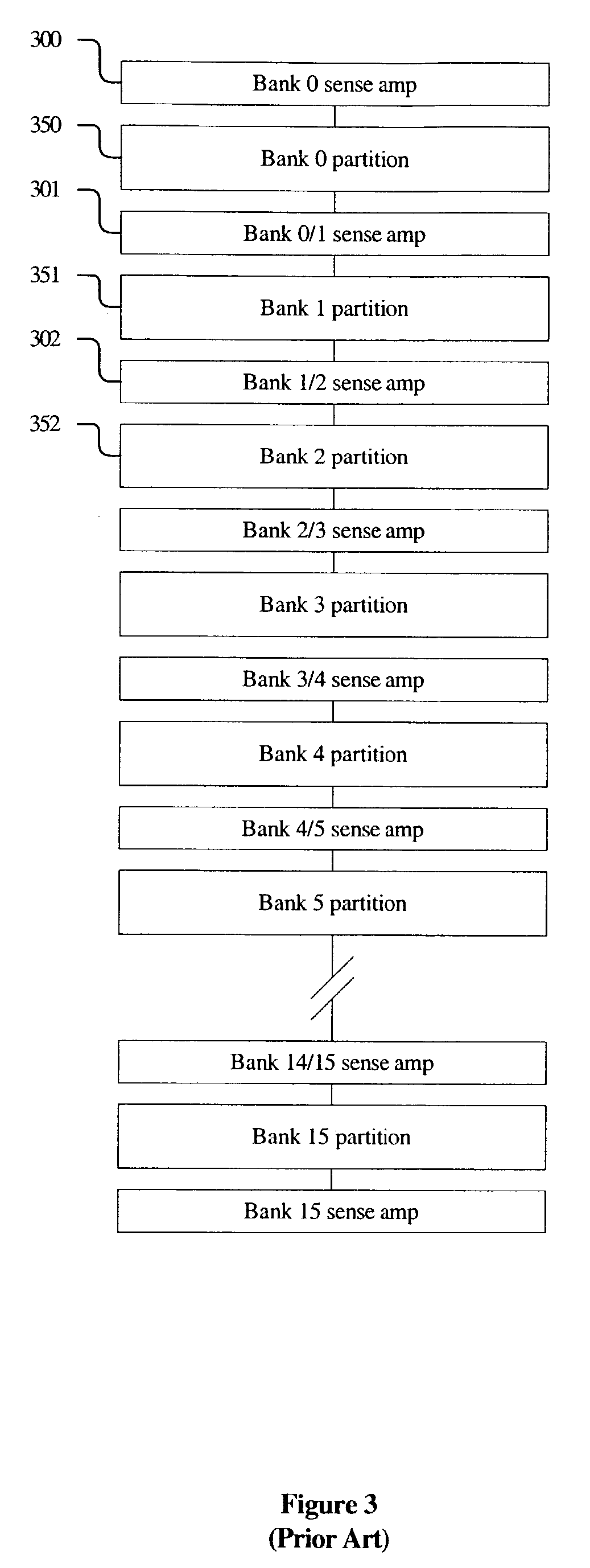

A method and apparatus for arranging fragments in a graphics memory. Each pixel of a display has a corresponding list of fragments in the graphics memory. Each fragment describes a three-dimensional surface at a plurality of sample points associated with the pixel. A predetermined number of fragments are statically allocated to each pixel. Additional space for fragment data is dynamically allocated and deallocated. Each dynamically allocated unit of memory contains fragment data for a plurality of pixels. Fragment data are arranged to exploit modem DRAM capabilities by increasing locality of reference within a single DRAM page, by putting other fragments likely to be referenced soon in pages that belong to non-conflicting banks, and by maintaining bookkeeping structures that allow the relevant DRAM precharge and row activate operations to be scheduled far in advance of access to fragment data.

Owner:HEWLETT PACKARD DEV CO LP

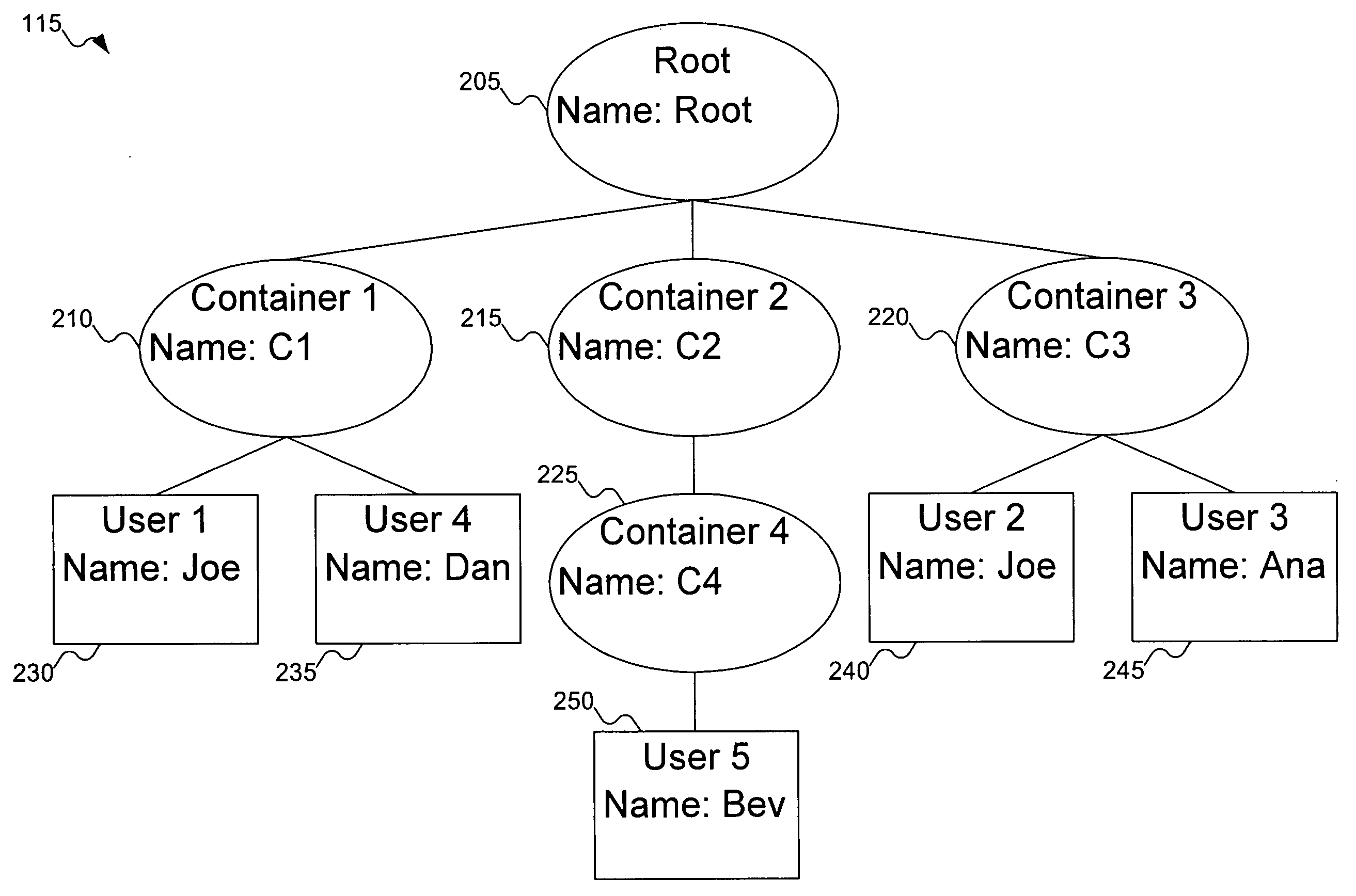

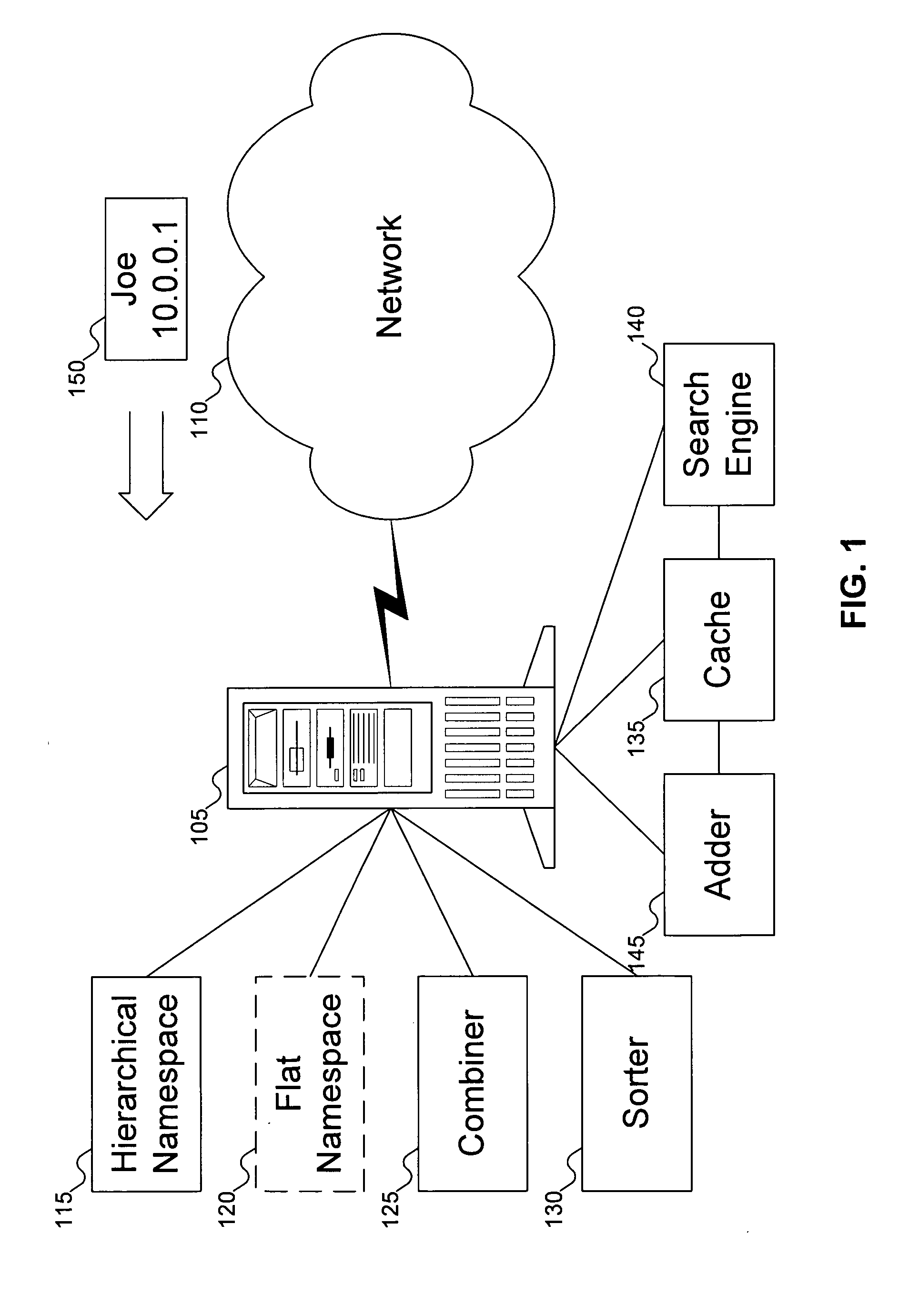

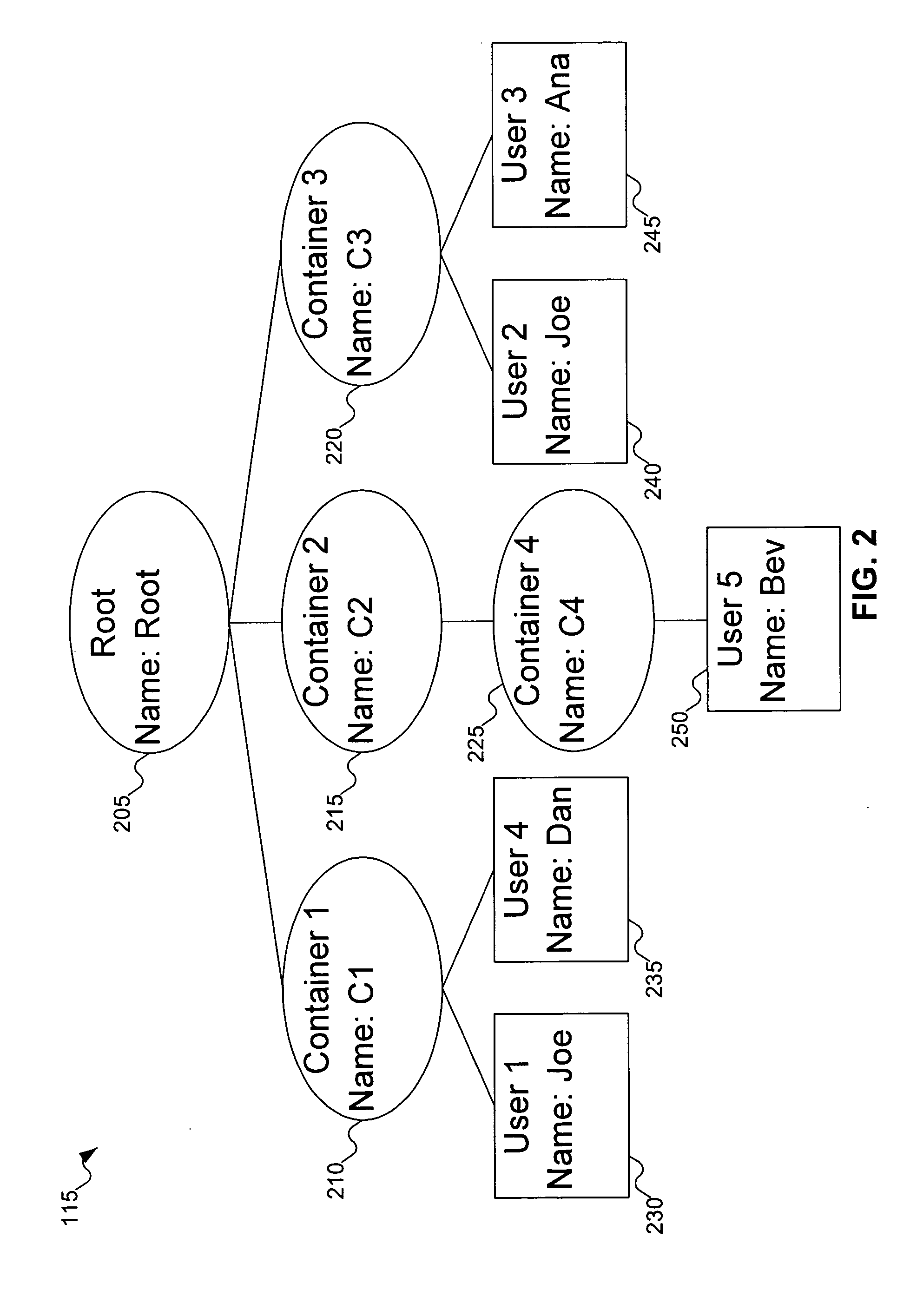

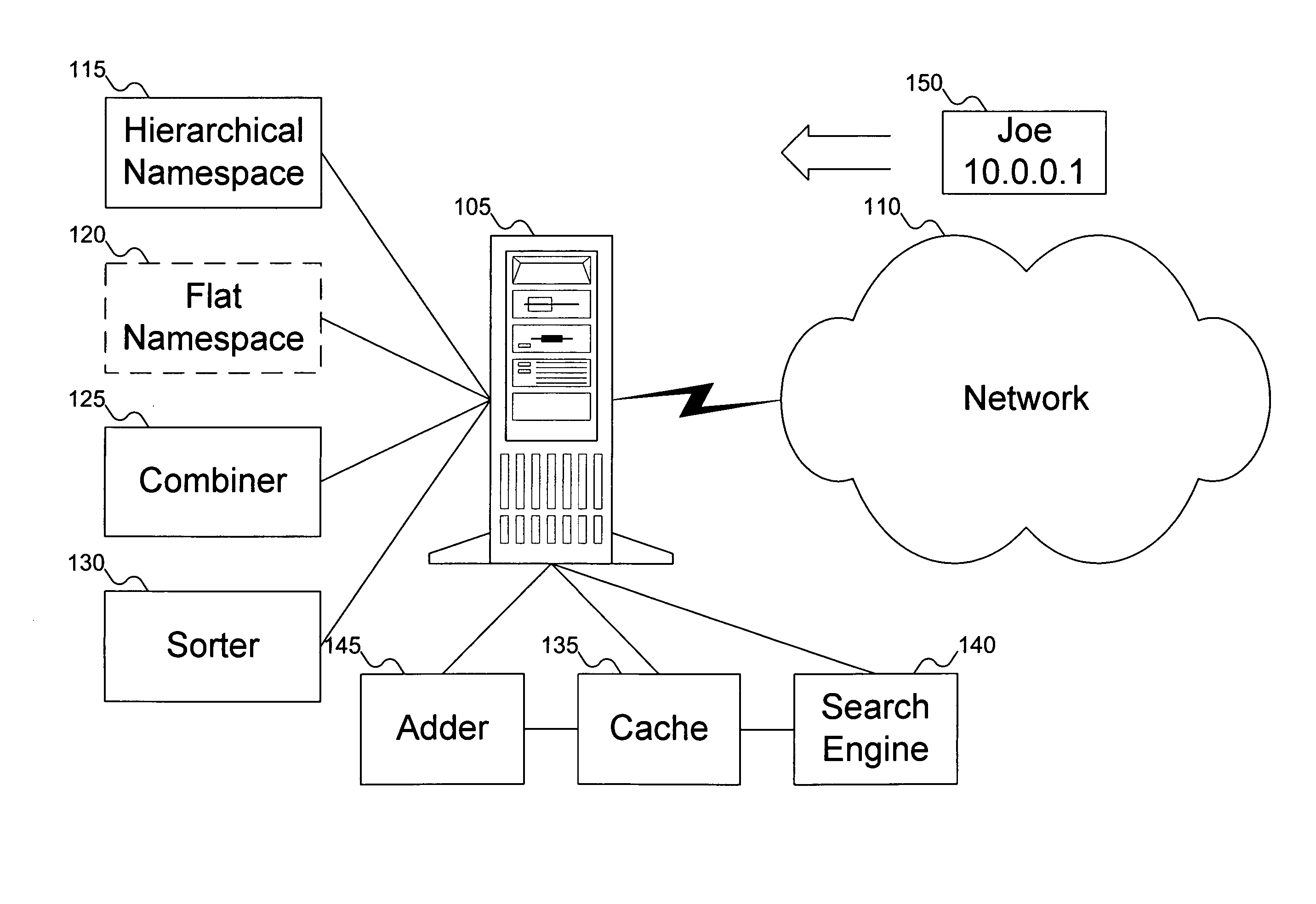

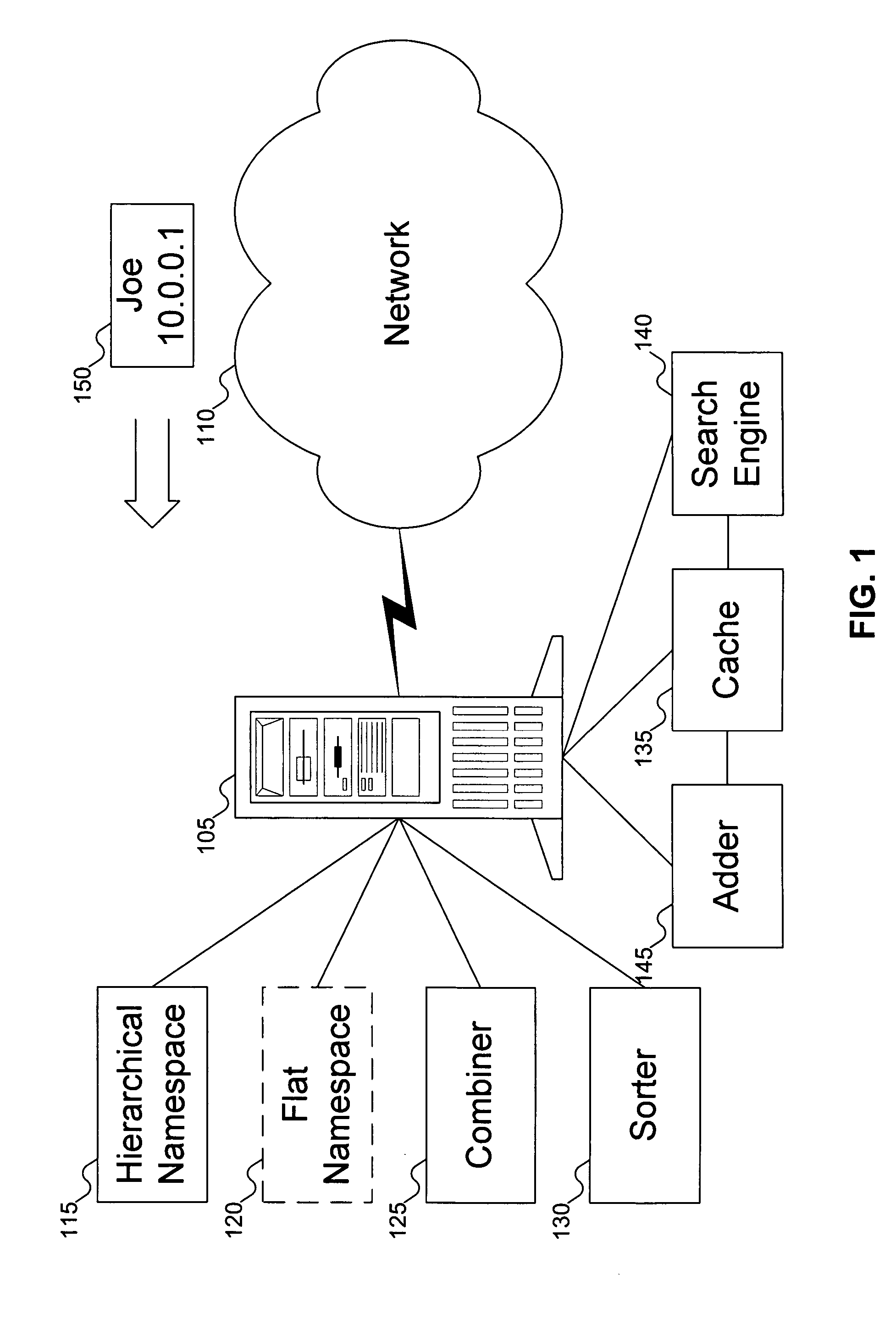

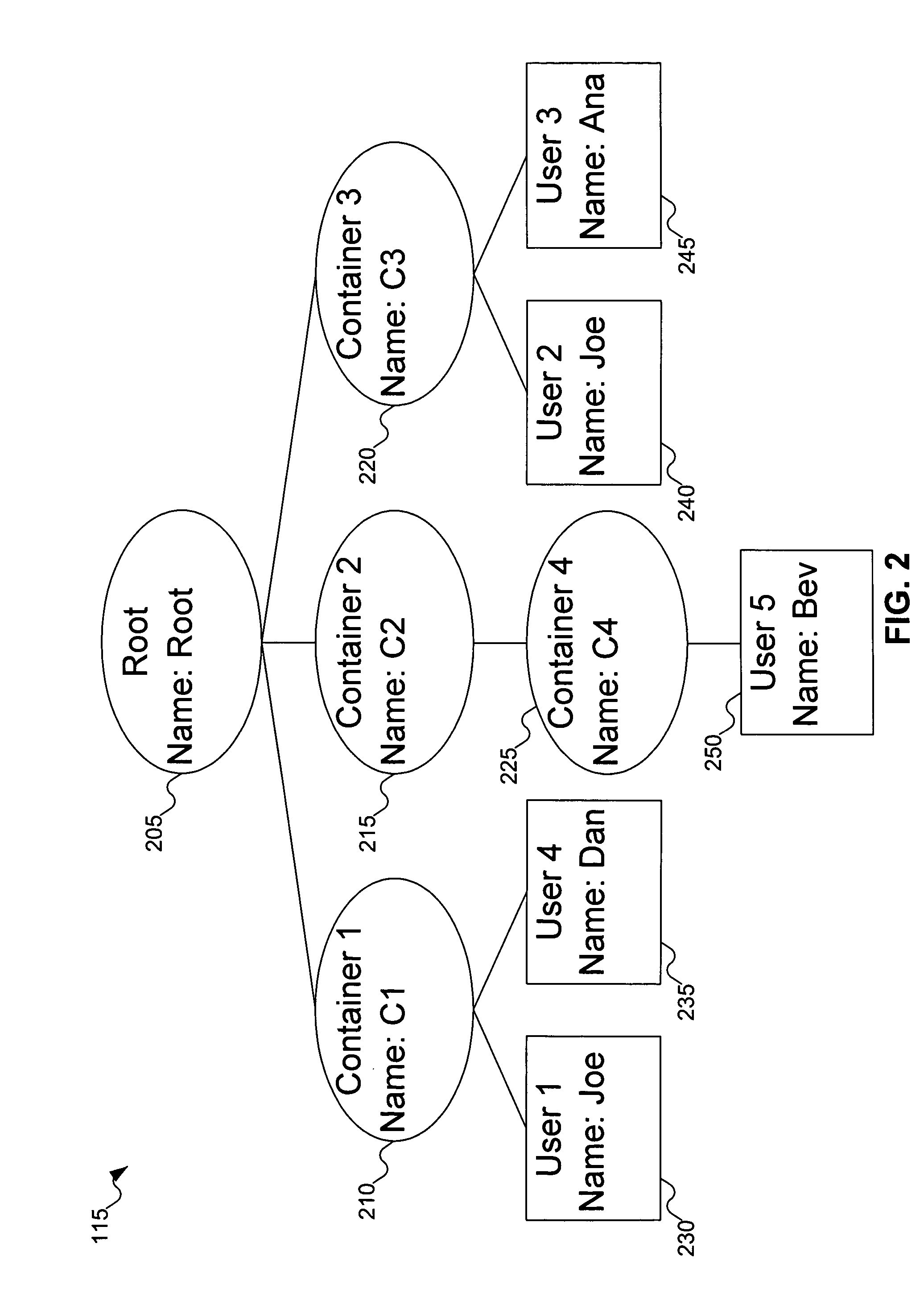

Method for mapping a flat namespace onto a hierarchical namespace using locality of reference cues

ActiveUS20050097073A1Digital data processing detailsDigital data authenticationLocality of referenceComputer architecture

A flat namespace includes a flat identifier. A hierarchical namespace includes containers in some organization. A cache includes associations between flat identifiers, locality reference cues, and containers in the hierarchical namespace. A combiner combines the flat identifier with containers in the hierarchical namespace. The combiner can be used first with containers found in the cache, before trying other containers in the hierarchical namespace.

Owner:EMC IP HLDG CO LLC

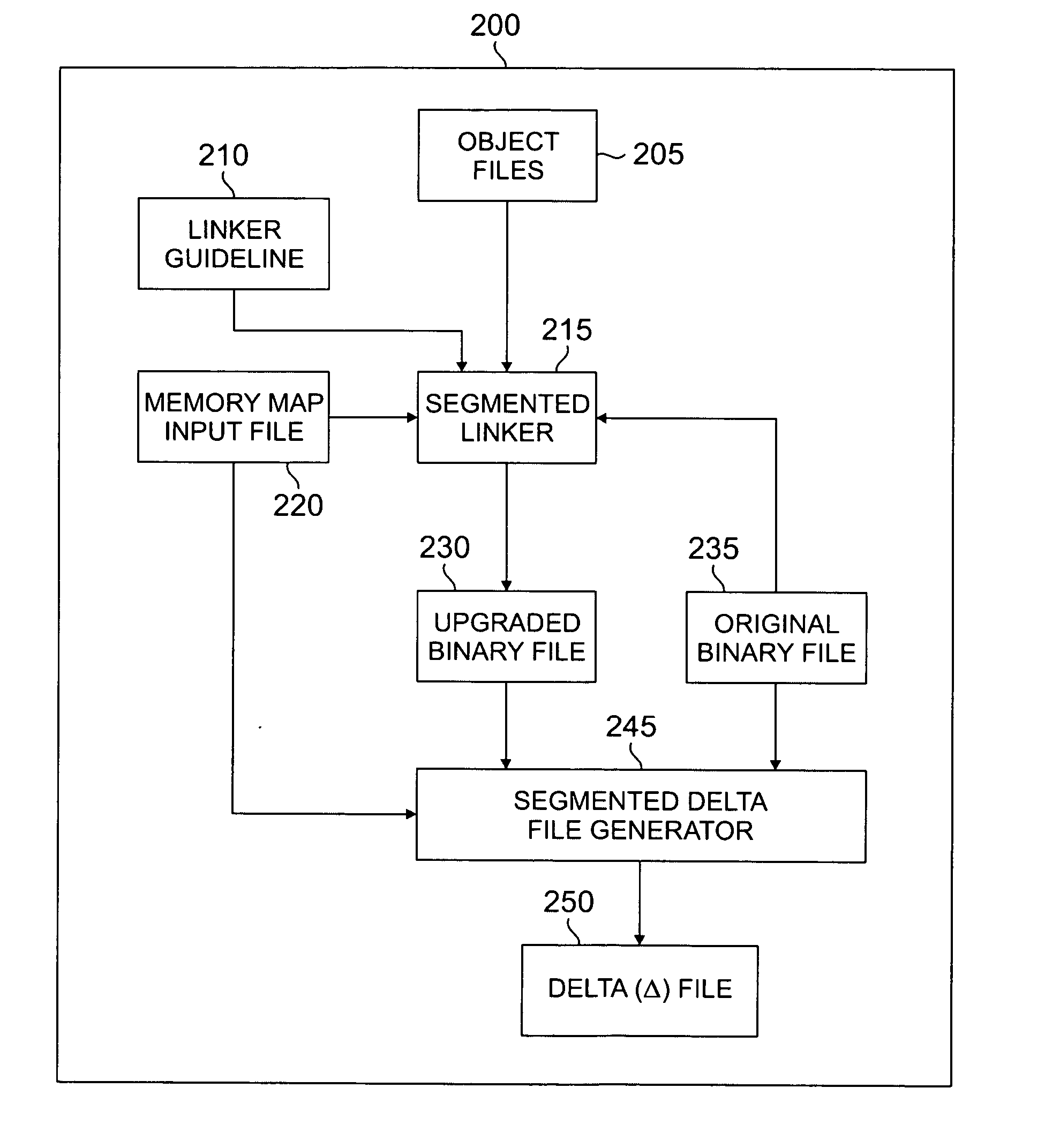

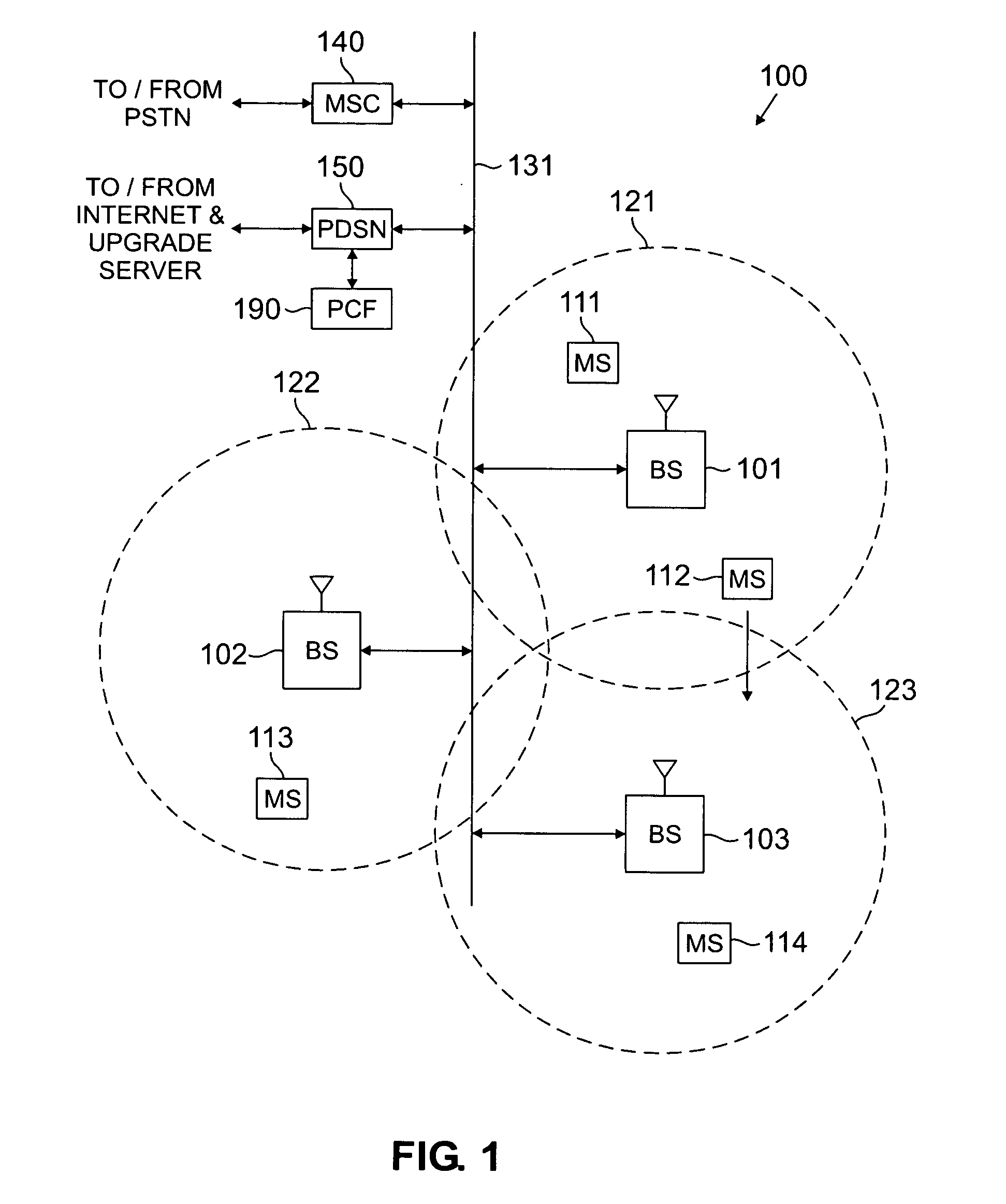

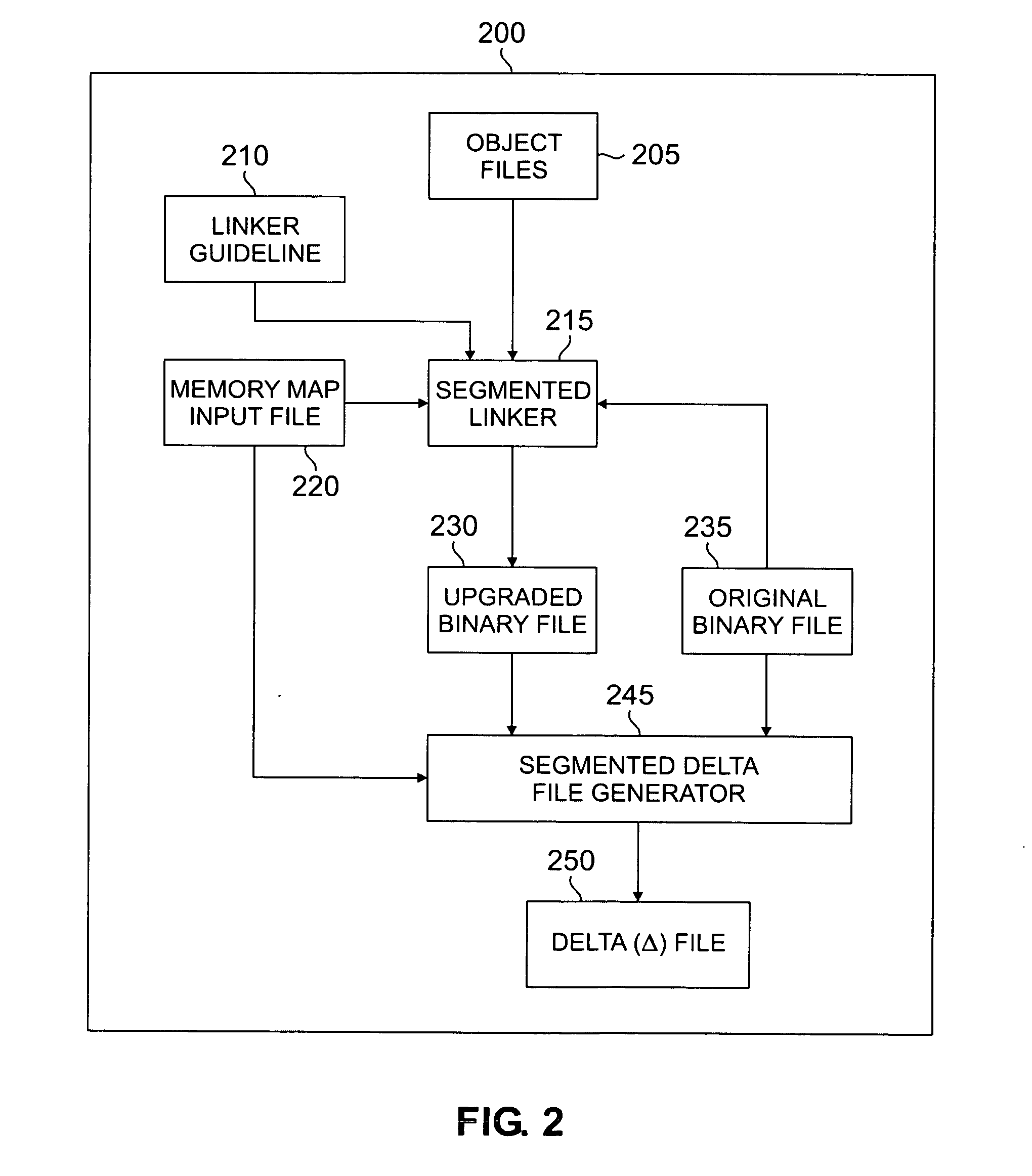

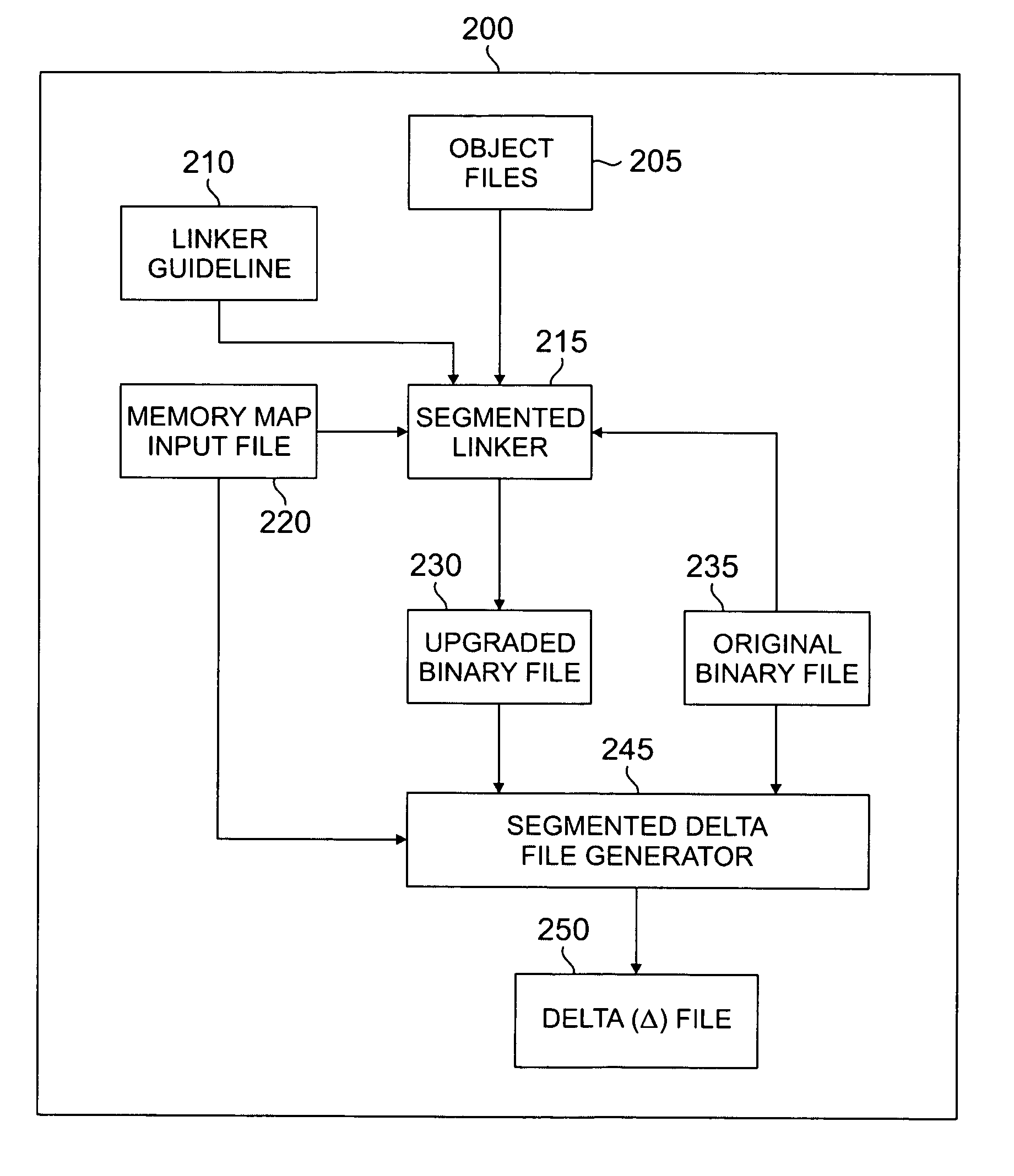

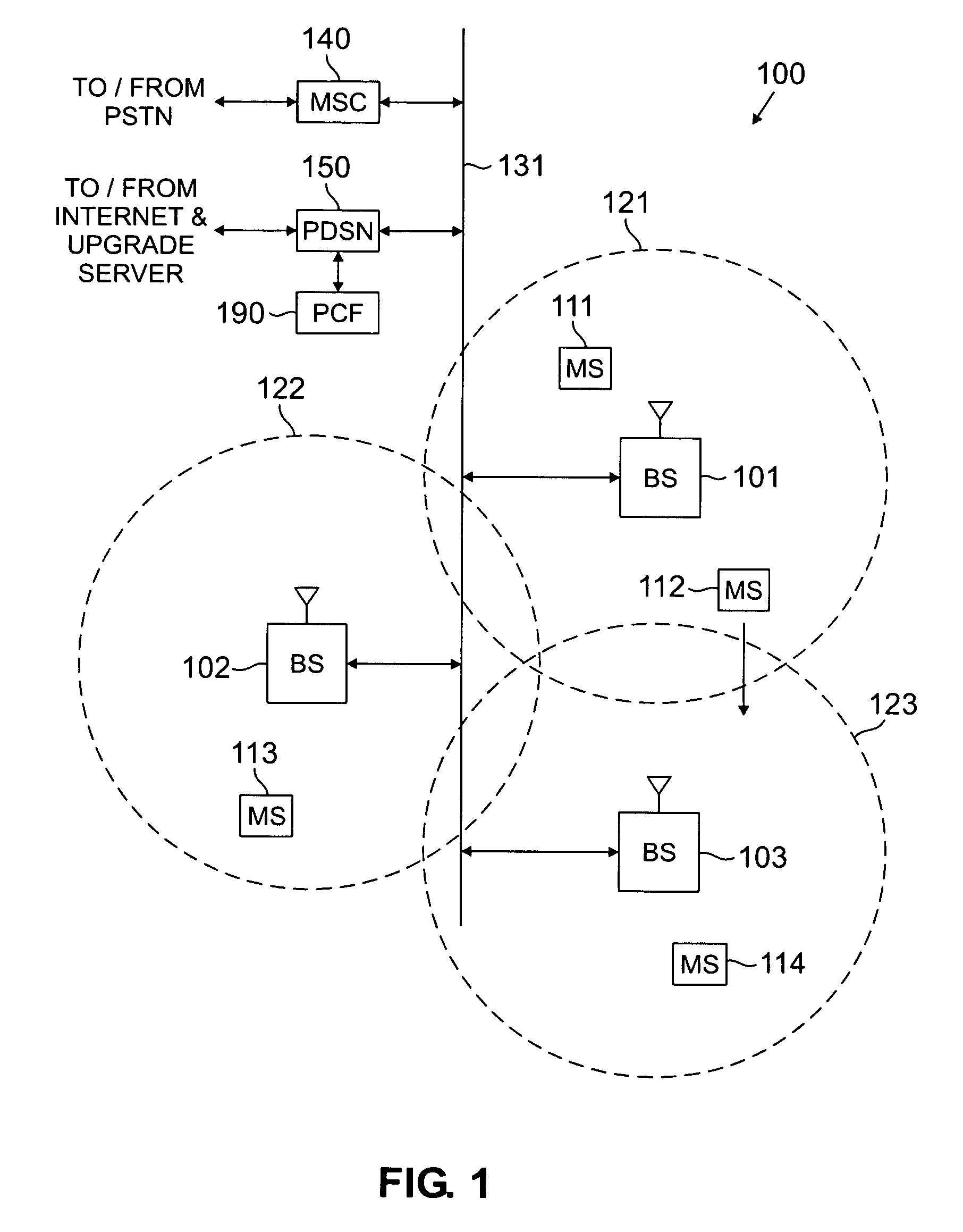

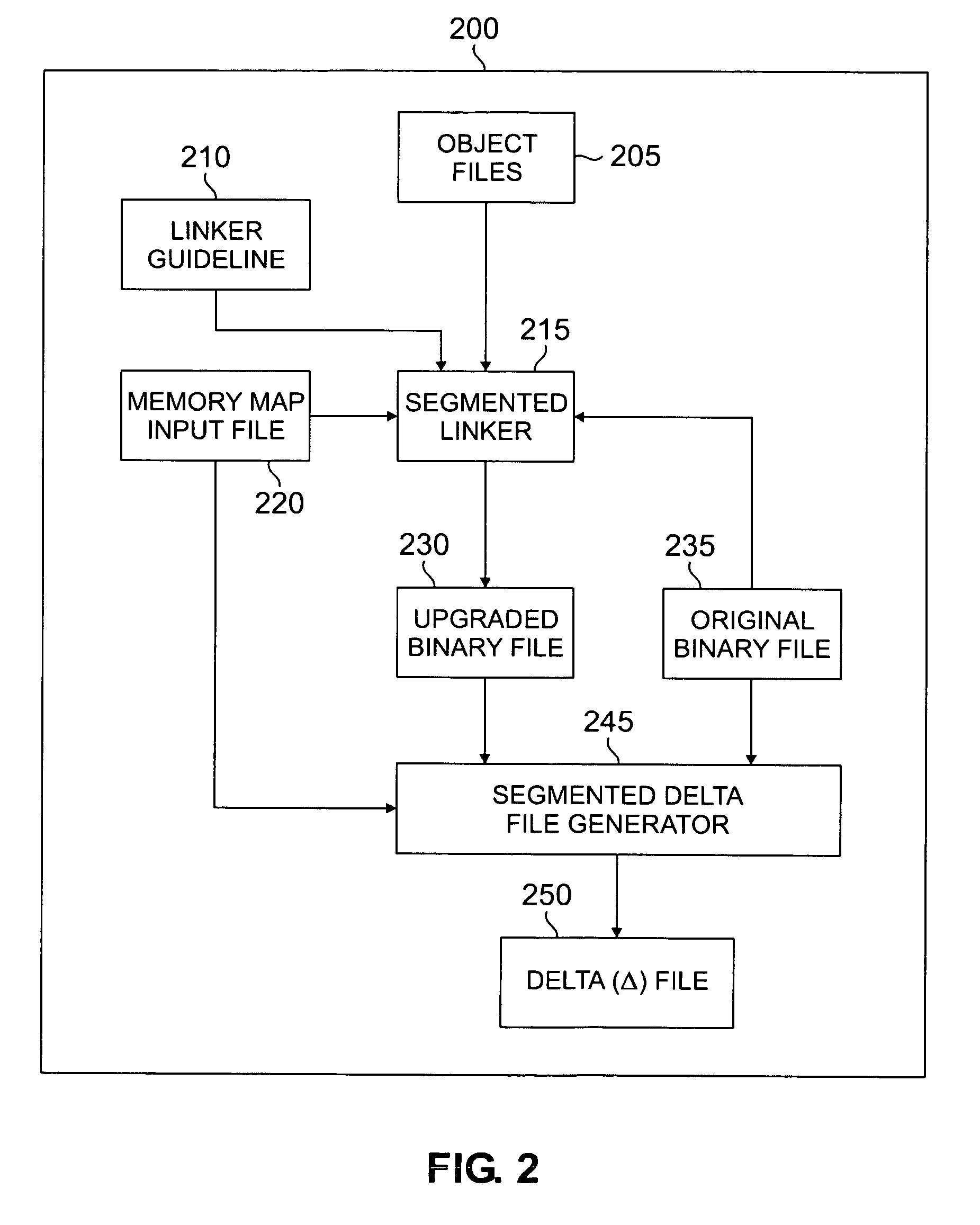

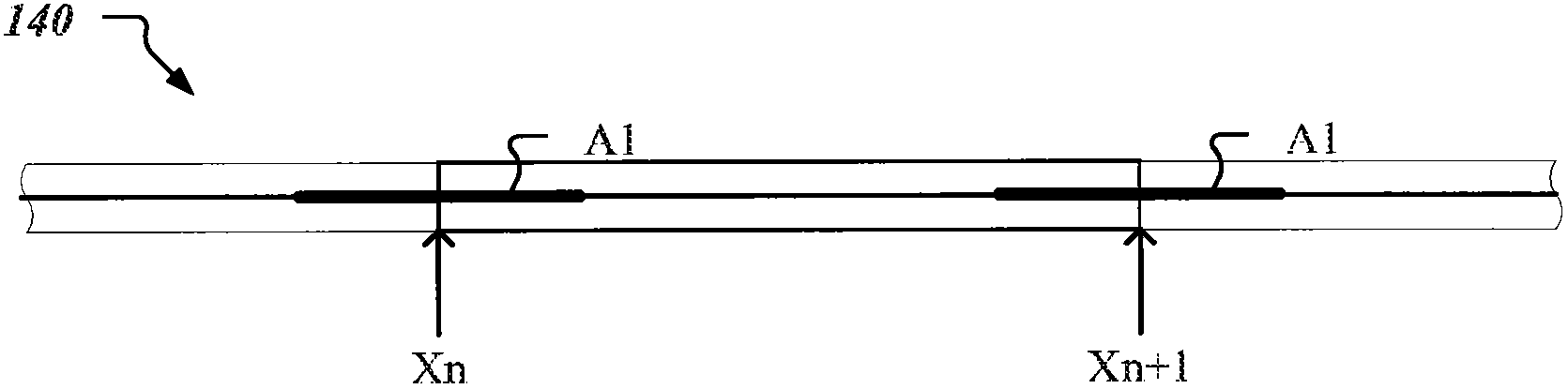

Segmented linker using spatial locality of reference for over-the-air software updates

InactiveUS20050278715A1Reduce in quantityIncrease generationSoftware engineeringProgram loading/initiatingLocality of referenceReference space

A segmented linker for generating from an original binary file an upgraded binary file suitable for replacing a copy of the original binary file installed in a target device. The segmented linker receives as inputs a plurality of objects, the original binary file, and a memory map input file associated with a target device. The segmented linker preserves in the upgraded binary file at least some of the spatial locality of reference of code in the original binary file. The segmented linker further receives as an input a linker guideline file that defines the layout of objects having spatial locality of reference in the original binary file. The segmented linker uses the linker guideline file to limit the propagation of cascading address references in the upgraded binary file.

Owner:SAMSUNG ELECTRONICS CO LTD

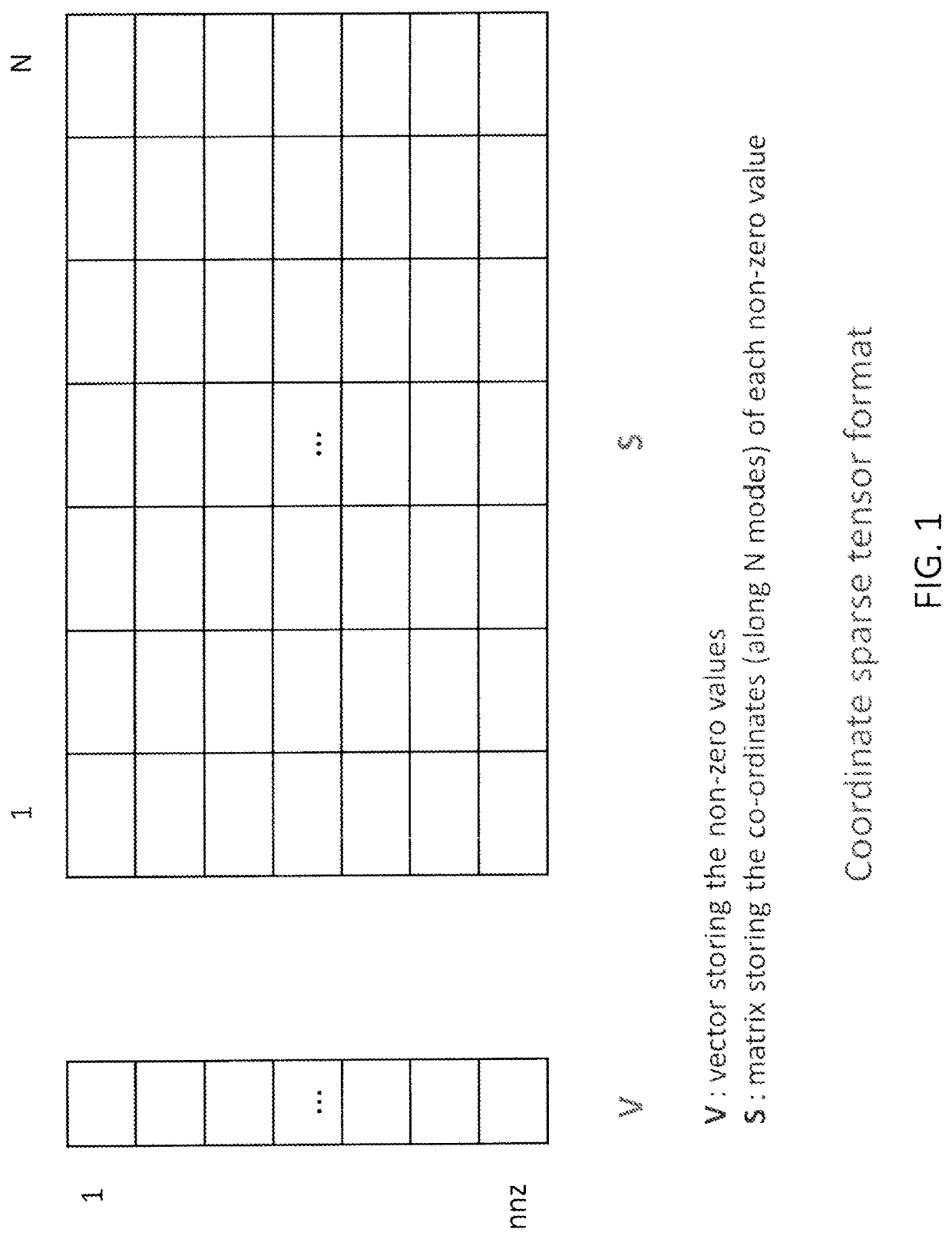

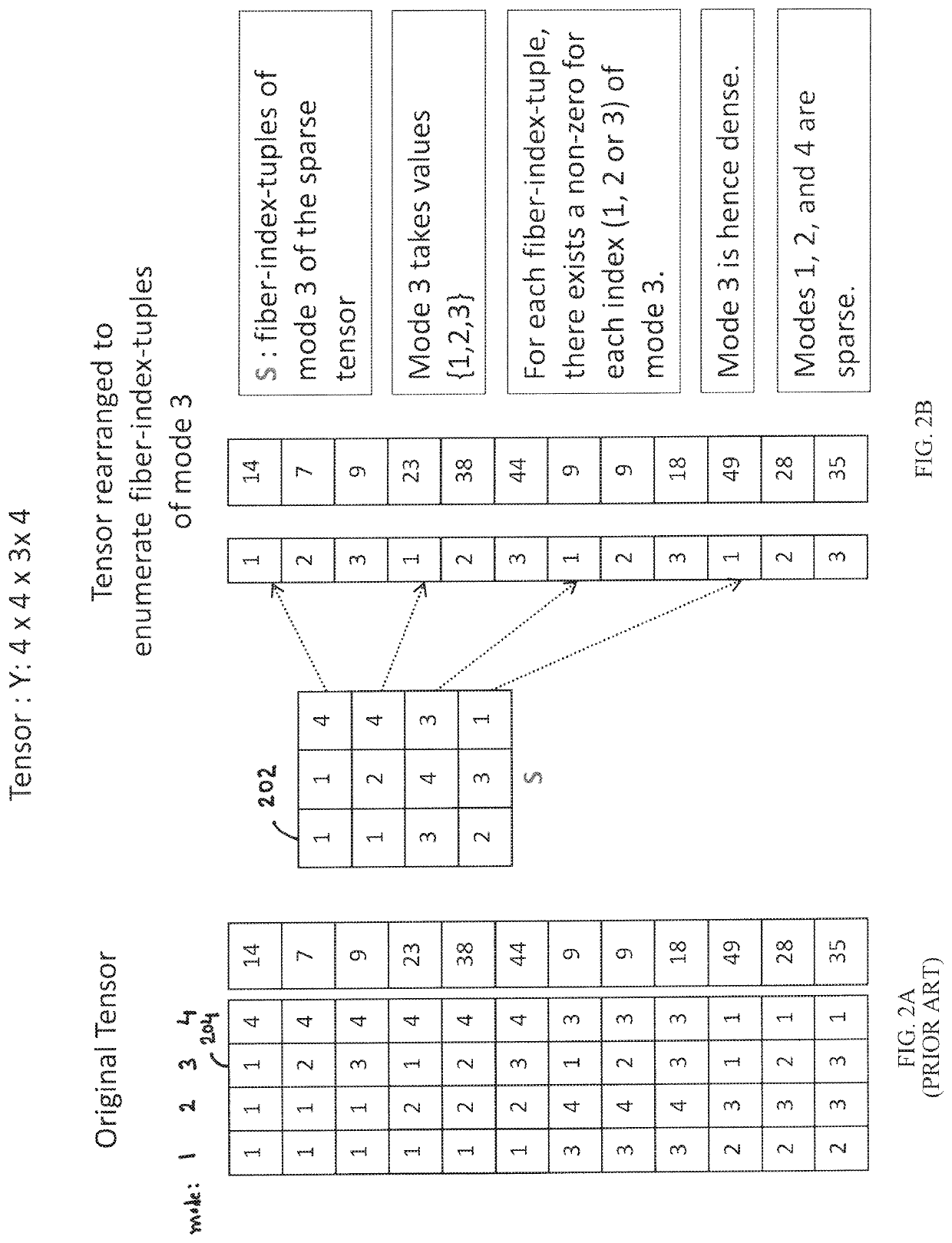

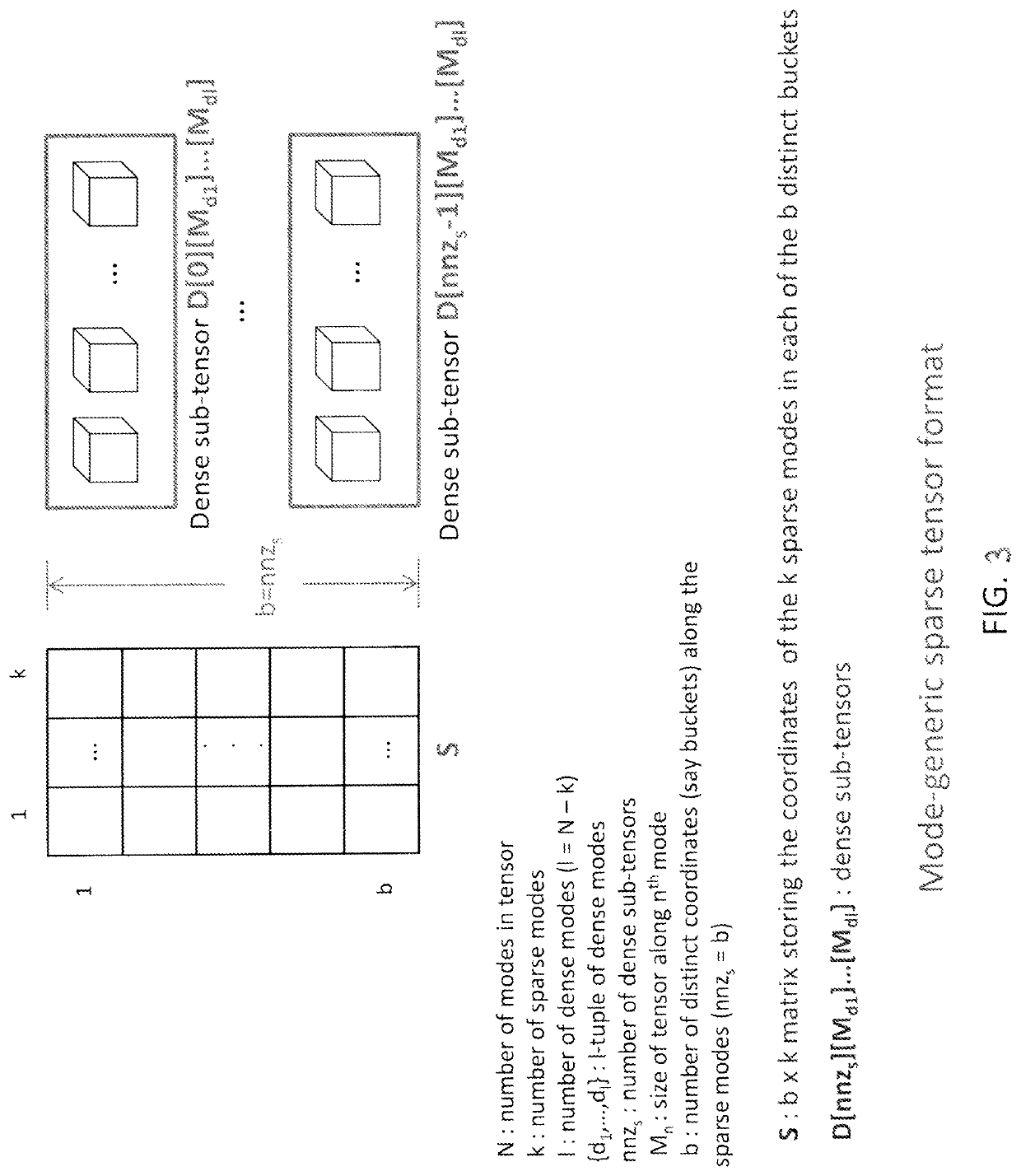

Efficient and scalable computations with sparse tensors

ActiveUS10936569B1Improve data localityMemory storage benefitsComplex mathematical operationsDatabase indexingLocality of referenceAlgorithm

In a system for storing in memory a tensor that includes at least three modes, elements of the tensor are stored in a mode-based order for improving locality of references when the elements are accessed during an operation on the tensor. To facilitate efficient data reuse in a tensor transform that includes several iterations, on a tensor that includes at least three modes, a system performs a first iteration that includes a first operation on the tensor to obtain a first intermediate result, and the first intermediate result includes a first intermediate-tensor. The first intermediate result is stored in memory, and a second iteration is performed in which a second operation on the first intermediate result accessed from the memory is performed, so as to avoid a third operation, that would be required if the first intermediate result were not accessed from the memory.

Owner:QUALCOMM INC

Segmented linker using spatial locality of reference for over-the-air software updates

InactiveUS7673300B2Reduce in quantityIncrease generationSoftware engineeringProgram loading/initiatingLocality of referenceReference space

A segmented linker for generating from an original binary file an upgraded binary file suitable for replacing a copy of the original binary file installed in a target device. The segmented linker receives as inputs a plurality of objects, the original binary file, and a memory map input file associated with a target device. The segmented linker preserves in the upgraded binary file at least some of the spatial locality of reference of code in the original binary file. The segmented linker further receives as an input a linker guideline file that defines the layout of objects having spatial locality of reference in the original binary file. The segmented linker uses the linker guideline file to limit the propagation of cascading address references in the upgraded binary file.

Owner:SAMSUNG ELECTRONICS CO LTD

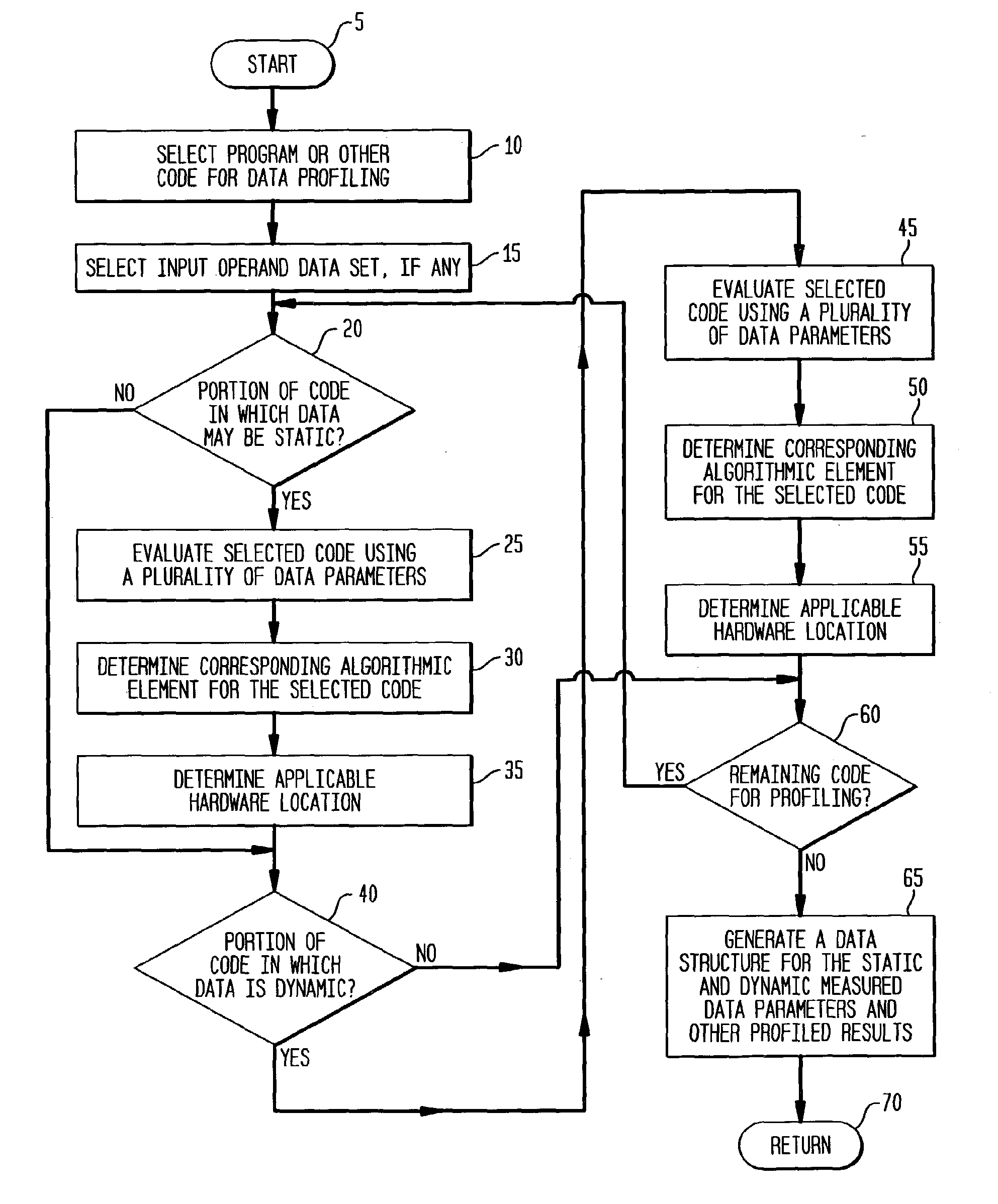

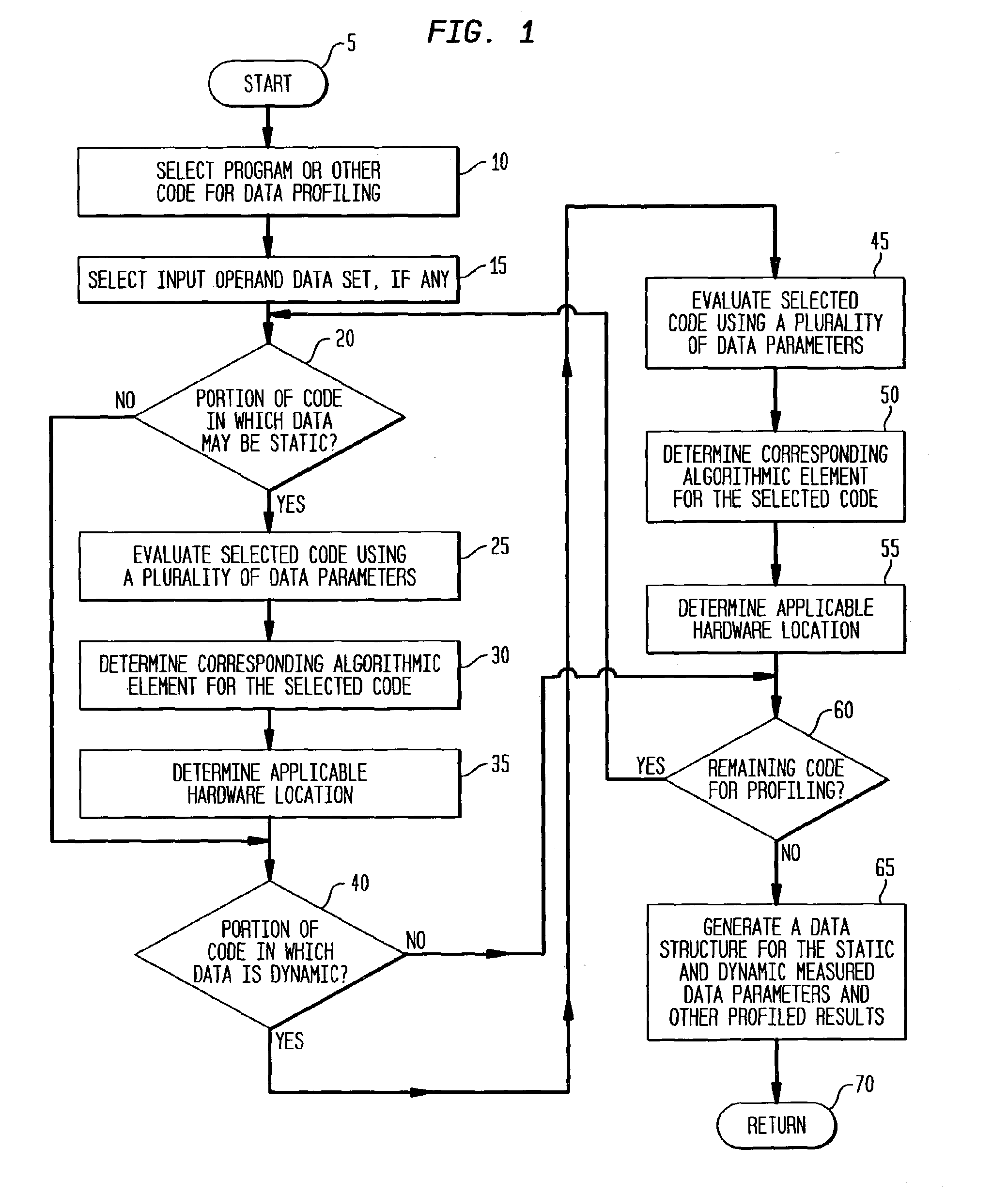

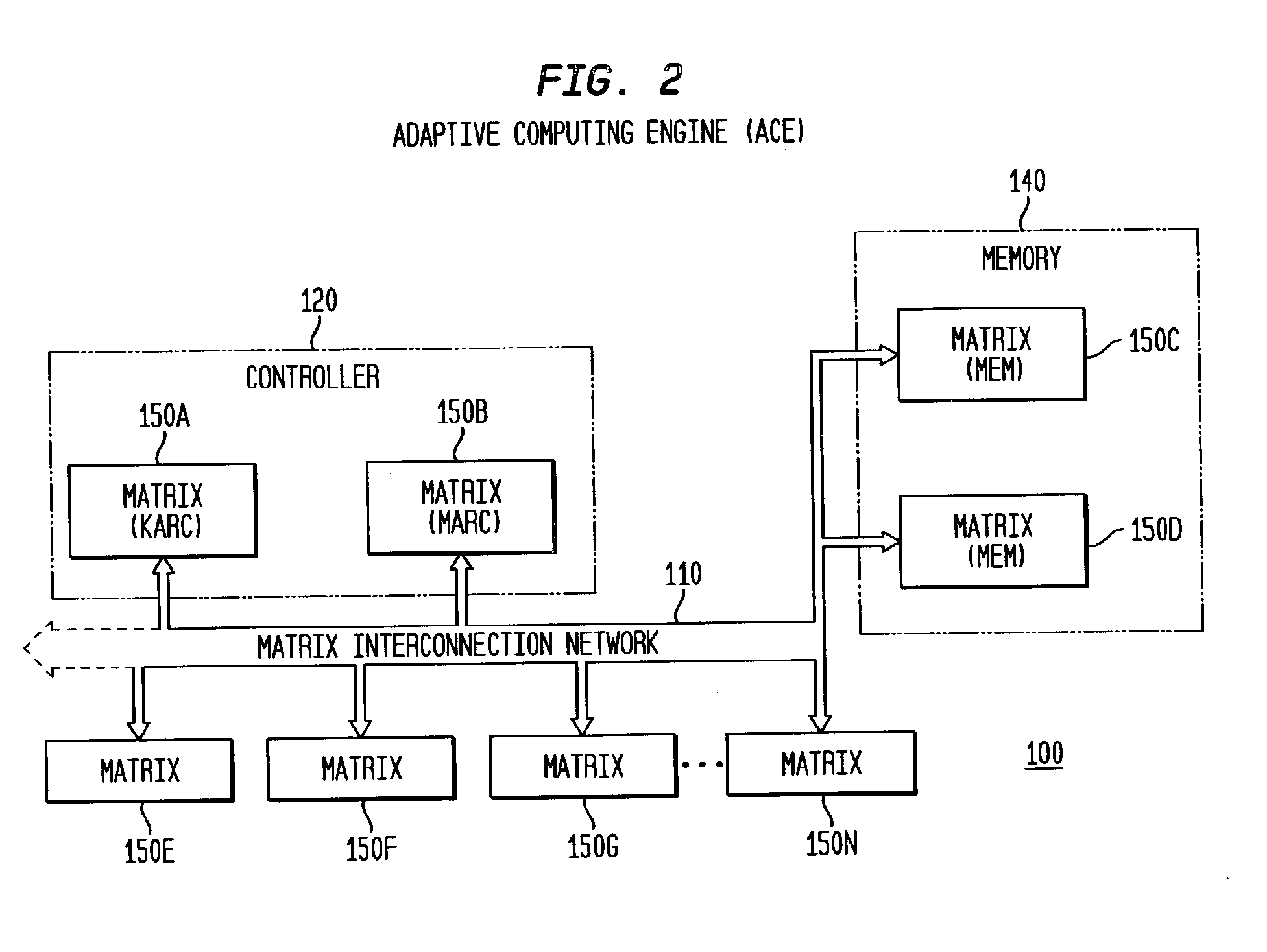

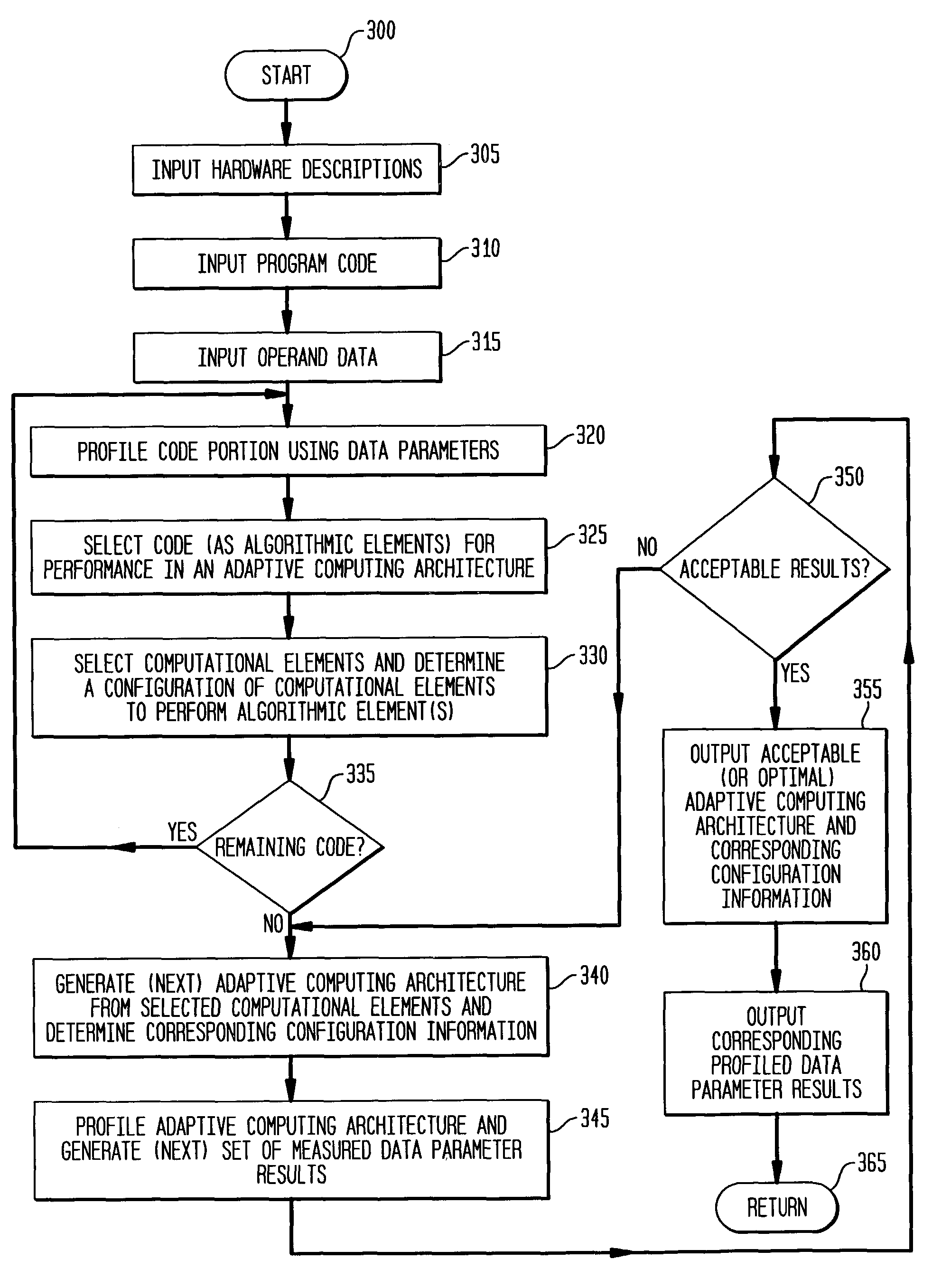

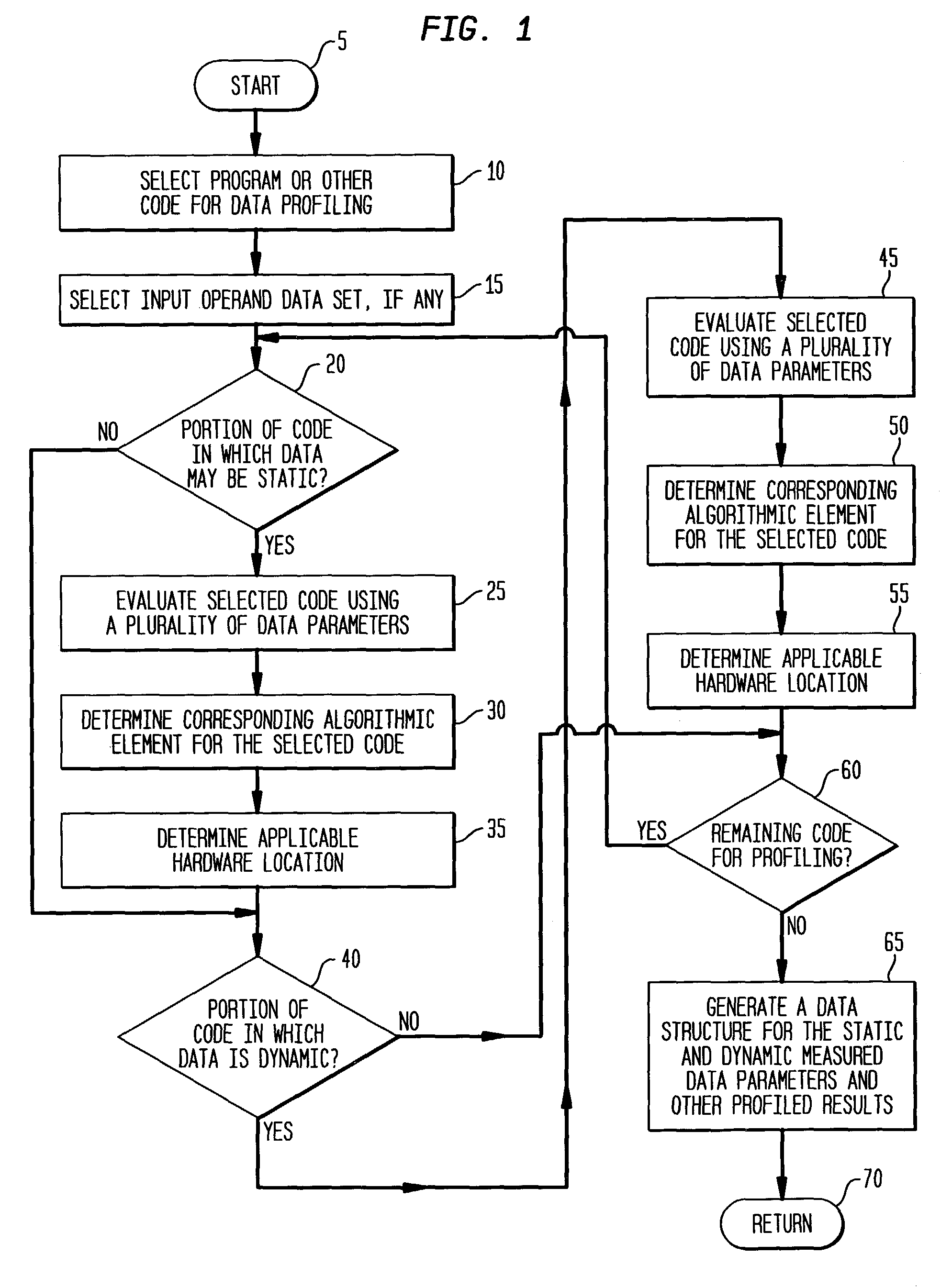

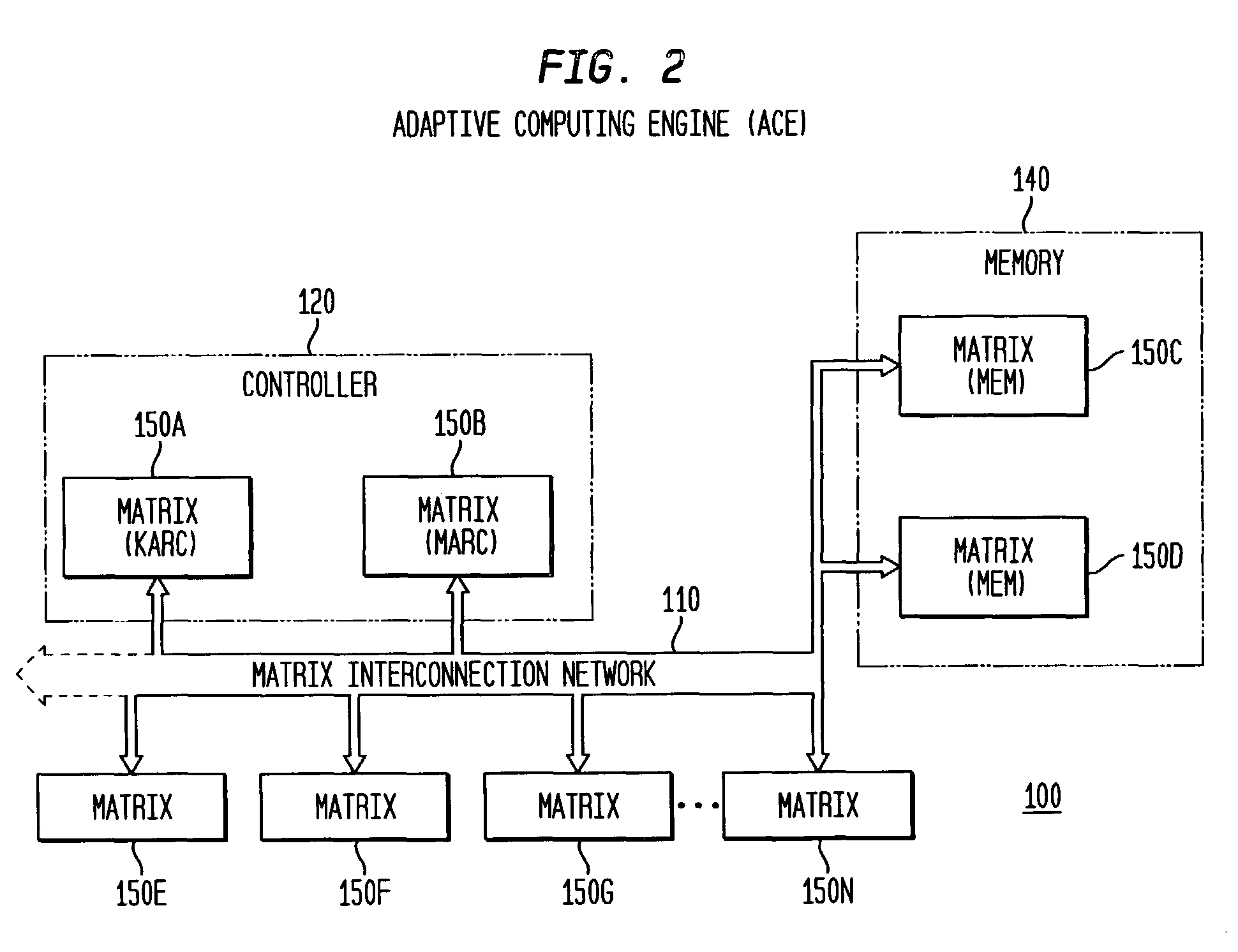

Profiling of software and circuit designs utilizing data operation analyses

The present invention is a method, system, software and data structure for profiling programs, other code, and adaptive computing integrated circuit architectures, using a plurality of data parameters such as data type, input and output data size, data source and destination locations, data pipeline length, locality of reference, distance of data movement, speed of data movement, data access frequency, number of data load / stores, memory usage, and data persistence. The profiler of the invention accepts a data set as input, and profiles a plurality of functions by measuring a plurality of data parameters for each function, during operation of the plurality of functions with the input data set, to form a plurality of measured data parameters. From the plurality of measured data parameters, the profiler generates a plurality of data parameter comparative results corresponding to the plurality of functions and the input data set. Based upon the measured data parameters, portions of the profiled code are selected for embodiment as computational elements in an adaptive computing IC architecture.

Owner:QST HLDG L L C

Increasing Parallel Program Performance for Irregular Memory Access Problems with Virtual Data Partitioning and Hierarchical Collectives

InactiveUS20120124585A1Improve business performanceMultiprogramming arrangementsMemory systemsLocality of referenceDistributed memory

A method for increasing performance of an operation on a distributed memory machine is provided. Asynchronous parallel steps in the operation are transformed into synchronous parallel steps. The synchronous parallel steps of the operation are rearranged to generate an altered operation that schedules memory accesses for increasing locality of reference. The altered operation that schedules memory accesses for increasing locality of reference is mapped onto the distributed memory machine. Then, the altered operation is executed on the distributed memory machine to simulate local memory accesses with virtual threads to check cache performance within each node of the distributed memory machine.

Owner:IBM CORP

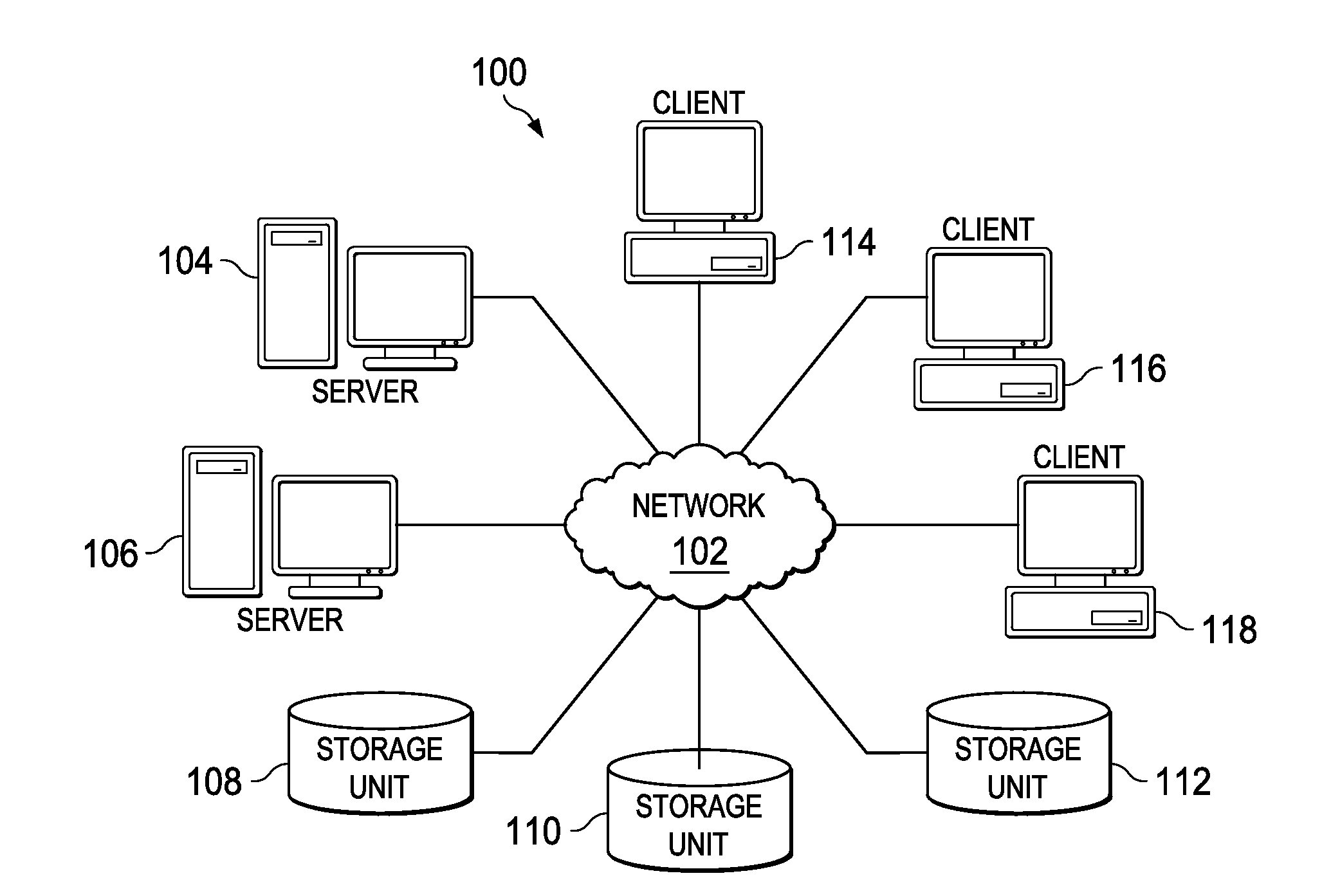

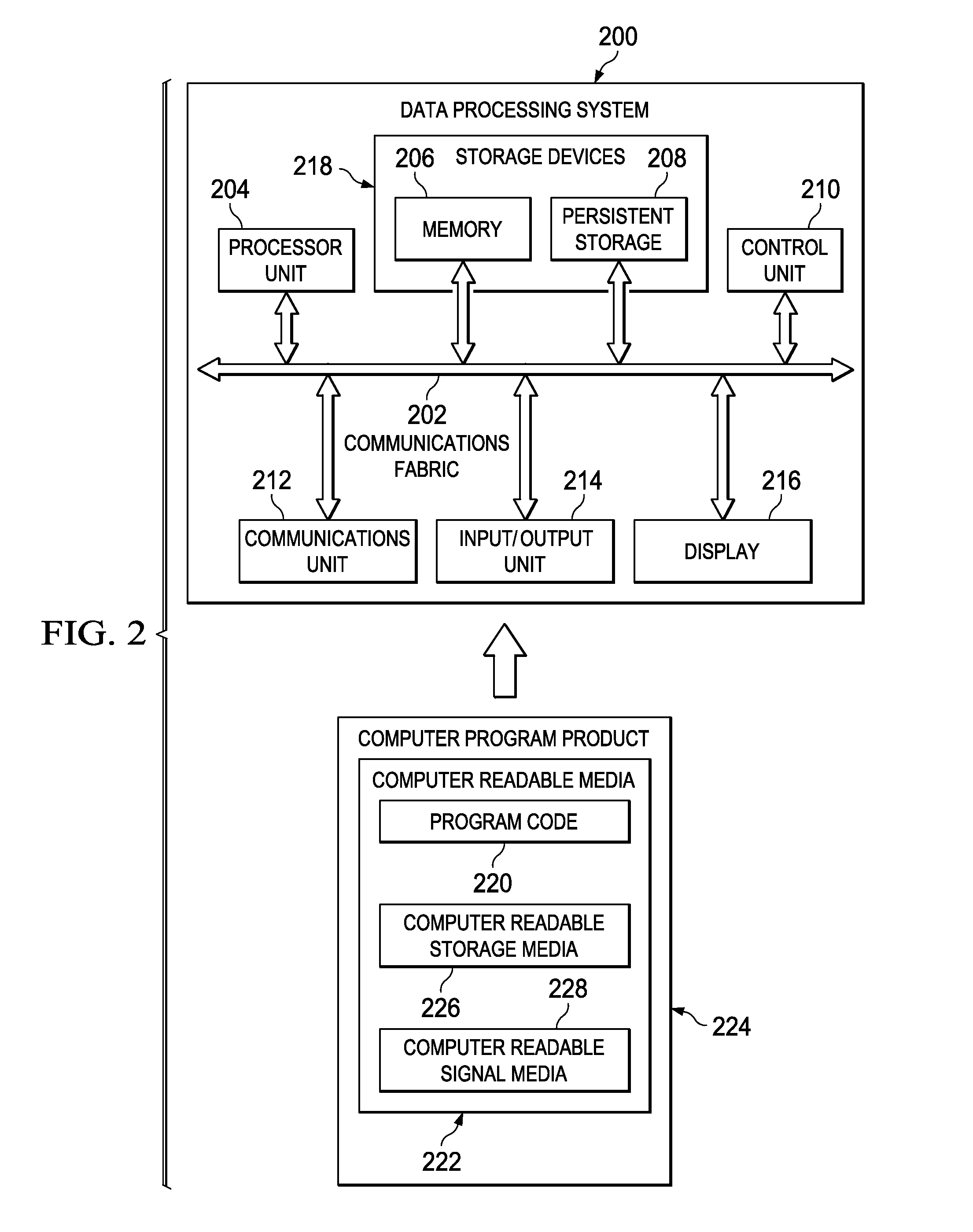

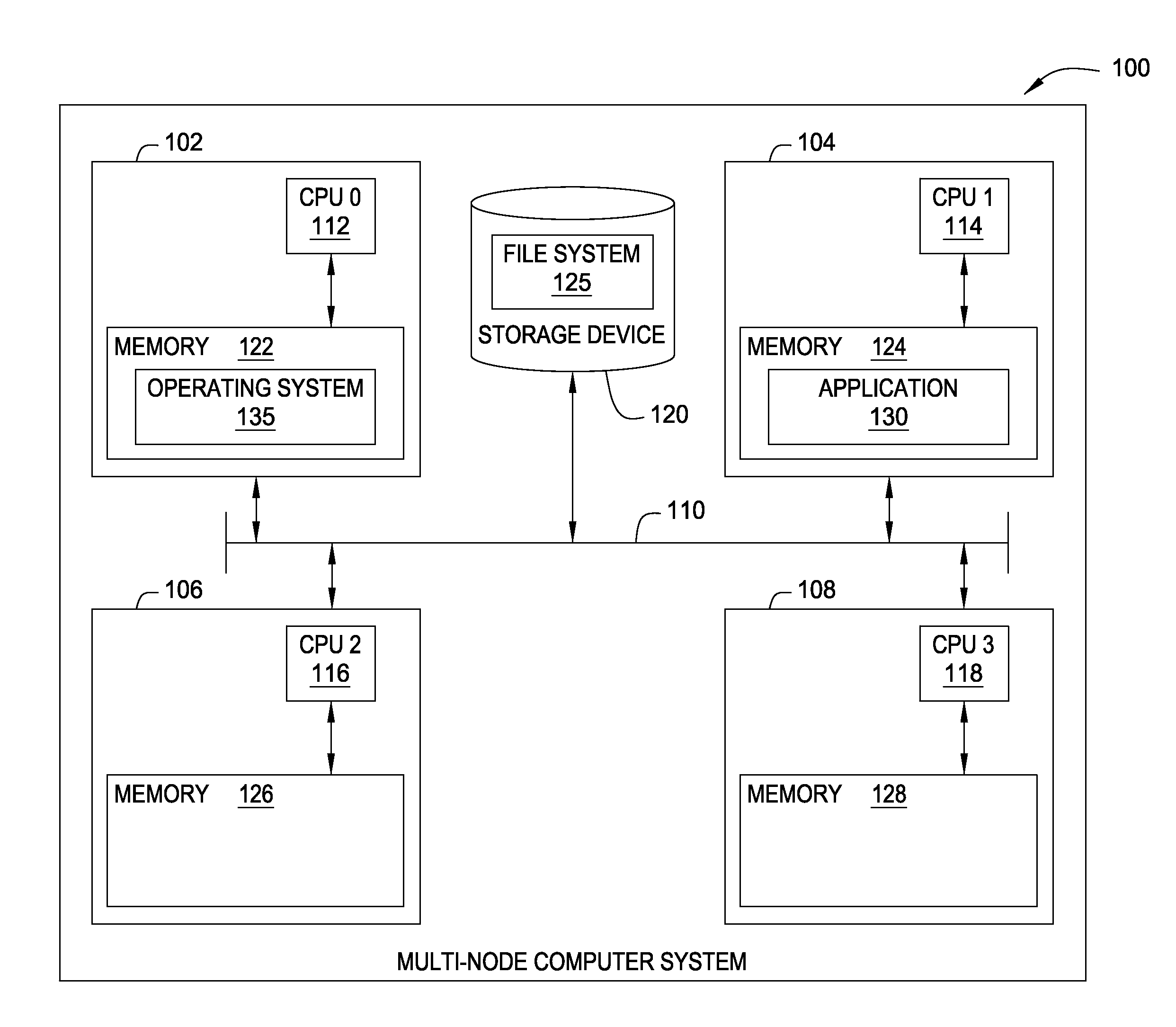

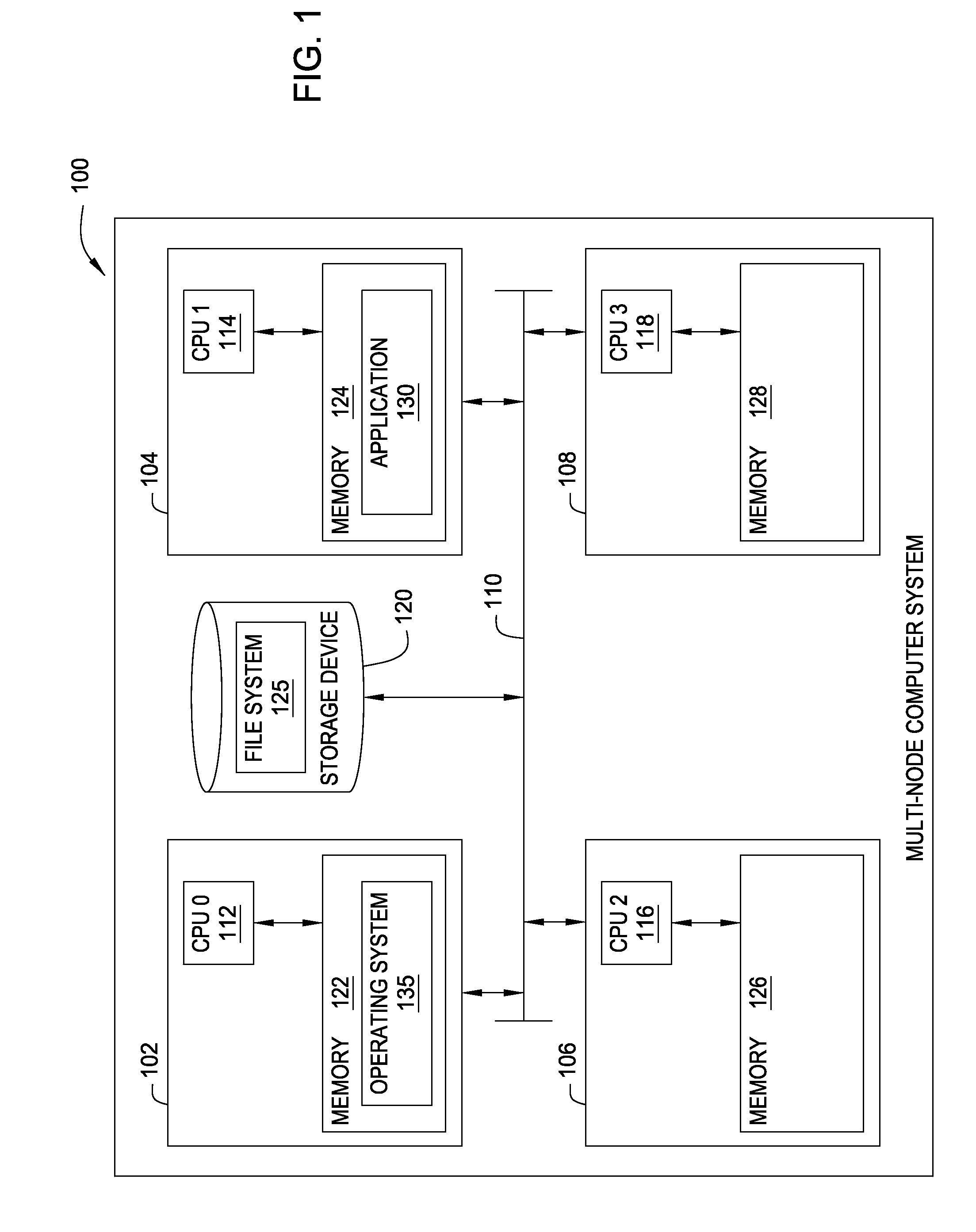

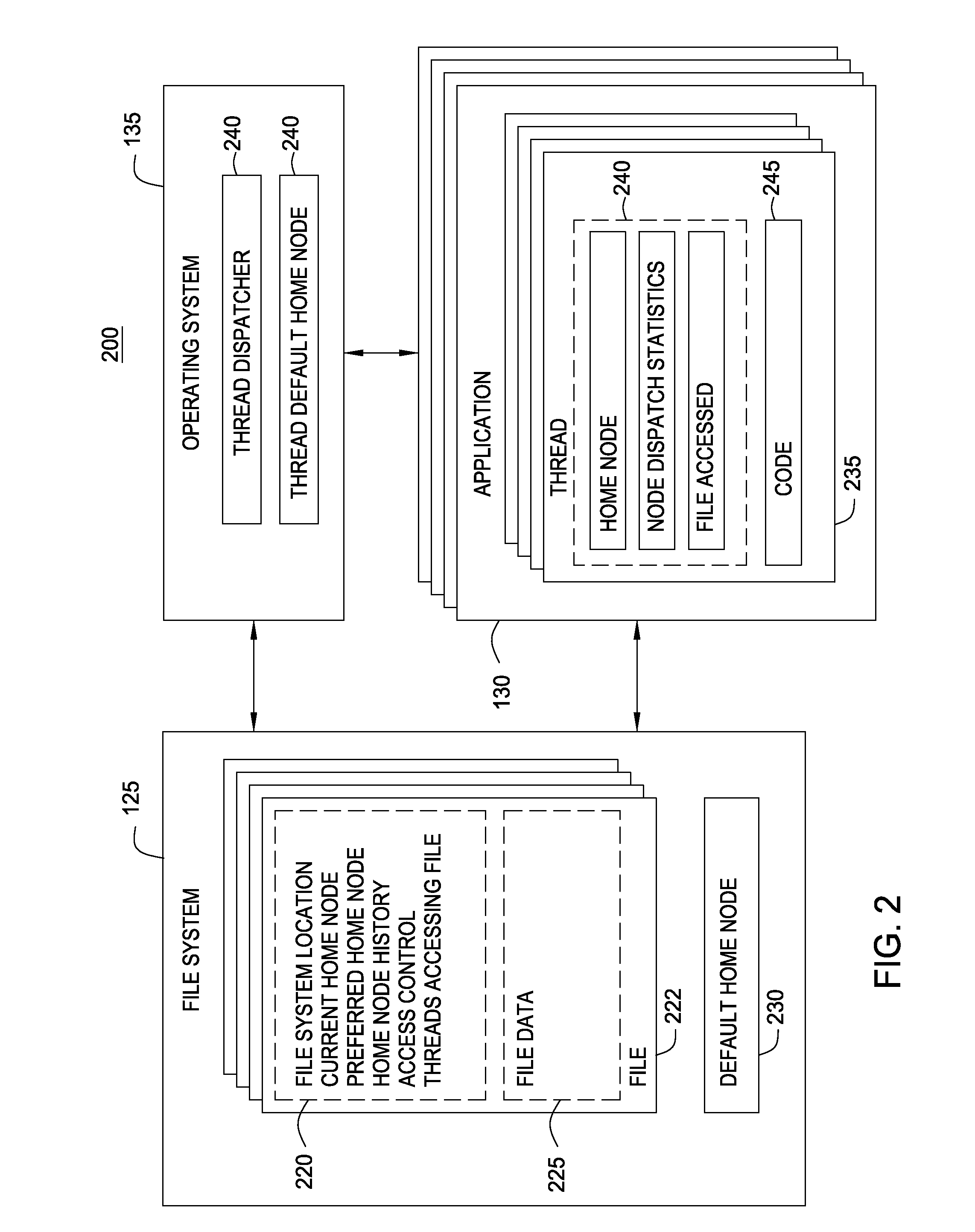

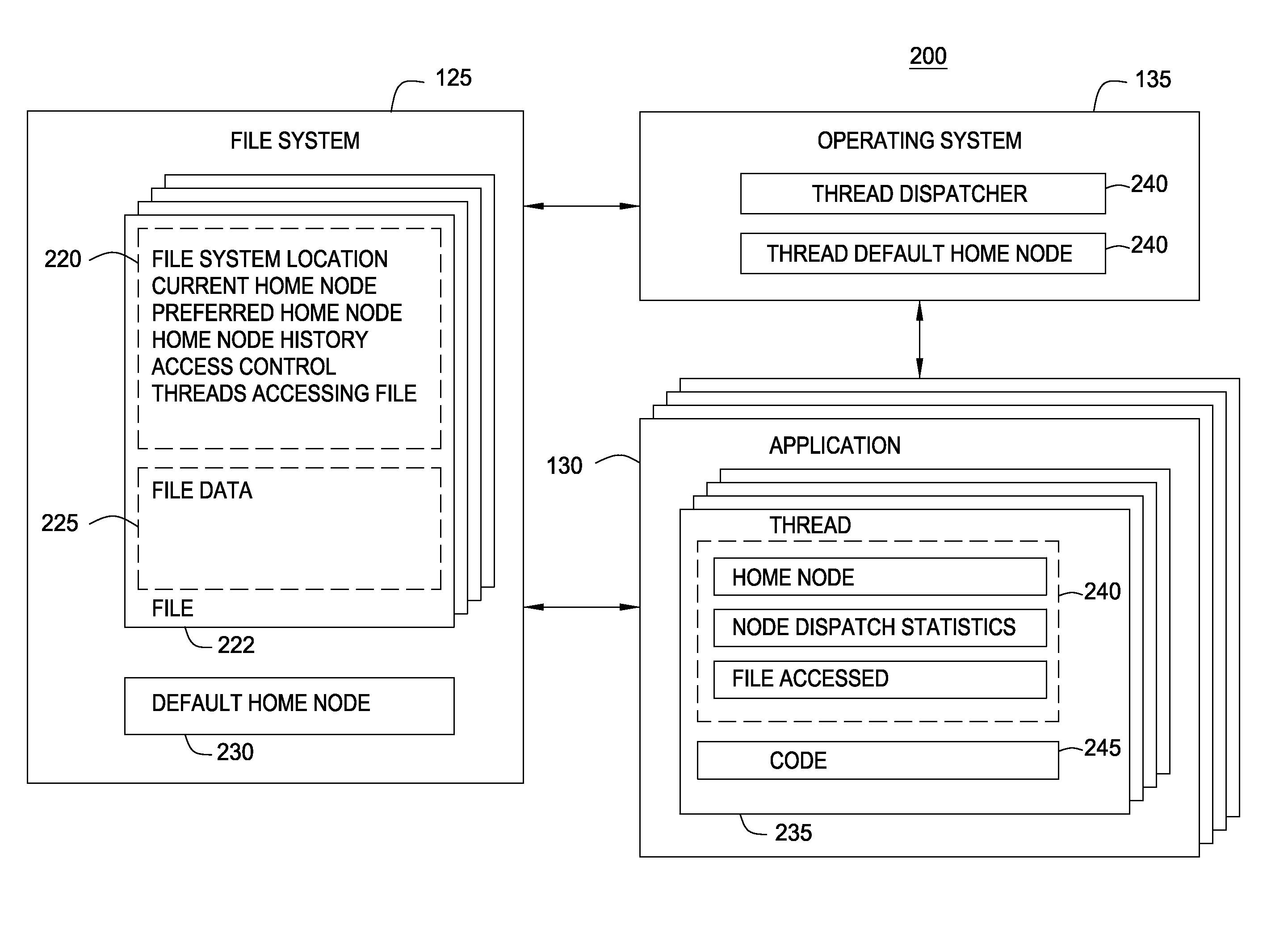

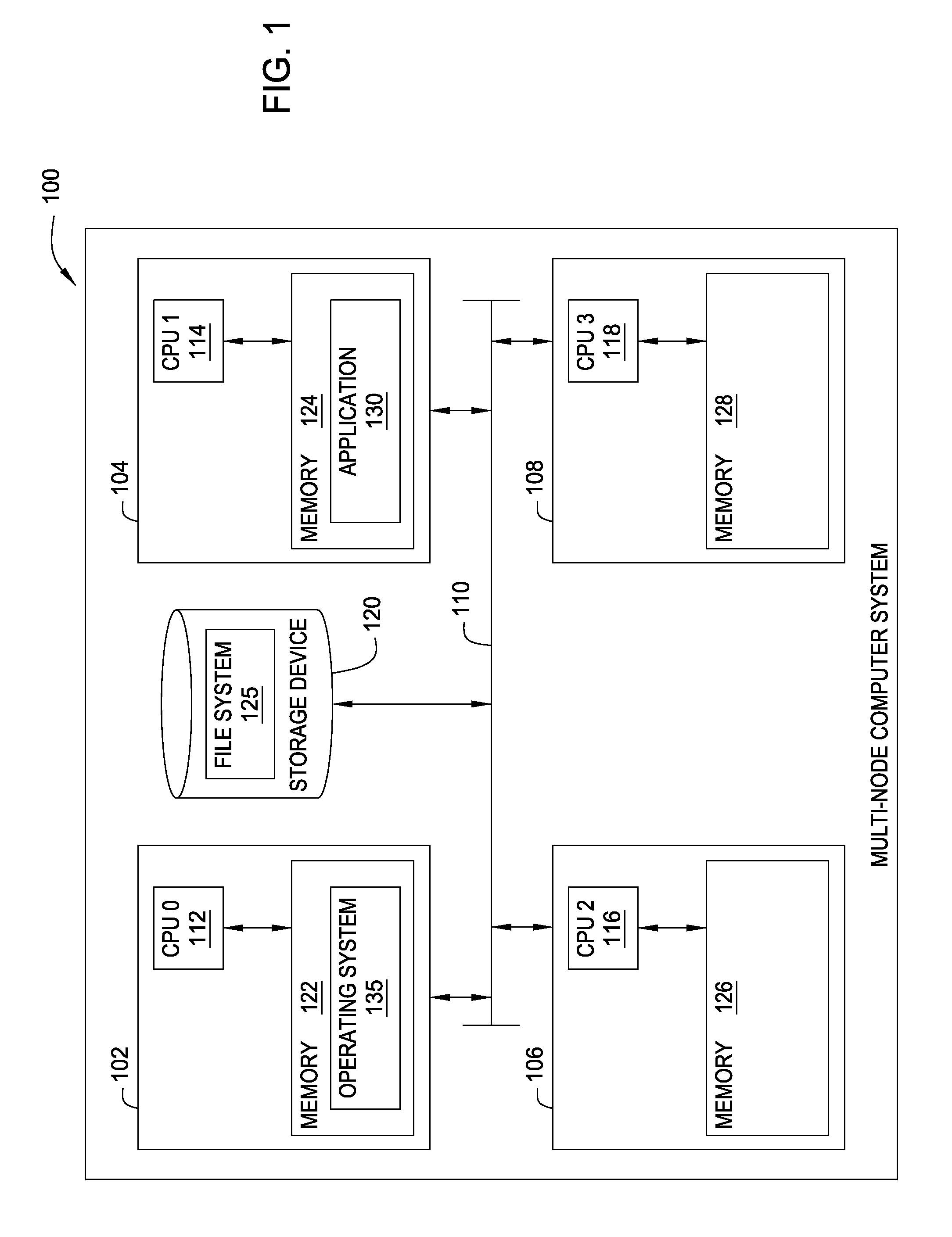

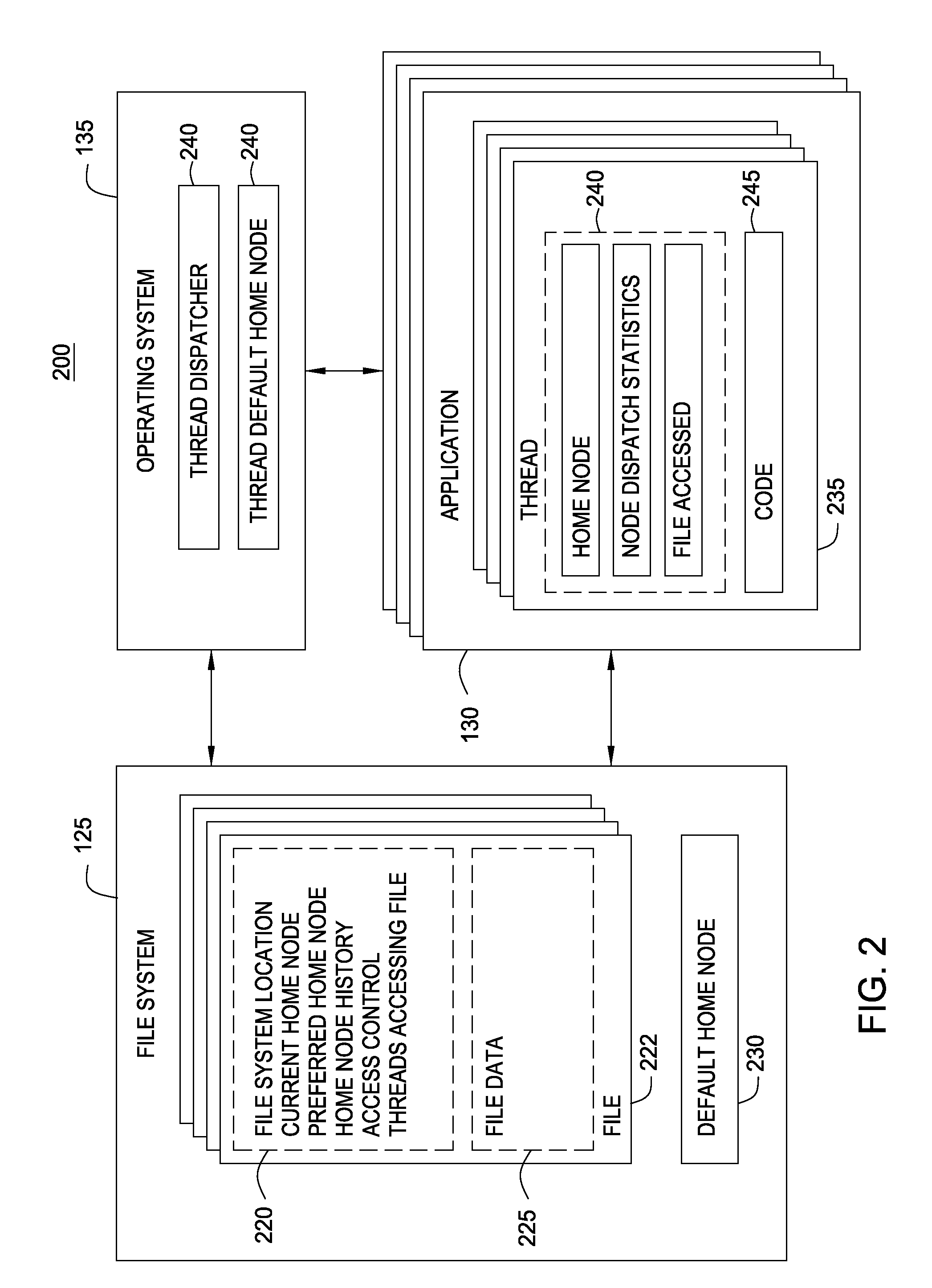

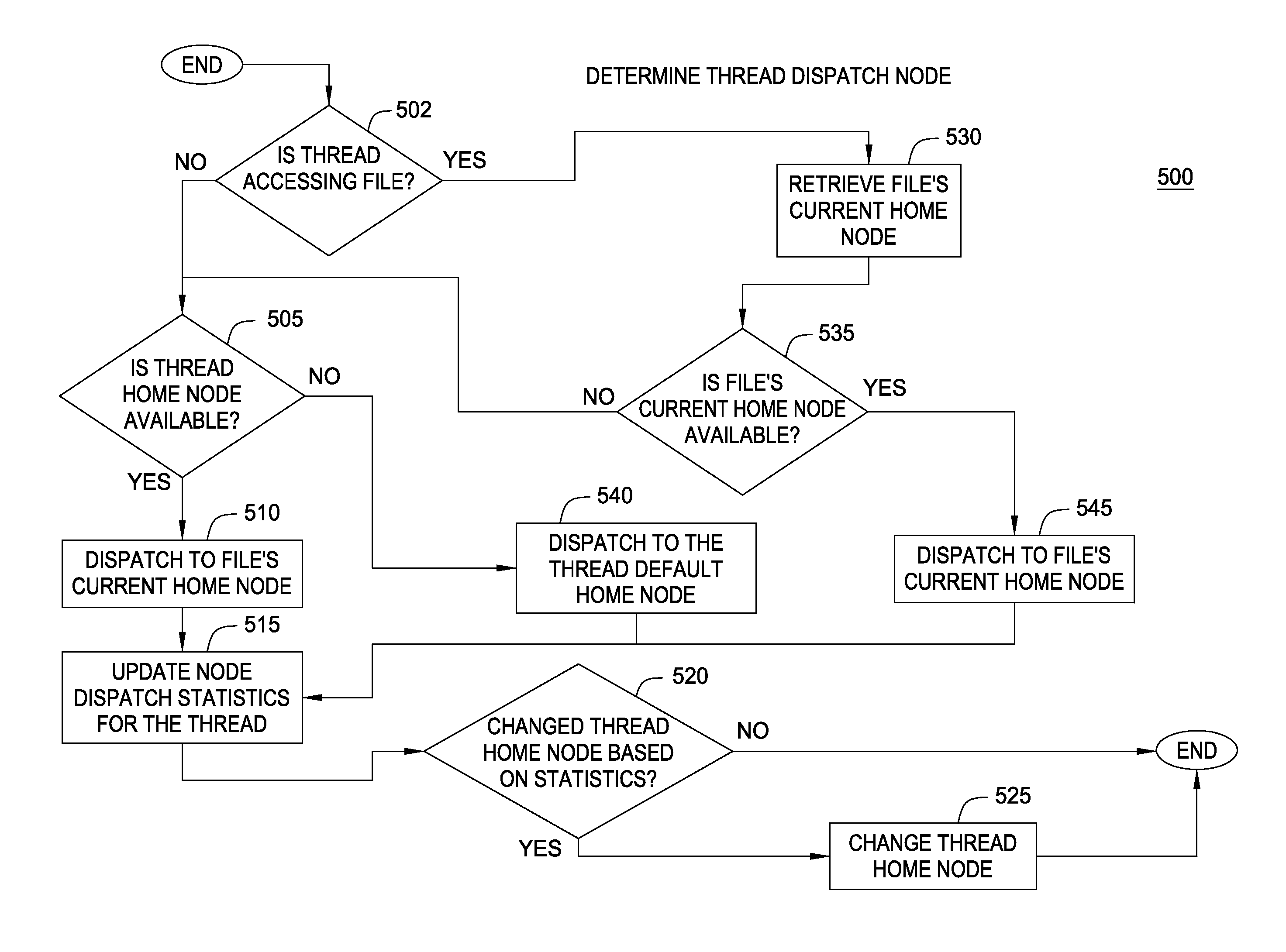

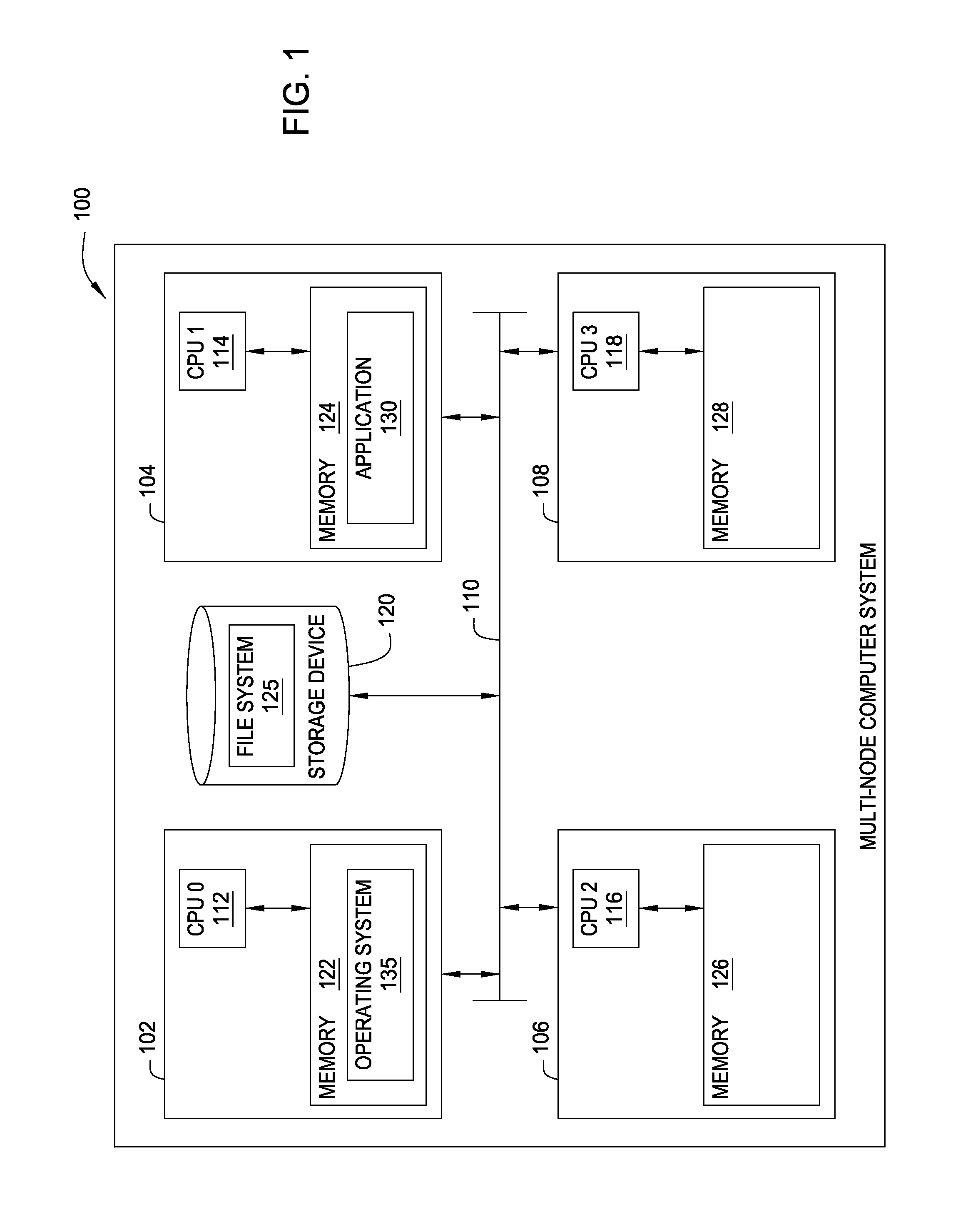

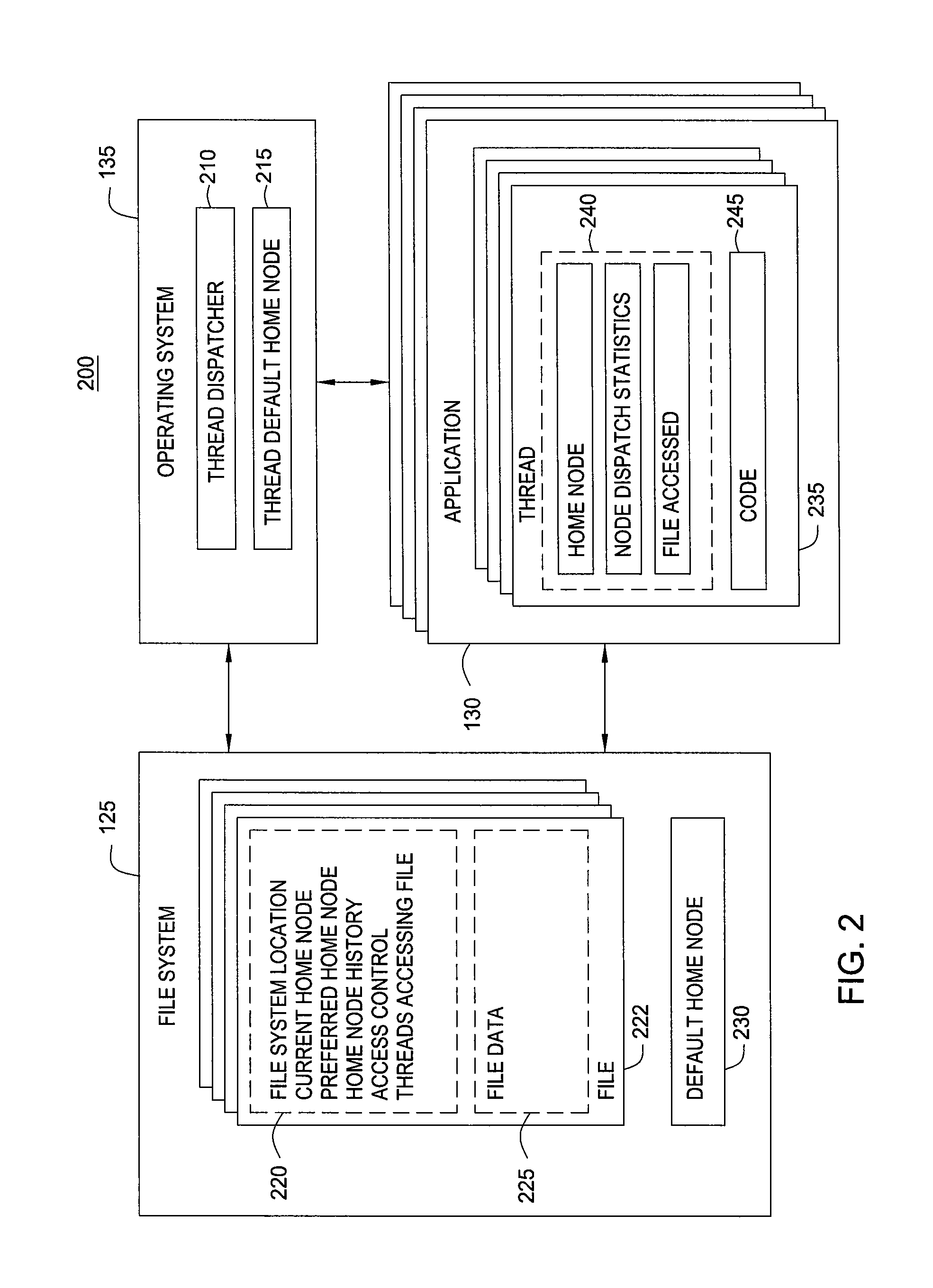

File System Object Node Management

InactiveUS20090320036A1Improving locality of referenceMultiprogramming arrangementsMemory systemsLocality of referenceFile system

Embodiments of the invention provide a method for assigning a home node to a file system object and using information associated with file system objects to improve locality of reference during thread execution. Doing so may improve application performance on a computer system configured using a non-uniform memory access (NUMA) architecture. Thus, embodiments of the invention allow a computer system to create a nodal affinity between a given file system object and a given processing node.

Owner:IBM CORP

File System Object Node Management

Embodiments of the invention provide a method for assigning a home node to a file system object and using information associated with file system objects to improve locality of reference during thread execution. Doing so may improve application performance on a computer system configured using a non-uniform memory access (NUMA) architecture. Thus, embodiments of the invention allow a computer system to create a nodal affinity between a given file system object and a given processing node.

Owner:IBM CORP

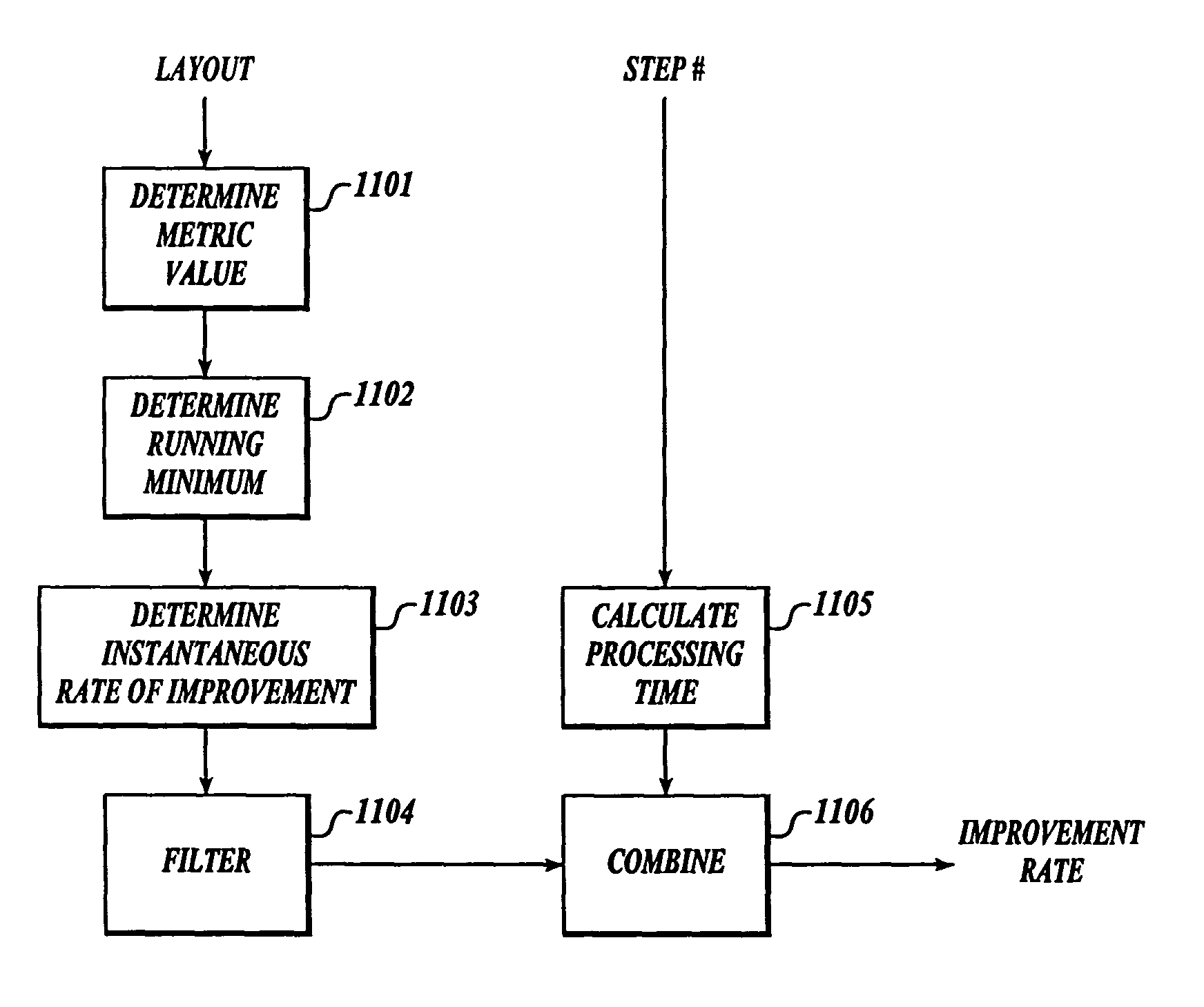

Method and system for controlling the improving of a program layout

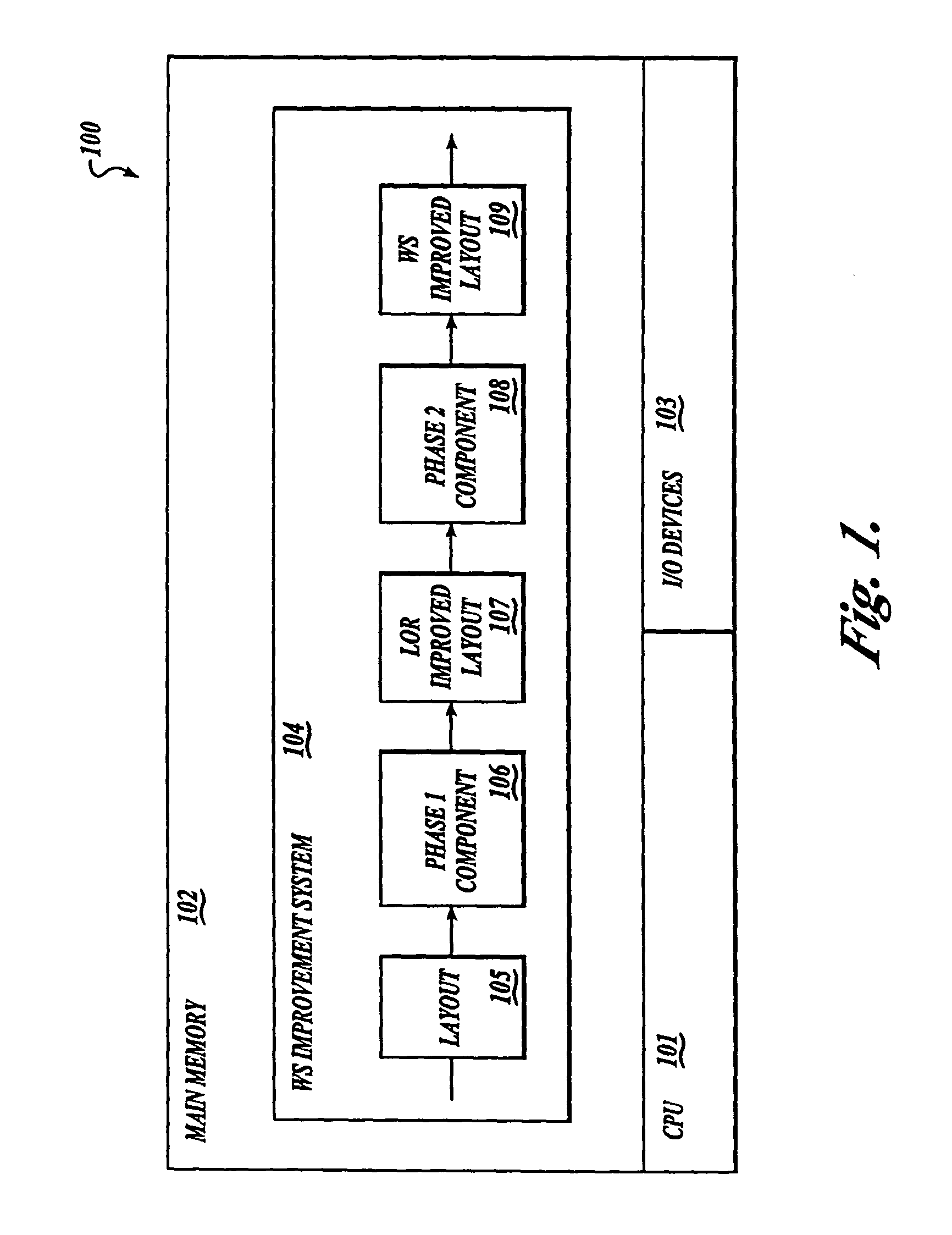

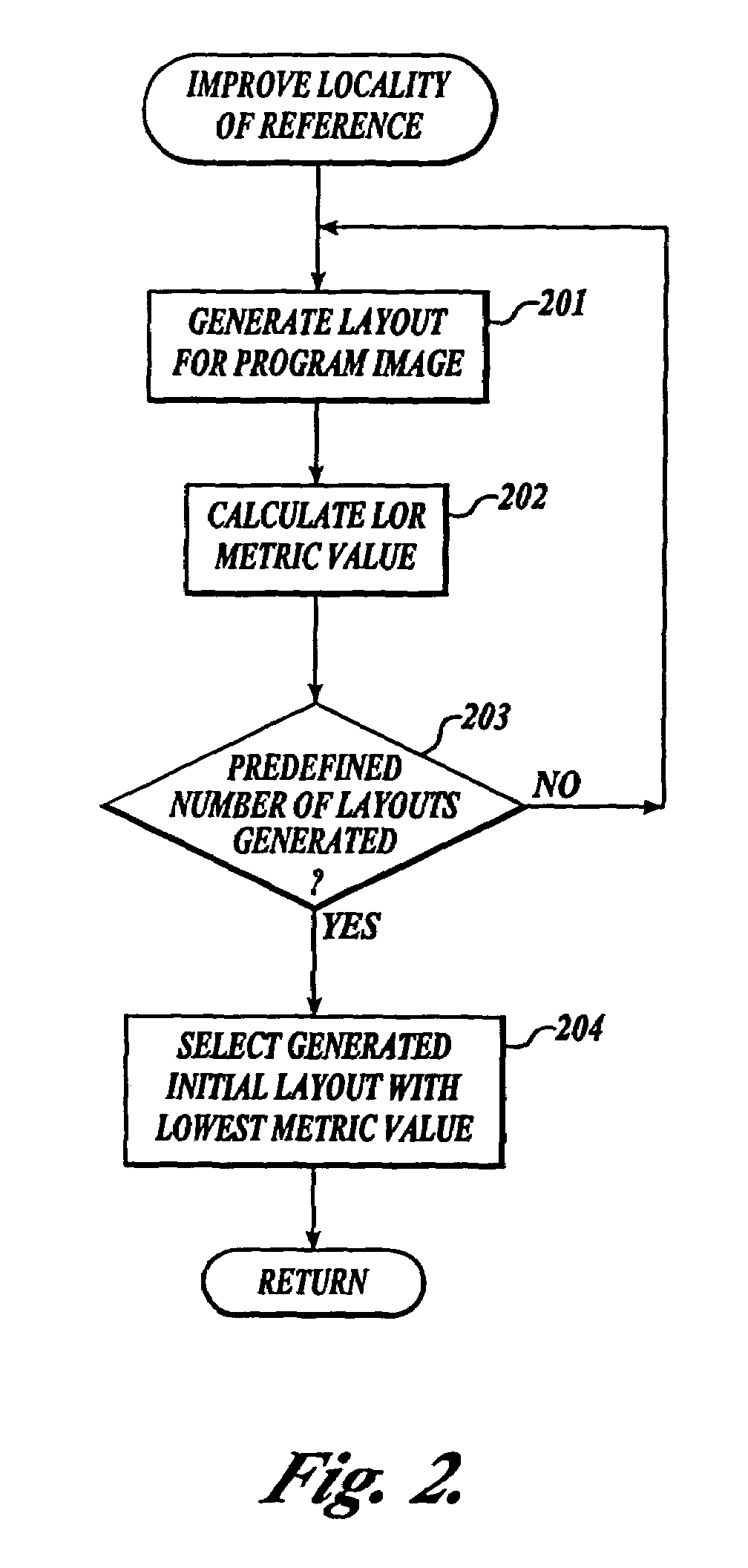

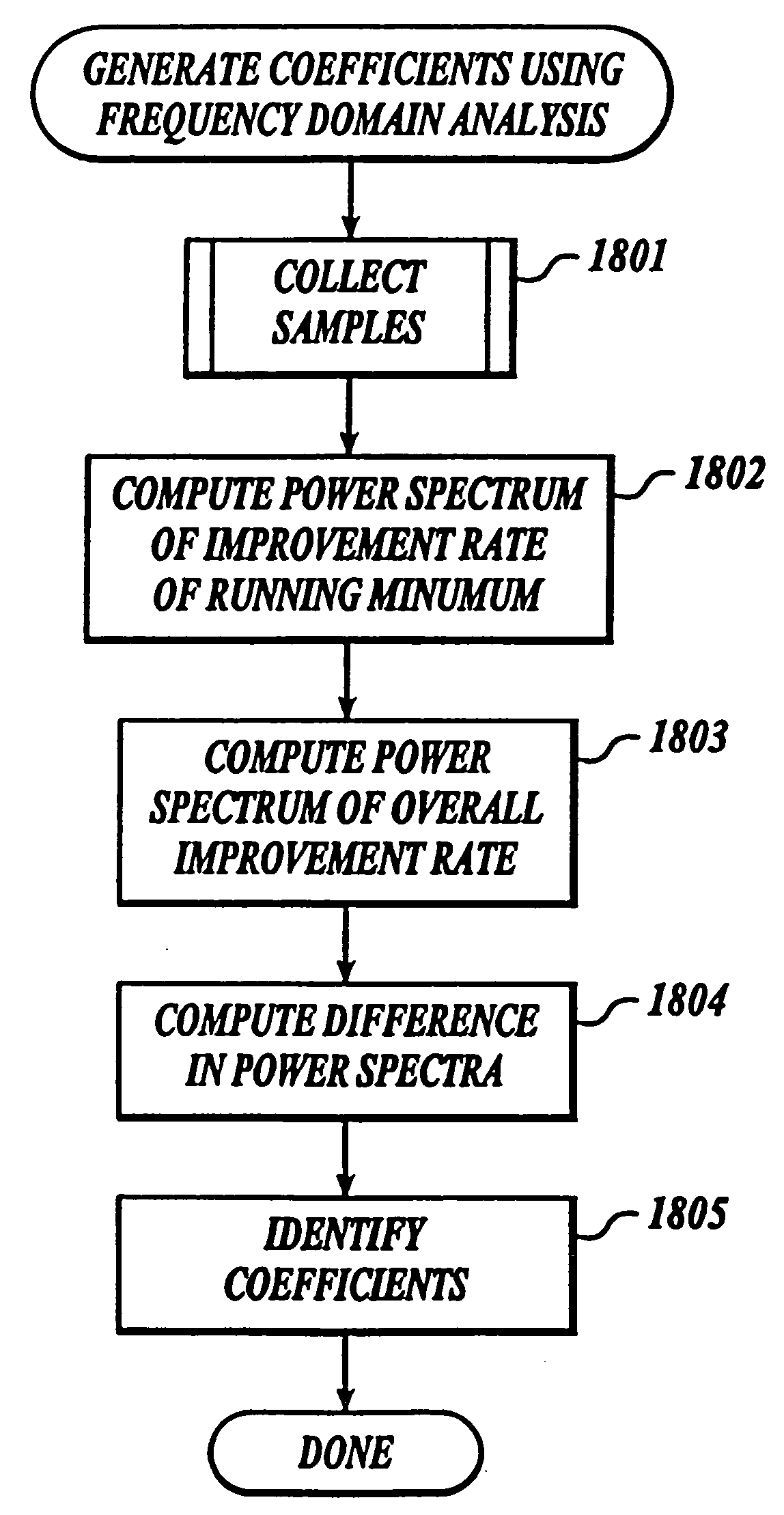

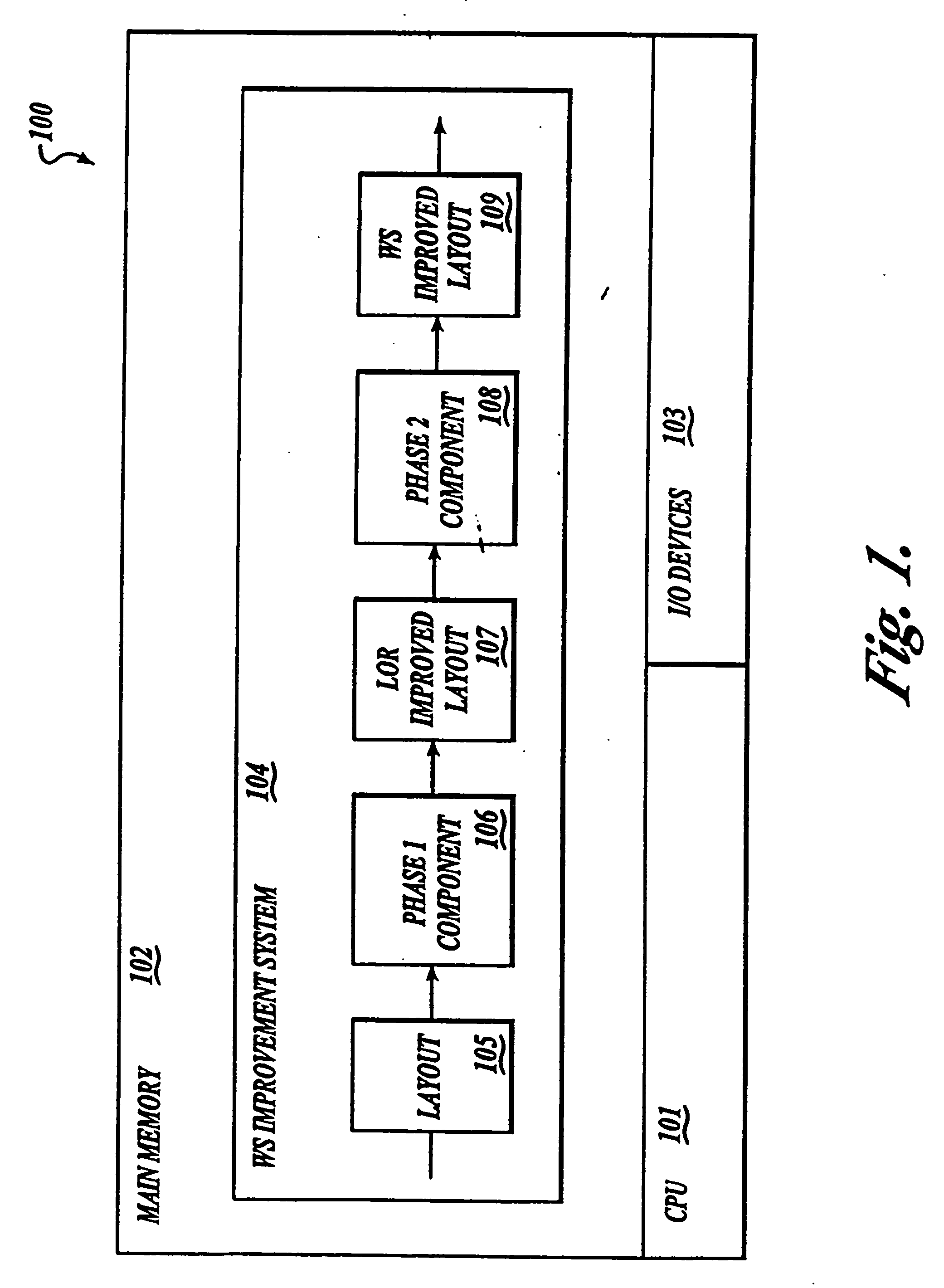

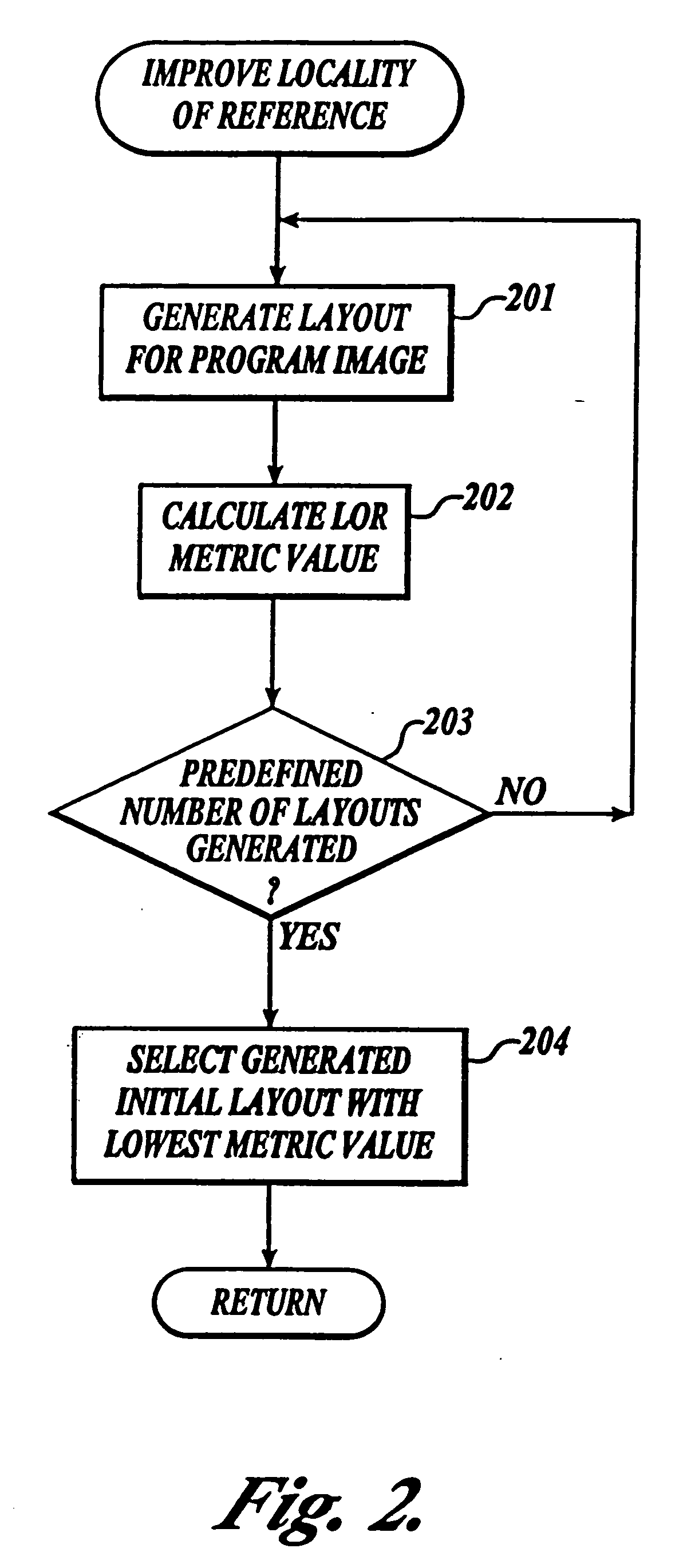

InactiveUS7181736B2Add settingsReduce settingsNuclear monitoringDigital computer detailsLocality of referenceWorking set

Owner:MICROSOFT TECH LICENSING LLC

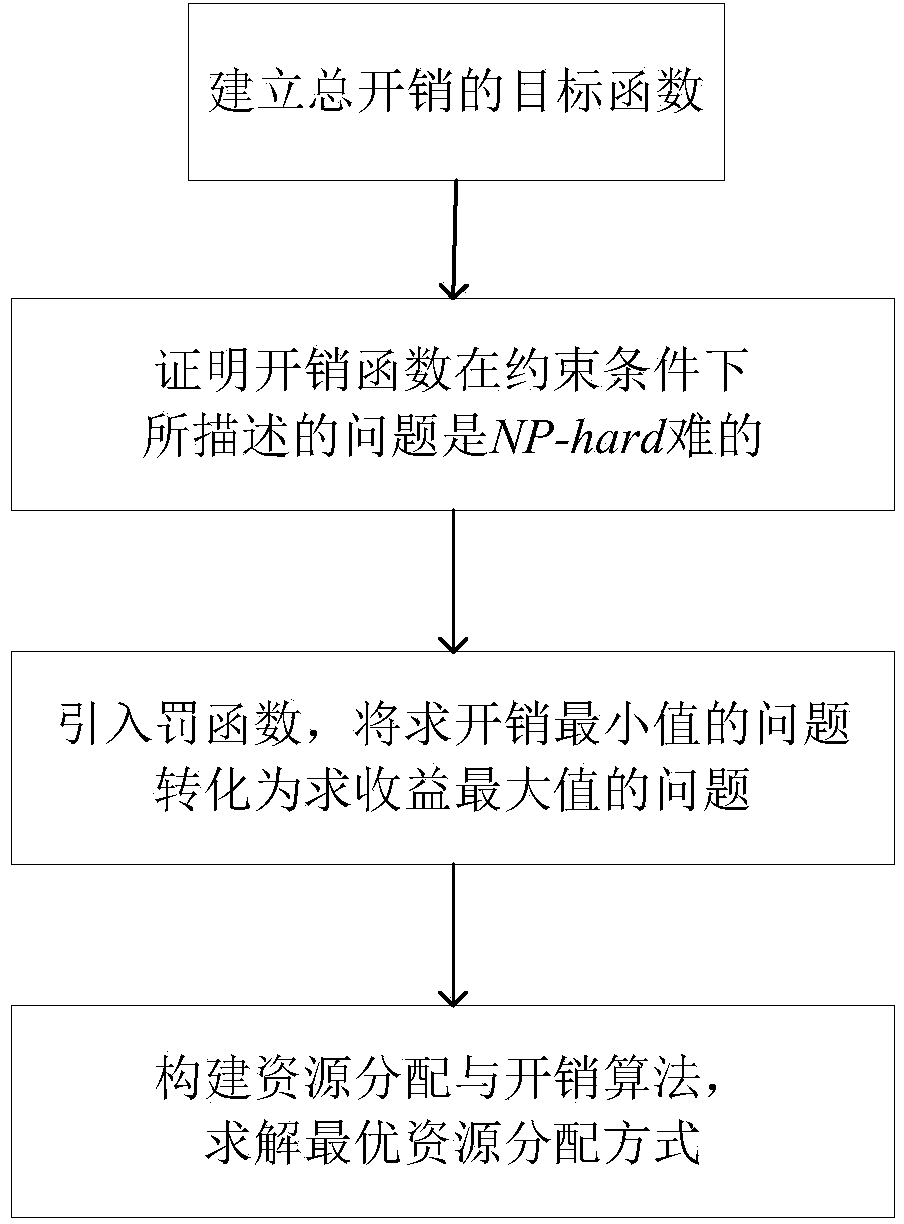

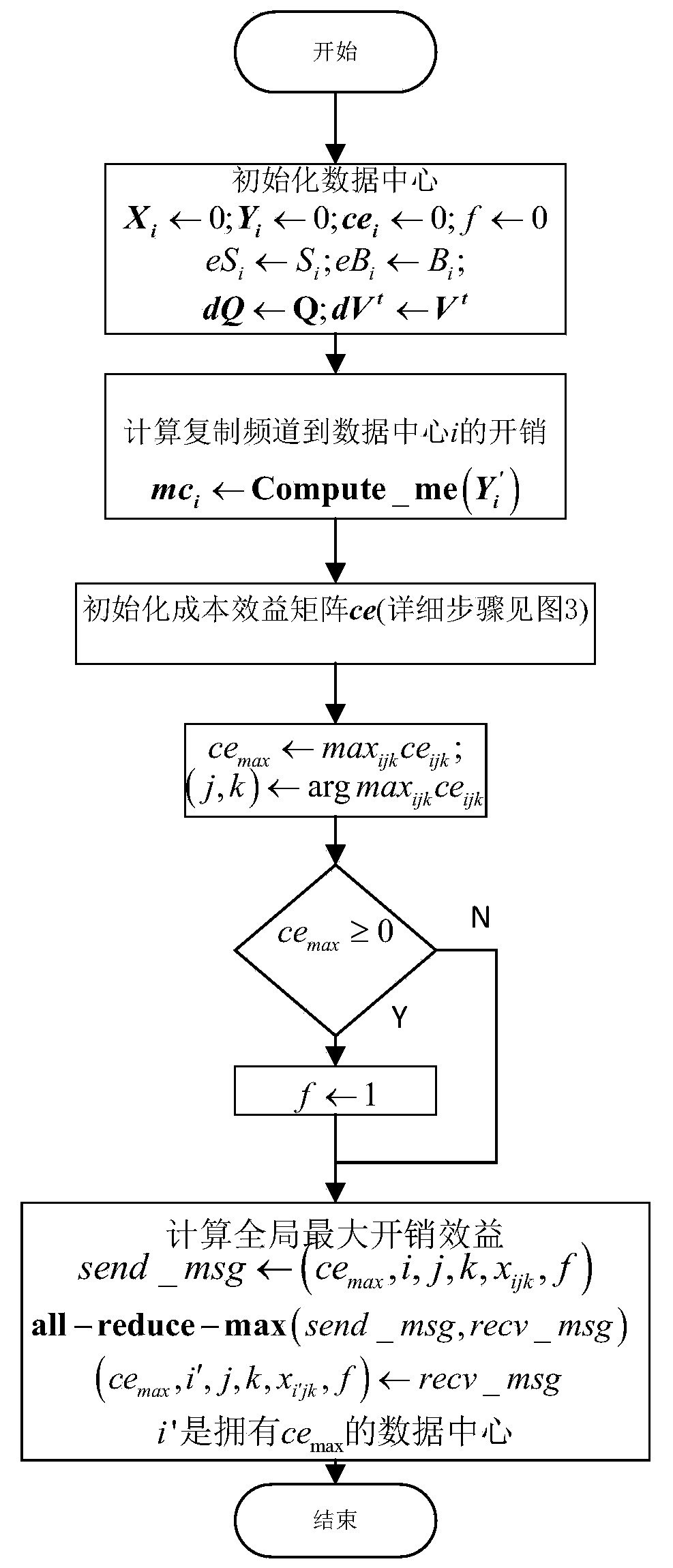

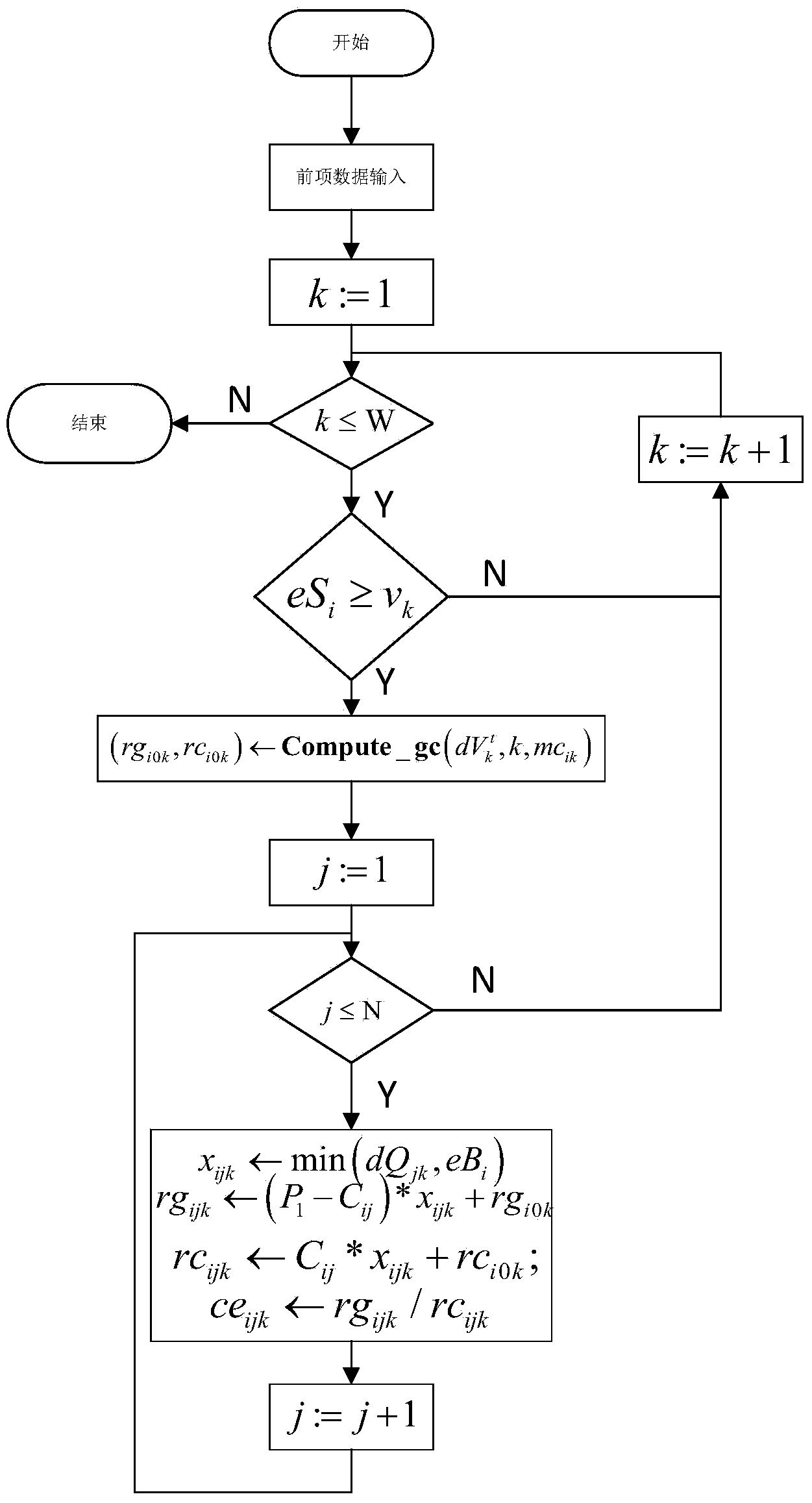

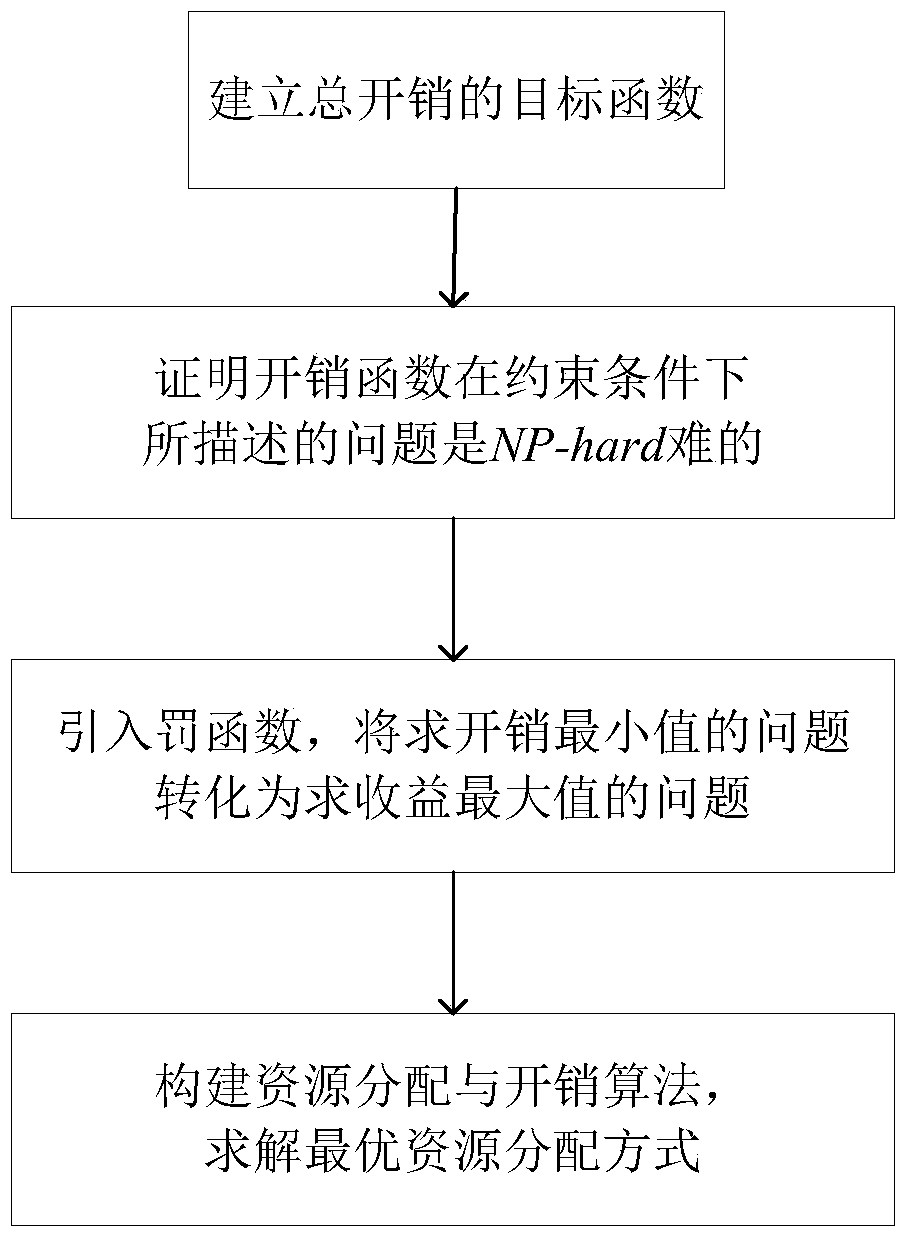

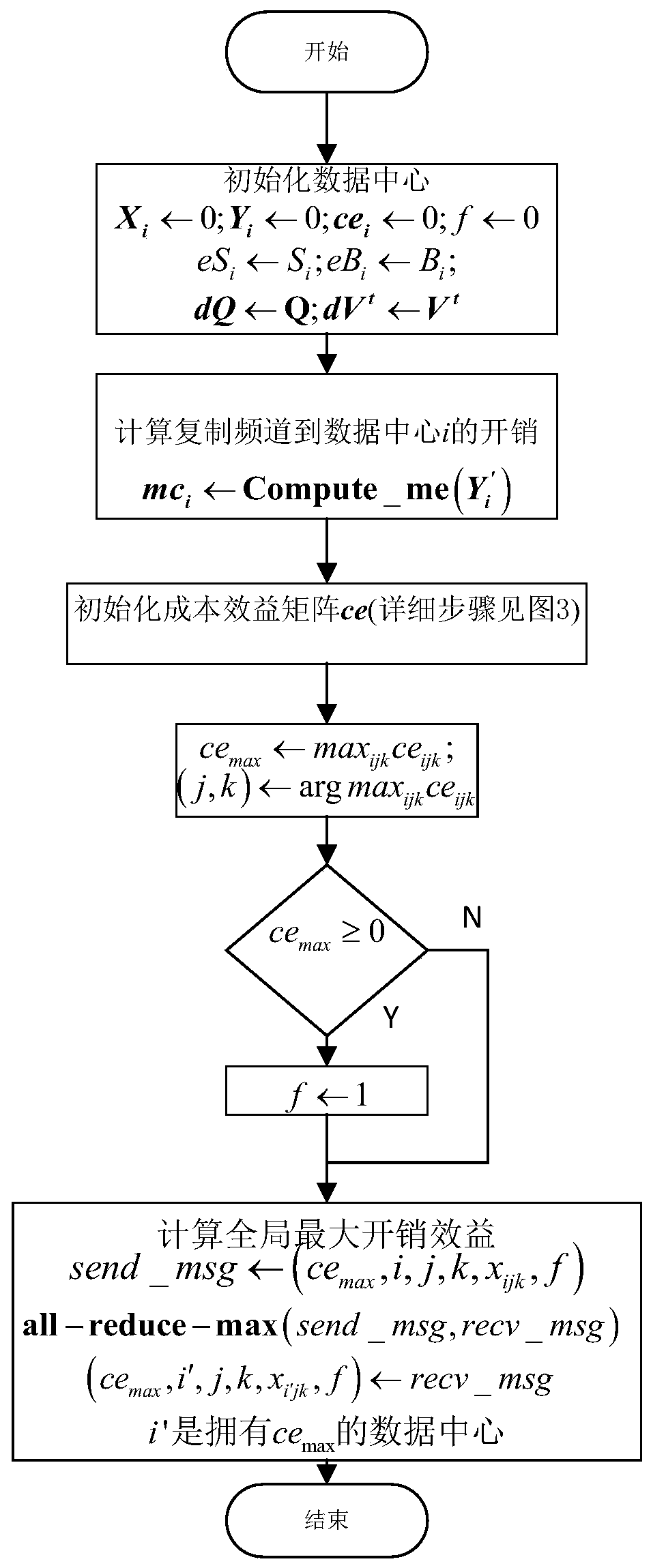

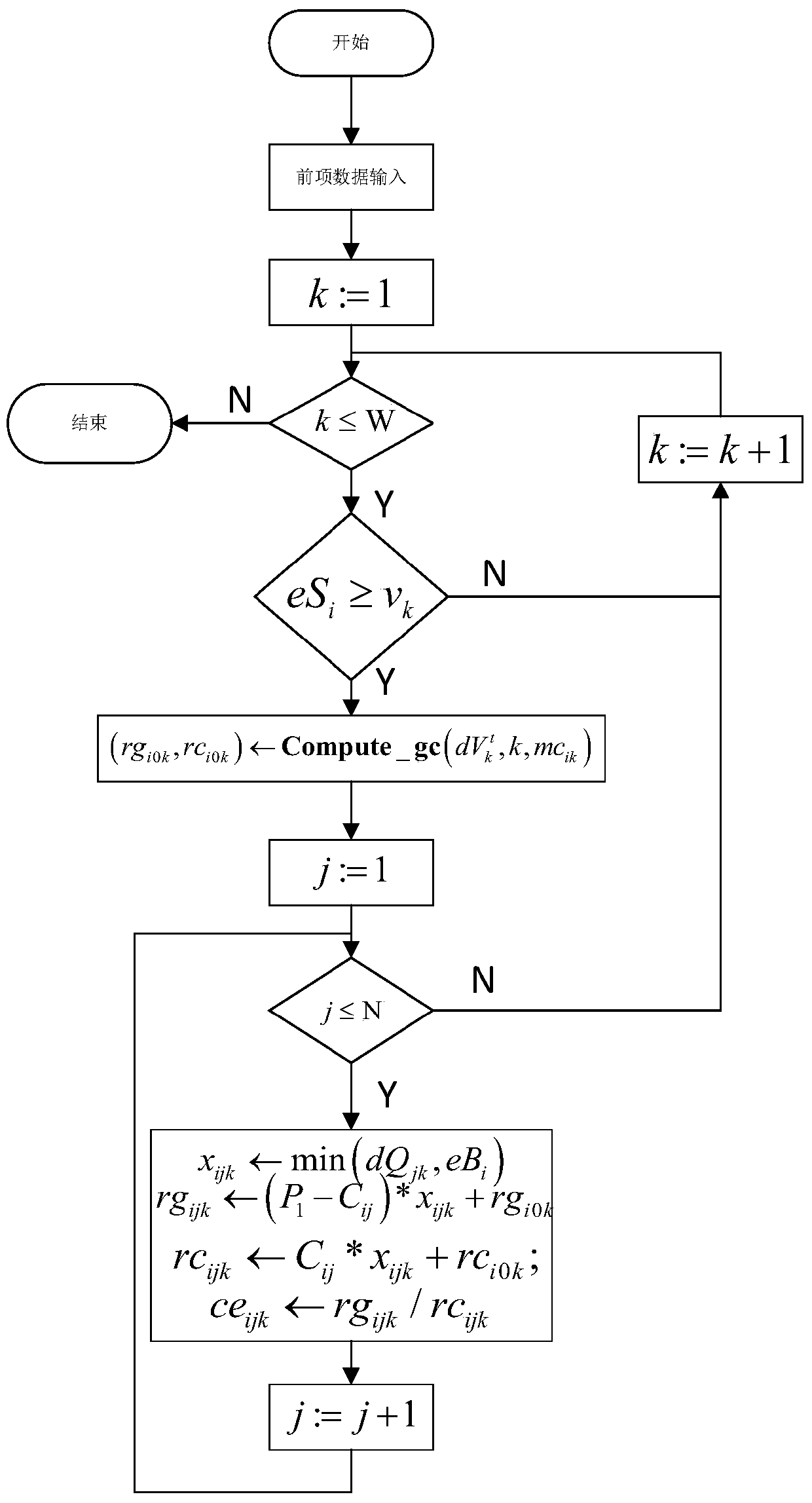

Resource allocation and overhead optimization method for cloud video

ActiveCN103973780AGood data availabilityImprove utilization efficiencyResource allocationTransmissionLocality of referenceOperational costs

The invention discloses a resource allocation and overhead optimization method for a cloud video. The method comprises the steps that a mathematical model used for describing the relations between channel distribution, user bandwidth distribution, total operation cost and quality of service (QoS); the model is proved and solved to be NP-hard; by the introduction of a penalty function, the problem of minimizing overhead through channel copying and bandwidth allocation is equivalently transformed into the problem of maximizing benefits through the channel copying and the bandwidth allocation; a resource allocation and overhead optimization method, namely DREAM, in a cloud data center is put forward to solve the problems of reservation and allocation of the bandwidth of a cloud platform and to determine the copy layout of channels of the cloud data center; the principle of locality is integrated in a resource allocation and overhead optimization algorithm, and a DREAM-L algorithm is put forward. Compared with the prior art, the method allows a cloud system to provide satisfactory watching quality, locality of reference and data availability for video-on-demand service at a low price.

Owner:HUAZHONG UNIV OF SCI & TECH

Profiling of software and circuit designs utilizing data operation analyses

InactiveUS8276135B2CAD circuit designSpecific program execution arrangementsLocality of referenceData set

The present invention is a method, system, software and data structure for profiling programs, other code, and adaptive computing integrated circuit architectures, using a plurality of data parameters such as data type, input and output data size, data source and destination locations, data pipeline length, locality of reference, distance of data movement, speed of data movement, data access frequency, number of data load / stores, memory usage, and data persistence. The profiler of the invention accepts a data set as input, and profiles a plurality of functions by measuring a plurality of data parameters for each function, during operation of the plurality of functions with the input data set, to form a plurality of measured data parameters. From the plurality of measured data parameters, the profiler generates a plurality of data parameter comparative results corresponding to the plurality of functions and the input data set. Based upon the measured data parameters, portions of the profiled code are selected for embodiment as computational elements in an adaptive computing IC architecture.

Owner:CORNAMI INC

Method and system for controlling the improving of a program layout

InactiveUS20060247908A1Improving working setWorking set is reducedProgram loading/initiatingMemory systemsLocality of referenceWorking set

A method and system for improving the working set of a program image. The working set (WS) improvement system of the present invention employs a two-phase technique for improving the working set. In the first phase, the WS improvement system inputs the program image and outputs a program image with the locality of its references improved. In the second phase, the WS improvement system inputs the program image with its locality of references improved and outputs a program image with the placement of its basic blocks in relation to page boundaries improved so that the working set is reduced.

Owner:MICROSOFT TECH LICENSING LLC

Distribution data structures for locality-guided work stealing

ActiveUS8813091B2Multiprogramming arrangementsSoftware simulation/interpretation/emulationLanguage constructLocality of reference

A data structure, the distribution, may be provided to track the desired and / or actual location of computations and data that range over a multidimensional rectangular index space in a parallel computing system. Examples of such iteration spaces include multidimensional arrays and counted loop nests. These distribution data structures may be used in conjunction with locality-guided work stealing and may provide a structured way to track load balancing decisions so they can be reproduced in related computations, thus maintaining locality of reference. They may allow computations to be tied to array layout, and may allow iteration over subspaces of an index space in a manner consistent with the layout of the space itself. Distributions may provide a mechanism to describe computations in a manner that is oblivious to precise machine size or structure. Programming language constructs and / or library functions may support the implementation and use of these distribution data structures.

Owner:SUN MICROSYSTEMS INC

File system object node management

InactiveUS8954969B2Resource allocationMemory adressing/allocation/relocationLocality of referenceFile system

Embodiments of the invention provide a method for assigning a home node to a file system object and using information associated with file system objects to improve locality of reference during thread execution. Doing so may improve application performance on a computer system configured using a non-uniform memory access (NUMA) architecture. Thus, embodiments of the invention allow a computer system to create a nodal affinity between a given file system object and a given processing node.

Owner:IBM CORP

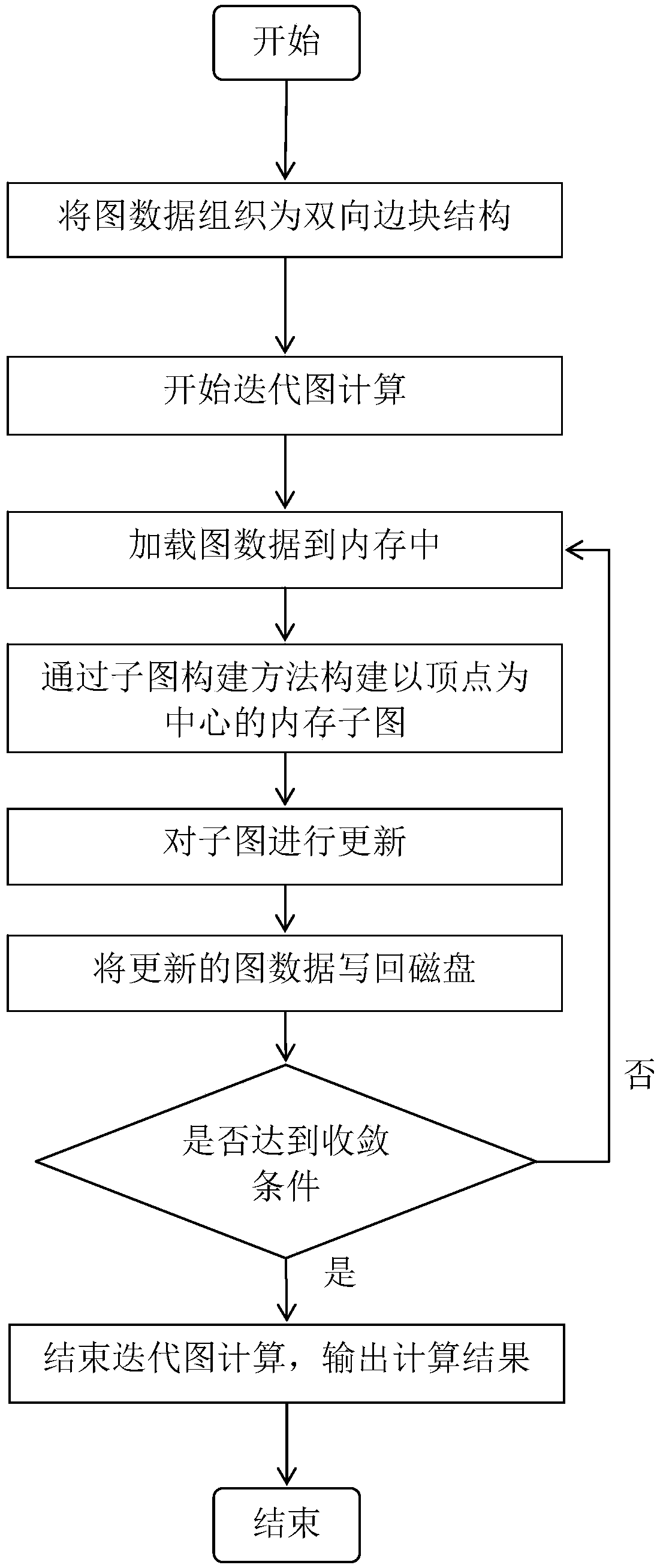

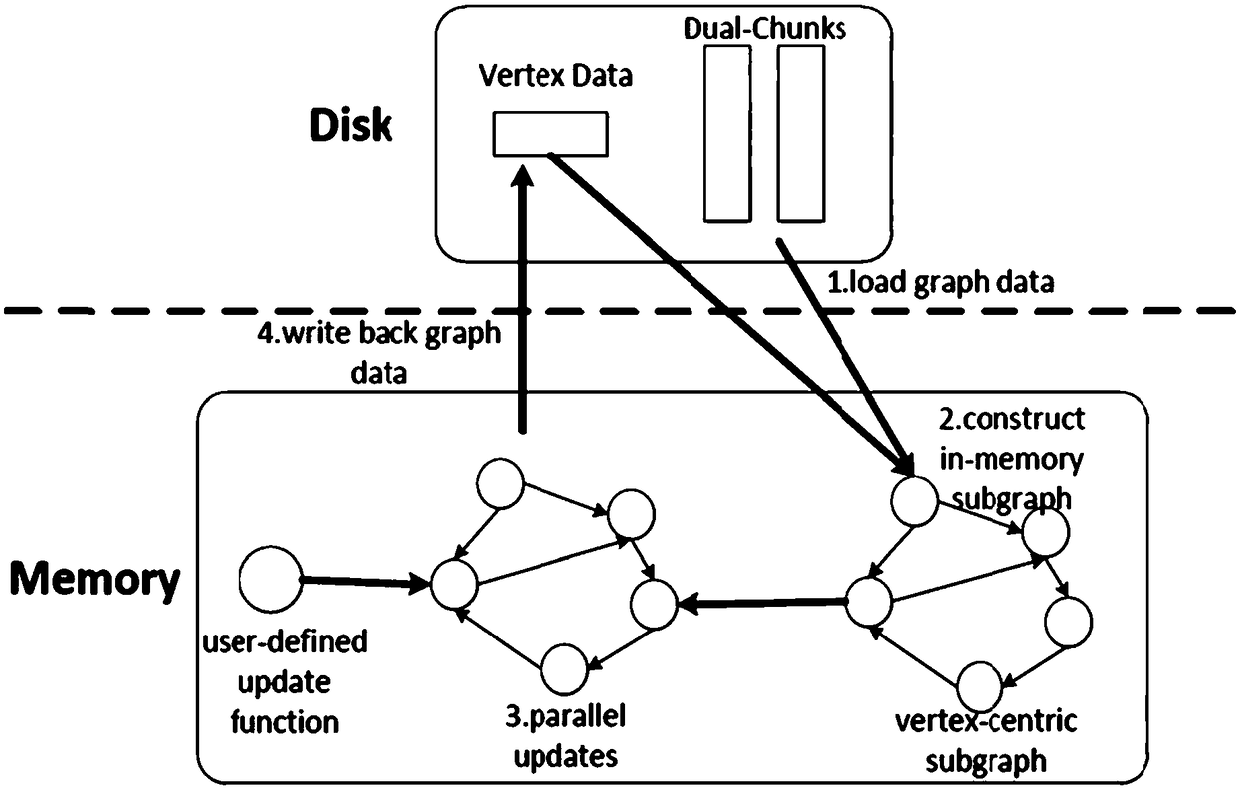

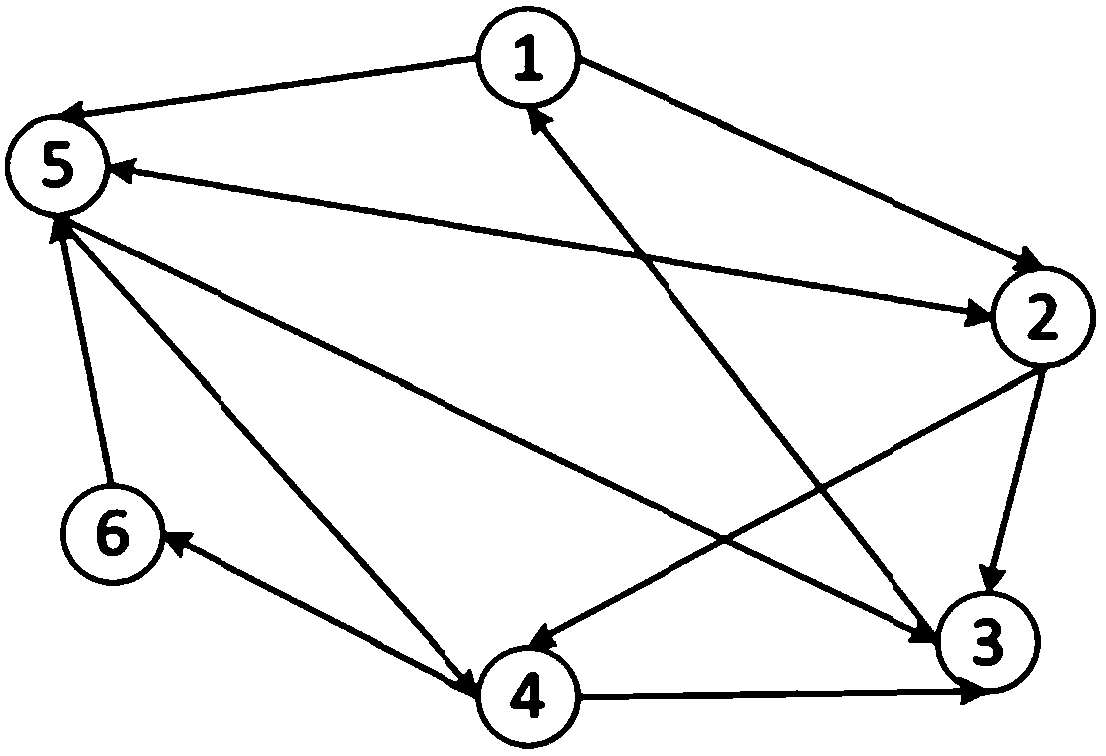

A disk graph processing method and a disk graph processing system based on subgraph construction

ActiveCN109254725AFix build overheadImprove performanceInput/output to record carriersLocality of referenceBlock structure

The invention discloses a disk graph processing method and a disk graph processing system based on subgraph construction, the method comprises the following steps: organizing graph data into a bi-directional edge block structure; Starting iterative graph computation; Loading graph data into memory; constructing a vertex-centric memory subgraph by an efficient subgraph construction method, updatingthe sub-graph; Writing the updated graph data back to disk; Judging whether the convergence condition is reached; Ending Iteration Graph Calculation. The disk graph processing method based on subgraph construction of the invention continuously organizes vertices and edges needed to be accessed in the process of subgraph construction, Ensure that memory access locality is fully utilized in the process of subgraph construction, solves the problem of high subgraph construction overhead in disk graph processing system, and significantly improves the overall performance of the system.

Owner:HUAZHONG UNIV OF SCI & TECH

Method for providing a flat view of a hierarchical namespace without requiring unique leaf names

ActiveUS7949682B2Digital data processing detailsDigital data authenticationLocality of referenceNamespace

A set of flat identifiers are received, and a locality of reference cue determined. A cache is searched to locate an association including the combination of the set of flat identifiers and the locality of reference cue. If such an association is found in the cache, the hierarchical object(s) associated with that combination are used first in attempting to map the set of flat identifiers onto a hierarchical namespace.

Owner:EMC IP HLDG CO LLC

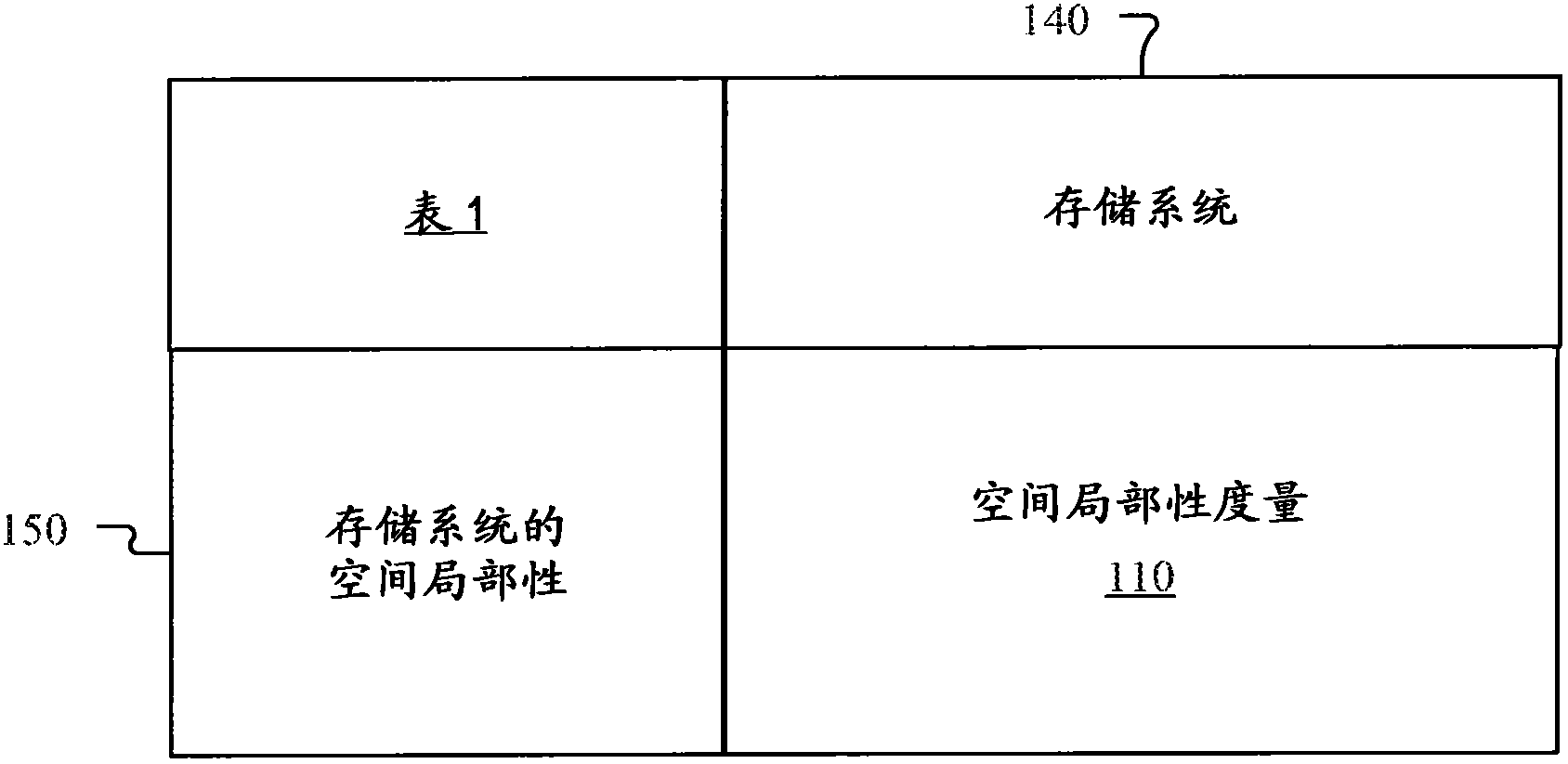

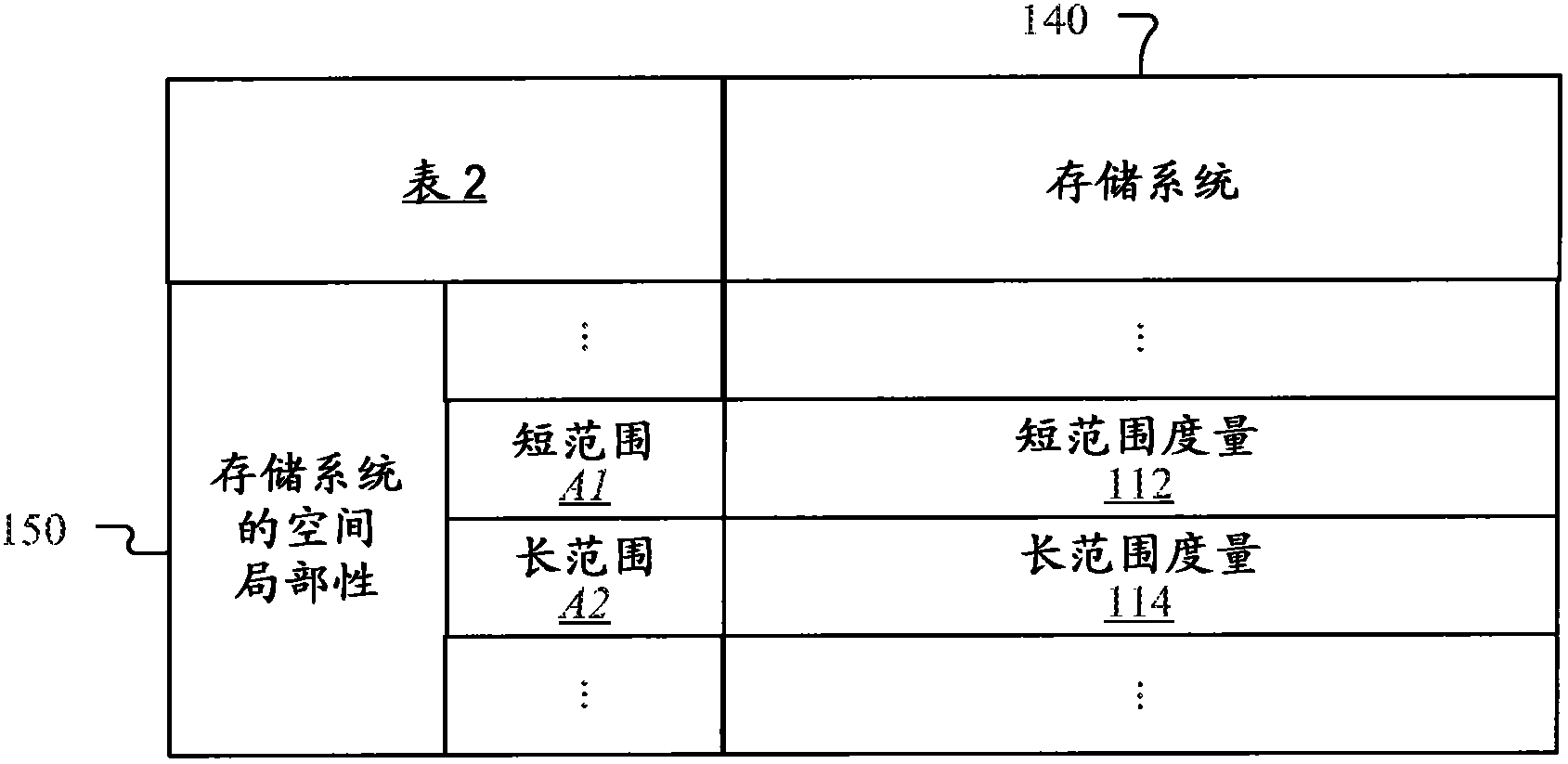

Caching based on spatial distribution of accesses to data storage devices

ActiveCN102884512AEasy to understandMemory architecture accessing/allocationMemory adressing/allocation/relocationLocality of referenceHybrid storage system

Methods, systems, and apparatus, including computer programs encoded on a computer storage medium, for quantifying a spatial distribution of accesses to storage systems and for determining spatial locality of references to storage addresses in the storage systems, are described. In one aspect, a method includes determining a measure of spatial distribution of accesses to a data storage system based on multiple distinct groups of accesses to the data storage system, and adjusting a caching policy used for the data storage system based on the determined measure of spatial distribution.

Owner:MARVELL ASIA PTE LTD

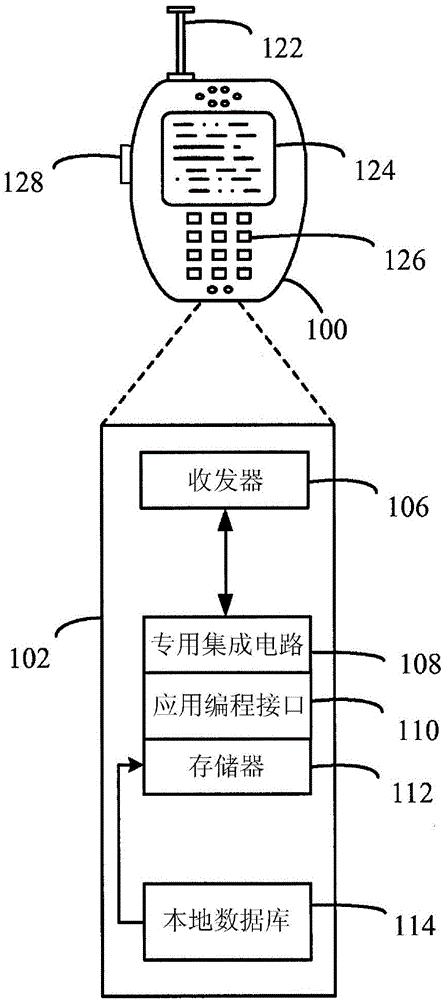

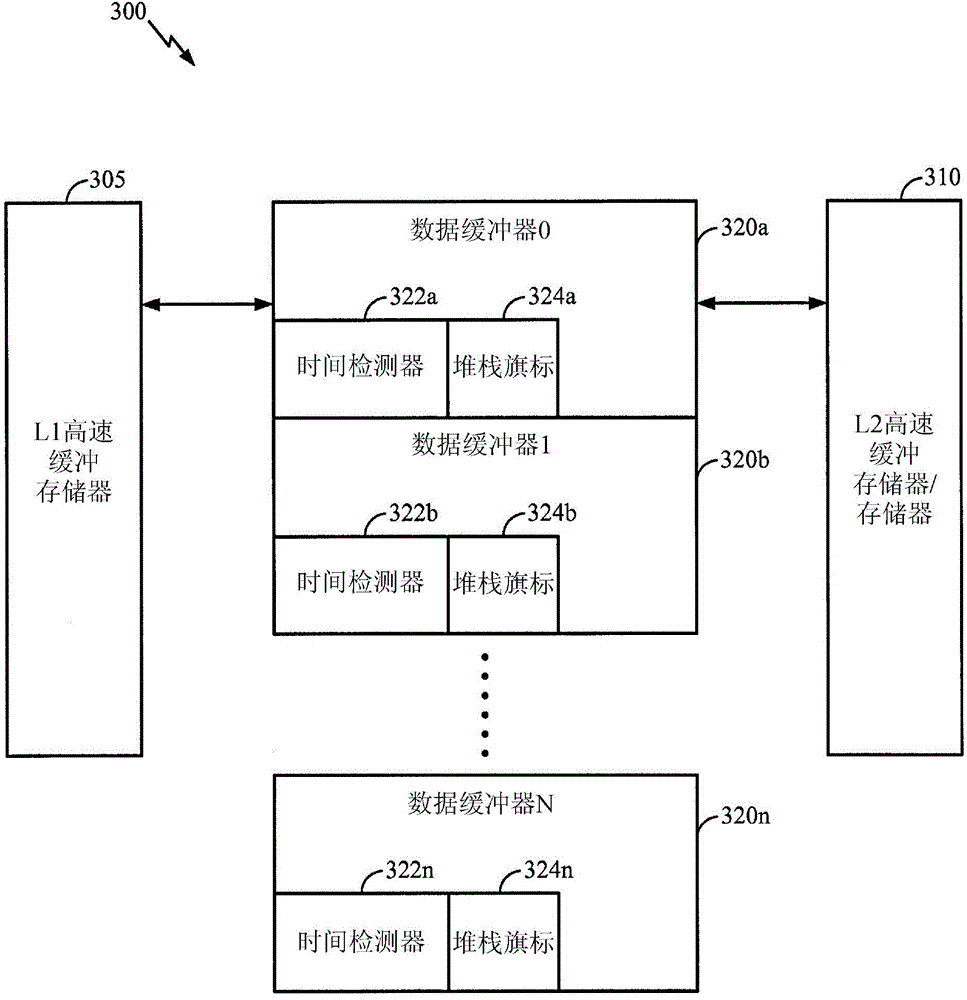

Preventing the displacement of high temporal locality of reference data fill buffers

InactiveCN104067244AMemory architecture accessing/allocationEnergy efficient ICTLocality of referenceParallel computing

The disclosure relates to accessing memory content with a high temporal locality of reference. An embodiment of the disclosure stores the content in a data buffer, determines that the content of the data buffer has a high temporal locality of reference, and accesses the data buffer for each operation targeting the content instead of a cache storing the content.

Owner:QUALCOMM INC

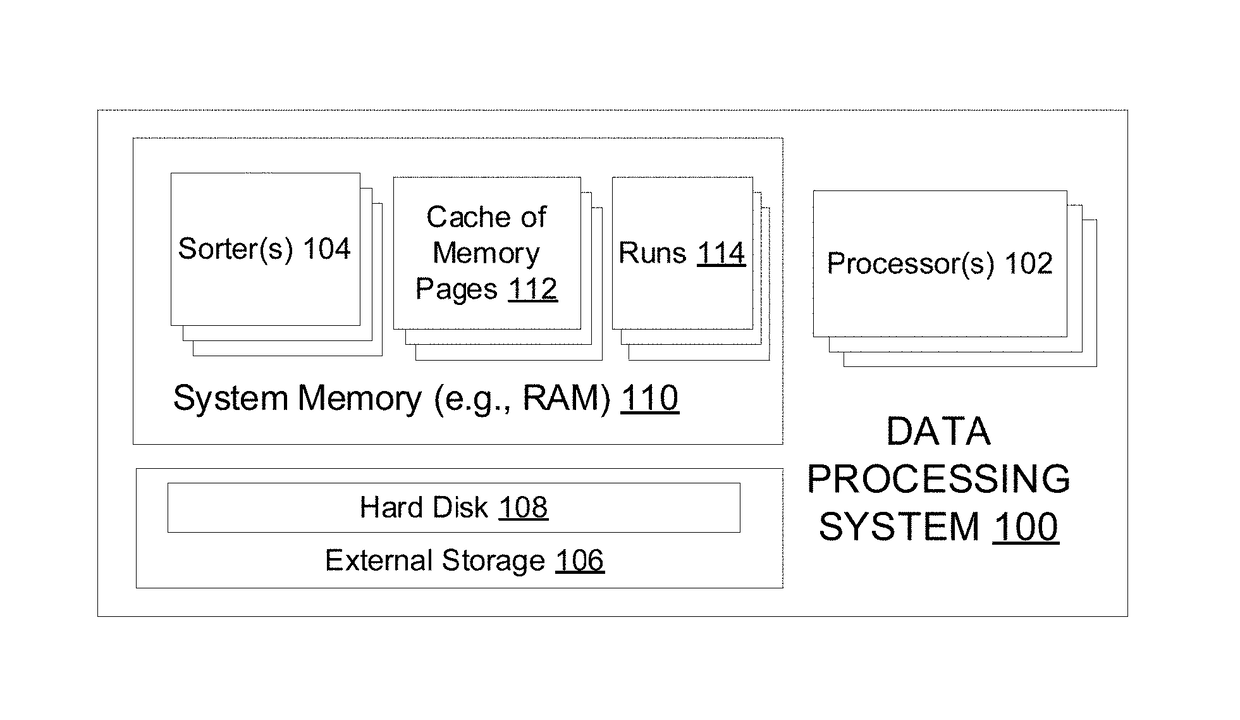

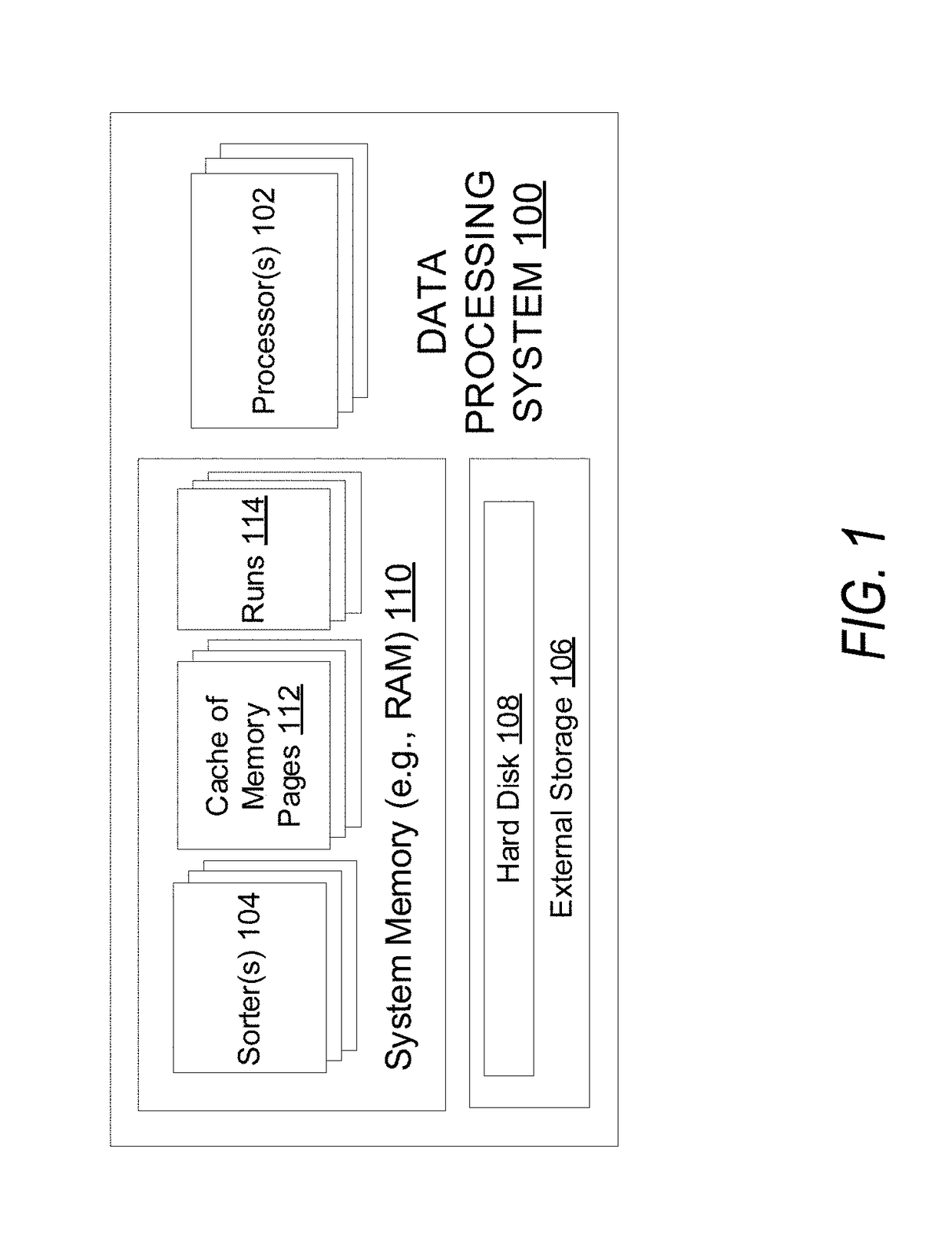

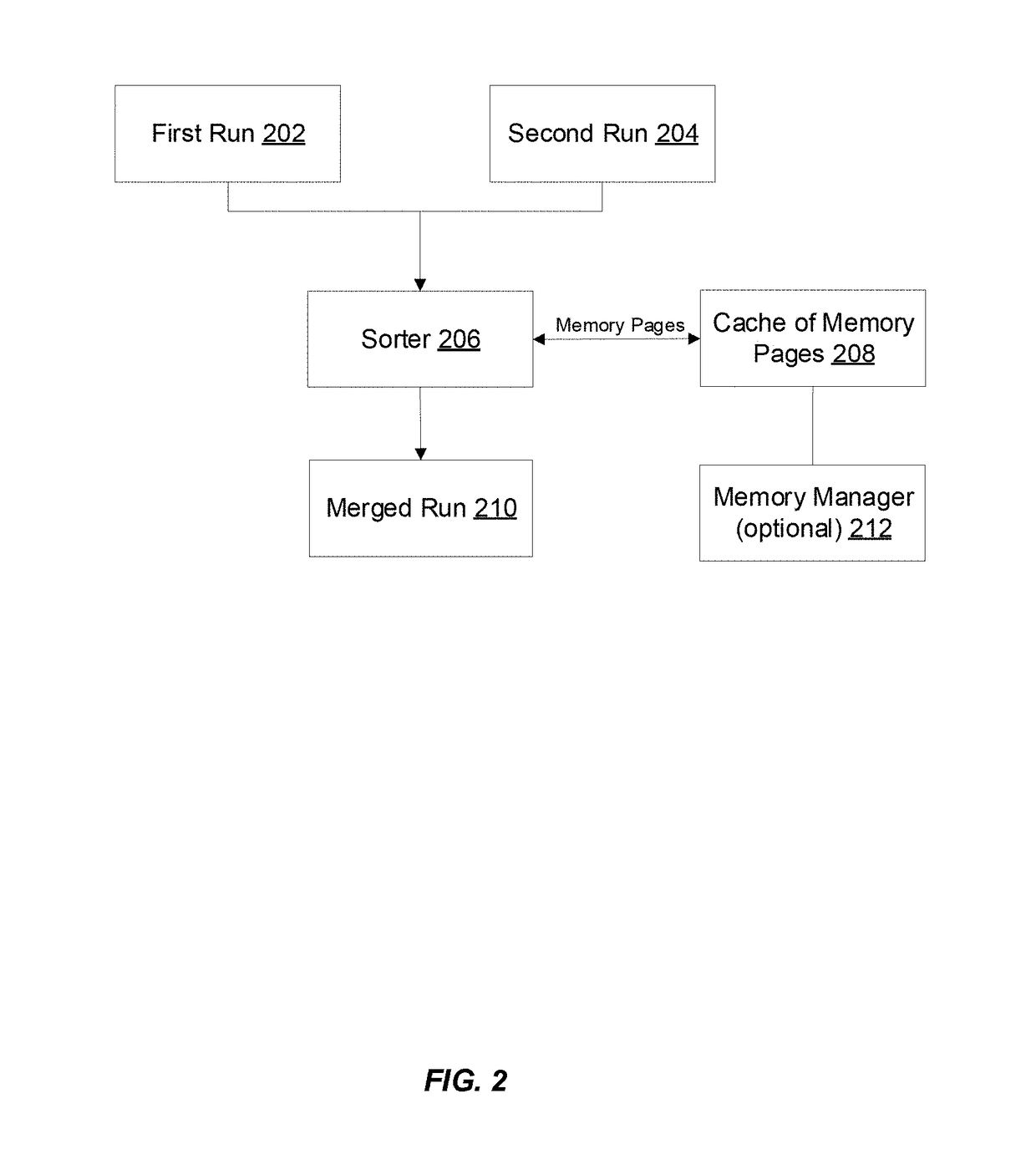

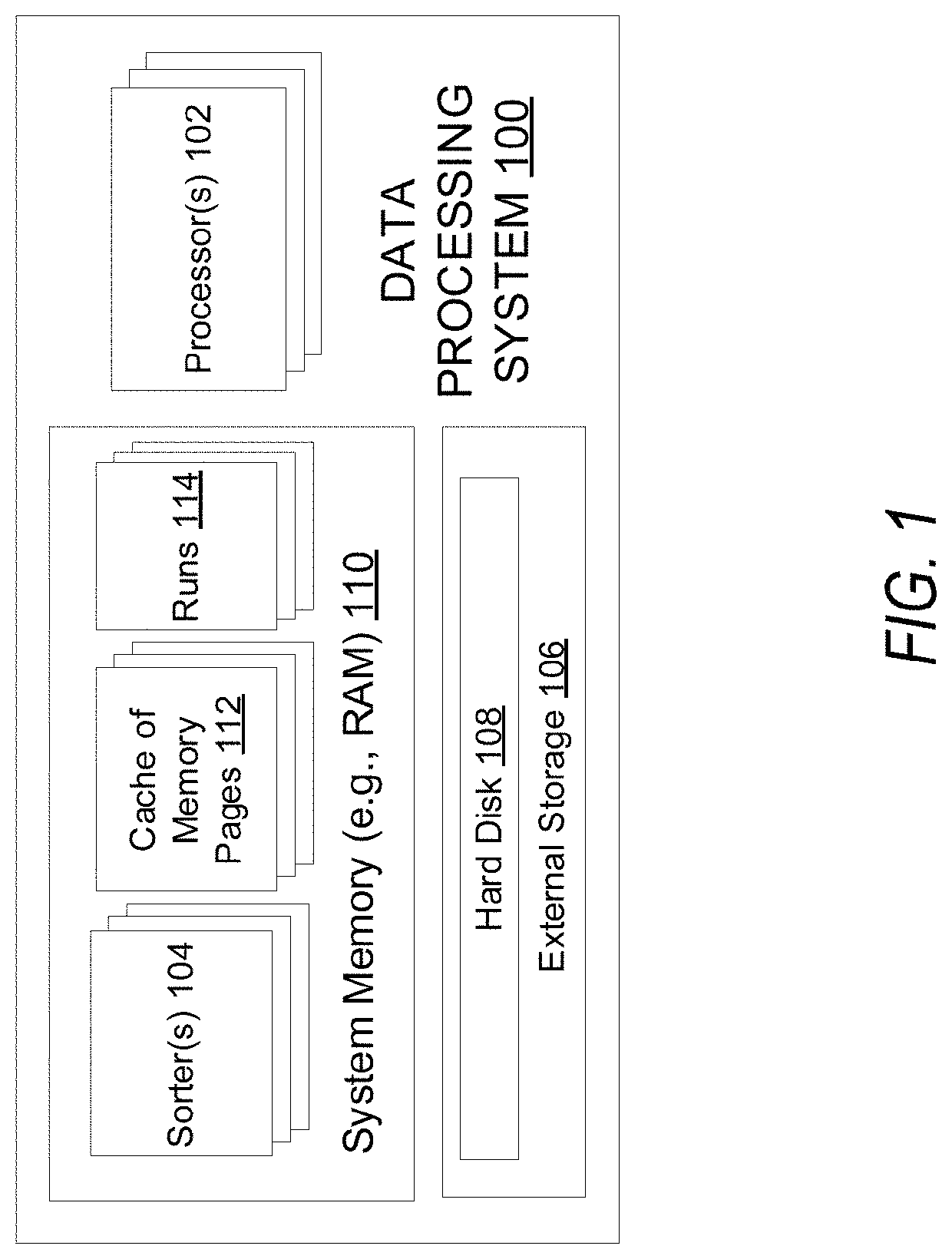

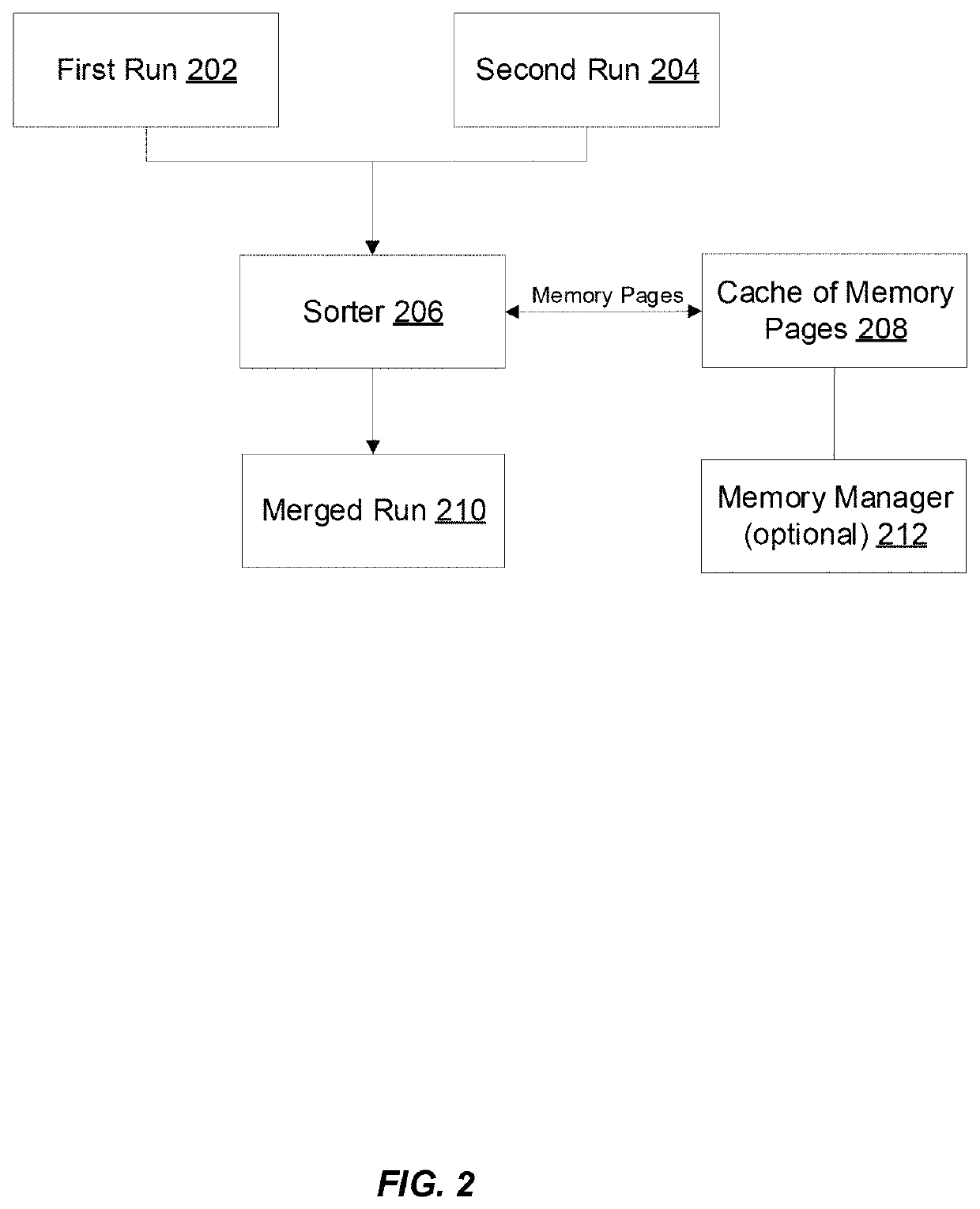

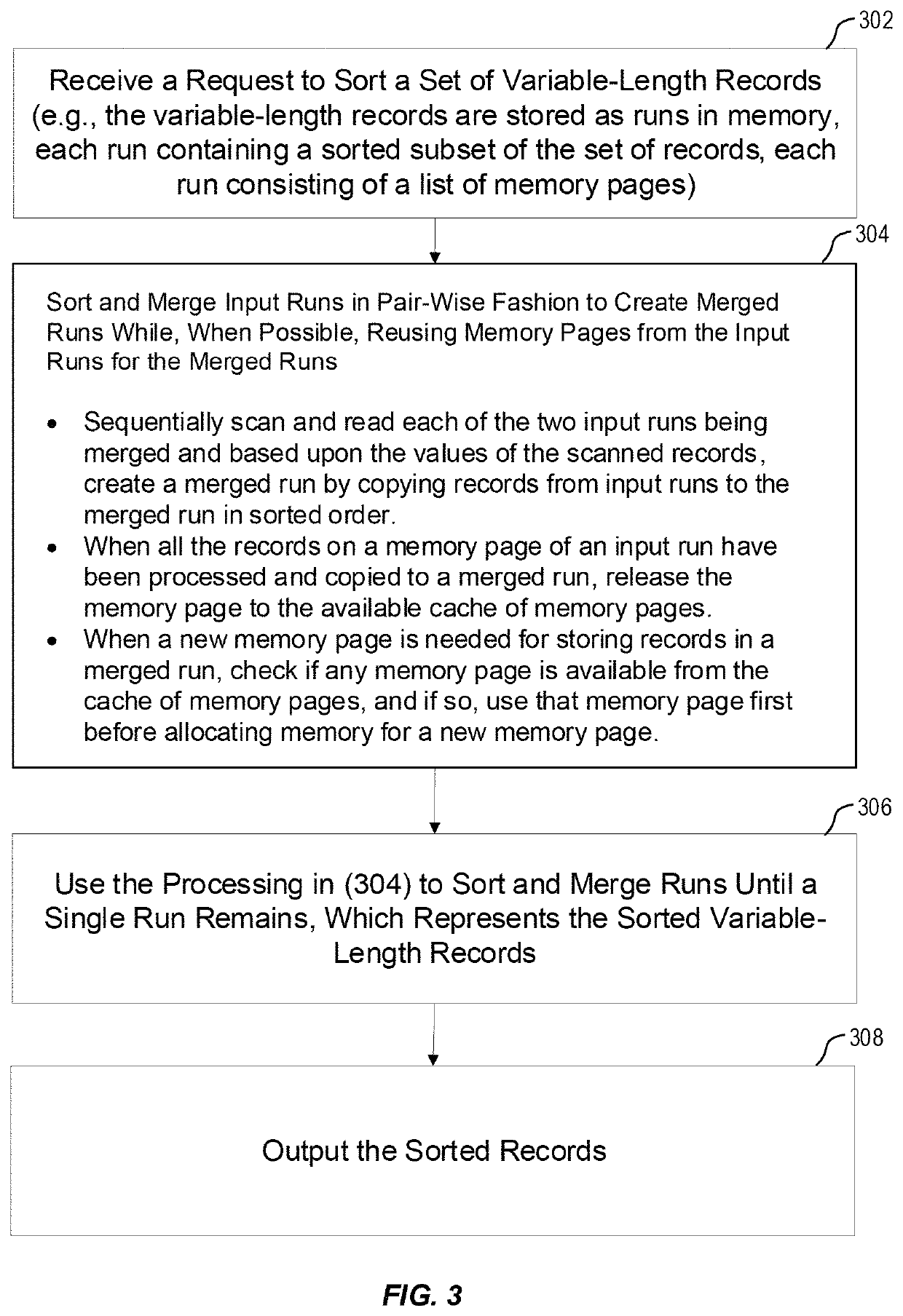

Optimized sorting of variable-length records

ActiveUS20180314465A1Improve processing speedImprove efficiencyMemory architecture accessing/allocationInput/output to record carriersLocality of referenceParallel computing

Optimized techniques are disclosed for sorting variable-length records using an optimized amount of memory while maintaining good locality of references. The amount of memory required for sorting the variable length records is optimized by reusing some of the memory used for storing the variable length records being sorted. Pairs of input runs storing variable length records may be merged into a merged run that contains the records in a sorted order by incrementally scanning, sorting, and copying the records from the two input runs being merged into memory pages of the merged run. When all the records of a memory page of an input run have been processed or copied to the merged run, that memory page can be emptied and released to a cache of empty memory pages. Memory pages available from the cache of empty memory pages can then be used for generating the merged run.

Owner:ORACLE INT CORP

Optimized sorting of variable-length records

ActiveUS10824558B2Improve processing speedImprove efficiencyInput/output to record carriersDigital data processing detailsLocality of referenceParallel computing

Optimized techniques are disclosed for sorting variable-length records using an optimized amount of memory while maintaining good locality of references. The amount of memory required for sorting the variable length records is optimized by reusing some of the memory used for storing the variable length records being sorted. Pairs of input runs storing variable length records may be merged into a merged run that contains the records in a sorted order by incrementally scanning, sorting, and copying the records from the two input runs being merged into memory pages of the merged run. When all the records of a memory page of an input run have been processed or copied to the merged run, that memory page can be emptied and released to a cache of empty memory pages. Memory pages available from the cache of empty memory pages can then be used for generating the merged run.

Owner:ORACLE INT CORP

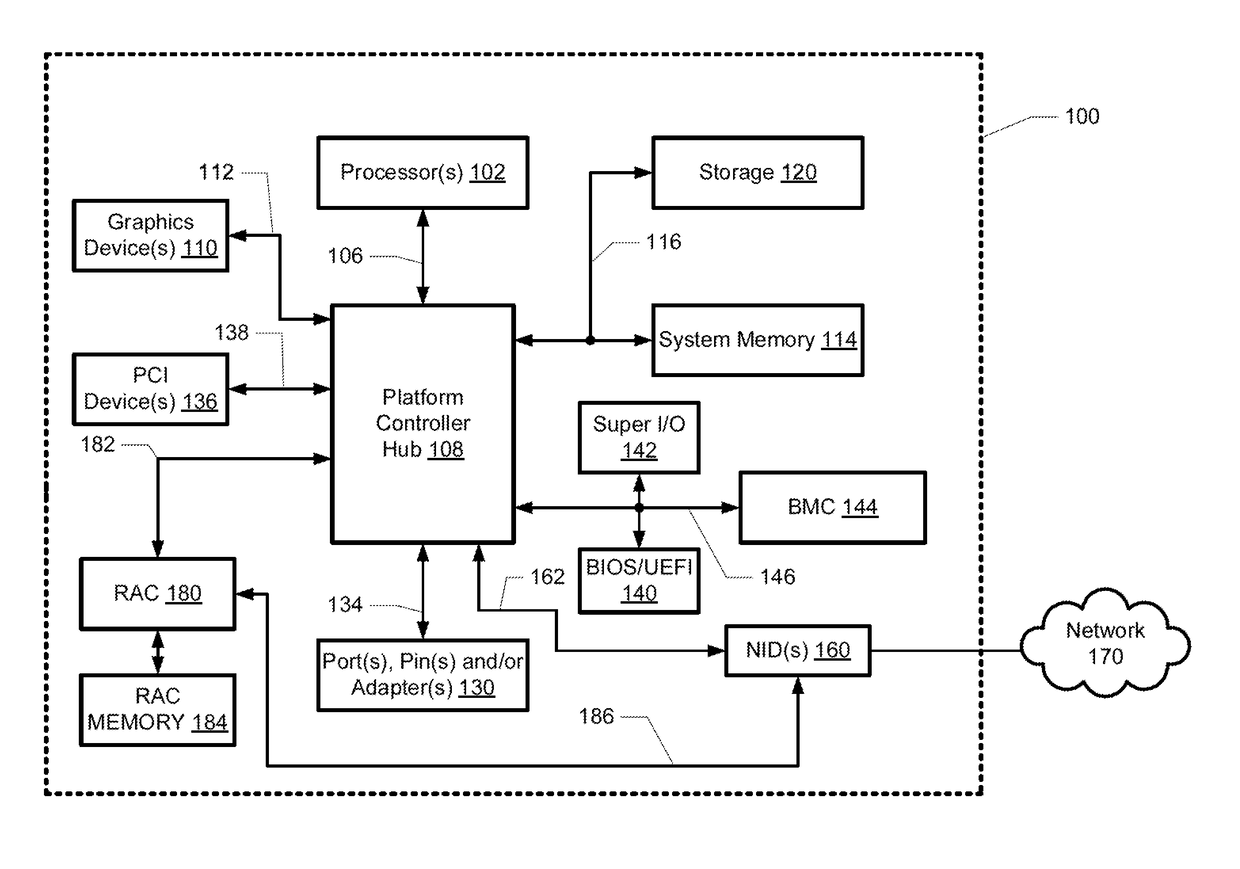

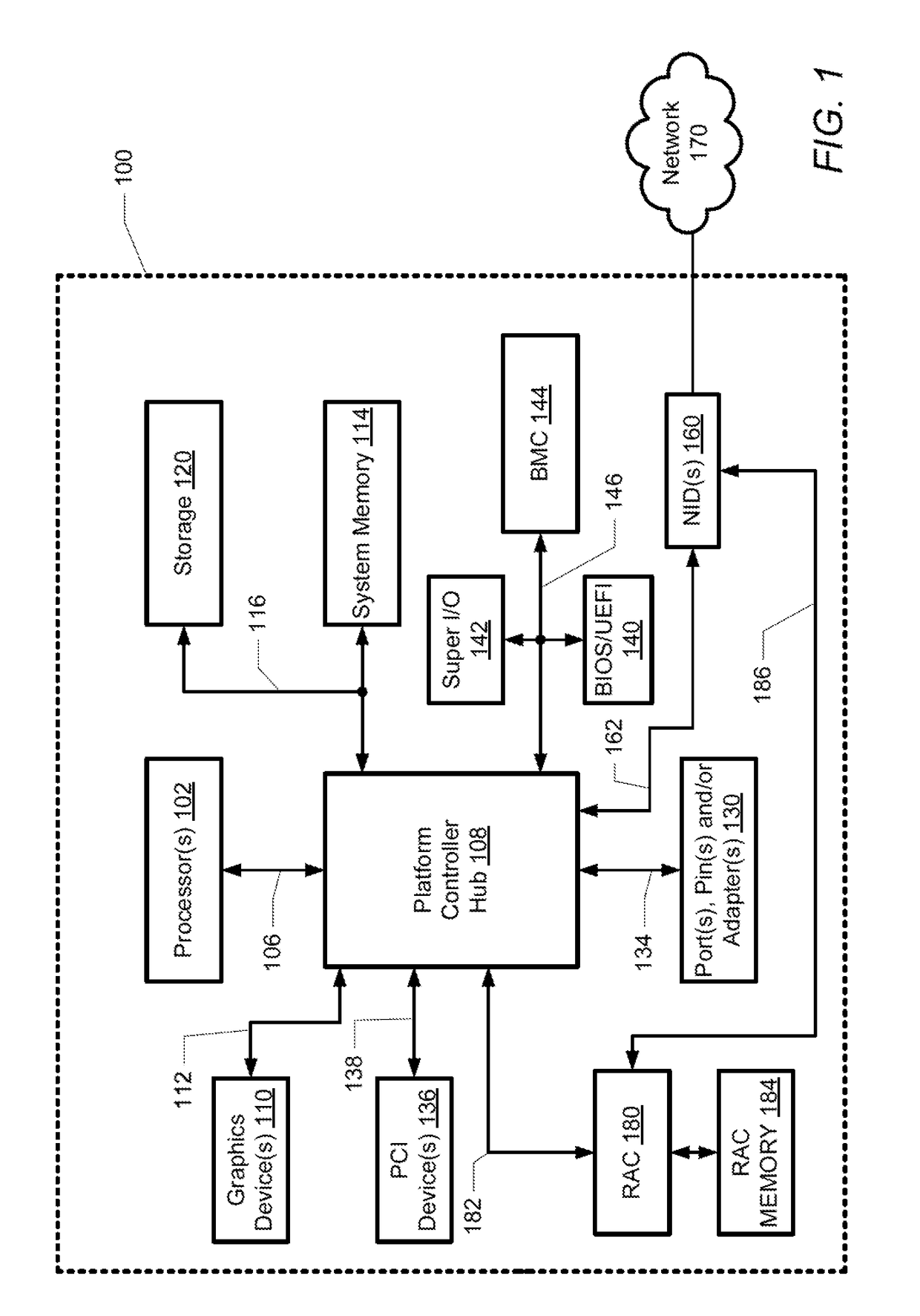

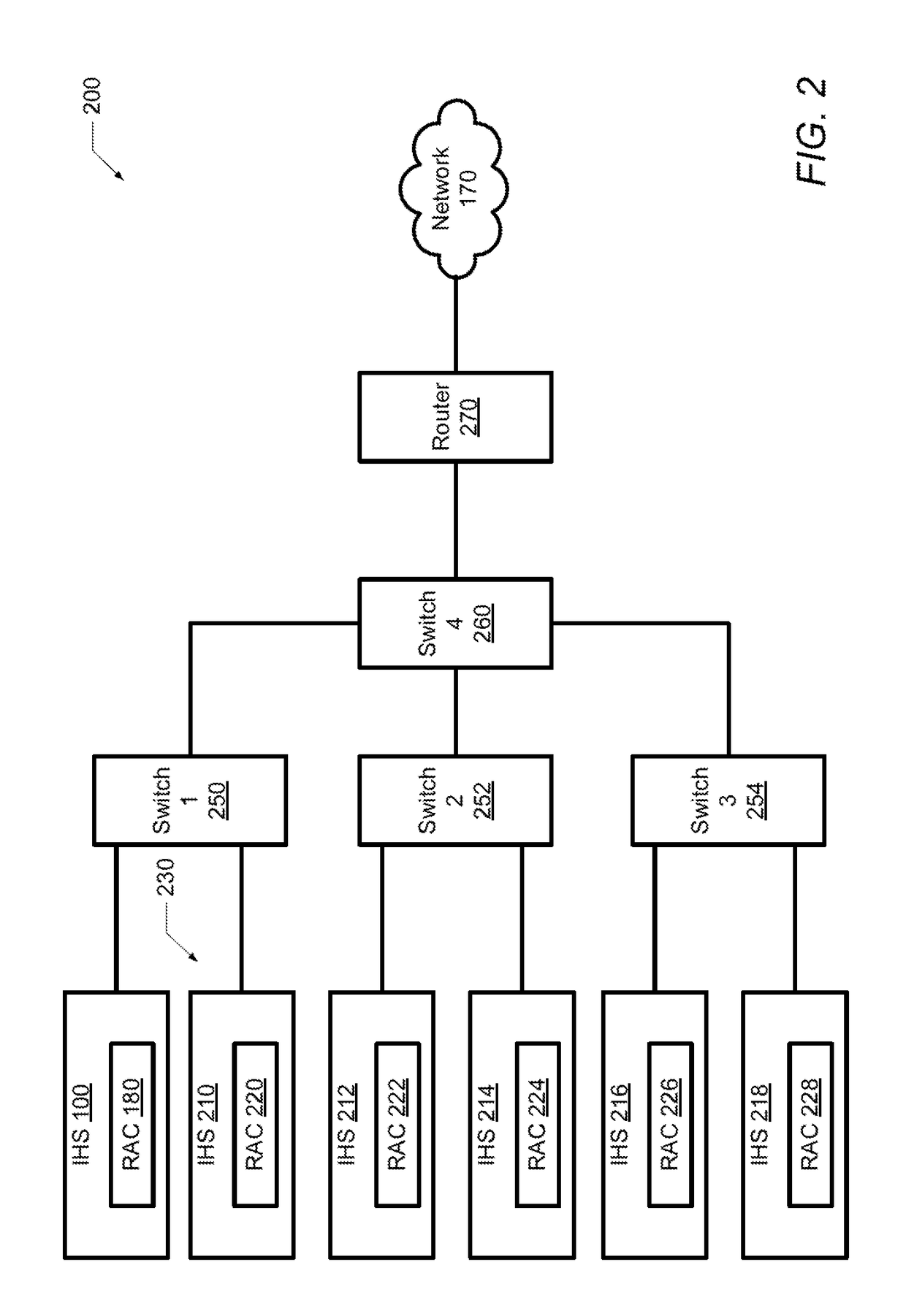

System and method for determining a master remote access controller in an information handling system

ActiveUS20170085418A1Error detection/correctionData switching networksLocality of referenceHandling system

A method and information handling system (IHS) determines a master remote access controller (RAC) in a distributed IHS having multiple communicatively-connected computing nodes with corresponding RACs. The method includes transmitting a first set of RAC parameters from a first RAC to several other RACs. The first set of RAC parameters includes a locality of reference (LOR) value for the first RAC. Several other sets of RAC parameters are received from the other RACs. A first list of all of the RACs is generated including the associated LOR values. The first list is sorted based on the LOR values and the RAC having the highest LOR value in the first list is designated as a first master RAC candidate.

Owner:DELL PROD LP

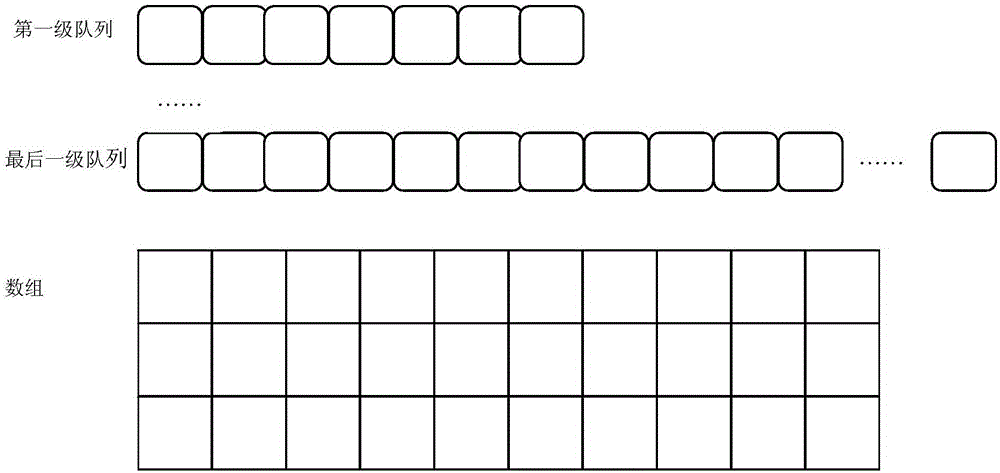

Memory pool and memory allocation method

ActiveCN105138475AWon't swap outAvoid failureMemory adressing/allocation/relocationLocality of referenceMemory object

The invention discloses a memory pool. The memory pool comprises at least two stages of queues which are correspondingly related with memory objects in the memory pool and used for allocating and releasing the memory objects corresponding to the queues. The at least two stages of queues comprise the first stage of queue. When an external module applies for the memory objects from the memory pool, the first stage of queue is preferentially used for allocating the memory objects for the external module. The memory pool can accord with the access locality principle; even high-throughput network data forwarding is performed, frequent access of a system will not exist on a large scale; it is avoided that the Cache of a CPU loses efficacy when the system has access to the memory objects, effective data in the Cache of the CPU will not be swapped out, and the hit rate of the Cache of the CPU is increased to a large extent.

Owner:NEUSOFT CORP

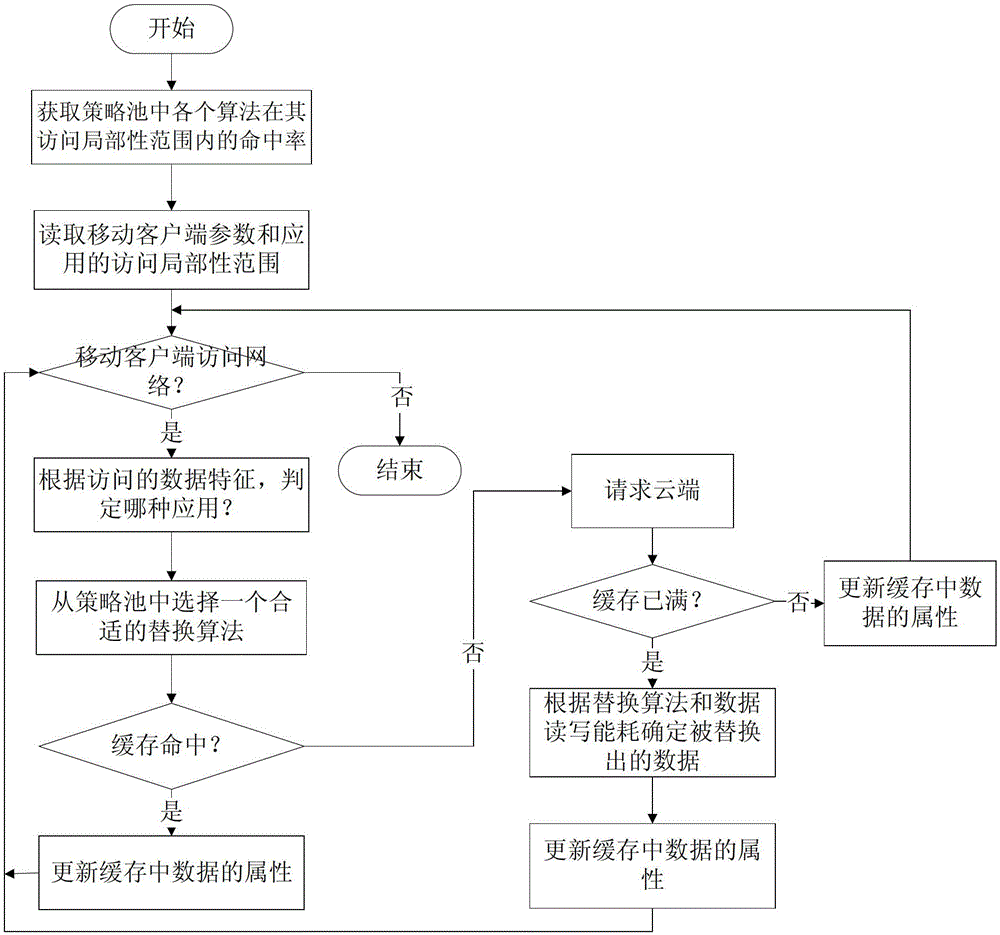

Low energy consumption-based data cache method in mobile cloud computing environment

The invention relates to a low energy consumption-based data cache method in a mobile cloud computing environment. The method comprises the following steps: step 1, the hit rate of each algorithm within an access locality range in a strategy pool is acquired; step 2, parameters of a mobile client and the access locality range of the application are read; step 3, in the case when the mobile client accesses the network, according to features of the access data, the used application is judged, and according to the access locality range of the application, a replacement algorithm with the highest hit rate within the access locality range in the strategy pool is selected; step 4, the mobile client firstly carries out query in the local cache, attributes of data in the cache is directly updated if cache hits, and the third step is carried out, and if cache does not hit, data are requested from the cloud, the attributes of data in the cache is updated according to the selected replacement algorithm, and the third step is carried out. Compared with the prior art, on the premise that the system performance demands are met, energy consumption can be effectively saved.

Owner:TONGJI UNIV

A resource allocation and cost optimization method for cloud video

ActiveCN103973780BGood data availabilityImprove utilization efficiencyResource allocationTransmissionLocality of referenceOperational costs

The invention discloses a resource allocation and overhead optimization method for a cloud video. The method comprises the steps that a mathematical model used for describing the relations between channel distribution, user bandwidth distribution, total operation cost and quality of service (QoS); the model is proved and solved to be NP-hard; by the introduction of a penalty function, the problem of minimizing overhead through channel copying and bandwidth allocation is equivalently transformed into the problem of maximizing benefits through the channel copying and the bandwidth allocation; a resource allocation and overhead optimization method, namely DREAM, in a cloud data center is put forward to solve the problems of reservation and allocation of the bandwidth of a cloud platform and to determine the copy layout of channels of the cloud data center; the principle of locality is integrated in a resource allocation and overhead optimization algorithm, and a DREAM-L algorithm is put forward. Compared with the prior art, the method allows a cloud system to provide satisfactory watching quality, locality of reference and data availability for video-on-demand service at a low price.

Owner:HUAZHONG UNIV OF SCI & TECH

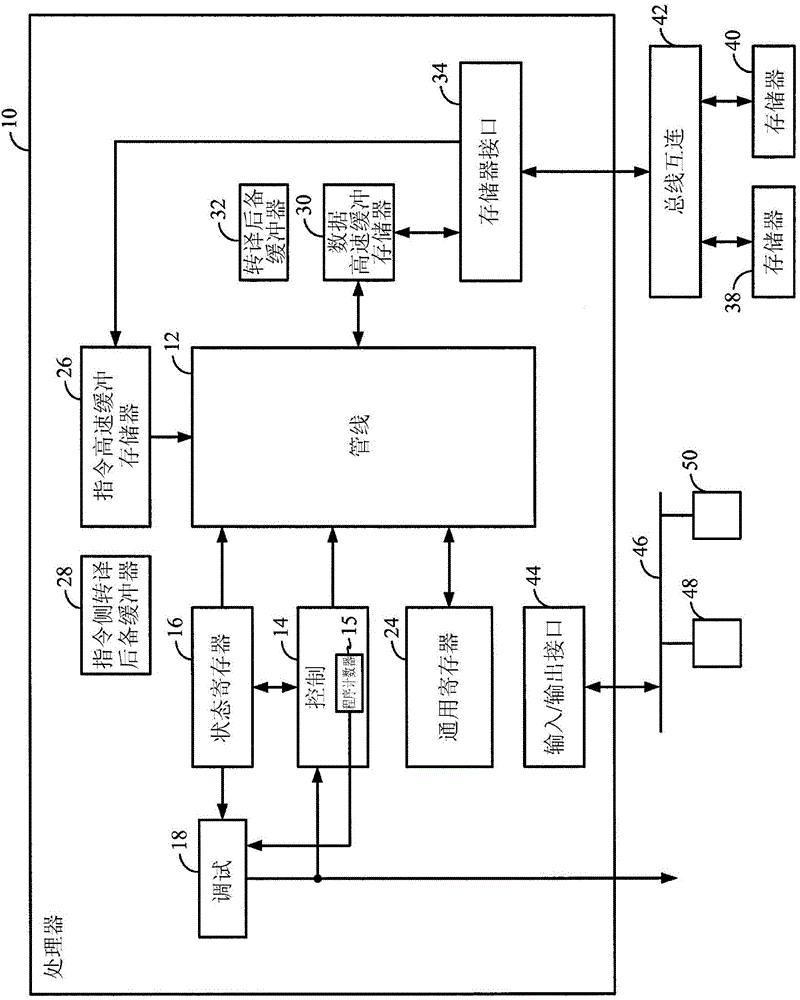

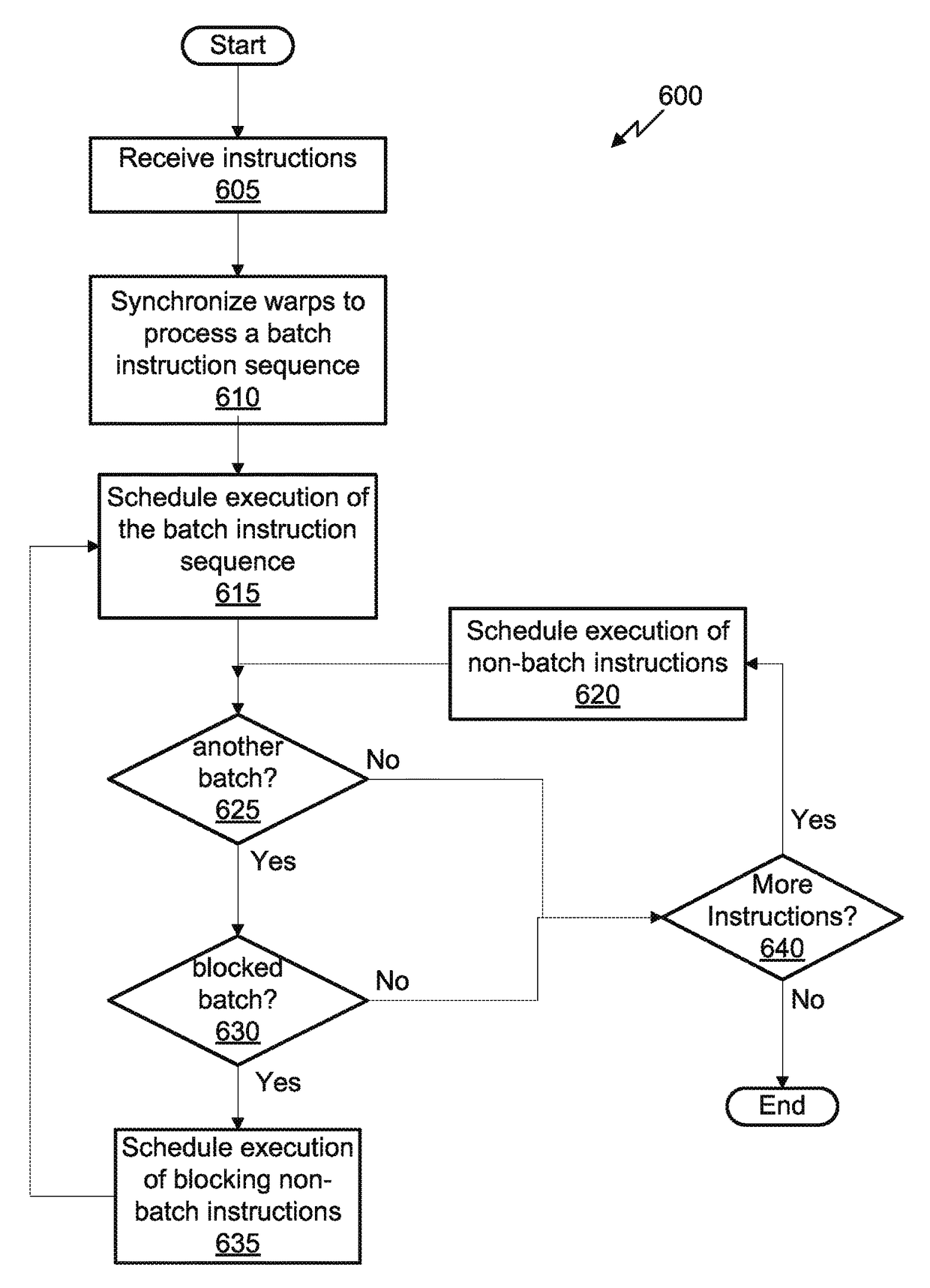

Reordering buffer for memory access locality

ActiveUS9798544B2Instruction analysisConcurrent instruction executionLocality of referenceBatch processing

Owner:NVIDIA CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com