Patents

Literature

194 results about "Memory object" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

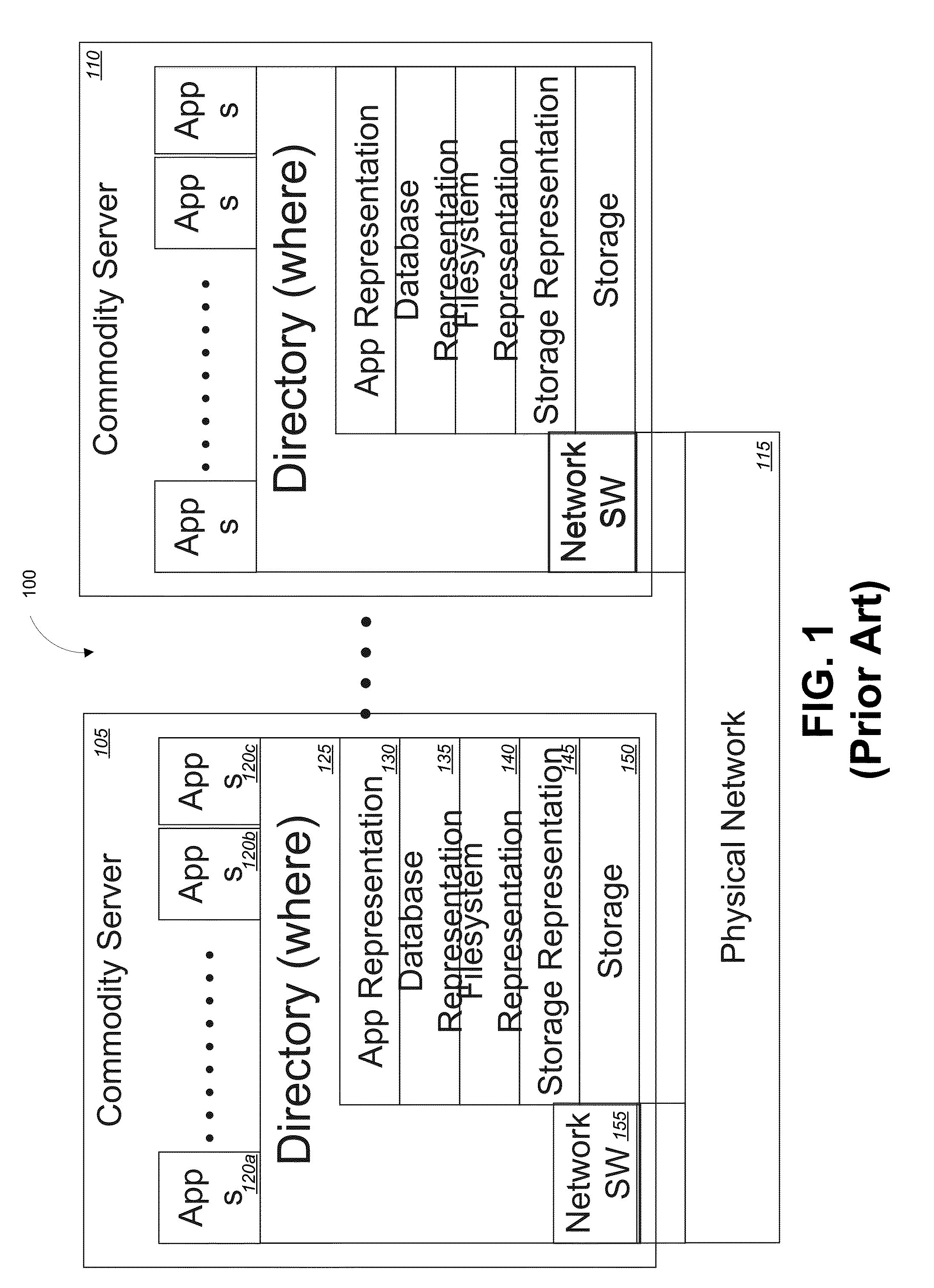

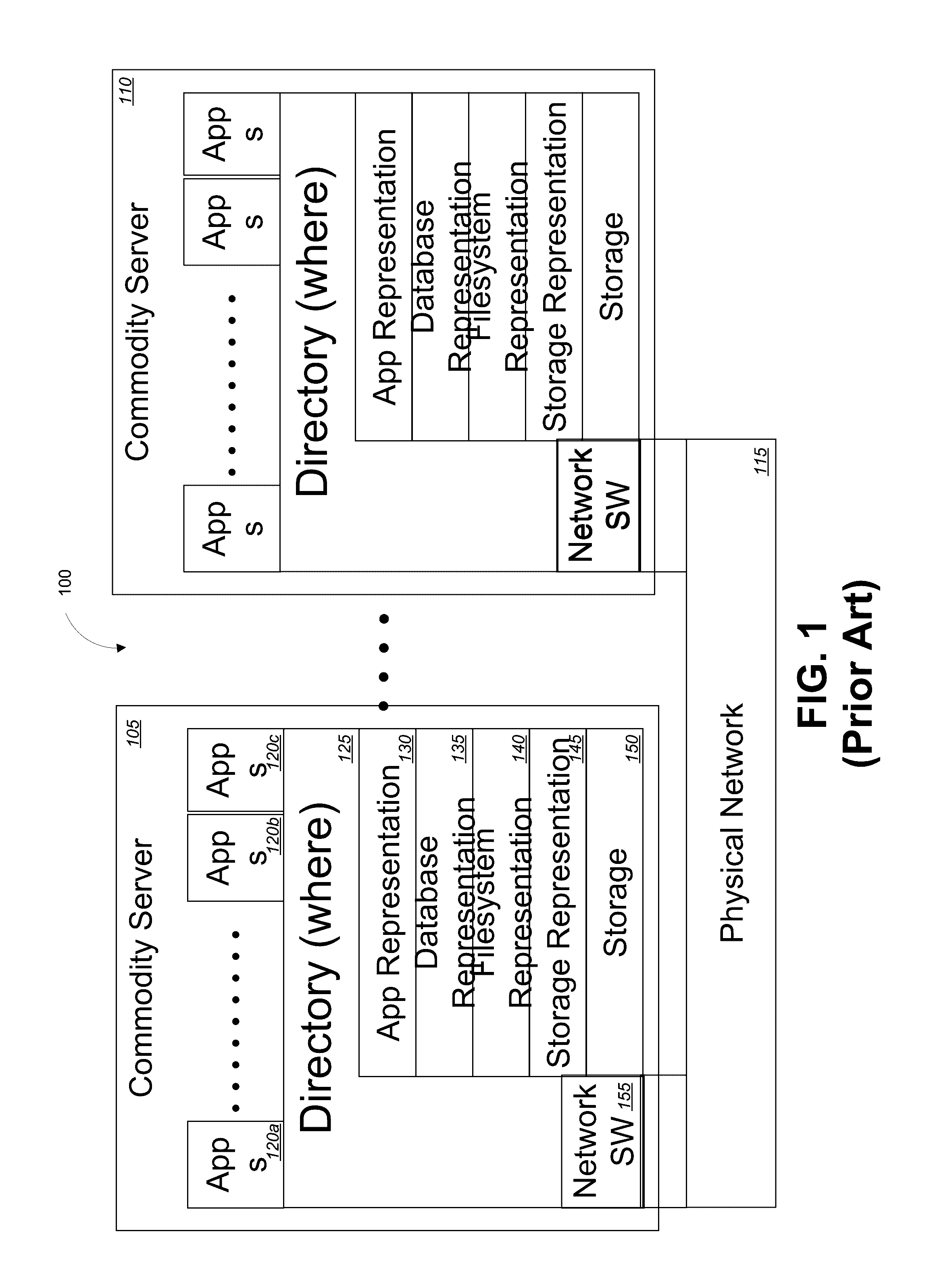

Object memory involves processing features of an object or material such as texture, color, size, and orientation. It is processed mainly in the ventral regions of the brain. A few studies have shown that on average most people can recall up to four items each with a set of four different visual qualities.

Method for combining card marking with remembered sets for old area of a memory heap

InactiveUS6148310AData processing applicationsMemory adressing/allocation/relocationWaste collectionMemory object

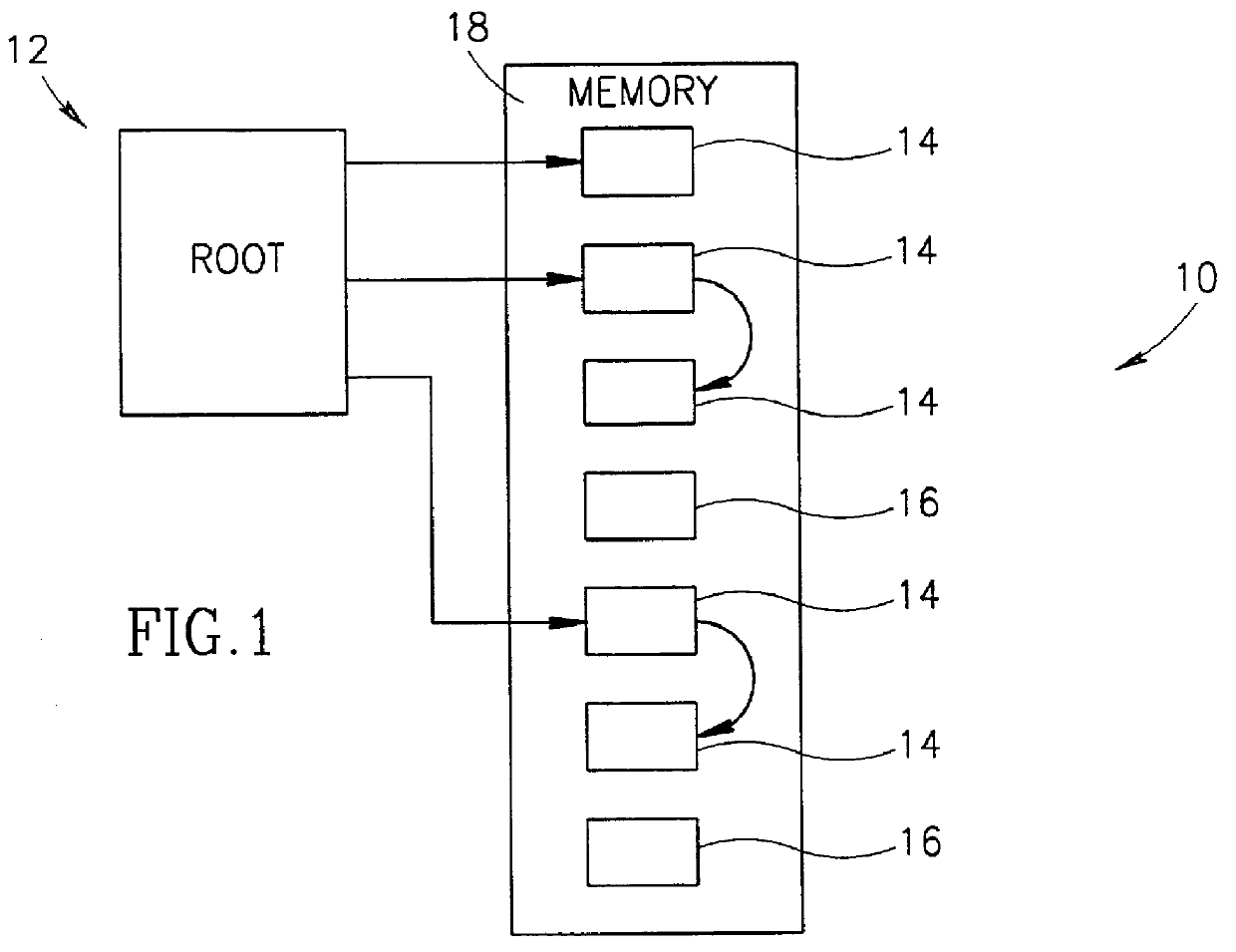

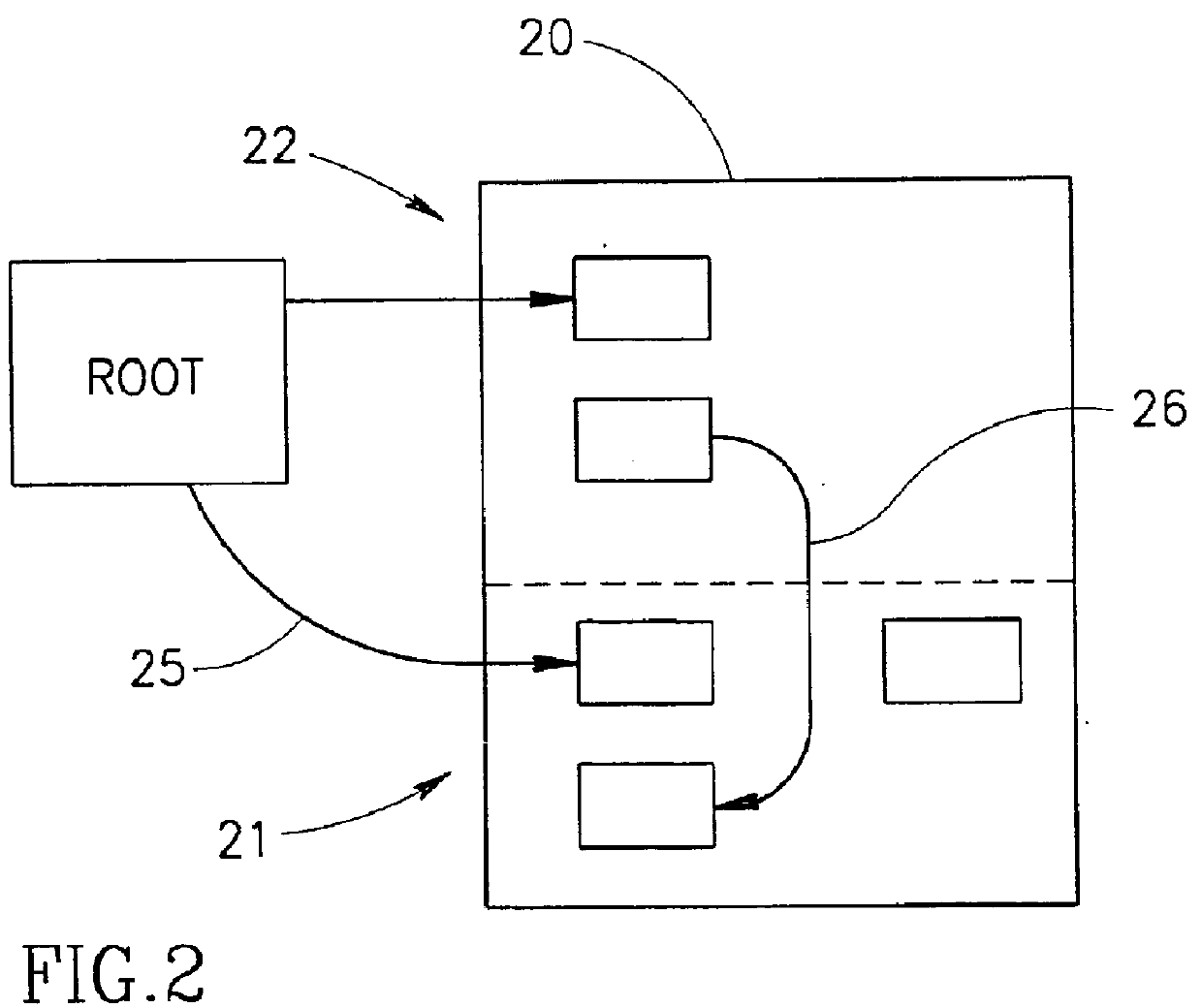

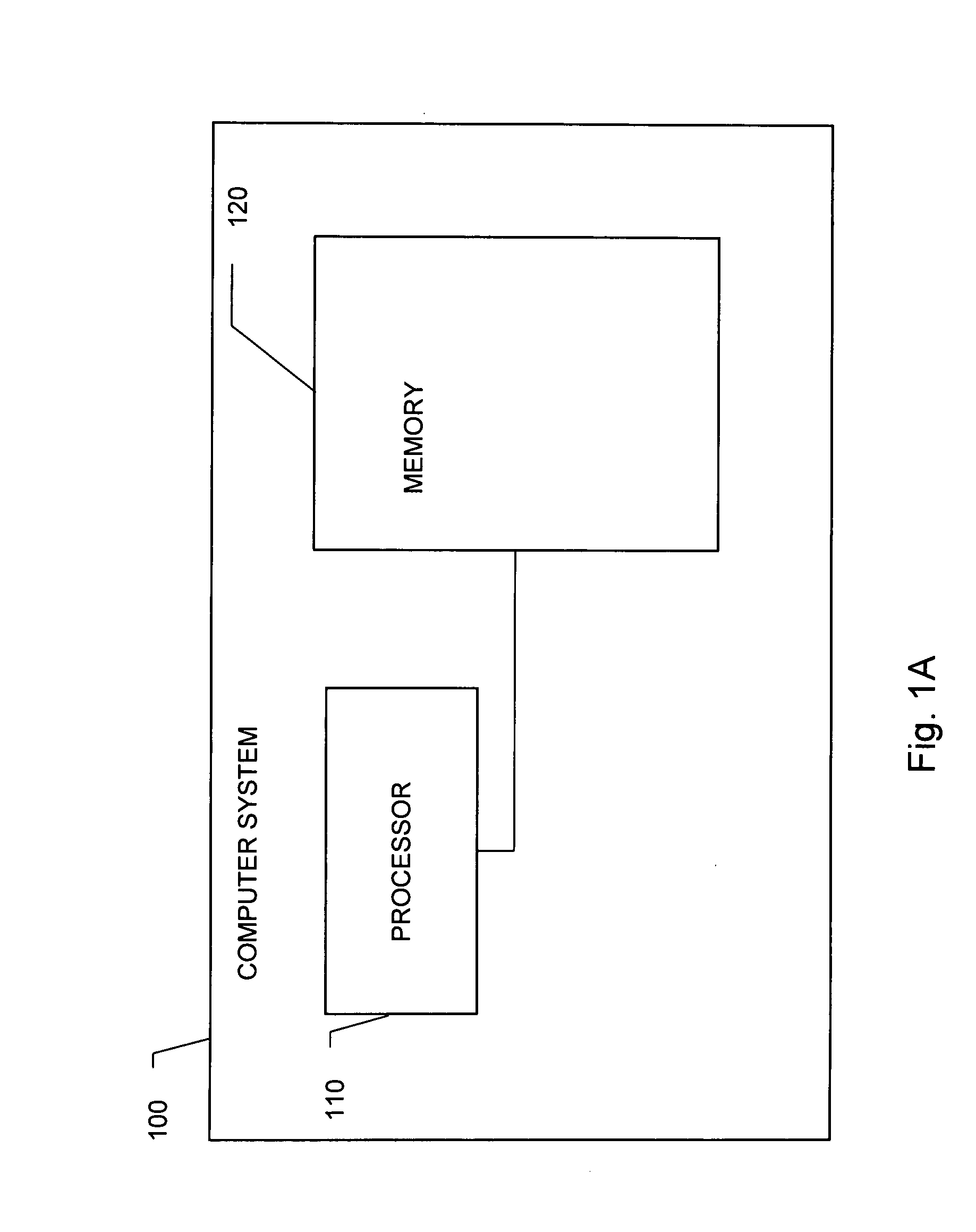

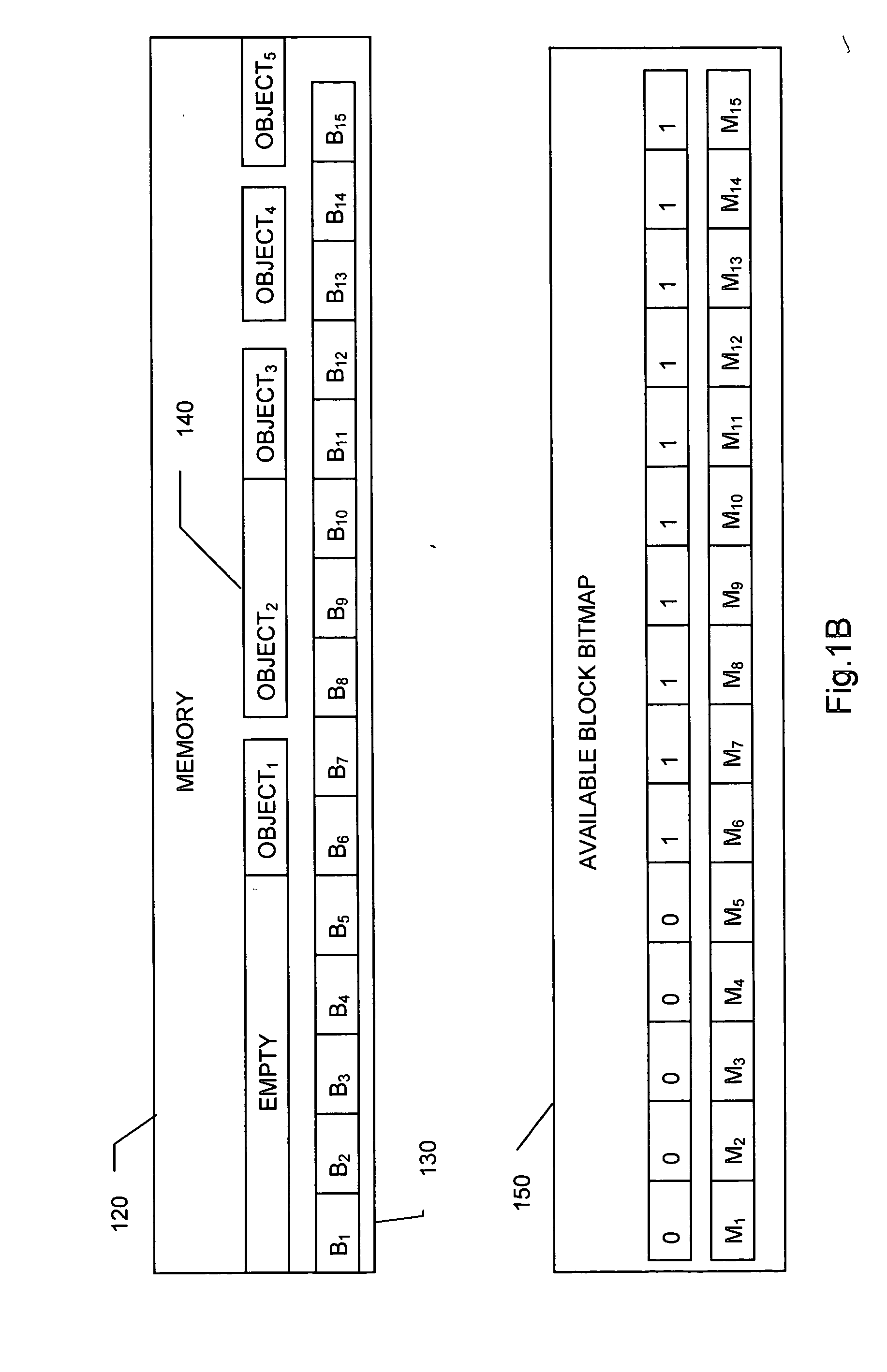

A system for garbage collection of memory objects in a memory heap. The system includes memory heap that is partitioned into respective old and young areas. The old area is partitioned into cars and is further associated with card markings and remembered sets data structures. The card markings include for each card, a card time stamp that represents the time that the card was updated. The car includes, for each car, a car time entry stamp that represents the time the remembered set of the car was updated. The system further includes a processor communicating with the memory, and being capable of identifying all cards that were updated later than the remembered set of a selected car. In response to the event, it performs identifying change in pointers that refer from the card to a memory object in the selected car and in response to identified change in pointers, updating the remembered set of the car with the identified pointers. The process is further capable of updating the car time stamp of the selected car.

Owner:IBM CORP

System and method for memory reclamation

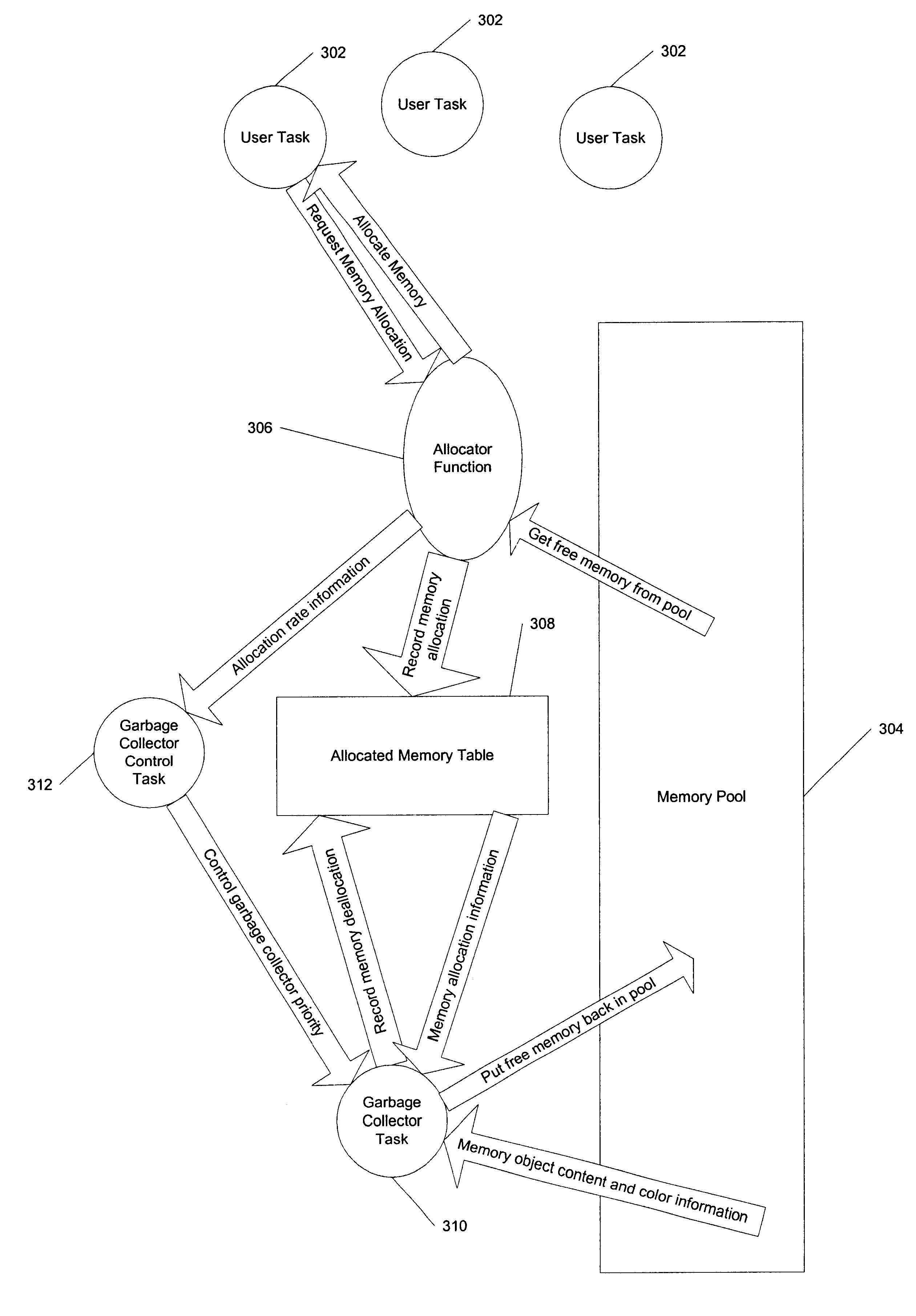

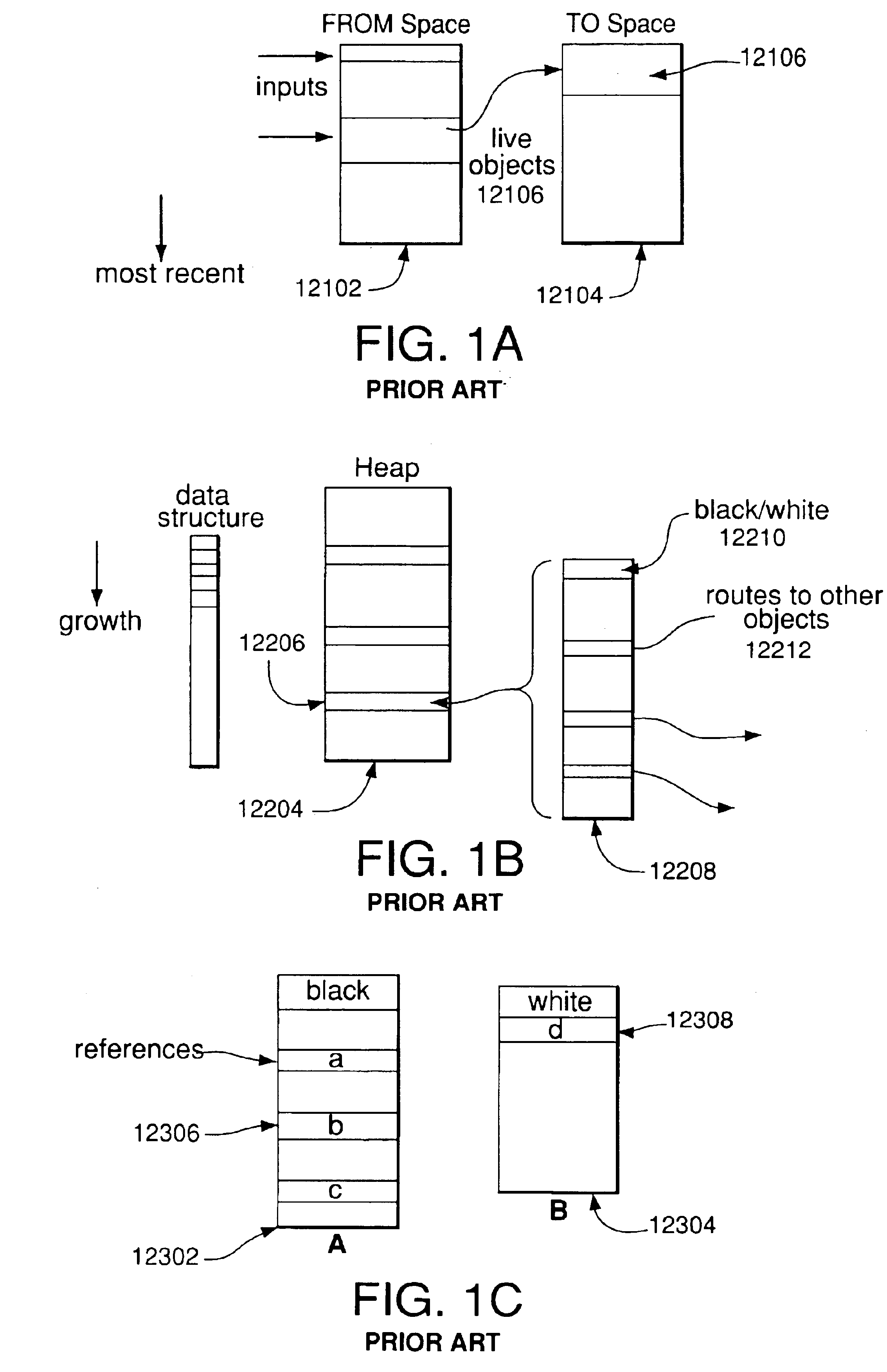

A method for memory reclamation is disclosed that includes marking a memory object when an attempt to alter a reference to the memory object is detected by a software write barrier. Marking be by using representations (“black,”“white”, “gray”) stored as part of a garbage collection information data structure associated with each memory object. Initially, all allocated memory objects are marked white. Objects are then processed such that objects referenced by pointers are marked gray. Each object marked gray is then processed to determine objects referenced by pointers in it, and such objects are marked gray. When all objects referenced by pointers in the selected gray objected have been processed, the selected gray object is marked black. When all processing has been completed, objects still marked white may be reclaimed. Also described is a garbage collector which runs as a task concurrently with other tasks. A priority of the garbage collector may be increased to prevent interruption during certain parts of the garbage collection procedure.

Owner:WIND RIVER SYSTEMS

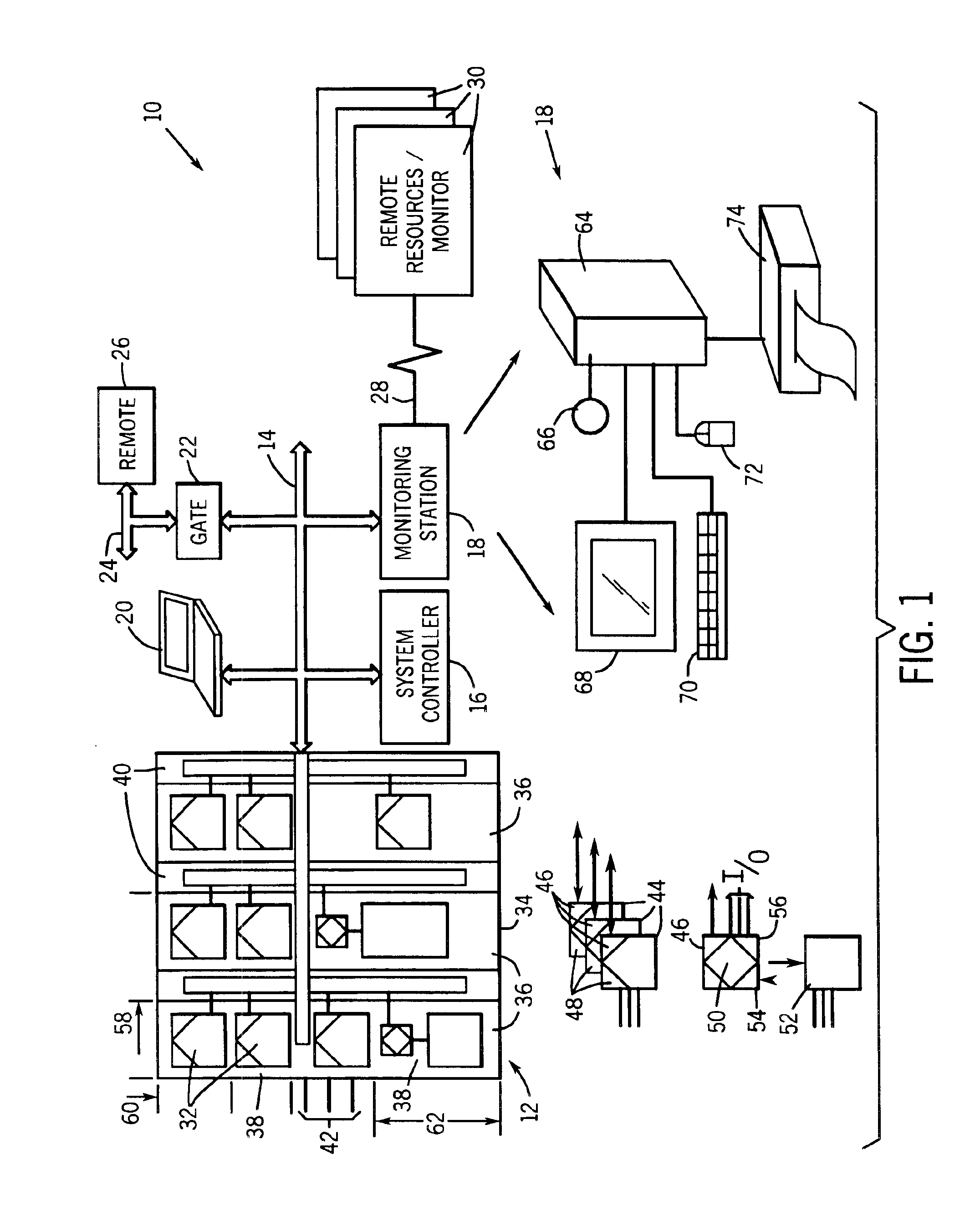

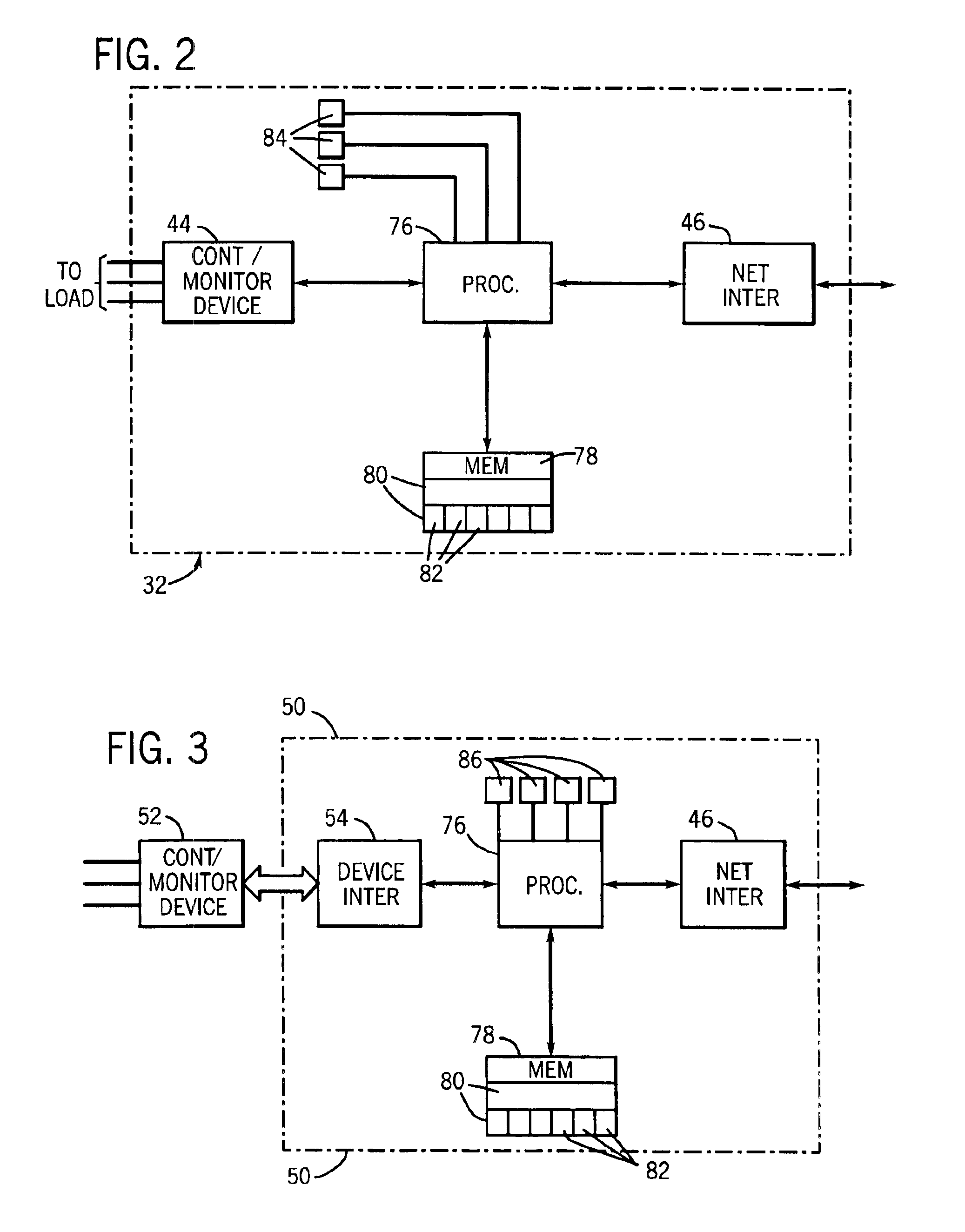

Electrical control system configuration method and apparatus

A technique for configuring programmable, networked electrical components of a system includes downloading data into each component from a configurator, based upon a database for the system. The components include memory objects which are designed to store data descriptive of the system, the component, the component location in the system, and the component configuration. The configurator may load the information prior to, during or following assembly of the components in the system. The configurator may also modify system and component configurations following installation of the system by modifying the data downloaded into the individual components.

Owner:ROCKWELL TECH

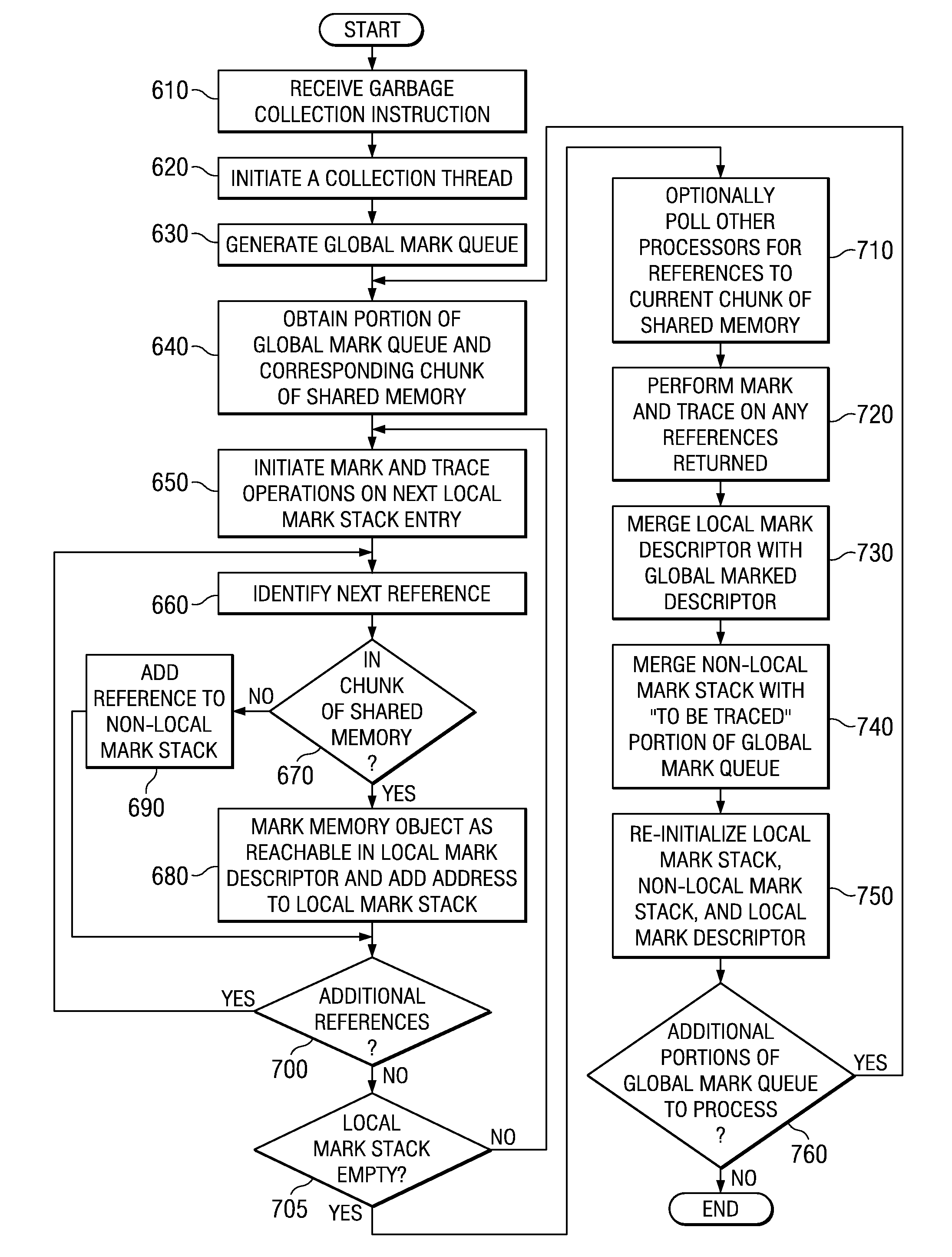

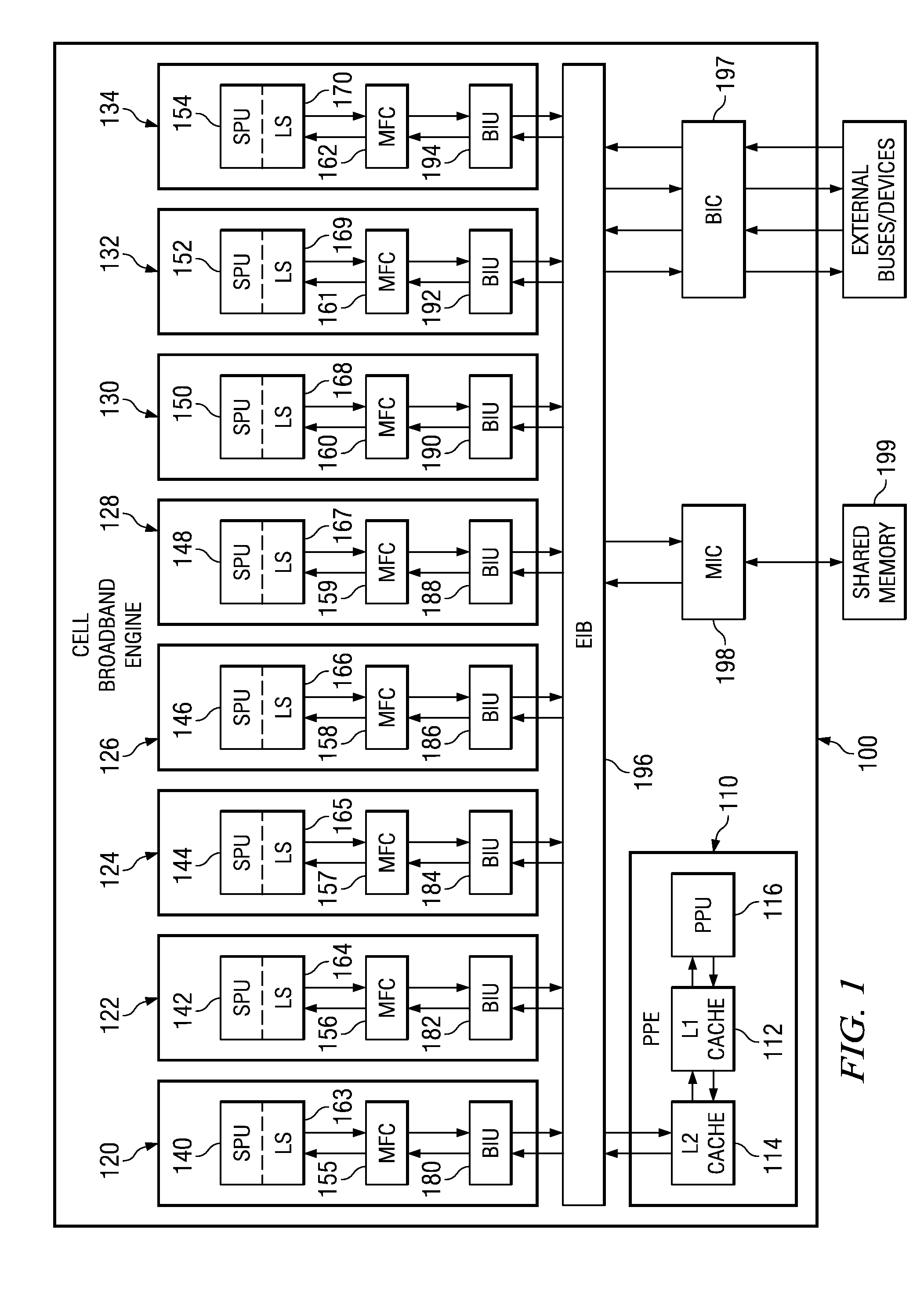

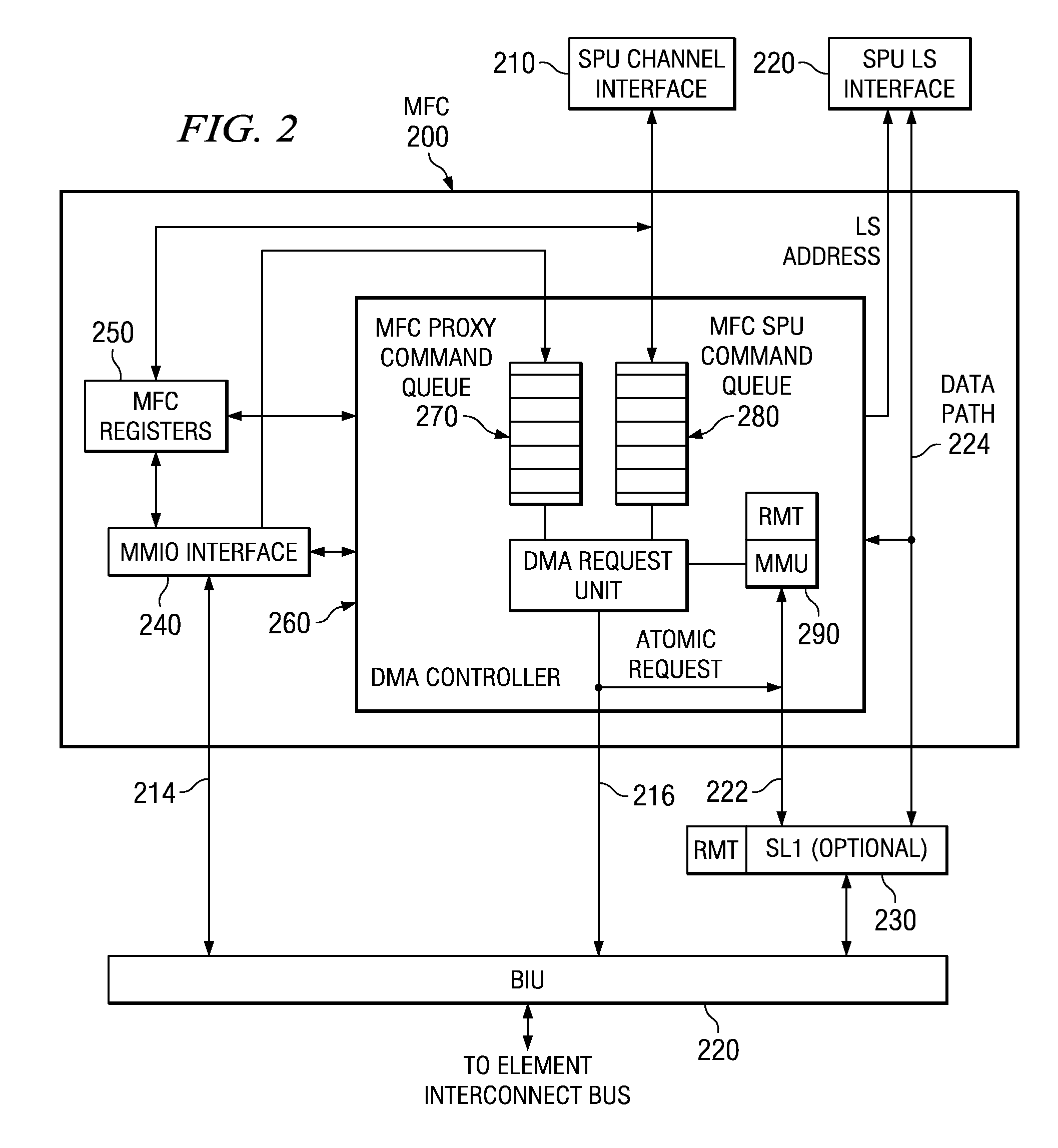

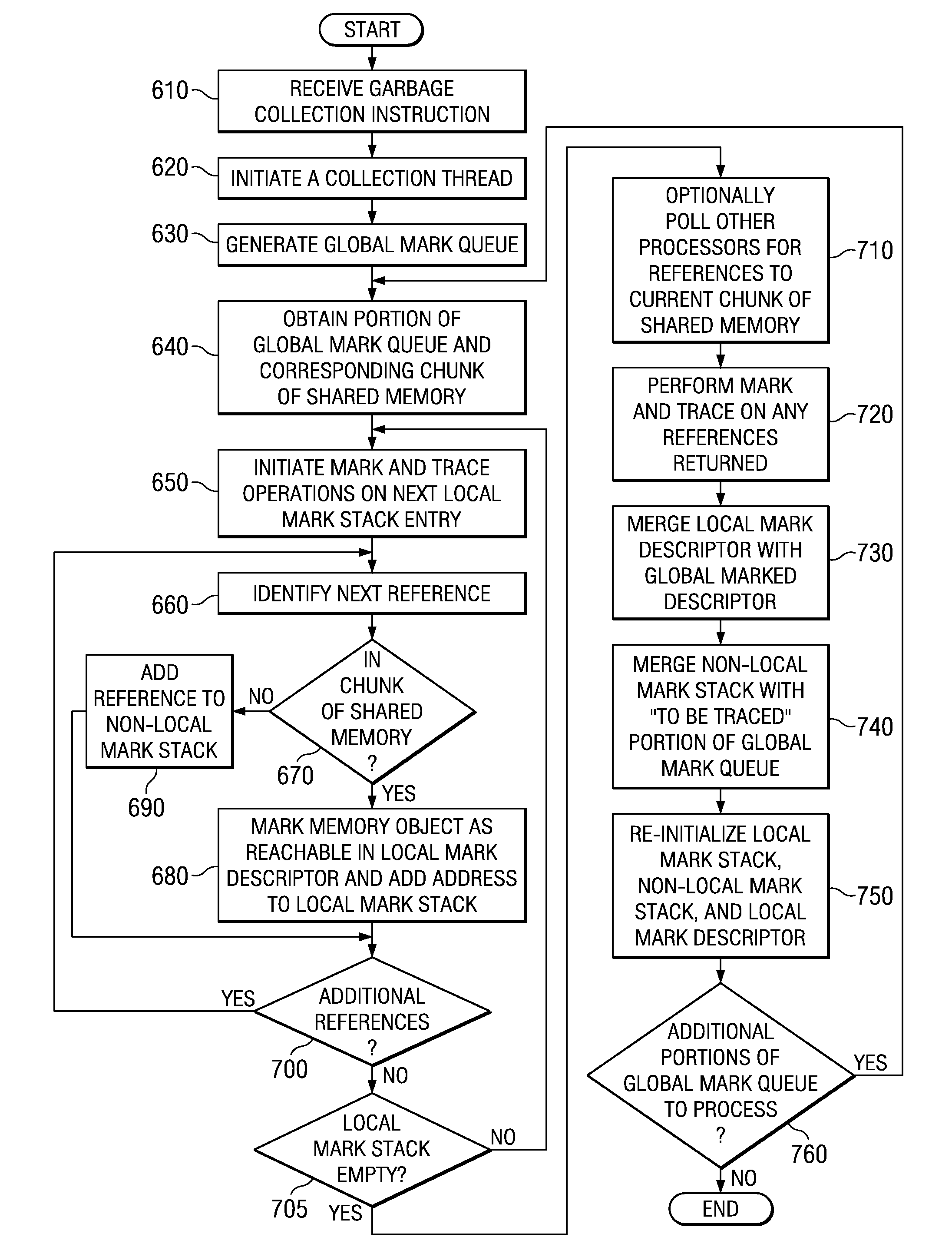

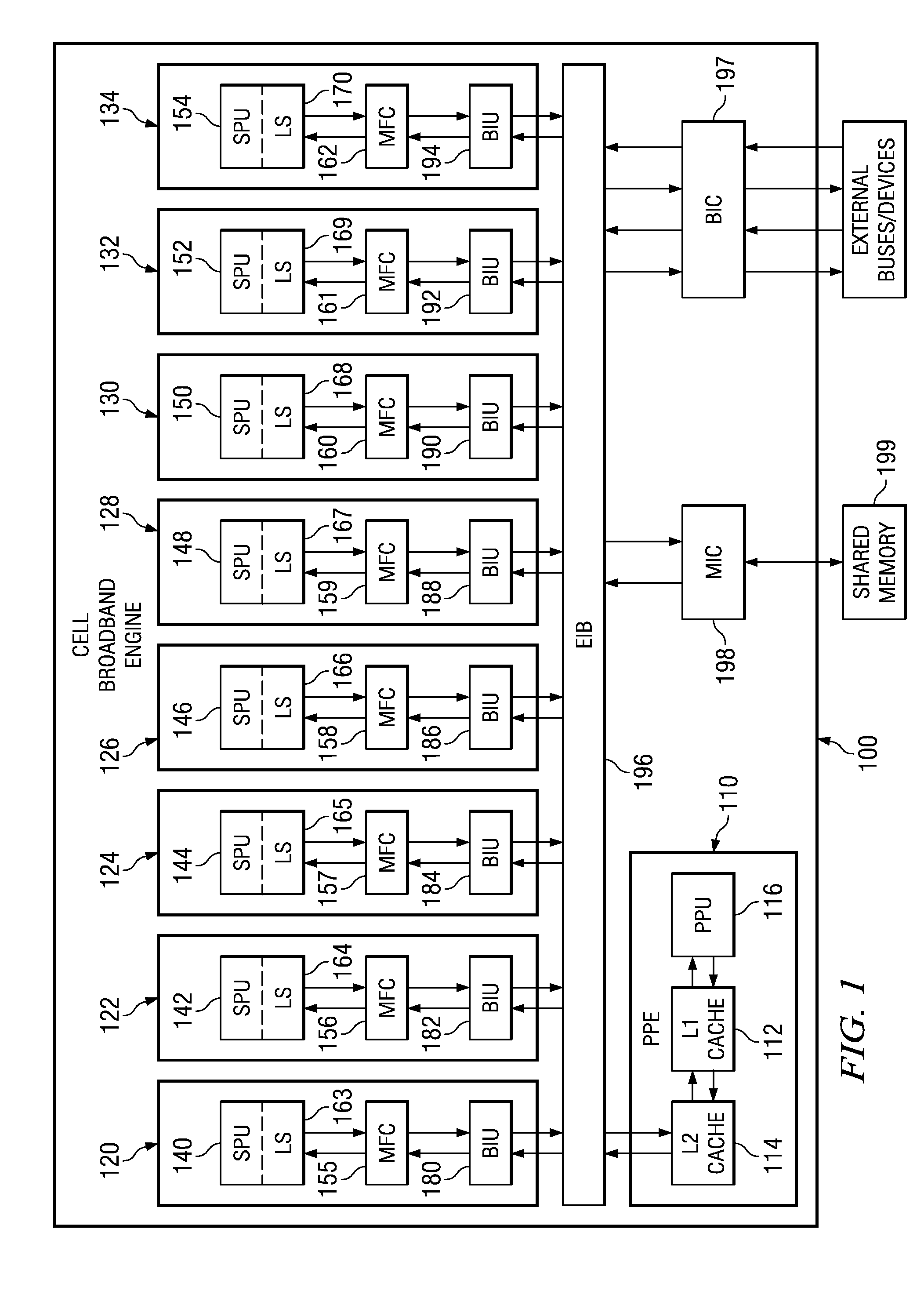

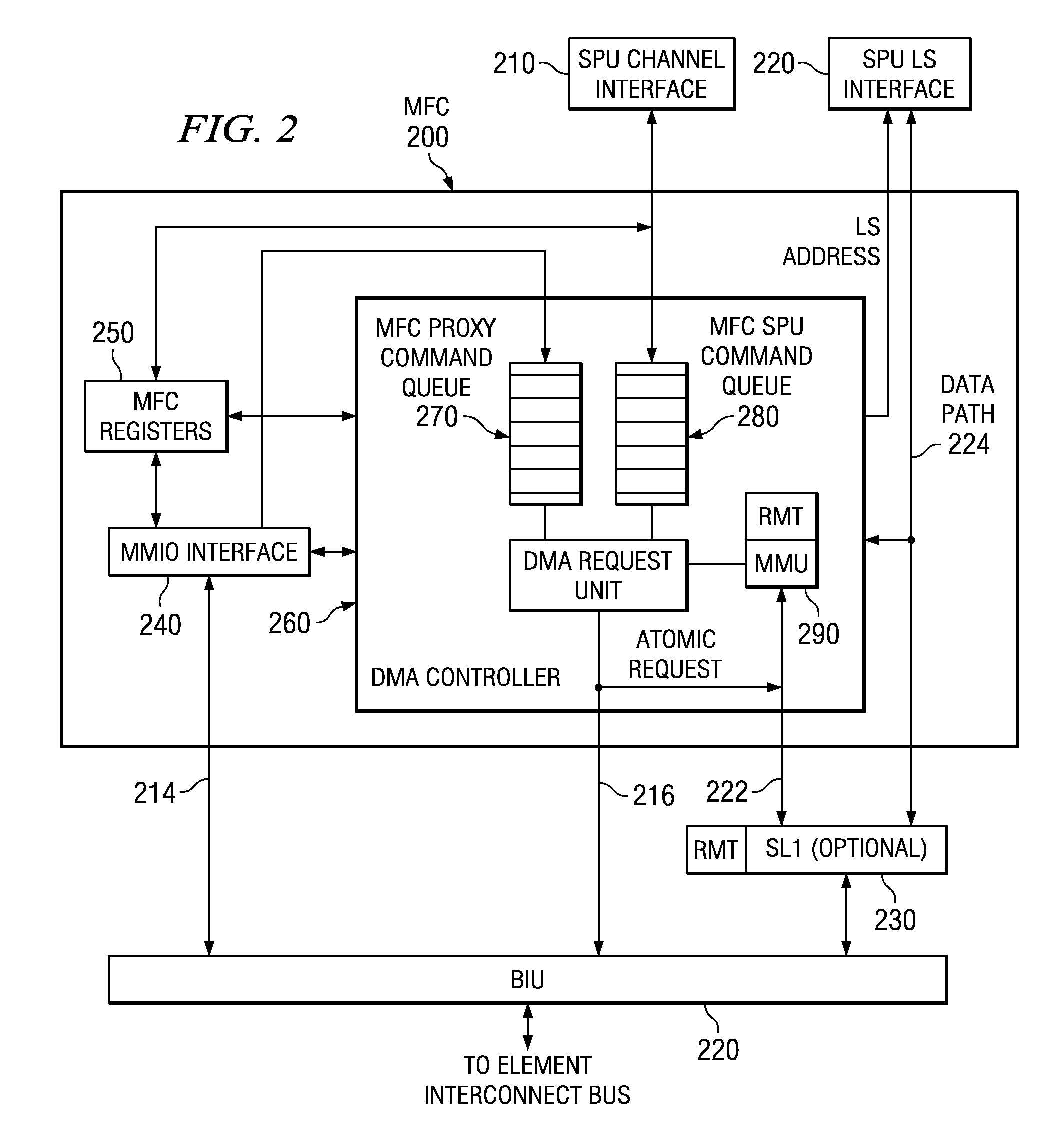

System and method for garbage collection in heterogeneous multiprocessor systems

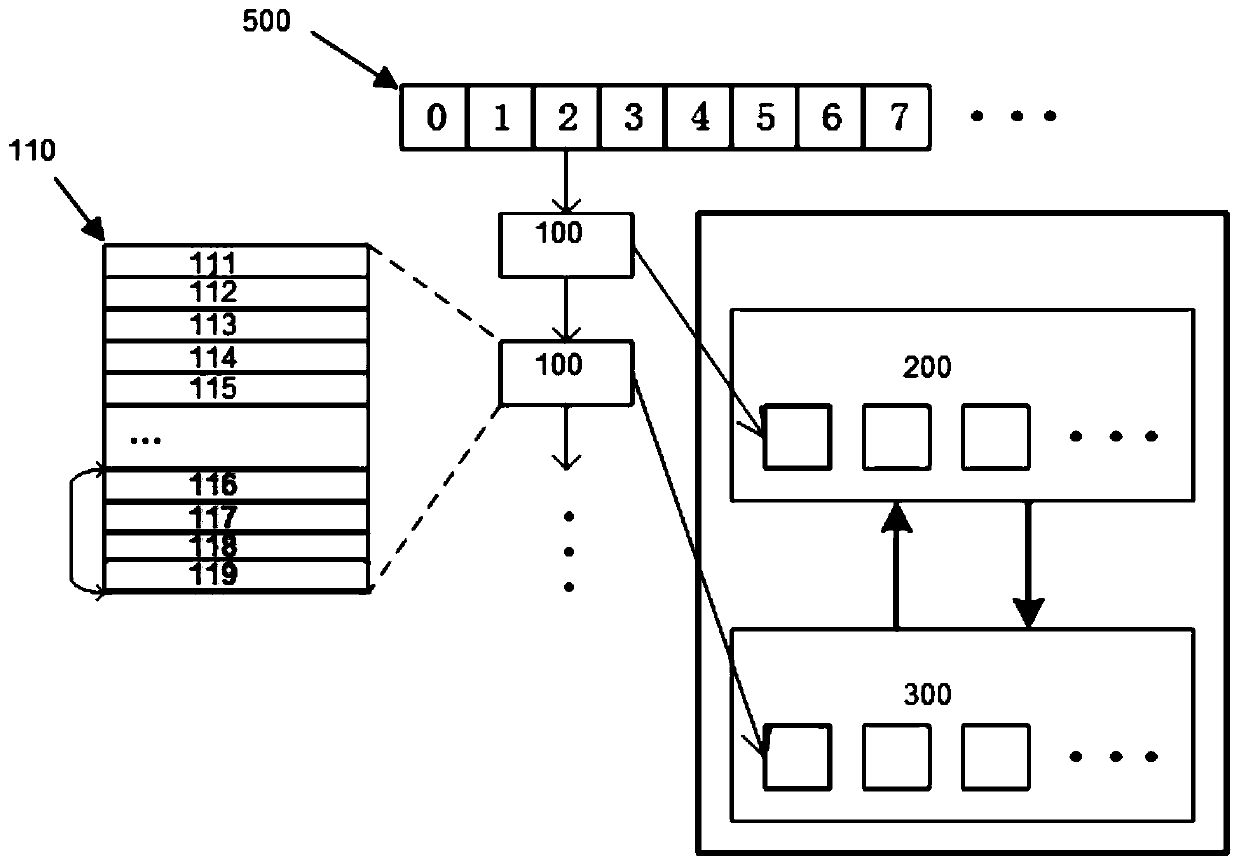

A system and method for garbage collection in heterogeneous multiprocessor systems are provided. In some illustrative embodiments, garbage collection operations are distributed across a plurality of the processors in the heterogeneous multiprocessor system. Portions of a global mark queue are assigned to processors of the heterogeneous multiprocessor system along with corresponding chunks of a shared memory. The processors perform garbage collection on their assigned portions of the global mark queue and corresponding chunk of shared memory marking memory object references as reachable or adding memory object references to a non-local mark stack. The marked memory objects are merged with a global mark stack and memory object references in the non-local mark stack are merged with a “to be traced” portion of the global mark queue for re-checking using a garbage collection operation.

Owner:IBM CORP

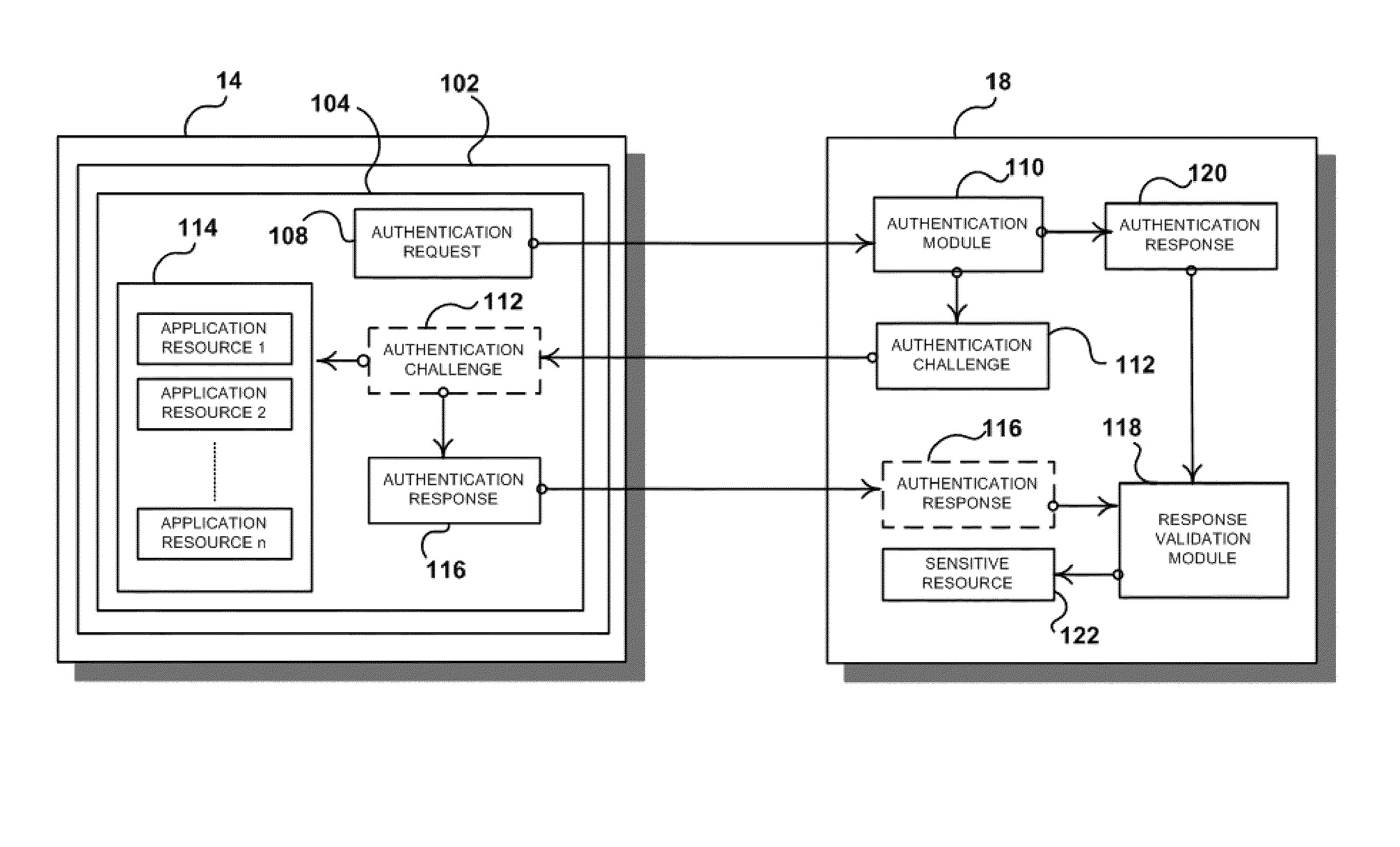

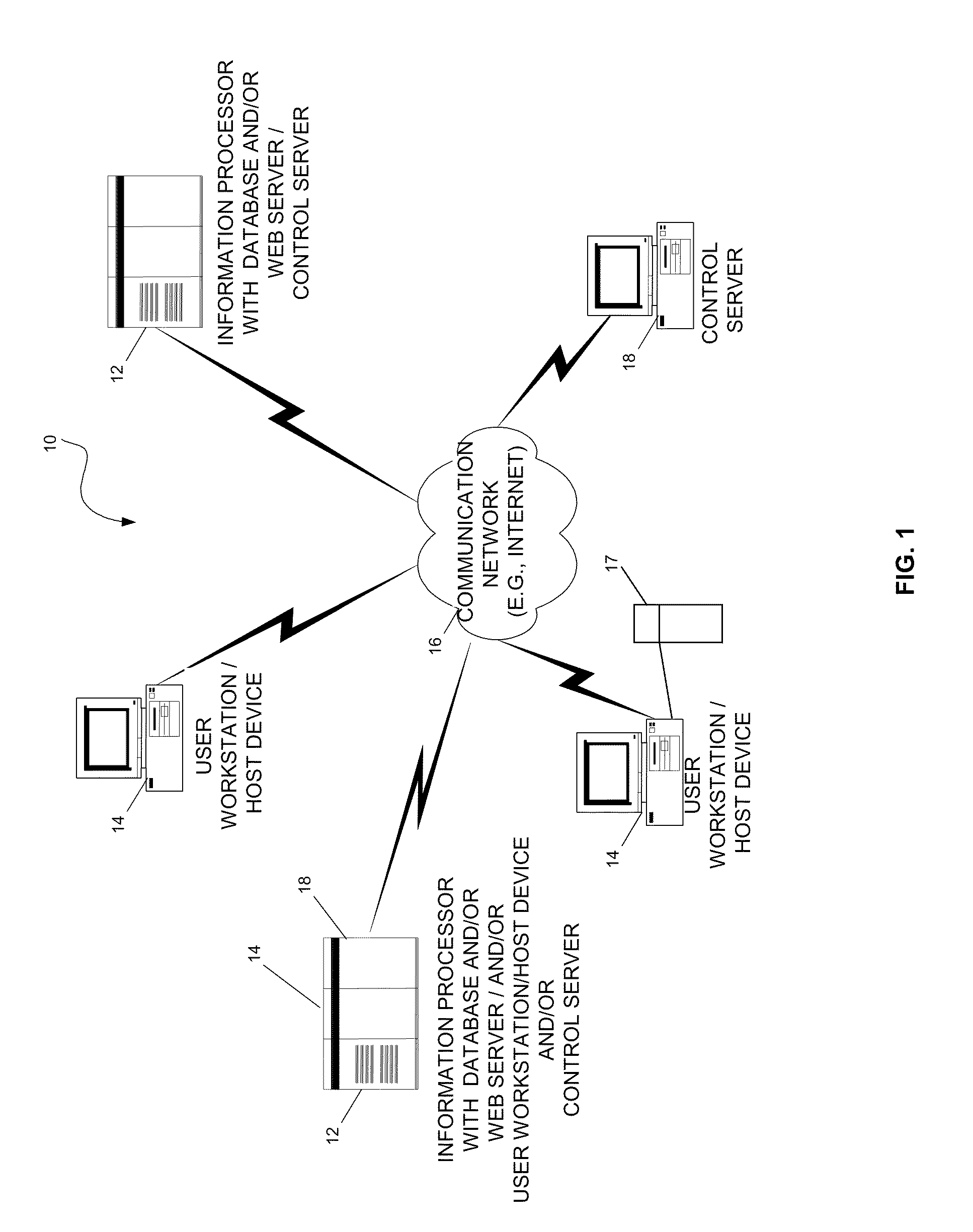

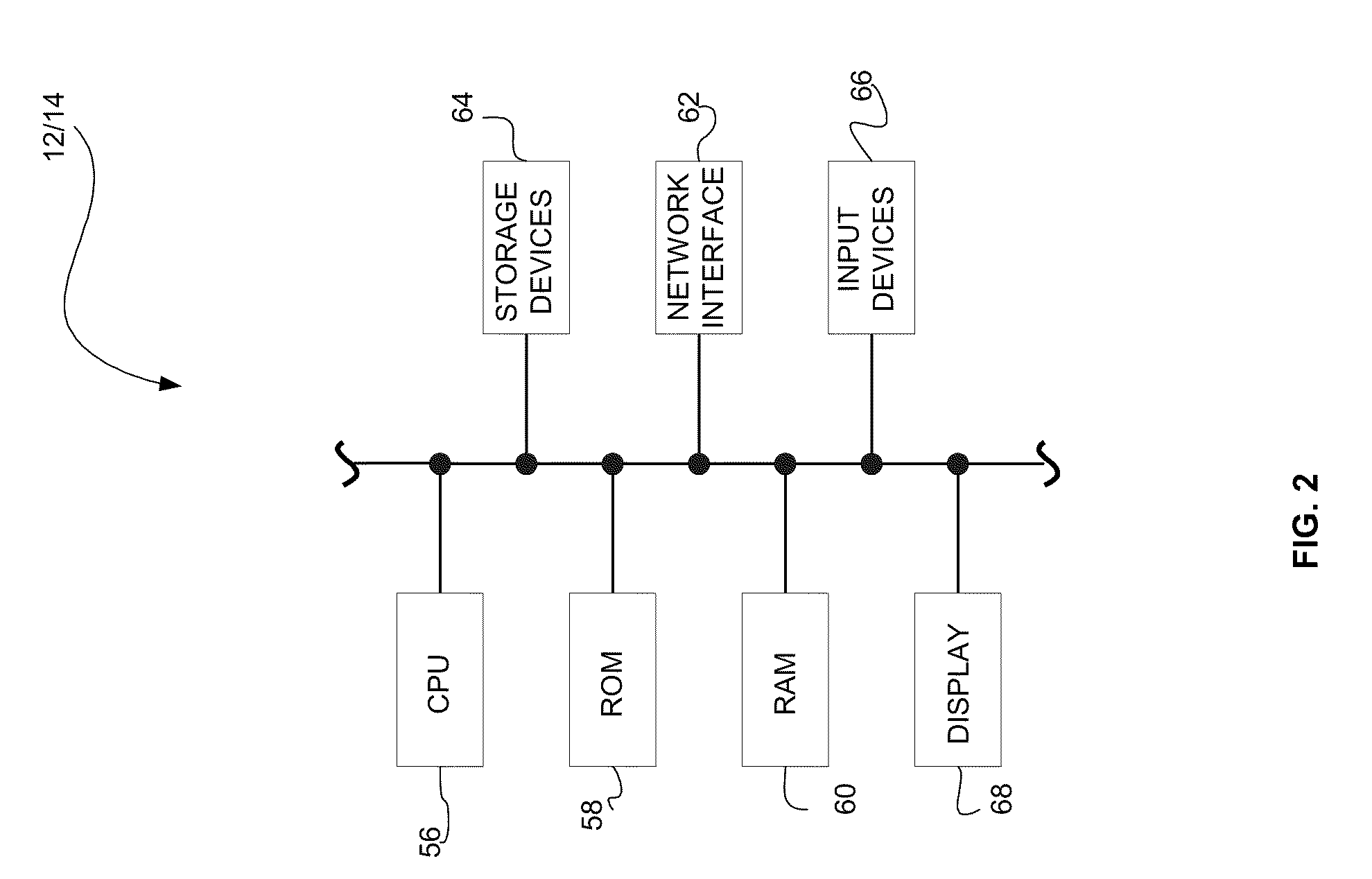

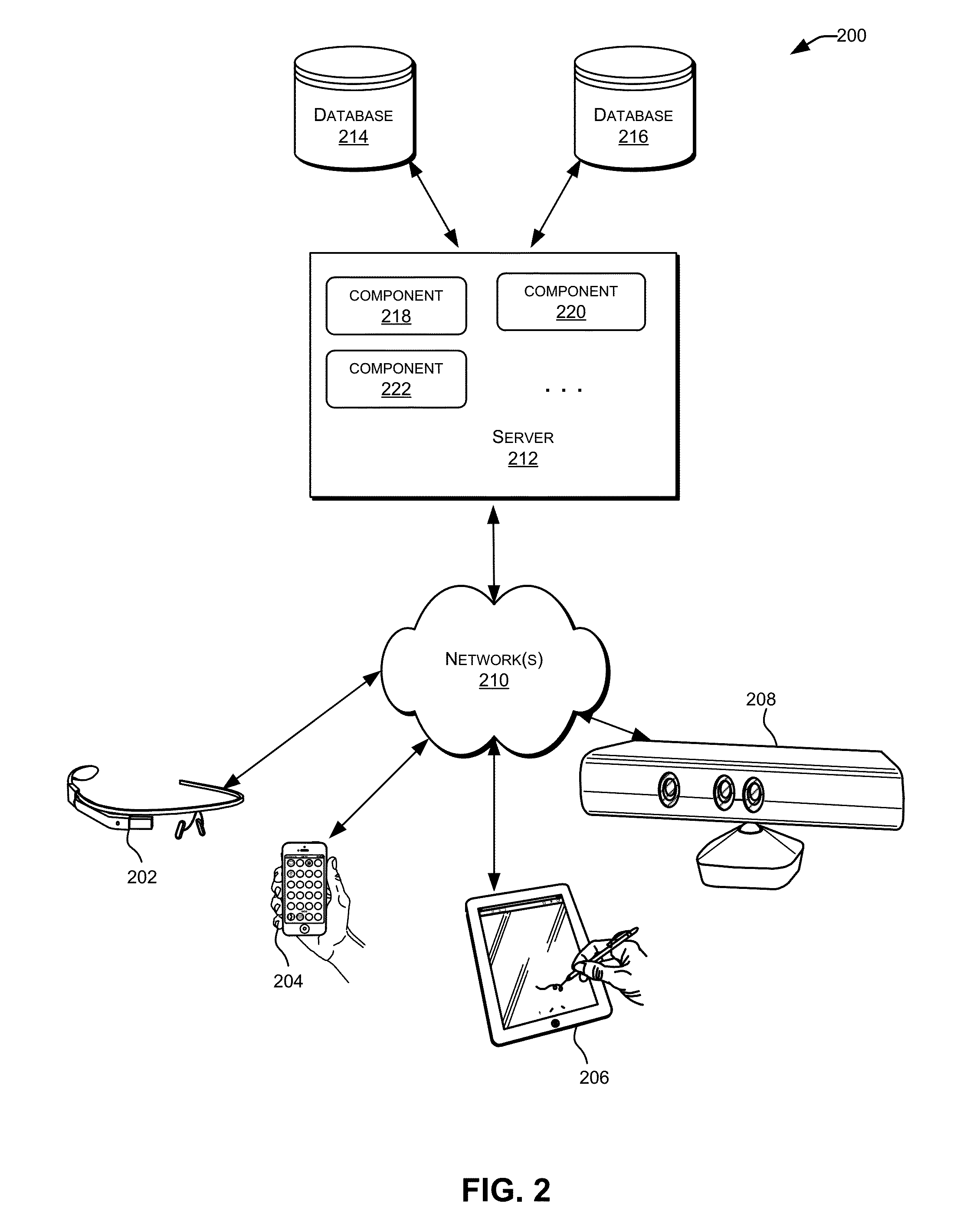

Application authentication system and method

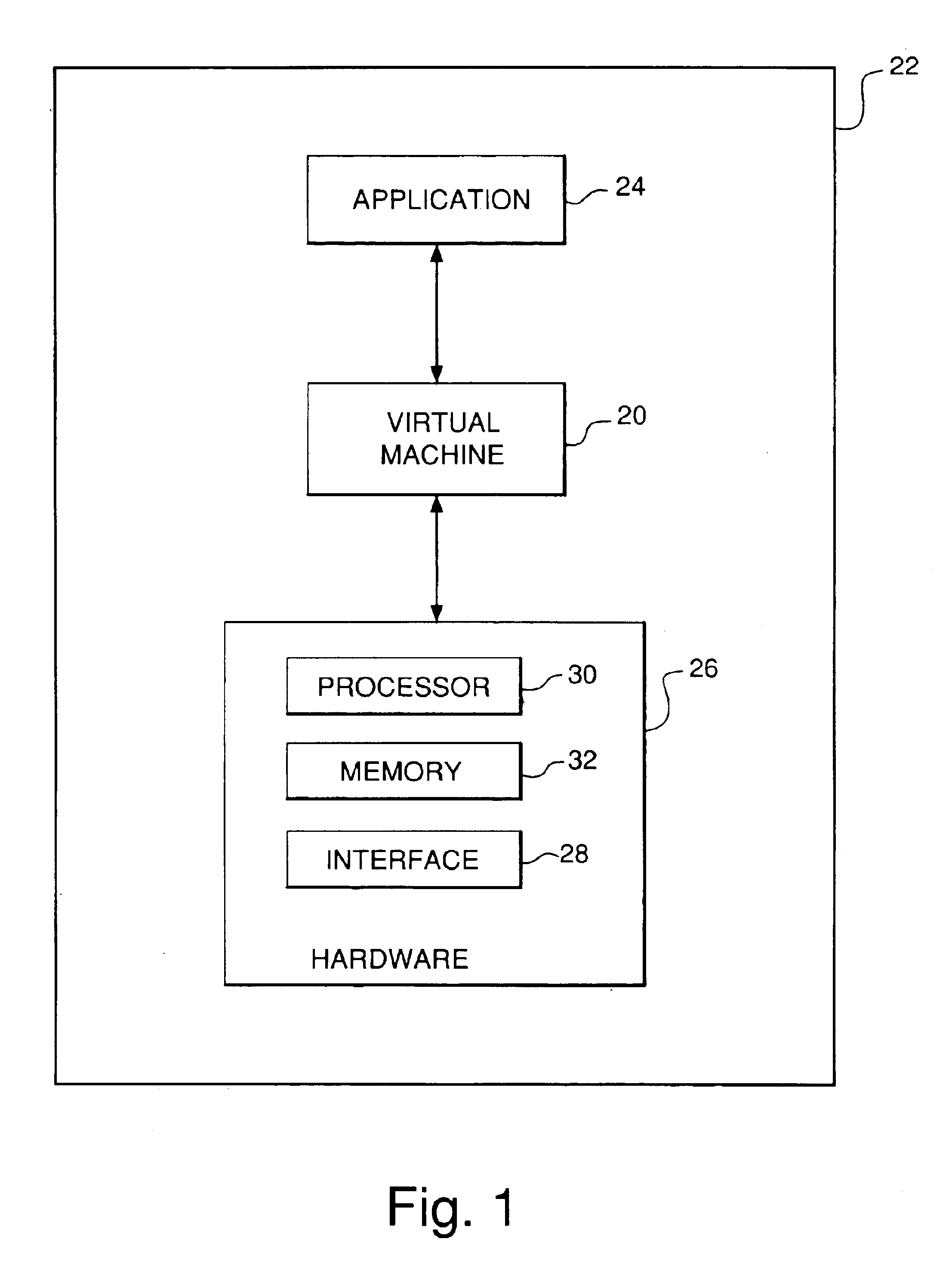

InactiveUS20110030040A1Digital data processing detailsUser identity/authority verificationProgram instructionMemory object

A system and method are provided for validating executable program code operating on at least one computing device. Program instructions that include a request for access to sensitive information are executed on a first computing device. An authentication request for access to the electronic information is sent from the first computing device to a second computing device. In response to the authorization request, a challenge is sent from the second computing device to the first computing device. The first computing device executes the challenge and generates an authentication response that includes at least one memory object associated with the program instructions. The response is sent to the second computing device from the first computing device, and the second computing device generates and sends a verification to the first computing device confirming that at least some of the first program instructions have not been altered or tampered with, and further grants the first computing device access to at least some of the electronic information.

Owner:EISST

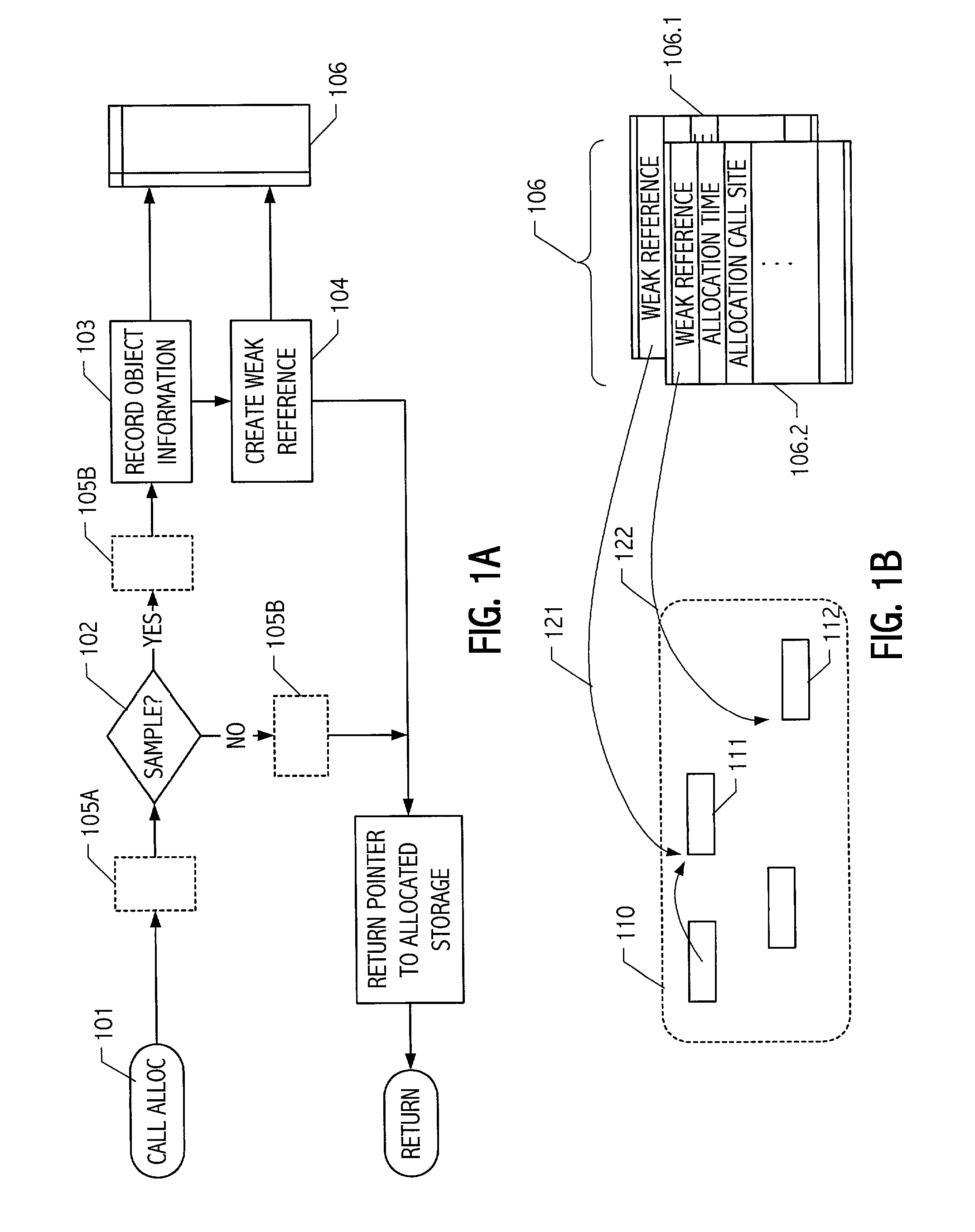

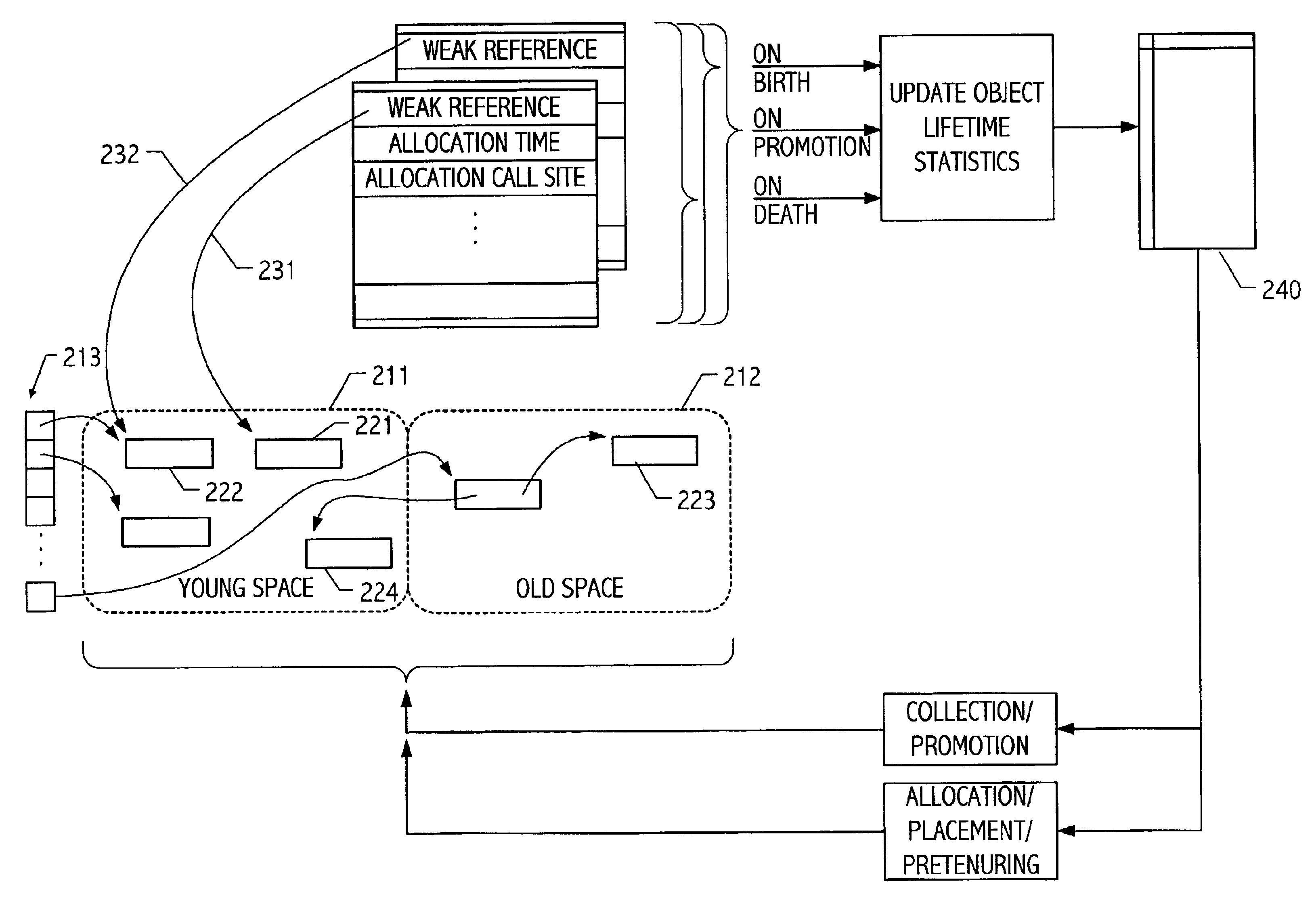

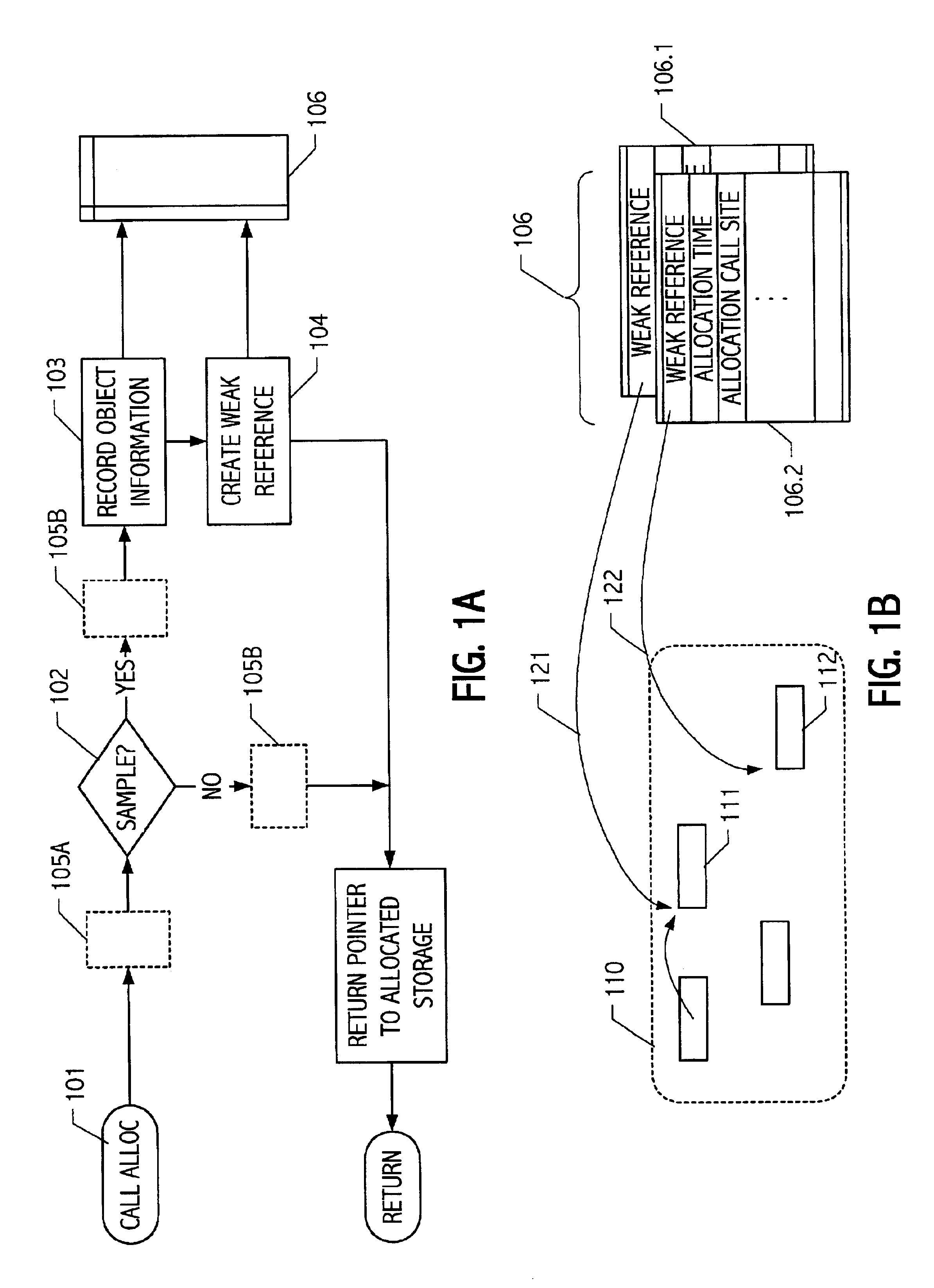

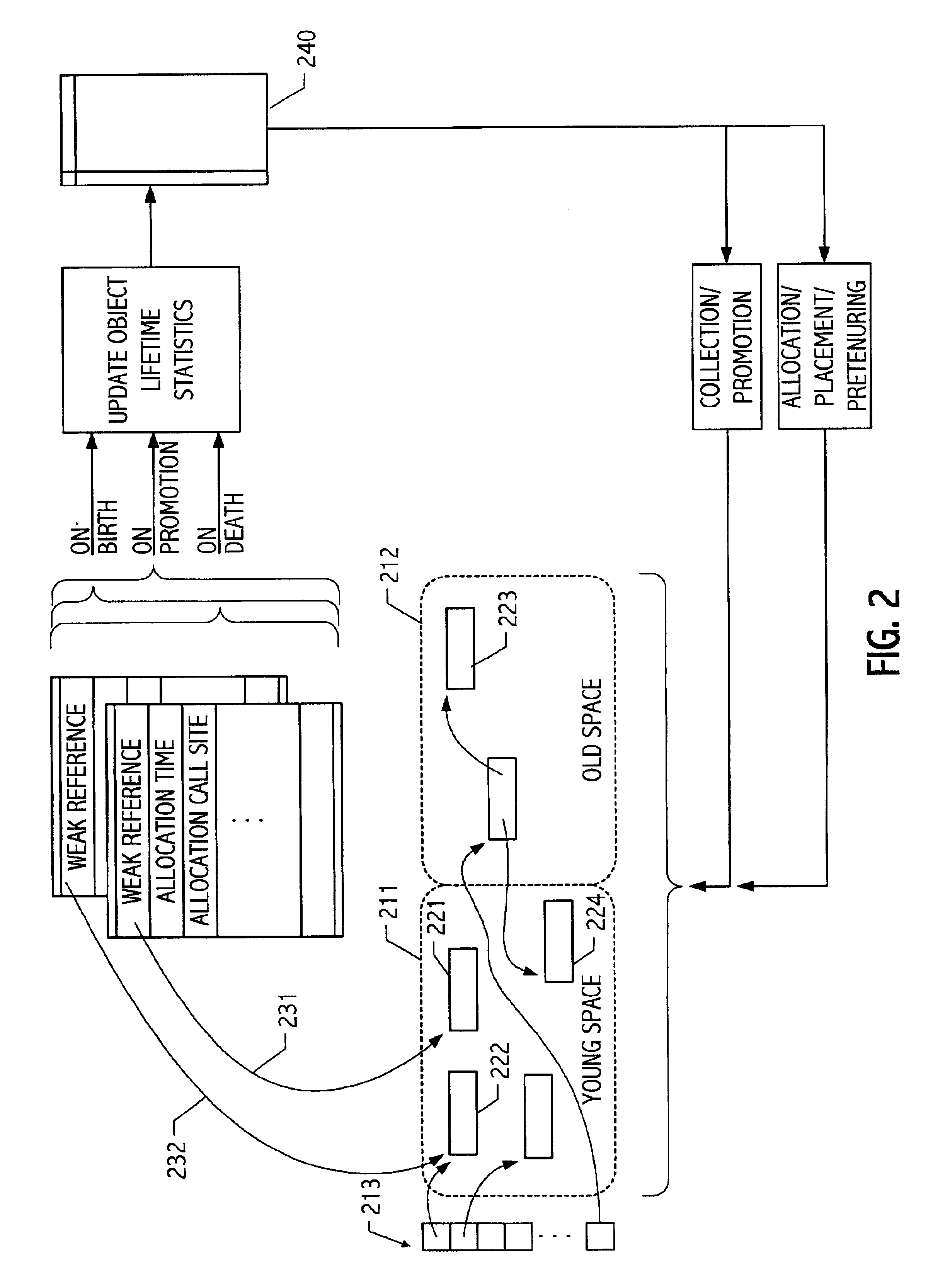

Dynamic adaptive tenuring of objects

InactiveUS20010044856A1Data processing applicationsMemory adressing/allocation/relocationObject lifetimeMemory object

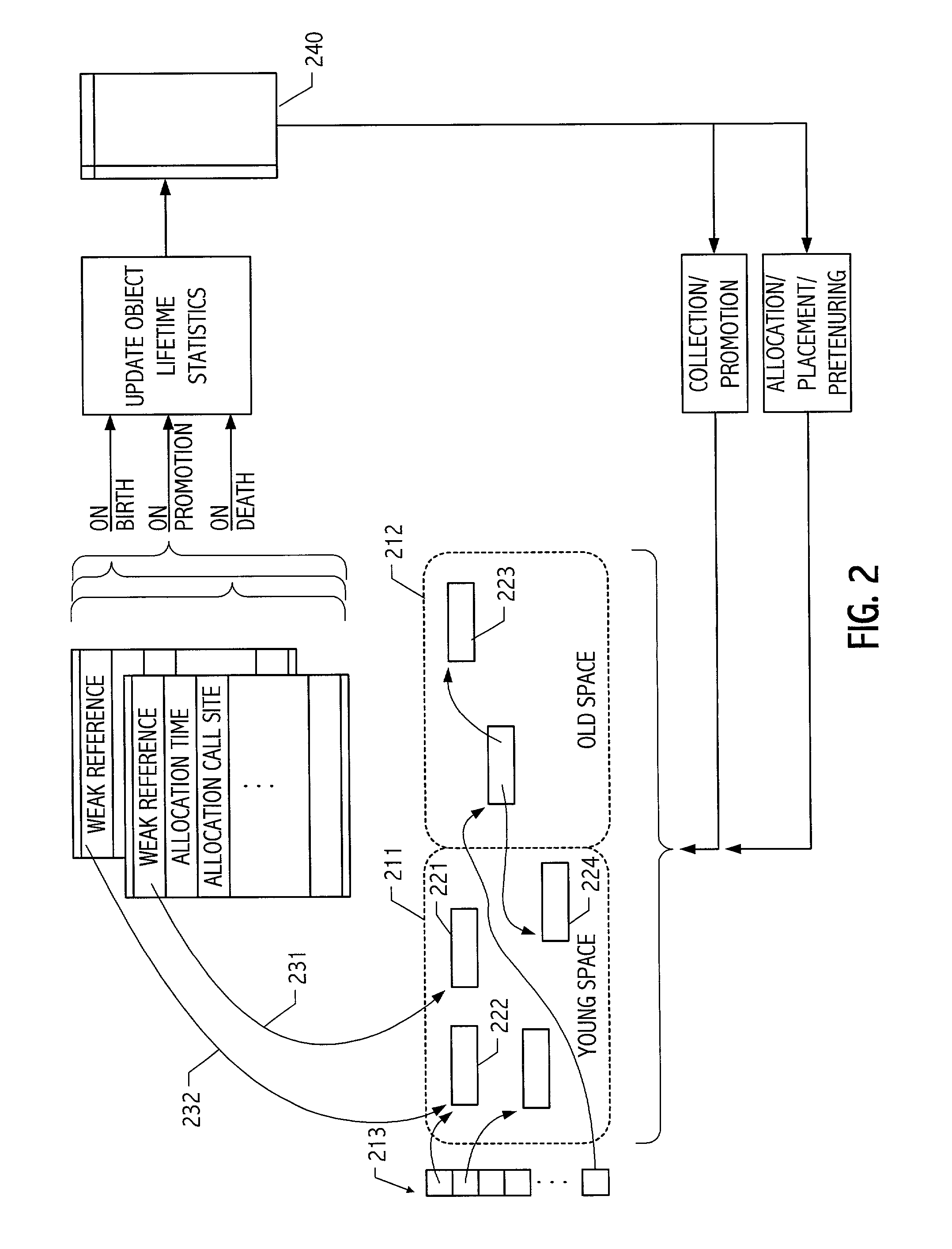

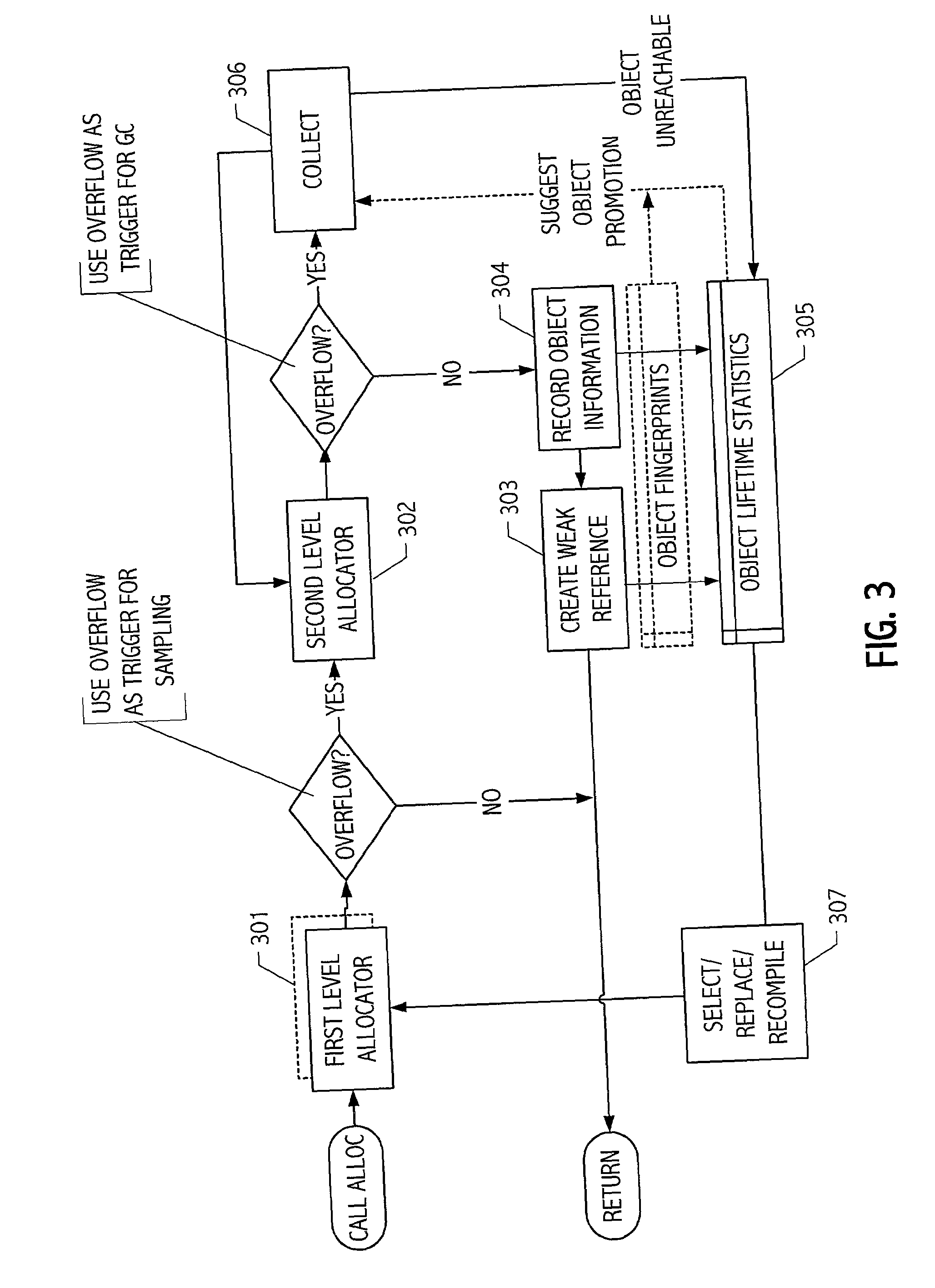

Run time sampling techniques have been developed whereby representative object lifetime statistics may be obtained and employed to adaptively affect tenuring decisions, memory object promotion and / or storage location selection. In some realizations, object allocation functionality is dynamically varied to achieve desired behavior on an object category-by-category basis. In some realizations, phase behavior affects sampled lifetimes e.g., for objects allocated at different phases of program execution, and the dynamic facilities described herein provide phase-specific adaptation tenuring decisions, memory object promotion and / or storage location selection. In some realizations, reversal of such decisions is provided.

Owner:ORACLE INT CORP

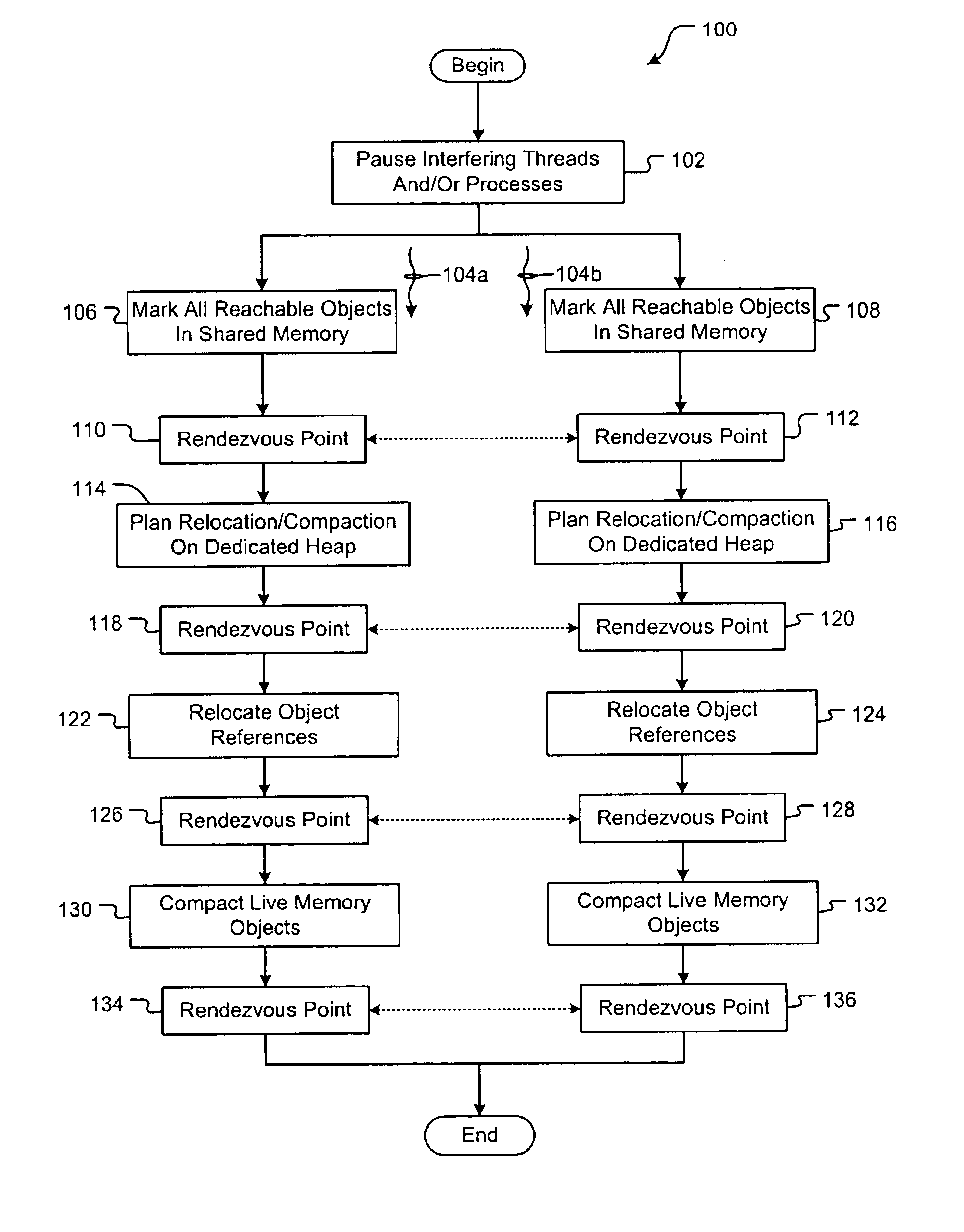

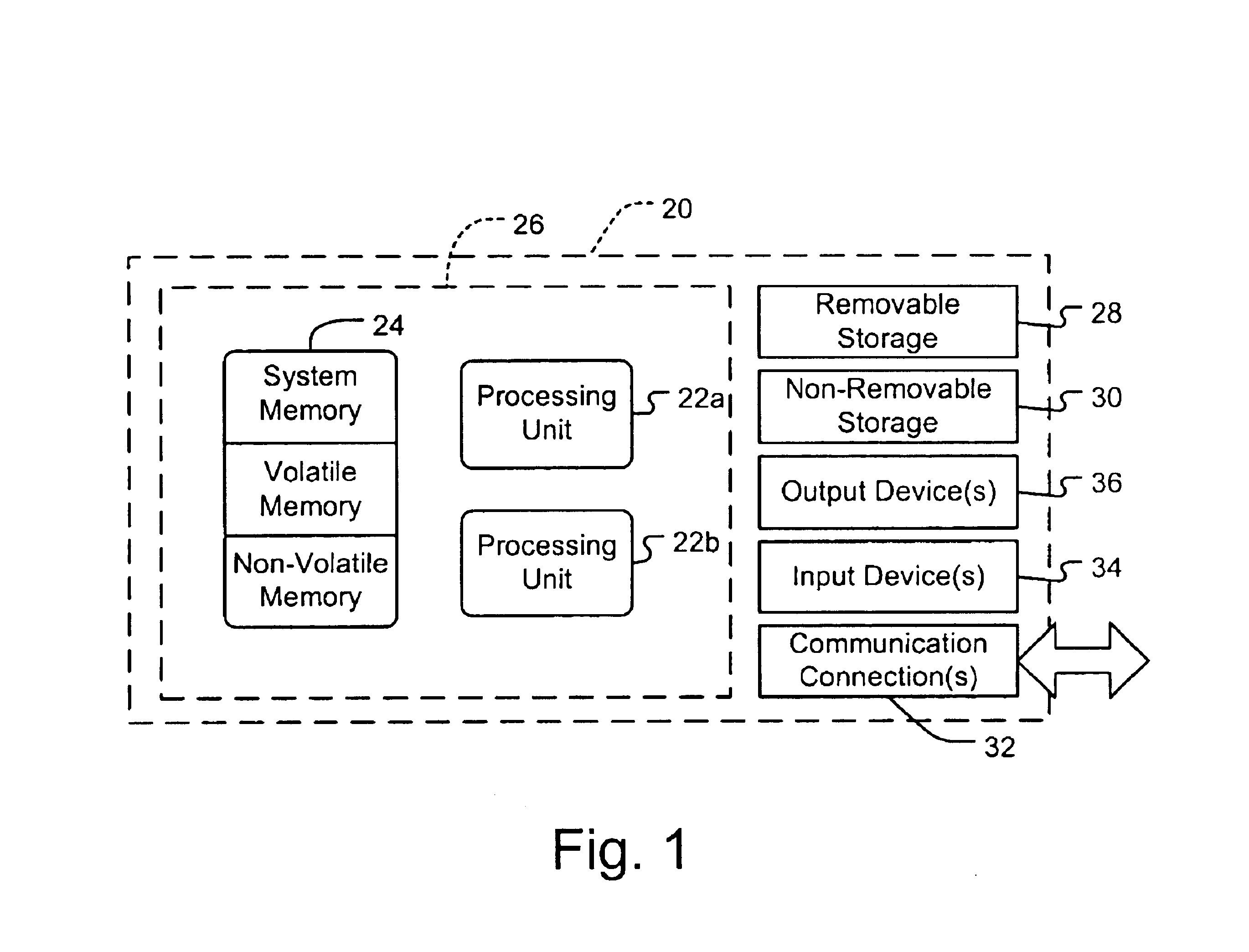

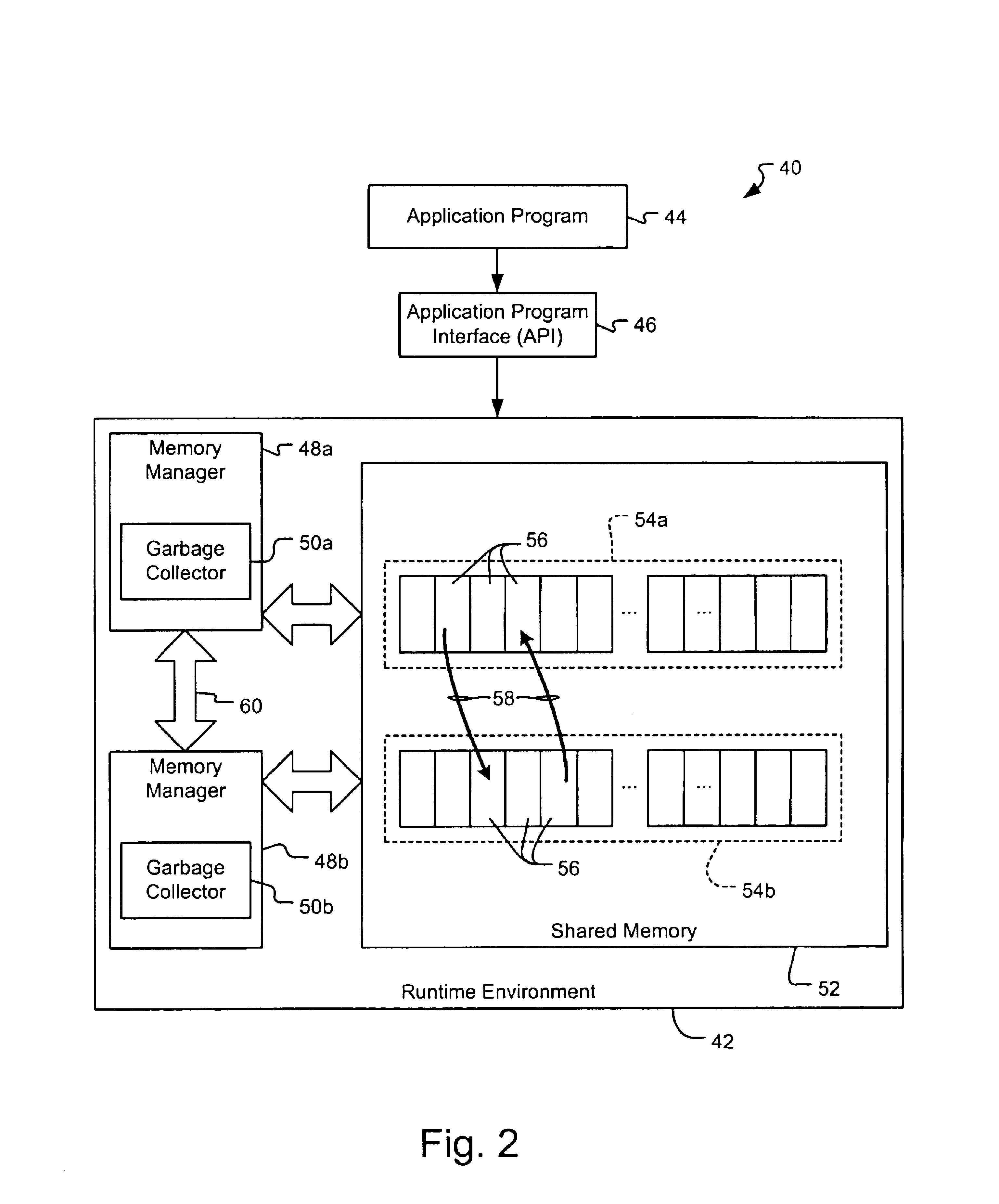

Method and system for multiprocessor garbage collection

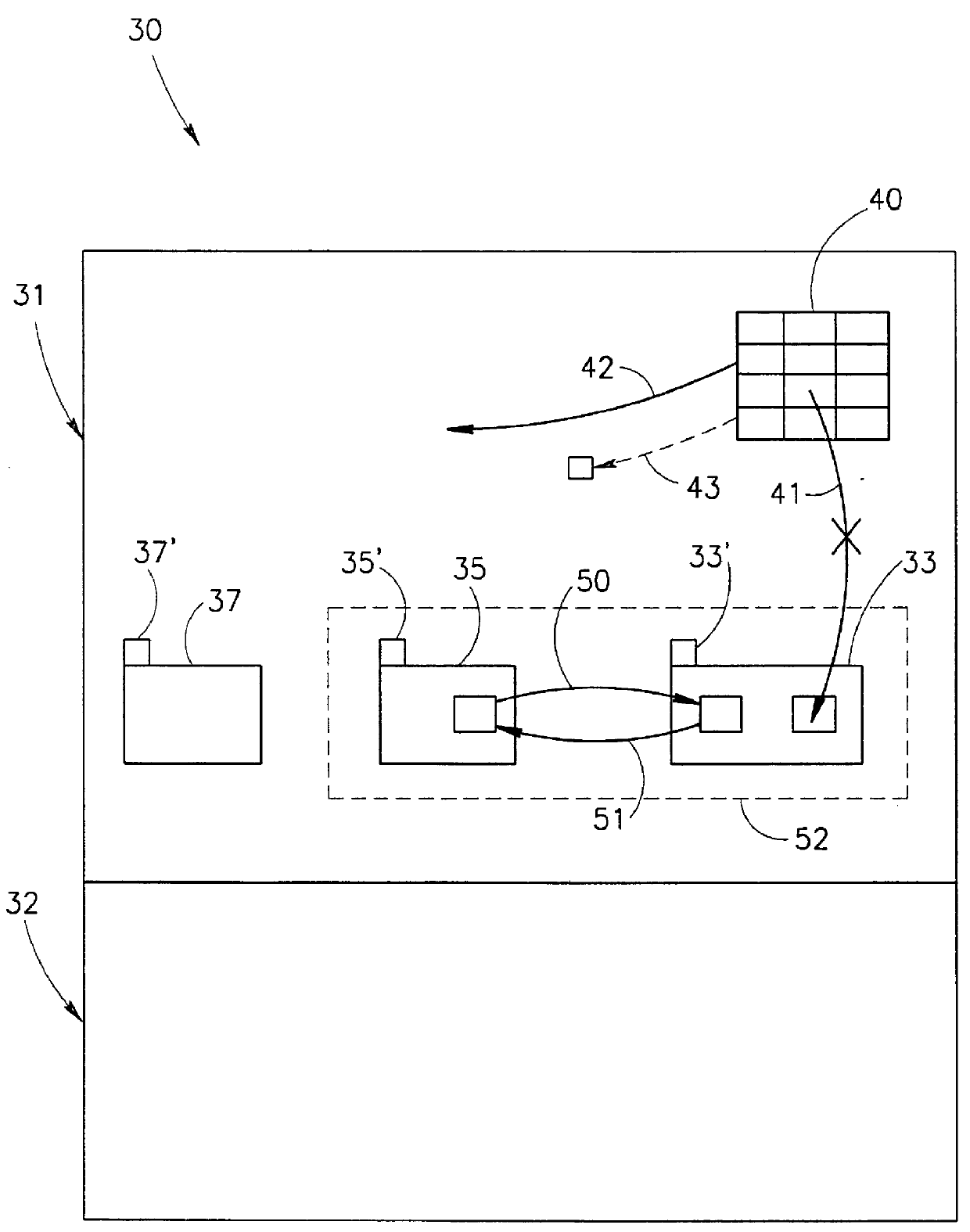

InactiveUS6865585B1Data processing applicationsMemory adressing/allocation/relocationRefuse collectionMulti processor

A garbage collection system and method in a multiprocessor environment having a shared memory wherein two or more processing units participate in the reclamation of garbage memory objects. The shared memory is divided into regions or heaps and all heaps are dedicated to one of the participating processing units. The processing units generally perform garbage collection operations, i.e., a thread on the heap or heaps that are dedicated to that the processing unit. However, the processing units are also allowed to access and modify other memory objects, in other heaps when those objects are referenced by and therefore may be traced back to memory objects within the processing units dedicated heap. The processors are synchronized at rendezvous points to prevent reclamation of used memory objects.

Owner:MICROSOFT TECH LICENSING LLC

Dynamic adaptive tenuring of objects

InactiveUS6839725B2Data processing applicationsMemory adressing/allocation/relocationObject lifetimeMemory object

Run time sampling techniques have been developed whereby representative object lifetime statistics may be obtained and employed to adaptively affect tenuring decisions, memory object promotion and / or storage location selection. In some realizations, object allocation functionality is dynamically varied to achieve desired behavior on an object category-by-category basis. In some realizations, phase behavior affects sampled lifetimes e.g., for objects allocated at different phases of program execution, and the dynamic facilities described herein provide phase-specific adaptation tenuring decisions, memory object promotion and / or storage location selection. In some realizations, reversal of such decisions is provided.

Owner:ORACLE INT CORP

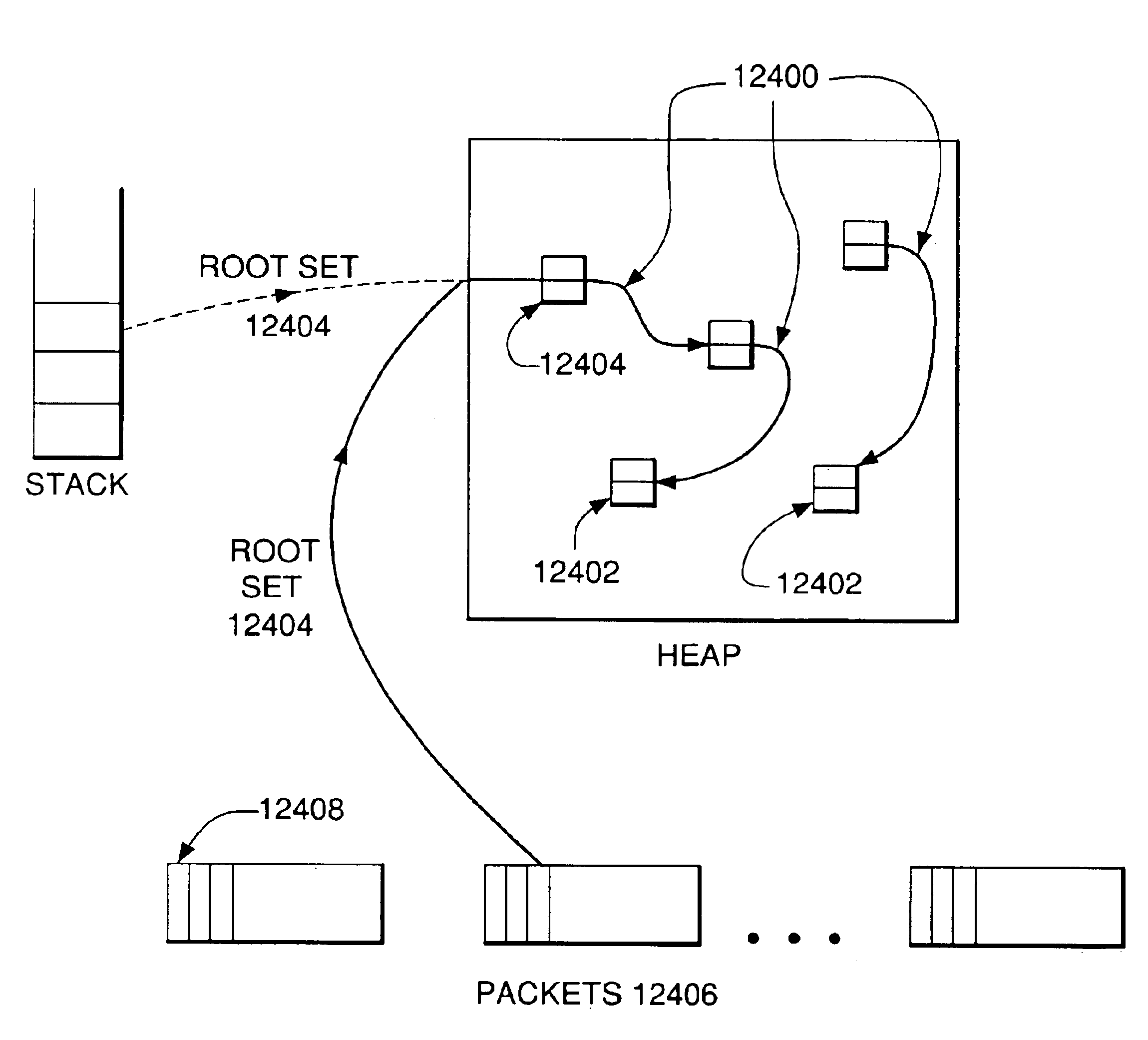

Method and system for concurrent garbage collection

InactiveUS7310655B2Data processing applicationsDigital data processing detailsRefuse collectionWaste collection

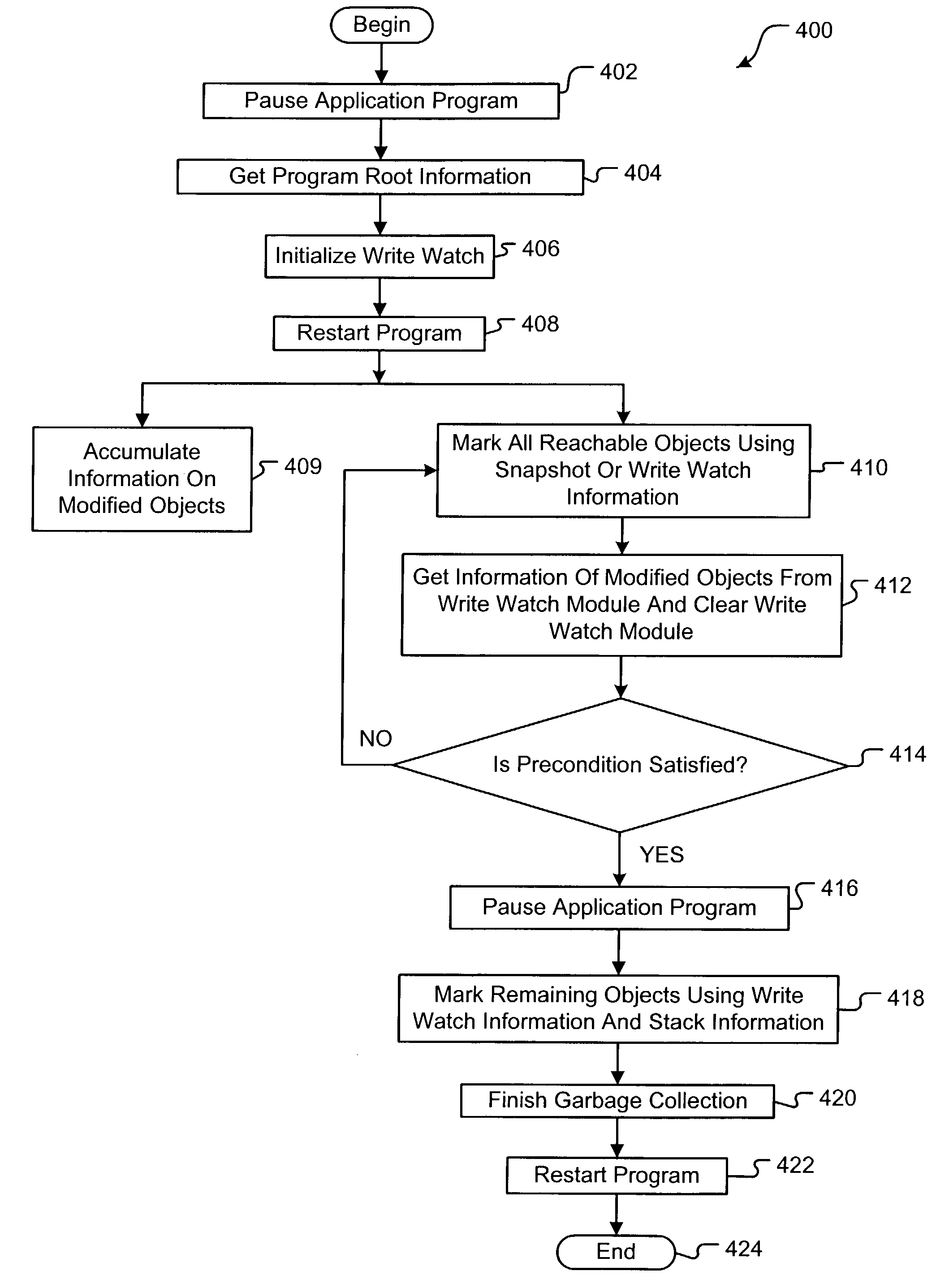

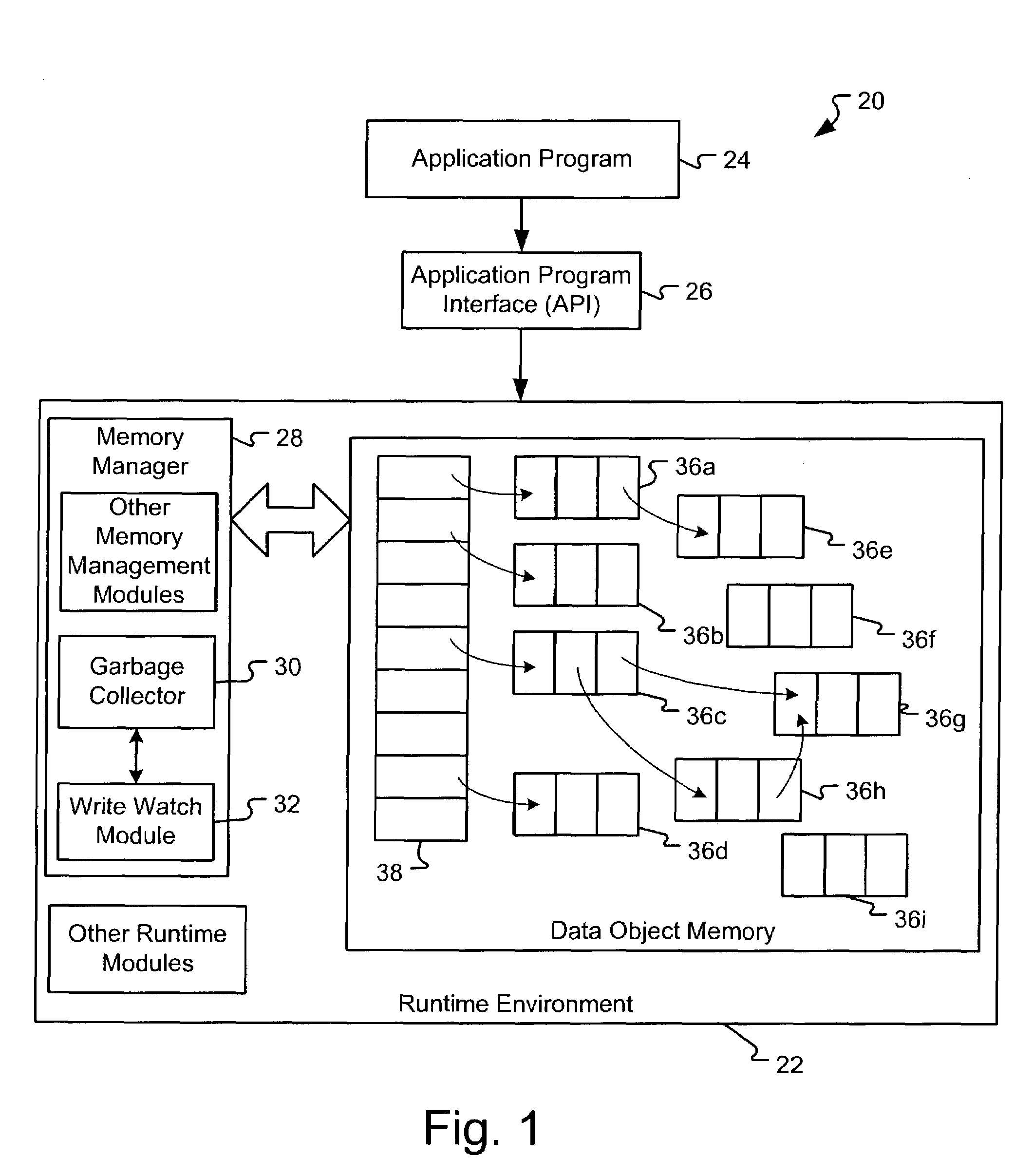

A method and system for concurrent garbage collection wherein live memory objects, i.e., not garbage, can be marked while an application executes. Root information is gleaned by taking a snapshot program roots or by arranging the stack to be scanned during execution of the program. Next, a first marking act is performed using the root information while the program executes. Modifications in the memory structure that occur during the concurrent marking act are logged or accumulated by a write watch module. The application is then paused or stopped to perform a second marking act using information from the write watch module. Following the second marking act, the garbage collection may be completed using various techniques.

Owner:MICROSOFT TECH LICENSING LLC

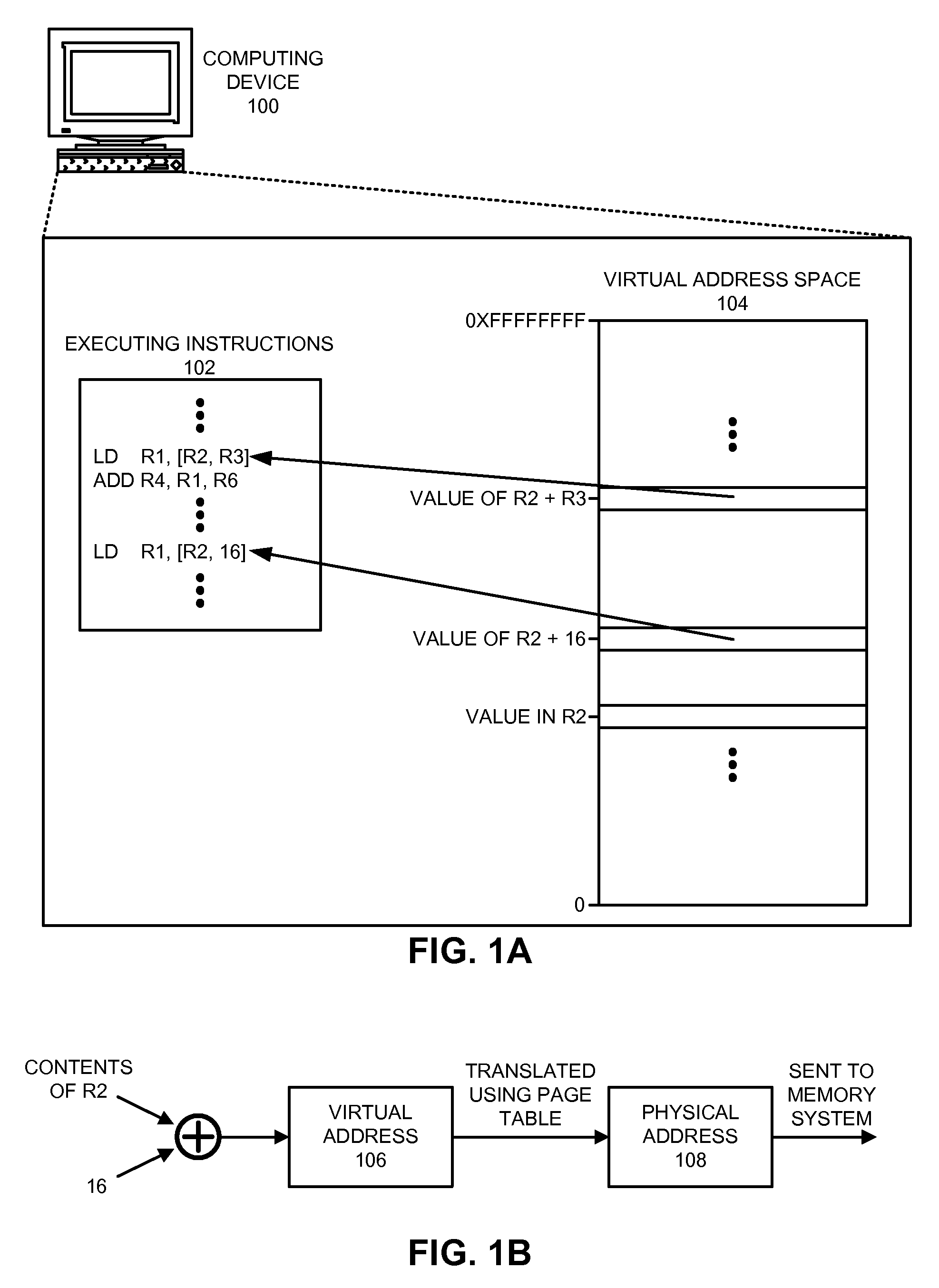

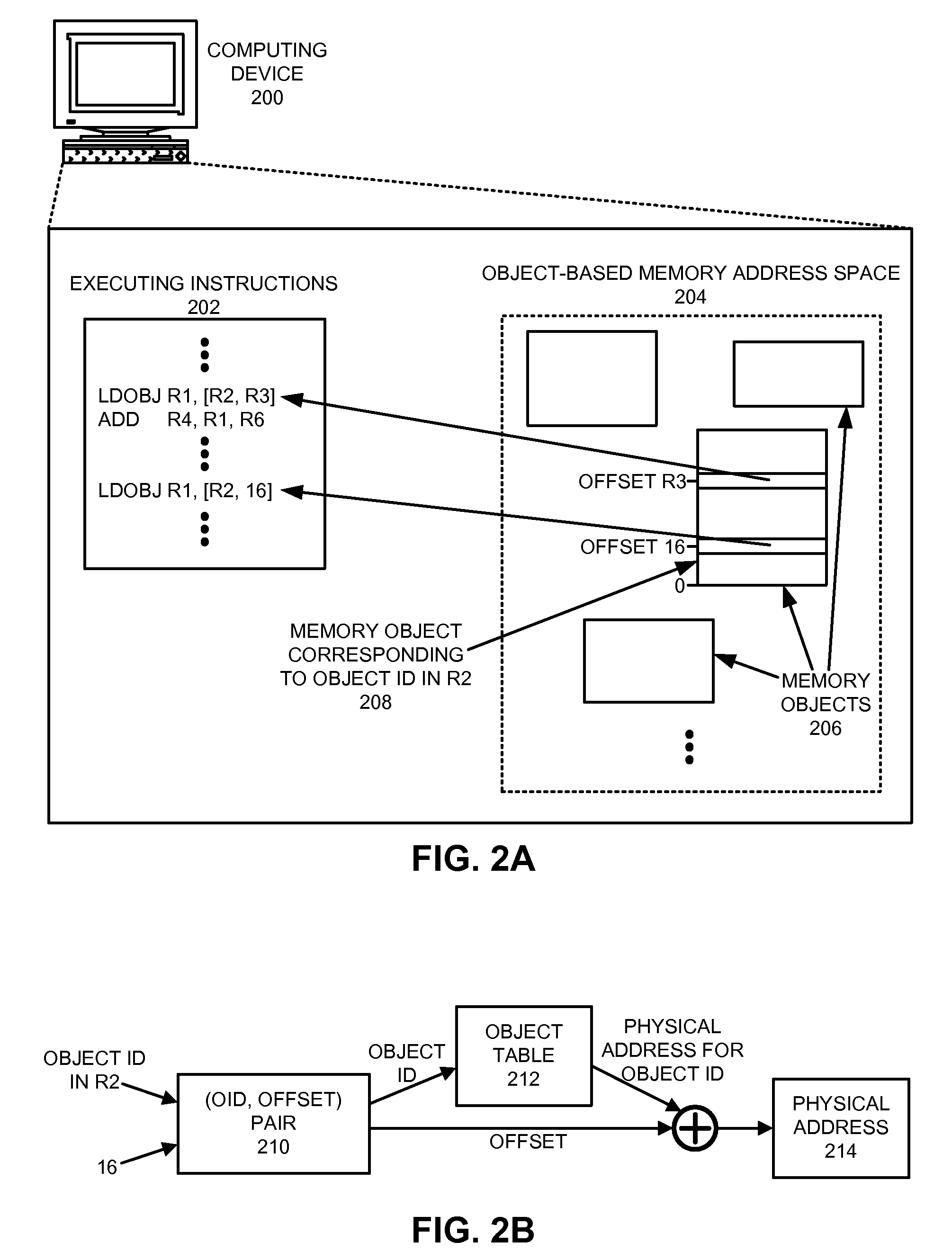

Accessing memory locations for paged memory objects in an object-addressed memory system

ActiveUS20100228936A1Easy accessFacilitating cross-privilege and cross-domain inliningMemory adressing/allocation/relocationUnauthorized memory use protectionParallel computingMemory object

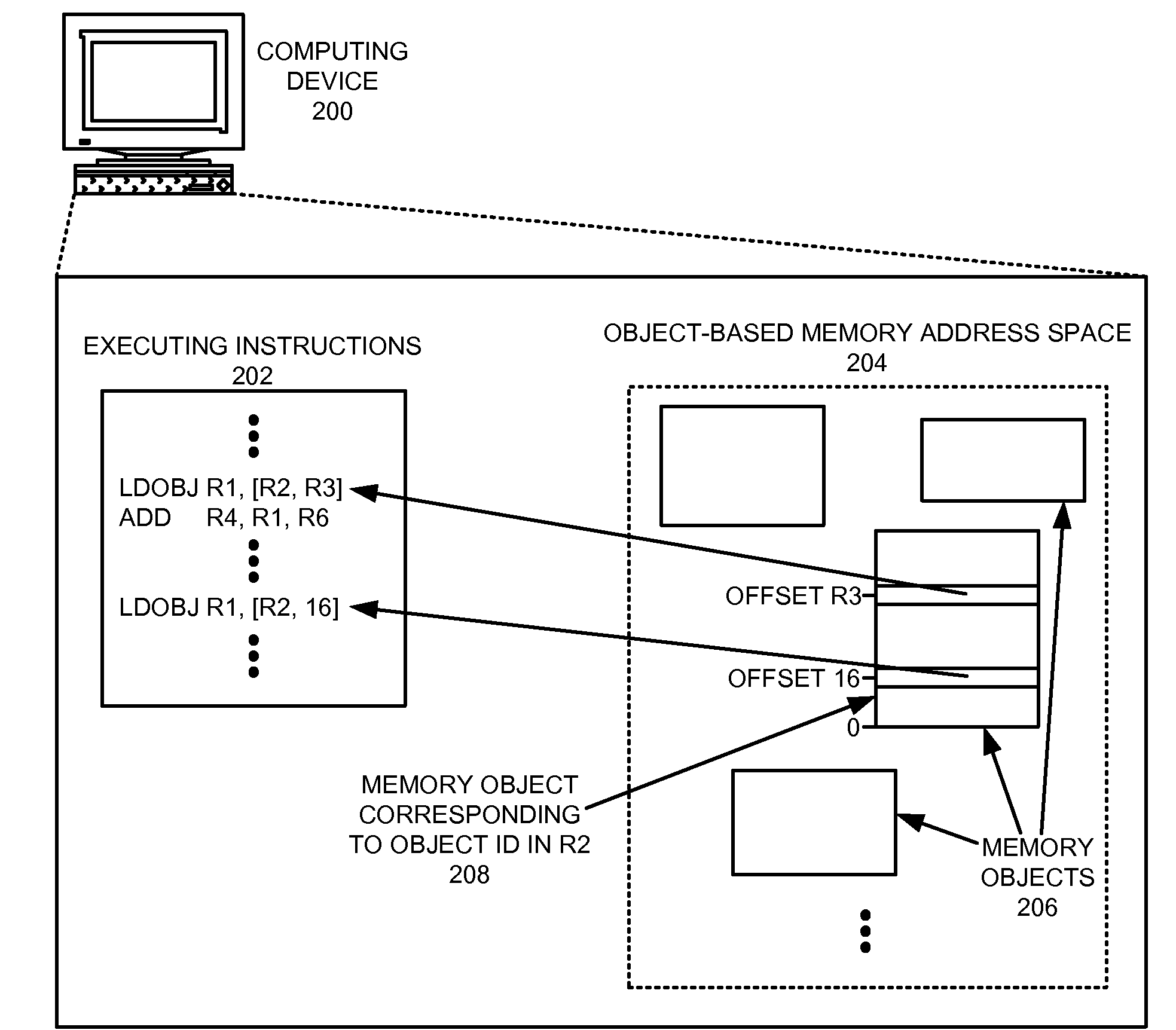

One embodiment of the present invention provides a system that accesses memory locations in an object-addressed memory system. During a memory access in the object-addressed memory system, the system receives an object identifier and an address. The system then uses the object identifier to identify a paged memory object associated with the memory access. Next, the system uses the address and a page table associated with the paged memory object to identify a memory page associated with the memory access. After determining the memory page, the system uses the address to access a memory location in the memory page.

Owner:ORACLE INT CORP

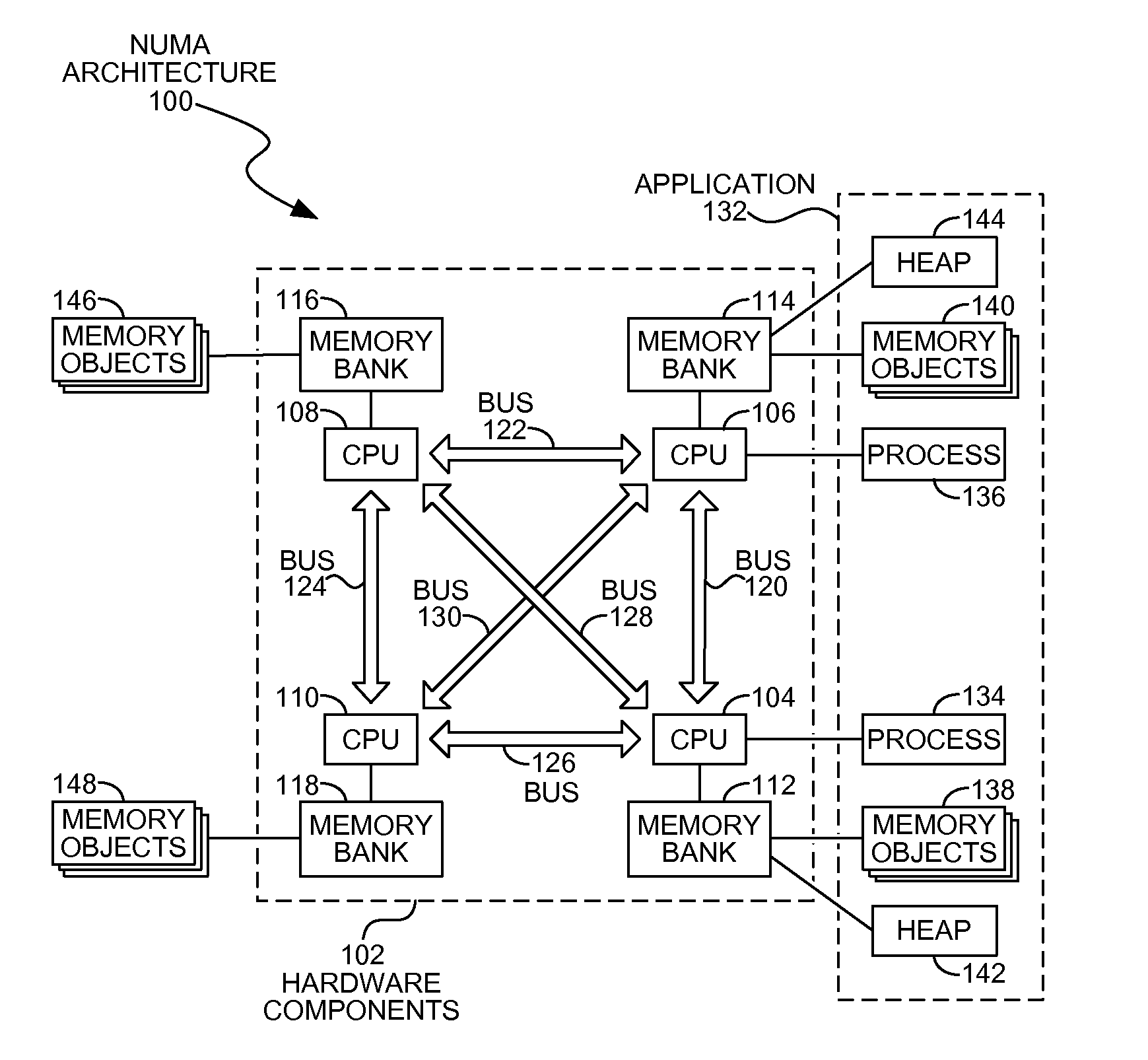

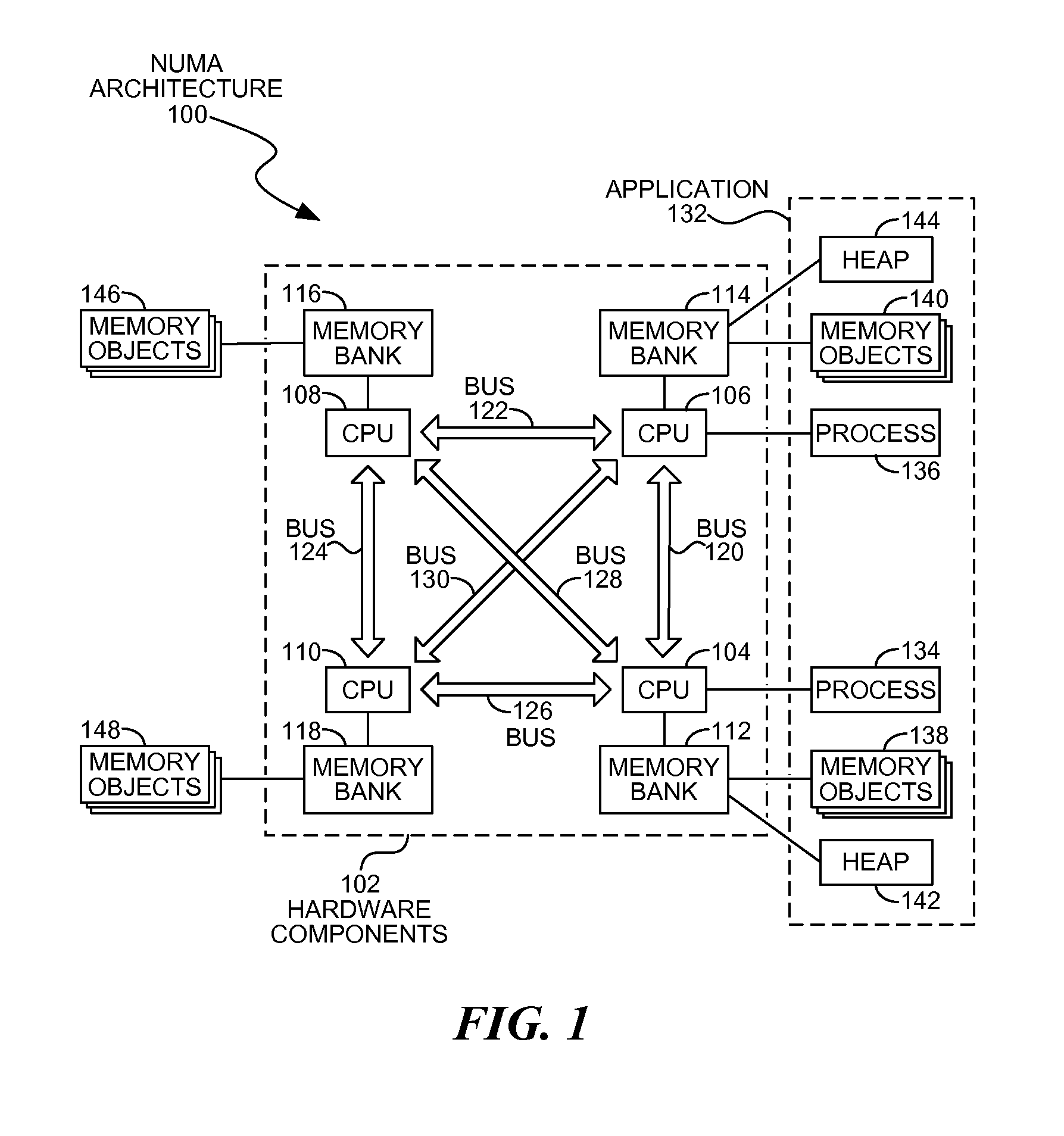

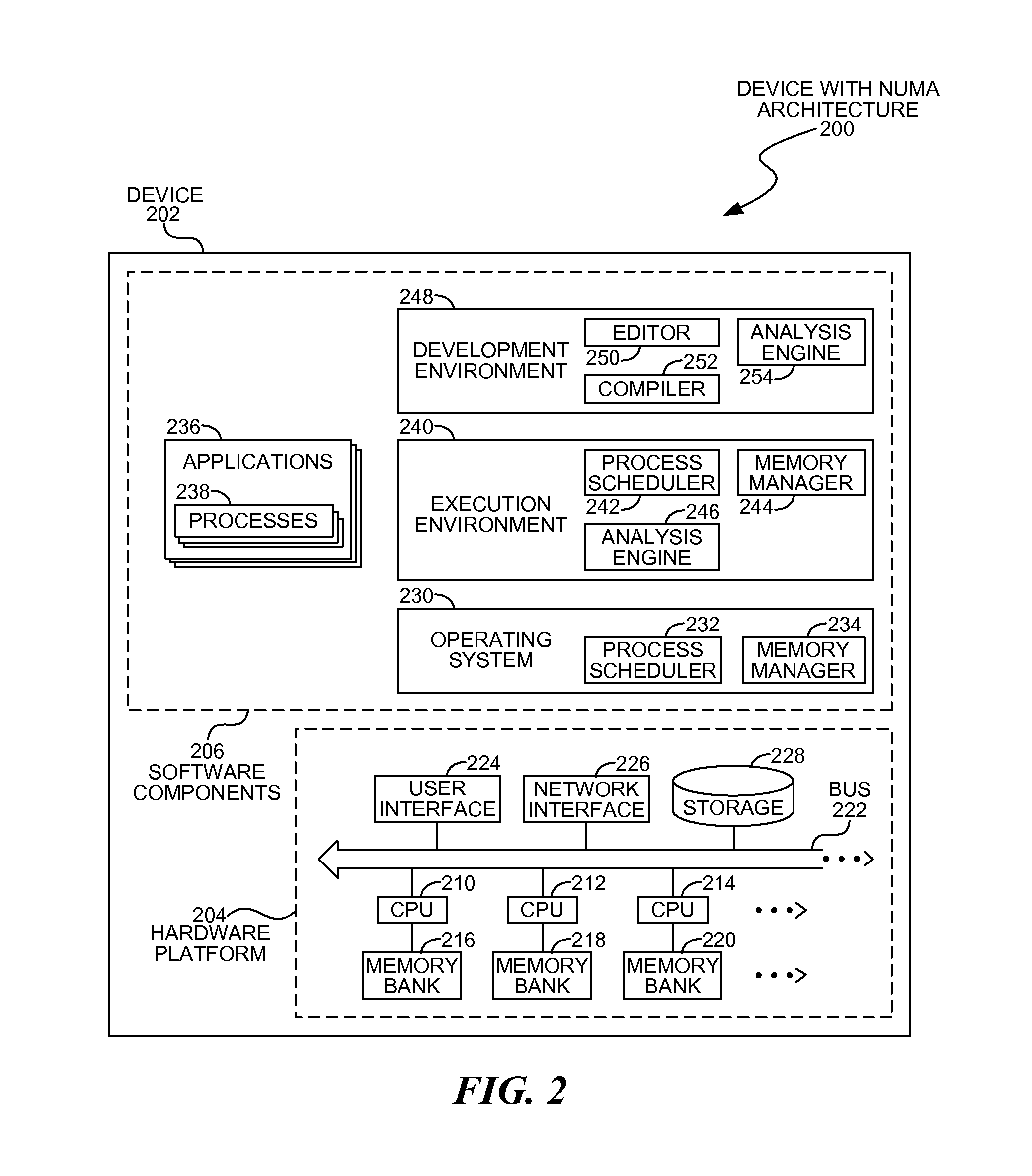

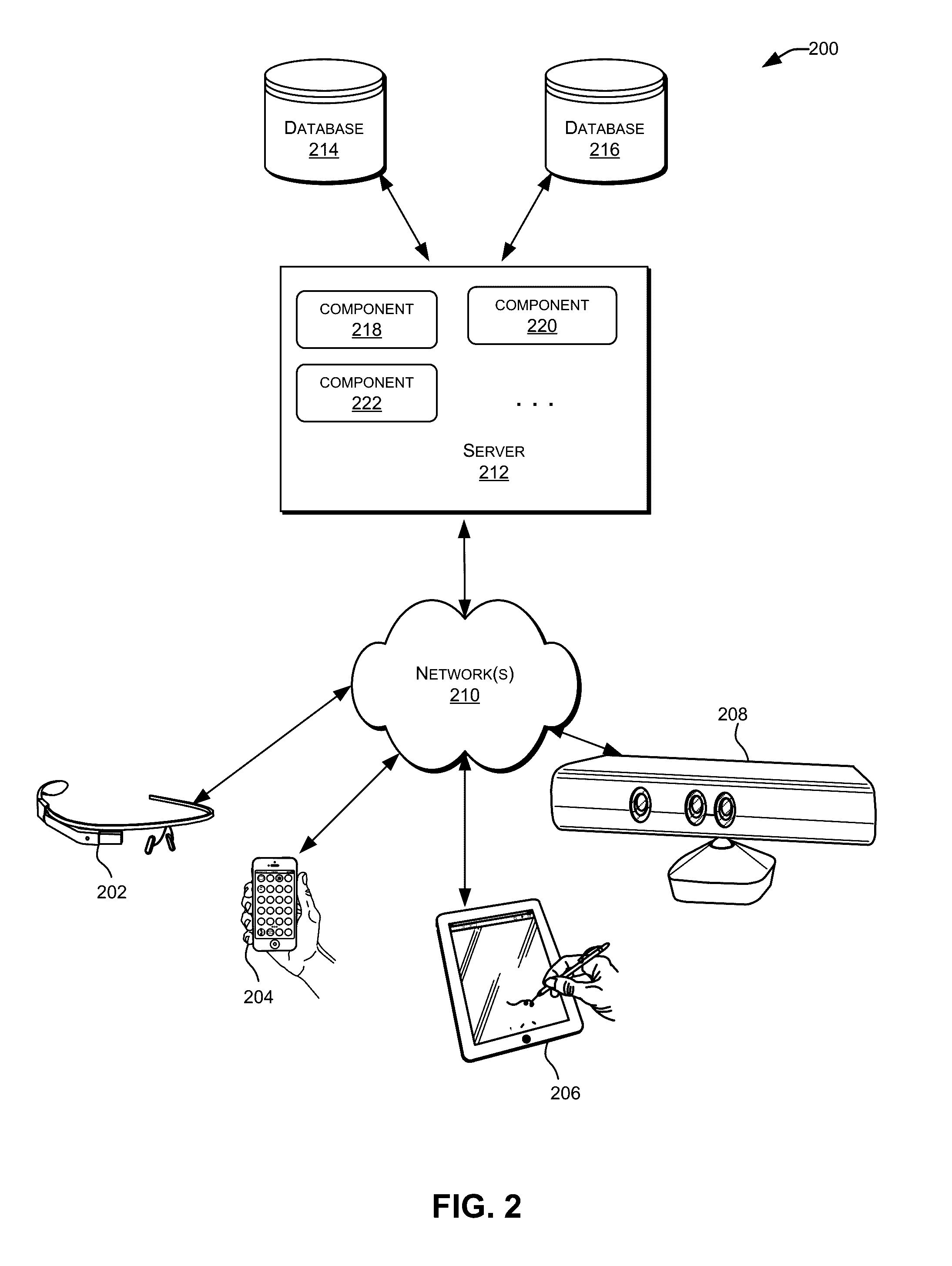

Usage Aware NUMA Process Scheduling

Processes may be assigned to specific processors when memory objects consumed by the processes are located in memory banks closely associated with the processors. When assigning processes to threads operating in a multiple processor NUMA architecture system, an analysis of the memory objects accessed by a process may identify processor or group of processors that may minimize the memory access time of the process. The selection may take into account the connections between memory banks and processors to identify the shortest communication path between the memory objects and the process. The processes may be pre-identified as functional processes that make little or no changes to memory objects other than information passed to or from the processes.

Owner:MICROSOFT TECH LICENSING LLC

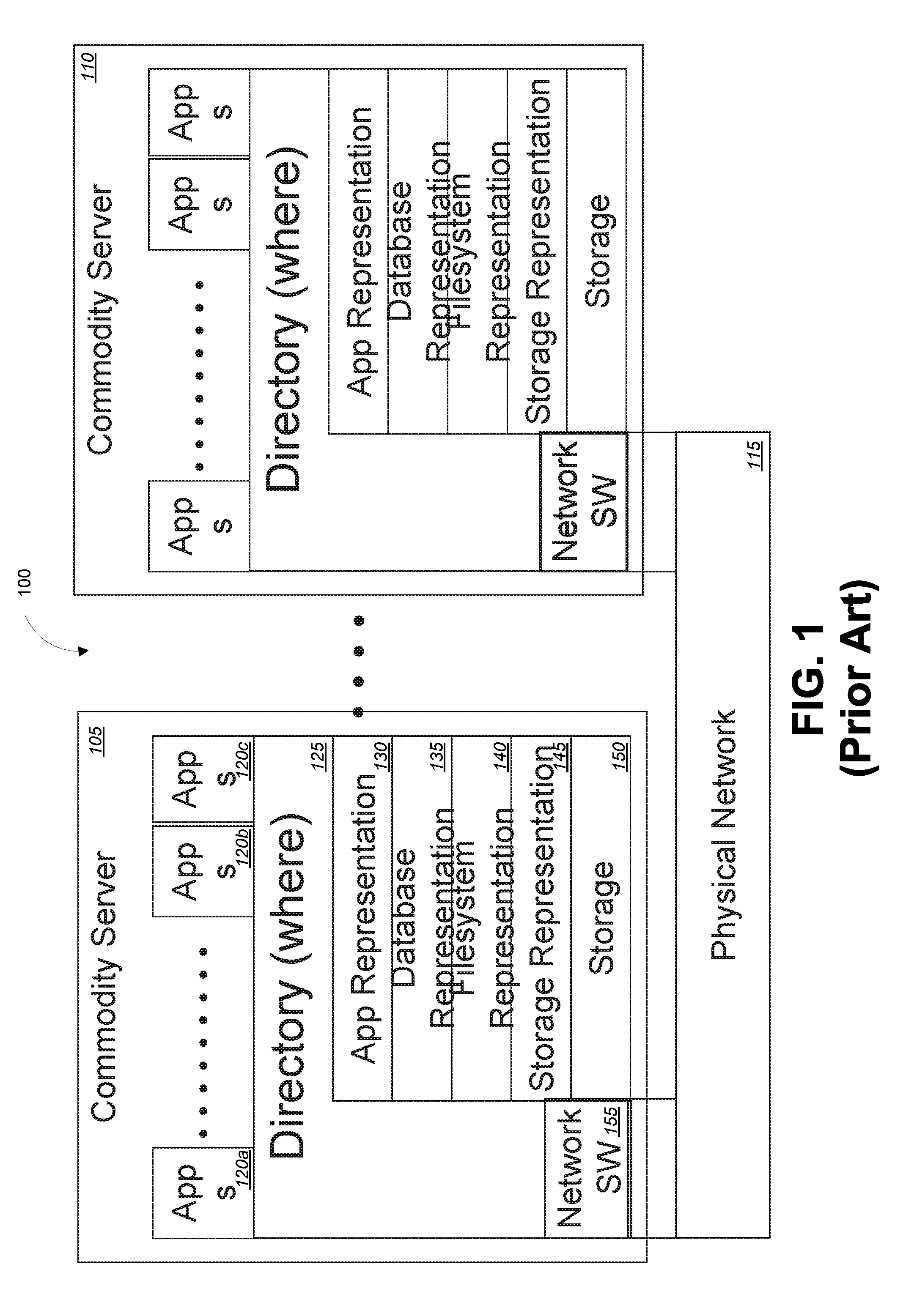

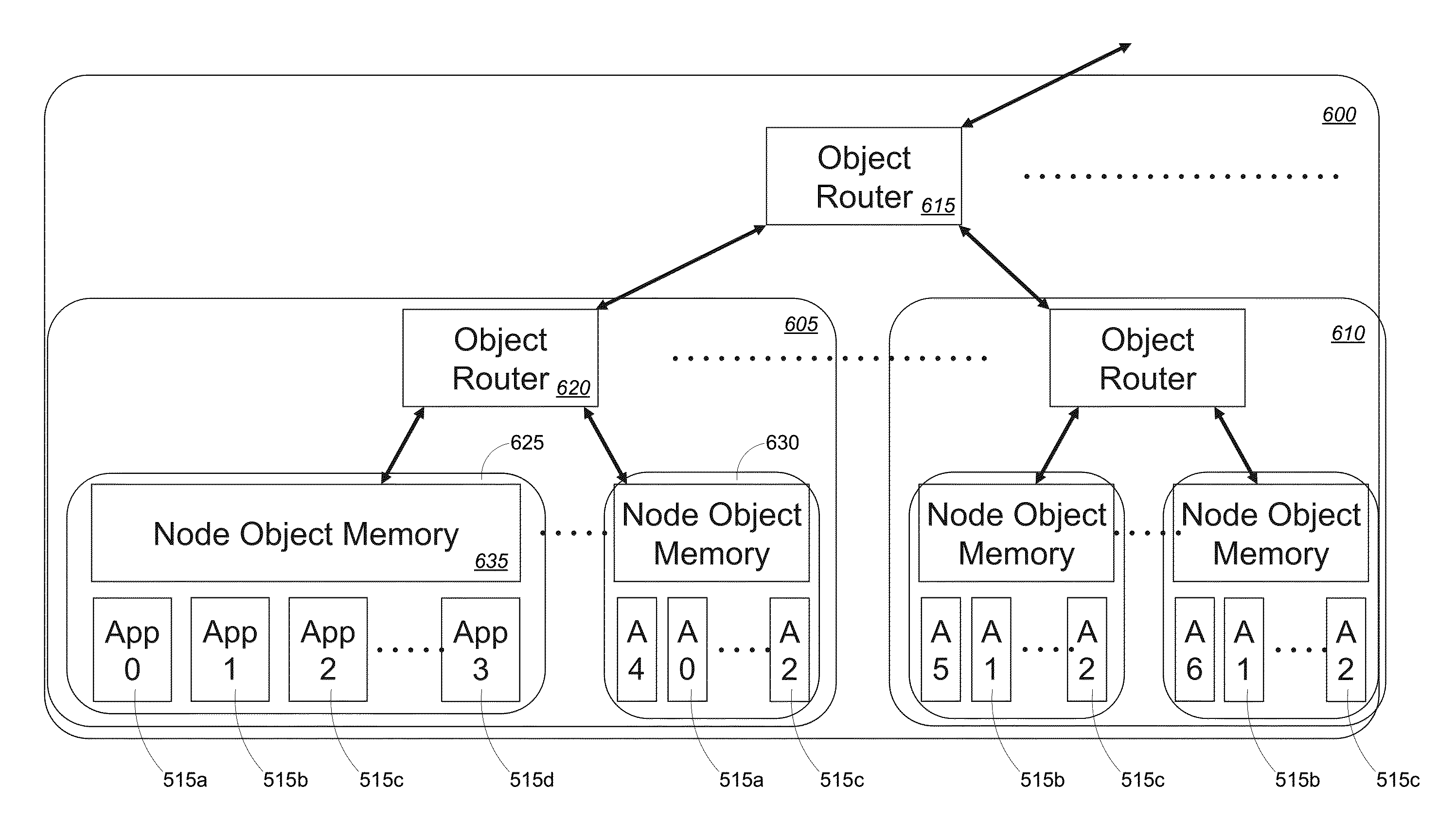

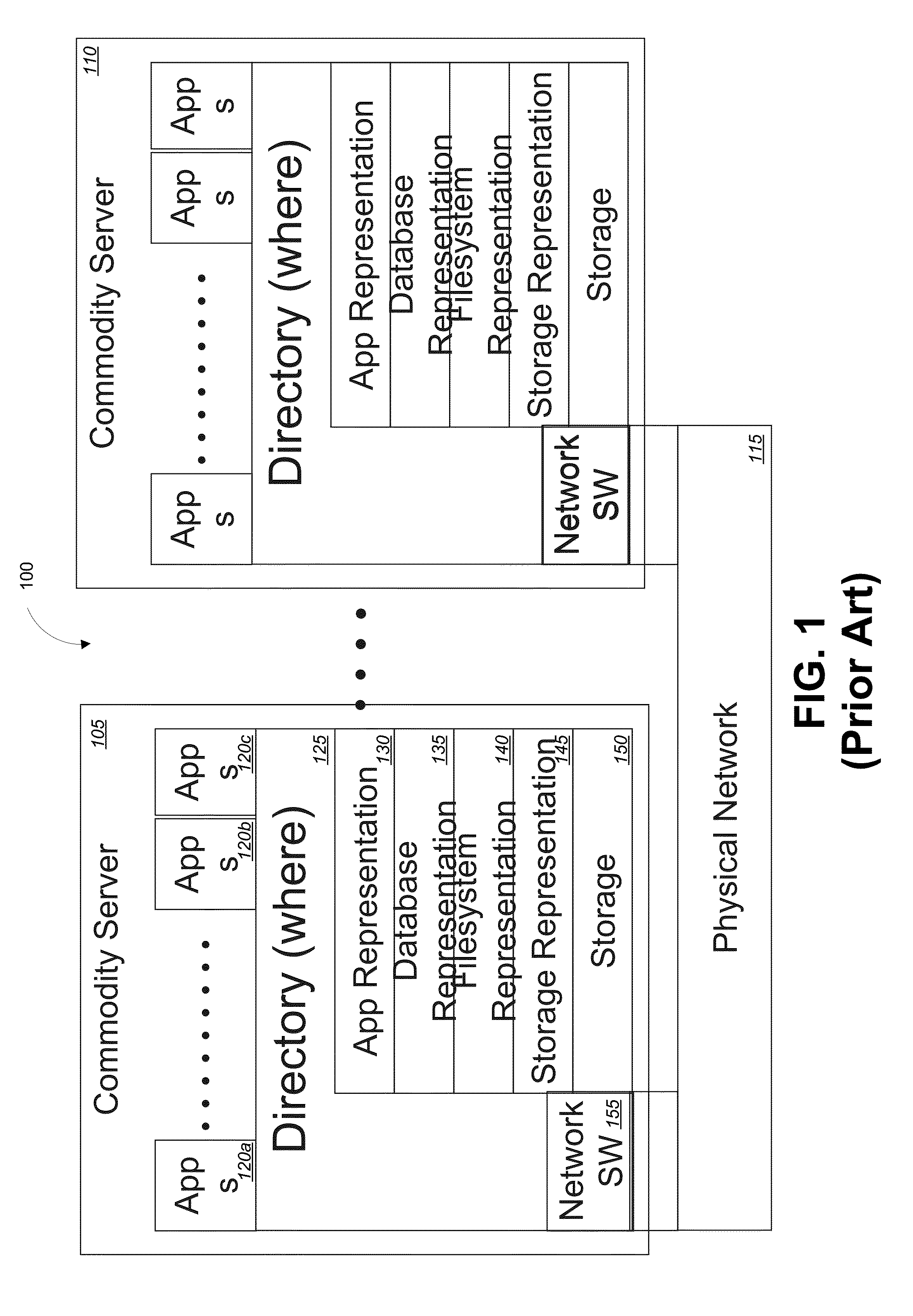

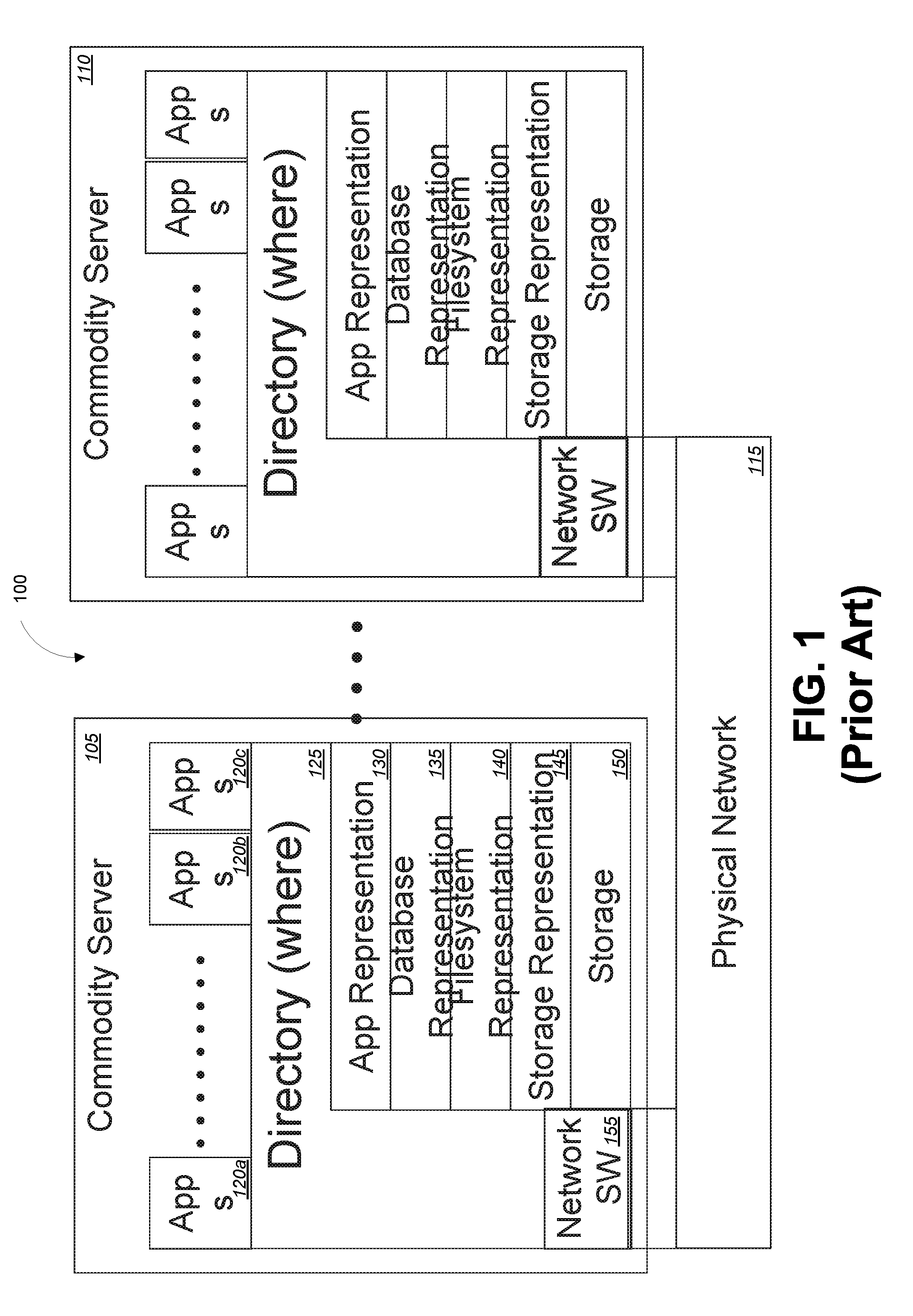

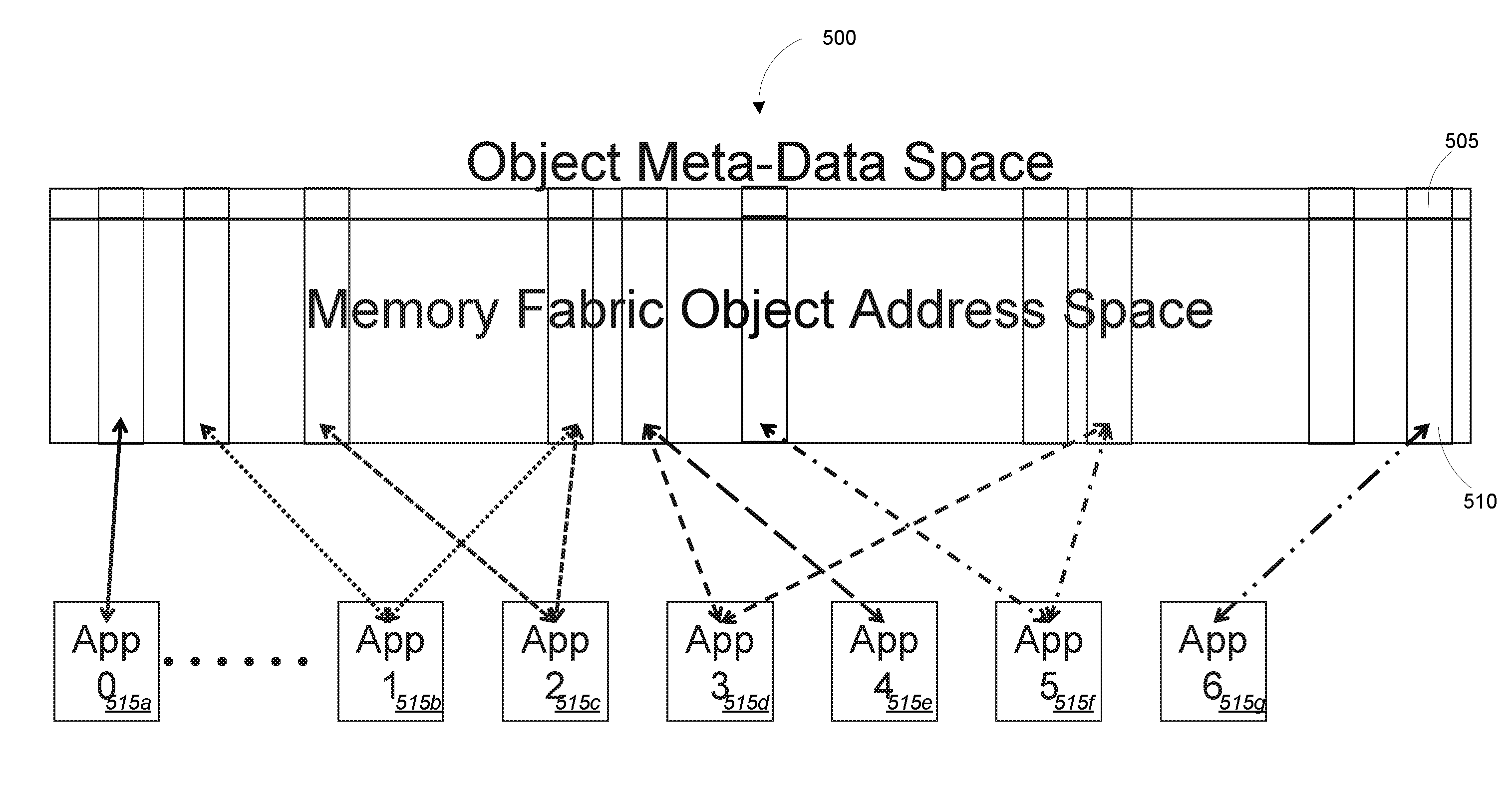

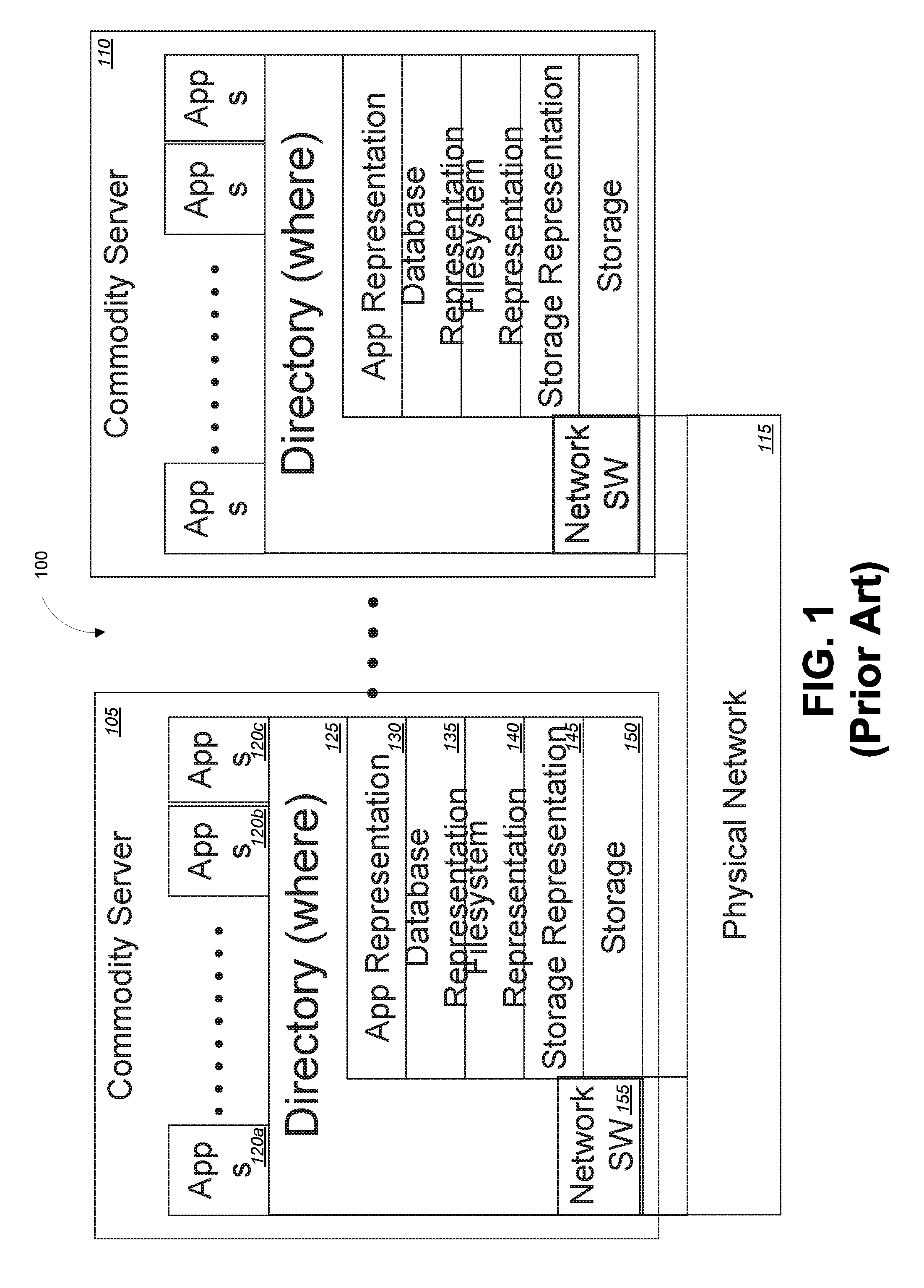

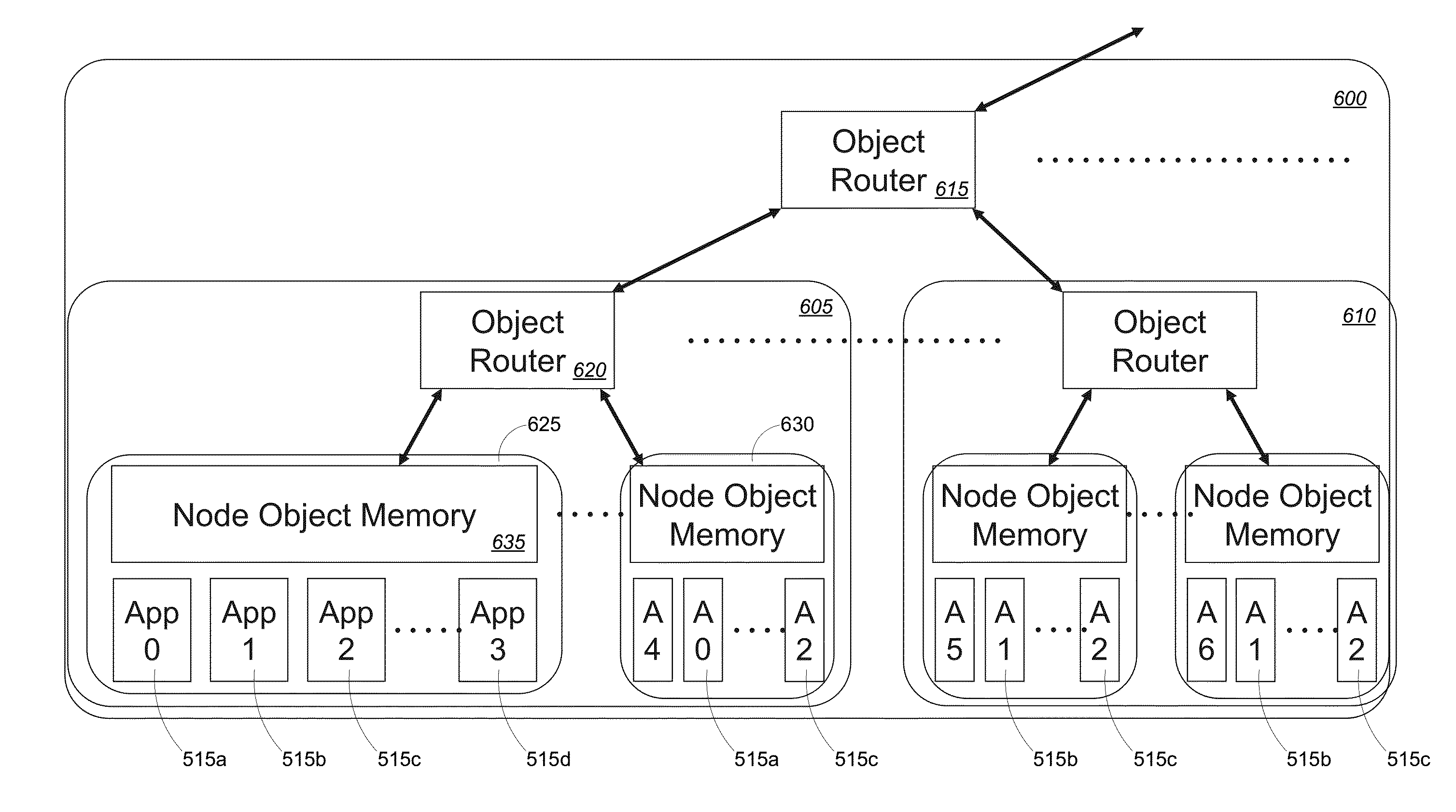

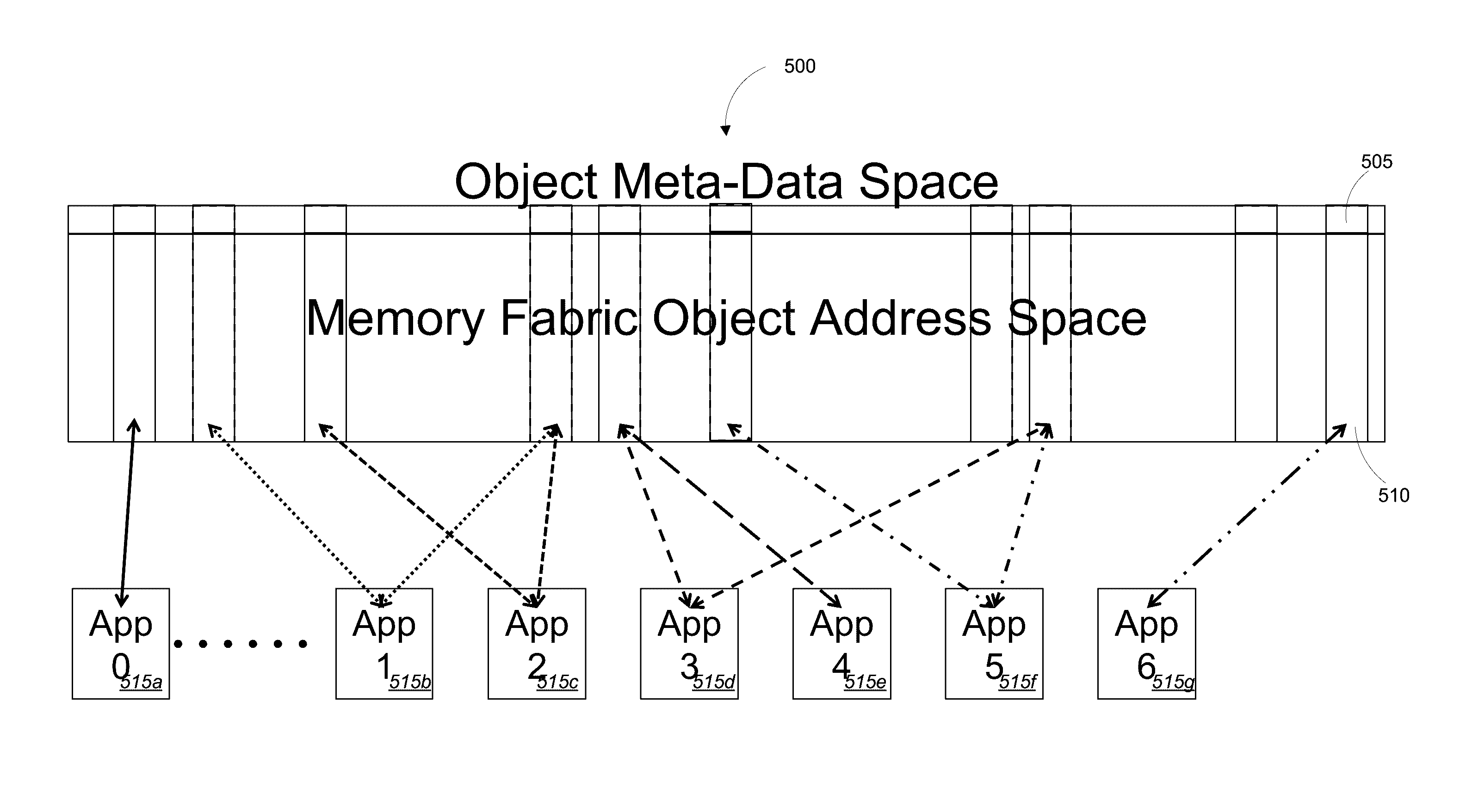

Implementation of an object memory centric cloud

PendingUS20160210082A1Improve compatibilityImprove performanceMemory architecture accessing/allocationInput/output to record carriersPhysical addressParallel computing

Embodiments of the invention provide systems and methods to implement an object memory fabric including hardware-based processing nodes having memory modules storing and managing memory objects created natively within the memory modules and managed by the memory modules at a memory layer, where physical address of memory and storage is managed with the memory objects based on an object address space that is allocated on a per-object basis with an object addressing scheme. Each node may utilize the object addressing scheme to couple to additional nodes to operate as a set of nodes so that all memory objects of the set are accessible based on the object addressing scheme, which defines invariant object addresses for the memory objects that are invariant with respect to physical memory storage locations and storage location changes of the memory objects within the memory module and across all modules interfacing the object memory fabric.

Owner:ULTRATA LLC

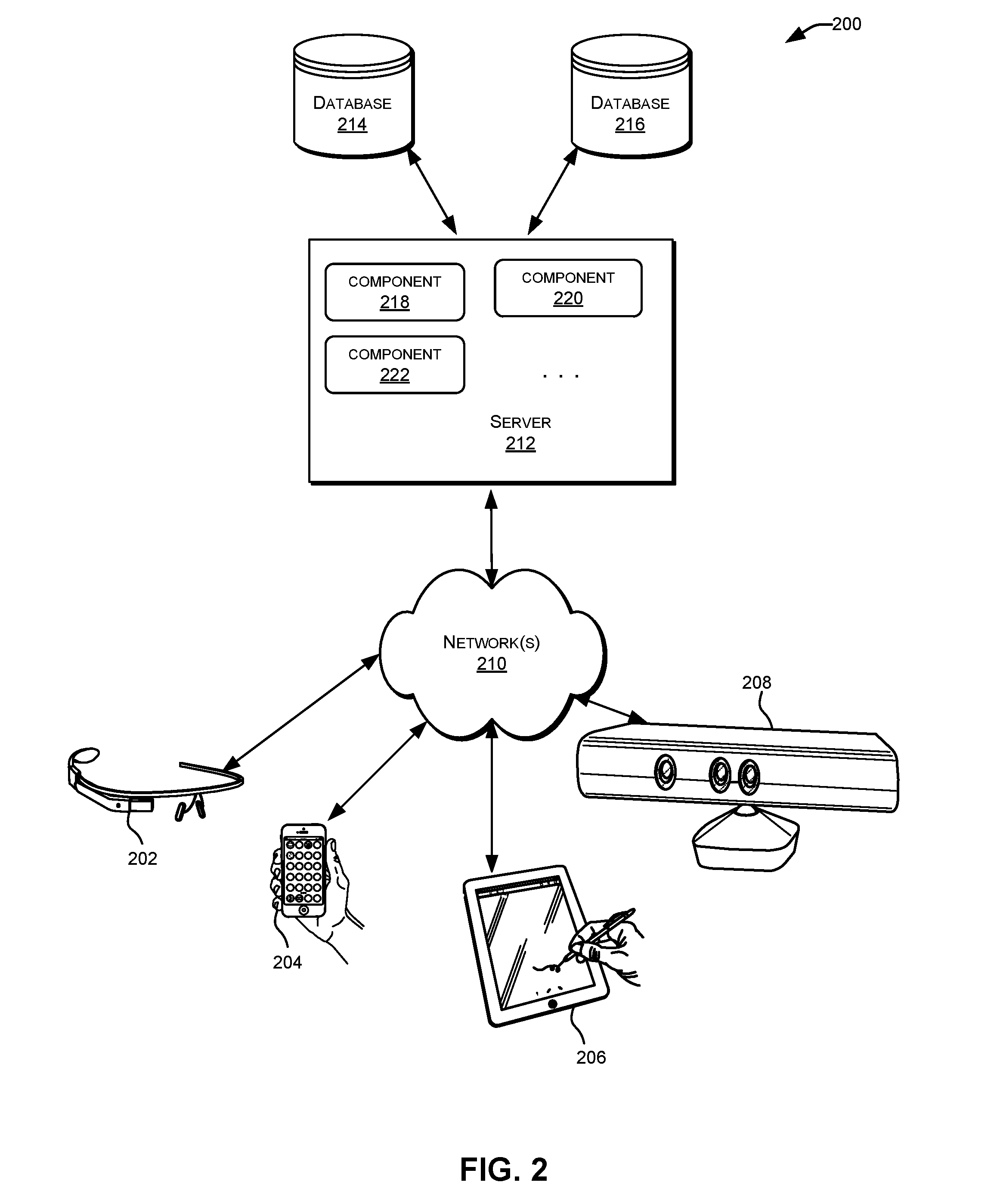

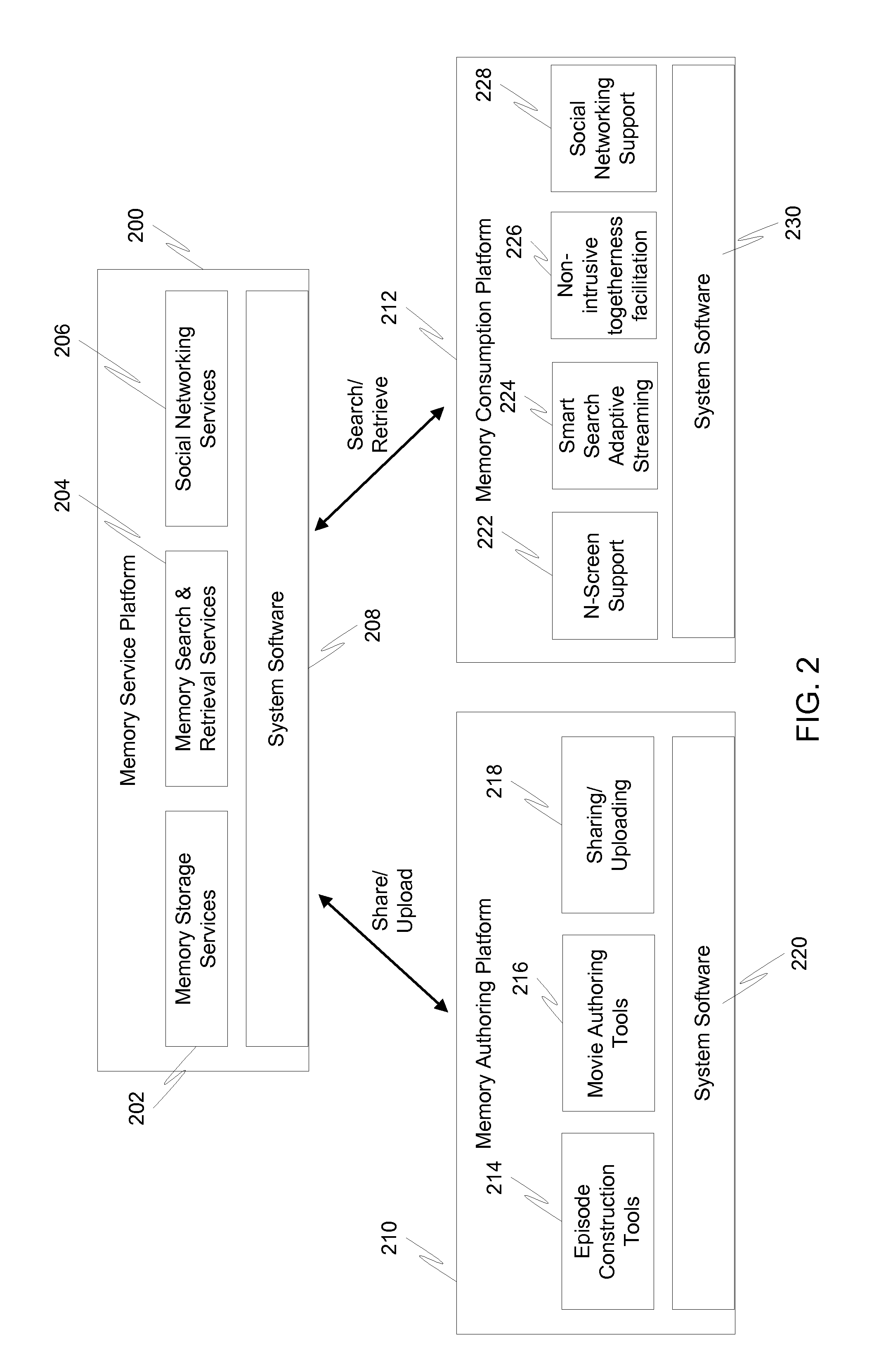

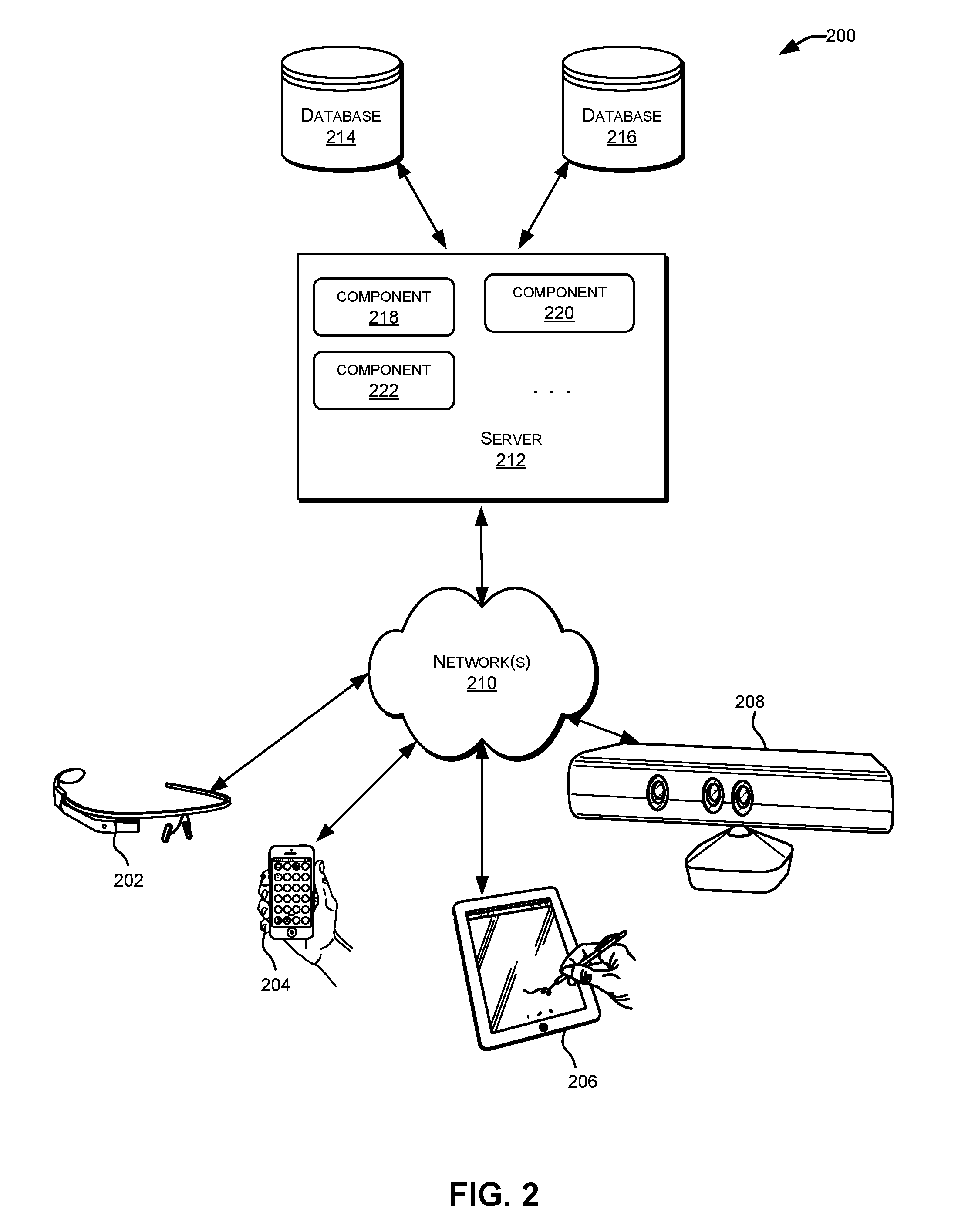

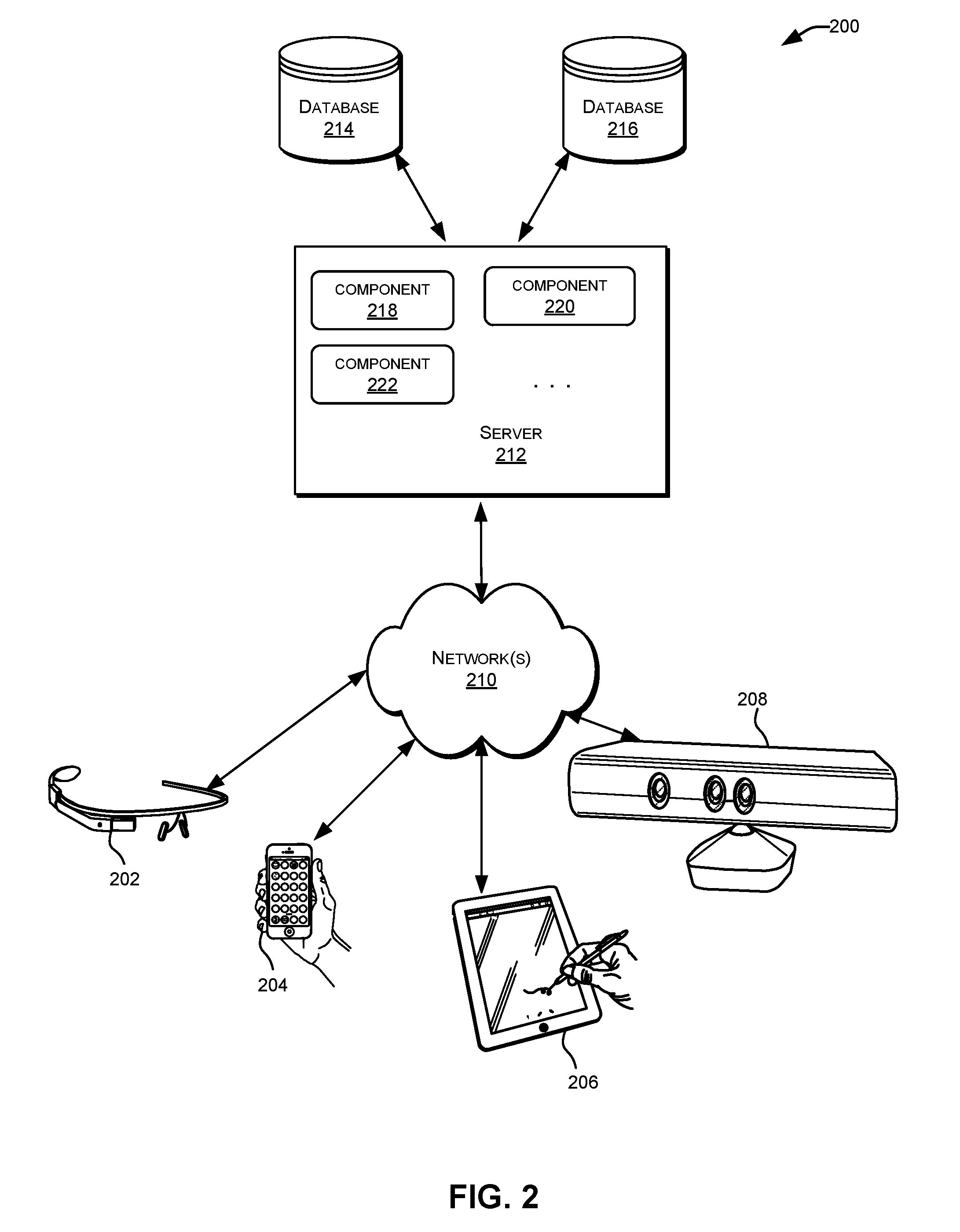

Life-logging and memory sharing

InactiveUS20130038756A1Television system detailsInput/output to record carriersMemory objectMemory sharing

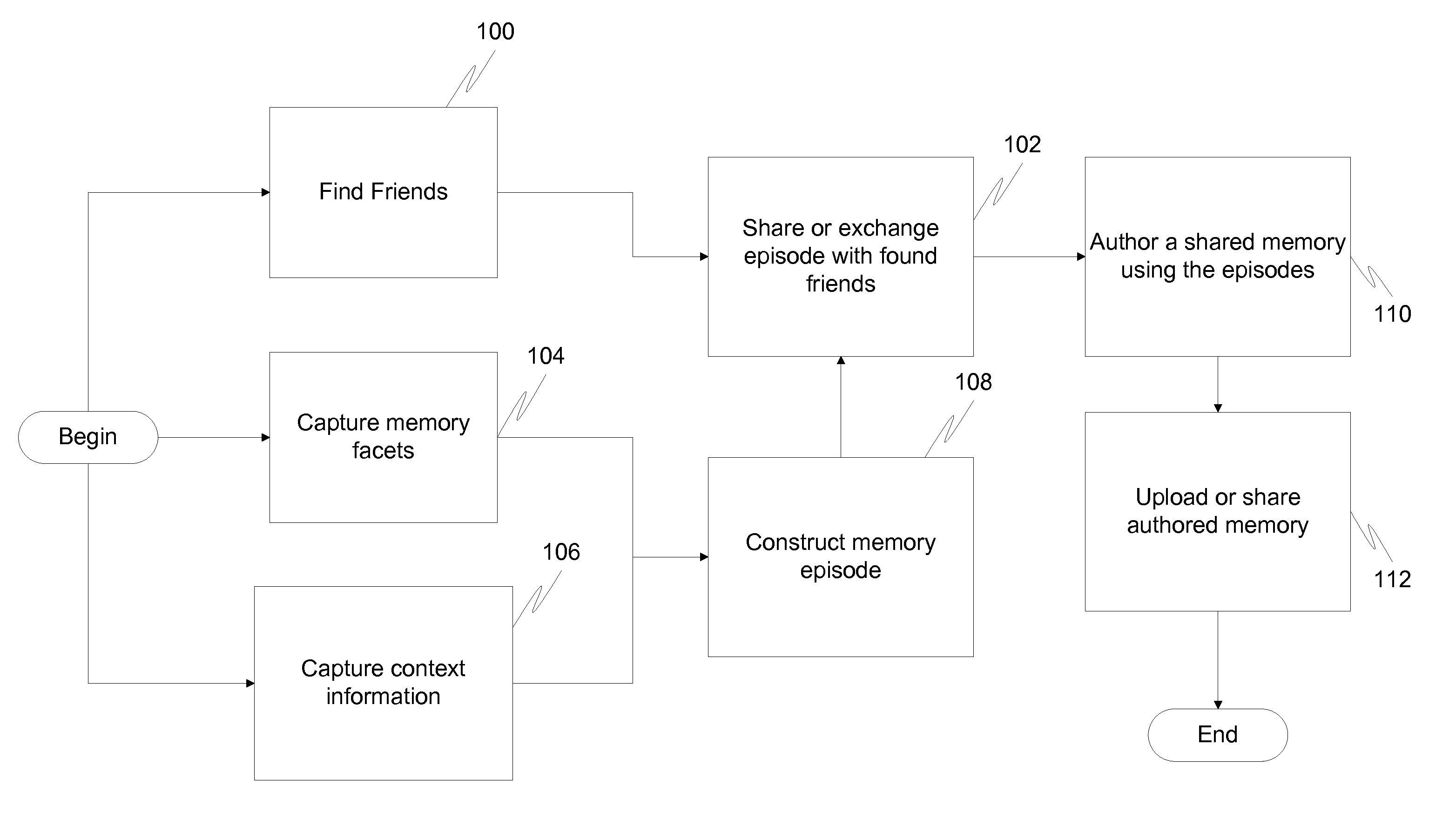

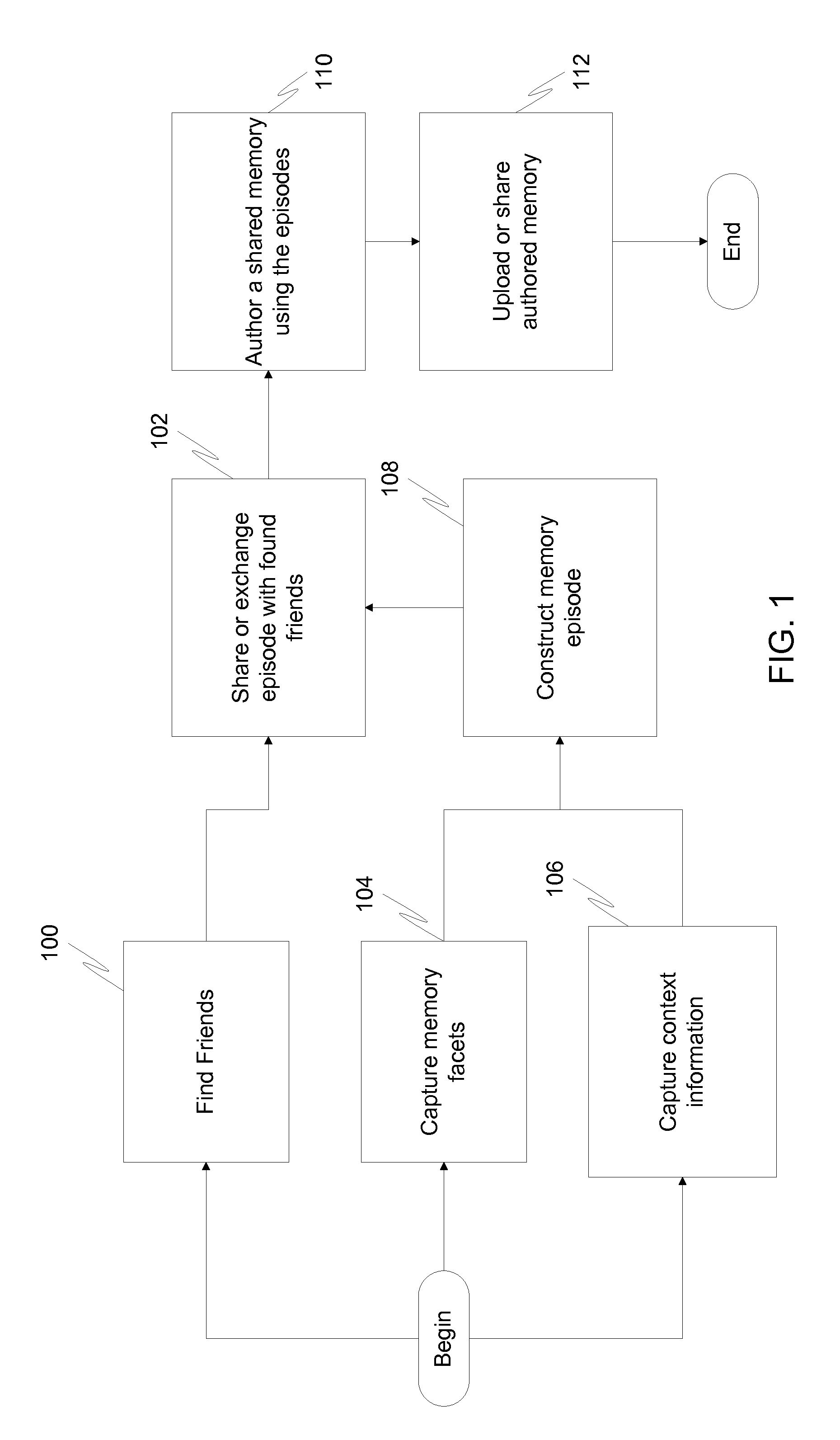

In a first embodiment of the present invention, a method for creating a memory object on an electronic device is provided, comprising: capturing a facet using the electronic device; recording sensor information relating to an emotional state of a user of the electronic device at the time the facet was captured; determining an emotional state of the user based on the recorded sensor information; and storing the facet along with the determined emotional state as a memory object.

Owner:SAMSUNG ELECTRONICS CO LTD

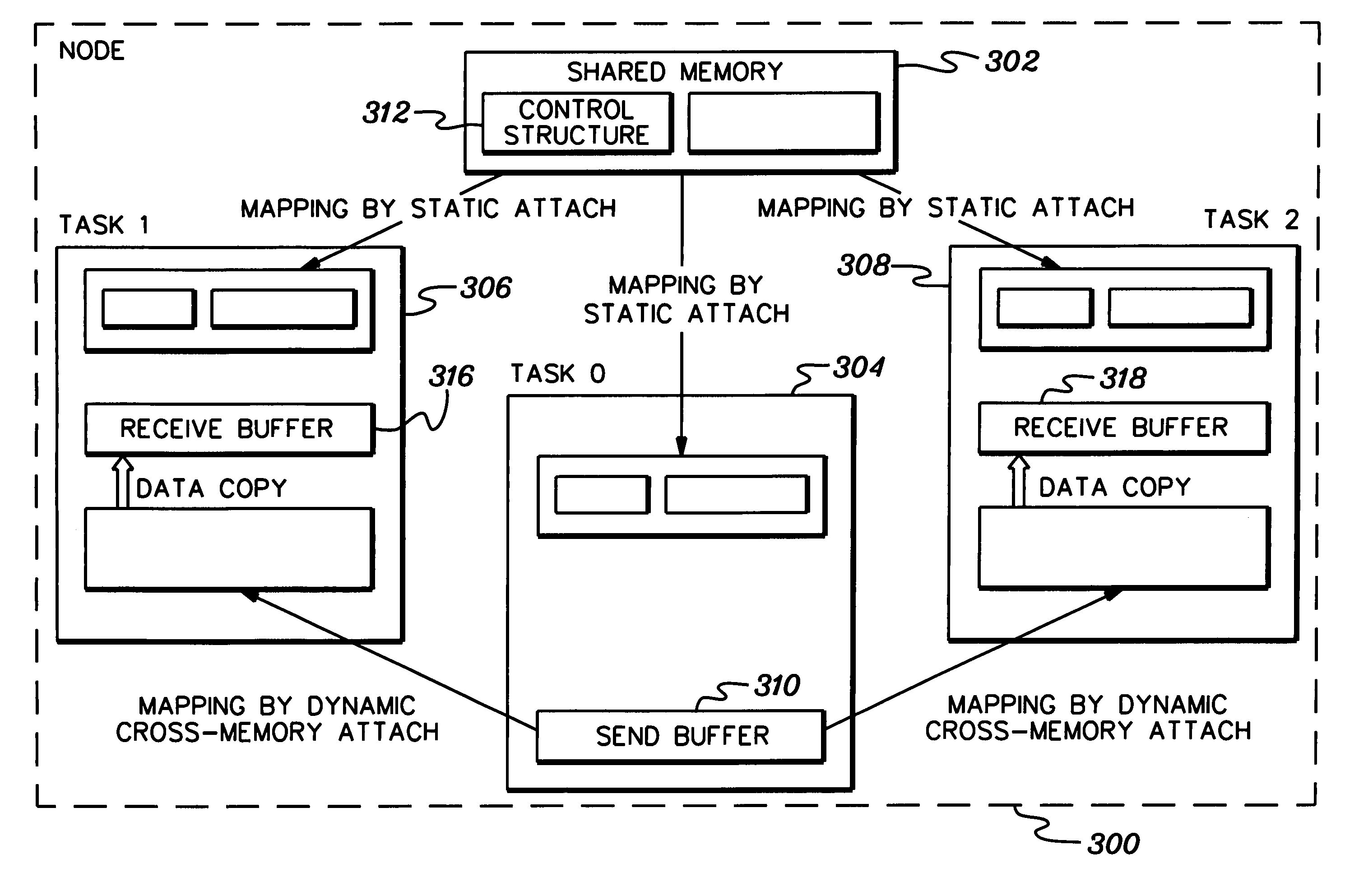

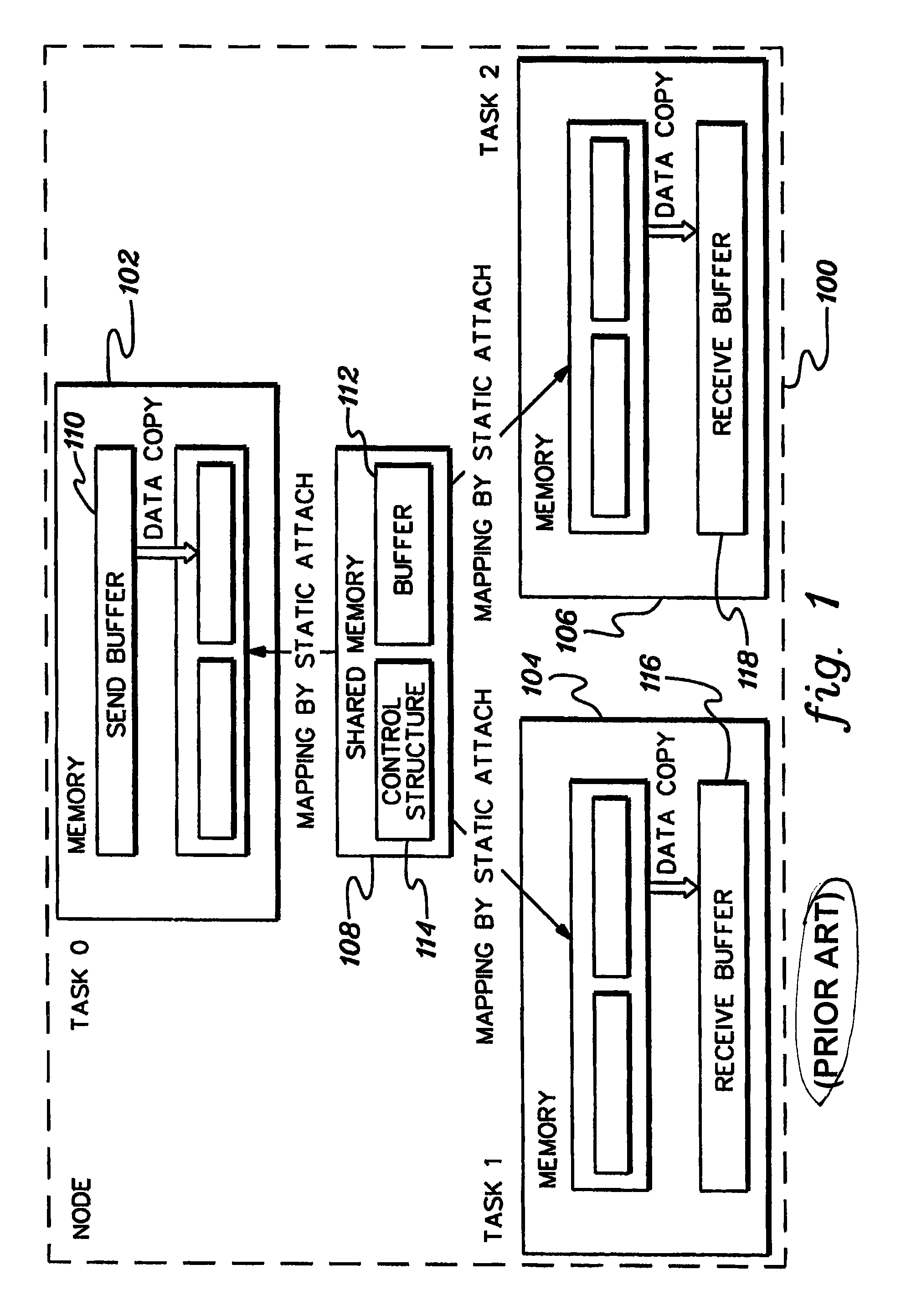

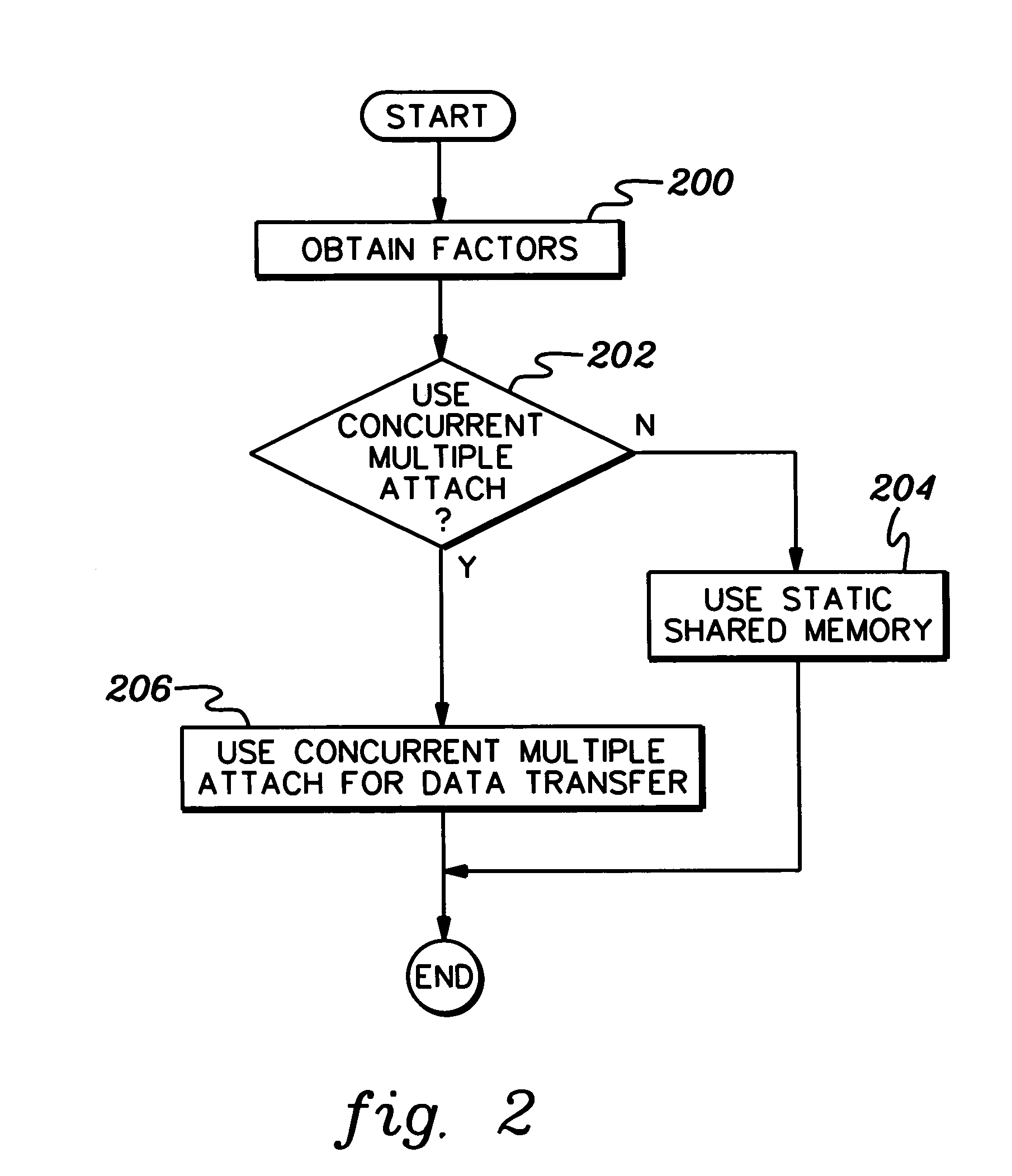

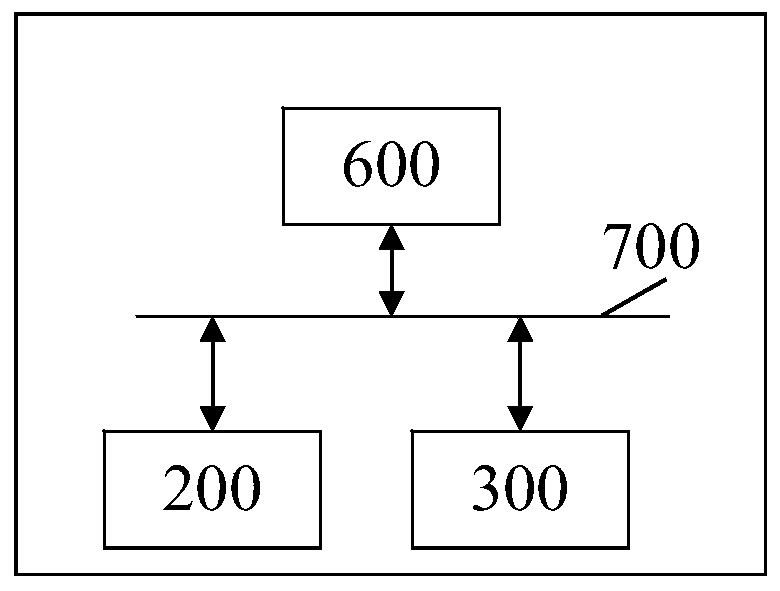

Facilitating intra-node data transfer in collective communications

Intra-node data transfer in collective communications is facilitated. A memory object of one task of a collective communication is concurrently attached to the address spaces of a plurality of other tasks of the communication. Those tasks that attach the memory object can access the memory object as if it was their own. Data can be directly written into or read from an application data structure of the memory object by the attaching tasks without copying the data to / from shared memory.

Owner:INT BUSINESS MASCH CORP

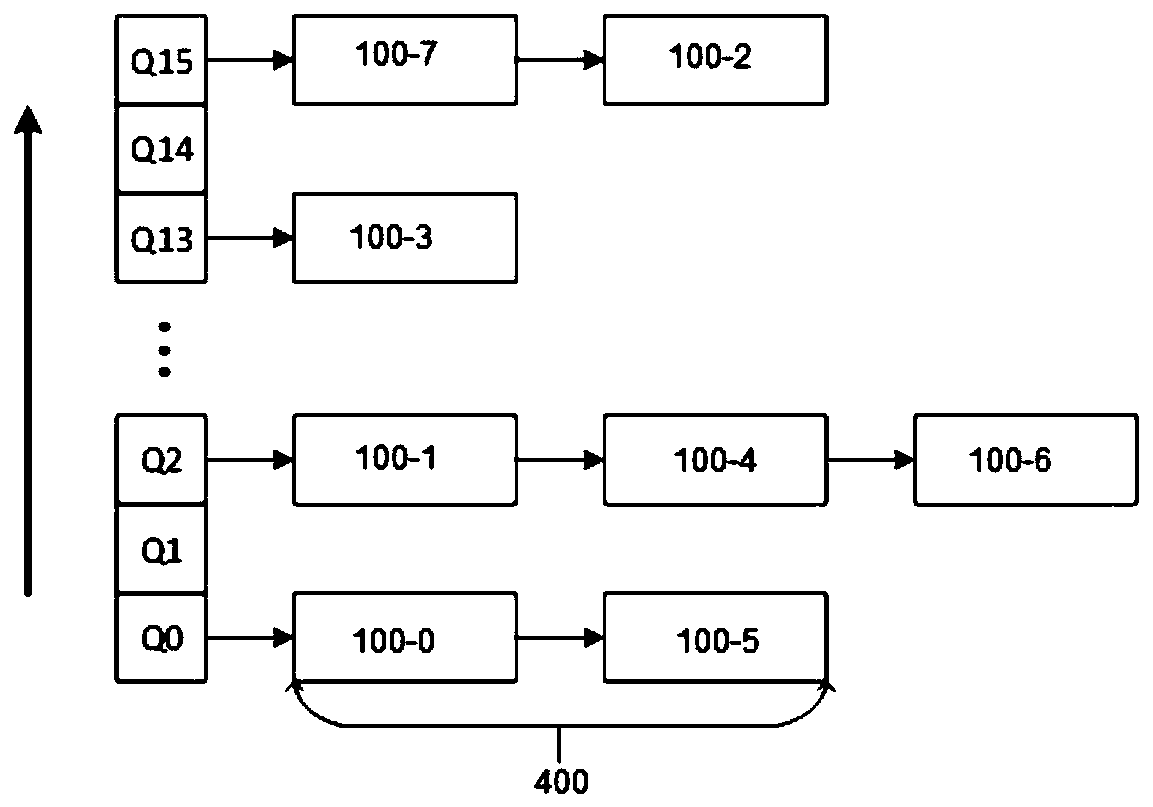

Object memory data flow instruction execution

ActiveUS20160210080A1Improve efficiencyImprove performanceMemory architecture accessing/allocationInput/output to record carriersMemory objectData access

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. More specifically, embodiments of the present invention are directed to an instruction set of an object memory fabric. This object memory fabric instruction set can be used to provide a unique instruction model based on triggers defined in metadata of the memory objects. This model represents a dynamic dataflow method of execution in which processes are performed based on actual dependencies of the memory objects. This provides a high degree of memory and execution parallelism which in turn provides tolerance of variations in access delays between memory objects. In this model, sequences of instructions are executed and managed based on data access. These sequences can be of arbitrary length but short sequences are more efficient and provide greater parallelism.

Owner:ULTRATA LLC

Method and Apparatus for Efficient Memory Placement

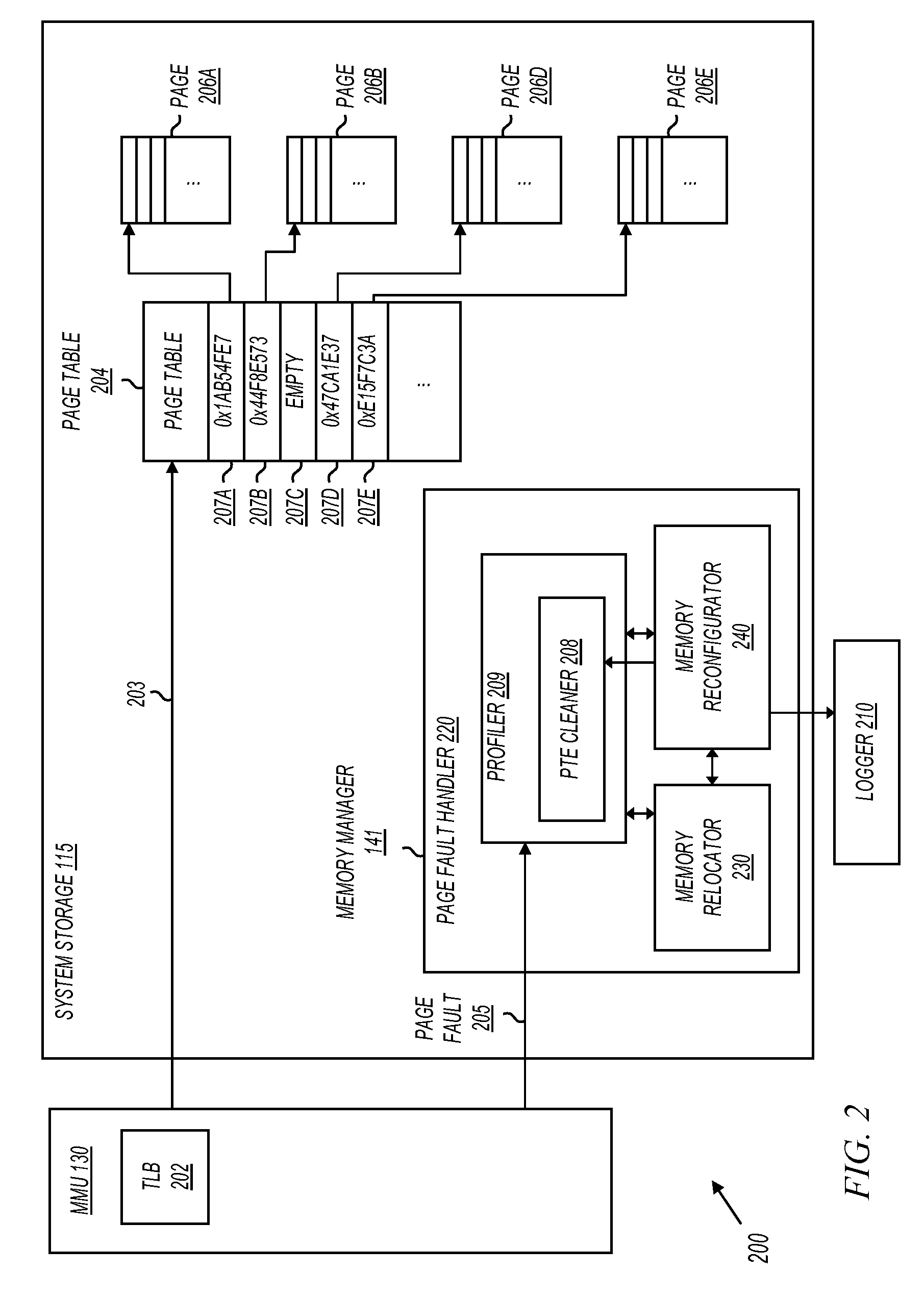

InactiveUS20100169602A1Memory architecture accessing/allocationMemory systemsMemory profilingMemory type

A memory profiling system profiles memory objects in various memory devices and identifies memory objects as candidates to be moved to a more efficient memory device. Memory object profiles include historical read frequency, write frequency, and execution frequency. The memory object profile is compared to parameters describing read and write performance of memory types to determine candidate memory types for relocating memory objects. Memory objects with high execution frequency may be given preference when relocating to higher performance memory devices.

Owner:MICRON TECH INC

Object memory fabric performance acceleration

InactiveUS20160210079A1Improve efficiencyImprove performanceInput/output to record carriersMemory adressing/allocation/relocationParallel computingMemory object

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. Embodiments described herein can provide transparent and dynamic performance acceleration, especially with big data or other memory intensive applications, by reducing or eliminating overhead typically associated with memory management, storage management, networking, and data directories. Rather, embodiments manage memory objects at the memory level which can significantly shorten the pathways between storage and memory and between memory and processing, thereby eliminating the associated overhead between each.

Owner:ULTRATA LLC

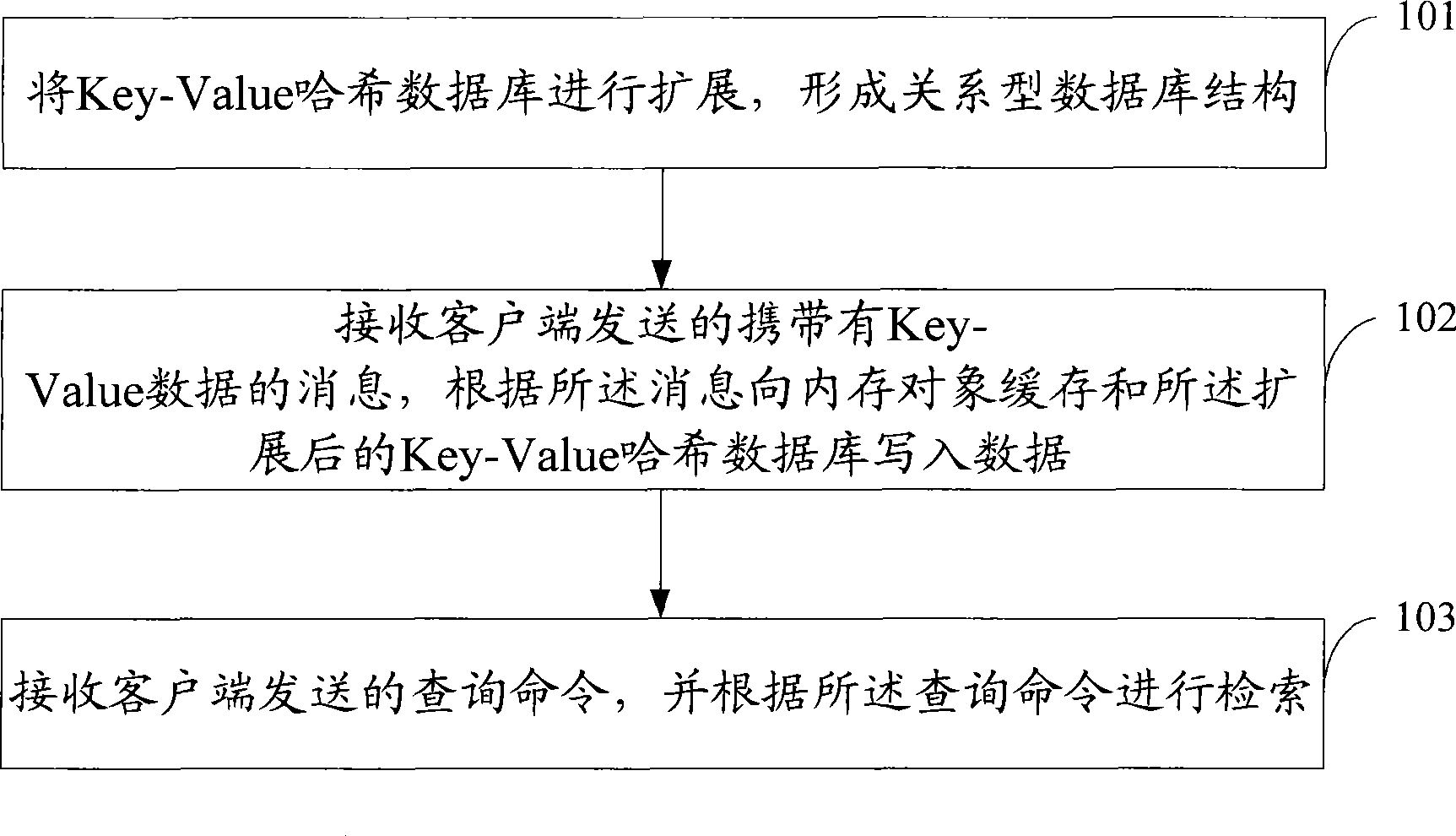

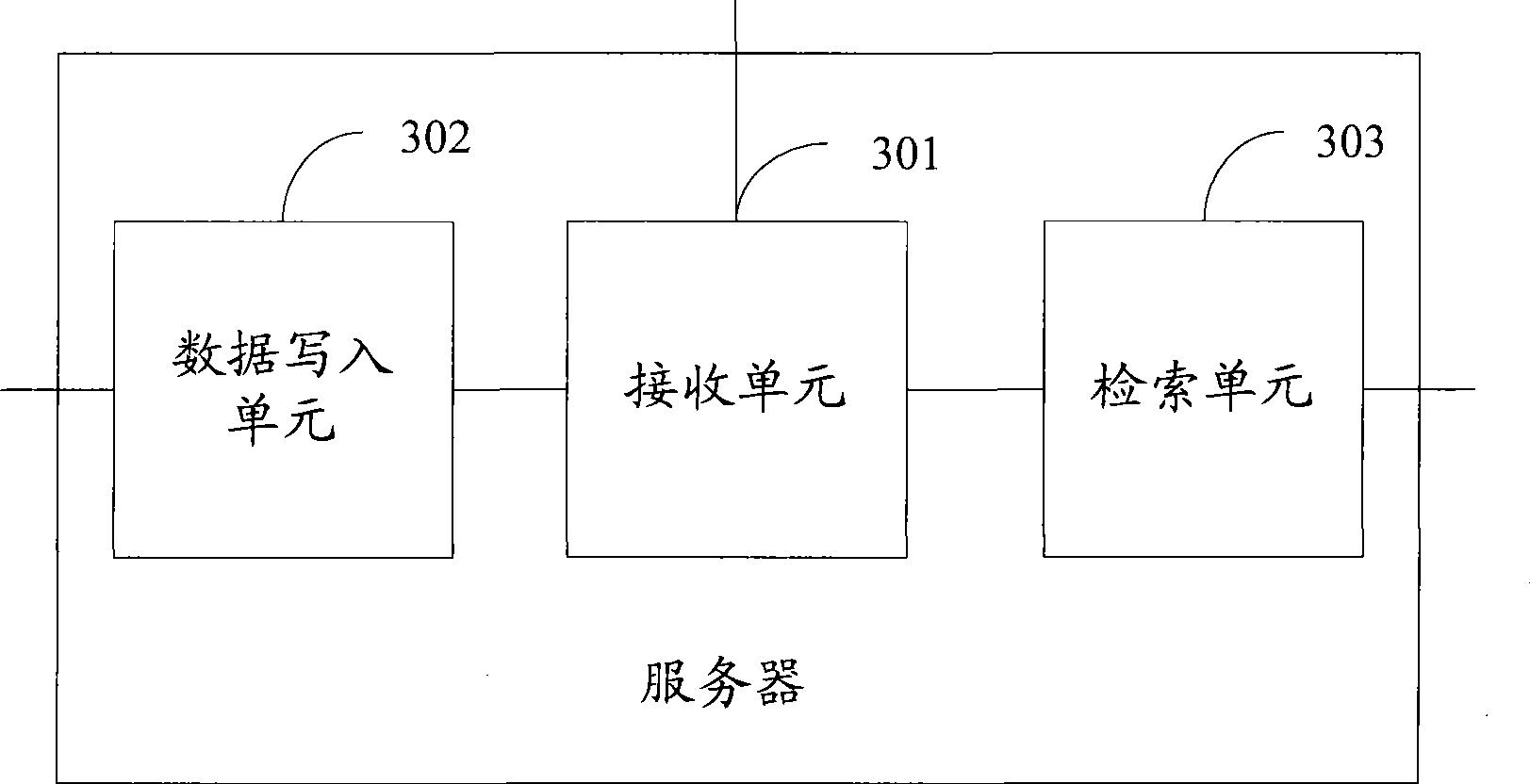

Method, system and server for implementing real time search

ActiveCN101510209AFast read and writeGuaranteed not to loseSpecial data processing applicationsRelational databaseMemory object

The embodiment of the invention discloses a method for realizing real-time retrieval. After extending a Key-Value Hash database to form a relational database structure, the method comprises the following steps of: receiving information carrying Key-Value data and delivered by a client; writing data into memory object caches and extended Key-Value Hash database according to the information; receiving query commands delivered by the client; and retrieving according to the query commands. Simultaneously, the embodiment of the invention also correspondingly discloses a system and a server for realizing the real-time retrieval. Adding the Key-Value database served as persistent storage for the memory object caches, the embodiment of the invention is capable of realizing quickly reading and writing the data in the database under the condition of high concurrent access and prevents data loss in the retrieving process. Simultaneously, the Key-Value database is extended to form the relational database structure, thus being capable of realizing the queries on complex conditions.

Owner:BEIJING KINGSOFT SOFTWARE +1

Object memory data flow triggers

ActiveUS20160210048A1Improve efficiencyImprove performanceMemory architecture accessing/allocationInput/output to record carriersMemory objectObject storage

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. More specifically, embodiments of the present invention are directed to an instruction set of an object memory fabric. This object memory fabric instruction set can include trigger instructions defined in metadata for a particular memory object. Each trigger instruction can comprise a single instruction and action based on reference to a specific object to initiate or perform defined actions such as pre-fetching other objects or executing a trigger program.

Owner:ULTRATA LLC

Universal single level object memory address space

InactiveUS20160210078A1Improve efficiencyImprove performanceInput/output to record carriersMemory adressing/allocation/relocationMemory addressMemory object

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. Embodiments described herein can eliminate typical size constraints on memory space of commodity servers and other commodity hardware imposed by address sizes. Rather, physical addressing can be managed within the memory objects themselves and the objects can be in turn accessed and managed through the object name space.

Owner:ULTRATA LLC

Low-contention grey object sets for concurrent, marking garbage collection

InactiveUS6925637B2Limited amountImprove performanceData processing applicationsProgram initiation/switchingWaste collectionComputerized system

A method and system of carrying out garbage collection in a computer system. Specifically, the method and system utilize low contention grey object sets for concurrent marking garbage collection. A garbage collector traces memory objects and identifies memory objects according to a three-color abstraction, identifying a memory object with a certain color if that memory object itself has been encountered by the garbage collector, but some of the objects to which the memory object refers have not yet been encountered. A packet manager organizes memory objects identified with the certain color into packets, provides services to obtain empty or partially full packets, and obtain full or partially full packets, and verifies whether a packet of the certain color is being accessed by one of the threads of the garbage collector.

Owner:RPX CORP

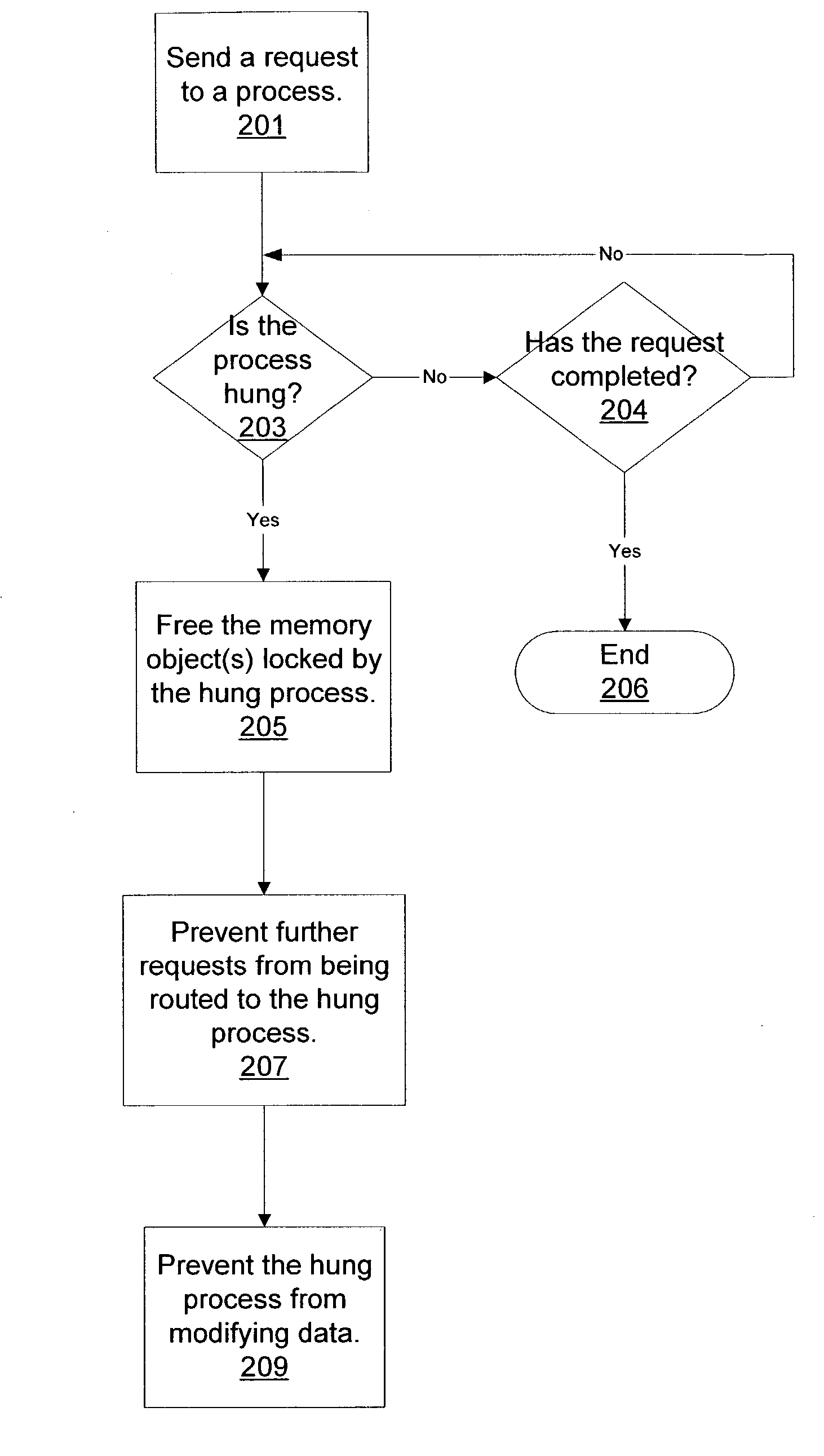

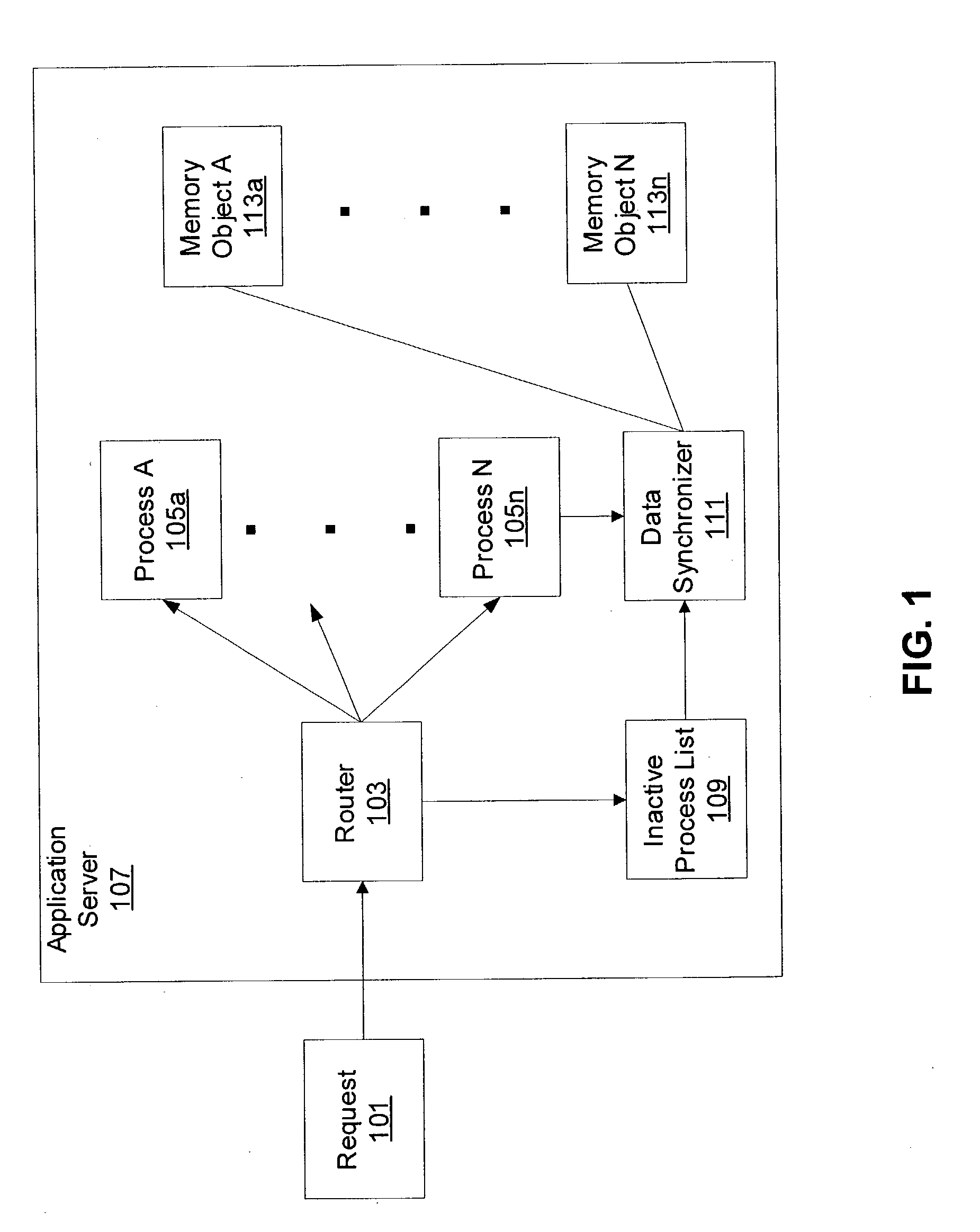

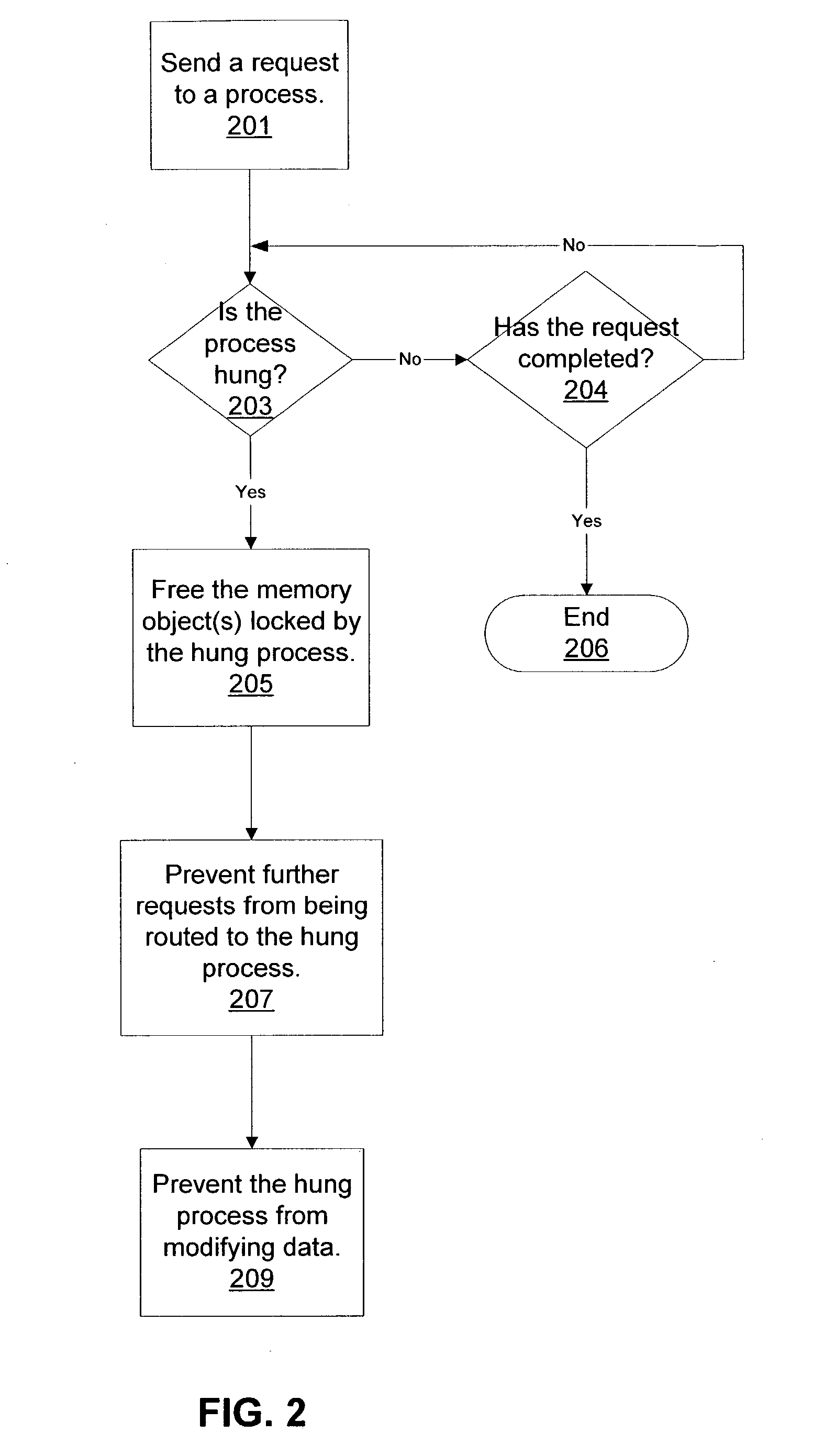

System and method for request routing

ActiveUS20040225922A1Reduce processing stepsData processing applicationsUnauthorized memory use protectionData synchronizationMemory object

A method and a system for request routing may include a router configured to forward a request to a process. The process may acquire a lock on a memory object. If the process is becomes hung, a data synchronizer may release the lock on the memory object assigned to the process. The lock on the memory object assigned to the process may be released after the process is detected to be hung and after another process requests a lock to the memory object. The router may list inactive processes, and if the process is hung, add the process to the list of inactive processes. The router may be configured to check the inactive list and not route requests to processes on the inactive list. The data synchronizer may be configured to prevent processes on the inactive list from modifying data.

Owner:ORACLE INT CORP

Method for garbage collection in heterogeneous multiprocessor systems

Garbage collection in heterogeneous multiprocessor systems is provided. In some illustrative embodiments, garbage collection operations are distributed across a plurality of the processors in the heterogeneous multiprocessor system. Portions of a global mark queue are assigned to processors of the heterogeneous multiprocessor system along with corresponding chunks of a shared memory. The processors perform garbage collection on their assigned portions of the global mark queue and corresponding chunk of shared memory marking memory object references as reachable or adding memory object references to a non-local mark stack. The marked memory objects are merged with a global mark stack and memory object references in the non-local mark stack are merged with a “to be traced” portion of the global mark queue for re-checking using a garbage collection operation.

Owner:IBM CORP

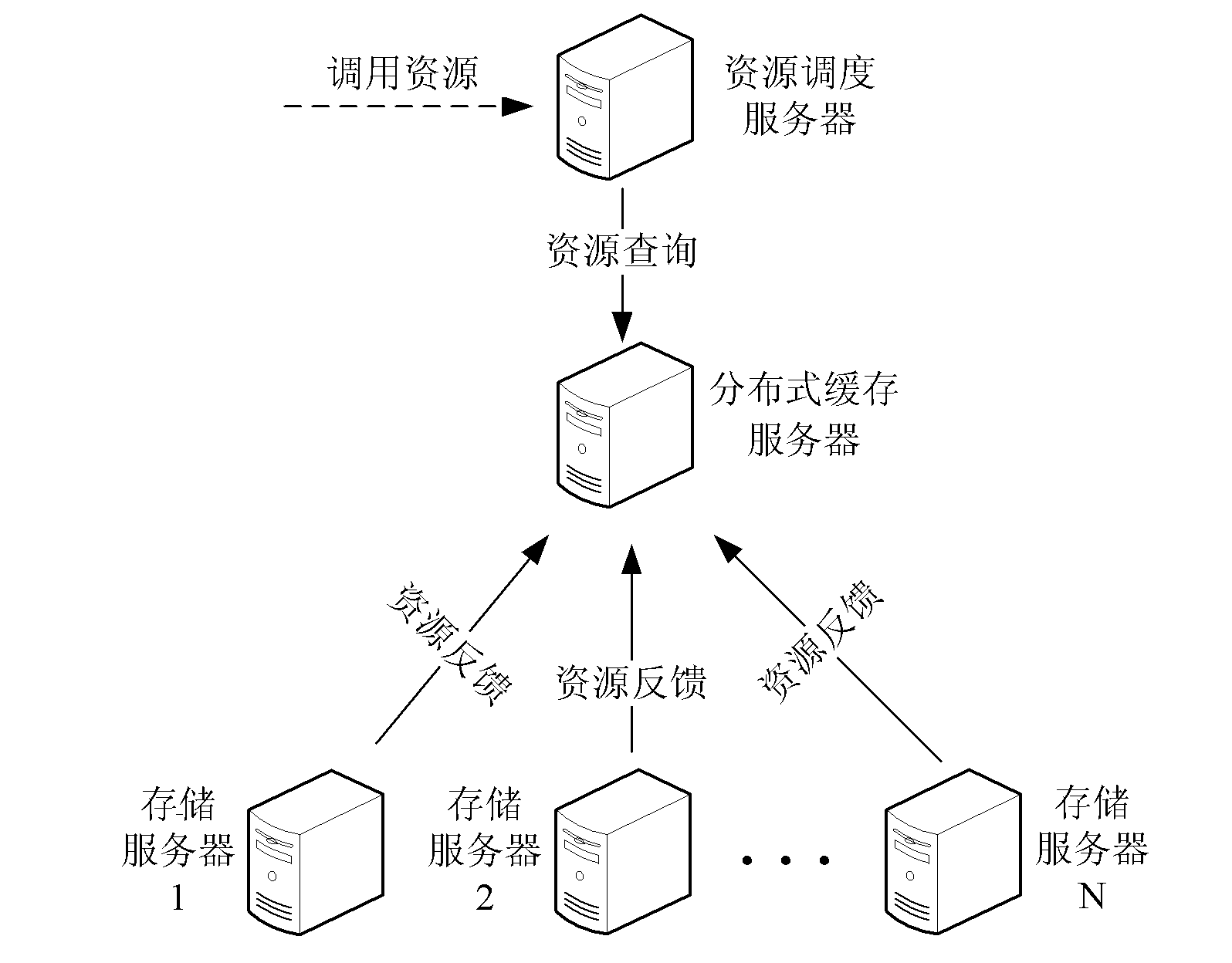

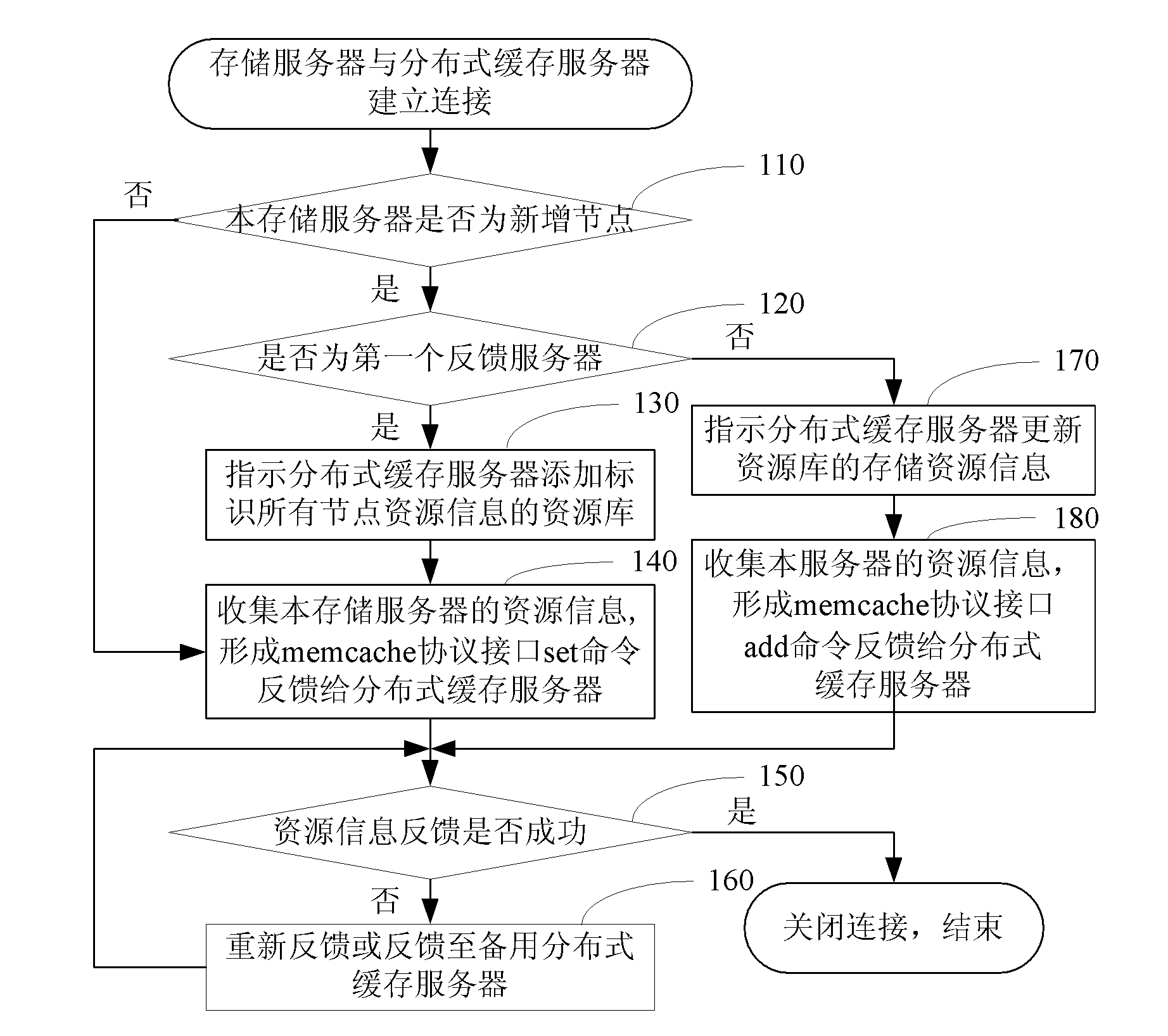

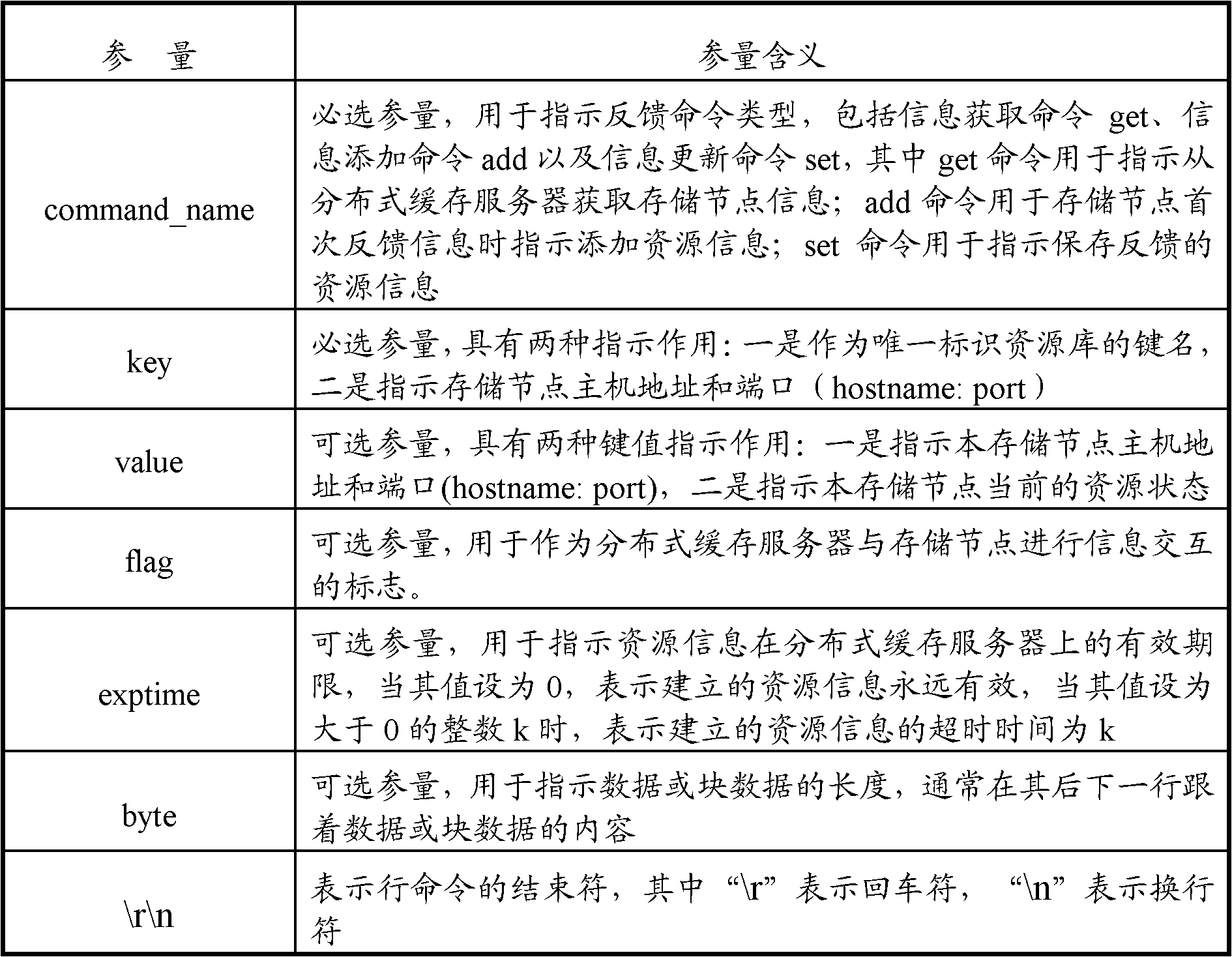

System and method for scheduling cloud storage resource

InactiveCN102130959AReliable schedulingReasonable schedulingTransmissionSpecial data processing applicationsResource informationDistributed object

The invention discloses a system and a method for scheduling a cloud storage resource. The system at least comprises a plurality of storage servers and a distributed cache server; the plurality of storage servers are used for feeding storage resource information of the server back to the distributed cache server via a distributed object cache interface at set intervals; and the distributed cache server is used for storing the storage resource information fed back by each storage server. Through a protocol interface of a distributed cache memory object, the resource information of each storage node is organized into a data format according with the protocol interface to be fed back quickly and timely, so that a cloud storage system can reliably and rationally schedule the storage resource.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

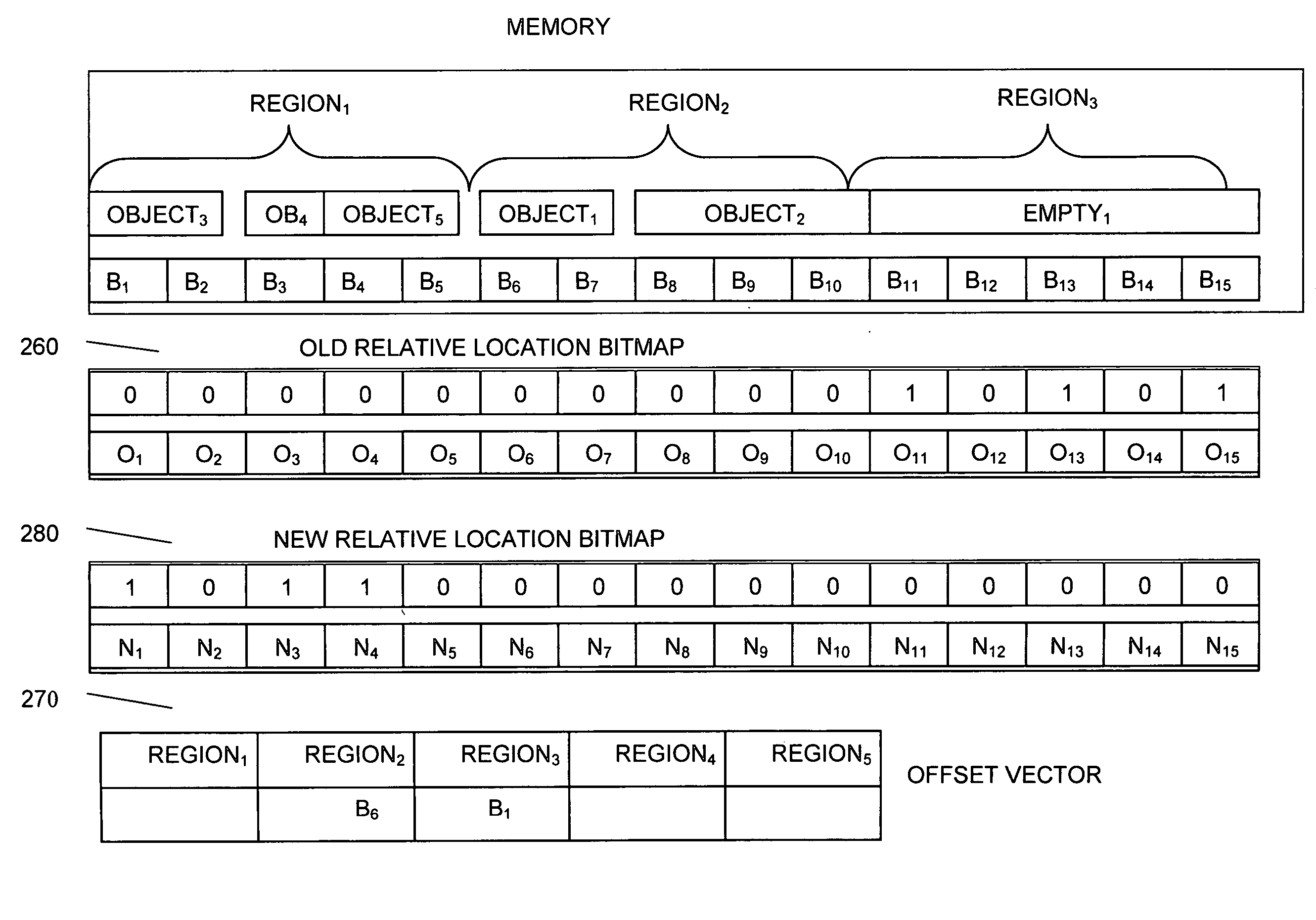

Relative positioning and access of memory objects

InactiveUS20050138092A1Memory adressing/allocation/relocationSpecial data processing applicationsMemory objectParallel computing

A garbage collector including a bit mapper operative to designate a plurality of regions within a memory, associate any of a plurality of objects with any of the regions if the start address of the object to be associated lies within the region, and record the relative location of a group of objects within any of the regions, a mover operative to relocate any of the groups of objects found within a source region from among the regions to begin at a destination address, and a fixer operative to record the destination address at an index corresponding to the source region.

Owner:LINKEDIN

Extensible memory object storage system based on heterogeneous memory

ActiveCN110134514ARealize dynamic usageEliminate overheadMemory architecture accessing/allocationResource allocationMemory objectHeterogeneous network

The invention relates to an extensible memory object storage system based on a heterogeneous memory, which comprises a DRAM (Dynamic Random Access Memory) and an NVM (Non-Volatile Memory), and is configured to: execute a memory allocation operation through a Slab-based memory allocation mechanism and divide each Slab Class into a DRAM (Dynamic Random Access Memory) domain and an NVM domain; monitor access heat degree information of each memory object at an application layer level; and dynamically adjust a storage area of corresponding key value data in each Slab Class based on the access heatdegree information of each memory object, store the key value data of the memory object with relatively high access heat in each Slab Class in a DRAM domain, and store the key value data of the memoryobject with relatively low access heat in each Slab Class in an NVM domain; and monitor the access heat of the memory object at the application layer level. Dynamic use of the DRAM / NVM heterogeneousmemory is achieved, and compared with a traditional method of monitoring the access heat of the application at the hardware or operating system level, huge hardware and operating system expenditure iseliminated.

Owner:HUAZHONG UNIV OF SCI & TECH

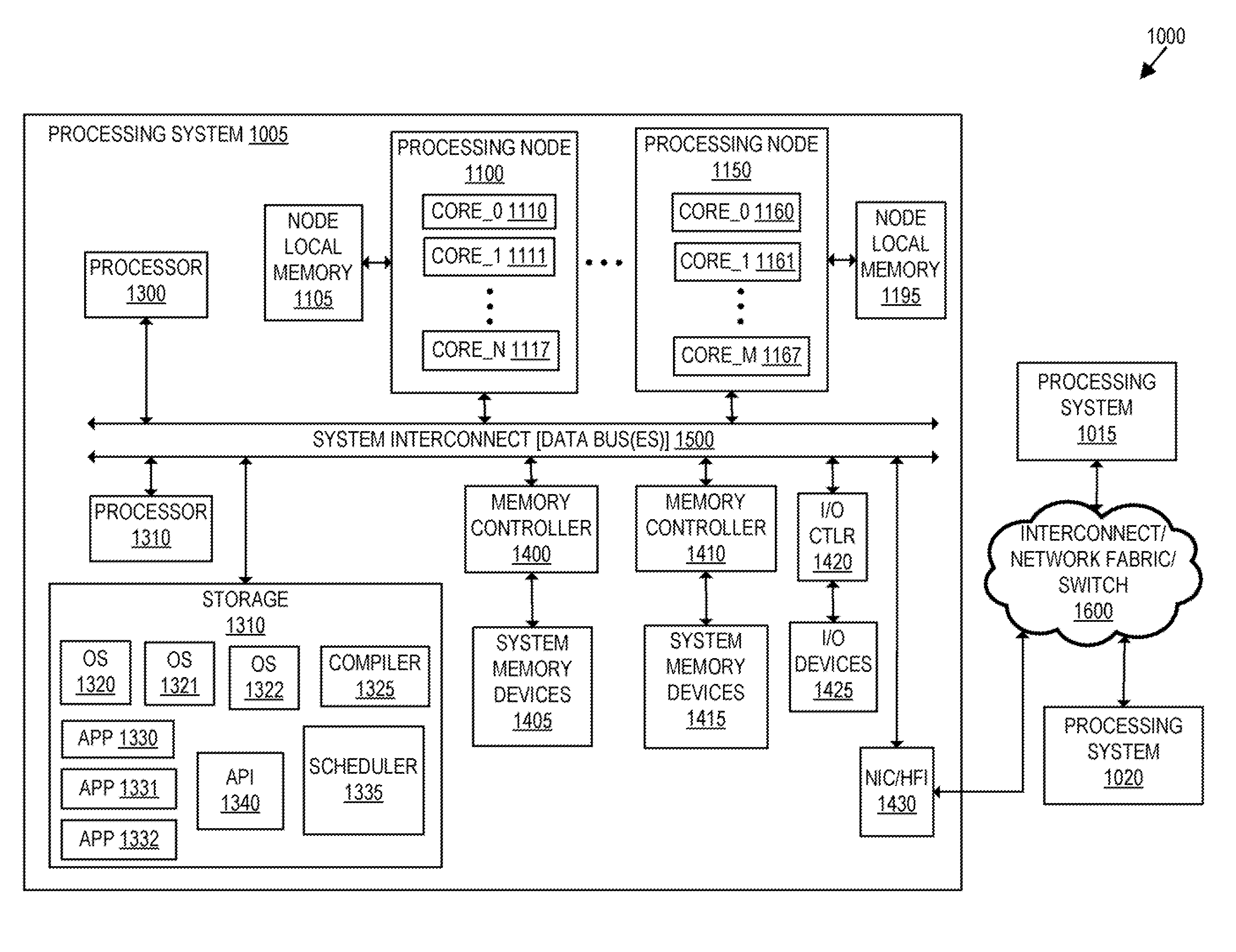

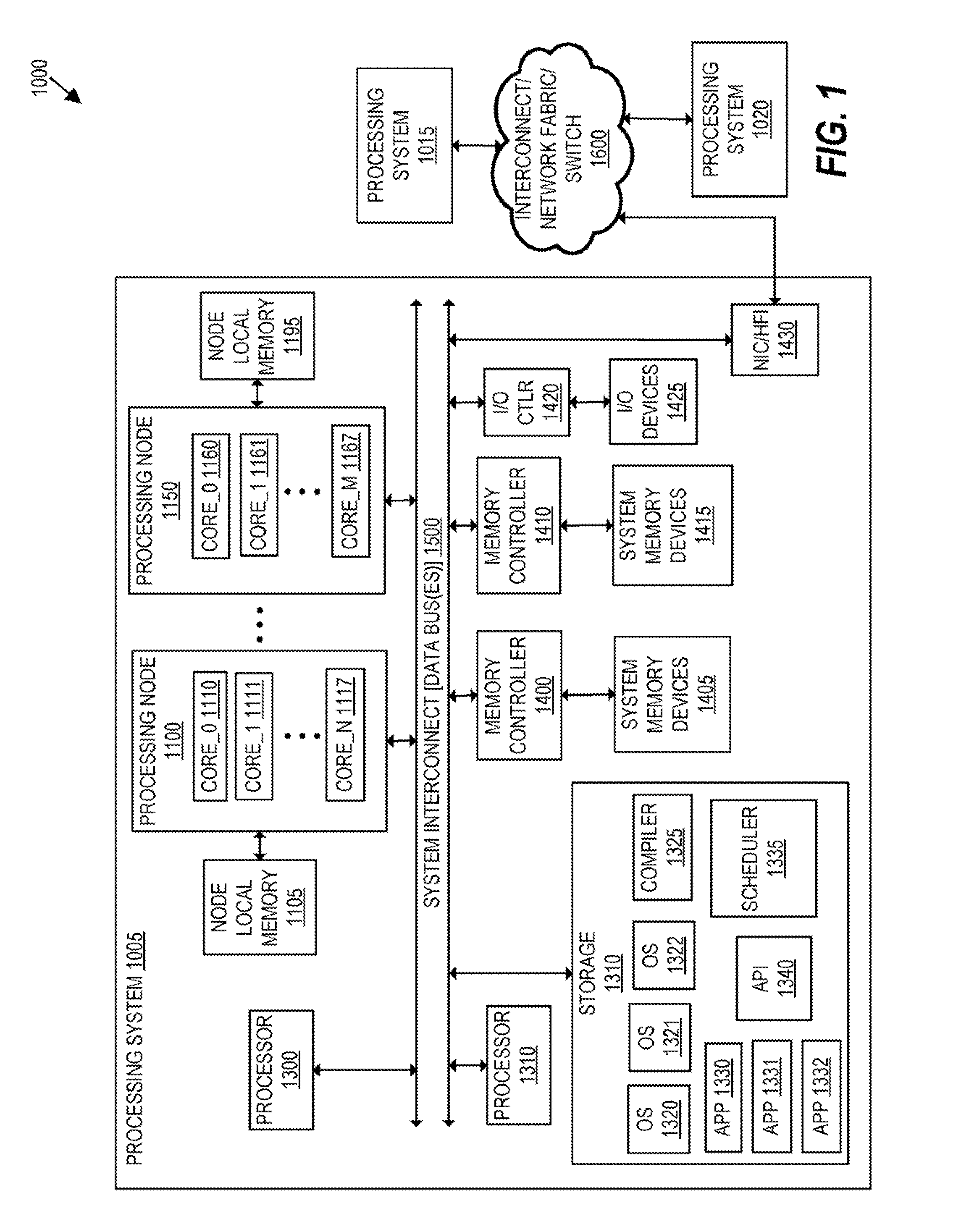

Infinite memory fabric hardware implementation with memory

ActiveUS20160364172A1Improve efficiencyImprove performanceInput/output to record carriersInterprogram communicationData setMemory object

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. More specifically, embodiments of the present invention are directed to a hardware-based processing node of an object memory fabric. The processing node may include a memory module storing and managing one or more memory objects, the one or more memory objects each include at least a first memory and a second memory, wherein the first memory has a lower latency than the second memory, and wherein each memory object is created natively within the memory module, and each memory object is accessed using a single memory reference instruction without Input / Output (I / O) instructions, wherein a set of data is stored within the first memory of the memory module; wherein the memory module is configured to receive an indication of a subset of the set of data that is eligible to be transferred between the first memory and the second memory; and wherein the memory module dynamically determines which of the subset of data will be transferred to the second memory based on access patterns associated with the object memory fabric.

Owner:ULTRATA LLC

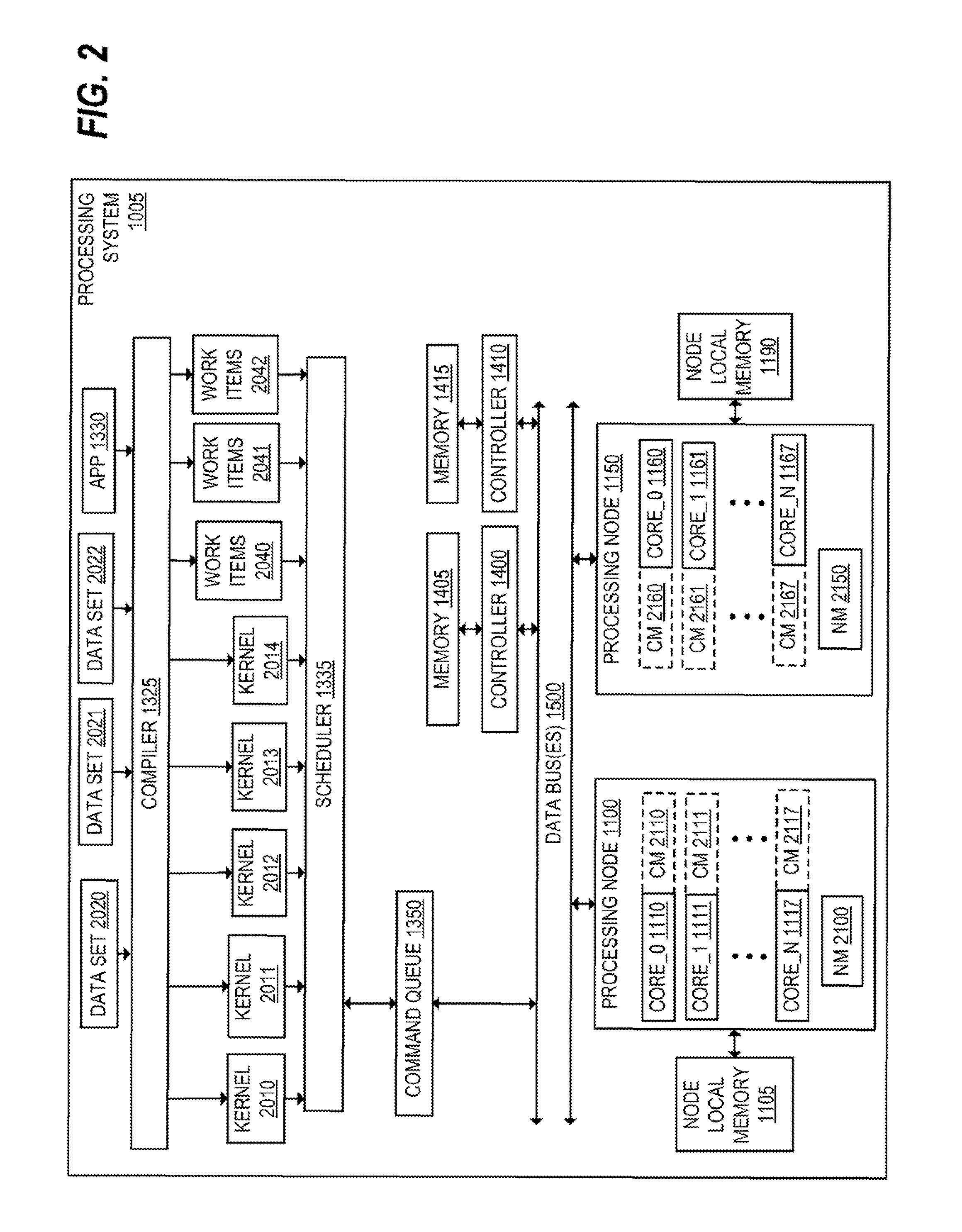

Method to customize function behavior based on cache and scheduling parameters of a memory argument

InactiveUS20110161608A1Memory architecture accessing/allocationMemory loss protectionData processing systemParallel computing

Disclosed are a method, a system and a computer program product of operating a data processing system that can include or be coupled to multiple processor cores. In one or more embodiments, each of multiple memory objects can be populated with work items and can be associated with attributes that can include information which can be used to describe data of each memory object and / or which can be used to process data of each memory object. The attributes can be used to indicate one or more of a cache policy, a cache size, and a cache line size, among others. In one or more embodiments, the attributes can be used as a history of how each memory object is used. The attributes can be used to indicate cache history statistics (e.g., a hit rate, a miss rate, etc.).

Owner:IBM CORP

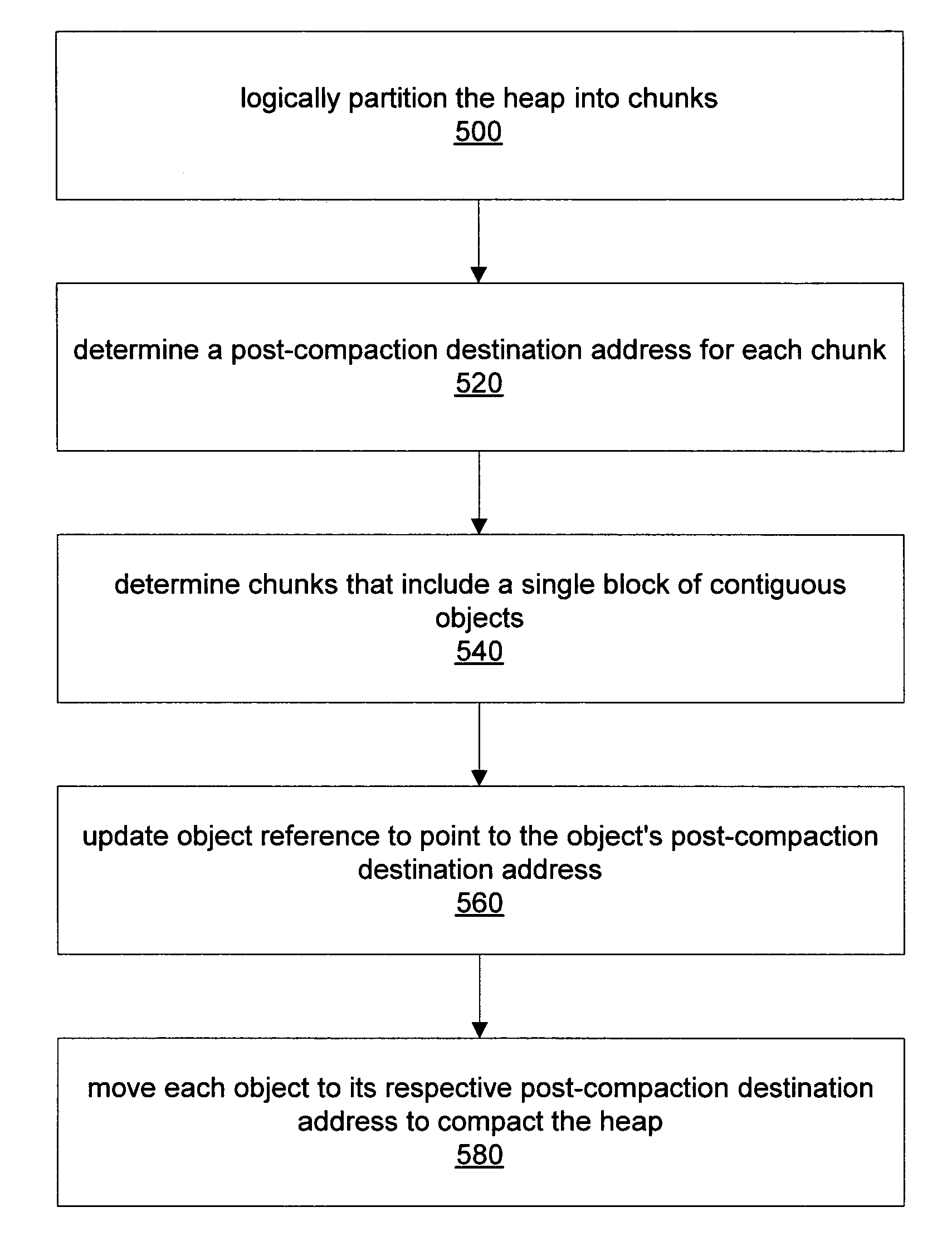

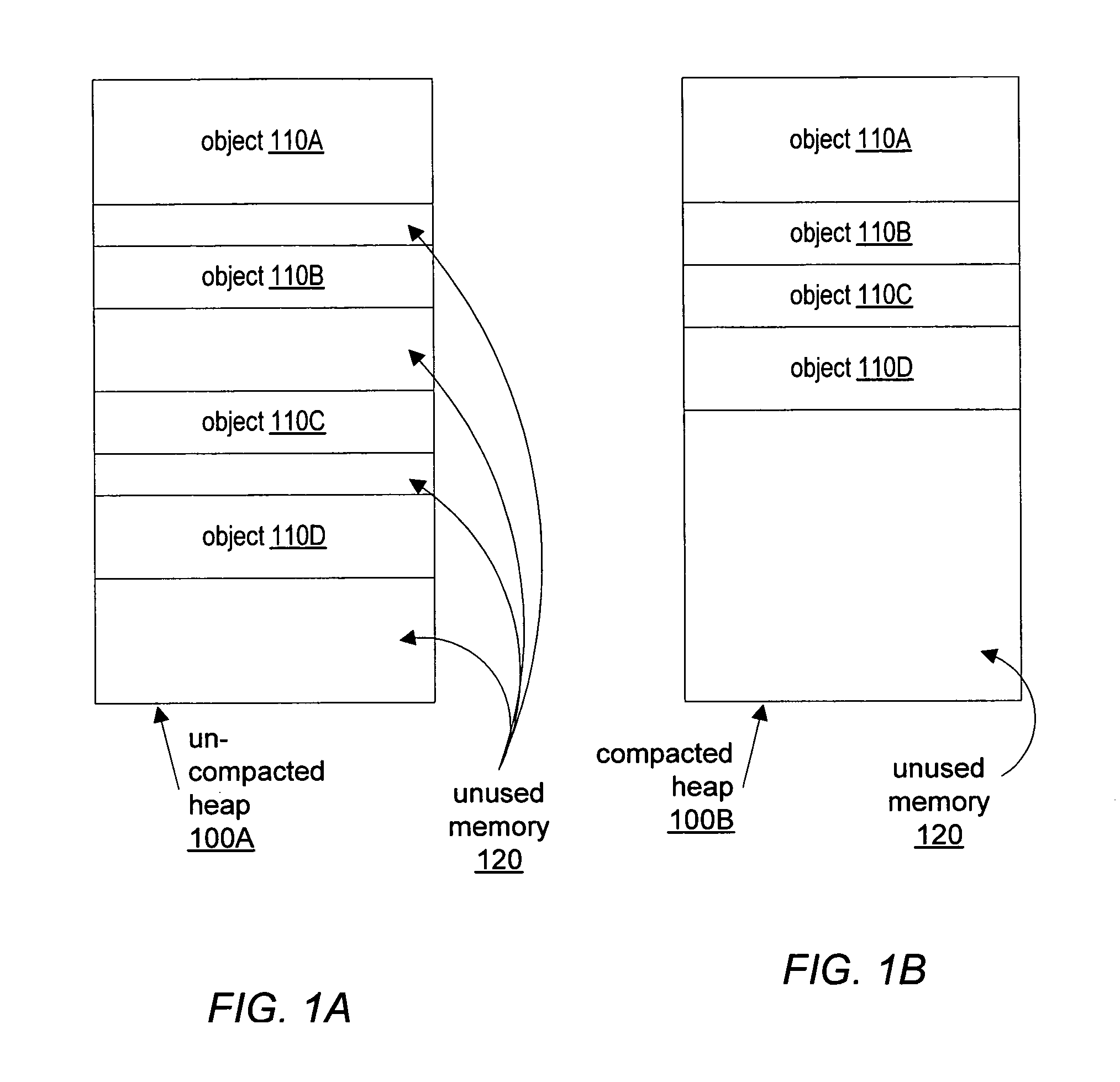

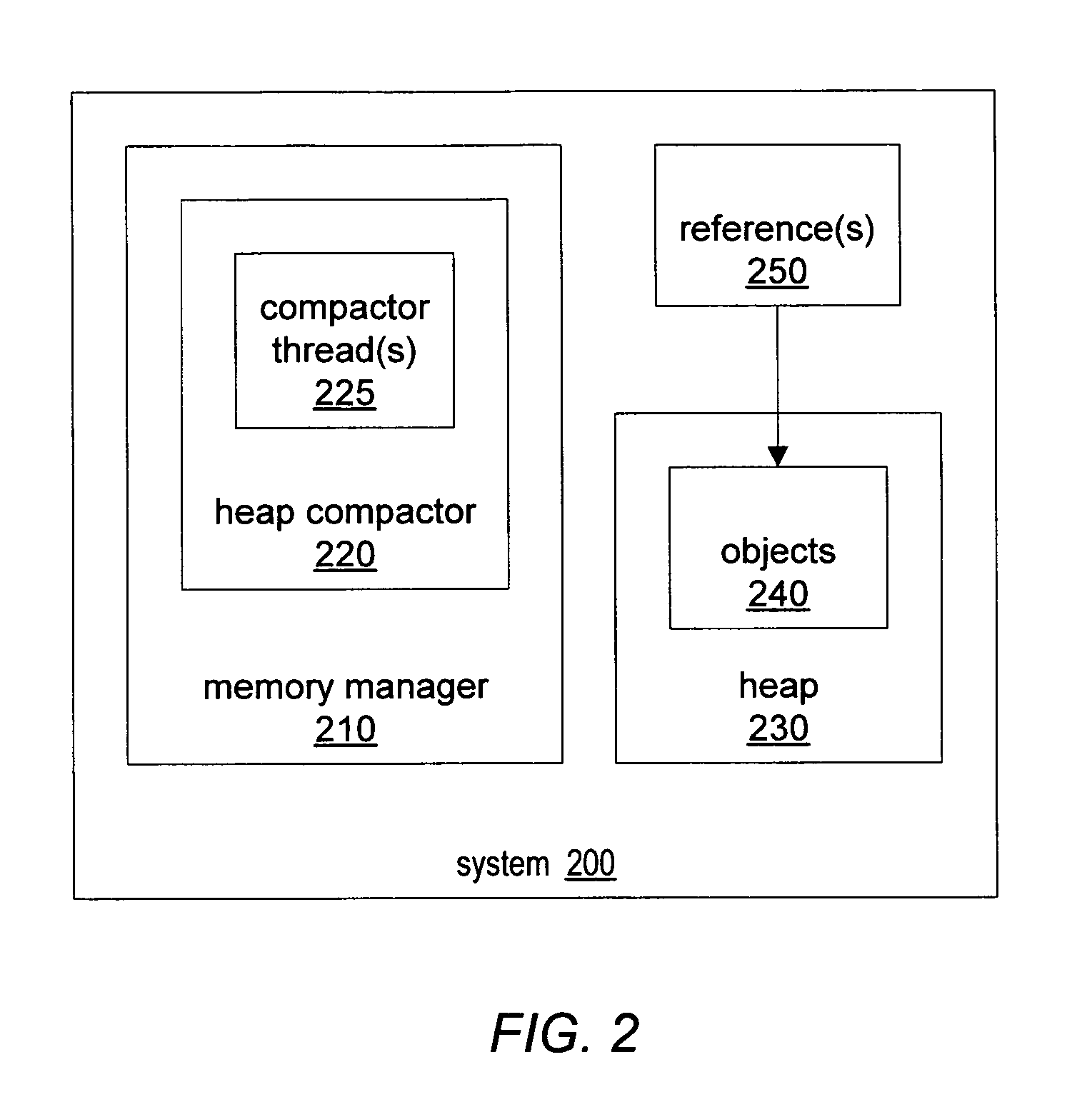

Offset-based forward address calculation in a sliding-compaction garbage collector

Owner:ORACLE INT CORP

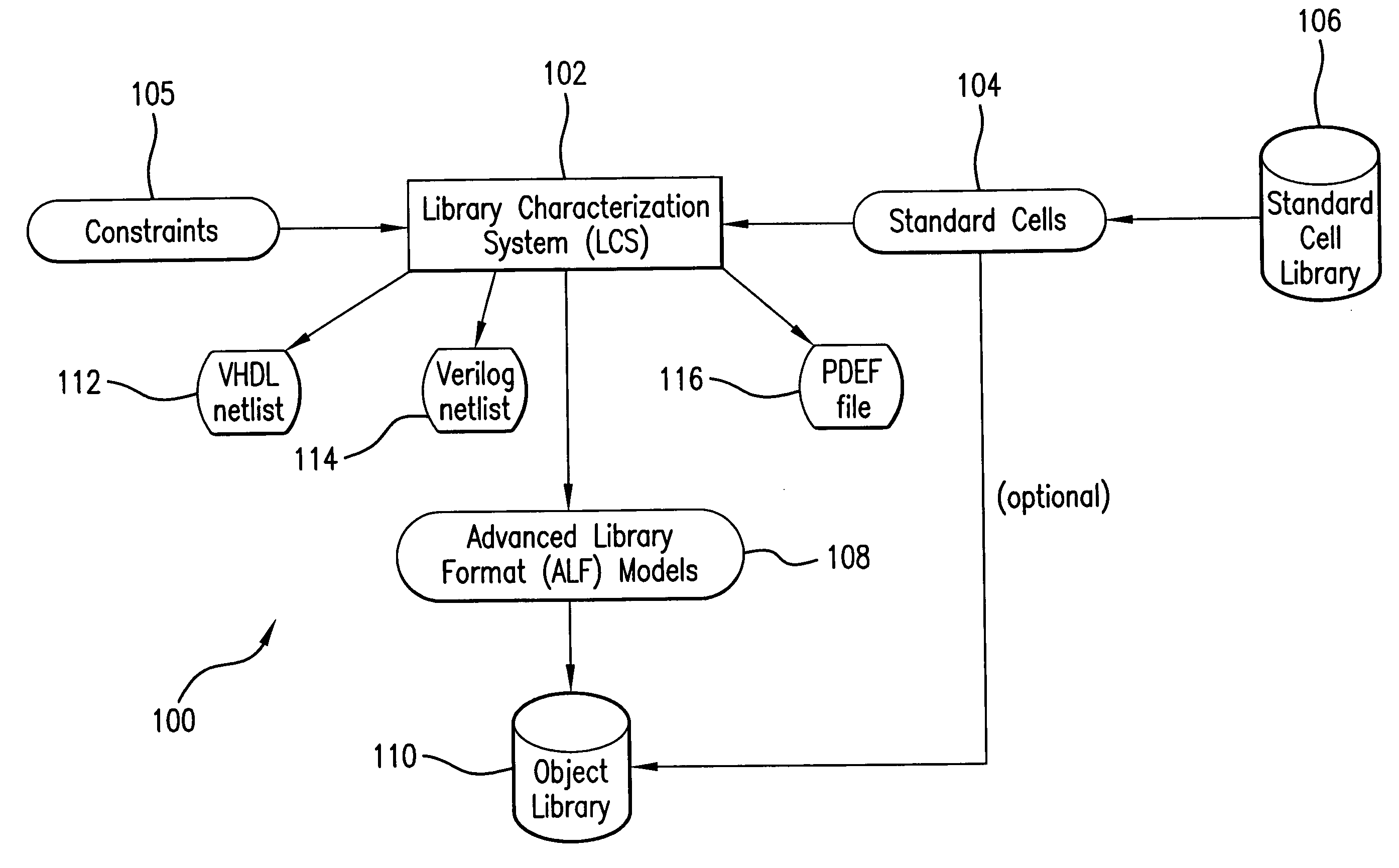

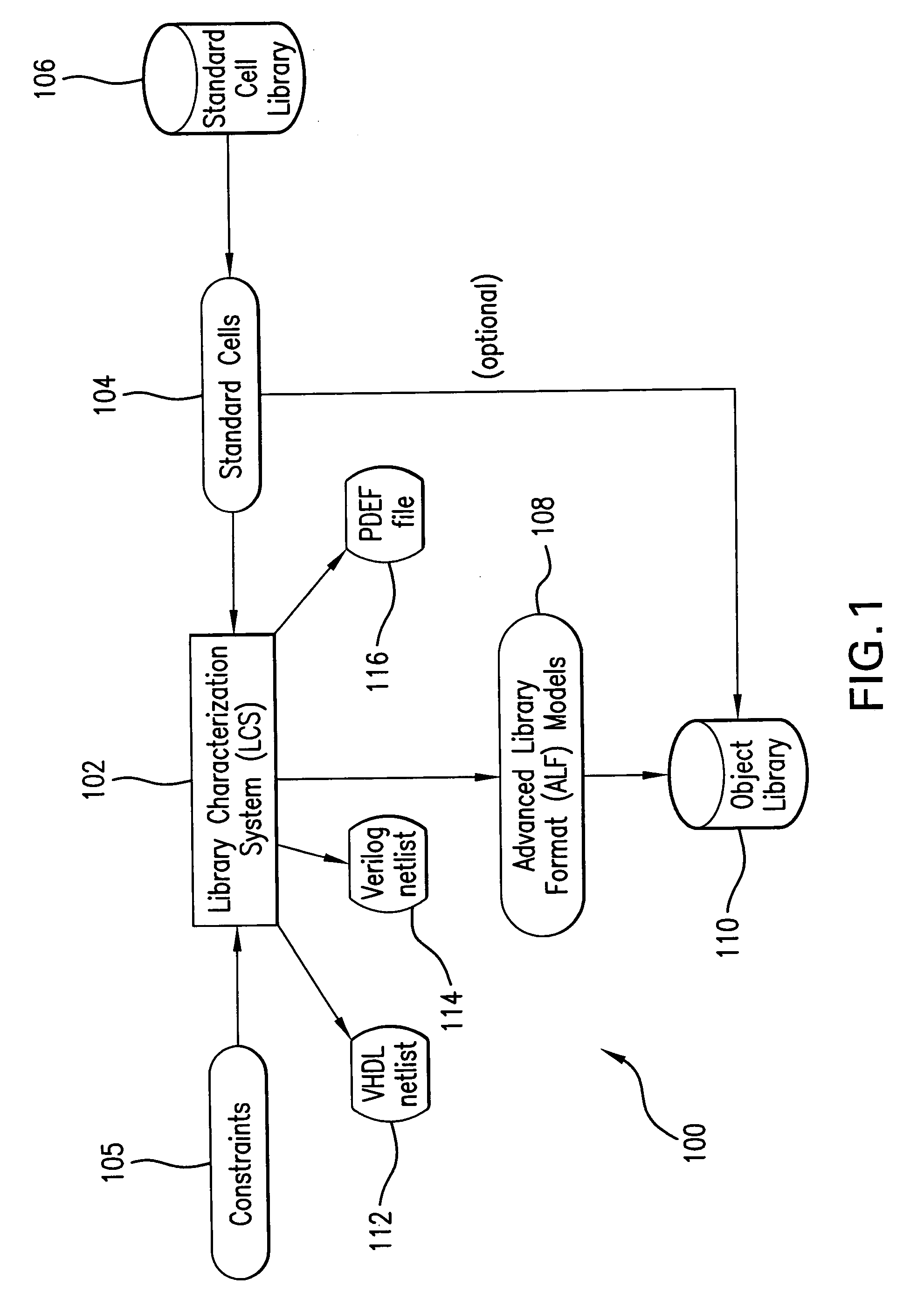

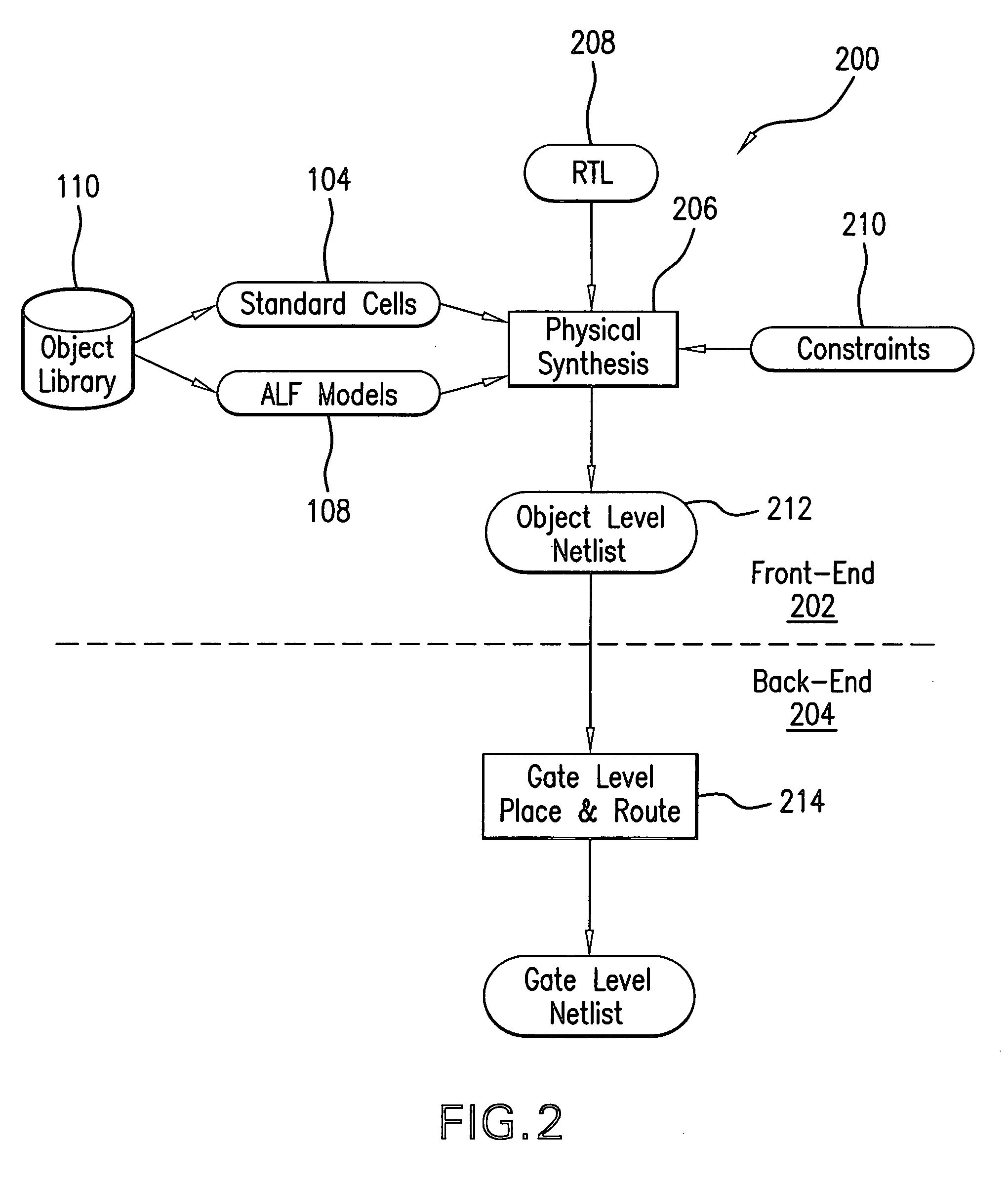

Methods and systems for structured ASIC electronic design automation

InactiveUS20050268268A1Minimize the numberCAD circuit designProgram controlComputer architectureMemory object

Electronic design automation (“EDA) methods and systems for structured ASICs include accessing or receiving objects representative of source code for a structured ASIC. The objects are flattened to remove hierarchies associated with the source code, such as functional RTL hierarchies. The flattened objects are clustered to accommodate design constraints associated with the structured ASIC. The clustered objects are floorplanned within a design area of the structured ASIC. The objects are then placed within the portions of the design areas assigned to the corresponding clusters. The objects optionally include logic objects and one or more memory objects and / or proprietary objects, wherein the one or more memory objects and / or proprietary objects are placed concurrently with the logic objects.

Owner:TERA SYST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com