Patents

Literature

261results about How to "Eliminate overhead" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Transaction system

InactiveUS6236981B1Improve securityImprove the level ofUser identity/authority verificationComputer security arrangementsService provisionThe Internet

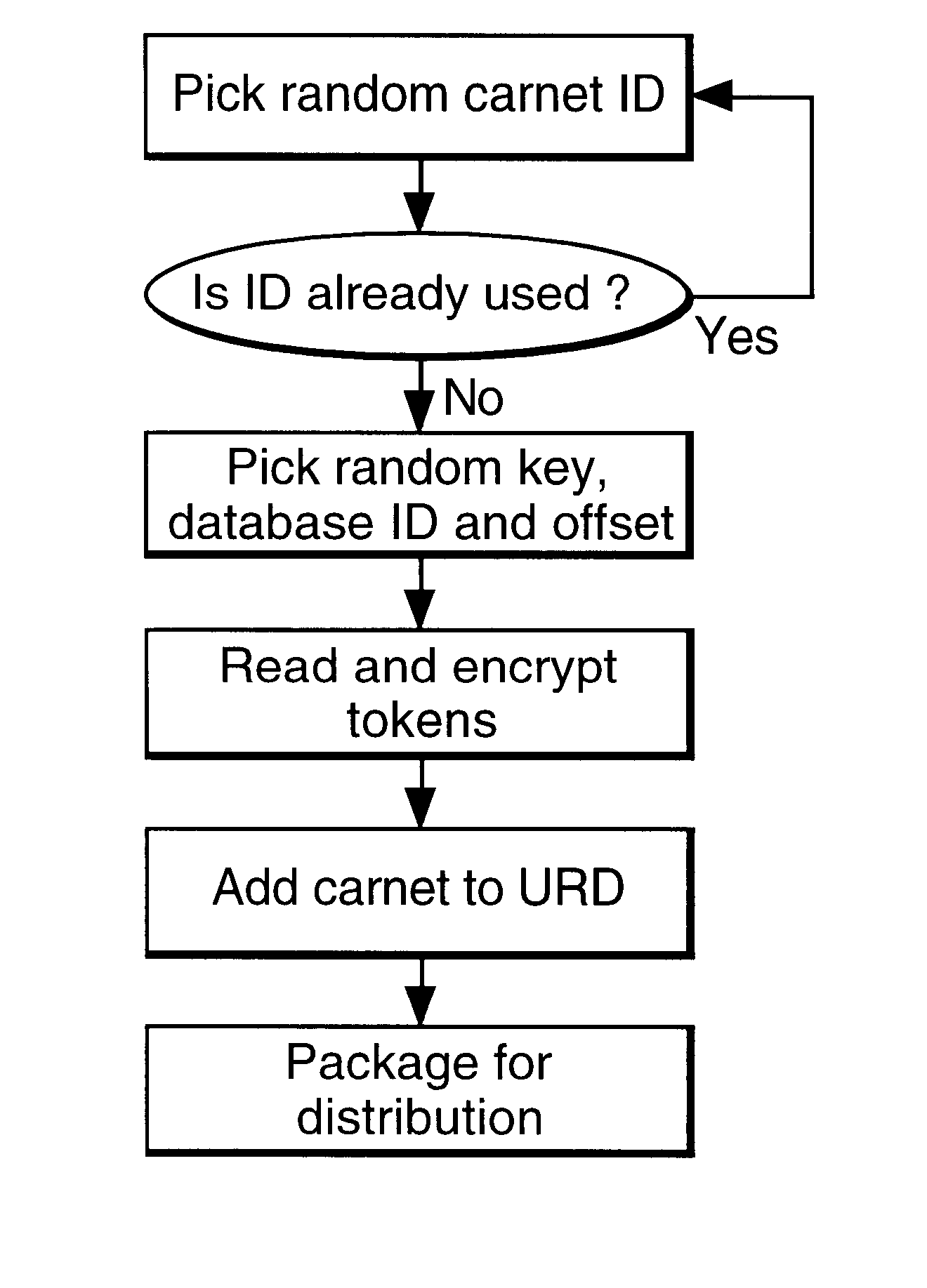

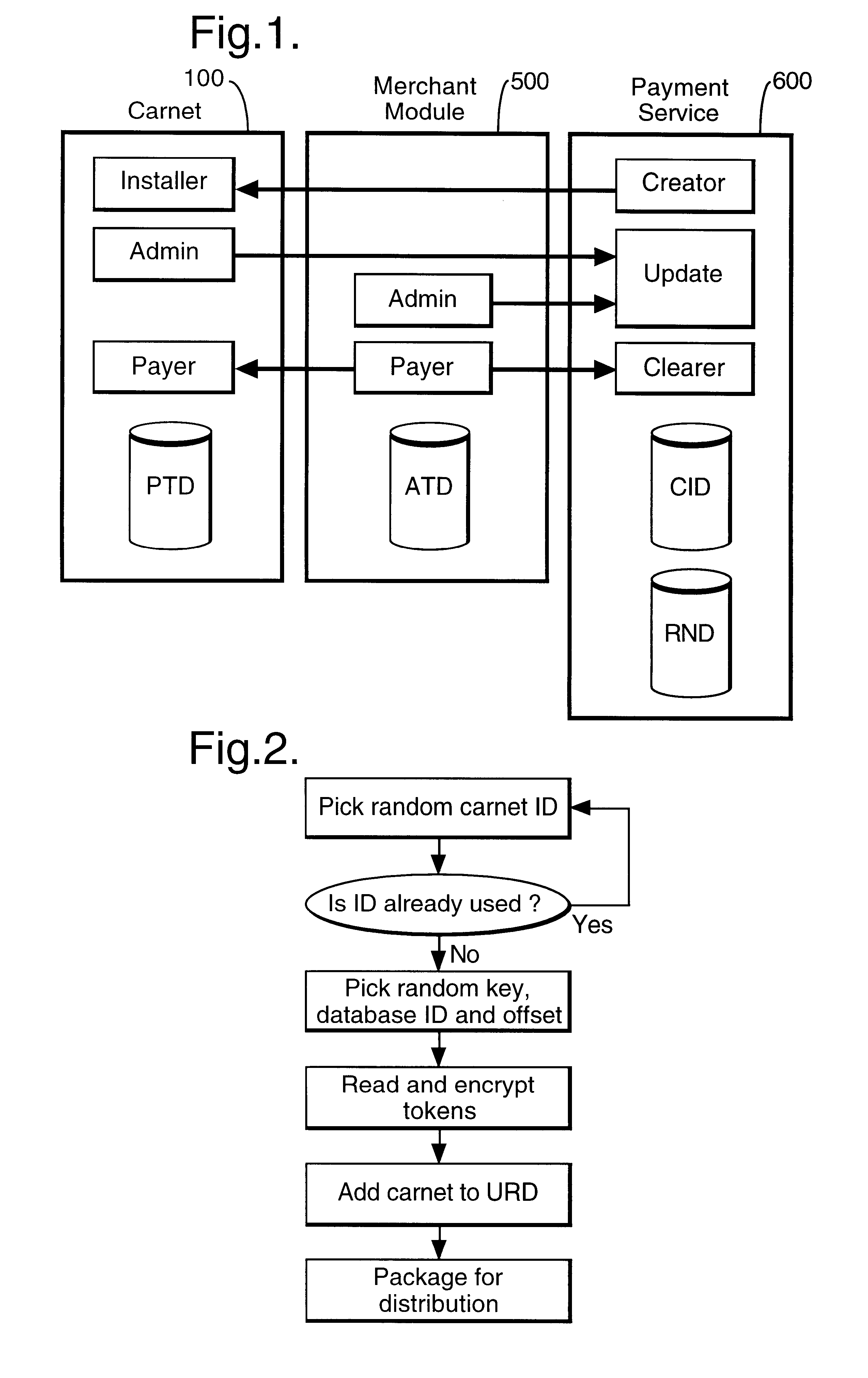

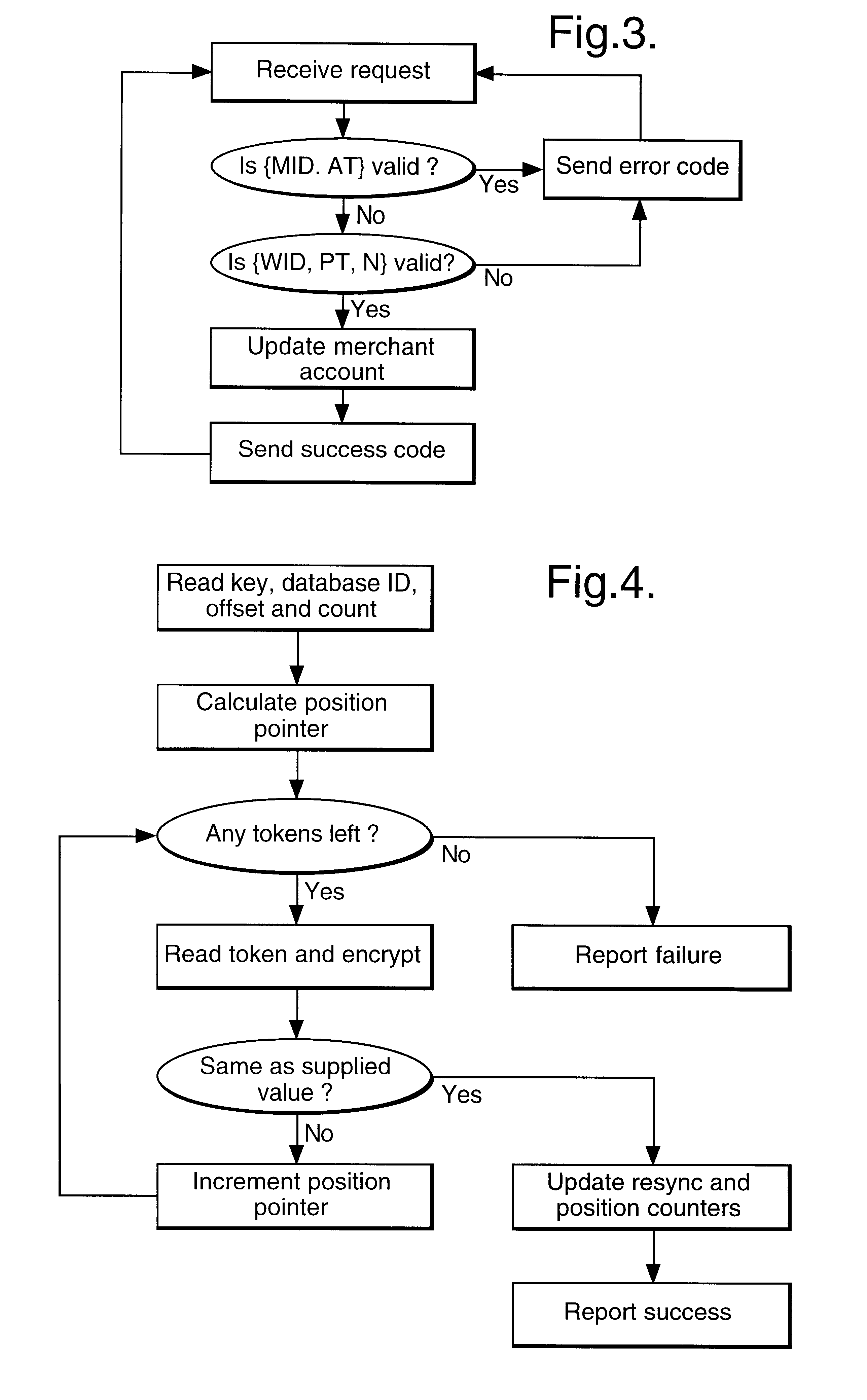

In a digital payment system, a sequence of random numbers is stored at a payment service. A set of digitally encoded random numbers derived from the stored sequence is issued to the user in return for payment. The tokens are stored in a Carnet. The user can then spend the tokens by transferring tokens to a merchant, for example, to an on-line service provider. The merchant returns each token received to the payment server. The payment server authenticates the token and transmits an authentication message to the merchant. The merchant, payment server and user may be linked by internet connections.

Owner:BRITISH TELECOMM PLC

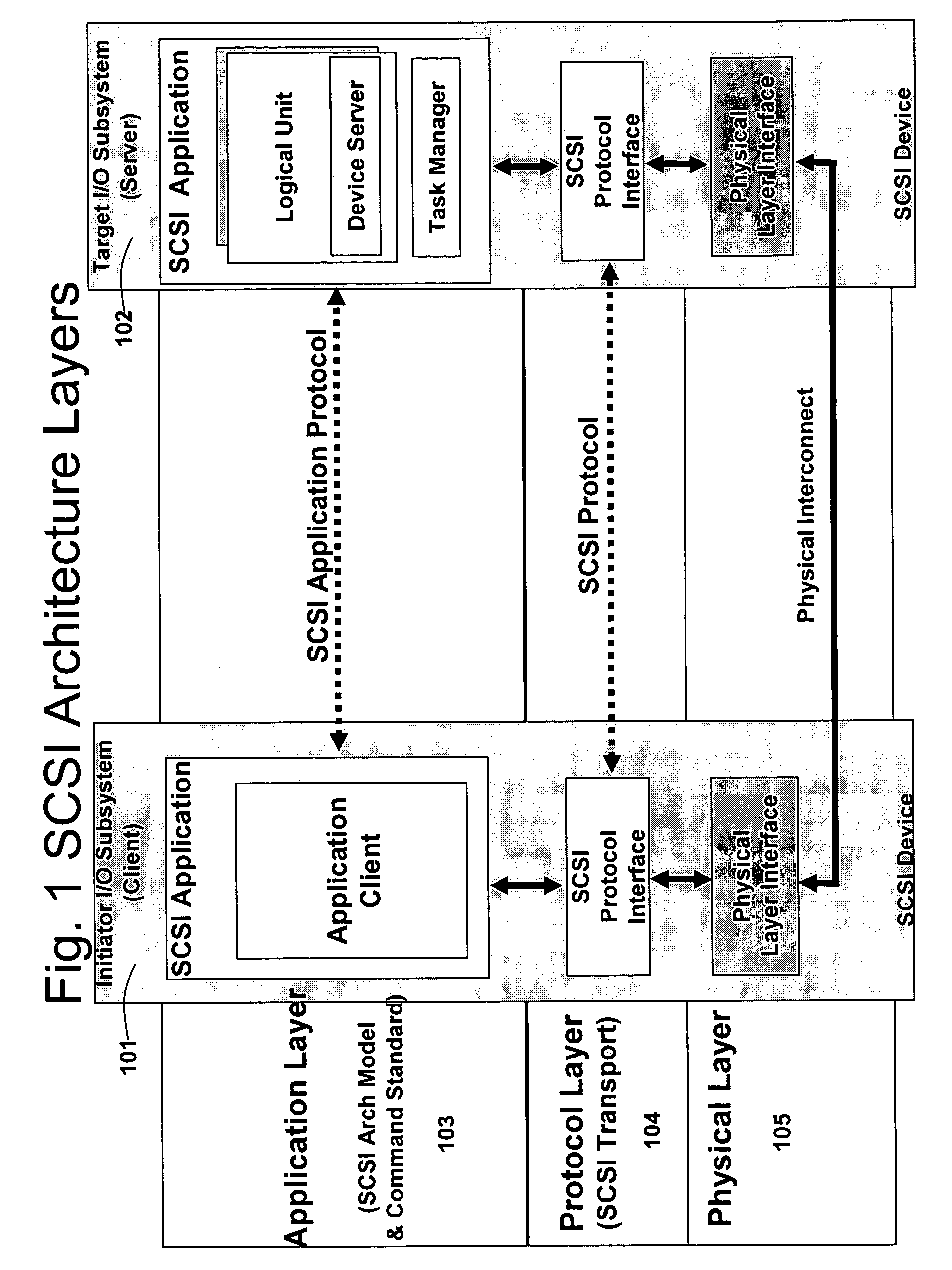

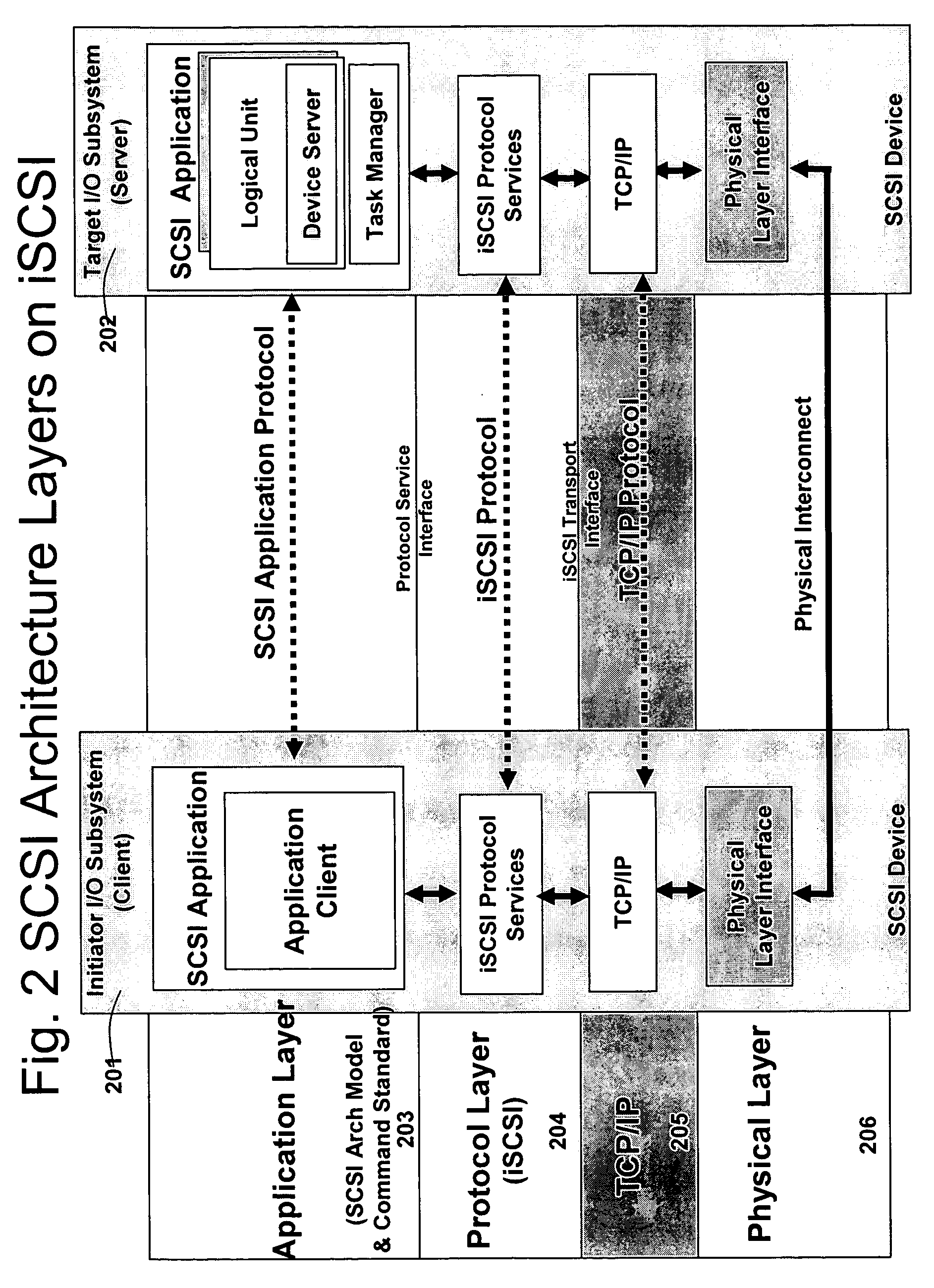

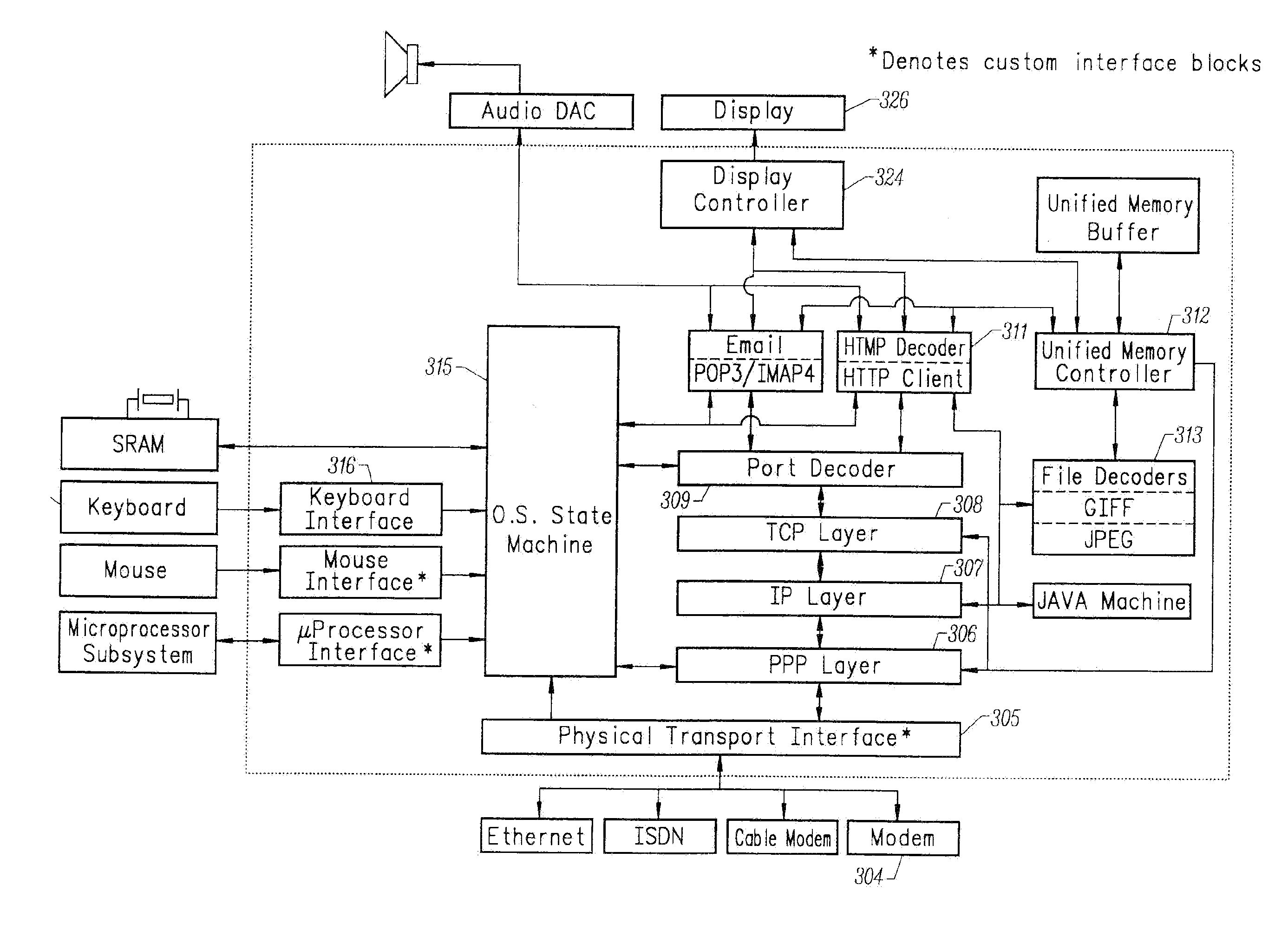

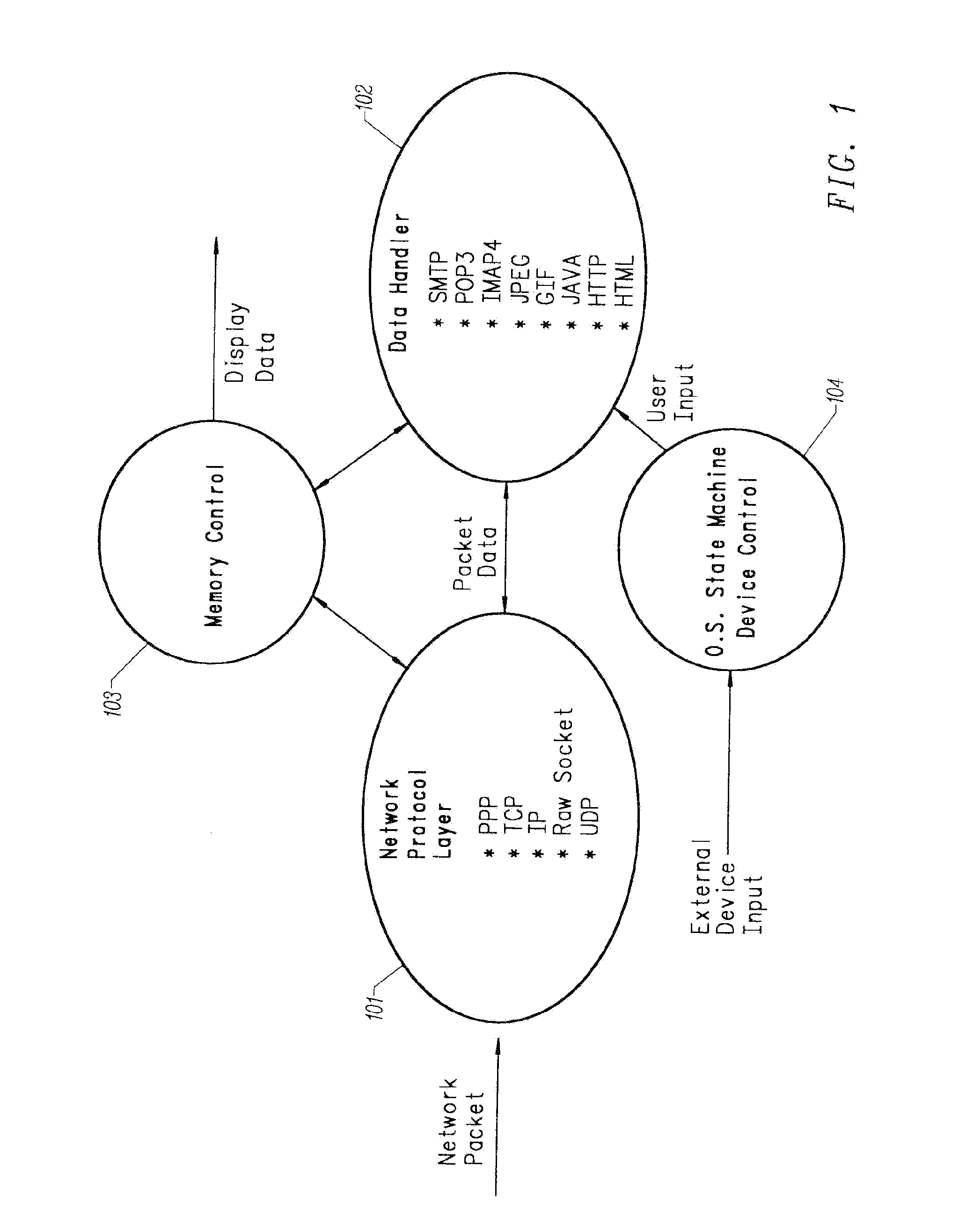

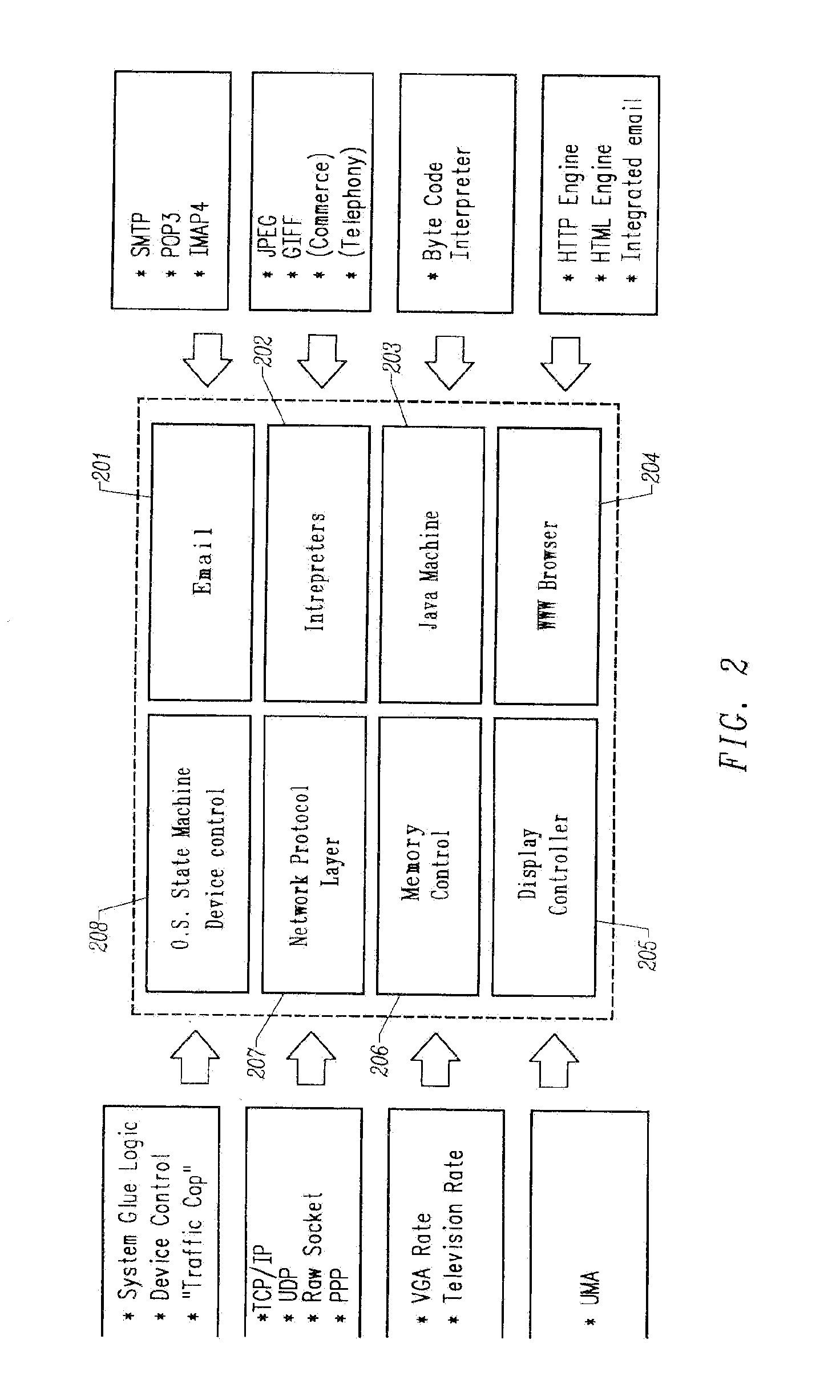

Multiple network protocol encoder/decoder and data processor

InactiveUS6034963AReduce system costLow costTime-division multiplexData switching by path configurationRaw socketByte

A multiple network protocol encoder / decoder comprising a network protocol layer, data handler, O.S. State machine, and memory manager state machines implemented at a hardware gate level. Network packets are received from a physical transport level mechanism by the network protocol layer state machine which decodes network protocols such as TCP, IP, User Datagram Protocol (UDP), PPP, and Raw Socket concurrently as each byte is received. Each protocol handler parses and strips header information immediately from the packet, requiring no intermediate memory. The resulting data are passed to the data handler which consists of data state machines that decode data formats such as email, graphics, Hypertext Transfer Protocol (HTTP), Java, and Hypertext Markup Language (HTML). Each data state machine reacts accordingly to the pertinent data, and any data that are required by more than one data state machine is provided to each state machine concurrently, and any data required more than once by a specific data state machine, are placed in a specific memory location with a pointer designating such data (thereby ensuring minimal memory usage). Resulting display data are immediately passed to a display controller. Any outgoing network packets are created by the data state machines and passed through the network protocol state machine which adds header information and forwards the resulting network packet via a transport level mechanism.

Owner:NVIDIA CORP

Shape-based geometry engine to perform smoothing and other layout beautification operations

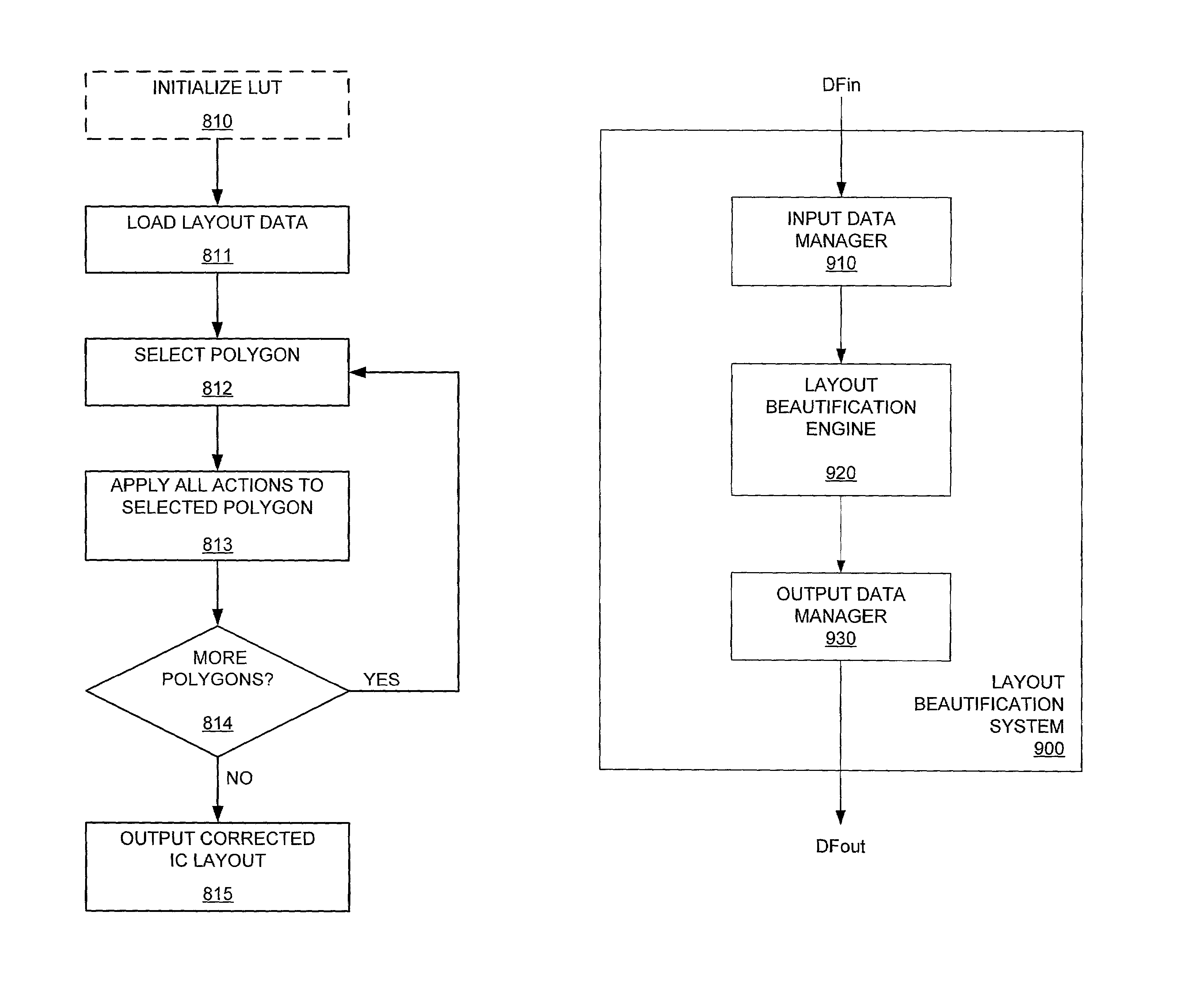

ActiveUS7159197B2Efficient executionData be eliminatedOriginals for photomechanical treatmentComputer aided designData fileEngineering

A shape-based layout beautification operation can be performed on an IC layout to correct layout imperfections. A shape is described by edges (and vertices) related according to specified properties. Each shape can be configured to match specific layout imperfection types. Corrective actions can then be associated with the shapes, advantageously enabling efficient formulation and precise application of those corrective actions. Corrective actions can include absolute, adaptive, or replacement-type modifications to the detected layout imperfections. A concurrent processing methodology can be used to minimize processing overhead during layout beautification, and the actions can also be incorporated into a lookup table to further reduce runtime. A layout beautification system can also be connected to a network across which shapes, actions, and IC layout data files can be accessed and retrieved.

Owner:SYNOPSYS INC

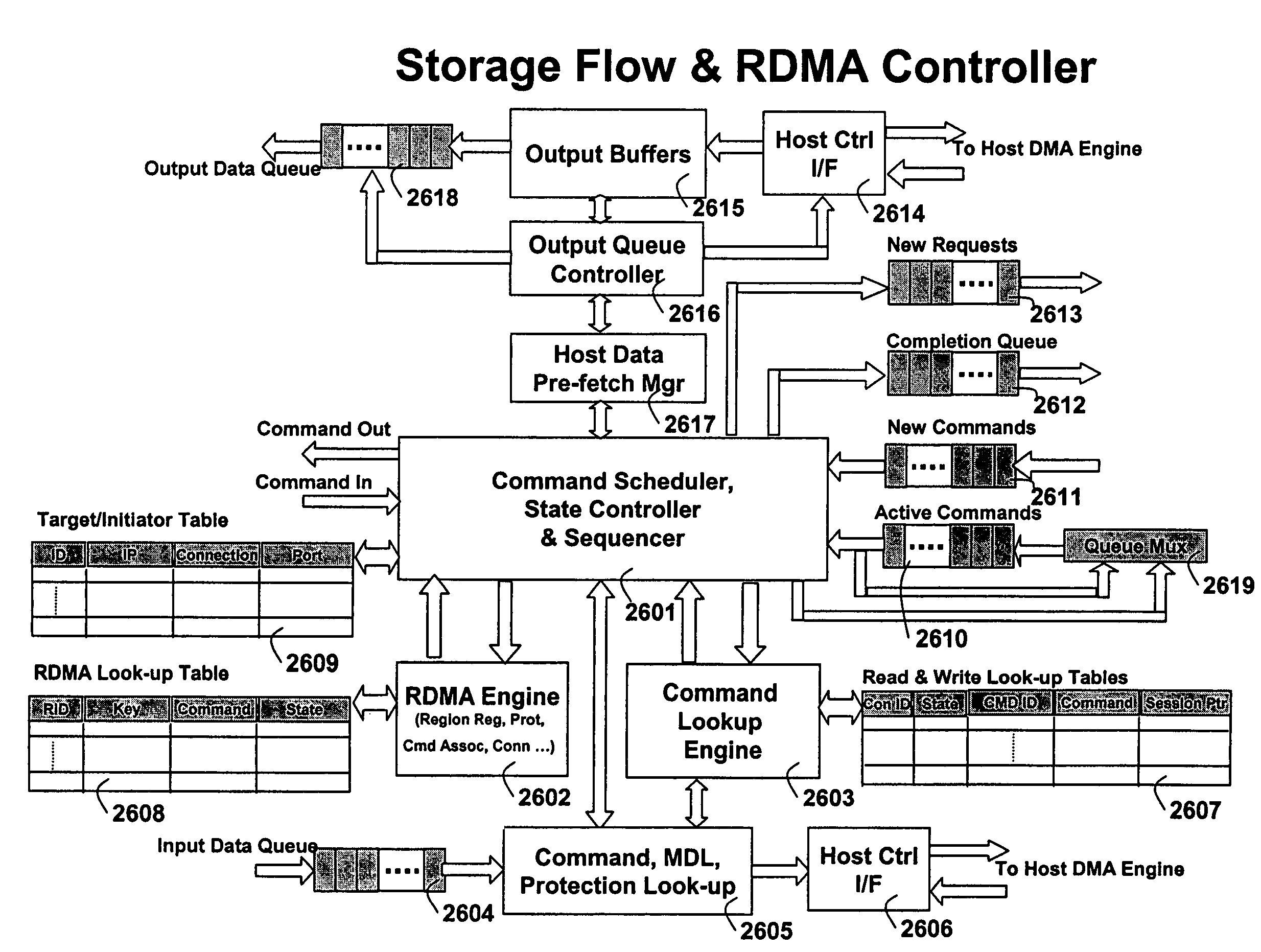

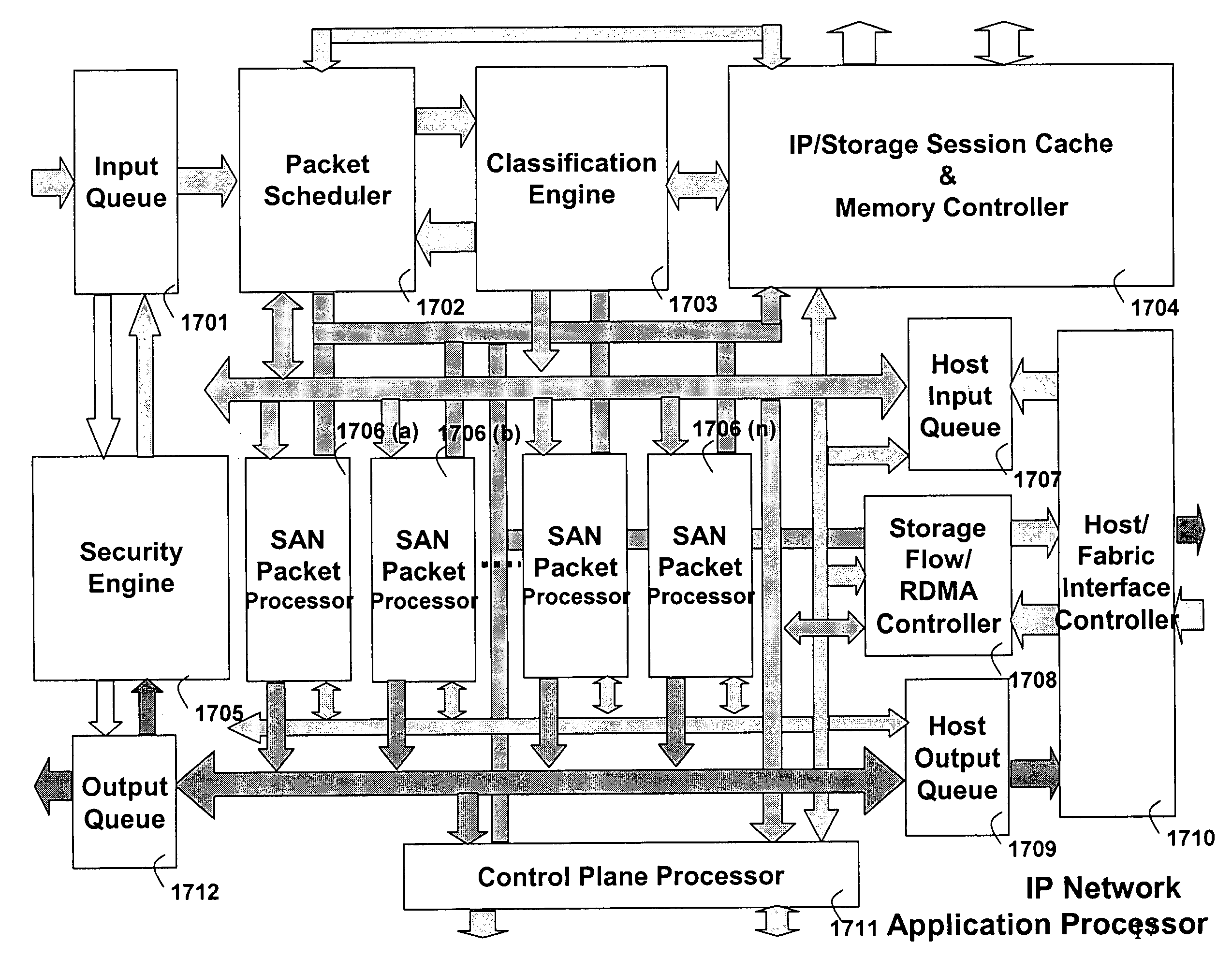

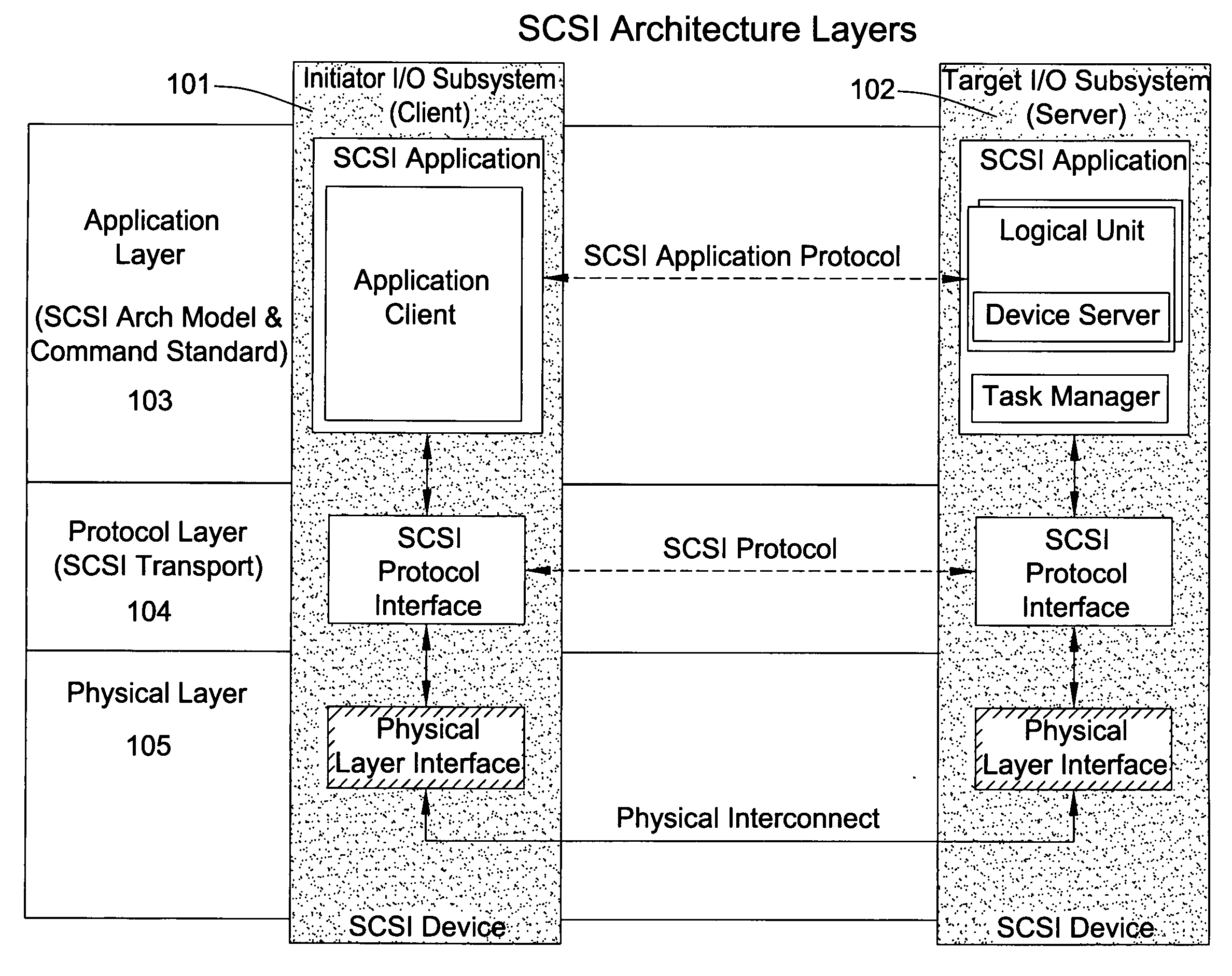

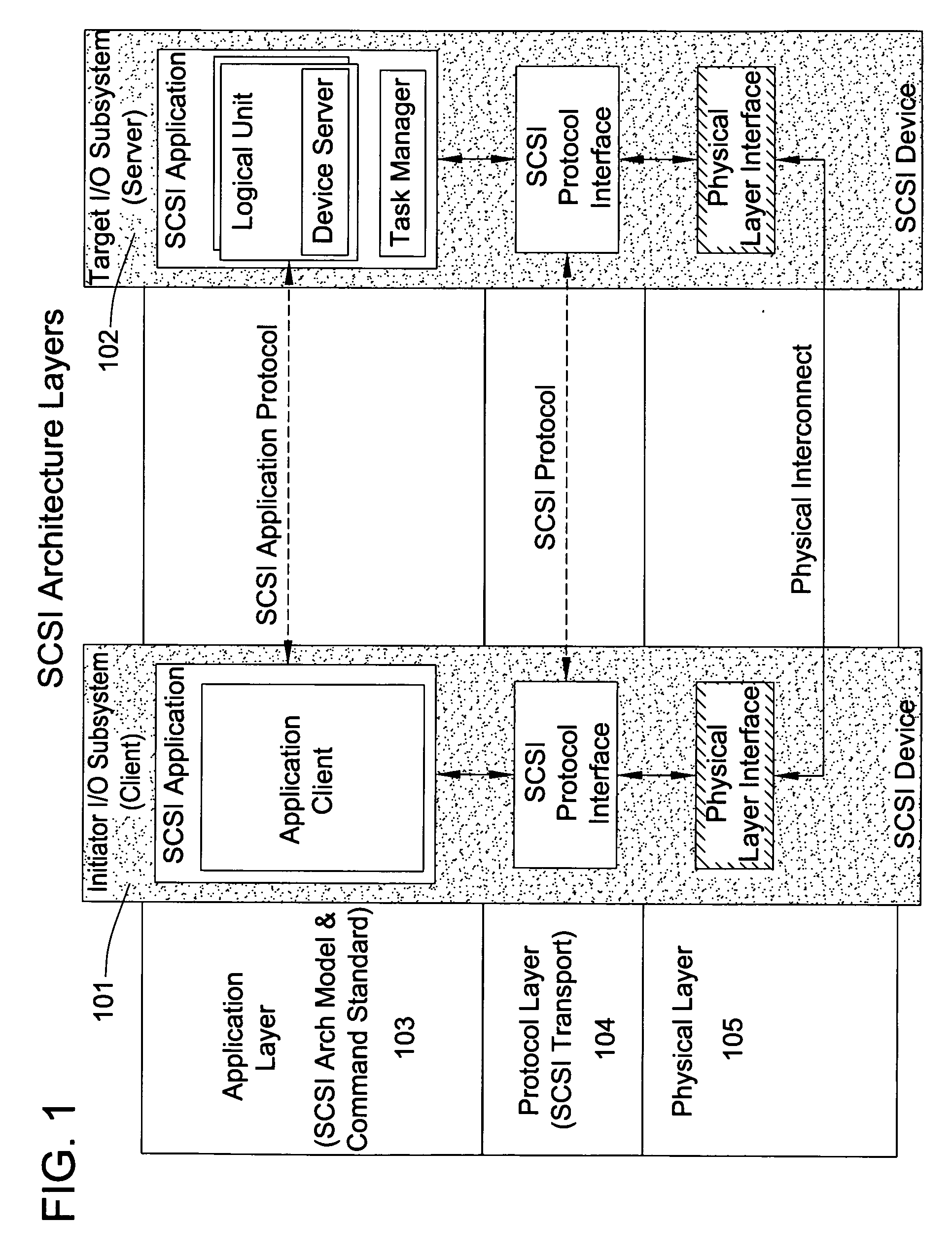

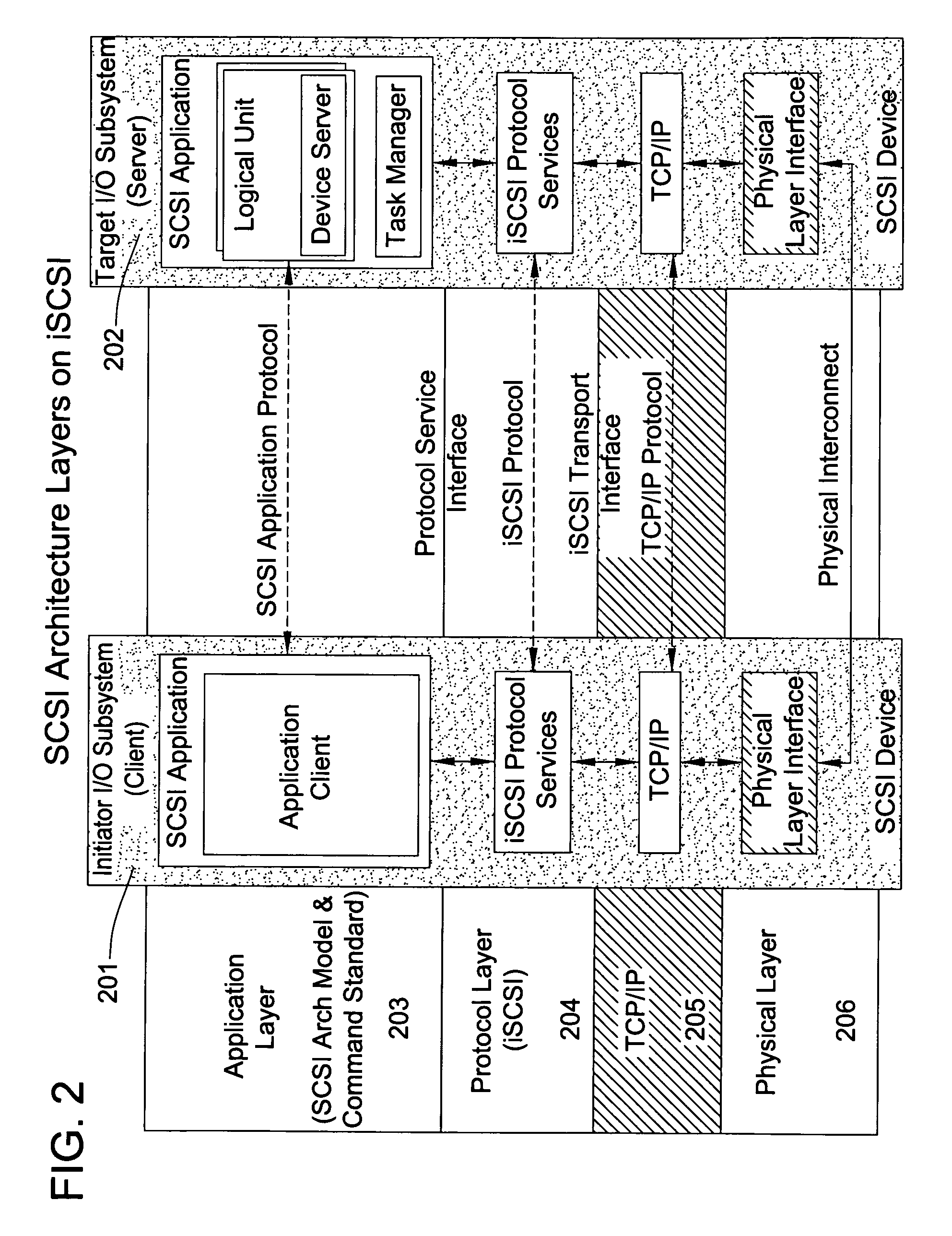

TCP/IP processor and engine using RDMA

ActiveUS7376755B2Sharply reduces TCP/IP protocol stack overheadImprove performanceMultiplex system selection arrangementsMemory adressing/allocation/relocationTransmission protocolInternal memory

A TCP / IP processor and data processing engines for use in the TCP / IP processor is disclosed. The TCP / IP processor can transport data payloads of Internet Protocol (IP) data packets using an architecture that provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. The engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a TCP / IP session information database and may also store a storage information session database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer.

Owner:MEMORY ACCESS TECH LLC

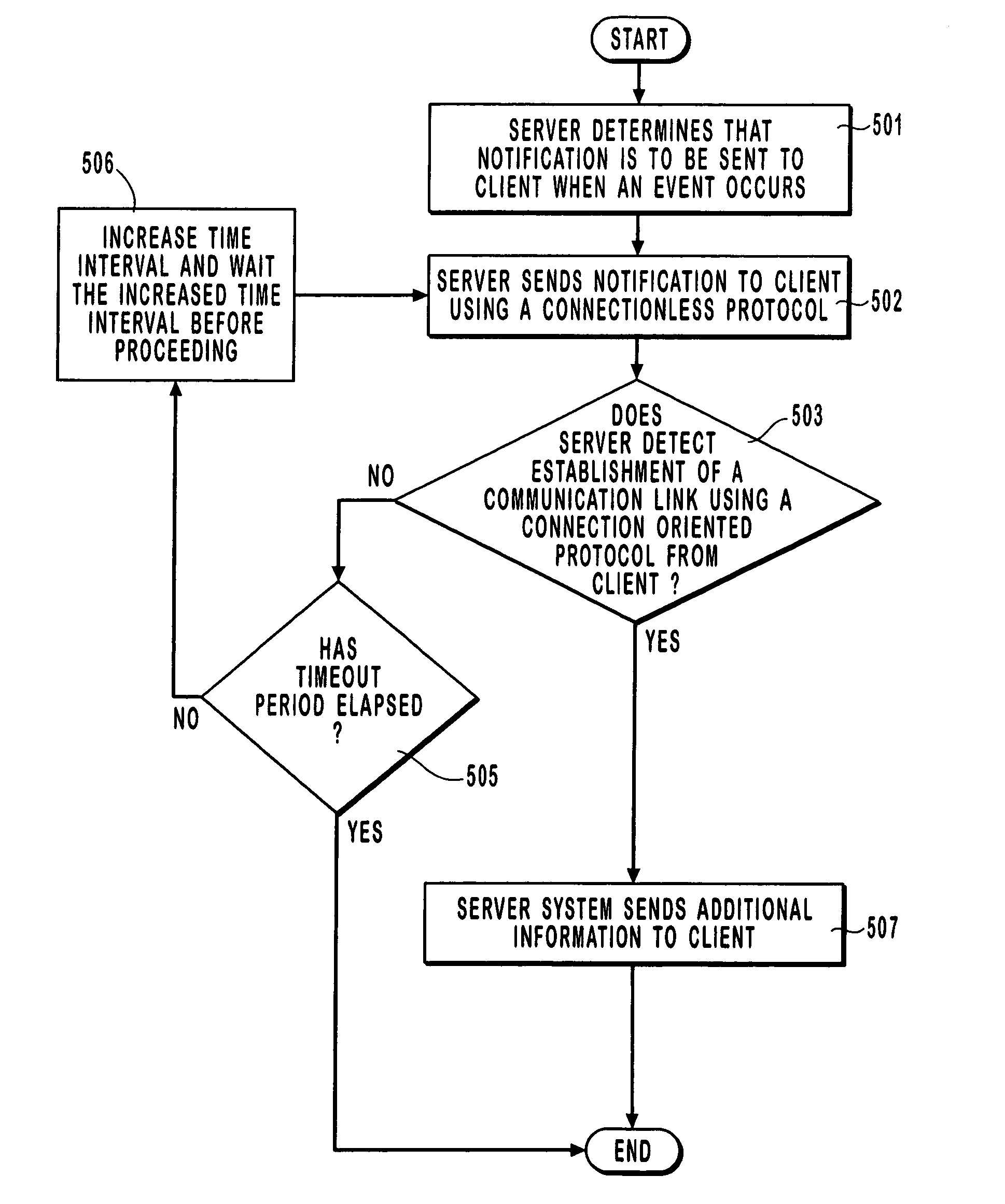

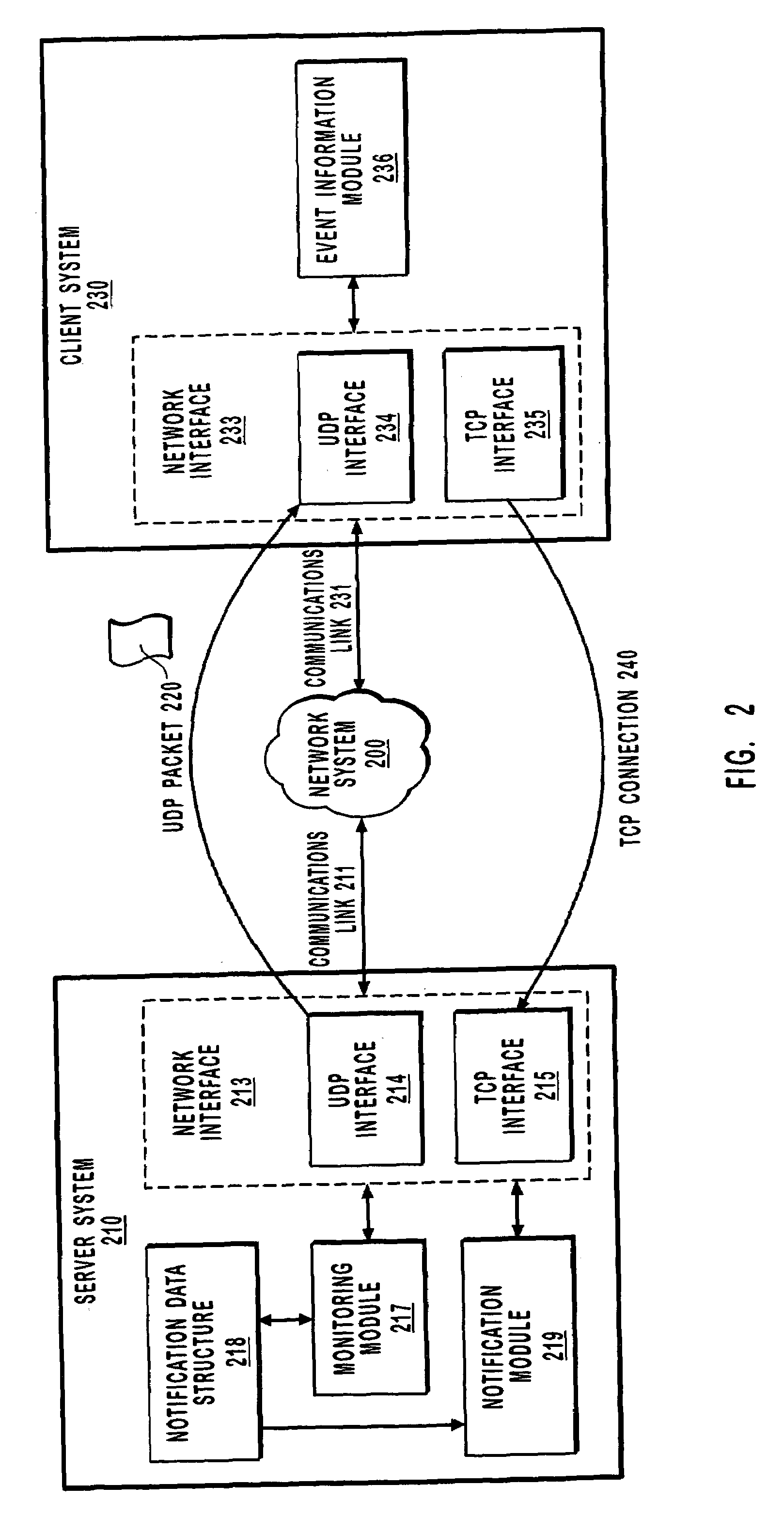

Efficiently sending event notifications over a computer network

InactiveUS6999992B1Reduce data volumeEliminate overheadMultiple digital computer combinationsTransmissionApplication softwareClient-side

A method for efficiently sending notifications over a network. A client system requests to be notified when an event occurs. A server system receives the requests and monitors for the occurrence of the event. When the event occurs a single packet using a connectionless protocol (such as User Datagram Protocol) is sent to the client to notify the client of the occurrence of the event. Using a connectionless protocol to send notification reduces the overall amount of data on the network and thus reduces network congestion and the processing capacity of the server and client. When the client system receives notification an attempt to establish a connection using as connection-oriented protocol is executed. Additional data associated with the occurrence of the event is transferred over the connection. The server may repeatedly send notification using a connectionless protocol until a connection using a connection-oriented protocol is established. The server may send notification that notifies the client of the occurrence of multiple events simultaneously within a single packet. The server may also notify multiple applications of the occurrence of an event using a single notification.

Owner:MICROSOFT TECH LICENSING LLC

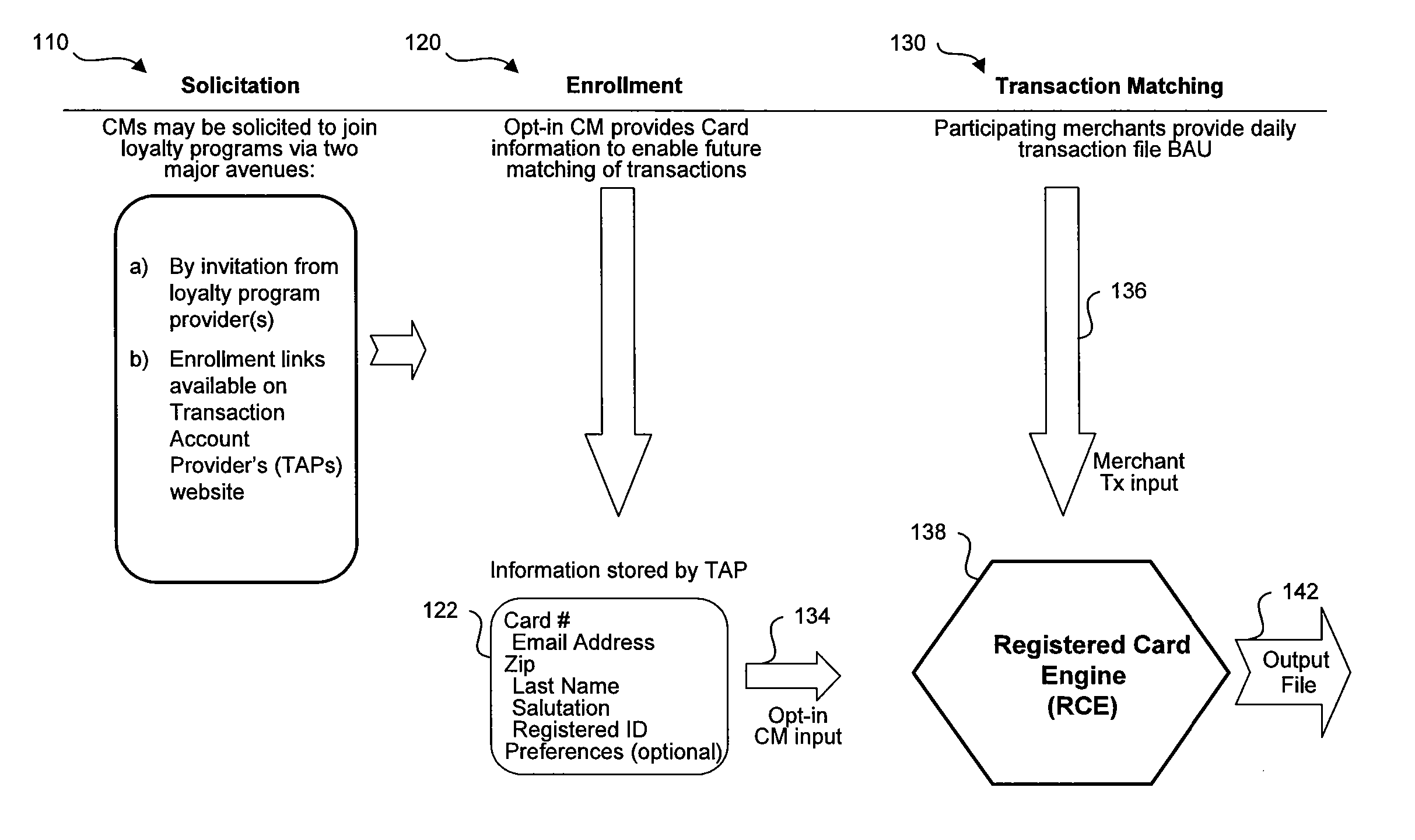

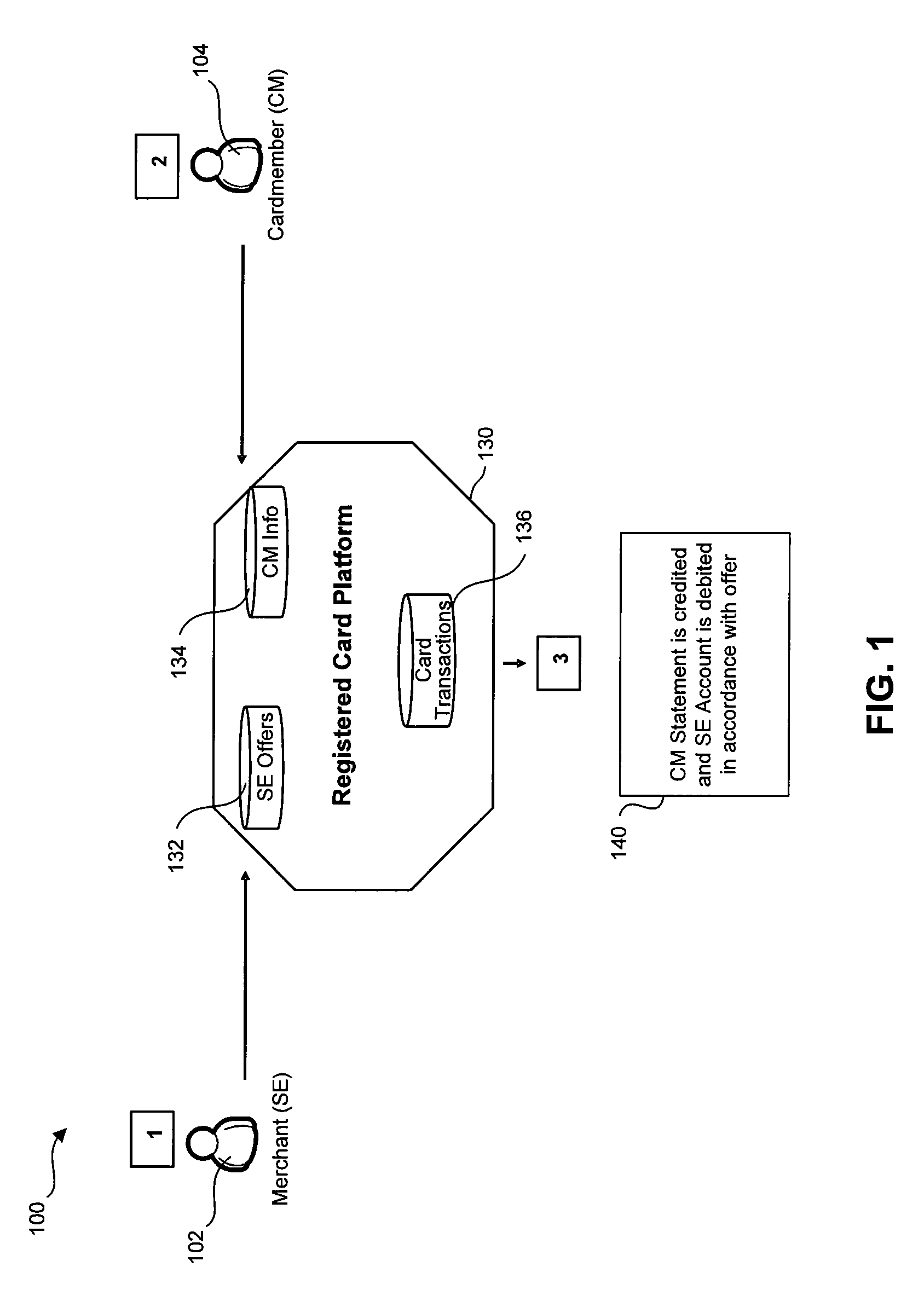

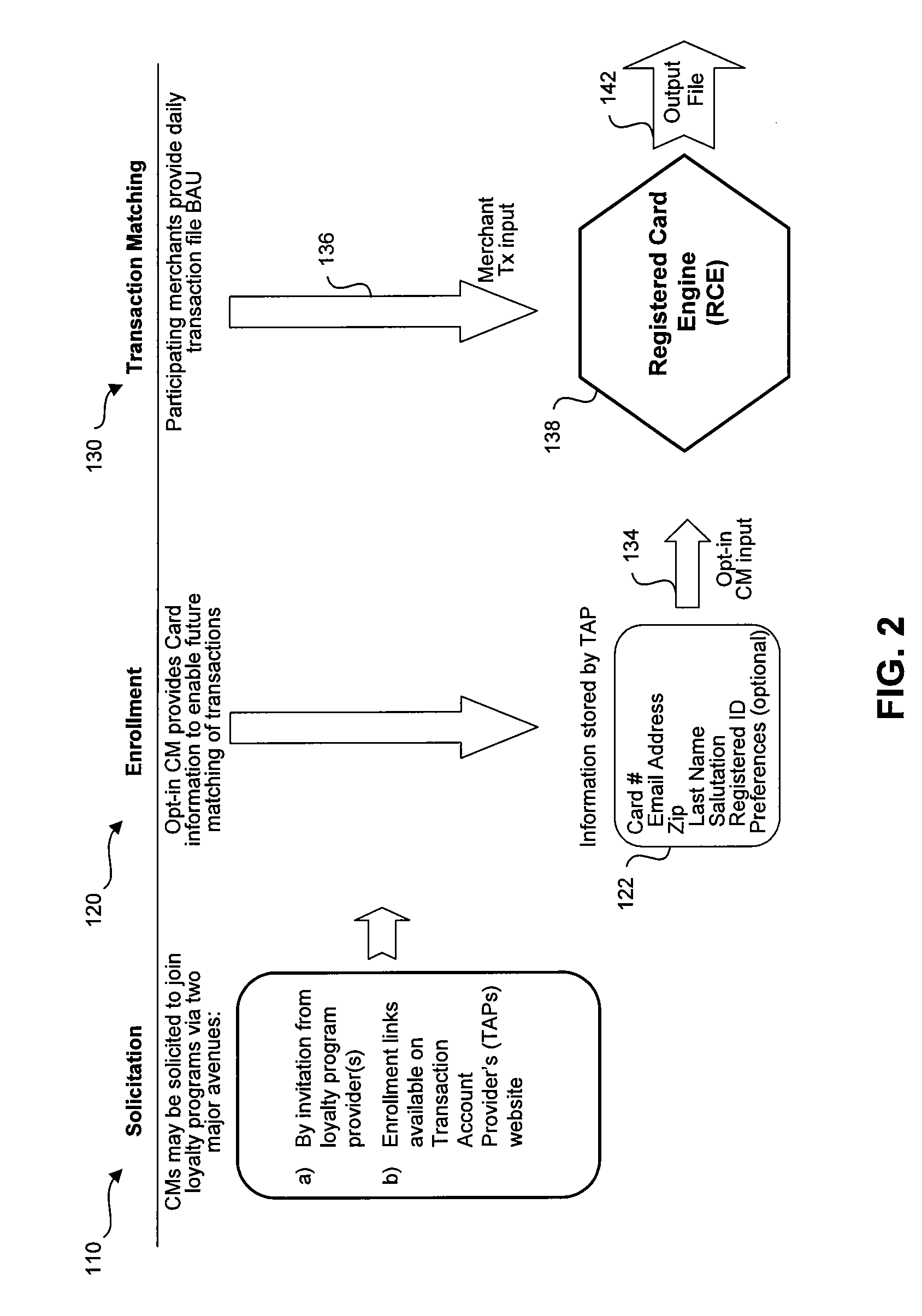

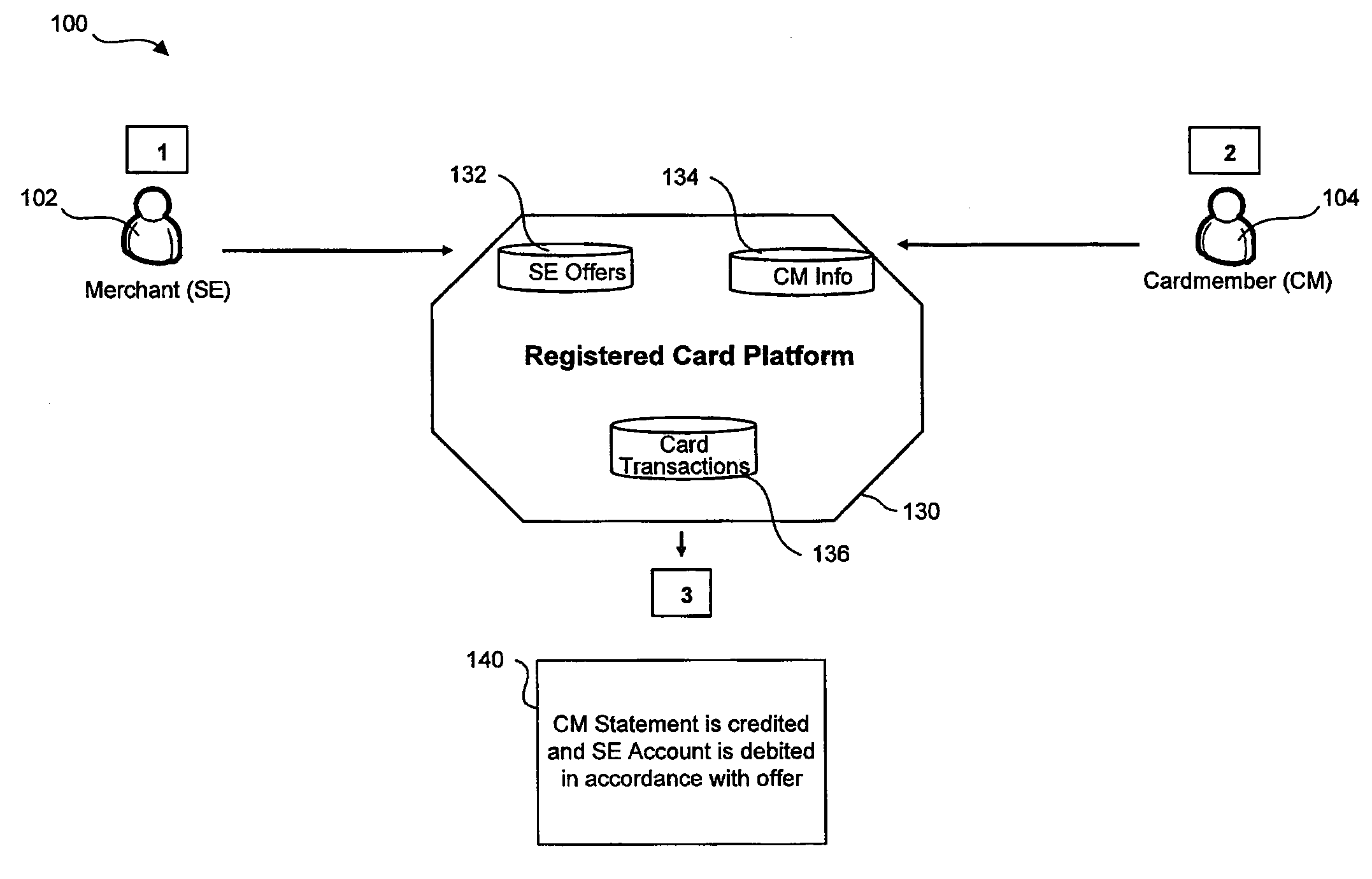

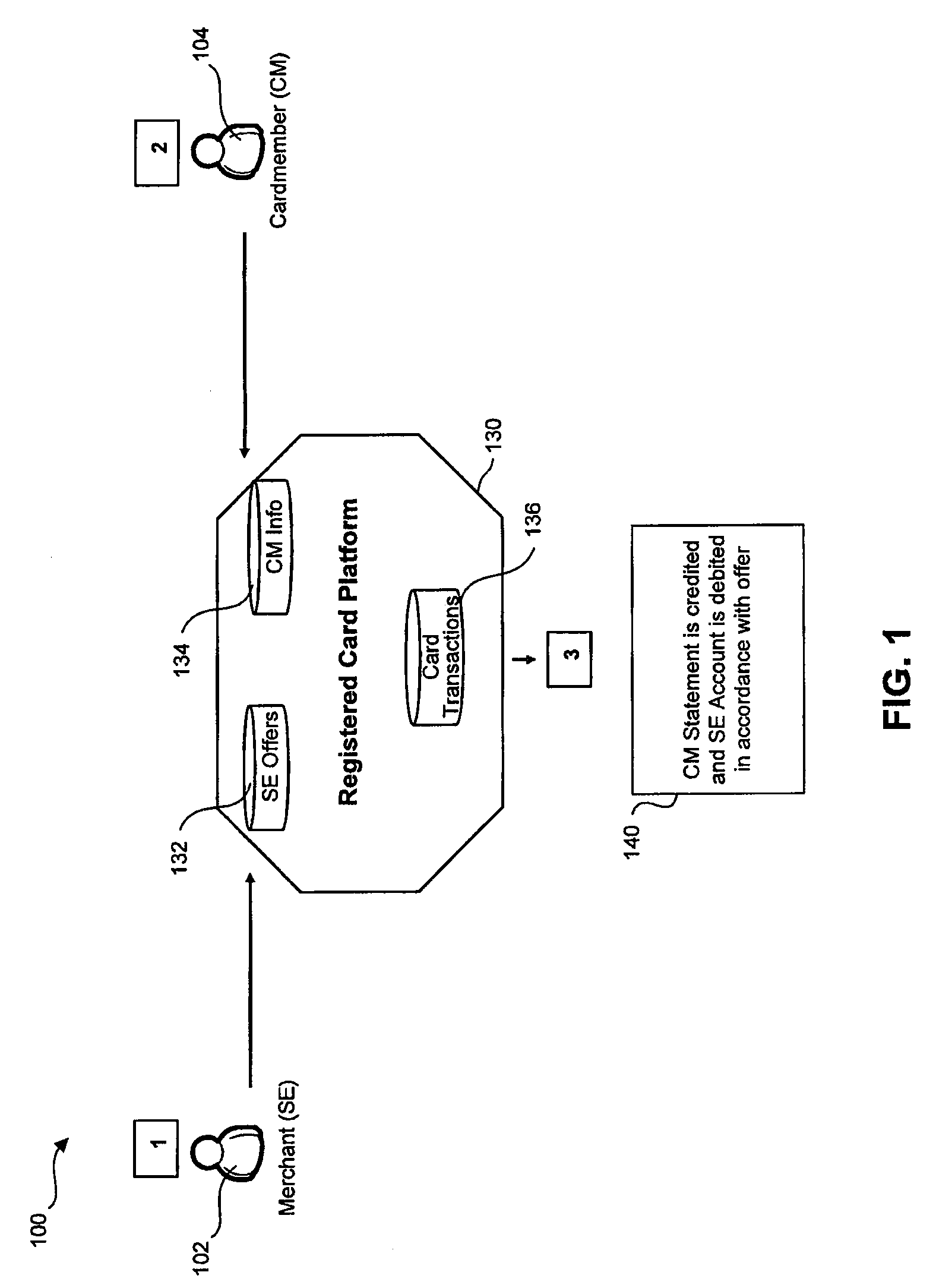

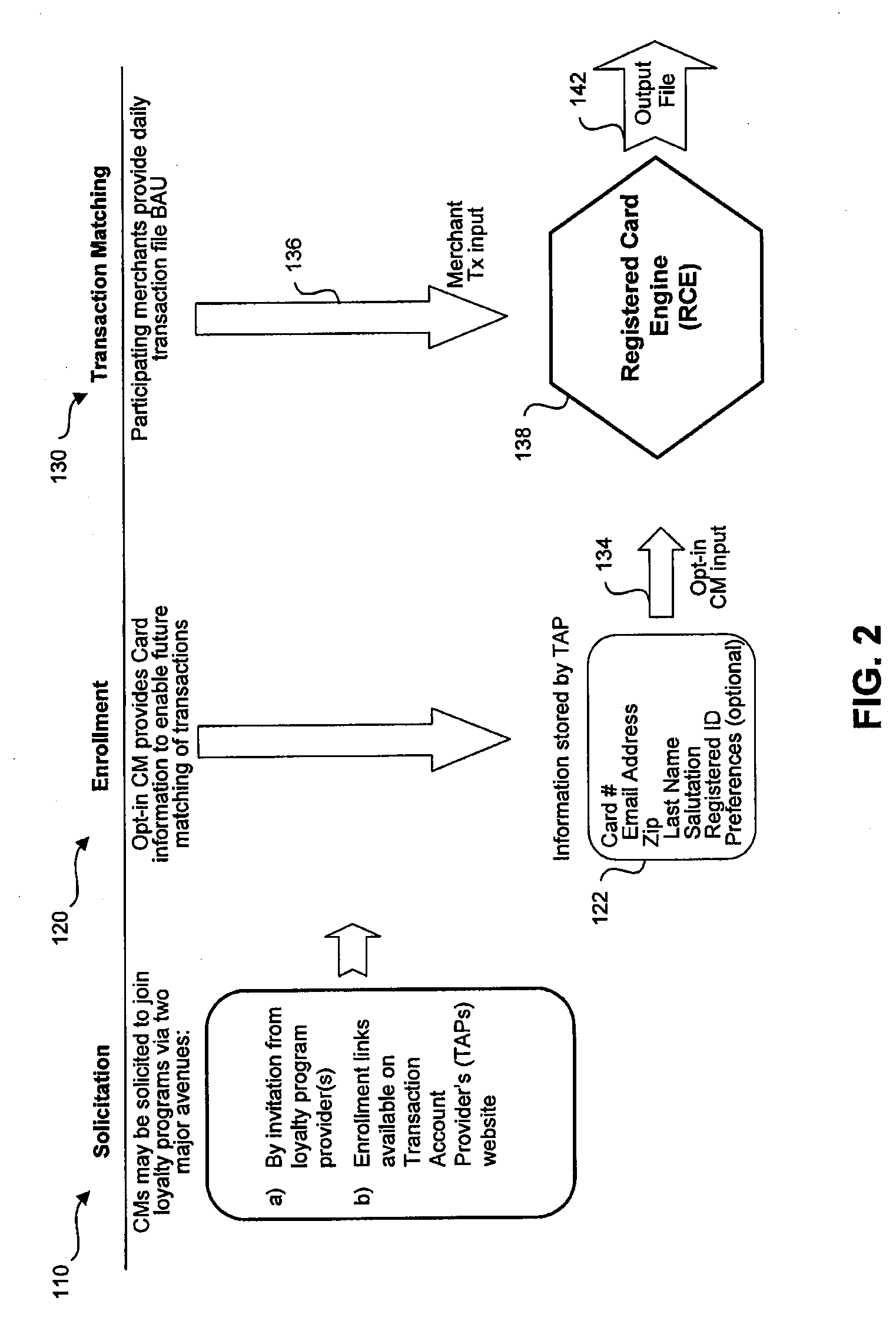

Loyalty Incentive Program Using Transaction Cards

ActiveUS20080021772A1Increase salesEliminate overheadFinancePayment architectureTransaction accountDatabase

A system and method provide rewards or loyalty incentives to card member customers. The system includes an enrolled card member customer database, an enrolled merchant database, a participating merchant offer database and a registered card processor. The enrolled card member customer database includes transaction accounts of card member customers enrolled in a loyalty incentive program. The enrolled merchant database includes a list of merchants participating in the loyalty incentive program. The participating merchant offer database includes loyalty incentive offers from participating merchants. The registered card processor receives a record for charge for a purchase made with an enrolled merchant by an enrolled card member customer and uses the record of charge to determine whether the purchase qualifies for a rebate credit in accordance with a discount offer from the enrolled merchant. If the purchase qualifies for a rebate credit, the registered card processor provides the rebate credit to an account of the enrolled card member customer. The system provides a coupon-less way for merchants to provide incentive discounts to enrolled customers.

Owner:AMERICAN EXPRESS TRAVEL RELATED SERVICES CO INC

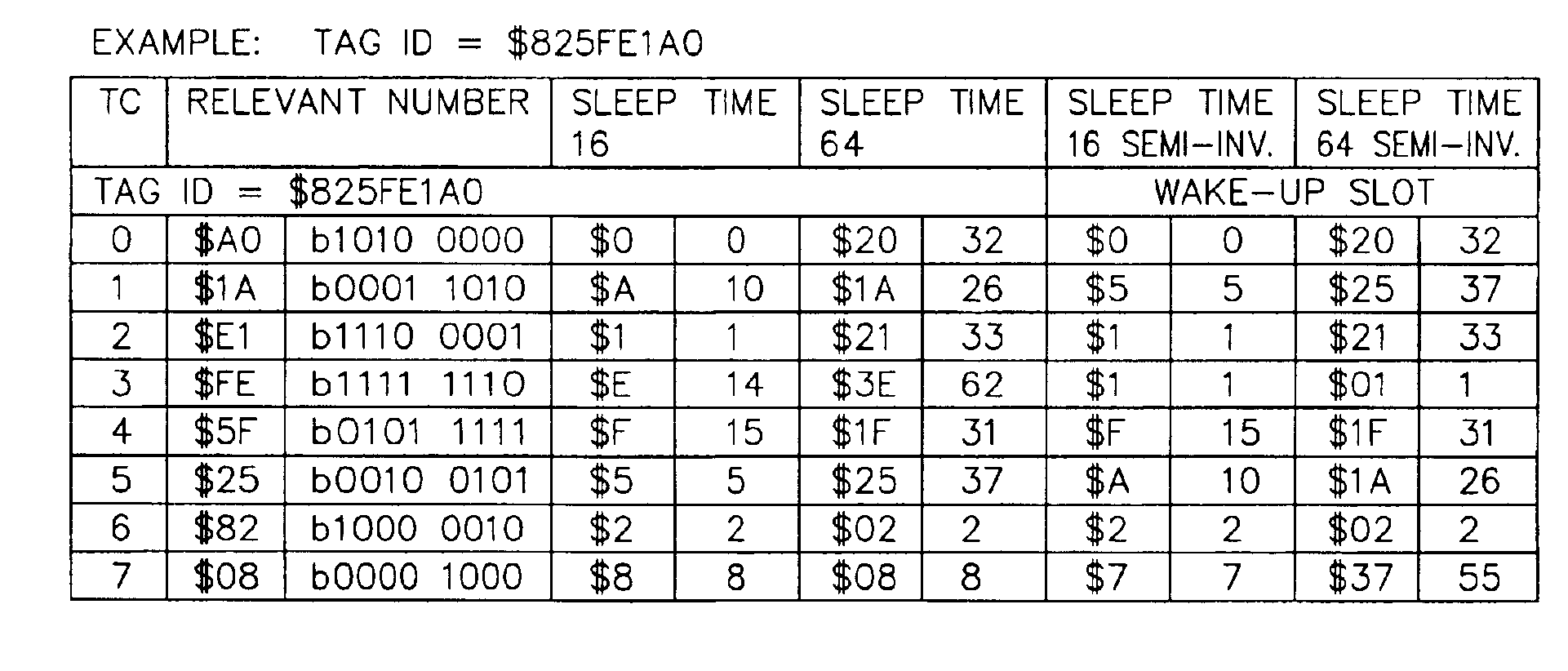

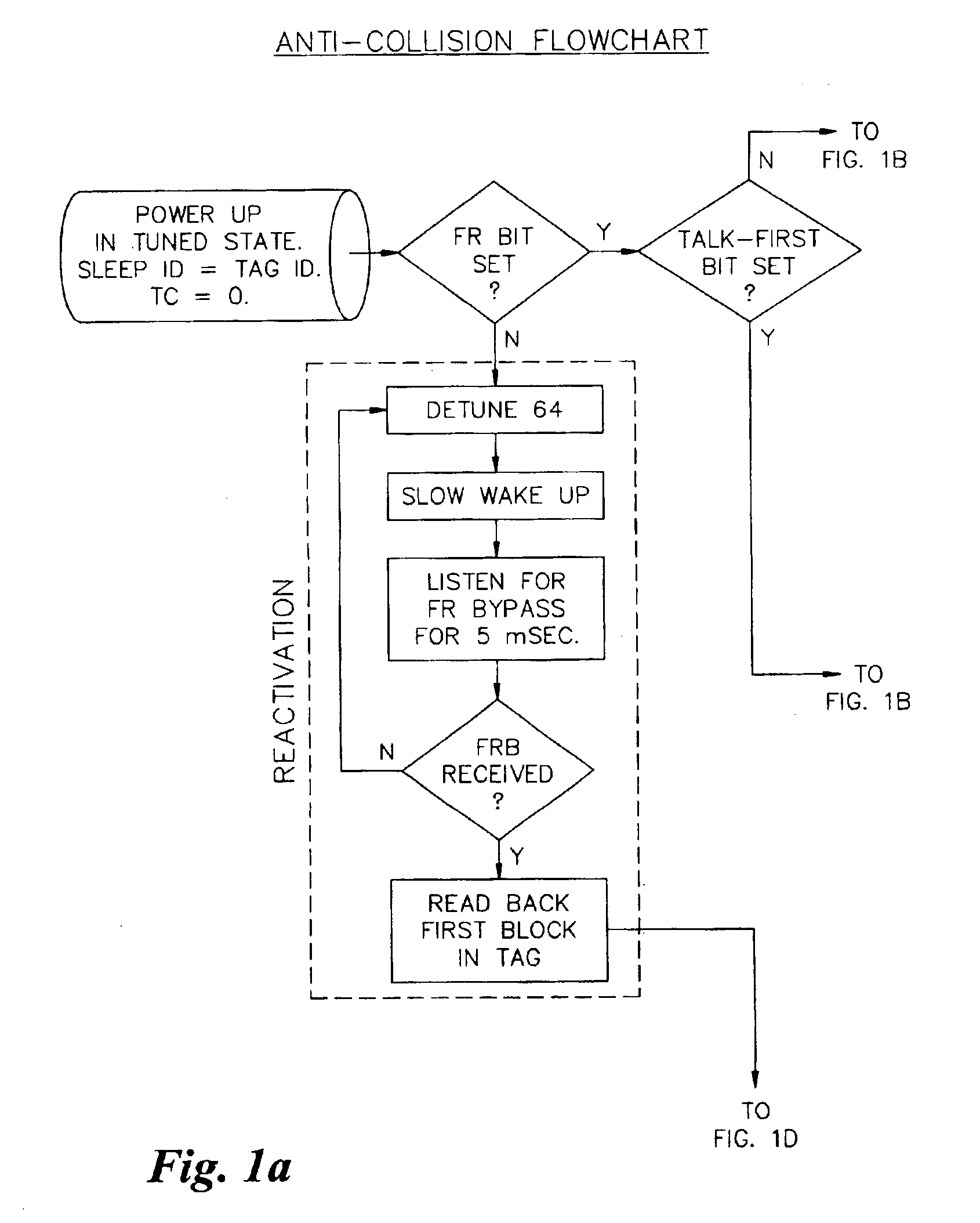

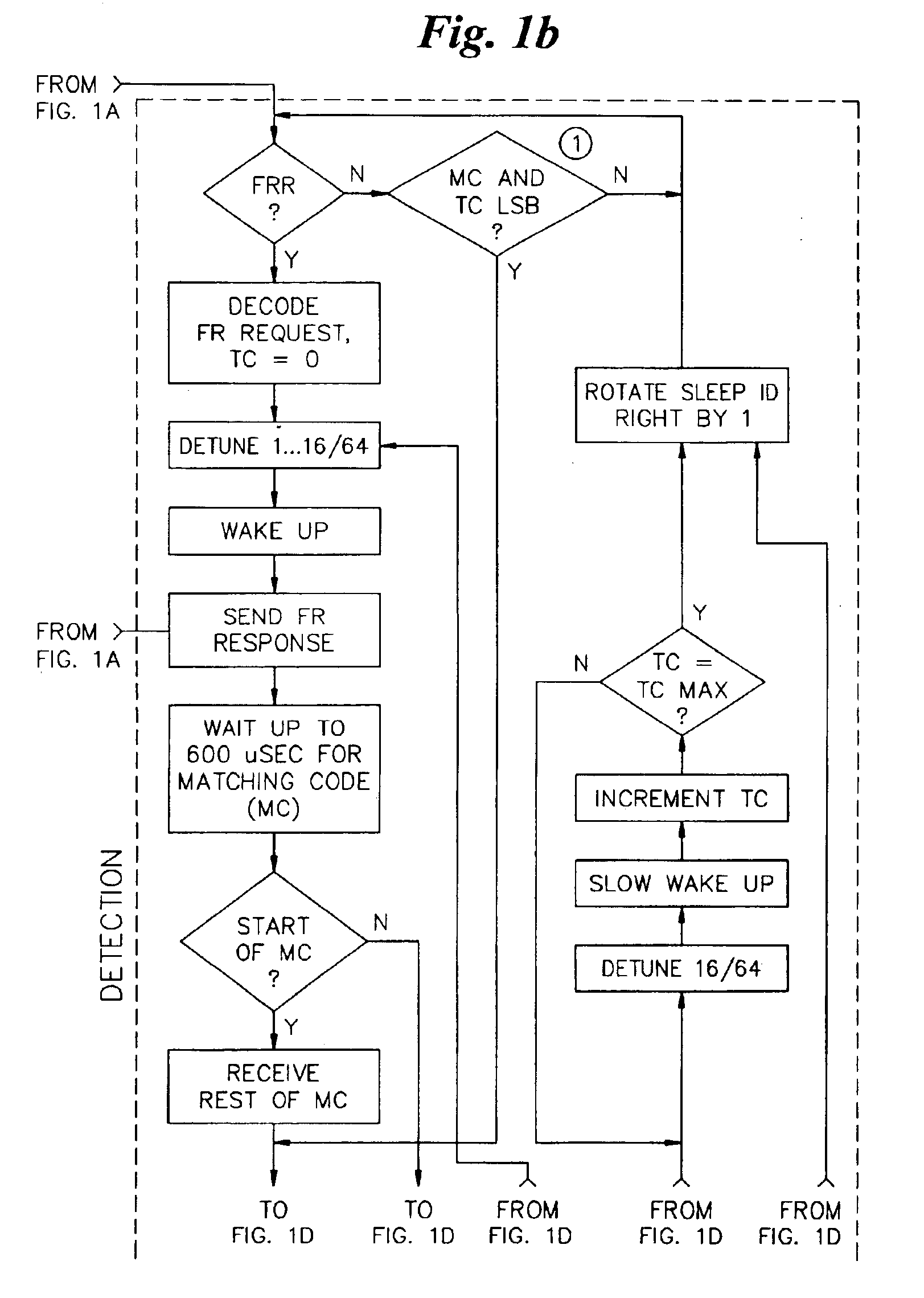

Anticollision protocol with fast read request and additional schemes for reading multiple transponders in an RFID system

InactiveUS6963270B1Fast readMinimize overheadTransmission systemsMemory record carrier reading problemsData rateCommunication control

Arbitration of multiple transponders, such as RFID tags, occurs in an interrogation field. The arbitration process is custom-tailored for individual applications, under software control, by the transponder reader or tag reader. Different wake-up slots are calculated for each tag during successive transmission cycles based upon the tag ID and the transmission cycle number. The tag reader may send a special fast read command to the tag which includes a read request and communications control parameters including the number of time slots for transponder communications, the number of transmissions that an individual transponder is allowed to issue, and the data rate at which the reader communicates to the transponder. The tag reader may also send a special command to a tag to read its data and cause the tag to become decoupled from the environment. Additional schemes are provided to halve the number of active tags in each transmission cycle and to selectively inactivate designated groupings of tag, thereby improving discrimination of the tags in the interrogation field. The tags may be selectively placed in either a tag-talk-first mode or a reader-talk-first mode.

Owner:CHECKPOINT SYST INC +2

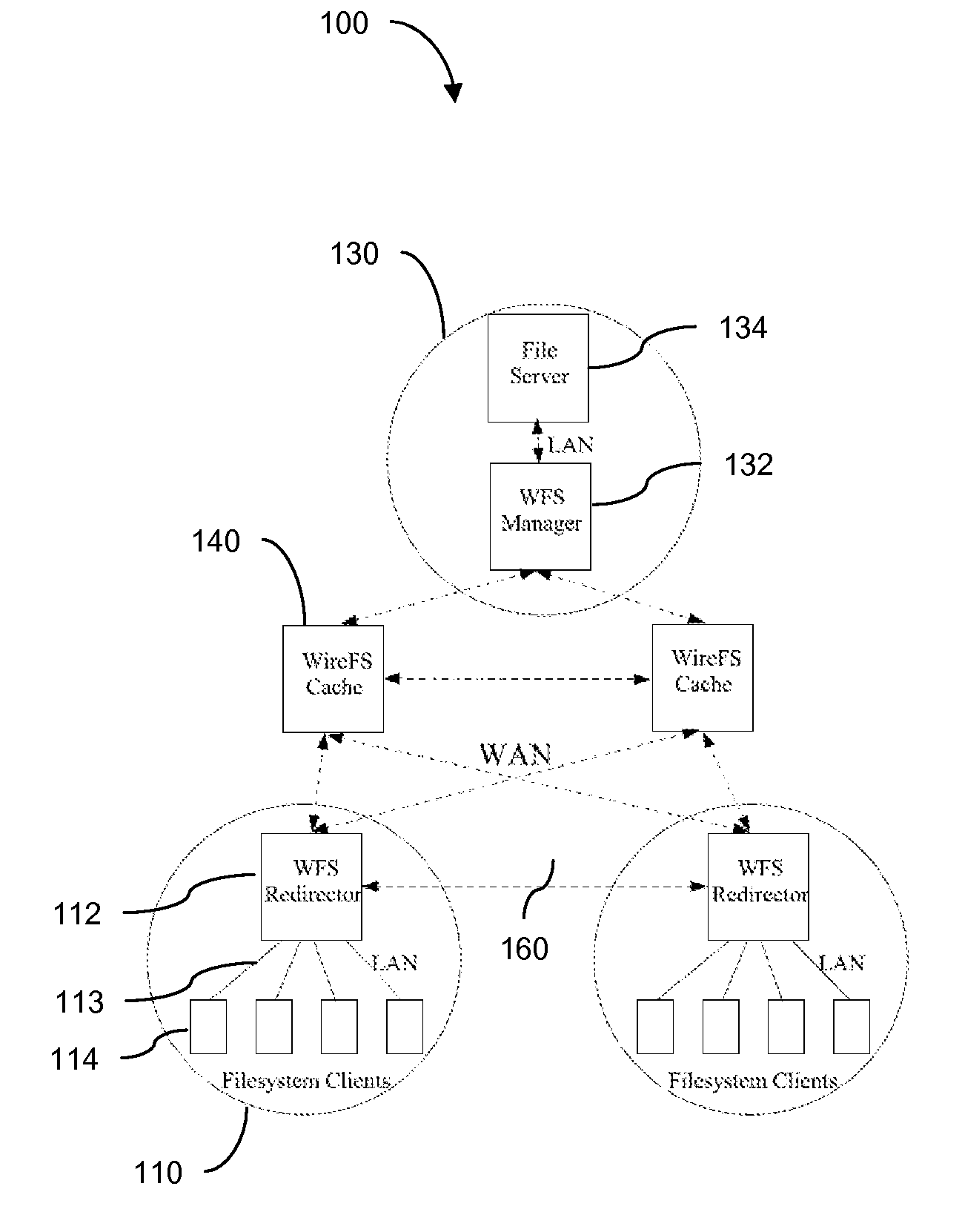

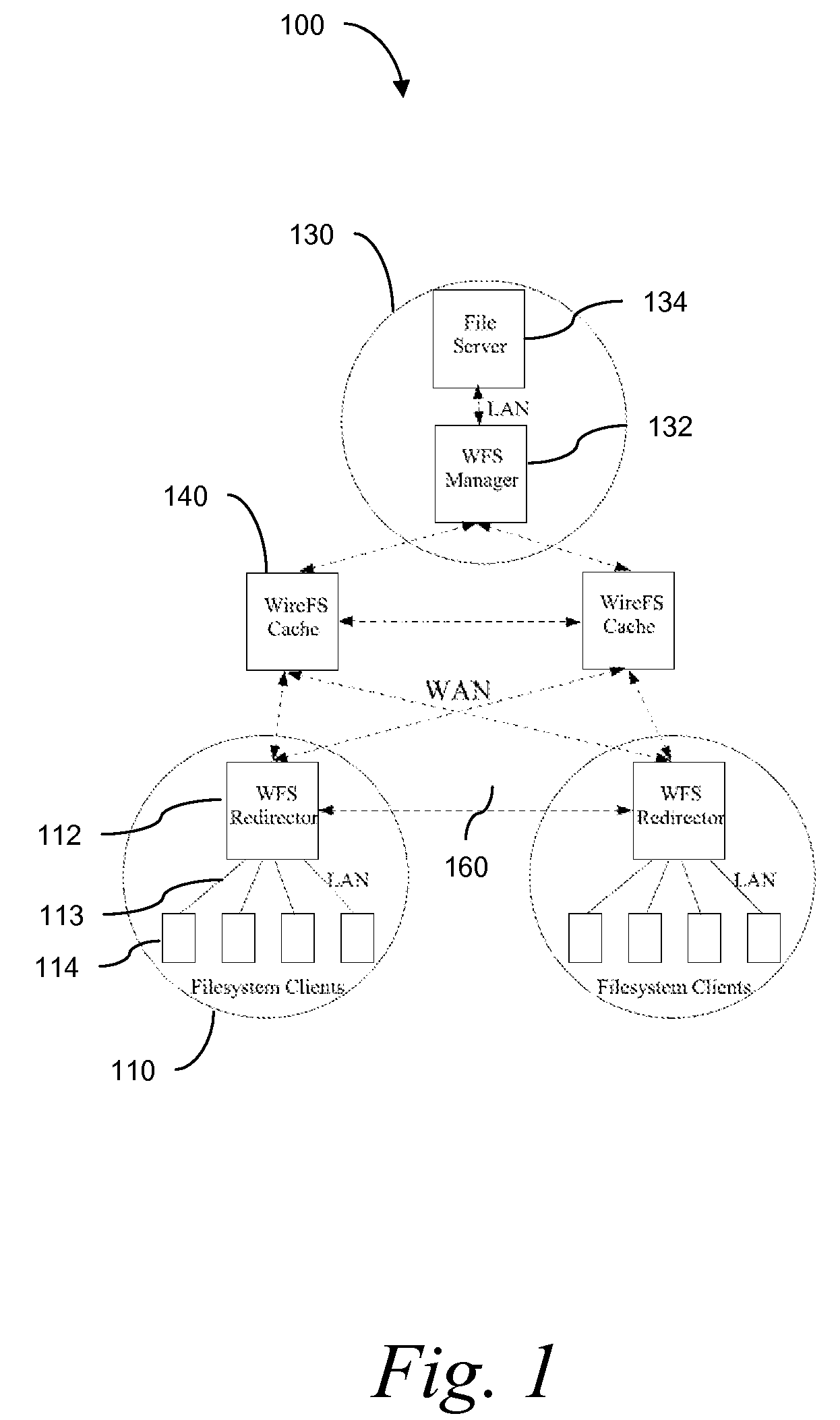

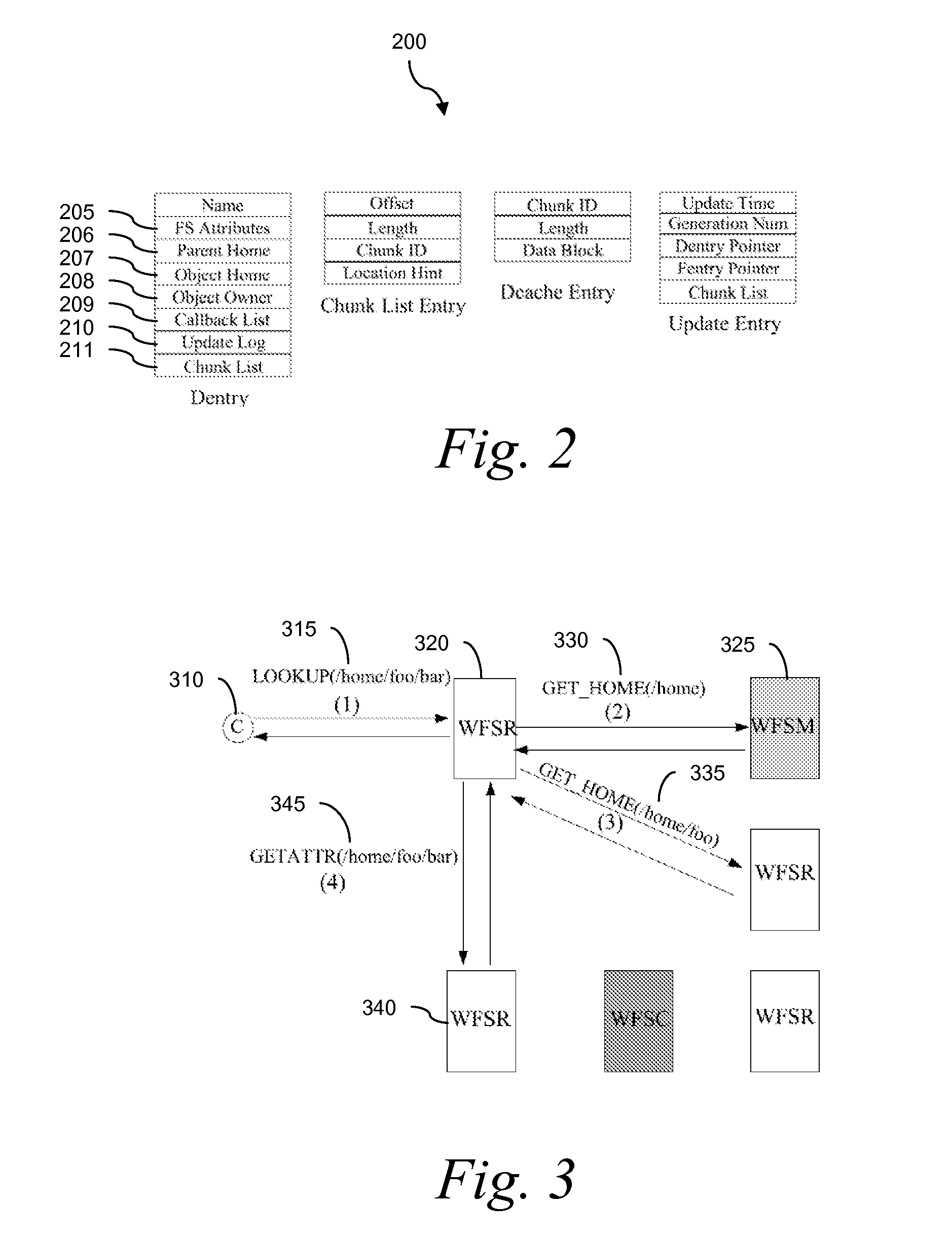

Wide Area Networked File System

InactiveUS20070162462A1Eliminate overheadAlleviates the bottleneck at the central serverSpecial data processing applicationsMemory systemsWide areaRandomized algorithm

Traditional networked file systems like NFS do not extend to wide-area due to network latency and dynamics introduced in the WAN environment. To address that problem, a wide-area networked file system is based on a traditional networked file system (NFS / CIFS) and extends to the WAN environment by introducing a file redirector infrastructure residing between the central file server and clients. The file redirector infrastructure is invisible to both the central server and clients so that the change to NFS is minimal. That minimizes the interruption to the existing file service when deploying WireFS on top of NFS. The system includes an architecture for an enterprise-wide read / write wide area network file system, protocols and data structures for metadata and data management in this system, algorithms for history based prefetching for access latency minimization in metadata operations, and a distributed randomized algorithm for the implementation of global LRU cache replacement scheme.

Owner:NEC CORP

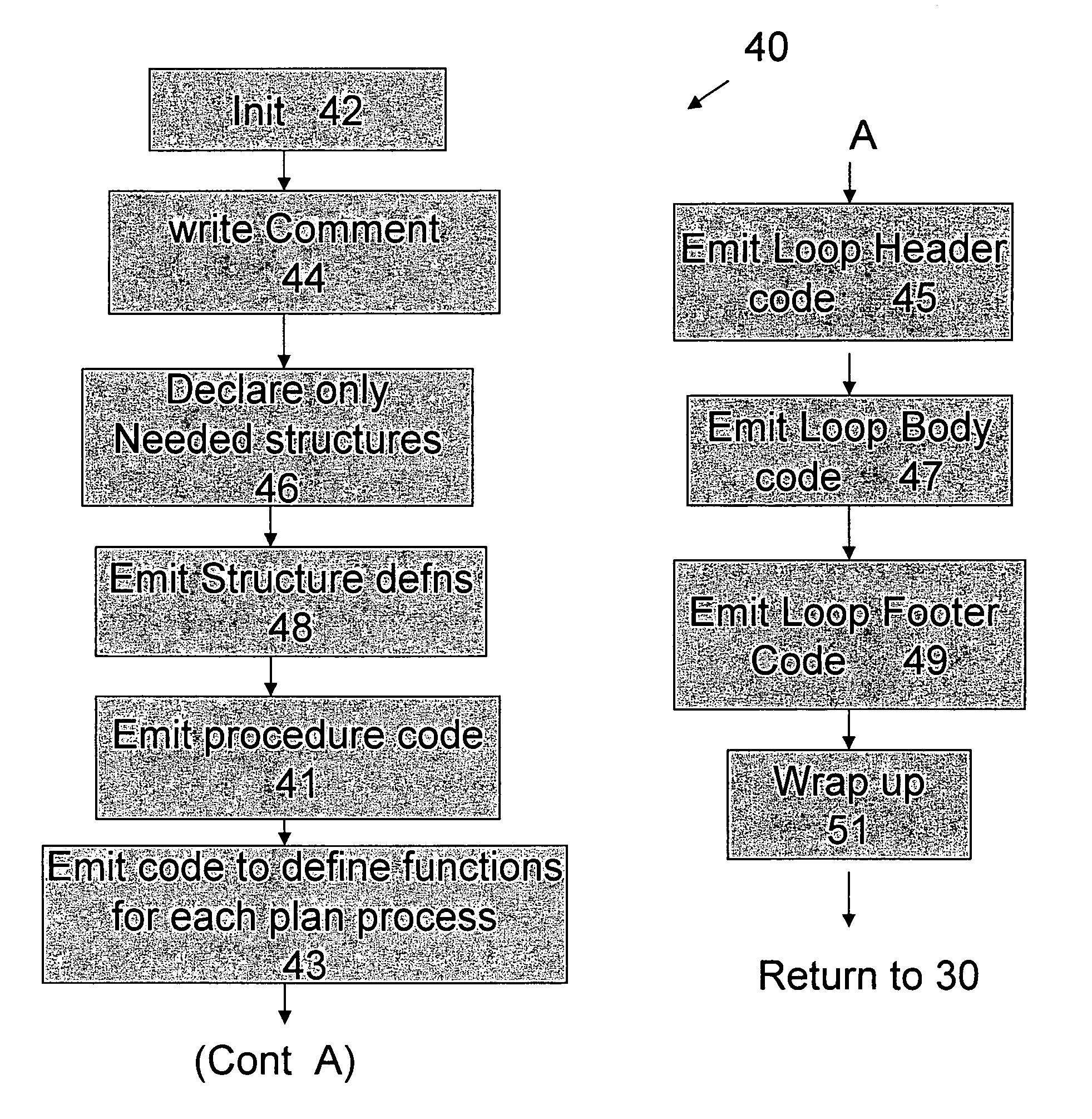

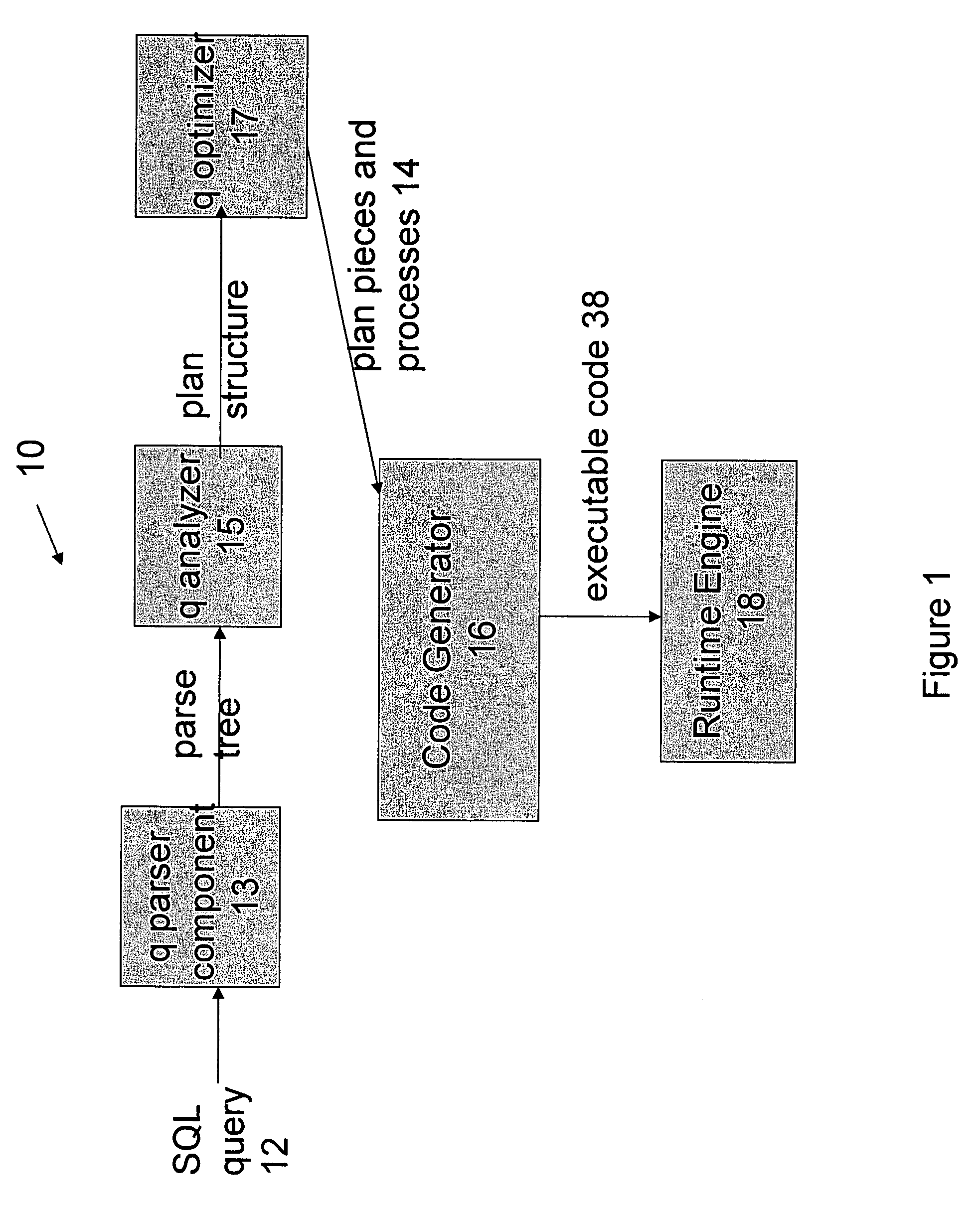

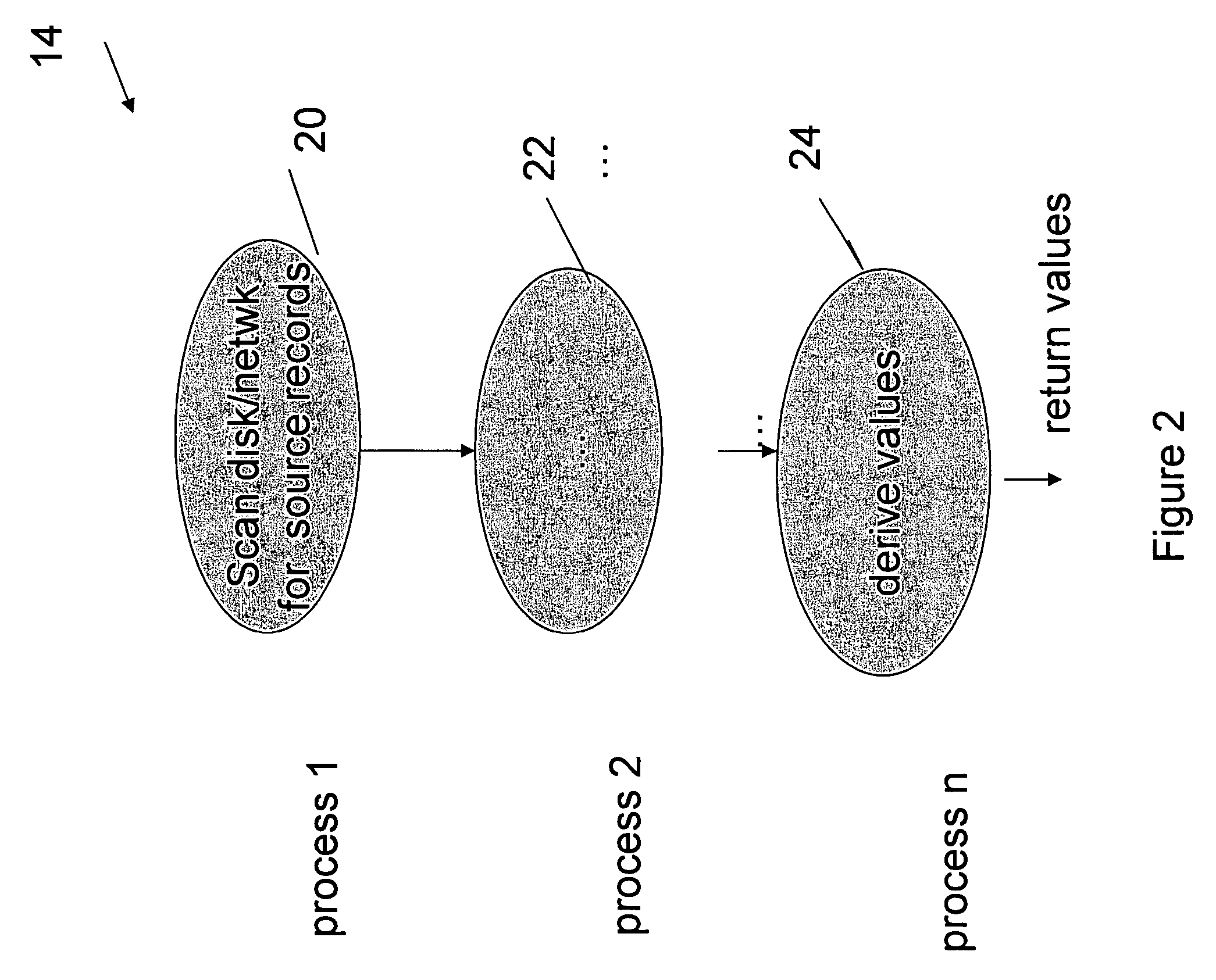

Optimized SQL code generation

ActiveUS7430549B2Reduce and minimize compilation timeReduce and minimize execution timeDigital data information retrievalData processing applicationsExecution planCode generation

This invention relates generally to a system for processing database queries, and more particularly to a method for generating high level language or machine code to implement query execution plans. The present invention provides a method for generating executable machine code for query execution plans, that is adaptive to dynamic runtime conditions, that is compiled just in time for execution and most importantly, that avoids the bounds checking, pointer indirection, materialization and other similar kinds of overhead that are typical in interpretive runtime execution engines.

Owner:INT BUSINESS MASCH CORP

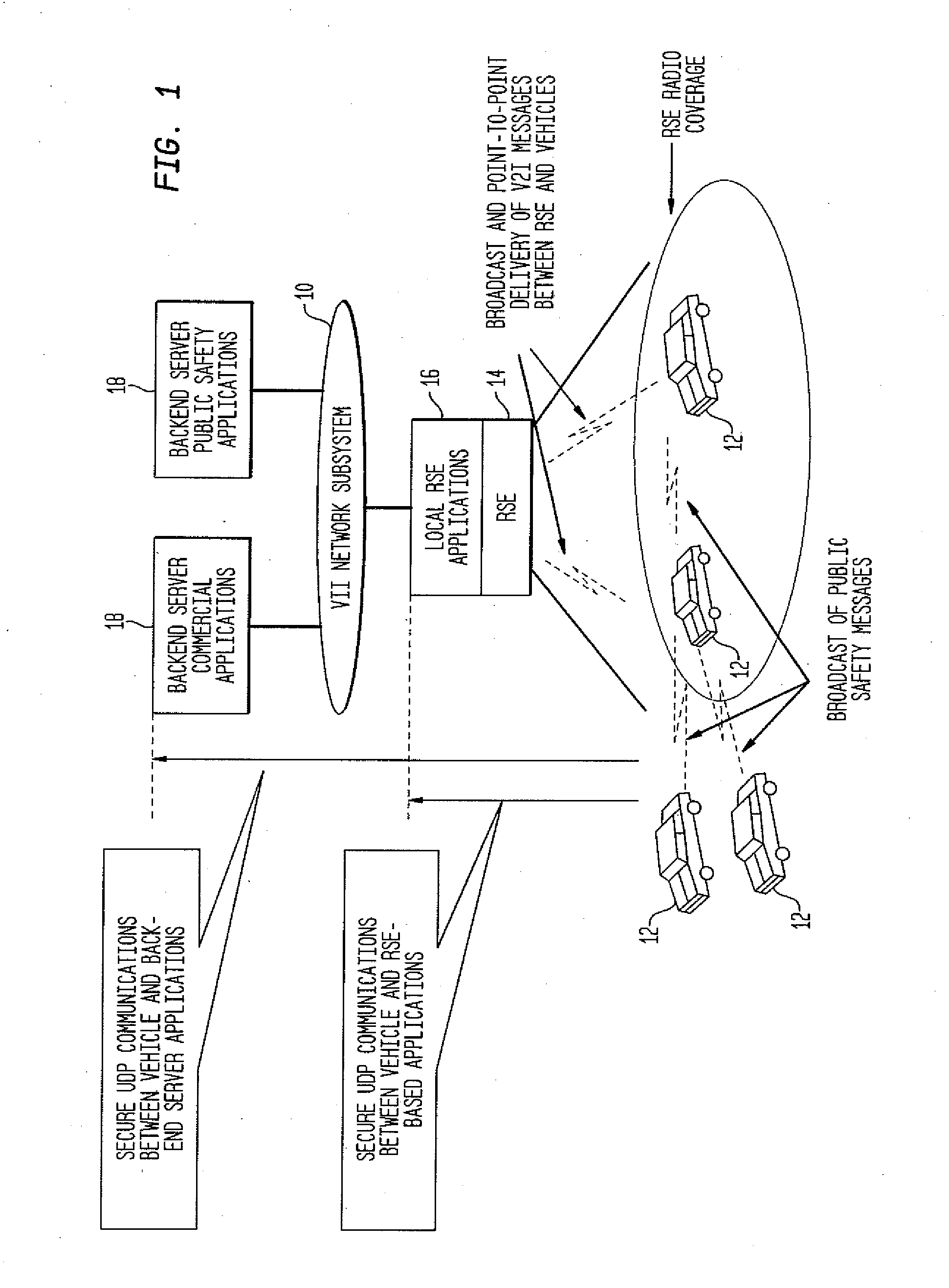

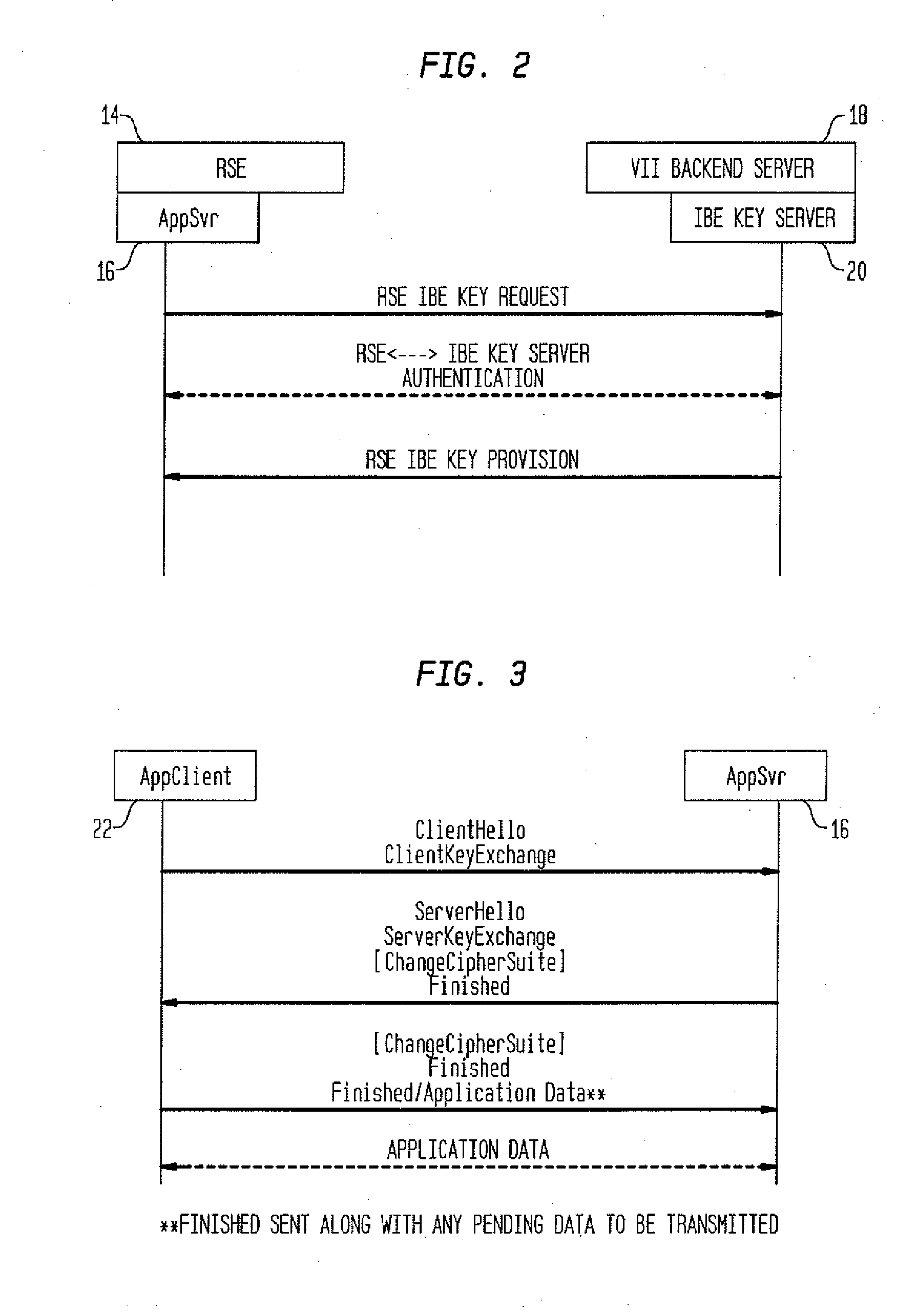

Method and System for Secure Session Establishment Using Identity-Based Encryption (VDTLS)

ActiveUS20100031042A1Communication securityPrivacy protectionUser identity/authority verificationSecurity arrangementID-based encryptionSecure communication

The inventive system for providing strong security for UDP communications in networks comprises a server, a client, and a secure communication protocol wherein authentication of client and server, either unilaterally or mutually, is performed using identity based encryption, the secure communication protocol preserves privacy of the client, achieves significant bandwidth savings, and eliminates overheads associated with certificate management. VDTLS also enables session mobility across multiple IP domains through its session resumption capability.

Owner:TELCORDIA TECHNOLOGIES INC

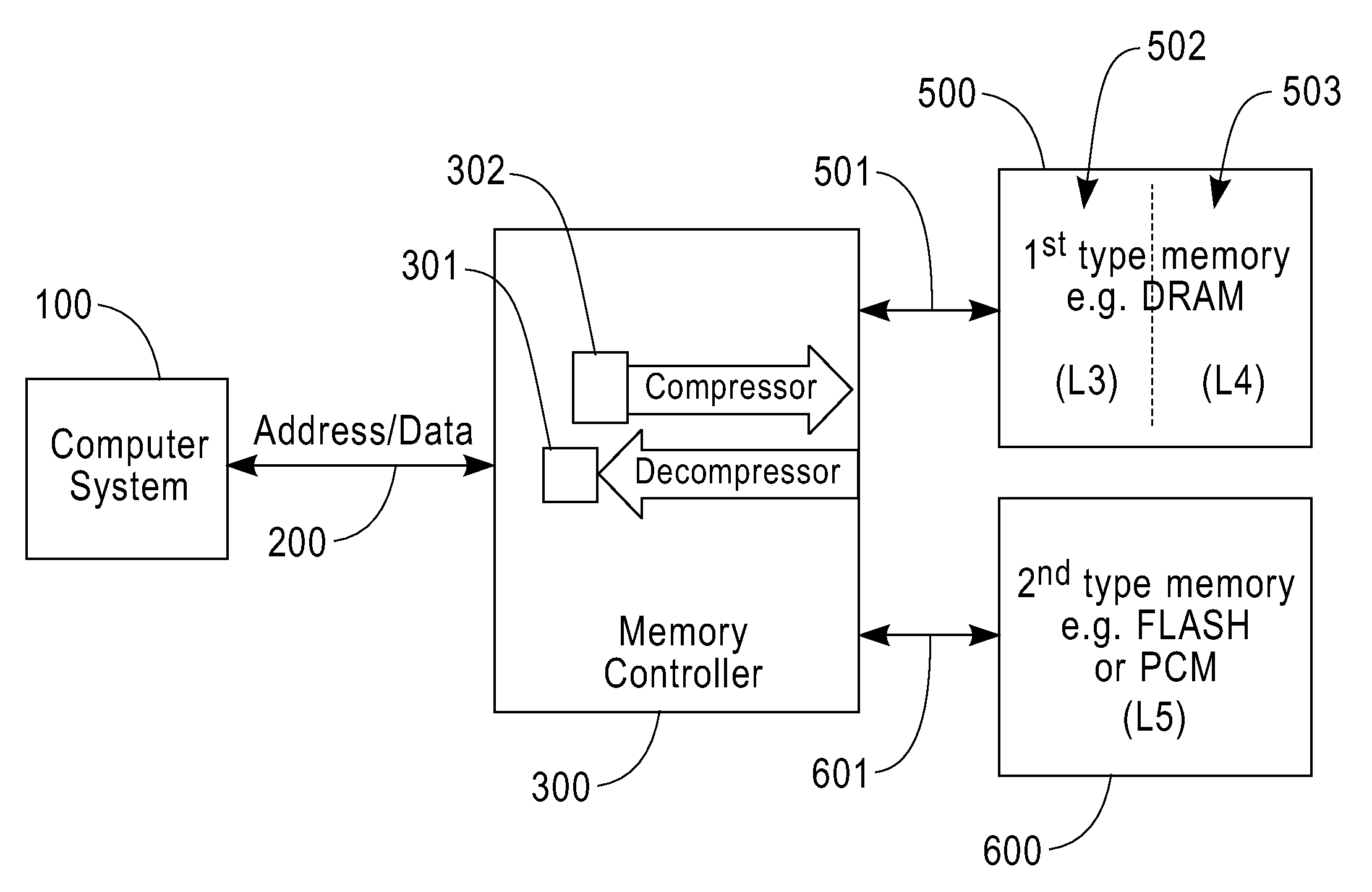

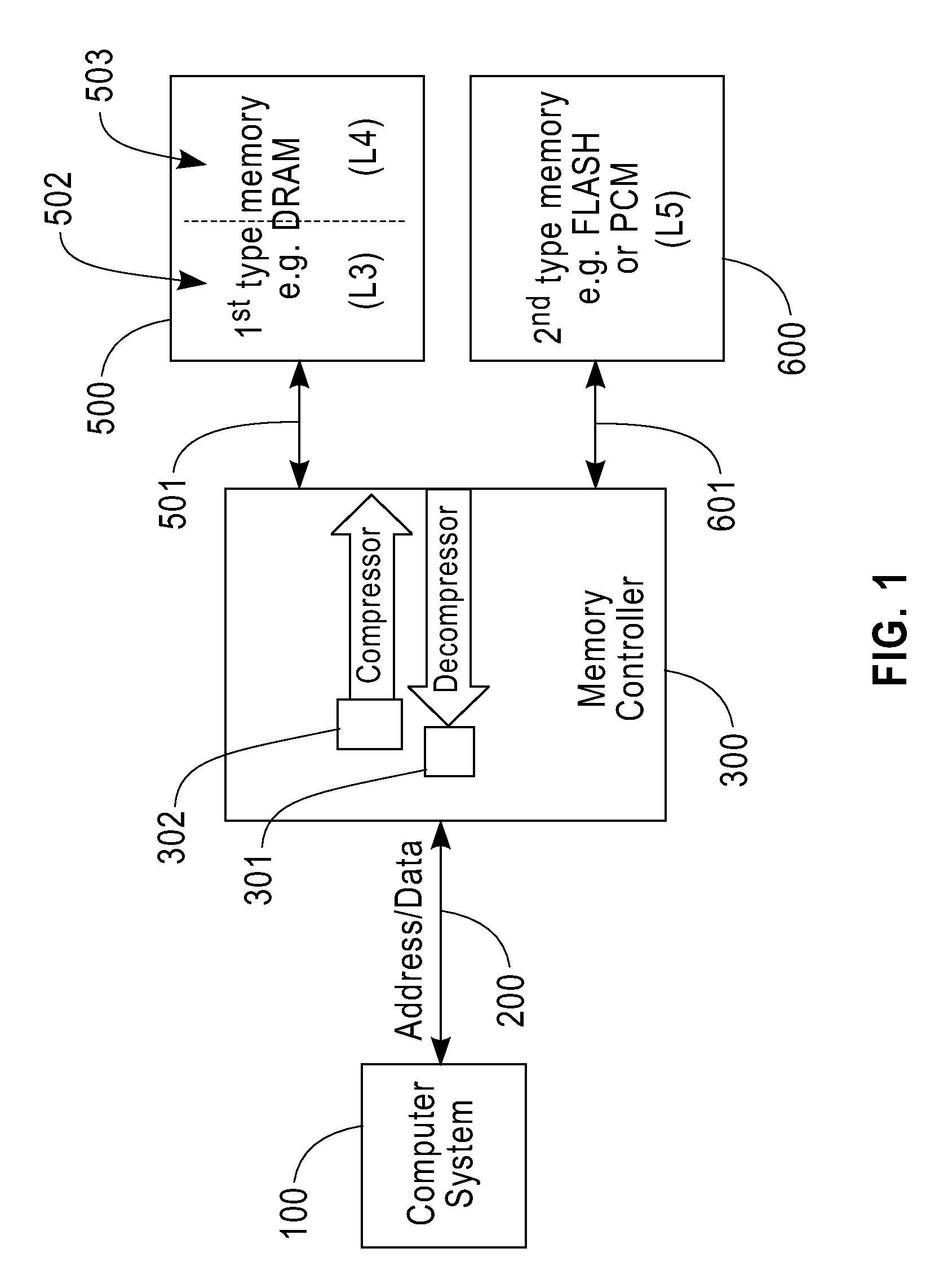

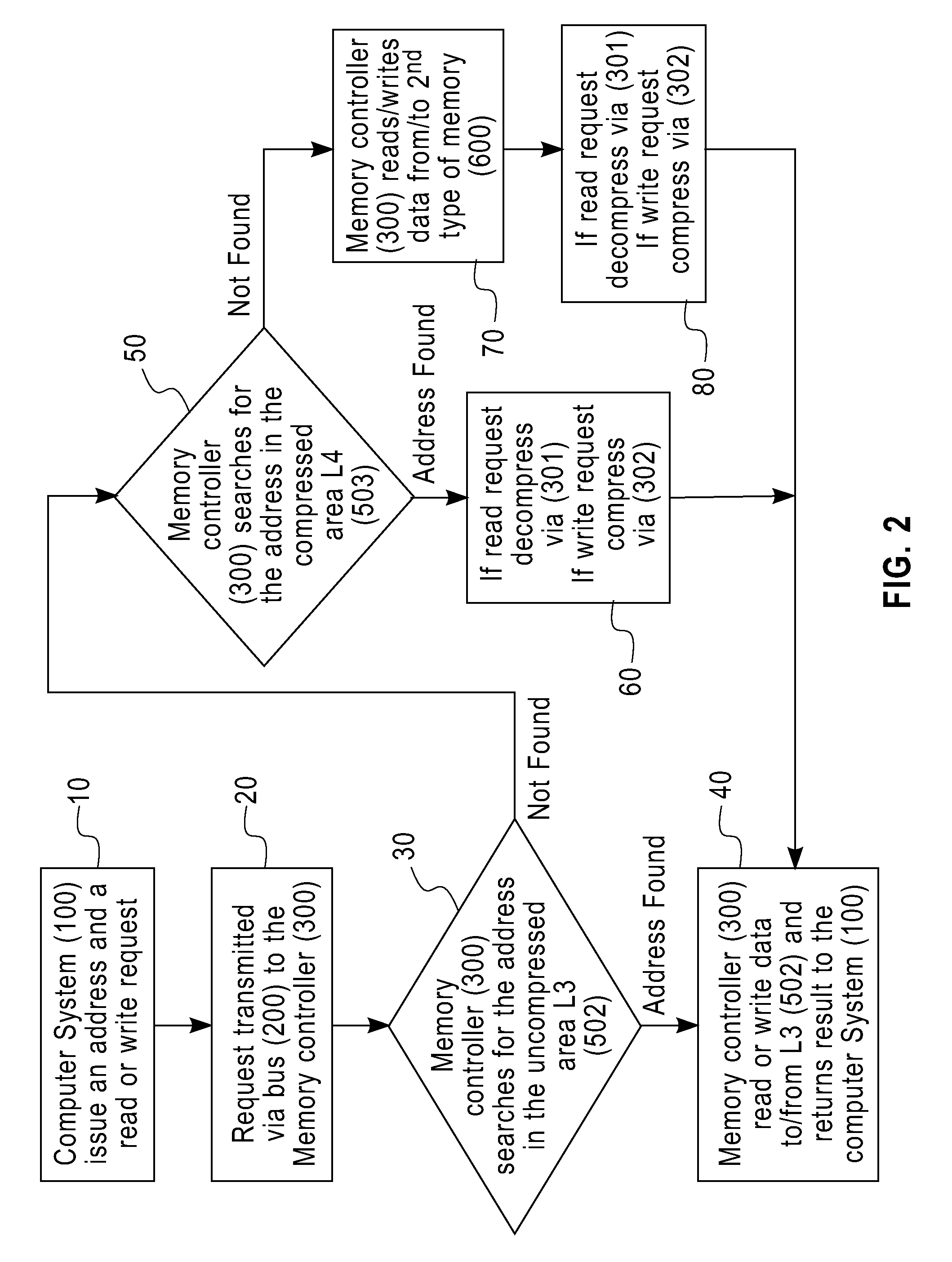

Bus attached compressed random access memory

InactiveUS20090254705A1Eliminate the problemLow costMemory architecture accessing/allocationMemory adressing/allocation/relocationThree levelMemory hierarchy

A computer memory system having a three-level memory hierarchy structure is disclosed. The system includes a memory controller, a volatile memory, and a non-volatile memory. The volatile memory is divided into an uncompressed data region and a compressed data region.

Owner:IBM CORP

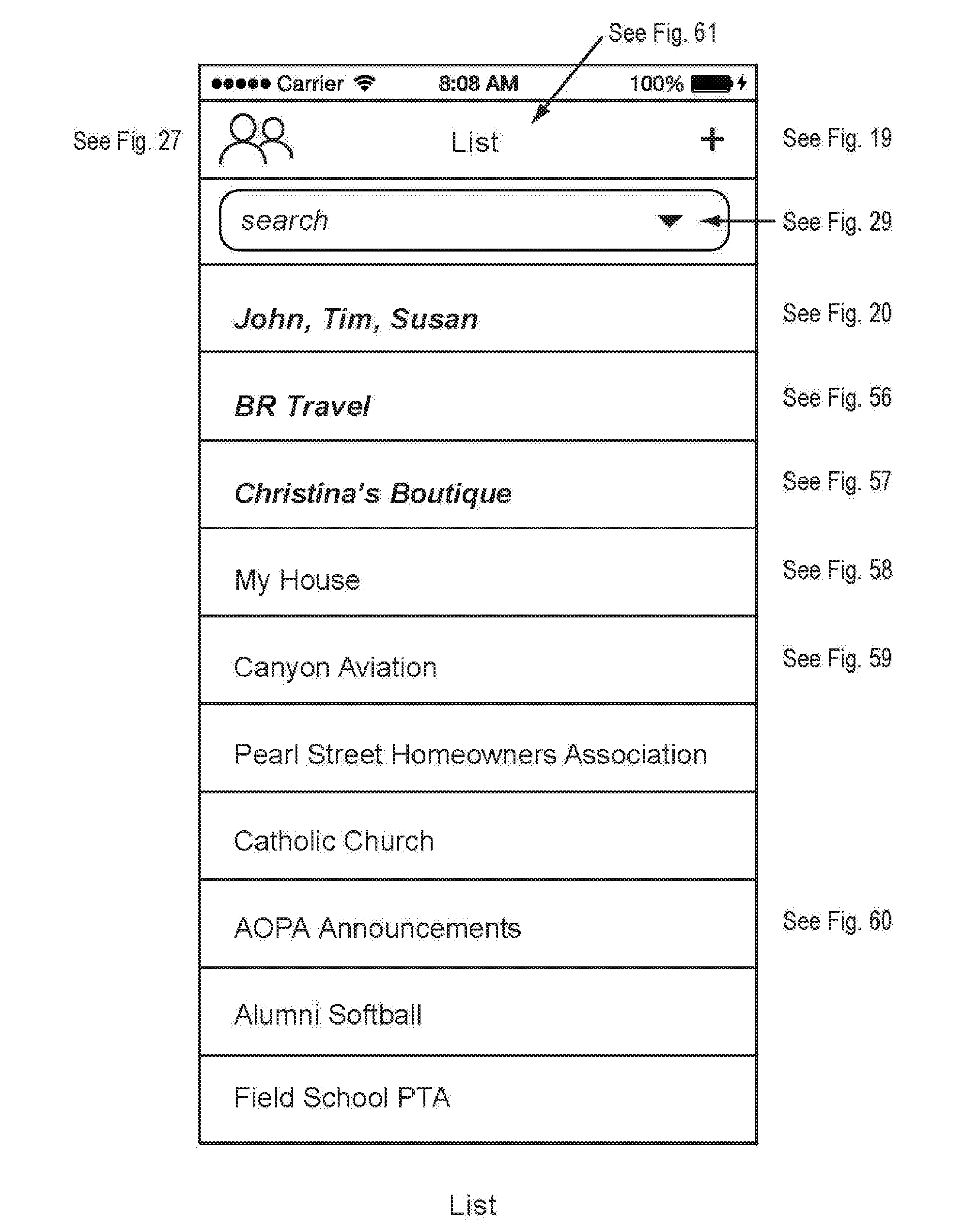

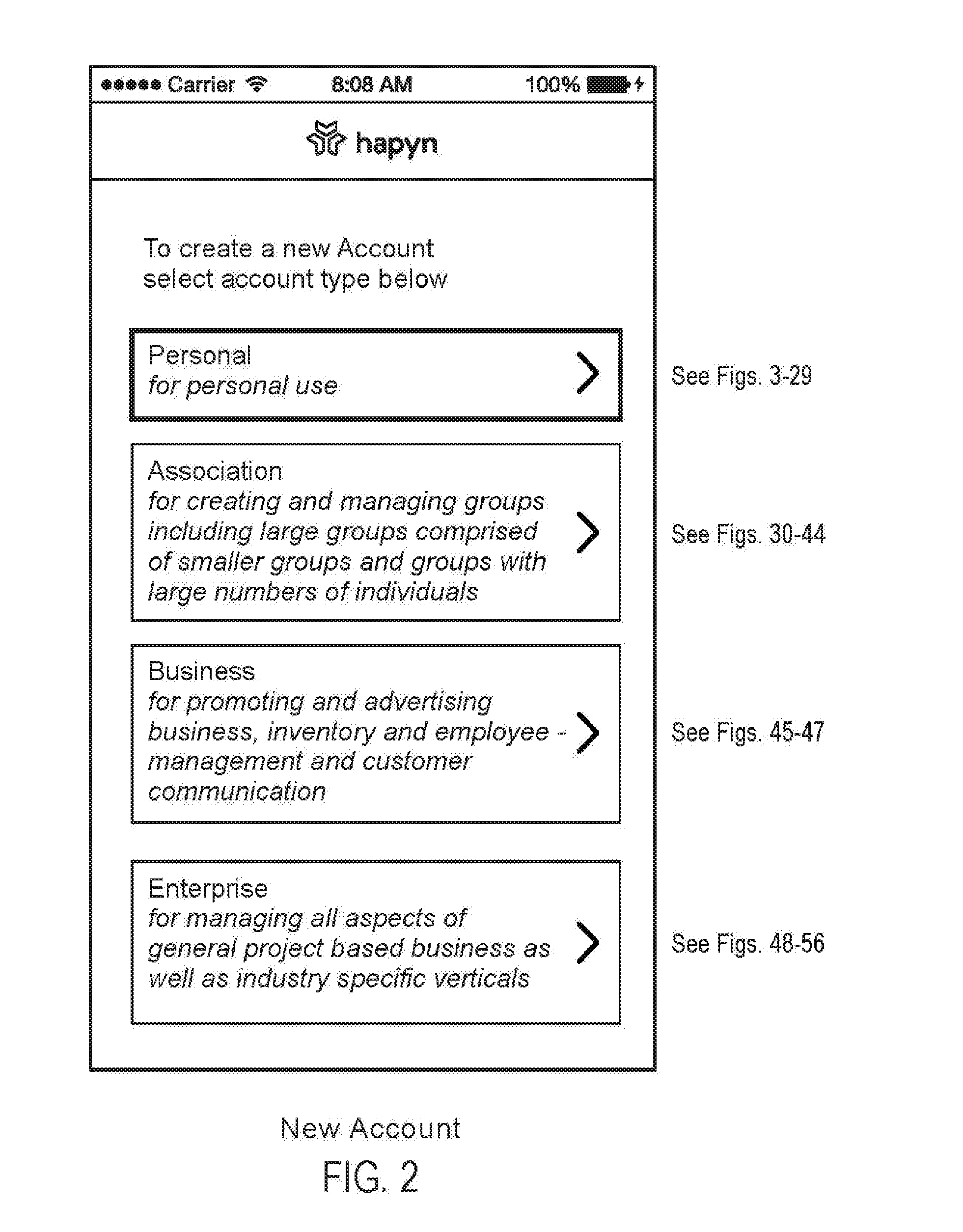

Online Systems and Methods for Advancing Information Organization Sharing and Collective Action

ActiveUS20170024091A1Conveniently and selectively engageHighly organized and efficient bilateralInformation formatServices signallingKnowledge organizationMobile device

Methods and systems and mobile device interfaces for creating, joining, organizing and managing via mobile devices affinity groups in a cloud computing environment for social and business purposes.

Owner:HOSIER JR GERALD DOUGLAS

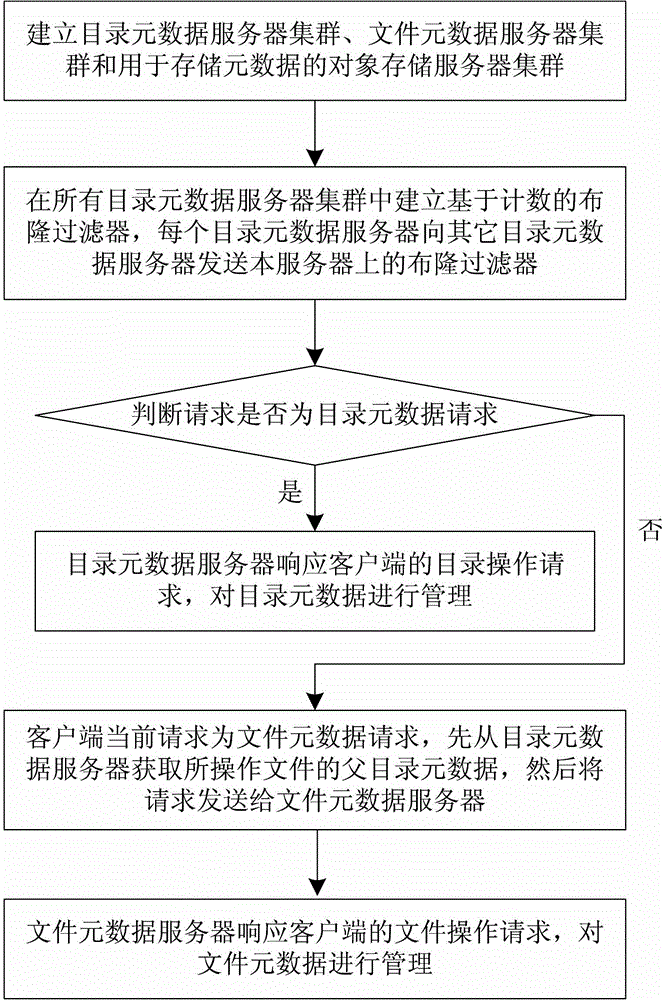

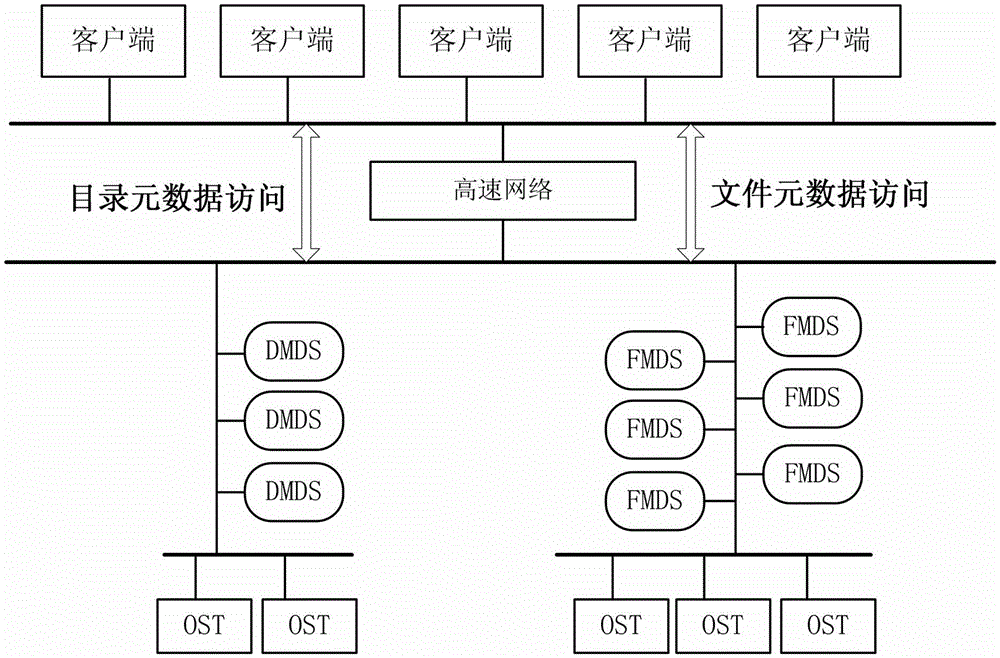

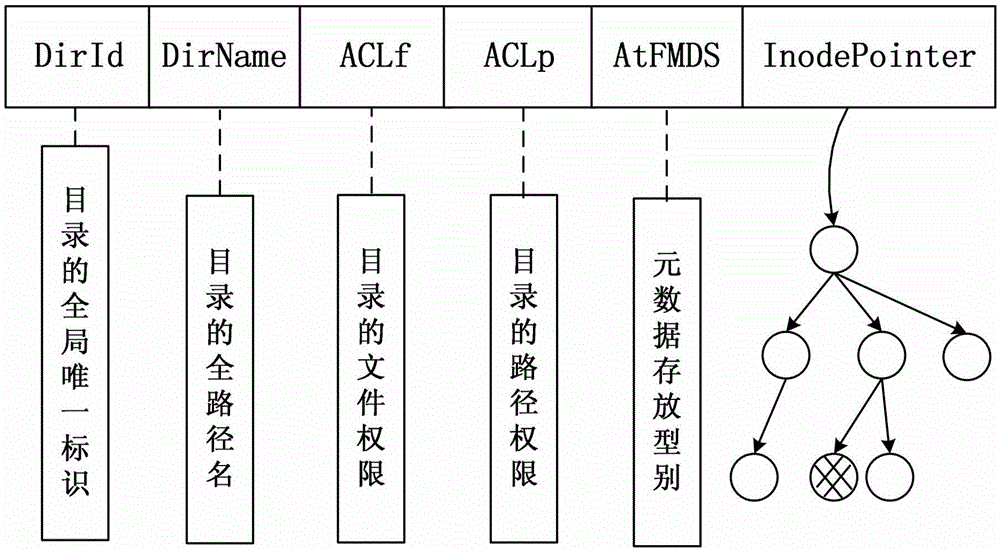

Distributed file system metadata management method facing to high-performance calculation

ActiveCN103150394AInhibit migrationLoad balancingTransmissionSpecial data processing applicationsDistributed File SystemMetadata management

The invention discloses a distributed file system metadata management method facing to high-performance calculation. The method comprises the following steps of: 1) establishing a catalogue metadata server cluster, a file metadata server cluster and an object storage server cluster; 2) establishing a global counting-based bloom filter in the catalogue metadata server cluster; 3) when the operation request of a client side arrives, skipping to execute step 4) or 5); 4) enabling the catalogue metadata server cluster to respond to the catalogue operation request of the client side to manage the catalogue metadata; and 5) enabling the file metadata server cluster to respond to the file operation request of the client side to manage the file metadata data. According to the distributed file system metadata management method disclosed by the invention, the metadata transferring problem brought by catalogue renaming can be effectively solved, and the distributed file system metadata management method has the advantages of high storage performance, small maintenance expenditure, high load, no bottleneck, good expansibility and balanced load.

Owner:NAT UNIV OF DEFENSE TECH

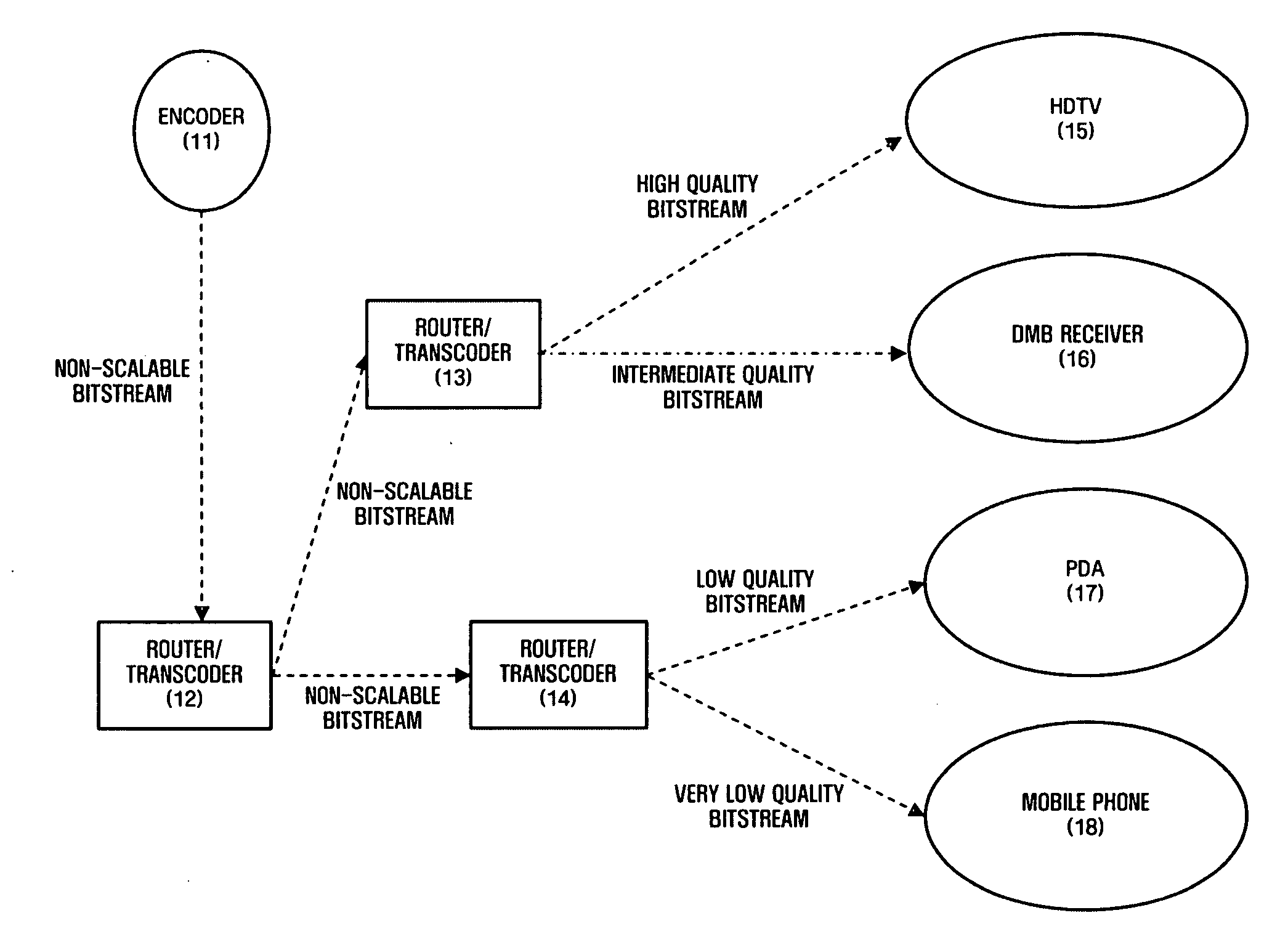

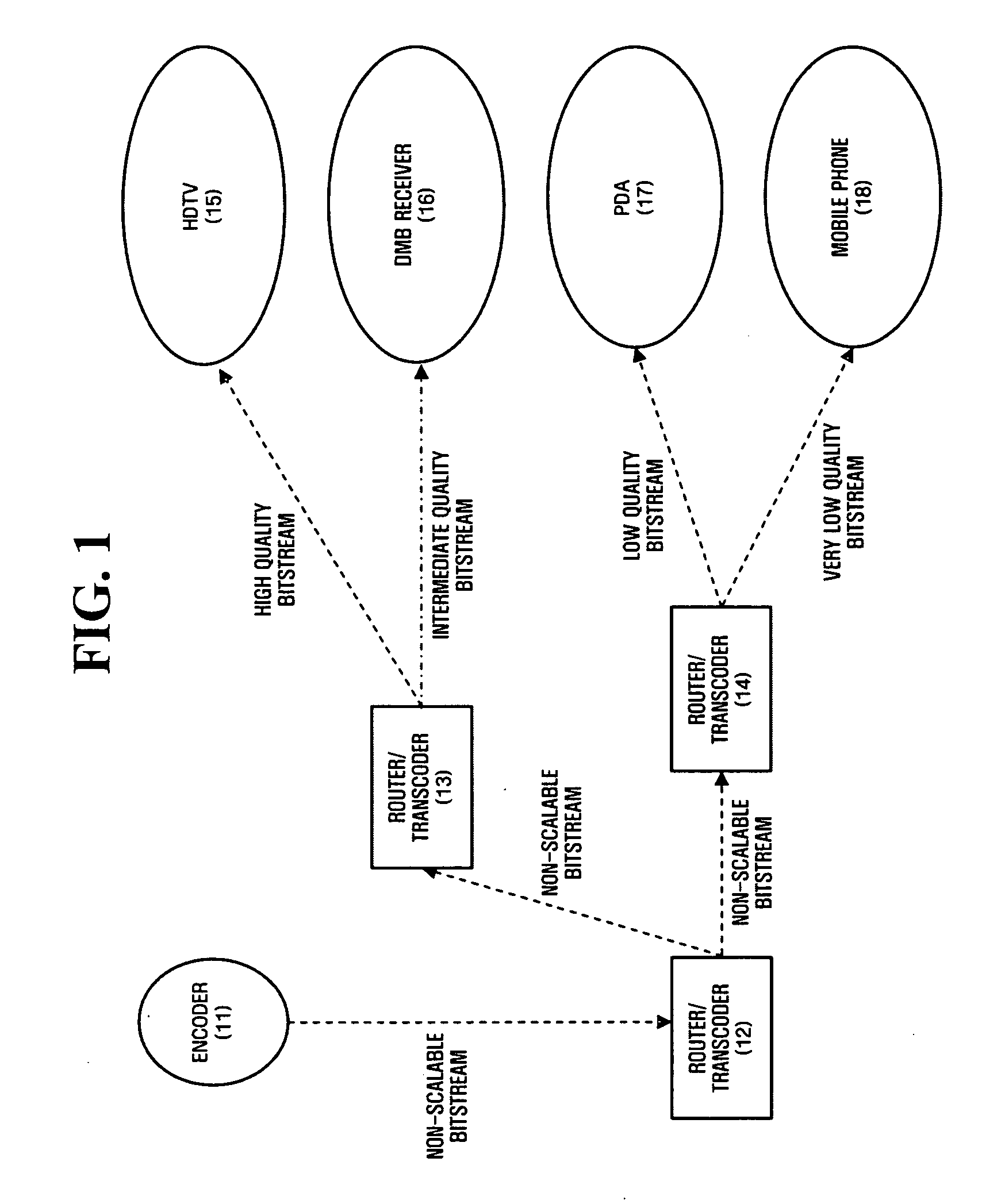

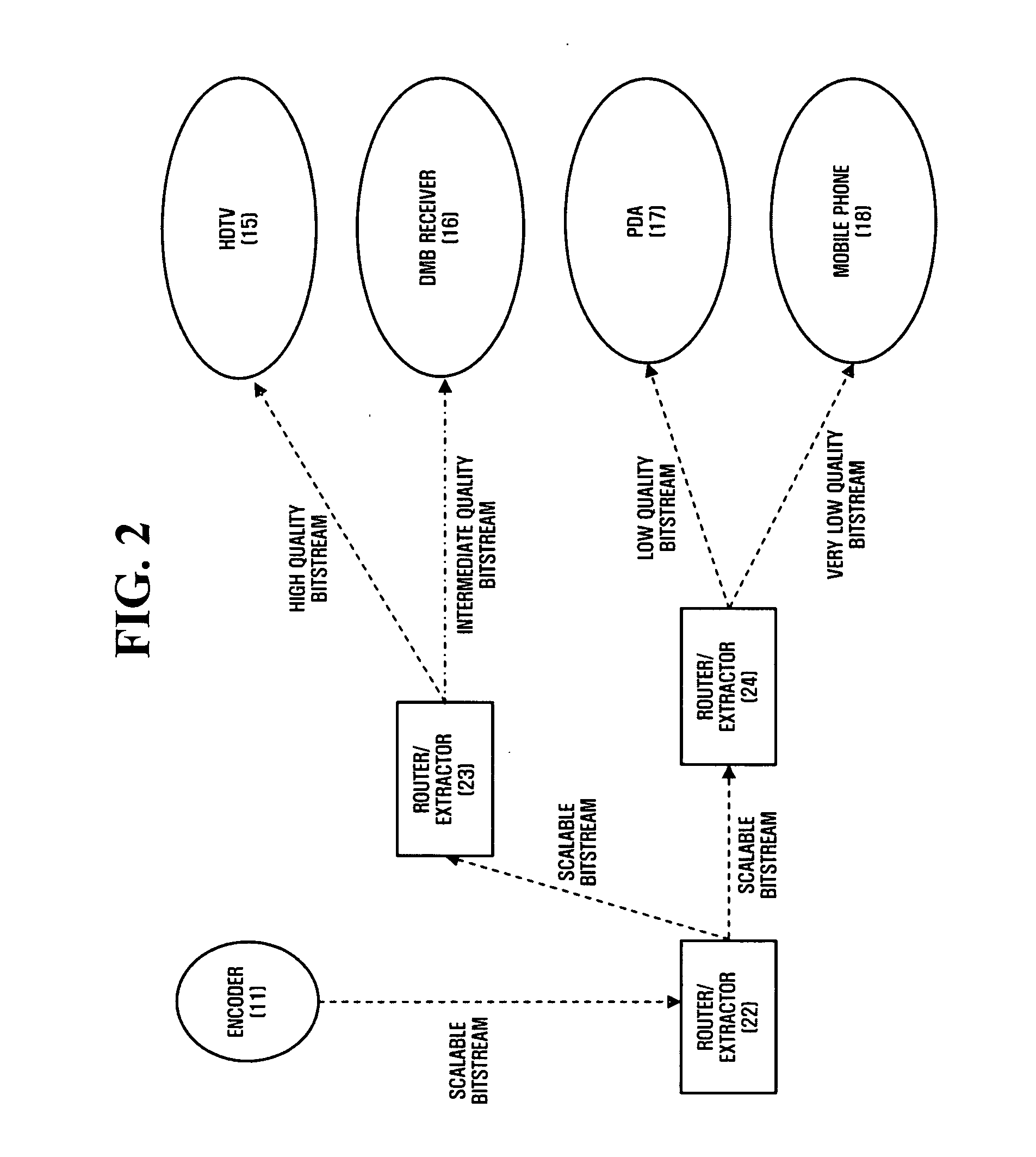

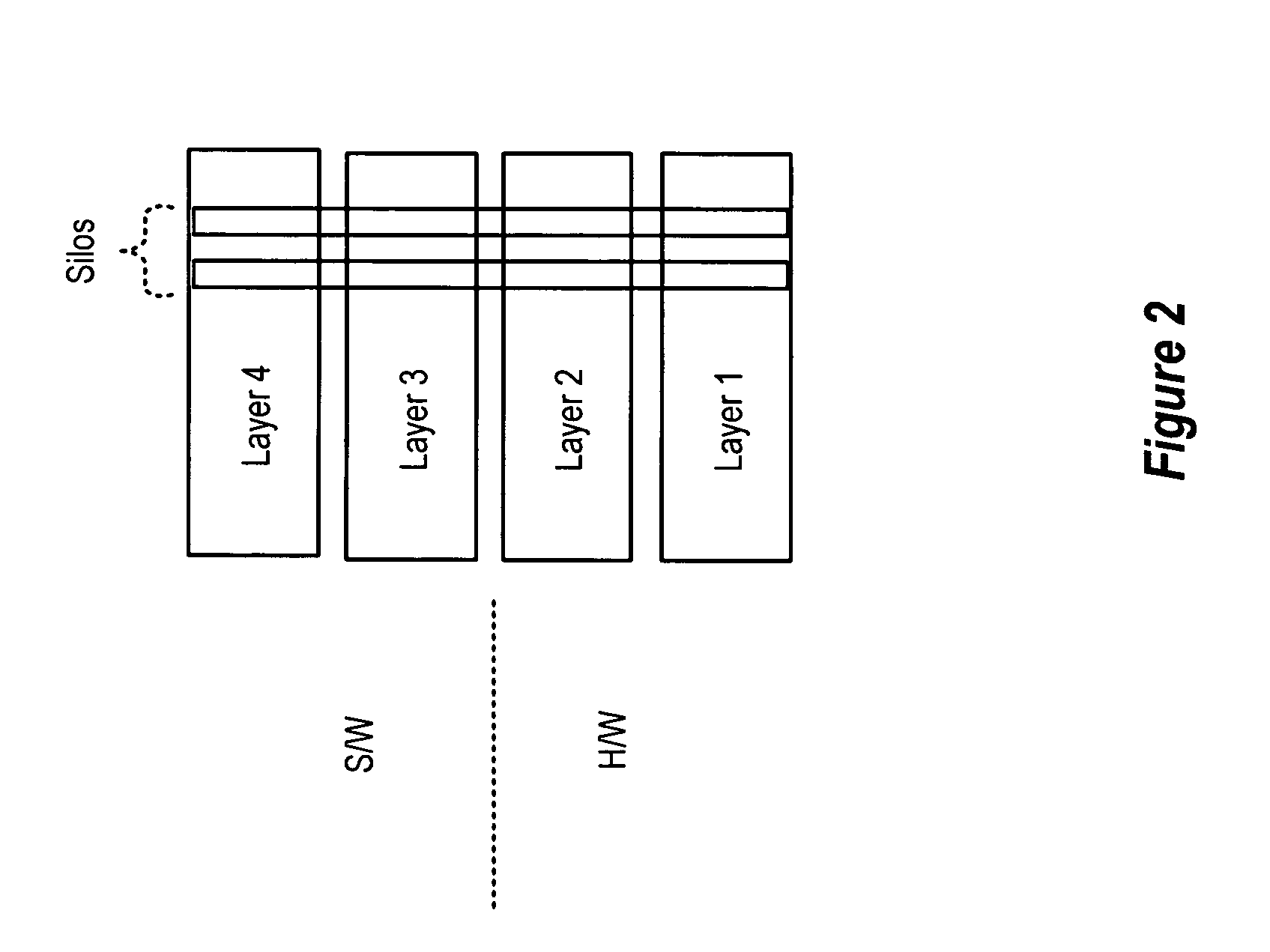

Scalable video coding method and apparatus based on multiple layers

InactiveUS20070121723A1Improve encoding performanceEliminate overheadProgramme-controlled manipulatorPulse modulation television signal transmissionComputer architectureVideo encoding

A scalable video encoding method and apparatus based on a plurality of layers are provided. The video encoding method for encoding a video sequence having a plurality of layers includes coding a residual of a first block existing in a first layer among the plurality of layers; recording the coded residual of the first block on a non-discardable region of a bitstream, if a second block is coded using the first block, the second block existing in a second layer among the plurality of layers and corresponding to the first block; and recording the coded residual of the first block on a discardable region of the bitstream, if a second block is coded without using the first block.

Owner:SAMSUNG ELECTRONICS CO LTD

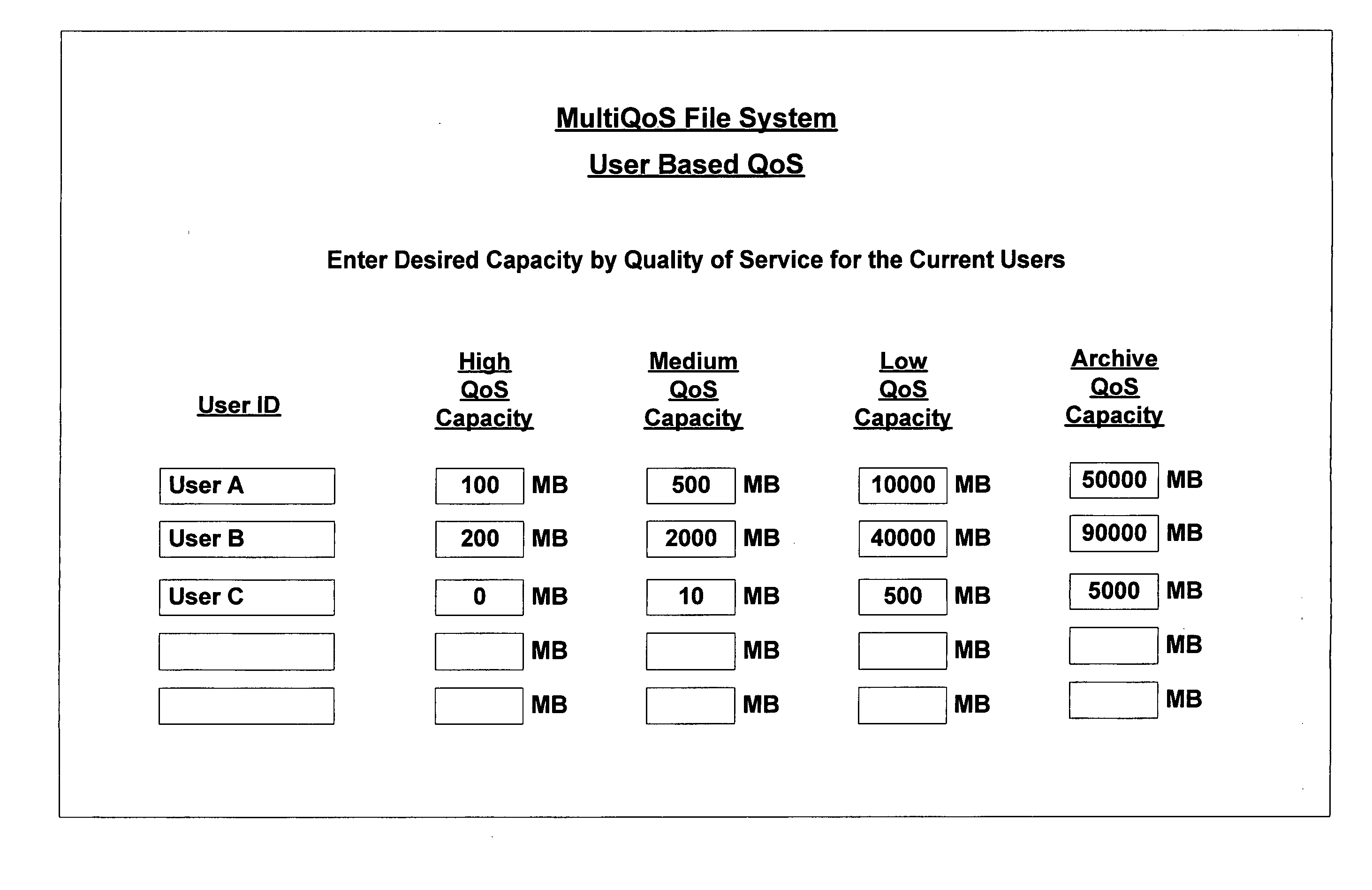

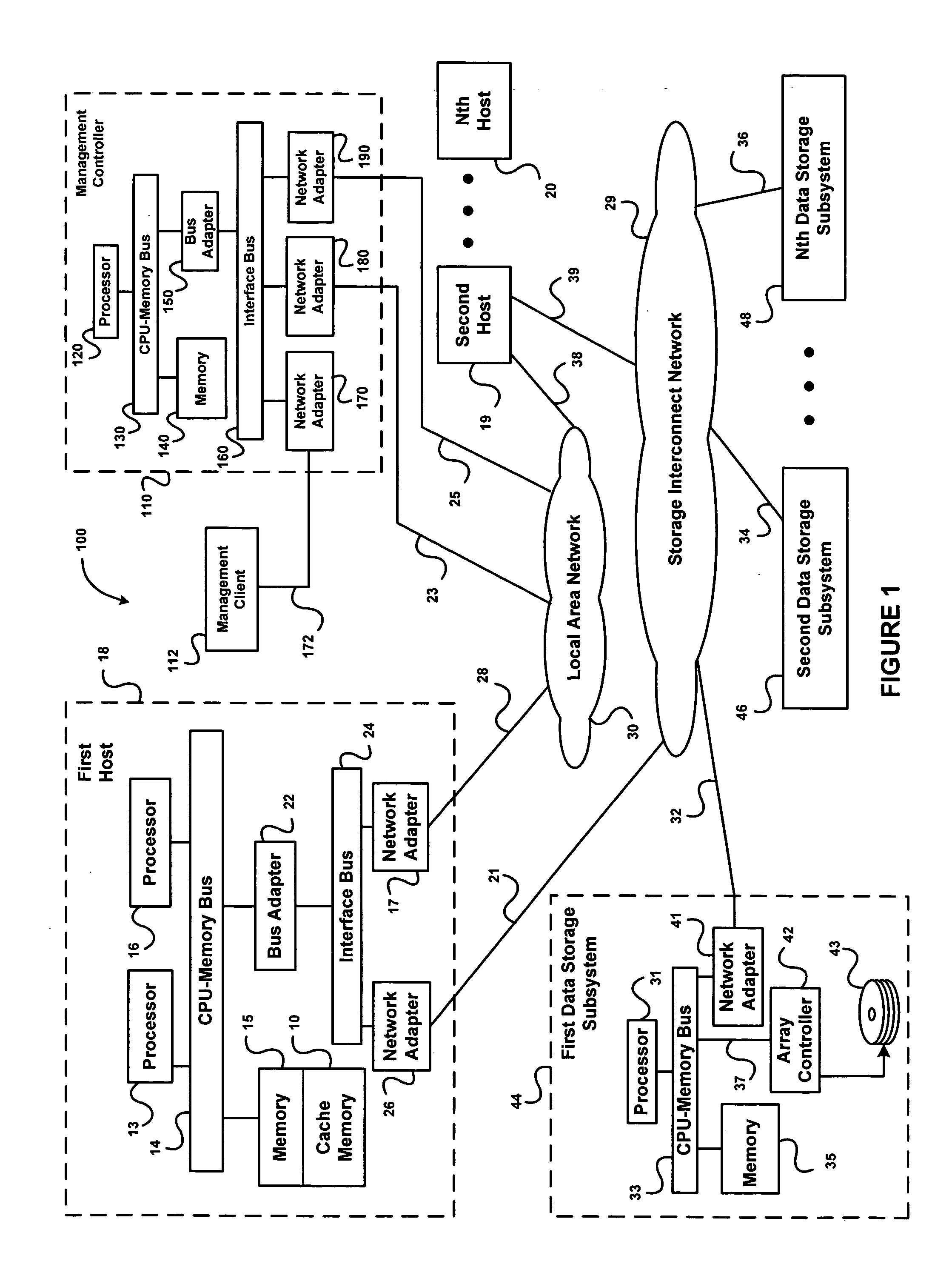

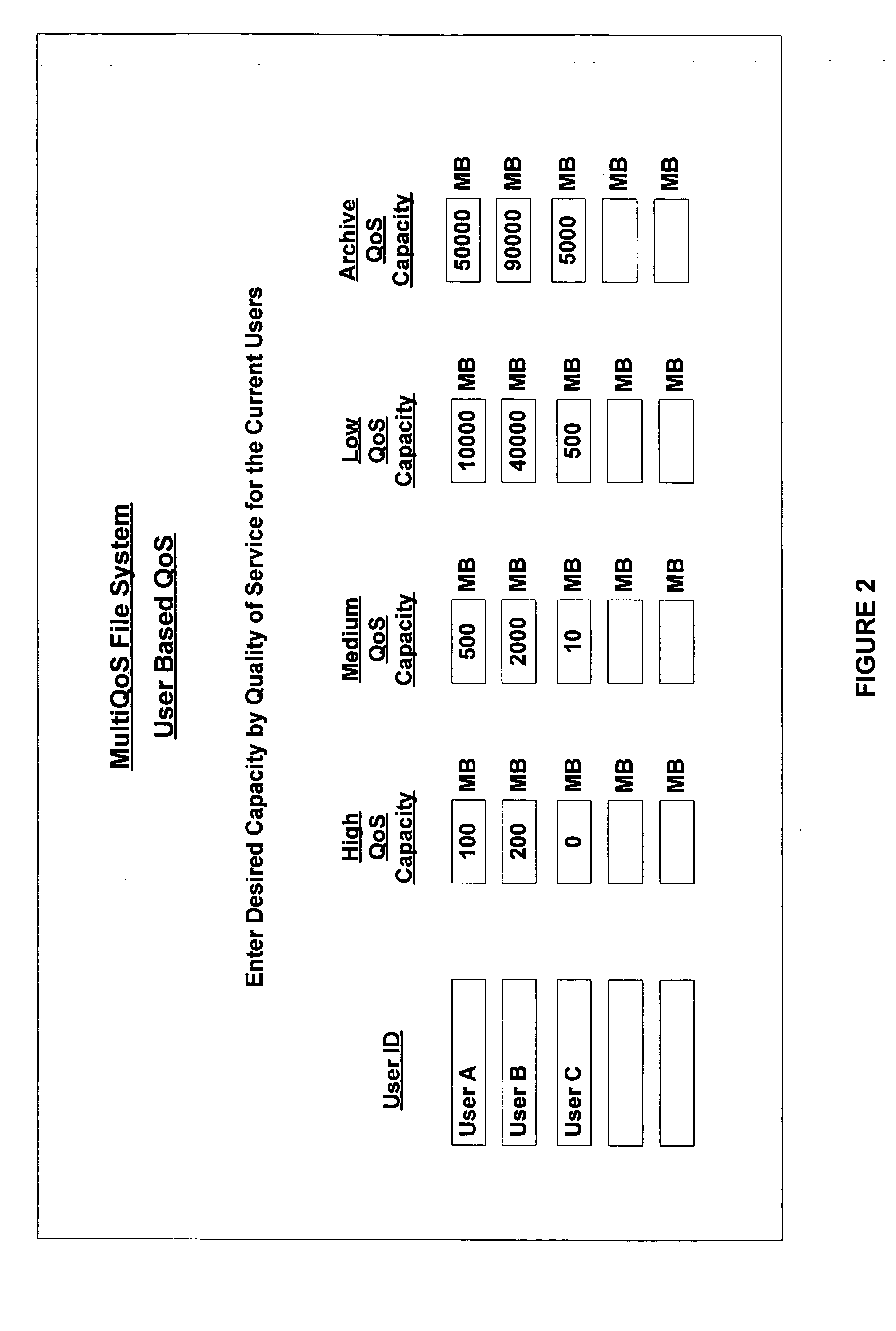

Multiple quality of service file system

InactiveUS20070083482A1Enhances descriptive informationIncreasing the IT administrator workloadDigital data information retrievalSpecial data processing applicationsQuality of serviceFile system

The invention relates to a multiple QoS file system and methods of processing files at different QoS according to rules. The invention allocates multiple VLUNs at different qualities of service to the multiQoS file system. Using the rules, the file system chooses an initial QoS for a file when created. Thereafter, the file system moves files to different QoS using rules. Users of the file system see a single unified space of files, while administrators place files on storage with the new cost and performance according to attributes of the files. A multiQoS file system enhances the descriptive information for each file to contain the chosen QoS for the file.

Owner:PILLAR DATA SYSTEMS

Distributed network security system and a hardware processor therefor

ActiveUS7415723B2High line rateHigh performanceData switching by path configurationMultiple digital computer combinationsNetwork security policyPacket processing

An architecture provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:BLUE LOBSTER SERIES 110 OF ALLIED SECURITY TRUST I

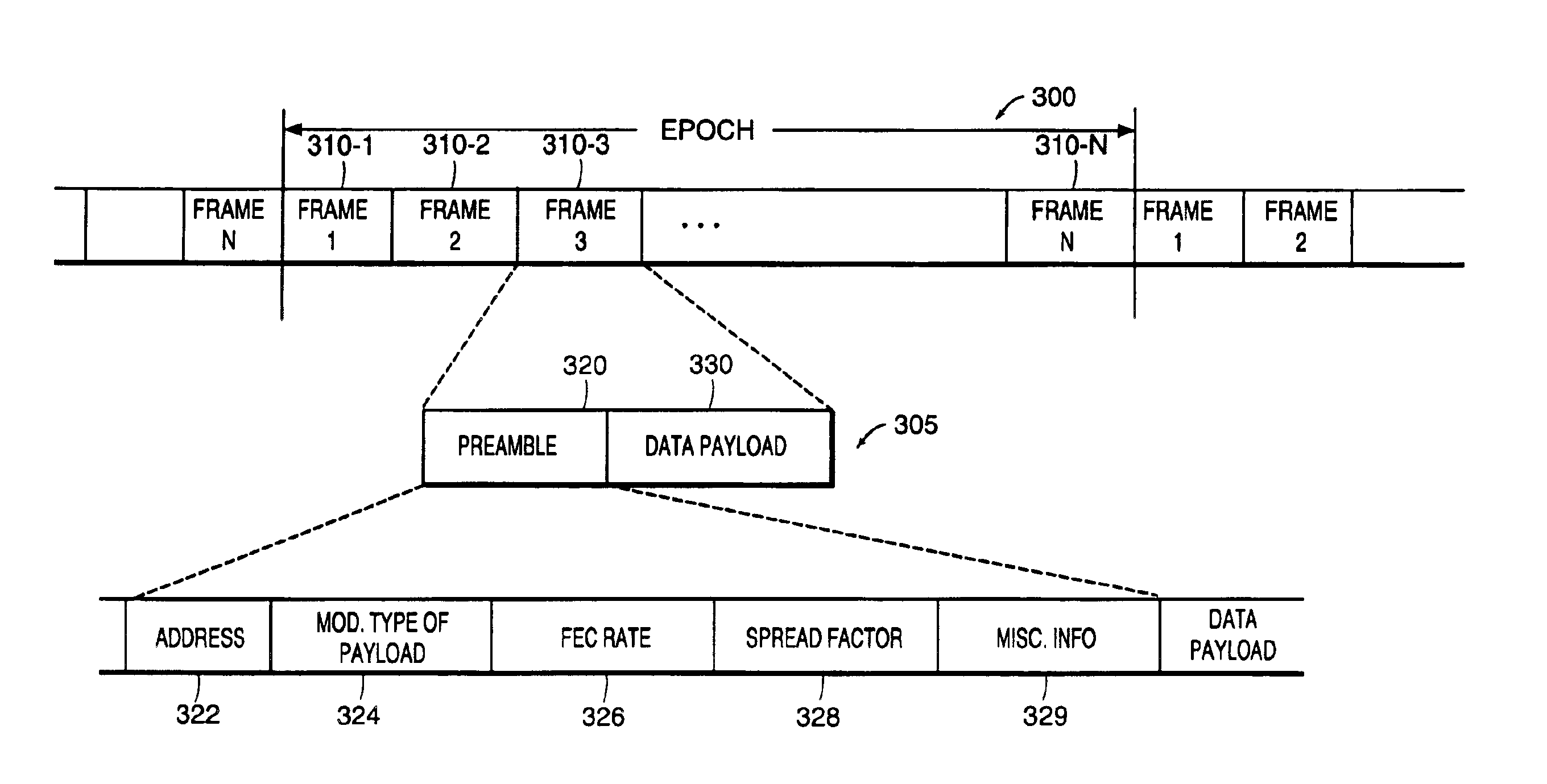

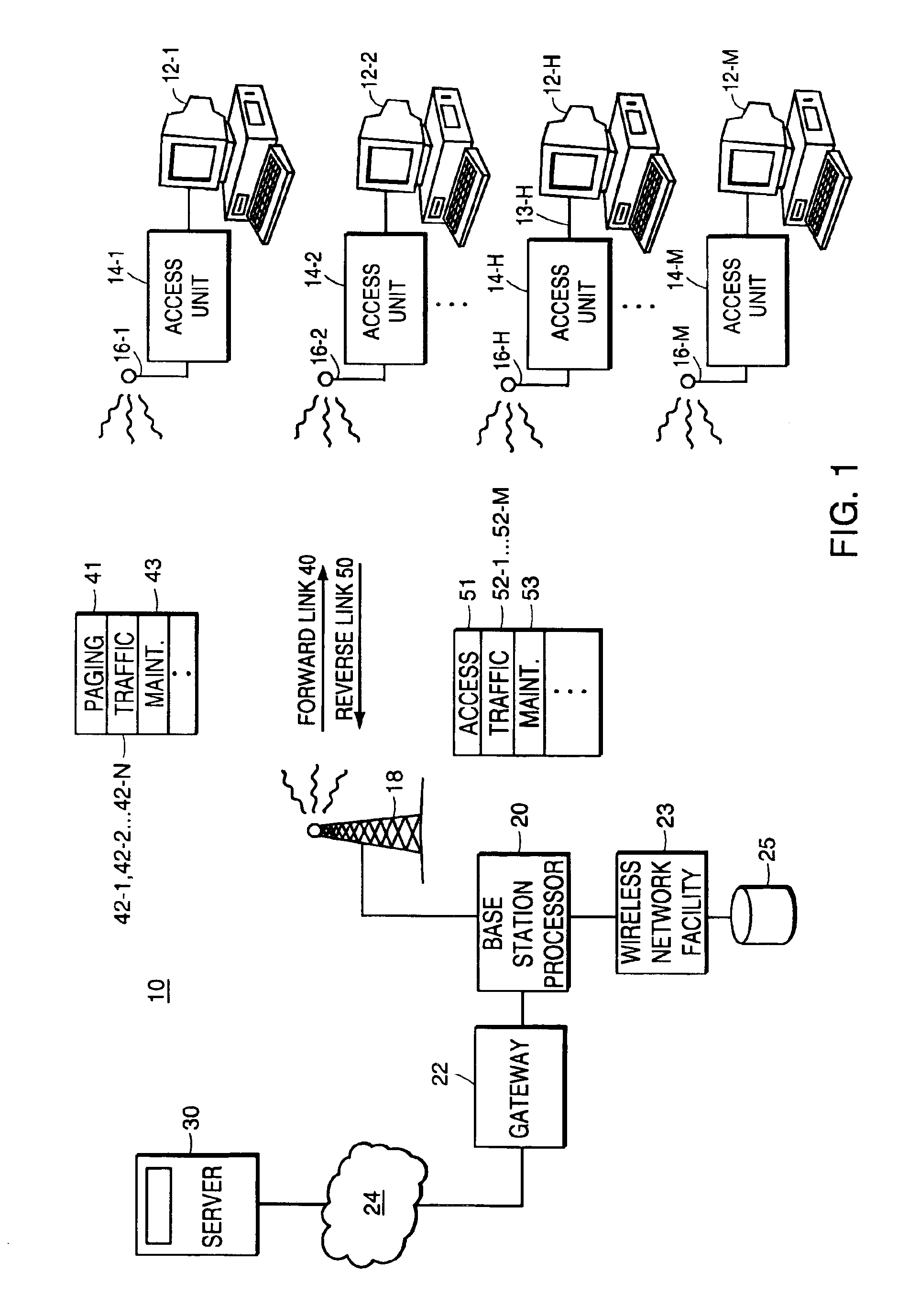

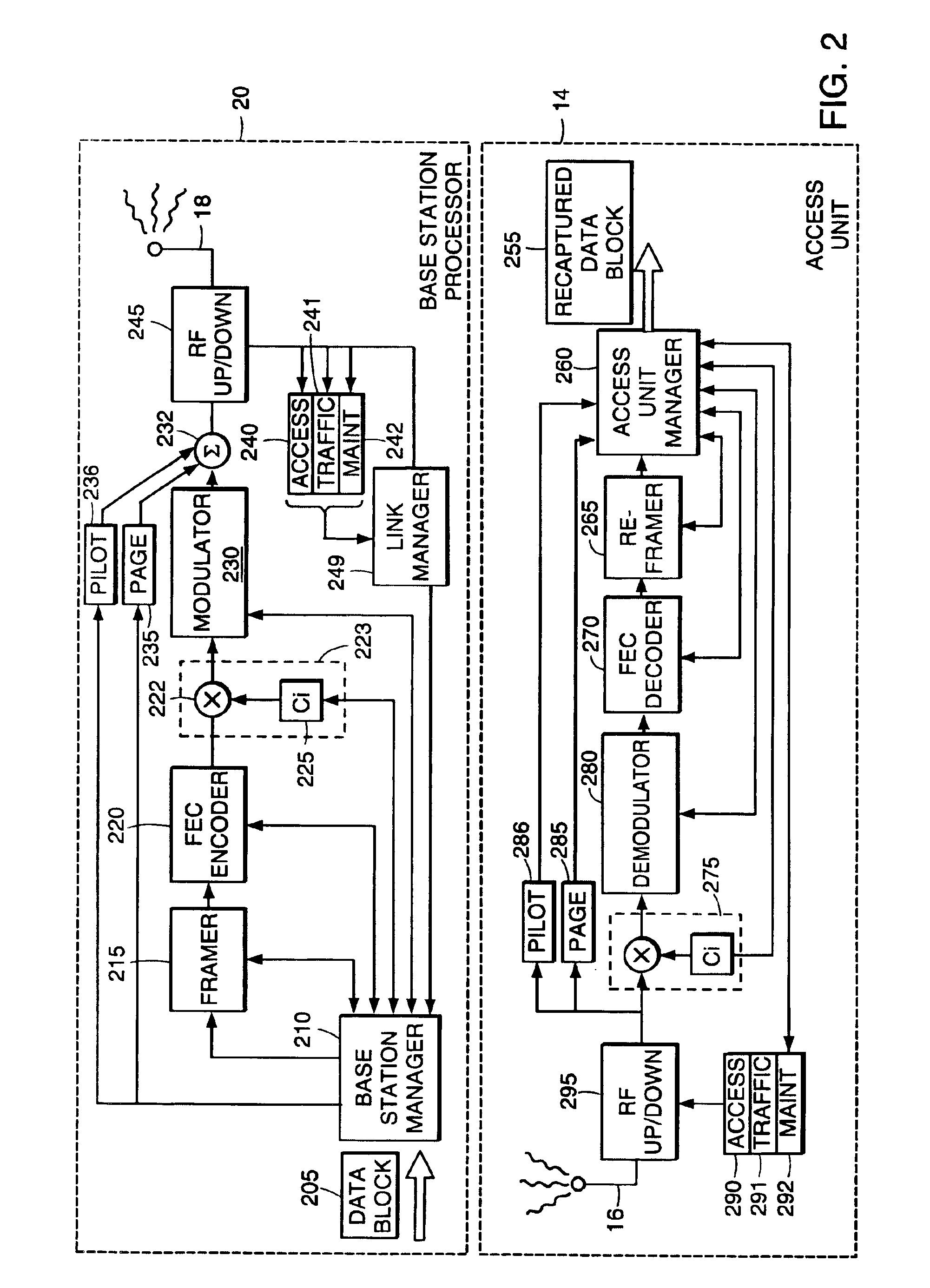

Time-slotted data packets with a preamble

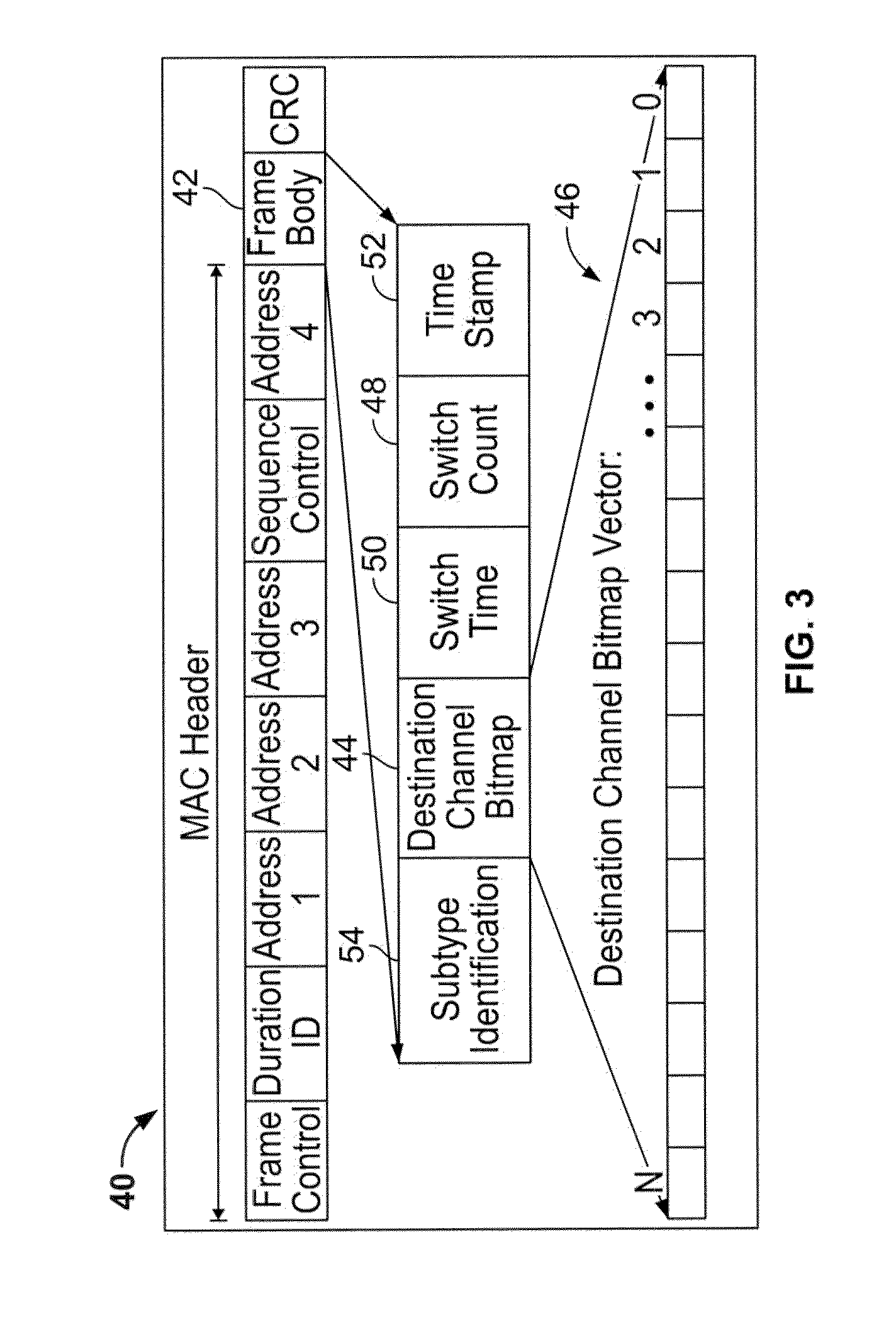

ActiveUS6925070B2Eliminate overheadQuickly respondNetwork traffic/resource managementTime-division multiplexPreambleReal-time computing

An illustrative embodiment of the present invention supports the transmission of data to a user on an as-needed basis over multiple allocated data channels. Data packets are transmitted in time-slots of the allocated data channels to corresponding target receivers without the need for explicitly assigning particular time-slots to a target user well in advance of transmitting any data packets in the time-slots. Instead, each data packet transmitted in a time-slot includes a header label or preamble indicating to which of multiple possible receivers a data packet is directed. The preamble also preferably includes decoding information indicating how a corresponding data payload of the data packet is to be processed for recapturing transmitted raw data.

Owner:APPLE INC

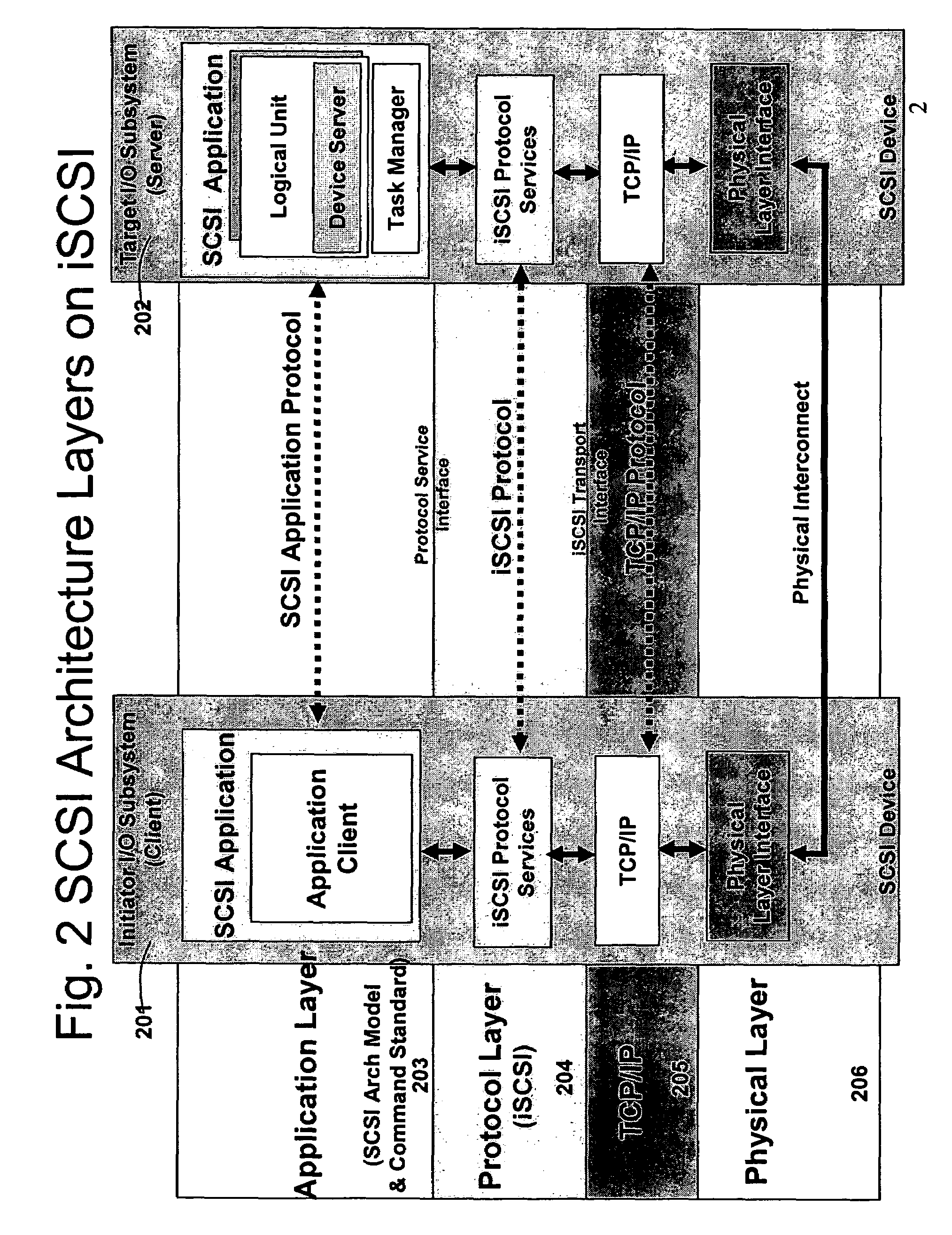

Gigabit Ethernet Adapter

InactiveUS20070253430A1Fast communication speedCompact solutionWide area networksRaw socketSmall form factor

A gigabit Ethernet adapter provides a provides a low-cost, low-power, easily manufacturable, small form-factor network access module which has a low memory demand and provides a highly efficient protocol decode. The invention comprises a hardware-integrated system that both decodes multiple network protocols byte-streaming manner concurrently and processes packet data in one pass, thereby reducing system memory and form factor requirements, while also eliminating software CPU overhead. A preferred embodiment of the invention comprises a plurality of protocol state machines that decode network protocols such as TCP, IP, User Datagram Protocol (UDP), PPP, Raw Socket, RARP, ICMP, IGMP, iSCSI, RDMA, and FCIP concurrently as each byte is received. Each protocol handler parses, interprets, and strips header information immediately from the packet, requiring no intermediate memory. The invention provides an internet tuner core, peripherals, and external interfaces. A network stack processes, generates and receives network packets. An internal programmable processor controls the network stack and handles any other types of ICMP packets, IGMP packets, or packets corresponding to other protocols not supported directly by dedicated hardware. A virtual memory manager is implemented in optimized, hardwired logic. The virtual memory manager allows the use of a virtual number of network connections which is limited only by the amount of internal and external memory available.

Owner:NVIDIA CORP

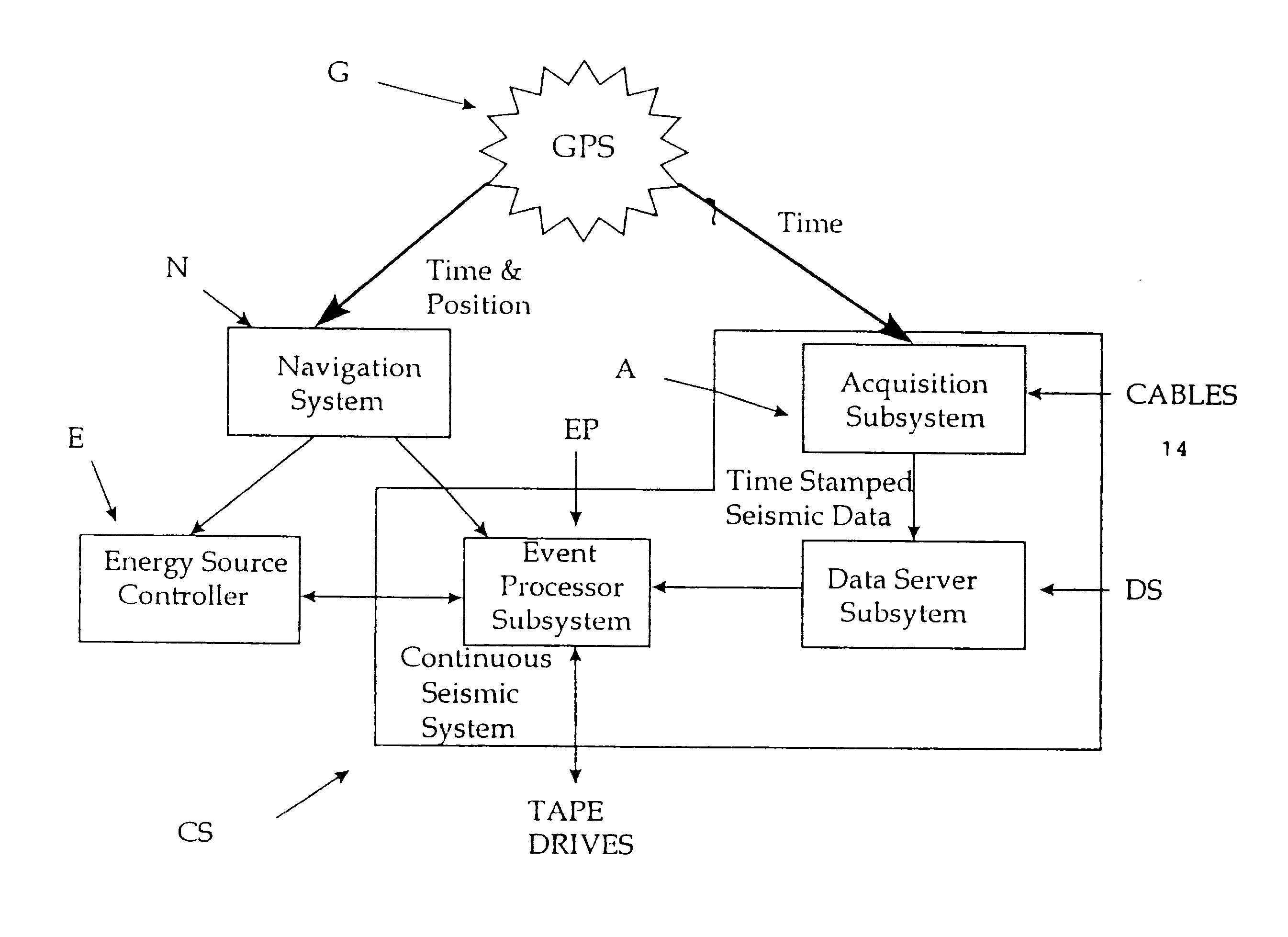

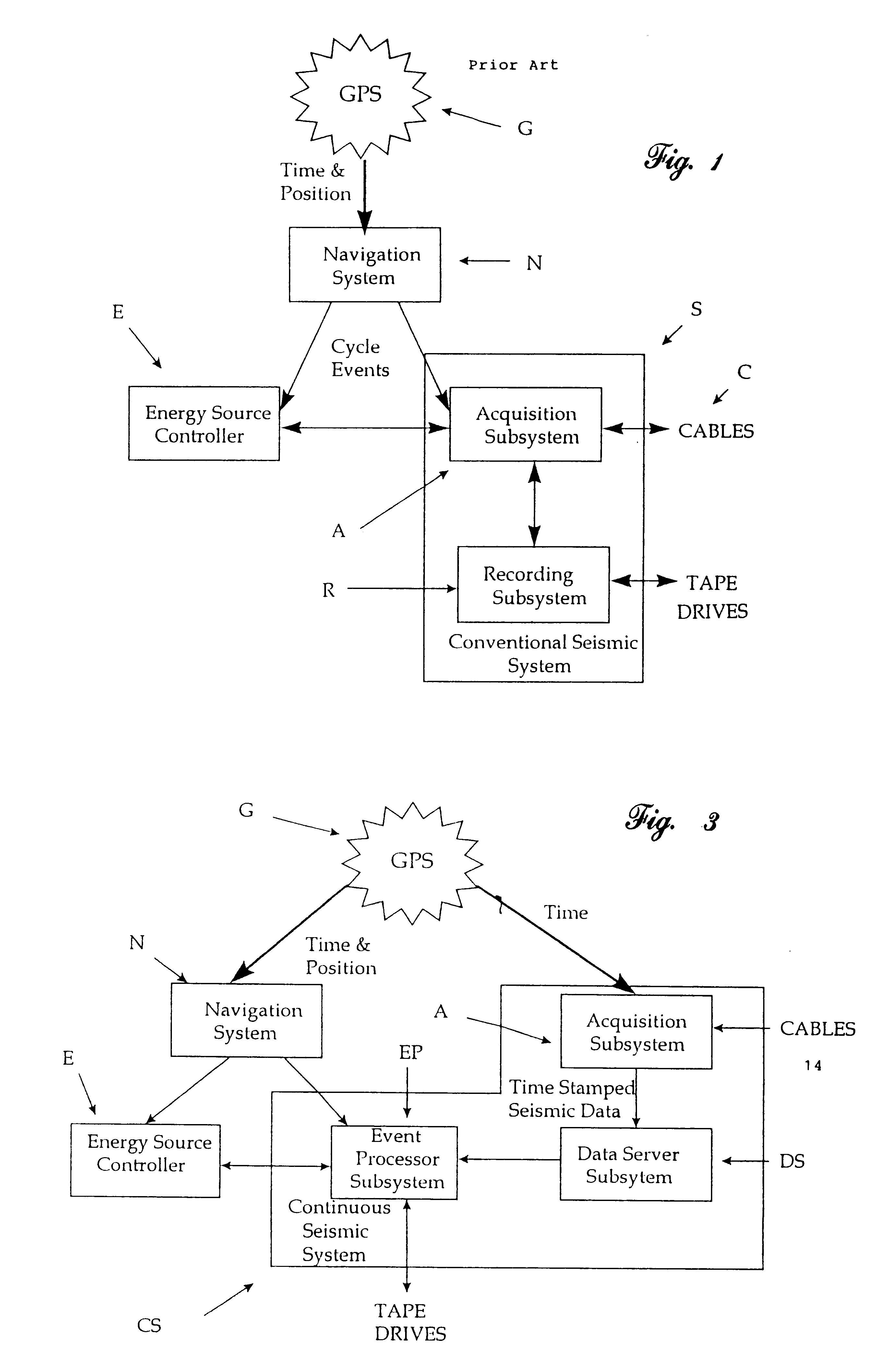

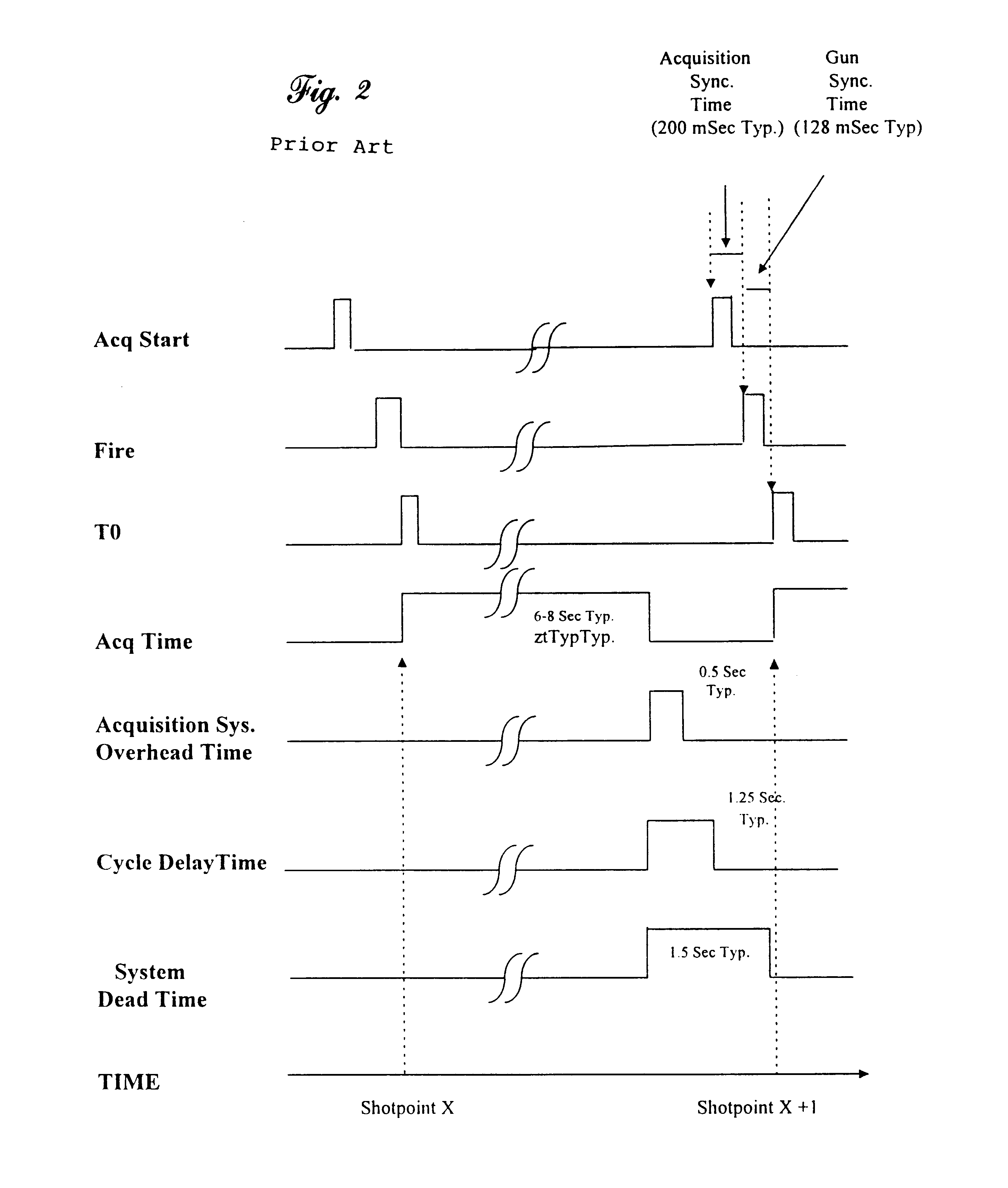

Continuous data seismic system

InactiveUS6188962B1Eliminate dead timeEliminate overheadBeacon systems using ultrasonic/sonic/infrasonic wavesSeismic data acquisitionContinuous flowMarine navigation

A system for seismic data acquisition has been invented having, in certain aspects, one or multiple distributed data acquisition subsystems that are independent of energy source controller and / or of navigation system operation. Thus the distributed acquisition system(s) do not require a complicated and burdensome interface to the energy source controller or navigation systems. The seismic data acquisition system supplies a continuous flow of data which is buffered within a centralized data repository for short term storage until required by non-real-time processes. A seismic data acquisition method has been invented that replaces conventional closely coupled interaction between seismic acquisition systems and the energy source controller and the navigation systems by utilizing GPS time stamps on both the data as well as cycling events of the energy source controller and / or navigation systems to insure precise association of seismic data with cycling events. By continuously acquiring data with no interruption in the acquired data flow, association with energy source or navigation events may be accomplished at a latter point in time and not in the real-time operation of the acquisition subsystem.

Owner:REFLECTION MARINE NORGE AS

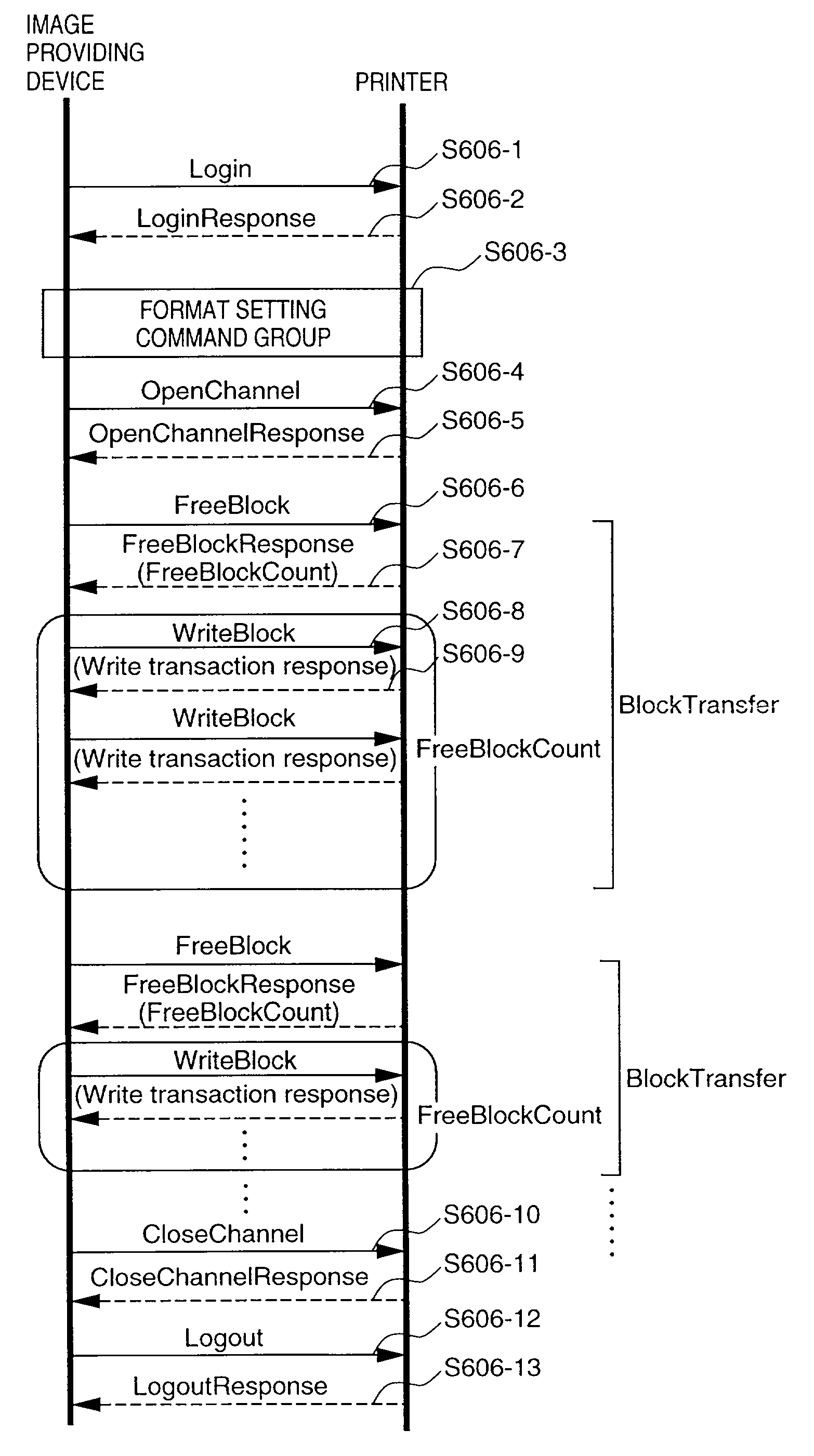

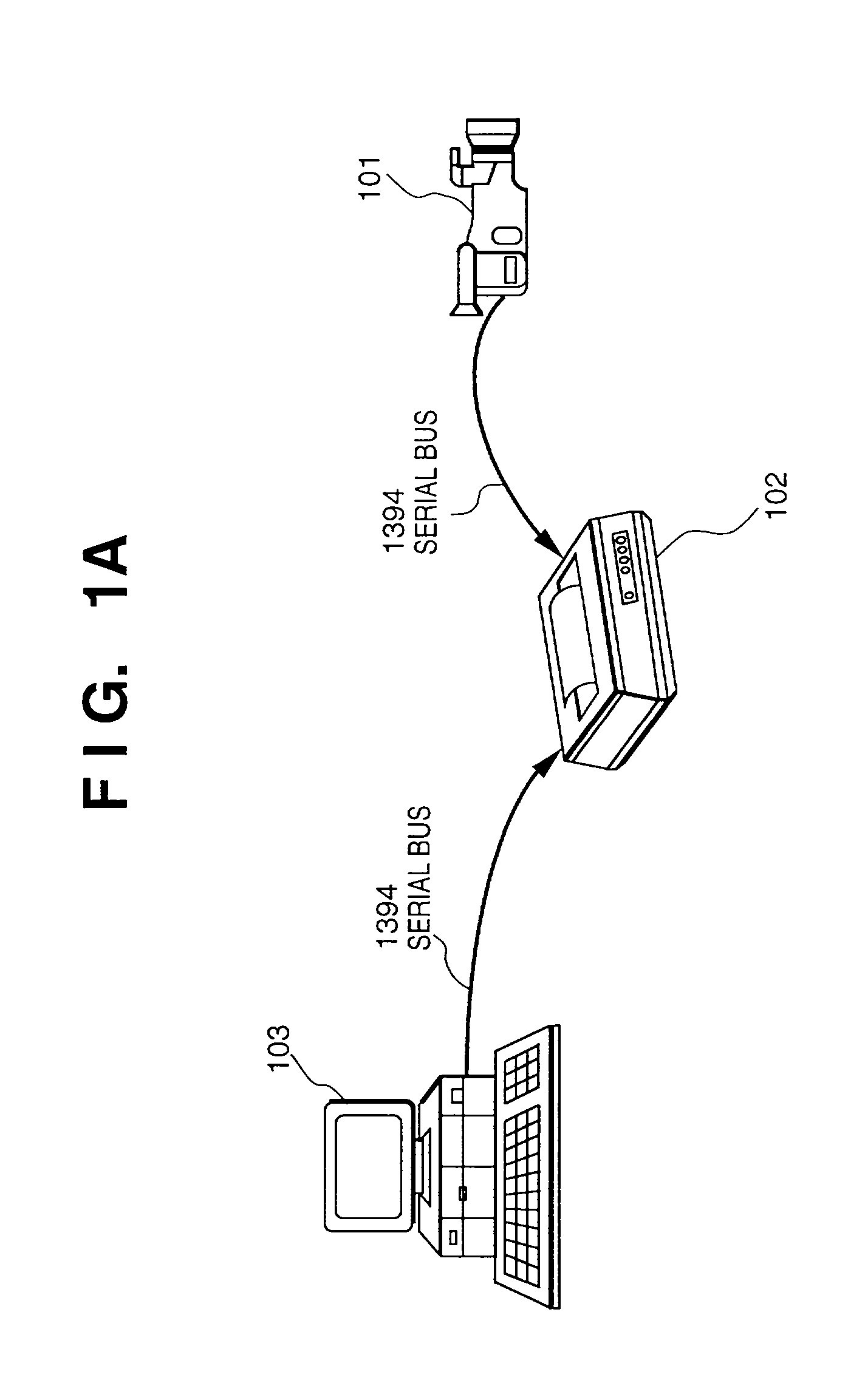

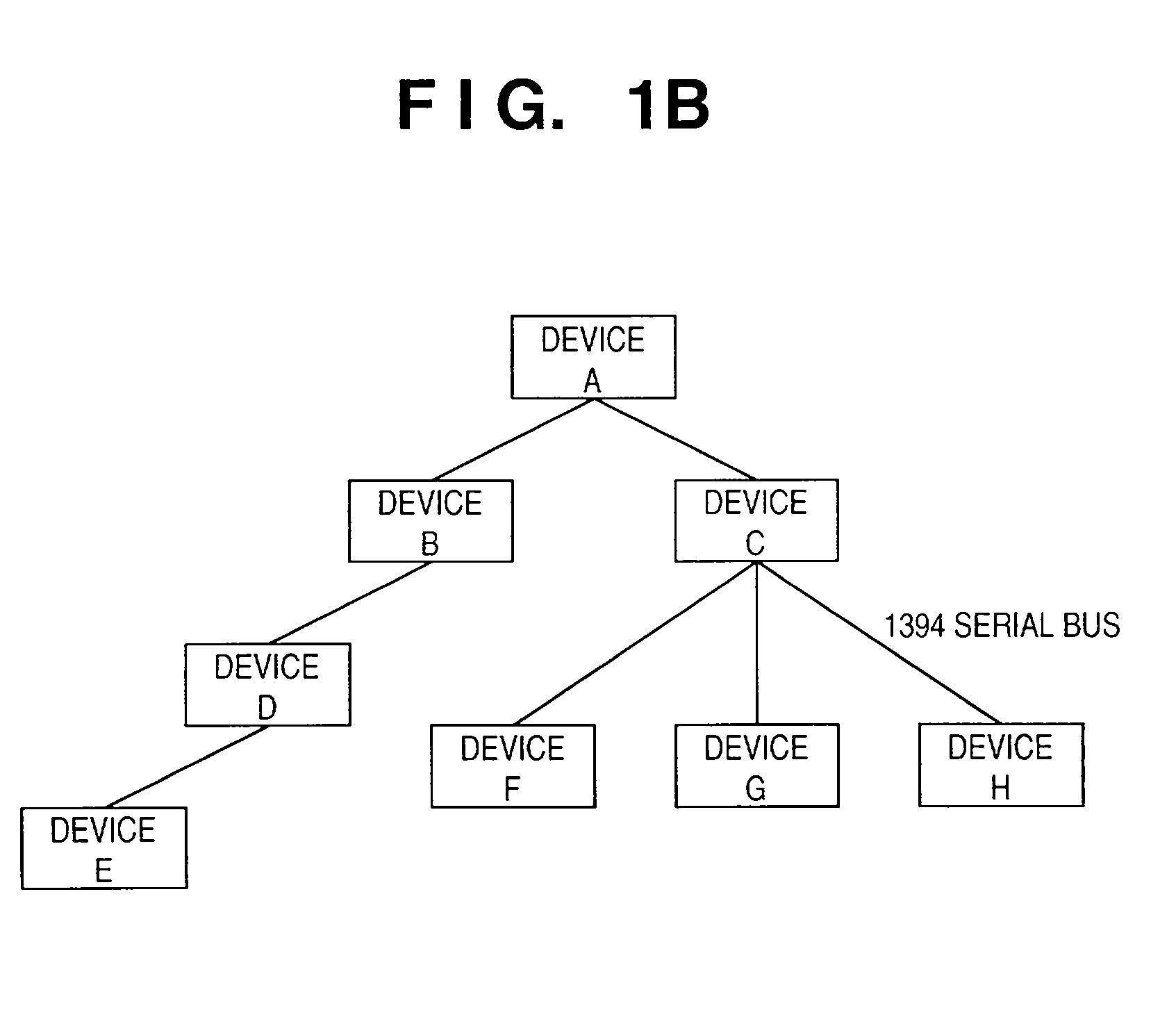

Data transmission apparatus, system and method, and image processing apparatus

InactiveUS20030158979A1High transfer efficiencyEliminate overheadPulse modulation television signal transmissionHybrid switching systemsImaging processingComputer graphics (images)

In a system where a digital still camera 1101 is connected to a printer 1102 on a 1394 serial bus, when the digital still camera 1101 as a data transfer originator node transfers image data to the printer 1102 as a data transfer destination node, the printer 1102 sends information on the data processing capability of the printer 1102 to the digital still camera 1101 prior to data transfer. The digital still camera 1101 determines the block size of image data to be transferred in accordance with the data processing capability of the printer 1102, and sends image data of the block size to the printer 1102. The information indicates the capacity of a memory of the printer for storing print data, or the printing capability of a printer engine, i.e., printing resolution, printing speed and the like. Thus, data transfer is always efficiently performed regardless of the data processing capability of the printer 1102.

Owner:CANON KK

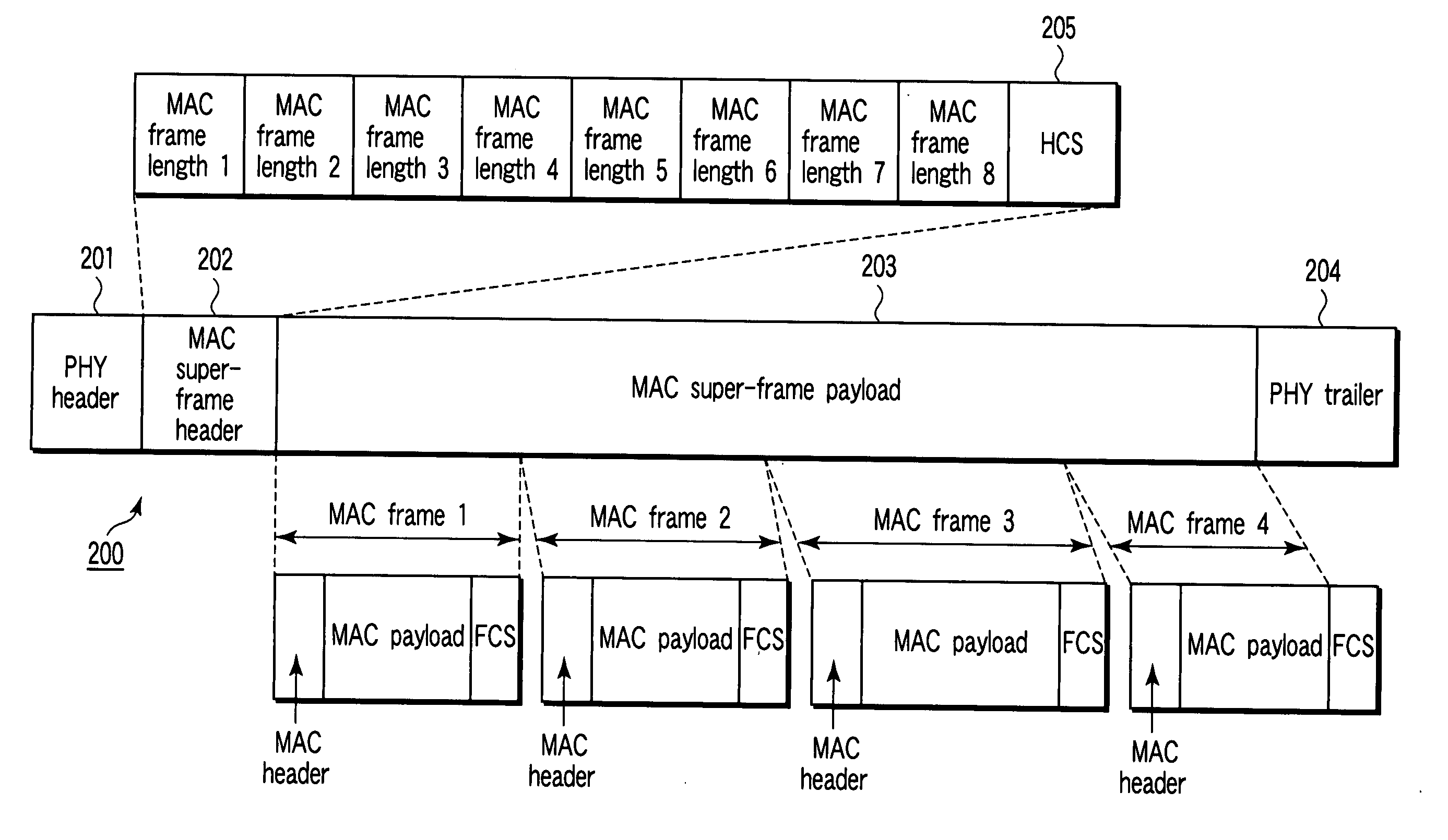

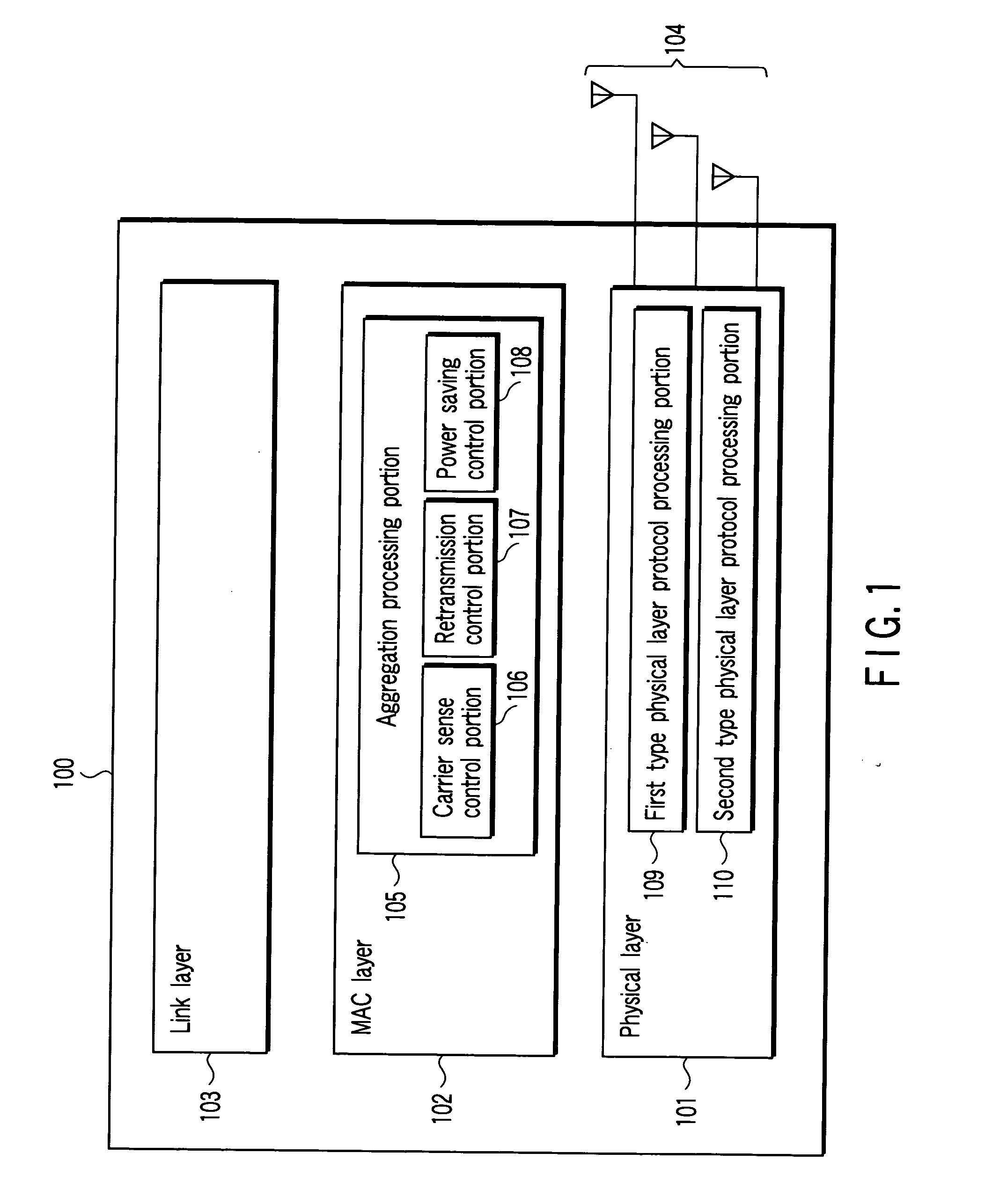

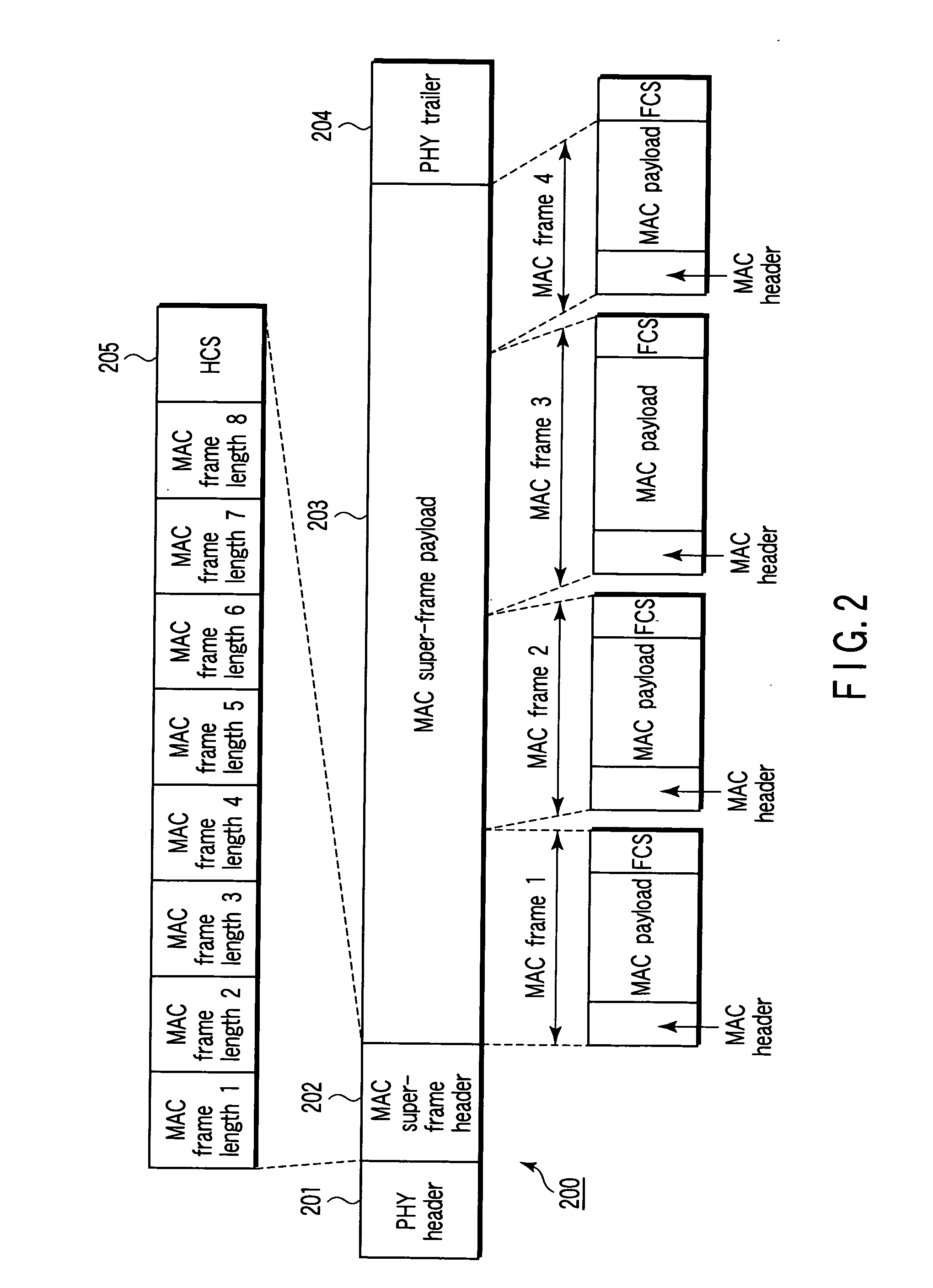

Communication apparatus, communication method, and communication system

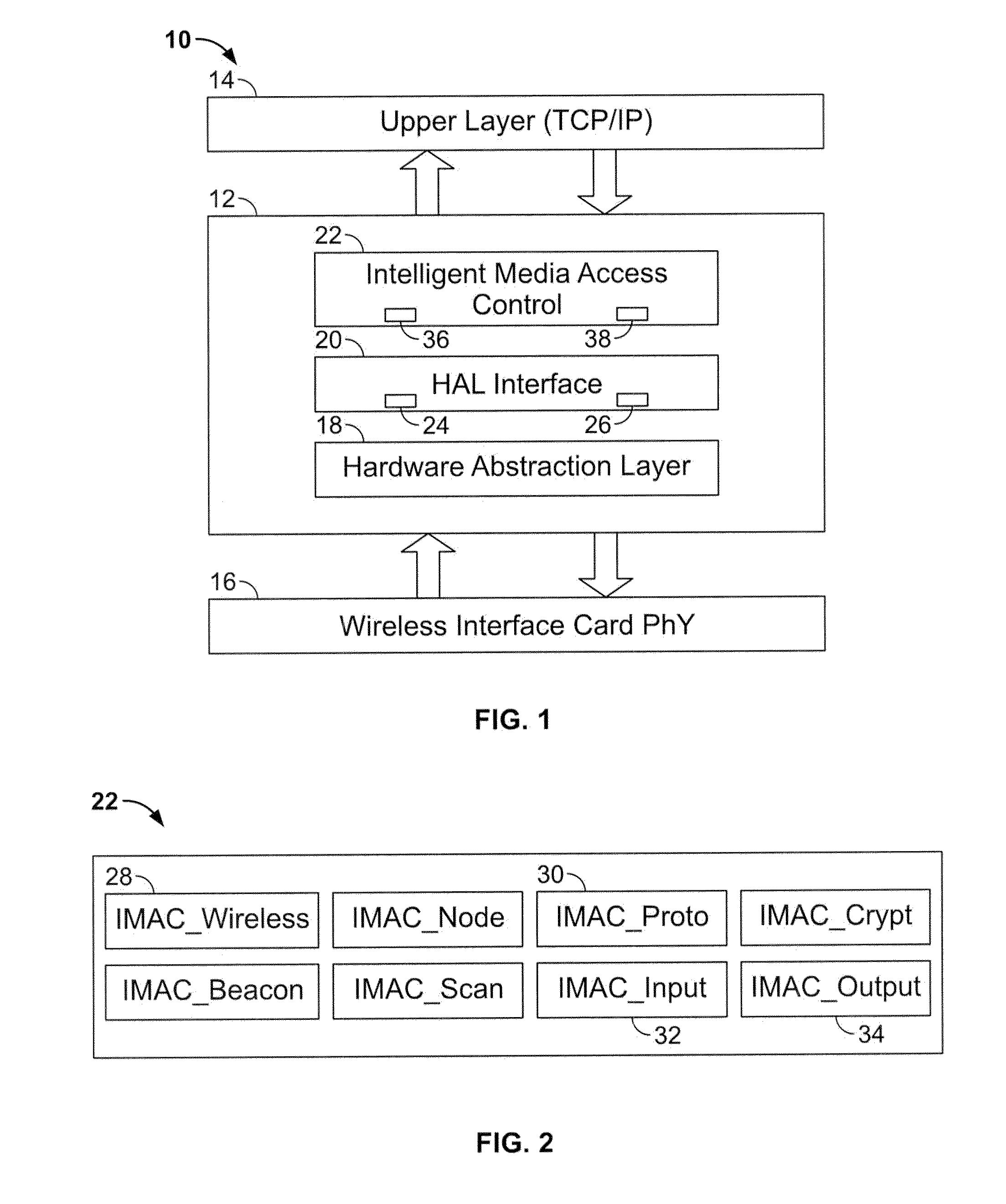

ActiveUS20050165950A1Eliminate overheadImprove substantial communication throughputError preventionSignal allocationComputer hardwareCommunications system

A physical frame is constructed, the physical frame including a medium access control super-frame payload which in turn includes a plurality of medium access control frames. With respect to the constructed physical frame, virtual carrier sense information is set in the plurality of medium access control frame so that a result of carrier sense is identical to another by virtual carrier sense based on the plurality of medium access control frames in the medium access control super-frame payload. The physical frame in which the virtual carrier sense information has been set is transmitted to a destined communication apparatus.

Owner:PALMIRA WIRELESS AG

Tcp/ip processor and engine using rdma

InactiveUS20080253395A1Sharply reduces TCP/IP protocol stack overheadImprove performanceDigital computer detailsTime-division multiplexInternal memoryTransmission protocol

A TCP / IP processor and data processing engines for use in the TCP / IP processor is disclosed. The TCP / IP processor can transport data payloads of Internet Protocol (IP) data packets using an architecture that provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. The engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a TCP / IP session information database and may also store a storage information session database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer.

Owner:MEMORY ACCESS TECH LLC

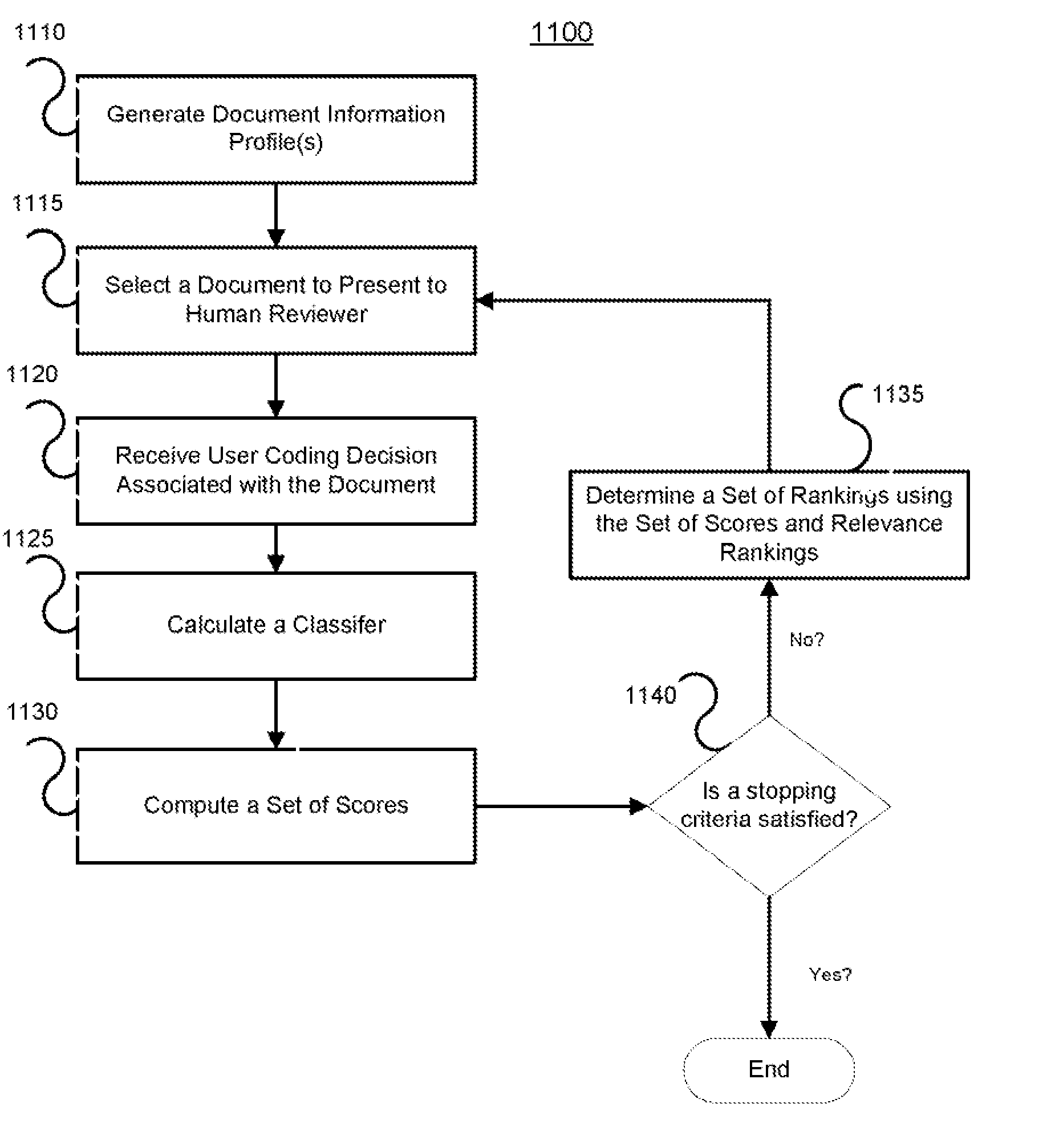

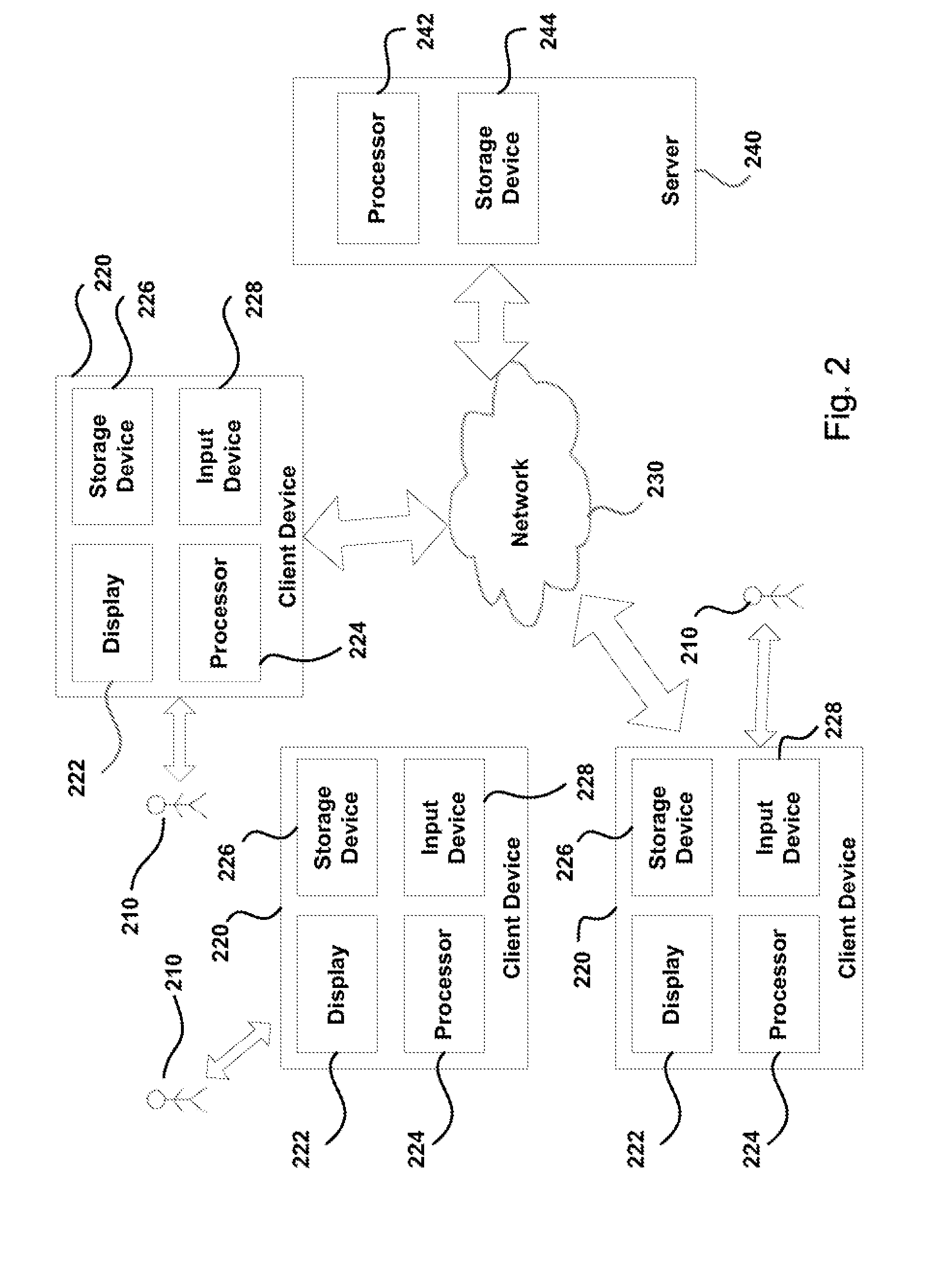

Systems and methods for classifying electronic information using advanced active learning techniques

ActiveUS8620842B1Easy to transportReduce complexityDigital computer detailsRelational databasesElectronic informationData mining

Systems and methods for classifying electronic information or documents into a number of classes and subclasses are provided through an active learning algorithm. In certain embodiments, the active learning algorithm forks a number of classification paths corresponding to predicted user coding decisions for a selected document. The active learning algorithm determines an order in which the documents of the collection may be processed and scored by the forked classification paths. Such document classification systems are easily scalable for large document collections, require less manpower and can be employed on a single computer, thus requiring fewer resources. Furthermore, the classification systems and methods described can be used for any pattern recognition or classification effort in a wide variety of fields.

Owner:CORMACK GORDON VILLY

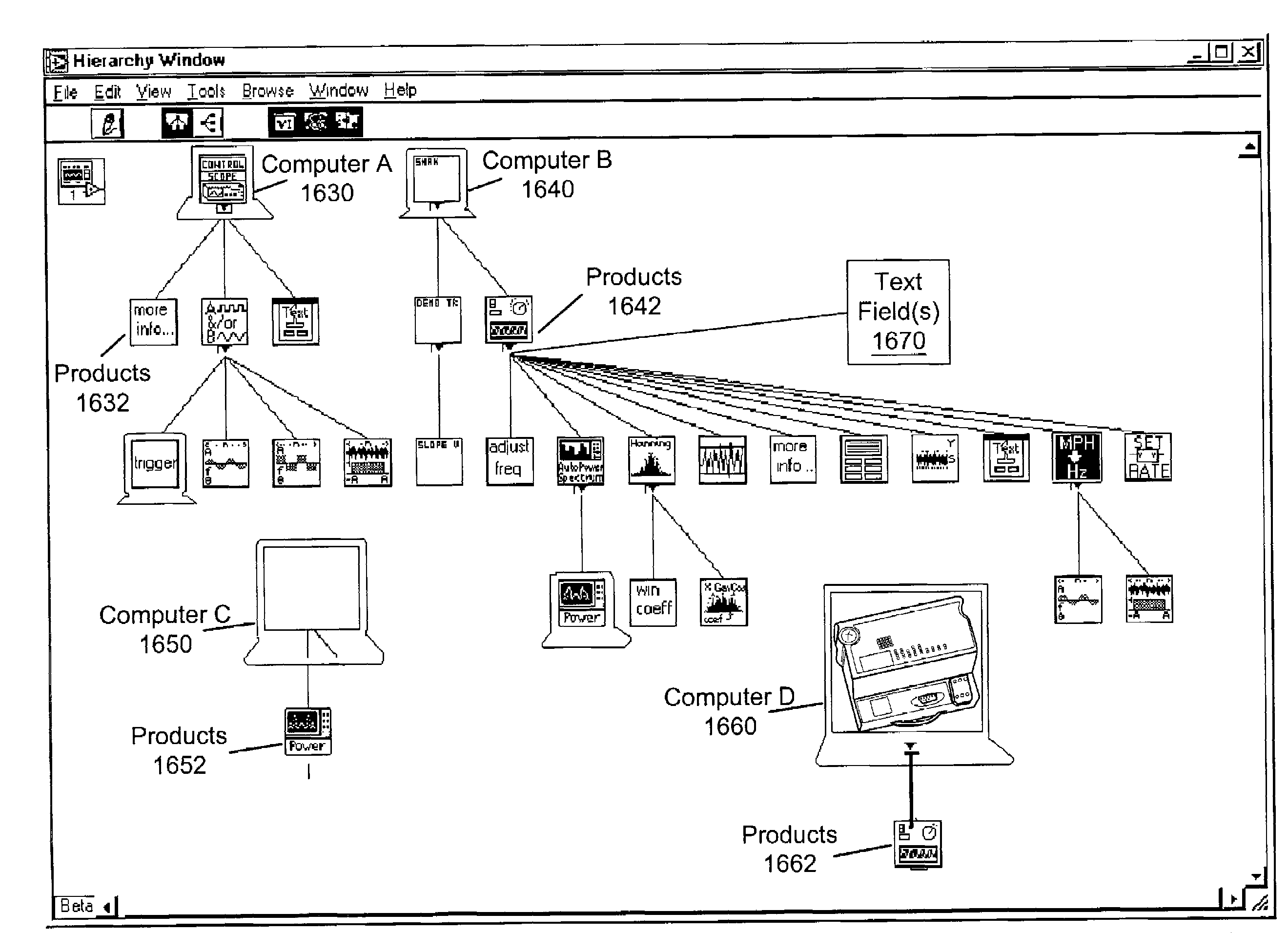

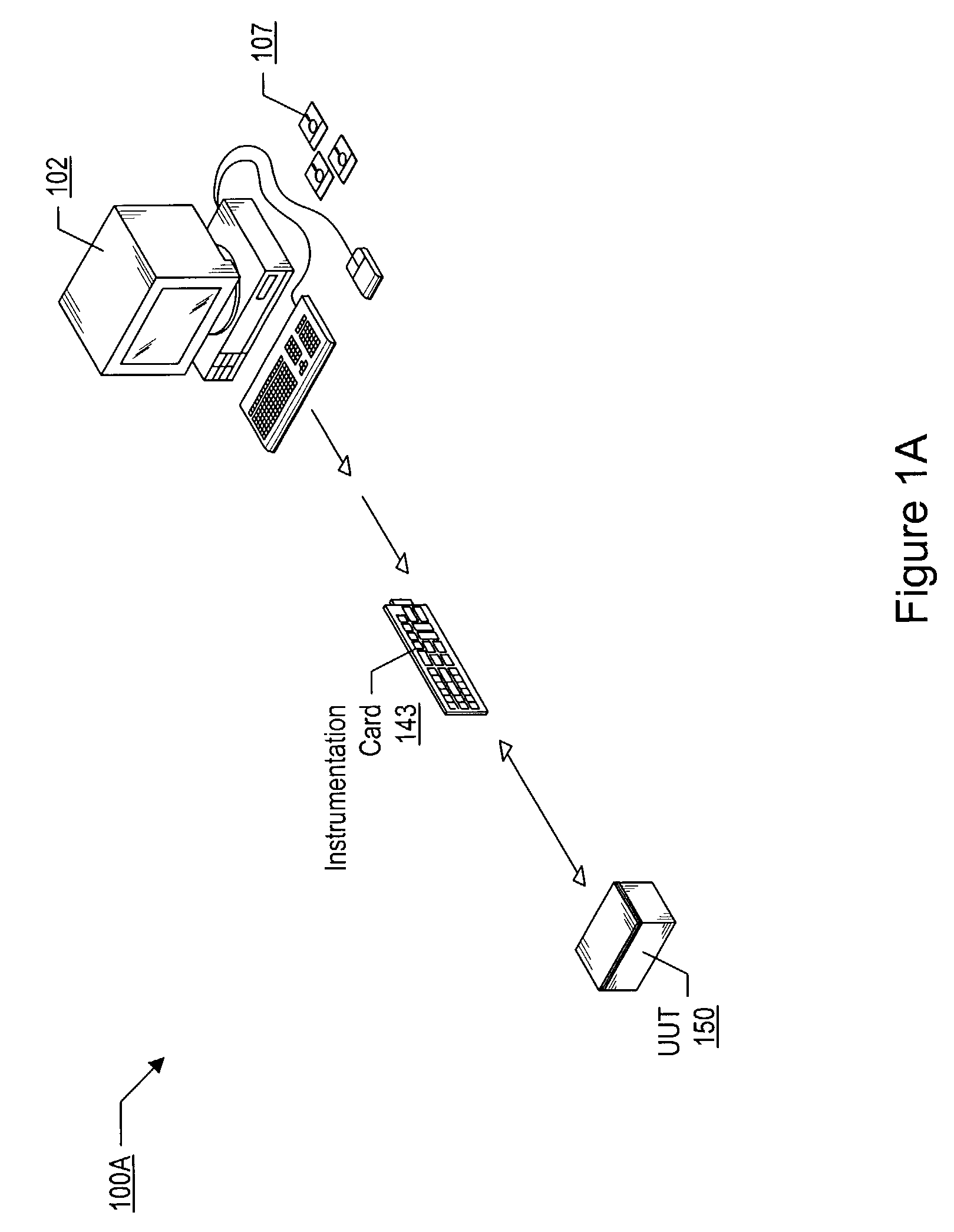

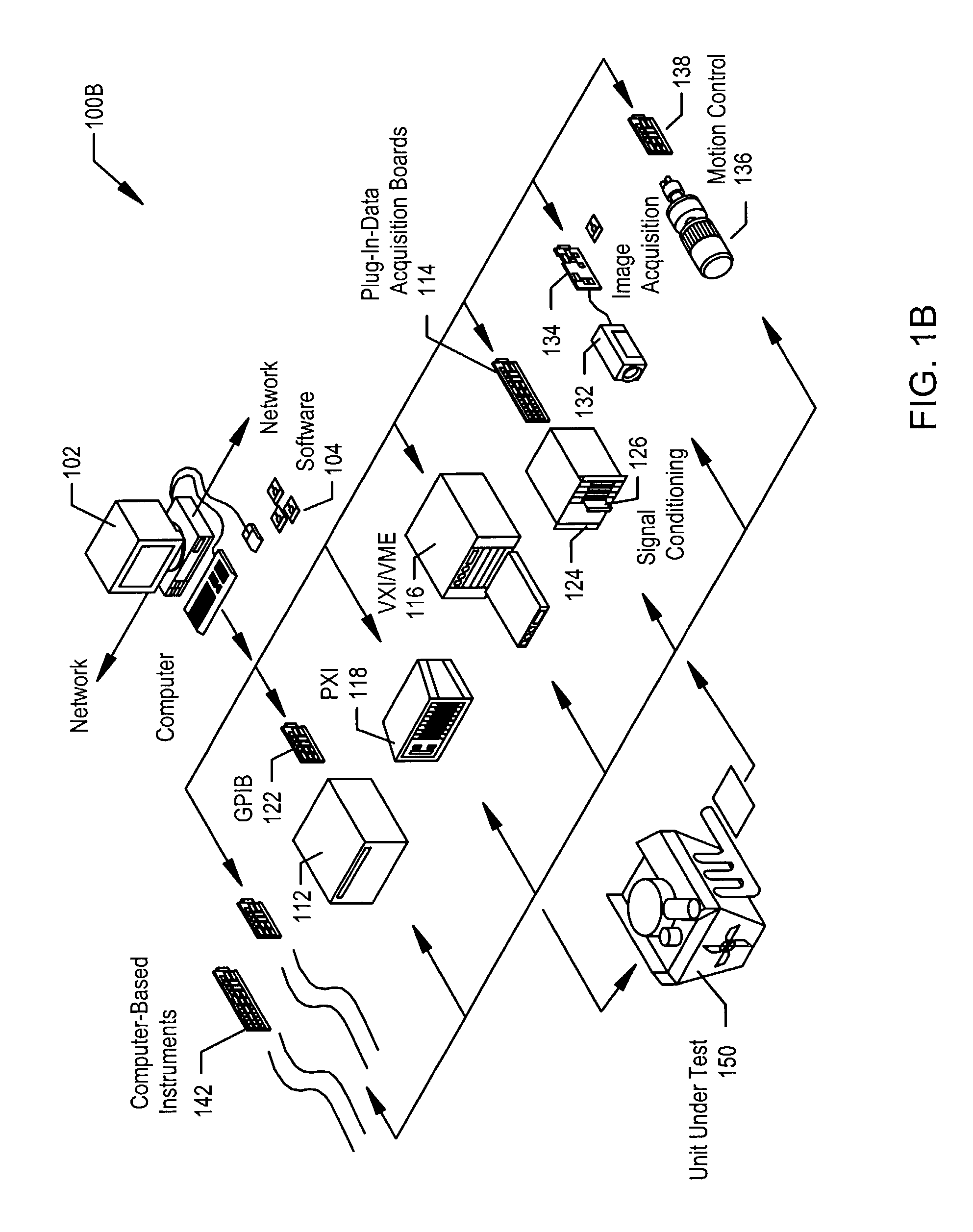

Filtering graphical program elements based on configured or targeted resources

ActiveUS7120874B2Improve system efficiencyEliminate overheadDigital computer detailsVisual/graphical programmingGraphicsUser input

System and method for filtering attributes of a graphical program element (GPE) in a graphical program or diagram, e.g., a property node, menu, property page, icon palette, etc., based on targeted or configured resources. Input is received specifying or selecting a filter option from presented filter options. The filter options include 1) display all attributes of the GPE; 2) display attributes of the GPE associated with configured resources; and 3) display attributes of the GPE associated with selected configured resources. User input is received to access the GPE. Attributes for the GPE associated with the resources are retrieved from the database and displayed in accordance with the selected filtering option. The filtered attributes of the element are then selectable by a user for various operations, e.g., to configure the graphical program, to configure resources, to initiate a purchase or order for the resources, and / or to install the resources, among others.

Owner:NATIONAL INSTRUMENTS

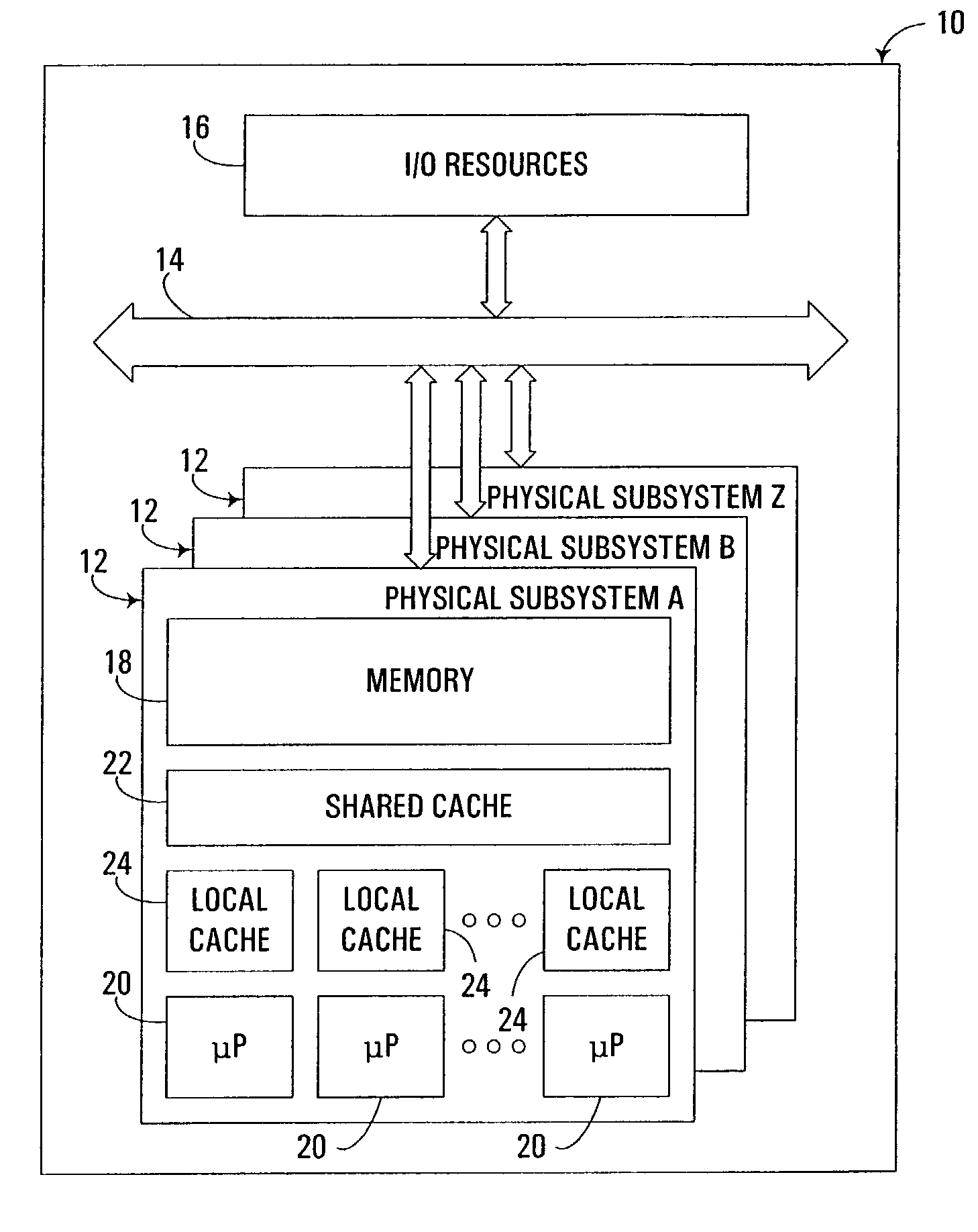

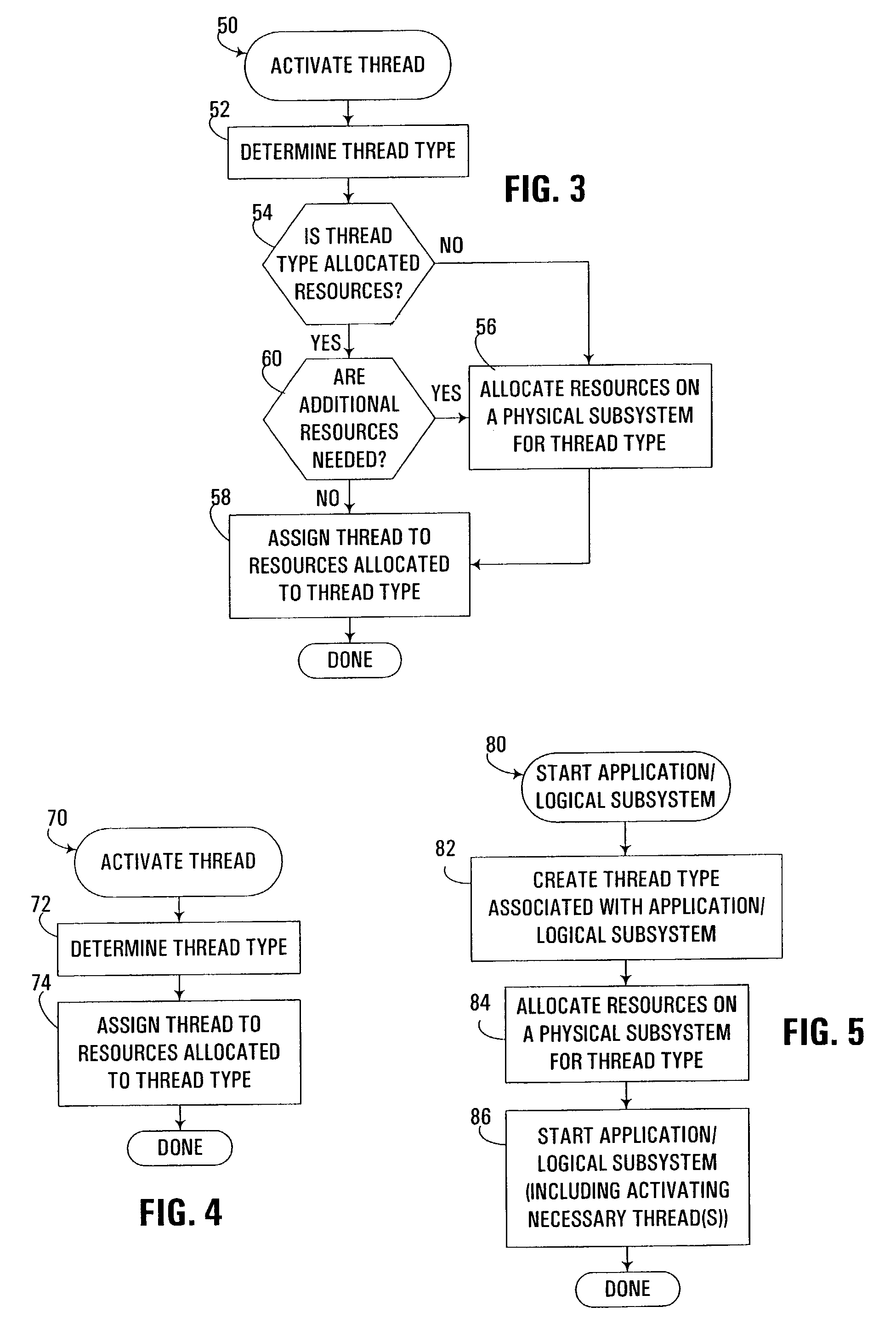

Dynamic allocation of computer resources based on thread type

ActiveUS7222343B2Reduce needEliminate overheadResource allocationMemory adressing/allocation/relocationComputer resourcesTraffic capacity

An apparatus, program product and method dynamically assign threads to computer resources in a multithreaded computer including a plurality of physical subsystems based upon specific “types” associated with such threads. In particular, thread types are allocated resources that are resident within the same physical subsystem in a computer, such that newly created threads and / or reactivated threads of those particular thread types are dynamically assigned to the resources allocated to their respective thread types. As such, those threads sharing the same type are generally assigned to computer resources that are resident within the same physical subsystem of a computer, which often reduces cross traffic between multiple physical subsystems resident in a computer, and thus improves overall system performance.

Owner:IBM CORP

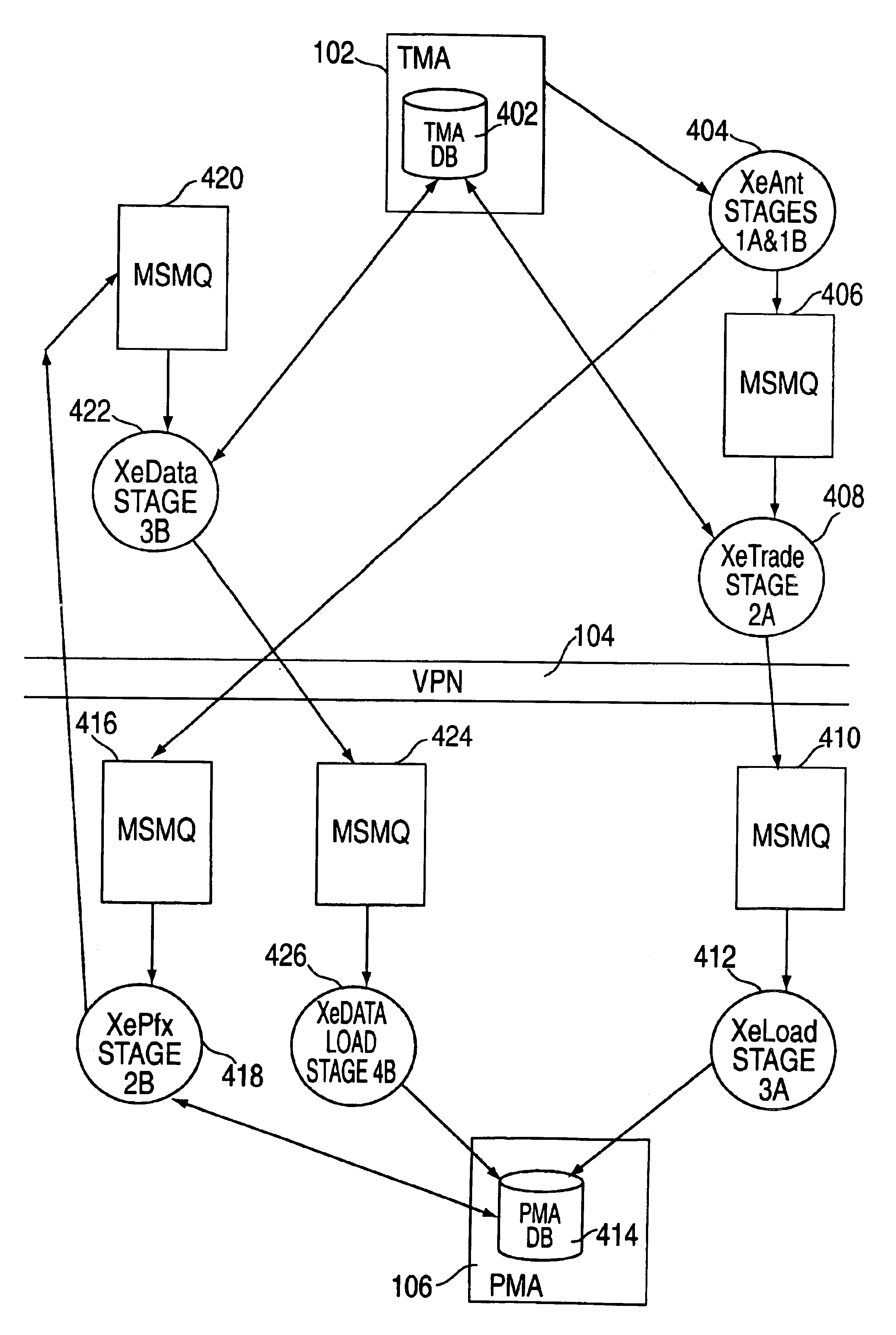

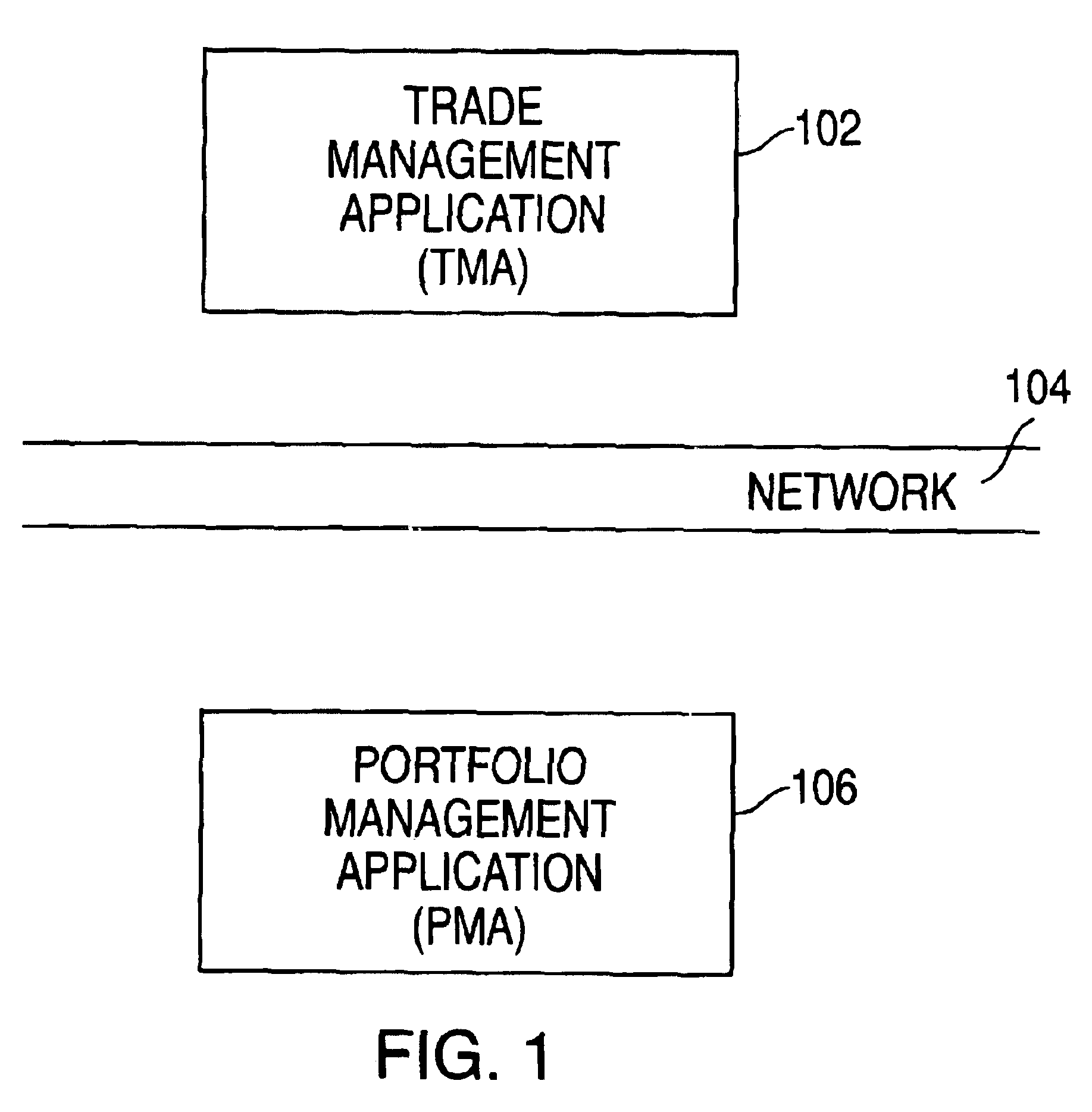

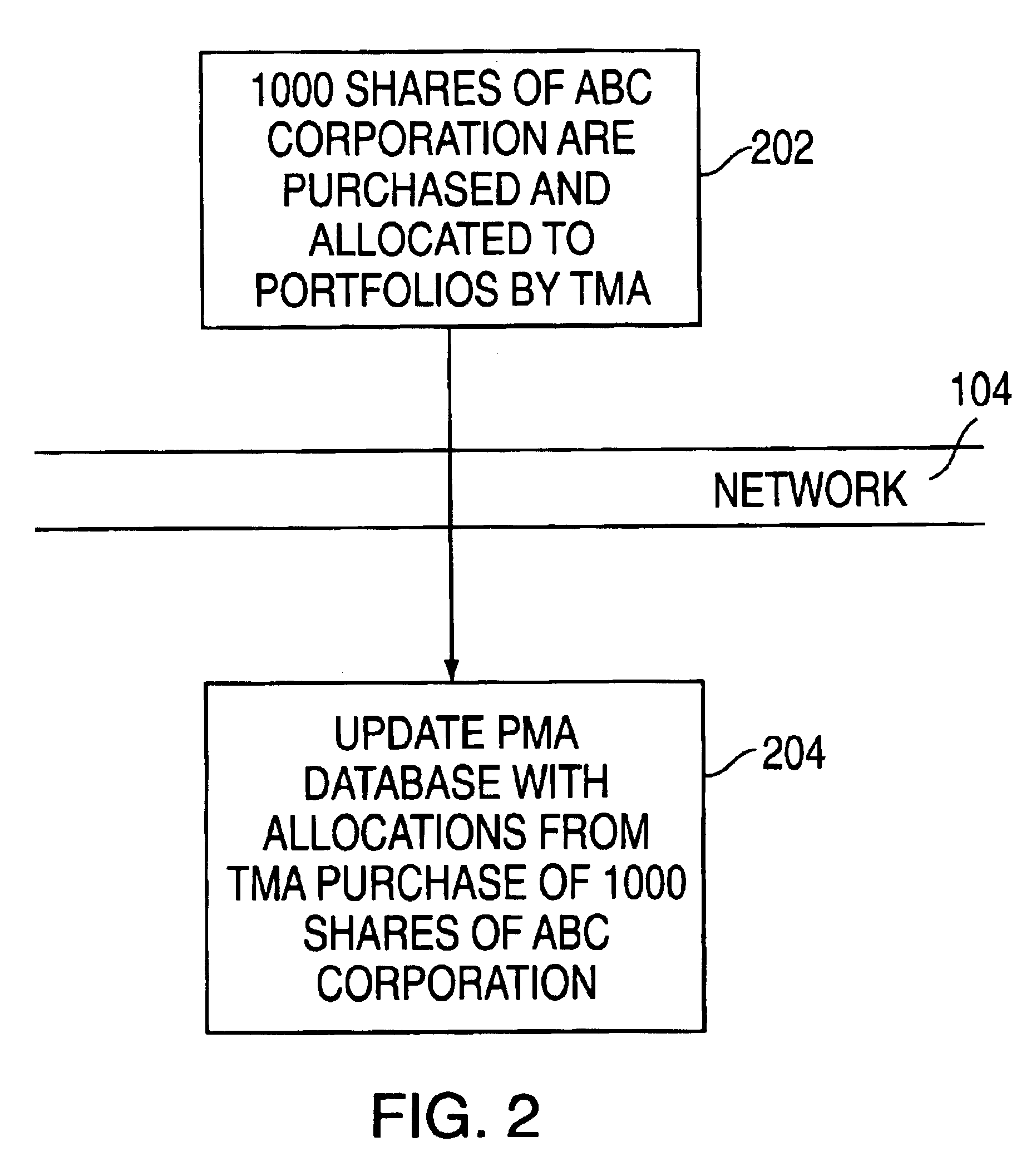

Method and system for straight through processing

InactiveUS6845507B2Simple developmentSimplify deploymentFinanceInterprogram communicationMessage passingStraight-through processing

A method and system for performing straight through processing is presented. The method includes monitoring a queue in order to detect a specific message. This message is parsed to take it from an external format into an internal format. The contents of the message include stages, with one stage being marked as active, and each stage having at least one step and a queue identifier. The processing specified in the steps contained in the active stage is performed, the active stage is marked inactive, and a new stage is marked active. The message is parsed back into the external format and directed to the queue specified by the queue identifier. Additional embodiments include a storage medium and a signal propagated over a propagation medium for performing computer messaging.

Owner:SS&C TECHNOLOGIES

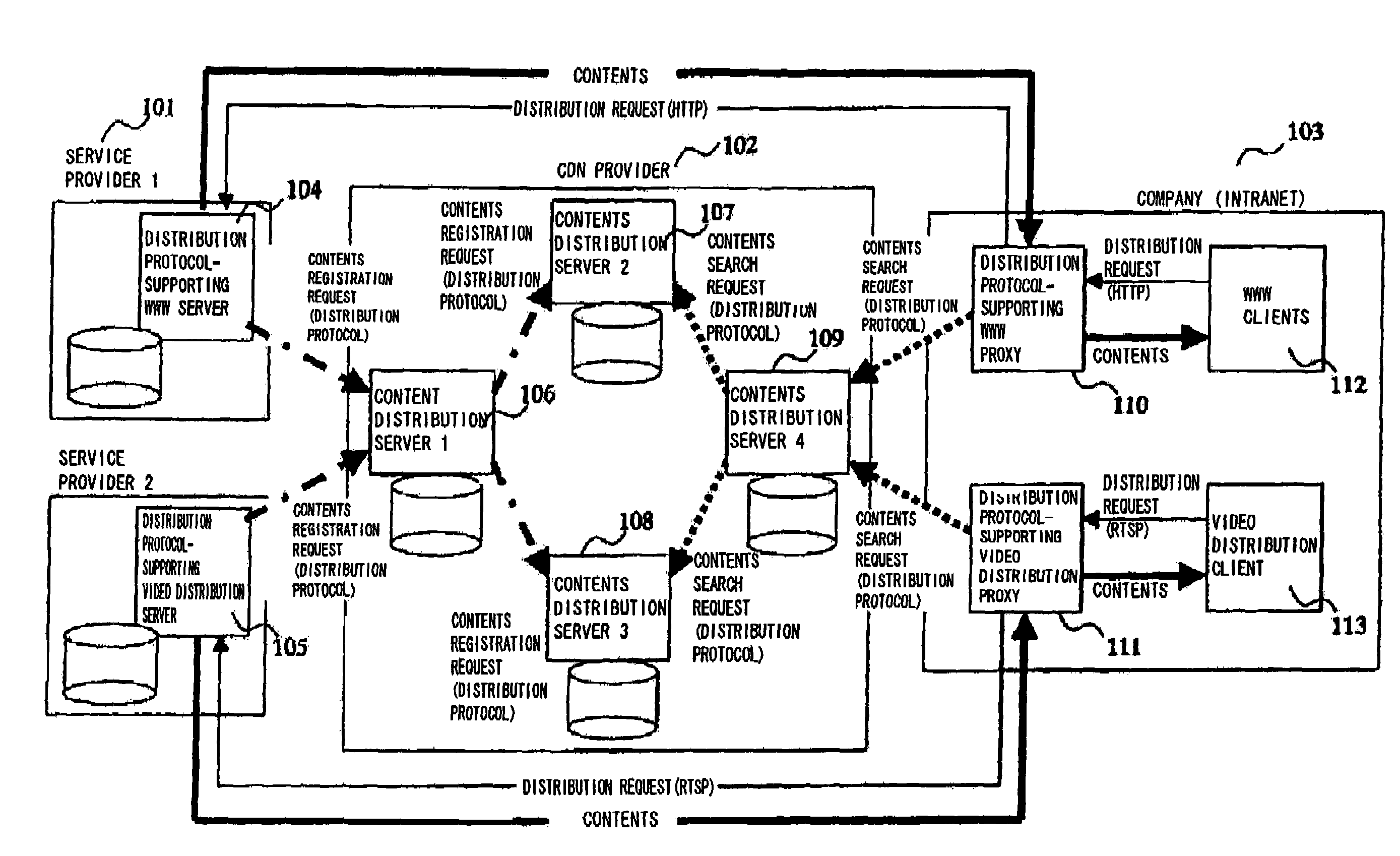

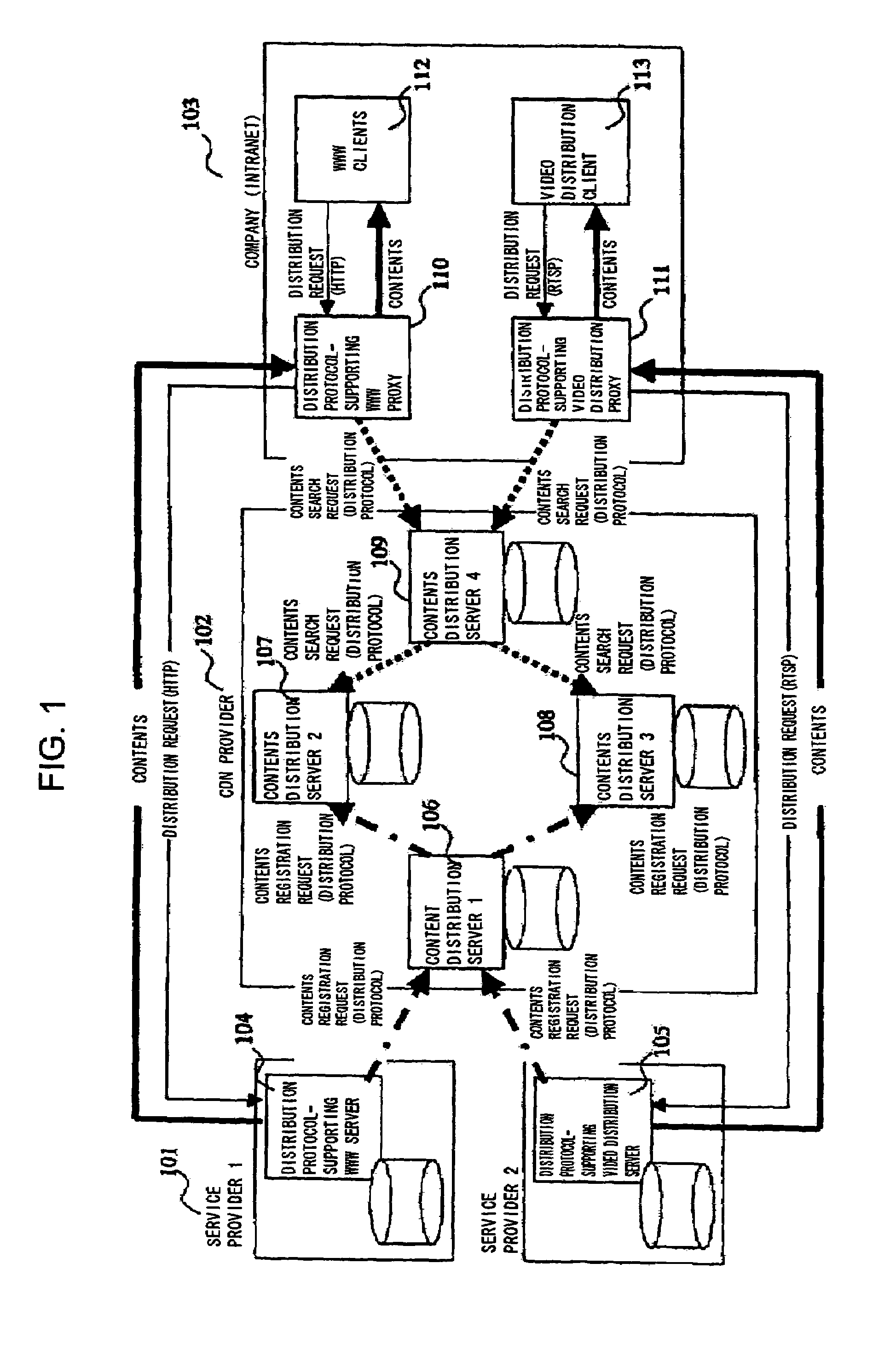

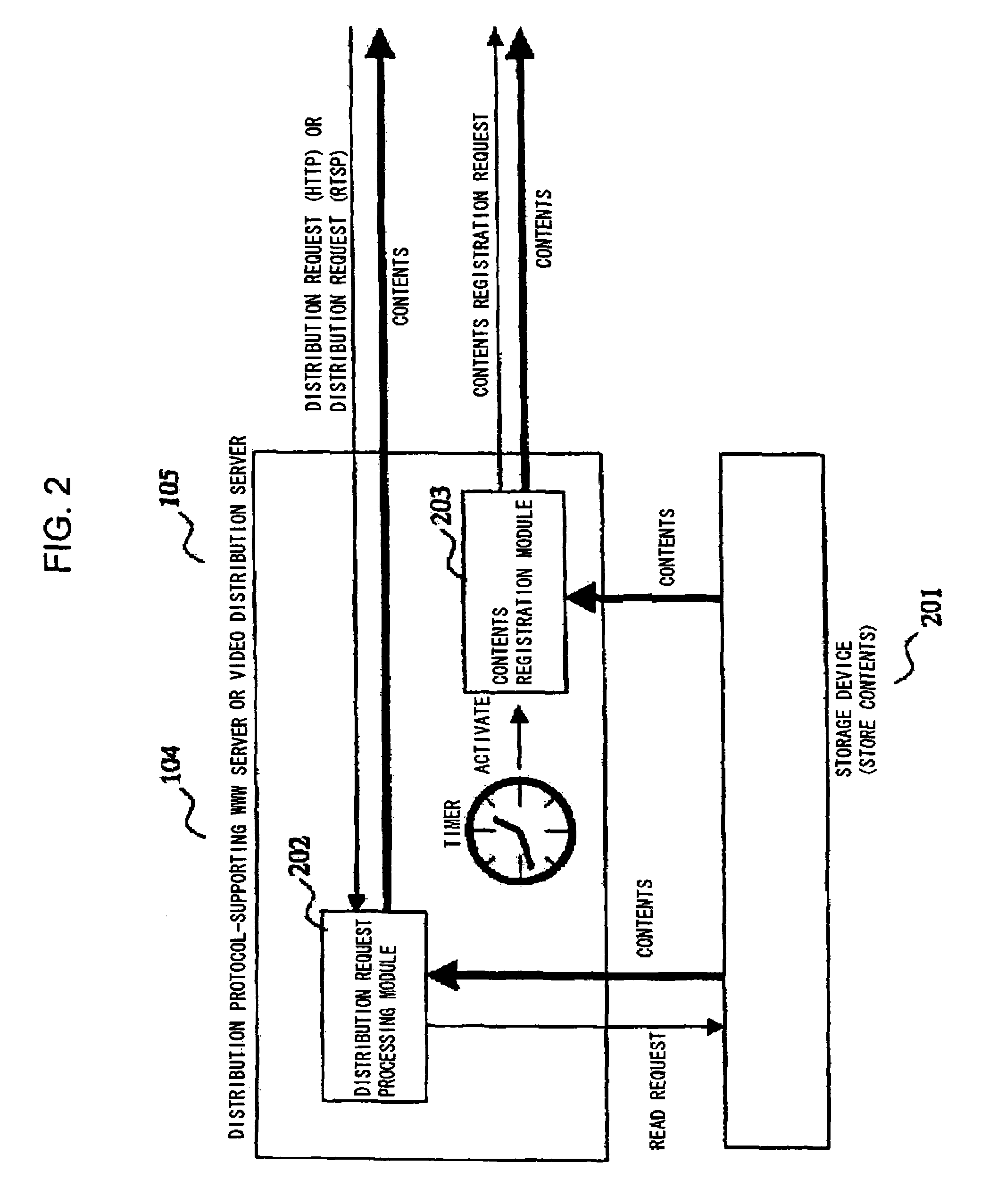

Data distribution server

InactiveUS7404201B2Reduce necessityEliminate overheadAnalogue secracy/subscription systemsMultiple digital computer combinationsSupplicantData Distribution Service

Owner:HITACHI LTD

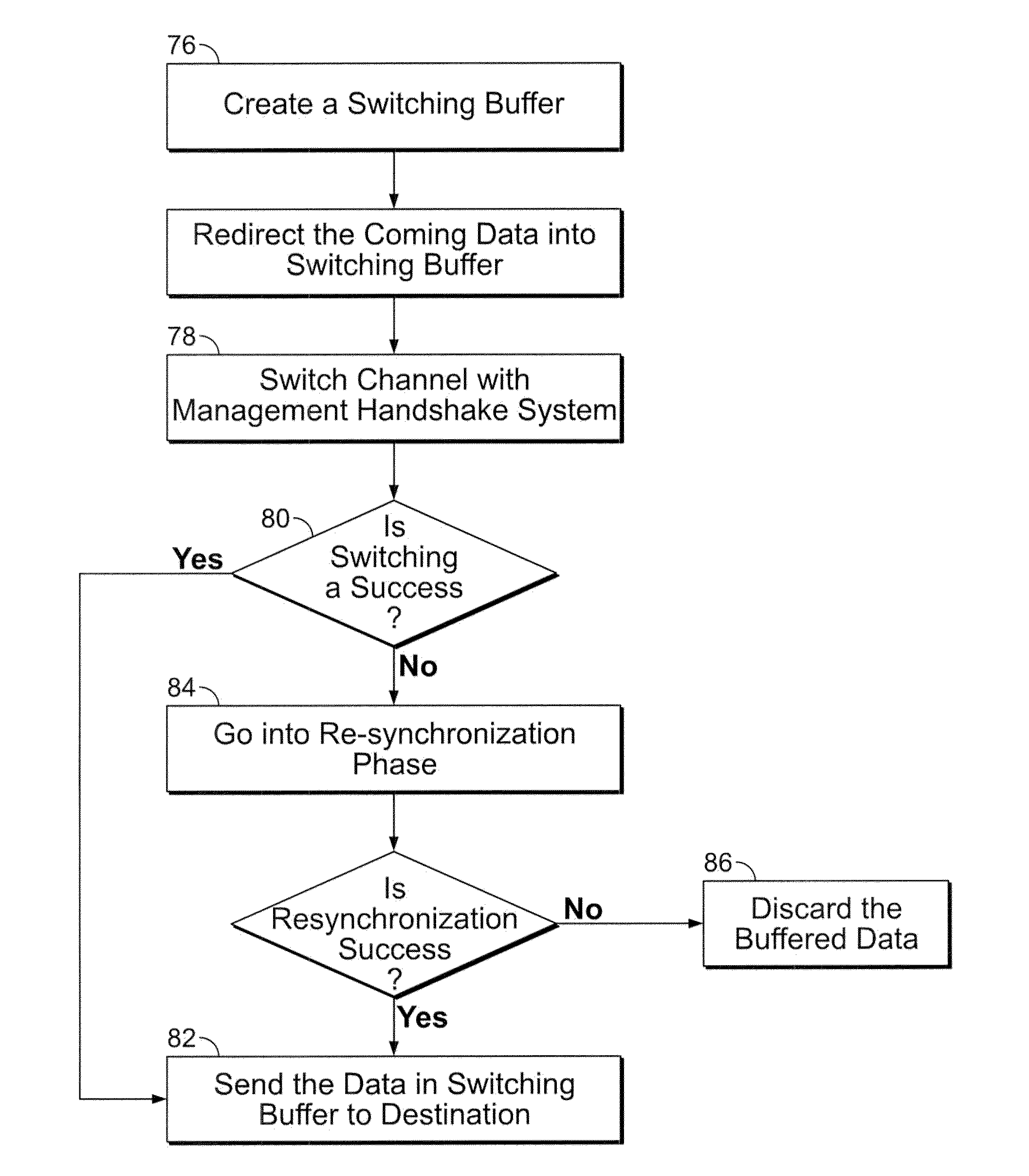

Method and apparatus for dynamic spectrum access

InactiveUS20100135226A1Increase robustnessReduce overheadWireless commuication servicesTransmissionTelecommunications linkSelf adaptive

Methods and apparatus are provided for the efficient use of cognitive radios in the performance of dynamic spectrum access. This includes spectrum sensing algorithms, adaptive optimized sensing of parameters based on changing radio environments, and identifying vacant channels. Protocols for switching communication links to different channels, and synchronizing communicating nodes in order to maintain reliable connectively while maximizing spectrum usage are also provided.

Owner:STEVENS INSTITUTE OF TECHNOLOGY

System and Method for Prepaid Rewards

ActiveUS20100312629A1Increase salesEliminate overheadPre-payment schemesMarketingTransaction accountDatabase

A system and method provide rewards or loyalty incentives to card member customers. The system includes an enrolled card member customer database, an enrolled merchant database, a participating merchant offer database and a registered card processor. The enrolled card member customer database includes transaction accounts of card member customers enrolled in a loyalty incentive program. The enrolled merchant database includes a list of merchants participating in the loyalty incentive program. The participating merchant offer database includes loyalty incentive offers from participating merchants. The registered card processor receives a record for charge for a purchase made with an enrolled merchant by an enrolled card member customer and uses the record of charge to determine whether the purchase qualifies for a rebate credit in accordance with a discount offer from the enrolled merchant. If the purchase qualifies for a rebate credit, the registered card processor provides the rebate credit to an account of the enrolled card member customer. The registered card processor also provides for electronic notification of rewards offers or credit to prepaid cards, in response to purchases conforming to a specific set of merchant criteria. The system provides a coupon-less way for merchants to provide incentive discounts and / or credits to enrolled customers, along with notifying customers of other available incentive offers.

Owner:AMERICAN EXPRESS TRAVEL RELATED SERVICES CO INC

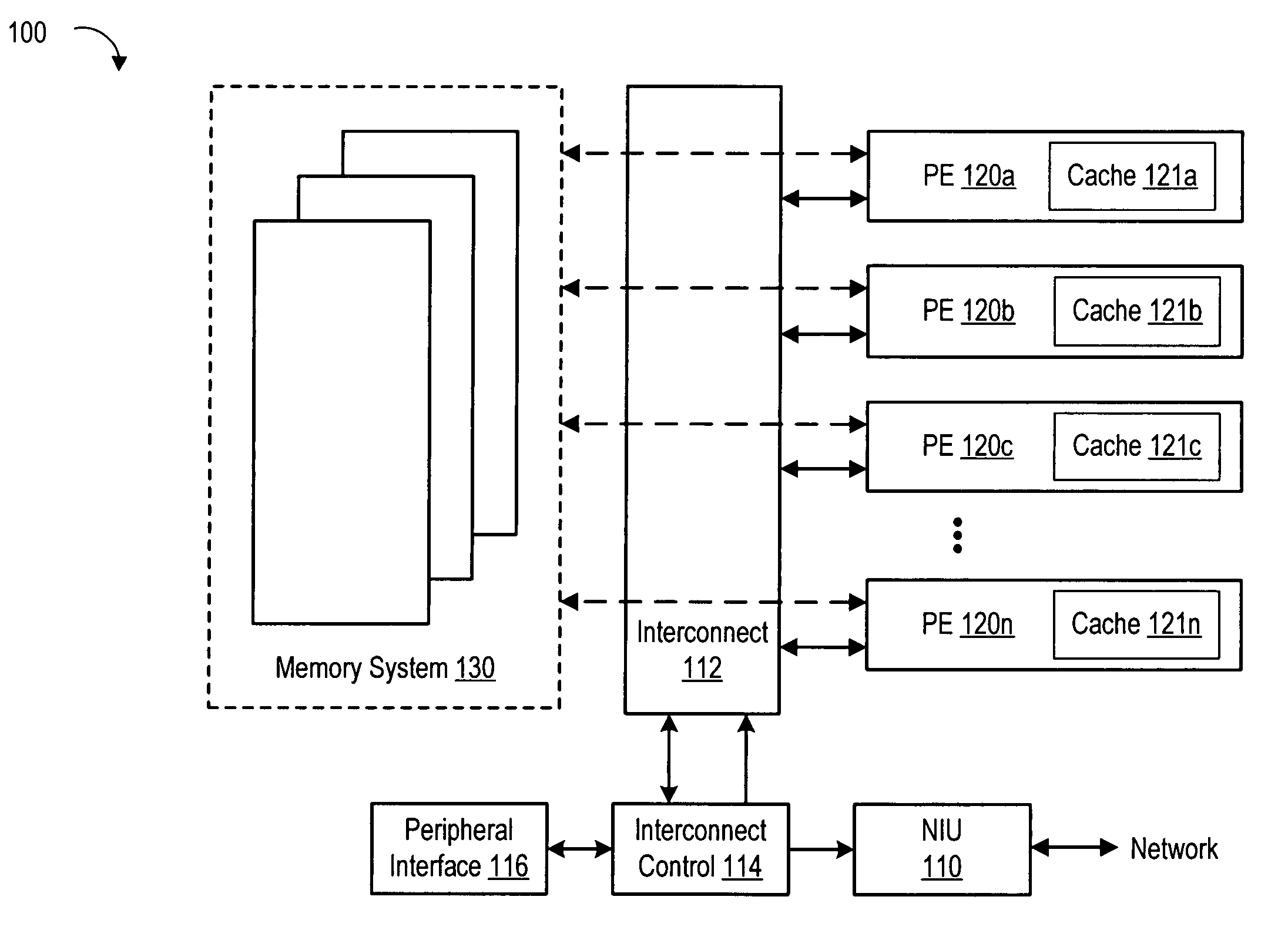

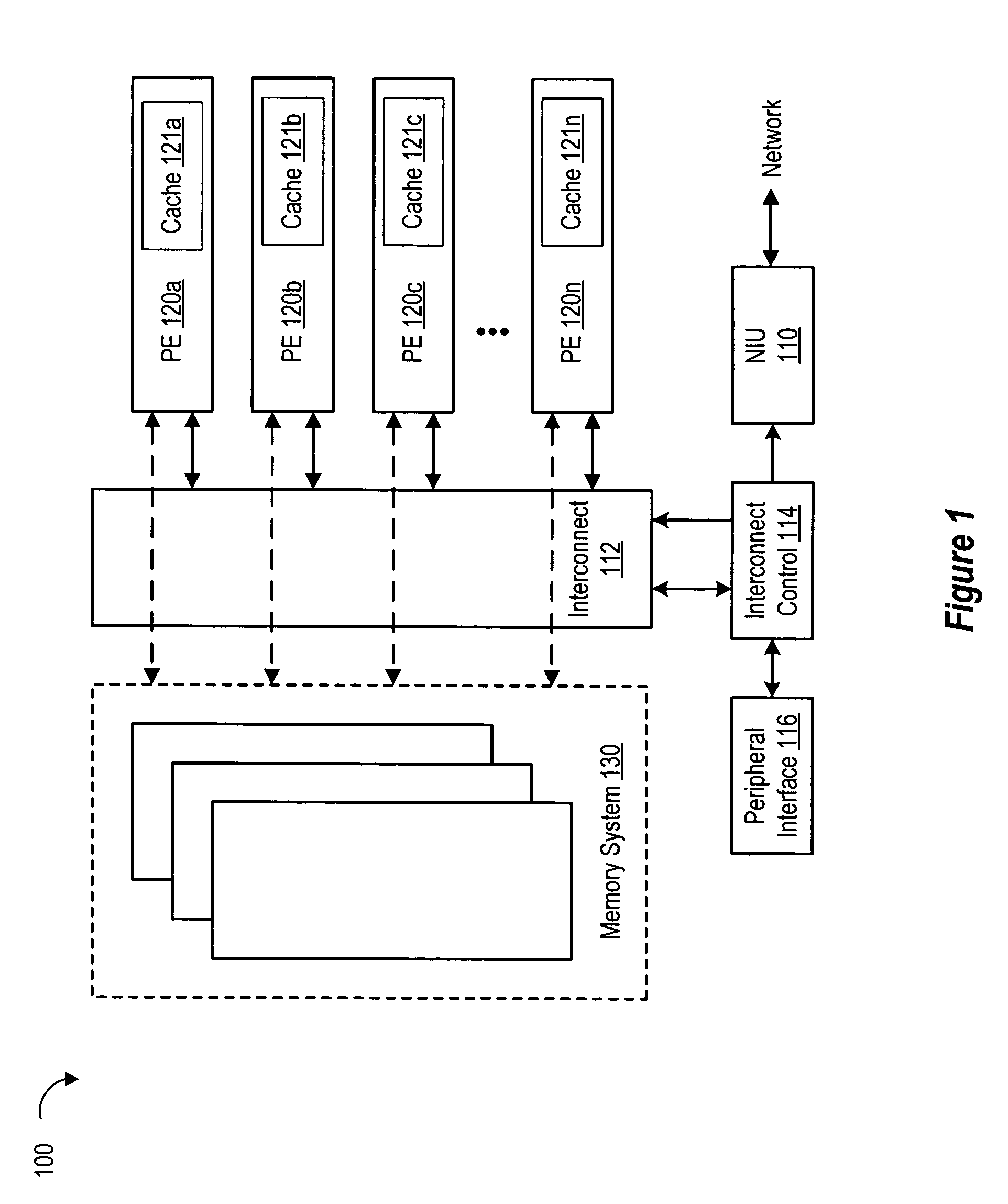

Network system including packet classification for partitioned resources

ActiveUS7567567B2Lower latencyHigh bandwidthData switching by path configurationStore-and-forward switching systemsNetworked systemMemory systems

A network system which includes a plurality of processing entities, an interconnect device coupled to the plurality of processing entities, a memory system coupled to the interconnect device and the plurality of processing entities, a network interface unit coupled to the plurality of processing entities and the memory system via the interconnect device. The network interface includes a memory access module and a packet classifier. The memory access module includes a plurality of parallel memory access channels. The packet classifier provides a flexible association between packets and the plurality of processing entities via the plurality of memory access channels.

Owner:ORACLE INT CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com