Tcp/ip processor and engine using rdma

a processor and engine technology, applied in the field of storage networking semiconductors, can solve the problems of lower line rate, lower performance host processors, and prohibitive software stack overhead, and achieve the effects of low cost, low cost, and low cos

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

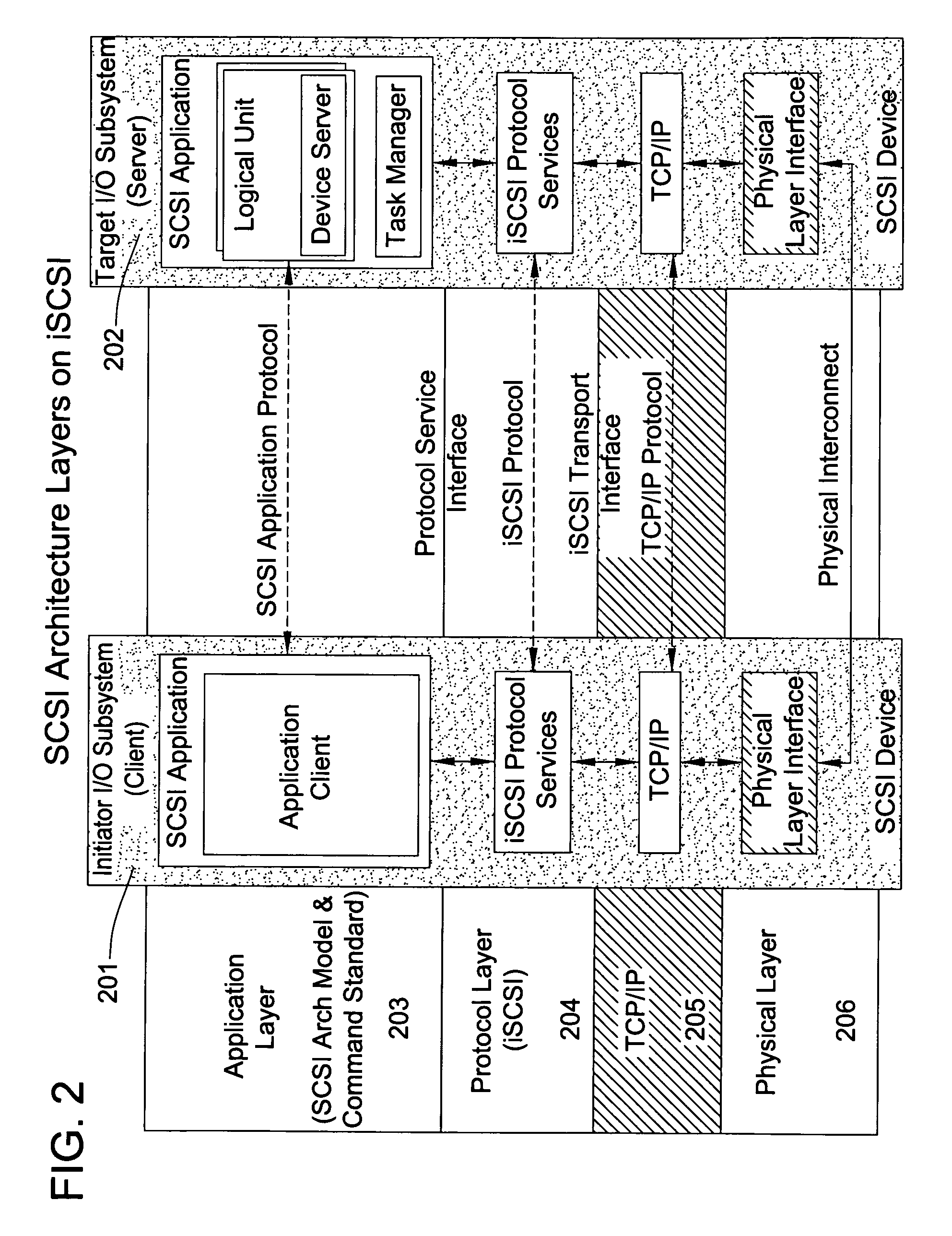

[0067]I provide a new high performance and low latency way of implementing a TCP / IP stack in hardware to relieve the host processor of the severe performance impact of a software TCP / IP stack. This hardware TCP / IP stack is then interfaced with additional processing elements to enable high performance and low latency IP based storage applications. This can be implemented in a variety of forms to provide benefits of TCP / IP termination, high performance and low latency IP storage capabilities, remote DMA (RDMA) capabilities, security capabilities, programmable classification and policy processing features and the like. Following are some of the embodiments that can implement this:

[0068]Server

[0069]The described architecture may be embodied in a high performance server environment providing hardware based TCP / IP functions that relieve the host server processor or processors of TCP / IP software and performance overhead. The IP processor may be a companion processor to a server chipset, pr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com