Patents

Literature

595 results about "Cache controller" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Cache controller. The cache controller is built from a xilinx 3064, supported by a xilinx 3020 and some fast PALs. It detects cache misses and controls sending and receiving the cells. This device also controls the perhaps interface, in the case of contention for transmission to the fabric the cache section always wins.

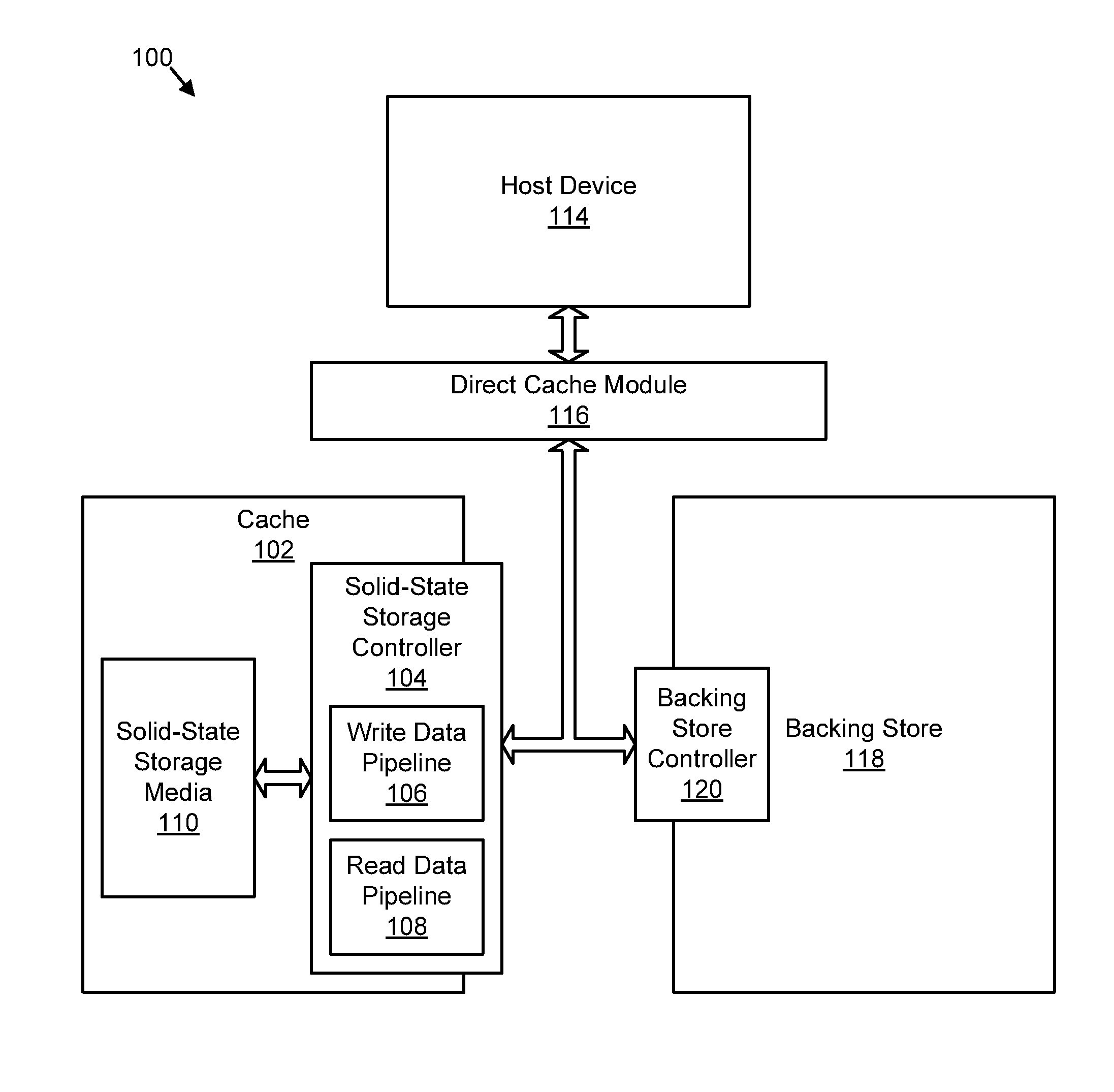

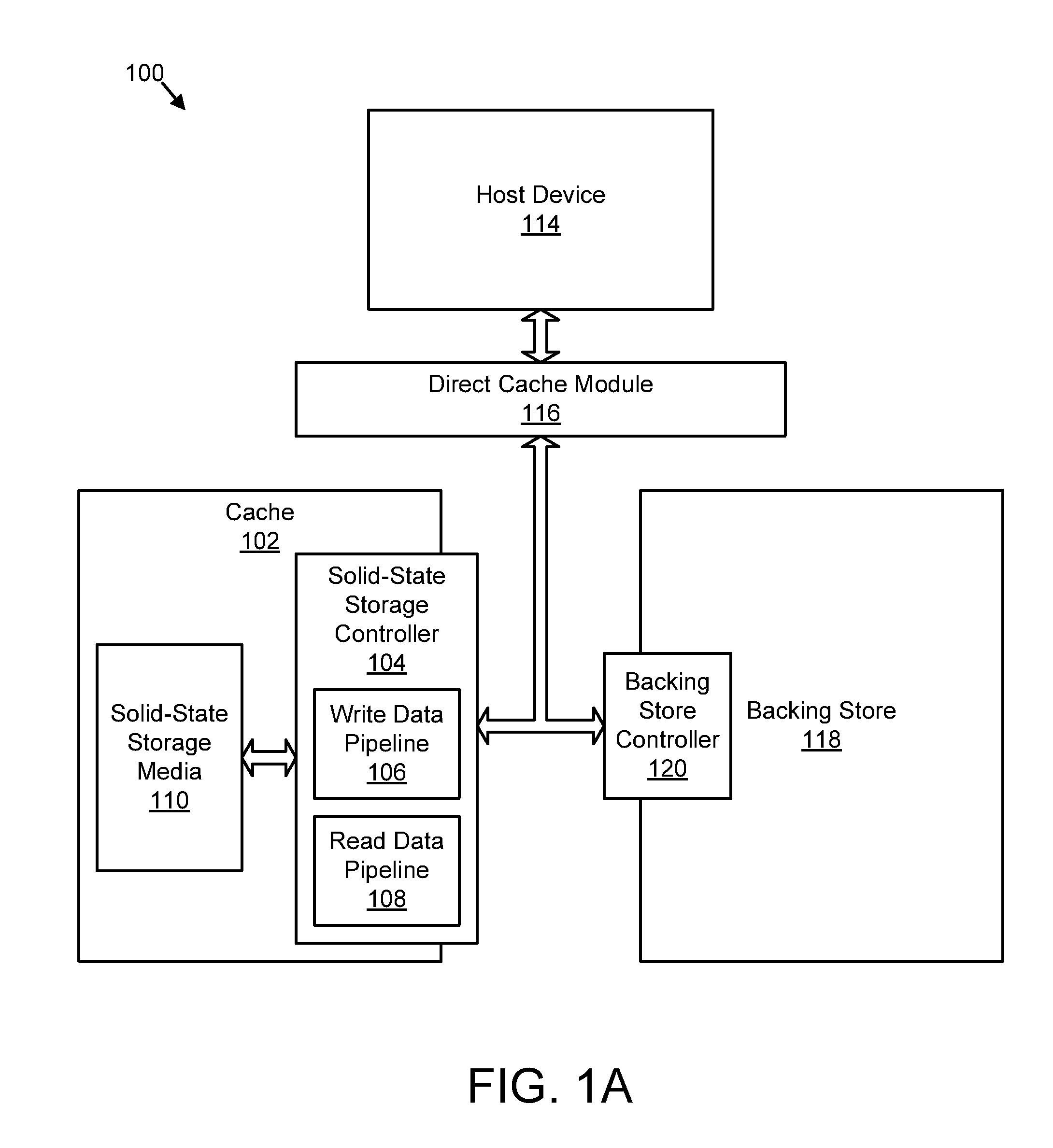

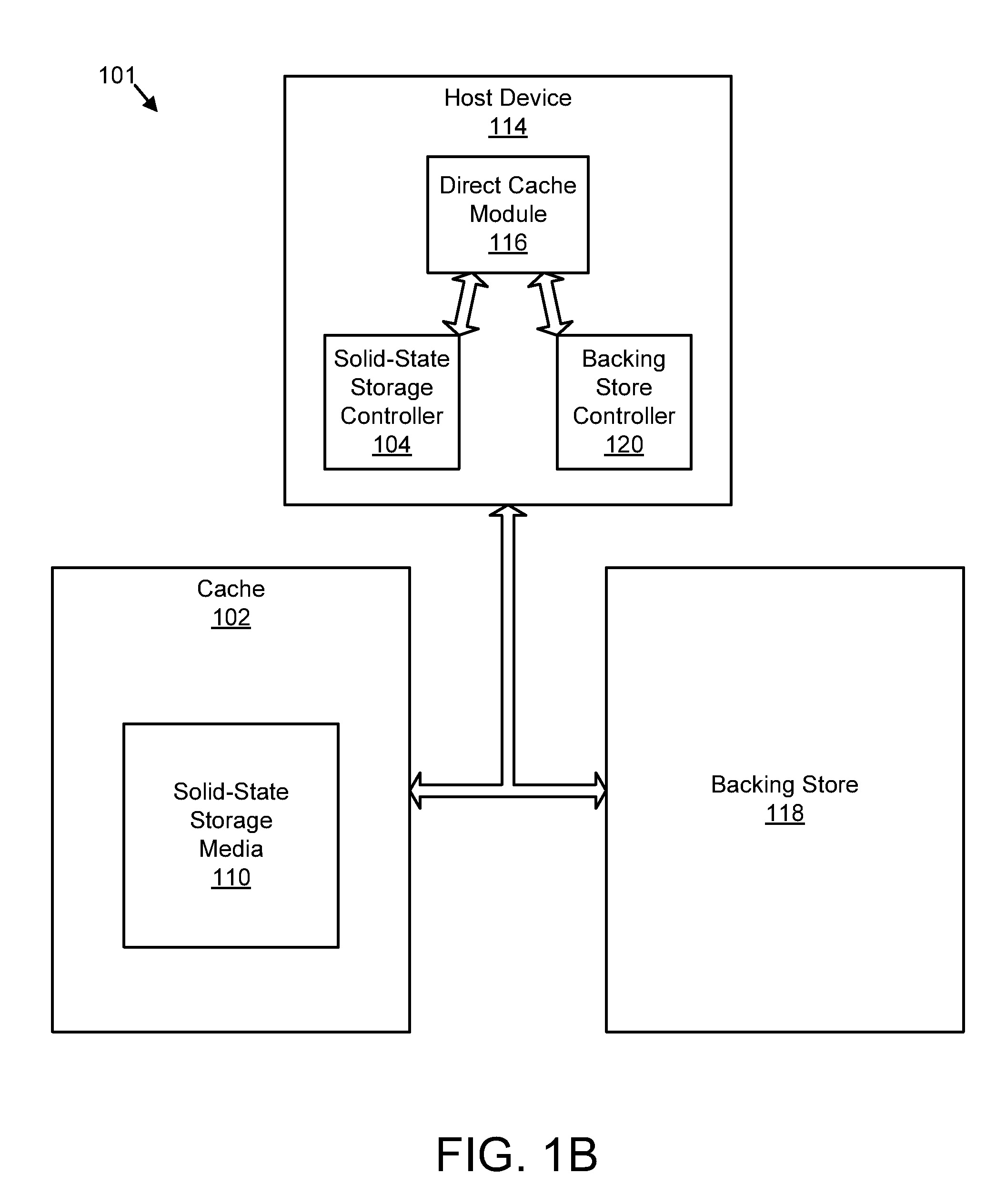

Apparatus, system, and method for destaging cached data

InactiveUS20110258391A1Memory architecture accessing/allocationError detection/correctionSolid-state storageControl store

An apparatus, system, and method are disclosed for destaging cached data. A controller detects one or more write requests to store data in a backing store. The cache controller sends the write requests to a storage controller for a nonvolatile solid-state storage device. The storage controller receives the write requests and caches the data associated with the write requests in the nonvolatile solid-state storage device by appending the data to a log of the nonvolatile solid-state storage device. The log includes a sequential, log-based structure preserved in the nonvolatile solid-state storage device. The cache controller receives at least a portion of the data from the storage controller in a cache log order and destages the data to the backing store in the cache log order. The cache log order comprises an order in which the data was appended to the log of the nonvolatile solid-state storage device.

Owner:SANDISK TECH LLC

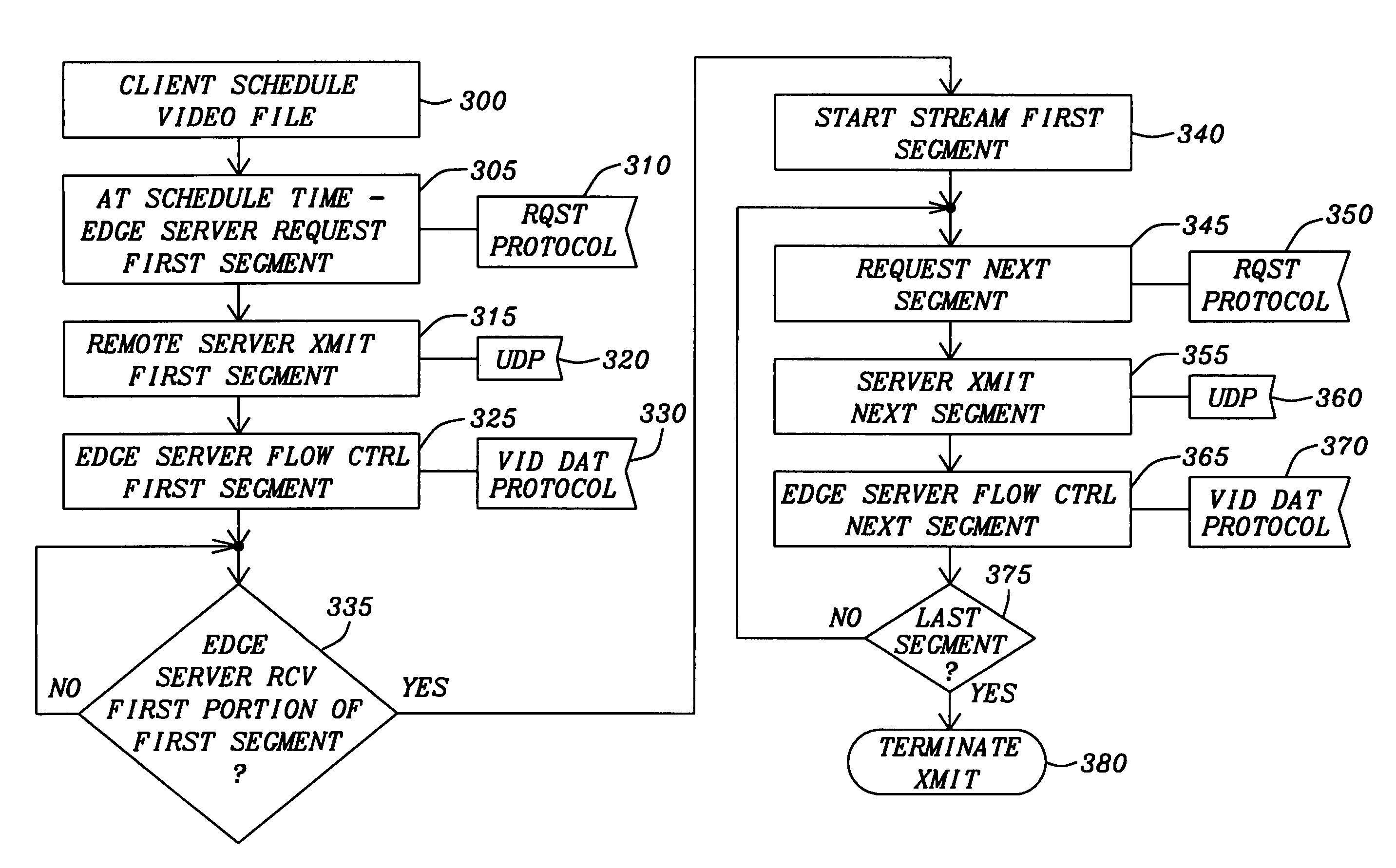

Streaming while fetching broadband video objects using heterogeneous and dynamic optimized segmentation size

ActiveUS7324555B1Inhibit transferTime-division multiplexClosed circuit television systemsDigital dataServer replication

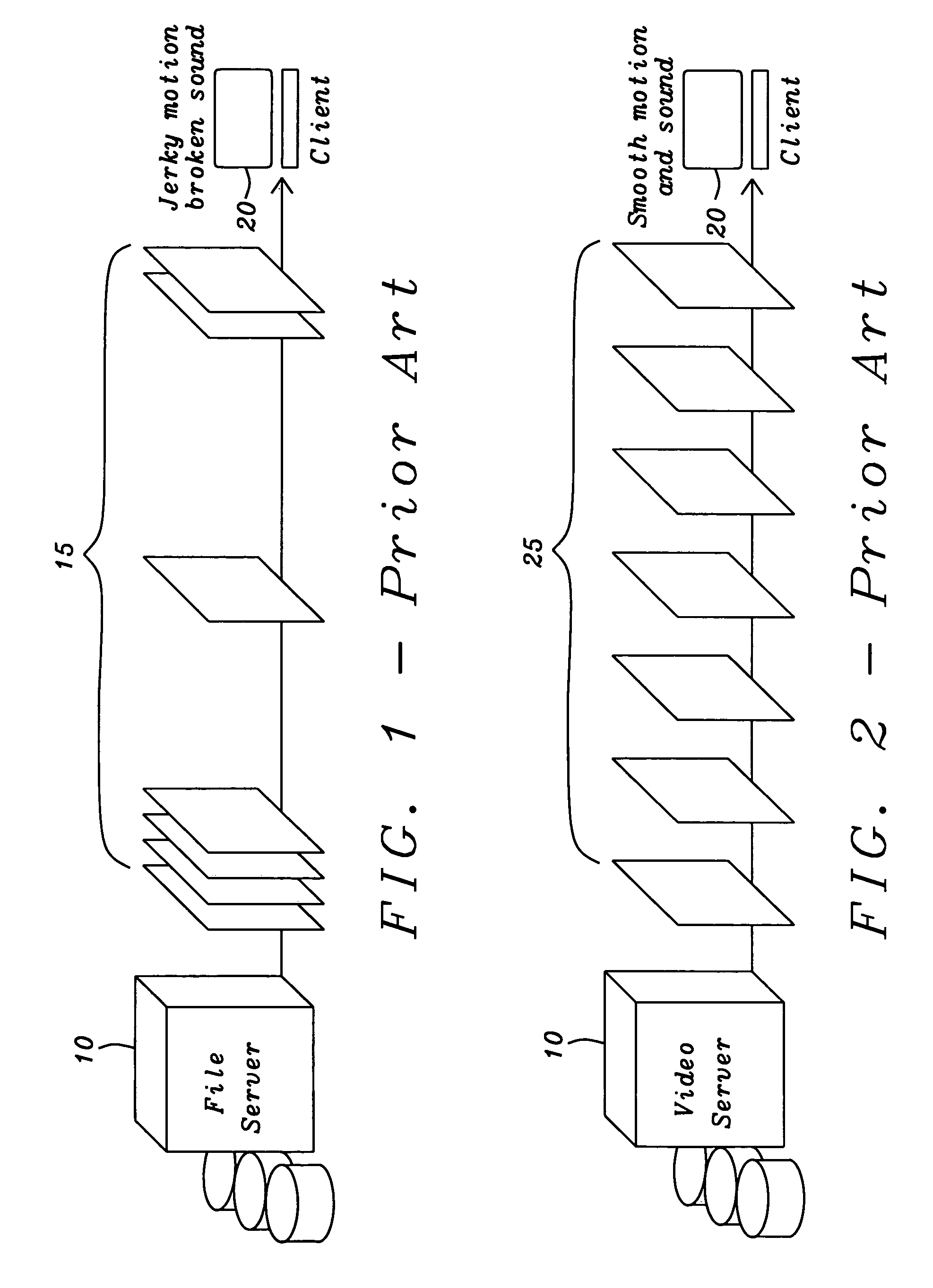

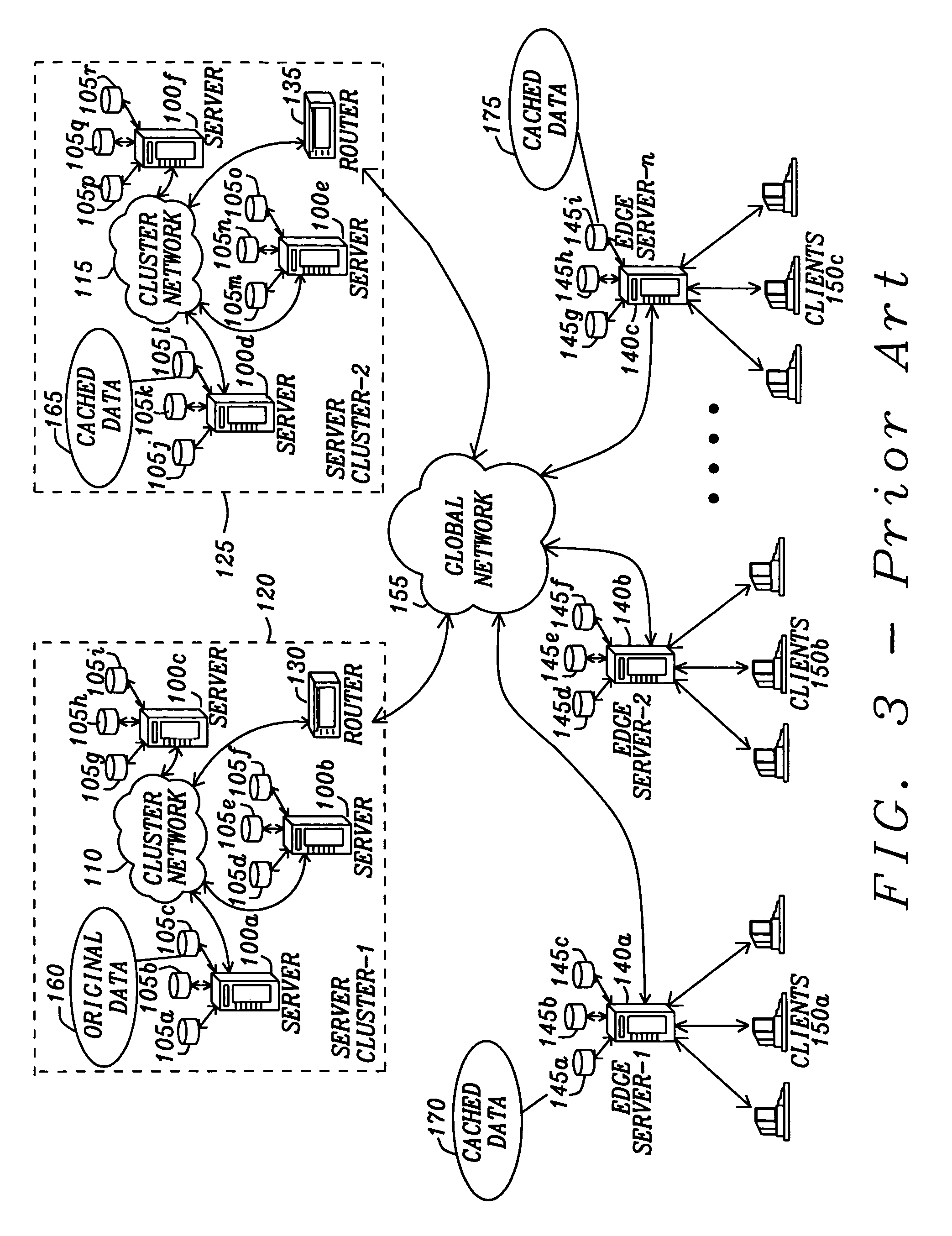

An video data object distribution system for transfer of video data objects includes a network of digital data file servers. The network of digital data file servers communicate with a client system to transfer video data objects. A scheduling apparatus schedules the transfer of the video data objects. A client streaming device within the client begins transfer of a first segment such that the video data object is started streaming of prior to reception of a totality of the first segment. An ordered sequential transfer device orders and sequentially transfers segments of the video data object to the client system. The preemption device allows persistent video data object transfer of video data object without resending the video data objects. A hierarchical caching controller copies segments of any of the video data objects from a central distribution server to any of the network of data file servers.

Owner:INFOVALUE COMPUTING

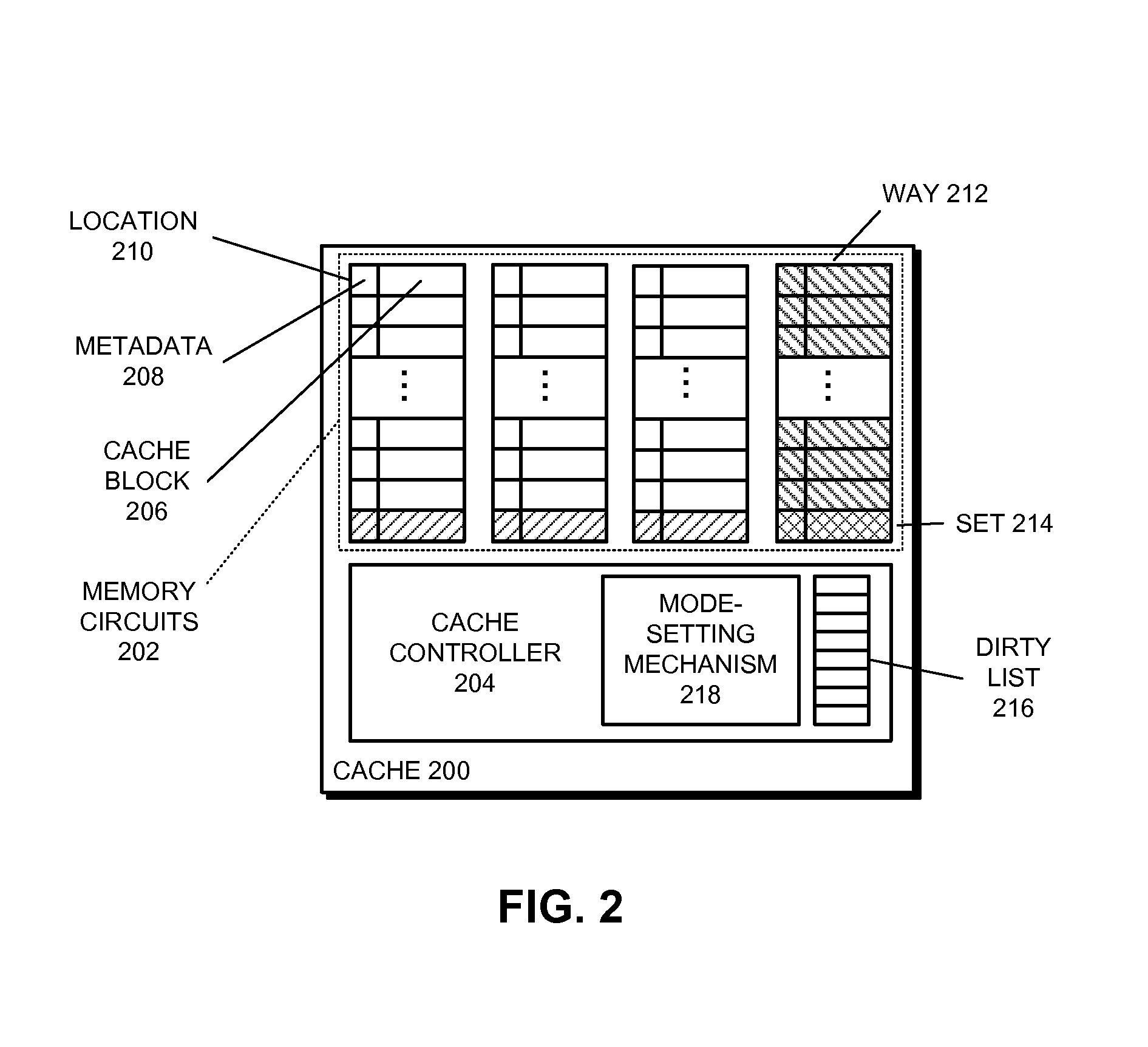

Dynamically Configuring Regions of a Main Memory in a Write-Back Mode or a Write-Through Mode

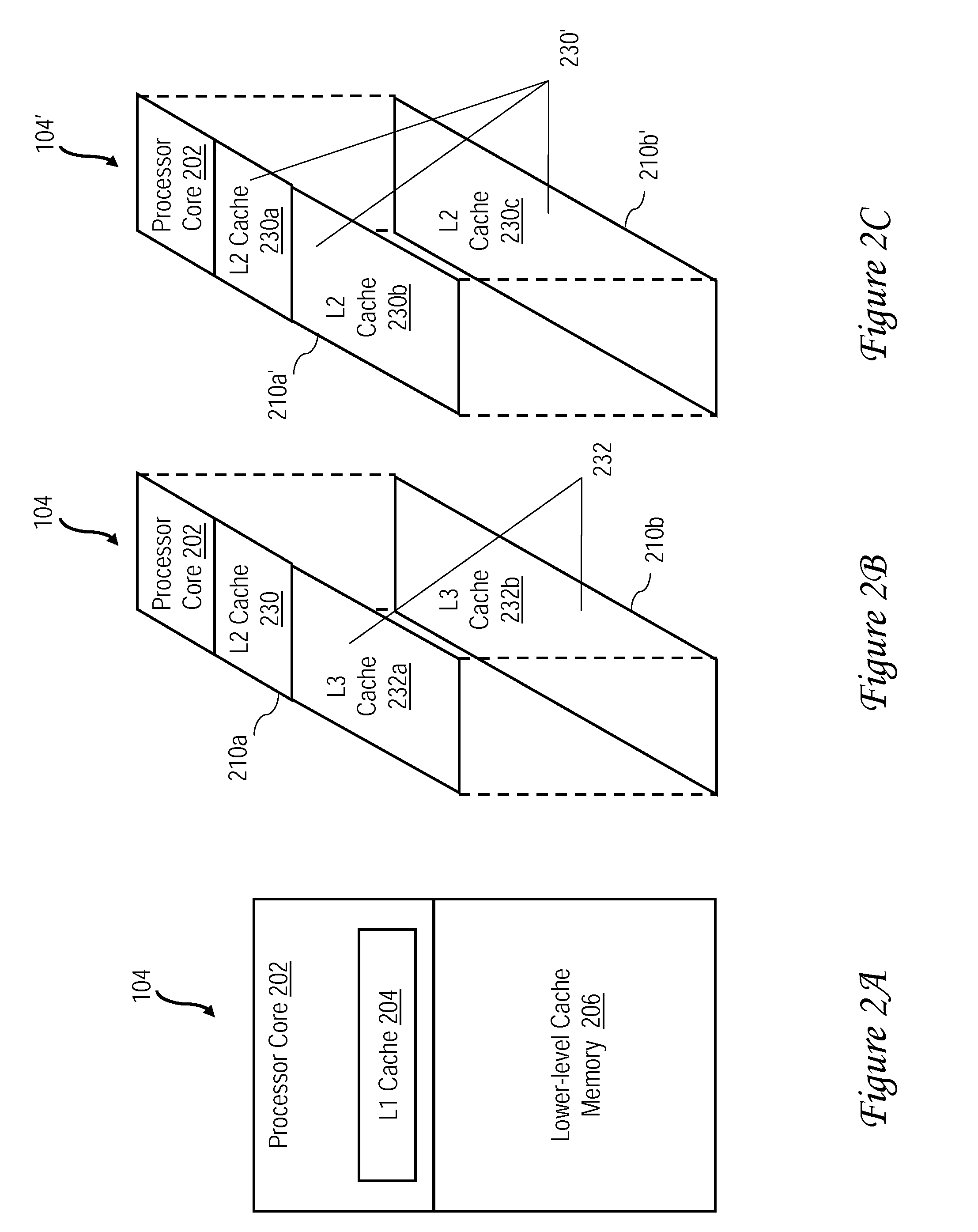

ActiveUS20140143505A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache controller

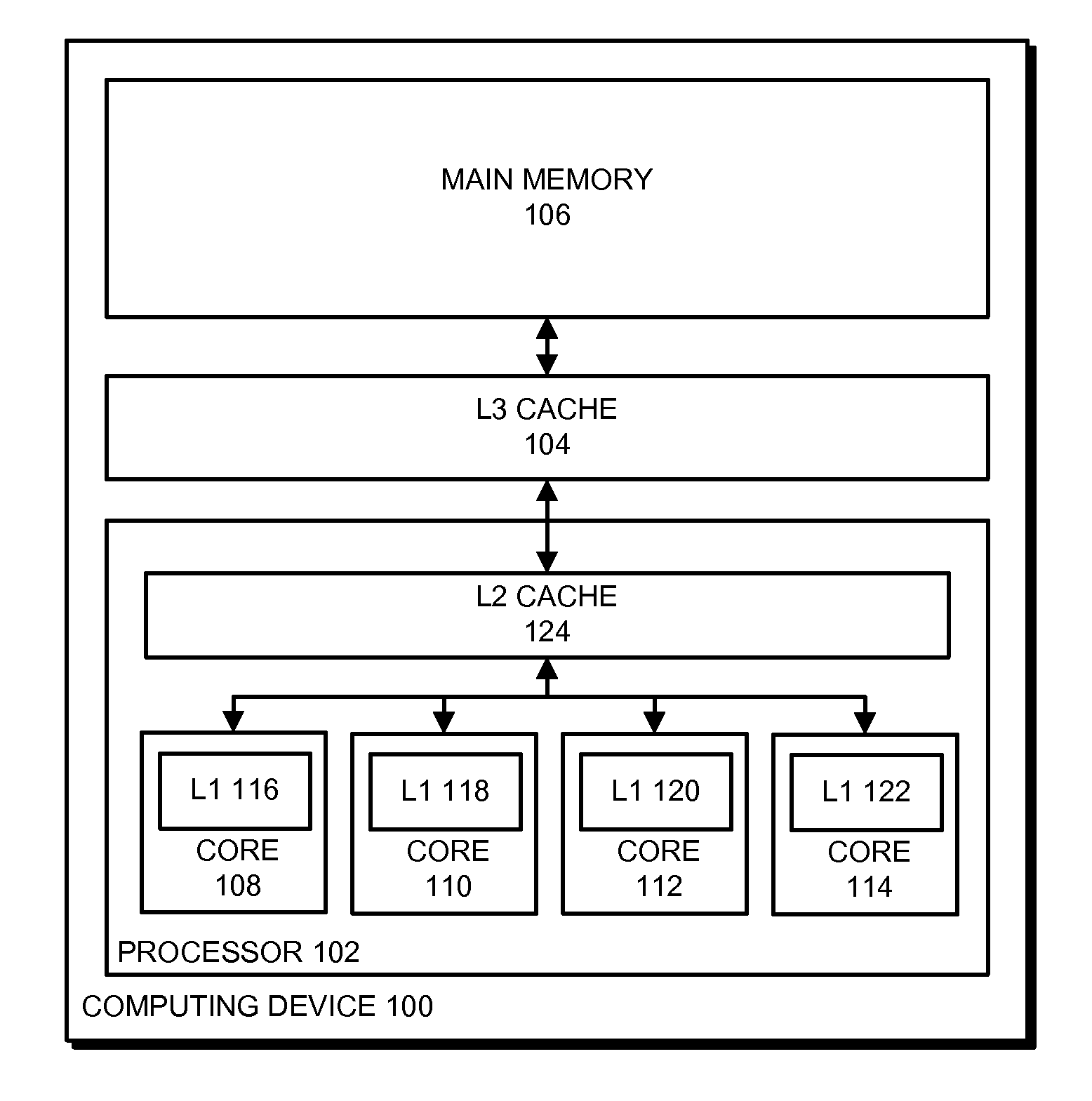

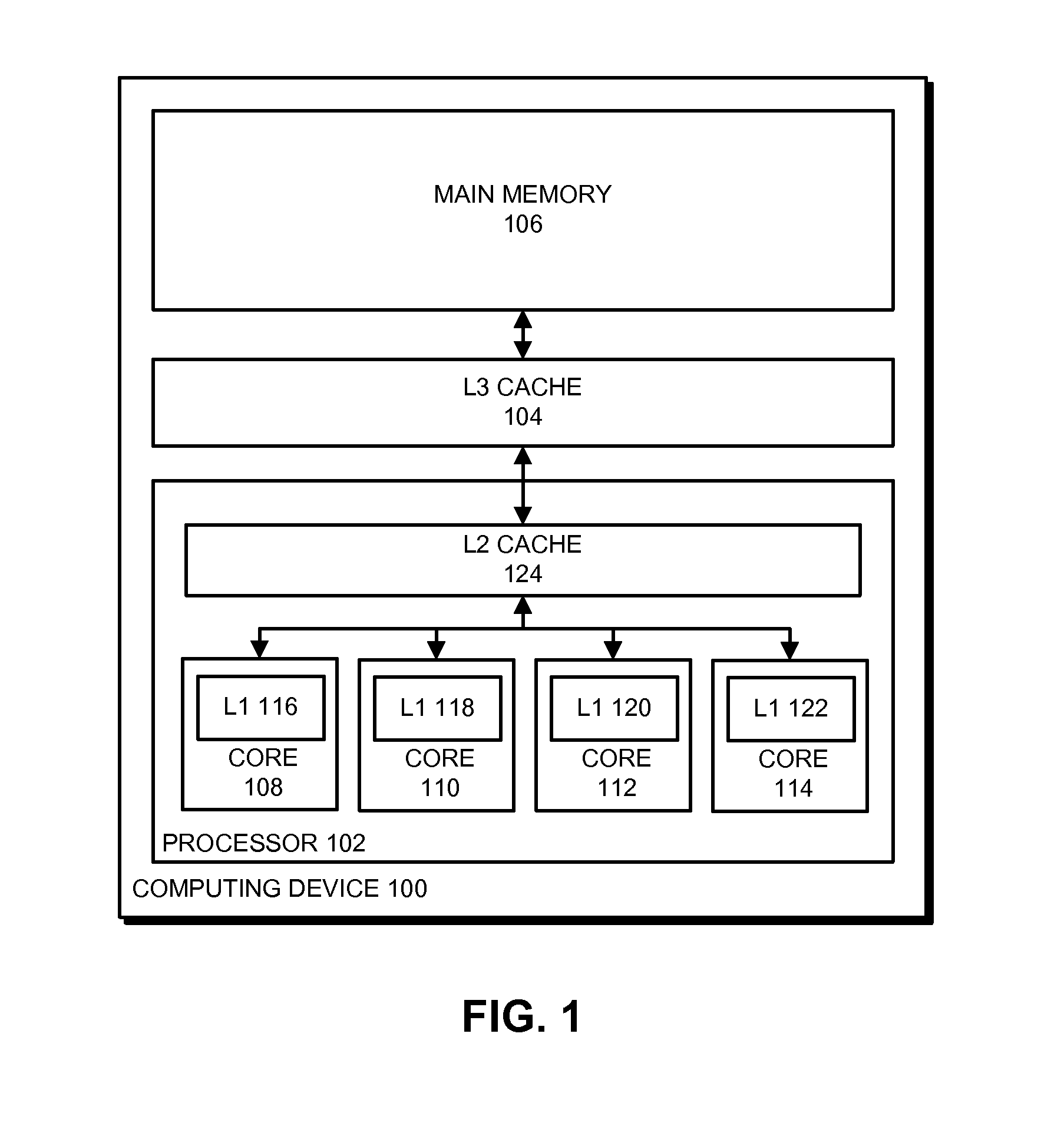

The described embodiments include a main memory and a cache memory (or “cache”) with a cache controller that includes a mode-setting mechanism. In some embodiments, the mode-setting mechanism is configured to dynamically determine an access pattern for the main memory. Based on the determined access pattern, the mode-setting mechanism configures at least one region of the main memory in a write-back mode and configures other regions of the main memory in a write-through mode. In these embodiments, when performing a write operation in the cache memory, the cache controller determines whether a region in the main memory where the cache block is from is configured in the write-back mode or the write-through mode and then performs a corresponding write operation in the cache memory

Owner:ADVANCED MICRO DEVICES INC

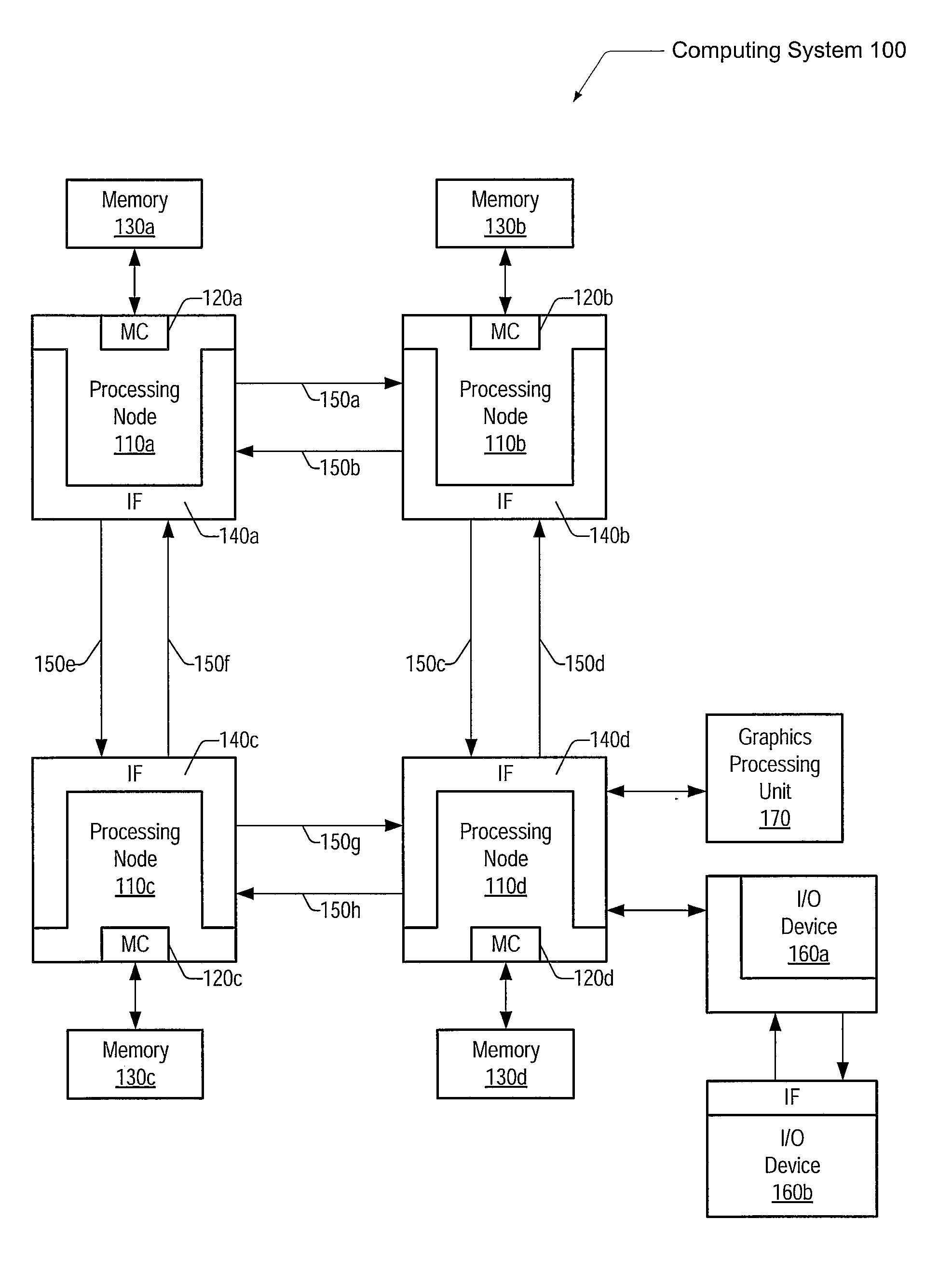

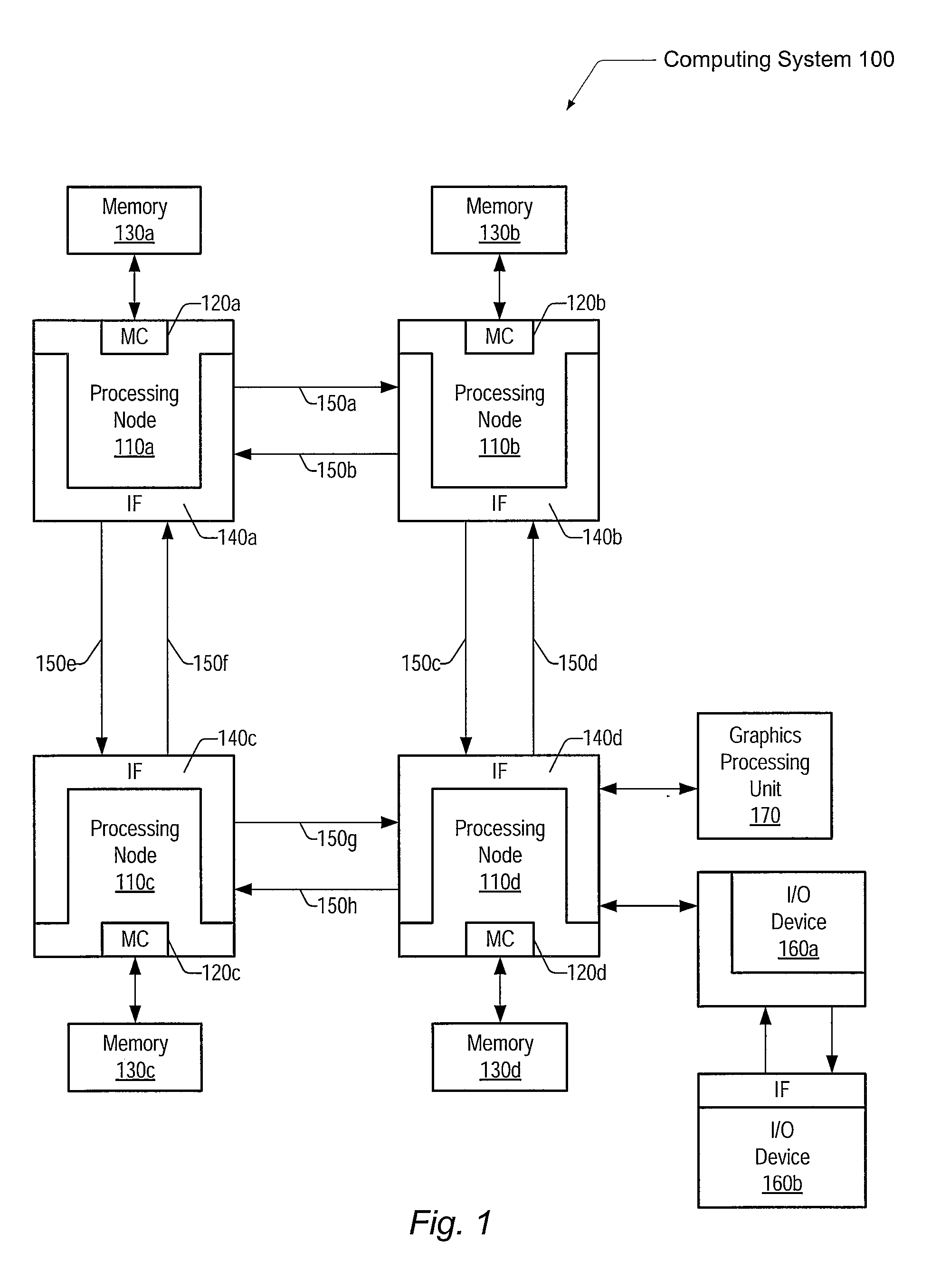

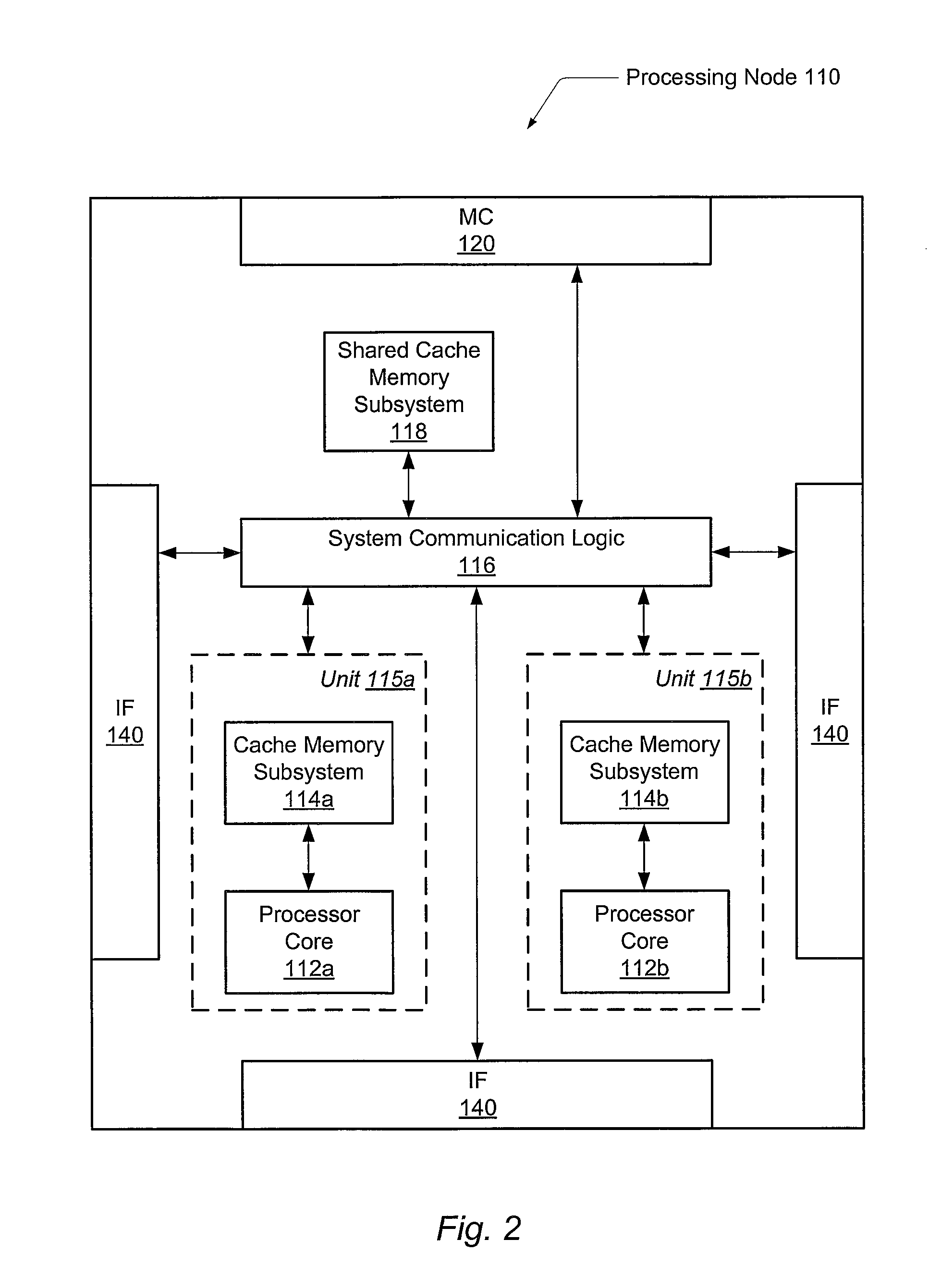

Method for way allocation and way locking in a cache

ActiveUS20100250856A1Small sizeEnergy efficient ICTMemory adressing/allocation/relocationGraphicsGraphics processing unit

A system and method for data allocation in a shared cache memory of a computing system are contemplated. Each cache way of a shared set-associative cache is accessible to multiple sources, such as one or more processor cores, a graphics processing unit (GPU), an input / output (I / O) device, or multiple different software threads. A shared cache controller enables or disables access separately to each of the cache ways based upon the corresponding source of a received memory request. One or more configuration and status registers (CSRs) store encoded values used to alter accessibility to each of the shared cache ways. The control of the accessibility of the shared cache ways via altering stored values in the CSRs may be used to create a pseudo-RAM structure within the shared cache and to progressively reduce the size of the shared cache during a power-down sequence while the shared cache continues operation.

Owner:ADVANCED MICRO DEVICES INC

System and method for performing scalable embedded parallel data decompression

InactiveUS7129860B2Remove system bottleneckImprove performanceMemory adressing/allocation/relocationCode conversionDigital dataSolid-state storage

A parallel decompression system and method that decompresses input compressed data in one or more decompression cycles, with a plurality of tokens typically being decompressed in each cycle in parallel. A parallel decompression engine may include an input for receiving compressed data, a history window, and a plurality of decoders for examining and decoding a plurality of tokens from the compressed data in parallel in a series of decompression cycles. Several devices are described that may include the parallel decompression engine, including intelligent devices, network devices, adapters and other network connection devices, consumer devices, set-top boxes, digital-to-analog and analog-to-digital converters, digital data recording, reading and storage devices, optical data recording, reading and storage devices, solid state storage devices, processors, bus bridges, memory modules, and cache controllers.

Owner:INTELLECTUAL VENTURES I LLC

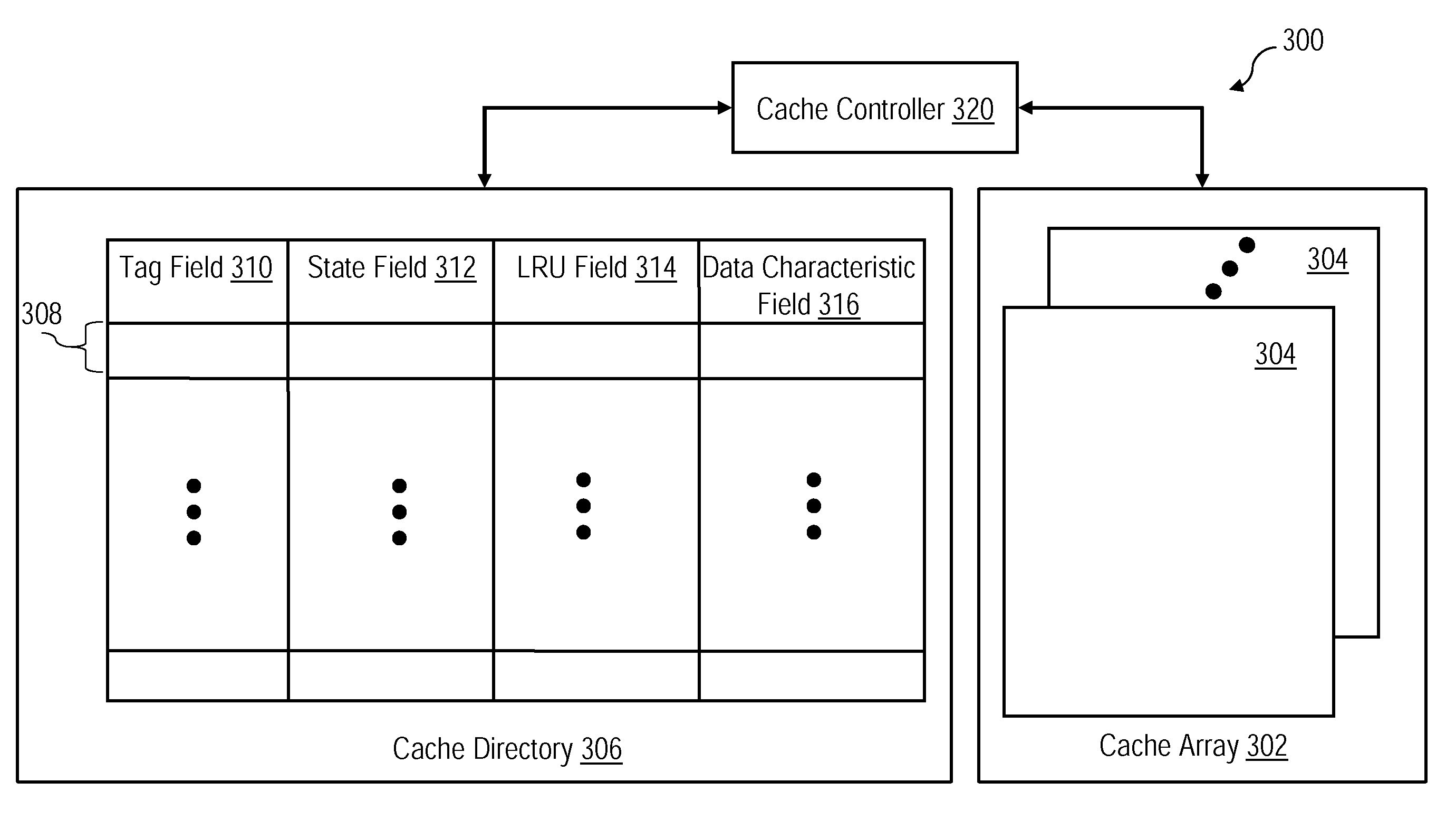

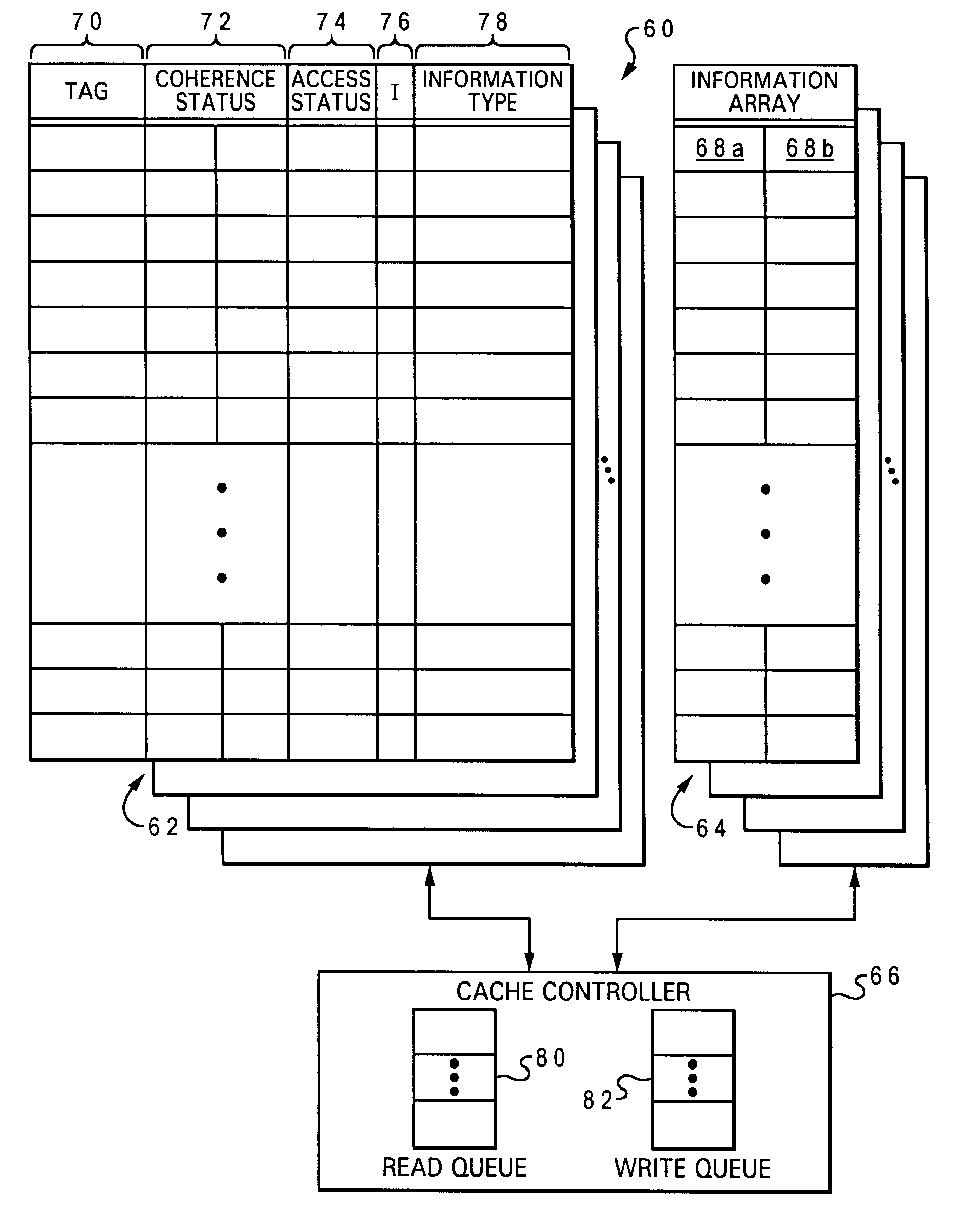

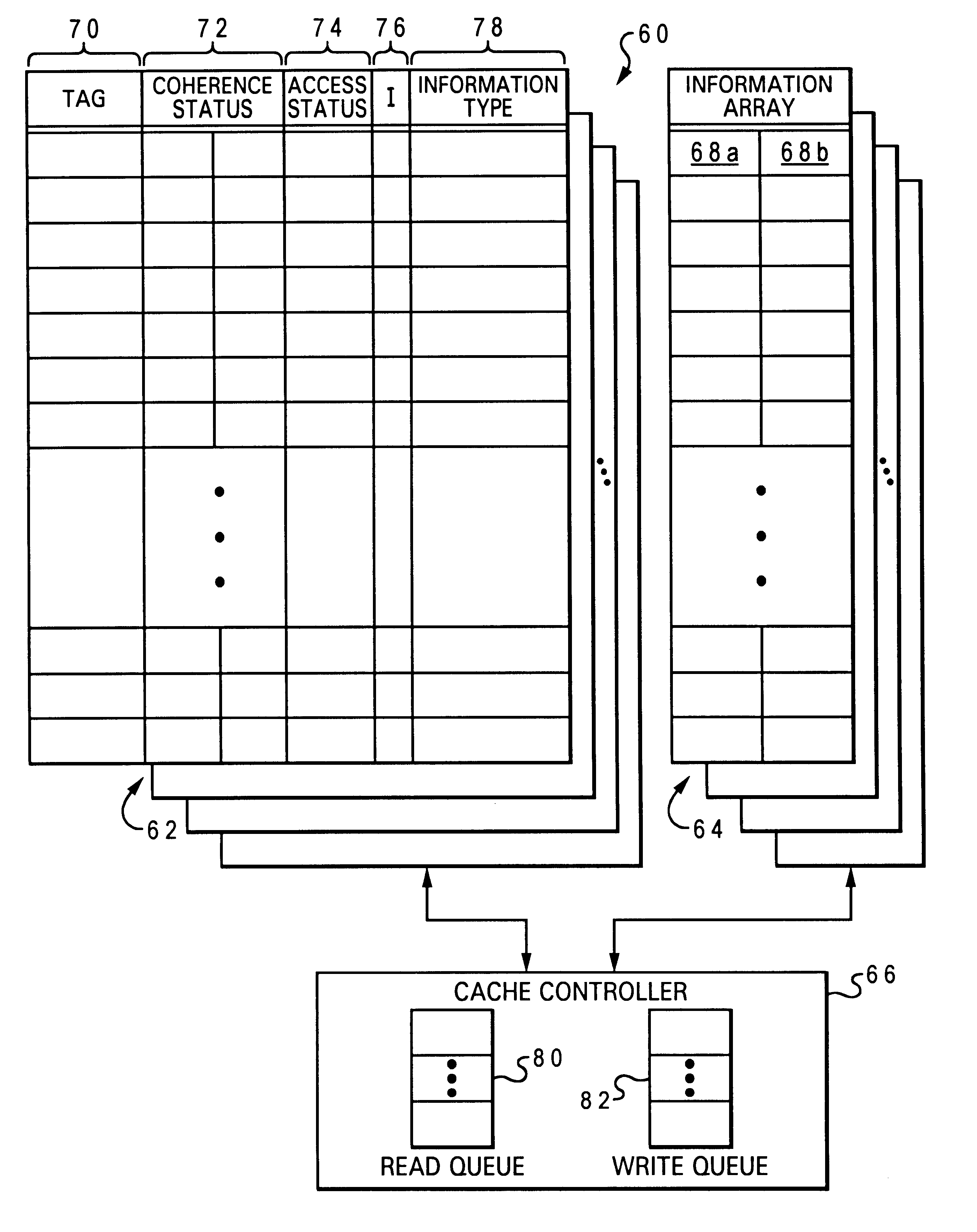

Method of cache management to dynamically update information-type dependent cache policies

A set associative cache includes a cache controller, a directory, and an array including at least one congruence class containing a plurality of sets. The plurality of sets are partitioned into multiple groups according to which of a plurality of information types each set can store. The sets are partitioned so that at least two of the groups include the same set and at least one of the sets can store fewer than all of the information types. To optimize cache operation, the cache controller dynamically modifies a cache policy of a first group while retaining a cache policy of a second group, thus permitting the operation of the cache to be individually optimized for different information types. The dynamic modification of cache policy can be performed in response to either a hardware-generated or software-generated input.

Owner:IBM CORP

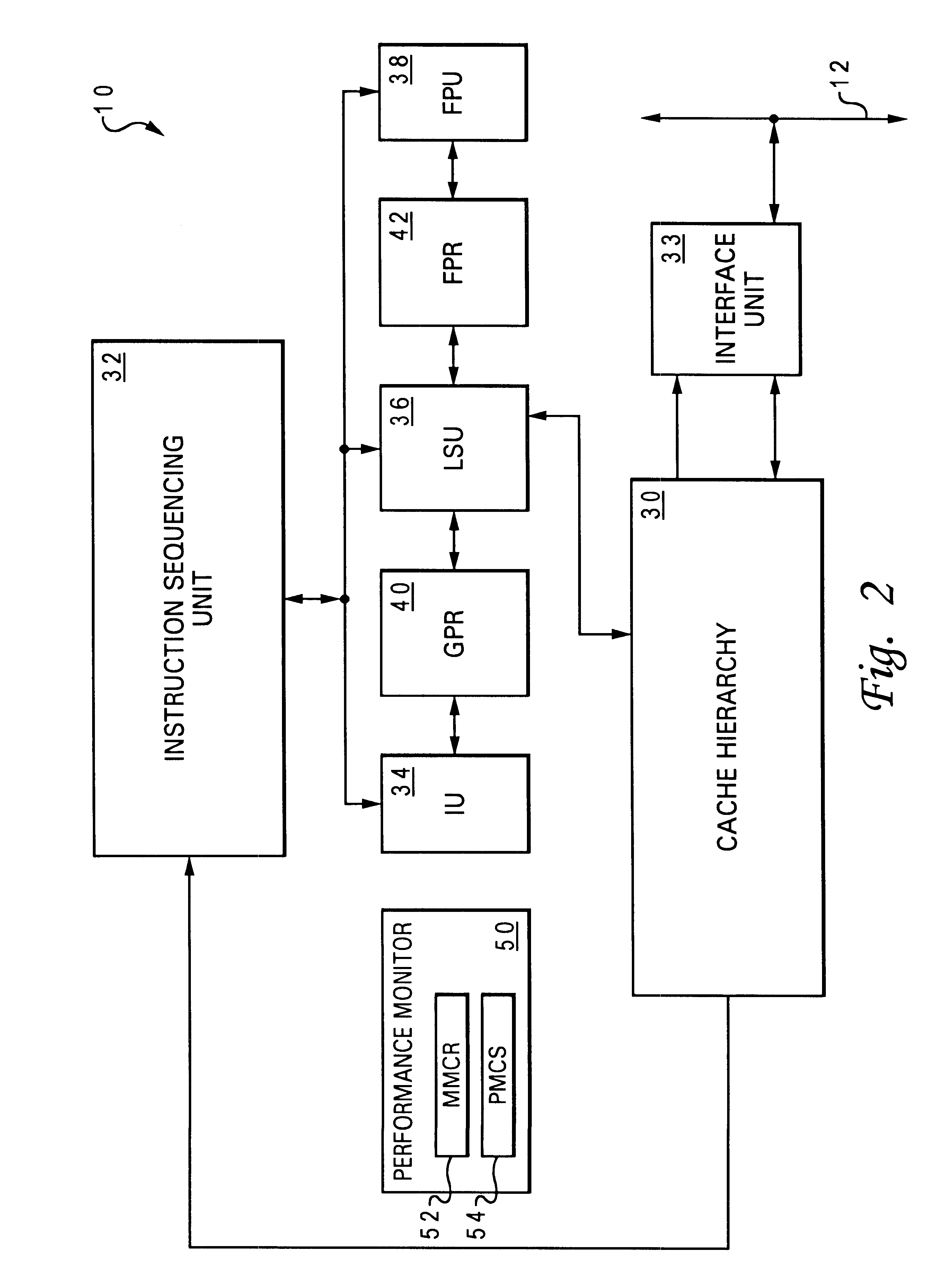

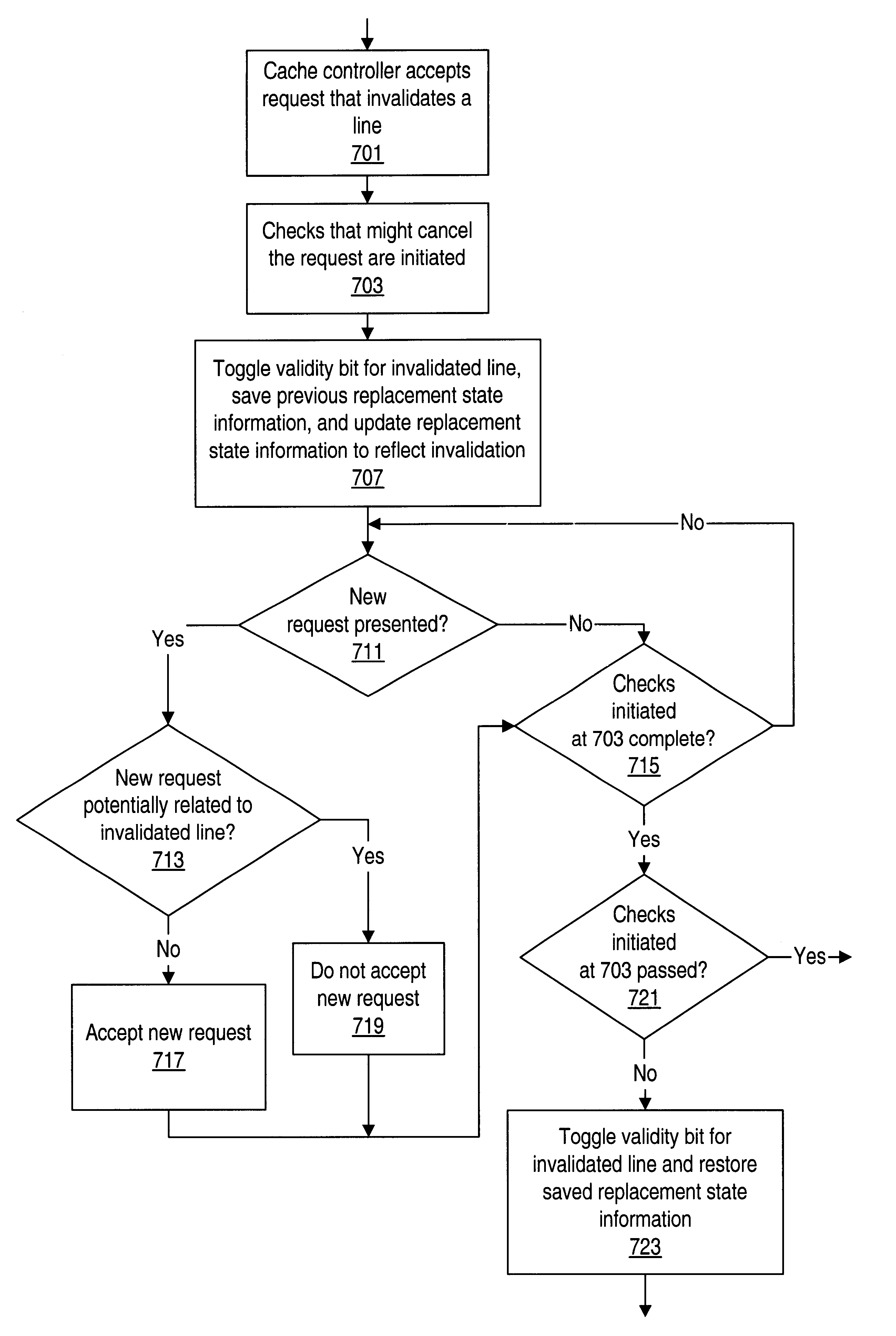

Method and system for speculatively invalidating lines in a cache

InactiveUS6725337B1Memory architecture accessing/allocationMemory adressing/allocation/relocationError checkParallel computing

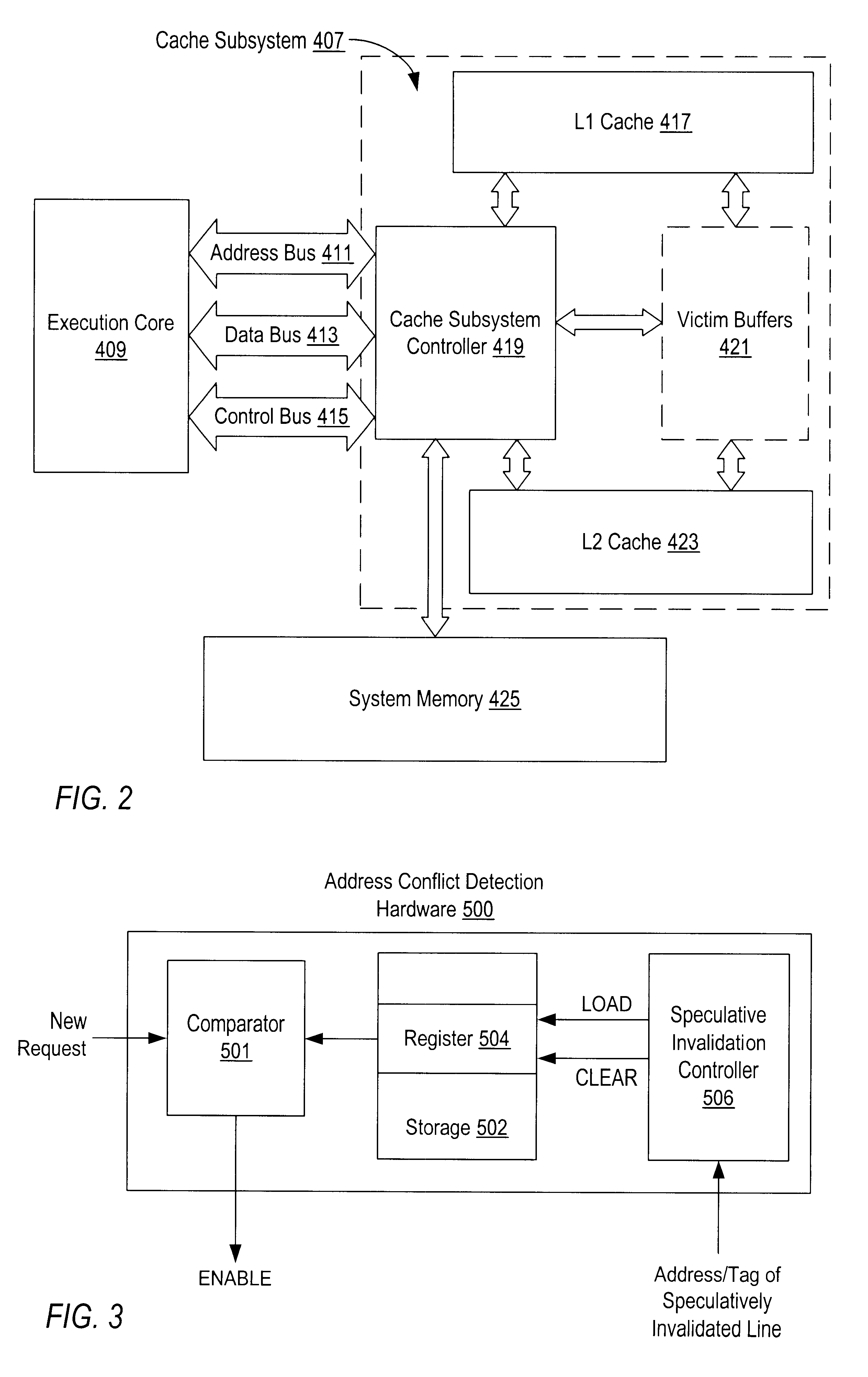

A cache controller configured to speculatively invalidate a cache line may respond to an invalidating request or instruction immediately instead of waiting for error checking to complete. In case the error checking determines that the invalidation is erroneous and thus should not be performed, the cache controller protects the speculatively invalidated cache line from modification until error checking is complete. This way, if the invalidation is later found to be erroneous, the speculative invalidation can be reversed. If error checking completes without detecting any errors, the speculative invalidation becomes non-speculative.

Owner:GLOBALFOUNDRIES US INC

Multi-Domain Management of a Cache in a Processor System

InactiveUS20100235580A1Memory adressing/allocation/relocationComputer security arrangementsMemory addressParallel computing

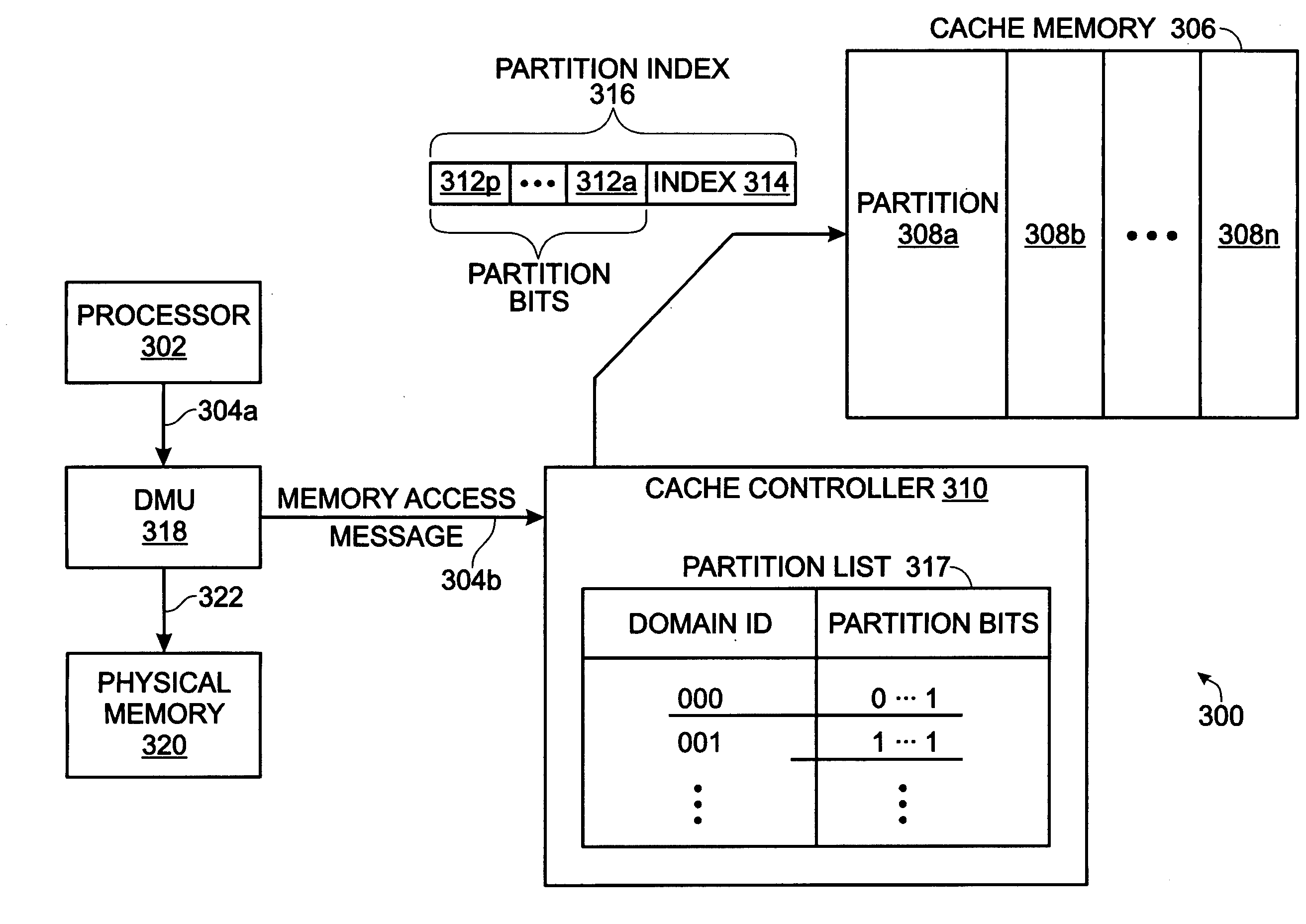

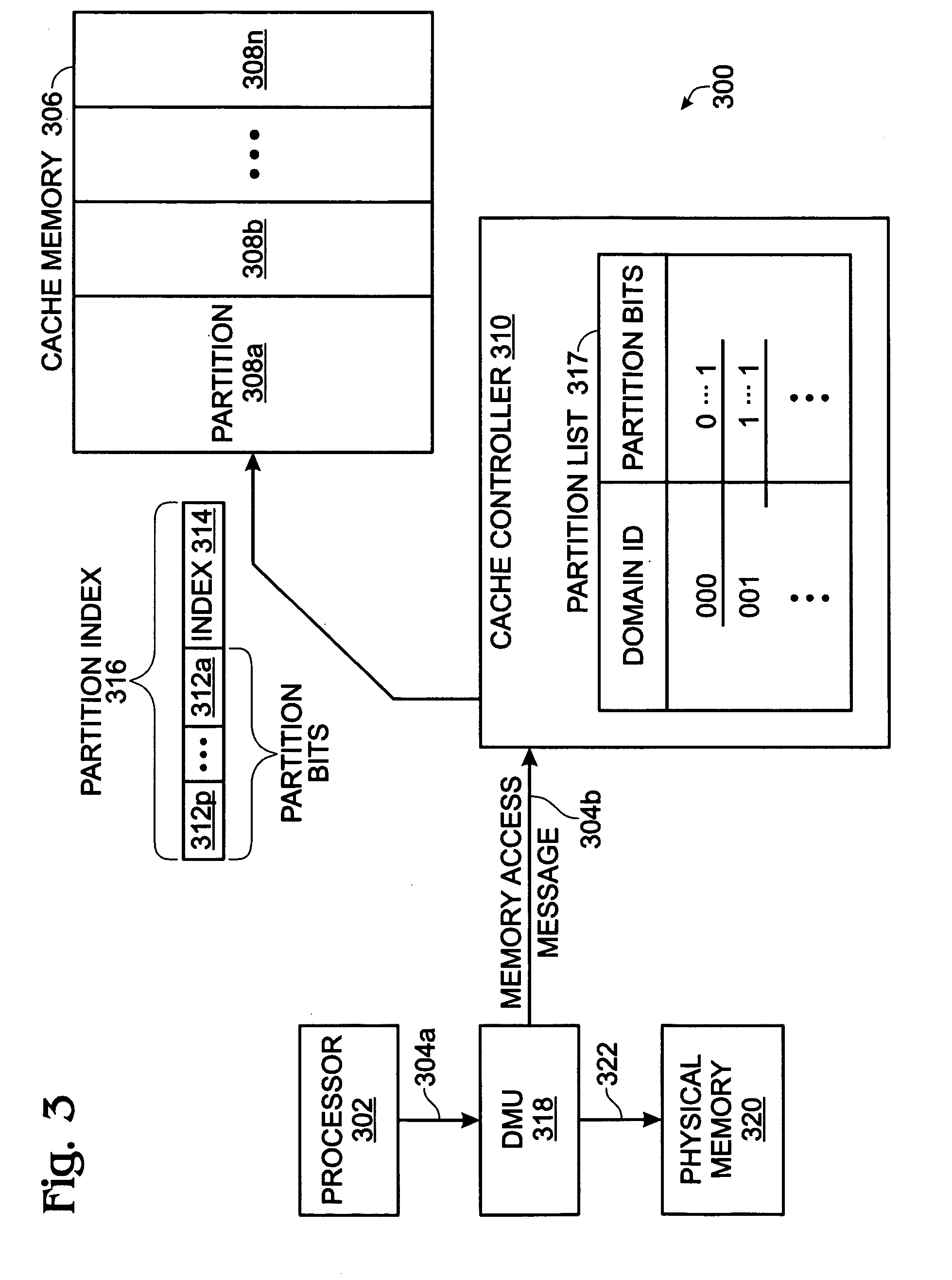

A system and method are provided for managing cache memory in a computer system. A cache controller portions a cache memory into a plurality of partitions, where each partition includes a plurality of physical cache addresses. Then, the method accepts a memory access message from the processor. The memory access message includes an address in physical memory and a domain identification (ID). A determination is made if the address in physical memory is cacheable. If cacheable, the domain ID is cross-referenced to a cache partition identified by partition bits. An index is derived from the physical memory address, and a partition index is created by combining the partition bits with the index. A processor is granted access (read or write) to an address in cache defined by partition index.

Owner:MACOM CONNECTIVITY SOLUTIONS LLC

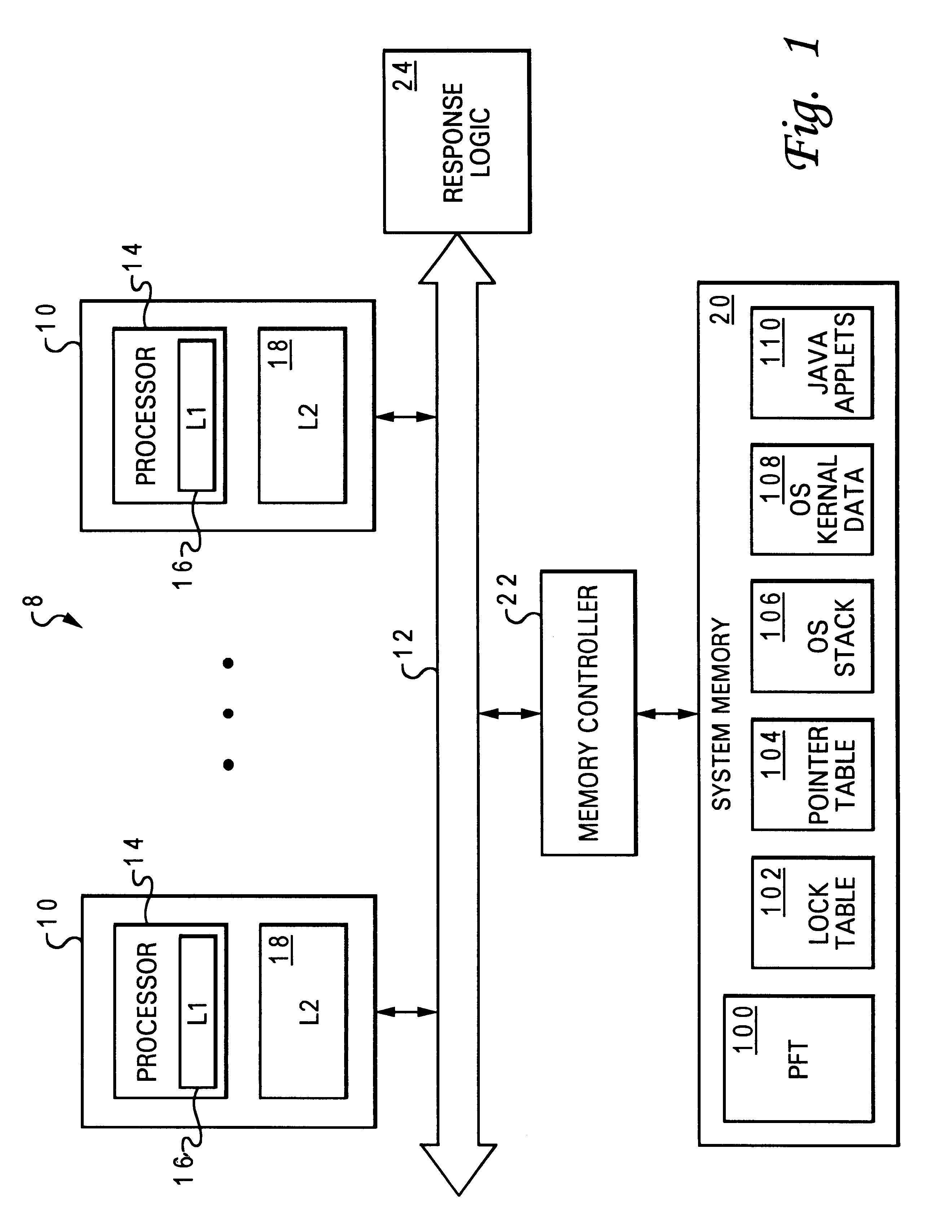

Data processing system, cache, and method that select a castout victim in response to the latencies of memory copies of cached data

A data processing system includes a processing unit, a distributed memory including a local memory and a remote memory having differing access latencies, and a cache coupled to the processing unit and to the distributed memory. The cache includes a congruence class containing a plurality of cache lines and a plurality of latency indicators that each indicate an access latency to the distributed memory for a respective one of the cache lines. The cache further includes a cache controller that selects a cache line in the congruence class as a castout victim in response to the access latencies indicated by the plurality of latency indicators. In one preferred embodiment, the cache controller preferentially selects as castout victims cache lines having relatively short access latencies.

Owner:IBM CORP

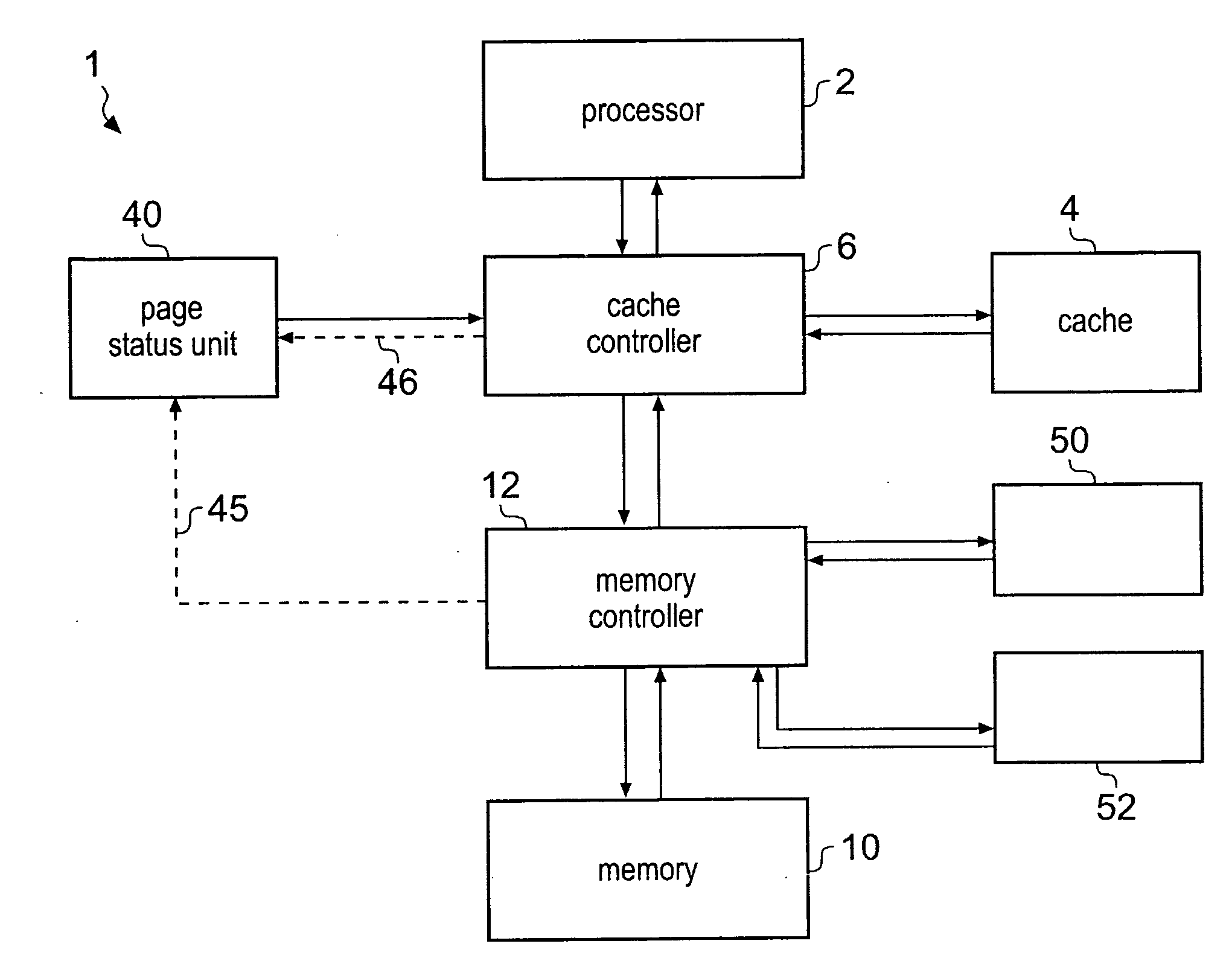

Efficiency of cache memory operations

InactiveUS20090265514A1Improve caching efficiencyEasy accessMemory systemsParallel computingCache management

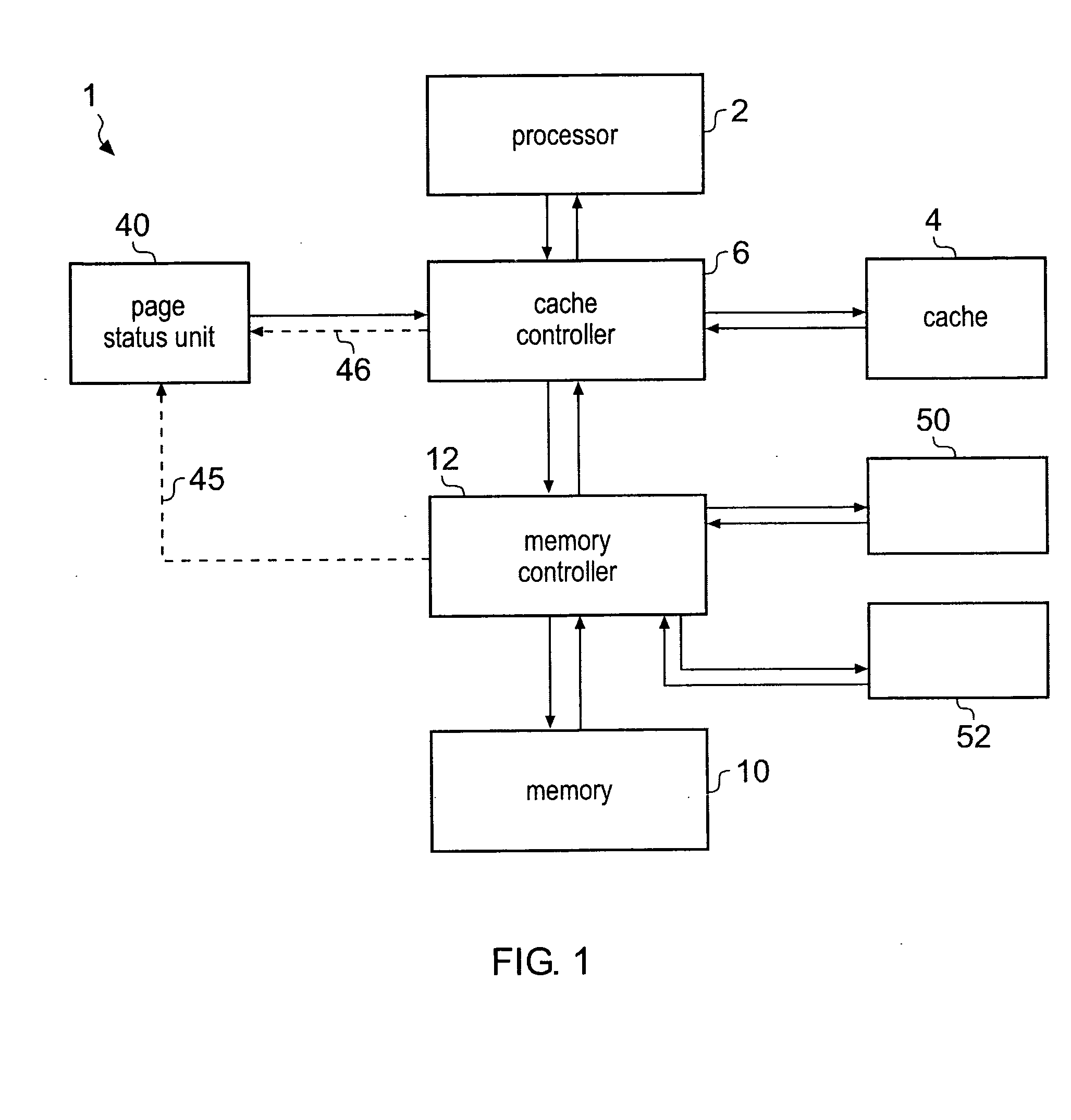

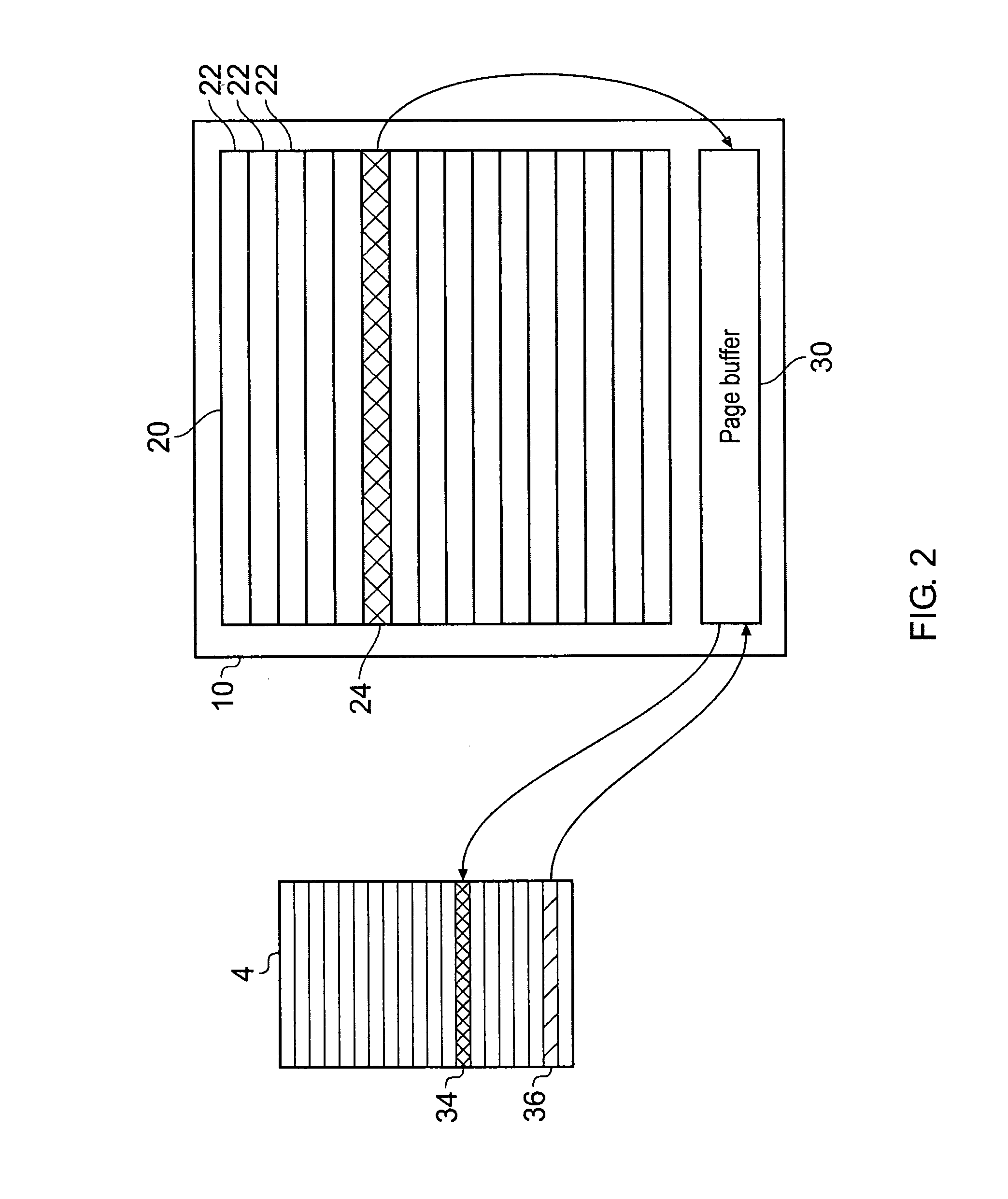

A processing system 1 including a memory 10 and a cache memory 4 is provided with a page status unit 40 for providing a cache controller with a page open indication indicating one or more open pages of data values in memory. At least one of one or more cache management operations performed by the cache controller is responsive to the page open indication so that the efficiency and / or speed of the processing system can be improved.

Owner:ARM LTD

Non-uniform cache architecture (NUCA)

InactiveUS20100115204A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache controller

In one embodiment, a cache memory includes a cache array including a plurality of entries for caching cache lines of data, where the plurality of entries are distributed between a first region implemented in a first memory technology and a second region implemented in a second memory technology. The cache memory further includes a cache directory of the contents of the cache array and a cache controller that controls operation of the cache memory.

Owner:IBM CORP

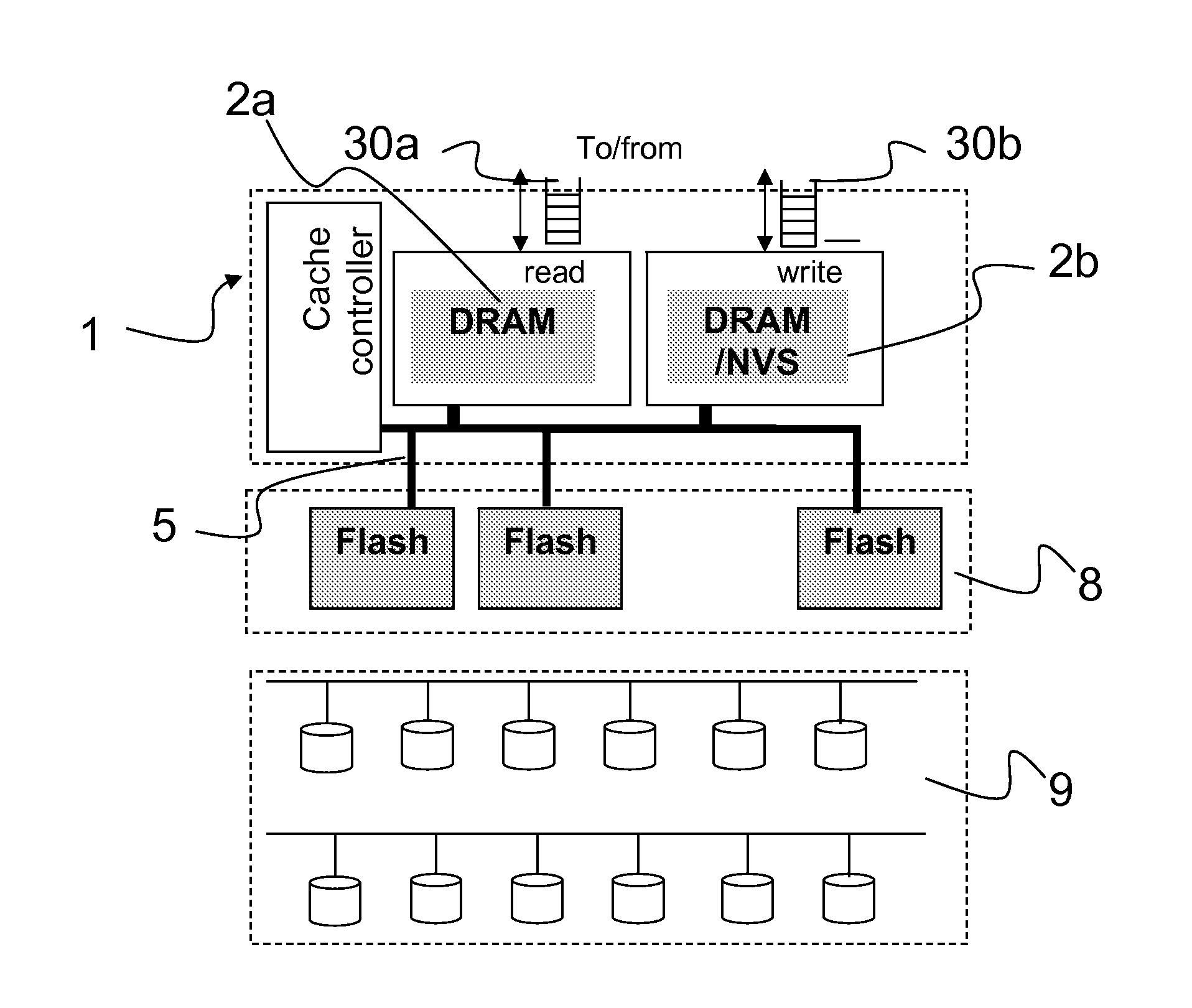

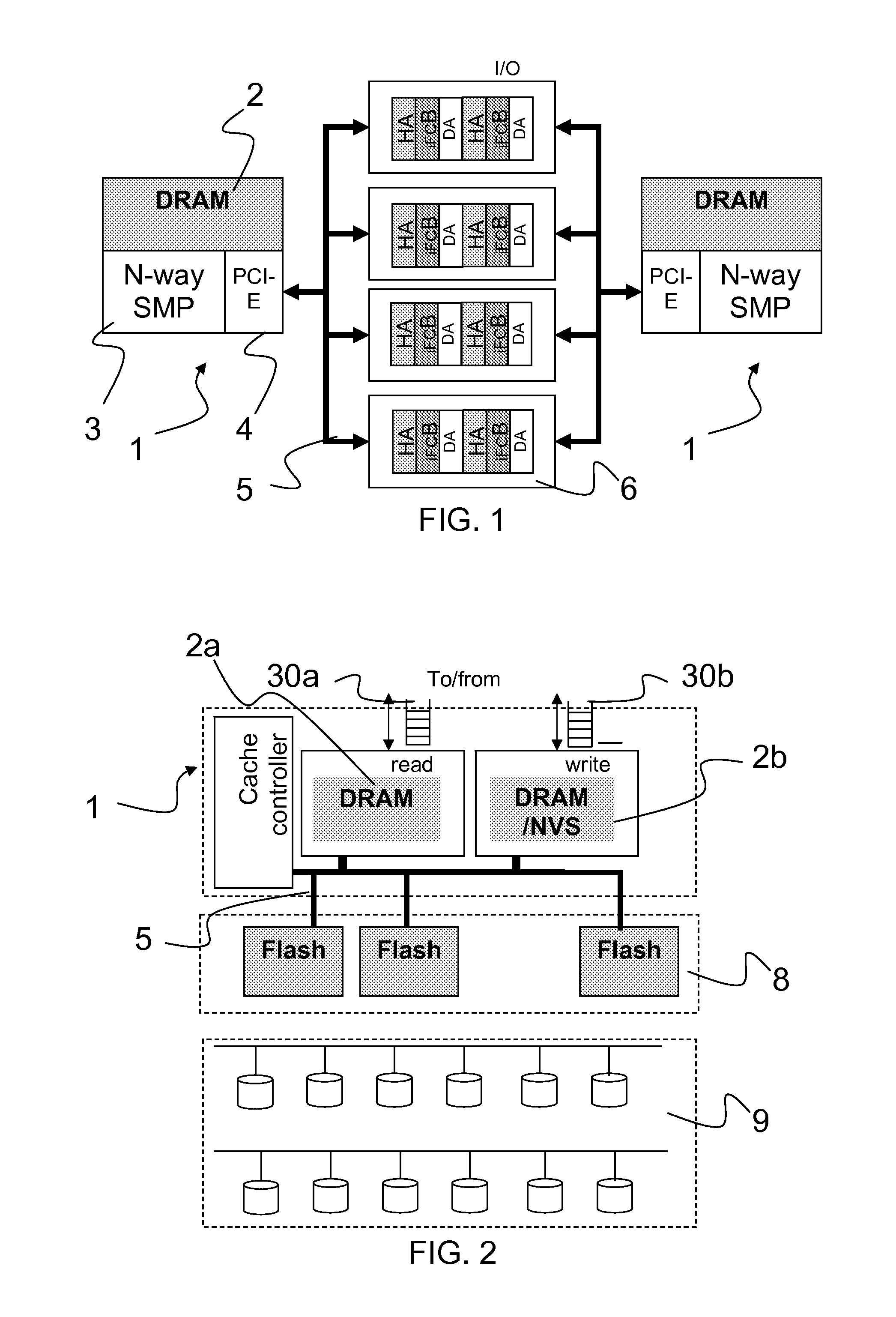

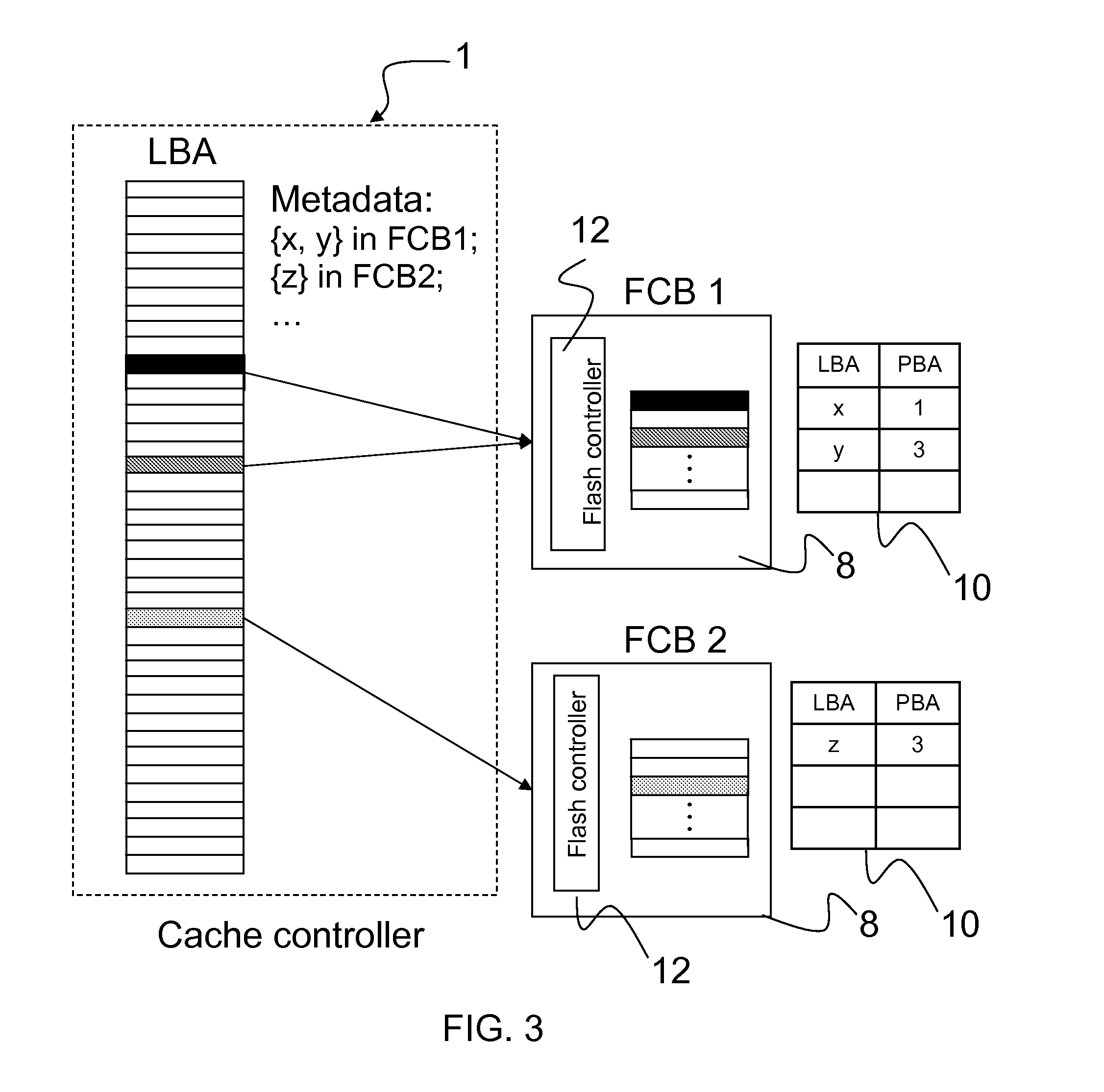

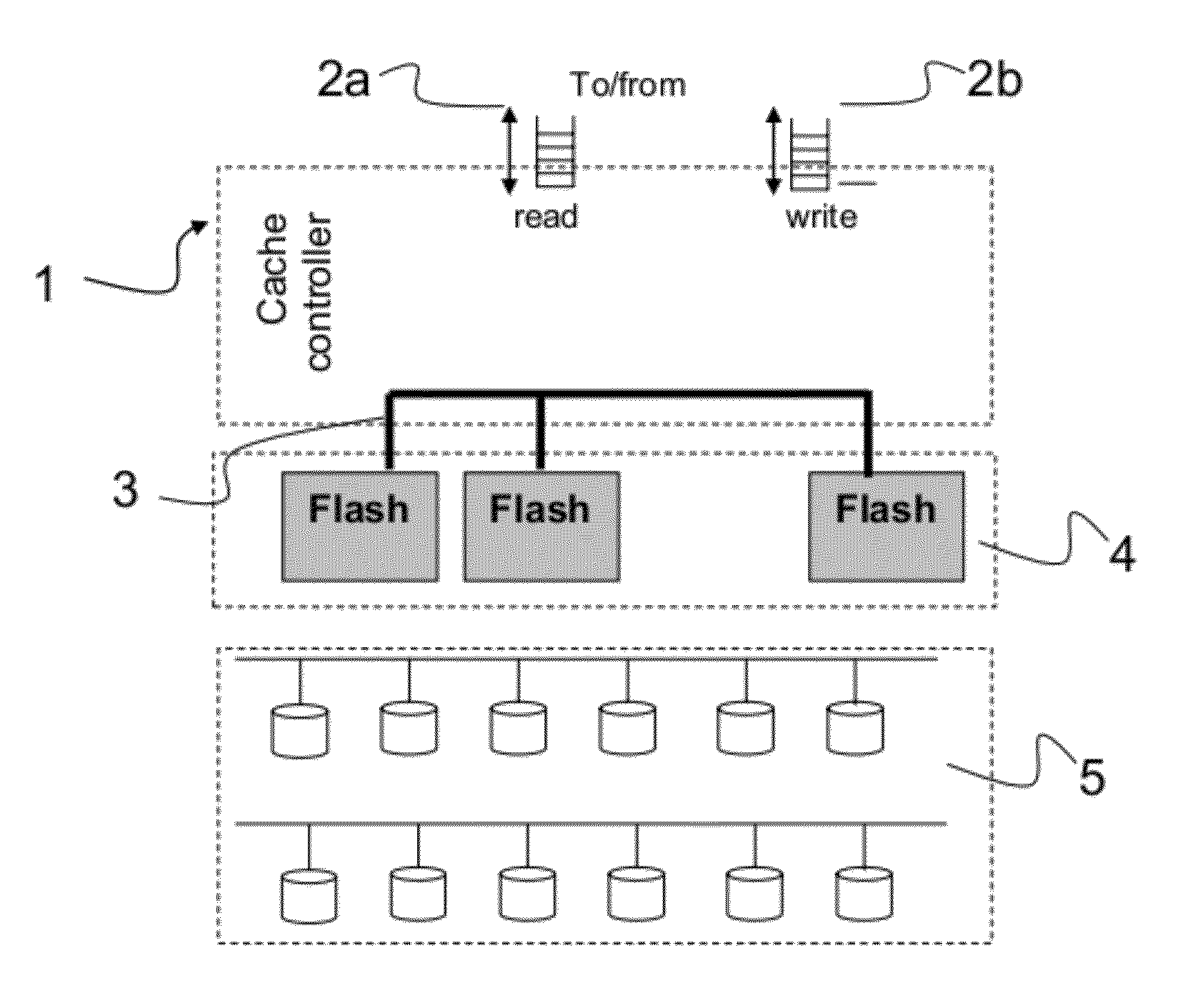

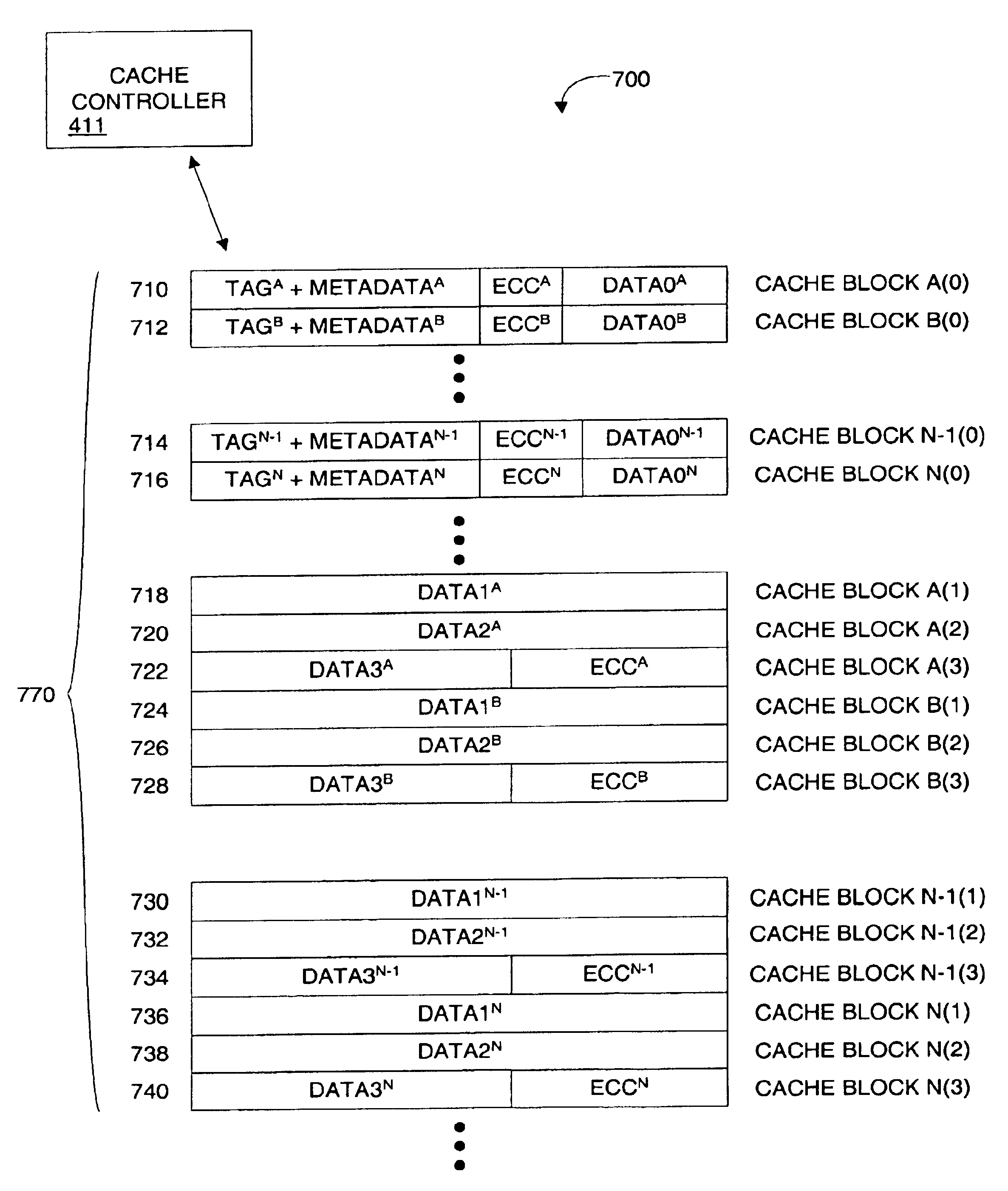

Cache memory management in a flash cache architecture

InactiveUS20130145089A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingMetadata

Owner:INT BUSINESS MASCH CORP

Method and apparatus for accelerating input/output processing using cache injections

InactiveUS6711650B1Memory adressing/allocation/relocationInput/output processes for data processingData processing systemDirect memory access

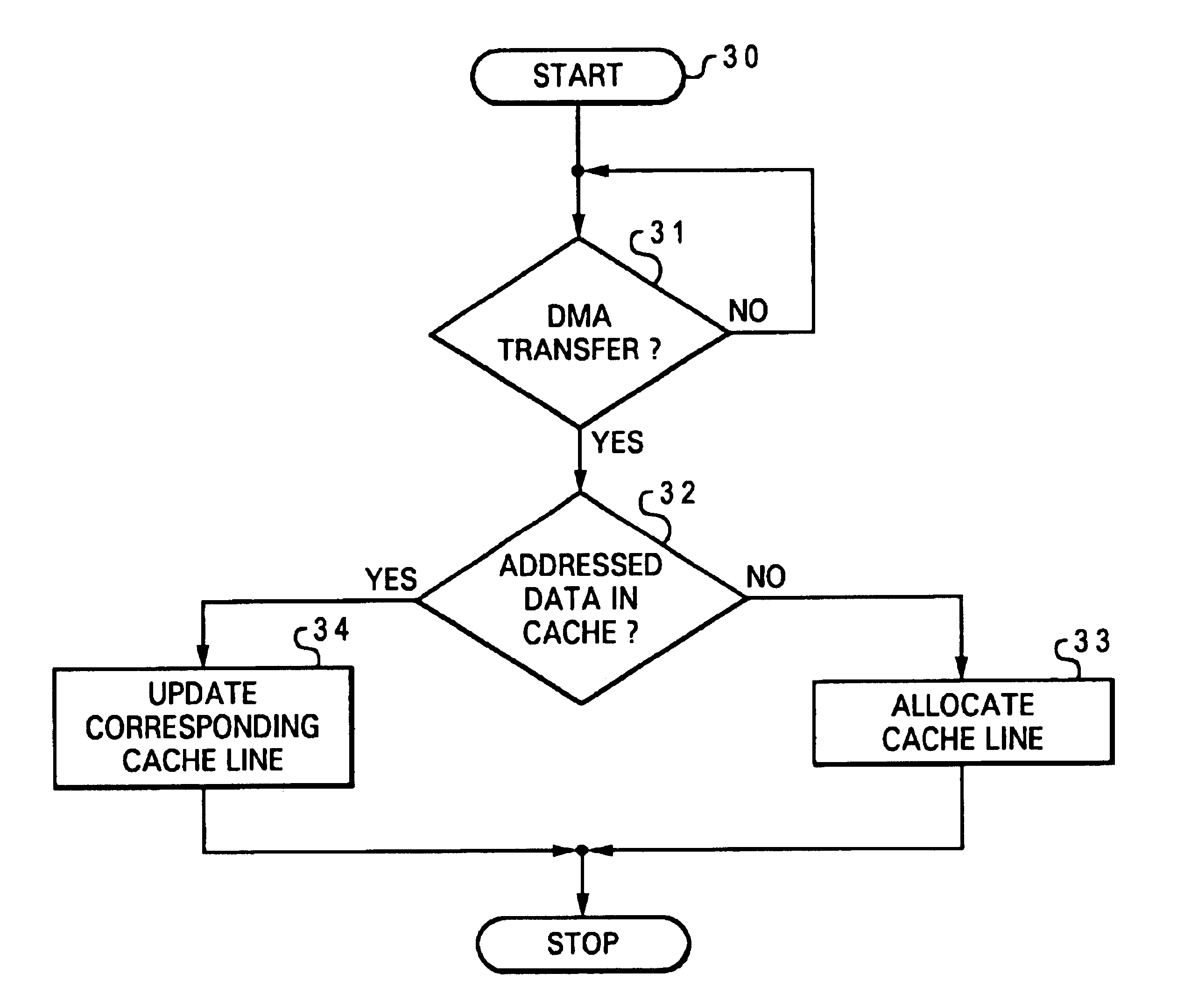

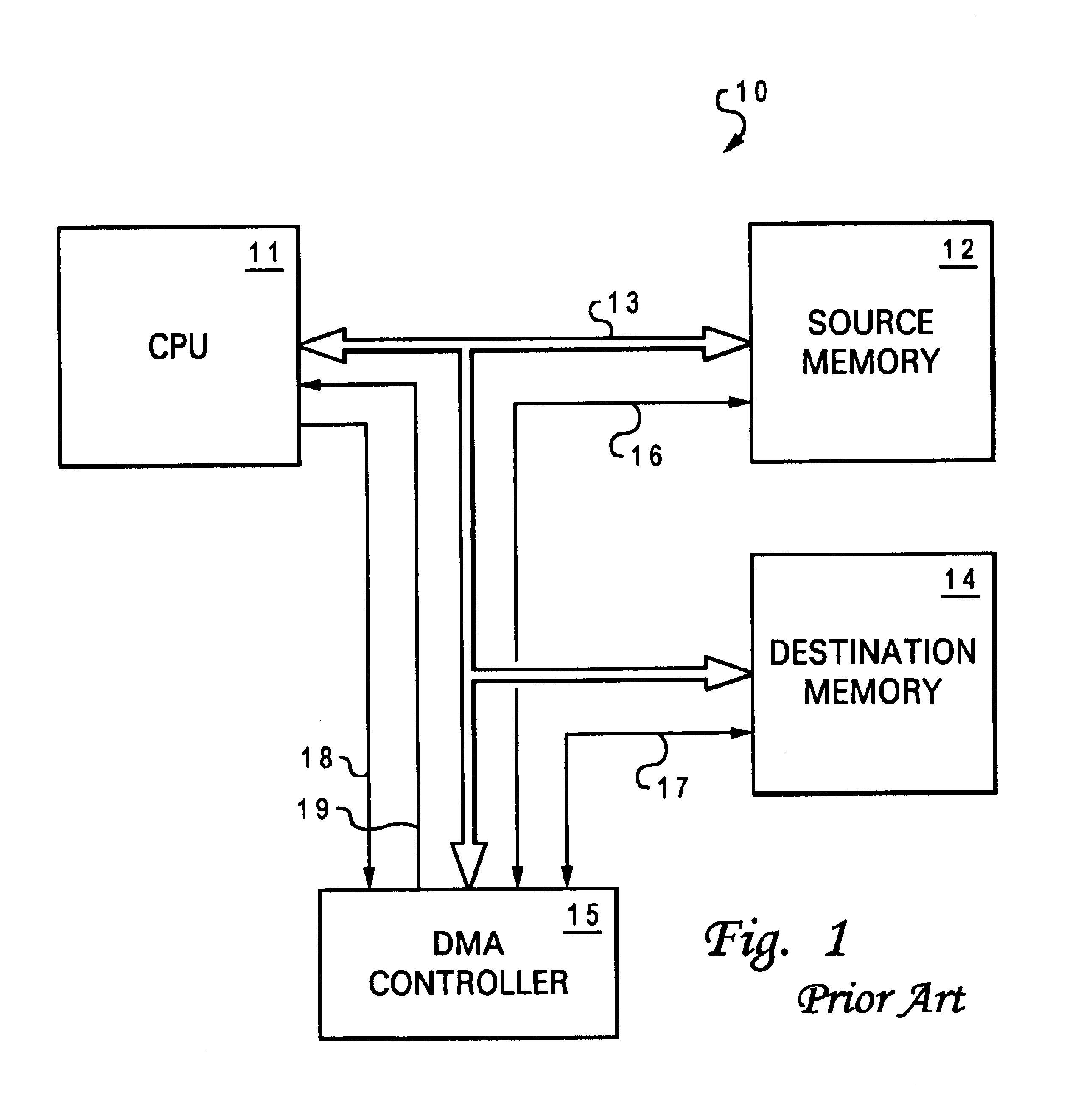

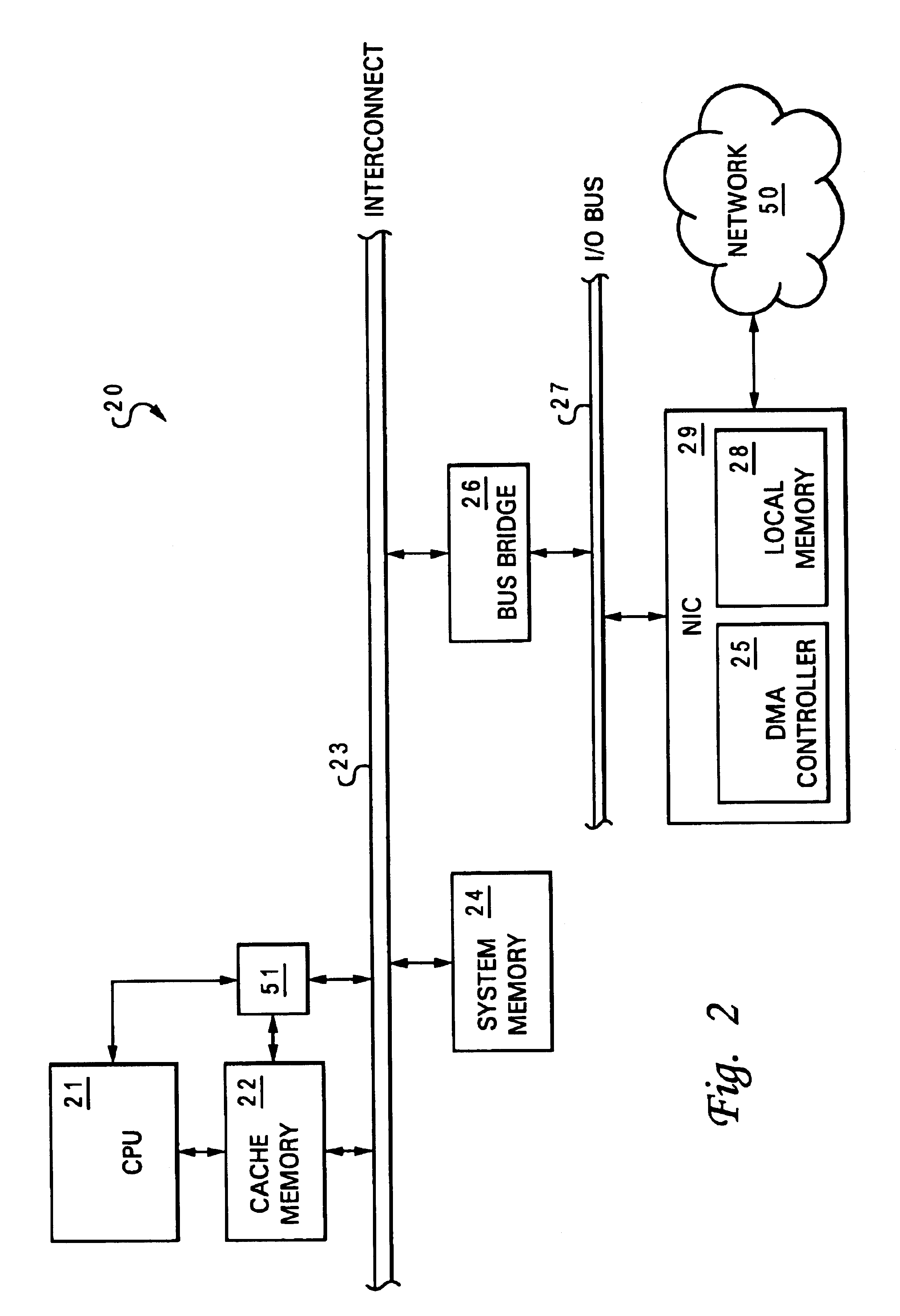

A method for accelerating input / output operations within a data processing system is disclosed. Initially, a determination is initially made in a cache controller as to whether or not a bus operation is a data transfer from a first memory to a second memory without intervening communications through a processor, such as a direct memory access (DMA) transfer. If the bus operation is such data transfer, a determination is made in a cache memory as to whether or not the cache memory includes a copy of data from the data transfer. If the cache memory does not include a copy of data from the data transfer, a cache line is allocated within the cache memory to store a copy of data from the data transfer.

Owner:IBM CORP

Management of cache memory in a flash cache architecture

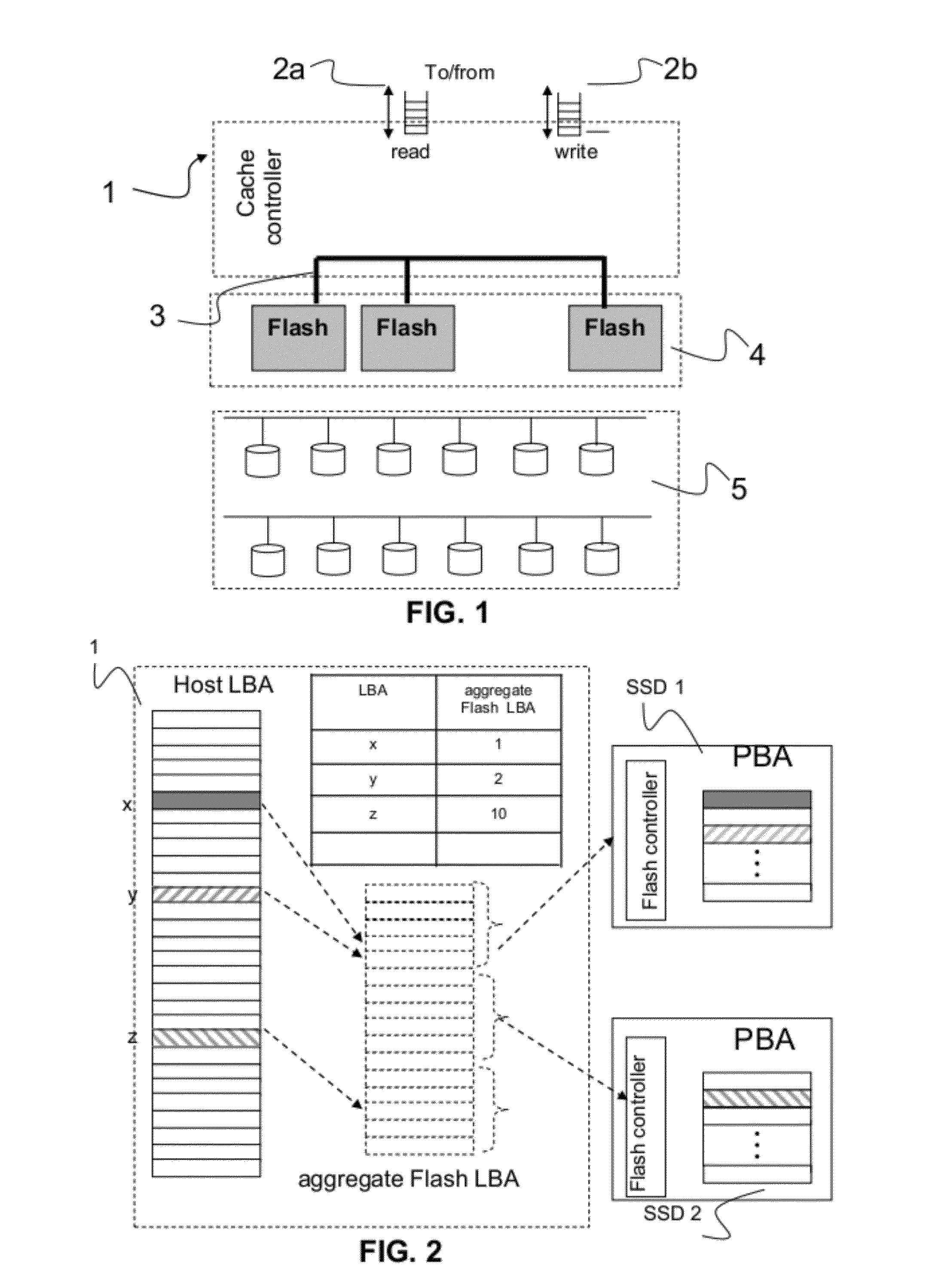

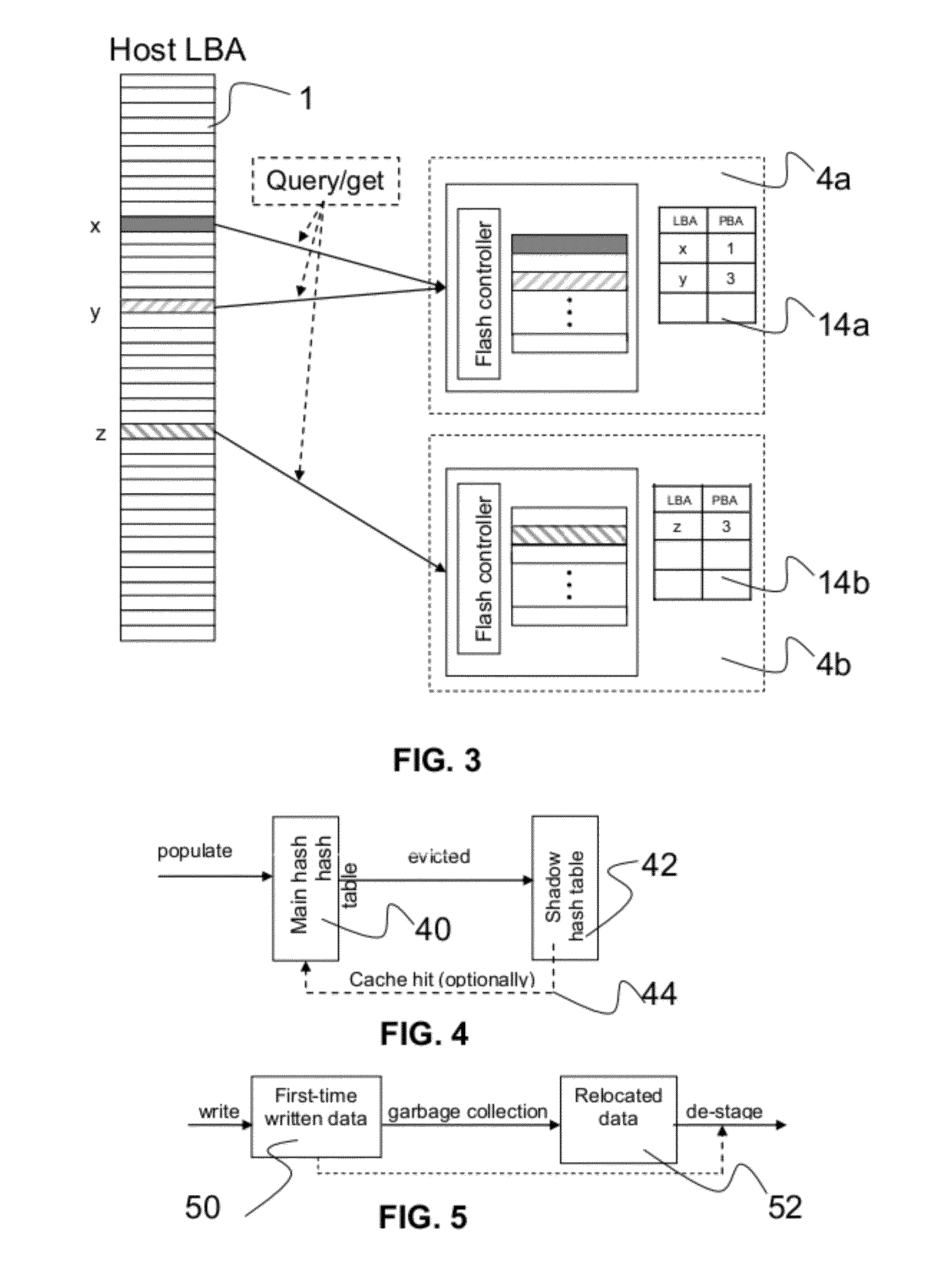

InactiveUS20120110247A1Overcome deficienciesMemory adressing/allocation/relocationFlash memory controllerComputer science

A method for managing cache memory in a flash cache architecture. The method includes providing a storage cache controller, at least a flash memory comprising a flash controller, and at least a backend storage device, and maintaining read cache metadata for tracking on the flash memory cached data to be read, and write cache metadata for tracking on the flash memory data expected to be cached.

Owner:IBM CORP

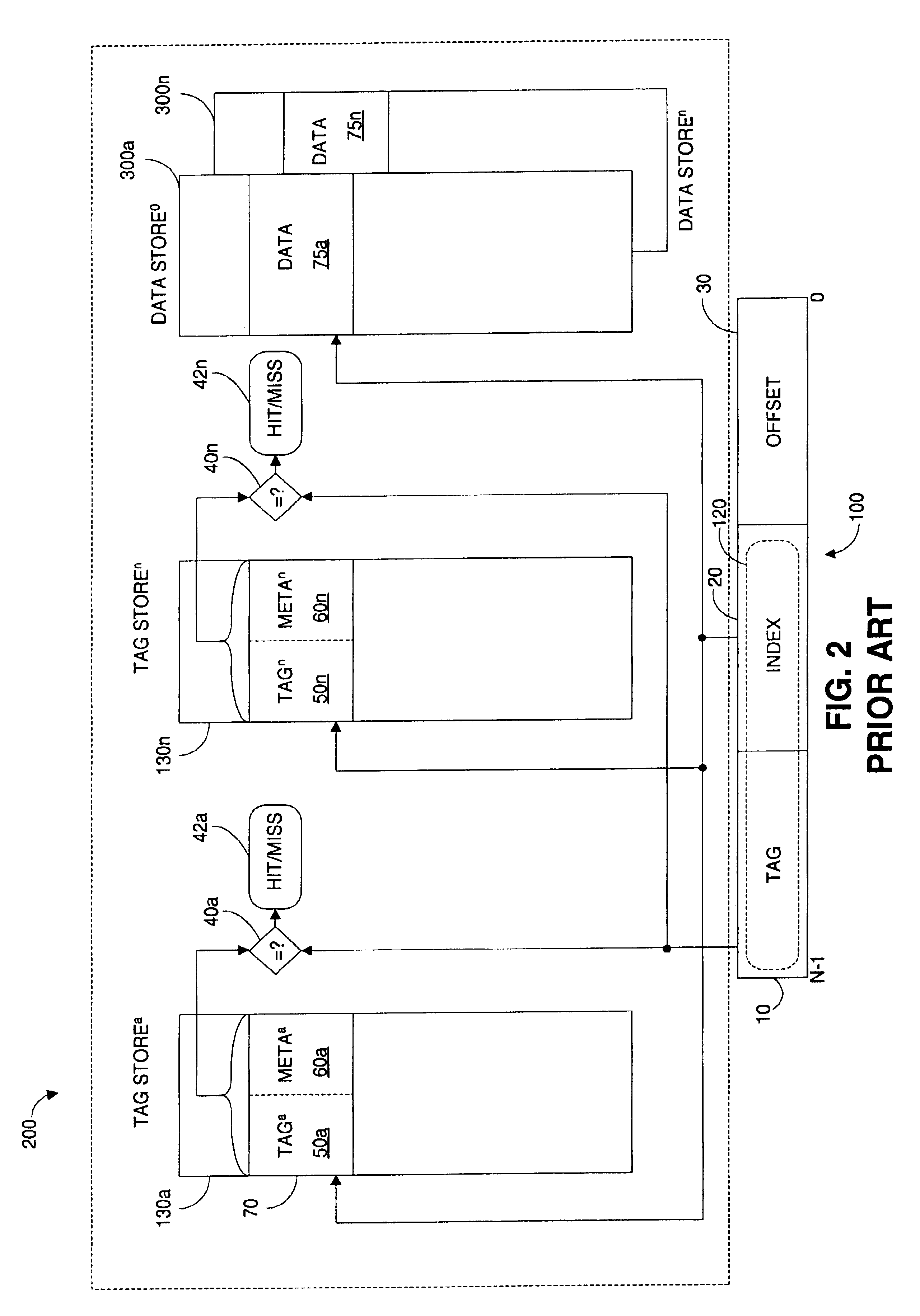

N-way set-associative external cache with standard DDR memory devices

InactiveUS6912628B2Increasing snoop bandwidthHigh bandwidthMemory architecture accessing/allocationMemory adressing/allocation/relocationDouble data rateBurst transmission

A method, cache system, and cache controller are provided. A two-way and n-way cache organization scheme are presented as at least two embodiments of a set-associative external cache that utilizes standard burst memory devices such as DDR (double data rate) memory devices. The set-associative cache organization scheme is designed to fully utilize burst efficiencies during snoop and invalidation operations. Cache lines are interleaved in such a way that a first burst transfer from the cache to the cache controller brings in a plurality of tags.

Owner:ORACLE INT CORP

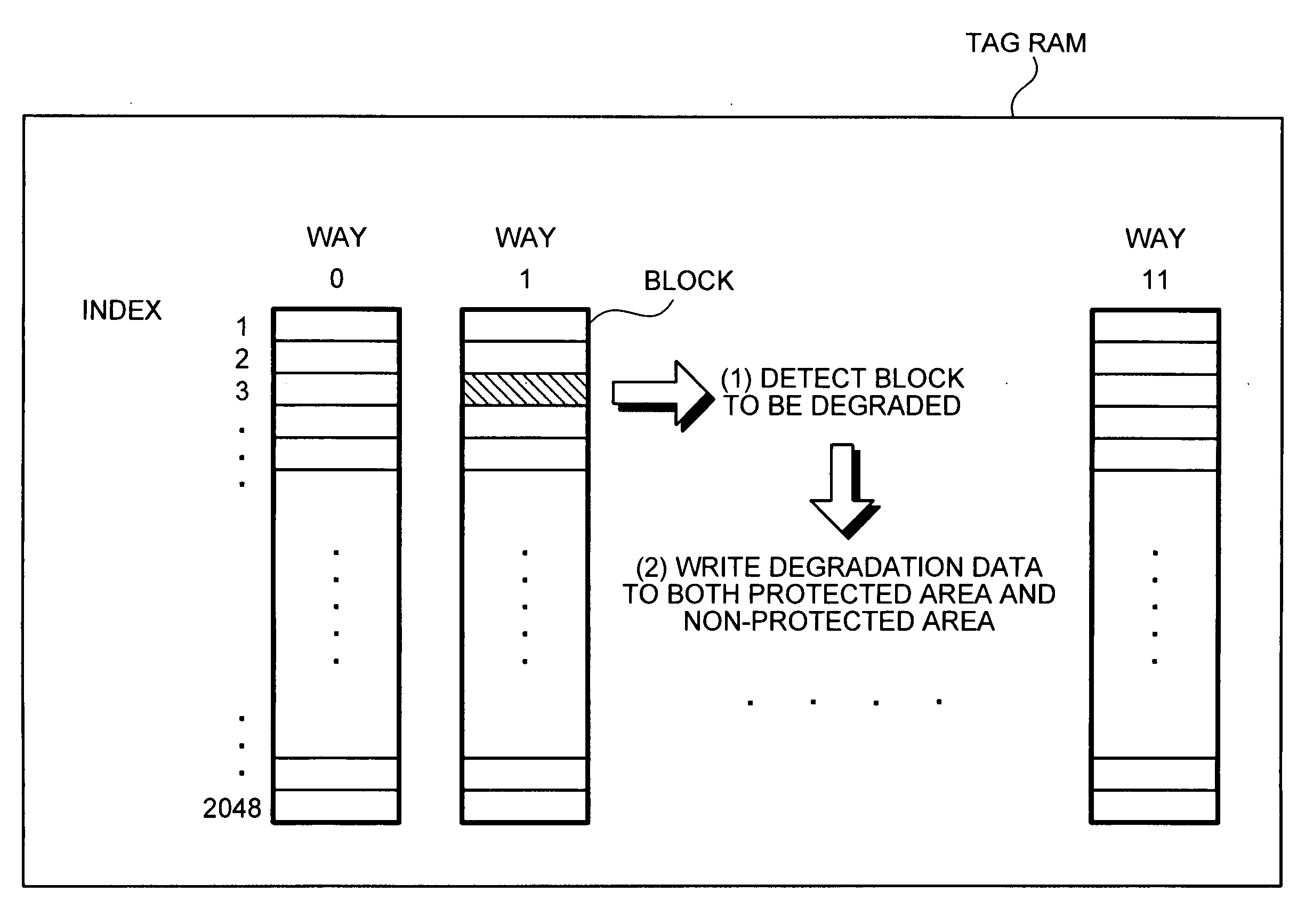

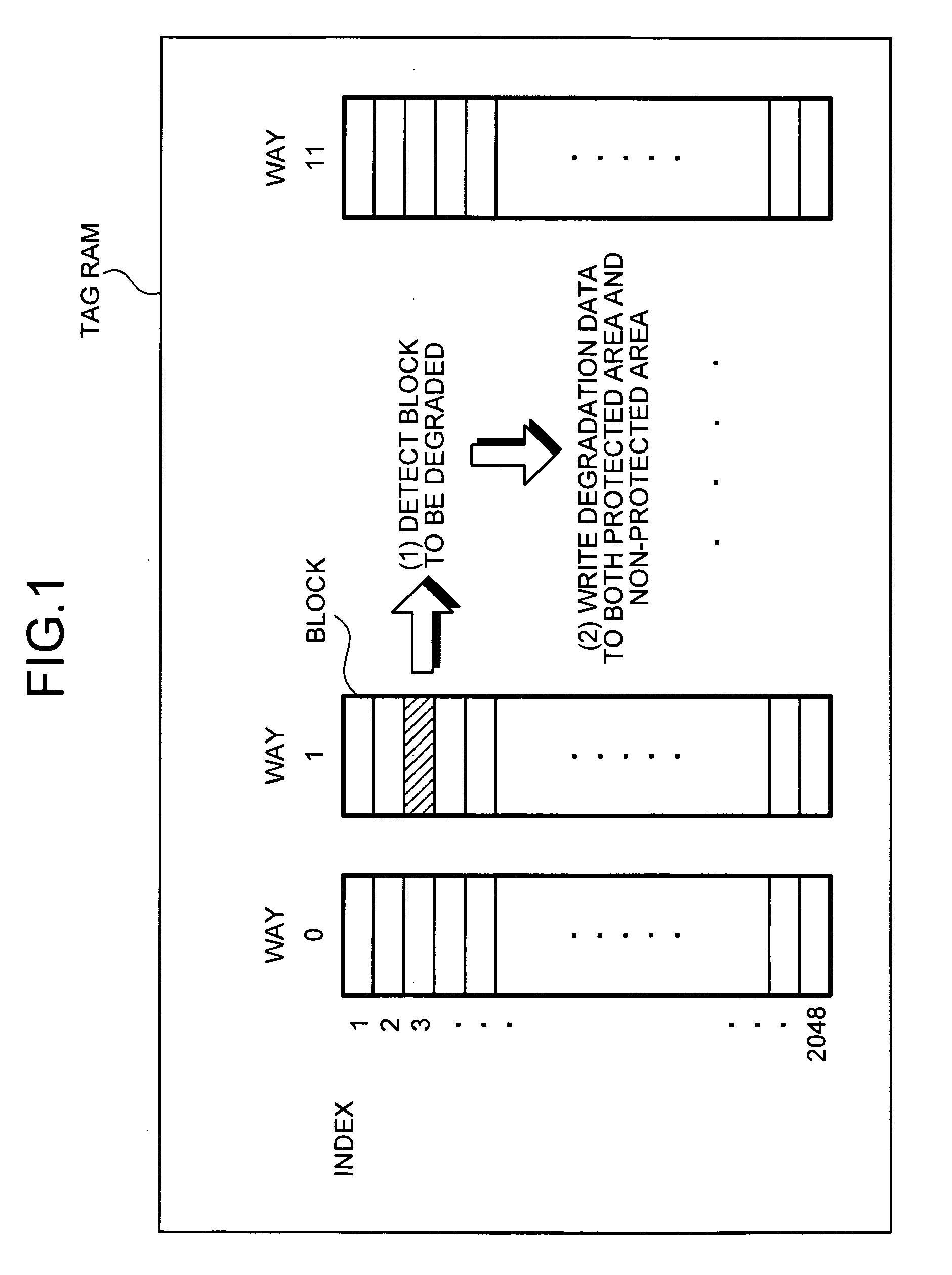

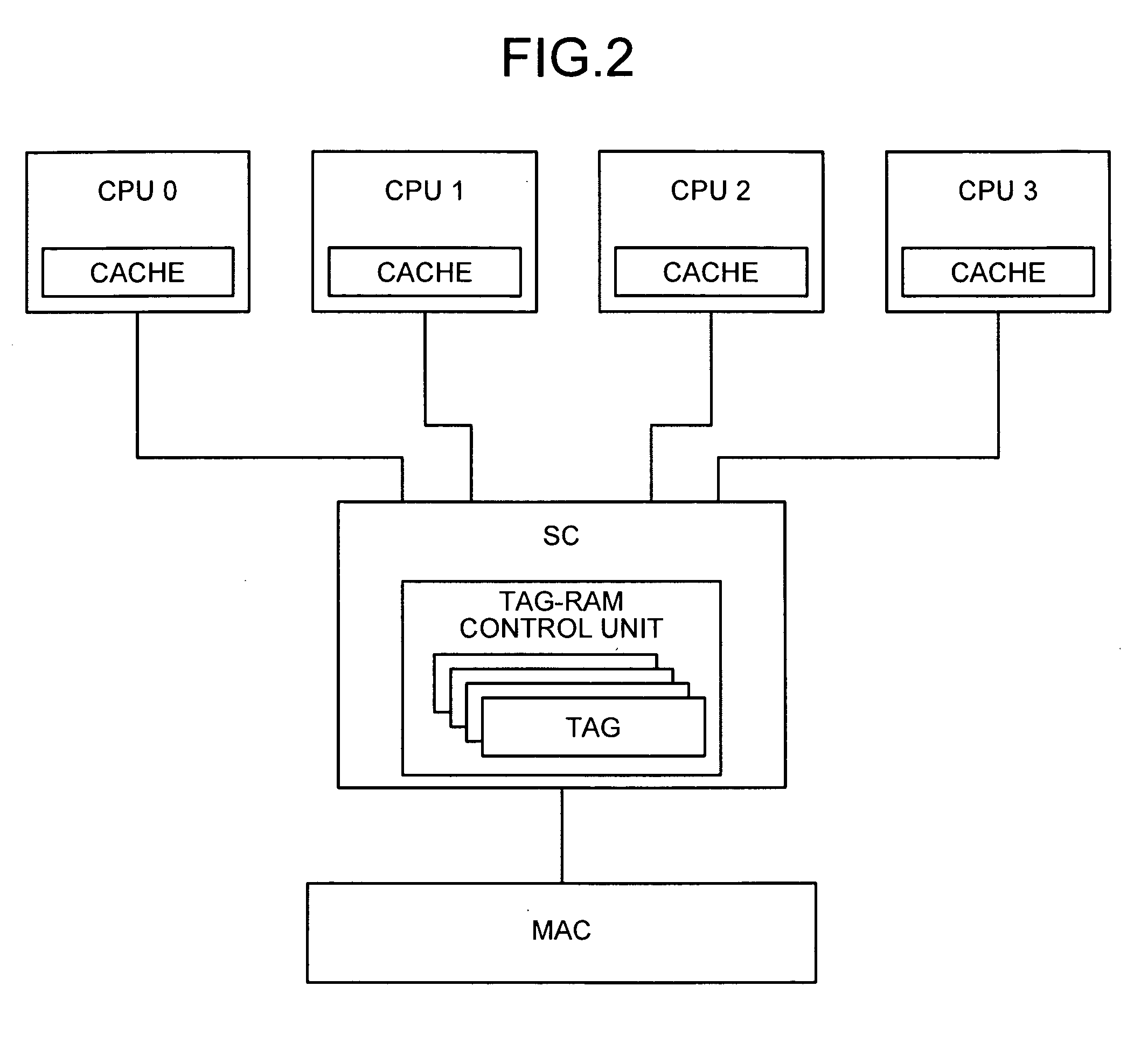

Method and apparatus for controlling cache

InactiveUS20080282037A1Solve problemsError detection/correctionMemory systemsParallel computingCache controller

A cache controller controls at least one cache. The cache includes ways including a plurality of blocks that stores therein entry data. A writing unit writes degradation data to a failed block. The degradation data indicates that the failed block is in a degradation state. A reading unit reads entry data from a block. A determining unit determines, if the entry data obtained by the reading unit includes the degradation data, that the block is in the degradation state.

Owner:FUJITSU LTD

Cache management mechanism to enable information-type dependent cache policies

A set associative cache includes a cache controller, a directory, and an array including at least one congruence class containing a plurality of sets. The plurality of sets are partitioned into multiple groups according to which of a plurality of information types each set can store. The sets are partitioned so that at least two of the groups include the same set and at least one of the sets can store fewer than all of the information types. The cache controller then implements different cache policies for at least two of the plurality of groups, thus permitting the operation of the cache to be individually optimized for different information types.

Owner:IBM CORP

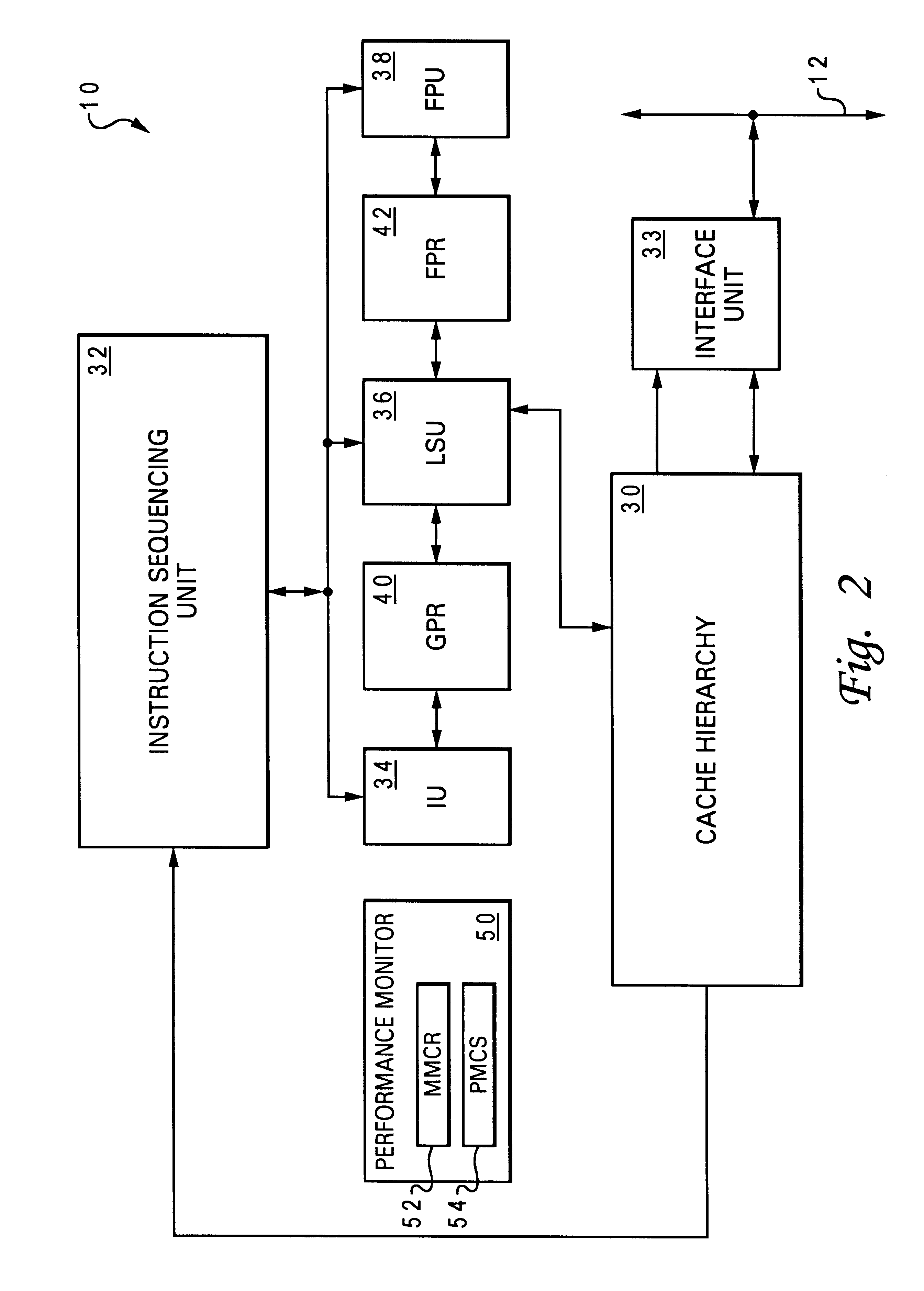

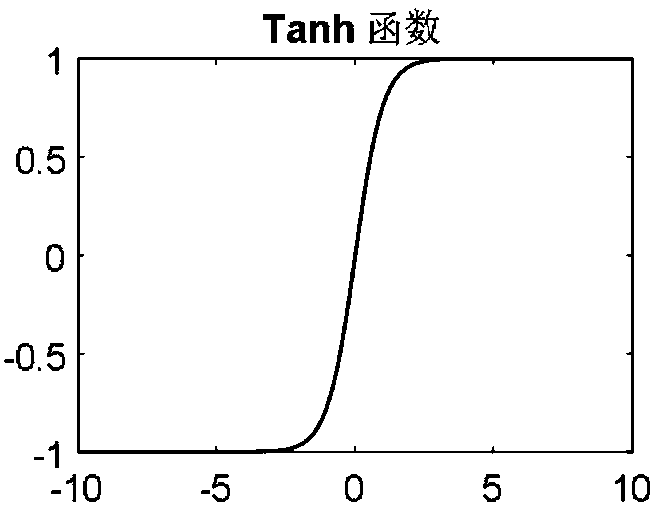

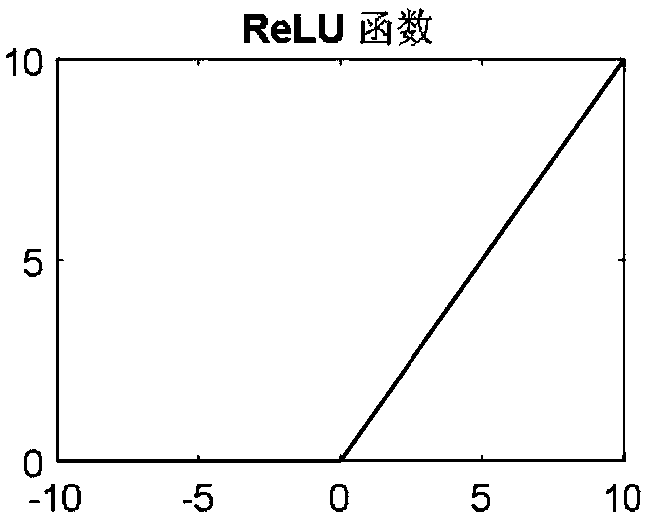

Sparse neural network architecture and realization method thereof

ActiveCN107609641ACalculation balanceImprove resource utilizationPhysical realisationResource utilizationParallel computing

The invention discloses a sparse neural network architecture and a realization method thereof. The sparse neural network architecture comprises an external memory controller, a weight cache, an inputcache, and output cache, an input cache controller and a computing array, wherein the computing array comprises multiple computing units, each row of reconfigurable computing units in the computing array share partial input in the input cache, and a partial weight, shared by each column of reconfigurable computing units, in the weight cache is computed; the input cache controller performs sparse operation on input of the input cache, and a zero value in the input is removed; and the external memory controller stores data of the computing array before and after processing. Through the sparse neural network architecture and the realization method thereof, invalid computing performed when the input is zero can be reduced and even eliminated, computed quantities among all the computing units are balanced, the hardware resource utilization rate is increased, and meanwhile shortest computing delay is guaranteed.

Owner:TSINGHUA UNIV

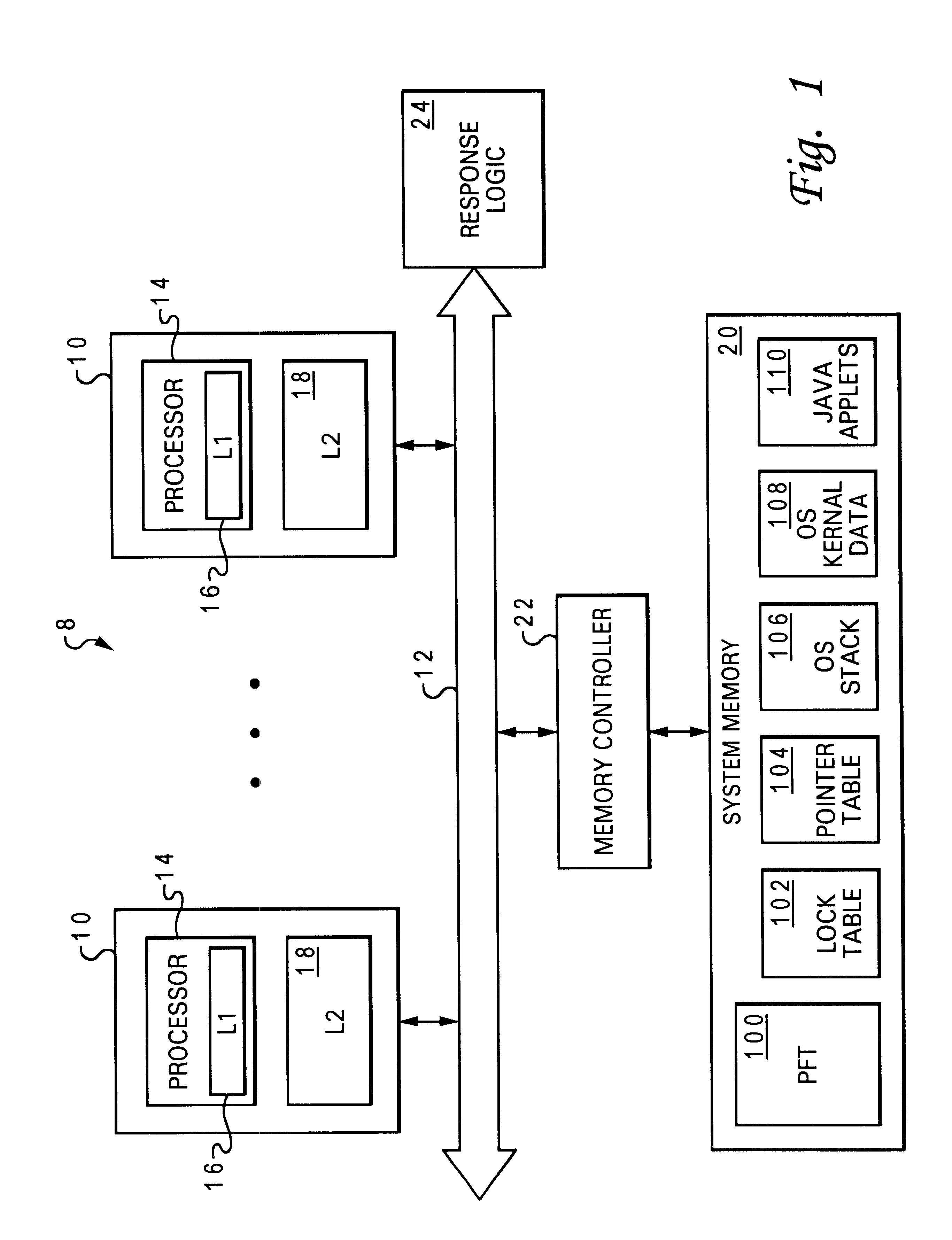

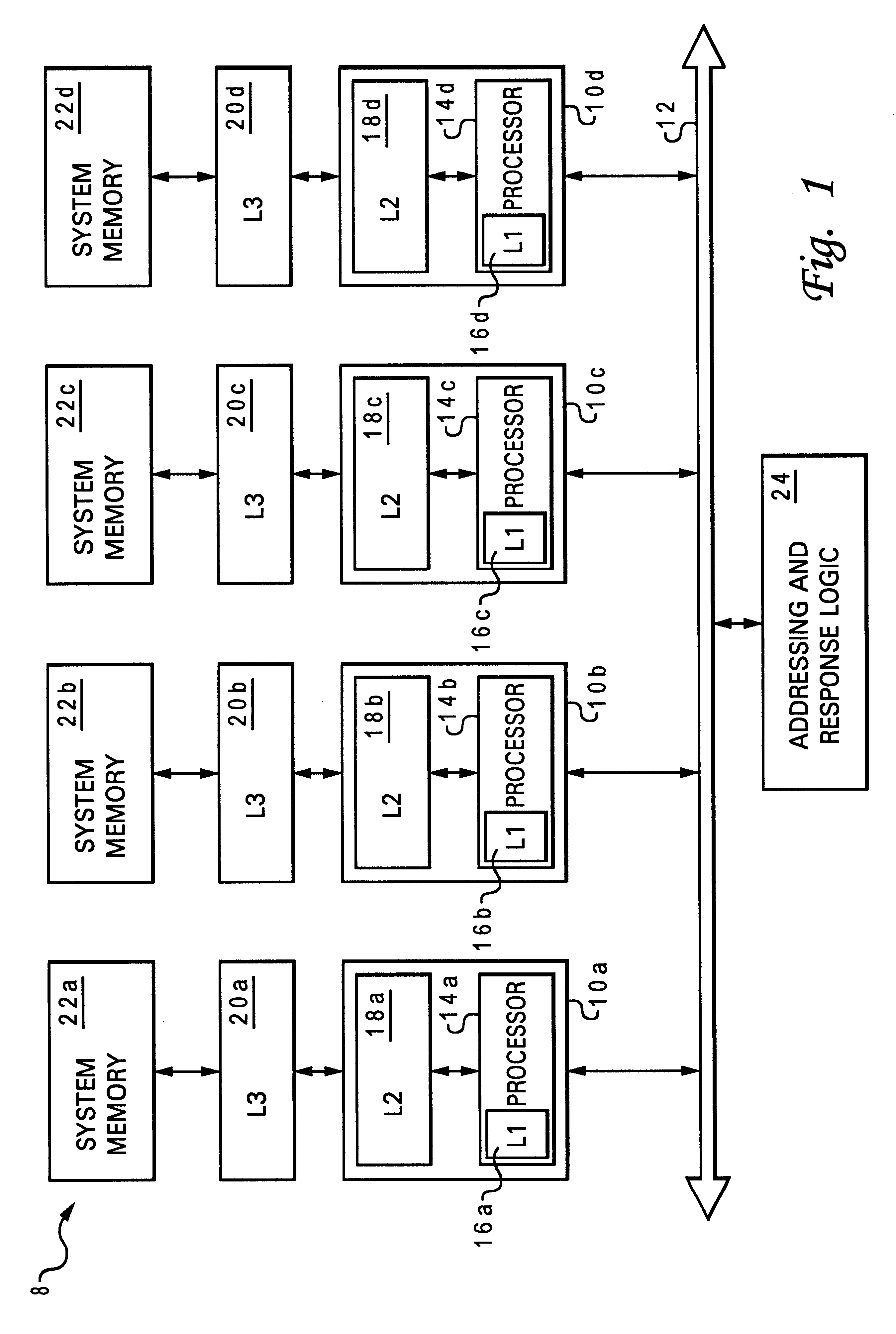

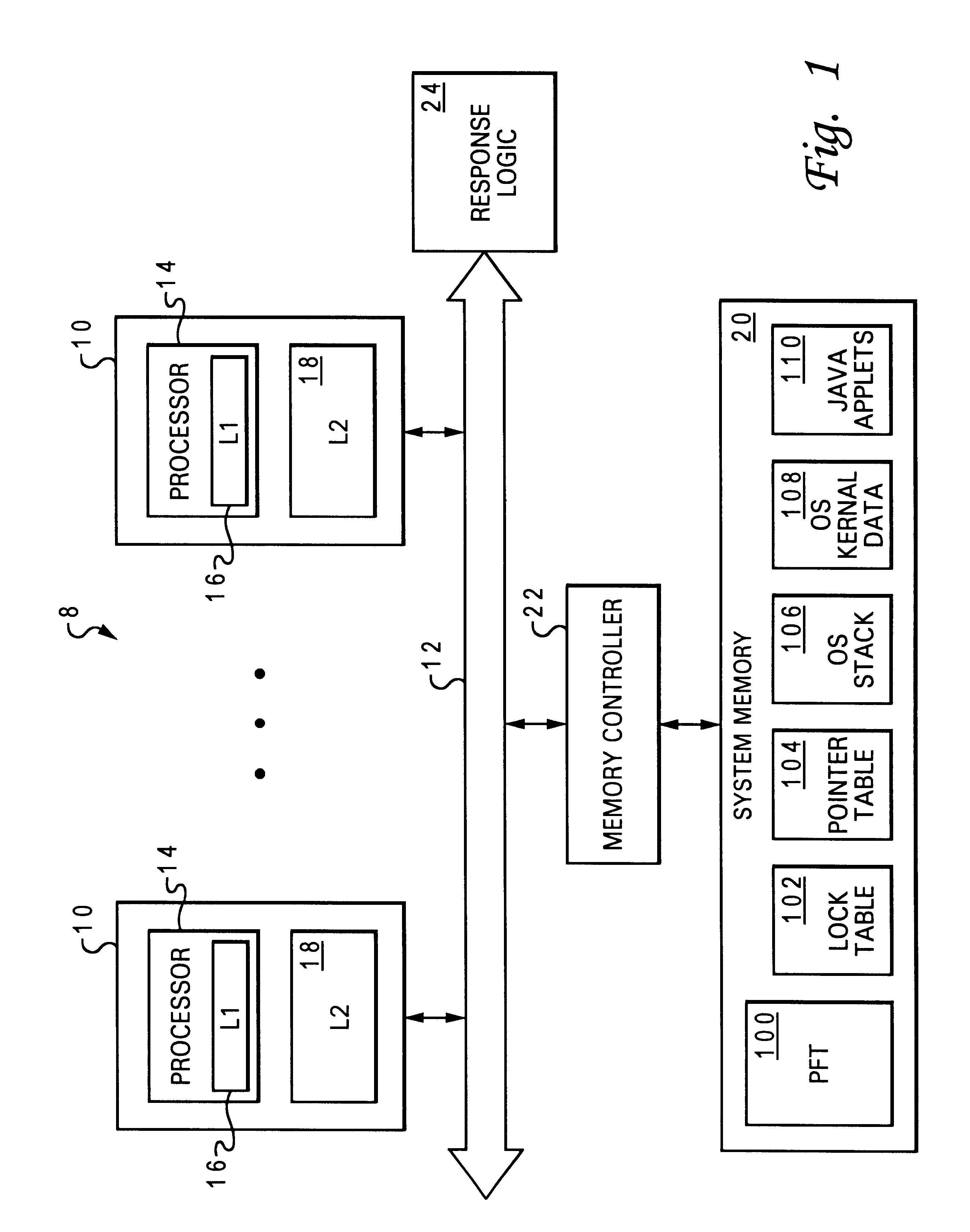

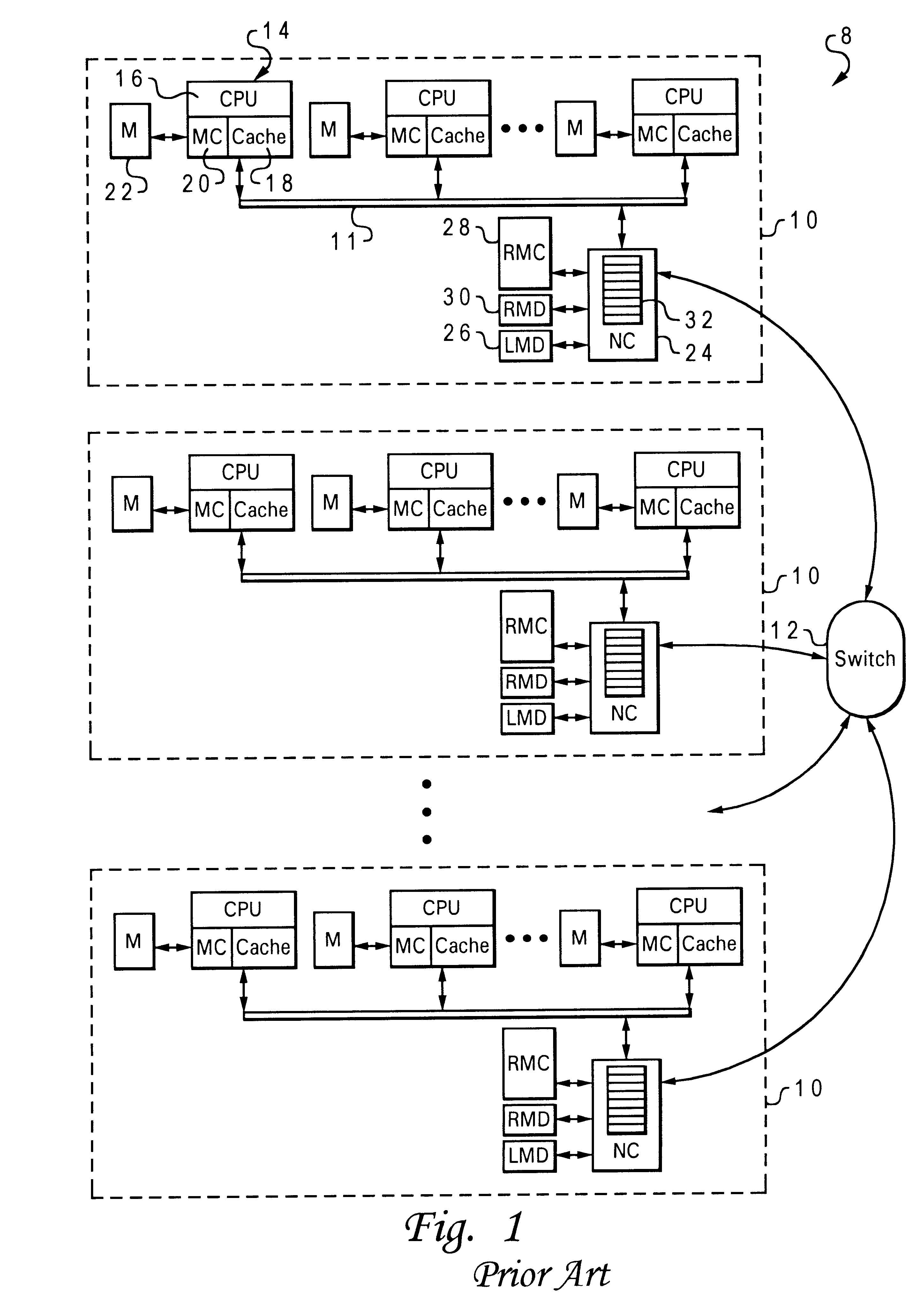

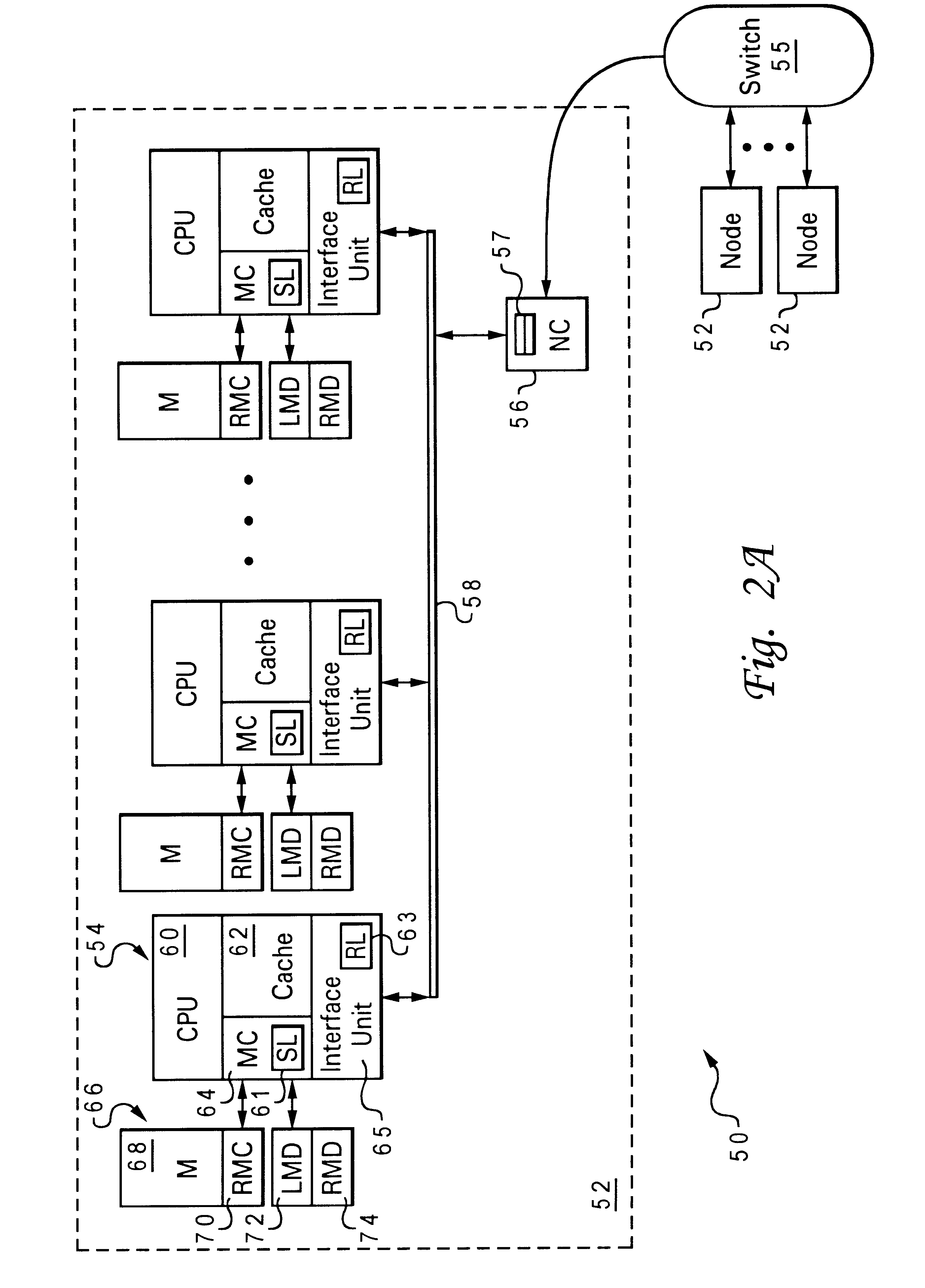

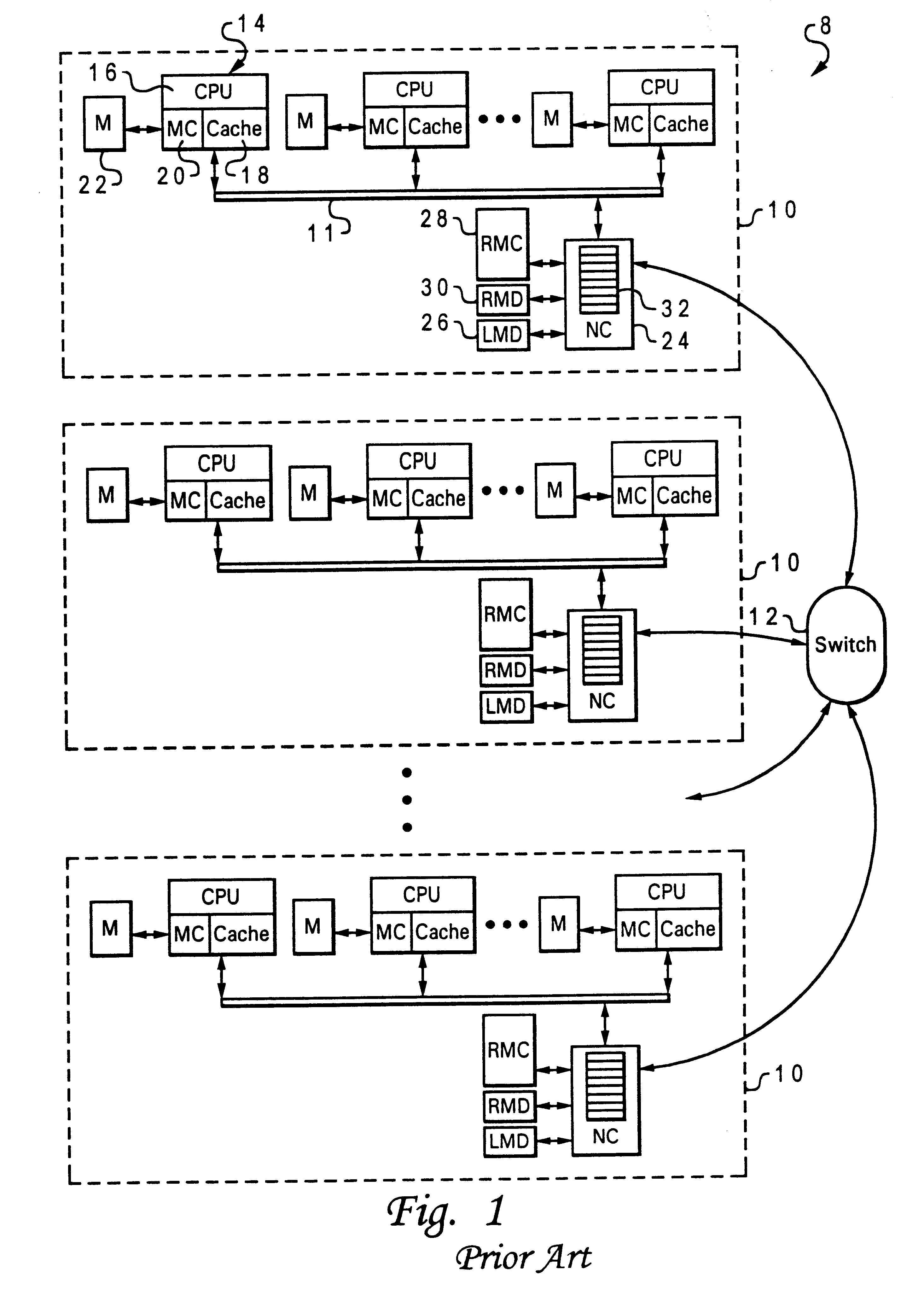

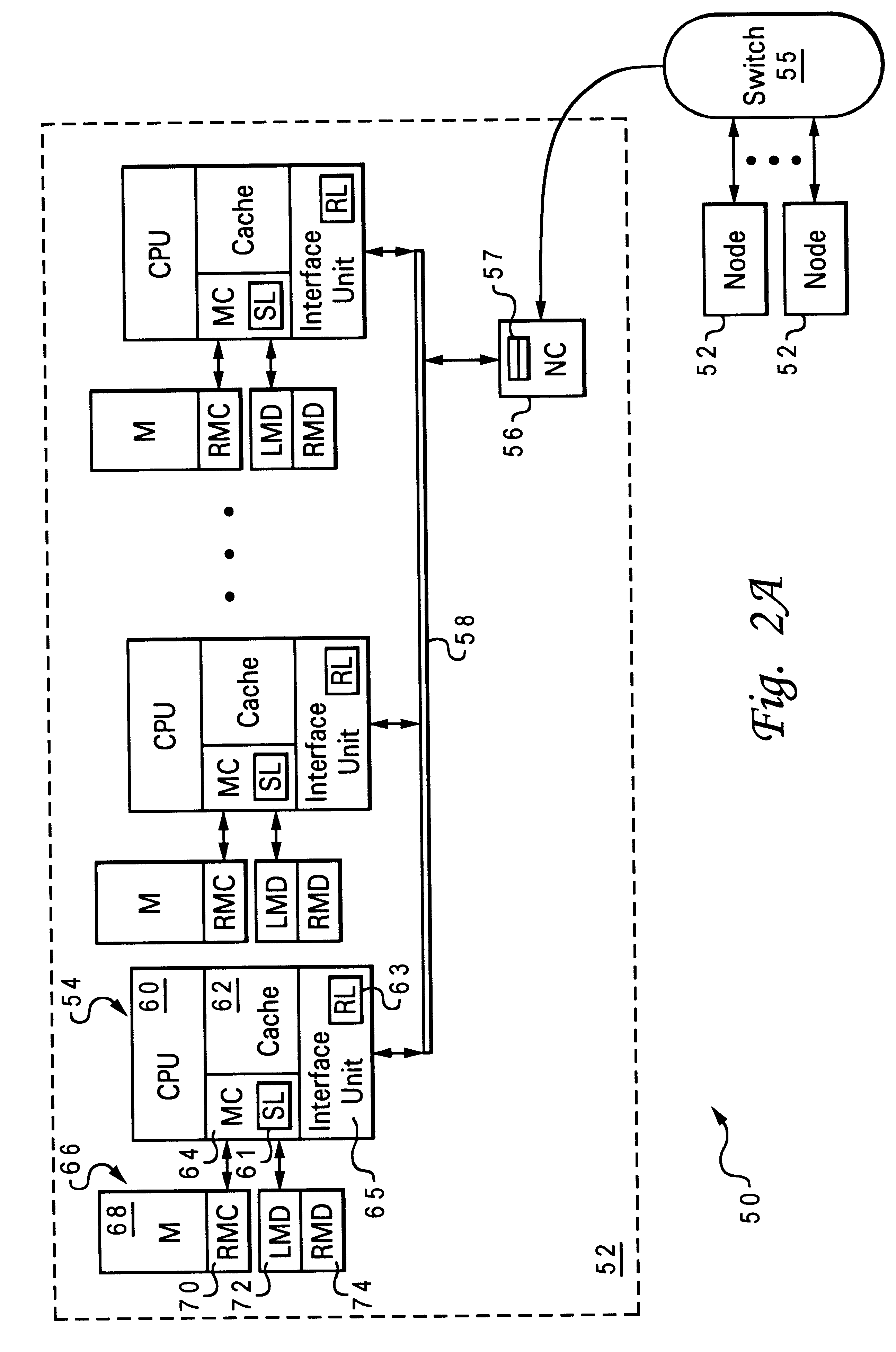

Non-uniform memory access (NUMA) data processing system that provides precise notification of remote deallocation of modified data

Owner:INT BUSINESS MASCH CORP

Method of cache management to store information in particular regions of the cache according to information-type

A set associative cache includes a number of congruence classes that each contain a plurality of sets, a directory, and a cache controller. The directory indicates, for each congruence class, which of a plurality of information types each of the plurality of sets can store. At least one set in at least one of the congruence classes is restricted to storing fewer than all of the information types and at least one set can store multiple information types. When the cache receives information to be stored of a particular information type, the cache controller stores the information into one of the plurality of sets indicated by the directory as capable of storing that particular information type. By managing the sets in which sets information is stored according to information type, an awareness of the characteristics of the various information types can easily be incorporated into the cache's allocation and victim selection policies.

Owner:IBM CORP

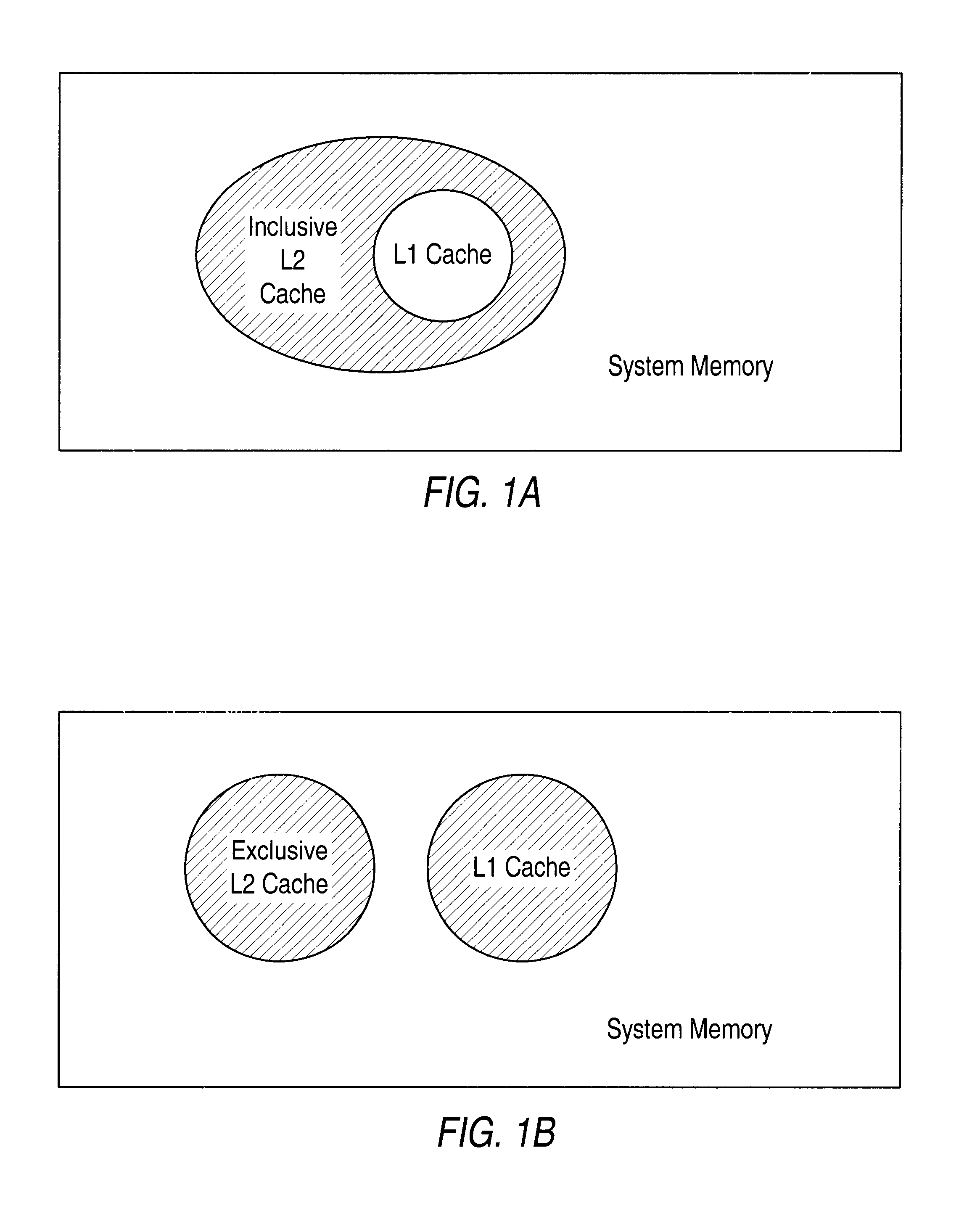

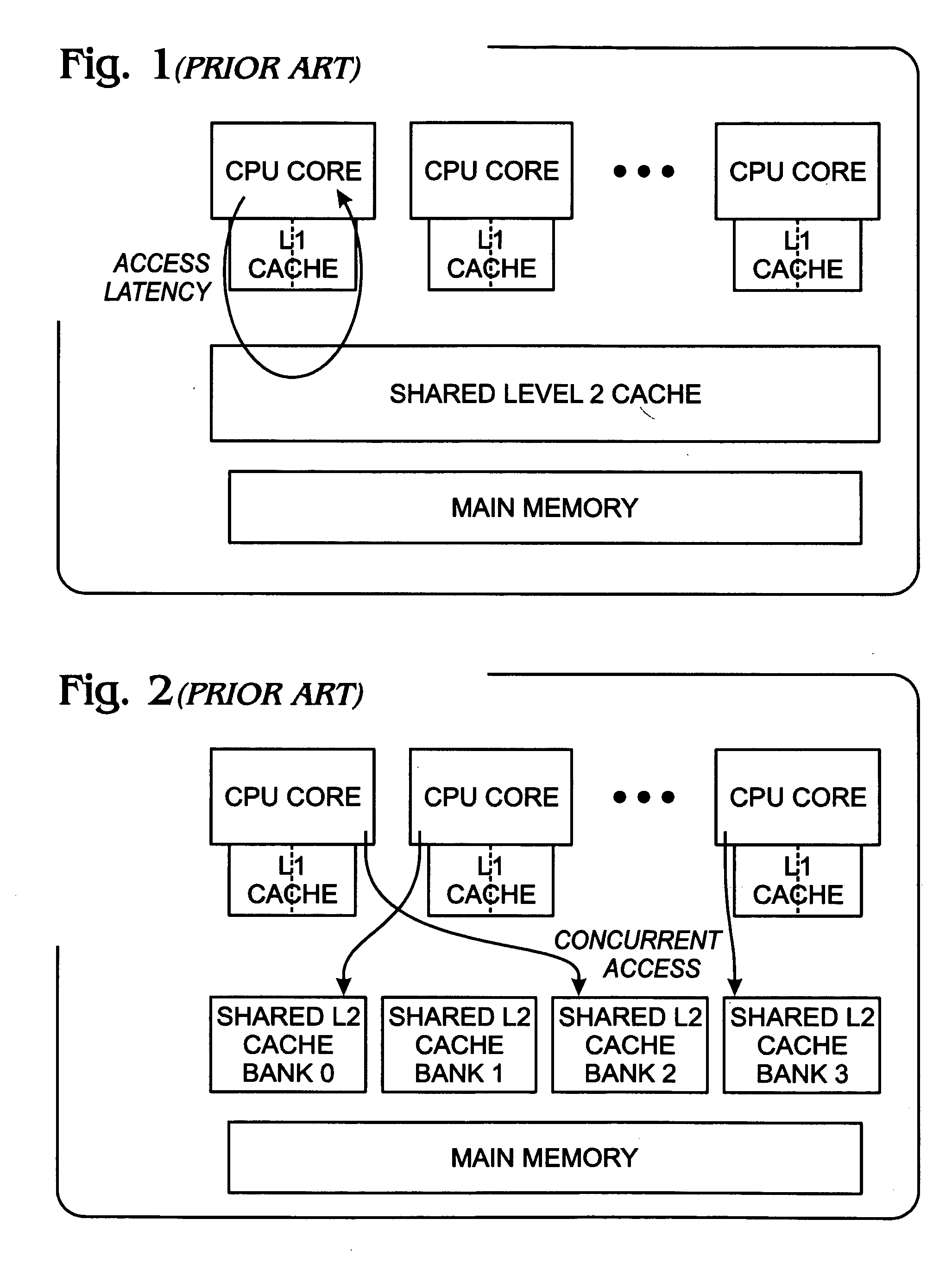

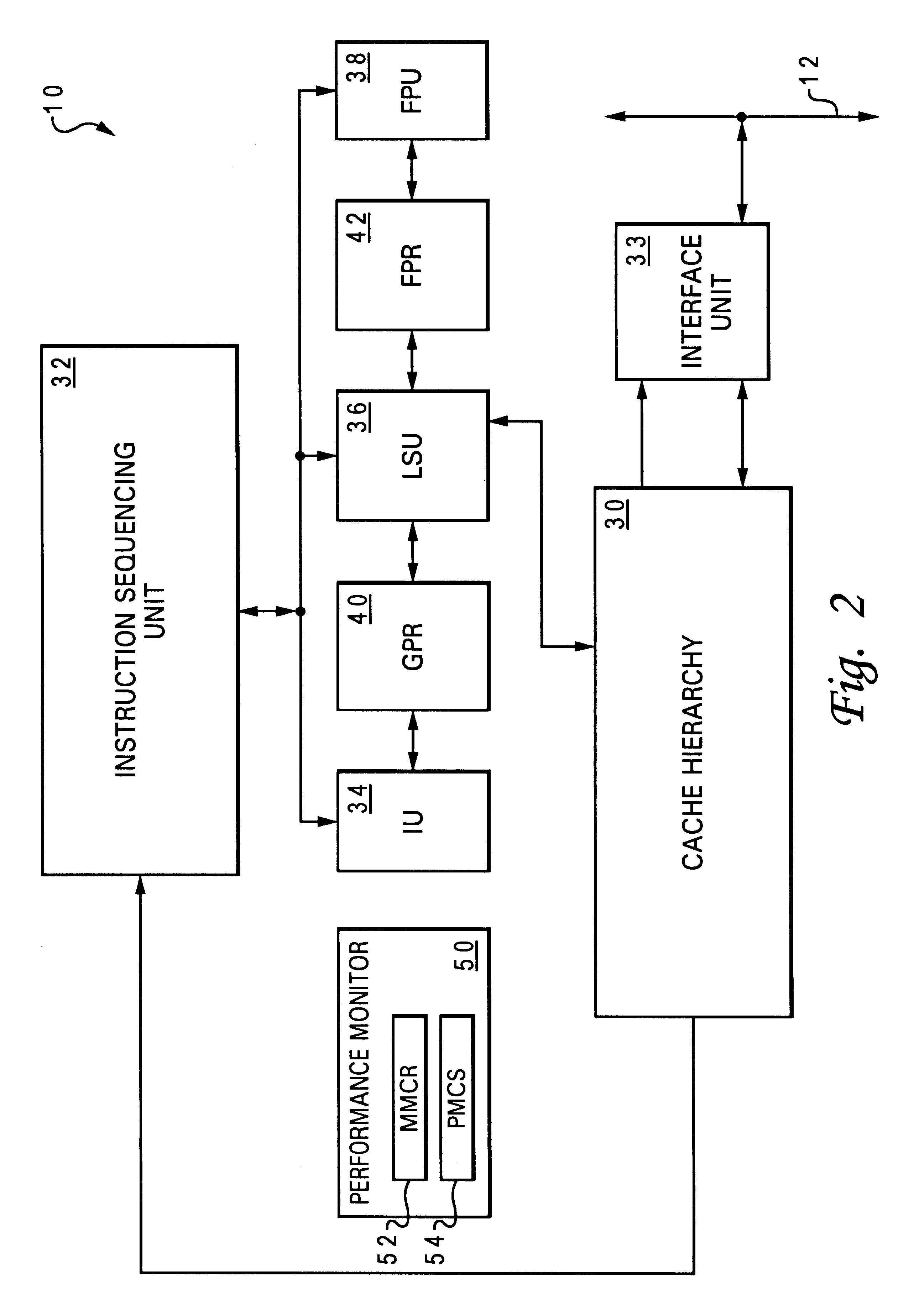

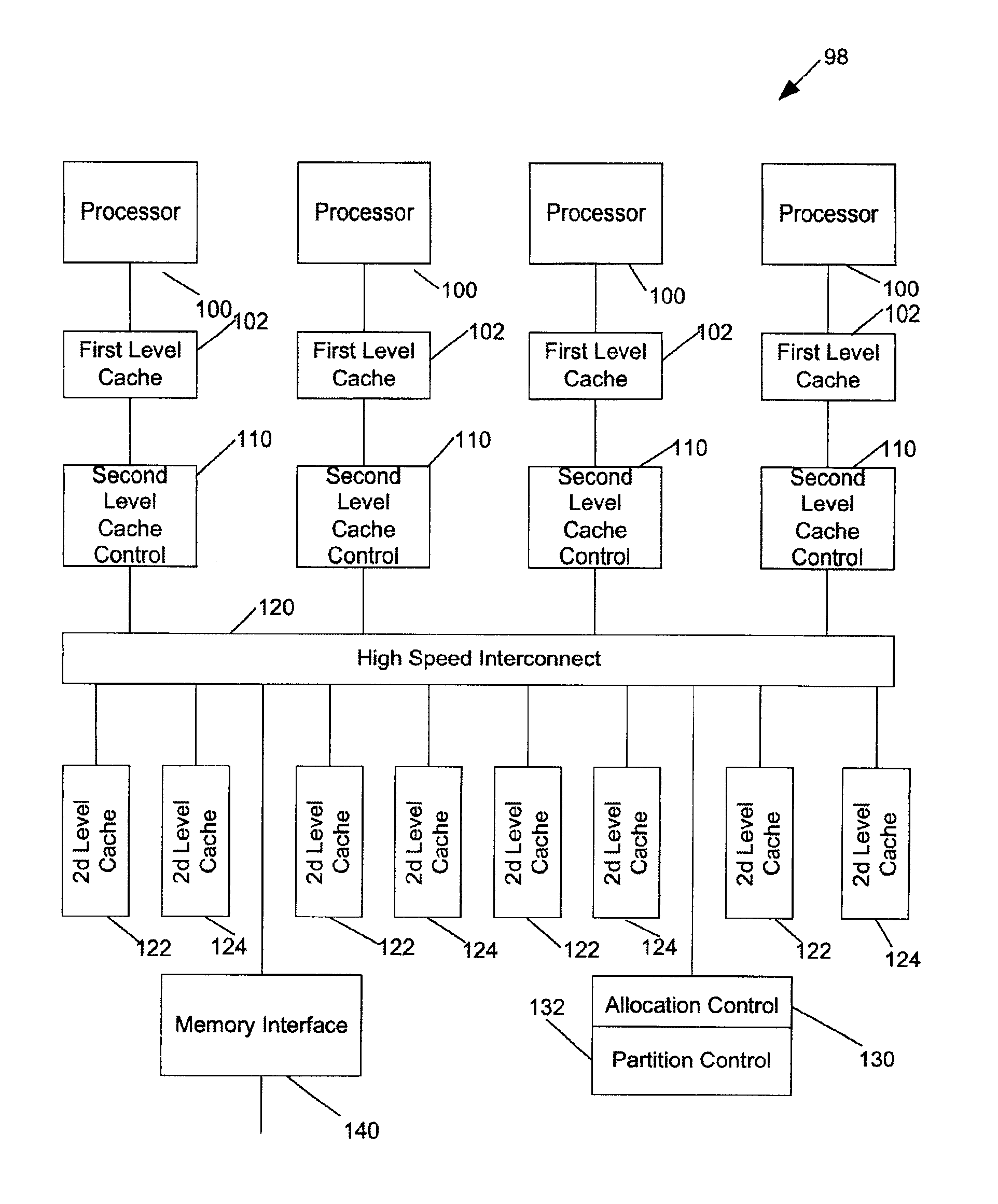

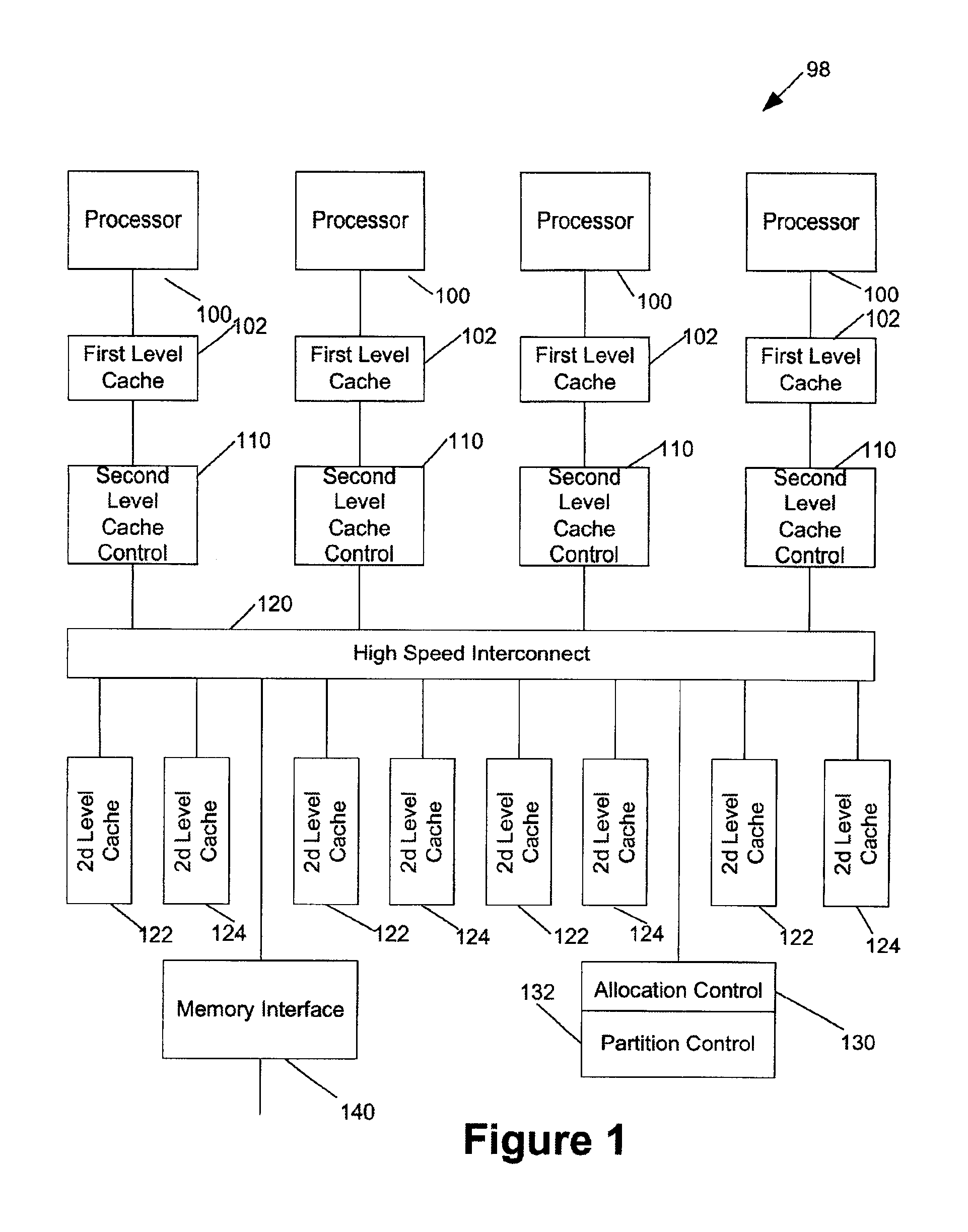

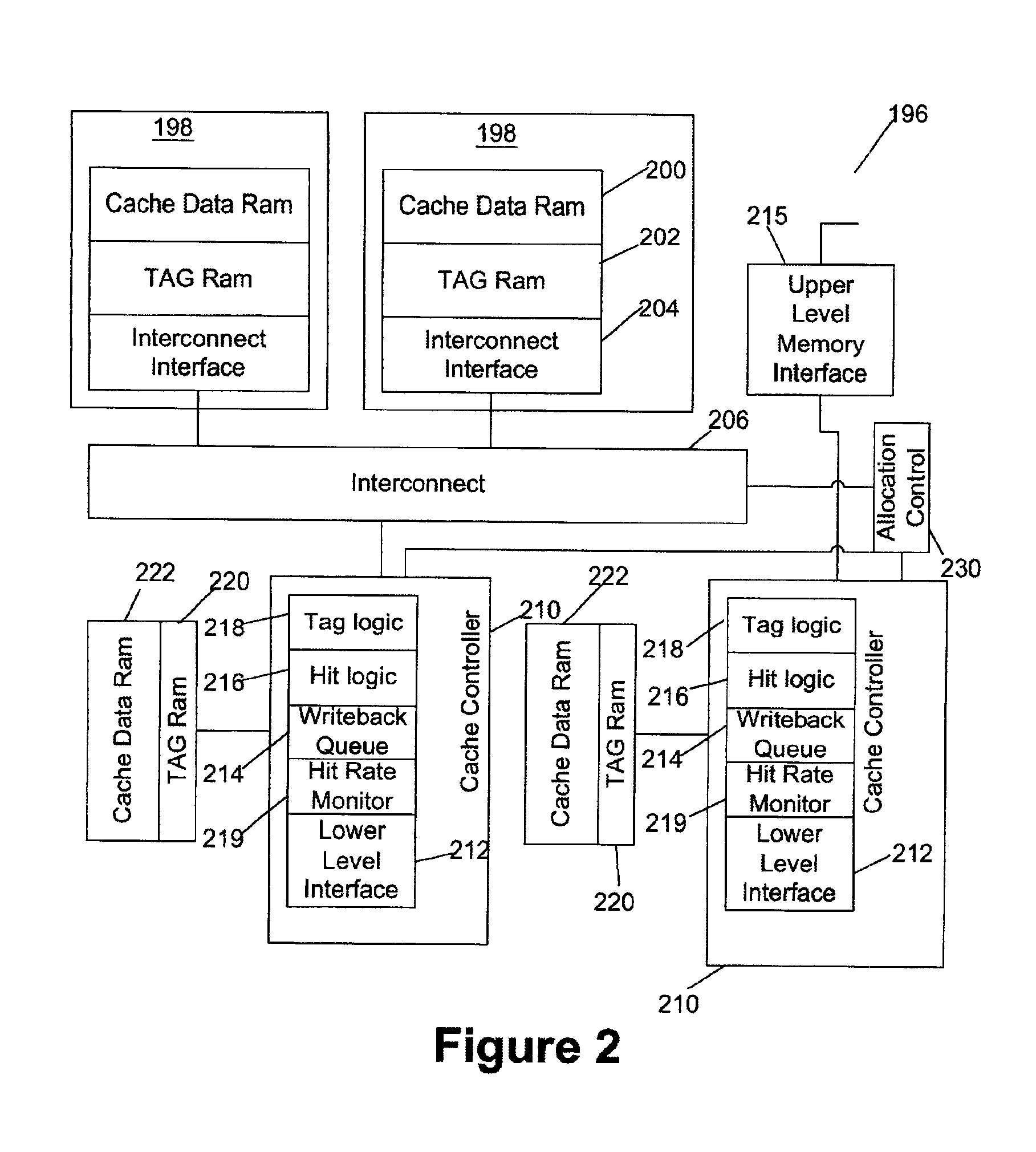

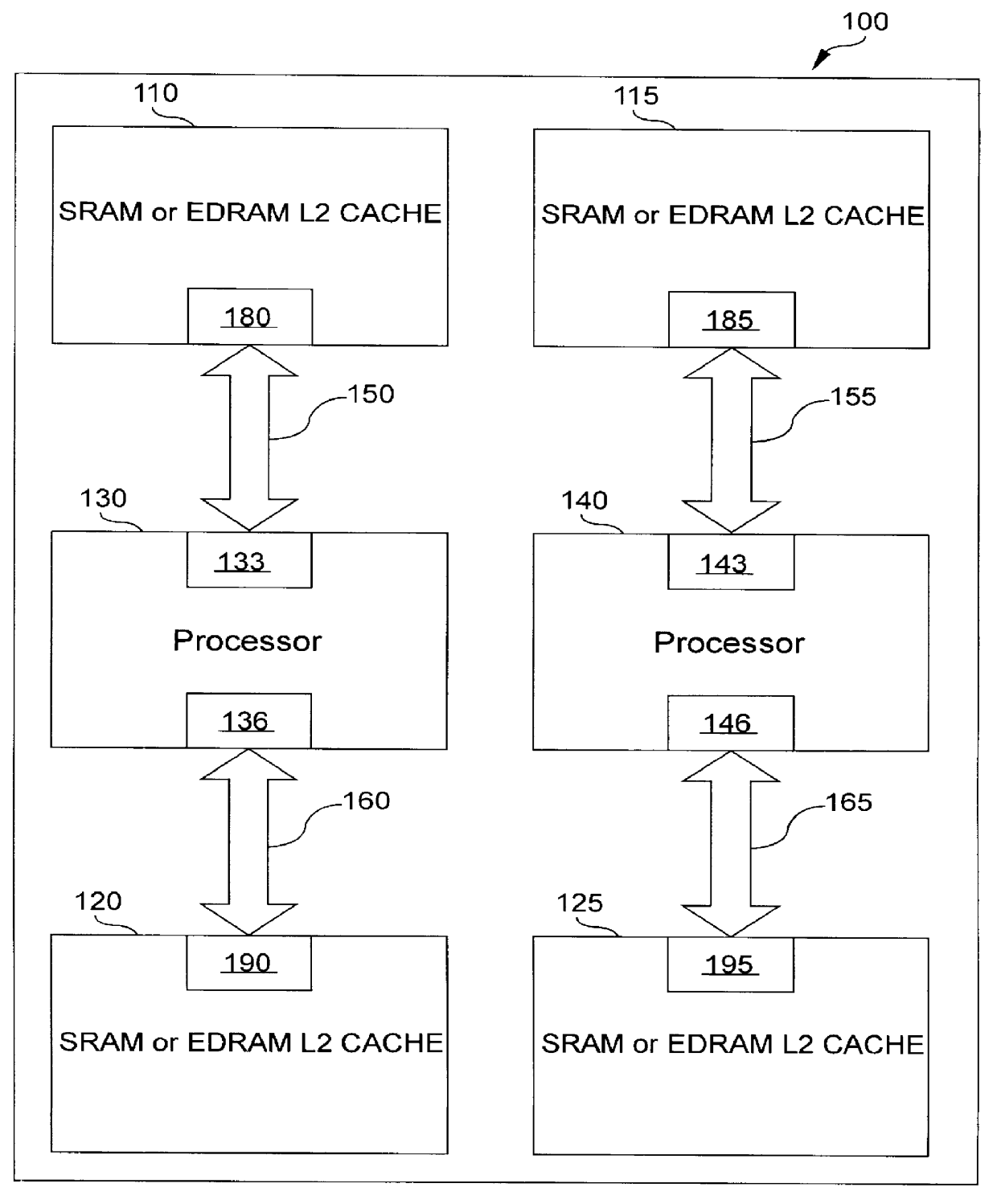

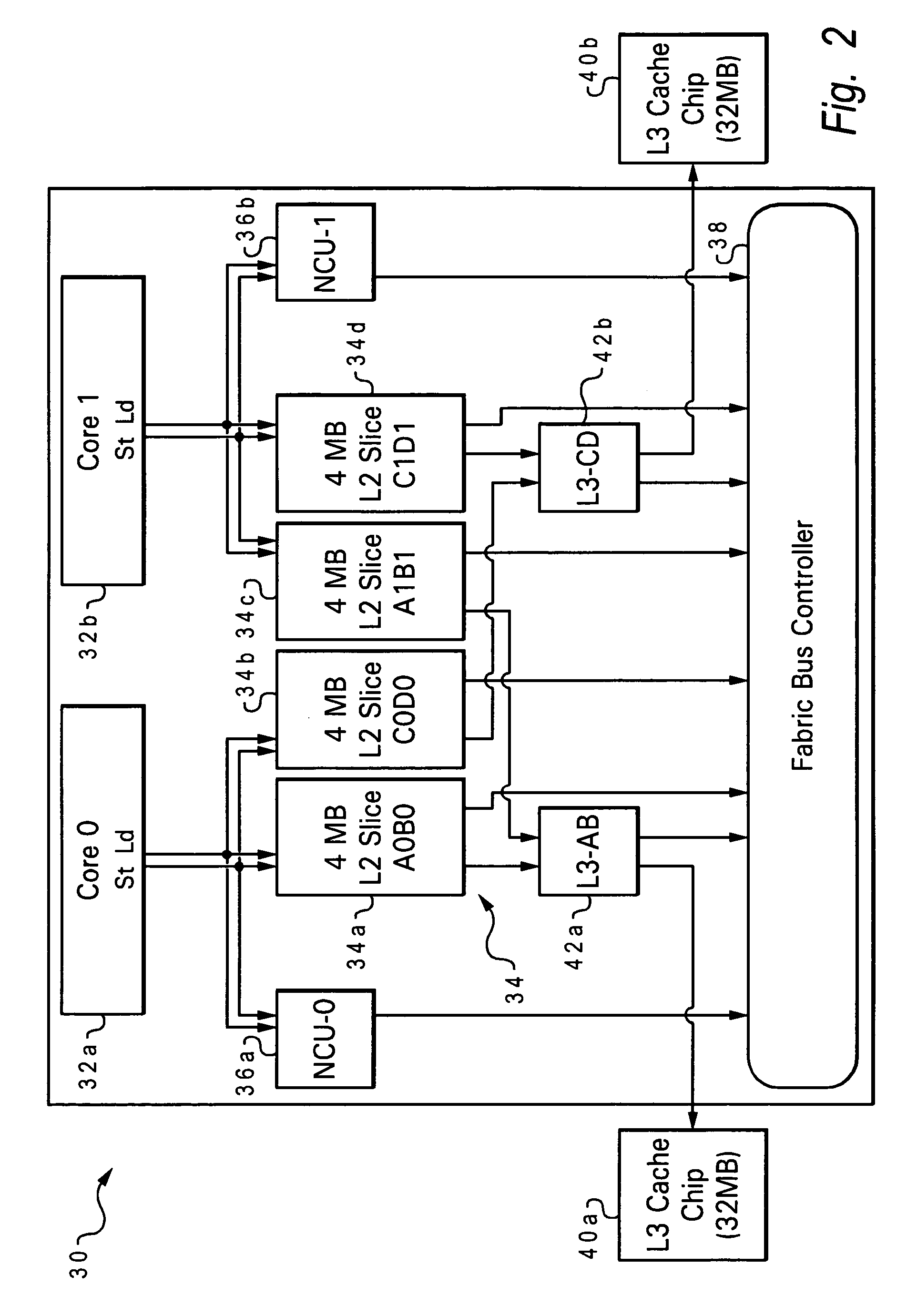

System and method for dynamic processor core and cache partitioning on large-scale multithreaded, multiprocessor integrated circuits

InactiveUS6871264B2Improve performanceMemory adressing/allocation/relocationMultiple digital computer combinationsMulti processorMemory controller

A processor integrated circuit capable of executing more than one instruction stream has two or more processors. Each processor accesses instructions and data through a cache controller. There are multiple blocks of cache memory. Some blocks of cache memory may optionally be directly attached to particular cache controllers. The cache controllers access at least some of the multiple blocks of cache memory through high speed interconnect, these blocks being dynamically allocable to more than one cache controller. A resource allocation controller determines which cache memory controller has access to the dynamically allocable cache memory block. In an embodiment the cache controllers and cache memory blocks are associated with second level cache, each processor accesses the second level cache controllers upon missing in a first level cache of fixed size.

Owner:VALTRUS INNOVATIONS LTD +1

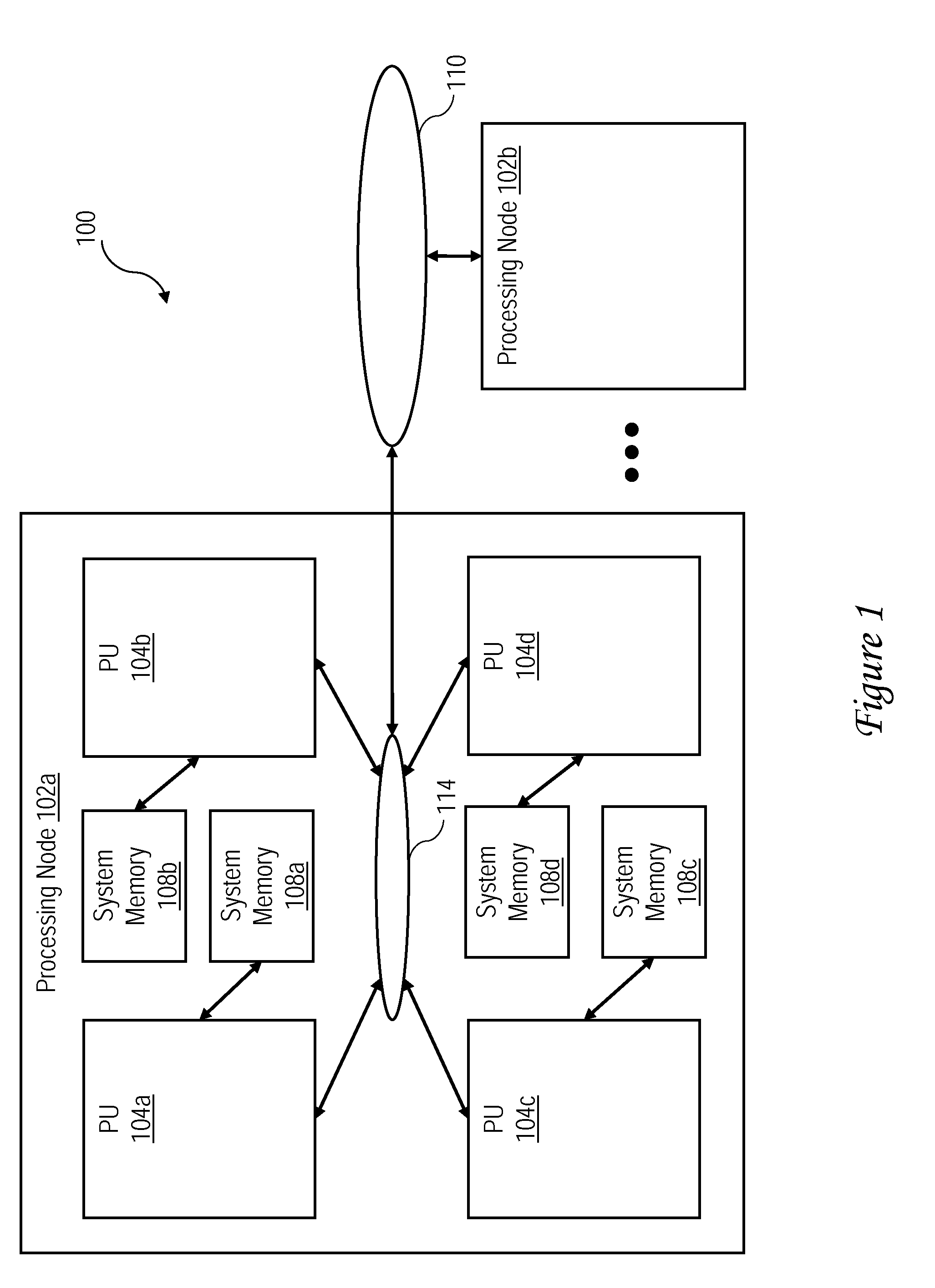

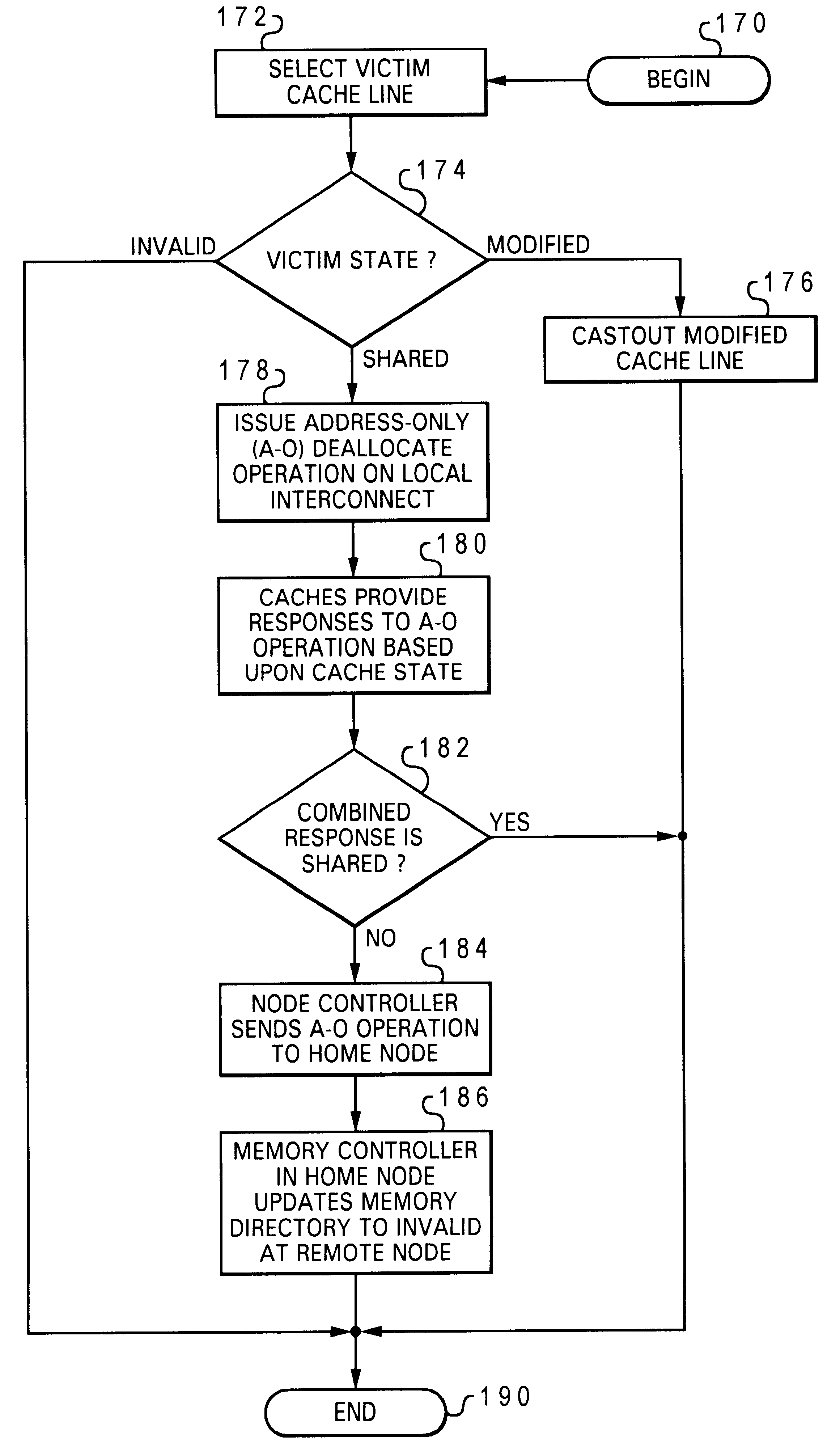

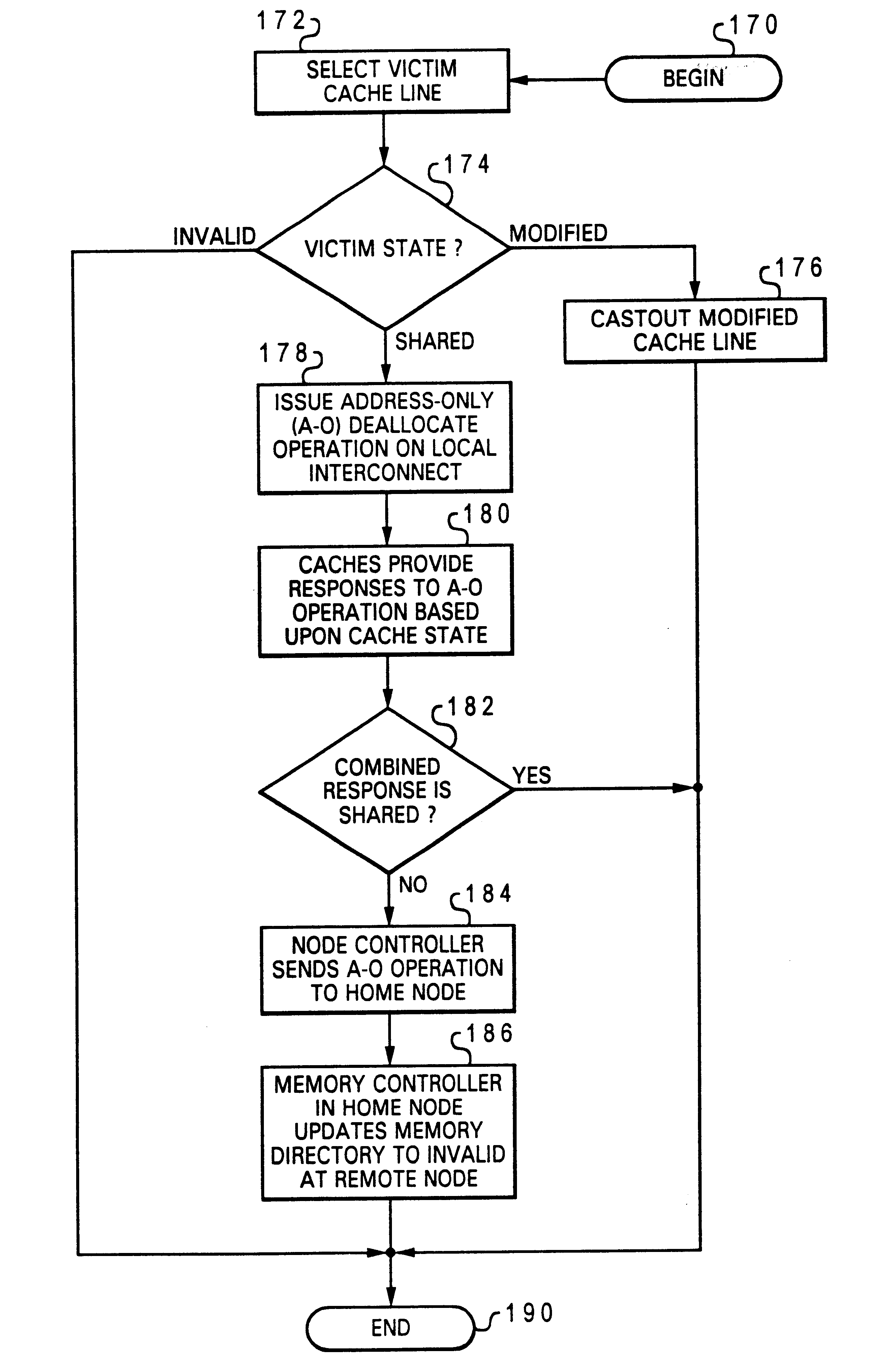

Non-uniform memory access (NUMA) data processing system that provides notification of remote deallocation of shared data

InactiveUS6633959B2Memory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemComputerized system

A non-uniform memory access (NUMA) computer system includes a node interconnect to which a remote node and a home node are coupled. The home node contains a home system memory, and the remote node includes at least one processing unit and a cache. In response to the cache deallocating an unmodified cache line that corresponds to data resident in the home system memory, a cache controller of the cache issues a deallocate operation on a local interconnect of the remote node. In one embodiment, the deallocate operation is further transmitted to the home node via the node interconnect only in response to an indication, such as a combined response, that no other cache in the remote node caches the cache line. In response to receipt of the deallocate operation, a memory controller in the home node updates a local memory directory associated with the home system memory to indicate that the remote node does not hold a copy of the cache line.< / PTEXT>

Owner:IBM CORP

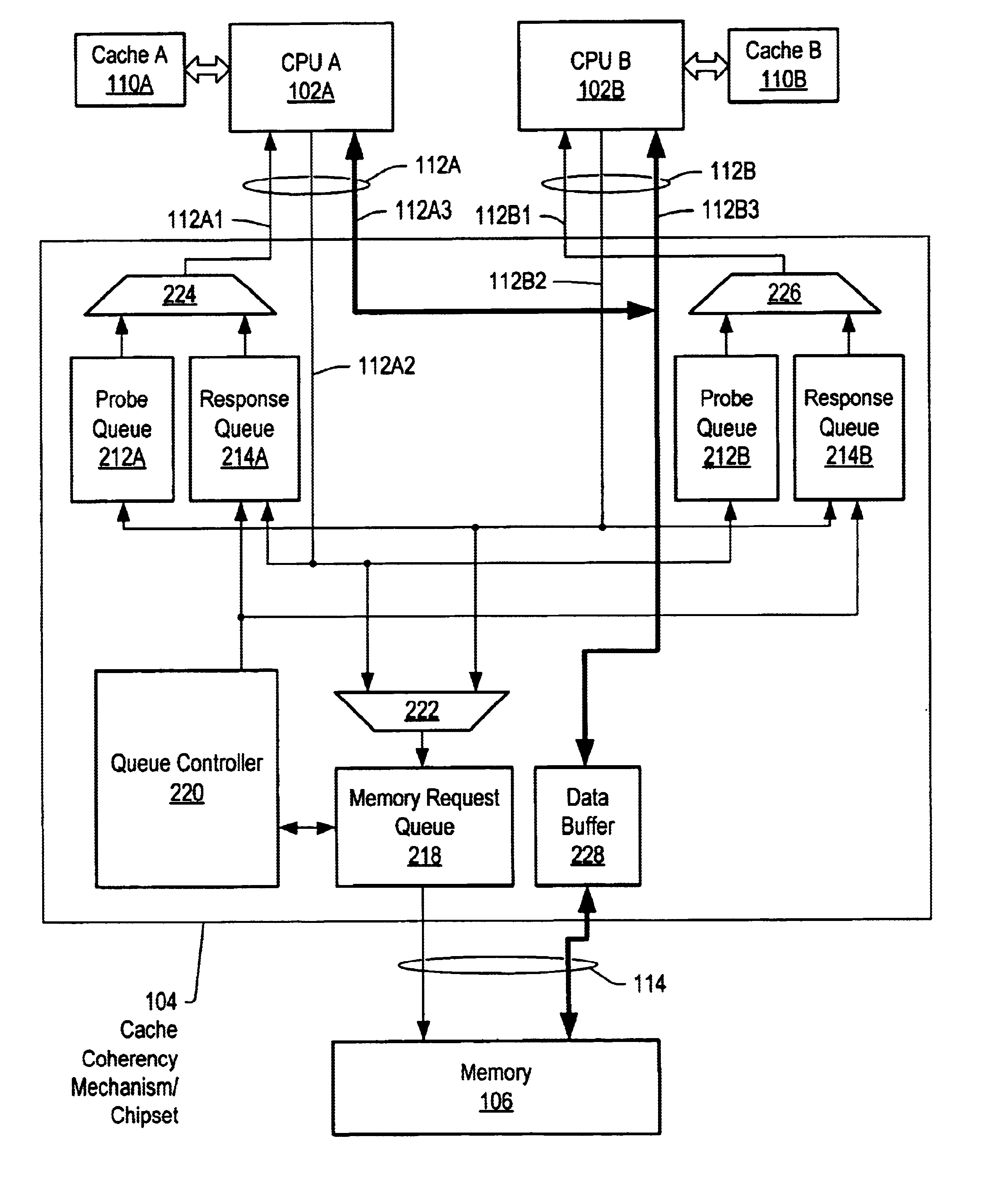

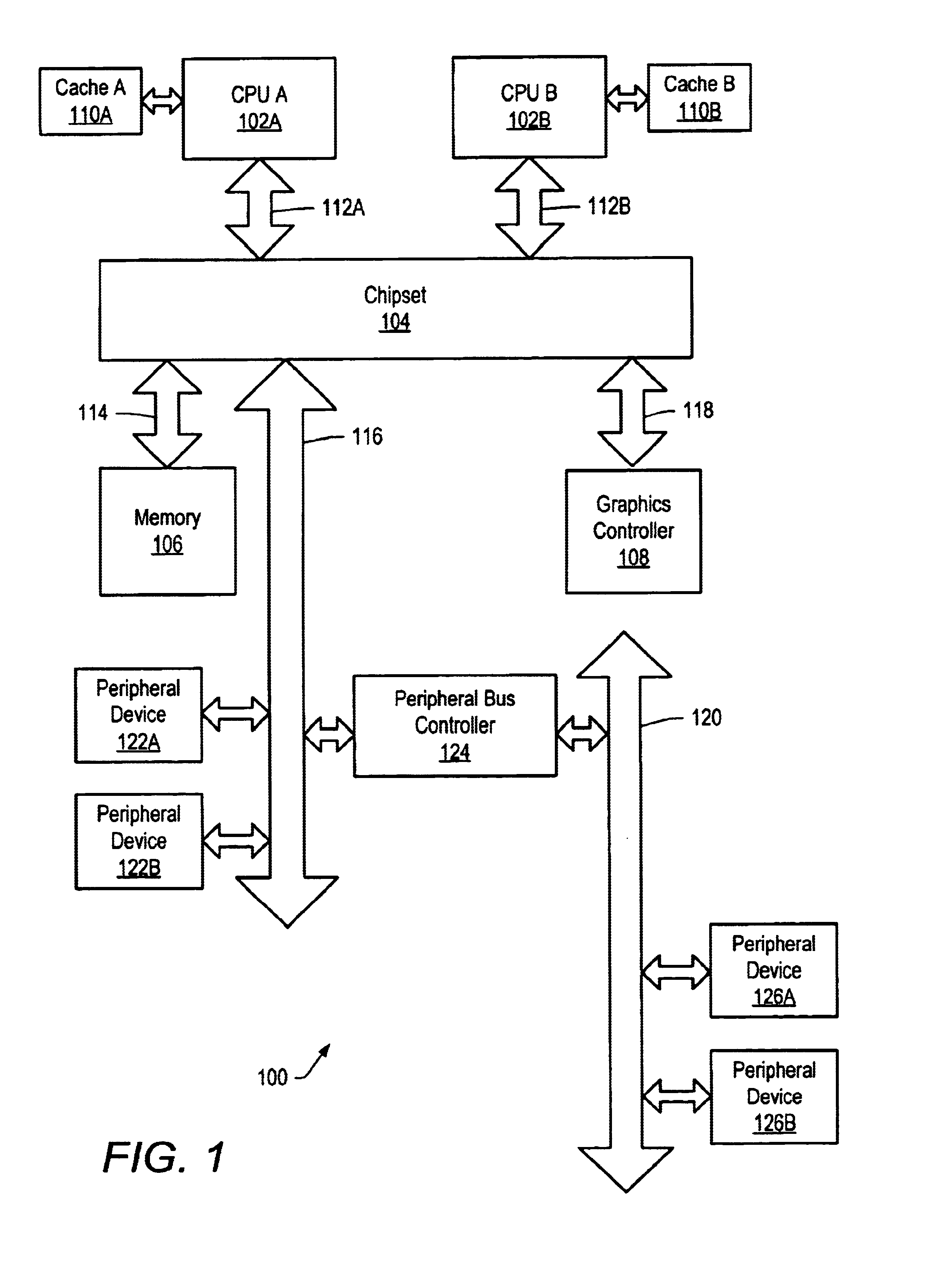

System and method for performing a speculative cache fill

InactiveUS6775749B1Memory architecture accessing/allocationMemory adressing/allocation/relocationComputerized systemShared memory

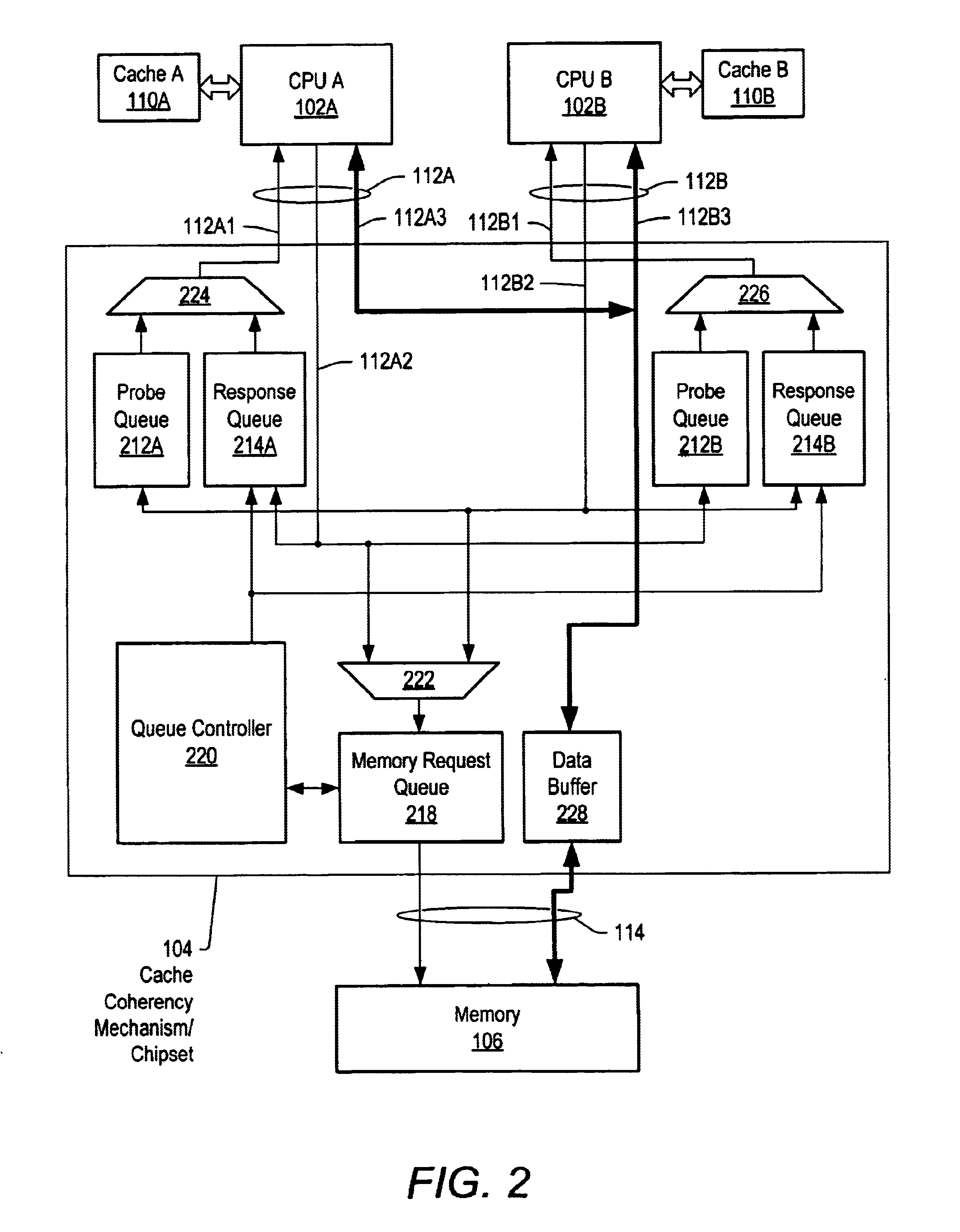

A computer system may include several caches that are each coupled to receive data from a shared memory. A cache coherency mechanism may be configured to receive a cache fill request, and in response, to send a probe to determine whether any of the other caches contain a copy of the requested data. Some time after sending the probe, the cache controller may provide a speculative response to the cache fill request to the requesting device. By delaying providing the speculative response until some time after the probes are sent, it may become more likely that the responses to the probes will be received in time to validate the speculative response.

Owner:GLOBALFOUNDRIES INC

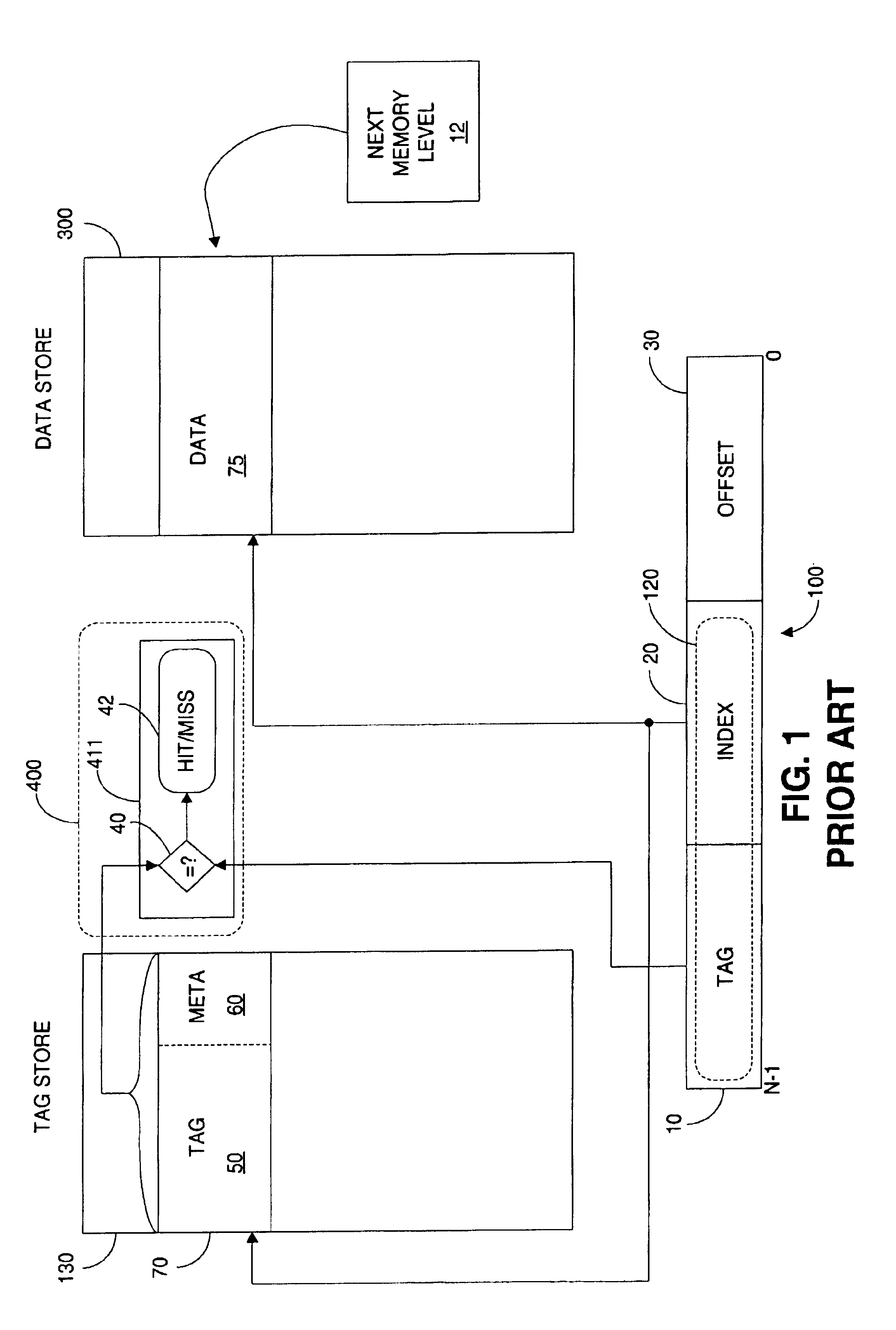

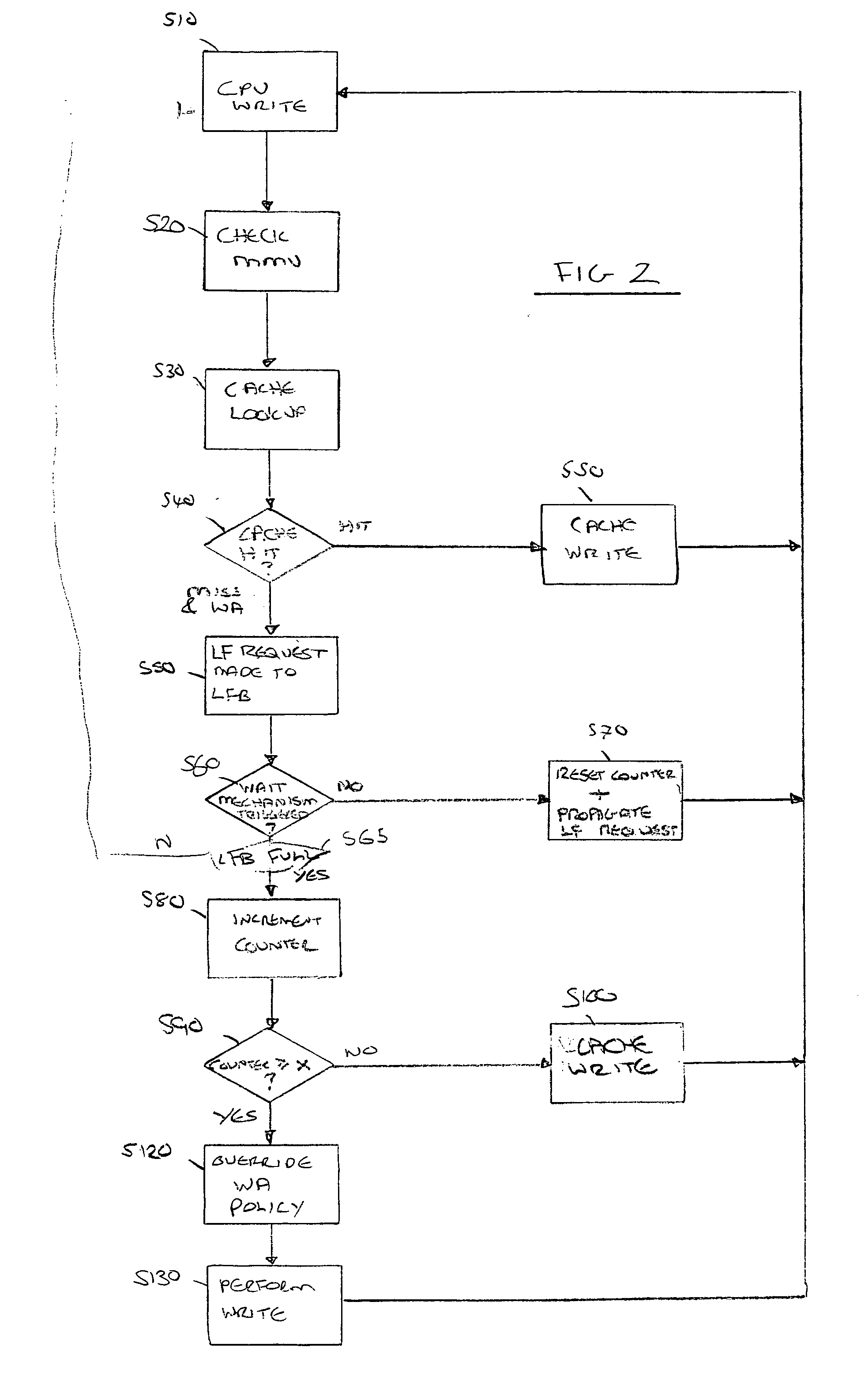

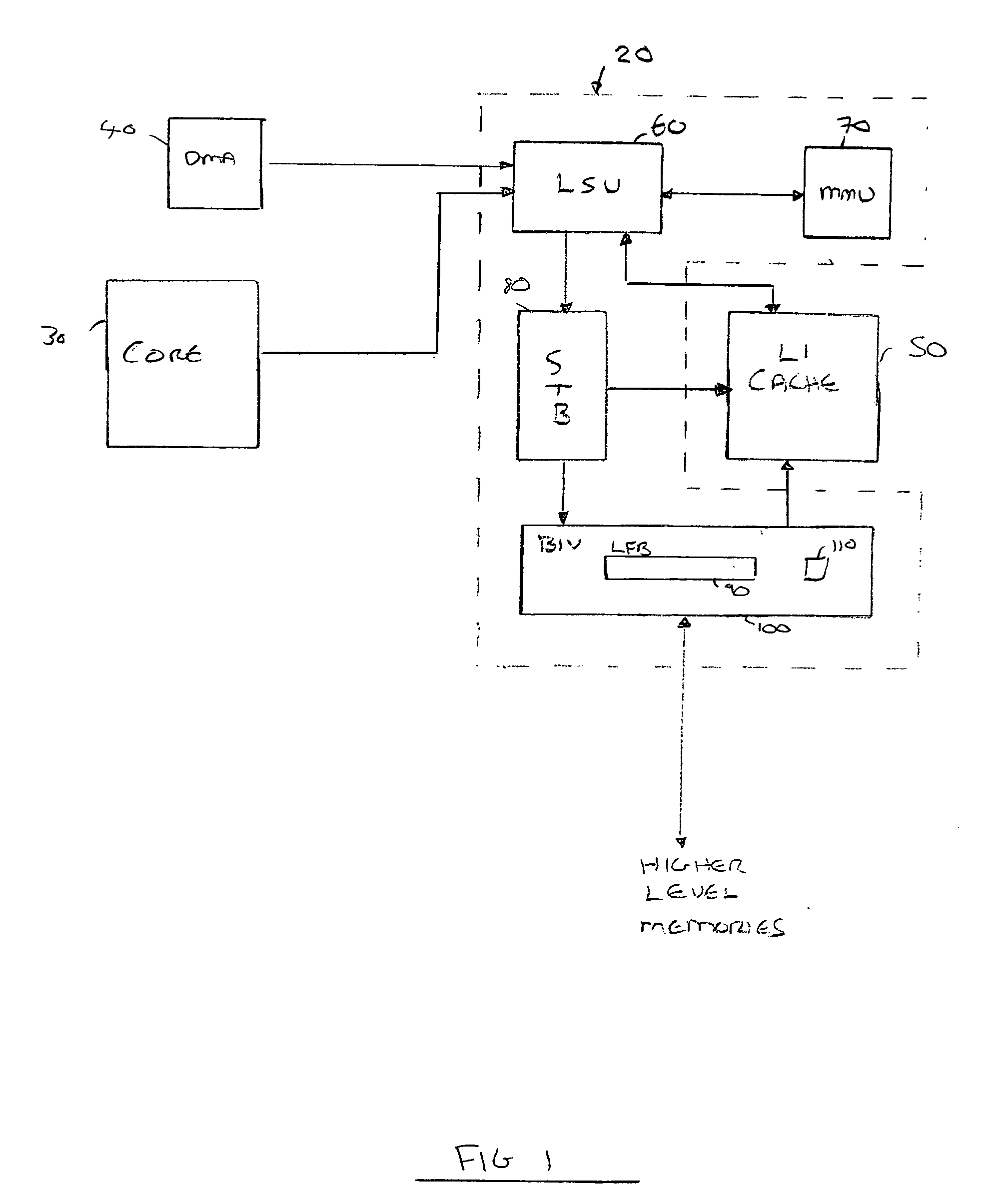

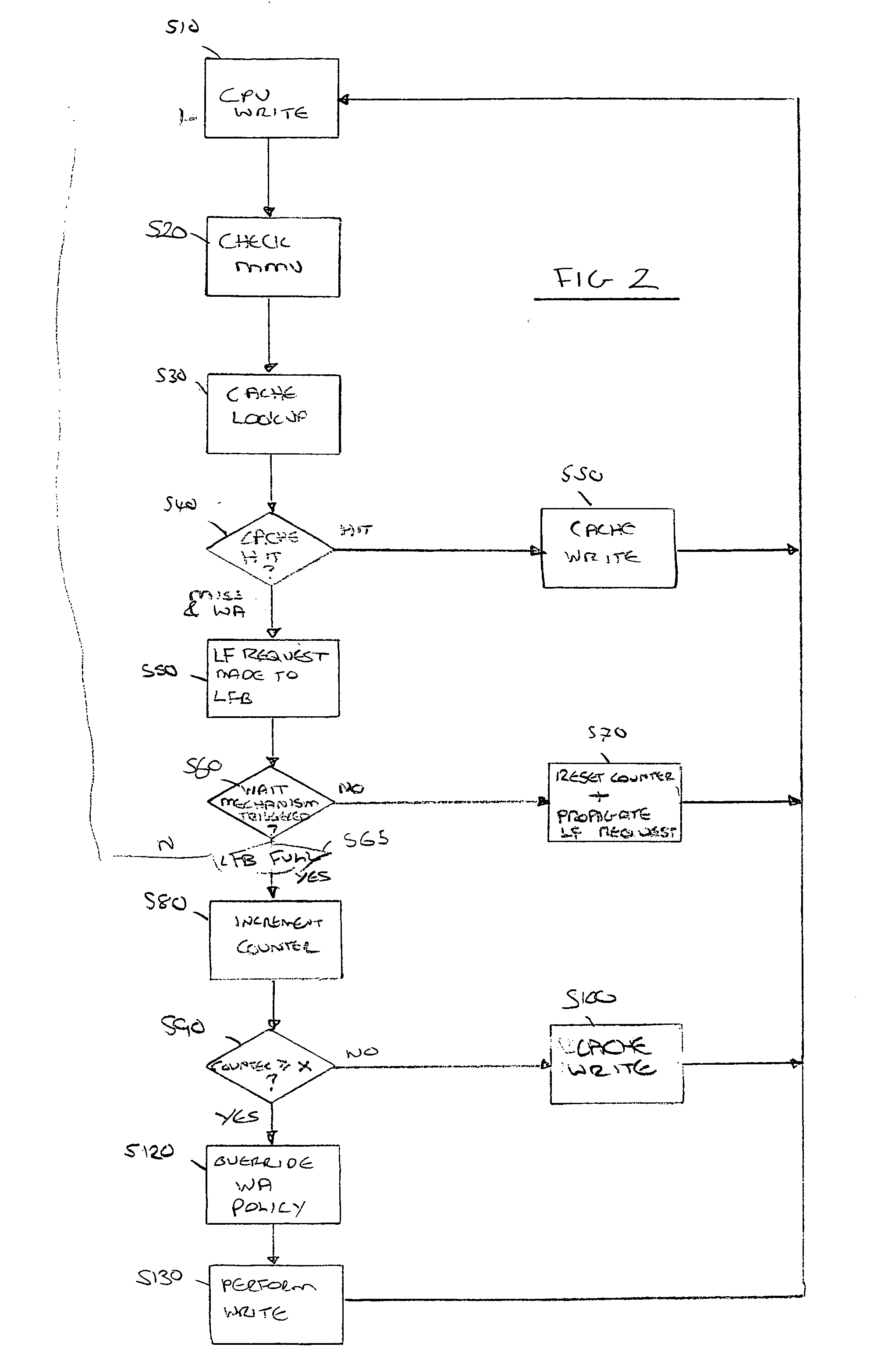

Cache controller

InactiveUS20070079070A1Adequate performance balanceUnlikely to resultMemory architecture accessing/allocationMemory systemsCache accessSequential data

A cache controller and a method is provided. The cache controller comprises: request reception logic operable to receive a write request from a data processing apparatus to write a data item to memory; and cache access logic operable to determine whether a caching policy associated with the write request is write allocate, whether the write request would cause a cache miss to occur, whether the write request is one of a number of write requests which together would cause greater than a predetermined number of sequential data items to be allocated in the cache and, if so, the cache access logic is further operable to override the caching policy associated with the write request to non-write allocate. In this way, in the event that the number of consecutive data items to be allocated within the cache exceeds the predefined number then the cache access logic will consider that it is highly likely that the write requests are associated with a block transfer operation and, accordingly, will override the write allocate caching policy. Accordingly, the write request will proceed but without the write allocate caching policy being applied. Hence, the pollution of the cache with these sequential data items is reduced.

Owner:ARM LTD

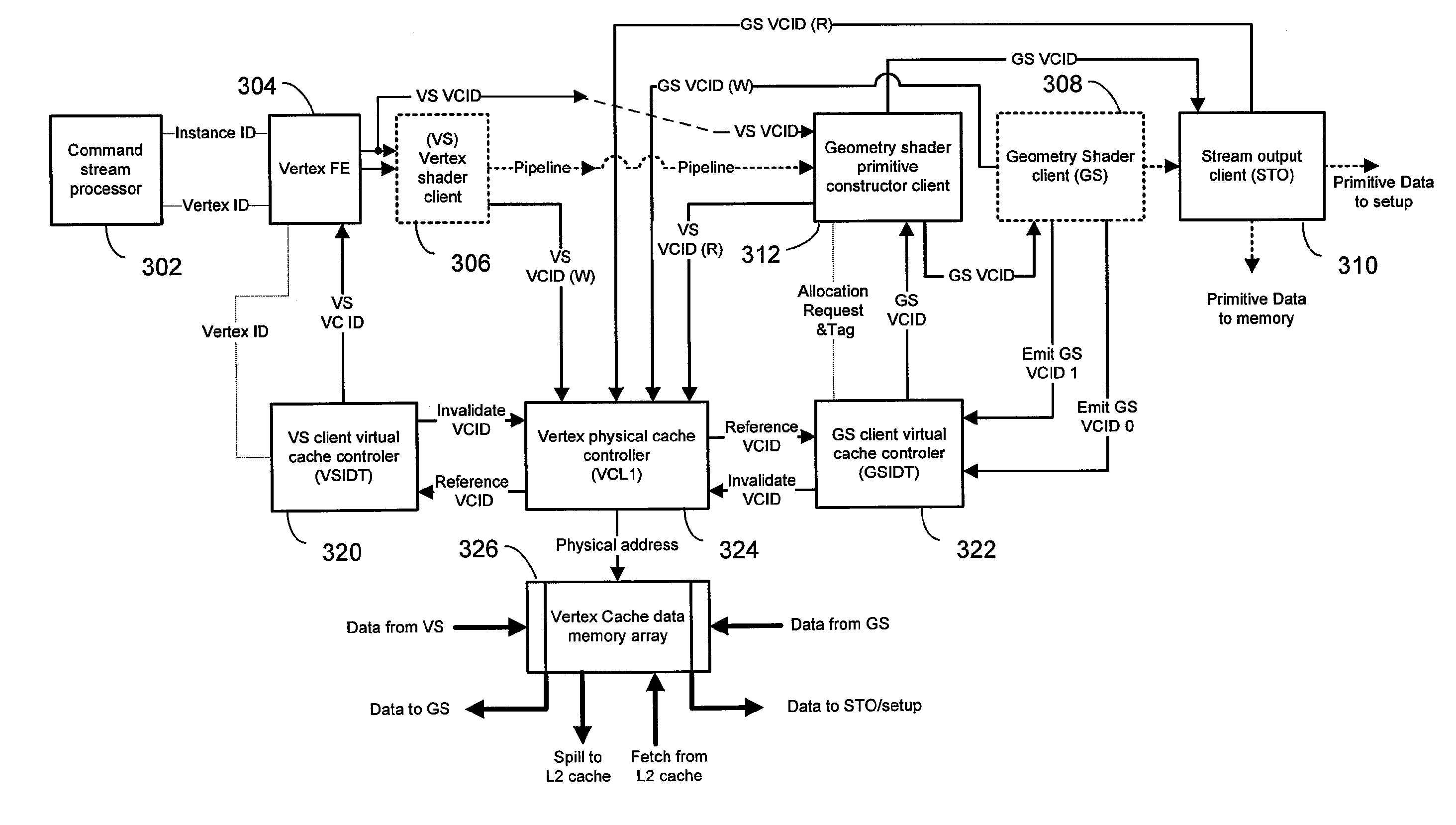

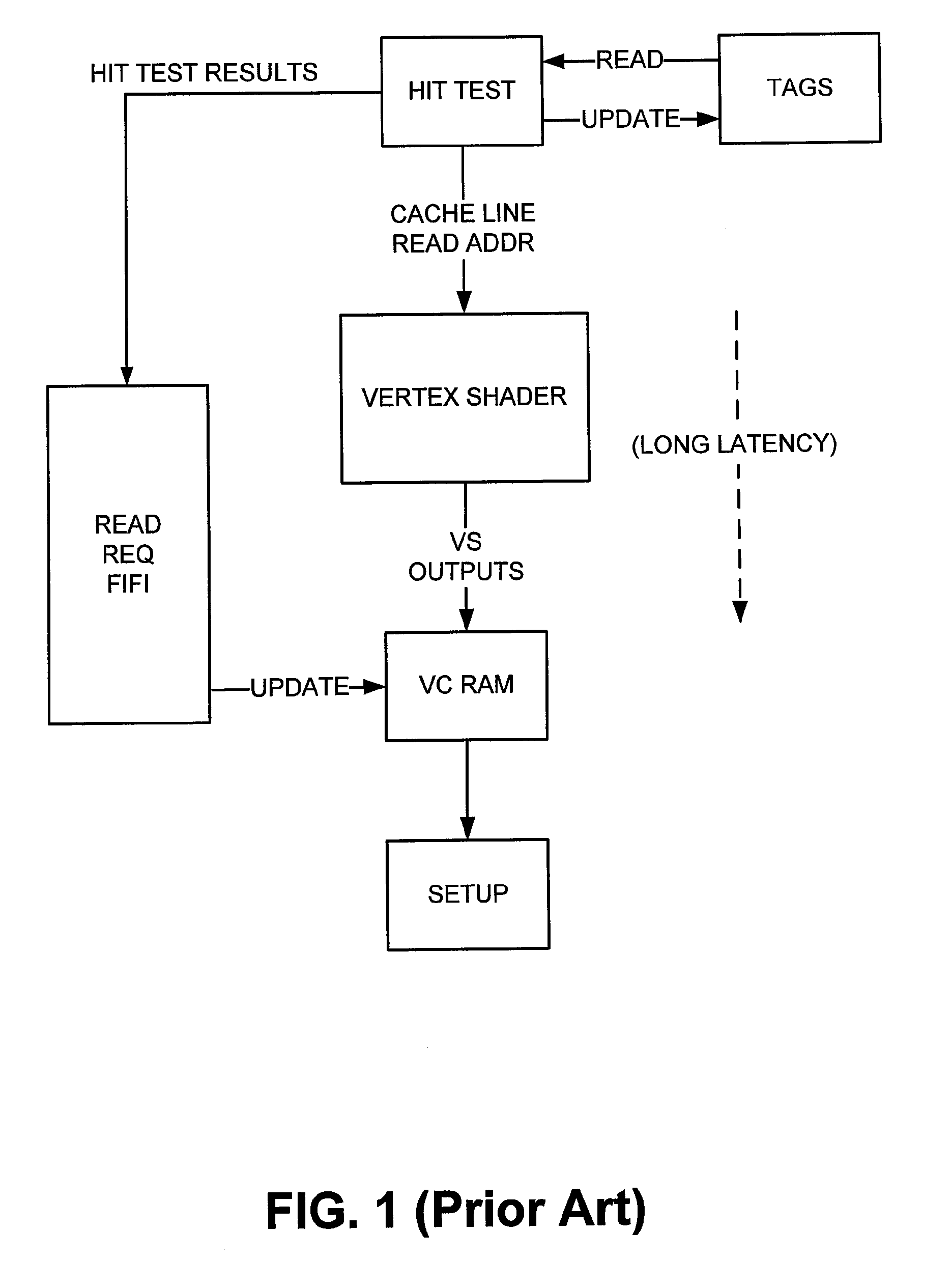

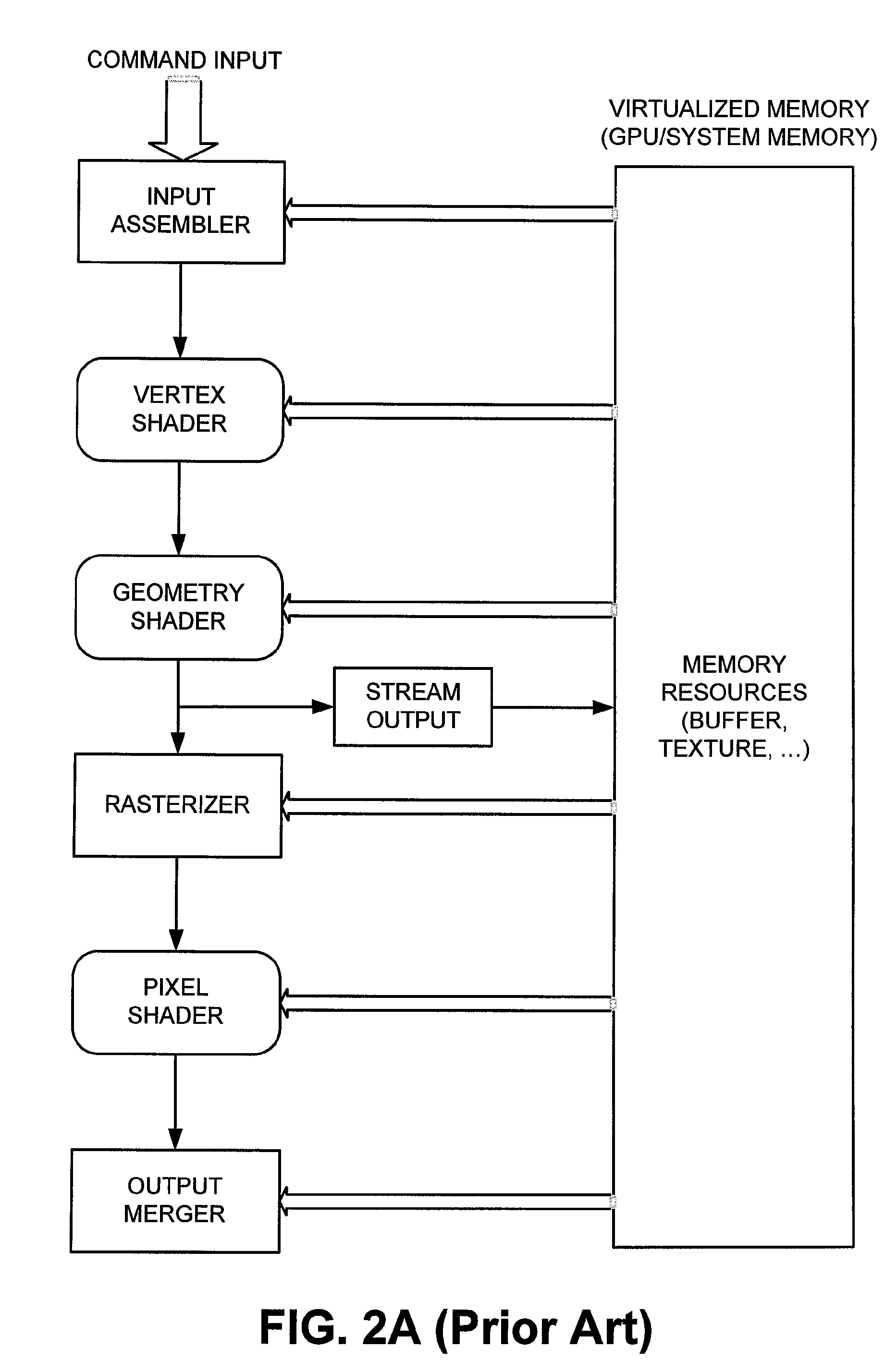

Caching Method and Apparatus for a Vertex Shader and Geometry Shader

Systems and methods for sharing a physical cache among one or more clients in a stream data processing pipeline are described. One embodiment, among others, is directed to a system for sharing caches between two or more clients. The system comprises a physical cache memory having a memory portion accessed through a cache index. The system further comprises at least two virtual cache spaces mapping to the memory portion and at least one virtual cache controller configured to perform a hit-miss test on the active window of the virtual cache space in response to a request from one of the clients for accessing the physical cache memory. In accordance with some embodiments, each of the virtual cache spaces has an active window which has a different size than the memory portion. Furthermore, data is accessed from the corresponding location of the memory portion when the hit-miss test of the cache index returns a hit.

Owner:VIA TECH INC

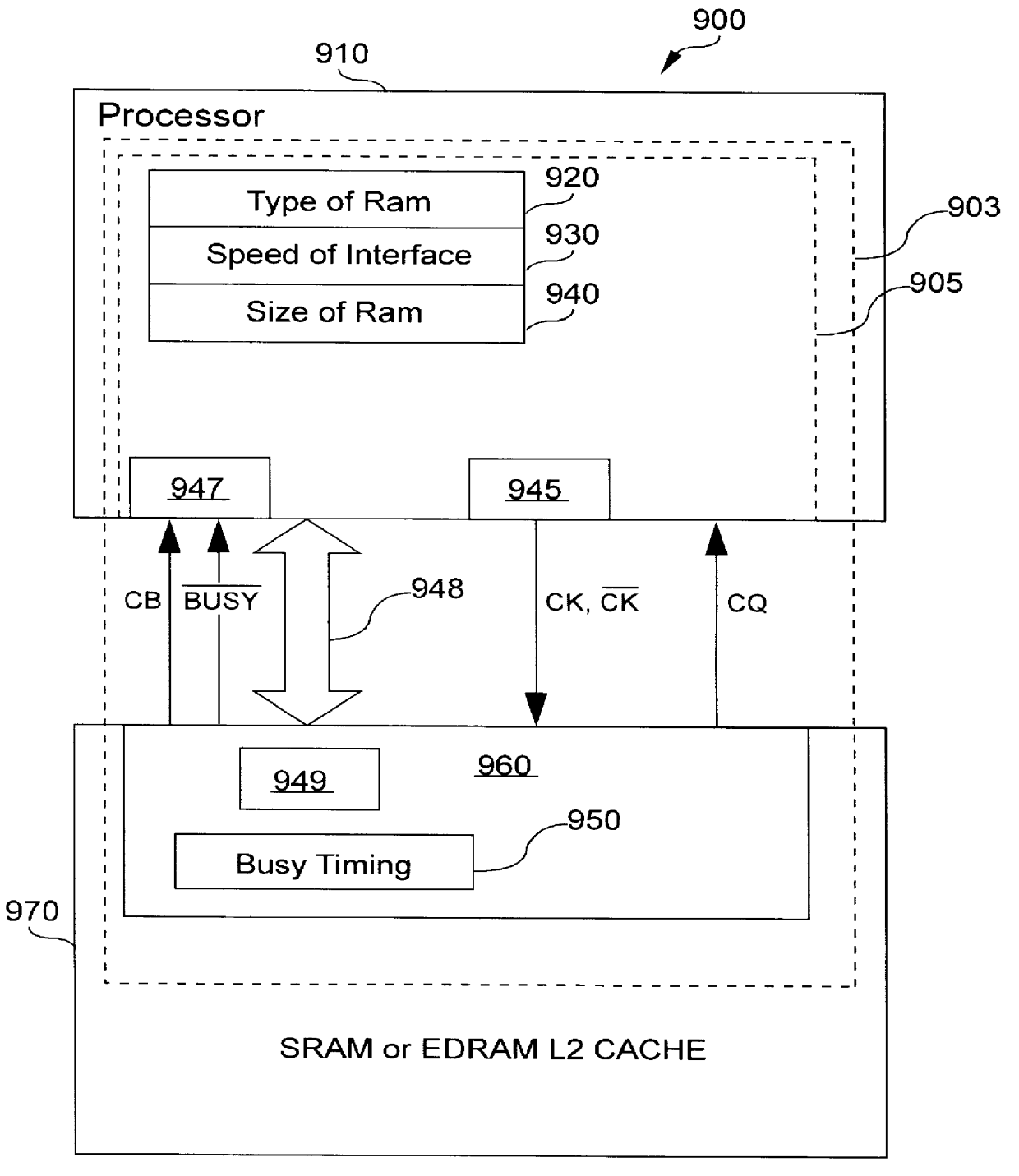

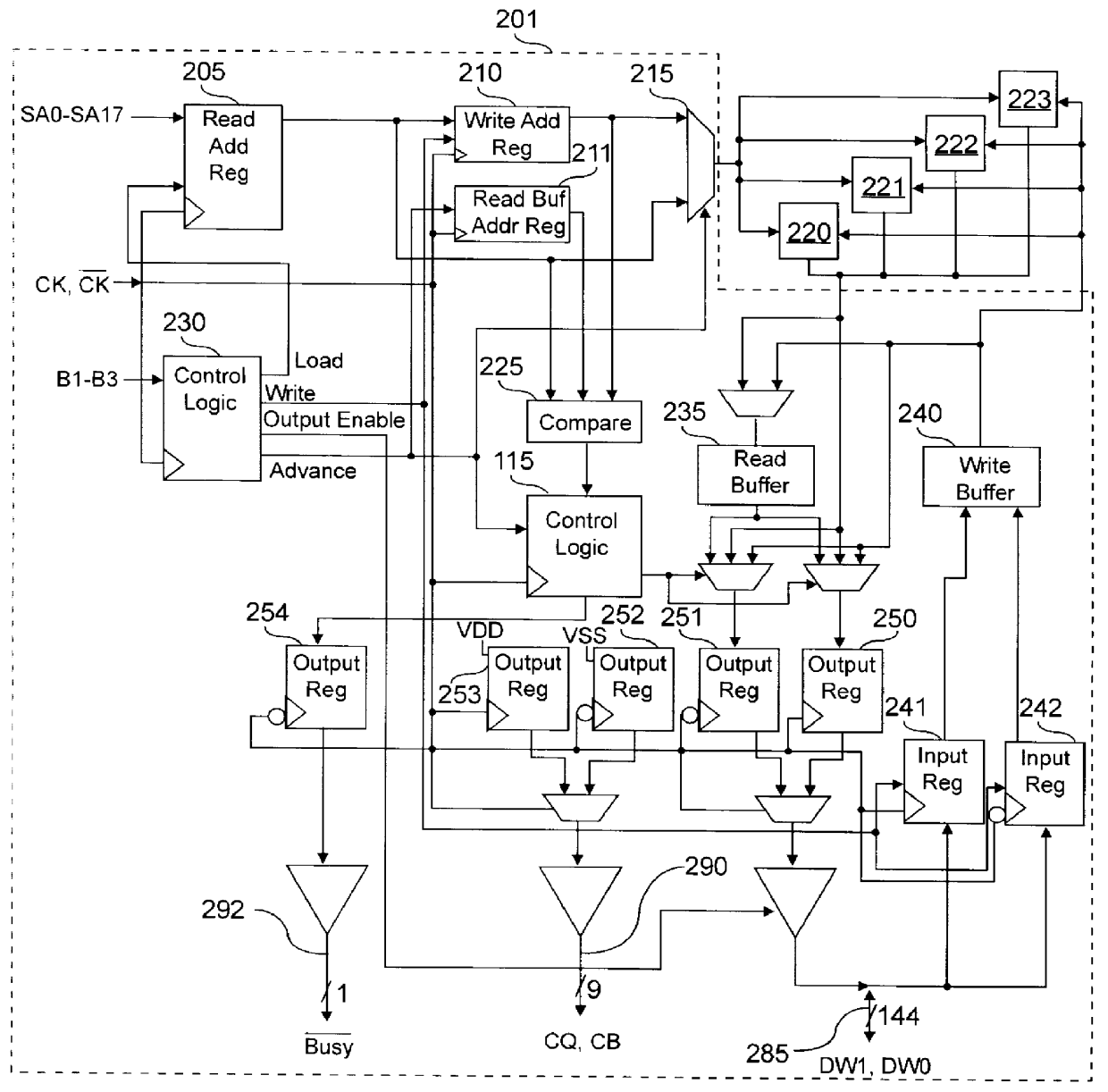

Programmable SRAM and DRAM cache interface with preset access priorities

InactiveUS6151664ACache interface portionMemory adressing/allocation/relocationStatic random-access memoryParallel computing

A cache interface that supports both Static Random Access Memory (SRAM) and Dynamic Random Access Memory (DRAM) is disclosed. The cache interface preferably comprises two portions, one portion on the processor and one portion on the cache. A designer can simply select which RAM he or she wishes to use for a cache, and the cache controller interface portion on the processor configures the processor to use this type of RAM. The cache interface portion on the cache is simple when being used with DRAM in that a busy indication is asserted so that the processor knows when an access collision occurs between an access generated by the processor and the DRAM cache. An access collision occurs when the DRAM cache is unable to read or write data due to a precharge, initialization, refresh, or standby state. When the cache interface is used with an SRAM cache, the busy indication is preferably ignored by a processor and the processor's cache interface portion. Additionally, the disclosed cache interface allows speed and size requirements for the cache to be programmed into the interface. In this manner, the interface does not have to be redesigned for use with different sizes or speeds of caches.

Owner:IBM CORP

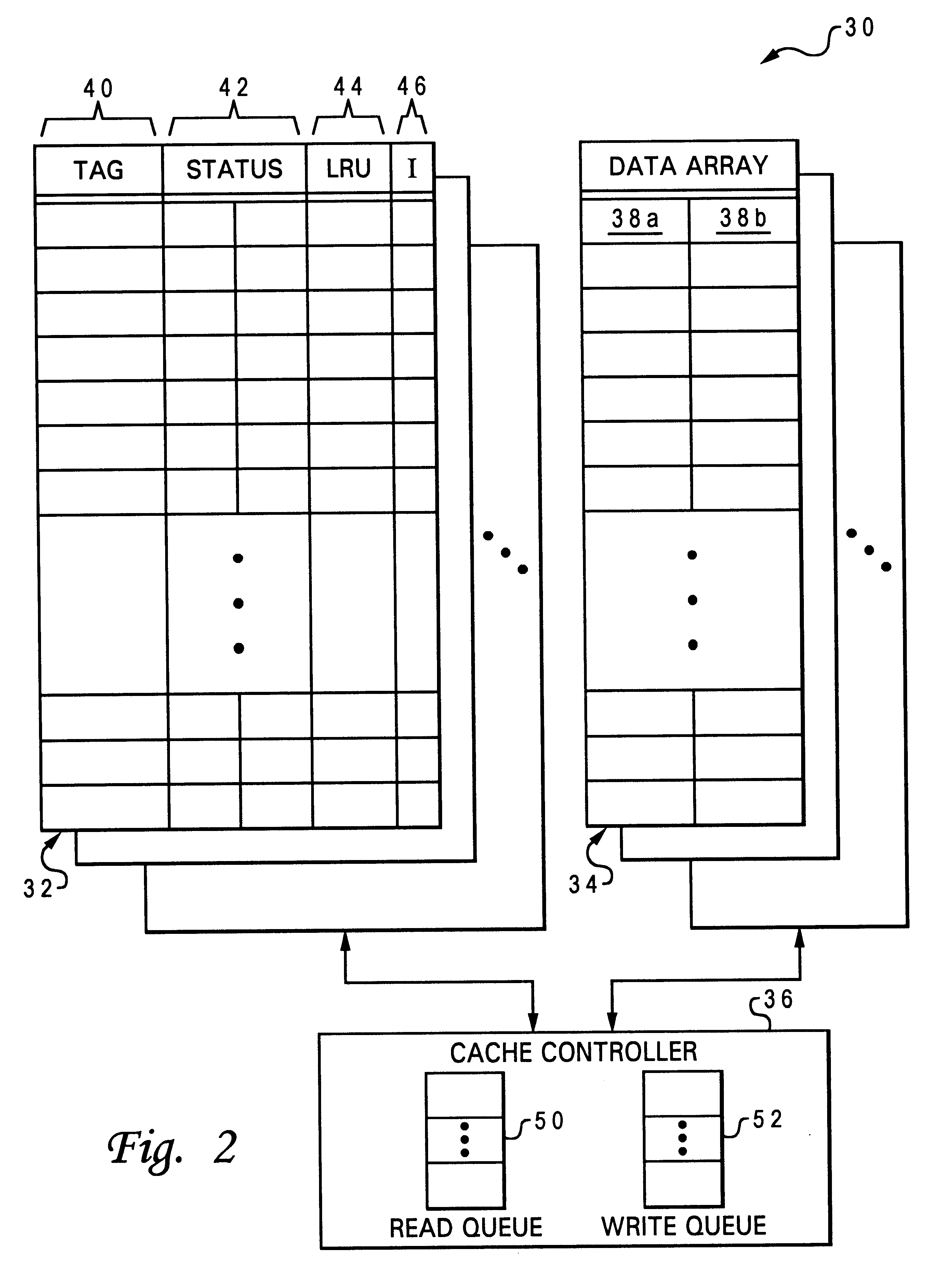

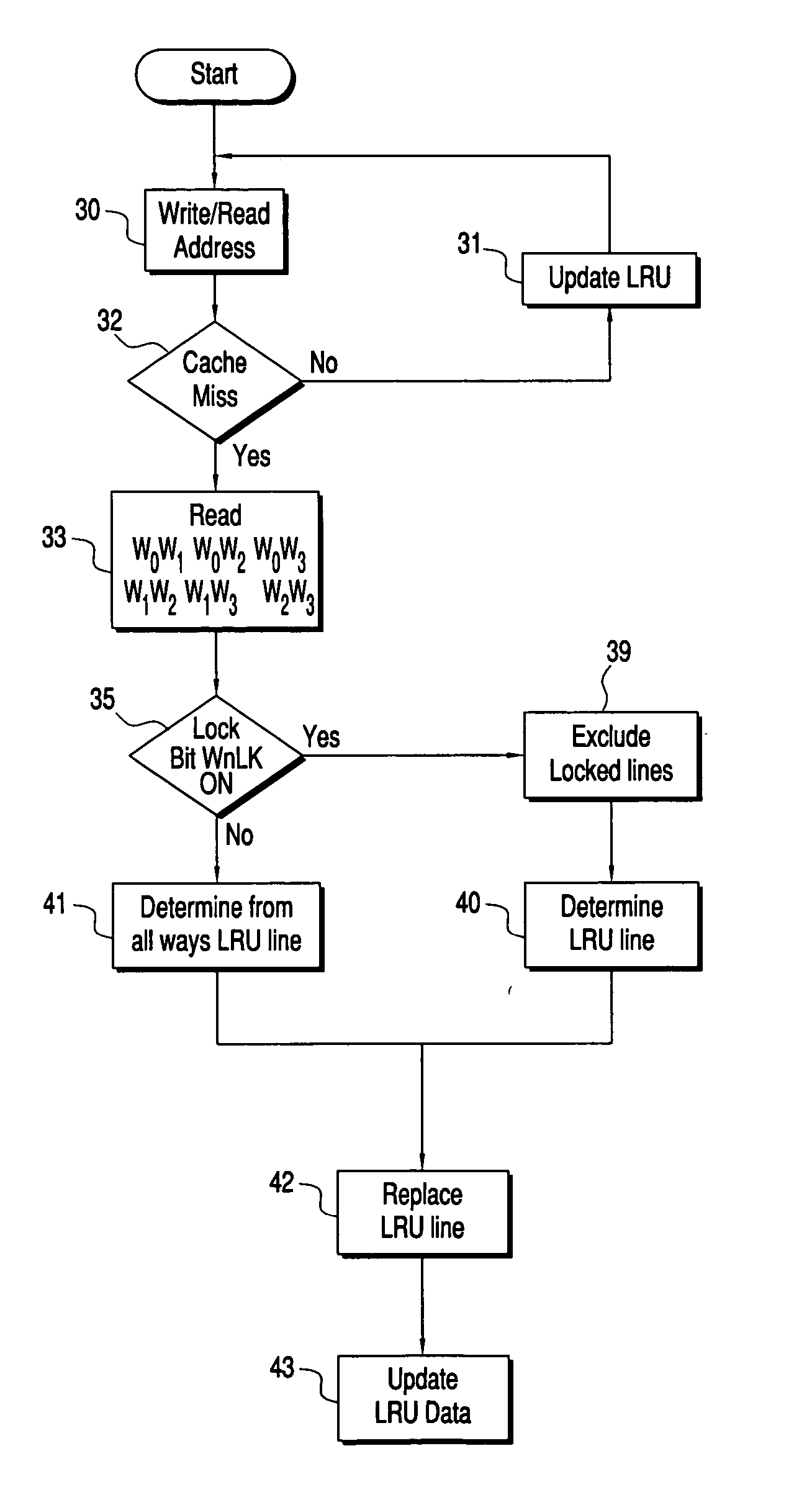

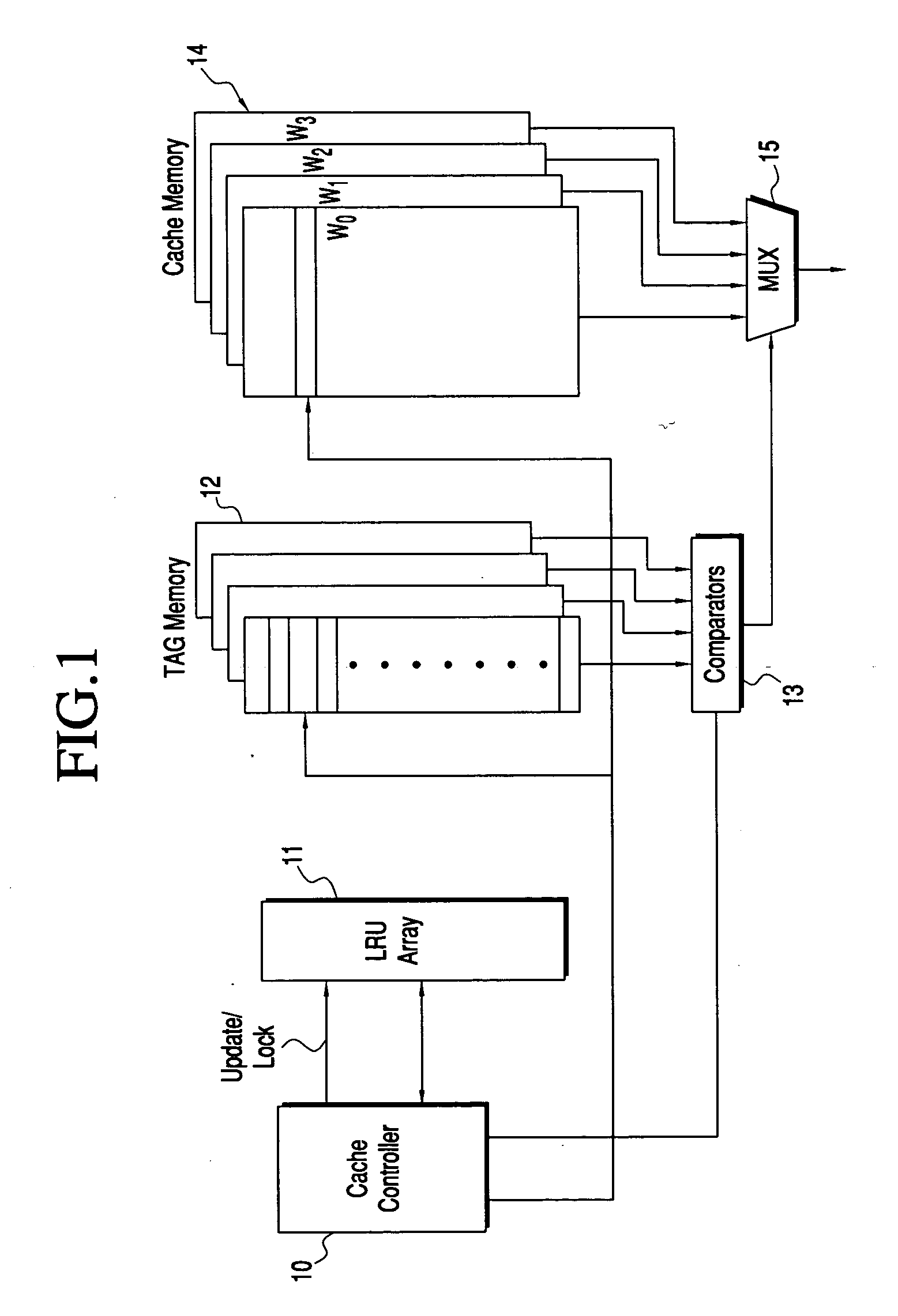

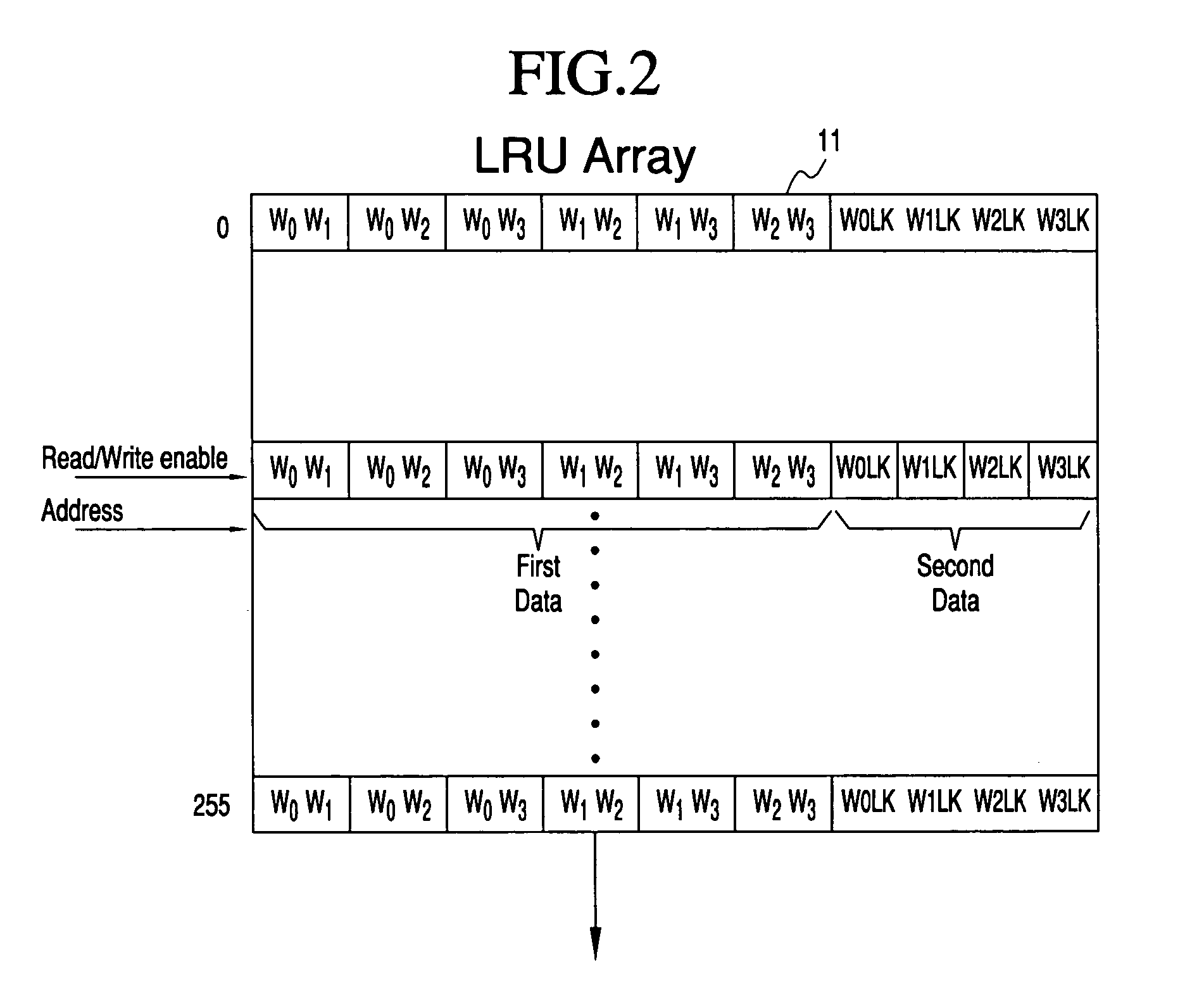

Method for software controllable dynamically lockable cache line replacement system

InactiveUS20060036811A1Shorten access timeSpeed up access timeMemory systemsParallel computingAccess line

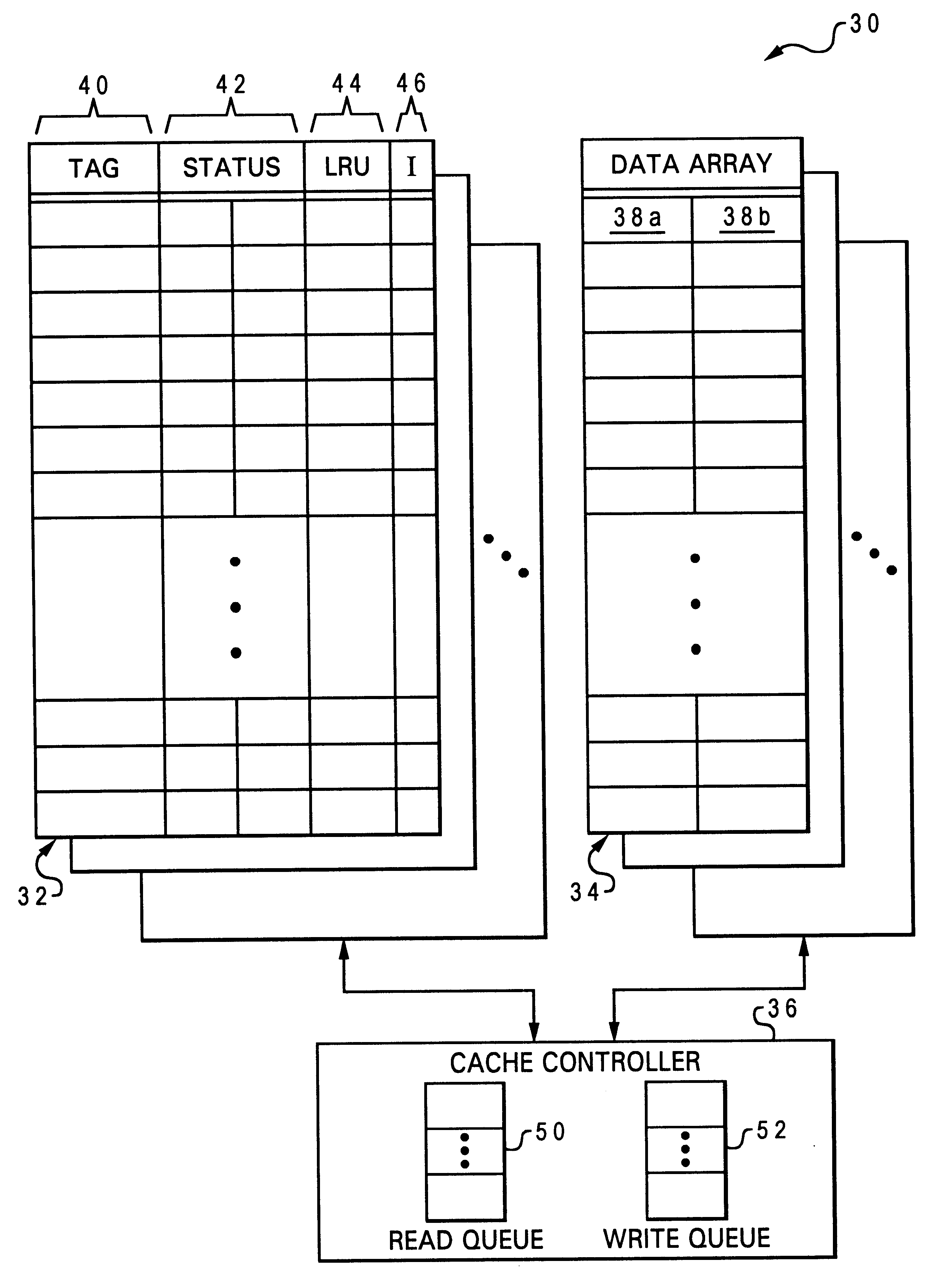

An LRU array and method for tracking the accessing of lines of an associative cache. The most recently accessed lines of the cache are identified in the table, and cache lines can be blocked from being replaced. The LRU array contains a data array having a row of data representing each line of the associative cache, having a common address portion. A first set of data for the cache line identifies the relative age of the cache line for each way with respect to every other way. A second set of data identifies whether a line of one of the ways is not to be replaced. For cache line replacement, the cache controller will select the least recently accessed line using contents of the LRU array, considering the value of the first set of data, as well as the value of the second set of data indicating whether or not a way is locked. Updates to the LRU occur after each pre-fetch or fetch of a line or when it replaces another line in the cache memory.

Owner:GOOGLE LLC

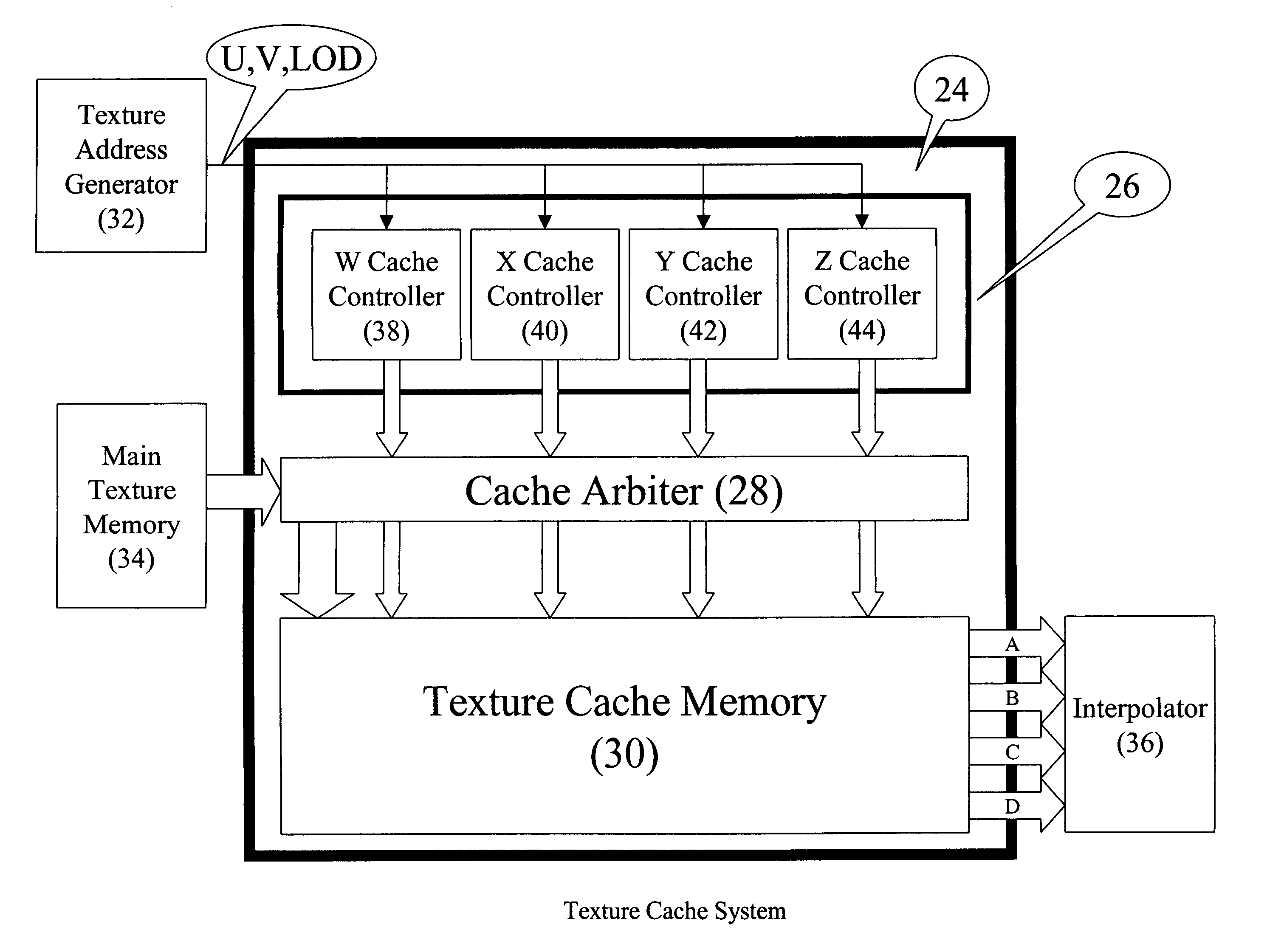

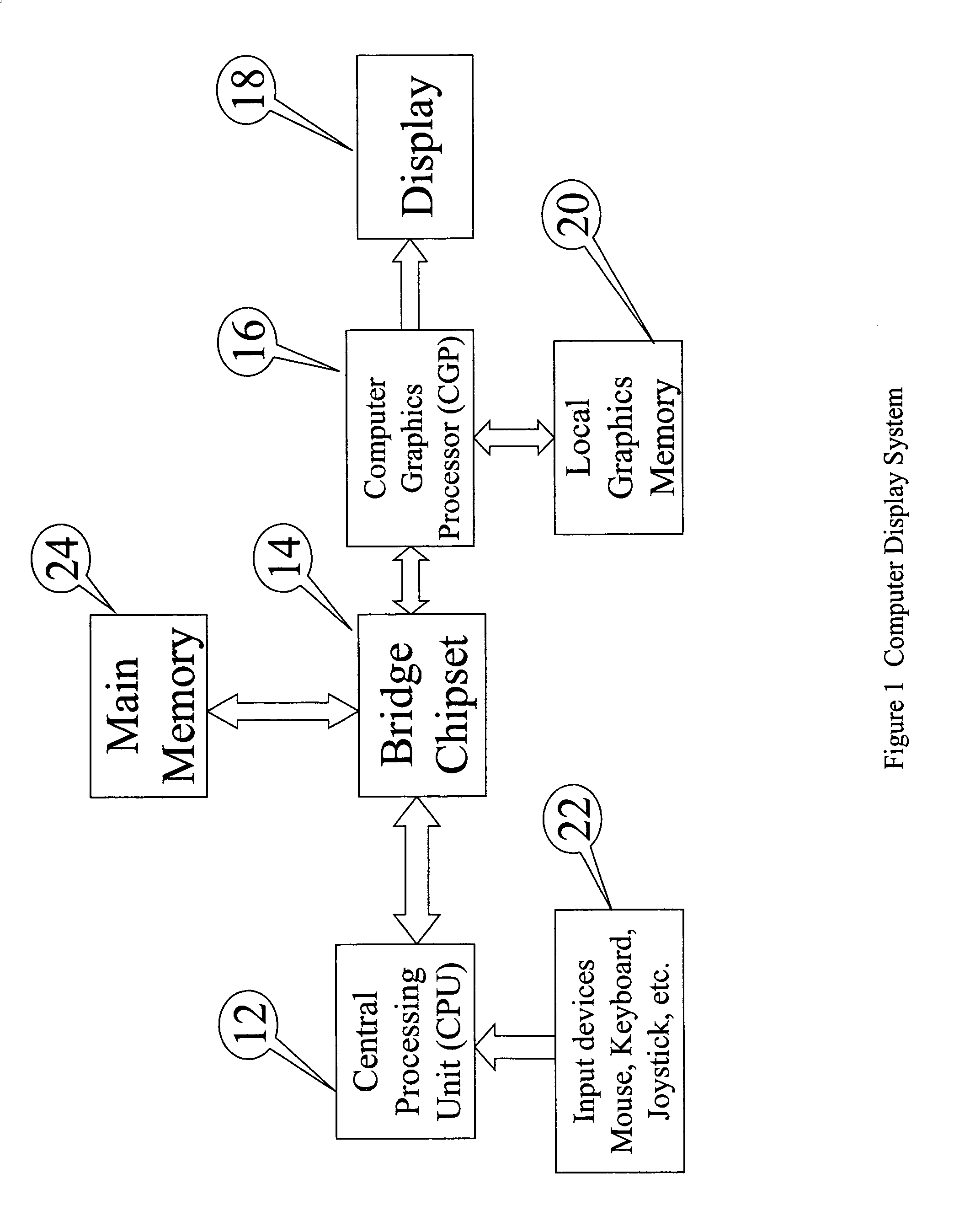

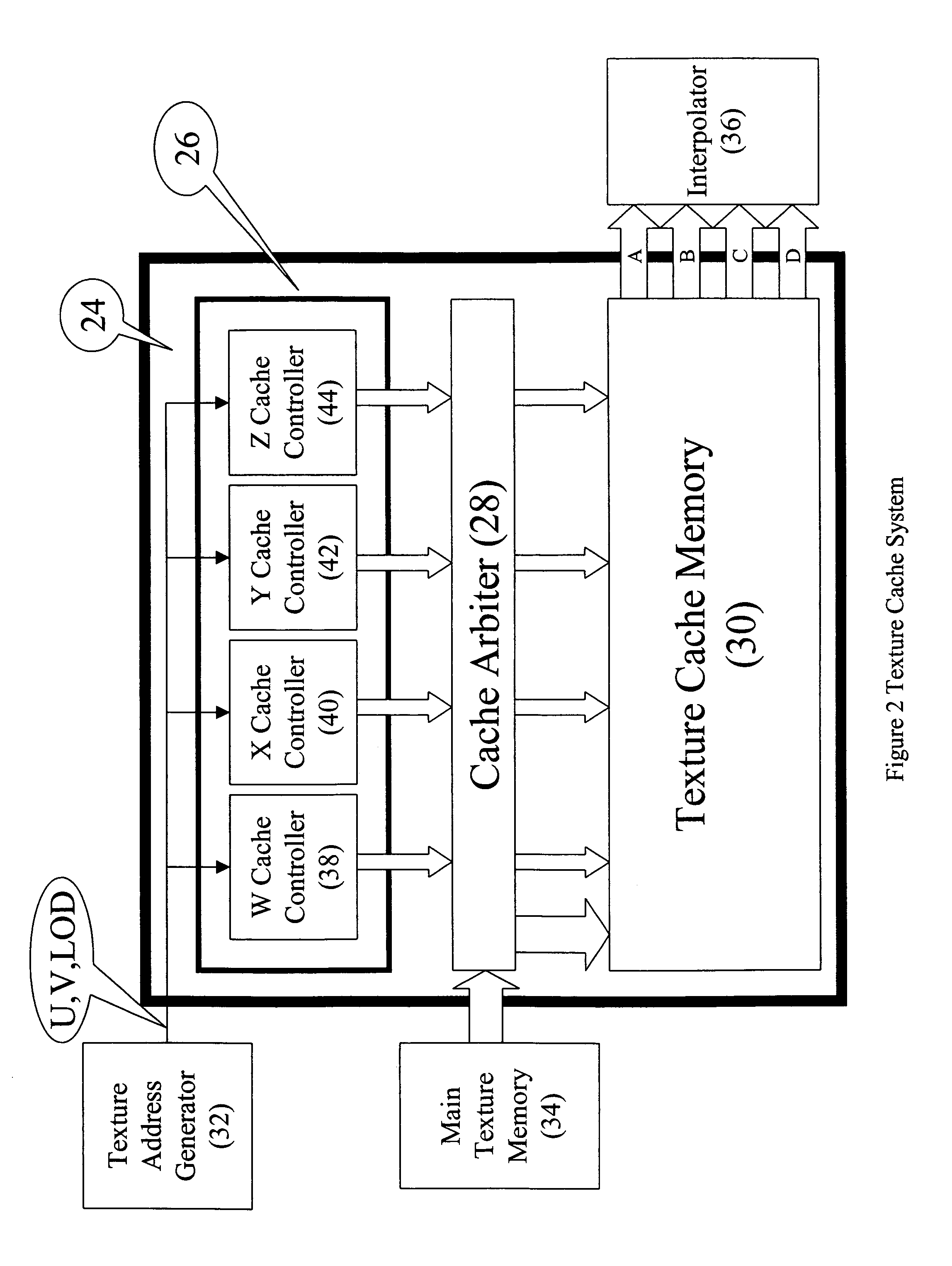

3-D rendering texture caching scheme

InactiveUS7050063B1Improve efficiencyEliminate significant numberMemory adressing/allocation/relocationImage memory managementComputational scienceParallel computing

A 3D rendering texture caching scheme that minimizes external bandwidth requirements for texture and increases the rate at which textured pixels are available. The texture caching scheme efficiently pre-fetches data at the main memory access granularity and stores it in cache memory. The data in the main memory and texture cache memory is organized in a manner to achieve large reuse of texels with a minimum of cache memory to minimize cache misses. The texture main memory stores a two dimensional array of texels, each texel having an address and one of N identifiers. The texture cache memory has addresses partitioned into N banks, each bank containing texels transferred from the main memory that have the corresponding identifier. A cache controller determines which texels need to be transferred from the texture main memory to the texture cache memory and which texels are currently in the cache using a least most recently used algorithm. By labeling the texture map blocks (double quad words), a partitioning scheme is developed which allow the cache controller structure to be very modular and easily realized. The texture cache arbiter is used for scheduling and controlling the actual transfer of texels from the texture main memory into the texture cache memory and controlling the outputting of texels for each pixel to an interpolating filter from the cache memory.

Owner:INTEL CORP

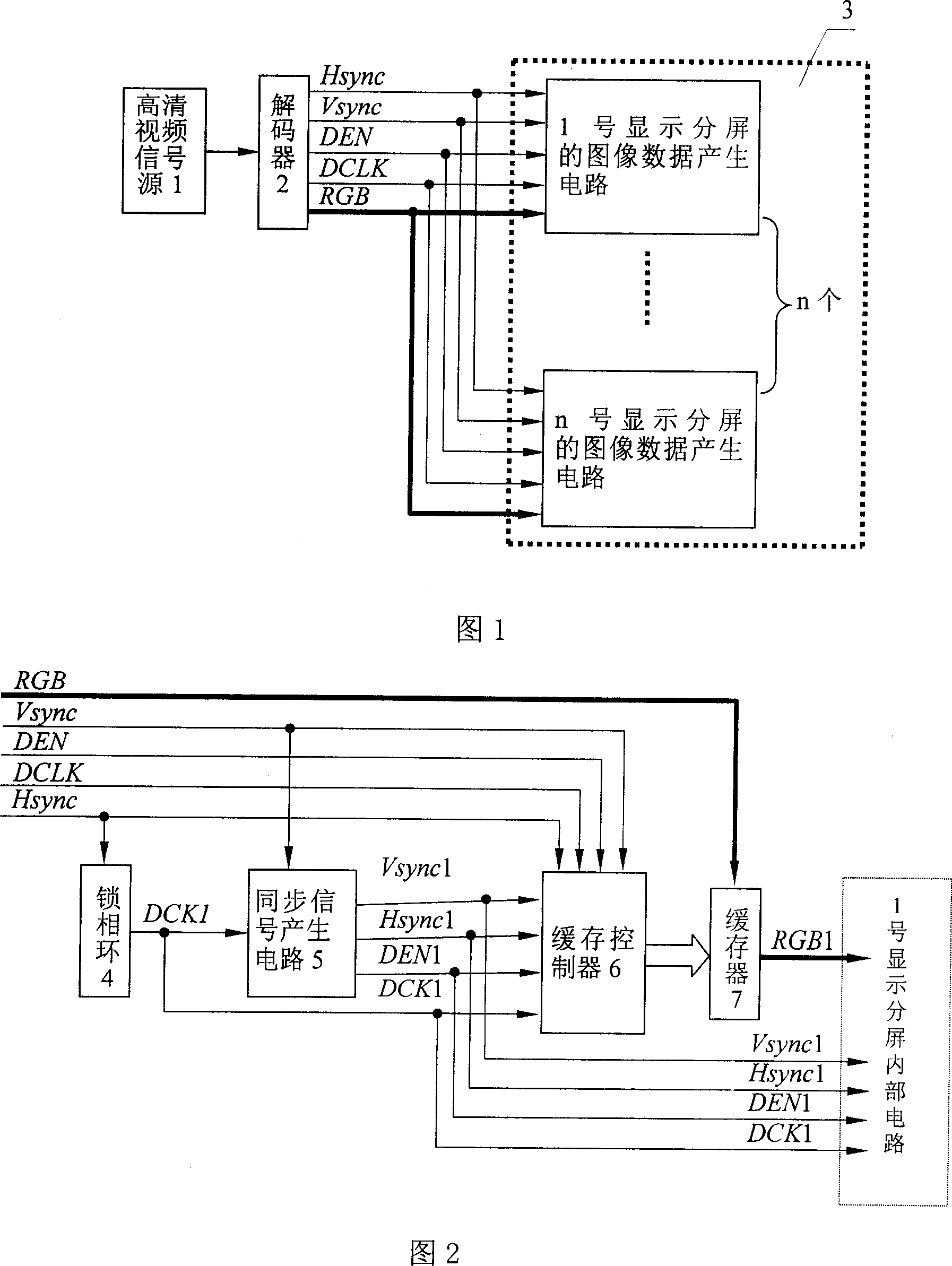

Multi-screen display splicing controller

ActiveCN101000755AImprove anti-interference abilityImprove stabilityPulse automatic controlCathode-ray tube indicatorsIsochronous signalHigh-definition video

Owner:KONKA GROUP

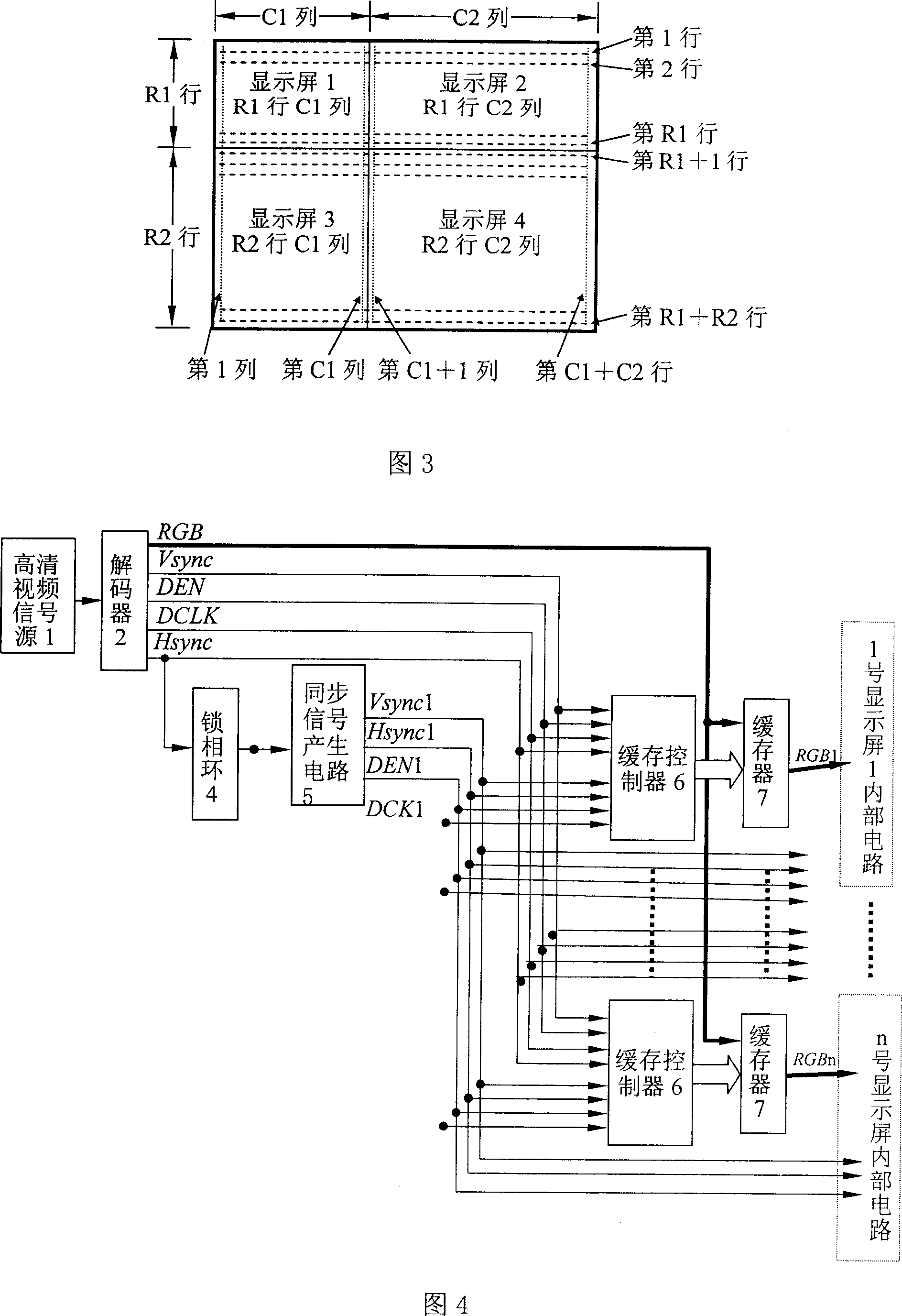

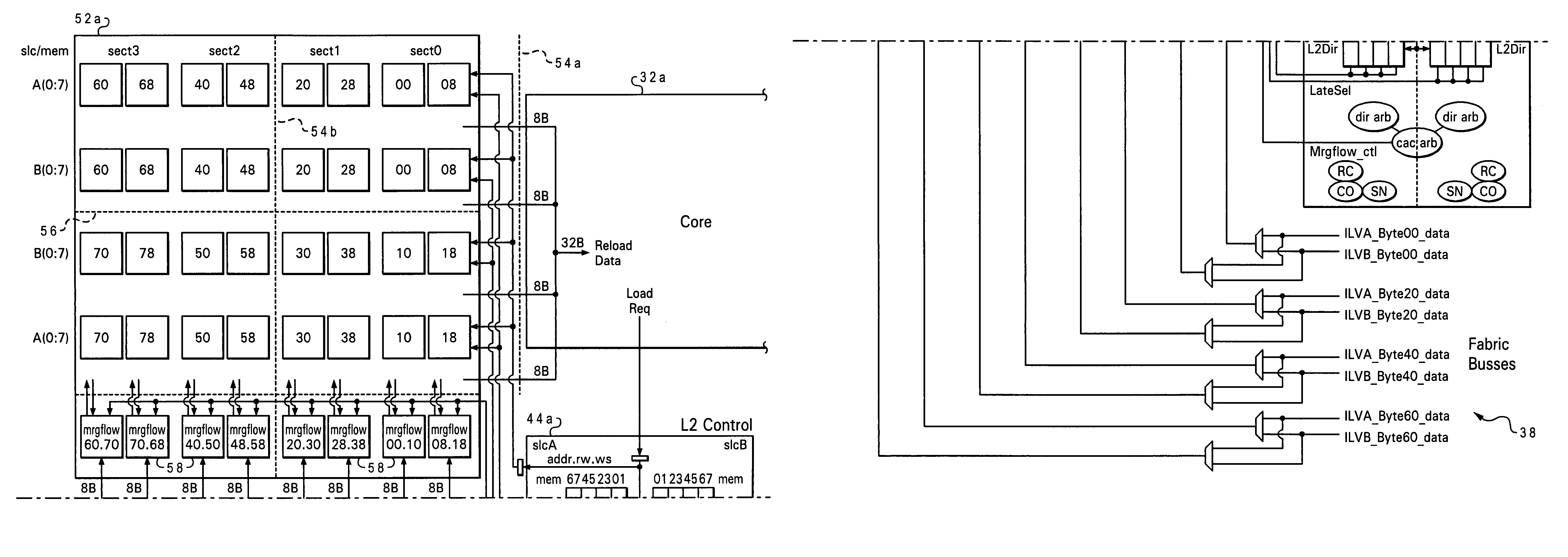

System bus structure for large L2 cache array topology with different latency domains

InactiveUS7469318B2Improve latencyImprove scalabilityEnergy efficient ICTMemory adressing/allocation/relocationData setParallel computing

A cache memory which loads two memory values into two cache lines by receiving separate portions of a first requested memory value from a first data bus over a first time span of successive clock cycles and receiving separate portions of a second requested memory value from a second data bus over a second time span of successive clock cycles which overlaps with the first time span. In the illustrative embodiment a first input line is used for loading both a first byte array of the first cache line and a first byte array of the second cache line, a second input line is used for loading both a second byte array of the first cache line and a second byte array of the second cache line, and the transmission of the separate portions of the first and second memory values is interleaved between the first and second data busses. The first data bus can be one of a plurality of data busses in a first data bus set, and the second data bus can be one of a plurality of data busses in a second data bus set. Two address busses (one for each data bus set) are used to receive successive address tags that identify which portions of the requested memory values are being received from each data bus set. For example, the requested memory values may be 32 bytes each, and the separate portions of the requested memory values are received over four successive cycles with an 8-byte portion of each value received each cycle. The cache lines are spread across different cache sectors of the cache memory, wherein the cache sectors have different output latencies, and the separate portions of a given requested memory value are loaded sequentially into the corresponding cache sectors based on their respective output latencies. Merge flow circuits responsive to the cache controller are used to receive the portions of a requested memory value and input those bytes into the cache sector.

Owner:INT BUSINESS MASCH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com