Patents

Literature

335 results about "Reconfigurable computing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Reconfigurable computing is a computer architecture combining some of the flexibility of software with the high performance of hardware by processing with very flexible high speed computing fabrics like field-programmable gate arrays (FPGAs). The principal difference when compared to using ordinary microprocessors is the ability to make substantial changes to the datapath itself in addition to the control flow. On the other hand, the main difference from custom hardware, i.e. application-specific integrated circuits (ASICs) is the possibility to adapt the hardware during runtime by "loading" a new circuit on the reconfigurable fabric.

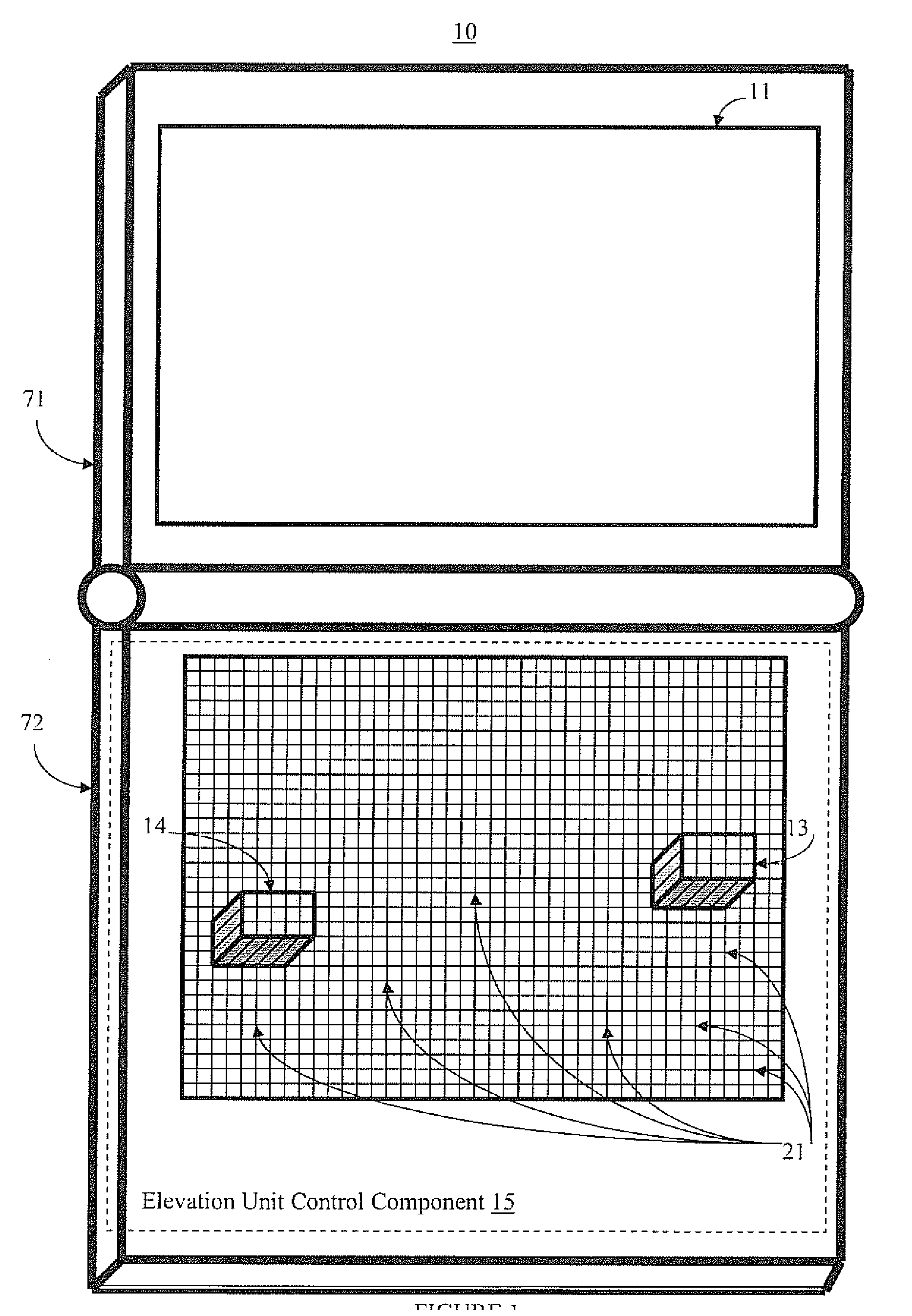

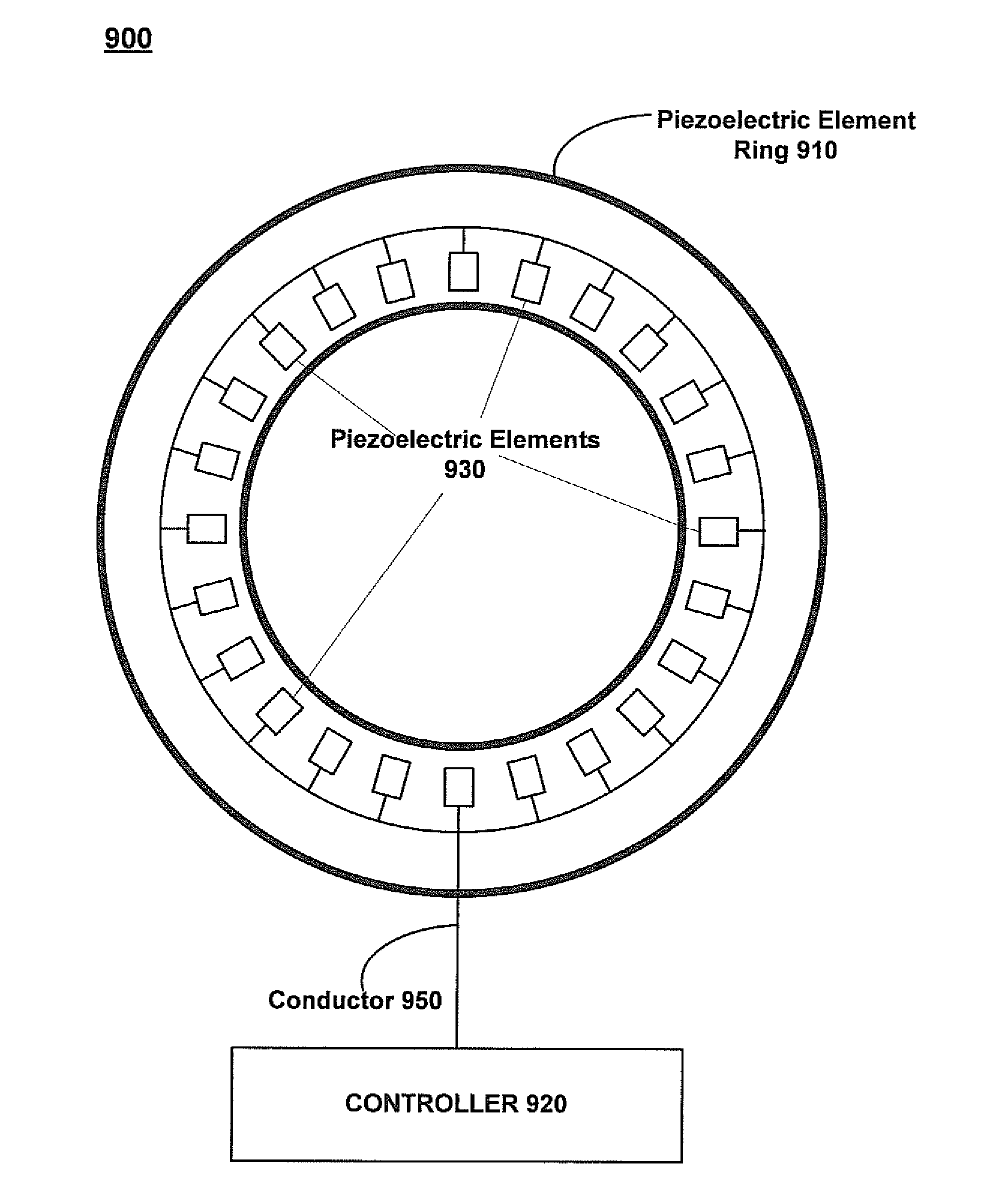

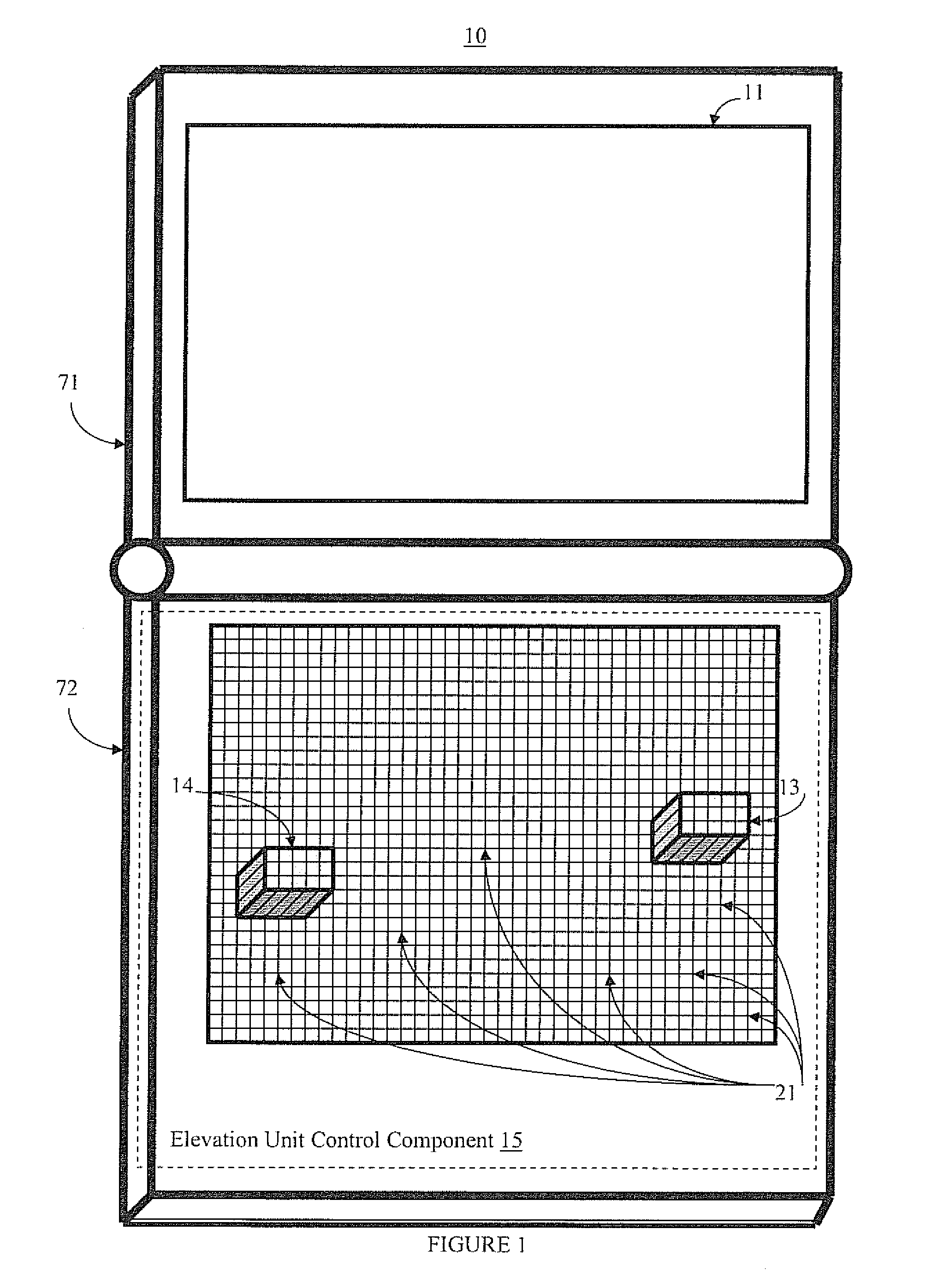

Physically reconfigurable input and output systems and methods

InactiveUS20110234502A1Effective interactionInput/output for user-computer interactionCathode-ray tube indicatorsUser needsReconfigurable antenna

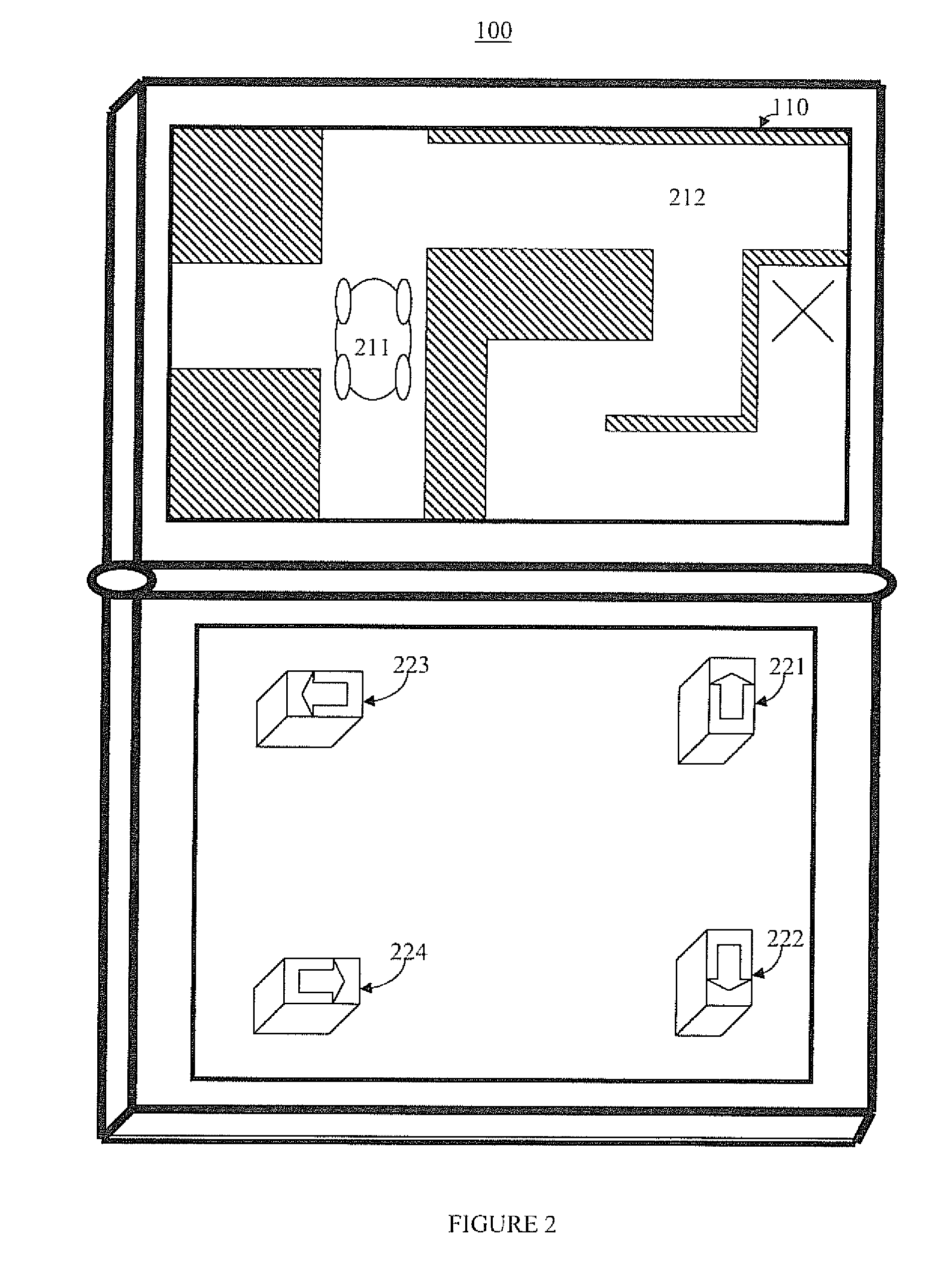

Systems and methods for altering the shape of a reconfigurable surface area are presented. The present systems and methods facilitate efficient and effective interaction with a device or system. In one embodiment, a surface reconfiguration system includes a flexible surface; an elevation unit that creates alterations in the contours of the surface; and an elevation control component that controls adjustments to the elevation unit. Thus, the surface of the device is reconfigurable based on system, application, mode, and / or user needs. Accordingly, the surface can be used to provide input and output functionality. The surface can include touch detection functionality for added input functionality.

Owner:PANASONIC OF NORTH AMERICA

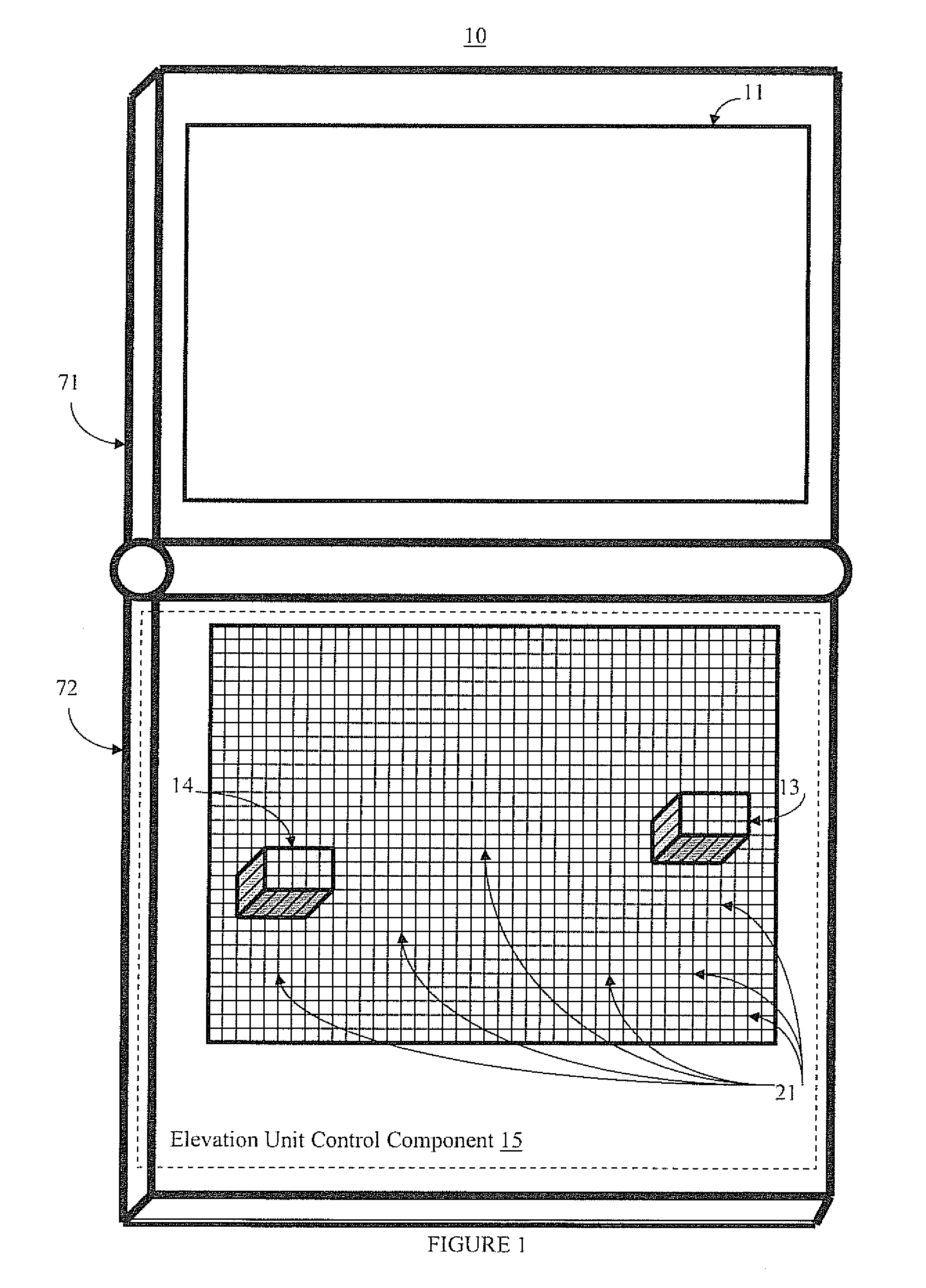

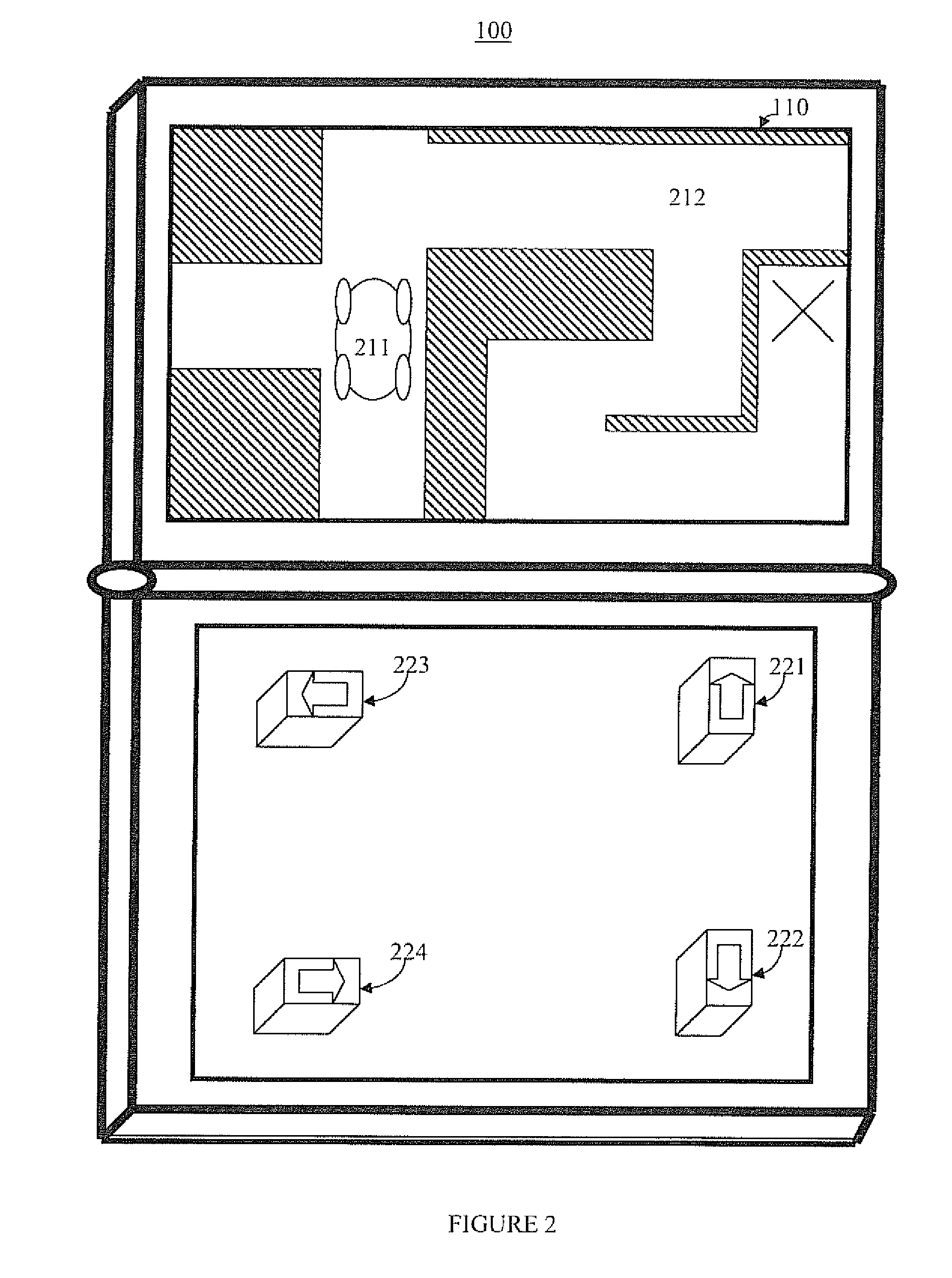

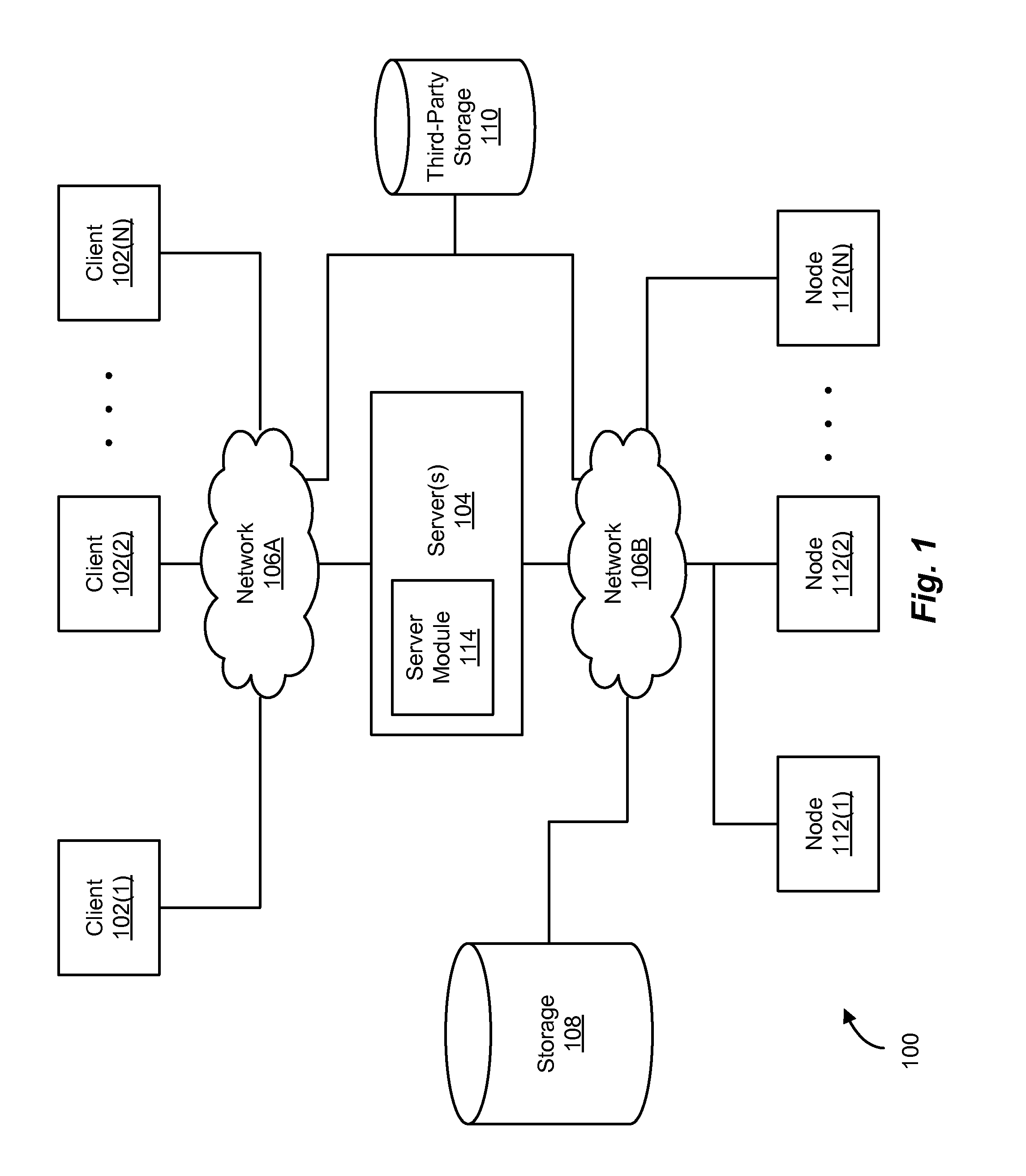

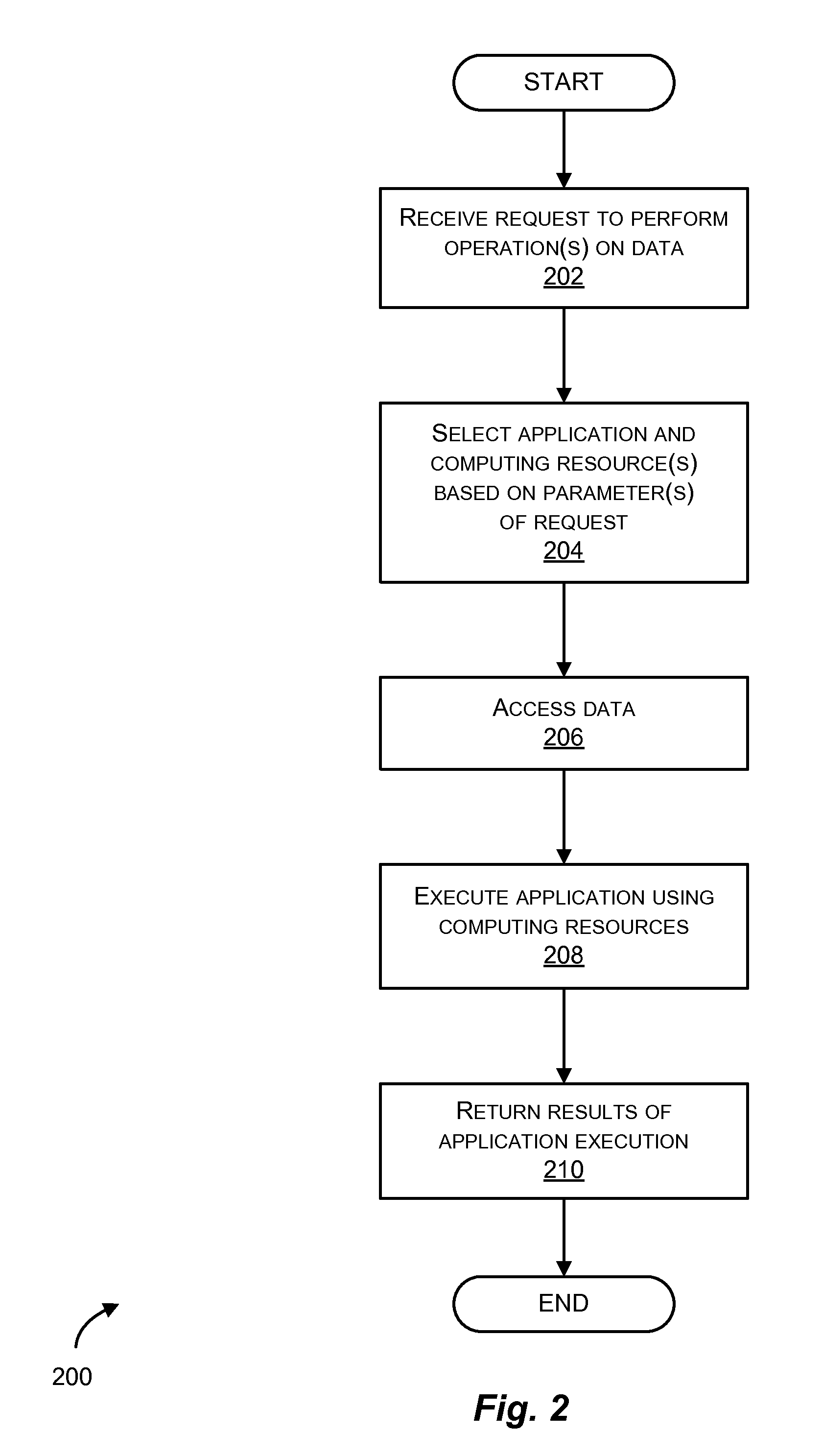

Reconfigurable cloud computing

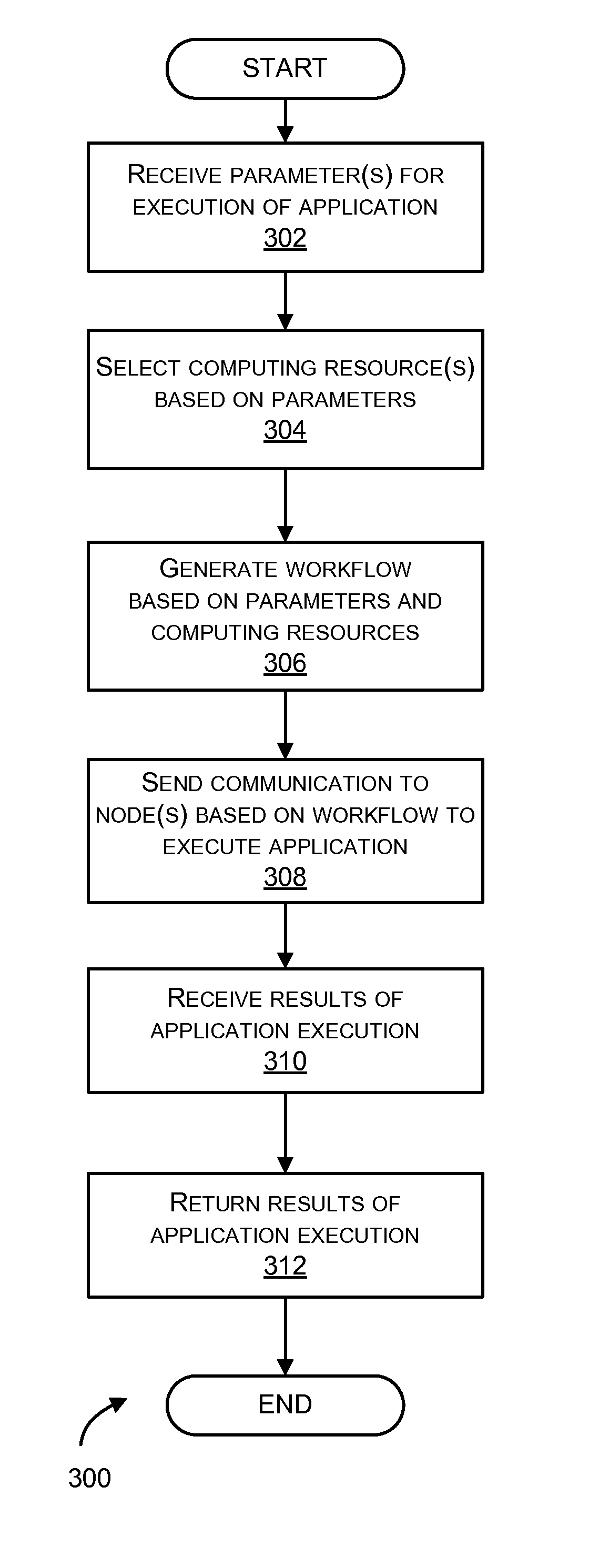

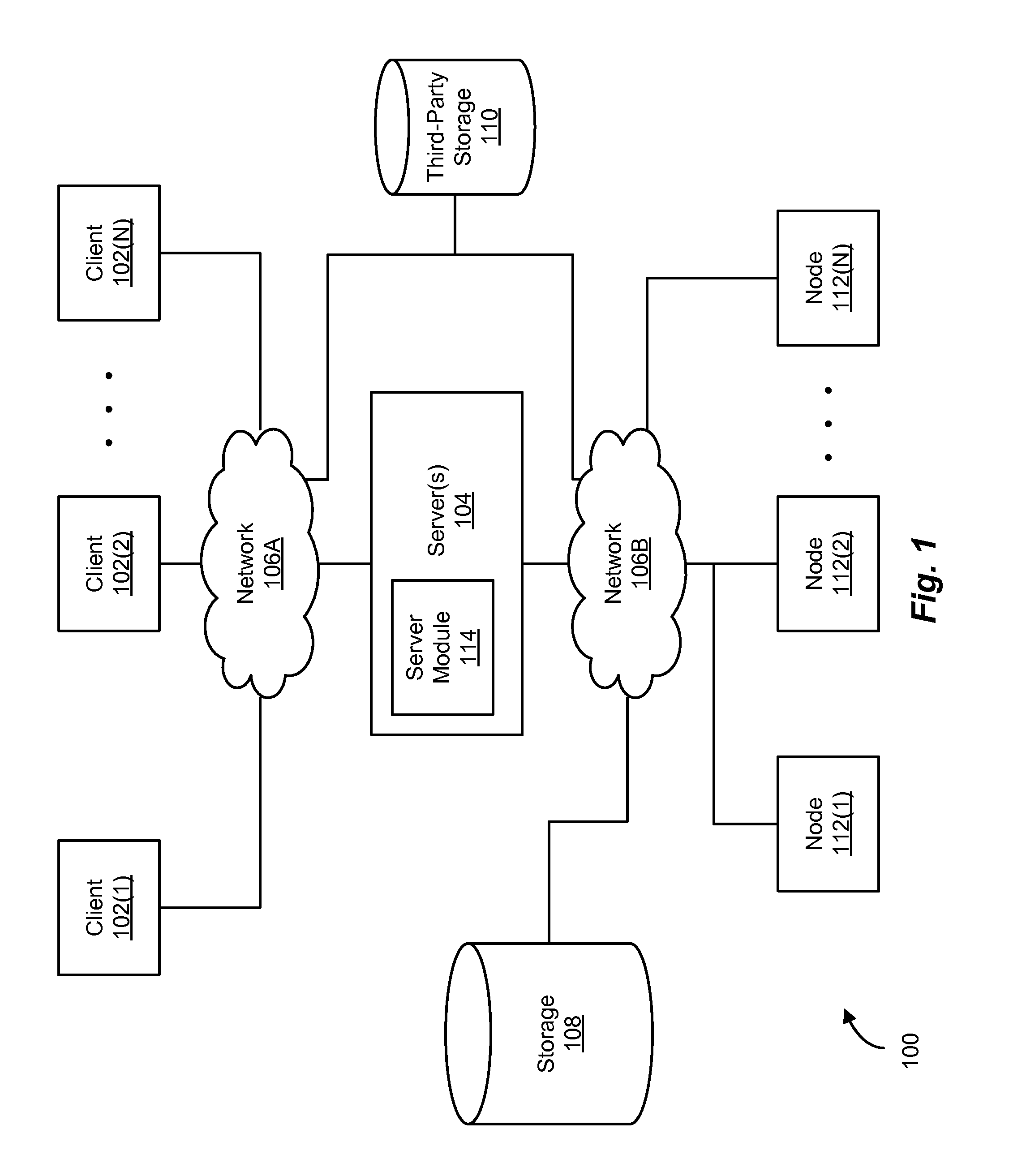

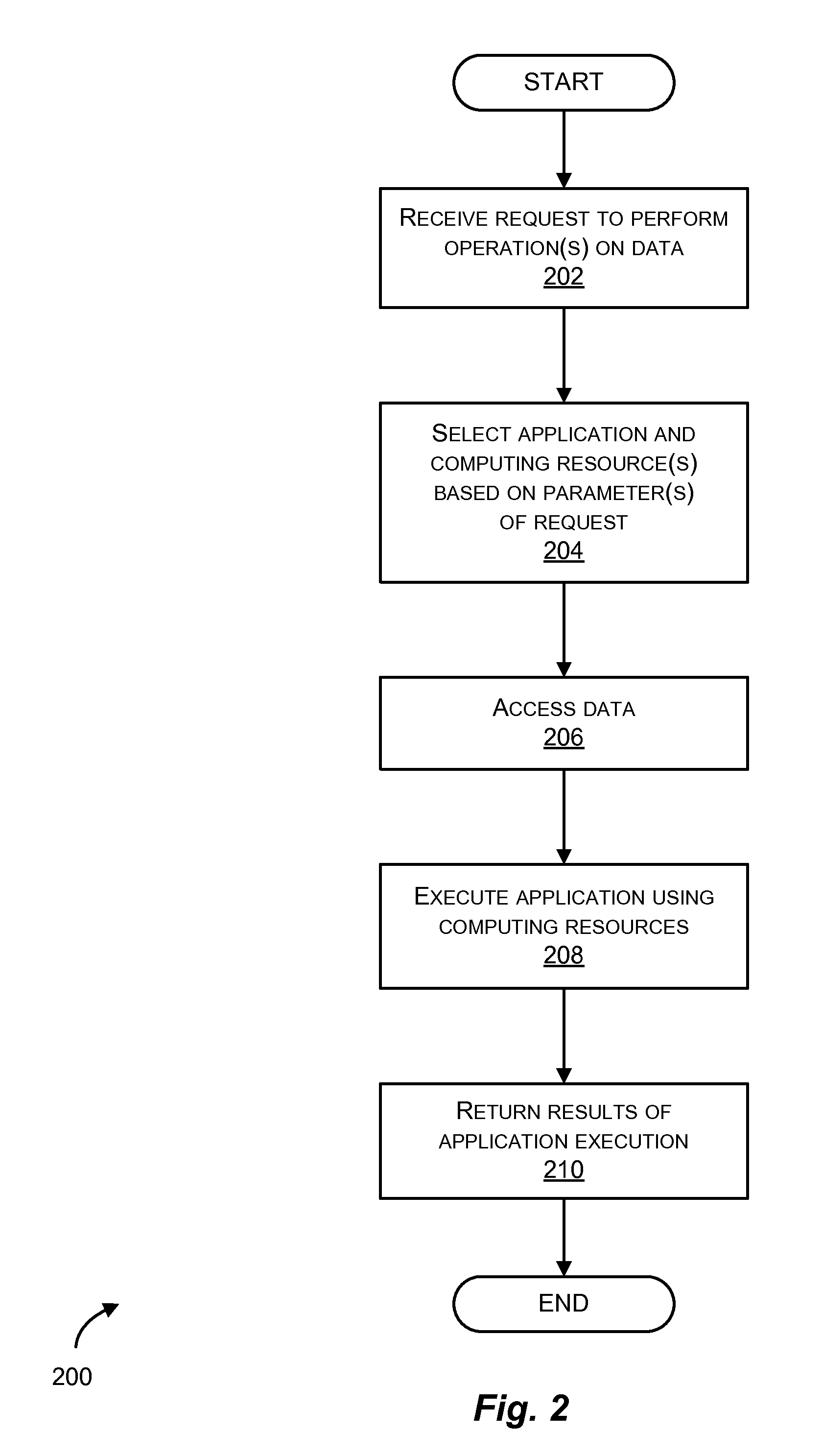

A method, system, and computer-readable storage medium for using a distributed computing system are disclosed. For example, one method involves receiving one or more parameters. The one or more parameters indicate one or more operations. The method also involves selecting one or more computing resources from computing resources. This selecting is based on the parameter(s). An application is configured to be executed using the computing resource(s). The method also involves generating a workflow. The workflow indicates that the application is to be executed using the computing resource(s). The workflow indicates that the application performs the operation(s). The method also involves communicating at least a part of the workflow to one or more nodes, where the node(s) include the computing resource(s).

Owner:AGARIK SAS

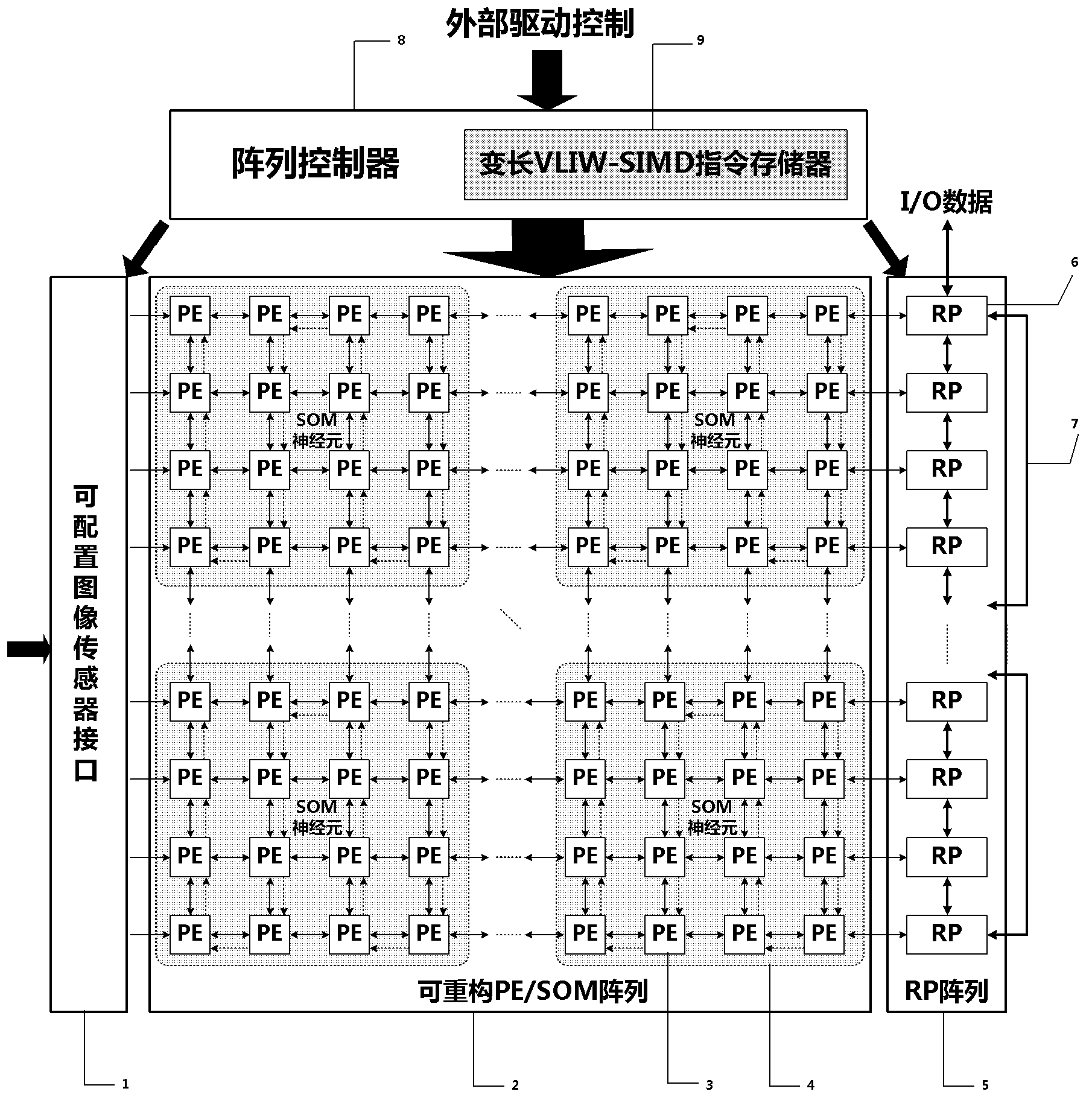

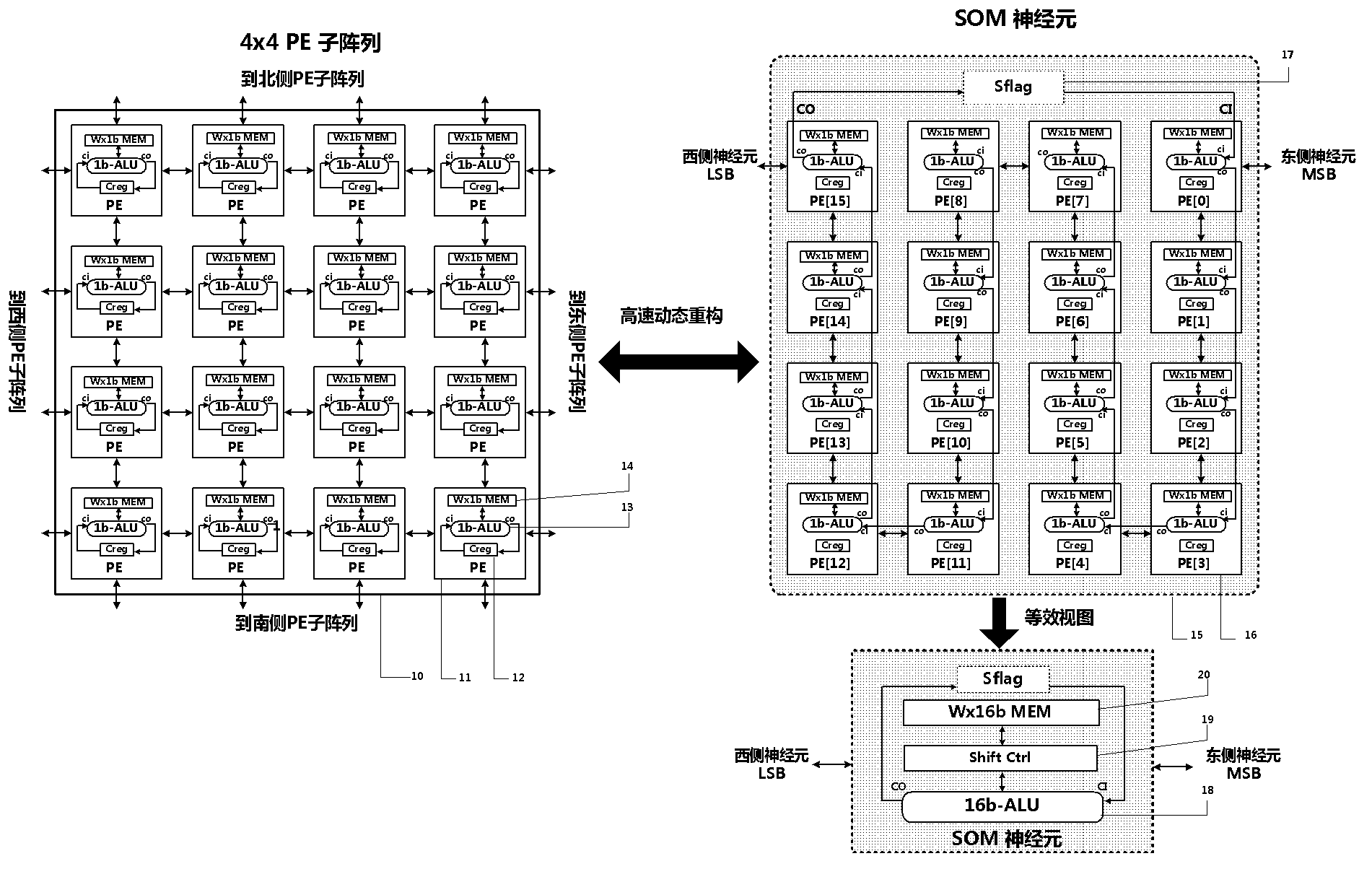

Dynamically reconfigurable multi-stage parallel single instruction multiple data array processing system

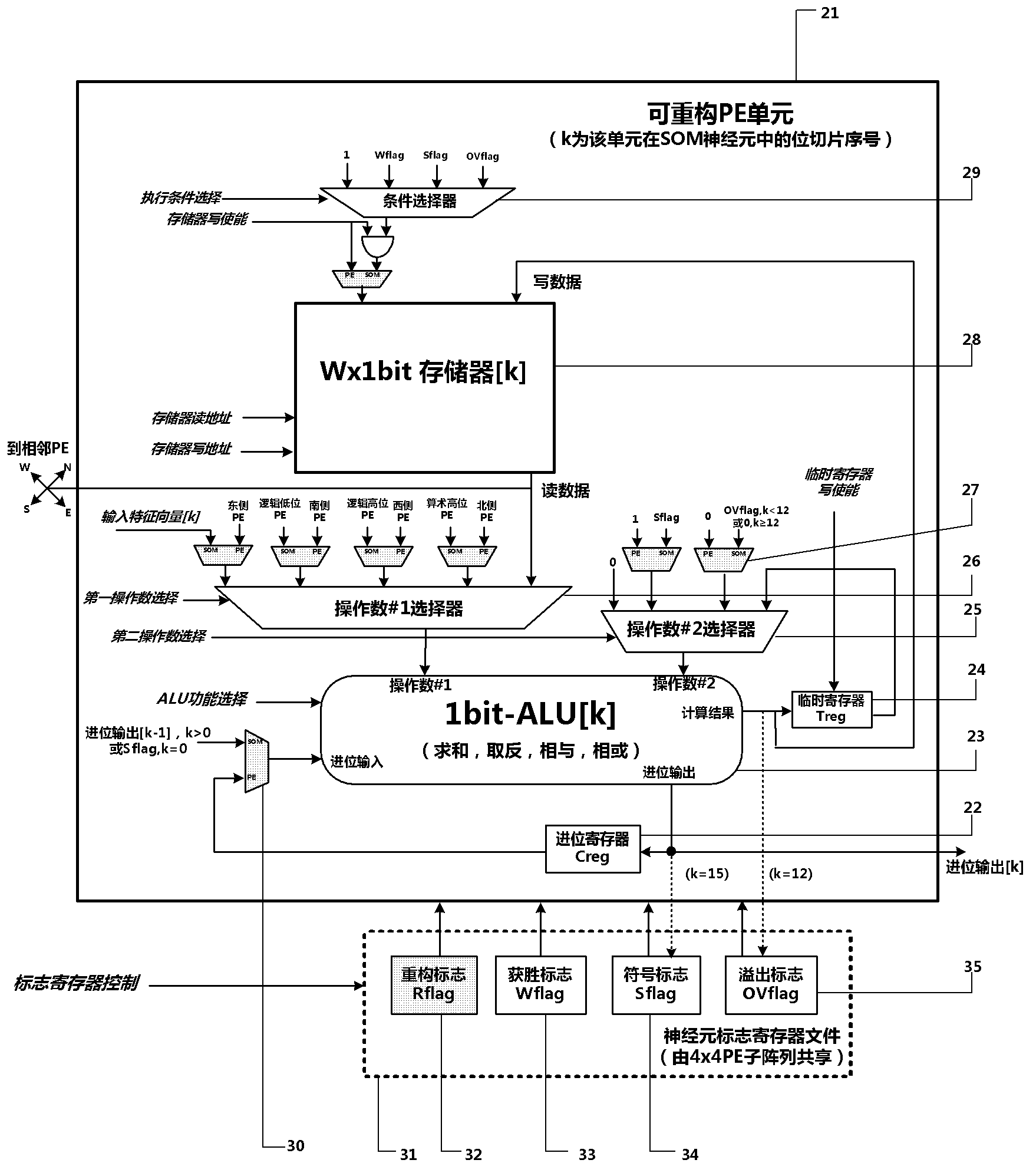

ActiveCN103019656AFunctionalImplement image feature extractionConcurrent instruction executionImaging processingReconfigurable computing

The invention discloses a dynamically reconfigurable multi-stage parallel single instruction multiple data array processing system, which comprises a pixel level parallel processing element (PE) array and a row parallel row processor (RP) array, wherein the PE array is mainly used for finishing a linear operation part suitable for the parallel execution of all pixels in low-level and intermediate-level image processing; the RP array is used for operation suitable to be finished in a row parallel way or complex nonlinear operation in the low-level and intermediate-level processing; and particularly, the PE array can also be dynamically reconfigured into a two-dimensional self-organizing map (SOM) neural network with extremely low performance and area overhead, and the neural network can realize advanced image processing functions of high-speed parallel online training, feature recognition and the like with the coordination of RPs. The shortcoming that advanced image processing cannot be used for pixel level parallel RP arrays in the conventional programmable vision chip and the conventional parallel vision processor is completely overcome, and the implementation of a fully-functional, low-cost, low-power consumption, intelligent and portable high-speed real-time visual image system on chip is facilitated.

Owner:INST OF SEMICONDUCTORS - CHINESE ACAD OF SCI

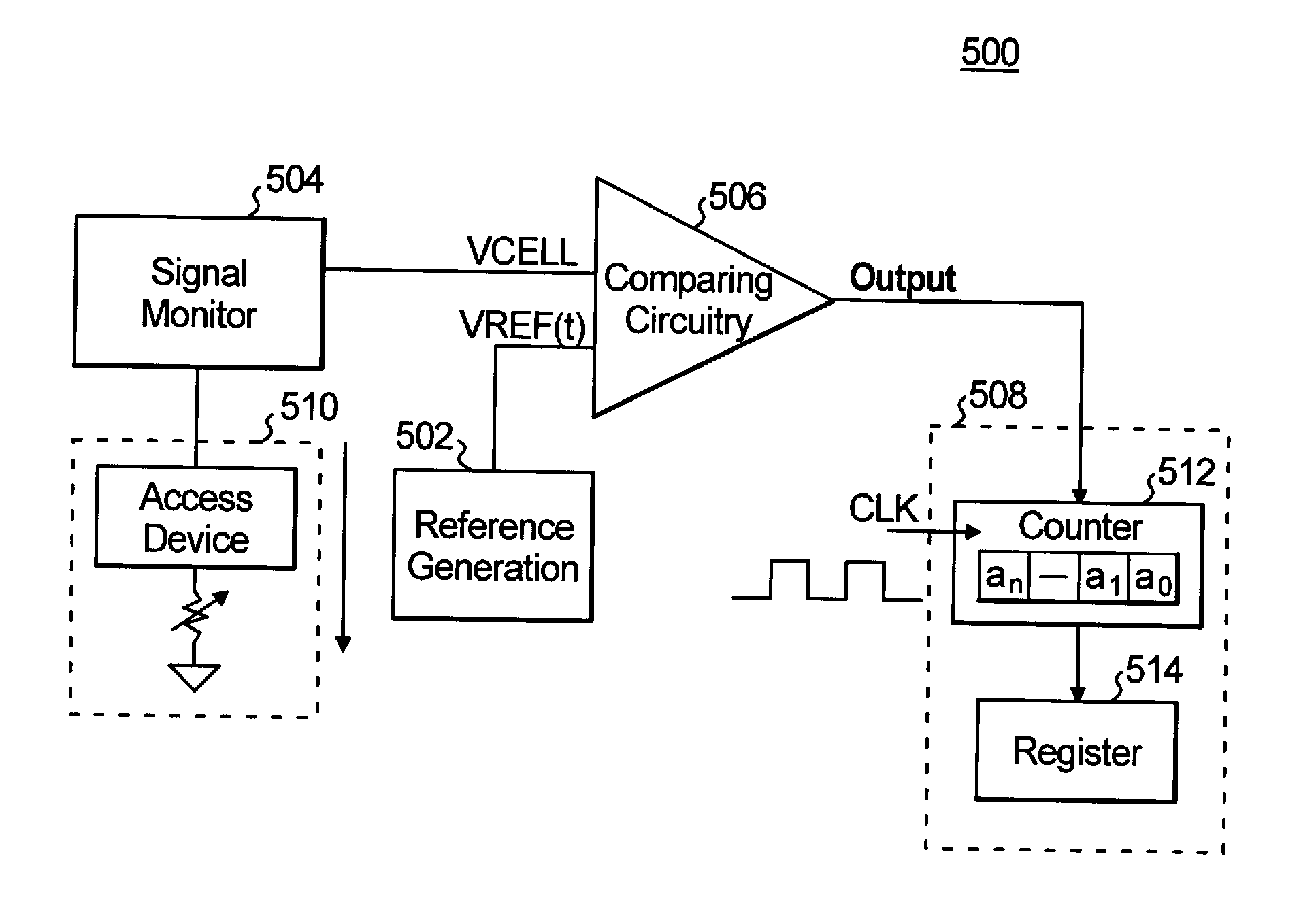

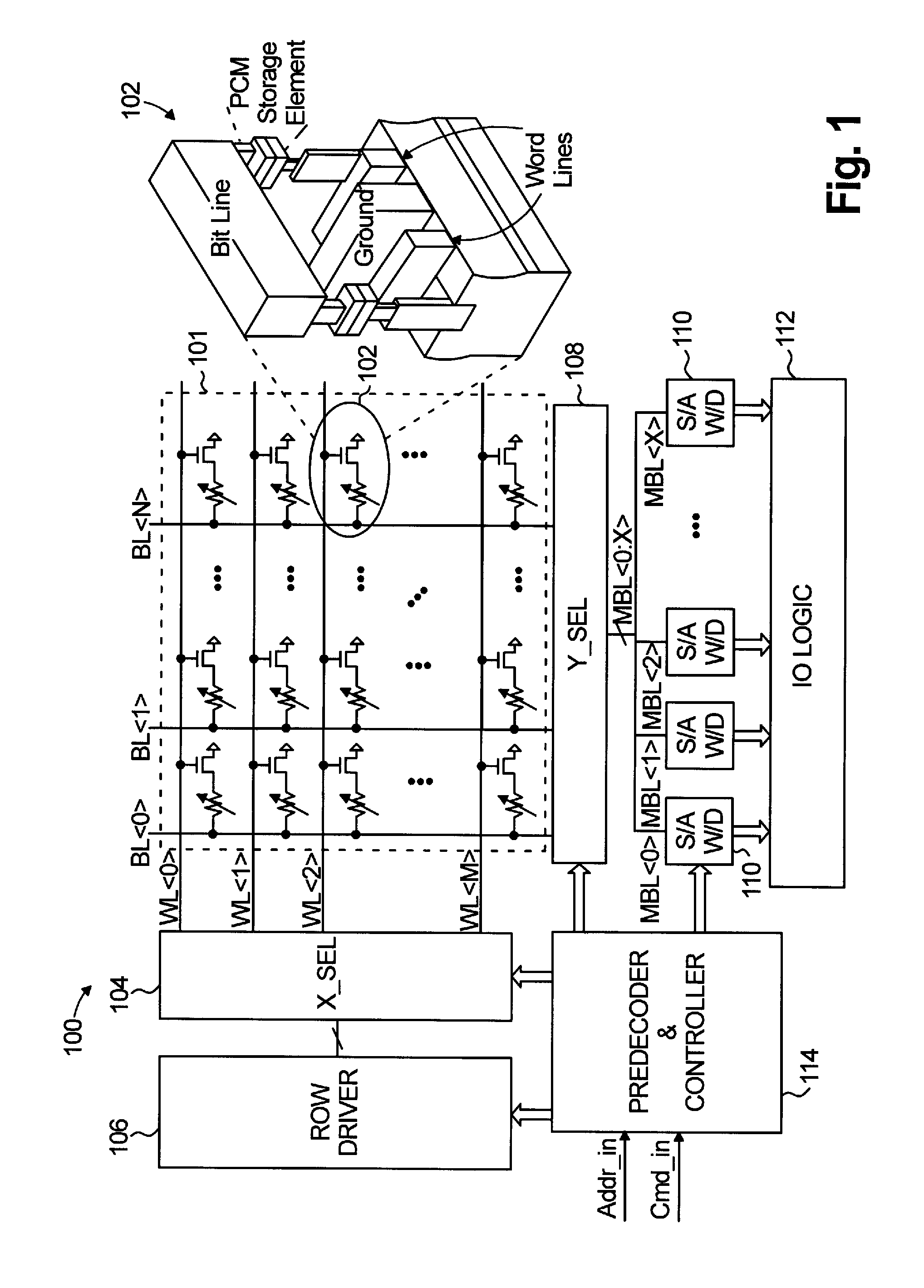

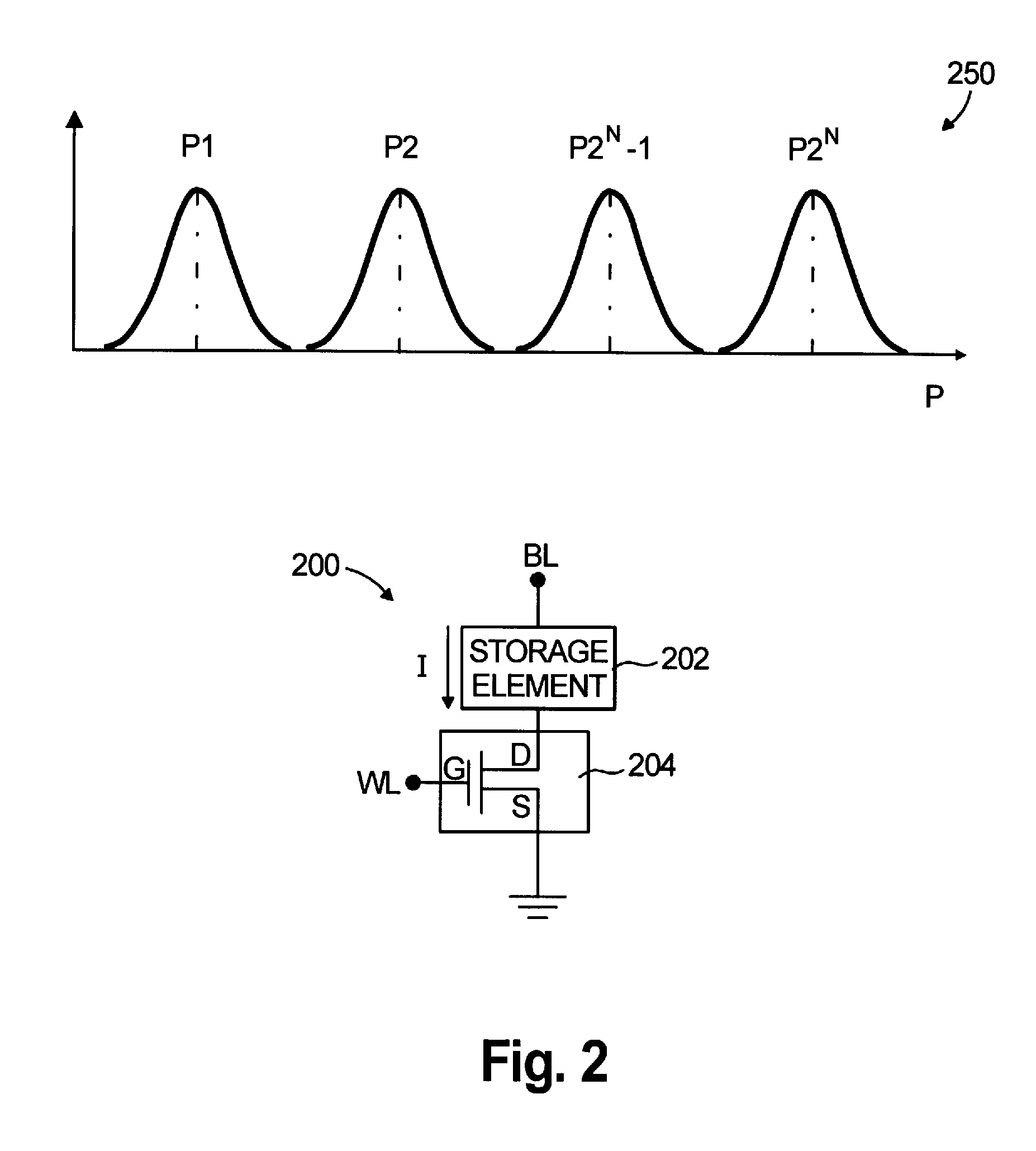

Reconfigurable Multi-level Sensing Scheme for Semiconductor Memories

ActiveUS20120063195A1Simple structureReduce chip areaDigital storageTemporal changeMultilevel memory

A method for sensing at least one parameter indicative of a logical state of a multi-level memory cell includes the steps of: measuring the parameter of the multi-level memory cell; comparing the measured parameter of the multi-level memory cell with a prescribed reference signal, the reference signal having a value which varies as a function of time; and storing a time value corresponding to a point in time at which the reference signal is substantially equal to the measured parameter of the multi-level memory cell, the stored time value being indicative of a sensed logical state of the multi-level memory cell.

Owner:GLOBALFOUNDRIES US INC

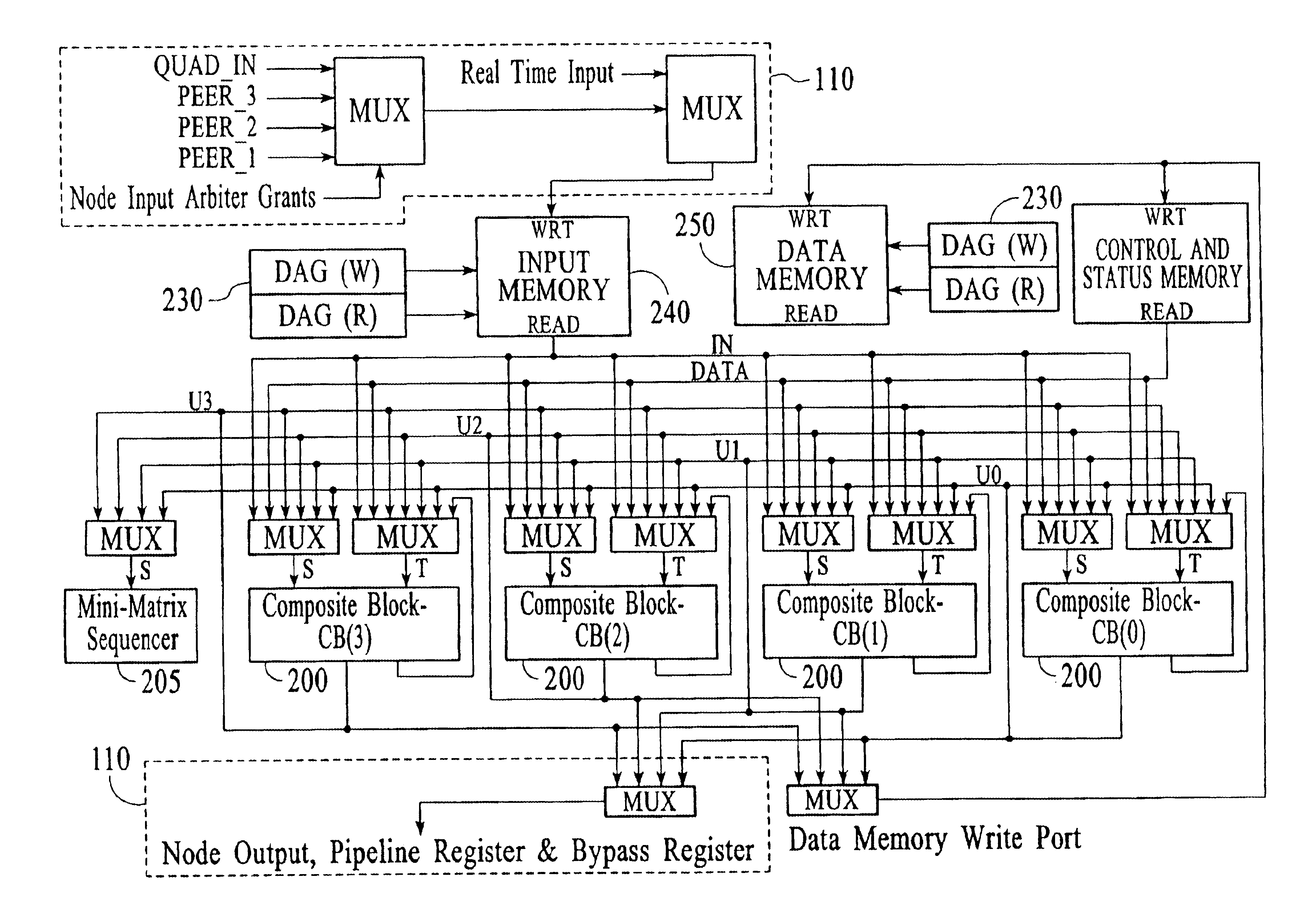

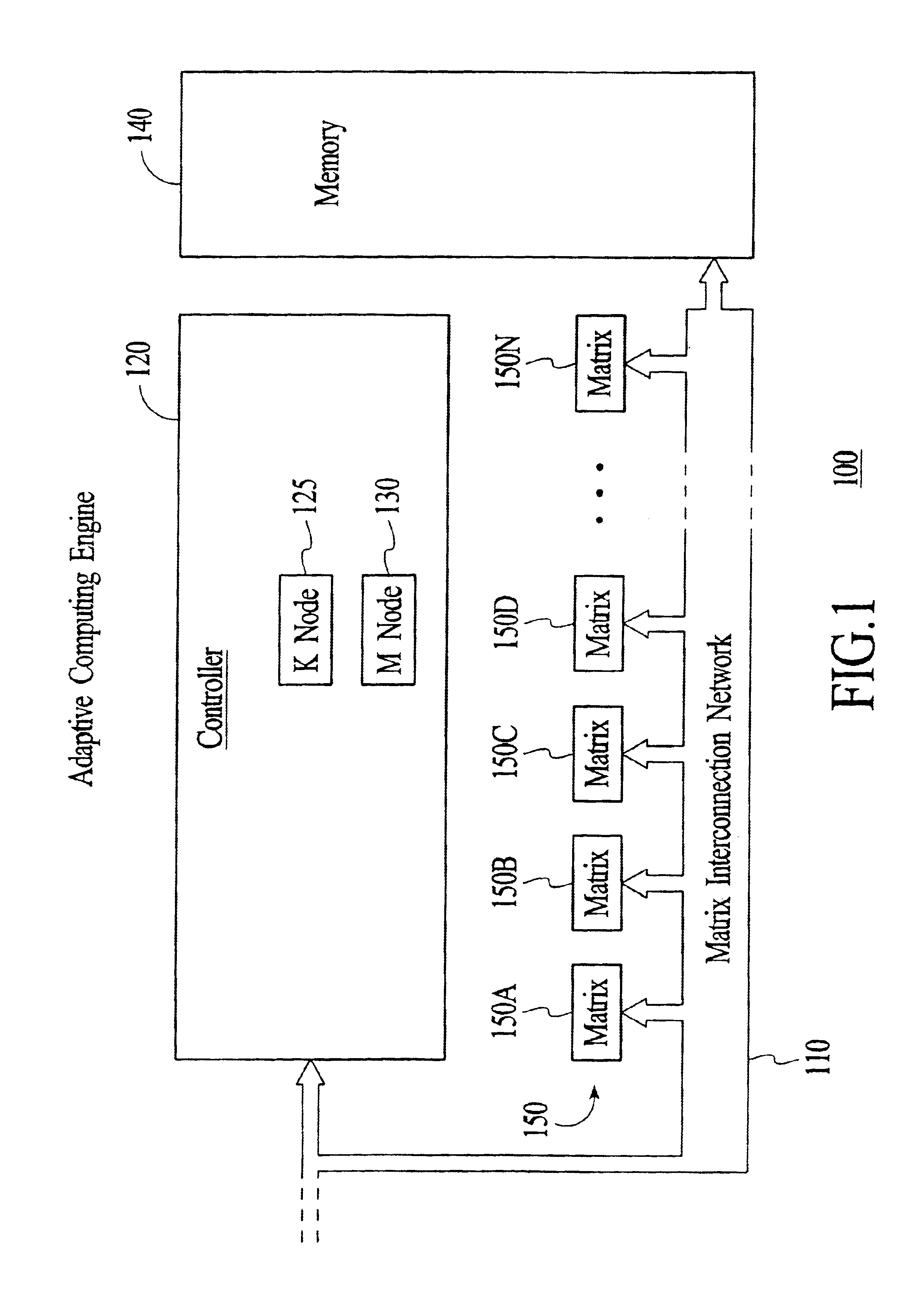

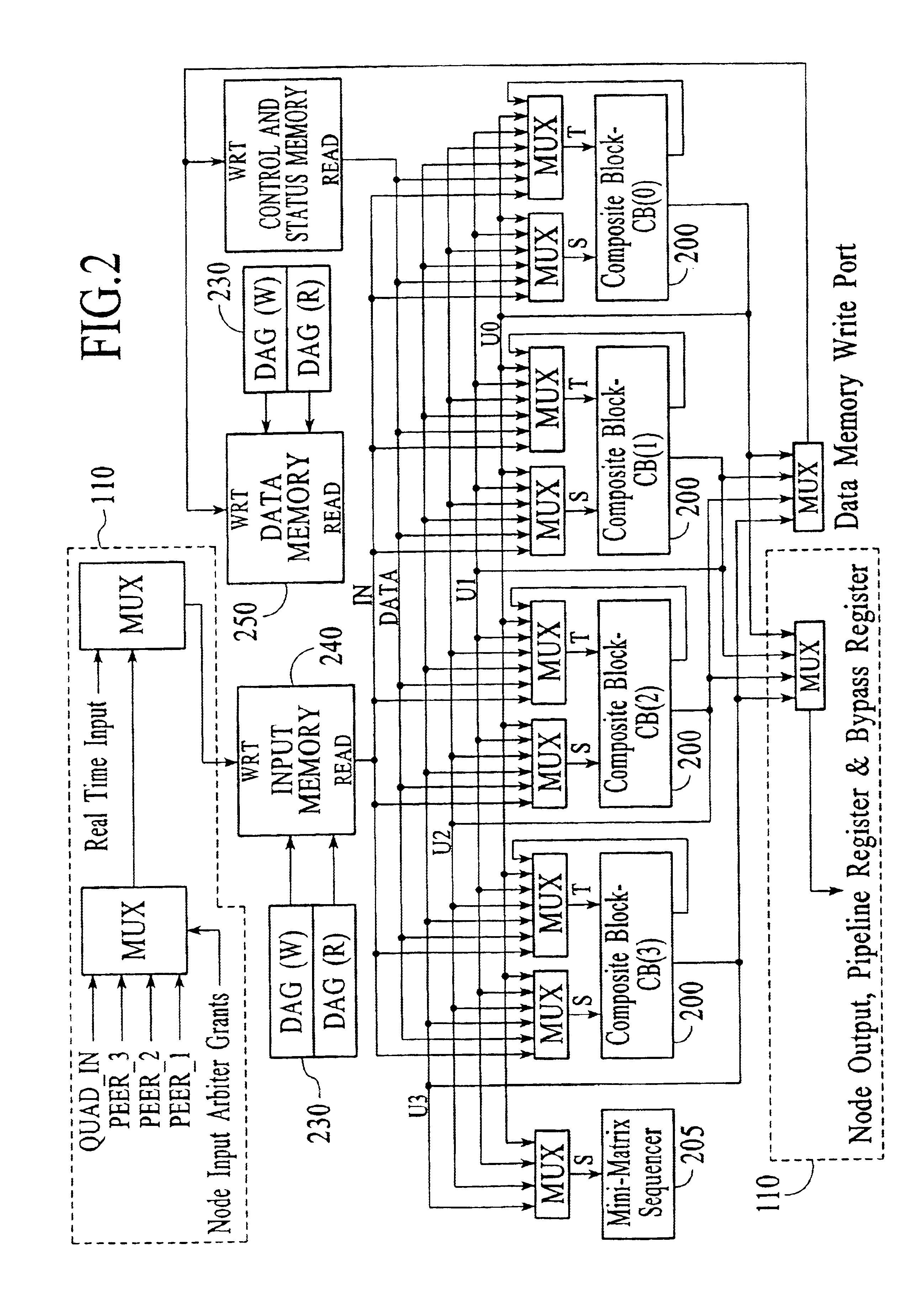

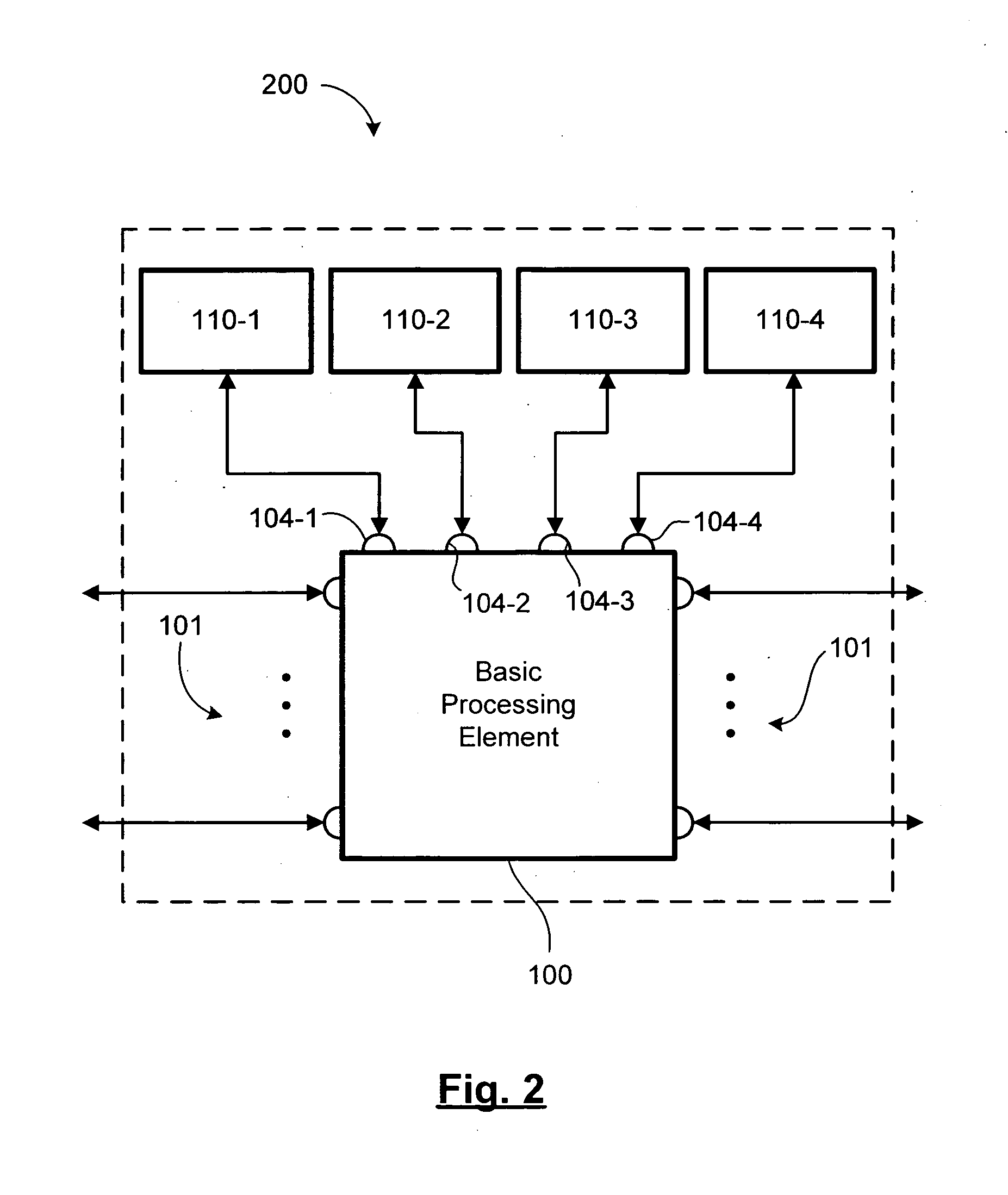

Adaptive computing engine with dataflow graph based sequencing in reconfigurable mini-matrices of composite functional blocks

InactiveUS6874079B2Single instruction multiple data multiprocessorsNext instruction address formationDigital signal processingParallel computing

Aspects of a method and system for digital signal processing within an adaptive computing engine are described. These aspects include a mini-matrix, the mini-matrix comprising a set of composite blocks, each composite block capable of executing a predetermined set of instructions. A sequencer is included for controlling the set of composite blocks and directing instructions among the set of composite blocks based on a data-flow graph. Further, a data network is included and transmits data to and from the set of composite blocks and to the sequencer, while a status network routes status word data resulting from instruction execution in the set of composite blocks. With the present invention, an effective combination of hardware resources is provided in a manner that provides multi-bit digital signal processing capabilities for an embedded system environment, particularly in an implementation of an adaptive computing engine. These and other advantages will become readily apparent from the following detailed description and accompanying drawings.

Owner:CORNAMI INC

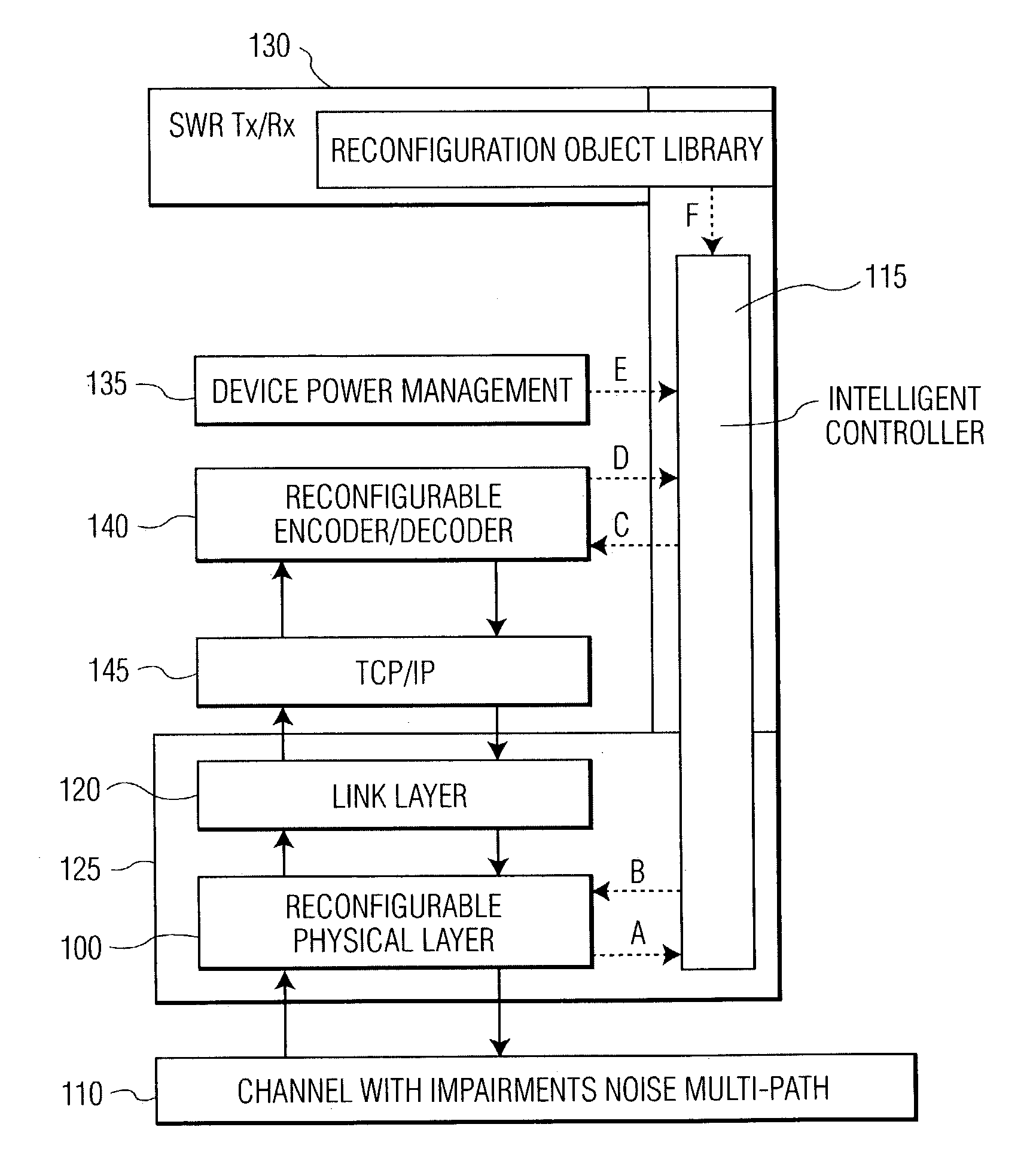

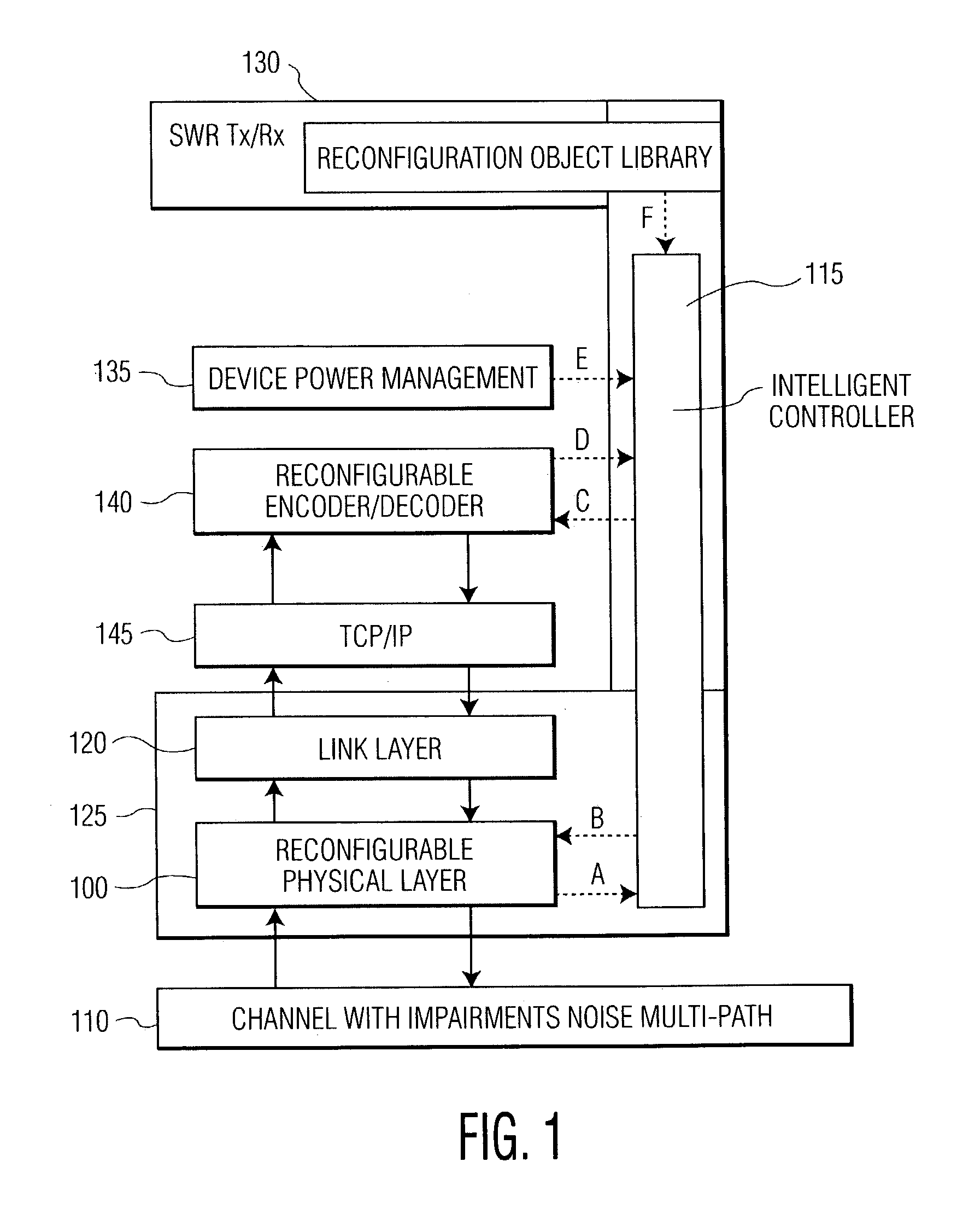

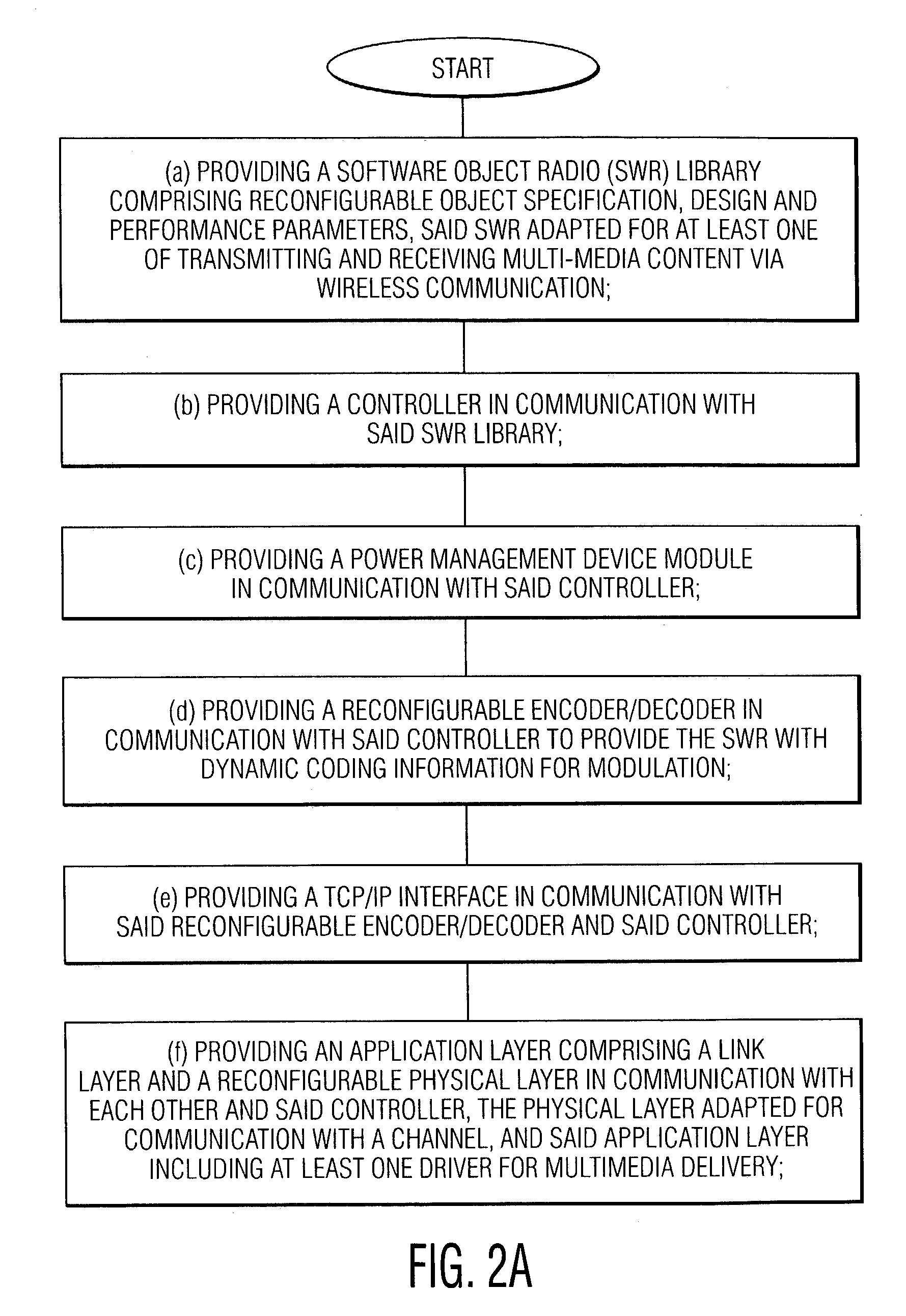

Method and apparatus for a reconfigurable multi-media system

ActiveUS7016668B2Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningComputer hardwareMultimedia system

A reconfigurable multi-media system, method and device provides monitoring and reconfiguration of a plurality of communication layers of a communications stack to dynamically reconfigure the modulation and coding of software defined radio (SDR). The system includes a software object radio (SWR) library having reconfigurable object specification, design and performance parameters, the SWR is adapted for at least one of transmitting and receiving multi-media content via wireless communication; a controller in communication with the SWR library; a power management device module in communication with said controller; a reconfigurable encoder / decoder in communication with said controller to provide the SWR with dynamic coding information for modulation; a TCP / IP interface in communication with said reconfigurable encoder / decoder and said controller; and an application layer comprising a link layer and a reconfigurable physical layer in communication with each other and said controller, the physical layer adapted for communication with a channel, and the application layer including at least one driver for multi-media delivery. The controller monitors the physical layer and link layer information and the reconfigurable encoder / decoder dynamically reconfigures modulation and coding of multi-media content according to a cross-layer optimization approach.

Owner:UNILOC 2017 LLC

Physically reconfigurable input and output systems and methods

InactiveUS8232976B2Effective interactionInput/output for user-computer interactionCathode-ray tube indicatorsUser needsReconfigurable antenna

Systems and methods for altering the shape of a reconfigurable surface area are presented. The present systems and methods facilitate efficient and effective interaction with a device or system. In one embodiment, a surface reconfiguration system includes a flexible surface; an elevation unit that creates alterations in the contours of the surface; and an elevation control component that controls adjustments to the elevation unit. Thus, the surface of the device is reconfigurable based on system, application, mode, and / or user needs. Accordingly, the surface can be used to provide input and output functionality. The surface can include touch detection functionality for added input functionality.

Owner:PANASONIC OF NORTH AMERICA

Reconfigurable cloud computing

Owner:AGARIK SAS

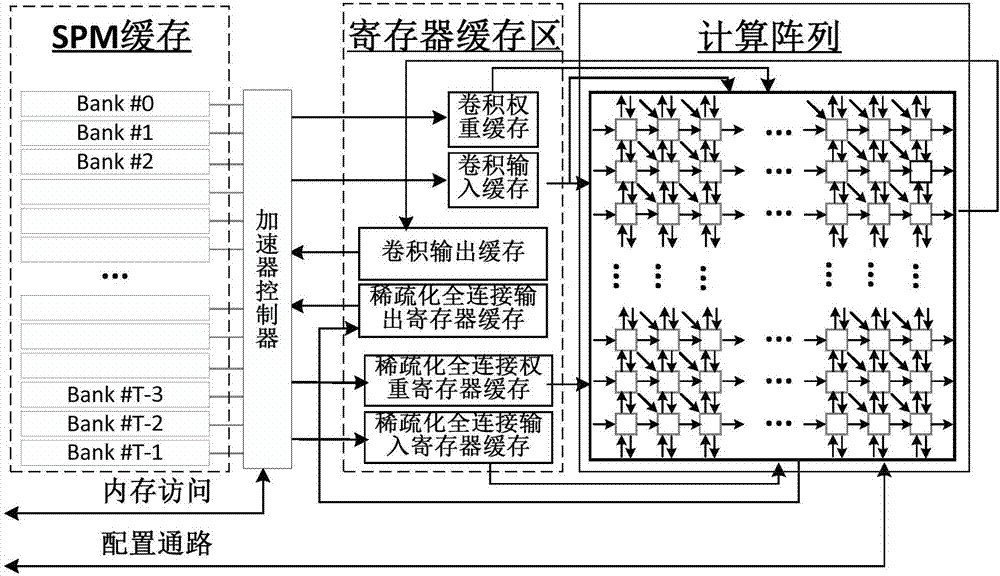

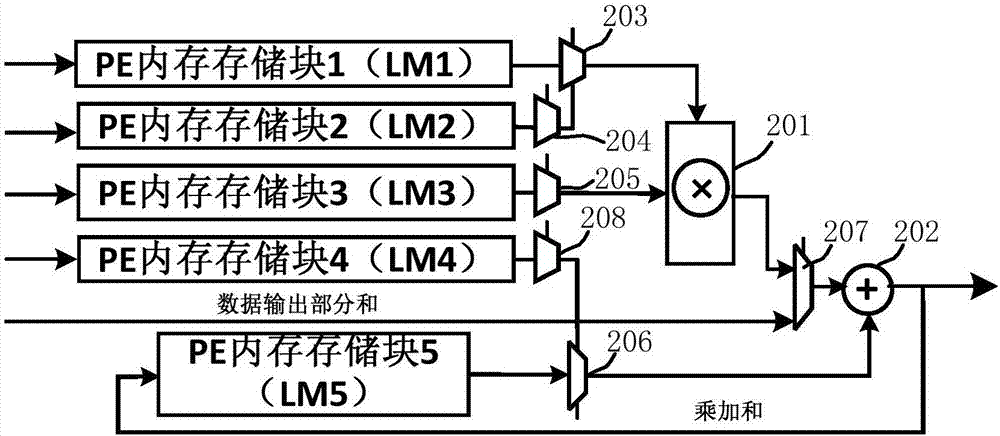

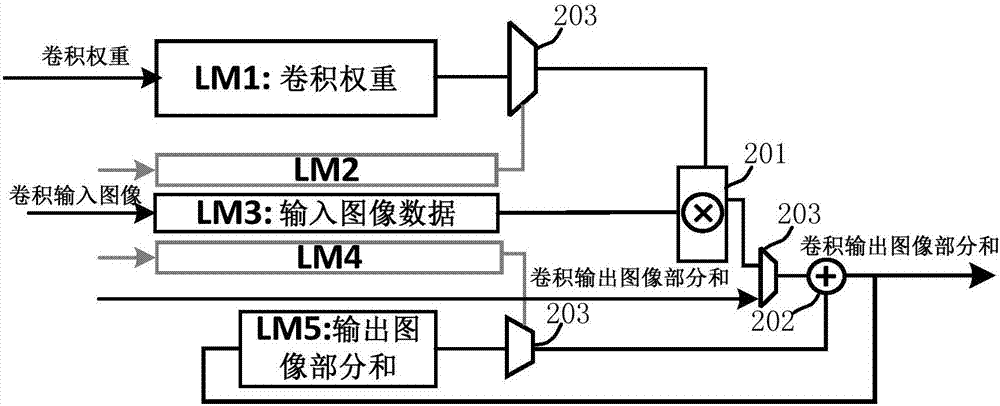

Separable array-based reconfigurable accelerator and realization method thereof

ActiveCN107341544AIncrease computing resourcesIncrease profitPhysical realisationNeural learning methodsProcessor registerParallel computing

The invention provides a separable array-based reconfigurable accelerator and a realization method thereof. The reconfigurable accelerator comprises a scratchpad memory cache area, separable calculation arrays, and a register cache area, wherein the scratchpad memory cache area is used for realizing reuse of data of convolution calculation and sparsity full connection calculation, the separable calculation arrays comprise multiple reconfigurable calculation units and fall into a convolution calculation array and a sparsity full connection calculation array, the register cache area is a storage area formed by multiple registers, and provides input data, weight data and corresponding output results for convolution calculation and sparsity full connection calculation, input data and weight data of convolution calculation are input into the convolution calculation array, the convolution calculation array outputs a convolution calculation result, input data and weight data of the sparsity full connection calculation are input into the sparsity full connection calculation array, and the sparsity full connection calculation array outputs a sparsity full connection calculation result. Characteristics of two neural networks are fused, so that the calculation resource of the chip and the memory bandwidth use ratio are improved.

Owner:TSINGHUA UNIV

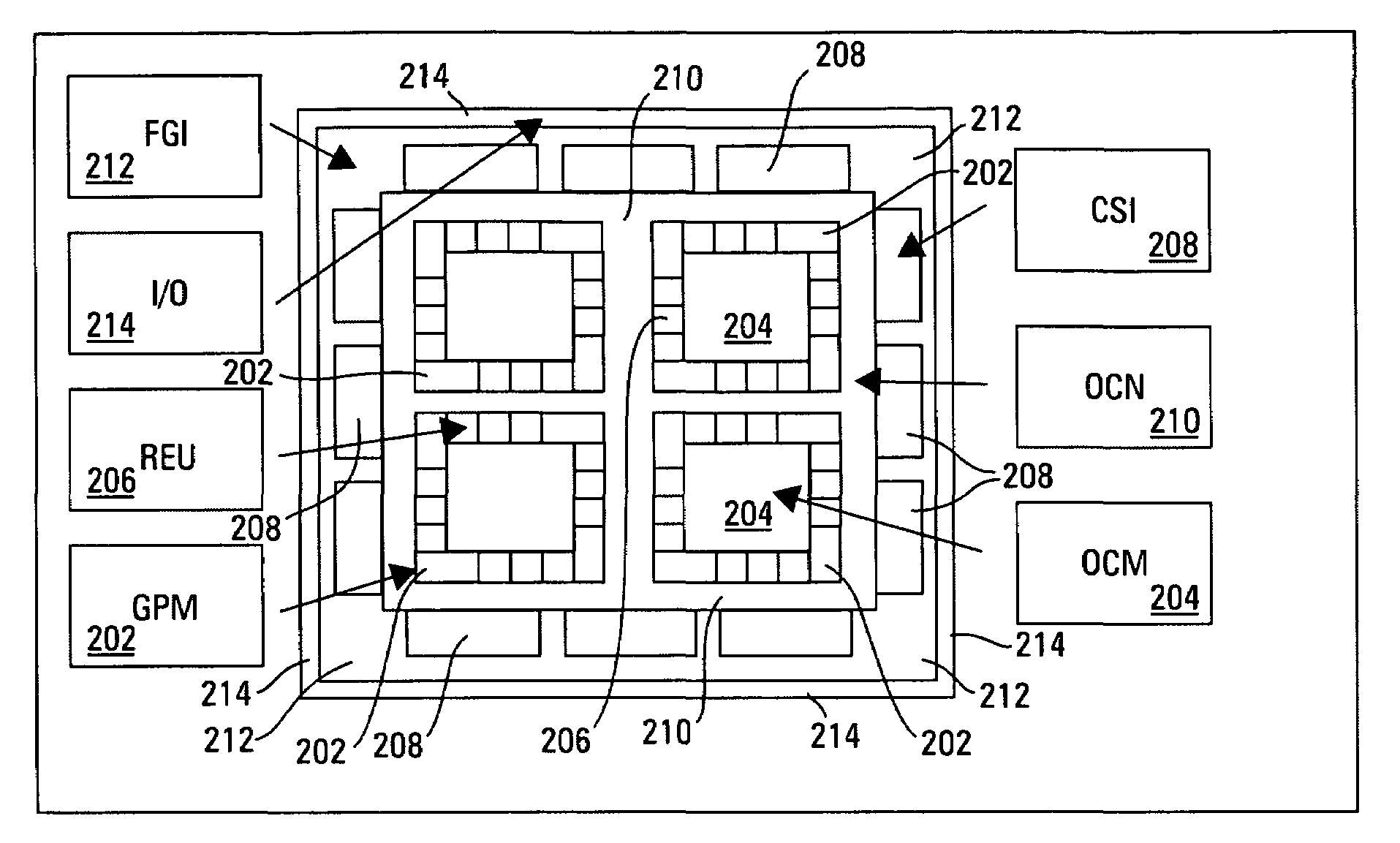

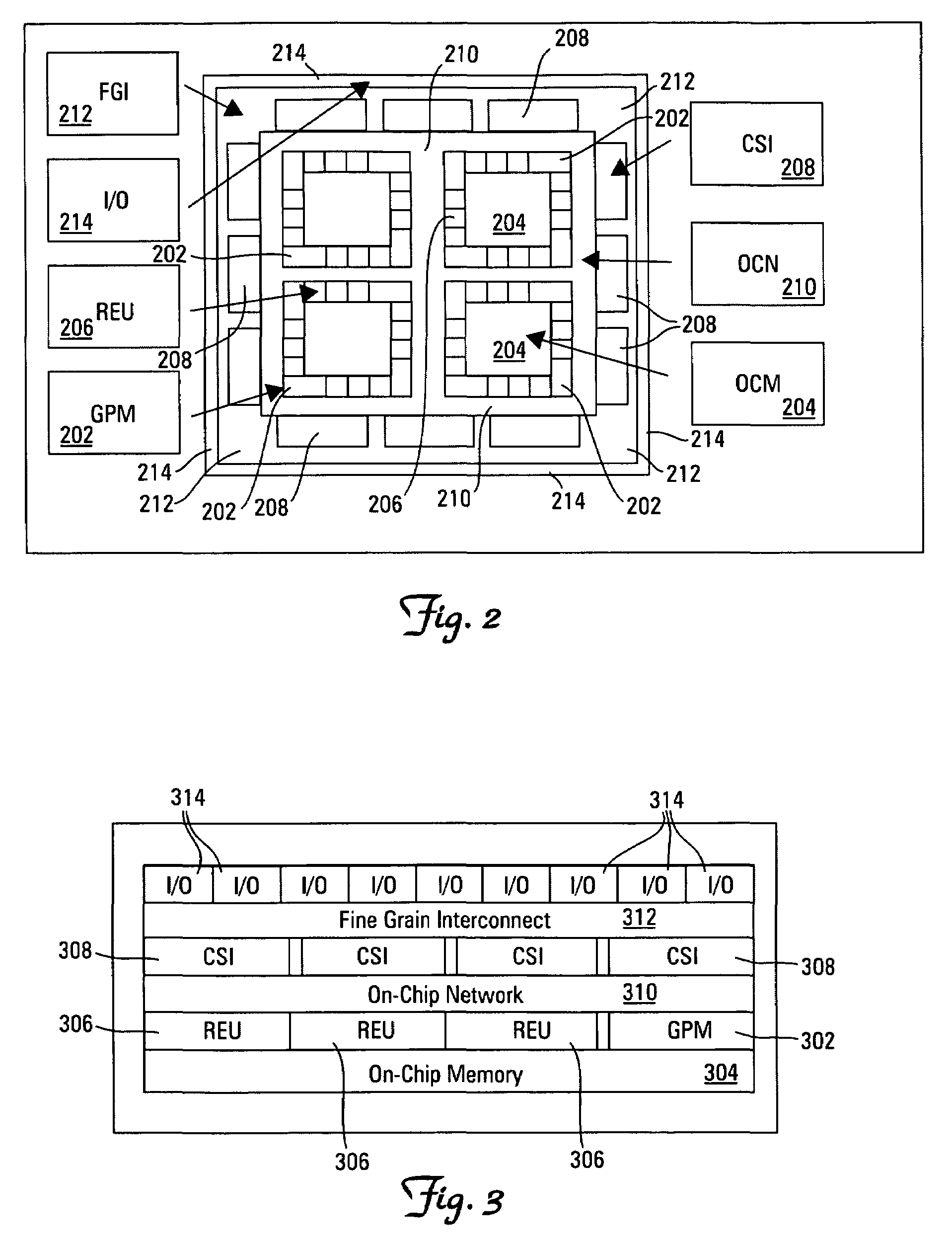

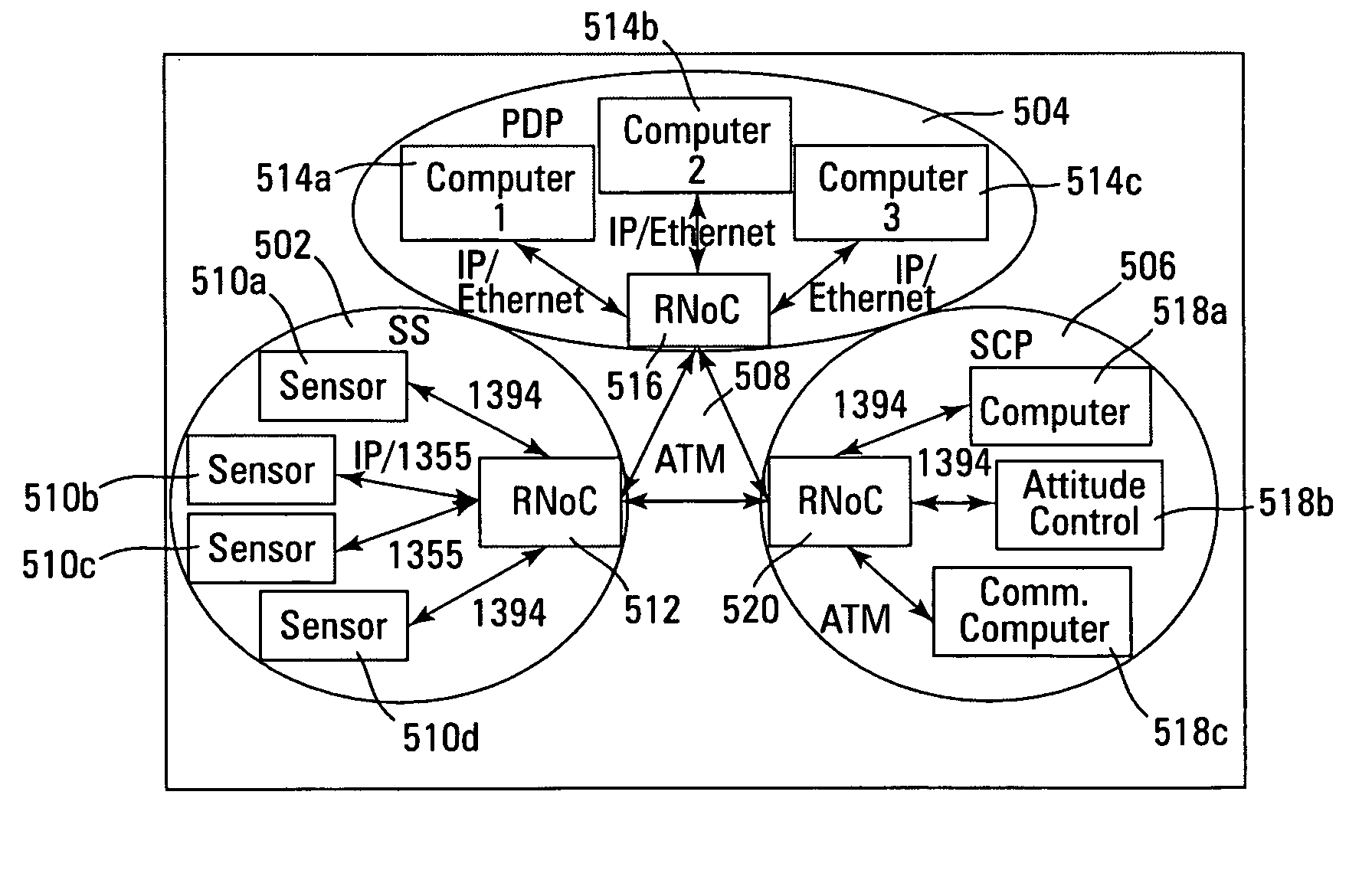

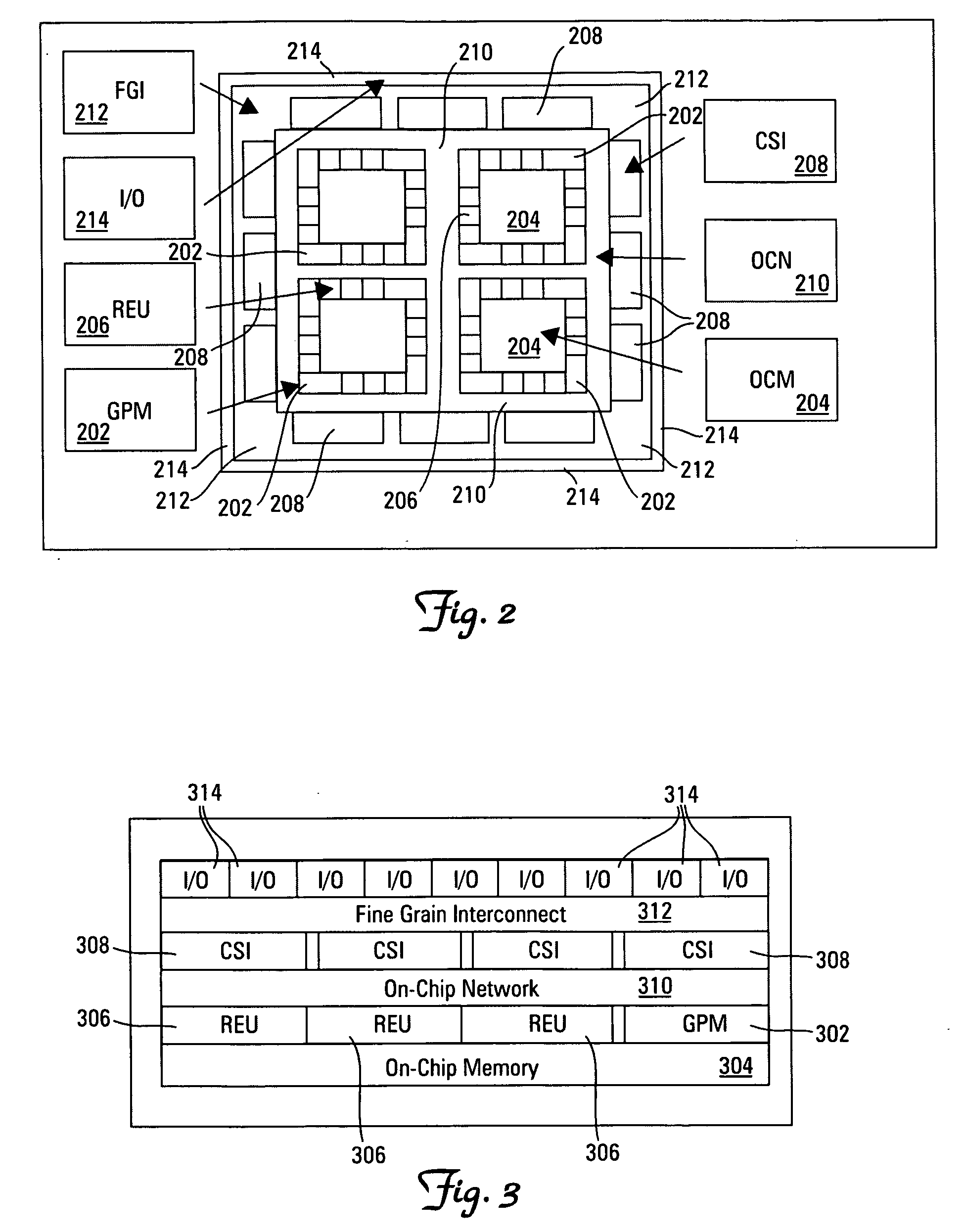

Reconfigurable network on a chip

ActiveUS7382154B2Easy programmingIncrease flexibilitySolid-state devicesDigital computer detailsSemiconductor chipProgrammable logic array

An architecture for a reconfigurable network that can be implemented on a semiconductor chip is disclosed, which includes a hierarchical organization of network components and functions that are readily programmable and highly flexible. Essentially, a reconfigurable network on a chip is disclosed, which includes aspects of reconfigurable computing, system on a chip, and network on a chip designs. More precisely, a reconfigurable network on a chip includes a general purpose microprocessor for implementing software tasks, a plurality of on-chip memories for facilitating the processing of large data structures as well as processor collaboration, a plurality of reconfigurable execution units including self-contained, individually reconfigurable programmable logic arrays, a plurality of configurable system interface units that provide interconnections between on-chip memories, networks or buses, an on-chip network including a network interconnection interface that enables communication between all reconfigurable execution units, configurable system interface units and general purpose microprocessors, a fine grain interconnect unit that gathers associated input / output signals for a particular interface and attaches them to a designated system interface resource, and a plurality of input / output blocks that supply the link between an on-chip interface resource and a particular external network or device interface. Advantageously, the network minimizes the configuration latency of the reconfigurable execution units and also enables reconfiguration on-the-fly.

Owner:HONEYWELL INT INC

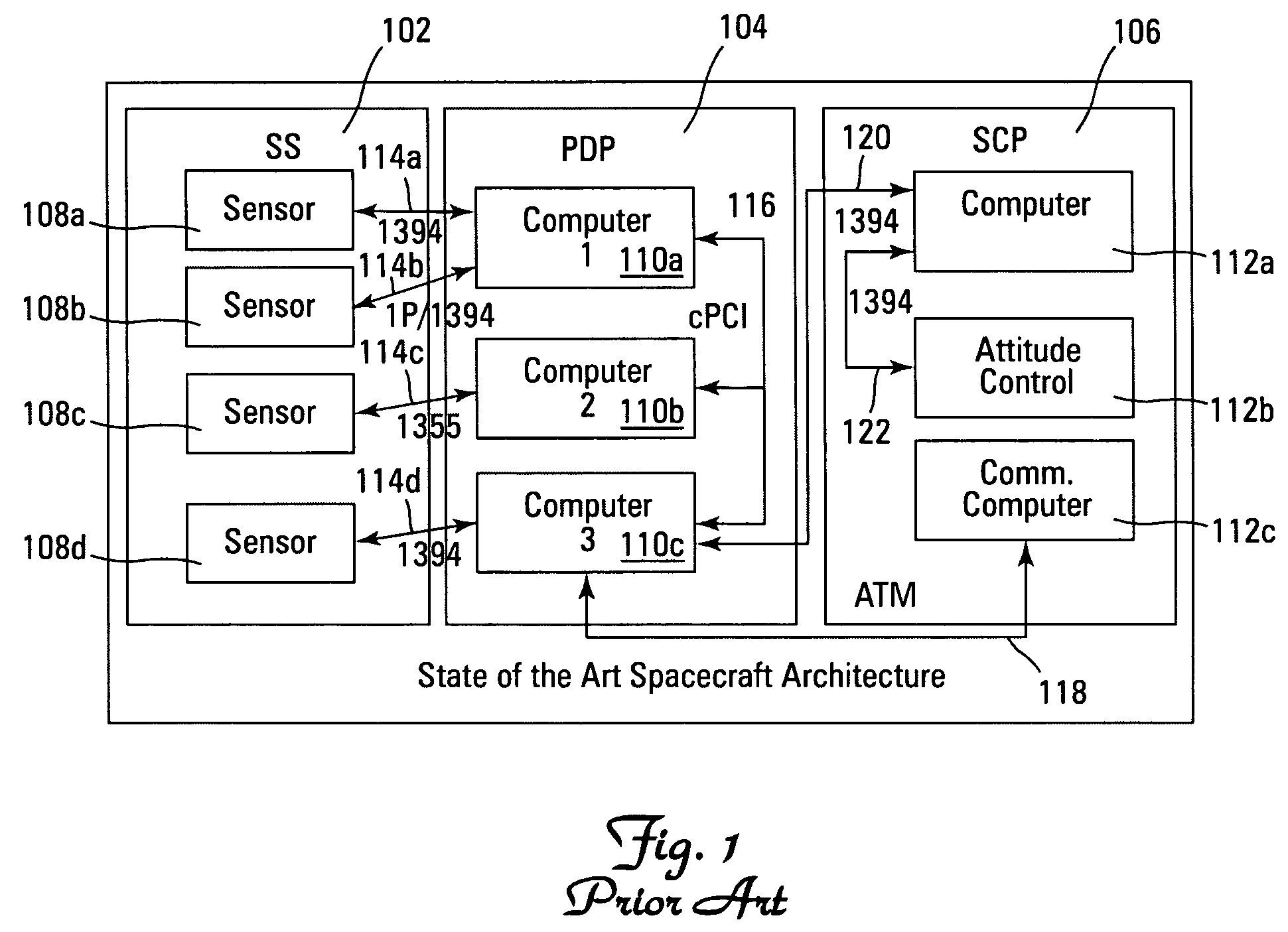

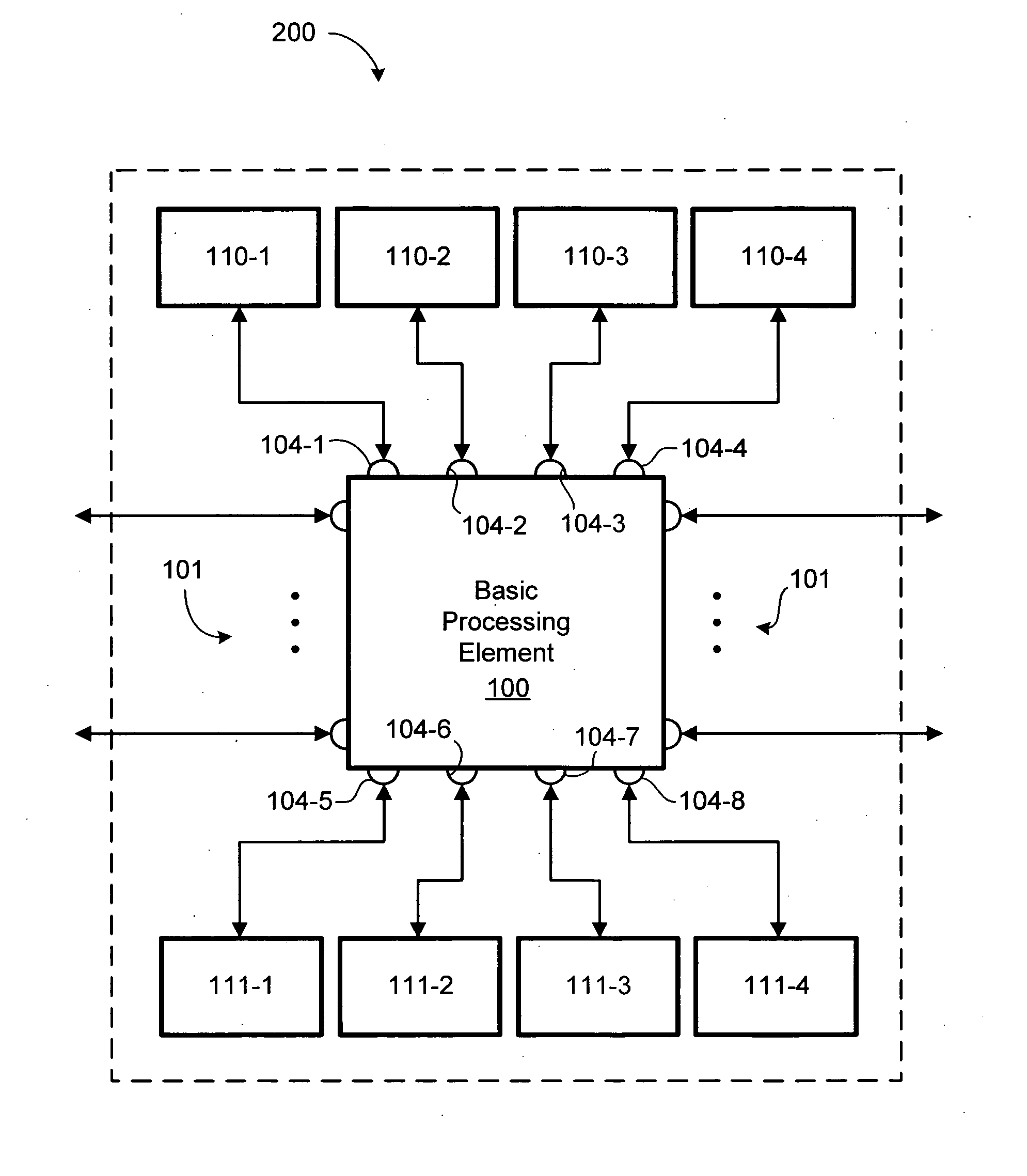

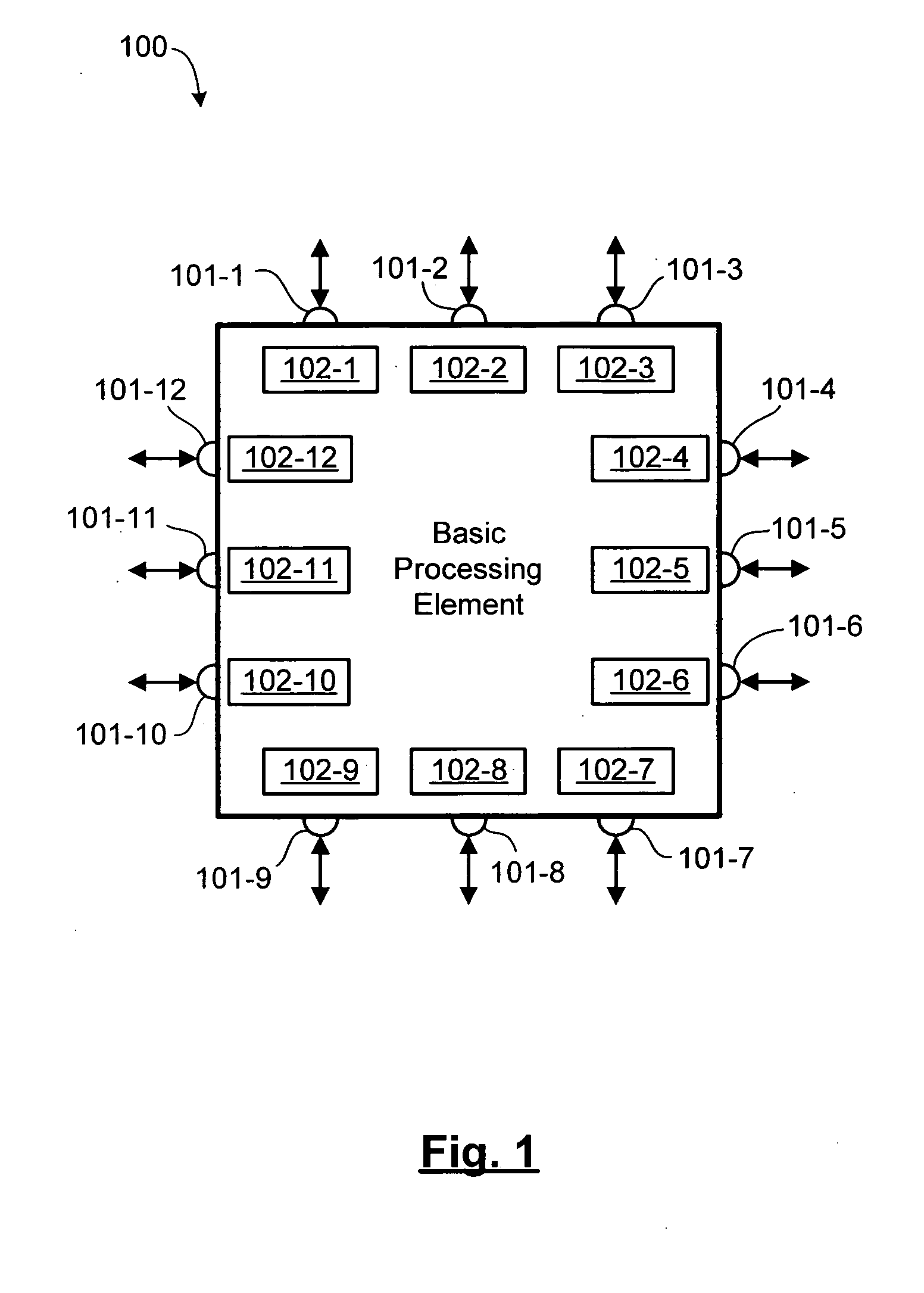

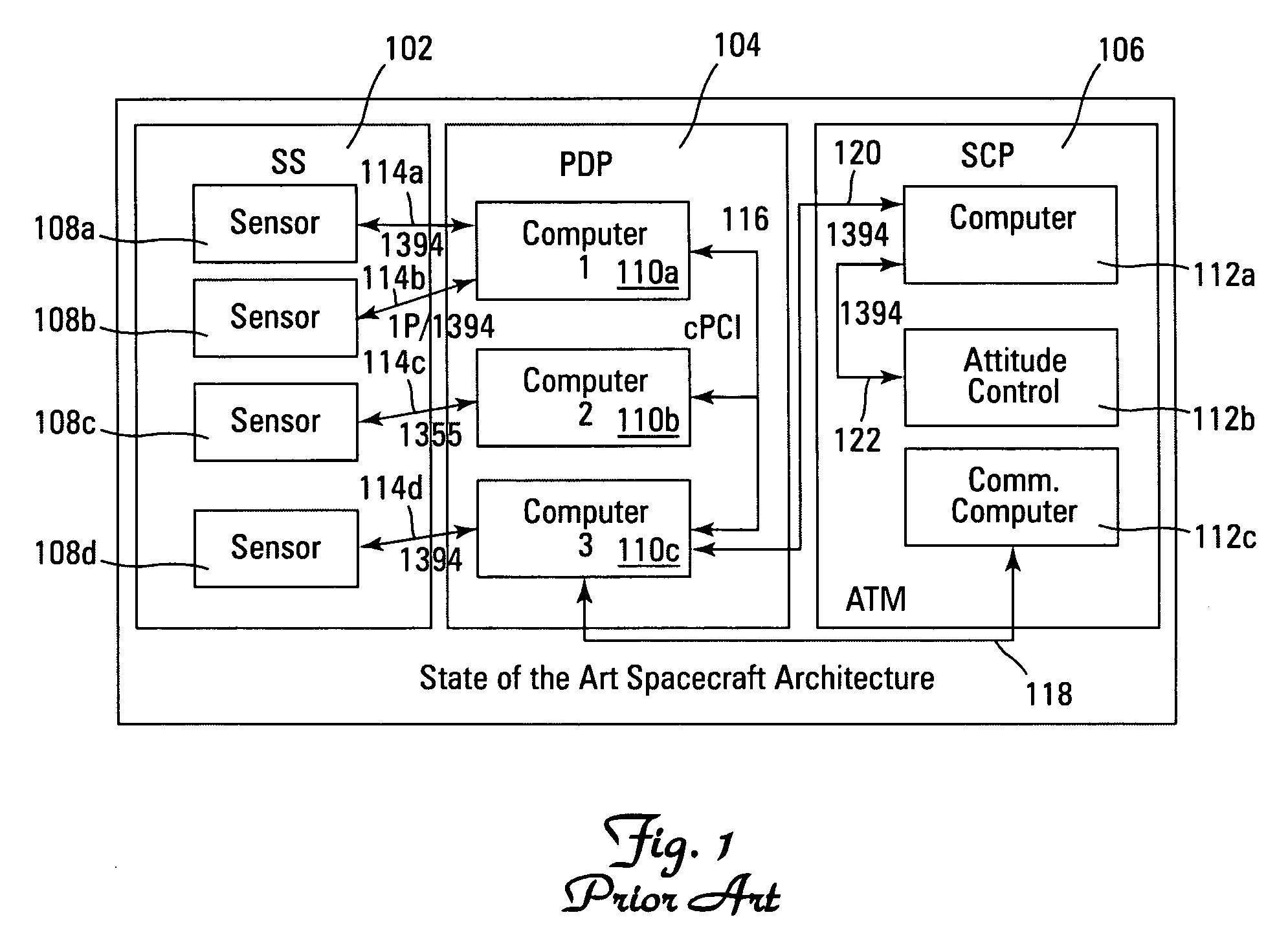

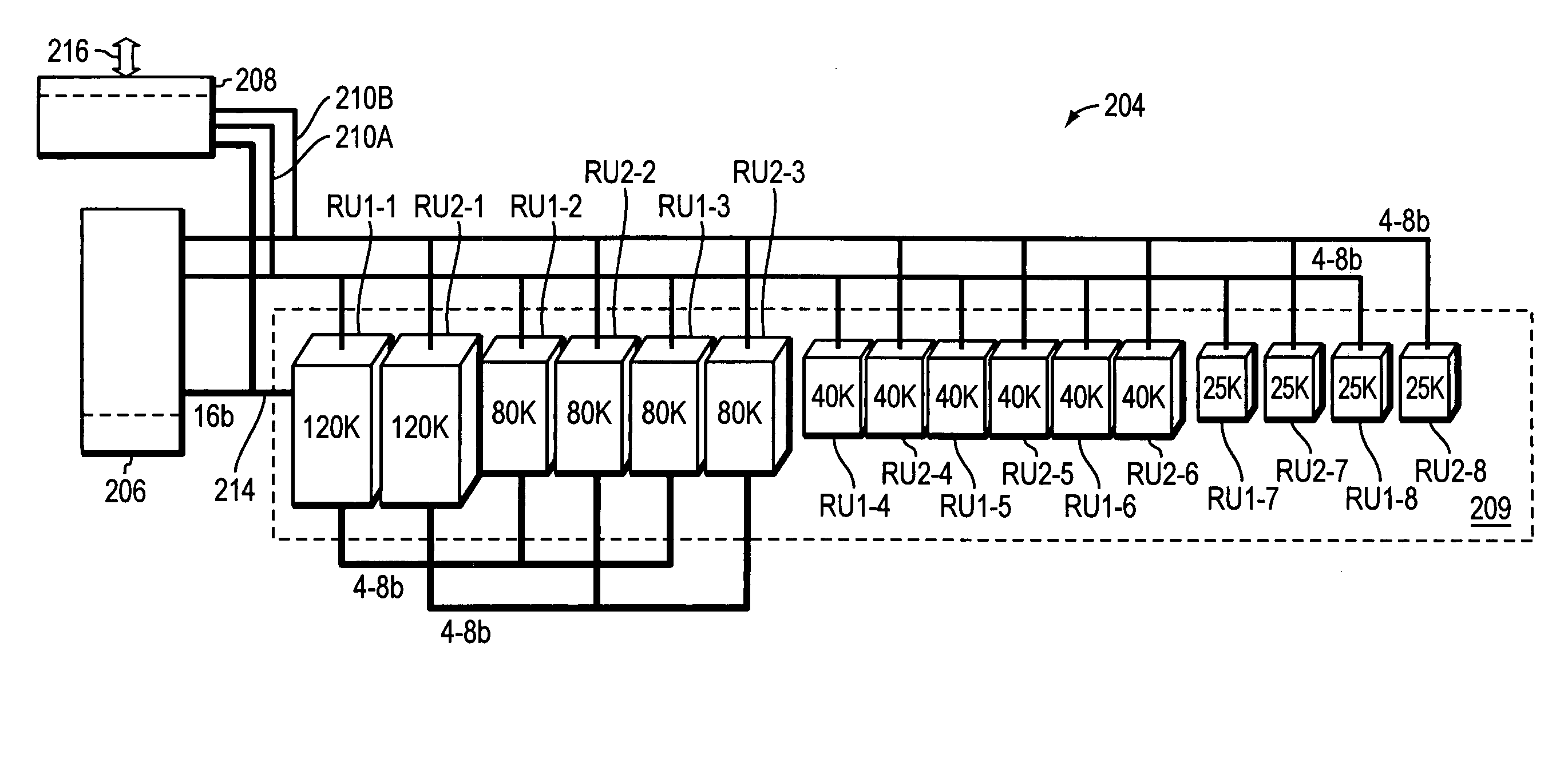

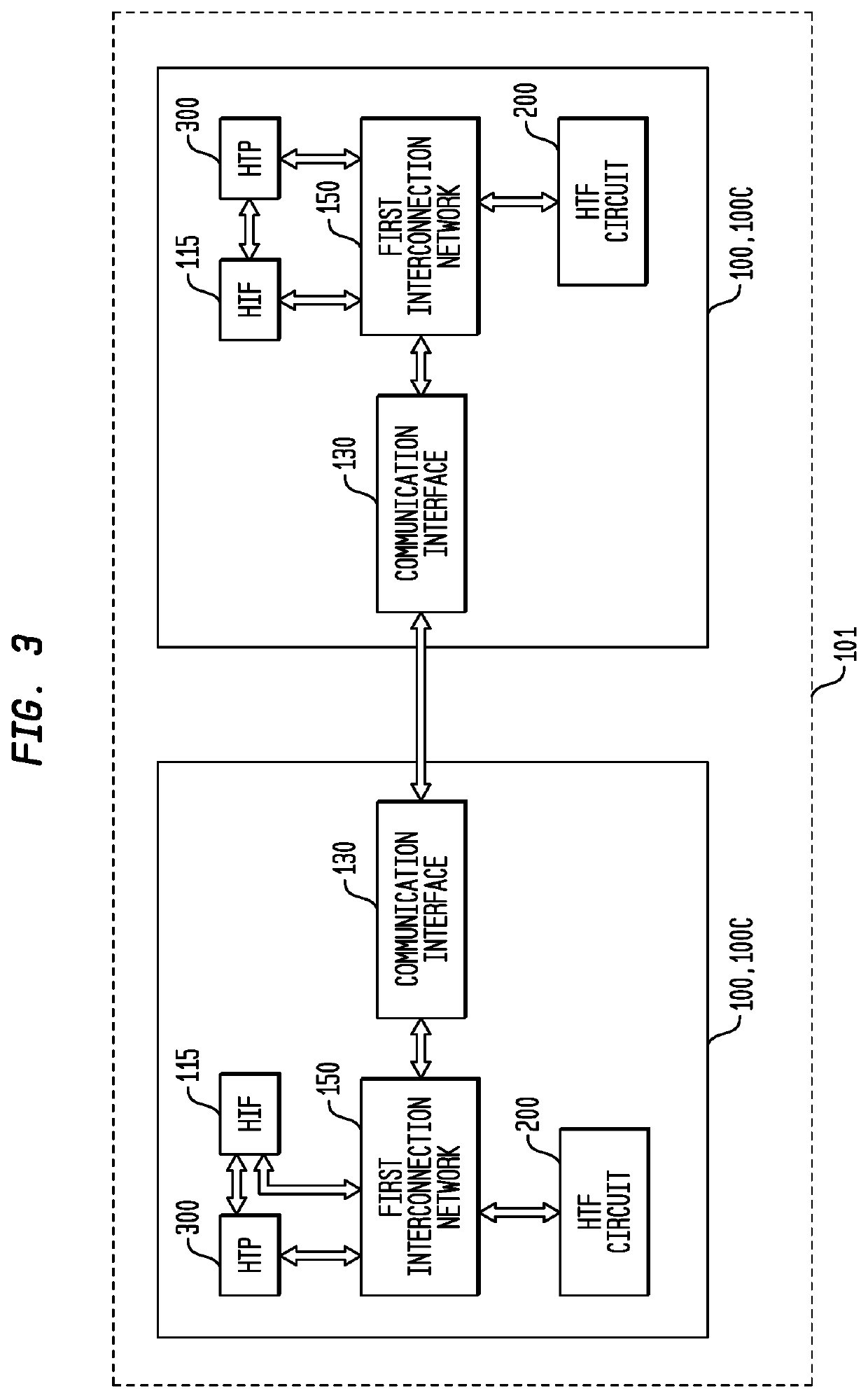

Multi-processor reconfigurable computing system

ActiveUS20060031659A1Reduce communication delayImproved bandwidth throughputGeneral purpose stored program computerInput/output processes for data processingCross connectionMulti processor

A reconfigurable multi-processor computing system including a plurality of configurable processing elements each having a plurality of integrated high-speed serial input / output ports. Interconnects link the plurality of processing elements, wherein at least one of the integrated high-speed serial input / output ports of each processing element is connected by at least one interconnect to at least one of the integrated high-speed serial input / output ports of each other processing element, thereby creating a full mesh network. The full mesh network is located on a processor card, multiples of which may be grouped in a shelf having a backplane card with a shelf controller card for providing cross-connects between processor cards. Multiple shelves may be interconnected to form a large computer system.

Owner:ARCHES COMPUTING SYST

Sparse neural network architecture and realization method thereof

ActiveCN107609641ACalculation balanceImprove resource utilizationPhysical realisationResource utilizationParallel computing

The invention discloses a sparse neural network architecture and a realization method thereof. The sparse neural network architecture comprises an external memory controller, a weight cache, an inputcache, and output cache, an input cache controller and a computing array, wherein the computing array comprises multiple computing units, each row of reconfigurable computing units in the computing array share partial input in the input cache, and a partial weight, shared by each column of reconfigurable computing units, in the weight cache is computed; the input cache controller performs sparse operation on input of the input cache, and a zero value in the input is removed; and the external memory controller stores data of the computing array before and after processing. Through the sparse neural network architecture and the realization method thereof, invalid computing performed when the input is zero can be reduced and even eliminated, computed quantities among all the computing units are balanced, the hardware resource utilization rate is increased, and meanwhile shortest computing delay is guaranteed.

Owner:TSINGHUA UNIV

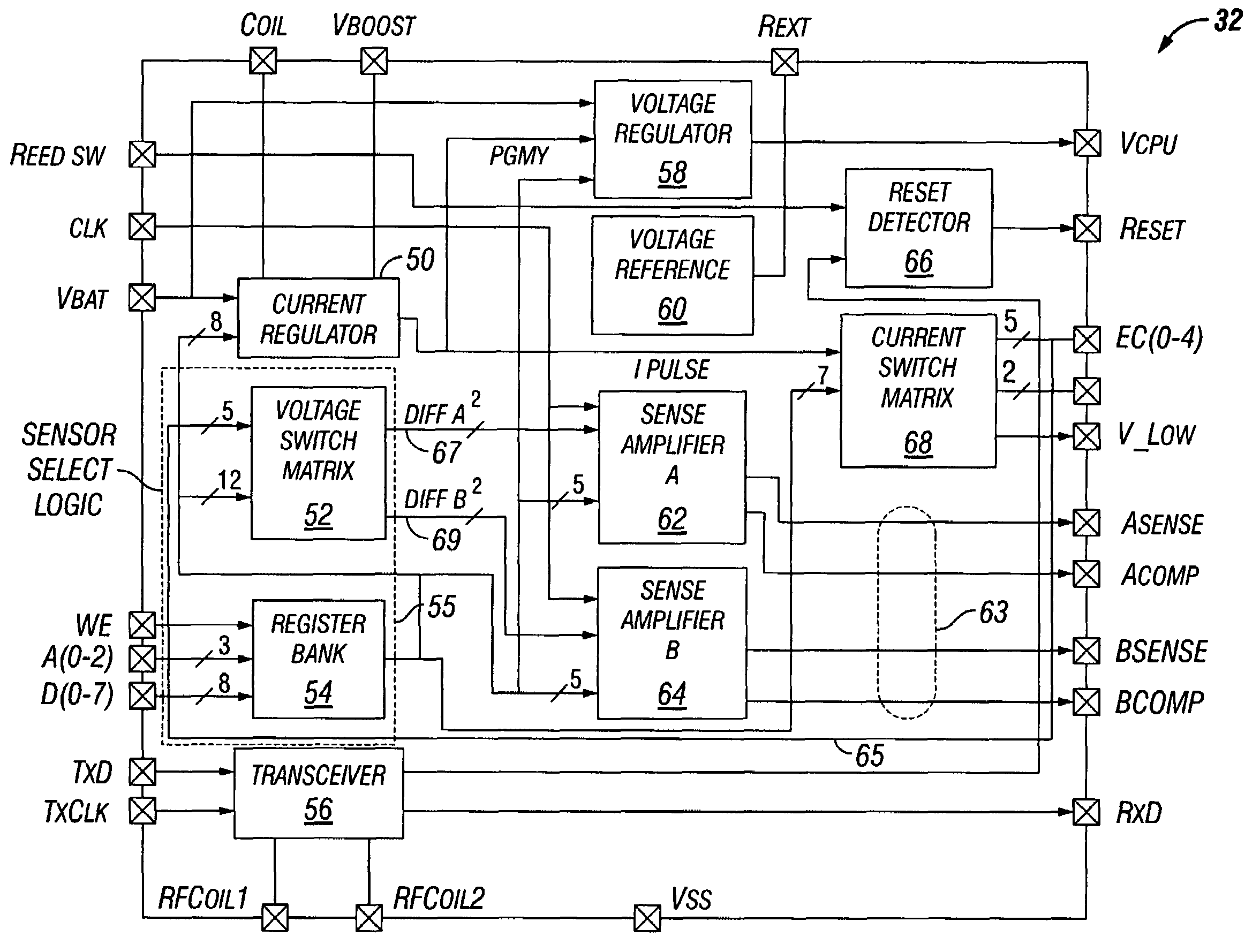

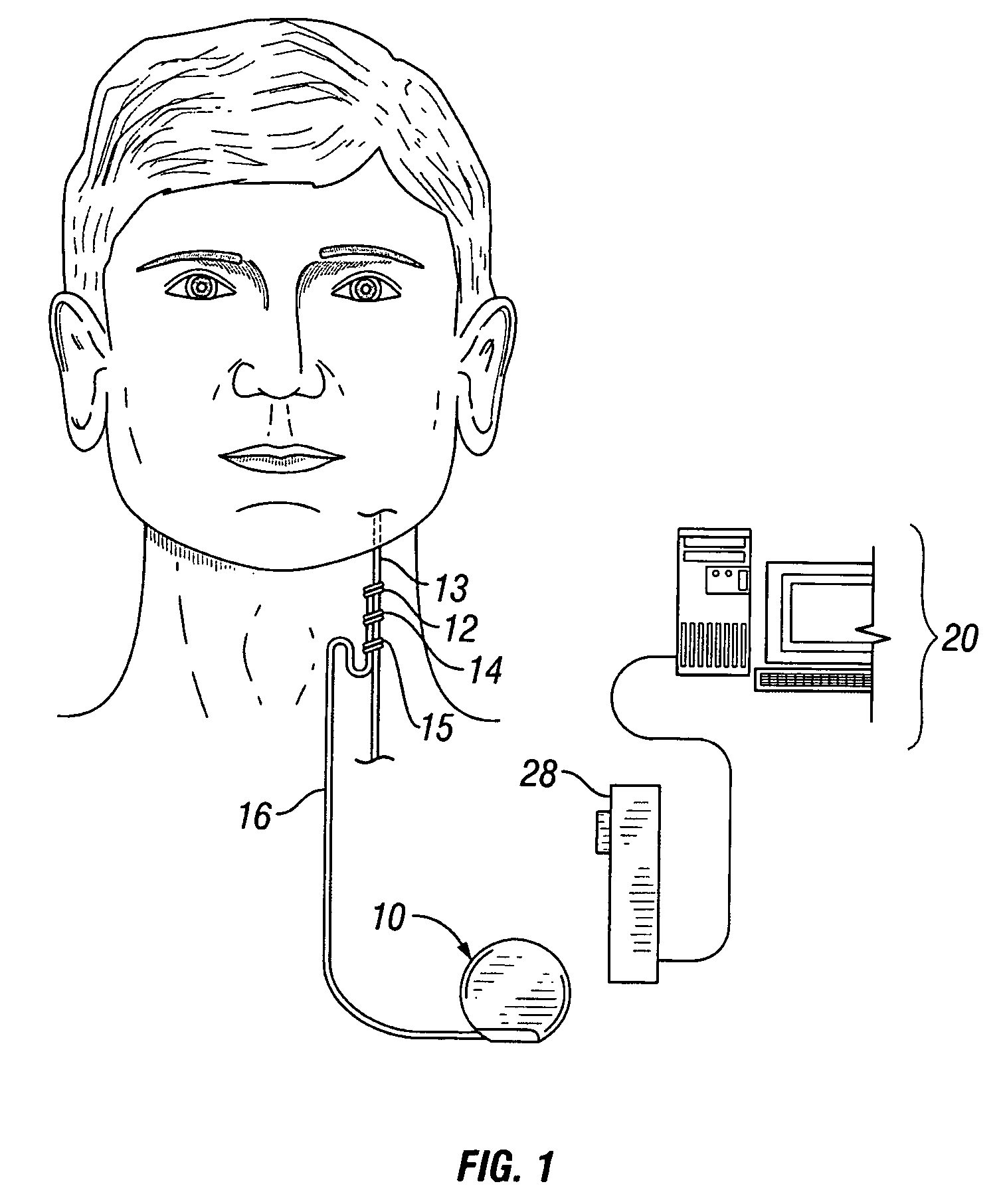

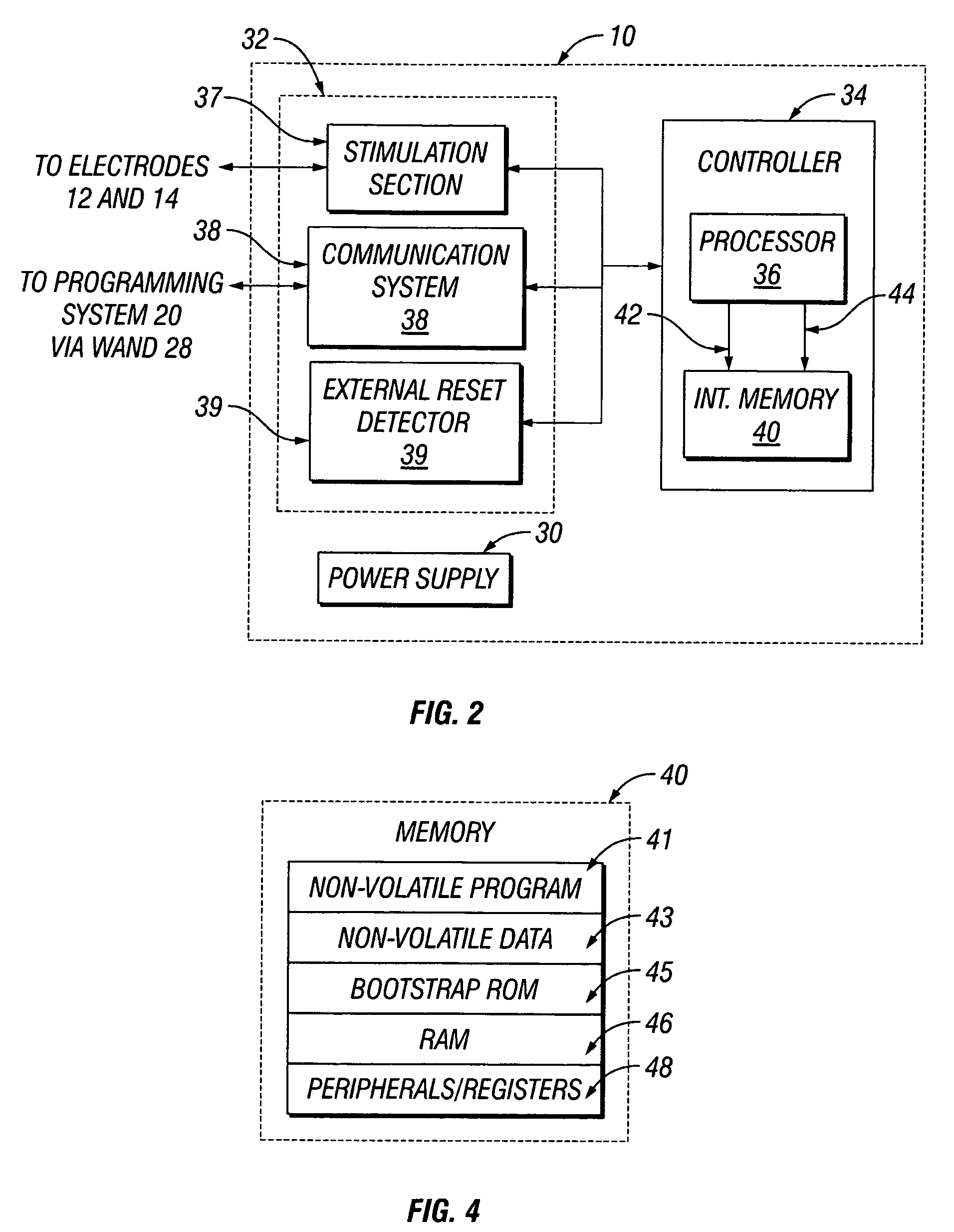

Implantable medical device with reconfigurable non-volatile program

A device comprises a stimulus generator comprising an instruction processor. The stimulus generator is configured to deliver stimuli to a biological tissue. The device also comprises a non-volatile memory for storing instructions directly executable by the instruction processor, the instructions controlling, at least in part, the operation of the device. The instruction processor generates an erase control signal to erase at least a segment of the non-volatile memory and a write control signal to write one or more new instructions to at least a segment of the non-volatile memory, thereby modifying the operation of the device.

Owner:LIVANOVA USA INC

Reconfigurable network on a chip

ActiveUS20070075734A1Easy programmingIncrease flexibilitySolid-state devicesDigital computer detailsSemiconductor chipProgrammable logic array

An architecture for a reconfigurable network that can be implemented on a semiconductor chip is disclosed, which includes a hierarchical organization of network components and functions that are readily programmable and highly flexible. Essentially, a reconfigurable network on a chip is disclosed, which includes aspects of reconfigurable computing, system on a chip, and network on a chip designs. More precisely, a reconfigurable network on a chip includes a general purpose microprocessor for implementing software tasks, a plurality of on-chip memories for facilitating the processing of large data structures as well as processor collaboration, a plurality of reconfigurable execution units including self-contained, individually reconfigurable programmable logic arrays, a plurality of configurable system interface units that provide interconnections between on-chip memories, networks or buses, an on-chip network including a network interconnection interface that enables communication between all reconfigurable execution units, configurable system interface units and general purpose microprocessors, a fine grain interconnect unit that gathers associated input / output signals for a particular interface and attaches them to a designated system interface resource, and a plurality of input / output blocks that supply the link between an on-chip interface resource and a particular external network or device interface. Advantageously, the network minimizes the configuration latency of the reconfigurable execution units and also enables reconfiguration on-the-fly.

Owner:HONEYWELL INT INC

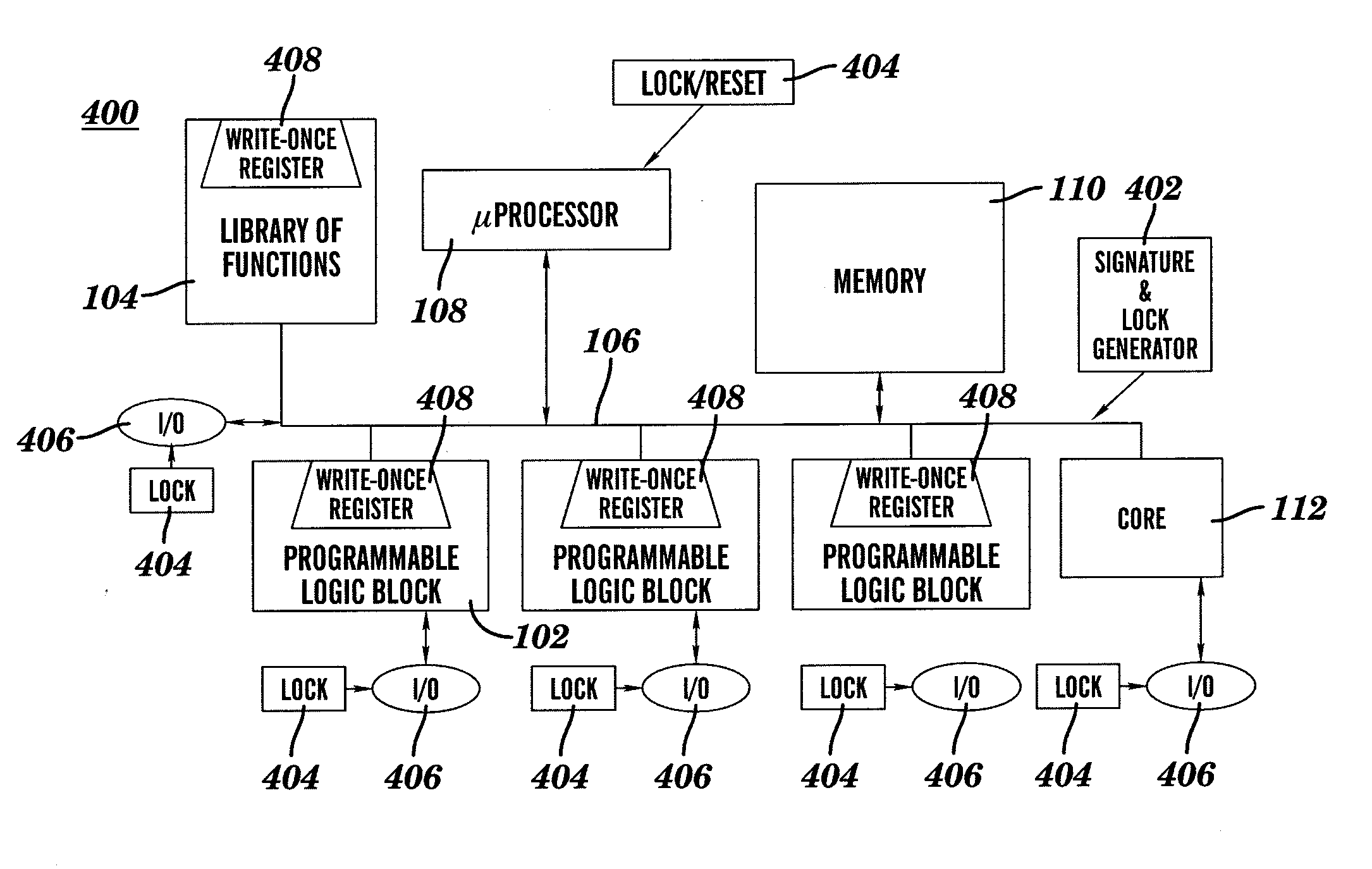

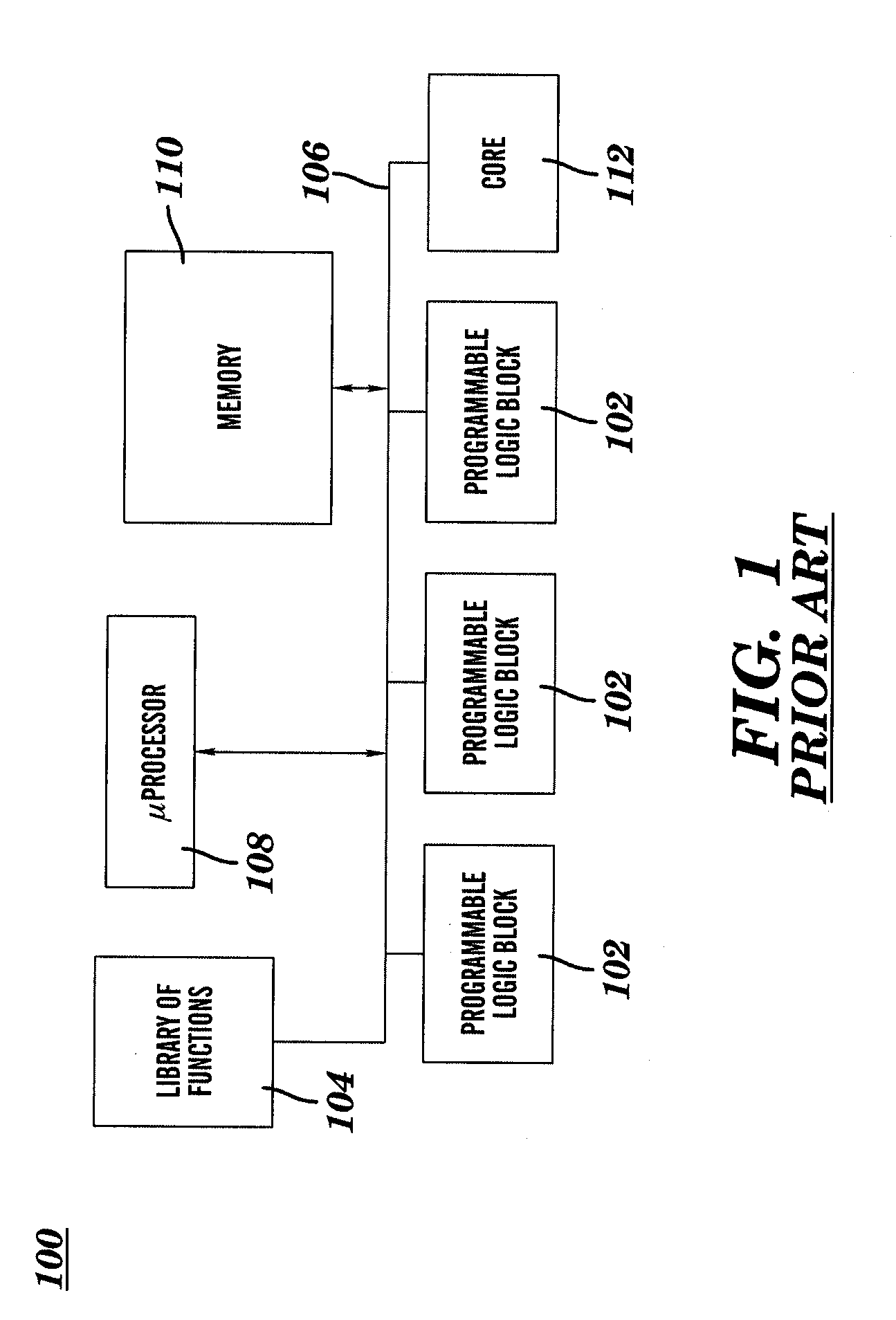

Method for system level protection of field programmable logic devices

InactiveUS20050278551A1Avoid accessDigital data processing detailsUser identity/authority verificationField programmable logic devicesProgrammable logic device

A method for protecting a dynamically reconfigurable computing system includes generating an encoding signature and passing the encoding signature, through a system level bus, to at least one field programmable logic device and to a function library included within the system. The function library contains a plurality of functions for selective programming into the at least one field programmable logic device. A lock is generated so as to prevent external resources with respect to the system from accessing the encoding signature during the passing thereof.

Owner:IBM CORP

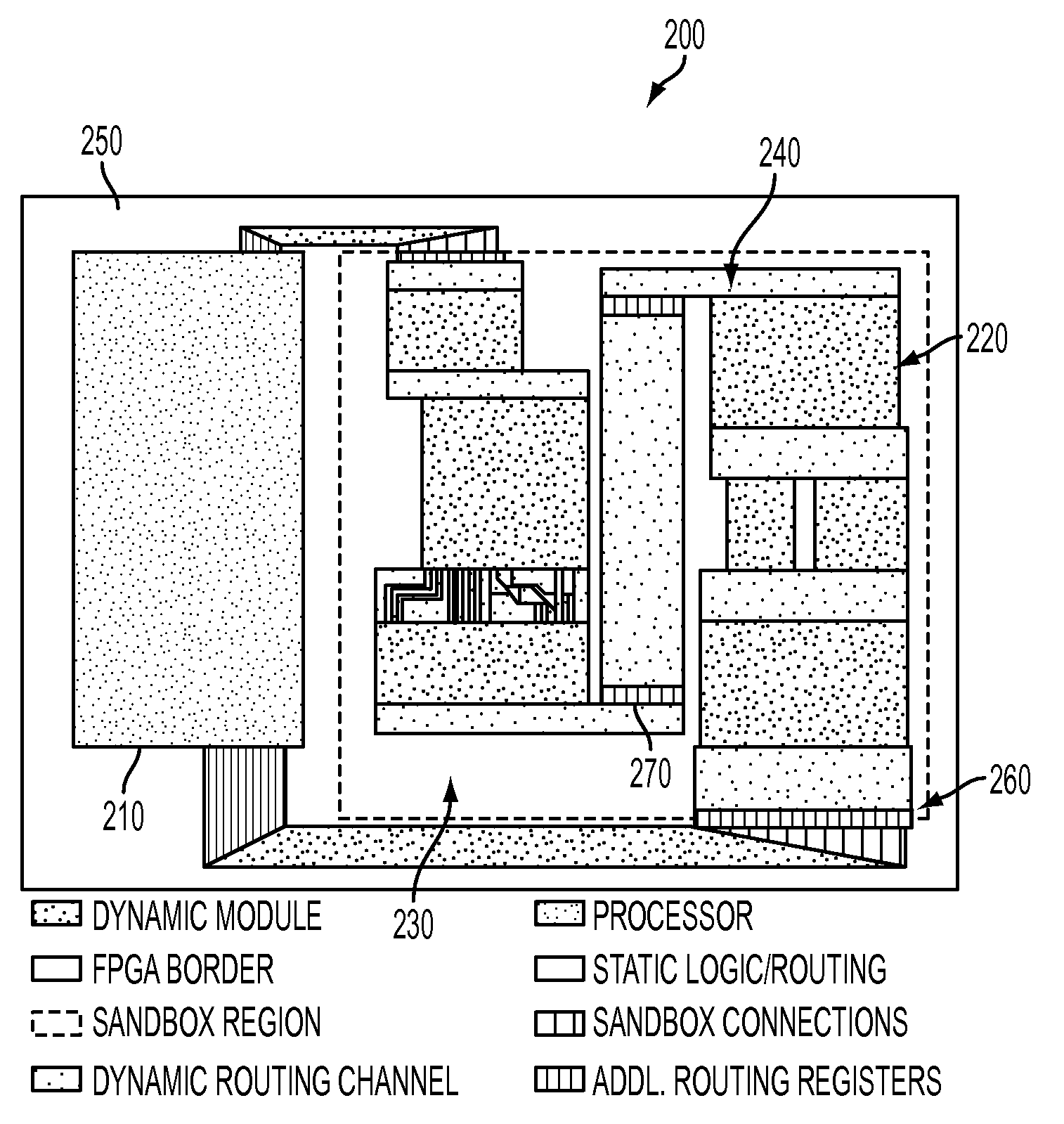

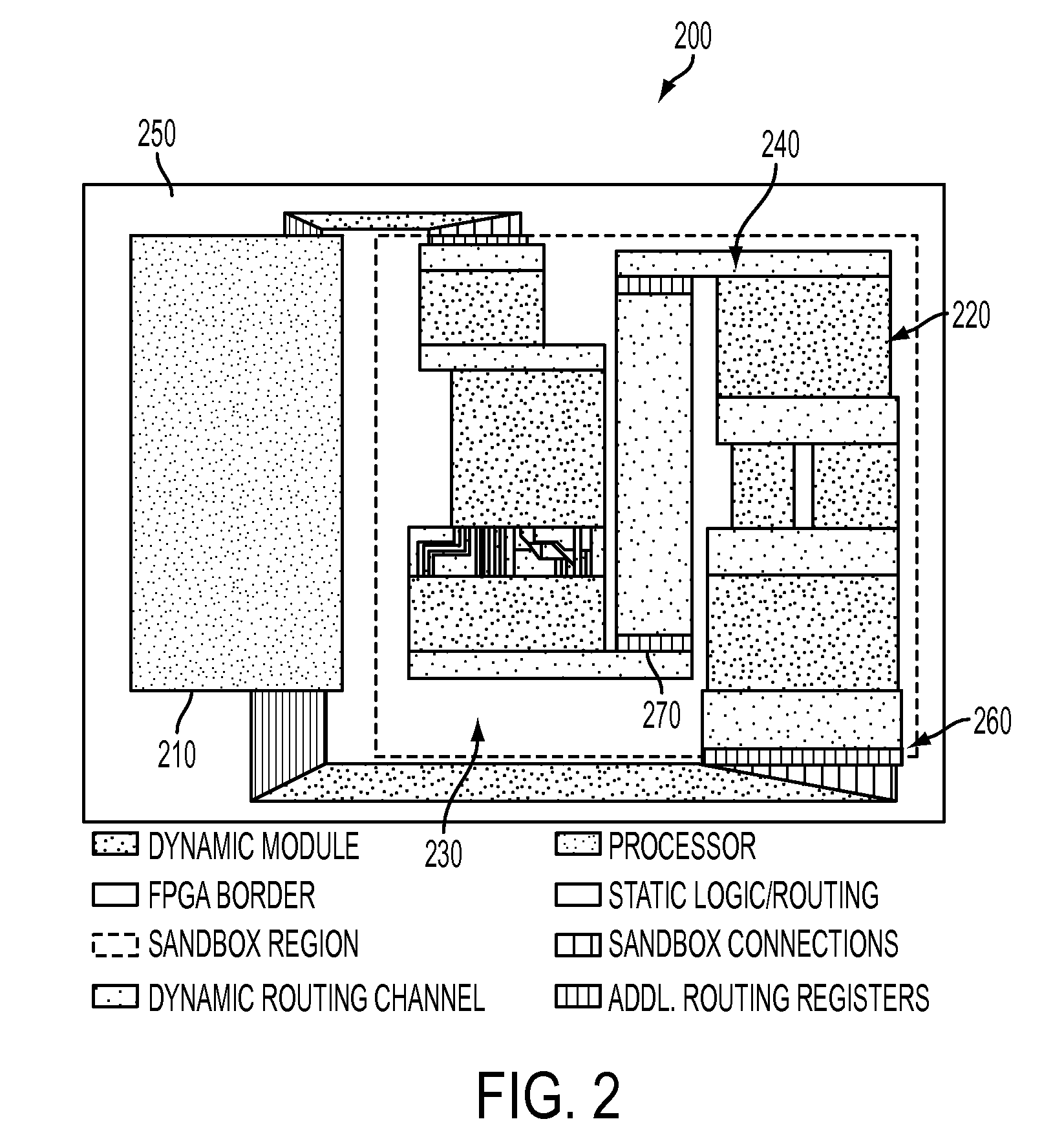

Wires on demand: run-time communication synthesis for reconfigurable computing

InactiveUS7902866B1Flexible allocationSolid-state devicesCAD circuit designComputer moduleReconfigurable computing

A method, and system, for reconfiguring an FPGA which has a static region and a dynamic region is provided. The method includes the steps of: (a) providing a dynamic module library having information of predetermined modules; (b) receiving a reconfiguration request external to the FPGA; (c) computing reconfiguration of the FPGA at a predetermined location using predetermined module information from the dynamic module library and the reconfiguration request, and generating reconfigurable partial bitstreams; and (d) sending partial bitstreams from the predetermined location to the FPGA to perform the reconfiguration.

Owner:LUNA INNOVATIONS +1

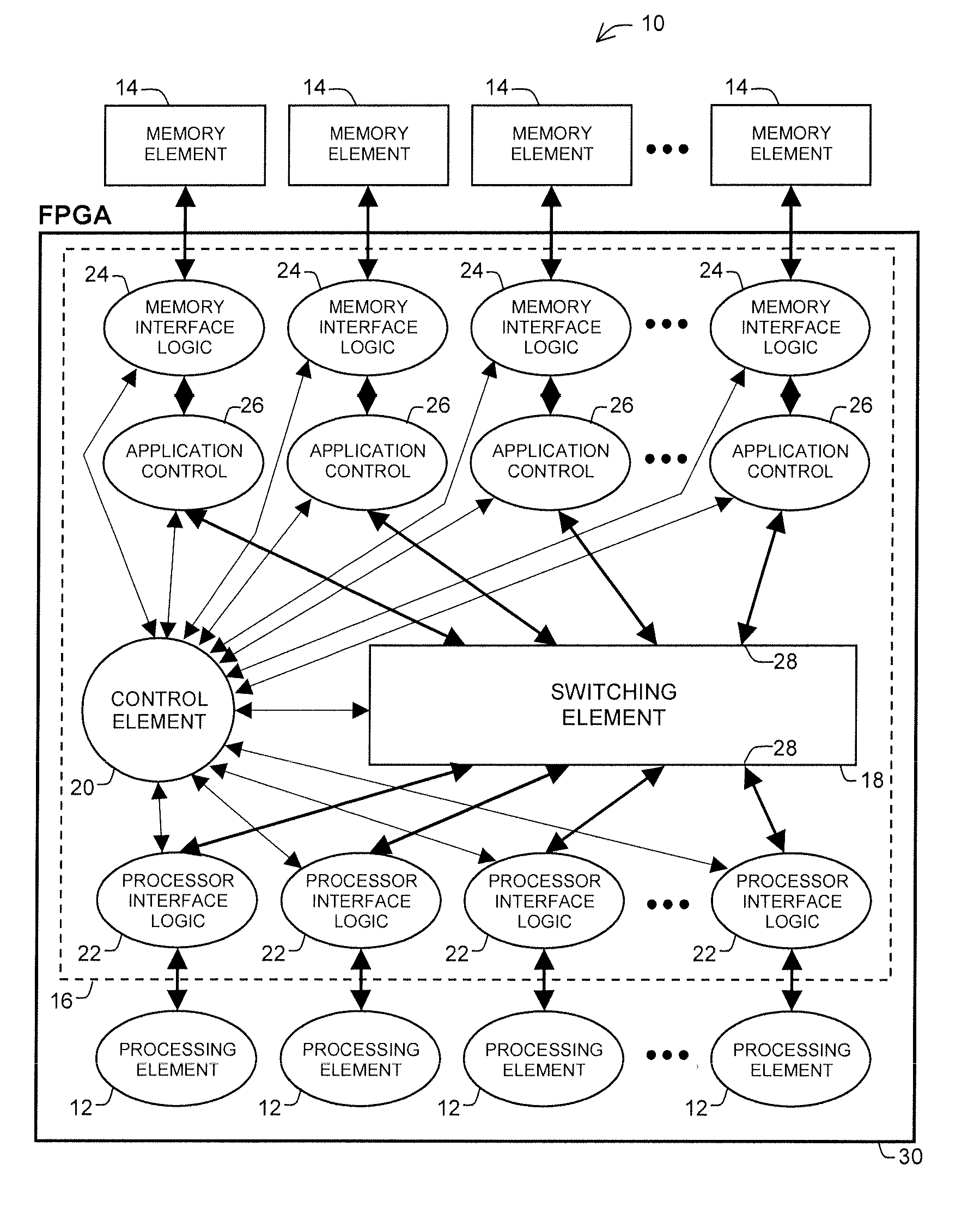

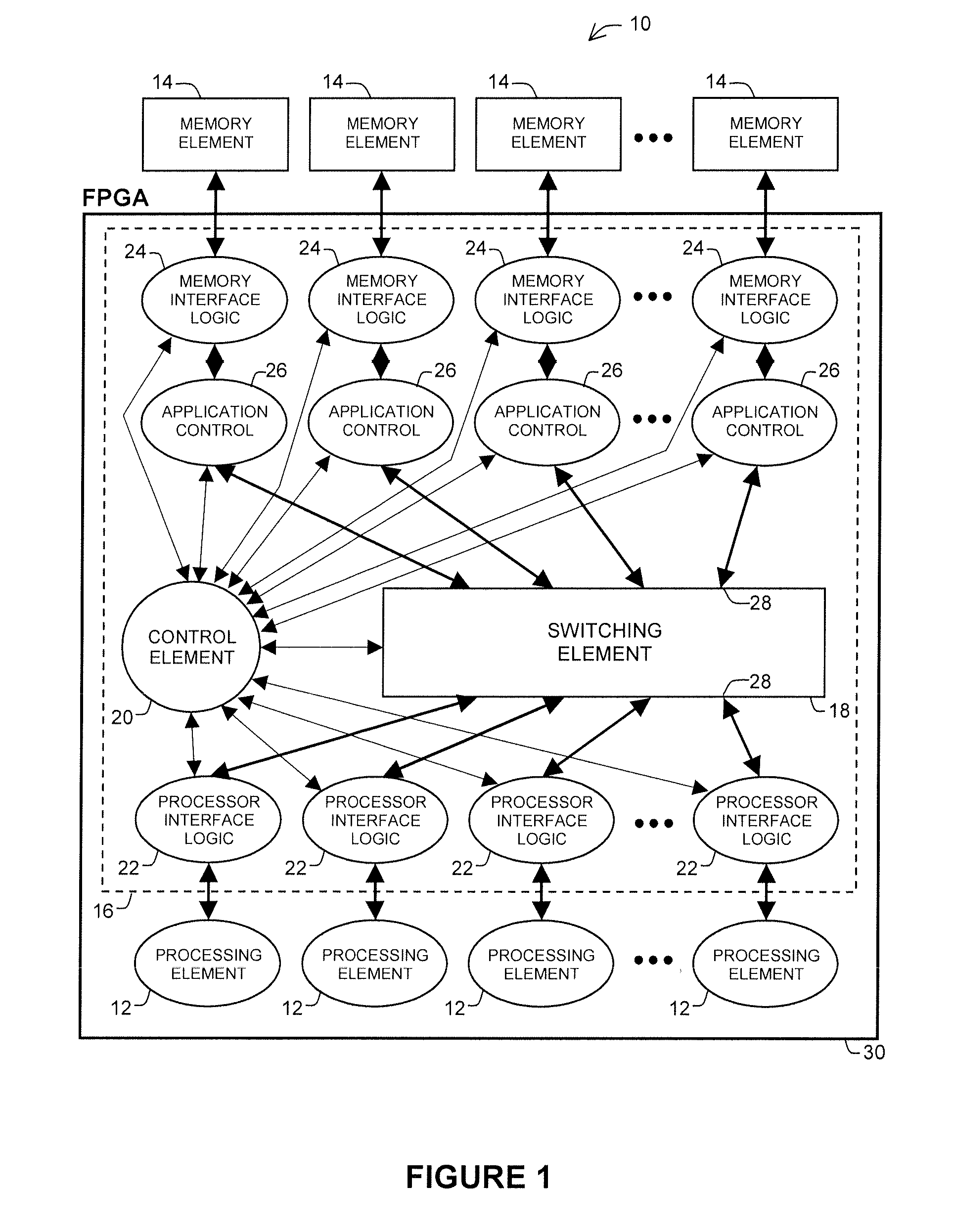

Switch-based parallel distributed cache architecture for memory access on reconfigurable computing platforms

InactiveUS20090178043A1Well formedGeneral purpose stored program computerMultiprogramming arrangementsMemory interfaceDistributed cache

A computing architecture comprises a plurality of processing elements to perform data processing calculations, a plurality of memory elements to store the data processing results, and a reconfigurable interconnect network to couple the processing elements to the memory elements. The reconfigurable interconnect network includes a switching element, a control element, a plurality of processor interface units, a plurality of memory interface units, and a plurality of application control units. In various embodiments, the processing elements and the interconnect network may be implemented in a field-programmable gate array.

Owner:L 3 COMM INTEGRATED SYST

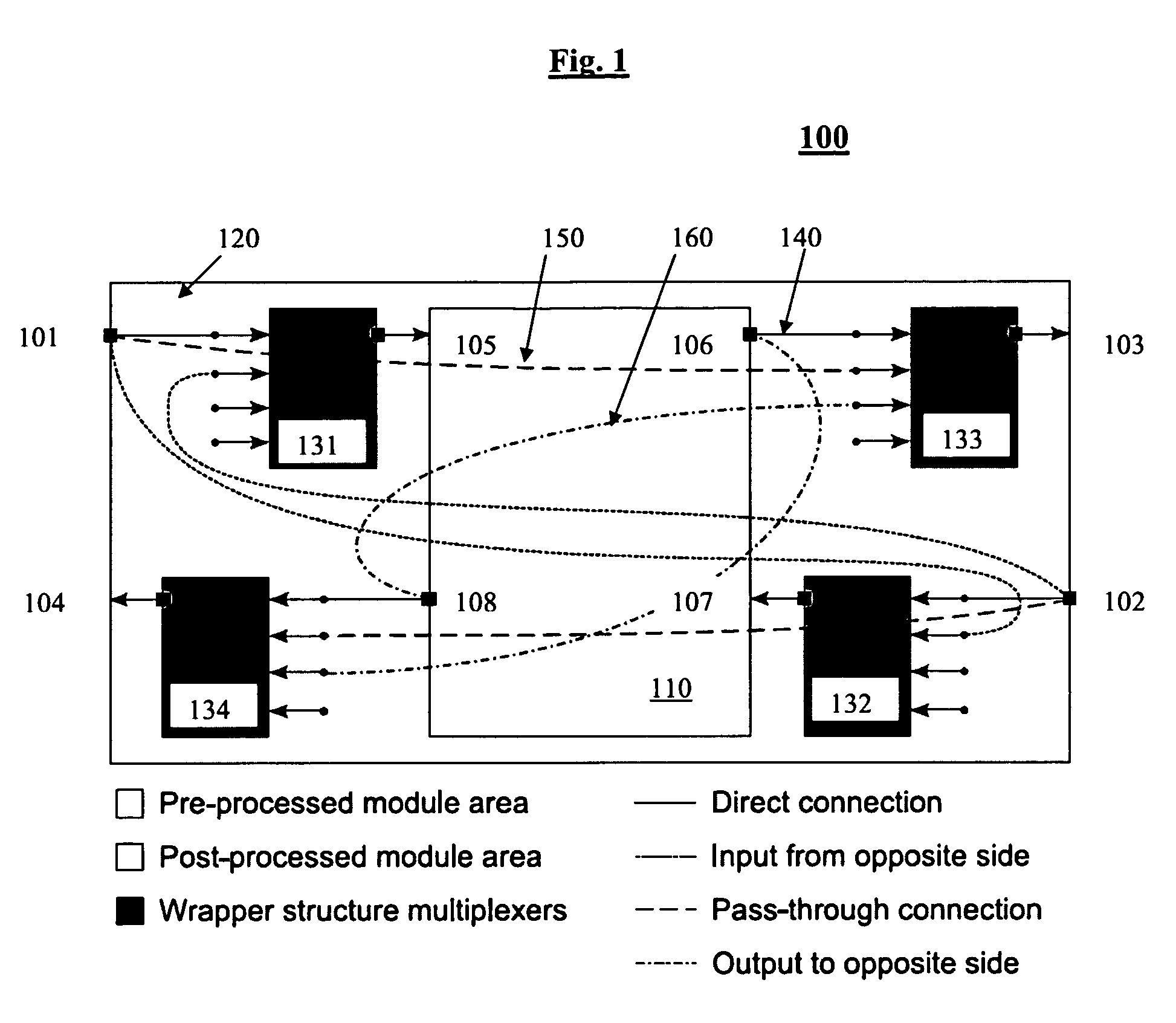

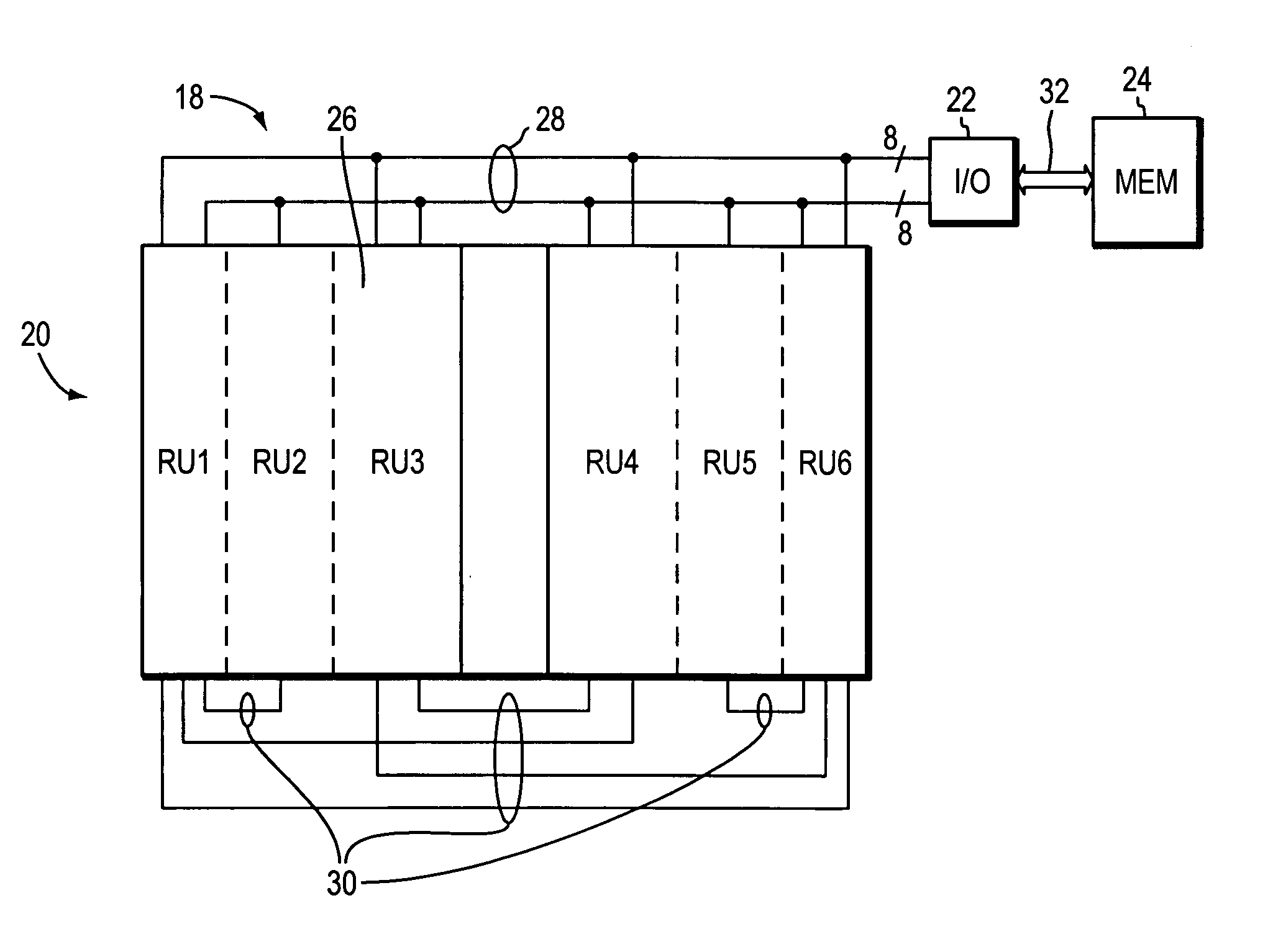

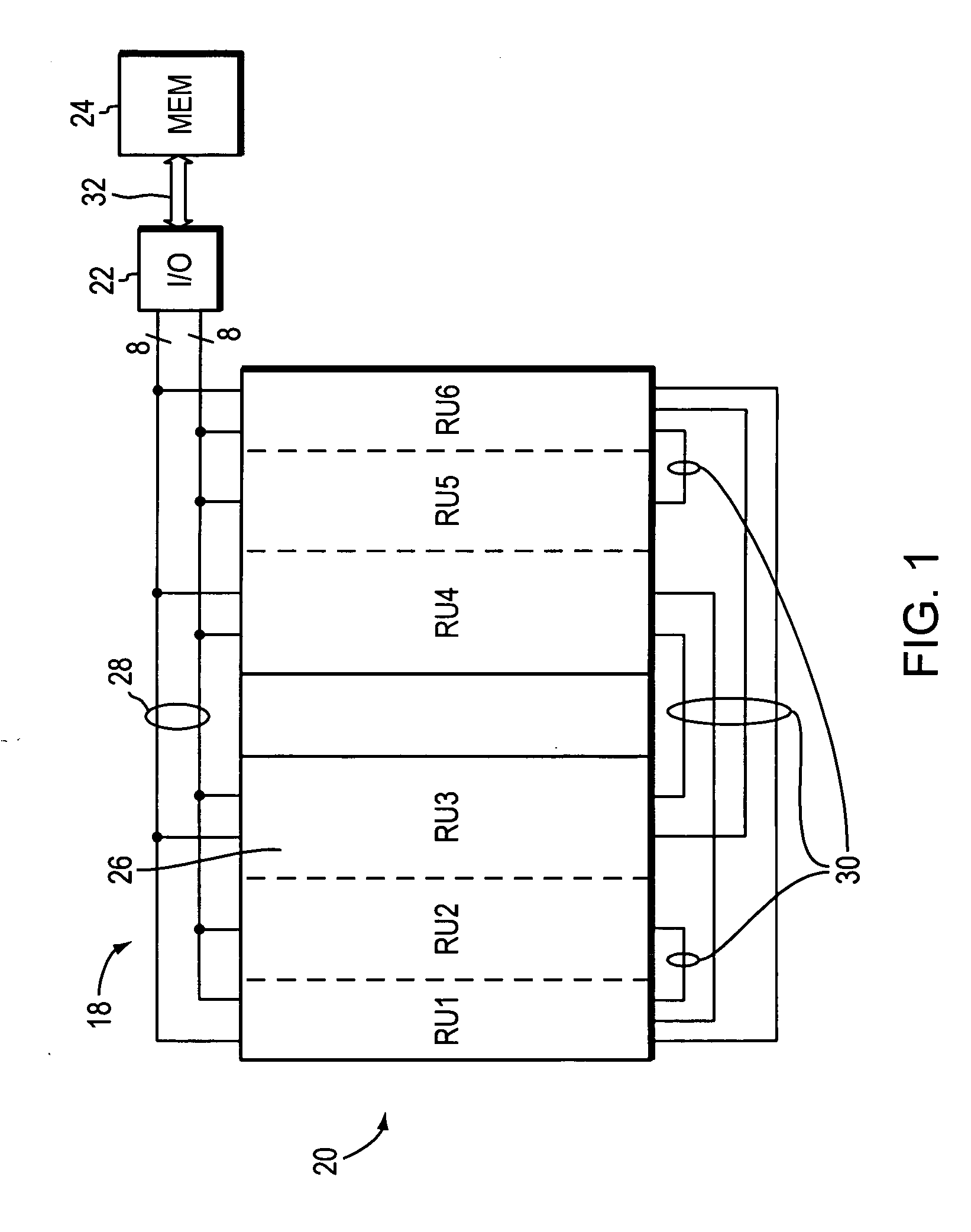

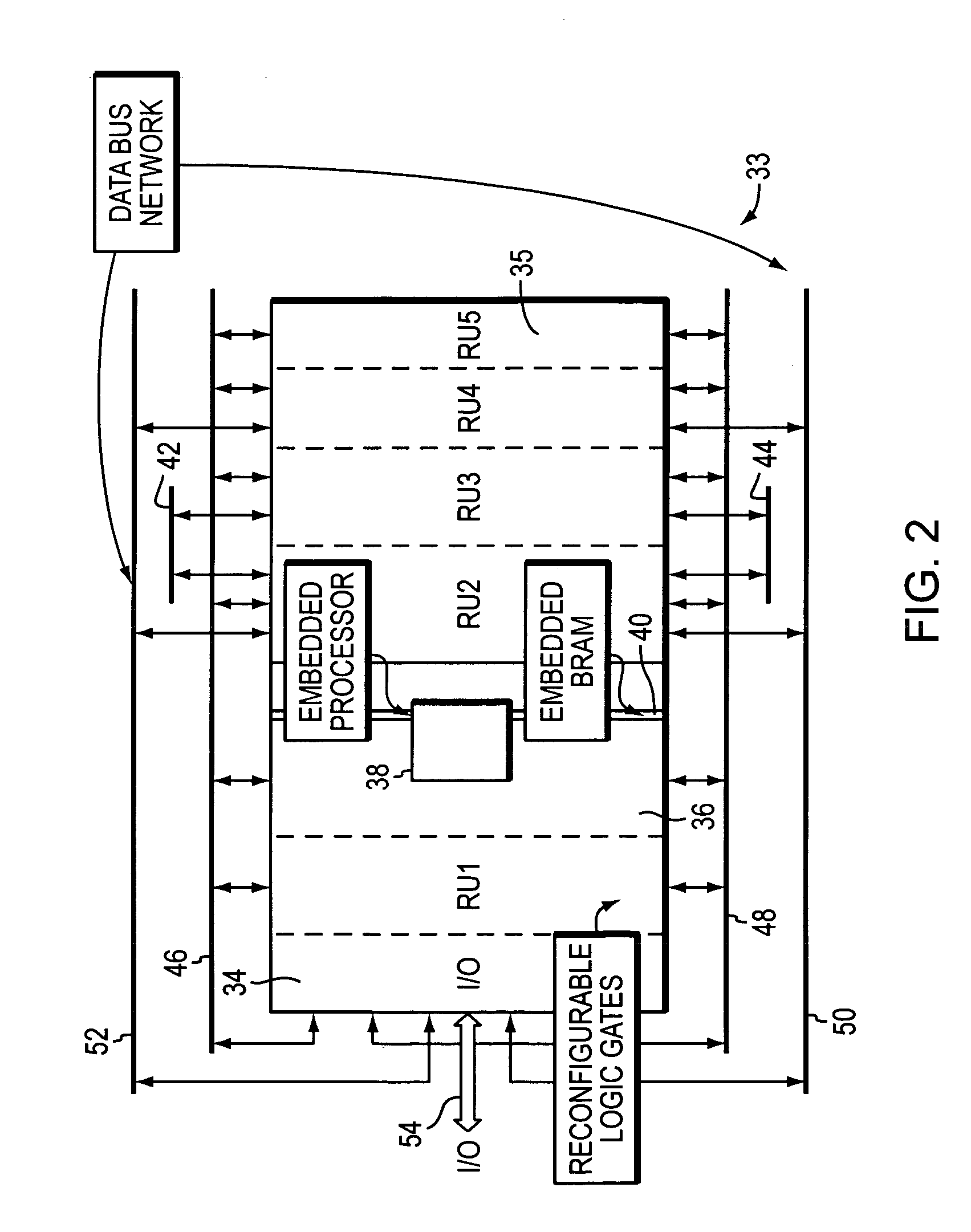

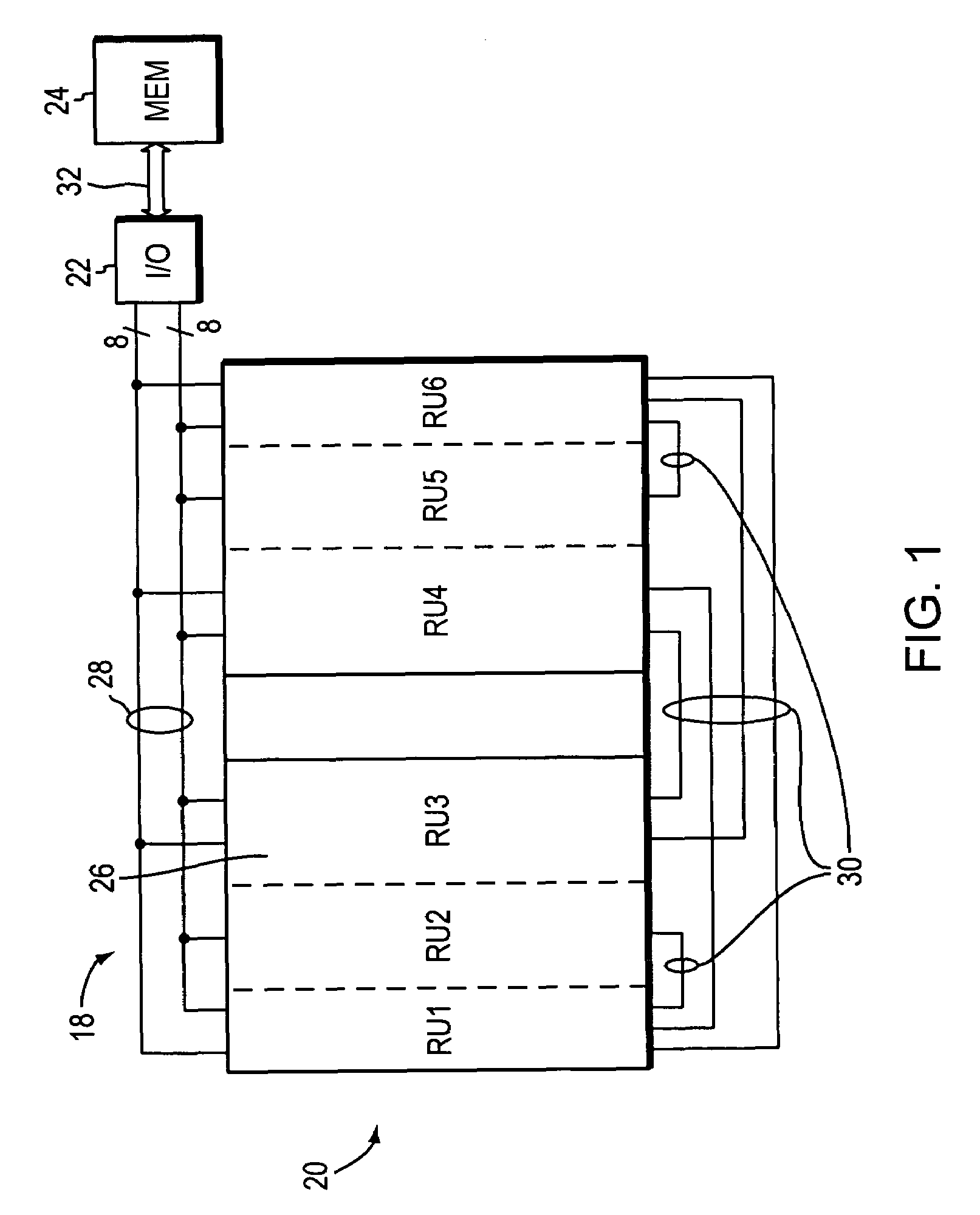

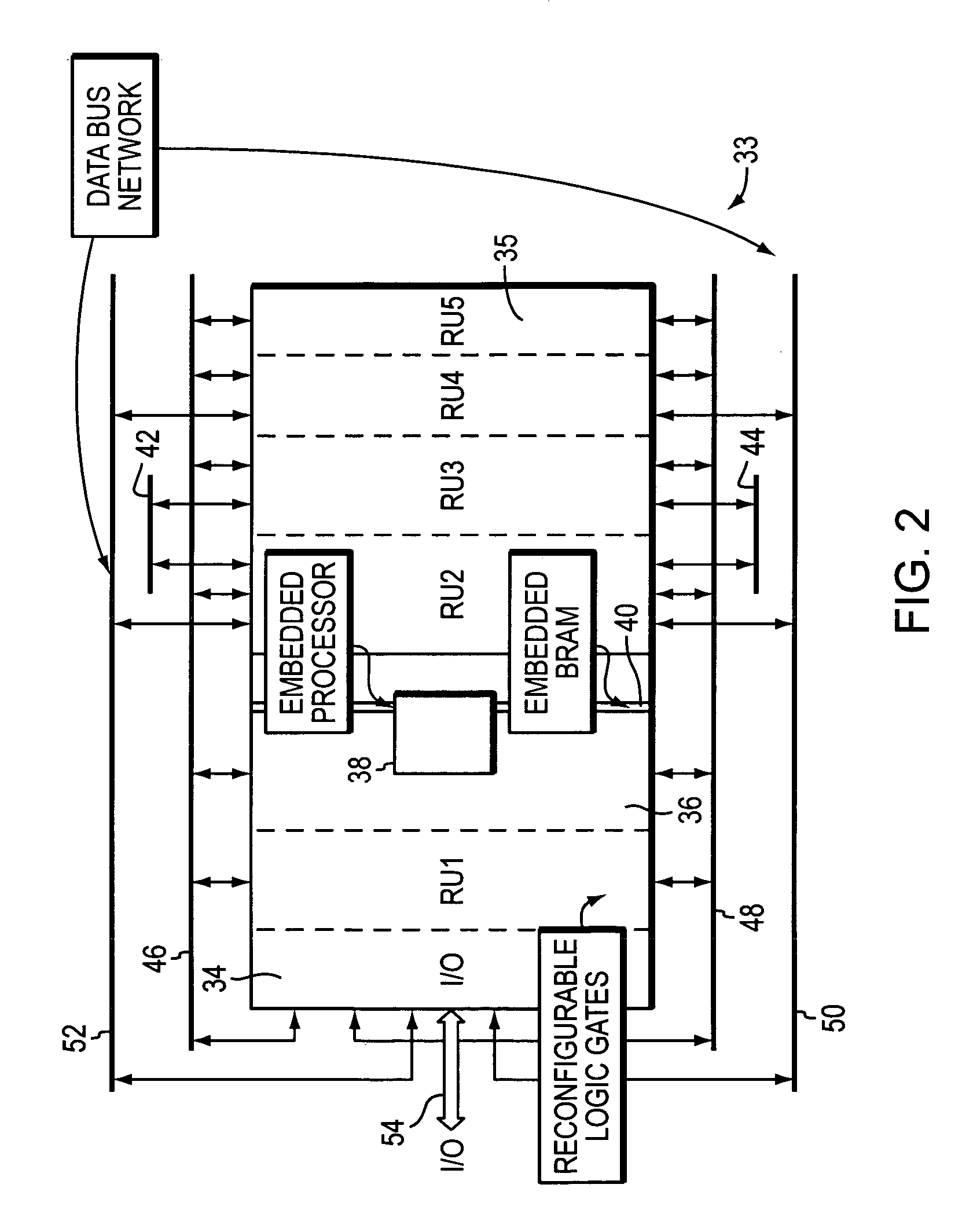

Systems and methods for reconfigurable computing

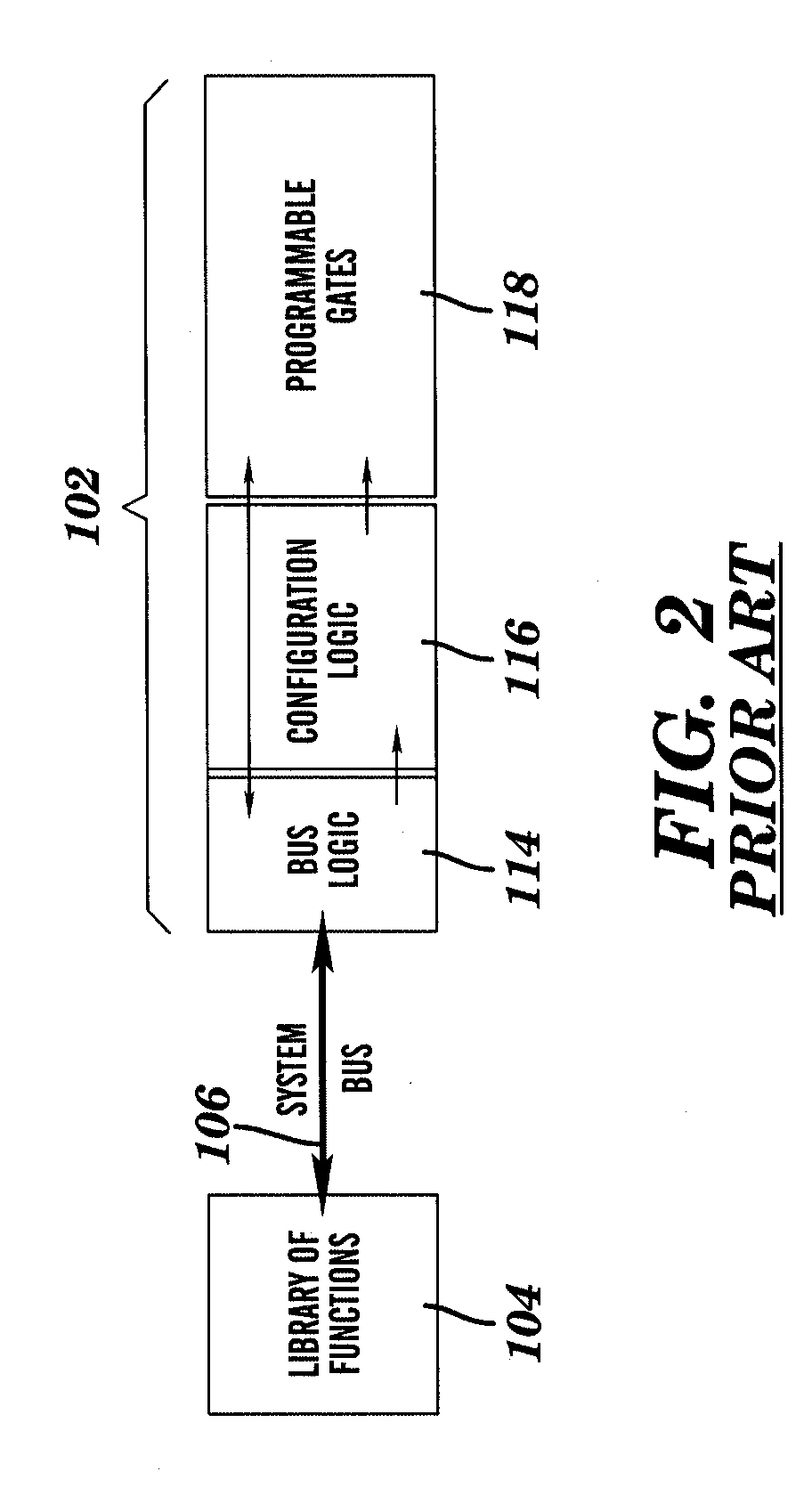

InactiveUS20050235070A1Architecture with single central processing unitInput/output processes for data processingReconfigurable computingBus mastering

A processing system includes a communication bus, a controller, an Input / Output (“I / O”) block, and reconfigurable logic segments (e.g., reconfigurable units). Individually reconfigurable logic segments are part of a single chip. A communication bus is in electrical communication with the logic segments. A first logic segment communicates to a second logic segment over the communication bus. Reconfiguration can partition a first logic segment into a second and a third logic segment where the smaller logic segments are in electrical communication with the communication bus. Resources are dynamically reallocated when reconfigurable units are either combined or partitioned. More specifically, both partitioning a logic segment and combining two or more logic segments can change the bus width allocated to a reconfigurable unit and the quantity of logic gates in the reconfigured unit. The embedded resources included in a logic segment can also change as a result of reconfiguration. The processing system provides high chip utilization throughout the chip's operation.

Owner:CHARLES STARK DRAPER LABORATORY

Systems and methods for reconfigurable computing

InactiveUS7669035B2Program controlArchitecture with single central processing unitReconfigurable computingLogic gate

A processing system includes a communication bus. a controller, an Input / Output (“I / O”) block, and reconfigurable logic segments (e.g., reconfigurable units). Individually reconfigurable logic segments are part of a single chip. A communication bus is in electrical communication with the logic segments. A first logic segment communicates to a Second logic segment over the communication bus. Reconfiguration can partition a first logic segment into a second and a third logic segment where the smaller logic segments are in electrical communication with the communication bus. Resources are dynamically reallocated when reconfigurable units are either combined or partitioned. More specifically, both partitioning a logic segment and combining two or more logic segments can change the bus width allocated to a reconfigurable unit and the quantity of logic gates in the reconfigured unit. As a result of a reconfiguration, a logic segment's embedded resources can change. The processing system provides high chip utilization throughout the chip's operation.

Owner:CHARLES STARK DRAPER LABORATORY

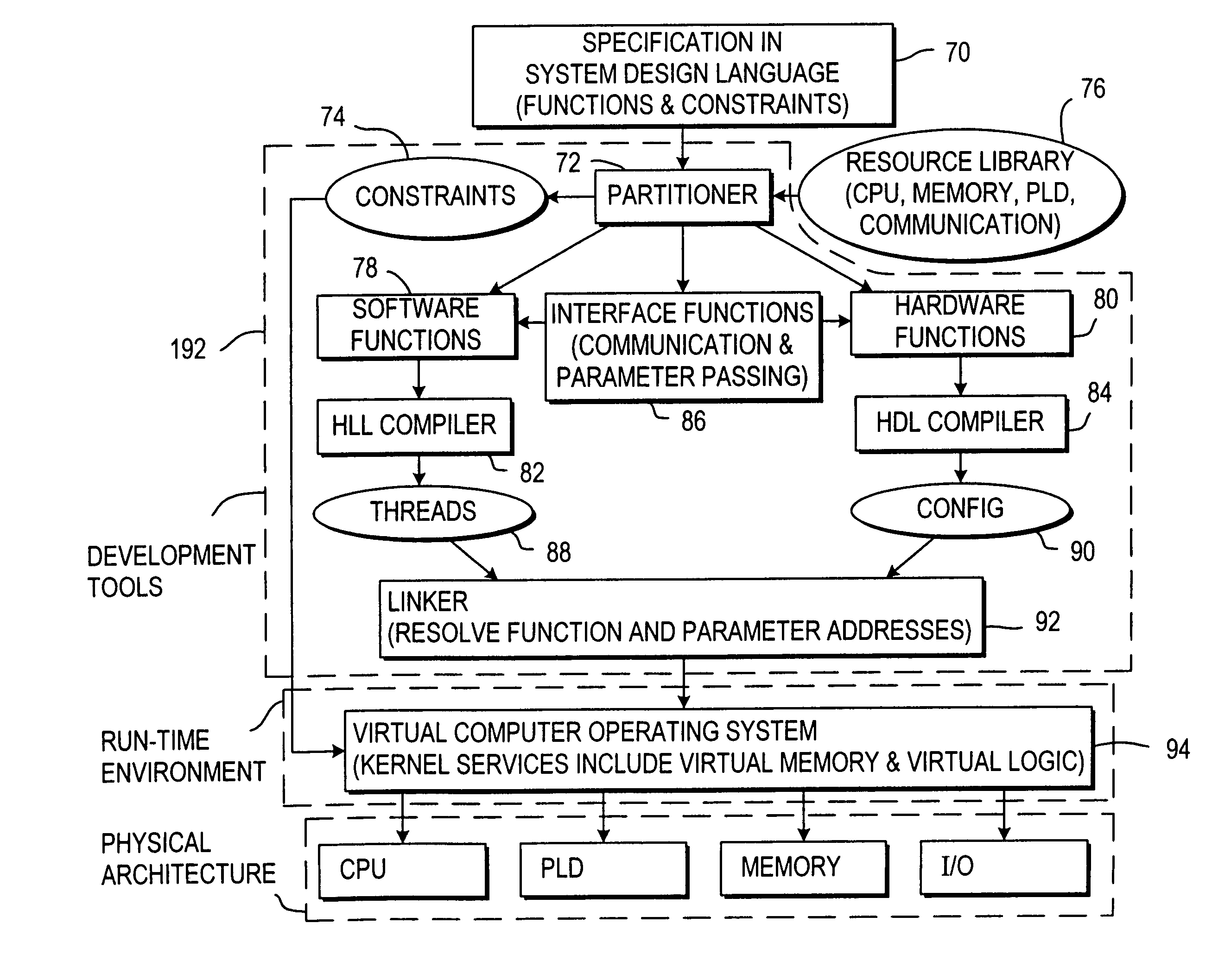

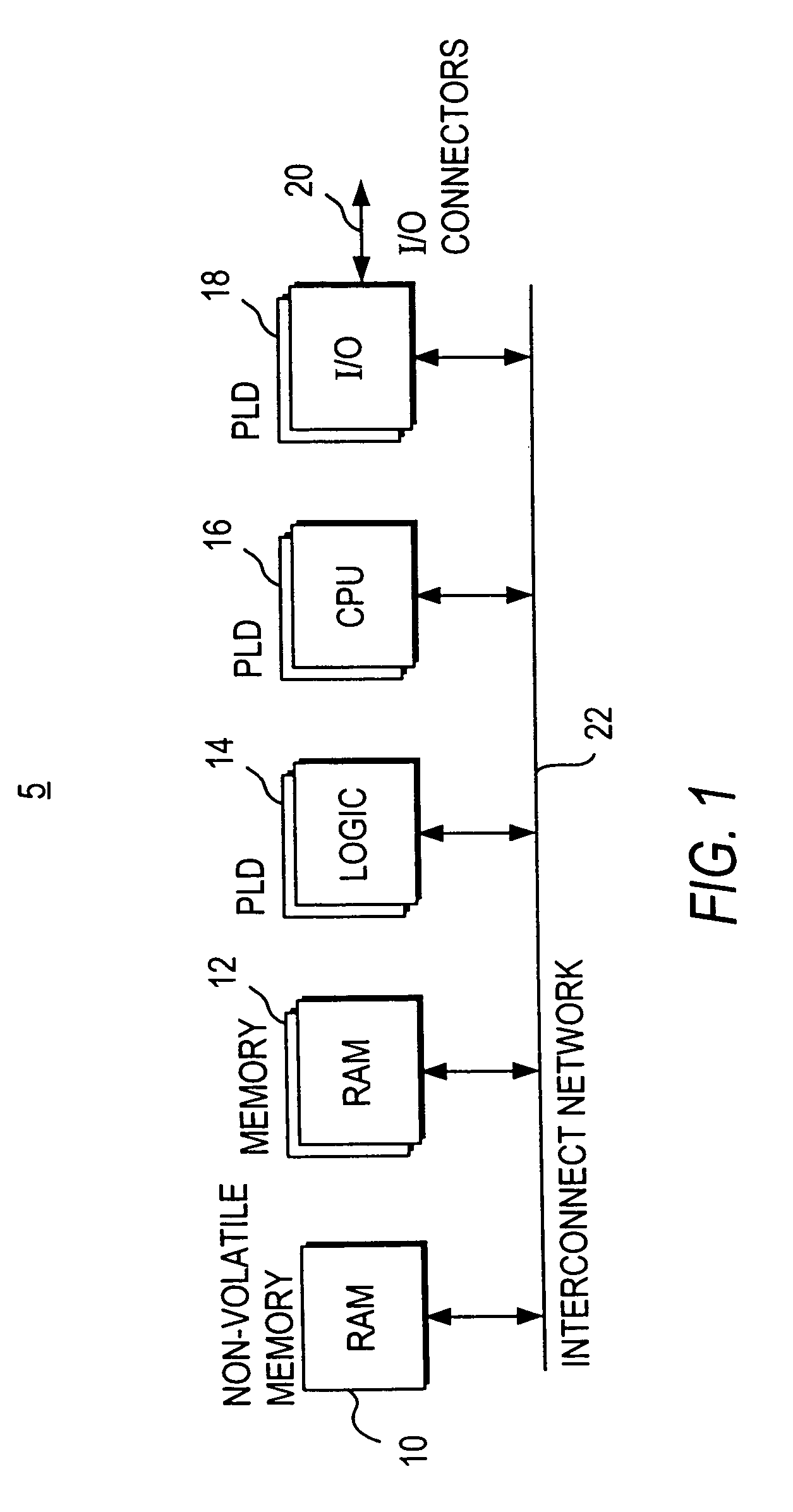

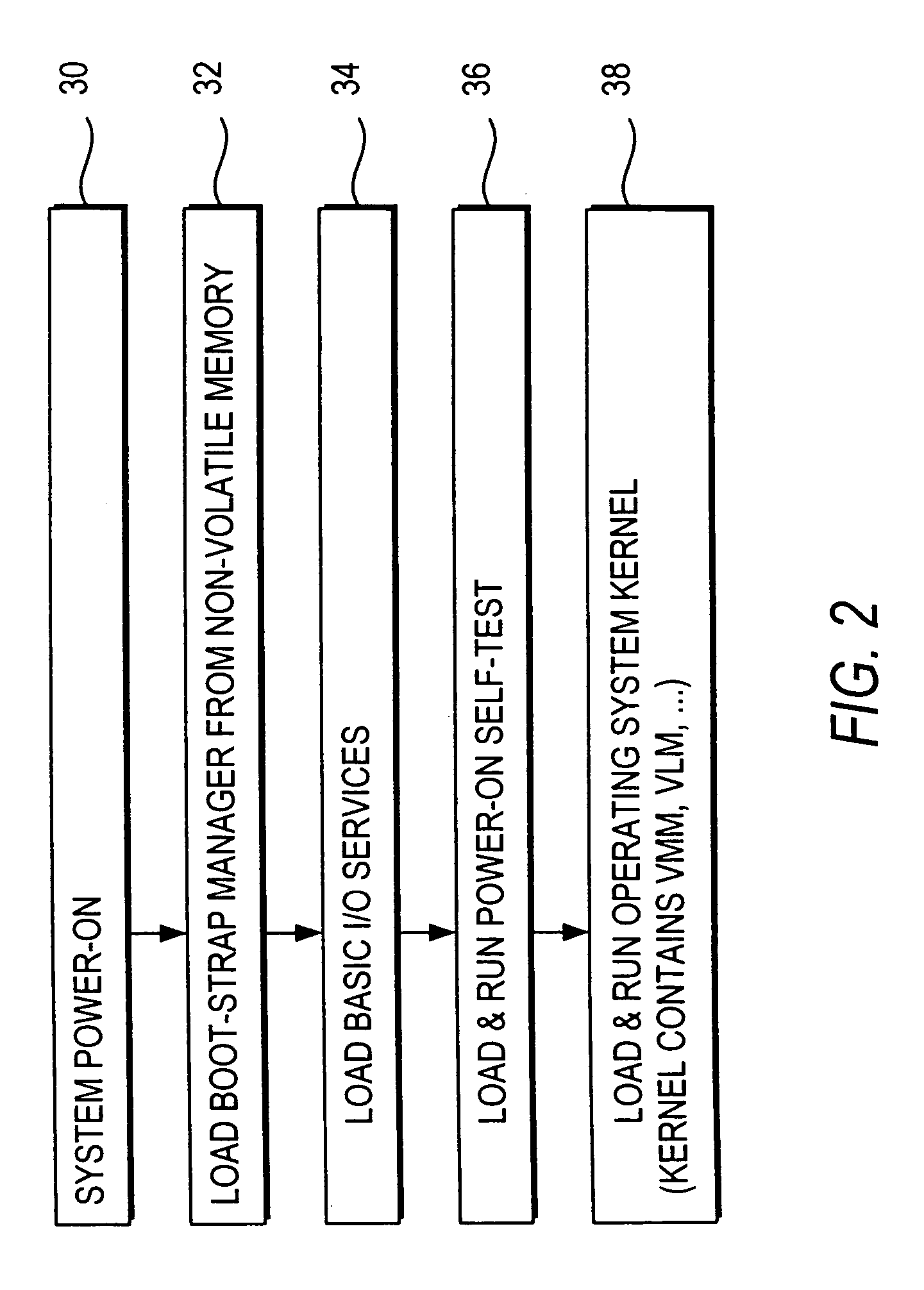

Method for managing resources in a reconfigurable computer having programmable logic resources where automatically swapping configuration data between a secondary storage device and the programmable logic resources

InactiveUS7171548B2Improve system performanceImprove performanceError detection/correctionData resettingOperational systemSystems design

A reconfigurable computer system based on programmable logic is provided. A system design language may be used to write applications. The applications may be automatically partitioned into software components and programmable logic resource components. A virtual computer operating system may be provided to schedule and allocate system resources. The virtual computer operating system may include a virtual logic manager that may increase the capabilities of programmable logic resources in the system.

Owner:ALTERA CORP

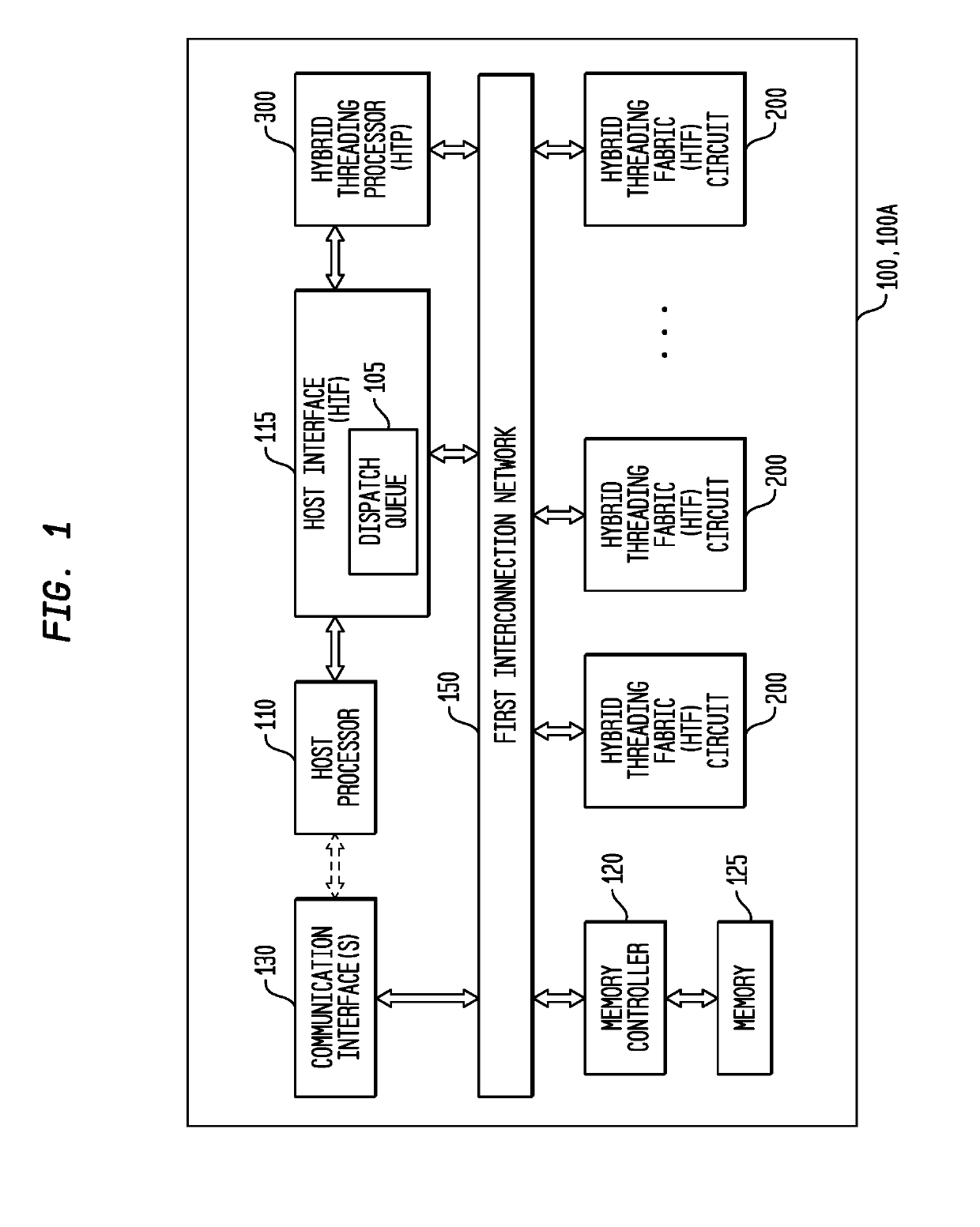

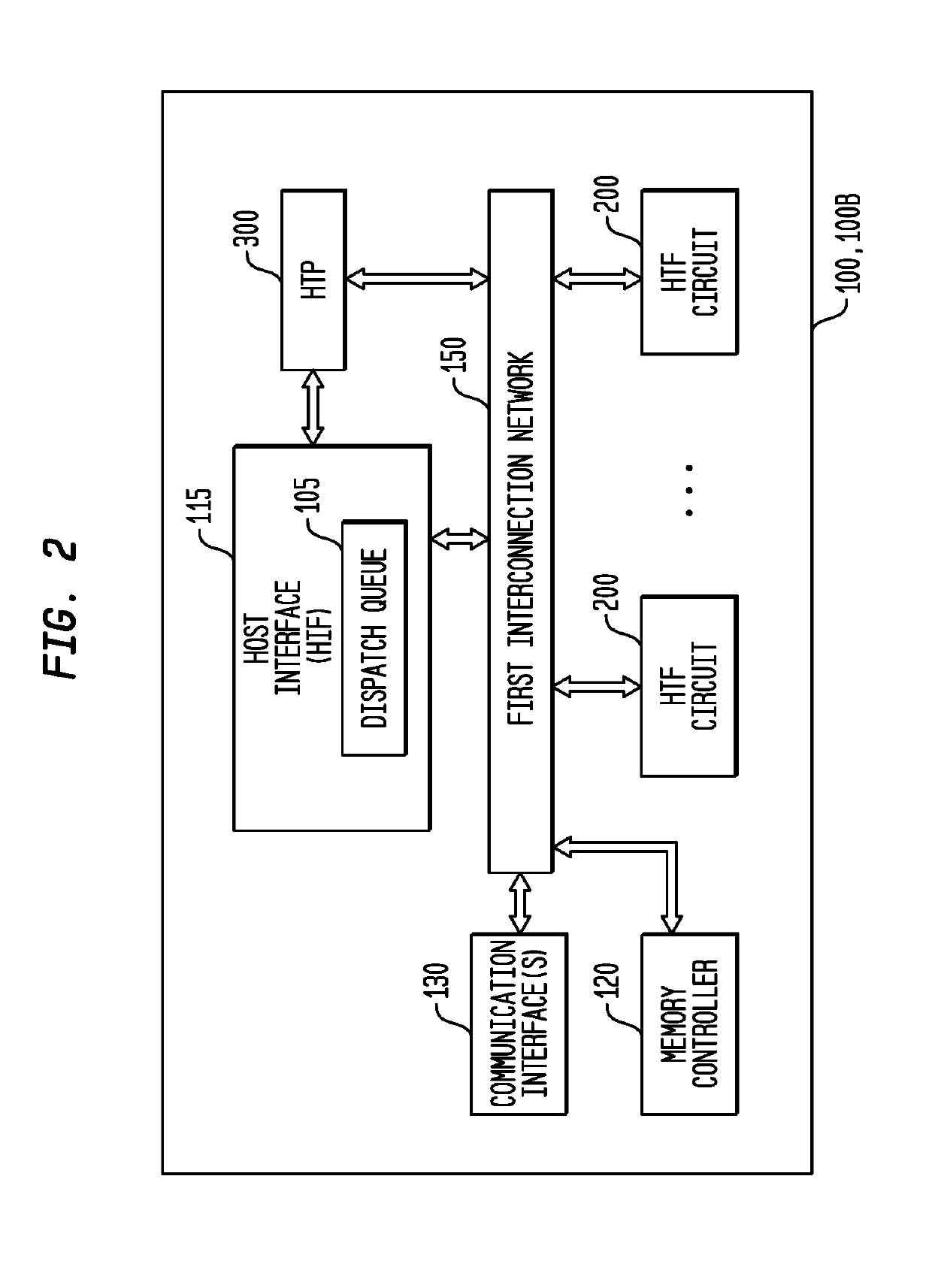

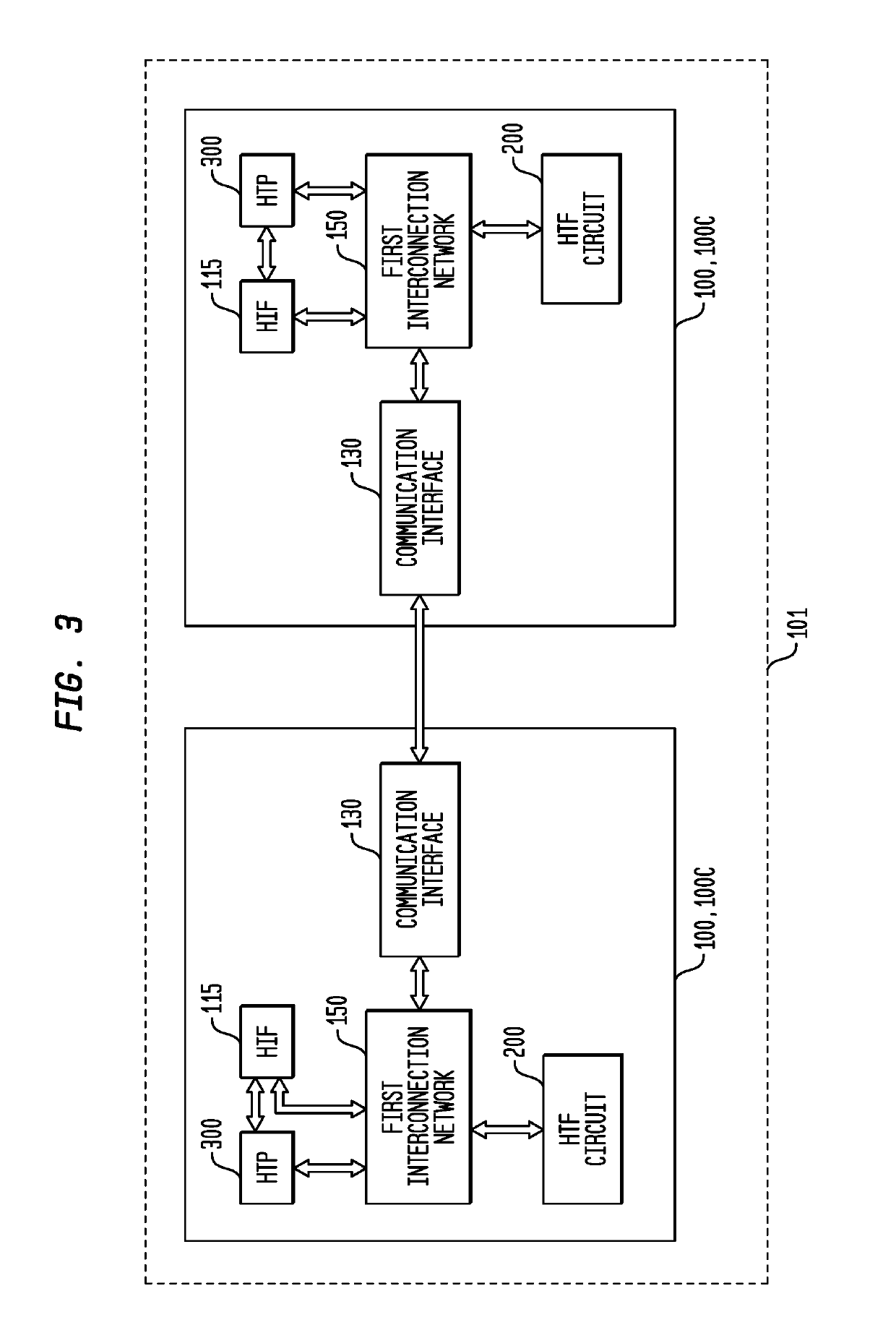

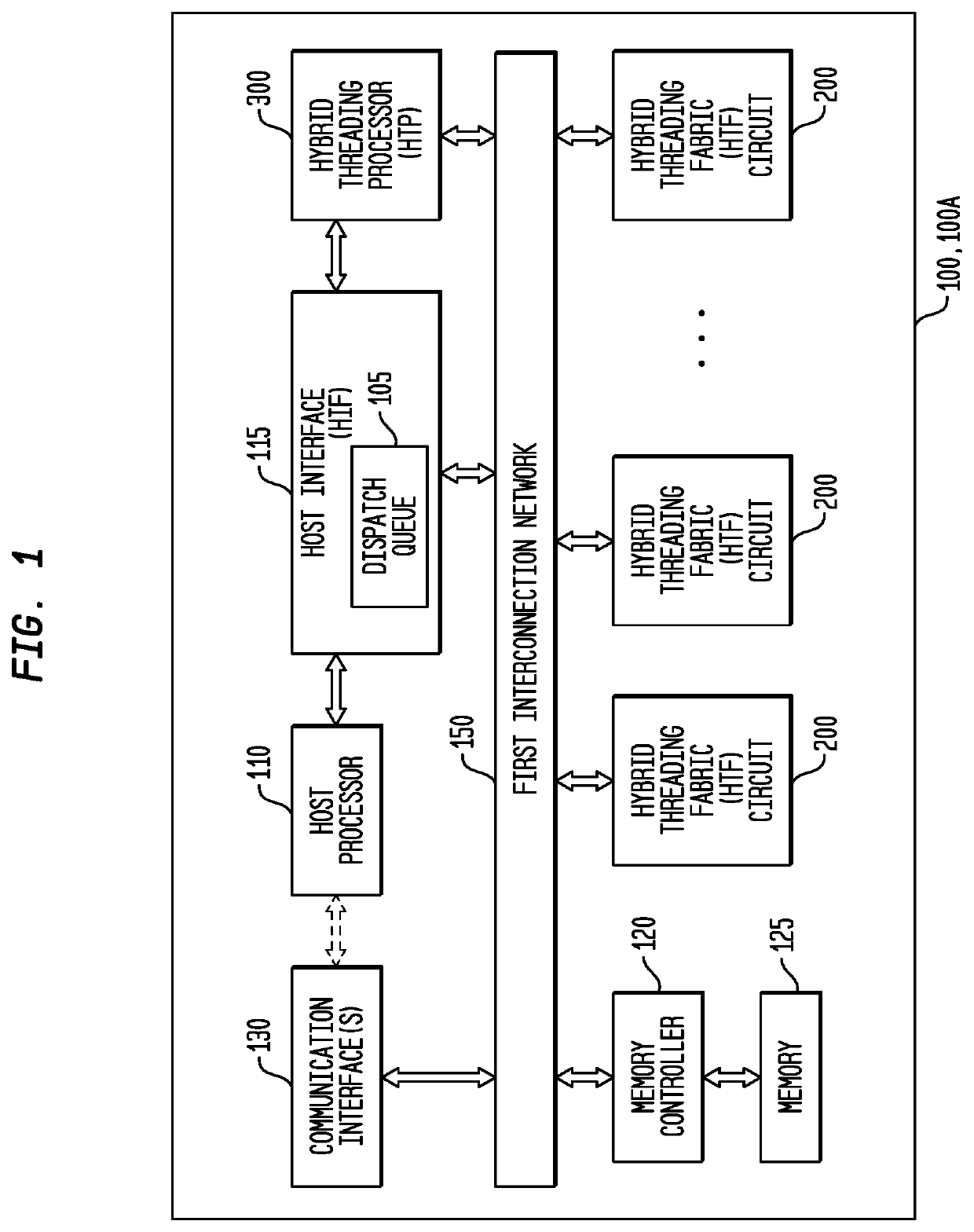

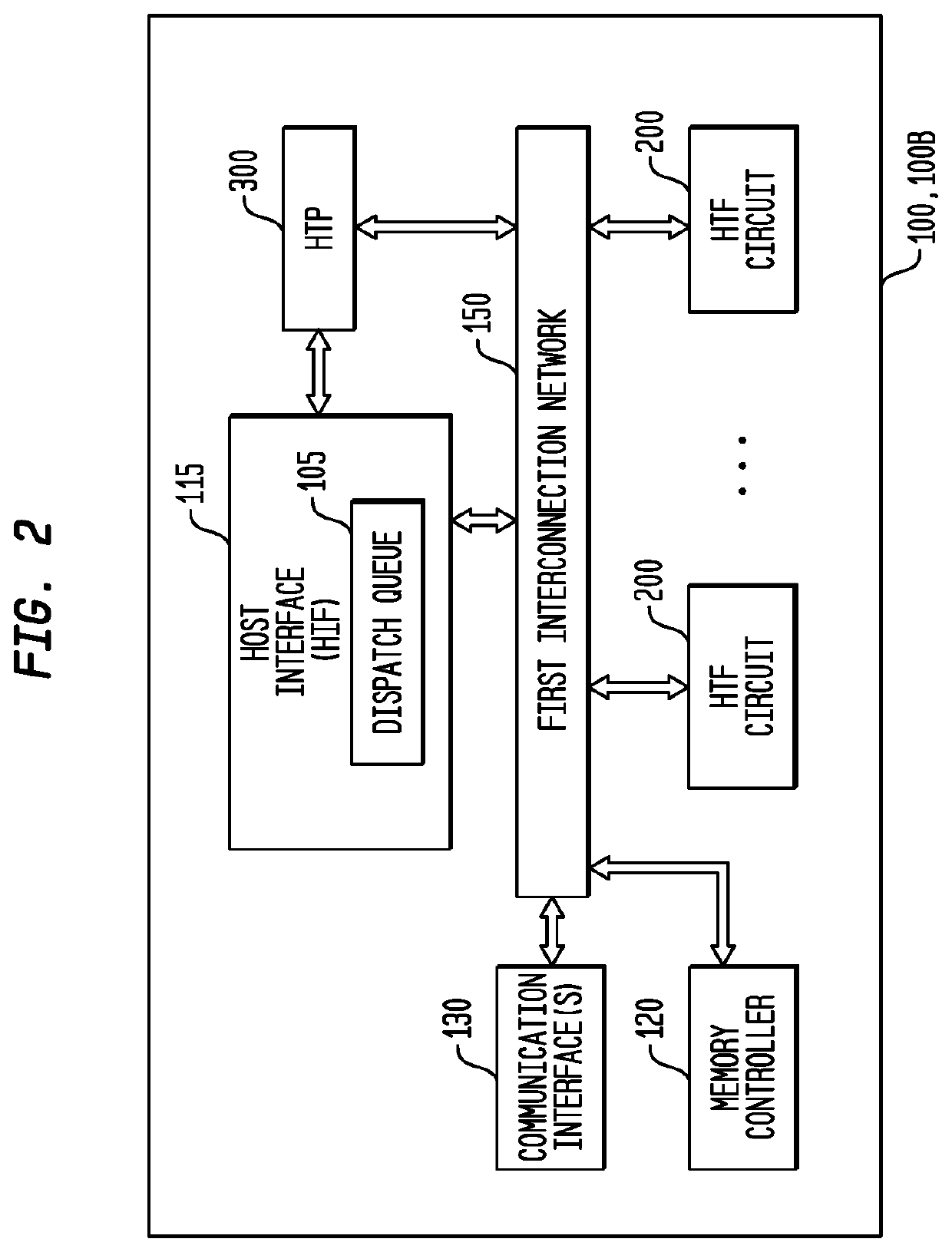

Conditional Branching Control for a Multi-Threaded, Self-Scheduling Reconfigurable Computing Fabric

ActiveUS20190303154A1Fast executionRegister arrangementsInterprogram communicationInstruction memoryInterconnection

Representative apparatus, method, and system embodiments are disclosed for configurable computing. A representative system includes an interconnection network; a processor; and a plurality of configurable circuit clusters. Each configurable circuit cluster includes a plurality of configurable circuits arranged in an array; a synchronous network coupled to each configurable circuit of the array; and an asynchronous packet network coupled to each configurable circuit of the array. A representative configurable circuit includes a configurable computation circuit and a configuration memory having a first, instruction memory storing a plurality of data path configuration instructions to configure a data path of the configurable computation circuit; and a second, instruction and instruction index memory storing a plurality of spoke instructions and data path configuration instruction indices for selection of a master synchronous input, a current data path configuration instruction, and a next data path configuration instruction for a next configurable computation circuit.

Owner:MICRON TECH INC

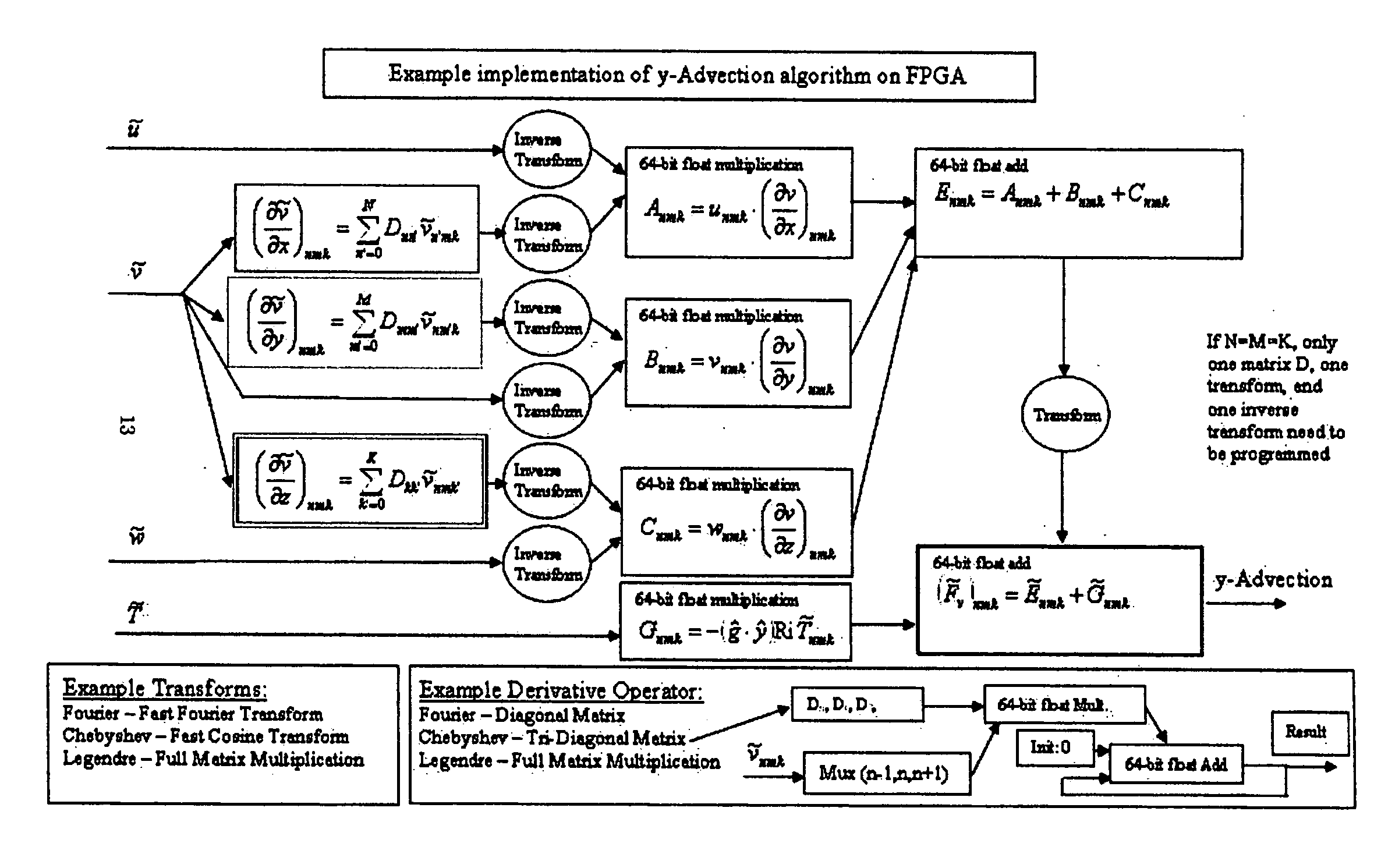

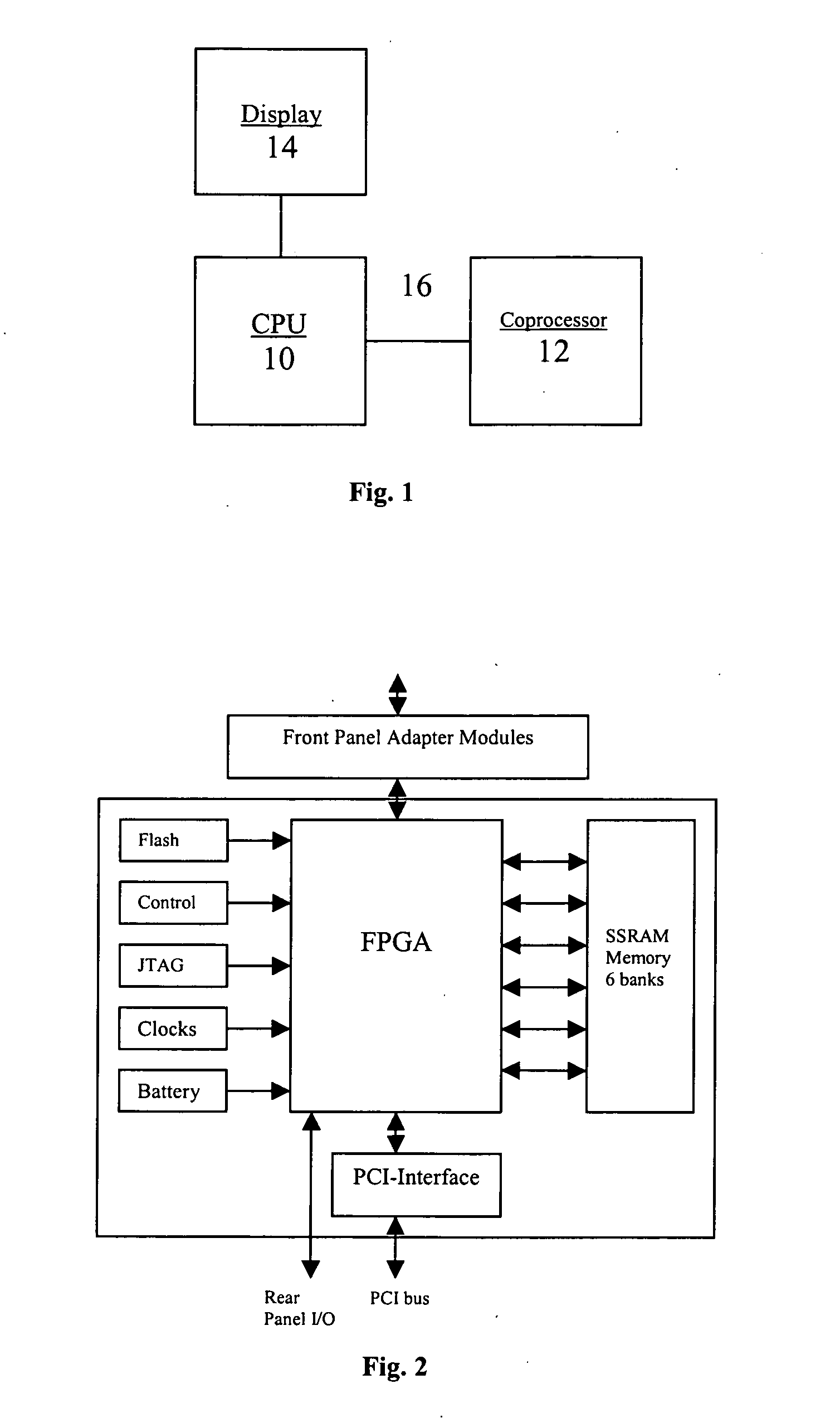

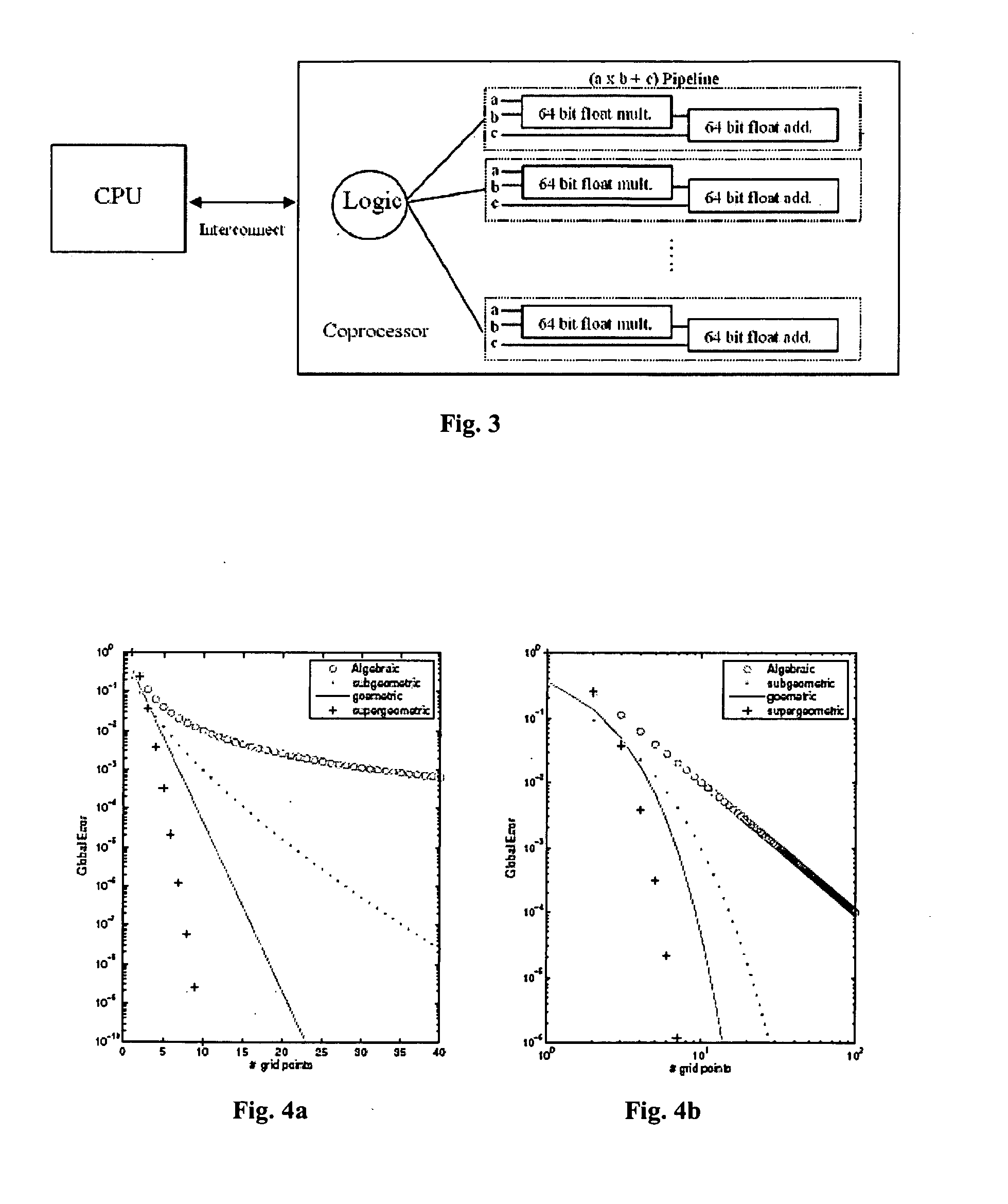

Computational fluid dynamics (CFD) coprocessor-enhanced system and method

InactiveUS20070219766A1Cost-effectiveWide variety of usDesign optimisation/simulationProgram controlCoprocessorDisplay device

The present invention provides a system, method and product for porting computationally complex CFD calculations to a coprocessor in order to decrease overall processing time. The system comprises a CPU in communication with a coprocessor over a high speed interconnect. In addition, an optional display may be provided for displaying the calculated flow field. The system and method include porting variables of governing equations from a CPU to a coprocessor; receiving calculated source terms from the coprocessor; and solving the governing equations at the CPU using the calculated source terms. In a further aspect, the CPU compresses the governing equations into combination of higher and / or lower order equations with fewer variables for porting to the coprocessor. The coprocessor receives the variables, iteratively solves for source terms of the equations using a plurality of parallel pipelines, and transfers the results to the CPU. In a further aspect, the coprocessor decompresses the received variables, solves for the source terms, and then compresses the results for transfer to the CPU. The CPU solves the governing equations using the calculated source terms. In a further aspect, the governing equations are compressed and solved using spectral methods. In another aspect, the coprocessor includes a reconfigurable computing device such as a Field Programmable Gate Array (FPGA). In yet another aspect, the coprocessor may be used for specific applications such as Navier-Stokes equations or Euler equations and may be configured to more quickly solve non-linear advection terms with efficient pipeline utilization.

Owner:VIRGINIA TECH INTPROP INC

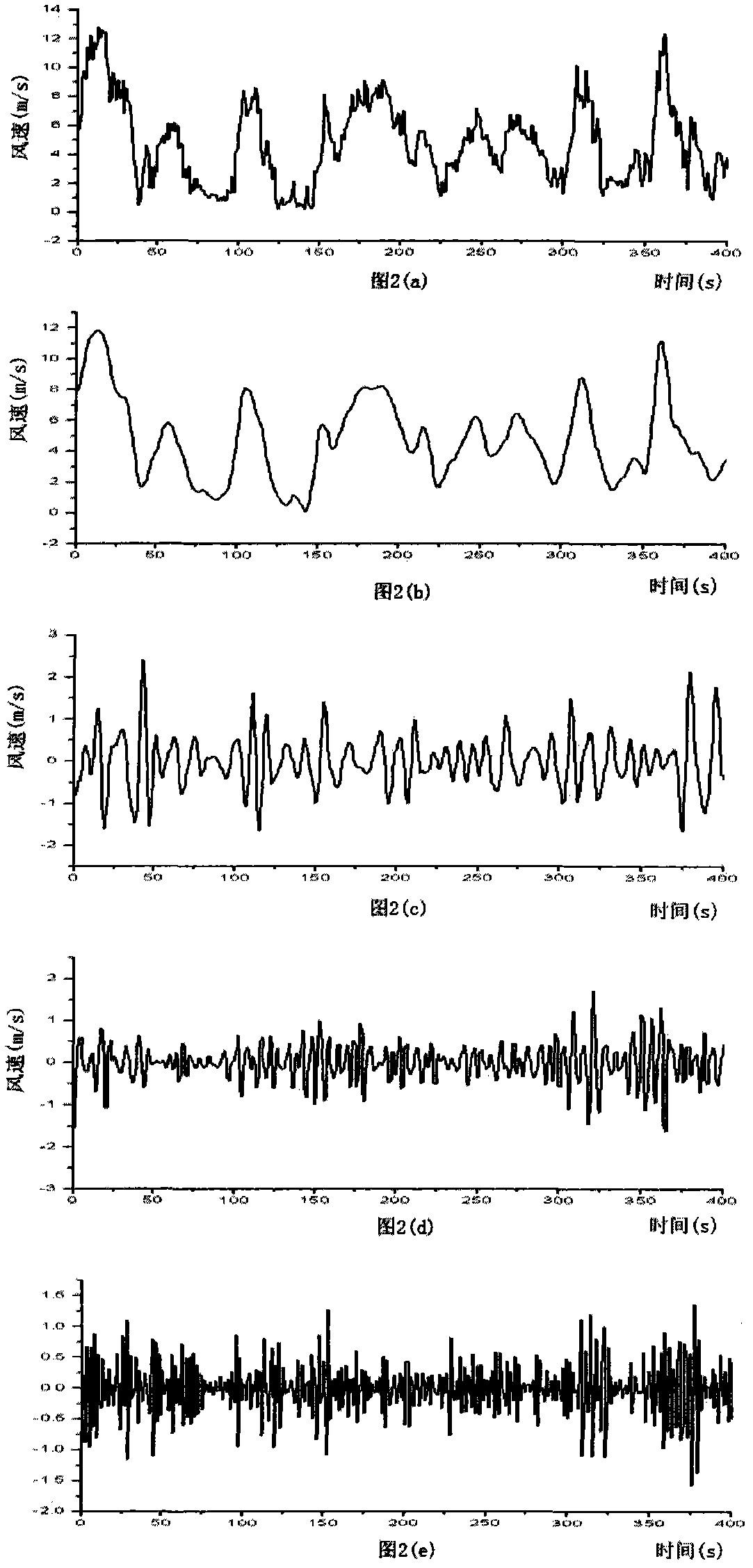

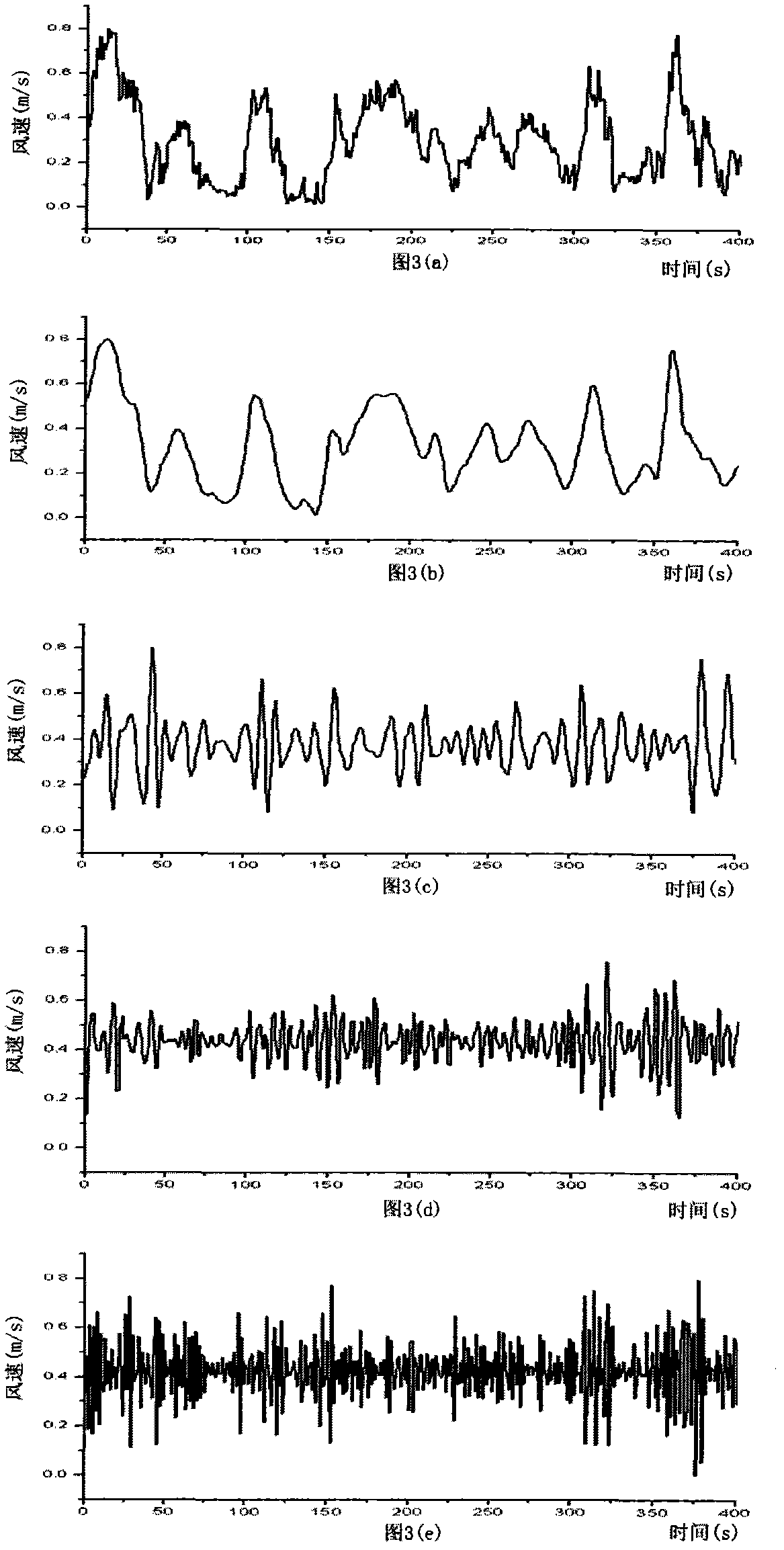

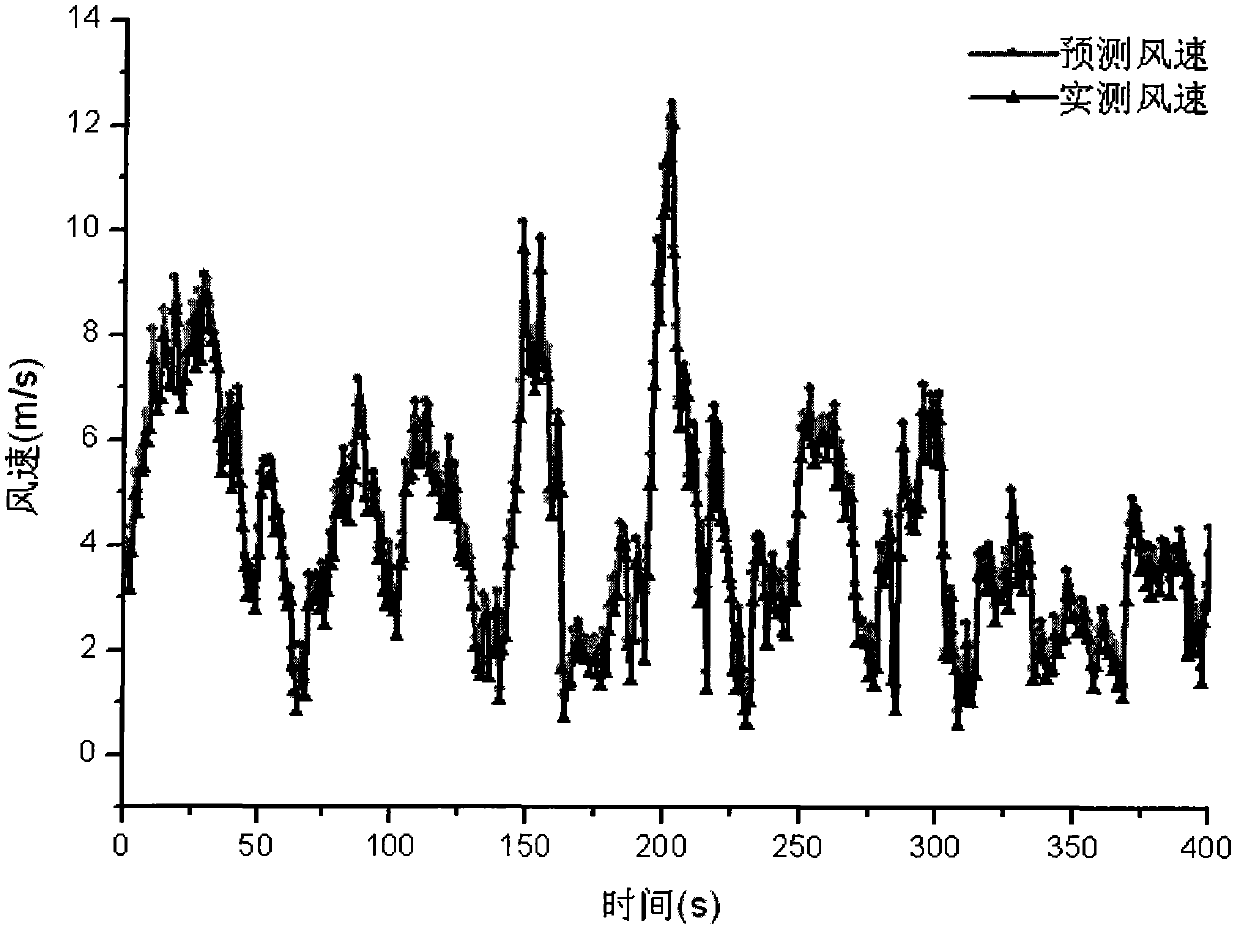

Method for forecasting wind speed of high speed railway line

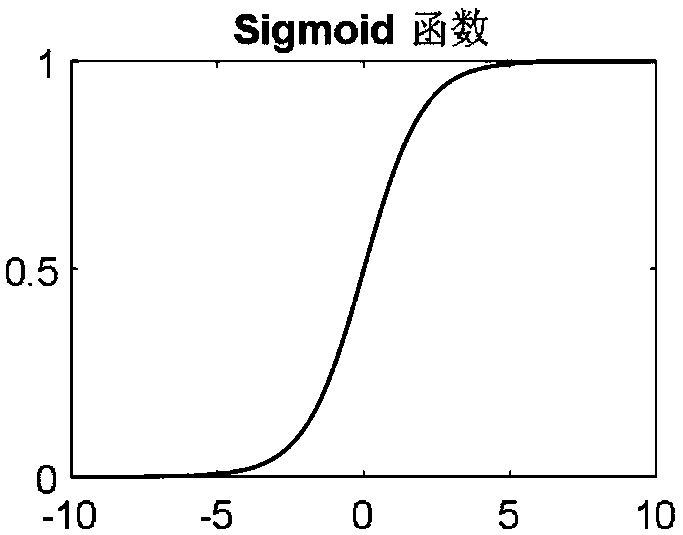

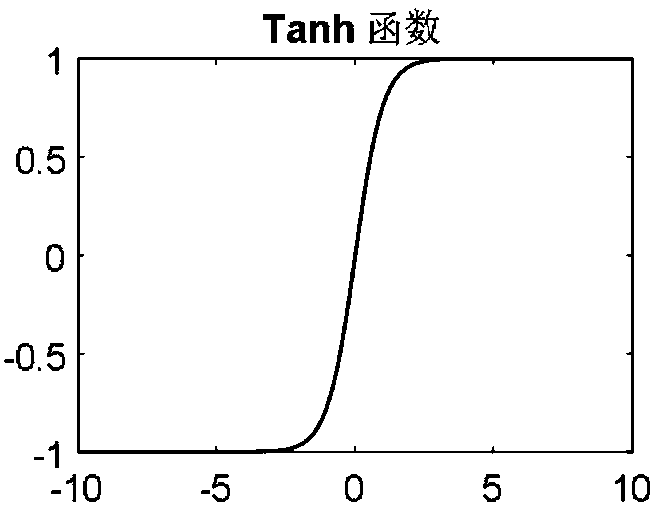

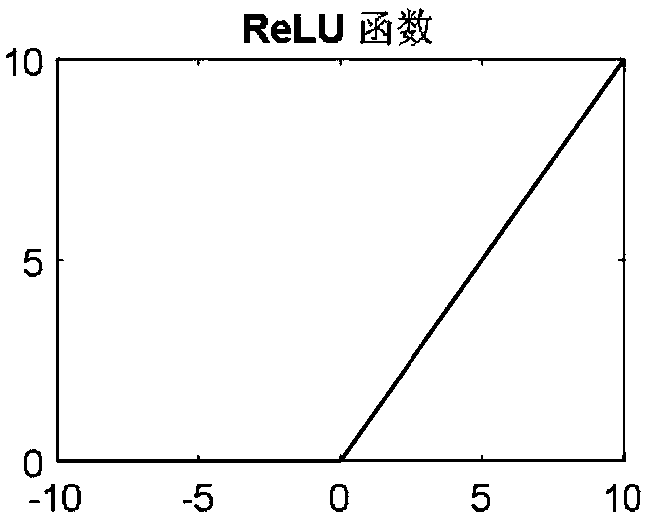

InactiveCN102063641ASolve problems in forecasting methodsGuaranteed accuracyNeural learning methodsDecompositionReconfigurable computing

The invention discloses a method for forecasting wind speed of a high speed railway line by combining a wavelet analysis method and a BP (Back Propagation) neural network. In the invention, actually measured wind speed data is subjected to multilayered decomposition and reconfigurable computing through the decomposition of wavelet analysis and a reconfiguration algorithm so as to decompose an original wind speed sequence into wind speed sequences of different scales. The method comprises the following steps of: normalizing all layers of decomposed wind speed sequences; training the neural network by utilizing an error BP (Back Propagation) learning algorithm until converging; respectively establishing corresponding forecasting models for the wind speed sequences of all the layers for forecasting; and finally, carrying out reconfigurable computing on wind speed forecasting values of all the layers to obtain a forecasting value of the original wind speed sequence. The invention overcomes the defects of low forecasting precision, too large time interval, and the like of a traditional method, realizes the wind speed forecasting of a high speed railway line under various climate types, has the advantages of short computing time and high forecasting precision and provides a scientific reference for formulating operation control regulations of the high speed railway.

Owner:PEKING UNIV

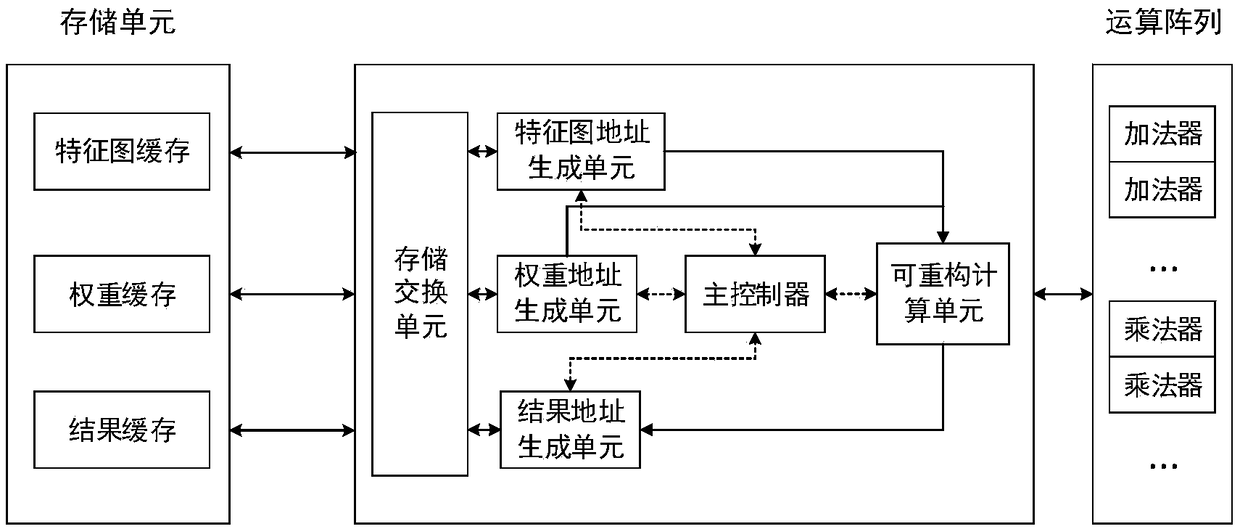

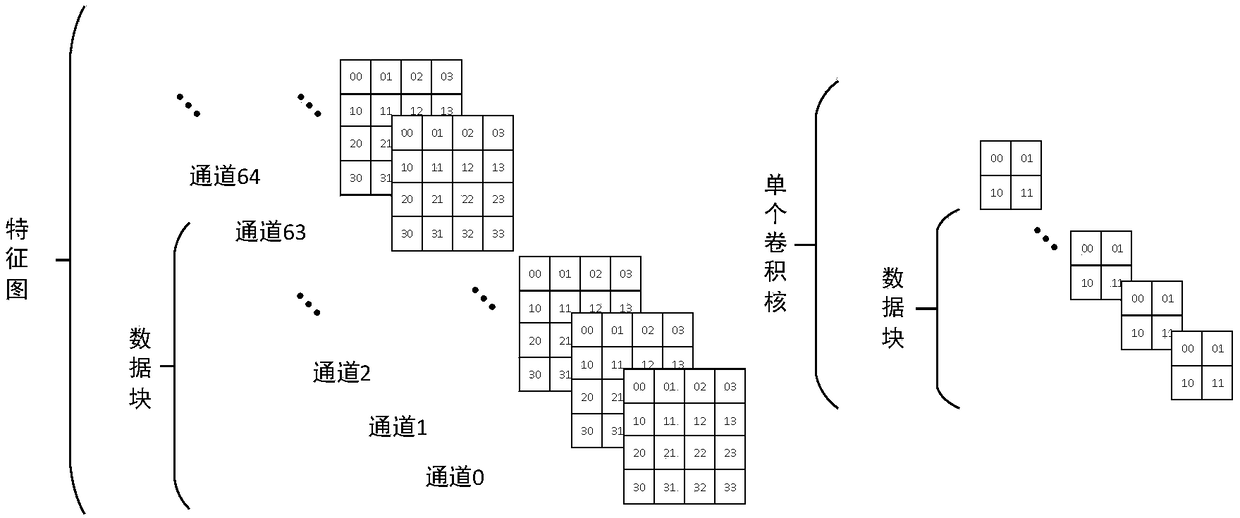

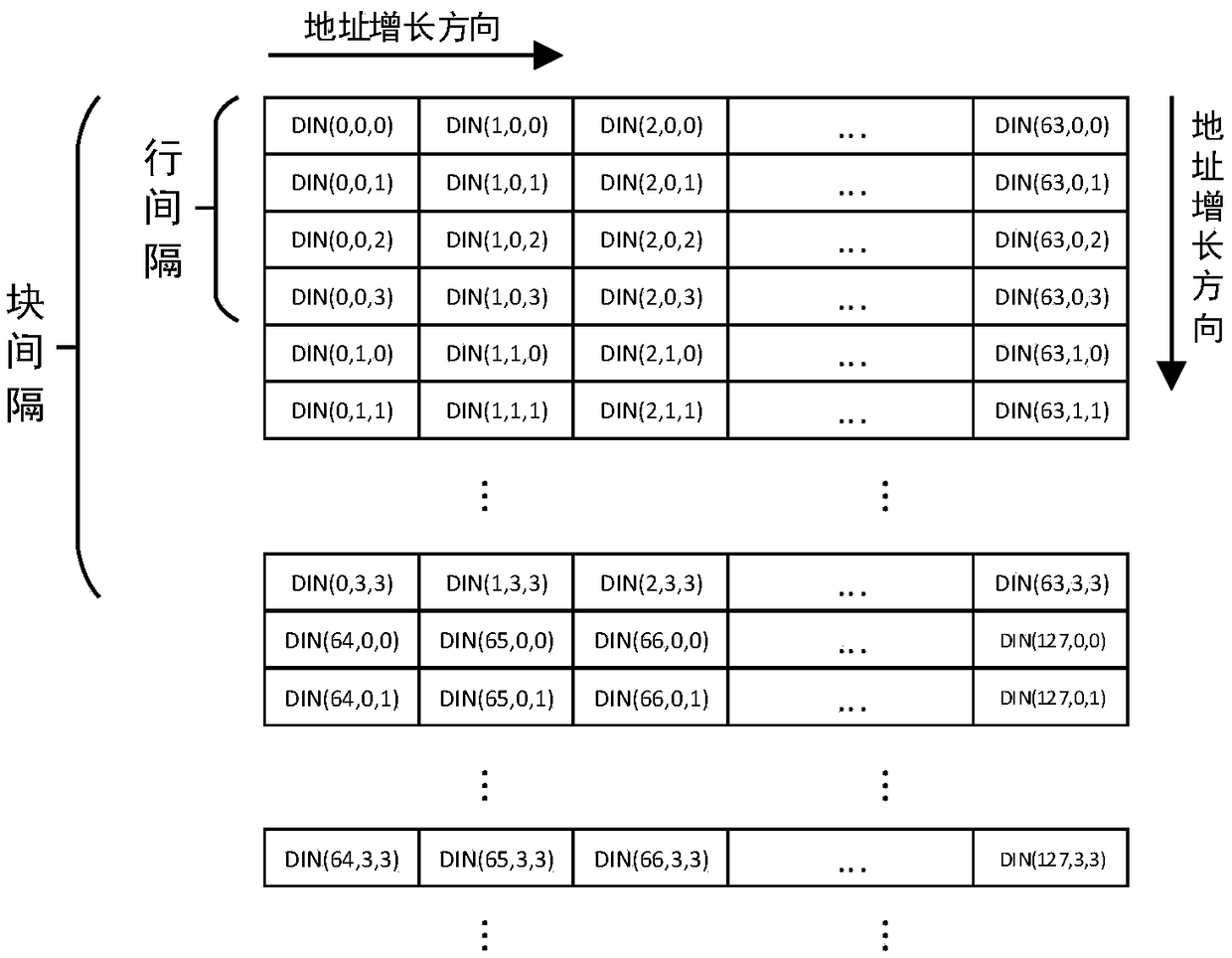

Reconfigurable CNN (Convolutional Neural Network) high concurrency convolution accelerator

ActiveCN108805266AIncrease profitImprove parallelismNeural architecturesAddress generation unitReconfigurable computing

The invention provides a reconfigurable CNN (Convolutional Neural Network) high concurrency convolution accelerator, which comprises a weight address generation unit, a result address generation unit,a reconfigurable calculation unit, a characteristic pattern address generation unit, a master controller and a memory exchange unit, wherein the weight address generation unit generates the address of convolution kernel data in a cache; the result address generation unit generates the address of result data in the cache; the reconfigurable calculation unit can reconfigure a calculation array intotwo multiply-accumulation tree circuits with different particle sizes; the characteristic pattern address generation unit generates the address of characteristic pattern data in the cache; the mastercontroller generates an accumulator resetting signal synchronous with the address, carries out gating on a corresponding circuit in the reconfigurable calculation unit, and generates an interrupt signal for the end of the whole operation; and the memory exchange unit converts an effective characteristic pattern read address and a weight read address into the read operation of a memory unit, and converts an effective result write address and data into a write operation for the memory unit. The accelerator has the beneficial effects that a control part is simplified, the degree of parallelism of a multi-channel convolution operation and memory access efficiency can be greatly improved, and occupied resources are reduced.

Owner:NANJING UNIV

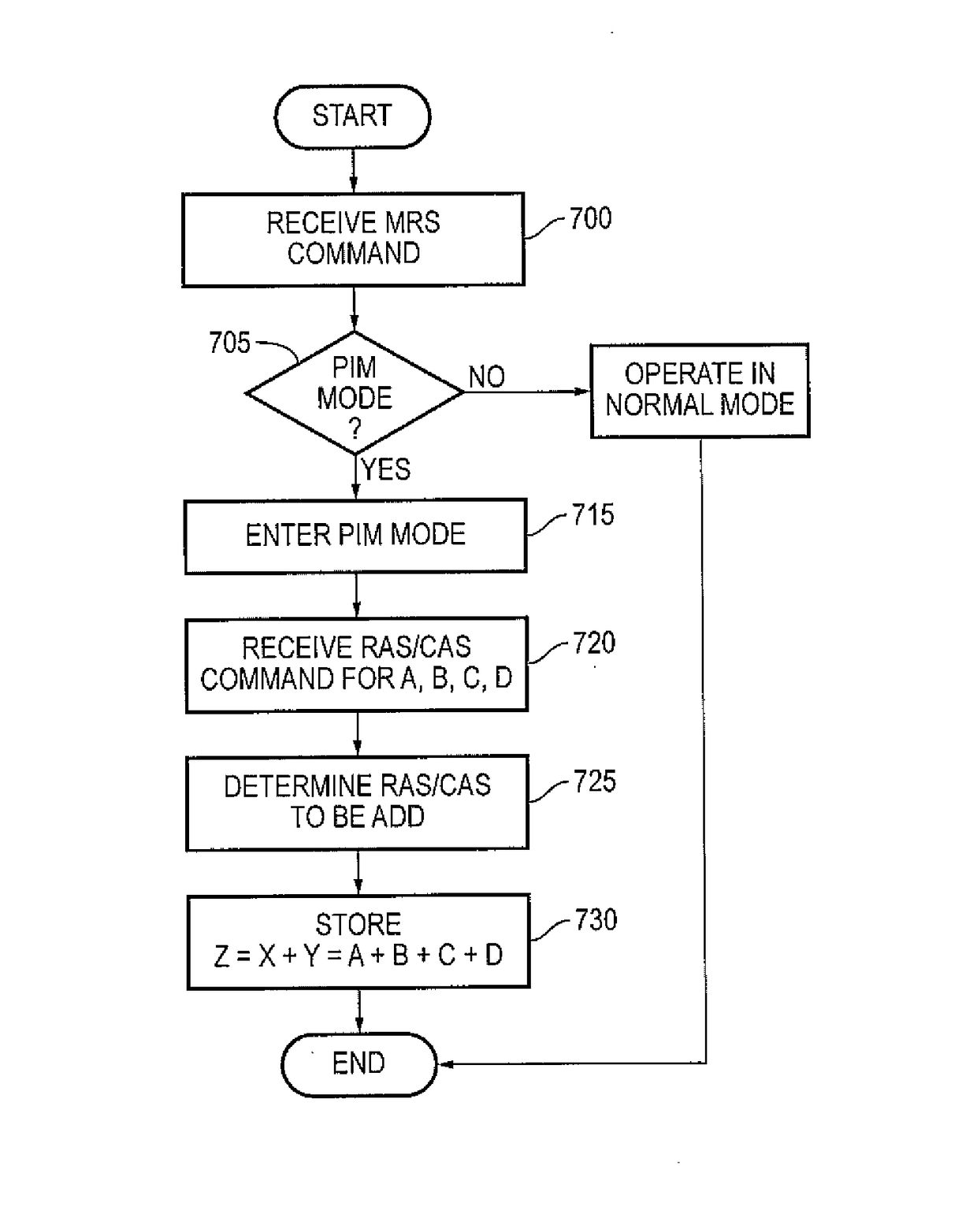

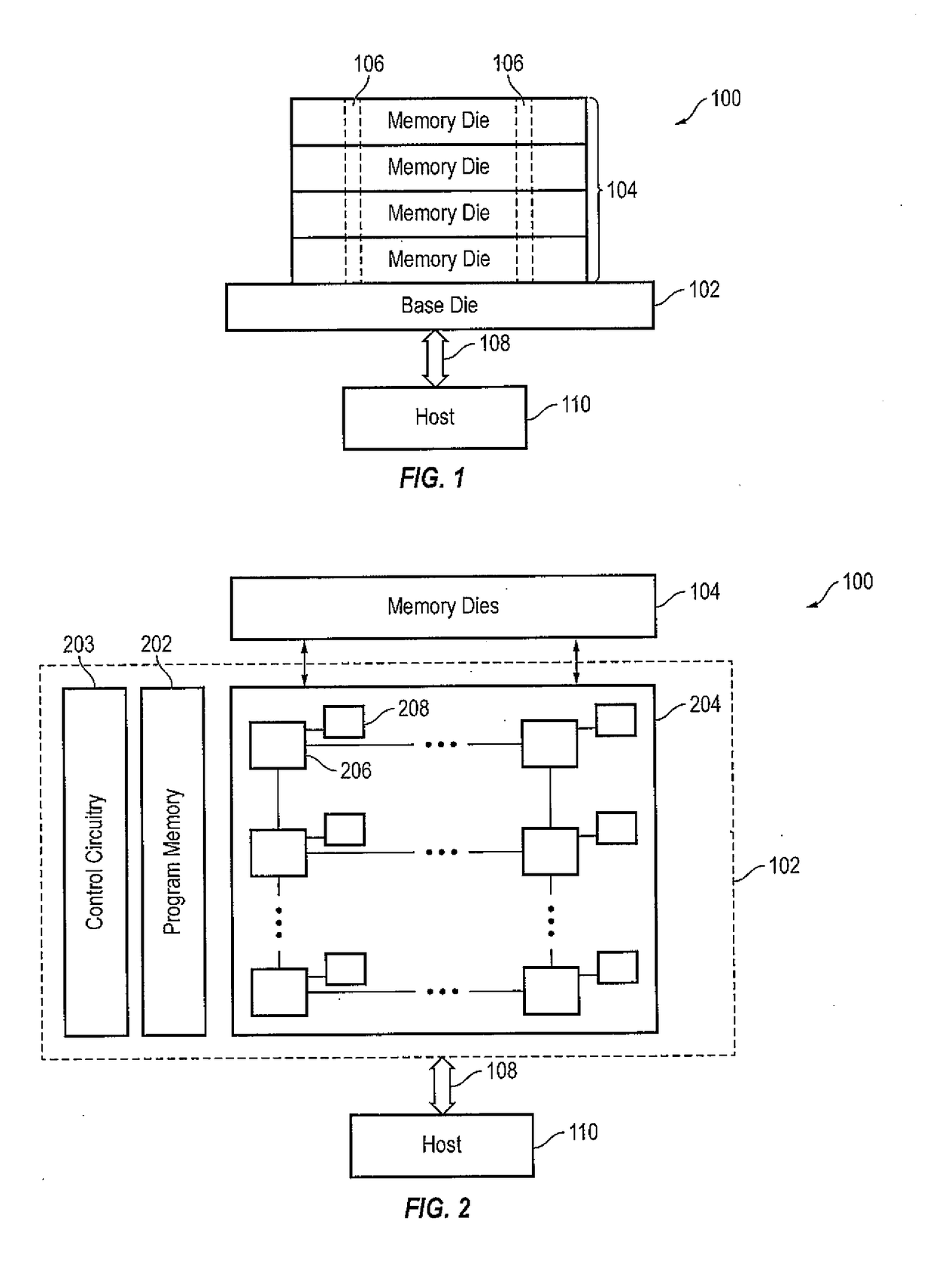

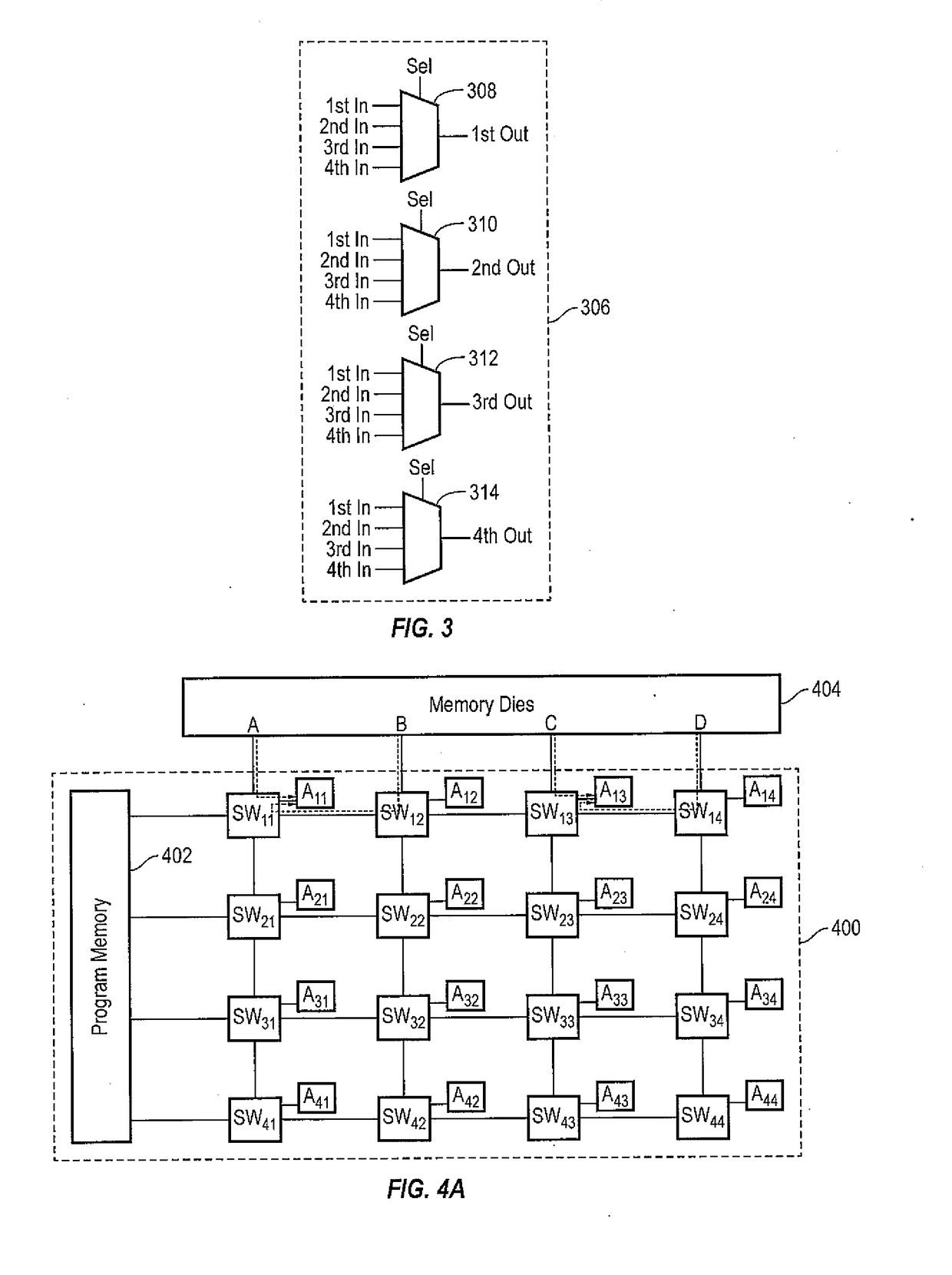

3-d stacked memory with reconfigurable compute logic

ActiveUS20170255390A1Input/output to record carriersDigital computer detailsArithmetic logic unitData stream

Owner:SAMSUNG ELECTRONICS CO LTD

Efficient loop execution for a multi-threaded, self-scheduling reconfigurable computing fabric

Representative apparatus, method, and system embodiments are disclosed for configurable computing. A representative system includes an interconnection network; a processor; and a plurality of configurable circuit clusters. Each configurable circuit cluster includes a plurality of configurable circuits arranged in an array; a synchronous network coupled to each configurable circuit of the array; and an asynchronous packet network coupled to each configurable circuit of the array. A representative configurable circuit includes a configurable computation circuit and a configuration memory having a first, instruction memory storing a plurality of data path configuration instructions to configure a data path of the configurable computation circuit; and a second, instruction and instruction index memory storing a plurality of spoke instructions and data path configuration instruction indices for selection of a master synchronous input, a current data path configuration instruction, and a next data path configuration instruction for a next configurable computation circuit.

Owner:MICRON TECH INC

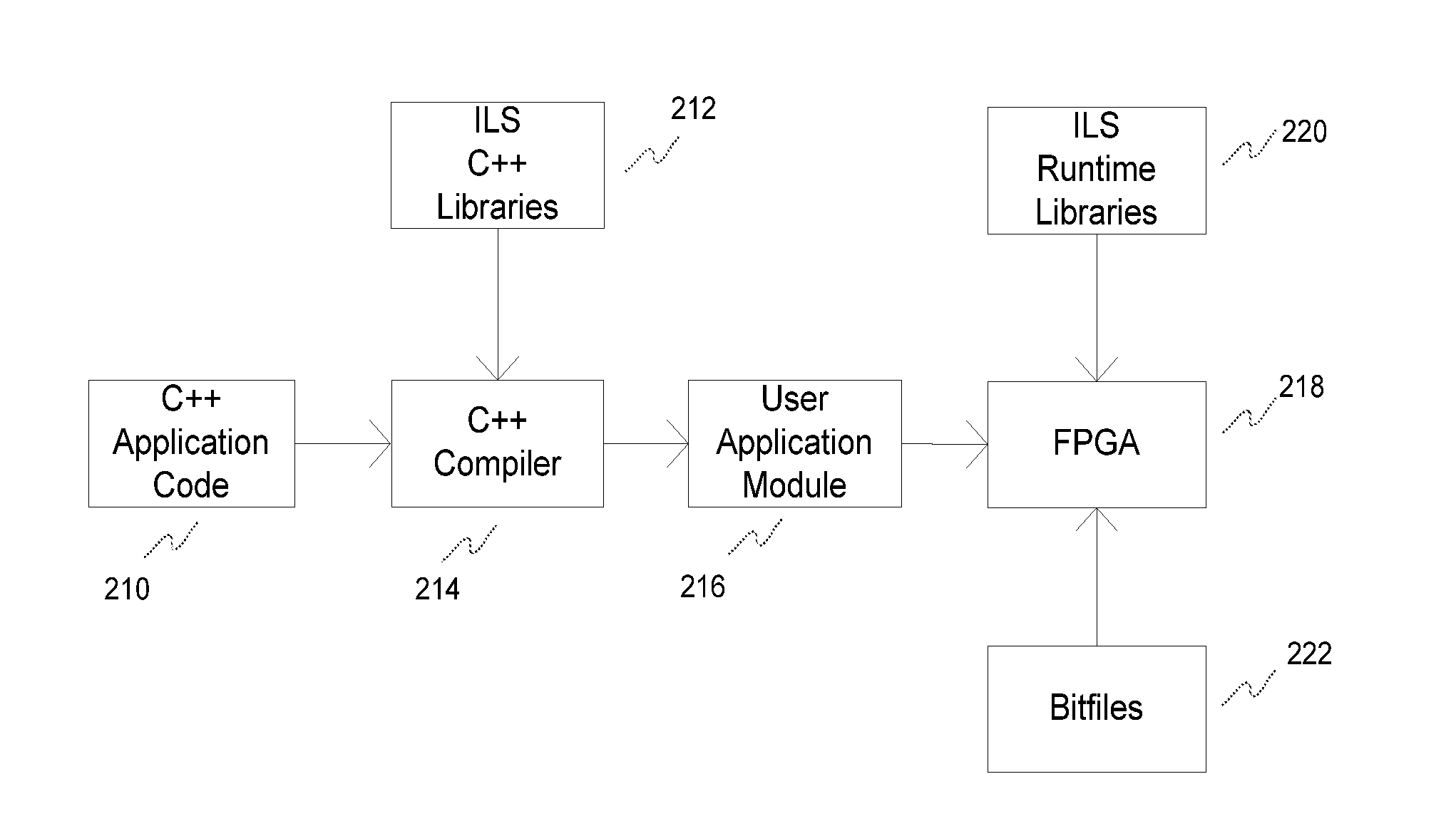

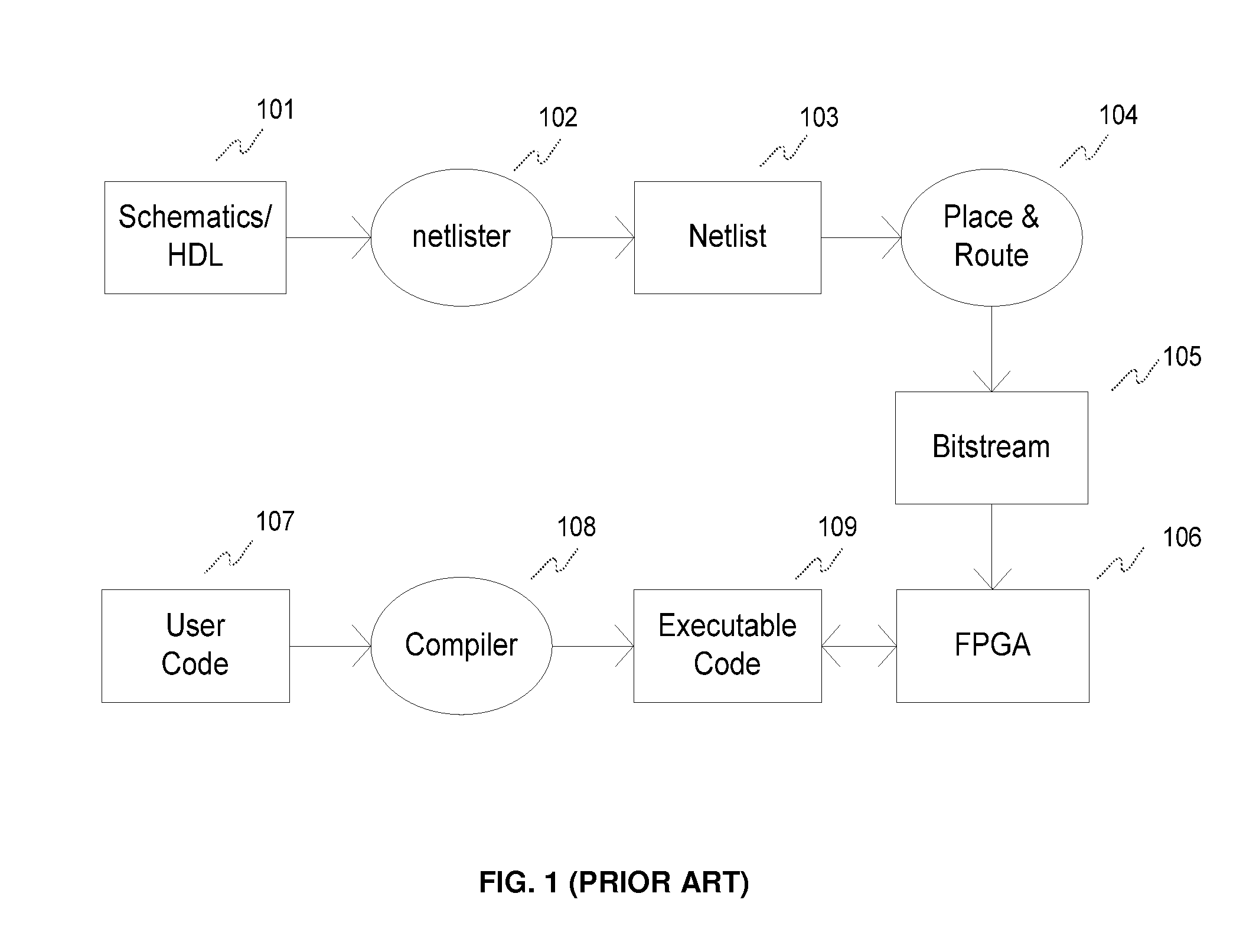

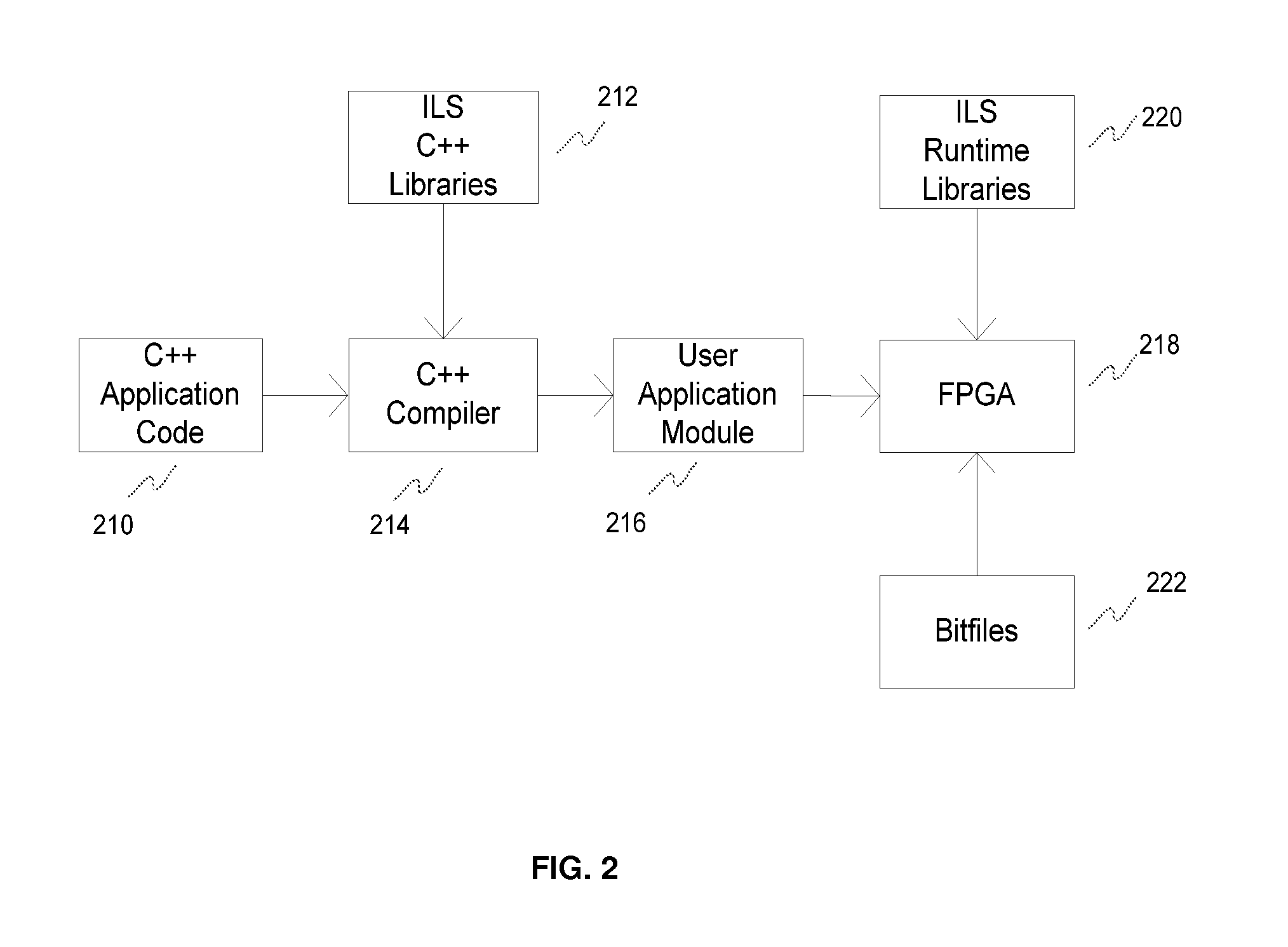

Reconfigurable computing system and method of developing application for deployment on the same

A method of developing an application for deployment on a computing system. The computing system includes a processor and a reconfigurable logic in communication with the processor for configuration thereby. The method includes programming the processor with hardware-neutral instructions in a high-level software programming language. The instructions are representative of an application configured to execute at least partially on the reconfigurable logic. The method further includes instantiating elements from a library of elements compatible with the high-level programming language; and constructing programmatically a generic data graph representative of the application to be mapped at least partially onto the reconfigurable logic. The generic data graph is expressed as streams of records flowing between operators. A computing system is also disclosed. By presenting an instruction level streaming data processing model that expresses an application as operators and data flows, the invention provide several advantages such as design portability.

Owner:ISHEBABI HAROLD

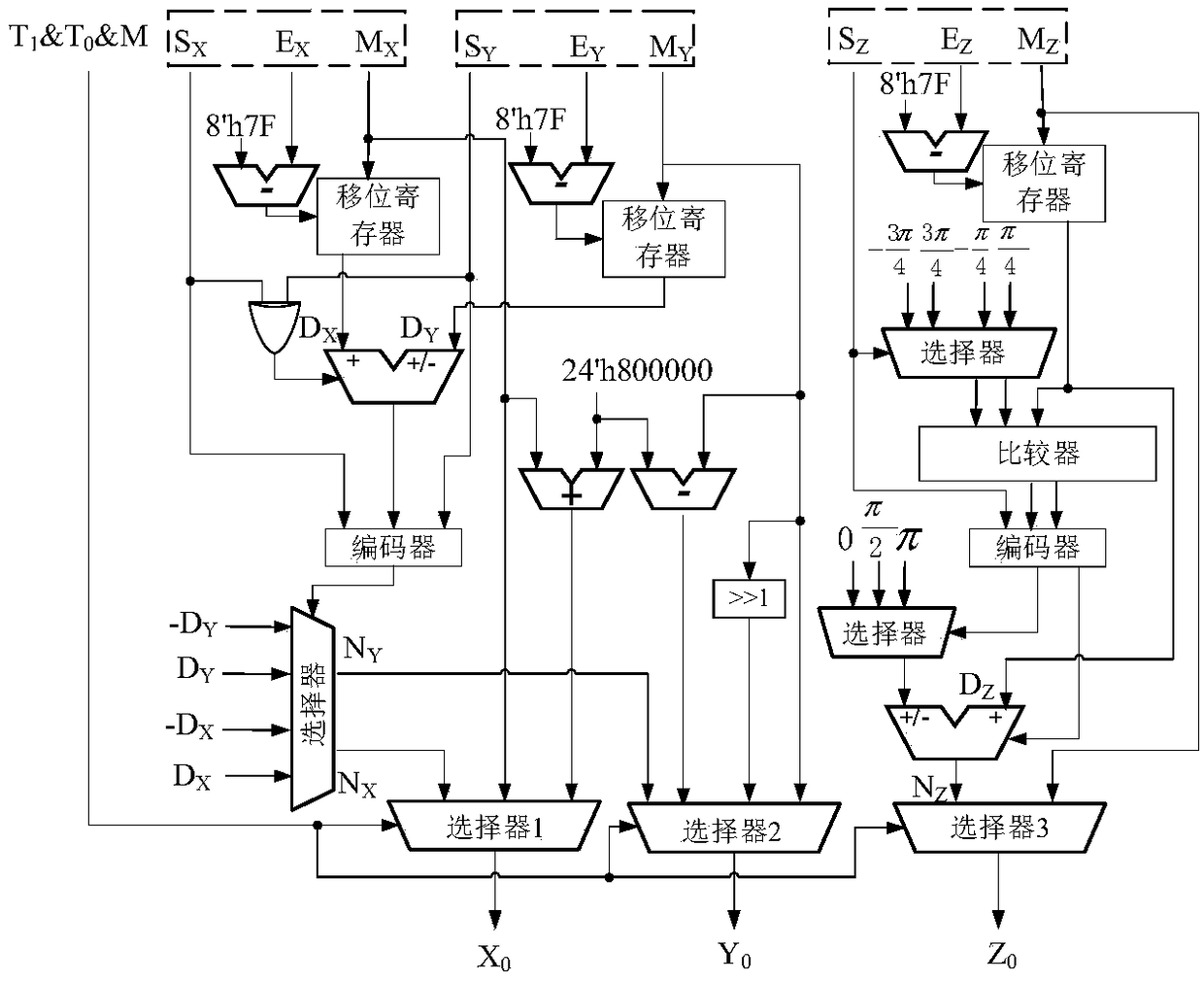

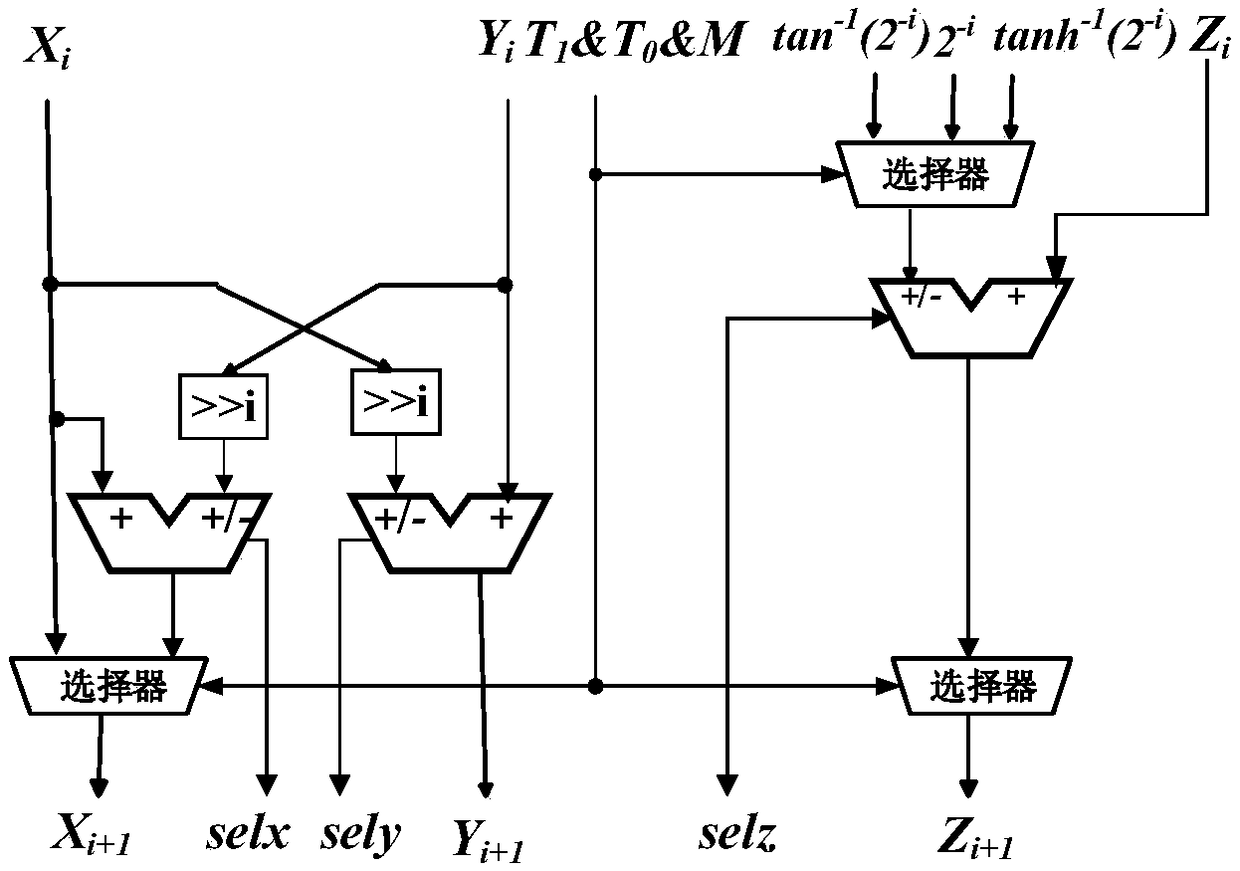

Reconfigurable floating-point operation device based on CORDIC algorithm

ActiveCN109062540AExtended region of convergenceReduce clock cyclesDigital data processing detailsReconfigurable antennaComputer module

A reconfigurable floating-point arithmetic device based on CORDIC algorithm comprises a preprocessing module for completing input data from IEEE-754 standard, and maps it into the convergence region;a series-parallel hybrid reconfigurable CORDIC iterative unit module. The iterative operation part of CORDIC algorithm is composed of two parts: rotation modules A and B, wherein, the rotation moduleA is used to realize serial pipeline structure to maximize module reuse, the rotation module B is based on parallel prediction method of rotation direction and adopts tree adder structure to realize parallel structure in rotation mode; in the post-processing module, the corresponding result output is selected according to the encoded signal of the pre-processing module, and the mantissa normalization processing is completed to output the single-precision floating-point data format calculation result. The invention has the characteristics of simple principle, low delay, high precision and low hardware cost.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

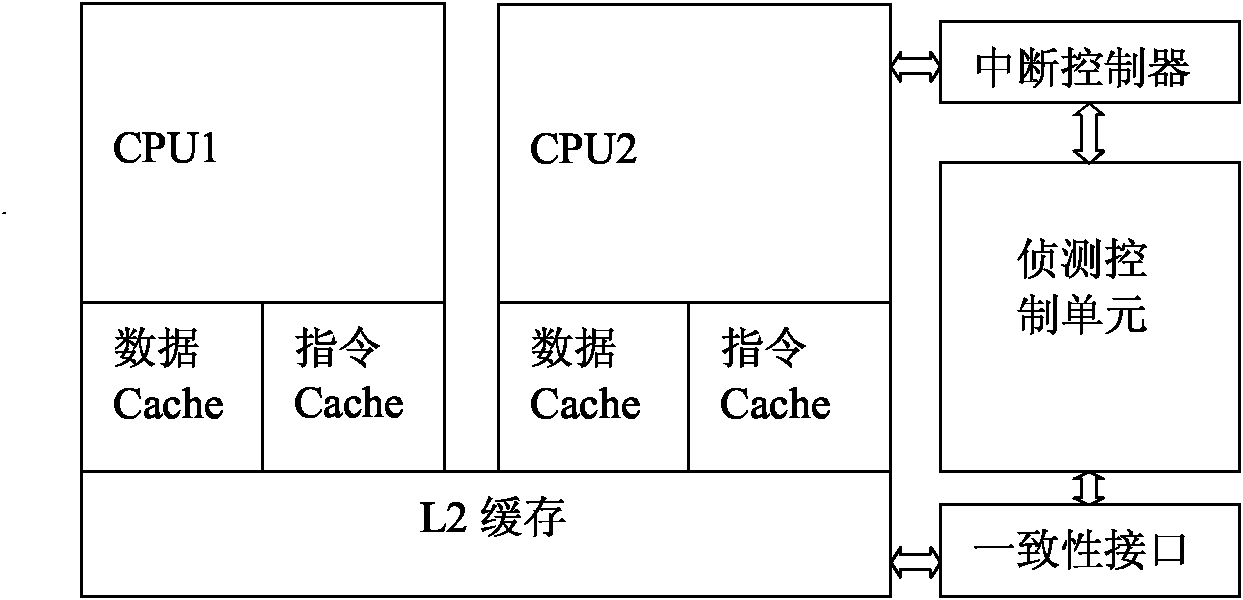

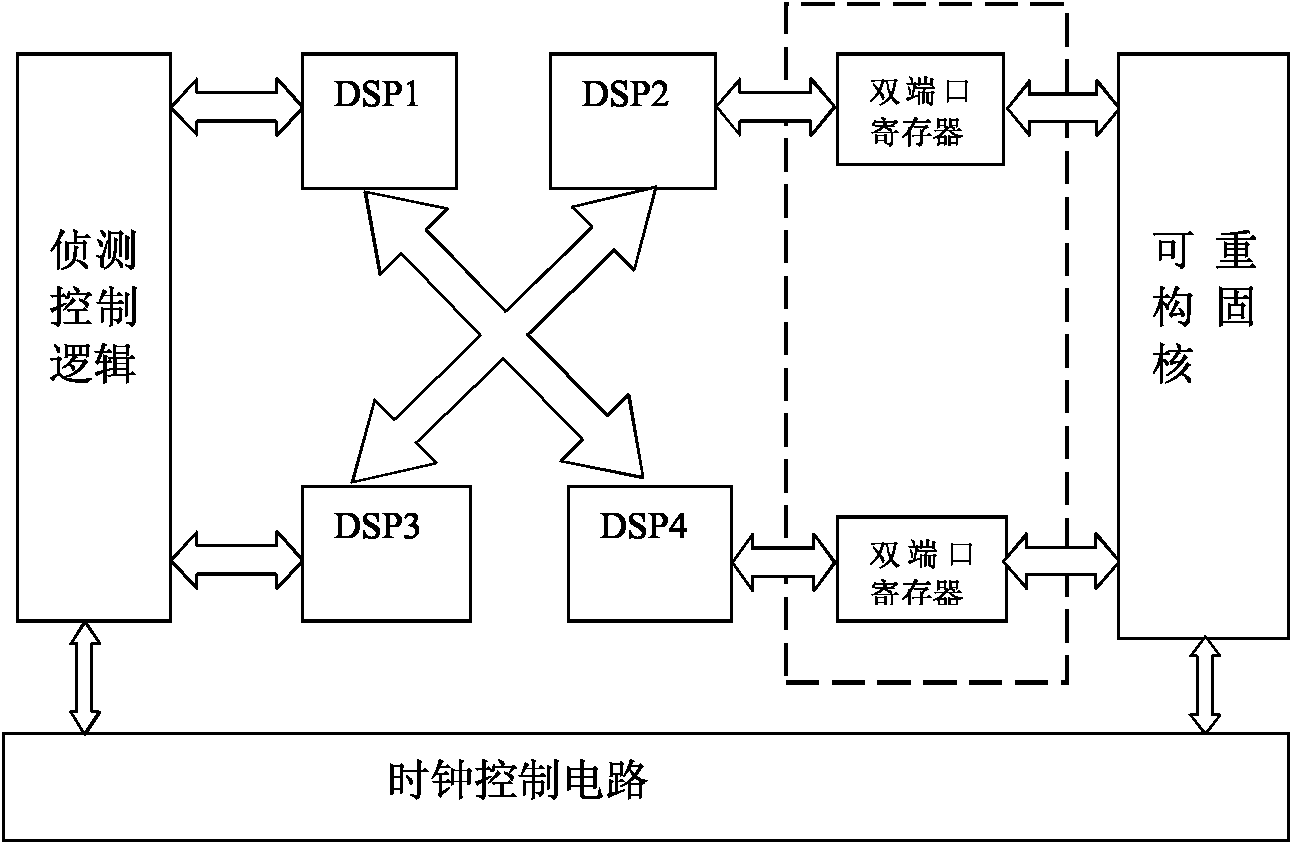

Multi-kernel DSP reconfigurable special integrated circuit system

InactiveCN102073481AExcellent scheduling management abilityPowerful digital signal processing capabilityConcurrent instruction executionArchitecture with single central processing unitData interfaceIntegrated circuit

The invention discloses a multi- kernel DSP (digital signal processing) reconfigurable special integrated circuit system, belonging to the technical field of digital signal processing, and comprising: an internal bus, and a control processor kernel, an enhanced direct memory access, an input and output cache, a DSP multi-kernel array, a configuration information cache, a reconfigurable logic unitand an internal cache which are all connected with the internal bus, wherein the DSP multi-kernel array is connected with the configuration information cache and the reconfigurable logic unit througha reconfigurable on-chip interconnection mode and transmits the configuration information and reconfigurable information. The multi-kernel DSP reconfigurable special integrated circuit system can be well combined with an IP multiplexing technology of SoC (System on a Chip), a multi-kernel DSP reconfigurable ASIC (Application Specific Integrated Circuit) takes the DSP multi-kernel array as a core,and simultaneously, integrates IP modules, such as a logic control, an embedded memory, a data interface and the like, thereby being capable of flexibly and efficiently implement large scale computing.

Owner:SHANGHAI JIAO TONG UNIV +1

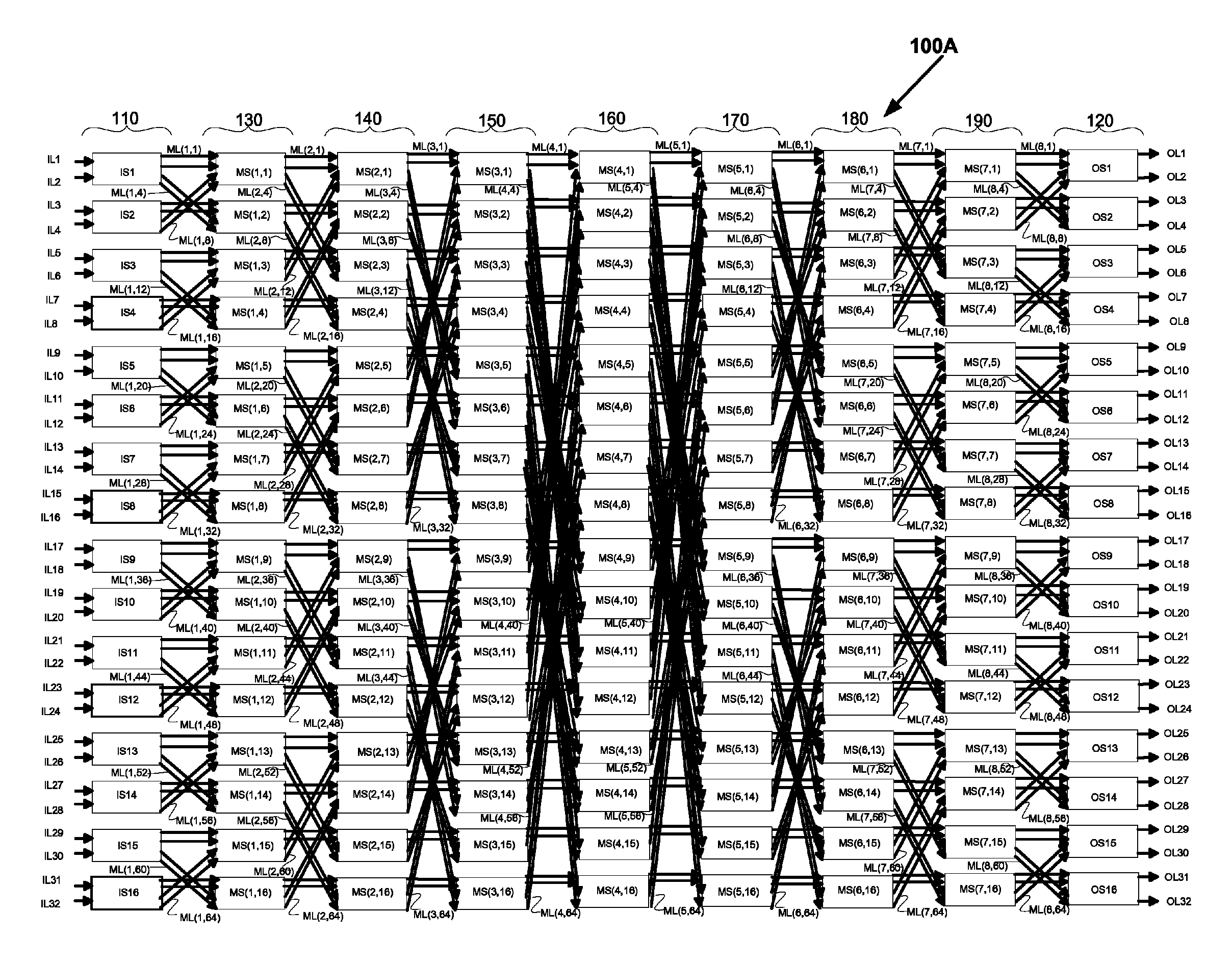

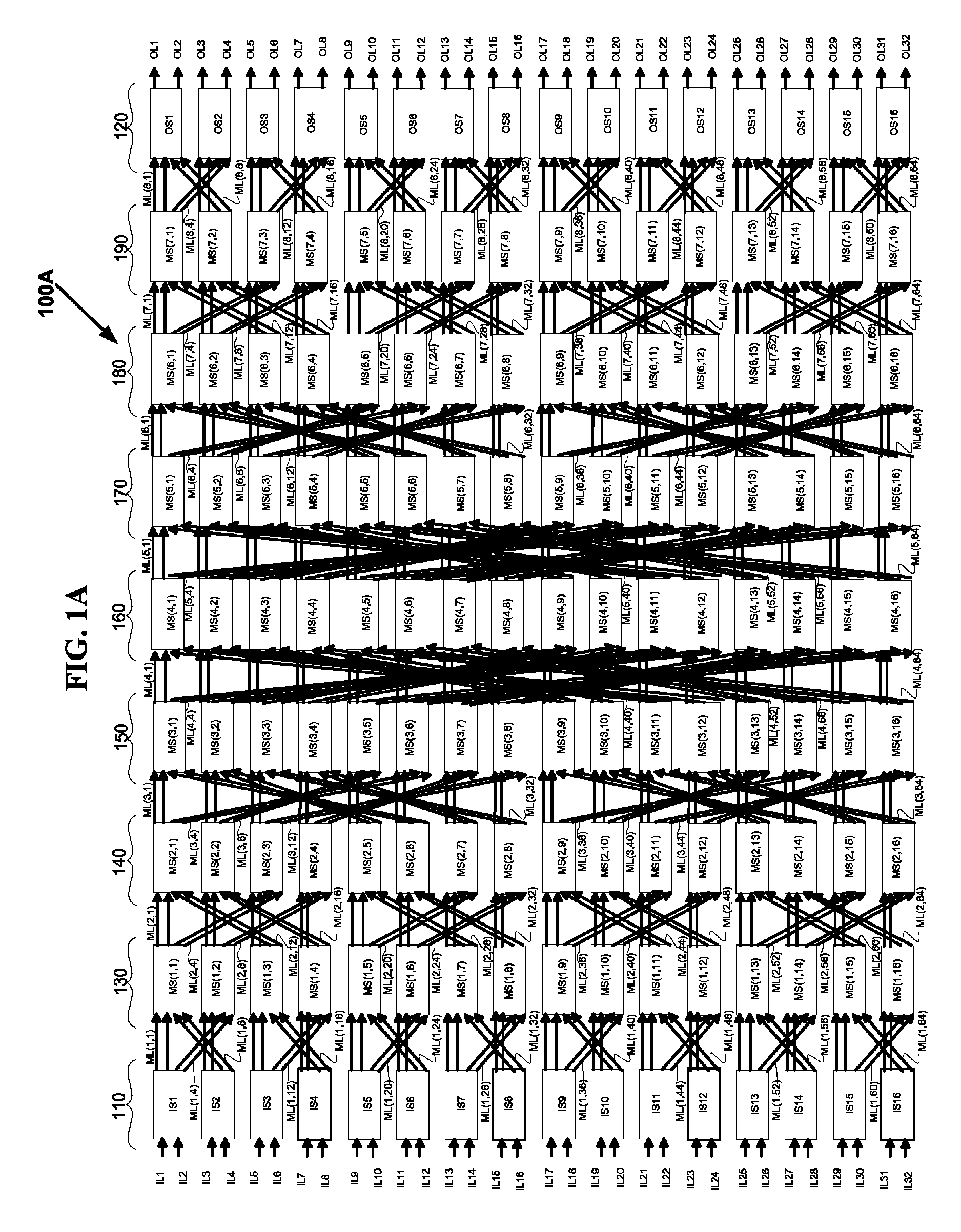

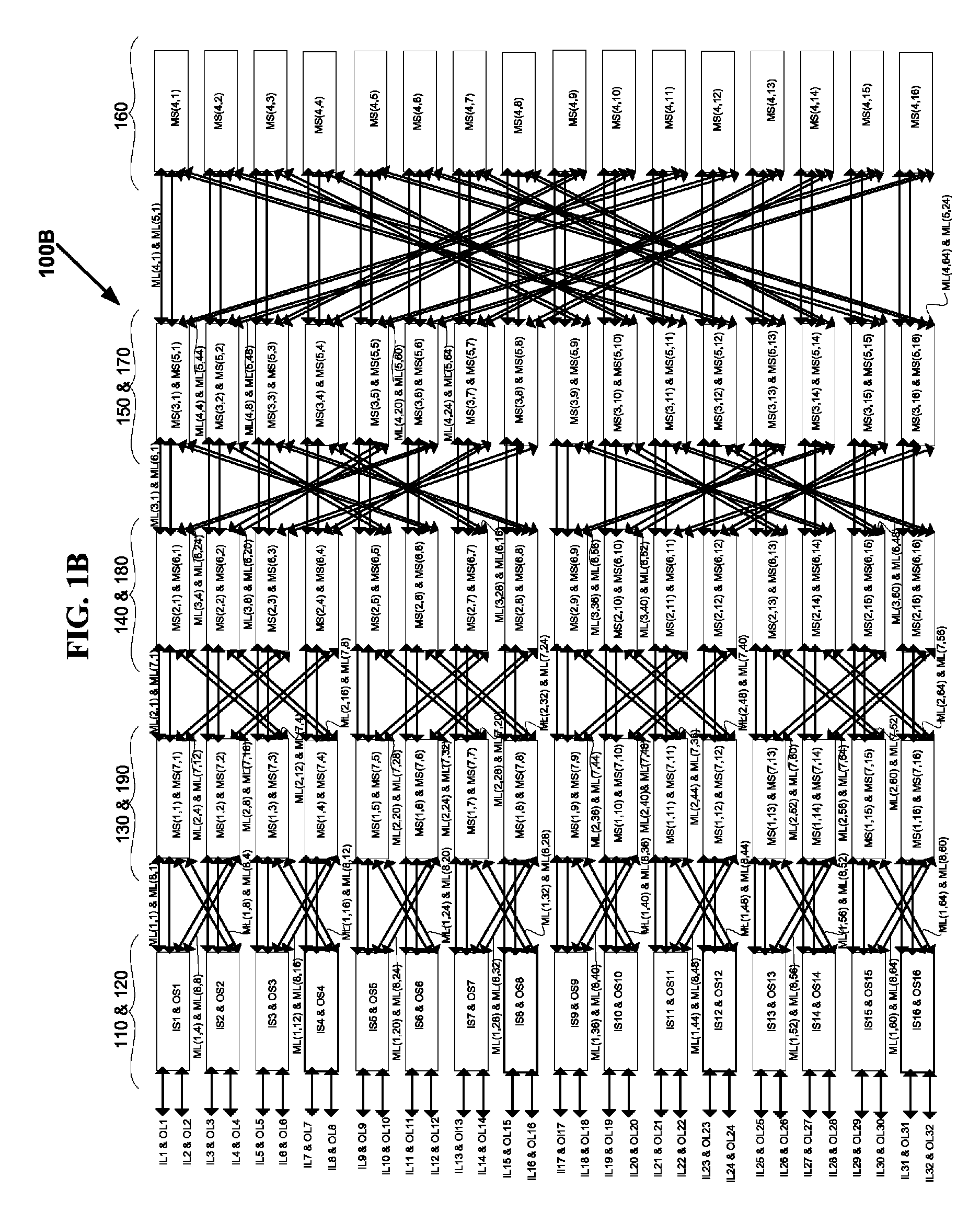

VLSI layouts of fully connected generalized and pyramid networks with locality exploitation

ActiveUS8898611B2Low pour pointReduce signal delayMultiplex system selection arrangementsGeometric CADCross-linkMulti link

VLSI layouts of generalized multi-stage and pyramid networks for broadcast, unicast and multicast connections are presented using only horizontal and vertical links with spacial locality exploitation. The VLSI layouts employ shuffle exchange links where outlet links of cross links from switches in a stage in one sub-integrated circuit block are connected to inlet links of switches in the succeeding stage in another sub-integrated circuit block so that said cross links are either vertical links or horizontal and vice versa. Furthermore the shuffle exchange links are employed between different sub-integrated circuit blocks so that spacially nearer sub-integrated circuit blocks are connected with shorter links compared to the shuffle exchange links between spacially farther sub-integrated circuit blocks. In one embodiment the sub-integrated circuit blocks are arranged in a hypercube arrangement in a two-dimensional plane. The VLSI layouts exploit the benefits of significantly lower cross points, lower signal latency, lower power and full connectivity with significantly fast compilation.The VLSI layouts with spacial locality exploitation presented are applicable to generalized multi-stage and pyramid networks, generalized folded multi-stage and pyramid networks, generalized butterfly fat tree and pyramid networks, generalized multi-link multi-stage and pyramid networks, generalized folded multi-link multi-stage and pyramid networks, generalized multi-link butterfly fat tree and pyramid networks, generalized hypercube networks, and generalized cube connected cycles networks for speedup of s≧1. The embodiments of VLSI layouts are useful in wide target applications such as FPGAs, CPLDs, pSoCs, ASIC placement and route tools, networking applications, parallel & distributed computing, and reconfigurable computing.

Owner:KONDA TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com