Patents

Literature

232 results about "Speedup" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer architecture, speedup is a number that measures the relative performance of two systems processing the same problem. More technically, it is the improvement in speed of execution of a task executed on two similar architectures with different resources. The notion of speedup was established by Amdahl's law, which was particularly focused on parallel processing. However, speedup can be used more generally to show the effect on performance after any resource enhancement.

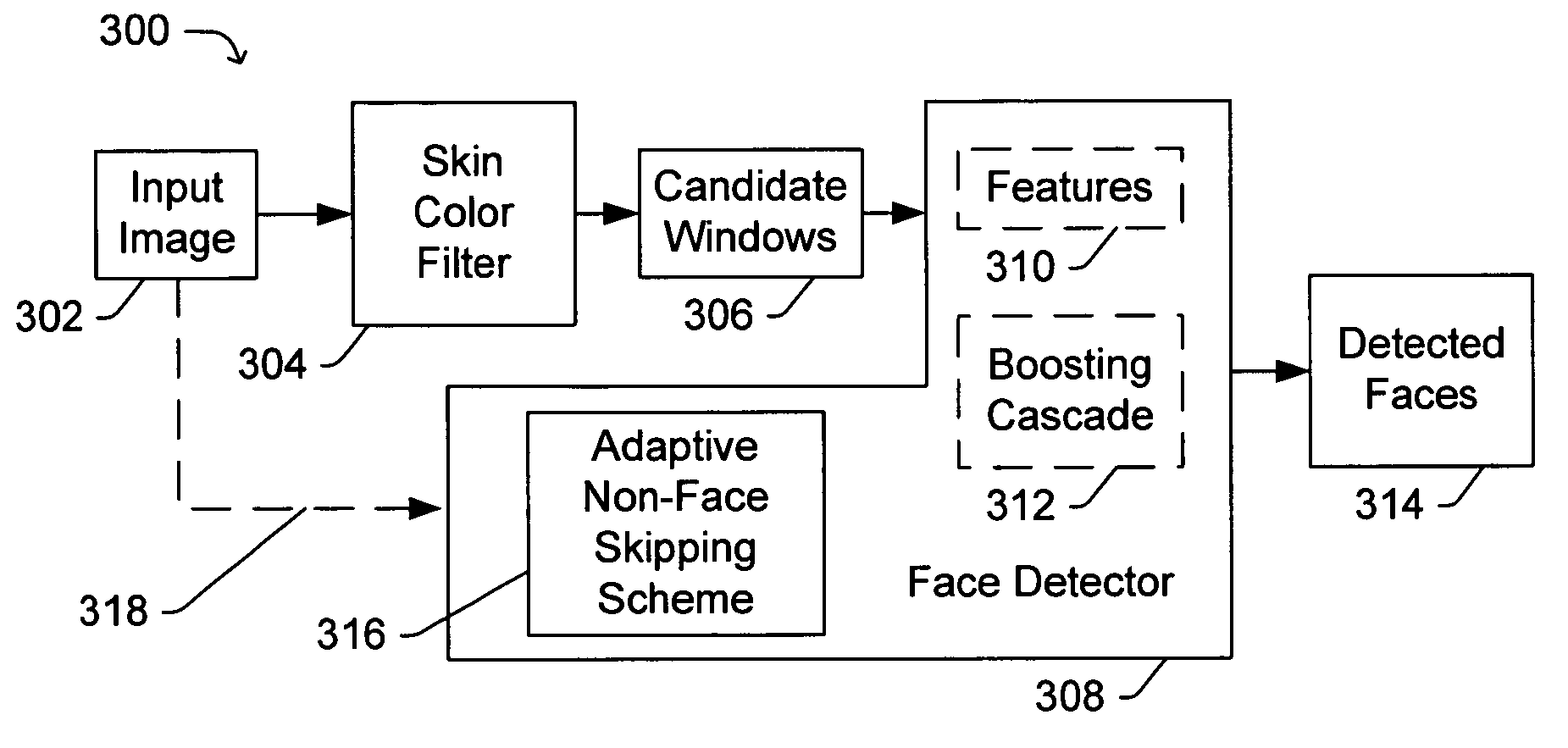

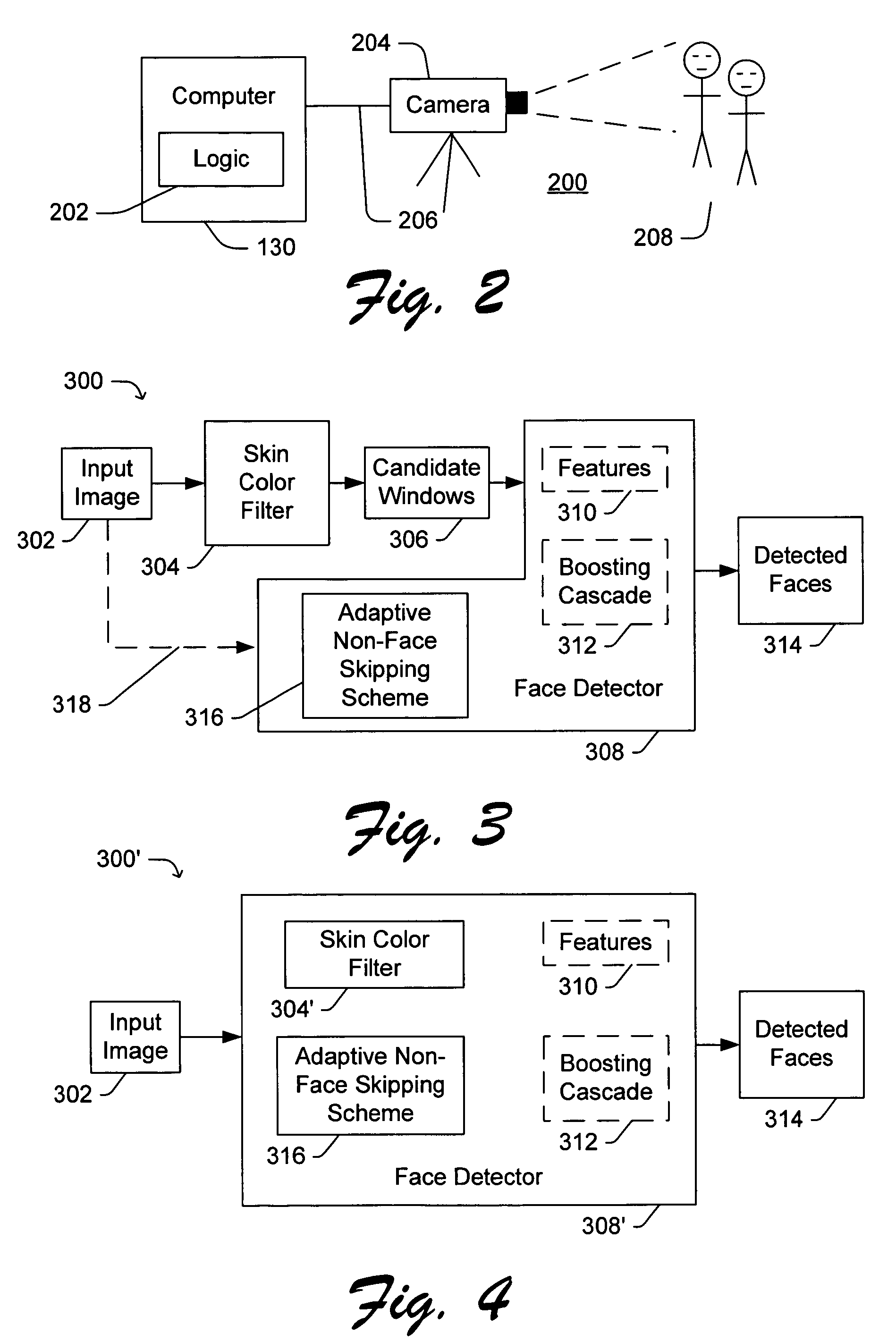

Speedup of face detection in digital images

ActiveUS7190829B2Increase face detection speedReduce in quantityCharacter and pattern recognitionFace detectionSkin color

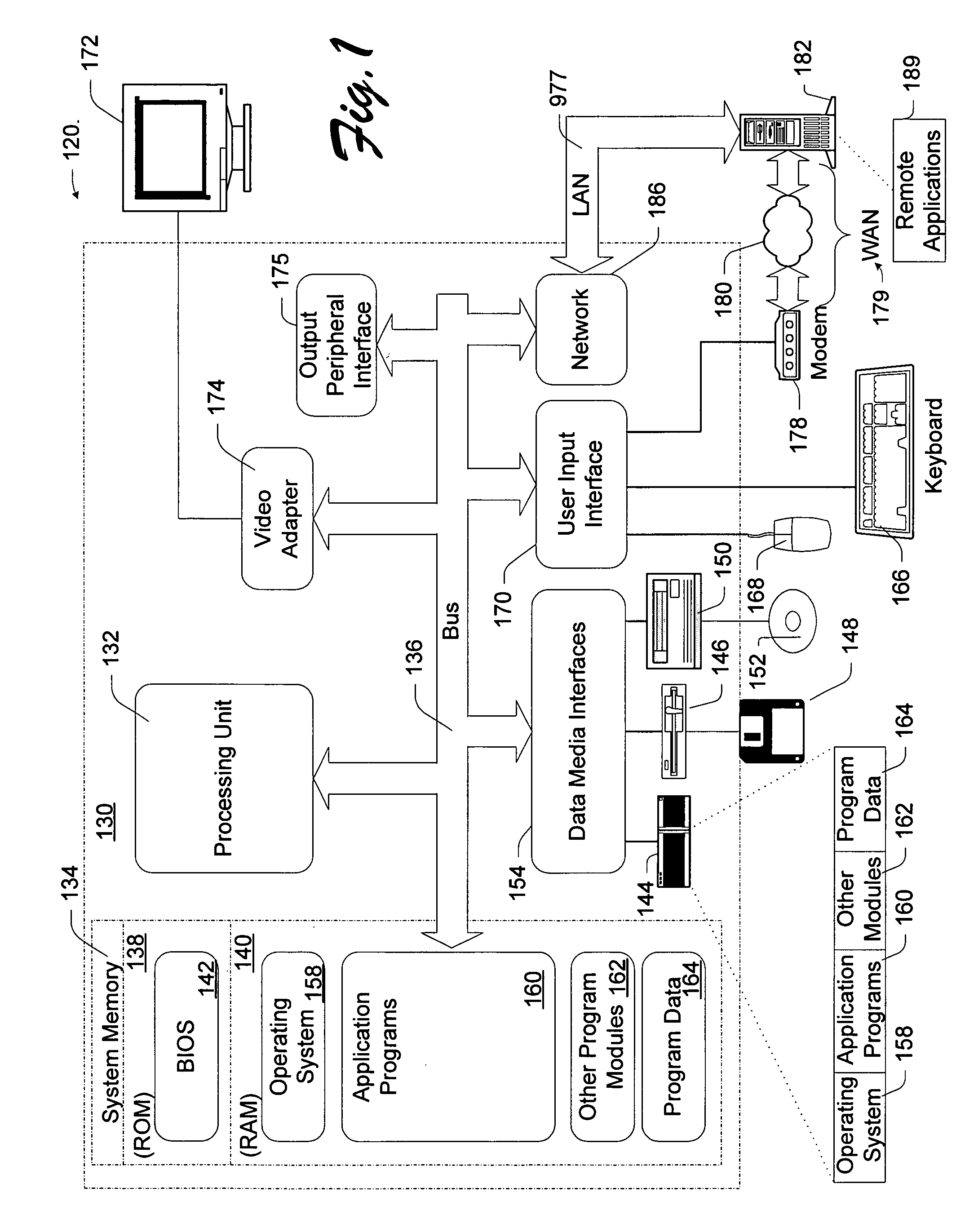

Improved methods and apparatuses are provided for use in face detection. The methods and apparatuses significantly reduce the number of candidate windows within a digital image that need to be processed using more complex and / or time consuming face detection algorithms. The improved methods and apparatuses include a skin color filter and an adaptive non-face skipping scheme.

Owner:MICROSOFT TECH LICENSING LLC

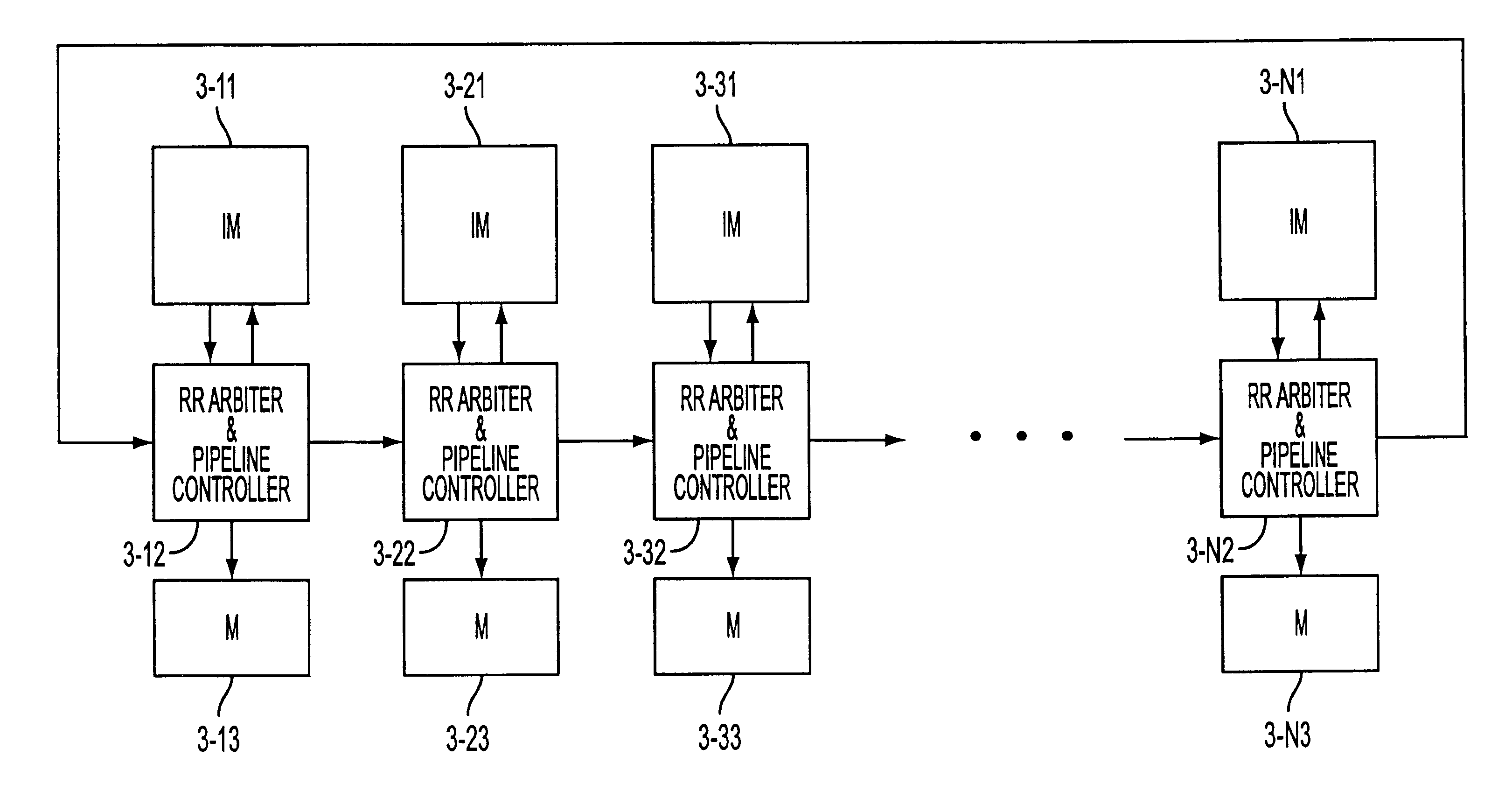

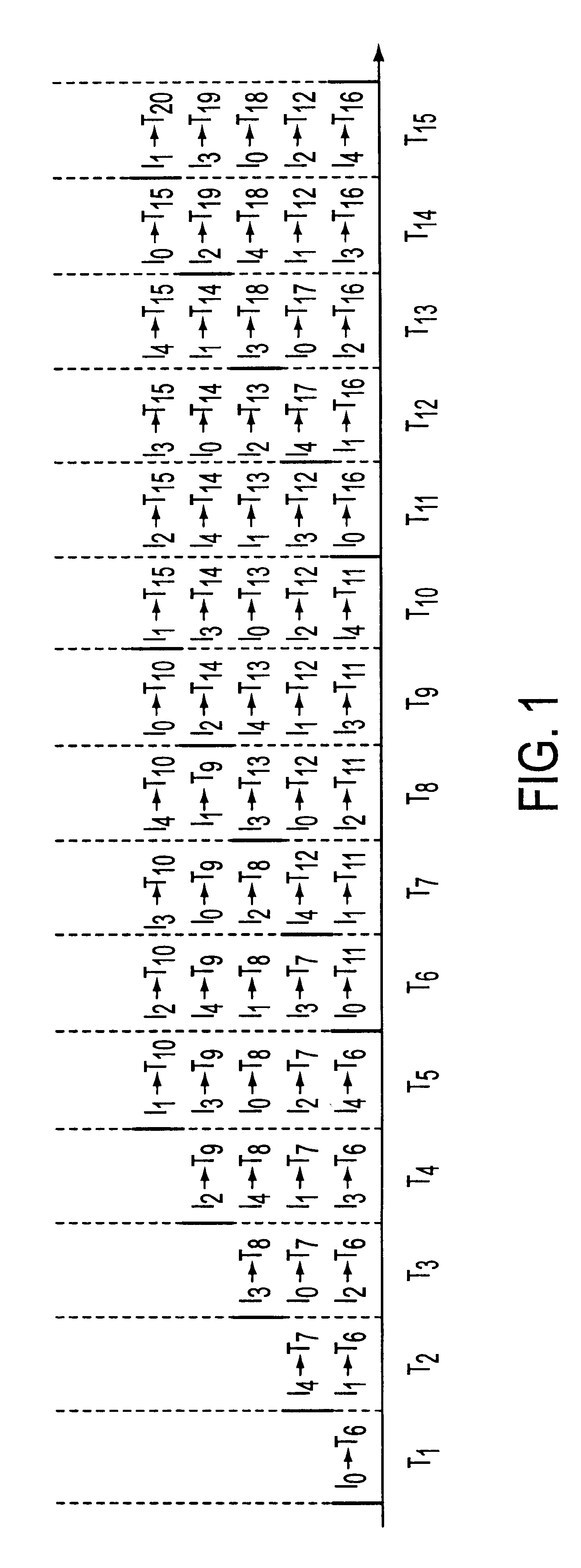

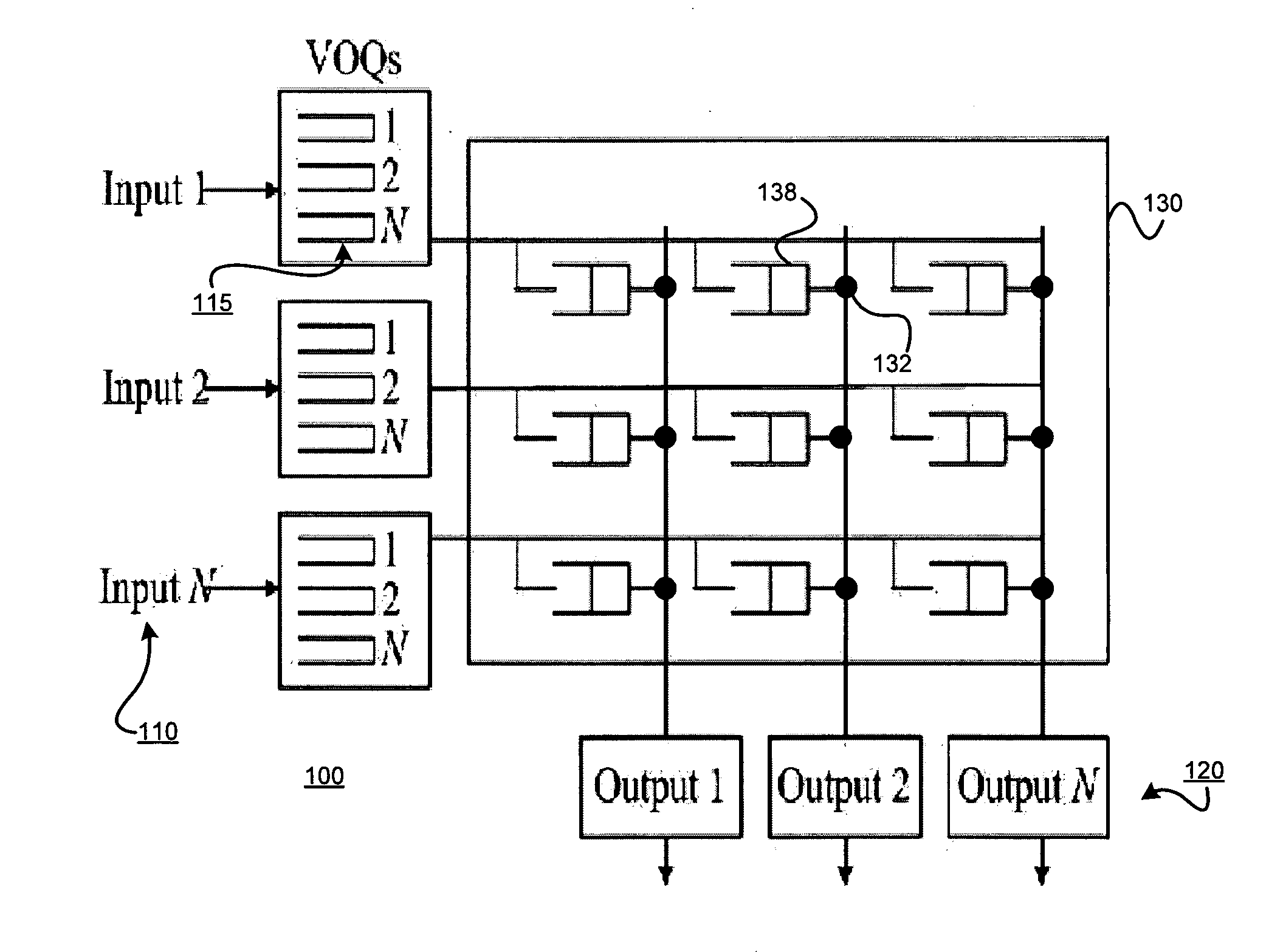

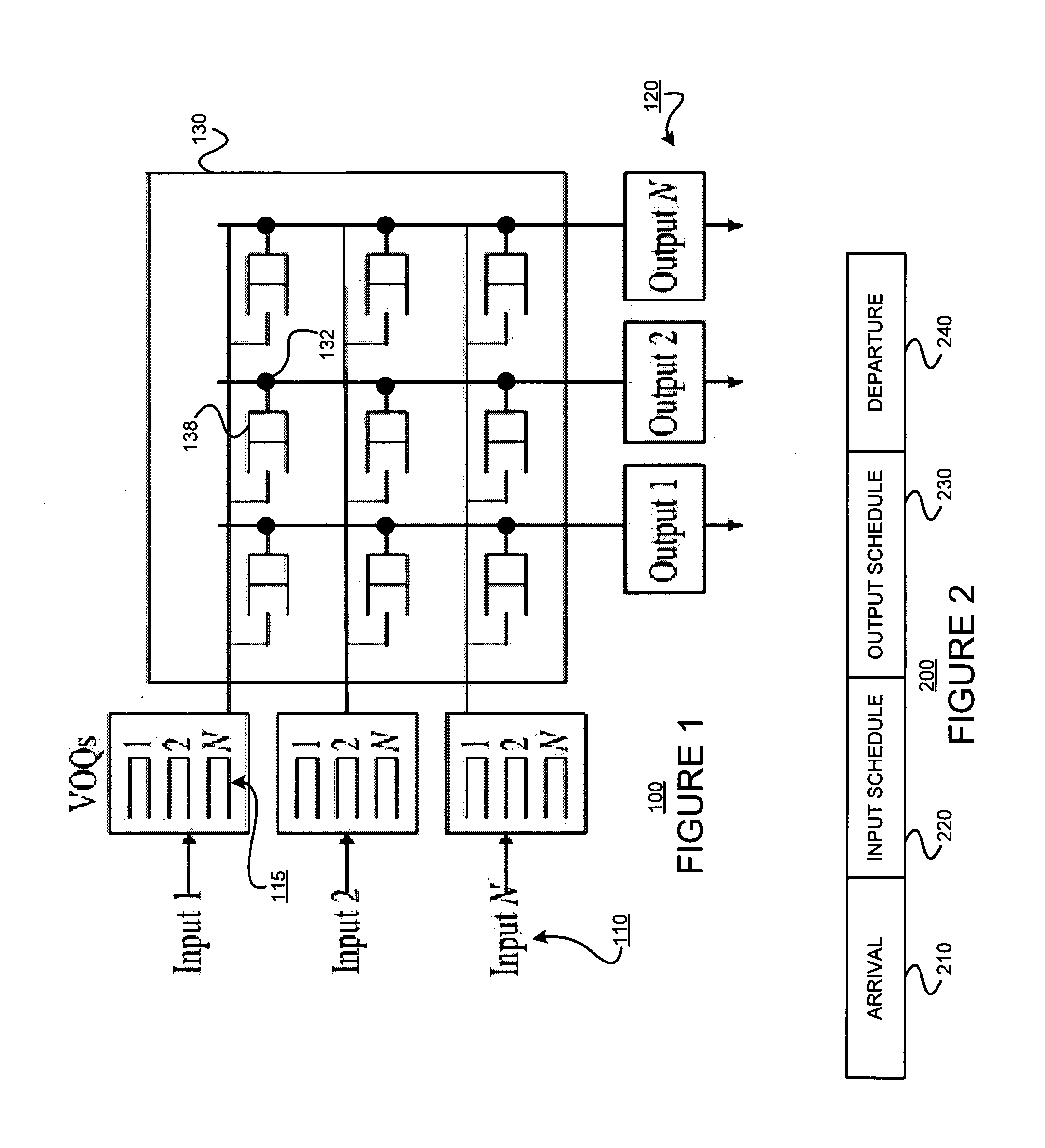

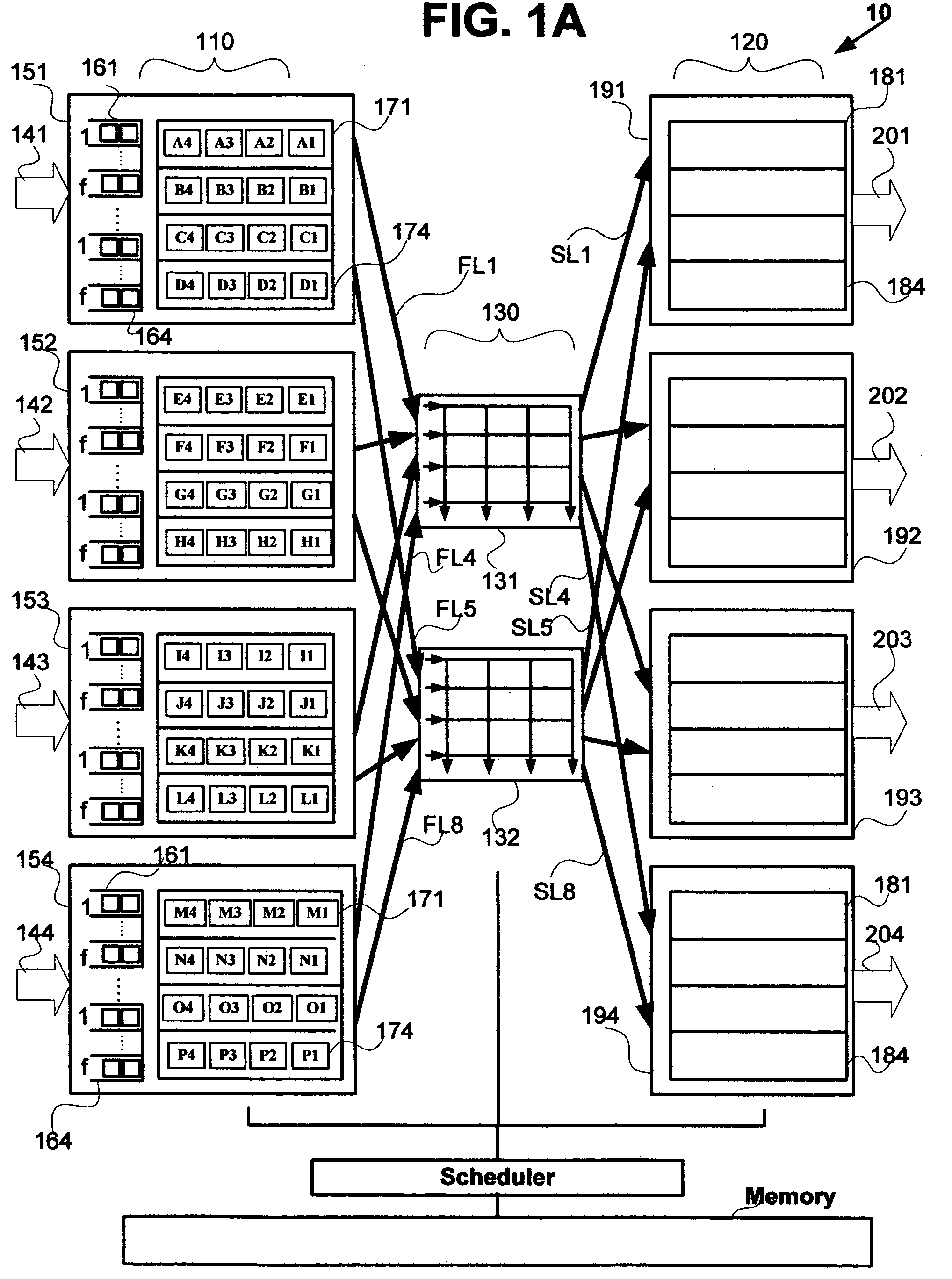

RRGS-round-robin greedy scheduling for input/output terabit switches

InactiveUS6618379B1Easy to useImprove performanceTime-division multiplexLoop networksCrossbar switchOptimal scheduling

A novel protocol for scheduling of packets in high-speed cell based switches is provided. The switch is assumed to use a logical cross-bar fabric with input buffers. The scheduler may be used in optical as well as electronic switches with terabit capacity. The proposed round-robin greedy scheduling (RRGS) achieves optimal scheduling at terabit throughput, using a pipeline technique. The pipeline approach avoids the need for internal speedup of the switching fabric to achieve high utilization. A method for determining a time slot in a NxN crossbar switch for a round robin greedy scheduling protocol, comprising N logical queues corresponding to N output ports, the input for the protocol being a state of all the input-output queues, output of the protocol being a schedule, the method comprising: choosing input corresponding to i=(constant-k-1)mod N, stopping if there are no more inputs, otherwise choosing the next input in a round robin fashion determined by i=(i+1)mod N; choosing an output j such that a pair (i,j) to a set C={(i,j)| there is at least one packet from I to j}, if the pair (i,j) exists; removing i from a set of inputs and repeating the steps if the pair (i,j) does not exist; removing i from the set of inputs and j from a set of outputs; and adding the pair (i,j) to the schedule and repeating the steps.

Owner:NEC CORP

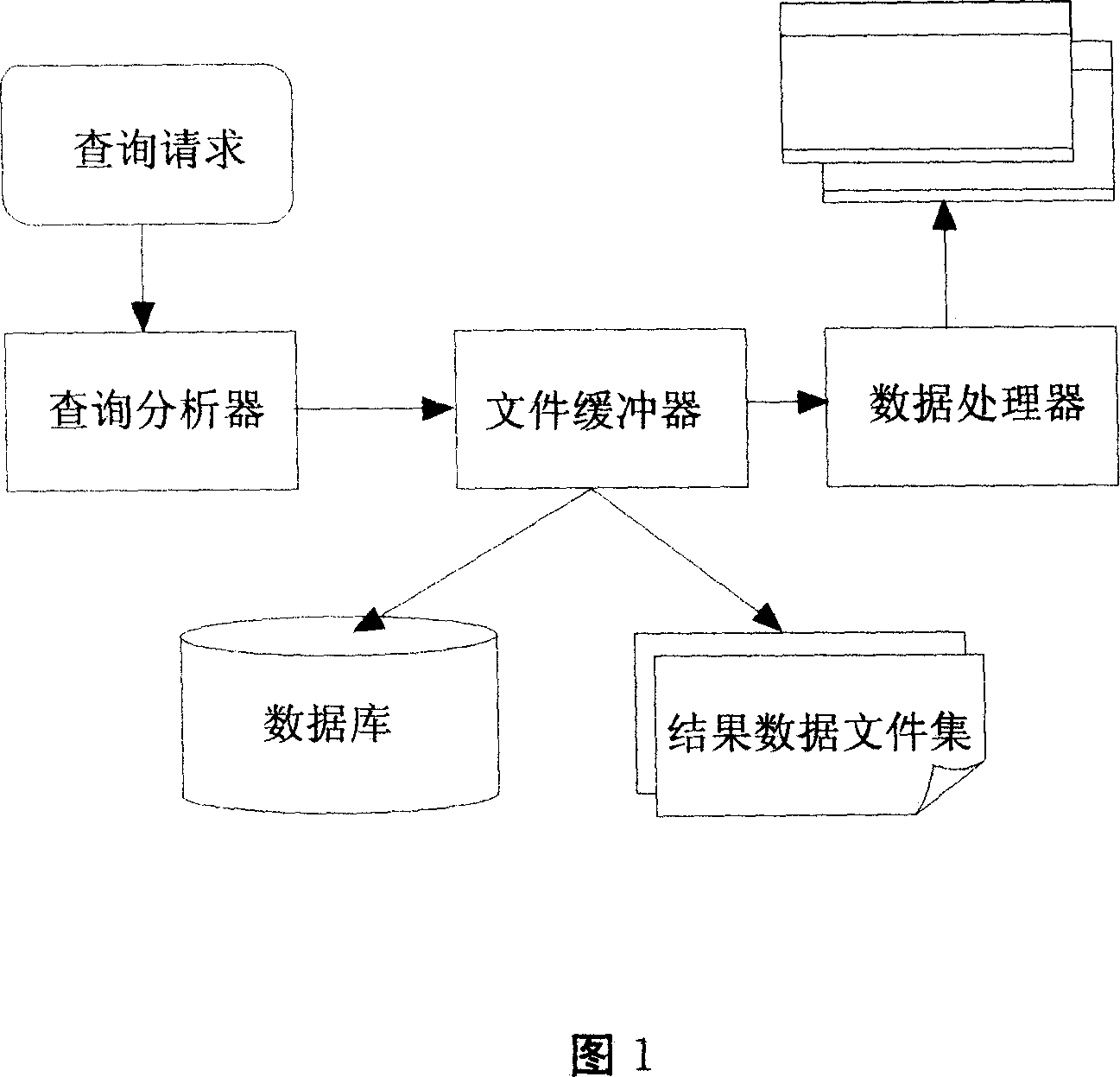

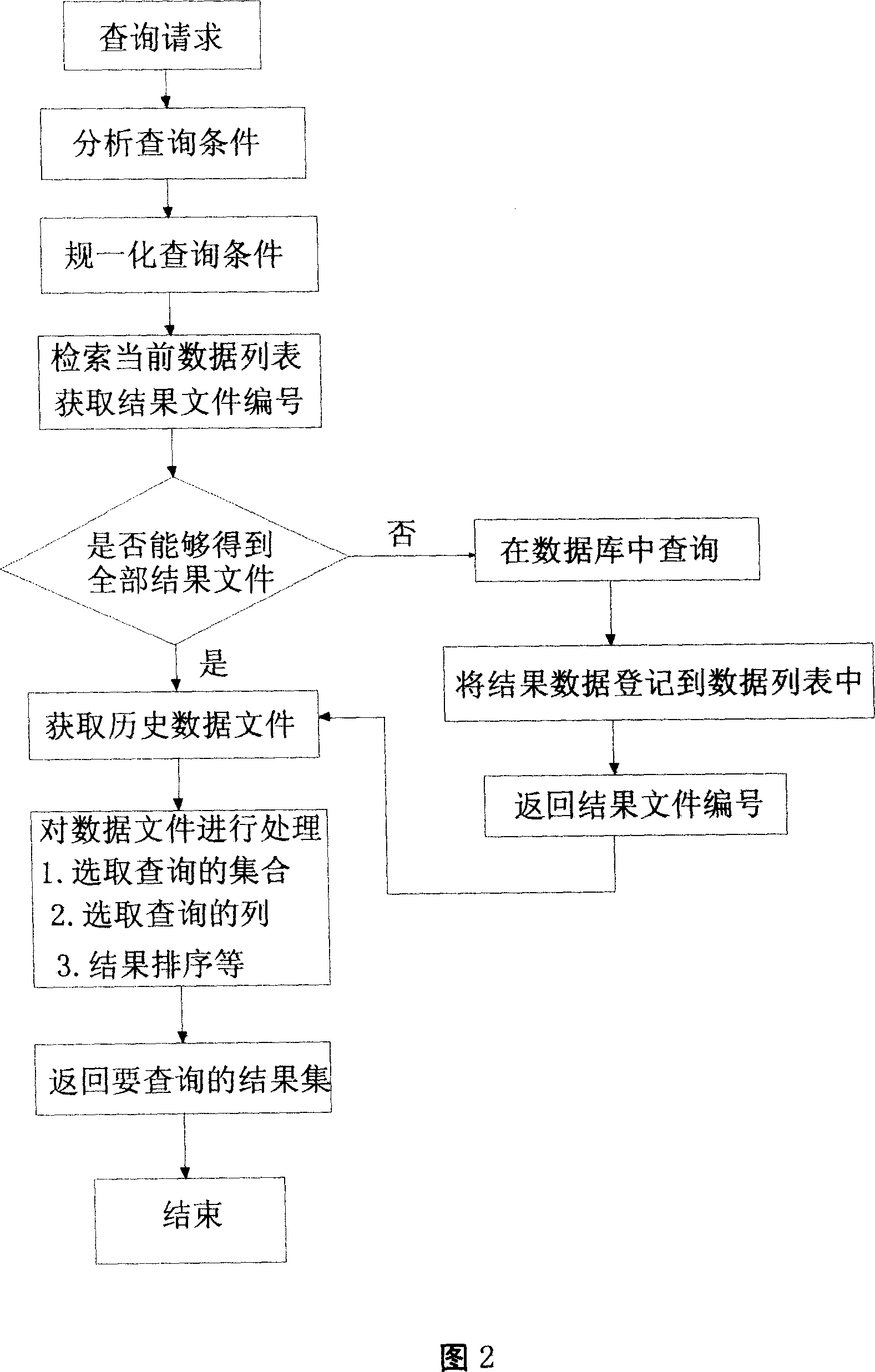

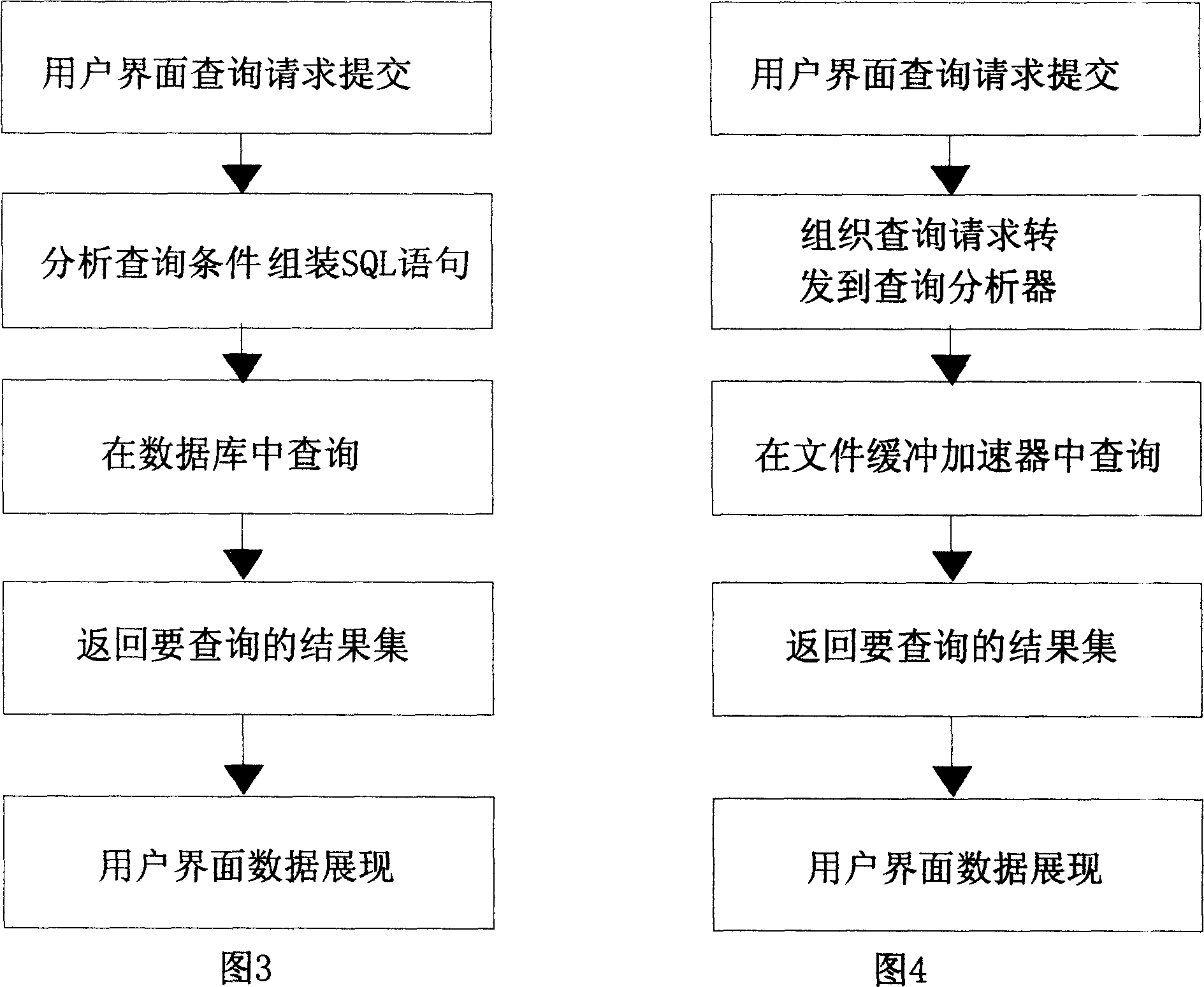

Data speedup query method based on file system caching

InactiveCN101110074AImprove hit rateIncrease in inquiriesSpecial data processing applicationsQuery analysisFile system

The invention relates to an accelerated data query method in a query system, which provides an accelerated data query method based on a cache of document system according to the features of unchanged saved historical data and frequent query of same data. The invention saves the historical query data and takes it as the cache data for query, so as to accelerate the query. Upon receiving the query quest from user, the system will first of all turn to the query analyzer; the principal function of the query analyzer is to analyze and plan the query condition; then, after passing through a document buffer, the system analyzes whether the data document in the result satisfies the present query need; if not, the query will be carried out in the database and the final result will be fed back to a data processor; then, the data processor will filter and sort the data according to the final query demand and finally feed back the result document to a super stratum application system. Therefore, without increasing the investment on hardware, this query method is able to greatly reduce the occupation of database and system resource, so as to improve the query speed.

Owner:INSPUR TIANYUAN COMM INFORMATION SYST CO LTD

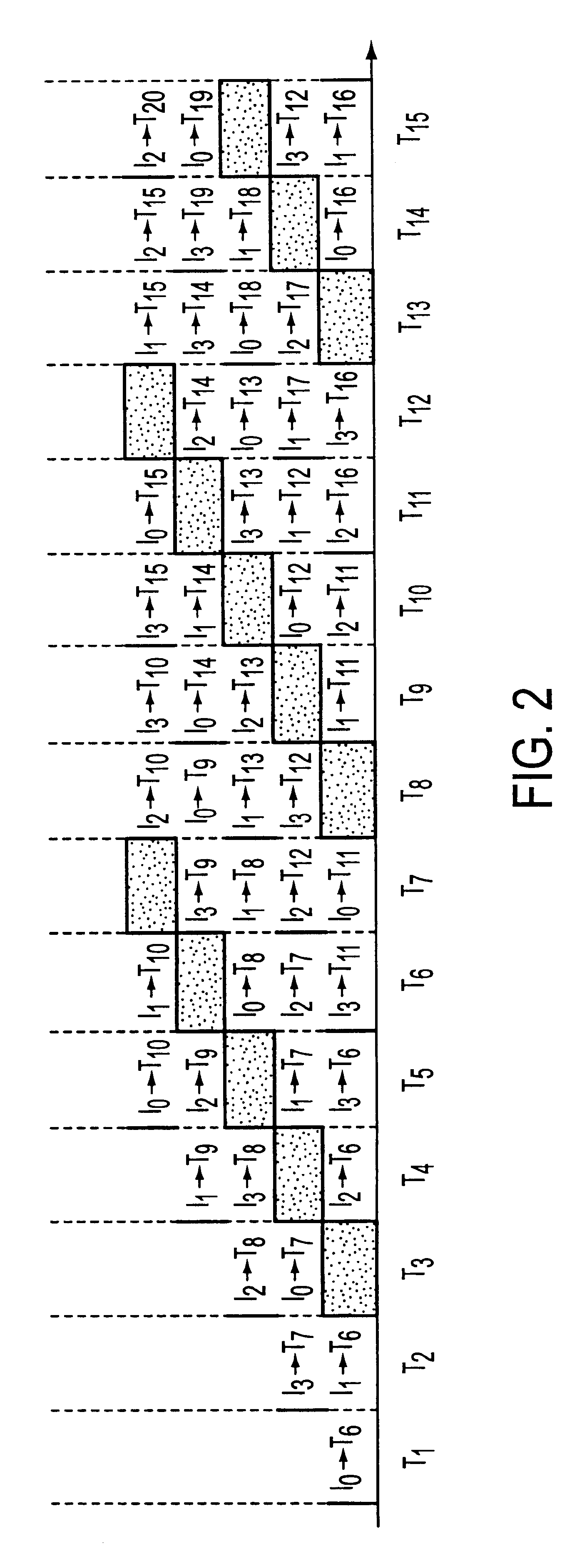

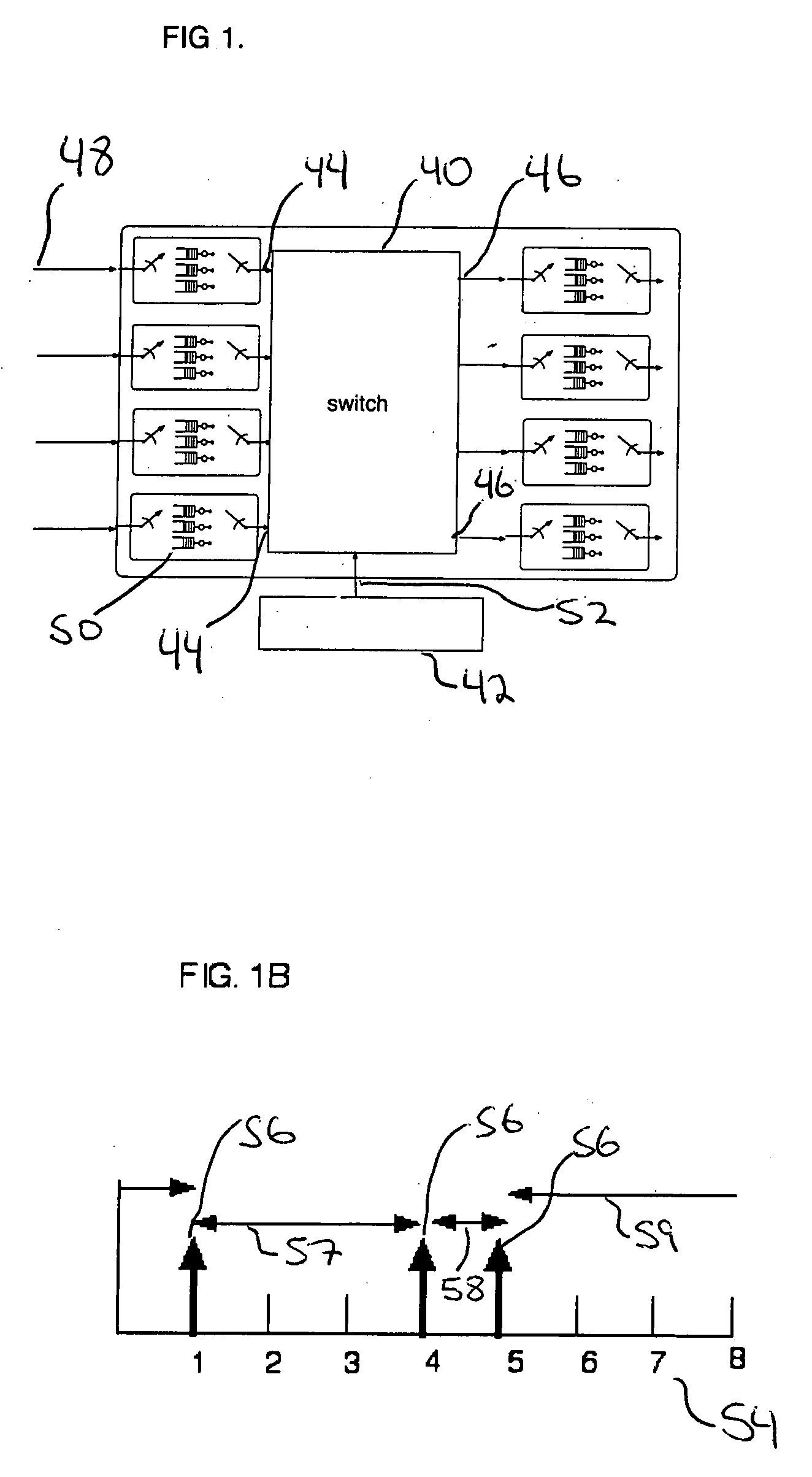

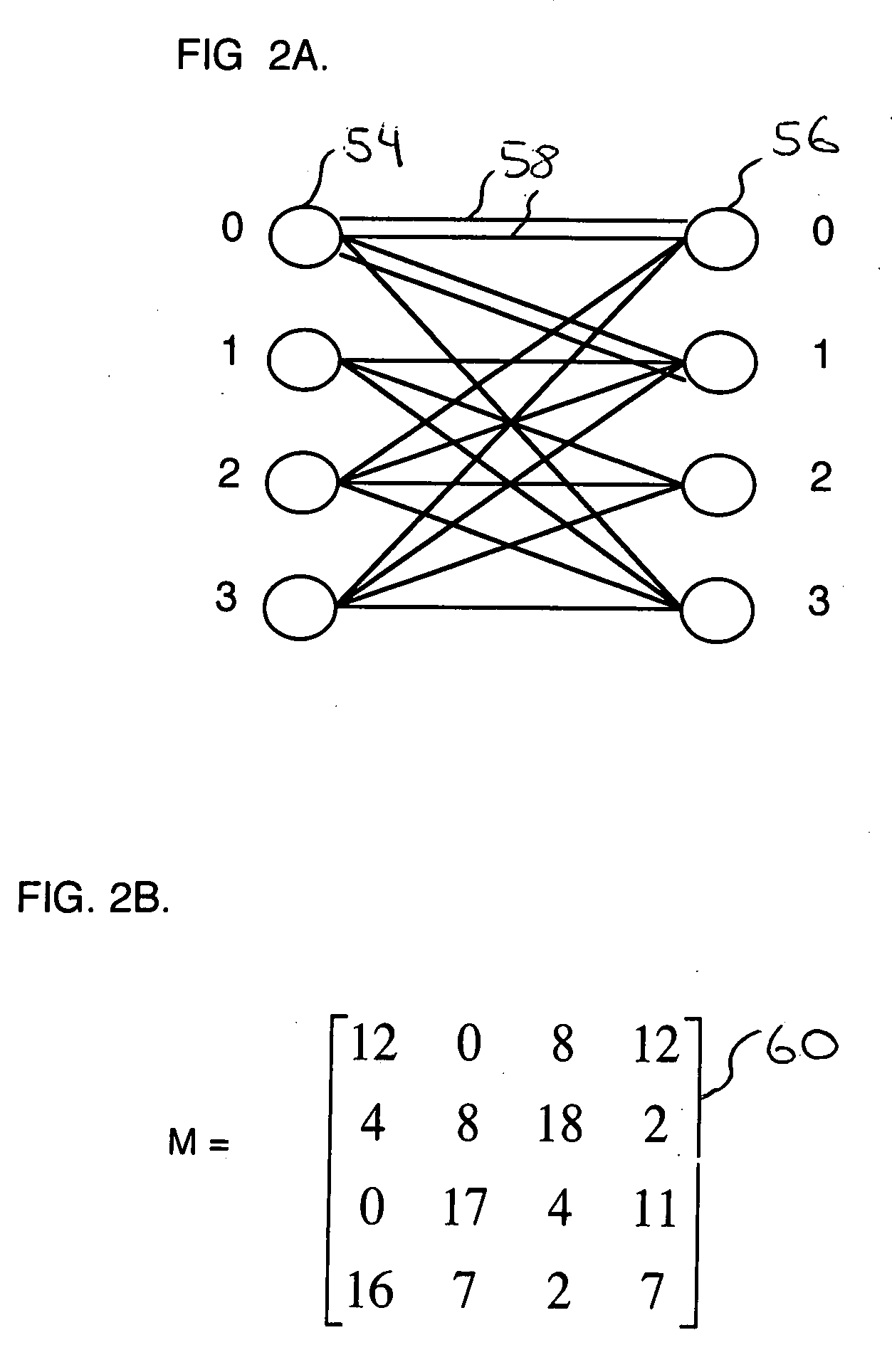

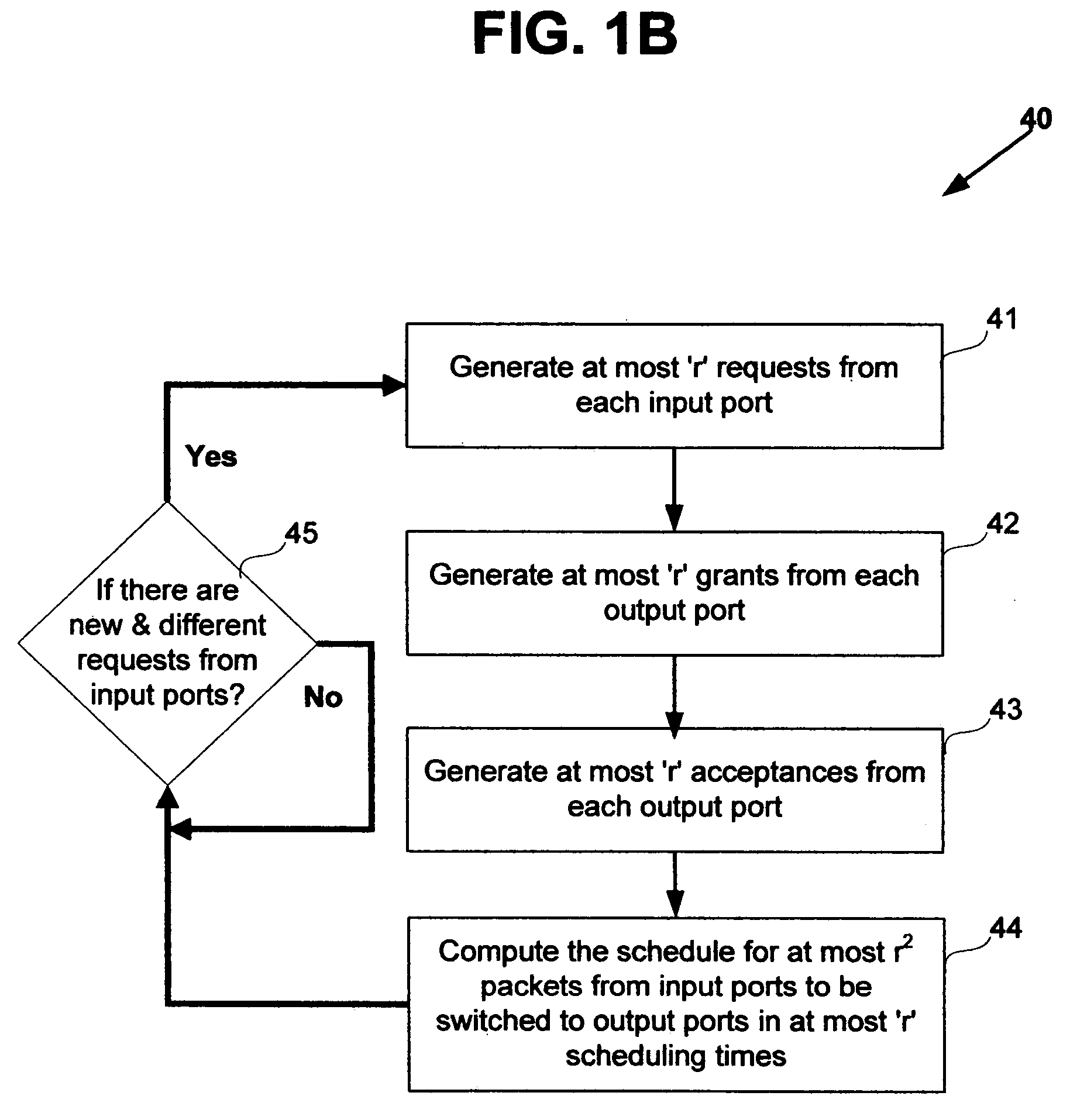

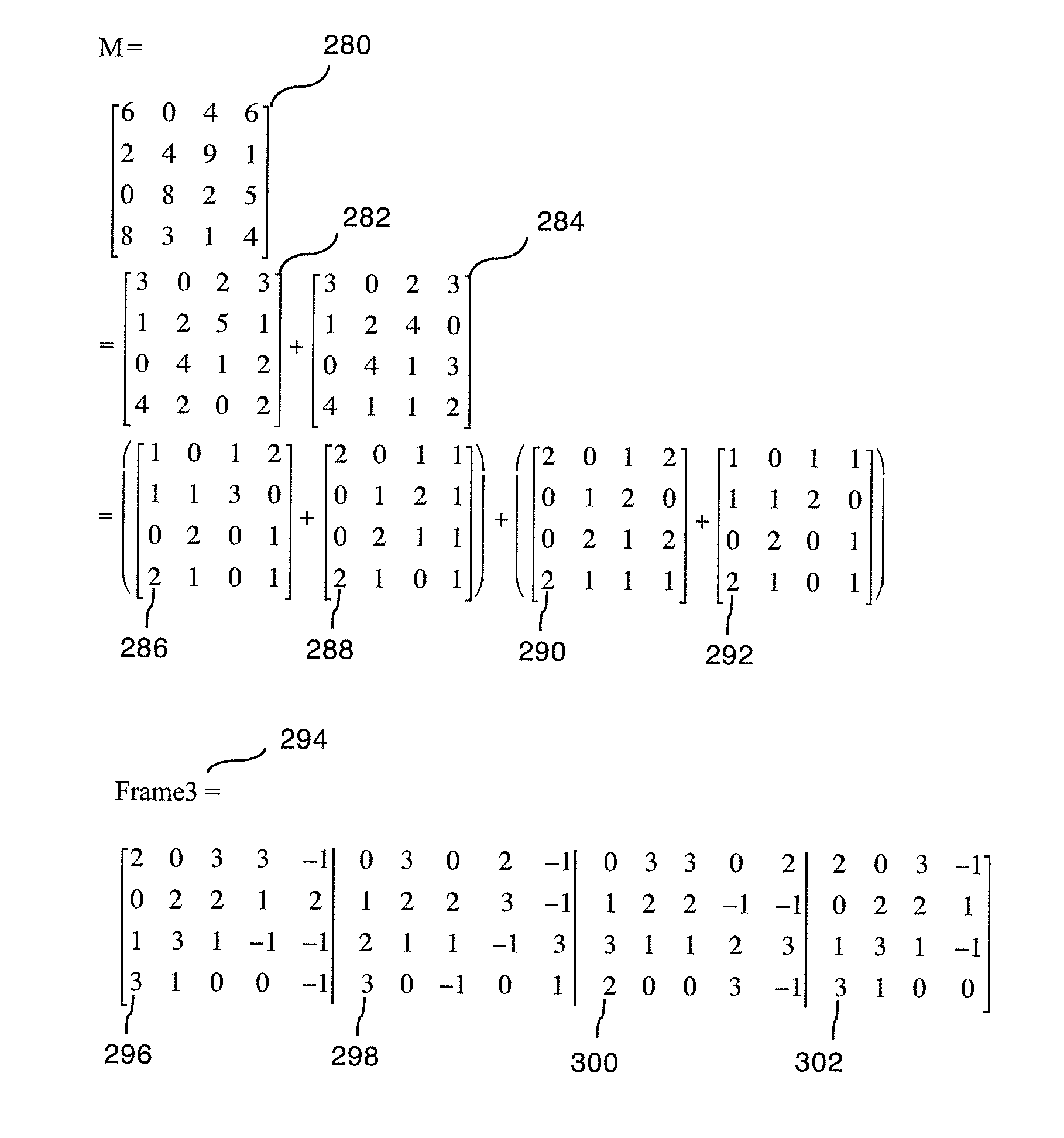

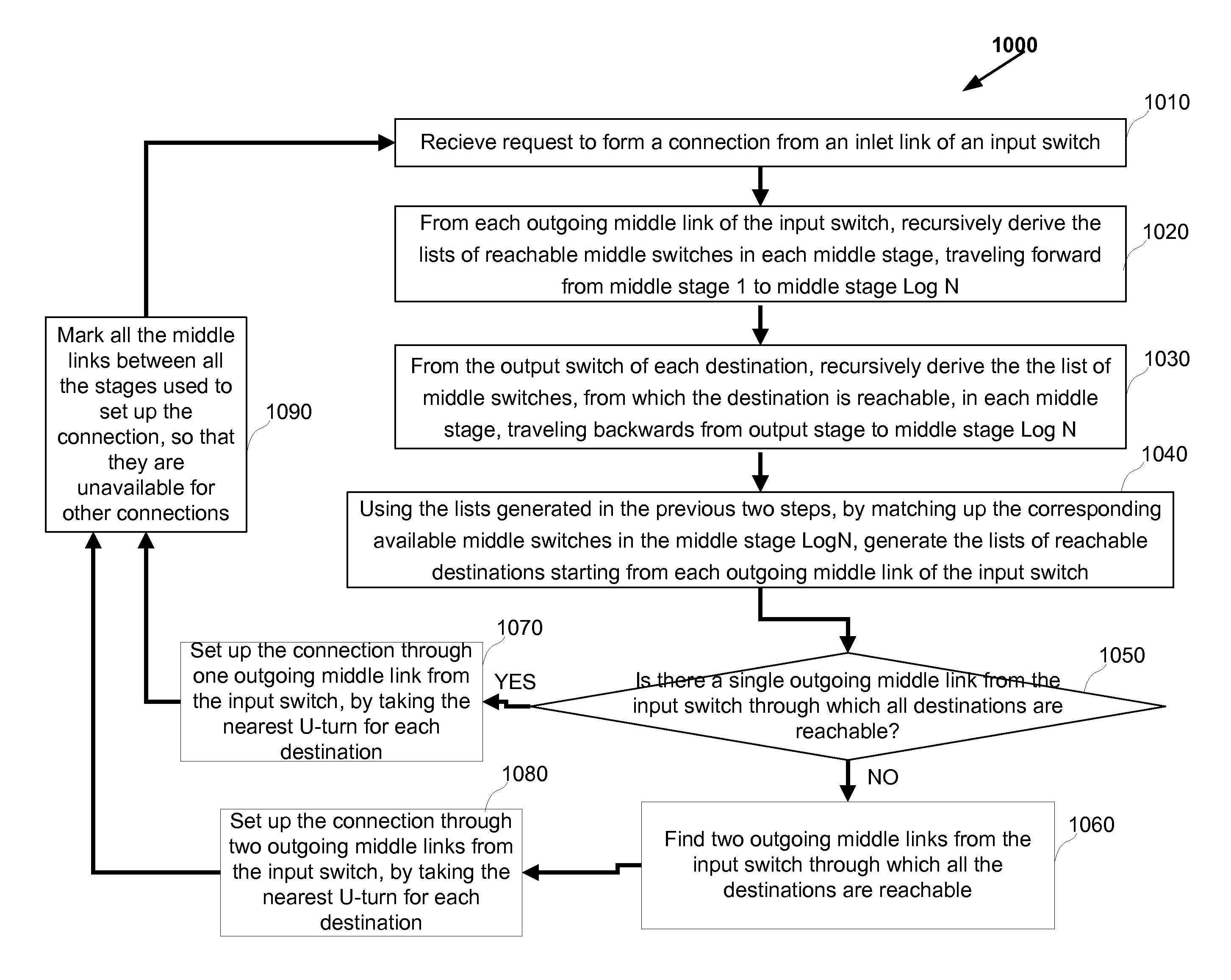

Method and apparatus to schedule packets through a crossbar switch with delay guarantees

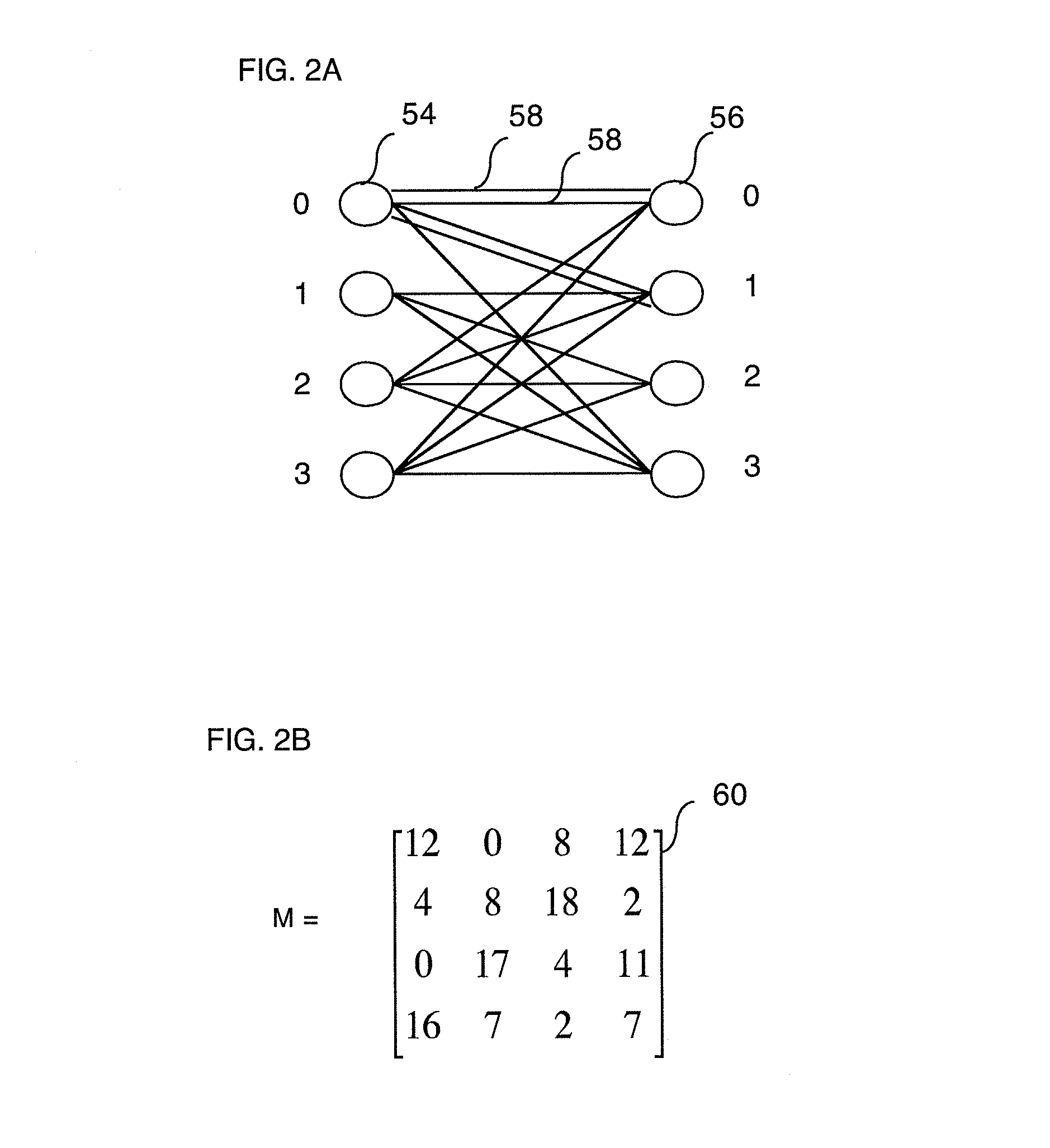

ActiveUS20070280261A1Minimize delay jitterMeet bandwidth requirementsMultiplex system selection arrangementsData switching by path configurationCrossbar switchDifferentiated services

A method for scheduling cell transmissions through a switch with rate and delay guarantees and with low jitter is proposed. The method applies to a classic input-buffered N×N crossbar switch without speedup. The time axis is divided into frames each containing F time-slots. An N×N traffic rate matrix specifies a quantized guaranteed traffic rate from each input port to each output port. The traffic rate matrix is transformed into a permutation with NF elements which is decomposed into F permutations of N elements using a recursive and fair decomposition method. Each permutation is used to configure the crossbar switch for one time-slot within a frame of size F time-slots, and all F permutations result in a Frame Schedule. In the frame schedule, the expected Inter-Departure Time (IDT) between cells in a flow equals the Ideal IDT and the delay jitter is bounded and small. For fixed frame size F, an individual flow can often be scheduled in O(logN) steps, while a complete reconfiguration requires O(NlogN) steps when implemented in a serial processor. An RSVP or Differentiated Services-like algorithm can be used to reserve bandwidth and buffer space in an IP-router, an ATM switch or MPLS switch during a connection setup phase, and the proposed method can be used to schedule traffic in each router or switch. Best-effort traffic can be scheduled using any existing dynamic scheduling algorithm to fill the remaining unused switch capacity within each Frame. The scheduling algorithm also supports multicast traffic.

Owner:SZYMANSKI TED HENRYK

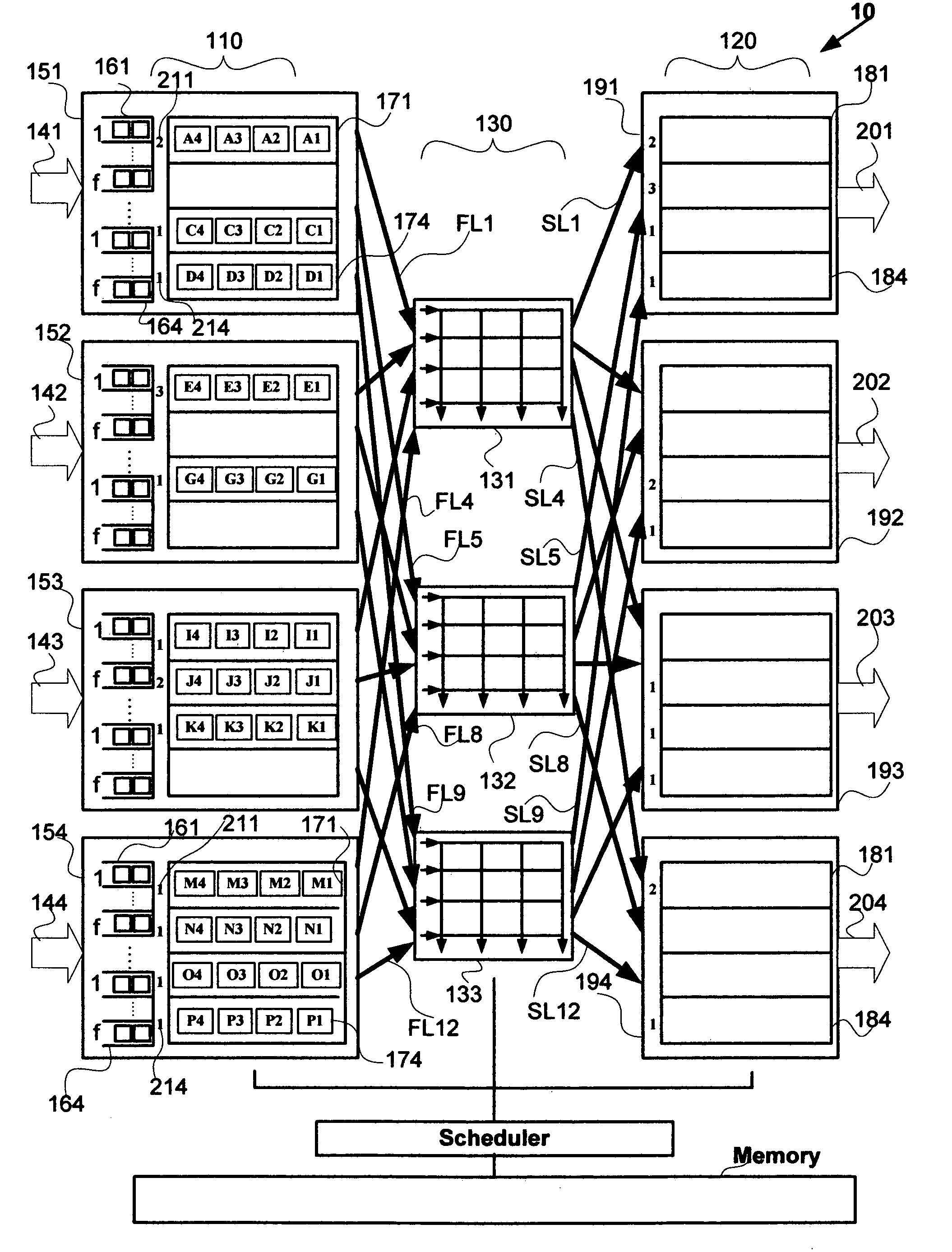

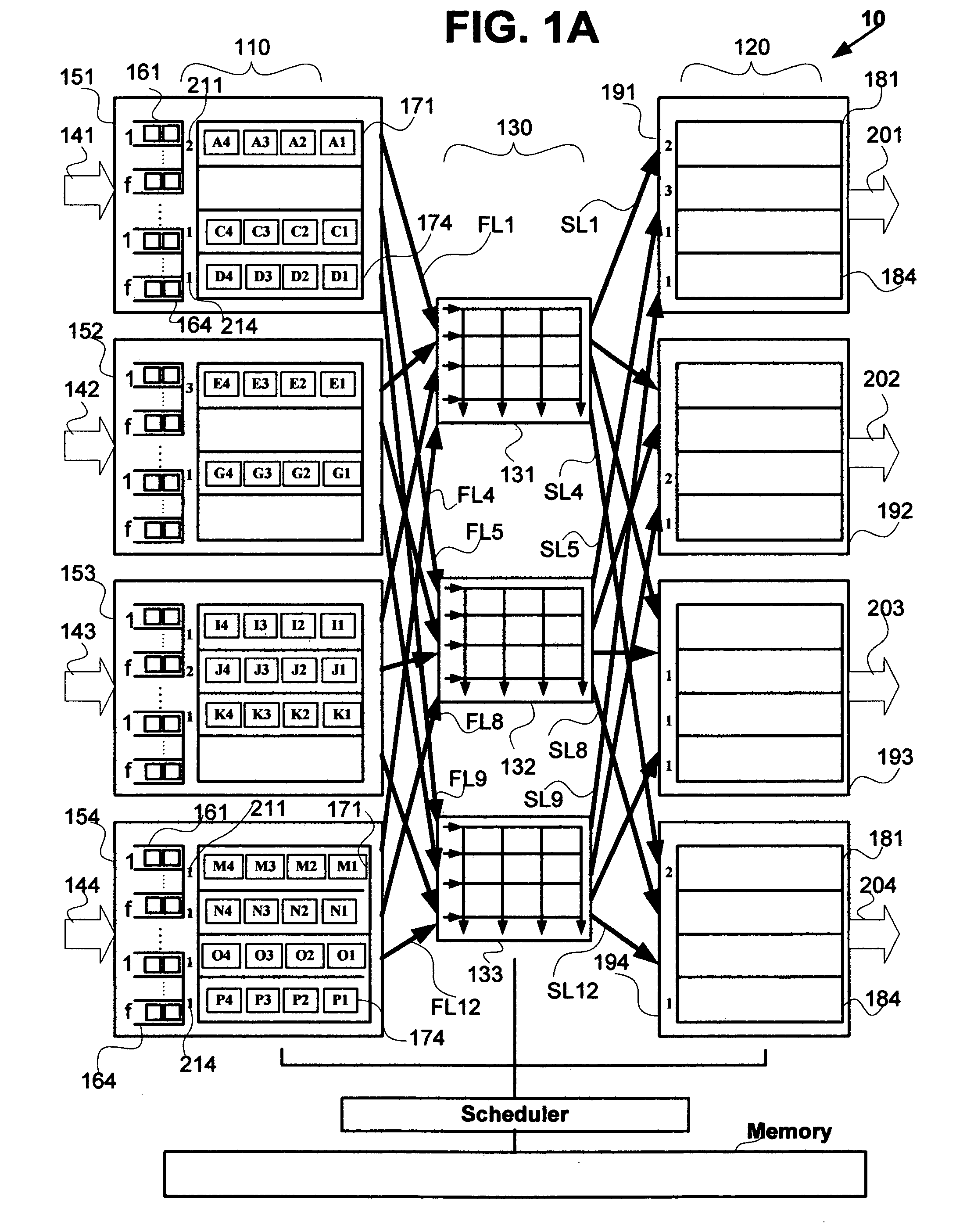

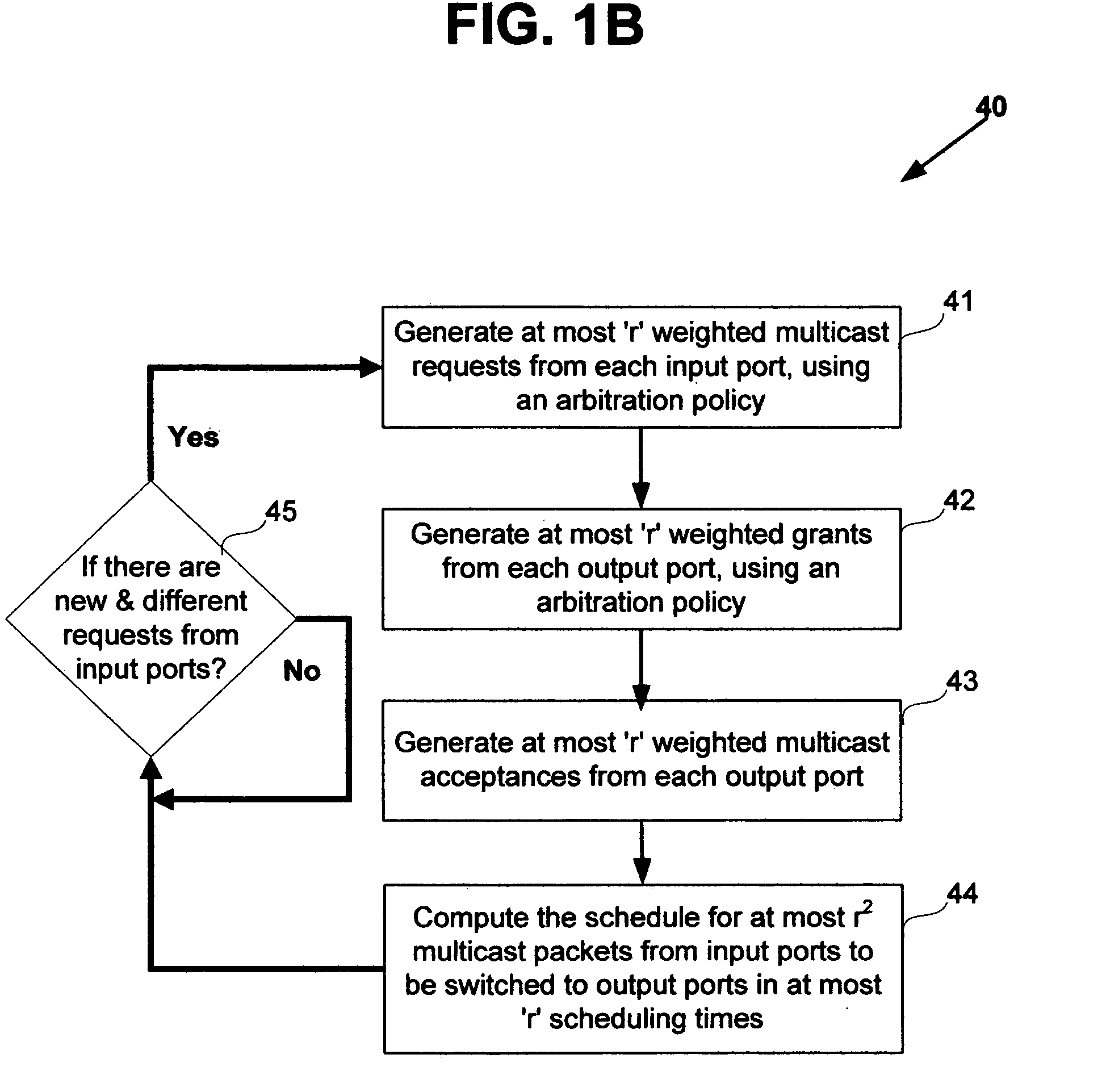

Nonblocking and deterministic multirate multicast packet scheduling

InactiveUS20070053356A1Guaranteed bandwidthGuaranteed LatencyData switching by path configurationSwitching timeMulti rate

A system for scheduling multirate multicast packets through an interconnection network having a plurality of input ports, a plurality of output ports, and a plurality of input queues, comprising multirate multicast packets with rate weight, at each input port is operated in nonblocking manner in accordance with the invention by scheduling corresponding to the packet rate weight, at most as many packets equal to the number of input queues from each input port to each output port. The scheduling is performed so that each multicast packet is fan-out split through not more than two interconnection networks and not more than two switching times. The system is operated at 100% throughput, work conserving, fair, and yet deterministically thereby never congesting the output ports. The system performs arbitration in only one iteration, with mathematical minimum speedup in the interconnection network. The system operates with absolutely no packet reordering issues, no internal buffering of packets in the interconnection network, and hence in a truly cut-through and distributed manner. In another embodiment each output port also comprises a plurality of output queues and each packet is transferred corresponding to the packet rate weight, to an output queue in the destined output port in deterministic manner and without the requirement of segmentation and reassembly of packets even when the packets are of variable size. In one embodiment the scheduling is performed in strictly nonblocking manner with a speedup of at least three in the interconnection network. In another embodiment the scheduling is performed in rearrangeably nonblocking manner with a speedup of at least two in the interconnection network. The system also offers end to end guaranteed bandwidth and latency for multirate multicast packets from input ports to output ports. In all the embodiments, the interconnection network may be a crossbar network, shared memory network, clos network, hypercube network, or any internally nonblocking interconnection network or network of networks.

Owner:TEAK TECH

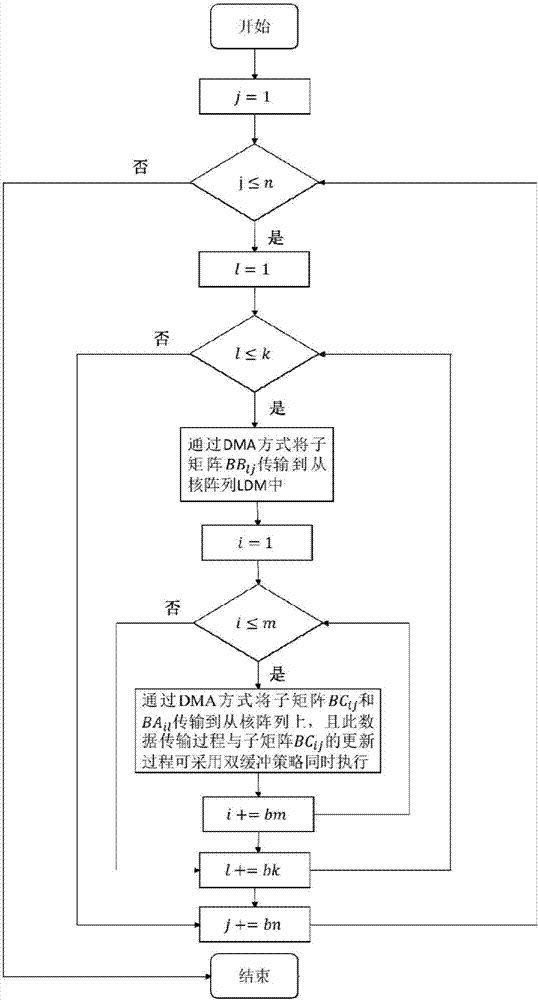

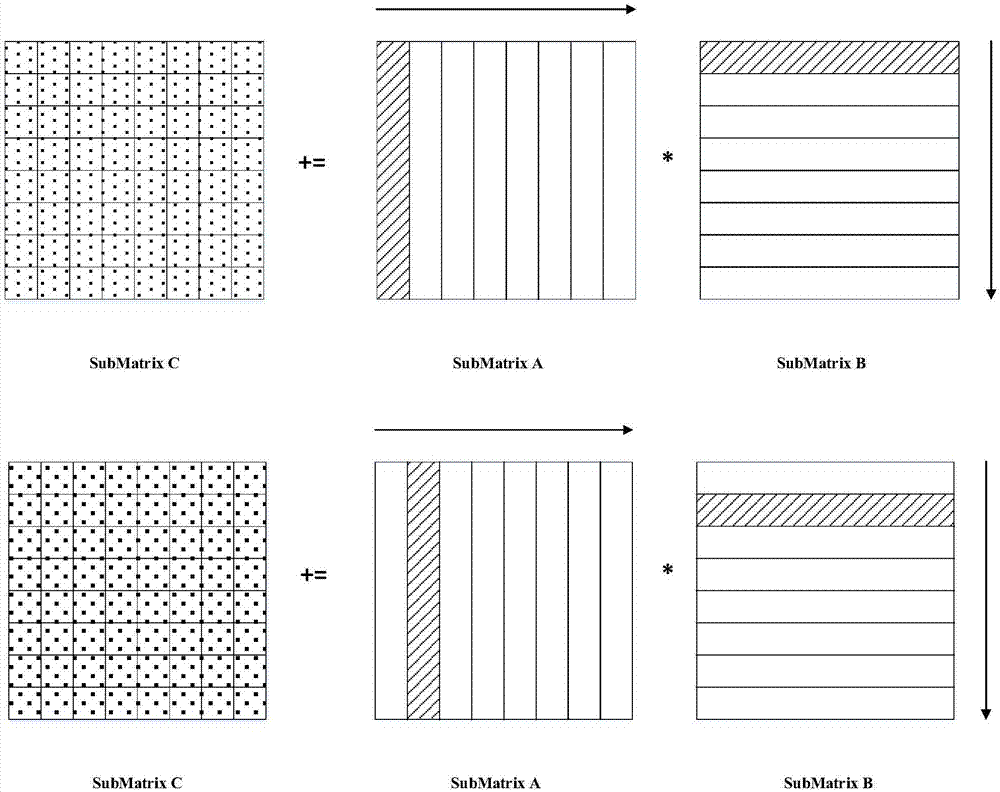

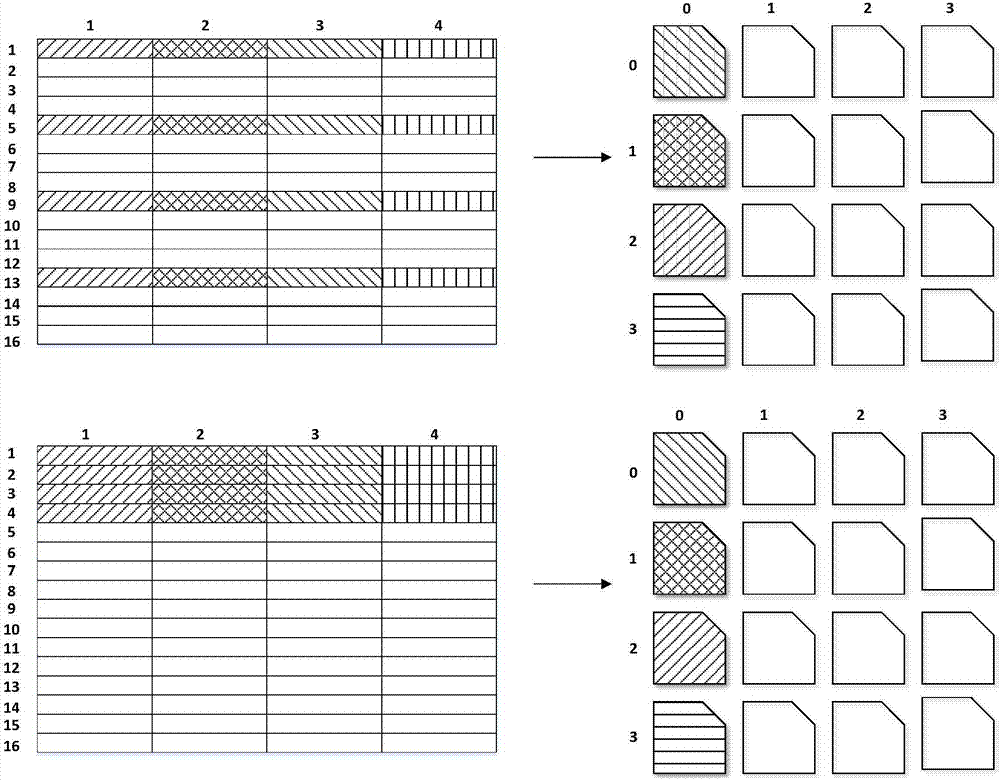

A GEMM (general matrix-matrix multiplication) high-performance realization method based on a domestic SW 26010 many-core CPU

ActiveCN107168683ASolve the problem that the computing power of slave cores cannot be fully utilizedImprove performanceRegister arrangementsConcurrent instruction executionFunction optimizationAssembly line

The invention provides a GEMM (general matrix-matrix multiplication) high-performance realization method based on a domestic SW 26010 many-core CPU. For a domestic SW many-core processor 26010, based on the platform characteristics of storage structures, memory access, hardware assembly lines and register level communication mechanisms, a matrix partitioning and inter-core data mapping method is optimized and a top-down there-level partitioning parallel block matrix multiplication algorithm is designed; a slave core computing resource data sharing method is designed based on the register level communication mechanisms, and a computing and memory access overlap double buffering strategy is designed by using a master-slave core asynchronous DMA data transmission mechanism; for a single slave core, a loop unrolling strategy and a software assembly line arrangement method are designed; function optimization is achieved by using a highly-efficient register partitioning mode and an SIMD vectoring and multiplication and addition instruction. Compared with a single-core open-source BLAS math library GotoBLAS, the function performance of the high-performance GEMM has an average speed-up ratio of 227. 94 and a highest speed-up ratio of 296.93.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI +1

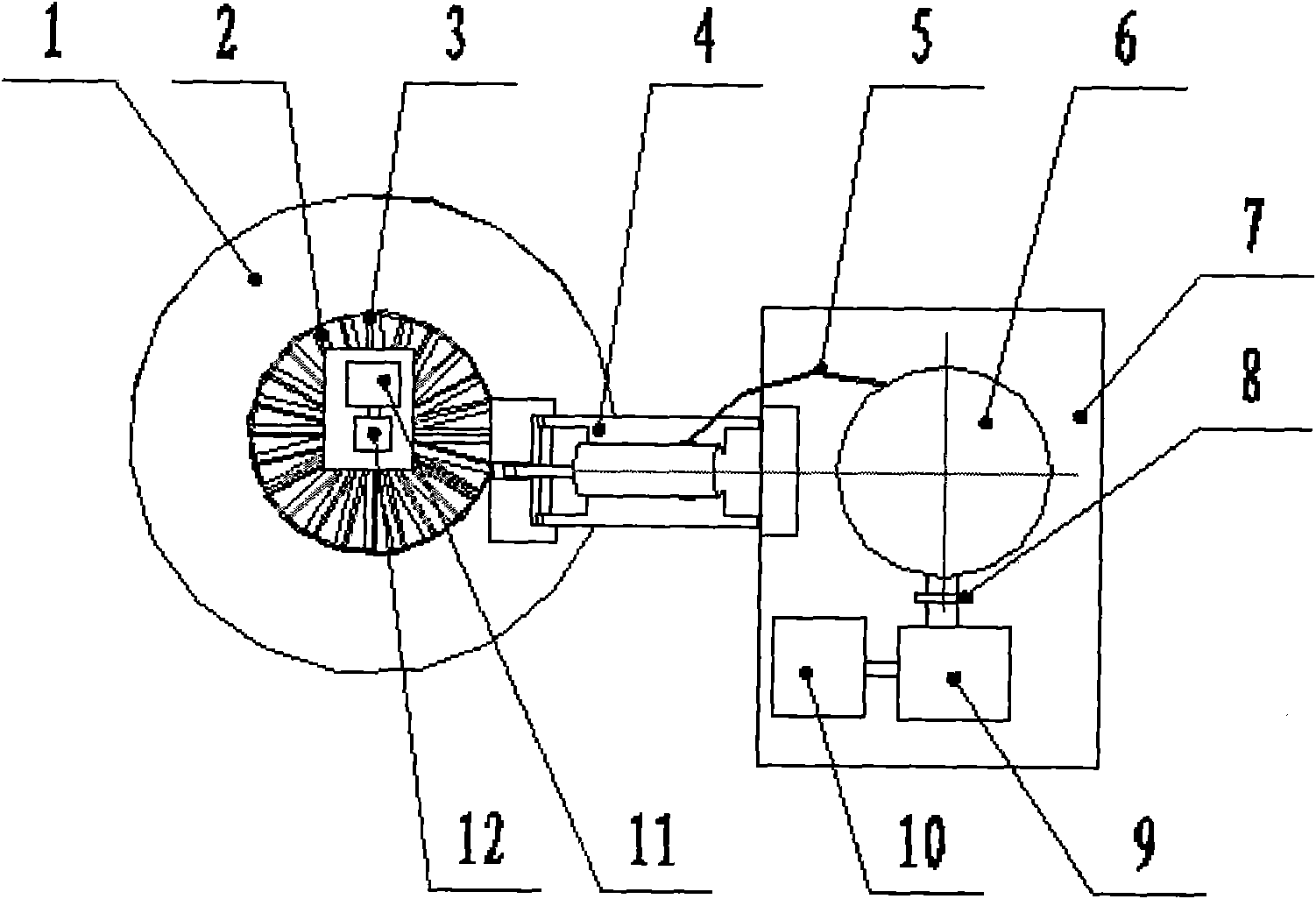

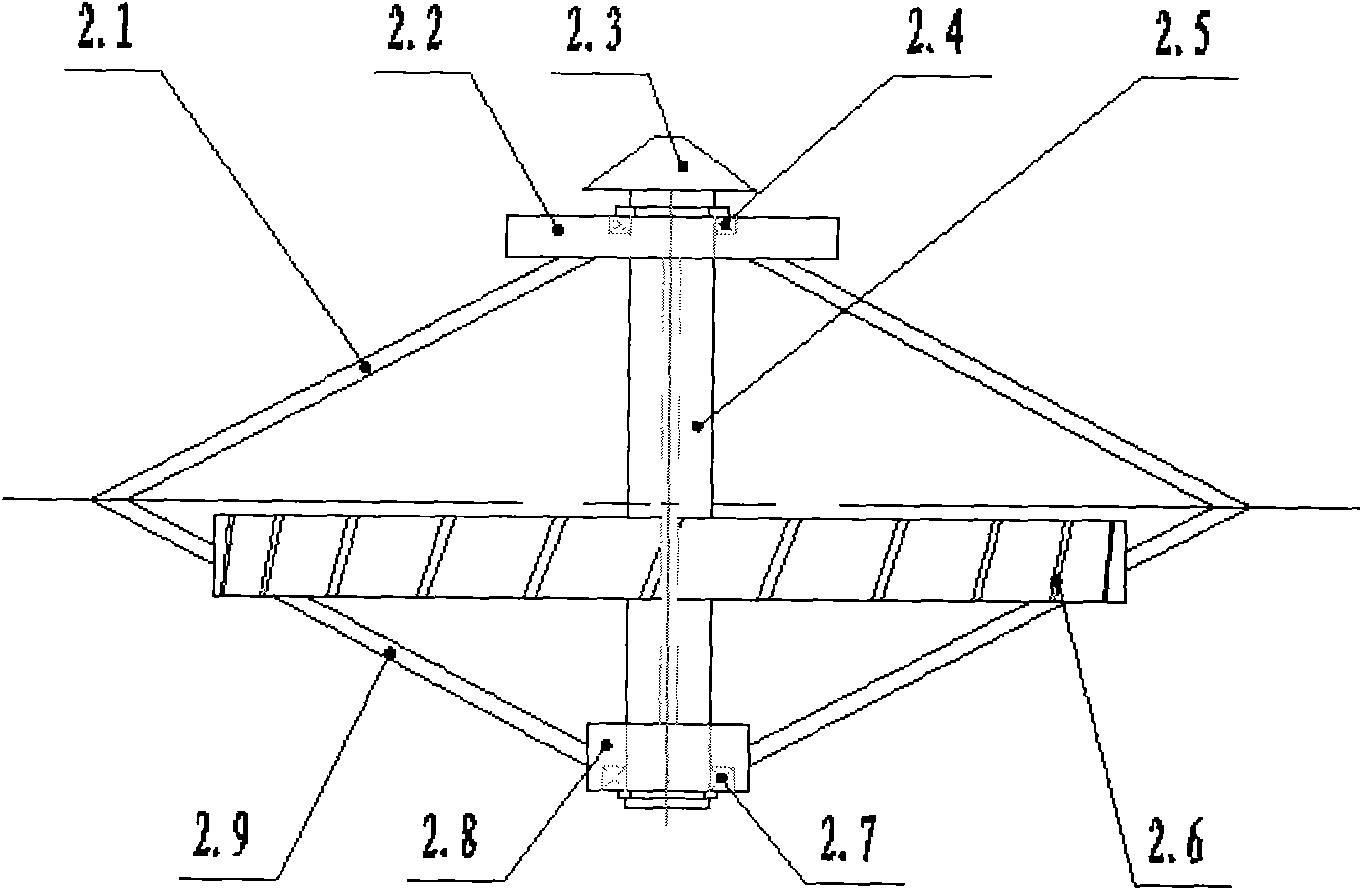

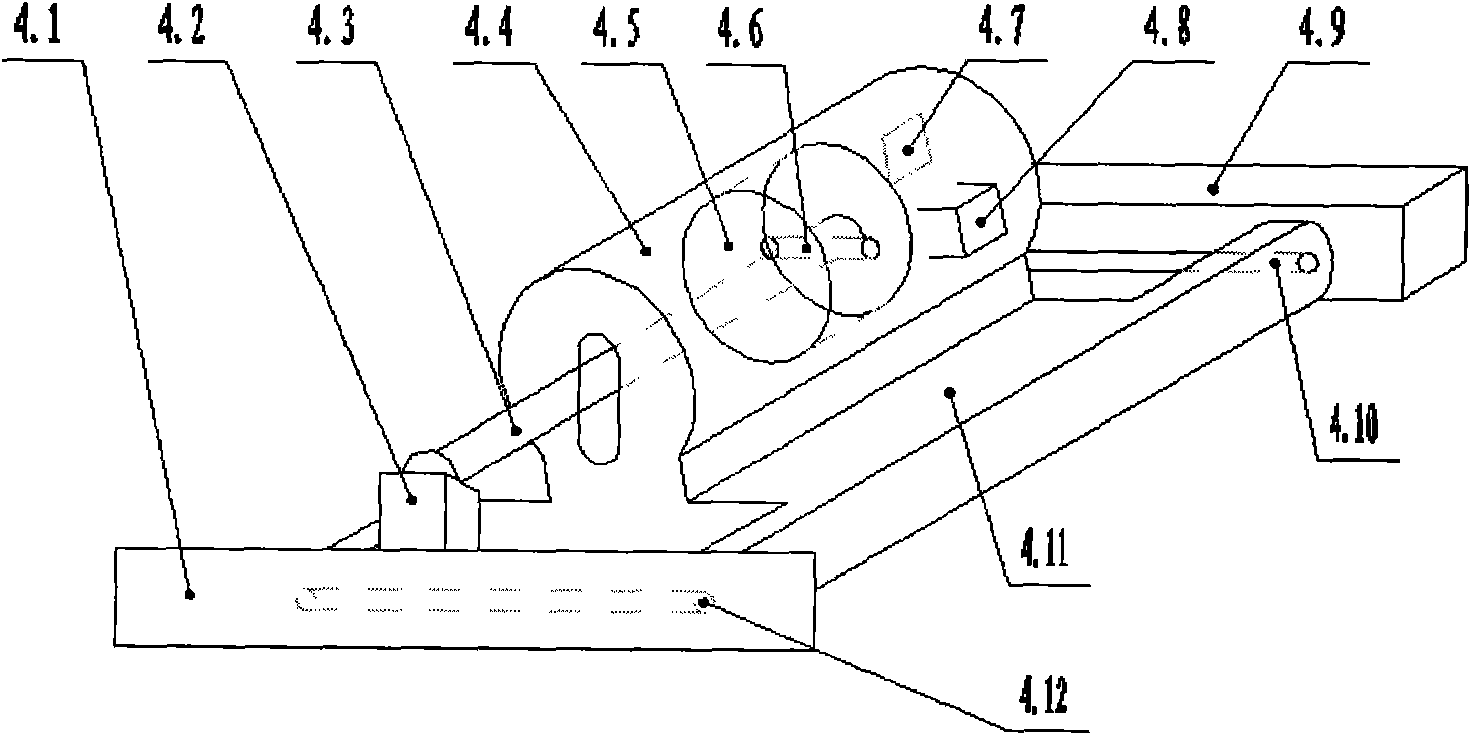

Composite type device utilizing ocean wave energy for generating electricity

ActiveCN101614180AIncrease profitGood power generation stabilityMachines/enginesEngine componentsElectricityImpeller

The invention provides a composite type device utilizing the ocean wave energy for generating electricity, comprising a first energy acquisition device, a second energy acquisition device and an electricity generation device, wherein the first energy acquisition device comprises a floating body, a vane wheel, a bracket, a speedup transmission device, a supporting platform; and the second energy acquisition device comprises an air cylinder, an air intake one way valve, an air exhaust one way valve, an air storage tank, a steam turbine, a fixed platform, etc. The invention is characterized in that: firstly, the ocean heading face is provided with the floating body on which the vane wheel is fixed. The movement of waves propels the blades to rotate and drive an intermediate shaft of the vane wheel to rotate together, and drives the generator to generate the electricity by the speedup transmission device; secondly, when the floating body floats upwards, the air is injected into the air cylinder by driving a connecting rod and a piston; and when the floating body falls, the air in the air cylinder is compressed into the air storage tank. When the air pressure in the air storage tank generates a certain value, the pressure valve is automatically opened to push the steam turbine to rotate, and then the generator can be driven to generate the electrical energy. The electricity generation device applies two energy acquiring method, which can double efficient utilize the ocean for generating electricity with good economic benefit.

Owner:ADVANCED MFG TECH CENT CHINA ACAD OF MASCH SCI & TECH

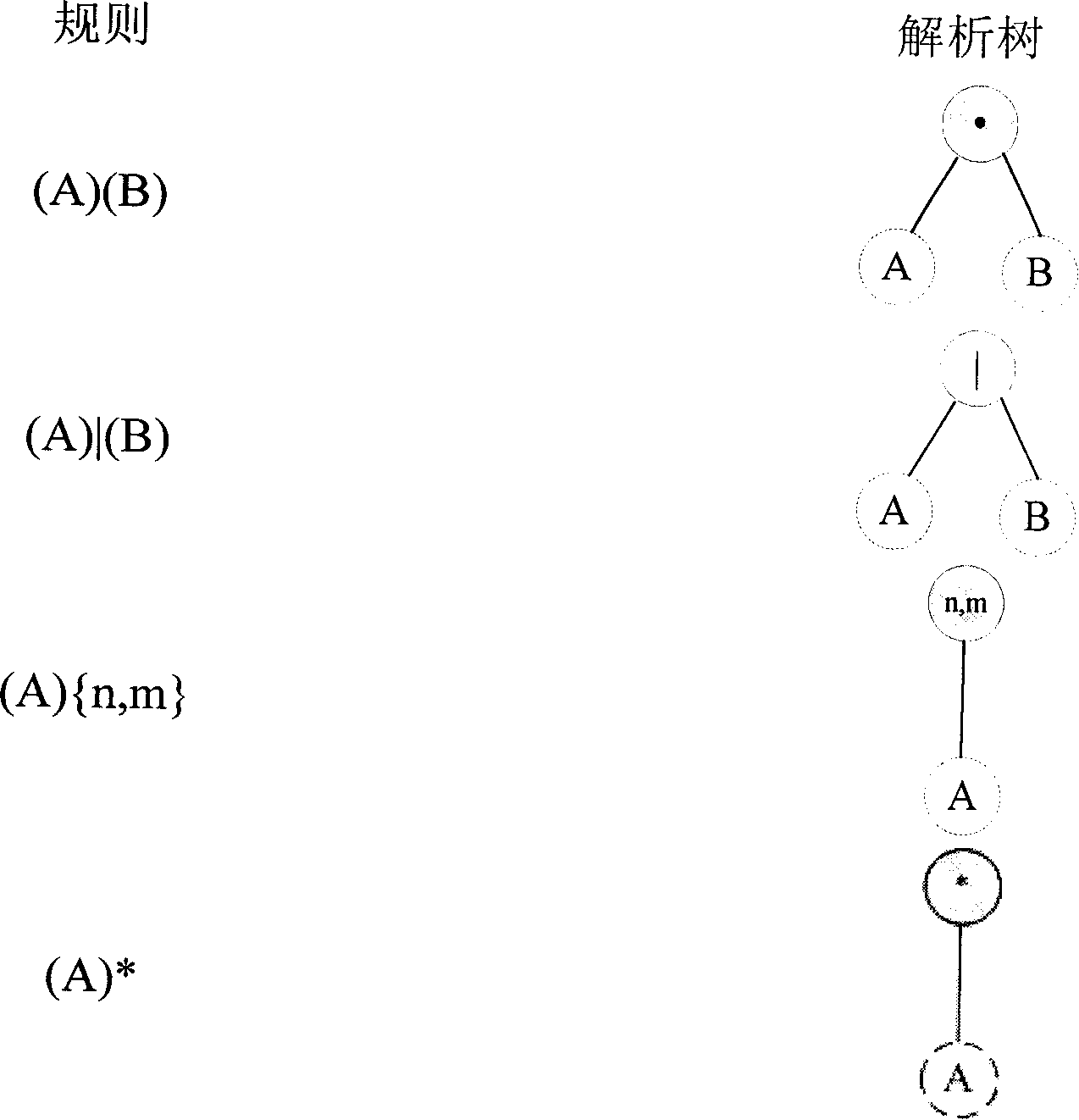

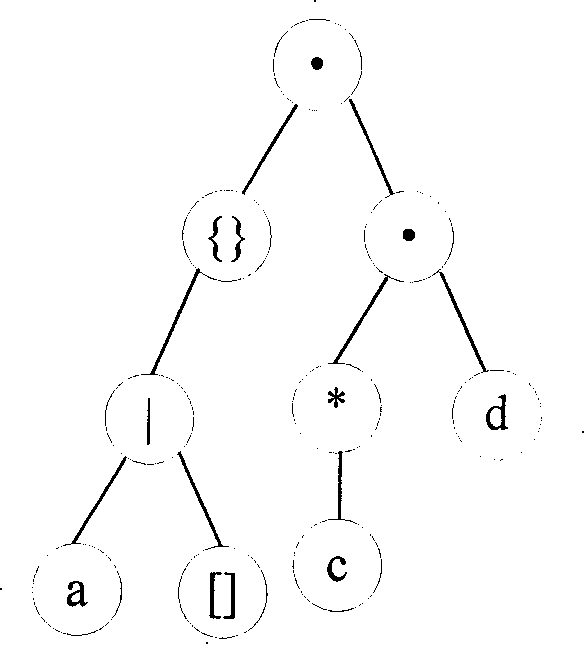

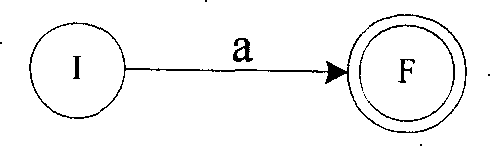

Method for matching in speedup regular expression based on finite automaton containing memorization determination

InactiveCN101201836AReduce development difficultyReduce time spentSpecial data processing applicationsMemory footprintPattern matching

The invention discloses a matching and accelerating method of a regular expression based on a deterministic finite automaton with memory, including a rule compiler of the regular expression and a pattern matching engine; the rule compiler of the regular expression firstly transforms the regular expression into an analytic tree, and then transforms the analytic tree into a nondeterministic finite automaton with memory and the deterministic finite automaton with memory respectively; the pattern matching engine can accelerate pattern matching by using the deterministic finite automaton with memory generated by the rule compiler. The invention has the advantages that: 1) by directly supporting repeat operators, the compiler does not need to unfold the repeat expression, thus the difficulty of the development of the compiler is greatly reduced and the memory occupation and the compile time of the compiler are decreased as well; 2) for the same reason, the volume of a rules database generated by the compiler can be reduced, so the cost and complexity of the pattern matching engine can be lowered.

Owner:ZHEJIANG UNIV

Low complexity scheduling algorithm for a buffered crossbar switch with 100% throughput

InactiveUS20080175259A1Data switching by path configurationStore-and-forward switching systemsCrossbar switchRound complexity

Scheduling techniques for use with buffered crossbar switches, without speedup, which can provide 100% throughput are described. Each input / output may keep track of the previously served VOQ / crosspoint buffer. The queue lengths of such VOQs and the queue lengths of VOQs corresponding to a uniform probability selection output (e.g., from a Hamiltonian walk schedule) are used to improve the schedule at each time slot.

Owner:POLYTECHNIC INSTITUTE OF NEW YORK UNIVERSITY

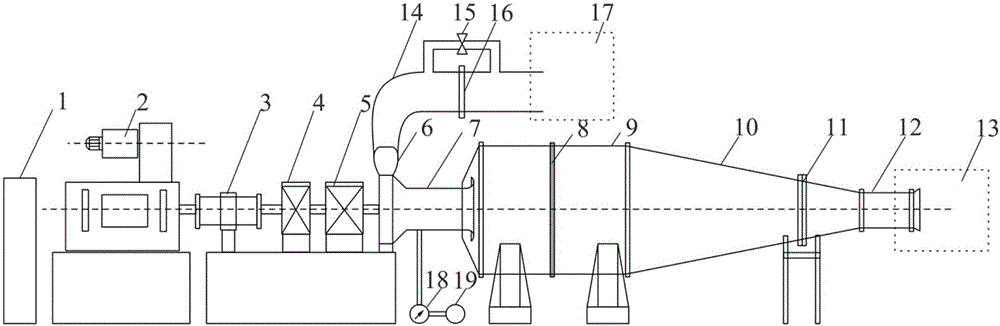

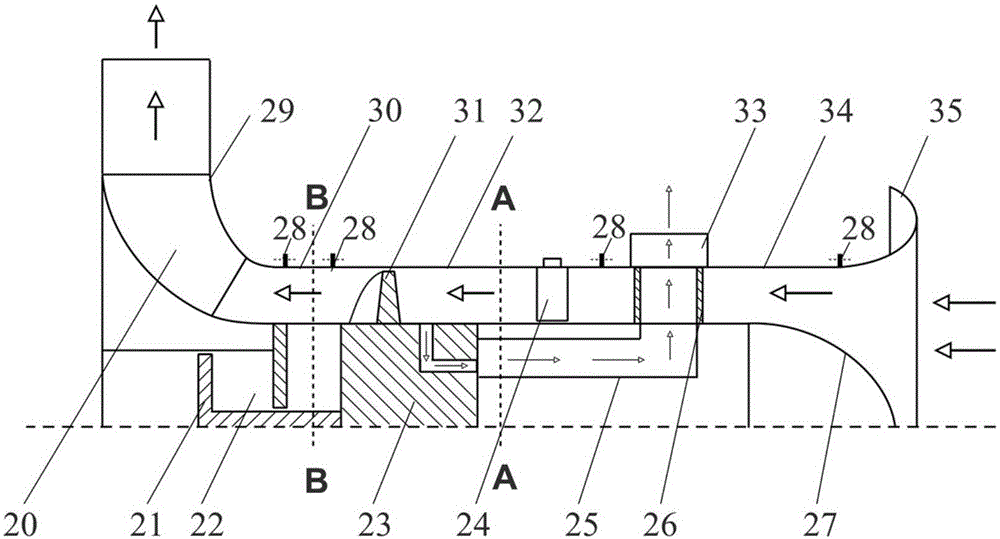

Rotary punching air compressor testing system

ActiveCN106198034ARealize measurementSolve starting problemsInternal-combustion engine testingLow speedPilot system

The invention discloses a rotary punching air compressor testing system comprising a power system, a torque-measuring apparatus, a speedup system, an air inlet system, an air exhaust system, a rotary punching air compressor test segment, an air discharging device, a vibration measuring device, an auxiliary system and a pneumatic parameter measuring and data collecting system; detailed performance test data of a rotary punching compression rotor which is a core part of a rotary punching air compressor can be obtained, wall surface static pressure can be measured dynamically, a flow field structure of a tip part of the rotary punching compression rotor can be obtained, and requirements for lubrication and safety in high speed conditions and requirements for starting in low speed conditions can be met.

Owner:DALIAN MARITIME UNIVERSITY

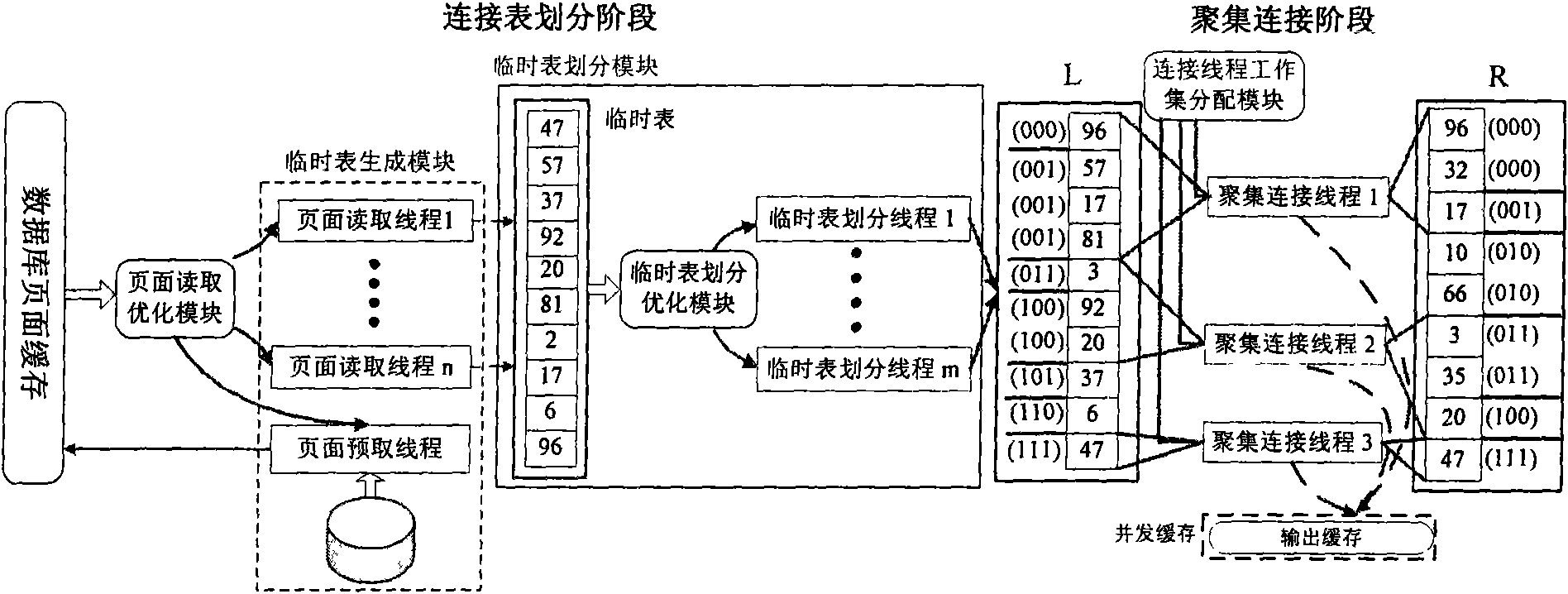

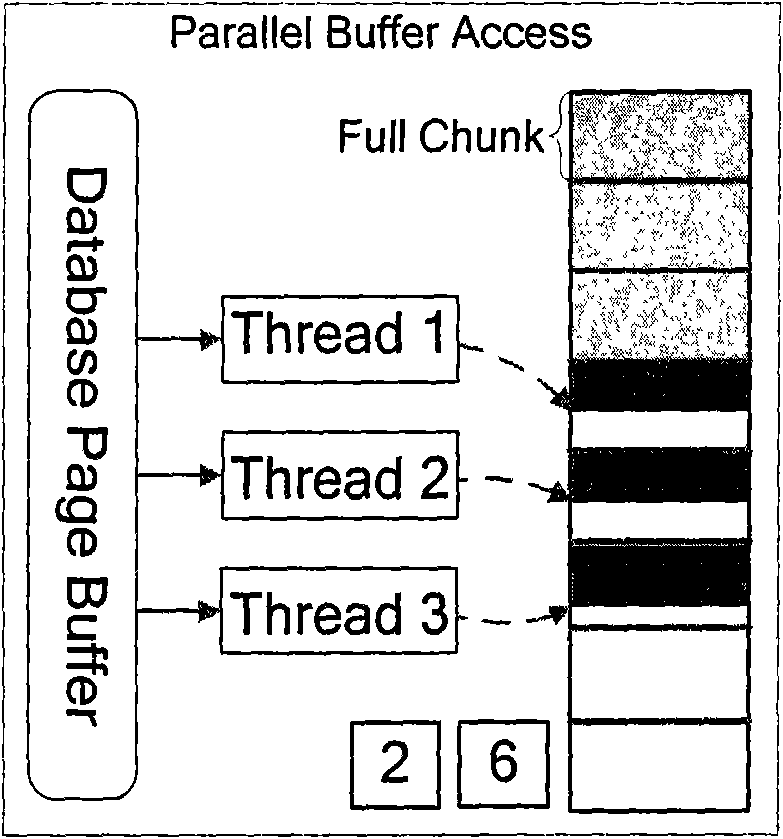

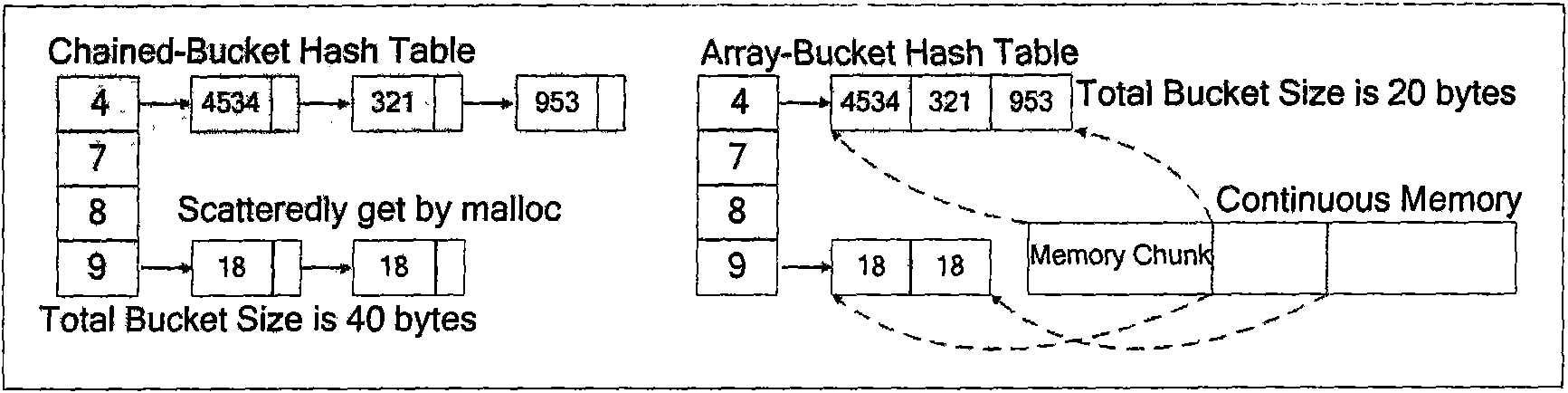

Hash connecting method for database based on shared Cache multicore processor

InactiveCN101593202AReduce Cache access conflictsLoad balancingMemory adressing/allocation/relocationConcurrent instruction executionCache accessLinked list

The invention discloses a hash connecting method for database based on a shared Cache multicore processor. The method is divided into a link list division phase and an aggregation connection phase, wherein the link list division comprises the following steps that: firstly, a temporary list is generated through a temporary list generation module; secondly, temporary list division is executed on the temporary list by temporary list division thread; thirdly, before division, a proper data division strategy is determined according to the size of the temporary list; and fourthly, proper start occasion of the temporary list division thread is determined in a process of temporary list division to reduce Cache access collision; in aggregation connection, an aggregation connection execution methodbased on classification of aggregation sizes is adopted, and the memory access in hash connection is optimized. The method ensures that the hash connection sufficiently utilizes operating resources of a multicore processor, and the speedup ratio executed by hash connection is close to the number of cores of the processor so as to greatly shorten the execution time of the hash connection.

Owner:NAT UNIV OF DEFENSE TECH +2

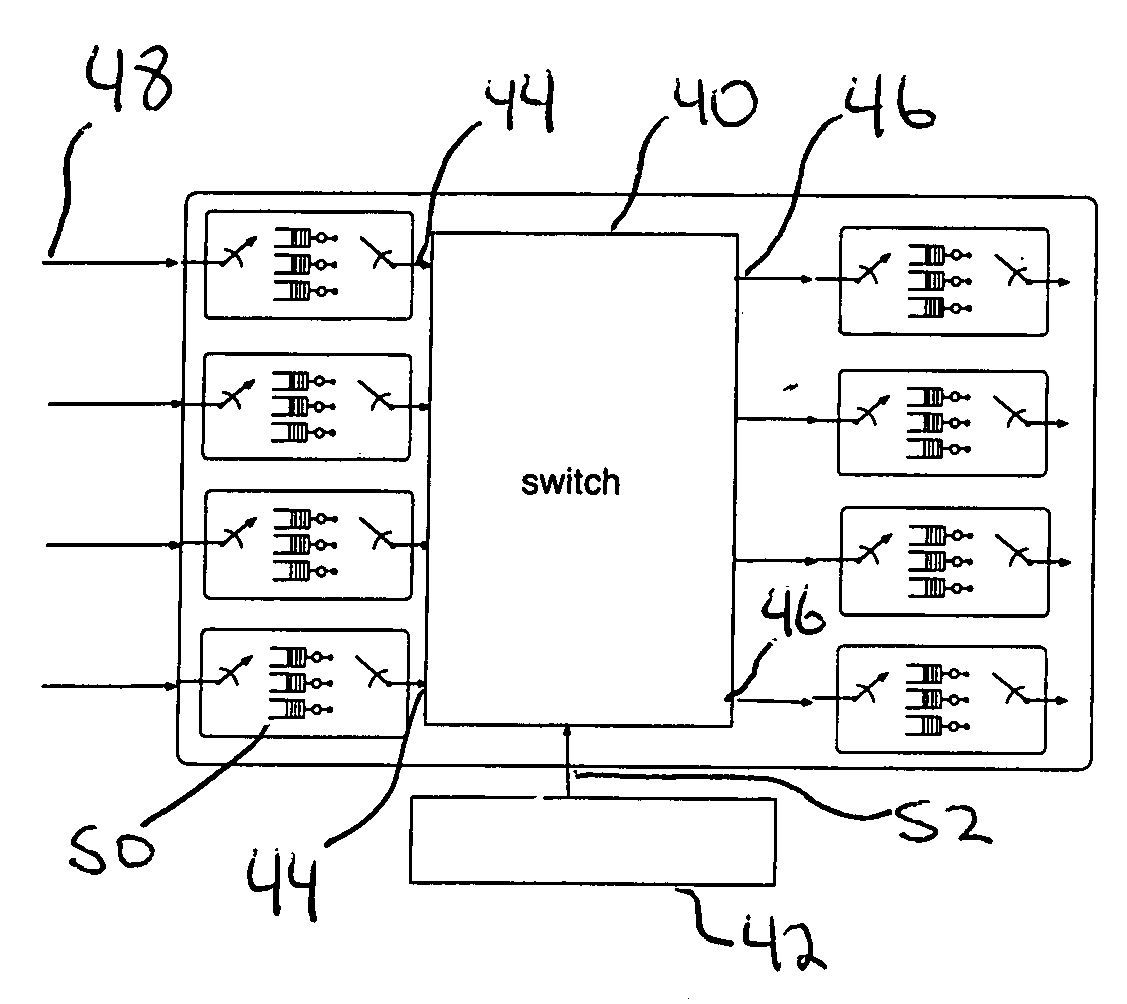

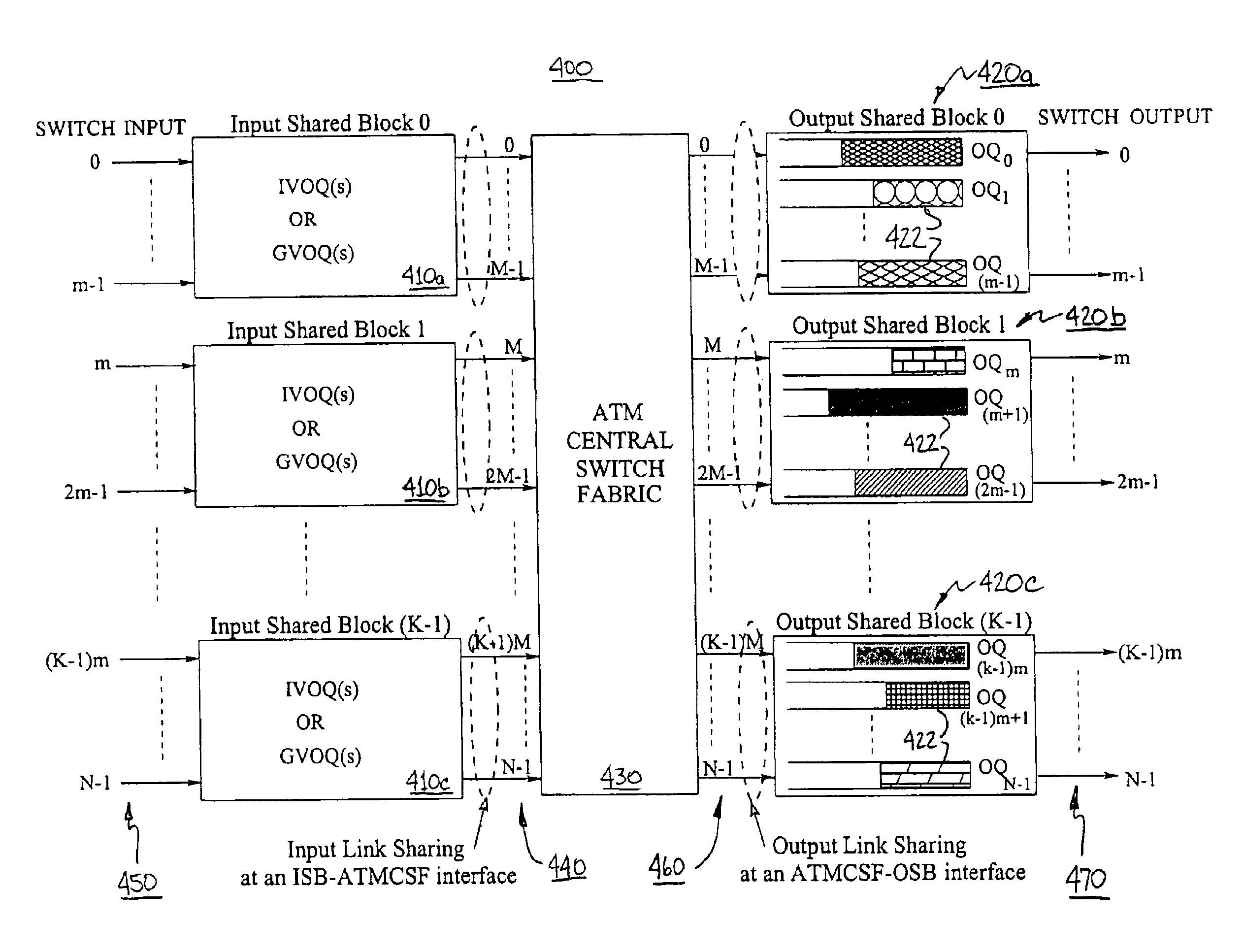

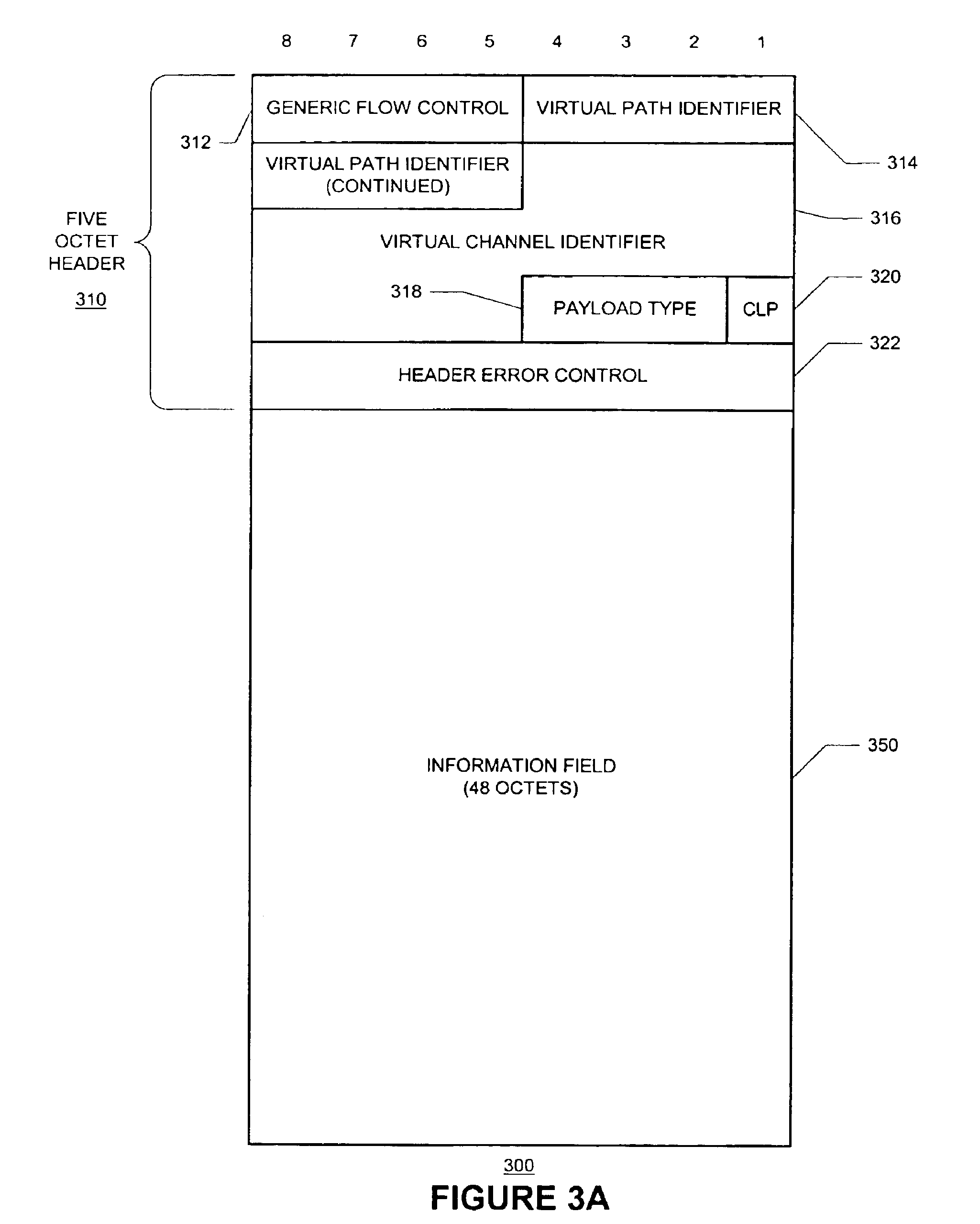

Methods and apparatus for switching packets

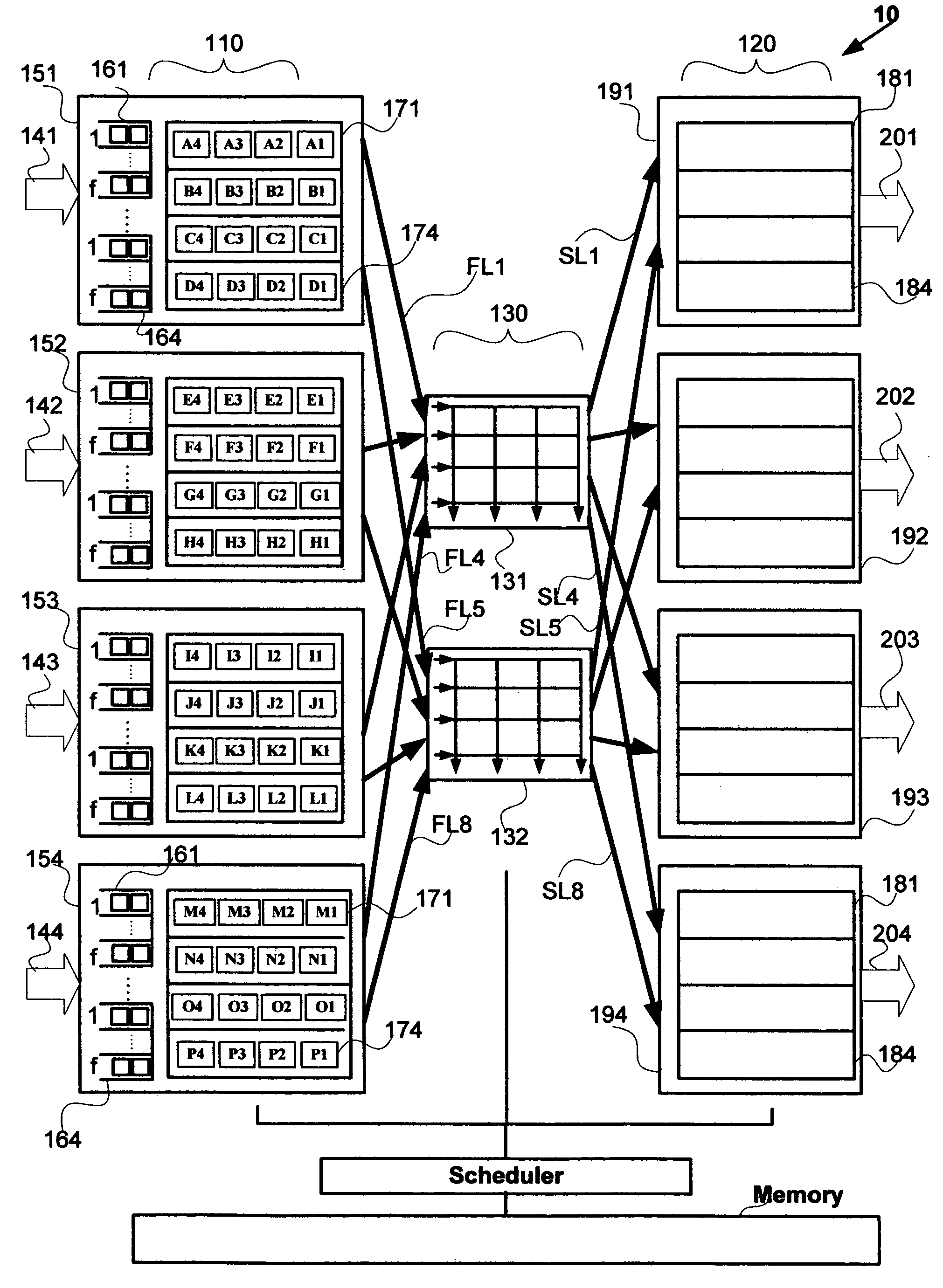

InactiveUS6961342B1Can be highImprove scalabilityError preventionFrequency-division multiplex detailsCell schedulingLarge size

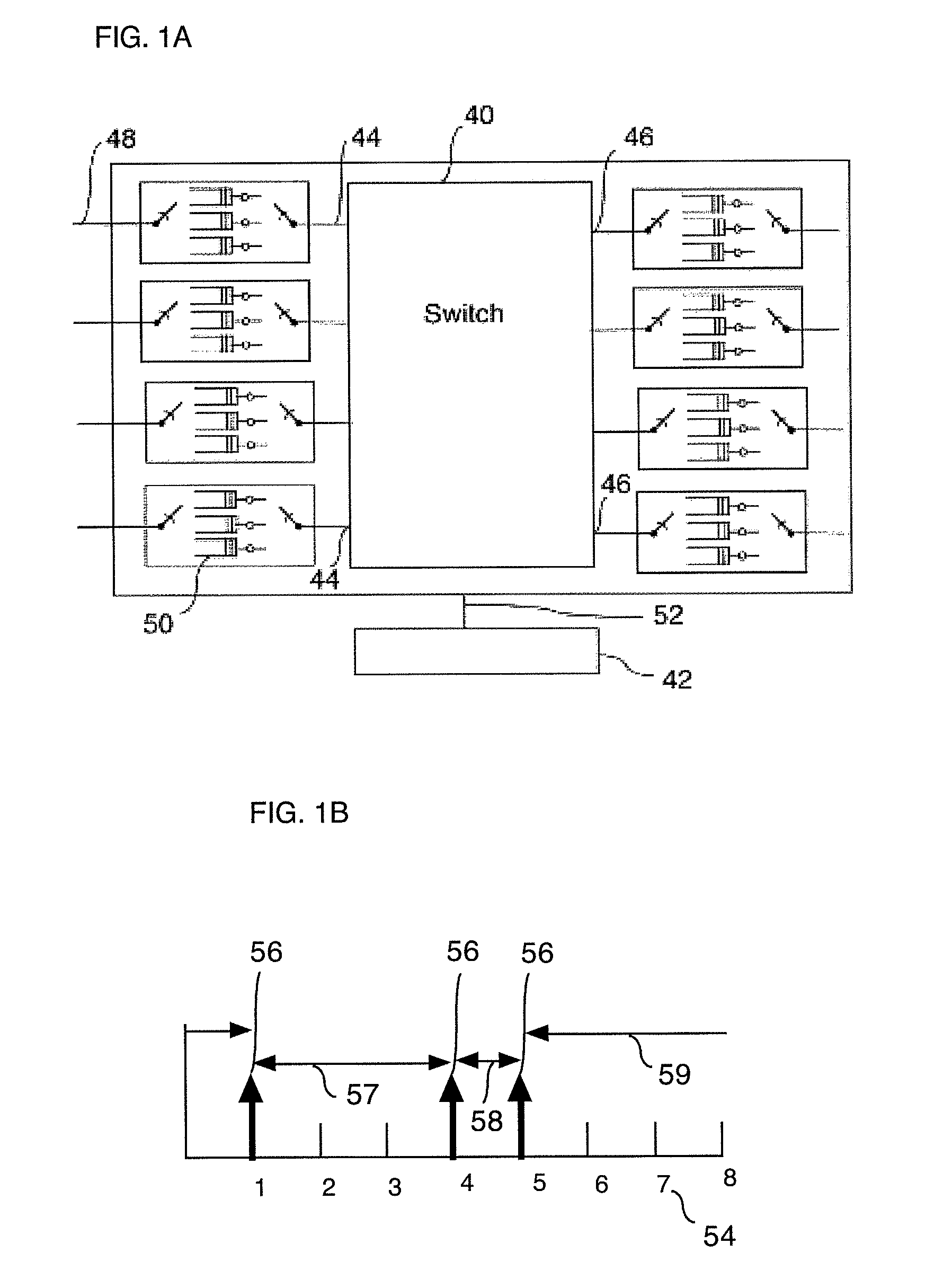

In Switches, switch inputs and outputs may be grouped into (e.g., small) modules called input shared blocks (or “ISBs”) and output shared blocks (or “OSBs”), respectively. Each of the switches includes three (3) main parts: (i) input shared blocks (ISBs); (ii) a central switch fabric (or “ATMCSF”); and (iii) output shared blocks (OSBs). Input link sharing at every ISB-ATMCSF interface and output link sharing at every ATMCSF-OSB interface cooperate intelligently to resolve output contention and essentially eliminate any speedup requirement in central switch fabric. Each of the proposed switches can easily scale to a large size by cascading additional input and output shared blocks (ISBs and OSBs). Instead of using a centralized scheduler to resolve input and output contention, the each of the switches applies a distributed link reservation scheme upon which cell scheduling is based. In one embodiment, a dual round robin dynamic link reservation technique, in which an input shared block (ISB) only needs its local available information to arbitrate potential modification for its own link reservation, may be used.

Owner:UZUN NECDET

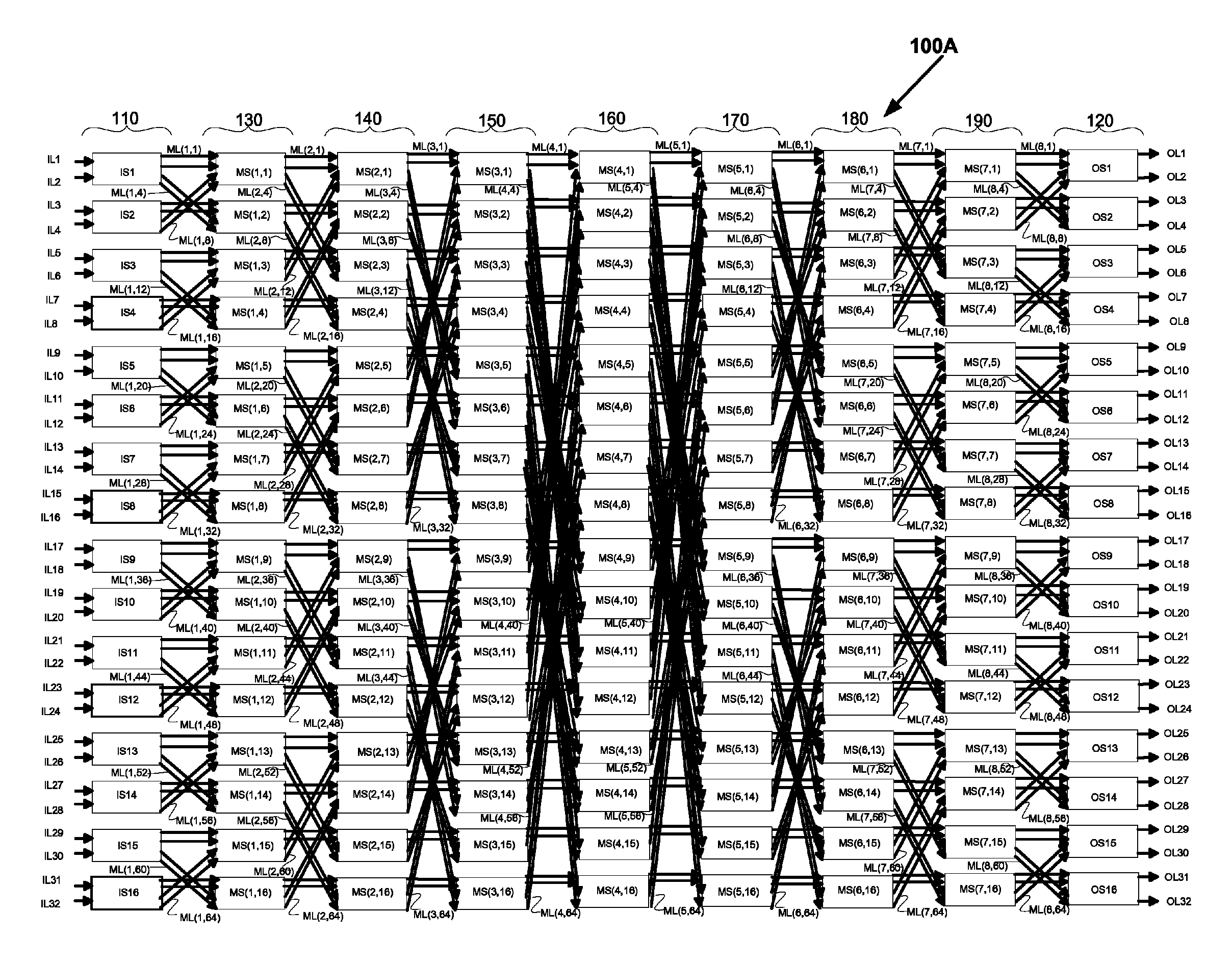

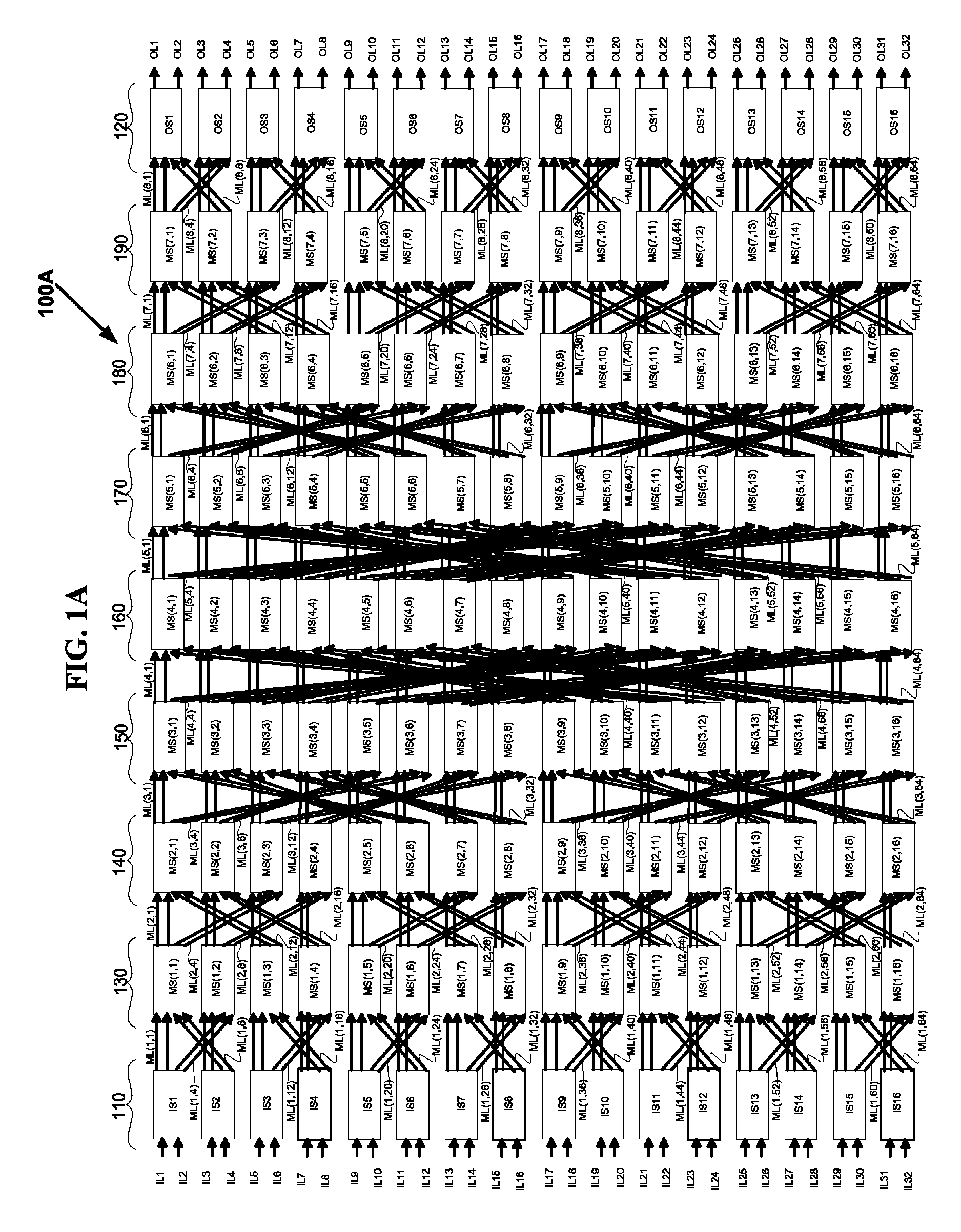

Nonblocking and deterministic unicast packet scheduling

InactiveUS20050117575A1Guaranteed bandwidthElectronic switchingData switching by path configurationCrossbar switchPacket scheduling

A system for scheduling unicast packets through an interconnection network having a plurality of input ports, a plurality of output ports, and a plurality of input queues, comprising unicast packets, at each input port is operated in nonblocking manner in accordance with the invention by scheduling at most as many packets equal to the number of input queues from each input port to each output port. The system is operated at 100% throughput, work conserving, fair, and yet deterministically thereby never congesting the output ports. The system performs arbitration in only one iteration, with mathematical minimum speedup in the interconnection network. The system operates with absolutely no packet reordering issues, no internal buffering of packets in the interconnection network, and hence in a truly cut-through and distributed manner. In another embodiment each output port also comprises a plurality of output queues and each packet is transferred to an output queue in the destined output port in nonblocking and deterministic manner and without the requirement of segmentation and reassembly of packets even when the packets are of variable size. In one embodiment the scheduling is performed in strictly nonblocking manner with a speedup of at least two in the interconnection network. In another embodiment the scheduling is performed in rearrangeably nonblocking manner with a speedup of at least one in the interconnection network. The system also offers end to end guaranteed bandwidth and latency for packets from input ports to output ports. In all the embodiments, the interconnection network may be a crossbar network, shared memory network, clos network, hypercube network, or any internally nonblocking interconnection network or network of networks.

Owner:TEAK TECH

Method and apparatus to schedule packets through a crossbar switch with delay guarantees

ActiveUS8089959B2Multiplex system selection arrangementsData switching by path configurationDifferentiated servicesCrossbar switch

A method for scheduling cell transmissions through a switch with rate and delay guarantees and with low jitter is proposed. The method applies to a classic input-buffered N×N crossbar switch without speedup. The time axis is divided into frames each containing F time-slots. An N×N traffic rate matrix specifies a quantized guaranteed traffic rate from each input port to each output port. The traffic rate matrix is transformed into a permutation with NF elements which is decomposed into F permutations of N elements using a recursive and fair decomposition method. Each permutation is used to configure the crossbar switch for one time-slot within a frame of size F time-slots, and all F permutations result in a Frame Schedule. In the frame schedule, the expected Inter-Departure Time (IDT) between cells in a flow equals the Ideal IDT and the delay jitter is bounded and small. For fixed frame size F, an individual flow can often be scheduled in O(log N) steps, while a complete reconfiguration requires O(N log N) steps when implemented in a serial processor. An RSVP or Differentiated Services-like algorithm can be used to reserve bandwidth and buffer space in an IP-router, an ATM switch or MPLS switch during a connection setup phase, and the proposed method can be used to schedule traffic in each router or switch. Best-effort traffic can be scheduled using any existing dynamic scheduling algorithm to fill the remaining unused switch capacity within each Frame. The scheduling algorithm also supports multicast traffic.

Owner:SZYMANSKI TED HENRYK

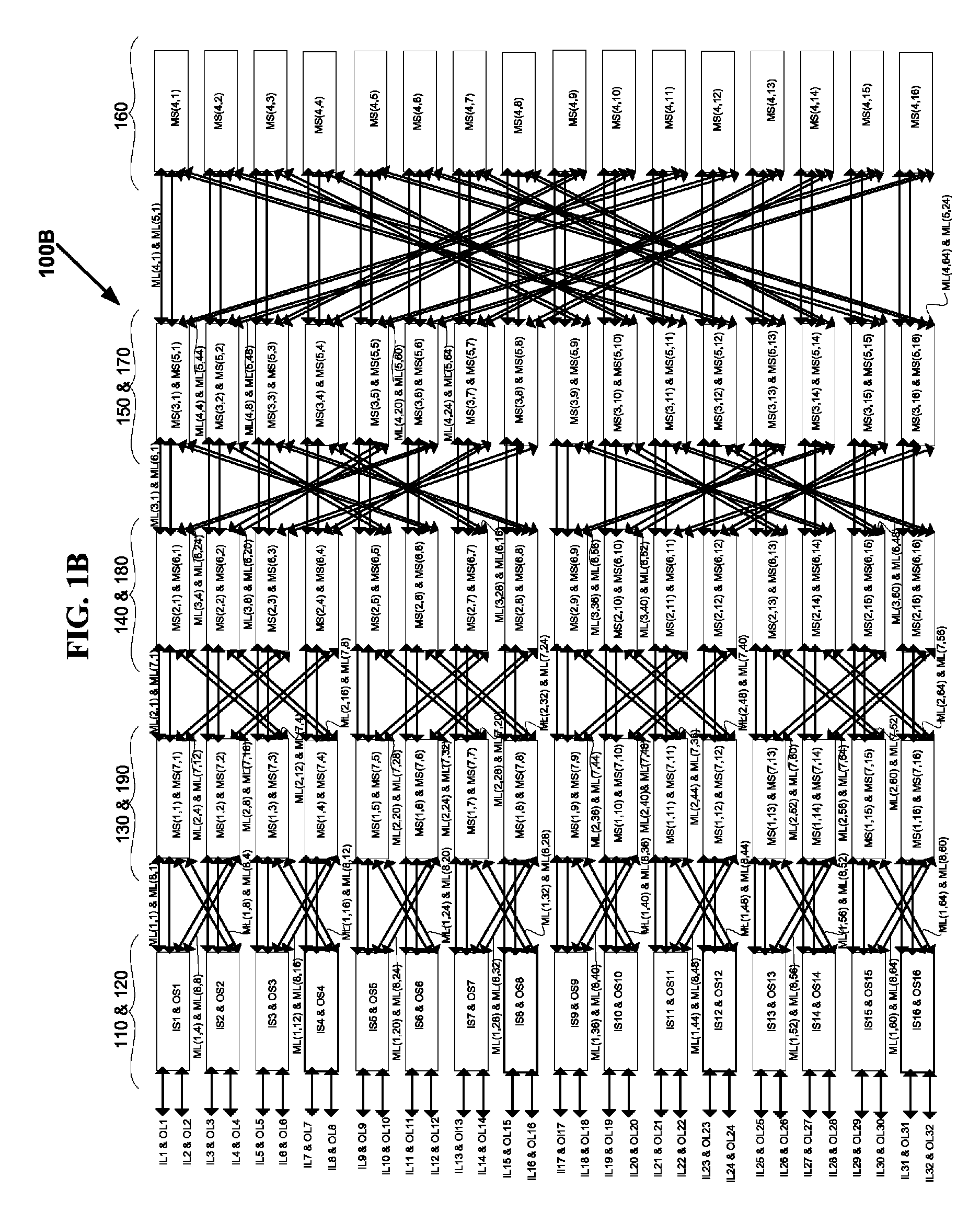

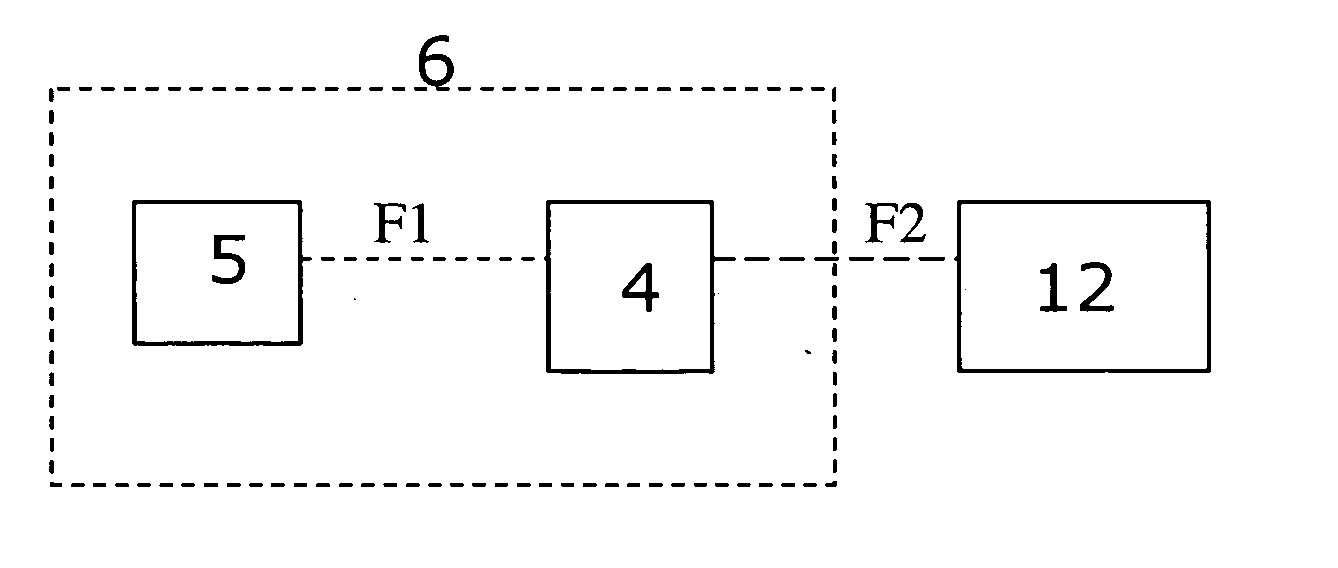

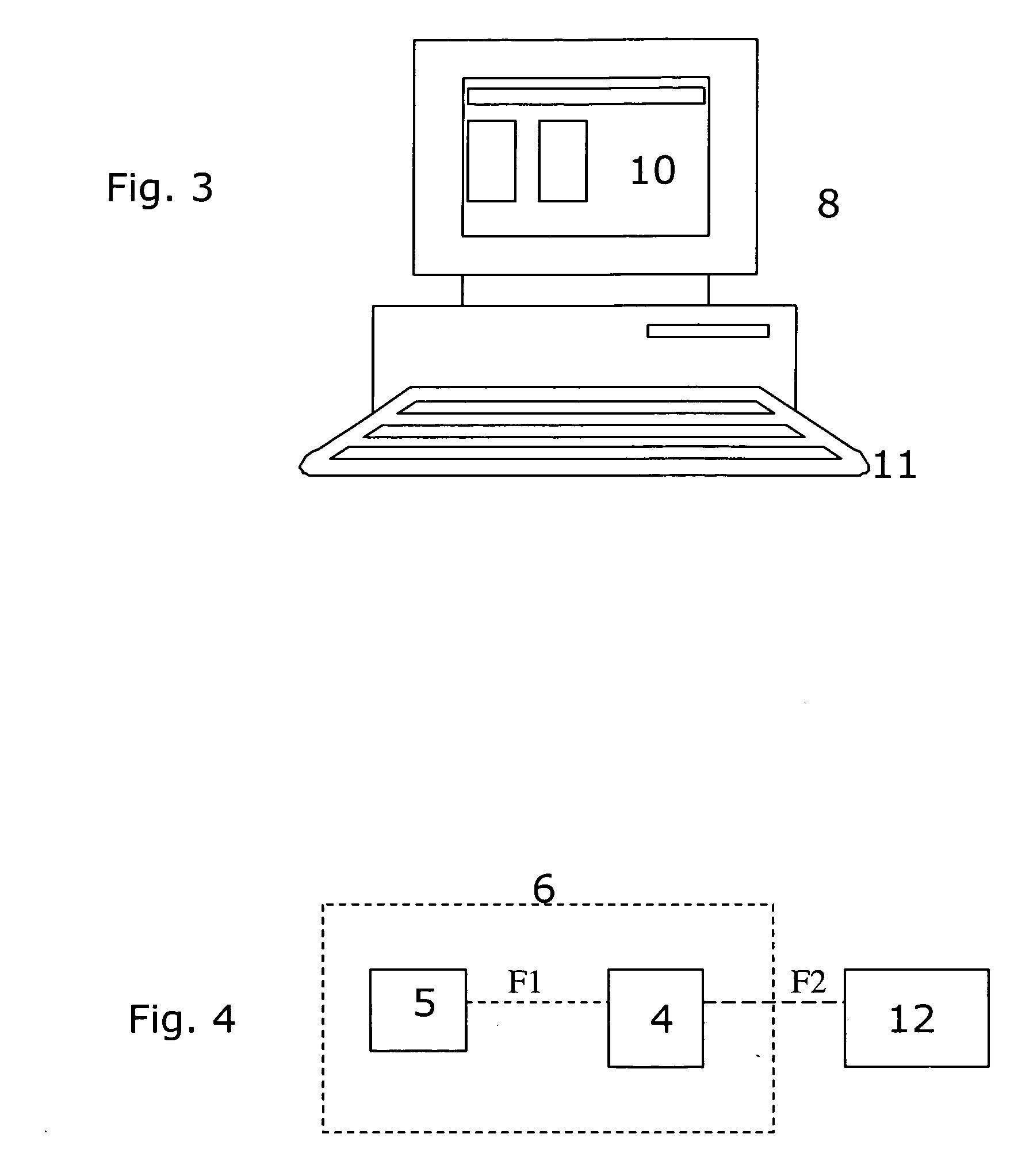

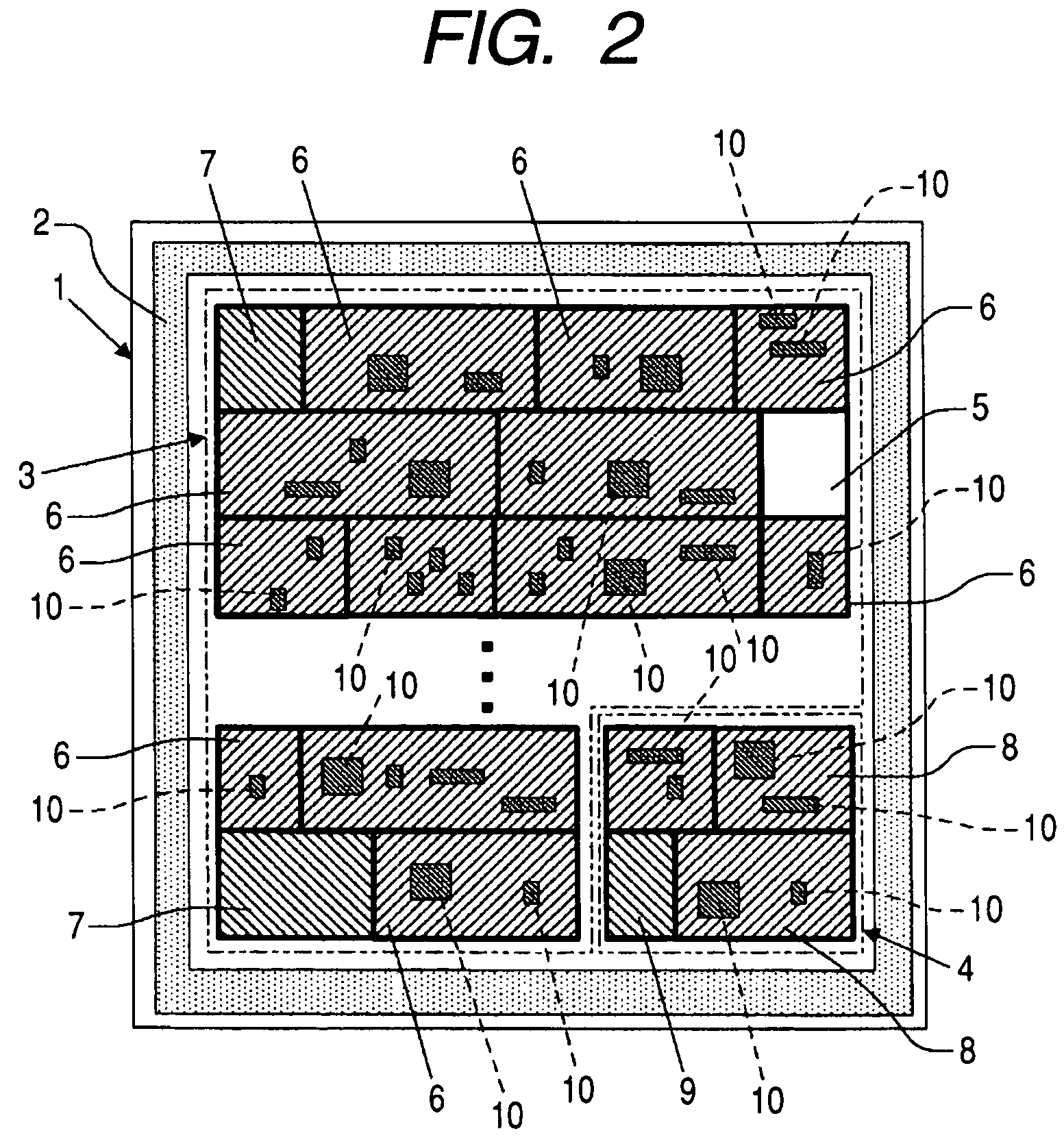

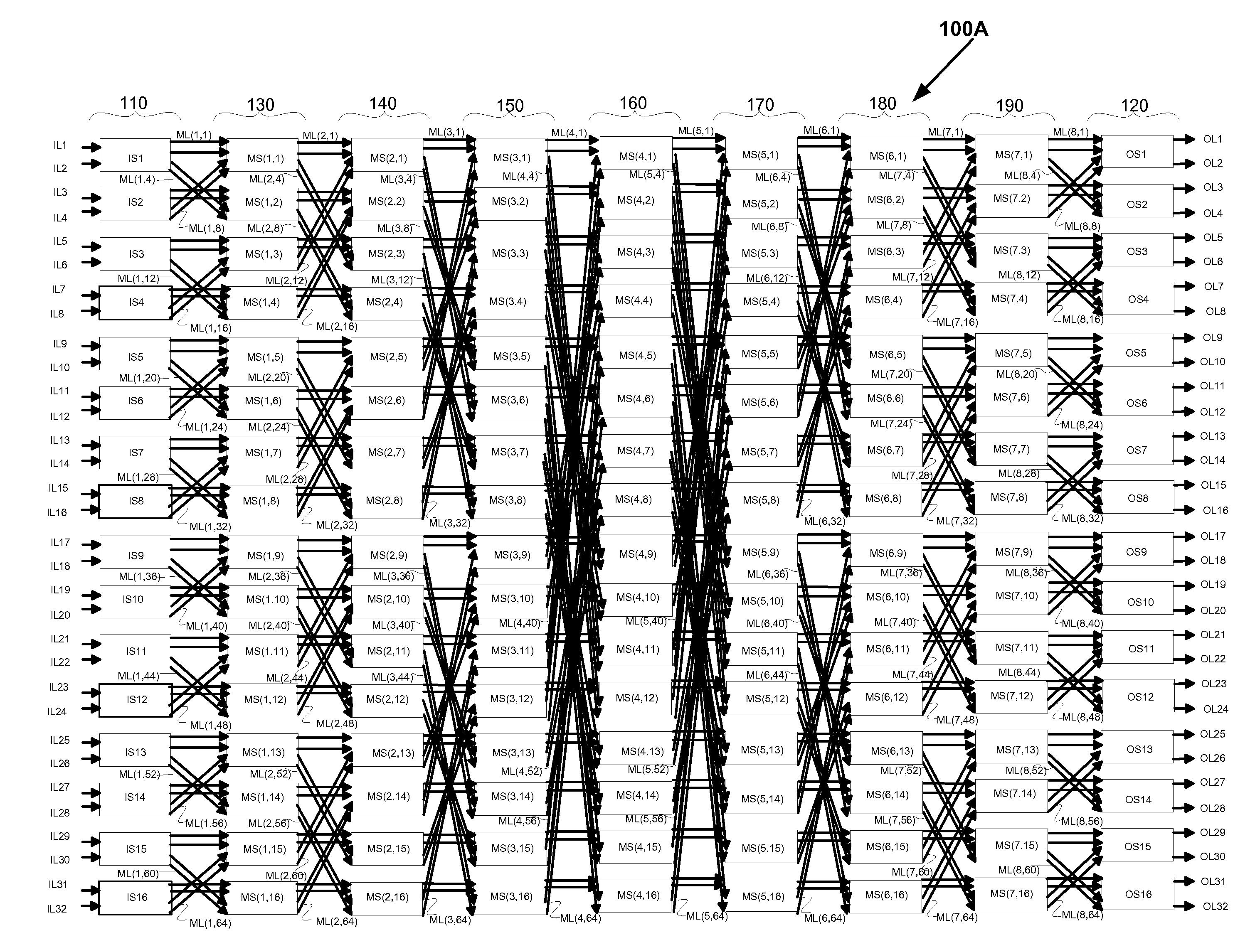

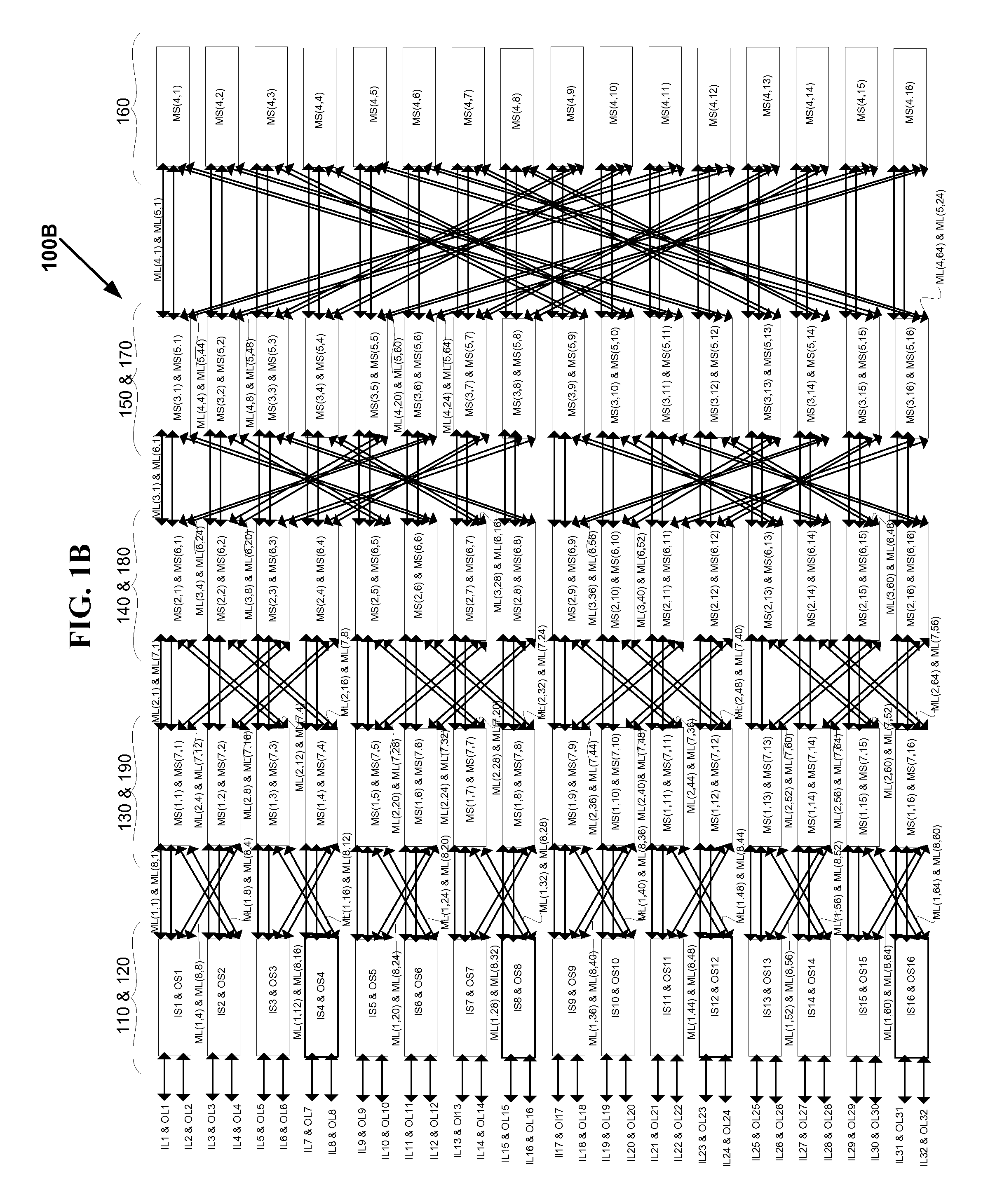

VLSI layouts of fully connected generalized and pyramid networks with locality exploitation

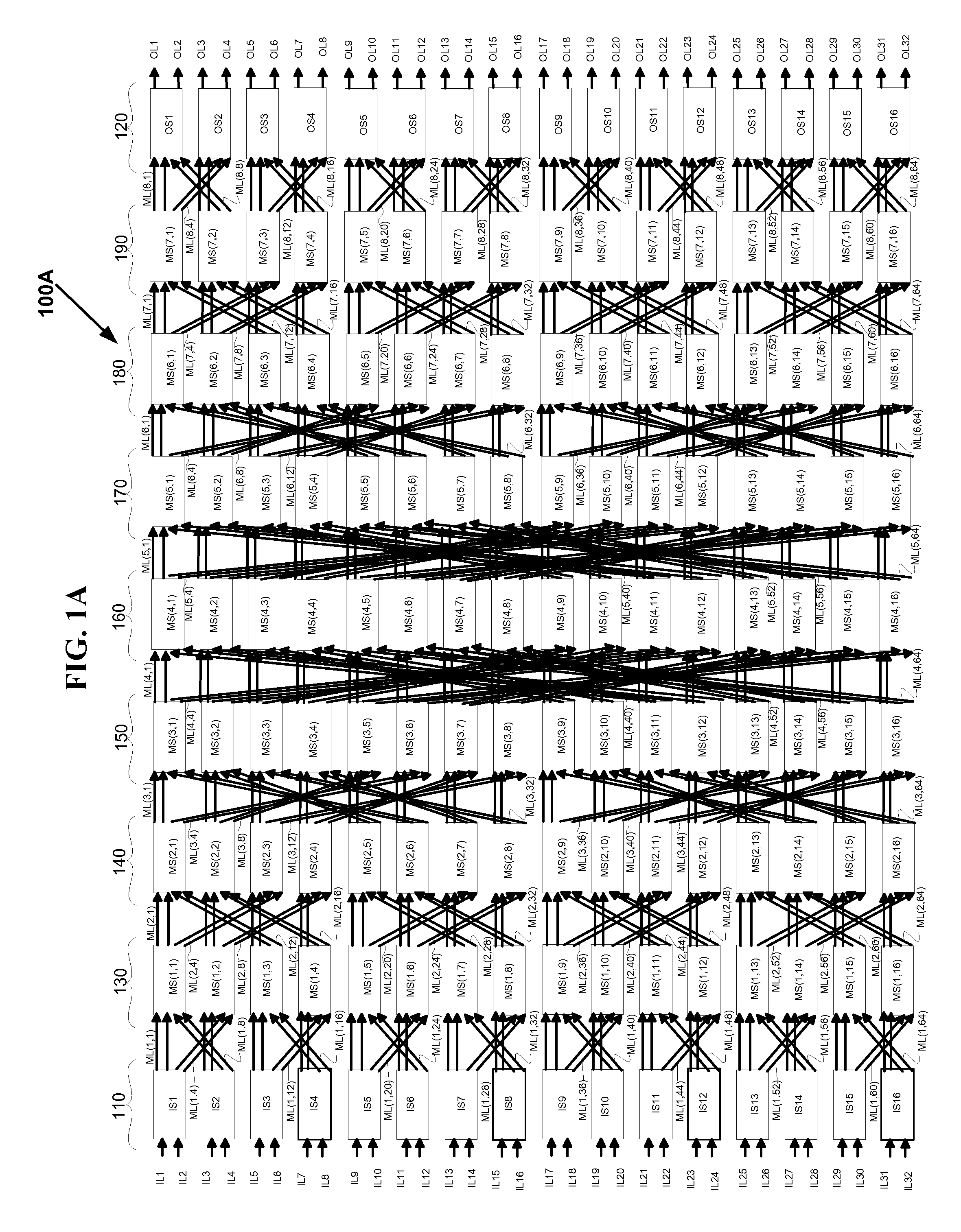

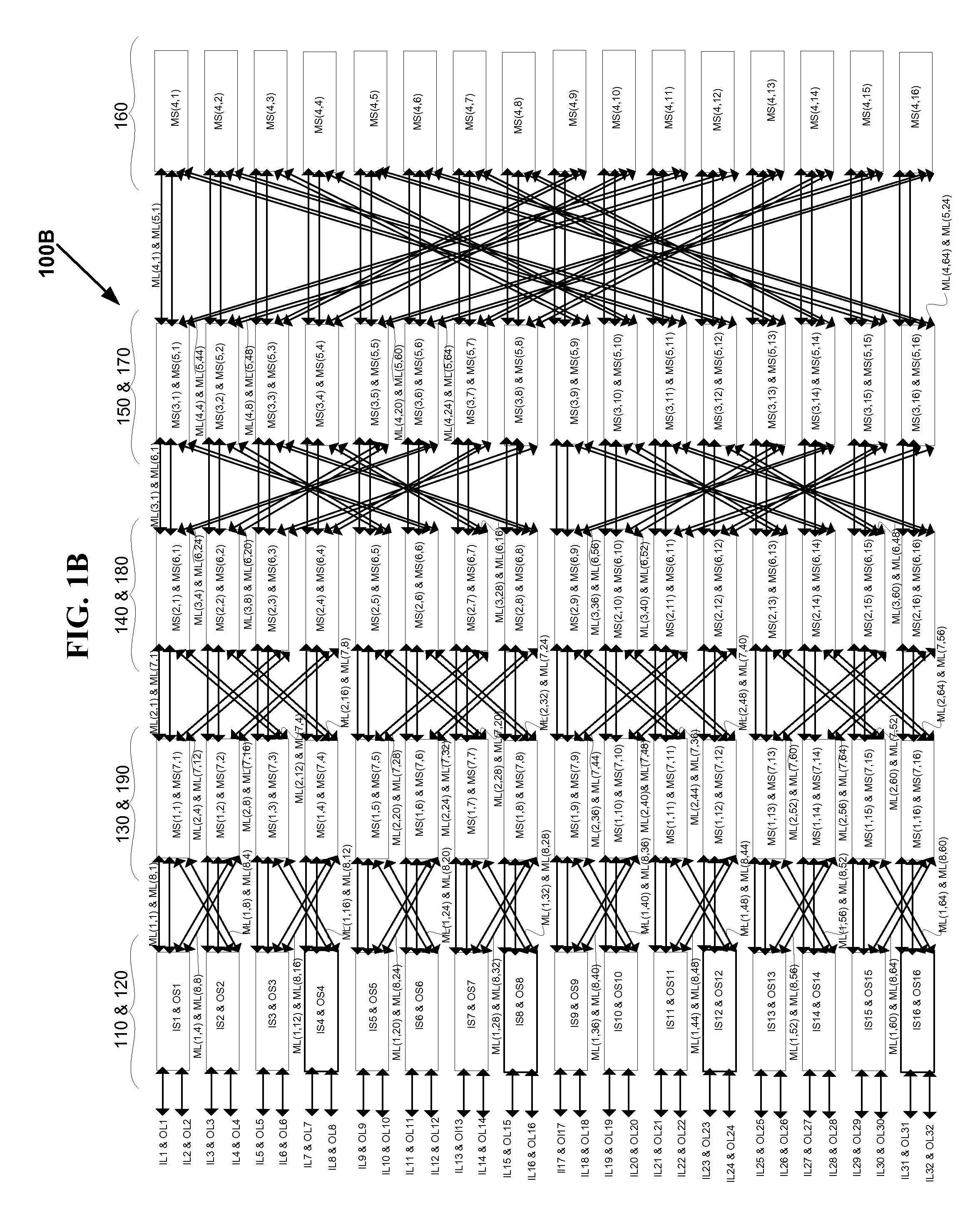

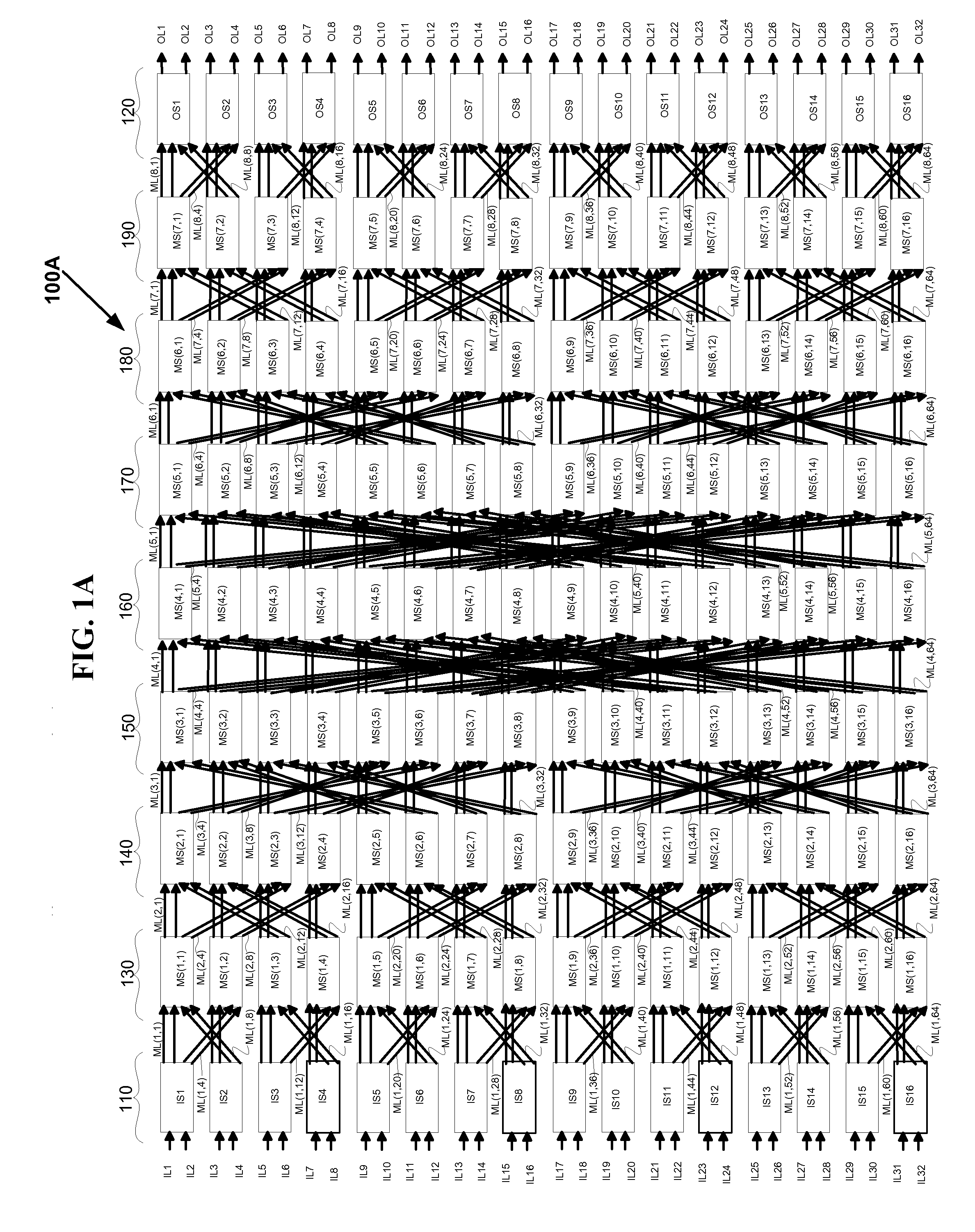

ActiveUS8898611B2Low pour pointReduce signal delayMultiplex system selection arrangementsGeometric CADCross-linkMulti link

VLSI layouts of generalized multi-stage and pyramid networks for broadcast, unicast and multicast connections are presented using only horizontal and vertical links with spacial locality exploitation. The VLSI layouts employ shuffle exchange links where outlet links of cross links from switches in a stage in one sub-integrated circuit block are connected to inlet links of switches in the succeeding stage in another sub-integrated circuit block so that said cross links are either vertical links or horizontal and vice versa. Furthermore the shuffle exchange links are employed between different sub-integrated circuit blocks so that spacially nearer sub-integrated circuit blocks are connected with shorter links compared to the shuffle exchange links between spacially farther sub-integrated circuit blocks. In one embodiment the sub-integrated circuit blocks are arranged in a hypercube arrangement in a two-dimensional plane. The VLSI layouts exploit the benefits of significantly lower cross points, lower signal latency, lower power and full connectivity with significantly fast compilation.The VLSI layouts with spacial locality exploitation presented are applicable to generalized multi-stage and pyramid networks, generalized folded multi-stage and pyramid networks, generalized butterfly fat tree and pyramid networks, generalized multi-link multi-stage and pyramid networks, generalized folded multi-link multi-stage and pyramid networks, generalized multi-link butterfly fat tree and pyramid networks, generalized hypercube networks, and generalized cube connected cycles networks for speedup of s≧1. The embodiments of VLSI layouts are useful in wide target applications such as FPGAs, CPLDs, pSoCs, ASIC placement and route tools, networking applications, parallel & distributed computing, and reconfigurable computing.

Owner:KONDA TECH

Application software initiated speedup

ActiveUS20070186123A1Reduce overheadCost timeEnergy efficient ICTVolume/mass flow measurementSoftware engineeringApplication software

The invention discloses an instruction, processor, system and method which allow application level software to explicitly request a temporary performance boost, from computing hardware. More specifically it relates to advanced management of working frequency of a processor in order to achieve the performance boost. Preferably a processor according to the invention may be implemented in electronic exchanges or similar applications where peak periods may occur.

Owner:NASDAQ TECHNOLOGY AB

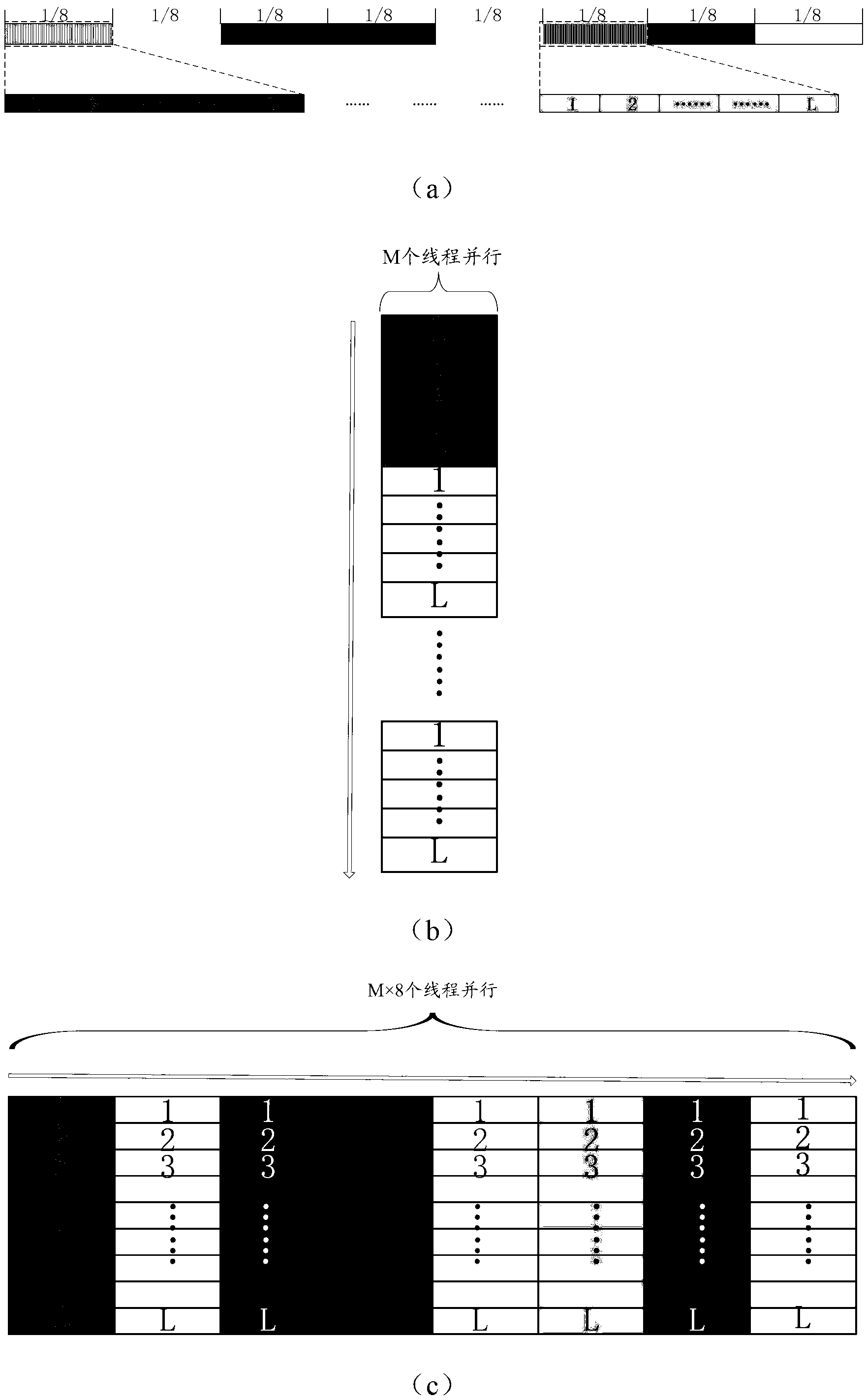

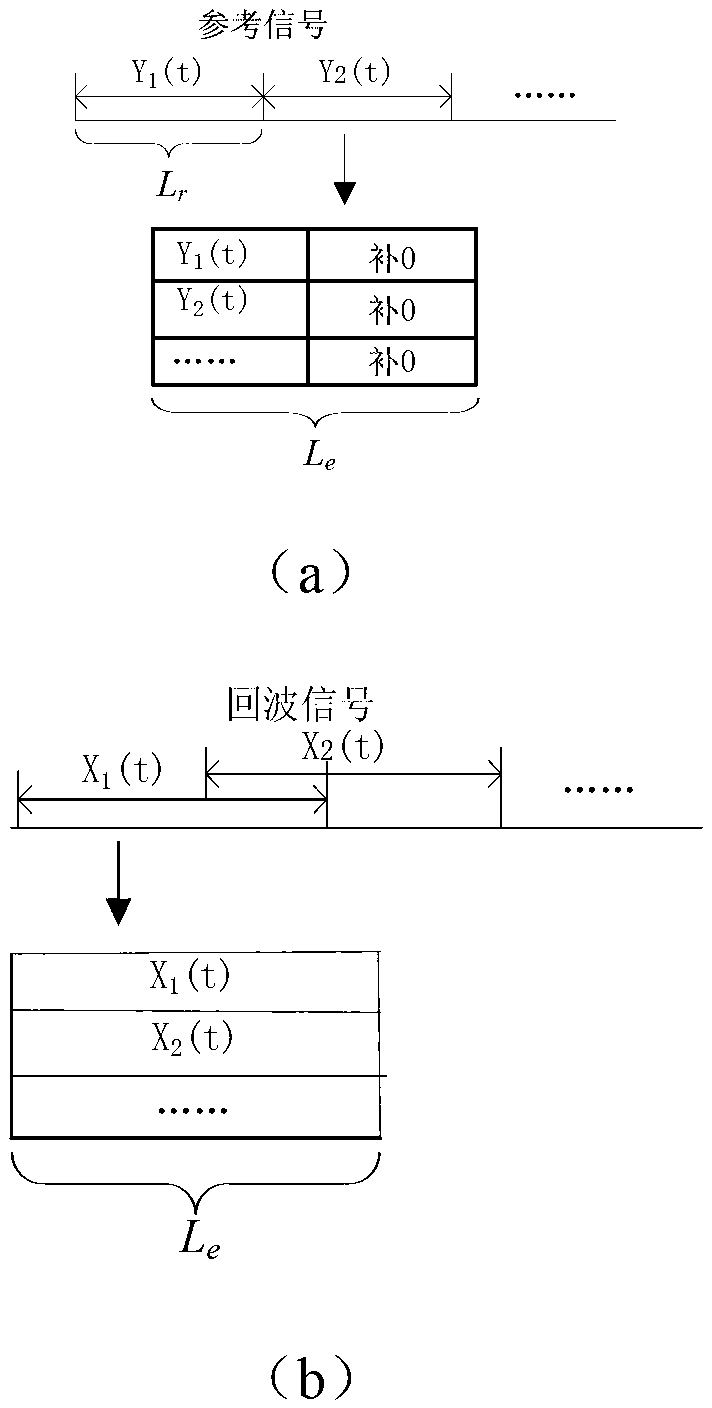

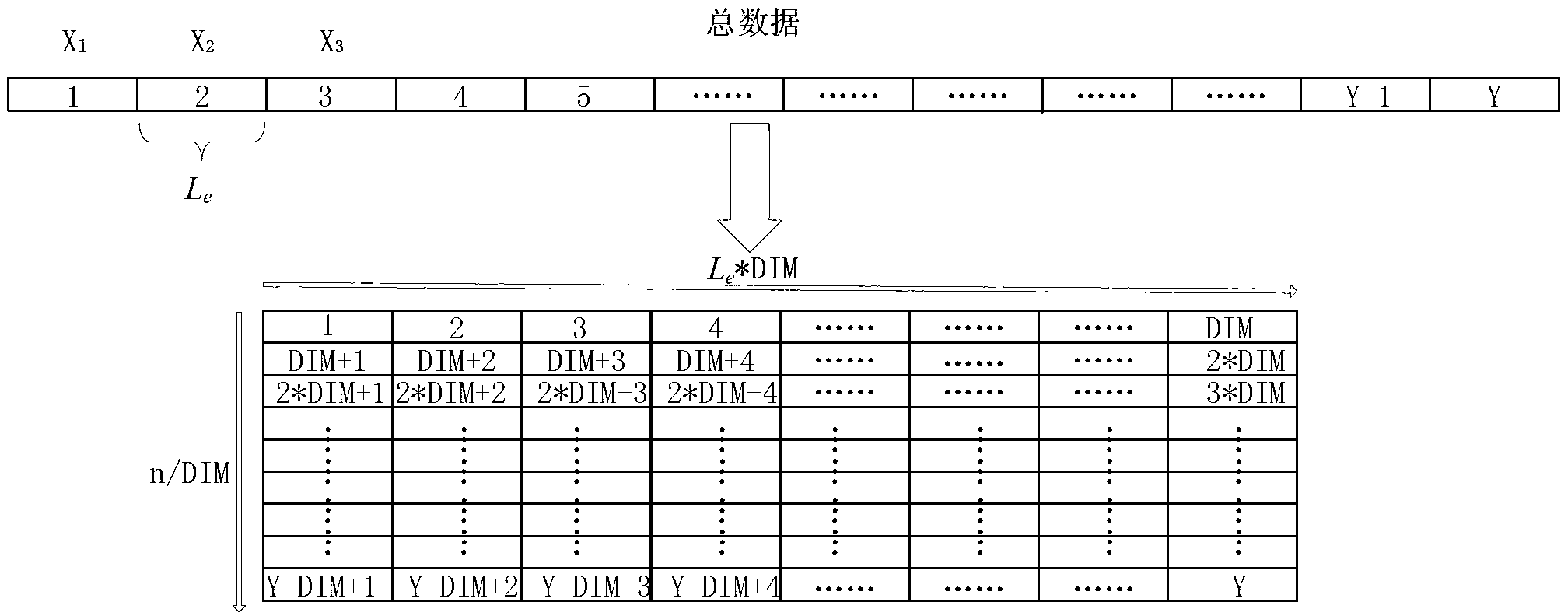

Method for fast realizing signal processing of passive radar based on GPU (Graphics Processing Unit)

ActiveCN103308897AProcessing speedHigh speedupWave based measurement systemsVideo memoryData segment

The invention discloses a method for fast realizing the signal processing of passive radar based on a GPU (Graphics Processing Unit). The method comprises the following steps of at a link of direct wave and clutter suppression, dividing whole data into N data blocks and dividing the data blocks into L data segments, wherein the number of data points within each data segment is M; splicing the data with the same data segment number, of each data block together in a way that the current segment of the No. N data block is spliced with the next segment of the first data block; enabling M*N threads by the GPU for parallel processing; at a link of coherent accumulation and walk correction, segmenting the data in a way that all the data are divided into n segments; grouping the continuous segments in a way that every DIM segments form one group; splicing the all groups of data together in sequence and storing such data in a continuous address space of a GPU video memory; and finally, carrying out GPU parallel processing on the each group of data. The method for fast realizing the signal processing of the passive radar based on the GPU is more applicable for the GPU parallel processing, and the higher speedup ratio can enable real-time processing demands to be met.

Owner:INST OF ELECTRONICS CHINESE ACAD OF SCI

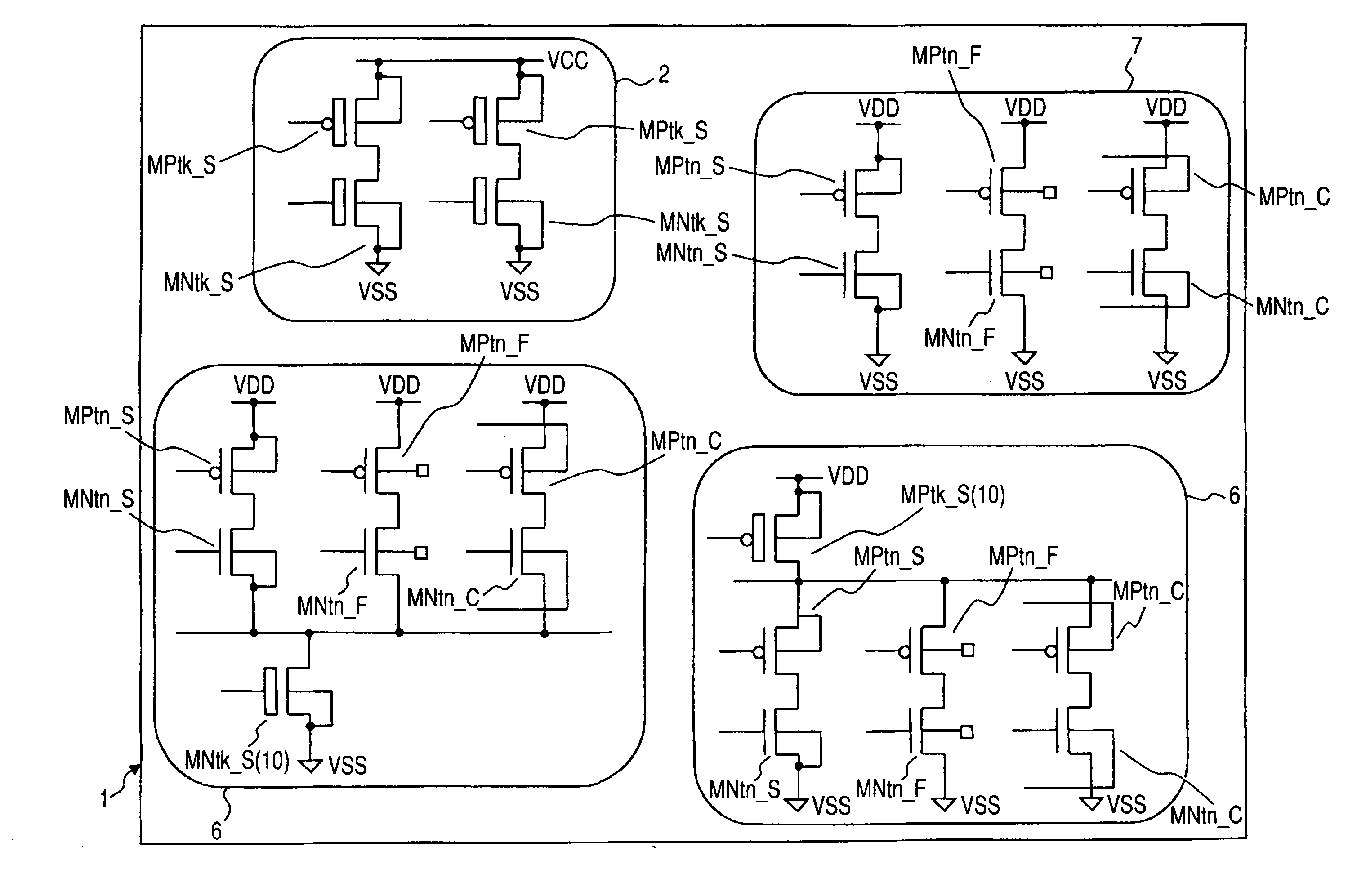

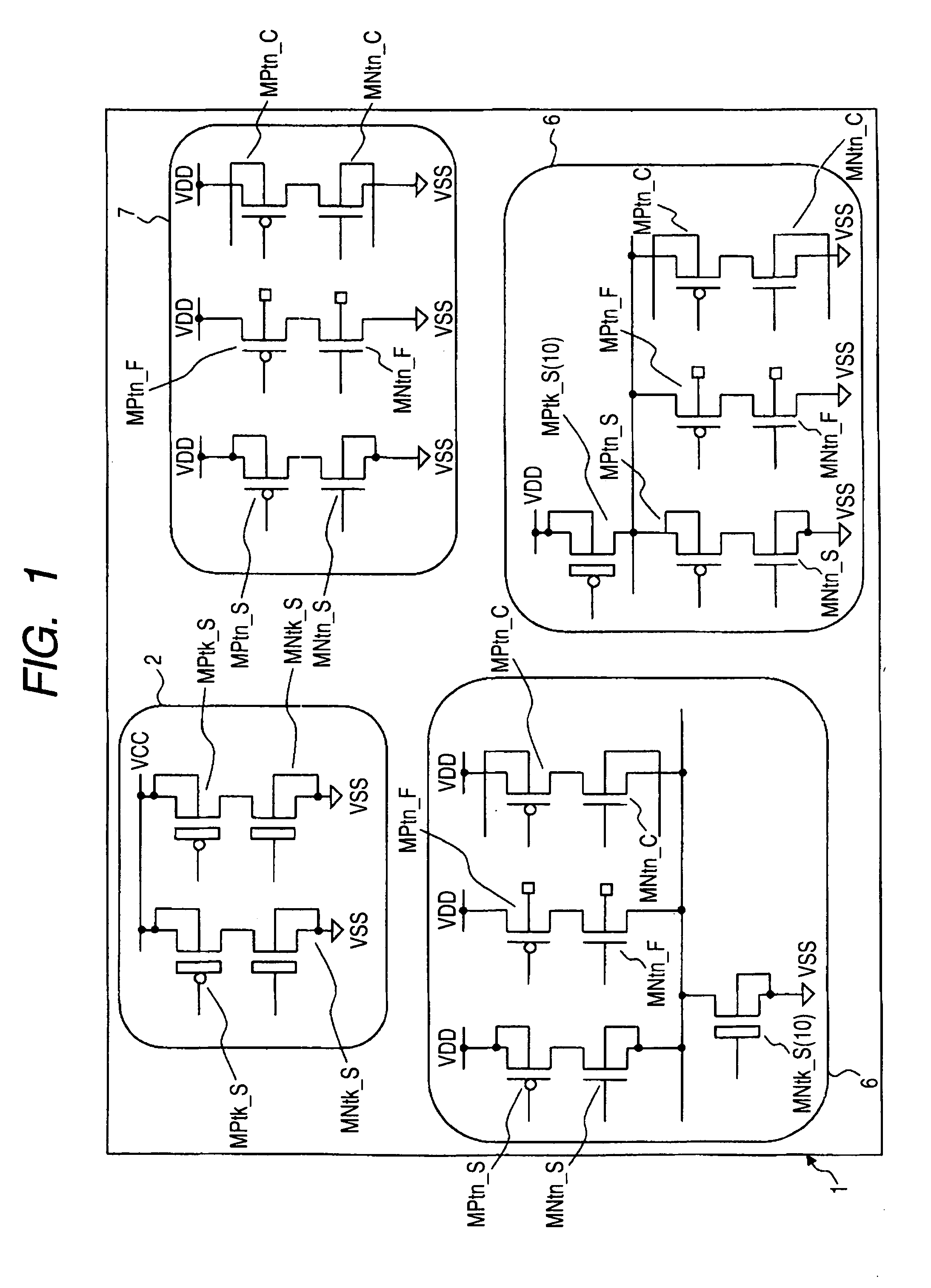

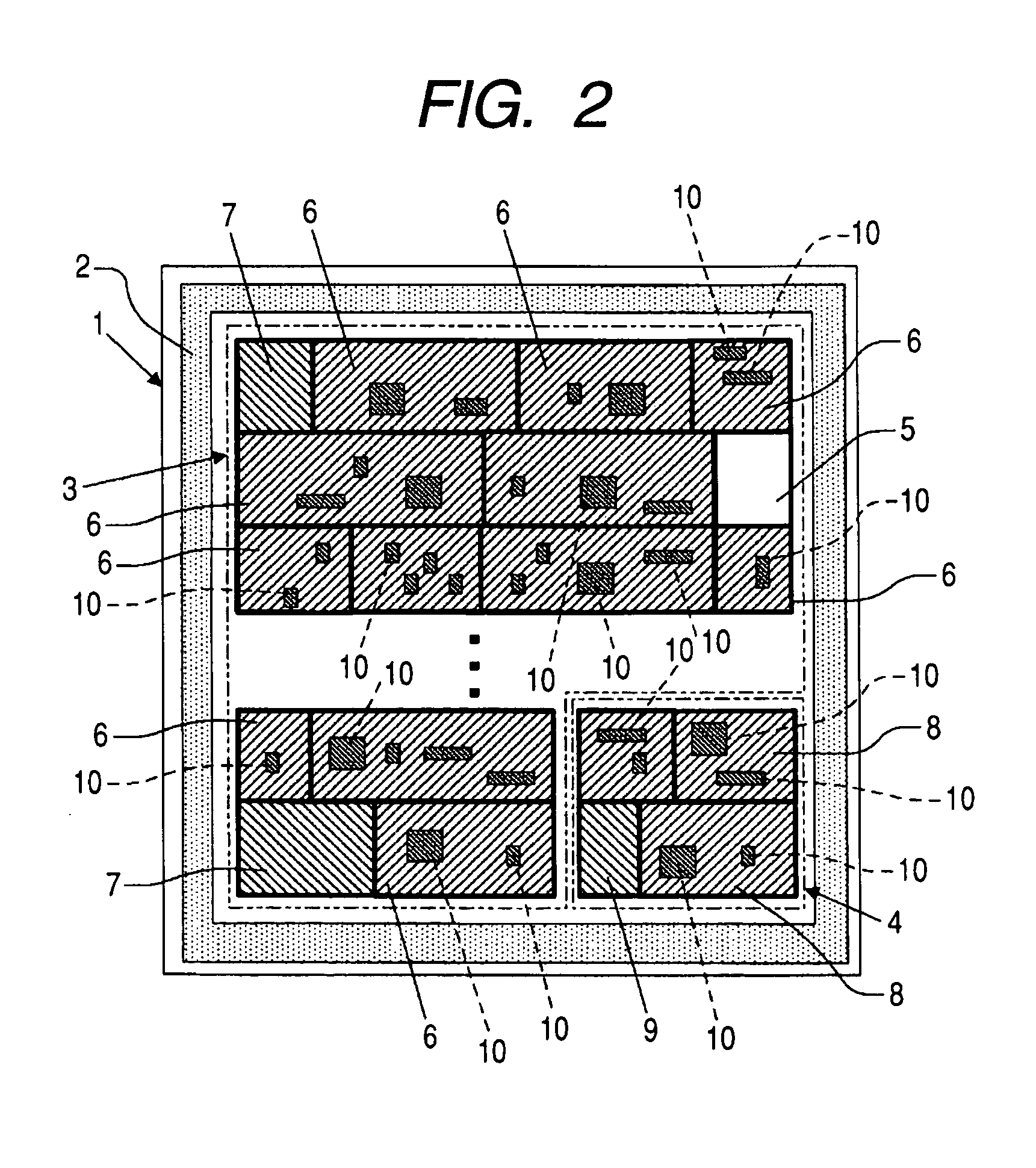

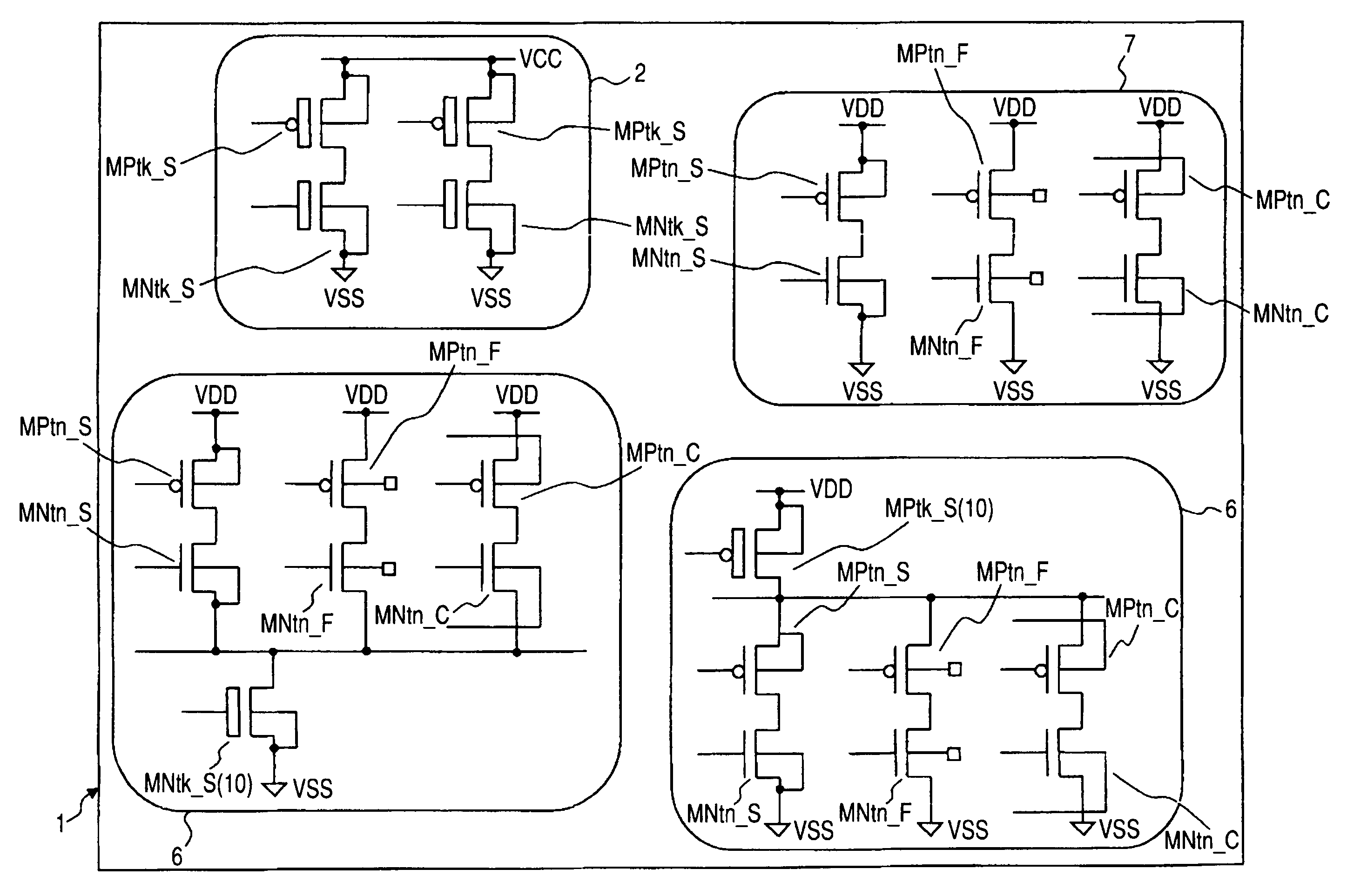

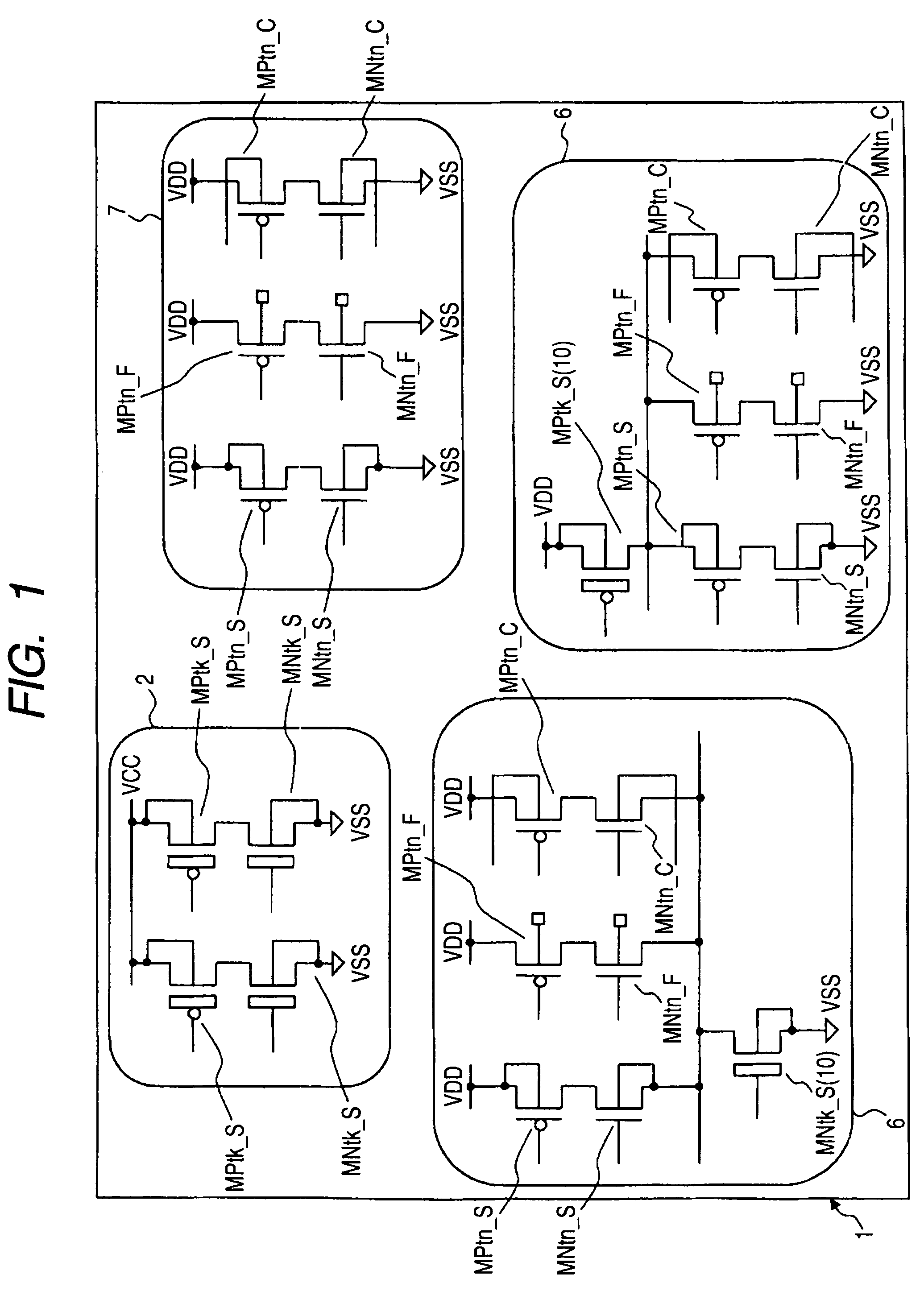

Semiconductor integrated circuit

InactiveUS20070176233A1Operation of circuitReduce power consumptionSwitching accelaration modificationsReliability increasing modificationsLow voltageActive state

A plurality of MOS transistors each having an SOI structure includes, in mixed form, those brought into body floating and whose body voltages are fixed and variably set. When a high-speed operation is expected in a logic circuit in which operating power is relatively a low voltage and a switching operation is principally performed, body floating may be adopted. Body voltage fixing may be adopted in an analog system circuit that essentially dislikes a kink phenomenon of a current-voltage characteristic. Body bias variable control may be adopted in a logic circuit that requires the speedup of operation in an active state and needs low power consumption in a standby state. Providing in mixed form the transistors which are subjected to the body floating and the body voltage fixing and which are variably controlled in body voltage, makes it easier to adopt an accurate body bias according to a circuit function and a circuit configuration in terms of the speedup of operation and the low power consumption.

Owner:RENESAS ELECTRONICS CORP

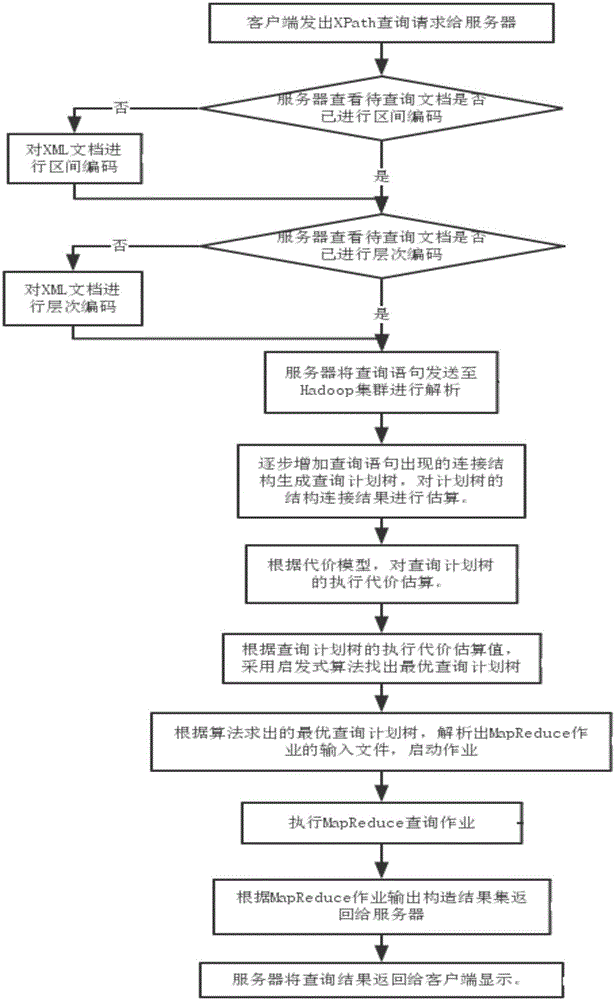

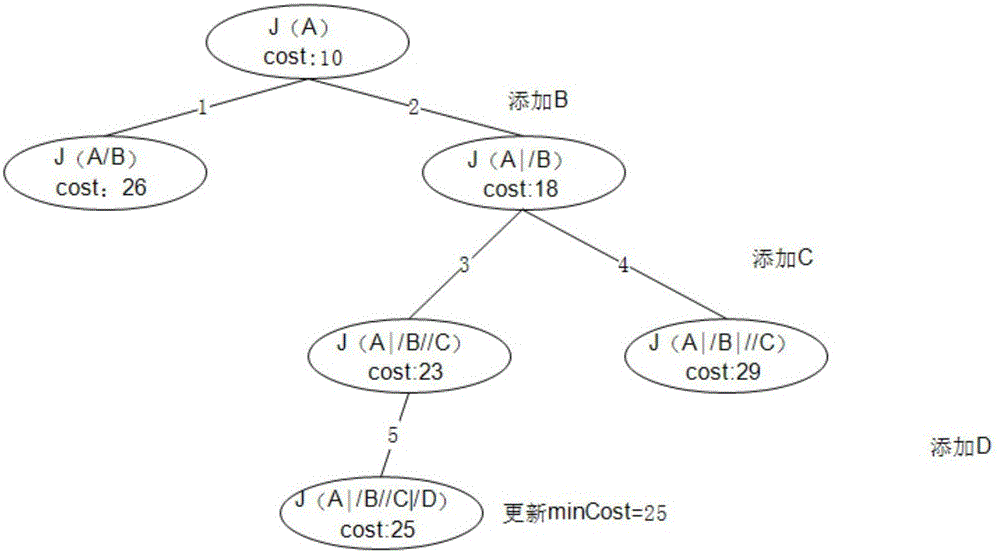

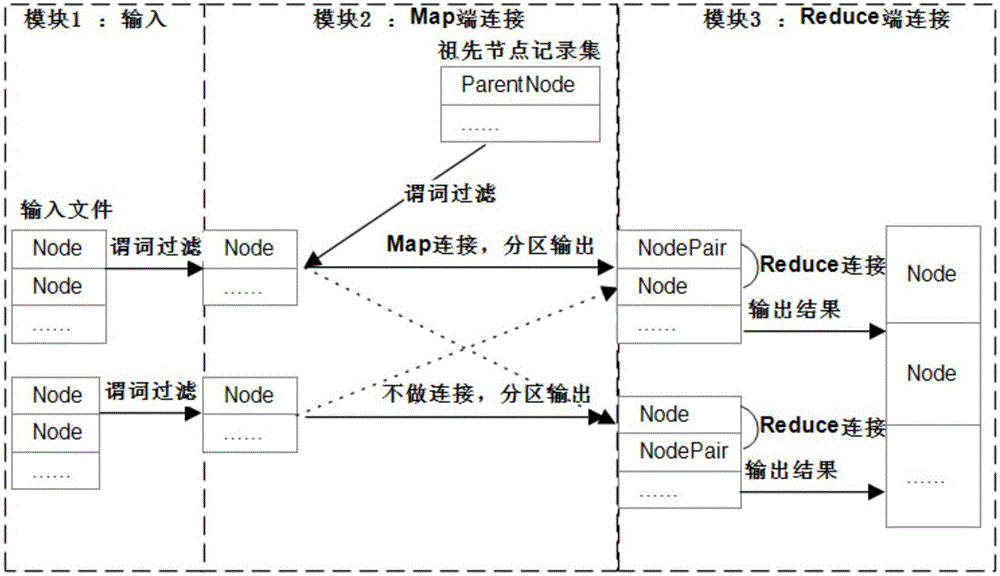

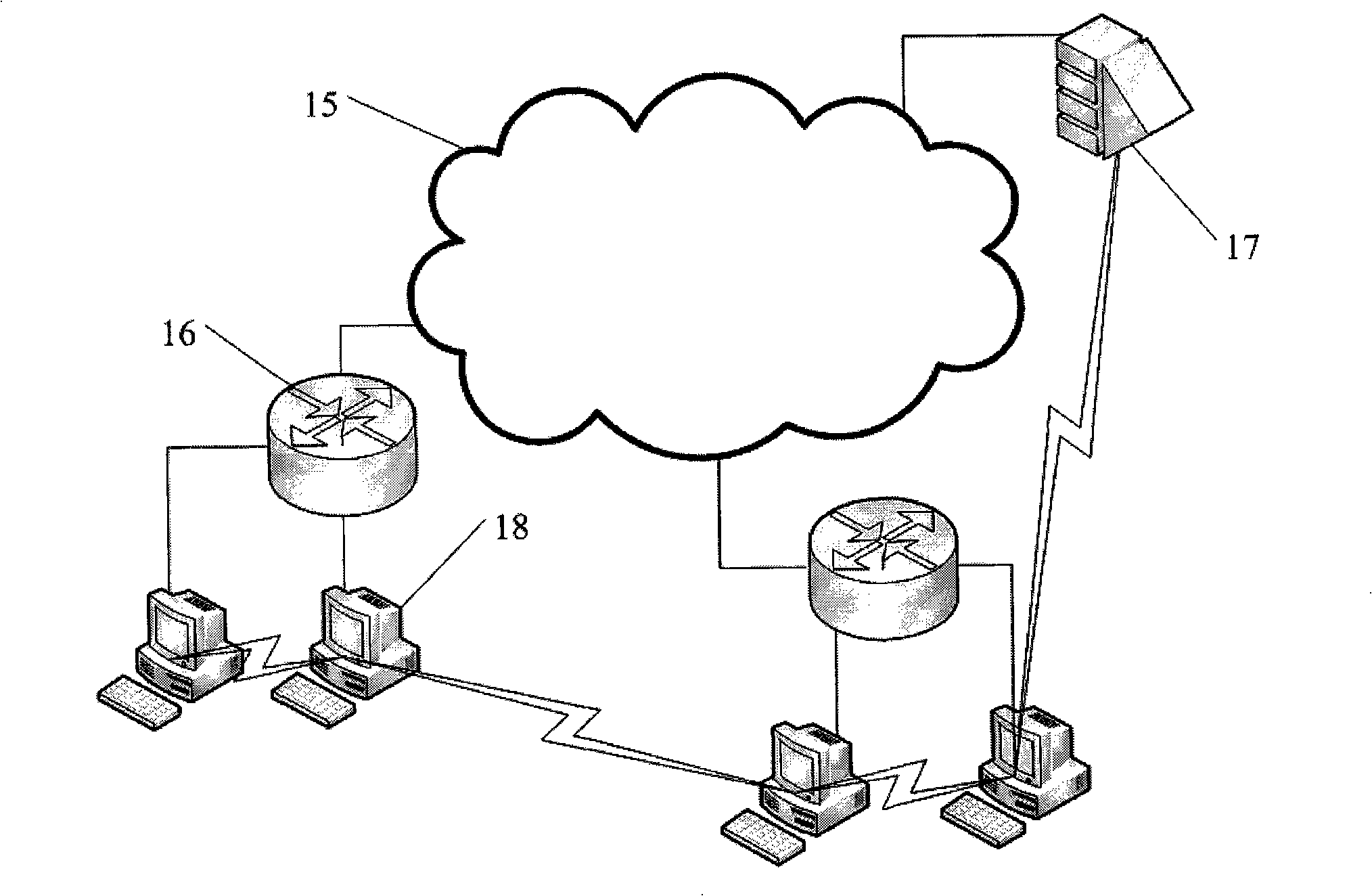

MapReduce based XML data query method and system

ActiveCN105005606AImprove performanceObvious query speed advantageWeb data indexingSpecial data processing applicationsQuery planXPath

The present invention discloses a MapReduce based XML data query method and system. The method comprises the steps of: receiving an XPath query request of a client by a server; checking whether a to-be-queried XML document is subjected to region encoding or not; performing region encoding on the to-be-queried XML document not subjected to the region encoding; checking whether the to-be-queried XML document is subjected to hierarchical encoding by the server; performing hierarchical encoding on the to-be-queried XML document not subjected to the hierarchical encoding; analyzing a query statement in the query request; generating a query plan tree, and performing estimation on a structural connection result; establishing a cost model, and executing cost estimation on the query plan tree; finding a optimal query plan tree; obtaining the optimal query plan tree, and analyzing an input file of a MapReduce task; executing a MapReduce query task; constructing an output file of the MapReduce task into an XML data result as a query result; and returning the XML data query result to the client. The method has the advantages of being relatively high in execution efficiency, high in speedup ratio, good in query processing performance and good in scalability.

Owner:SOUTH CHINA UNIV OF TECH

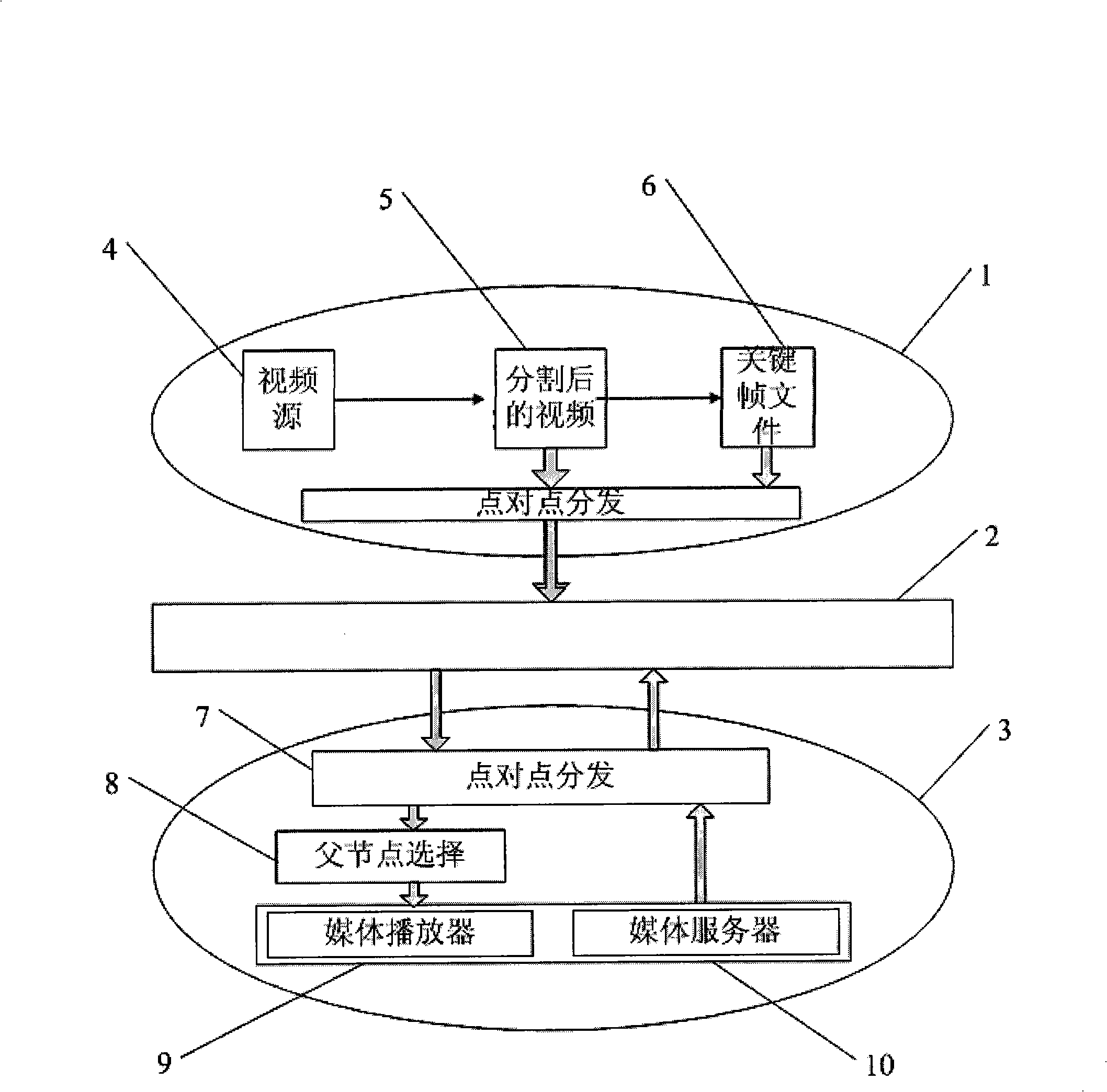

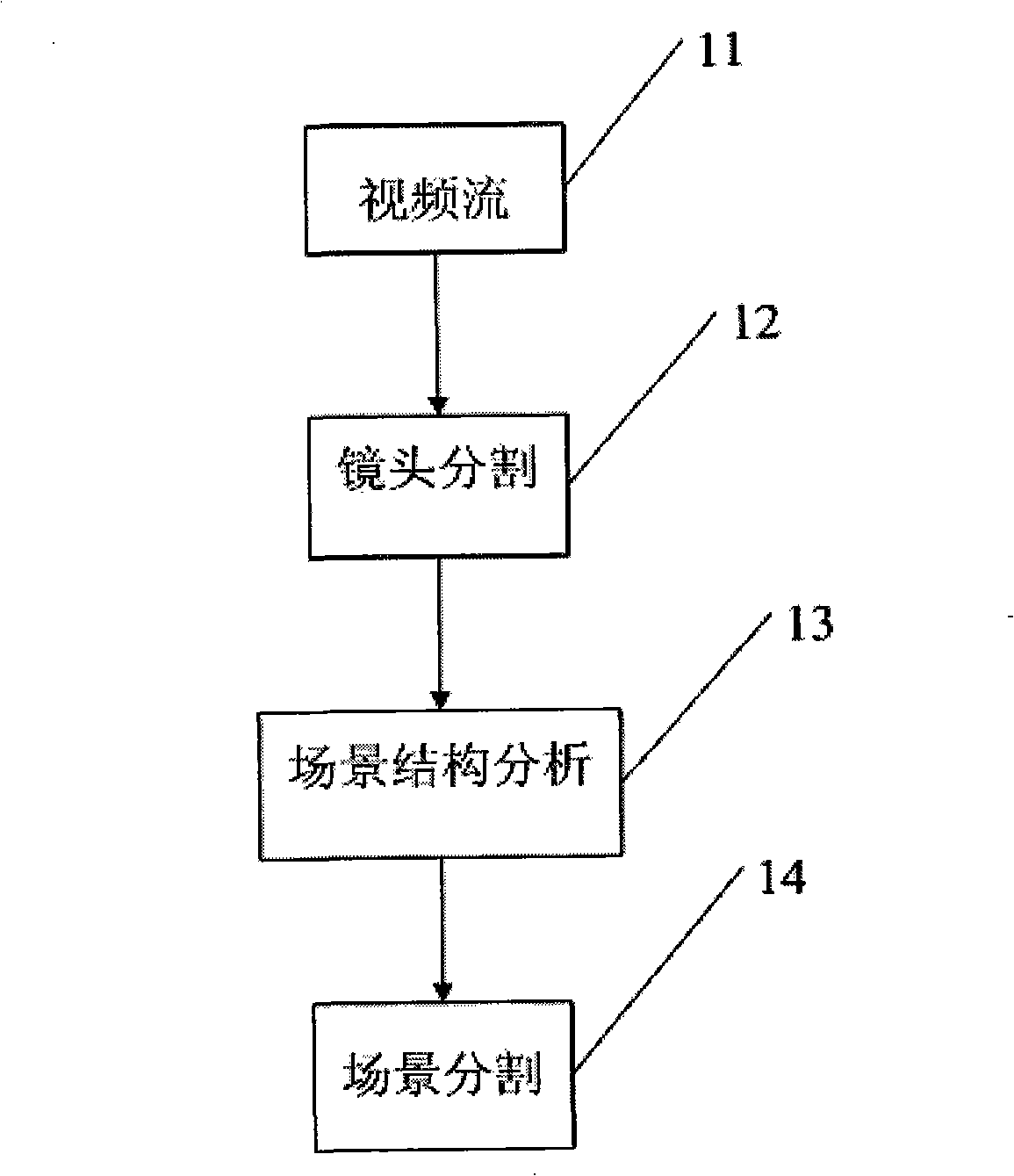

User VCR operation method and stream medium distributing based on video dividing technique

The invention belongs to network stream media technical field, particularly a video partition technology based stream media distributing mode and user VCR operation. The stream media distributing mode and user VCR operation (comprising play, pause, interim, speedup and fast backward etc.) dependent on the video partition technology comprises the following two parts contents: one is changing traditional video distributing mode with video frame as unit; two is providing new speedup and fast backward operation, no longer the modes of simple 2X, 4X or 8X etc., but according to the result of video partition, providing new speedup and fast backward operation based on camera and scene, which is convenient for the use of user greatly.

Owner:FUDAN UNIV

Semiconductor integrated circuit

InactiveUS7714606B2Meet high-speed operationReduce power consumptionSwitching accelaration modificationsReliability increasing modificationsLow voltageEngineering

A plurality of MOS transistors each having an SOI structure includes, in mixed form, those brought into body floating and whose body voltages are fixed and variably set. When a high-speed operation is expected in a logic circuit in which operating power is relatively a low voltage and a switching operation is principally performed, body floating may be adopted. Body voltage fixing may be adopted in an analog system circuit that essentially dislikes a kink phenomenon of a current-voltage characteristic. Body bias variable control may be adopted in a logic circuit that requires the speedup of operation in an active state and needs low power consumption in a standby state. Providing in mixed form the transistors which are subjected to the body floating and the body voltage fixing and which are variably controlled in body voltage, makes it easier to adopt an accurate body bias according to a circuit function and a circuit configuration in terms of the speedup of operation and the low power consumption.

Owner:RENESAS ELECTRONICS CORP

VLSI Layouts of Fully Connected Generalized and Pyramid Networks with Locality Exploitation

ActiveUS20120269190A1Low pour pointFull connectivityMultiplex system selection arrangementsGeometric CADCross-linkMulti link

VLSI layouts of generalized multi-stage and pyramid networks for broadcast, unicast and multicast connections are presented using only horizontal and vertical links with spacial locality exploitation. The VLSI layouts employ shuffle exchange links where outlet links of cross links from switches in a stage in one sub-integrated circuit block are connected to inlet links of switches in the succeeding stage in another sub-integrated circuit block so that said cross links are either vertical links or horizontal and vice versa. Furthermore the shuffle exchange links are employed between different sub-integrated circuit blocks so that spacially nearer sub-integrated circuit blocks are connected with shorter links compared to the shuffle exchange links between spacially farther sub-integrated circuit blocks. In one embodiment the sub-integrated circuit blocks are arranged in a hypercube arrangement in a two-dimensional plane. The VLSI layouts exploit the benefits of significantly lower cross points, lower signal latency, lower power and full connectivity with significantly fast compilation.The VLSI layouts with spacial locality exploitation presented are applicable to generalized multi-stage and pyramid networks, generalized folded multi-stage and pyramid networks, generalized butterfly fat tree and pyramid networks, generalized multi-link multi-stage and pyramid networks, generalized folded multi-link multi-stage and pyramid networks, generalized multi-link butterfly fat tree and pyramid networks, generalized hypercube networks, and generalized cube connected cycles networks for speedup of s≧1. The embodiments of VLSI layouts are useful in wide target applications such as FPGAs, CPLDs, pSoCs, ASIC placement and route tools, networking applications, parallel & distributed computing, and reconfigurable computing.

Owner:KONDA TECH

VLSI layouts of fully connected generalized and pyramid networks with locality exploitation

ActiveUS9529958B2Low pour pointReduce signal delayCircuit switching systemsCAD circuit designCross-linkMulti link

VLSI layouts of generalized multi-stage and pyramid networks for broadcast, unicast and multicast connections are presented using only horizontal and vertical links with spacial locality exploitation. The VLSI layouts employ shuffle exchange links where outlet links of cross links from switches in a stage in one sub-integrated circuit block are connected to inlet links of switches in the succeeding stage in another sub-integrated circuit block so that said cross links are either vertical links or horizontal and vice versa. Furthermore the shuffle exchange links are employed between different sub-integrated circuit blocks so that spacially nearer sub-integrated circuit blocks are connected with shorter links compared to the shuffle exchange links between spacially farther sub-integrated circuit blocks. In one embodiment the sub-integrated circuit blocks are arranged in a hypercube arrangement in a two-dimensional plane. The VLSI layouts exploit the benefits of significantly lower cross points, lower signal latency, lower power and full connectivity with significantly fast compilation. The VLSI layouts with spacial locality exploitation presented are applicable to generalized multi-stage and pyramid networks, generalized folded multi-stage and pyramid networks, generalized butterfly fat tree and pyramid networks, generalized multi-link multi-stage and pyramid networks, generalized folded multi-link multi-stage and pyramid networks, generalized multi-link butterfly fat tree and pyramid networks, generalized hypercube networks, and generalized cube connected cycles networks for speedup of s≧1. The embodiments of VLSI layouts are useful in wide target applications such as FPGAs, CPLDs, pSoCs, ASIC placement and route tools, networking applications, parallel & distributed computing, and reconfigurable computing.

Owner:KONDA TECH

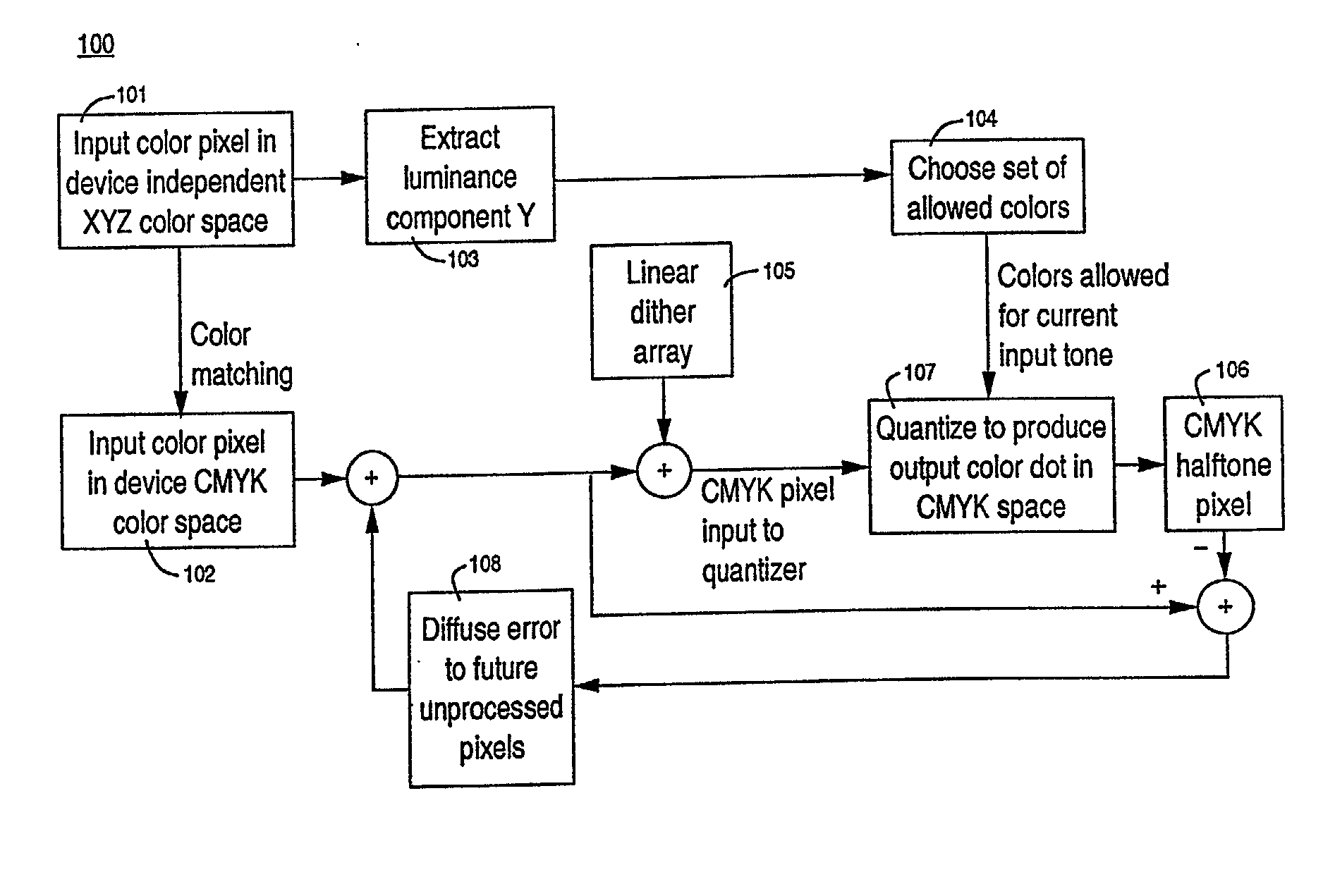

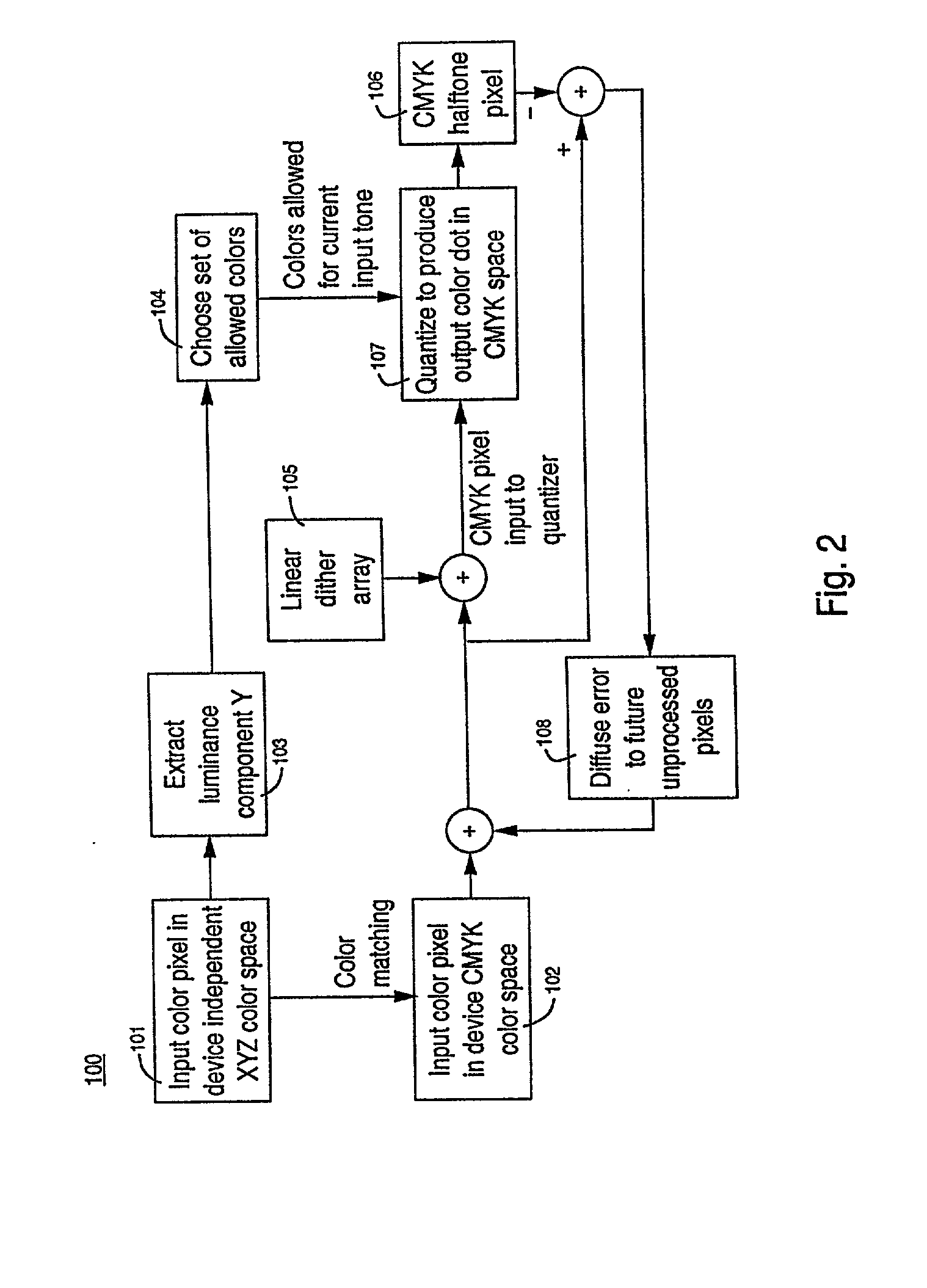

Color smooth error diffusion

InactiveUS20030038953A1Minimized color fluctuationQuality improvementDigitally marking record carriersDigital computer detailsPattern recognitionHue

Embodiments of the present invention include a method for generating a halftone image. This method comprises the steps of choosing a set of allowed colors, processing a color value, quantizing color value, and outputting a halftone pixel according to quantizing step. In one embodiment, a look up table is utilized to provide significant speedup over conventional implementations, especially in when implemented in software. The result is high quality imaging with excellent color smoothness and sharpness.

Owner:HEWLETT PACKARD DEV CO LP

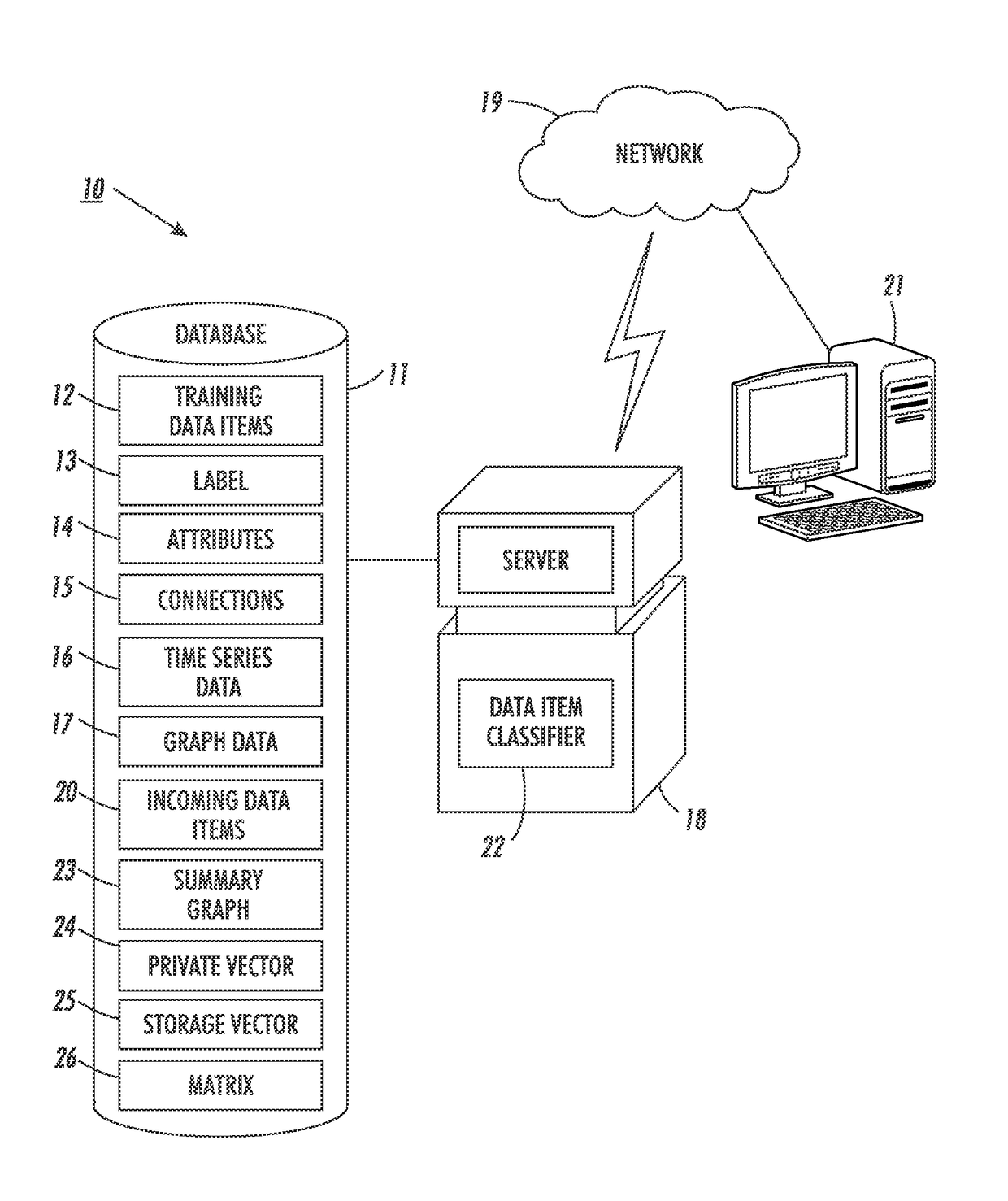

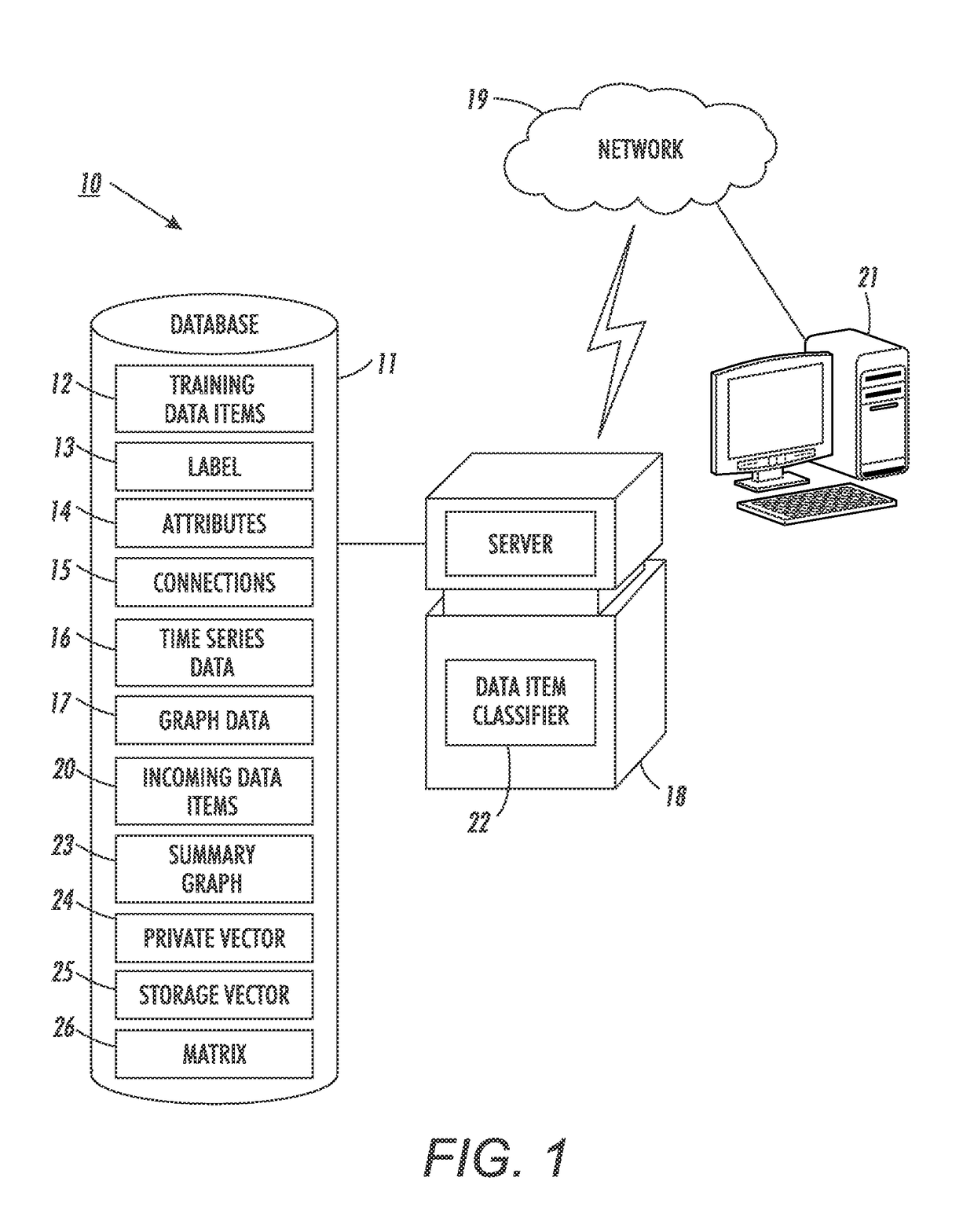

Computer-Implemented System And Method For Relational Time Series Learning

System and methods for relational time-series learning are provided. Unlike traditional time series forecasting techniques, which assume either complete time series independence or complete dependence, the disclosed system and method allow time series forecasting that can be performed on multivariate time series represented as vertices in graphs with arbitrary structures and predicting a future classification for data items represented by one of nodes in the graph. The system and methods also utilize non-relational, relational, temporal data for classification, and allow using fast and parallel classification techniques with linear speedups. The system and methods are well-suited for processing data in a streaming or online setting and naturally handle training data with skewed or unbalanced class labels.

Owner:XEROX CORP

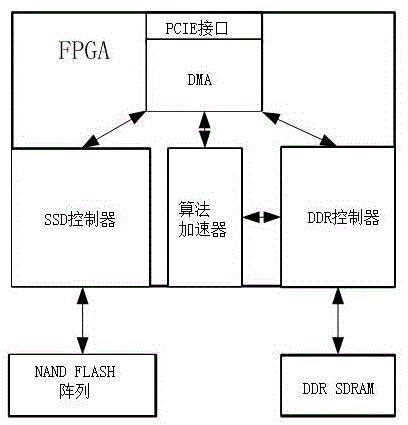

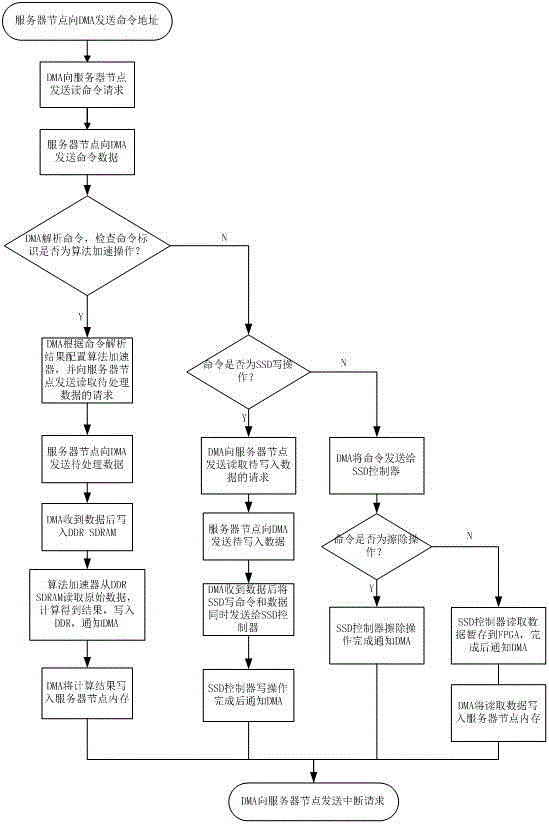

FPGA method achieving computation speedup and PCIESSD storage simultaneously

InactiveCN105677595AReduce power consumptionLow costEnergy efficient computingArchitecture with single central processing unitDisk controllerEmbedded system

The invention discloses an FPGA method achieving computation speedup and PCIE SSD storage simultaneously. An FPGA is used, an SSD controller and an algorithm accelerator are integrated in the FPGA, the FPGA is further internally provided with a DDR controller and a direct memory read module DMA, and the direct memory read module DMA is connected with the SSD controller, the DDR controller and the algorithm accelerator respectively. According to the FPGA method achieving computation speedup and PCIE SSD storage simultaneously, the two functions of computation speedup and SSD storage are achieved on PCIE equipment, the layout difficult is reduced, overall power consumption of server nodes is reduced, and the cost of an enterprise is reduced.

Owner:FASII INFORMATION TECH SHANGHAI

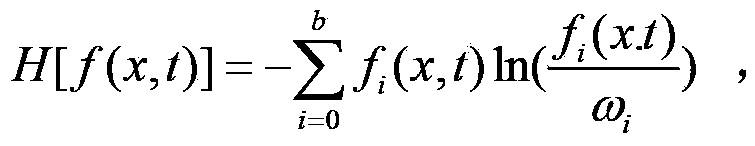

Implement method of parallel fluid calculation based on entropy lattice Boltzmann model

ActiveCN103425833AReduced simulation timeShorten the timeSpecial data processing applicationsPerformance indexIterative method

The invention discloses an implement method of parallel fluid calculation based on an entropy lattice Boltzmann model, and provides a parallel implement mode of the entropy lattice Boltzmann model based on the GPU of a mainstream video card nVIDIA in current general calculation territory. The fact that ELBM simulated time is shortened by one third than the simulated time on a CPU is achieved by measuring the speed-up ratio of fluid parallel calculation and comparing the performance index of lattice number updated per second, and applying the CUDA on the GPU of the nVIDIA video card. A method of directly approximately solving a parameter alpha is more effective than an iterative method, namely, and time can be reduced by 31.7% averagely. The implement method of the parallel fluid calculation based on the entropy lattice Boltzmann model can use hardware resources of the system fully, and verifies the parallel calculation mode of the entropy lattice Boltzmann model from the actual operation level, and therefore efficiency of the whole parallel fluid calculation is improved obviously.

Owner:HUNAN UNIV

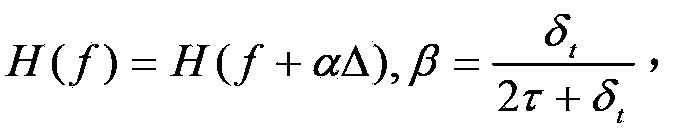

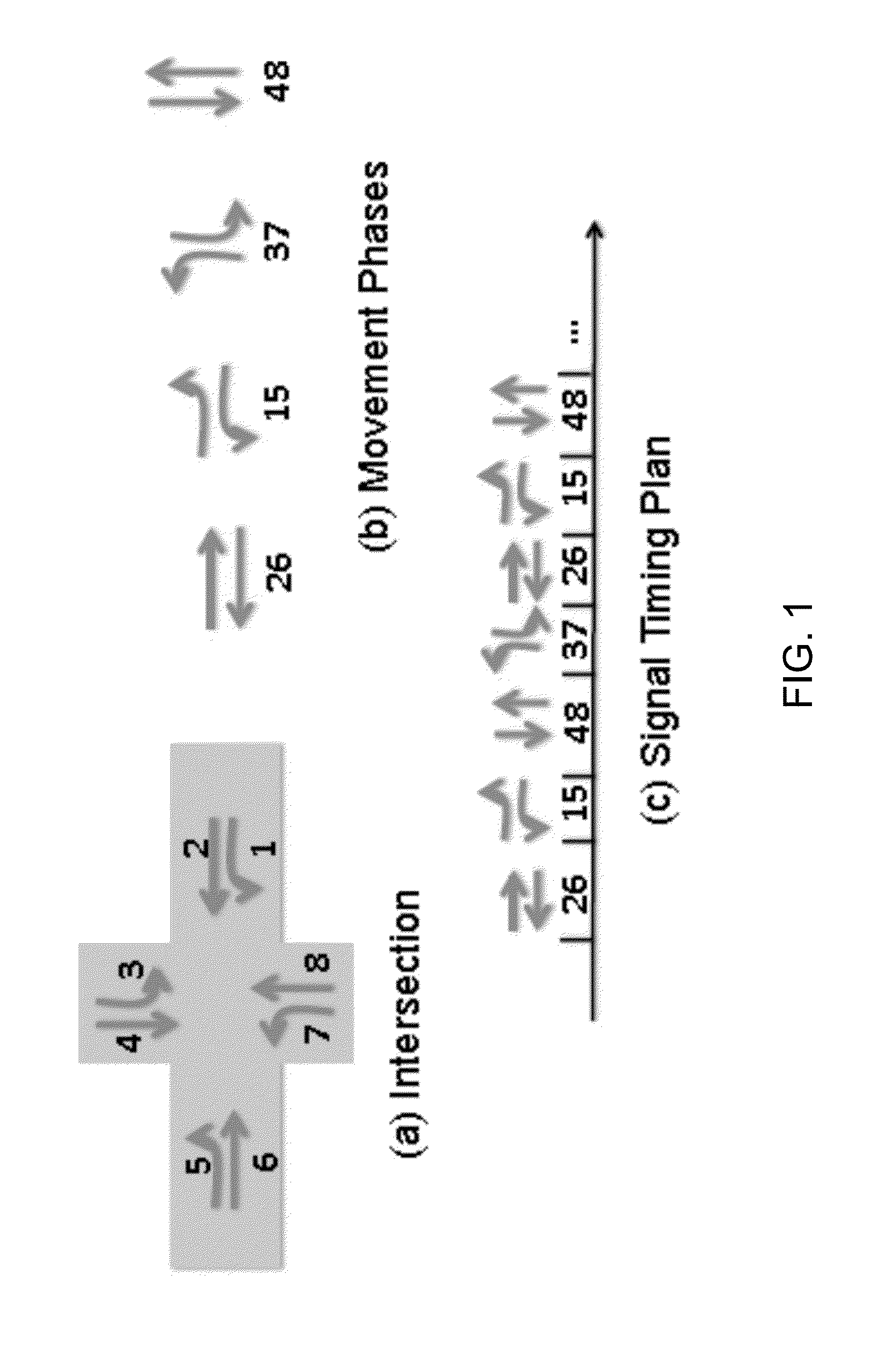

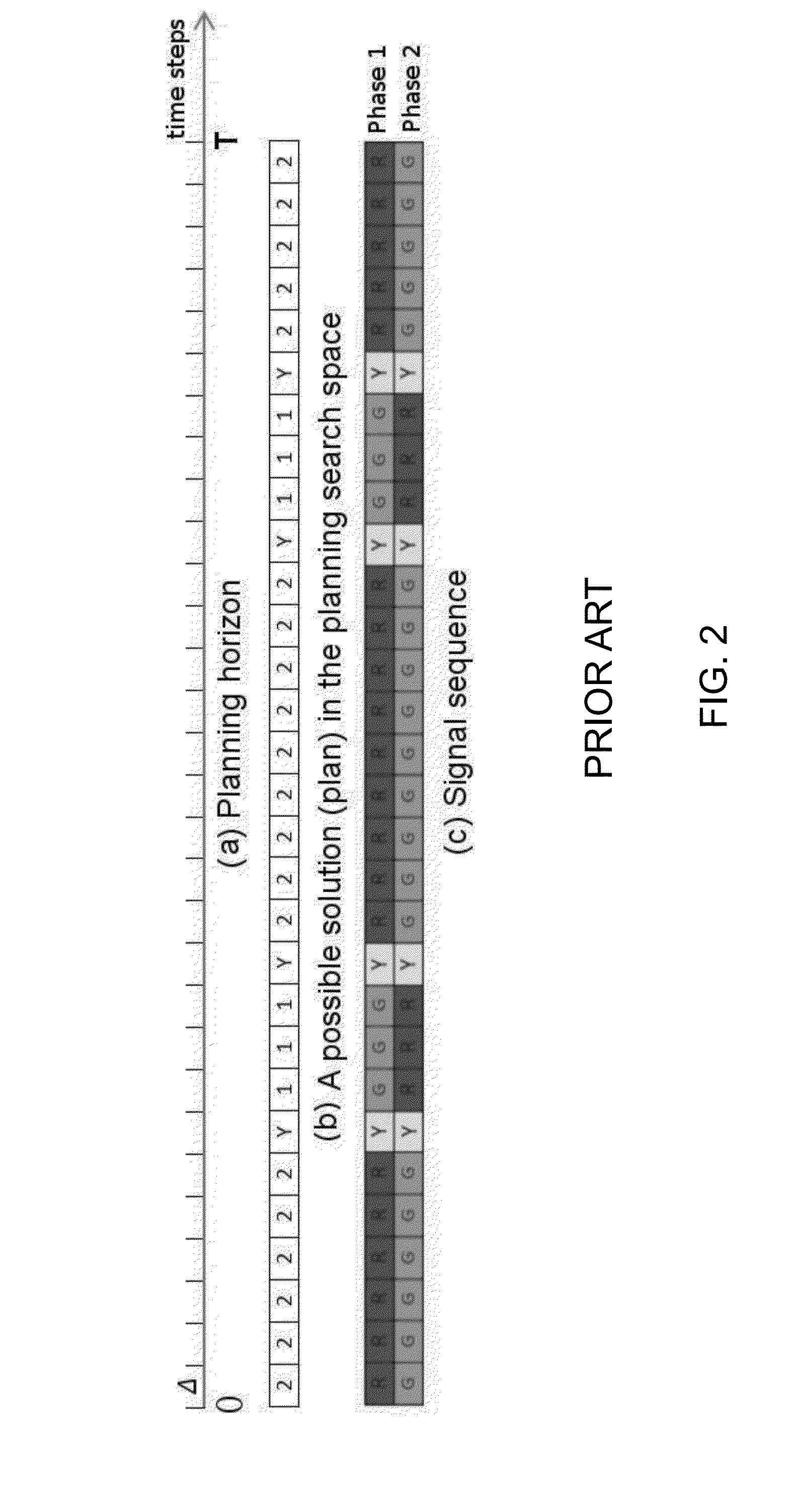

Smart and scalable urban signal networks: methods and systems for adaptive traffic signal control

Scalable urban traffic control system has been developed to address current challenges and offers a new approach to real-time, adaptive control of traffic signal networks. The methods and system described herein exploit a novel conceptualization of the signal network control problem as a decentralized process, where each intersection in the network independently and asynchronously solves a single-machine scheduling problem in a rolling horizon fashion to allocate green time to its local traffic, and intersections communicate planned outflows to their downstream neighbors to increase visibility of future incoming traffic and achieve coordinated behavior. The novel formulation of the intersection control problem as a single-machine scheduling problem abstracts flows of vehicles into clusters, which enables orders-of-magnitude speedup over previous time-based formulations and is what allows truly real-time (second-by-second) response to changing conditions.

Owner:CARNEGIE MELLON UNIV

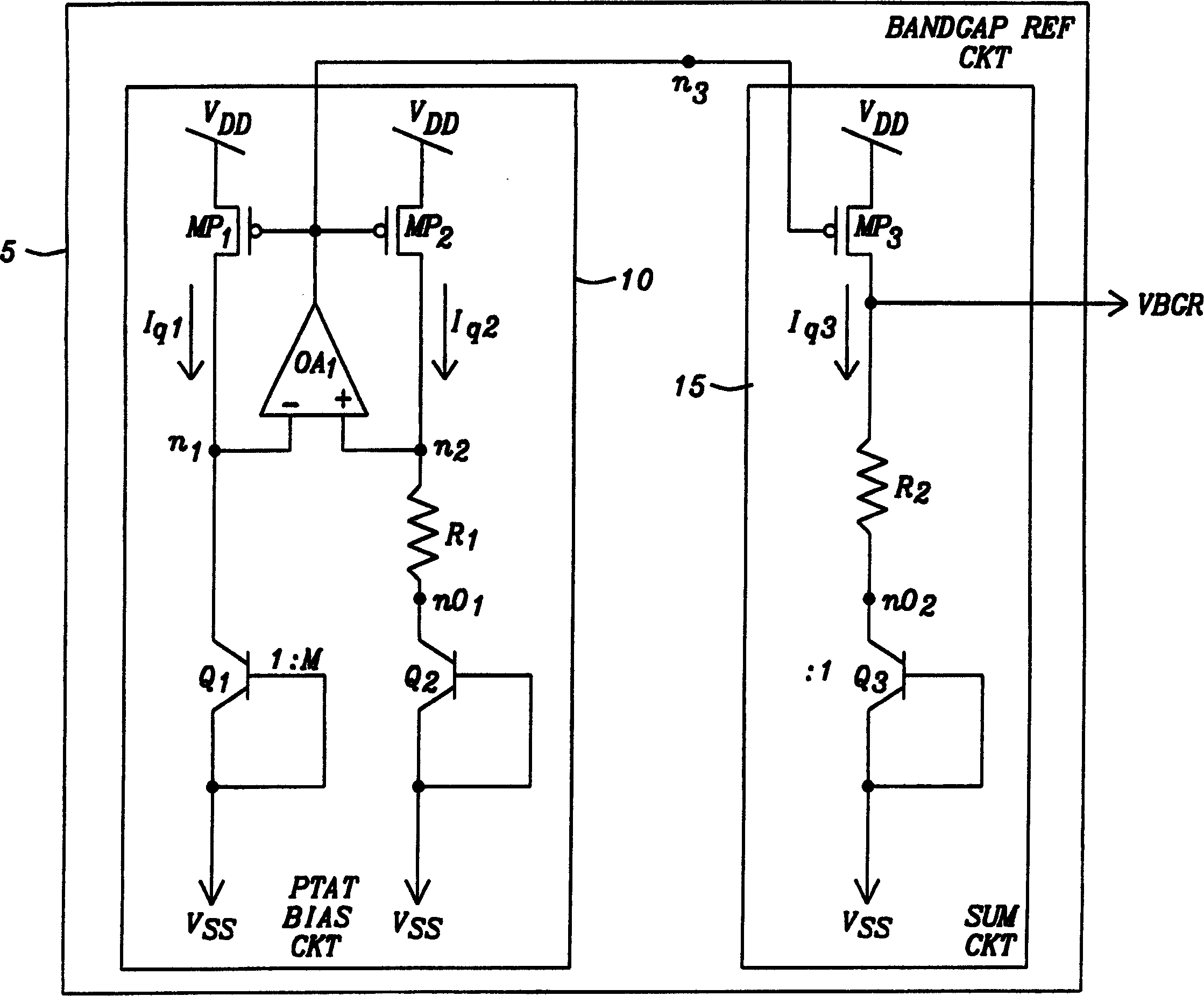

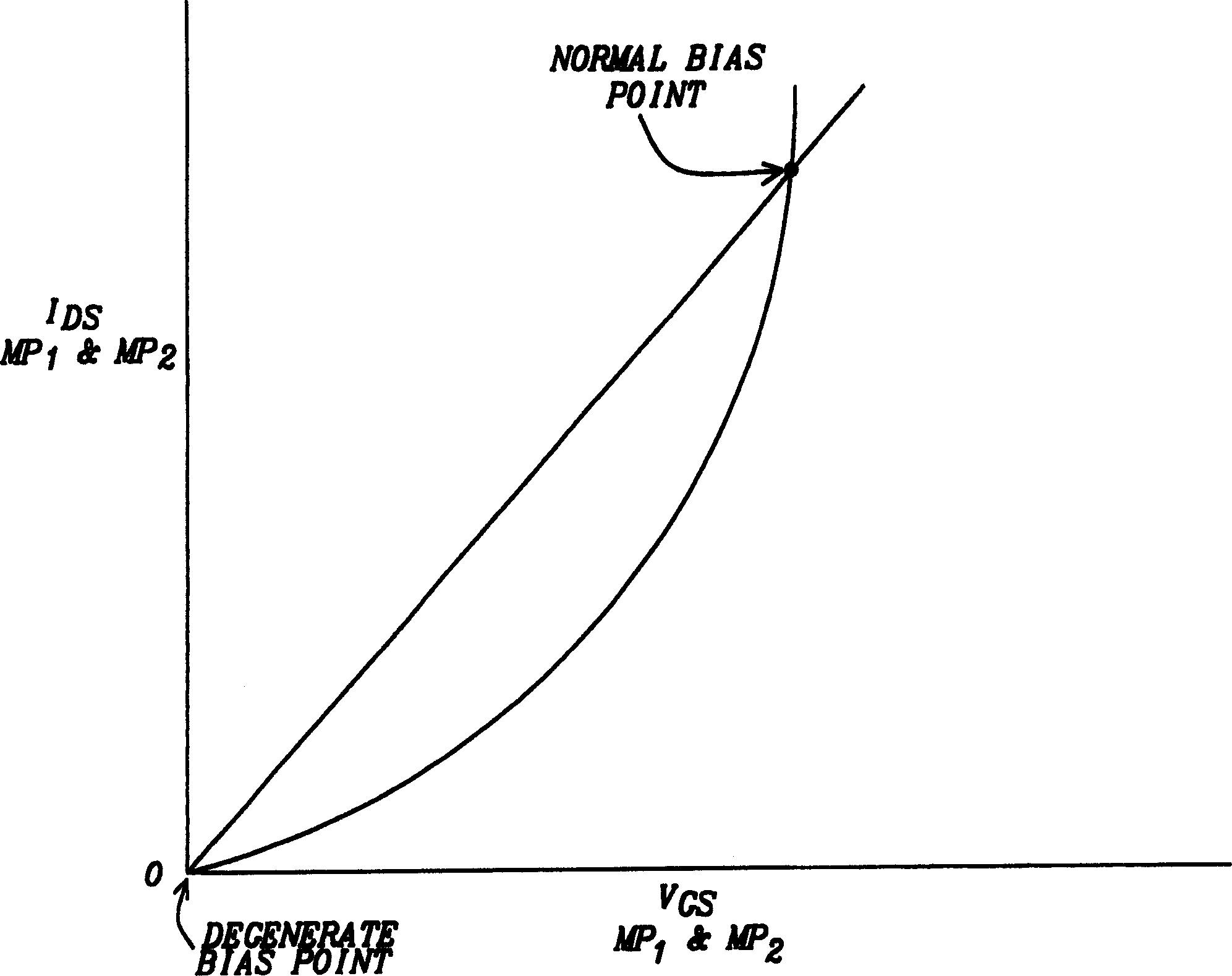

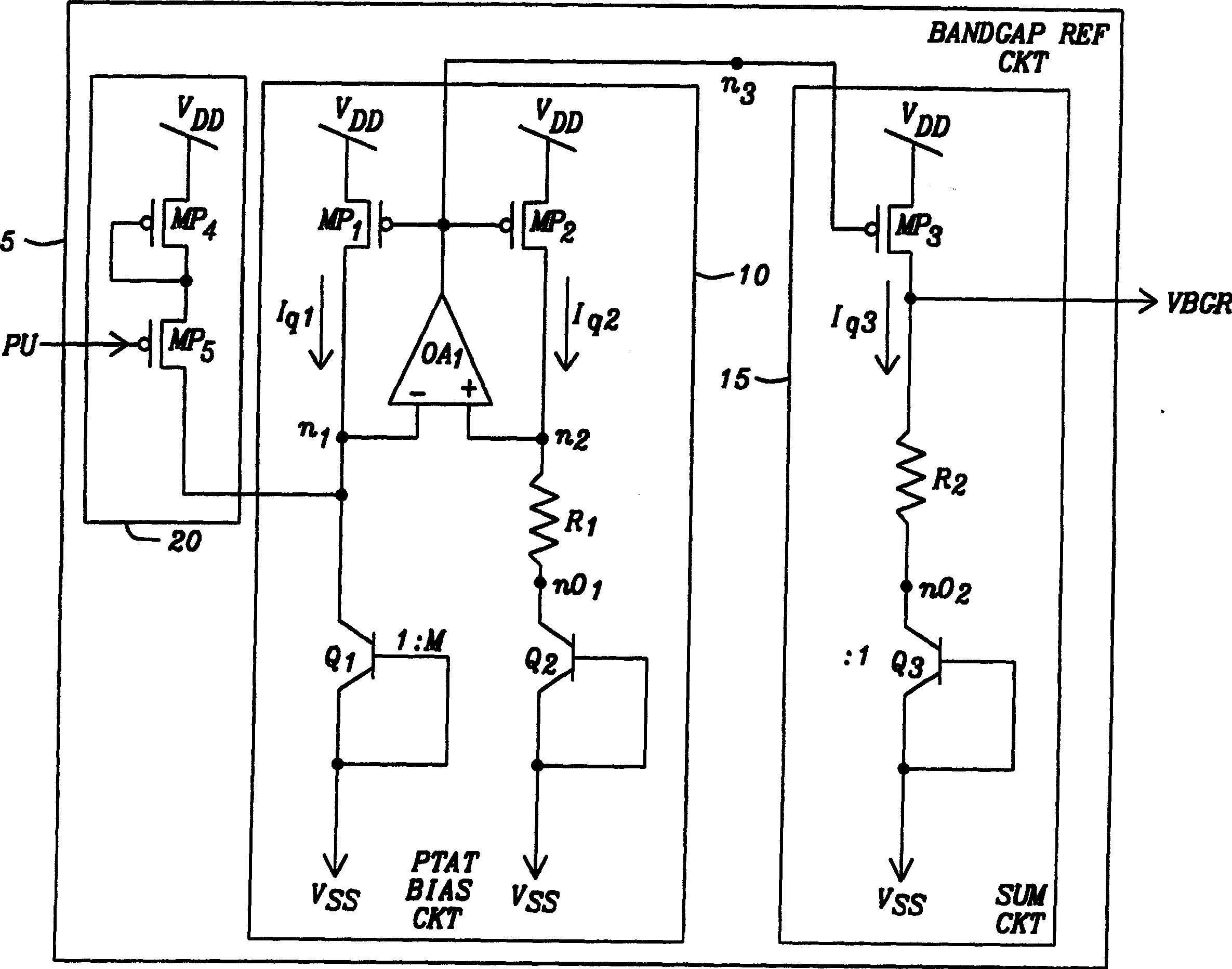

Initial acceleration circuit for dias circuit proportional to absolute temp

A PTAT biasing circuit for use in a bandgap referenced voltage source includes a startup sub-circuit. Prior to activation of a power up indication signal, the speedup circuit forces the PTAT biasing circuit from a degenerate operating point to a normal operating point. Upon detection of a feedback signal denoting the initiation of the PTAT biasing circuit, the startup sub-circuit terminates operation of the startup sub-circuit independent of the activation of the power up indication signal.

Owner:ETRON TECH INC

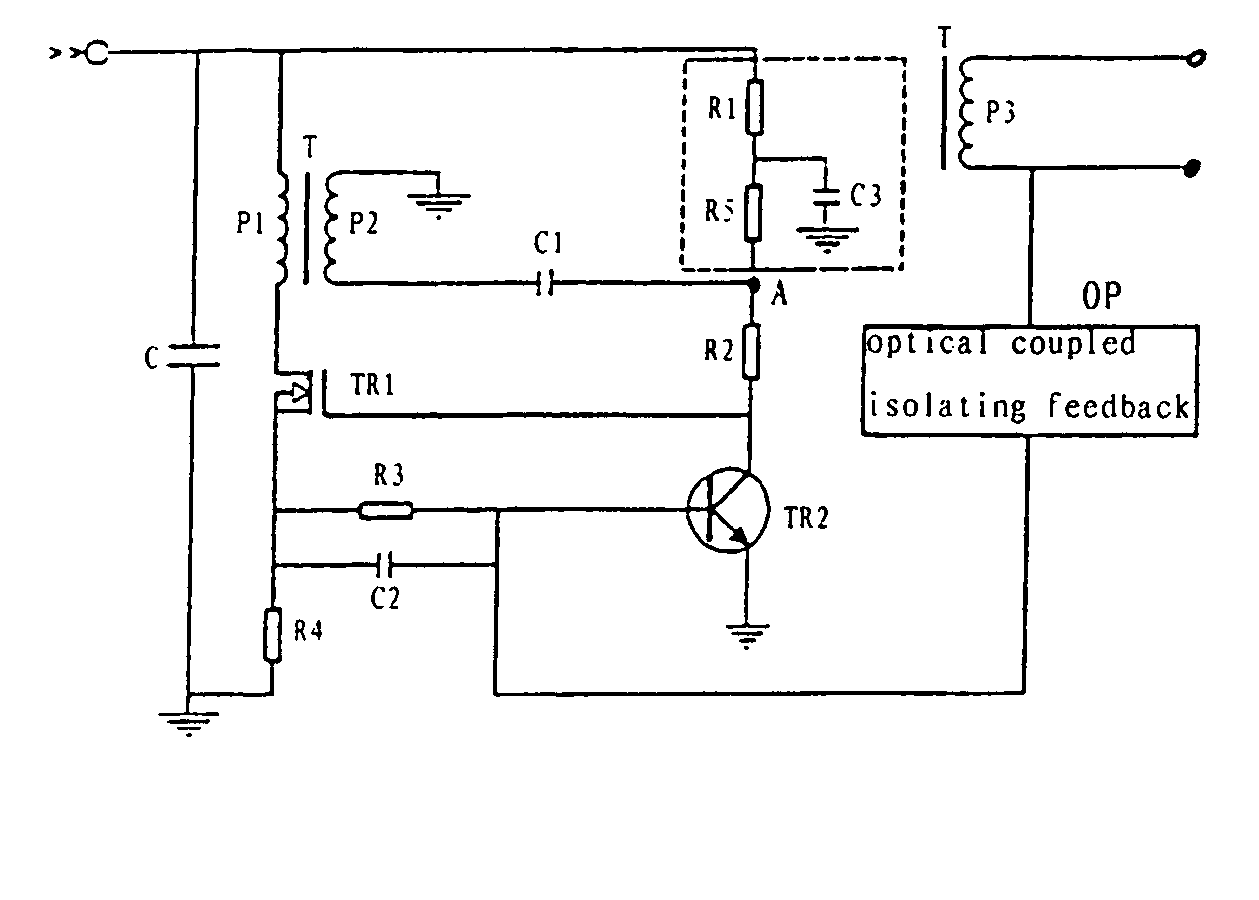

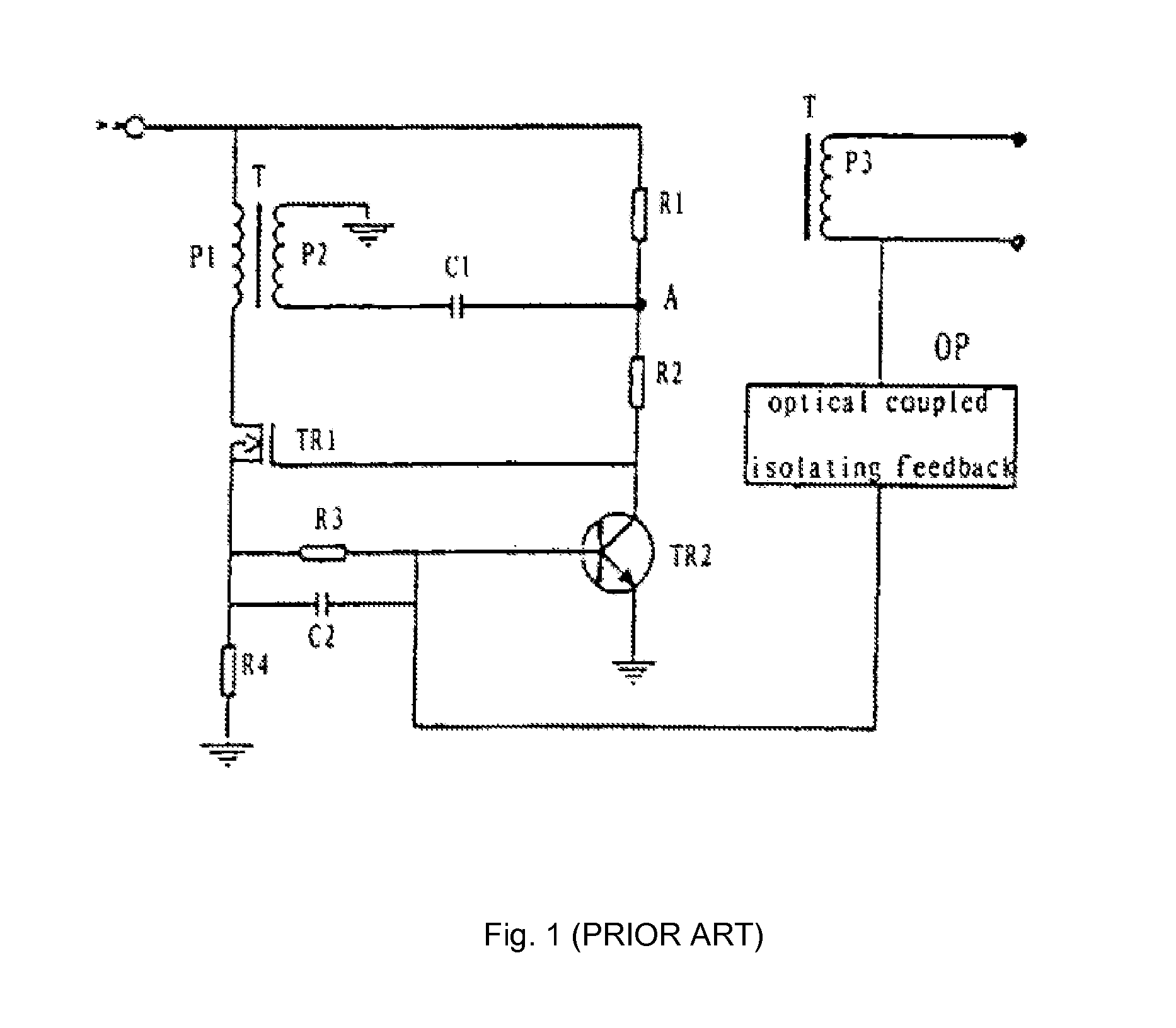

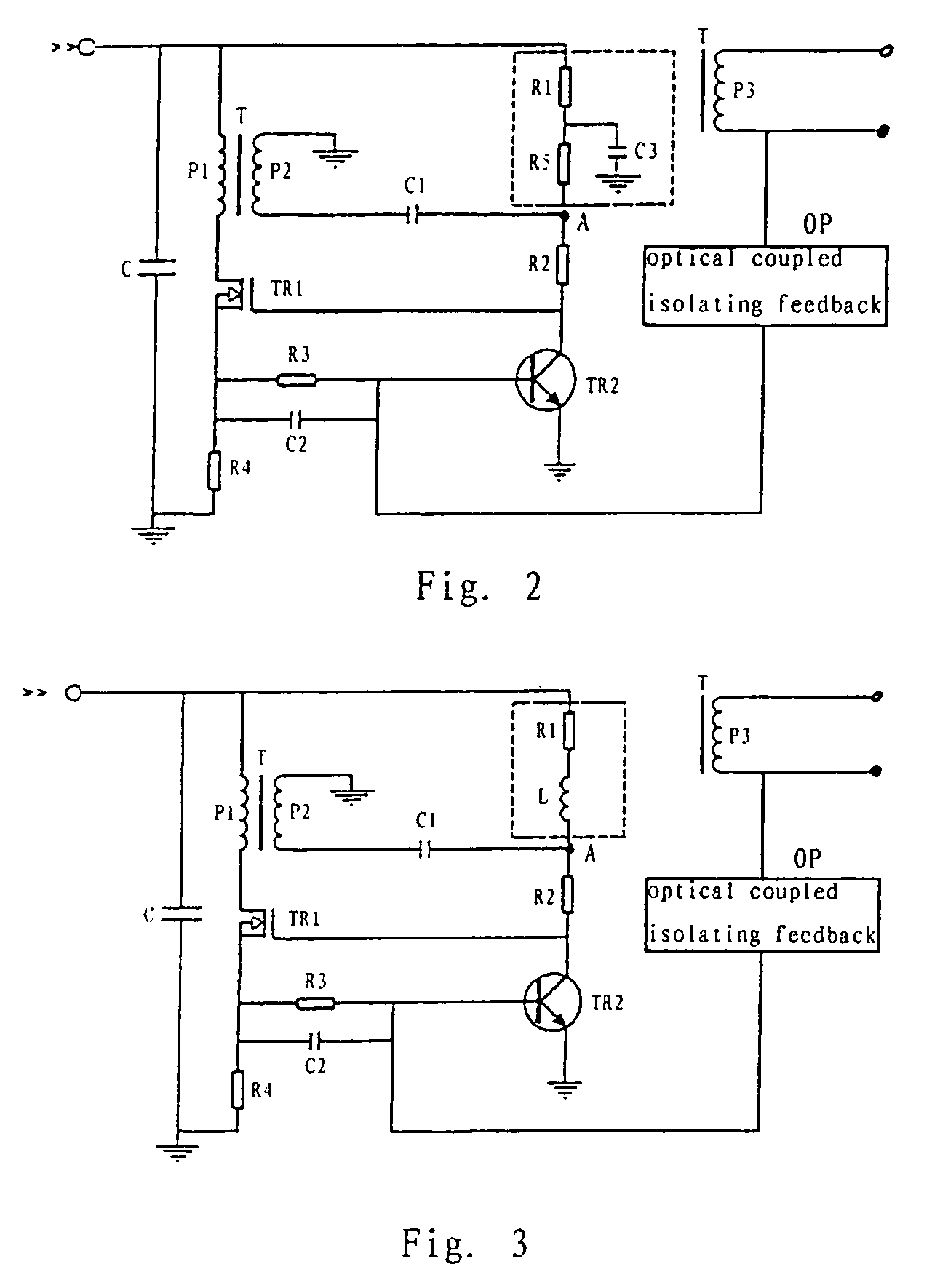

Isolating type self-oscillating flyback converter with a soft start loop

InactiveUS7333353B2Small currentImproving start featureAc-dc conversionDc-dc conversionCapacitanceElectrical resistance and conductance

An isolating type self-oscillating flyback converter is disclosed, which includes a coupled transformer, a FET, a transistor and an electro-optical coupled isolating feedback unit, wherein the input terminal of the circuit is connected to the source of the FET through a primary winding of the coupled transformer, the input terminal of the circuit is connected to the collector of the transistor through a resistor R1 and another resistor R2, the source of the FET is connected to the collector of the transistor, one branch of the drain of the FET is connected to the ground through a resistor, while the other branch is connected to the base of the transistor through the parallel connection body of a resistor and a capacitor, the base of the transistor is connected to the output terminal of a secondary output winding of the coupled transformer through the electro-optical coupled isolating feedback unit; the series connection joint between the said resistor R1 and the said resistor R2 is connected to the ground through a speedup capacitor and a secondary winding of the coupled transformer; a loop for implementing the soft start is connected between the said input terminal of the circuit and the series connection joint. Thus the start current of the invention is small and the converter can keep working normally when the input voltage is high.

Owner:MORNSUN GUANGZHOU SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com