Patents

Literature

76 results about "Matrix partitioning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Accounting allocation method

InactiveUS6014640AQuantity minimizationEasy and efficient implementationComplete banking machinesFinanceDistribution methodParallel computing

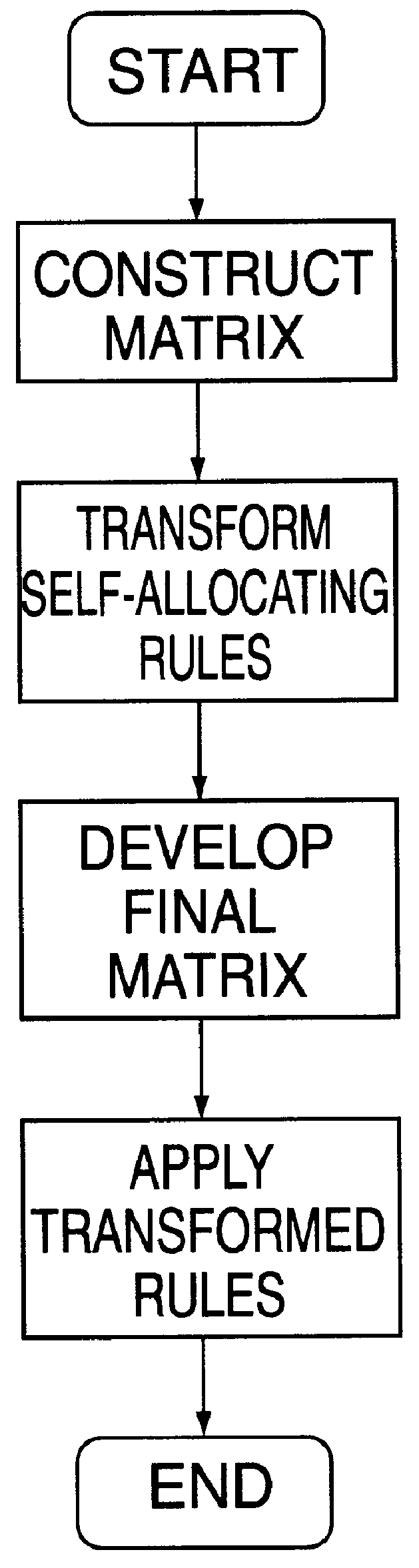

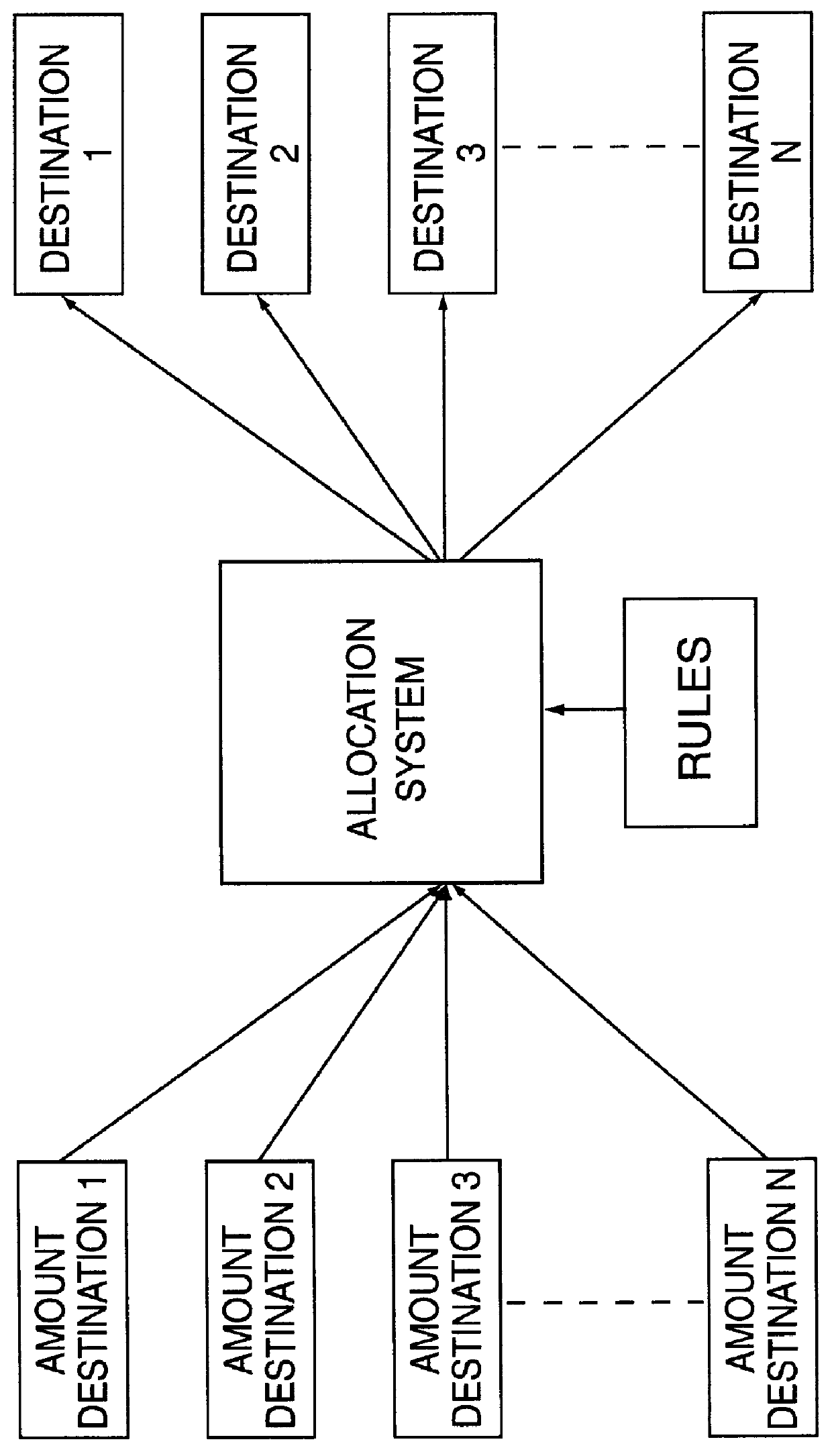

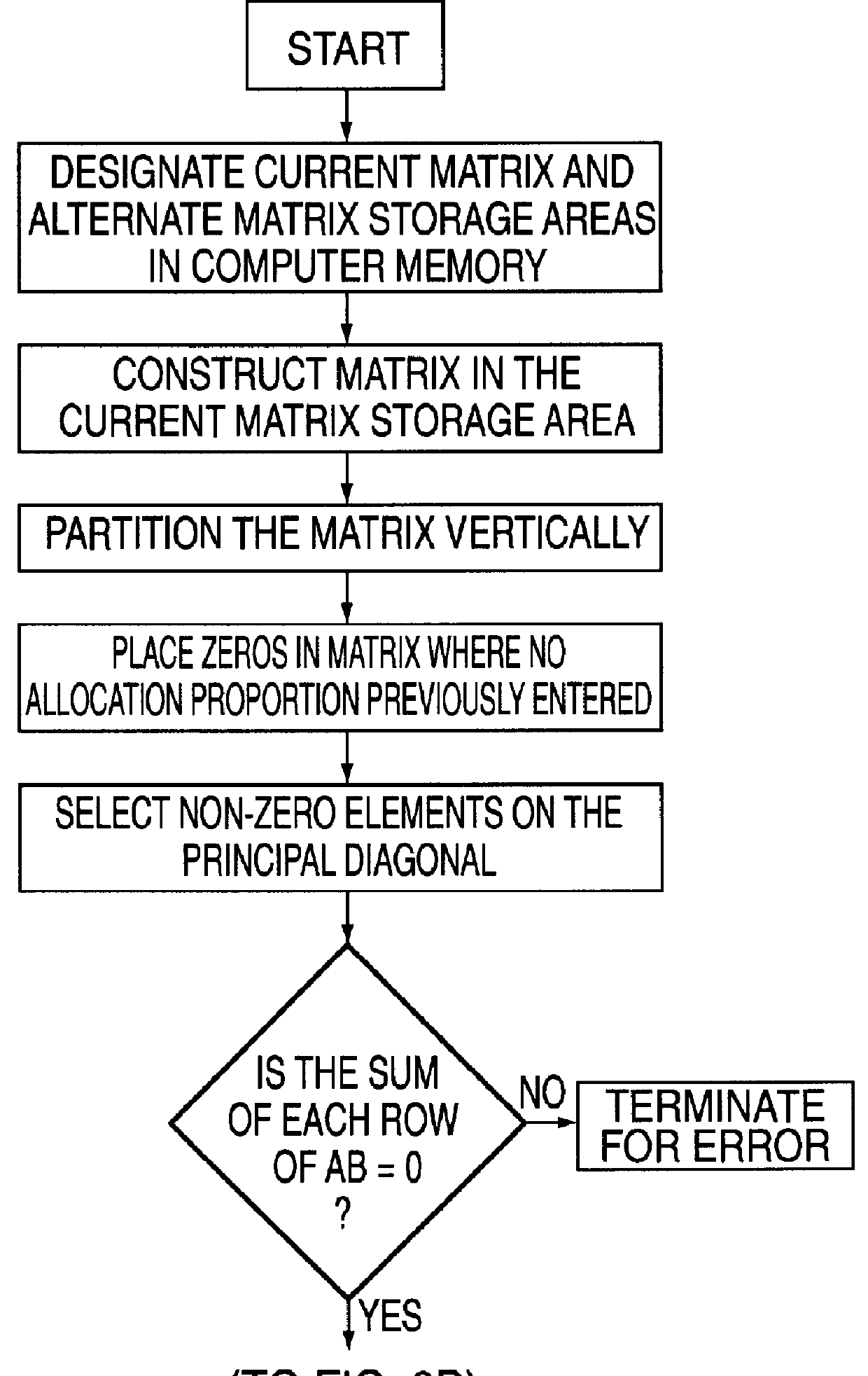

An expense allocation method utilizes matrix multiplication to transform a set of reciprocal or cascading allocation rules into a new set of one-step allocation rules. The new set of rules reallocates amounts directly to the ultimate destination without going through numerous intermediate levels of reallocation. By taking advantage of the specific structure of the matrix, and partitioning the matrix into four (4) subparts, the matrix multiplication process is greatly simplified. The expense allocation method can be easily and efficiently implemented on a computer system, including computers based upon parallel or vector architectures. This invention permits most allocation work to be done before the end of an accounting period, allowing end-of-period accounting work to be completed sooner. Alternatively, expenses can be allocated as they occur, rather than waiting until the end of a period.

Owner:BENT KIRKE M

Matrix transposition in a computer system

ActiveUS7031994B2Improves speed and parallelabilityEasy transpositionHandling data according to predetermined rulesGeneral purpose stored program computerAlgorithmComputerized system

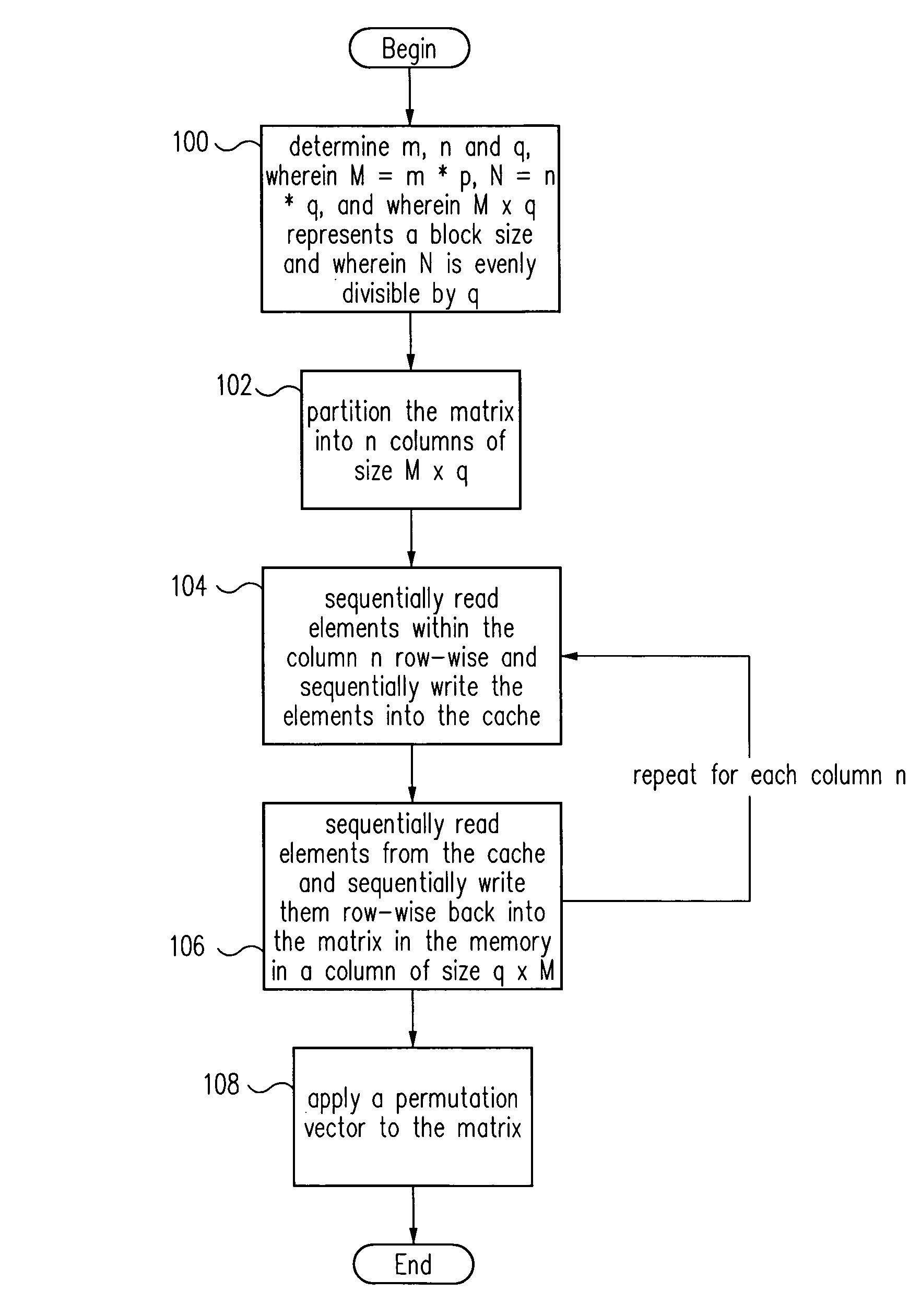

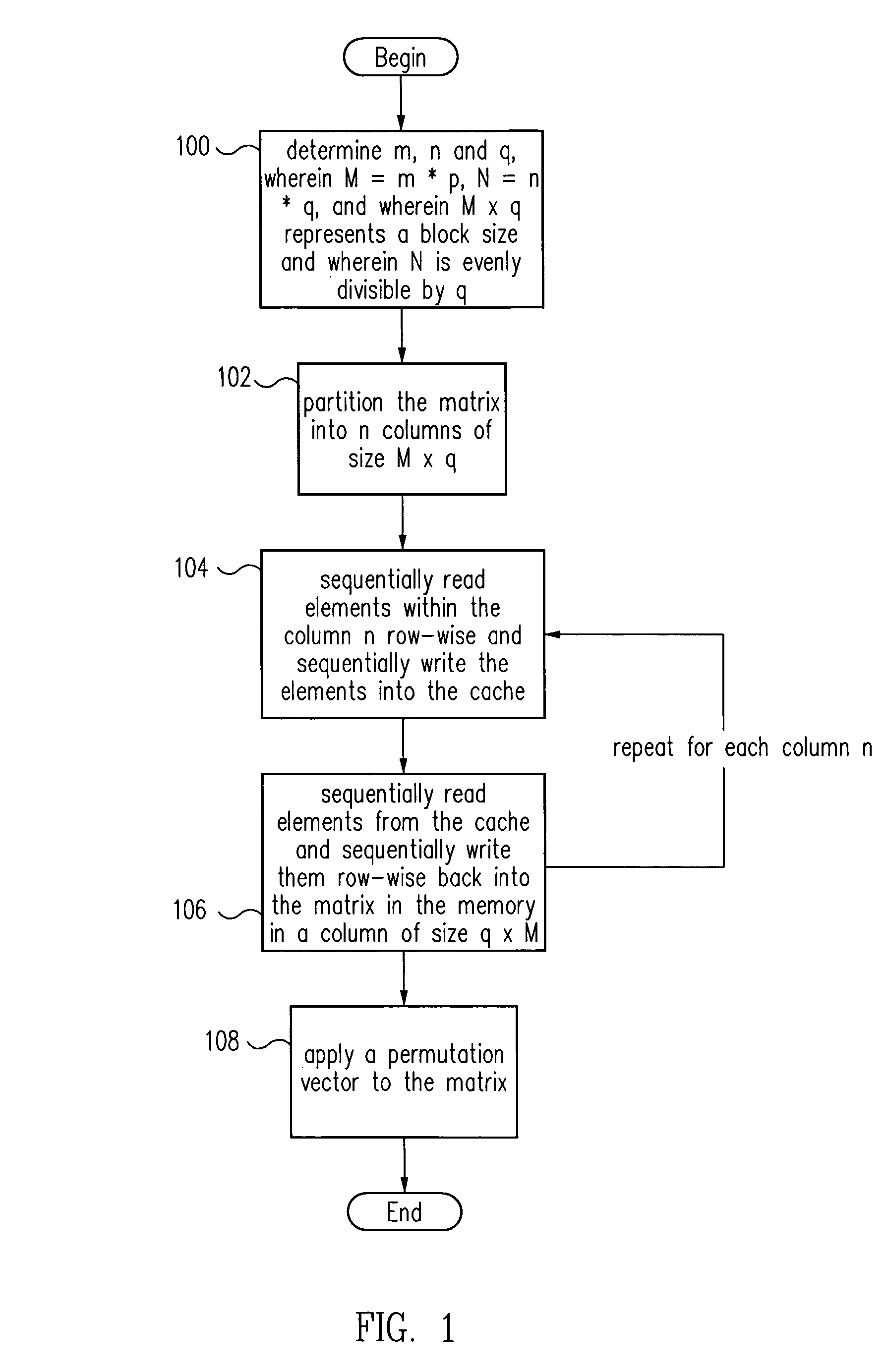

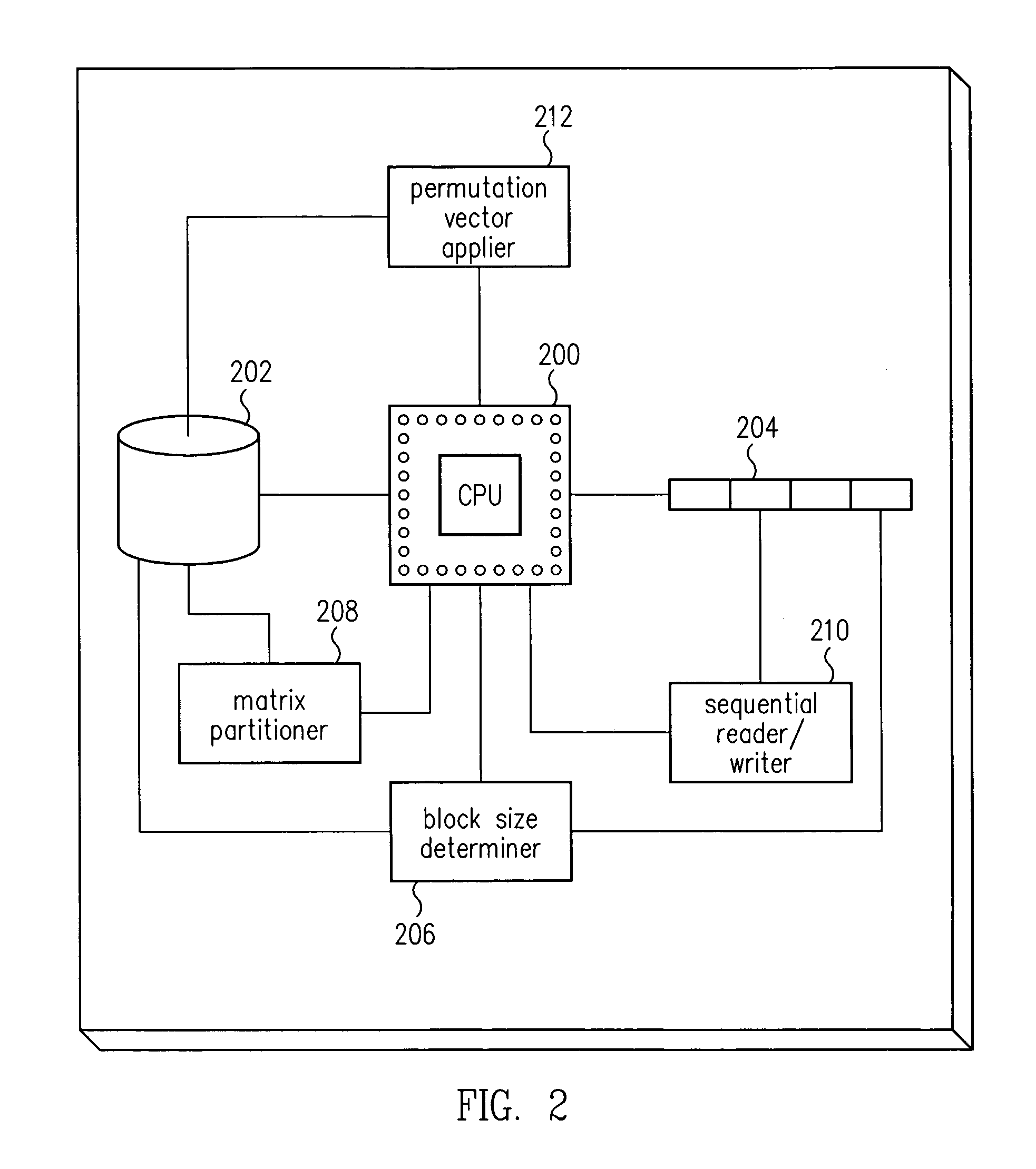

Improved transposition of a matrix in a computer system may be accomplished while utilizing at most a single permutation vector. This greatly improves the speed and parallelability of the transpose operation. For a standard rectangular matrix having M rows and N columns and a size M×N, first n and q are determined, wherein N=n*q, and wherein M×q represents a block size and wherein N is evenly divisible by p. Then, the matrix is partitioned into n columns of size M×q. Then for each column n, elements are sequentially read within the column row-wise and sequentially written into a cache, then sequentially read from the cache and sequentially written row-wise back into the matrix in a memory in a column of size q×M. A permutation vector may then be applied to the matrix to arrive at the transpose. This method may be modified for special cases, such as square matrices, to further improve efficiency.

Owner:ORACLE INT CORP

Systems and methods for a turbo low-density parity-check decoder

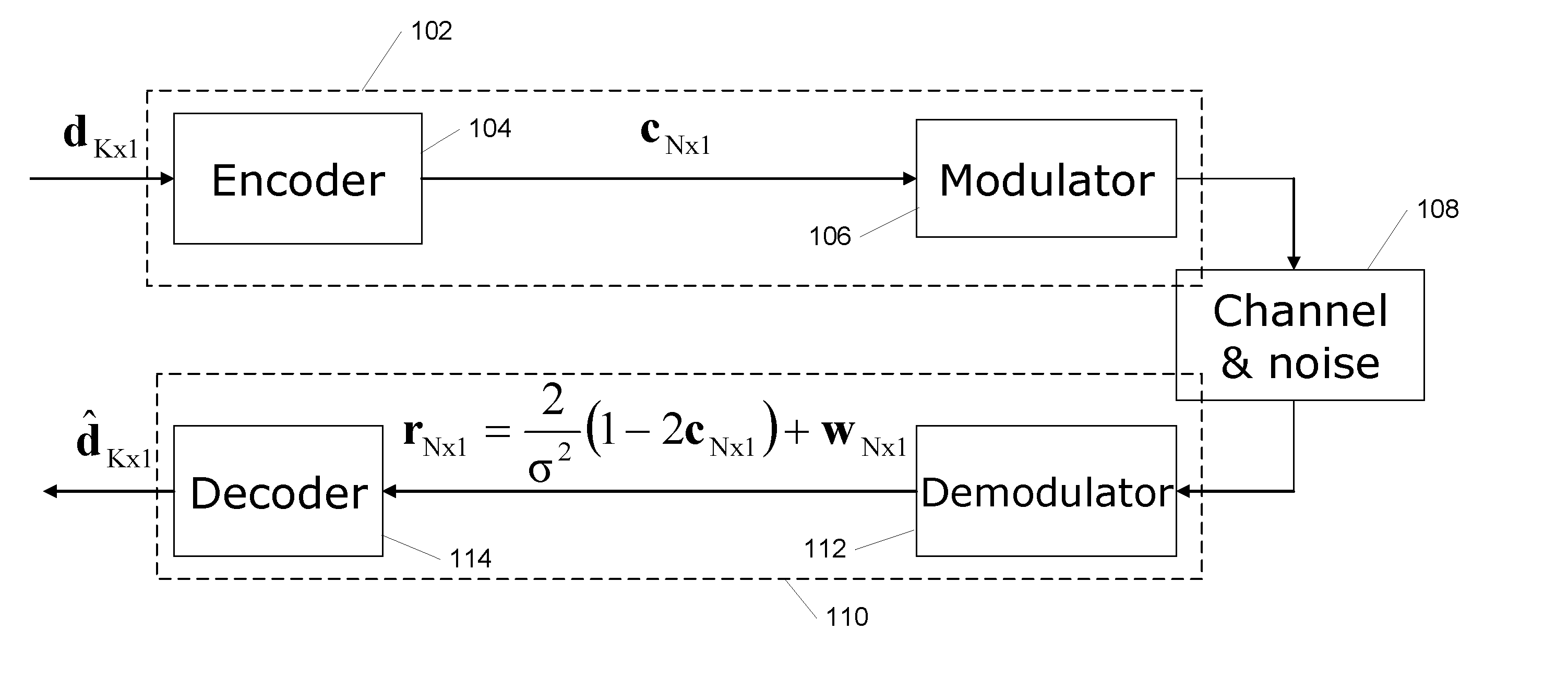

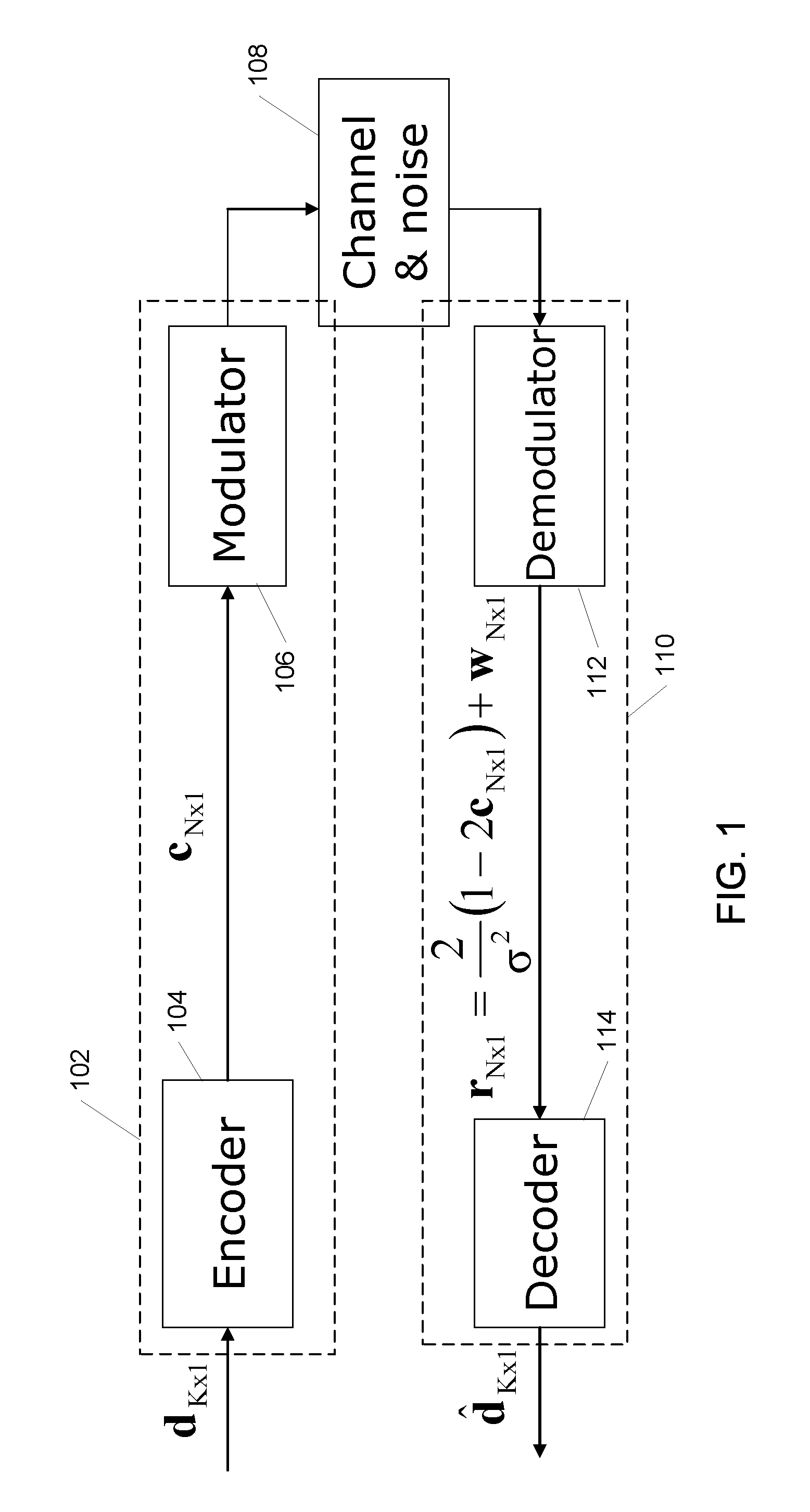

ActiveUS20070043998A1Control complexityReduce in quantityError detection/correctionCode conversionParity-check matrixData rate

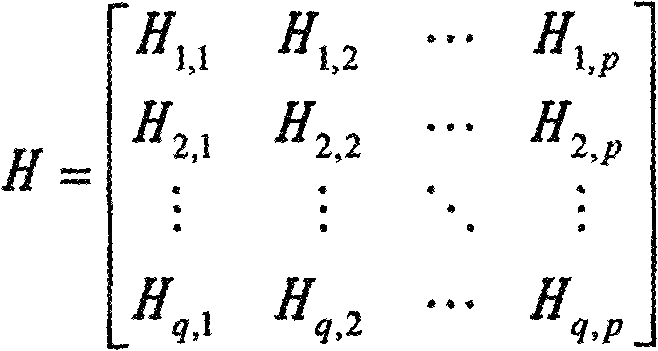

A method for forming a plurality of parity check matrices for a plurality of data rates for use in a Low-Density Parity-Check (LDPC) decoder, comprises establishing a first companion exponent matrix corresponding to a first parity check matrix for a first data rate, and partitioning the first parity check matrix and the first companion exponent matrix into sub-matrices such that the first parity check matrix is defined using a cyclical shift of an identity matrix.

Owner:QUALCOMM INC

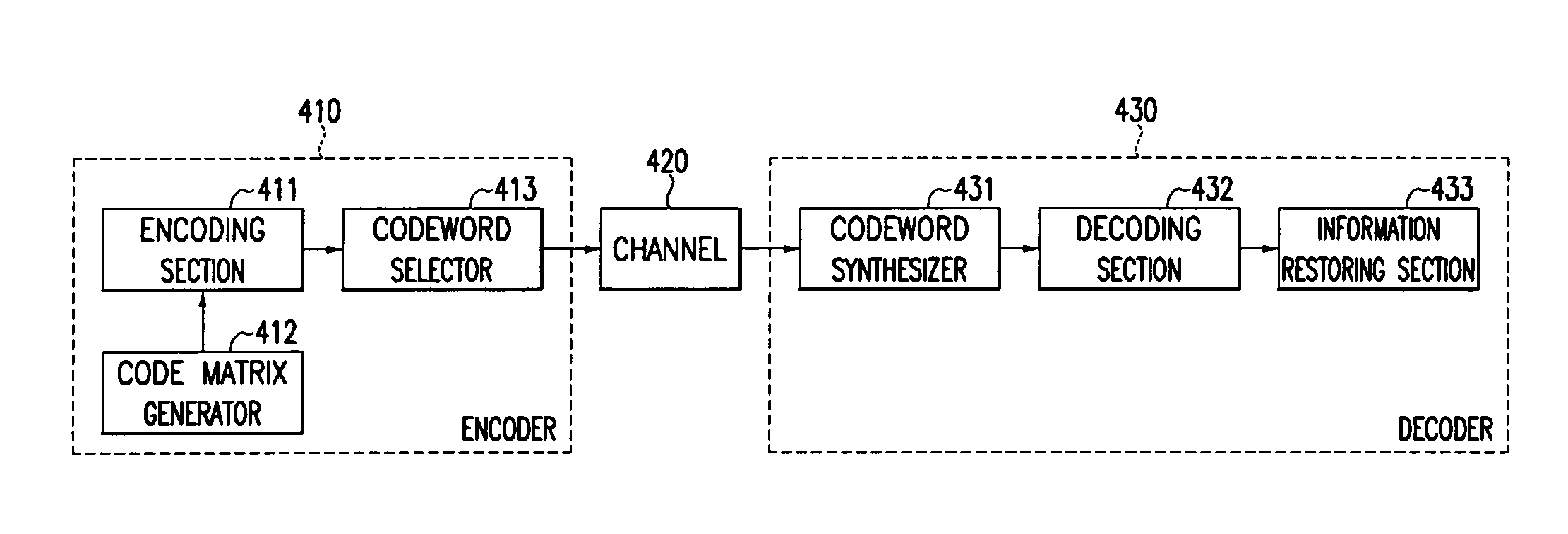

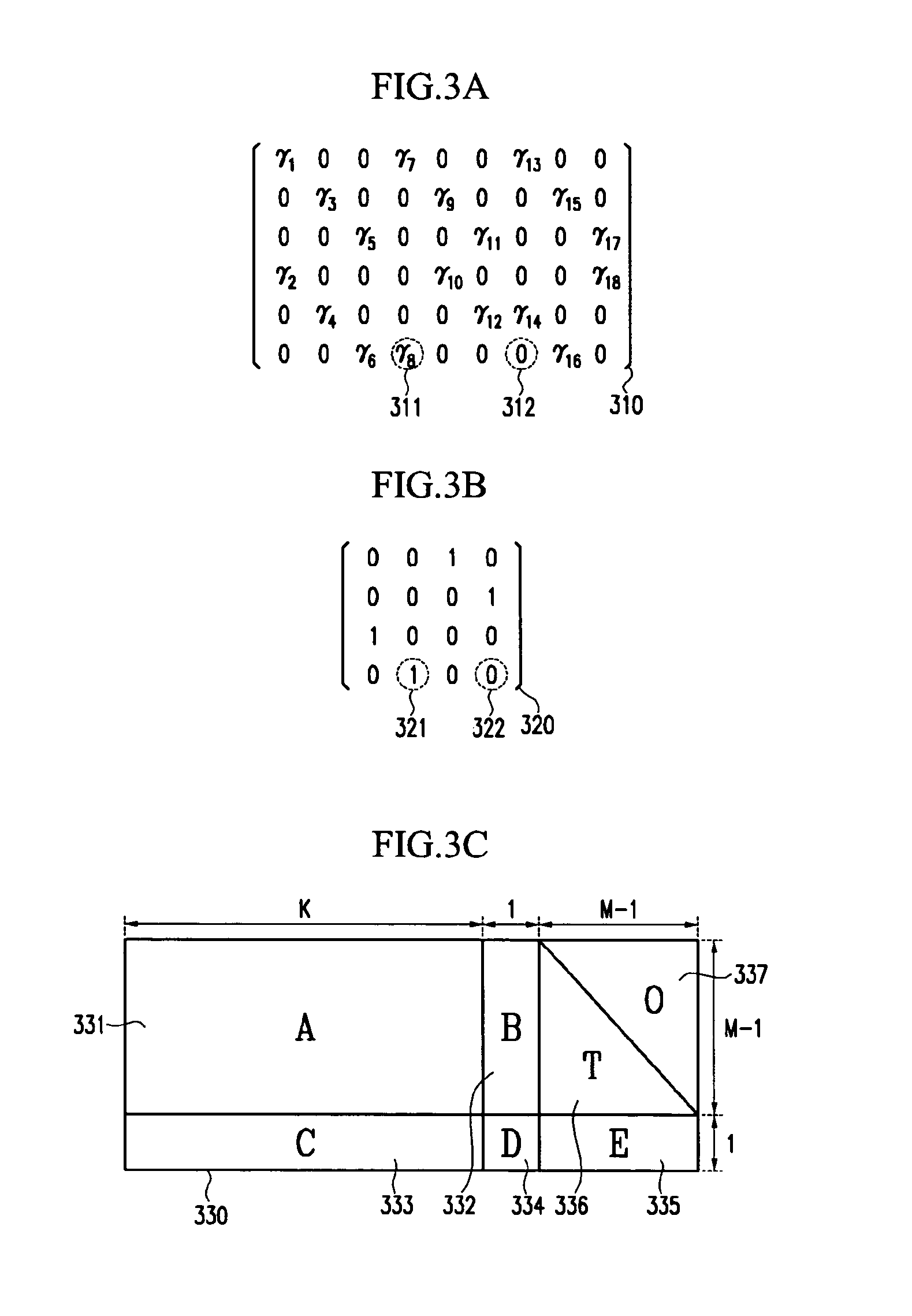

Apparatus for encoding and decoding of low-density parity-check codes, and method thereof

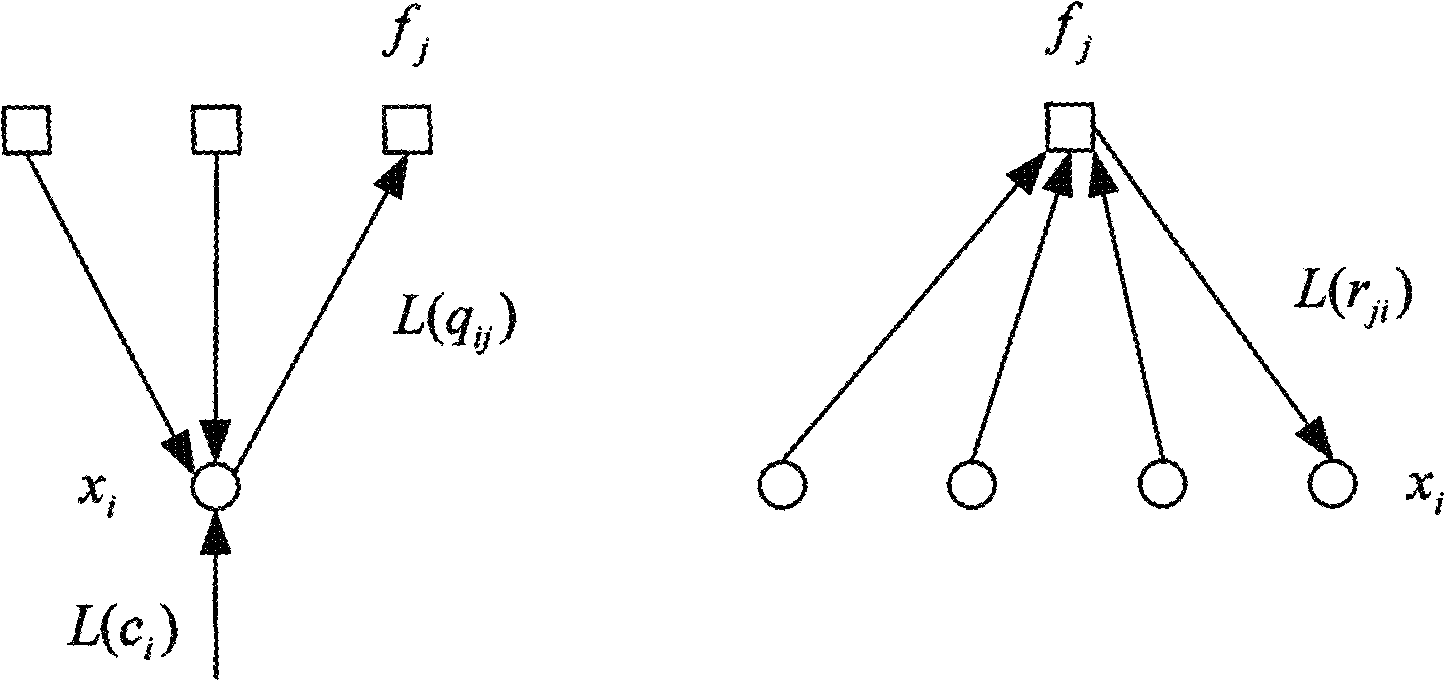

ActiveUS7395494B2Reduce memory sizeHigh-speed and simple hardwareError detection/correctionError correction/detection using multiple parity bitsTanner graphParity-check matrix

An LDPC code encoding apparatus includes: a code matrix generator for generating and transmitting a parity-check matrix comprising a combination of square matrices having a unique value on each row and column thereof; an encoding means encoding block LDPC codes according to the parity-check matrix received from the code matrix generator; and a codeword selector for puncturing the encoded result of the encoding means to generate an LDPC codeword. The code matrix generator divides an information word to be encoded into block matrices having a predetermined length to generate a vector information word. The encoding means encodes the block LDPC codes using the parity-check matrix divided into the block matrices and a Tanner graph divided into smaller graphs in correspondence to the parity-check matrix.

Owner:ELECTRONICS & TELECOMM RES INST

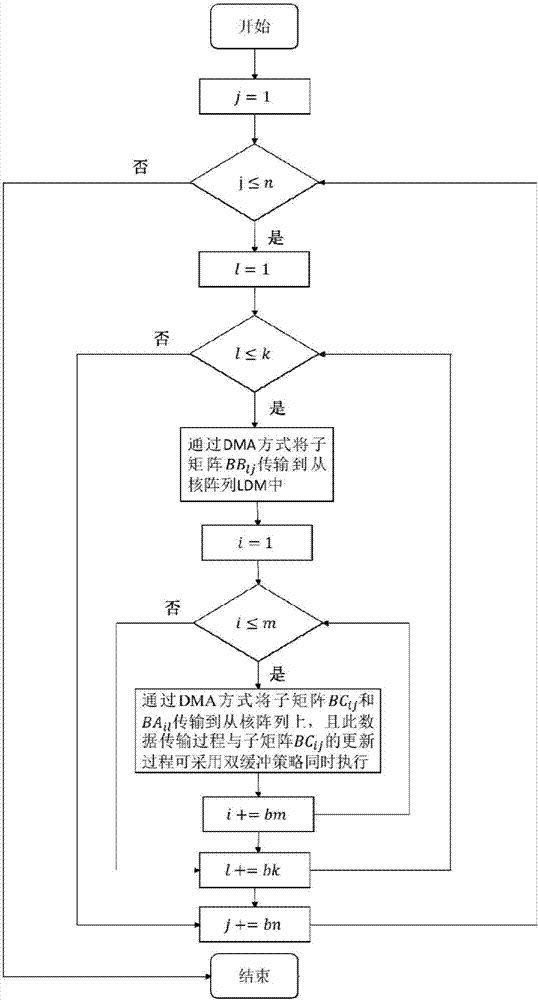

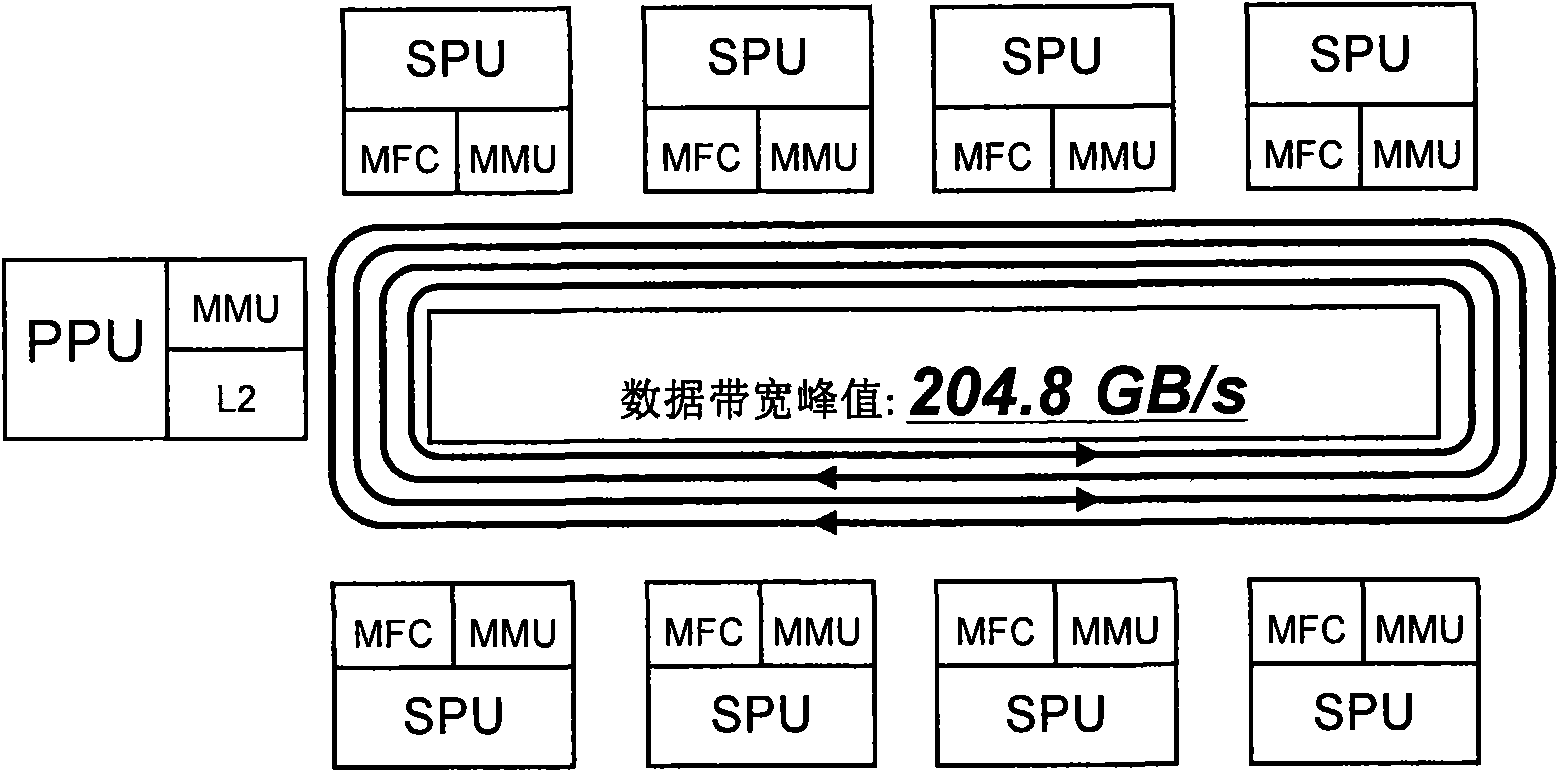

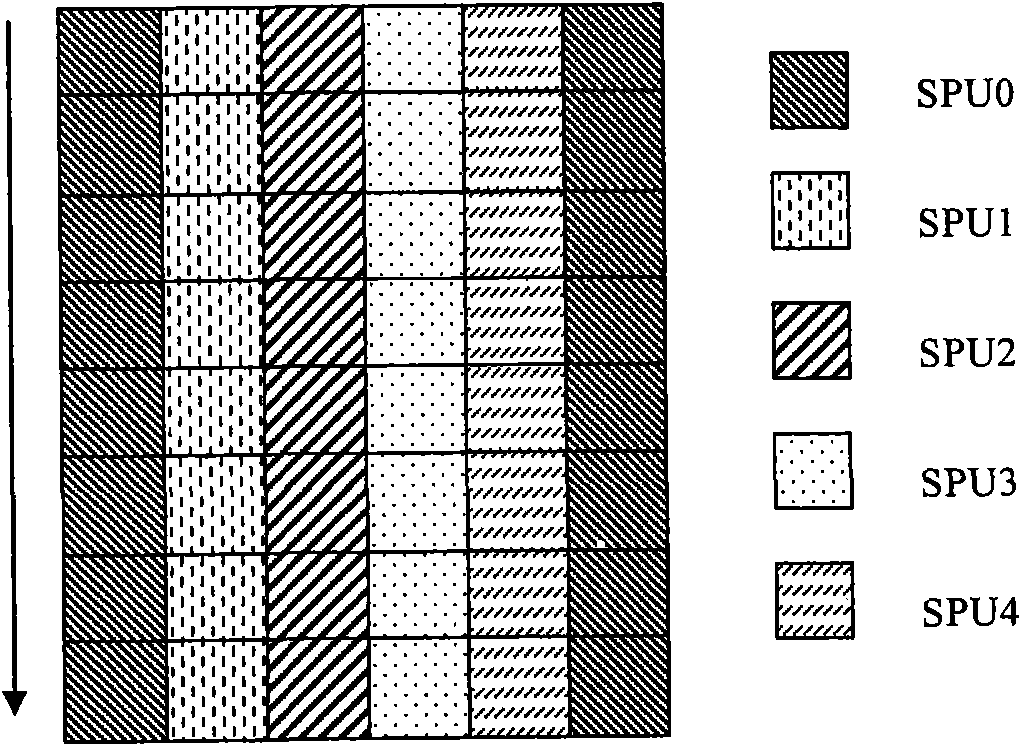

A GEMM (general matrix-matrix multiplication) high-performance realization method based on a domestic SW 26010 many-core CPU

ActiveCN107168683ASolve the problem that the computing power of slave cores cannot be fully utilizedImprove performanceRegister arrangementsConcurrent instruction executionFunction optimizationAssembly line

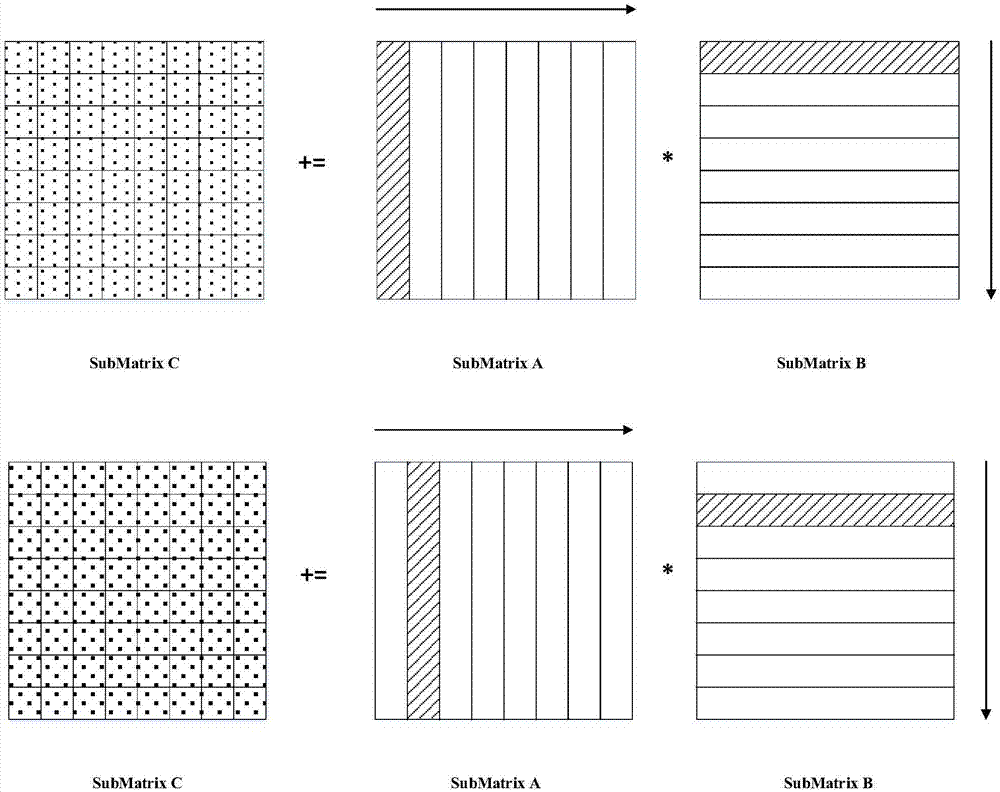

The invention provides a GEMM (general matrix-matrix multiplication) high-performance realization method based on a domestic SW 26010 many-core CPU. For a domestic SW many-core processor 26010, based on the platform characteristics of storage structures, memory access, hardware assembly lines and register level communication mechanisms, a matrix partitioning and inter-core data mapping method is optimized and a top-down there-level partitioning parallel block matrix multiplication algorithm is designed; a slave core computing resource data sharing method is designed based on the register level communication mechanisms, and a computing and memory access overlap double buffering strategy is designed by using a master-slave core asynchronous DMA data transmission mechanism; for a single slave core, a loop unrolling strategy and a software assembly line arrangement method are designed; function optimization is achieved by using a highly-efficient register partitioning mode and an SIMD vectoring and multiplication and addition instruction. Compared with a single-core open-source BLAS math library GotoBLAS, the function performance of the high-performance GEMM has an average speed-up ratio of 227. 94 and a highest speed-up ratio of 296.93.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI +1

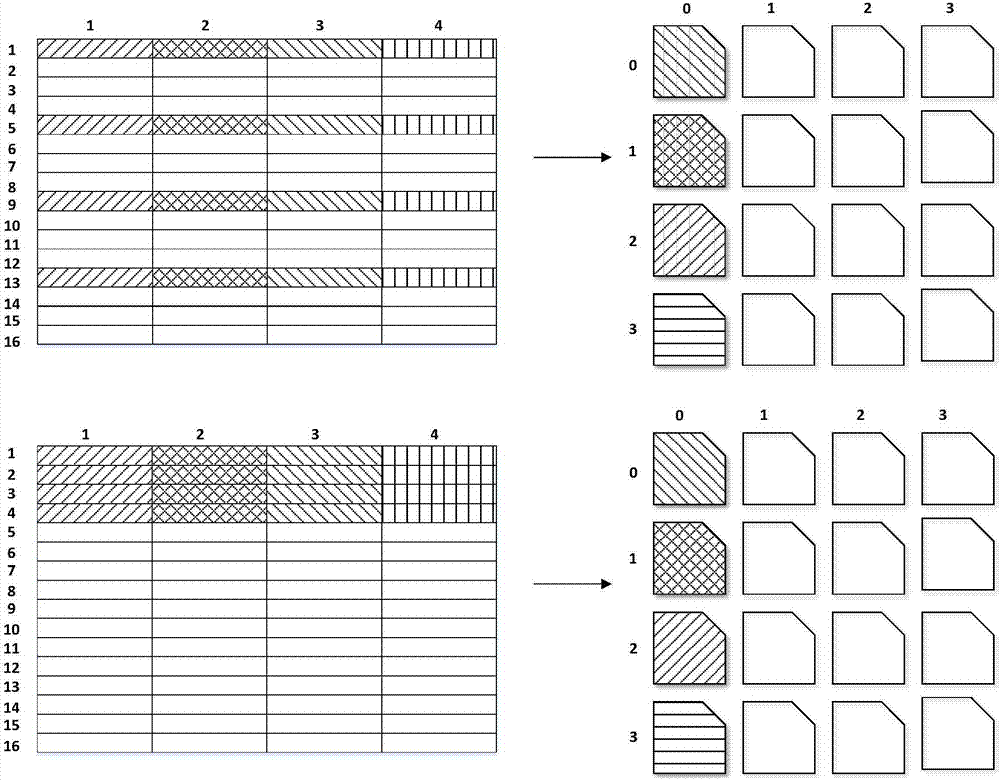

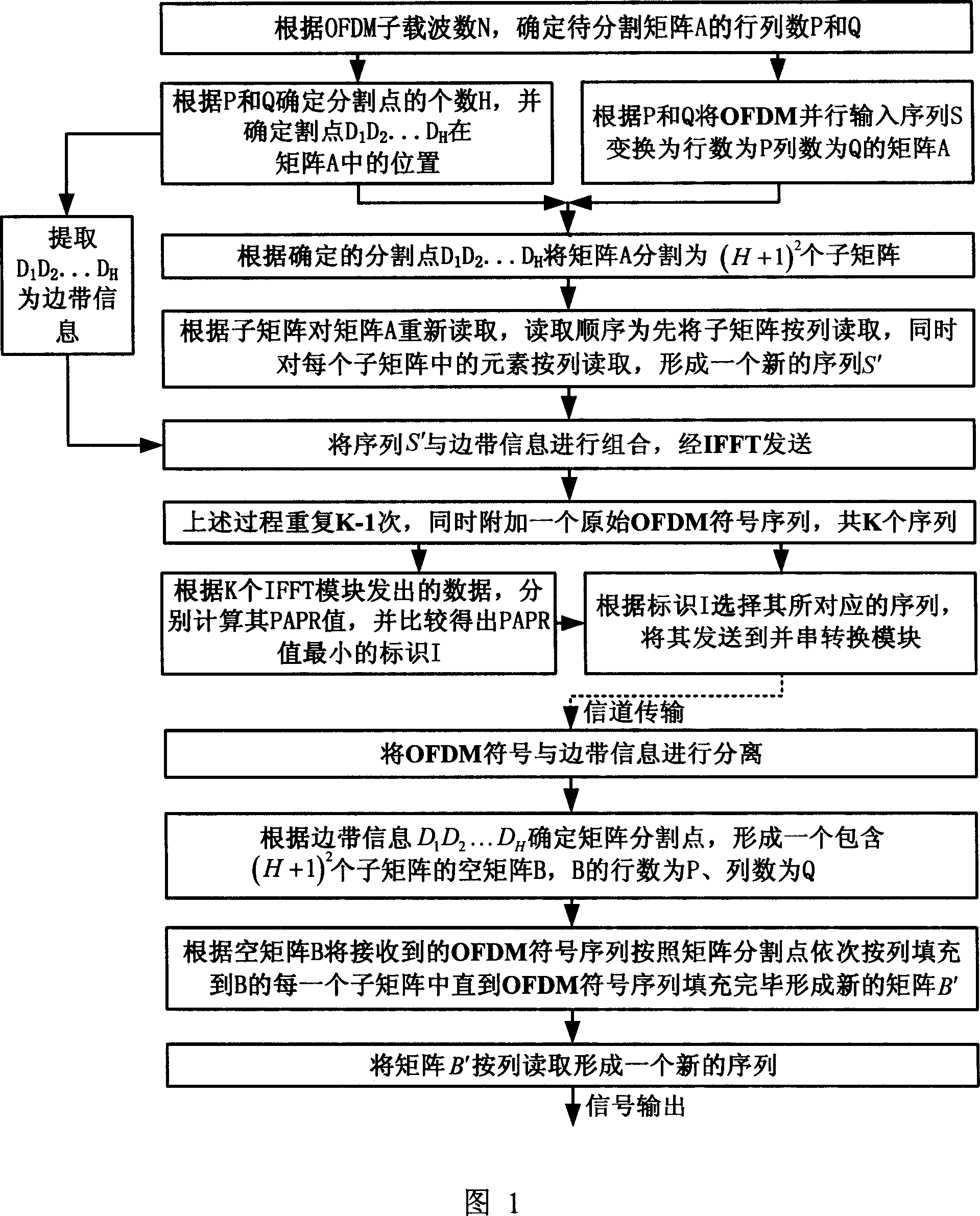

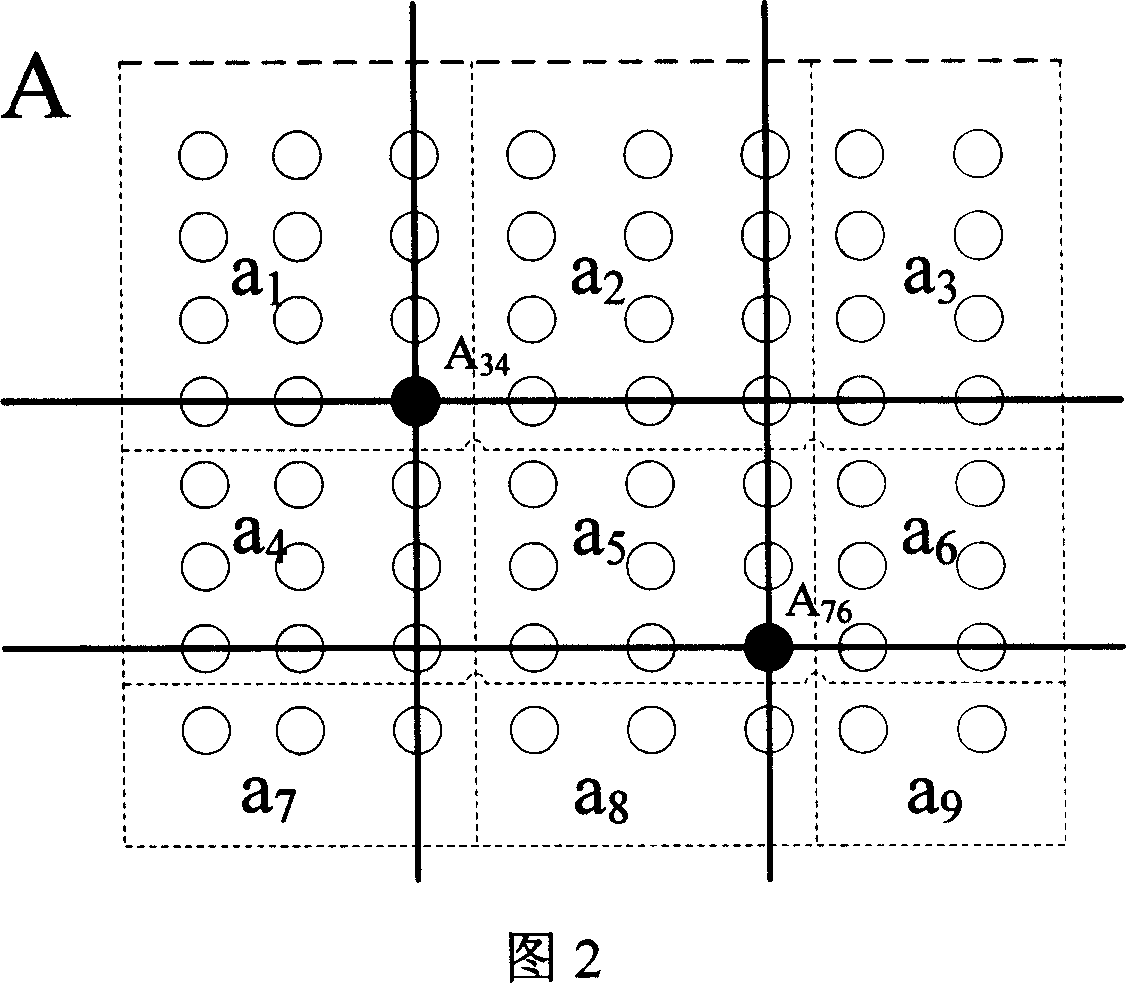

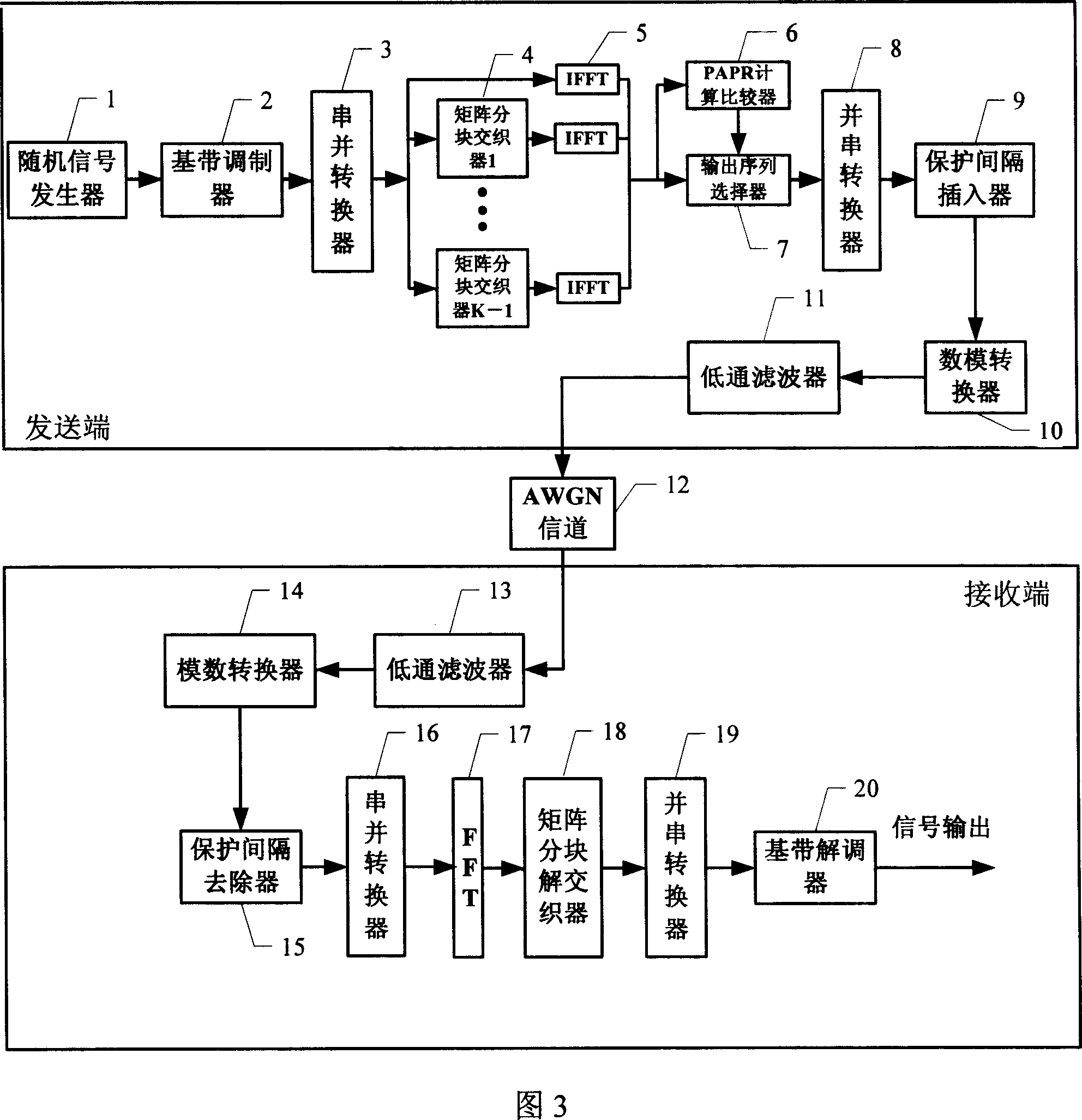

Matrix block interveaving method and device for reducing OFDM system peak-to-average power ratio

InactiveCN101005478AReduce processing delayReduce implementation complexityError preventionBaseband system detailsEngineeringMatrix partitioning

The method is based on the relationship between the autocorrelation of OFDM modulation signal and the PAPR, uses matrices- partitioning interlace approach to rearrange the parallel input sequence of OFDM modulation signals in many times, and selects the sequence with lowest correlation to make the modulus value of its non-cyclic autocorrelation coefficient to be minimal so as to effectively reduce PAPA and the system processing time delay. The apparatus comprises a sending end and a receiving end. The sending end mainly comprises matrices-partitioning interlace unit, PAPR calculation comparator and output sequence selector. The receiving end comprises, the matrices-partitioning de-interlace unit. Said matrices-partitioning interlace unit comprises matrix forming unit, partitioning-point selecting unit, matrix partitioning unit, matrix reading unit and combinational unit. The matrices-partitioning de-interlace unit comprises: separation unit, matrices-partitioning combinational unit and matrix reading unit.

Owner:XIDIAN UNIV

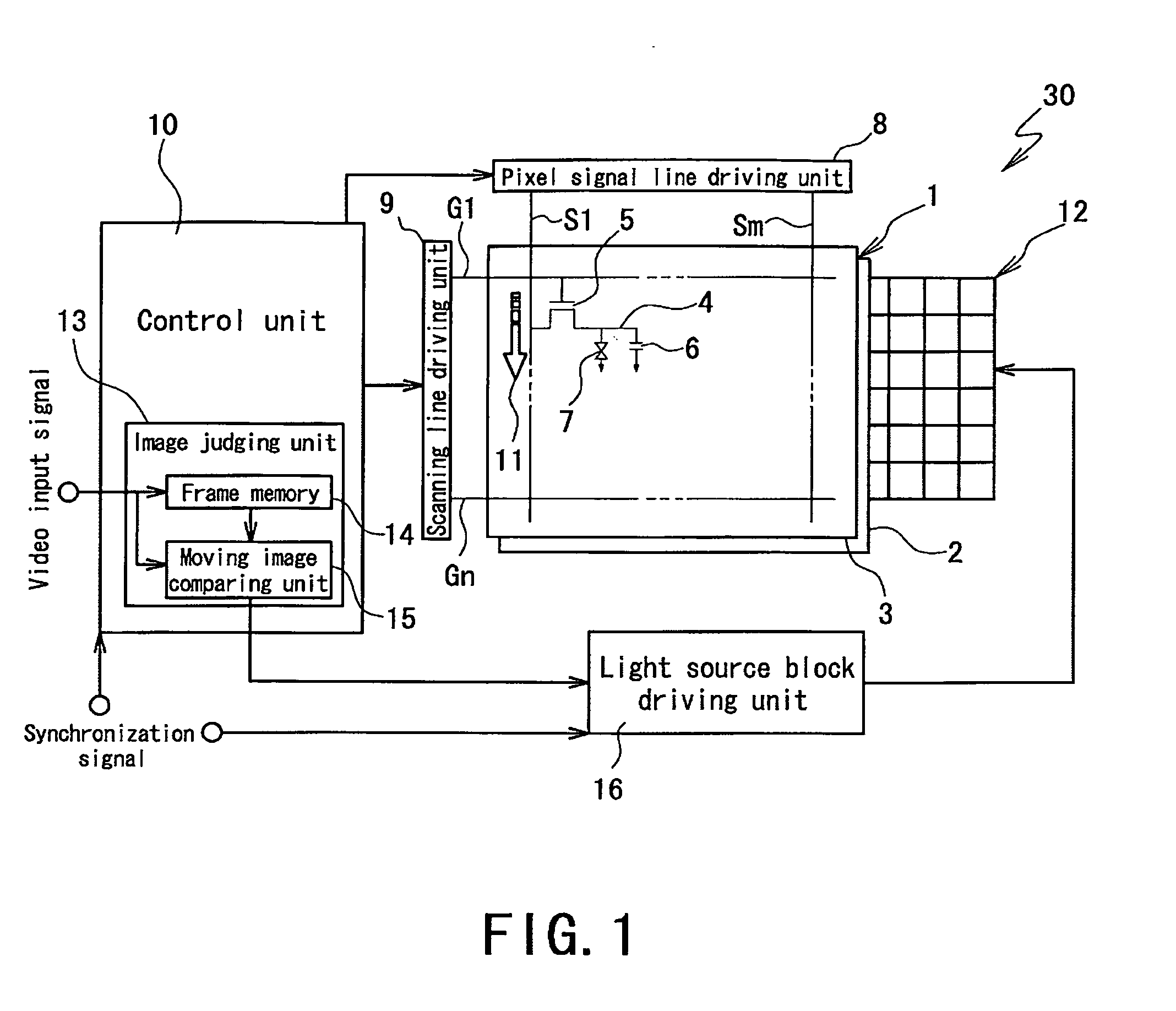

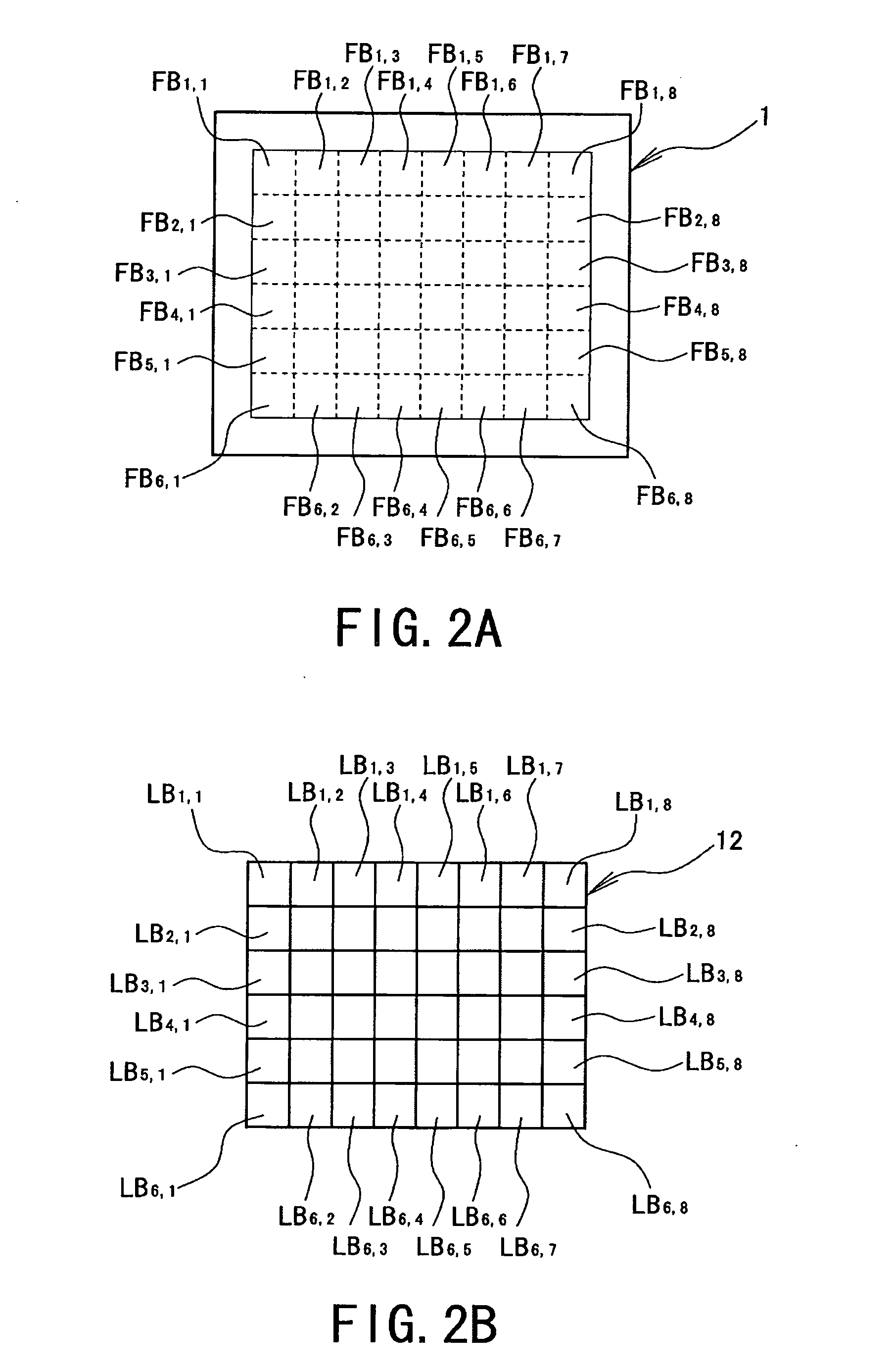

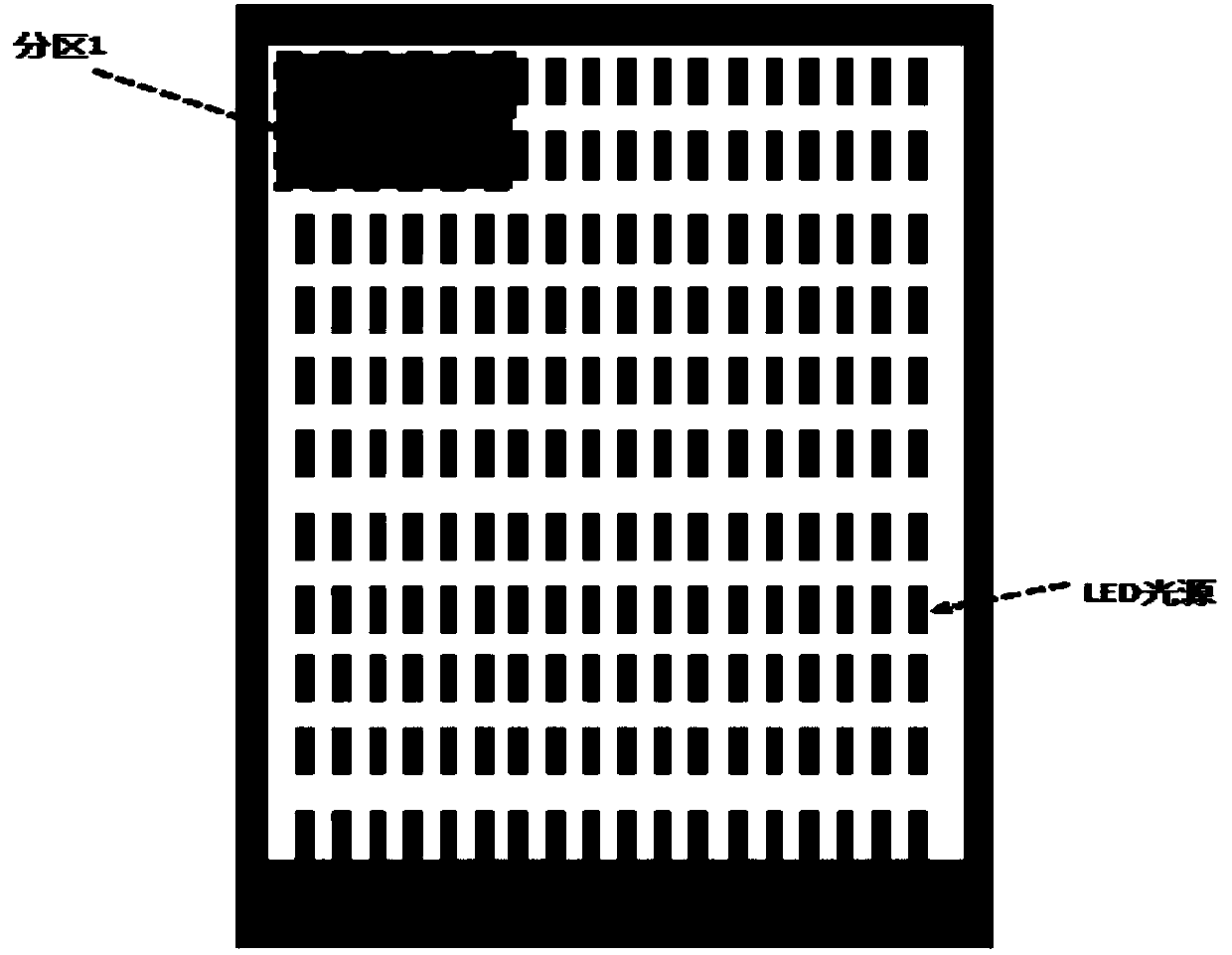

Display device, a receiving device and a method for driving the display device

InactiveUS20100002009A1Short timeIncrease the amount of lightCathode-ray tube indicatorsNon-linear opticsMatrix partitioningLight source

One embodiment of the device includes a display panel and a light source evenly placed behind the panel, wherein the light source includes light source blocks divided and arranged in a matrix, includes an image judging unit which divides one frame of a video input signal into frame blocks each corresponding to a size of each of the light source blocks and judging whether images of the frame blocks are moving or still, and a light source block driving unit which blinks the light source block corresponding to the frame block the image of which is judged as moving by the judging unit upon receiving optical responses from the cells while turning on all the time the light source block corresponding to the frame block the image of which is judged as still by the judging unit.

Owner:SHARP KK

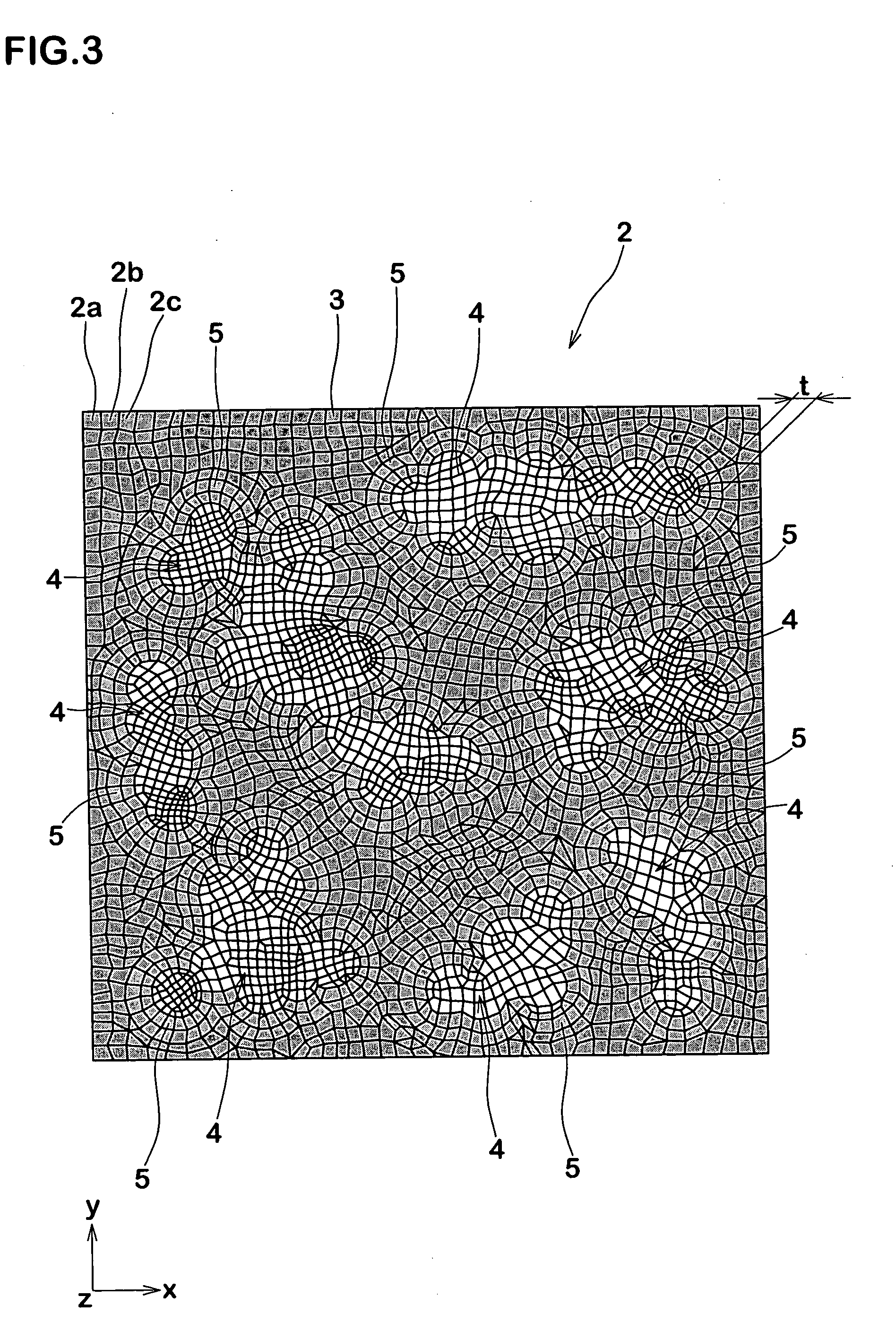

Method of simulating viscoelastic material

ActiveUS20050086034A1Improve accuracyFlow propertiesForce measurementFormation matrixMatrix partitioning

The present invention relates to a method of simulating the deformation of a viscoelastic material in which filler is blended to a matrix made of rubber or resin, the method including the steps of dividing the viscoelastic material into a finite number of elements to form a viscoelastic material model, a step of performing deformation calculation of the viscoelastic material model based on a predetermined condition, and a step of acquiring a necessary physical amount from the deformation calculation, where the step of dividing the viscoelastic material into a finite number of elements includes a step of dividing at least one filler into a finite number of elements to form a filler model, and a step of dividing the matrix into a finite number of elements to form a matrix model.

Owner:SUMITOMO RUBBER IND LTD

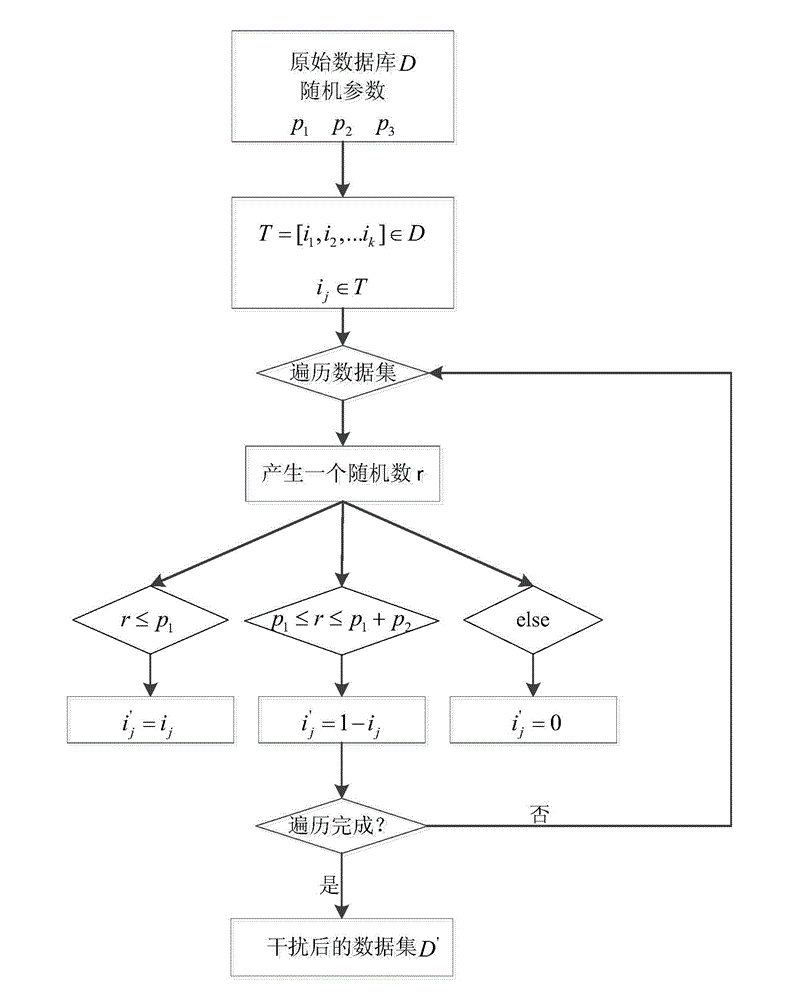

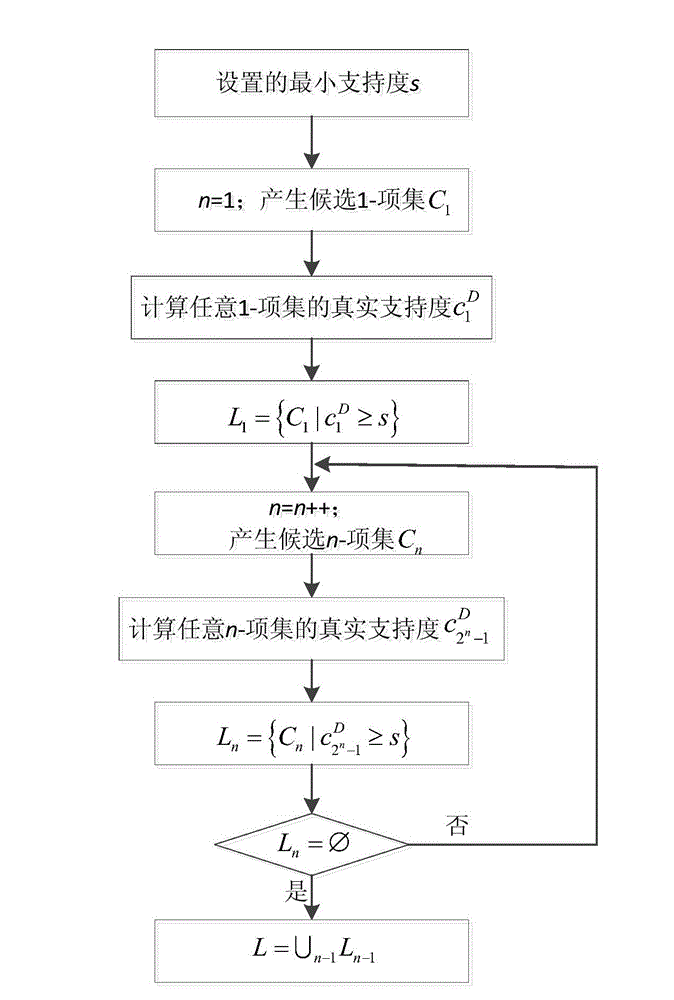

Privacy protection associated rule data digging method based on multi-parameter interference

Owner:TONGJI UNIV

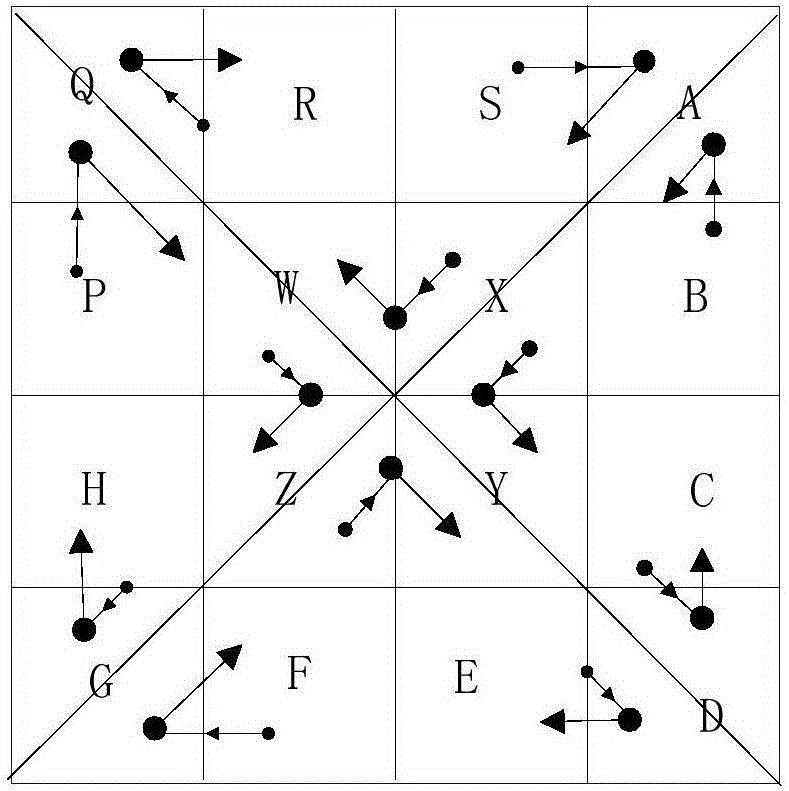

Method for testing motion available for real-time monitoring and device thereof

InactiveCN101572770ASuppress random noiseImprove detection accuracyTelevision system detailsImage analysisVideo monitoringIndependent vector

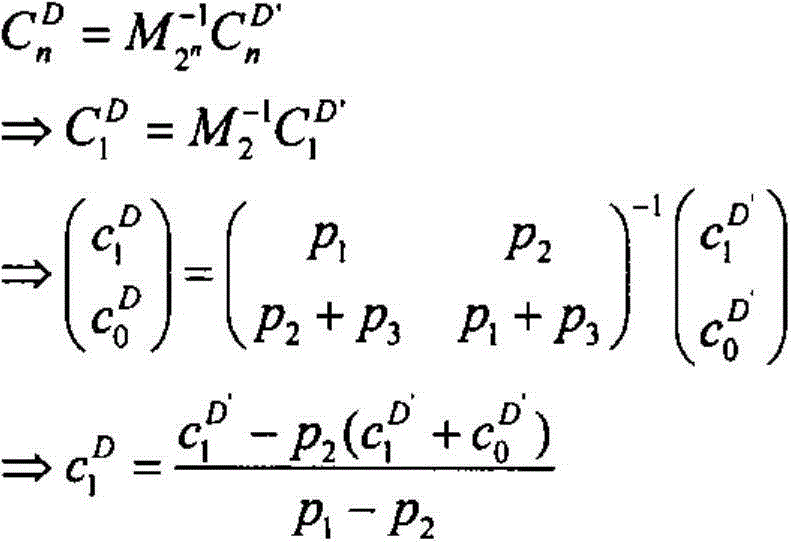

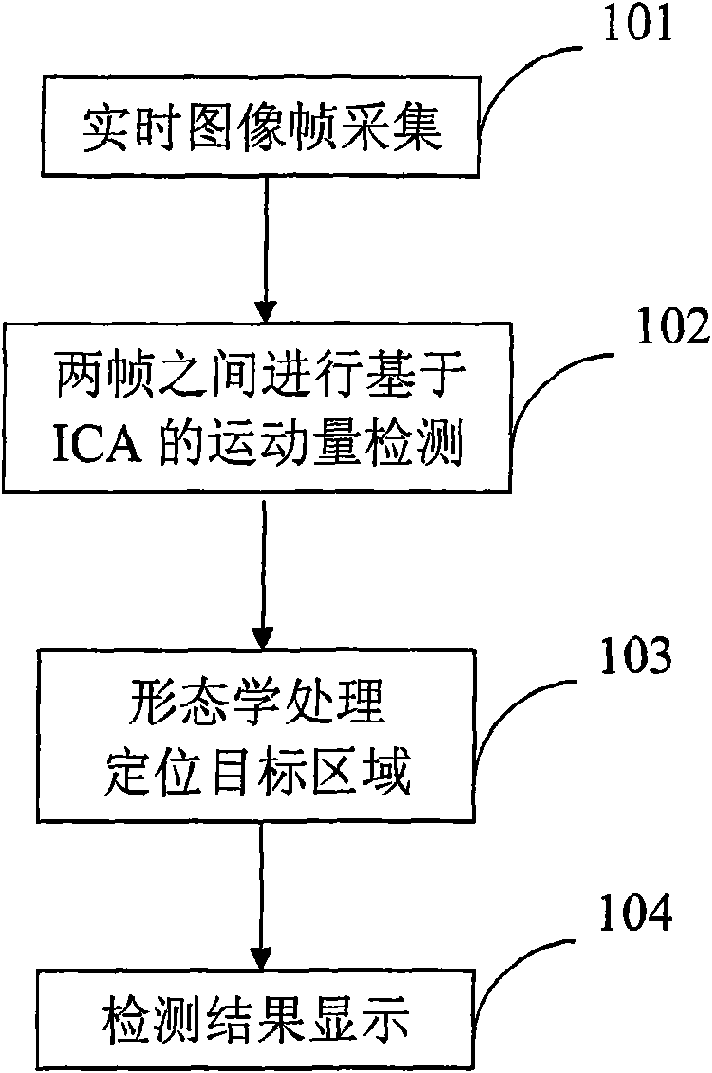

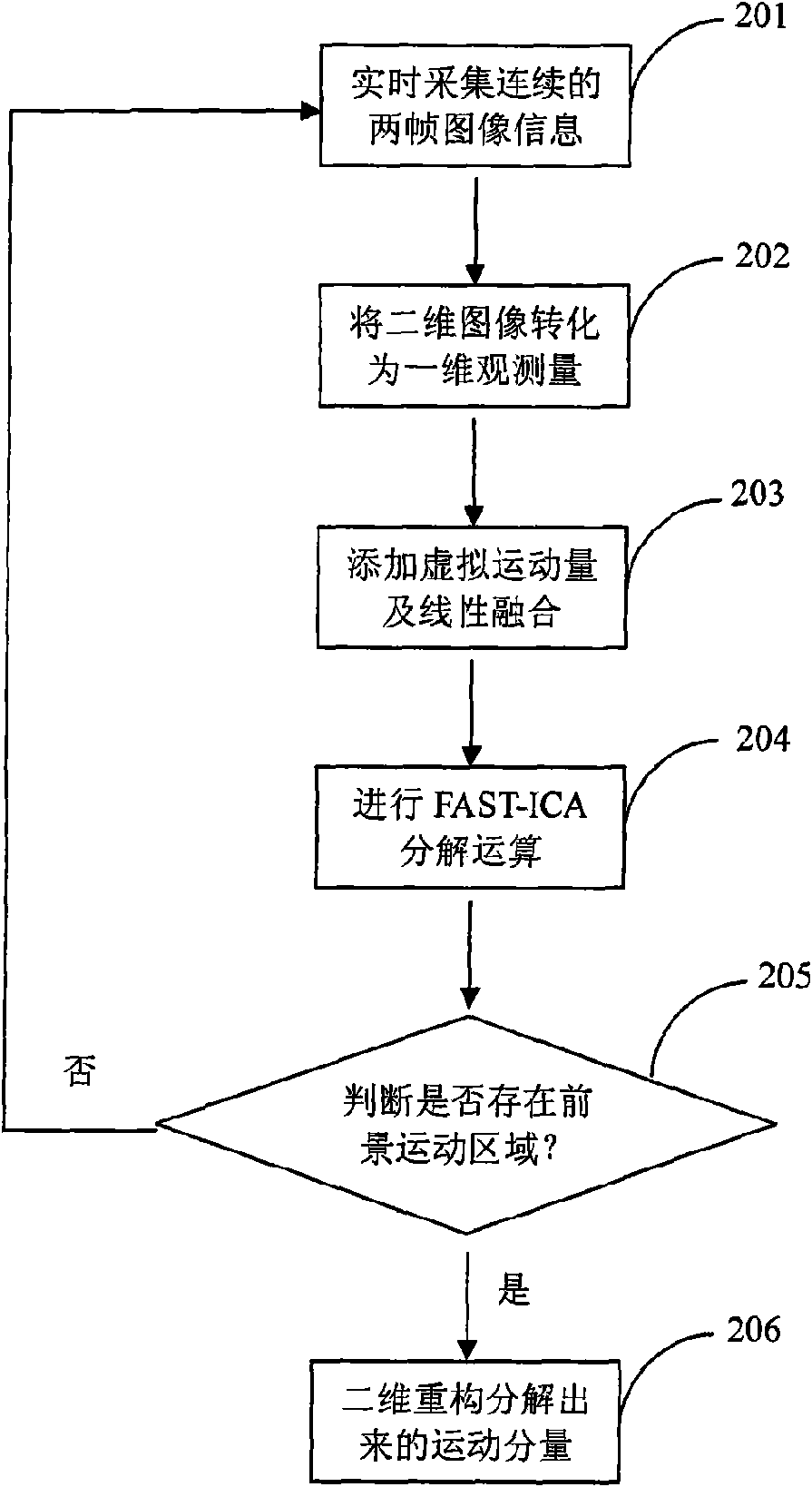

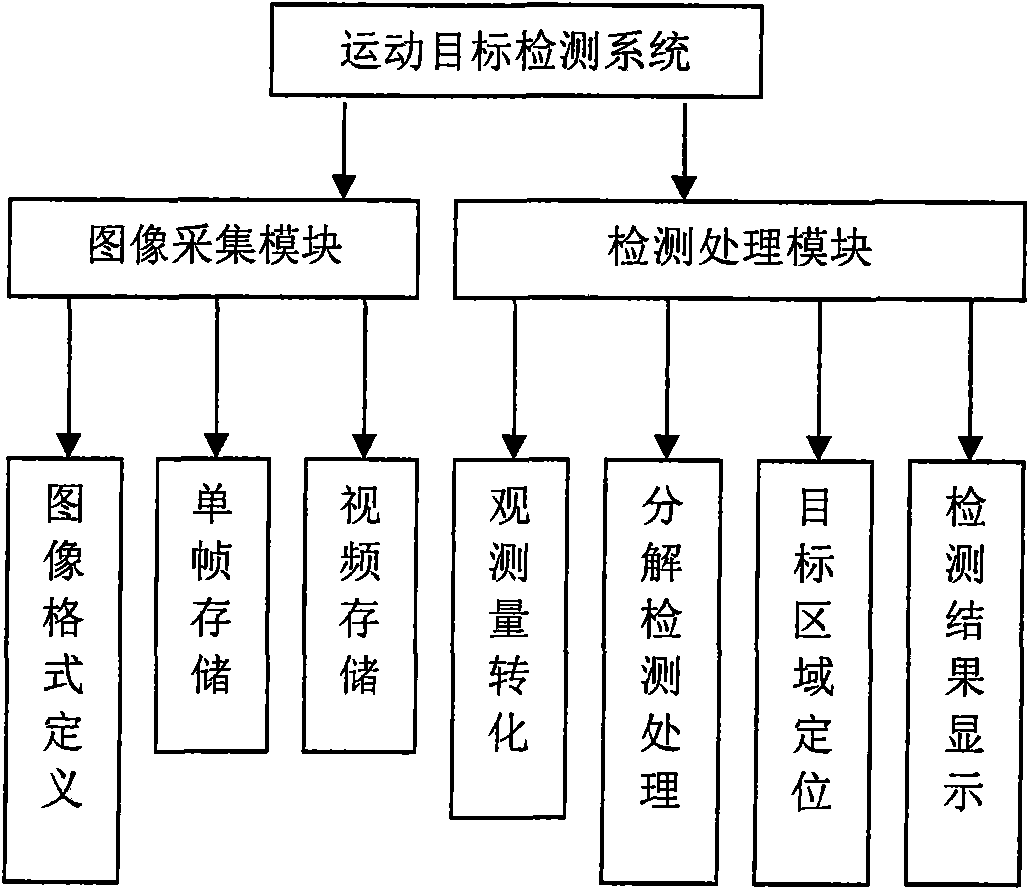

The invention discloses a method for testing motion available for real-time monitoring. Based on the analysis theory of an independent vector, a motion foreground and an environment background are taken as the main composition of a whole image; and corresponding data fusion is carried out on two observation vectors, and a mixing relationship between the vectors which are mutually independent in the statistical sense is adjusted so as to lead the motion foreground and the environment background to be linearly combined. Aiming at the interference of interframe random sampling noise, virtual motion is added into the observation vectors and the noise is filtered after the process of image reconstruction. Finally, an environment vector is separated from a motion vector by a matrix partitioning method to realize the test of the motion object and finally mark a tested object region into the image in a way of externally connected rectangle frame. The invention simultaneously discloses a device for testing motion. The invention can be applicable for the monitoring environment of complex colors; design implementation thereof uses the way of period processing to execute the real-time monitoring of the motion and self-adaptively locates the object region; and the invention is a general monitoring visual processing method.

Owner:UNIV OF SCI & TECH OF CHINA

Neural network operation device and method

ActiveCN108205700AReduce demandFast operationDigital data processing detailsNeural architecturesParallel computingMatrix partitioning

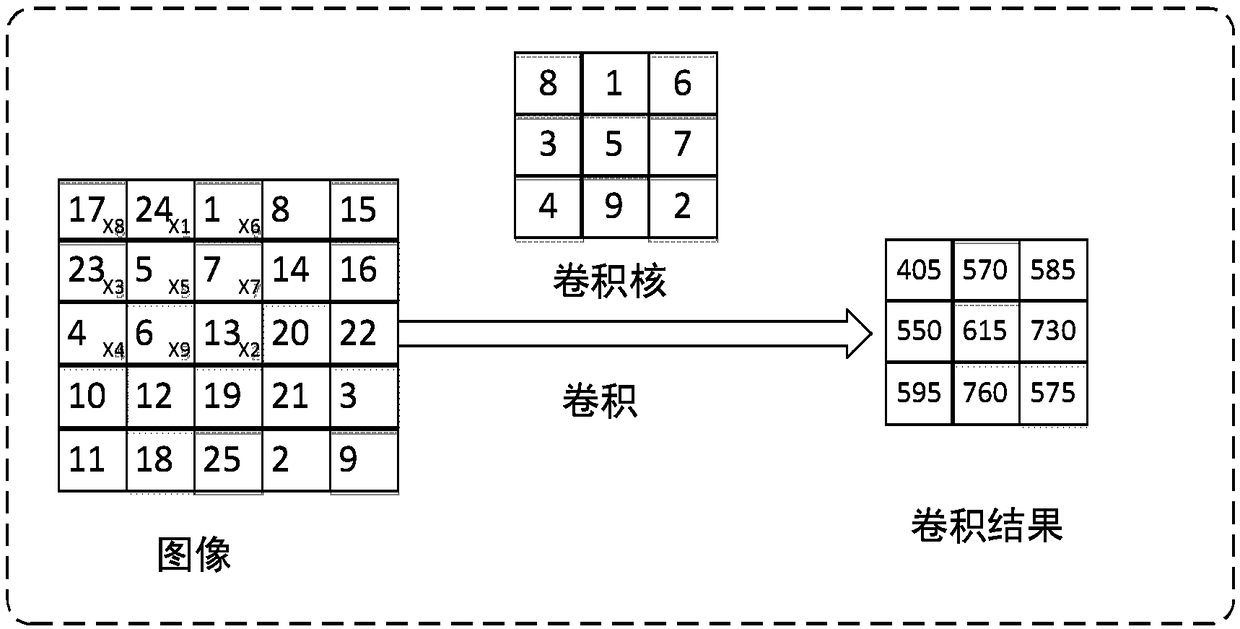

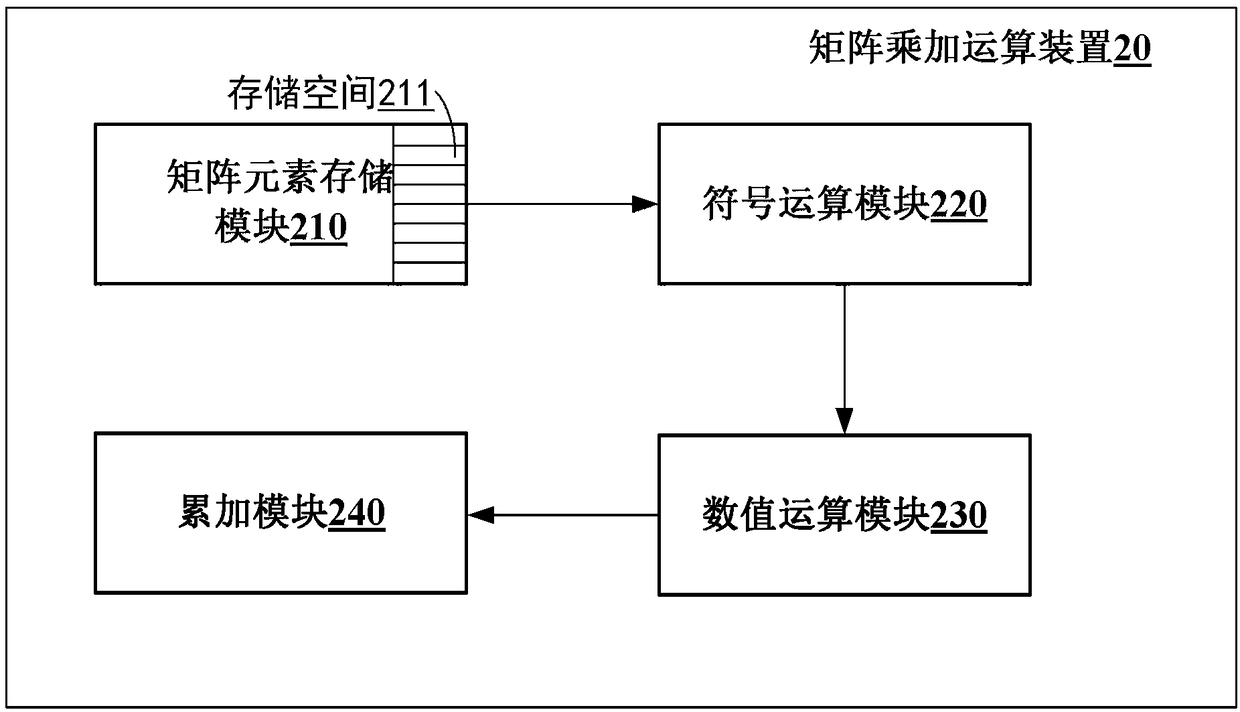

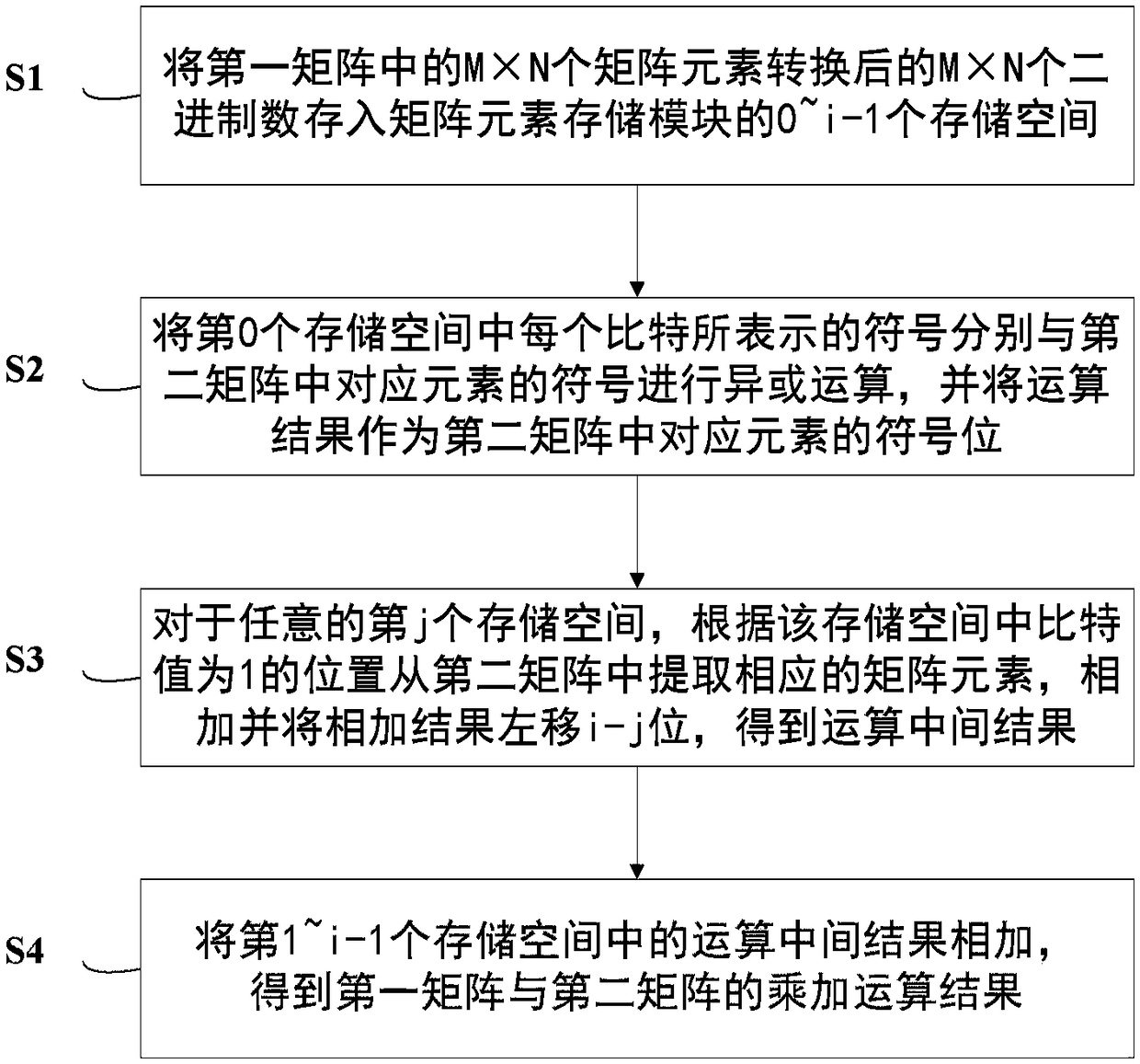

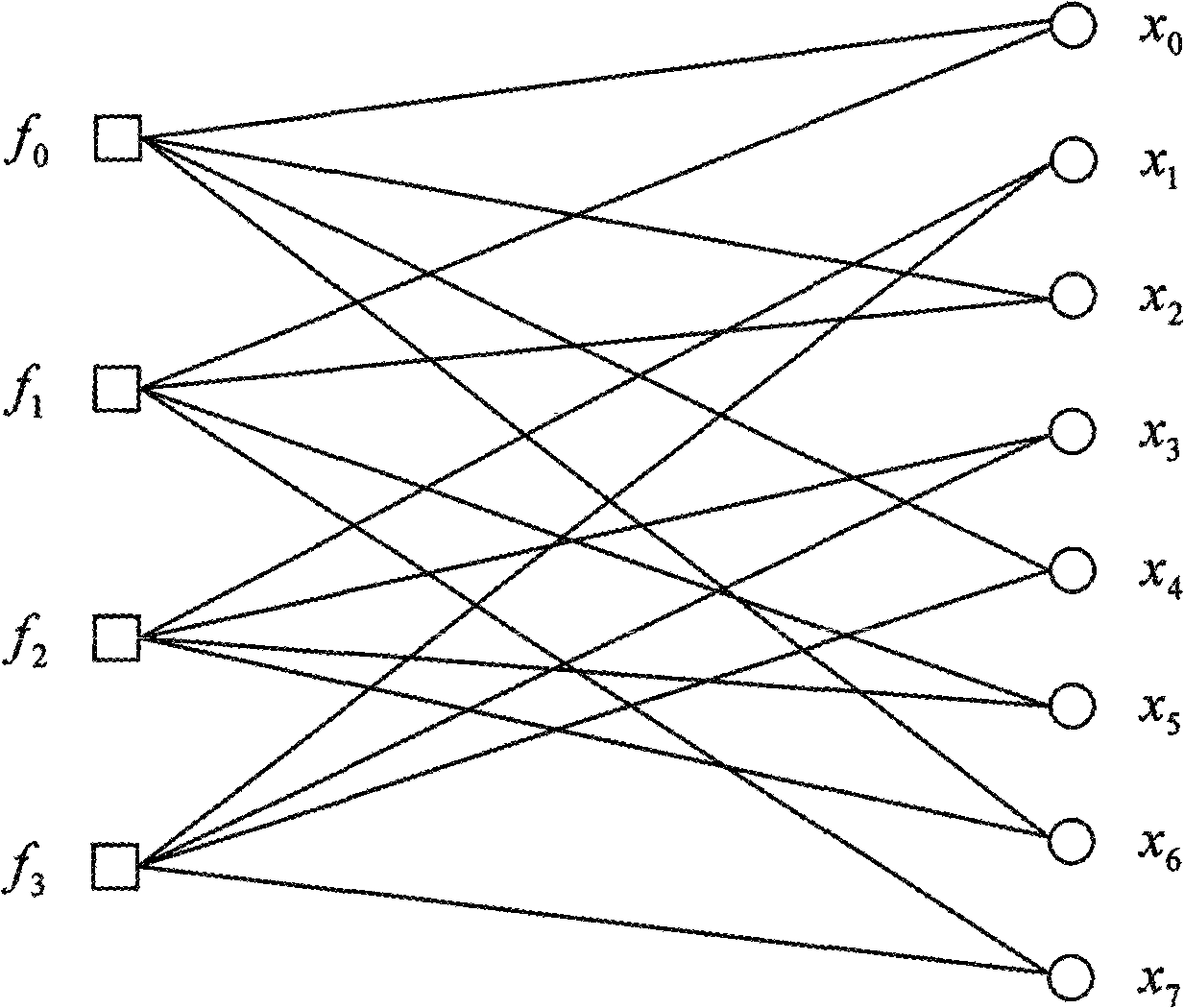

The invention provides a neural network operation device and method. The neural network operation device comprises a submatrix division module, a matrix element storage module, a symbolic operation module, a numerical value operation module, an accumulation module and a convolution result acquisition module, wherein the submatrix division module is used for taking a convolution kernel matrix as afirst matrix and taking each submatrix as a second matrix; the matrix element storage module contains multiple storage spaces, and is used for receiving the converted binary number of a matrix element in the first matrix according to a rule; the symbolic operation module is used for determining an operation result sign bit; the numerical value operation module is used for adding matrix elements on corresponding positions, and an addition result moves leftwards for an i-j bit to obtain an operation intermediate result; the accumulation module is used for adding the operation intermediate results in the first storage space to the (i-1)th storage space to obtain the multiplication and addition operation results of the first matrix and the second matrix; and the convolution result acquisitionmodule is used for forming a matrix by a plurality of multiplication and addition operation results as a convolution operation result according to a sliding sequence.

Owner:SHANGHAI CAMBRICON INFORMATION TECH CO LTD

High speed LDPC decoder implementing method based on matrix block

InactiveCN101335592ANo increase in capacityReduce capacityError preventionCode conversionAlgorithmParity-check matrix

The invention relates to a matrix partitioning-based realizing method of a high-speed LDPC encoder, which pertains to the technical field of channel coding. The method of the invention comprises the following specific steps that: (1) an LDPC code provided with a partitioning check matrix is constructed; firstly, a check matrix is constructed by using an algebraic approach; the check matrix has no circlet so as to guarantee the performance of the constructed code word; and then the rows of the check matrix are rearranged according to a certain rule to lead the rearranged check matrix to have the partition structure; (2) under a state that the check matrix of the LDPC code has the partition structure, the high-speed parallel decoding of the LDPC code is realized. The realizing method of the invention can realize the high-speed parallel decoding of the LDPC code in engineering; the complexity of the decoding is very low and the constructed code word has no circlet but good performance which is comparable to that of the code word which is constructed randomly.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

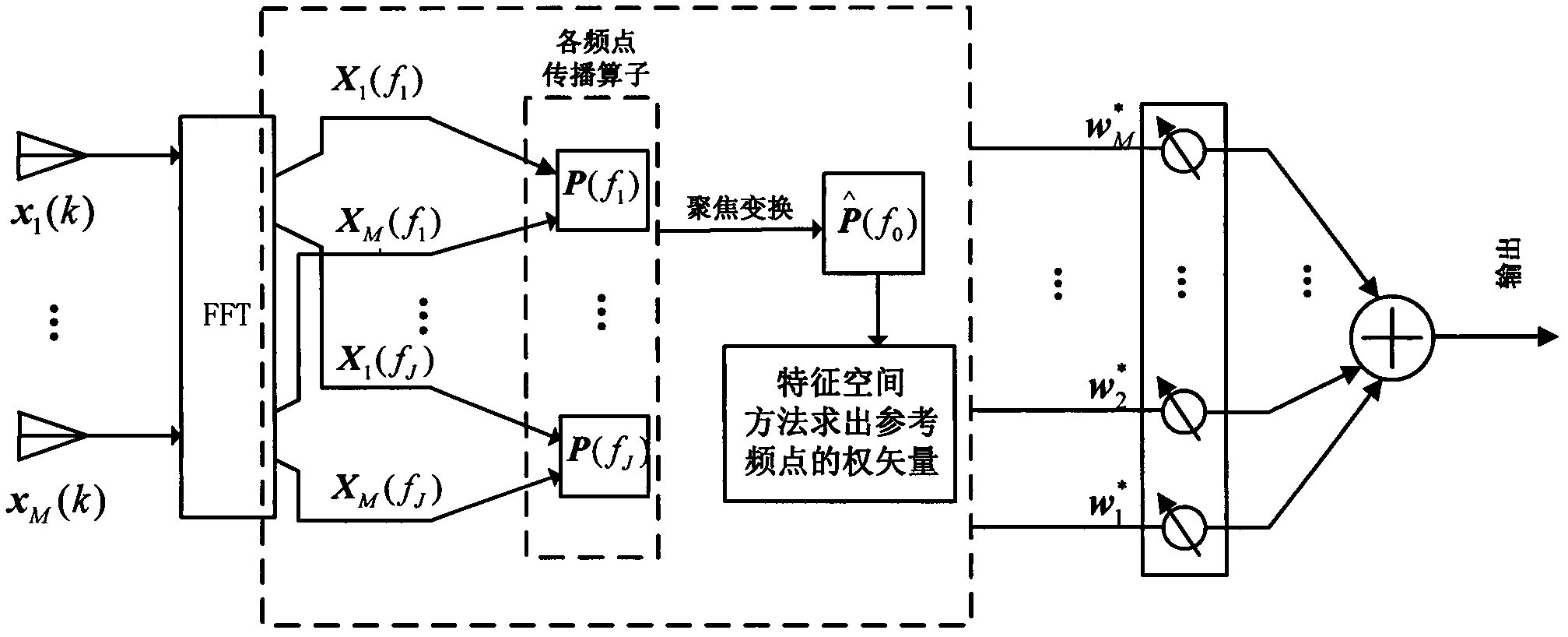

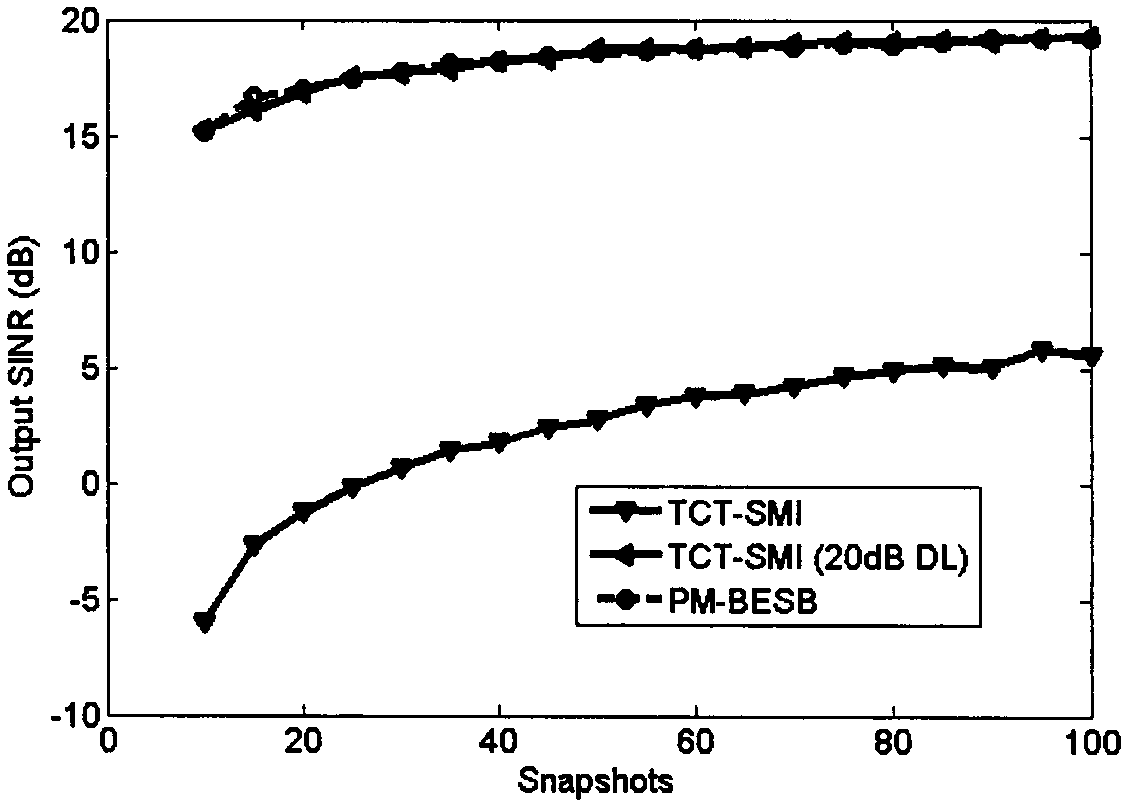

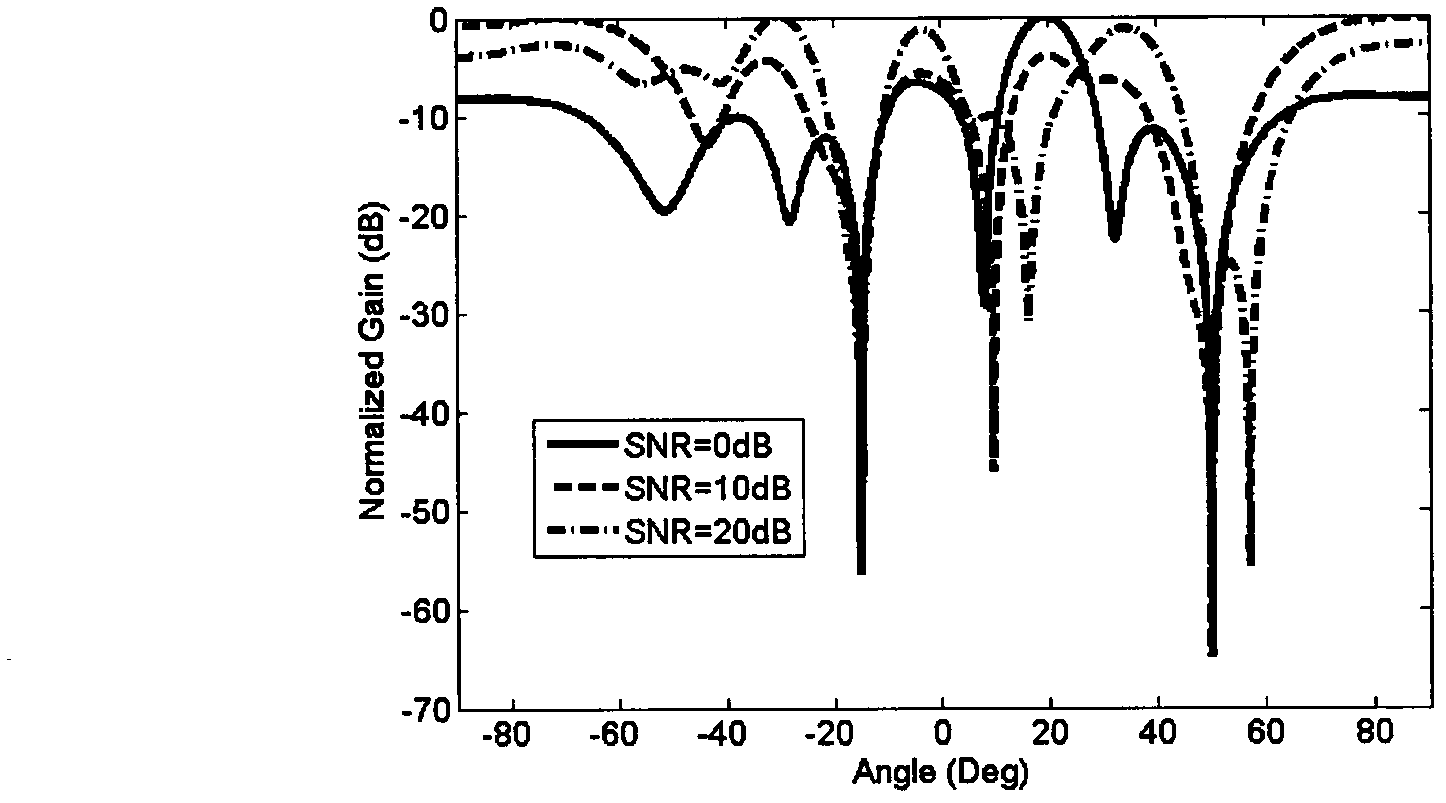

Efficient robust self-adapting beam forming method of broadband

InactiveCN102664666AReduce computationStrong robustnessSpatial transmit diversityDecompositionSignal subspace

The invention provides an efficient robust self-adapting beam forming method of broadband. The method is applied to the field of wireless communication and comprises steps as follows: performing fast fourier transform (FFT) to received data of an array to obtain the received data on different frequency points and a covariance matrix of the received data of each frequency point; choosing a central frequency point as a reference frequency point; using a propagator thought to respectively performing matrix partitioning on the covariance matrix of each frequency point and the covariance matrix of the central frequency point so as to obtain a propagator of each frequency point and the propagator of the central frequency point; constructing a focusing transformation matrix, focusing the propagators of different frequency points onto the same reference frequency point to obtain the final propagator estimation and noise subspace; and combining with a feature space method to configure a broadband beam forming algorithm weight vector to realize robust self-adapting beam forming of the broadband. In comparison with a traditional coherent signal subspace method, the method does not need any singular value or feature value decomposition, does not need a diagonal loading technique, and can reflect a good performance with respect to an environment having low snapshots and strong desired signals. Particularly, the method has stronger robustness and reduces the complexity under a condition that the desired signal estimation has a certain error.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

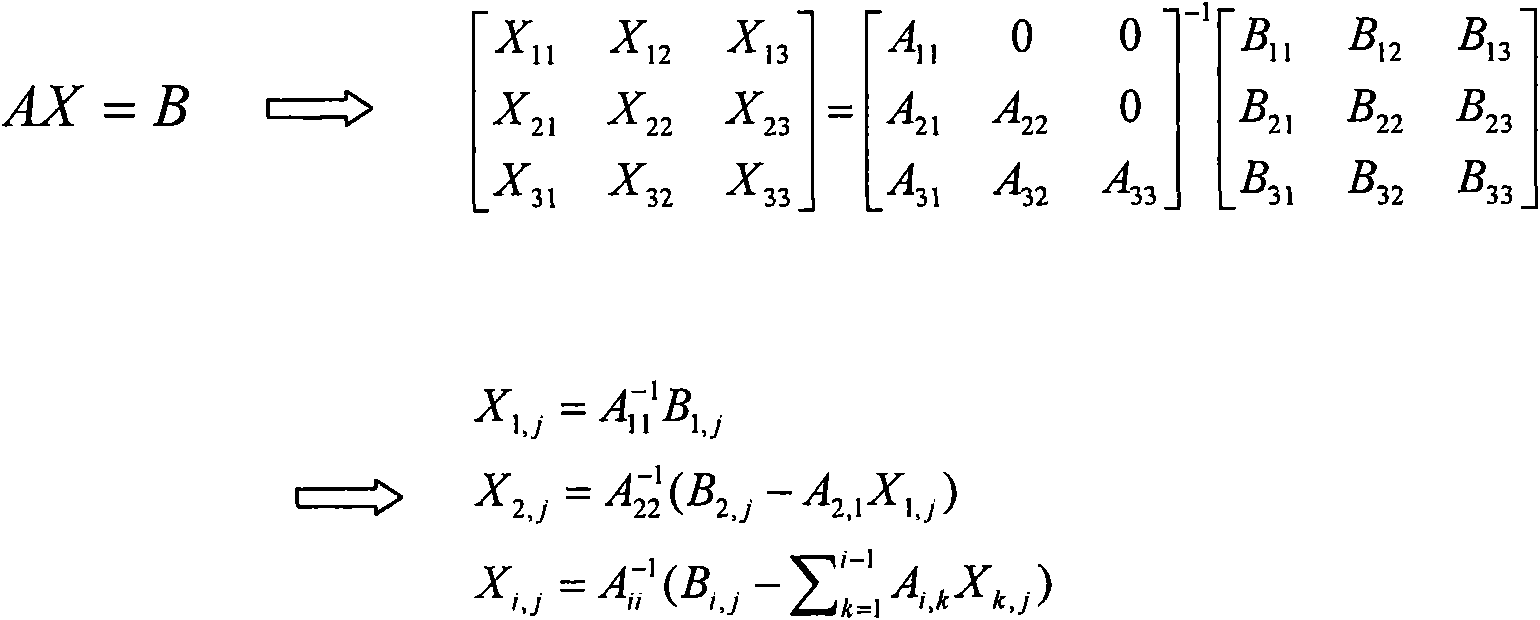

Method and device for solving triangular linear equation set of multiprocessor system

The invention provides a method and a device for solving a triangular linear equation set of a multiprocessor system. The multiprocessor system comprises at least one core processor and a plurality ofaccelerators. The method comprises the following steps: dividing matrixes which need to solve the triangular linear equation set into a plurality of sub-matrixes according to the sizes of the scheduled sub-matrixes; calculating and updating the sub-matrixes iteratively; and leading the accelerators to calculate and update the sub-matrixes in parallel, wherein each iteration comprises the following step: distributing the sub-matrixes to the accelerators from the sub-matrixes which are not calculated and updated in the sub-matrixes according to a row direction and the predefined sequence of theaccelerators. The loads of the accelerators can be balanced by distributing matrixes to be solved one by one through the sub-matrixes, and consequently, the calculation ability of the accelerators issufficiently utilized to calculate and update peak value performance.

Owner:IBM CORP

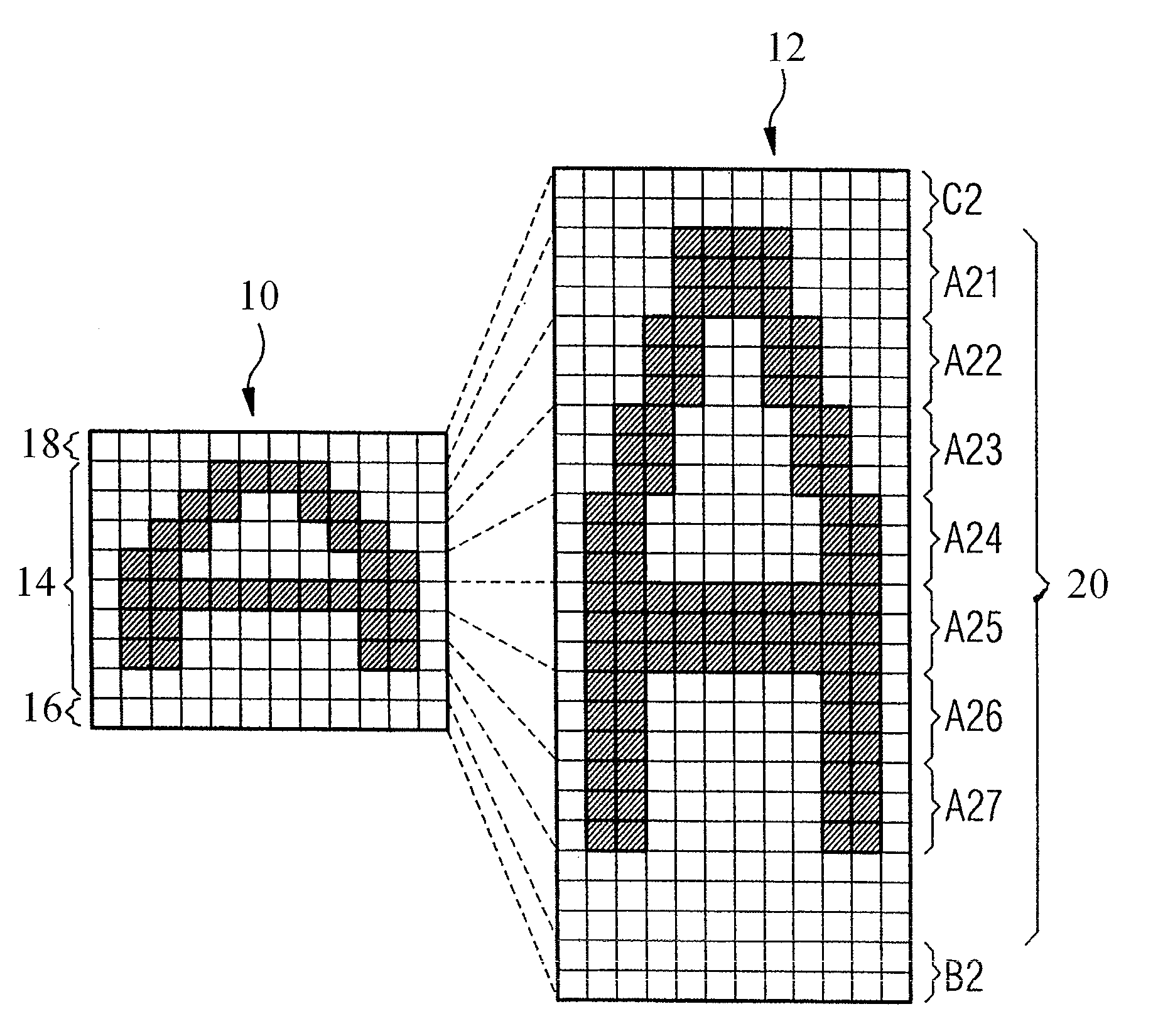

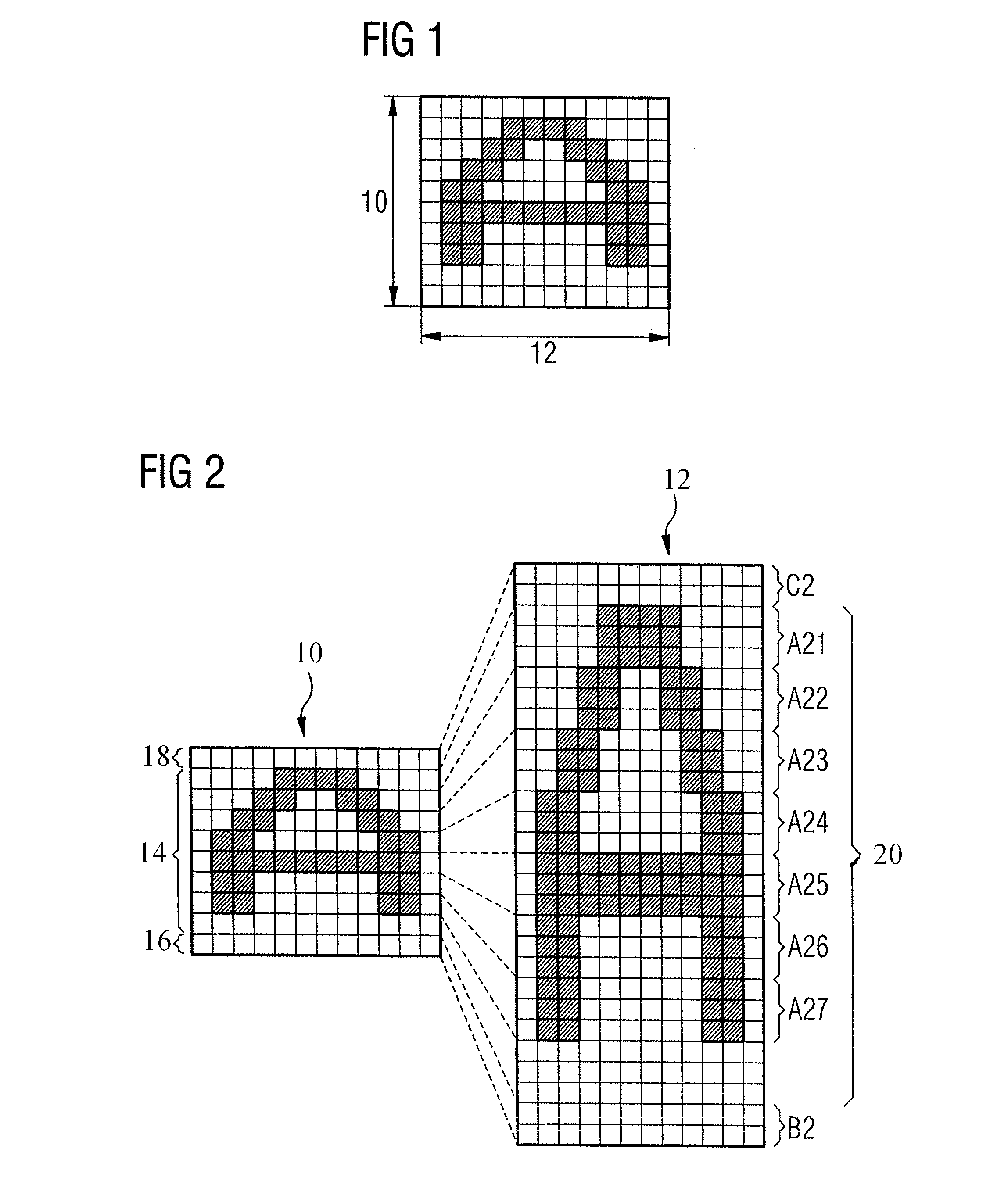

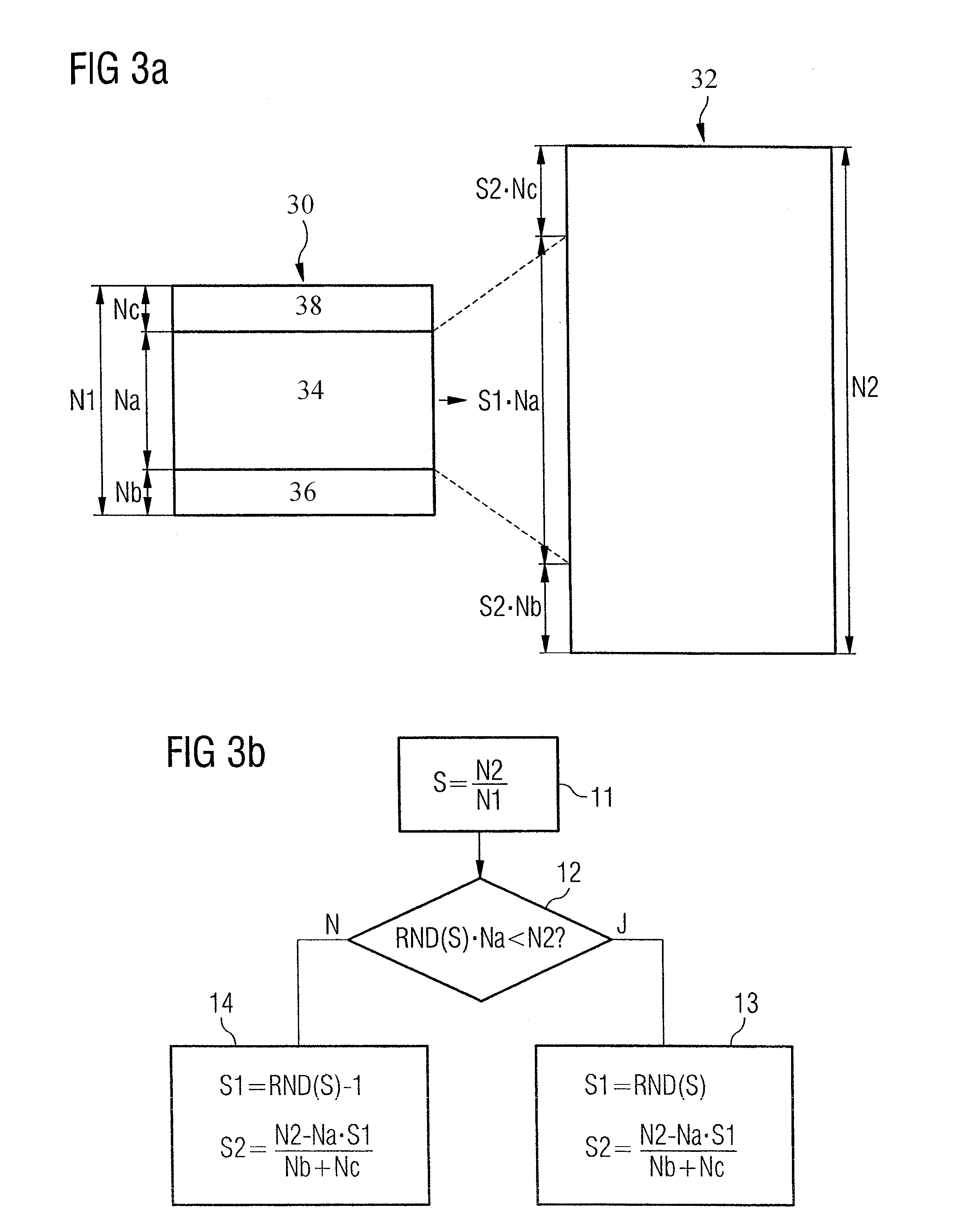

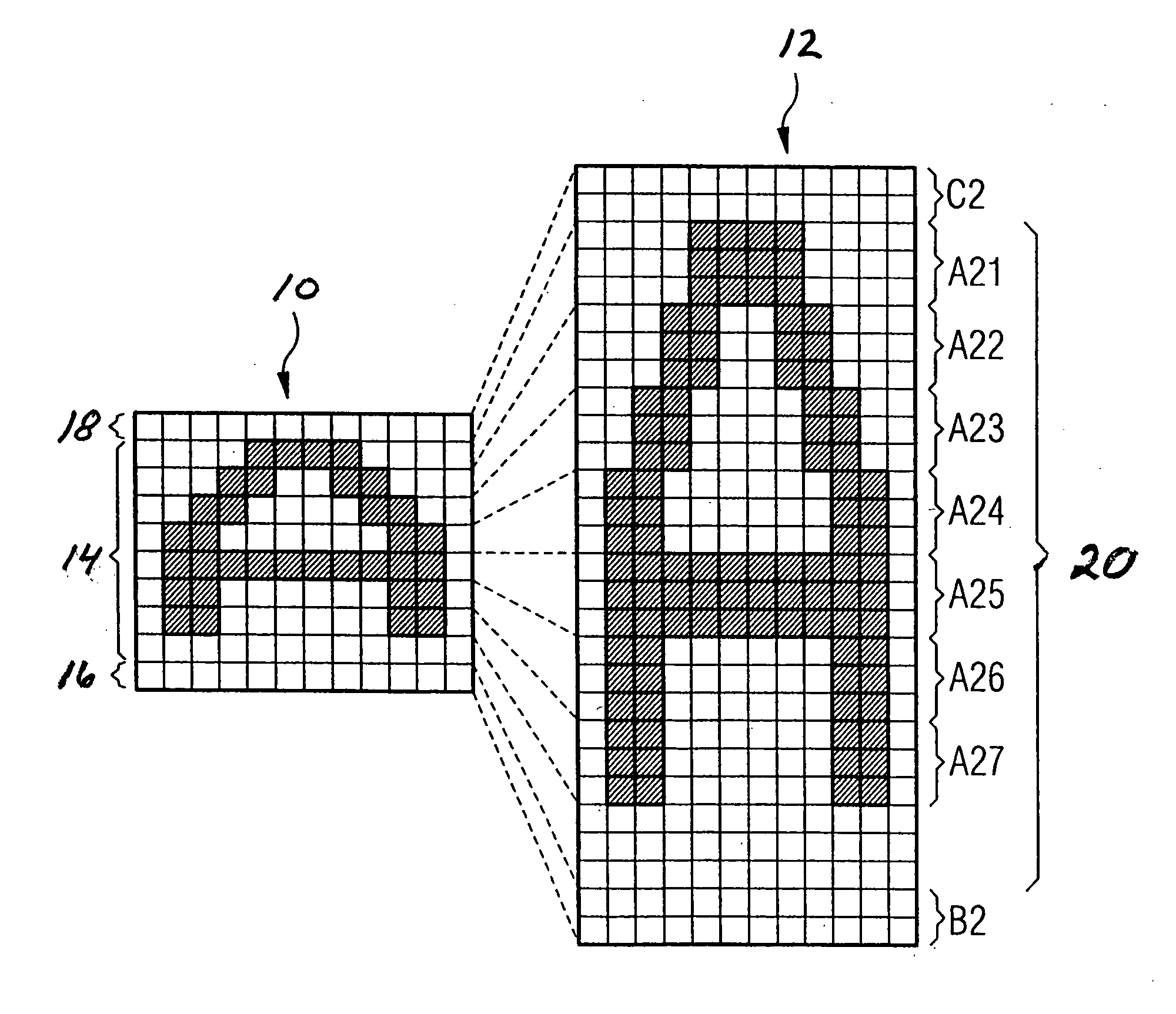

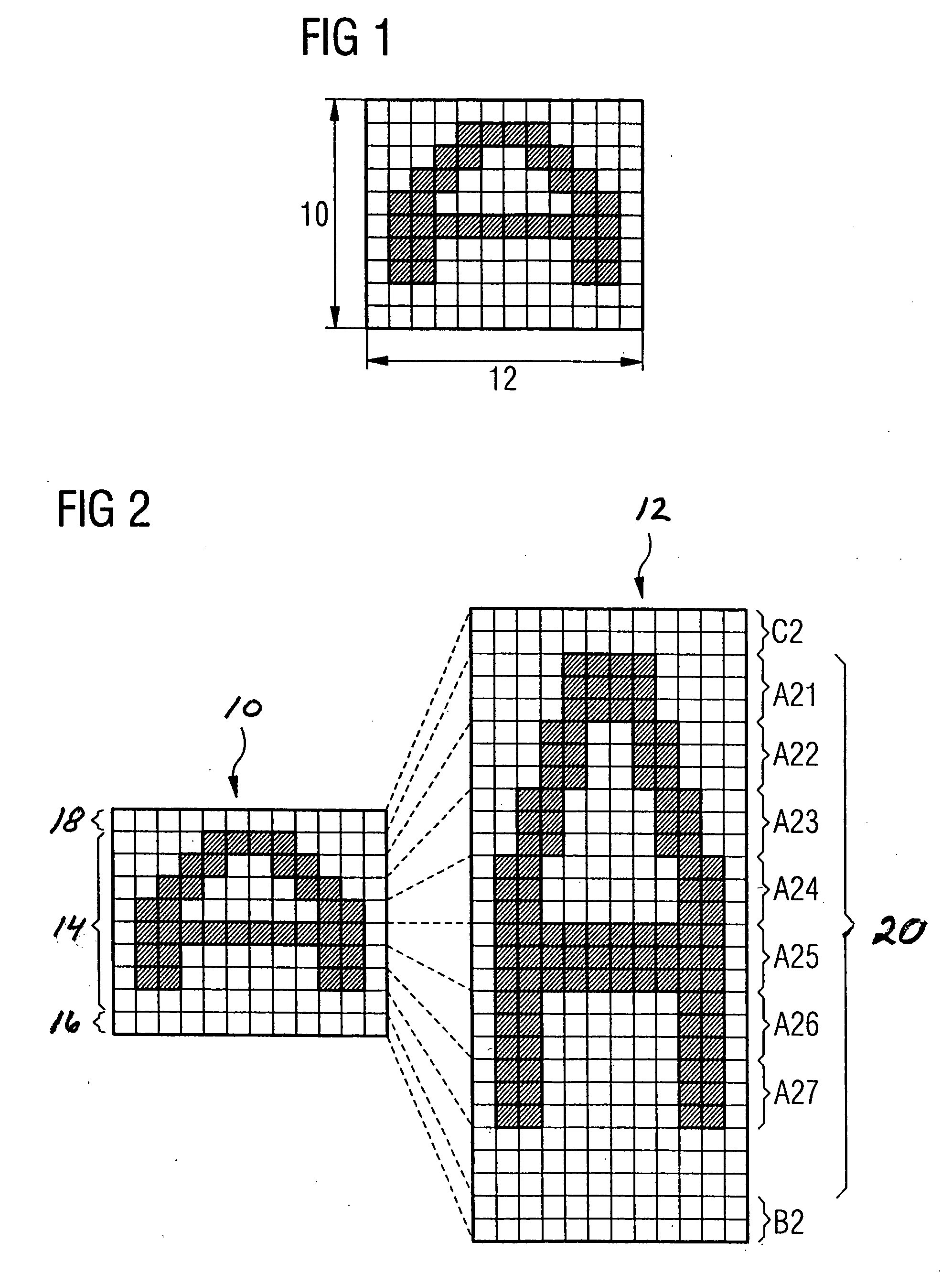

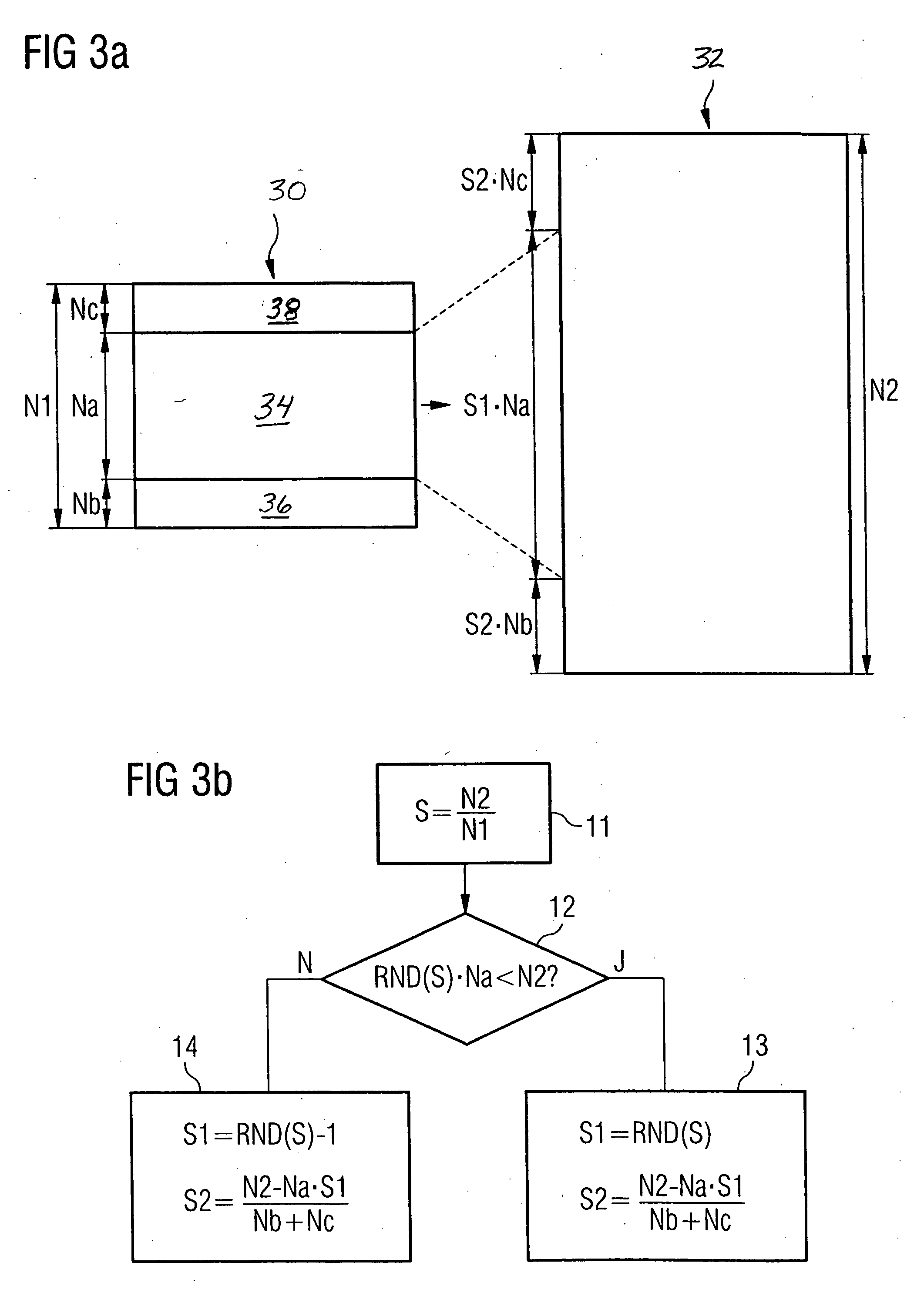

Method of scaling a graphic character

A graphic character that has a character matrix with a number of character units that are indivisible at least in either a horizontal direction or a vertical direction is scaled by dividing the character matrix into one first and at least one second character segment, each comprising at least one of the character units. The first character segment is symmetrically scaled using a first scaling factor and the second character segment is scaled using a second scaling factor different from the first scaling factor.

Owner:DYNAMIC DATA TECH LLC

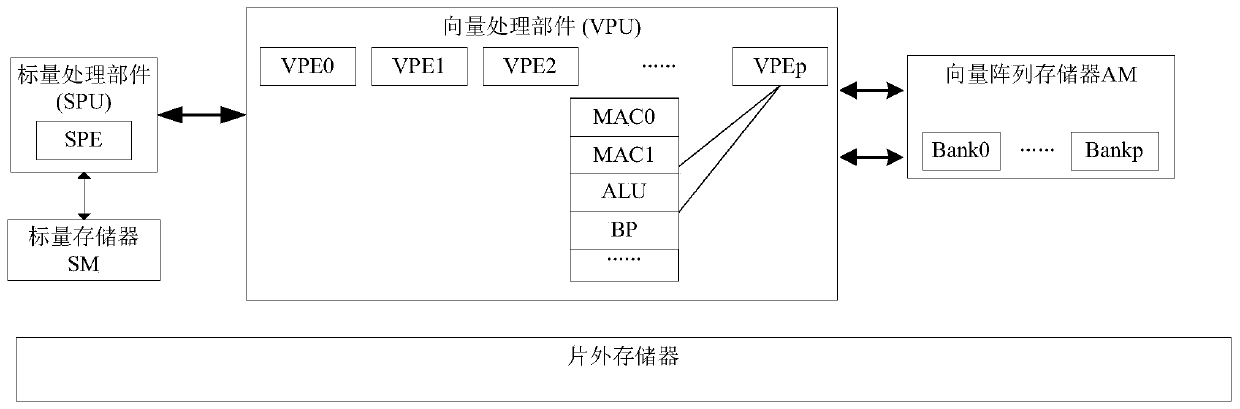

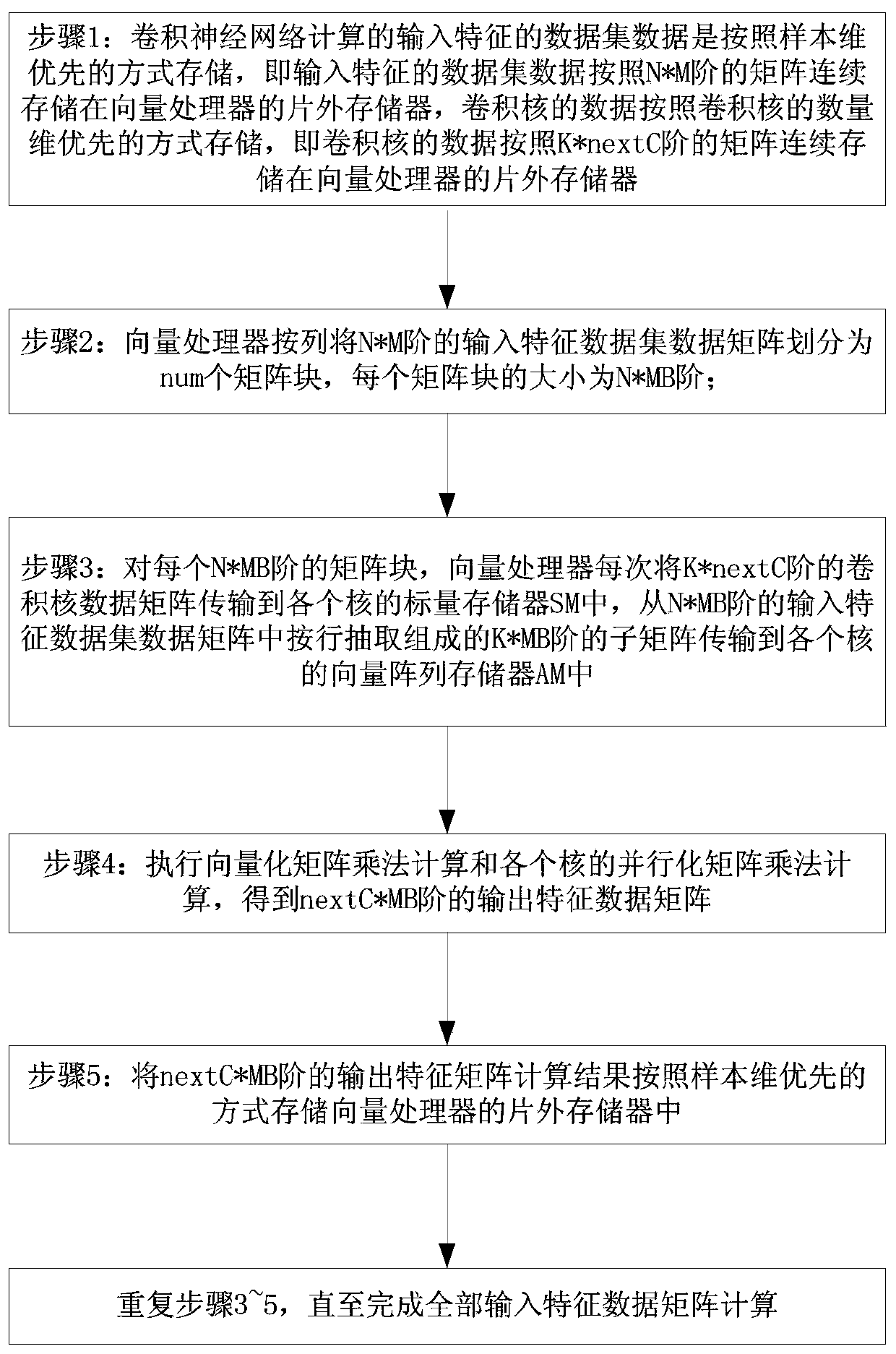

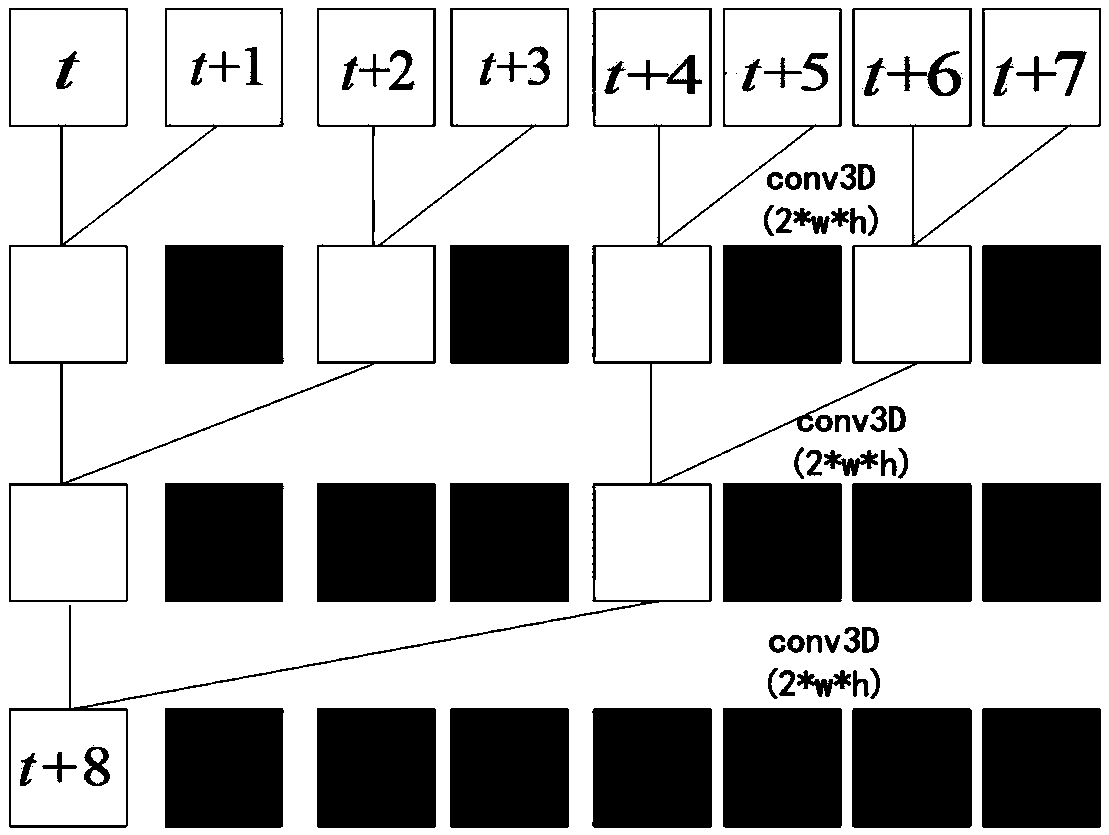

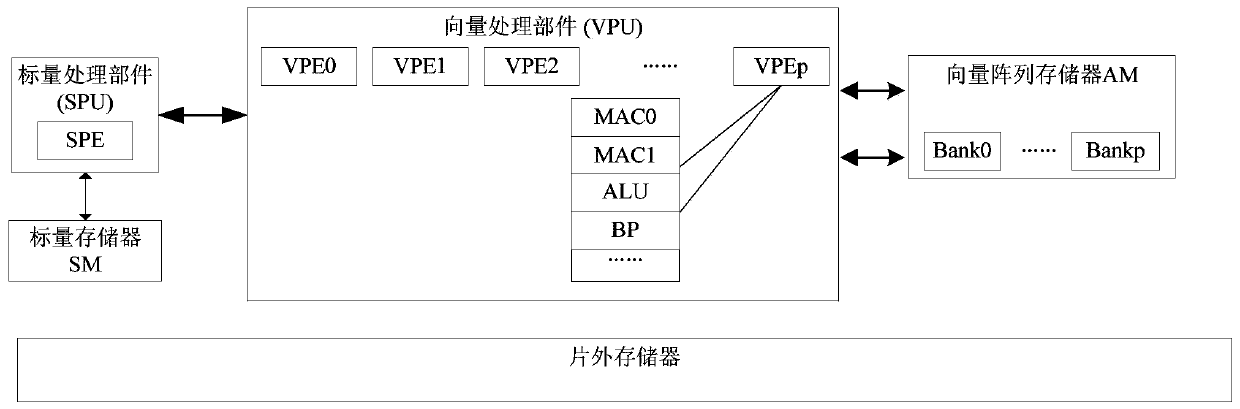

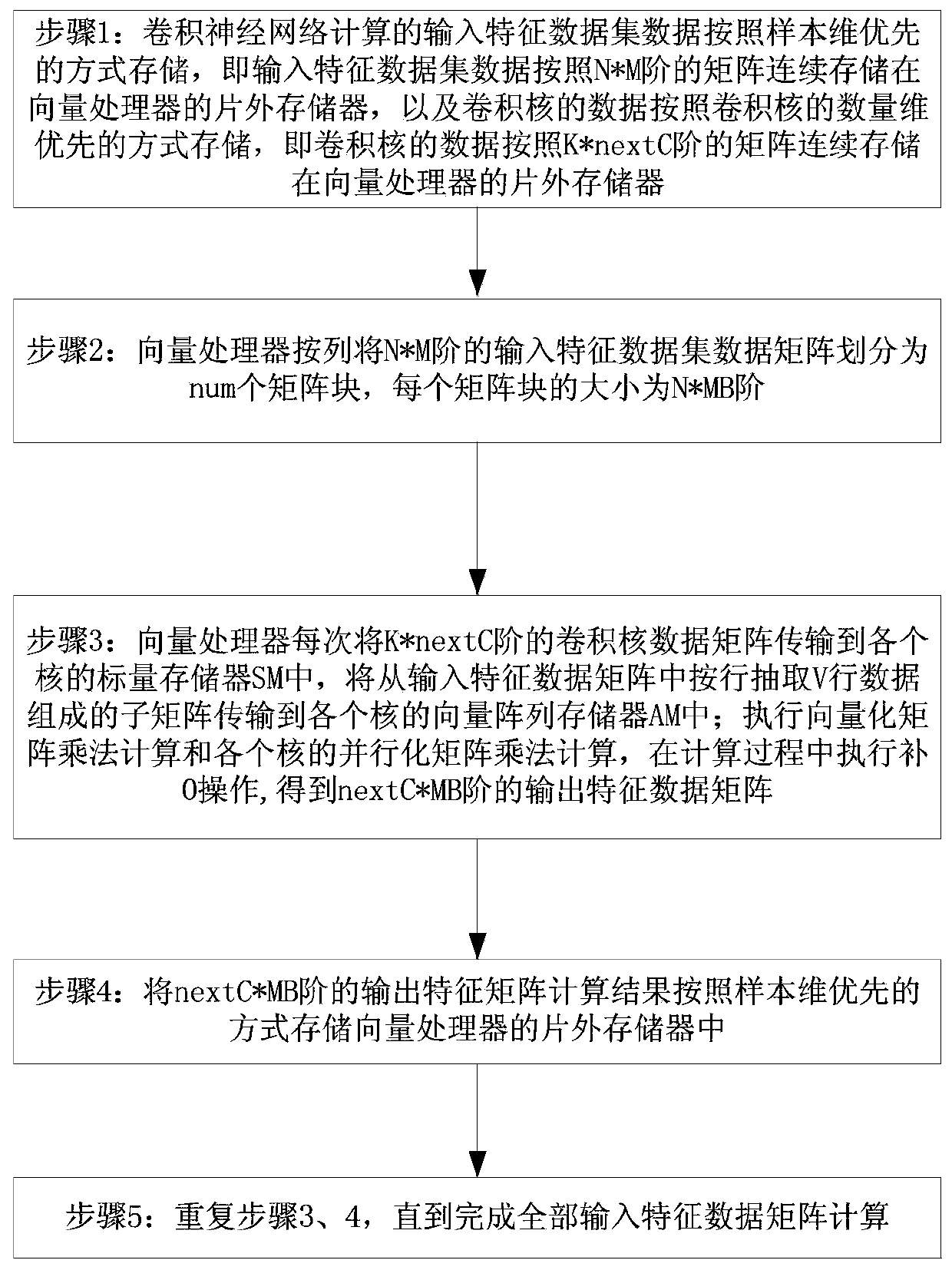

Vectorization implementation method for Valid convolution of convolutional neural network

ActiveCN110796235AVectorized implementationImprove efficiencySingle instruction multiple data multiprocessorsNeural architecturesData setAlgorithm

The invention discloses a Valid convolution vectorization implementation method for a convolutional neural network. The method comprises the following steps: 1, storing input feature data set data ina mode of sample dimension priority, and storing convolution kernel data in a mode of convolution kernel number dimension priority; 2, dividing a data matrix of the input feature data set into a plurality of matrix blocks according to columns; 3, transmitting the convolution kernel data matrix to the SM of each kernel each time, and transmitting a sub-matrix formed by extracting K rows of data from the input feature data matrix in rows to the AM of each kernel; 4, executing vectorization matrix multiplication calculation and parallelization matrix multiplication calculation; 5, storing an output characteristic matrix calculation result in an off-chip memory of the vector processor; and 6, repeating the steps 4 and 5 until all input characteristic data matrixes are calculated. The method has the advantages of being simple in implementation method, high in execution efficiency and precision, small in bandwidth requirement and the like.

Owner:NAT UNIV OF DEFENSE TECH

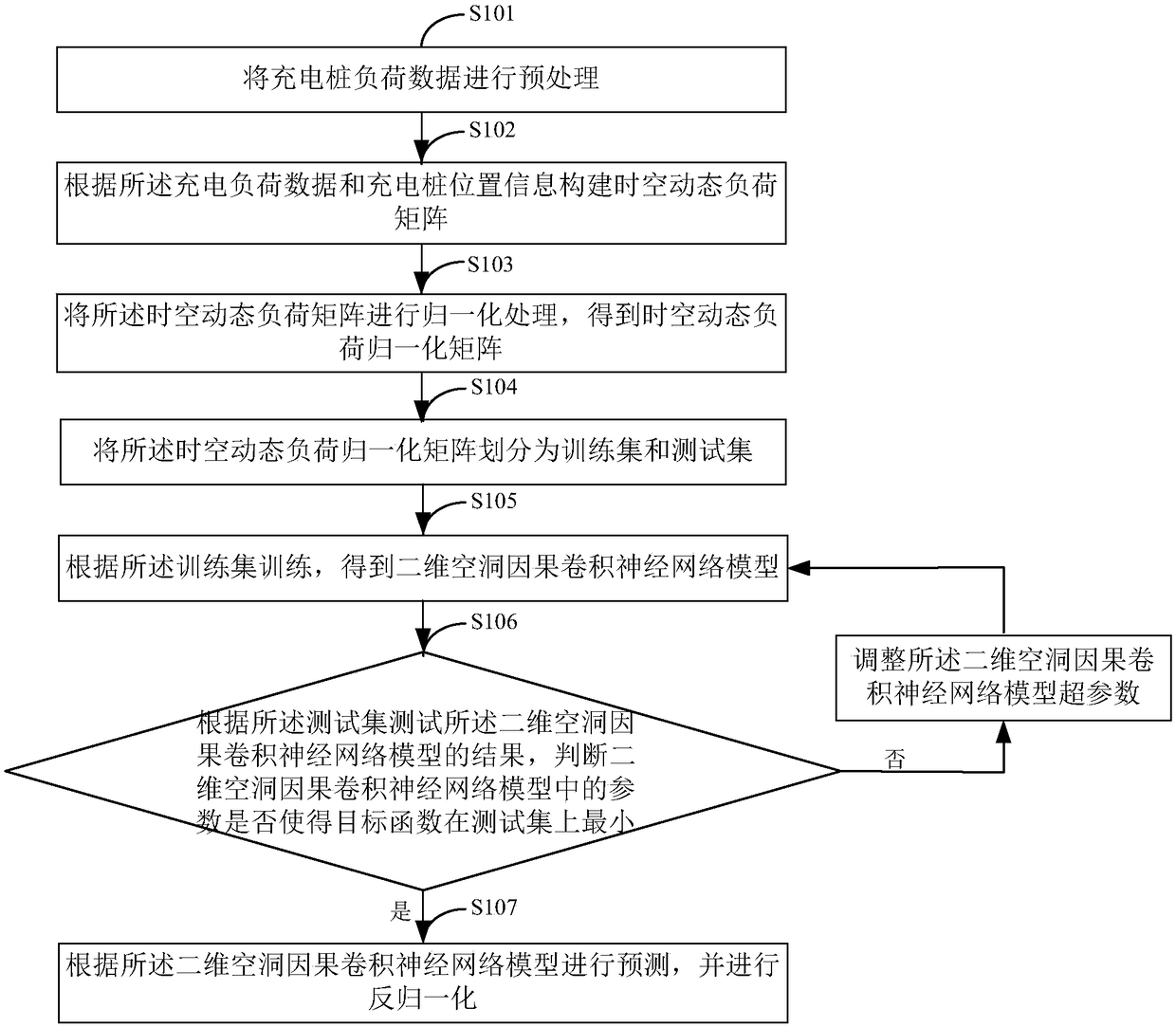

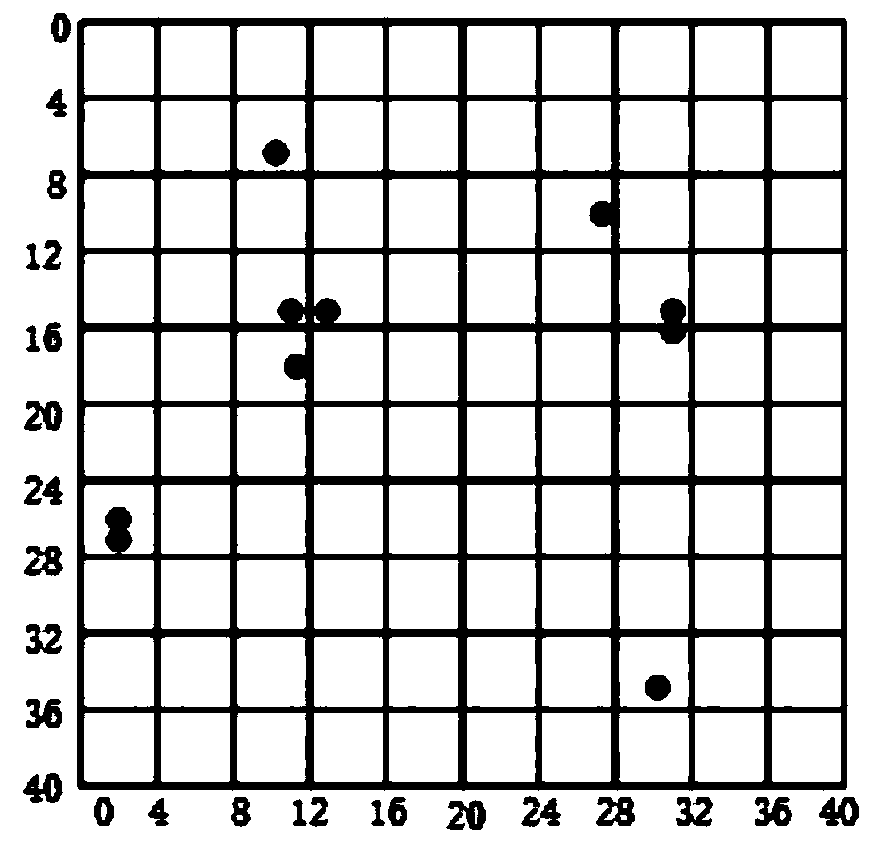

A spatio-temporal dynamic load forecasting method for electric vehicles

The invention discloses a spatio-temporal dynamic load forecasting method of an electric vehicle, which comprises the following steps of: preprocessing the load data of a charging pile; constructing Aspatio-temporal dynamic load matrix according to the charging load data and the charging pile position information. Normalizing The spatio-temporal dynamic load matrix and acquiring the spatio-temporal dynamic load normalized matrix. Dividing The spatio-temporal dynamic load normalization matrix into training set and test set. According to the training set, acquiring a two-dimensional causal convolution neural network model. If the parameters in the two-dimensional cavity causal convolution neural network model make the objective function minimum on the test set, predicting the test model according to the model and denormalized. Otherwise, adjusting the super parameters of the two-dimensional cavity causal convolution neural network model to obtain the two-dimensional cavity causal convolution neural network model again. The two-dimensional cavity causal convolution neural network model in the present application can fully consider time and space, and realize accurate spatio-temporaldynamic load forecasting of electric vehicles.

Owner:YUNNAN POWER GRID

Mobile terminal and screen operation control method thereof

InactiveCN106569674AImprove experienceEasy to operateInput/output processes for data processingOperabilityMatrix partitioning

The invention discloses a mobile terminal comprising an acquisition module, a determination module and a control module, wherein the acquisition module is used for acquiring matrix information that the mobile terminal performs matrix partition on the display screen; the determination module is used for determining whether a finger slides in a preset direction within a preset region on the display screen when a signal that the finger of a user slides on the display screen is monitored; the control module is used for controlling application icons on the display screen within a preset region of the matrix to move correspondingly according to the preset directions successively according to the information of the finger sliding on the display screen and the matrix information when it is determined that the finger slides within the preset region according to the preset direction. The invention also discloses a screen operation control method. By means of the mobile terminal and the screen operation control method thereof, the operability of application icons on the display screen of the mobile terminal is increased in that users can carry out full screen operation of the mobile terminal when the mobile terminal is held with single hand so that user experience is increased.

Owner:NUBIA TECHNOLOGY CO LTD

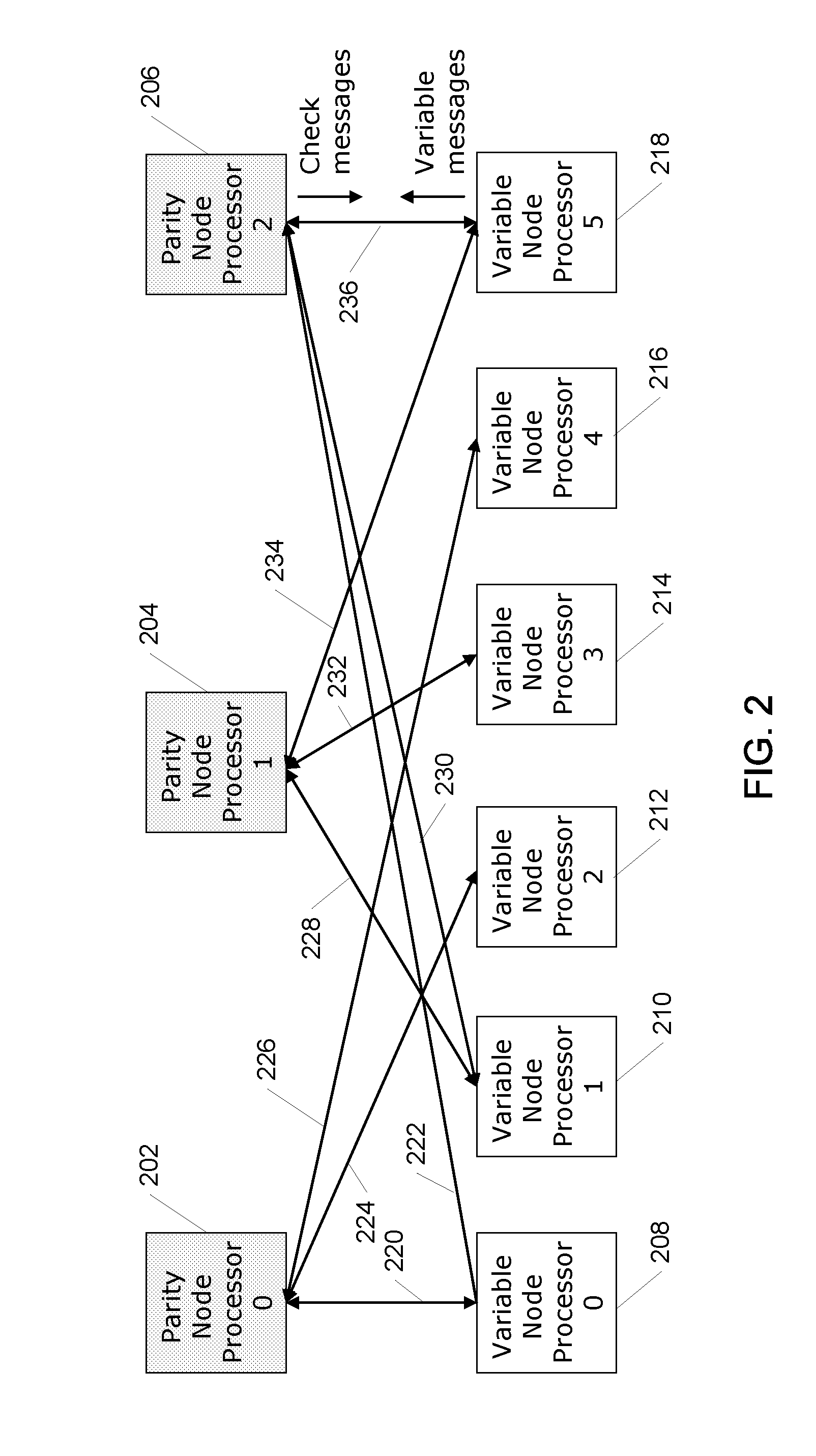

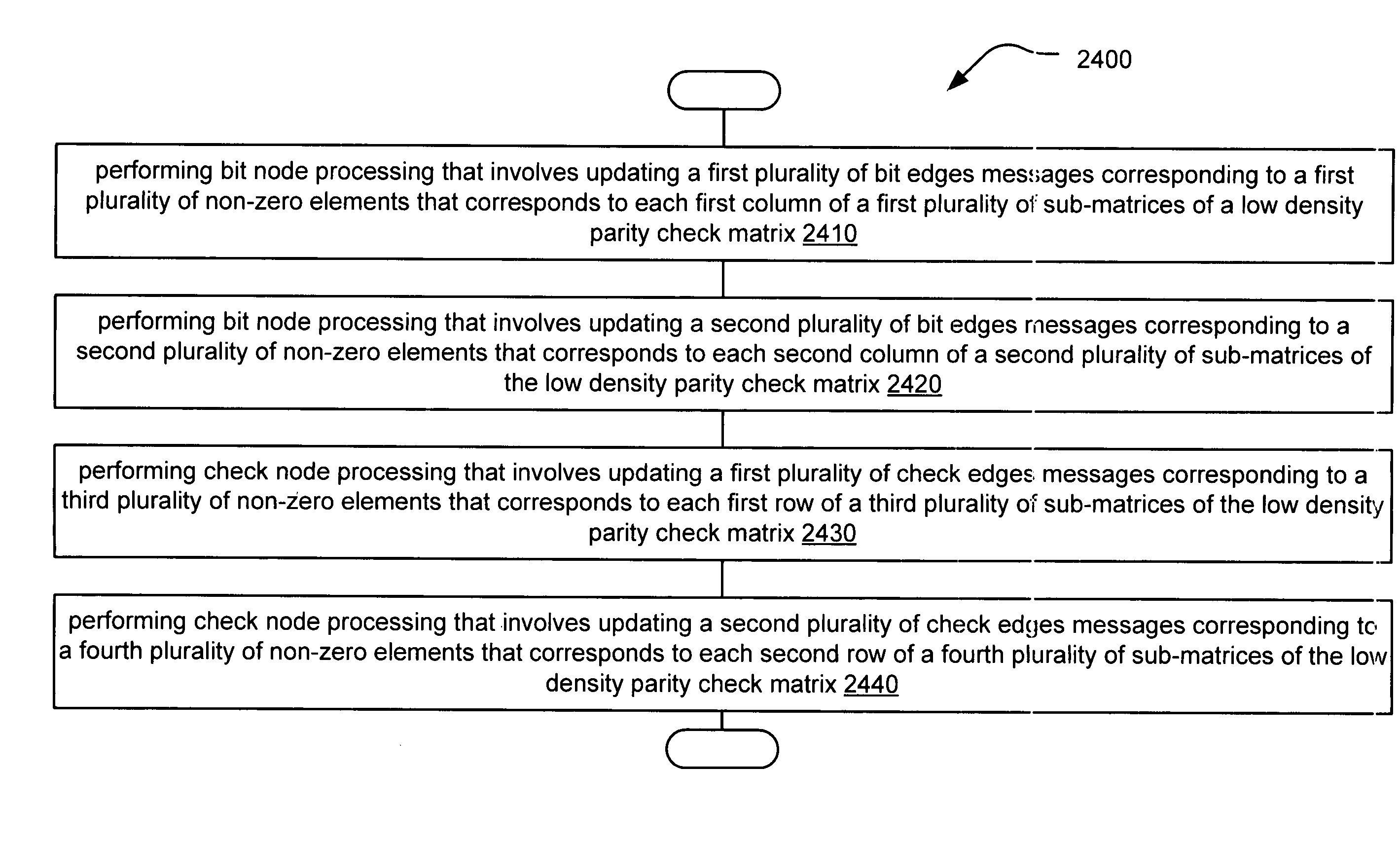

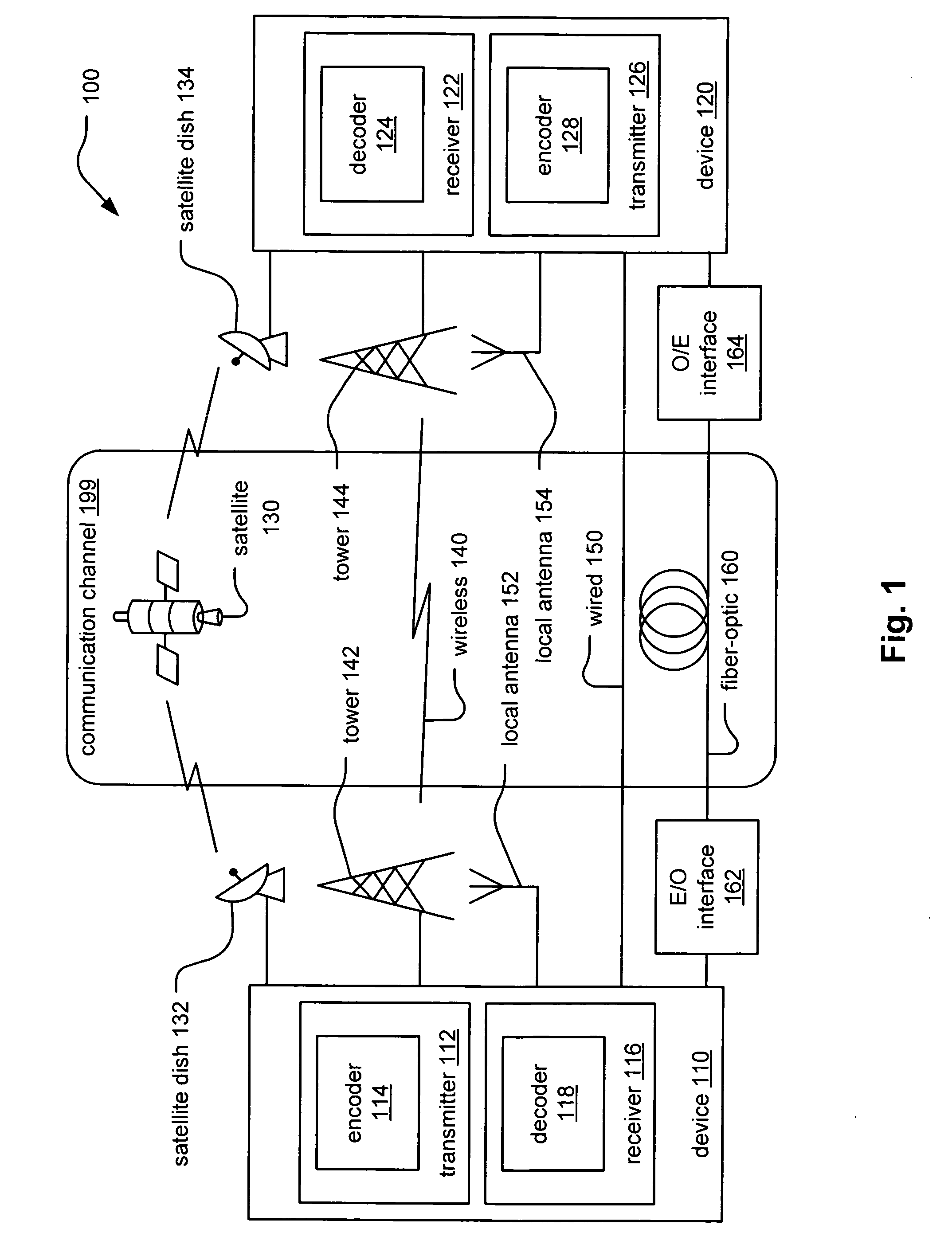

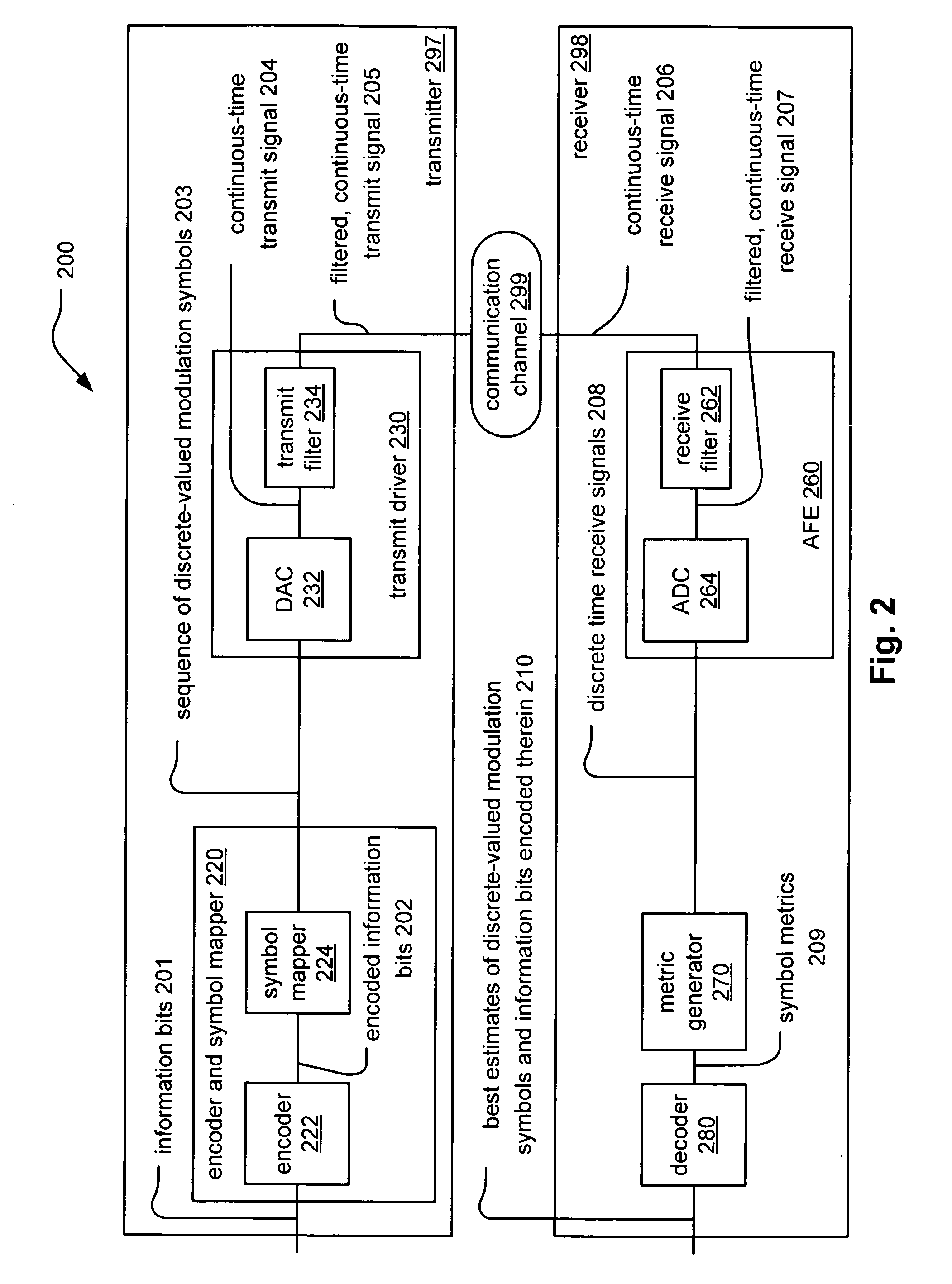

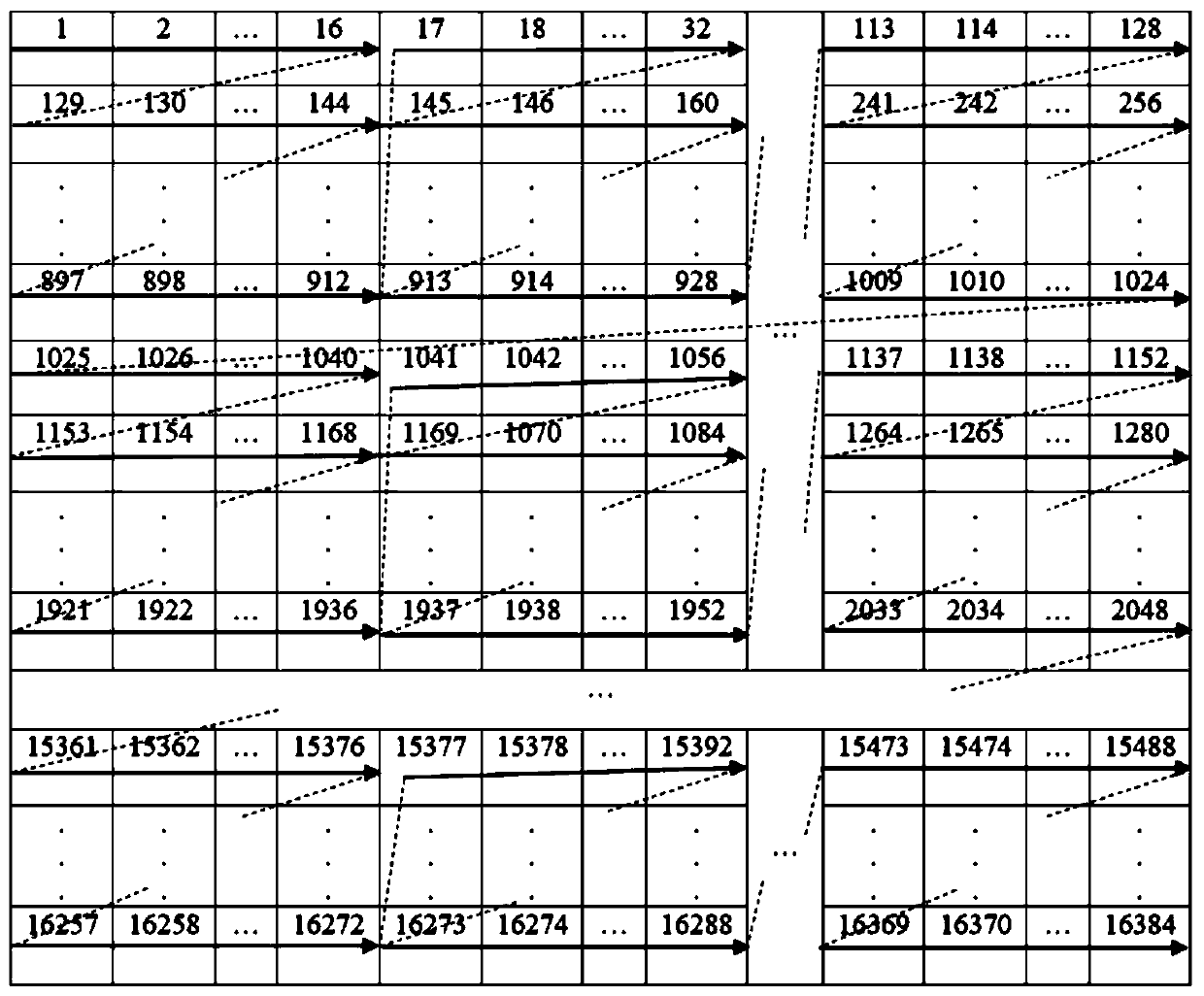

Implementation of LDPC (low density parity check) decoder by sweeping through sub-matrices

InactiveUS7617433B2Error correction/detection using LDPC codesCode conversionParity-check matrixTheoretical computer science

Implementation of LDPC (Low Density Parity Check) decoder by sweeping through sub-matrices. A novel approach is presented by which an LDPC coded signal is decoded processing the columns and rows of the individual sub-matrices of the low density parity check matrix corresponding to the LDPC code. The low density parity check matrix can partitioned into rows and columns according to each of the sub-matrices of it, and each of those sub-matrices also includes corresponding rows and columns. For example, when performing bit node processing, the same columns of at 1 or more sub-matrices can be processed together (e.g., all 1st columns in 1 or more sub-matrices, all 2nd columns in 1 or more sub-matrices, etc.). Analogously, when performing check node processing, the same rows of 1 or more sub-matrices can be processed together (e.g., all 1st rows in 1 or more sub-matrices, all 2nd rows in 1 or more sub-matrices, etc.).

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Multi-sample multi-channel convolutional neural network Same convolution vectorization implementation method

ActiveCN110807170AAchieve sharingReduce bandwidth requirementsNeural architecturesPhysical realisationData setAlgorithm

The invention discloses a multi-sample multi-channel convolutional neural network Same convolution vectorization implementation method, which comprises the steps of 1, storing input feature data set data according to a sample dimension priority mode, and storing data of convolution kernels according to a number dimension priority mode of the convolution kernels; 2, dividing a data matrix of the input feature data set into a plurality of matrix blocks according to columns; step 3, transmitting the convolution kernel data matrix to the SM of each kernel each time, transmitting a sub-matrix formed by row extraction from the input feature data matrix to the AM of each kernel, executing vectorization matrix multiplication calculation and parallelization matrix multiplication calculation, and executing zero supplement in the calculation; 4, storing an output characteristic matrix calculation result in an off-chip memory; and step 5, repeating the steps 3 to 4 until all calculations are completed. According to the invention, Same convolution vectorization can be realized, and the method has the advantages of simple implementation operation, high execution efficiency and precision, small bandwidth requirement and the like.

Owner:NAT UNIV OF DEFENSE TECH

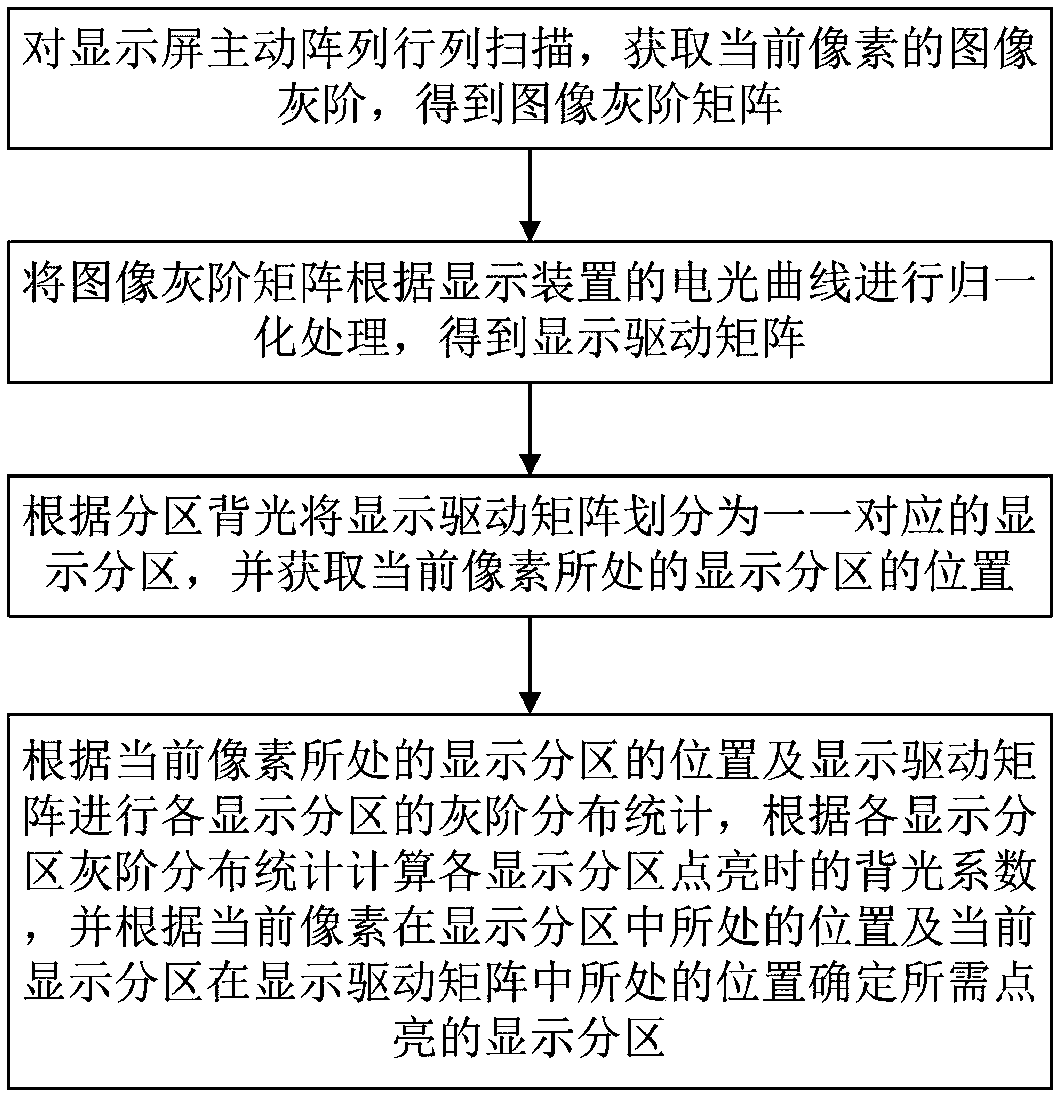

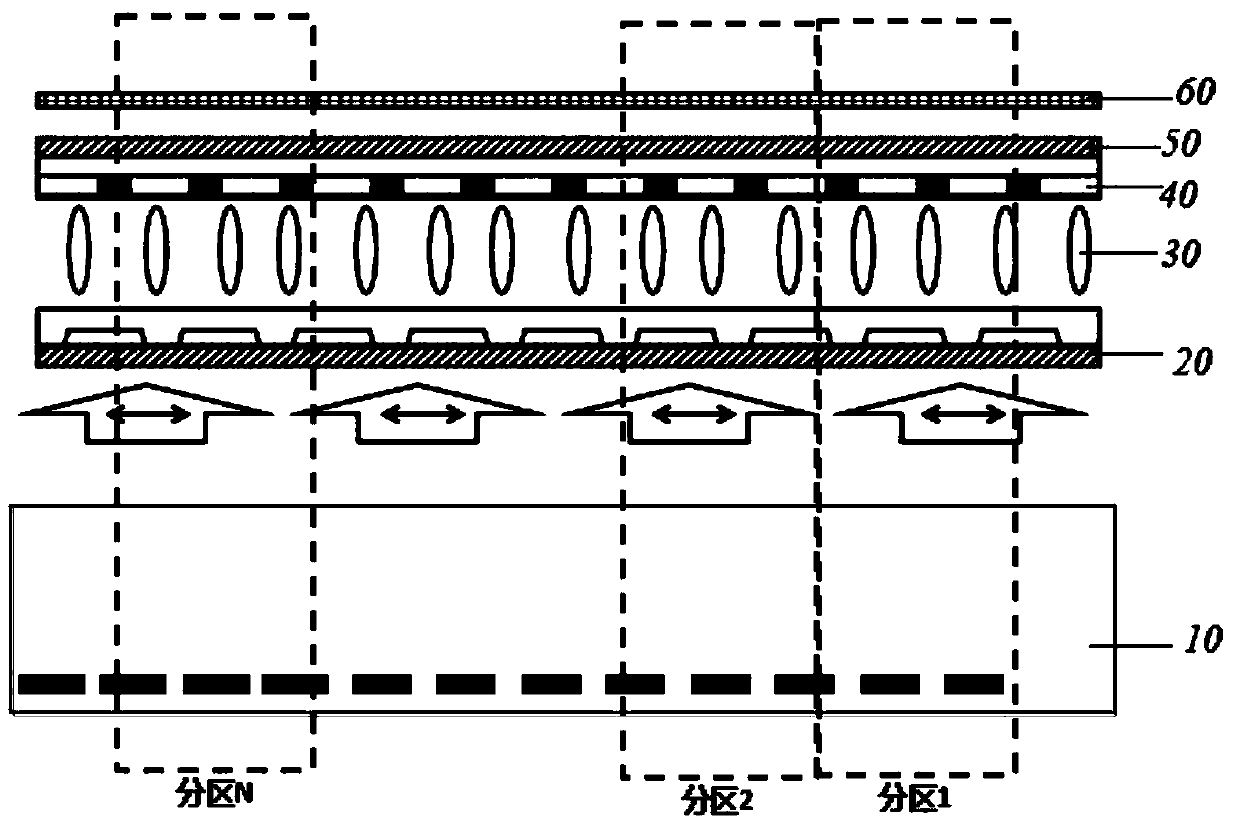

Partition backlight-based low-delay liquid crystal display device and driving method thereof

ActiveCN108022563AShort response timeSolve the lack of brightnessStatic indicating devicesLiquid-crystal displayGray level

The invention discloses a partition backlight-based low-delay liquid crystal display device and a driving method thereof. The liquid crystal display device comprises a plurality of partition backlightwhich is distributed in an arrayed manner. The driving method comprises the following steps: S1. performing line-rank scanning to active arrays of a display screen, and acquiring the gray-level of picture of current pixel, to obtain gray-level picture matrix; S2. normalizing the gray-level picture matrix according to the electro-optical curve of the display device, to obtain displayed driving matrix; S3. dividing the displayed driving matrix into displaying partitions correspondingly one by one according to the partition backlight, and acquiring the position of the displaying partition wherethe current pixel is; and S4. Performing the gray-level distribution statistics of each displaying partition according to the position, where the current pixel is, of the displaying partition and thedisplayed driving matrix, calculating the lighting backlight coefficient of each displaying partition according to the gray-level distribution statistics of the displaying partition, and determining the displaying partition needing to be lightened according to the position where the current pixel is in the displaying partition and the position where the current displaying partition is in the displayed driving matrix.

Owner:WUHAN CHINA STAR OPTOELECTRONICS TECH CO LTD

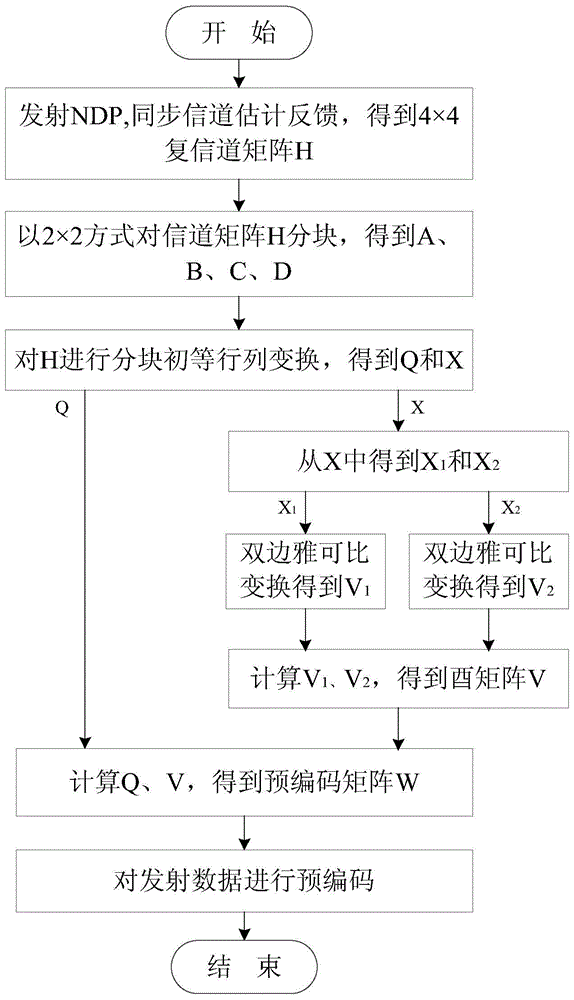

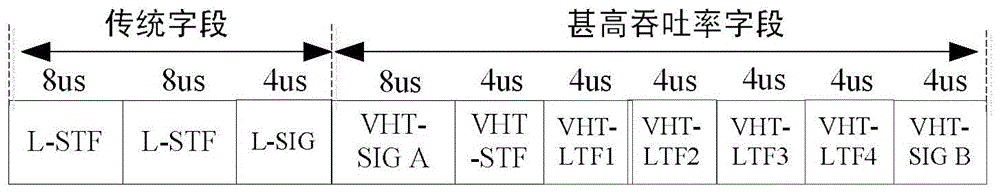

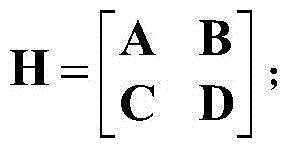

MIMO system precoding method

ActiveCN105703813AReduce overheadReduce complexitySpatial transmit diversitySingular value decompositionMatrix decomposition

The invention discloses an MIMO system precoding method which comprises the following steps: in a first step, an information channel is detected, state information of the information channel is displayed and fed back, and an information channel matrix H is obtained; in a second step, the information channel matrix H is partitioned, and a quasi diagonal matrix X and a partitioned elementary column transformation matrix Q are obtained; in a third step, the quasi diagonal matrix X is subjected to block-based singular value decomposition operation, and a unitary matrix V is obtained; in a step 4, a precoding matrix W is obtained according to the matrix Q and the matrix V, and therefore precoding operation can be conducted. The precoding matrix is obtained mainly by adopting a method of combining an operation of matrix partitioning and an operation of subjected the quasi diagonal matrix to SVD. According to the MIMO system precoding method, while precoding performance is not affected in an evident way, tremendous higher-order complex matrix operations can be prevented, the number of iterations needed for direct matrix decomposition can be greatly reduced, and therefore costs of hardware resource can be lowered.

Owner:SOUTHEAST UNIV

RS erasure rapid decoding method and system based on distributed storage

ActiveCN111697976AOmit stepsReduce operational complexityCode conversionCyclic codesComputational scienceDecoding methods

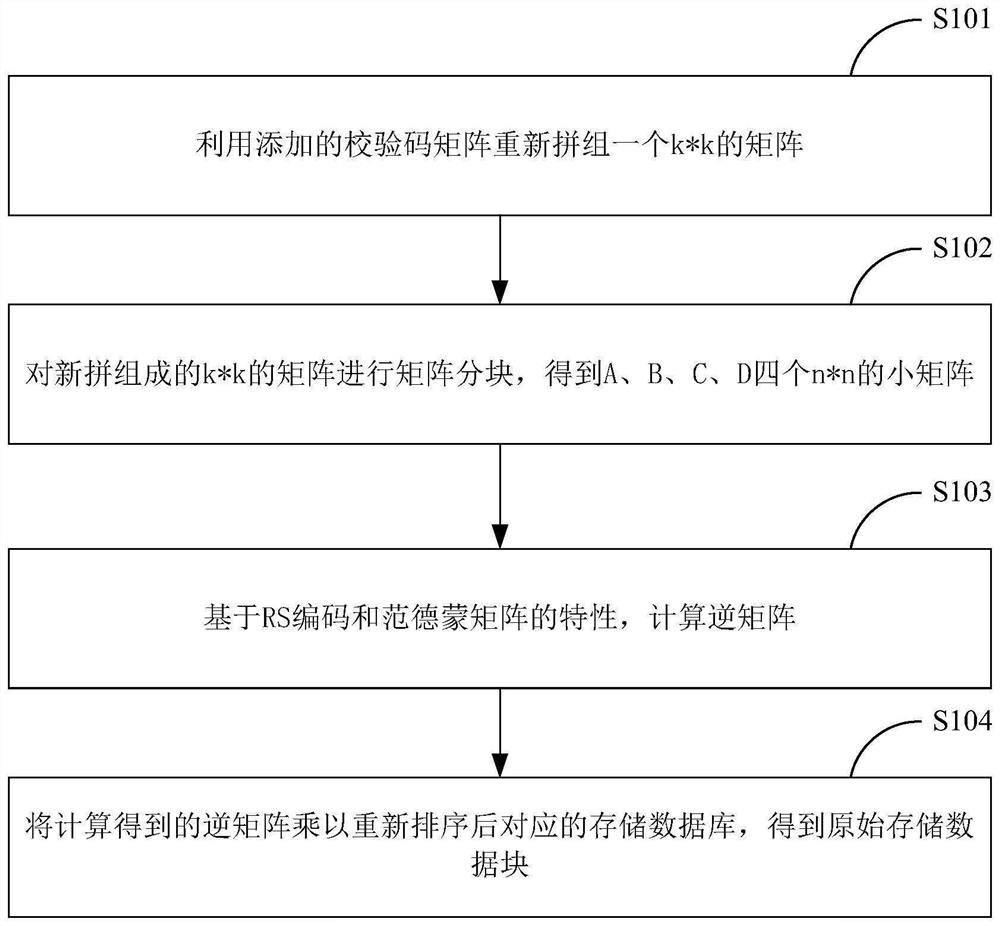

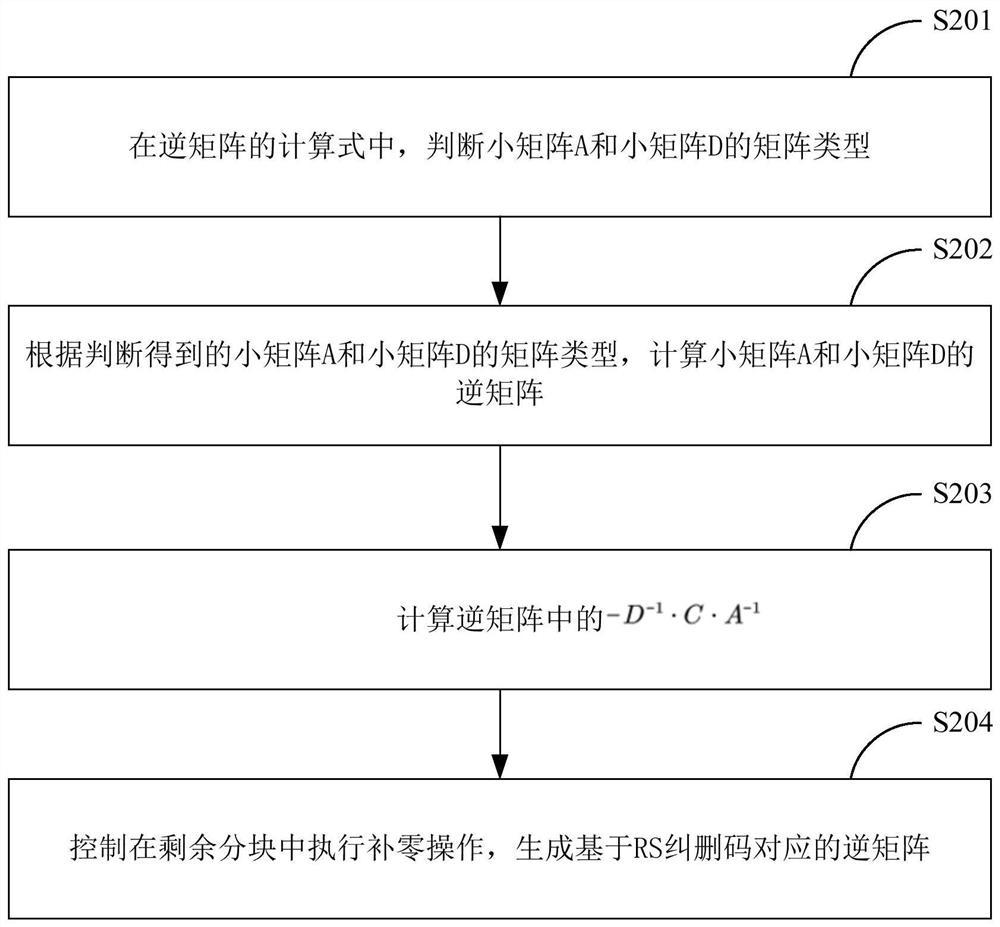

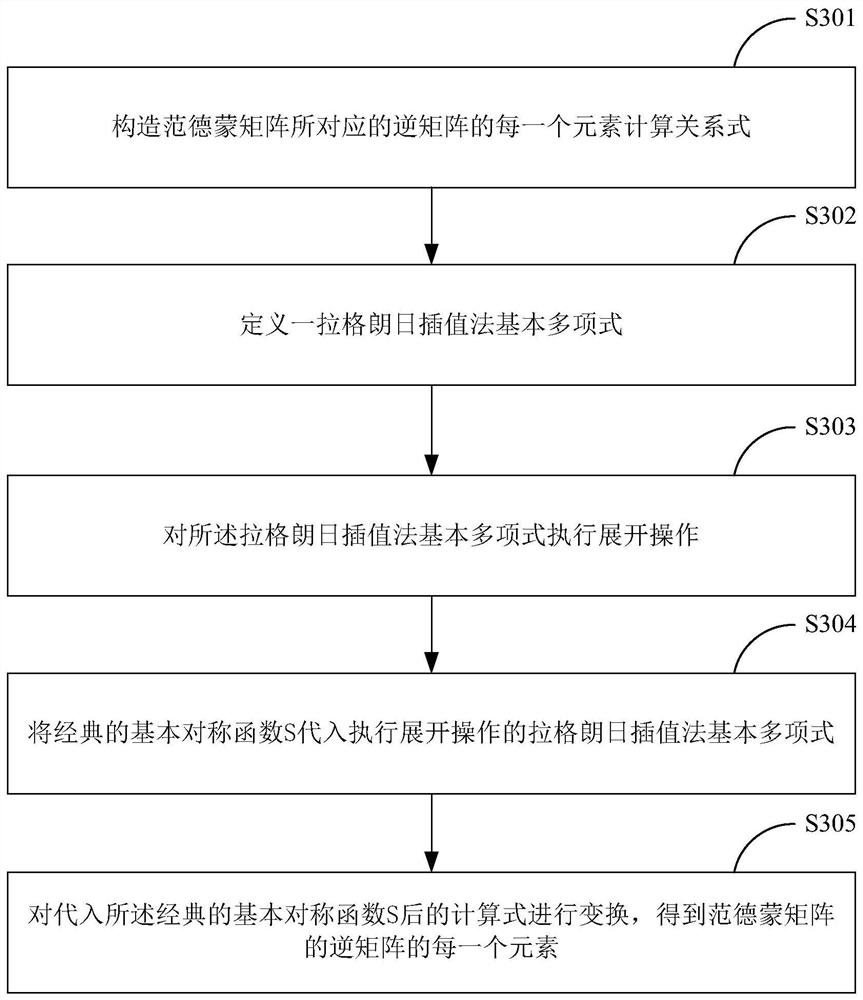

The invention relates to the technical field of server storage, and provides an RS erasure rapid decoding method and system based on distributed storage, and the method comprises the steps: employingan added check code matrix to reassemble a k*k matrix; carrying out matrix partitioning on the newly spliced k*k matrix to obtain four n*n small matrixes A, B, C and D; calculating an inverse matrix based on the RS code and the characteristics of the Van der Monte Carlo matrix; and multiplying the calculated inverse matrix by the reordered corresponding storage database to obtain an original storage data block, thereby synthesizing a simple small matrix through splitting, saving most of operations, reducing the operation complexity and improving the operation speed.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

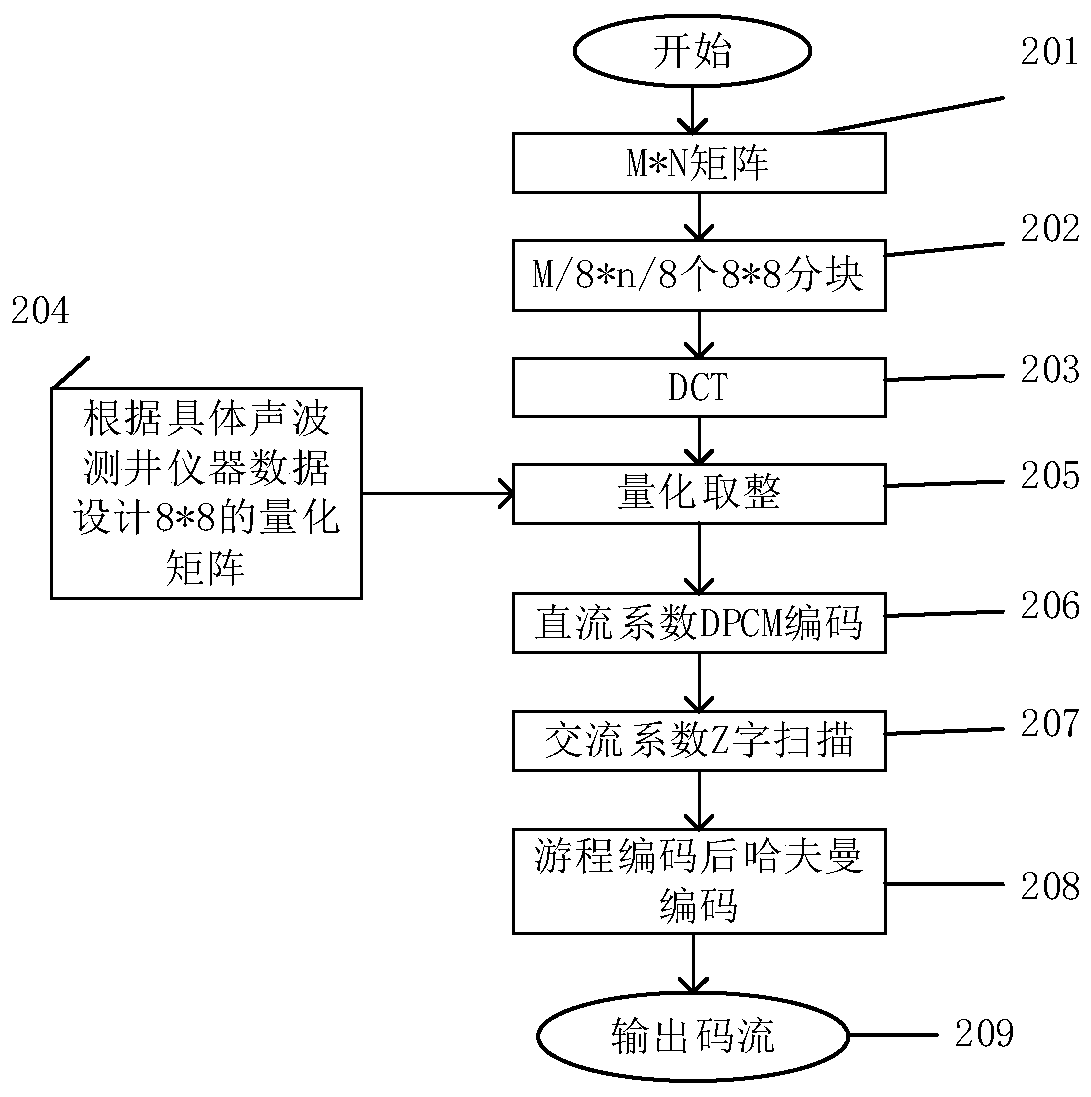

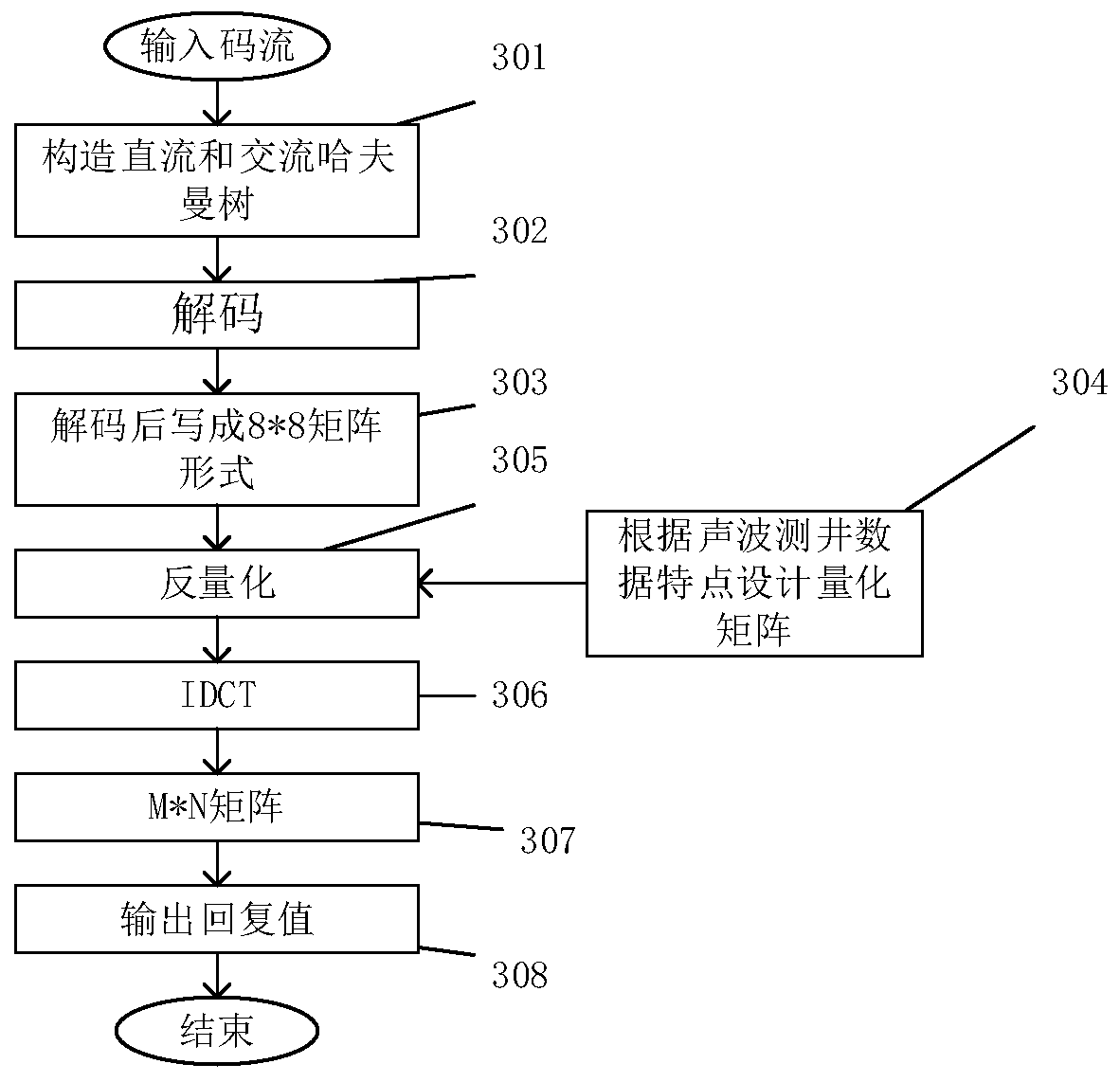

Method for compressing and decompressing acoustic logging data

InactiveCN111510152AIncrease flexibilityReduce transfer volumeSurveyCode conversionAlgorithmEngineering

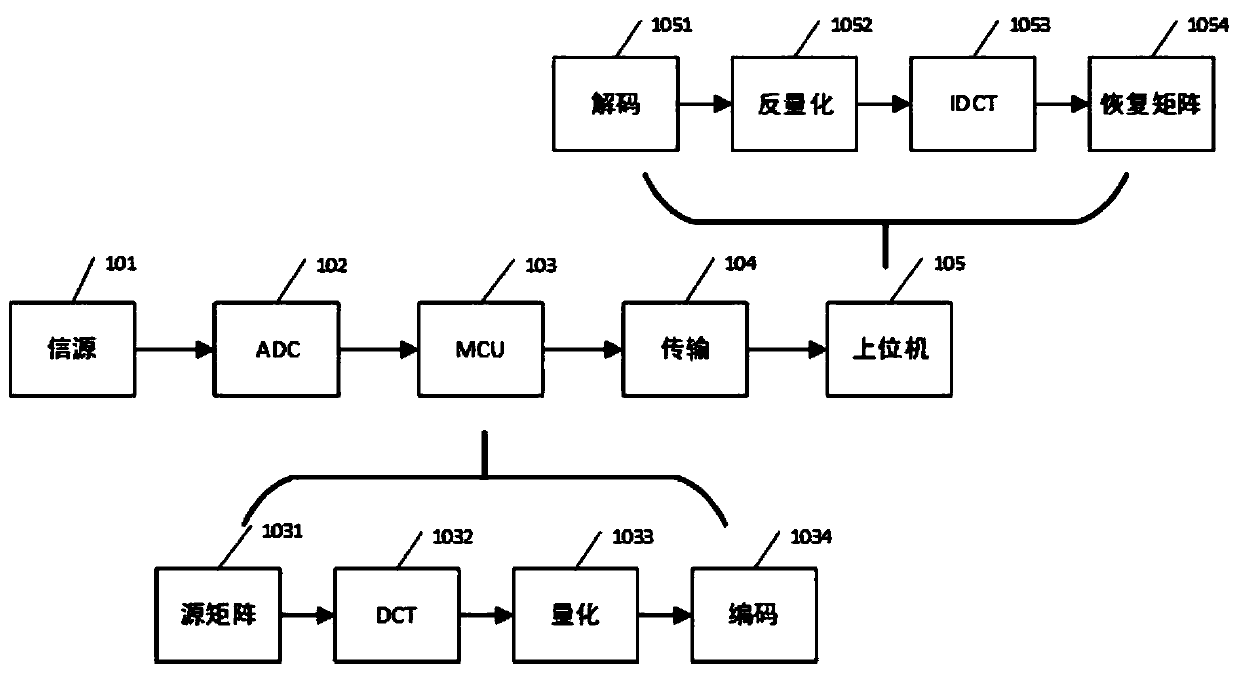

The invention discloses a method for compressing and decompressing acoustic logging data. The compressing method comprises the steps: conducting matrix partitioning on the data collected by an acoustic logging instrument according to the number of collection channels; carrying out discrete cosine transform on each block matrix to obtain a DCT coefficient matrix; designing an appropriate quantization matrix according to the characteristics of the sound wave signals, and quantizing the DCT coefficient matrix; carrying out run-length coding and Huffman coding on the quantized matrix; and obtaining a compressed code stream and transmitting the compressed code stream. According to the compressing method, on the basis that the calculation performance of a downhole main control device is improved, a high compression ratio is achieved. According to the method, the data can be compressed in real time downhole, and the data transmission amount is greatly reduced, so that the logging speed of the acoustic logging instrument is increased.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

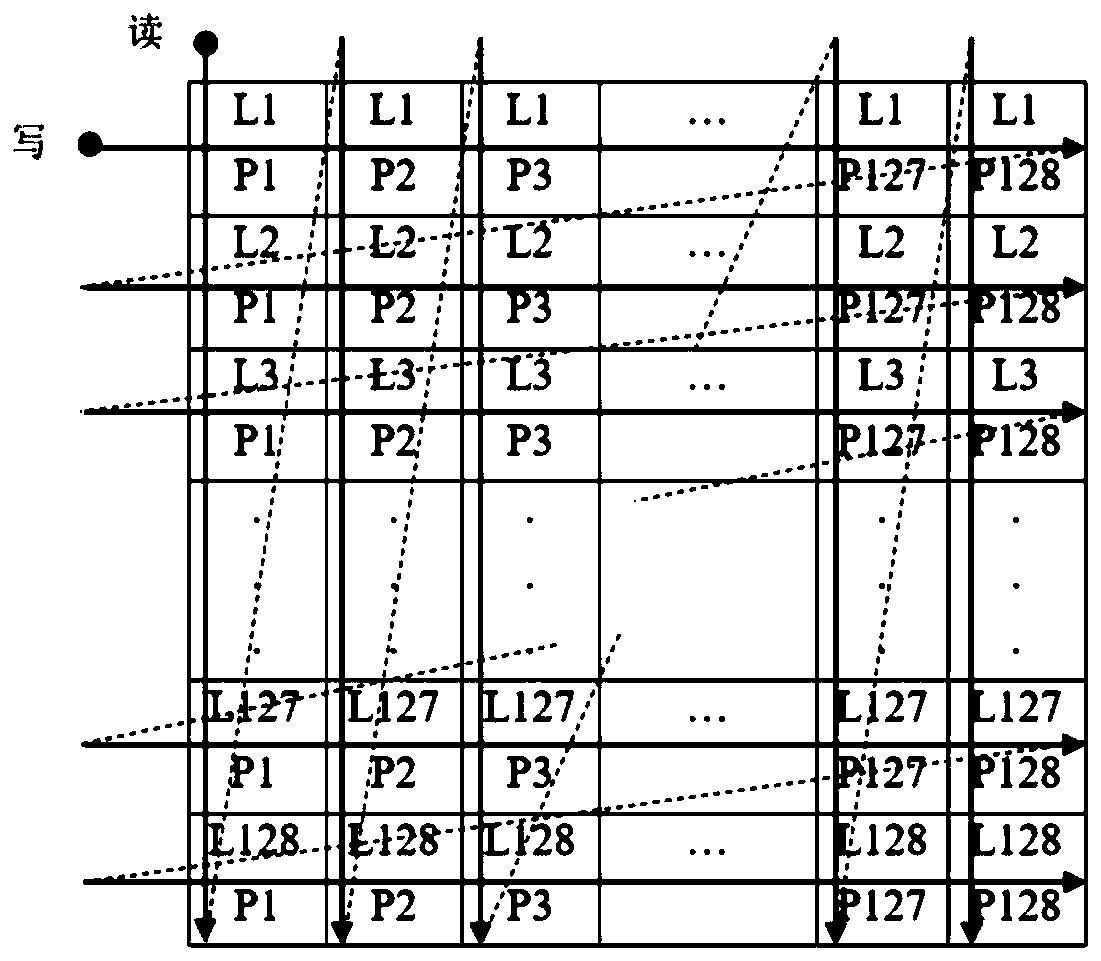

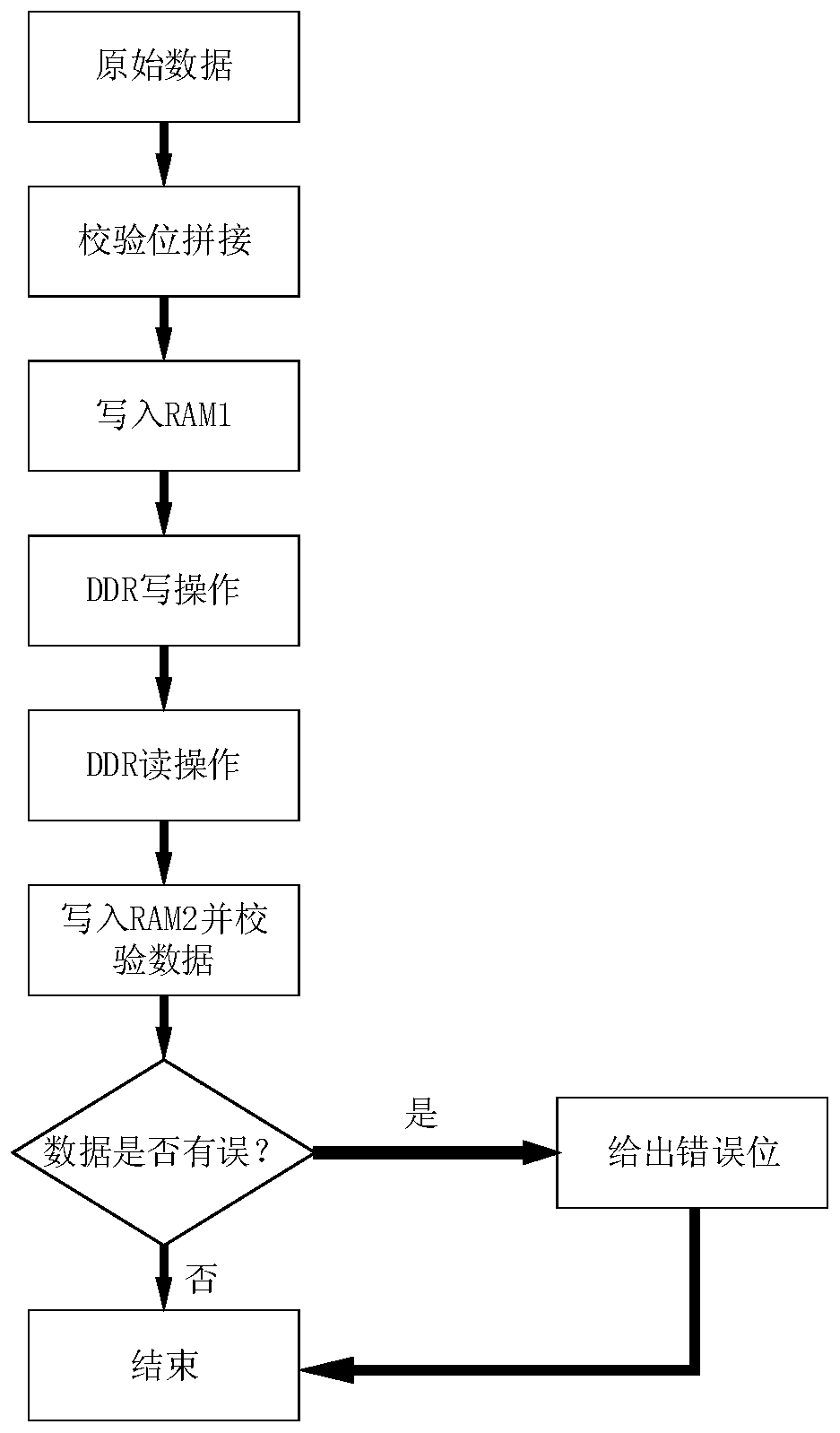

DDR-based high-efficiency matrix transposition processing method

ActiveCN110781447AImprove efficiencyTroubleshoot processing rate dropsEnergy efficient computingComplex mathematical operationsComputer hardwareComputer architecture

The invention discloses a DDR-based high-efficiency matrix transposition processing method. The method comprises the following steps of matching a write RAM and a read RAM with an IP core of a DDR3 SDRAM; wherein a to-be-transposed matrix is a 128 * 128 matrix with 64 bits of single data, and data of each row of the to-be-transposed matrix is taken as a small matrix; writing 16 data in each activesignal in the writing operation process; reading 8 * 16 pieces of data by each active signal in the reading operation process; and performing reading in DDR according to a same inter-row and data cyclic skip rule, so that the occurrence of row active signals is reduced as much as possible. According to the invention, the problem that the overall processing rate of the system is reduced due to the jump access of the DDR SDRAM in large-order matrix transposition is solved. By the matrix partitioning technology, on the basis that the writing rate is reduced to a small extent, the reading rate is greatly increased, the DDR reading and writing rate is balanced when a matrix is transposed, and therefore the average DDR reading and writing efficiency is improved.

Owner:TIANJIN UNIV

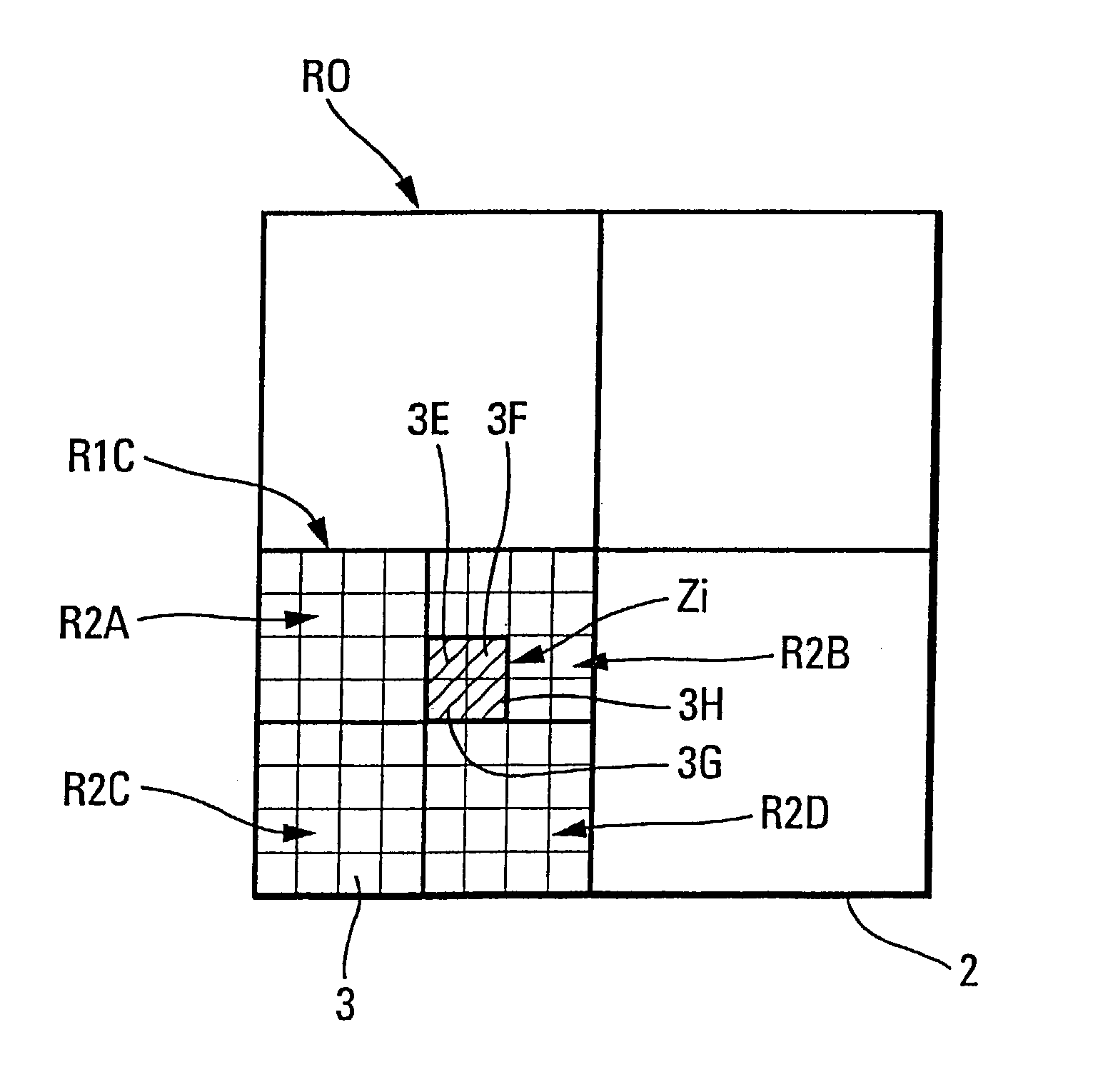

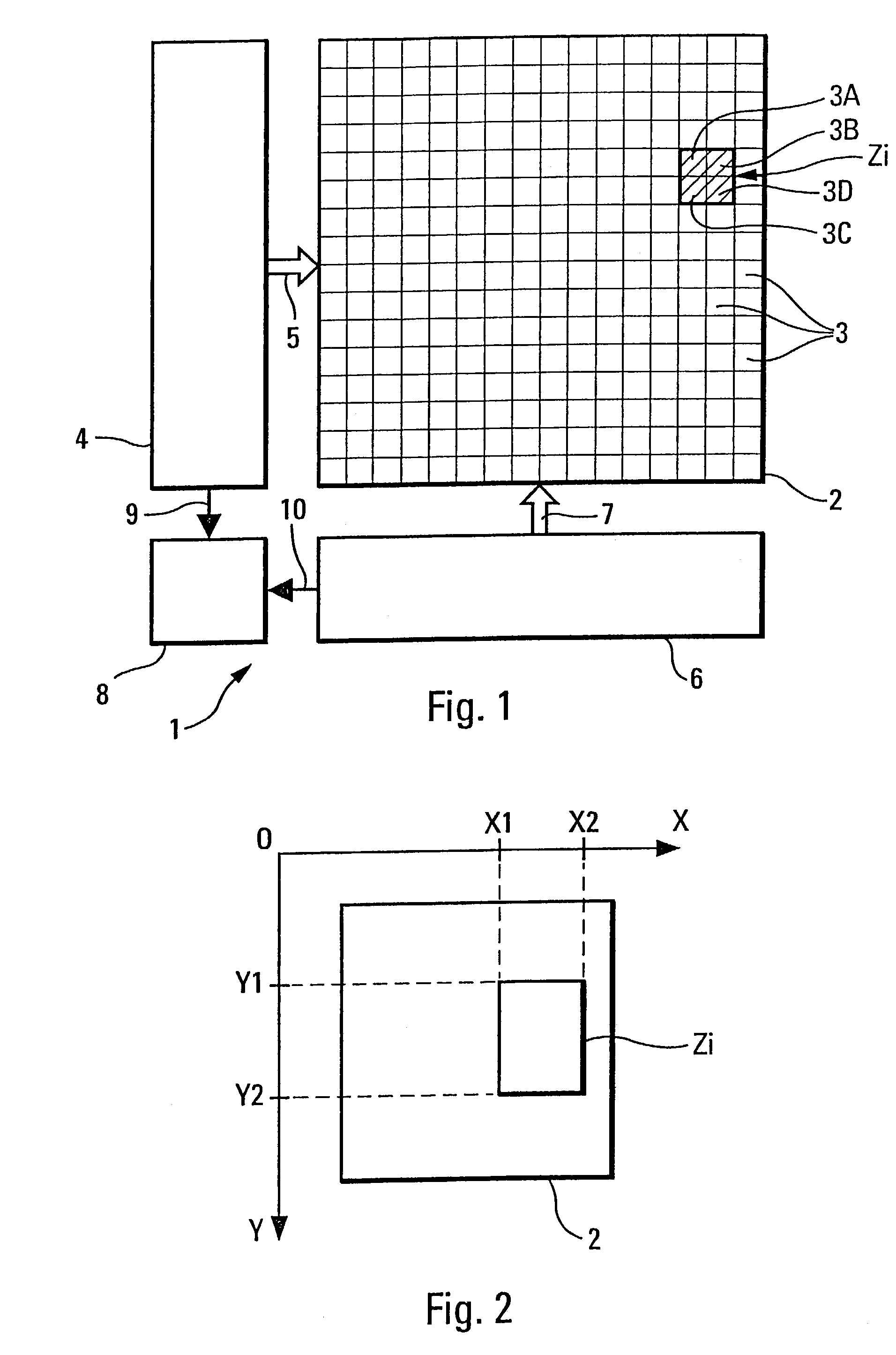

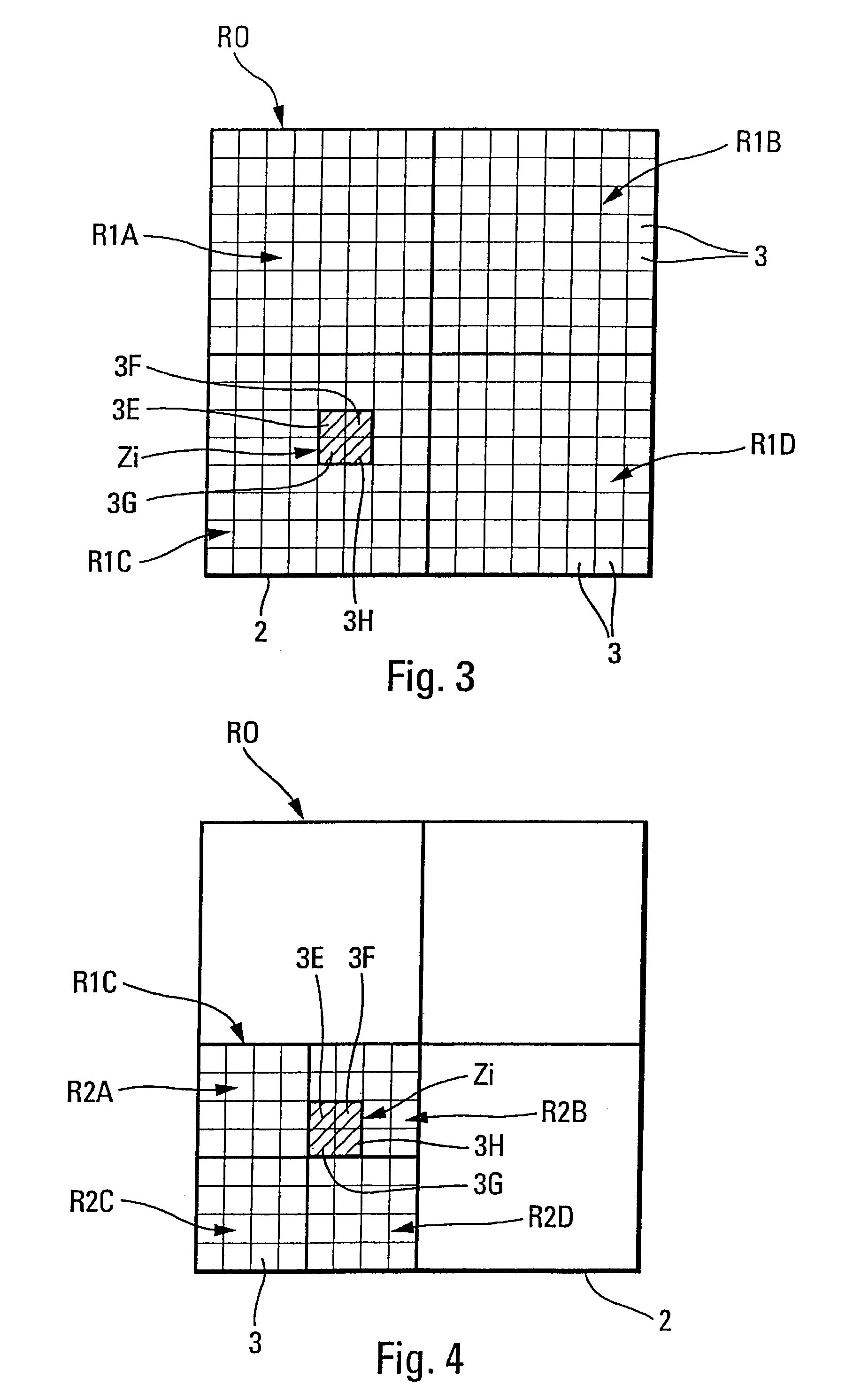

Method for extracting an illuminate zone from a photosensor matrix

ActiveUS6953924B2Quickly and reliably extractedTelevision system detailsDirection controllersEngineeringMatrix partitioning

Owner:MBDA FRANCE

Method of scaling a graphic character

A graphic character that has a character matrix with a number of character units that are indivisible at least in either a horizontal direction or a vertical direction is scaled by dividing the character matrix into one first and at least one second character segment, each comprising at least one of the character units. The first character segment is symmetrically scaled using a first scaling factor and the second character segment is scaled using a second scaling factor different from the first scaling factor.

Owner:DYNAMIC DATA TECH LLC

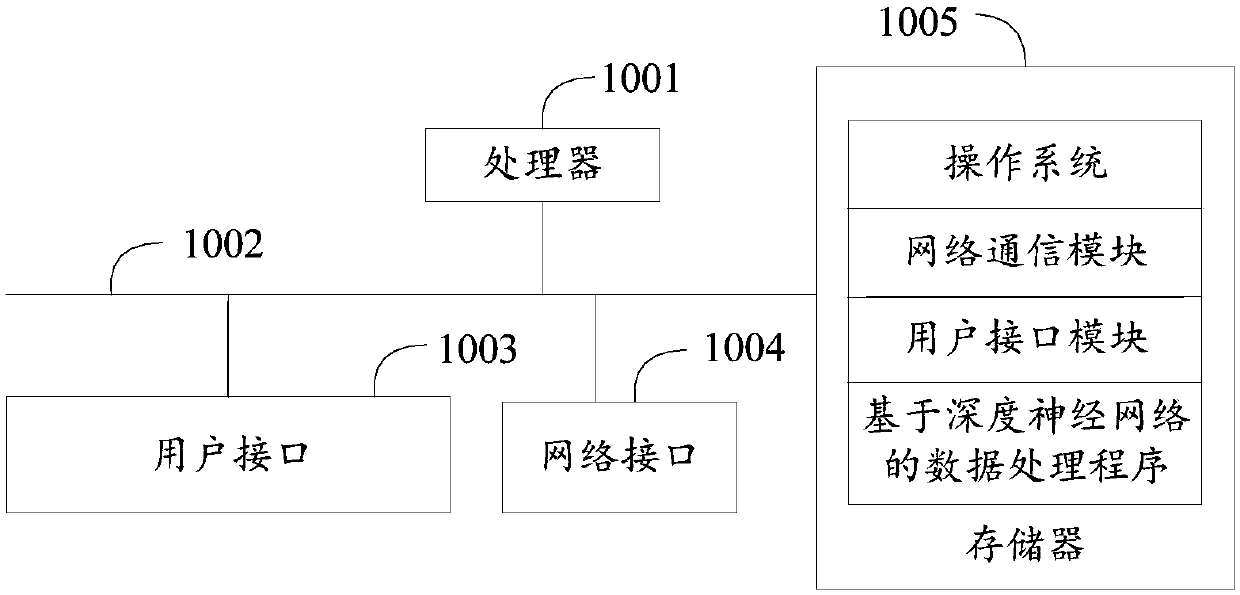

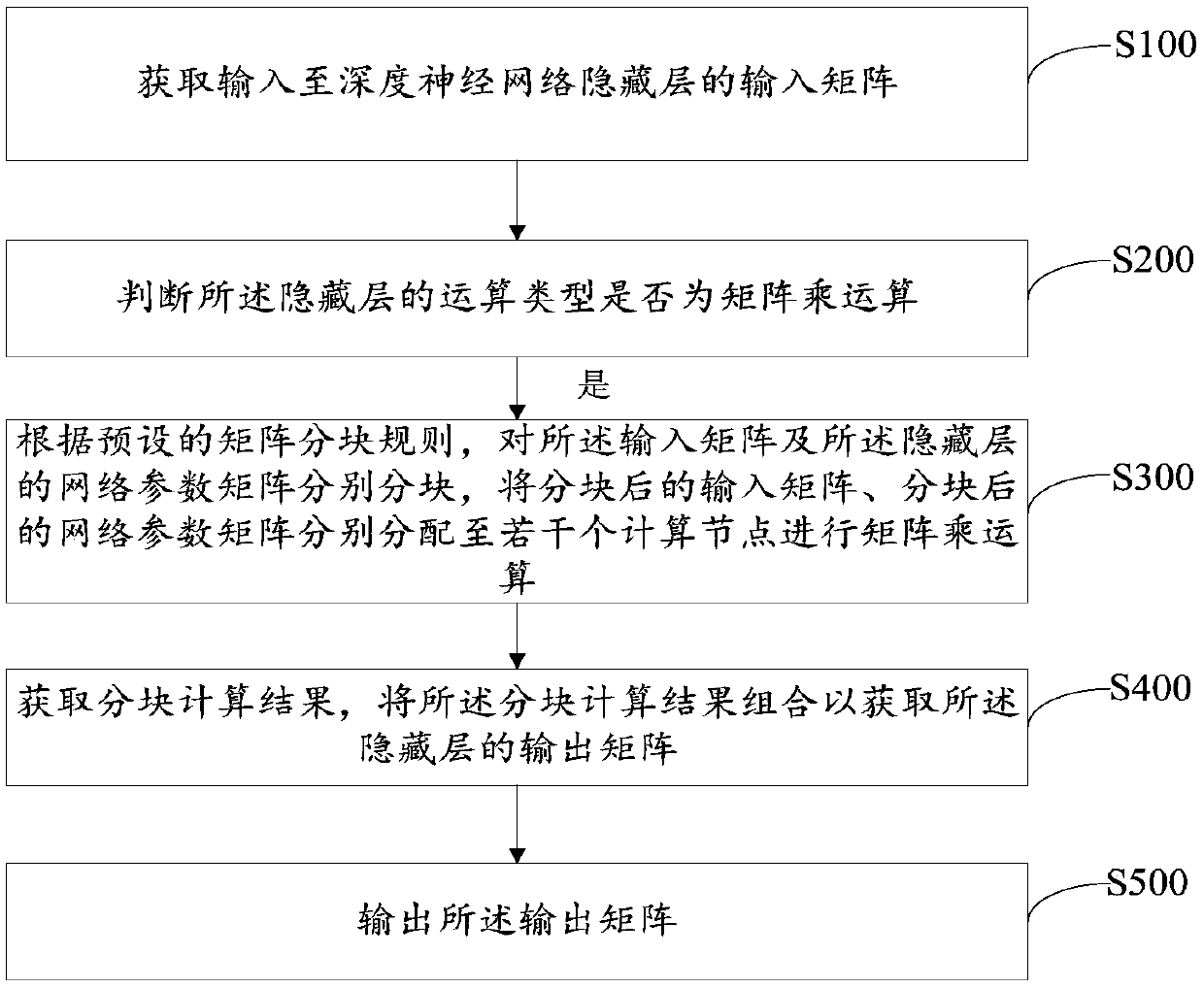

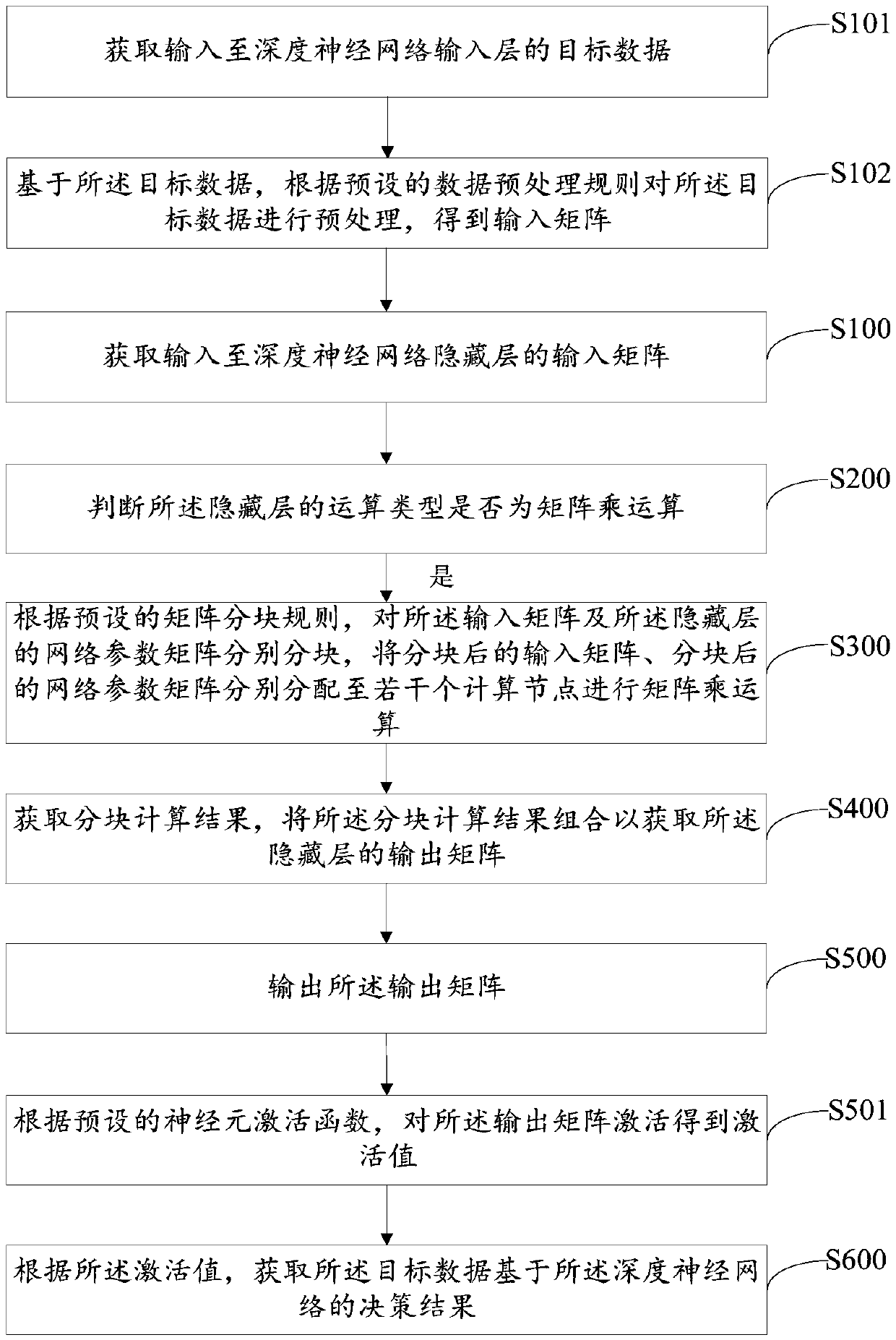

Data processing method and system based on deep neural network, terminal and medium

ActiveCN109597965AImprove computing efficiencyRealize parallel computingNeural architecturesEnergy efficient computingData processing systemHidden layer

The invention discloses a data processing method based on a deep neural network. The method comprises the steps of obtaining an input matrix input to a hidden layer of the deep neural network; Judgingwhether the operation type of the hidden layer is a matrix multiplication operation or not; If yes, respectively partitioning the input matrix and the network parameter matrix of the hidden layer according to a preset matrix partitioning rule, and respectively distributing the partitioned input matrix and the partitioned network parameter matrix to a plurality of computing nodes to carry out matrix multiplication operation; Obtaining a partitioning calculation result, and combining the partitioning calculation result to obtain an output matrix of the hidden layer; Outputting the output matrix. The invention also discloses a data processing system of the deep neural network, a terminal and a medium. According to the method, the operation speed of matrix multiplication operation in the deepneural network can be improved, so that the overall calculation time is shortened.

Owner:SHENZHEN ZTE NETVIEW TECH

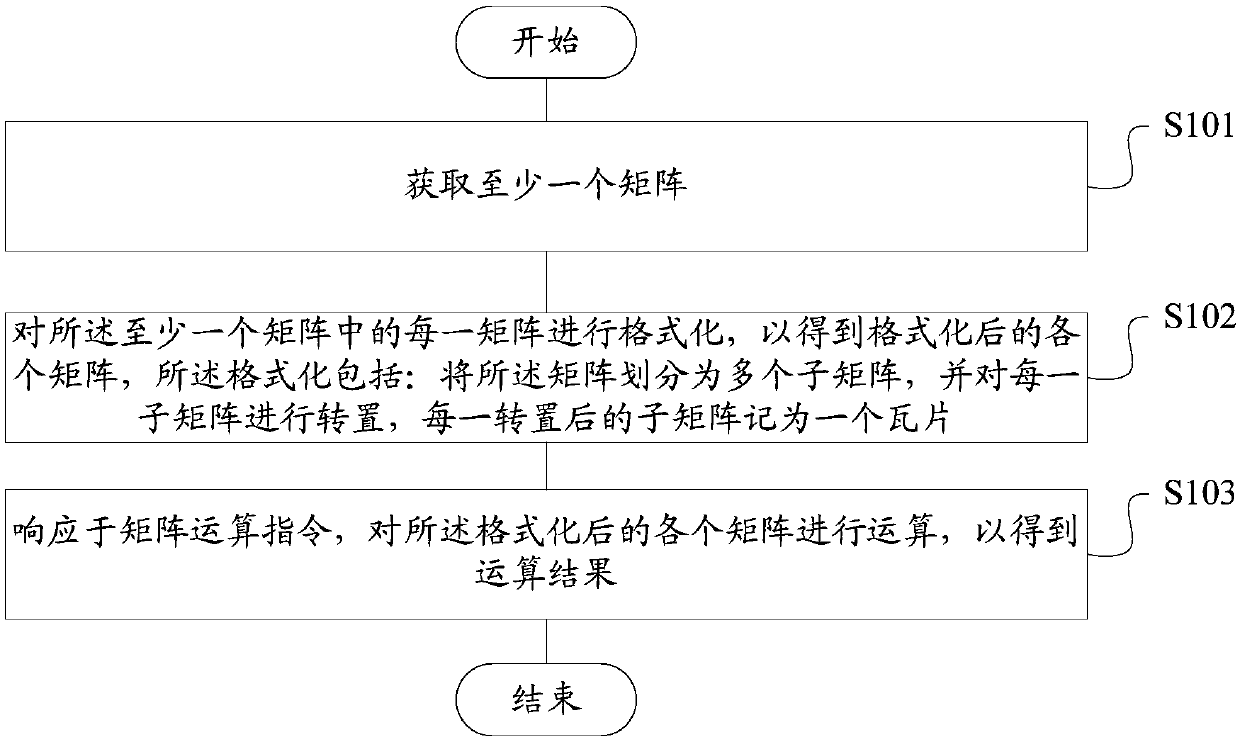

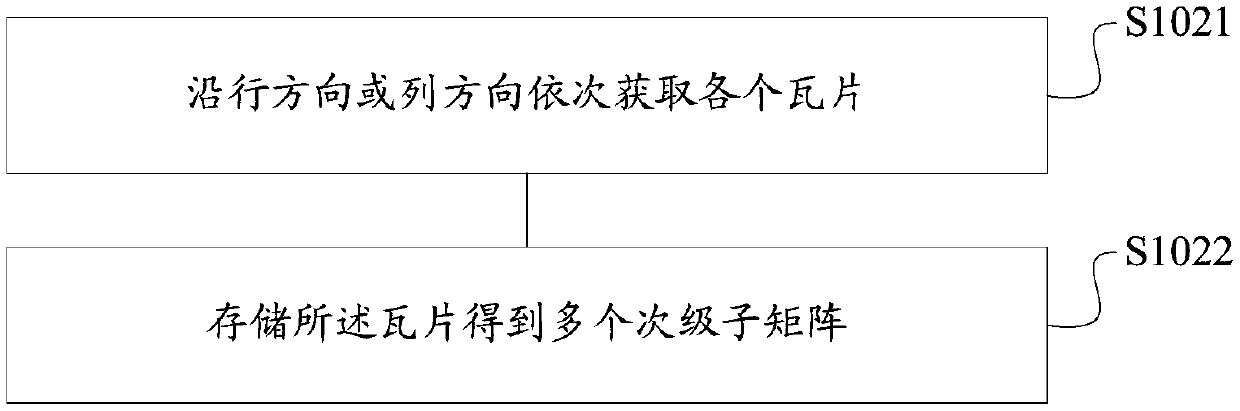

Matrix operation method and device, storage medium and terminal

ActiveCN110727911AReduce core consumptionReduce computationComplex mathematical operationsComputer architectureData operations

The invention discloses a matrix operation method and device, a storage medium and a terminal. The matrix operation method comprises the steps of obtaining at least one matrix; formatting each matrixin the at least one matrix to obtain each formatted matrix, the formatting comprising: dividing the matrix into a plurality of sub-matrixes, transposing each sub-matrix, and marking each transposed sub-matrix as a tile; and in response to a matrix operation instruction, performing operation on each formatted matrix to obtain an operation result. Through the scheme provided by the invention, matrixoperation can be carried out based on a more efficient data format, and especially in a multi-process scene, the data operation amount can be greatly reduced, and the transmission bandwidth requirement from a computer to a memory is optimized, and the execution delay in the operation period is reduced.

Owner:SPREADTRUM COMM (SHANGHAI) CO LTD

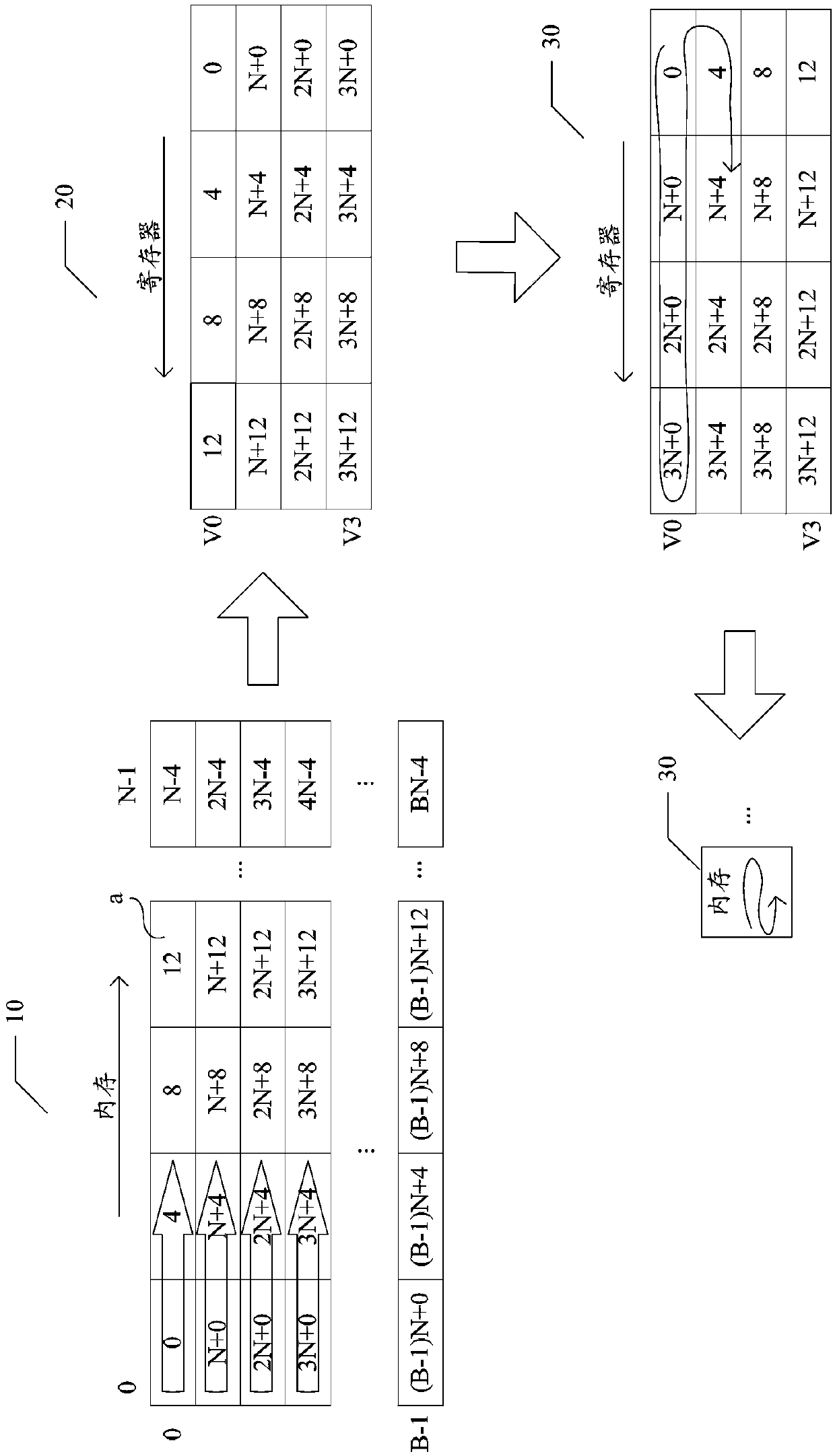

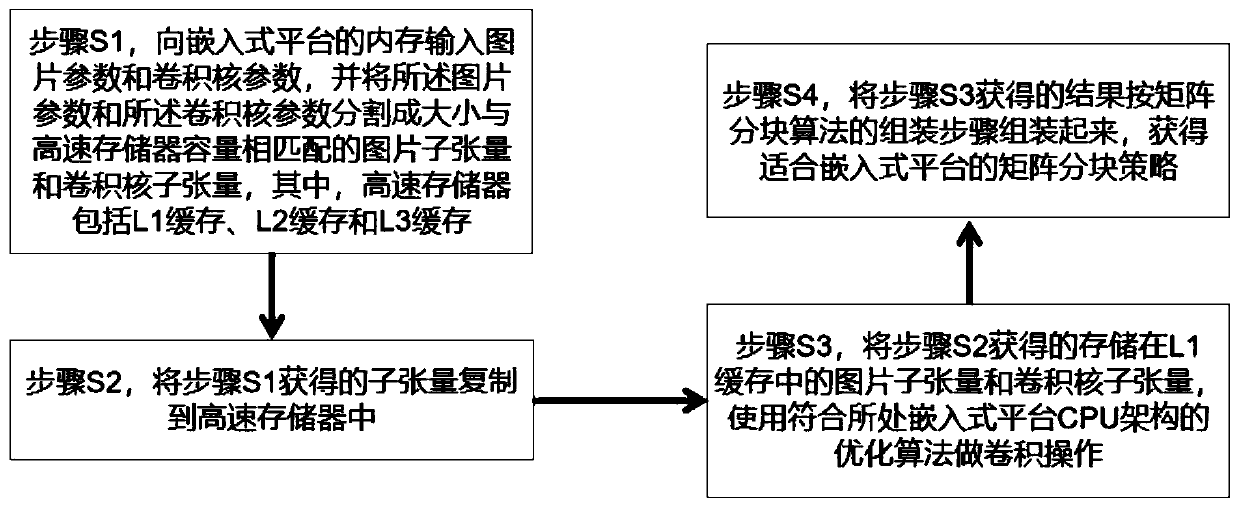

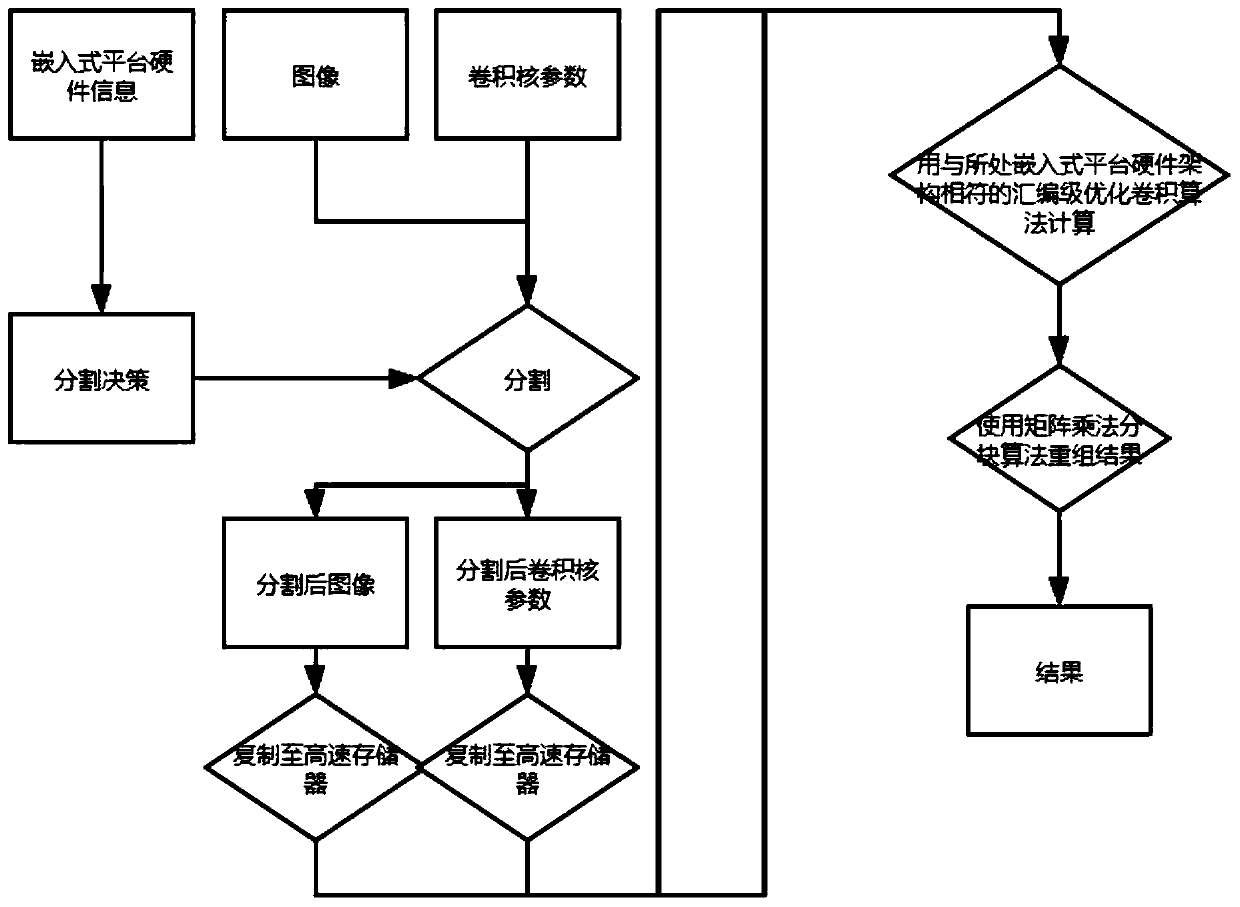

Convolutional operation optimization method and system for efficiently running deep learning task

ActiveCN111381968AIncrease computing speedEasy to implementImage analysisResource allocationMatrix partitioningConvolution

The invention discloses a convolution operation optimization method and system for efficiently running a deep learning task. The method comprises the following steps: obtaining picture parameters andconvolution kernel parameters, segmenting the picture parameters and the convolution kernel parameters to obtain picture sub-tensors and convolution kernel sub-tensors, copying the segmented sub-tensors to a high-speed memory, performing convolution operation on the sub-tensors stored in an L1 cache, and assembling the sub-tensors subjected to convolution operation according to an assembling stepof a matrix partitioning algorithm to obtain a final result. Through matrixes and tensor partitioning strategies adjusted according to hardware parameters of different embedded platforms, in the wholeoperation process, more operation data can be obtained from a high-speed memory instead of low-speed storage, and the operation speed is increased; meanwhile, through a reasonable strategy for optimizing the assembly level of the embedded platform, the potential of the platform can be better utilized by operation, and the operation speed is further increased; in addition, a matrix partitioning strategy is adopted, so that the implementation cost is lower.

Owner:SUN YAT SEN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com