Patents

Literature

108results about How to "High speedup" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

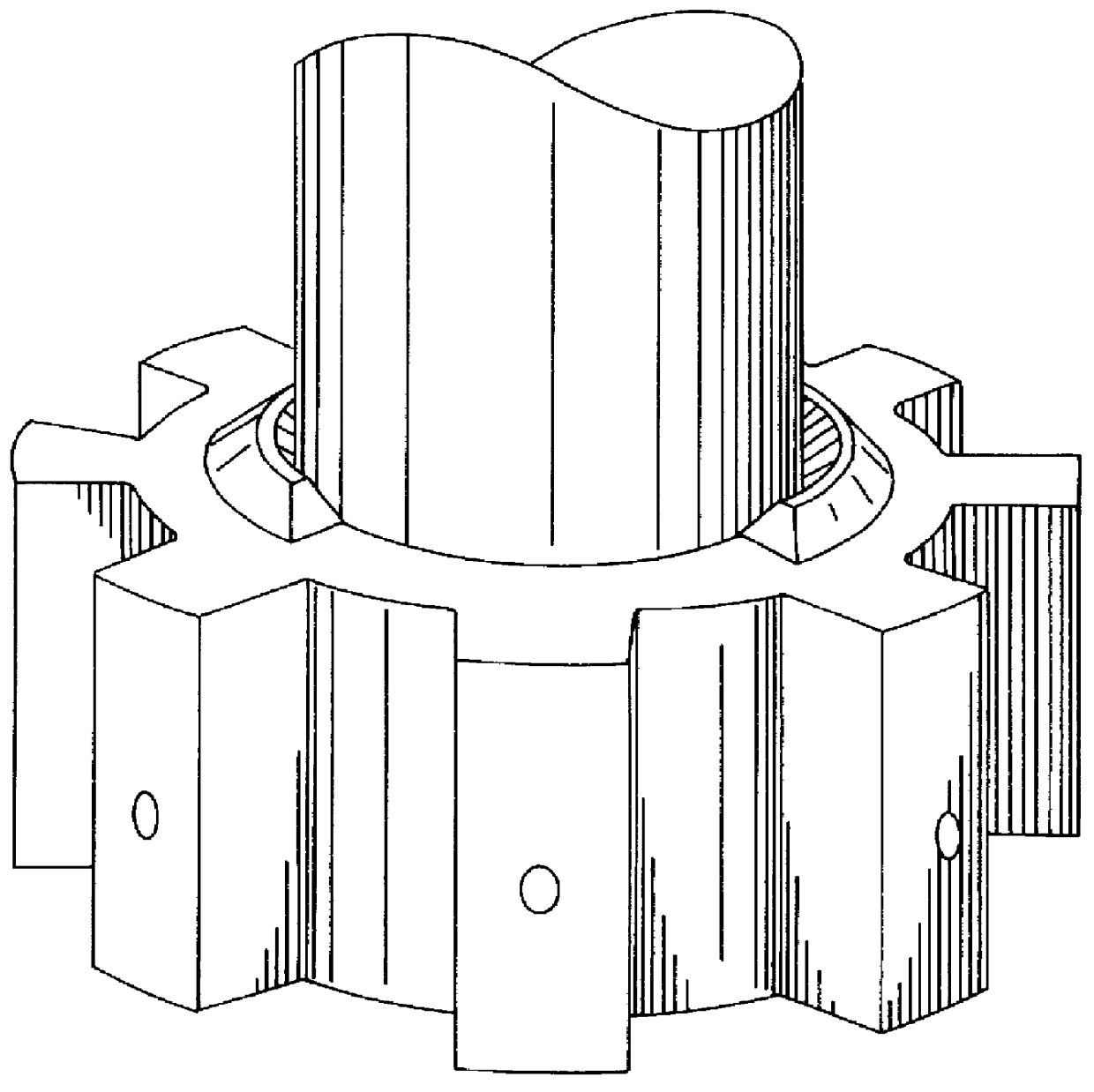

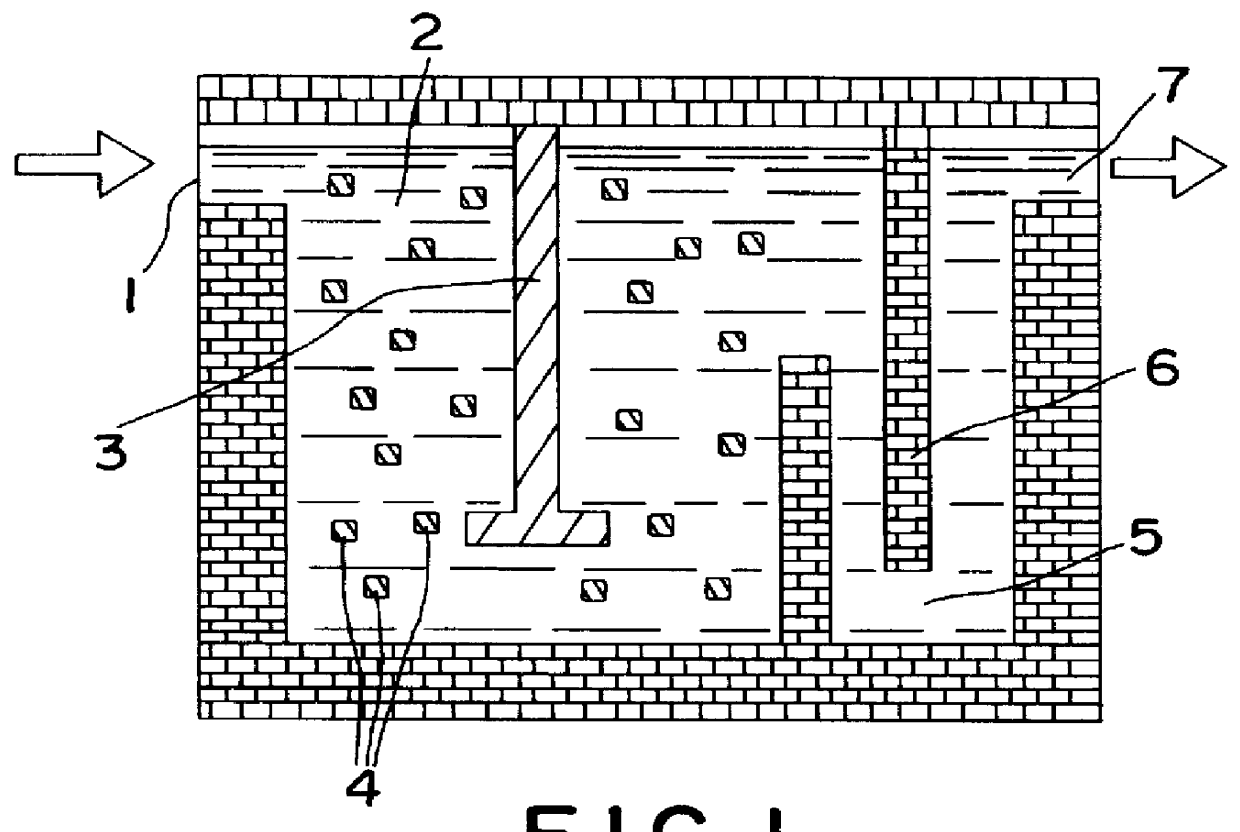

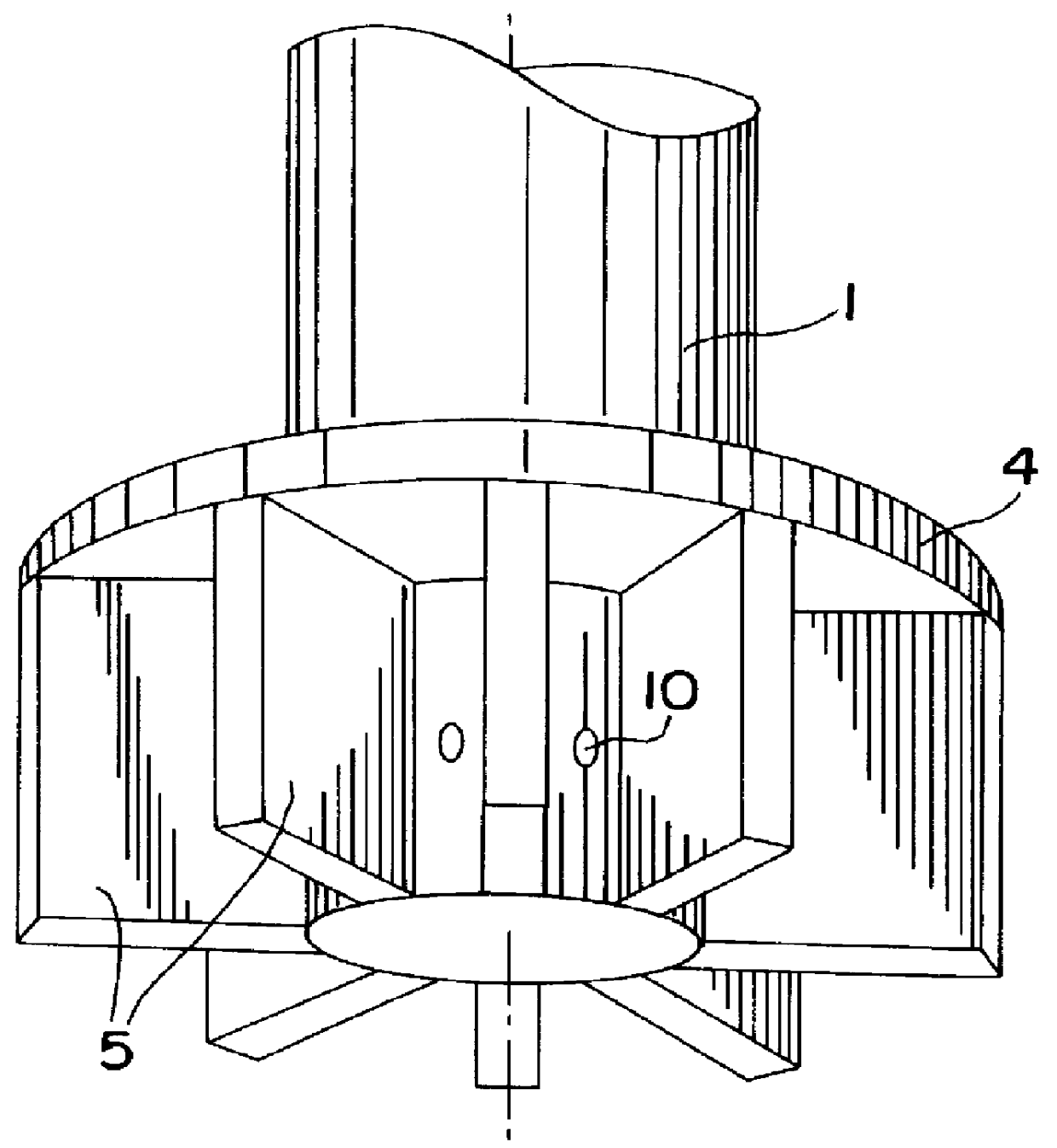

Rotary gas dispersion device for treating a liquid aluminium bath

InactiveUS6060013AHigh blade lift ratioDifficult to manufactureMechanical apparatusStirring devicesEngineeringLiquid aluminium

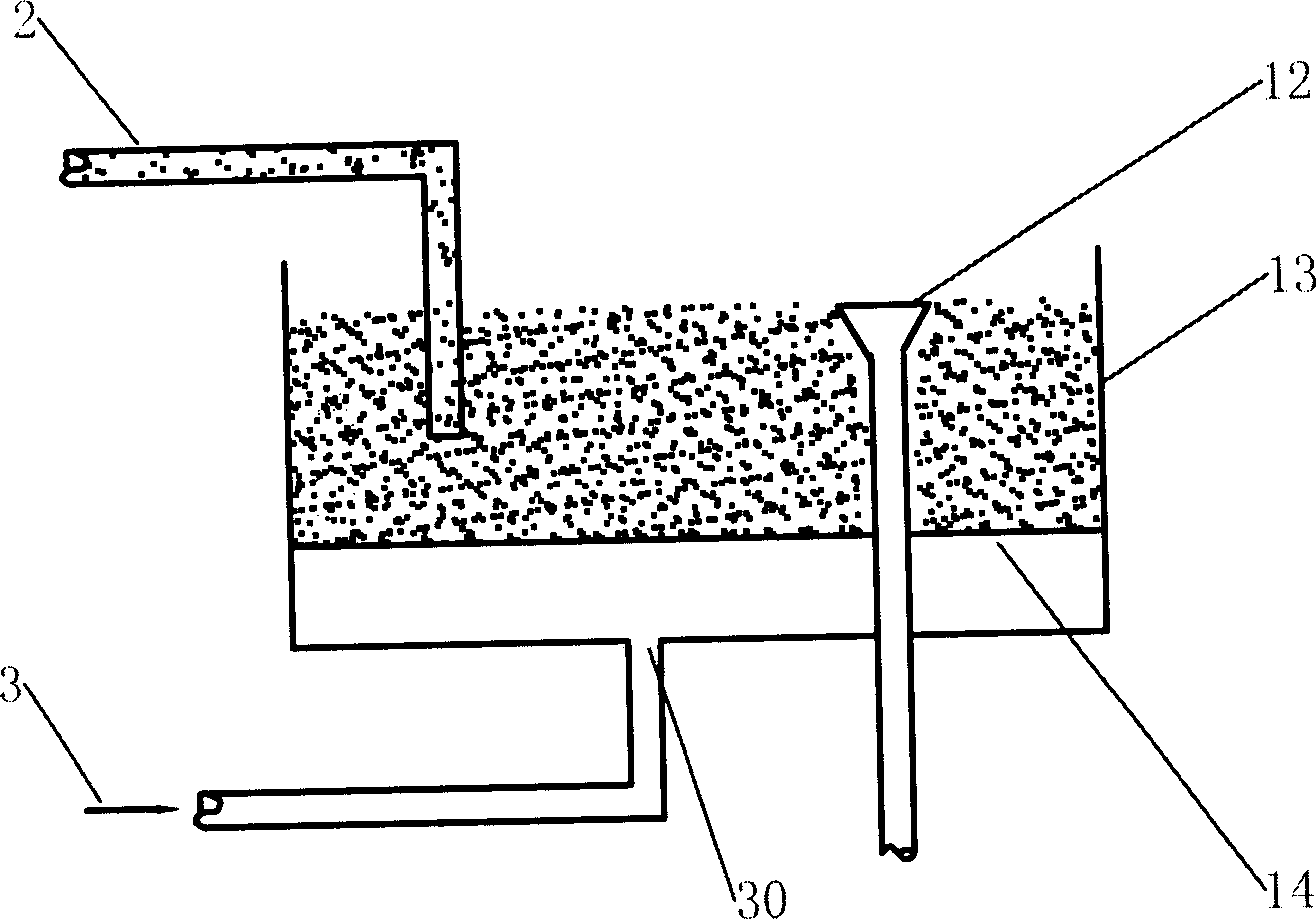

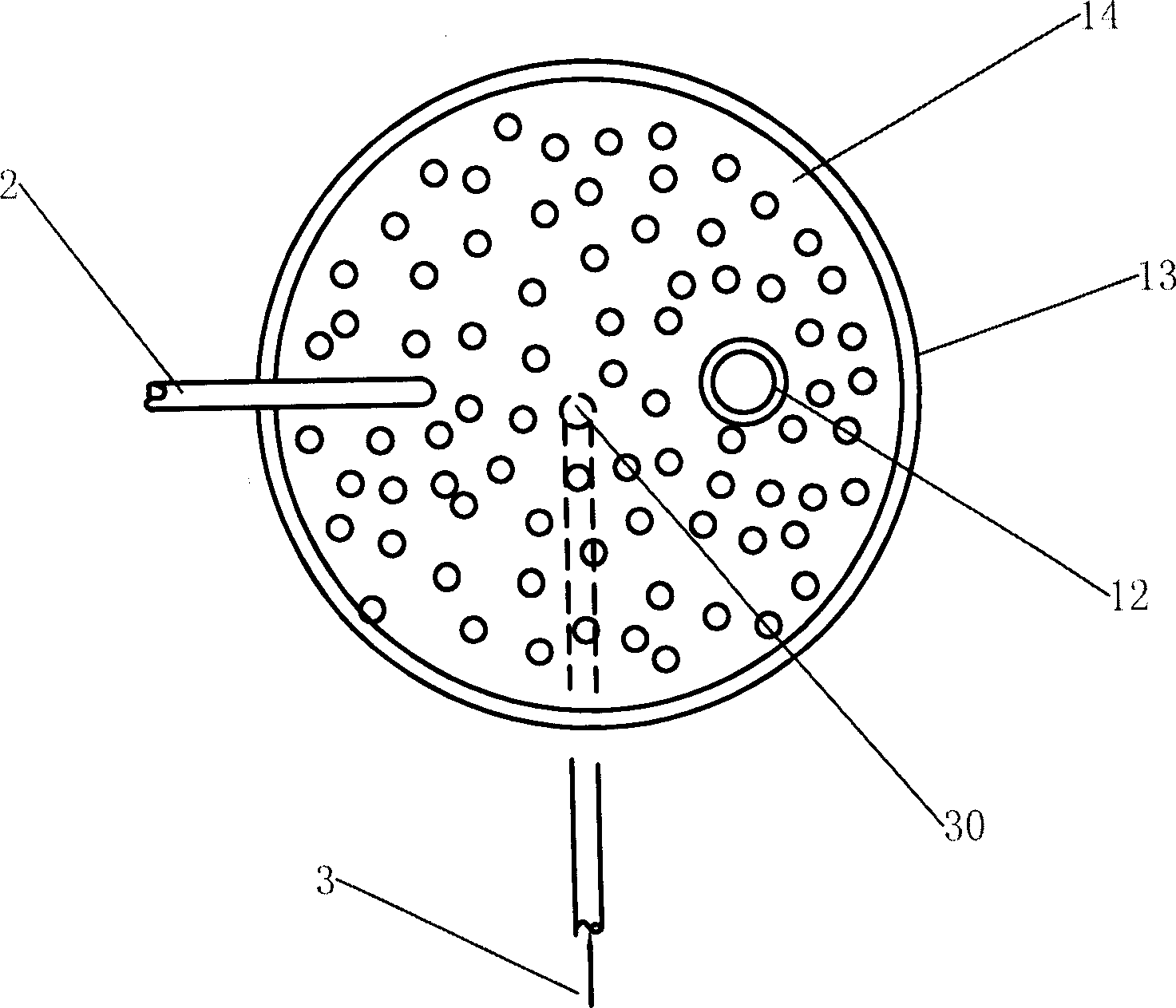

PCT No. PCT / FR97 / 01367 Sec. 371 Date Mar. 8, 1999 Sec. 102(e) Date Mar. 8, 1999 PCT Filed Jul. 23, 1997 PCT Pub. No. WO98 / 05915 PCT Pub. Date Feb. 12, 1998A rotary gas dispersion device for use in a liquid aluminium treatment vessel is disclosed. The device is useful for reducing surface disturbance, splashing and vortices while maintaining the effectiveness of the treatment. Said device includes a rotor (1) consisting of a set of blades (5) and a substantially flat disc (4) thereabove. Gas is injected through the central hub and side ports (10) between the blades. The ratio of the outer diameter of the rotor to the diameter of the central hub thereof is of 1.5-4.

Owner:NOVELIS INC

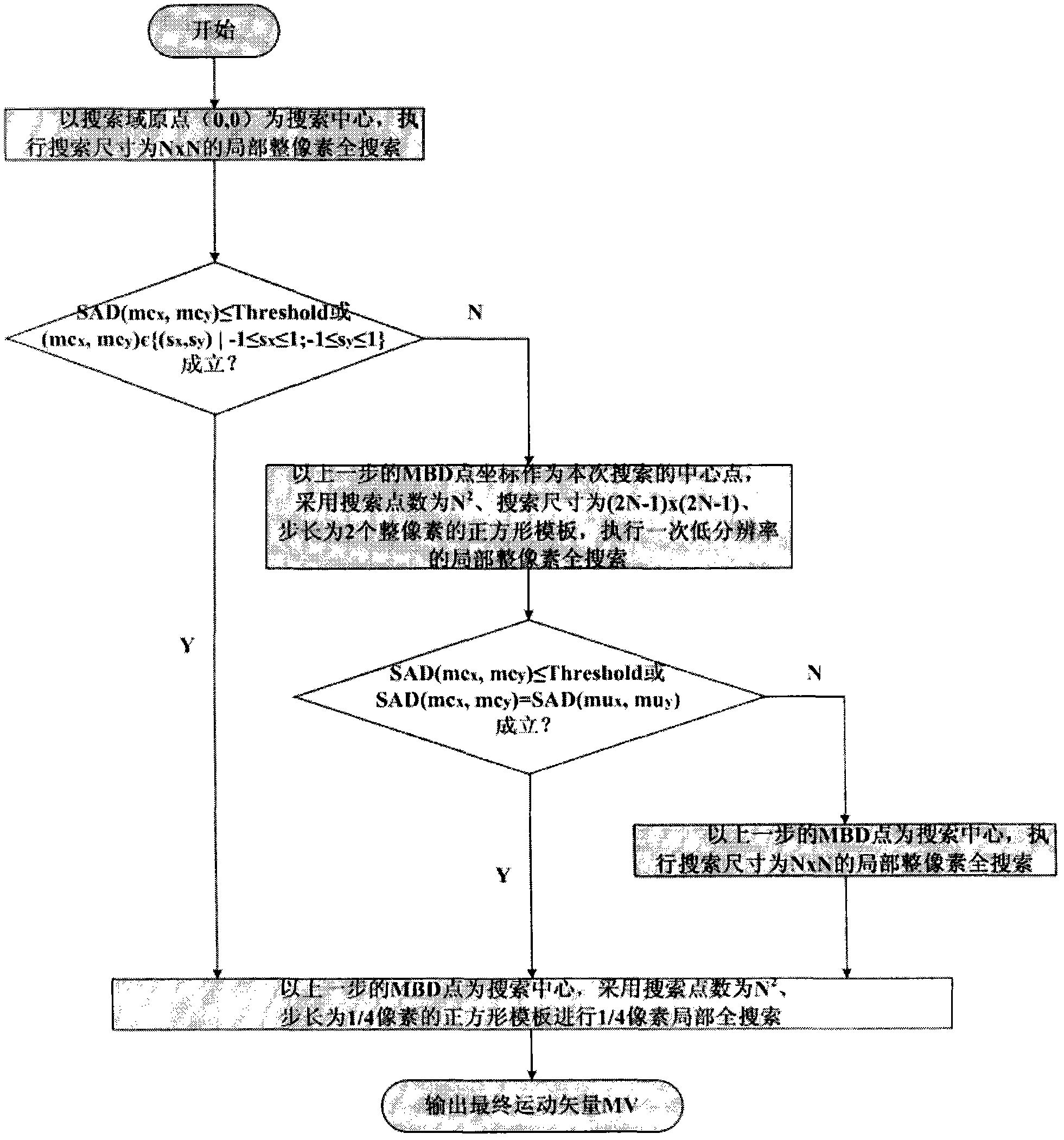

Fast motion estimation method realized based on GPU (Graphics Processing Unit) parallel

InactiveCN102547289AHigh speedupHigh precisionTelevision systemsDigital video signal modificationHigh densityImage resolution

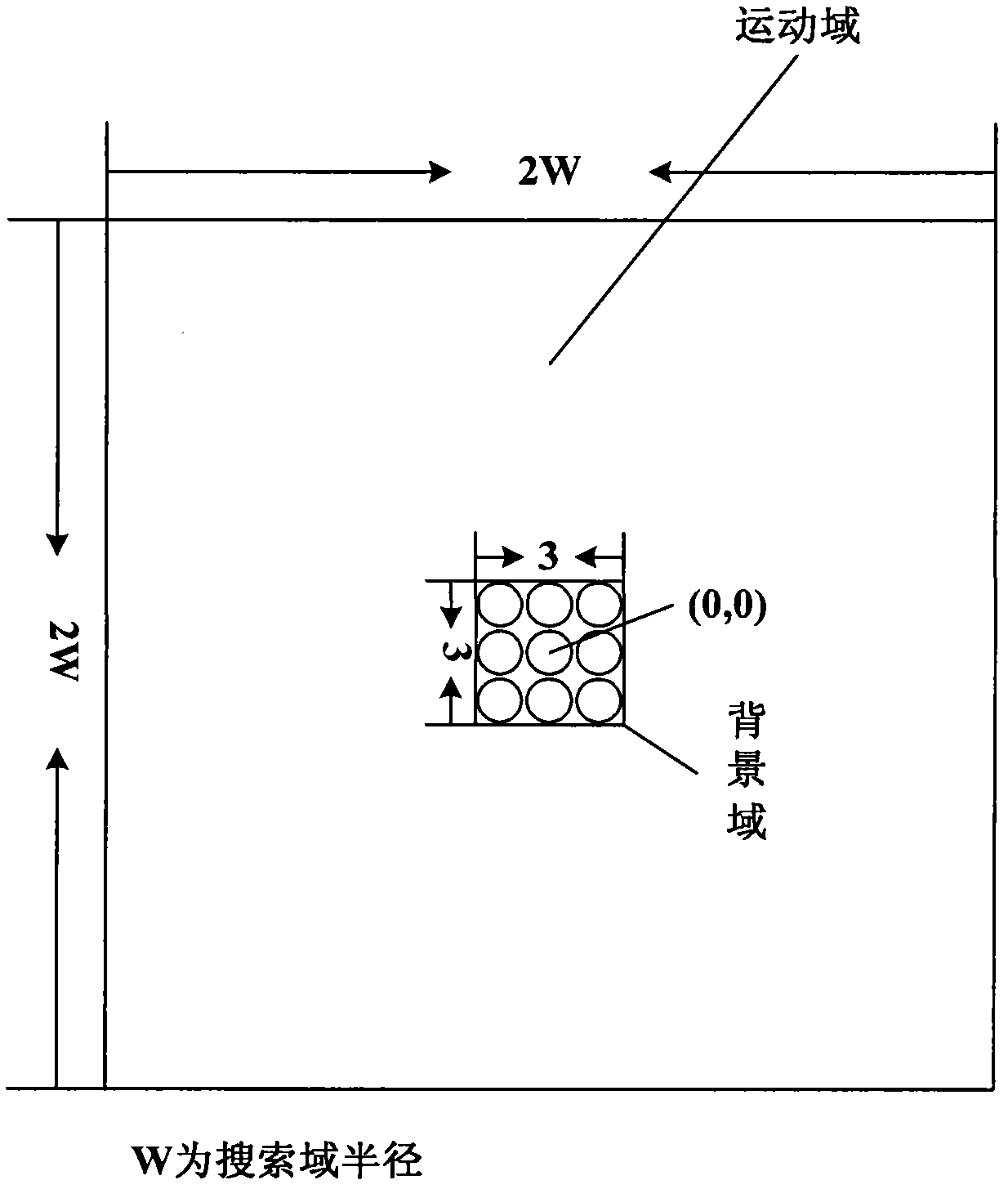

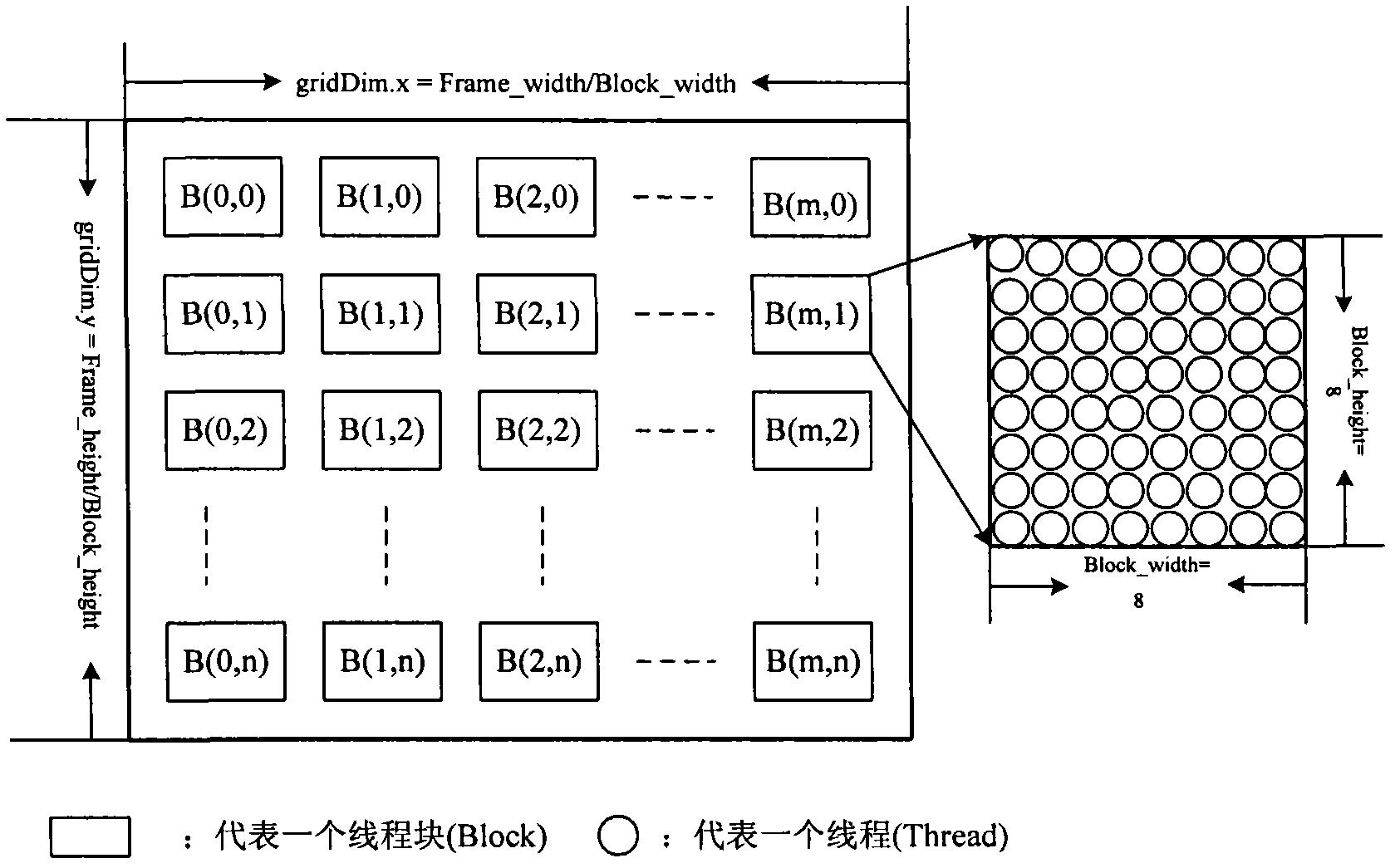

The invention discloses a fast motion estimation method realized based on GPU (graphics processing unit) parallel. The method comprises the following steps: firstly, whether a current segment belongs to a background domain is judged through a maximum probability using local full-search; secondly, if the current segment belongs to the background domain, full-pixel precision search is ended, or else a search step length is increased by reducing a search domain resolution, and an optimal motion vector distribution range is captured by executing local full-pixel full-search of low resolution, i.e., coarse positioning; thirdly, after the segment belonging to the motion domain is subjected to coarse positioning, the motion vector distribution range is refined by local full-search, so as to accomplish motion estimation of full-pixel precision, i.e., refining positioning; finally, a search template with high density and high precision is used for refining the motion vector, thereby accomplishing a quarter of pixel precision search and ending the motion estimation of the current segment. A termination judgment technique is adopted in the search process, i.e., if a minimum distortion reference segment reaches a set matching precision, the full-pixel search process of the algorithm is ended, and all search points of each step in the search process will have no change.

Owner:XIDIAN UNIV

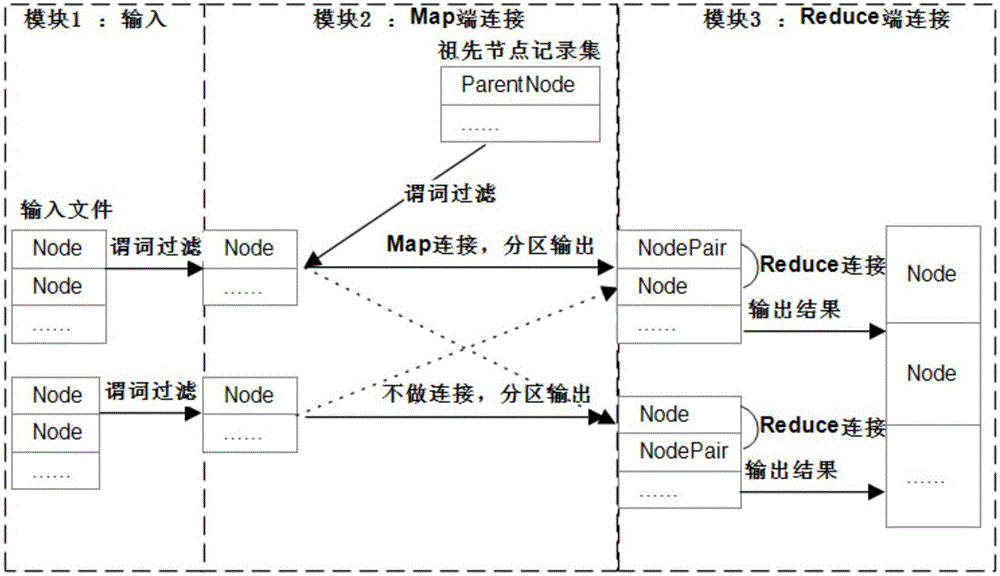

MapReduce based XML data query method and system

ActiveCN105005606AImprove performanceObvious query speed advantageWeb data indexingSpecial data processing applicationsQuery planXPath

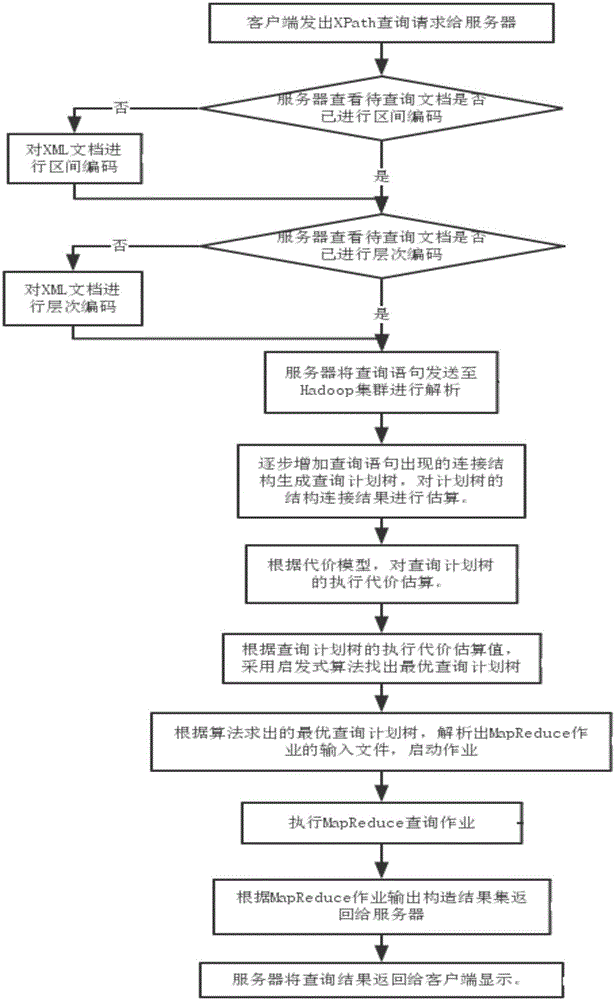

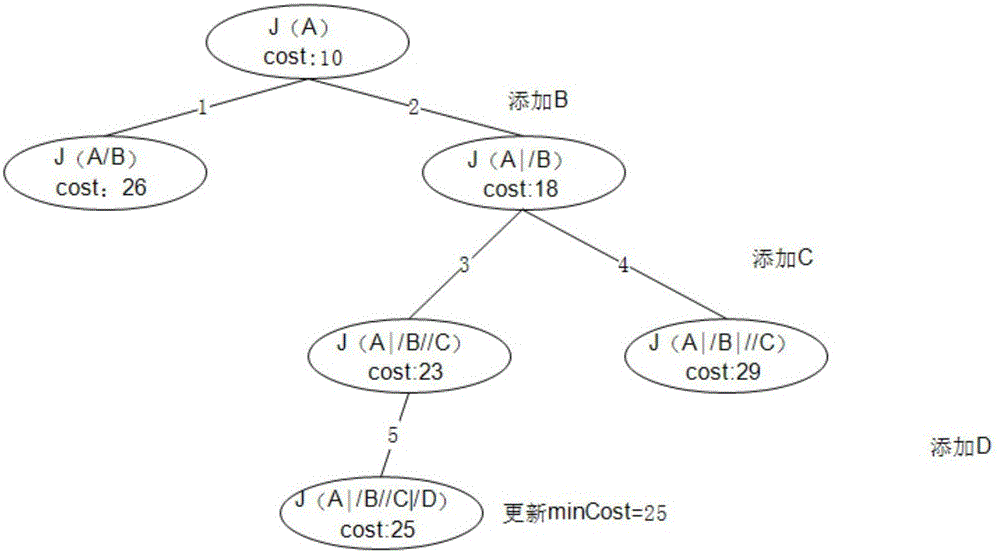

The present invention discloses a MapReduce based XML data query method and system. The method comprises the steps of: receiving an XPath query request of a client by a server; checking whether a to-be-queried XML document is subjected to region encoding or not; performing region encoding on the to-be-queried XML document not subjected to the region encoding; checking whether the to-be-queried XML document is subjected to hierarchical encoding by the server; performing hierarchical encoding on the to-be-queried XML document not subjected to the hierarchical encoding; analyzing a query statement in the query request; generating a query plan tree, and performing estimation on a structural connection result; establishing a cost model, and executing cost estimation on the query plan tree; finding a optimal query plan tree; obtaining the optimal query plan tree, and analyzing an input file of a MapReduce task; executing a MapReduce query task; constructing an output file of the MapReduce task into an XML data result as a query result; and returning the XML data query result to the client. The method has the advantages of being relatively high in execution efficiency, high in speedup ratio, good in query processing performance and good in scalability.

Owner:SOUTH CHINA UNIV OF TECH

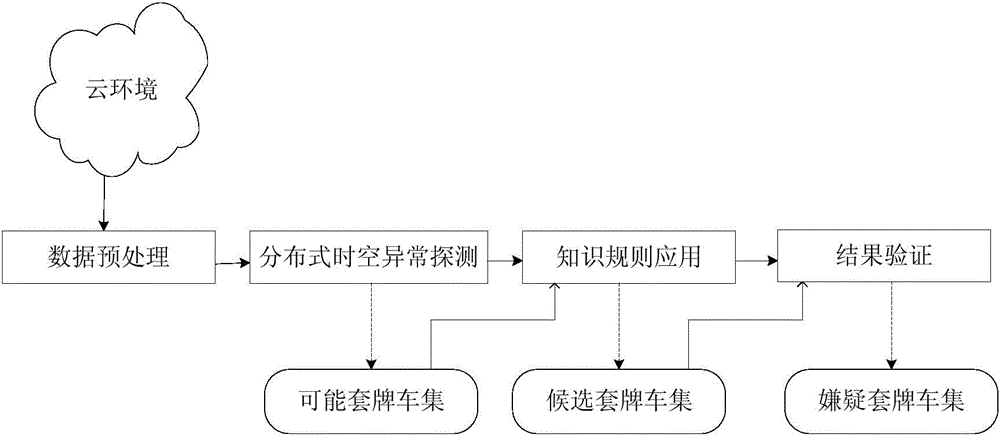

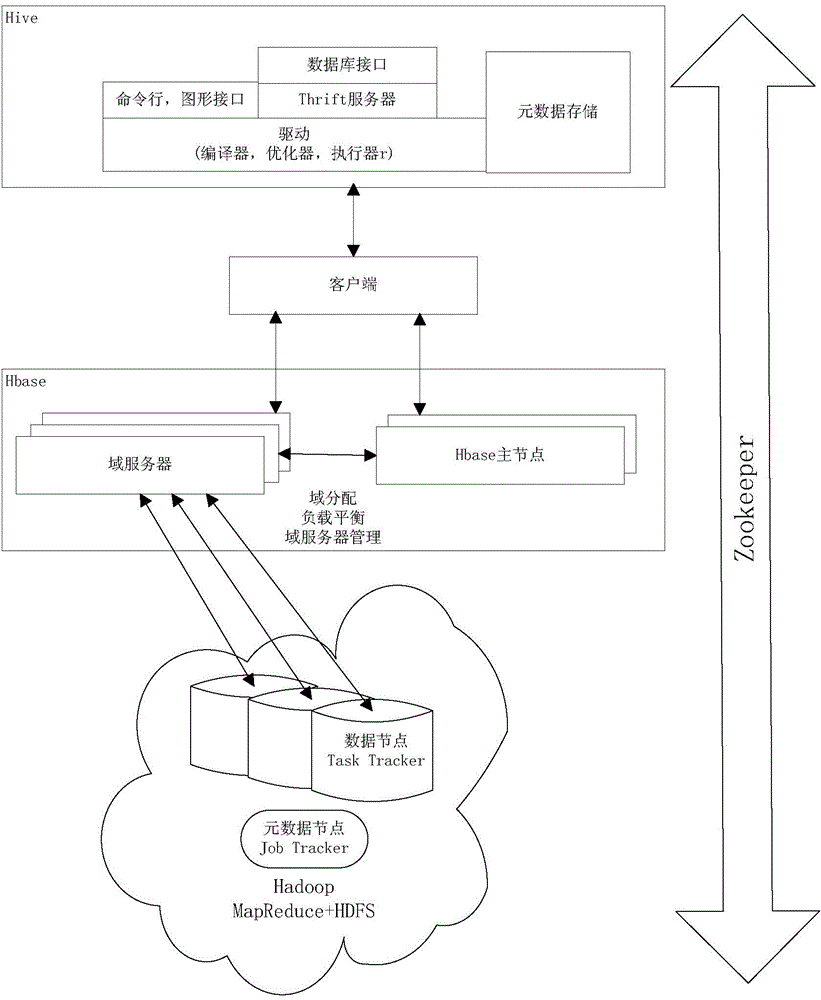

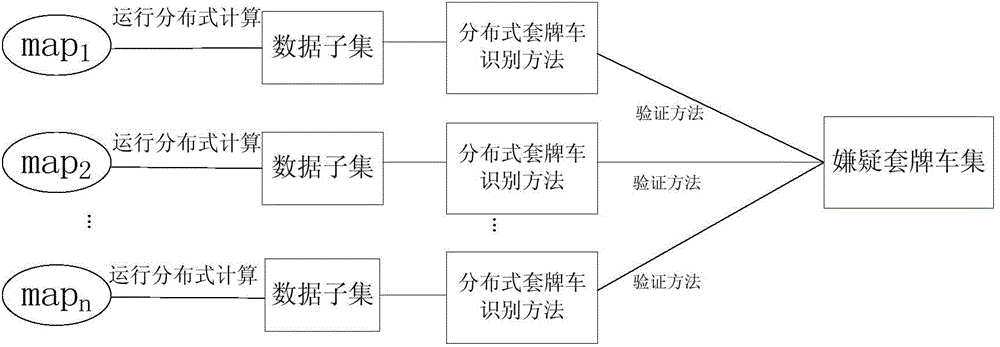

Hadoop-based recognition method for fake-licensed car

ActiveCN104035954AReduce the number of matchesHigh operating efficiency and speed-up ratioRoad vehicles traffic controlRelational databasesWeight valuePattern recognition

The invention discloses a hadoop-based recognition method for a fake-licensed car. The hadoop-based recognition method for the fake-licensed car is characterized in that the input is massive process records. The method comprises the following steps: transferring valid passing car records subjected to dimension reduction into HBase of a Hadoop cluster; acquiring the passing record of a car that has the same license and appears in any two monitoring points from HBase through Hive; grouping and sequencing according to the license number and passing time; initializing a weighted graph that adopts the monitoring points as a vertex set, and the space between every two monitoring points as the edge weight value; calculating the shortest path between every two monitoring points; combining every two monitoring points and processing by block; creating a plurality of threads; concurrently submitting Hive tasks to recognize the fake-licensed car under the principle of the fake-licensed car and according to the combination of every two monitoring points subjected to block processing; acquiring the final suspectable fake-licensed car through correction factors. Compared with the non-optimization method under the traditional environment, the hadoop-based recognition method has the advantages that the running efficiency and speed-up ratio are raised, and the fake-licensed car can be effectively recognized.

Owner:HANGZHOU DIANZI UNIV

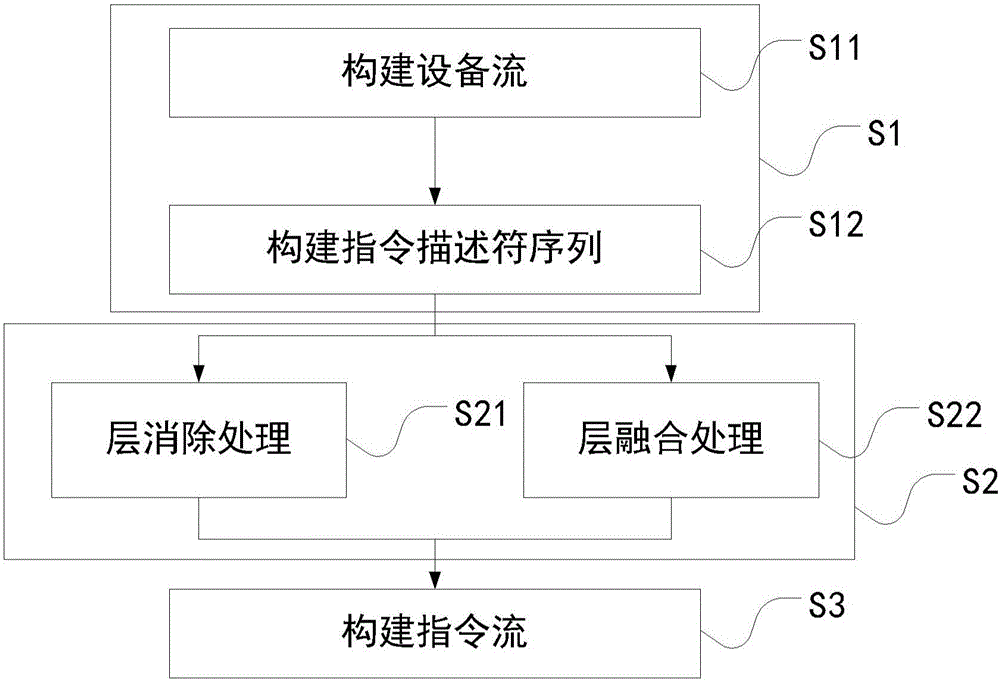

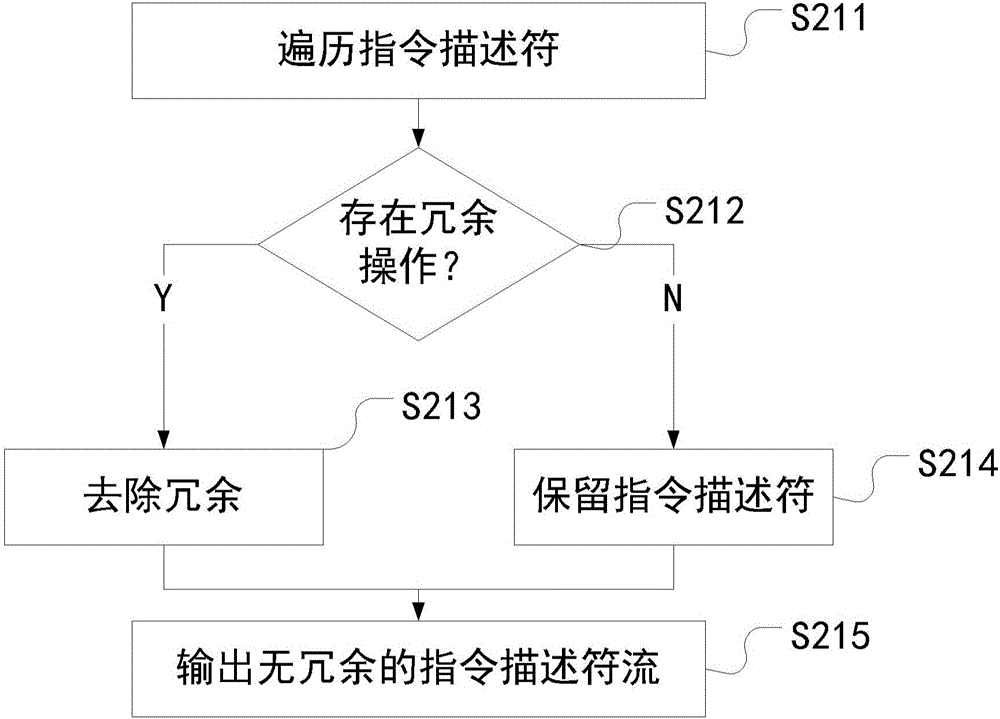

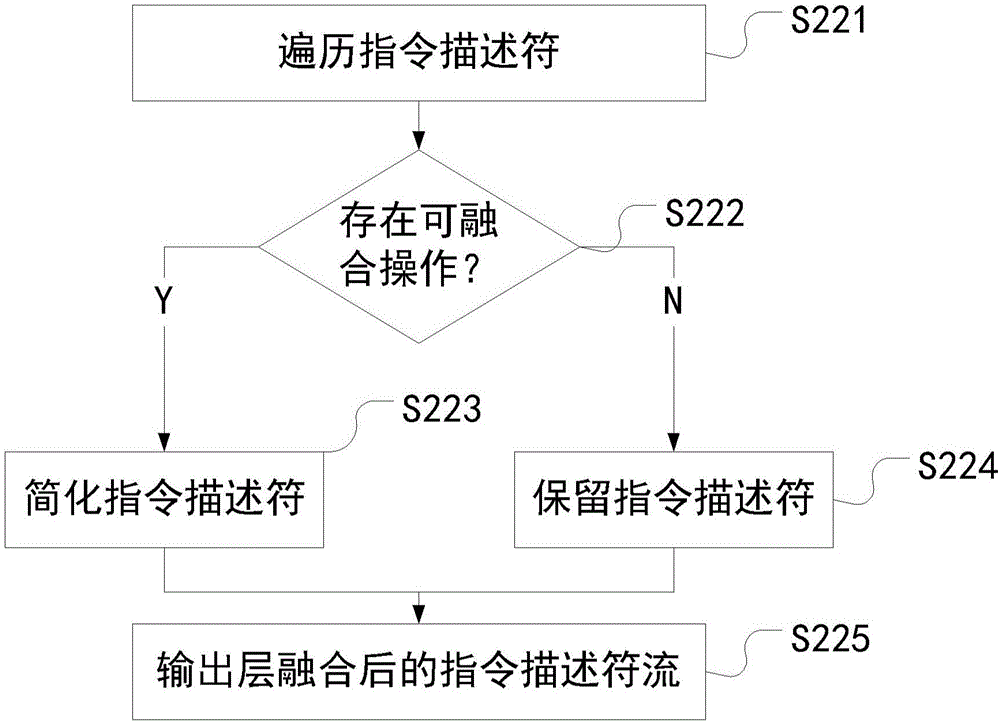

Stream execution method and apparatus

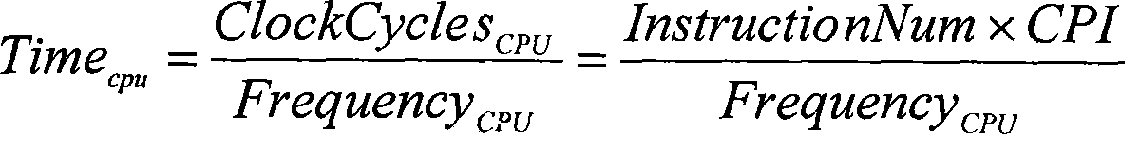

ActiveCN106845631AEfficient Functional RefactoringHigh speedupConcurrent instruction executionNeural architecturesInstruction streamExecution time

The invention discloses a stream execution method and apparatus. The method comprises a preprocessing step of obtaining an instruction descriptor stream, a layer optimization step of optimizing the instruction descriptor stream, and an instruction stream construction step of constructing an instruction stream according to the optimized instruction descriptor stream.

Owner:SHANGHAI CAMBRICON INFORMATION TECH CO LTD

Theven equivalent overall modeling method of modularized multi-level converter (MMC)

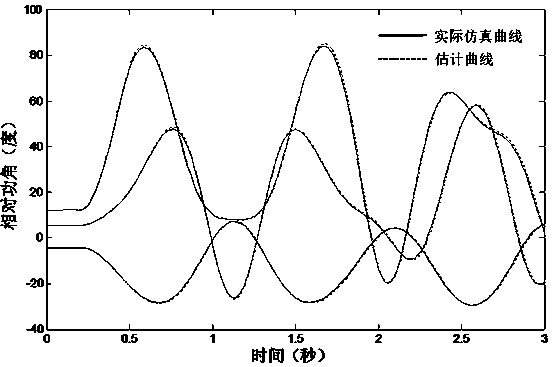

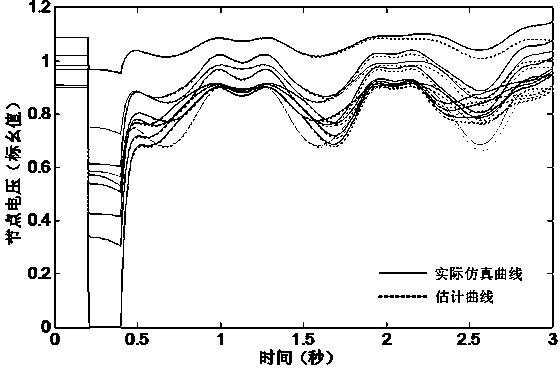

ActiveCN103914599AHigh computational complexityHigh speedupSpecial data processing applicationsCapacitanceComputation complexity

The invention relates to a theven equivalent overall modeling method of a modularized multi-level converter (MMC), belonging to the technical field of power transmission and distribution. The electromagnetic transient off-line simulation efficiency of the MMC is determined by the integral computation complexity of a converter part and a modulation voltage-sharing part of the MMC, and the two parts are respectively optimized in three levels by the overall modeling method of the MMC. The core technological scheme provided by the invention is as follows: firstly, assuming the equivalence to be an infinite resistor (ROFF= infinity) when a switch element in a theven equivalent model of the MMC; secondly, discretizing all submodule capacitors in the MMC by adopting a regressive eulerian method, so as to replace a trapezoid integral method in the known model; and finally, providing a novel sequencing and voltage-sharing algorithm by combining the infinite resistor with a turn-off characteristic and the regressive eulerian method, wherein the computation complexity of the novel sequencing and voltage-sharing algorithm is the same as the number of the bridge arm submodules of the MMC. The complexity of the electromagnetic transient simulating calculation of the MMC can be linearly changed along with the increasing of the number of the submodules under the premise of guaranteeing the simulation precision, so that the overall modeling method has important reference significance on researchers in the field of the MMC.

Owner:NORTH CHINA ELECTRIC POWER UNIV (BAODING)

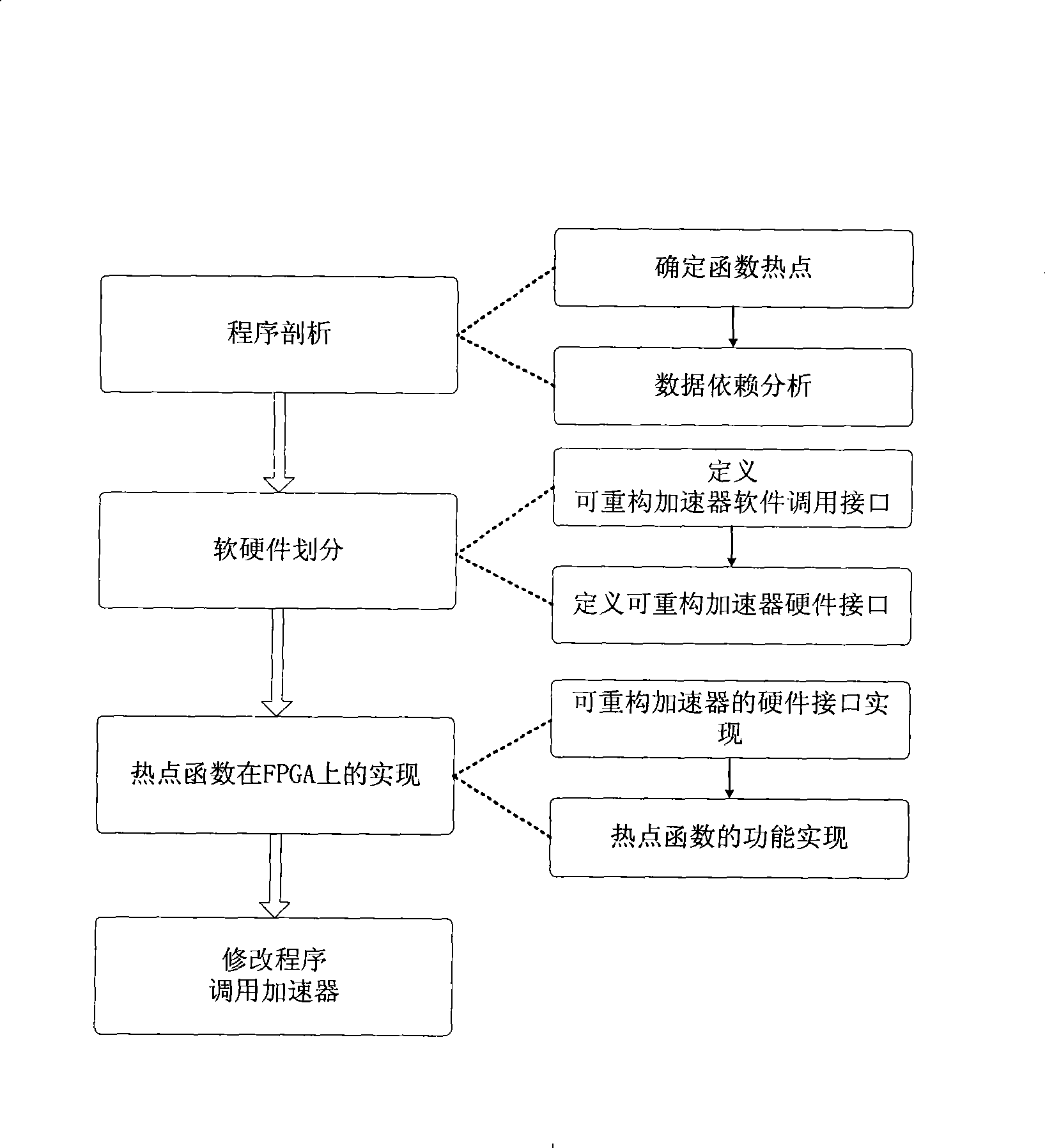

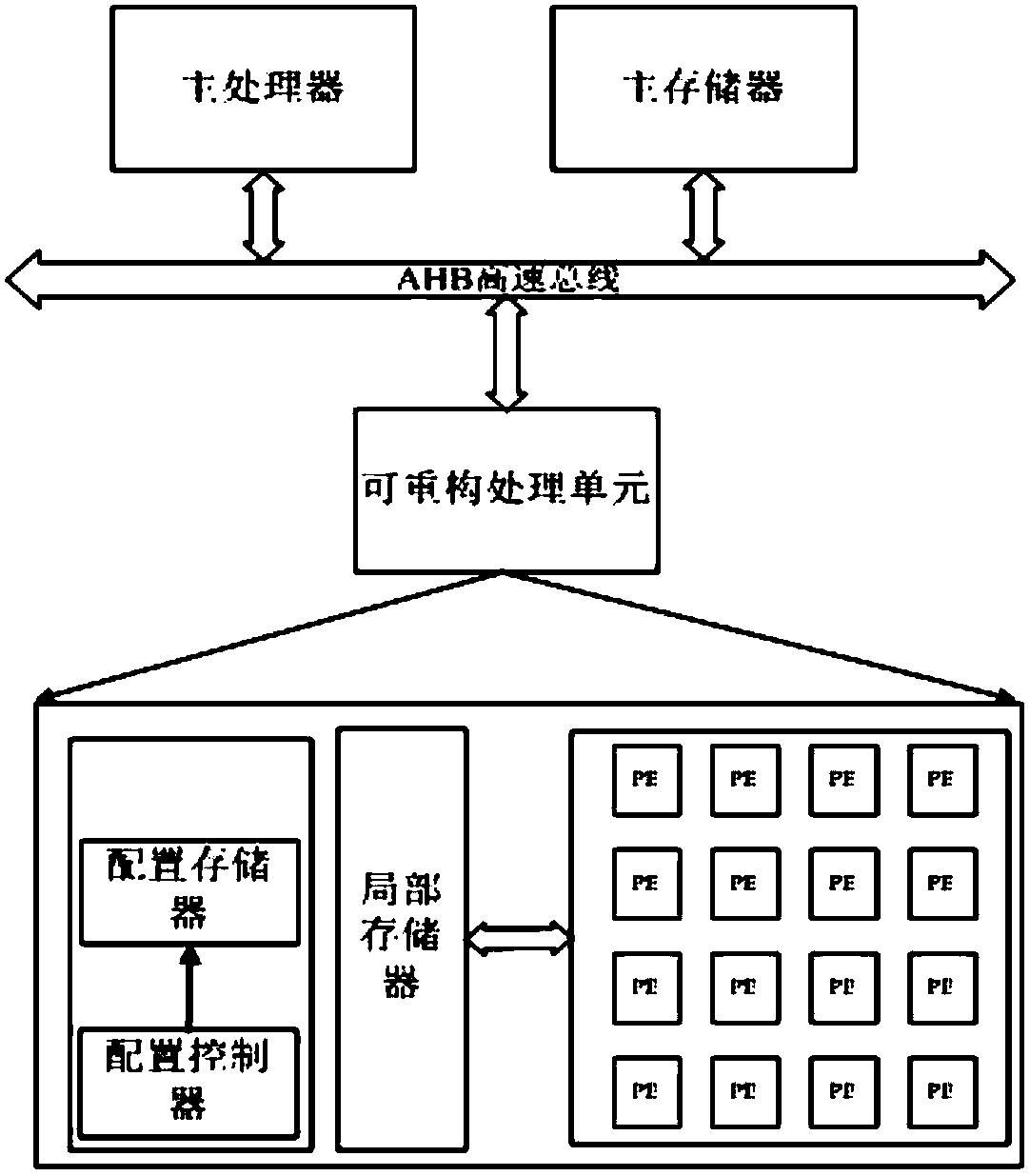

Method for implementing reconfigurable accelerator custom-built for program

InactiveCN101441564AHigh frequencyImprove performanceSpecific program execution arrangementsGeneral purposeGranularity

The invention discloses a method for realizing a reconfigurable accelerator customized for a program. The reconfigurable accelerator customized for the program accelerates the program on an FPGA by arranging the FPGA for the prior general-purpose computer system. The method has a main function of analyzing the program, uses functions to calculate information for the runtime of a granularity sampling program, acquires computing-intensive hot spot functions in the program, realizes the hot spot functions as the reconfigurable accelerator on the FPGA, and modifies call of the hot spot functions in the program into call of the corresponding reconfigurable accelerator to accelerate execution of the hot spot functions. The method uses the reconfigurable accelerator to realize the hot spot functions of the program, improves the total speed-up ratio of the program, uses the FPGA to realize the reconfigurable accelerator, achieves the performance of an approximately applied custom integrated circuit, and simultaneously maintains the flexibility of a general-purpose processor.

Owner:ZHEJIANG UNIV

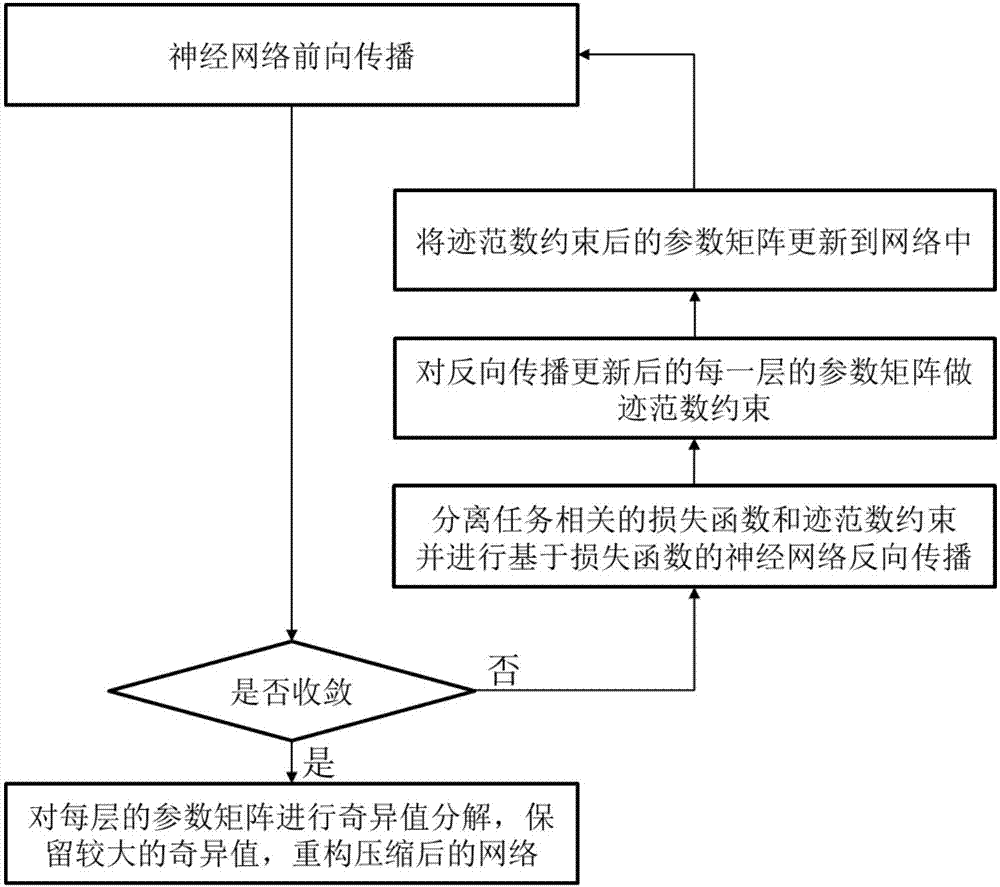

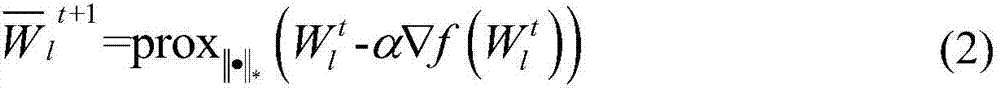

Neural network acceleration and compression method based on trace norm constraints

InactiveCN107967516AGood acceleration and compressionSave storage spaceNeural learning methodsSingular value decompositionPhases of clinical research

The invention discloses a neural network acceleration and compression method based on trace norm constraints, which comprises the following steps: forward propagation of the neural network is carriedout, and whether to converge is judged; a task-related loss function and trace norm constraints are separated, and neural network back propagation based on the loss function is carried out; trace normconstraints are carried out on each layer of parameter matrix after updating of the back propagation; the parameter matrix after the trace norm constraints is updated to the network; and after the training process of the neural network converges, the final parameter matrix is subjected to singular value decomposition, and the neural network is compressed and reconstructed. Through improving the low rank characteristics of model parameters during a model training stage, good acceleration and compression effects are obtained, the applicability is wide, the precision is not influenced, and the needs of reducing storage space and improving the processing speed in practical applications can be met.

Owner:SEETATECH BEIJING TECH CO LTD

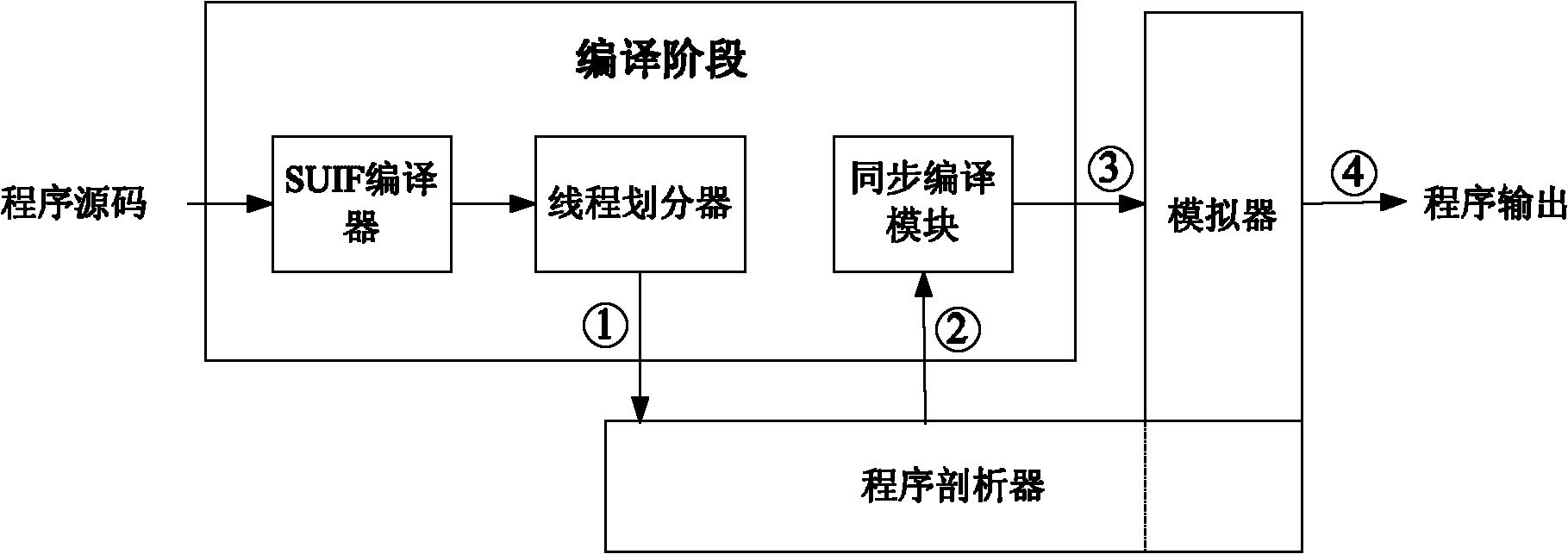

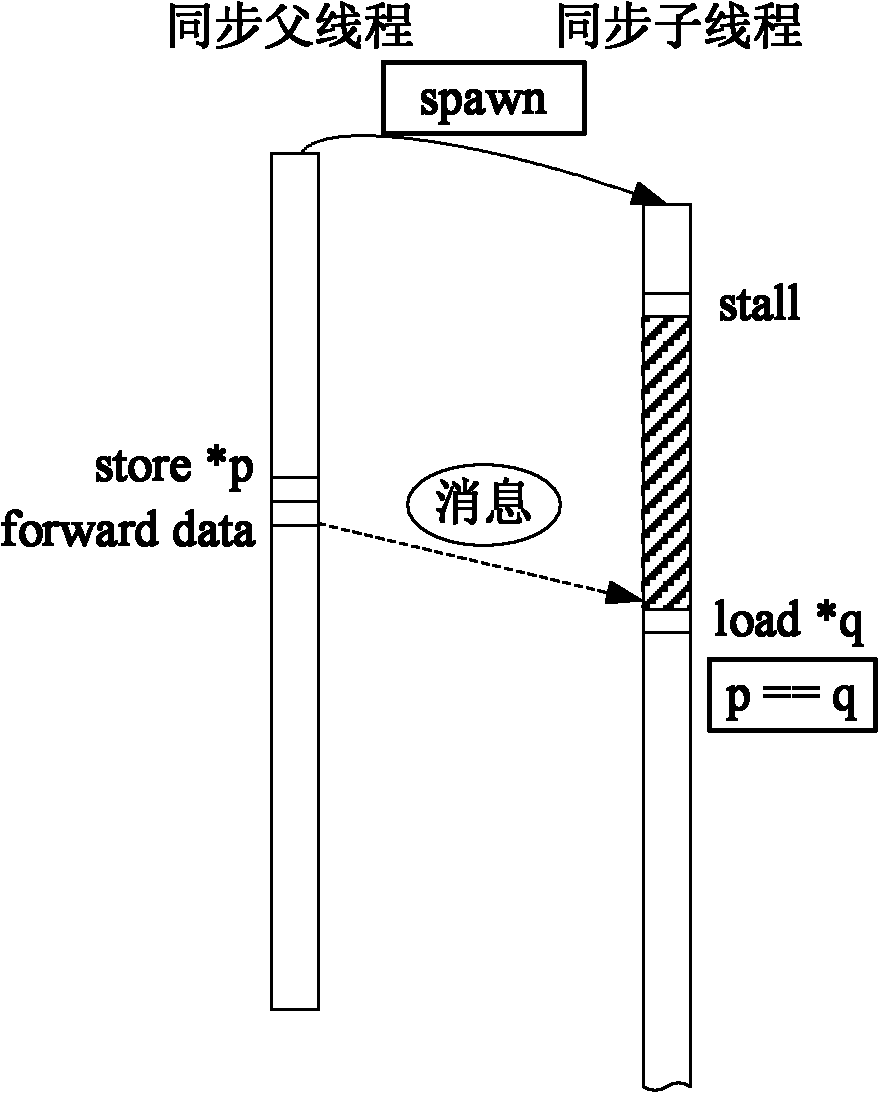

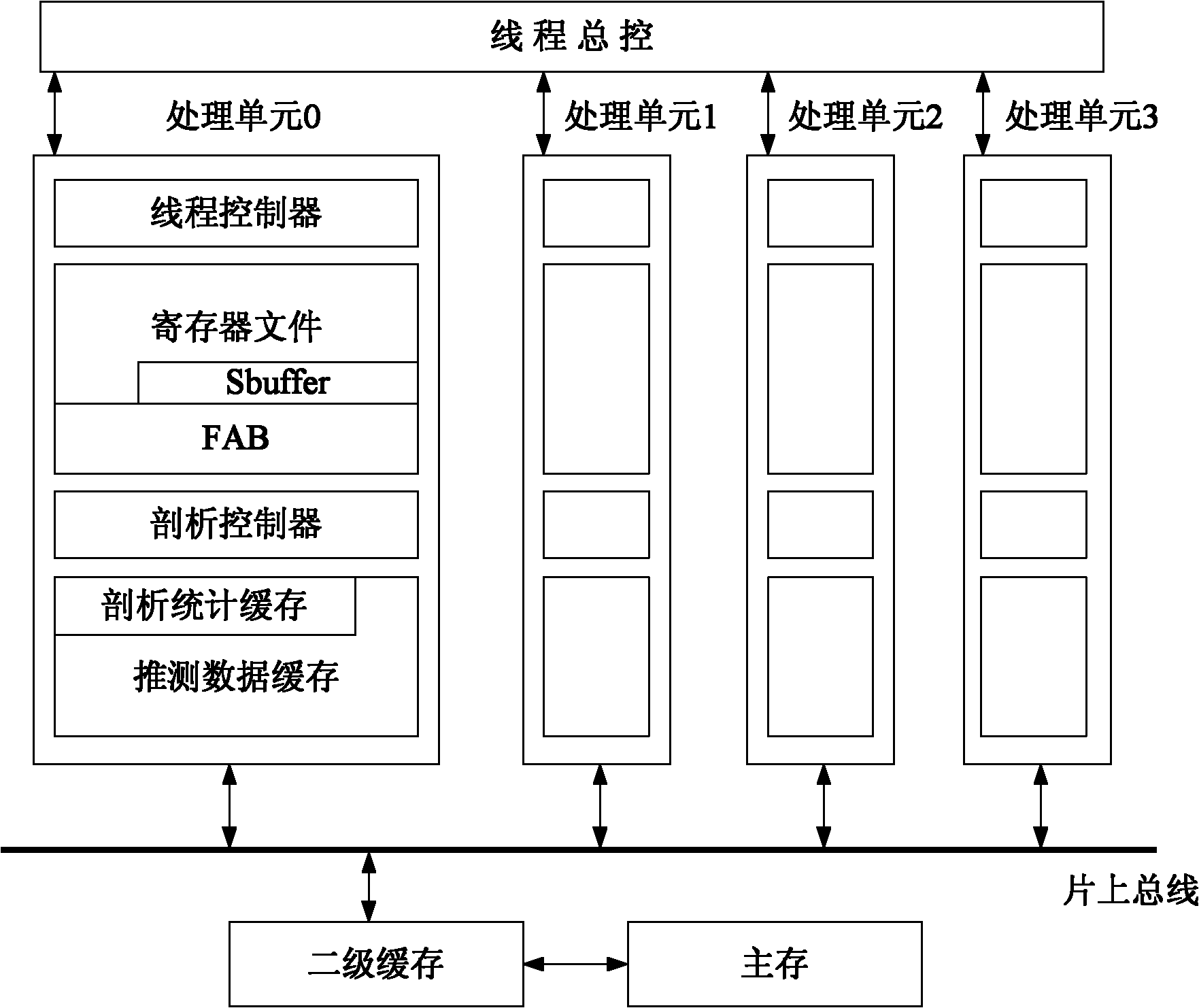

Speculative multithreading memory data synchronous execution method under support of compiler and device thereof

InactiveCN101833440AHigh speedupReduce the number of timesConcurrent instruction executionData synchronizationSpeculative execution

The invention discloses a speculative multithreading memory data synchronous execution method under the support of a compiler and a device thereof, which can synchronize selected read / write memory instructions when a program is operated, reduce the frequency of read / write data dependency violation and improve the integral speedup ratio of multithreading synchronous operation. The method comprisesthe following steps of: adding a stall instruction before a read instruction after a candidate read / write instruction pair is obtained, replacing the read instruction into a synchronous read instruction synload, adding one or more forward instructions behind a write instruction and adding a synset instruction behind a thread initiating instruction spawn of a thread in which the write instruction is positioned; finally operating on a simulator after an executable file generated through compilation linking is loaded; and speculatively executing a multithreading program in a synchronous mode to obtain an operation result and a higher speedup ratio.

Owner:XI AN JIAOTONG UNIV

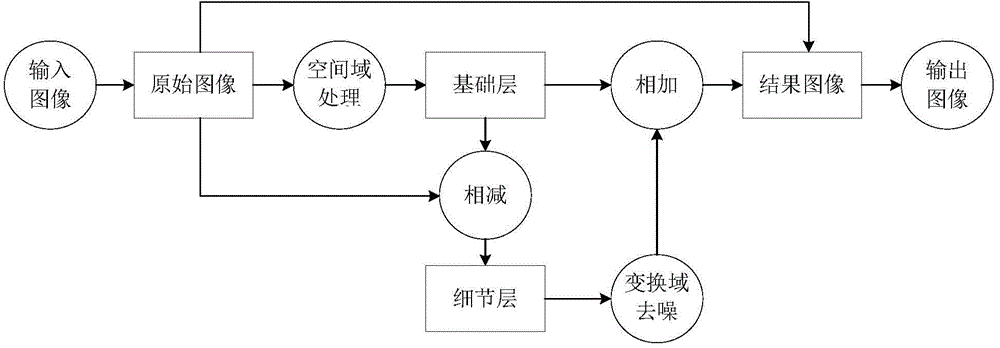

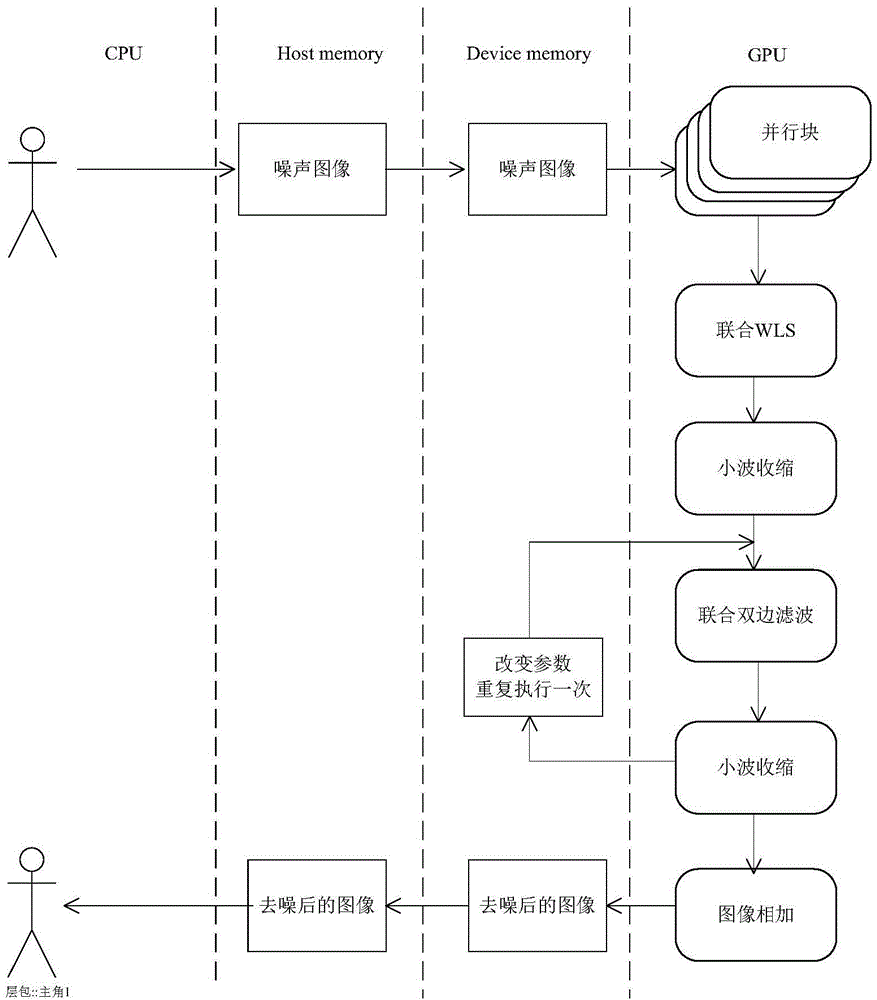

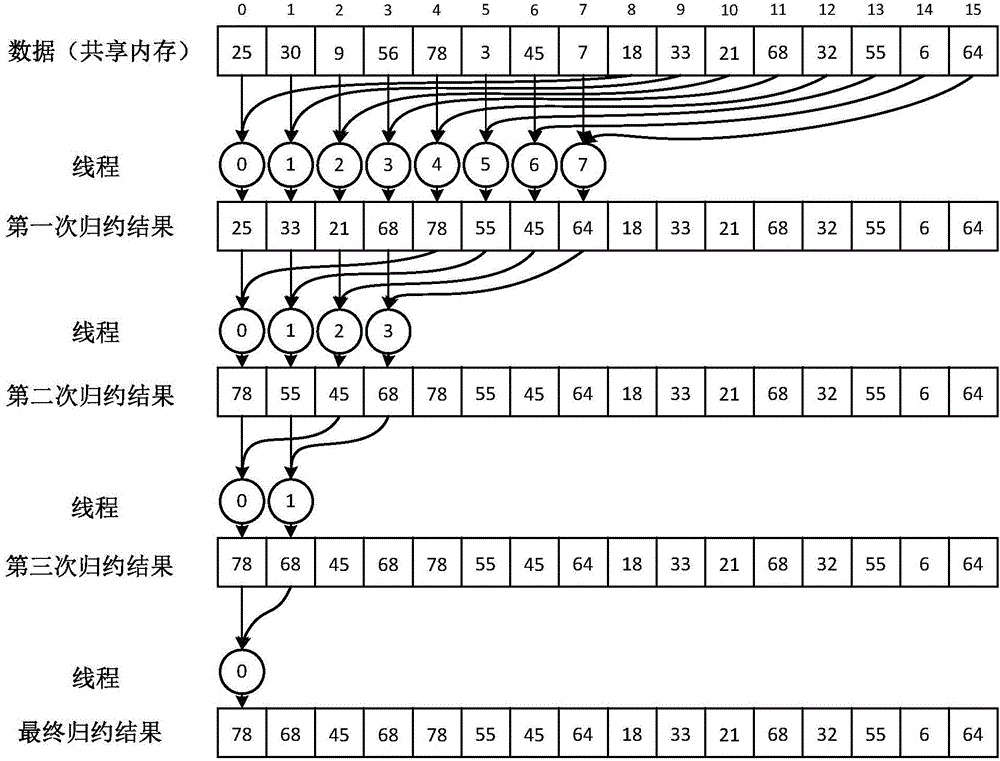

OpenCL-based parallel optimization method of image de-noising algorithm

The invention discloses an openCL-based parallel optimization method of an image de-noising algorithm. According to an idea of image layering, an image is divided into a high-contrast base layer and a low-contrast detail layer by using a combined dual-side filtering algorithm and a combined WLS algorithm; de-noising processing is carried out on the detail layer by using stockham FF; and then image restoring is carried out by changing frequency spectrum contraction and image adding methods, thereby realizing the de-noising effect. According to the invention, on the basis of characteristics of large execution function processing data volume and high data parallelism of the base layer obtaining and detail layer de-noising processing, the openCL platform model is used and parallel calculation of the image de-noising algorithm is realized on GPU; and then details of the calculation process are modified, wherein the modification processing contains local internal memory usage, proper working team size selection, and parallel reduction usage and the like. The speed-up ratio of the de-noising algorithm that is realized finally can reach over 30 times; and thus the practicability of the algorithm can be substantially improved.

Owner:XIDIAN UNIV

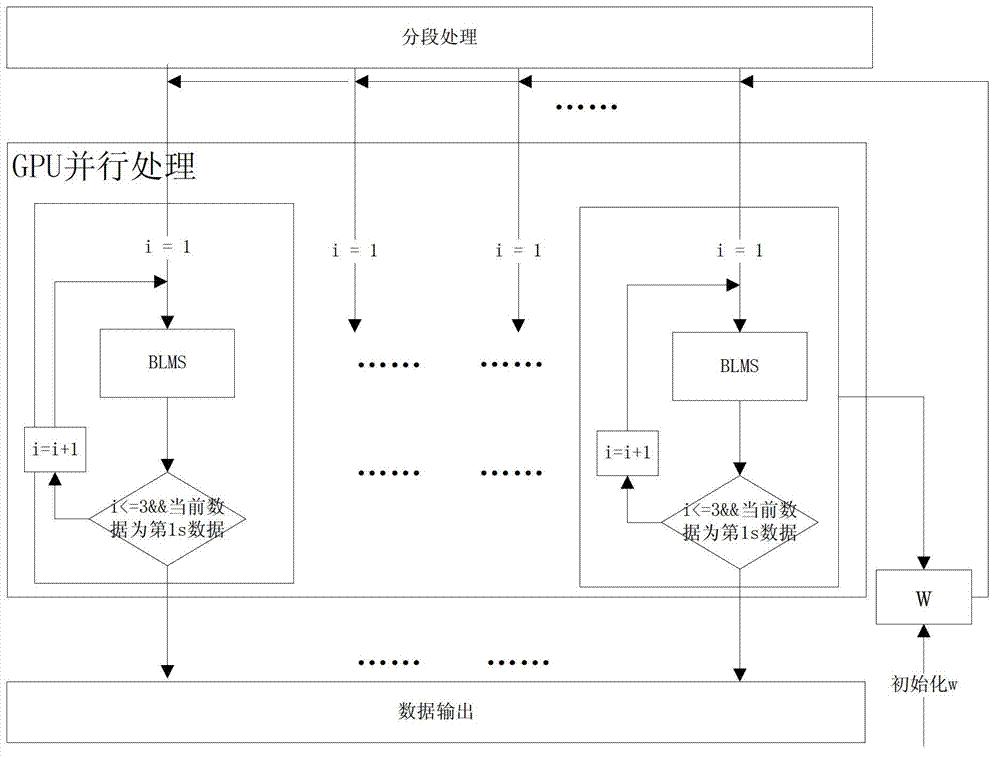

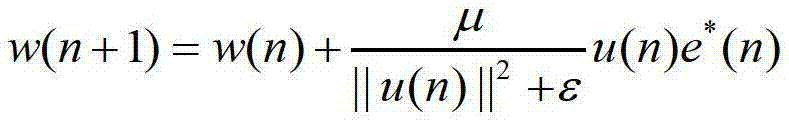

Real-time processing method for cancellation of direct wave and clutter of external radiation source radar based on graphic processing unit (GPU)

ActiveCN103197300ASimple structureEasy to handleWave based measurement systemsComputational scienceWeight coefficient

The invention provides a real-time processing method for cancellation of direct wave and clutter of an external radiation source radar on a graphic processing unit (GPU), a high-efficiency parallel processing process is built, and the real-time processing capacity of an algorithm is improved. The method comprises the steps: (1), conducting parallel processing on signal in a section mode, and sectioning a signal to be processed in T seconds; (2) adopting a BLMS method to conduct cancellation processing on the signal of each section, wherein the signal of each section is parallel processed through the step (1); (3) adopting the GPU to conduct parallel processing on the signal, processed through the step (1), of each section, conducting processing on data in each section through a fast Fourier transform (FFT) algorithm in a multithreading mode, removing an overlapping region on an output result, conducting splicing, at the same time, outputting a filter coefficient of a last block of data, and serving the filter coefficient as an initial weight coefficient of subsequent data processing; and (4) serving an iterative finally-obtained filter coefficient w (n) as an initial filter weight value of data in a next second, namely, iterating the data of a first section for K times, and processing the subsequent data only once.

Owner:INST OF ELECTRONICS CHINESE ACAD OF SCI

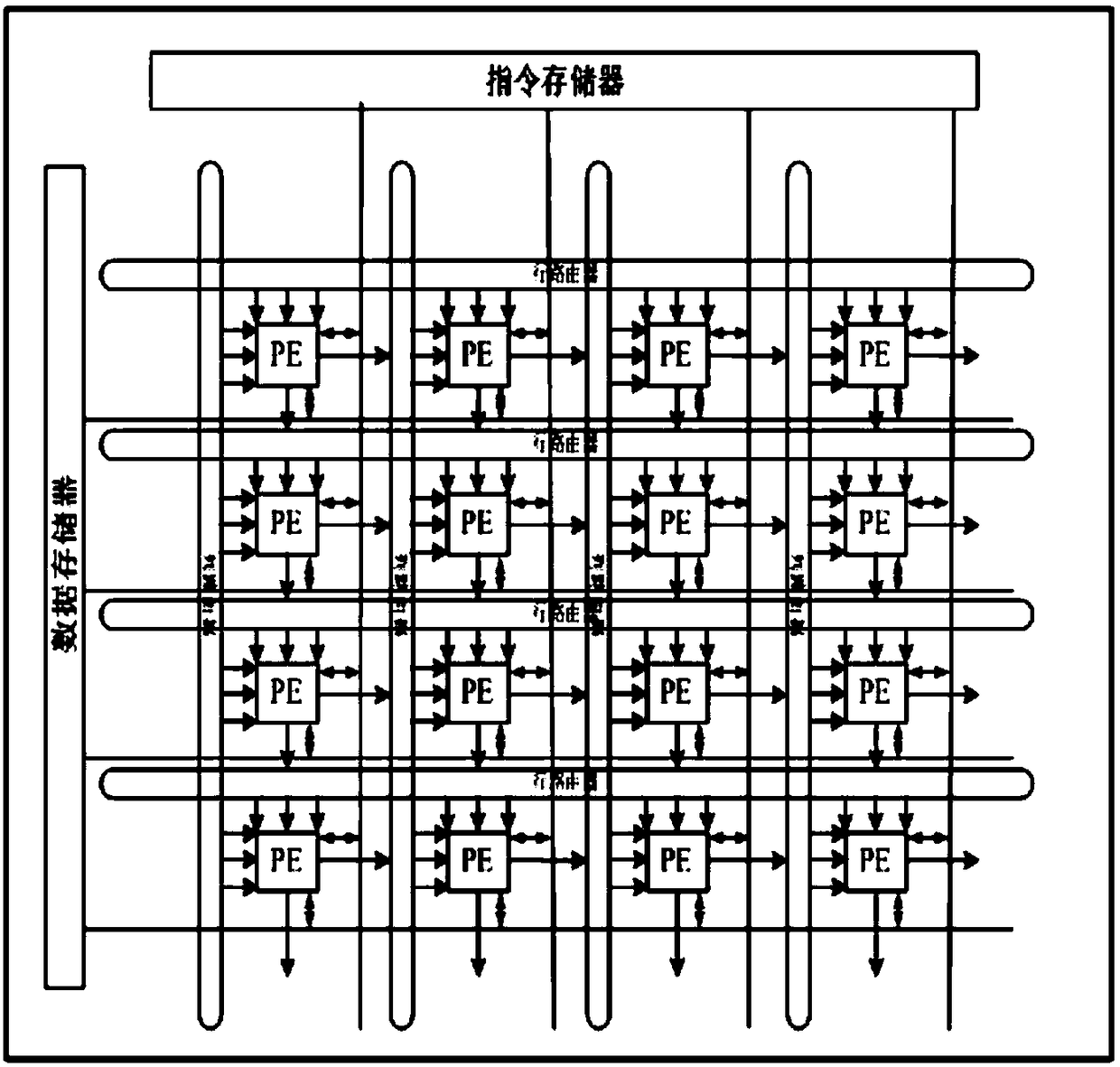

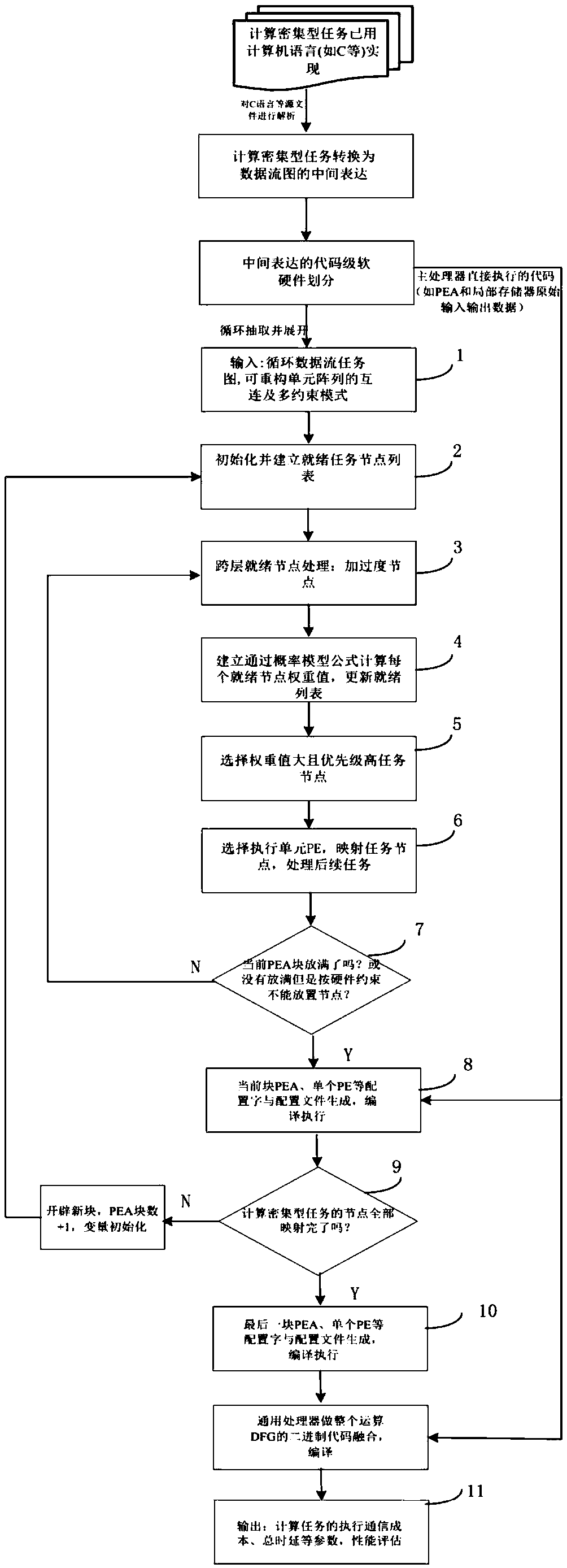

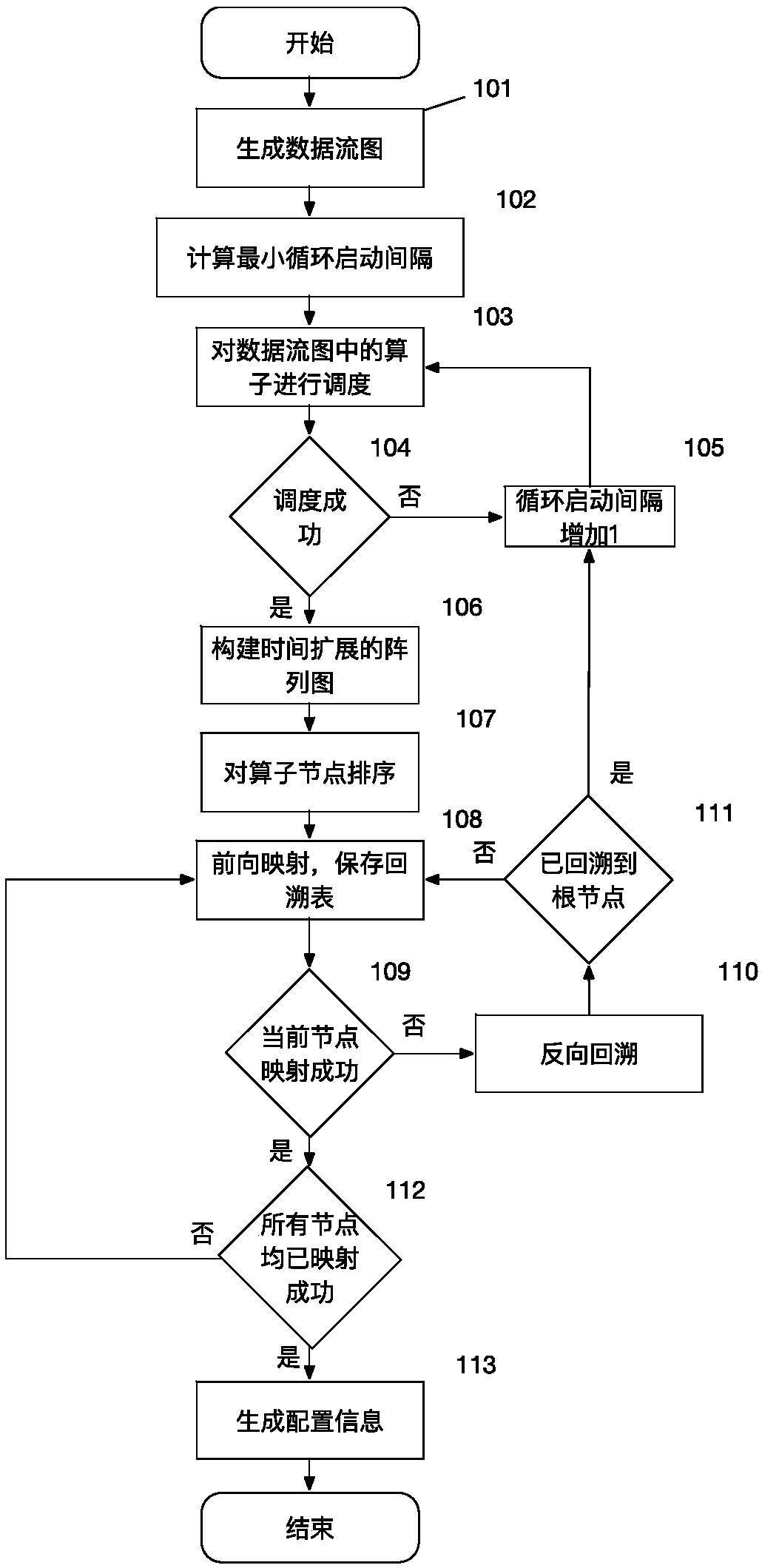

A multi-objective optimization automatic mapping scheduling method for row-column parallel coarse-grained reconfigurable arrays

ActiveCN109144702AHigh speedupEasy to optimizeProgram initiation/switchingData streamResource utilization

The invention discloses a method for multi-objective optimization automatic map scheduling of row-column parallel coarse-grained reconfigurable arrays. Computing-intensive tasks are described in codesuch as C, which is translated into an intermediate representation of the data flow graph by semantic parsing, and then hardware and software are divided at the code level, the platform information such as the interconnection of the reconfigurable cell arrays and the scale constraint and the task set of the reconfigurable data flow are inputted through the core loop tool software to initialize theready task queue, the ready cross-layer and misaligned tasks are then removed, the priority of the operation nodes is calculated, and the execution units are selected to map one by one. The scenariois based on tightness dependencies between task nodes, a solution is given under the conditions of the parallelism of task nodes, which effectively solves the problems of high communication cost between computing arrays, ineffective integration of execution time extension and task scheduling in traditional methods, and achieves higher acceleration ratio, lower configuration cost and higher resource utilization ratio of reconfigurable units.

Owner:LANZHOU UNIVERSITY

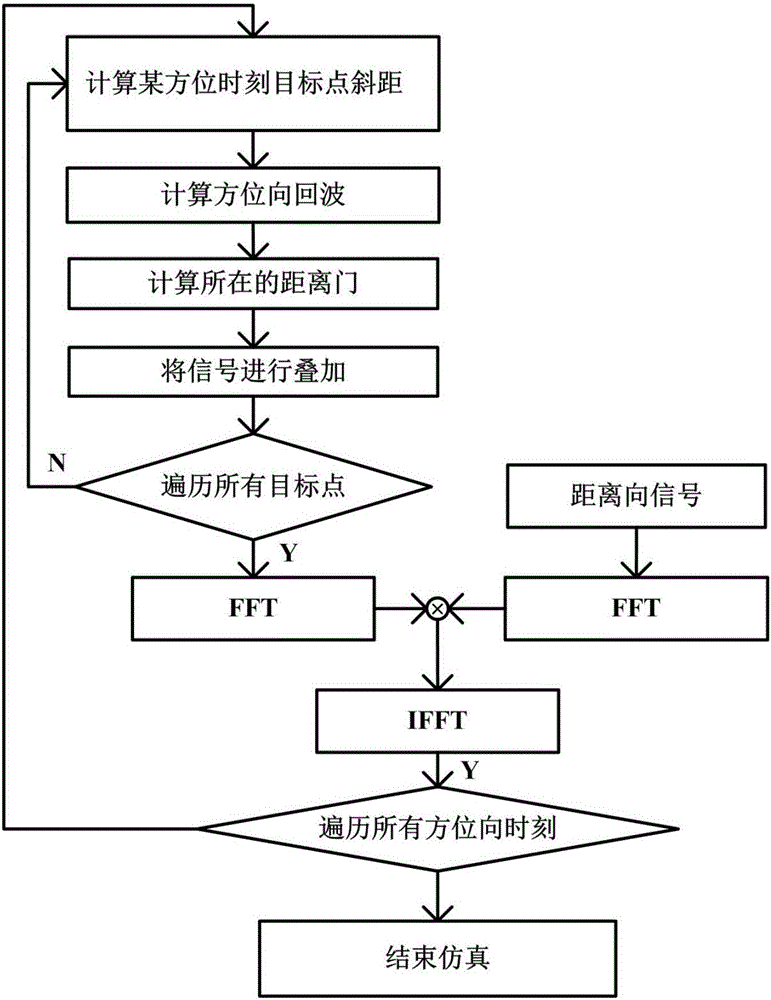

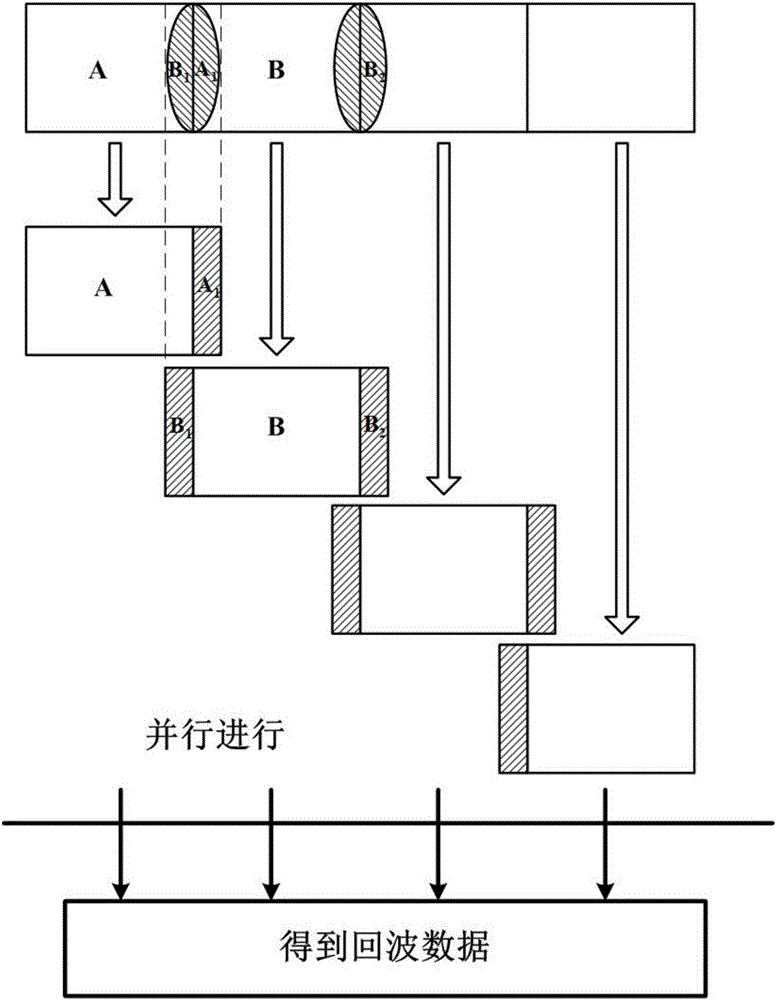

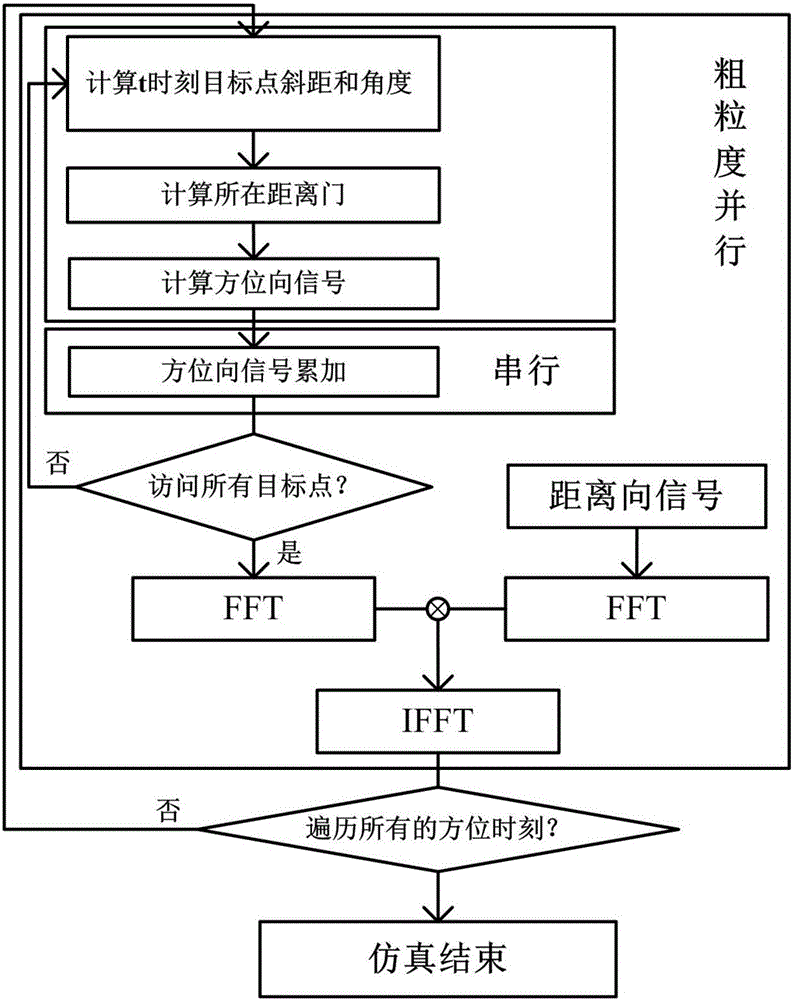

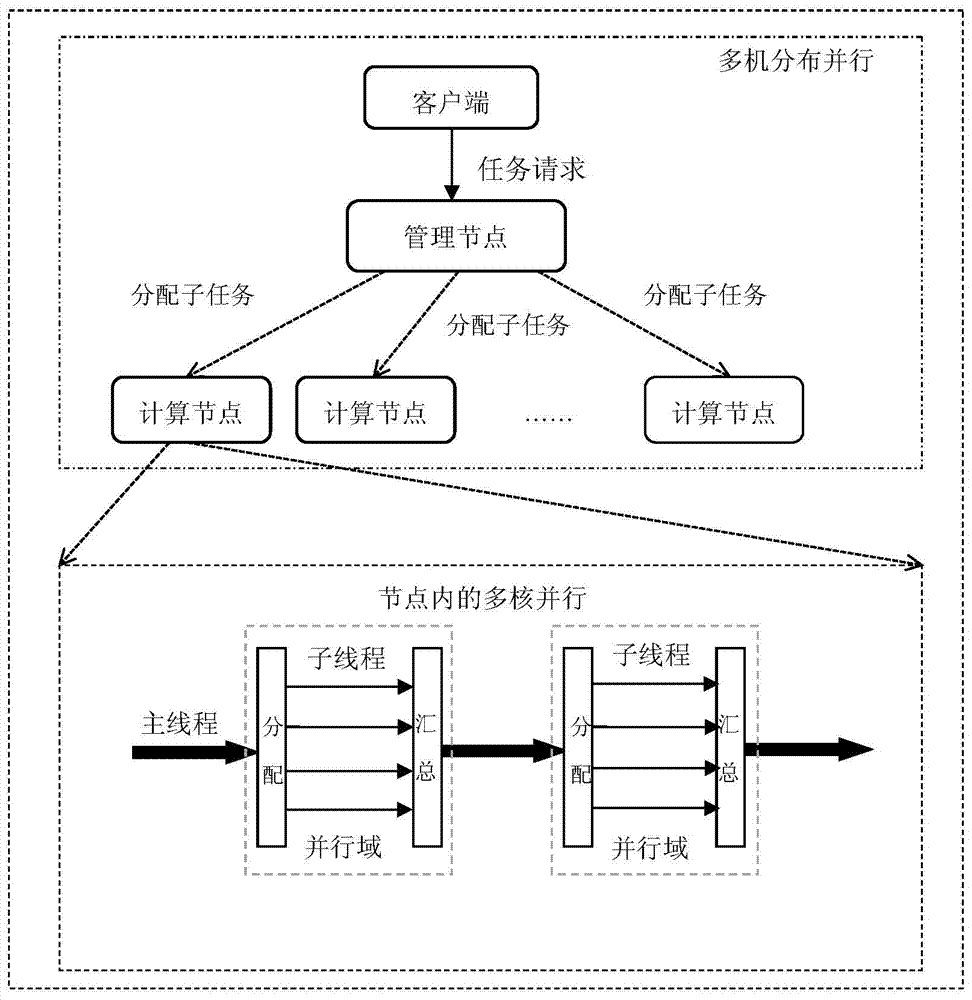

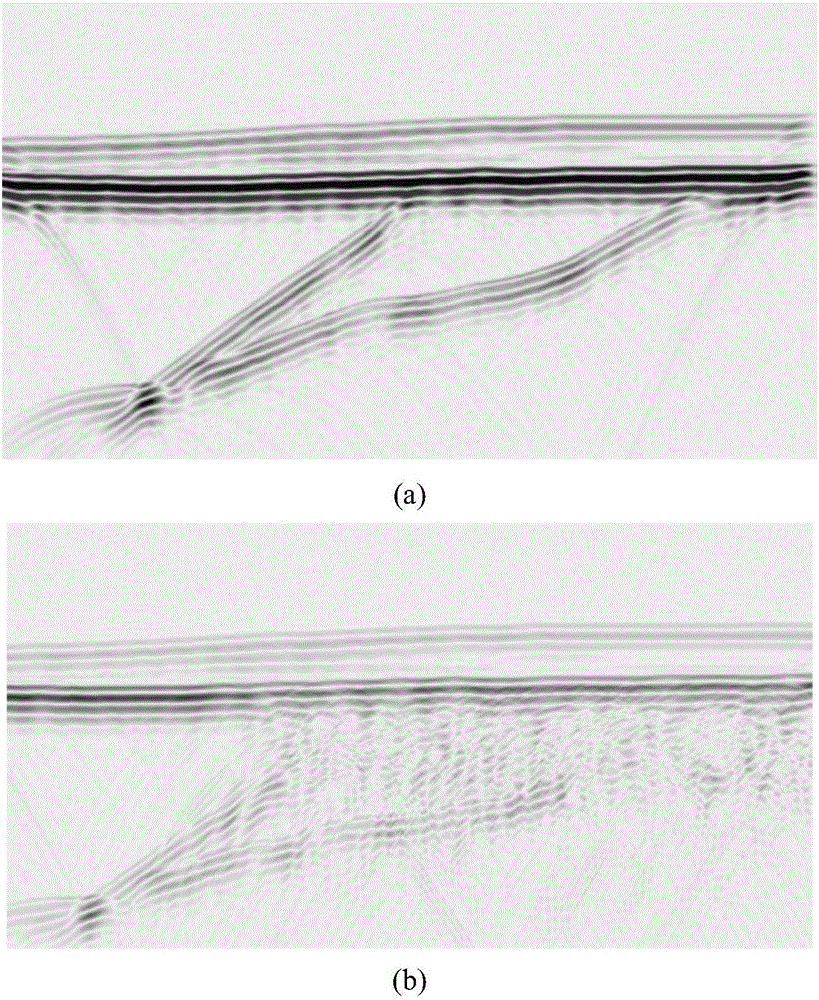

Synthetic aperture radar echo parallel simulation method based on depth cooperation

ActiveCN105911532AOptimize co-processing modeCollaborative processing mode is obviousRadio wave reradiation/reflectionSynthetic aperture radarRadar

The invention discloses a synthetic aperture radar echo parallel simulation method based on depth cooperation, belonging to the technical field of synthetic aperture radar application. Under the traditional CPU+GPU heterogeneous computing mode, generally CPU refers to operation with high processing logicality, while GPU refers to computing which is used for processing relatively dense data and is suitable for parallel operations. The invention takes an example by a related satellite-borne SAR echo simulation parallel simulation algorithm on the basis of fully researching related articles of SAR rapid echo simulation at home and abroad, and provides a satellite-borne SAR rapid echo simulation algorithm based on SIMD heterogeneous parallel processing, the SAR echo simulation process is accelerated in parallel by virtue of depth cooperation of multi-core vector expanded CPU / many-core GPU, optimization of redundant computation and parallel depth optimization aiming at irregular problem computing in the SAR echo process are performed on the basis. The experimental result proves that compared with the traditional serial computing method, the optimized CPU / GPU heterogeneous cooperation mode has the advantage that the computational efficiency can be improved by 2-3 order of magnitudes.

Owner:BEIJING UNIV OF CHEM TECH

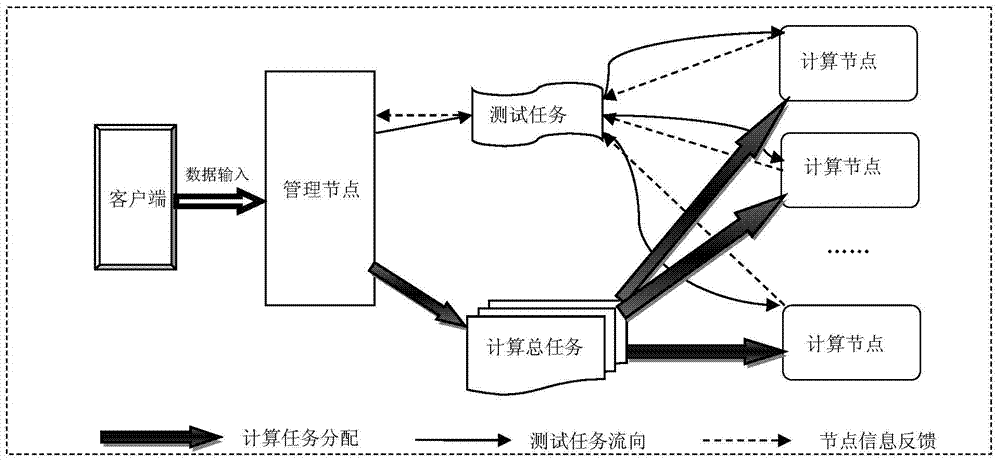

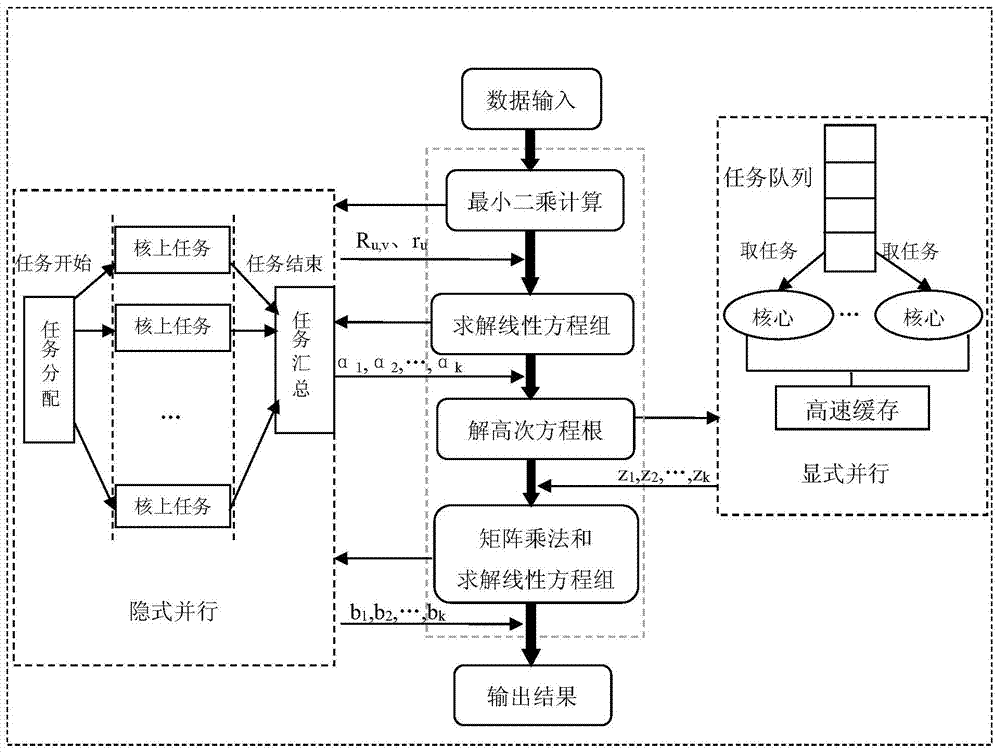

Double parallel computing-based on-line Prony analysis method

ActiveCN104504257AImprove resource utilization and computing efficiencyCalculation speedSpecial data processing applicationsHigh performance computationPower grid

The invention discloses a double parallel computing-based on-line Prony analysis method, and relates to the field of dispatching automaton of an electric power system and the field of high-performance computing of a computer. According to the method, distributed parallel processing of multiple computing nodes is provided aiming at the defects of low resource utilization rate and low computing speed due to adoption of a conventional serial Prony-based algorithm in oscillation parameter identification under the condition of low-frequency oscillation of a large-scale power grid, so task scheduling and load balancing are effectively performed, and the response time of the system is greatly reduced. Parallel design on a Prony mathematical model is realized for the first time; multi-thread parallel computing of Prony is realized by using a multi-thread parallel computing technology. According to the method, on-line identification of parameters such as oscillation amplitude, frequency, initial phase and an attenuation factor of multiple branches and multiple electrical quantities of the power grid can be synchronously realized, the computing and analysis speed is effectively improved, and the requirement on synchronous on-line computing of the large-scale power grid can be met better.

Owner:STATE GRID CORP OF CHINA +1

Data processing method, data processing device and electronic device

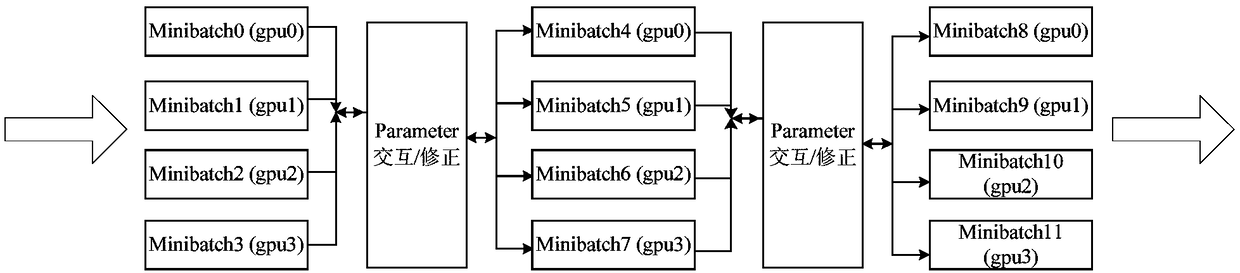

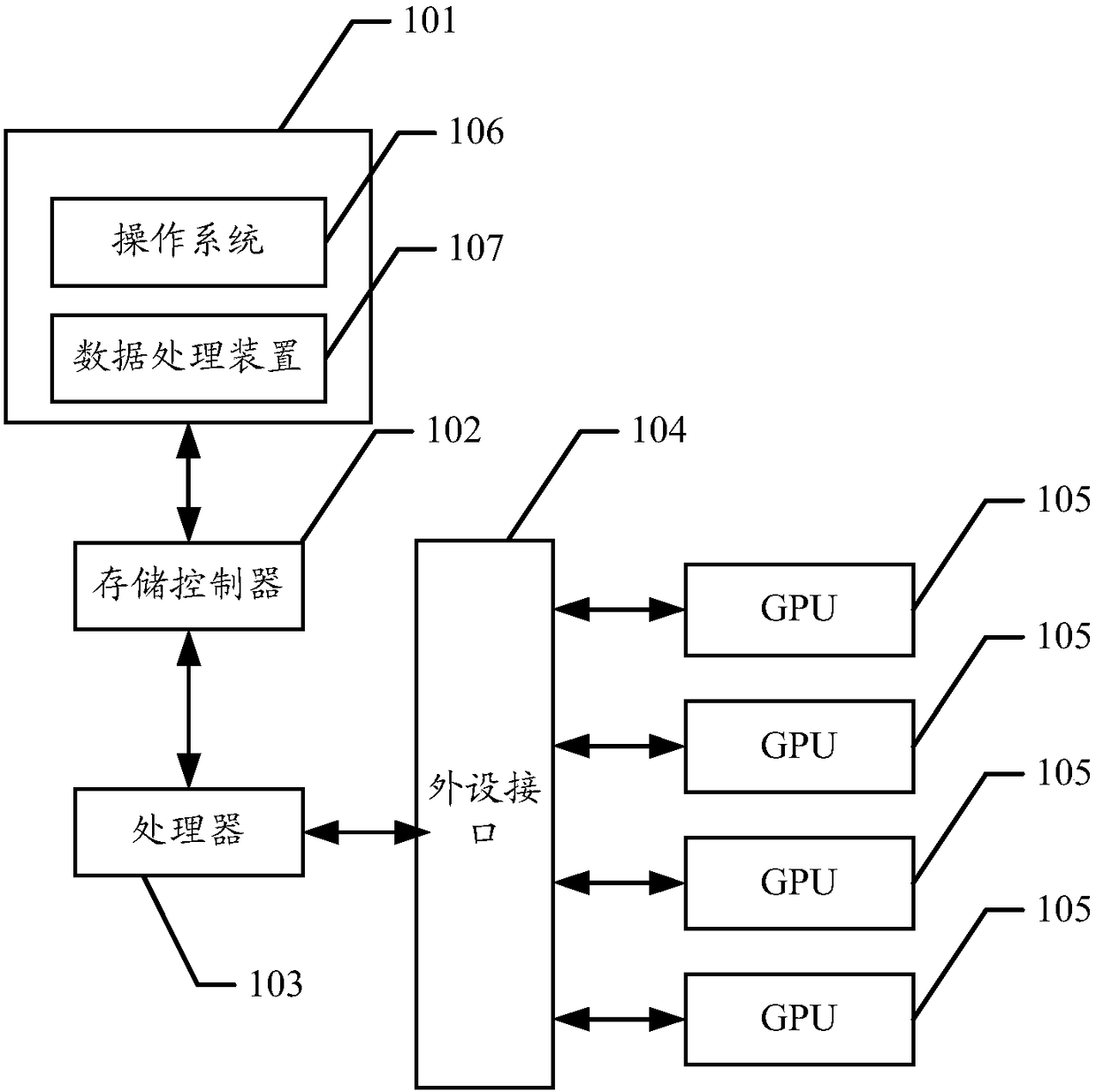

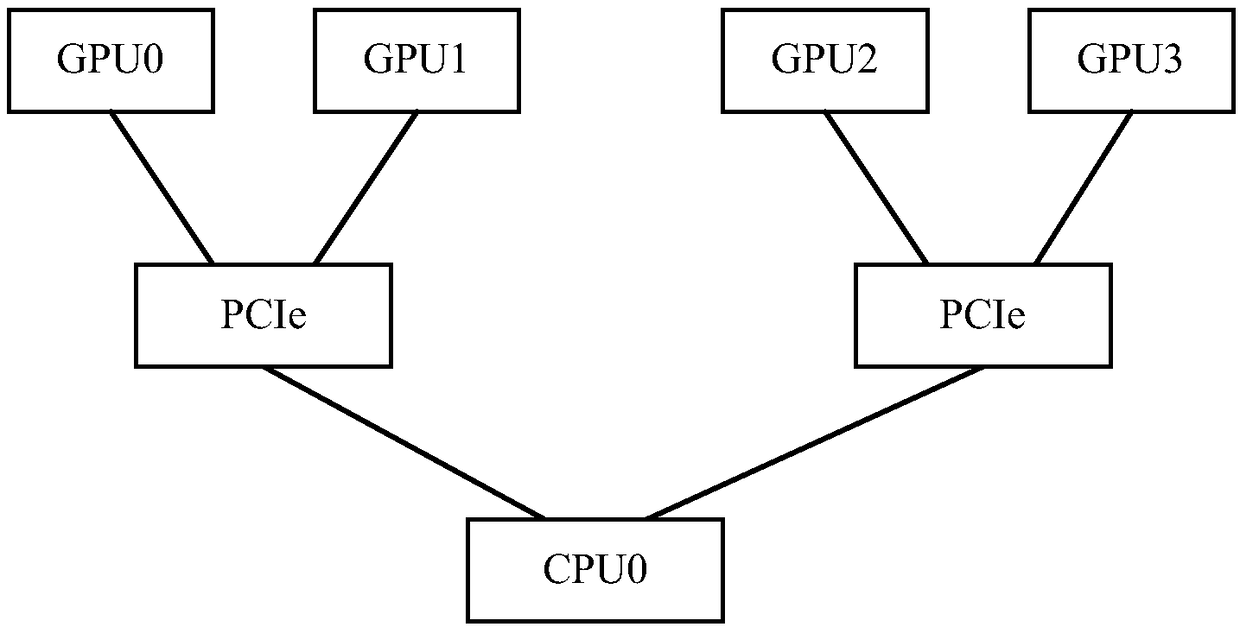

ActiveCN108229687AReduce waiting timeShorten the timeMathematical modelsEnsemble learningData setElectric equipment

The invention discloses a data processing method. The method includes loading different training data set to a plurality of GPUs; controlling the GPUs to run in parallel so as to the received trainingdata sets, and storing modification parameters generated in a reveries process to corresponding display memories; in a process of controlling the GPUs to run in parallel so as to train the received training data set, controlling the GPUs to perform exchange treatment on the modification parameters generated through training and not subjected to exchanging. According to the invention, when the GPUs implement training of the training data sets, a part of modification parameters generated in training of each GPU is exchanged to other GPUs, so that waiting time of the GPUs can be shortened, timeconsumed in each round can be shorted, and further time consumed in the whole depth learning training can be shortened. The equipment acceleration ratio is improved. The invention also discloses a corresponding data processing device and an electronic device.

Owner:TENCENT TECH (SHENZHEN) CO LTD

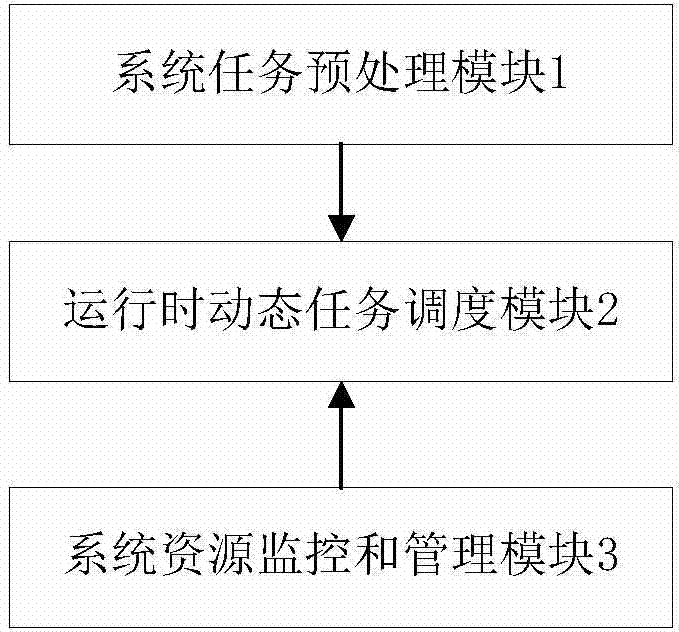

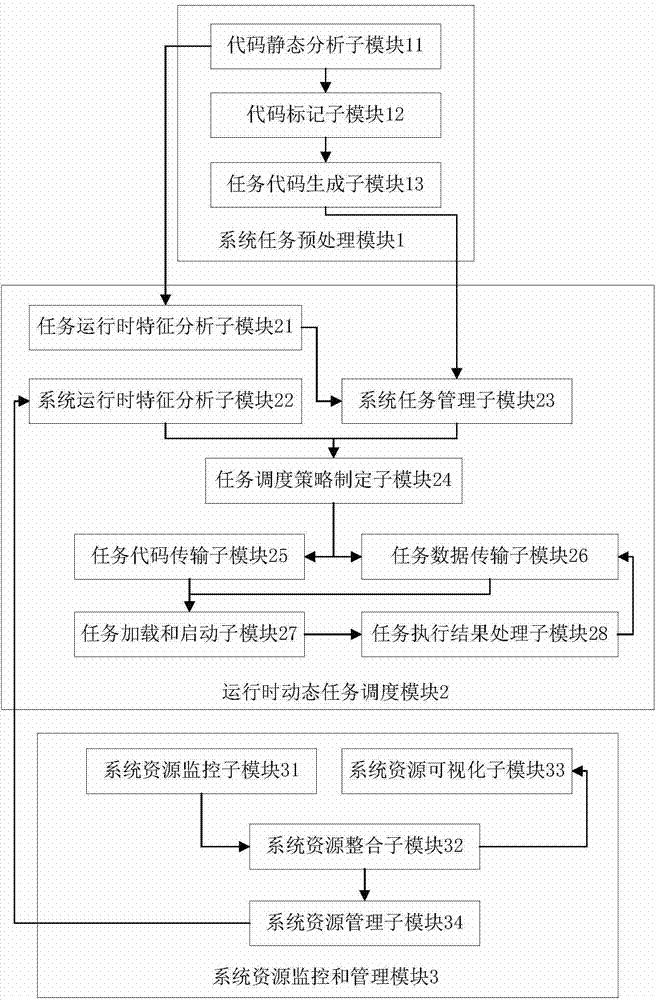

Multi-task runtime collaborative scheduling system under heterogeneous environment

ActiveCN103699432AReduce interventionReduce difficulty and task loadMultiprogramming arrangementsSystem of recordHigh energy

The invention discloses a multi-task runtime collaborative scheduling system under a heterogeneous environment. The system comprises a system task preprocessing module, a runtime dynamic task scheduling module and a system resource monitoring and managing module, wherein the system task preprocessing module is used for performing static analysis and marking on a code and generating a task code for performing collaborative scheduling by taking a thread as a unit; the system resource monitoring and managing module is used for monitoring, tidying and recording the use condition of system resources, and providing to the runtime dynamic task scheduling module for performing system runtime feature analysis after processing; the runtime dynamic task scheduling module is used for receiving and managing the task code generated by the system task preprocessing module as well as loading and executing the corresponding task according to the system runtime information received from the system resource monitoring and managing module. By implementing the multi-task runtime collaborative scheduling system, the existing program can be fast transferred to the multi-core-many-core heterogeneous environment under the condition of ensuring higher speed-up ratio, higher energy efficiency ratio and higher system resource utilization ratio.

Owner:HUAZHONG UNIV OF SCI & TECH

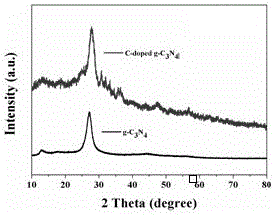

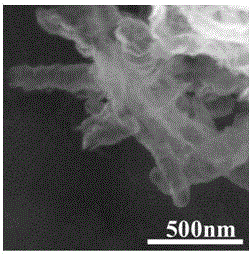

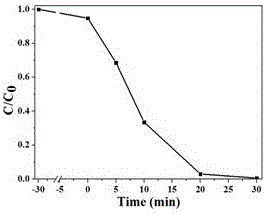

Carbon-doped graphite-phase carbon nitride nanotube and preparation method thereof

ActiveCN106744745AThin wallVarious shapesMaterial nanotechnologyNitrogen and non-metal compoundsPolystyrenePhotocatalytic degradation

The invention discloses a carbon-doped graphite-phase carbon nitride nanotube and a preparation method thereof. A preparation process of the carbon-doped graphite-phase carbon nitride nanotube comprises the steps of firstly, dispersing melamine into an ethanol solution of aminopropyl trimethoxysilane, carrying out a hydrothermal reaction, and then carrying out centrifugal drying to obtain solid powder; mixing (3-mercaptopropyl) trimethoxysilane with tetraethoxysilane and evenly stirring, adding a mixed solution of ethanol and water into the mixture and stirring again, and carrying out centrifugal separation to obtain a modified polystyrene (MPS)-modified SiO2 solution; adding the solid powder of the pretreated melamine into the MPS-modified SiO2 solution, stirring, then centrifuging, drying, and calcining to obtain a product; etching the product by using a hydrogen fluoride (HF) solution to obtain the carbon-doped graphite-phase carbon nitride nanotube. The preparation process of the carbon-doped graphite-phase carbon nitride nanotube is novel, convenient and fast, and high in controllability; the obtained nanotube has the advantages of being more uniform in size, thinner in tube wall, better in conductivity, excellent in photocatalytic property, and the like; the carbon-doped graphite-phase carbon nitride nanotube can be used for constructing multiple heterostructures, and has good application potential in the aspects of photocatalytic degradation of organic pollutants, water photolysis for hydrogen production, and the like.

Owner:UNIV OF JINAN

Convolutional neural network channel self-selection compression and acceleration method based on knowledge migration

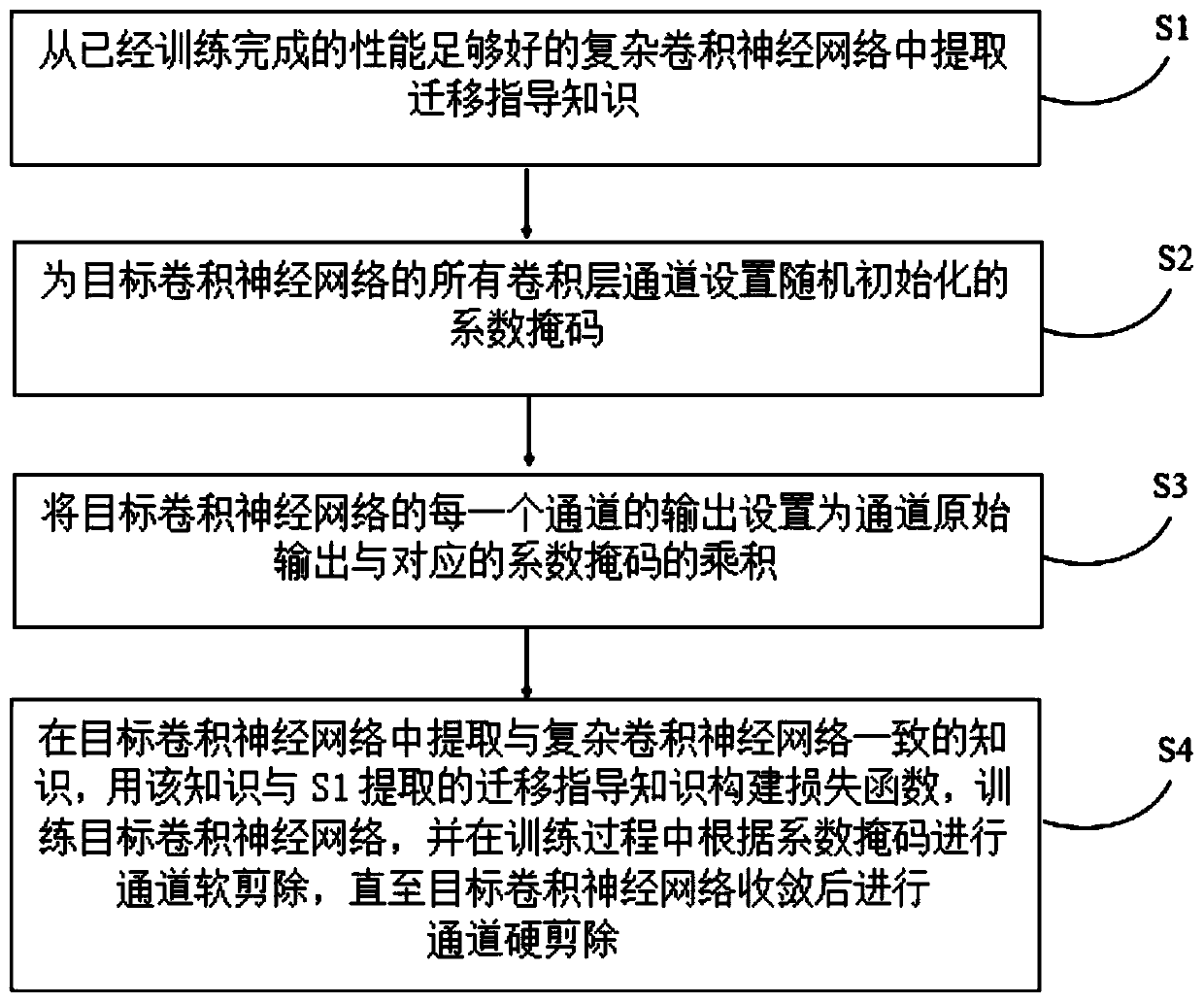

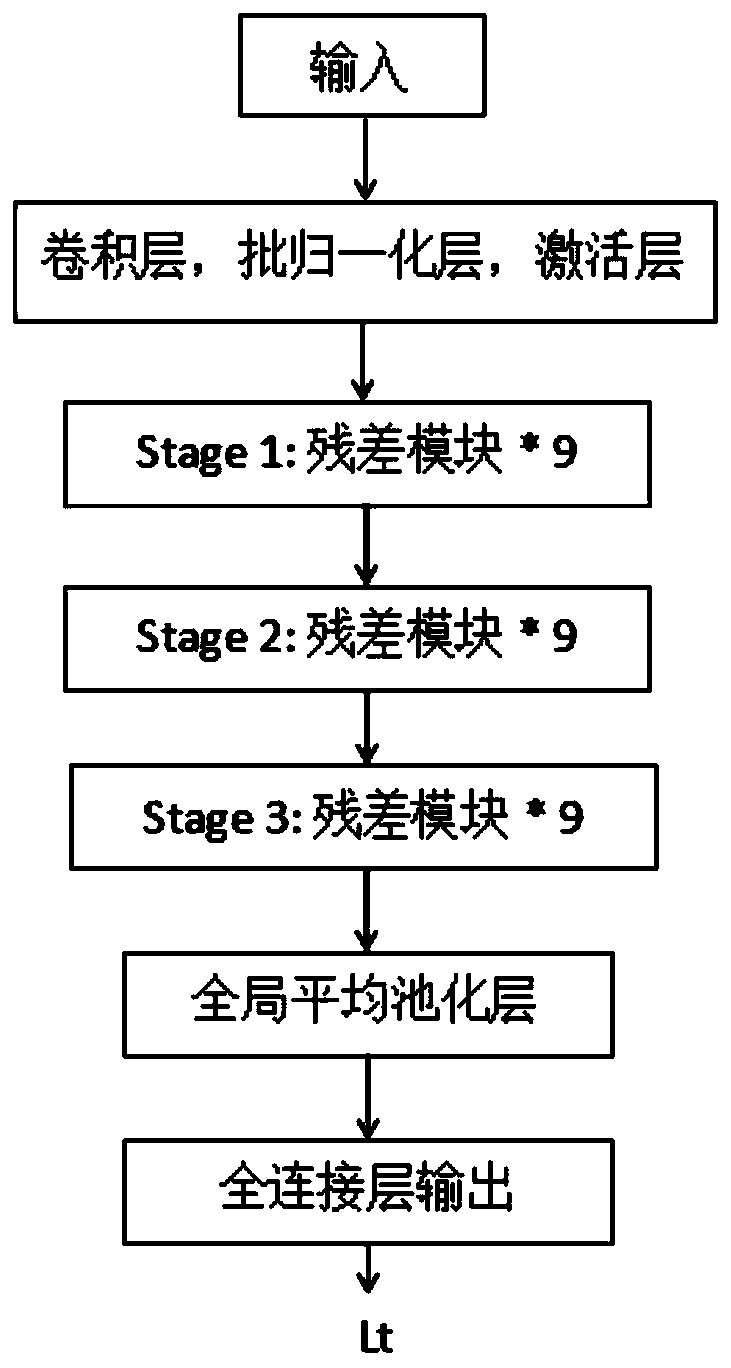

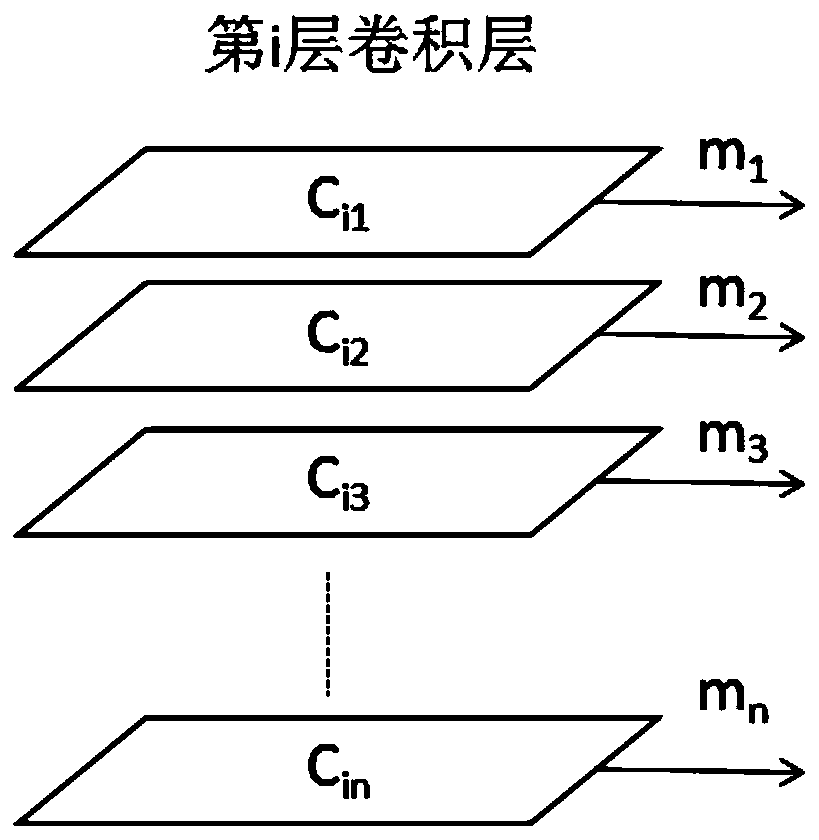

InactiveCN109993302AHigh compression ratio and speed-up ratioReserved expression capacityNeural architecturesNeural learning methodsConvolutionCompression ratio

The invention discloses a convolutional neural network channel self-selection compression and acceleration method based on knowledge migration. The method comprises the following steps: S1, extractingmigration guidance knowledge from a trained complex convolutional neural network CN1; S2, setting randomly initialized coefficient masks for all convolutional layer channels of the target convolutional neural network ON1; S3, setting the output of each channel of the target network as a product of the original output of the channel and the corresponding coefficient mask; and S4, optimizing the target network under the guidance of the migration guidance knowledge, carrying out channel soft cutting according to the coefficient mask, and carrying out channel hard cutting until the target networkconverges. According to the method, the network can automatically select the channel to be cut off, manual selection operation is omitted, the expression capacity of the network is reserved and the generalization performance is improved through soft cut-off operation and a knowledge migration method, so that a higher compression ratio and a higher acceleration ratio are realized.

Owner:SOUTH CHINA UNIV OF TECH

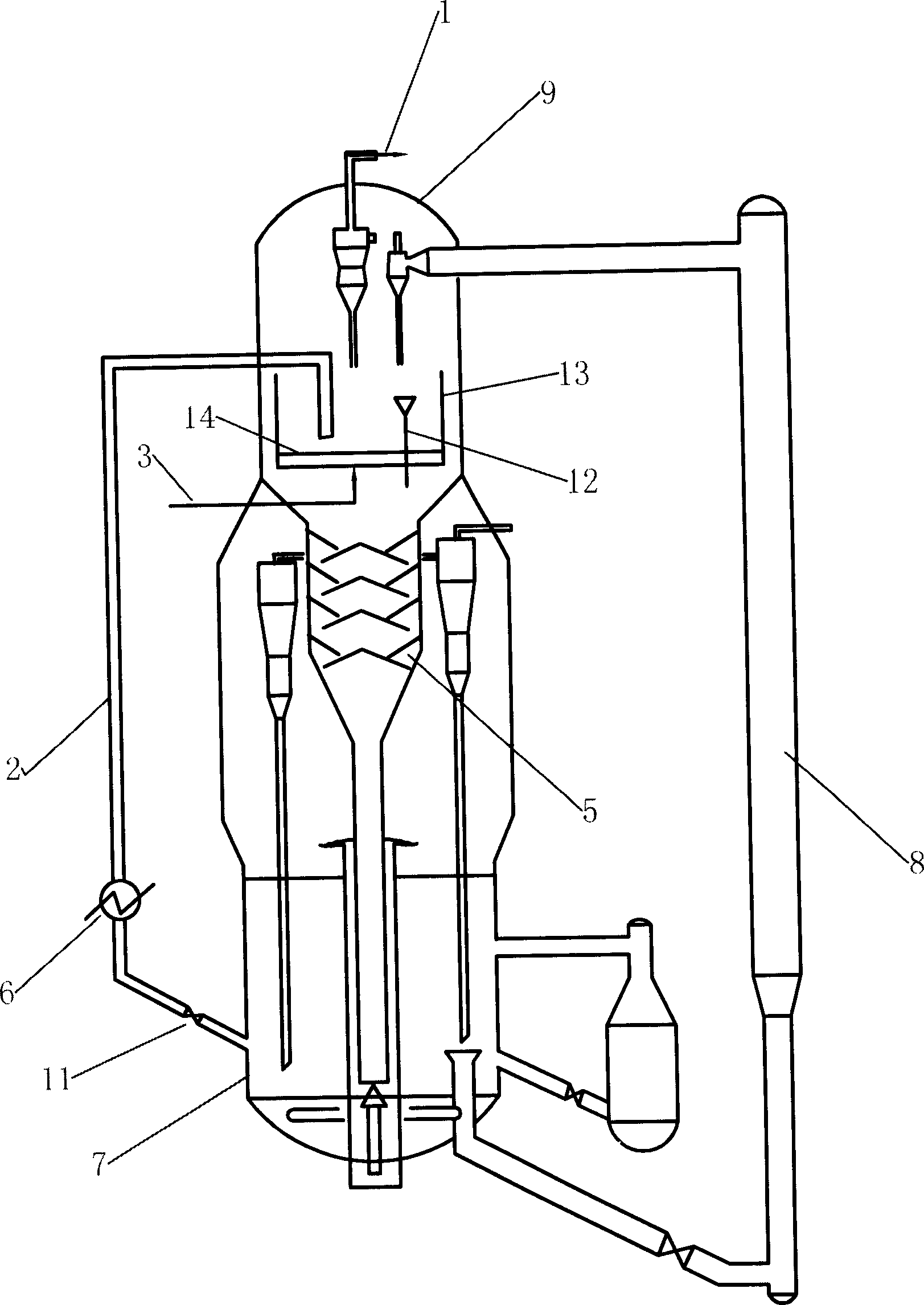

Method and apparatus for catalytic modification of poor gasoline

InactiveCN1470605AReduce sulfur contentVolume content reductionCatalytic crackingGasolineLower grade

The present invention discloes a method for catalytic upgrading low-grade gasoline, said method include a fluidized catalytic cracking process, and is characterized by that the partial regenerated catalyst is led out from regenerator, and passed through catalyst coveying pipe and injected into the bed reactor over stripper zone of the settler, and the low-grade gasoline is fed into the bed reactor and contacted with catalyst bed to implement catalytic upgrading treatment of low-grade gasoline. The olefine volume content of the upgraded gasoline can be reduced by 20-50 %, and sulfur content can be reduced by 10-35%.

Owner:CHINA PETROCHEMICAL CORP +1

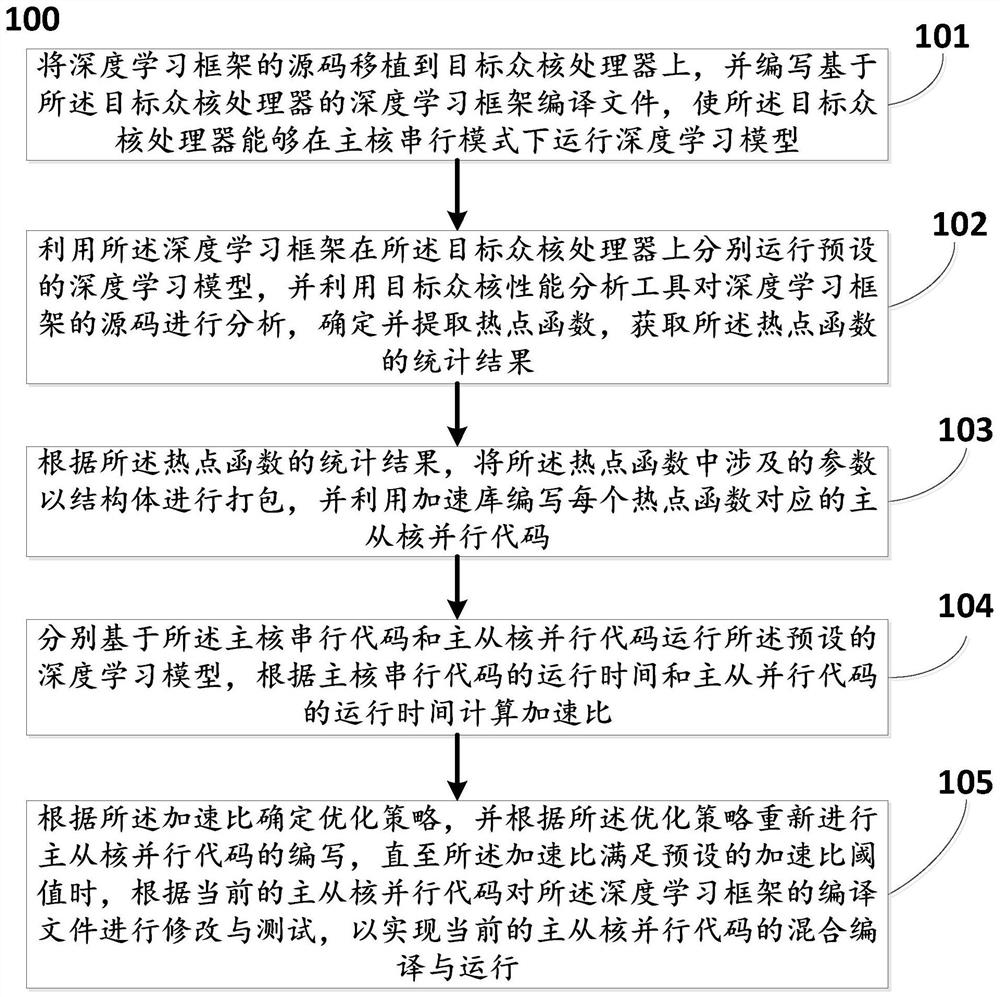

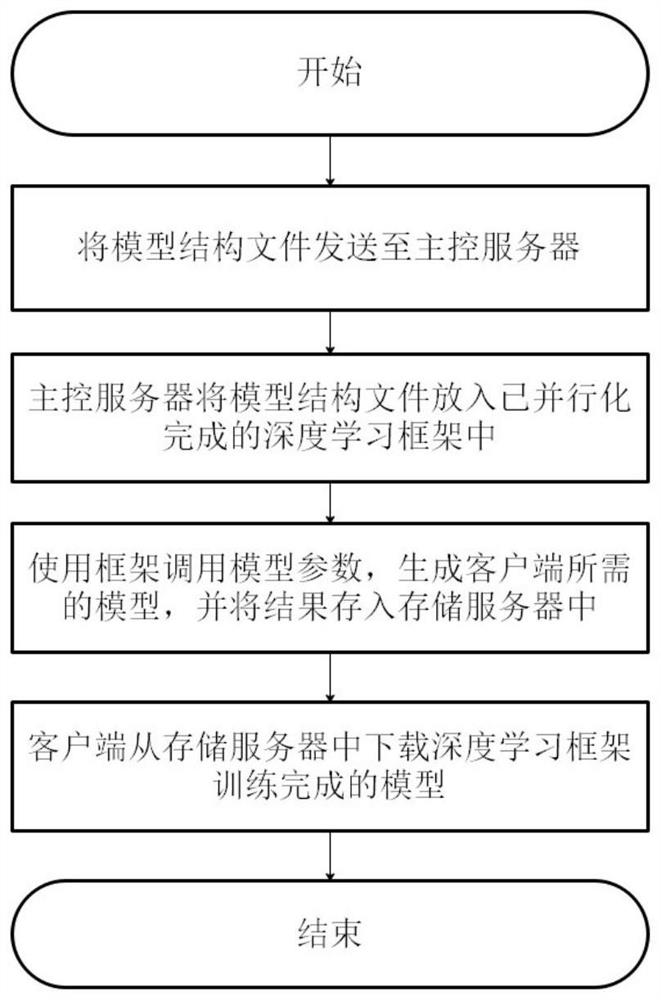

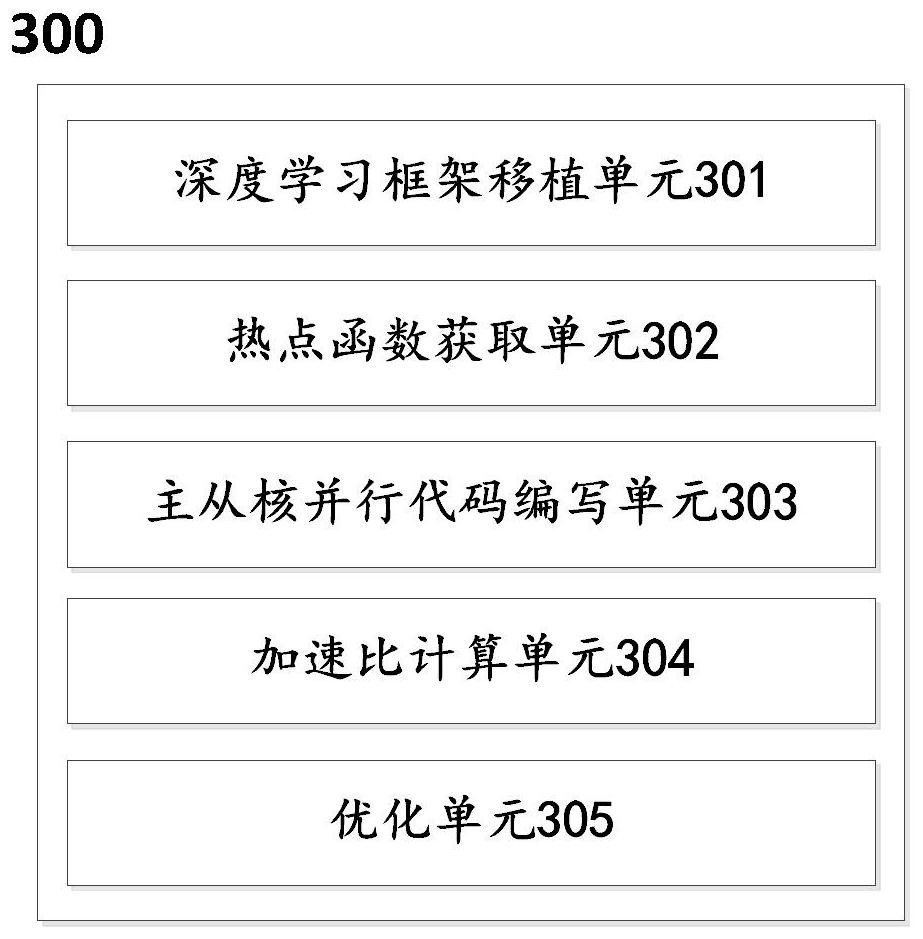

Deep learning framework transplanting and optimizing method and system based on target many-core

ActiveCN111667061AGuaranteed correctnessHigh speedupEnergy efficient computingPhysical realisationAlgorithmTheoretical computer science

The invention relates to a deep learning framework transplanting and optimizing method and system based on a target many-core. The method comprises the steps: in a transplanting process, transplantinga source code of a deep learning framework to a target many-core machine, modifying and compiling the framework according to a compiling instruction of the target many-core machine, and enabling theframework to meet the operation conditions of the target many-core machine; the acceleration optimization process comprises the steps that the framework is used for operating a functional model basedon deep learning on domestic many-cores, a target many-core performance analysis tool is used for analyzing codes, and confirmation and extraction of hotspot functions are achieved; analyzing and testing features and function parameters of the hotspot function; accelerating the hotspot function by using a parallel acceleration library; and determining an optimization strategy, finally improving the speed-up ratio of the framework on the premise of ensuring the correctness of the framework, and modifying and testing the compiled file of the deep learning framework according to the current master-slave core parallel code so as to realize the hybrid compilation and operation of the current master-slave core parallel code.

Owner:OCEAN UNIV OF CHINA +1

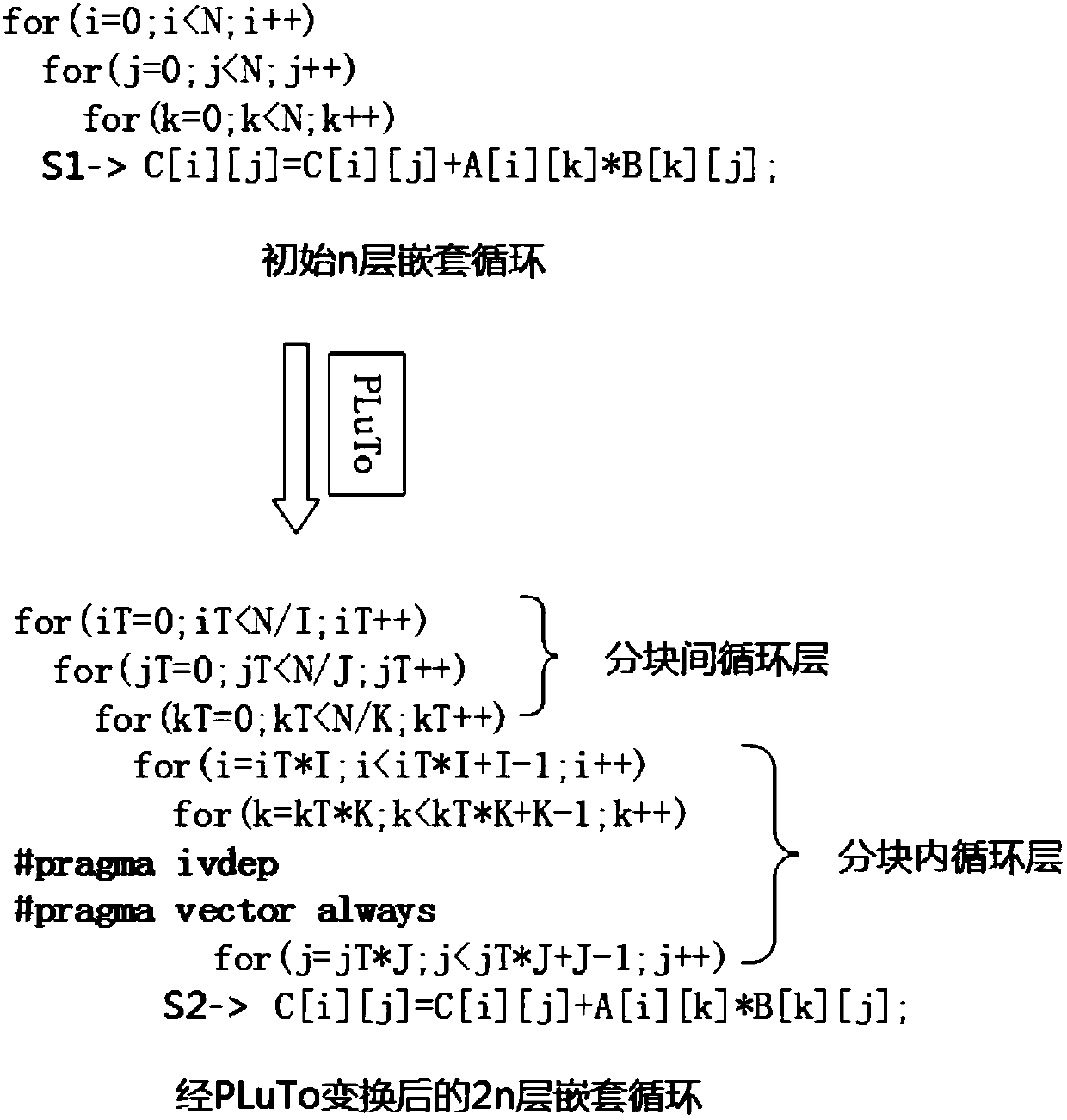

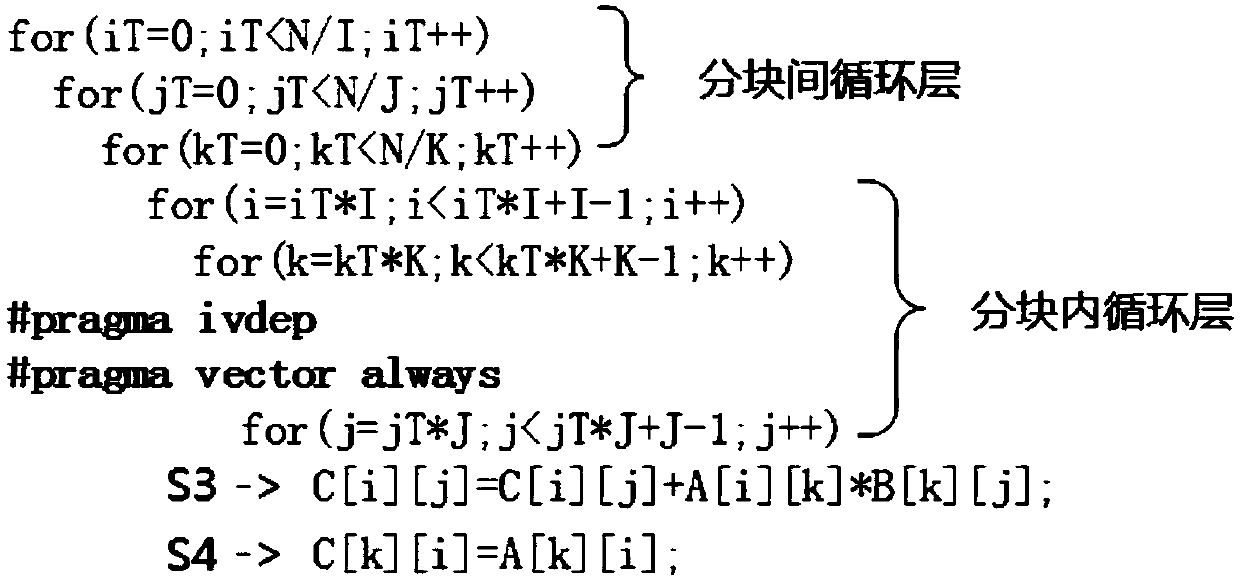

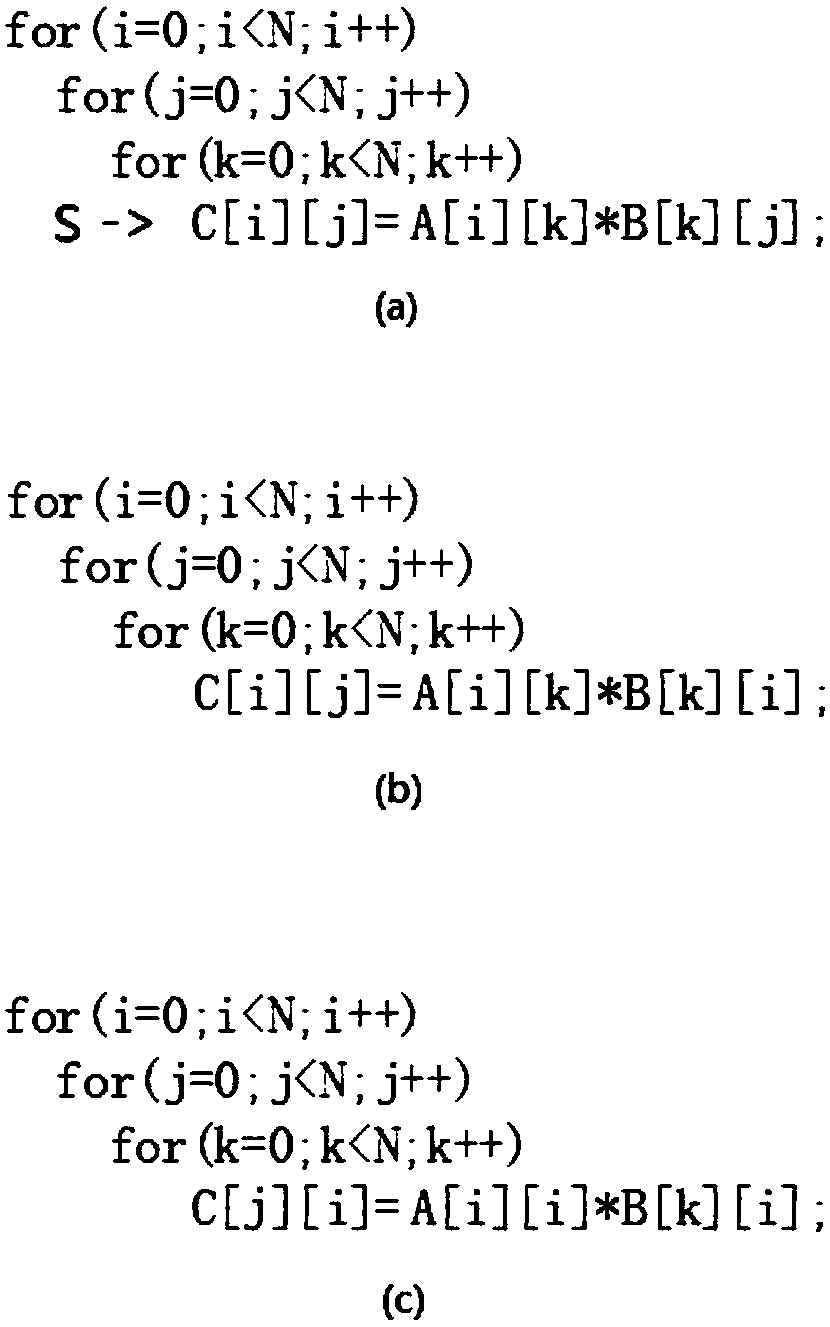

A machine learning-based loop tile size selection method

ActiveCN106990995AImprove performanceHigh speedupSoftware engineeringProgram controlLearning basedAlgorithm

The invention provides a machine learning-based loop tile size selection method. The method comprises the steps of performing synthetic program construction for original DOALL loops and making feature values of n layers of nested loops in synthetic programs comprehensively cover loops in an original program and an actual application program through full arrangement of column subscript tuples; performing feature value extraction on the innermost n layers of loops of 2n layers of nested loops obtained through conversion of the n layers of nested loops, and an optimal tile size vector of the n layers of nested loops is obtained via a global search method to form a neural network training sample set; acquiring an optimal tile size prediction model via training and performance analysis to perform tile size prediction on the DOALL loops in the actual application program. Through the combination of the synthetic program construction and feature value extracting methods and a machine learning process, the method has loop tile sizes with higher performance compared with the prior art.

Owner:西安汉格尚华网络科技有限公司

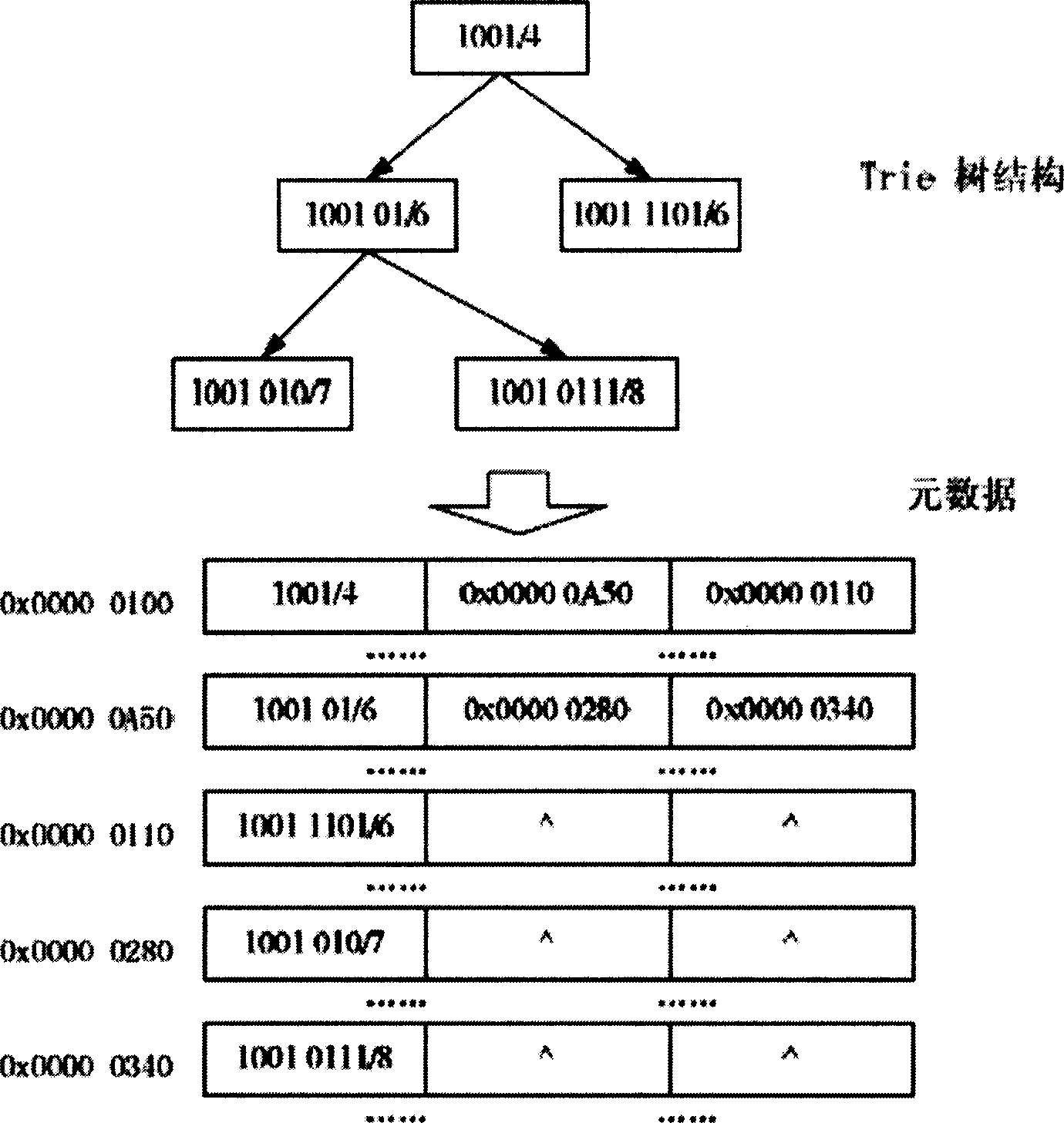

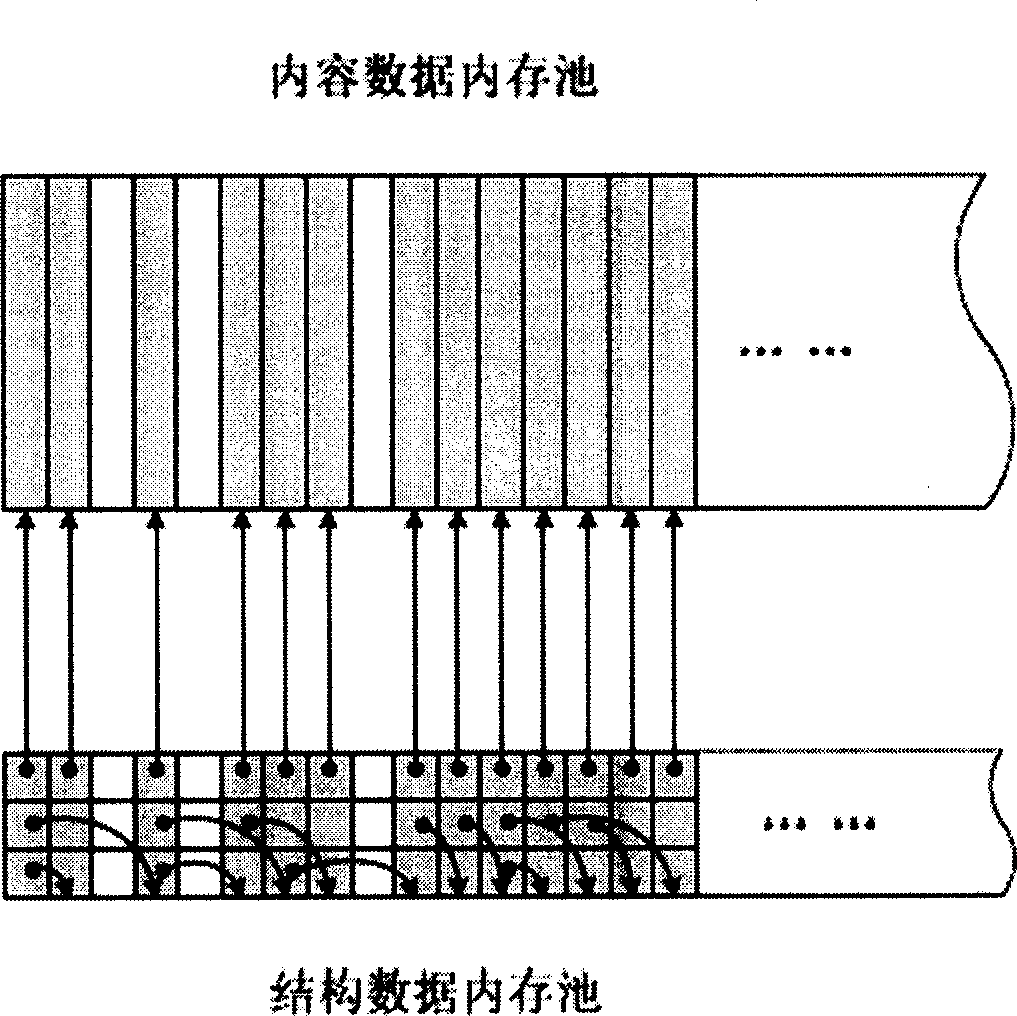

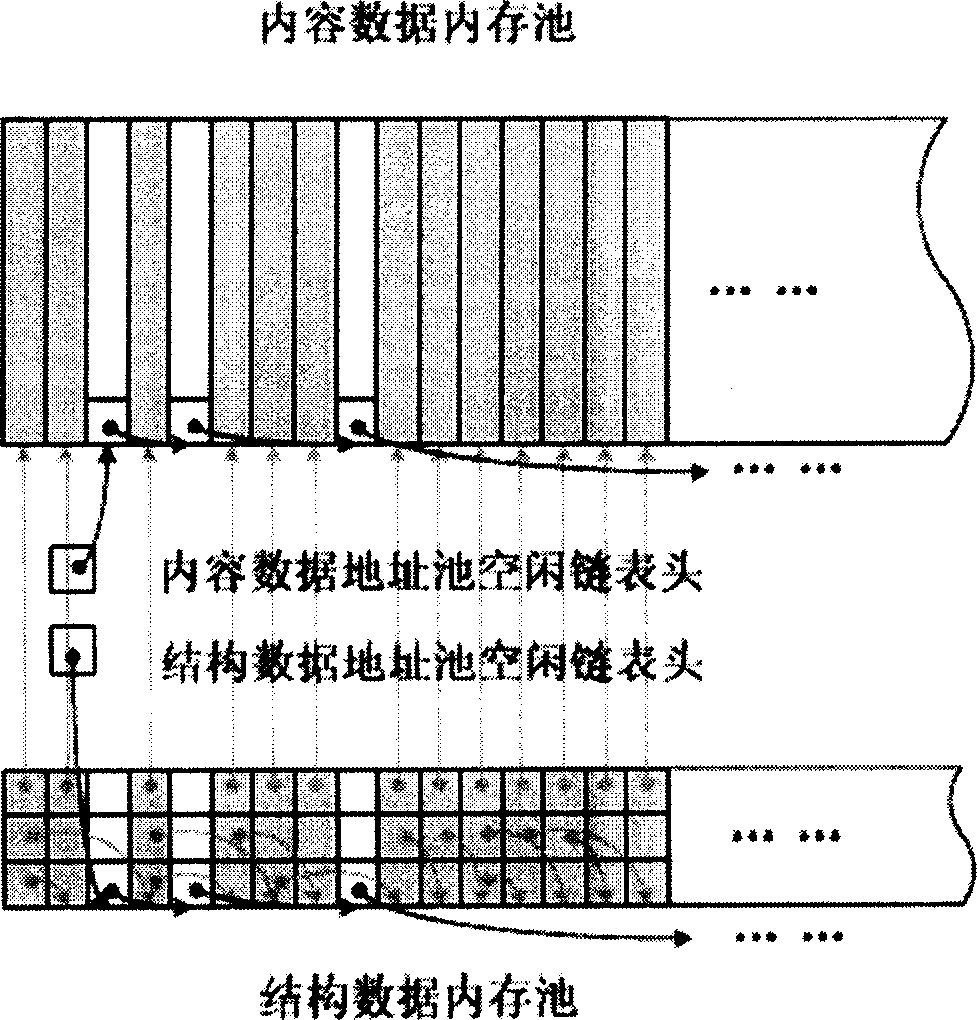

Large data-set task node transferrying method based on content and relation separation

InactiveCN1897570AMeets linear computational complexityExtend the movement timeData switching networksSpecial data processing applicationsMemory addressData set

The invention features the following points: sequentially building a content data memory pool including routing information and a configuration data memory pool including the relation between routing items; based on the difference between the first addresses of said two memory pools, getting and building the mapping relation of said memories on the store address; separately building the idle memory link table of said each memory pool; when the routing table manages to move to a independent memory area, according to the size of each memory pool a content memory pool and a configuration memory pool are separately built in the new memory area, and a mapping relation in the new memory area and a idle memory link table are rebuilt. The invention is used to control the hollow space of memory occurring at routing maintenance process.

Owner:TSINGHUA UNIV

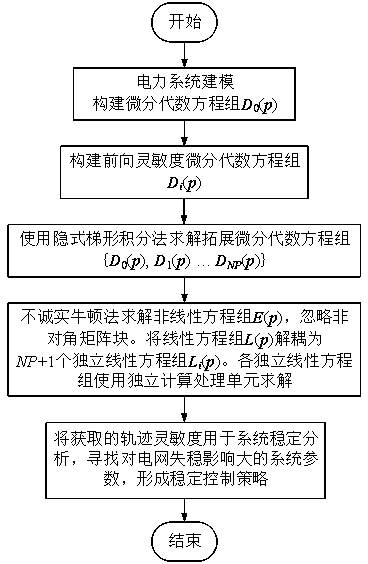

Method for obtaining power system parallelization track sensitivity

ActiveCN104036118AHigh speedupImprove parallel efficiencySpecial data processing applicationsSystems analysisStructure property

The invention discloses a method for obtaining power system parallelization track sensitivity. Track sensitivity has important application value in the fields of power system dynamic performance assessment, stable verification and control and the like, but in large-scale power system analysis, the technical difficulty that calculation time is excessively long exists and accordingly track sensitivity cannot be effectively applied in an existing power system based on real-time control. To solve the preceding problem, the novel method for obtaining power system track sensitivity more quickly by means of a parallel computing technique is put forward. By digging and making use of the Newton iteration matrix structure property in forward sensitivity analysis, a special dishonest Newton iteration strategy is designed, linear equation systems in Newton iteration can be decoupled and deployed on different computing processing units to be calculated, parallelization of track sensitivity obtaining is achieved, computational efficiency is greatly improved and the method can be used for the fields of power system stable analysis and control and the like.

Owner:ZHEJIANG UNIV

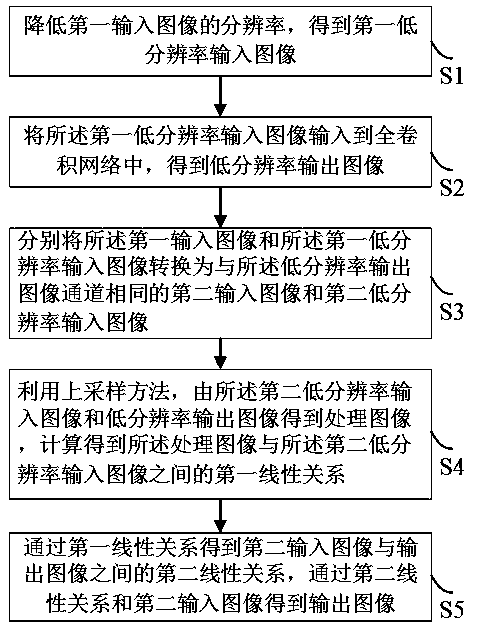

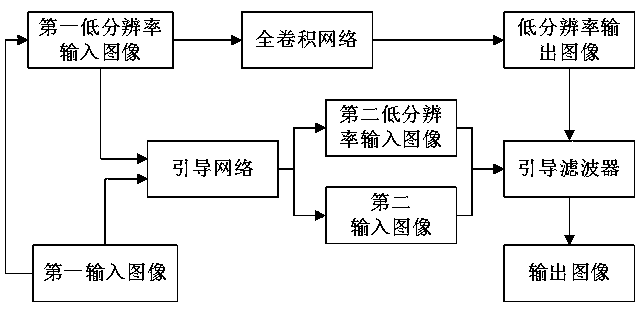

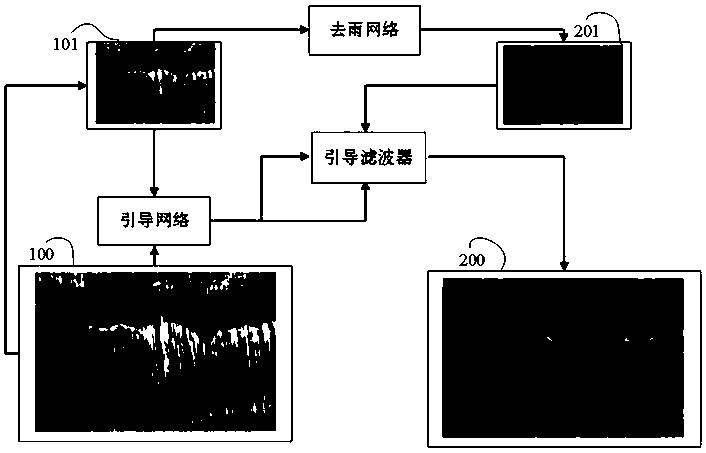

Image processing method and device based on full convolutional network, and computer equipment

ActiveCN110211057AQuality is not affectedReduce resolutionImage enhancementImage analysisImaging processingImage resolution

The invention relates to an image processing method and device based on a full convolutional network, and computer equipment. The image processing method comprises the steps: reducing the resolution of a first input image, obtaining a first low-resolution input image, inputting the first low-resolution input image into the full convolutional network, and obtaining a low-resolution output image; respectively converting the first input image and the first low-resolution input image into a second input image and a second low-resolution input image; utilizing an up-sampling method to obtain a processed image from the second low-resolution input image and the low-resolution output image, and calculating to obtain a first linear relation between the processed image and the second low-resolutioninput image; and obtaining a second linear relation between the second input image and the output image according to the first linear relation, and obtaining the output image according to the second linear relation and the second input image. The image processing method can be seamlessly connected with an existing trained network framework, has universality, and has an extremely high accelerationratio while not influencing the image processing quality.

Owner:WUHAN TCL CORP RES CO LTD

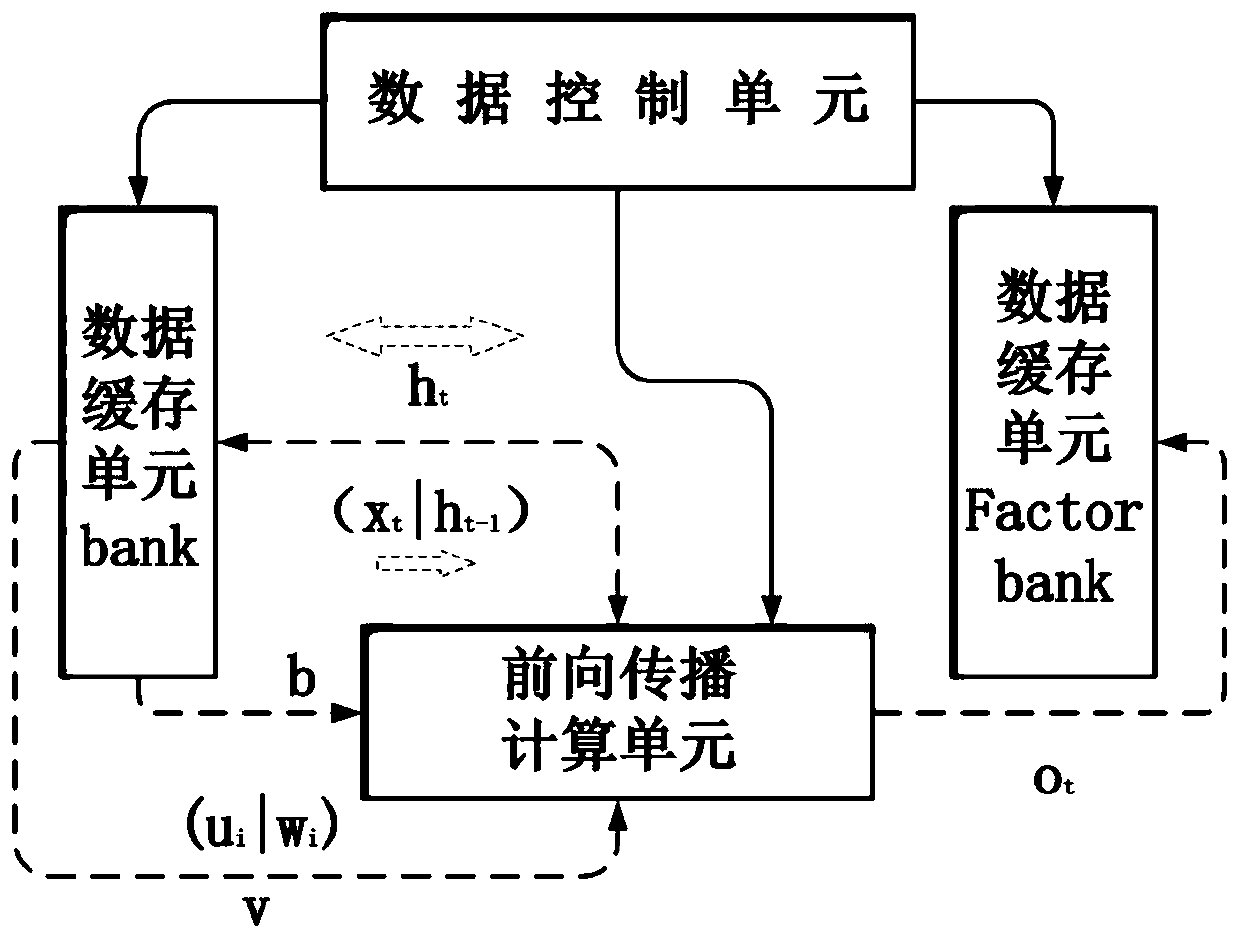

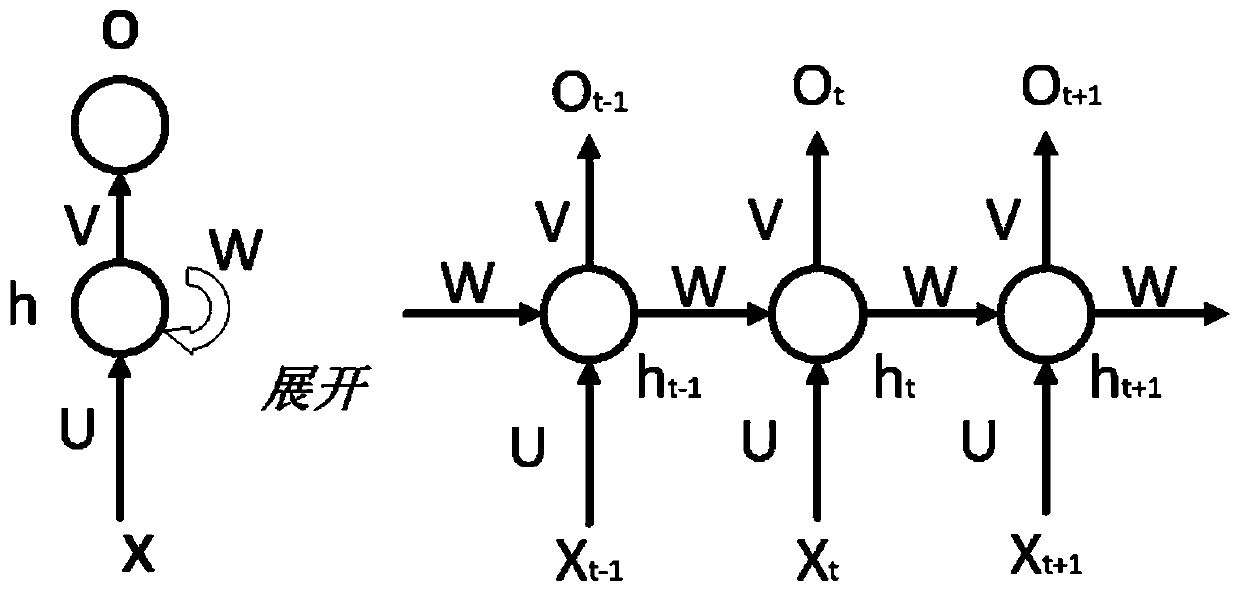

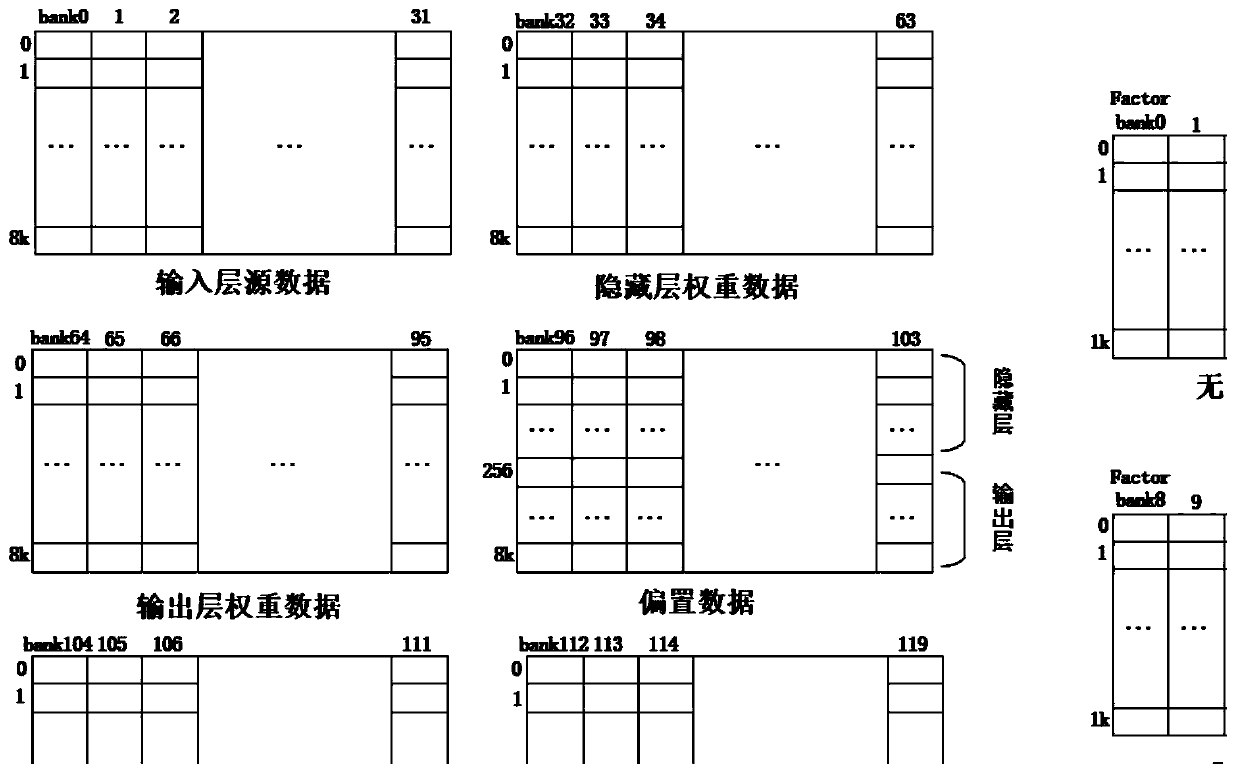

Hardware acceleration implementation system and method for RNN forward propagation model based on transverse pulsation array

ActiveCN110826710AImprove utilization efficiencyImprove scalabilitySystolic arraysNeural architecturesHidden layerActivation function

The invention discloses a hardware acceleration implementation system and a method for an RNN forward propagation model based on a transverse pulsation array. The method comprises the steps of firstly, configuring network parameters, initializing data, lateral systolic array, wherein a blocking design is adopted in the weight in calculation; partitioning a weight matrix calculated by the hidden layer according to rows; carrying out matrix multiplication vector and vector summation operation and activation function operation; calculating hidden layer neurons, obtaining hidden layer neurons according to the obtained hidden layer neurons; performing matrix multiplication vector, vector summation operation and activation function operation; generating an RNN output layer result; finally, generating an output result required by the RNN network according to time sequence length configuration information; according to the method, a hidden layer and an output layer are parallel in a multi-dimensional mode, the pipelining performance of calculation is improved, meanwhile, the characteristic of weight matrix parameter sharing in the RNN is achieved, the partitioning design is adopted, the parallelism degree of calculation is further improved, the flexibility, expandability, the storage resource utilization rate and the acceleration ratio are high, and calculation is greatly reduced.

Owner:NANJING UNIV

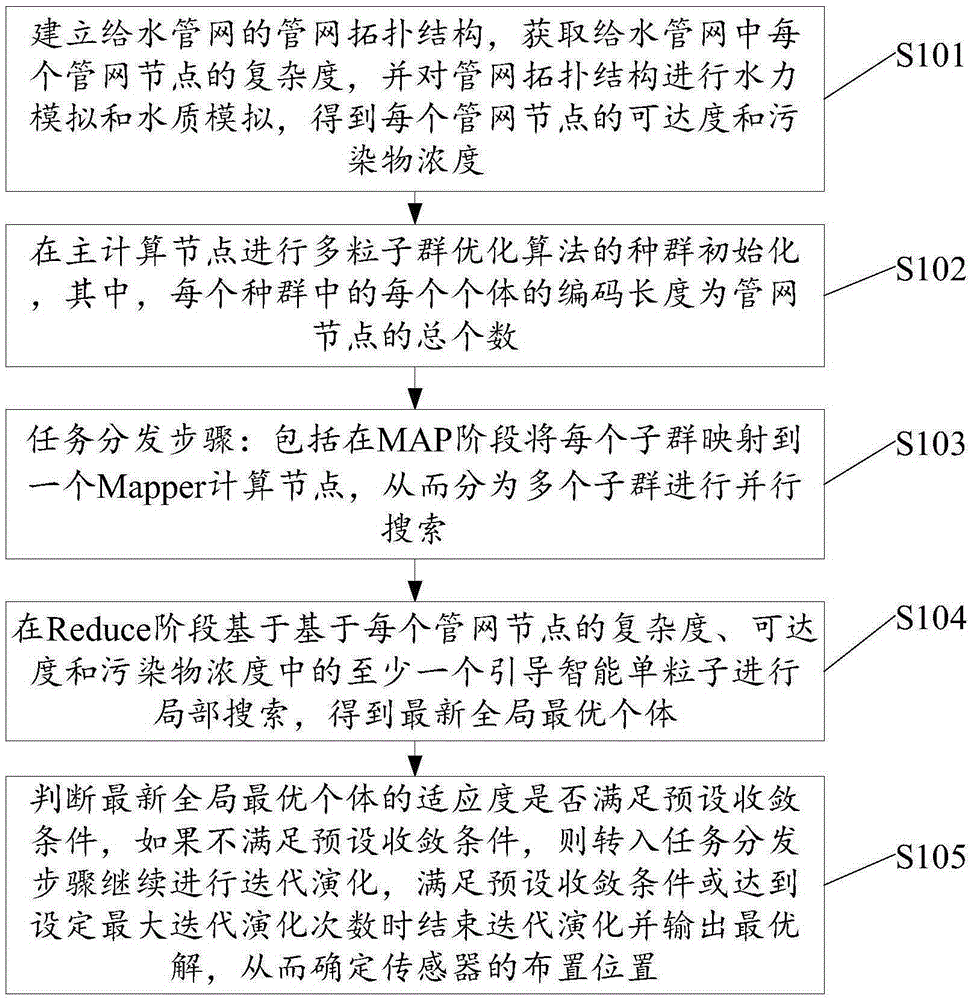

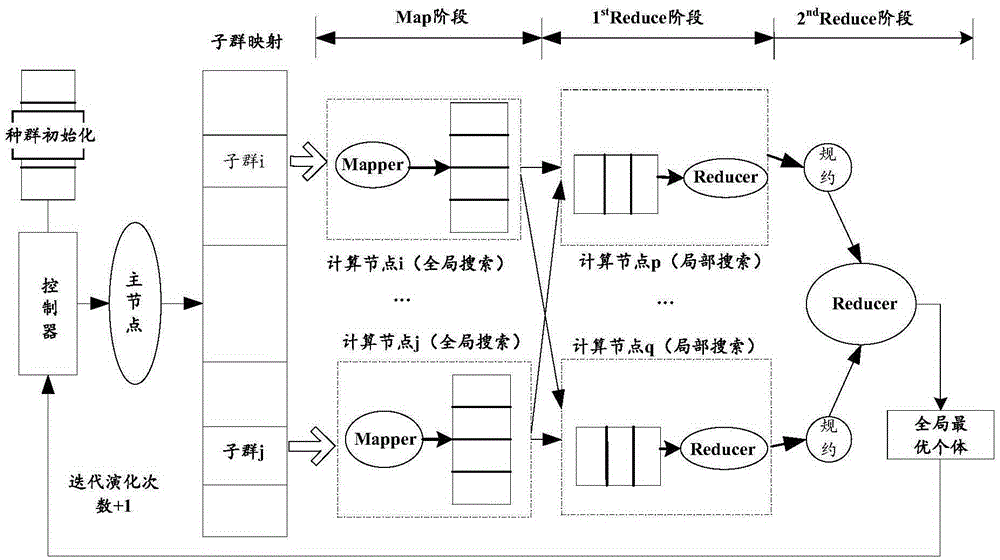

Water supply pipe network sensor arrangement optimization method based on multiple particle swarm optimization algorithm

InactiveCN105426984ASolve to maximize the monitoring effectAvoid security risksGeneral water supply conservationForecastingEngineeringWater quality modelling

The invention discloses a water supply pipe network sensor arrangement optimization method based on a multiple particle swarm optimization algorithm. The method includes: a pipe network topology structure of a water supply pipe network is established, the complexity of each pipe network node in the water supply pipe network is obtained, hydraulic simulation and water quality simulation of the pipe network topology structure are performed, and the accessibility and the pollutant concentration of each pipe network node are obtained; population initialization of the multiple particle swarm optimization algorithm is performed at a main calculating node, and global search is conducted in the MAP stage; local search is conducted in the Reduce stage, and the newest global optimal individual is obtained; whether the fitness of the newest global optimal individual satisfies a preset convergence condition is determined, and if the fitness does not satisfy the preset convergence condition, iteration evolution is continued via moving to the task distribution step. According to the method, the technical problem of long optimization time of water supply pipe network sensor arrangement in the prior art is effectively solved, the monitoring effect is maximized (such as detecting pollution events with the fastest time), and safety risks due to pollution of drinking water are prevented.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

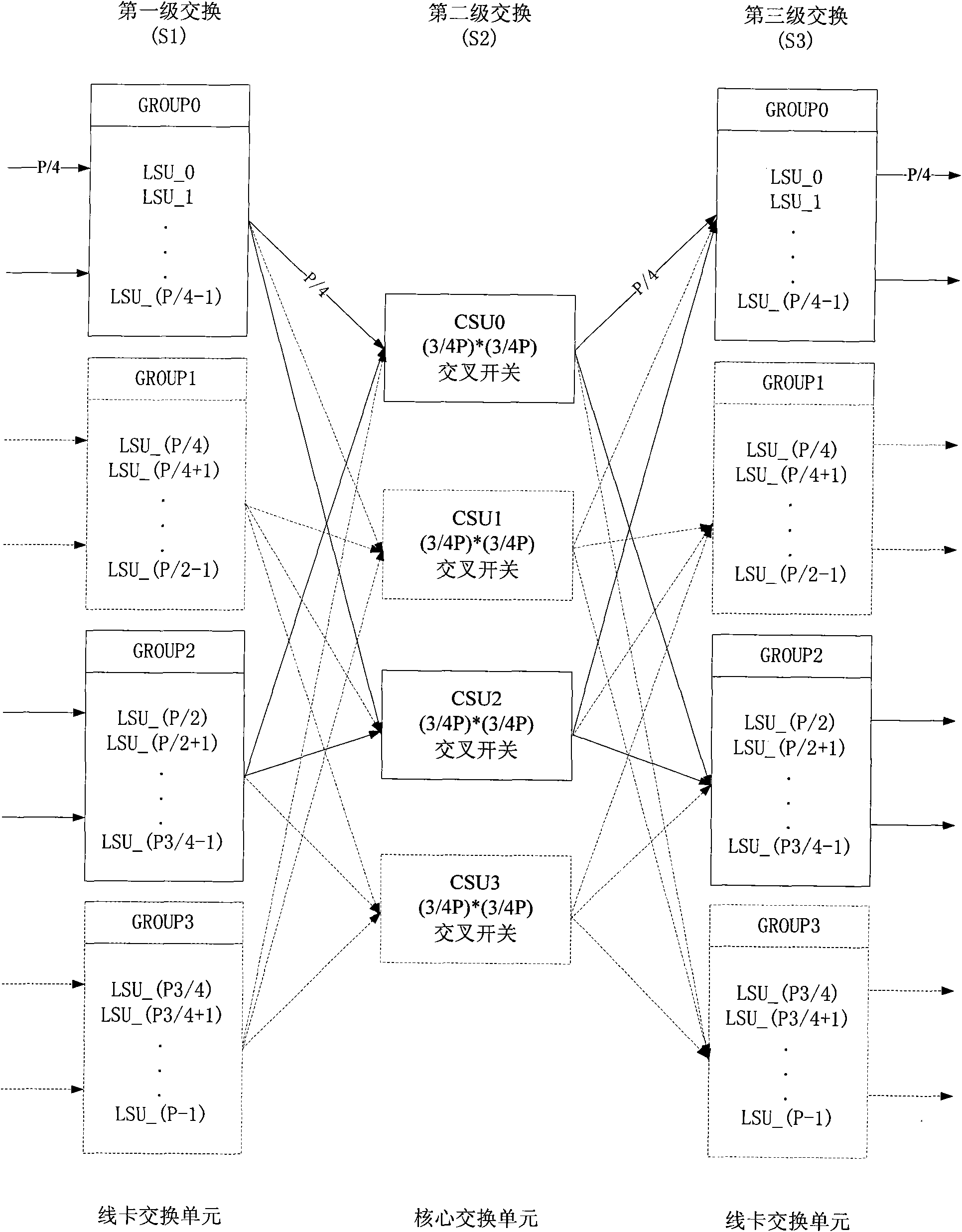

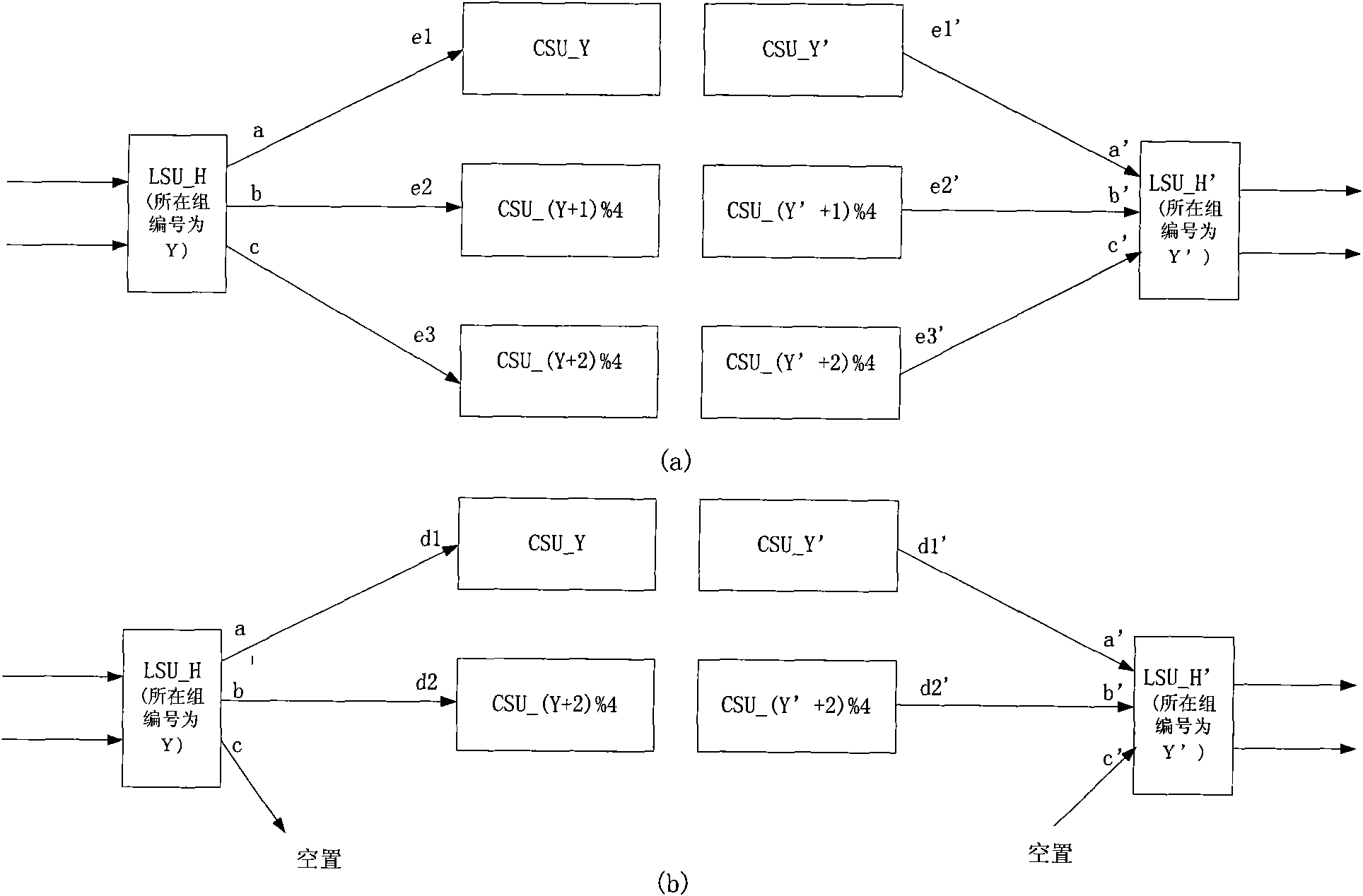

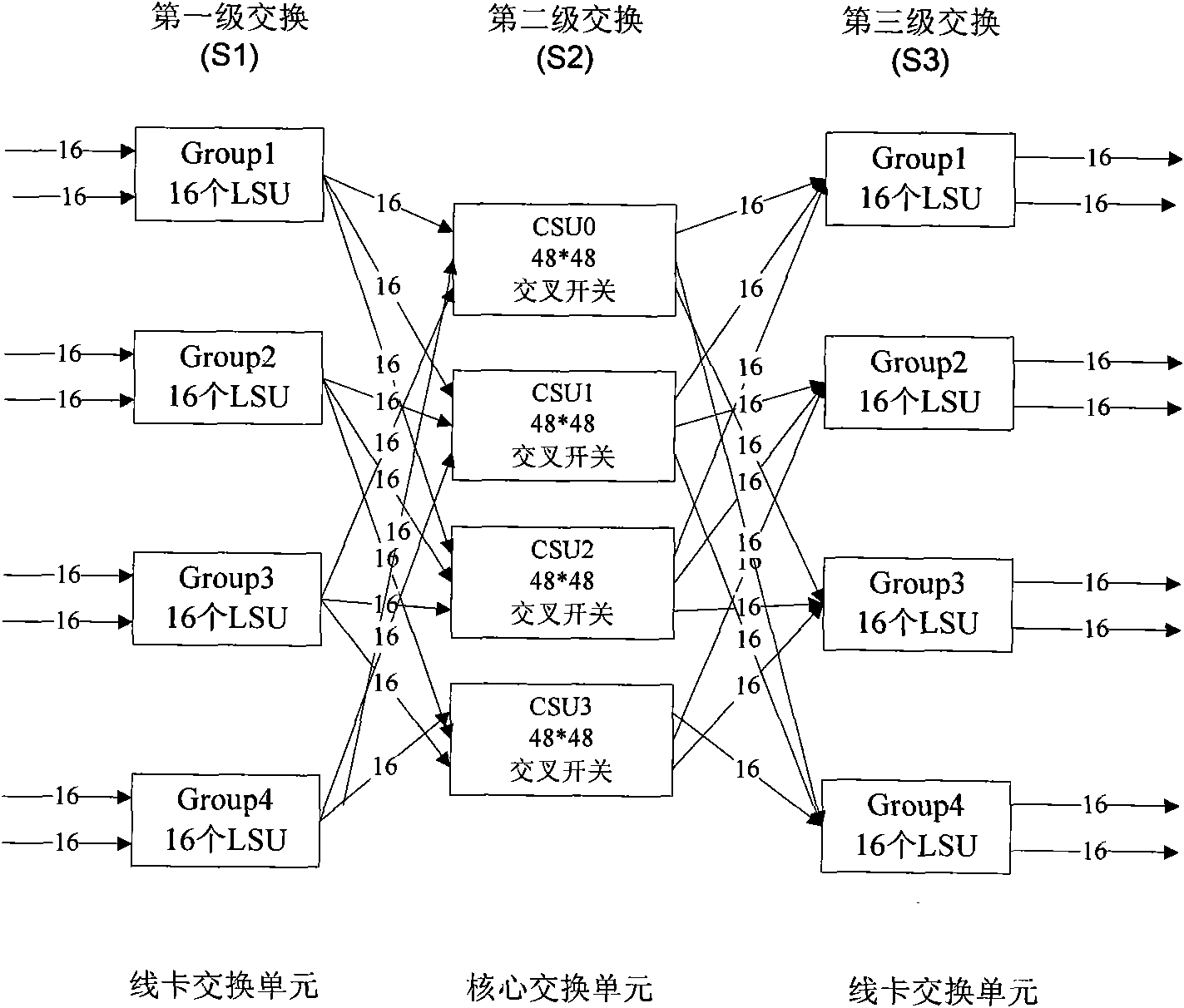

Switched network system structure with adjustable throughput rate

InactiveCN101778044ASimple structureEasy size adjustmentData switching networksCrossbar switchExchange network

The invention discloses a switched network system structure with adjustable throughput rate, which aims at providing an expandable switched network system structure, so the throughput rate is adjustable, and the reliability, the flexibility and the controllability of the network can be improved. The invention adopts the technical scheme that: the switched network system structure with adjustable throughput rate is an improved tertiary-level indirect connection network system structure which has a complete switched capacity mode and a binary switched capacity mode, a first level of the complete switched capacity mode is formed by 2*3 crossbar switches with the quantity of P, a second level is formed by 4 Q*Q (Q=3P / 4) crossbar switches, and a third level is formed by 3*2 crossbar switches with the quantity of P. Each line card is provided with one 3*2 crossbar switch and one 3*2crossbar switch, the Q*Q crossbar switches of the second level is arranged on a core switching board of a router, and the core switching board is connected with the line card through a back panel; and when only the part field with even number or odd number is configured, the binary switched capacity mode is adopted. The structure of the switched network is simplified, the scale is easy to be adjusted, and the reliability of the data switch is improved.

Owner:NAT UNIV OF DEFENSE TECH

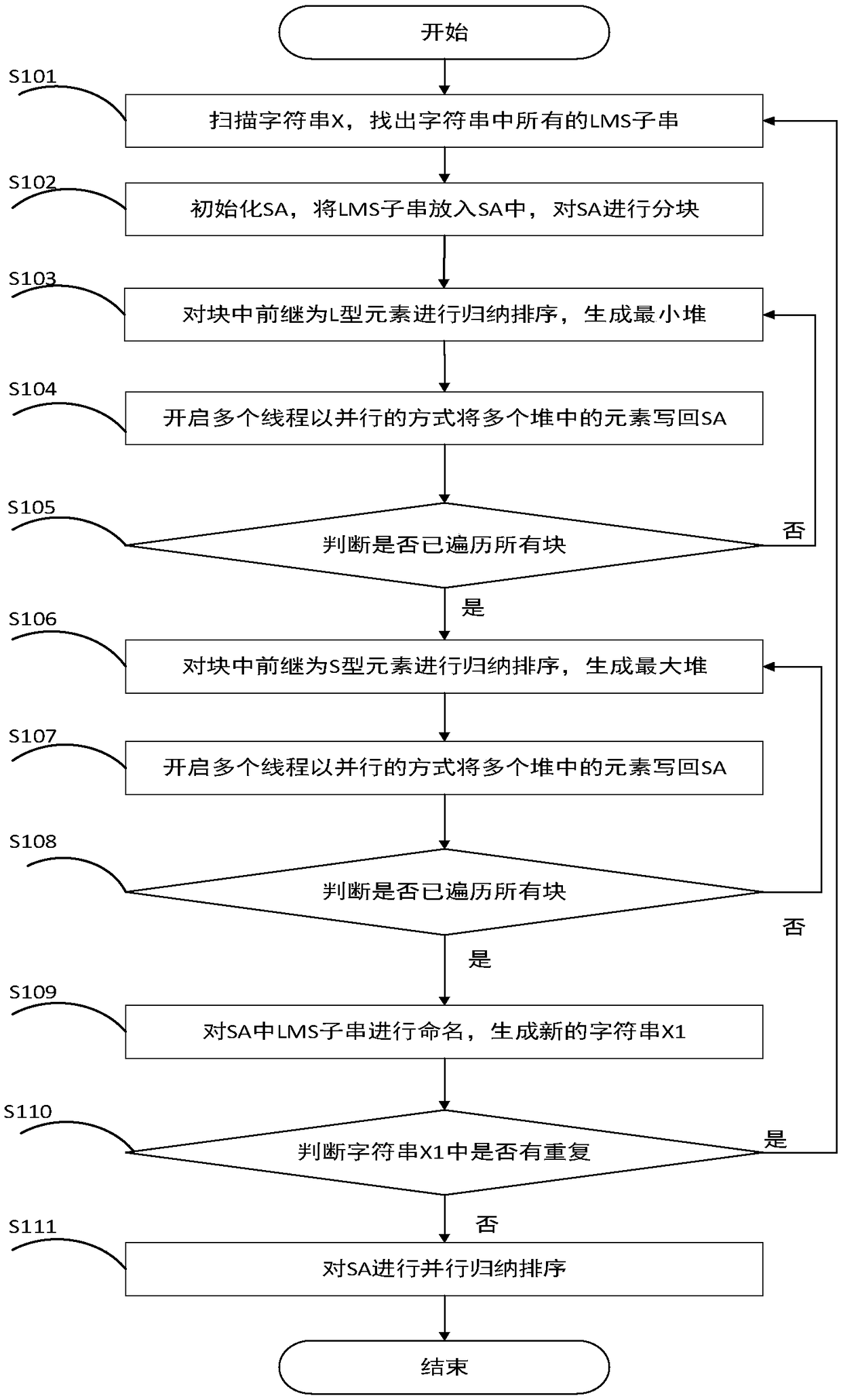

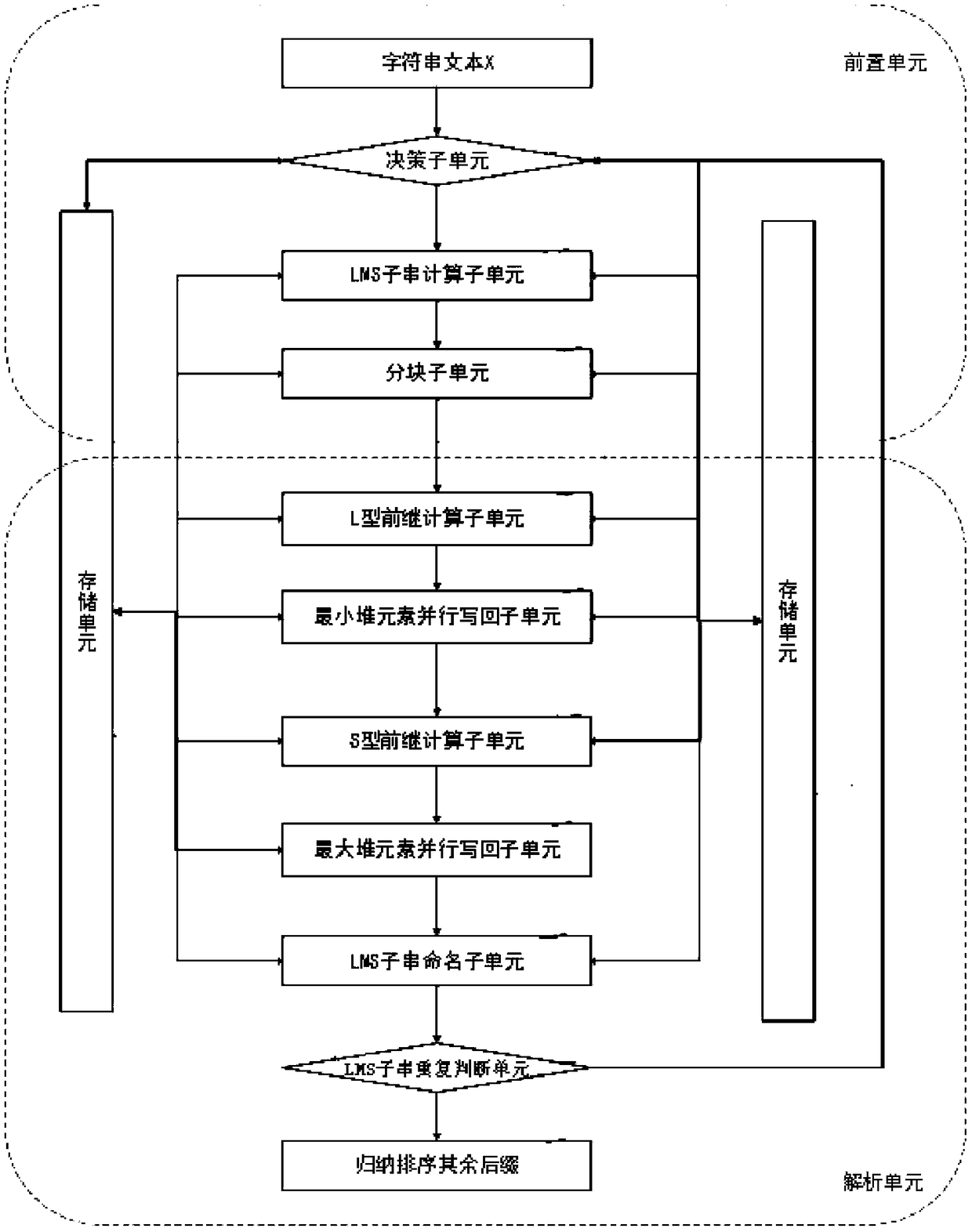

A parallel suffix sorting method and system

ActiveCN109375989ARun fastHigh speedupDigital data information retrievalMultiprogramming arrangementsInteraction timeSuffix sorting

The invention relates to a parallel suffix sorting method and system. For the string X of length n, when its size is much larger than the size of the computer Cache, the method of dividing SA into blocks is used to increase the hit rate of the Cache, reduce the interaction times between the Cache and the memory, and thus greatly reduce the sorting time of the string. The invention utilizes the parallel computing resources of the modern multi-core computer to parallelize the data access operation in the sorting process by using multi-threads, effectively improves the running speed of the algorithm, induces the parallelism of the sorting process to be high, the system can obtain a higher acceleration ratio, and greatly improves the work efficiency.

Owner:SUN YAT SEN UNIV

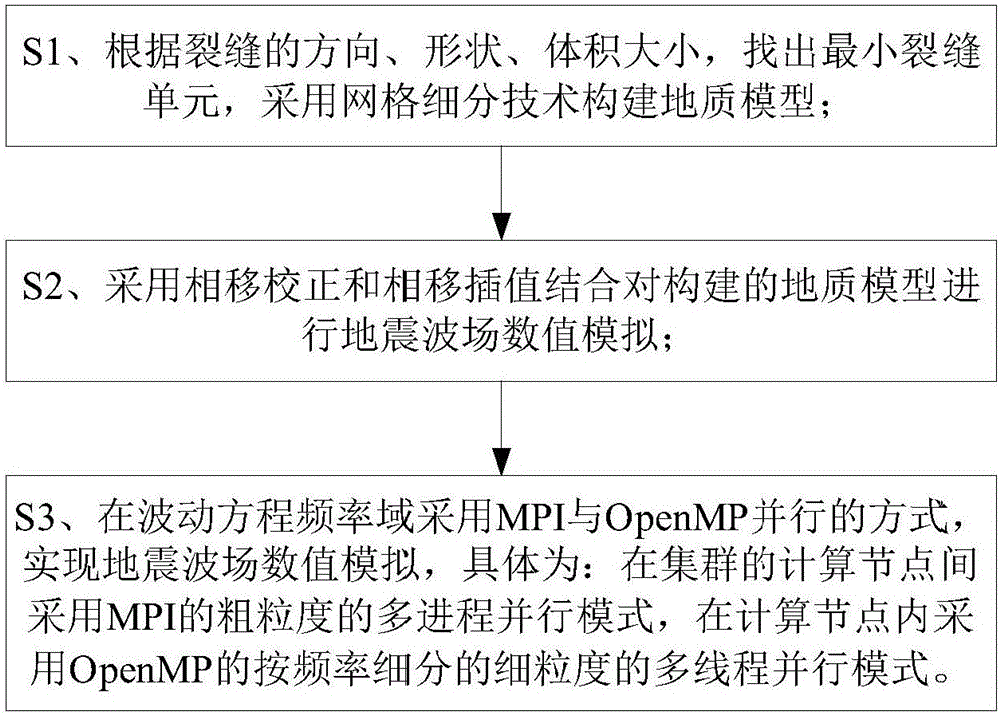

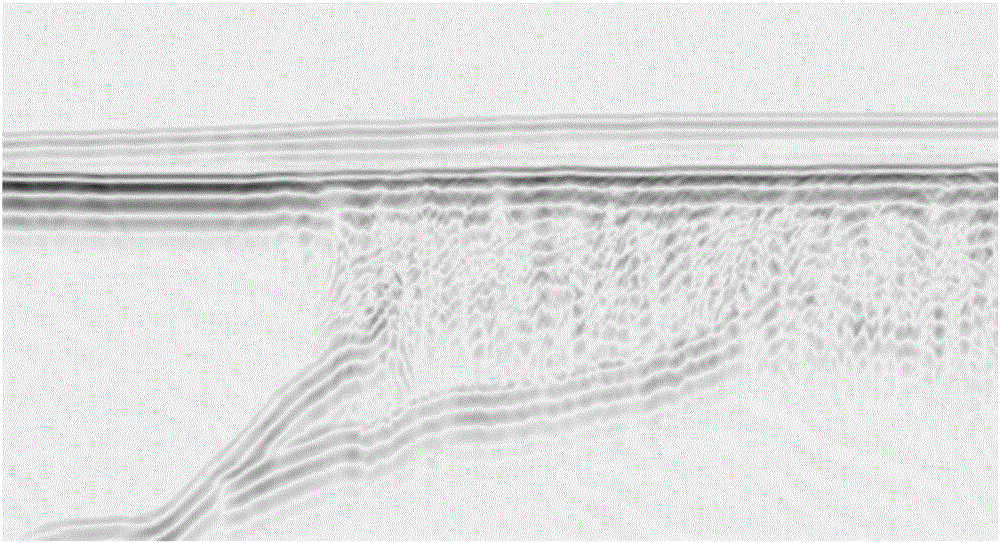

Fractured medium seismic wave field numerical simulation method based on multiple machines and multiple cores

InactiveCN107526104AFast operationHigh speedupSeismic signal processingComputer clusterEnvironmental geology

The invention discloses a fractured medium seismic wave field numerical simulation method based on multiple machines and multiple cores, which is applied to the field of fractured seismic wave field. By introducing cluster processing to wave equation numerical simulation and making an MPI message transfer interface work on multiple machines, a computer cluster can be used in finely-gridded fractured medium seismic wave field numerical simulation, and the computation speed is greatly improved. Moreover, through the use of multiple threads, the multiple cores of a single node are fully utilized, the memory and idle resources of the computer are fully utilized, and therefore, the speedup ratio of the algorithm is further enhanced.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

Operator mapping method and system for coarse-grained reconfigurable architecture

ActiveCN109471636AGood mapping performanceHigh speedupCode compilationOperator schedulingTheoretical computer science

The invention provides an operator mapping method and a system of a coarse-grained reconfigurable architecture, comprises a data flow graph generating step, a minimum cycle start interval calculatingstep, an operator scheduling step, a scheduling judging step, an array graph constructing step, a sorting step, a forward mapping step, a current mapping judging step, a reverse tracing step, all mapping judging steps and a configuration generating step. The invention can achieve better mapping performance in shorter compiling time, obtain higher acceleration ratio, and has smaller area and energyconsumption overhead. The ordered operator nodes are mapped forward in turn. When the forward mapping fails, a new mapping path is entered by reverse backtracking, and a successful mapping scheme isfound as far as possible without degrading the performance.

Owner:SHANGHAI JIAO TONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com