Patents

Literature

82 results about "Data parallelism" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to task parallelism as another form of parallelism.

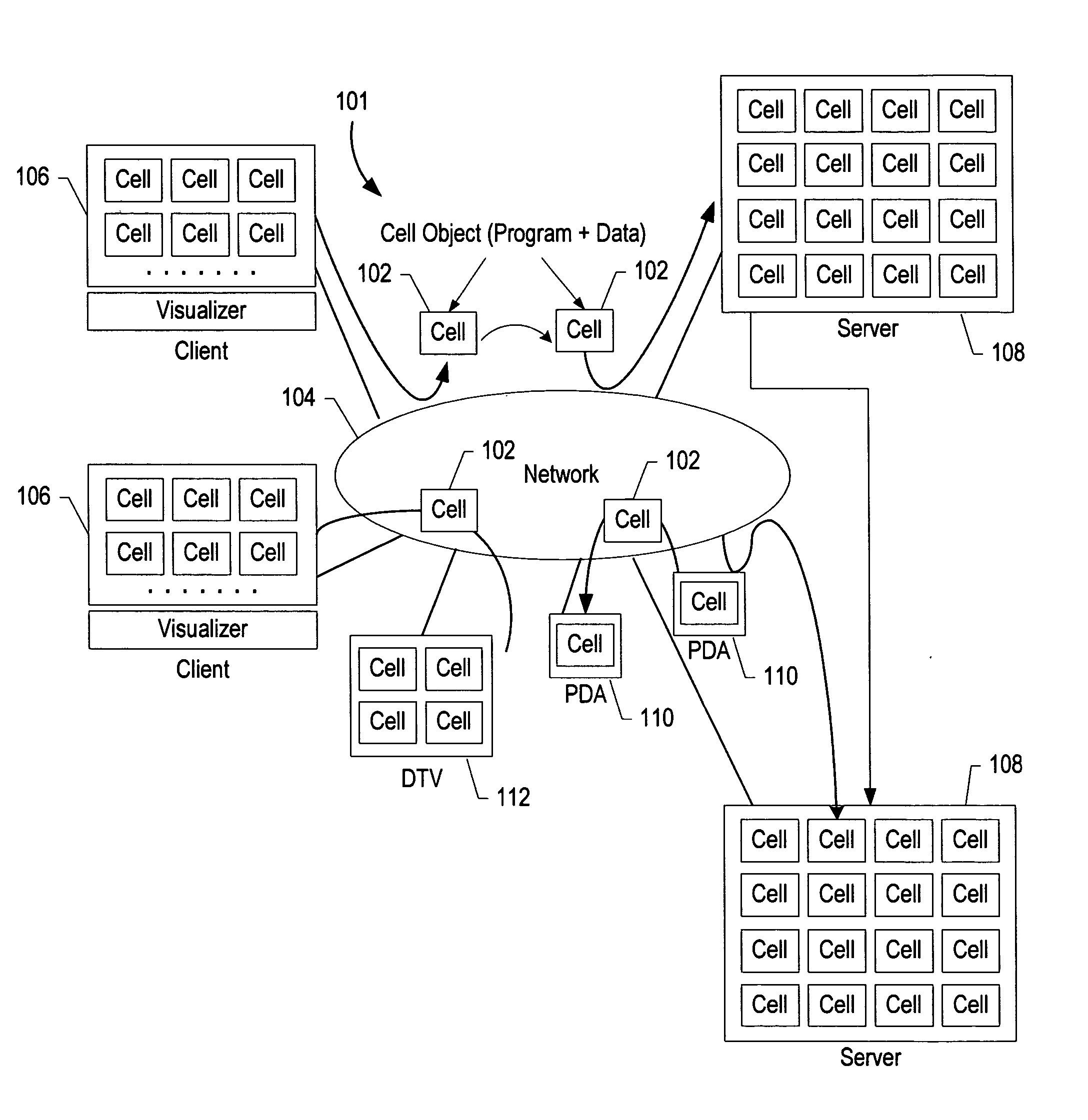

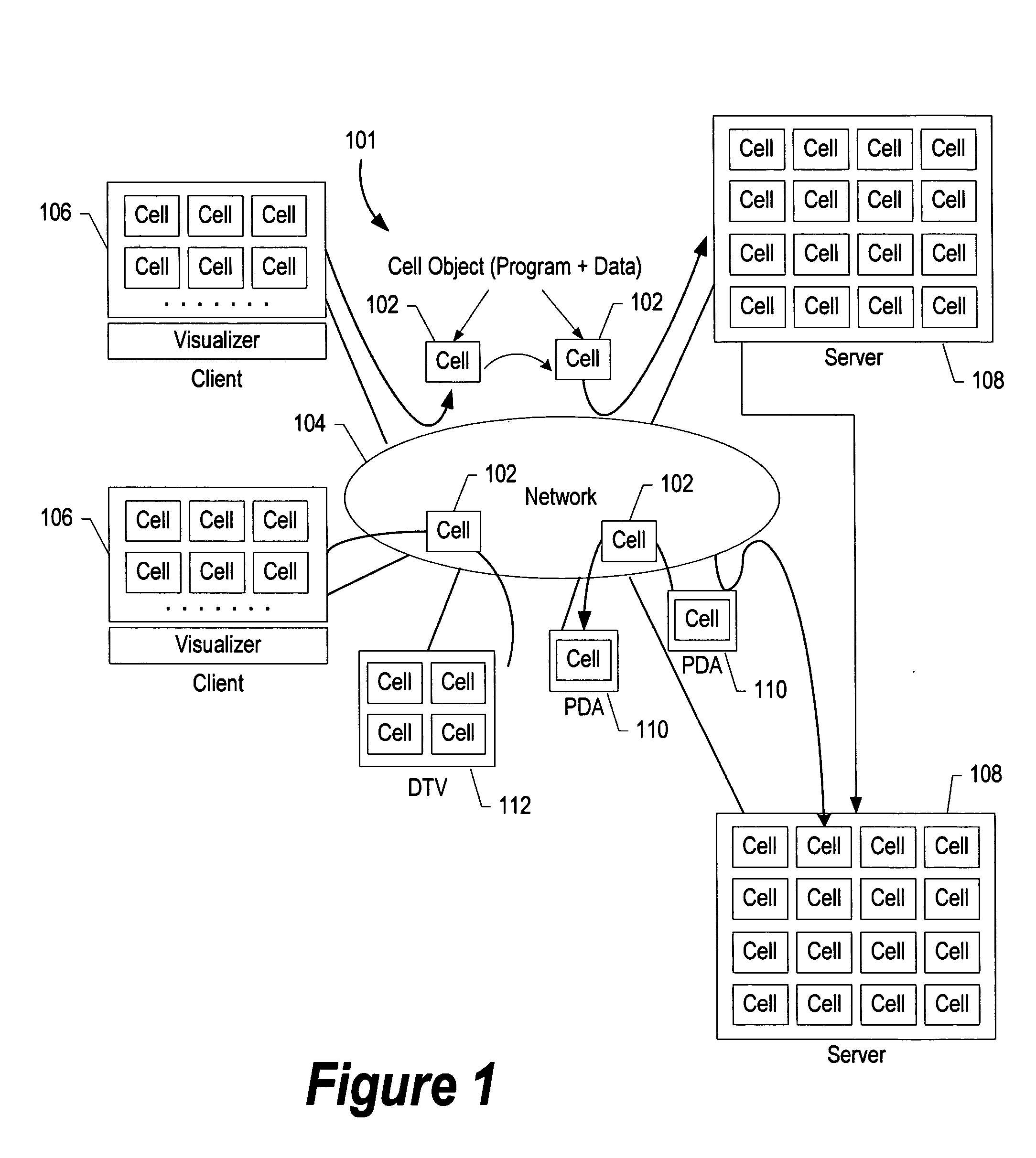

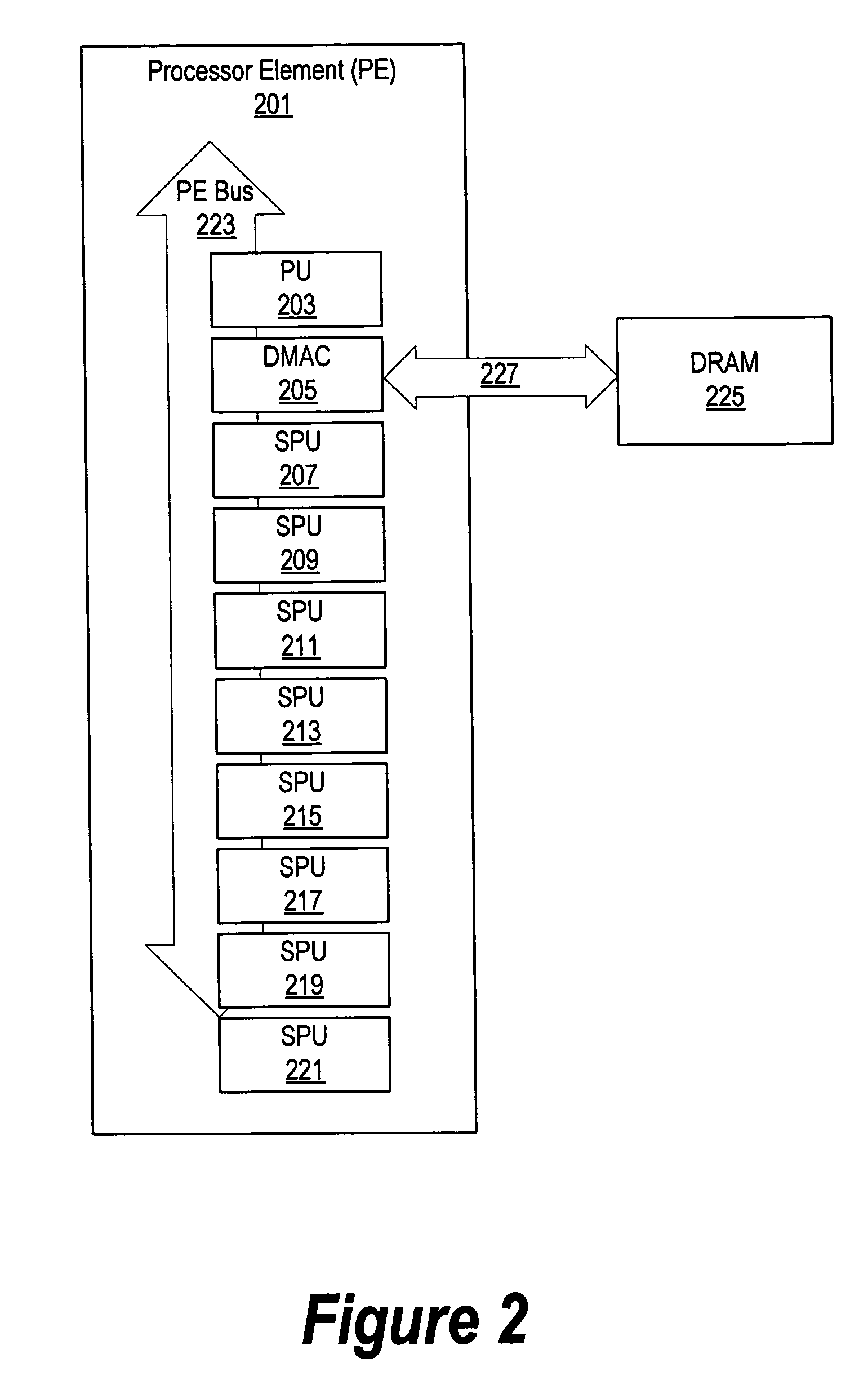

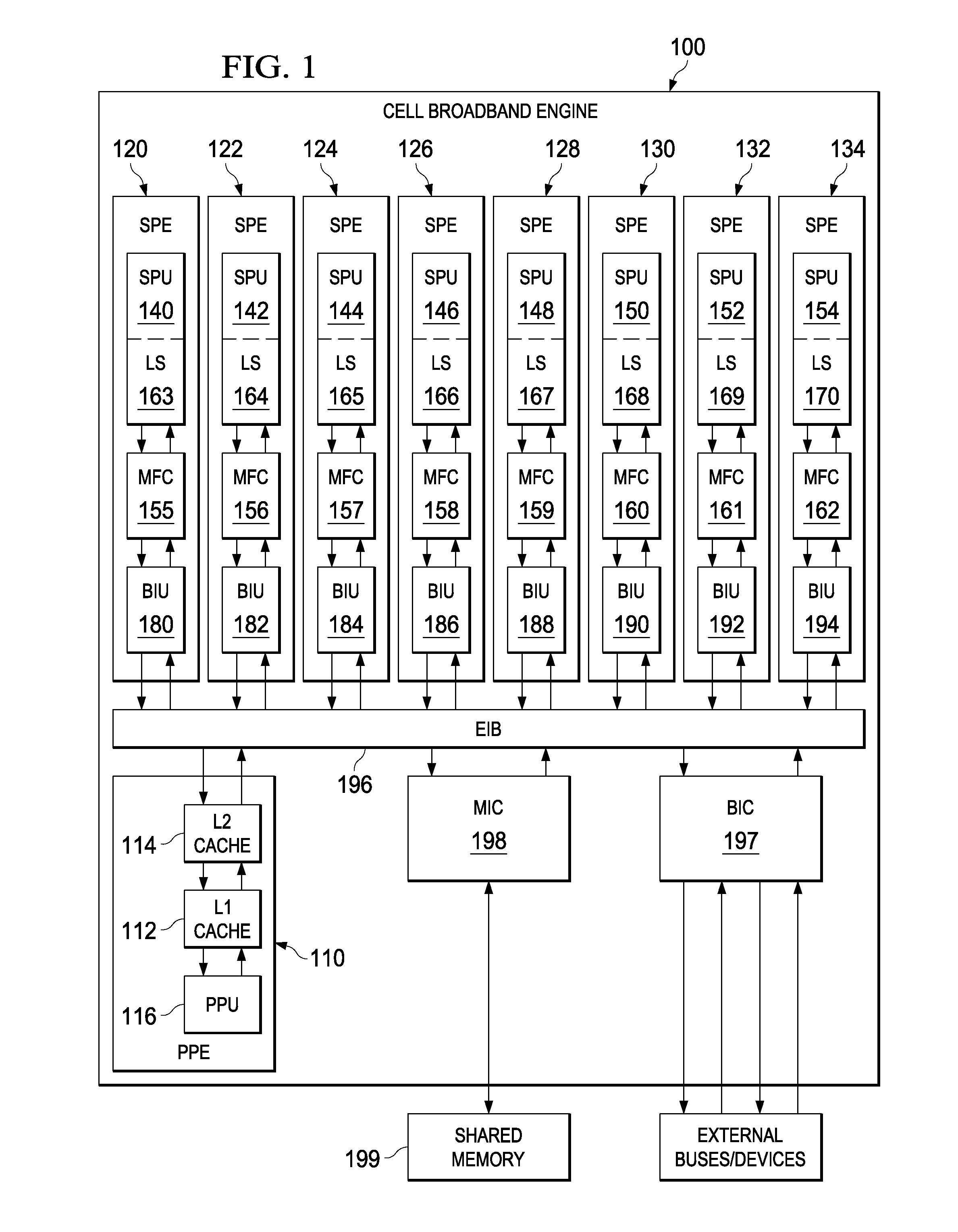

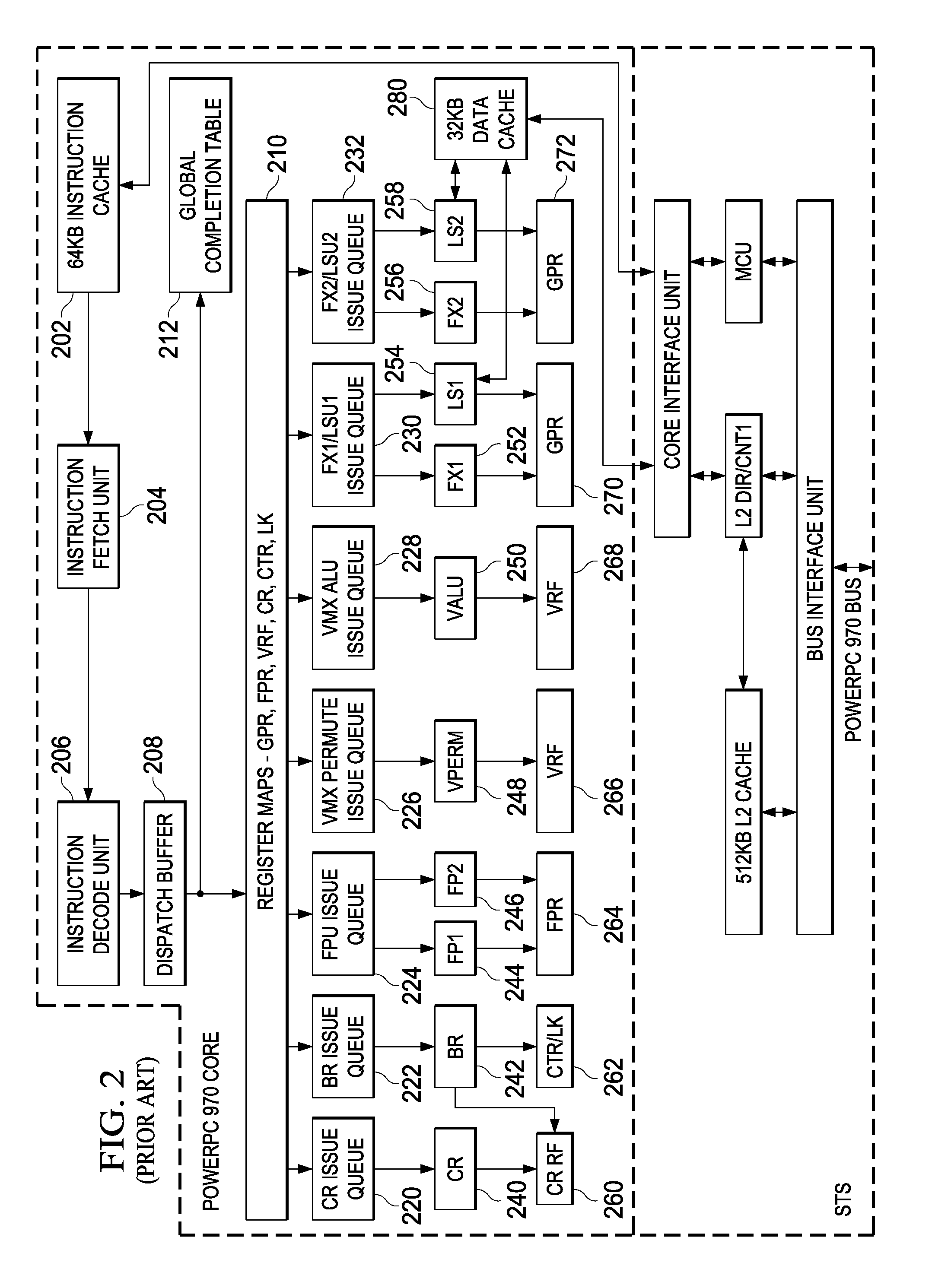

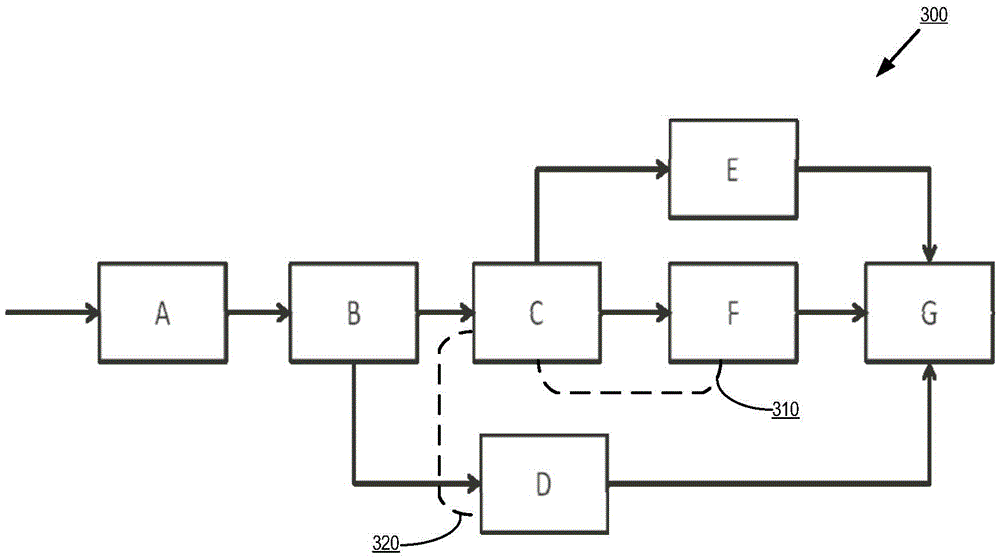

System and method for dynamically partitioning processing across plurality of heterogeneous processors

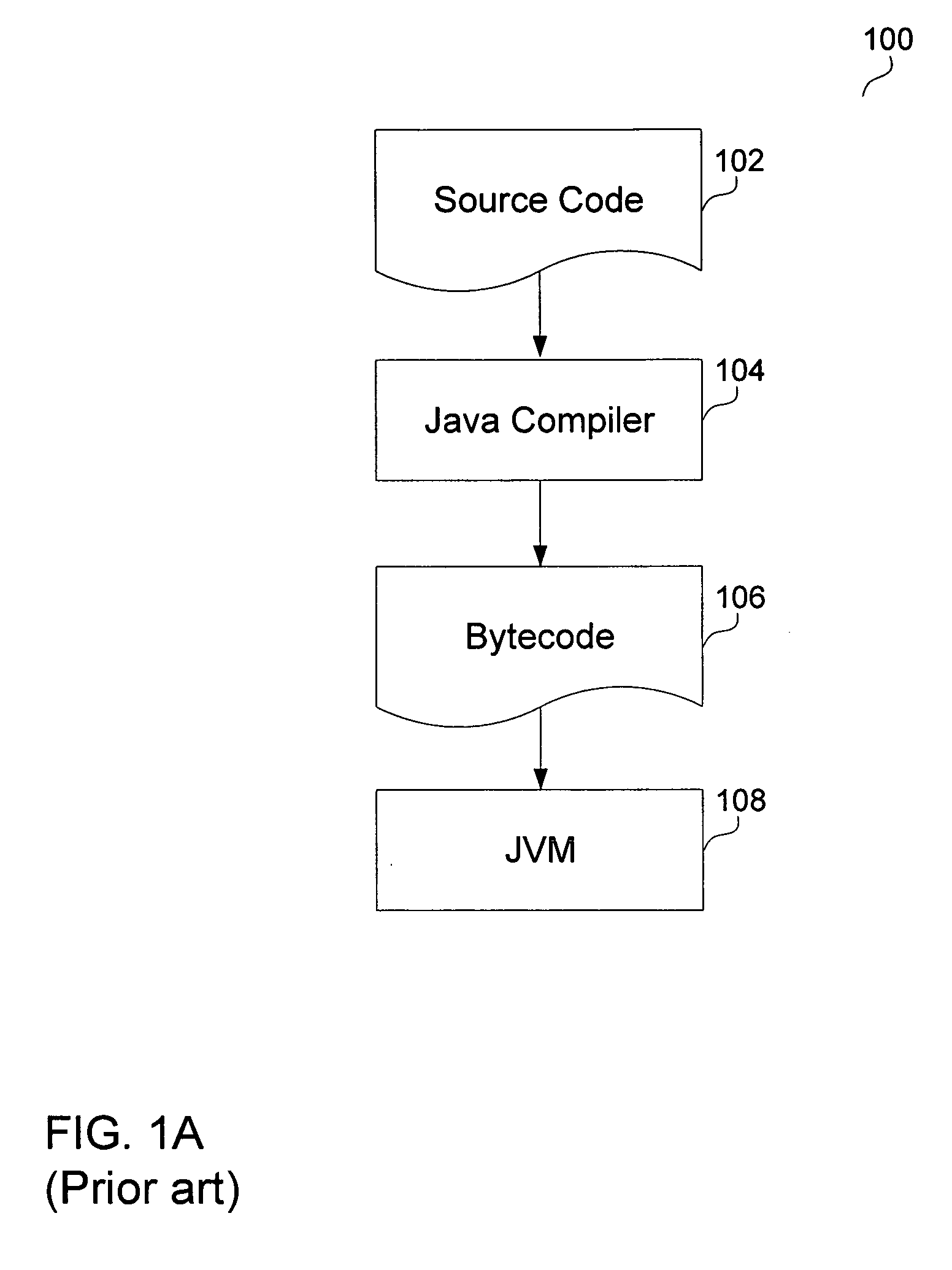

InactiveUS20050081181A1Faster processorBig amount of dataResource allocationMultiple digital computer combinationsCurrent loadRunning time

A program is into at least two object files: one object file for each of the supported processor environments. During compilation, code characteristics, such as data locality, computational intensity, and data parallelism, are analyzed and recorded in the object file. During run time, the code characteristics are combined with runtime considerations, such as the current load on the processors and the size of the data being processed, to arrive at an overall value. The overall value is then used to determine which of the processors will be assigned the task. The values are assigned based on the characteristics of the various processors. For example, if one processor is better at handling intensive computations against large streams of data, programs that are highly computationally intensive and process large quantities of data are weighted in favor of that processor. The corresponding object is then loaded and executed on the assigned processor.

Owner:IBM CORP

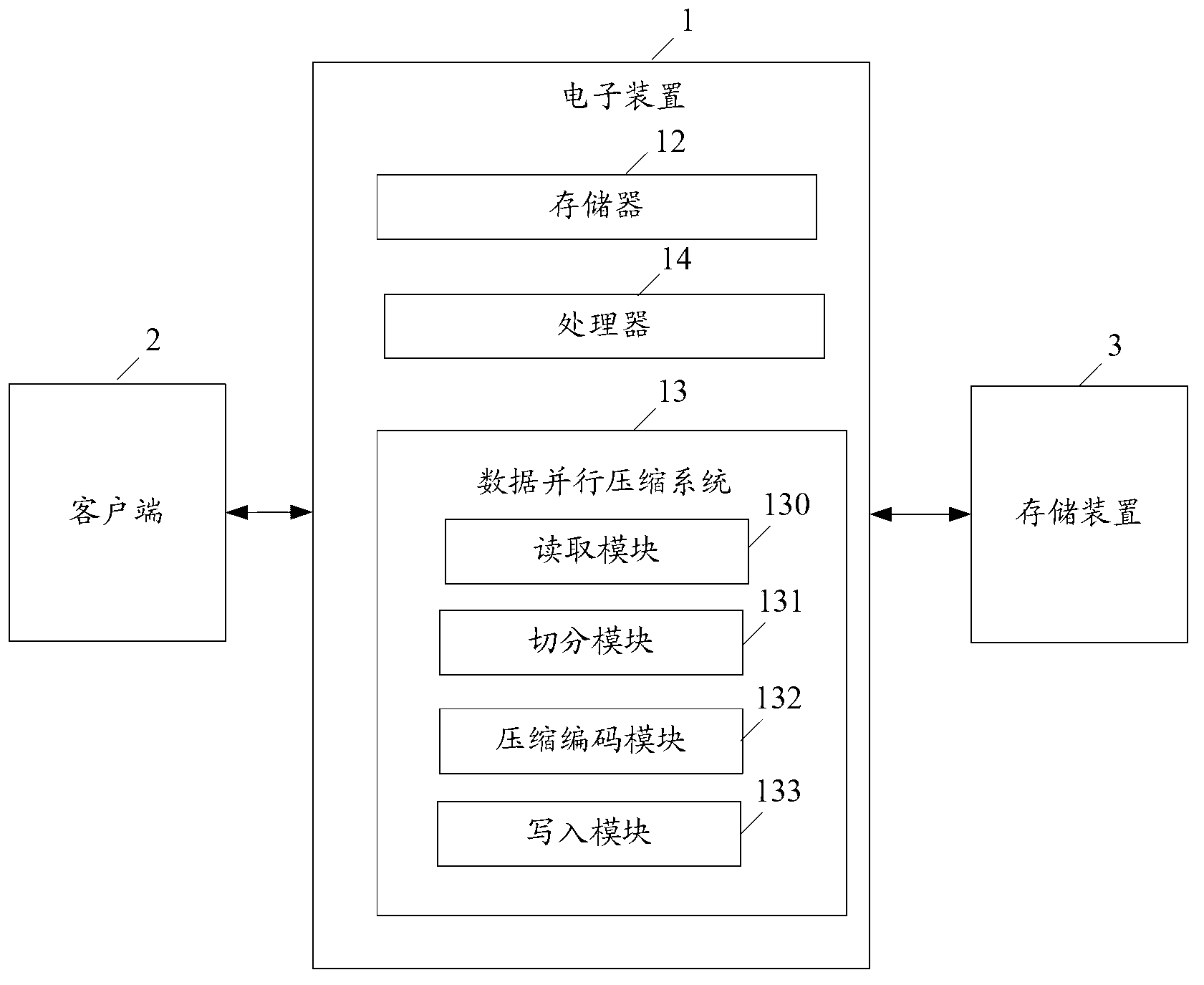

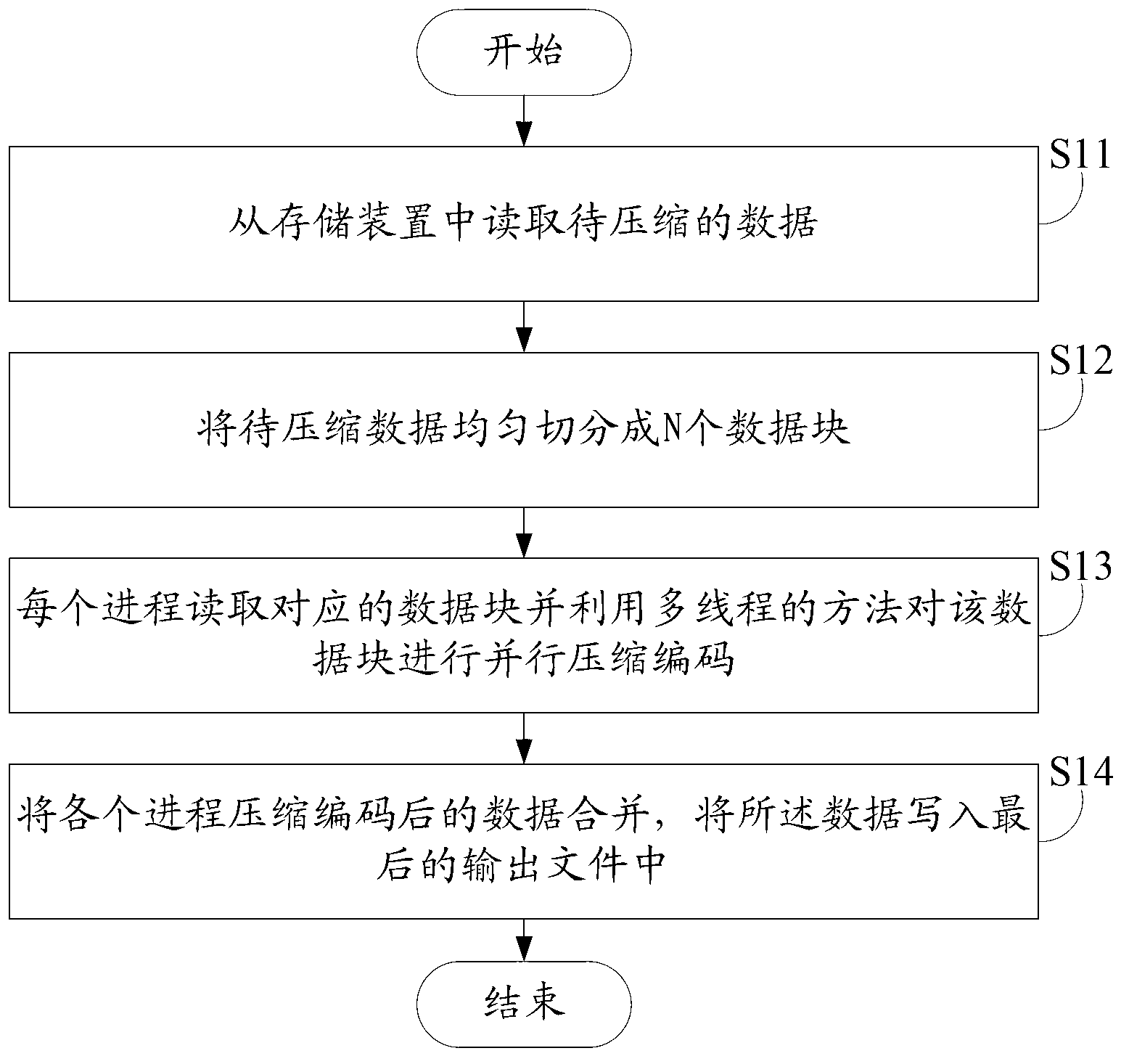

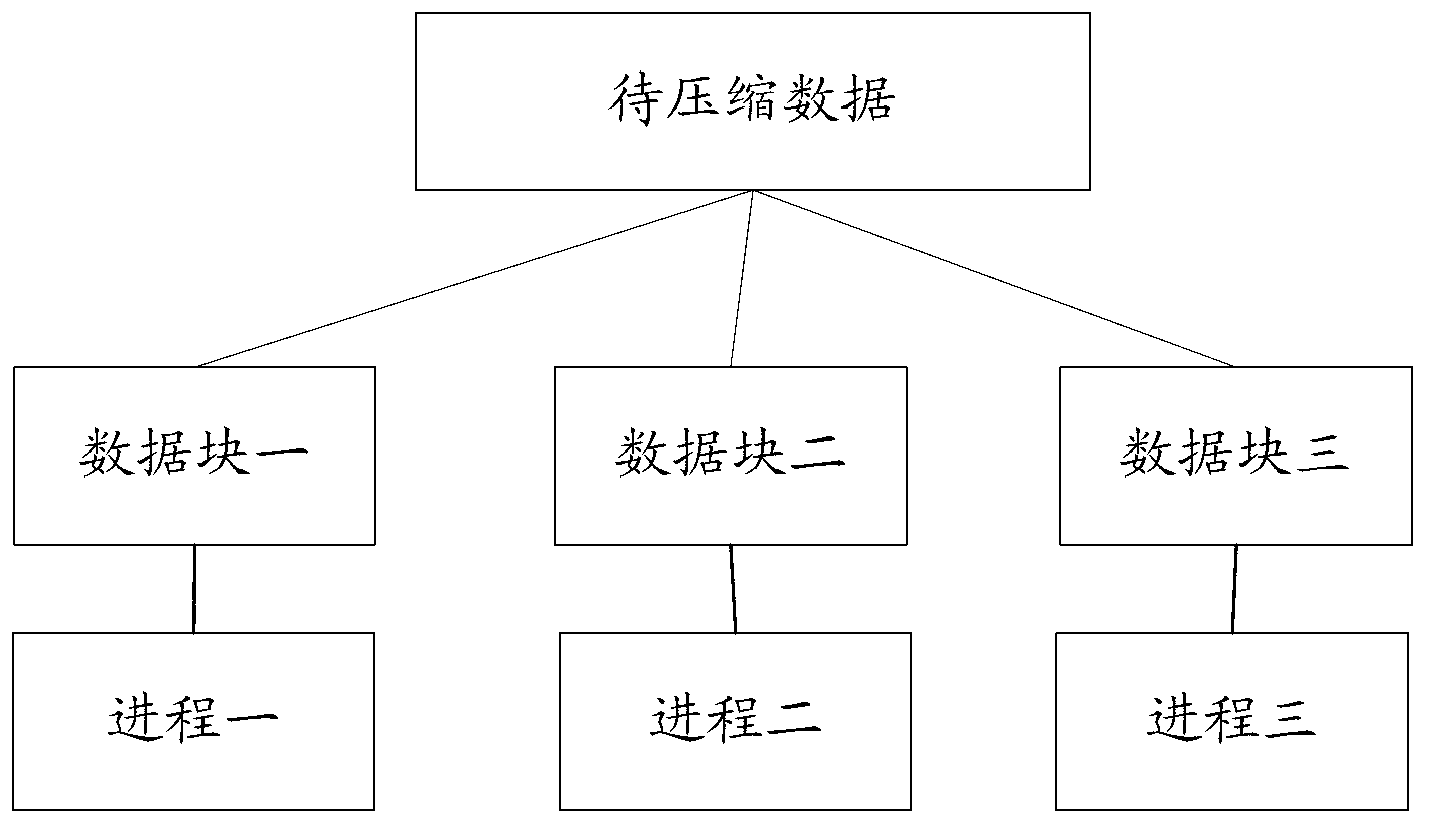

Data parallelism compression method

ActiveCN103326730AReduce overheadImprove parallelismCode conversionParallel compressionData compression ratio

The invention discloses a data parallelism compression method. The data parallelism compression method comprises the step of reading, namely, data to be compressed are read from a memory device through an I / O, the step of segmenting, namely, data to be compressed are evenly segmented into N data blocks which are stored in an input buffering area corresponding to each data block, the step of compressing and encoding, namely, processes control the data blocks in the corresponding input buffering areas, the data blocks are compressed and encoded through a multithreading method, and the data which are compressed and encoded are stored in an output buffering area, and the step of writing, the data which are compressed and encoded in all processes in the output buffering area are merged, and the merged data are written to an output file. According to the data parallelism compression method, an ideal compression ratio is obtained, time for compressing is greatly shortened, the data parallelism compression method can be well applied to a cloud storage or database system, the problem of low compression timeliness is solved, and whole performance of compression is improved.

Owner:TSINGHUA UNIV

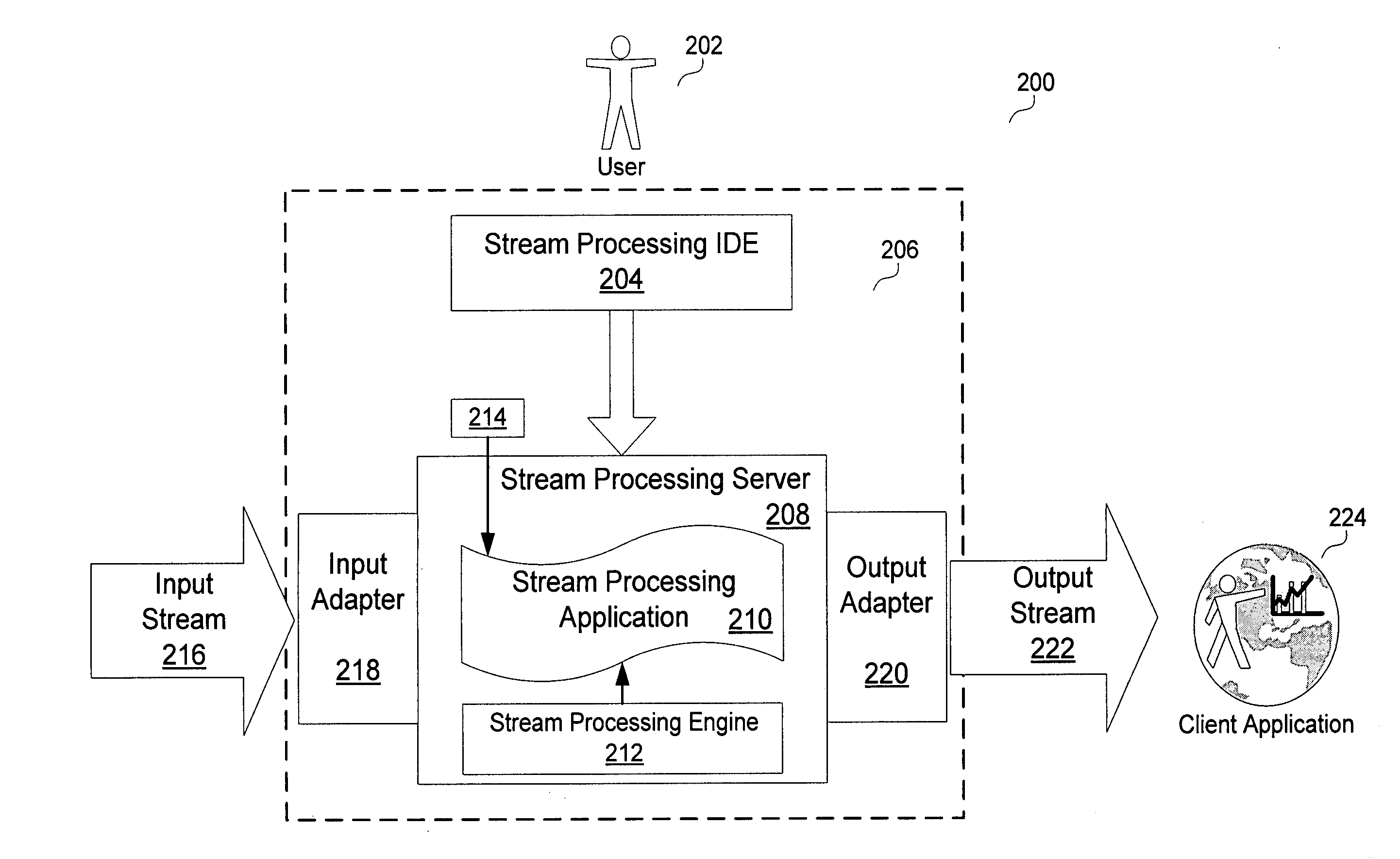

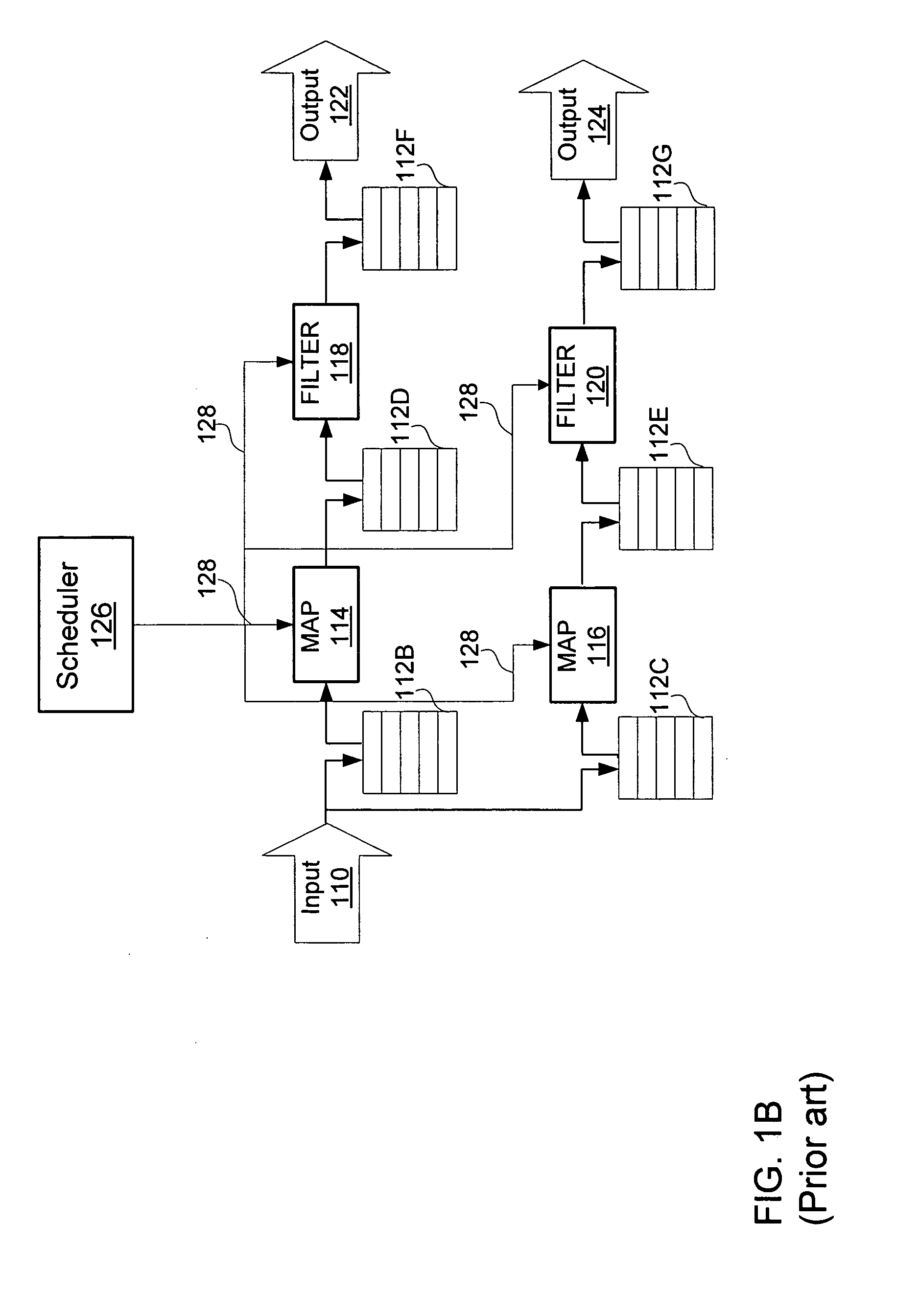

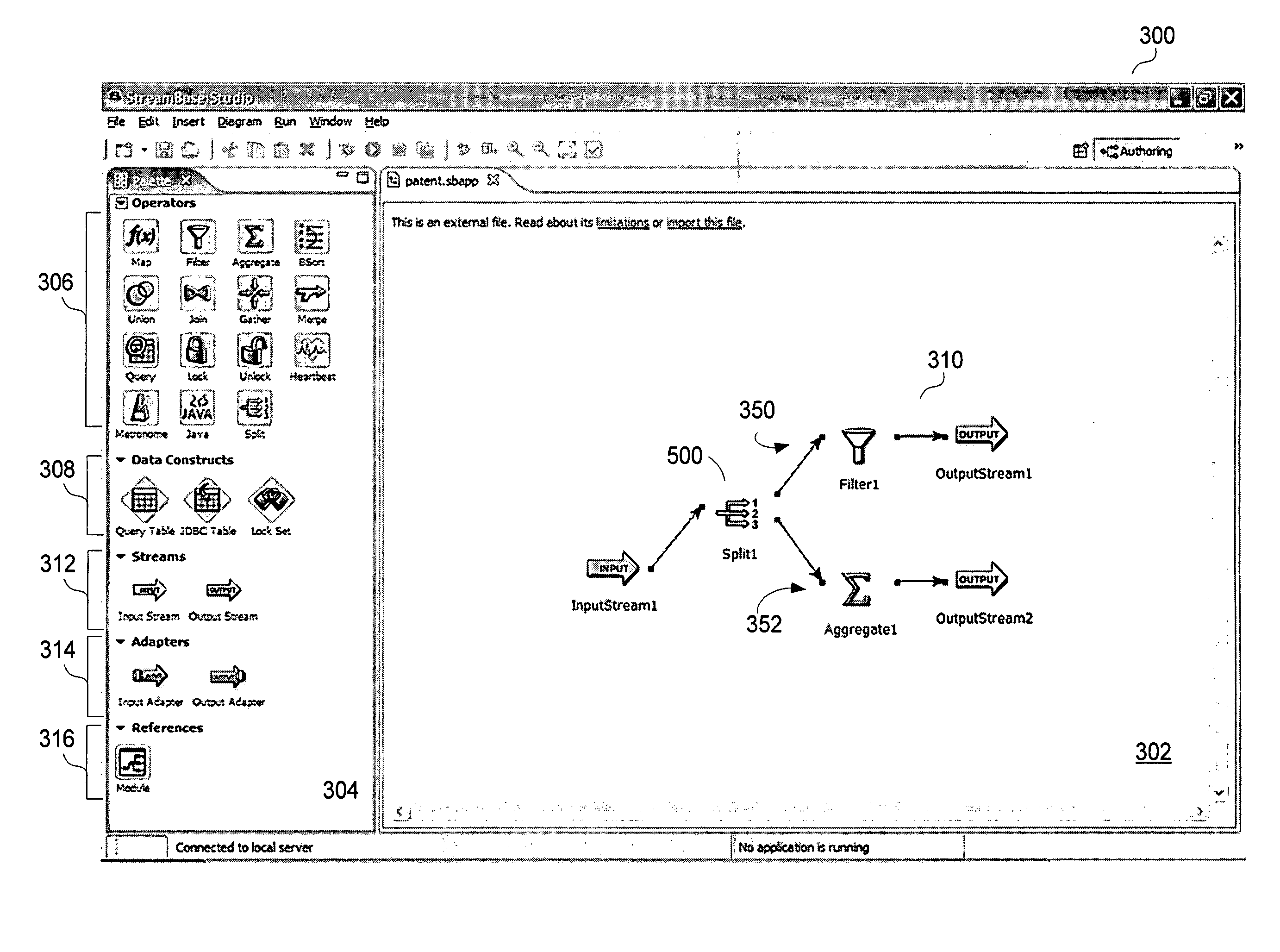

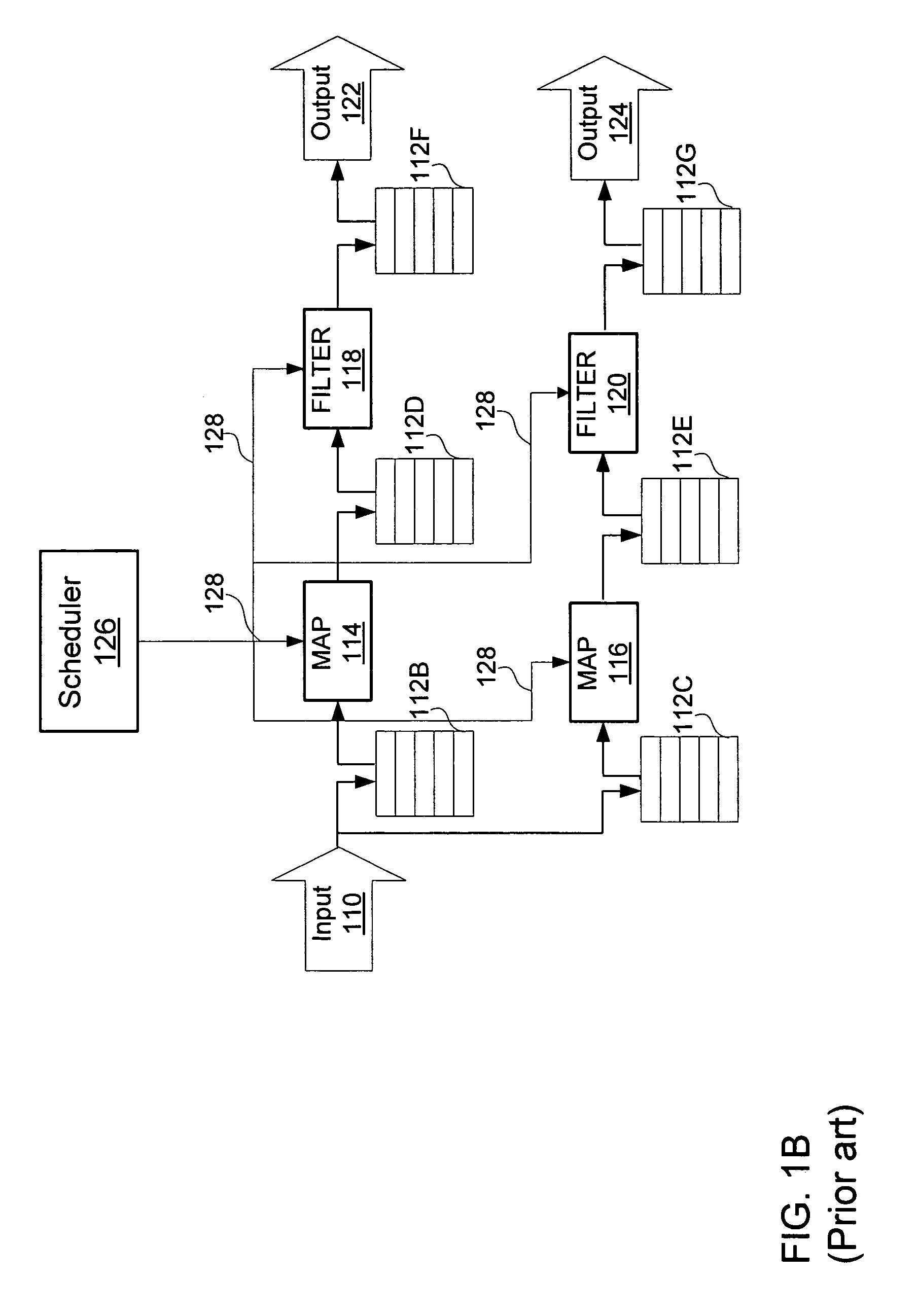

Data parallelism and parallel operations in stream processing

ActiveUS20080133891A1Digital computer detailsSpecific program execution arrangementsApplication softwareComputer science

A stream processing platform that provides fast execution of stream processing applications within a safe runtime environment. The platform includes a stream compiler that converts a representation of a stream processing application into executable program modules for a safe environment. The platform allows users to specify aspects of the program that contribute to generation of modules that execute as intended. A user may specify aspects to control a type of implementation for loops, order of execution for parallel paths, whether multiple instances of an operation can be performed in parallel or whether certain operations should be executed in separate threads. In addition, the stream compiler may generate executable modules in a way that cause a safe runtime environment to allocate memory or otherwise operate efficiently.

Owner:CLOUD SOFTWARE GRP INC

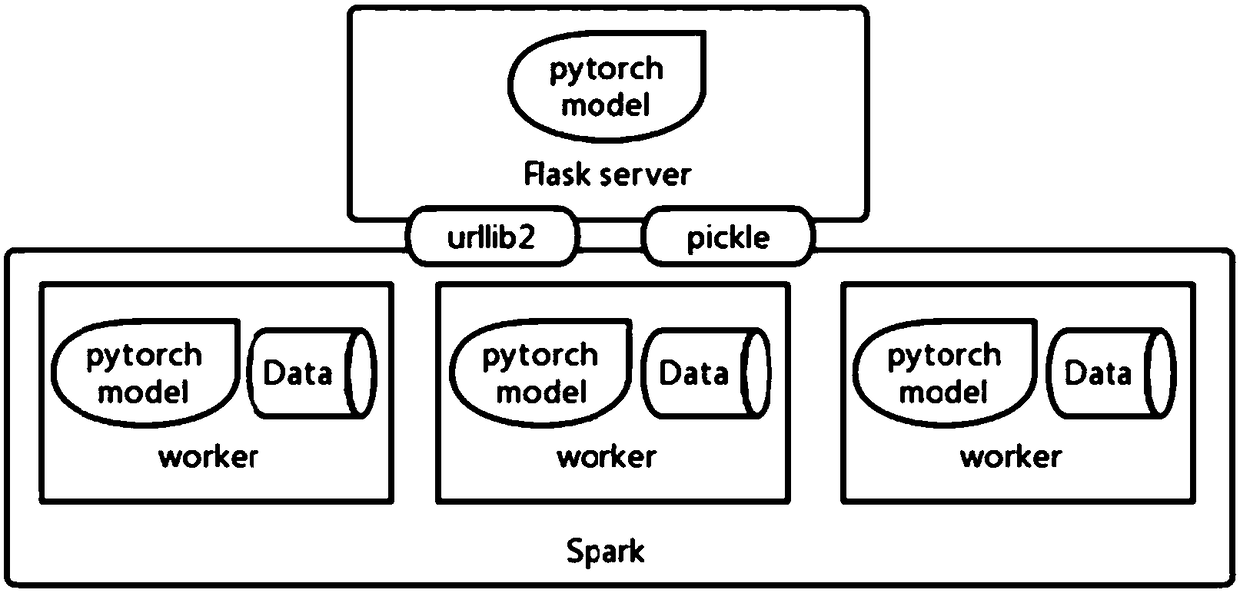

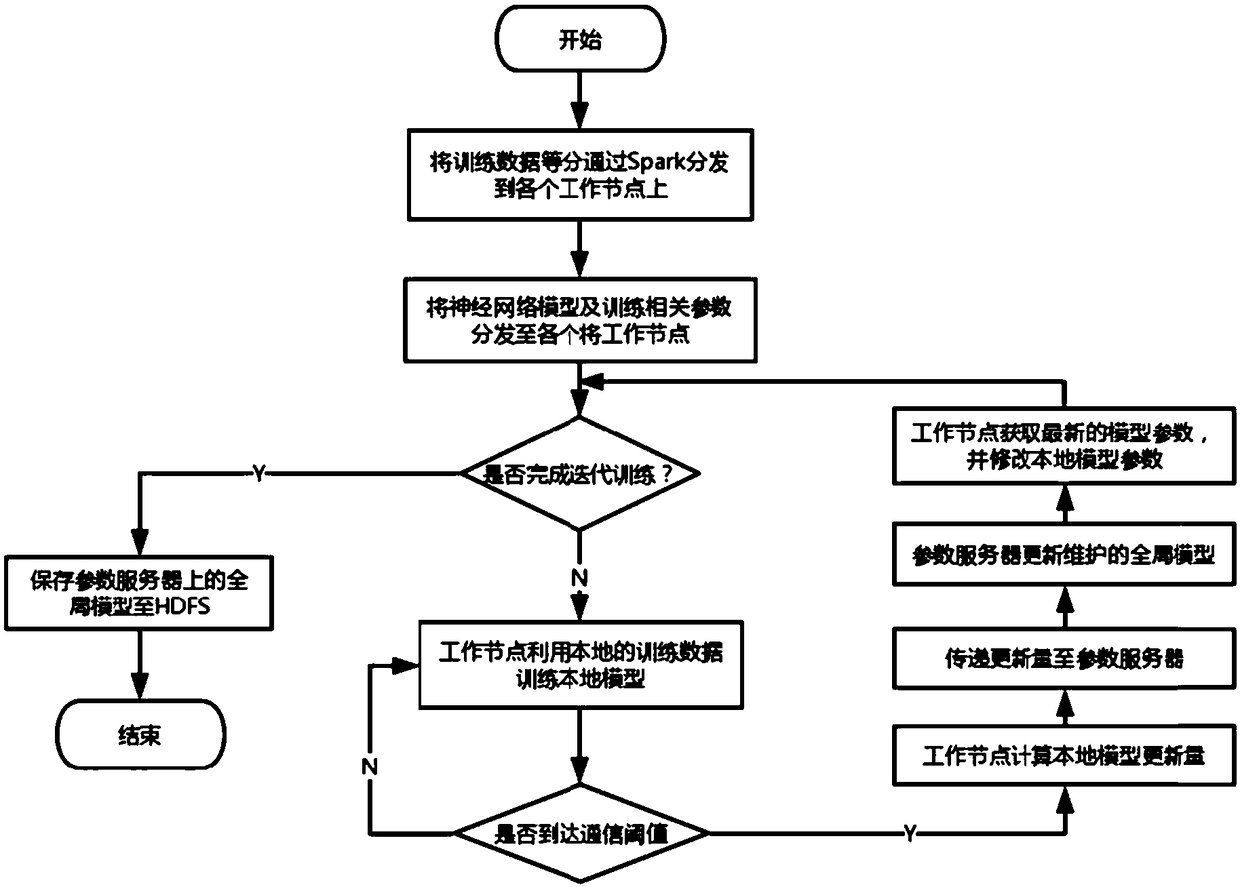

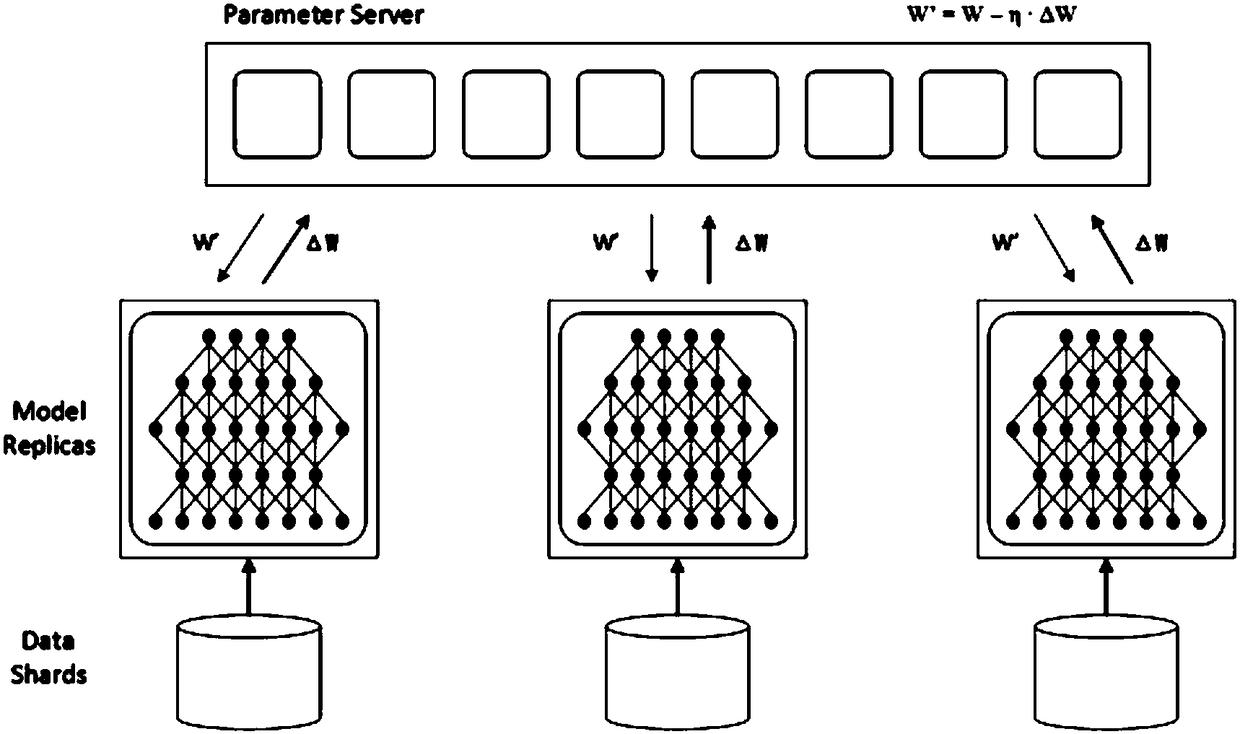

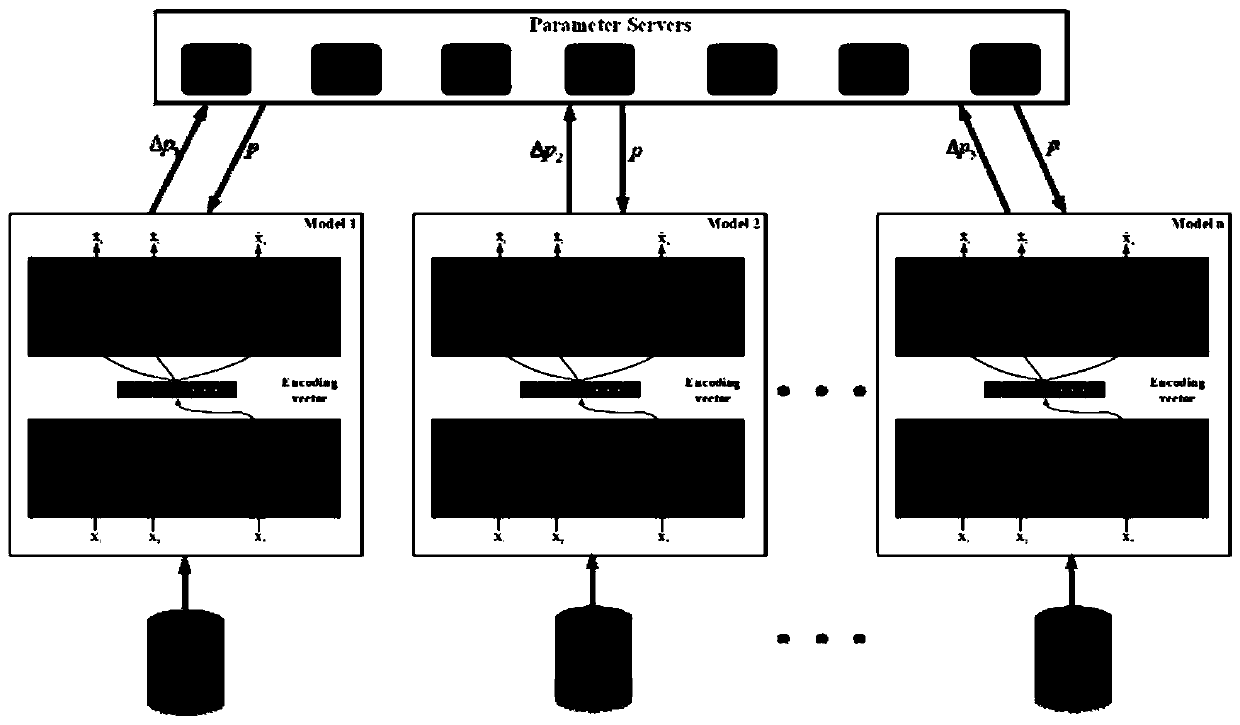

A distributed depth learning method and system based on a data parallel strategy

ActiveCN109032671AImprove training efficiencyIncrease flexibilityConcurrent instruction executionNeural architecturesComputer moduleNetwork model

The invention discloses a distributed depth learning method and a system based on a data parallel strategy. The system of the invention comprises a distributed computing framework Spark, a PyTorch depth learning framework, a lightweight Web application framework Flask, a pickle, a urllib2 and other related components. The Spark framework provides the functions of cluster resource management, datadistribution and distributed computing. The PyTorch Deep Learning Framework provides the interface defined by neural network and the function of upper layer training and computation of neural network;the flask framework provides parameter server functionality; the urllib2 module is responsible for providing the network communication function between the working node and the parameter server node;Pickle is responsible for serializing and deserializing the parameters in the neural network model for transmission over the network. The invention effectively combines PyTorch and Spark, decouples PyTorch and bottom distributed cluster through Spark, absorbs respective advantages, provides convenient training interface, and efficiently realizes distributed training process based on data parallelism.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

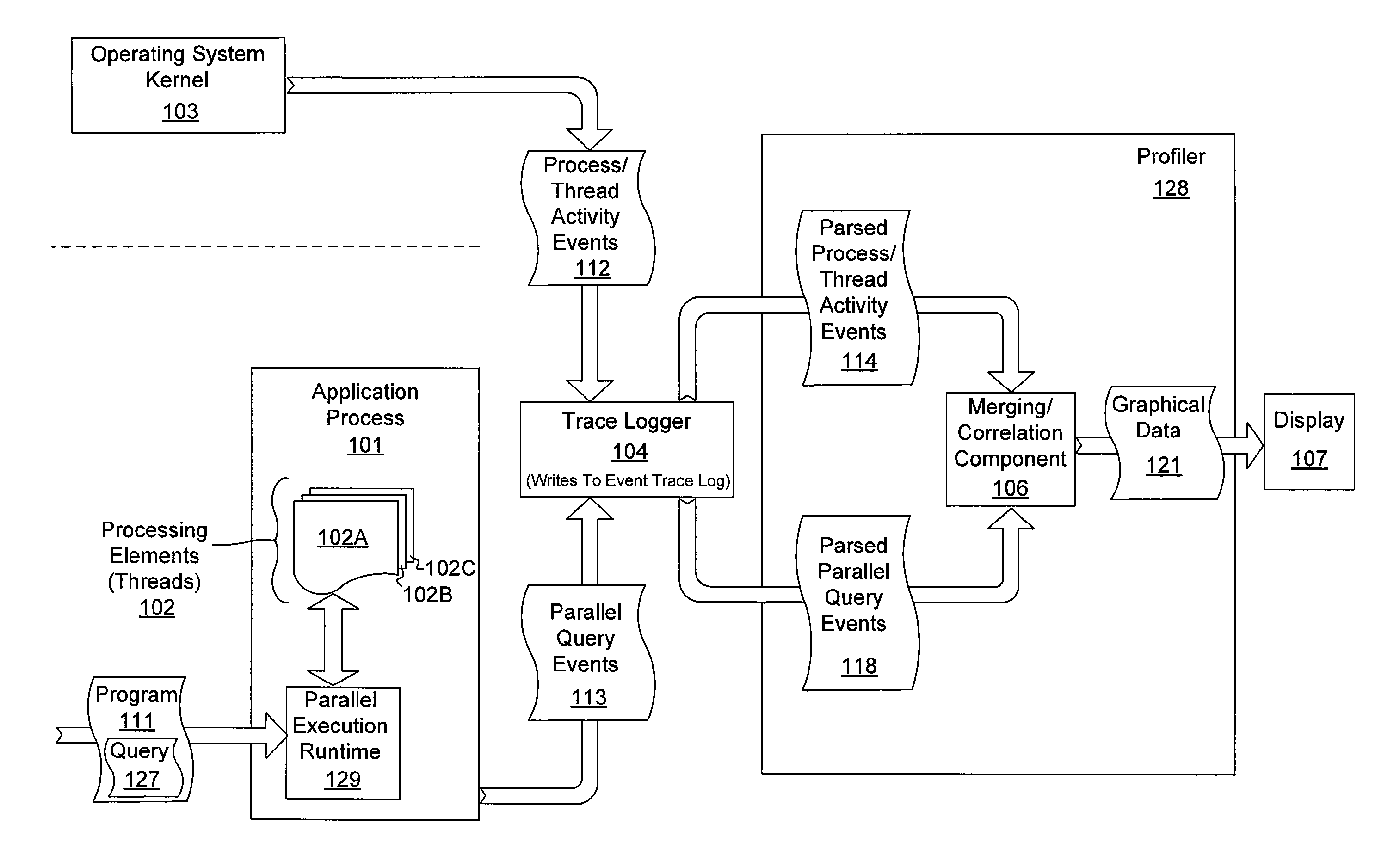

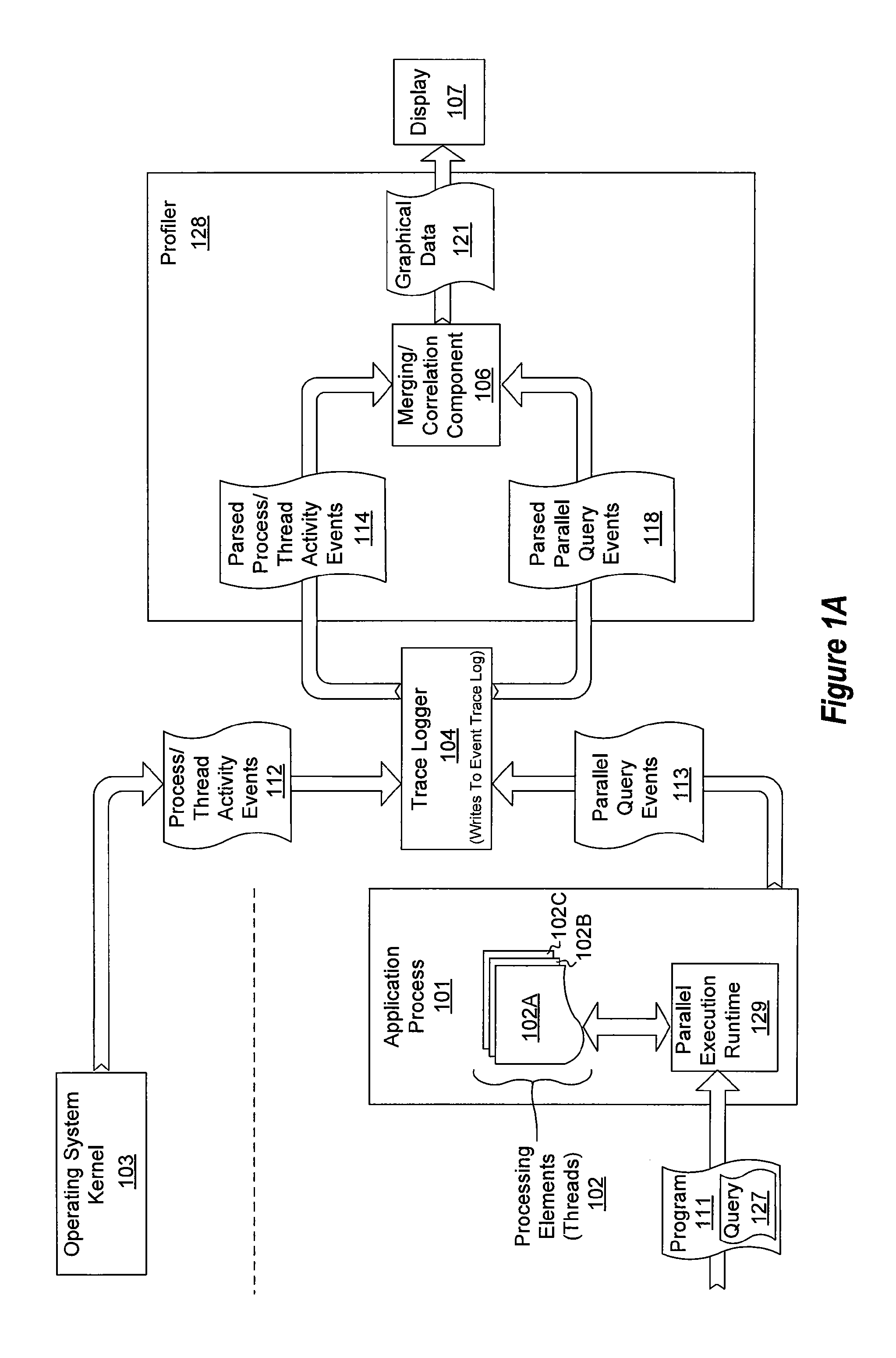

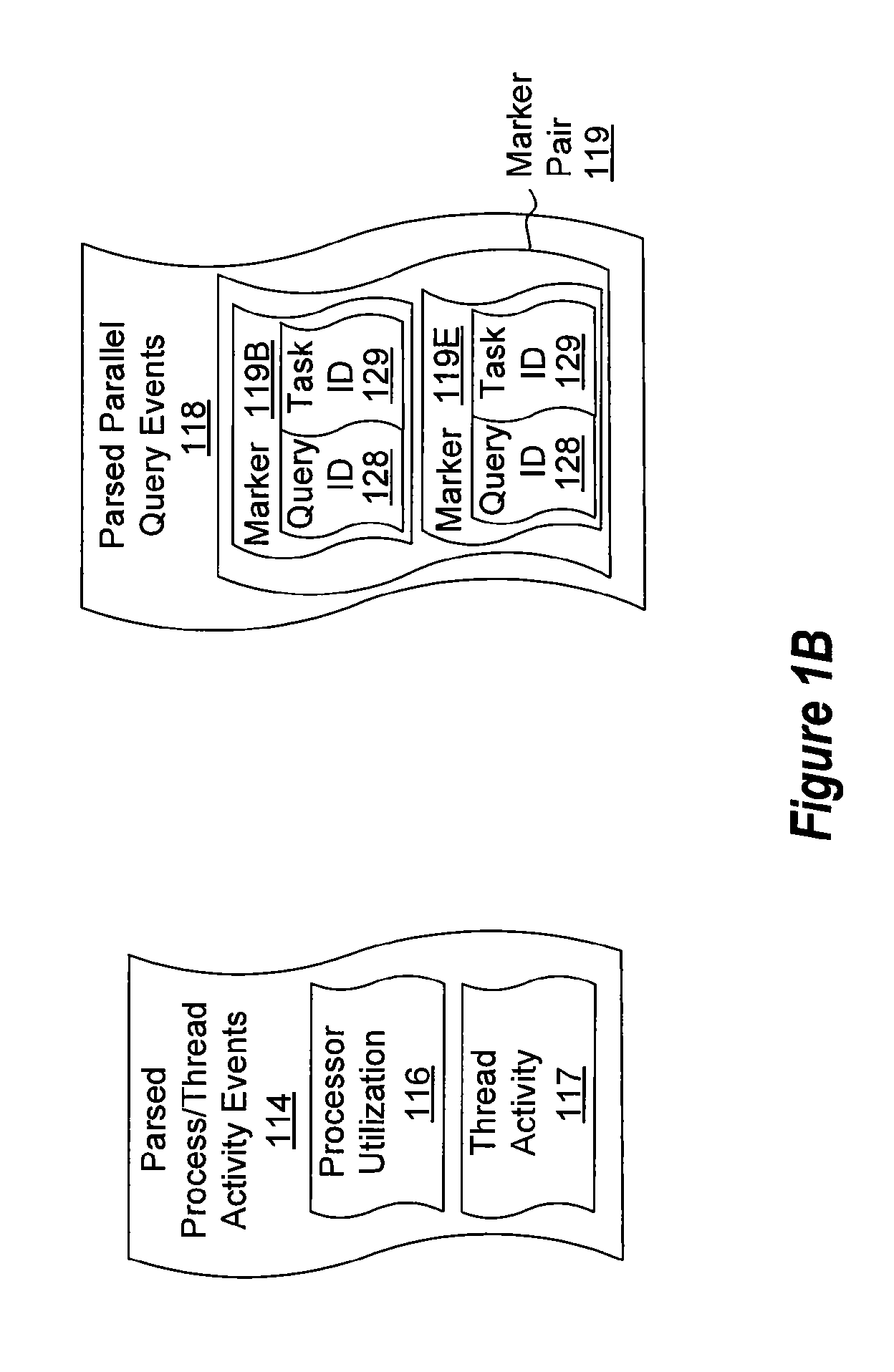

Indicating parallel operations with user-visible events

ActiveUS20110307905A1Understand performanceWell formedError detection/correctionSpecific program execution arrangementsMinutiaeParallel processing

The present invention extends to methods, systems, and computer program products for indicating parallel operations with user-visible events. Event markers can be used to indicate an abstracted outer layer of execution as well as expose internal specifics of parallel processing systems, including systems that provide data parallelism. Event markers can be used to show a variety of execution characteristics including higher-level markers to indicate the beginning and end of an execution program (e.g., a query). Inside the execution program (query) individual fork / join operations can be indicated with sub-levels of markers to expose their operations. Additional decisions made by an execution engine, such as, for example, when elements initially yield, when queries overlap or nest, when the query is cancelled, when the query bails to sequential operation, when premature merging or re-partitioning are needed can also be exposed.

Owner:MICROSOFT TECH LICENSING LLC

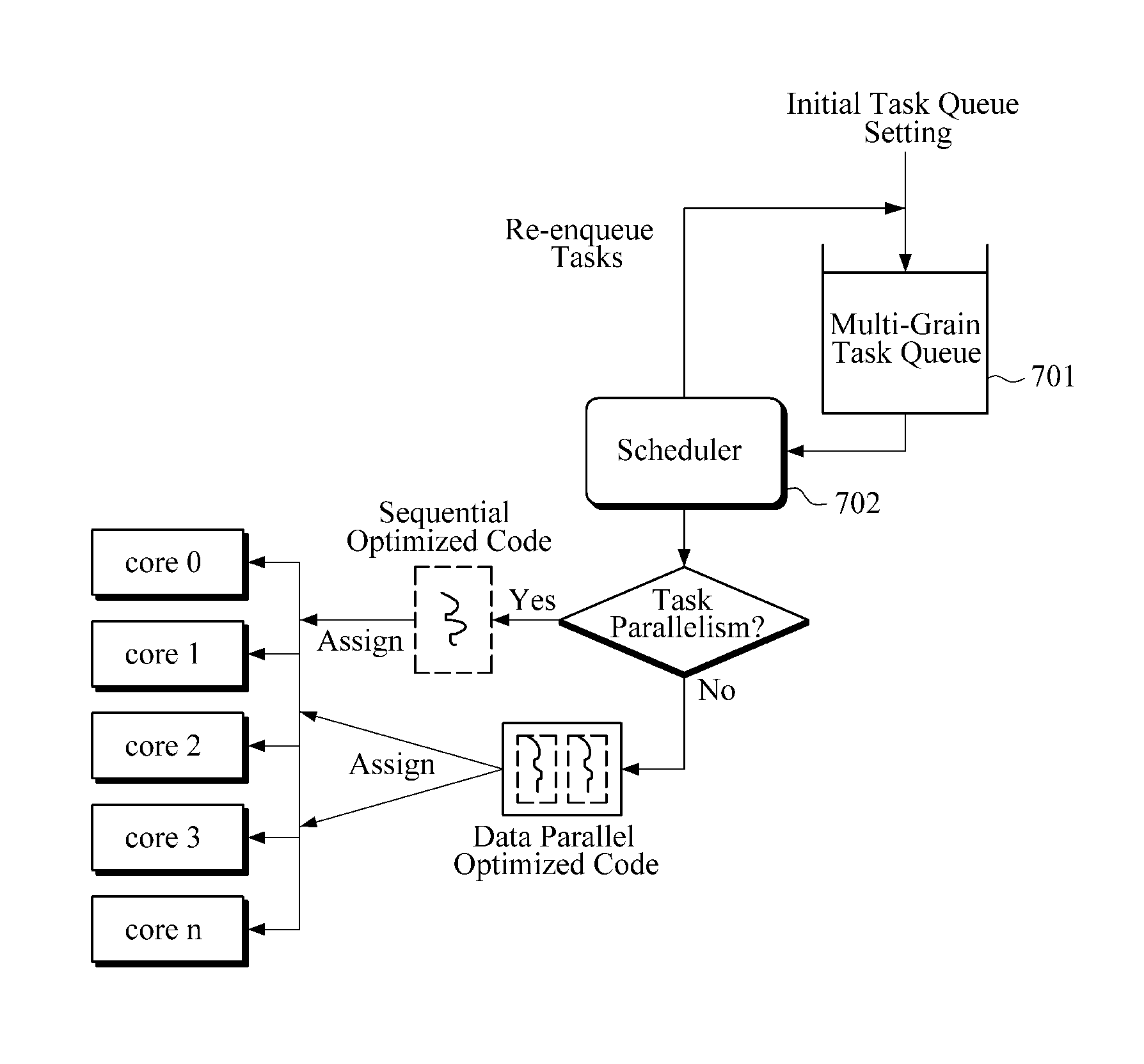

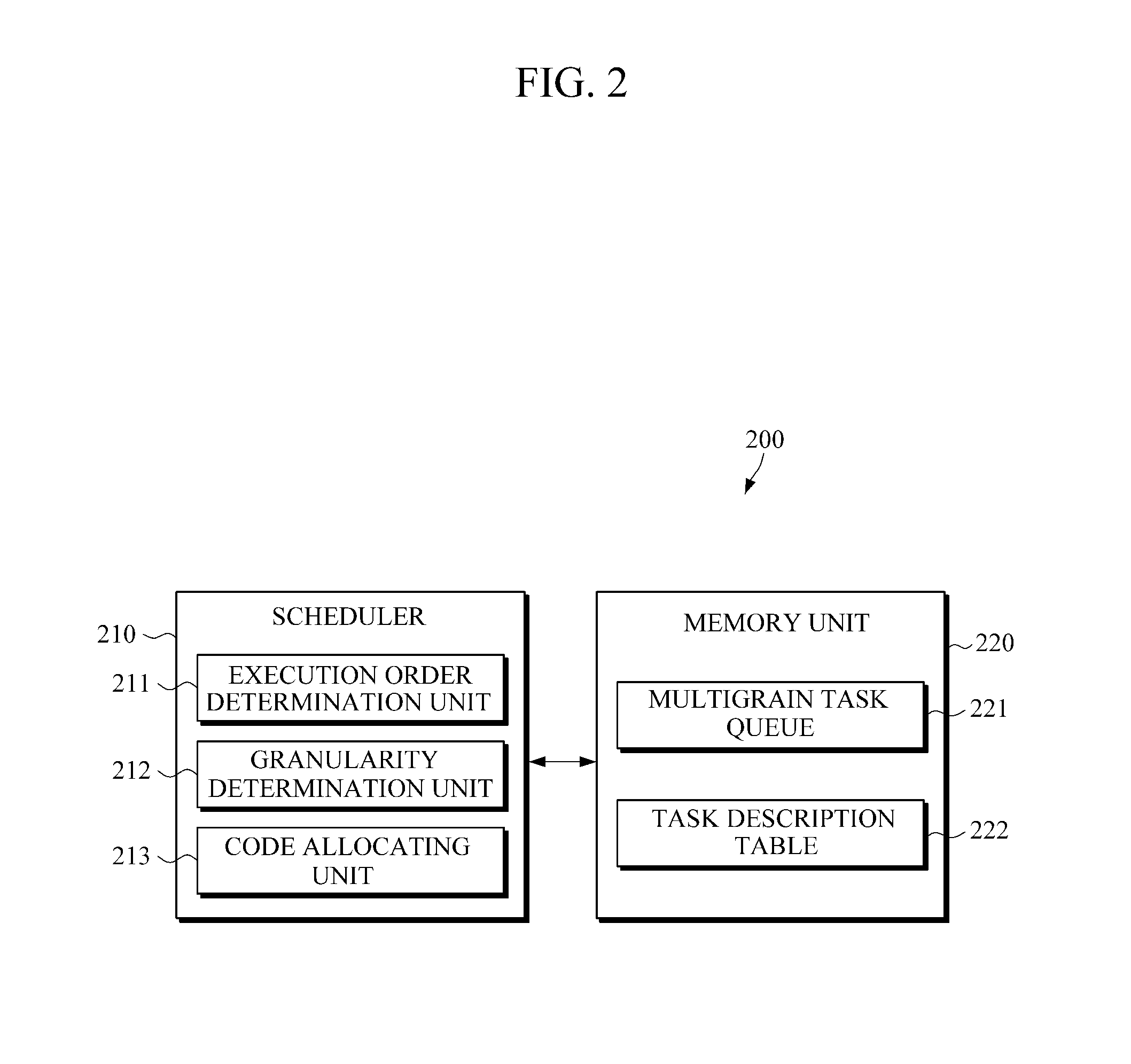

Apparatus and method for parallel processing

InactiveUS20110161637A1Operational speed enhancementDigital computer detailsParallel processingDegree of parallelism

An apparatus and method for parallel processing in consideration of degree of parallelism are provided. One of a task parallelism and a data parallelism is dynamically selected while a job is processed. In response to a task parallelism being selected, a sequential version code is allocated to a core or processor for processing a job. In response to a data parallelism being selected, a parallel version code is allocated to a core a processor for processing a job.

Owner:SAMSUNG ELECTRONICS CO LTD

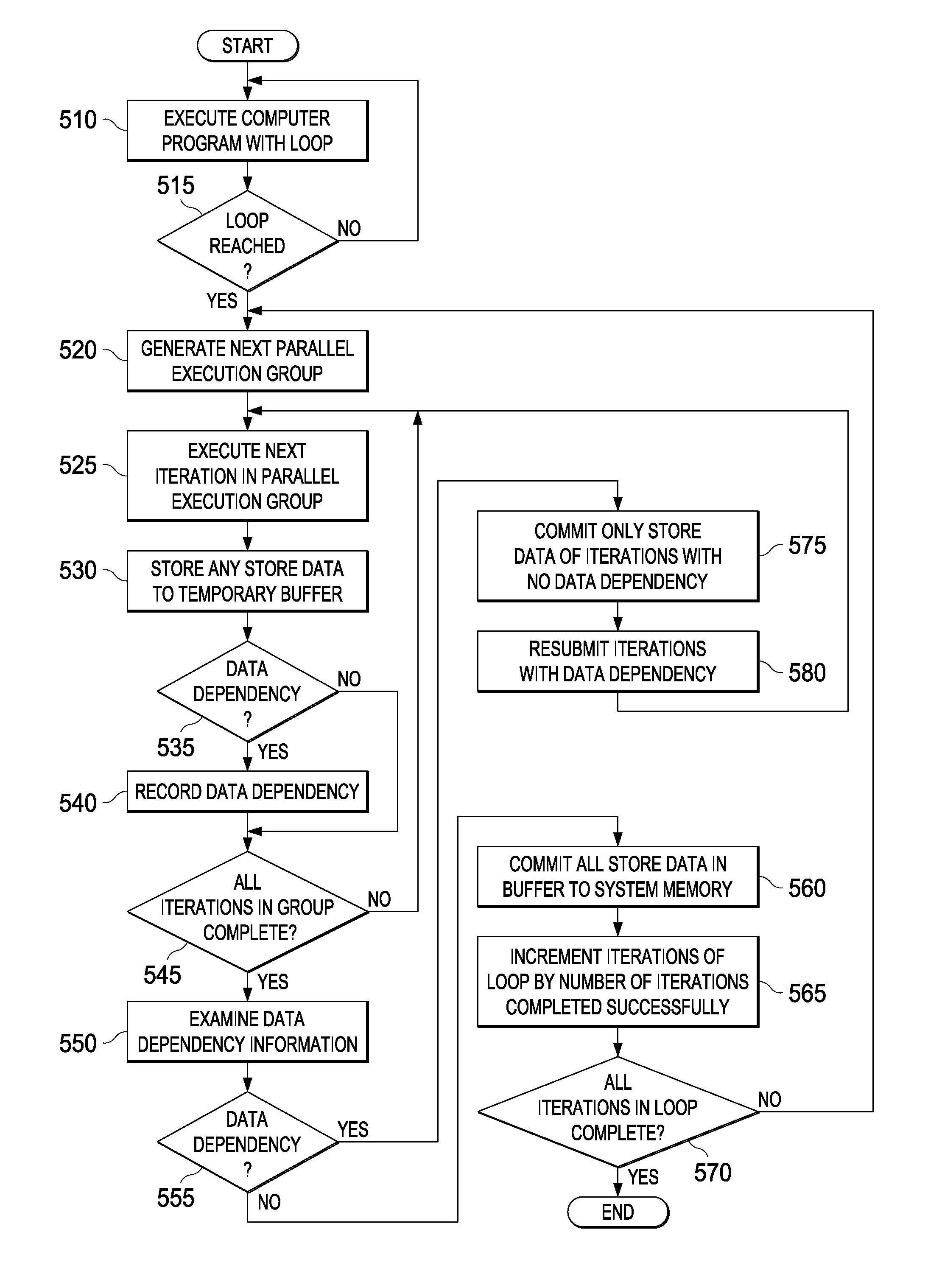

Runtime Extraction of Data Parallelism

Mechanisms for extracting data dependencies during runtime are provided. The mechanisms execute a portion of code having a loop and generate, for the loop, a first parallel execution group comprising a subset of iterations of the loop less than a total number of iterations of the loop. The mechanisms further execute the first parallel execution group and determining, for each iteration in the subset of iterations, whether the iteration has a data dependence. Moreover, the mechanisms commit store data to system memory only for stores performed by iterations in the subset of iterations for which no data dependence is determined. Store data of stores performed by iterations in the subset of iterations for which a data dependence is determined is not committed to the system memory.

Owner:IBM CORP

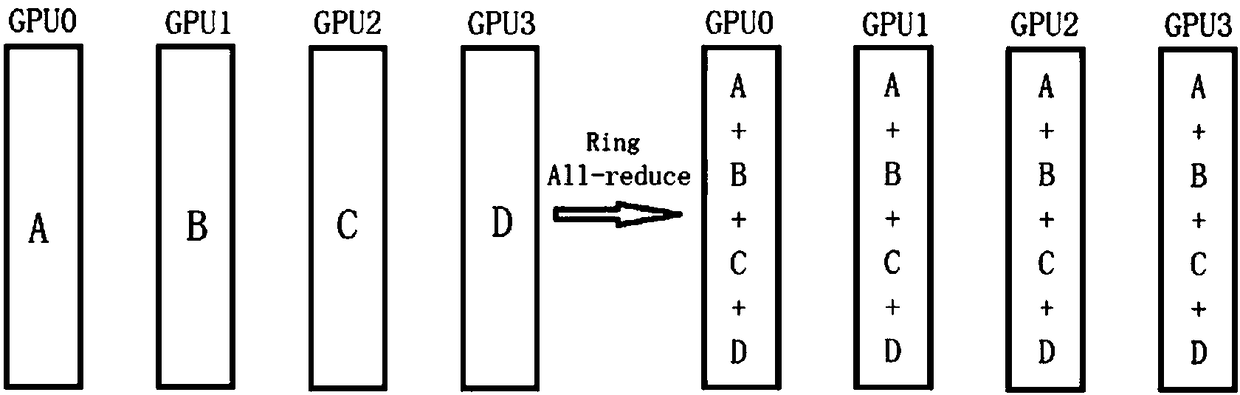

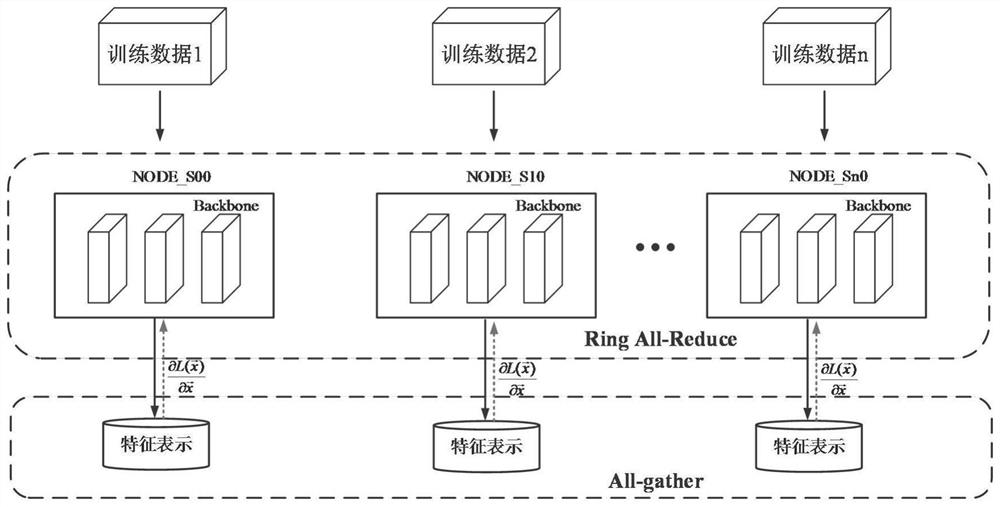

Multi-machine multi-card hybrid parallel asynchronous training method for convolutional neural network

InactiveCN108460457AReduce overheadImprove operational efficiencyNeural architecturesNeural learning methodsMulti machineAlgorithm

The invention provides a multi-machine multi-card hybrid parallel asynchronous training method for the convolutional neural network. The method comprises steps that a CNN model is constructed, and training parameters are set; data parallelism of a Softmax layer is changed into model parallelism, the integral model is divided into multiple pieces, and the multiple pieces respectively correspond tomultiple GPUs for calculation; a source code of the Softmax layer is modified, instead of exchanging parameter data before calculating the result, Ring All-reduce communication algorithm operation ofthe calculation result is carried out; one multi-machine multi-card GPU is selected as a parameter server, and other GPUs are for training; in a Parameter Server model, each Server is only responsiblefor some of assigned parameter and processing tasks; each sub node maintains the own parameters, after update, the result is returned to a main node for global update, new parameters are further transmitted by the main node to sub nodes, and training is sequentially completed.

Owner:苏州纳智天地智能科技有限公司

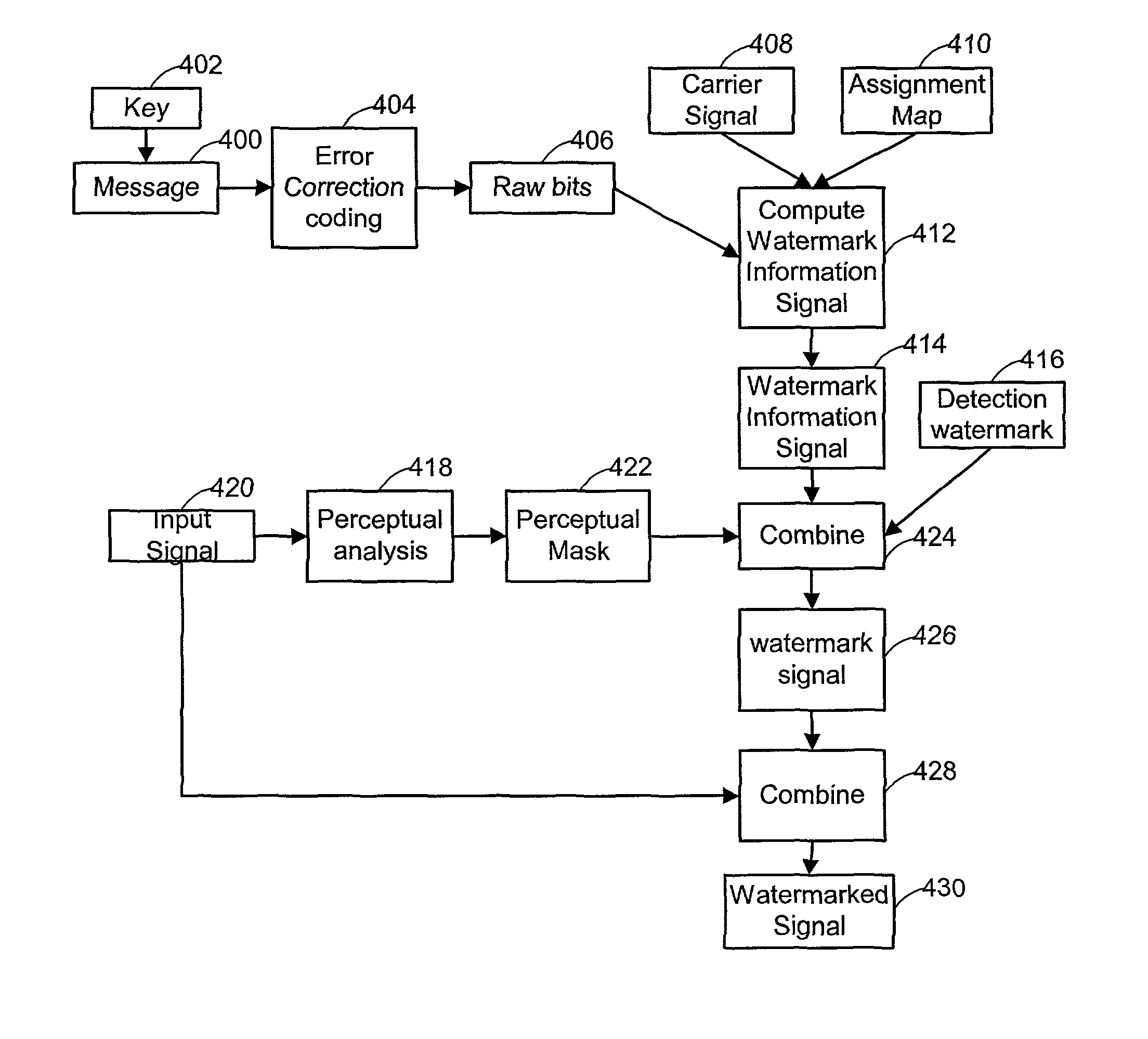

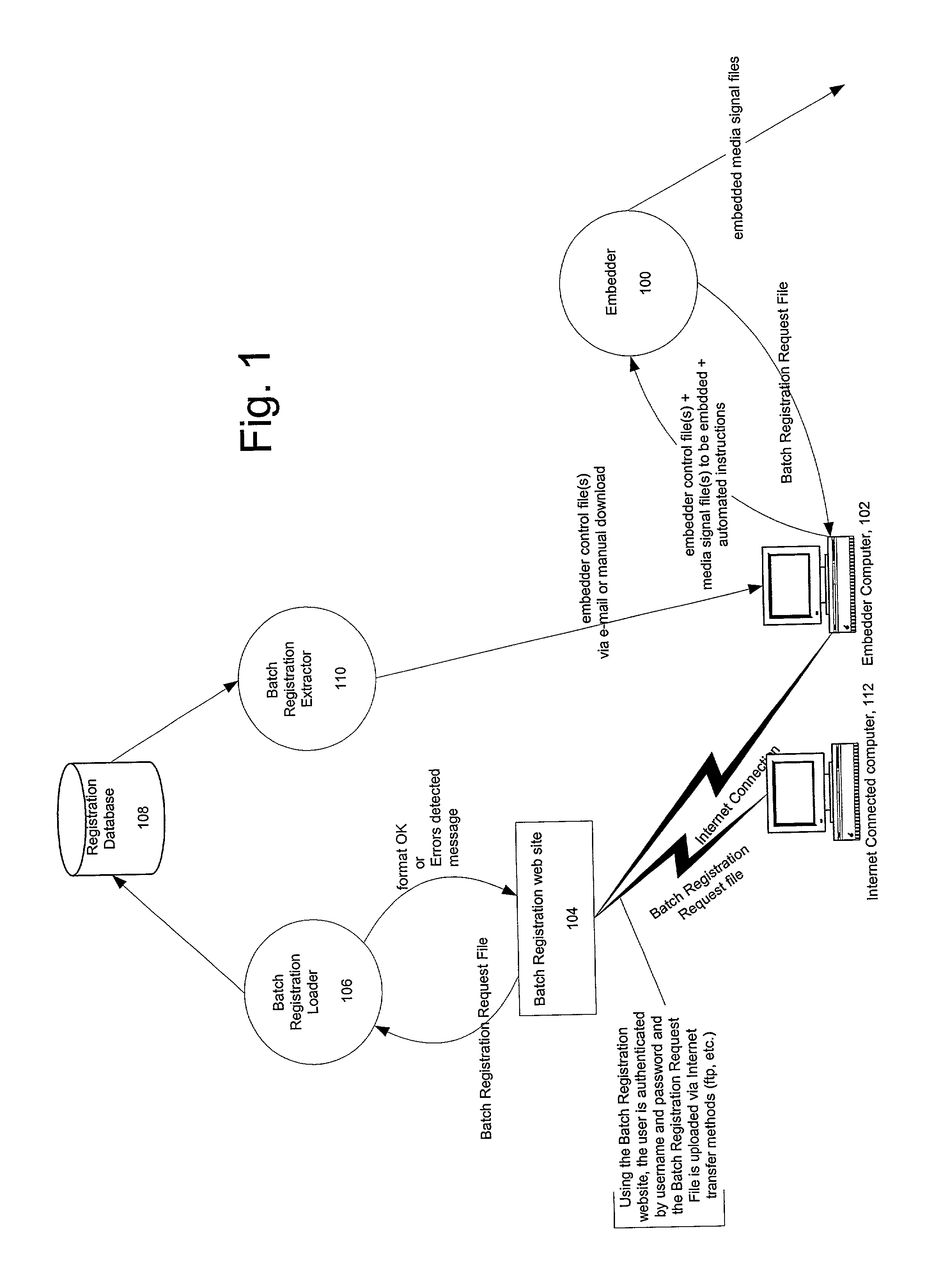

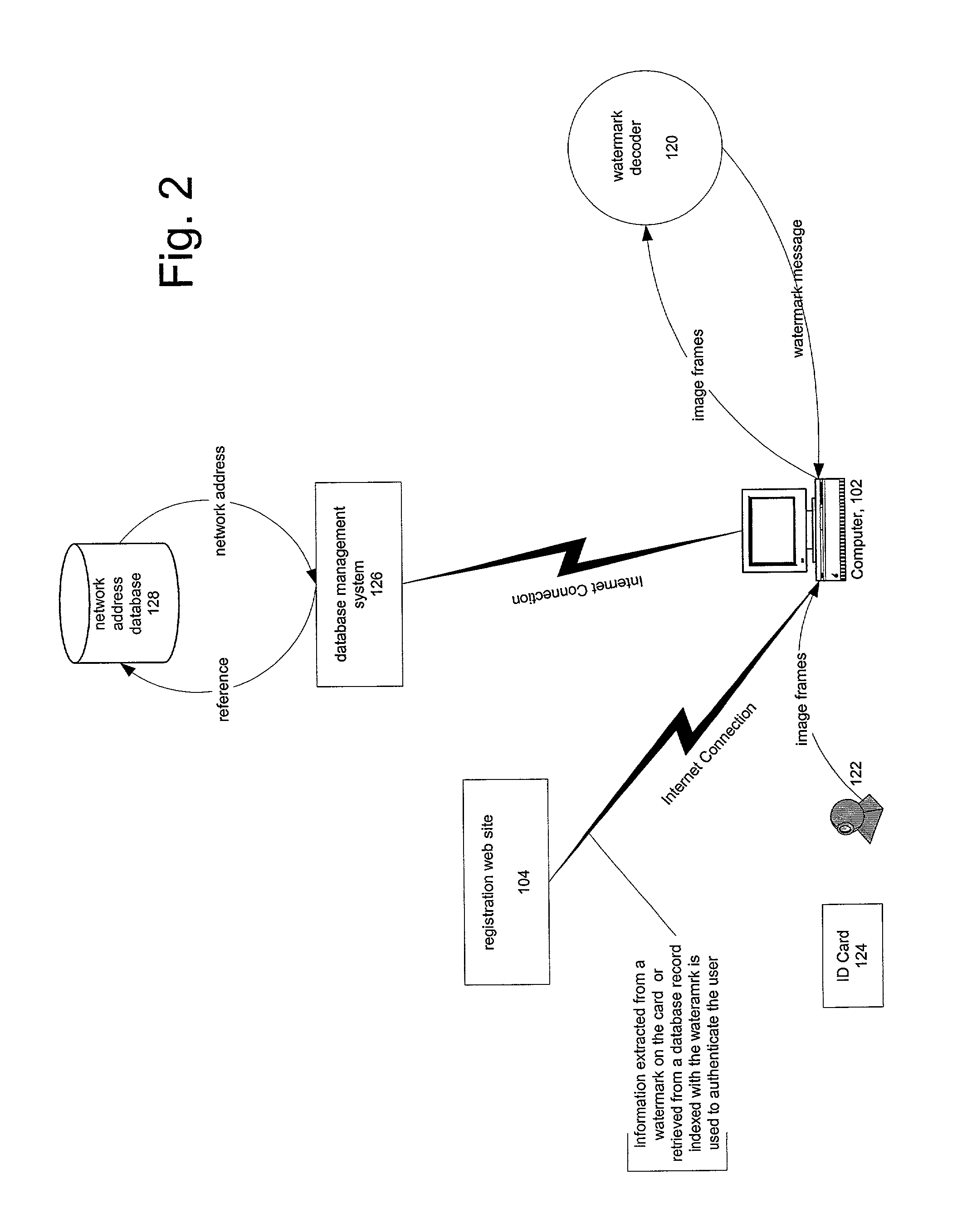

Parallel processing of digital watermarking operations

ActiveUS8355525B2Facilitate rapid embeddingFacilitate decoding operationFinanceRoad vehicles traffic controlSignal generatorParallel processing

A method of segmenting a media signal for parallel watermarking operations sub-divides the media signal into segments, distributes the segments to parallel processors, and performs parallel digital watermark operations on the segments in the parallel processors. These parallel processors may comprise separate threads of execution on a processing unit, or several execution threads distributed to several processing units. In one enhancement, the method prioritizes the segments for watermarking operations. This enables finite processing resources to be allocated to segments in order of their priority. Further, processing resources are devoted to segments where the digital watermark is more likely to be imperceptible and / or readable. A system for distributed watermark embedding operations includes a watermark signal generator, a perceptual analyzer and a watermark applicator. The system supports operation-level parallelism in the concurrent operation of watermark embedder modules, and data parallelism in the concurrent operation of these modules on segments of a media signal to be embedded with an imperceptible digital watermark.

Owner:DIGIMARC CORP

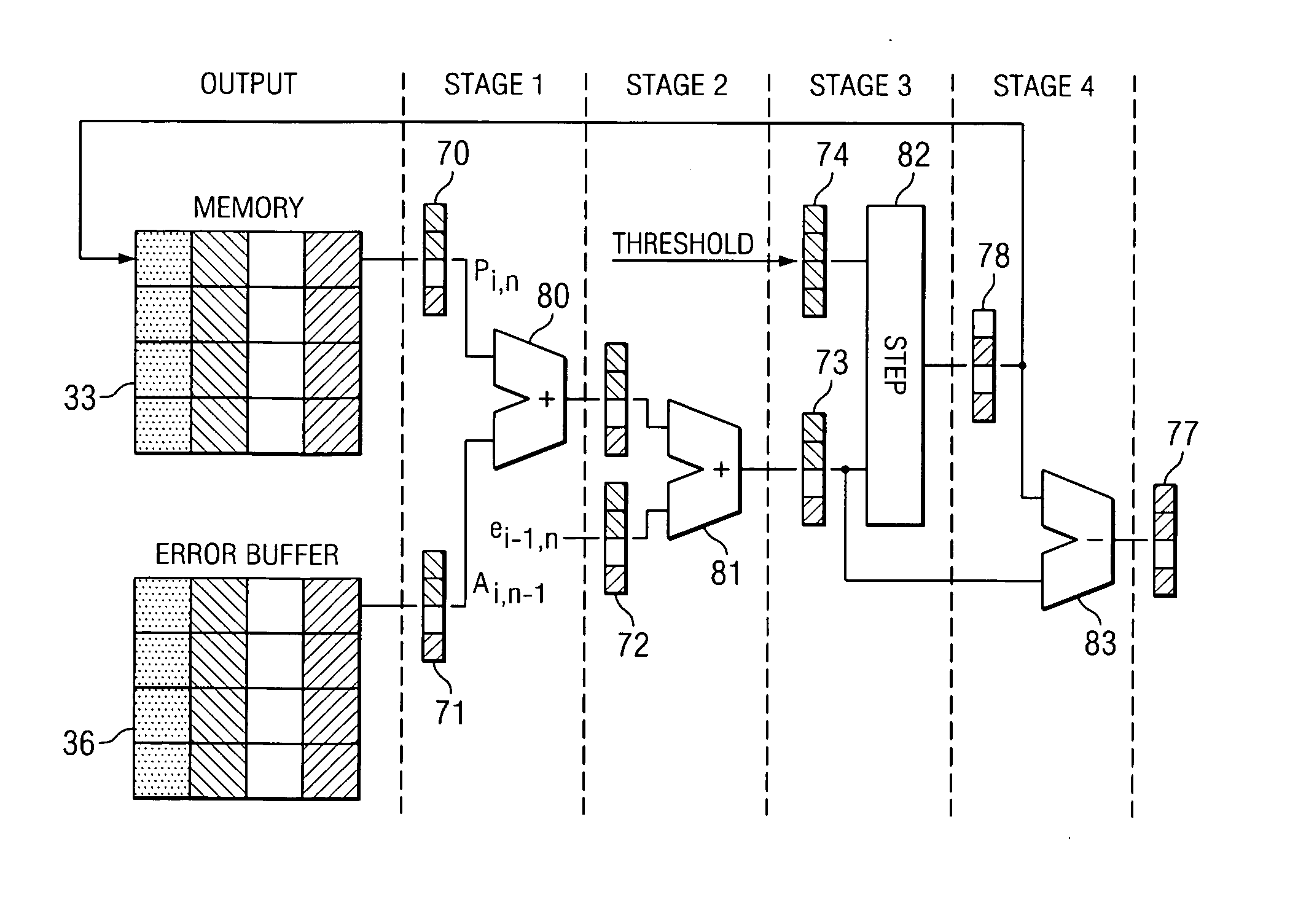

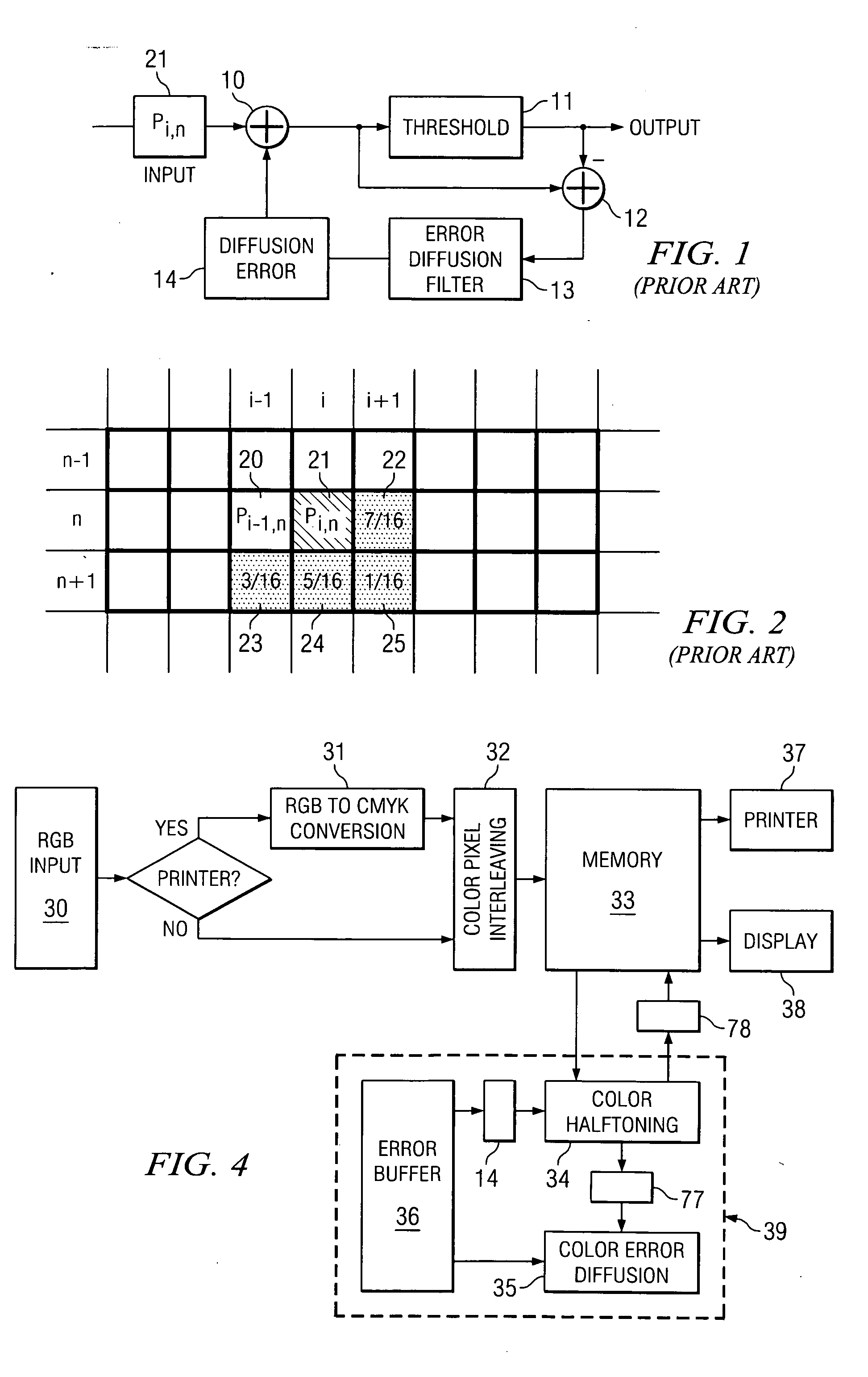

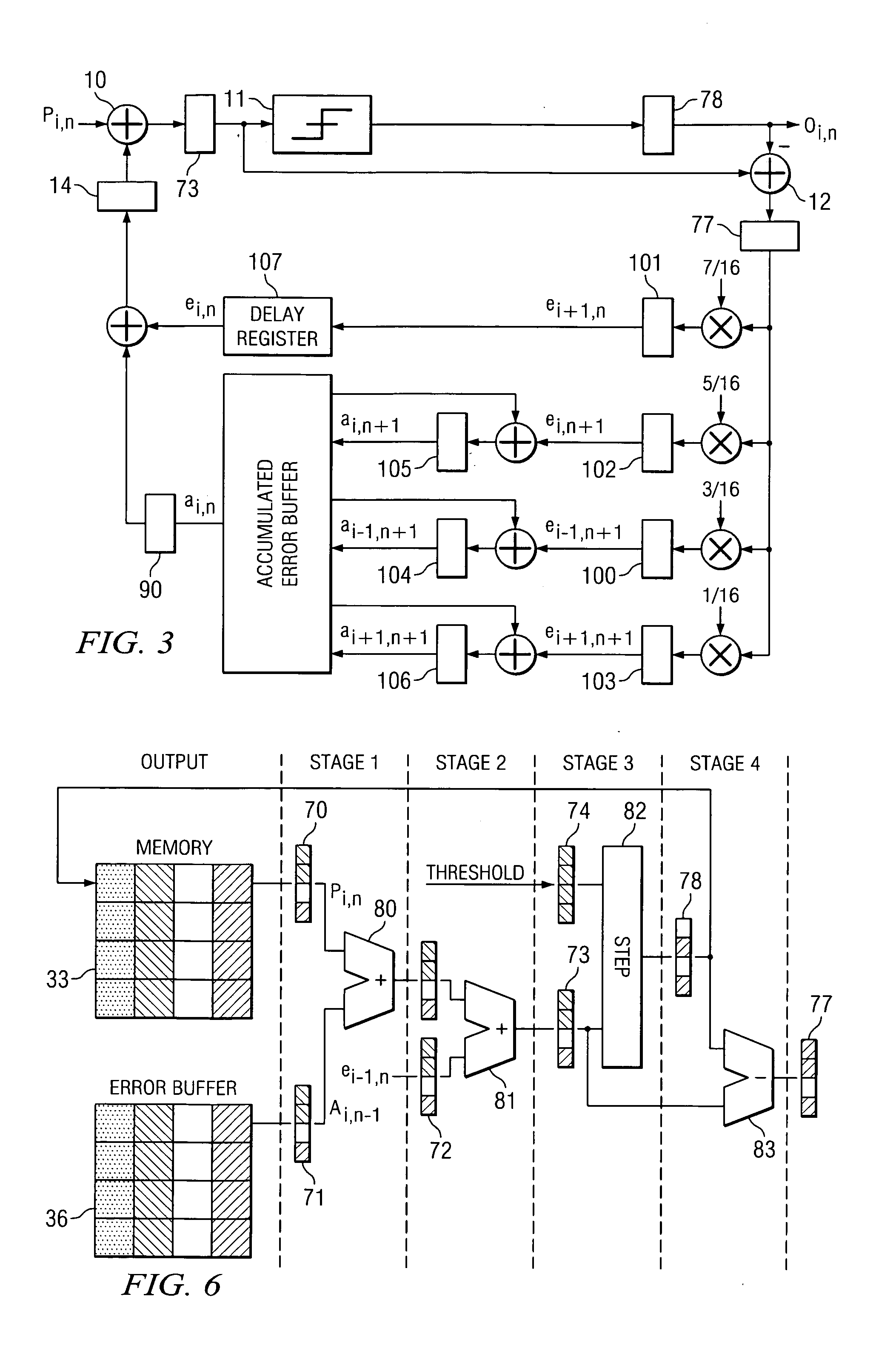

High performance coprocessor for color error diffusion halftoning

ActiveUS20050094211A1Improve performanceReduce complexityVisual presentationForme preparationCoprocessorEmbedded applications

An apparatus and method are provided to accelerate error diffusion for color halftoning for embedded applications. High performance is achieved by utilizing functional parallelism within the halftoning error diffusion process, including exploiting data parallelism in different color planes, reducing the number of memory accesses to the error buffer, accelerating the computation by using a parallel instruction set, and improving the throughput of the system by implementing pipelined architecture. A halftoning coprocessor architecture can implement the foregoing. The architecture can be optimized for high performance, low complexity and small footprint. The coprocessor can be incorporated into embedded systems to accelerate the performance of error diffusion halftoning therein.

Owner:STMICROELECTRONICS SRL

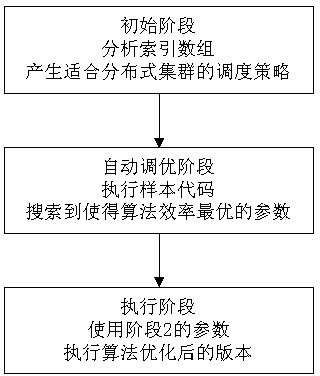

Irregular iteration parallelization method

InactiveCN102096744AImprove good performanceImprove operational efficiencySpecial data processing applicationsAnalysis dataCalculation methods

The invention relates to an irregular iteration parallelization method. In the initializing stage, the data locality property and the data parallelism of irregular iteration calculation are enhanced by analyzing an access mode of data according to a generation strategy of a data block and a sub data block; in the executing stage, the data locality property and the data parallelism of an irregular iteration technology are enhanced by executing a new generated scheduling strategy and a converted code; and in an actual executing process, an automatic performance optimizer of the irregular iteration calculation method is created, a parameter value combination under the condition of optimal efficiency is found out by an exhaustion detecting method and the parameter values of the parameter value combination are fixed, and the optimal running efficiency under the system architecture can be realized. The method is good in parallelization efficiency and expandability.

Owner:HANGZHOU DIANZI UNIV

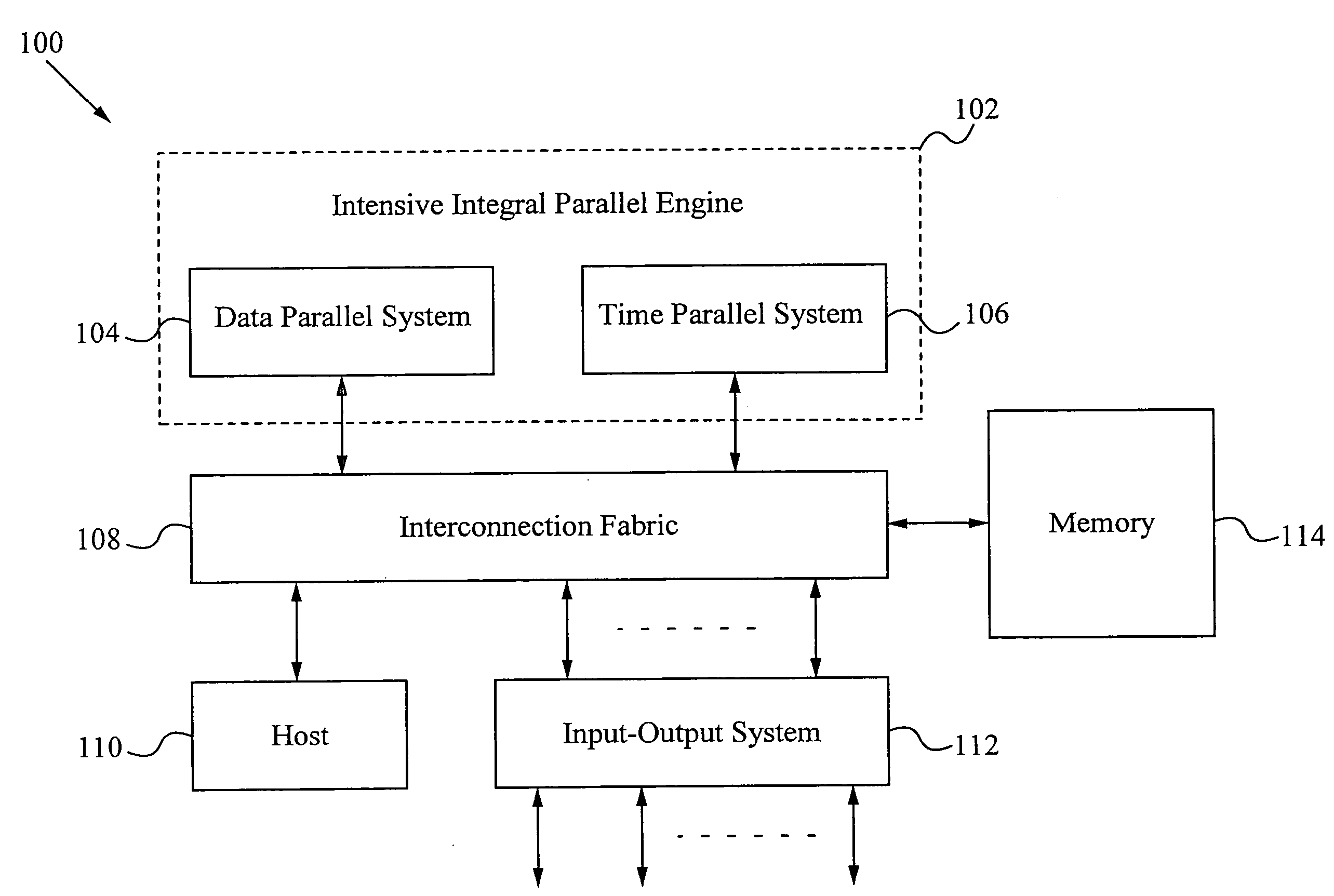

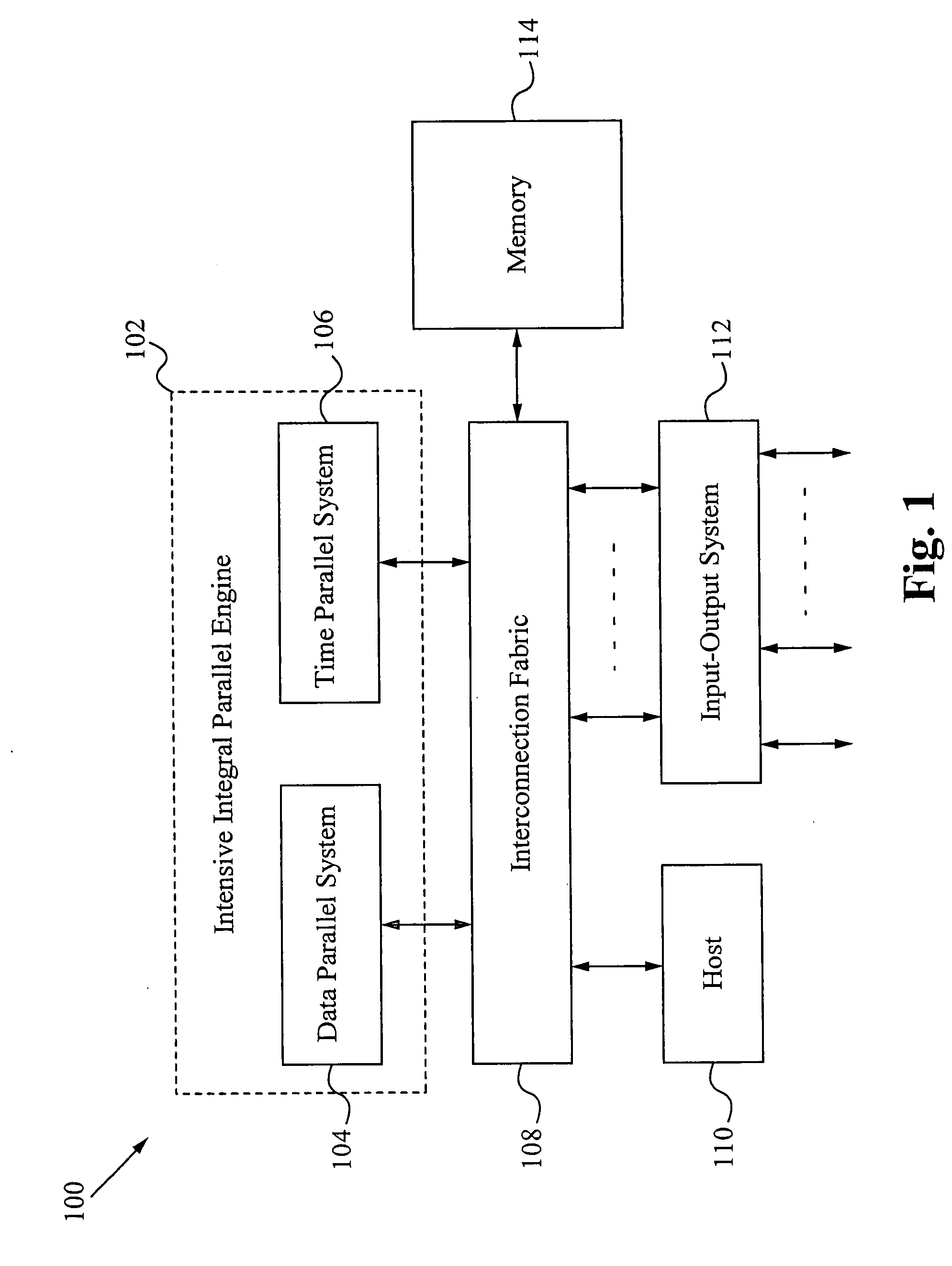

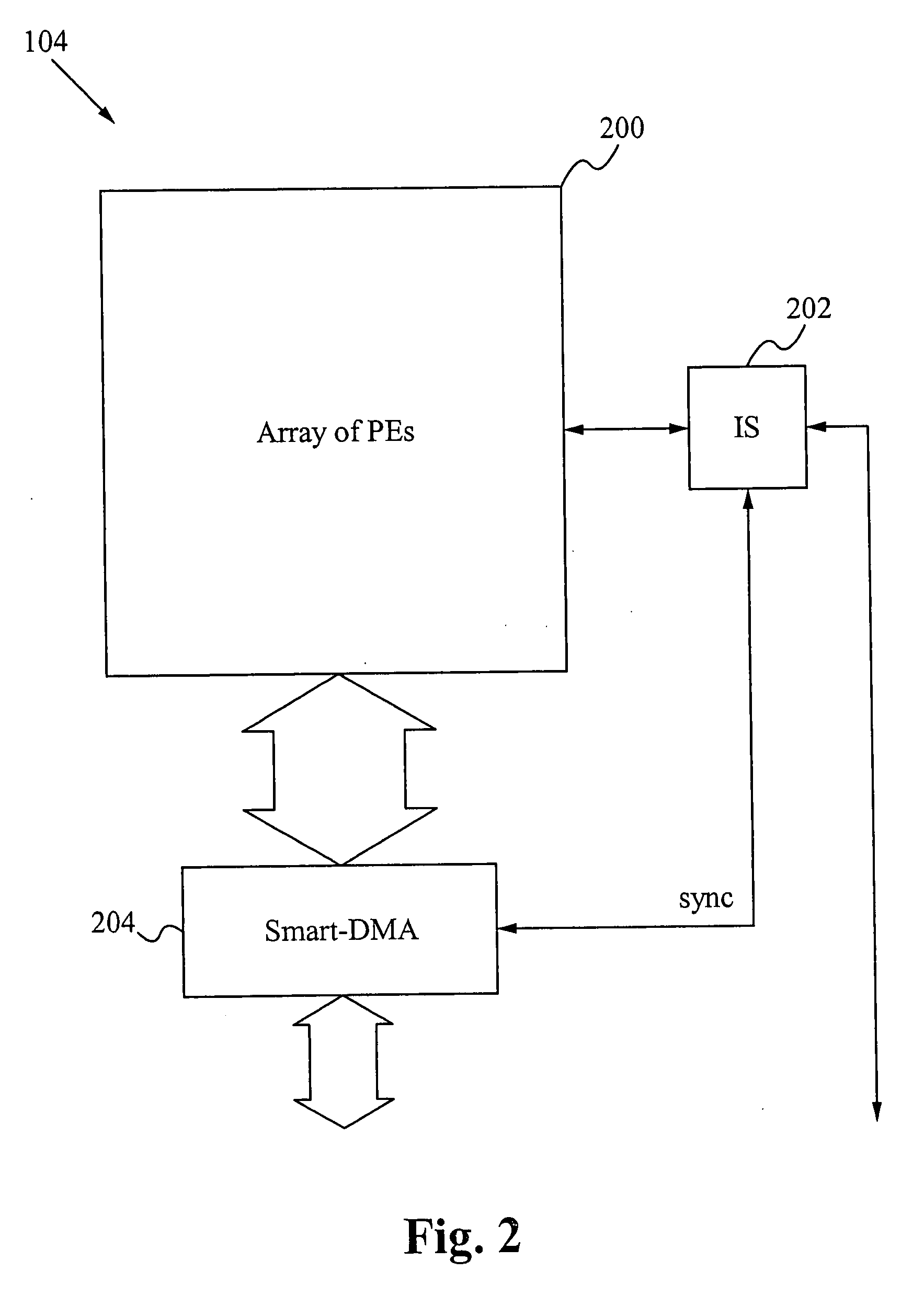

Integral parallel machine

InactiveUS20080059764A1Functionality is very flexibleSingle instruction multiple data multiprocessorsProgram control using wired connectionsProcessing elementSequential function

The present invention is an integral parallel machine for performing intensive computations. By combining data parallelism, time parallelism and speculative parallelism where data parallelism and time parallelism are segregated, efficient computations can be performed. Specifically, for sequential functions, the time parallel system in conjunction with an implementation for speculative parallelism is able to handle the sequential computations in a parallel manner. Each processing element in the time parallel system is able to perform a function and receives data from a prior processing element in the pipeline. Thus, after a latency period for filling the pipeline, a result is produced after clock cycle or other desired time period.

Owner:ALLSEARCH SEMI

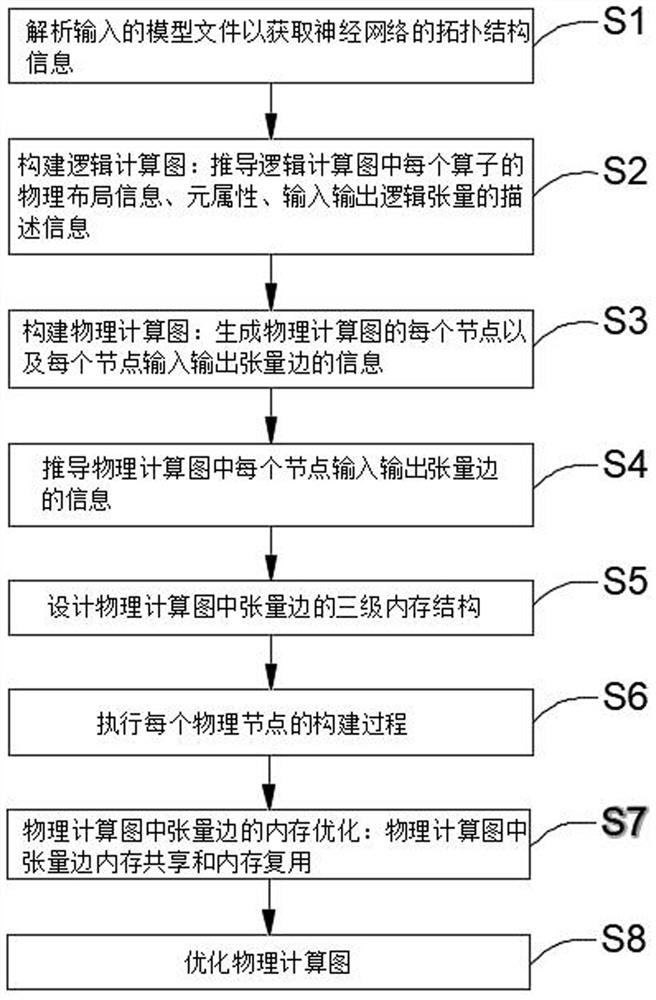

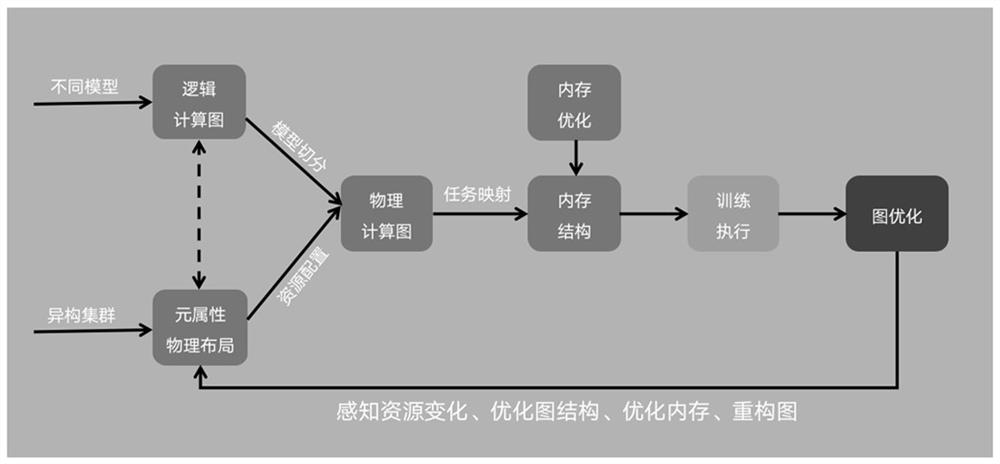

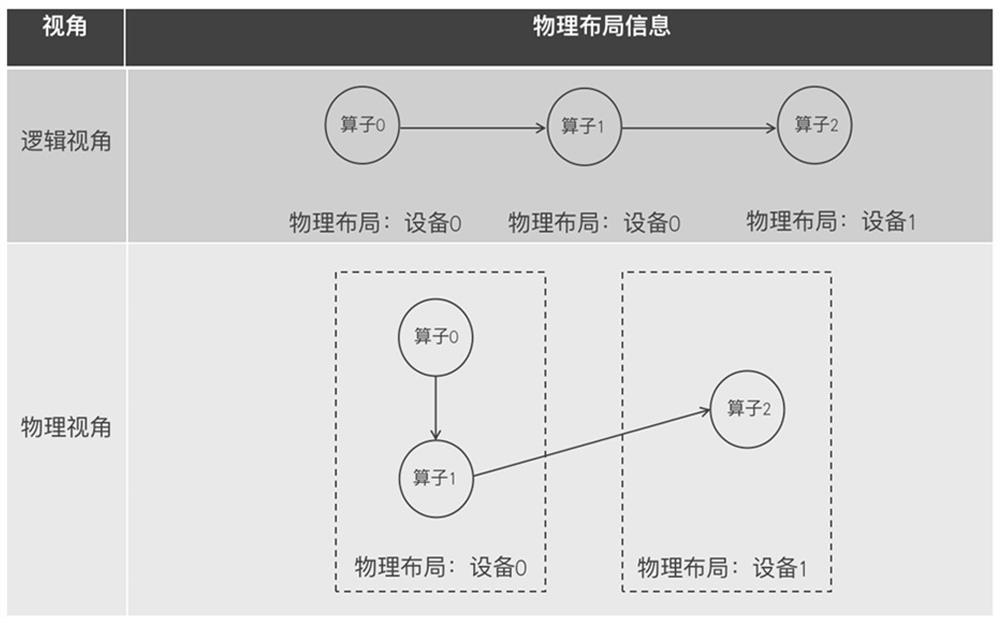

Intermediate representation method and device for neural network model calculation

ActiveCN114186687AEasy to buildLower barriers to useResource allocationNeural architecturesAlgorithmTheoretical computer science

The invention discloses a neural network model calculation-oriented intermediate representation method and device. The method comprises the following steps of S1, analyzing an input model file to obtain topological structure information of a neural network; s2, constructing a logic calculation graph; s21, deducing physical layout information of each operator in the logic calculation graph; s22, deriving the element attribute of each operator in the logic calculation graph; s23, deducing description information of an input and output logic tensor of each operator in the logic calculation graph; s3, constructing a physical calculation graph; s31, generating a physical calculation graph; according to the meta-attribute-based intermediate representation for neural network model calculation disclosed by the invention, data parallelism, model parallelism and pipeline parallelism are originally supported from an operator level. According to the neural network model calculation-oriented intermediate representation method and device disclosed by the invention, the calculation expressions are taken as basic units, the tensors are taken as flowing data in the calculation graph formed by the whole calculation expressions, and the calculation process of the neural network model is realized in a composition manner.

Owner:ZHEJIANG LAB

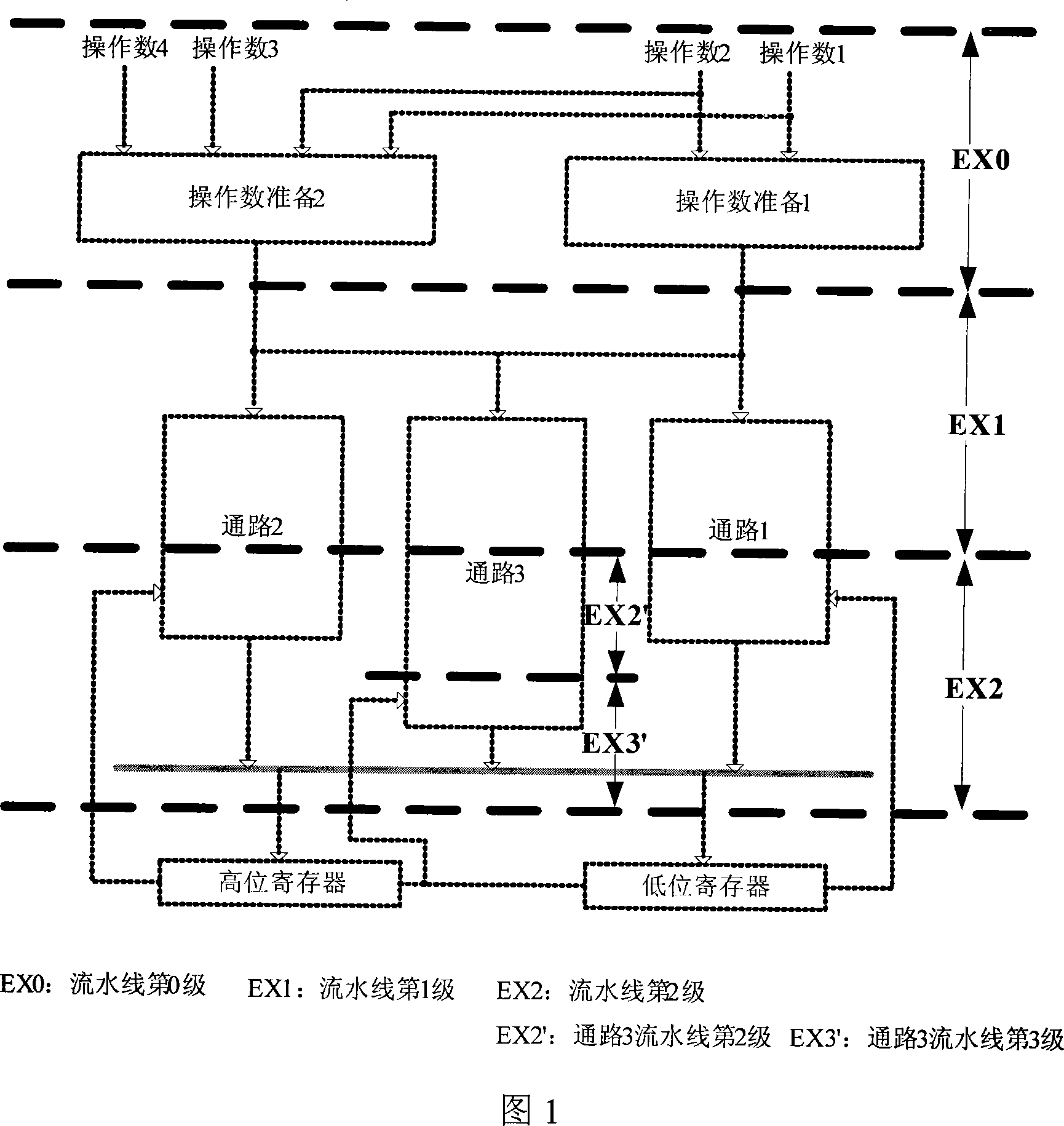

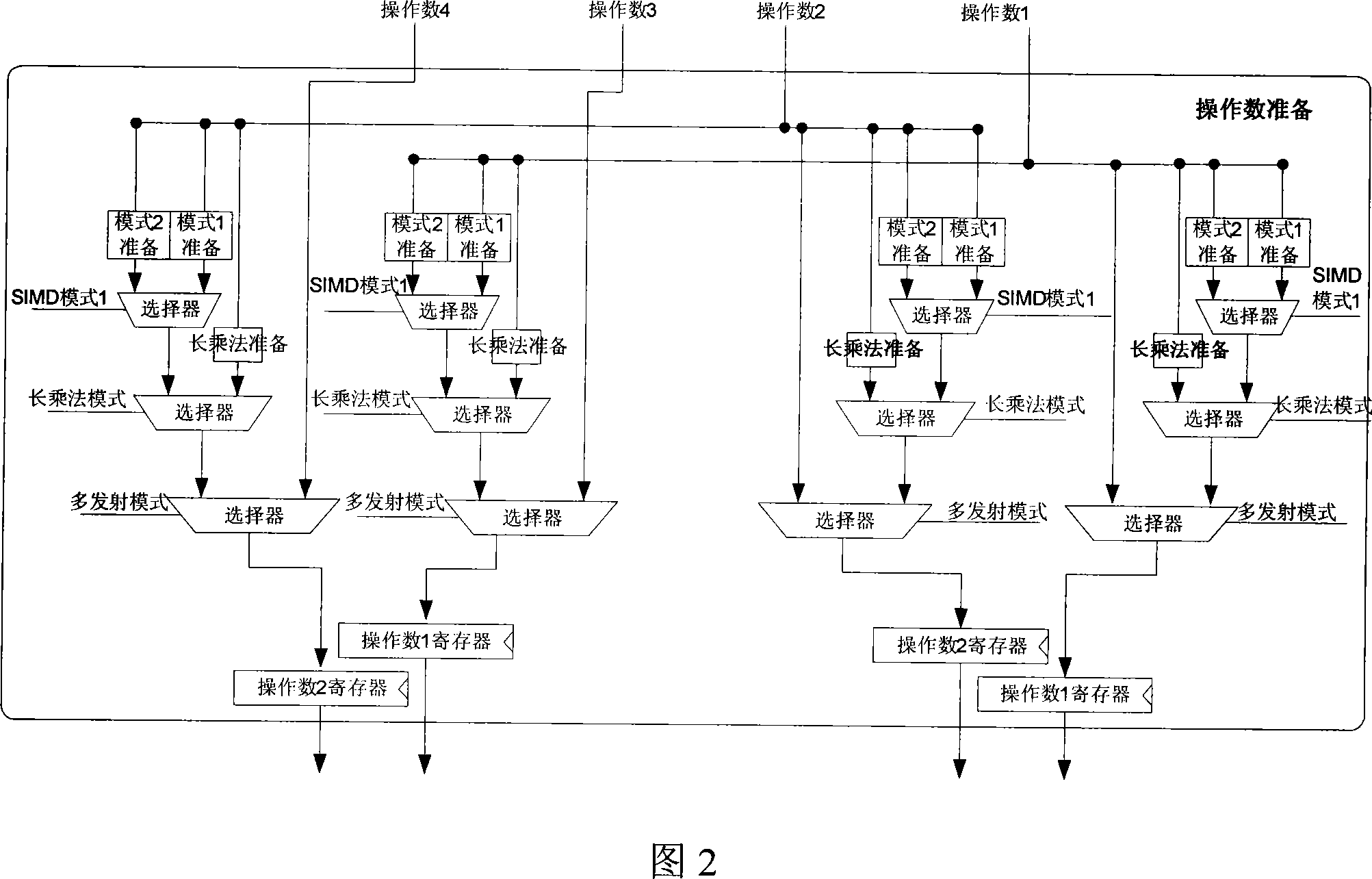

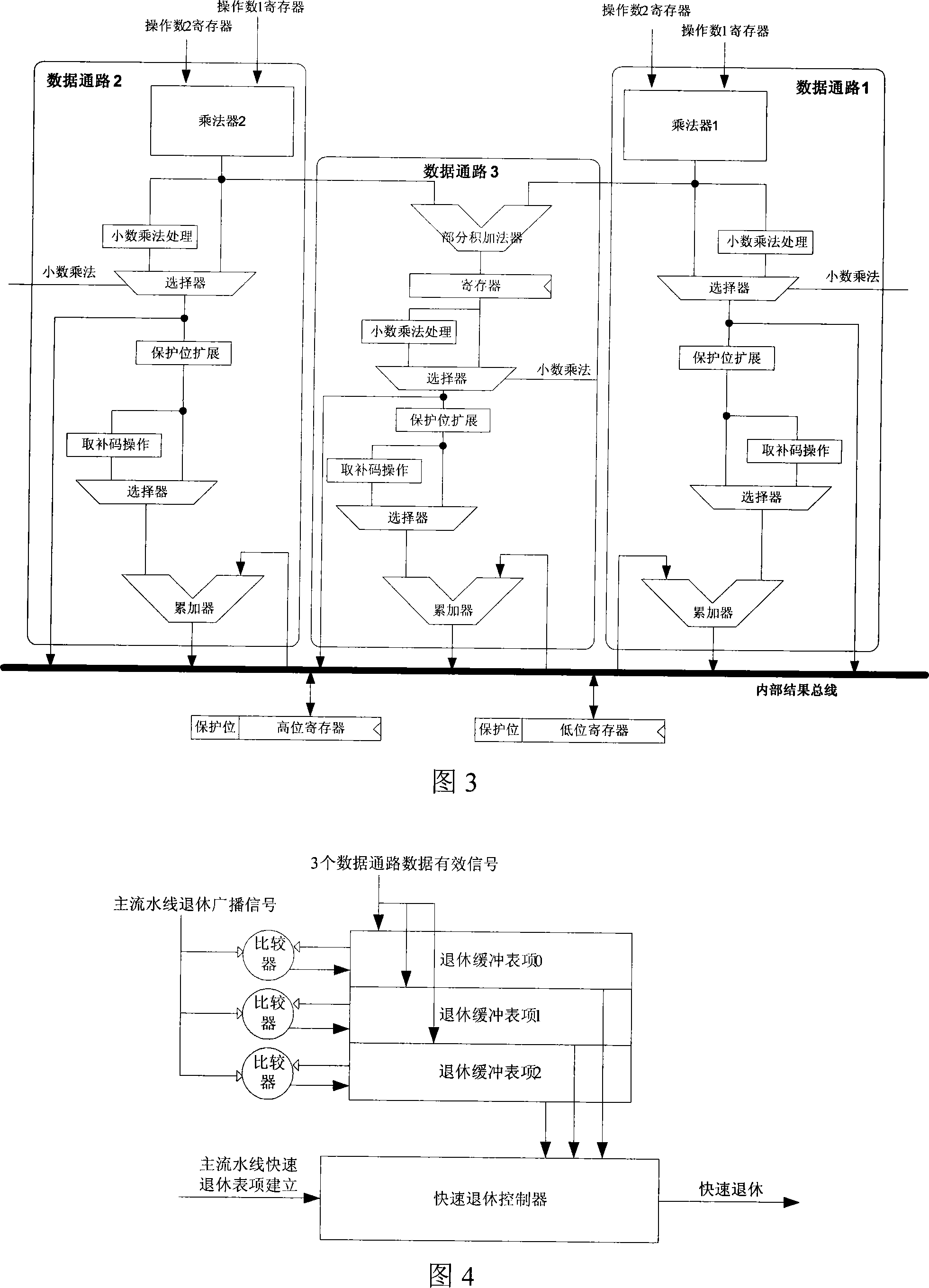

Medium reinforced pipelined multiplication unit design method supporting multiple mode

InactiveCN101162423AFlexible supportImprove computing throughputConcurrent instruction executionMemory systemsDigital signal processingComputer architecture

The invention discloses a multi-mode supported media reinforcement flowline multiplication unit design method. Besides realizing the common multiplication and the multiplication progression operations, the invention also provides the single instruction multiple data (SIMD) mode aiming at the data parallelism in the media application, the multiple-instruction multiple data (SDMD) mode aiming at the non data parallelism in the media application and the high accuracy operation mode aiming at the high accuracy data operation. The four multiplication operation modes provide the support for the complex algorithm of the digital signal processing in the media application field. The four modes can be dynamically switched by instructions, thereby effectively reducing the time of the algorithm switch. The multiplication unit applies flowline design methods respectively aiming at different multiplications, thereby improving the operation speed of all multiplications and greatly enhancing the operation efficiency. When the multiplication unit provided by the invention is applied to an embedding type processor, the digital signal processing capability of the embedding type processor is improved and the application domain is widened.

Owner:ZHEJIANG UNIV +1

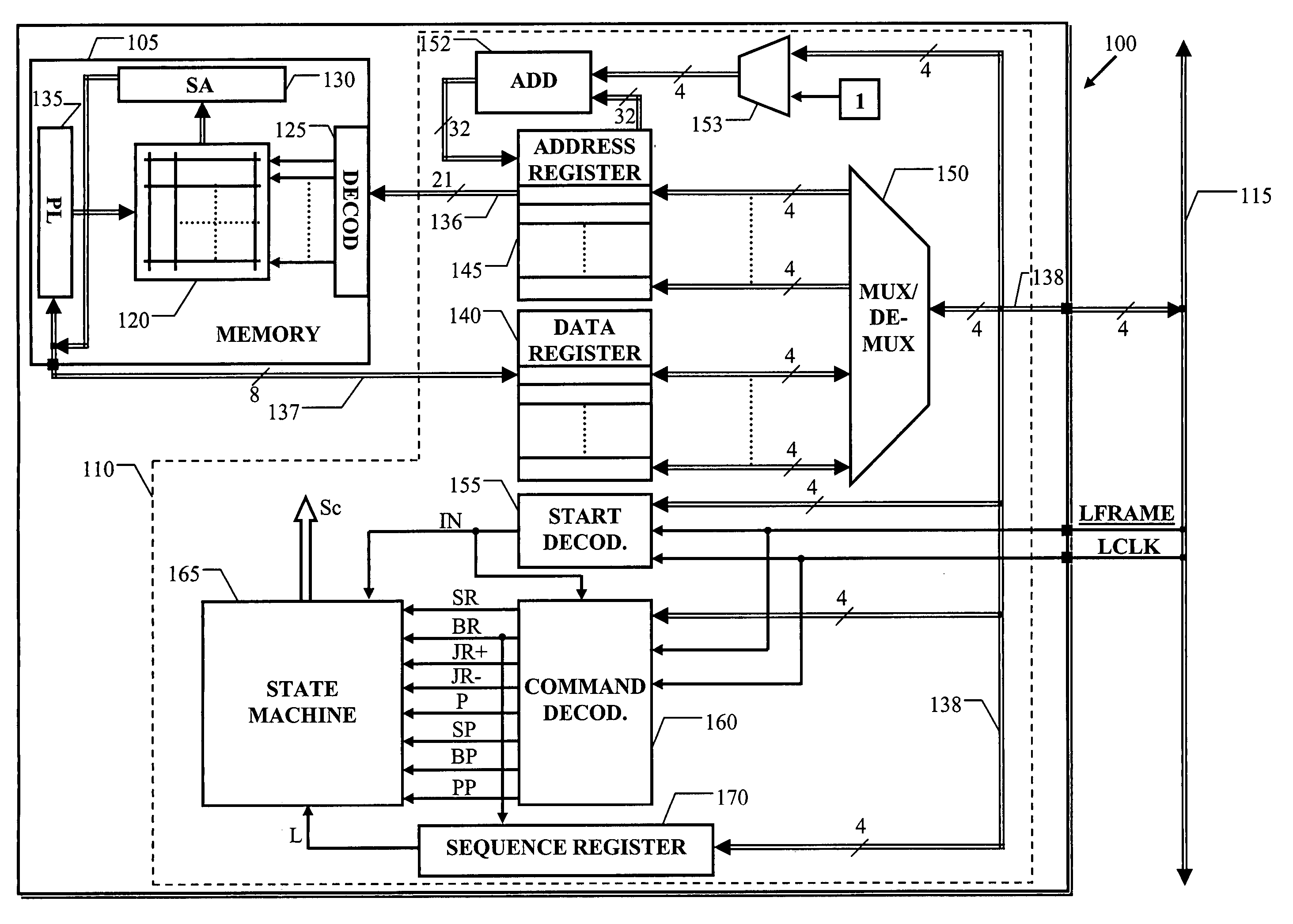

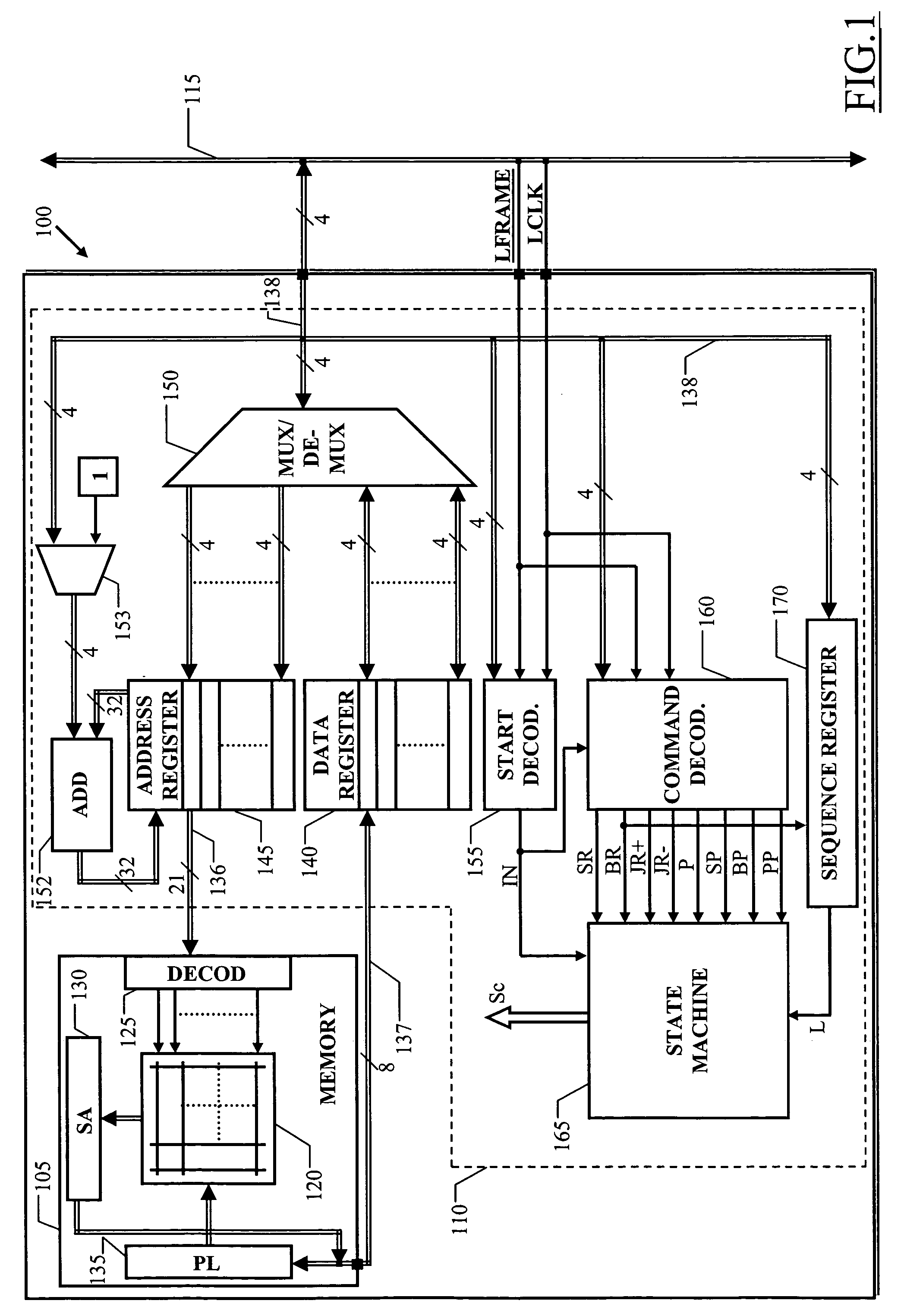

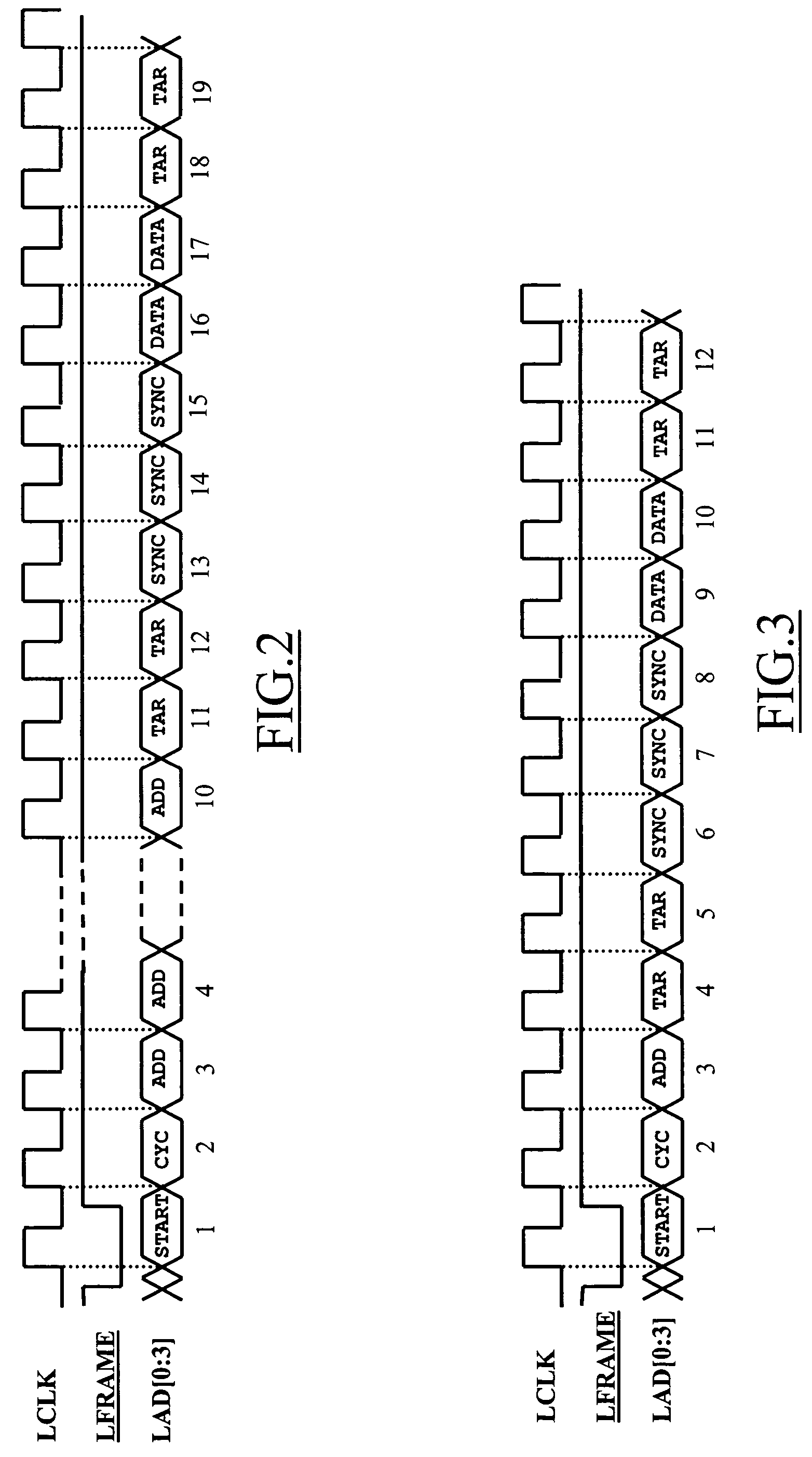

Integrated device with multiple reading and/or writing commands

ActiveUS7376810B2Memory adressing/allocation/relocationRead-only memoriesCommunication interfaceComputer science

An integrated device is provided that includes a non-volatile memory having an addressing parallelism and a data parallelism, and a communication interface for interfacing the memory with an external bus. The external bus has a transfer parallelism lower than the addressing parallelism and the data parallelism. The communication interface includes control means for executing multiple reading operations and / or multiple writing operations on the memory according to different modalities in response to corresponding command codes received from the external bus. Also provided is a method of operating such an integrated device.

Owner:STMICROELECTRONICS SRL

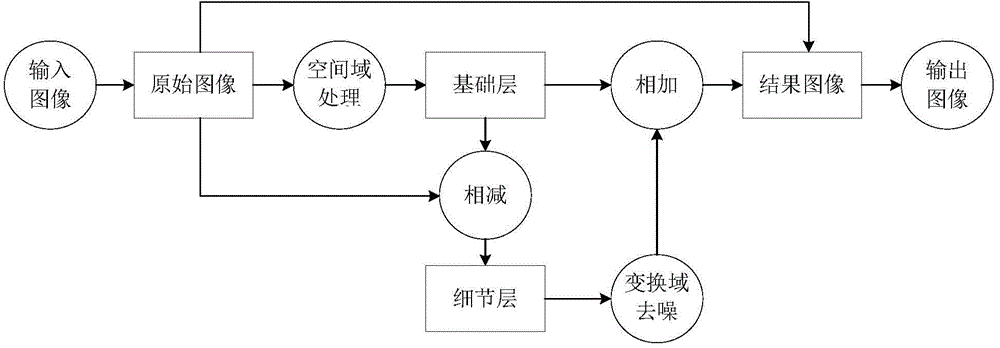

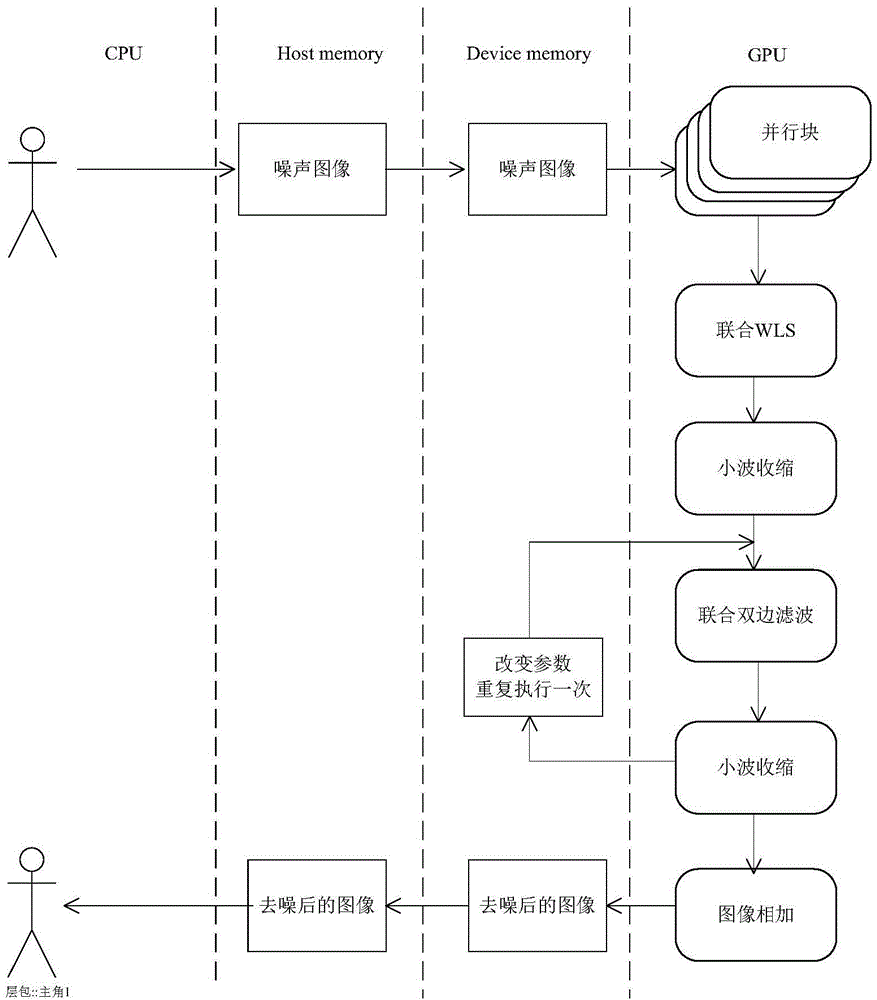

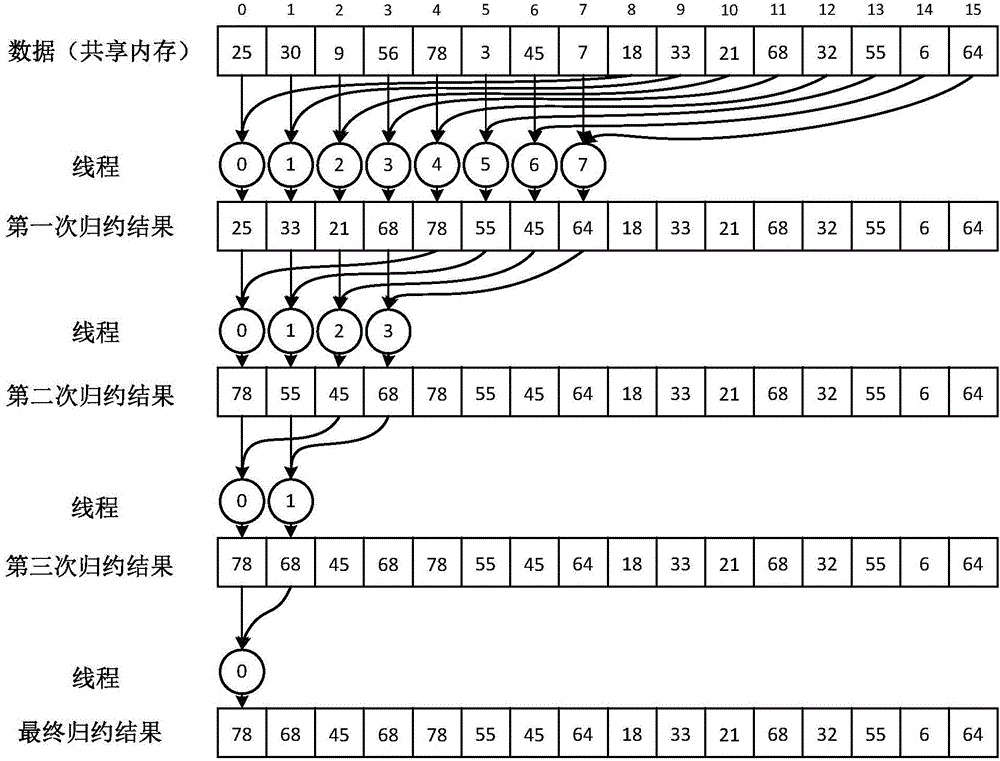

OpenCL-based parallel optimization method of image de-noising algorithm

The invention discloses an openCL-based parallel optimization method of an image de-noising algorithm. According to an idea of image layering, an image is divided into a high-contrast base layer and a low-contrast detail layer by using a combined dual-side filtering algorithm and a combined WLS algorithm; de-noising processing is carried out on the detail layer by using stockham FF; and then image restoring is carried out by changing frequency spectrum contraction and image adding methods, thereby realizing the de-noising effect. According to the invention, on the basis of characteristics of large execution function processing data volume and high data parallelism of the base layer obtaining and detail layer de-noising processing, the openCL platform model is used and parallel calculation of the image de-noising algorithm is realized on GPU; and then details of the calculation process are modified, wherein the modification processing contains local internal memory usage, proper working team size selection, and parallel reduction usage and the like. The speed-up ratio of the de-noising algorithm that is realized finally can reach over 30 times; and thus the practicability of the algorithm can be substantially improved.

Owner:XIDIAN UNIV

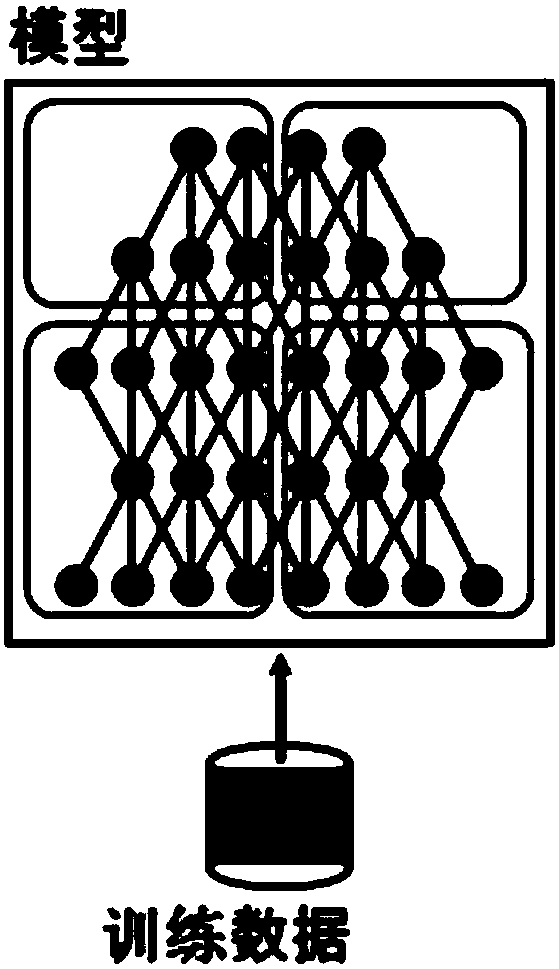

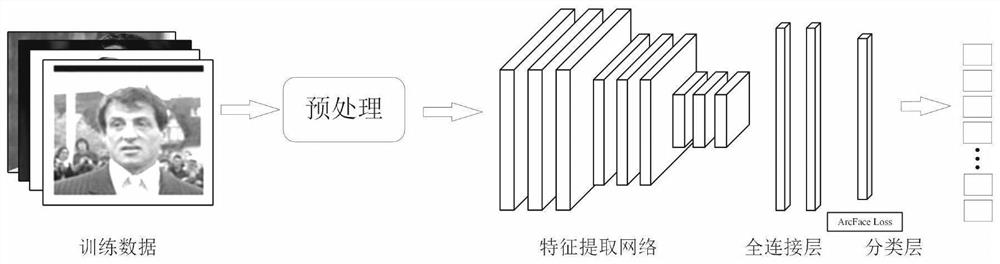

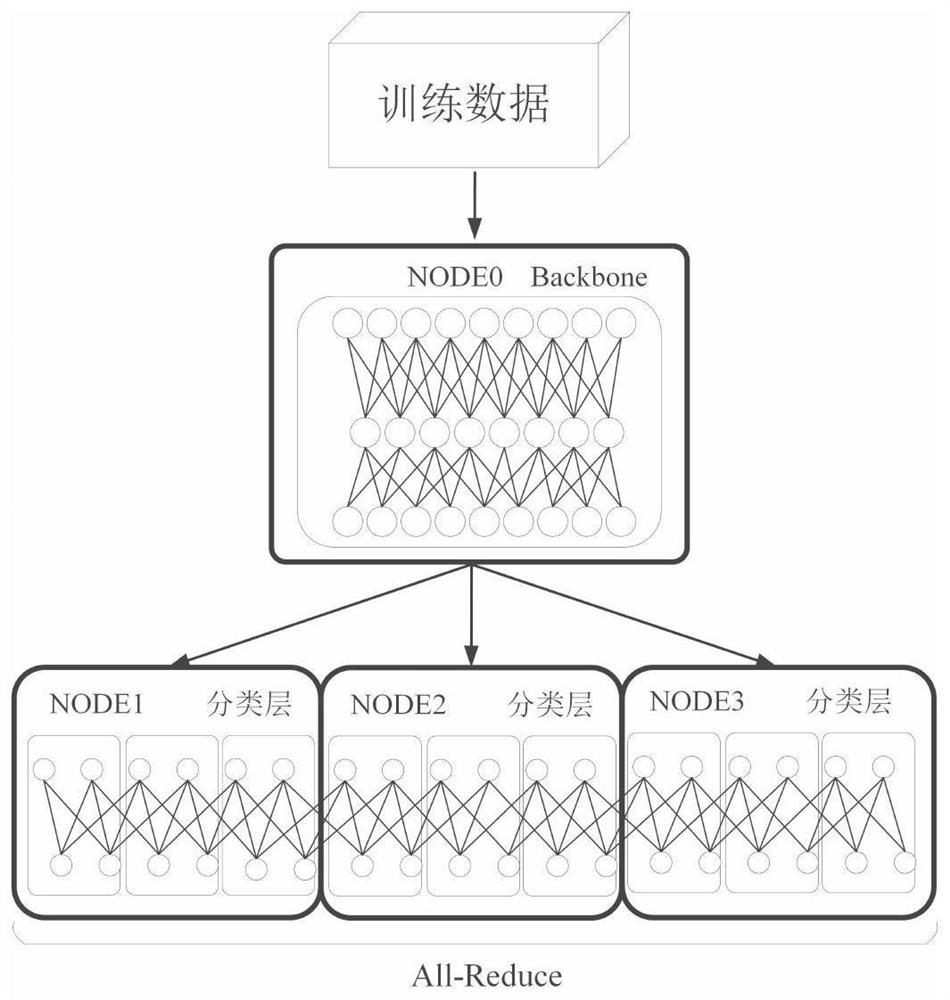

Distributed training method based on hybrid parallelism

PendingCN112464784AIncrease training speedTraining SatisfactionCharacter and pattern recognitionNeural architecturesVideo memoryConcurrent computation

The invention discloses a distributed model training method based on hybrid parallelism, relates to the technical field of deep neural network model training, and solves the problems by adopting a hybrid parallelism mode of data parallelism and model parallelism and using multiple nodes and multiple GPUs. Firstly, for the problem of long training time, a distributed clustering method is adopted toperform parallel computing on mass data, and the training speed is increased; secondly, for the problem that a classification layer model occupies too much video memory during training, a model parallel mode is adopted, the classification layer model is segmented into a plurality of parts and deployed on a plurality of GPUs of a plurality of nodes in a cluster, meanwhile, the number of the nodescan be dynamically adjusted according to the size of the classification layer model, and classification model training under the large ID condition is met. According to the method, a hybrid parallel mode based on data parallelism and model parallelism is used, distributed cluster training is used, the model training efficiency can be greatly improved while the original deep learning training effect is kept, and classification model training under a large ID is met.

Owner:西安烽火软件科技有限公司

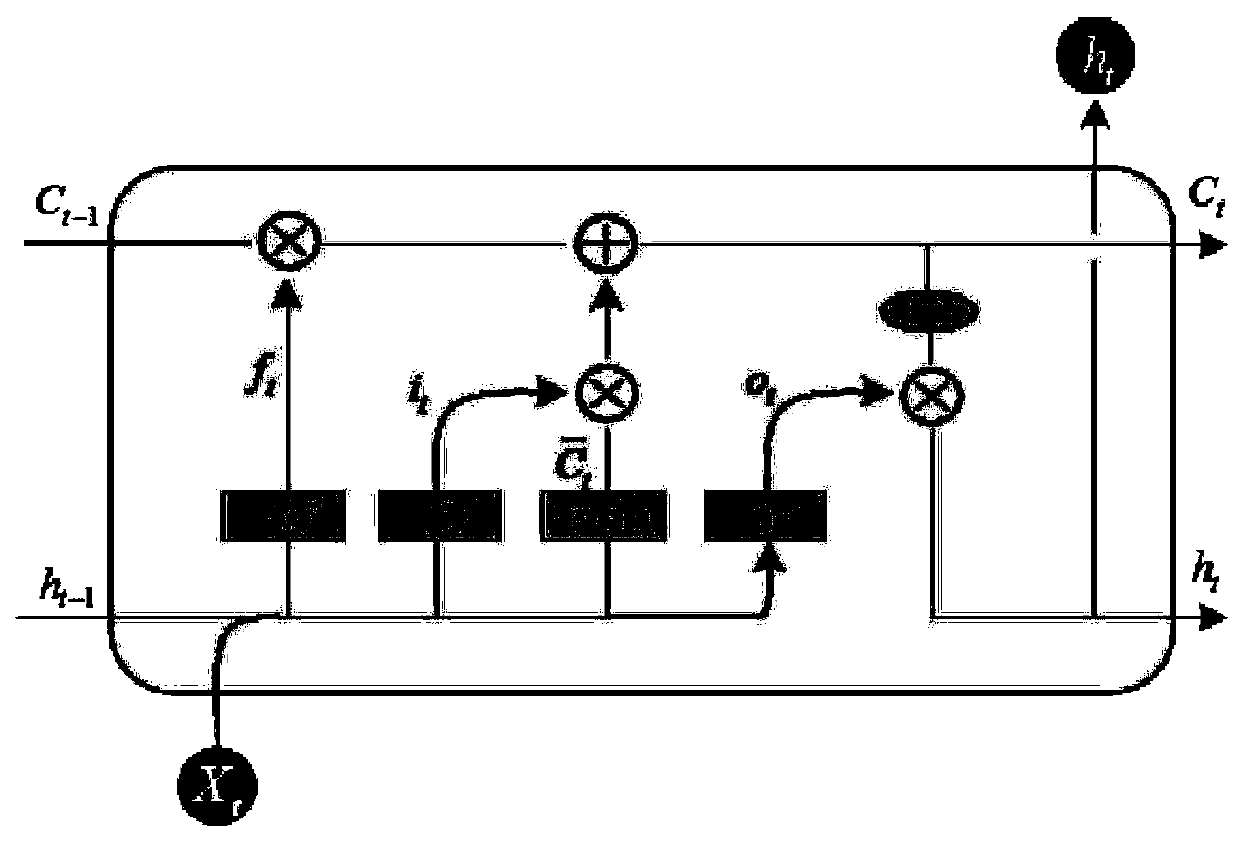

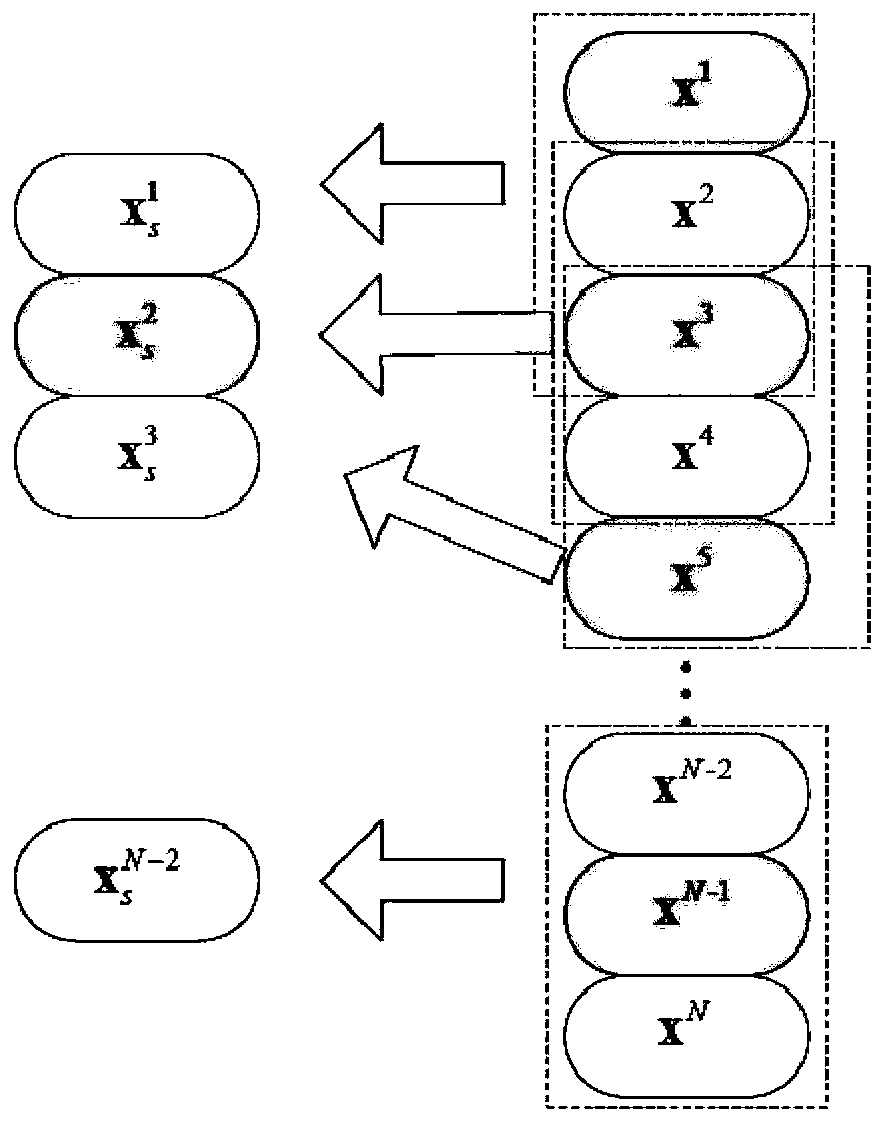

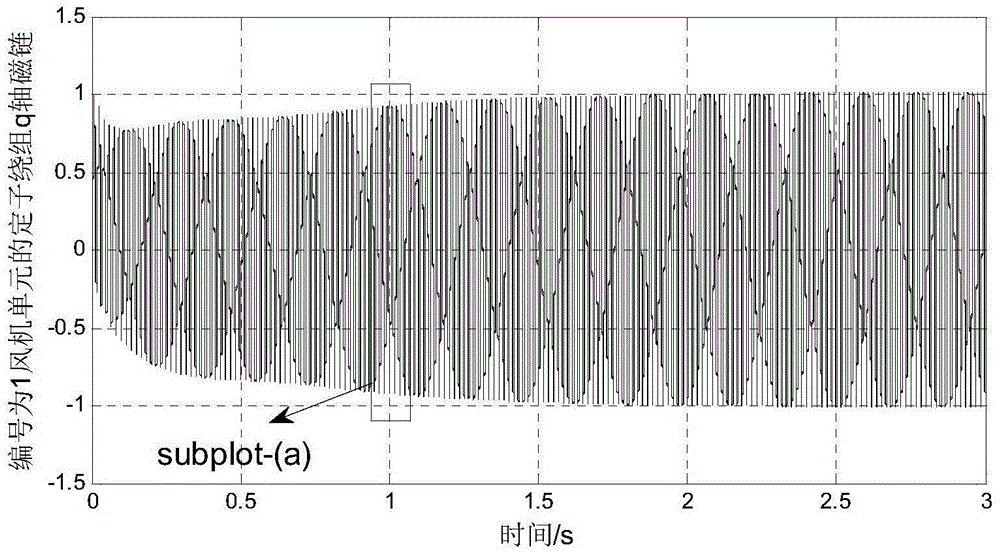

XGBoost soft measurement modeling method based on parallel LSTM auto-encoder dynamic feature extraction

ActiveCN110210495AIncrease training speedEasy to migrateCharacter and pattern recognitionNeural architecturesFeature extractionTest sample

The invention discloses an XGBoost soft measurement modeling method based on parallel LSTM auto-encoder dynamic feature extraction, and belongs to the field of industrial process prediction and control. The LSTM auto-encoder extracts an encoding vector as a dynamic feature in a manner of restoring an input sequence; the idea of data parallelism and model parallelism is utilized to carry out distributed training on the network; the modeling efficiency is improved, the extracted dynamic features and the original features are combined to train an XGBoost model, and finally the steps of predictingthe test sample, repeatedly extracting and splicing the features, inputting the features into the XGBoost model and the like are carried out until prediction of all the samples is completed. The XGBoost soft measurement modeling method based on parallel LSTM auto-encoder dynamic feature extraction is helpful for solving dynamic problems in process soft measurement based on other models, and meanwhile, two modes of data parallelism and model parallelism are adopted in the network training process, so that the network training speed is increased; and it can be ensured that the precision of theXGBoost model is unchanged or stably improved after the dynamic characteristics are introduced, and the robustness of the model is improved.

Owner:ZHEJIANG UNIV

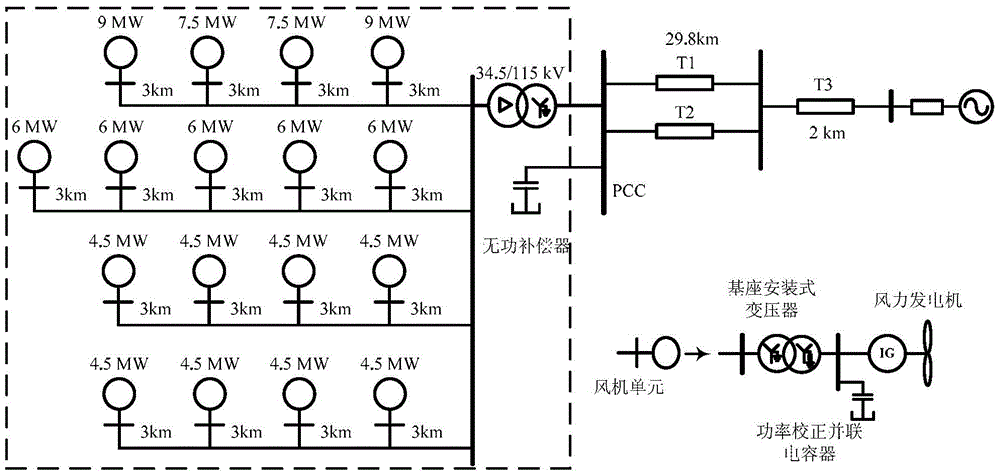

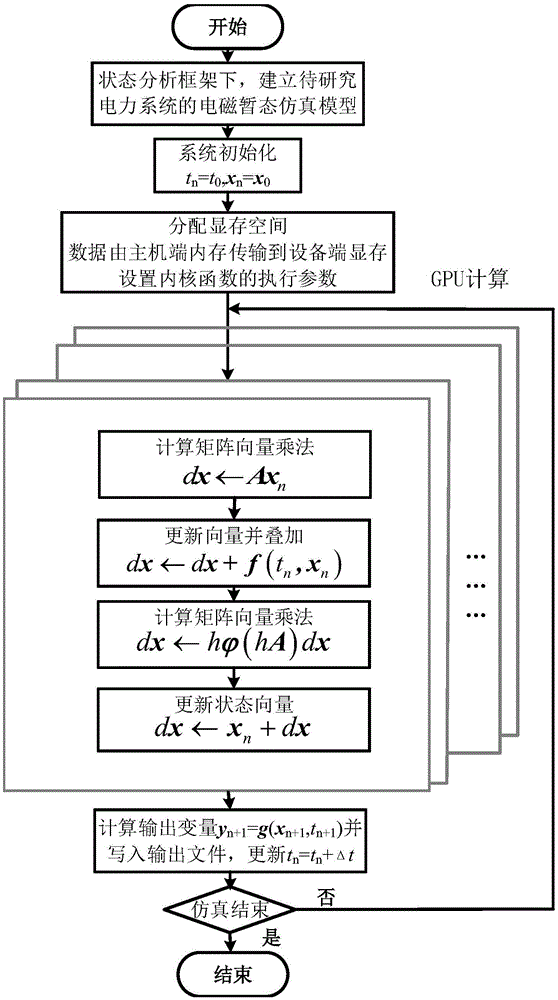

Matrix exponent-based parallel calculation method for electromagnetic transient simulation graphic processor

InactiveCN105574809AGood precisionEasy to handleProcessor architectures/configurationDetails involving image processing hardwareGraphicsTransient state

The invention provides a matrix exponent-based parallel calculation method for an electromagnetic transient simulation graphic processor. A to-be-researched overall electromagnetic transient simulation model of a power system is built under a state analysis framework; and electromagnetic transient rapid simulation of the power system is achieved by combining the data parallelism of a matrix exponent algorithm and the performance advantages of parallel calculation of the graphic processor. The matrix exponent-based parallel calculation method reserves good numerical precision and rigid processing capacity of a matrix exponential integration method, has general modeling and simulation capabilities of nonlinear links of power system elements, and achieves high efficiency of a matrix exponential integration algorithm in the field of large-scale electromagnetic transient simulation for the power system by high parallelism characteristic of data of the matrix exponent integration algorithm. Parallel calculation of the simulation graphic processor of the electromagnetic transient model of the general power system is achieved on the basis of the matrix exponential operation under a state analysis framework; and the calculation speed of the matrix exponent-based electromagnetic transient simulation method is improved.

Owner:天津天成恒创能源科技有限公司

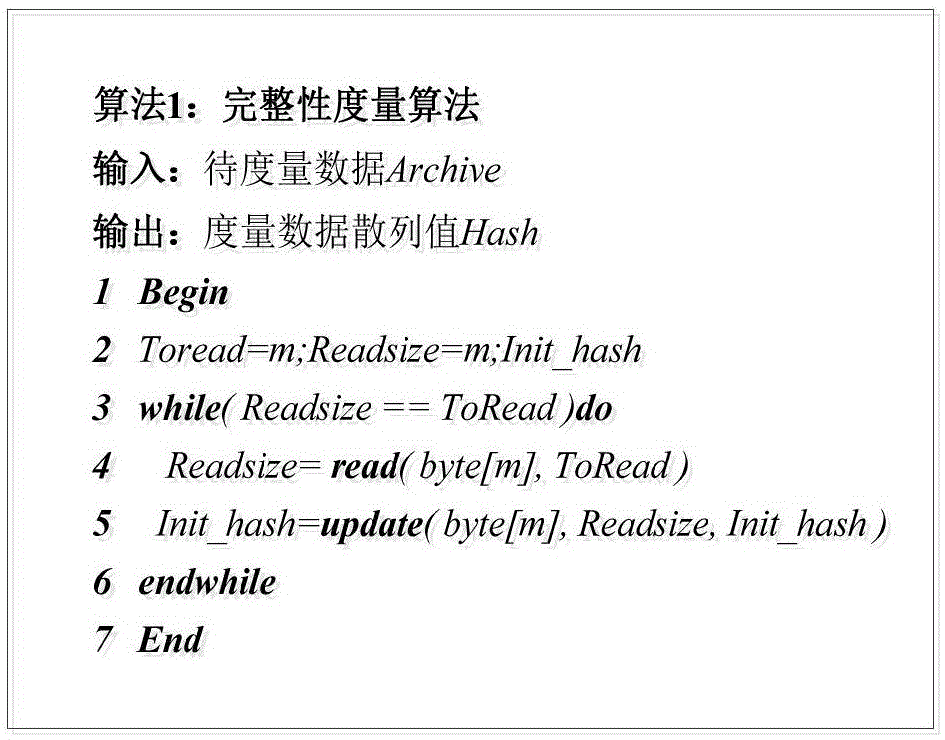

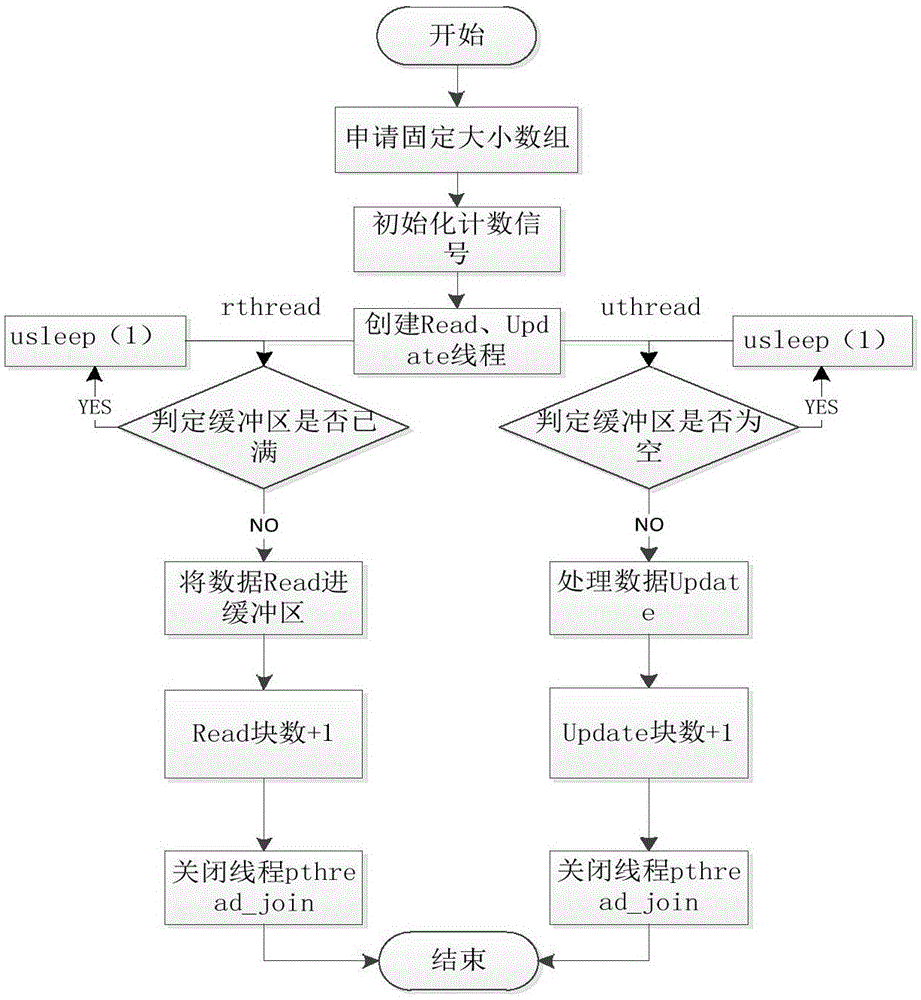

Multi-thread parallelism based integrity measurement hash algorithm optimization method

ActiveCN105159654AReduce measurement timeImprove measurement efficiencyConcurrent instruction executionArray data structureAlgorithm optimization

The present invention relates to a multi-thread parallelism based integrity measurement hash algorithm optimization method. The optimization method specifically comprises: 1, dividing an integrity measurement hash algorithm into a Read thread and an Update thread, and initializing a counting signal for inter-thread synchronization; 2, storing, by the Read thread, to-be-measured data that is partitioned uniformly into a buffer area array in a data prefetching manner, and performing, by the Update thread, sequential operation processing on the data written into the buffer area array; and 3, when the Read thread writes data into a buffer area, continuously accumulating preset counting signals, and when the buffer area is full, stopping writing and waiting for an operation of the Read thread, and further, sequentially processing, by the Update thread, the data written into the buffer area until the buffer area is empty, and waiting for the Read thread, and implementing synchronization on the Read thread and the Update thread according to a relationship between the counting signals. By using the optimization method of the present invention, measurement time is reduced and measurement efficiency is improved.

Owner:THE PLA INFORMATION ENG UNIV

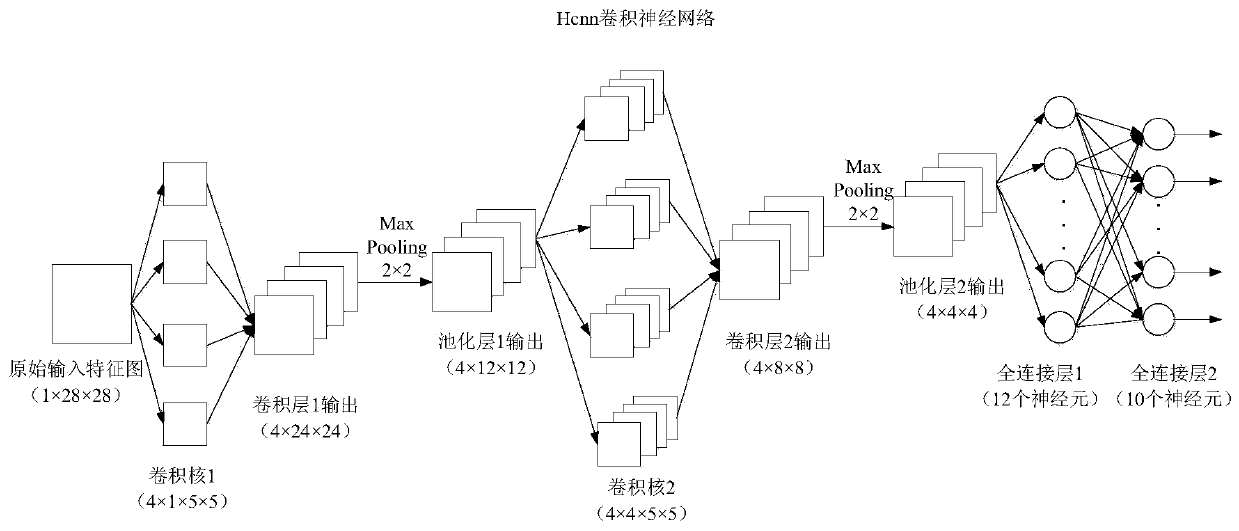

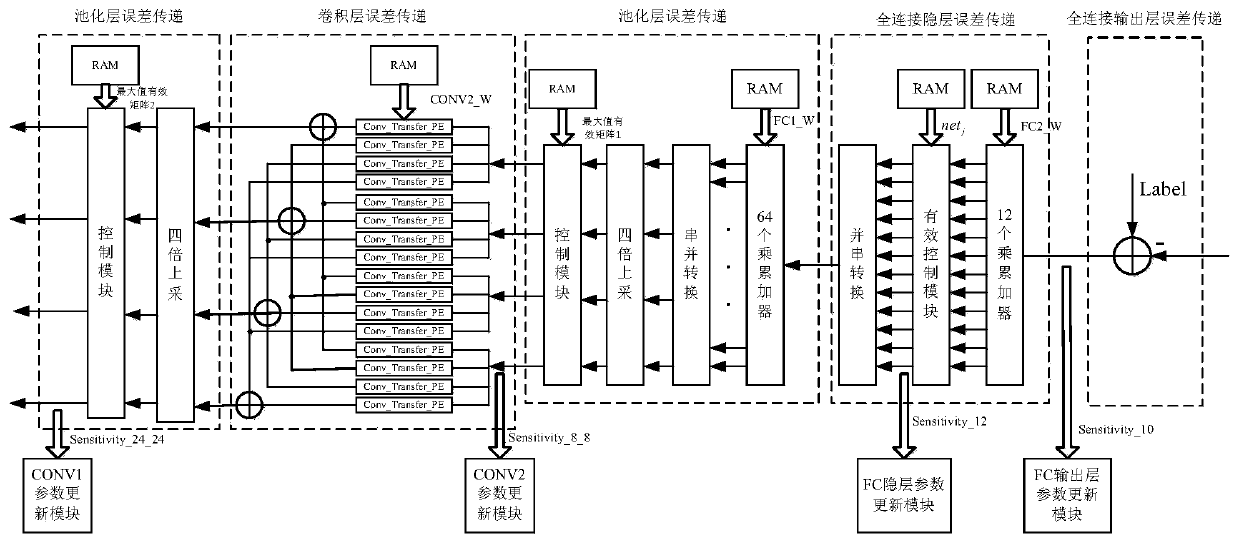

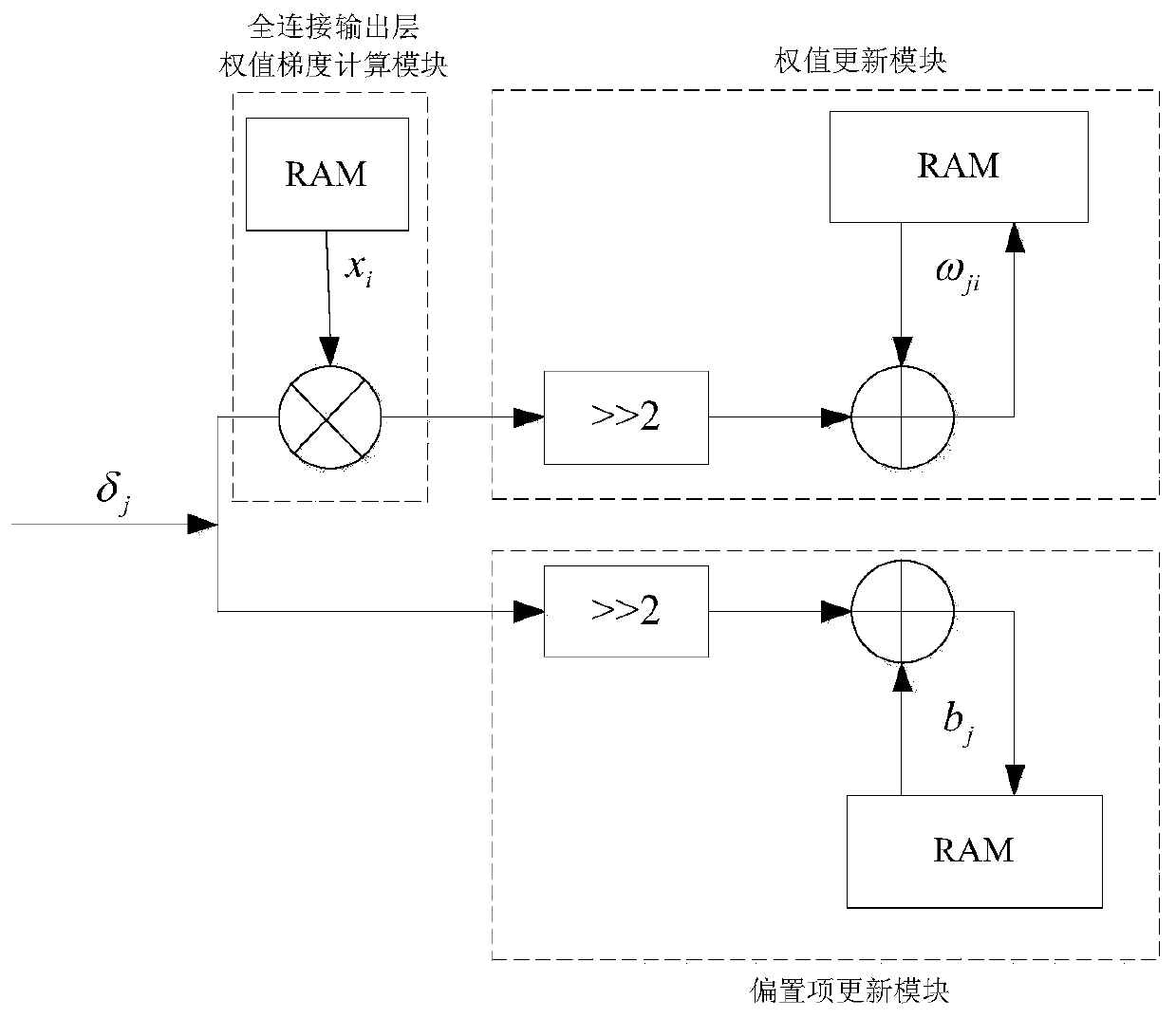

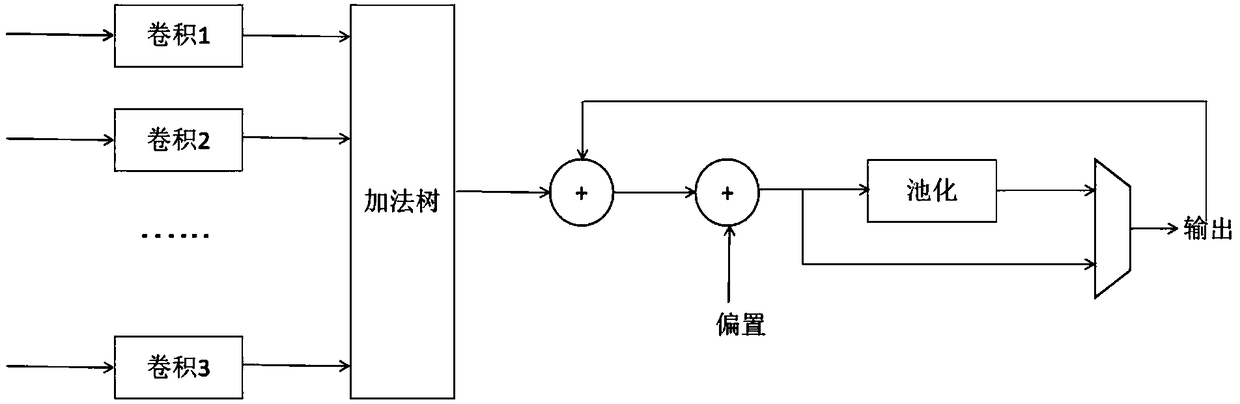

Hardware acceleration implementation architecture for backward training of convolutional neural network based on FPGA

ActiveCN110543939ASolve the implementation problemEasy to implementNeural architecturesPhysical realisationFpga implementationsData access

The invention discloses a hardware acceleration implementation architecture for backward training of a convolutional neural network based on an FPGA. Based on a basic processing module of backward training of each layer of a convolutional neural network, the hardware acceleration implementation architecture comprehensively considers operation processing time and resource consumption, and realizesa backward training process of the Hcnn convolutional neural network in a parallel pipeline form by using methods of parallel-serial conversion, data fragmentation, pipeline design resource reuse andthe like according to the principle of achieving the maximum degree of parallelism and the minimum amount of resource consumption as much as possible. According to the hardware acceleration implementation architecture, the characteristics of data parallelism and assembly line parallelism of the FPGA are fully utilized, and the implementation is simple, and the structure is more regular, and the wiring is more consistent, and the frequency is also greatly improved, and the acceleration effect is remarkable. More importantly, the hardware acceleration implementation architecture uses an optimized pulsation array structure to balance IO read-write and calculation, improves the throughput rate under the condition that less storage bandwidth is consumed, and effectively solves the problem thatthe data access speed is much higher than that of implementation of a convolutional neural network FPGA with the data processing speed.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

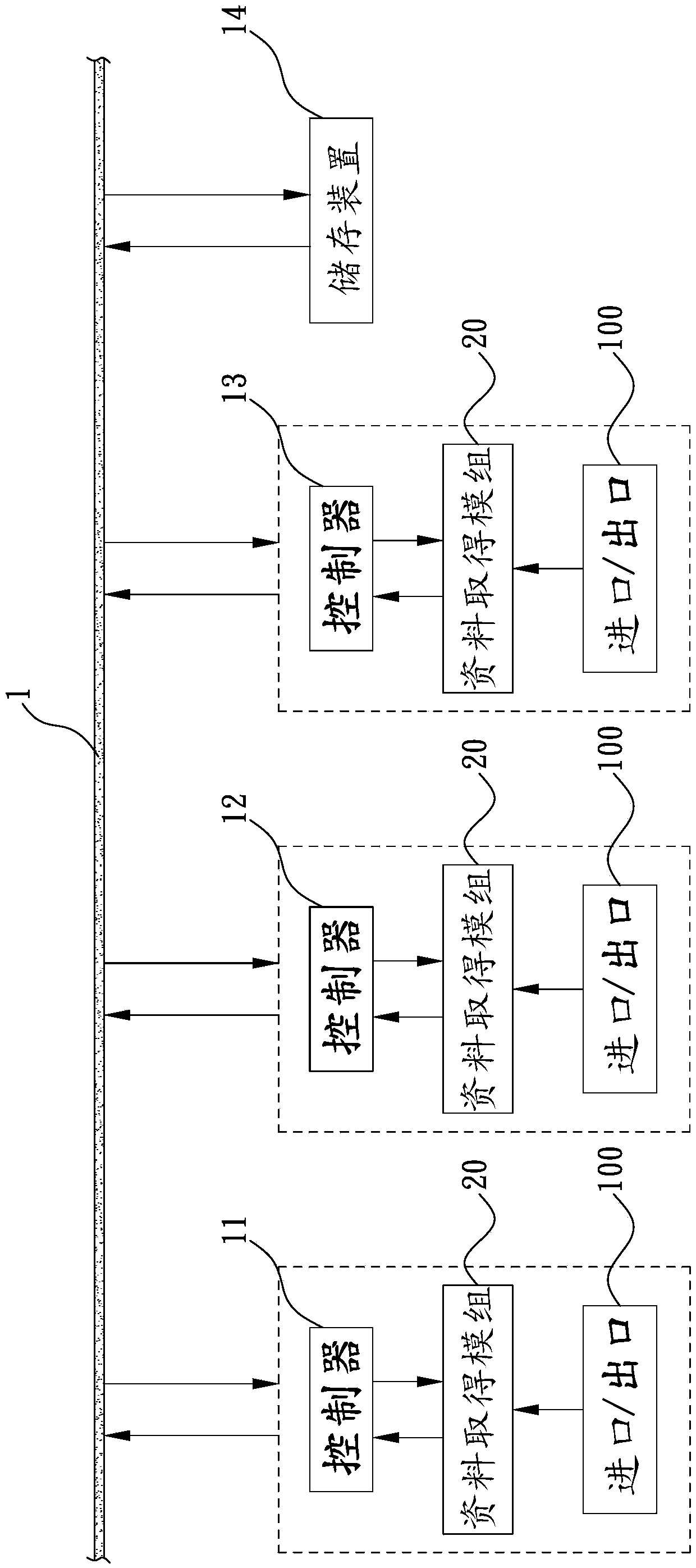

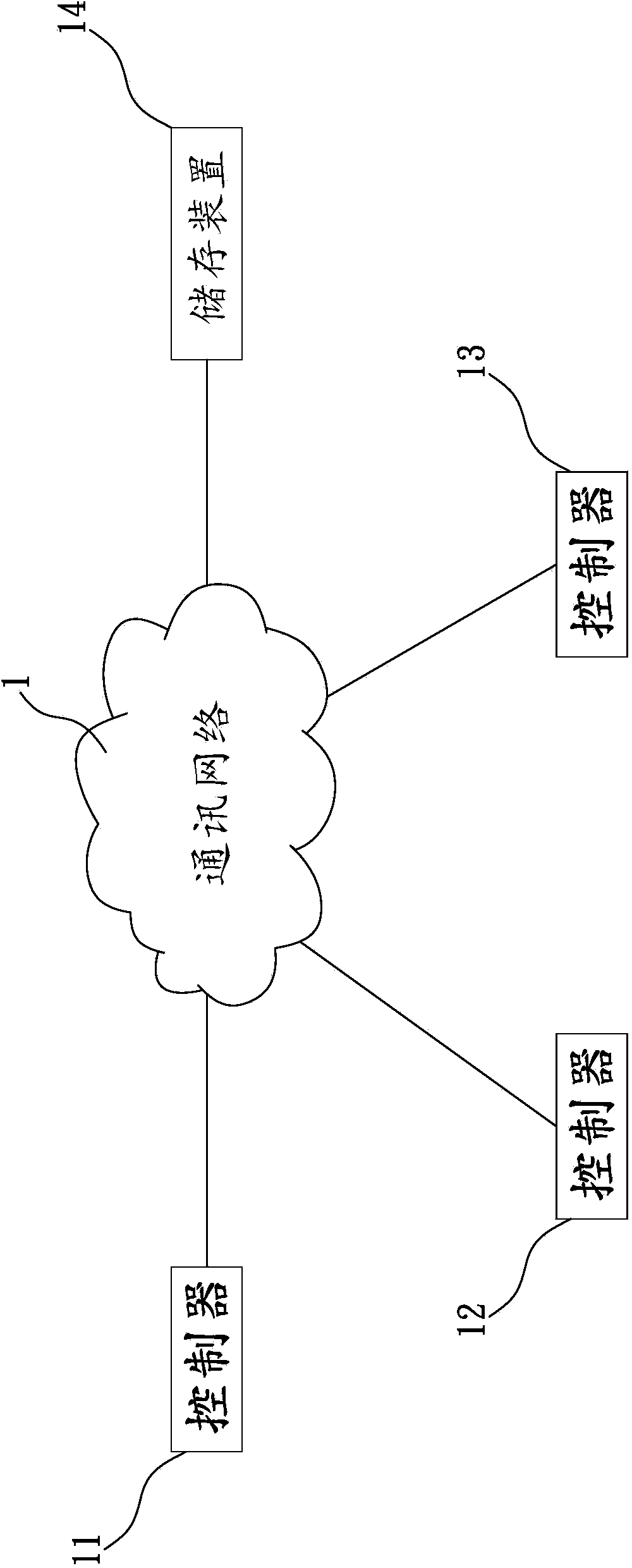

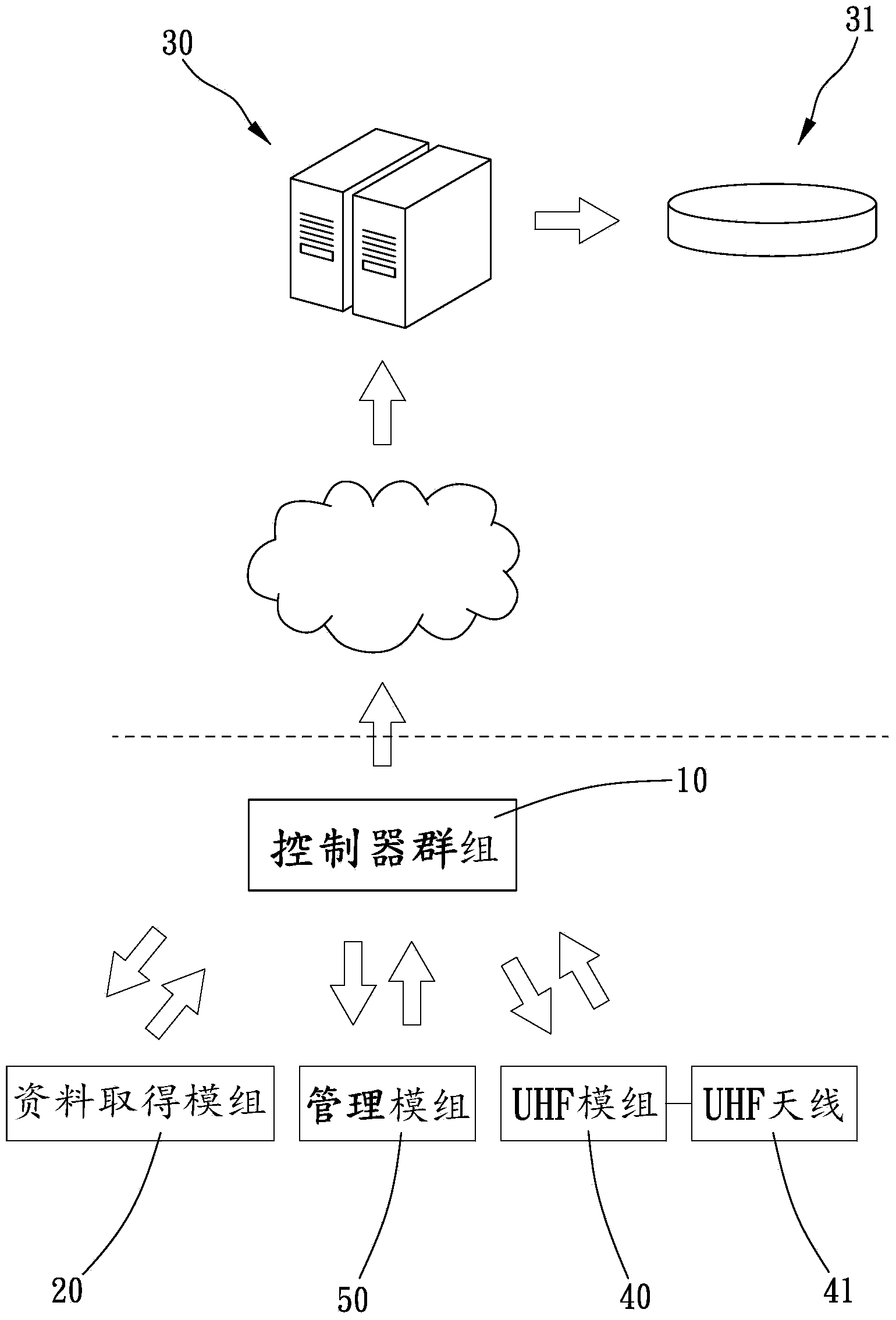

Parking lot system

The invention provides a parking lot system. The system includes a controller group, the controller group includes a plurality of controllers, the plurality of controllers are communicatively connected to at least one communication network, and user site data related with a vehicle which are input to the controller group can be parallelly transmitted to the controllers through the at least one communication network, so that the controllers have the same data. The parking lot system in the invention enables the plurality of controllers to have synchronous data through the at least one communication network, and when an abnormality occurs in the system, synchronous data can be acquired from any controller; and circumstances of one-in multi-out, multi-in one-out, multi-in multi-out, repetitive in / out, vehicle sneaking and the like can be prevented.

Owner:SYRIS TECH CORP

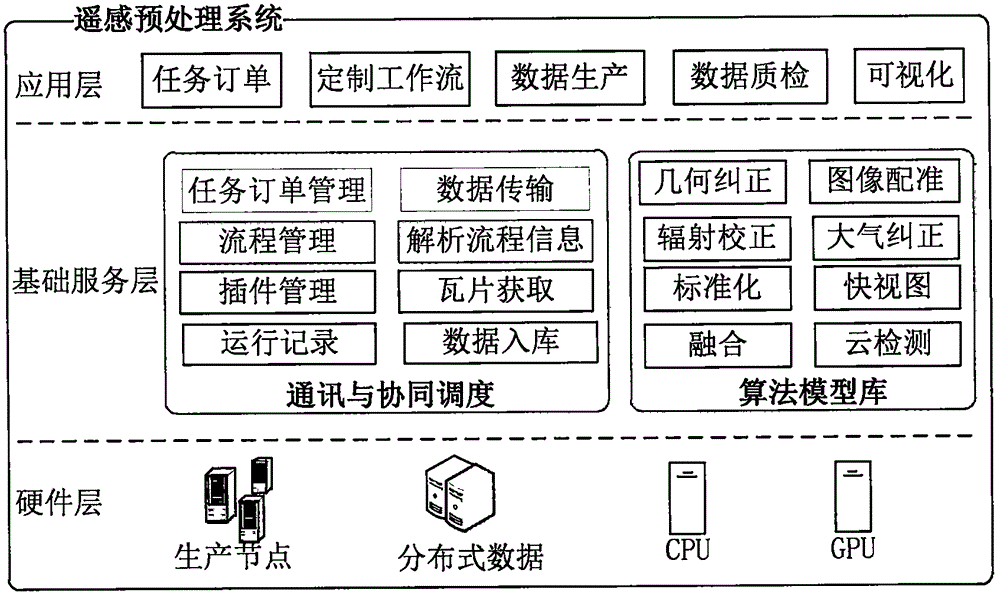

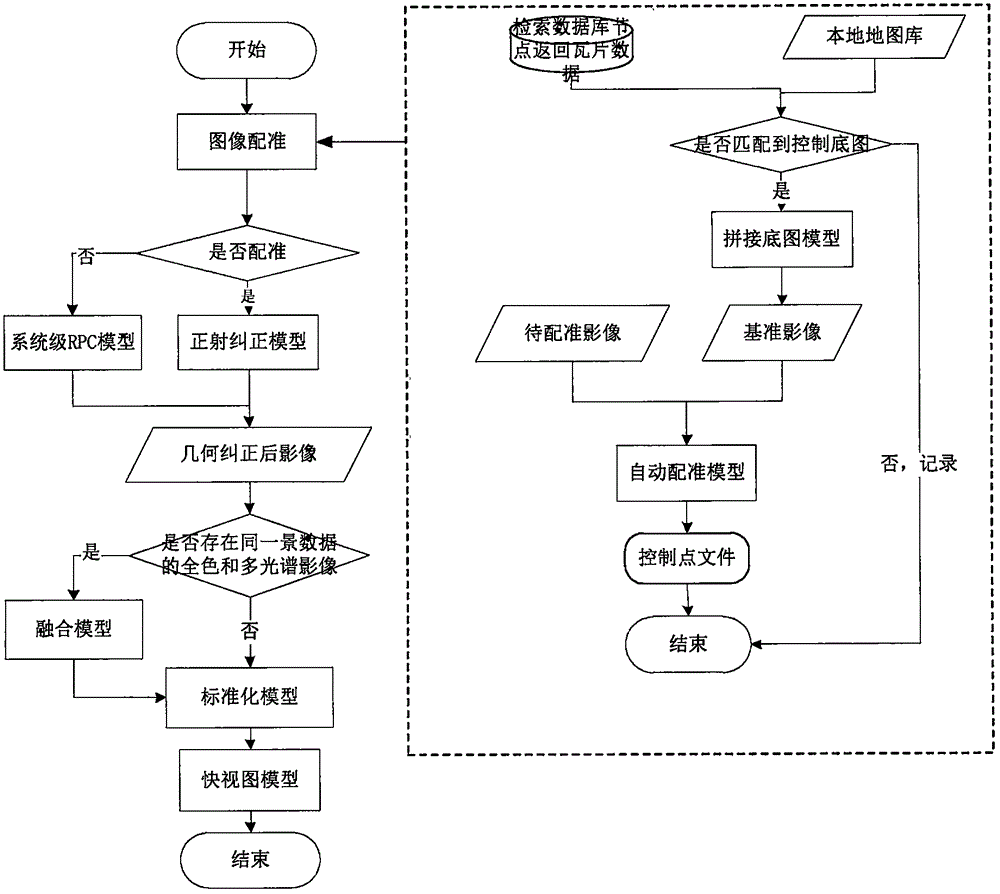

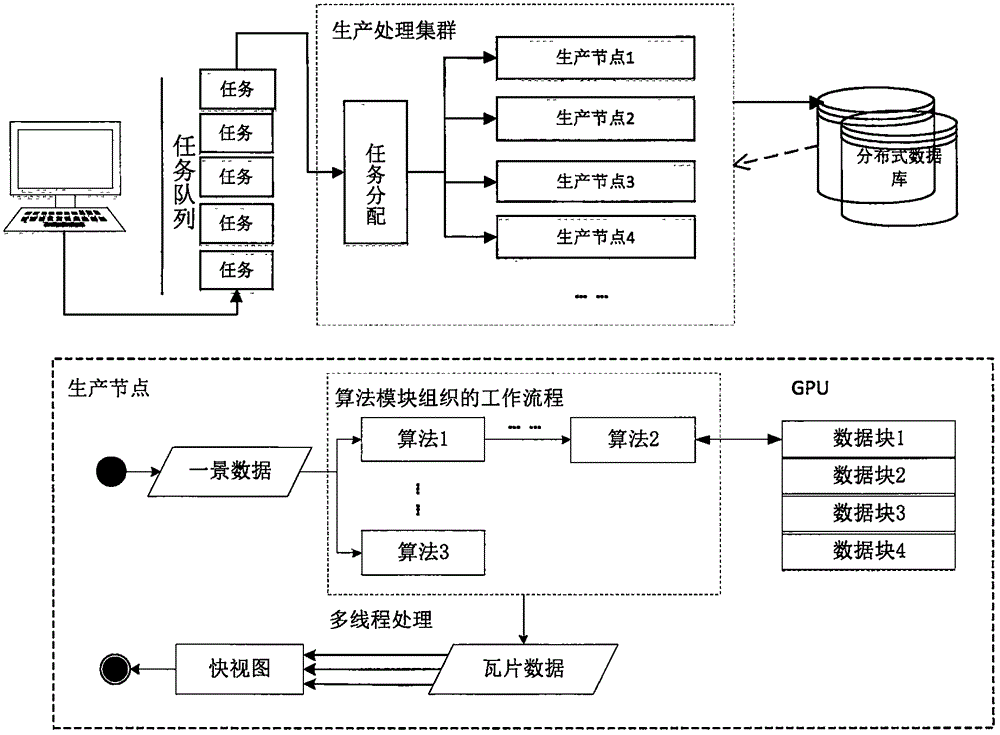

Data intensive computing-based remote sensing image preprocessing system

InactiveCN106202145AShorten production timeSolve analytical calculationsImage analysisStill image data indexingSensing dataData-intensive computing

The invention discloses a data intensive computing-based remote sensing image preprocessing system. Around the problem of remote sensing data automation and business preprocessing, various remote sensing algorithm models are organized to form workflow for finishing business processing of remote sensing data, and adaptive workflow is flexibly established for different remote sensing data. A ''5-parallelism and 1-acceleration'' computing system is formed through task parallelism, data parallelism, algorithm parallelism, multi-machine parallelism, multi-thread parallelism and GPU acceleration in combination with a computing environment, so that production time of remote sensing image processing is shortened. According to the data intensive computing-based remote sensing image preprocessing system, a massive scale of various remote sensing data can be processed quickly in real time, so that the production efficiency is greatly improved while the processing precision is ensured.

Owner:北京四维新世纪信息技术有限公司

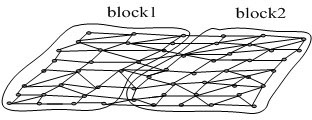

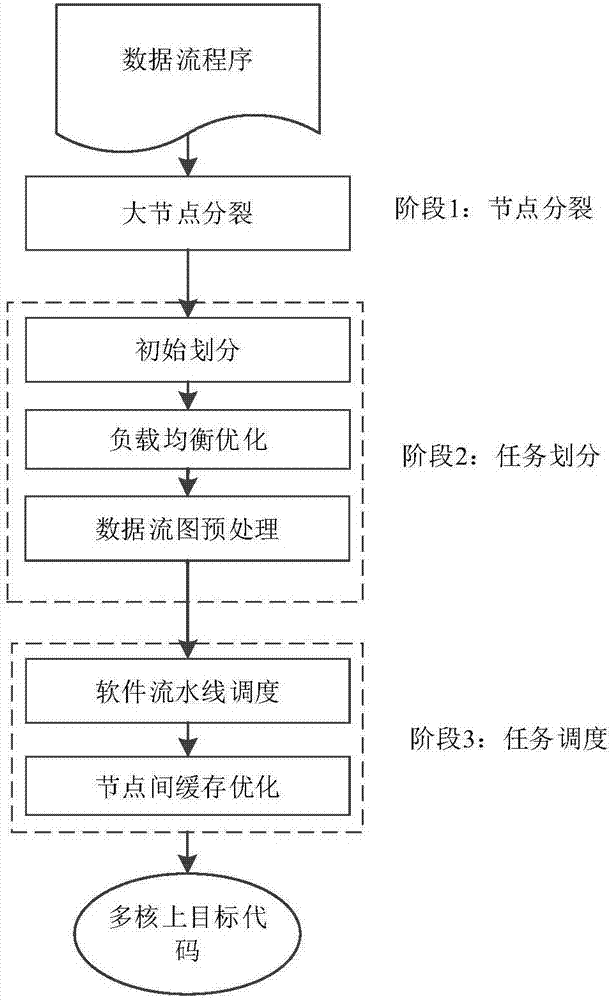

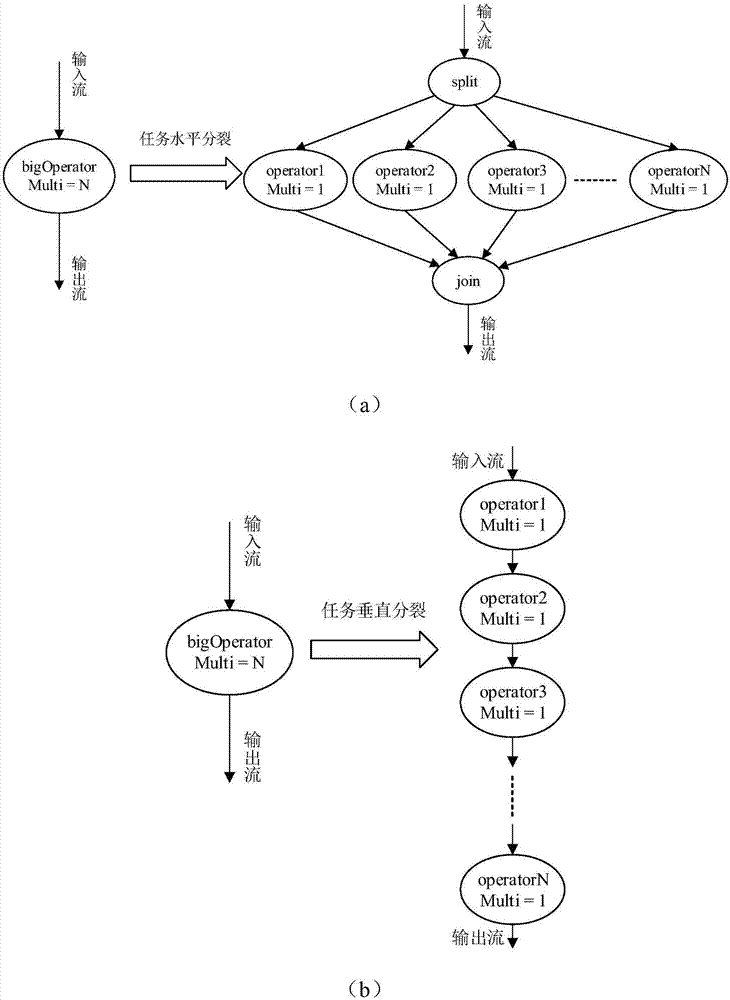

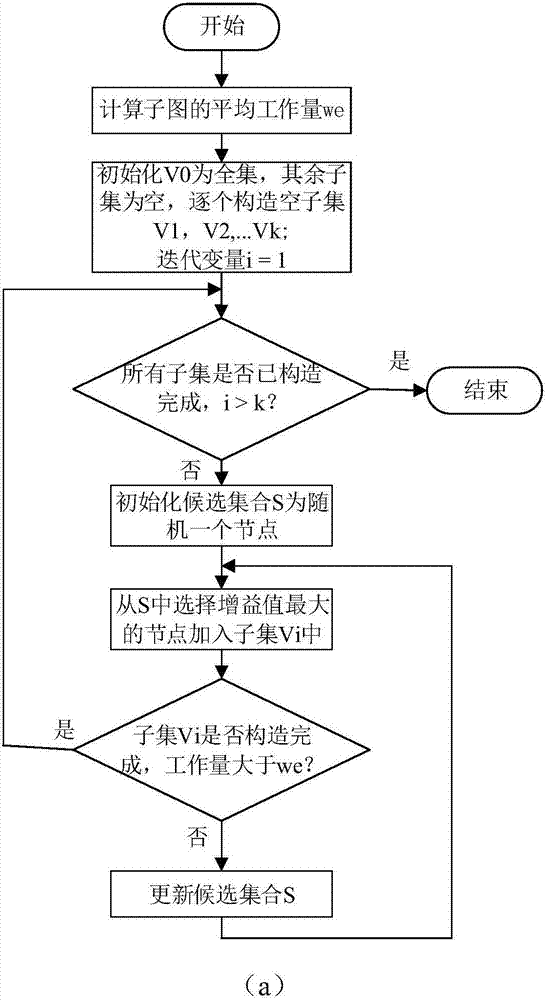

Data flow program task partitioning and scheduling method for multi-core system

ActiveCN107247628ASmall granularityImprove parallelismResource allocationInterprogram communicationData flow programmingMulticore architecture

The invention discloses a data flow program task partitioning and scheduling method for a multi-core system. The method mainly comprises the steps of the splitting algorithm of data flow graph nodes, the GAP task partitioning algorithm, a software pipeline scheduling model and the double-buffer mechanism of the data flow graph nodes. According to the method, the data parallelism, the task parallelism and the software pipeline parallelism which are contained in the data flow programming model are utilized to maximize the program parallelism, according to the characteristics of a multi-core framework, a data flow program is scheduled, and the performance of a multi-core processor is brought into full play.

Owner:HUAZHONG UNIV OF SCI & TECH

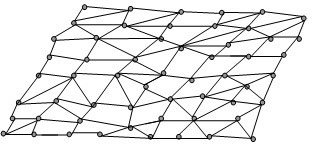

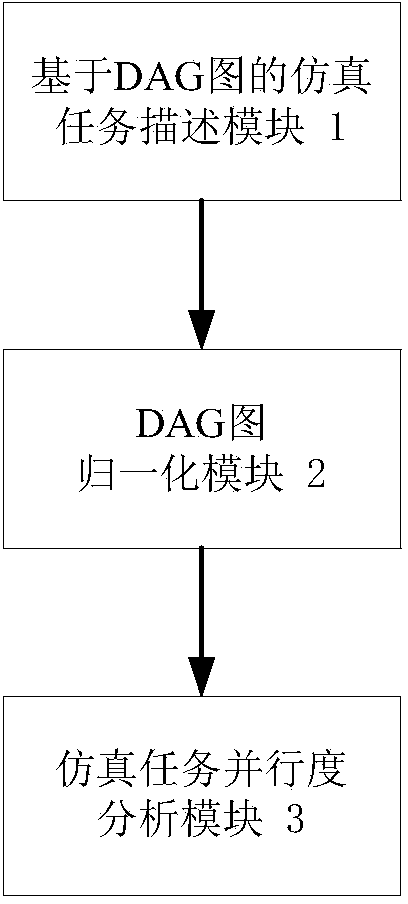

Analysis method for degree of parallelism of simulation task on basis of DAG (Directed Acyclic Graph)

InactiveCN103778001AGuaranteed performanceGuaranteed efficiencySoftware simulation/interpretation/emulationComputation complexityCoupling

The invention discloses an analysis method for a degree of parallelism of a simulation task on basis of a DAG (Directed Acyclic Graph). A prototype system for realizing the analysis method mainly comprises a DAG-based simulation task description module, a DAG-normalizing module and a simulation task parallelism degree analyzing module. The analysis method comprises the concrete implementation steps: constructing a simulation task description and parallelism degree analyzing system; performing attribute description on the calculating complexity, communication coupling degree and task causality sequence of the simulation task by the DAG-based simulation task description module; performing DAG-normalizing processing on the simulation task by the DAG-normalizing module; automatically performing inter-task parallelism degree analyzing and obtaining a quantified parallelism degree value by the simulation task parallelism degree analyzing module according to the normalized DAG. The analysis method for the degree of parallelism of the simulation task oriented to high-effect simulation of a complex system is realized, the inter-task parallelism can be quickly, effectively and automatically analyzed according to the DAG description of the simulation task and the parallelism property and efficiency of a high-effect simulation system are ensured.

Owner:BEIJING SIMULATION CENT

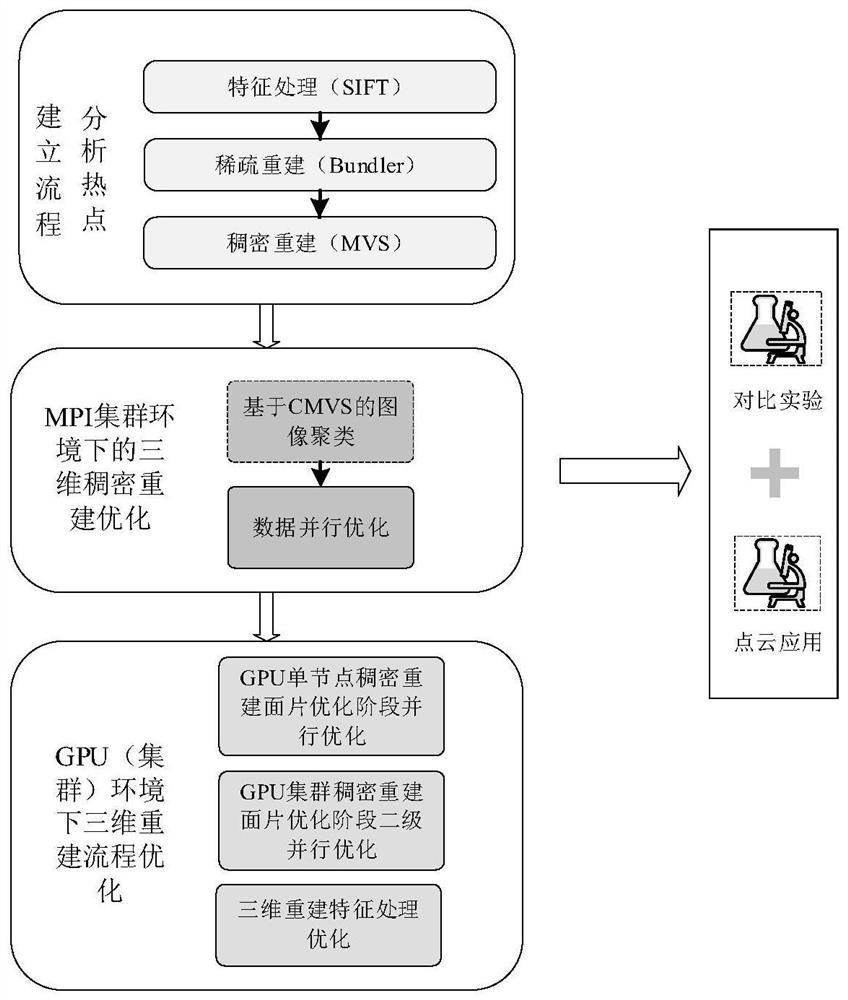

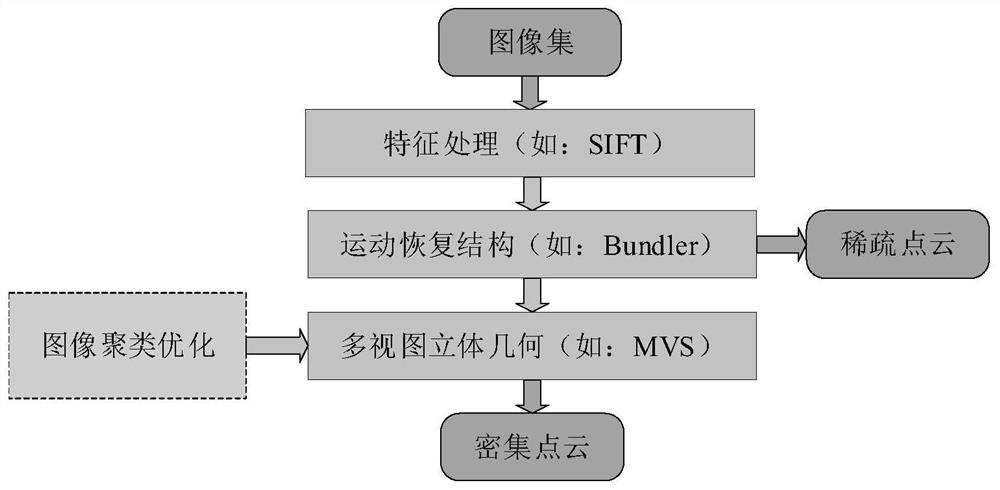

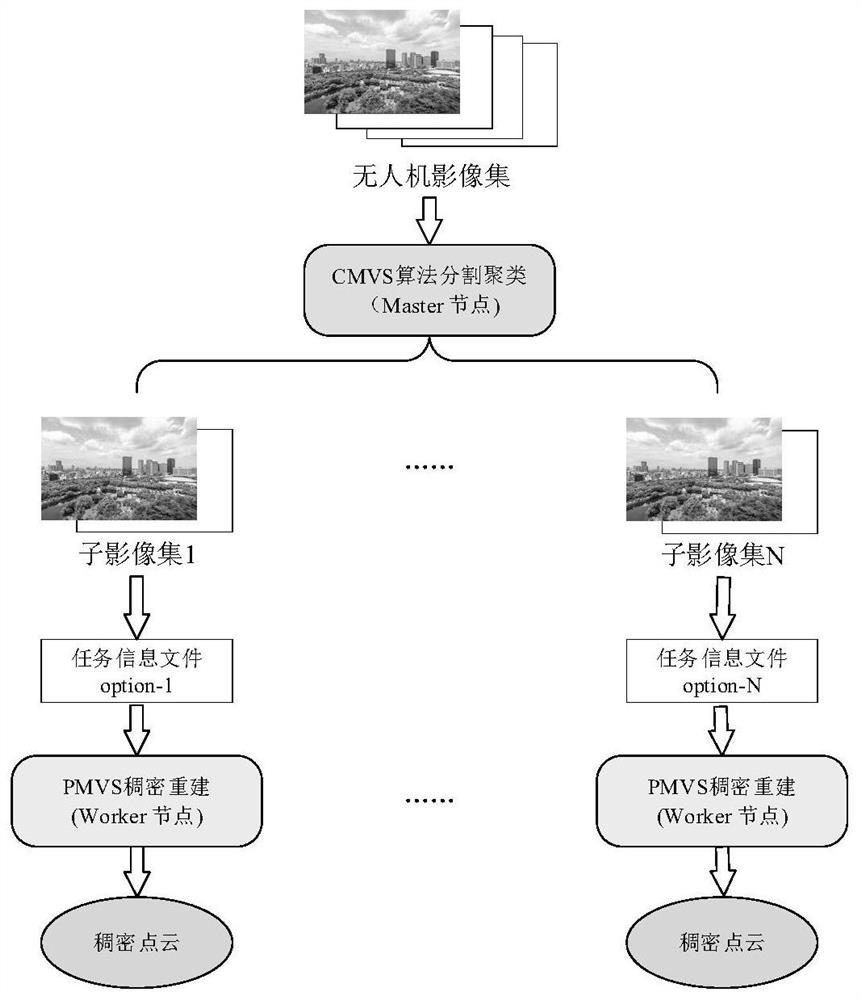

Three-dimensional reconstruction algorithm parallelization method based on GPU cluster

InactiveCN111968218AOptimize the rebuild processOptimize and speed up the rebuilding processMultiple digital computer combinationsElectric digital data processingComputational sciencePoint cloud

The invention discloses a three-dimensional reconstruction algorithm parallelization method based on a GPU cluster, and relates to the technical field of computer vision. The method is based on an SfMalgorithm, researches a drone image three-dimensional reconstruction technology process, and adopts a GPU cluster as a processing platform to solve the problem that drone three-dimensional dense reconstruction processing is time-consuming. Specifically, based on an SFM_MVS correlation theory, a real scene three-dimensional reconstruction correlation process based on a picture sequence is grasped,and meanwhile, an MPI parallel programming technology and a GPU parallel programming technology are adopted to carry out optimization acceleration research work on part of links of the three-dimensional reconstruction process. According to the method, sparse reconstruction algorithm operator replacement is carried out by using a cluster, so that the problems of large data volume and time-consuming calculation of aerial images of an unmanned aerial vehicle are effectively solved; and dense point cloud reconstruction flow in the later stage of three-dimensional reconstruction is effectively accelerated by coarse-grained data parallelism of a dense reconstruction algorithm and fine-grained parallelism and optimization of a dense matching algorithm feature extraction link.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Data parallelism and parallel operations in stream processing

ActiveUS8769485B2Digital computer detailsSpecific program execution arrangementsApplication softwareData parallelism

A stream processing platform that provides fast execution of stream processing applications within a safe runtime environment. The platform includes a stream compiler that converts a representation of a stream processing application into executable program modules for a safe environment. The platform allows users to specify aspects of the program that contribute to generation of modules that execute as intended. A user may specify aspects to control a type of implementation for loops, order of execution for parallel paths, whether multiple instances of an operation can be performed in parallel or whether certain operations should be executed in separate threads. In addition, the stream compiler may generate executable modules in a way that cause a safe runtime environment to allocate memory or otherwise operate efficiently.

Owner:CLOUD SOFTWARE GRP INC

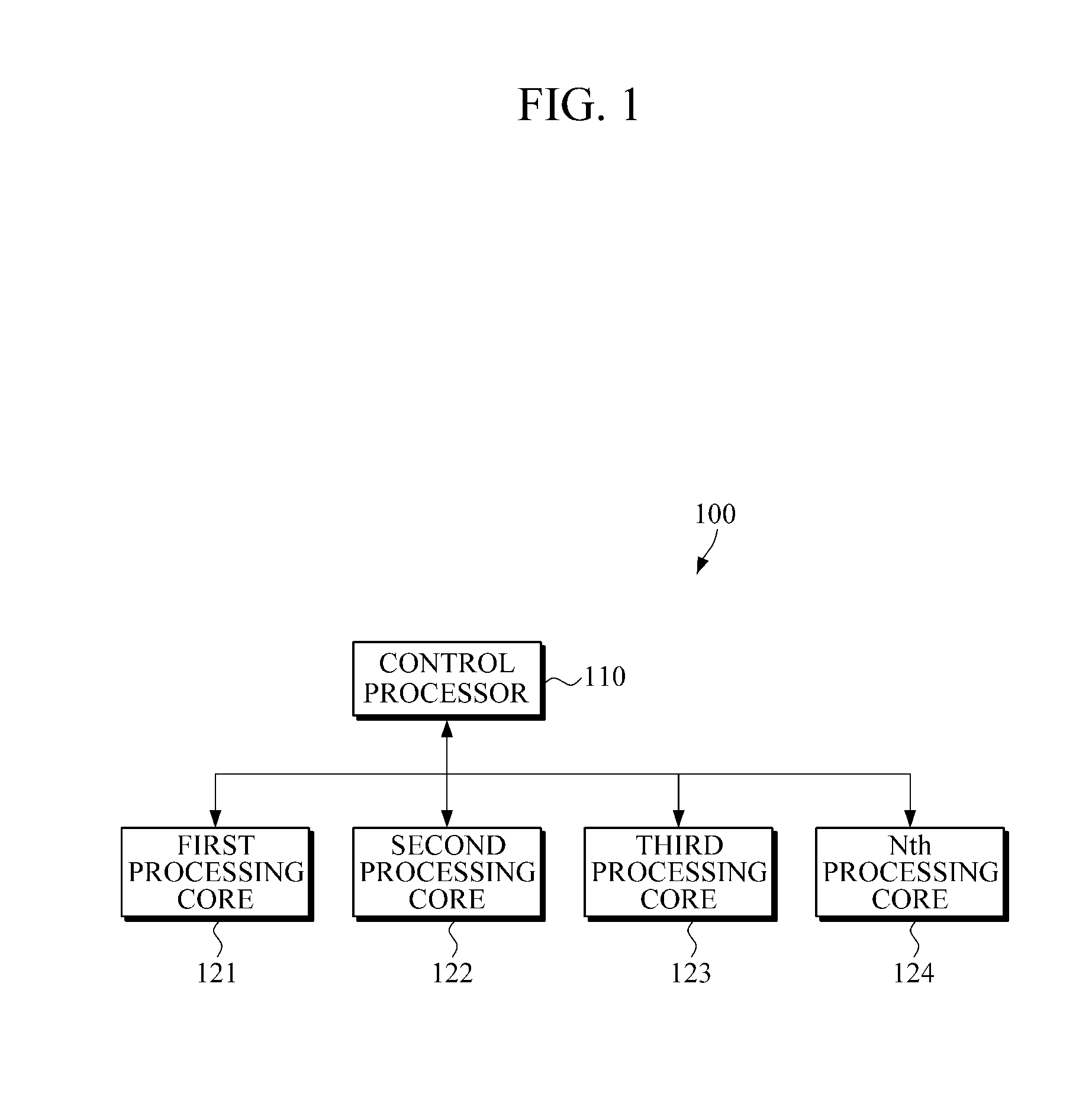

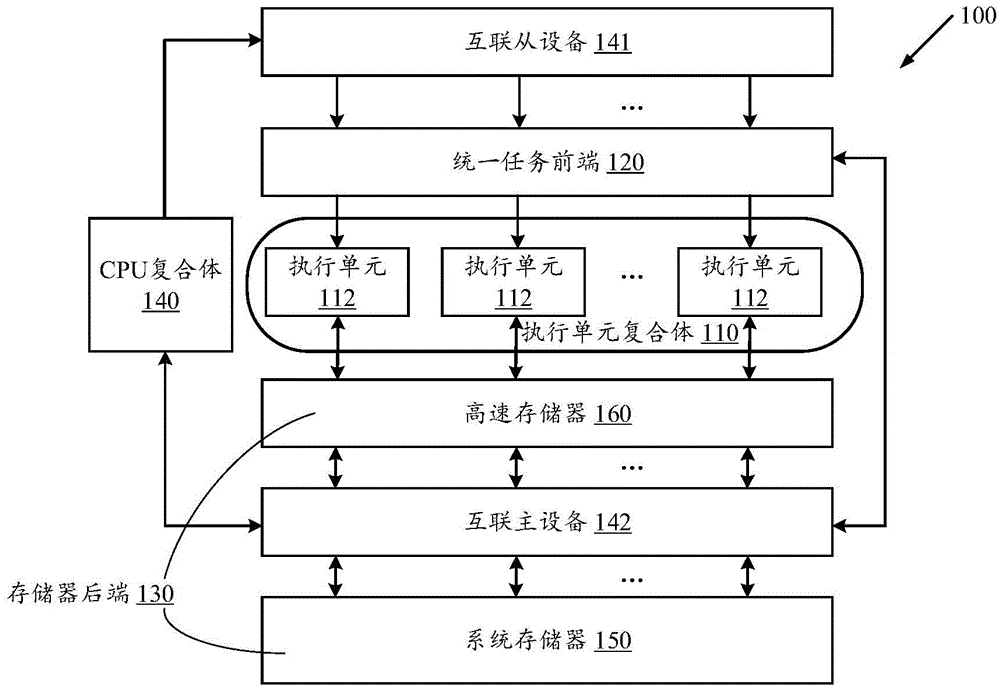

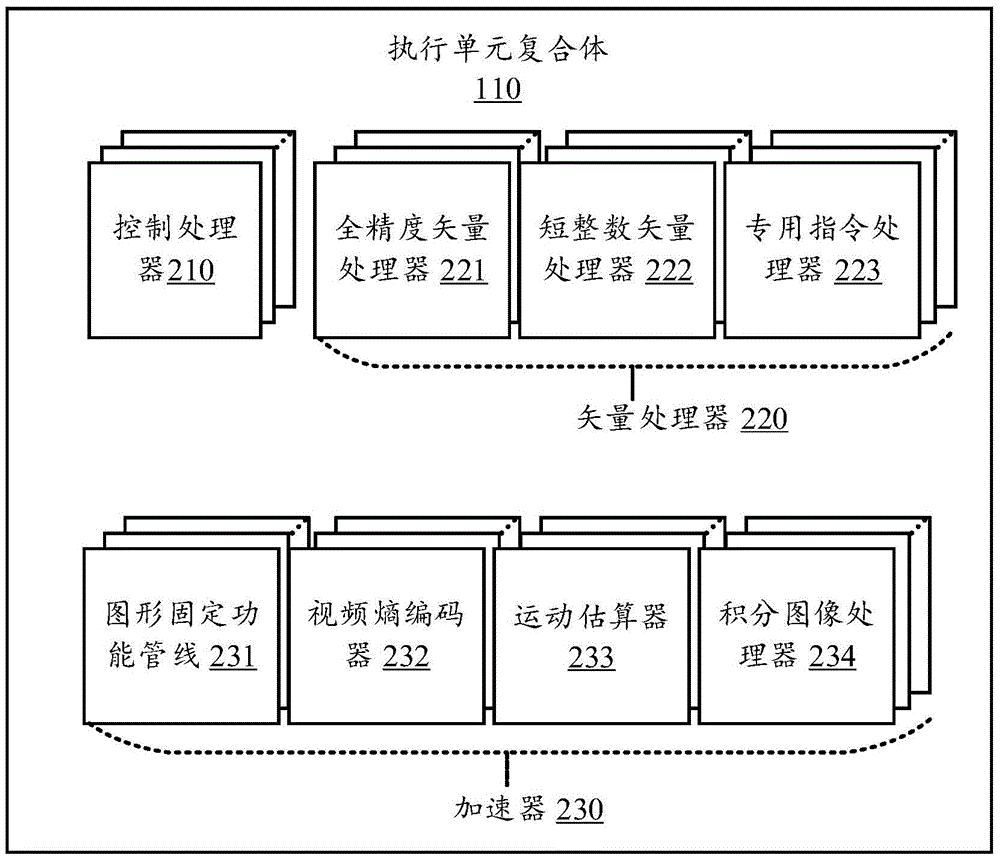

Heterogeneous Computing system and method thereof

InactiveCN106502782AImprove energy efficiencyImprove computing efficiencyResource allocationEnergy efficient computingComputerized systemMultimedia signal processing

A heterogeneous computing system described herein has an energy-efficient architecture that exploits producer-consumer locality, task parallelism and data parallelism. The heterogeneous computing system includes a task frontend that dispatches tasks and updated tasks from queues for execution based on properties associated with the queues, and execution units that include a first subset acting as producers to execute the tasks and generate the updated tasks, and a second subset acting as consumers to execute the updated tasks. The execution units includes one or more control processors to perform control operations, vector processors to perform vector operations, and accelerators to perform multimedia signal processing operations. The heterogeneous computing system also includes a memory backend containing the queues to store the tasks and the updated tasks for execution by the execution units.

Owner:MEDIATEK INC

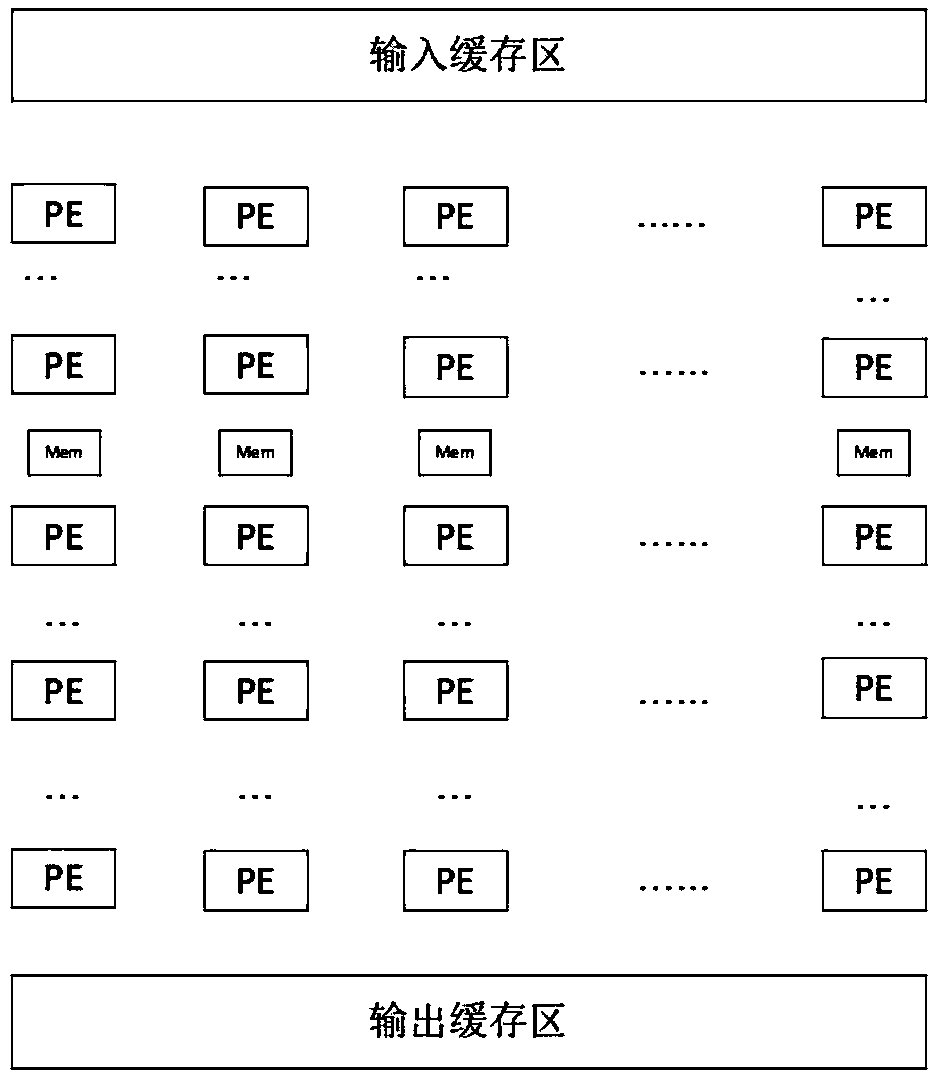

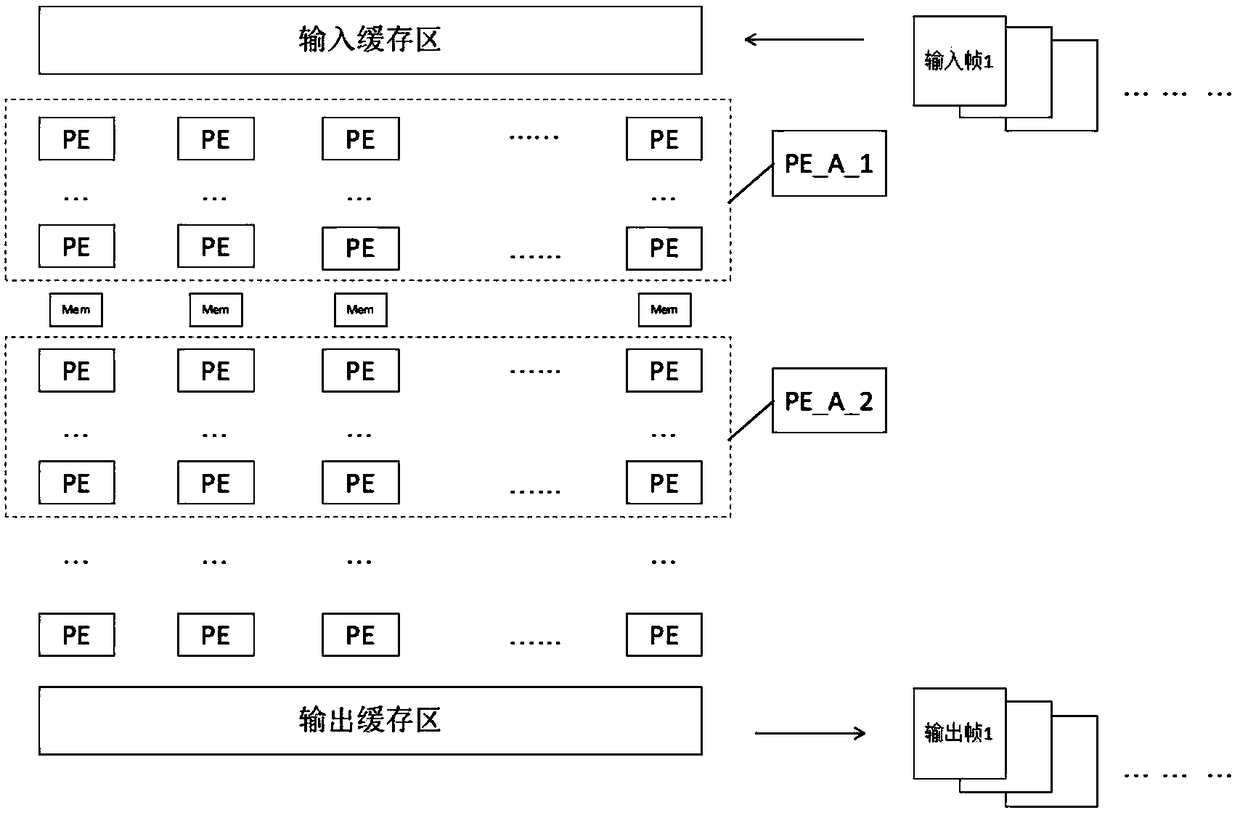

Data parallelism-based deep learning processor architecture and method

ActiveCN108334474AIncrease profitIncrease usageResource allocationArchitecture with single central processing unitNerve networkNetwork data

The invention relates to a data parallelism-based deep learning processor architecture and method. The architecture comprises an input buffer region (In_Buf), PE arrays, multiple on-chip buffer regions and an output buffer region (Out_Buf); and a group of the on-chip buffer regions is arranged between the two adjacent N*N PE arrays. By configuring the N*N PE arrays, on-chip transmission of data can be realized and two-way transmission of the data and off-chip is reduced to the maximum extent; energy consumption of on-chip and off-chip transmission of conventional neural network data is reduced; and a new solution is provided for reducing the energy consumption problem of a neural network.

Owner:SHANDONG LINGNENG ELECTRONIC TECH CO LTD

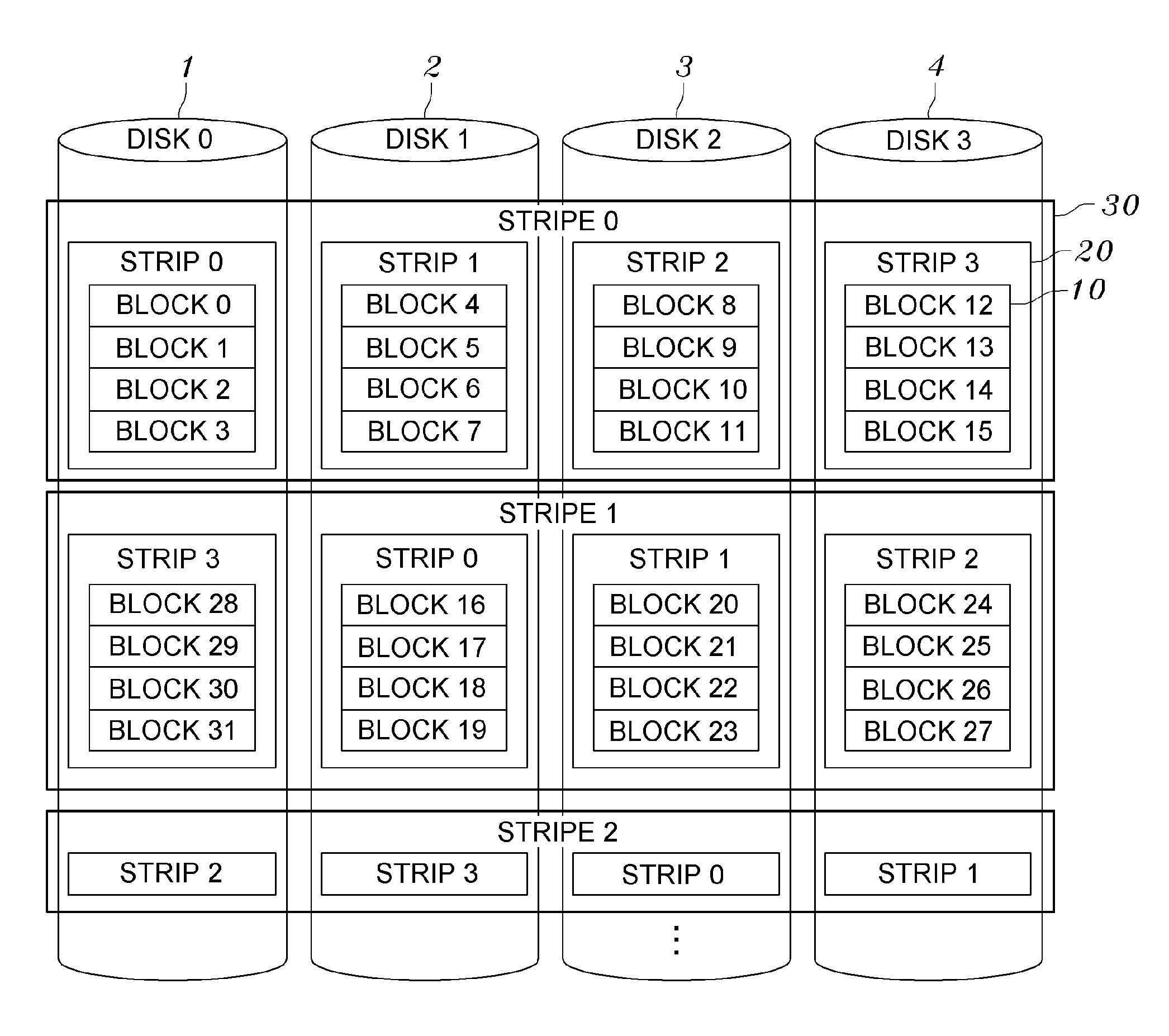

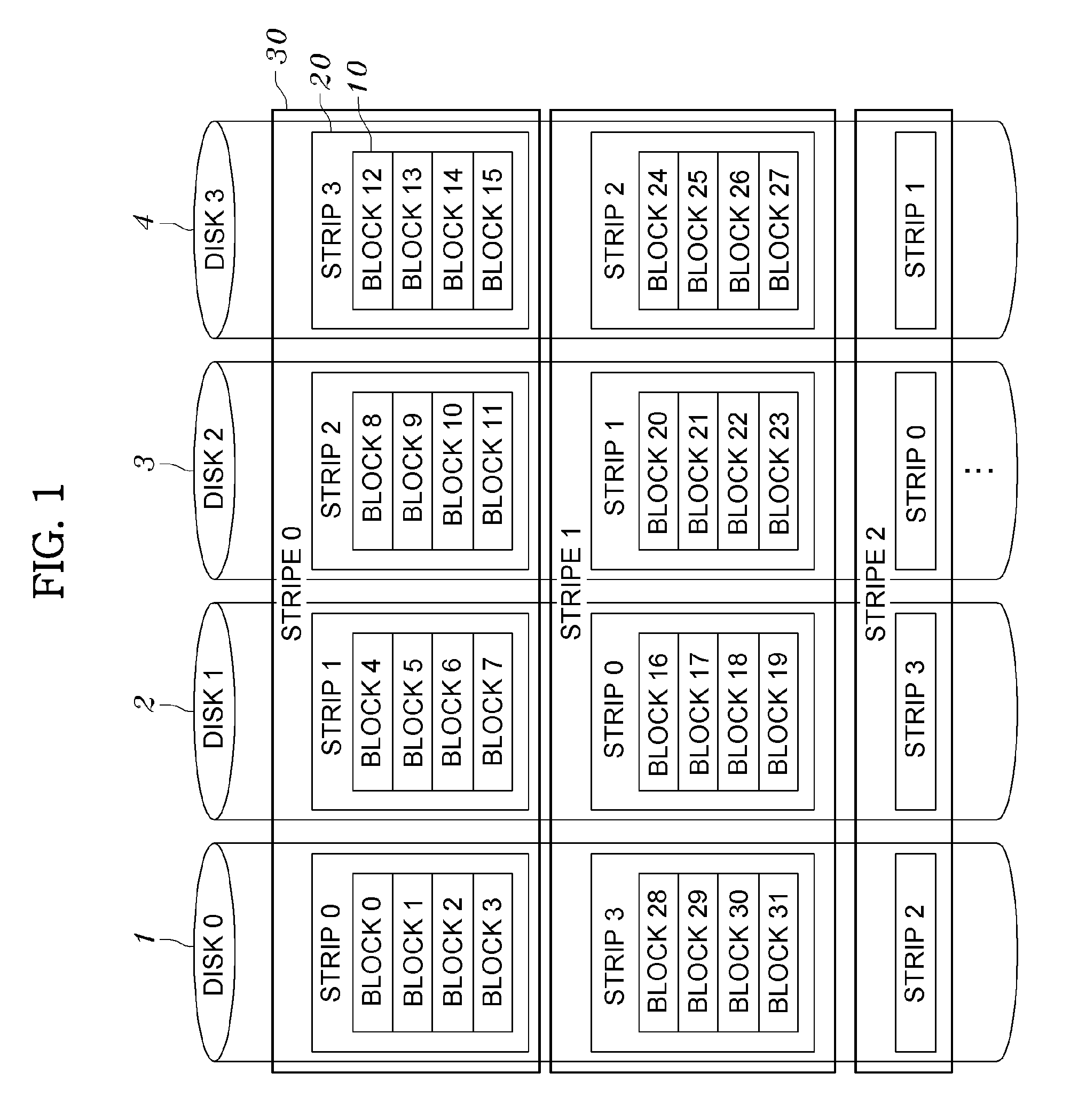

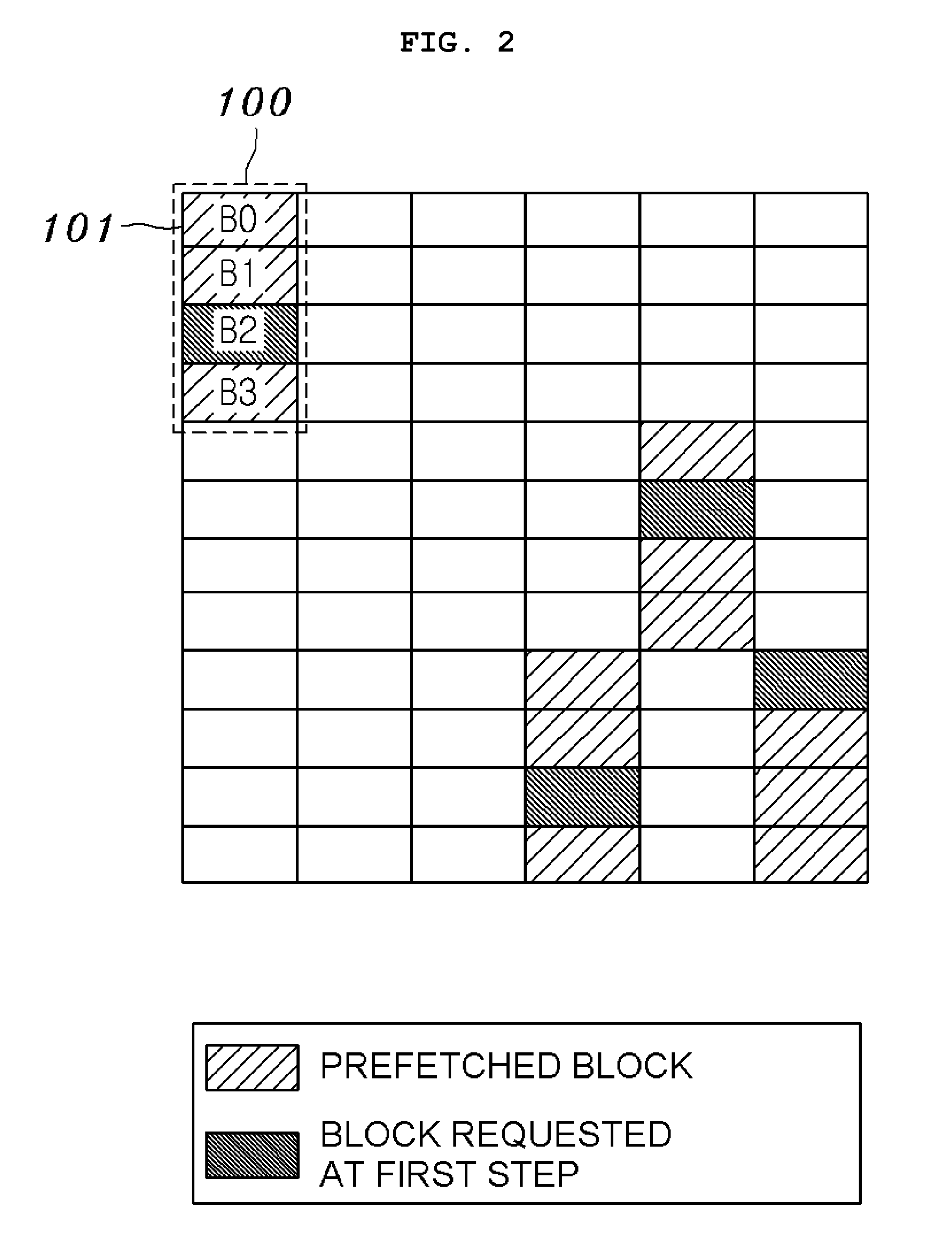

Mass prefetching method for disk array

InactiveUS7822920B2Memory architecture accessing/allocationMultiple digital computer combinationsIndependence lossDisk array

Owner:KOREA ADVANCED INST OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com