Patents

Literature

97 results about "GPU cluster" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

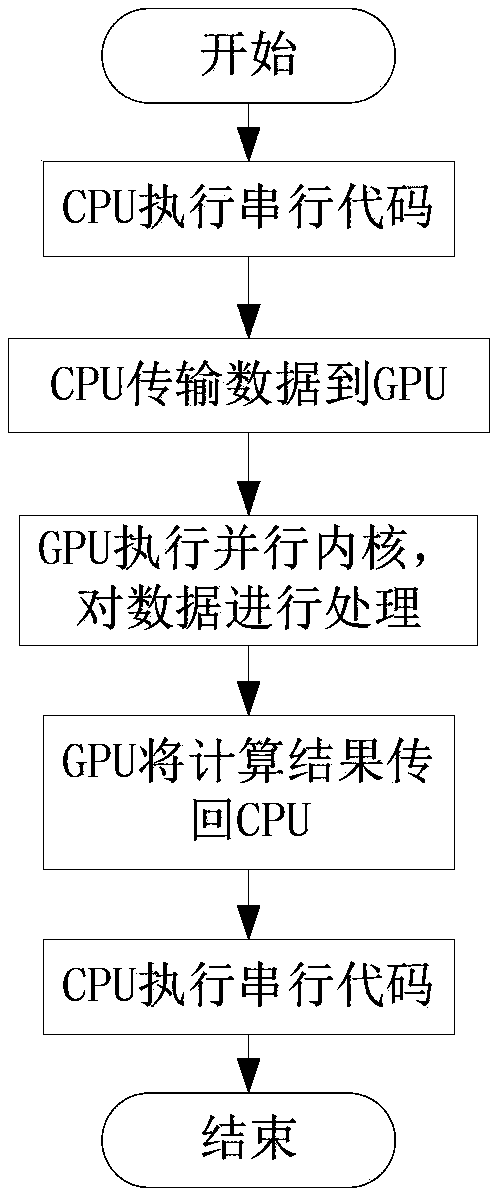

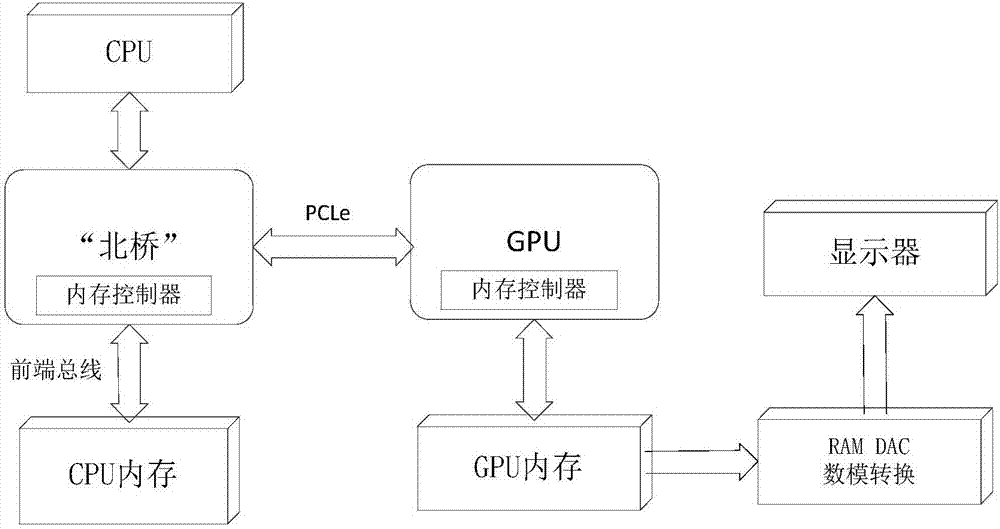

A GPU cluster is a computer cluster in which each node is equipped with a Graphics Processing Unit (GPU). By harnessing the computational power of modern GPUs via General-Purpose Computing on Graphics Processing Units (GPGPU), very fast calculations can be performed with a GPU cluster.

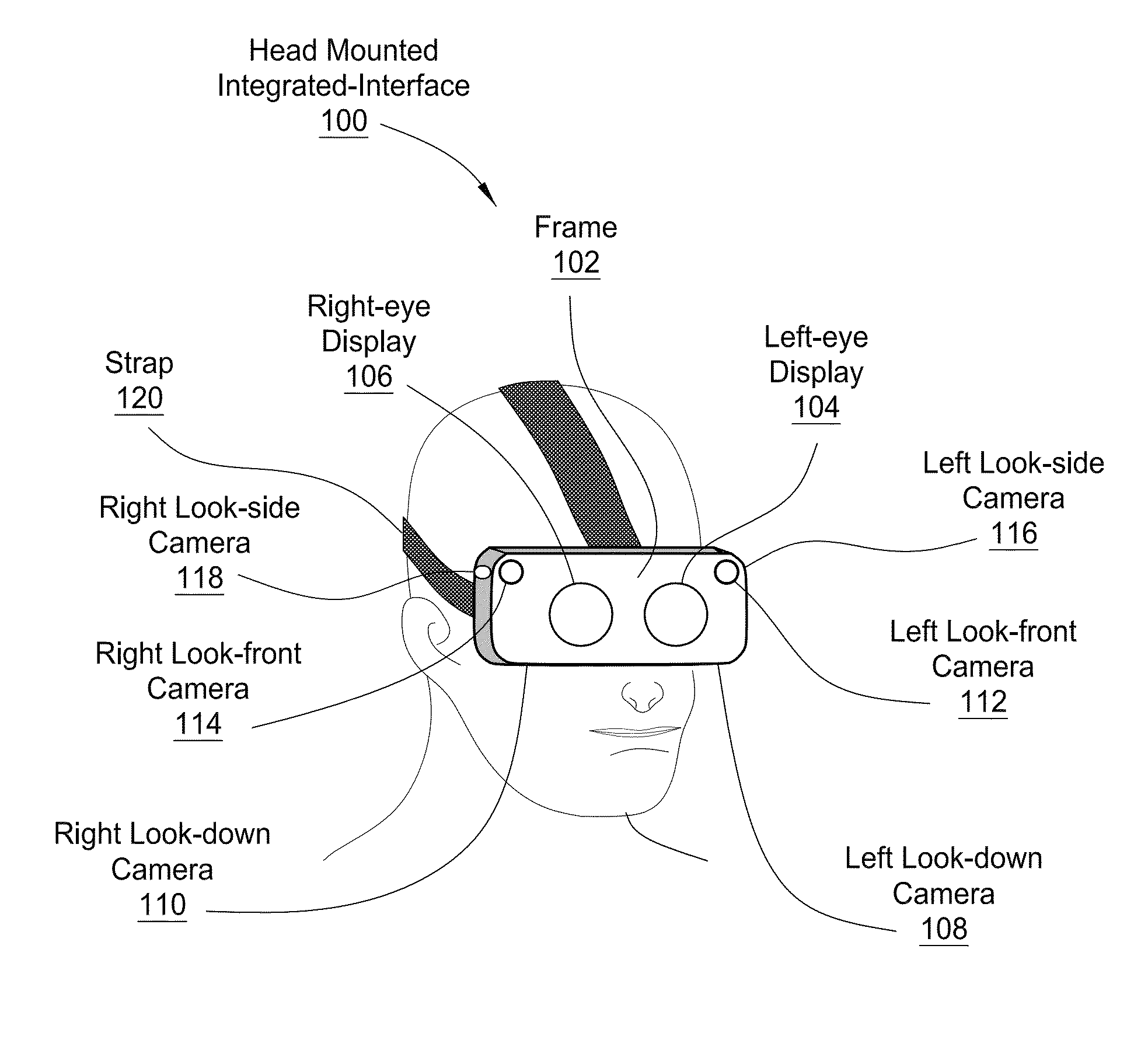

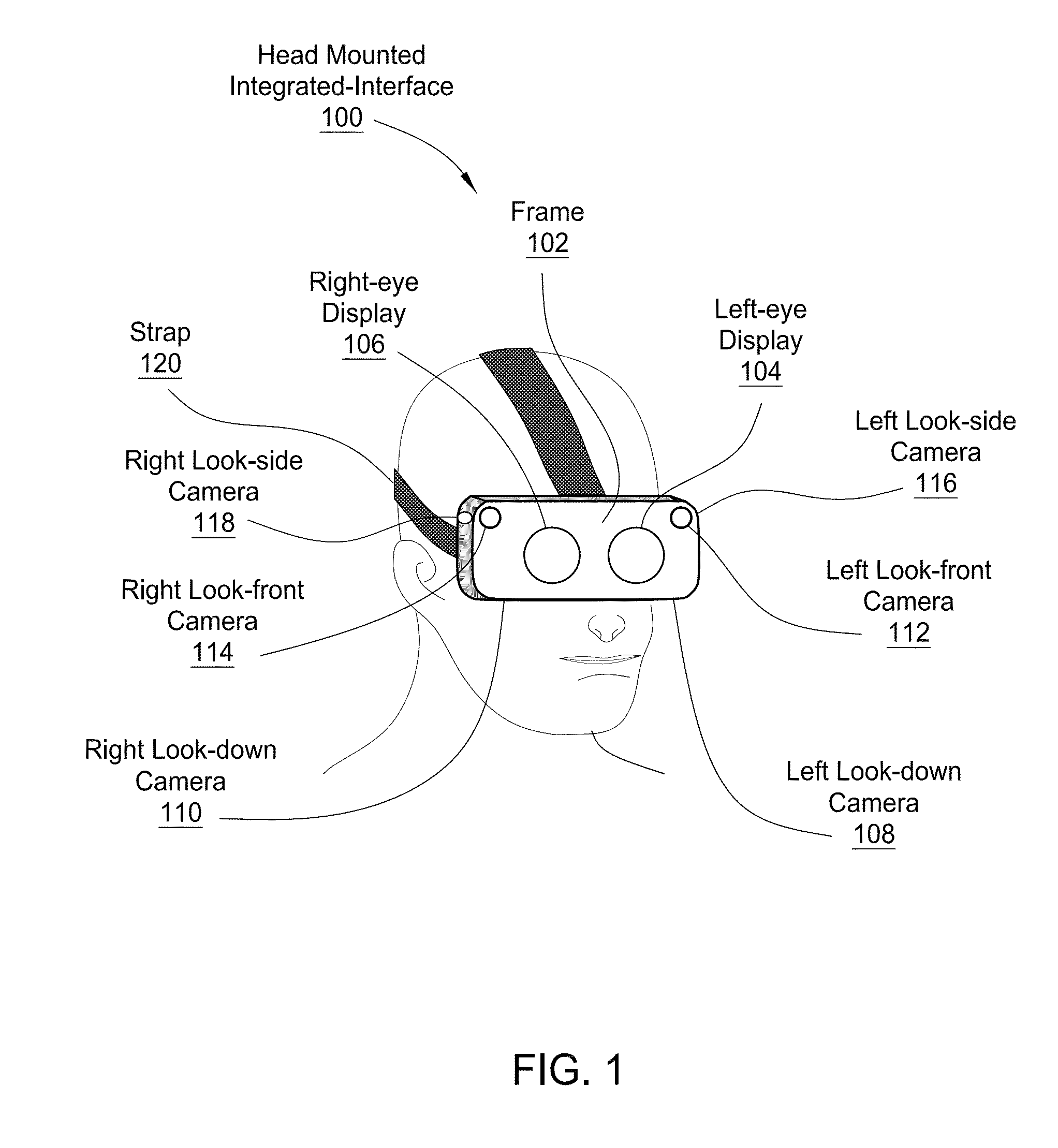

Head-mounted integrated interface

InactiveUS20150138065A1Improve versatilityImprove portabilityInput/output for user-computer interactionCathode-ray tube indicatorsGraphicsLarge screen

A head mounted integrated interface (HMII) is presented that may include a wearable head-mounted display unit supporting two compact high resolution screens for outputting a right eye and left eye image in support of the stereoscopic viewing, wireless communication circuits, three-dimensional positioning and motion sensors, and a processing system which is capable of independent software processing and / or processing streamed output from a remote server. The HMII may also include a graphics processing unit capable of also functioning as a general parallel processing system and cameras positioned to track hand gestures. The HMII may function as an independent computing system or as an interface to remote computer systems, external GPU clusters, or subscription computational services, The HMII is also capable linking and streaming to a remote display such as a large screen monitor.

Owner:NVIDIA CORP

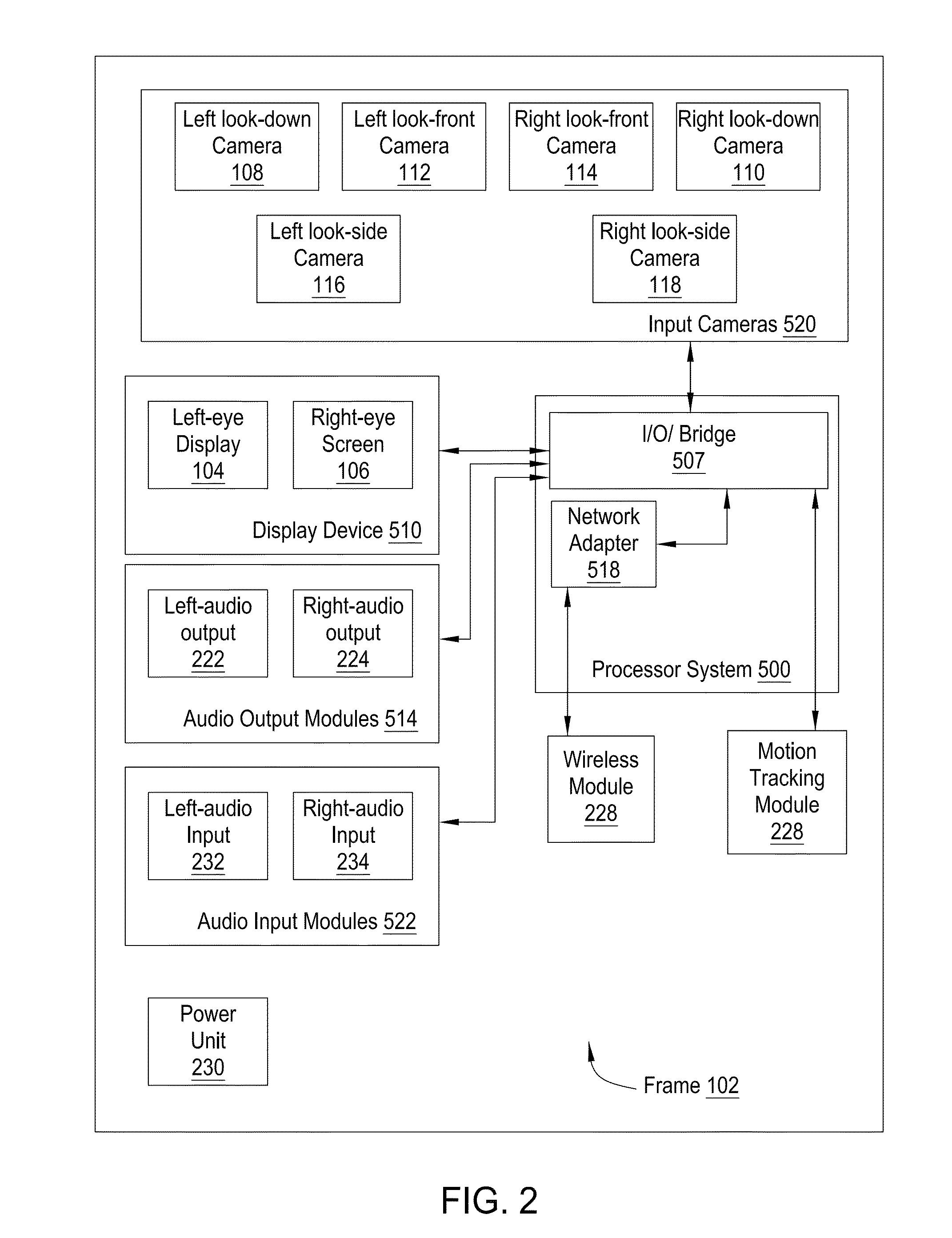

Model training method, server and computer readable storage medium

ActiveCN110134636AImprove training speedupReduce communication needsDigital computer detailsEnergy efficient computingModel parametersGPU cluster

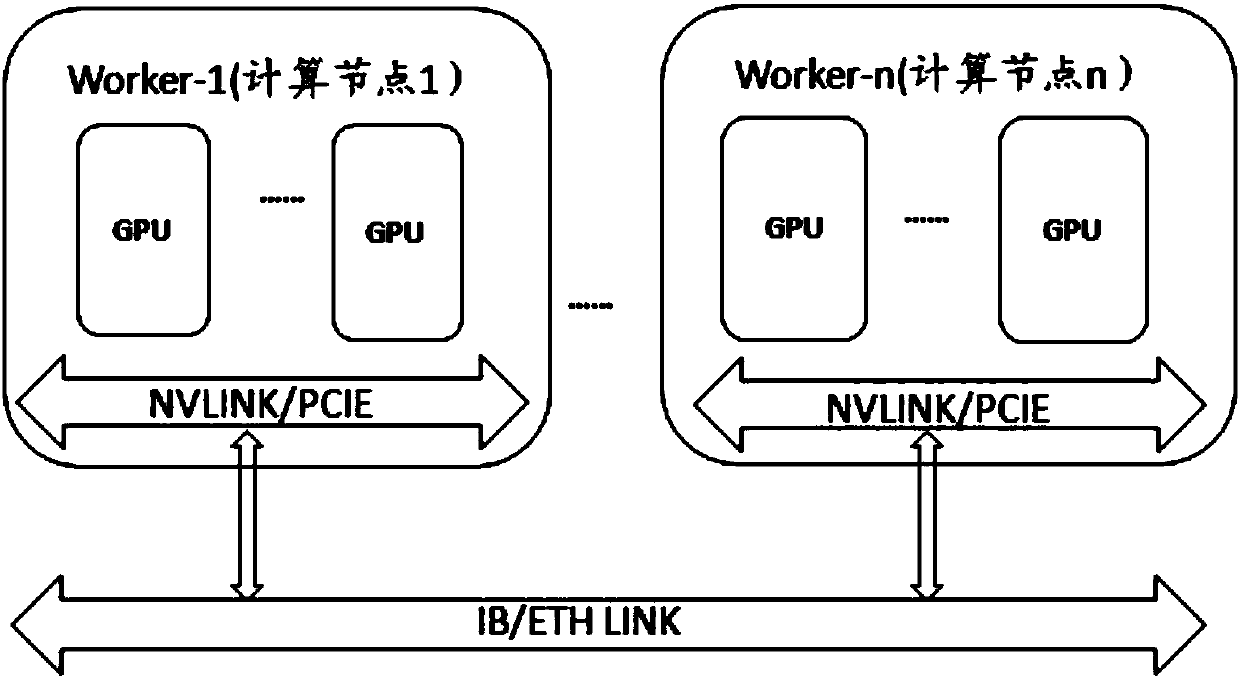

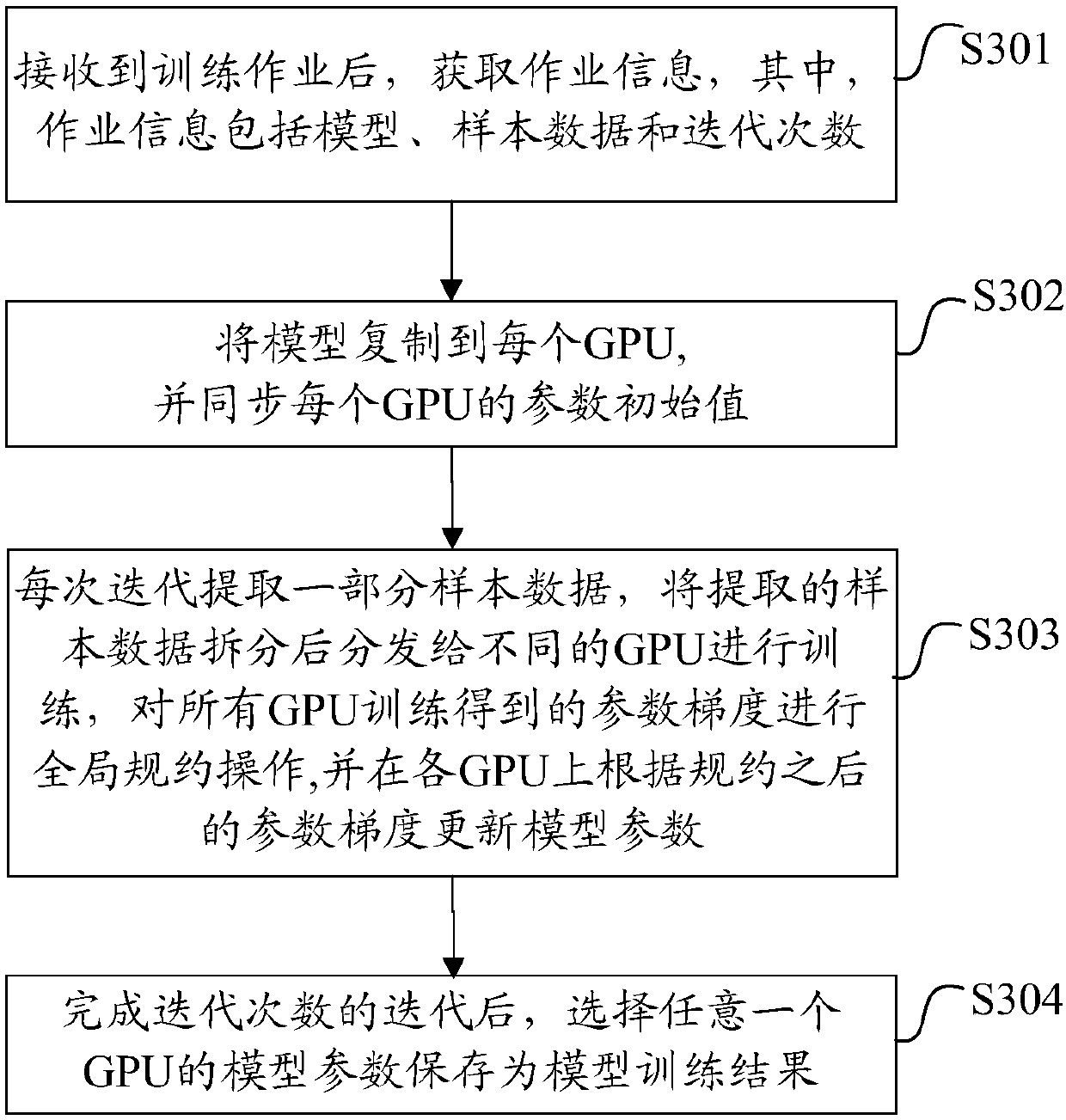

The invention discloses a model training method, a server and a computer readable storage medium, and belongs to the field of artificial intelligence calculation. The model training method comprises the steps: after a training job is received, acquiring job information; copying a model to each GPU, and synchronizing the initial values of model parameters of each GPU; extracting a part of sample data each time of iteration, splitting the extracted sample data, distributing the split sample data to different GPUs for training, performing global protocol operation on parameter gradients obtainedby training of all the GPUs, and updating the model parameters on the GPUs according to the parameter gradients after protocol; and after iteration of the iteration times is completed, selecting the model parameters of any GPU and storing the model parameters as a model training result. According to the model training method, the bandwidth bottleneck and the computing power bottleneck between thecomputing nodes are eliminated by fully utilizing the high-speed data transmission bandwidth of the GPU-GPU, so that the synchronous training efficiency and the speed-up ratio of the model on the GPUcluster are improved.

Owner:ZTE CORP

Real-time ray tracing and rendering method based on GPU (Graphics Processing Unit) aggregate

ActiveCN103049927AIntersection calculation speed increasedImprove parallelism3D-image renderingDynamic balanceGPU cluster

The invention discloses a real-time ray tracing and rendering method based on a GPU (Graphics Processing Unit) aggregate. The method comprises the following steps of pre-dividing each frame task to be rendered into a plurality of sub-tasks in a screen space; distributing the sub-tasks to each rendering machine node in the aggregate by using a dynamic balance mechanism; parallelly performing parallel ray tracing calculation on each pixel of the screen space of each sub-task in each rendering node by using the GPU; and after the sub-task of rendering on each rendering node is completed, sending a middle image to a managing machine node, and after the managing machine node receives rendering result images of all the sub-tasks, splicing all the rendering result images of all the sub-tasks into a final result image. The ray is highly and parallelly traced and calculated by the GPU, meanwhile intersection operation on points and triangular surface sheets is performed by a polar coordinate expression way, so that while a picture with the effect of an actual feeling is achieved, very good rendering performance is obtained, and the real-time requirement of application is met.

Owner:ZHEJIANG UNIV

Budget power guidance-based high-energy-efficiency GPU (Graphics Processing Unit) cluster system scheduling algorithm

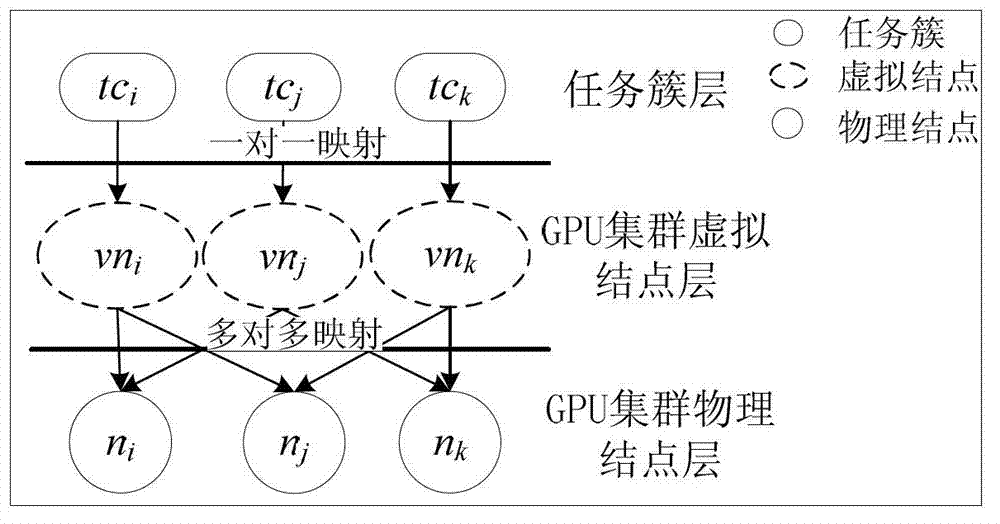

ActiveCN102819460AContinuous energy saving effectGood energy saving effectEnergy efficient ICTResource allocationHigh energyCluster systems

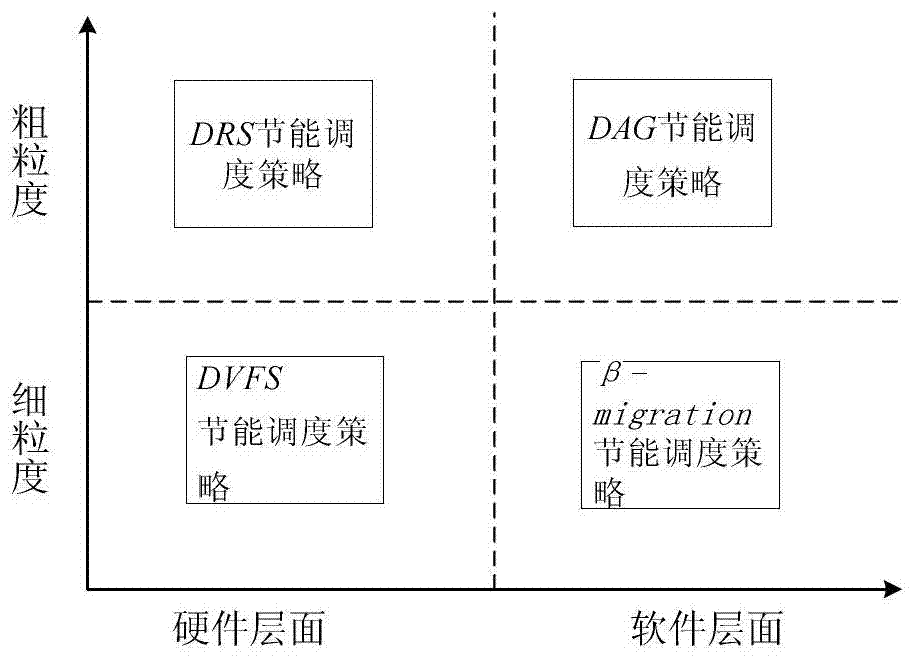

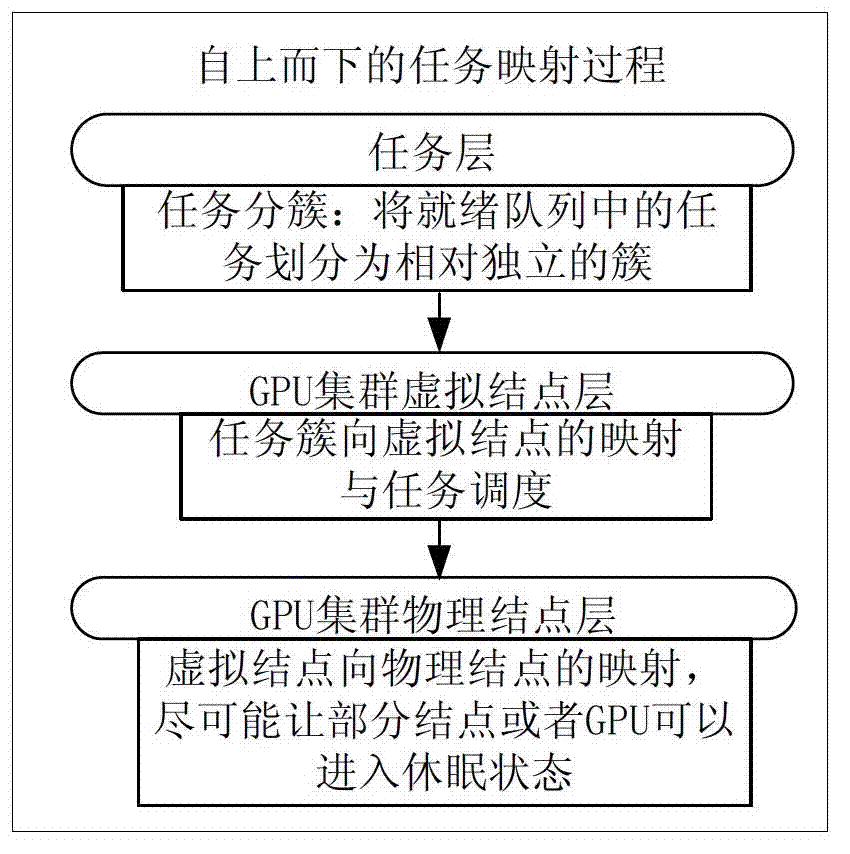

The invention discloses a budget power guidance-based high-energy-efficiency GPU (Graphics Processing Unit) cluster system scheduling algorithm, which comprises the following steps of: decomposing all tasks to be scheduled into basic tasks; dividing all the basic tasks into a plurality of independent task clusters; generating virtual nodes according to the task clusters to form one-to-one mapping relation between the task clusters and the virtual nodes; and finally performing many-to-many mapping on the virtual nodes to physical nodes to meet the requirement of dynamically allocating a proper processor to the tasks in the task clusters for execution of the tasks. According to the budget power guidance-based high-energy-efficiency GPU cluster system scheduling algorithm, the energy efficiency of a GPU cluster system can be effectively increased, so that an obvious energy-saving effect can be realized in the long term.

Owner:TSINGHUA UNIV

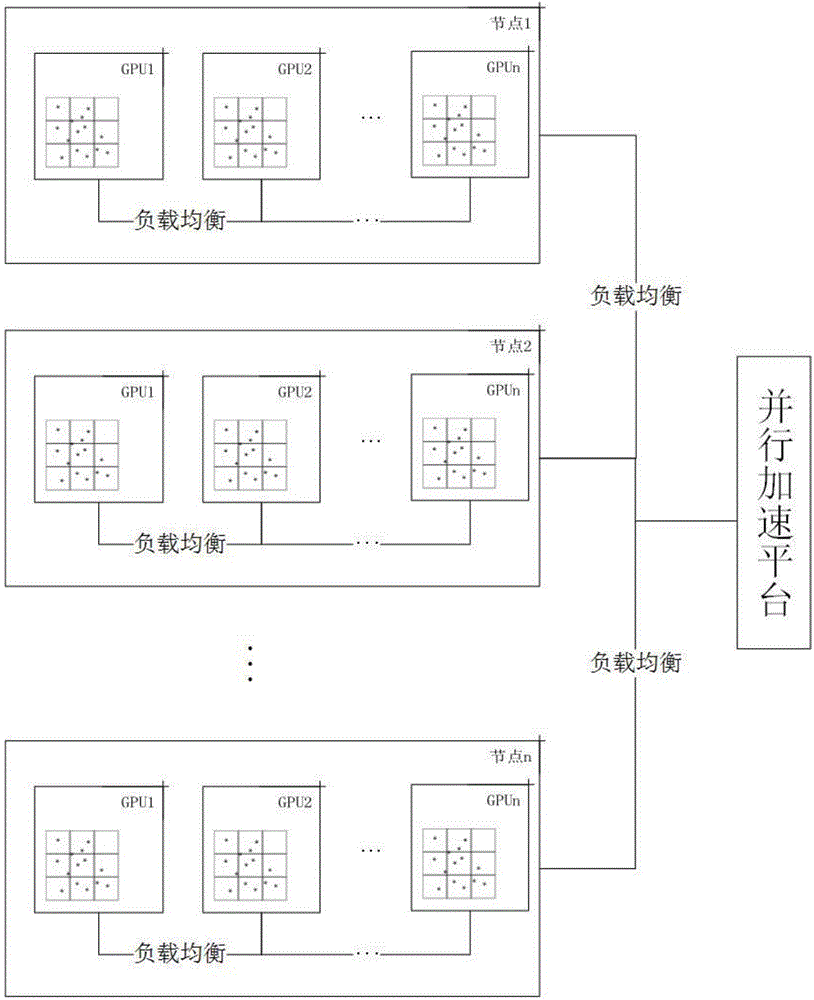

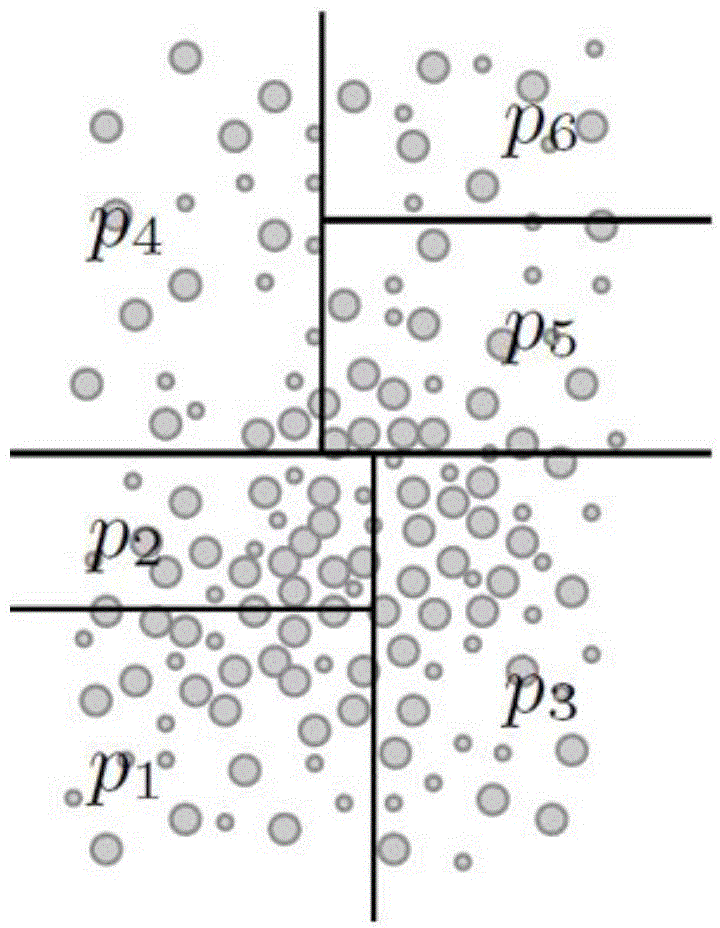

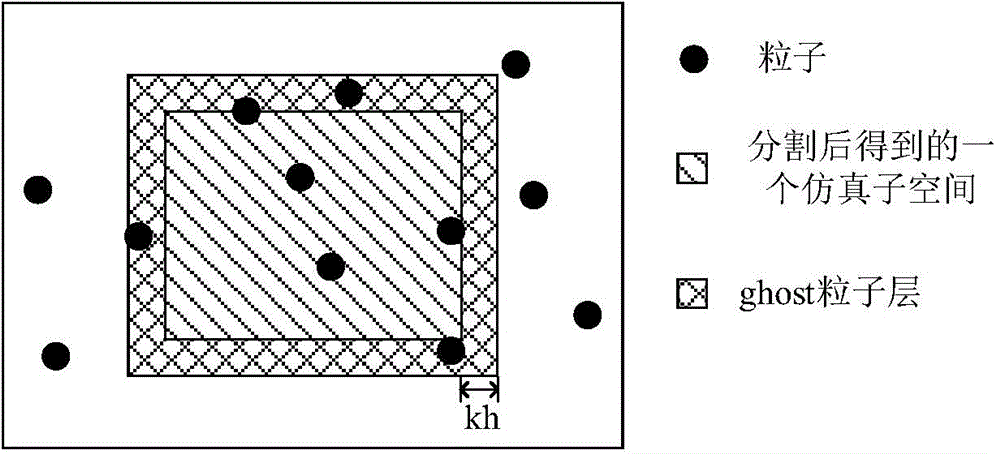

Parallel fluid simulation acceleration method based on GPU (Graphics Processing Unit) cluster

InactiveCN104360896ALoad balancingNarrow down the neighborhood search spaceResource allocationSoftware simulation/interpretation/emulationGPU clusterGraphics processing unit

The invention relates to a parallel fluid simulation acceleration method based on a GPU (Graphics Processing Unit) cluster. Aiming at the characteristics of large fluid simulation calculated quantity and high degree of parallelism, an automatic parallel acceleration method is designed to carry out simulation and algorithm research on fluid. The invention provides and realizes a load-balancing algorithm between multiple GPUs and between multiple nodes in the same node. In a given space, a fluid simulation algorithm based on location is used to simulate physical action of the fluid, and the algorithm is accelerated through reducing branches and shrinking a neighbor region searching range. The space where the fluid is located is divided, each node processes one sub-space, and the corresponding sub-space is further divided in each node according to the quantity of GPUs, so that the parallelization of fluid simulation on the GPU cluster is completed.

Owner:BEIHANG UNIV

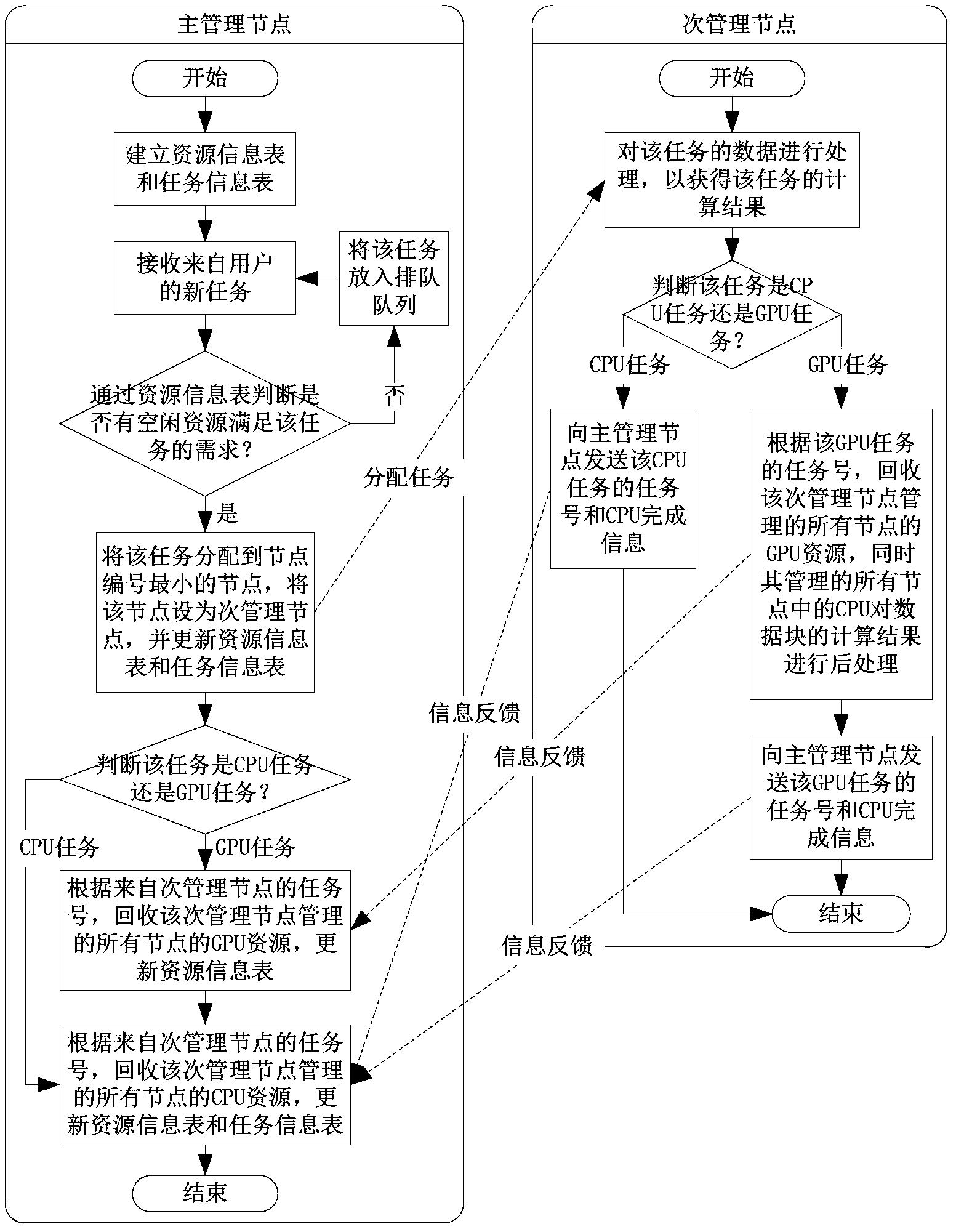

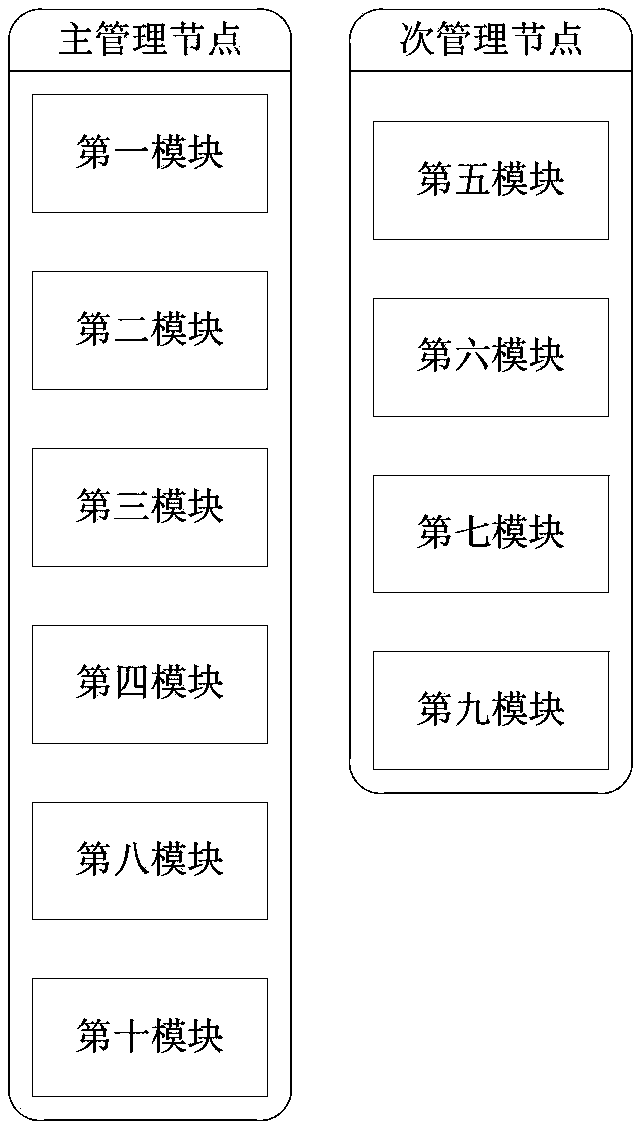

Resource management method and system facing GPU (Graphic Processing Unit) cluster

ActiveCN103365726AReduce loadEfficient use ofResource allocationResource informationResource management

The invention discloses a resource management method facing a GPU (Graphic Processing Unit) cluster, which comprises the following steps: a main management node establishes two charts (a resource information chart and a task information chart); the main management node receives a new task; the main management node judges whether the task is a CPU (Central Processing Unit) task or a GPU task; the main management node seeks free resource meeting the requirement of the task; if the task is a CPU task, a secondary management node conducts pretreatment on the data of the task, and dispensing pieces of the data to all nodes managed by the secondary management node for calculation, the main management node reclaims CPU resource related to all the nodes managed by the secondary management node according to the number of the task after calculation; if the task is a GPU task, the main management node reclaims the GPU resource related to all the nodes managed by the secondary management node according to the number of the task when GPU calculation is detected to be finished; meanwhile, the CPUs of all the nodes managed by the secondary management node are used for post-processing of a result, and the post-processing is finished. According to the invention, CPU resource and the GPU resource are treated differently; through the detection of the task, free GPU resource can be reclaimed fast.

Owner:HUAZHONG UNIV OF SCI & TECH

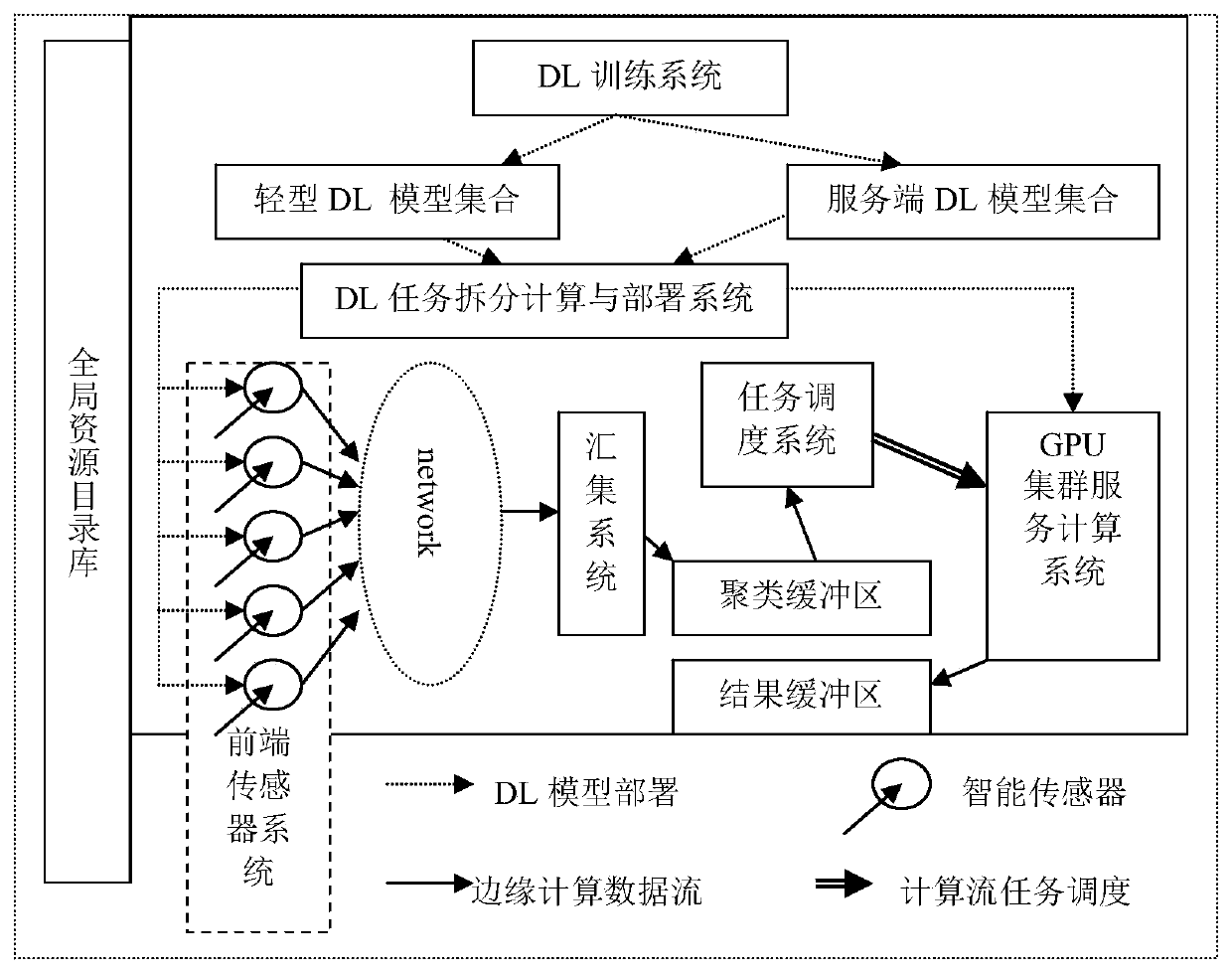

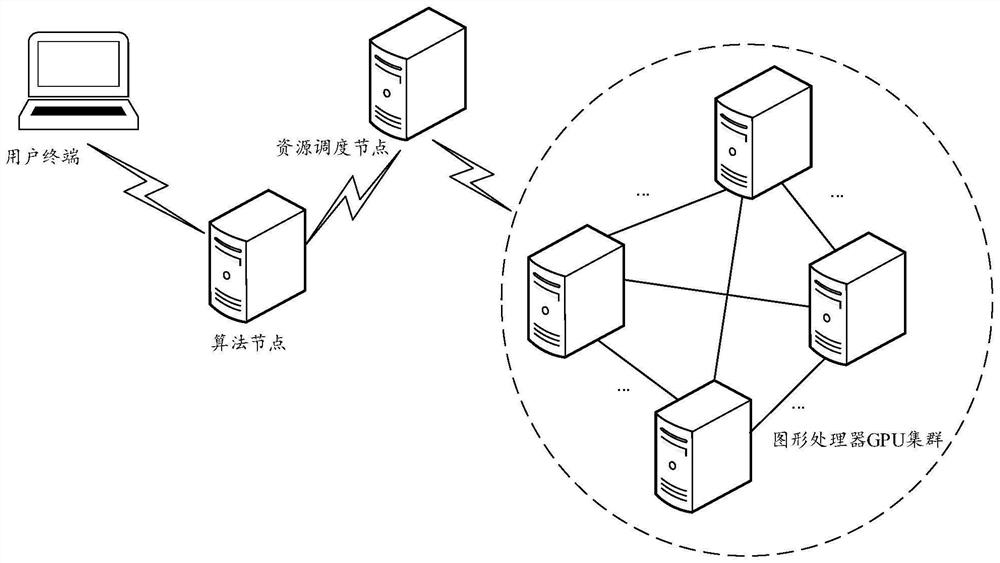

A GPU cluster deep learning edge computing system oriented to sensing information processing

ActiveCN109948428AImprove Parallel Computing EfficiencyReduce cost pressureCharacter and pattern recognitionTransmissionInformation processingRelease consistency

The invention relates to a GPU cluster deep learning edge computing system oriented to sensing information processing. pre-feature extraction is carried out on sensing information by using weak computing power of front-end intelligent sensing equipment; the quantity of original data information is greatly compressed, then the rest processing tasks are transmitted to a GPU cluster for large-scale sensing data feature clustering set processing, the computing power of front-end intelligent sensing equipment can be dynamically adapted through task splitting processing, and the cost pressure of theconsistency requirement of the front-end sensing equipment and hardware versions is reduced; The communication pressure of the edge computing network is reduced, so that the cost of constructing theedge computing network is greatly reduced; Network data feature transmission hides user privacy;, the SPMD advantages of the GPU are brought into play through the clustering operation according to thedata transmitted in the network and the stored data core characteristics, the parallel computing efficiency of edge computing is improved, and meanwhile, the large-scale parallel computing capacity of the GPU cluster and the advantages of low cost and high reliability are effectively brought into play.

Owner:UNIV OF SHANGHAI FOR SCI & TECH

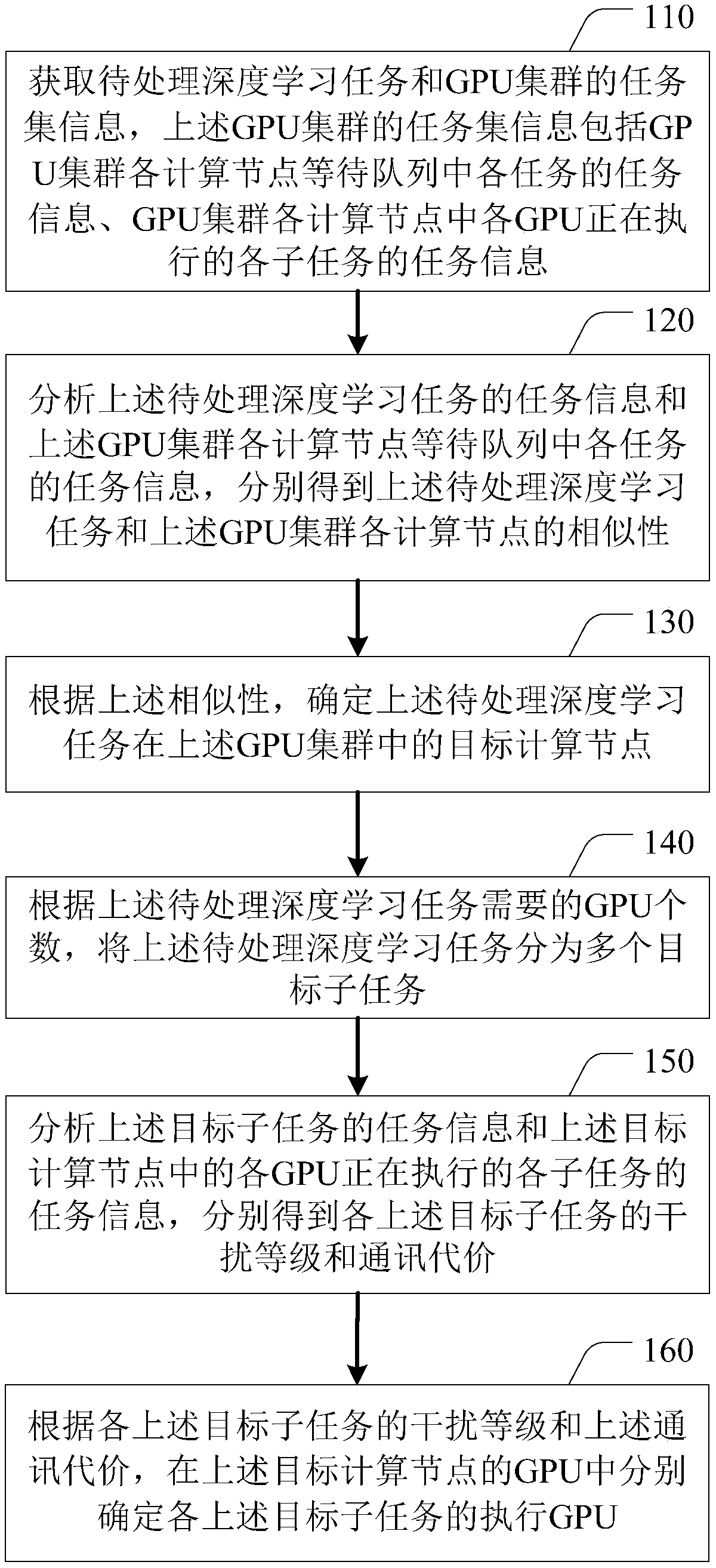

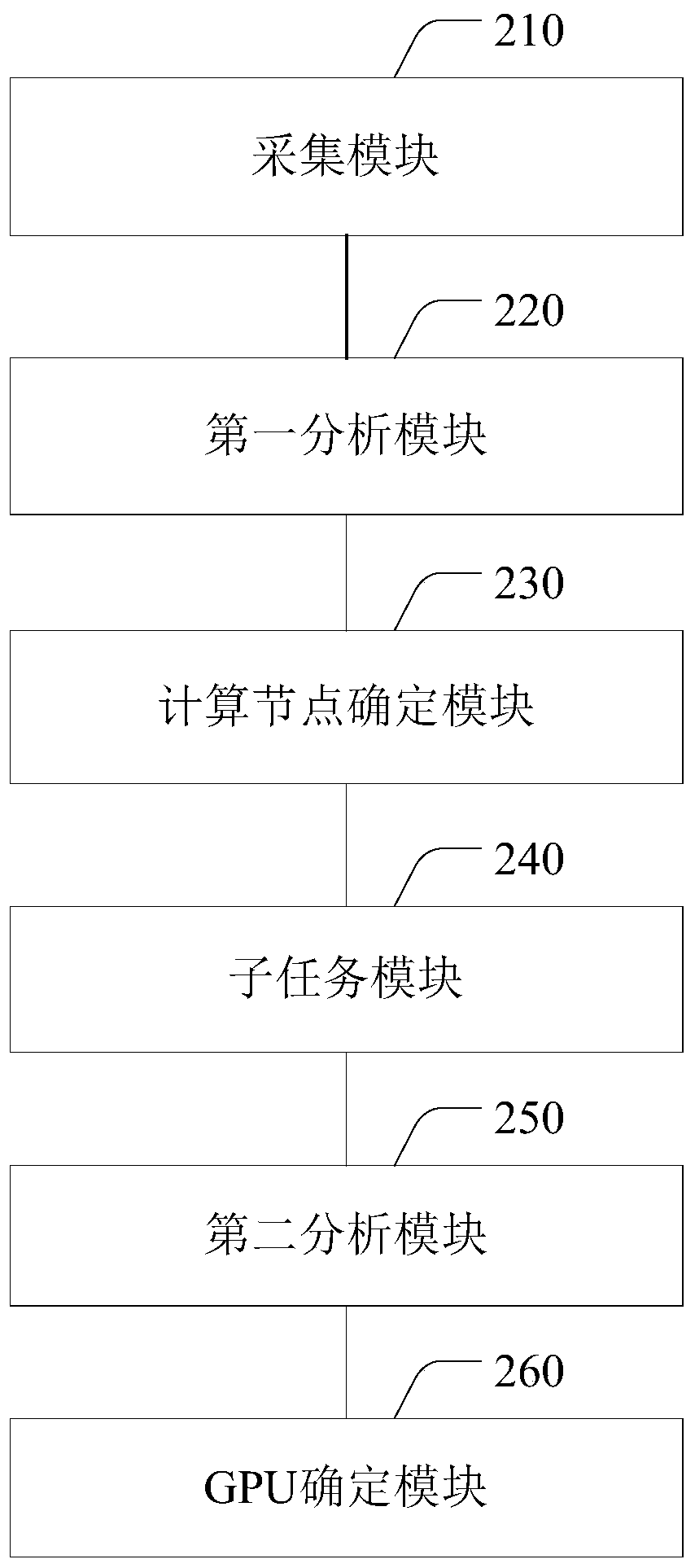

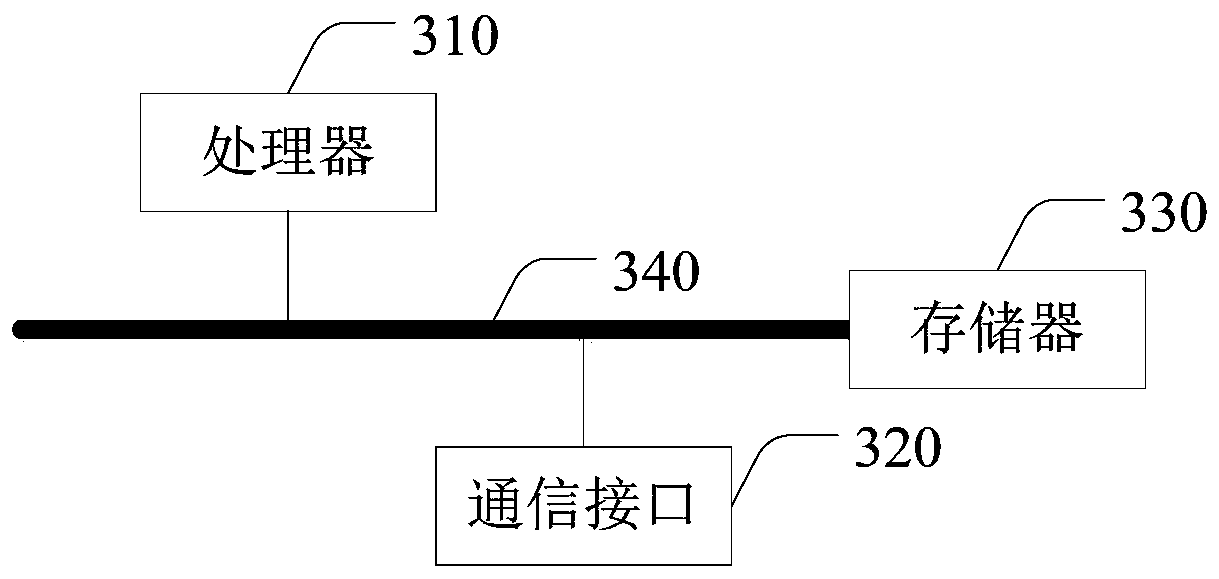

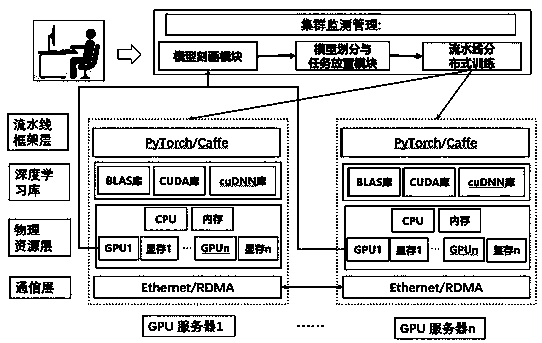

GPU cluster deep learning task parallelization method, device and electronic equipment

ActiveCN110399222AAchieve a high degree of parallelismImprove resource utilizationResource allocationArtificial lifeResource utilizationThe Internet

The embodiment of the invention provides a GPU cluster deep learning task parallelization method, a GPU cluster deep learning task parallelization device and electronic equipment, and relates to the technical field of internet. The method comprises the following steps: firstly, analyzing the similarity between a to-be-processed deep learning task and each computing node of a GPU cluster; determining a target computing node of the to-be-processed deep learning task in the GPU cluster, reducing the possibility of computing node resource contention, and improving the utilization rate and the execution efficiency of deep learning task system resources; according to the number of GPUs required by the to-be-processed deep learning task, obtaining a to-be-processed deep learning task; dividing the to-be-processed deep learning task into a plurality of target sub-tasks, analyzing the interference level and the communication cost of the target subtask; determining the target GPU of the target sub-task in the target computing node, and avoiding unbalanced resource allocation on the GPU in the computing node. The high parallelization of the deep learning task is realized. The resource utilization rate of the GPU cluster is improved. Meanwhile, the execution efficiency of the deep learning task is improved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

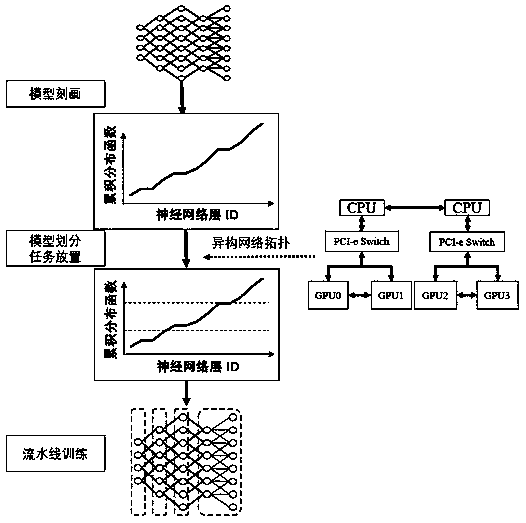

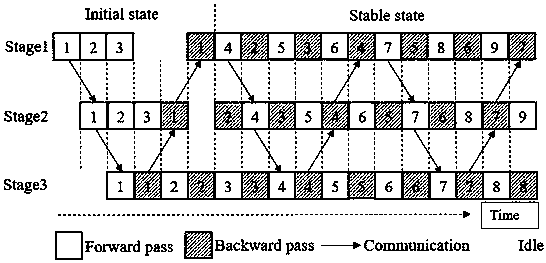

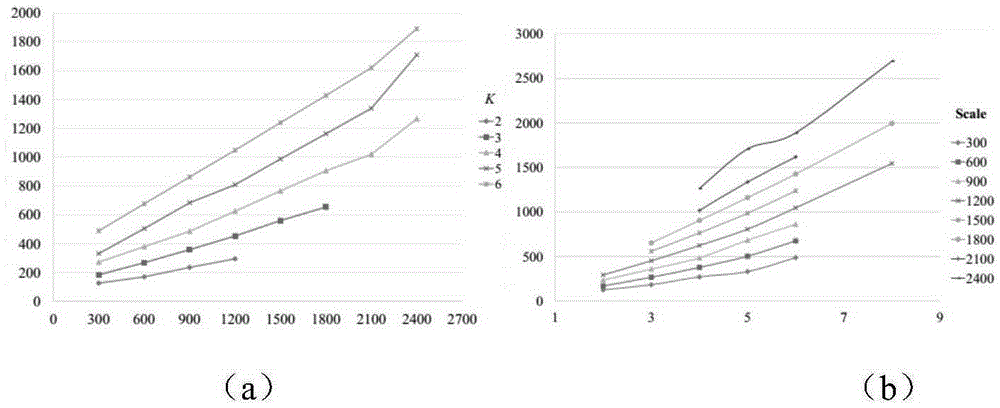

Heterogeneous network perception model division and task placement method in pipelined distributed deep learning

ActiveCN110533183AIncrease training speedSolve the load problemNeural architecturesPhysical realisationModel descriptionPerception model

The invention provides a heterogeneous network perception model division and task placement method in assembly line distributed deep learning, which mainly comprises three parts, namely deep learningmodel description, model division and task placement and assembly line distributed training. According to the method, firstly, for resource requirements of deep learning application in the GPU training process, corresponding indexes such as calculation time, intermediate result communication quantity and parameter synchronization quantity in the training execution process of the deep learning application are described and serve as input of model division and task placement; then indexes and heterogeneous network connection topology of the GPU cluster are obtained according to model description, a dynamic programming algorithm based on min-max is designed to execute model division and task placement, and the purpose is to minimize the maximum value of task execution time of each stage afterdivision so as to ensure load balance. And finally, according to a division placement result, performing distributed training by using assembly line time-sharing injection data on the basis of modelparallelism, thereby realizing effective guarantee of training speed and precision.

Owner:SOUTHEAST UNIV

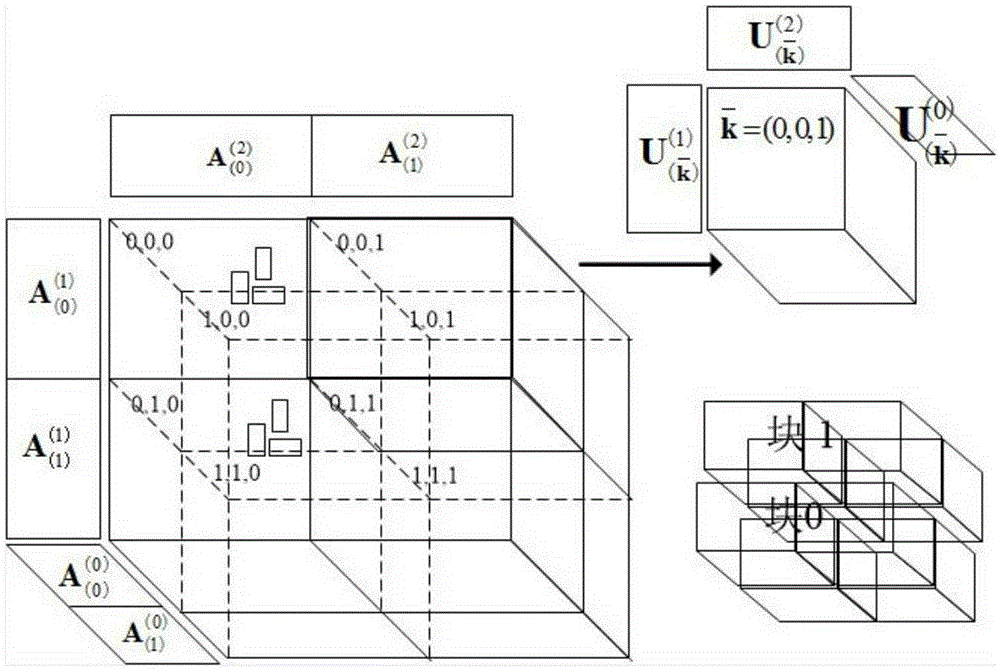

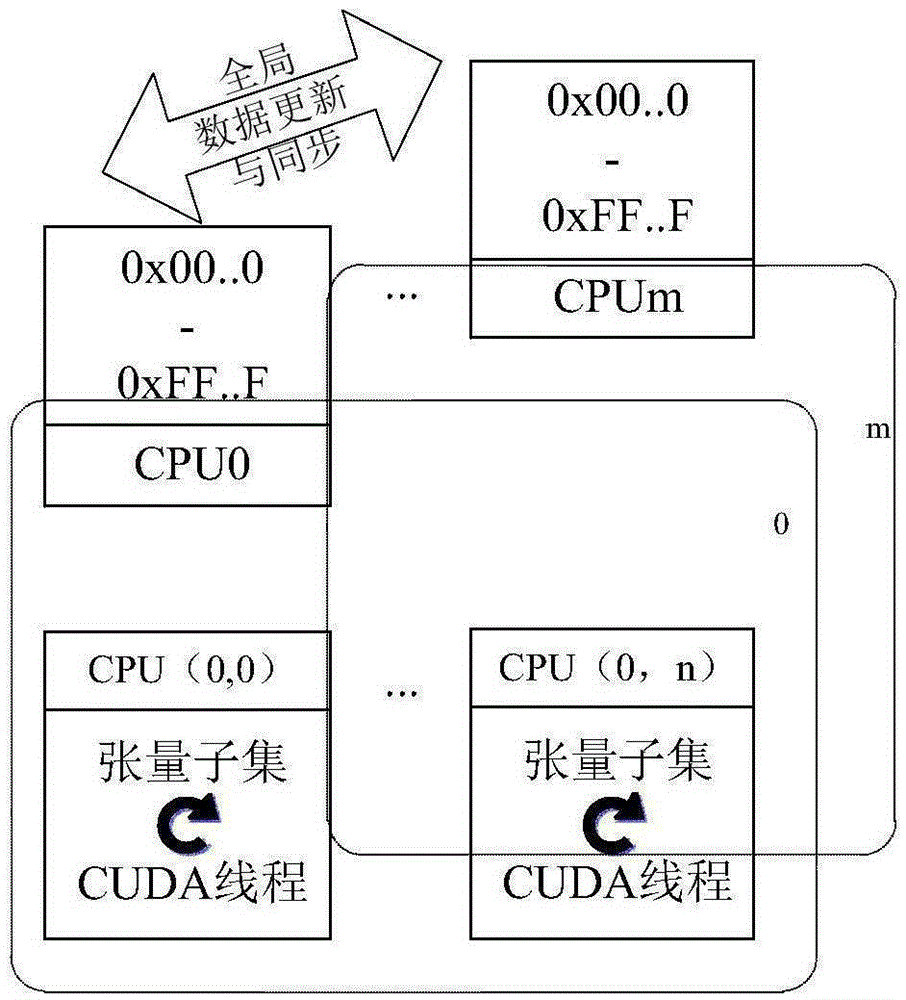

GPU cluster-based multidimensional big data factorization method

The invention discloses a GPU cluster-based multidimensional big data factorization method, aims to solve the problem that a conventional grid parallel factor analysis model cannot process large-scale and high-dimension multidimensional data analysis, and provides an effective pattern processing unit-based multidimensional big data multi-mode decomposition method, namely a hierarchical parallel factor analysis framework. The framework is based on the conventional grid parallel factor analysis model, comprises a process of integrating tensor subsets under a coarse-grained model and a process of calculating all of the tensor subsets and fusing factor subsets under a fine-grained model, and is operated on a cluster formed by a plurality of nodes; each node comprises a plurality of pattern processing units. Tensor decomposition on pattern processing unit equipment can fully utilize a powerful parallel computing capability and a paralleling resource generated in tensor decomposition; experimental results show that through the adoption of the method, executive time for acquiring tensor factors can be greatly shortened, the large-scale data processing capability is improved, and the problem that the computing resource is insufficient is well solved.

Owner:WUHAN UNIV

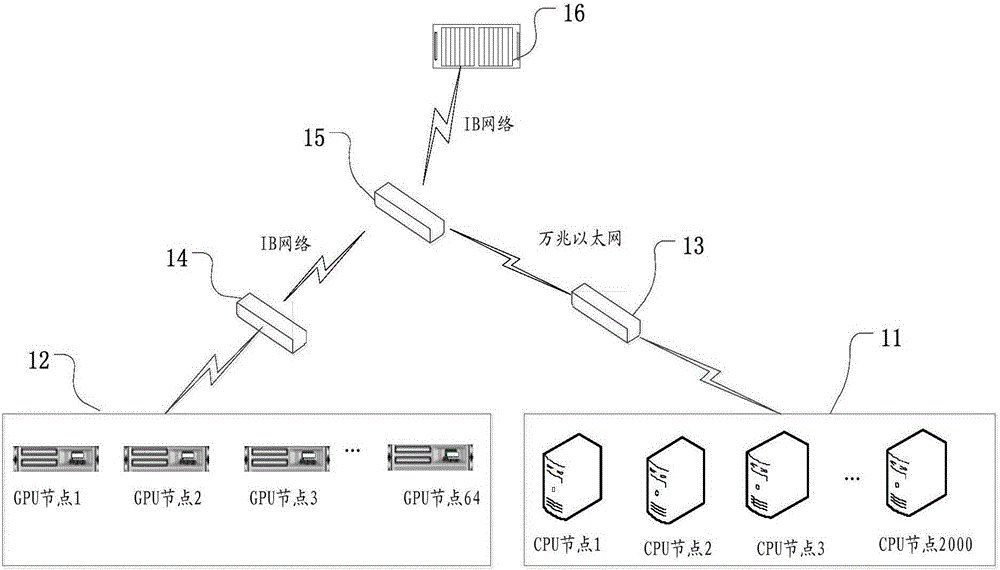

CPU and GPU hybrid cluster architecture system for deep learning

InactiveCN105227669AExtended processing timeImprove computing efficiencyTransmissionGPU clusterImage processing

The invention discloses a CPU and GPU hybrid cluster architecture system for deep learning. The system comprises a central processing unit CPU cluster used for operating a logic-intensive deep learning application; a graphics processing unit GPU cluster used for operating a computing-intensive deep learning application; a first switch connected with the CPU cluster; a second switch connected with the GPU cluster; a third switch connected with the first switch and the second switch; and a parallel storage device connected with the third switch and used for providing shared data for the CPU cluster and the GPU cluster. The system reduces resource power consumption, improves the deep learning processing efficiency and reduces the cost.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

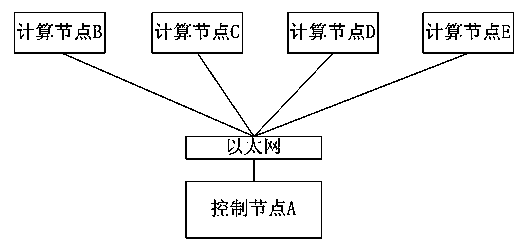

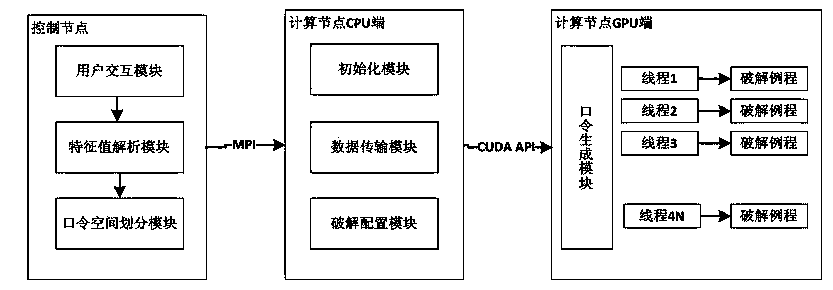

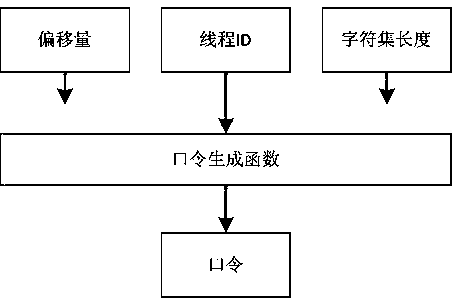

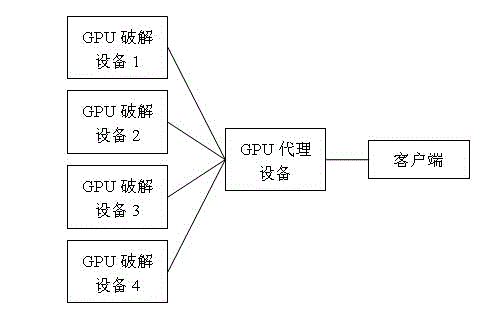

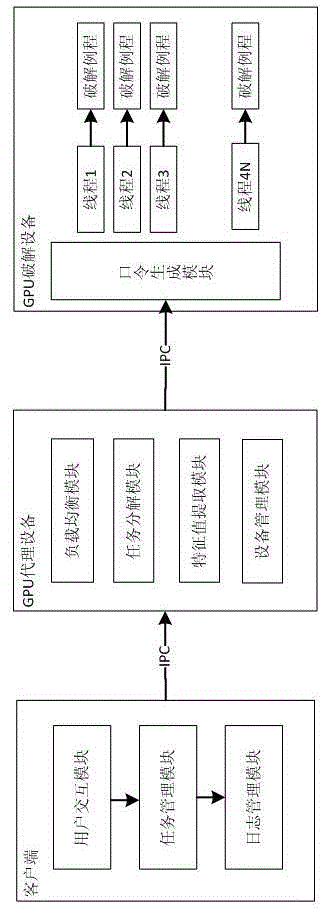

WPA shared key cracking system based on GPU cluster

ActiveCN103684754AGood cracking effectReliable Task Scheduling SystemKey distribution for secure communicationPassphraseComputer architecture

The invention relates to the technical field of password cracking, and discloses a WPA shared key cracking system based on a GPU cluster. The WPA shared key cracking system based on the GPU cluster specifically comprises a control node and a plurality of GPU computational nodes. The control node intercepts and obtains a WPA data package, a characteristic value extracting module extracts cracking characteristic values, and a user interaction module of the control node receives a password cracking range defined by a user; a password space partition module partitions password sections of certain ranges to all GPU computational nodes, and sends the password sections to all the corresponding GPU computational nodes; the GPU computational nodes calculate and obtain temporary verification parameters MIC_TMP, a breaking password passphrase is regarded as the shared key when the temporary verification parameters MIC_TMP are identical with a verification parameter MIC value, and password cracking is completed. According to the WPA shared key cracking system based on the GPU cluster, the GPU cluster is adopted to crack a password of a WPA / WPA2-PSK, multi-node GPU clusters are supported, expansion can be carried out properly according to needs, and cracking performance is improved well. Meanwhile, oriented to the heterogeneous characteristic of the GPU clusters, a reliable task dispatching system is designed, load balancing is achieved, and cracking speed is improved.

Owner:NO 30 INST OF CHINA ELECTRONIC TECH GRP CORP

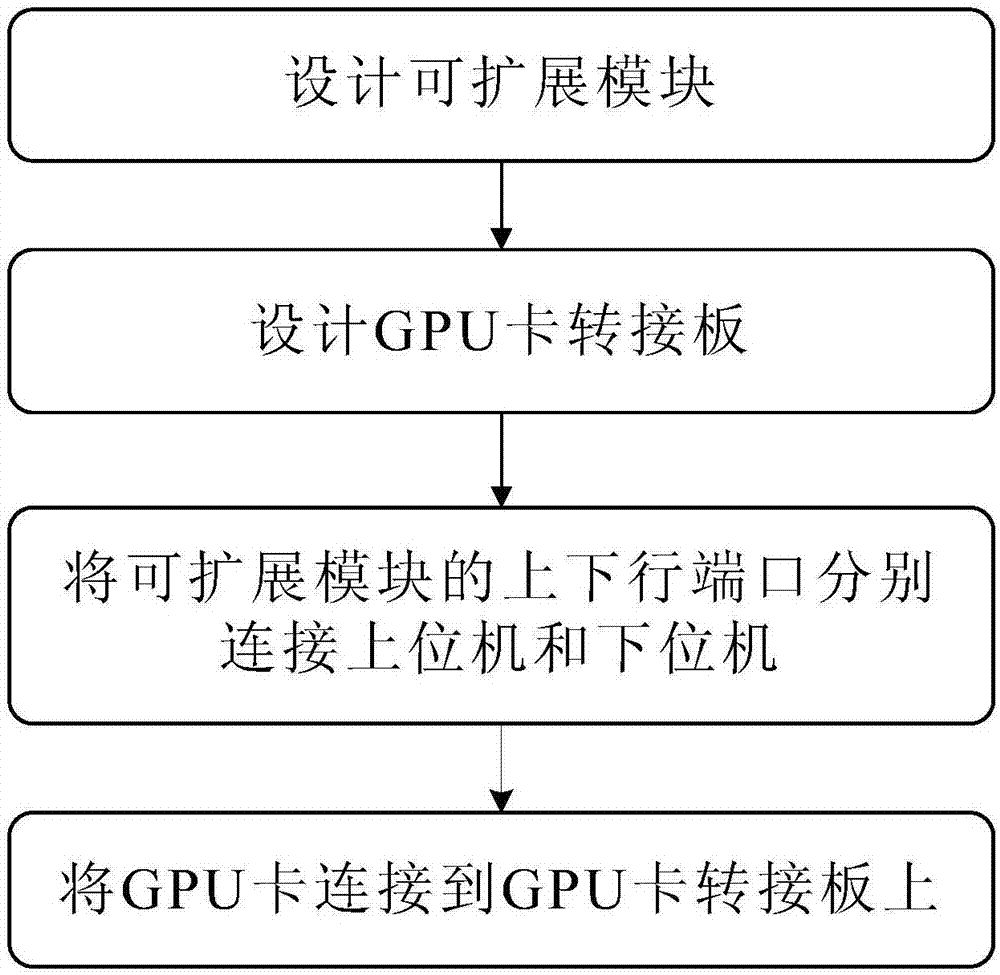

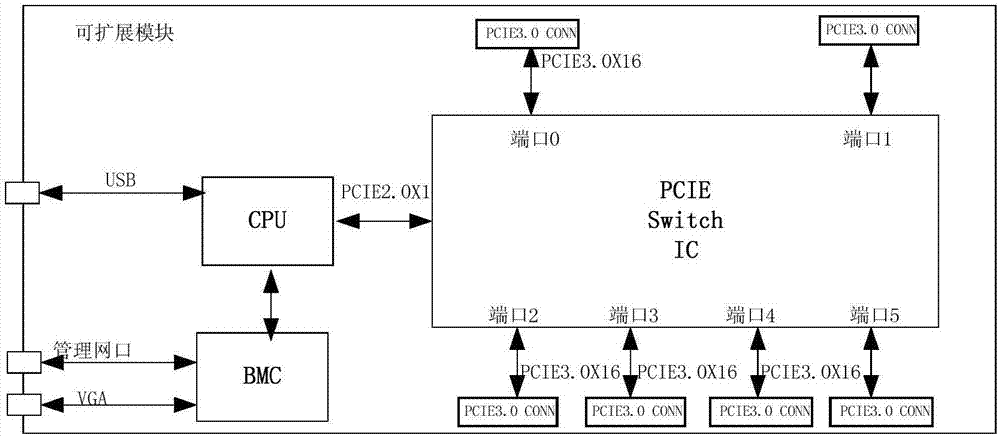

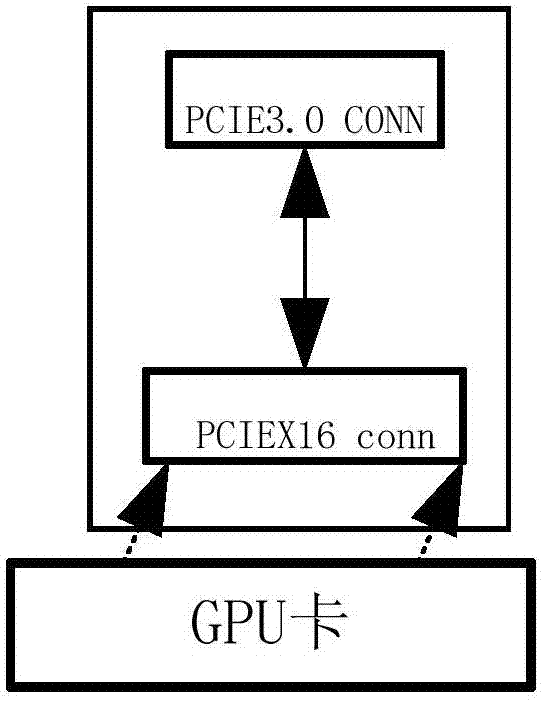

Method for GPU cluster expanding by using high-speed connector

InactiveCN107102964AFacilitate understanding of the applicationBasic structure is simpleMultiple digital computer combinationsProcessor architectures/configurationInterconnectionGPU cluster

The invention discloses a method for GPU cluster expanding by using a high-speed connector. A GPU card is connected to a server through an expandable module to build GPU clusters different in size; the method includes the following steps that 1, the expandable module is designed; 2, a GPU card adapter plate is designed; 3, an upstream port of the expandable module is connected with an upper computer, and a downstream port is connected with a lower computer, wherein the upper computer includes a server or a previous-level expandable module of the expandable module, and the lower computer includes the GPU card adapter plate or a next-level expandable module of the expandable module; 4, the GPU card is connected with the GPU card adapter plate. The method is based on the basic expandable module, flexible and free combination is achieved through the high-speed connector, the GPU clusters suitable for different kinds of business are build through interconnection between every two modules, the basic structure is simple, and the development cost is saved.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

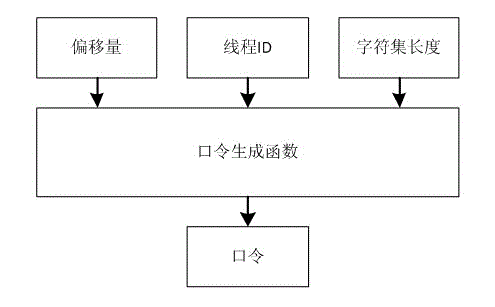

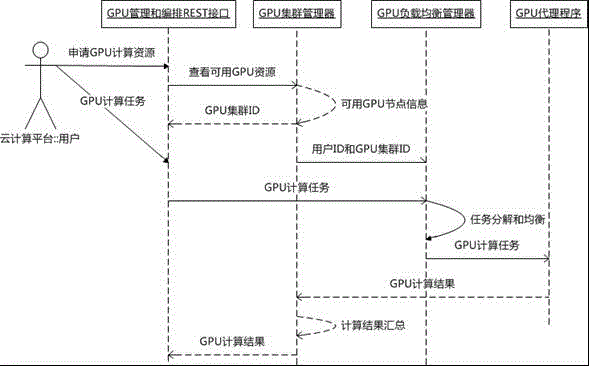

Code cracking method and system based on multiple GPU cracking devices

InactiveCN104615945ASpeed up crackingGood cracking effectDigital data protectionClient-sideGPU cluster

The invention relates to the technical field of code cracking, and discloses a code cracking method based on multiple GPU cracking devices. The code cracking method based on the multiple GPU cracking devices includes the following steps that firstly, a client side builds a cracking task according to the user requirement and sends the cracking task to a GPU proxy device, and the GPU proxy device divides the cracking task sent by the client side into a plurality of sub-tasks and distributes the sub-tasks to the different GPU cracking devices; secondly, each GPU cracking device executes the distributed sub-task and feeds the cracking result back to the client side through the GPU proxy device. The invention further discloses a code cracking system based on the multiple GPU cracking devices. The code cracking method and system can support a multi-node GPU cluster, properly carry out expansion of the GPU cracking devices according to requirements, improve the code cracking speed and well improve the code cracking performance.

Owner:NO 30 INST OF CHINA ELECTRONIC TECH GRP CORP

Cluster resource scheduling method and device, electronic equipment and storage medium

PendingCN113377540AAvoid consumptionReduce development costsResource allocationInterprogram communicationResource assignmentParallel computing

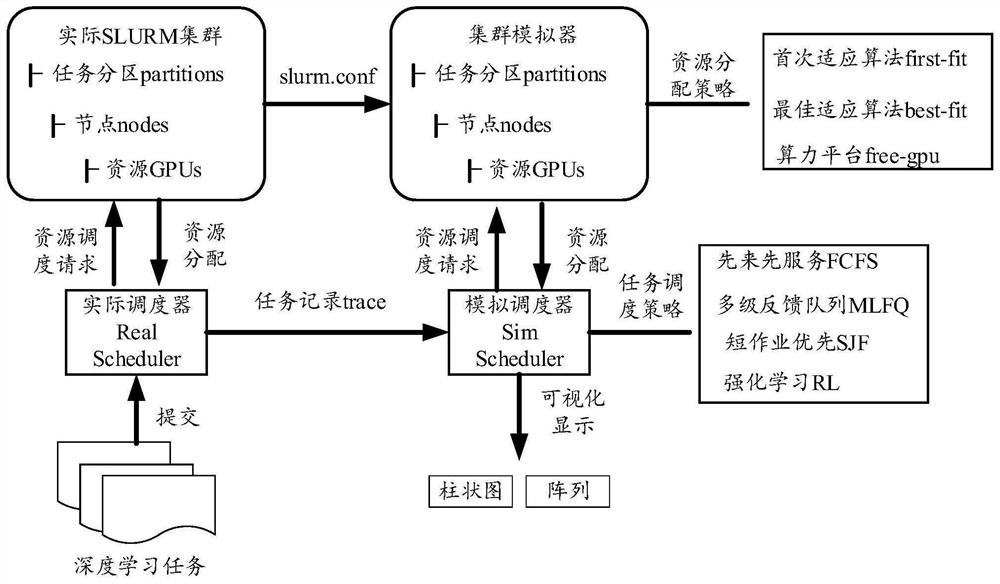

The embodiment of the invention discloses a cluster resource scheduling method and device, electronic equipment and a storage medium, and the method comprises the steps: obtaining a resource scheduling request for a GPU in a GPU cluster in a first operation environment; executing a task scheduling strategy according to the request parameter to add the deep learning task into a task queue, and executing a preset resource allocation strategy to determine at least one target GPU from a GPU cluster; scheduling the deep learning task to at least one target GPU for processing; and adjusting the task scheduling strategy and the preset resource allocation strategy, and deploying the adjusted task scheduling strategy and the preset resource allocation strategy in the second operation environment. The embodiment of the invention is favorable for reducing the development cost of a resource scheduling algorithm.

Owner:SHANGHAI SENSETIME TECH DEV CO LTD

GPU-based cluster resource allocation method and device

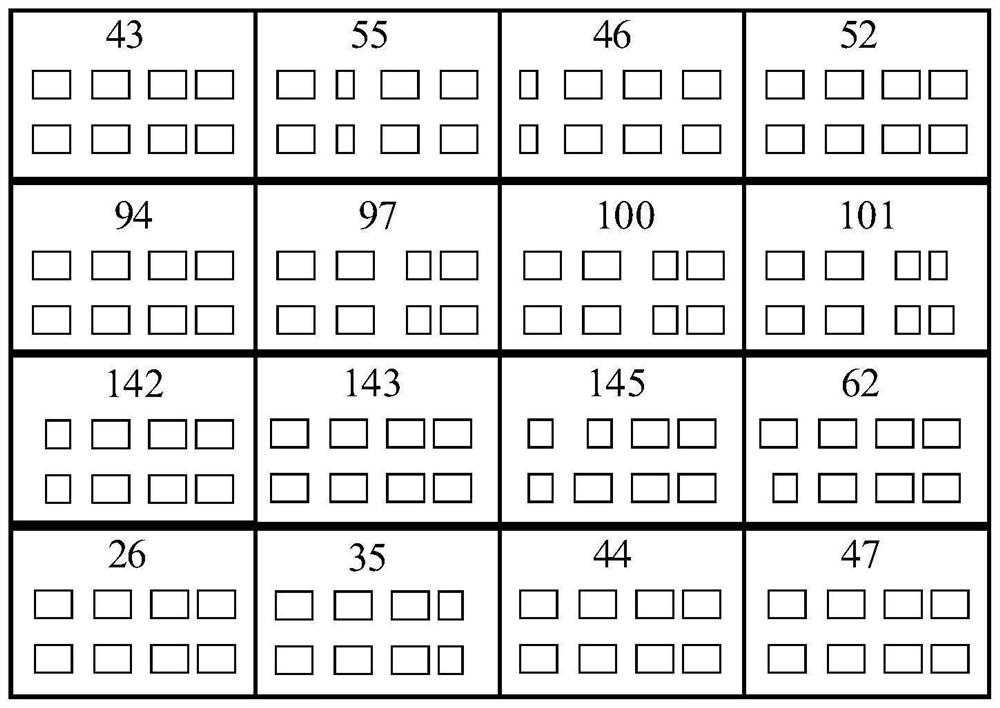

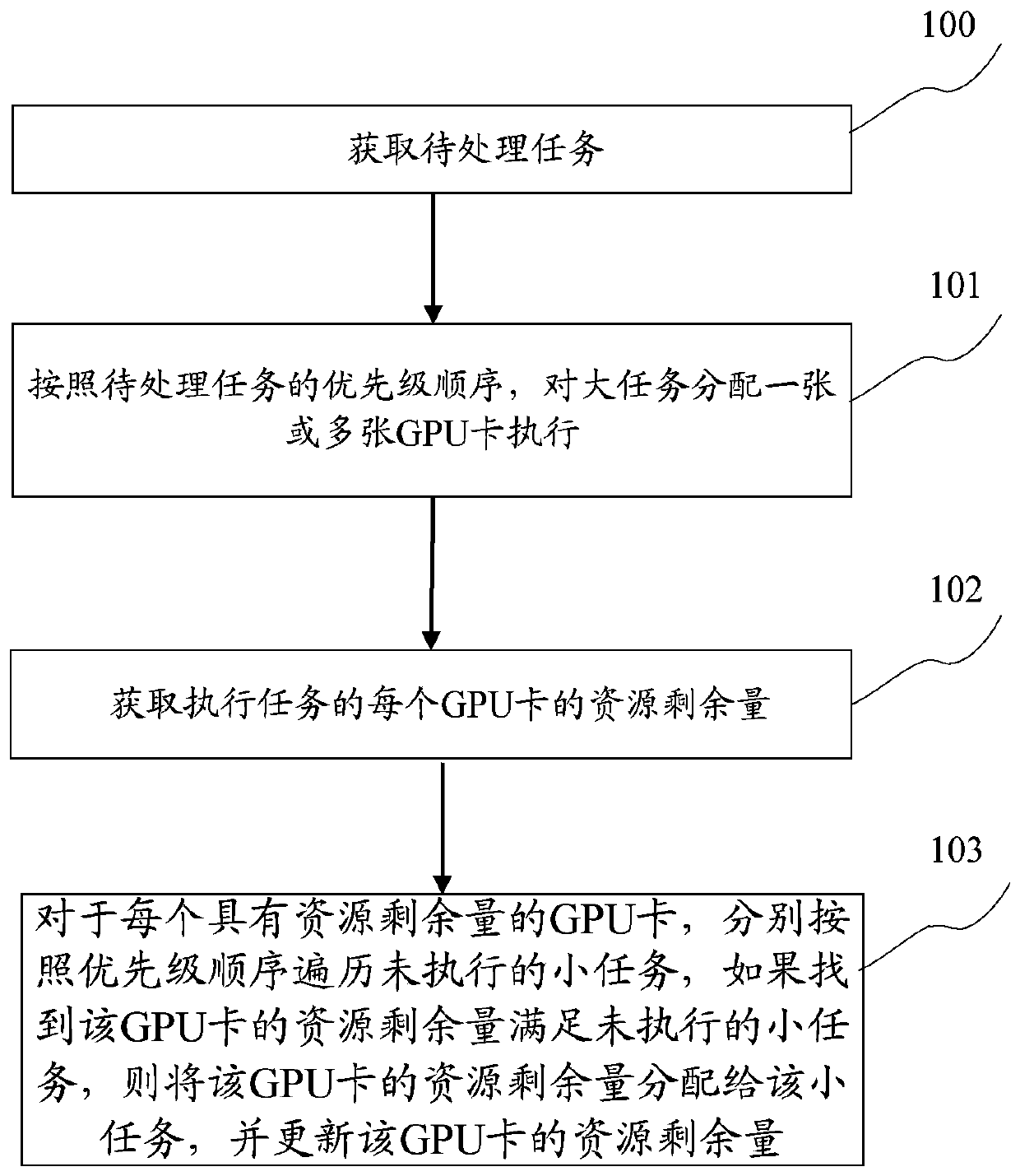

The invention discloses a GPU-based cluster resource allocation method. A GPU cluster comprises a plurality of GPU cards. The method comprises the steps of obtaining to-be-processed tasks, wherein theto-be-processed tasks comprise a large task and a small task; the big task refers to a to-be-processed task of which the required resource quantity is greater than or equal to one GPU card; the small task refers to a to-be-processed task of which the required resource quantity is smaller than that of one GPU card; according to the priority sequence of the to-be-processed tasks, allocating one ormore GPU cards to the big tasks for execution; only one big task is allocated to each GPU card; obtaining the resource surplus of each GPU card executing the task; and for each GPU card with the resource surplus, traversing the unexecuted small tasks according to a priority sequence, if the resource surplus of the GPU cards is found to meet the unexecuted small tasks, allocating the resource surplus of the GPU cards to the small tasks, and updating the resource surplus of the GPU cards. Through the scheme of the invention, the GPU cluster resource utilization rate is improved.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

CPU (central processing unit)+GPU (graphic processing unit) cluster management method, device and equipment for implementing target detection

InactiveCN108108248AImprove resource utilizationImprove work efficiencyResource allocationVersion controlMain processing unitResource utilization

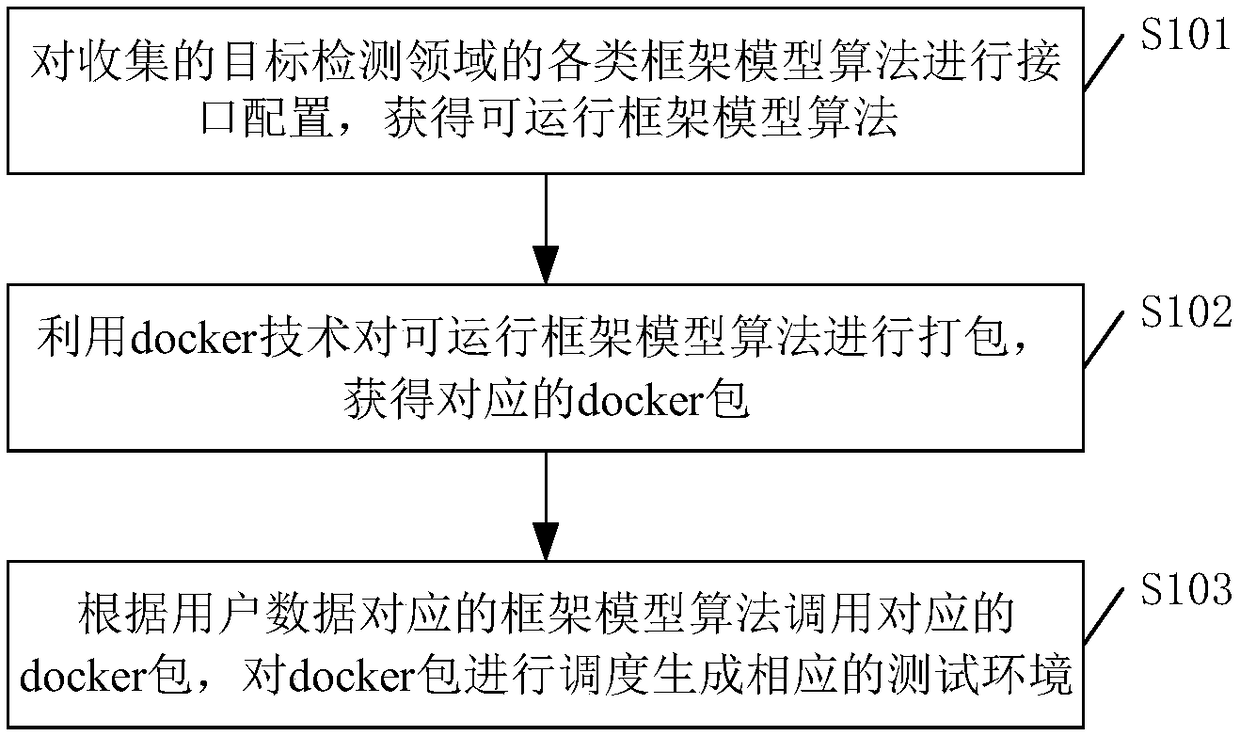

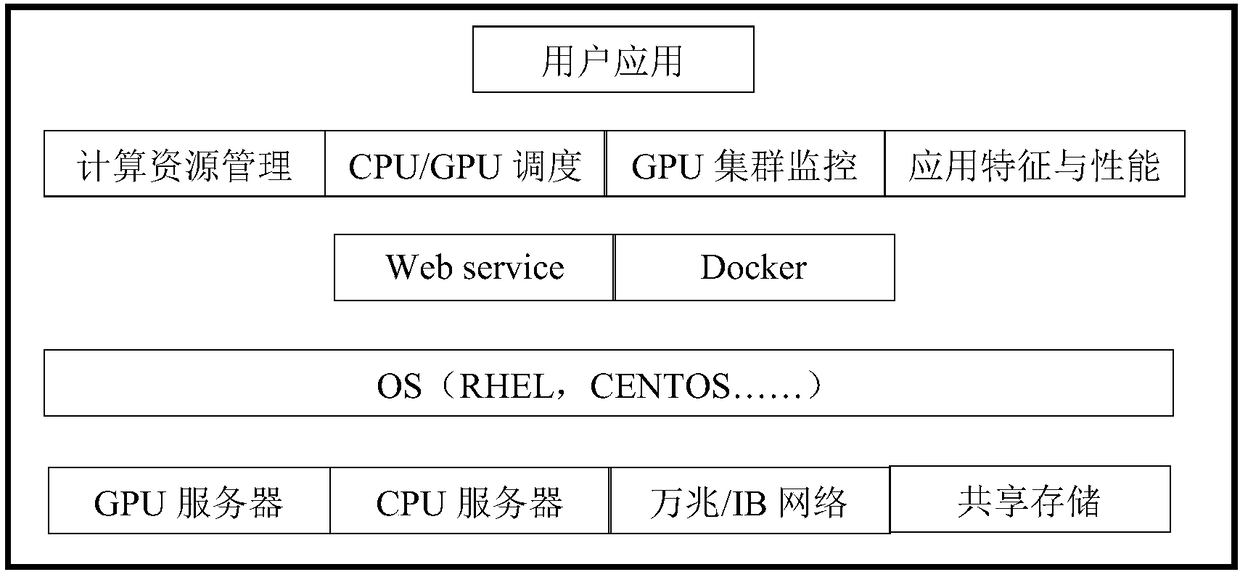

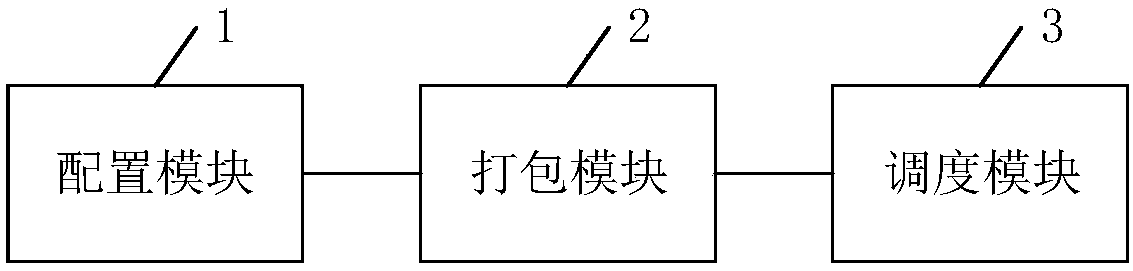

The invention discloses a CPU (central processing unit)+GPU (graphic processing unit) cluster management method for implementing target detection. The method includes the steps: performing interface configuration on various framework model algorithms in the field of collected target detection to obtain a runnable framework model algorithm; packing the runnable framework model algorithm by a dockertechnology to obtain a corresponding docker packet; calling the corresponding docker packet according to the runnable framework model algorithm corresponding to user data, and scheduling the docker packet to generate a corresponding testing environment. By the method, dynamic management can be realized to transfer resources, resource use ratio and working efficiency are greatly increased, use cost is further reduced, and research and development time is shortened. The invention further discloses a CPU+GPU cluster management device and equipment for implementing target detection and a computerreadable storage medium which have the advantages.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

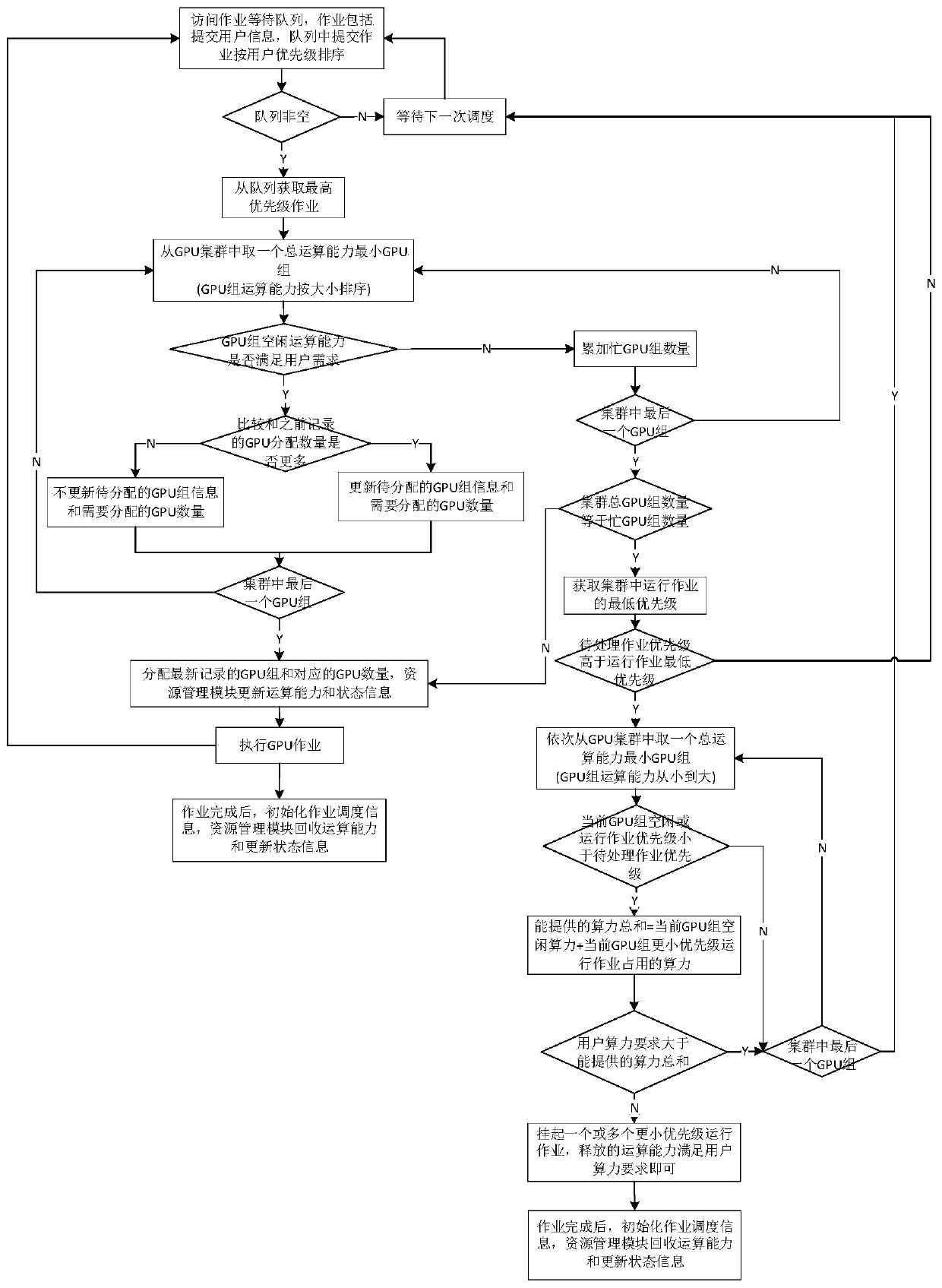

Deep learning-oriented multi-type GPU cluster resource management scheduling method and system

ActiveCN110442451AReduce in quantitySimplify Management ComplexityResource allocationEnergy efficient computingGPU clusterDistributed computing

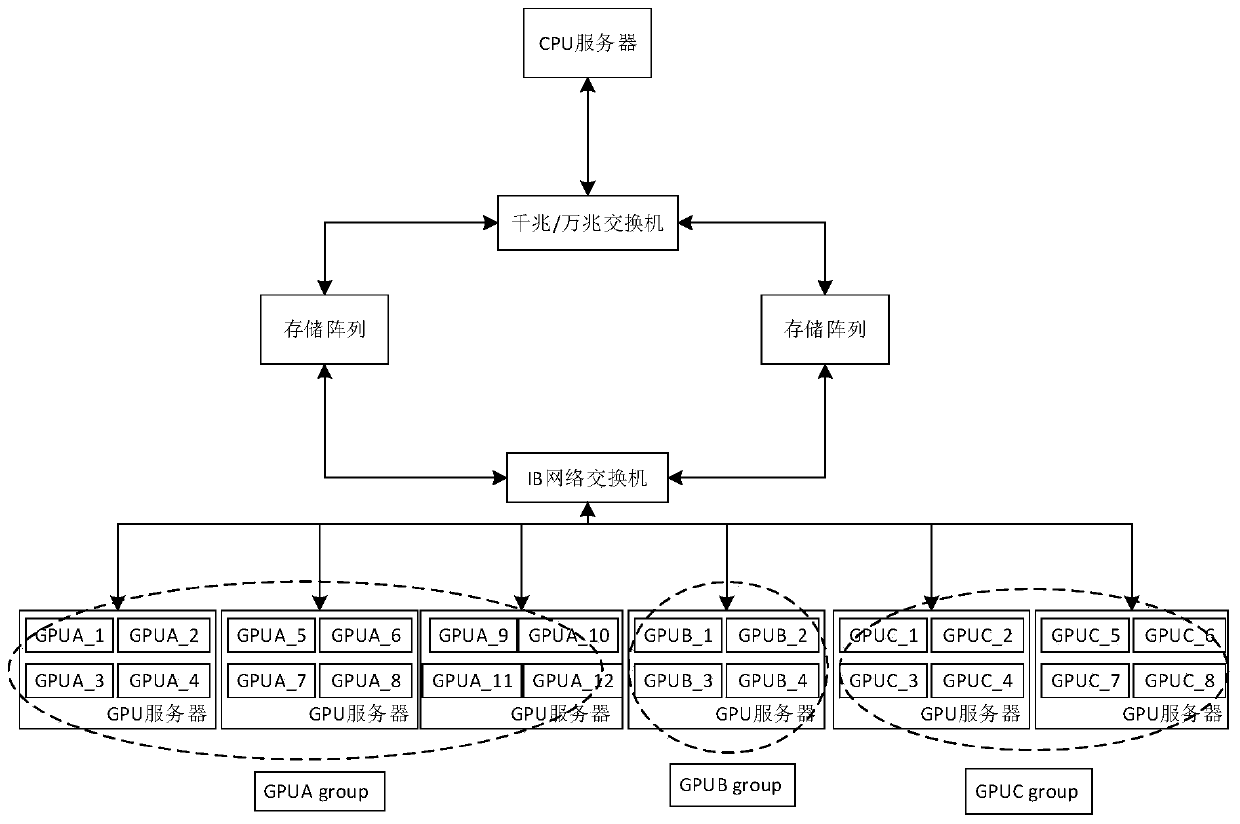

The invention discloses a deep learning-oriented multi-type GPU cluster resource management and scheduling method and system. The method comprises the following steps: dividing a GPU cluster into a plurality of GPU groups according to the model of a GPU, counting the idle operational capability of each GPU group, obtaining all users accessing the GPU cluster, and recording the minimum operationalcapability requirement of each user; and periodically accessing the job queue, obtaining the job to be processed with the highest priority in the job queue, and scheduling GPU cluster resources according to the job to be processed. According to the invention, GPUs of different brands and models are uniformly managed as one cluster for deep learning, the number of maintained GPU clusters is reduced, and the GPU cluster management complexity is simplified; the requirements of different users in deep learning can be met; reasonable user attributes are set according to user requirements, users donot need to be familiar with and care about GPU cluster environments, resource scheduling is carried out according to operational capability requirements and priorities of the users, resources meetingthe requirements can be automatically allocated through the scheduling method, and the resource utilization rate of different GPU type groups is increased.

Owner:HANGZHOU EBOYLAMP ELECTRONICS CO LTD

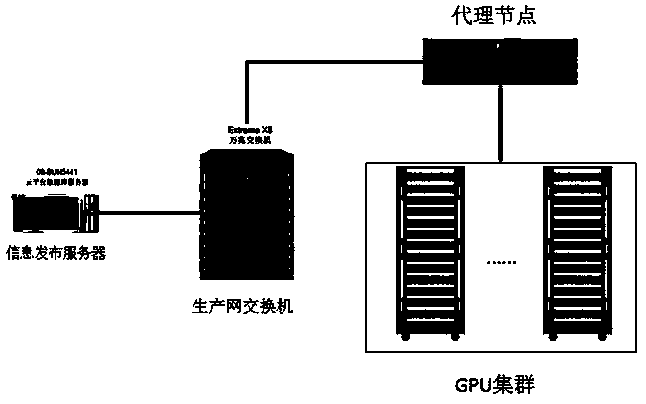

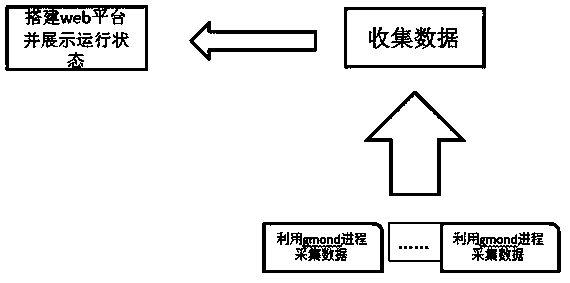

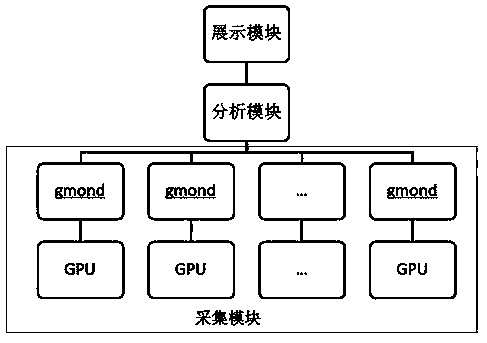

GPU cluster monitoring system and method for issuing monitoring alarm

The invention provides a GPU cluster monitoring system and a method for issuing monitoring alarms and belongs to field of information technology. The GPU cluster monitoring system comprises data acquiring modules, an analyzing module, and a showing module. Each calculating node in a GPU cluster is provided with a data acquiring module which acquires data information of the calculating node, wherein the data information is the utilization rate of a GPU card. The analyzing module arranged on an agent node and collects the data information acquired by the data acquiring modules in the agent node, performs statistical analysis on the data information, and generates a simplified data sheet. The showing module arranged on an information issuing server receives the simplified data sheet generated by the analyzing module, establishes a web platform, and shows the simplified data sheet in a graphic form and visualized manner such that an operation and maintenance worker may monitor the GPU cluster real time.

Owner:CHINA PETROLEUM & CHEM CORP +1

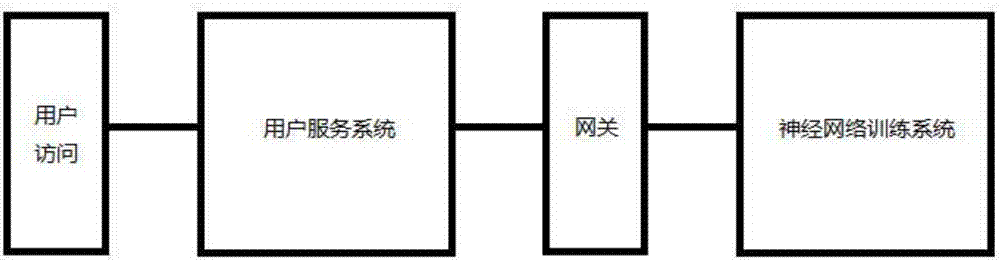

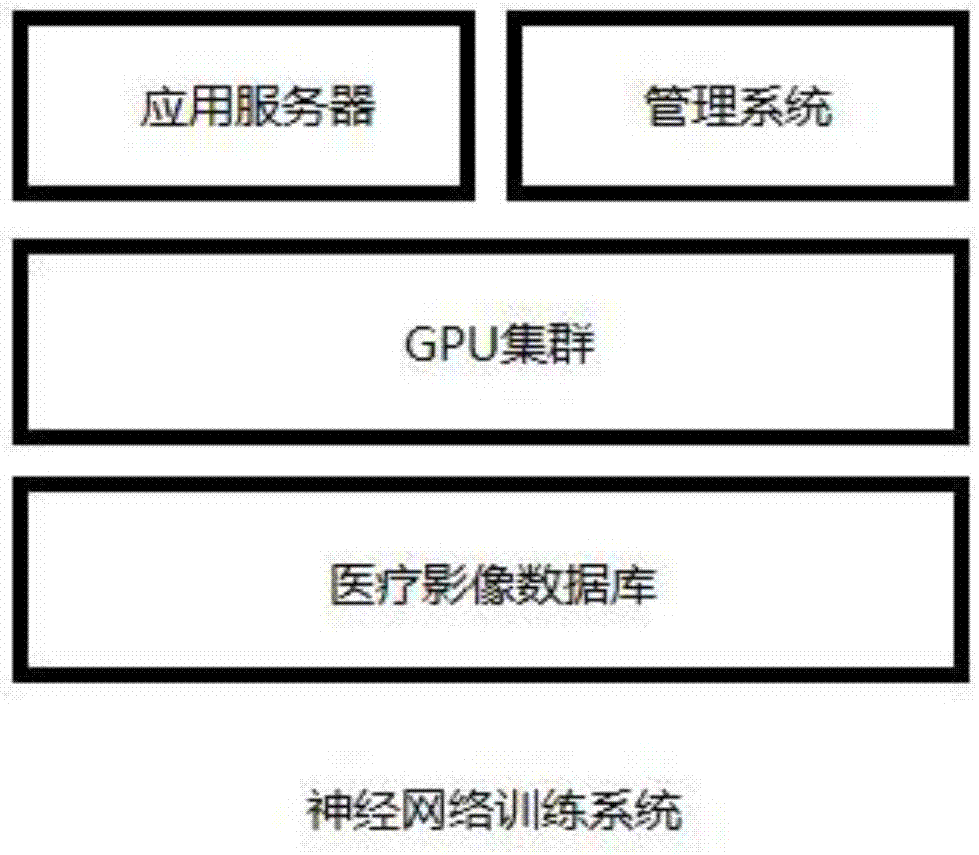

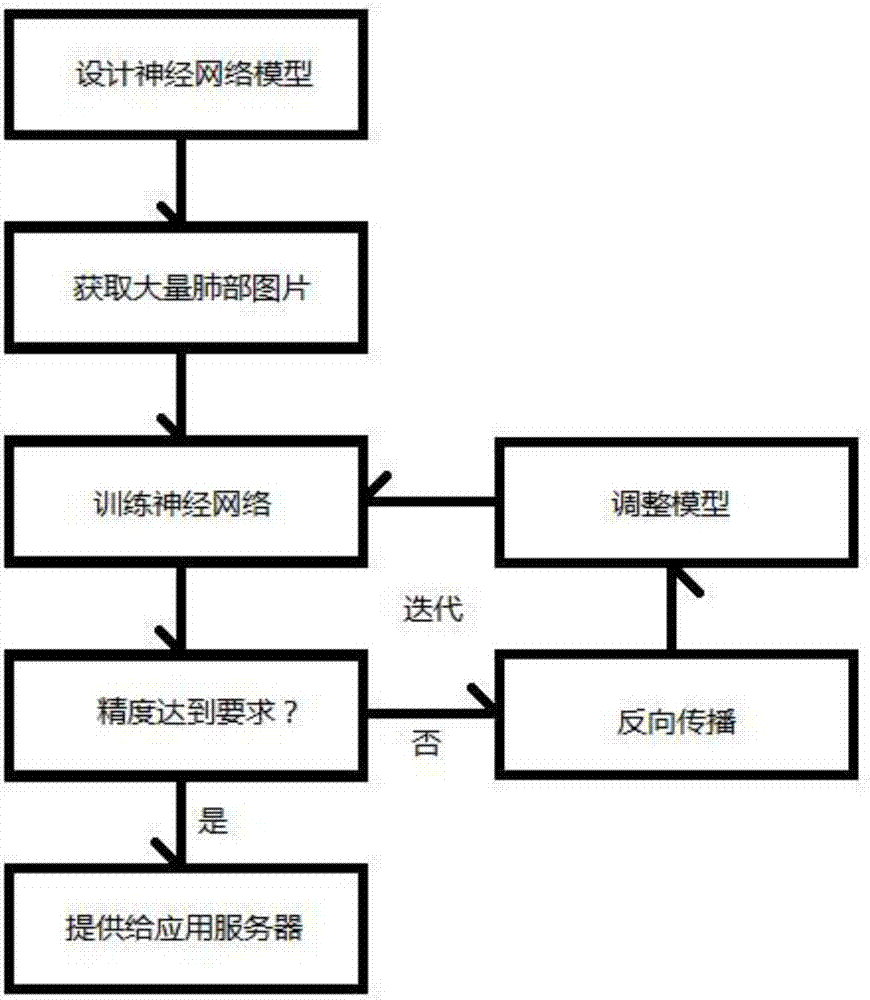

Deep neural network based lung cancer recognition system

InactiveCN107423576ALower quality requirementsEarly detectionCharacter and pattern recognitionSpecial data processing applicationsData setGPU cluster

The invention provides a deep neural network based lung cancer recognition system, and relates to the technical field of medical image processing. The deep neural network based lung cancer recognition system includes a user service system and a neural network training system; the user service system and the neural network training system are isolated from each other through a gateway; the neural network training system includes an application server, a management system, a GPU cluster, and a medical image database; and the user service system is used for providing a service interface for a user, and includes a web page, a service logical processing module, and a database. The complexity of image processing and artificial characteristic extraction of the conventional methods is lowered through automatic learning characteristics of deep learning, the quality demand of a dataset is lowered, discovery and early treatment of the patient buy time, help is provided for diagnosis of a doctor, wrong diagnosis due to human negligence can be avoided, and the accuracy and the training time can be optimized.

Owner:厦门市厦之医生物科技有限公司

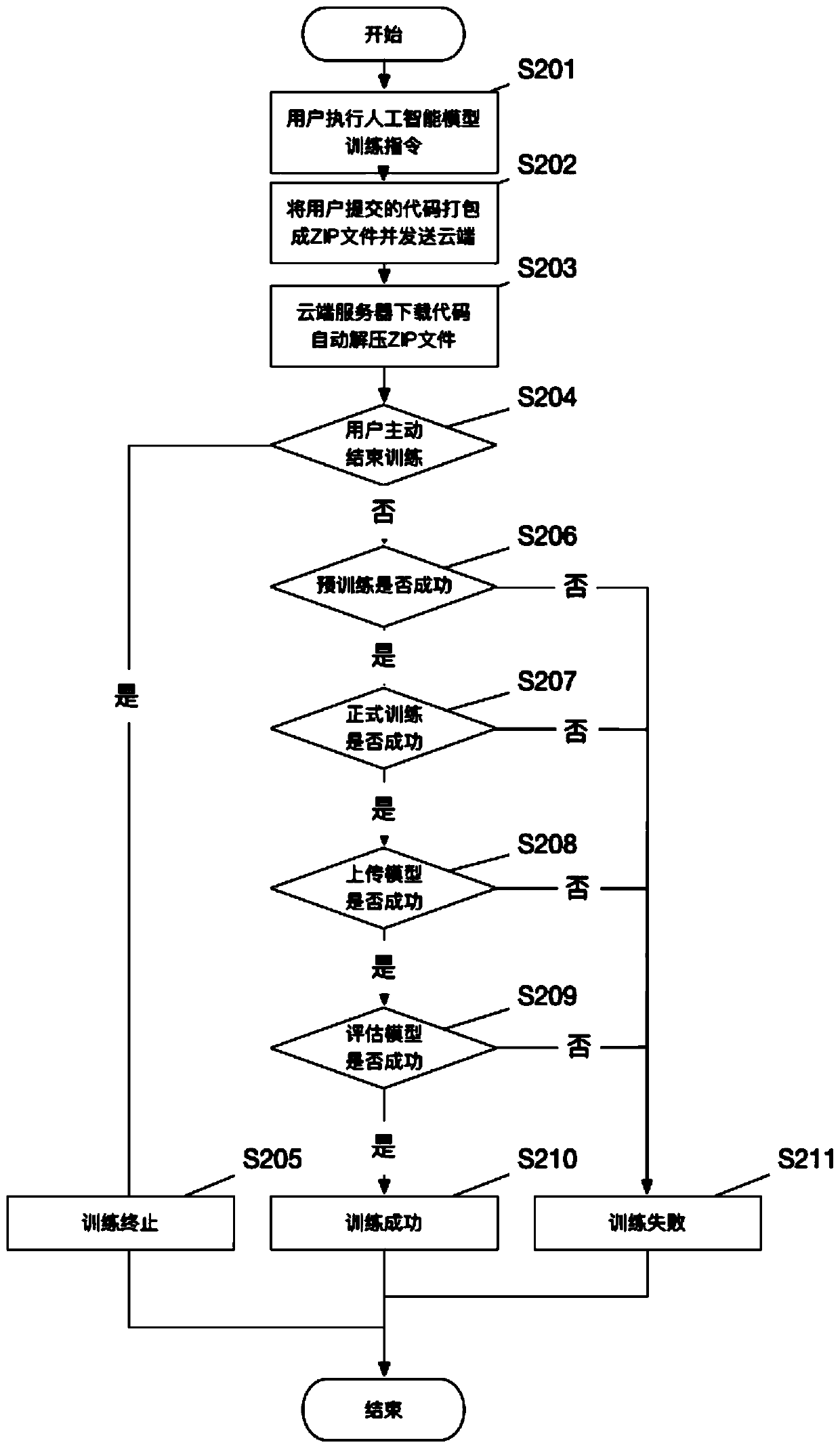

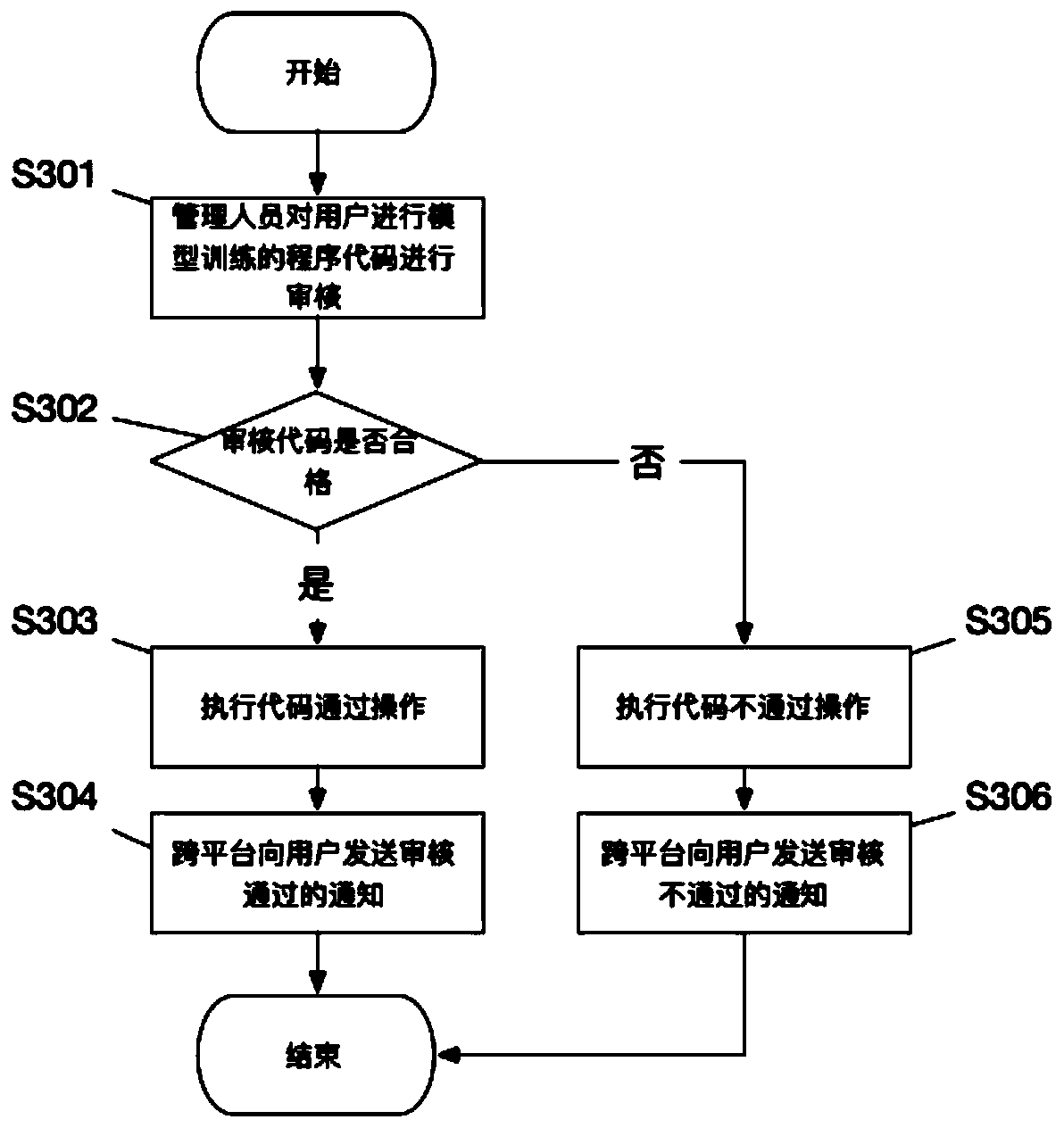

Method for checking model training notifications and training logs at mobile terminal

ActiveCN111143207AImprove iteration efficiencyTimely message feedbackCharacter and pattern recognitionSoftware testing/debuggingHTML5Technology development

The invention provides a method for dynamically receiving an artificial intelligence model training notification at a mobile terminal in a cross-platform manner. During artificial intelligence technology development, a large number of deep learning model tasks need to use a GPU cluster at the cloud to complete training tasks. The deep learning model training state message notification can be checked in real time by using the mobile terminal device, the experience of checking the model training state and checking the training state feedback in real time by the user can be greatly improved, andthe process of participating in the competition becomes more and more convenient and efficient. Therefore, according to a technical scheme, a competition training task instruction can be sent to a GPUserver for training on equipment such as a mobile terminal HTML5 page or a local terminal PC; according to the method for checking the training notice and the training process in the mobile terminalequipment at any time, the single use scene limitation that a user checks the training process and the notice in the competition training process is solved. A user can check the competition training process and the result notification in real time through the mobile terminal equipment in any scene, and the user experience and the competition training time efficiency of participating in competitiontraining are improved.

Owner:北京智能工场科技有限公司

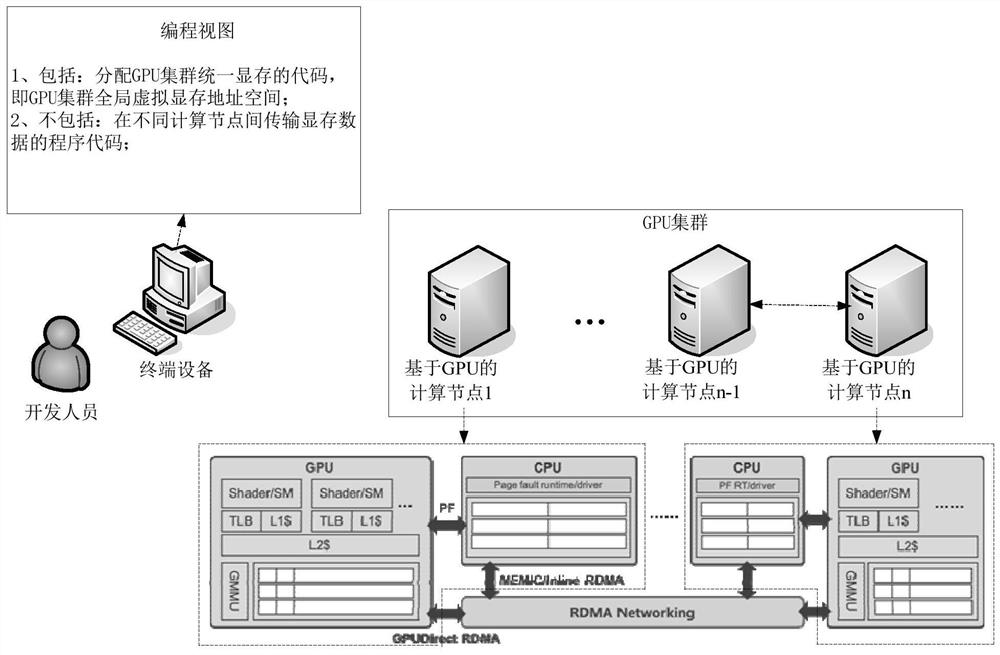

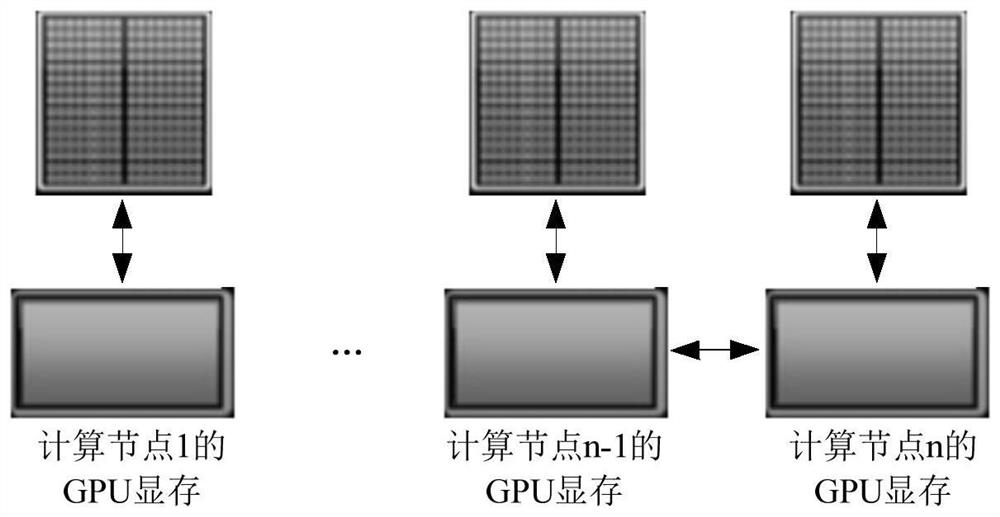

GPU cluster shared video memory system, method, device and equipment

PendingCN113674133AImprove the performance of shared video memoryHelp with integrationResource allocationProcessor architectures/configurationVideo memoryComputer architecture

The invention discloses a GPU cluster video memory sharing method, device and system and equipment. The method comprises the following steps: determining GPU cluster global video memory address mapping information of a target application according to a GPU cluster global virtual video memory address space of the target application running on a first computing node; when page missing abnormity occurs when the target application accesses the GPU video memory, determining a second computing node where the target page data is located according to global video memory address mapping information of the target application; and calling the target page data in the second computing node into the GPU video memory of the first computing node, and reading the target page data from the GPU video memory of the first computing node by the target application. By the adoption of the processing mode, the video memory resources are aggregated from the GPU cluster system level, a unified GPU video memory address space and a single programming view are provided for a distributed GPU cluster in the face of large loads with high video memory resource requirements, explicit management data migration and communication are avoided, and GPU cluster system programming is simplified.

Owner:ALIBABA SINGAPORE HLDG PTE LTD

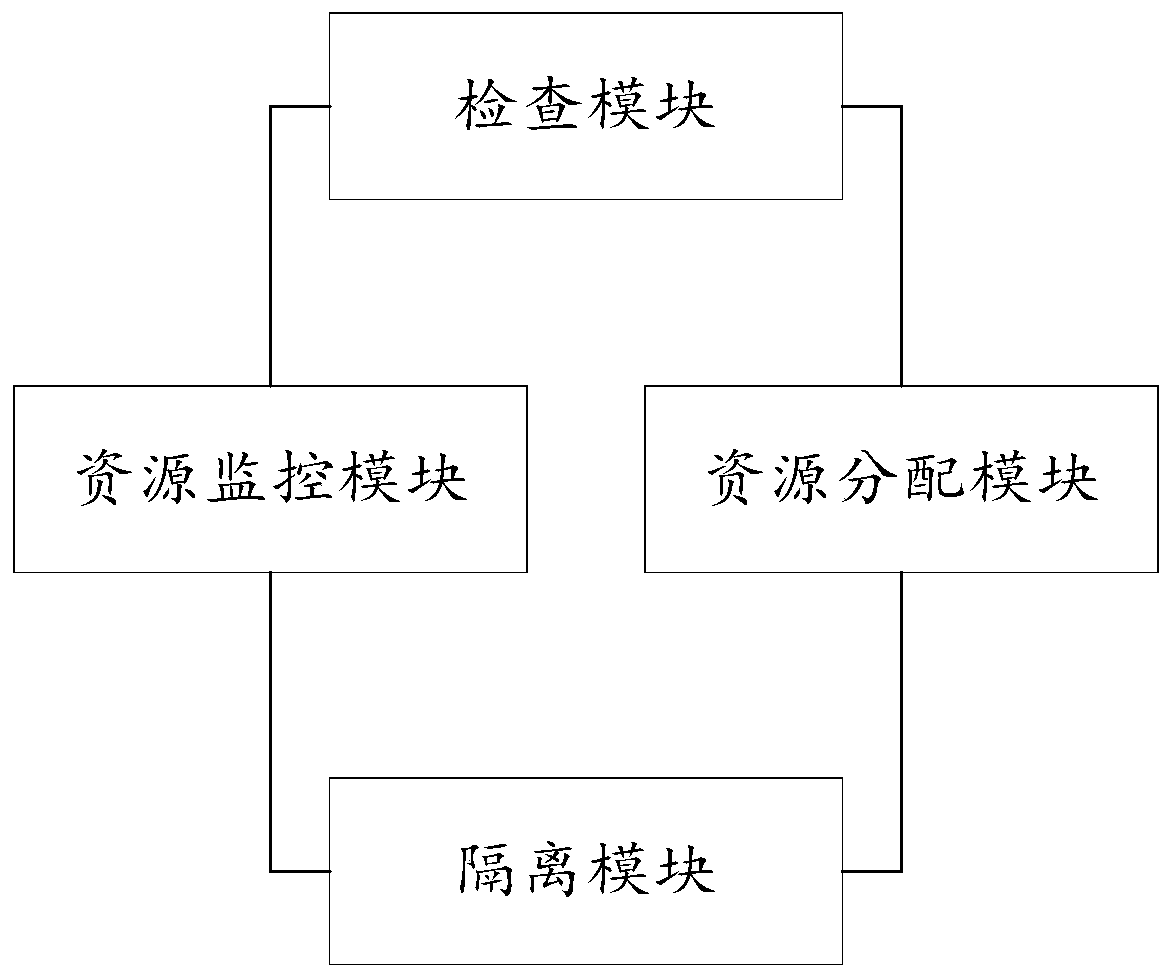

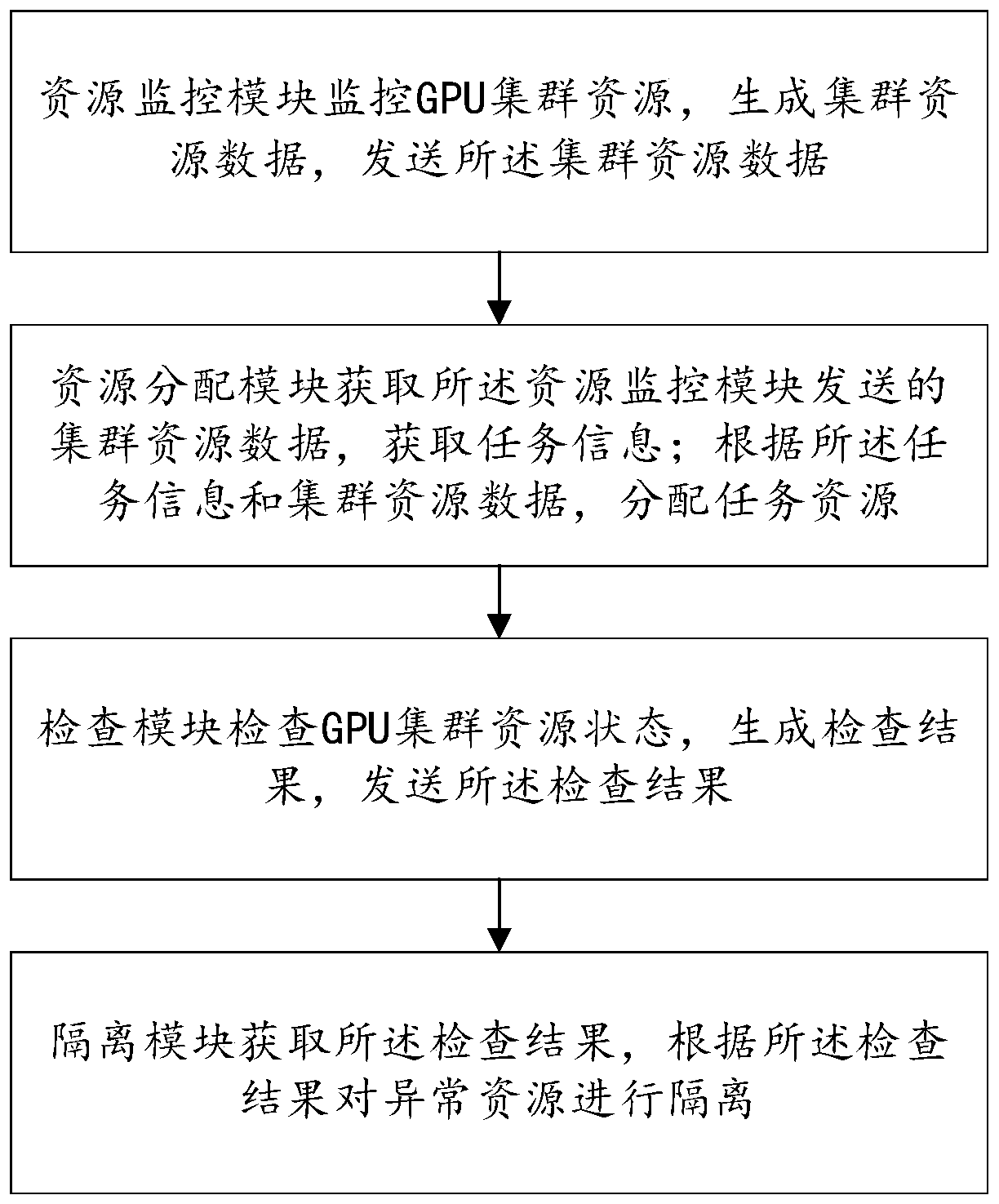

GPU cluster service management system and method

ActiveCN111552556AGuaranteed uptimeImprove processing efficiencyResource allocationHardware monitoringParallel computingGPU cluster

The invention belongs to the field of computer management, and particularly relates to a GPU cluster service management system and method. The GPU cluster service management system comprises a resource monitoring module used for monitoring GPU cluster resources, generating cluster resource data and sending the cluster resource data, a resource allocation module used for acquiring task informationand the cluster resource data and allocating task resources according to the task information and the cluster resource data, a checking module used for obtaining the cluster resource data sent by theresource monitoring module, checking the GPU cluster resource state according to the cluster resource data, generating a checking result and sending the checking result, and an isolation module used for acquiring the inspection result and isolating abnormal resources according to the inspection result. According to the GPU cluster service management system and method, all resource states in the GPU cluster can be monitored in real time, and it is ensured that resources are efficiently utilized; and abnormal resources can be automatically checked out and isolated, so that normal operation of the GPU cluster is ensured, and the processing efficiency of the GPU cluster is improved.

Owner:北京中科云脑智能技术有限公司 +1

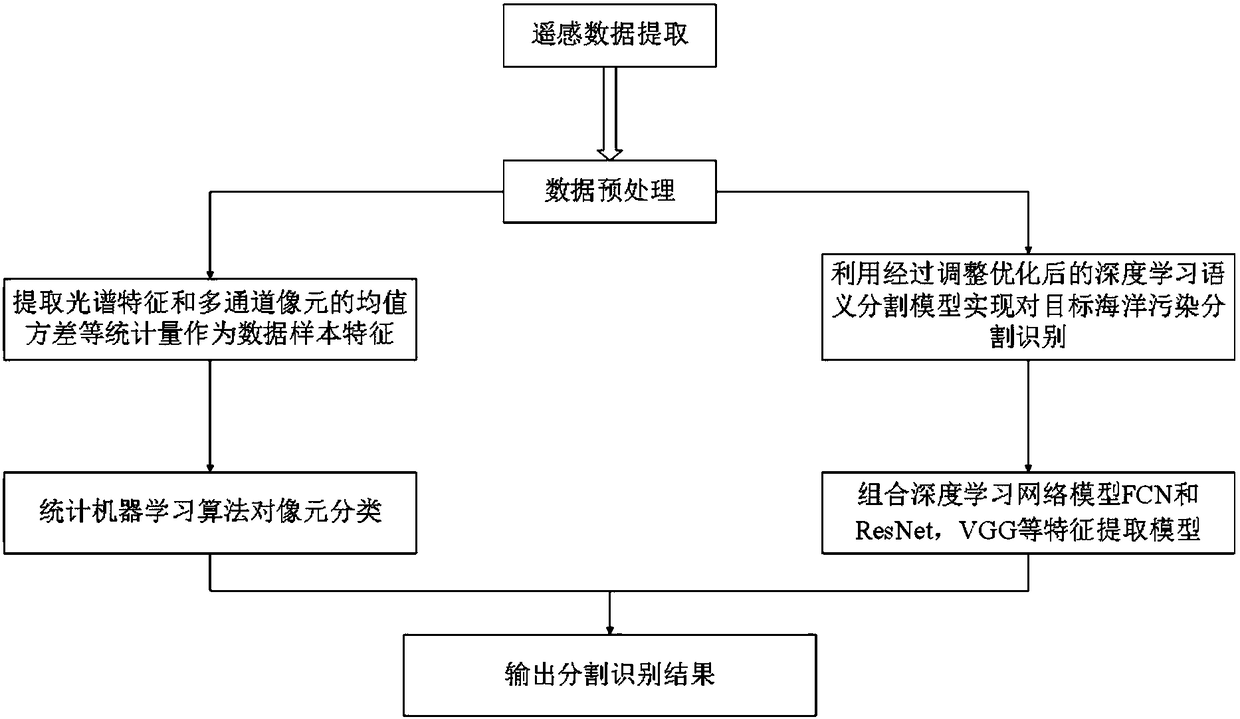

Deep semantic segmentation-based ocean oil spill detection system and method

InactiveCN108596065AFast and accurate monitoring of contaminationMonitoring pollutionCharacter and pattern recognitionNeural architecturesNetwork modelOil spill

The invention provides a deep semantic segmentation-based ocean oil spill detection system. The system comprises a computing server, a GPU cluster, an ocean pollution remote sensing image database anda normal no-pollution image database; the computing server is deployed on the GPU cluster; the computing server stores a trained neural network model; and an input image is identified. The inventionfurthermore provides a deep semantic segmentation-based ocean oil spill detection method. The system and the method have the beneficial effects that the ocean oil spill pollution can be quickly and accurately monitored.

Owner:SHENZHEN POLYTECHNIC

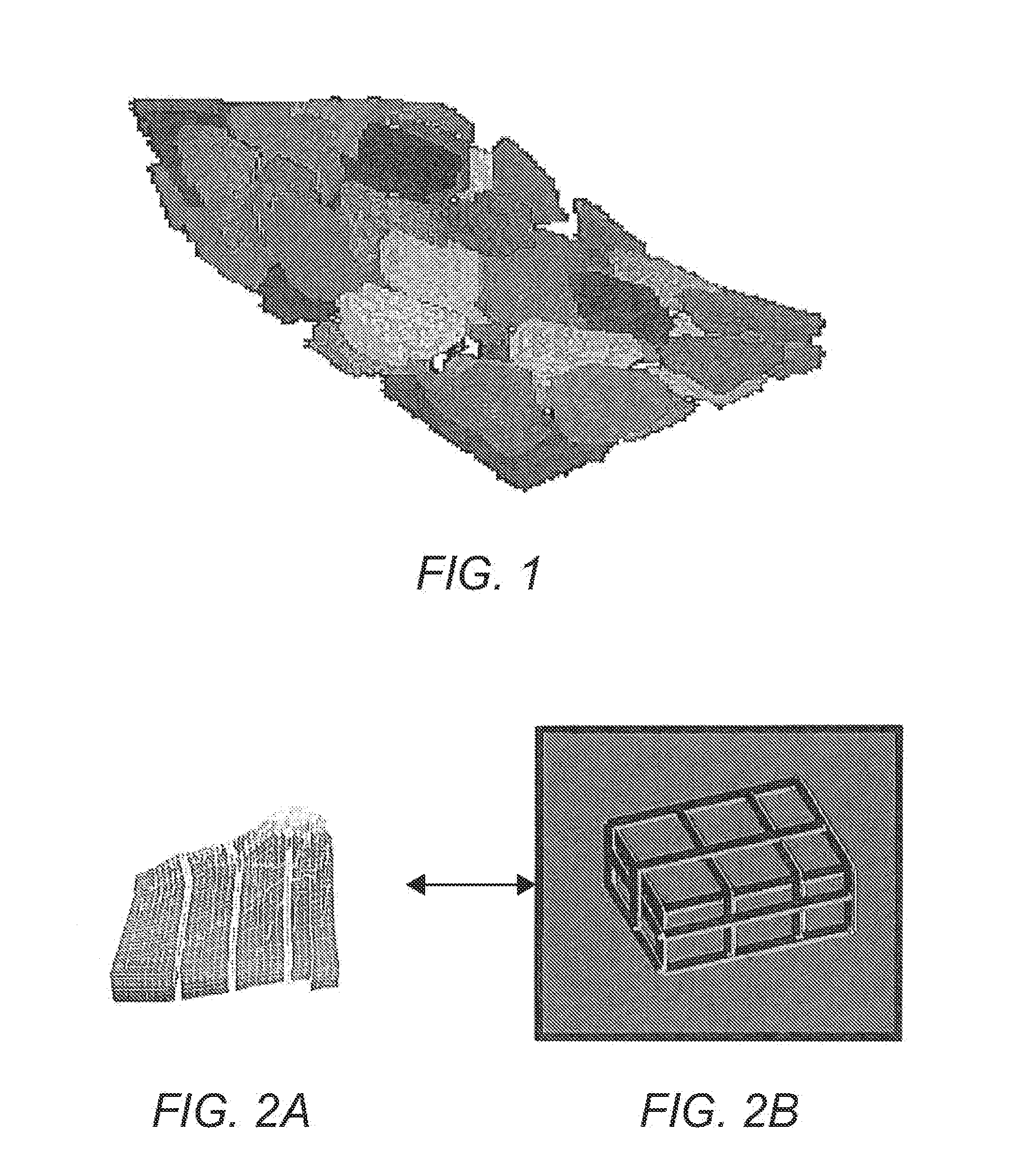

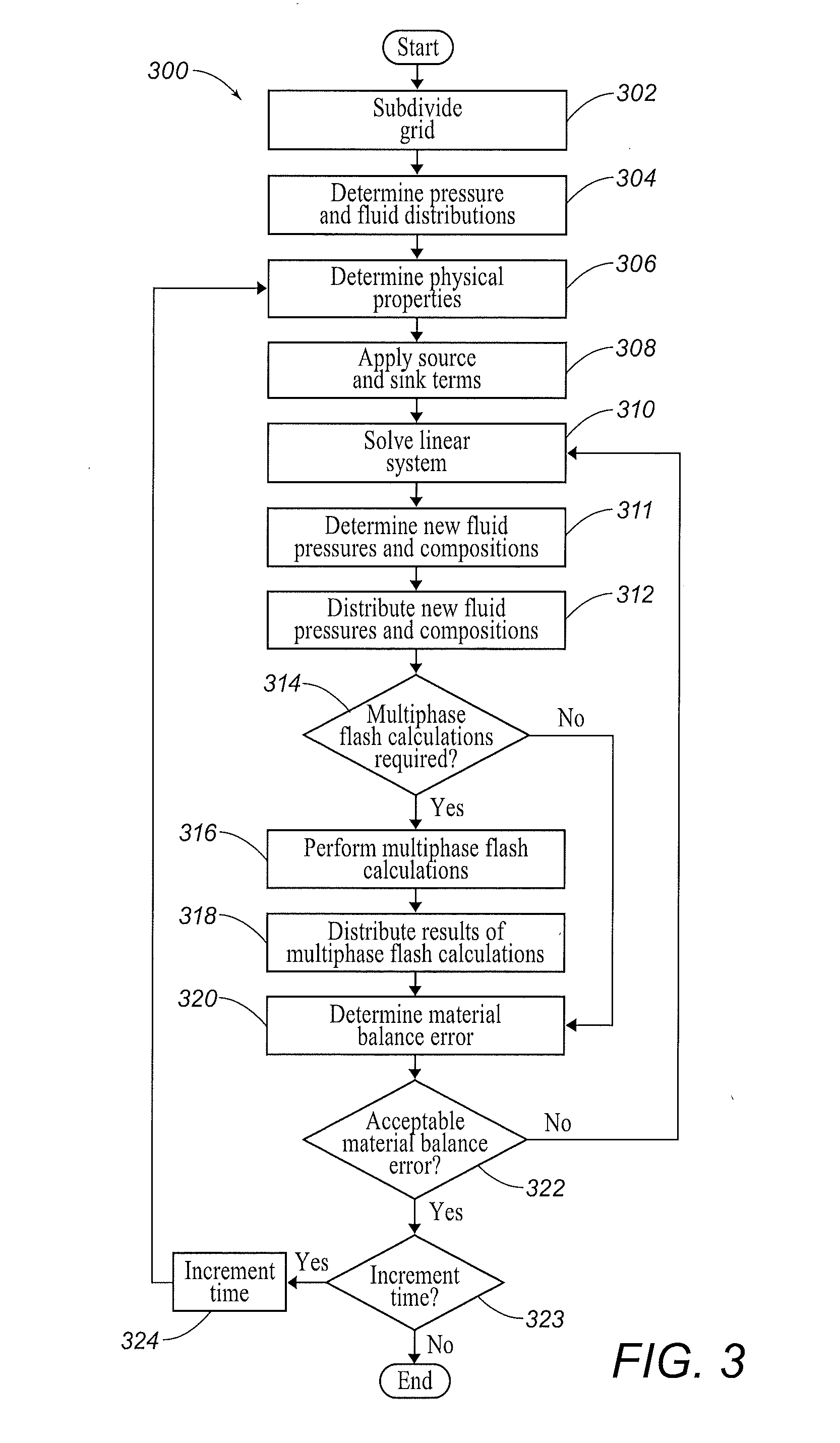

Systems and Methods for Reservoir Simulation

InactiveUS20140015841A1Balance computing loadOvercome deficienciesDigital computer detailsSeismologyGPU clusterParallel processing

Systems and methods for structured and unstructured reservoir simulation using parallel processing on GPU clusters to balance the computational load.

Owner:LANDMARK GRAPHICS

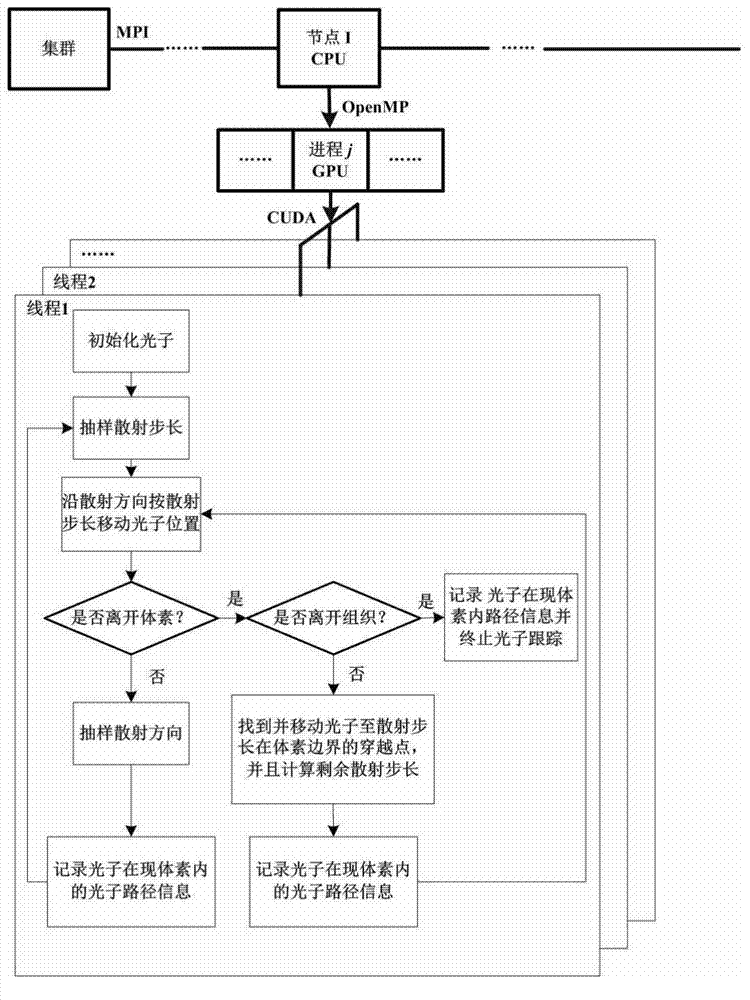

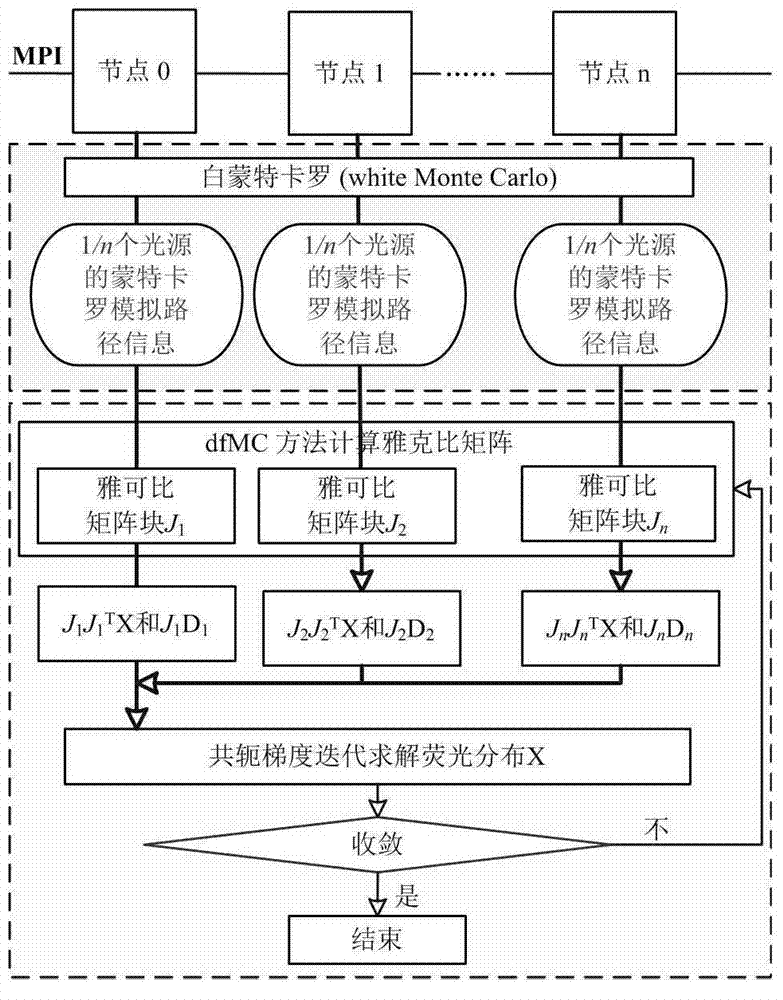

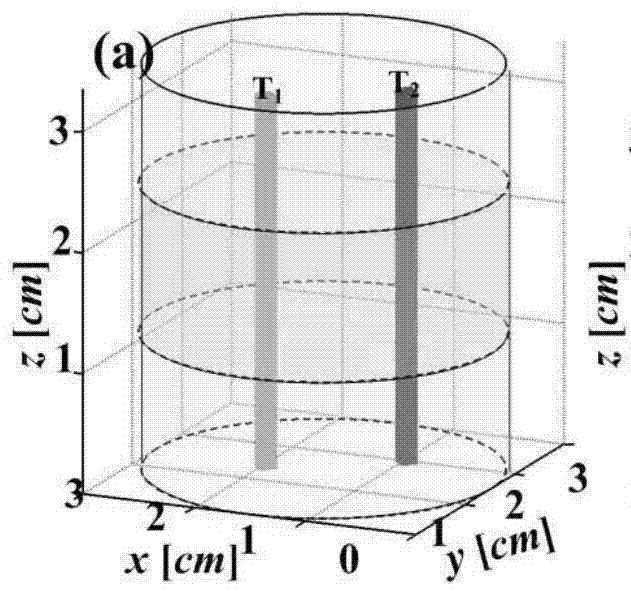

Fluorescent diffusion optical cross-sectional image reestablishing method based on dfMC model

ActiveCN104706320AAchieve reconstructionImprove reconstruction accuracyDianostics using fluorescence emissionSensorsDiffusionSpatial structure

The invention discloses a fluorescent diffusion optical cross-sectional image reestablishing method based on a decoupling fluorescence Monte Carlo (dfMC) model, and belongs to the technical field of biomedical engineering. The method includes the steps of firstly, determining a detecting area, selecting a plurality of scanning points in the detecting area, and obtaining fluorescent intensity distribution on a detector; secondly, establishing a three-dimensional digital model for depicting a tissue optical parameter space structure, conducting forward-direction white Monte Carlo simulation of stimulating photons according to the scanning positions and directions of a light source, tracking the stimulating photons, and recording the corresponding physical quantities of the photons reaching the detector on a path; thirdly, calculating the weight of fluorescent photons through a dfMC method, and calculating a fluorescent Jacobi matrix; fourthly, calculating the positions and absorbing coefficients of fluorophores in tissue through iterative reconstruction of GPU clusters. The method has the advantage of providing an accurate and rapid reestablishing method for a three-dimensional fluorescence tomography system through the high-precision dfMC model on the basis of the accelerated iterative reconstruction process of the GPU clusters.

Owner:HUAZHONG UNIV OF SCI & TECH

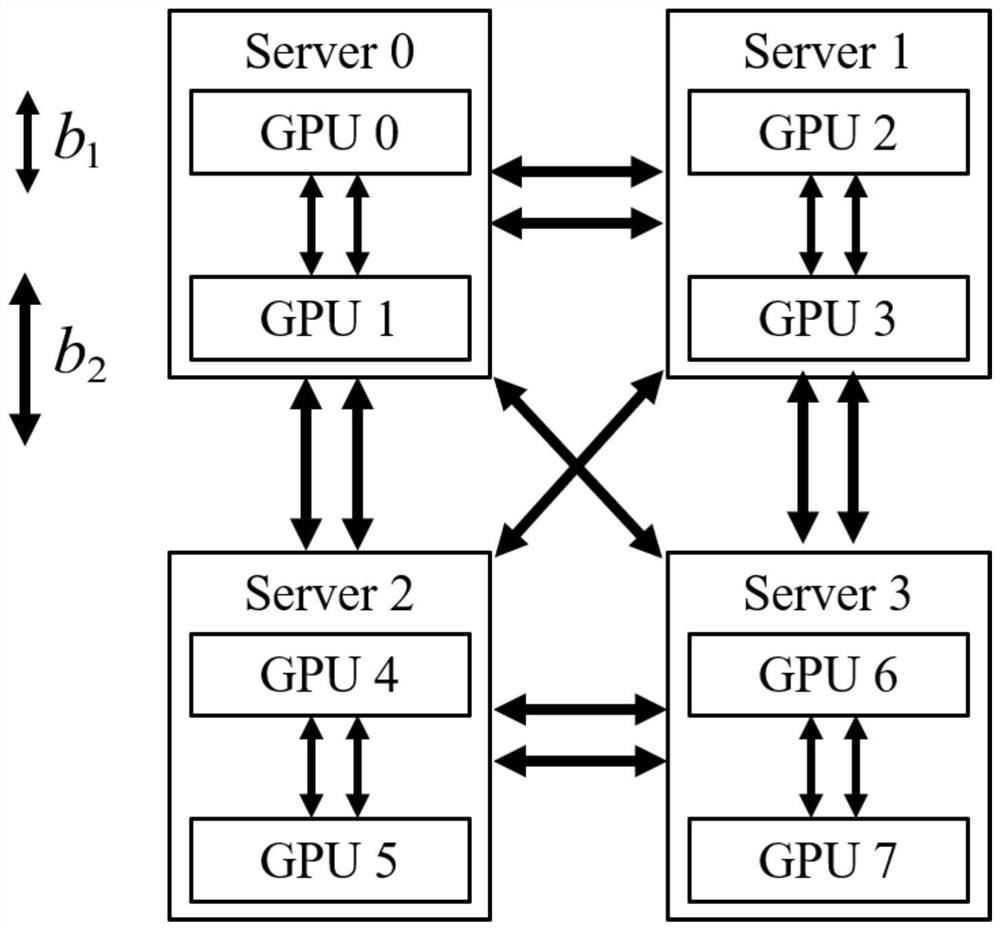

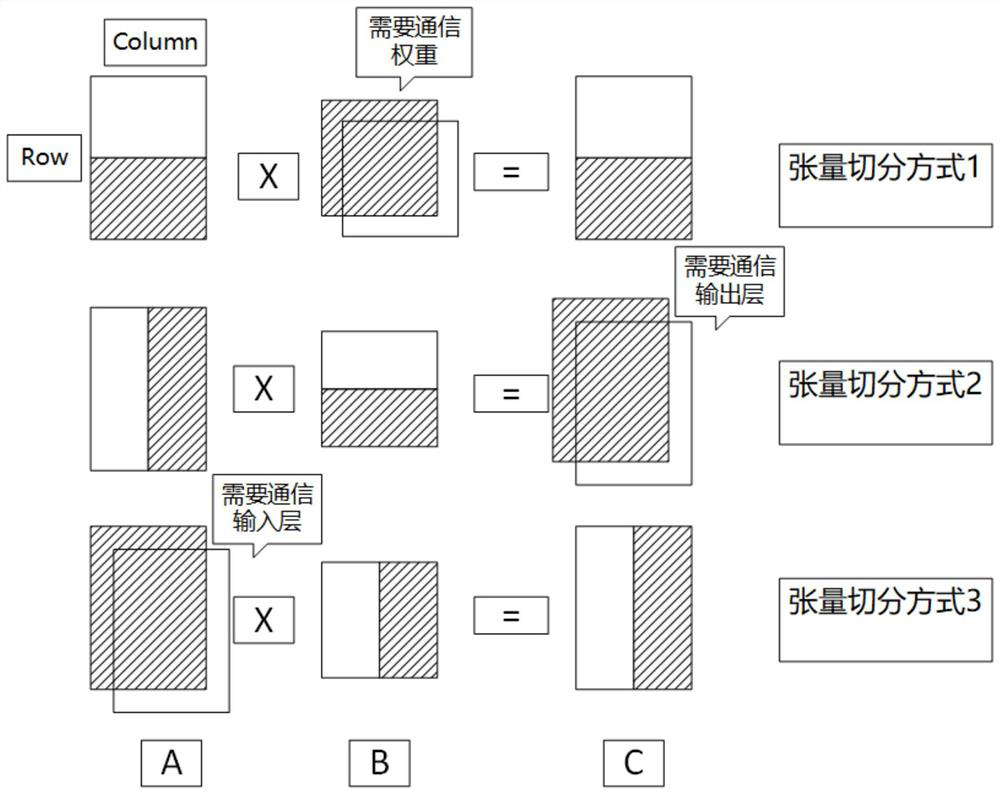

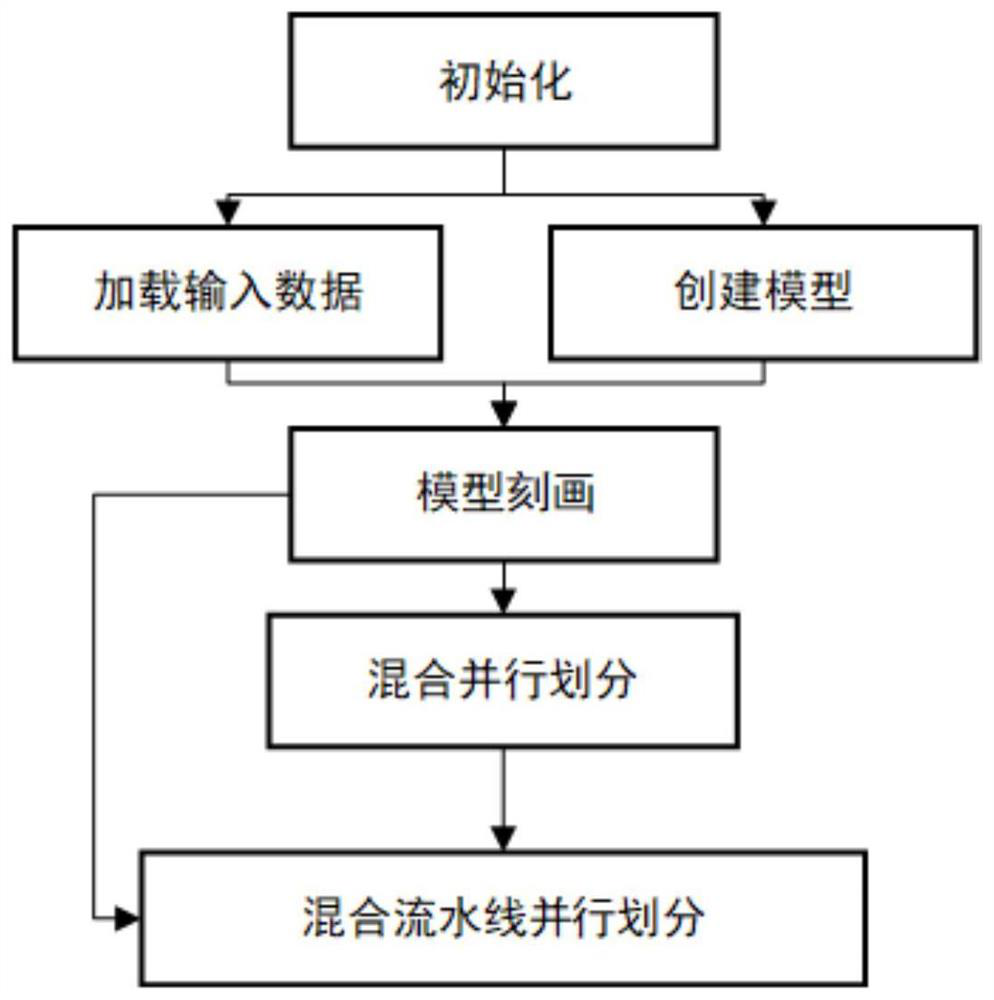

Hybrid pipeline parallel method for accelerating distributed deep neural network training

PendingCN112784968AExtended Parallel ModeRaise the possibilityResource allocationNeural architecturesTheoretical computer scienceDivision algorithm

The invention provides a hybrid pipeline parallel method for accelerating distributed deep neural network training, and mainly solves the problems that resources are not fully utilized and efficient distributed training cannot be realized in a traditional GPU cluster distributed training process. The core mechanism of the method mainly comprises three parts, namely deep learning model description, model hybrid division and hybrid pipeline parallel division. The method comprises: firstly, for the resource requirements of deep learning application in a GPU training process, describing corresponding indexes such as a calculated amount, an intermediate result communication amount and a parameter synchronization amount in the training process, and using the indexes as input of model hybrid division and task placement; and then, according to a model description result and an environment of a GPU cluster, designing two division algorithms based on dynamic programming to realize model hybrid division and hybrid pipeline parallel division so as to minimize the maximum value of task execution time of each stage after division, so that load balance is ensured, and efficient distributed training of a deep neural network is realized.

Owner:SOUTHEAST UNIV

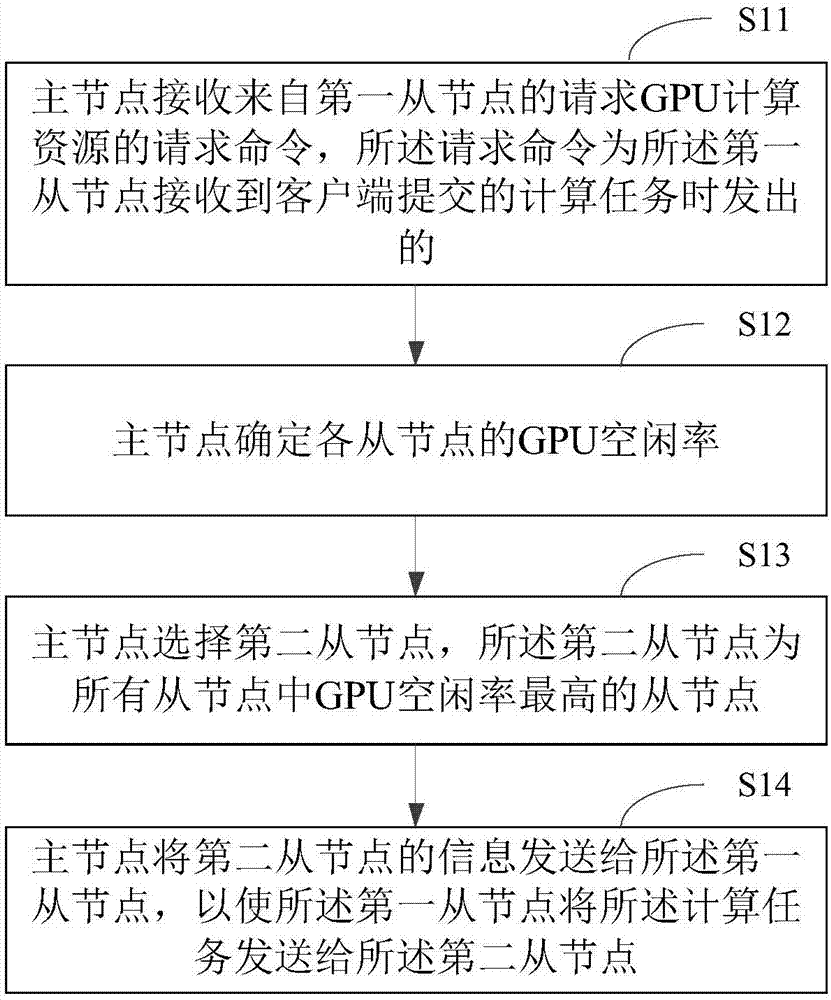

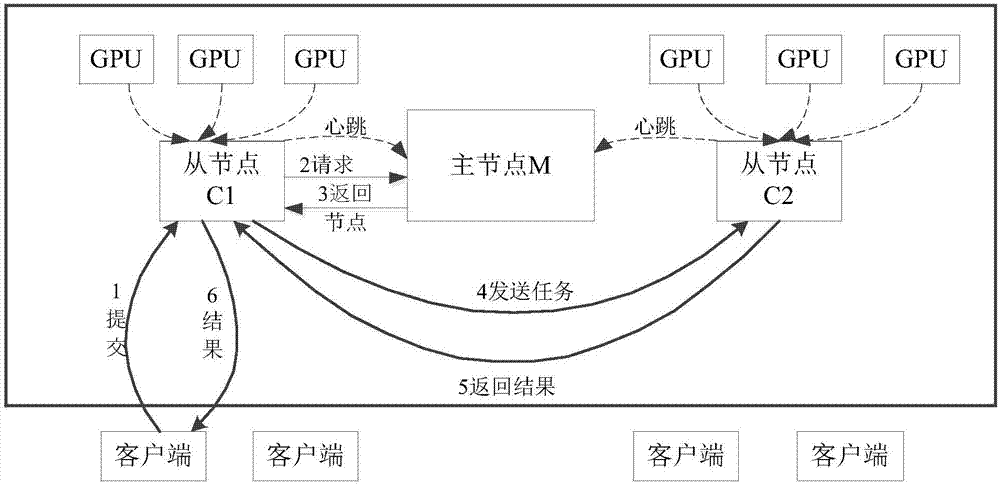

GPU resource scheduling method and apparatus

ActiveCN107544845AImprove computing powerScheduling balanceProgram initiation/switchingResource allocationGPU clusterDistributed computing

The invention relates to a GPU resource scheduling method and apparatus. The method comprises the following steps: a master node receives a request command of requesting GPU computing resources from afirst slave node, wherein the request command is sent by the first slave node when receiving a computing task submitted by a client; the master node determines GPU vacancy rates of the slave nodes; the master node selects a second slave node, wherein the second slave node is a slave node having the highest GPU vacancy rate in all slave nodes; and the master node sends the information of the second slave node to the first slave node, so that the first slave node sends the computing task to the second slave node. By adoption of the GPU resource scheduling method / apparatus according to various aspects of the invention, balanced scheduling of the computing resources of a GPU cluster can be realized, the computing performance of the GPU cluster is improved, and the GPU resources require no manual management of the user, so that the scheduling manner is simple.

Owner:NEW H3C BIG DATA TECH CO LTD

GPU cluster environment-oriented method for avoiding GPU resource contention

ActiveCN107943592AAvoid resource contentionGuarantee the effect of implementationResource allocationFeature extractionGPU cluster

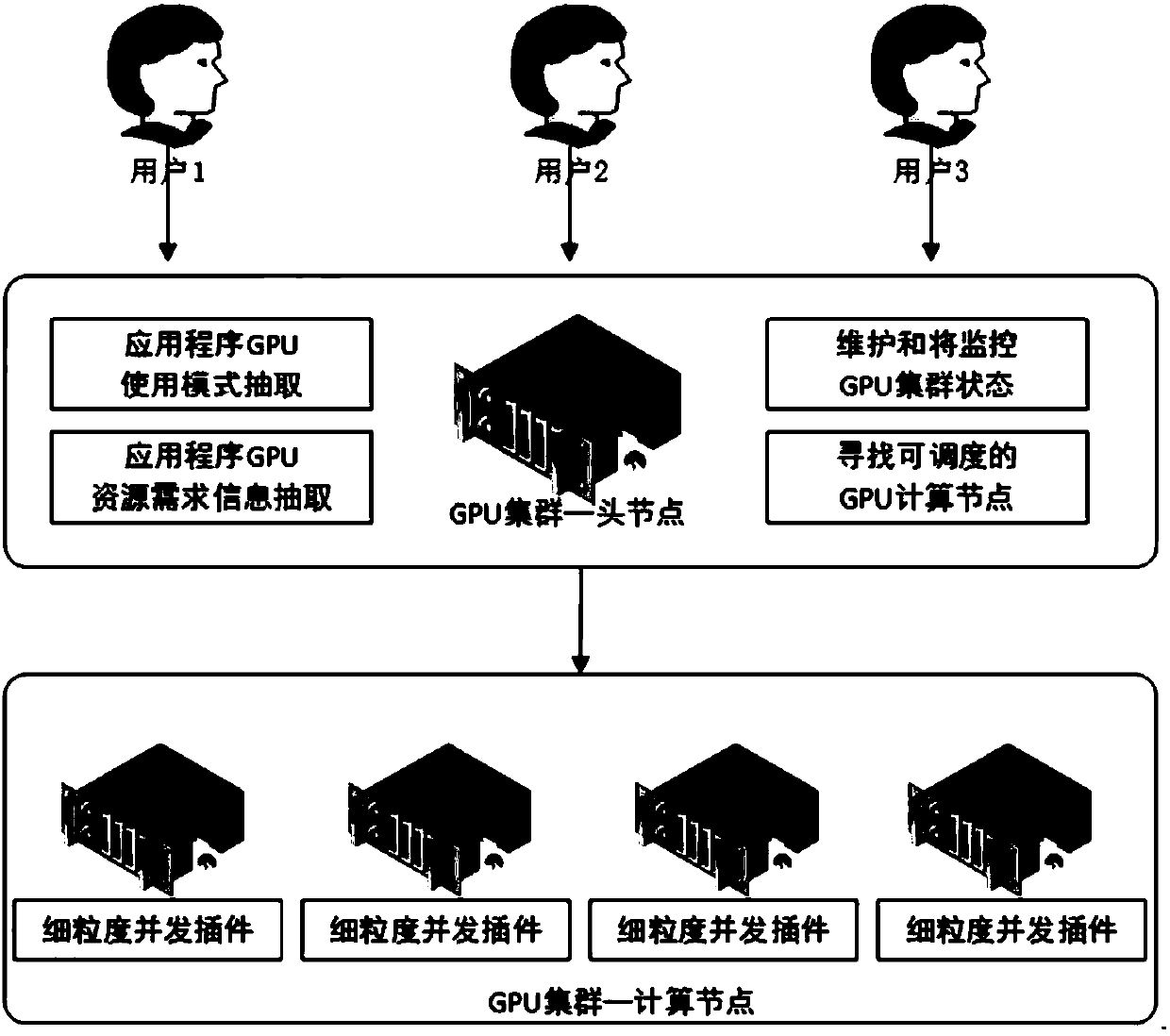

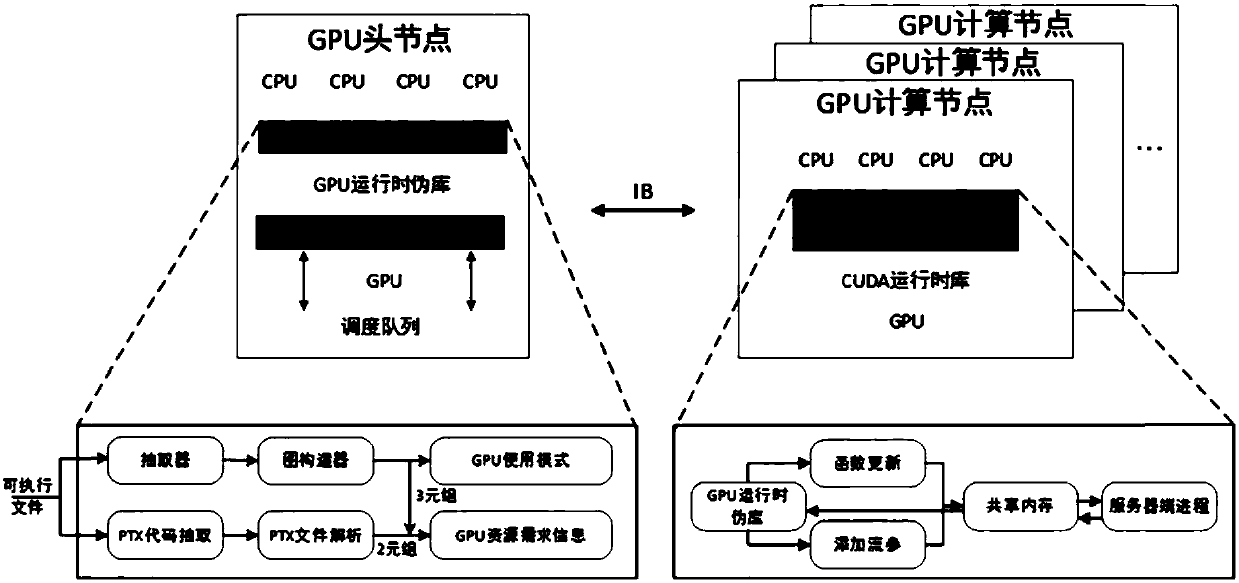

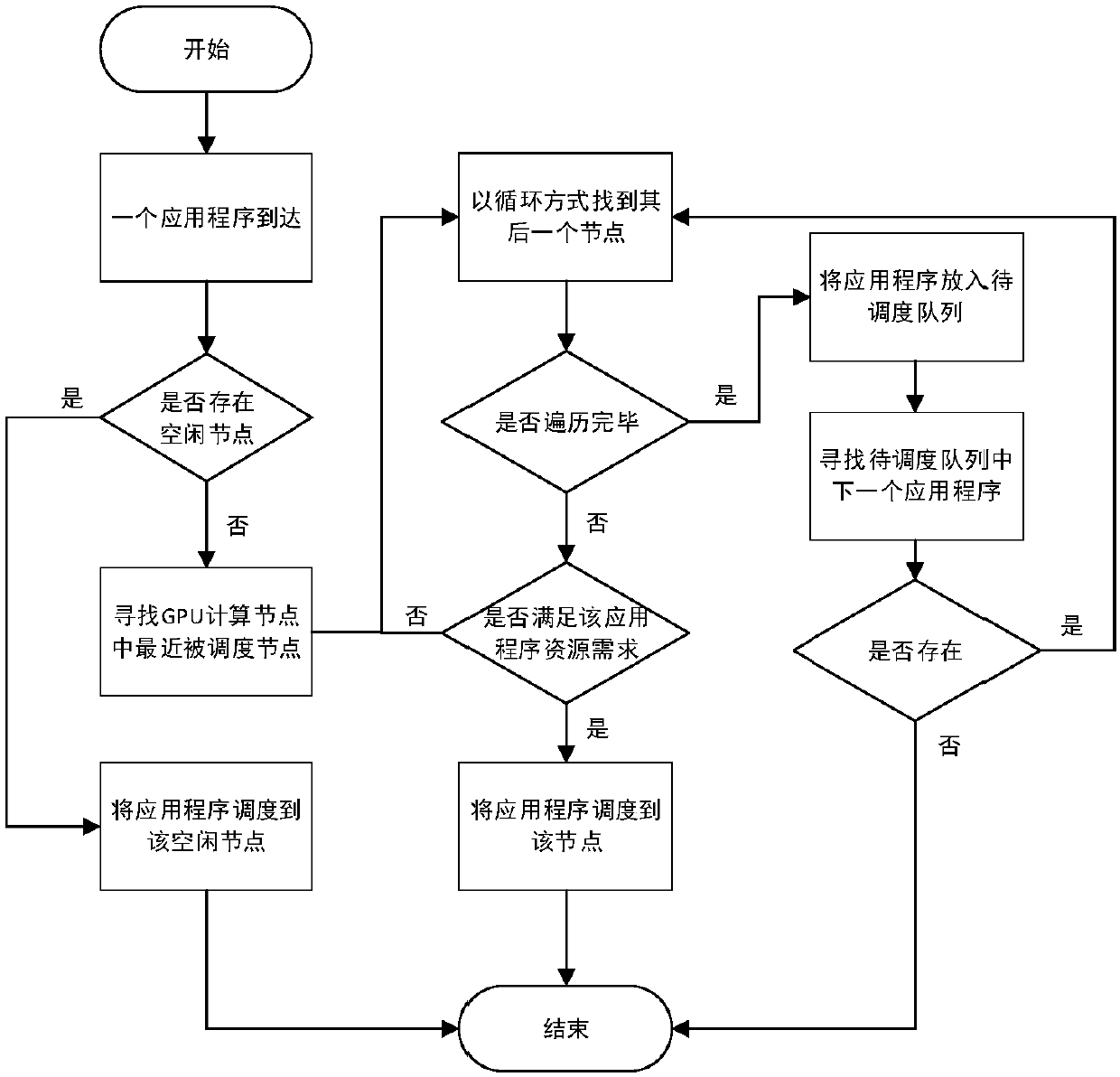

The invention discloses a GPU cluster environment-oriented method for avoiding GPU resource contention. The method includes the steps of constructing a plug-in supporting multi-application fine-grained concurrent execution, application behavior feature extraction and application task scheduling. Against the problem of GPU resource contention that may arise for multiple applications running on thesame NVIDIA GPU node, a platform that supports multi-application fine-grained concurrent execution is built, so that the multiple applications can undergo concurrent execution on the same GPU node asmuch as possible. GPU behavior features of each application, including GPU usage patterns and GPU resource requirement information, are extracted. According to the GPU behavior features of each application and the resource usage status of each GPU node in a current GPU cluster, the applications is dispatched to a suitable GPU node, thereby minimizing the resource contention of multiple independentapplications on the same GPU node.

Owner:CHINA INFOMRAITON CONSULTING & DESIGNING INST CO LTD +1

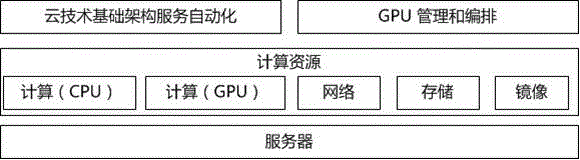

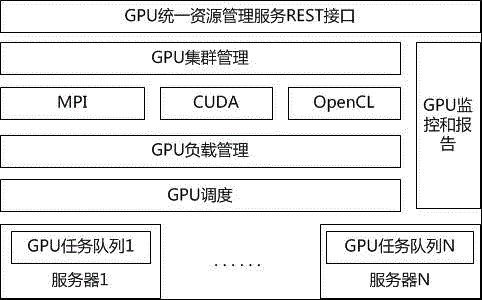

Method and system for unified management service of GPU cloud computing resource

InactiveCN106155804AFlexible combinationTo achieve the goal of green energy savingResource allocationEnergy efficient computingComputing centerGPU cluster

The invention discloses a method for unified management service of GPU cloud computing resources. The method comprises the following steps: GPUs of all servers in a computing center forming a software defined GPU computing cluster; an application for computational nodes being sent to a GPU cluster manager after CPU resources are applied, to apply for an available GPU computational node list; after application is successful, through a GPU workload manager and a second-level framework of a GPU agent, performing horizontal partitioning on GPU stored data and calling of a GPU computational task, to perform distributed GPU computation. The method improves utilization rate of hardware and reduces energy consumption.

Owner:BEIJING DIANZAN TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com