Patents

Literature

62results about How to "Improve Parallel Computing Efficiency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method for parallel execution of particle swarm optimization algorithm on multiple computers

InactiveCN101819651ASolve the problem of long calculation timeReduce computing timeResource allocationBiological modelsMulti core programmingComputer programming

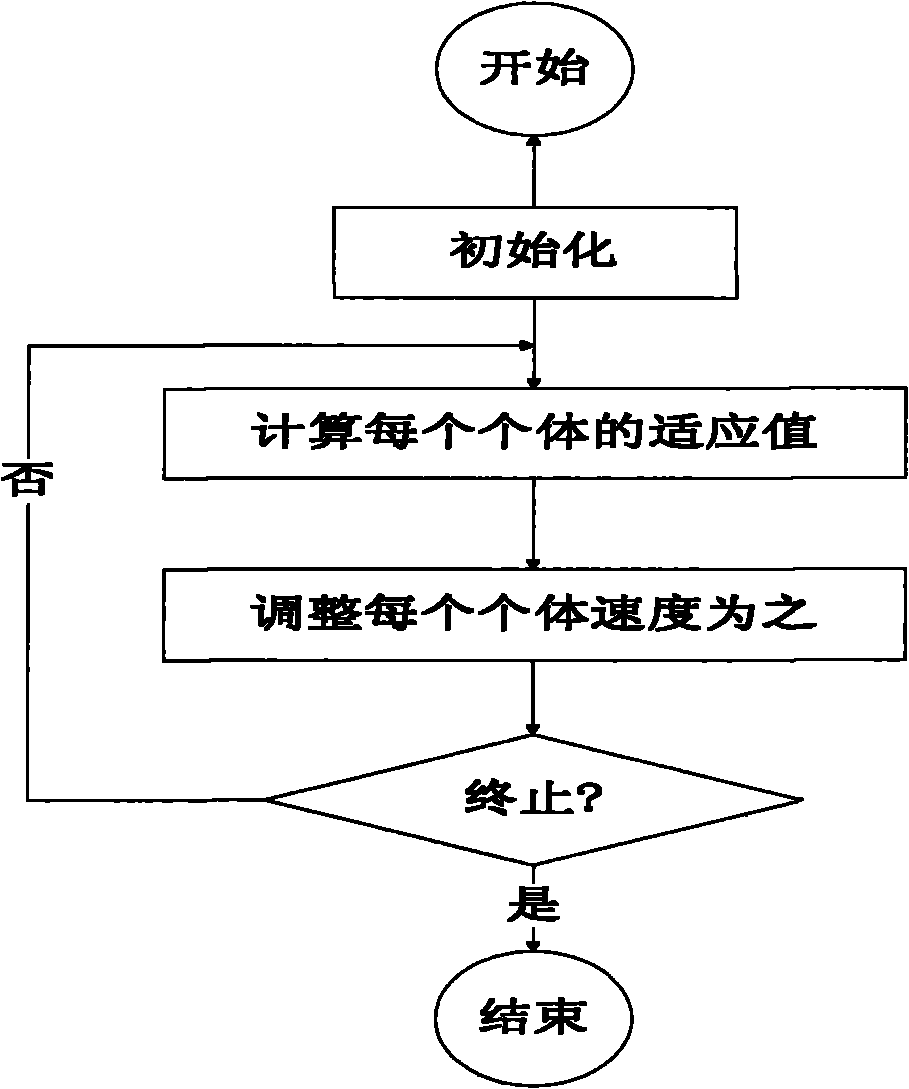

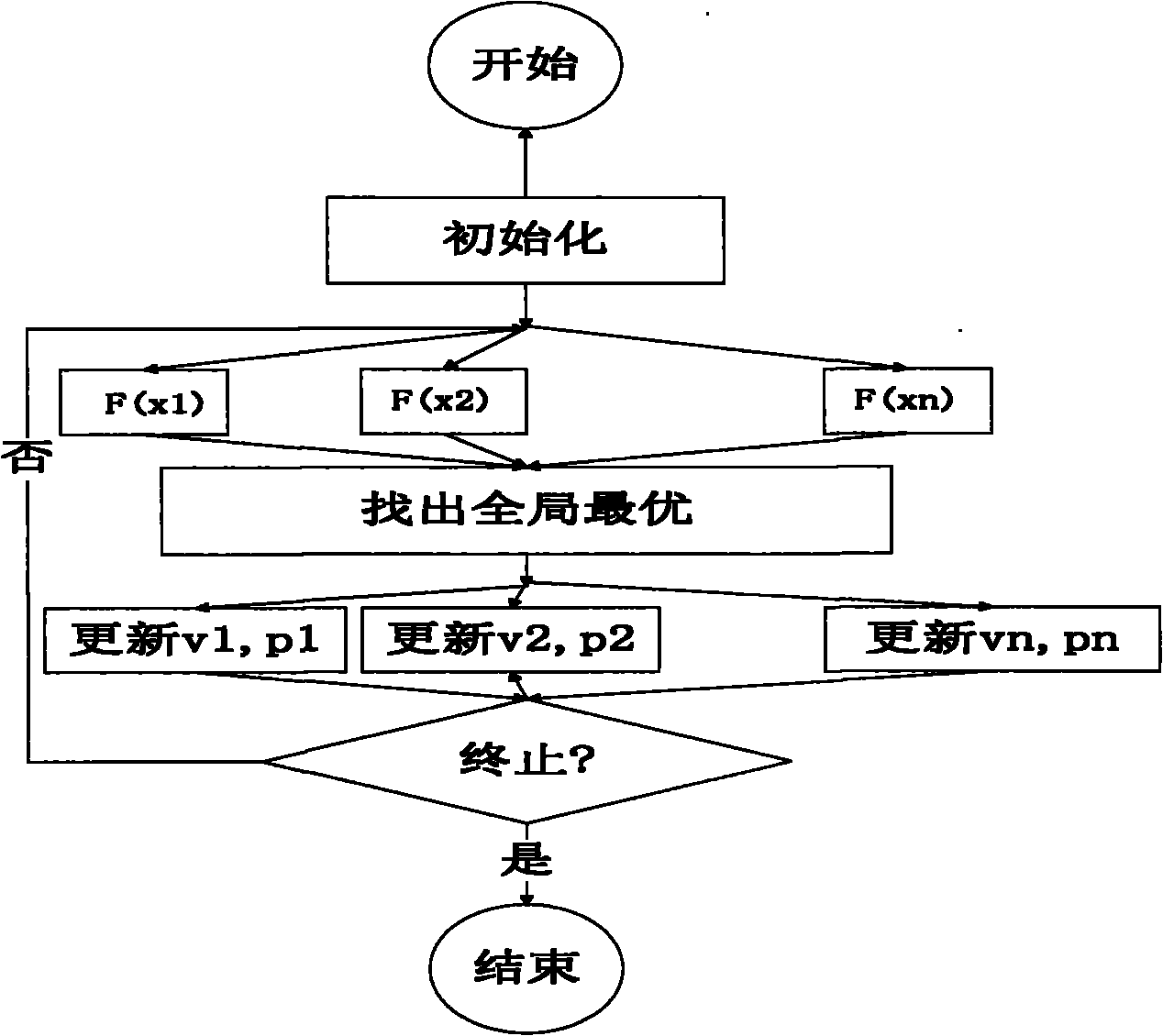

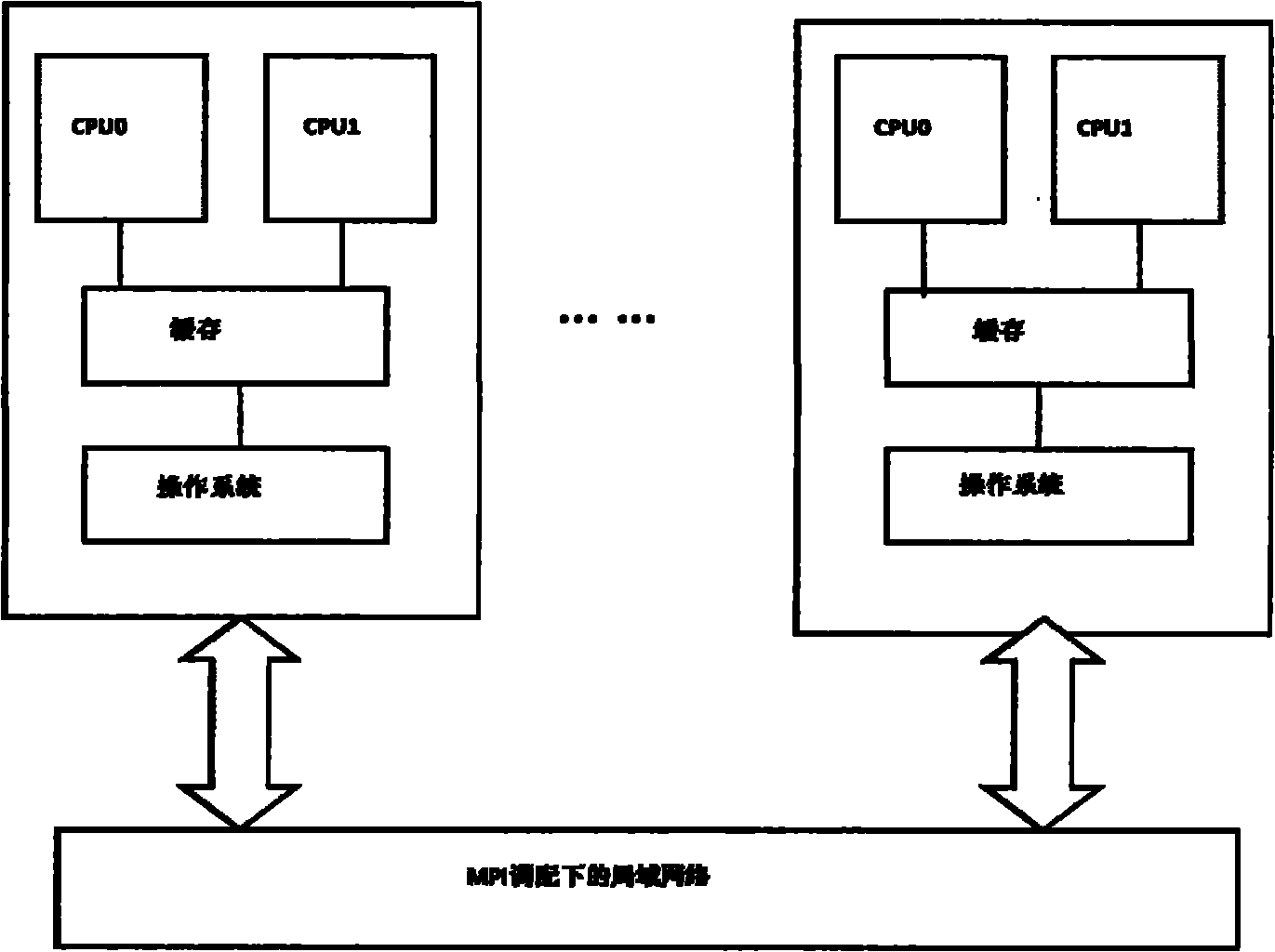

The invention discloses a method for parallel execution of particle swarm optimization algorithm on multiple computers. The method comprises the initialization step, the evaluation and adjustment step, the step of judging termination conditions and the termination and output step, wherein the evaluation and adjustment is the part for realizing parallel computation through parallel programming of MPI plus OpenMP. The method carries out parallelization on the operations of updating particles and evaluating particles in the particle swarm optimization algorithm by combining with the existing MPI plus OpenMP multi-core programming method according to the independence before and after updating the particle swarm optimization algorithm. The invention adopts a master-slave parallel programming mode for solving the problem of too slow speed of running the particle swarm optimization algorithm on the single computer in the past and accelerating the speed of the particle swarm optimization algorithm, thereby greatly expanding the application value and the application field of the particle swarm optimization algorithm.

Owner:ZHEJIANG UNIV

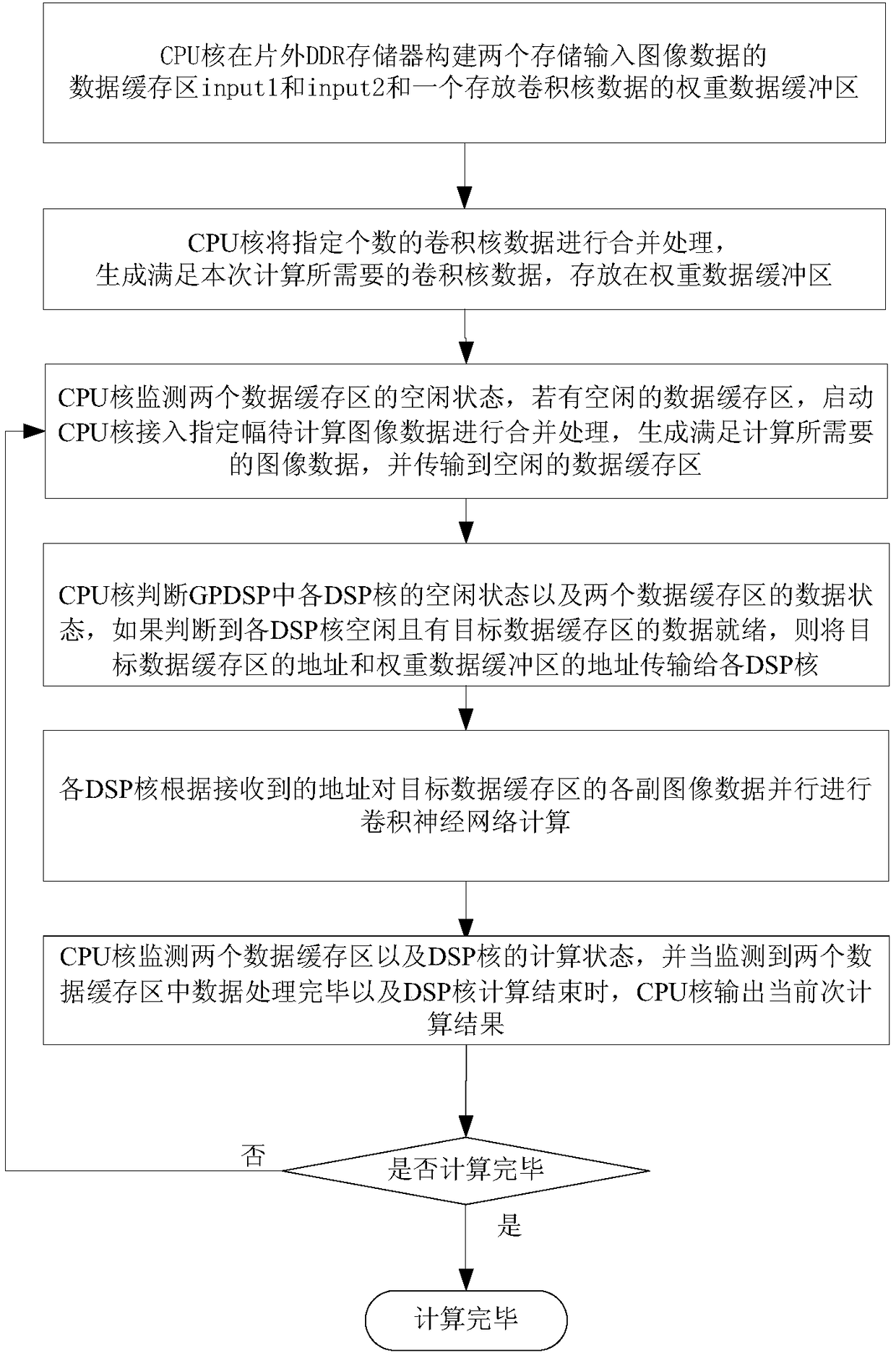

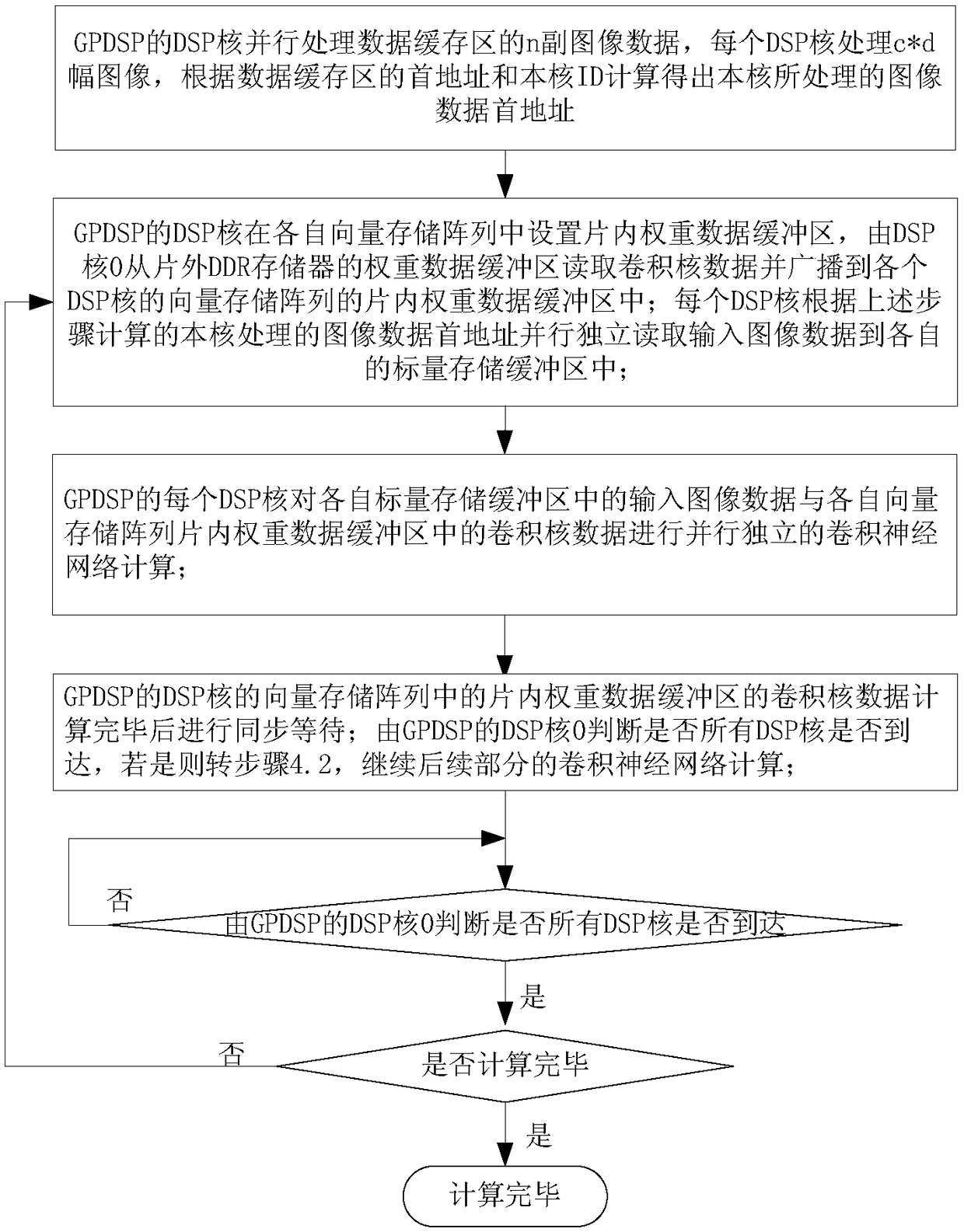

Convolutional neural network multinuclear parallel computing method facing GPDSP

ActiveCN108920413ACooperate closelyGive full play to the powerful vectorized computing capabilitiesNeural architecturesPhysical realisationParallel computingConvolution

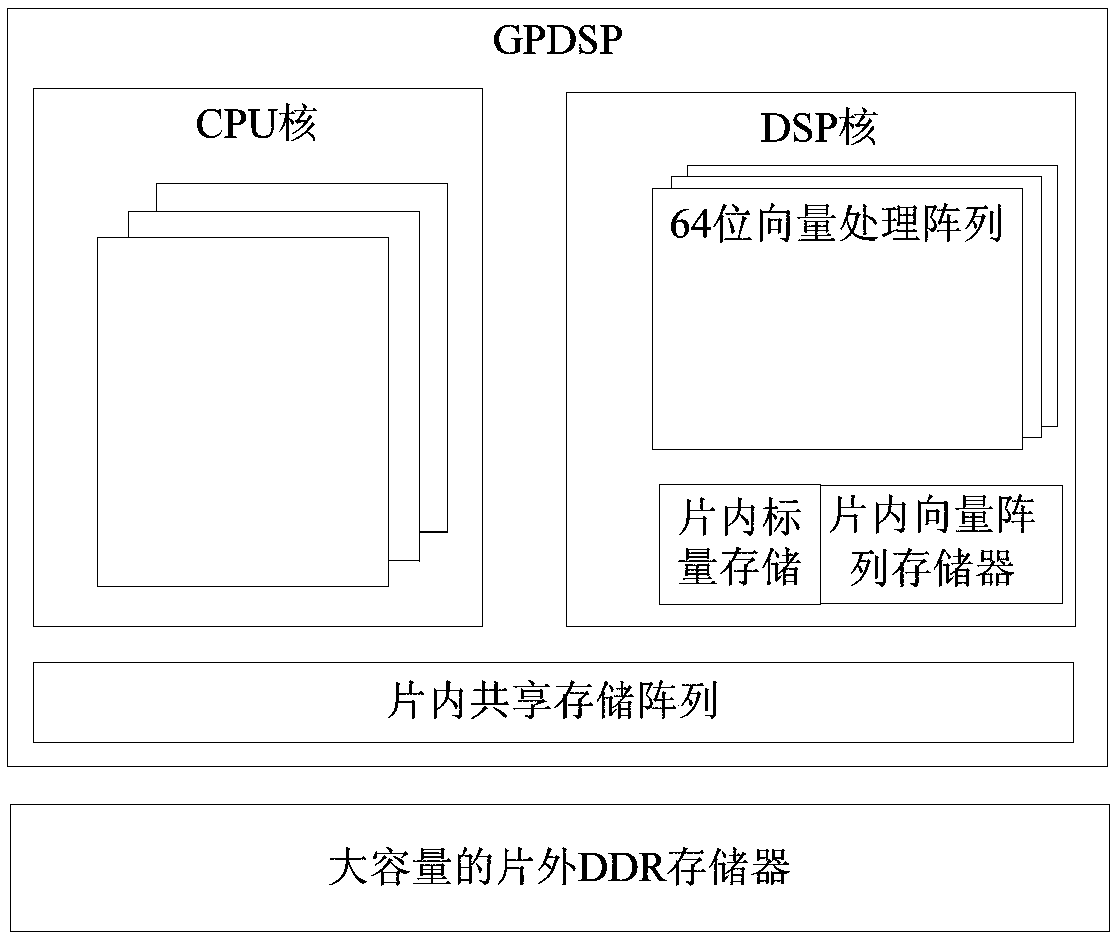

The invention discloses a convolutional neural network multinuclear parallel computing method facing GPDSP. The method comprises the following steps that S1, two data cache regions and a weight data cache region are constructed by a CPU core in an off-chip memory; S2, convolution kernel data of the designated number is combined by the CPU core for processing and stored in the weight data cache region; S3, the CPU core accesses to-be-calculated image data of the designated amplitude for combination processing, and the data is transmitted to a free data cache region; S4, if DSP cores are leisure, and data ready of the data cache regions is achieved, an address is transmitted to the DSP cores; S5, all the DSP cores conduct convolution neural network calculation; S6, a calculation result of the current time is output; S7, the steps of S3-S6 are repeated until all the calculations are completed. The performance and multi-level parallelism of the CPU core and the DSP cores in the GPDSP can be fully exerted, and efficient convolutional neural network calculation is achieved.

Owner:NAT UNIV OF DEFENSE TECH

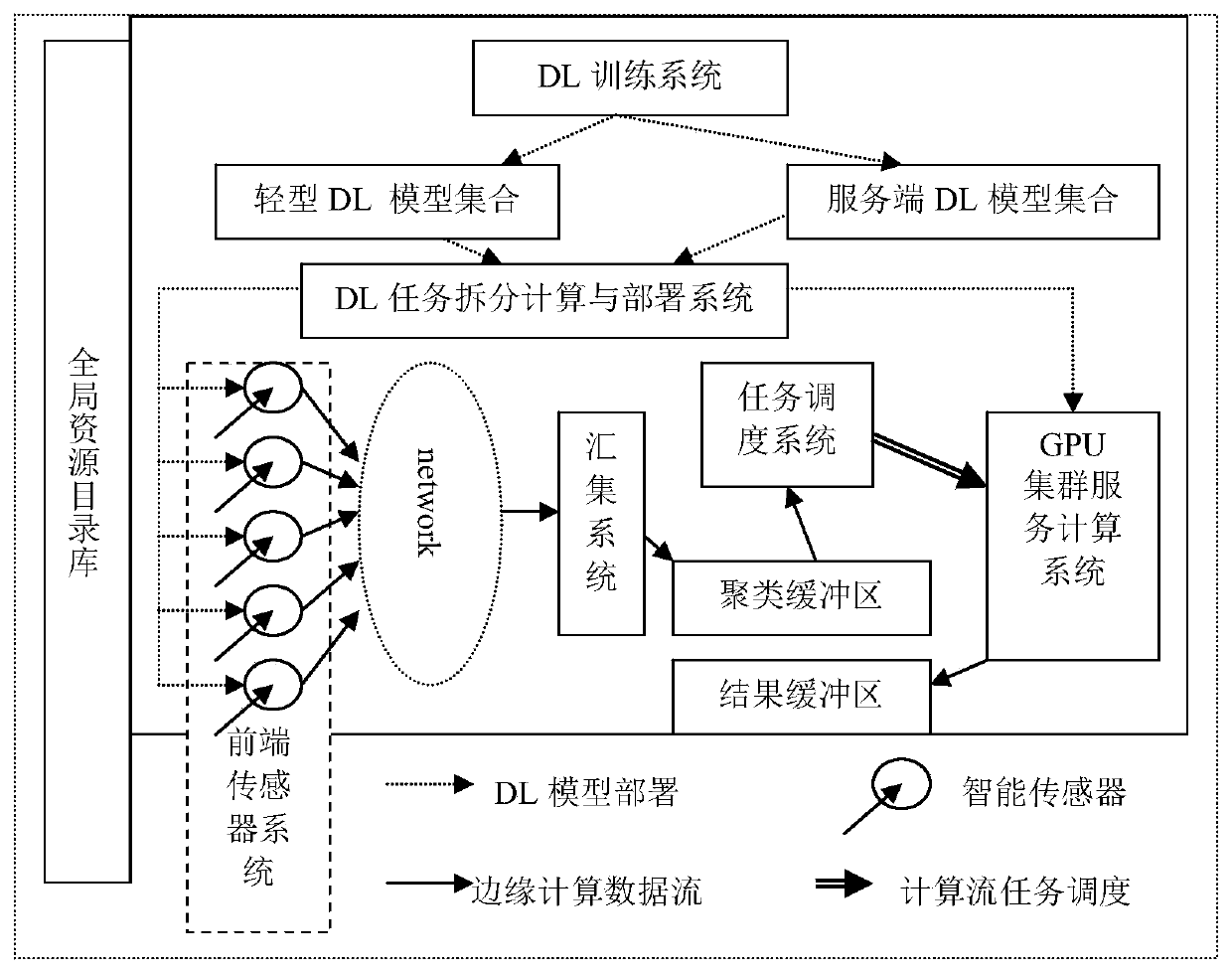

A GPU cluster deep learning edge computing system oriented to sensing information processing

ActiveCN109948428AImprove Parallel Computing EfficiencyReduce cost pressureCharacter and pattern recognitionTransmissionInformation processingRelease consistency

The invention relates to a GPU cluster deep learning edge computing system oriented to sensing information processing. pre-feature extraction is carried out on sensing information by using weak computing power of front-end intelligent sensing equipment; the quantity of original data information is greatly compressed, then the rest processing tasks are transmitted to a GPU cluster for large-scale sensing data feature clustering set processing, the computing power of front-end intelligent sensing equipment can be dynamically adapted through task splitting processing, and the cost pressure of theconsistency requirement of the front-end sensing equipment and hardware versions is reduced; The communication pressure of the edge computing network is reduced, so that the cost of constructing theedge computing network is greatly reduced; Network data feature transmission hides user privacy;, the SPMD advantages of the GPU are brought into play through the clustering operation according to thedata transmitted in the network and the stored data core characteristics, the parallel computing efficiency of edge computing is improved, and meanwhile, the large-scale parallel computing capacity of the GPU cluster and the advantages of low cost and high reliability are effectively brought into play.

Owner:UNIV OF SHANGHAI FOR SCI & TECH

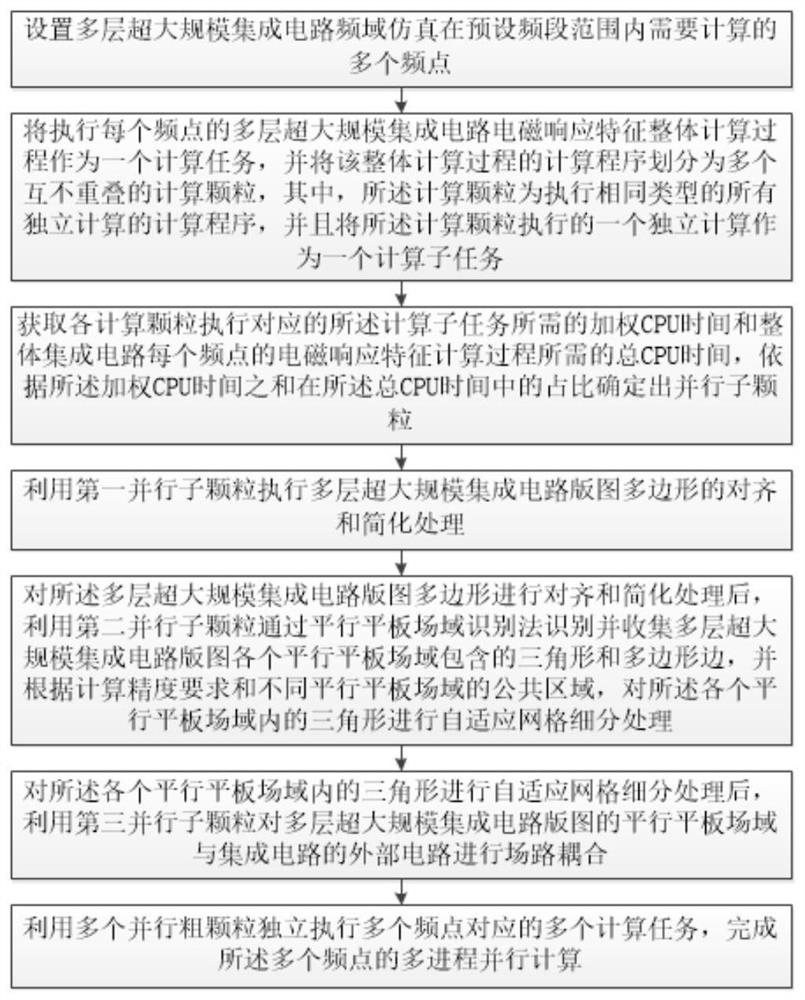

Integrated circuit electromagnetic response calculation method and device based on multi-level parallel strategy

ActiveCN111898330AImprove Parallel Computing EfficiencyImprove computing efficiencyCAD circuit designSpecial data processing applicationsIntegrated circuitFrequency domain

The embodiment of the invention discloses an integrated circuit electromagnetic response calculation method and device based on a multi-level parallel strategy. According to the method, the method comprises the steps of dividing the calculation of electromagnetic response characteristics of each frequency point needing to be calculated in frequency domain simulation of the multi-layer super-large-scale integrated circuit into a plurality of calculation subtasks; and respectively carrying out parallel computing on each computing subtask by adopting the plurality of parallel subparticles, and independently executing the plurality of computing tasks corresponding to the plurality of frequency points by utilizing the plurality of parallel coarse particles to finish multi-process parallel computing of the plurality of frequency points. According to the method, the calculation efficiency of each part in the electromagnetic response characteristic calculation of the frequency points in the frequency domain simulation of the multi-layer super-large-scale integrated circuit can be improved, the parallel calculation efficiency of the electromagnetic response characteristics of a large numberof integrated circuits with different frequency points can also be improved, and the efficient calculation requirement is met.

Owner:北京智芯仿真科技有限公司

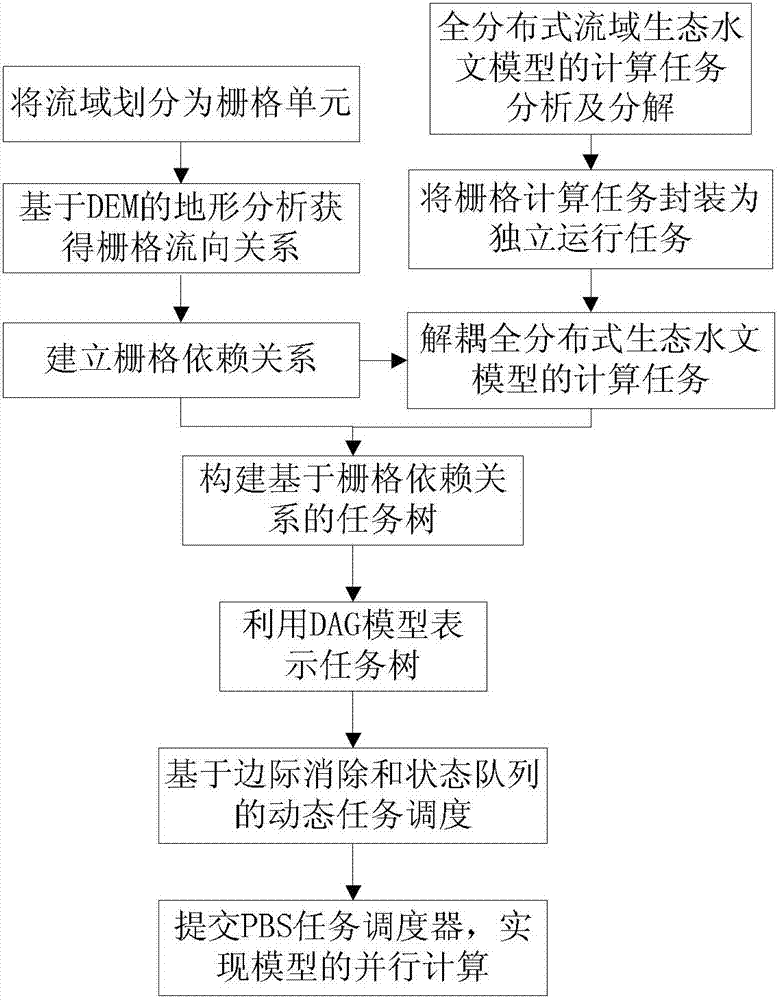

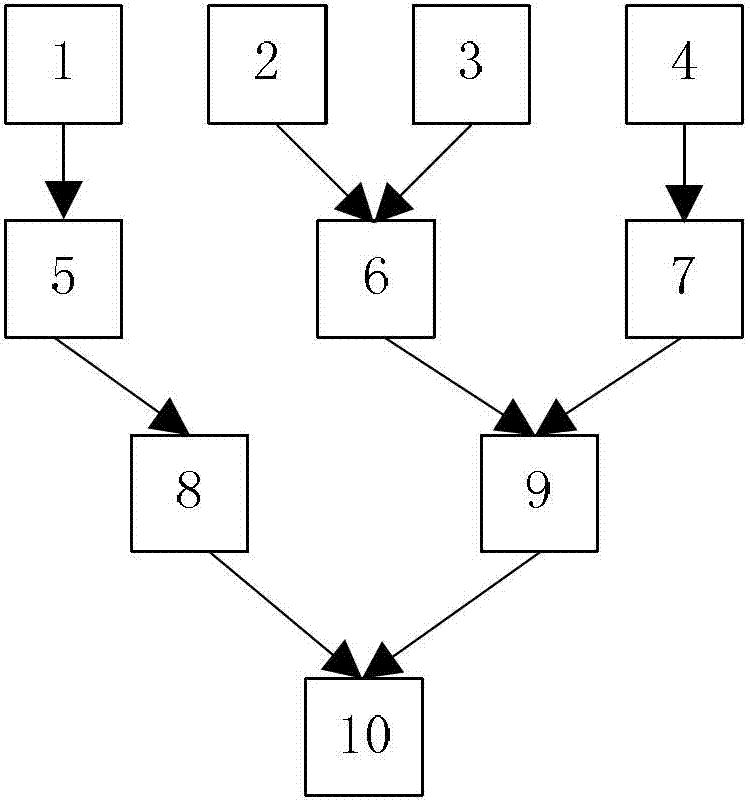

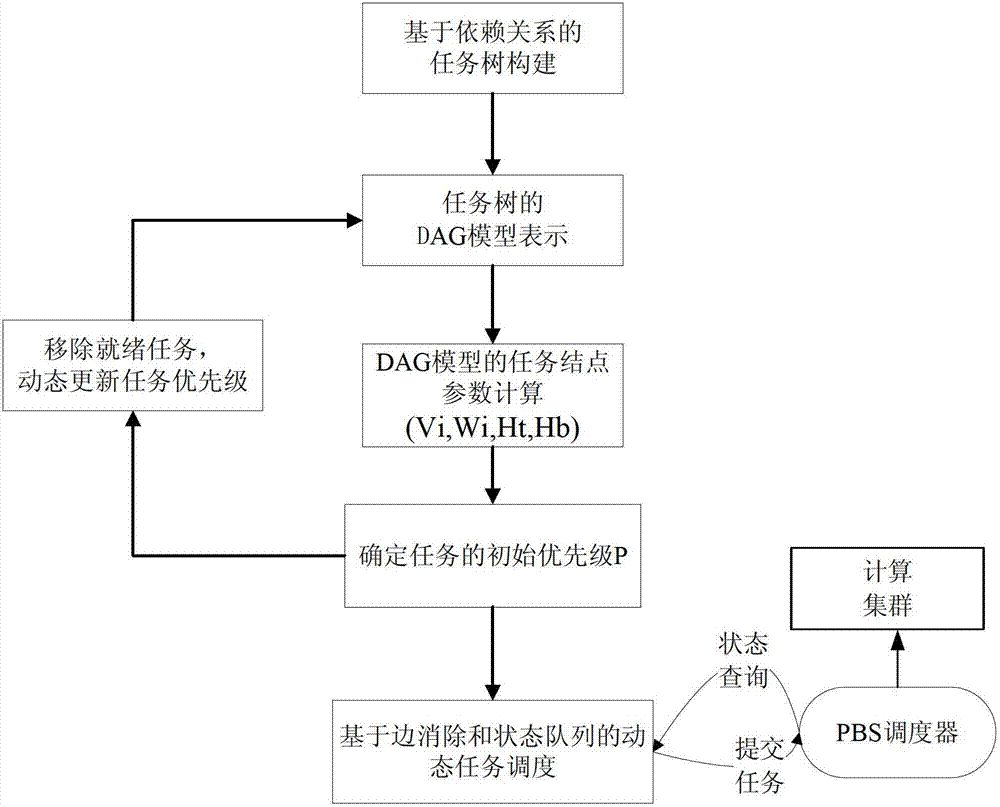

Rapid parallelization method of totally-distributed type watershed eco-hydrology model

ActiveCN103164190ABreak through singlenessReduce parallel granularityConcurrent instruction executionTerrain analysisLandform

The invention relates to a rapid parallelization method of a totally-distributed type watershed eco-hydrology model. The method is that grids serve as basic computing units, through the digital elevation model (DEM) terrain analysis, a watershed grid flow diagram is obtained and the computing dependency relationships of the grids are established, eco-hydrology process simulations of the vertical direction of the grid units serve as independent computing tasks, the grid unit computing tasks are decoupled according to the dependency relationships among the grid units, a task tree is constructed, a data bank available group (DAG) model is adopted to express the task tree, a task scheduling sequence is generated dynamically by utilization of the DAG model and the marginal elimination dynamic scheduling algorithm, the grid computing tasks are distributed to different nodes to compute through a portable batch system (PBS) dynamic scheduler, and parallelization of the totally-distributed type watershed eco-hydrology model is achieved. The rapid parallelization method of the totally-distributed type watershed eco-hydrology model has the advantages that operation is easy, practicability is strong, parallel logic control of the parallelization processing algorithm is greatly simplified, the parallel computing efficiency is effectively improved, and the parallel stability is improved.

Owner:CENT FOR EARTH OBSERVATION & DIGITAL EARTH CHINESE ACADEMY OF SCI

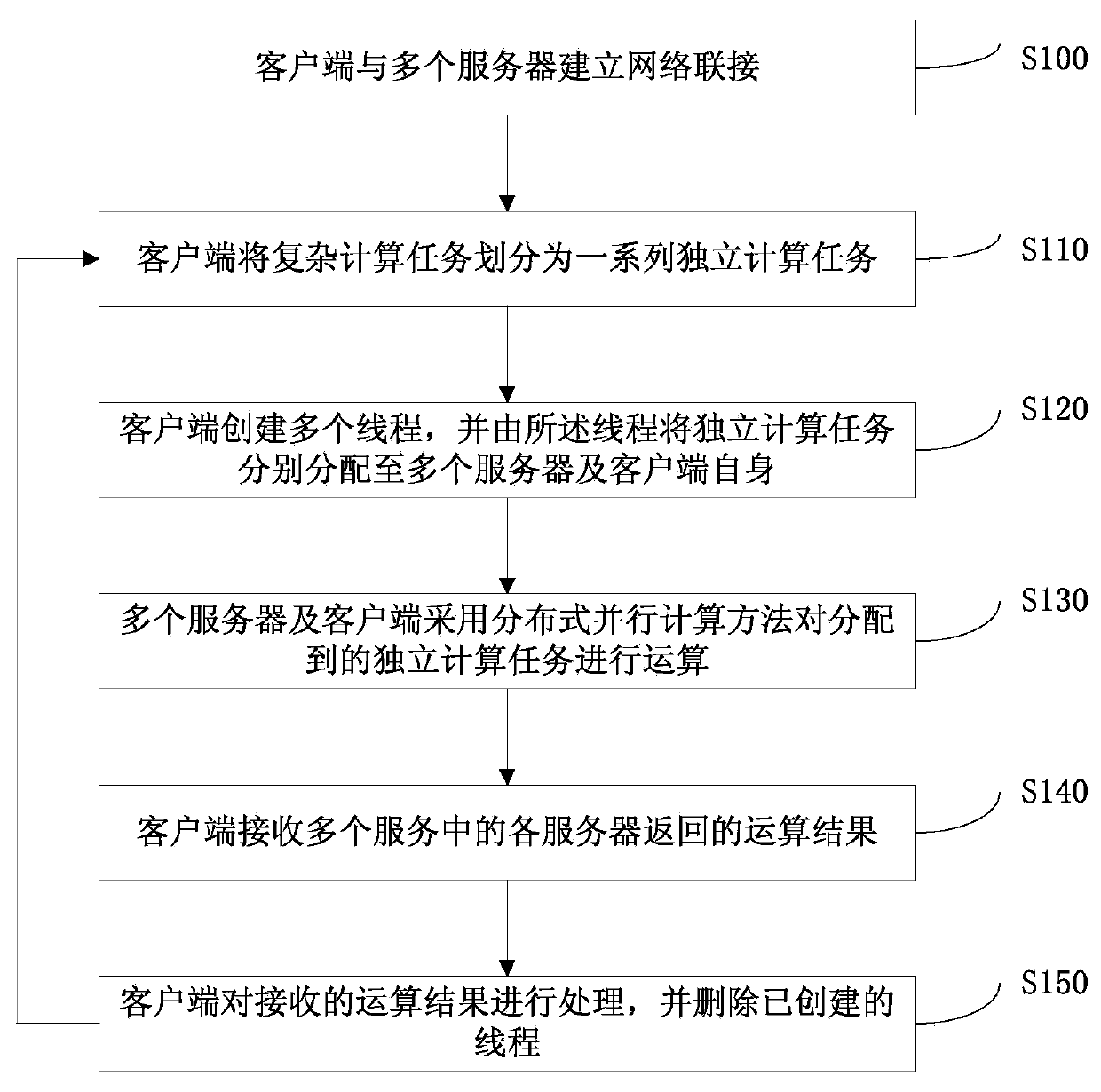

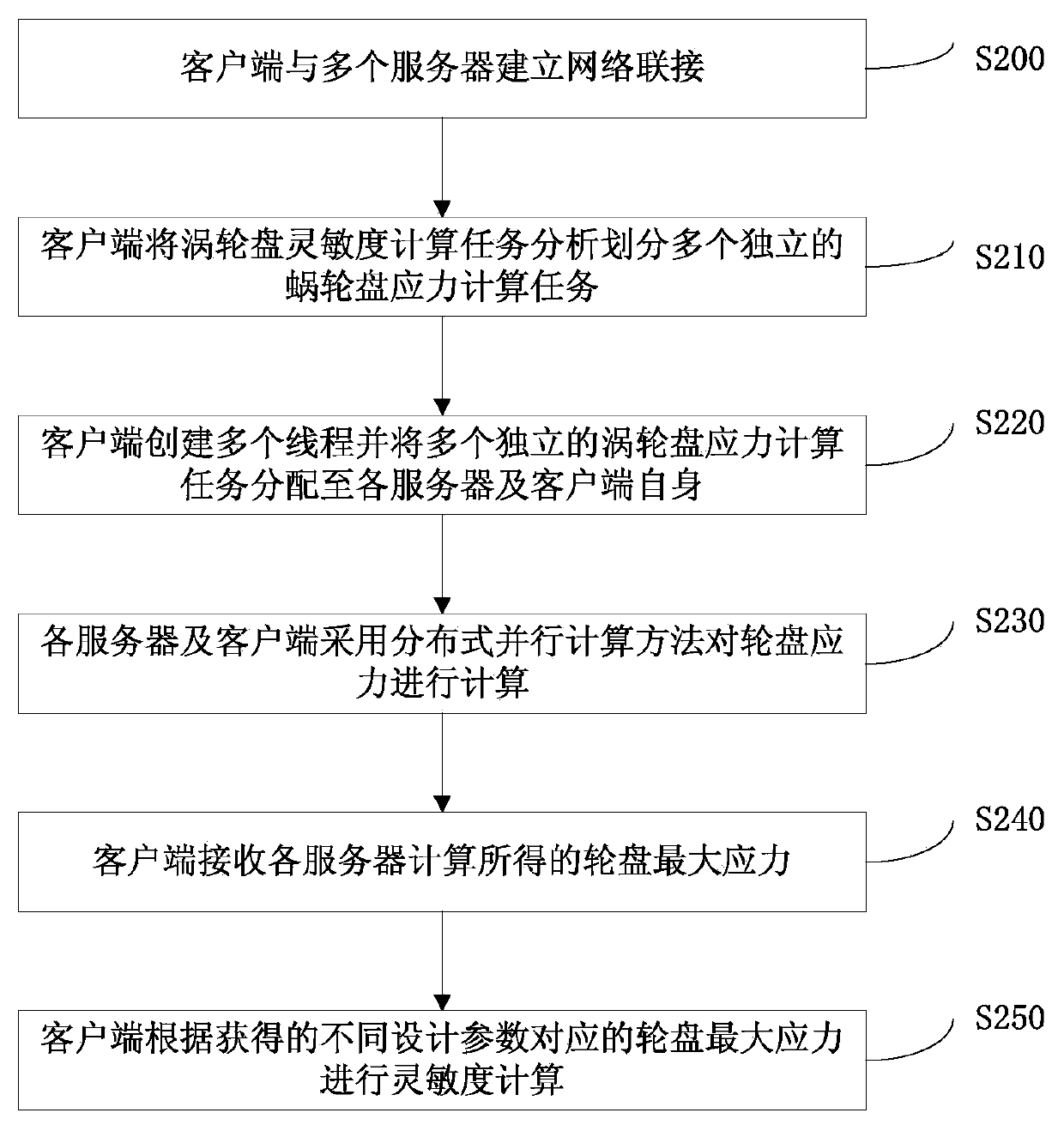

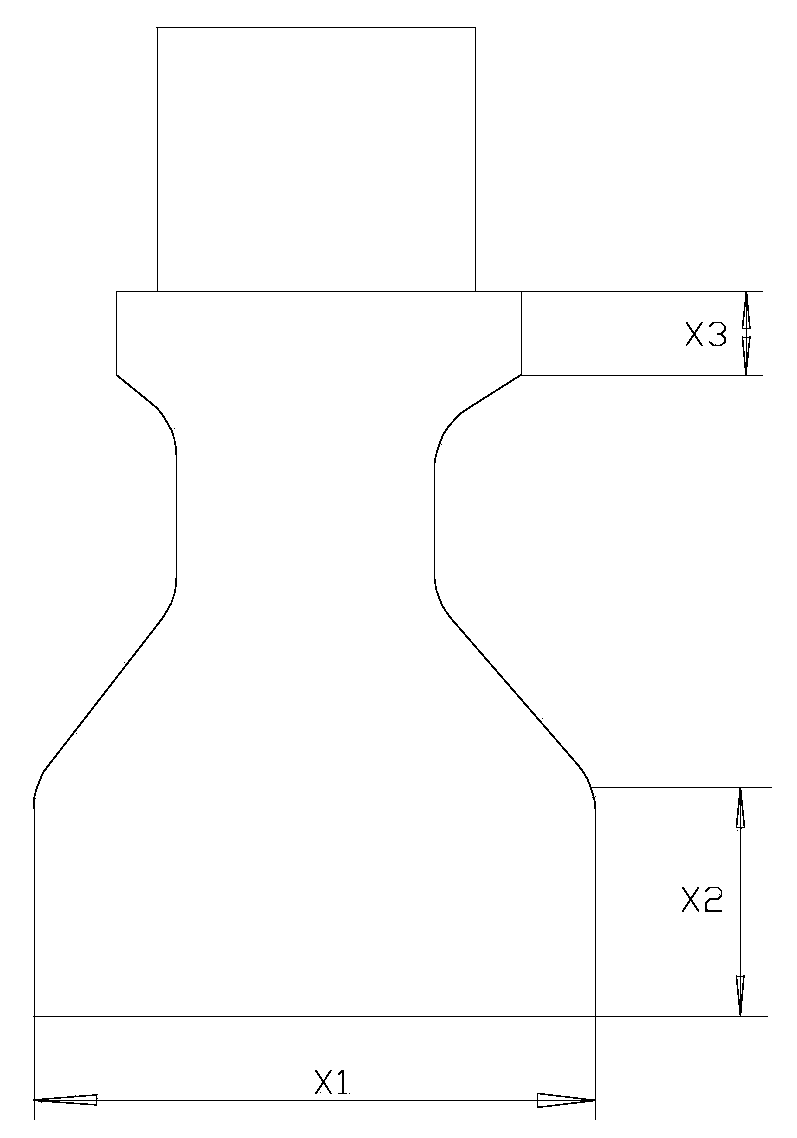

Method and system for dispatching distributed parallel computing job

ActiveCN103428217AImprove job execution efficiencySimplified installation procedureConcurrent instruction executionTransmissionEngineeringServer-side

The invention discloses a method and a system for dispatching distributed parallel computing job. The method includes establishing network connection among a client side and a plurality of servers and dividing a complex computing task into a series of independent computing tasks; creating a plurality of threads on the client side and respectively dispatching the independent computing tasks to the multiple servers and the client side by the aid of the threads; enabling the multiple servers and the client to operate the dispatched computing tasks by a memory-sharing parallel process; enabling the client side to receive operation results fed by the various servers after computation is completed. The method and the system for dispatching the distributed parallel computing job have the advantages that a total computing task set is divided into the series of independent computing tasks, parallel computing is carried out on the server and the client side by the memory-sharing process, accordingly, installation programs of server sides are simplified, time consumption for transmitting large quantities of data via a network is saved, and the parallel computing efficiency is improved.

Owner:CHINA AVIATION POWER MACHINE INST

Dynamic load balancing method based on Linux parallel computing platform

InactiveCN103399800ABreak performance bottlenecksImprove Parallel Computing EfficiencyResource allocationConcurrent instruction executionSoftware developmentMultiple stages

The invention provides a dynamic load balancing method based on a Linux parallel computing platform, and belongs to the field of parallel computing. The hardware architecture of the dynamic load balancing method comprises multiple computers participating in computing, and each computer is provided with a Linux operating system and an MPI software development kit. In the parallel computing process, general computing tasks are divided into multiple stages with the same execution time to be executed. The routine job scheduling technology in a system is utilized, the current resource utilization rate of each node is firstly read before parallel computing of each time phase begins, calculated performance and computational complexity of each node are combined, dynamic allocation is conducted on the computing tasks of the nodes, the fact that computing time of the nodes in every phase is basically equal is guaranteed, and system synchronization waiting delay is reduced. Through the dynamic adjustment strategy, the general computing tasks can be completed by means of higher resource utilization rates, the dynamic load balancing method breaks through efficiency bottlenecks caused by low configuration computational nodes, the computing time is further saved on the basis of the parallel computing, and computational efficiency is improved.

Owner:SHANDONG UNIV

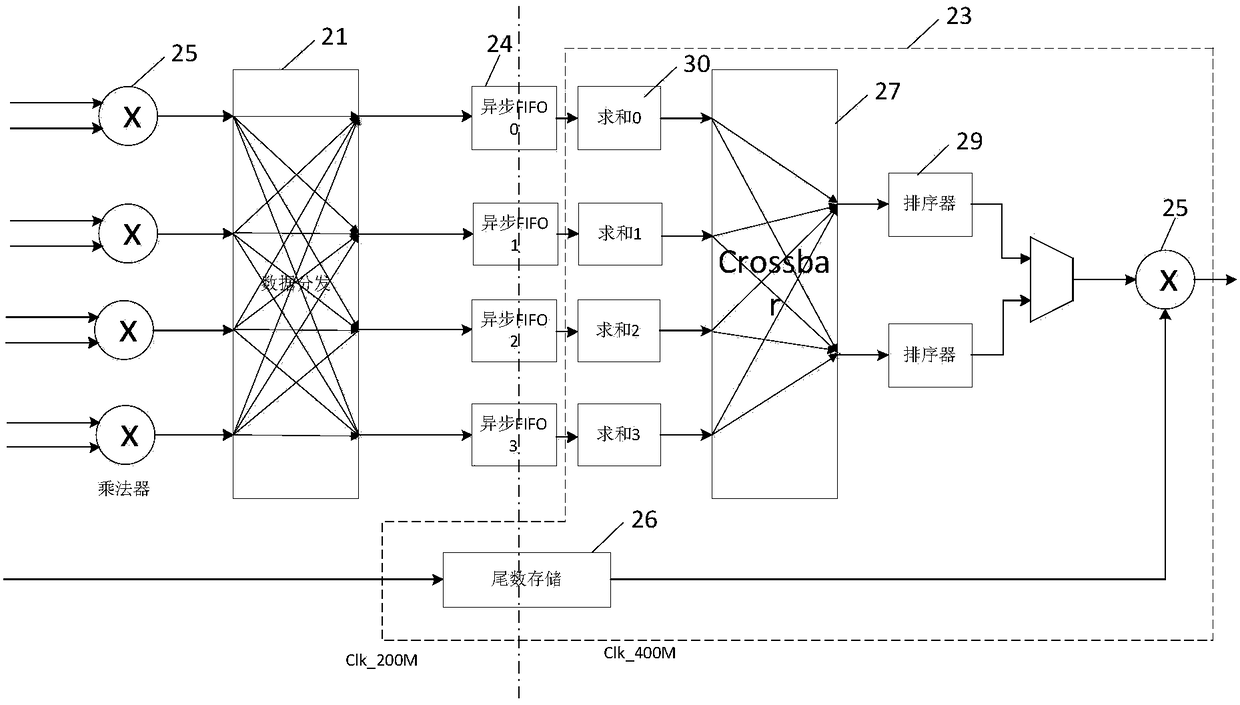

Neural network processor, current neural network data multiplexing method and related apparatus

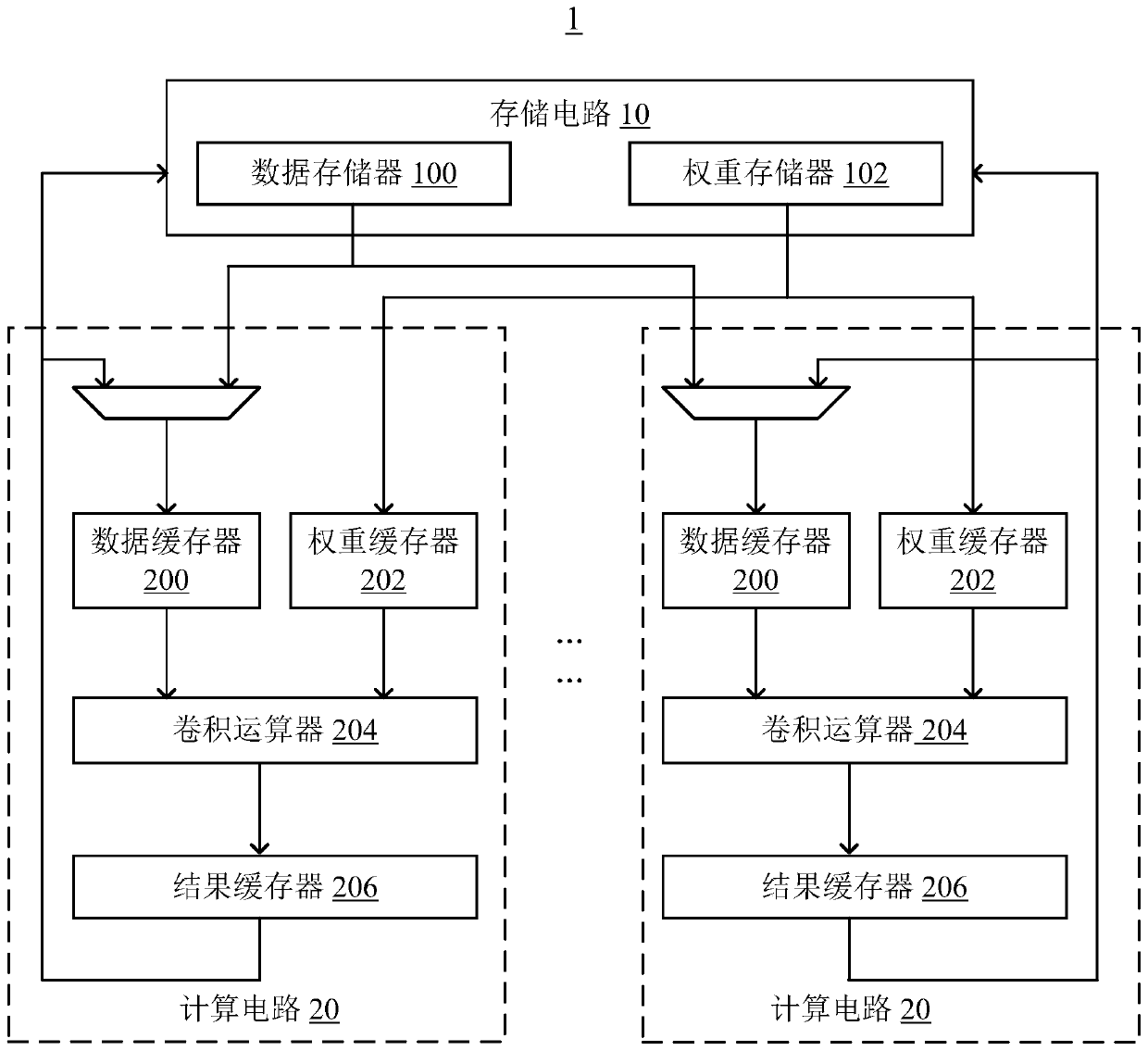

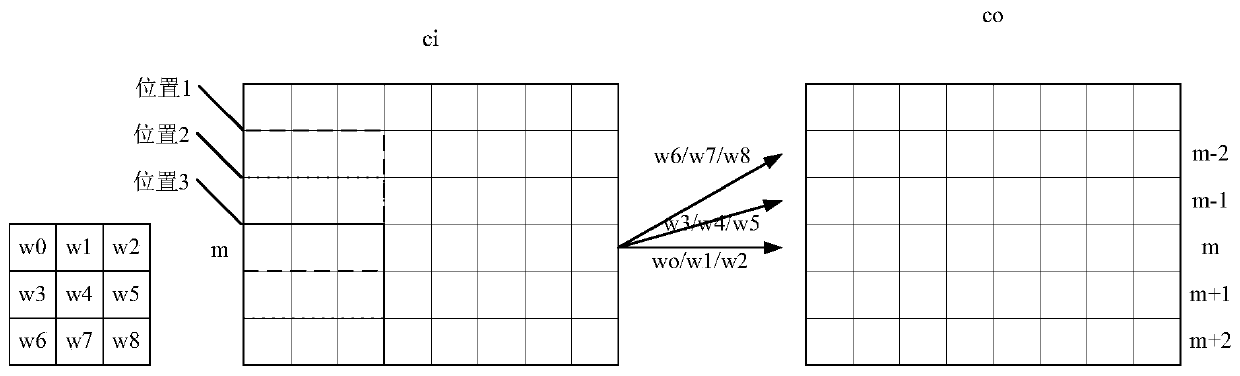

ActiveCN109740732AIncrease profitReduce the number of timesNeural architecturesPhysical realisationData bufferMulti level data

A neural network processor includes: a storage circuit that stores initial input data and a weight value required for performing a convolution operation; at least one computing circuit including: a data buffer that caches initial input data; the weight buffer caches the weight value; the convolution arithmetic unit is used for carrying out convolution operation in the current layer of convolutionneural network according to the initial input data and the weight values to obtain a plurality of first convolution results, and accumulating the first convolution results with the corresponding relations to obtain a plurality of second convolution results; at the same time, deleting the plurality of first convolution results; and the result buffer is used for caching the plurality of second convolution products as the initial input data of the next layer of convolutional neural network. The invention further provides a convolutional neural network data multiplexing method and device, electronic equipment and a storage medium. According to the invention, through multi-level data multiplexing, the operation speed of the neural network processor is improved, and the power consumption is reduced.

Owner:SHENZHEN INTELLIFUSION TECHNOLOGIES CO LTD

Data waveform processing method in seismic exploration

InactiveCN103105623AProgrammablePowerful floating-point computing capabilitySeismic signal processingGraphicsPrediction algorithms

The invention discloses a data waveform processing method in a seismic exploration, and the data waveform processing method in a seismic exploration is based on a three dimensional free surface multiple prediction method of a wave equation. The Data waveform processing method is used for the three dimensional surface multiple predictions in the process of the seismic exploration information. A graphic processing unit (GPU) is used for speeding up the fully three dimensional surface multiple prediction algorithm, namely, the GPU and the CPU are coordinated for calculation. Operations with intensive calculation are transferred to the GPU to carry out the calculation; higher calculation efficiency is available, the algorithm is capable of processing the earthquake information from the complicated underground medium. The method takes the space effect into account that a refection point, the shot point and an acceptance point are not in the same line. And the method also considers a two-dimensional algorithm which is superior to the convention. A simple approximation over the underground medium is unnecessary, therefore, the fully three dimensional free surface multiple prediction algorithm based on the wave equation accords to the true conditions of the underground medium so that the amplitude and the phase of the seismic data multiple wave can be exactly predicted.

Owner:NORTHEAST GASOLINEEUM UNIV

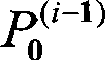

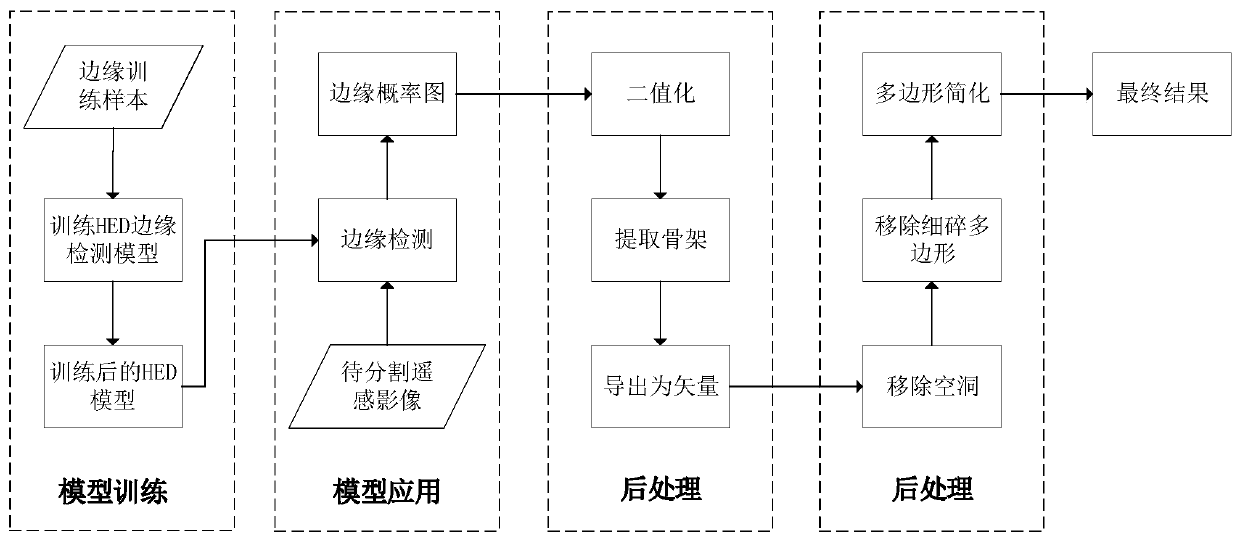

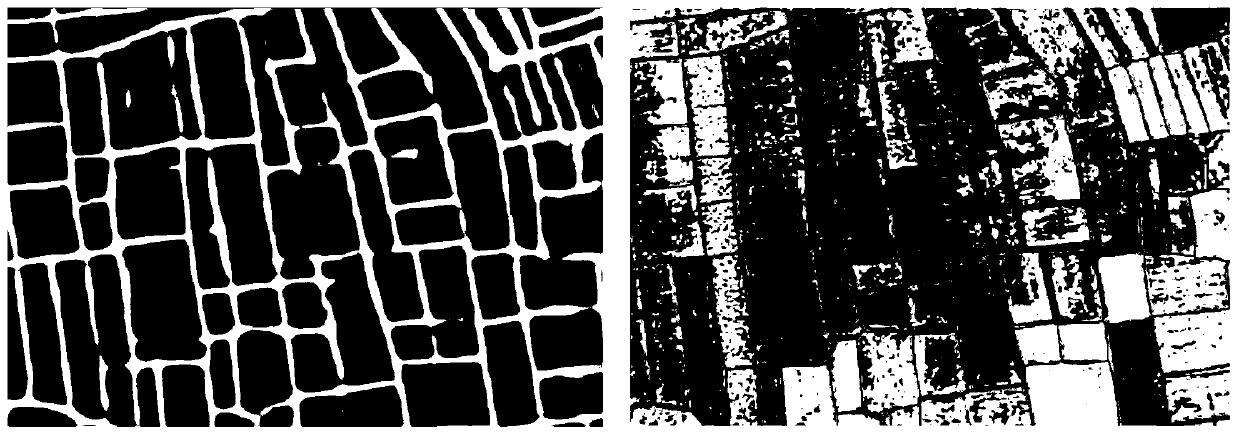

Image automatic segmentation method and device based on deep learning edge detection

PendingCN110570440ADifficulty of SimplificationGood segmentation effectImage enhancementImage analysisAutomatic segmentationComputer vision

The invention provides an image automatic segmentation method and device based on deep learning edge detection. The method comprises the steps of drawing a certain number of training samples accordingto a high-resolution remote sensing image; training an HED edge detection model through the training sample; performing edge detection on the remote sensing image to be segmented by using the trainedHED edge detection model to generate an edge probability graph; performing post-processing on the edge probability graph to generate a vector polygon; and simplifying the vector polygon to obtain animage segmentation result of the remote sensing image to be segmented. According to the image segmentation method provided by the invention, parameters do not need to be adjusted, the segmentation accuracy is higher, automatic operation can be realized, and the image segmentation efficiency is high.

Owner:武汉珈和科技有限公司

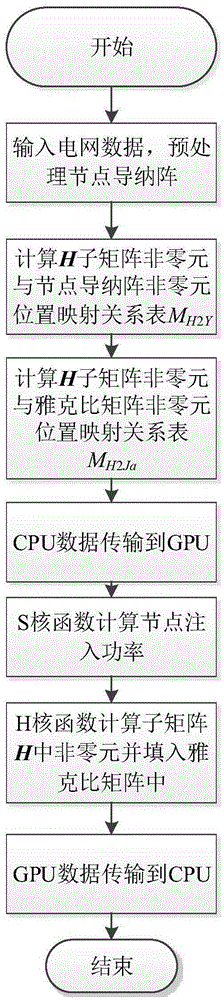

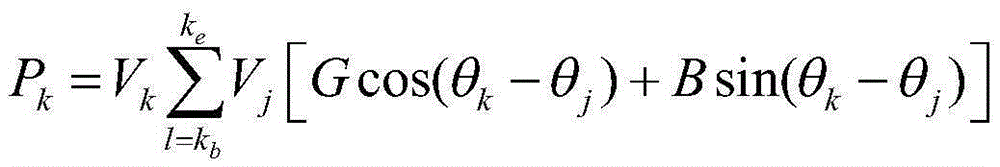

GPU thread design method of power flow Jacobian matrix calculation

InactiveCN105391057AImprove branch execution efficiencySolve load imbalanceAc network circuit arrangementsNODALPower flow

The invention discloses a GPU thread design method of power flow Jacobian matrix calculation. The method comprises the steps that: power grid data is input, and pre-processing is carried out on a node admittance matrix Y; a CPU calculates position mapping relation tables respectively between sub-matrix non-zero elements and node admittance matrix Y non-zero elements; the calculates position mapping relation tables respectively between sub-matrix non-zero elements and Jacobian matrix non-zero elements; in a GPU, injection power of each node is calculated by an injection power kernel function S; and in the GPU, non-zero elements in sub-matrixes are respectively calculated by Jacobian sub-matrix calculation kernel functions and stored in a Jacobian matrix. According to the invention, the calculation of Jacobian matrix non-zero elements is carried out by the sub-matrixes, and the judgment of branch structures of sub-matrix regional process belonging to the elements, which is required by the direct calculation process using a single kernel function, is avoided, so that the execution efficiency is improved; in addition, the non-zero elements in the sub-matrixes having identical calculation formulas are calculated in a centralized manner, so that the problem of unbalanced thread loads is solved, and the efficiency of parallel calculation is improved.

Owner:STATE GRID CORP OF CHINA +3

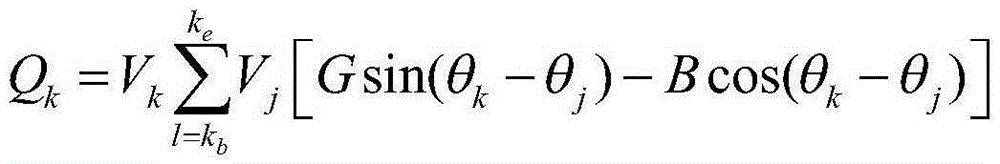

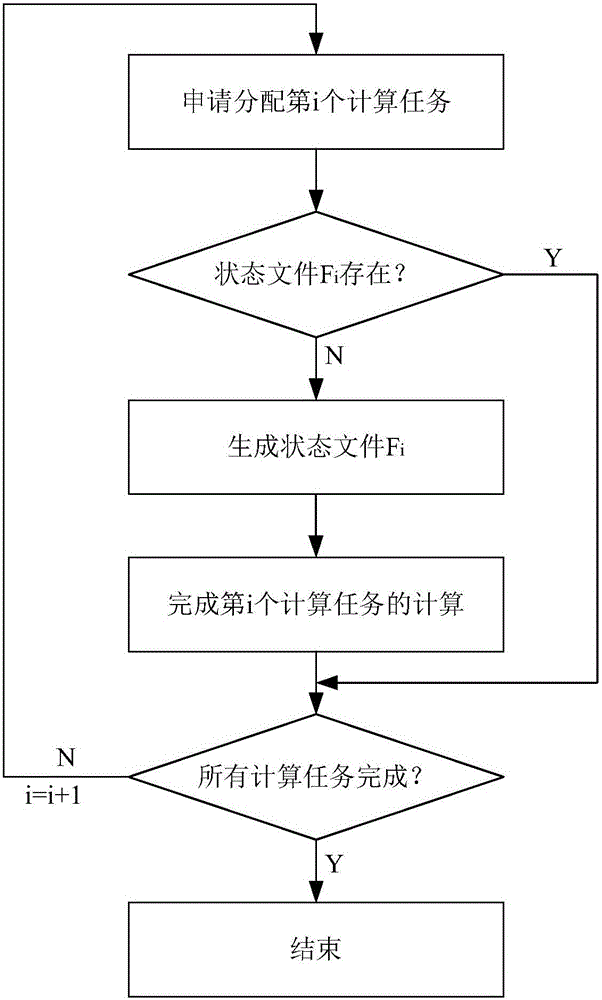

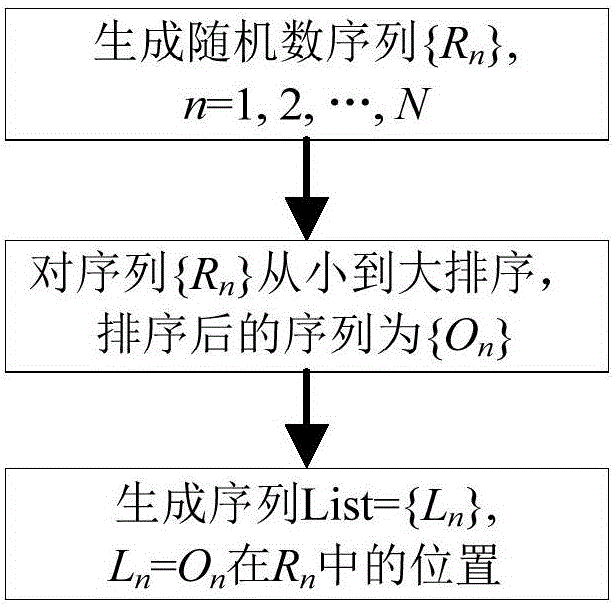

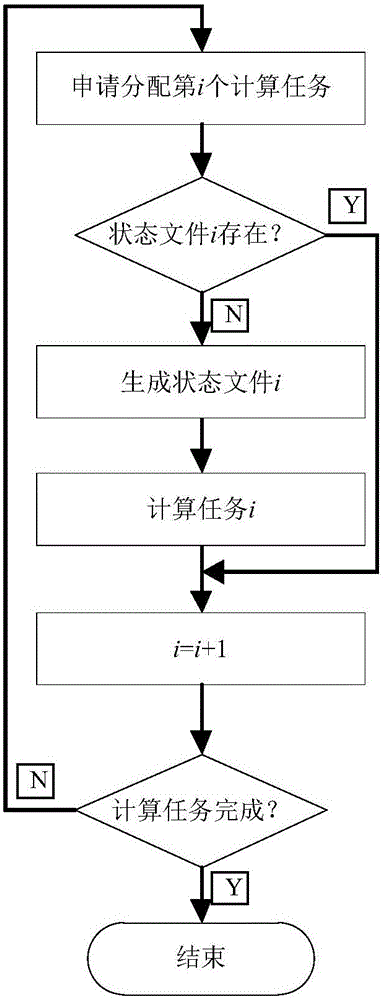

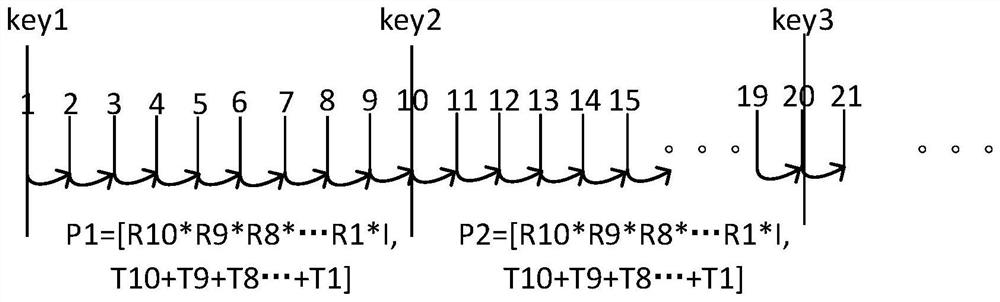

Automatic starting-stopping and computation task dynamic allocation method for mass parallel coarse particle computation

ActiveCN106055401AReduce communicationAvoid reading and writing bottlenecksProgram initiation/switchingResource allocationRound complexityParallel computing

The invention discloses an automatic starting-stopping and computation task dynamic allocation method for mass parallel coarse particle computation. The method comprises the following steps: defining parallel coarse particles according to a problem computation characteristic; dynamically allocating computation tasks in the parallel coarse particles and input parameters corresponding to the computation tasks to all processes including a master process by the master process according to a file marking technology and a dynamic allocation computation task strategy; dynamically allocating memories to the processes including the computation tasks based on an automatic starting-stopping technology; and after completion of parallel computation of all the parallel coarse particles, collecting output parameters of all the processes by the master process, and combining and integrating the output parameters to obtain a final result of complete running. Through adoption of the method, communications among the processes are reduced to the maximum extent; the hard disk reading-writing bottleneck occurring since a memory peak value is greater than an available physical memory during multi-process parallel computation is avoided; meanwhile, the problem of non-equivalent complexity of computation examples is solved perfectly; and the parallel computation efficiency is increased greatly.

Owner:北京智芯仿真科技有限公司

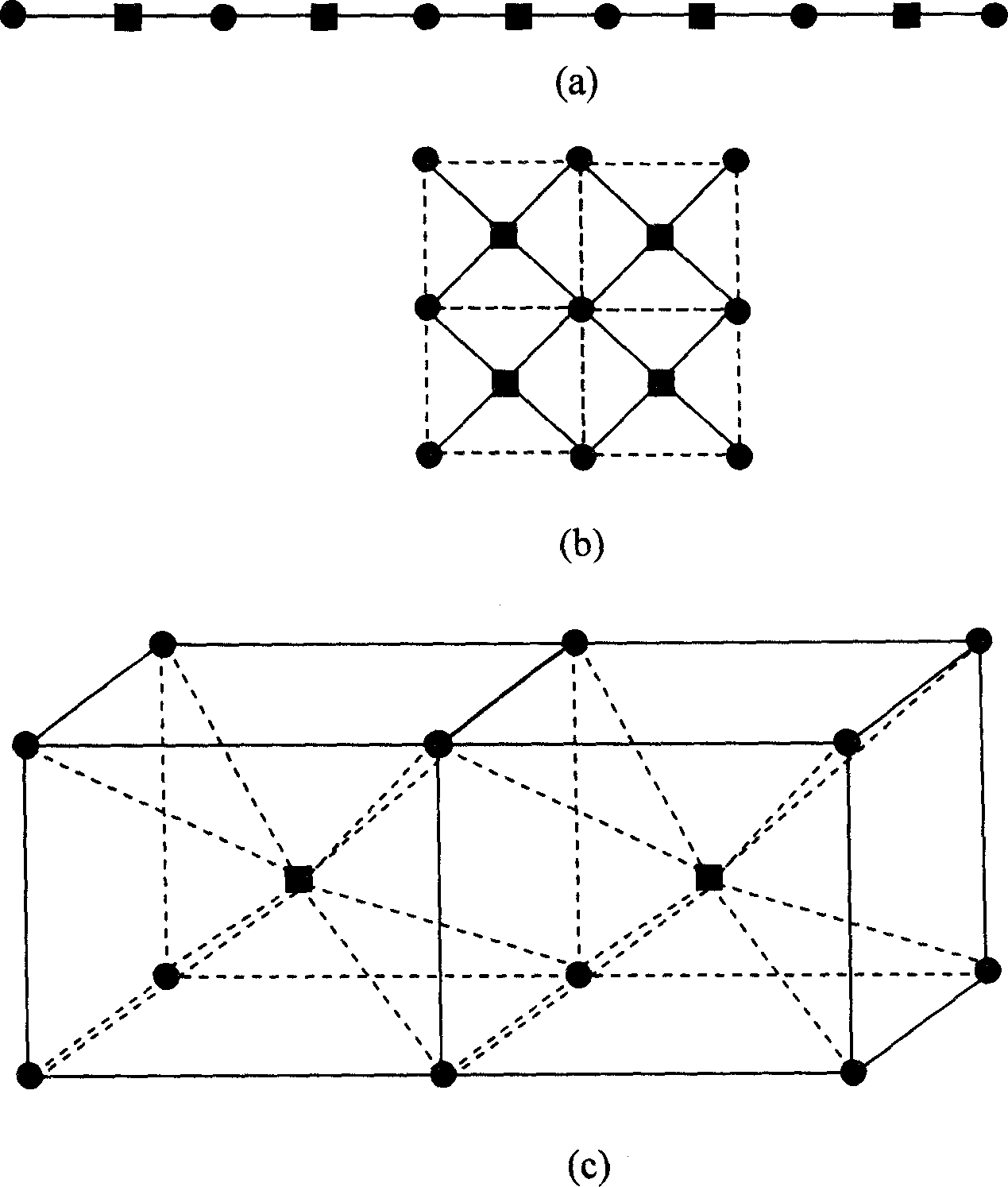

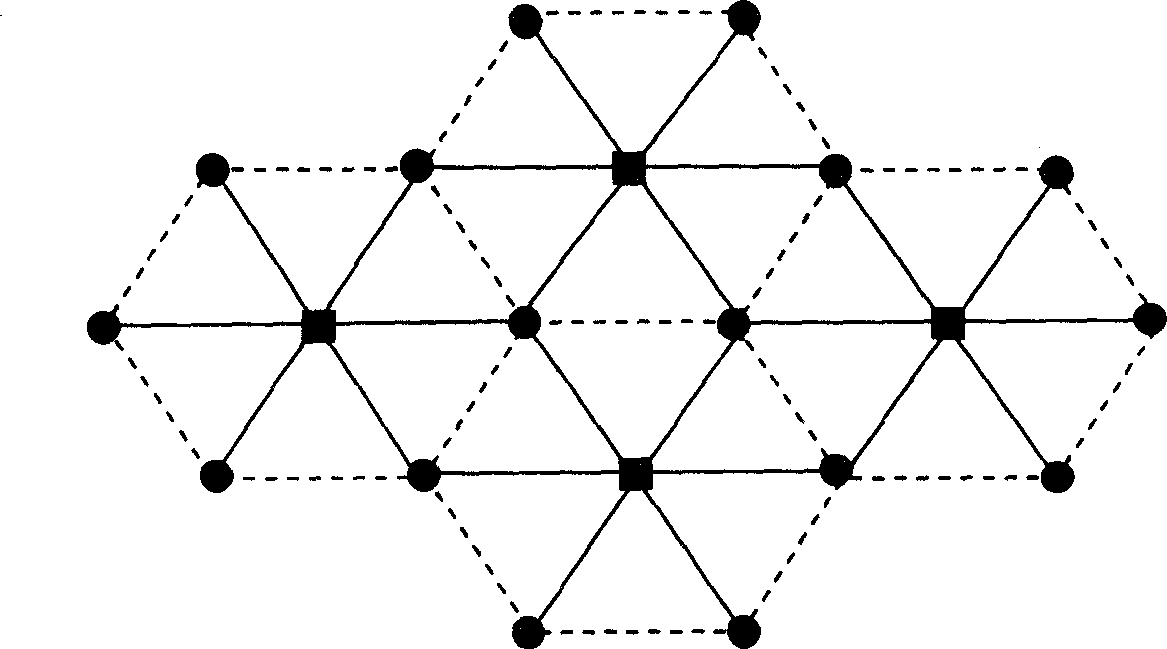

Parallel computing system facing to particle method

InactiveCN1851683AImprove execution efficiencyReduce complexityDigital computer detailsElectric digital data processingParallel computingParticle method

The present invention includes plurality of calculation nodes and plurality of storage nodes, wherein said plurality of calculation nodes arranged in periodicity distributed calculation node array in logic; said plurality of storage nodes arranged in periodicity distributed storage node array in logic; said calculation node array and said storage node array alternative arrangement and common forming periodicity distributed array. Each storage node is connected with and shared by plurality of neighboring calculation nodes; each calculation node is connected with plurality of neighboring storage nodes and to process data in said plurality of neighboring storage nodes. Said invention has advantages of comprehensive application area, very high software execution efficiency, greatly reducing massively parallel computation complexity.

Owner:INST OF PROCESS ENG CHINESE ACAD OF SCI

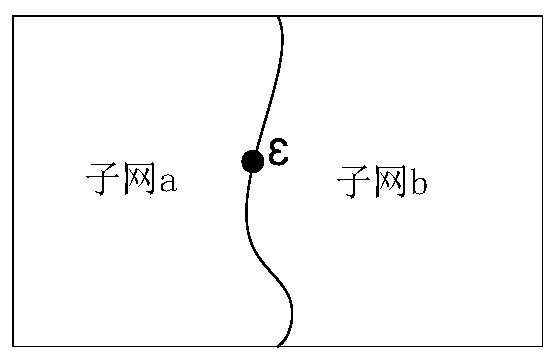

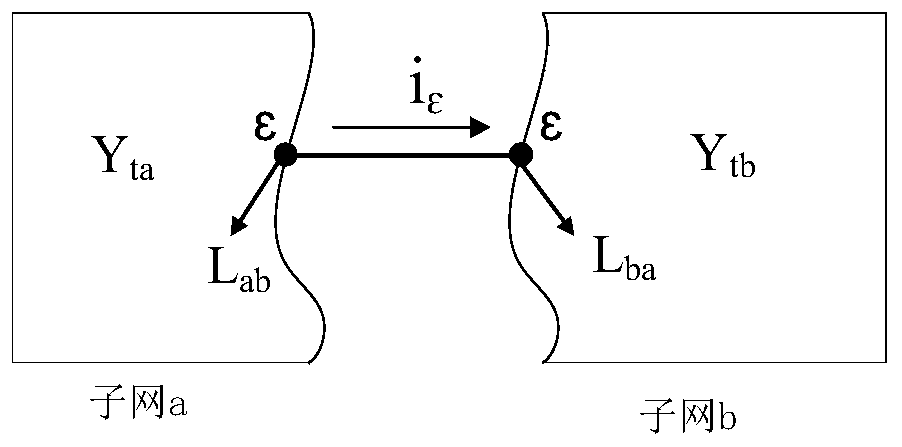

Load flow calculation method and device for large-scale power distribution network

ActiveCN109802392AIn line with actual operating conditionsFast convergenceAc networks with different sources same frequencyConstant powerConstant impedance

The embodiment of the invention provides a load flow calculation method and device for a large-scale power distribution network, and the method comprises the steps: decomposing a target power distribution network into a plurality of sub-networks, and obtaining an equation for solving a contact branch current value between the sub-networks; calculating constant impedance, constant current and constant power parameters of each subnet ZIP load model; calculating a node admittance matrix of each subnet according to the constant impedance parameters, and improving the node admittance matrix; calculating the node injection current of the current iteration according to the node voltage of the current iteration and the constant current and constant power parameters; obtaining the current iterationcontact branch current according to the improved node admittance matrix, the current iteration node injection current and an equation used for solving the contact branch current between the subnets;obtaining the node voltage phasor of the next iteration according to the contact branch current of the current iteration if the difference value between the contact branch current of the previous iteration and the next iteration is greater than the convergence criterion. According to the embodiment of the invention, the efficiency and flexibility of load flow parallel computing are considered.

Owner:CHINA AGRI UNIV

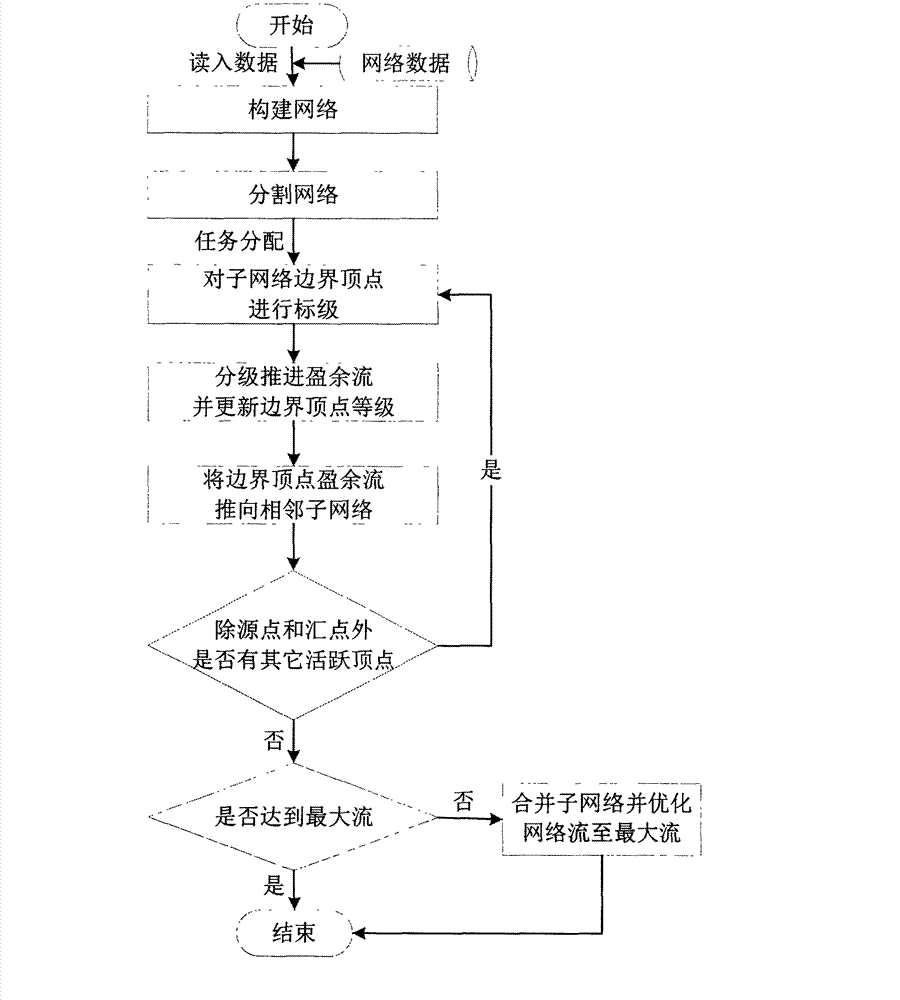

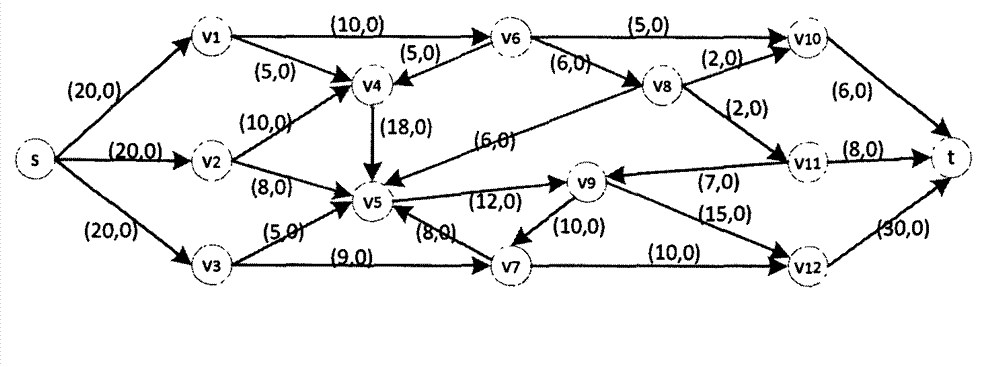

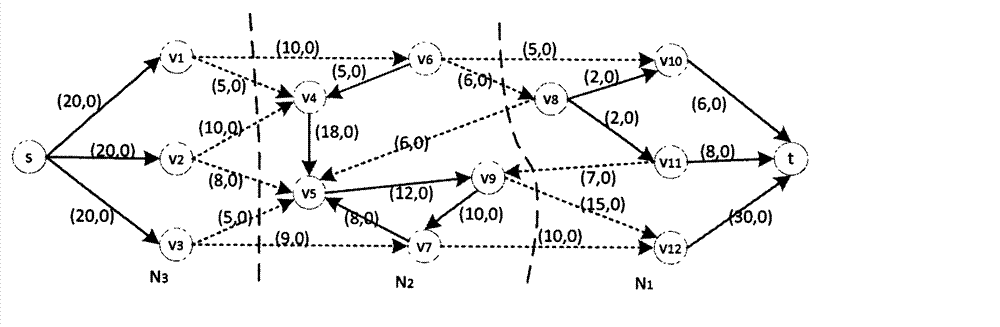

Network maximum flow parallel solving method

InactiveCN103093281ASolve efficiency problemsSolve resource problemsForecastingDistributed computingCommunication problem

The invention discloses a network maximum flow parallel solving method which comprises the follow steps that 1) network surplus flow is promoted in a parallel iteration mode; and 2) the network flow is optimized in a sub-network merging mode. According to the step 1) of the method, a network is divided into a plurality of sub-networks which are distributed to each computing unit, each computing unit divides border top points into three different levels in a marking mode according to different states of border arcs and border top points of the sub-network of the computing unit, the border top points with different levels push the surplus flow in different modes, pushing efficiency of the surplus flow is guaranteed, communication problems are effectively solved, solving speed of the parallel method is quickened, and meanwhile correctness of results is guaranteed through the step 2).

Owner:吴立新 +1

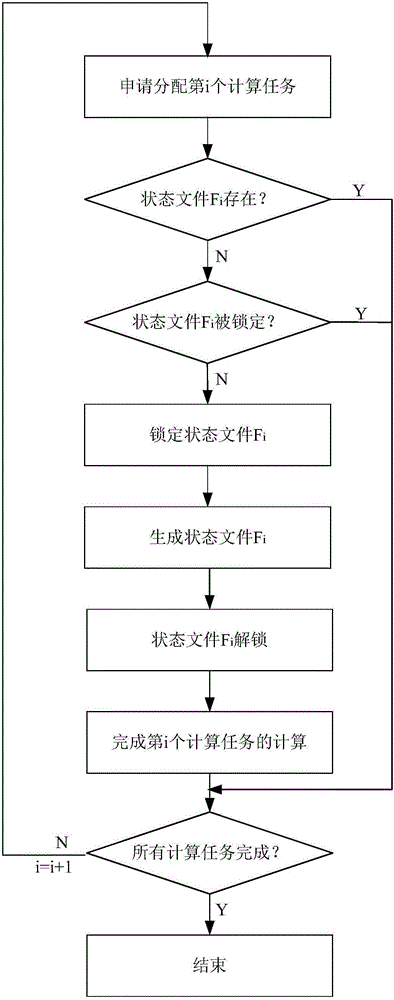

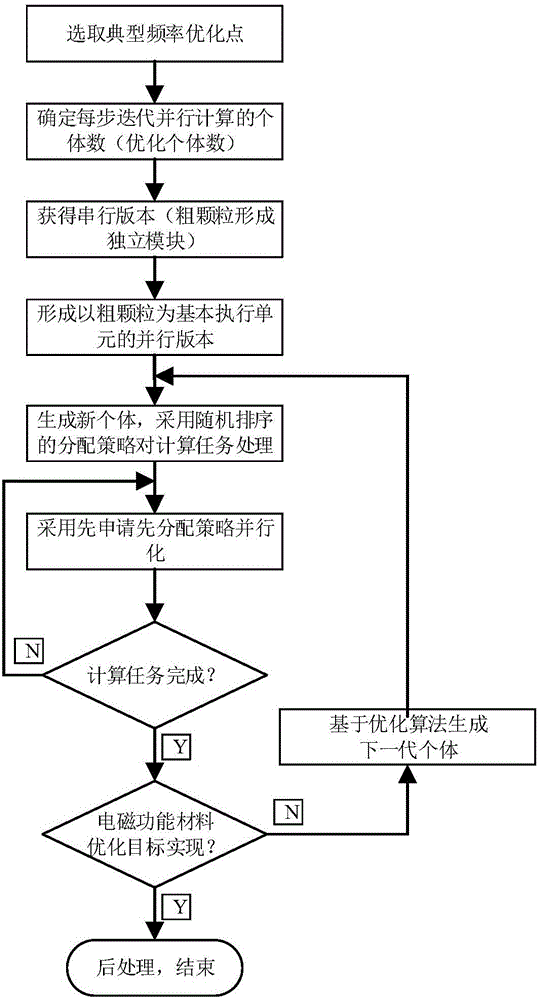

Coarse particle parallel method and system for electromagnetic function material optimization design

ActiveCN106126878AImprove Parallel Computing EfficiencySolve the low efficiency of parallel computingSpecial data processing applicationsInformaticsHigh performance computationMaterial Design

The invention relates to the field of function material design and high-performance calculation, in particular to a coarse particle parallel method and system for electromagnetic function material optimization design. The method comprises the steps of obtaining a serial version by taking a coarse particle as an independent execution module, and obtaining a parallel version by taking the coarse particle as a basic execution unit; further, processing a plurality of calculation tasks by adopting an allocation policy of random sorting, wherein the allocation policy of random sorting can thoroughly disrupt an allocation sequence of all the calculation tasks to obtain a new sequence of the calculation tasks; then, applying for allocating to-be-allocated calculation tasks in the new sequence by each calculation process through adopting a first-application first-allocation policy, and performing calculation until all the calculation tasks are finished; and finally, performing statistics on calculation results, performing targeting processing, comparing a processing result with an expected optimization goal, and judging whether the optimization goal is achieved or not. According to the coarse particle parallel method, the problem of low parallel calculation efficiency in electromagnetic function material optimization design at the present stage is effectively solved.

Owner:北京智芯仿真科技有限公司

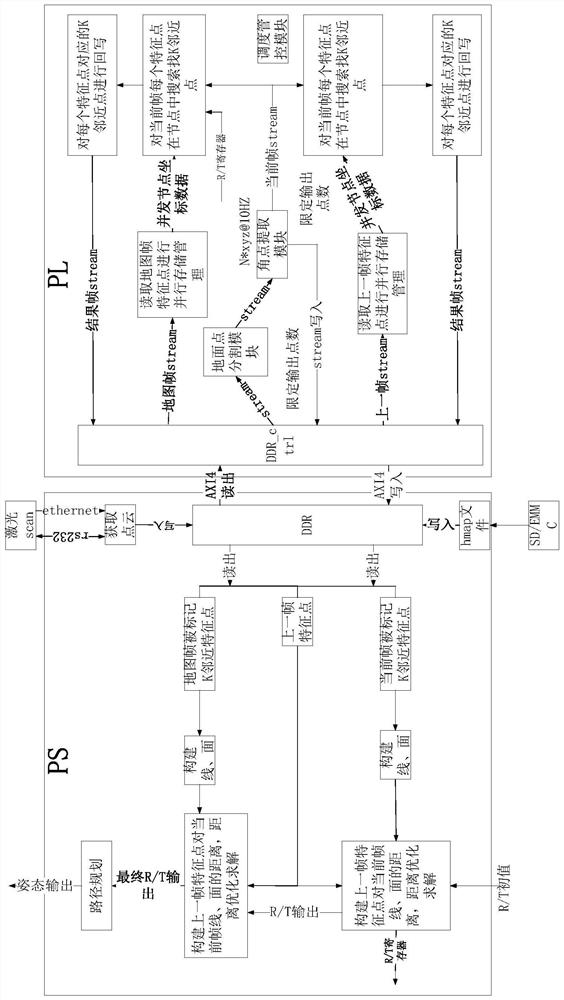

FPGA-based point cloud feature matching method and system and path planning system

PendingCN112182042AAvoid and simplify the KNN processImprove task execution efficiencyImage memory managementCharacter and pattern recognitionPathPingPoint cloud

The invention provides a point cloud feature matching method and system based on an FPGA, and a path planning system. The system comprises a processing system part and a programmable logic part. The processing system part performs periodic point cloud data acquisition, the acquired point cloud data is written into a specified address space of a memory and communicates with the programmable logic part through a register channel, and the programmable logic part acquires the point cloud data from the specified address space frame by frame and performs logic processing. The KNN process of processing feature point cloud matching on a traditional processor CPU is avoided and simplified.

Owner:武汉智会创新科技有限公司

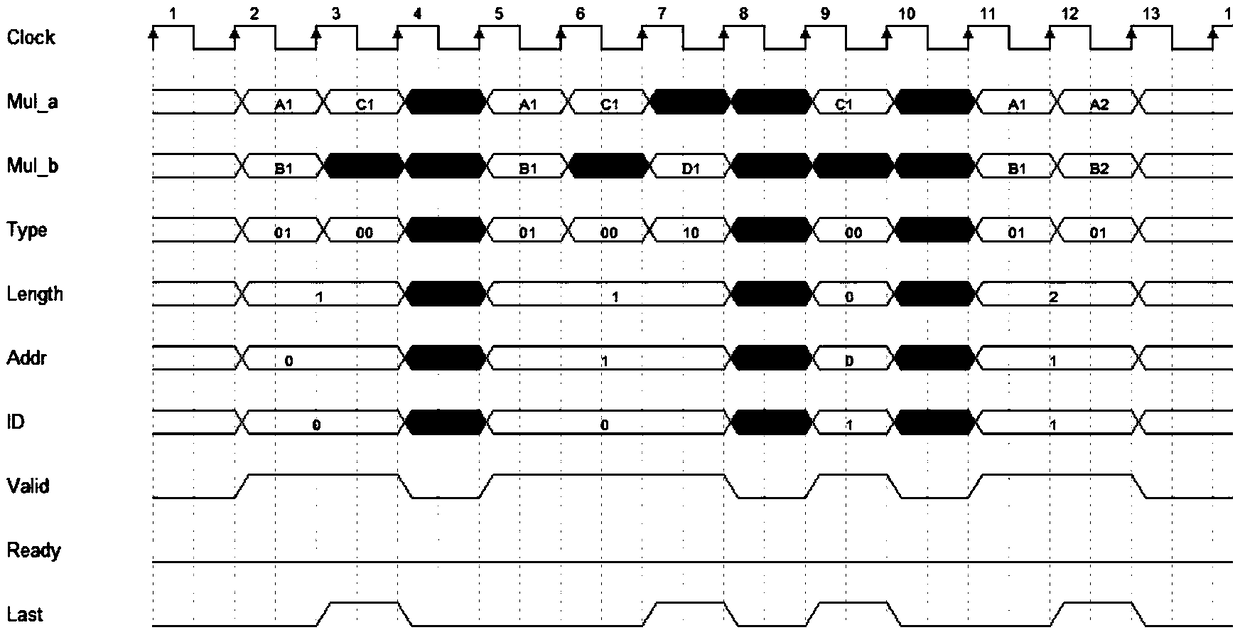

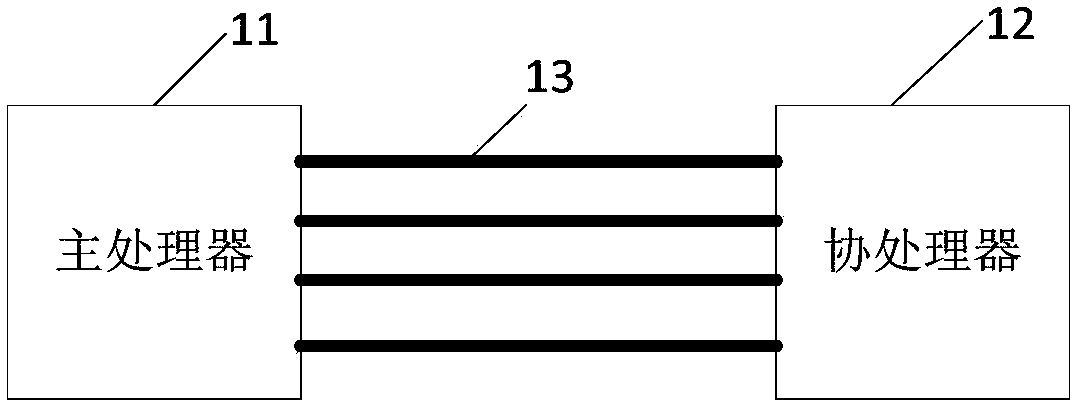

Arithmetic expression parallel computing device and method

ActiveCN108595369AImplement parallel transferImprove Parallel Computing EfficiencyConcurrent instruction executionMultiple digital computer combinationsCoprocessorTime-sharing

The invention provides an algorithm expression parallel computing device and method. A main processor in the device is connected to a coprocessor through a plurality of AXI buses; the main processor is used to determine the operation data and the algorithm expression additional information in an algorithm expression to be processed; the operation data includes a multiplication sub-algorithm expression, third data to be added with a product result, and mantissa data to be multiplied by an addition result; the multiplication sub-algorithm expression includes first data and second data; the operation data and the algorithm expression additional information are sent to the coprocessor in parallel through the AXI buses; the coprocessor is used to simultaneously perform multiplication on the first data and the second data received from the AXI buses separately, and perform calculation on a product result corresponding to the same algorithm expression to be processed, the third data and the mantissa data based on the algorithm expression additional information to obtain a calculation result, thus realizing the parallel processing of multiple operation data, realizing the multi-task time-sharing parallel processing, and improving the parallel computing efficiency.

Owner:TIANJIN CHIP SEA INNOVATION TECH CO LTD +1

Discrete data uniform quantification algorithm in mobile cloud calculation

InactiveCN106341325AImplement feature classificationImprove Parallel Computing EfficiencyResource allocationData switching networksMobile cloudData acquisition

The invention discloses a discrete data uniform quantification algorithm in mobile cloud calculation. A mobile cloud calculation data clustering model and a channel model are constructed, fluctuation discrete data acquisition is carried out, a path is selected according to a Logistic model, an optimal probability density of mobile cloud calculation intercluster fluctuation discrete data uniform quantification is obtained, a Bayes rough set uniform quantification optimization object function is constructed, and thus algorithm optimization is realized. The algorithm employed by the invention can effectively realize feature classification of mobile cloud calculation intercluster fluctuation discrete data, has a quite good fluctuation discrete data quantification effect and improves the parallel calculation efficiency of cloud calculation.

Owner:SICHUAN YONGLIAN INFORMATION TECH CO LTD

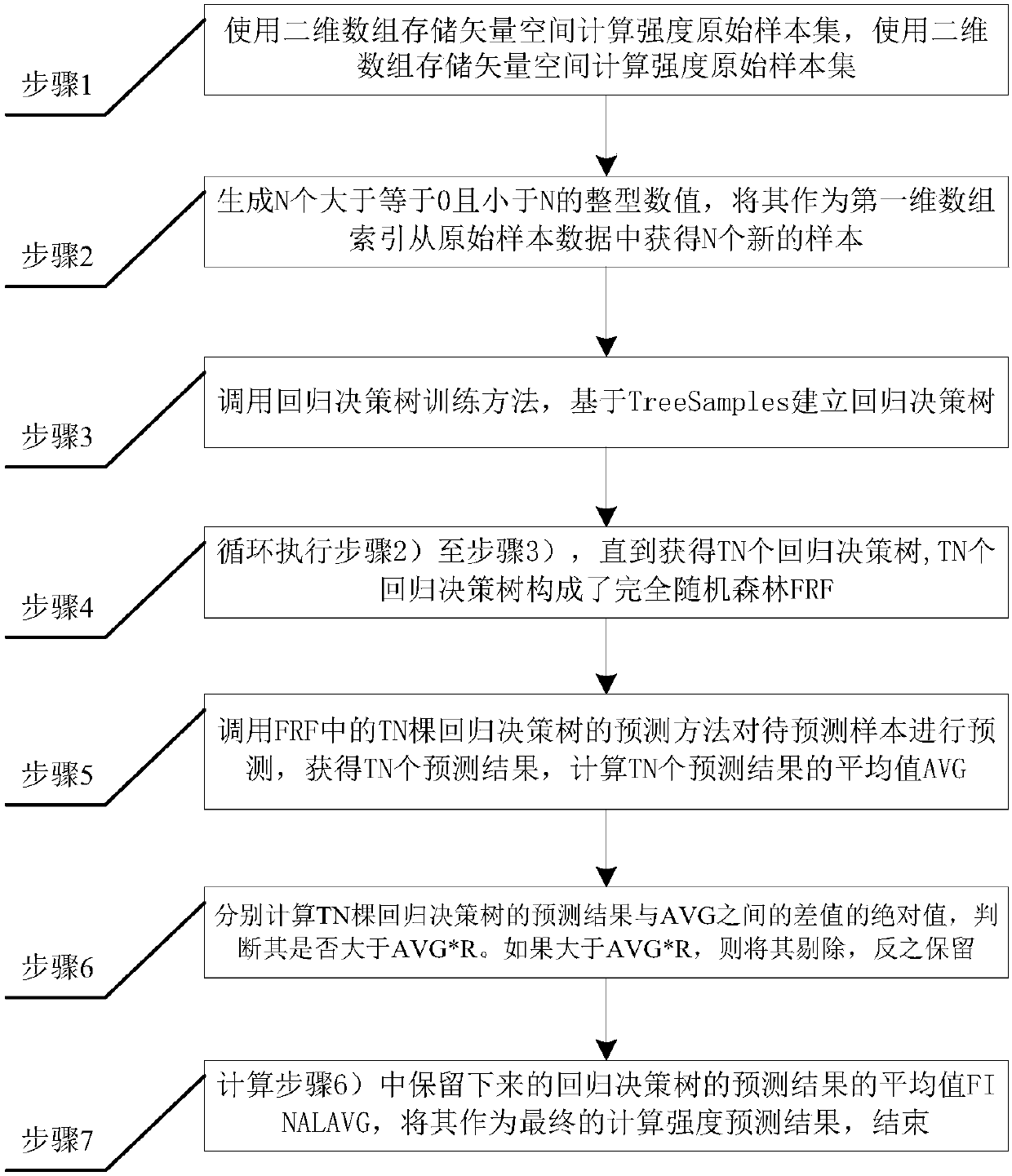

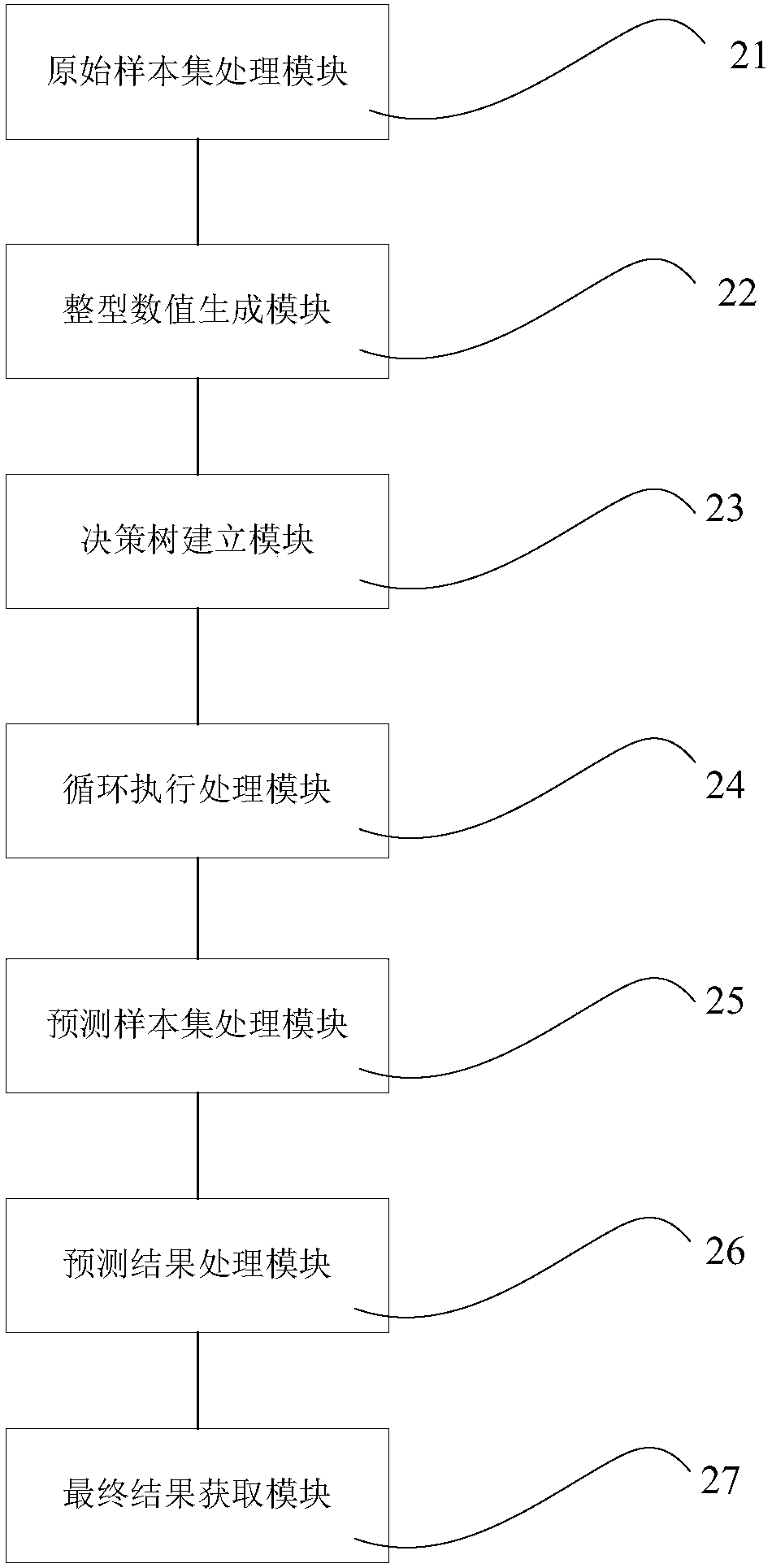

Vector space computing strength predicting method and system based on fully random forest

InactiveCN108052755AImprove forecast accuracyAccurate predictionDesign optimisation/simulationVectoral format still image dataAlgorithmVector space model

The invention discloses a vector space computing strength predicting method and system based on a fully random forest. By inputting all features relevant to the vector space computing strength, multiple full regression trees are trained, computing strength modeling for a vector space computing domain with various features is achieved, the prediction result of the fully random forest is optimized,prediction values which are distinctly different from the prediction result are removed, the prediction precision of the fully random forest is improved, and the vector space computing strength is precisely predicted in a parallel computing environment. By means of the vector space computing strength predicting method and system based on the fully random forest, in the training process of the fully random forest, the training sample of each regression decision-making tree is randomly selected from an original sample, the selected features comprise all features of the original sample, and the model can adapt to prediction of the vector space computing strength with few important features and many redundancy features. A basis can be provided for parallel computing resource balanced scheduling and allocating, and the parallel computing efficiency is improved.

Owner:地大(武汉)资产经营有限公司

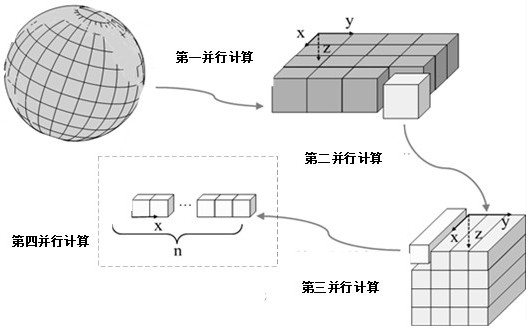

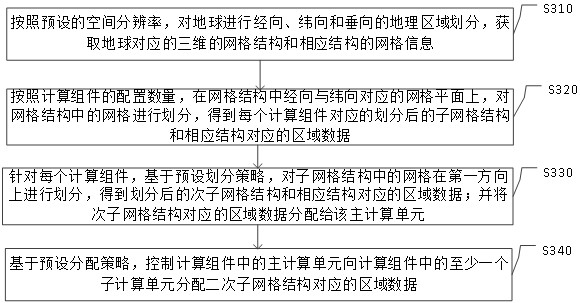

Data processing method and device and electronic device

ActiveCN113706706AImprove computing efficiencyImprove Parallel Computing EfficiencyDesign optimisation/simulationCAD numerical modellingComputational scienceOcean circulation model

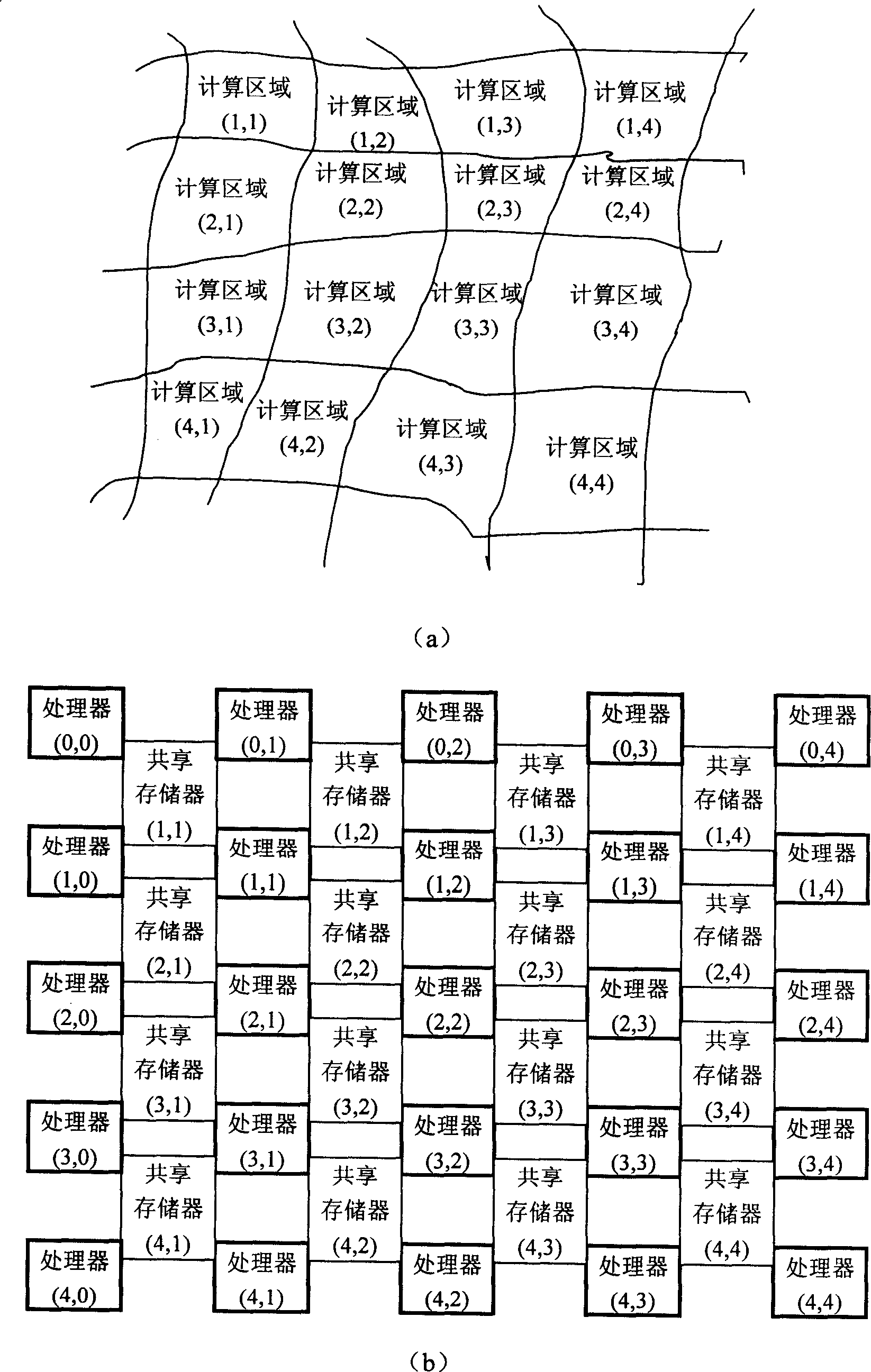

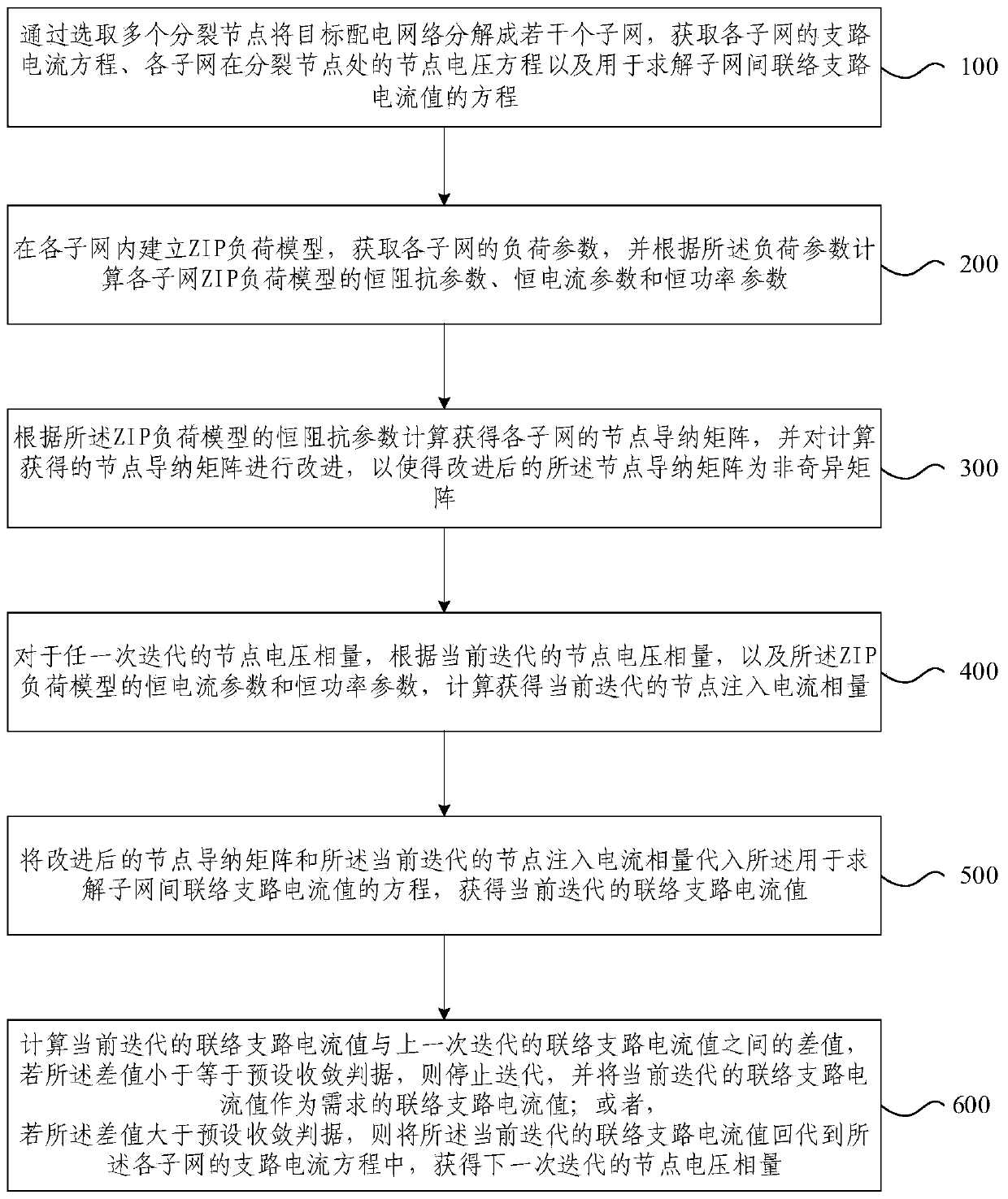

The invention provides a data processing method and device and an electronic device. The method comprises the following steps of according to a preset spatial resolution, carrying out the warp, weft and vertical geographic region division on the earth to obtain a three-dimensional grid structure corresponding to an earth space; dividing the grids in the grid structure on the grid planes corresponding to the warp direction and the weft direction in the grid structure to obtain a sub-grid structure corresponding to each calculation component; dividing the grids in the sub-grid structures in a first weft direction or vertical direction to obtain the secondary sub-grid structures; distributing the region data corresponding to the secondary sub-grid structures to a main calculation unit; and controlling the main computing unit to distribute the regional data corresponding to the secondary sub-grid structures to the at least one sub-computing unit, so that the main computing unit and the at least one sub-computing unit compute the service data in the regional data. According to the method, the calculation efficiency of an ocean circulation mode in the warp direction, the weft direction and the vertical direction is improved.

Owner:THE FIRST INST OF OCEANOGRAPHY SOA

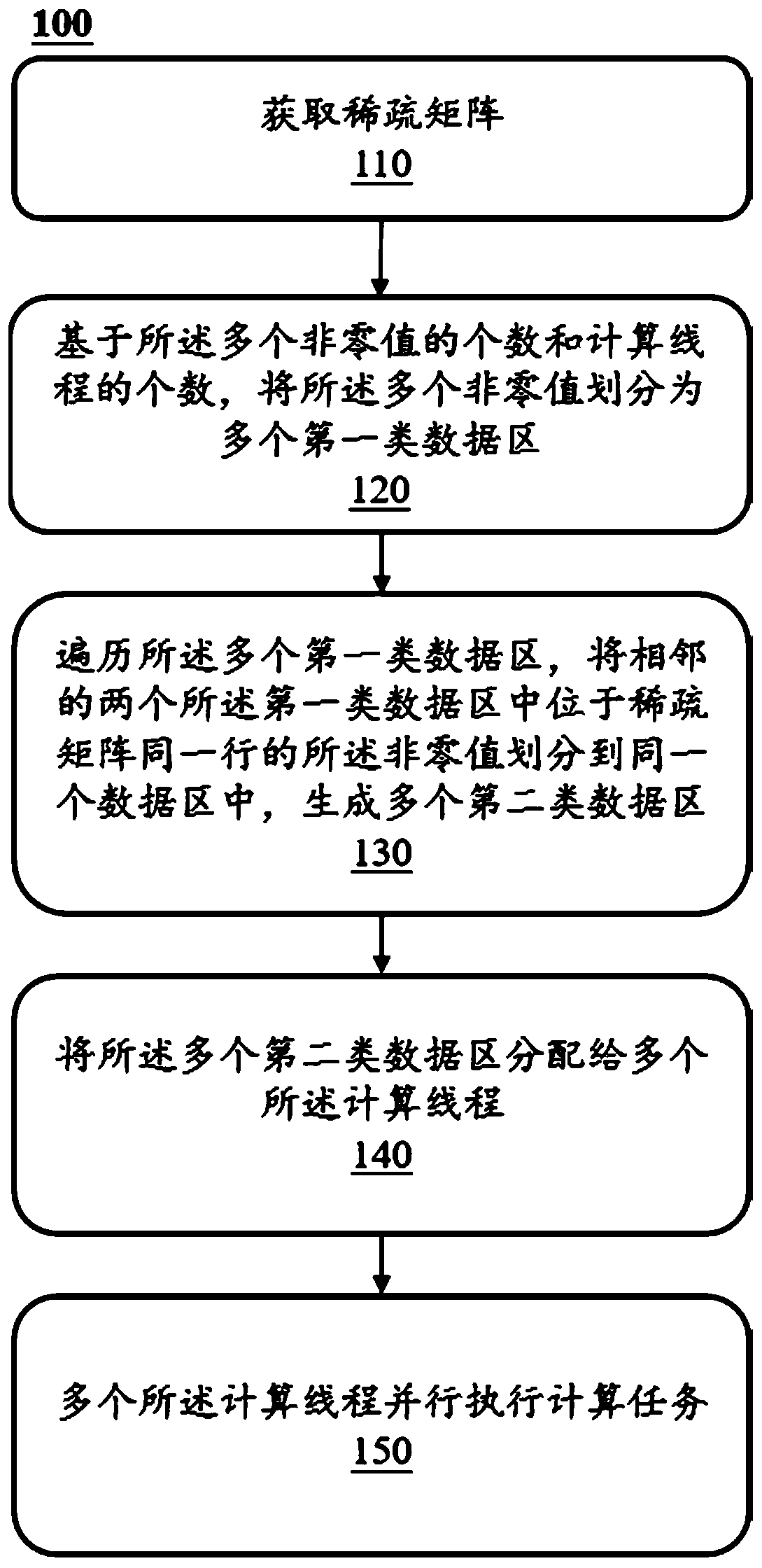

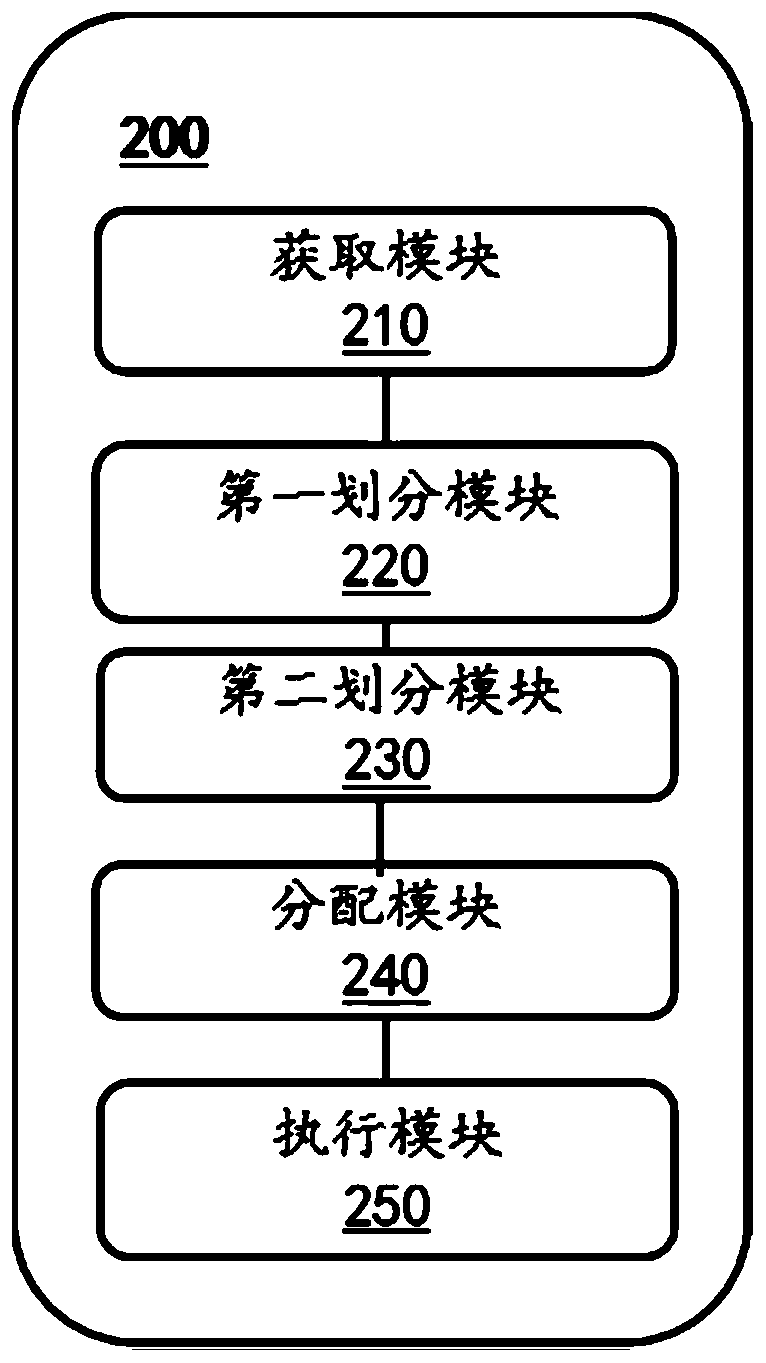

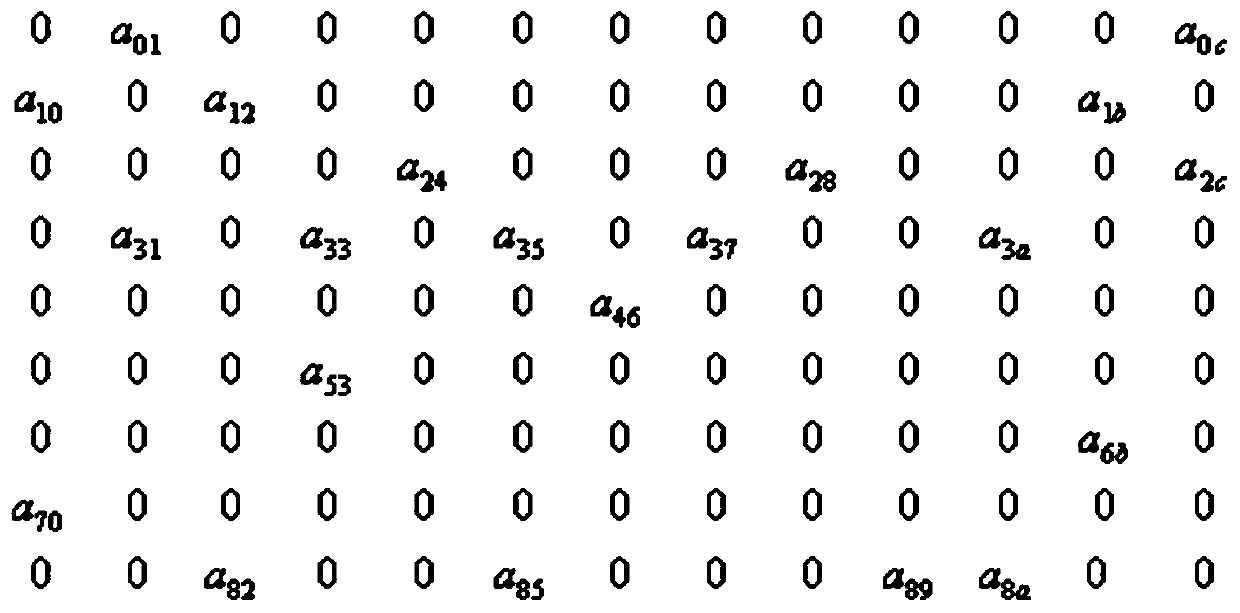

Method and system for improving parallel computing efficiency related to sparse matrix

ActiveCN111240744AImprove Parallel Computing EfficiencyConcurrent instruction executionComputational scienceConcurrent computation

The embodiment of the invention discloses a method and system for improving parallel computing efficiency related to a sparse matrix. The method comprises the steps: acquiring a sparse matrix, whereinthe sparse matrix is composed of a plurality of non-zero values and a plurality of coordinates corresponding to the non-zero values, and the coordinates represent the positions of the non-zero valuesin the sparse matrix, the coordinates comprise row coordinates, and the row coordinates represent the row number of the non-zero value in the sparse matrix; dividing the plurality of non-zero valuesinto a plurality of first-class data regions based on the number of the plurality of non-zero values and the number of the calculation threads; traversing the plurality of first-class data regions, and dividing the non-zero values in the same row of the sparse matrix in two adjacent first-class data regions into the same data region to generate a plurality of second-class data regions; distributing the plurality of second-class data regions to a plurality of computing threads; and enabling the plurality of computing threads to execute the computing task in parallel.

Owner:ALIPAY (HANGZHOU) INFORMATION TECH CO LTD

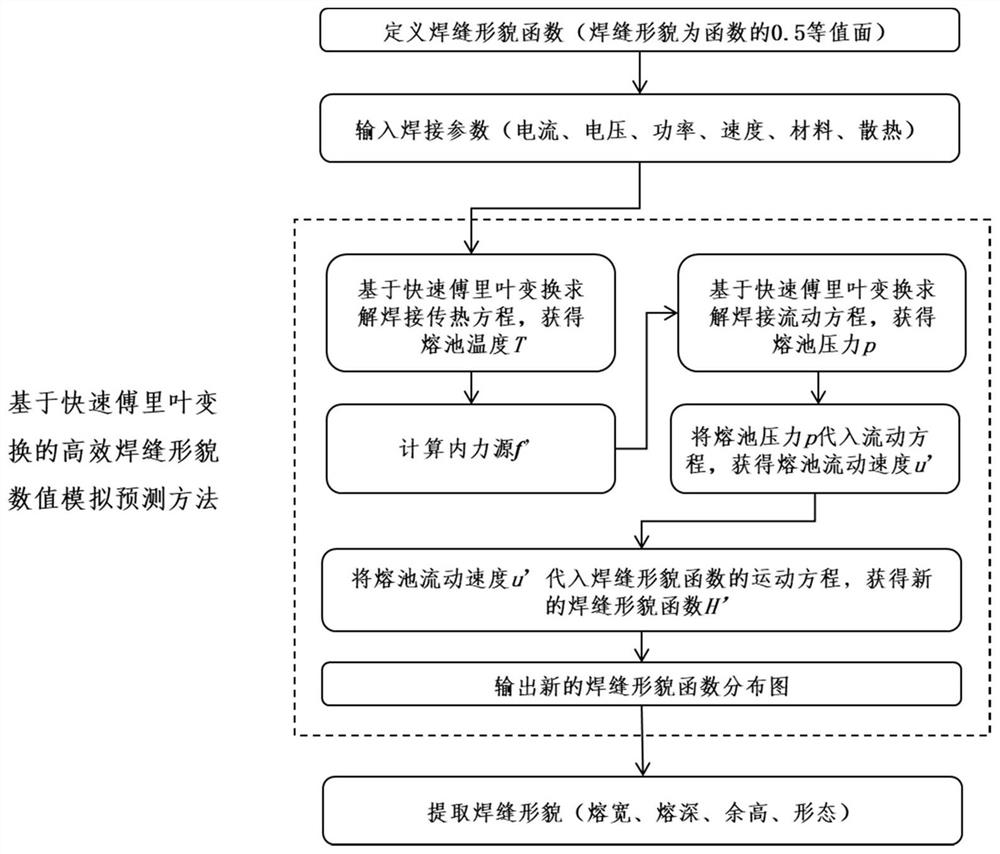

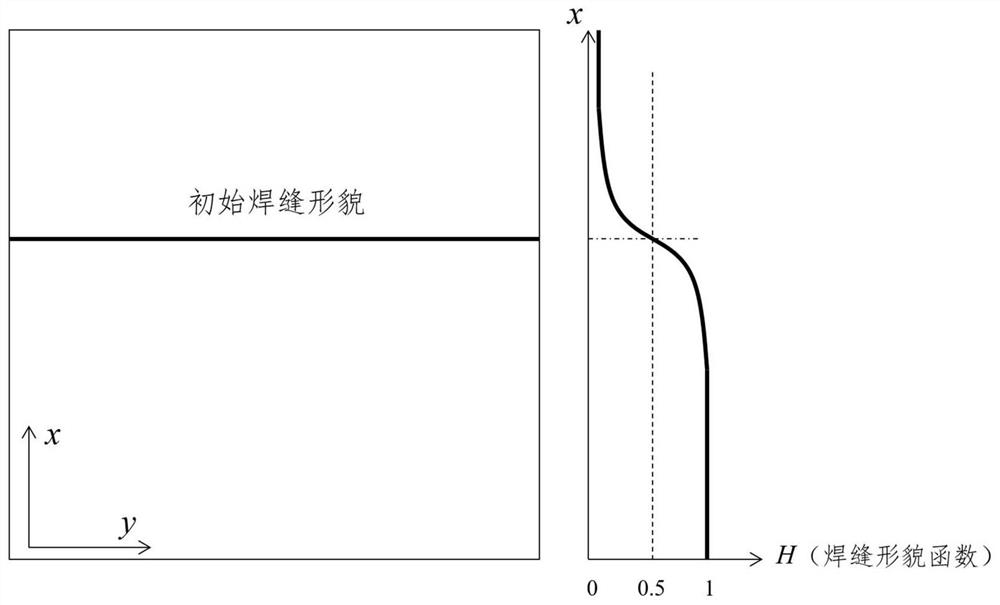

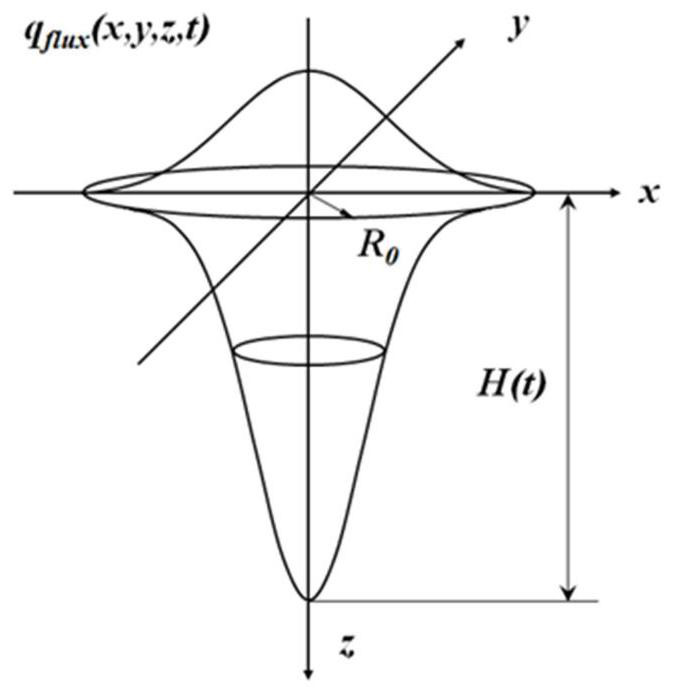

Efficient weld joint morphology numerical simulation prediction method based on fast Fourier transform

ActiveCN111950207AImprove forecasting efficiencyImprove Parallel Computing EfficiencyDesign optimisation/simulationCAD numerical modellingFast Fourier transformEngineering

The invention discloses an efficient weld joint morphology numerical simulation prediction method based on fast Fourier transform, and aims to solve heat transfer and flow equations in a weld joint forming process based on fast Fourier transform in order to solve the problem that an existing weld joint morphology numerical simulation method is low in prediction efficiency. Therefore, efficient prediction of the weld joint morphology change process is achieved. The method comprises the following steps of firstly, defining an initial weld joint morphology function and inputting welding parameters; secondly, solving a welding heat transfer and flow equation based on fast Fourier transform; further, updating a motion equation of the weld joint morphology function; and finally, outputting a newweld joint morphology function and extracting new weld joint morphology and characteristic parameters. Compared with an existing weld morphology numerical simulation method, the weld prediction efficiency can be effectively improved by dozens, hundreds and even thousands of times, the infinite parallel speed-up ratio is supported, and the calculation efficiency and the calculation precision of the method are far higher than those of an existing method.

Owner:CHANGSHU INSTITUTE OF TECHNOLOGY

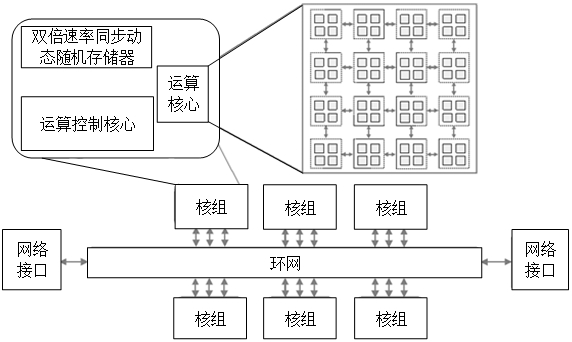

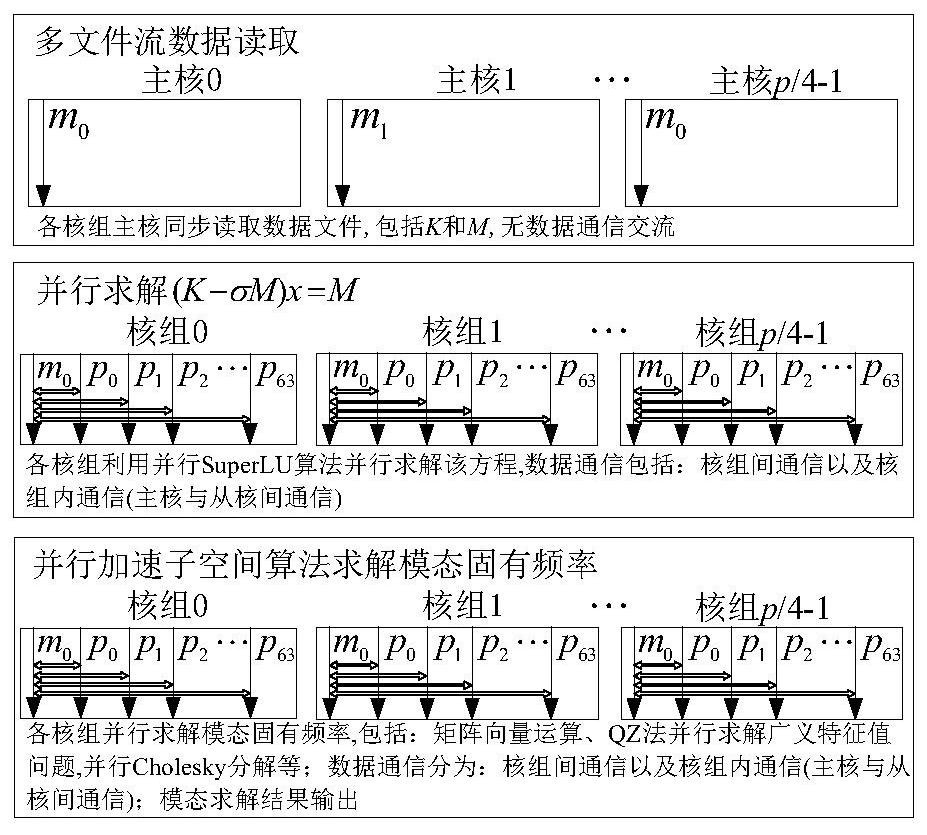

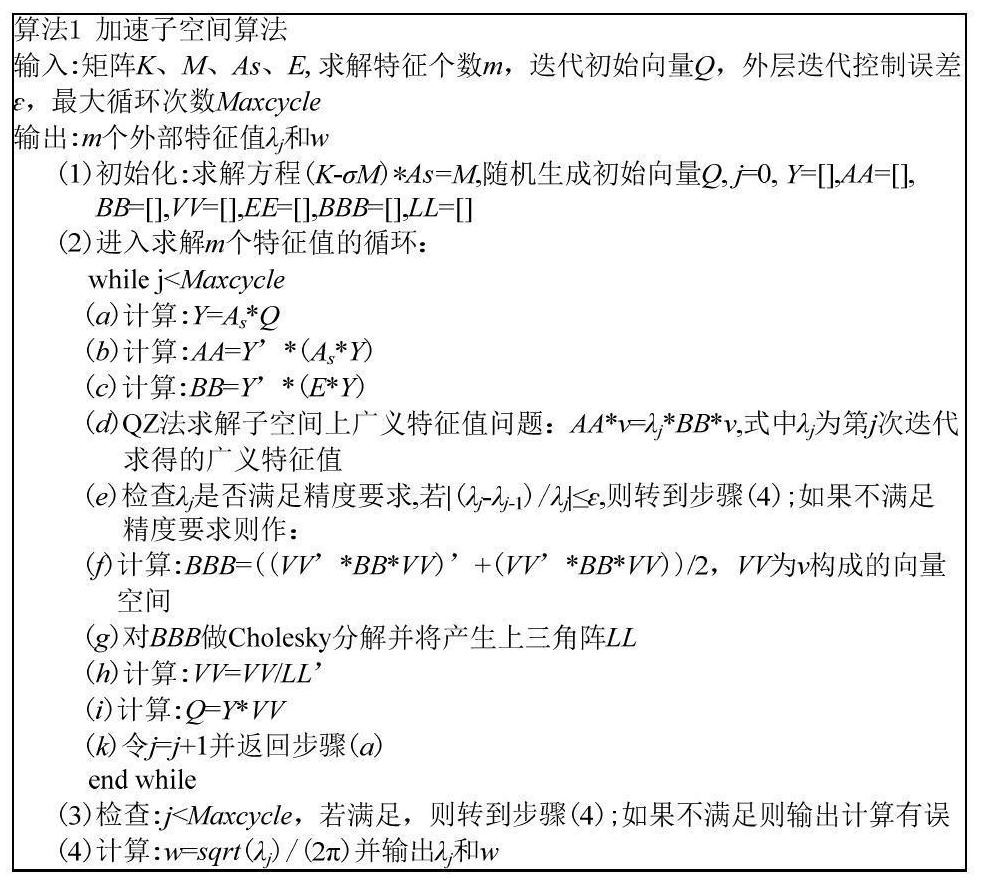

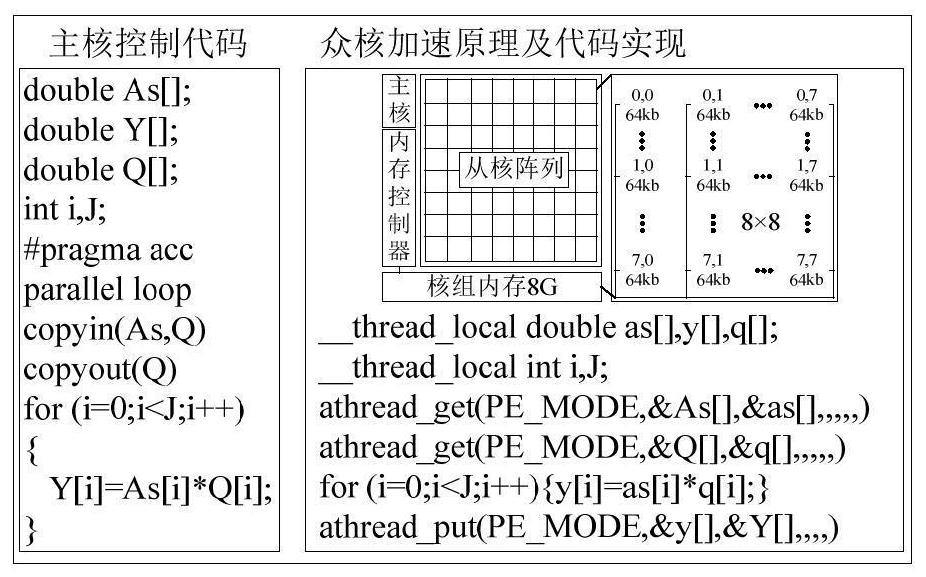

Modal parallel computing method and system for heterogeneous many-core parallel computer

ActiveCN111651208AImprove Parallel Computing EfficiencyImprove computing efficiencySustainable transportationMultiple digital computer combinationsComputational scienceConcurrent computation

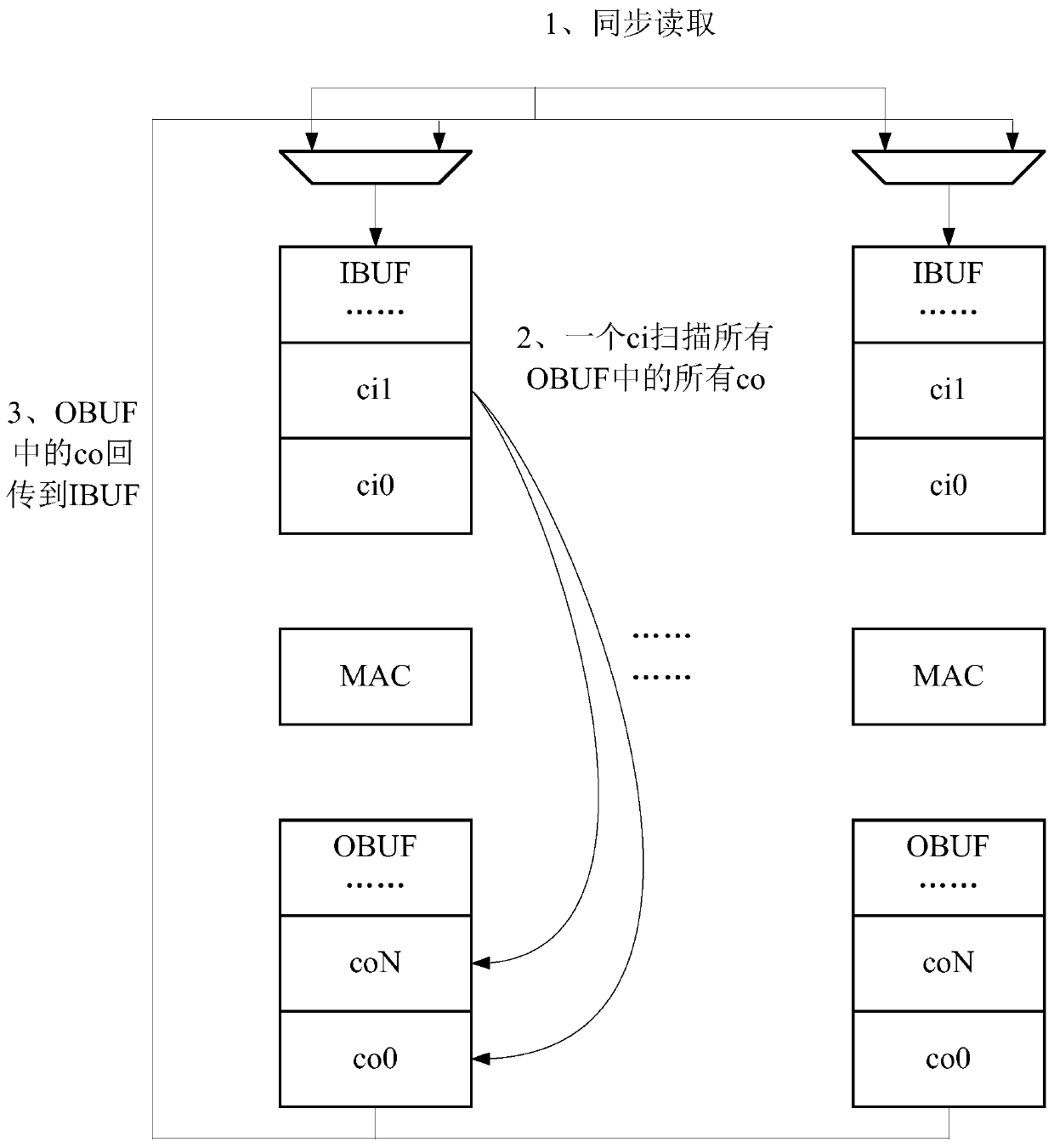

The invention provides a modal parallel computing method and system for a heterogeneous many-core parallel computer. The method comprises the following steps: the step S1: generating a finite elementmodel rigidity matrix and quality matrix data through a finite element program, dividing the generated finite element model rigidity matrix and quality matrix data into N subareas, and independentlystoring the finite element model rigidity matrix and quality matrix data of each area in a file, wherein N is an integral multiple of a single core group; and the step S2, enabling the main cores of the core groups which are subjected to parallel computing to synchronously read the finite element model rigidity matrix and the quality matrix data corresponding to the sub-regions, enabling the coregroups to have no data communication exchange, and enabling the slave cores in the core groups to have no data communication exchange. Layering of the calculation process and data communication is achieved through a layering strategy, a large amount of data communication is limited in each core group, and the advantage that the communication rate in the domestic heterogeneous many-core parallel computer core group is high is fully played.

Owner:SHANGHAI JIAO TONG UNIV

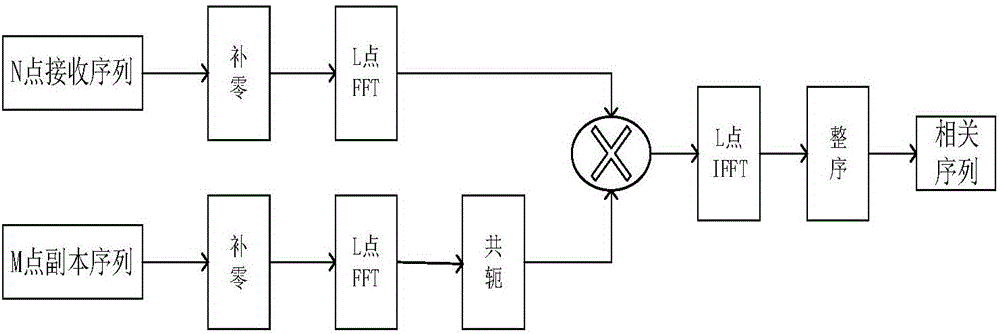

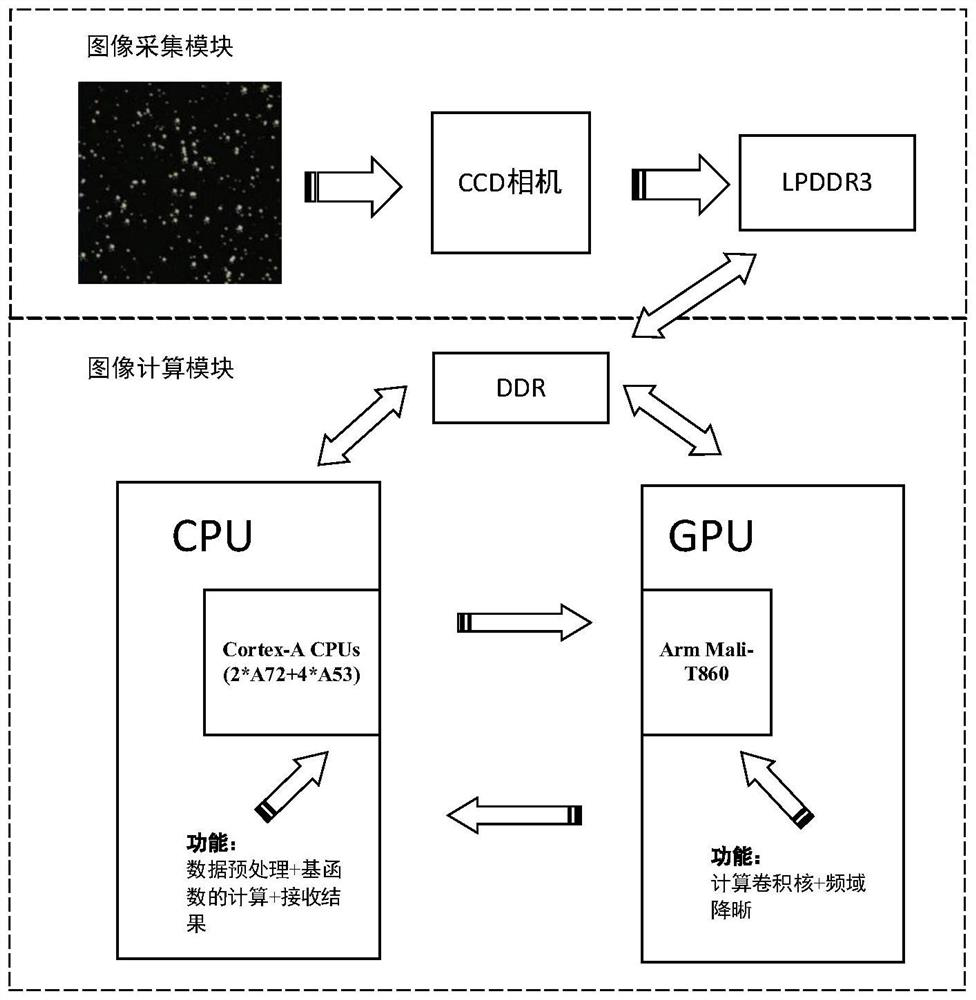

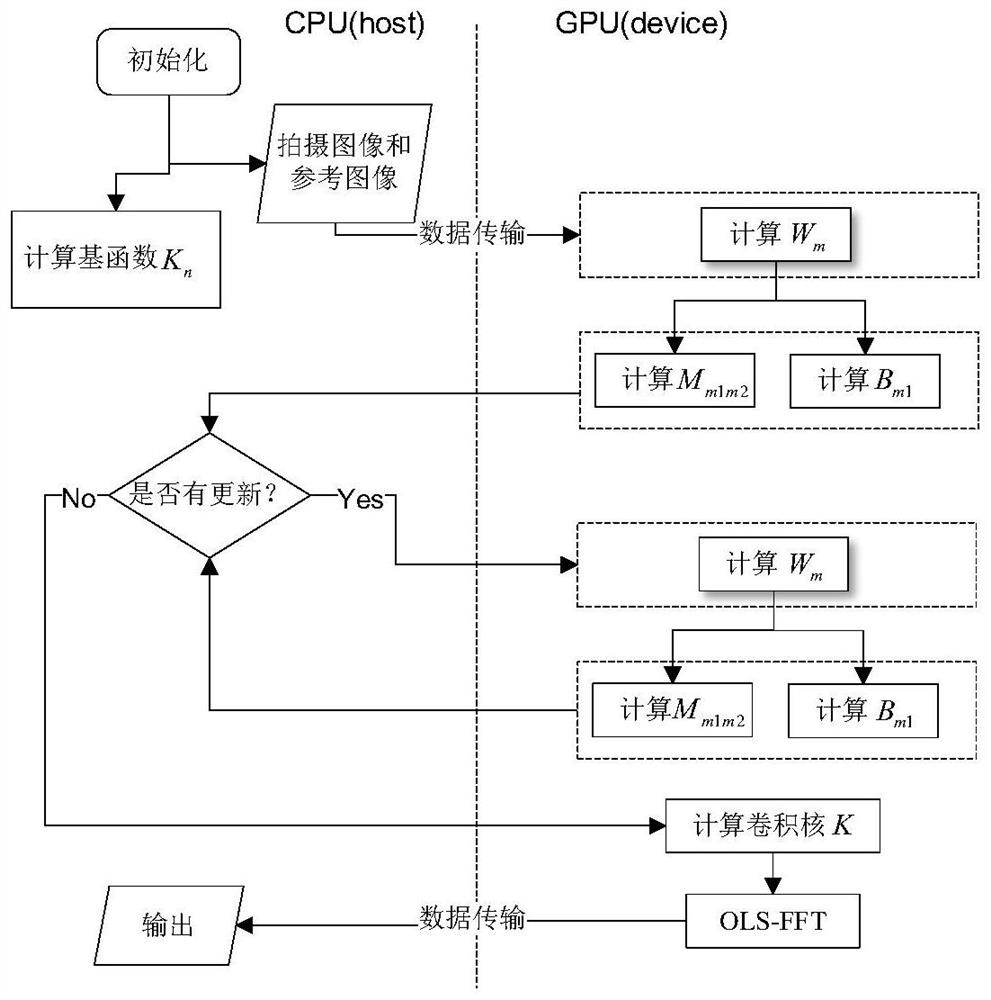

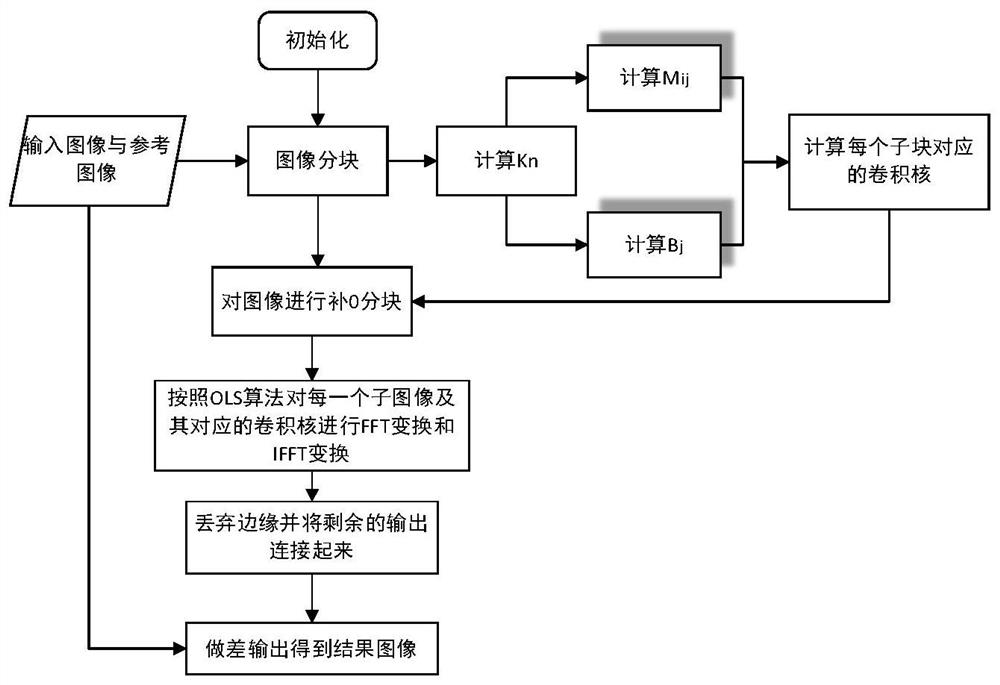

Frequency domain astronomical image target detection method and system

PendingCN113344765AOptimize performance bottlenecksReduce computational complexityImage enhancementImage analysisFrequency domainConvolution

The invention discloses a frequency domain astronomical image target detection method and system, and the method is realized based on a CPU-GPU heterogeneous processor, and the method comprises the steps of carrying out the preprocessing of a collected original astronomical image through a CPU based on a reference image obtained in advance; respectively carrying out image partitioning on the reference image and the preprocessed astronomical image by adopting an overlapping storage method, multiplying a Gaussian basis function by a polynomial to obtain n groups of basis vectors of a convolution kernel, and inputting the n groups of basis vectors into a GPU; enabling the GPU to perform fitting according to the reference sub-images and the astronomical sub-images in combination with n groups of base vectors to obtain a convolution kernel corresponding to each reference sub-image, using the convolution kernels for performing frequency domain filtering blurring processing on each reference sub-image to obtain a template image, and inputting the template image into the CPU; and discarding the edge of the template image by the CPU, connecting the remaining parts and making a difference between the remaining parts and the original astronomical image to obtain a difference image, thereby realizing target detection of the astronomical image.

Owner:NAT SPACE SCI CENT CAS

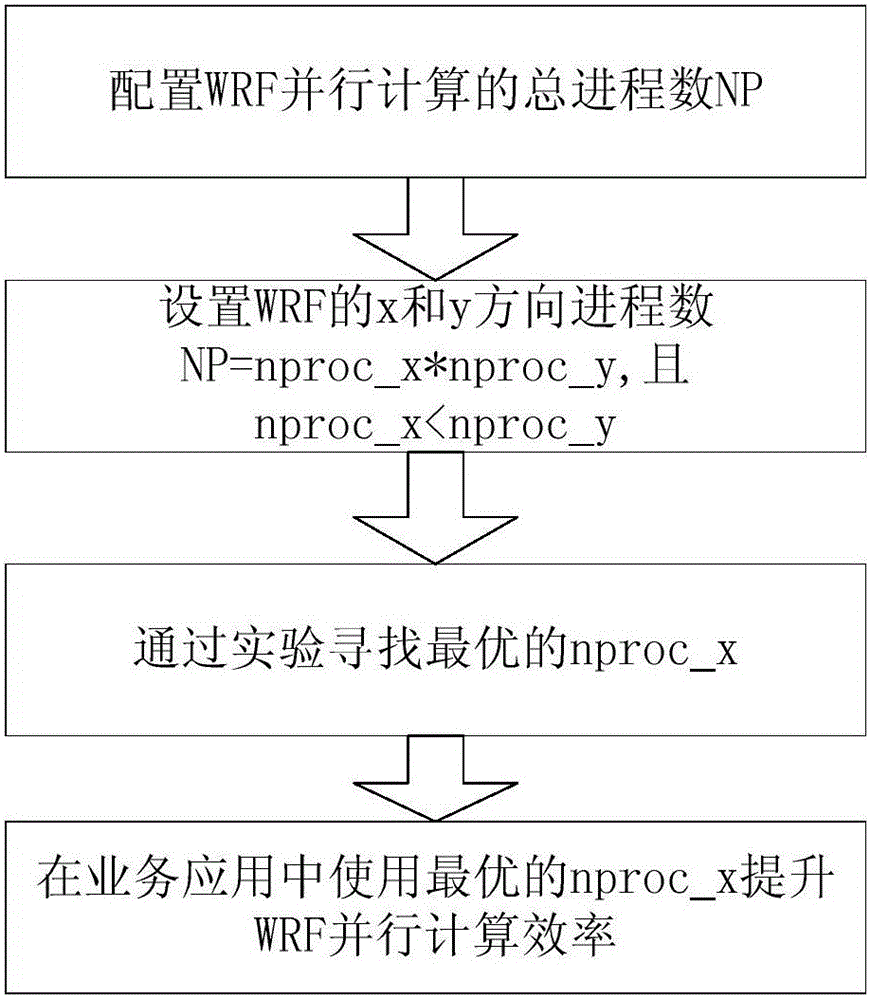

Method of improving WRF parallel computing efficiency

InactiveCN105938427AImprove Parallel Computing EfficiencyReduce energy consumptionConcurrent instruction executionEnergy consumptionParallel computing

The present invention relates to a method of improving WRF parallel computing efficiency, and belongs to the technical field of weather forecast and supercomputing application. The method reduces the number of processes in the X direction so as to improve the WRF parallel computing efficiency. The method specifically comprises the following steps of (S1) configuring the number of total processes NP of WRF parallel computing; (S2) setting the number of processes of the WRF in x and y directions nproc_x and nproc_y, wherein nproc_x<nproc_y; (S3) searching the optimal nproc_x through experiments; and (S4) using the optimal nproc_x in business application to improve the WRF parallel computing efficiency. According to the method, the WRF parallel computing efficiency can be effectively improved, supercomputing energy consumption can be reduced, and the method is easy to use and has good application prospect.

Owner:CHONGQING INST OF GREEN & INTELLIGENT TECH CHINESE ACADEMY OF SCI

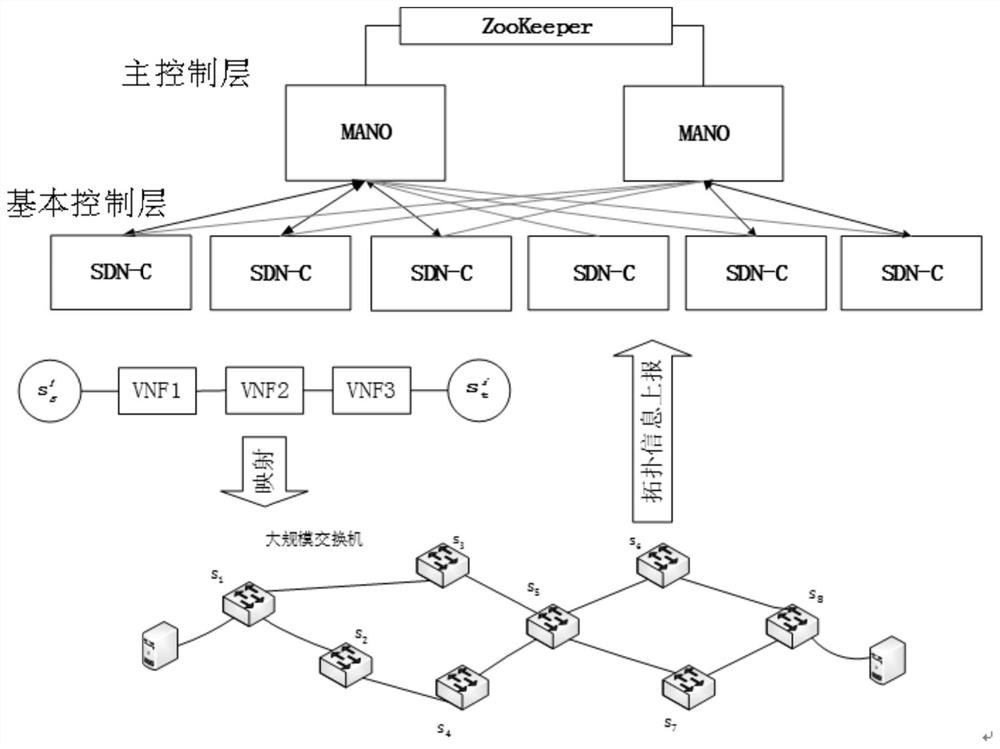

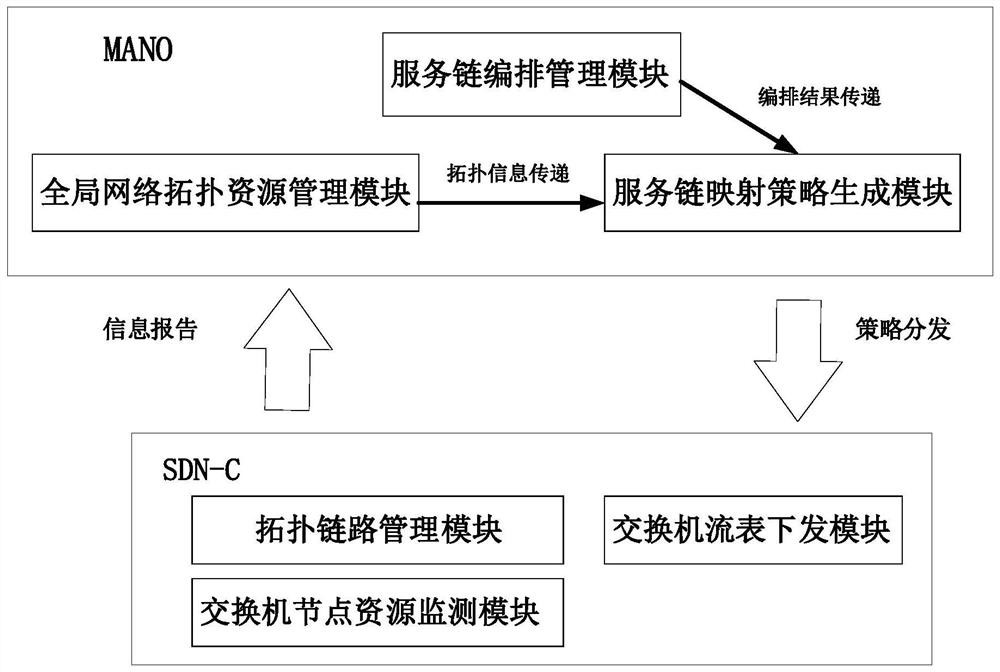

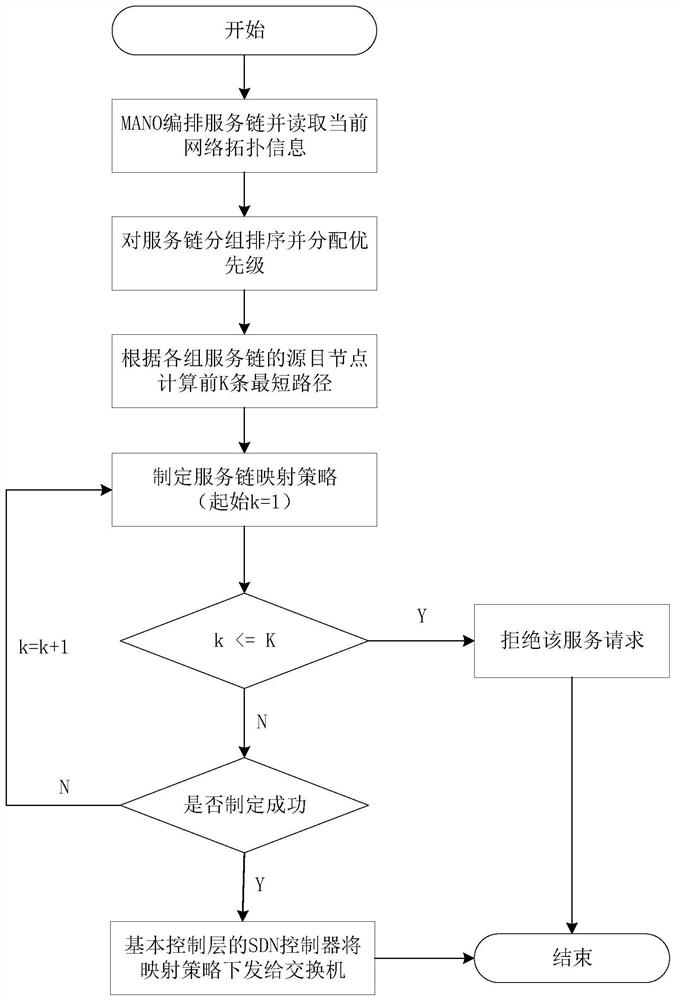

Layered data center resource optimization method and system based on SDN and NFV

The invention discloses a layered data center resource optimization method and system based on SDN and NFV, and the method comprises the steps: enabling an MANO controller to arrange a request initiated by a client to generate a corresponding service chain, and taking chains with the same source and destination nodes into a feature group; filtering the grouped service chains to obtain a part of which the bandwidth requirement is higher than a set threshold value, marking the highest priority, and arranging the remaining service chains in a descending order according to the requirement of the remaining service chains on the time delay to indicate the priority; calculating the first K shortest paths between the source node and the destination node for each group of service chains; formulating, by the MANO controller, a mapping strategy of each service chain by using a coevolutionary multi-population competition genetic algorithm according to the first K shortest paths; and issuing, by the MANO controller, the formulated mapping strategy to an SDN controller of a basic control layer, and enabling the SDN controller to convert the mapping strategy into a flow table suitable for being processed by a switch and issue the flow table to the switch for execution. The end-to-end time delay of the service chain is small, and the utilization rate of network resources is high.

Owner:NANJING UNIV OF POSTS & TELECOMM

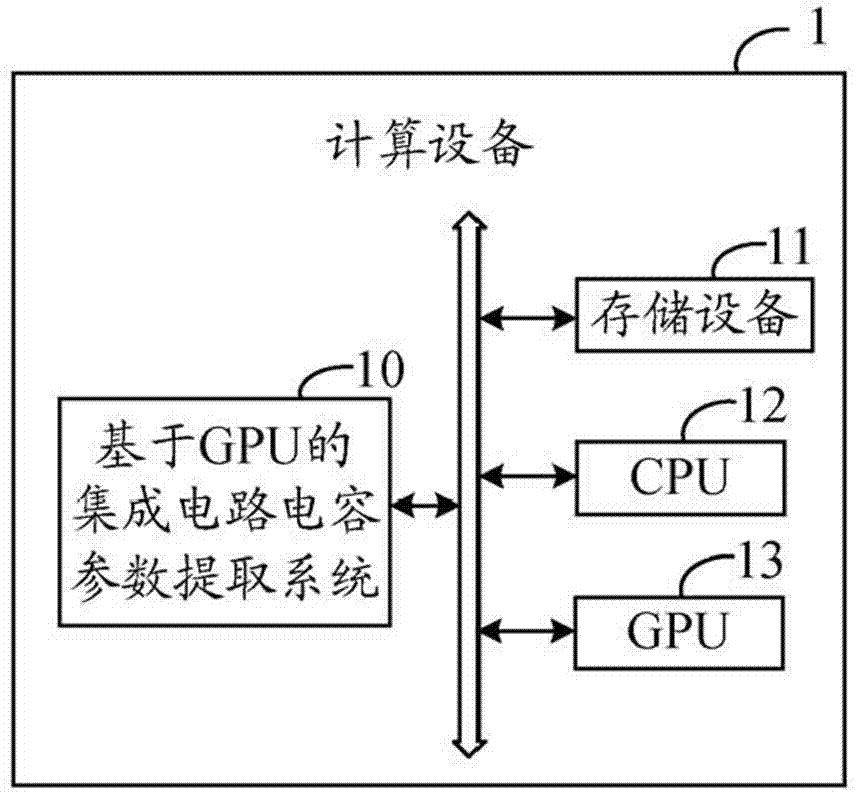

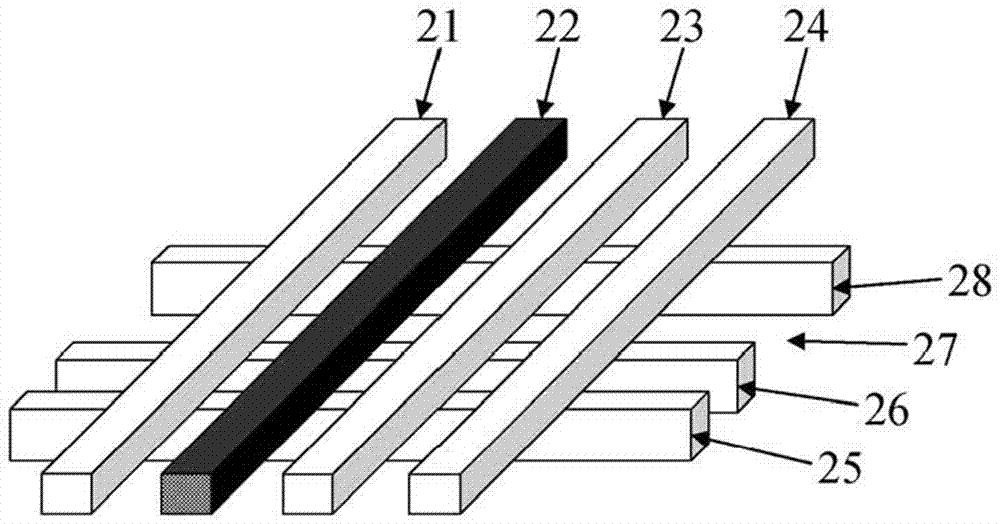

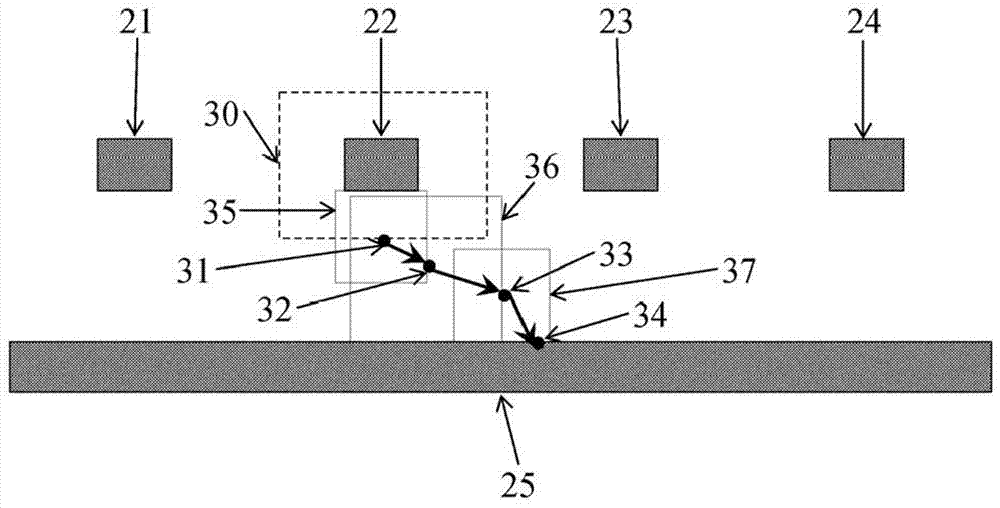

System and method for extracting capacitance parameters of integrated circuits based on GPU

ActiveCN103198177BCalculation speedReduce instruction divergenceSpecial data processing applicationsCapacitanceElectrical conductor

Owner:北京超逸达科技有限公司

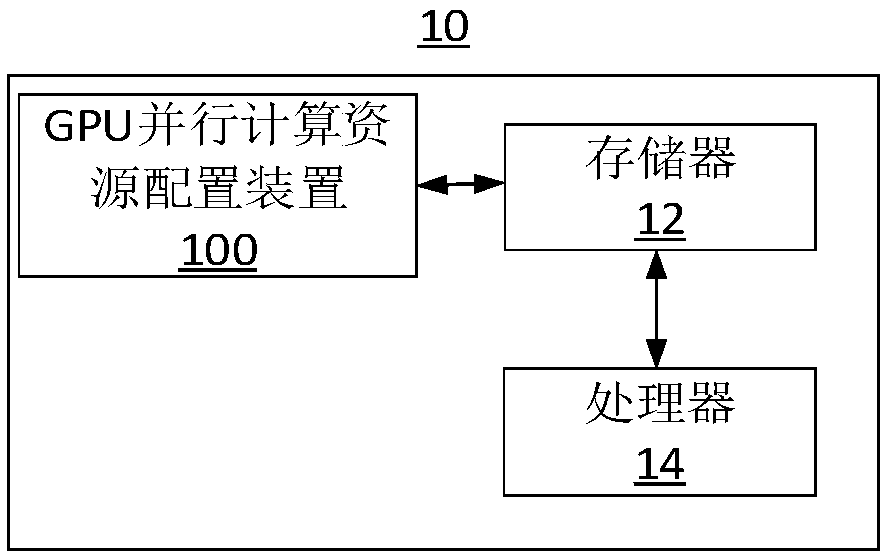

GPU parallel compute resource allocation method and device

ActiveCN109086137AImprove Parallel Computing EfficiencyProgram initiation/switchingResource allocationComputational modelComputational resource

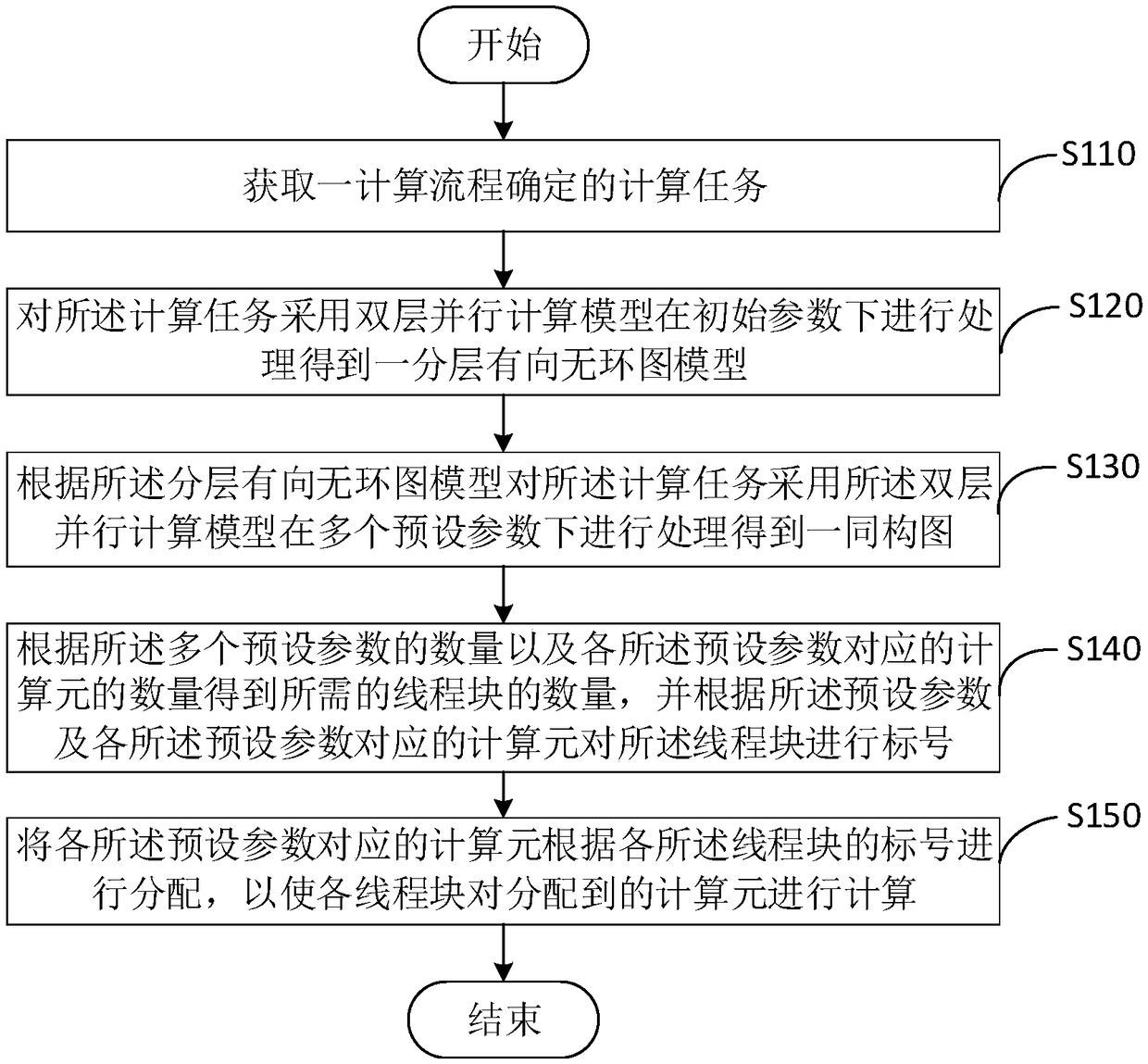

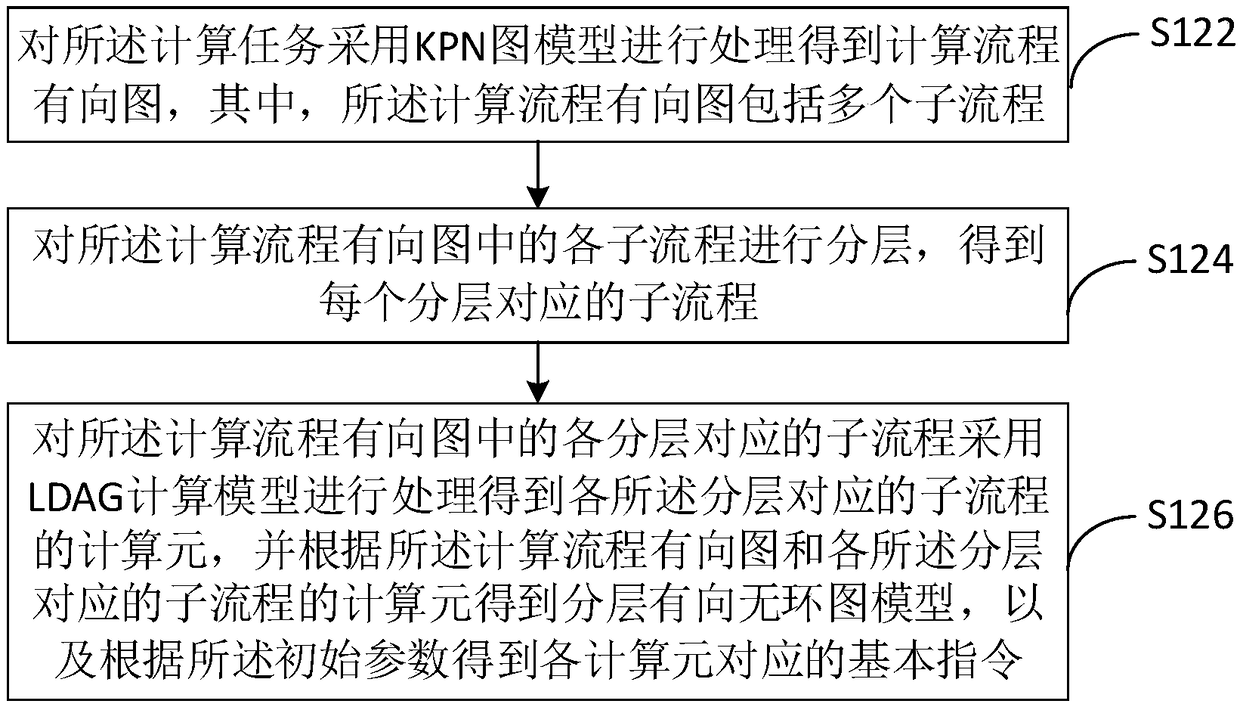

The invention relates to the field of computer technology, and particularly provideds a GPU parallel compute resource allocation method and apparatus, The methods include: a computing task determinedby a computing process is obtained, Aa layered directed acyclic graph model is obtained by adopting a two-layer parallel computing model to process the computational task under the initial parameters,a layered directional acyclic graph model is used to compute the computational task by using a two-layer parallel computational model, according to the number of a plurality of preset parameters andthe number of calculation elements corresponding to each preset parameter, the thread block is labeled according to the preset parameters and the calculation elements corresponding to each preset parameter, and the calculation elements corresponding to each preset parameter are allocated according to the labels of each thread block, so that each thread block calculates the allocated calculation elements. By using the above method, the parallel computing efficiency can be effectively improved.

Owner:SICHUAN ENERGY INTERNET RES INST TSINGHUA UNIV +1

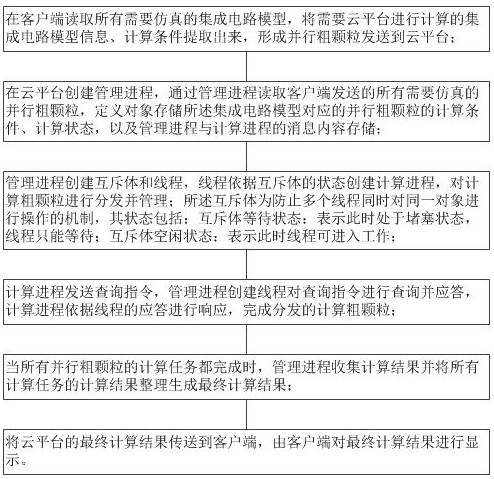

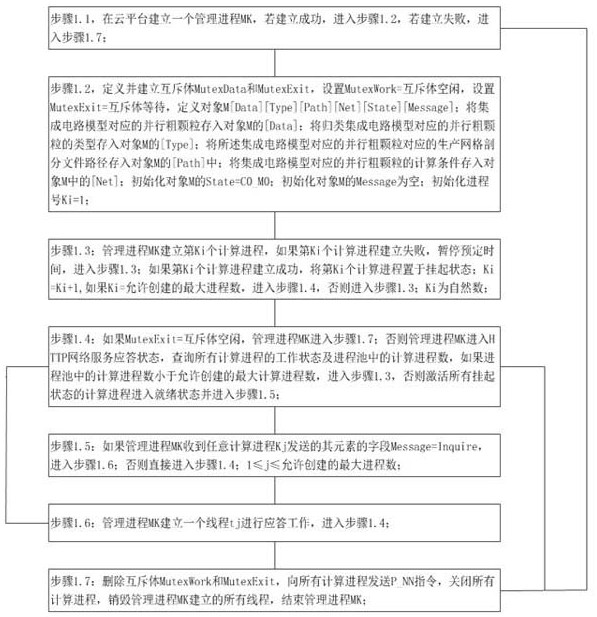

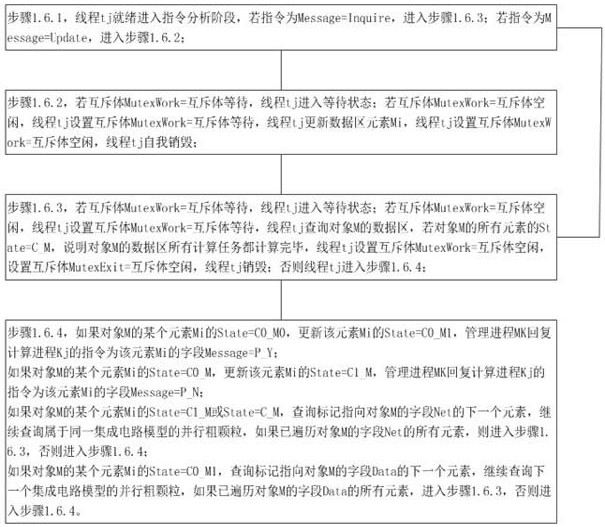

Integrated circuit simulation multi-thread management parallel method and device with secrecy function

ActiveCN112988403APrevent leakageImprove Parallel Computing EfficiencyProgram synchronisationConcurrent computationManagement process

The invention provides an integrated circuit simulation multi-thread management parallel method and device with a secrecy function. The method comprises the steps that electromagnetic simulation of a whole integrated circuit cloud platform is divided into two parts, namely a cloud computing platform and a client; the client extracts integrated circuit model information and calculation conditions which need to be calculated, and parallel coarse particles are formed and sent to the cloud platform; the cloud platform builds a management process, the management process builds a mutual exclusion body and a thread, and the thread builds a calculation process according to the state of the working mutual exclusion body and distributes and manages calculation coarse particles; and the threads created by the calculation process and the management process realize query and response communication to complete the distributed calculation coarse particles. According to the invention, only the parallel coarse particles extracted by the client are sent to the cloud platform instead of sending all information of the whole integrated circuit model to the cloud platform, so that the integrated circuit model is prevented from being leaked out through the Internet; and the calculation coarse particles are distributed and managed by adopting a mutual exclusion body and a thread, so that the parallel calculation efficiency is improved.

Owner:北京智芯仿真科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com