Neural network processor, current neural network data multiplexing method and related apparatus

A convolutional neural network and neural network technology, applied in the fields of electronic equipment and storage media, neural network processors, and convolutional neural network data multiplexing devices, can solve problems such as large power consumption and slow speed, and achieve improved efficiency, The effect of reducing power consumption and reducing the number of data accesses

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

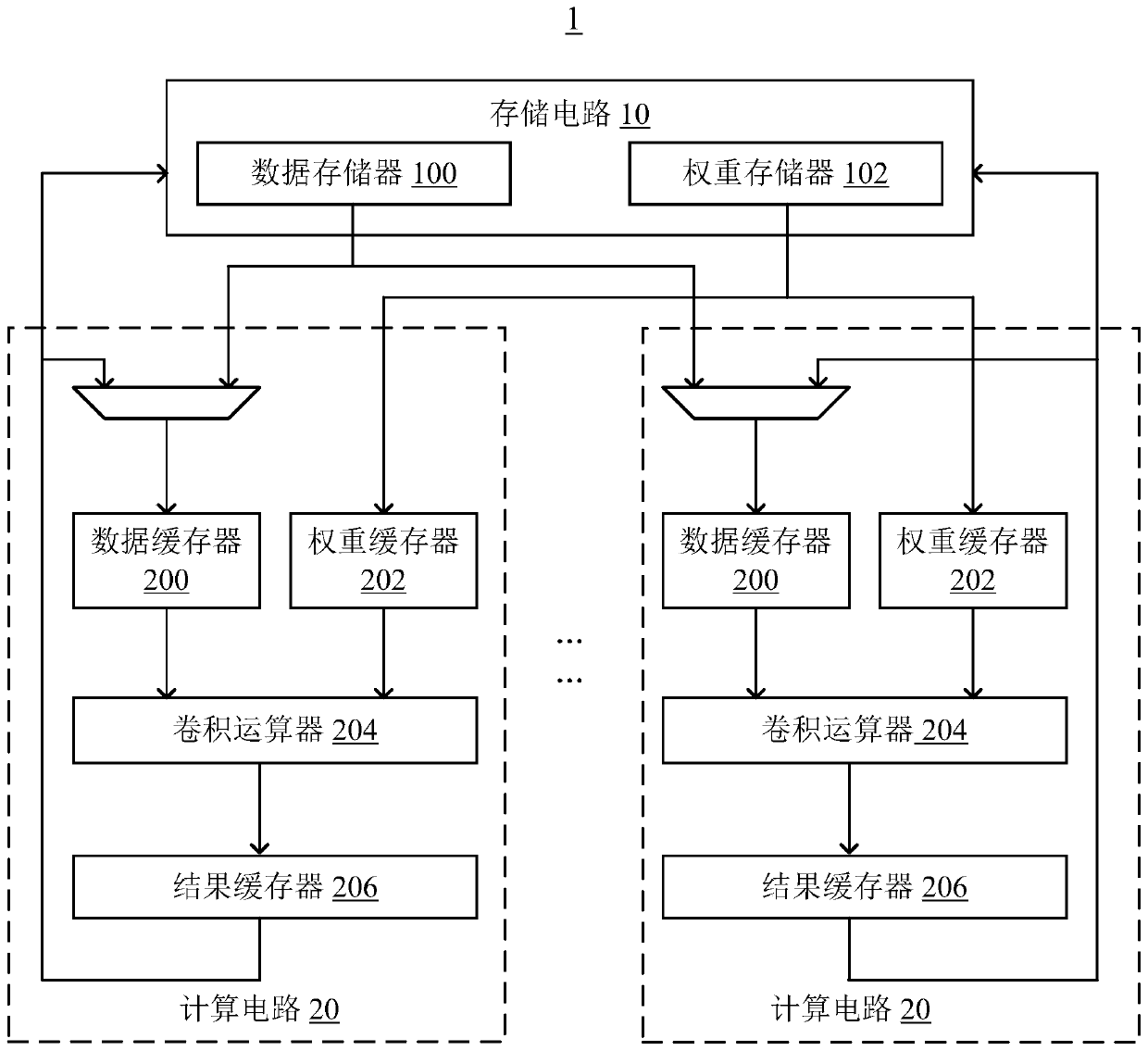

[0056] Please also see figure 1 and figure 2 As shown, is a schematic diagram of a neural network processor provided by an embodiment of the present invention.

[0057] In this embodiment, the neural network processor 1 may include: a storage circuit 10 and at least one calculation circuit 20 , wherein the calculation circuit 20 is connected to the storage circuit 10 . The neural network processor 1 may be a programmable logic device, such as a Field Programmable Logic Array (Field Programmable Gate Array, FPGA), or a dedicated neural network processor (Application Specific Integrated Circuits, ASIC).

[0058] The number of calculation circuits 20 can be set according to the actual situation, and the number of calculation circuits required can be considered comprehensively according to the entire calculation amount and the calculation amount that each calculation circuit can handle, for example, figure 1 Two computing circuits 20 are shown in parallel.

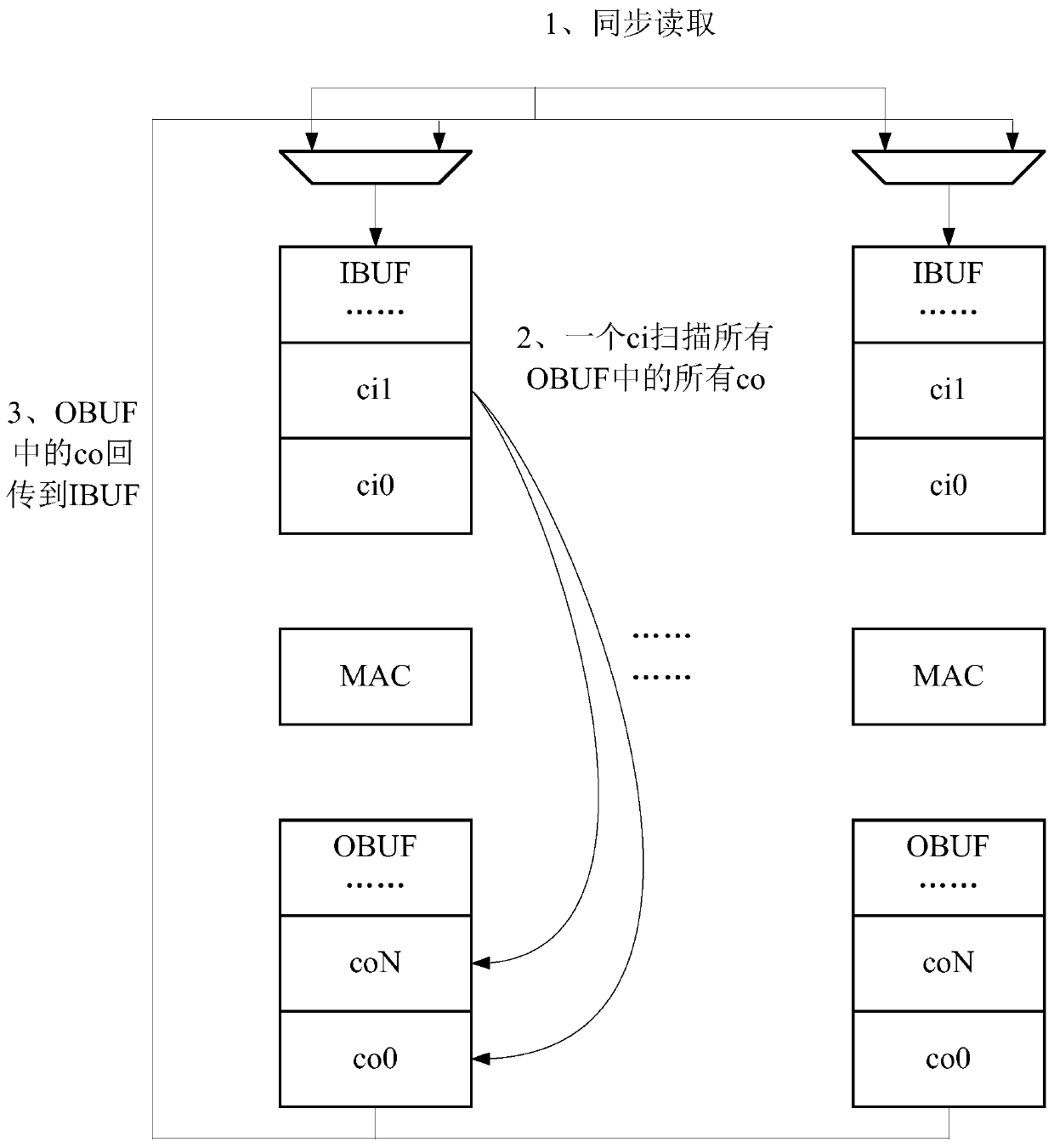

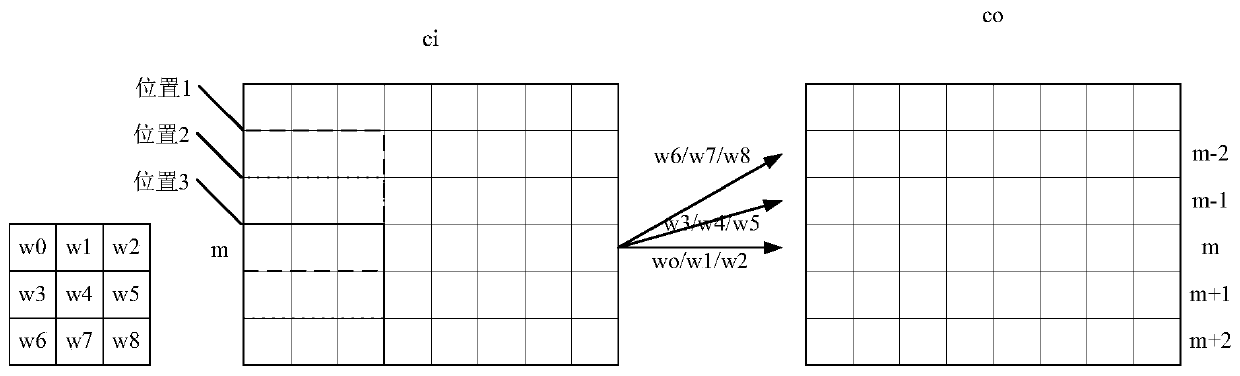

[0059] In this emb...

Embodiment 2

[0107] Figure 4 It is a flow chart of the convolutional neural network data multiplexing method provided by Embodiment 2 of the present invention.

[0108] The convolutional neural network data multiplexing method can be applied to mobile electronic devices or fixed electronic devices, and the electronic devices are not limited to personal computers, smart phones, tablet computers, desktop computers or all-in-one computers equipped with cameras, and the like. The electronic device stores the initial input data and weight values configured by the user for convolution operations in the storage circuit 10, and reads the initial input data and weight values from the storage circuit 10 by controlling at least one calculation circuit 20 And perform convolution operation based on the initial input data and the weight value. Since the initial input data and weight values required for the convolution operation are uniformly stored in the storage circuit 10, when there are multi...

Embodiment 3

[0167] refer to Figure 5 Shown is a functional block diagram of a preferred embodiment of the convolutional neural network data multiplexing device of the present invention.

[0168] In some embodiments, the convolutional neural network data multiplexing device 50 runs in an electronic device. The convolutional neural network data multiplexing device 50 may include a plurality of functional modules composed of program code segments. The program codes of each program segment in the convolutional neural network data multiplexing device 50 can be stored in the memory of the electronic device, and executed by at least one processor to execute (see for details Figure 4 Description) Data Multiplexing for Convolutional Neural Networks.

[0169] In this embodiment, the convolutional neural network data multiplexing device 50 can be divided into multiple functional modules according to the functions it performs. The functional modules may include: a storage module 501 , a convolut...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com