Patents

Literature

464results about How to "Reduce visits" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

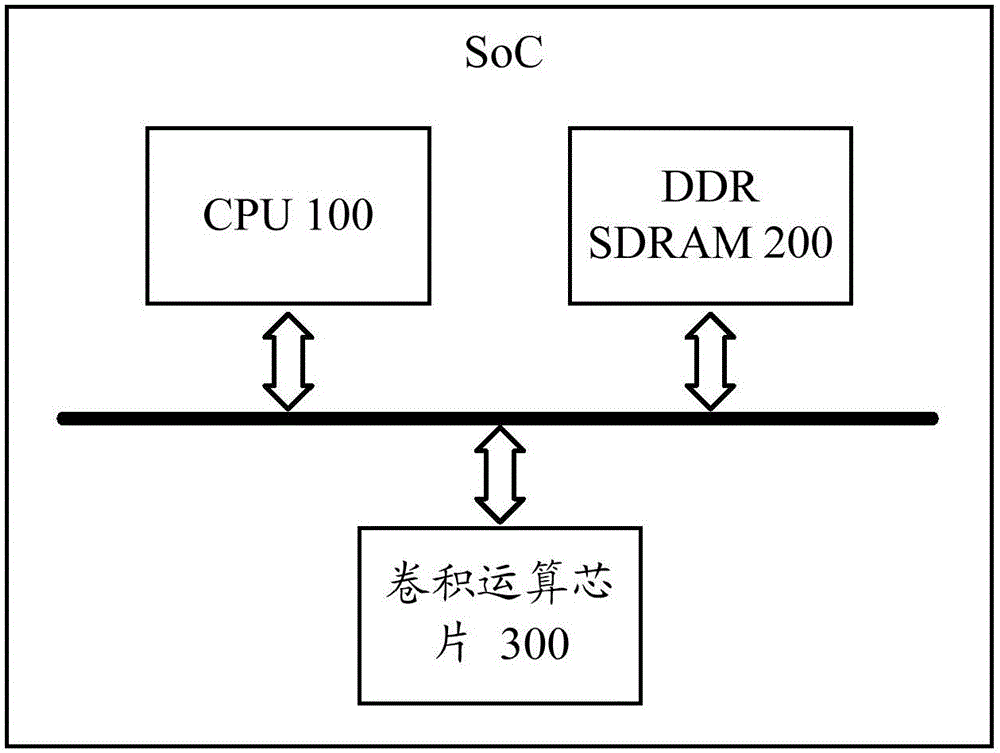

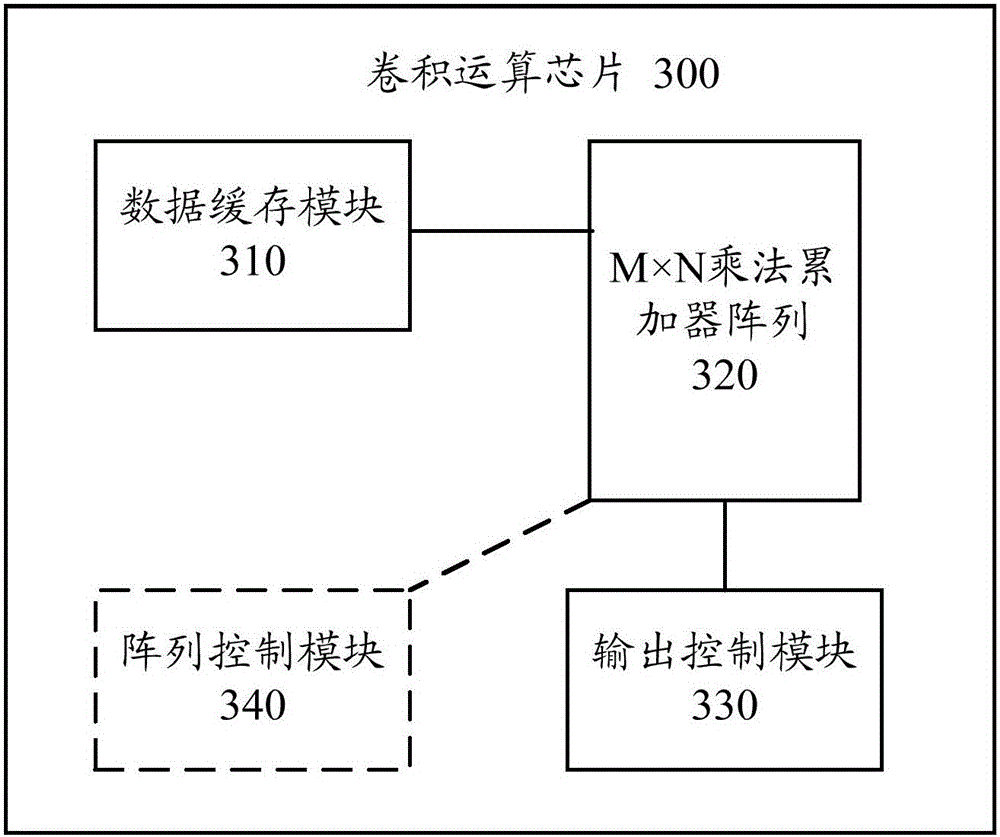

Convolution operation chip and communication equipment

ActiveCN106844294AReduce visitsReduce access pressureDigital data processing detailsBiological modelsResource utilizationComputer module

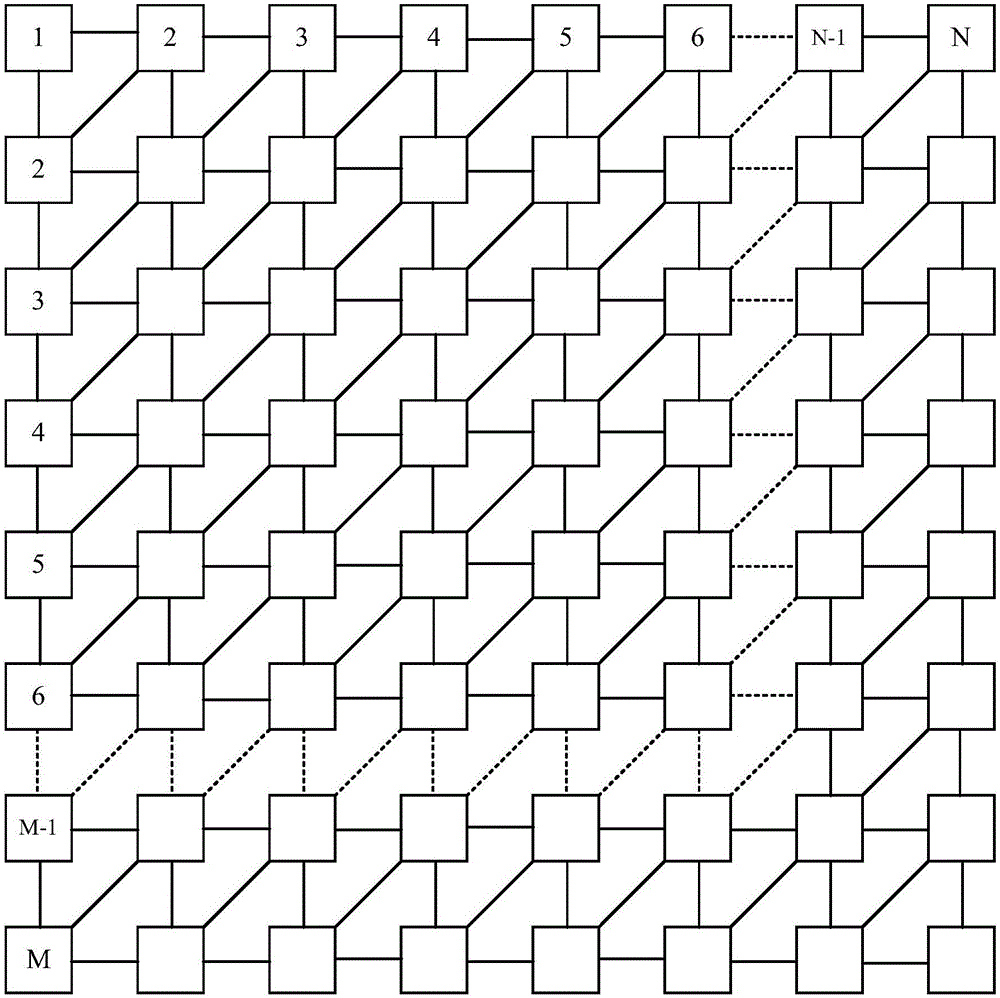

The invention provides a convolution operation chip and communication equipment. The convolution operation chip comprises an M*N multiplier accumulator array, a data cache module and an output control module; the M*N multiplier accumulator array comprises a first multiplier accumulator window, and a processing unit PE<X, Y> of the first multiplier accumulator window is used for conducting multiplication operation on convolution data of the PE<X, Y> and convolution parameters of the PE<X, Y> and transmitting the convolution parameters of the PE<X, Y> to PE<X, Y+1> and transmitting the convolution data of the PE<X, Y> to PE<X-1, Y+1> to serve as multipliers of multiplication operation between the PE<X, Y+1> and the PE<X-1, Y+1>; the data cache module is used for transmitting the convolution data and the convolution parameters to the first multiplier accumulator window; the output control module is used for outputting a convolution result. According to the convolution operation chip and the communication equipment, the RAM access frequency can be decreased and the RAM access stress can be relieved while the array resource utilization rate is increased.

Owner:HUAWEI MACHINERY

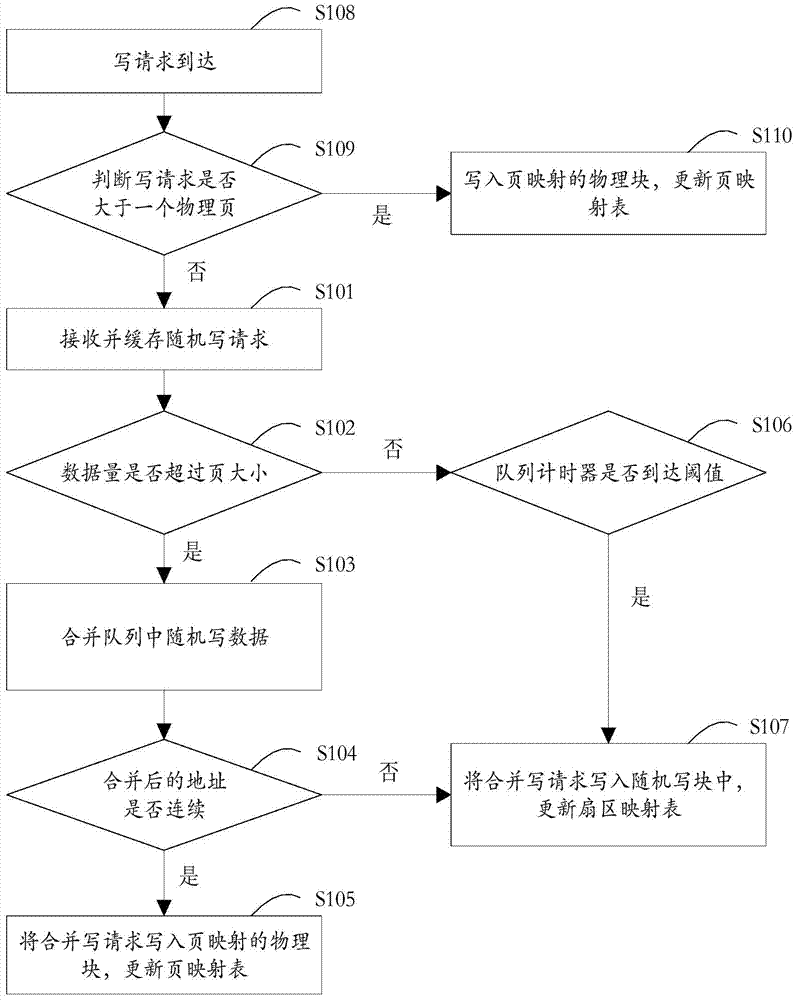

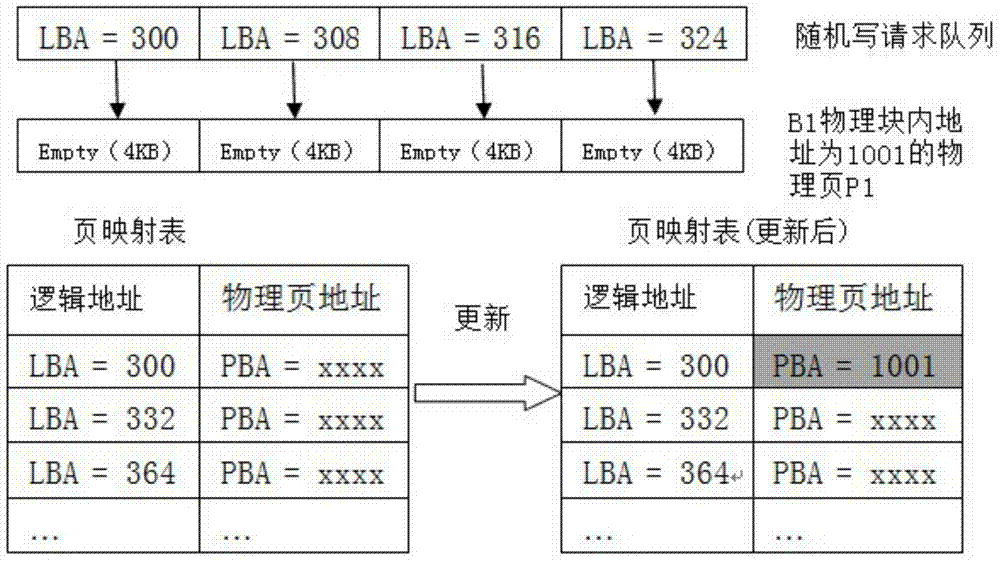

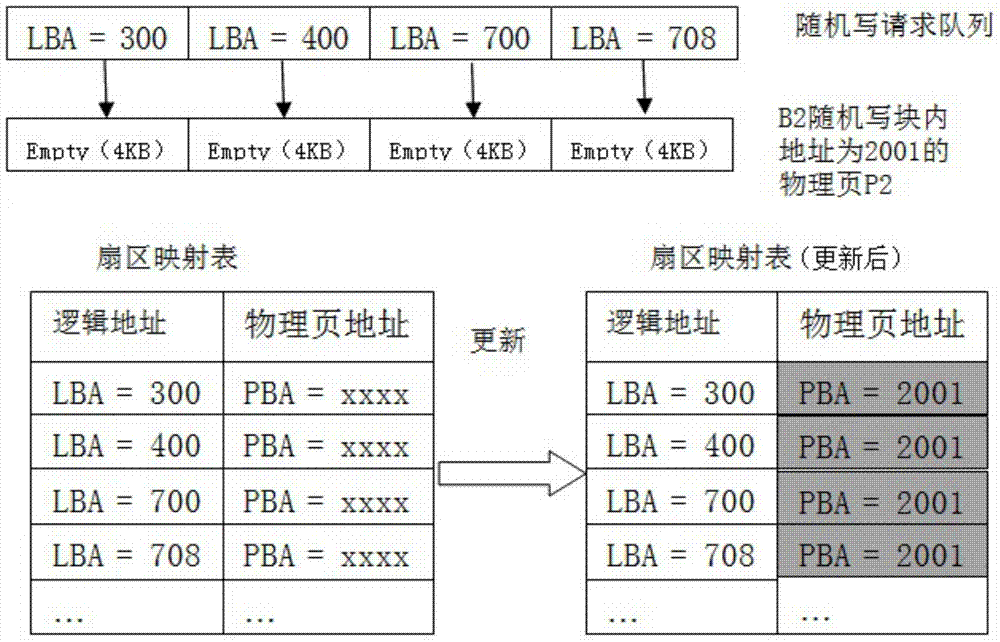

Method and device for increasing writing speed of nand flash

ActiveCN104503710AReduce visitsImprove writing efficiencyInput/output to record carriersMemory adressing/allocation/relocationComputer architectureFlash memory

Owner:FUZHOU ROCKCHIP SEMICON

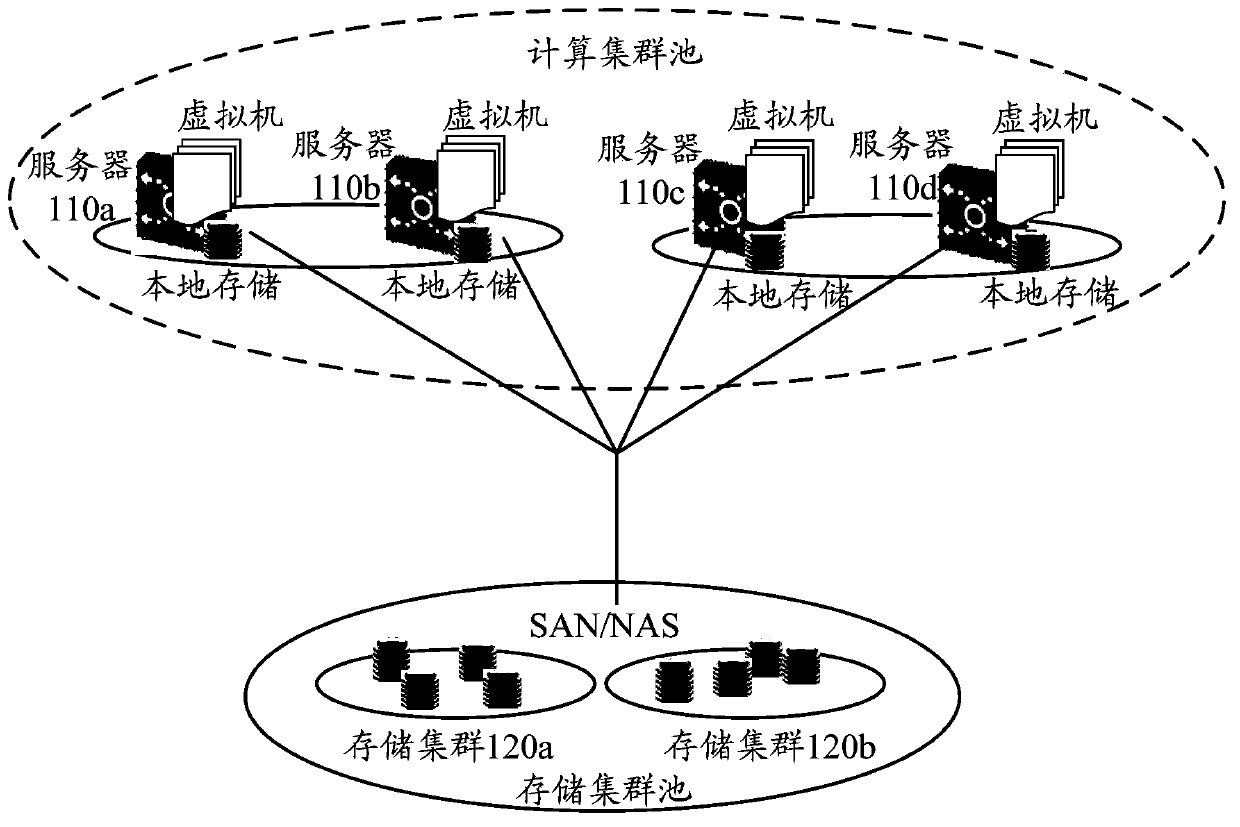

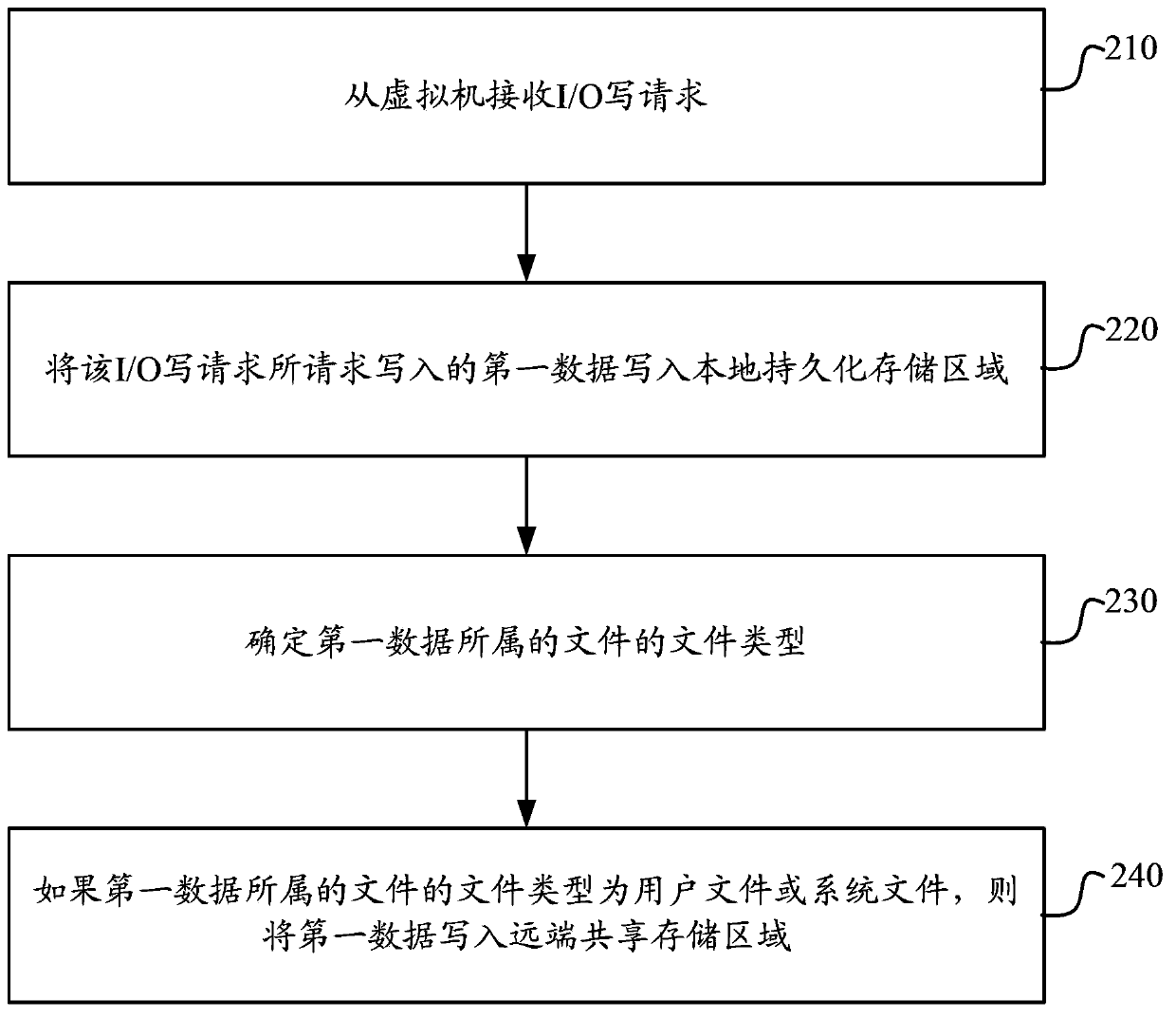

Method for processing input/output request, host, server and virtual machine

InactiveCN103389884AImprove experienceReduce visitsInput/output to record carriersTransmissionVirtual machineInput/output

The embodiment of the invention provides a method for processing an input / output (I / O) request and a server. The method comprises the following steps of receiving an input / output (I / O) write request from a virtual machine; writing first data requested to be written by the input / output (I / O) write request into a local persistent storage area; determining a file type of a file to which the first data belong; if the file type of the file to which the first data belong is a user file or a system file, writing the first data into a far-end shared storage area. According to the embodiment of the invention, by determining the file type of the file to which the first data stored in the local persistent storage area belong, the first data stored in the local persistent storage area are written into the far-end shared storage area under the condition that the file to which the first data belong is the user file or the system file, and the page view for the far-end shared storage area can be reduced.

Owner:HUAWEI TECH CO LTD

Safety data repetition removing method and system applicable to backup system

ActiveCN103530201AReduce the burden onImprove performanceUser identity/authority verificationInternal/peripheral component protectionComputer hardwareData loss

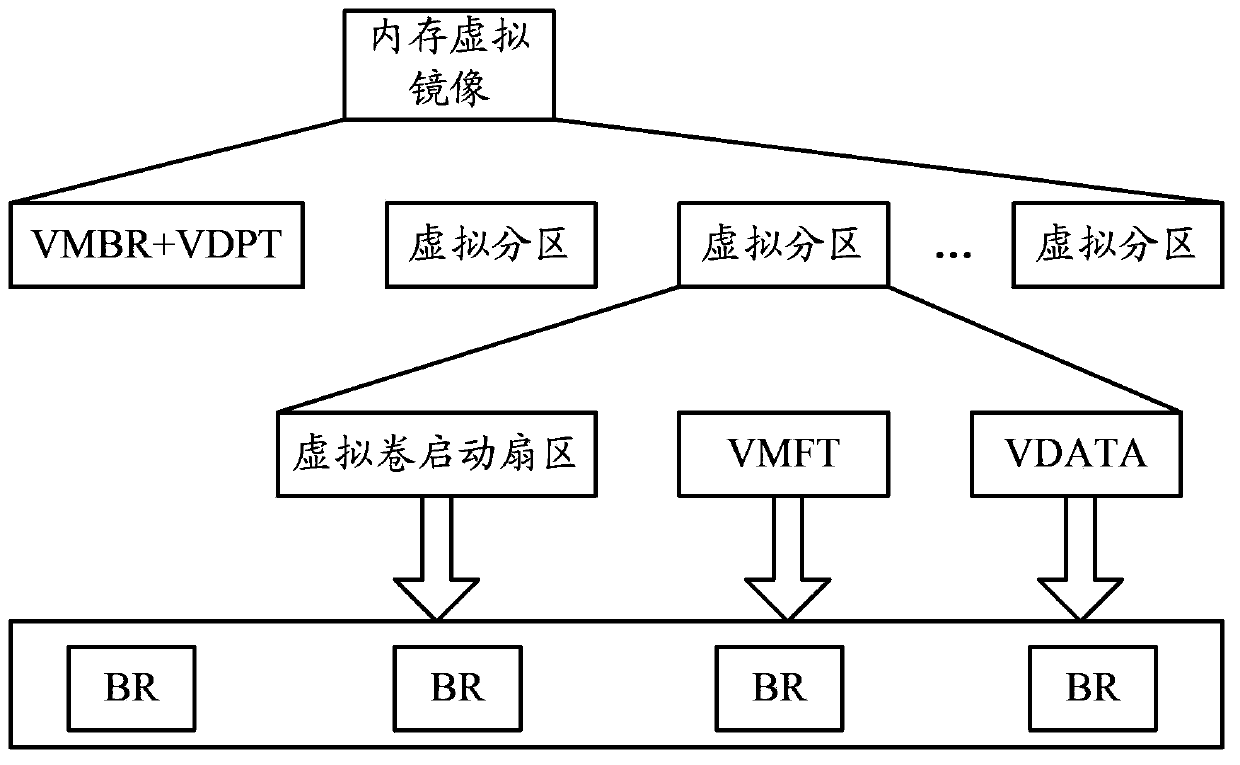

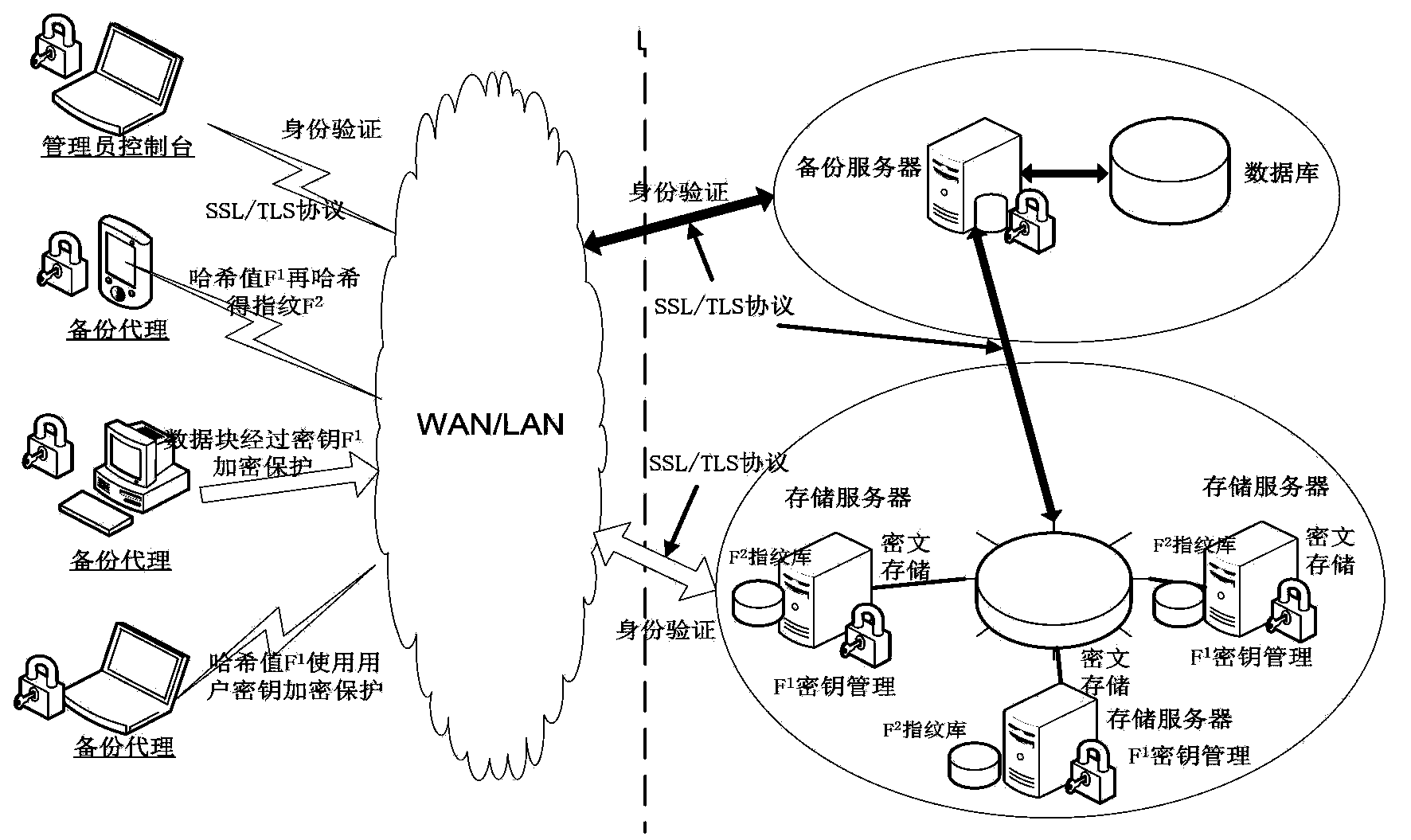

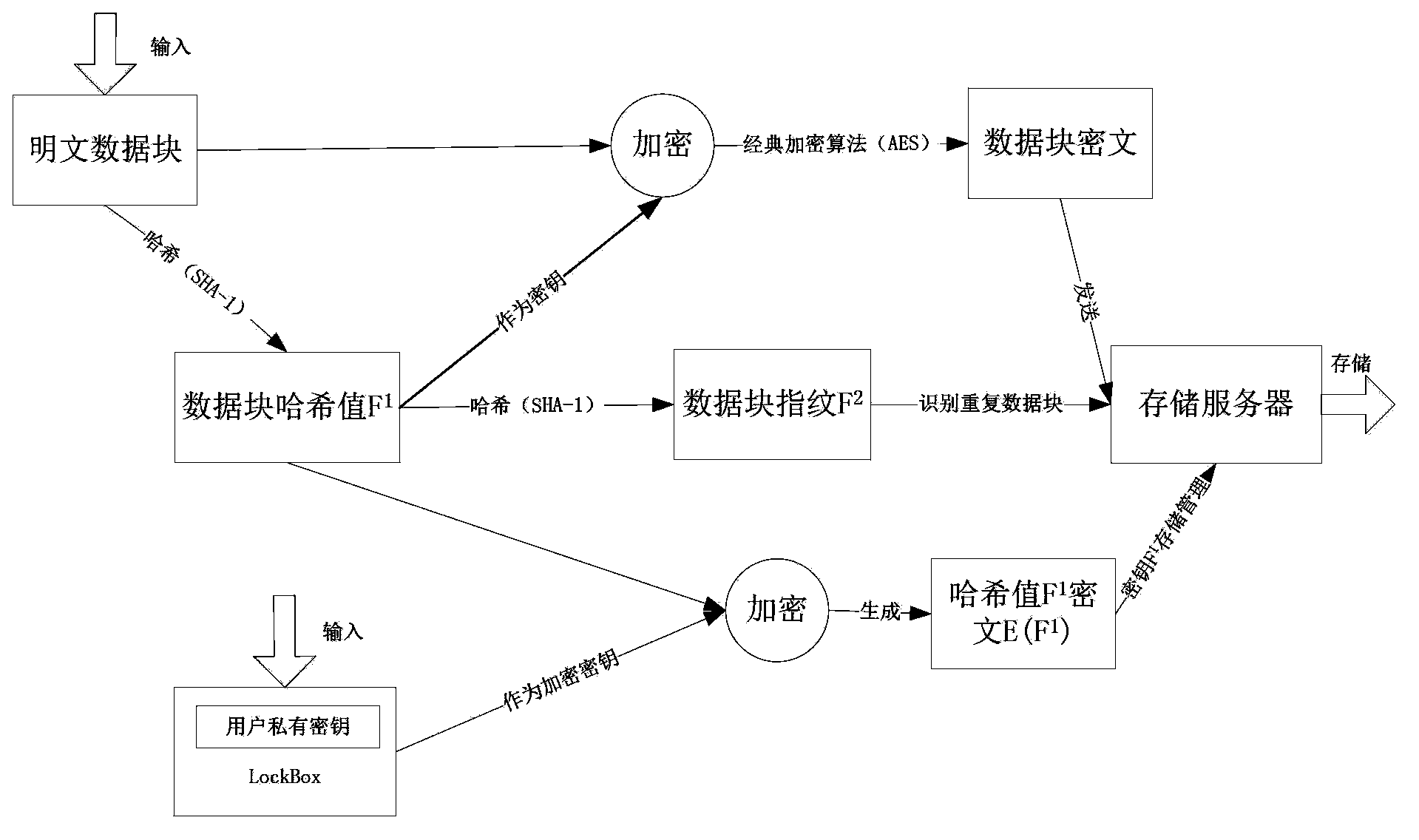

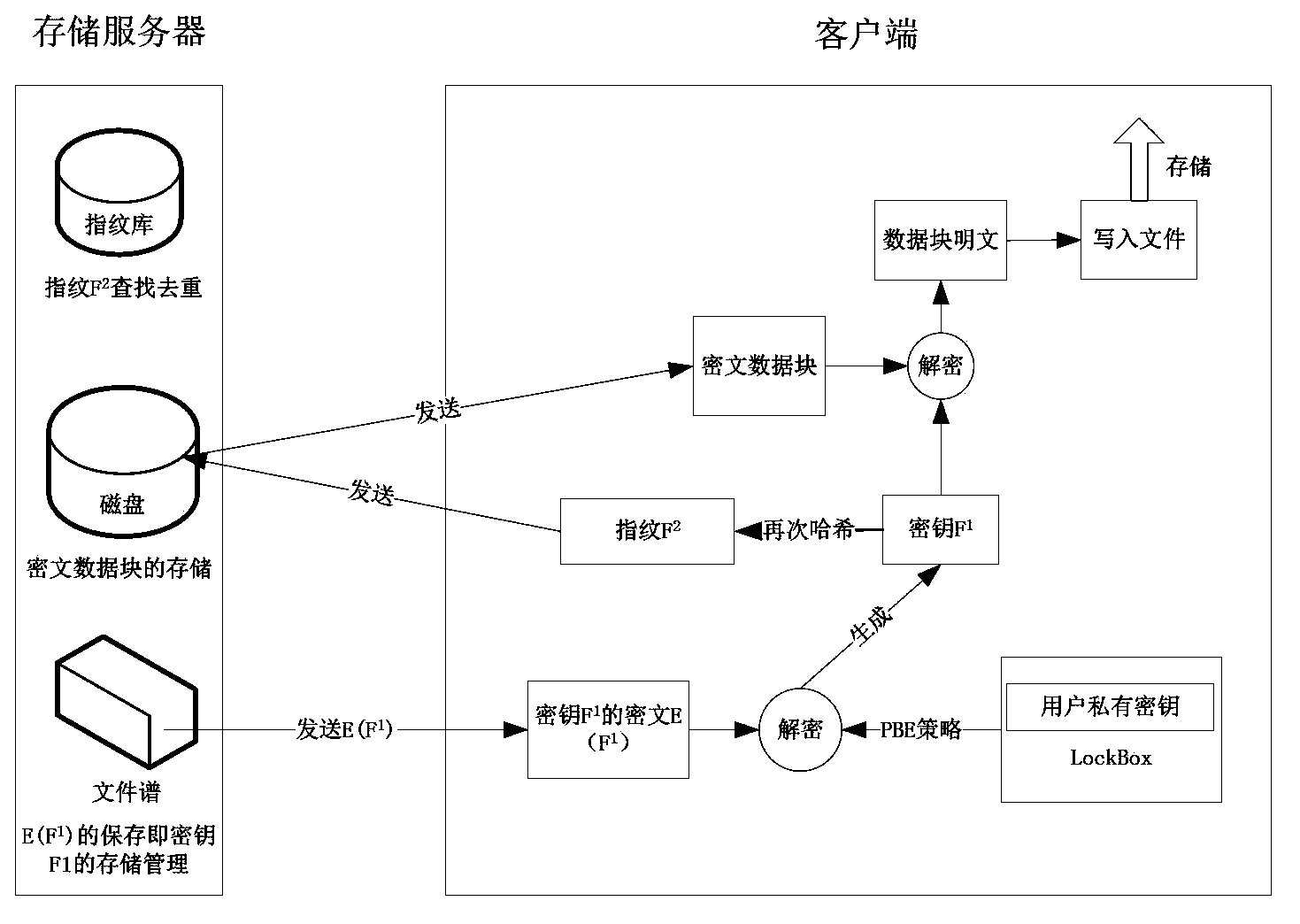

The invention discloses a safety data repetition removing method applicable to a backup system. The method includes the following steps that a backup request submitted by a user is received; all files needing backup are partitioned and multiple data blocks of different sizes are obtained; the Hash algorithm is used for calculating the Hash value F1 of each data block, wherein the Hash values F1 serve as encrypting keys of the data blocks; the Hash algorithm is used again for calculating the Hash value F2 of the Hash value F1 of each data block, wherein the Hash values F2 serve as fingerprints of the data blocks to identify repeated data blocks and the classic encryption algorithm and a private key of the user are used for encrypting the Hash values F1 of the data blocks to obtain ciphertexts E (F1) of the Hash values F1 of the data in order to protect the encrypting keys of the data blocks. All the data block fingerprints F2 and the like are packaged in sequence into fingerprint segments which are transmitted to a storage server. The SSL protocol is adopted in all communication processes. According to the safety data repetition removing method applicable to the backup system, the safety data repetition removing method is adopted to ensure that storage safety problem that data are lost or tampered is solved under the condition that the data repetition removing rate is not changed.

Owner:HUAZHONG UNIV OF SCI & TECH

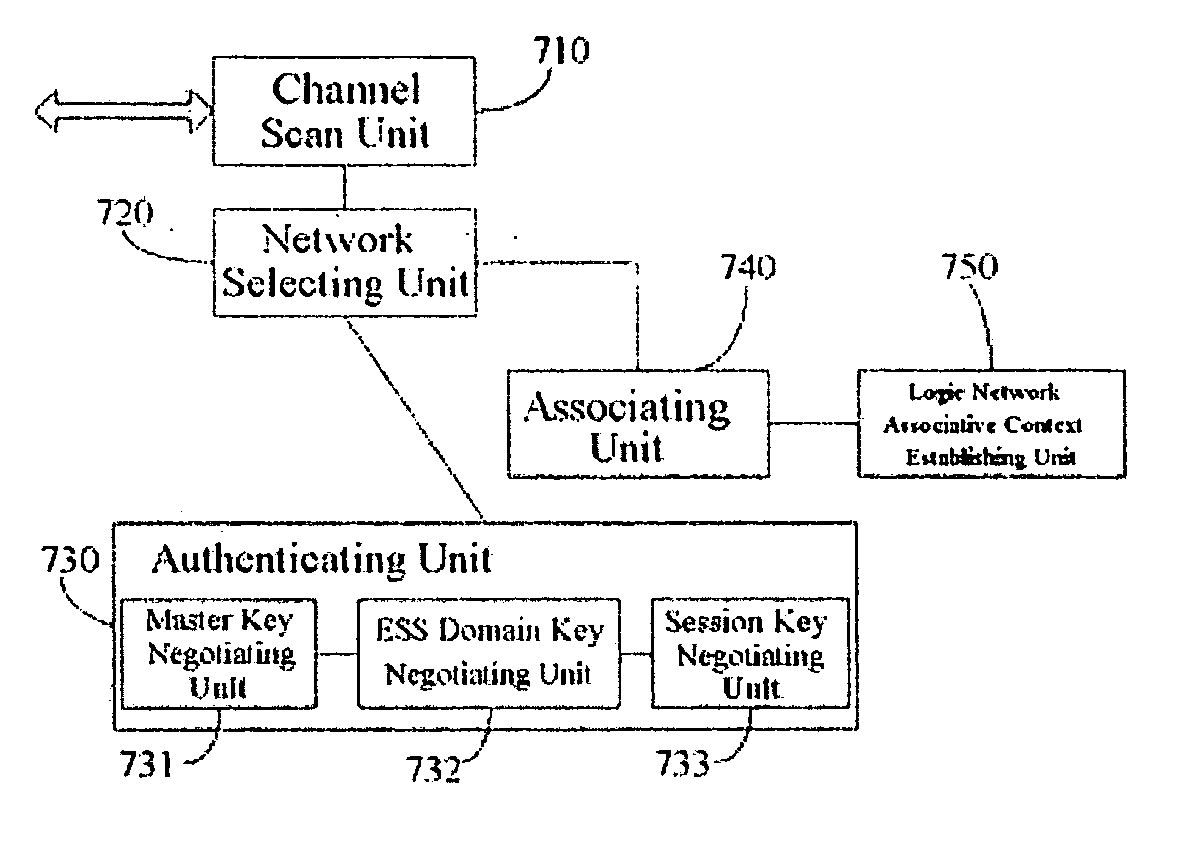

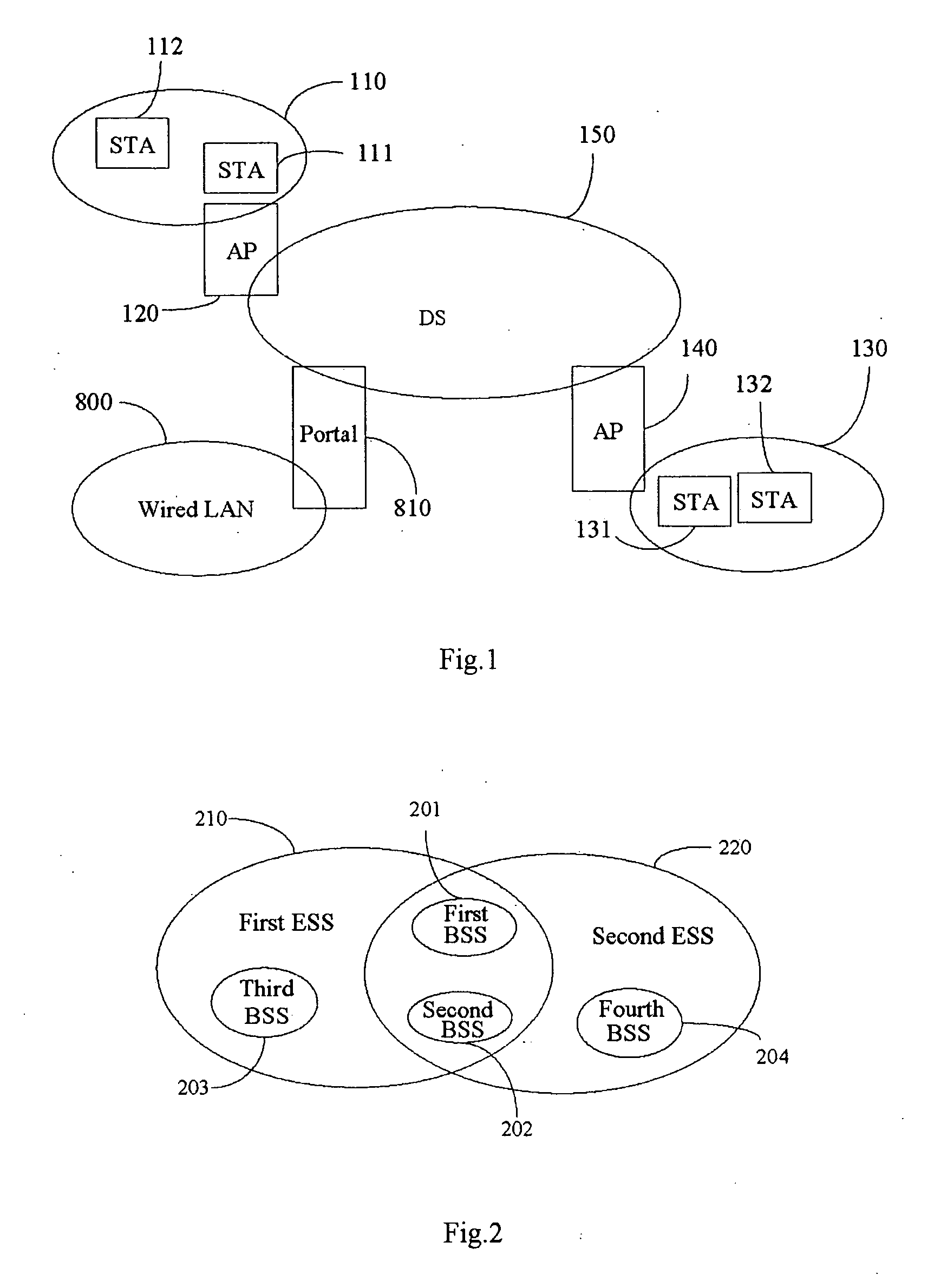

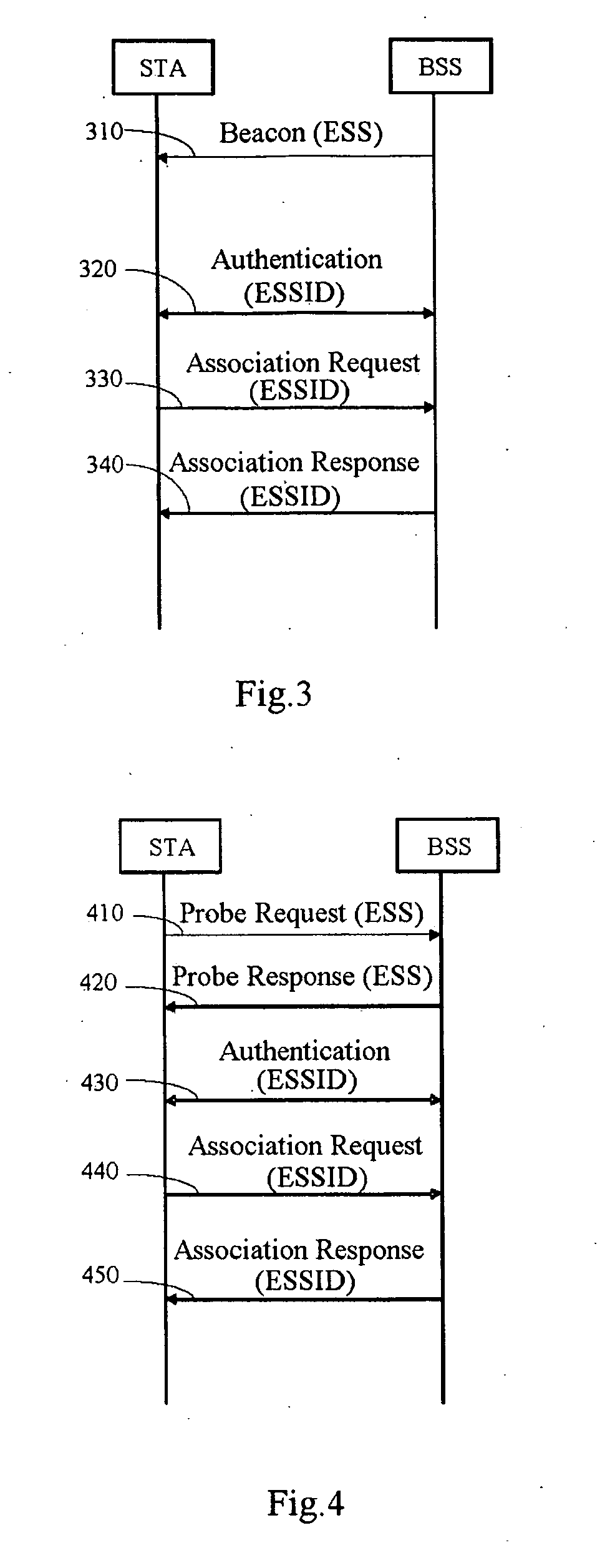

Method for a wireless local area network terminal to access a network, a system and a terminal

InactiveUS20070153732A1Reduce visitsReduce in quantityAssess restrictionNetwork topologiesTerminal equipmentBasic service

The present invention discloses a method for a wireless local area network terminal to access a network, a local area network system and a wireless local area network terminal. The wireless local area network includes at least one basic service set and at least one extended service set thereof constructed by a plurality of terminal equipments. In the invention, the extended service set has a uniquely identified extended service set ID, when performing channel scan, the extended service set ID parameter is added; and network selection is performed based on the extended service set ID parameter. Moreover, in the method according to the invention, network sharing may also be performed based on an extended service set.

Owner:HUAWEI TECH CO LTD

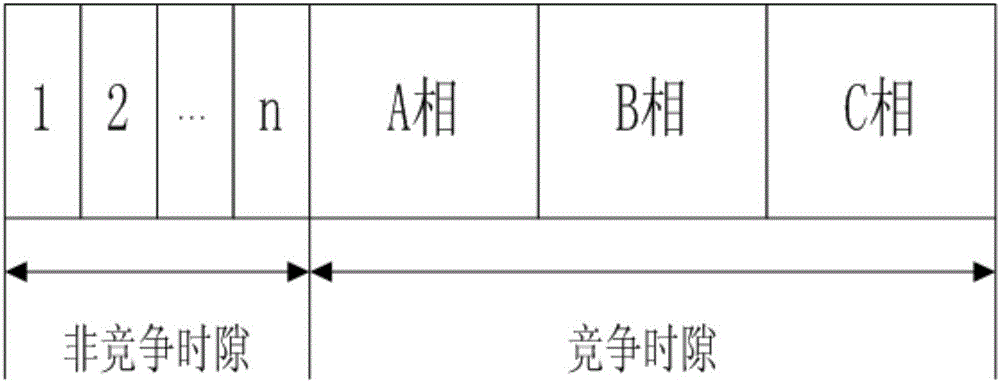

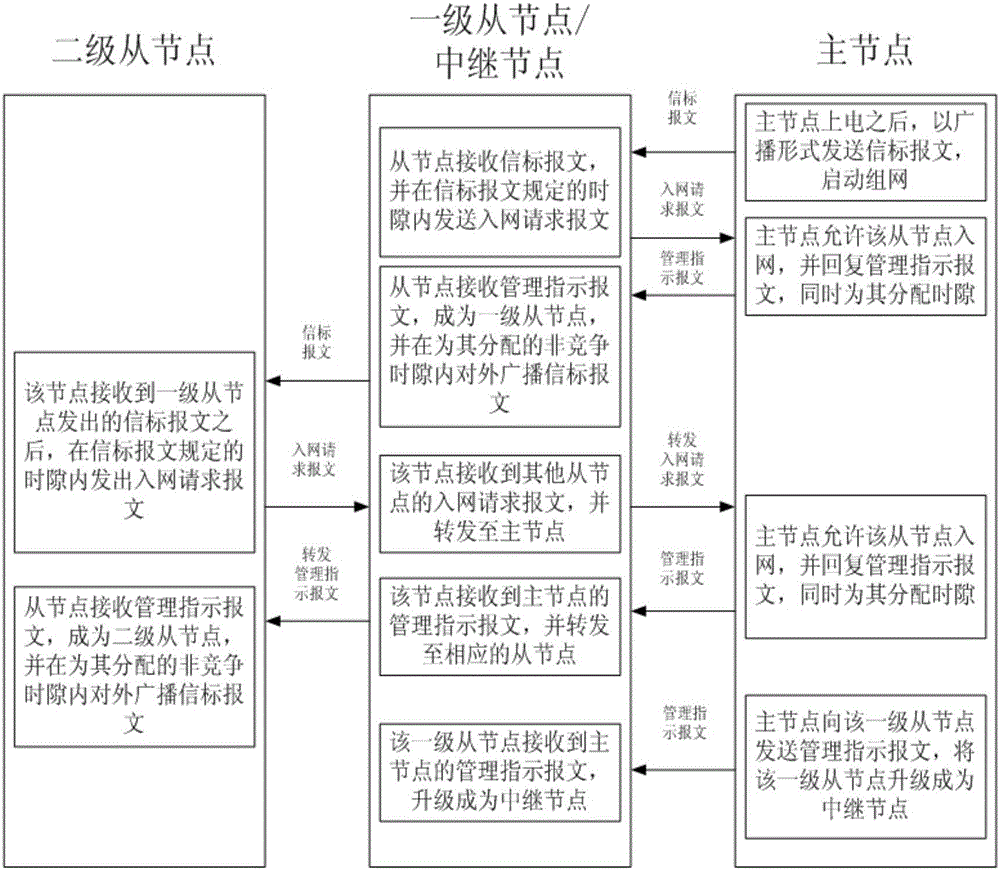

Networking method for power line carrier module applied to power utilization information acquisition

ActiveCN106100698AReduce visitsThe networking process is adjusted in an orderly mannerElectric signal transmission systemsPower distribution line transmissionCarrier signalAccess time

The invention discloses a networking method for a power line carrier module applied to power utilization information acquisition. A master node-dominated networking way is adopted in a networking initialization process; a beacon message is sent by a master node; network access applications are initiated specific to slave nodes which receive the beacon message sent by the master node; and silent modes are kept and channel access is not performed specific to slave nodes which do not receive the beacon message sent by the master node. The networking method for the power line carrier module applied to power utilization information acquisition is provided through redesign of a networking flow, the master node-dominated networking way is adopted, and a time slot allocation mechanism is introduced to allocate a dedicated contention slot and a dedicated non-contention slot to networking, so that the channel access times of the nodes are reduced; an entire networking process is more ordered; and the networking time can be shortened greatly and effectively.

Owner:NANJING NARI GROUP CORP +1

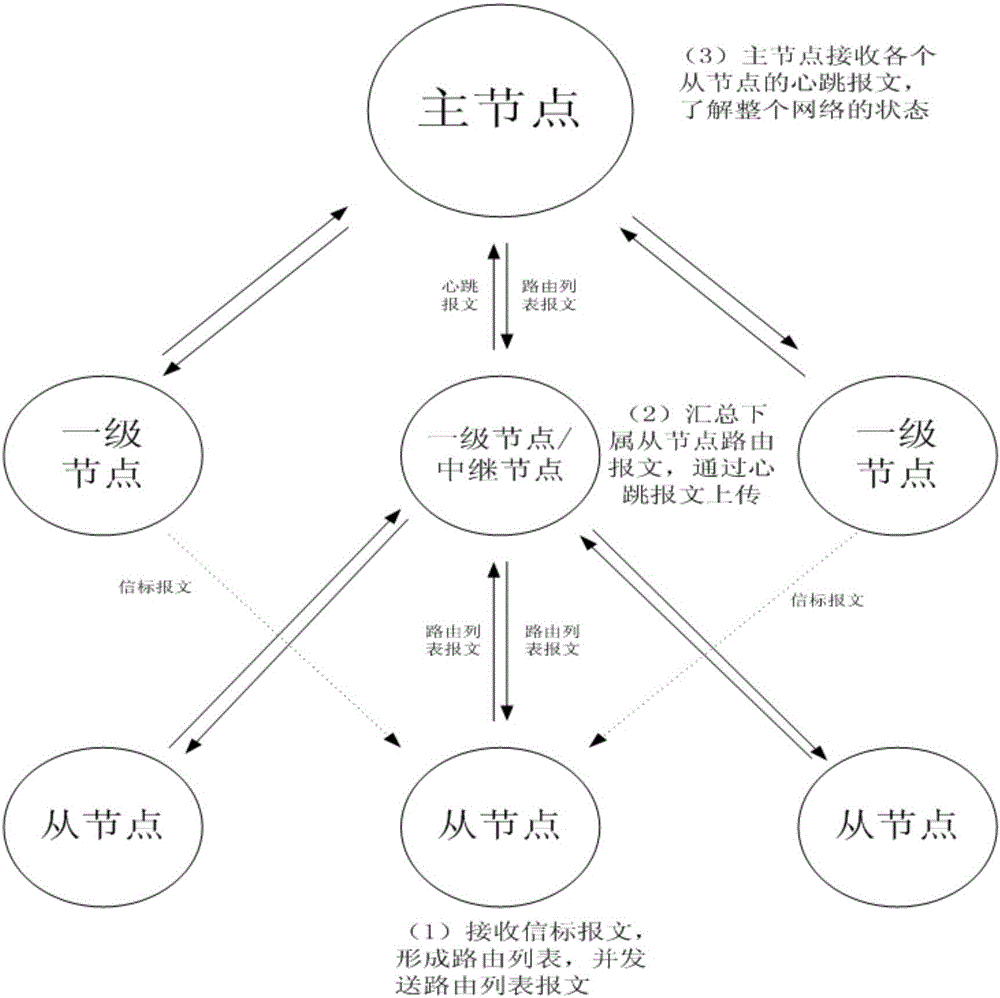

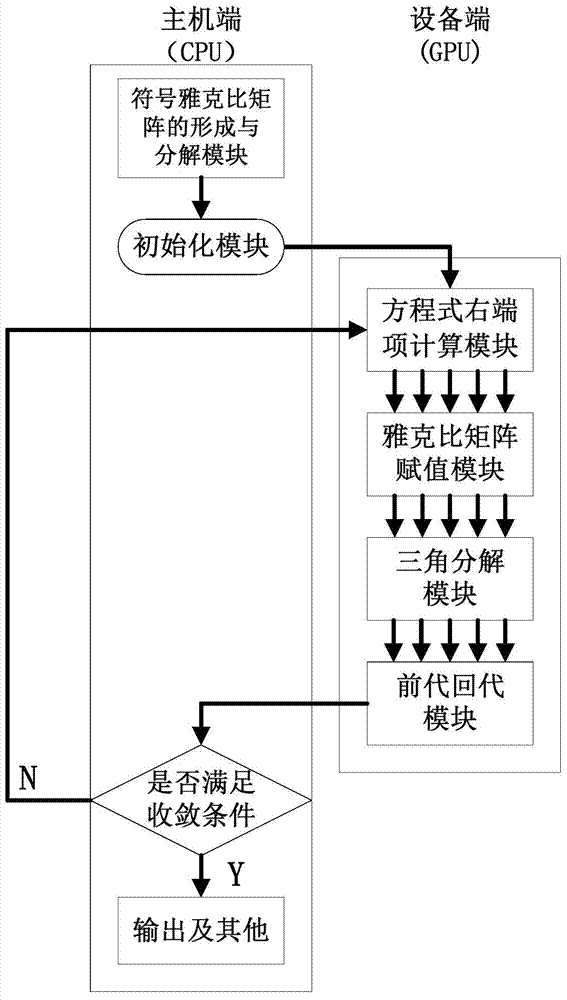

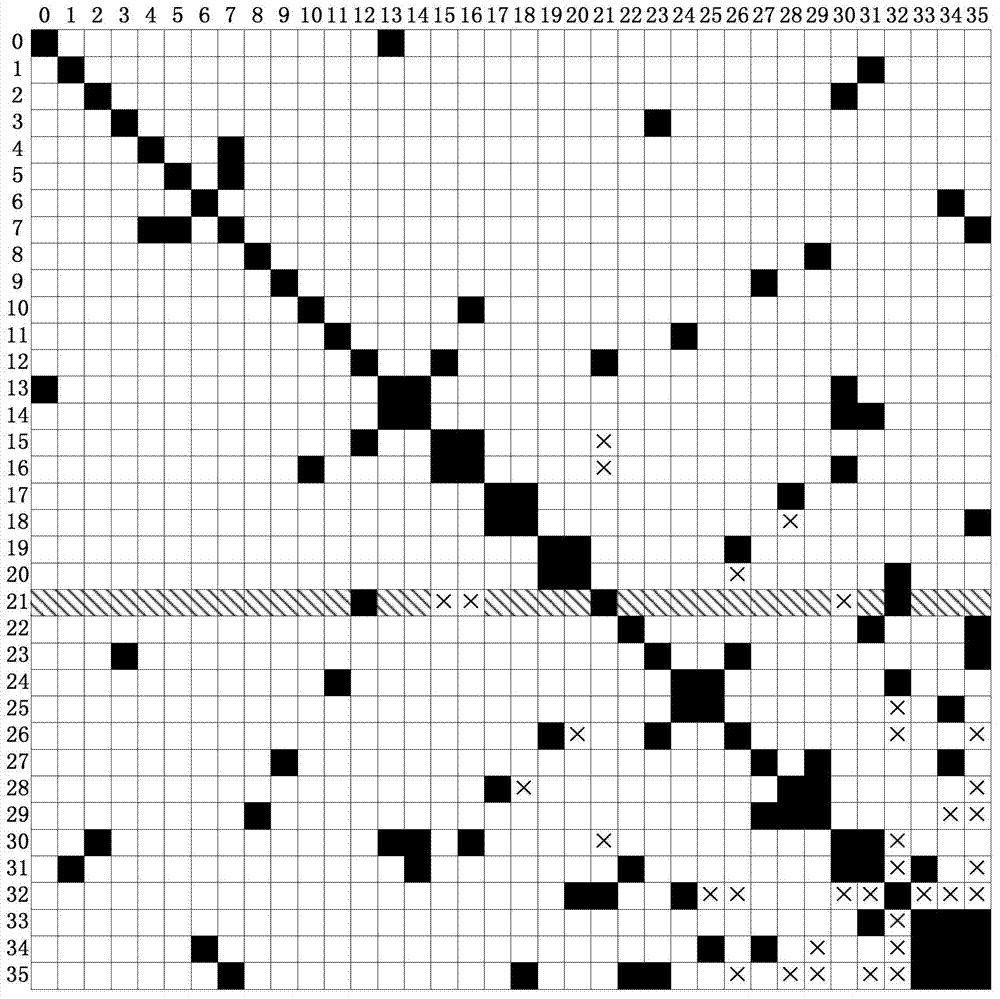

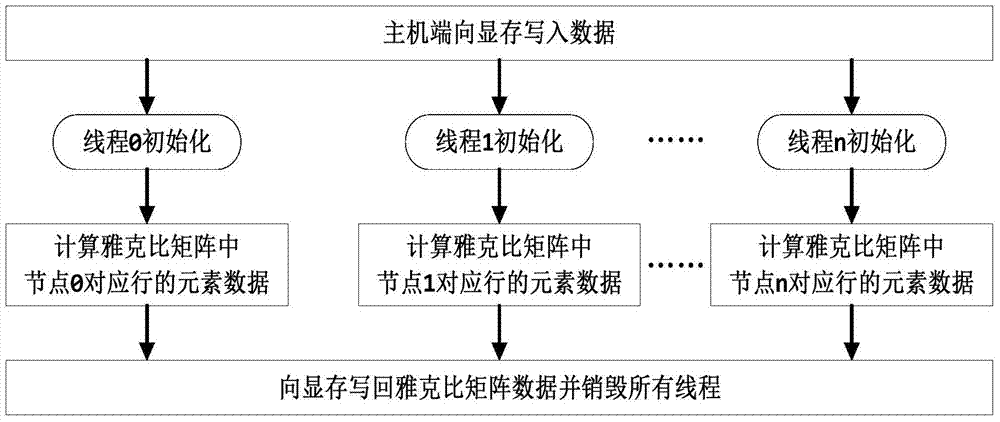

GPU (graphic processing unit) based parallel power flow calculation system and method for large-scale power system

ActiveCN103617150ARealize the loop iterative processImplement parallel build methodsComplex mathematical operationsMain processing unitArithmetic processing unit

The invention relates to a GPU (graphic processing unit) based parallel power flow calculation system and method for a large-scale power system. The system comprises a symbol Jacobian matrix forming and decomposing module, an initialization module, a power flow equation right-hand side calculation module, a jacobian matrix assignment module, an LU decomposing module and a forward and backward substitution module; the symbol Jacobian matrix forming and decomposing module is located on a host side, and the host side transmits calculating data to an equipment side; the power flow equation right-hand side calculation module, the jacobian matrix assignment module, the LU decomposing module and the forward and backward substitution module are sequentially connected on the equipment side. The method includes (1) transmitting data needed by calculation to the host side entirely; (2) generating a symbol Jacobian matrix and performing symbol composition on the symbol Jacobian matrix; (3) transmitting a decomposition result by the host side to the equipment side; (4) executing power flow equation right-hand side calculation; (5) executing Jacobian matrix assignment; (6) executing LU decomposition; (7) executing forward and backward substitution.

Owner:STATE GRID CORP OF CHINA +1

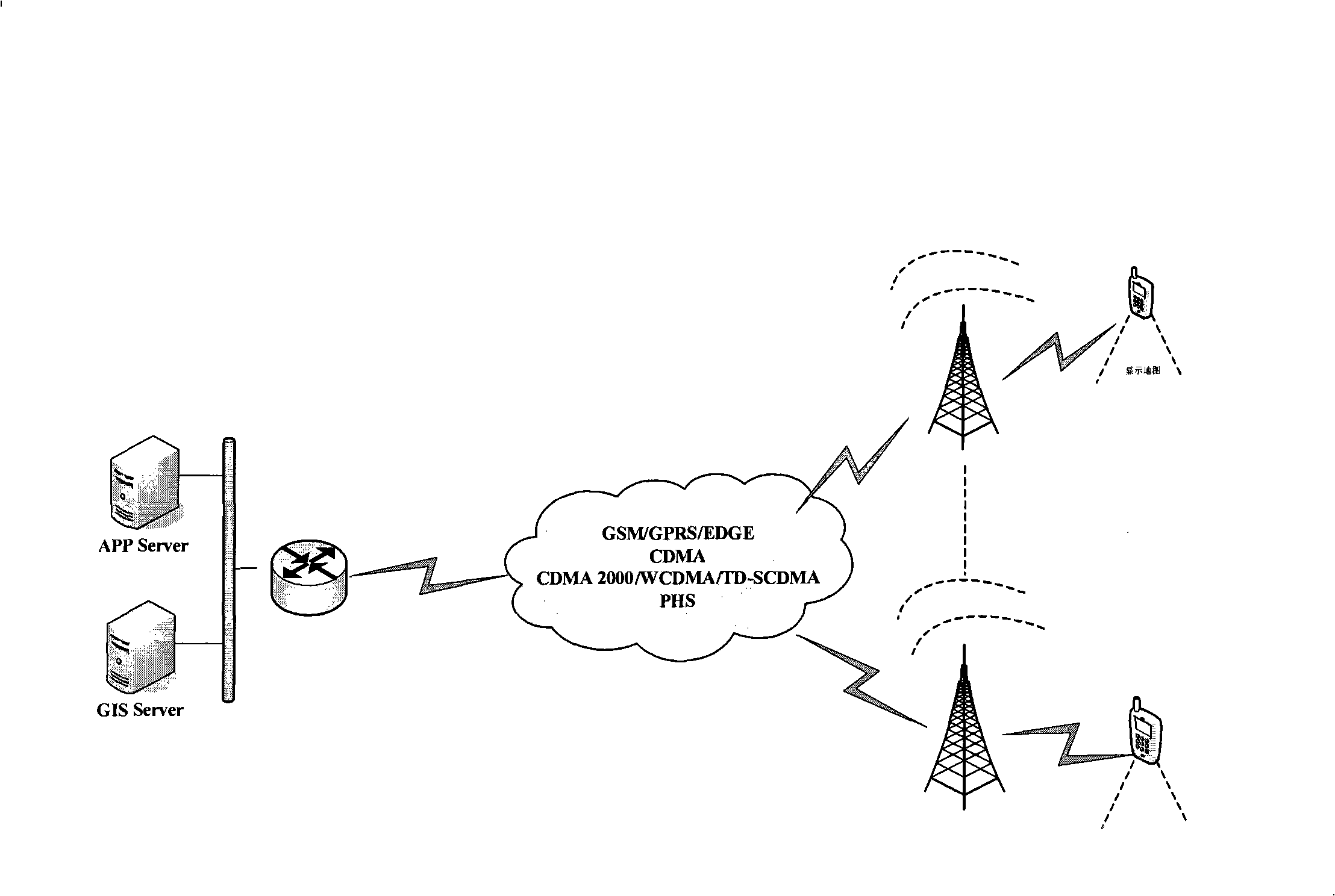

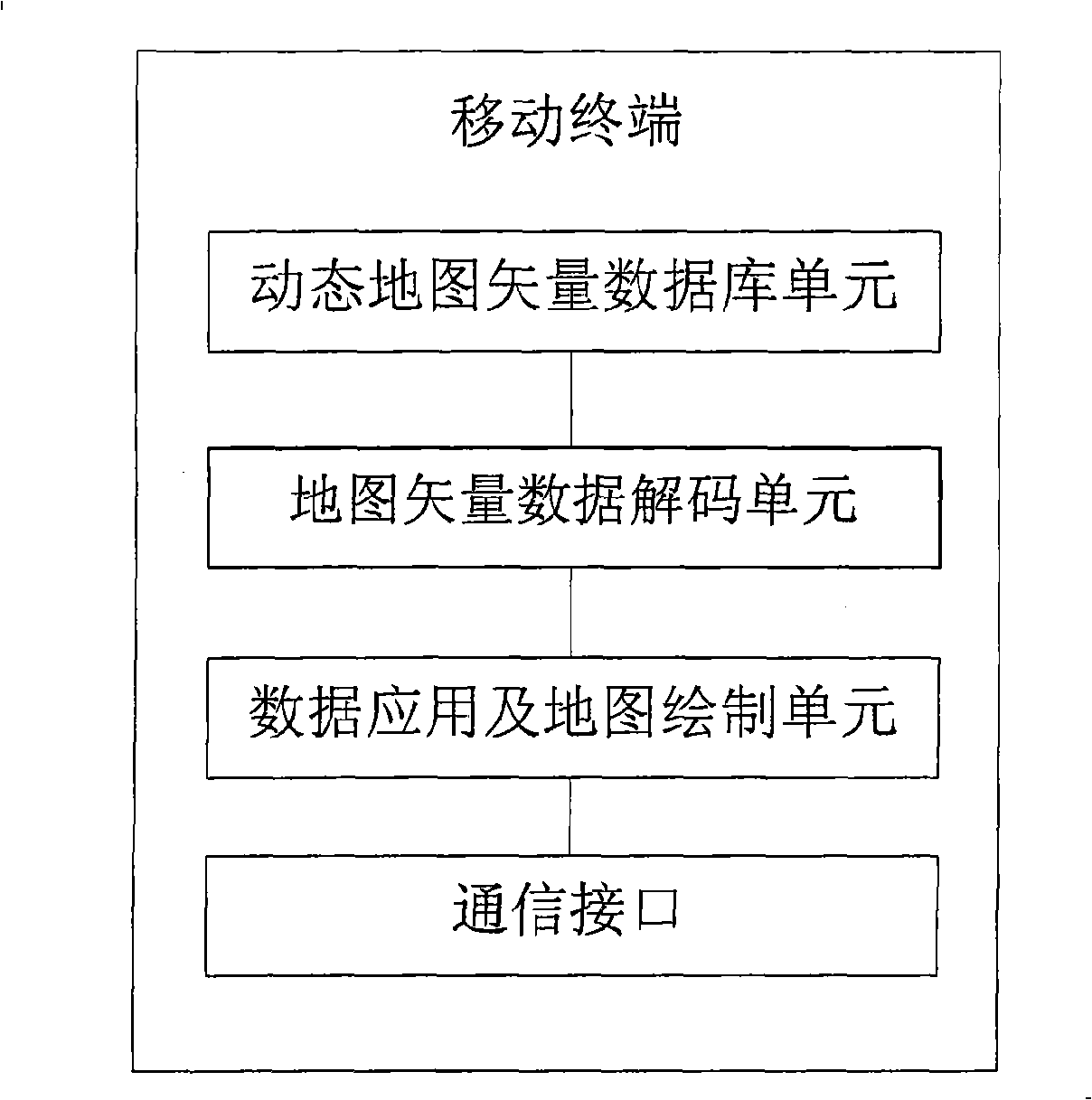

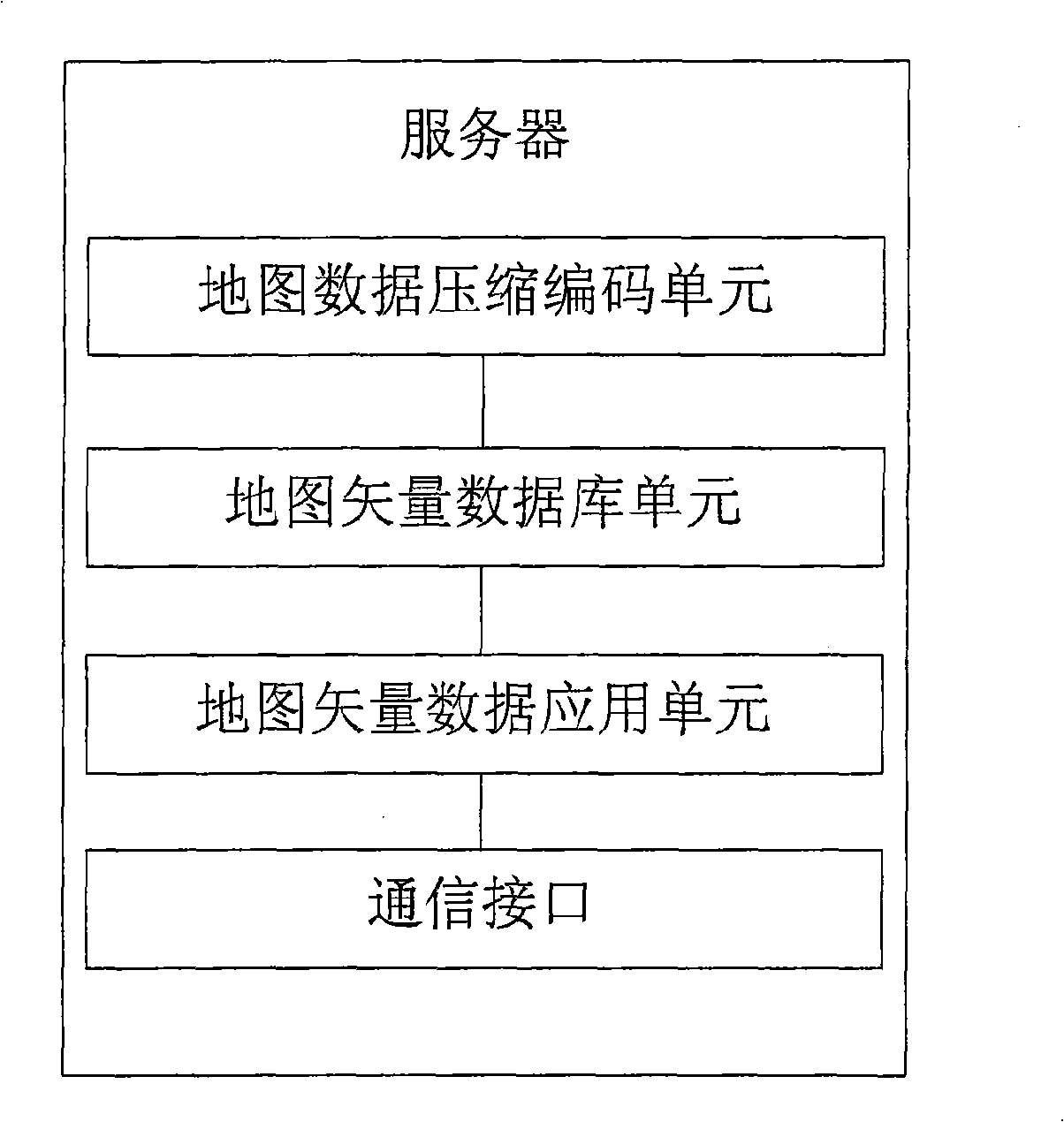

Map data processing method, system and mobile terminal based on mobile terminal

ActiveCN101290228ASmall amount of vector dataReduce data volumeInstruments for road network navigationSpecial data processing applicationsDisplay deviceComputer terminal

The invention provides a method for processing map data based on a mobile terminal, a system and the mobile terminal. The method comprises the following steps of: carrying out format conversion processing for the map data and generating map vector data; encoding the generated map vector data and generating the map vector data with a compressed format; constructing a dynamic vector database in the mobile terminal; downloading the map vector data with the compressed format into the dynamic vector database; reading the map vector data with the compressed format from the dynamic vector database and decoding the map vector data with the compressed format to obtain the map vector data; and mapping a map which is corresponding to the map vector data on a display of the mobile terminal according to the map vector data and mobile terminal display pixel data. The method, the system and the mobile terminal are used to solve the problem that map data storage is matched with display and real time map scanning, voice navigation, real time geographic information and interest point searching and the like on the mobile terminal are realized in deed.

Owner:ALIBABA (CHINA) CO LTD

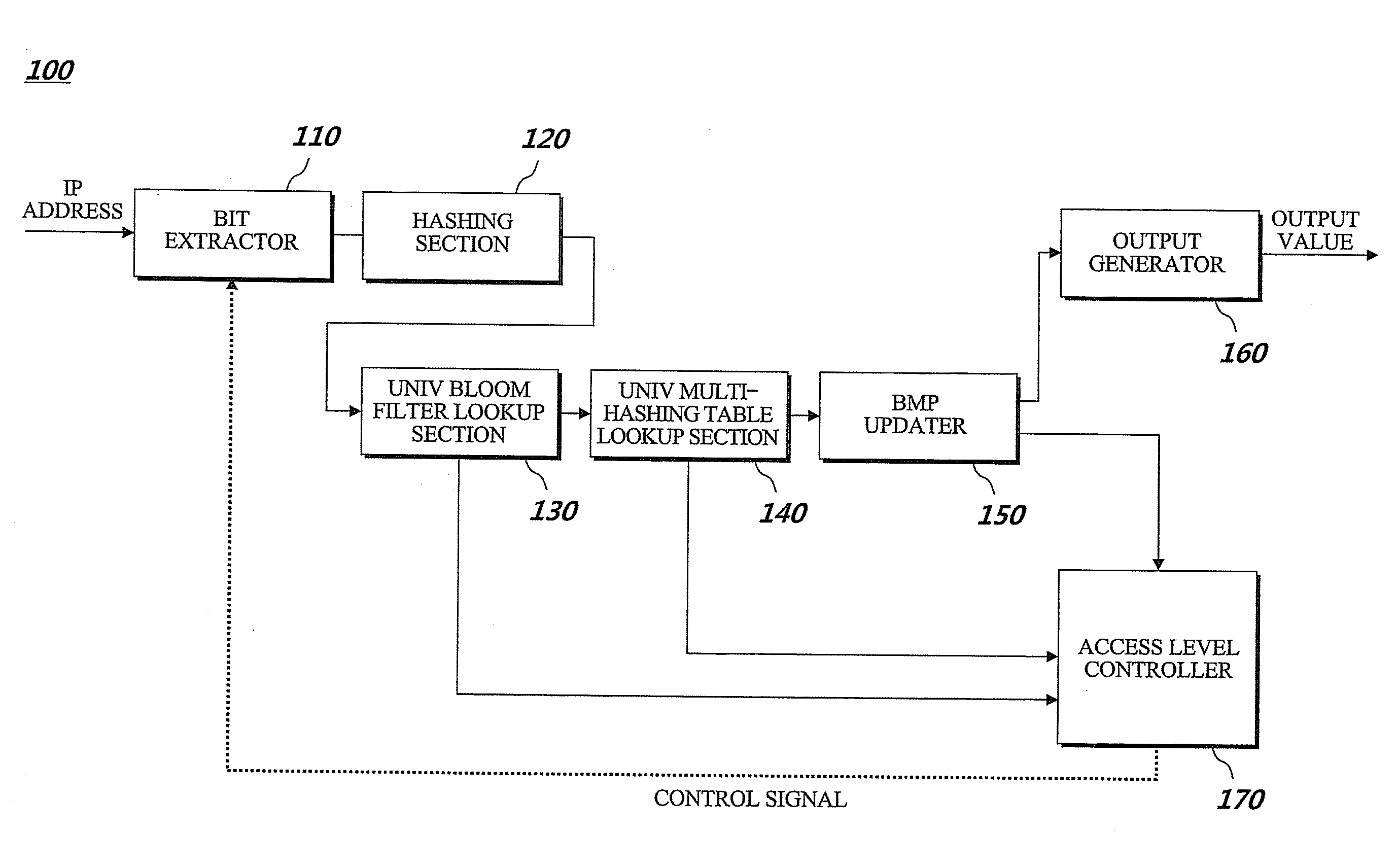

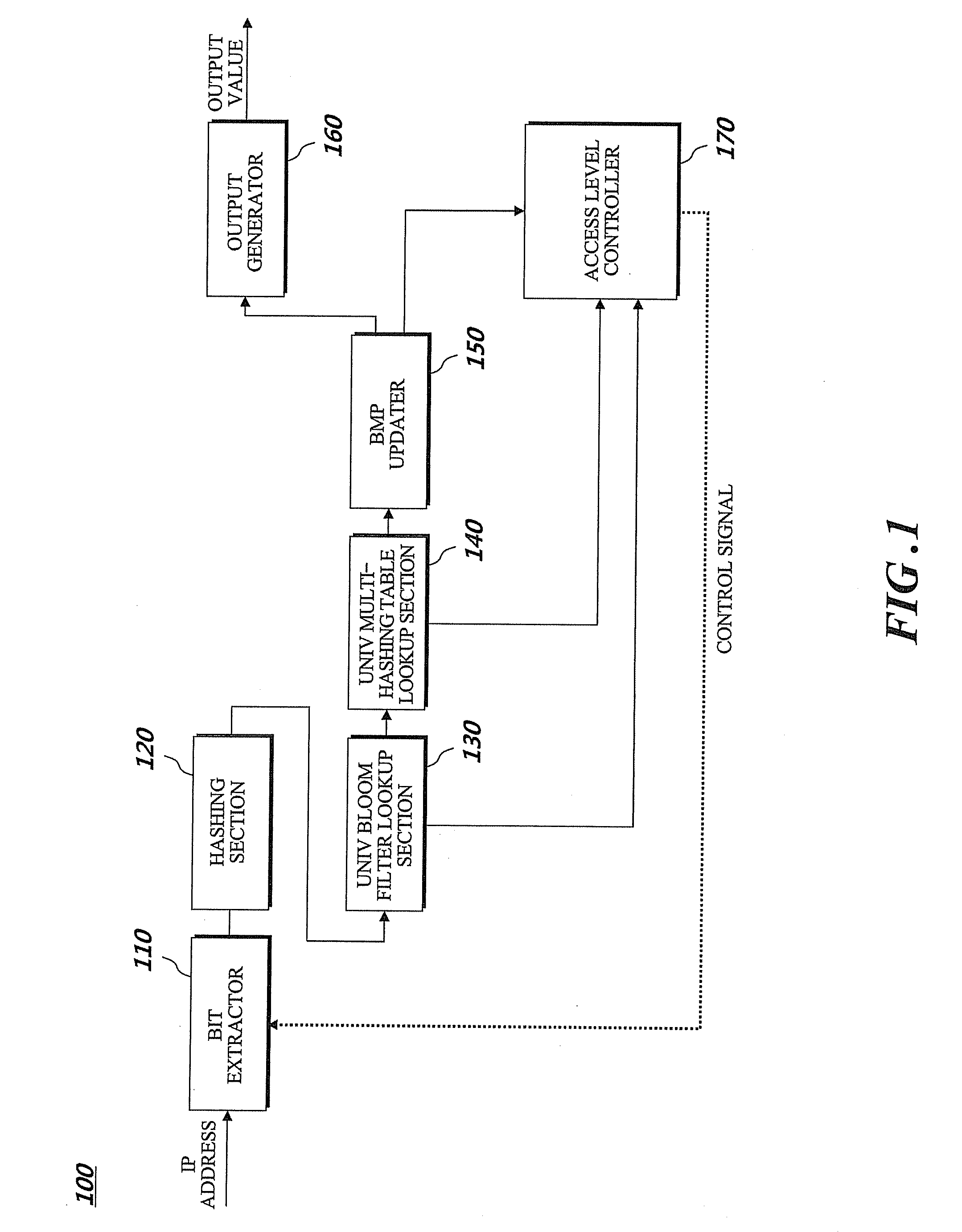

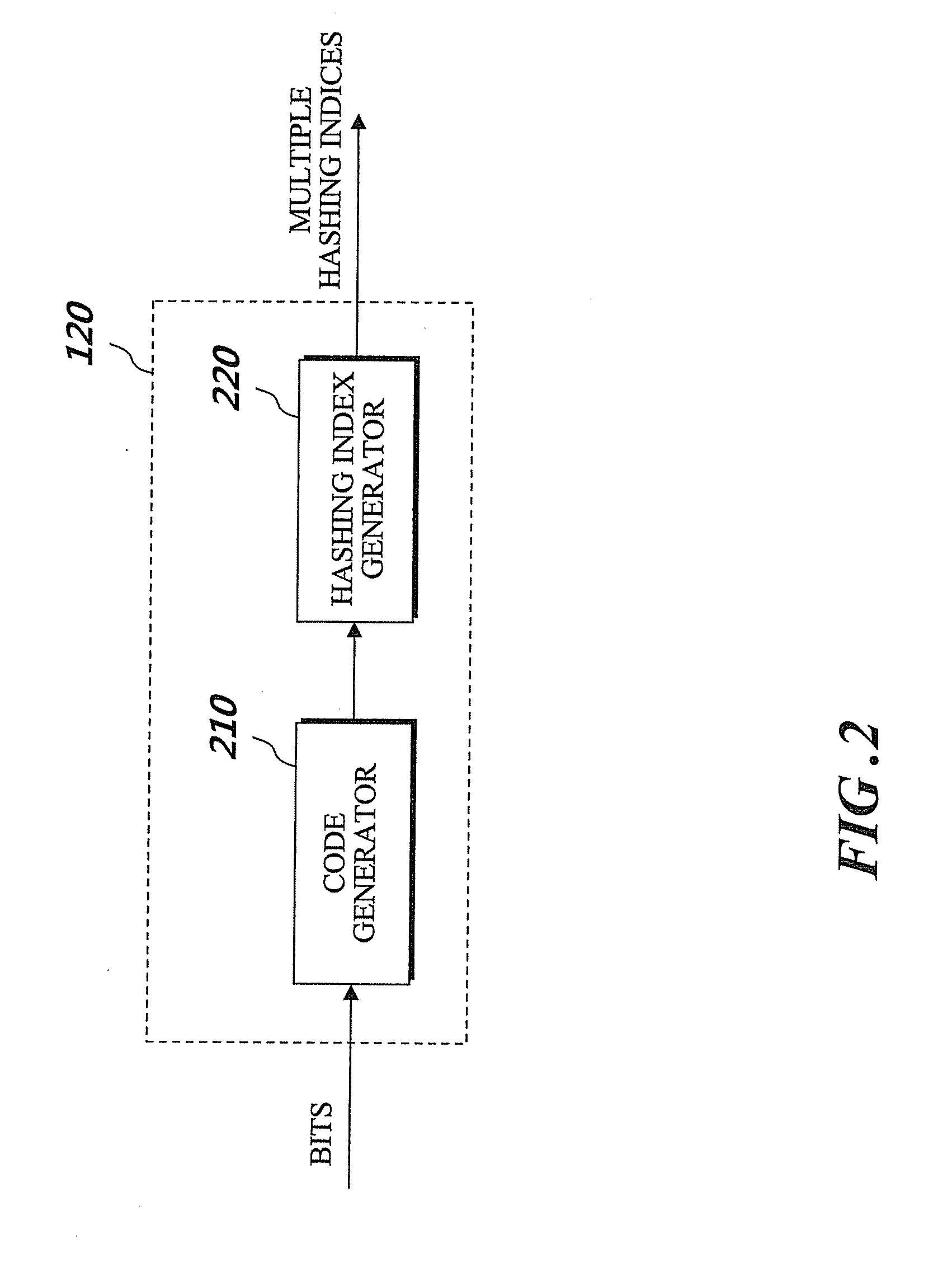

Method and apparatus for searching IP address

ActiveUS20100284405A1Reduce memory requirementsEfficient IP address lookupData switching by path configurationIp addressBloom filter

The present disclosure provides IP address lookup method and apparatus. In one embodiment of the disclosure, an IP address lookup apparatus stores node information generated for a binary search-on-levels architecture in a universal multi-hashing table prior to searching with an advance filtering by a universal Bloom filter minimizing the number of accesses to the universal multi-hashing table before executing the IP address lookup.

Owner:EWHA UNIV IND COLLABORATION FOUND

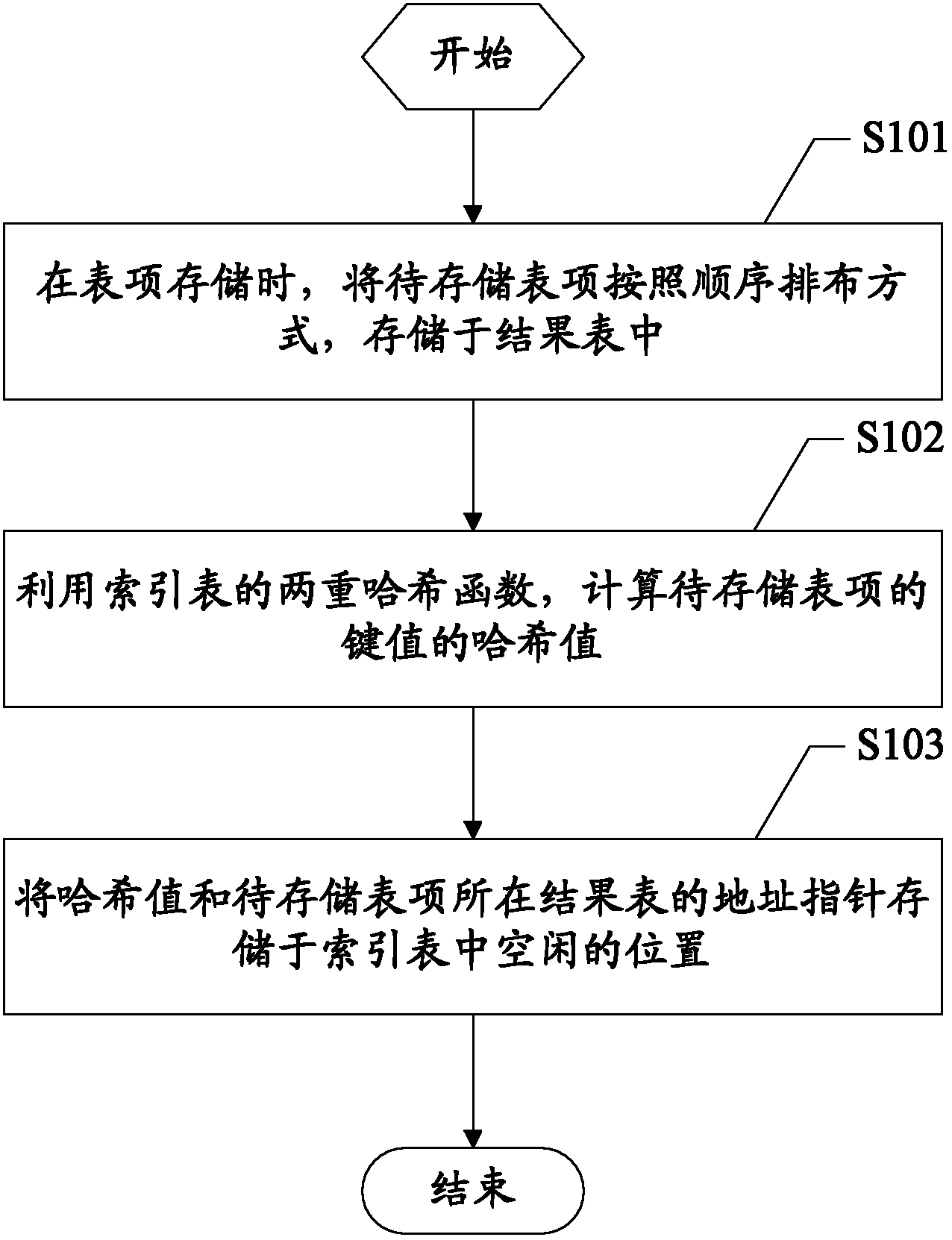

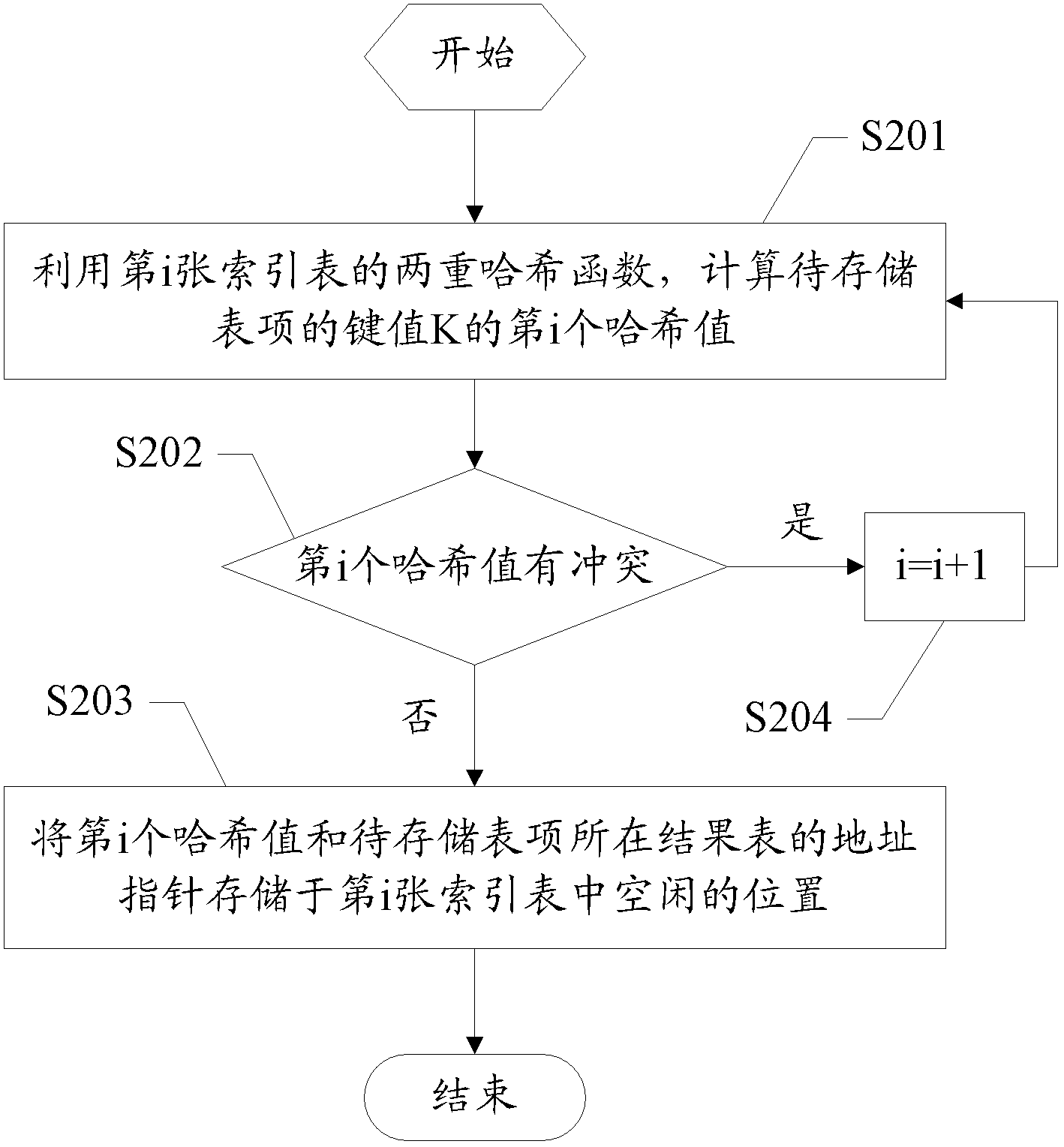

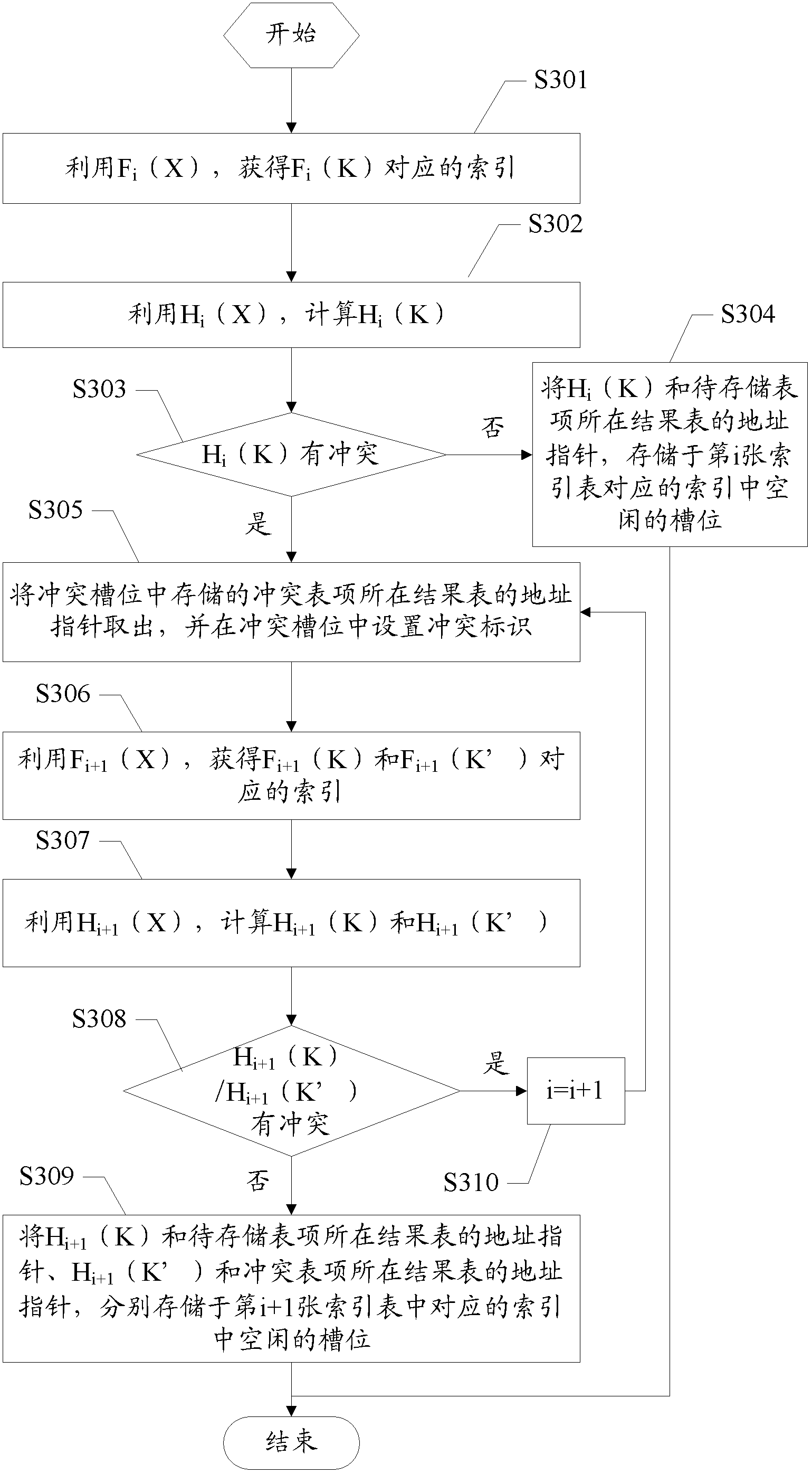

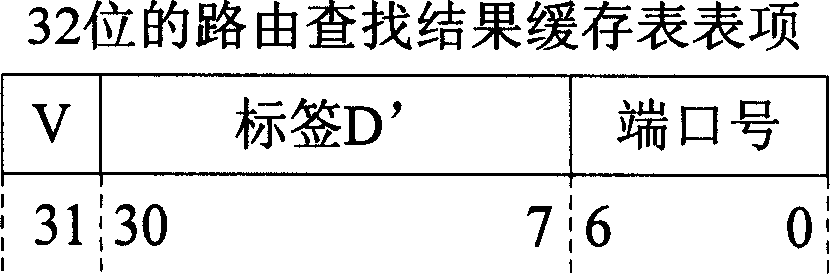

Method and device for processing table items based on Hash table

ActiveCN102682116AIncrease storage capacityImprove space utilizationSpecial data processing applicationsTheoretical computer scienceAccess frequency

The invention discloses a method and a device for processing table items based on a Hash table. The method comprises the steps of: during table item storage, storing table items to be stored in a result table in a sequential arrangement manner; calculating Hash values of key values of the table items to be stored by using dual Hash functions of an index table; and storing the Hash values and address pointers of the result table of the table items to be stored at idle positions in the index table. According to the invention, while lower access frequency and higher inquiry speed are guaranteed, the clause memory capacity of the service table items is increased effectively, the capacity of the Hash table for supporting possible services is increased, high spatial utilization rate is achieved for the Hash table, in addition, the probability of conflicts is reduced, and thus, the performance and the capacity of the Hash table are balanced.

Owner:ZTE CORP

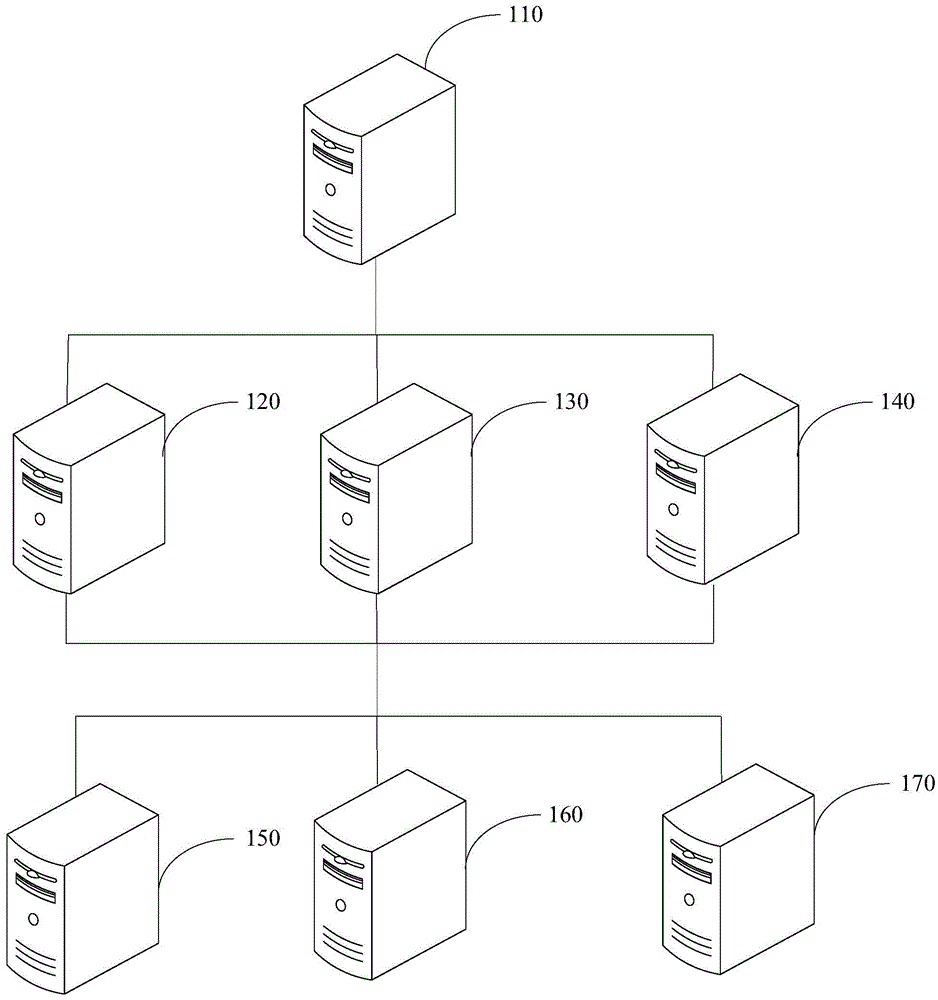

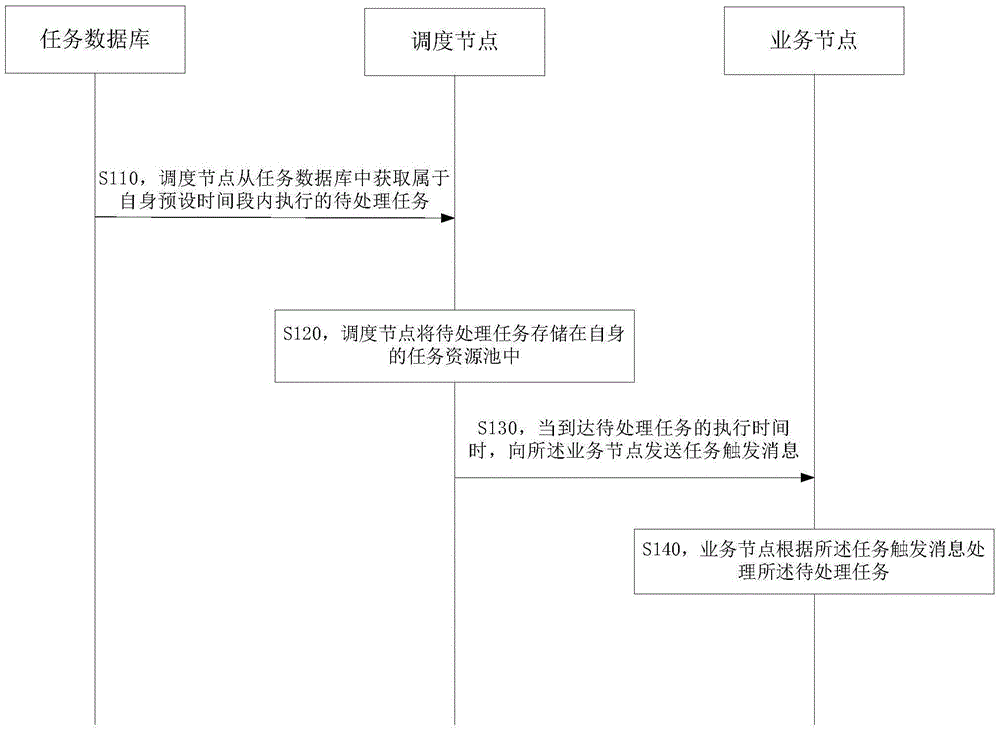

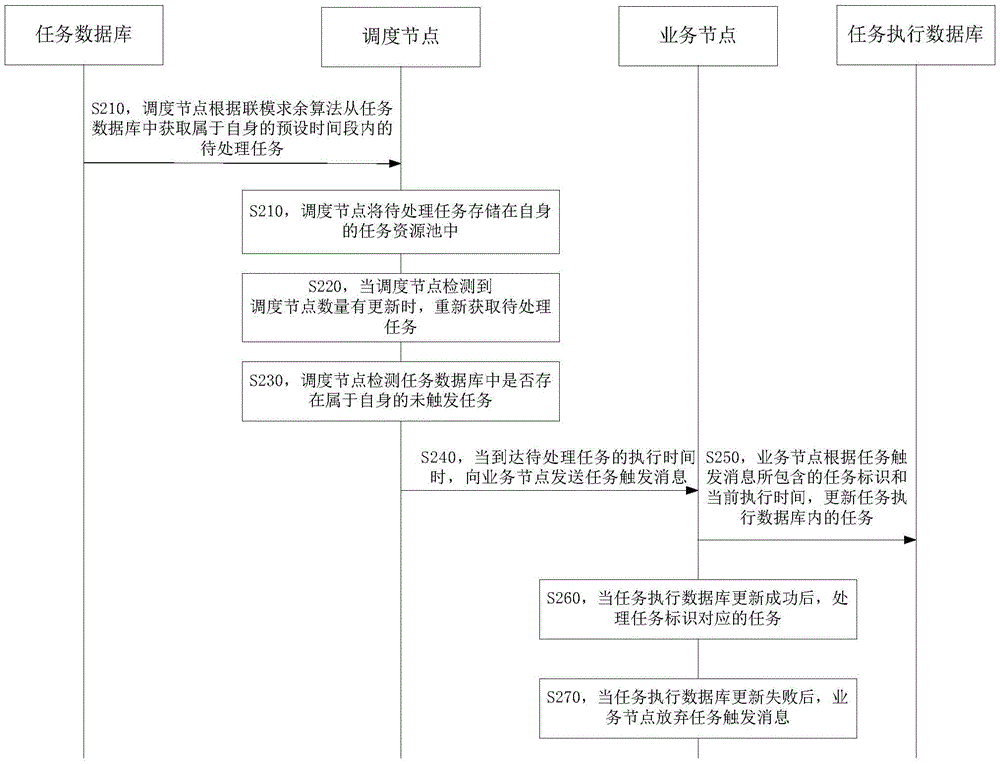

Task scheduling method and system

ActiveCN105468450AReduce visitsImprove processing powerProgram initiation/switchingSpecial data processing applicationsAccess timeResource pool

The embodiment of the invention discloses a task scheduling method and system. A scheduling node obtains waiting tasks belonging to the scheduling node in a preset time period from a task database according to a preset algorithm. The scheduling node stores the obtained waiting tasks in the task resource pool of the scheduling node. When the execution time of the tasks in the task resource pool is arrived, the scheduling node sends a task triggering message to a business node; the business node processes the waiting tasks according to the task triggering message. In the task scheduling process, a plurality of tasks belonging to the scheduling node in the preset time period are drawn from the task database by the scheduling node; the access times of the task database is reduced; the processing capability of the single scheduling node is improved; the shared lock mechanism of the database is unnecessarily used in the task scheduling process; the wait resource is reduced; and the concurrent processing performance of mass tasks is improved.

Owner:CHENGDU HUAWEI TECH

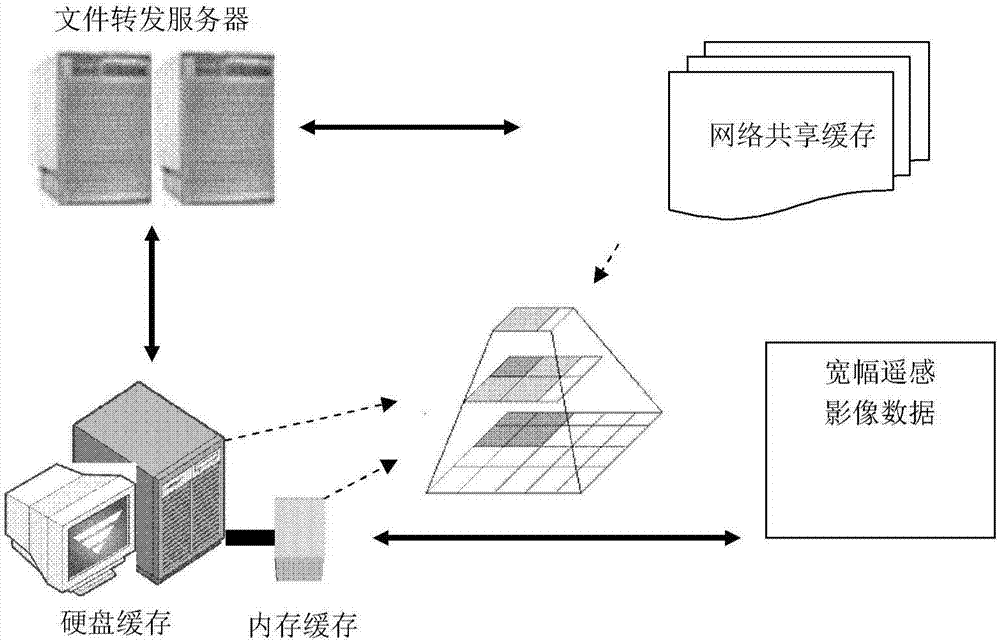

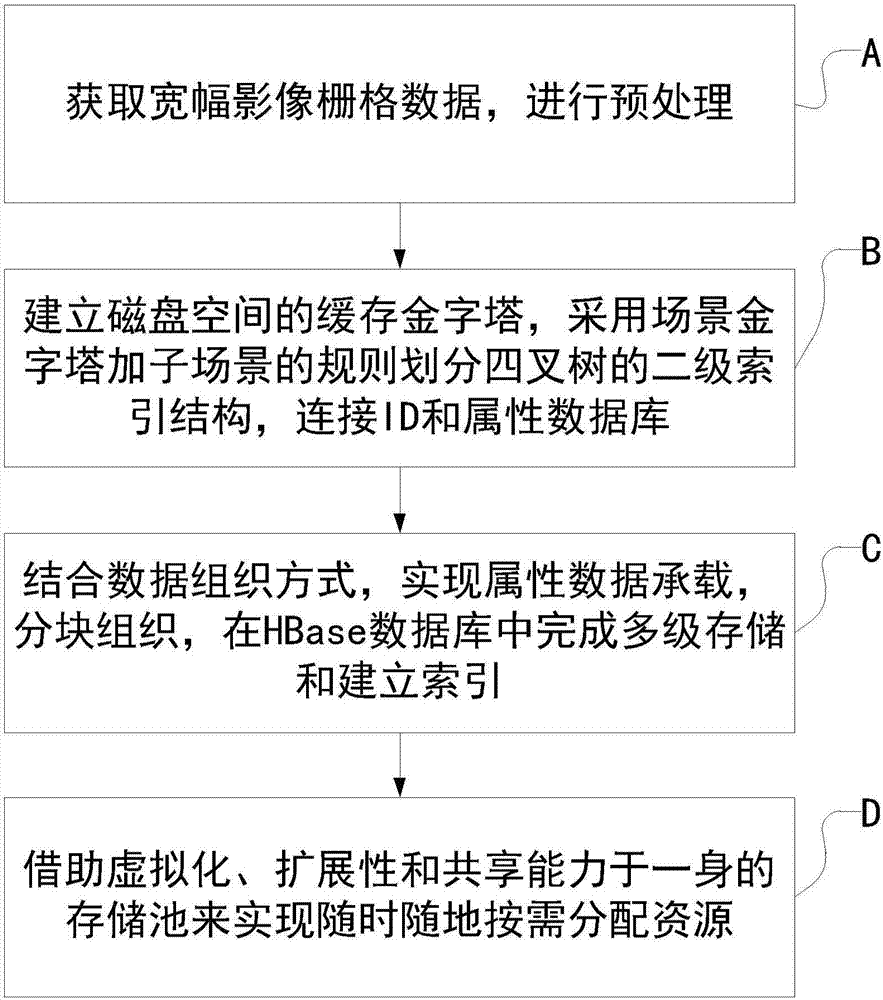

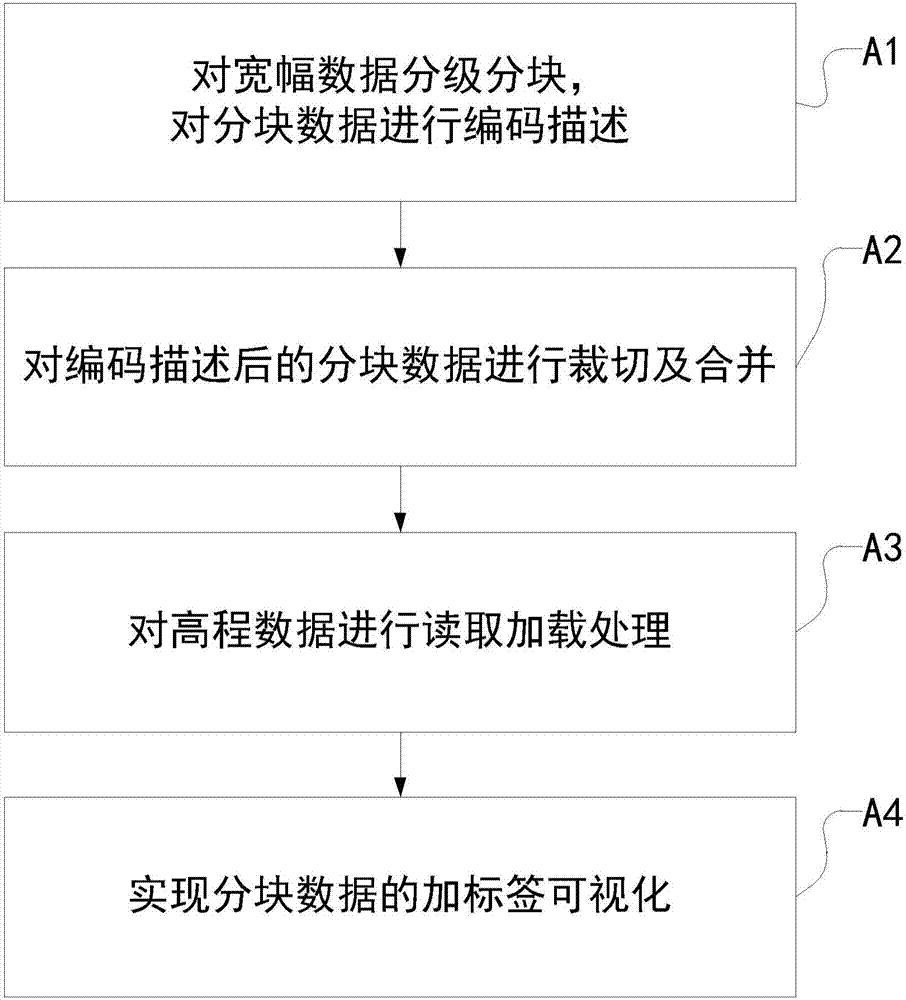

Multistage organizing and indexing method oriented to massive remote sensing images

ActiveCN106909644AStrengthen local featuresImprove access efficiencyGeographical information databasesSpecial data processing applicationsVirtualizationStorage pool

A multistage organizing and indexing method oriented to massive remote sensing images comprises the following steps that A, wide image grid data is acquired and preprocessed; B, a cache pyramid of disk space is built, a two-stage indexing structure of a quadtree is divided through a scene pyramid and sub-scene rule, and ID and an attribute database are connected; C, a data recognizing mode is combined, attribute data bearing is achieved, partitioning recognizing is carried out, and multistage storage and index building are completed in a HBase database; D, a storage pool integrating virtualization, expansibility and sharing capacity is used for distributing resources according to needs at any time and in any place.

Owner:济钢防务技术有限公司

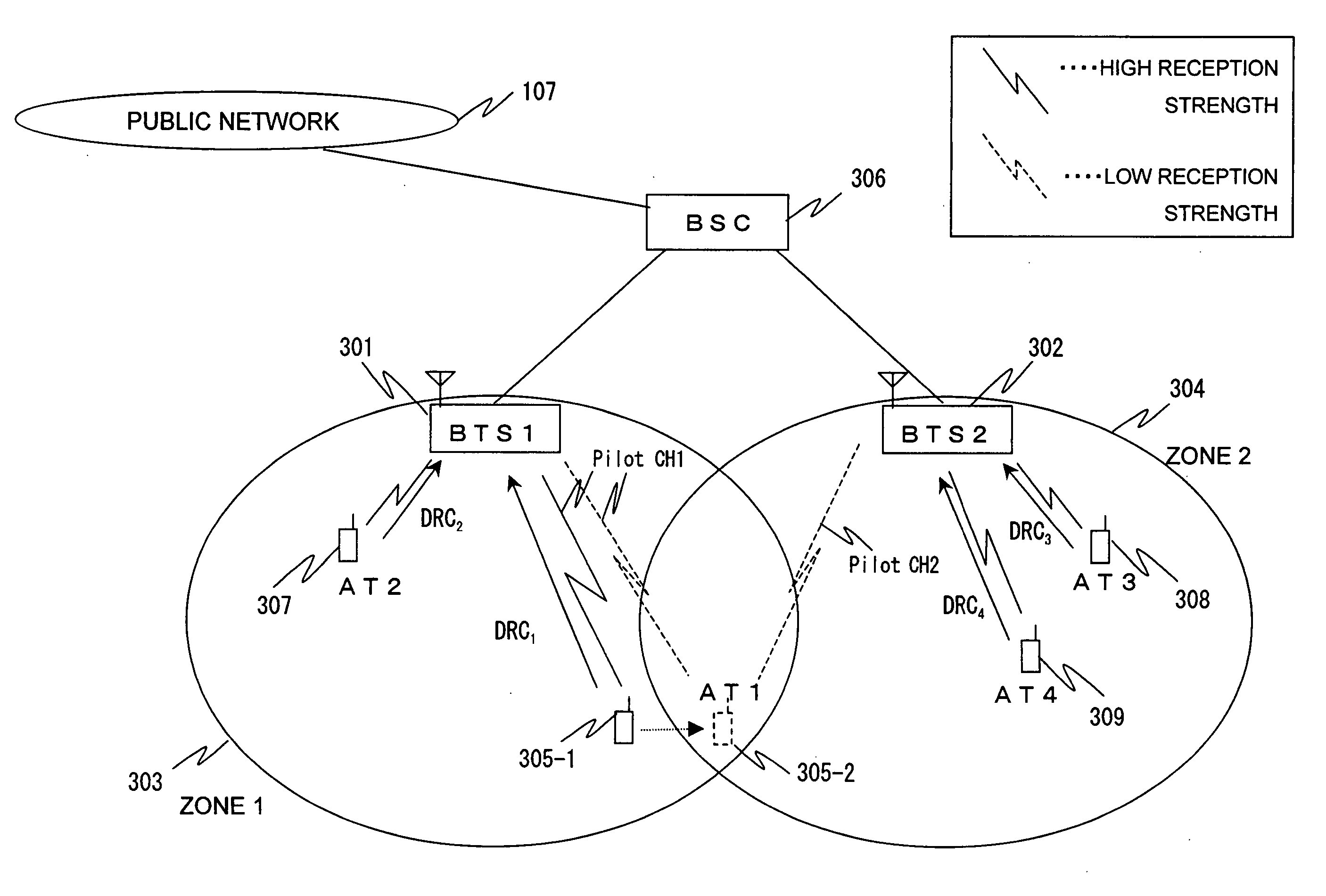

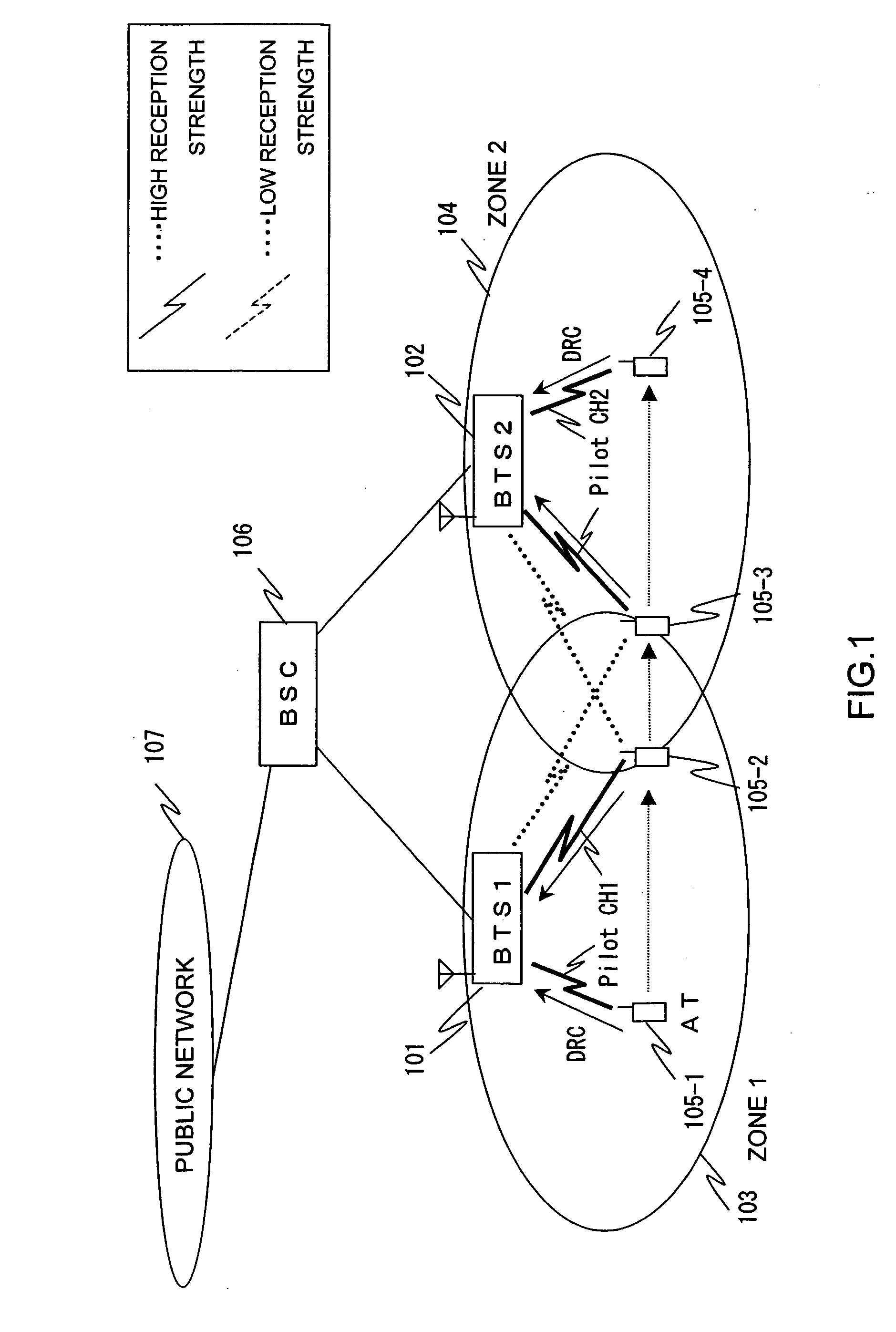

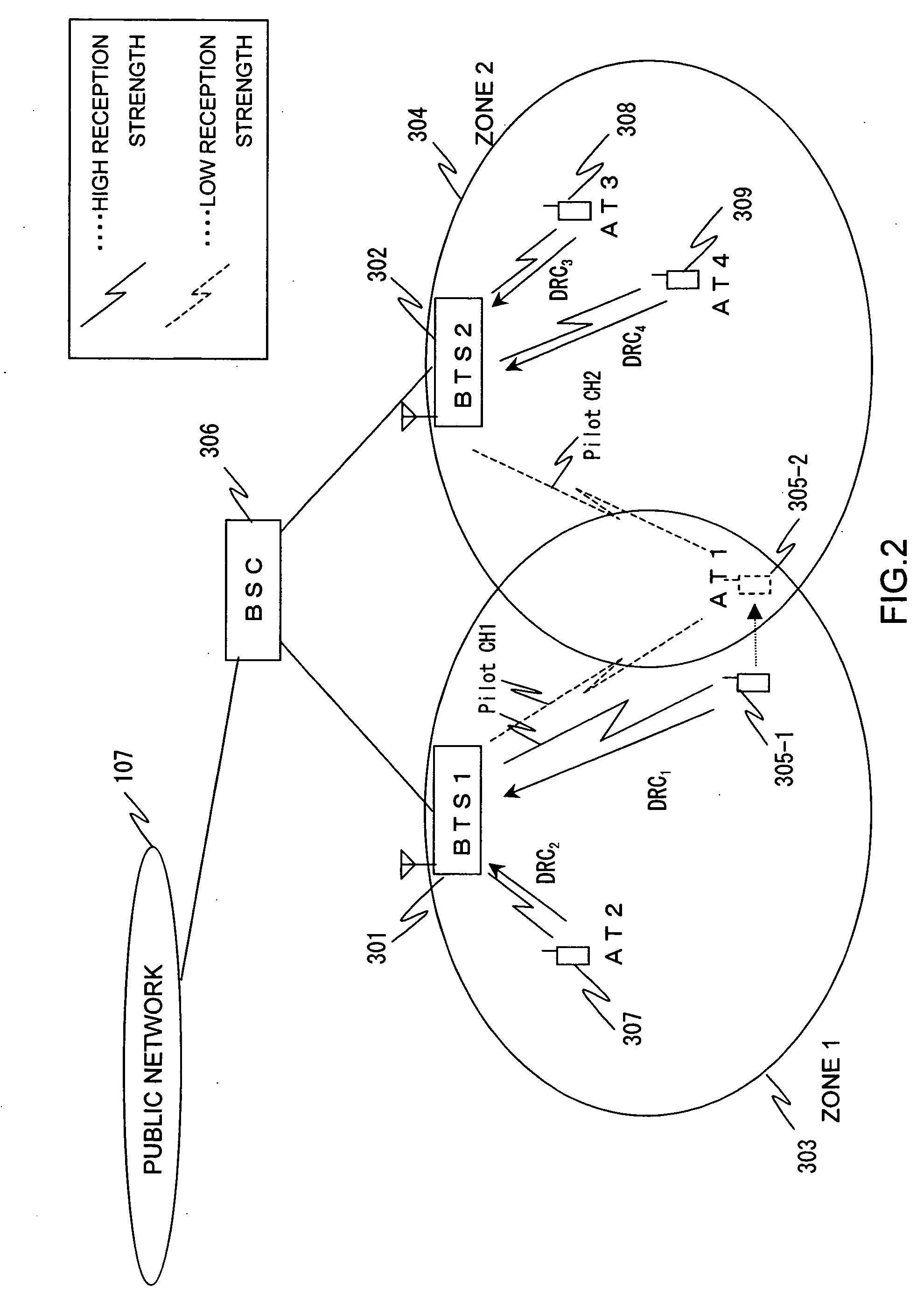

Handoff control method, base station controller, and base transceiver subsystem

ActiveUS20060029021A1Improve throughputHigh estimated throughput ratioError preventionFrequency-division multiplex detailsTransceiverHandoff control

Handoff is conventionally carried out in accordance with radio reception statuses such as C / I, so that handoff to a congested station can be made, resulting in a decrease in throughput. When an access terminal is handed off, requested-data-rate information based on a data rate control signal from each access terminal, average throughput of each access terminal before handoff, and a handoff request count are obtained from a base transceiver subsystem. A throughput after handoff of each access terminal is estimated from a ratio of the data rate information of each access terminal to the number of connected access terminals, and an estimated throughput ratio representing the degree of change from the average throughput of each access terminal before handoff to the estimated throughput is calculated. If the estimated throughput ratio is lower than or equal to a given value, the corresponding access terminal is not handed off. If the estimated throughput ratio is higher than the given value, the access terminal having the highest estimated throughput among access terminals requesting handoff is handed off.

Owner:FIPA FROHWITTER INTPROP AG

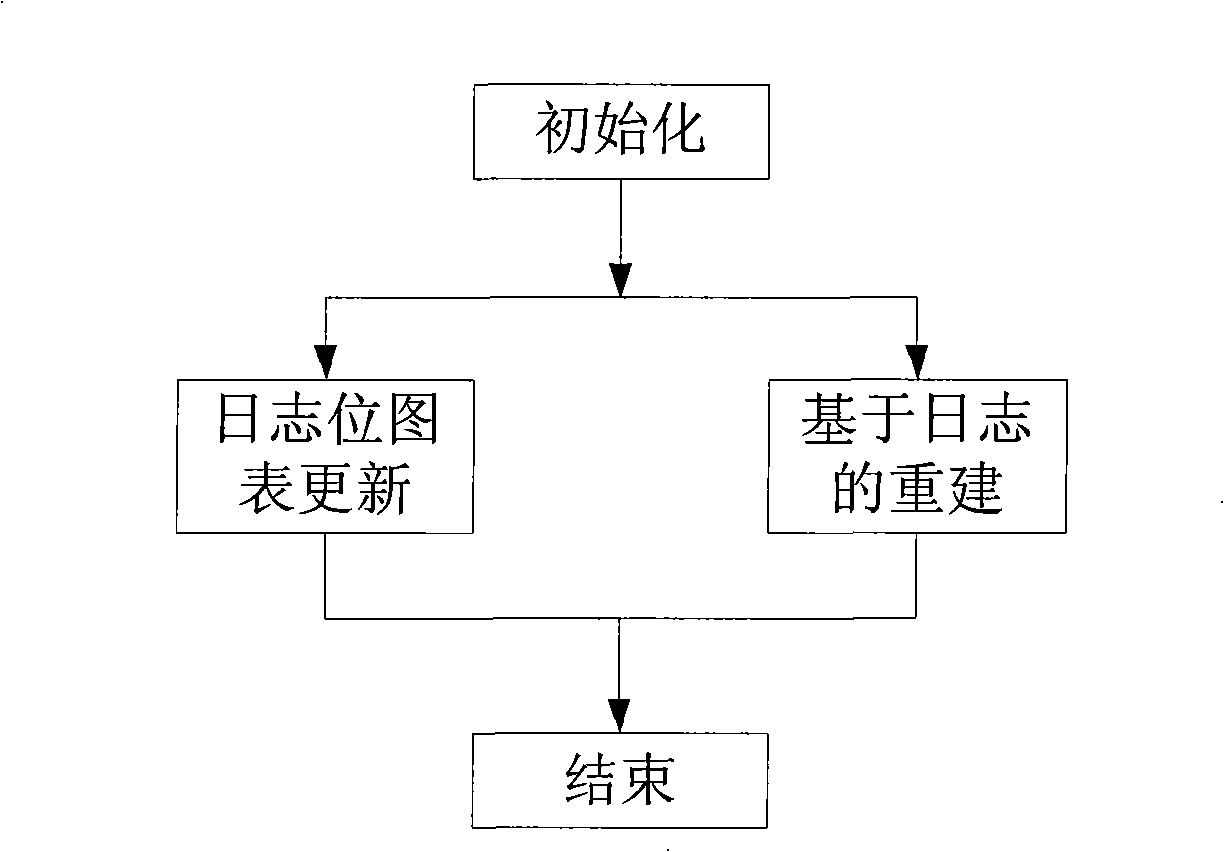

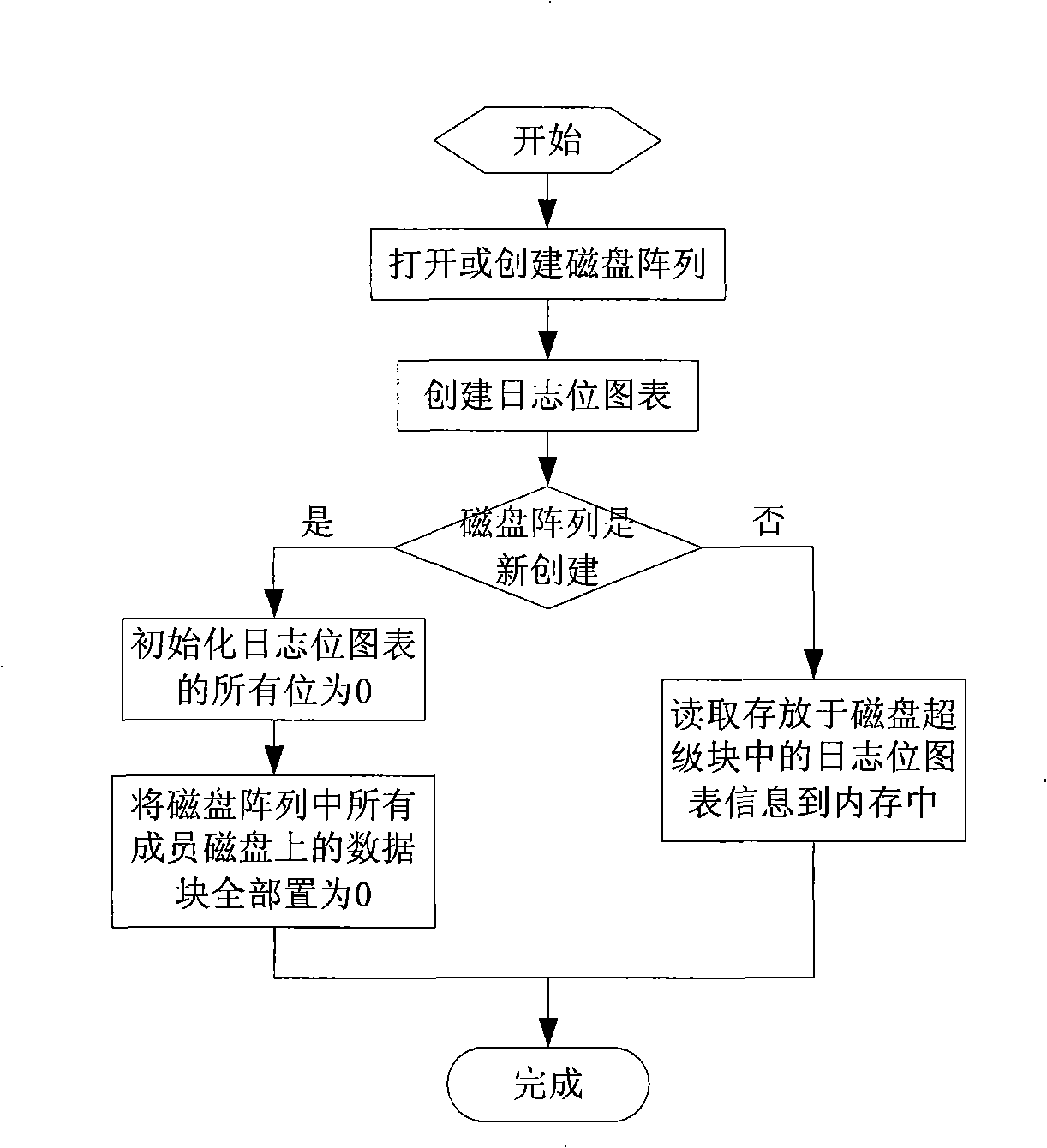

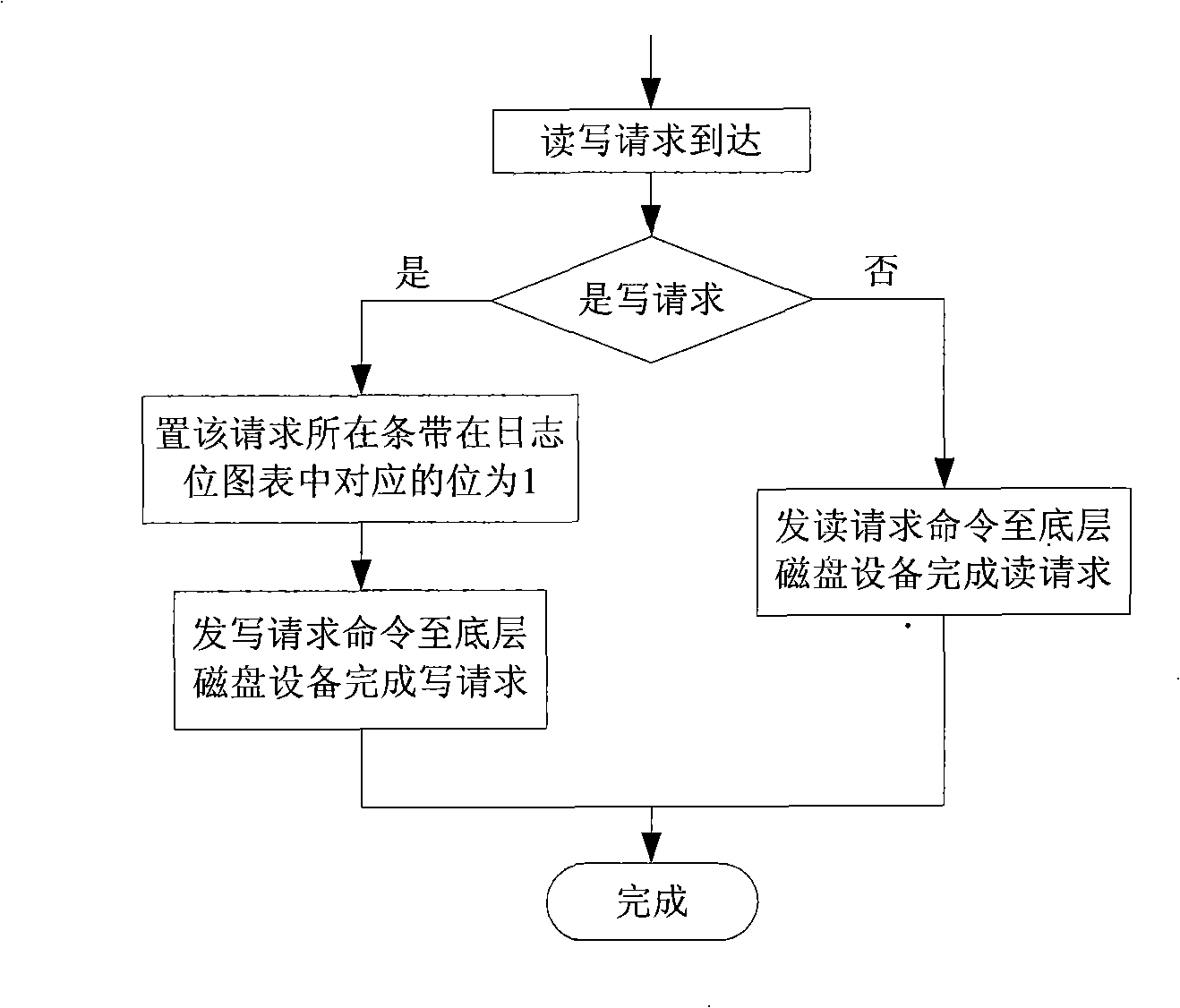

Method for rebuilding data of magnetic disk array

InactiveCN101329641AImprove performanceImprove usabilityRedundant operation error correctionVisit timeReconstruction method

The invention relates to a disk array data reconstruction method which pertains to a computer data storage method and solves the problem that the existing disk array data reconstruction method takes too much time and affects the reading and writing performances and reliability of a storage system. A disk array of the invention is provided with a main control module, reading and writing processing modules and a reconstruction module and comprises the steps of initialization, diary pot diagram update, reconstruction based on diaries and finishing. The method instructs data reconstruction process by the real-time monitoring of disk space using status of the disk array; when space that is not accessed is reconstructed, the only requirement is to write 0 in all corresponding data blocks newly added into a disk, thus greatly reducing physical disk visiting time brought by reconstruction, increasing reconstruction speed and reducing the response time of user access; the reconstruction method does not change the reconstruction procedure or the distribution manner of disk array data, can conveniently optimize various traditional disk array data reconstruction methods and is applicable to the construction of storage systems with high performance, high usability and high reliability.

Owner:HUAZHONG UNIV OF SCI & TECH

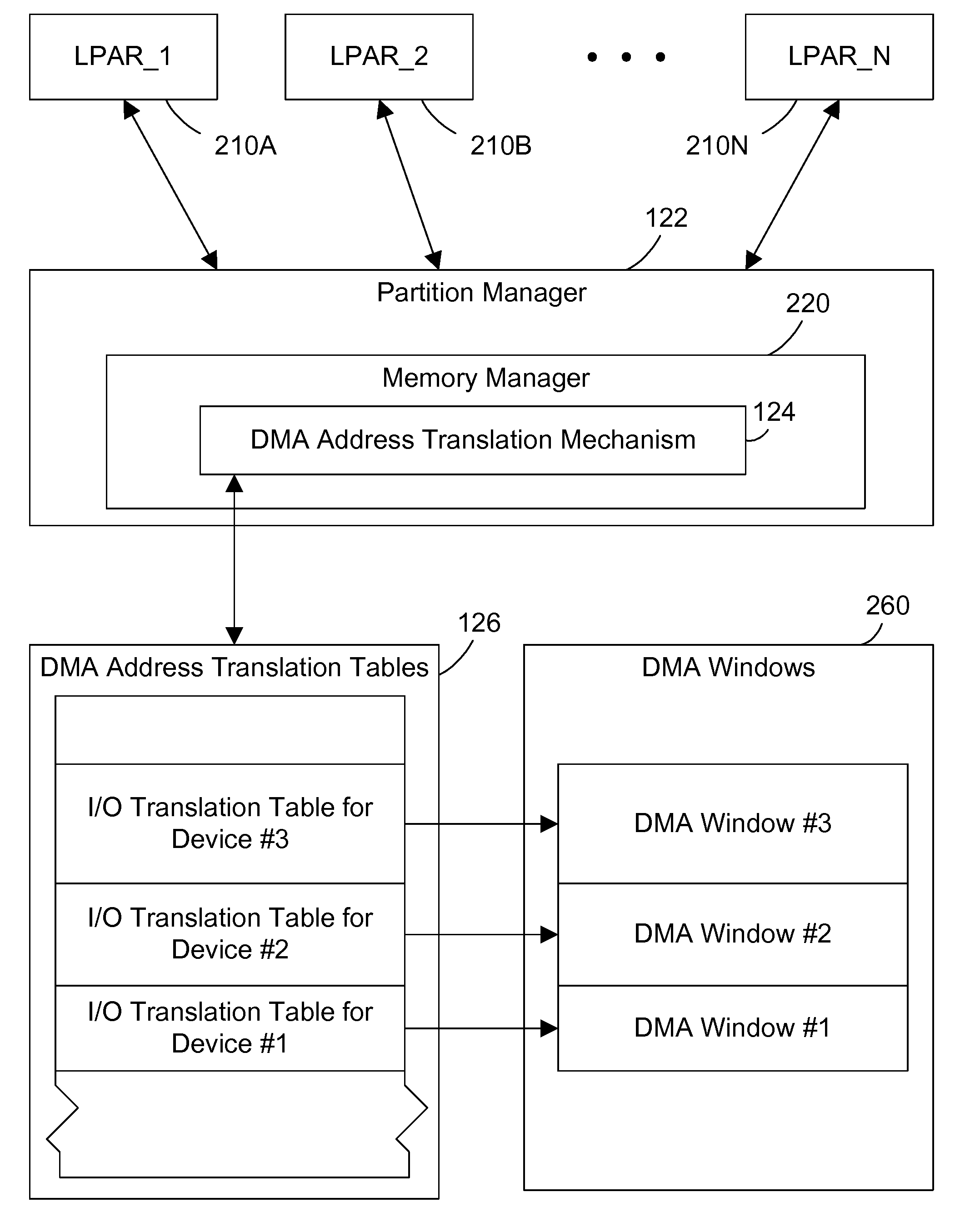

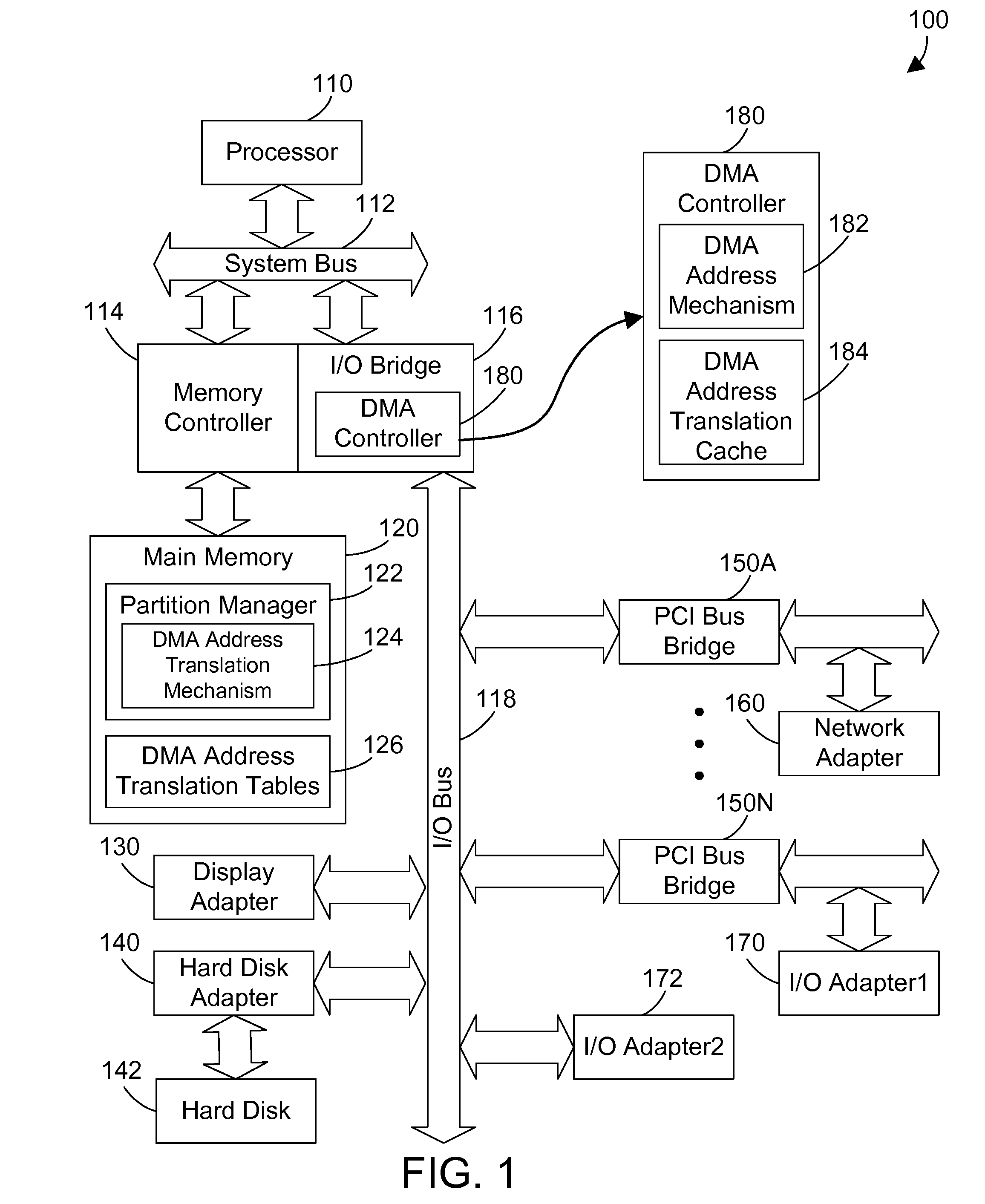

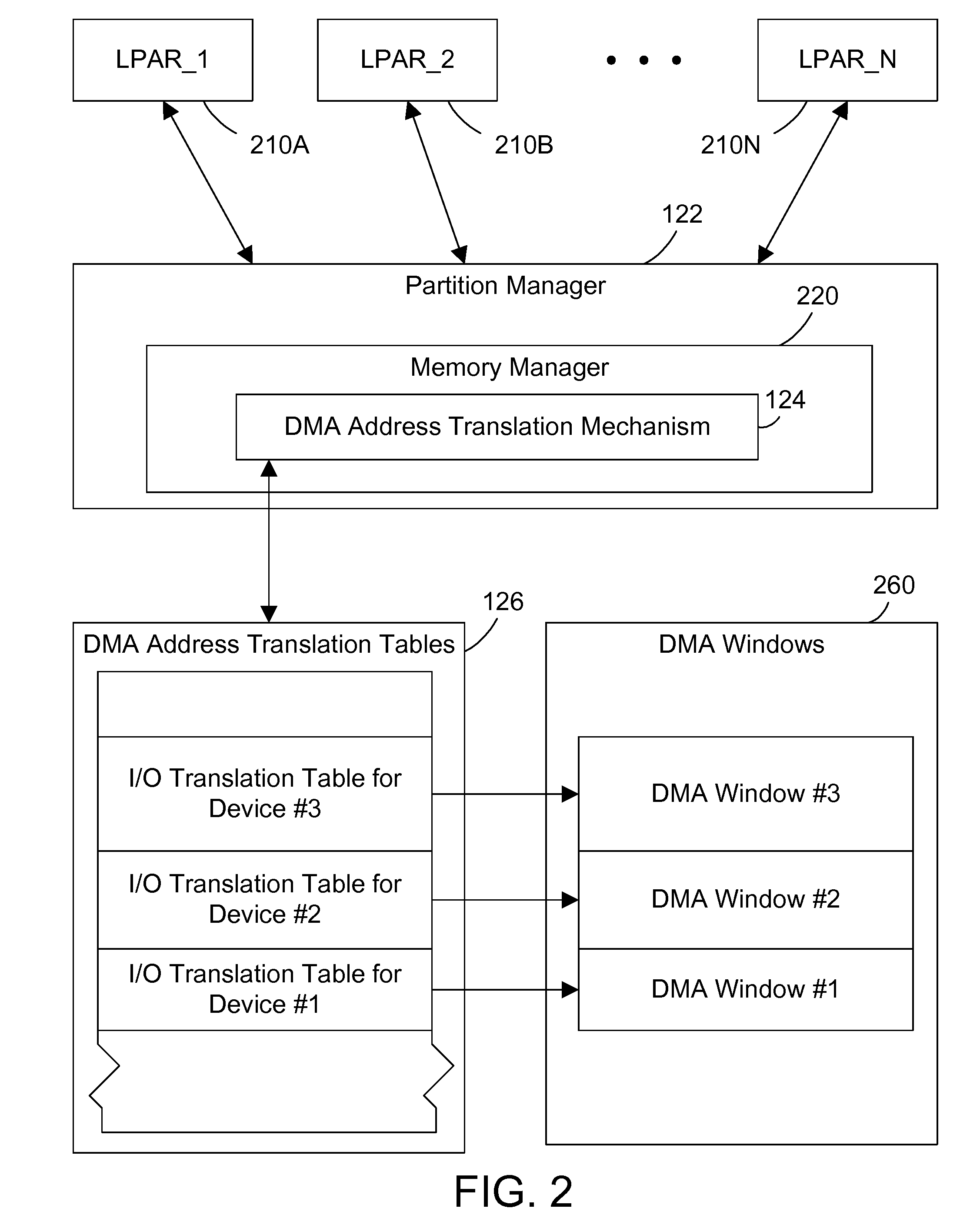

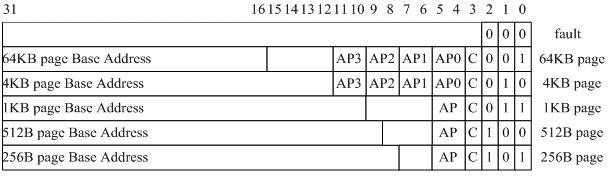

Direct memory access (DMA) address translation with a consecutive count field

InactiveUS20150067297A1Reduce visitsImprove system performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationDirect memory accessProgramming language

DMA translation table entries include a consecutive count (CC) field that indicates how many subsequent translation table entries point to successive real page numbers. A DMA address translation mechanism stores a value in the CC field when a translation table entry is stored, and updates the CC field in other affected translation table entries as well. When a translation table entry is read, and the CC field is non-zero, the DMA controller can use multiple RPNs from the access to the single translation table entry. Thus, if a translation table entry has a value of 2 in the CC field, the DMA address translation mechanism knows it can access the real page number (RPN) corresponding to the translation table entry, and also knows it can access the two subsequent RPNs without the need of reading the next two subsequent translation table entries.

Owner:IBM CORP

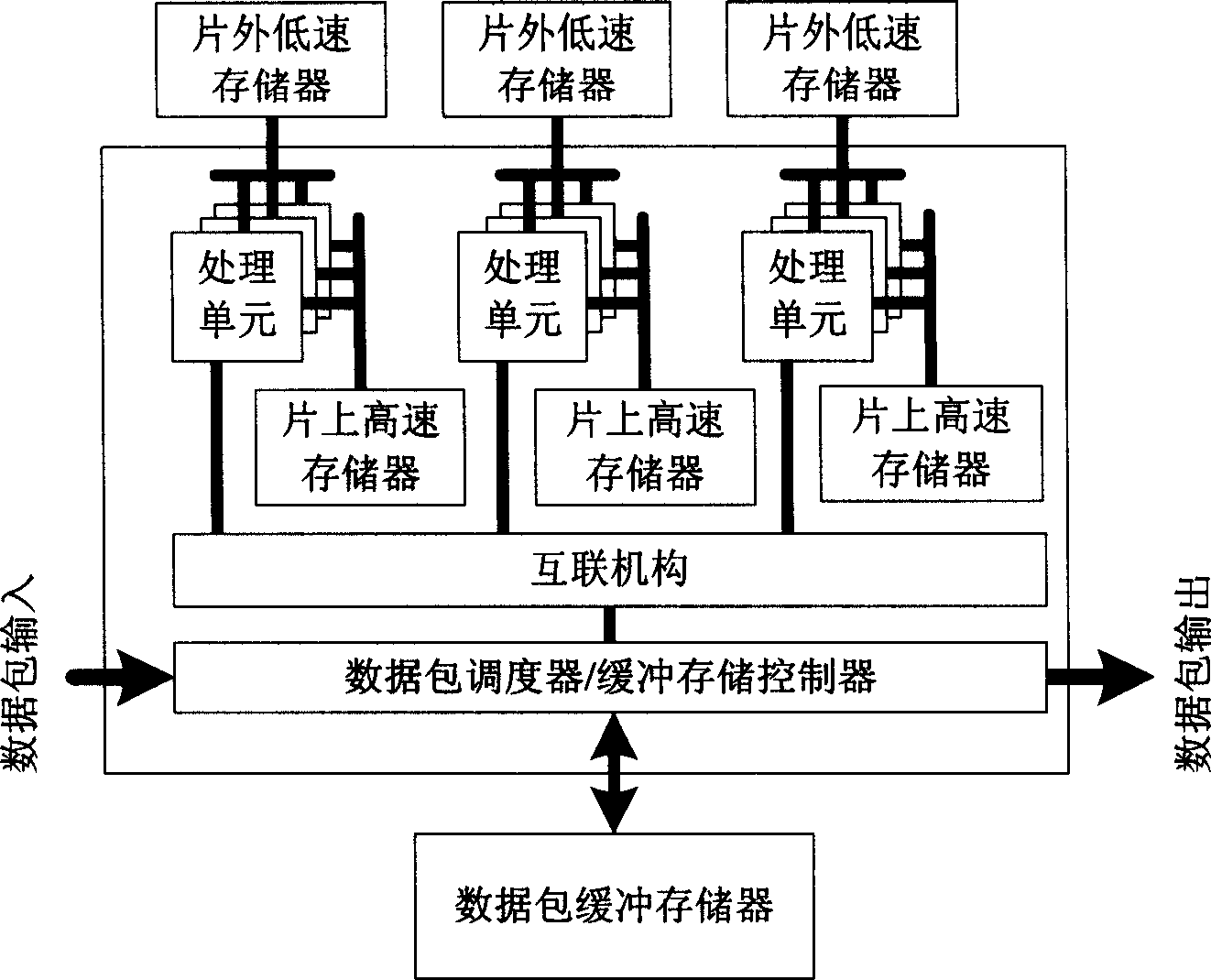

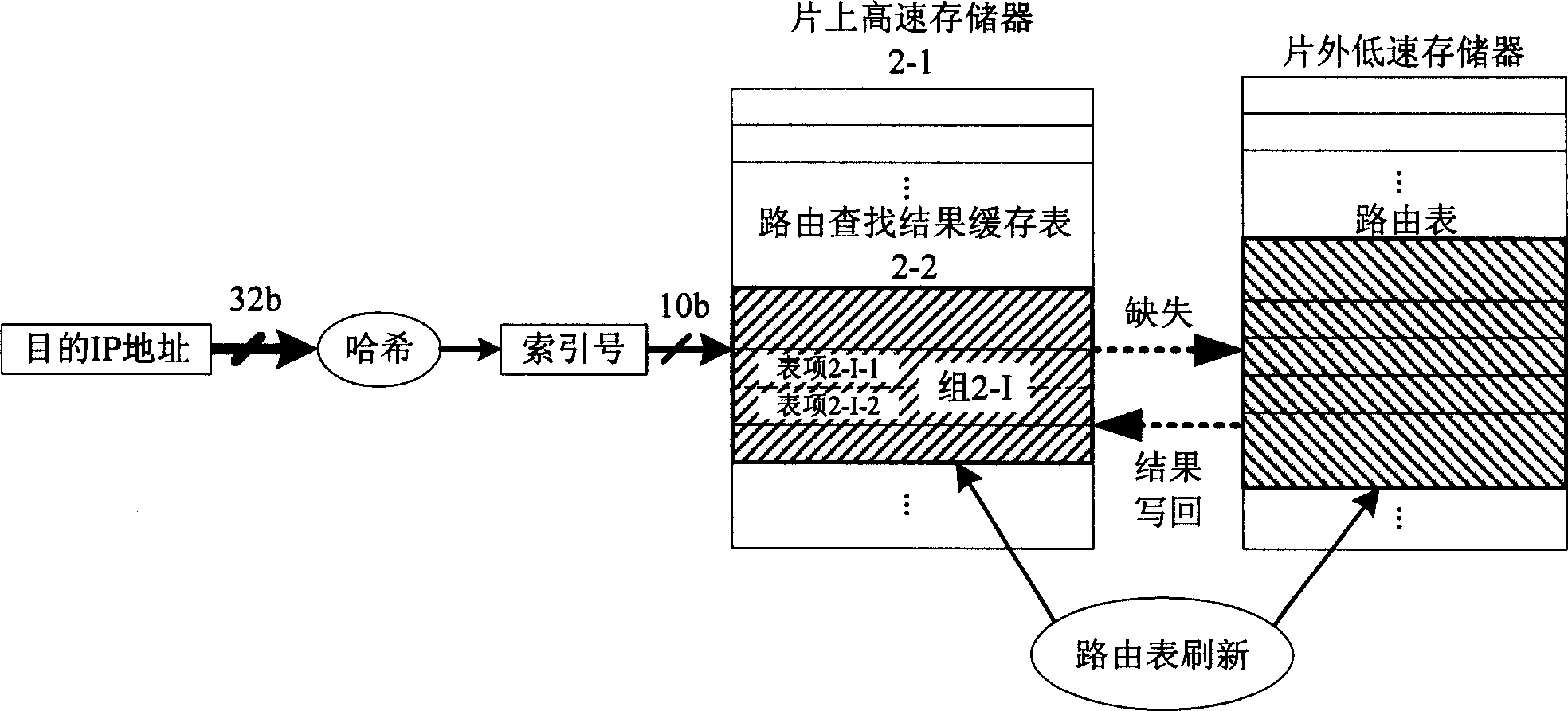

Route searching result cache method based on network processor

InactiveCN1863169AImprove search efficiencyEvenly distributedData switching networksLow speedRouting table

The invention a network processor-based route searching result caching method, belonging to computer field, characterized in: building and maintaining a route searching result caching table in on-chip high speed memory of the network processor; after each to-be-searched destination IP address is received by the network processor, firstly making fast searching in the caching list by Hash function; if its result has existed in the caching list, directly returning the route searching result and regulating the sequence of items in the caching list by a rule that the closer an item is, the least it is used, to find the result as soon as possible in the follow-up searching; otherwise searching the route list stored in an outside-chip low speed memory, returning the searching result to application program and writing the searching result back into the caching list. And the invention reduces number of accessing times of outside-chip low speed memory for route searching and reducing occupation of memory bandwidth.

Owner:TSINGHUA UNIV

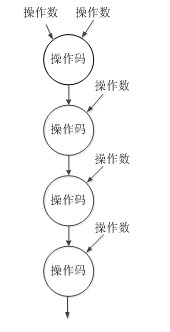

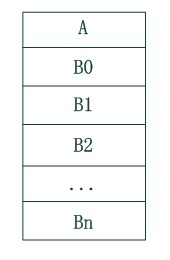

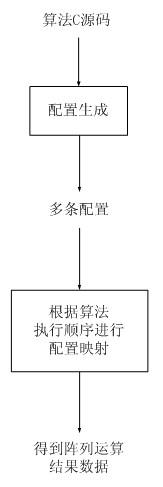

Configuration method applied to coarse-grained reconfigurable array

InactiveCN102508816AReduce the amount of informationReduce visitsProgram controlArchitecture with single central processing unitConfiguration generationEngineering

The invention discloses a configuration method applied to a coarse-grained reconfigurable array, which aims at a coarse-grained reconfigurable array with a certain scale, and comprises a configuration defining scheme taking data links as basic description objects, a corresponding configuration generating scheme and a corresponding configuration mapping scheme. The configuration defining scheme includes that a program corresponds to a plurality of configurations, each configuration corresponds to one data link, and each data link consists of a plurality of reconfigurable cells with data dependence relations. Compared with a traditional scheme taking RCs (reconfigurable cells) as basic description objects, the configuration defining scheme is capable of concealing interlinking information among the RCs and providing a larger configuration information compression space, thereby being beneficial to decrease of the total amount of configuration and time for switching configuration. Besides, the configuration of one description data link consists of a route, a functional configuration and one or more data configurations, the data configurations share one route and functional configuration information, and switching of one configuration includes one-time or multiple switching of the data configuration after one-time switching of the corresponding route and the functional configuration.

Owner:SOUTHEAST UNIV

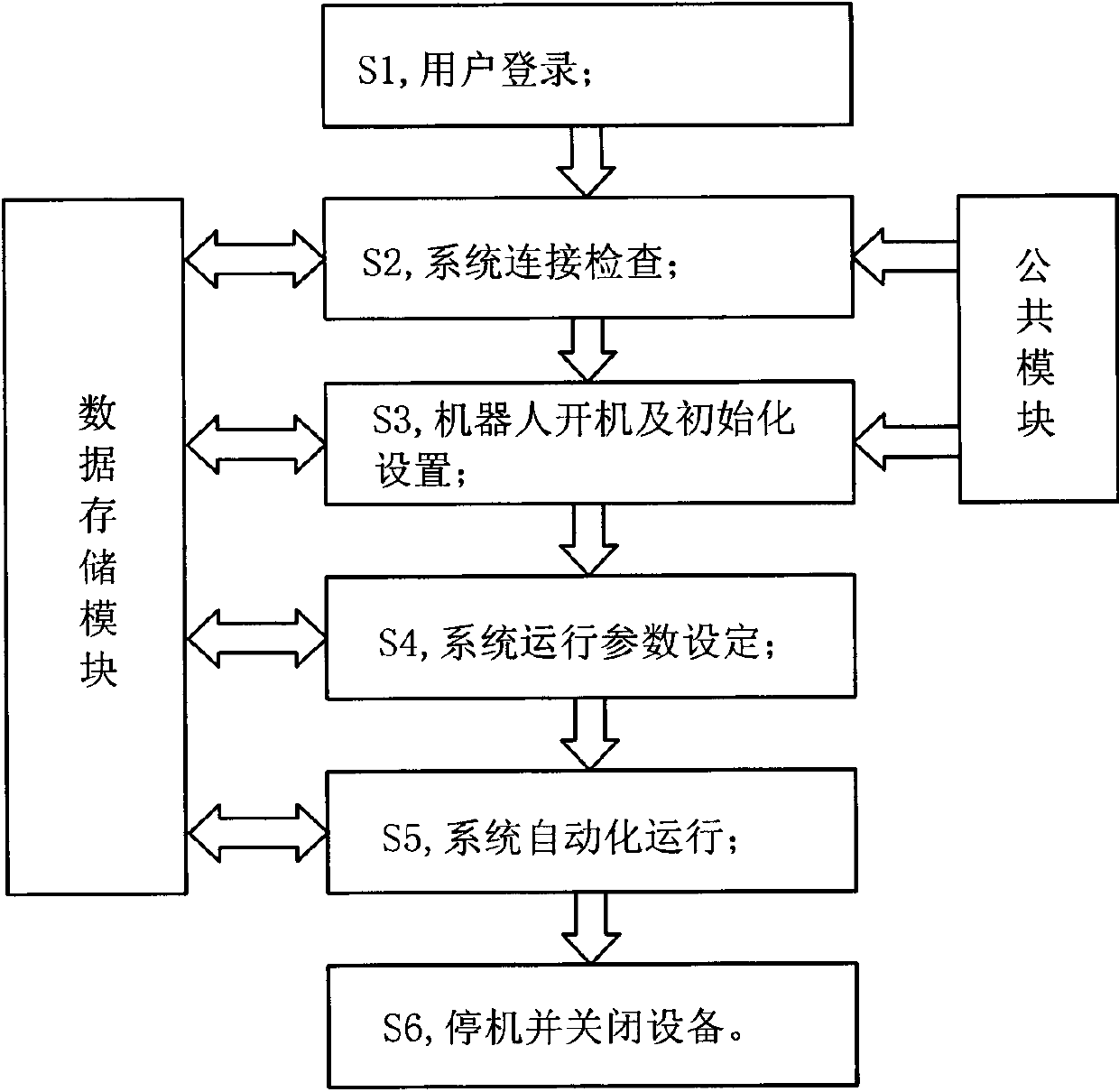

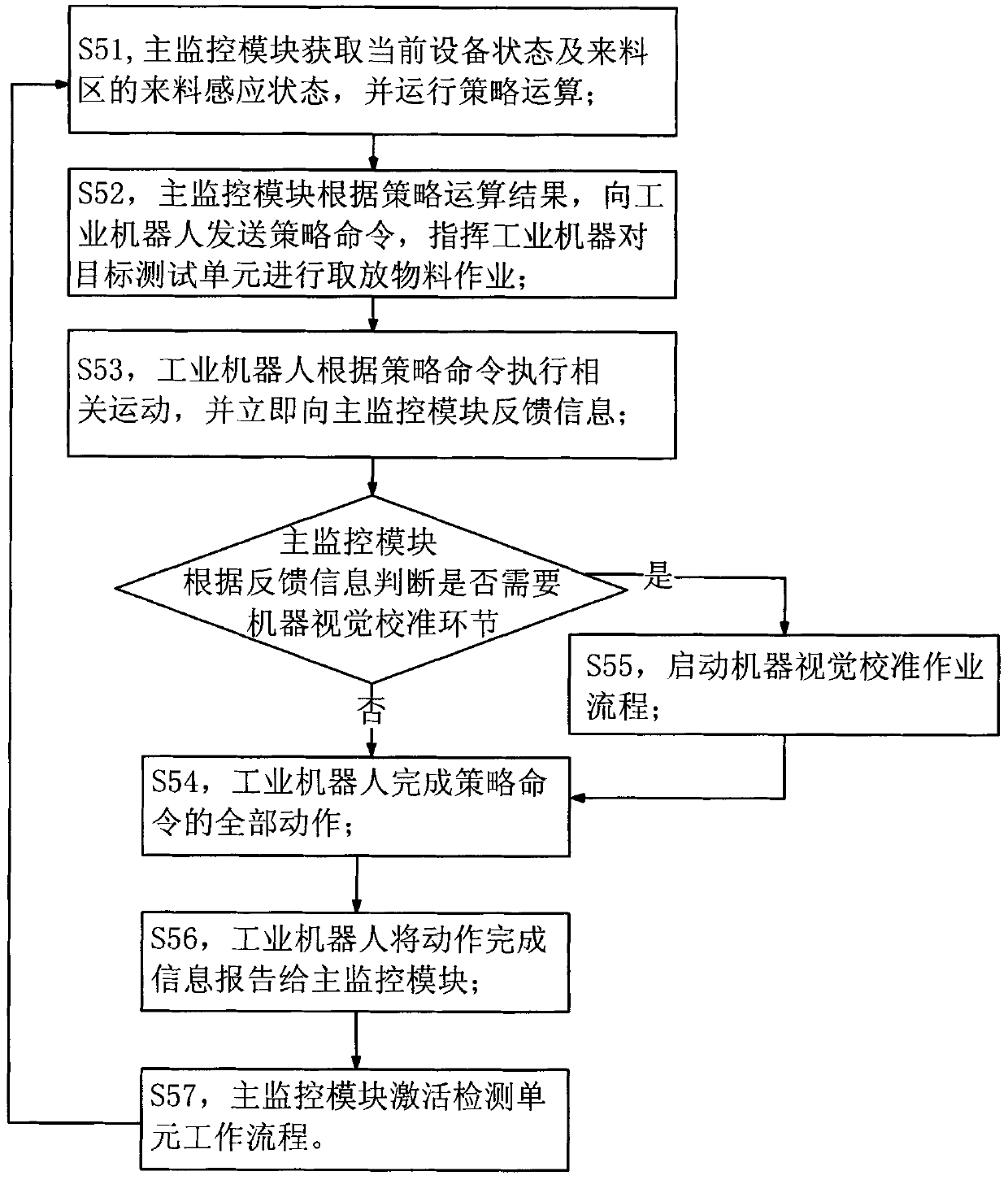

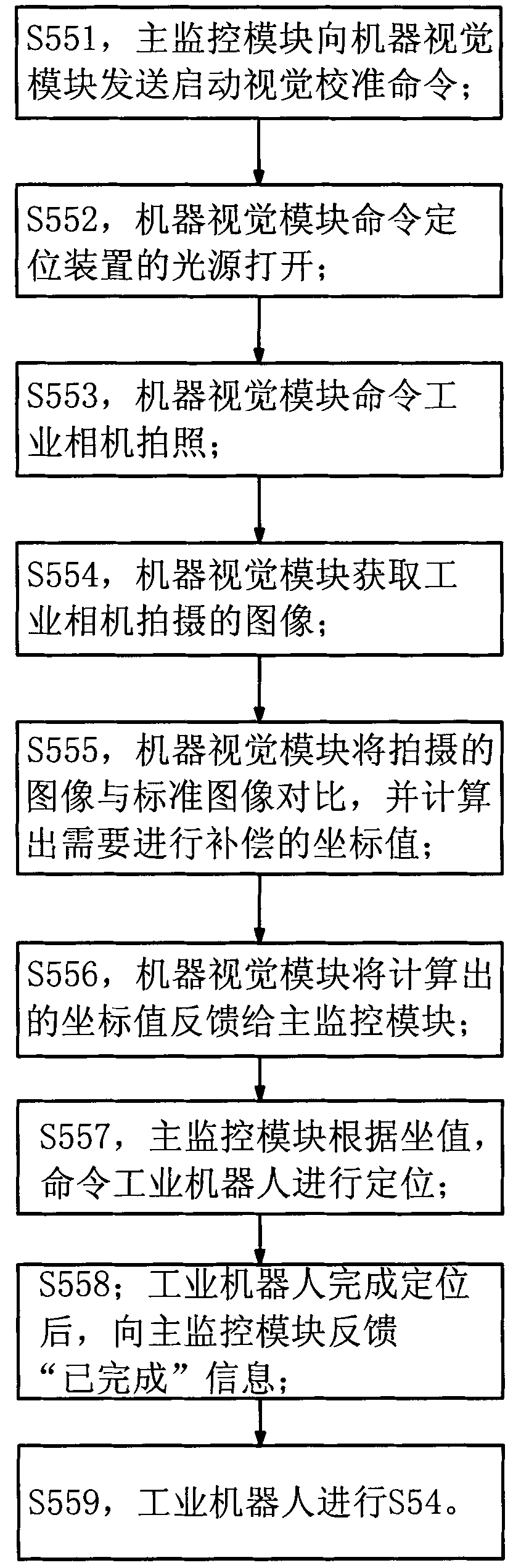

Automatic feeding, testing and sorting system and operation method thereof

ActiveCN103368795ARealize automatic feedingRealize unmannedData switching networksConveyor partsProgrammable logic controllerControl engineering

The invention discloses an automatic feeding, testing and sorting system and an operation method of the system. The operation method comprises the following steps of: S1, user login; S2, system connection checking; S3, robot starting and initialized setting; S4 system operation parameter setting; S5 system automatic operation; S6 stopping and equipment shutting. The operation method provided by the invention realizes organic combination in controlling test units, an industrial robot, a PLC (Programmable Logic Controller) electrical system, an image acquisition module and other peripheral equipment, automation in feeding, starting a test and sorting tested products to corresponding areas, as well as an unmanned testing process. In S2 and S3, required programs can be called from a public module in which global thread programs are stored; in S2, S3, S4 and S5, key variables required during system operation and stored in a data storage document can be called or rewritten so as to greatly improve the calculation efficiency.

Owner:SHENZHEN JIACHEN TECH

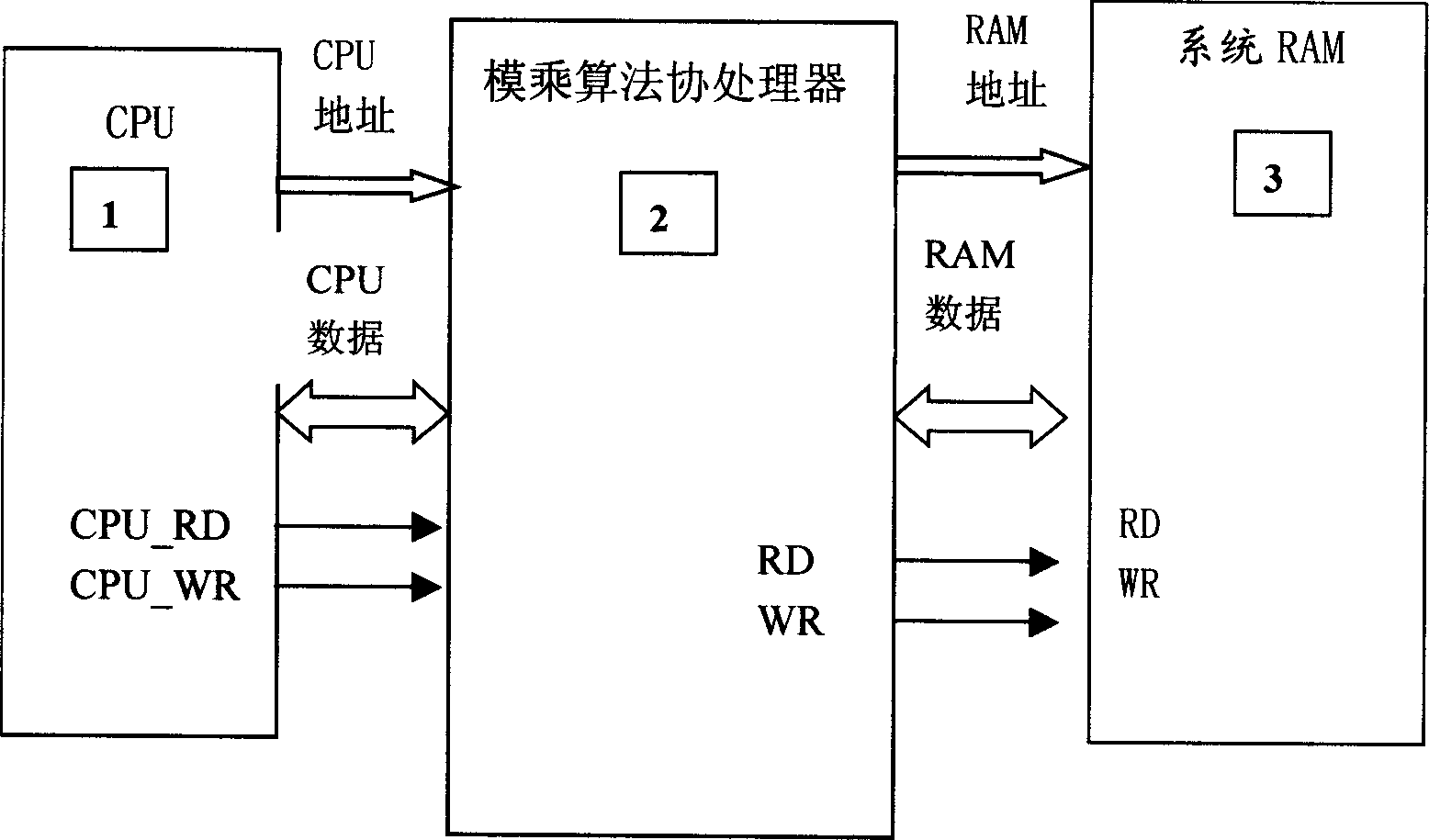

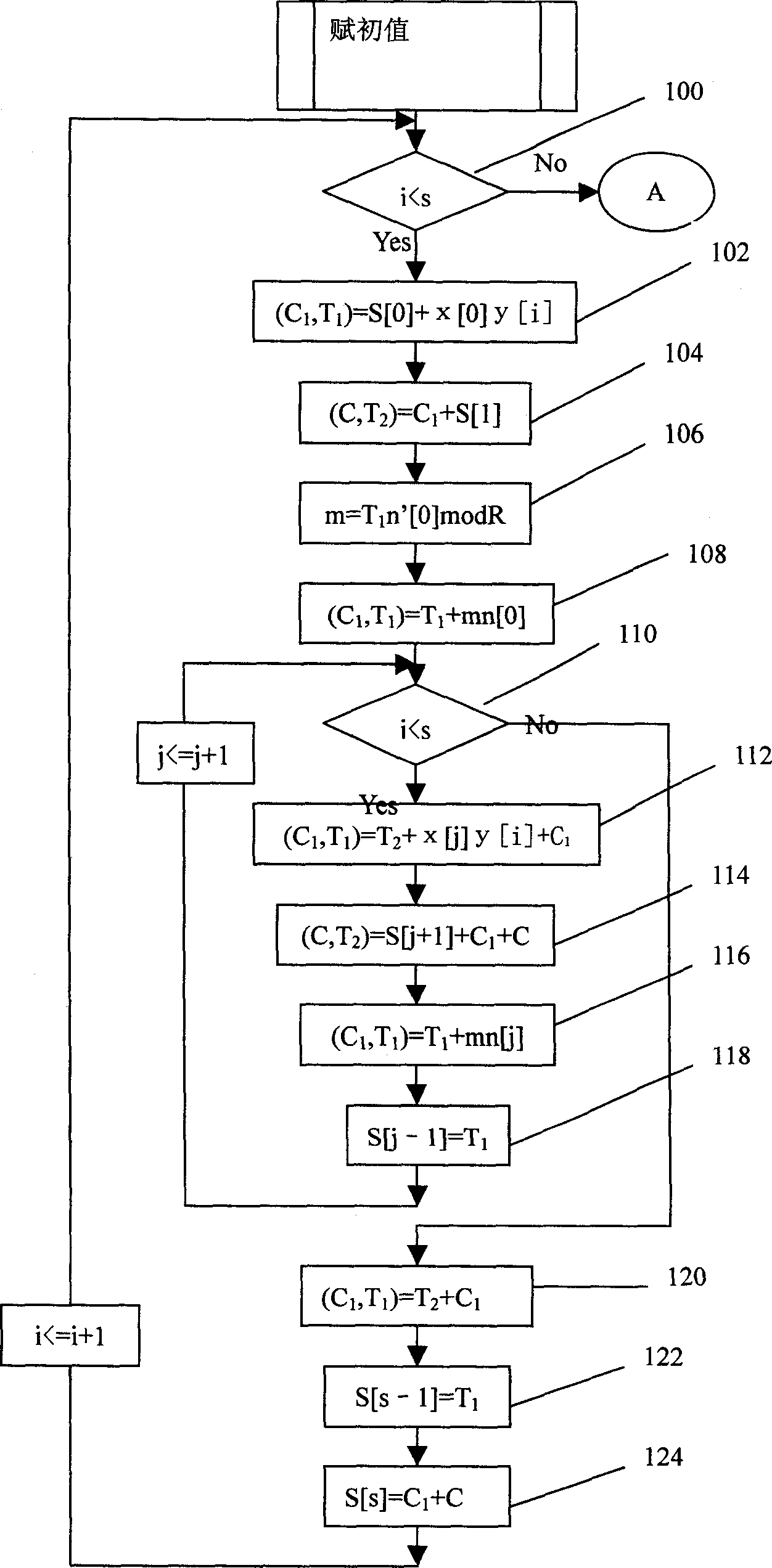

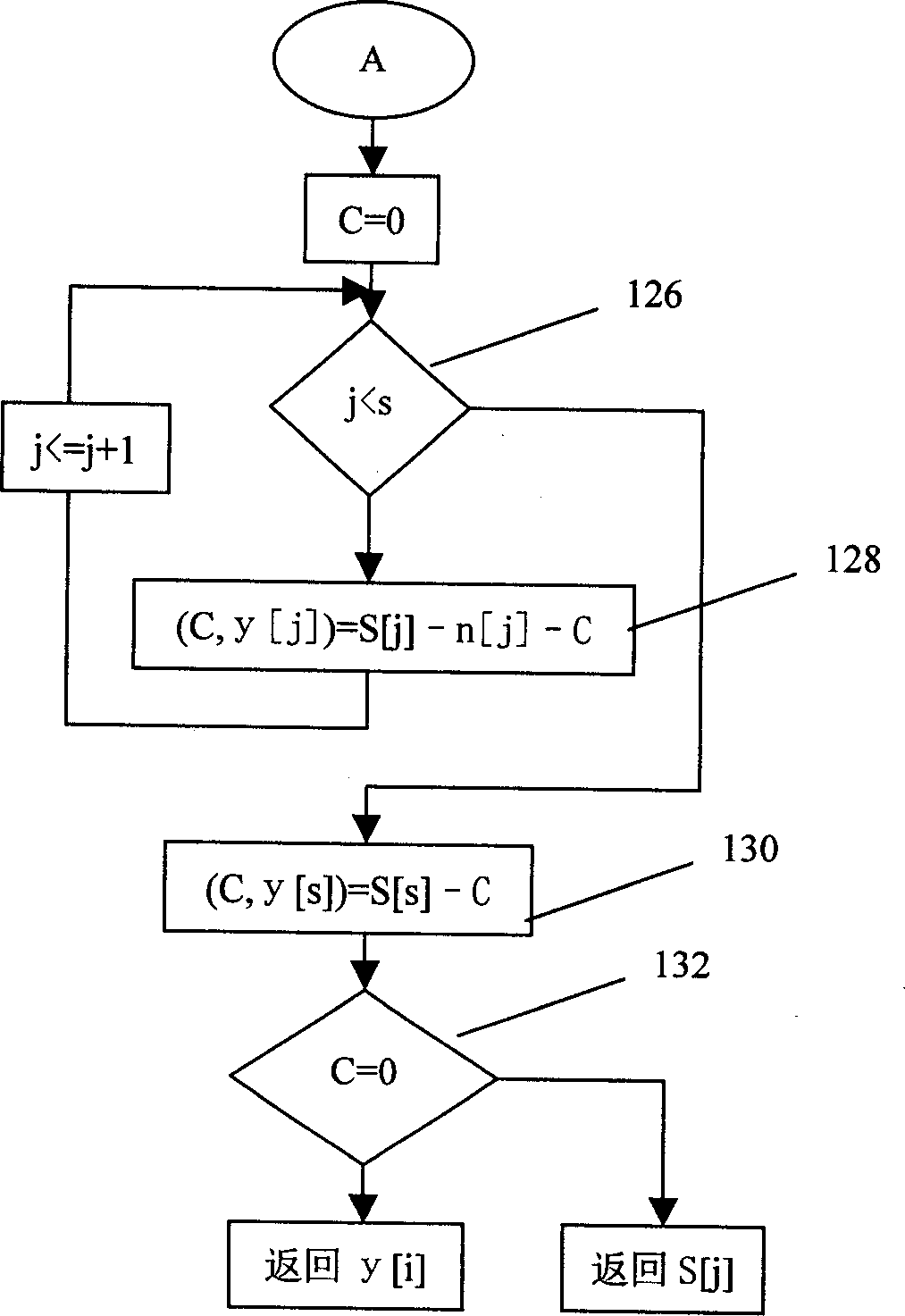

Montgomery analog multiplication algorithm and its analog multiplication and analog power operation circuit

ActiveCN1492316AReduce visitsFast operationComputation using denominational number representationInner loopAccess time

The analog multiplication algorithm of the present invention is one improvement of available multiple precision CIOS algorithm with reduced inner loop number from two to one and reduced external variable access times. The analog multiplication arithmetic circuit consists of addition, multiplication, address and loop computation module, data register, logic control module, inner circuit and some special functional modules; and has less operation steps, raised operation speed and data length capable of being set. The analog exponent operation circuits consist of the analog multiplication arithmetic circuit, CPU and system RAM, and under the control of the CPU, several times of analog multiplication operation are completed. Between two times of the analog multiplication operation, the basic address is altered based on dynamic data address pointer technology with greatly speeded analog exponent operation speed.

Owner:DATANG MICROELECTRONICS TECH CO LTD

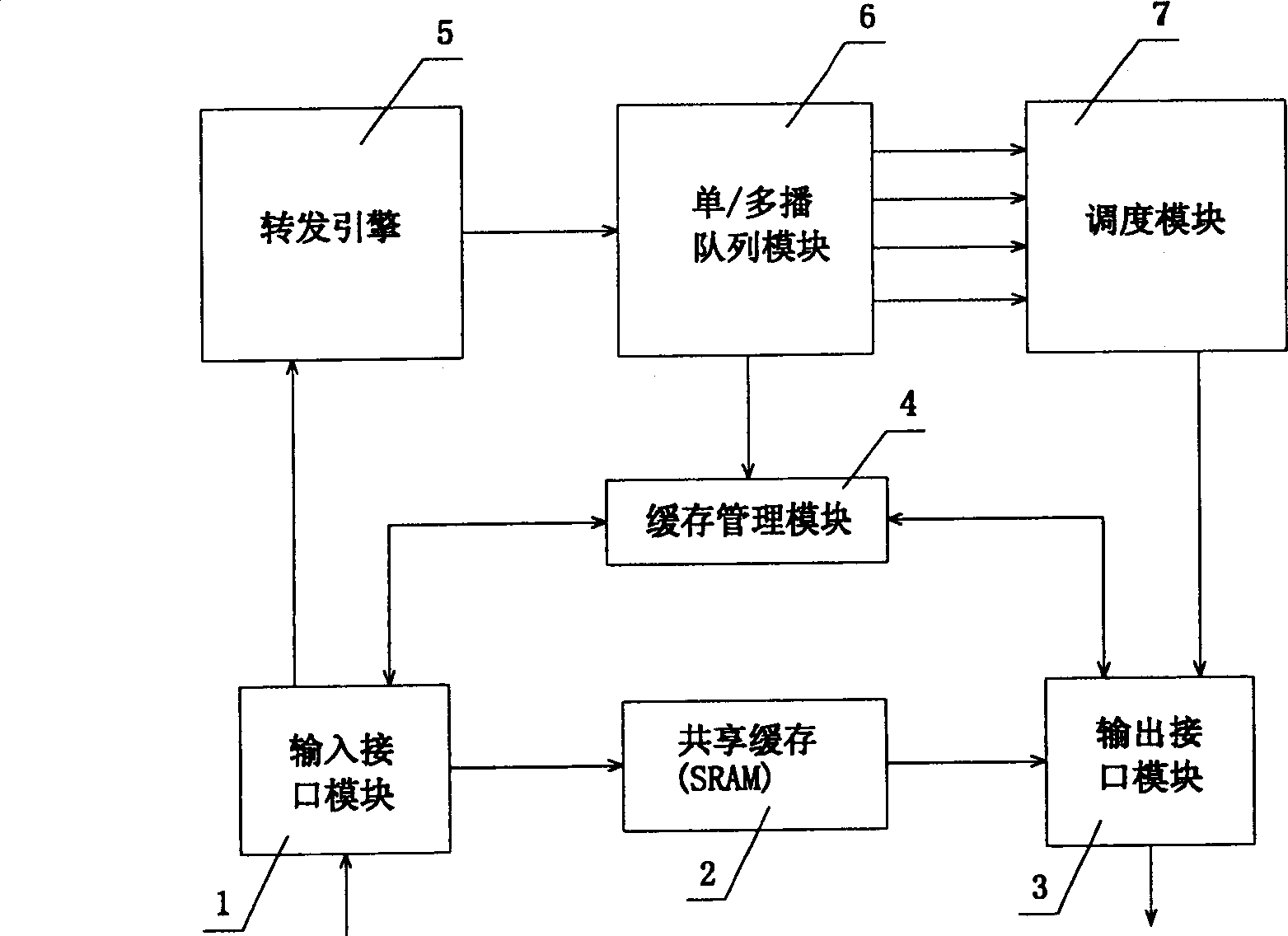

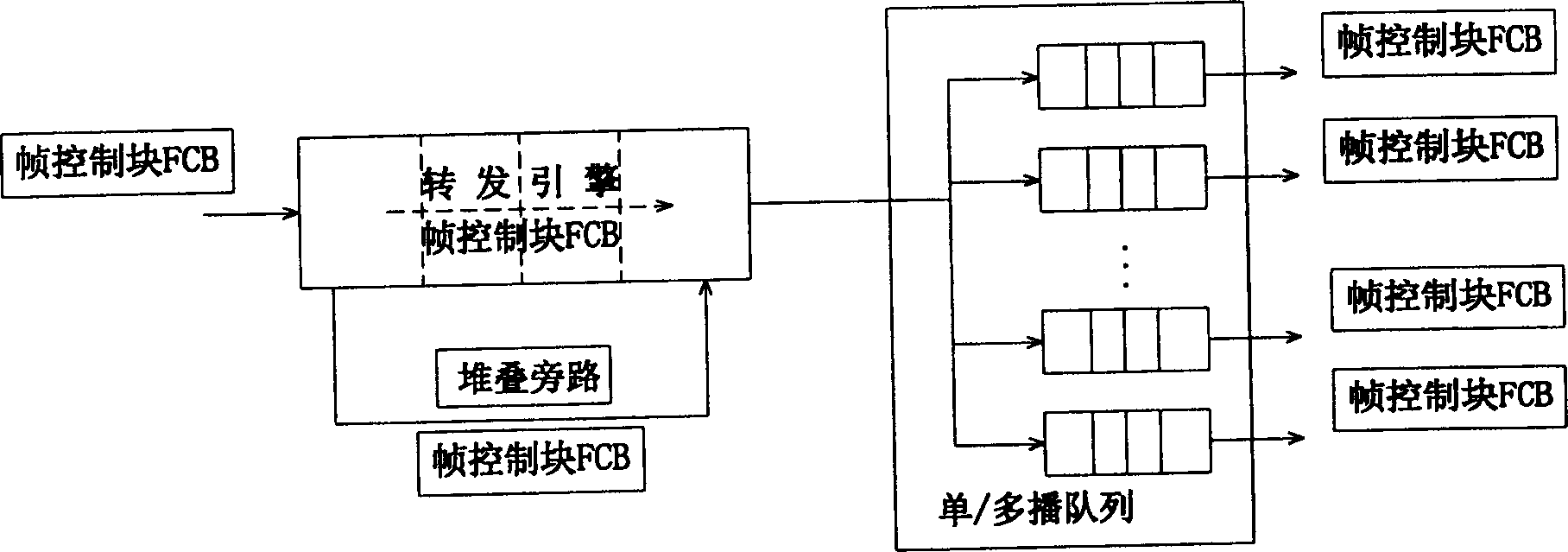

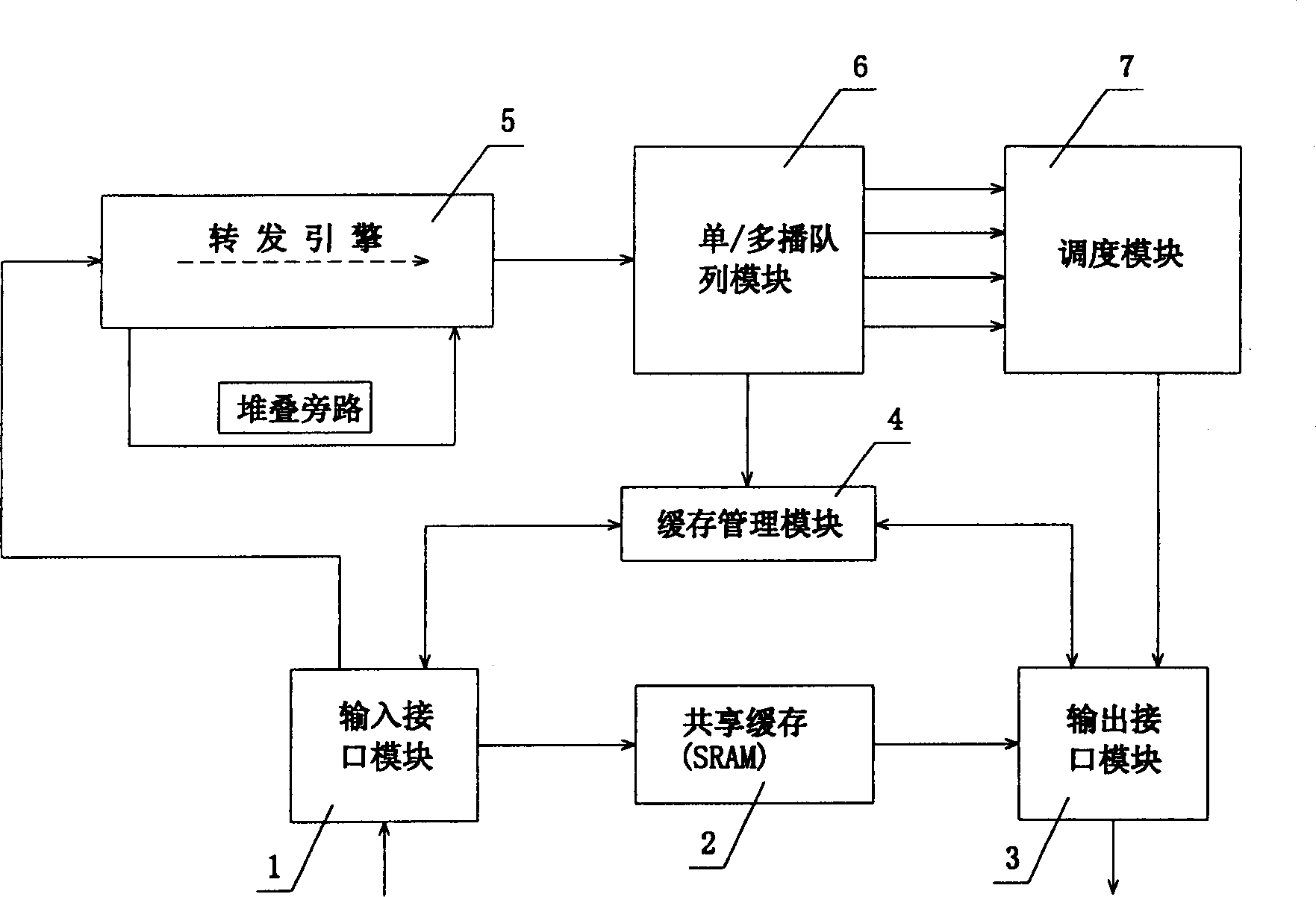

Method for managing and allocating buffer storage during Ethernet interchange chip transmission of data

InactiveCN1452351AReduce visitsEasy to meet the requirements of high-speed processingData switching by path configurationData transmissionEthernet

A method for managing and allocating buffer in the data transmission procedure of Ethernet switch chip includes allocating the address pointer to input interface module by buffer management module, extracting control information by input interface module, combining it with address. In the buffer management module, there is a release weight table. Each address pointer is relative to a table item. Different release weight values are assigned to different frame control blocks. When frame control blocks are released, the release weight values are decreased until it is equal to zero, that is, all frames are processed.

Owner:HUAWEI TECH CO LTD

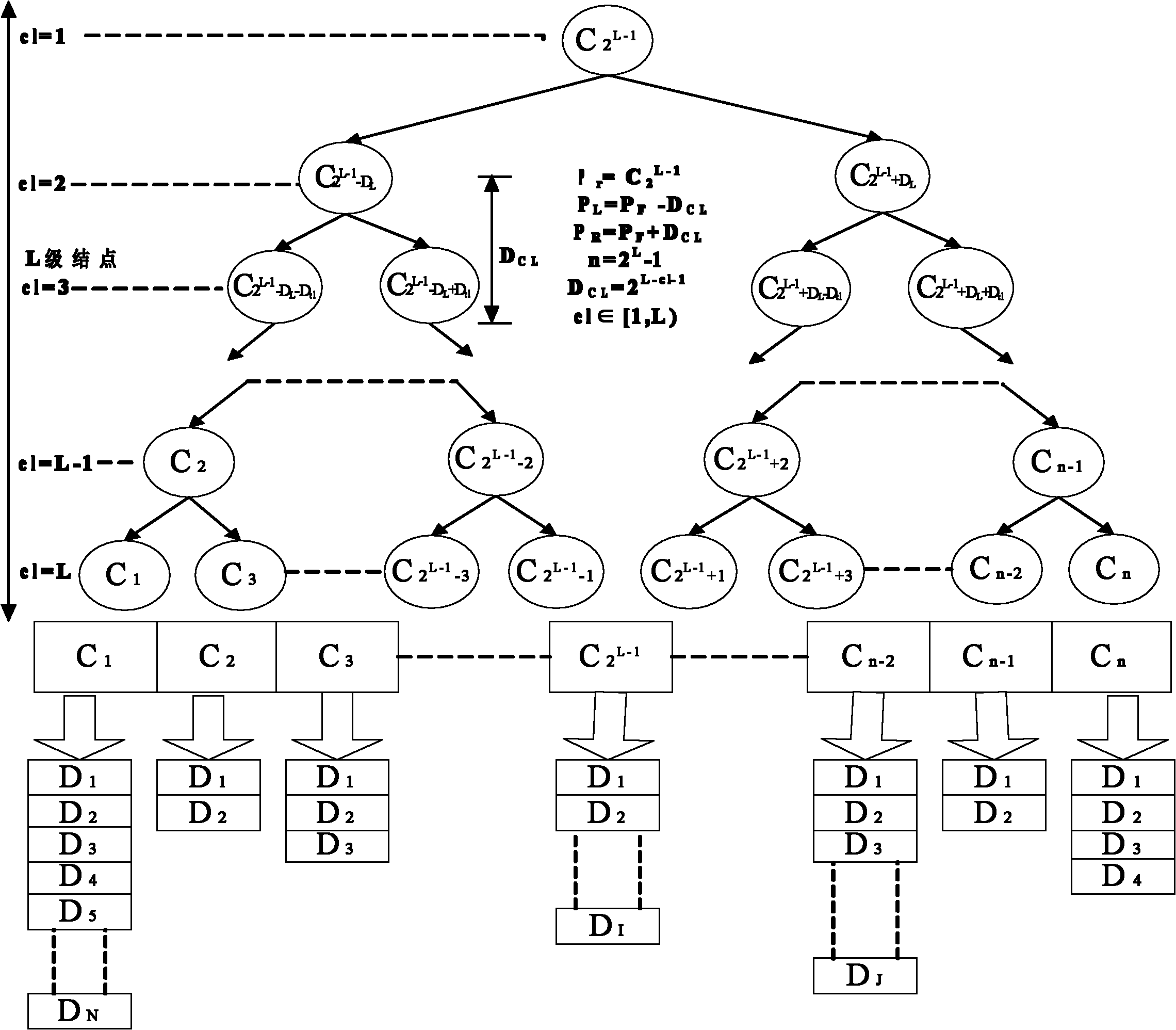

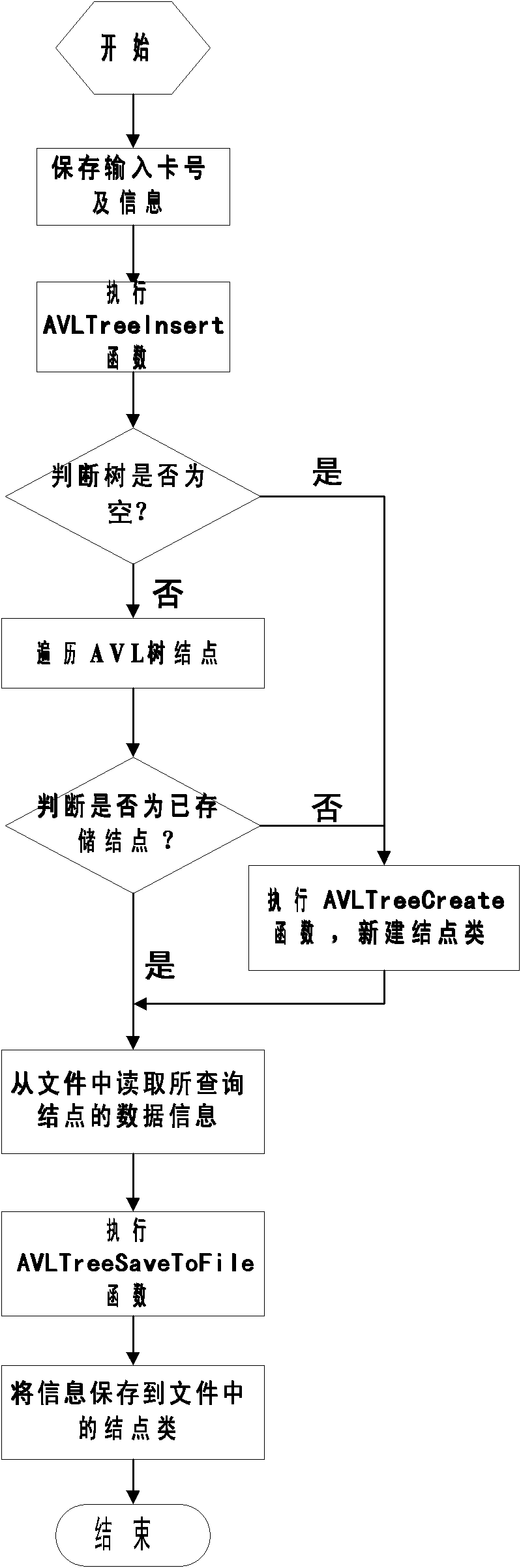

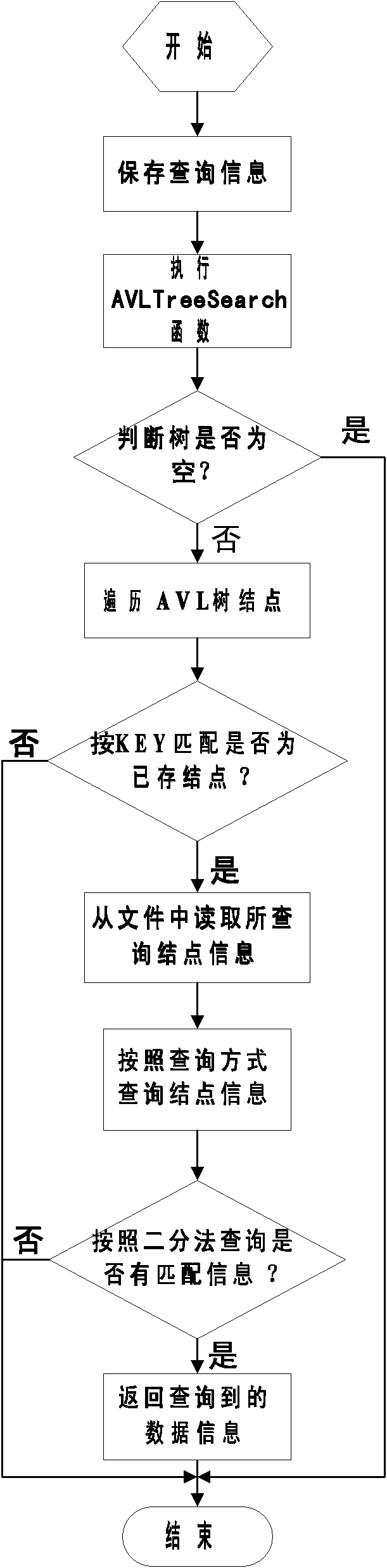

Data storage and query method based on classification characteristics and balanced binary tree

InactiveCN102521334AReduce complexityEasy to implementEnergy efficient computingSpecial data processing applicationsTheoretical computer scienceThe Internet

The invention discloses a data storage and query method based on classification characteristics and balanced binary tree. The method comprises the following steps: constructing a balanced binary tree to create nodes; dynamically classifying and storing data information in corresponding nodes according to the sequence of inorder traversal, preorder traversal and postorder traversal; and inputting an enquired content, dynamically traversing the AVL tree to obtain the desired data information. The method provided by the invention can lower the time complexity of dynamic query to static query level, thereby greatly improving storage and query efficiency. The method has the advantages of high speed, low energy consumption, less memory usage, and simple algorithm, and can be implemented by a plurality of languages. The method is widely suitable for data management in the communication field, in particular to massive data storage and query in communication of the internet of things.

Owner:GUANGDONG UNIV OF TECH

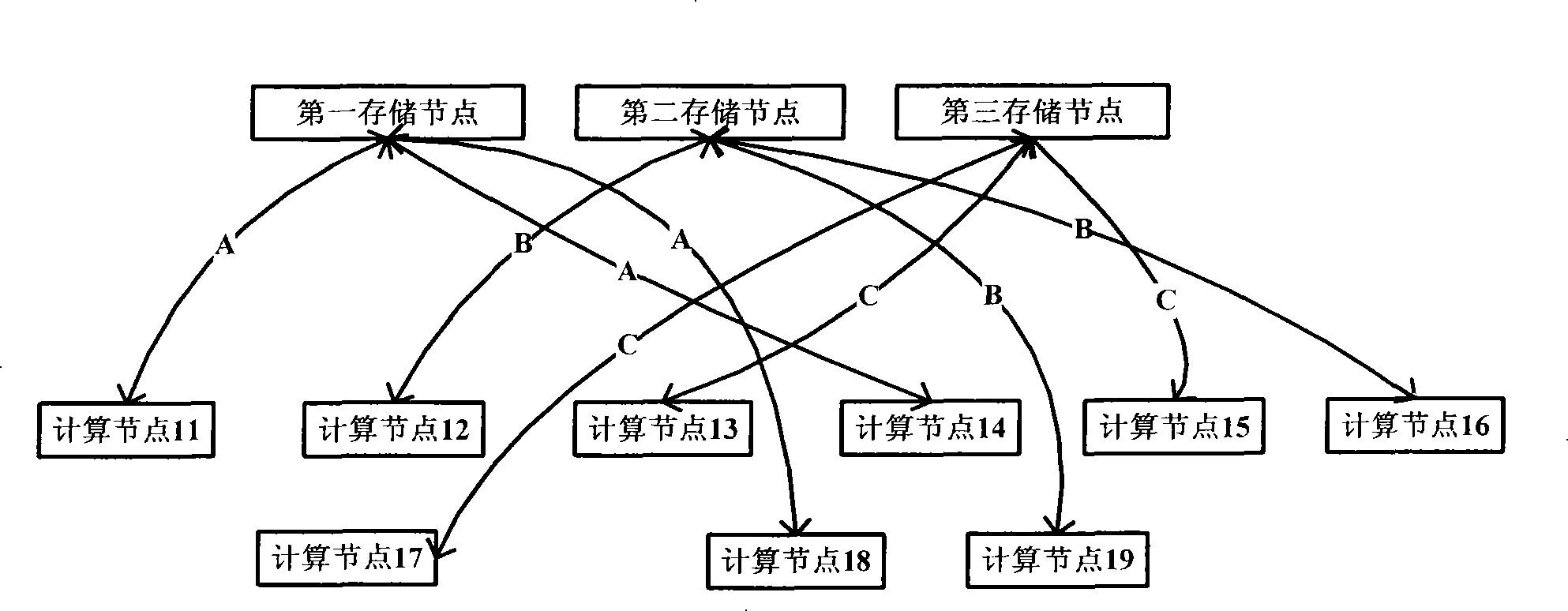

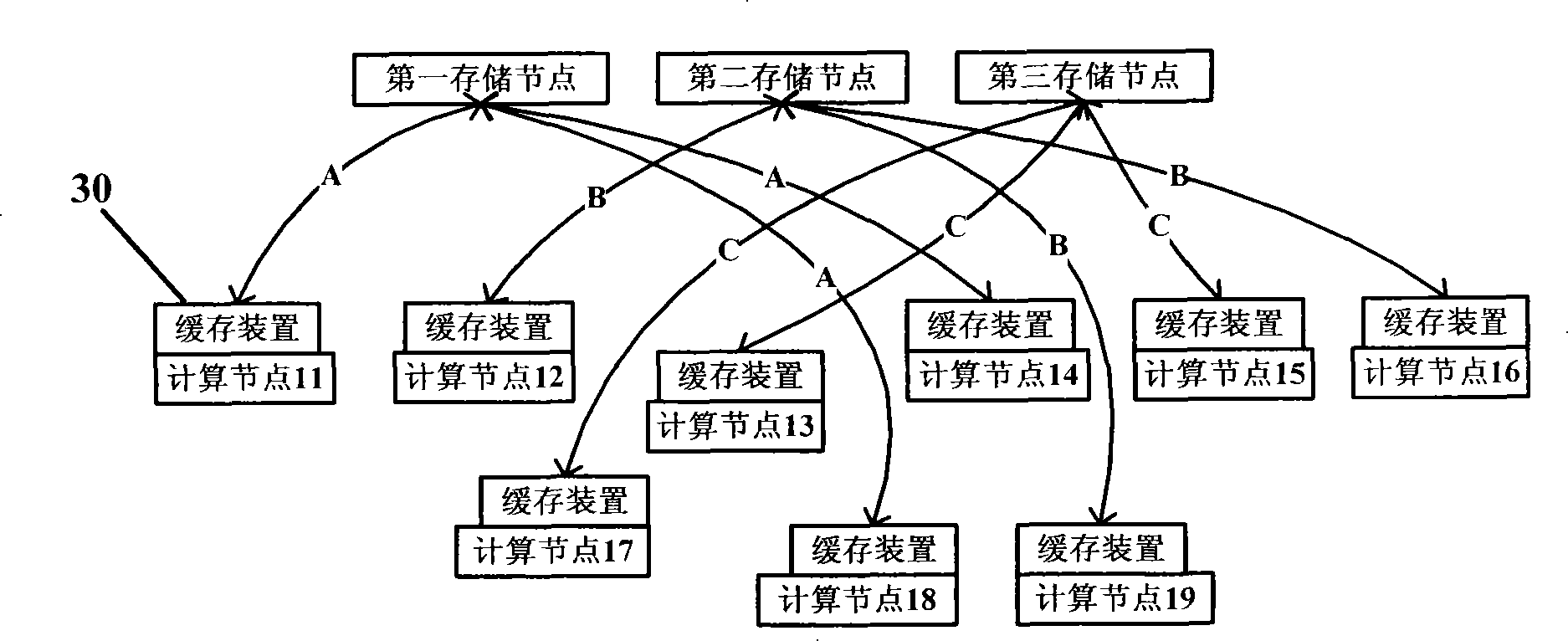

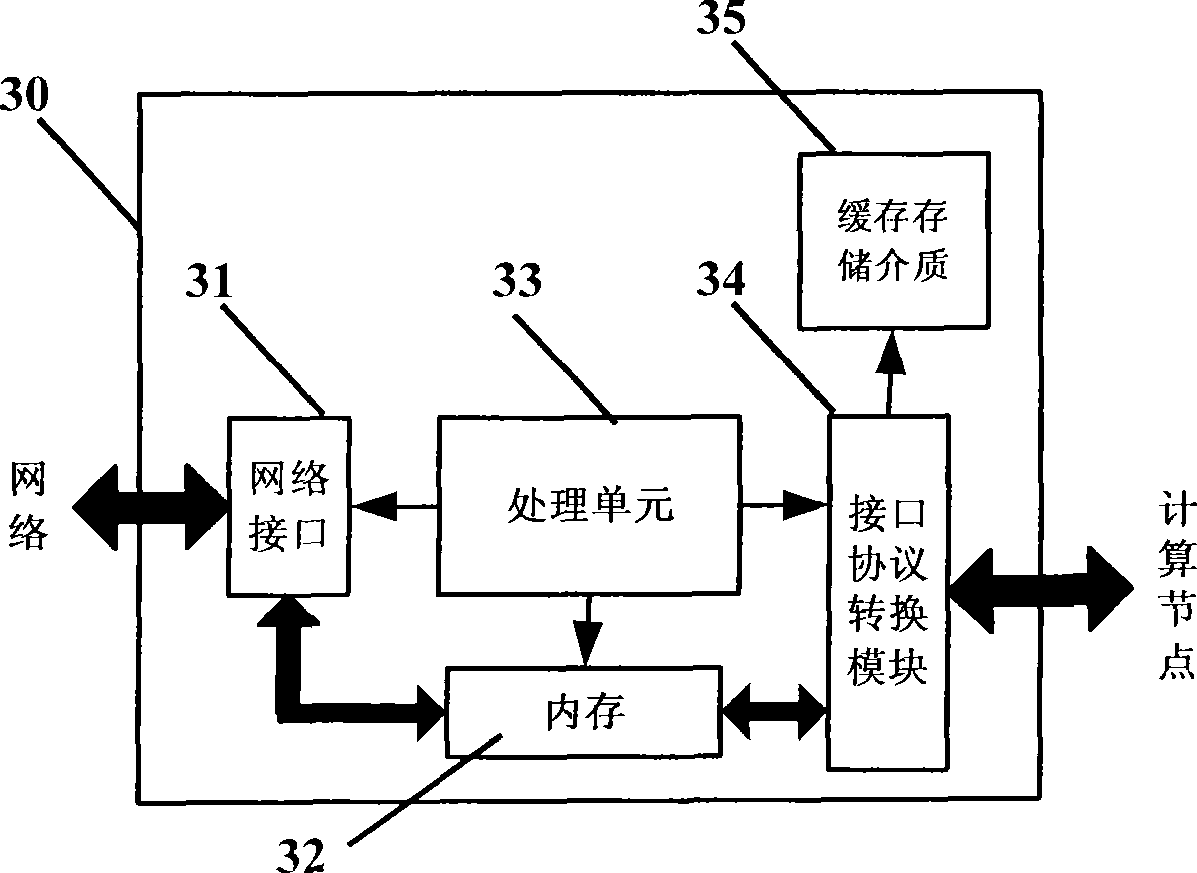

Data buffer apparatus and network storage system using the same and buffer method

InactiveCN101252589AReduce dependenceReduce loadStore-and-forward switching systemsControl dataInterface protocol

The invention provides a data buffering device, the network memory system and the buffering method adopting the data buffering device. The buffering device includes a network interface; an EMS memory connected with the network interface and used for memorizing the data requested by computational nodes; a computational node interface protocol transformation module connected with the EMS memory, used for transforming the request of the computational nodes into the request of a peripheral equipment and then submitting the request to a processing unit and used for transmitting data together with buffering memory media; a processing unit connected with the computational node interface protocol transformation module, the network interface and the EMS memory and used for controlling the transmission of data and confirming the buffering relationship of data; buffering memory media connected with the computational node interface protocol transformation module and used for buffering data according to the buffering relationship confirmed by the processing unit. The invention can reduce the independence of the computational nodes on visiting memory nodes through the network, reduce network load and reduce the network load pressure of the whole system at a given time.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

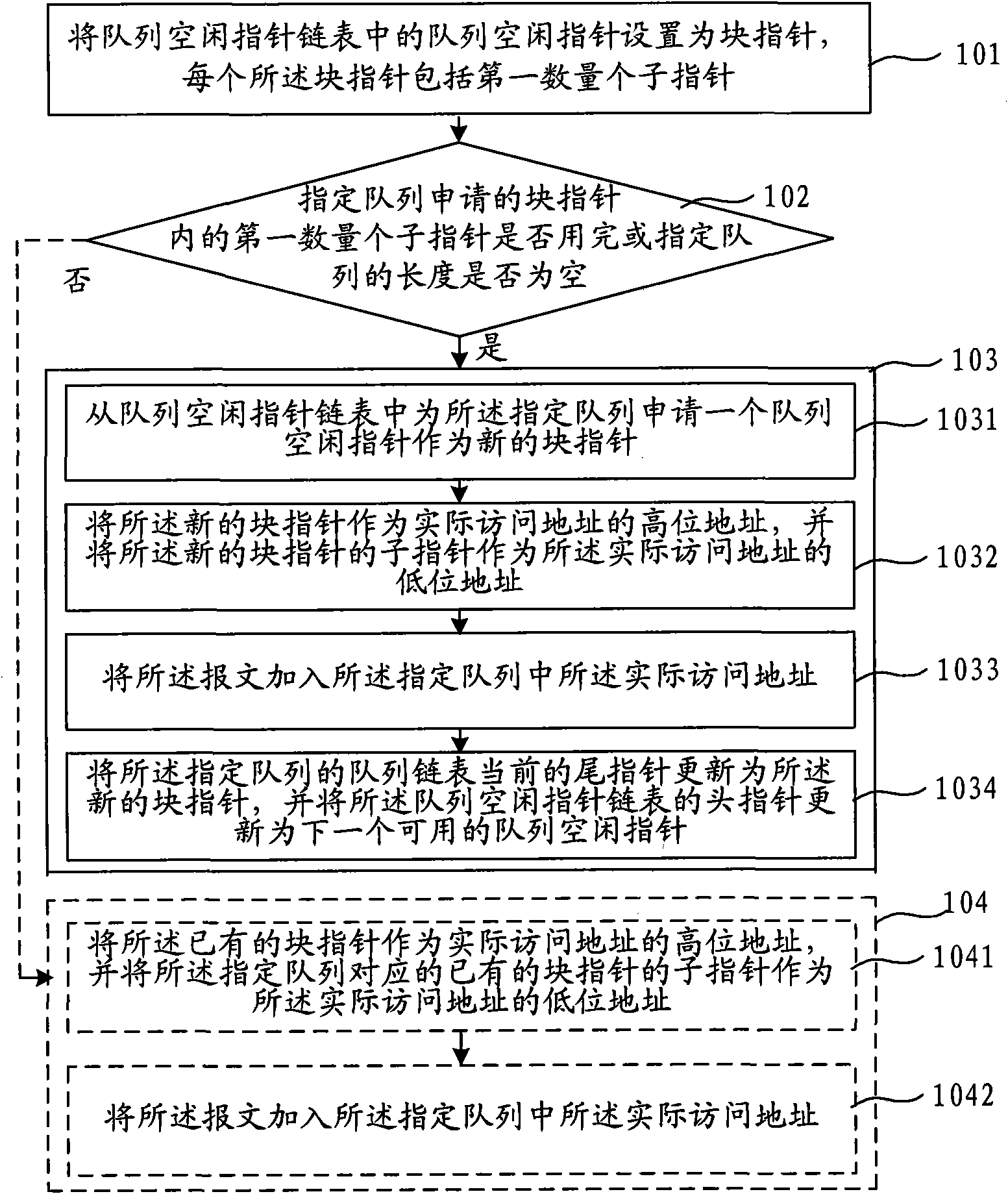

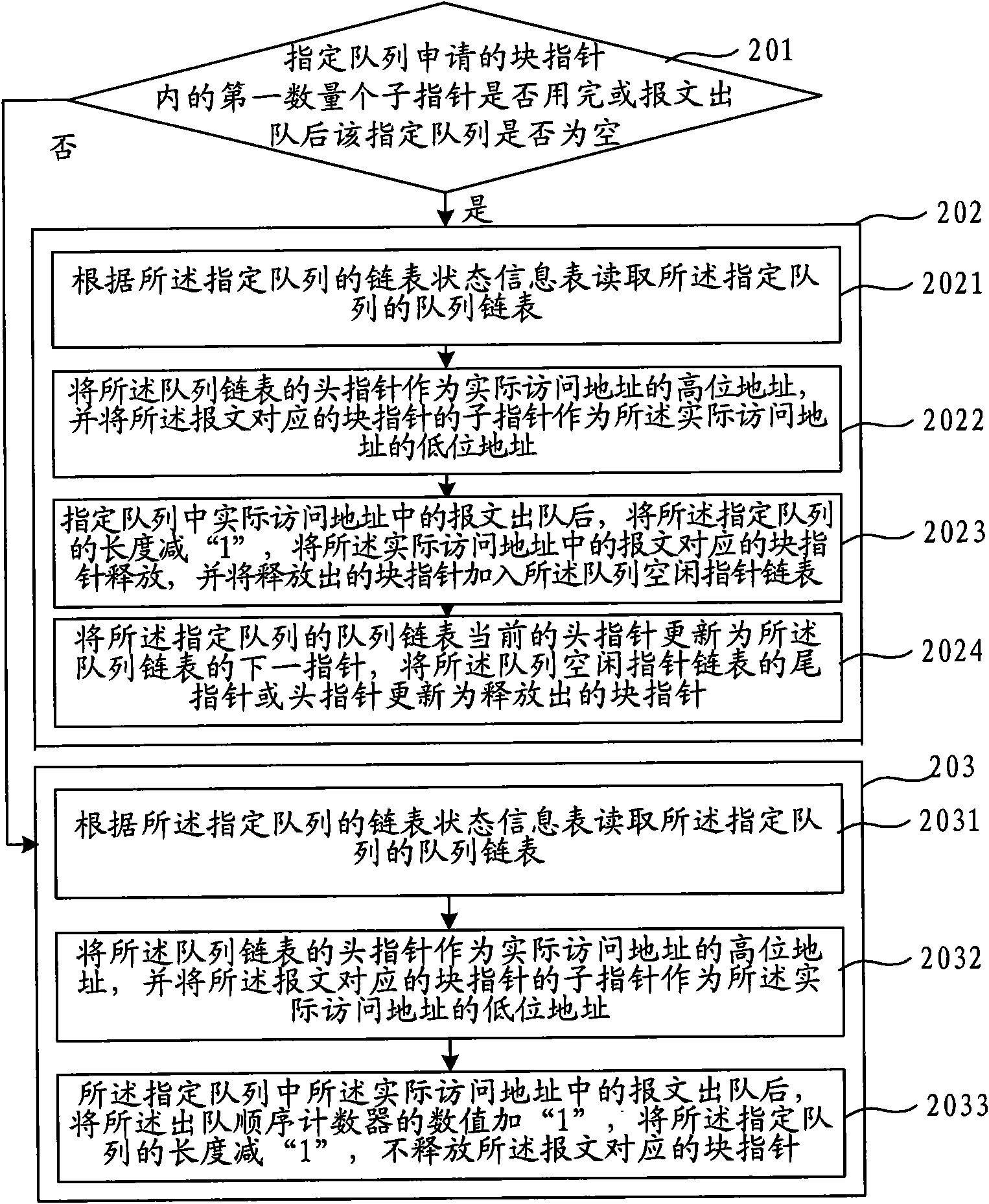

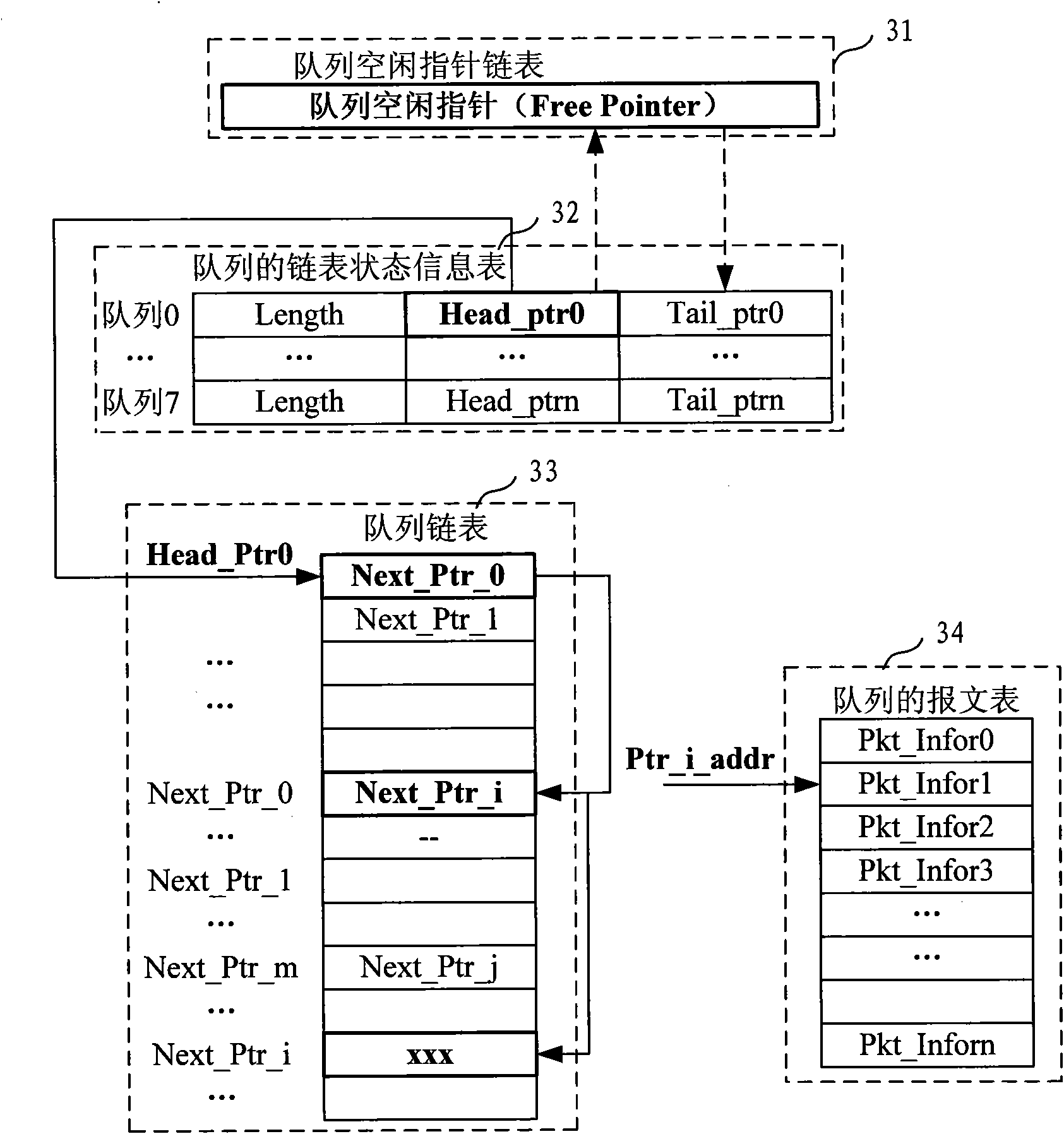

Method and apparatus for managing queue storage space

InactiveCN101605100AImprove management abilityReduce visitsMemory adressing/allocation/relocationData switching networksDistributed computingLinked list

The embodiment of the invention relates to a method and an apparatus for managing queue storage space. The method includes setting queue idle pointers in the queue idle pointer list as block pointers, each includes sub-pointers of a first number; if the packet needs to be added to a designated queue, determining whether the sub-pointers of the first number in the block pointer applied by the designated queue is used up, or whether the length of the designated queue is zero; if the sub-pointer of the first sub-pointer in the block pointer applied by the designate queue is used up or the length of the designated queue is zero, applying for a queue idle pointer for the designated queue from the queue idle pointer list as the novel block pointer, adding the novel block pointer to the designated queue. The embodiment of the invention uses block pointers for managing the queue storage space, reduces times of accessing the queue idle pointer list and the queue list, reduces bandwidth needed for managing RAM, thereby queue management capability is enhance under fixed RAM, and the cost is lowered.

Owner:HUAWEI TECH CO LTD

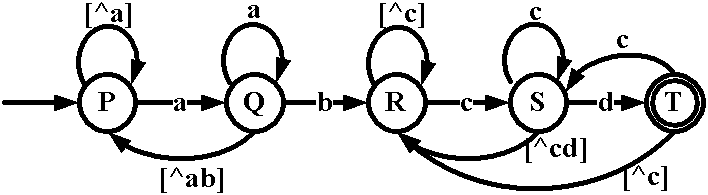

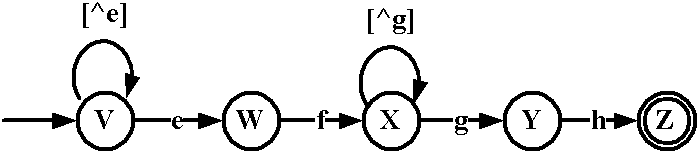

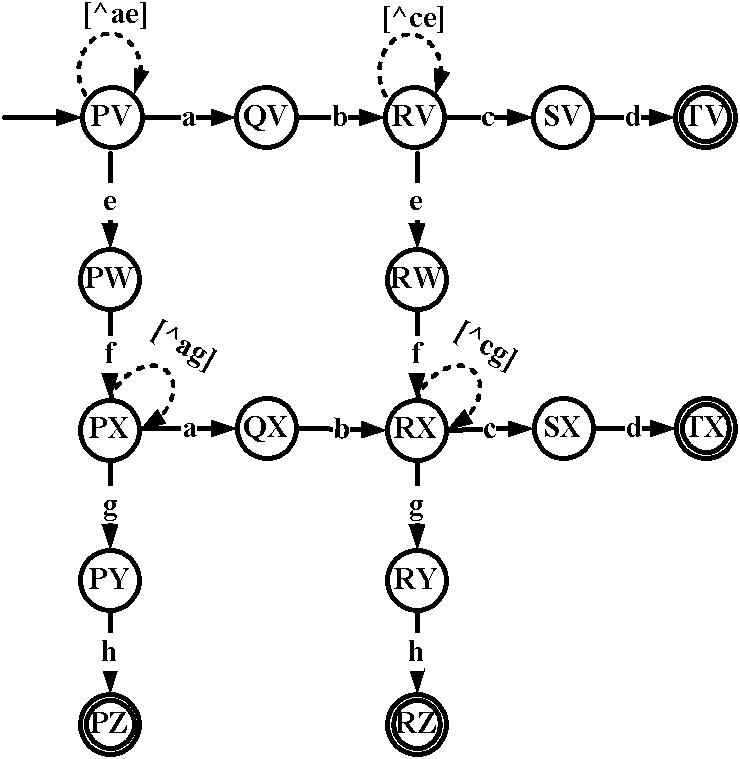

Regular expression matching method based on smart finite automaton (SFA)

InactiveCN102184197AReduce visitsHigh space-time efficiencySpecial data processing applicationsAutomatonTest set

The invention discloses a regular expression matching method based on a smart finite automaton (SFA), which comprises the steps of: selecting a proper regular expression rule set; and constructing the SFA; respectively carrying out character string matching on each read test set by an SFA matching method, and carrying out statistics on matching results. Experiment results indicate that: compared with an XFA (Xml Forms Architecture), the SFA has storage space overhead reduced by 44.1 percent, storage access frequency reduced by 69.1 percent and improved space-time efficiency of matching the regular expression. According to the invention, the problem of redundancy mobility edge existing in the XFA is solved, storage space can be effectively saved, and performances of the XFA are improved. Under the environment that the current network bandwidth and the traffic flow rapidly increase, the regular expression matching method provides an effective solution scheme for throughput requirements and storage space demands of line speed data packet processing during application.

Owner:HUNAN YIGU TECH DEV

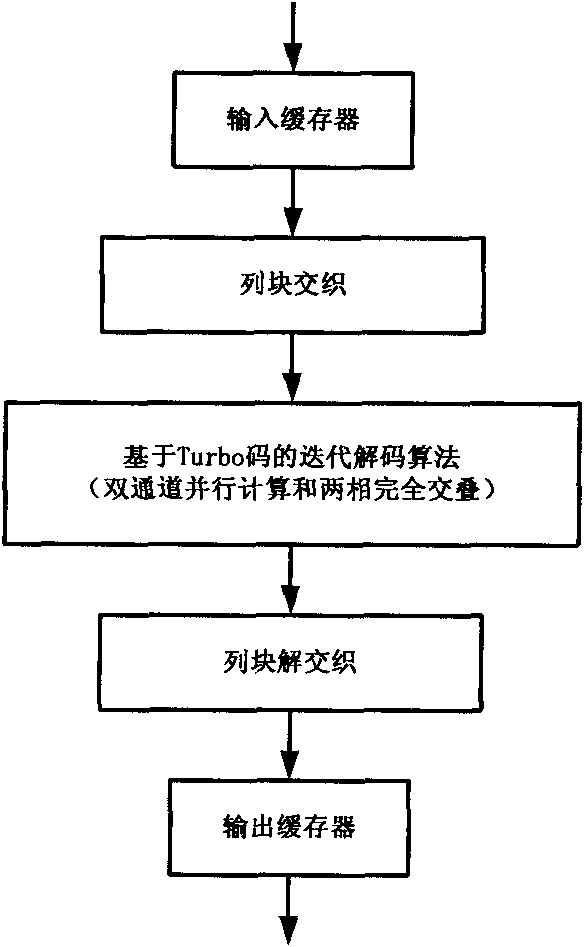

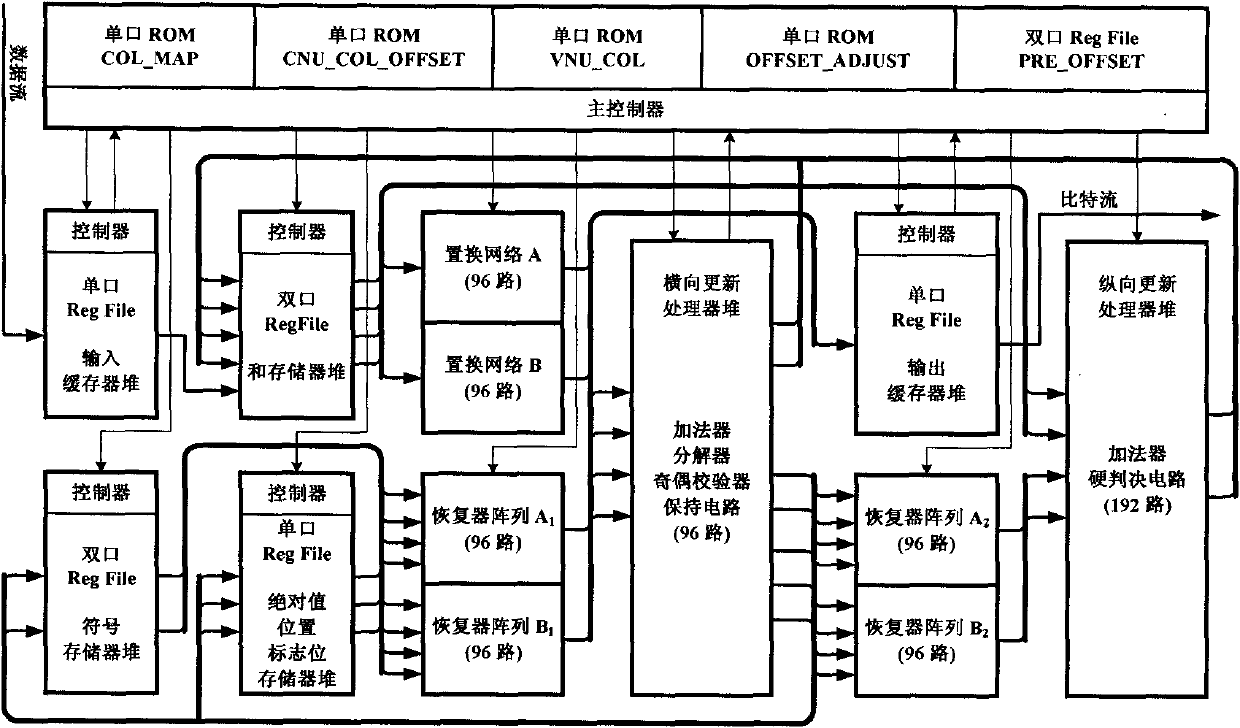

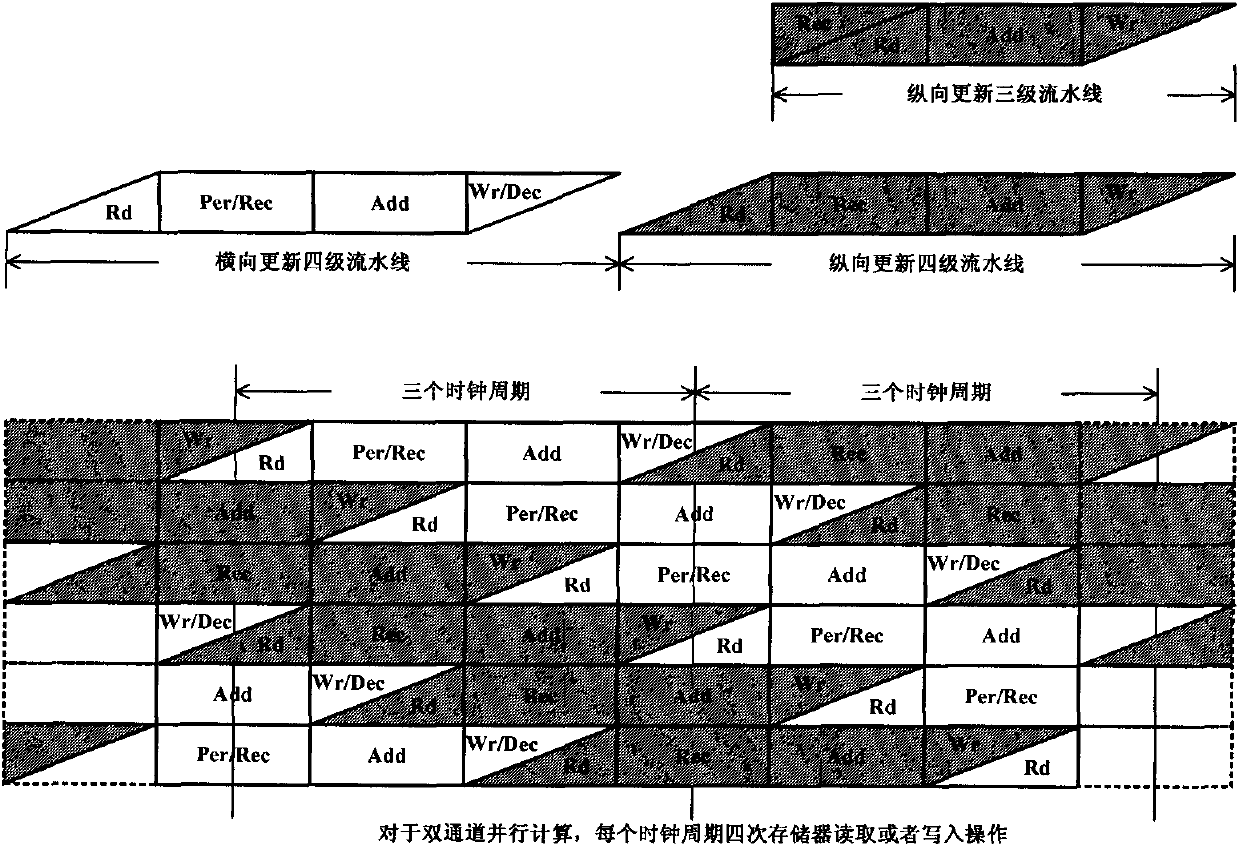

Ultrahigh-speed and low-power-consumption QC-LDPC code decoder based on TDMP

InactiveCN101771421AReduce visitsAvoid access violationsError correction/detection using multiple parity bitsComputer architectureParallel computing

The invention belongs to the technical field of wireless communication and micro-electronics, in particular to an ultrahigh-speed low-power-consumption and low-density parity check code (QC-LDPC) decoder based on TDMP. Through symmetrizing six grades of production lines, interlacing row blocks and line blocks, re-sequencing nonzero sub matrixes, carrying out four-quadrant division on a sum value register pile and adopting the technology of reading and writing the bypass, the decoder carries out serial scanning in the row sequence, two nonzero sub matrixes are respectively processed in each clock period during horizontal updating and vertical updating. The horizontal updating and the vertical updating are fully overlapped. Particularly, the sum value register pile stores the sum values of variable nodes, and is also used as an FIFO for storing transient external information transferred between two phases. The structure of the decoder has strong configurability, can be easily transplanted into any other irregular or irregular QC-LDPC codes, and has the excellent decoding performance, the peak frequency can reach 214 MHz, the thuoughput can reach about 1 gigabit per second, and the chip power consumption is only 397 milliwatts.

Owner:FUDAN UNIV

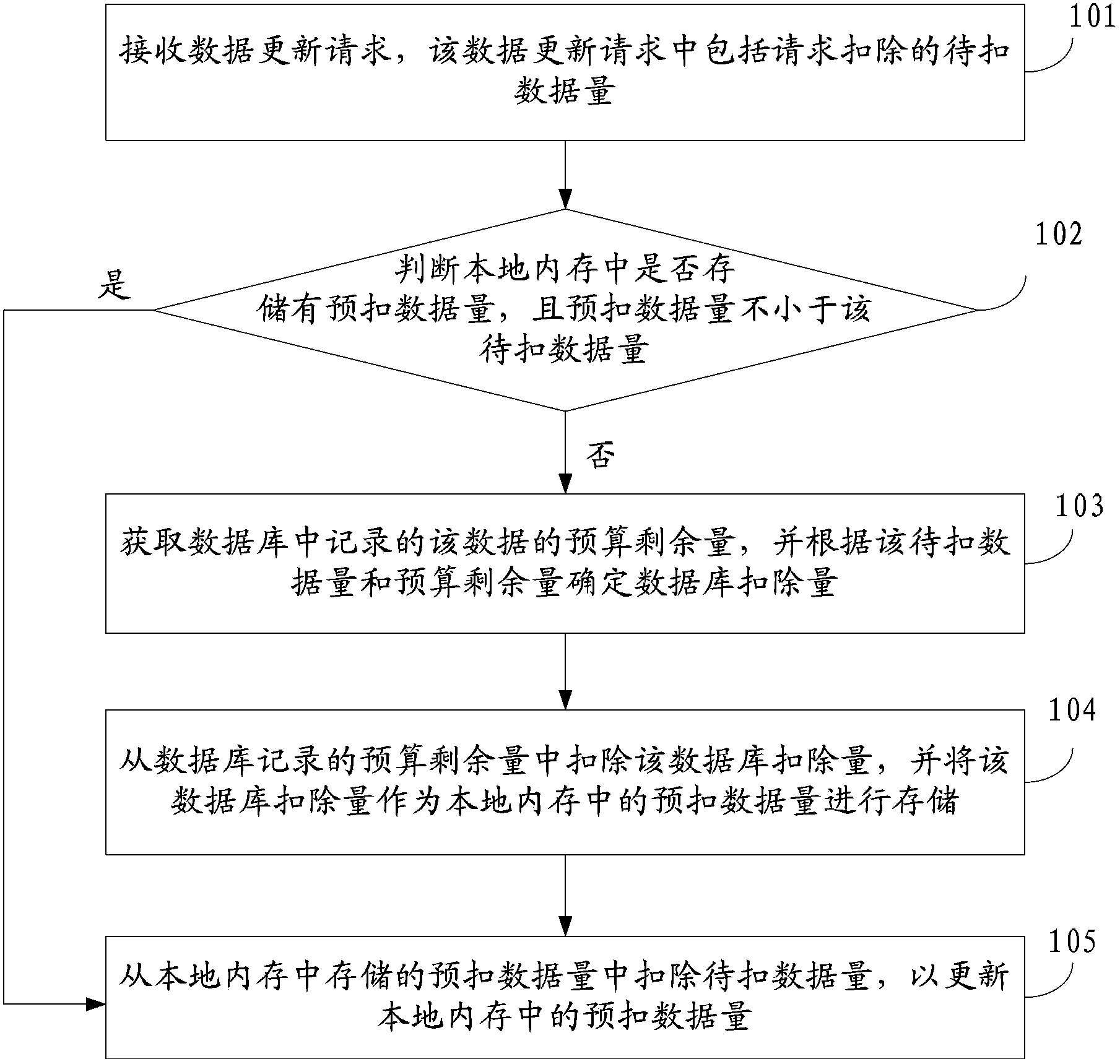

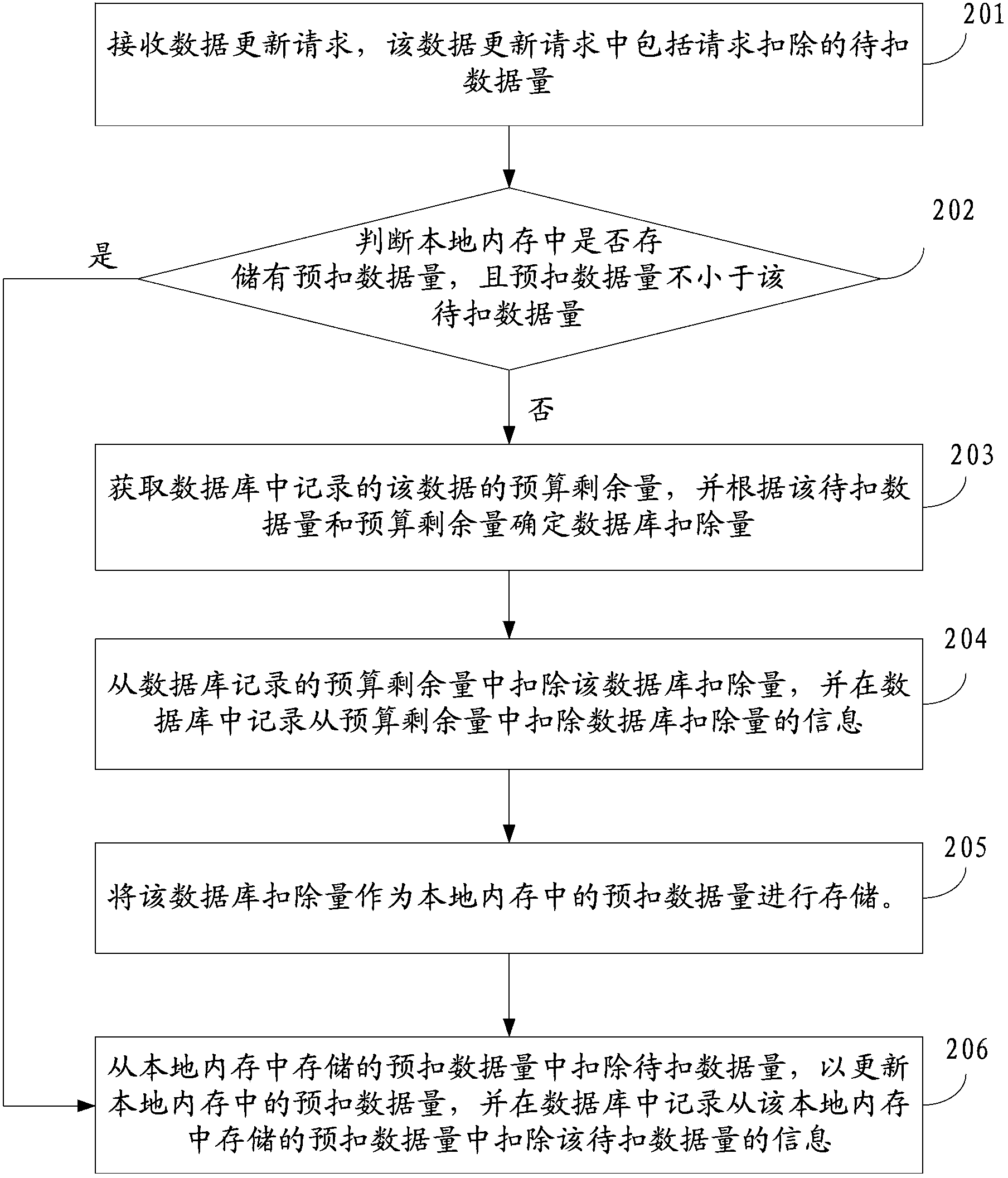

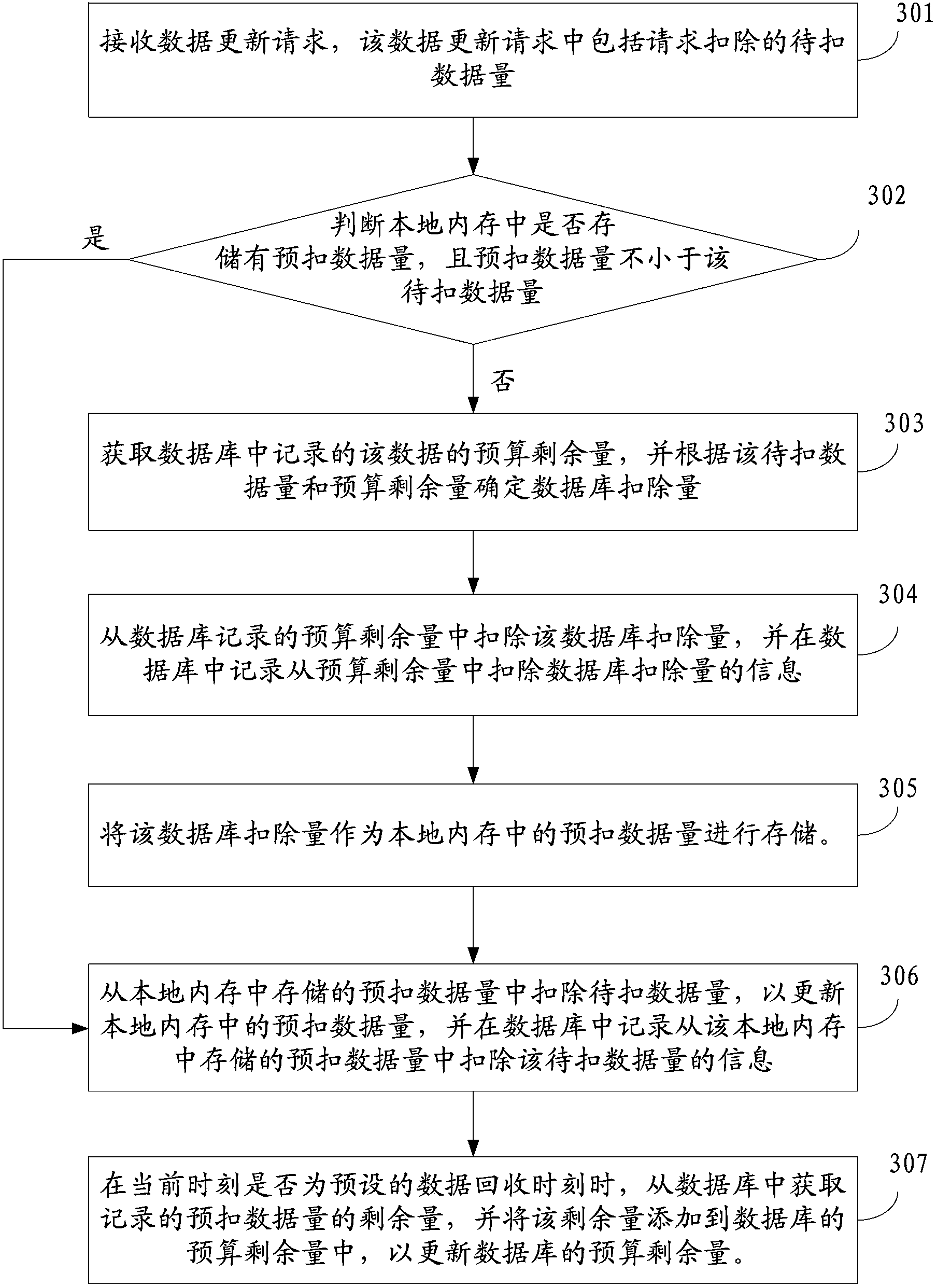

Data updating method and system based on database

ActiveCN103544153AReduce visitsReduce the number of write operationsSpecial data processing applicationsDatabase accessLocal memories

The invention provides a data updating method and system based on a database. The data updating method based on the database comprises the steps that when a data updating request containing a data size, to be deleted, requested to be deleted is received, whether a pre-deleted data size is stored in a local memory is judged, wherein the pre-deleted data size is equal to or larger than the data size to be deleted; if the pre-deleted data size is stored in the local memory, then the data size to be deleted is deleted from the pre-deleted data size in the local memory; only when no pre-deleted data size is stored in the local memory or the stored pre-deleted data size is smaller than the data size to be deleted, the predicted remaining size of data is acquired from the database, the database deleting amount is determined at the same time and is used as the pre-deleted data size in the local memory to be stored, and then the data size to be deleted is deleted from the pre-deleted data size in the local memory. The data updating method based on the database has the advantages that the number of times of database access can be reduced, time for lock wait is then reduced, and the number of processed data updating requests within a unit time of the system is increased.

Owner:ADVANCED NEW TECH CO LTD

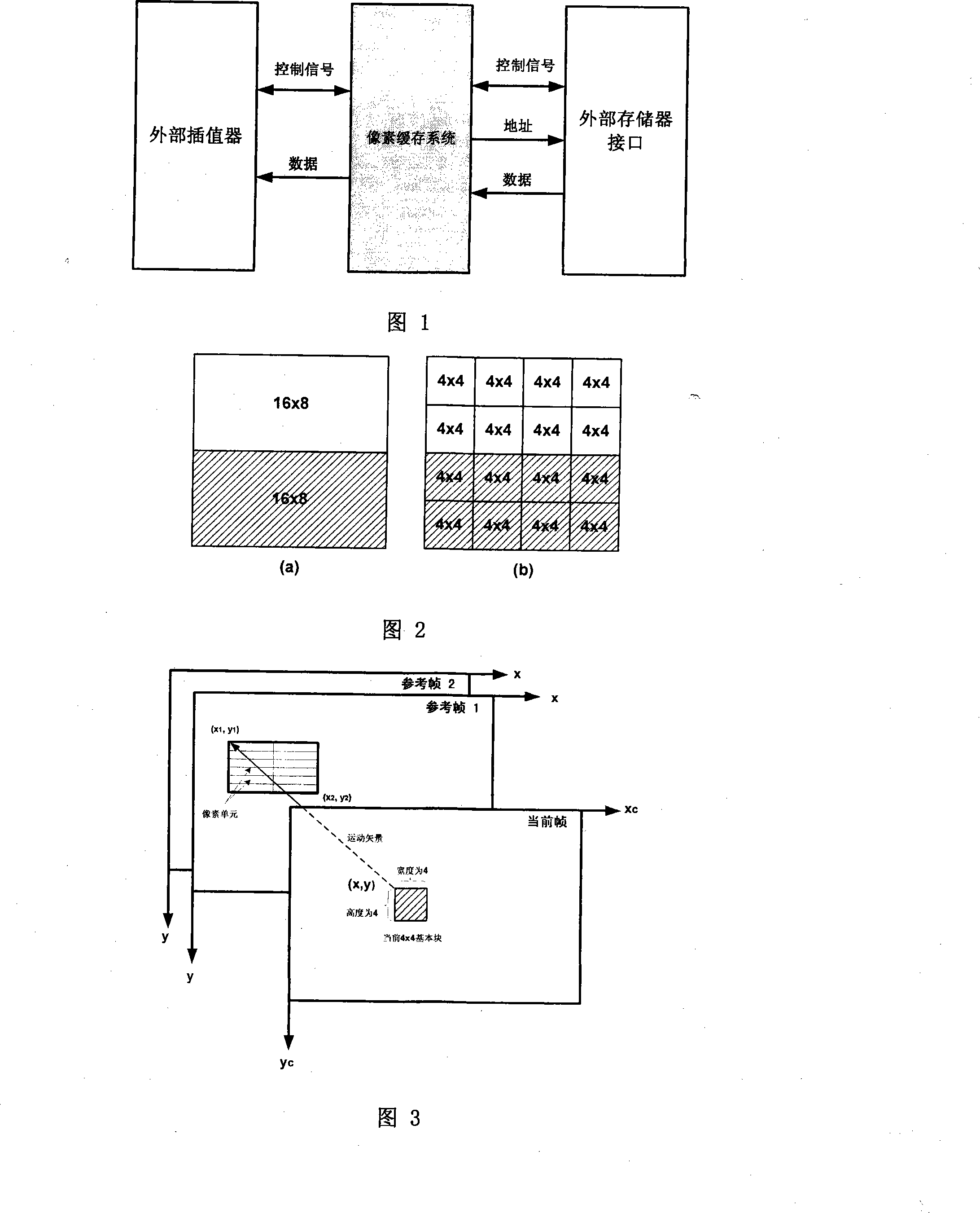

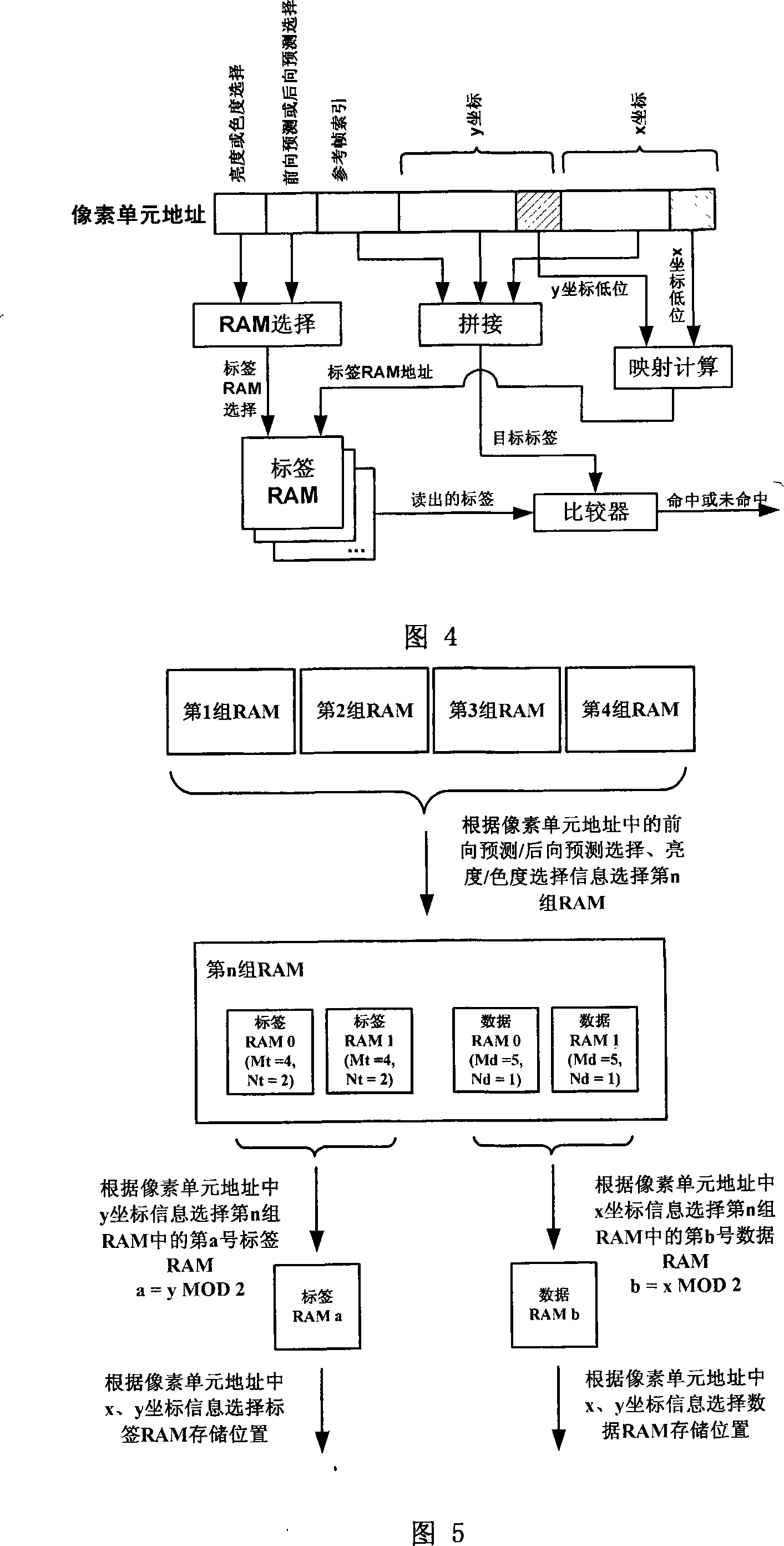

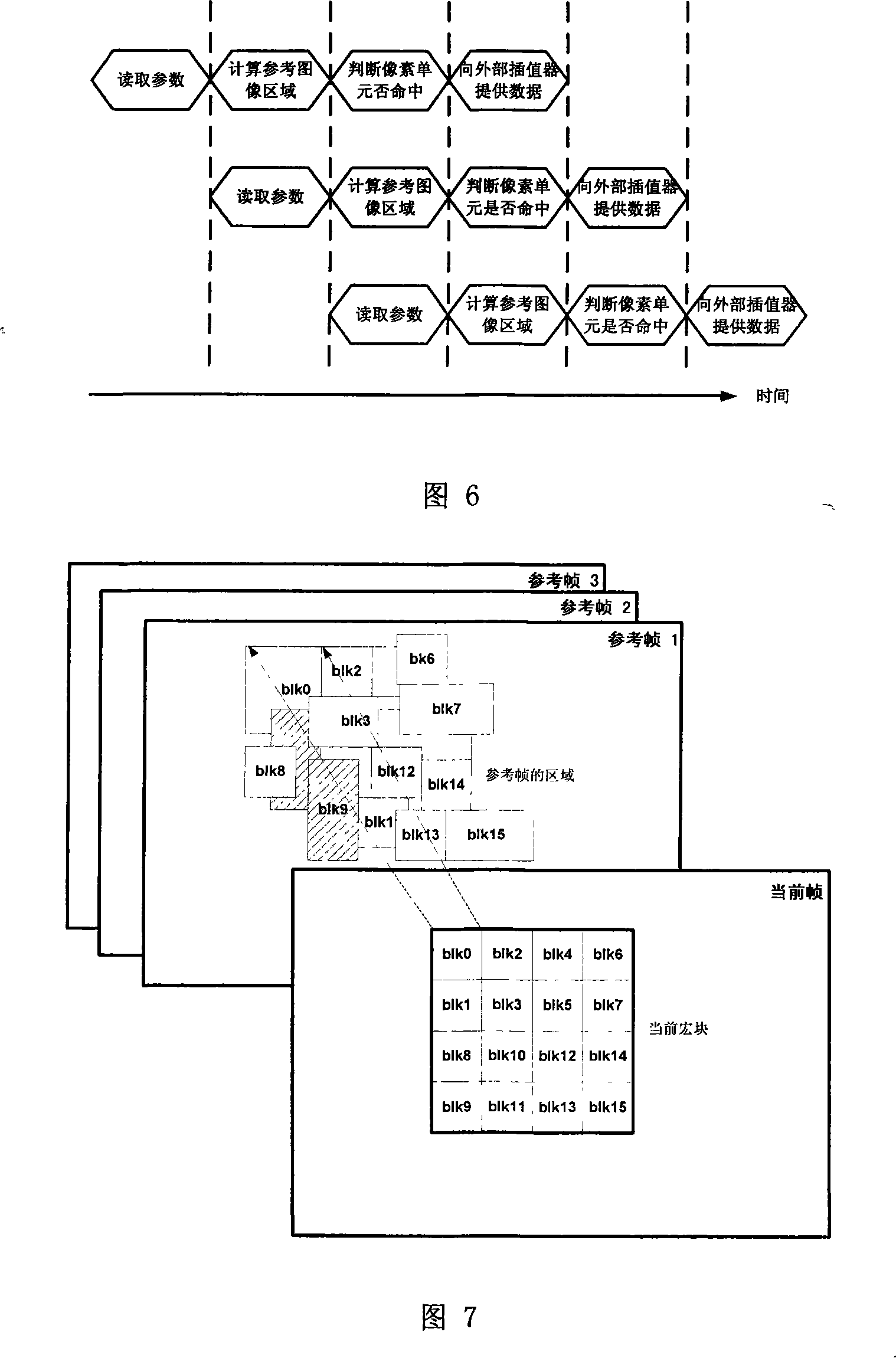

Picture element caching method and system in movement compensation process of video decoder

InactiveCN101163244AReduce visitsImprove hit rateTelevision systemsDigital video signal modificationInternal memoryExternal storage

An image element cache method and system, which is used in the motion compensation of an audio decoder in the field of audio decoding, includes: step 1. Reading motion compensation parameter information from the parameter memorizer; step 2. Zoning the area of the reference frame where the current basic block of motion compensation needs; step 3. Reading and selecting the image elements that are not in the internal memorizer out of the external memorizer and memorizing them in the internal memorizer; step 4. Reading and selecting out the data in the reference frame image element unit of the reference frame area from the internal memorizer in turn, and sending them together with the current motion vector of the basic block to an external interpolator. The invention system includes: an overall controlling module, a parameter module reading motion compensation, a reference frame area module which the motion compensation need, a judging and replacing module of image element unit, a controlling module of interpolation data output, and a memorizing module of data and label. The invention reduces the bandwidth need for external memorizer, and resolves the bandwidth bottleneck problem of the external memorizer of the audio decoder design.

Owner:SHANGHAI JIAO TONG UNIV

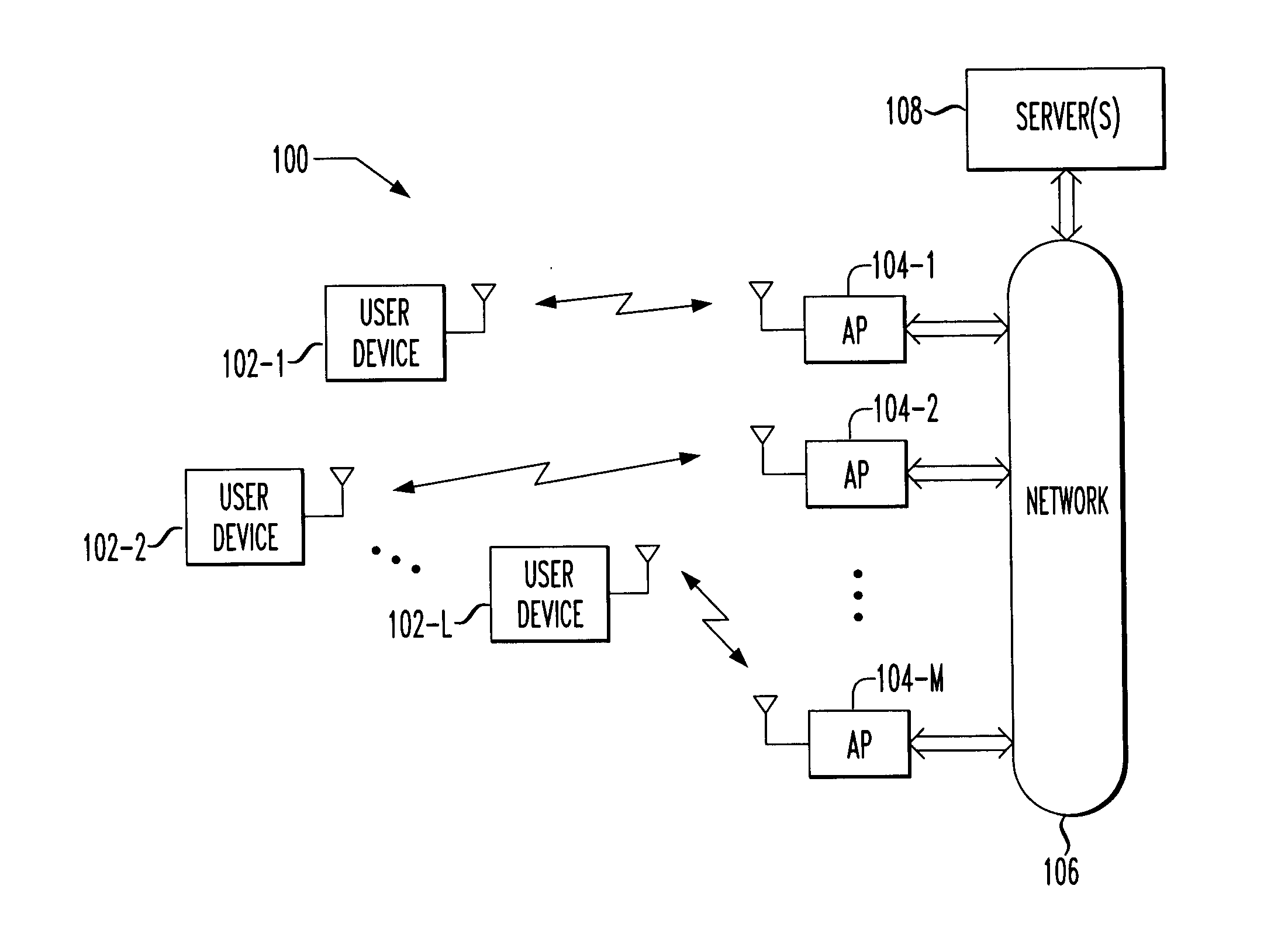

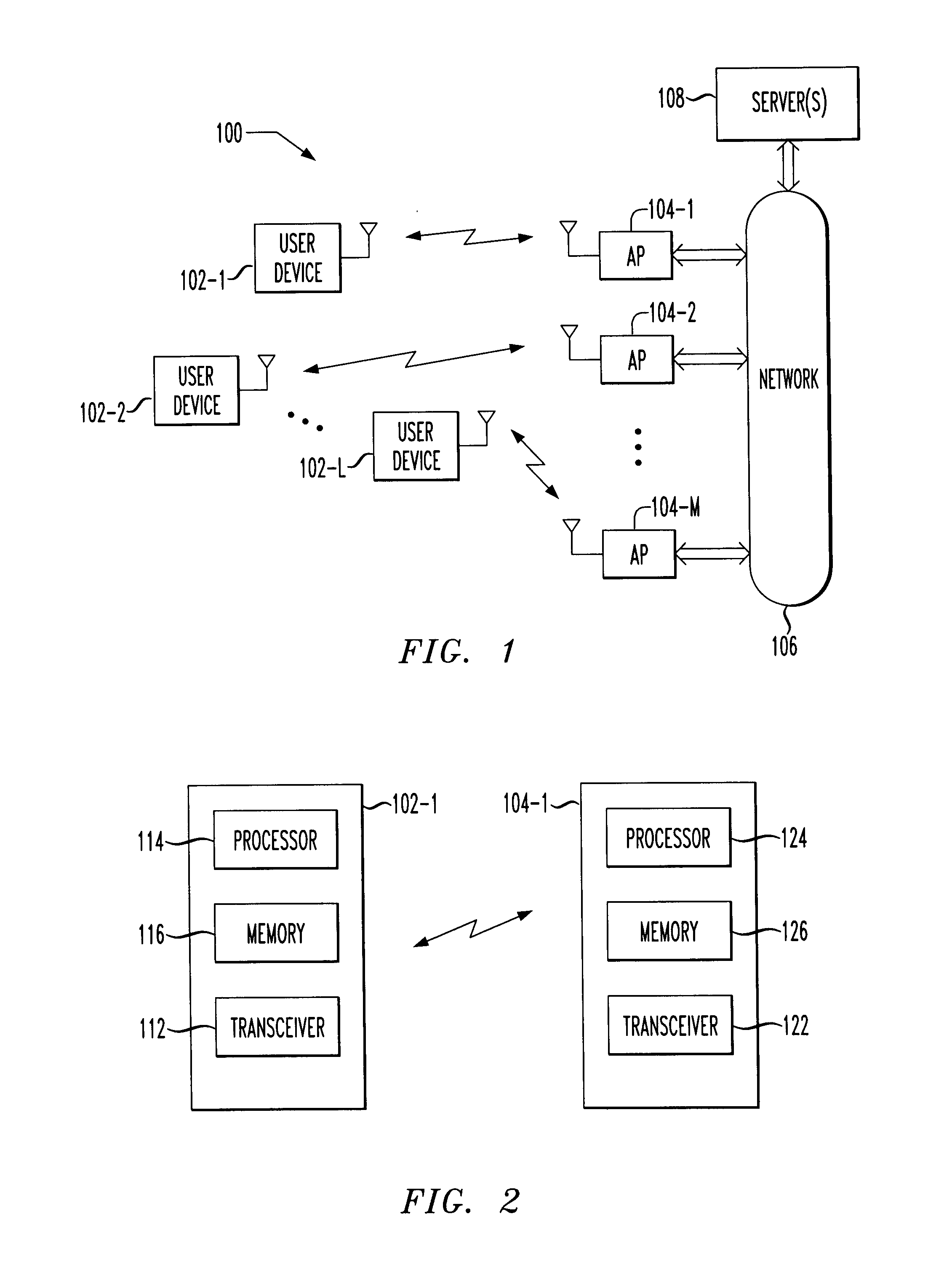

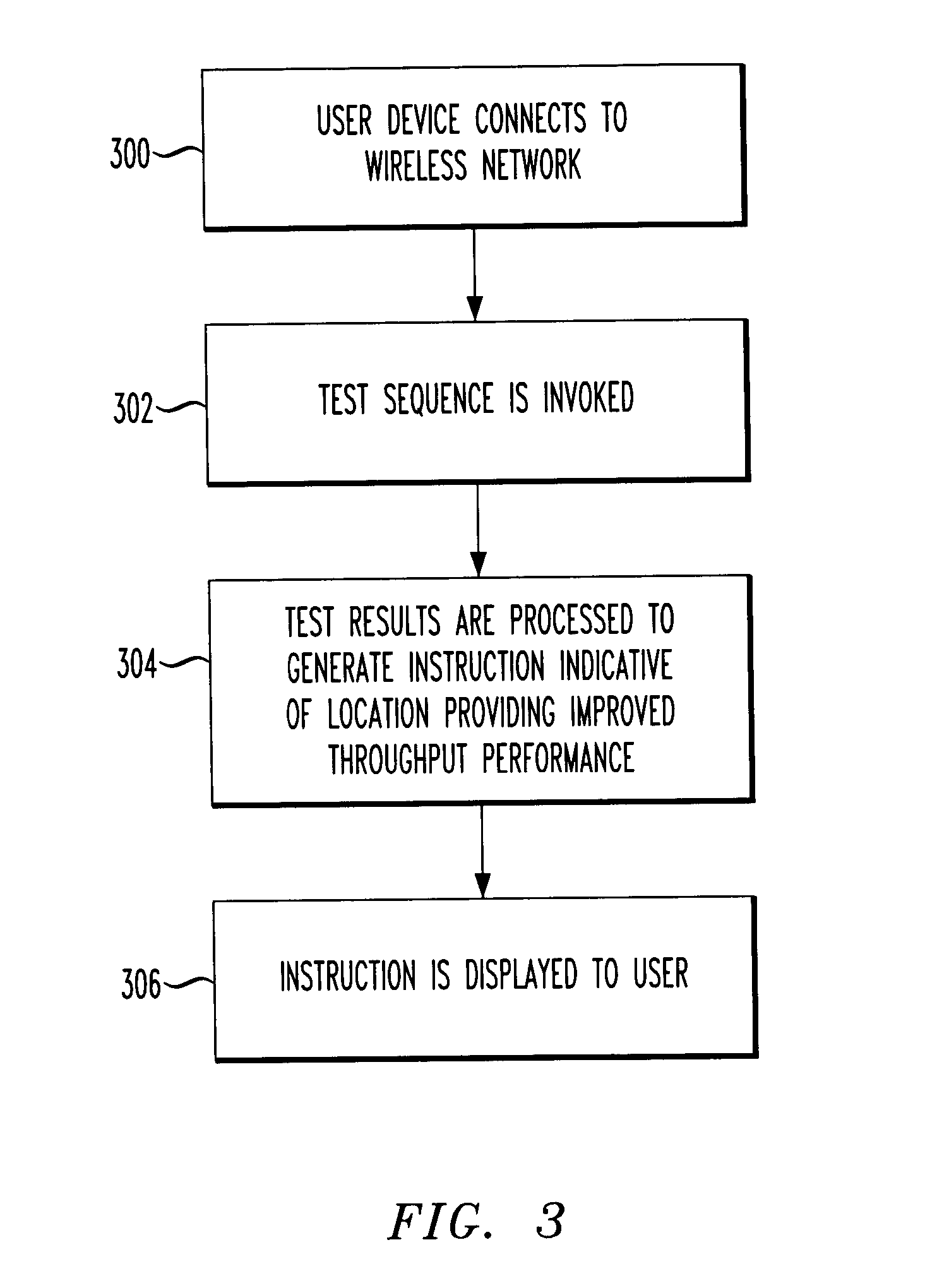

Method and apparatus for automatic determination of optimal user device location in a wireless network

ActiveUS20050064870A1Improve the level ofReduce visitsData switching by path configurationRadio/inductive link selection arrangementsUser deviceCommunications system

Techniques are disclosed for automatic generation of a location-indicative instruction displayable to one or more users in a communication system which includes a wireless network comprising a plurality of user devices adapted for communication with at least one access point device. A test of a communication link between at least one of the user devices and the access point device is initiated. Based at least in part on a result of the test, an instruction displayable to a user associated with a given one of the user devices is generated, the instruction being indicative of a location at which the given user device is expected to obtain a particular level of data throughput performance.

Owner:AVAGO TECH INT SALES PTE LTD

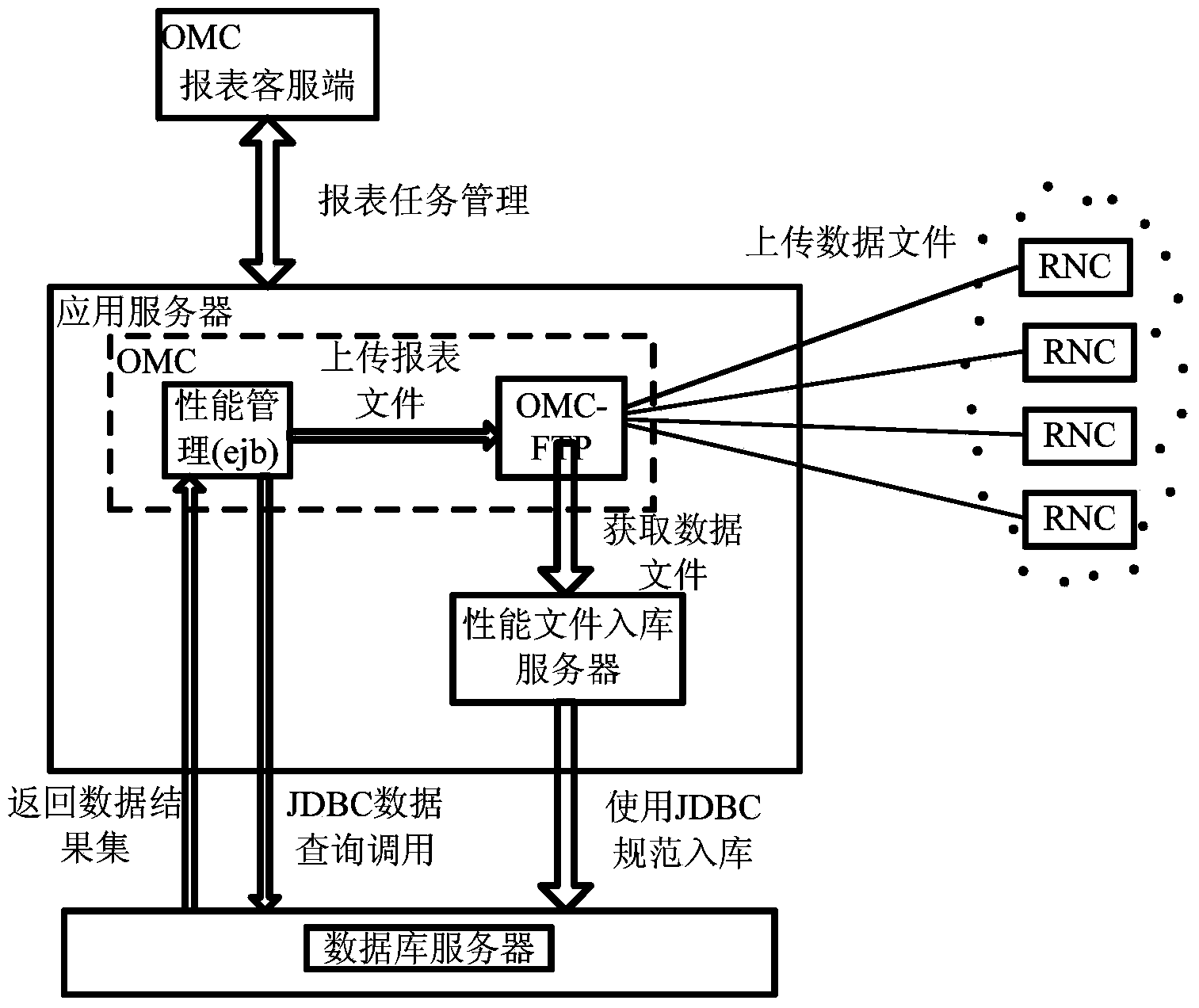

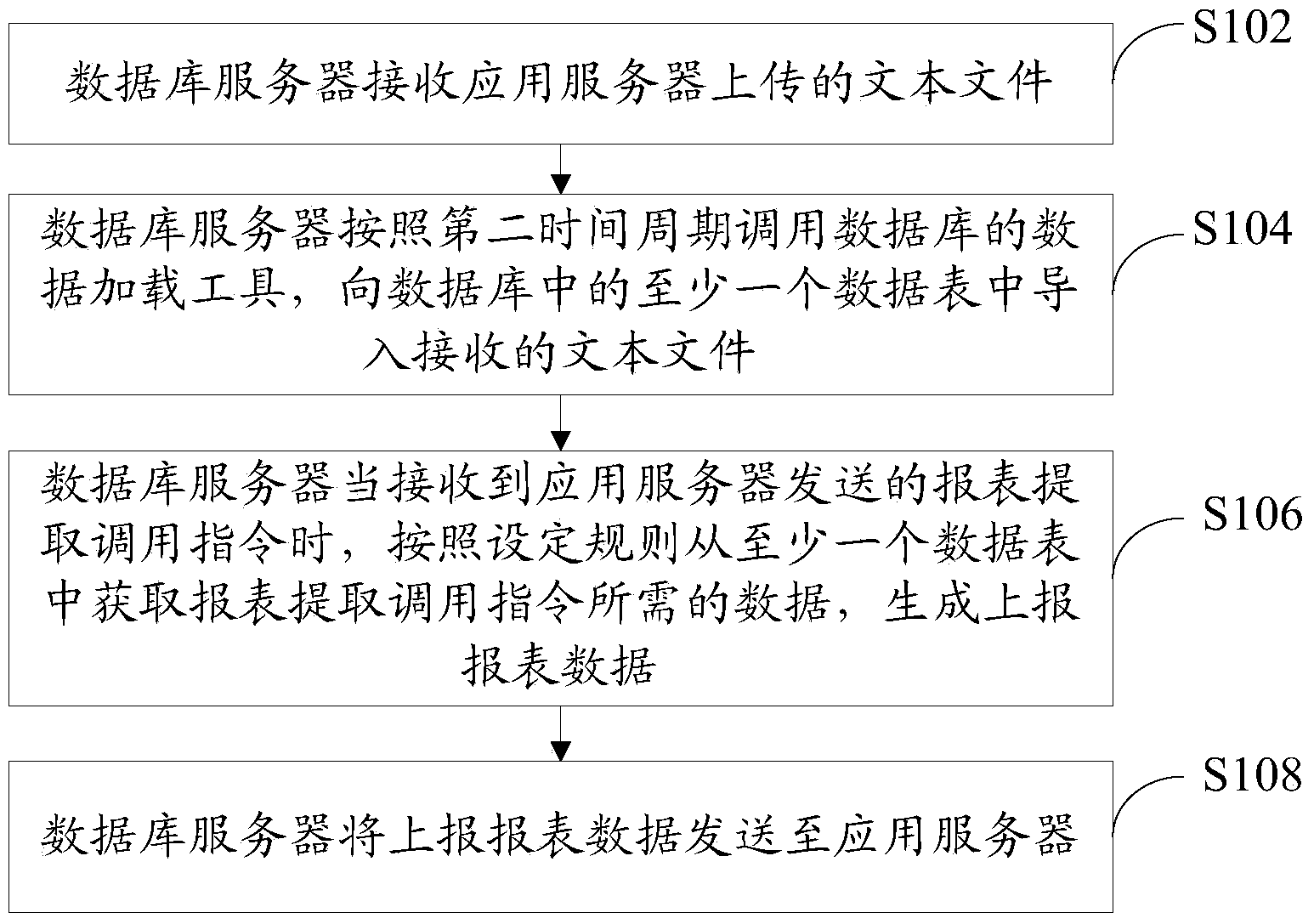

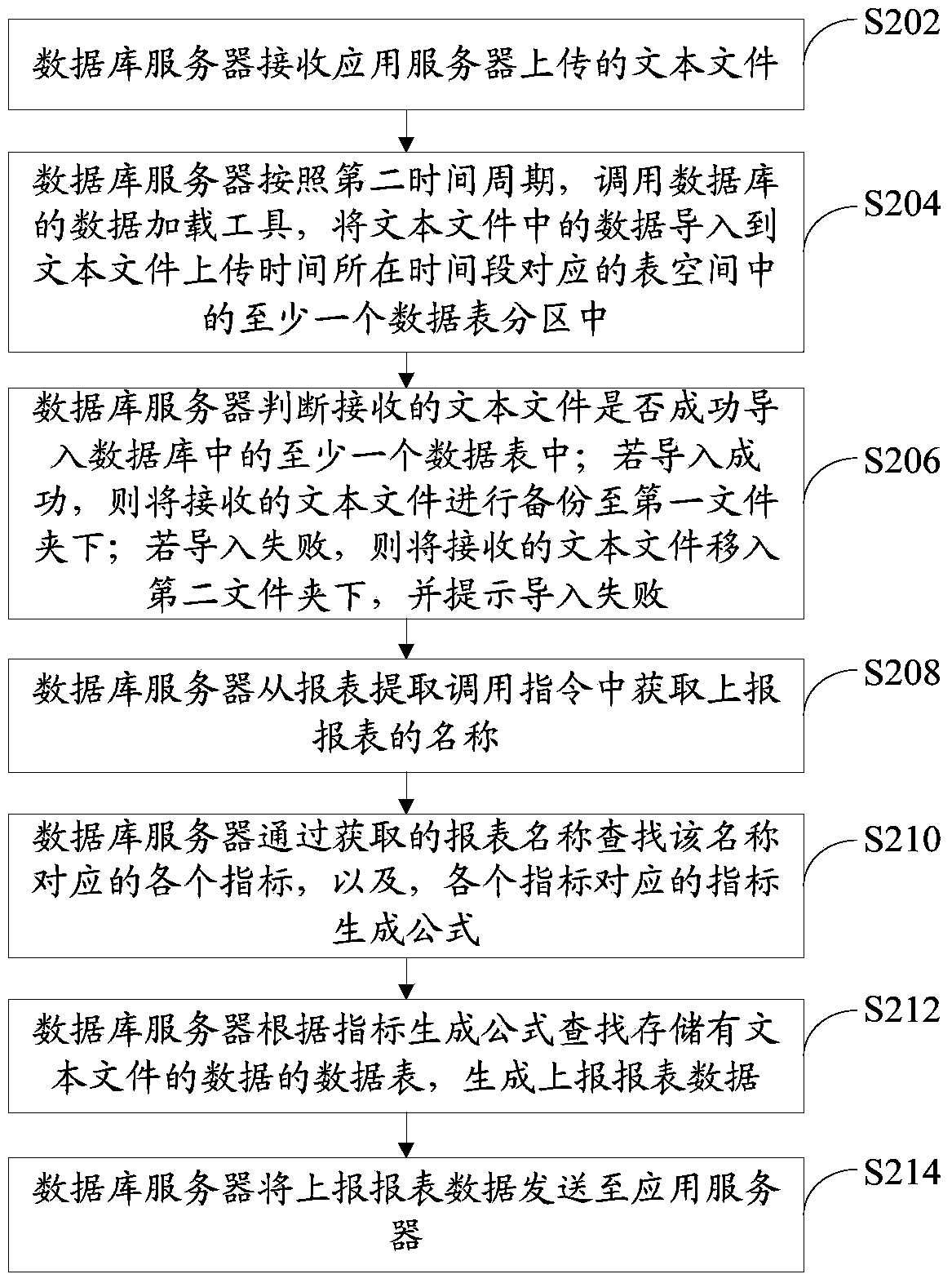

Mass data processing method, database server and application server

ActiveCN103942287ASave import timeReduce visitsSpecial data processing applicationsApplication serverDatabase server

The invention provides a mass data processing method, a database server and an application server. The mass data processing method comprises the steps that a text file uploaded by the application server is received, wherein the text file is obtained after the application server converts performance files uploaded by network elements according to a first time period, and the text file contains data in the performance files uploaded by at least one network element; a data loading tool in a database is called according to a second time period, and the received text file is imported into at least one data table in the database; when a report extraction calling instruction sent by the application server is received, data required by the report extraction calling instruction are obtained from at least one data table according to set rules, and then report data to be reported are generated; the report data to be reported are sent to the application server. By means of the mass data processing method, the database server and the application server, time for generating a report to be reported can be effectively shortened, and the frequency of access to the database can also be reduced.

Owner:DATANG MOBILE COMM EQUIP CO LTD

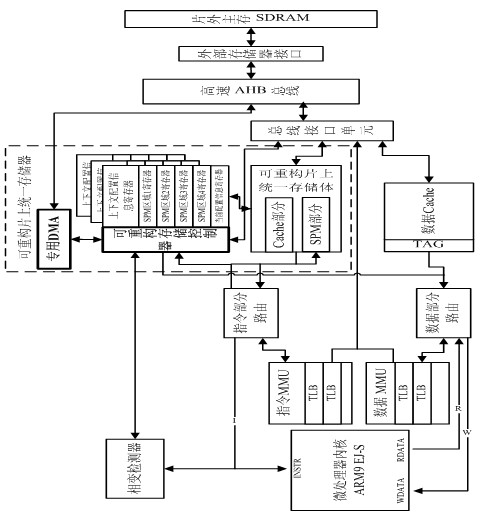

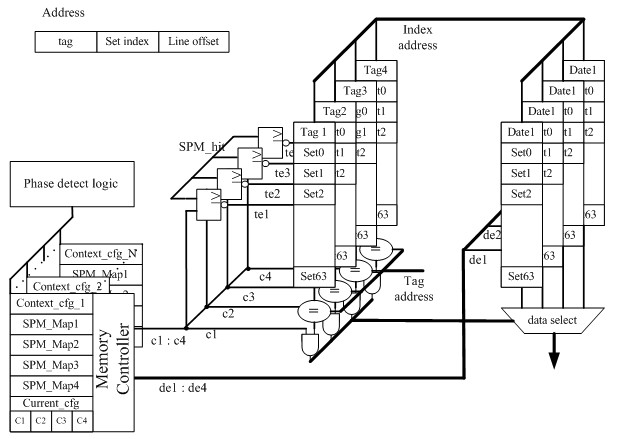

Method for managing reconfigurable on-chip unified memory aiming at instructions

InactiveCN102073596AReduce system power consumptionImprove system performanceEnergy efficient ICTMemory adressing/allocation/relocationProgram instructionInteger non linear programming

The invention discloses a method for implementing managing a reconfigurable on-chip unified memory aiming at instructions by utilizing a virtual memory mechanism. Through the method, the parameters of the Cache part and the SPM (Scratch-PadMemory) part in the reconfigurable unified memory can be dynamically adjusted in the program running process to adapt to the requirements for memory architecture in different program execution stages. The method is characterized by analyzing the memory access behaviors in different program running stages, obtaining phase change behavior diagrams of the instruction parts and carrying out mathematical abstraction on the phase change behavior diagrams, obtaining the reconfigurable memory configuration information in each program stage and selecting the program instruction parts needing to be optimized by adopting integer nonlinear programming (INLP) according to the energy consumption objective function and the performance objective function and mapping the code segments which have severe conflicts and are frequently accessed in the Cache into the SPM part as much as possible by virtue of a virtual memory management mechanism, thus not only reducing the external memory access energy consumption caused by repeatedly filling Cache, reducing the extra energy consumption caused by compare logic in the Cache and improving the system performance.

Owner:SOUTHEAST UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com