Patents

Literature

58 results about "Multiplier accumulator" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

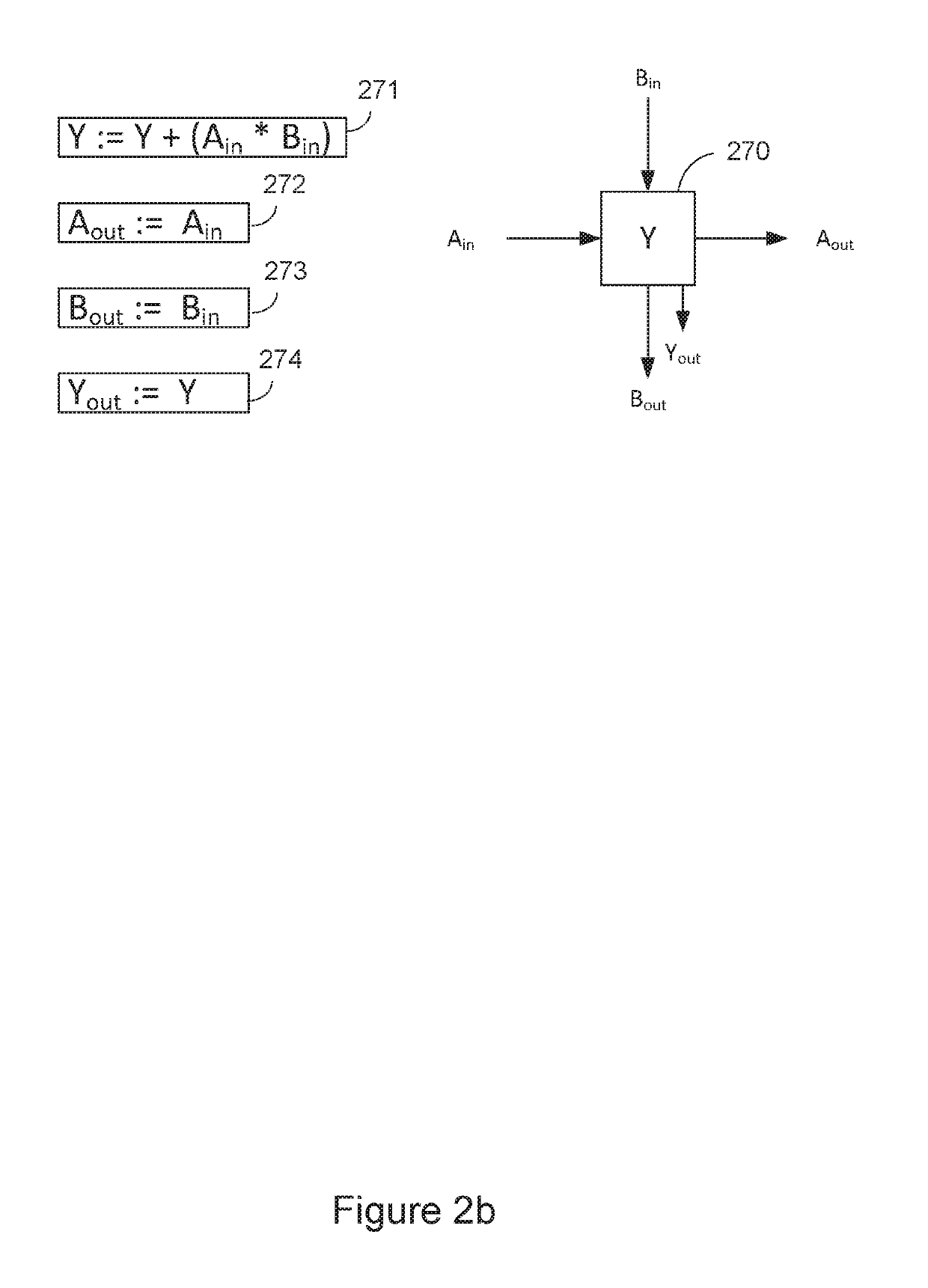

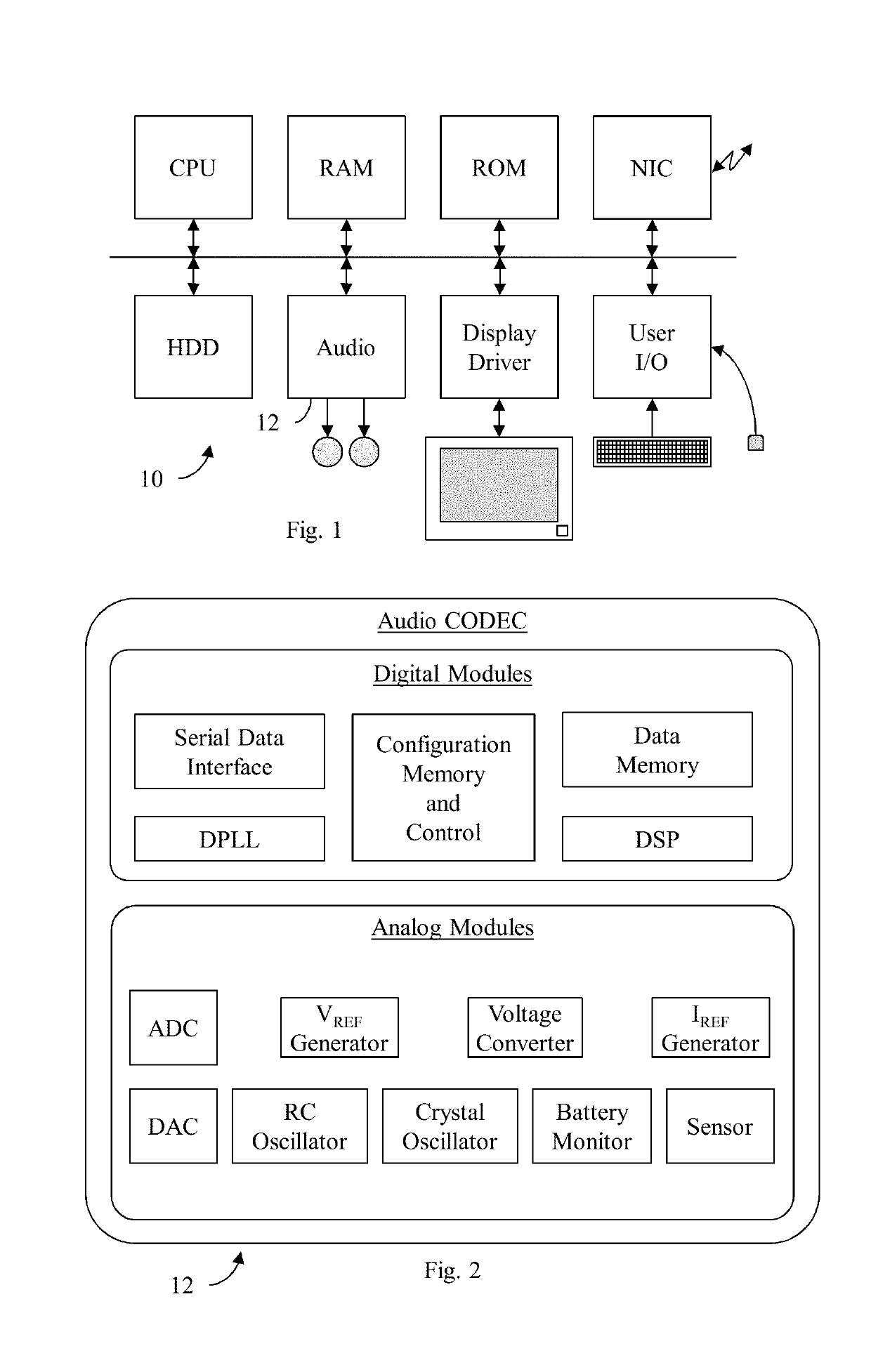

In computing, especially digital signal processing, the multiply–accumulate operation is a common step that computes the product of two numbers and adds that product to an accumulator.The hardware unit that performs the operation is known as a multiplier–accumulator (MAC, or MAC unit); the operation itself is also often called a MAC or a MAC operation.

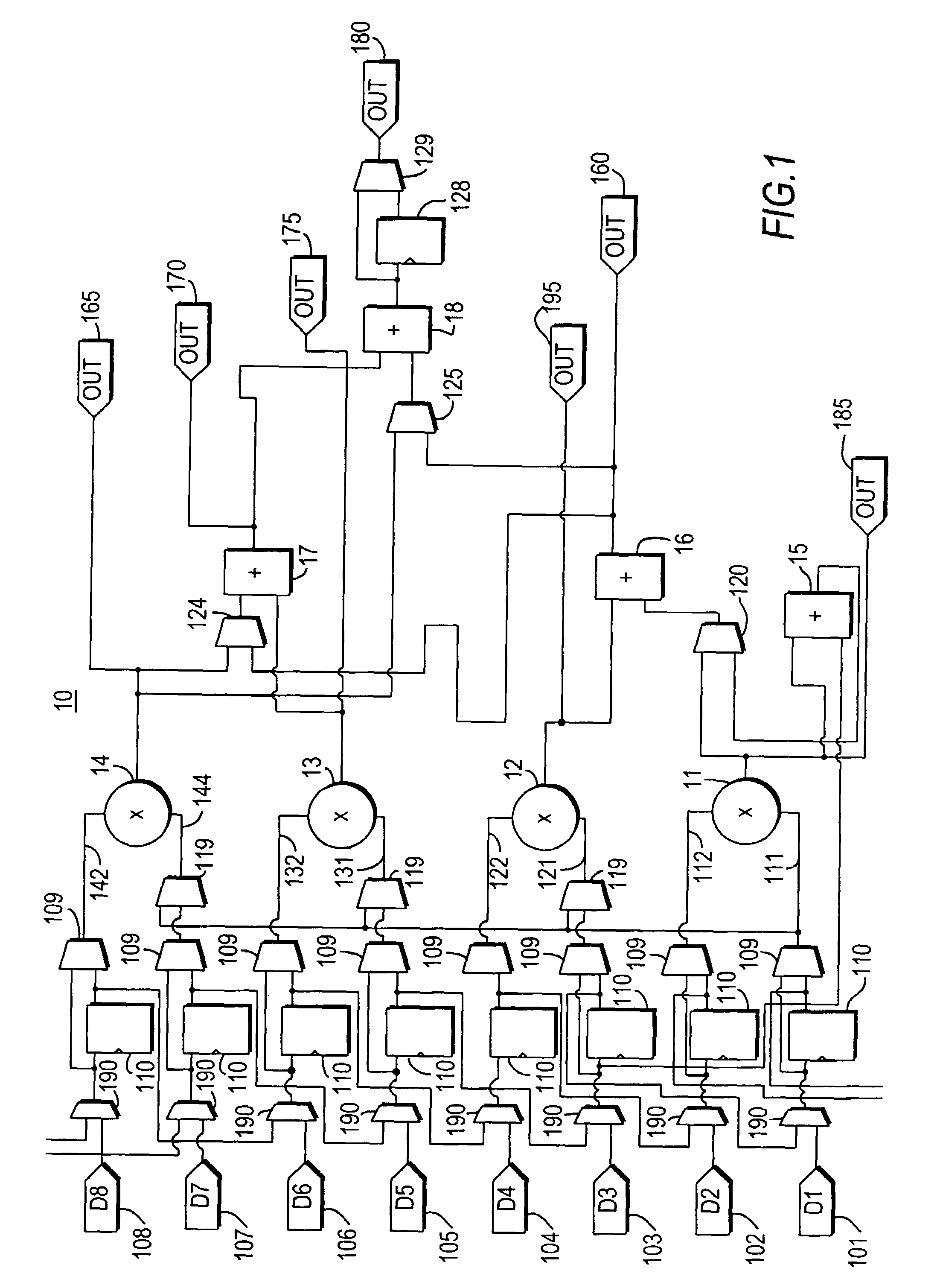

Multiplier accumulator circuits

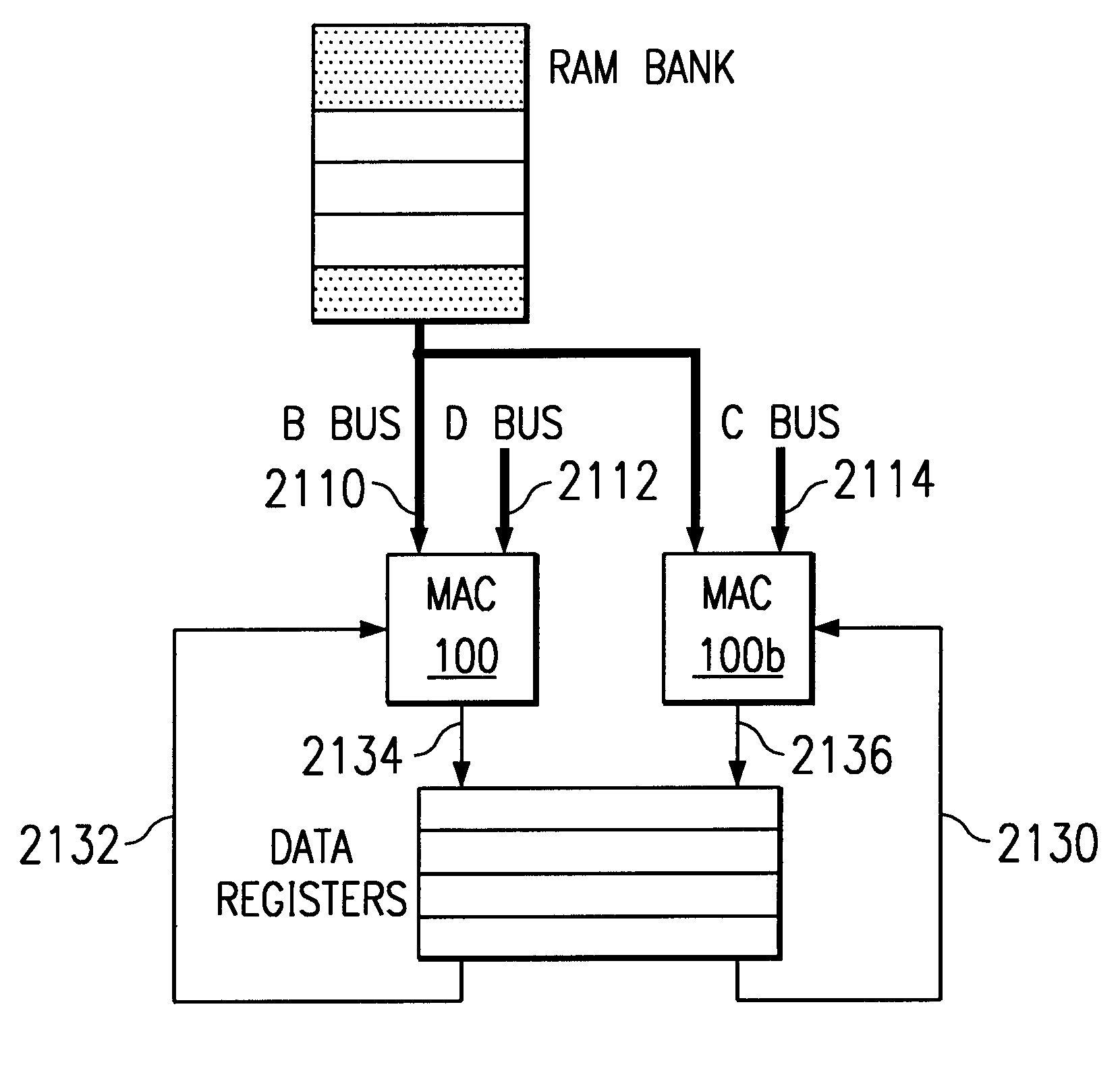

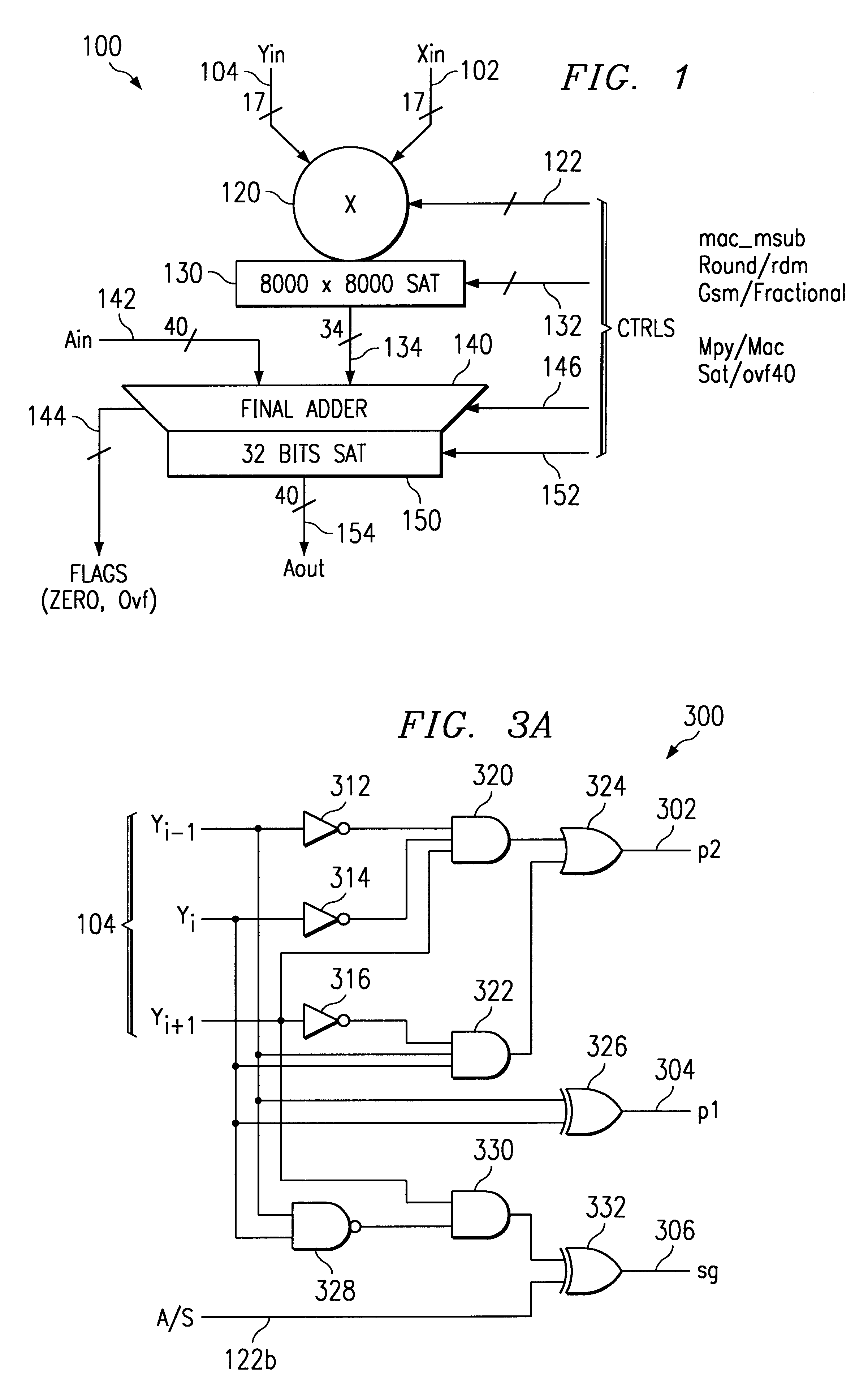

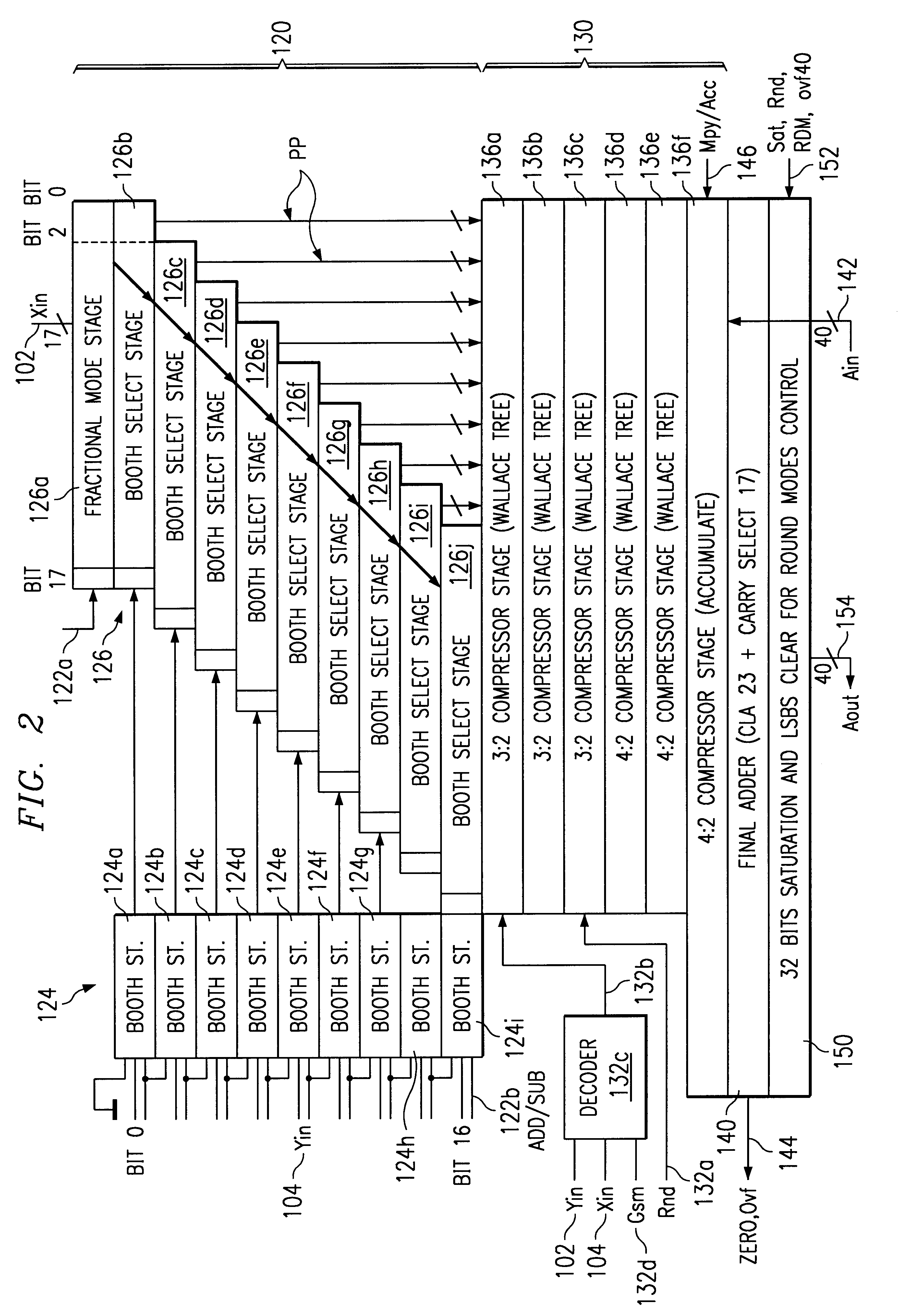

A multiply-accumulate (MAC) unit, having a first binary operand X, a second binary operand Y, a third binary operand, Booth recode logic for generating a plurality of partial products from said first and second operands, a Wallace tree adder for reducing the partial products and for selectively arithmetically combining the reduced partial products with said third operand, a final adder for generating a final sum, and a saturation circuitry for selectively rounding or saturating said final sum is provided. A dual MAC unit is also provided.

Owner:TEXAS INSTR INC

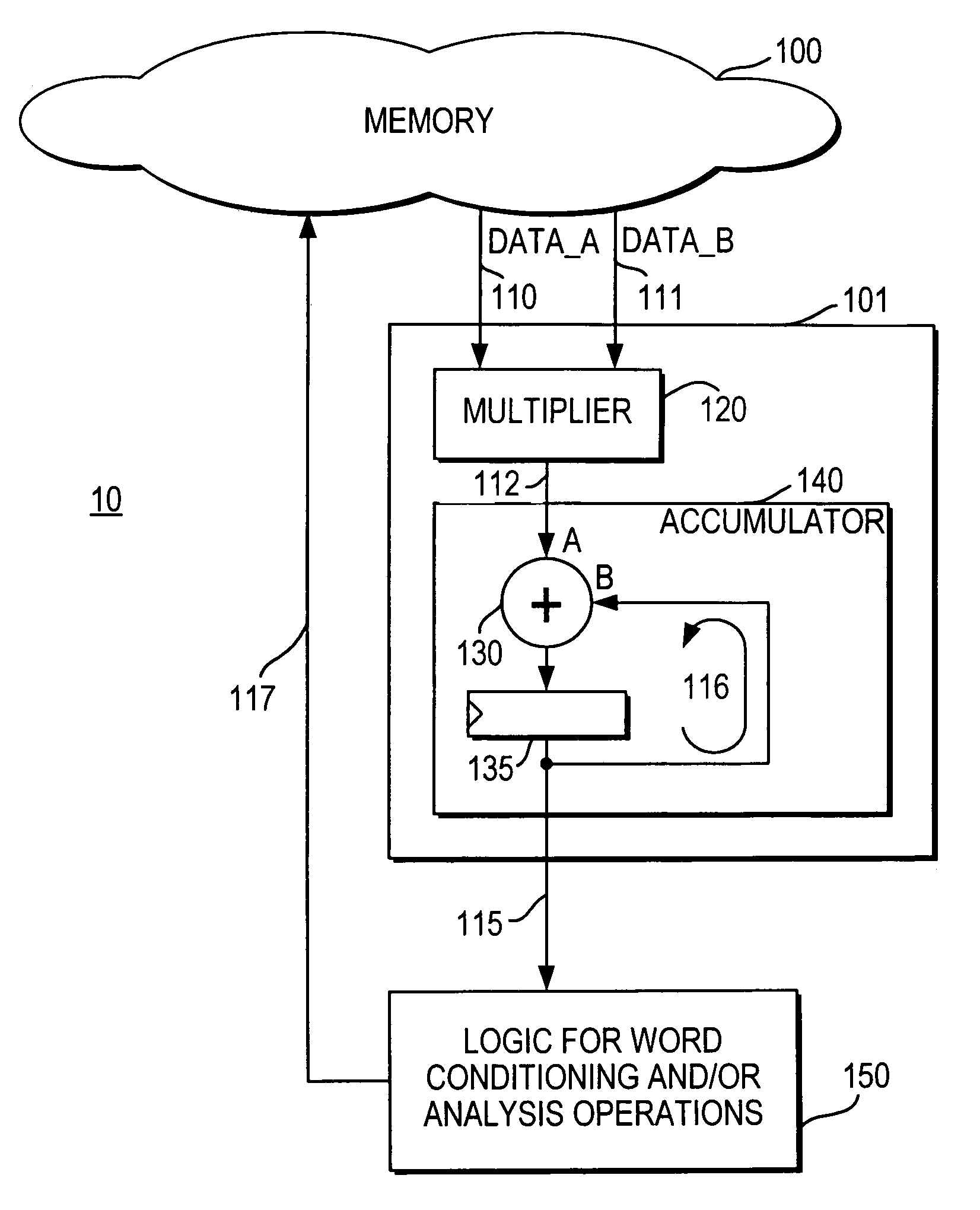

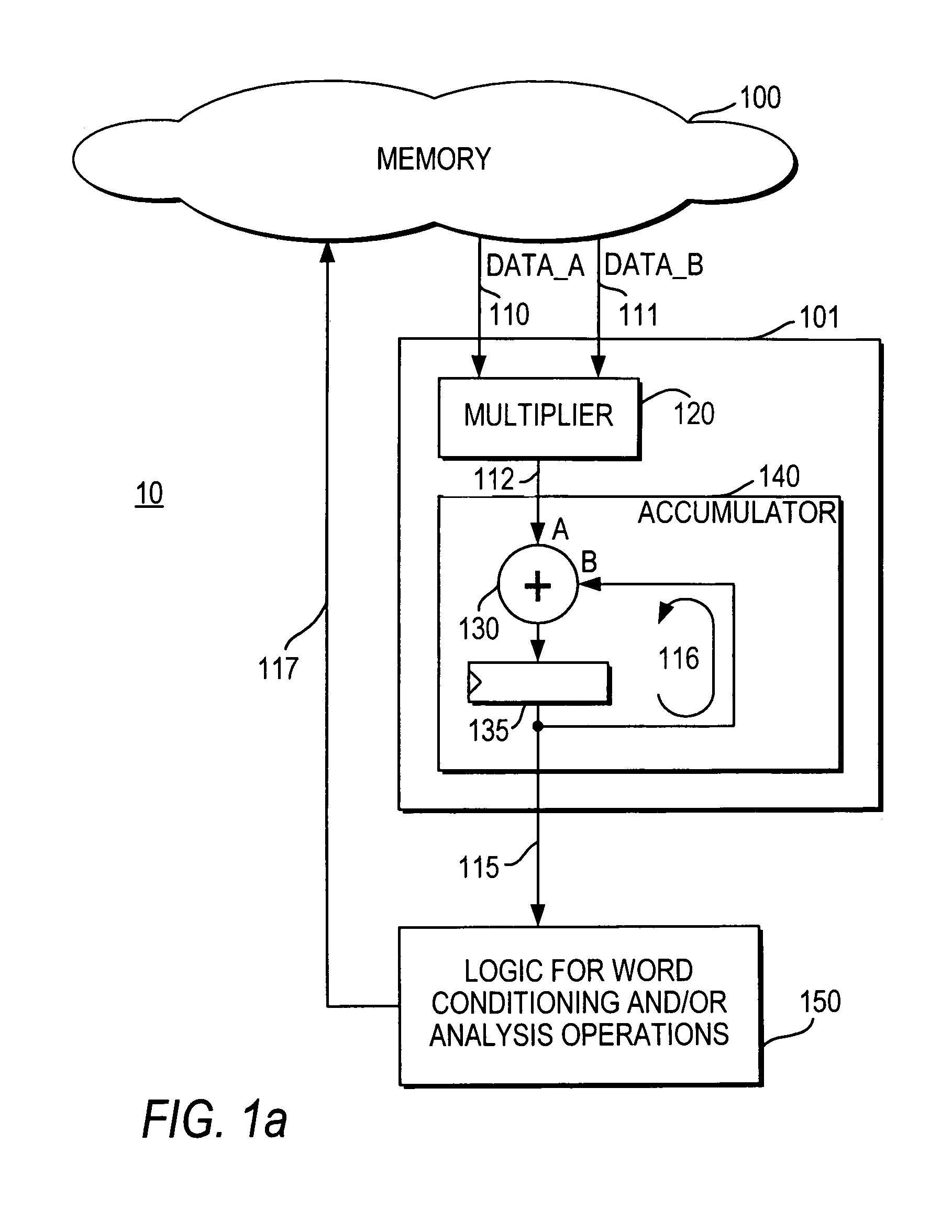

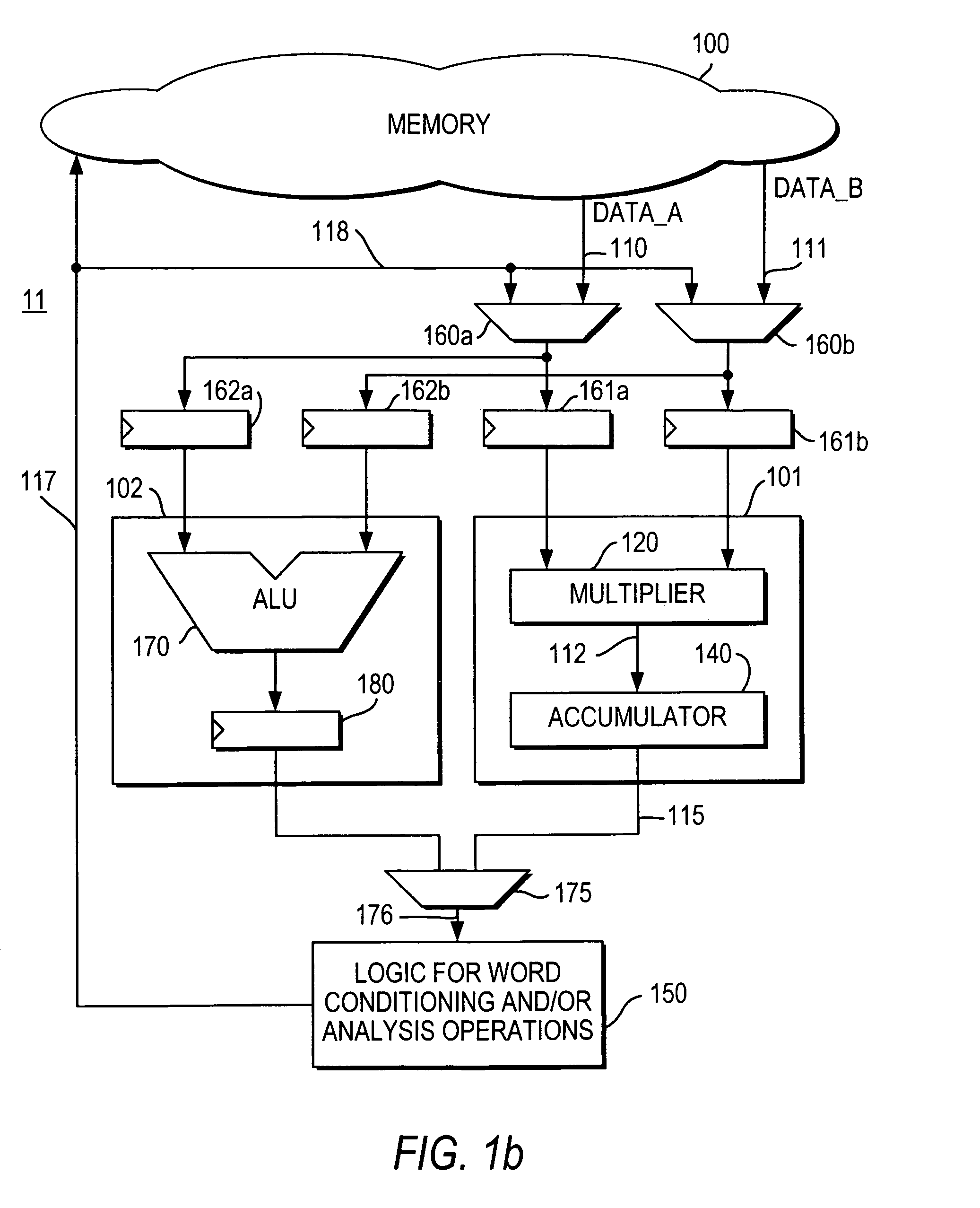

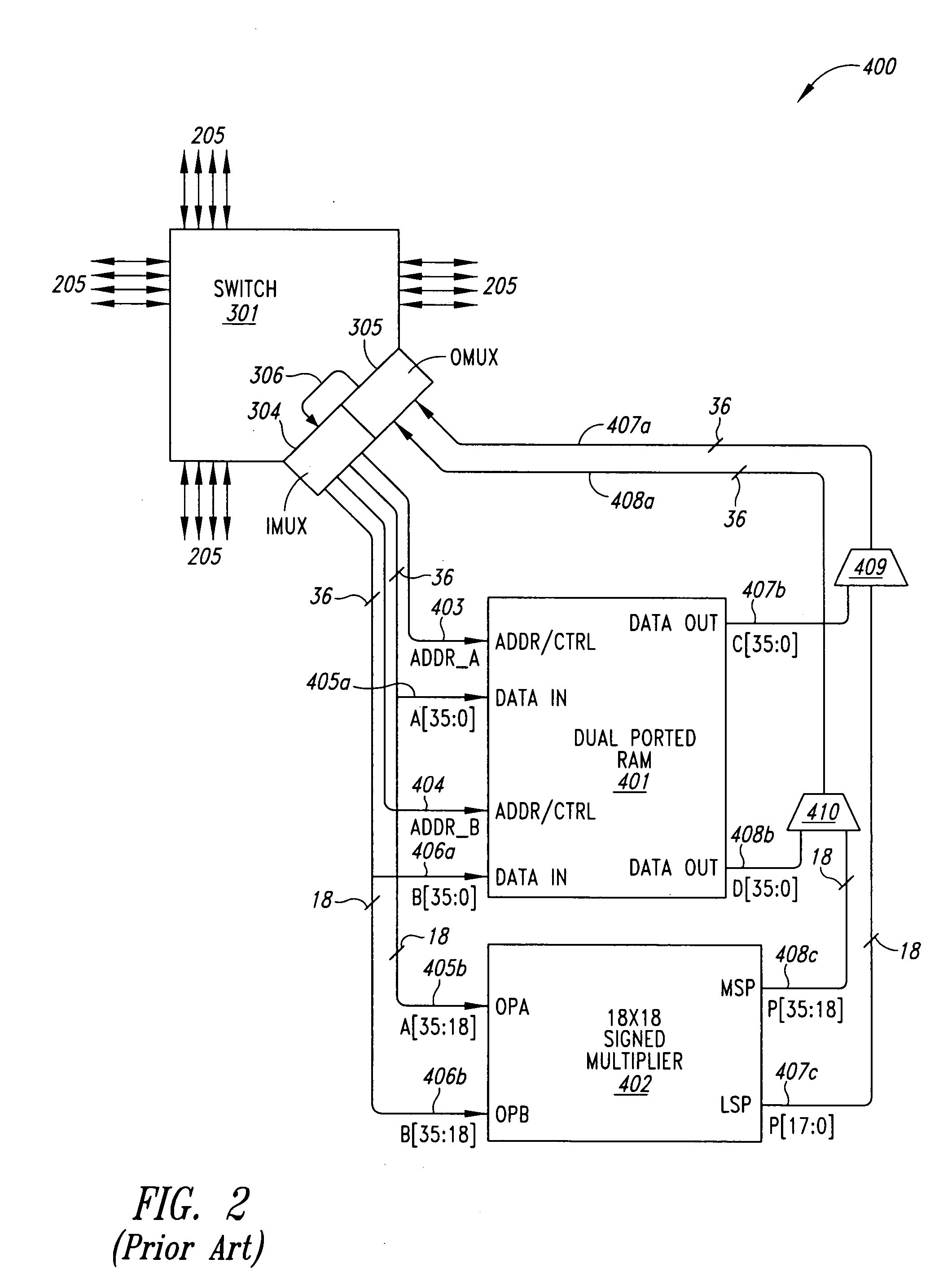

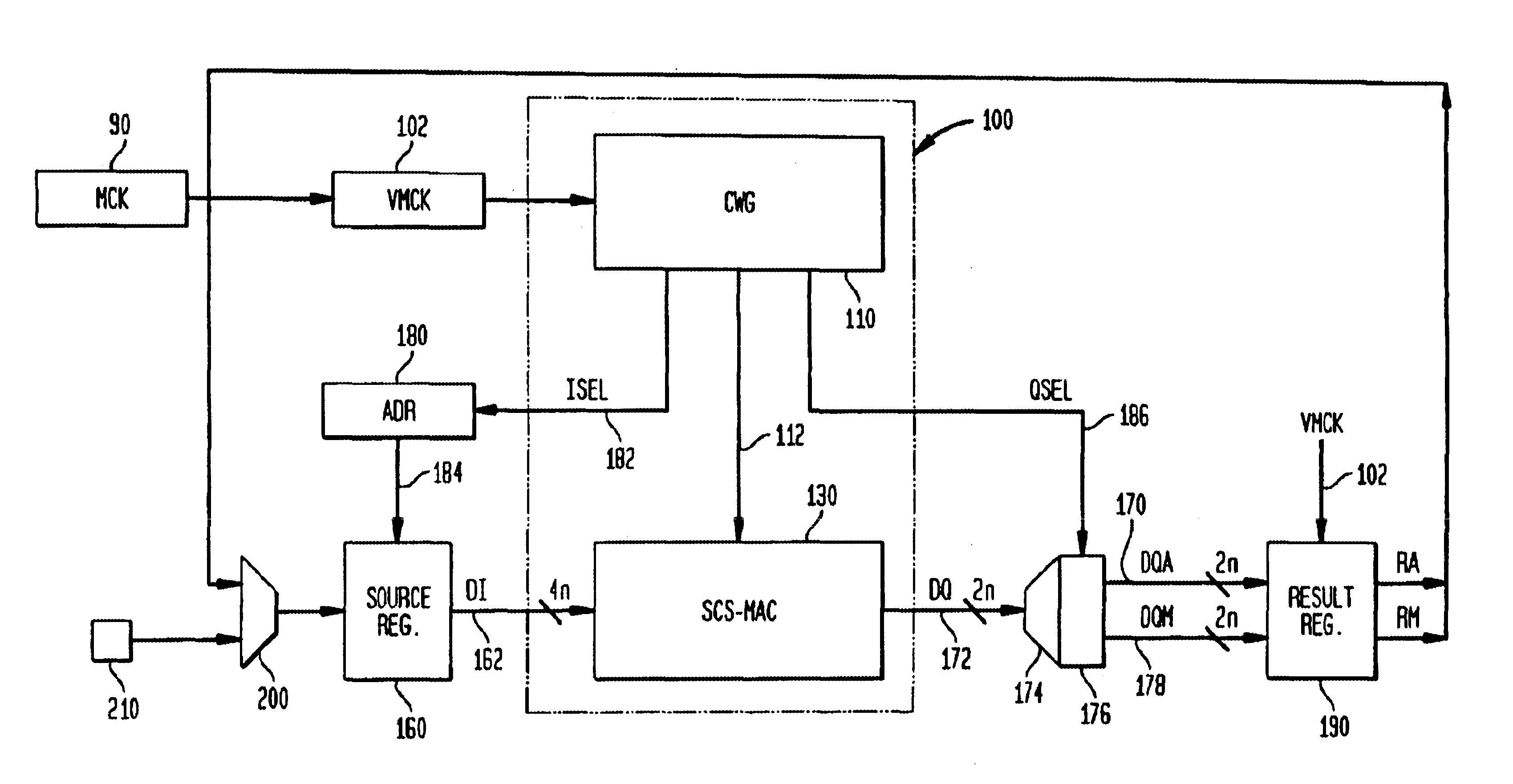

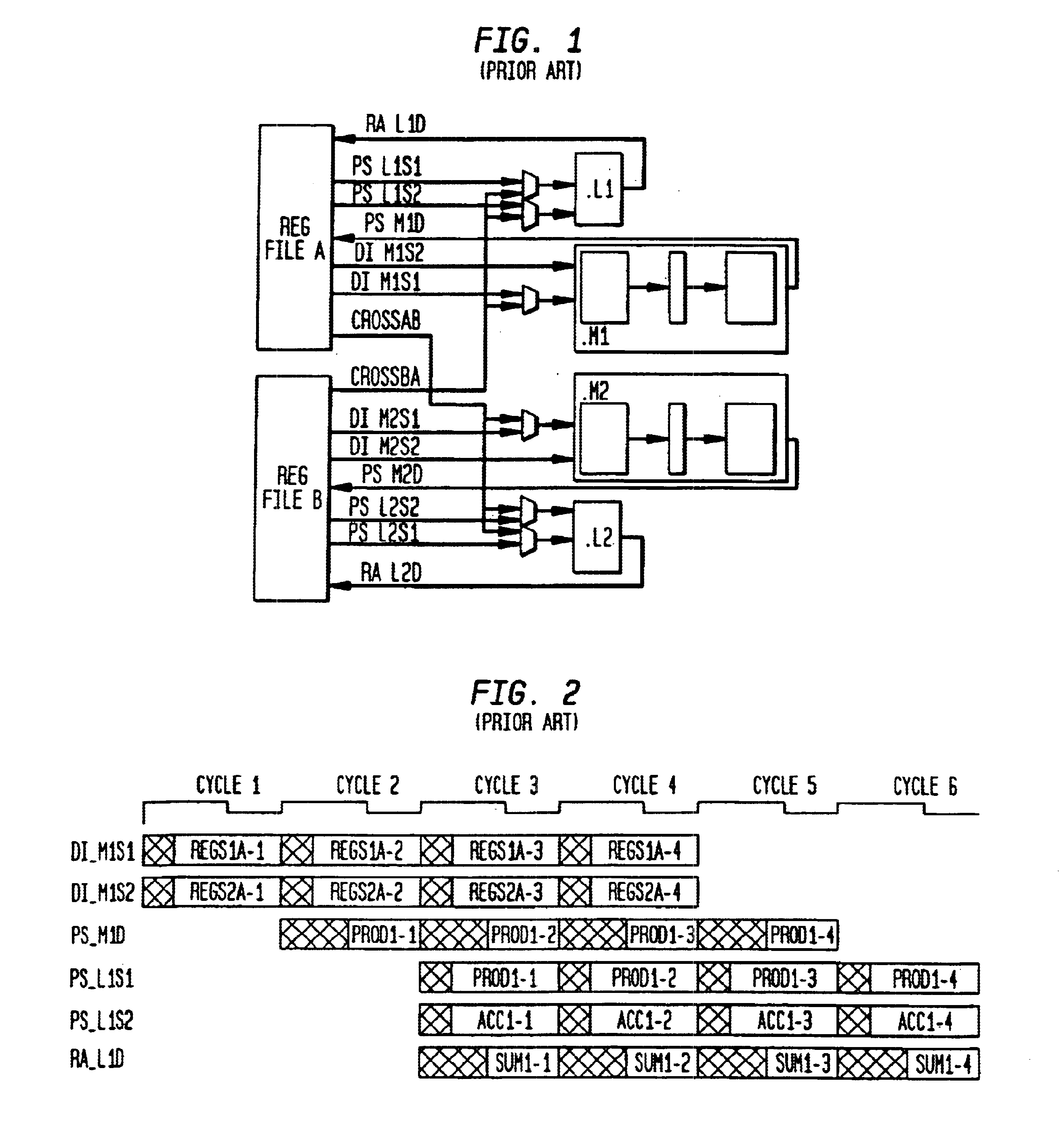

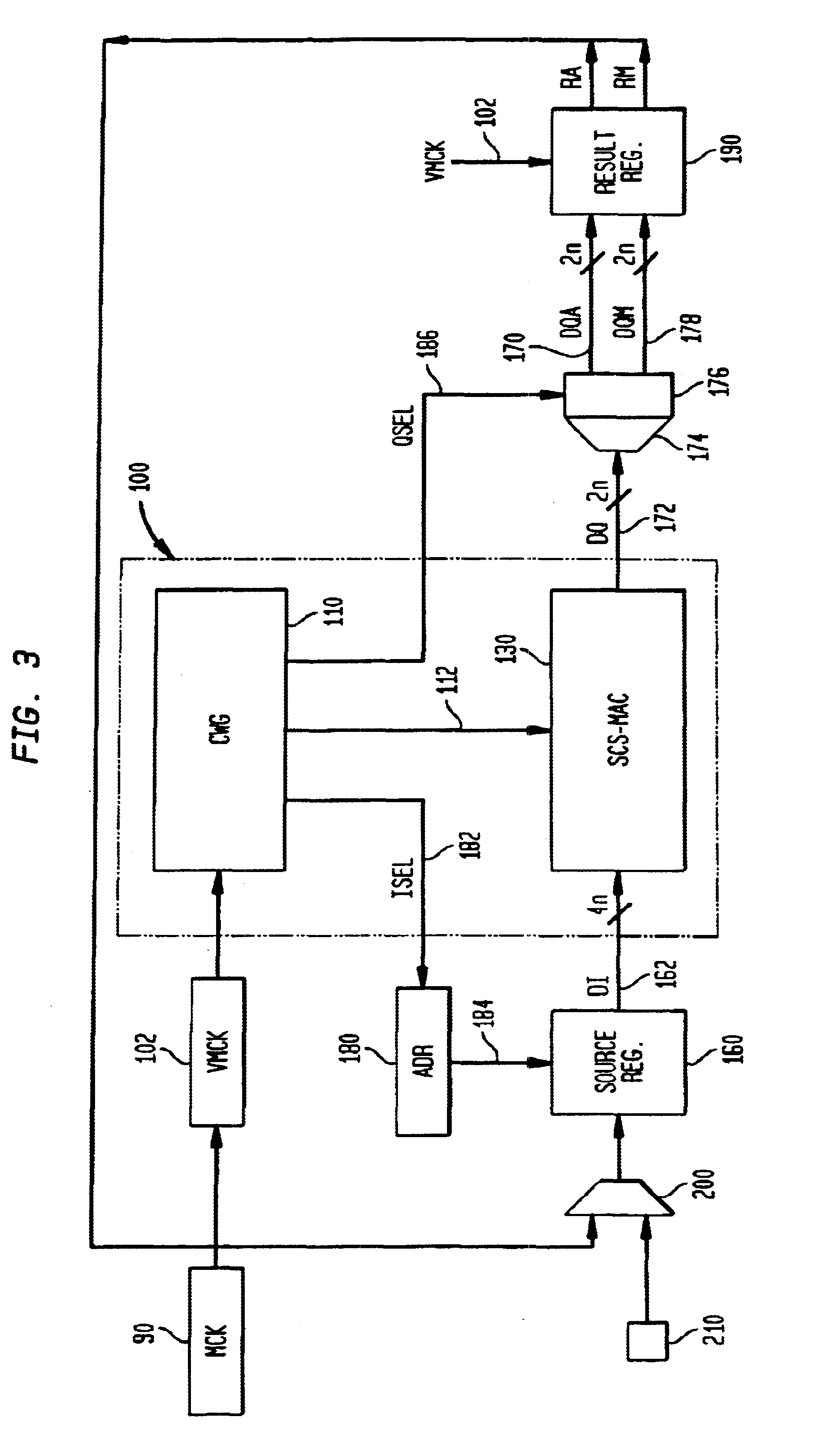

DSP processor architecture with write datapath word conditioning and analysis

InactiveUS6978287B1Improve throughputReduce delaysDigital computer detailsProgram controlRoundingBlock floating-point

Owner:ALTERA CORP

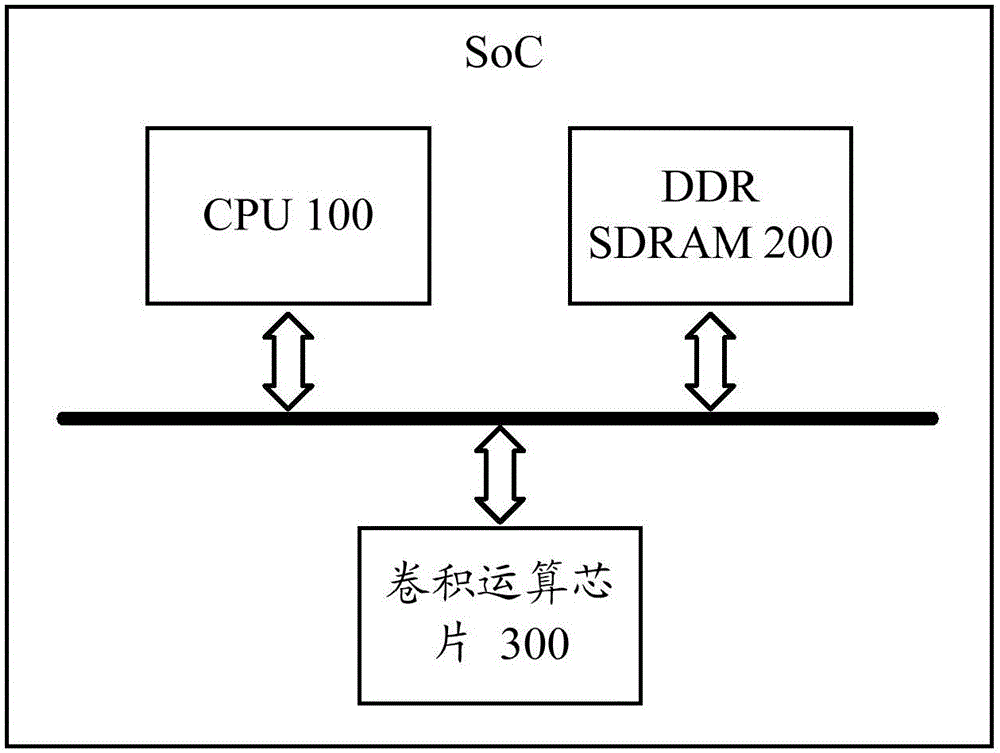

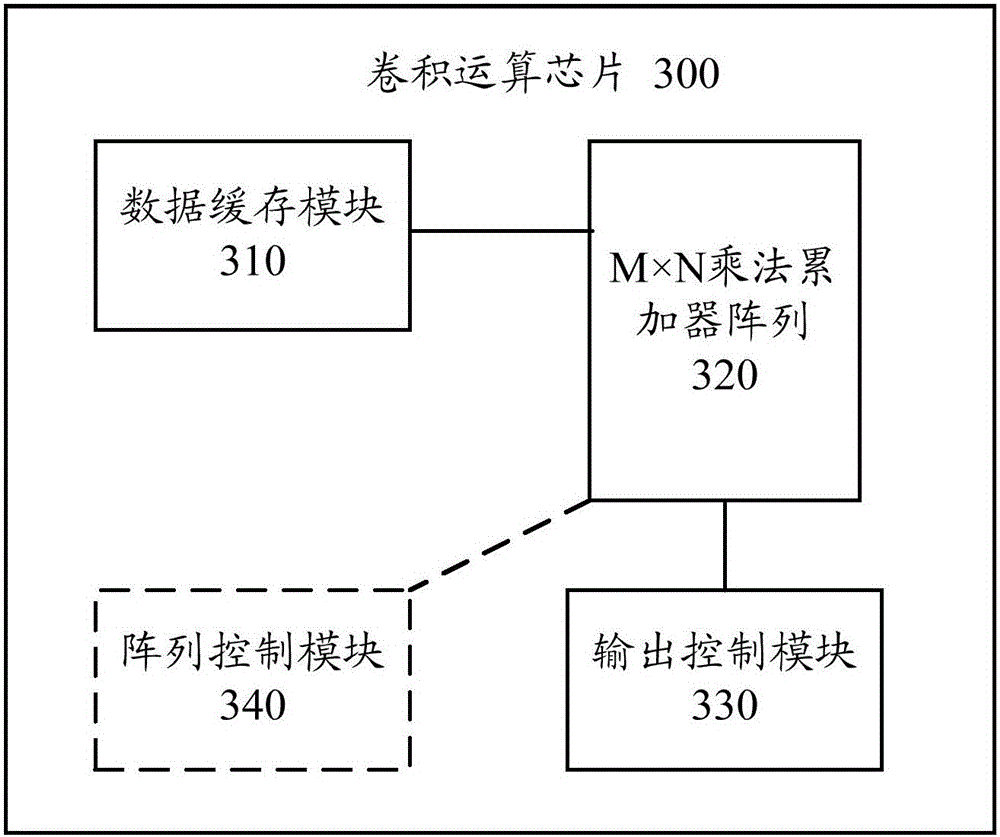

Convolution operation chip and communication equipment

ActiveCN106844294AReduce visitsReduce access pressureDigital data processing detailsBiological modelsResource utilizationComputer module

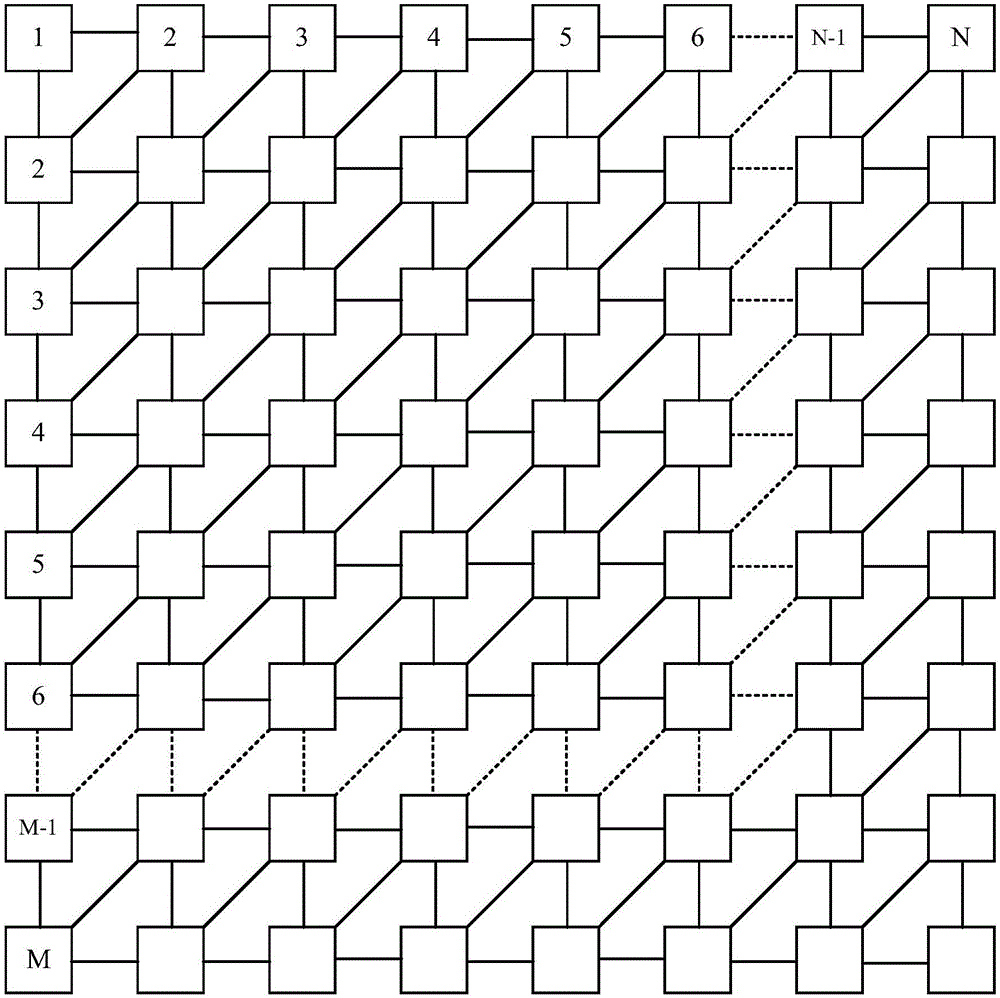

The invention provides a convolution operation chip and communication equipment. The convolution operation chip comprises an M*N multiplier accumulator array, a data cache module and an output control module; the M*N multiplier accumulator array comprises a first multiplier accumulator window, and a processing unit PE<X, Y> of the first multiplier accumulator window is used for conducting multiplication operation on convolution data of the PE<X, Y> and convolution parameters of the PE<X, Y> and transmitting the convolution parameters of the PE<X, Y> to PE<X, Y+1> and transmitting the convolution data of the PE<X, Y> to PE<X-1, Y+1> to serve as multipliers of multiplication operation between the PE<X, Y+1> and the PE<X-1, Y+1>; the data cache module is used for transmitting the convolution data and the convolution parameters to the first multiplier accumulator window; the output control module is used for outputting a convolution result. According to the convolution operation chip and the communication equipment, the RAM access frequency can be decreased and the RAM access stress can be relieved while the array resource utilization rate is increased.

Owner:HUAWEI MACHINERY

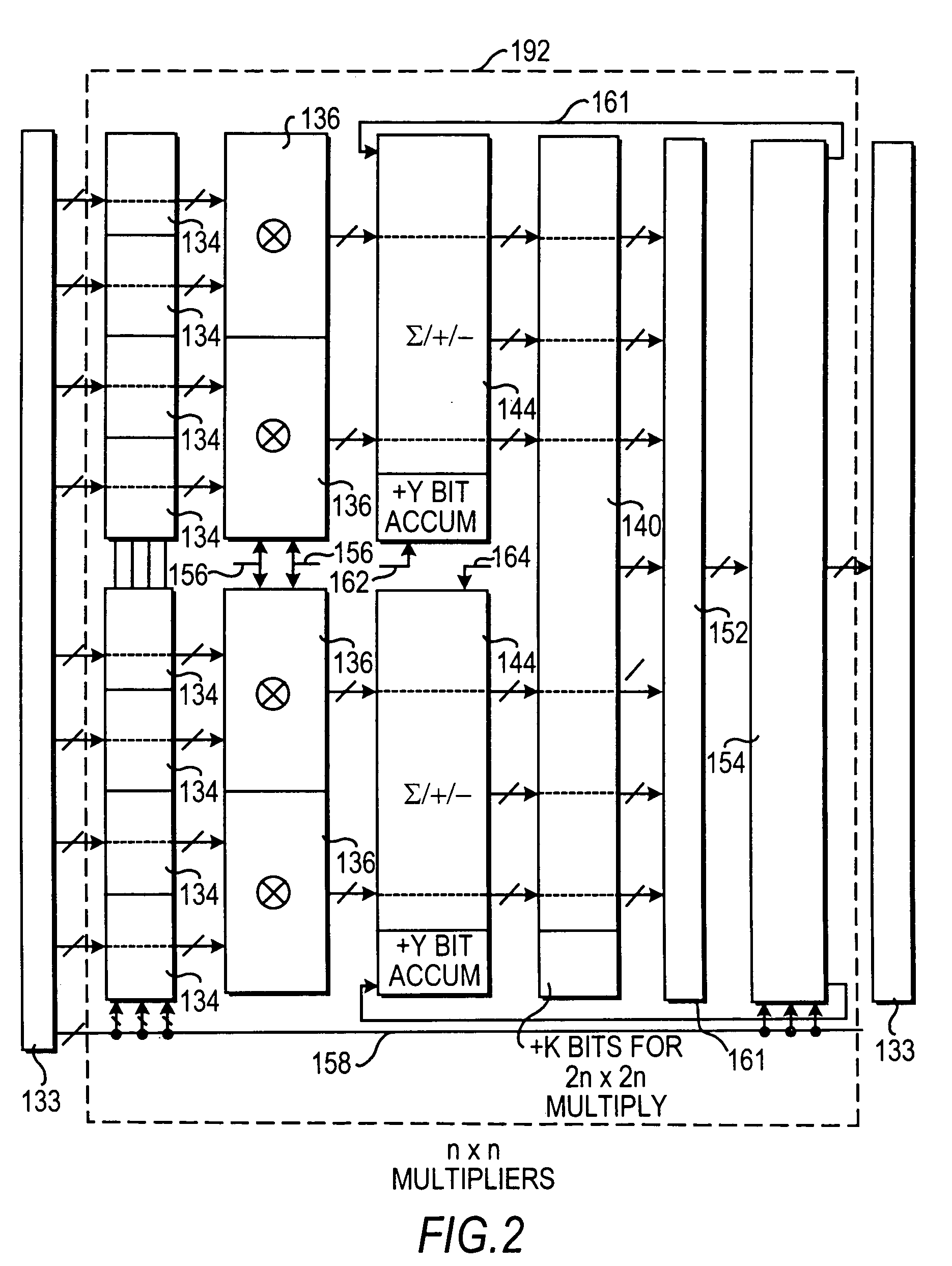

Flexible accumulator in digital signal processing circuitry

InactiveUS20050187997A1Computation using denominational number representationDigital signal processingBinary multiplier

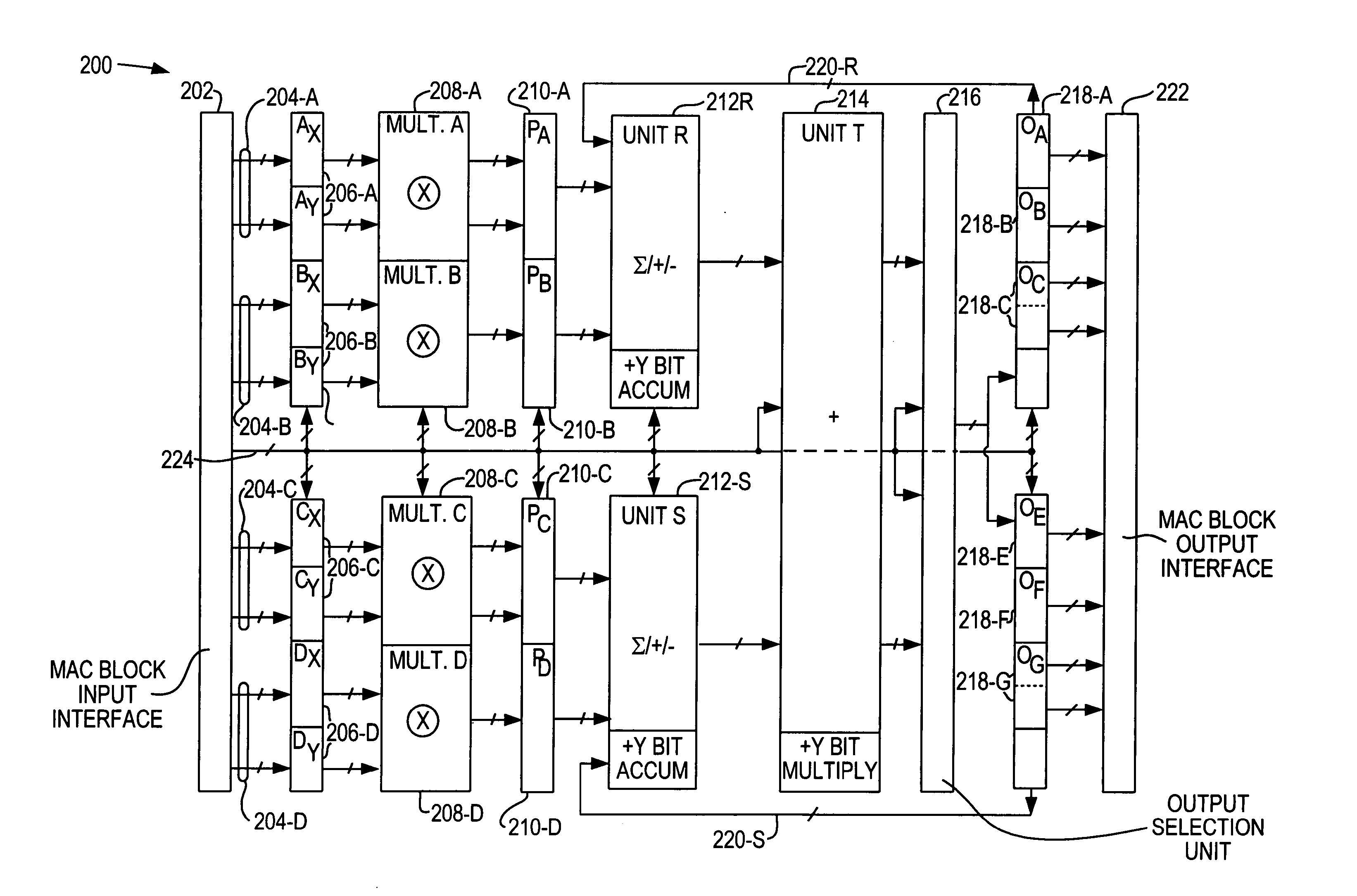

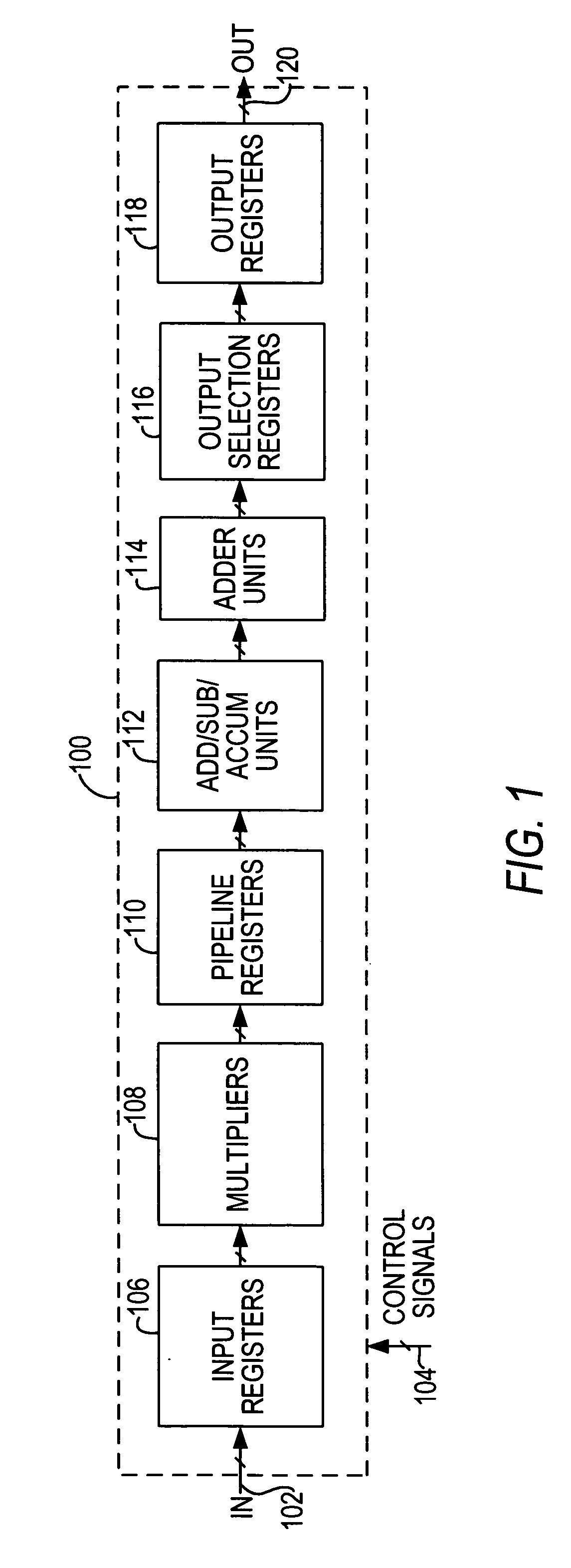

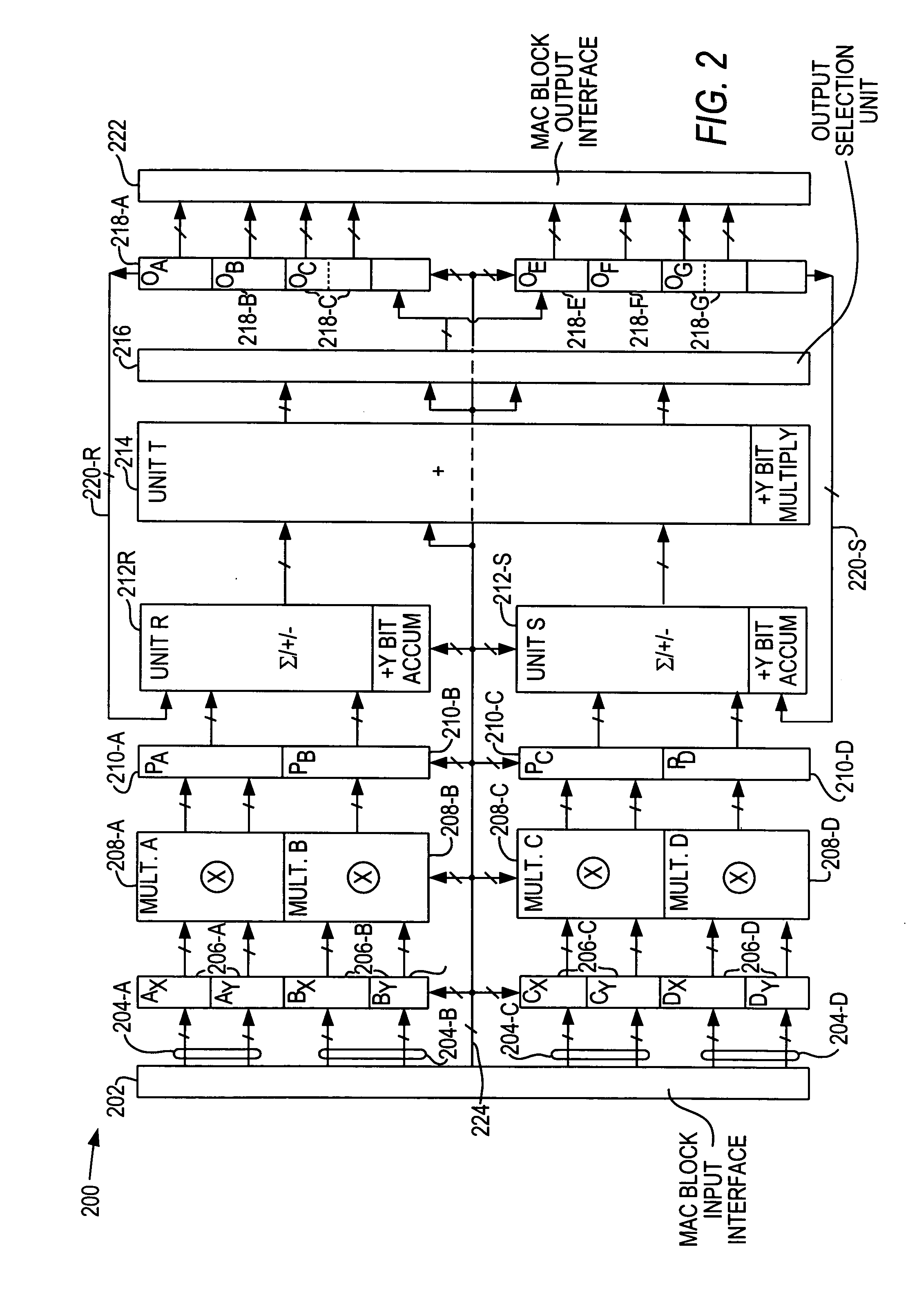

A multiplier-accumulator (MAC) block can be programmed to operate in one or more modes. When the MAC block implements at least one multiply-and-accumulate operation, the accumulator value can be zeroed without introducing clock latency or initialized in one clock cycle. To zero the accumulator value, the most significant bits (MSBs) of data representing zero can be input to the MAC block and sent directly to the add-subtract-accumulate unit. Alternatively, dedicated configuration bits can be set to clear the contents of a pipeline register for input to the add-subtract-accumulate unit. The least significant bits (LSBs) can be tied to ground and sent along the feedback path. To initialize the accumulator value, the MSBs of the initialization value can be input to the MAC block and sent directly to the add-subtract-accumulate unit. The LSBs can be sent to another multiplier that performs a multiply-by-one operation before being sent to the add-subtract-accumulate unit.

Owner:ALTERA CORP

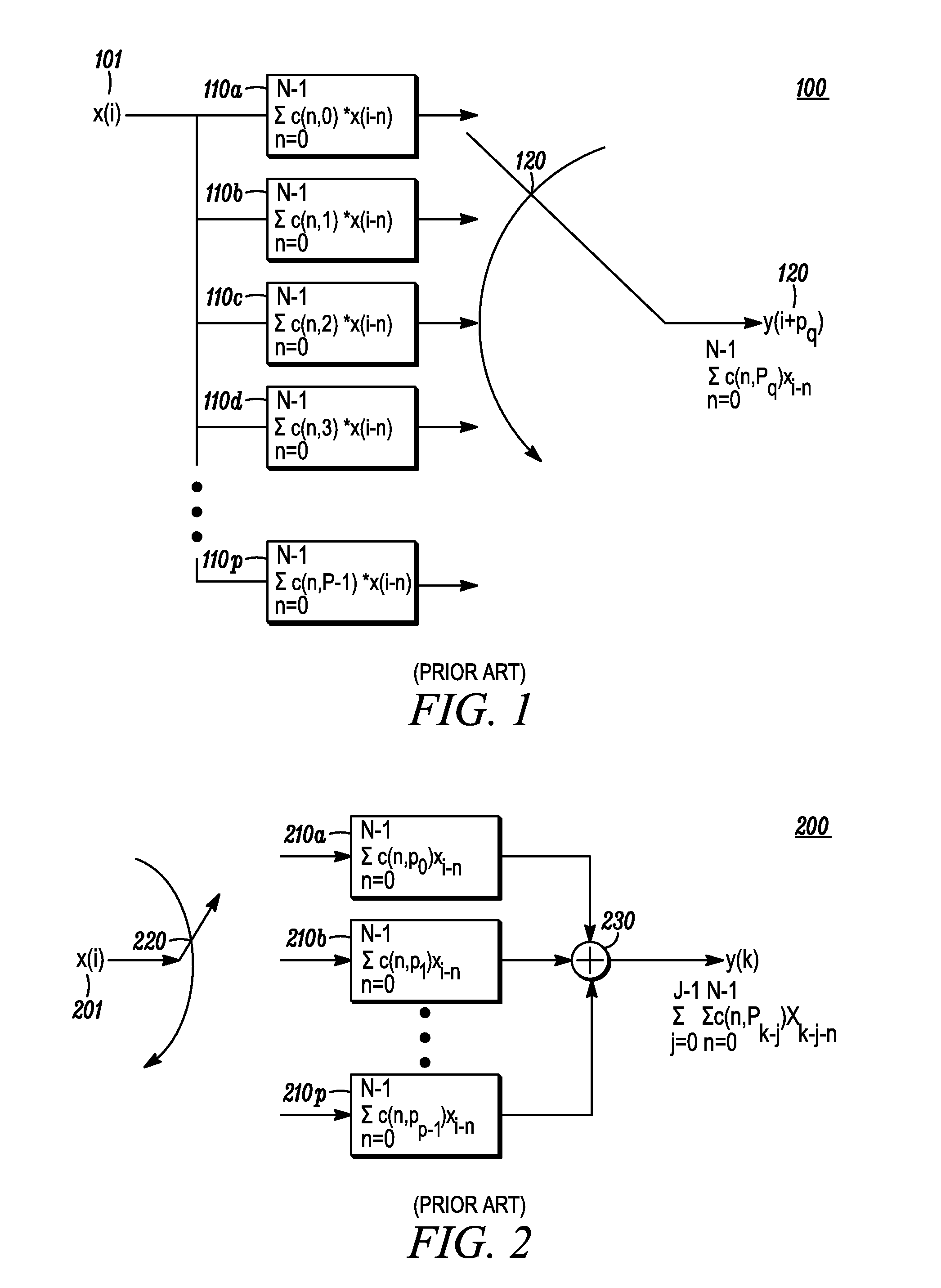

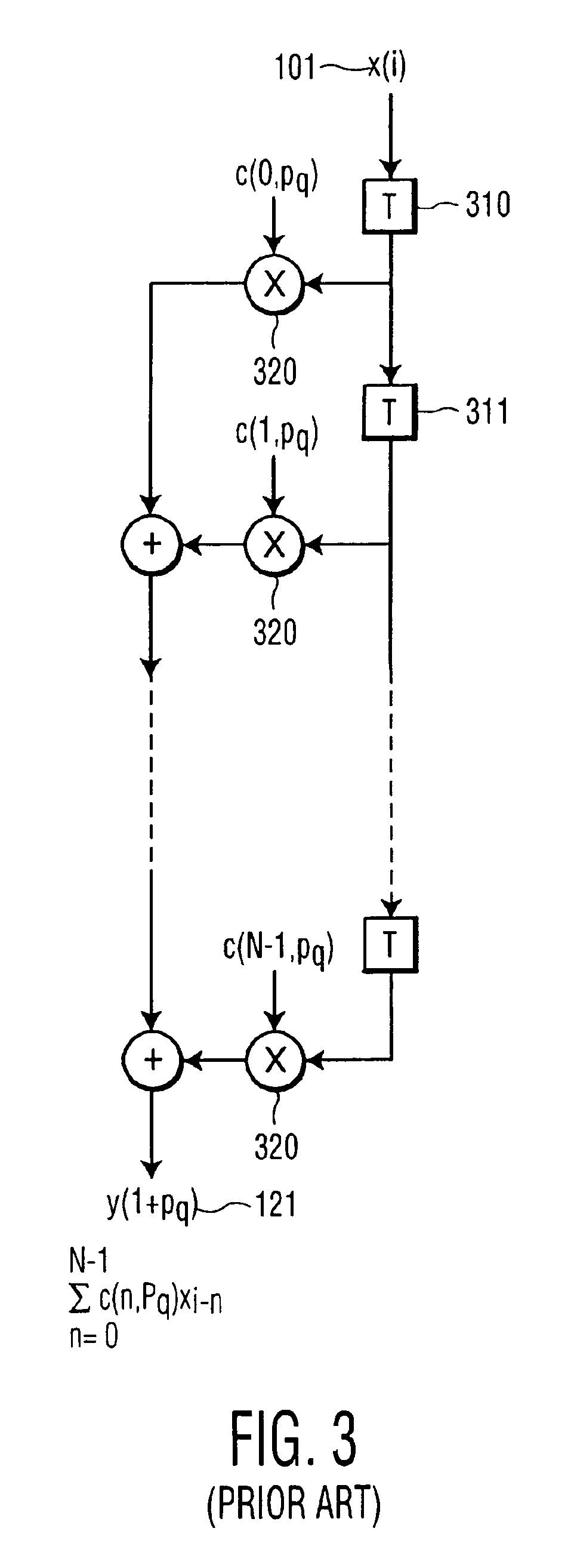

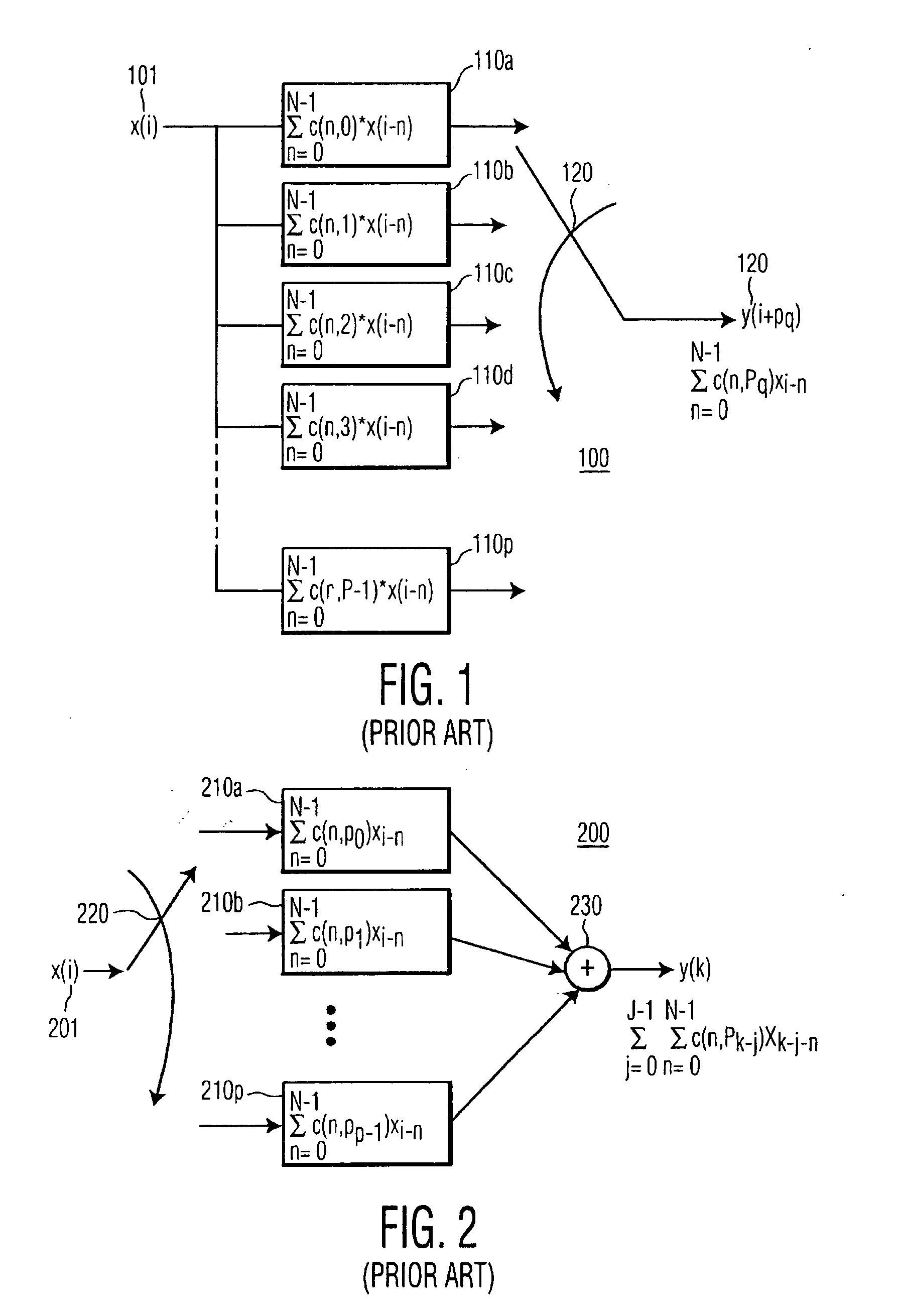

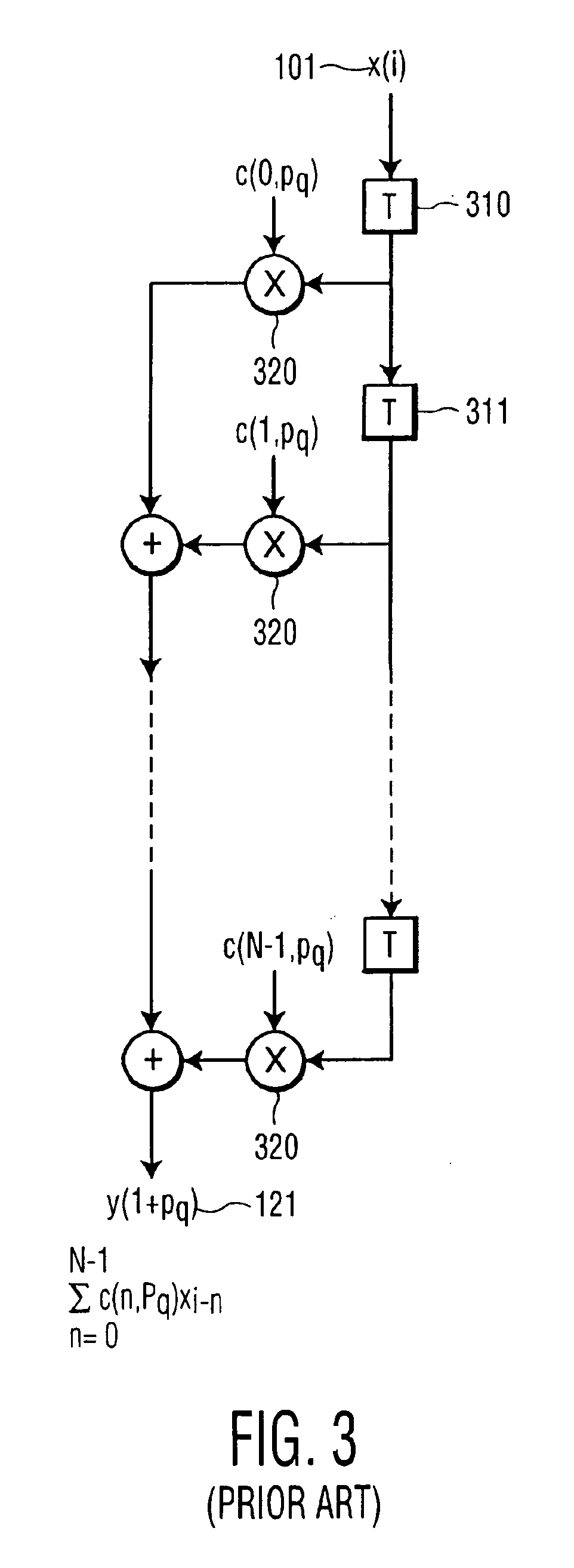

Polyphase filter with optimized silicon area

InactiveUS7409417B2Increase the number ofDigital technique networkComplex mathematical operationsComputer scienceSilicon

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

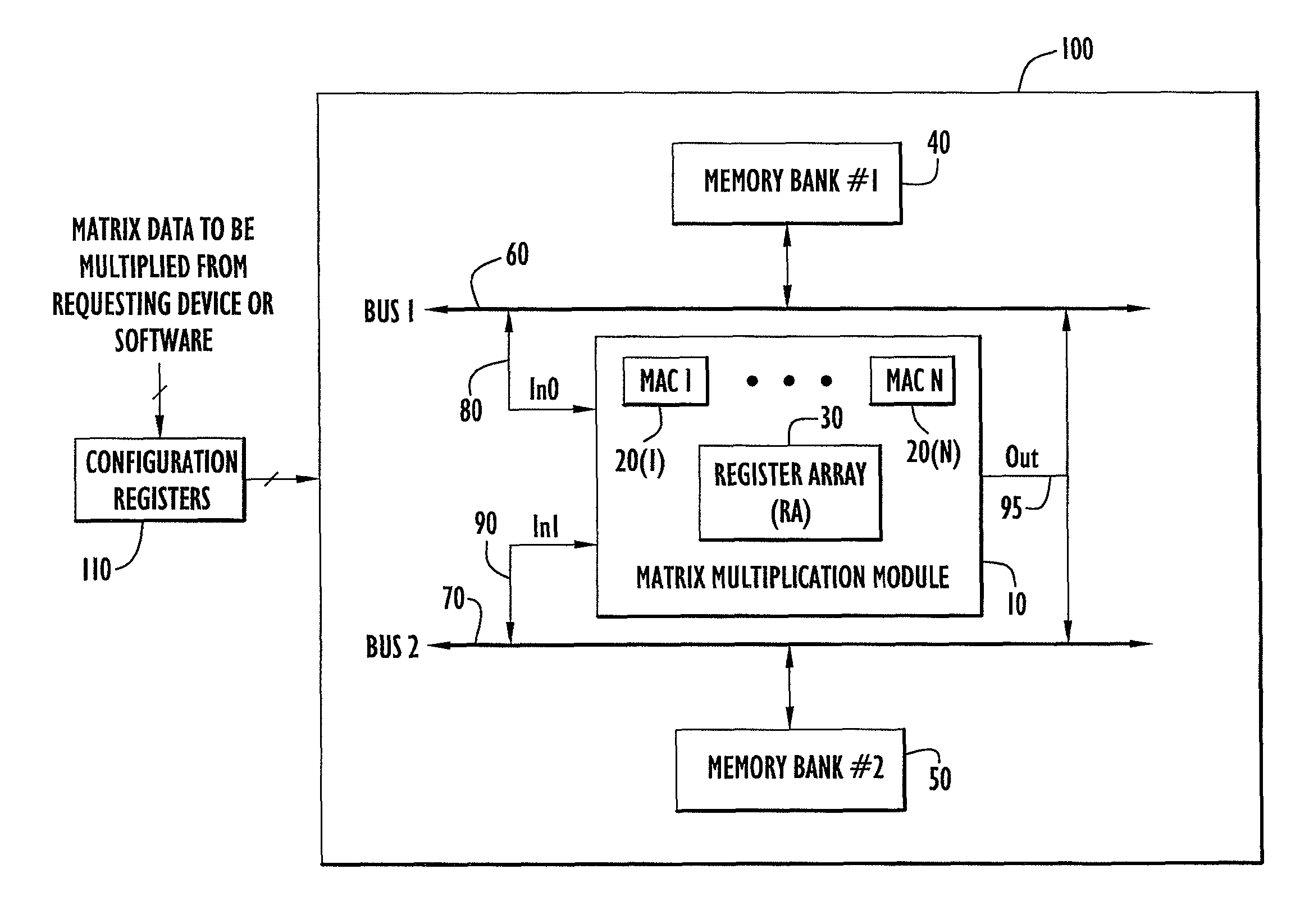

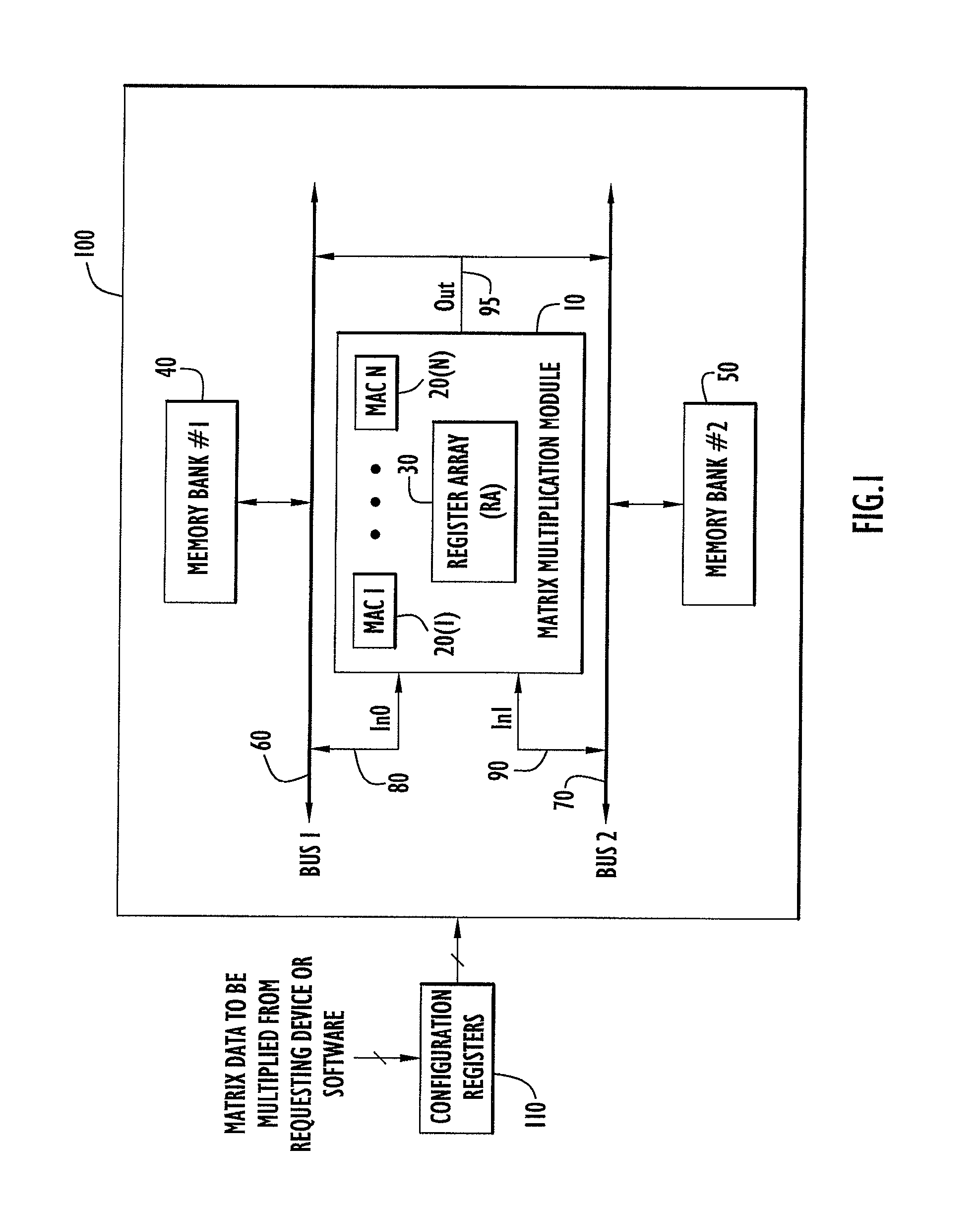

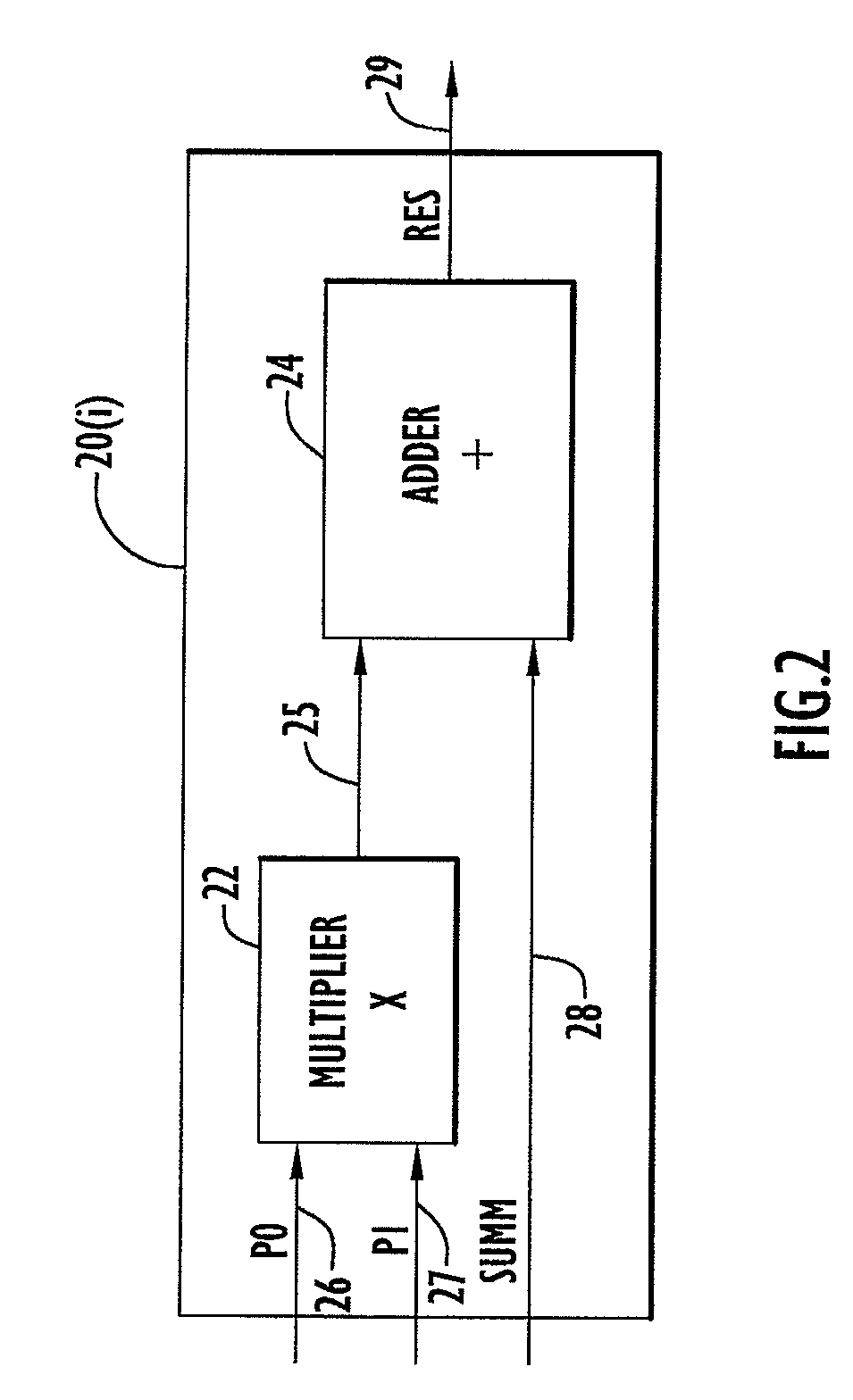

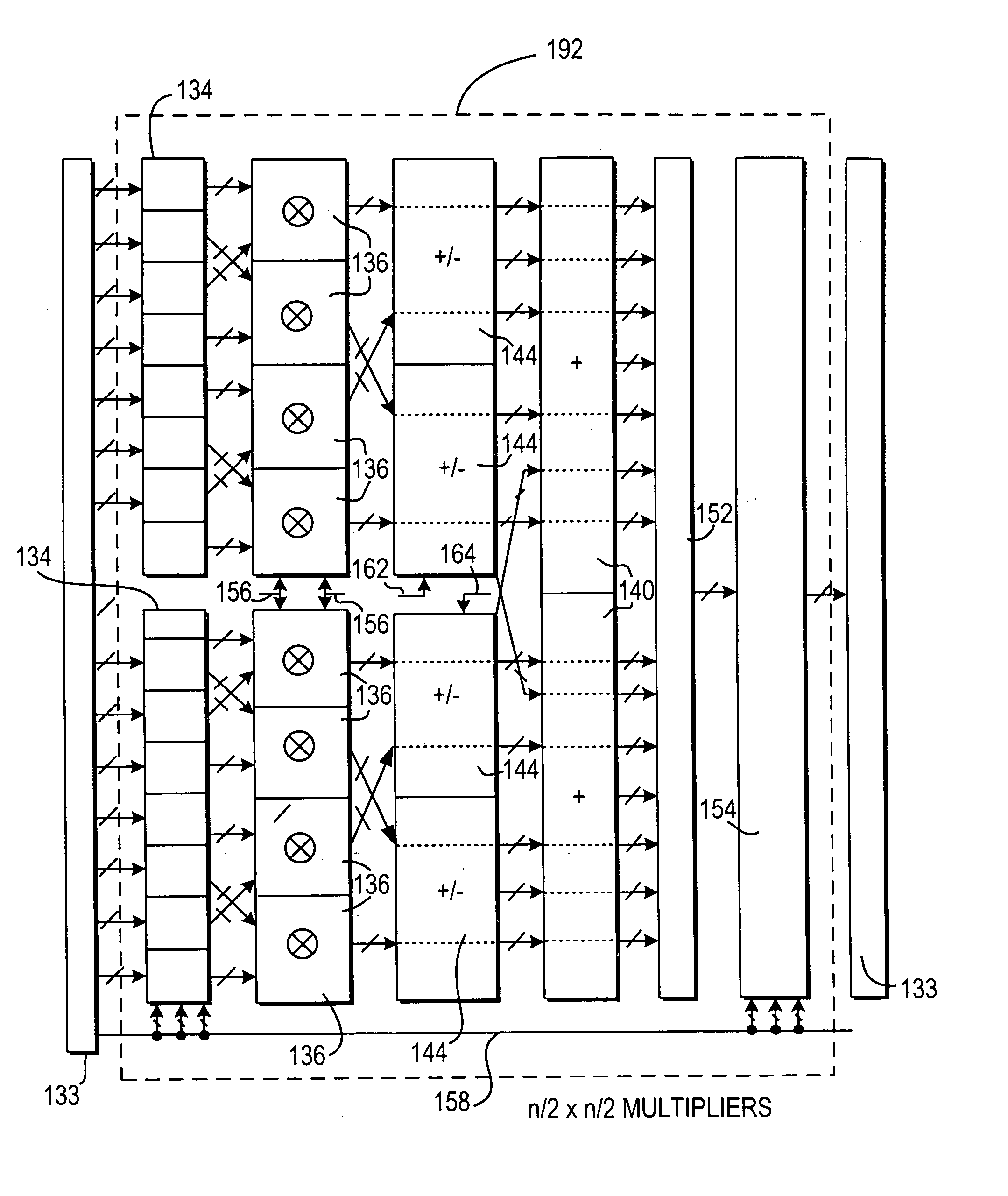

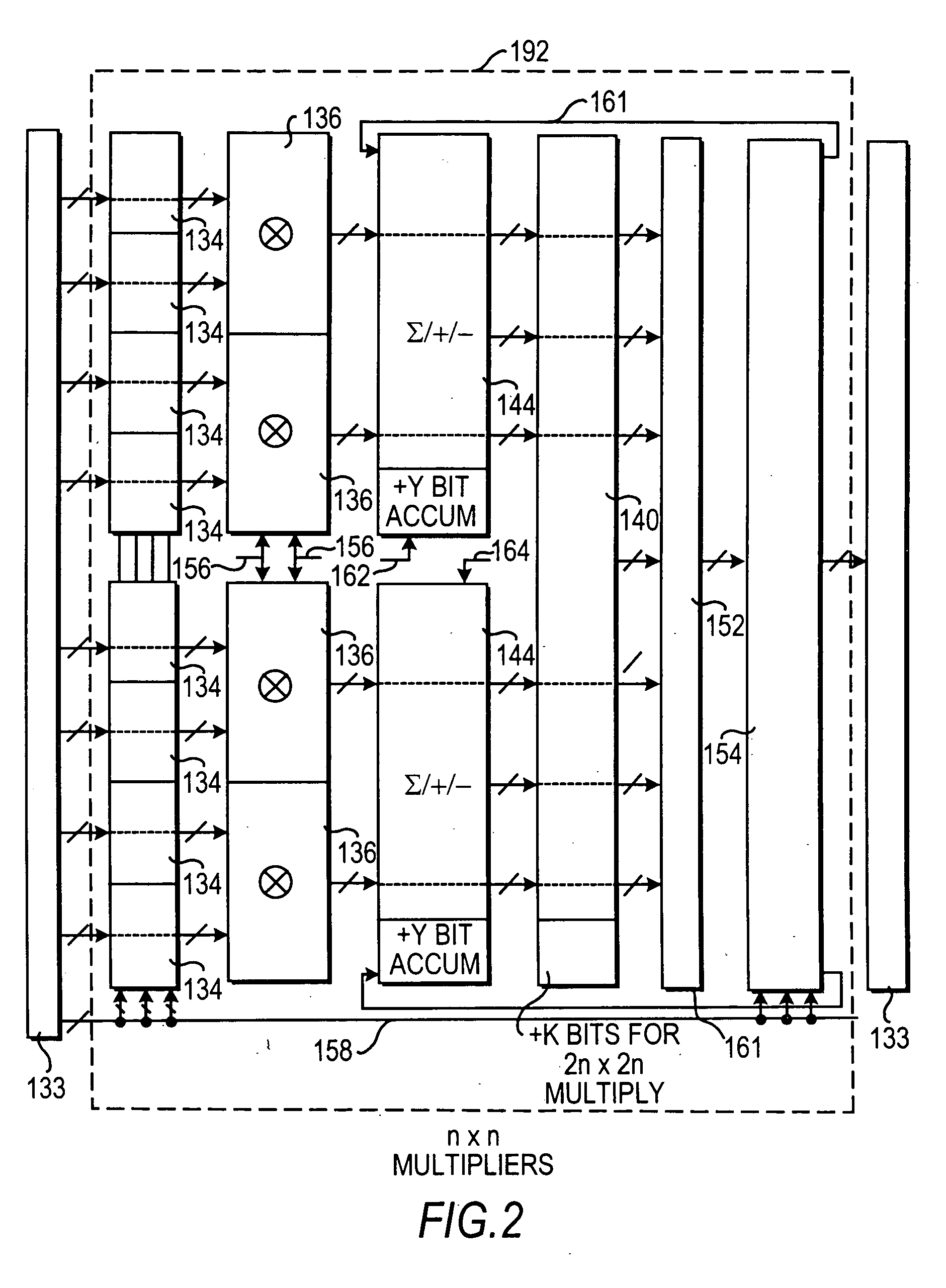

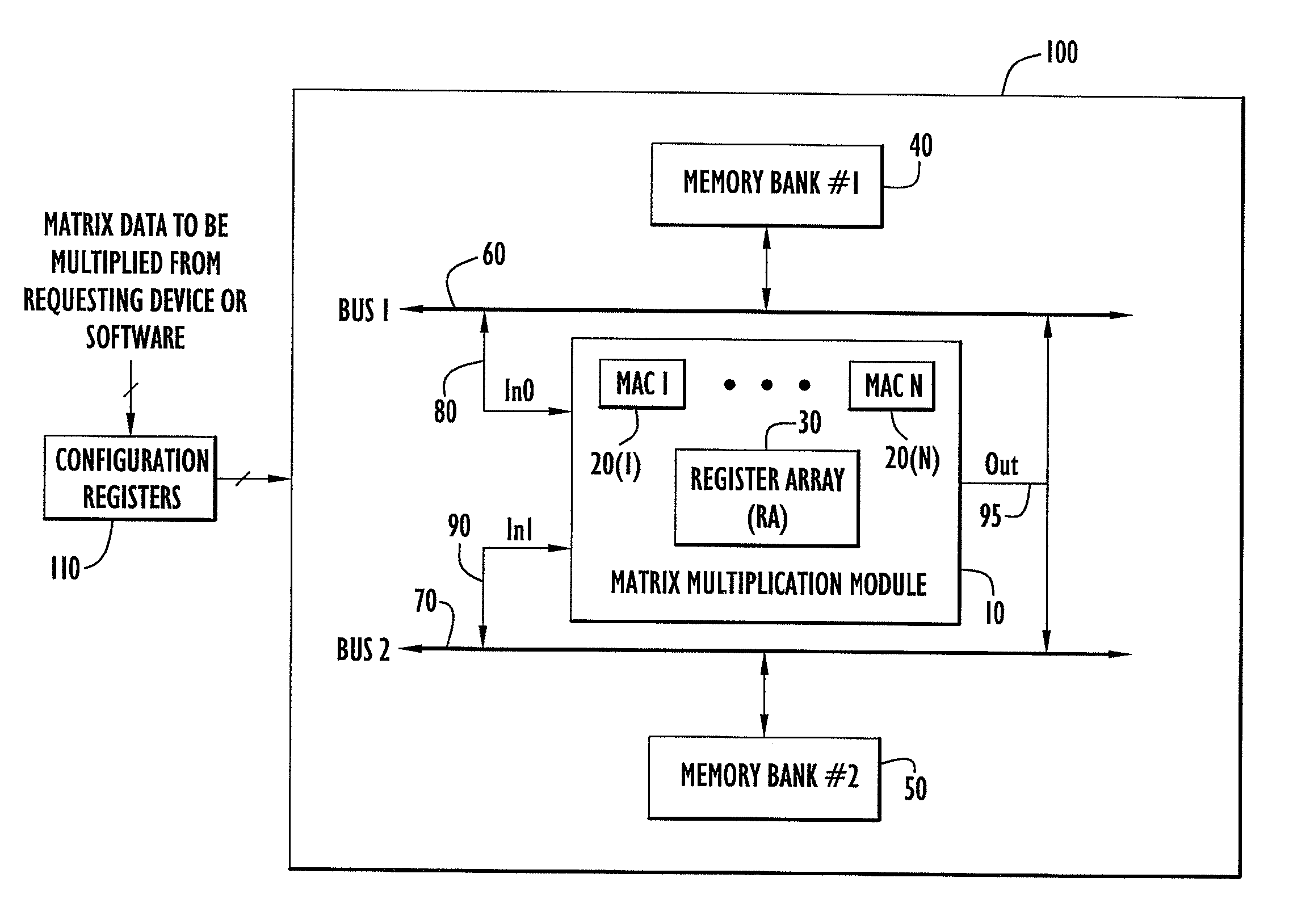

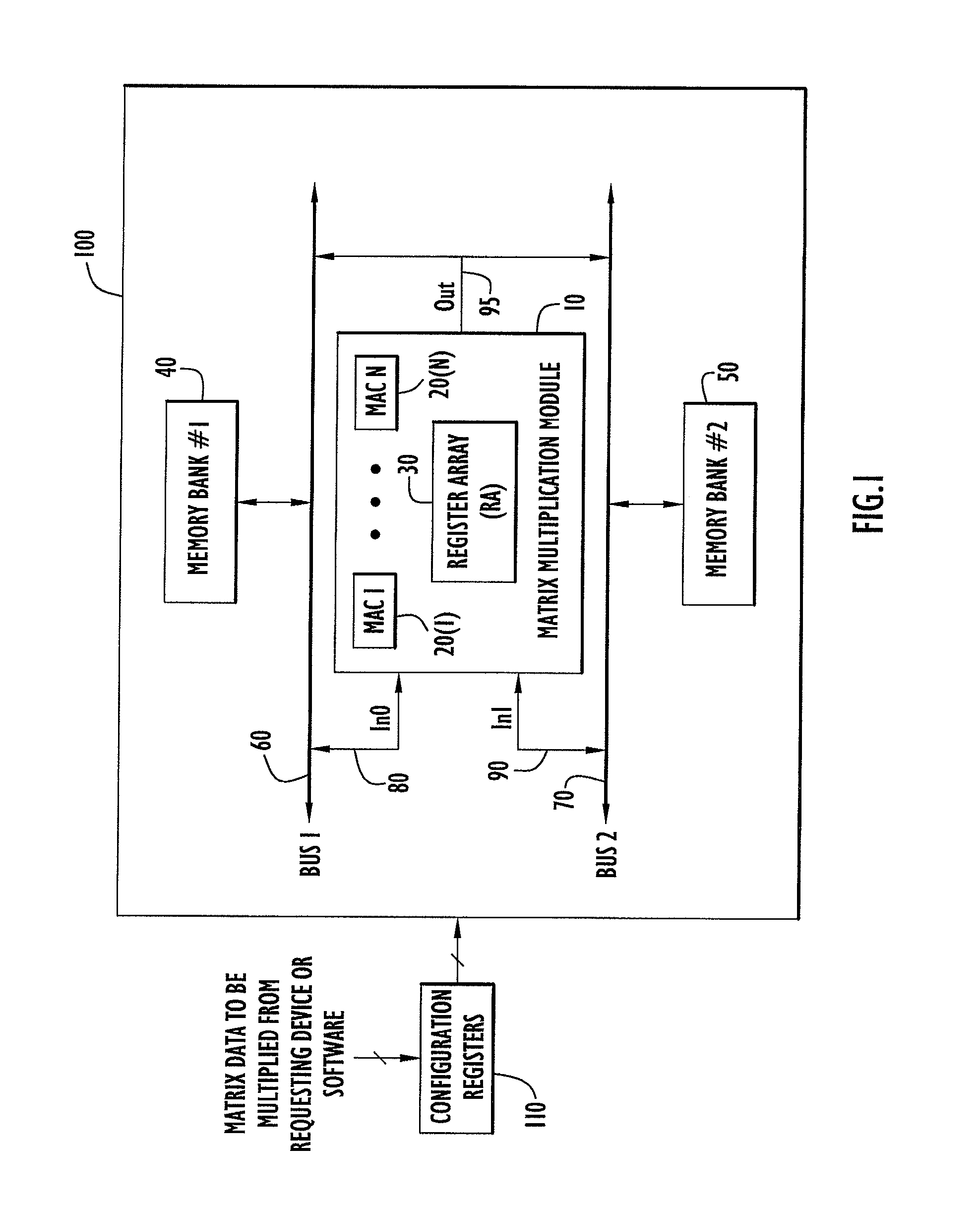

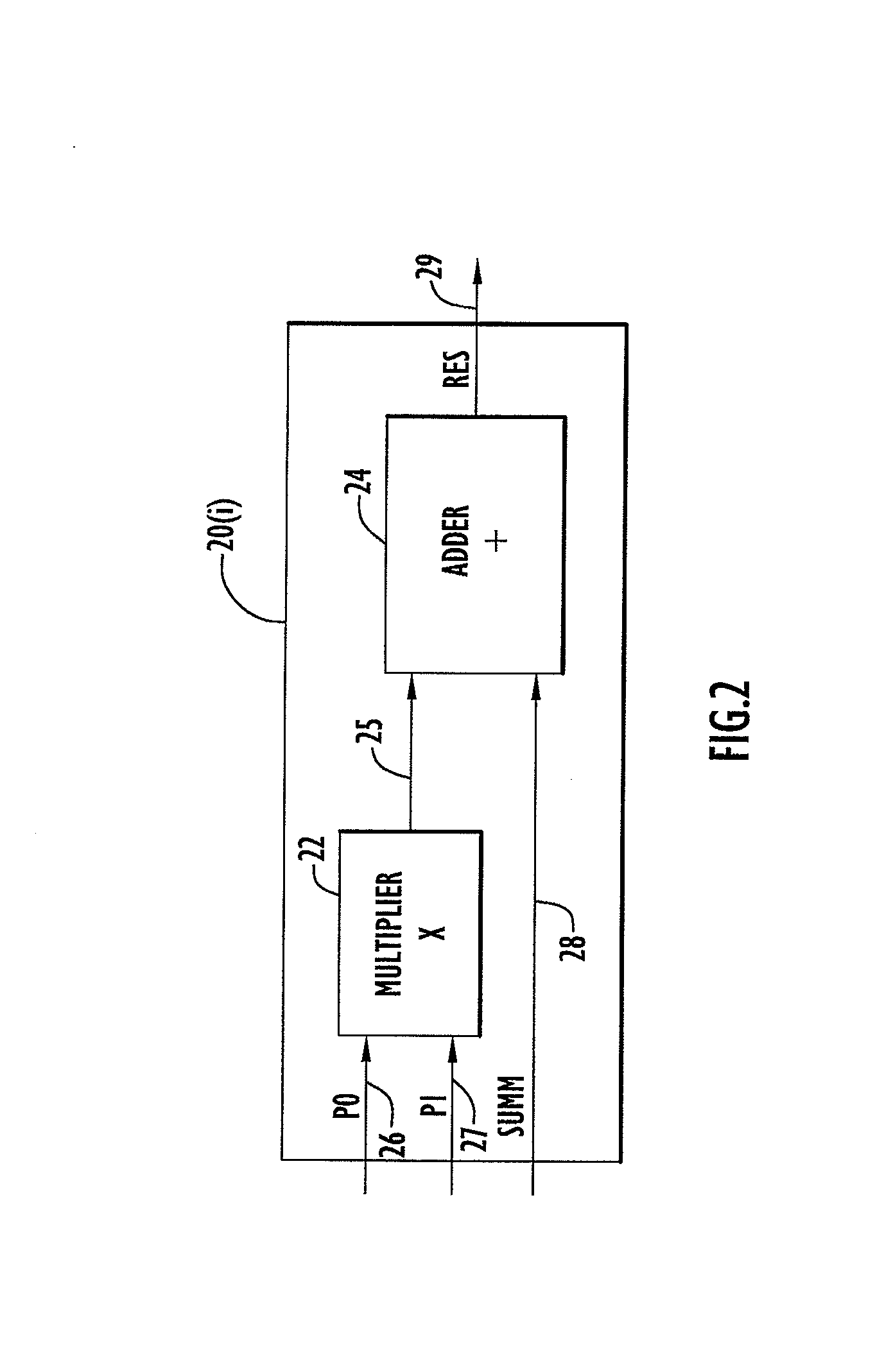

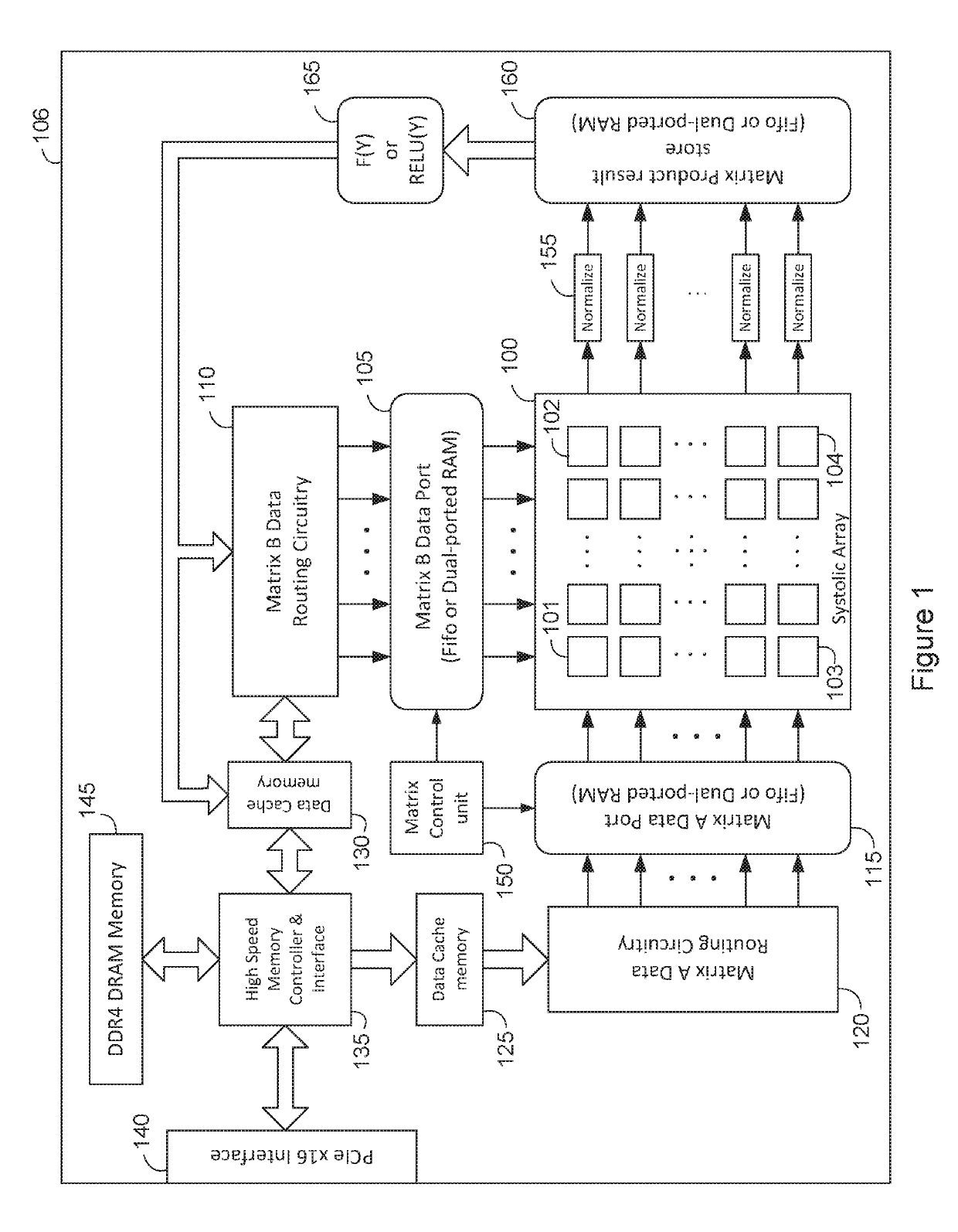

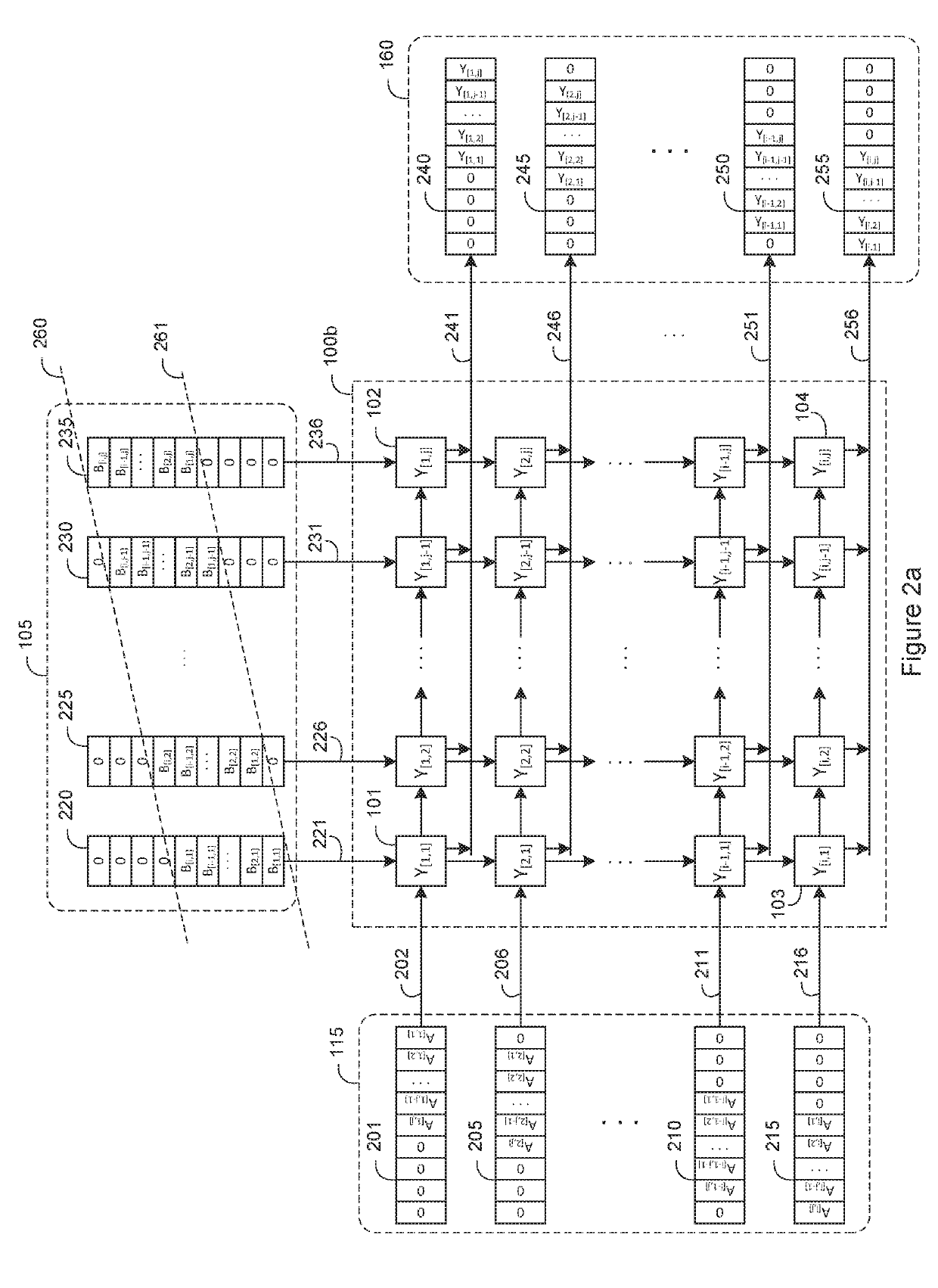

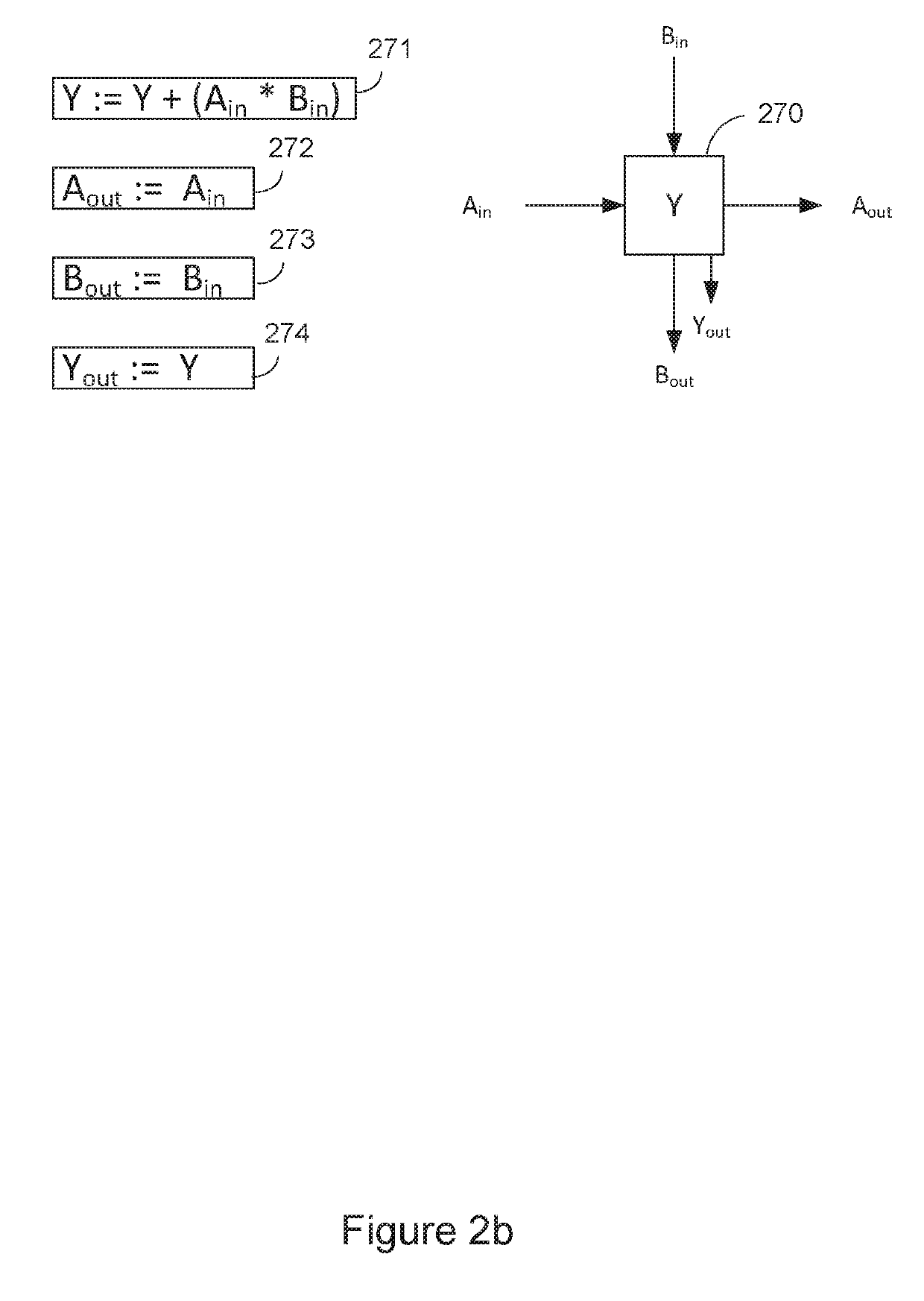

High speed and efficient matrix multiplication hardware module

ActiveUS8051124B2Computation using non-contact making devicesProgram controlClock rateComputer module

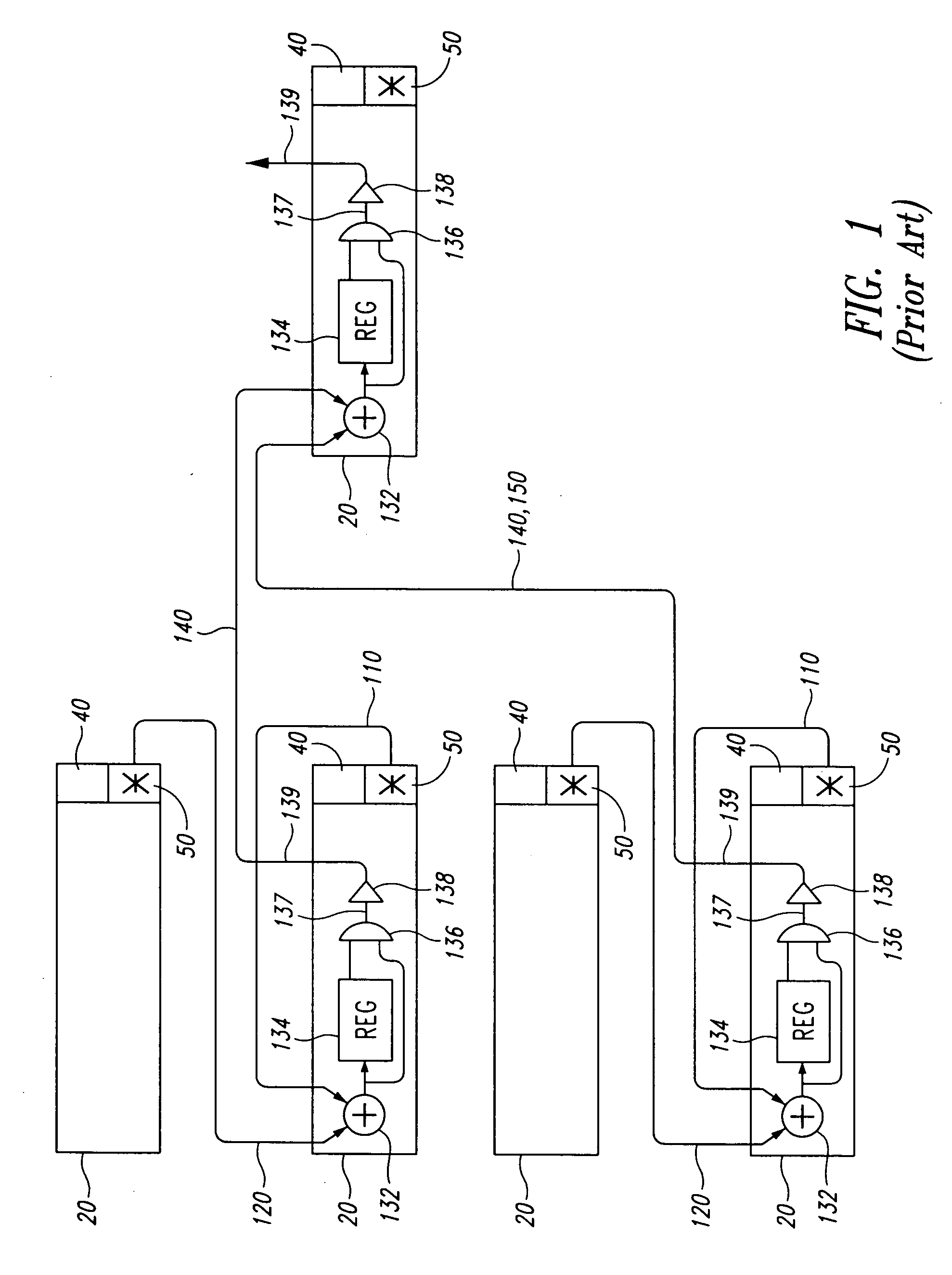

A matrix multiplication module and matrix multiplication method are provided that use a variable number of multiplier-accumulator units based on the amount of data elements of the matrices are available or needed for processing at a particular point or stage in the computation process. As more data elements become available or are needed, more multiplier-accumulator units are used to perform the necessary multiplication and addition operations. To multiply an N×M matrix by an M×N matrix, the total (maximum) number of used MAC units is “2*N−1”. The number of MAC units used starts with one (1) and increases by two at each computation stage, that is, at the beginning of reading of data elements for each new row of the first matrix. The sequence of the number of MAC units is {1, 3, 5, . . . , 2*N−1} for computation stages each of which corresponds to reading of data elements for each new row of the left hand matrix, also called the first matrix. For the multiplication of two 8×8 matrices, the performance is 16 floating point operations per clock cycle. For an FPGA running at 100 MHz, the performance is 1.6 Giga floating point operations per second. The performance increases with the increase of the clock frequency and the use of larger matrices when FPGA resources permit. Very large matrices are partitioned into smaller blocks to fit in the FPGA resources. Results from the multiplication of sub-matrices are combined to form the final result of the large matrices.

Owner:HARRIS CORP

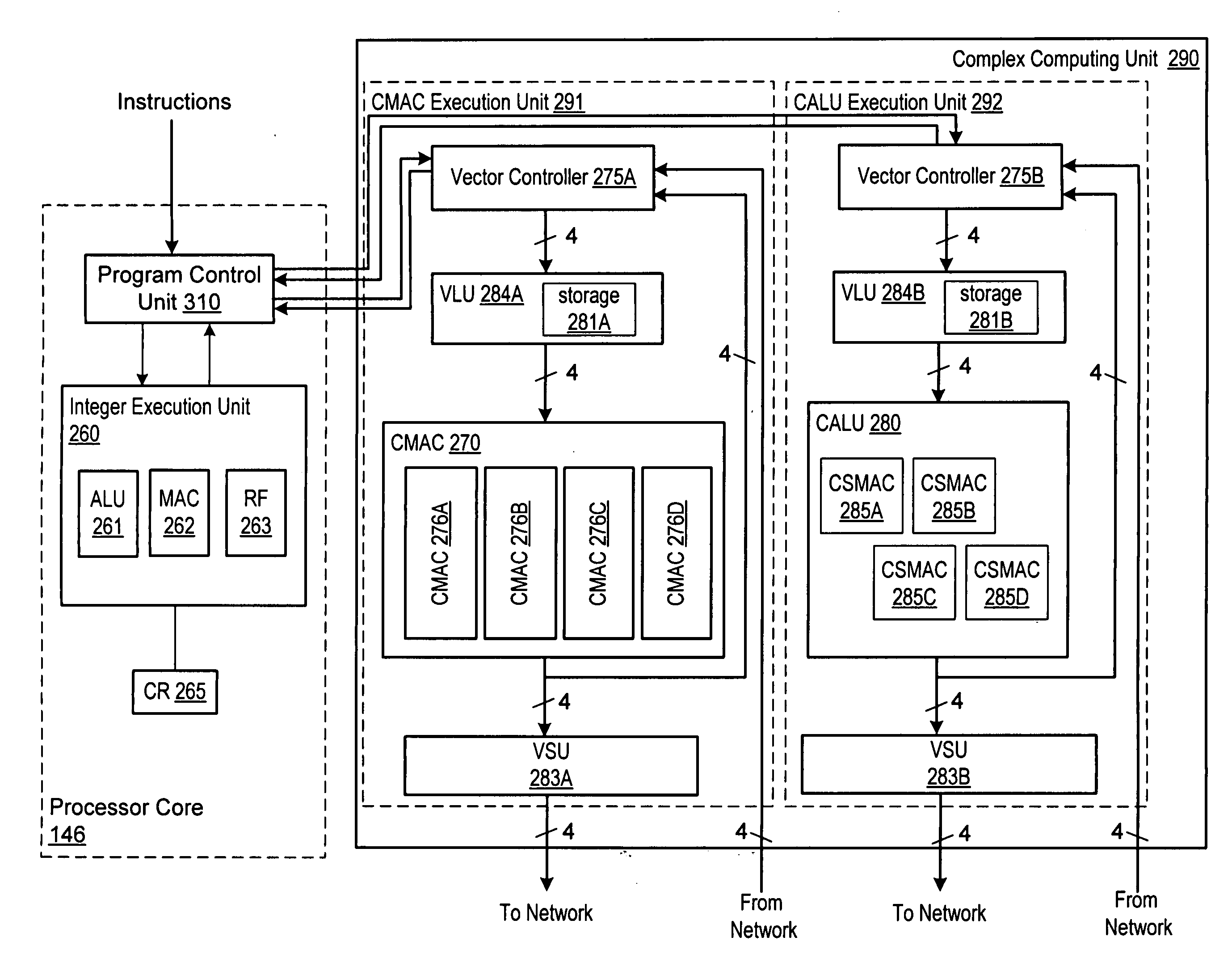

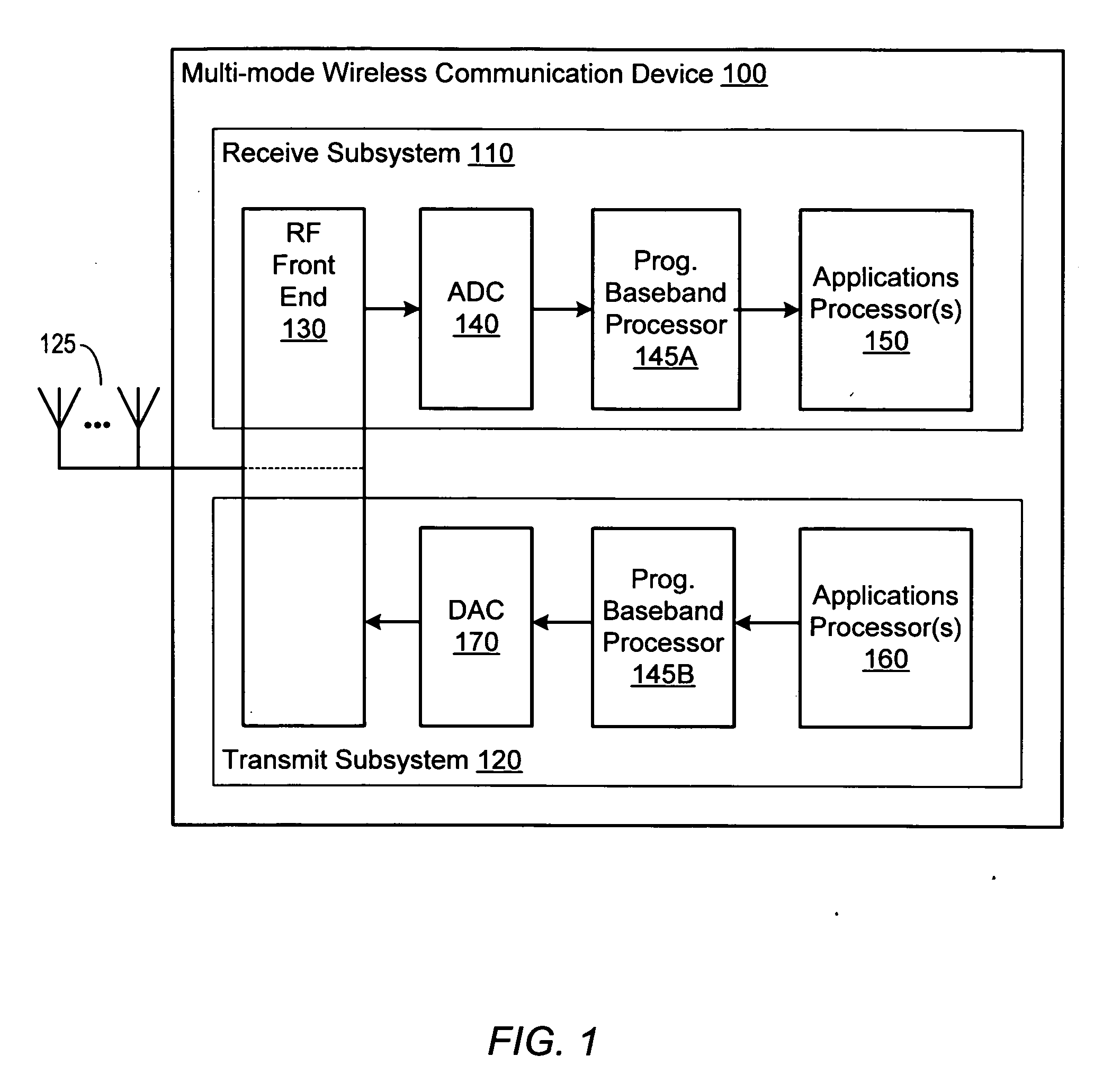

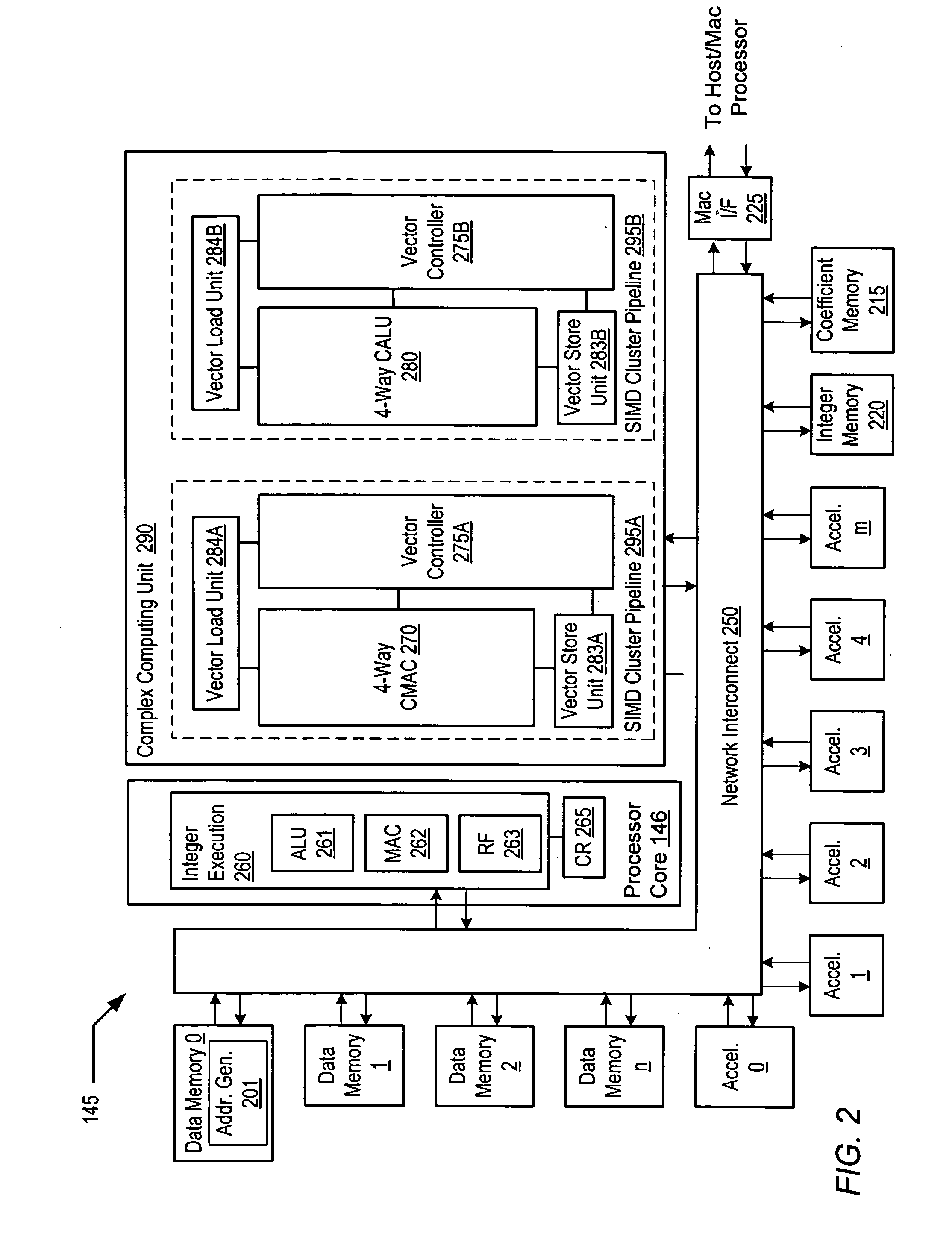

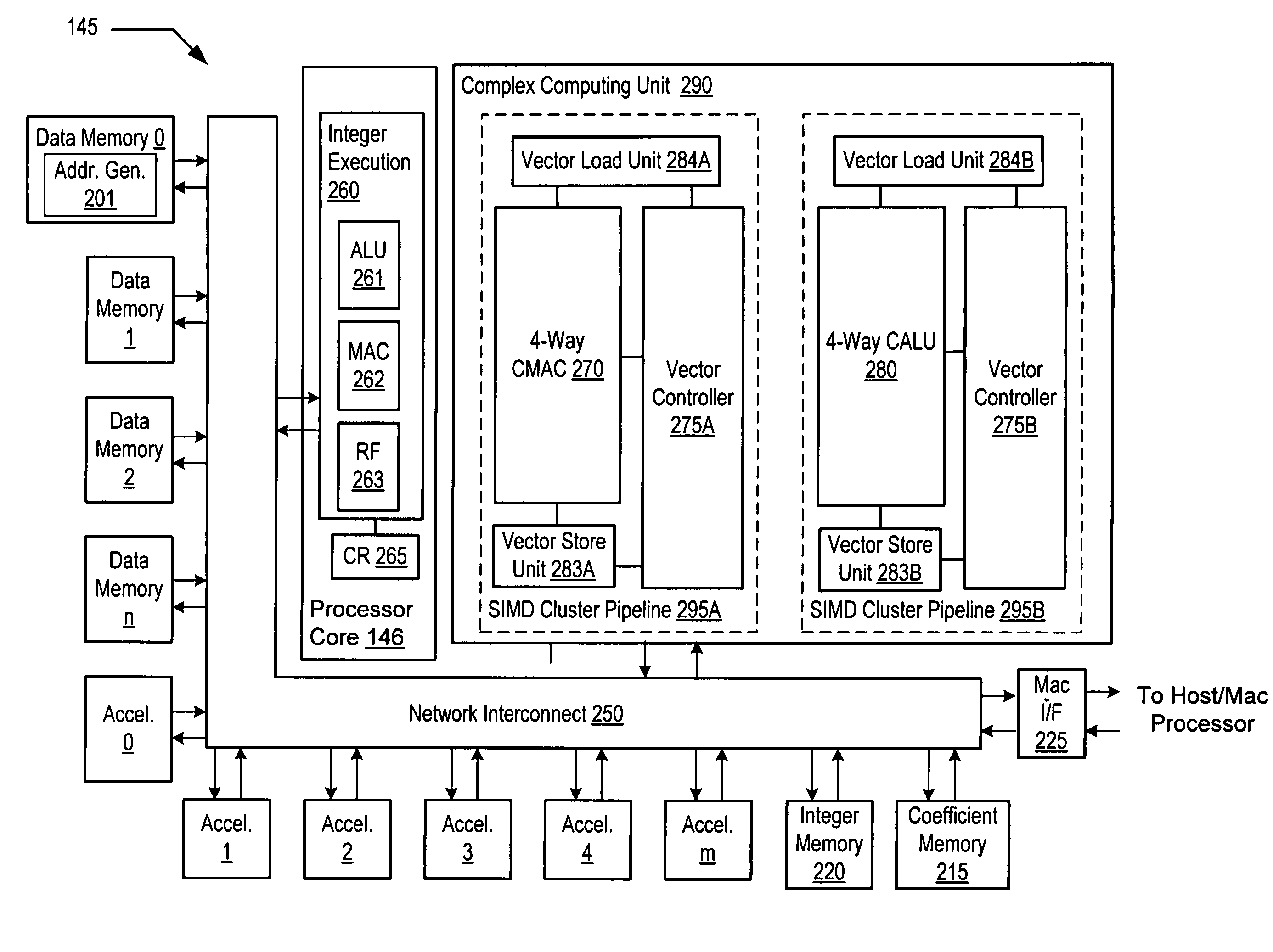

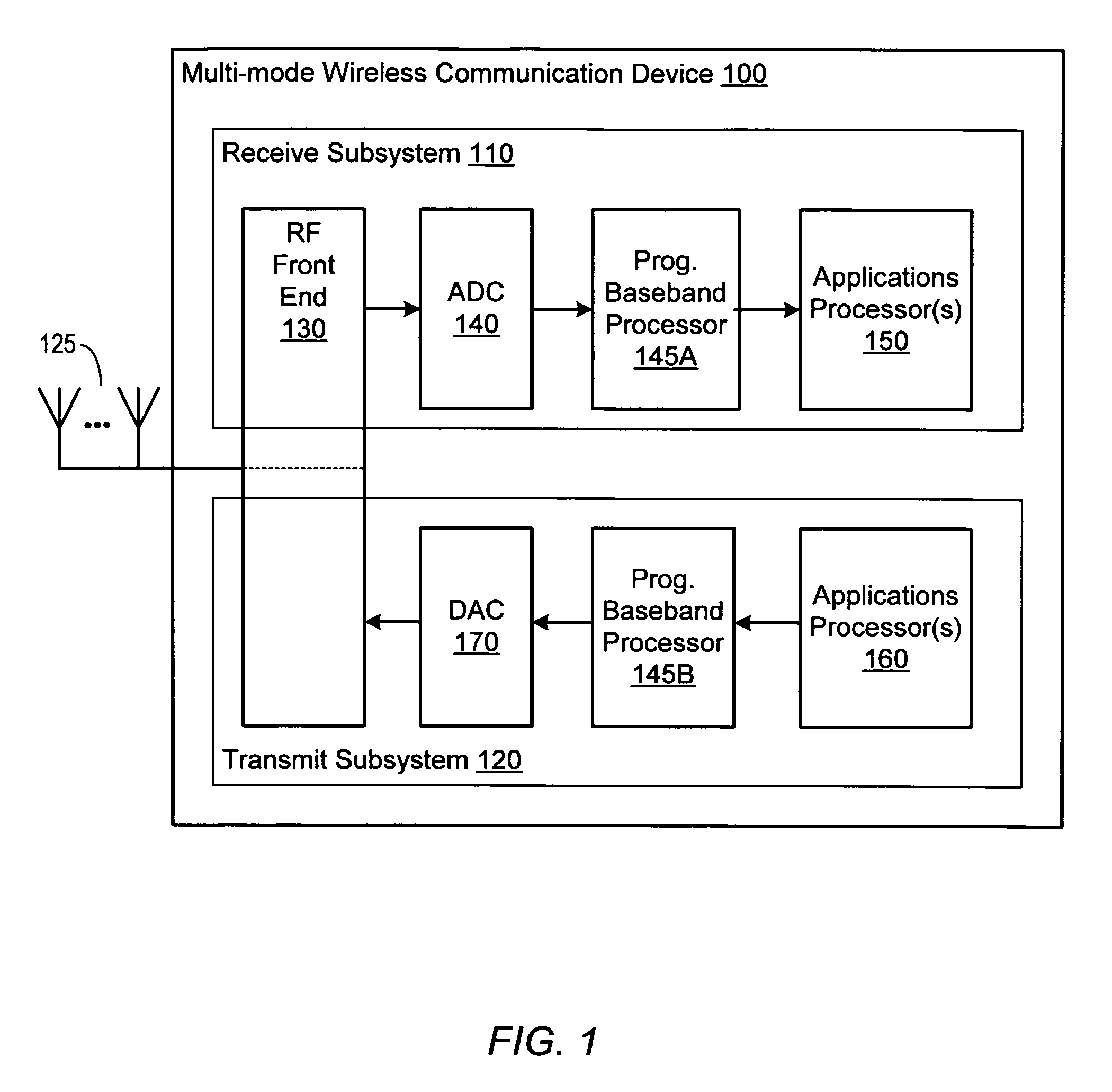

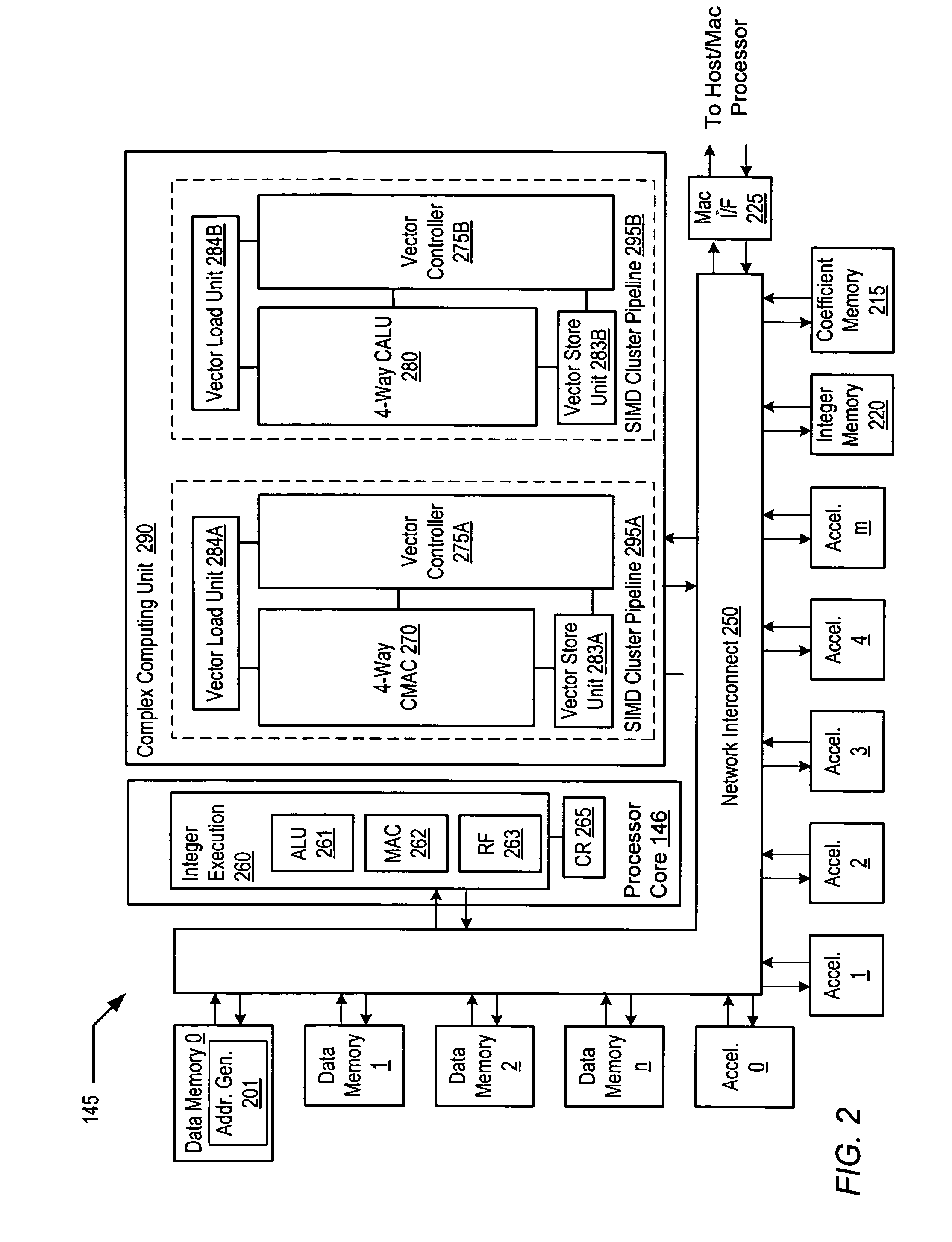

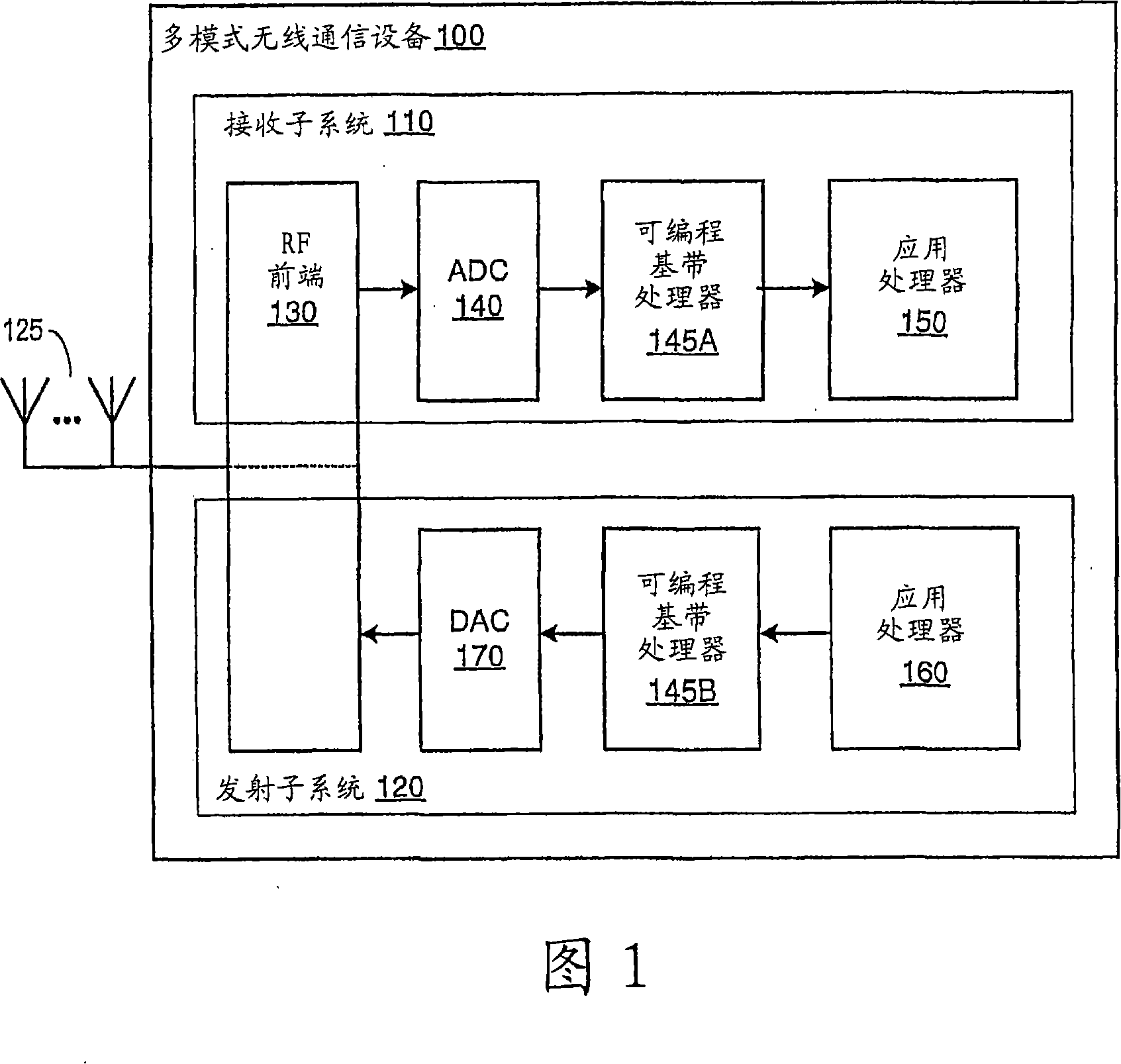

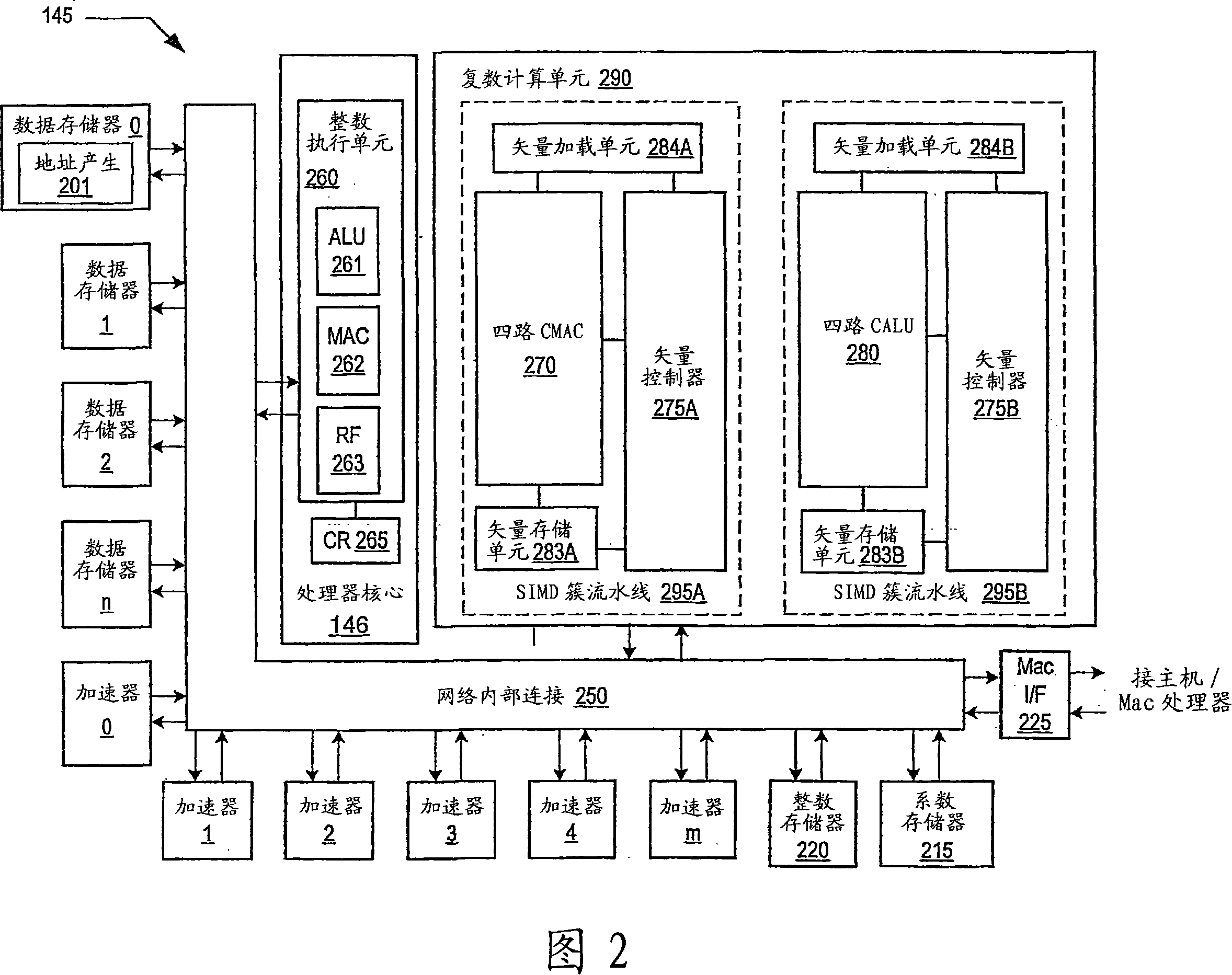

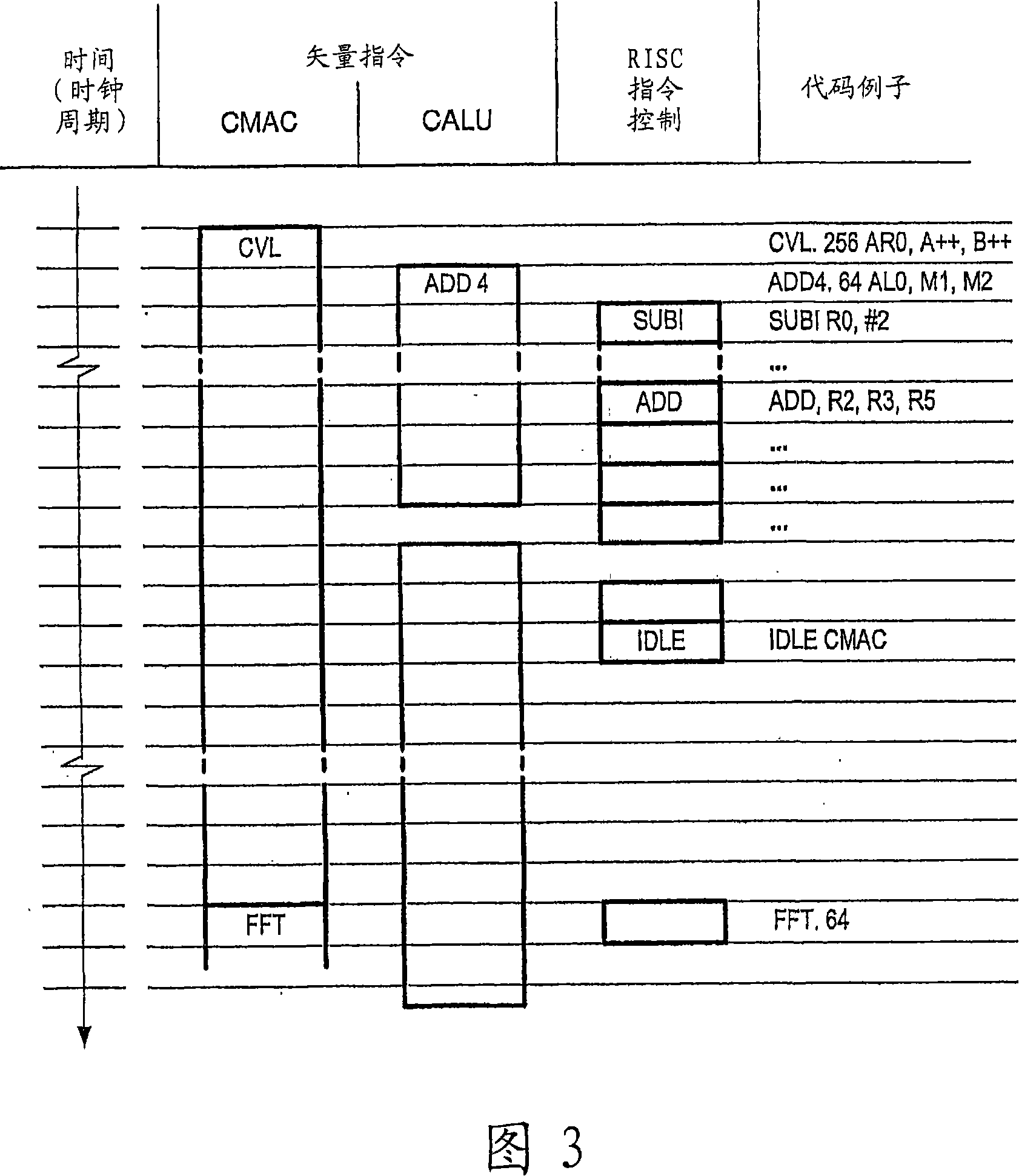

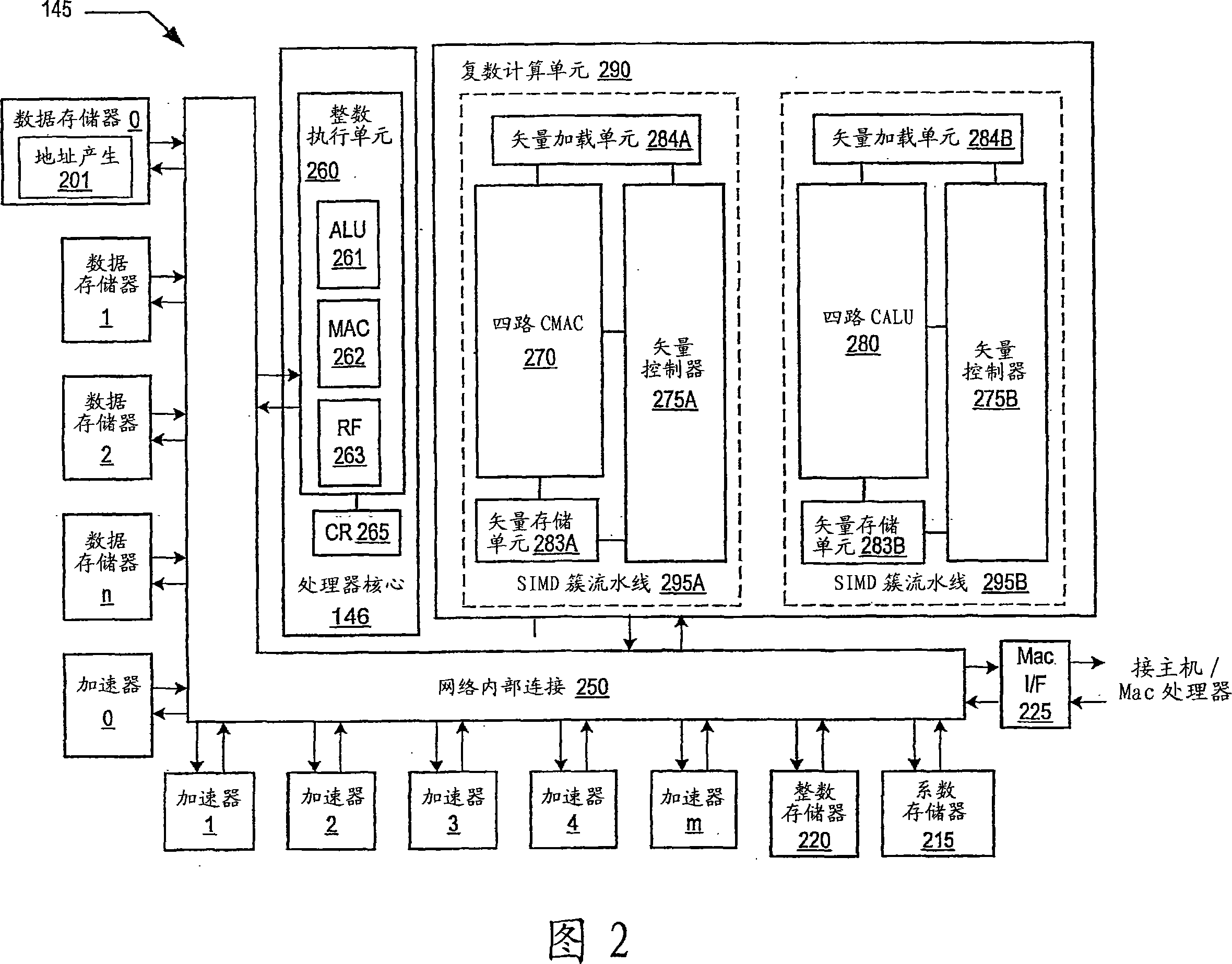

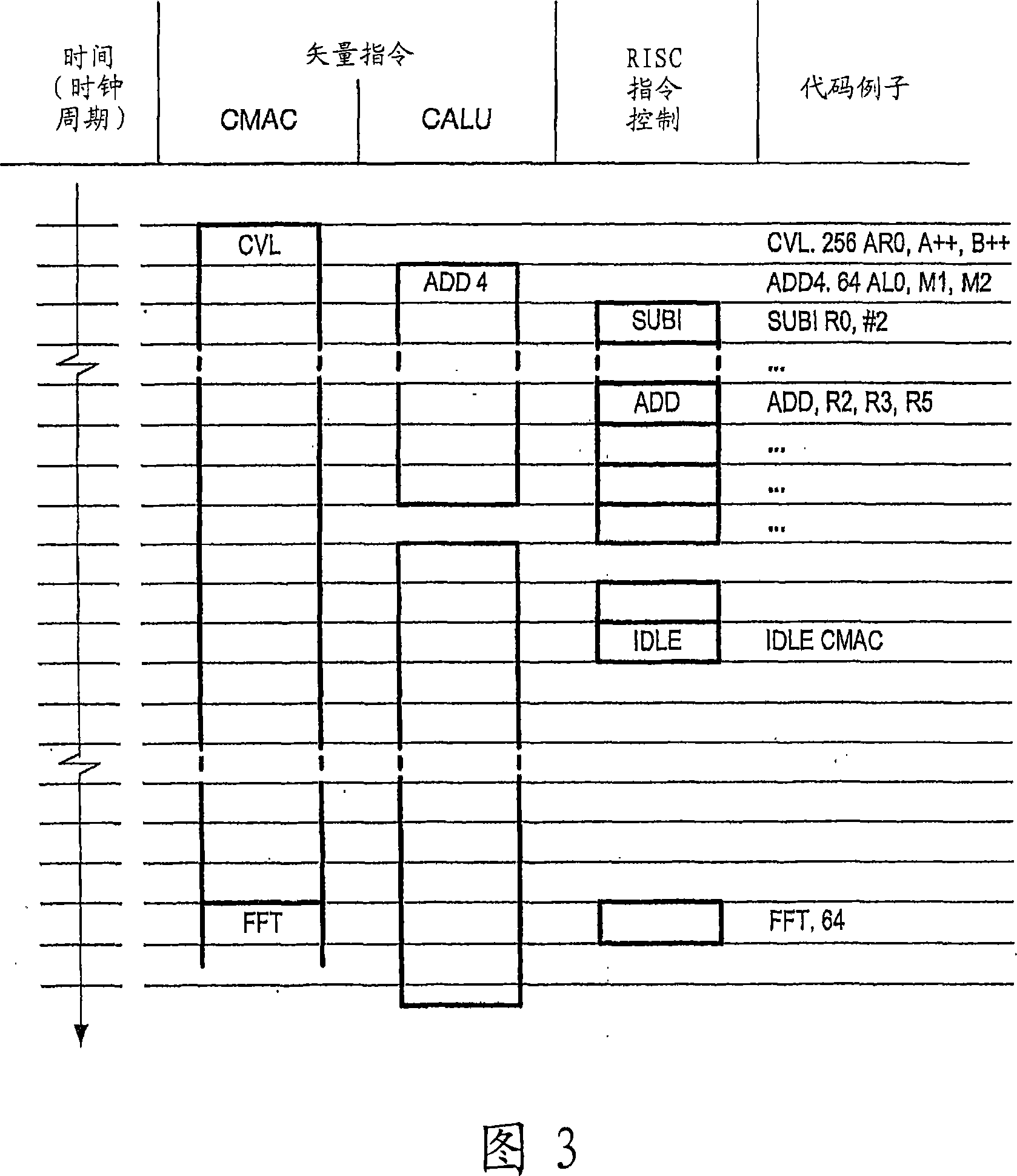

Programmable digital signal processor including a clustered SIMD microarchitecture configured to execute complex vector instructions

ActiveUS20060271764A1General purpose stored program computerSpecific program execution arrangementsArithmetic logic unitLine tubing

A programmable digital signal processor including a clustered SIMD microarchitecture includes a plurality of accelerator units, a processor core and a complex computing unit. Each of the accelerator units may be configured to perform one or more dedicated functions. The processor core includes an integer execution unit that may be configured to execute integer instructions. The complex computing unit may be configured to execute complex vector instructions. The complex computing unit may include a first and a second clustered execution pipeline. The first clustered execution pipeline may include one or more complex arithmetic logic unit datapaths configured to execute first complex vector instructions. The second clustered execution pipeline may include one or more complex multiplier accumulator datapaths configured to execute second complex vector instructions.

Owner:CORESONIC AB

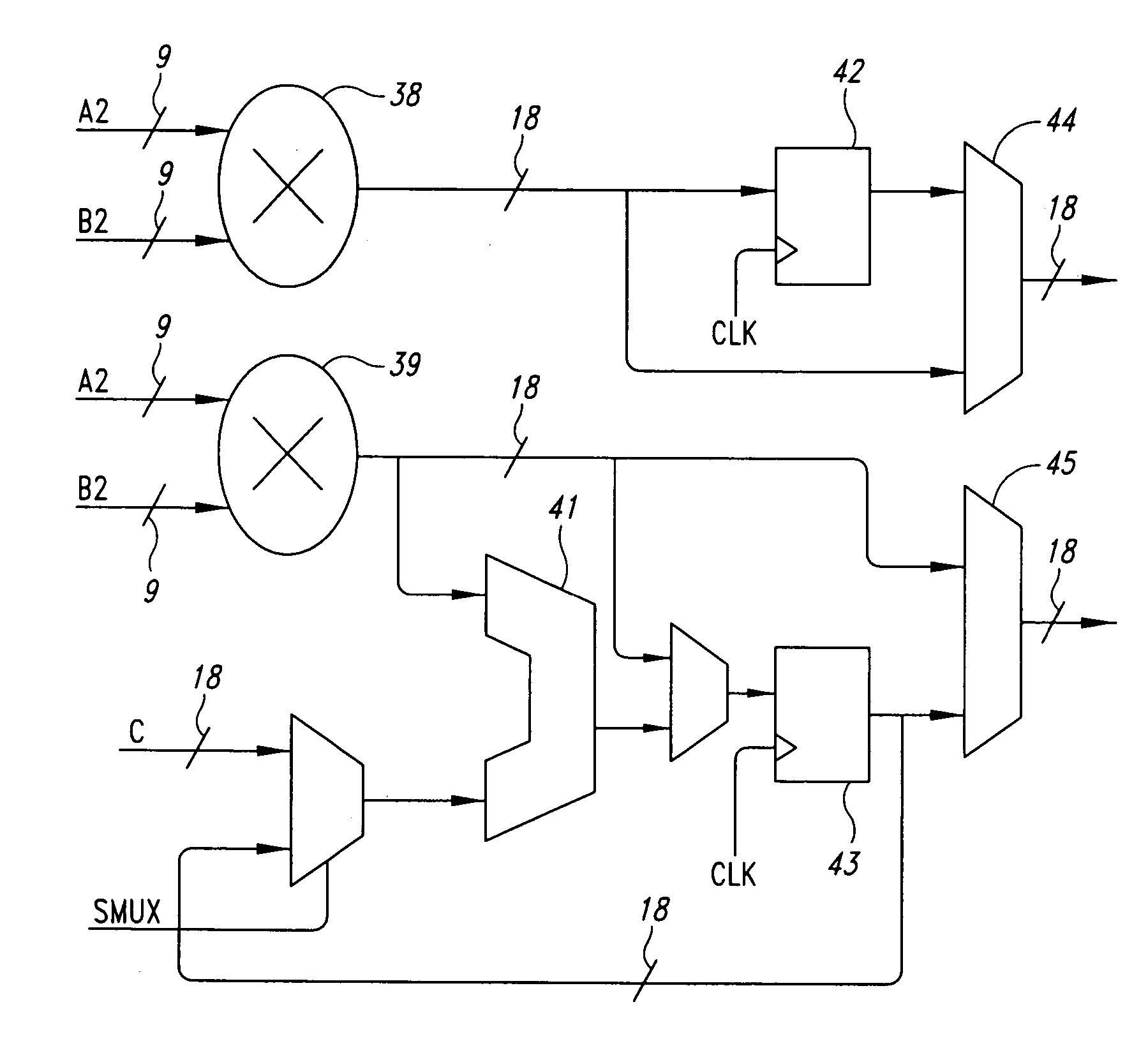

Multiplier-accumulator block mode splitting

ActiveUS20050187998A1Digital computer detailsLogic circuits using elementary logic circuit componentsBinary multiplierProgrammable logic device

A programmable logic device is provided that includes a MAC block having mode splitting capabilities. Different modes of operation may be implemented simultaneously whereby the multipliers and other DSP circuitry of the MAC block may be allocated among the different modes of operation. For example, one multiplier may be used to implement a multiply mode while another two multipliers may be used to implement a sum of two multipliers mode.

Owner:TAHOE RES LTD

Complex vector executing clustered SIMD micro-architecture DSP with accelerator coupled complex ALU paths each further including short multiplier/accumulator using two's complement

ActiveUS7299342B2General purpose stored program computerSpecific program execution arrangementsArithmetic logic unitExecution unit

A programmable digital signal processor including a clustered SIMD microarchitecture includes a plurality of accelerator units, a processor core and a complex computing unit. Each of the accelerator units may be configured to perform one or more dedicated functions. The processor core includes an integer execution unit that may be configured to execute integer instructions. The complex computing unit may be configured to execute complex vector instructions. The complex computing unit may include a first and a second clustered execution pipeline. The first clustered execution pipeline may include one or more complex arithmetic logic unit datapaths configured to execute first complex vector instructions. The second clustered execution pipeline may include one or more complex multiplier accumulator datapaths configured to execute second complex vector instructions.

Owner:CORESONIC AB

Programmable digital signal processor including a clustered SIMD microarchitecture configured to execute complex vector instructions

InactiveCN101238455AProgram controlArchitecture with single central processing unitArithmetic logic unitLogic cell

Owner:CORESONIC AB

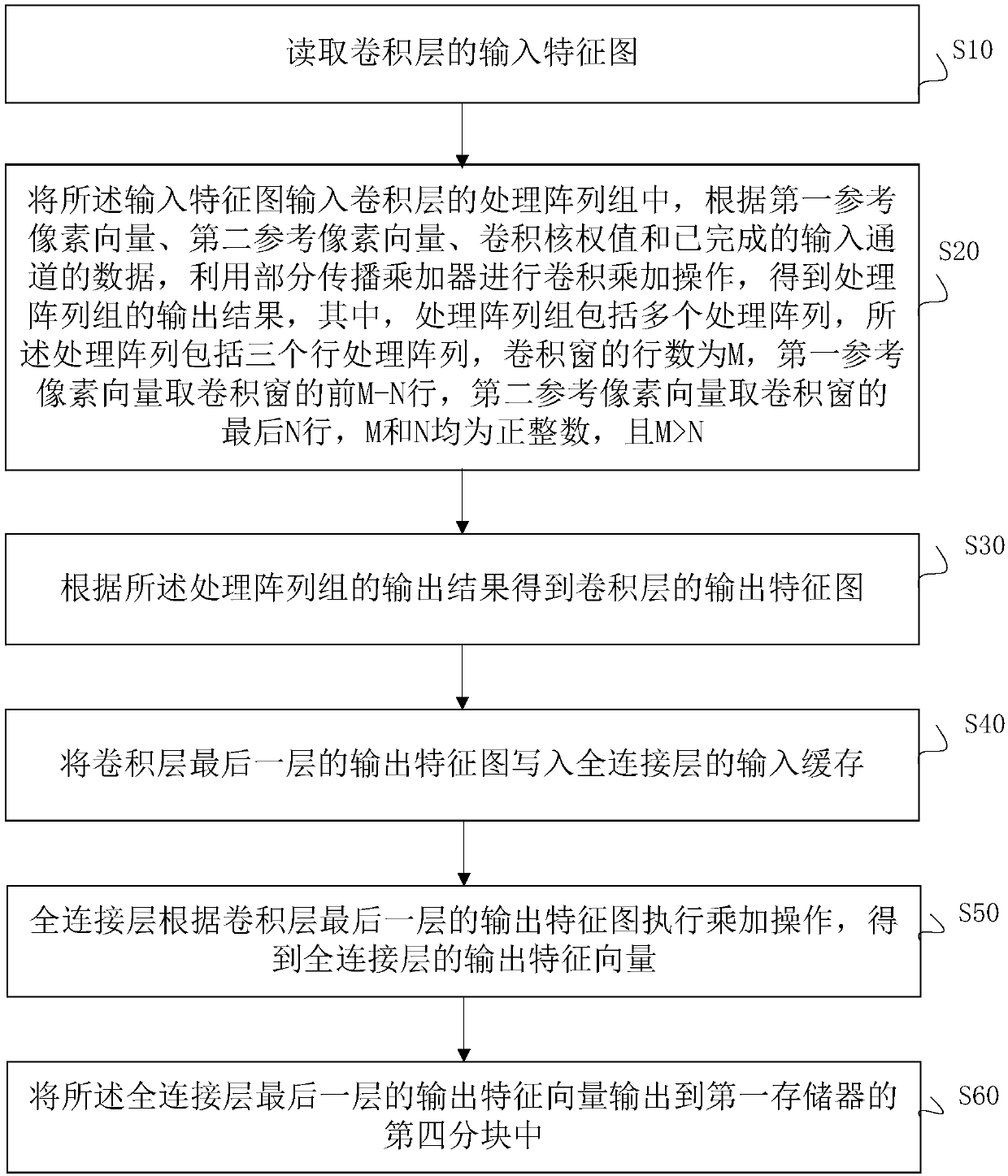

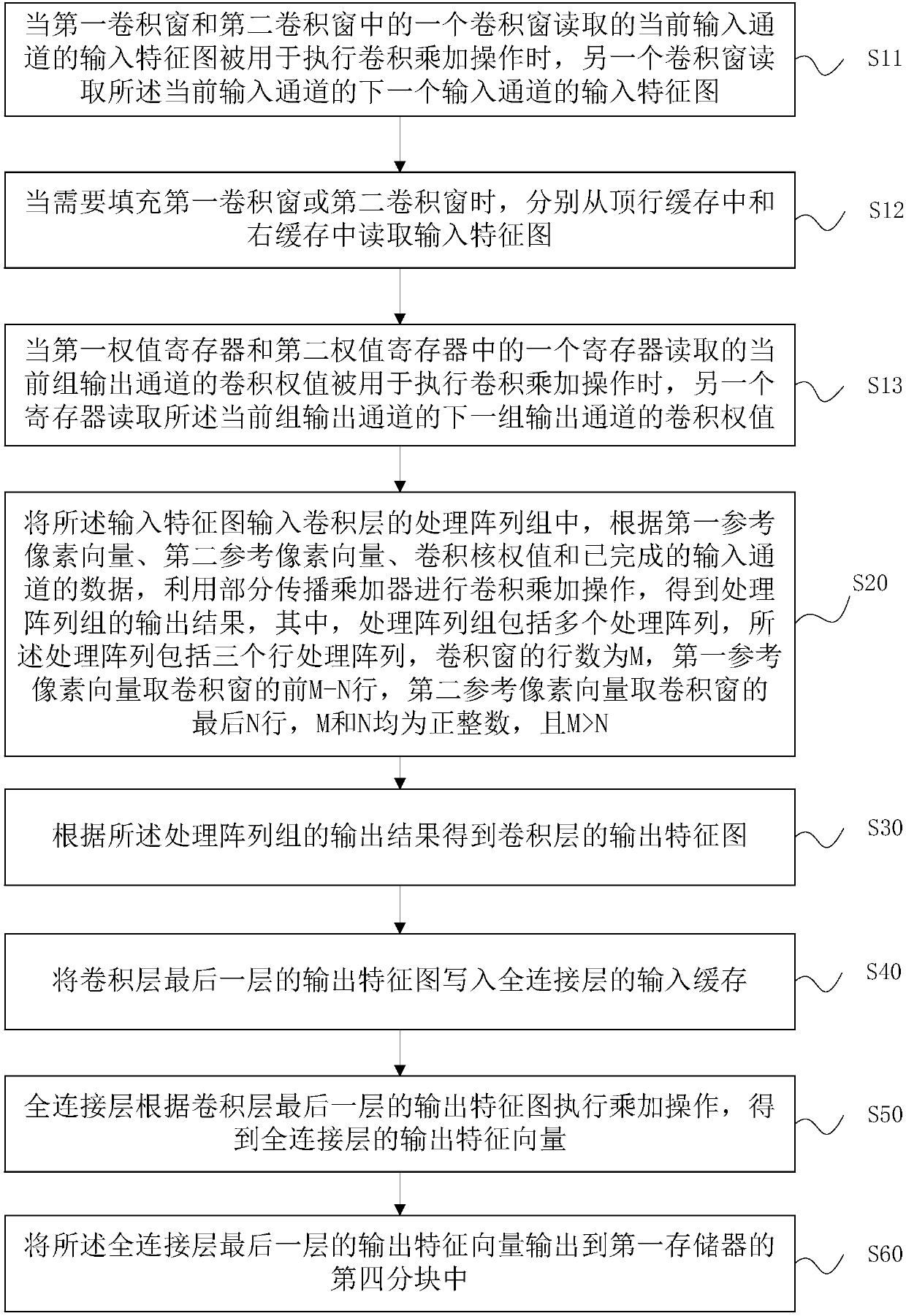

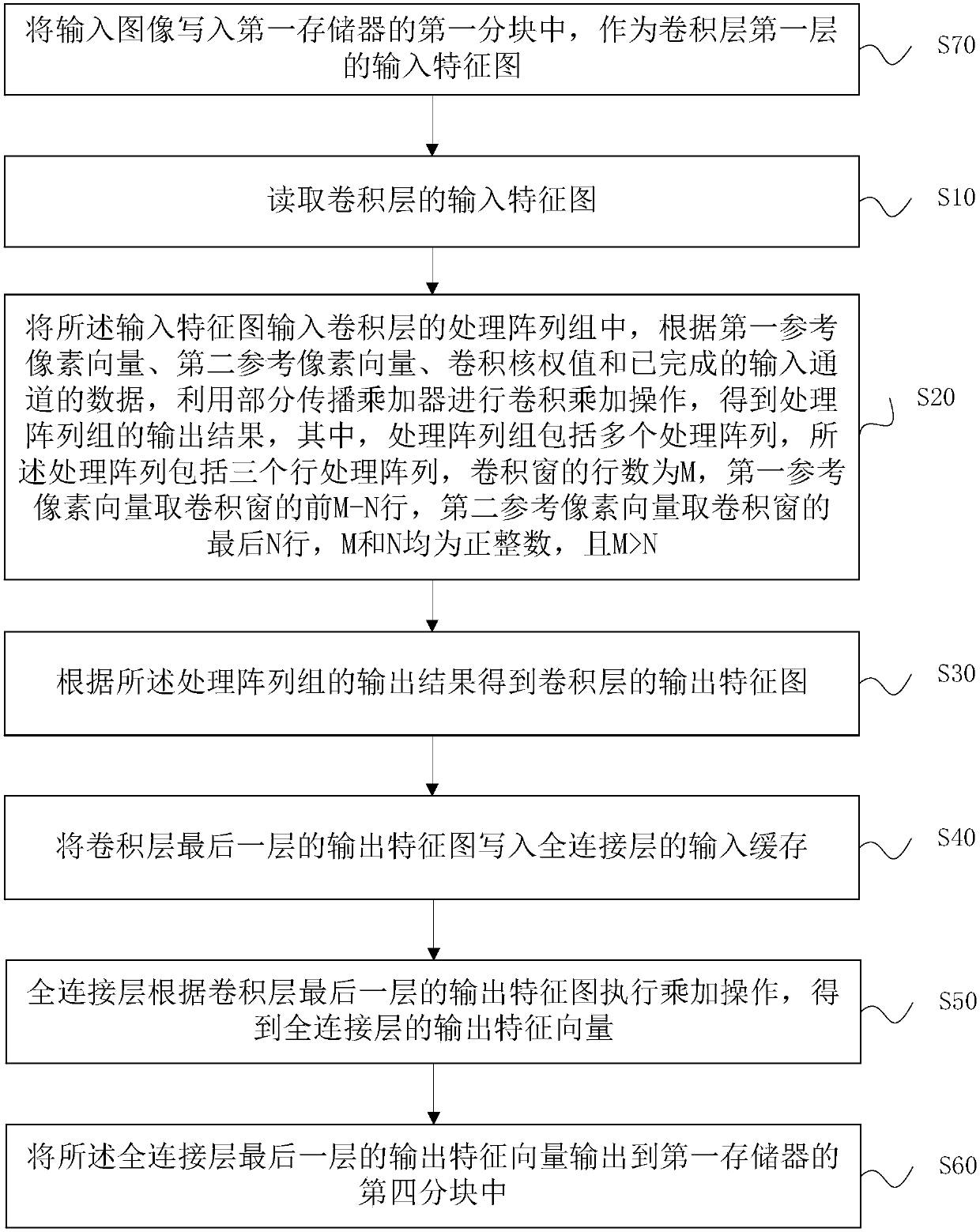

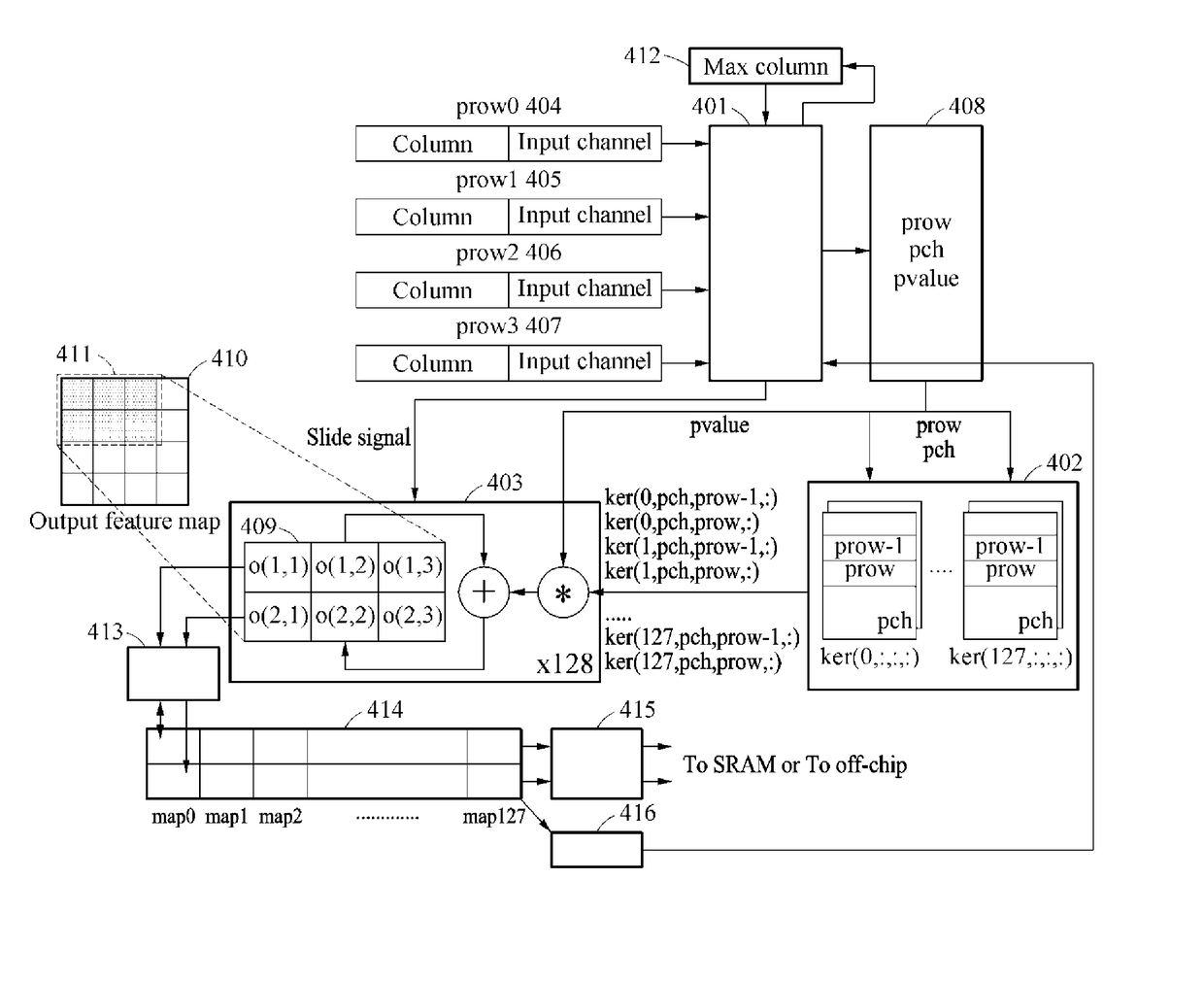

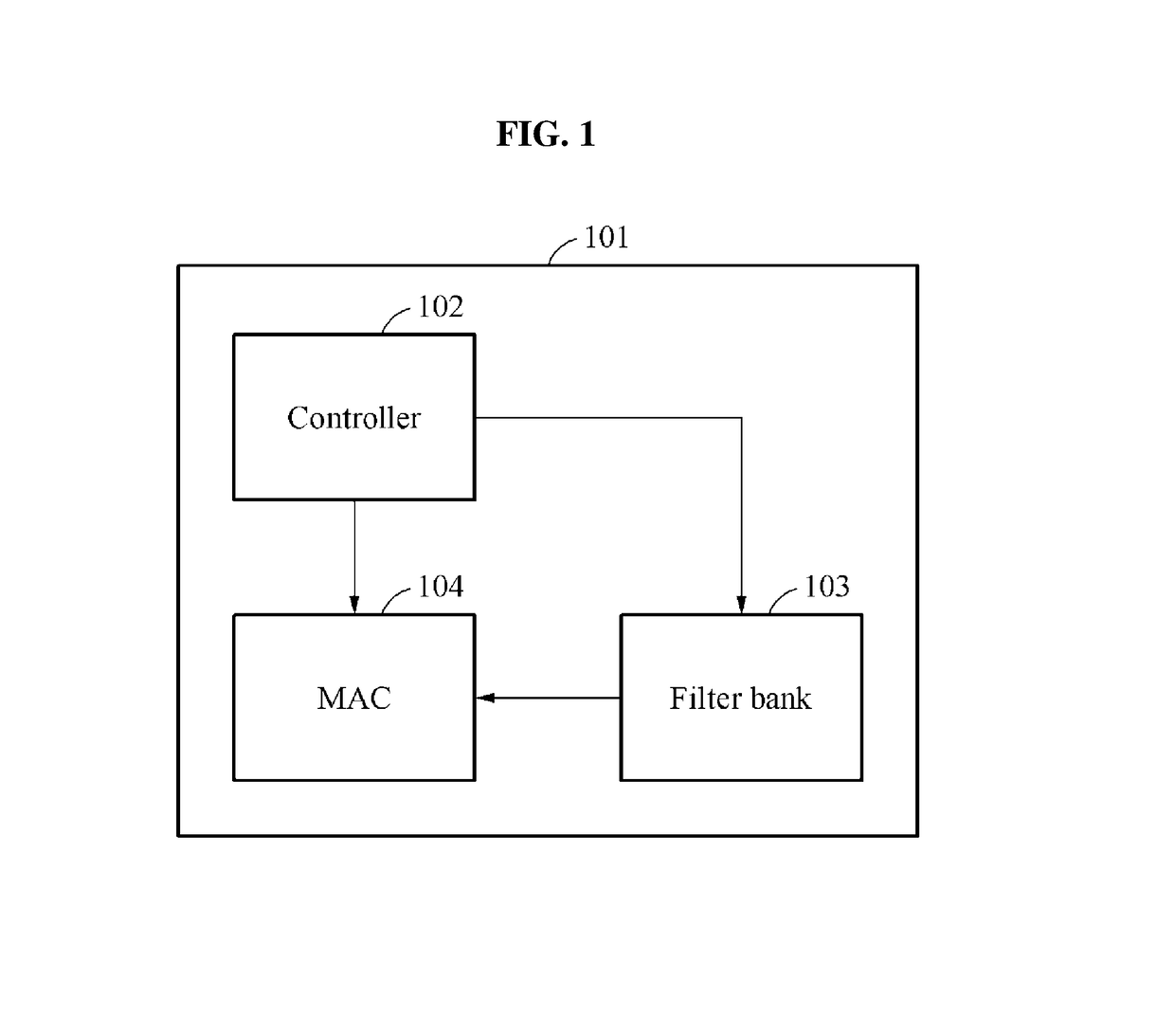

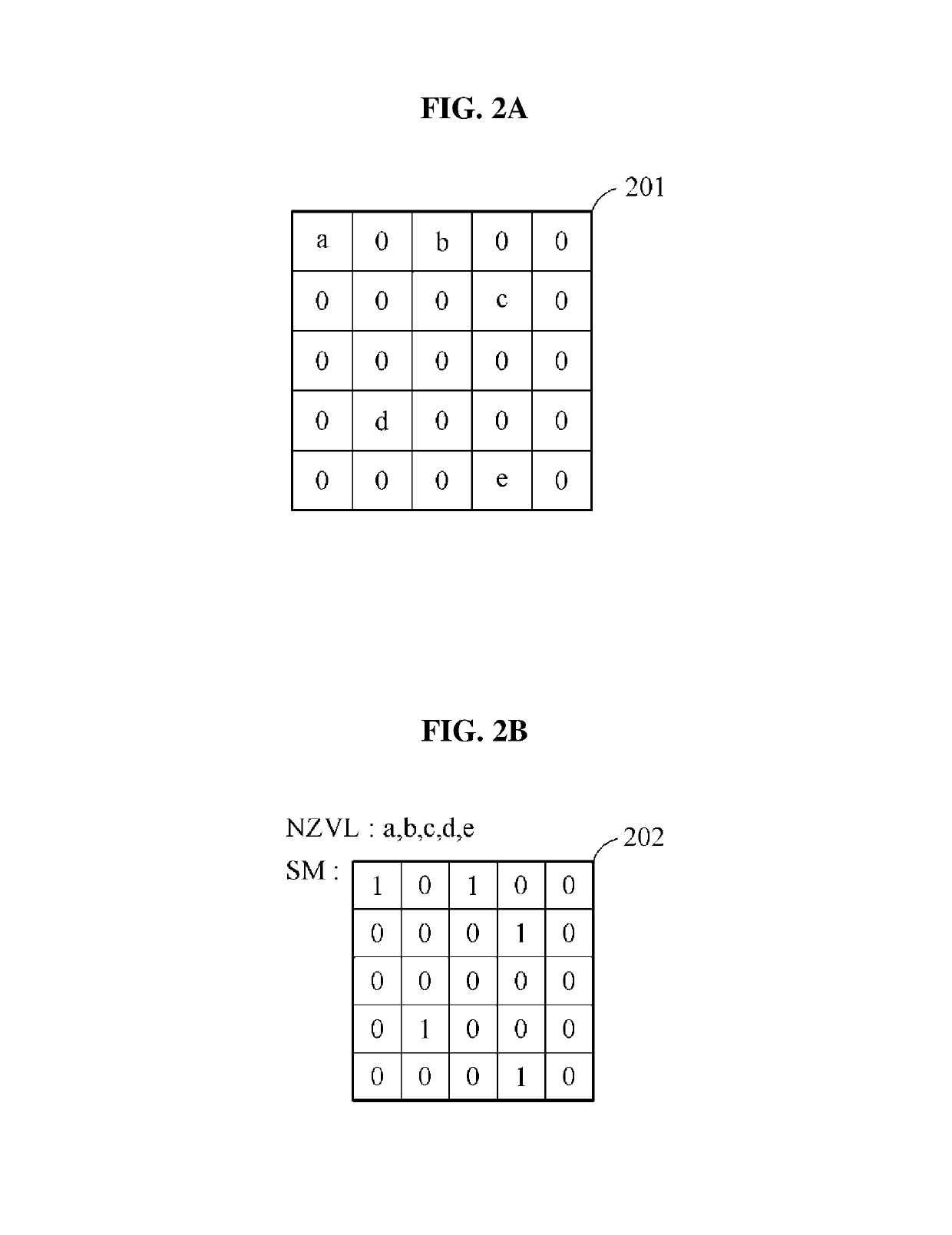

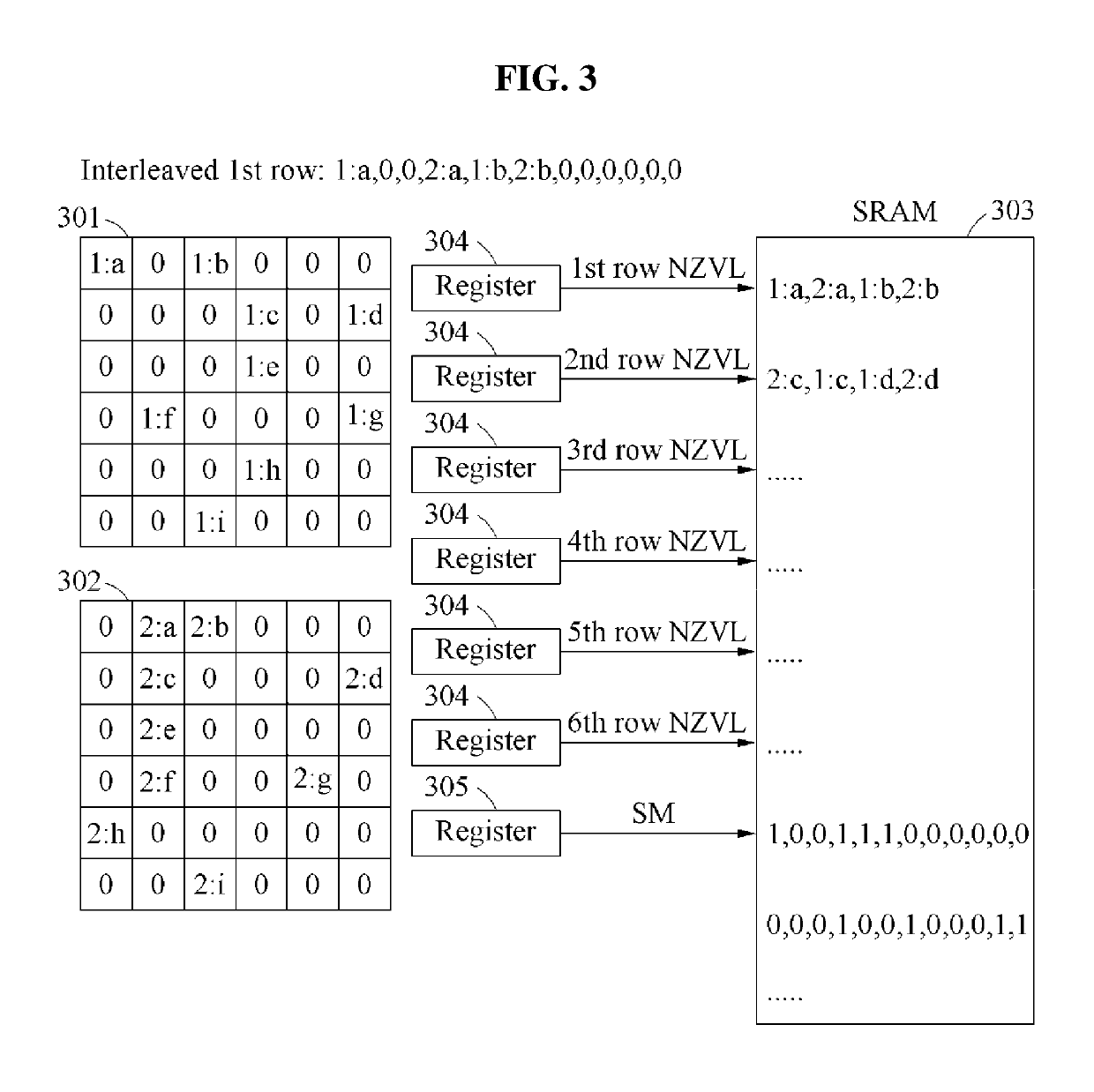

Convolutional neural network acceleration method and apparatus

ActiveCN108133270AReduce resource requirementsReduce power consumptionNeural architecturesPhysical realisationFeature vectorAlgorithm

The invention relates to a convolutional neural network acceleration method and apparatus. The method comprises the steps of reading an input feature graph of a convolutional layer; inputting the input feature graph to a processing array group of the convolutional layer, and according to a first reference pixel vector, a second reference pixel vector, a convolution kernel weight and finished inputchannel data, performing convolution multiplication and addition operation by utilizing a propagate partial multiplier-accumulator to obtain an output result of the processing array group; accordingto the output result of the processing array group, obtaining an output feature graph of the convolutional layer; writing the output feature graph of the final layer of the convolutional layer in an input cache of a full connection layer; executing the multiplication and addition operation by the full connection layer according to the output feature graph of the final layer of the convolutional layer to obtain an output eigenvector of the full connection layer; and outputting the output eigenvector of the final layer of the full connection layer to a fourth block of a first memory. The hardware resources and the power consumption are effectively reduced; and the processing speed of a convolutional neural network is increased.

Owner:TSINGHUA UNIV

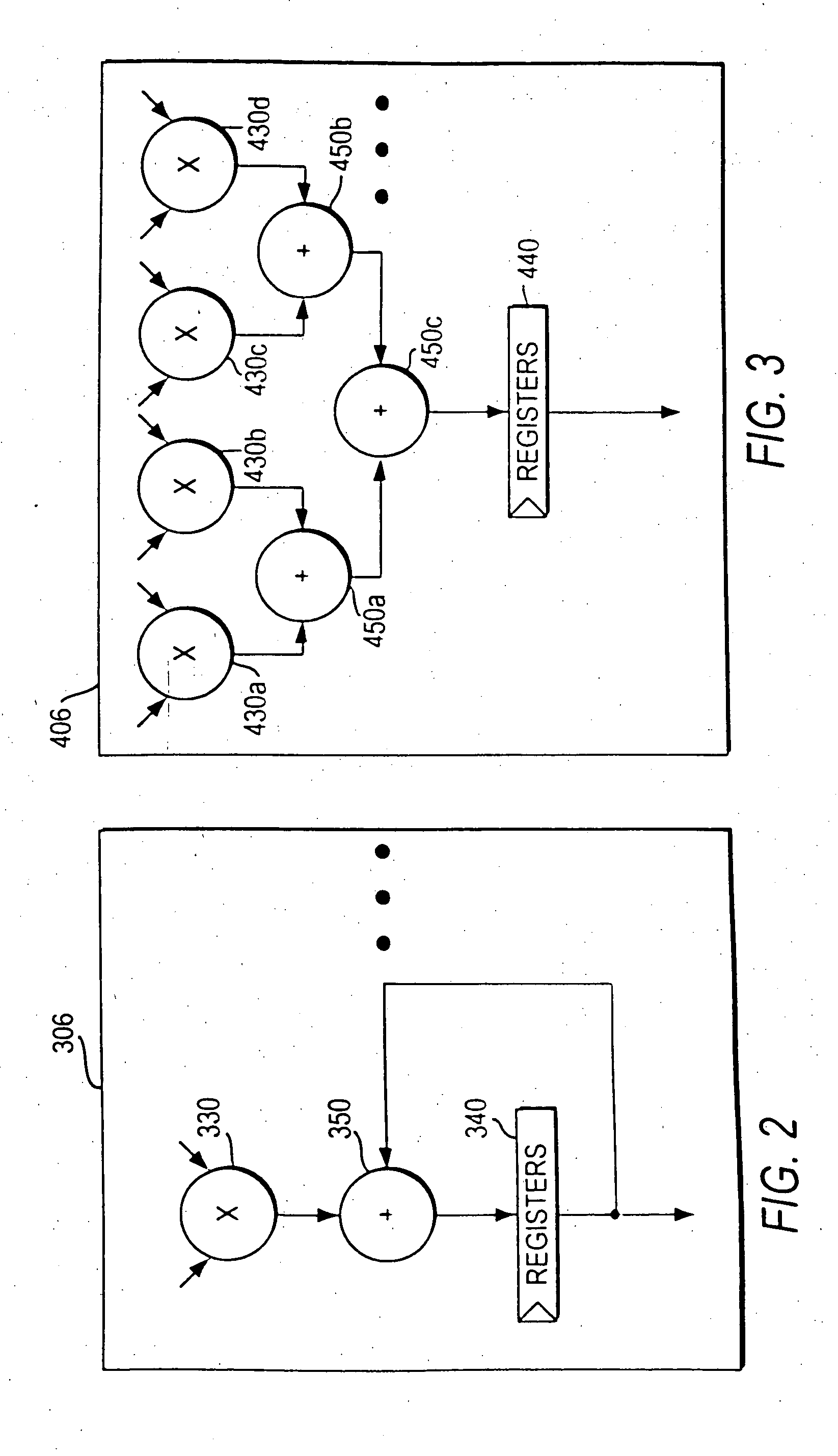

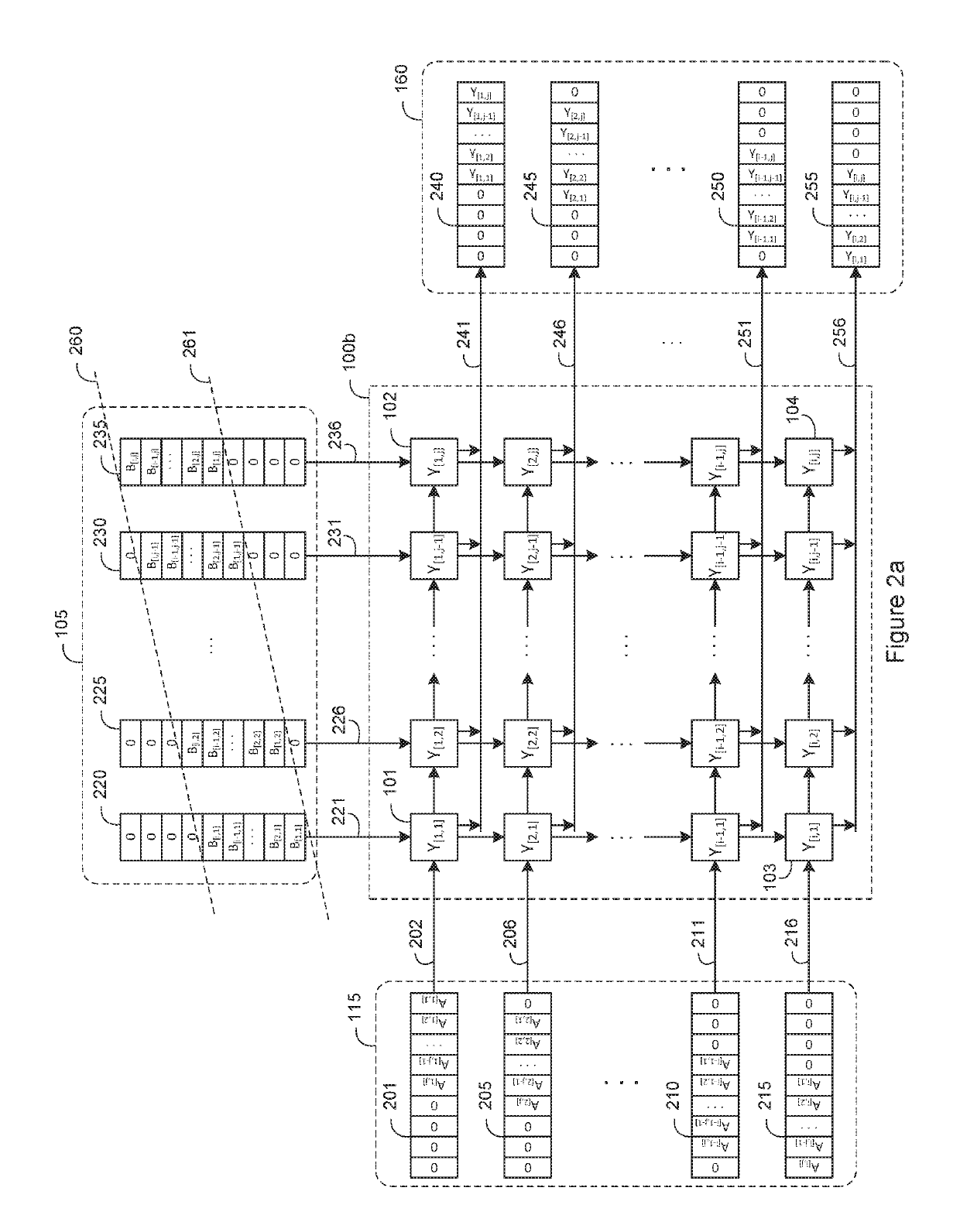

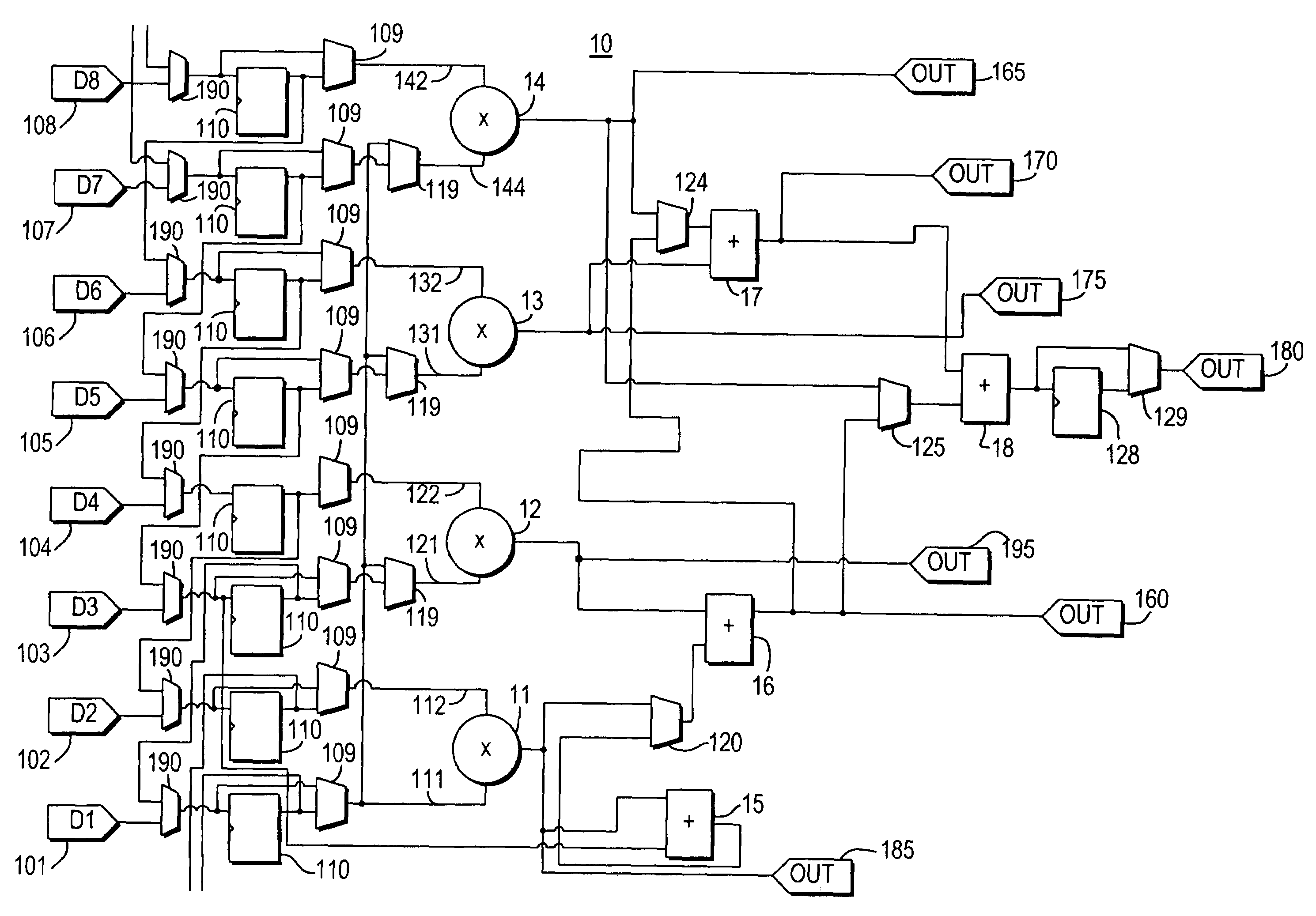

High Speed and Efficient Matrix Multiplication Hardware Module

ActiveUS20090024685A1Computation using non-contact making devicesProgram controlClock rateParallel computing

A matrix multiplication module and matrix multiplication method are provided that use a variable number of multiplier-accumulator units based on the amount of data elements of the matrices are available or needed for processing at a particular point or stage in the computation process. As more data elements become available or are needed, more multiplier-accumulator units are used to perform the necessary multiplication and addition operations. To multiply an N×M matrix by an M×N matrix, the total (maximum) number of used MAC units is “2*N−1”. The number of MAC units used starts with one (1) and increases by two at each computation stage, that is, at the beginning of reading of data elements for each new row of the first matrix. The sequence of the number of MAC units is {1, 3, 5, . . . , 2*N−1} for computation stages each of which corresponds to reading of data elements for each new row of the left hand matrix, also called the first matrix. For the multiplication of two 8×8 matrices, the performance is 16 floating point operations per clock cycle. For an FPGA running at 100 MHz, the performance is 1.6 Giga floating point operations per second. The performance increases with the increase of the clock frequency and the use of larger matrices when FPGA resources permit. Very large matrices are partitioned into smaller blocks to fit in the FPGA resources. Results from the multiplication of sub-matrices are combined to form the final result of the large matrices.

Owner:HARRIS CORP

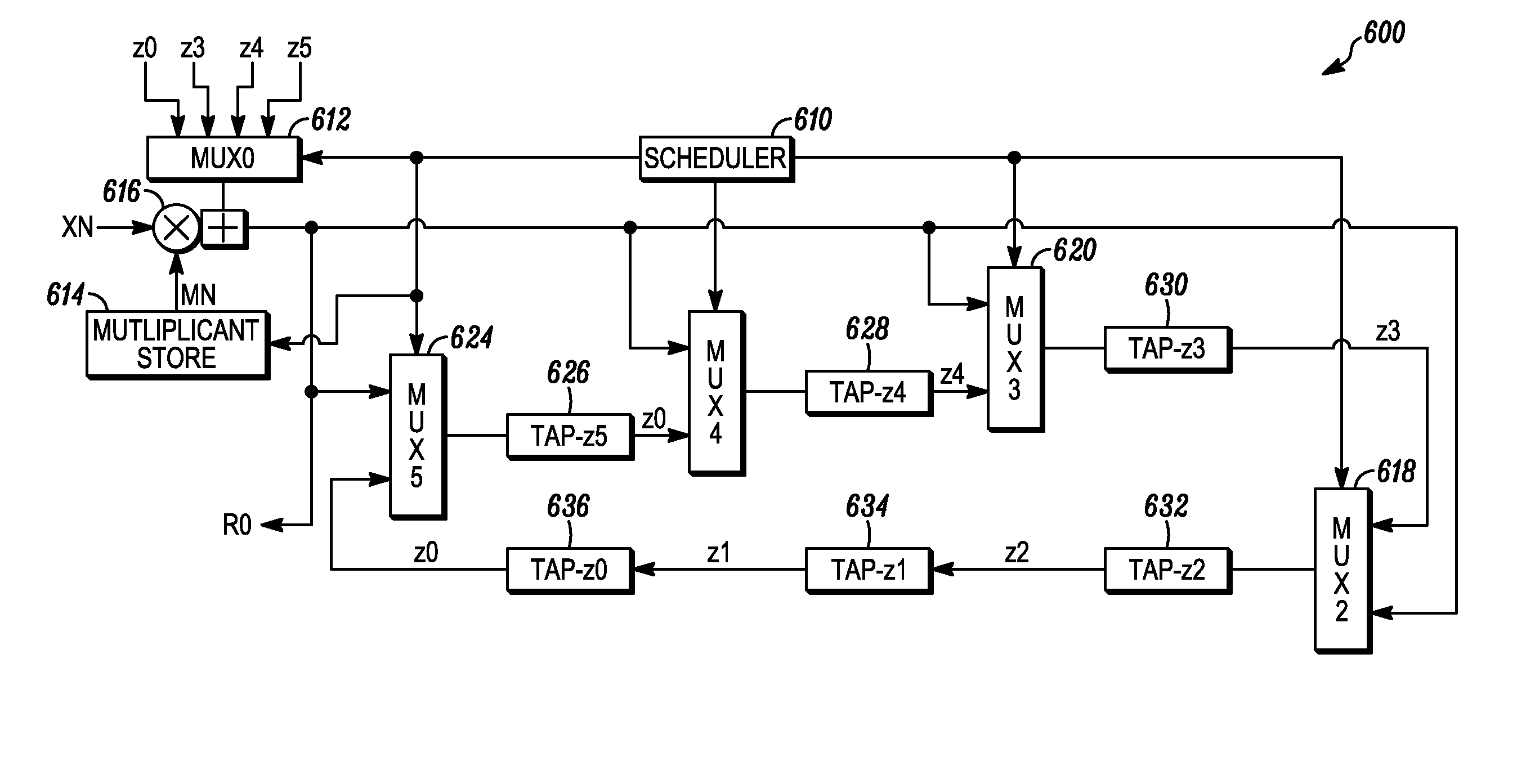

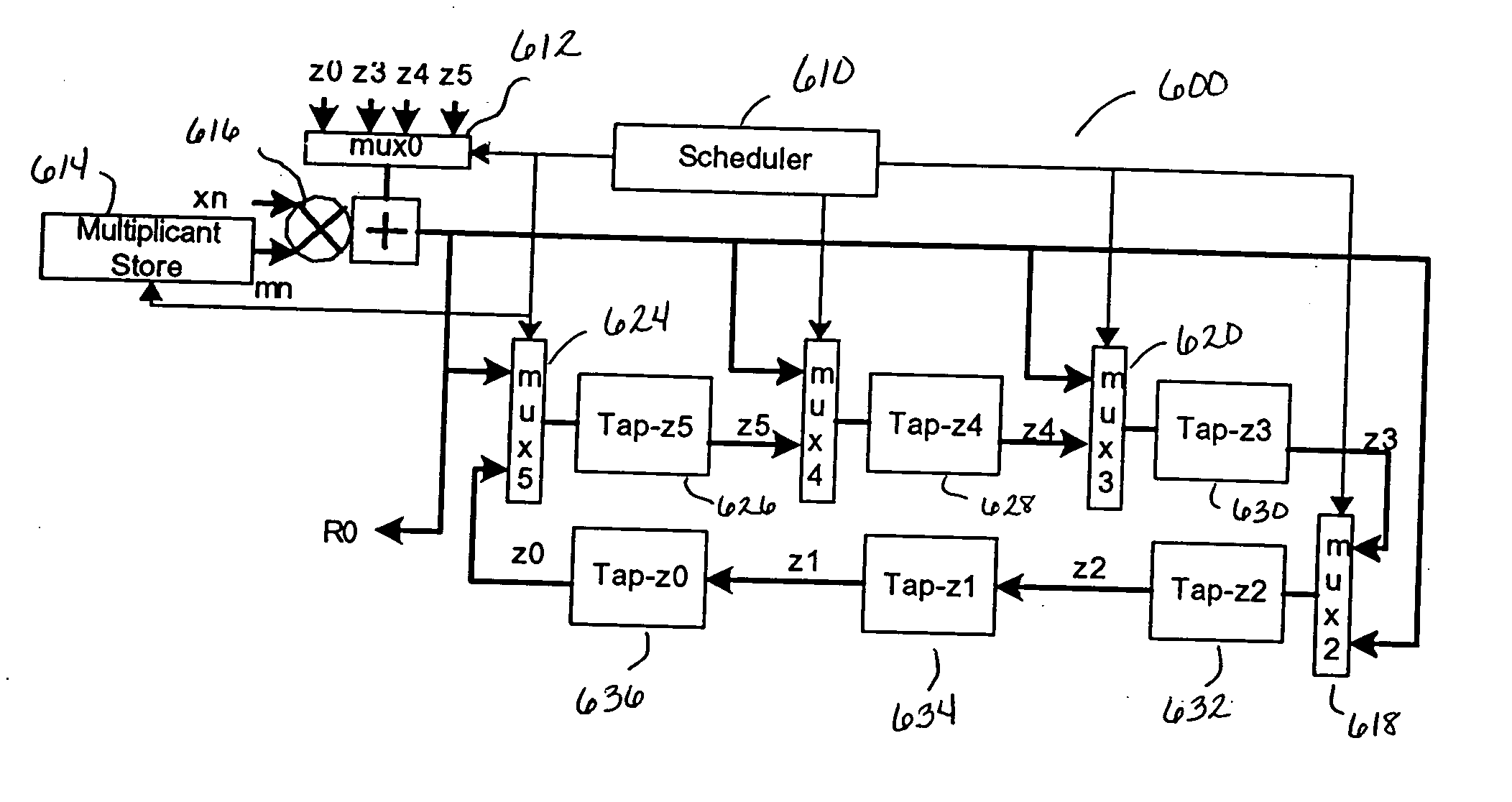

Efficient implementation of DSP functions in a field programmable gate array

ActiveUS20060075012A1Efficient cascadingLarge sectionDigital computer detailsComputation using denominational number representationMultiplexerParallel computing

An efficient implementation of DSP functions in a field programmable gate array (FPGA) using one or more computational blocks, each block having of a multiplier, an accumulator, and multiplexers. The structure implements most common DSP equations in a fast and a highly compact manner. A novel method for cascading these blocks with the help of dedicated DSP lines is provided, which leads to a very simple and proficient implementation of n-stage MAC operations.

Owner:MINERAL LASSEN

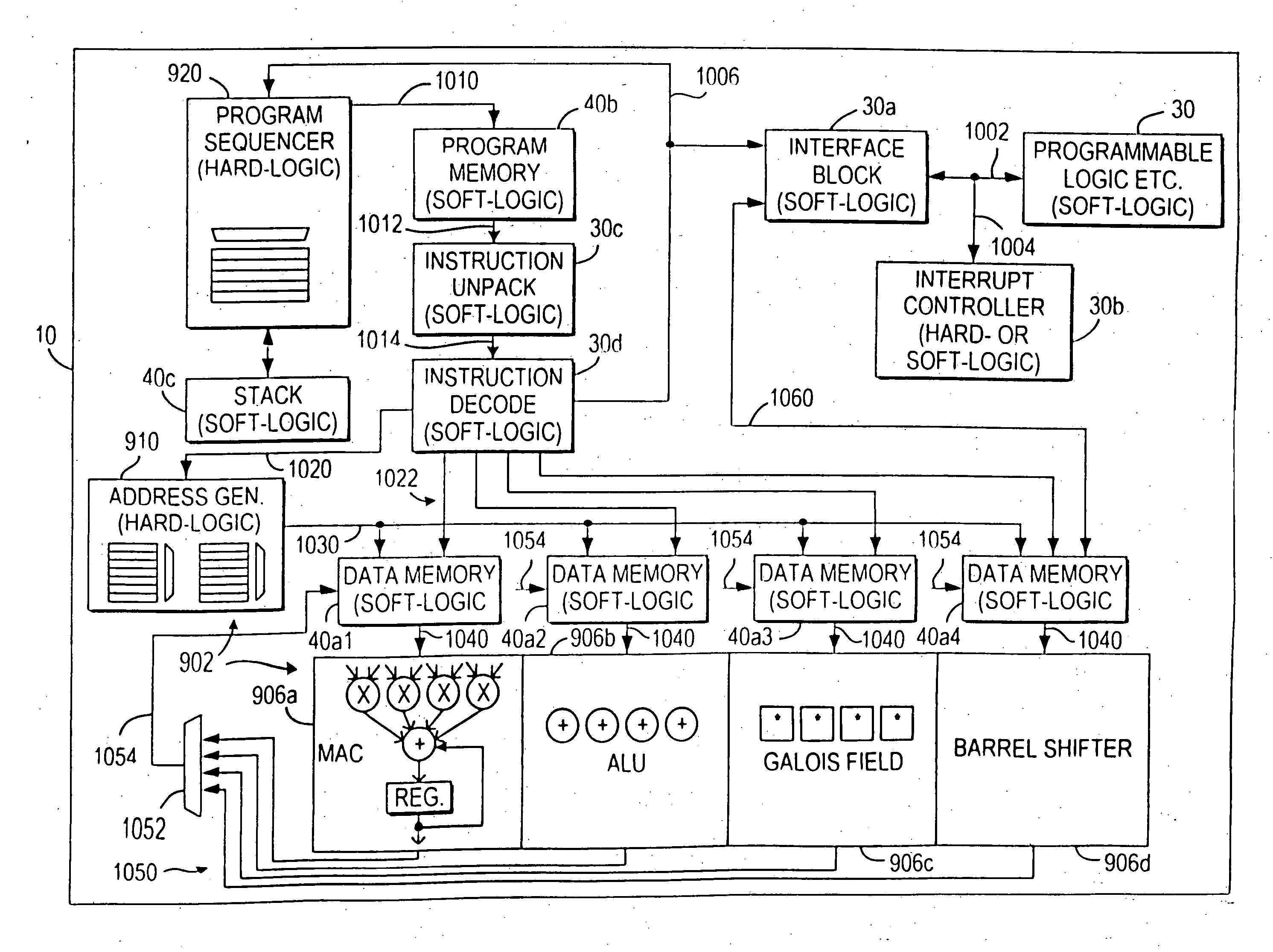

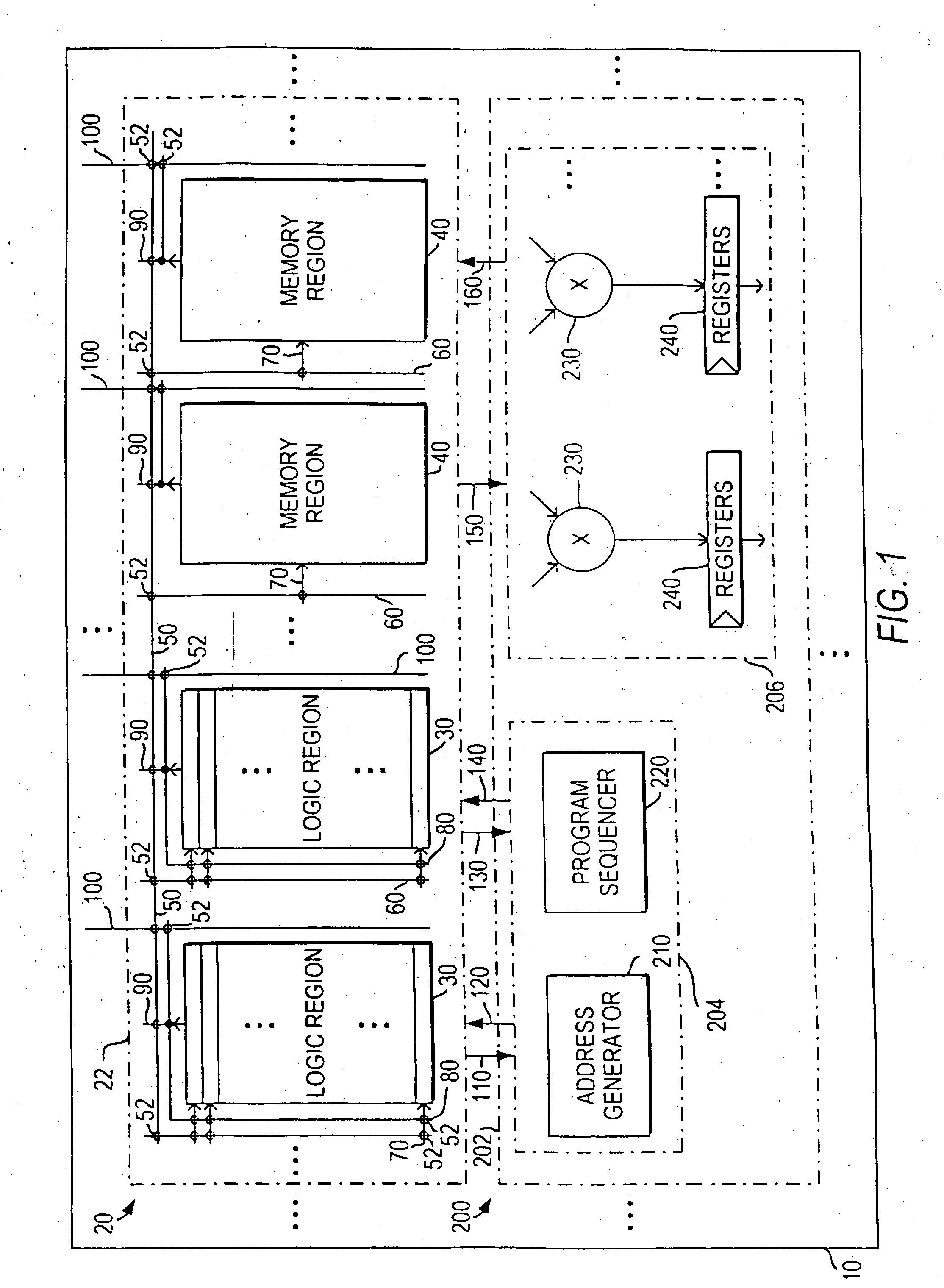

Programmable logic integrated circuit devices including dedicated processor components and hard-wired functional units

InactiveUS20050257030A1Function increaseFunctionalArchitecture with single central processing unitSpecific program execution arrangementsGeneral purposeArithmetic logic unit

A programmable logic integrated circuit device (“PLD”) includes programmable logic and a dedicated (i.e., at least partly hard-wired) processor object (or at least a high-functionality functional unit) for performing or at least helping to perform tasks that it is unduly inefficient to implement in the more general-purpose programmable logic and / or that, if implemented in the programmable logic, would operate unacceptably or at least undesirably slowly. The processor object includes an operating portion and a program sequencer that retrieves or at least helps to retrieve instructions for controlling or at least partly controlling the operating portion. The processor object may also include an address generator and / or a multi-ported register file for generating or at least helping to generate addresses of data on which the operating portion is to operate and / or destinations of data output by the operating portions. Examples of typical operating portions include multiplier-accumulators, arithmetic logic units, barrel shifters, and DSP circuitry of these or other kinds. The PLD may be provided with the capability to allow programs to be written for the device using local or “relative” addresses, and to automatically convert these addresses to actual or “absolute” addresses when the programs are actually performed by the device.

Owner:ALTERA CORP

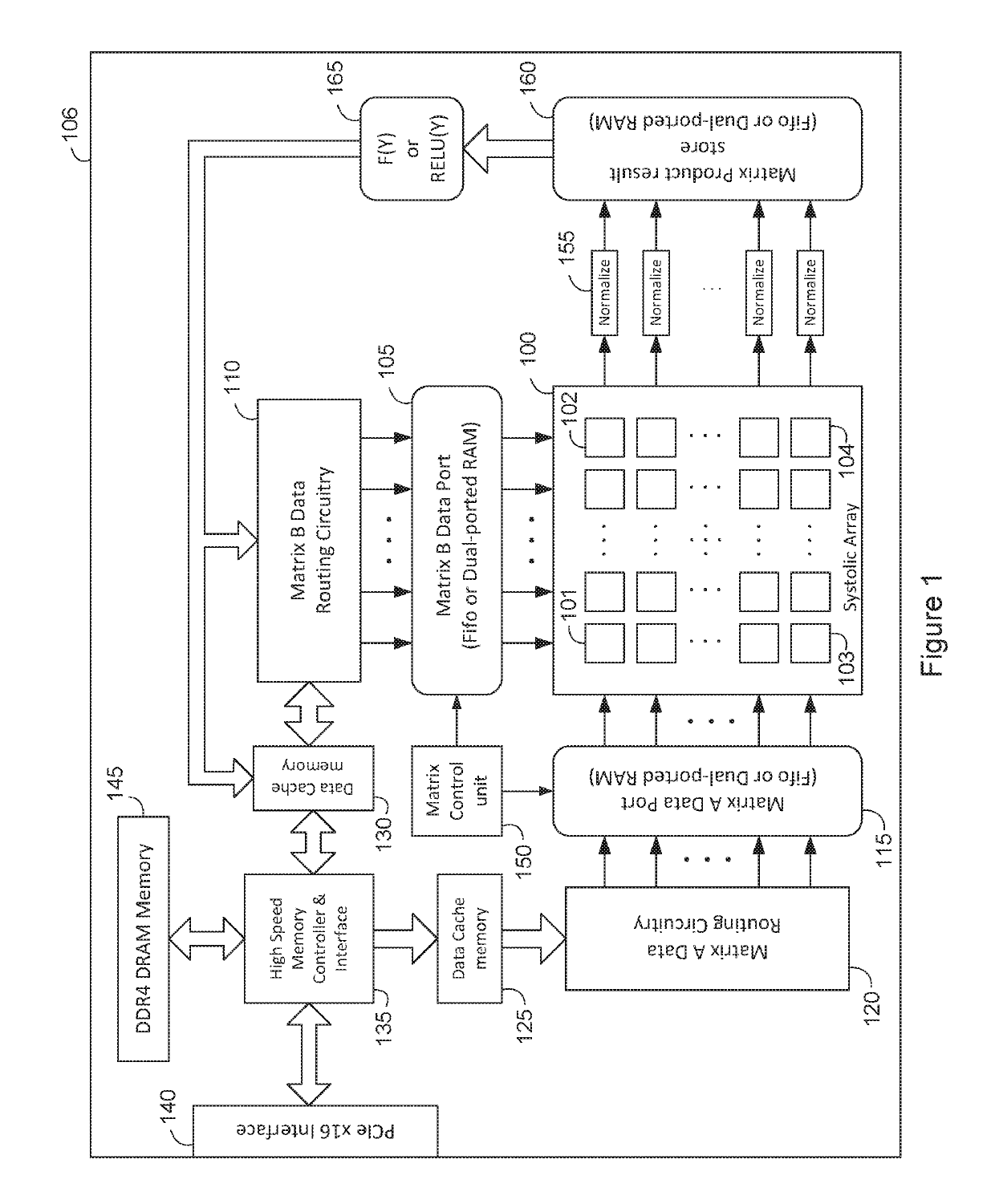

Residue number matrix multiplier

ActiveUS10387122B1Improve efficiencyIncrease speedComputations using residue arithmeticPhysical realisationFormation matrixAlgorithm

Arithmetic circuits and methods that perform efficient matrix multiplication for hardware acceleration of neural networks, machine learning, web search and other applications are disclosed herein. Various arrays of multiplier-accumulators may be coupled to form a matrix multiplier which processes data using high precision, fixed point residue number arithmetic.

Owner:OLSEN IP RESERVE

Virtual parallel multiplier-accumulator

InactiveUS6622153B1Computation using denominational number representationParallel computingTime-sharing

Owner:LUCENT TECH INC +1

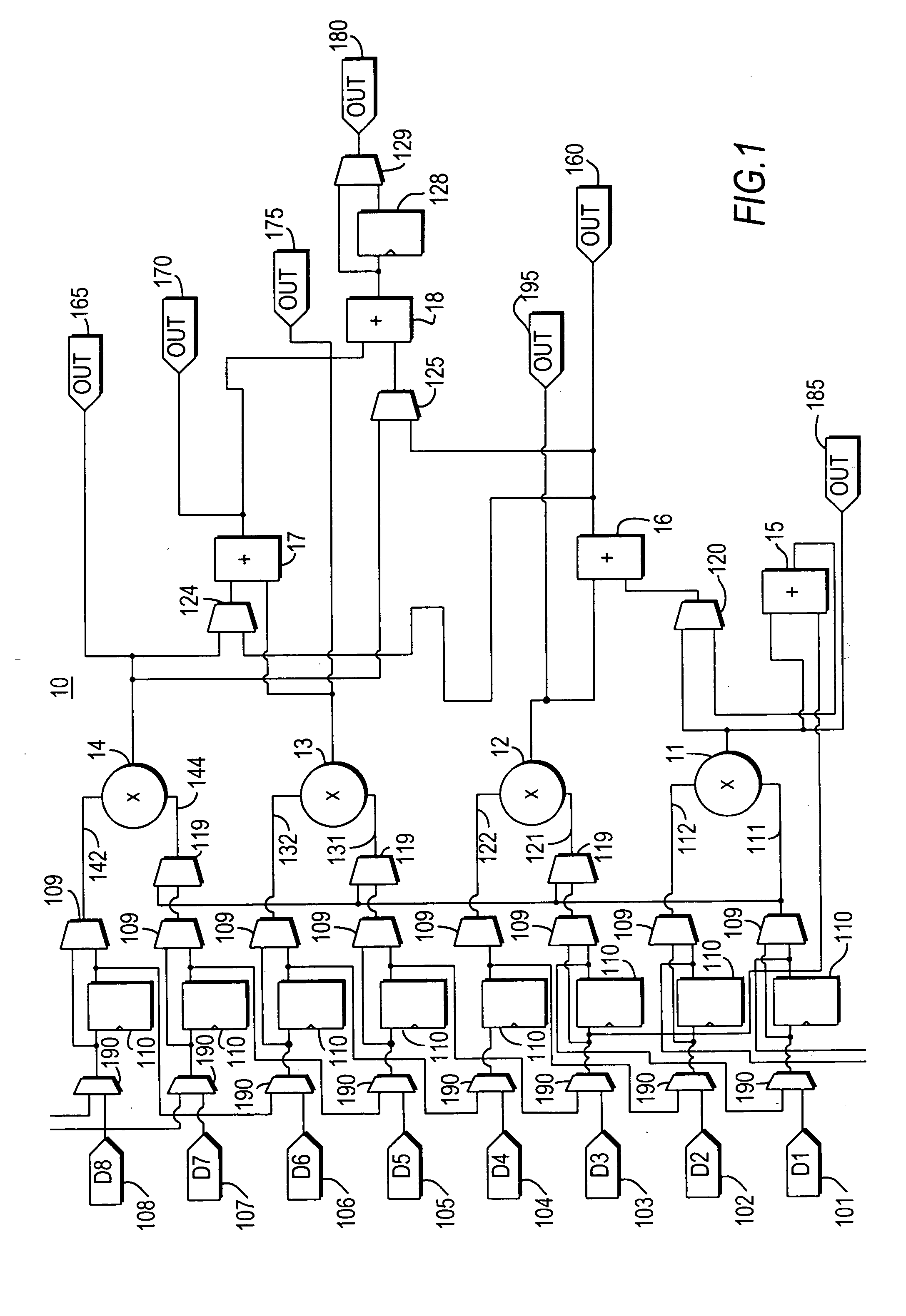

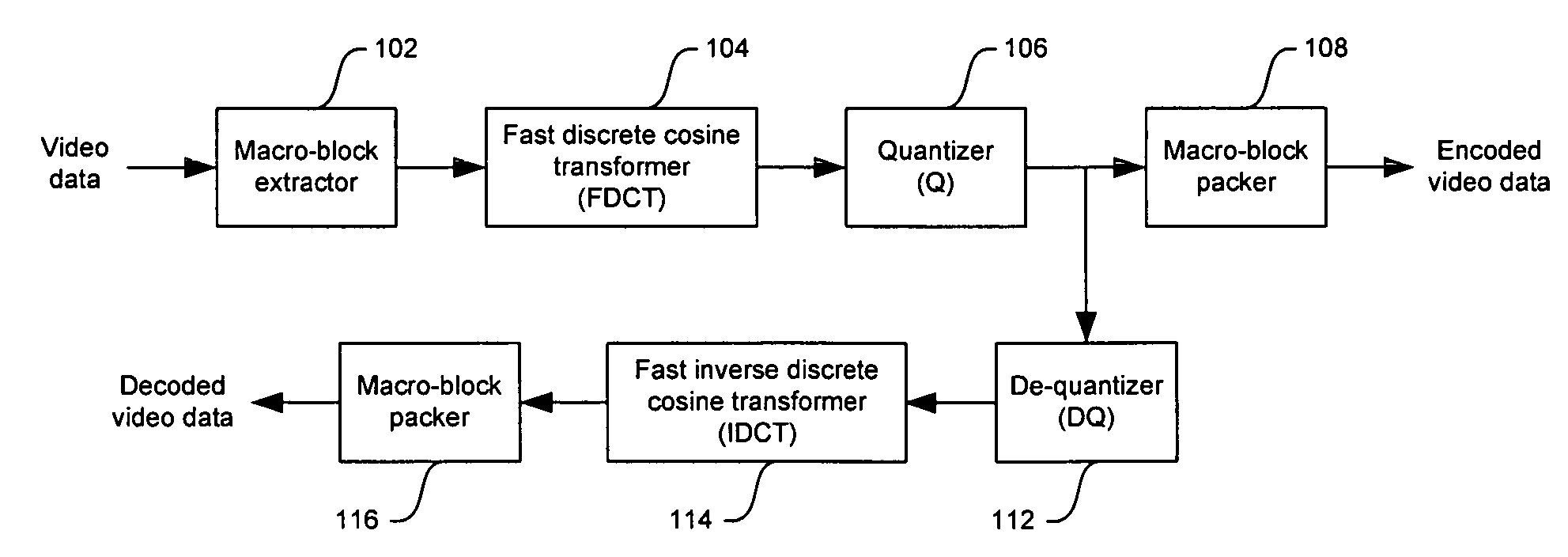

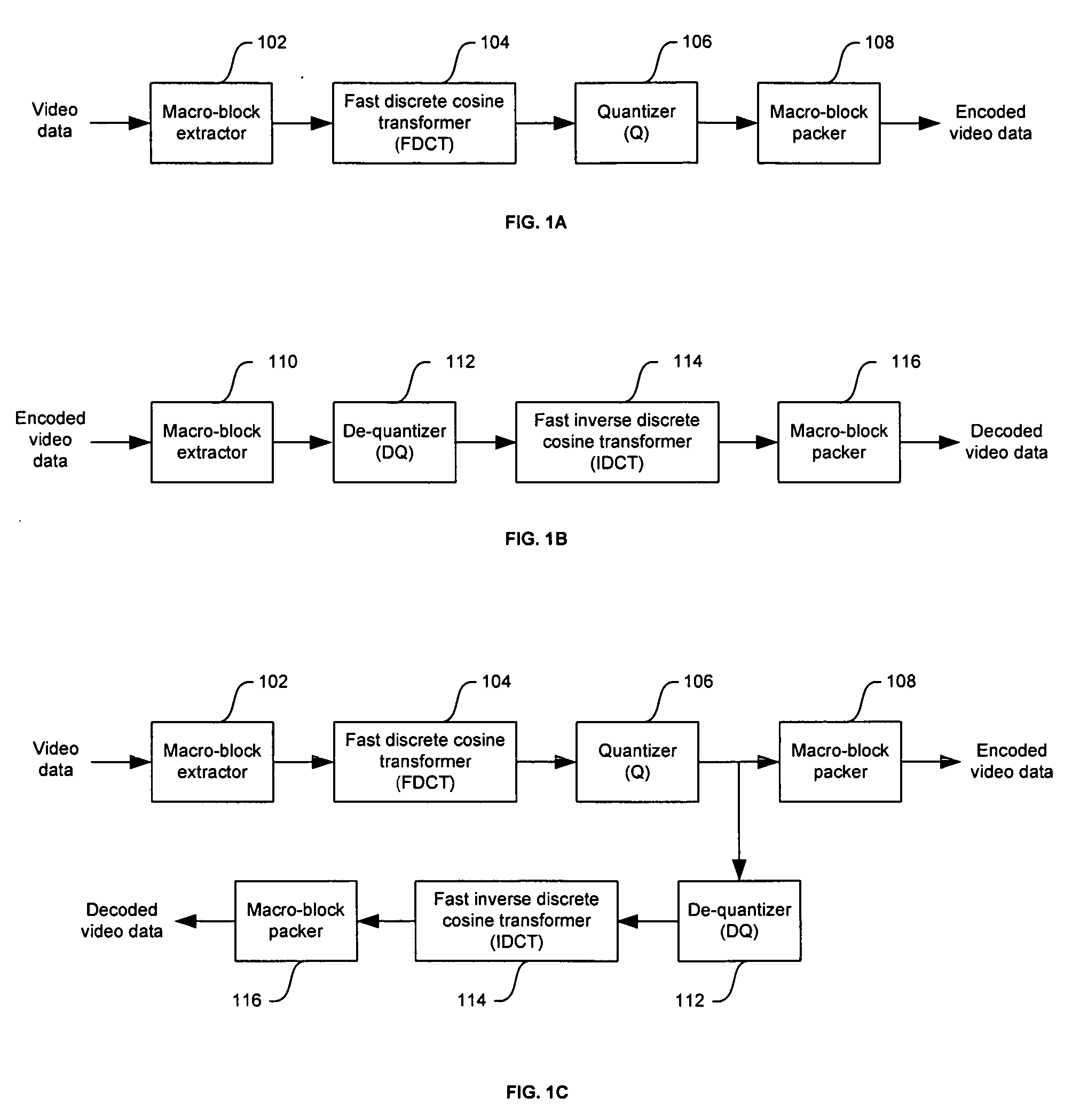

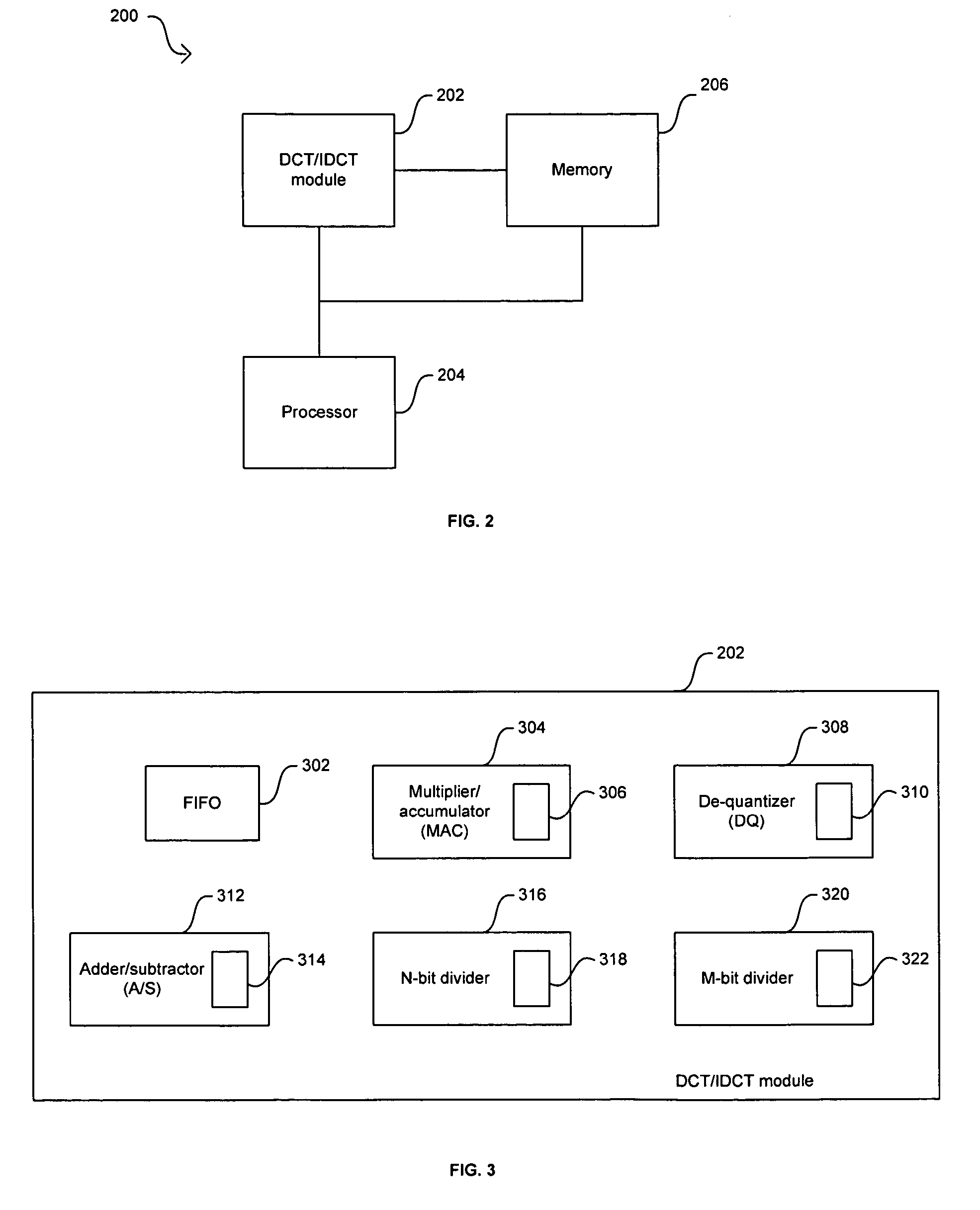

Method and system for dynamically configurable DCT/IDCT module in a wireless handset

ActiveUS20050281331A1Color television with pulse code modulationColor television with bandwidth reductionComputer architectureVideo processing

In a video processing system, a method and system for dynamically configurable DCT / IDCT module in a wireless handset are provided. A processor may be used to configure a quantization scheme and video format mode of operation and to configure a processing network in a DCT / IDCT module. The mode of operation may depend on whether the received video signal is in JPEG, MPEG, and / or H.263 format. The processing network may be configured into a DCT processing network configuration or an IDCT processing network configuration based on whether the received video signal is to be encoded or decoded respectively. The DCT / IDCT module may comprise a FIFO, an adder / subtractor, a multipler / accumulator, a plurality of digital dividers, and a de-quantizer. The plurality of digital dividers may comprise a 12-bit divider and an 7-bit divider. The mode and configuration modifications may be dynamically performed during operation of the wireless handset.

Owner:AVAGO TECH INT SALES PTE LTD

Programmable digital signal processor having a clustered SIMD microarchitecture including a complex short multiplier and an independent vector load unit

A programmable digital signal processor with a clustered SIMD microarchitecture includes a plurality of accelerator units, a processor core, and a complex computing unit. Each of the accelerator units may perform one or more dedicated functions. The processor core includes an integer execution unit that may execute integer instructions. The complex computing unit may include a complex arithmetic logic unit execution pipeline that may include one or more datapaths configured to execute complex vector instructions, and a vector load unit. In addition, each datapath may include a complex short multiplier accumulator unit that may be configured to multiply a complex data value by values in the set of numbers including {0, + / -1}+ {0, + / -i}. The vector load unit may cause the complex data items to be fetched each clock cycle for use by any datapath in the complex arithmetic logic unit execution pipeline.

Owner:扩你科公司

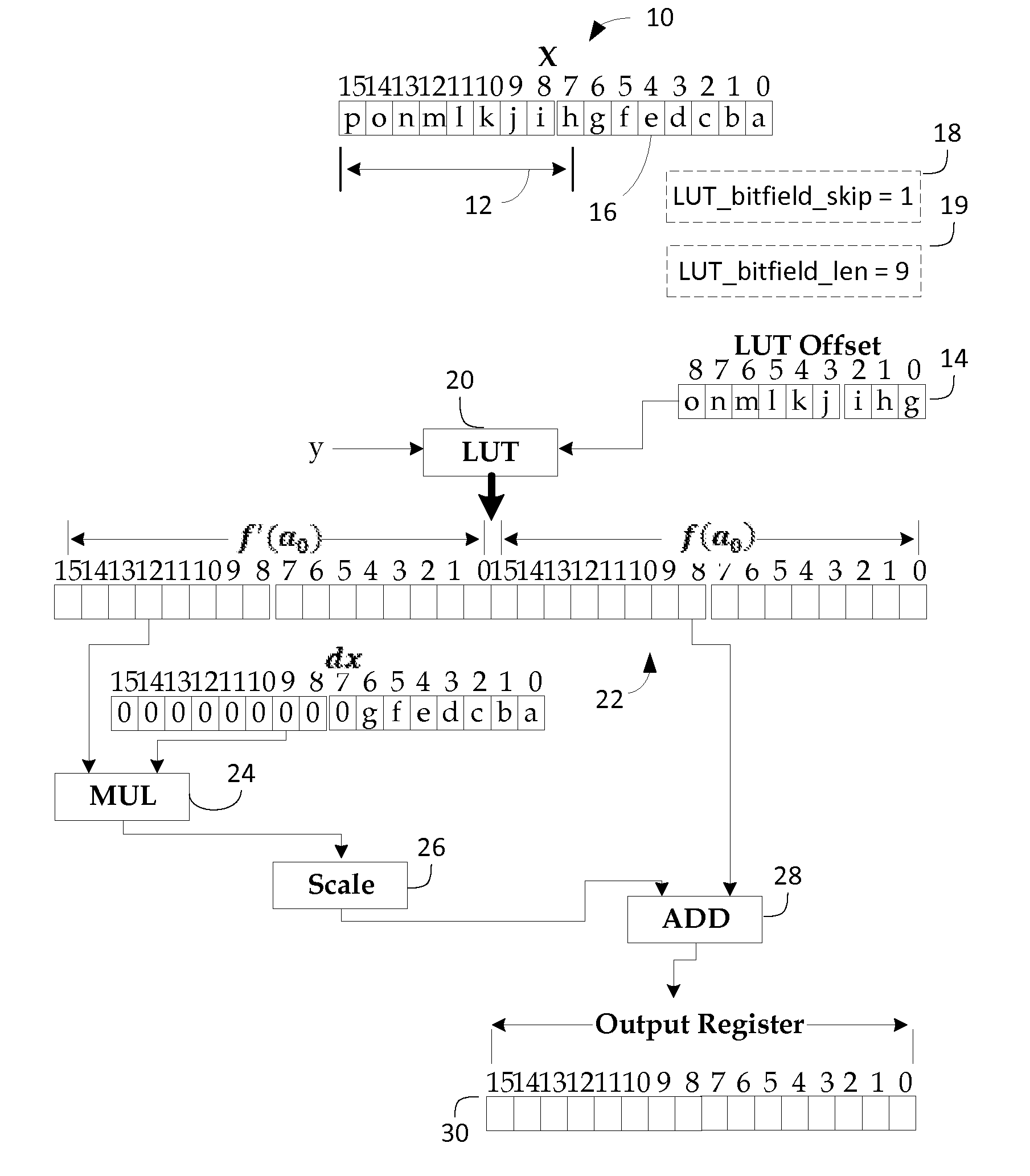

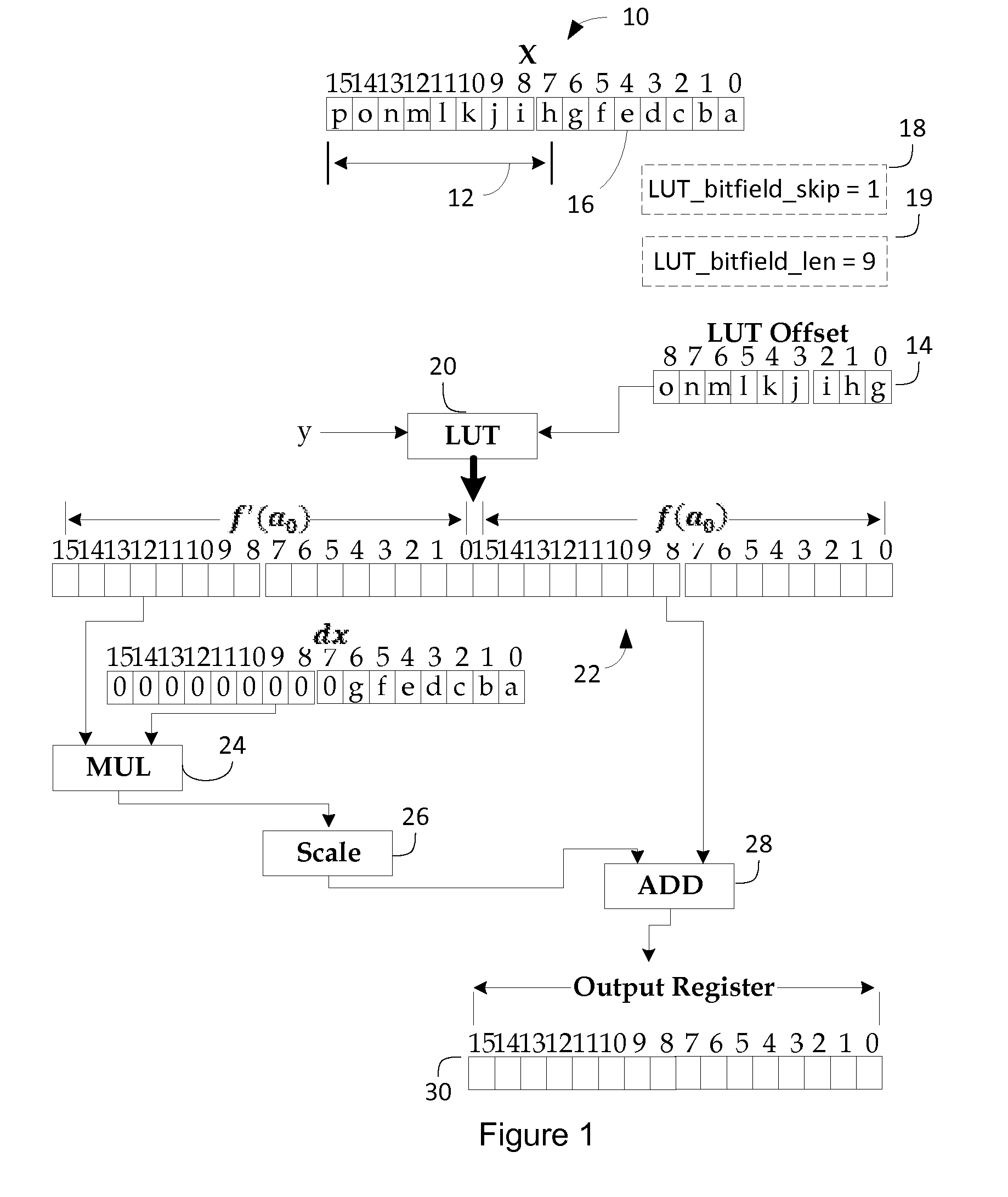

Algebraic processor

InactiveUS20130185345A1Computation using non-contact making devicesDigital function generatorsComputer scienceString interpolation

Owner:QUALCOMM INC

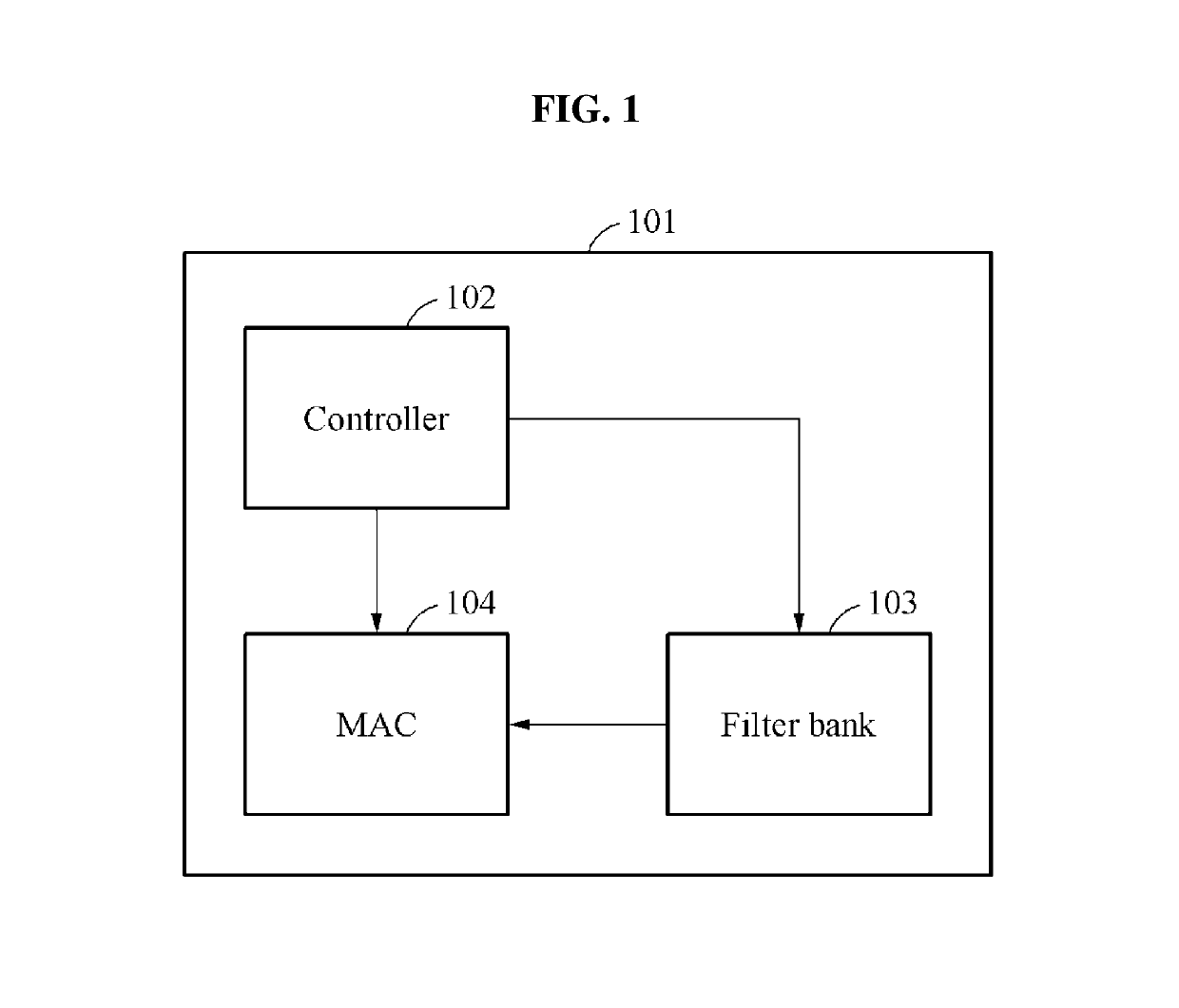

Convolution processing apparatus and method

ActiveUS20180150721A1Character and pattern recognitionNeural architecturesComputer scienceFilter bank

A convolution processing apparatus and method are disclosed. The convolution processing apparatus may include a controller configured to load a pixel of an input image and skip a process associated with the pixel in response to a value of the loaded pixel being 0, a filter bank including at least one filter and configured to extract at least one kernel element corresponding to the pixel from the at least one filter based on an index of the pixel and an input channel of the pixel, and a multiplier-accumulator (MAC) configured to perform a convolution operation based on the value of the pixel and a value of the at least one kernel element and accumulatively store an operation result of the convolution operation, the operation result corresponding to an output image.

Owner:SAMSUNG ELECTRONICS CO LTD +1

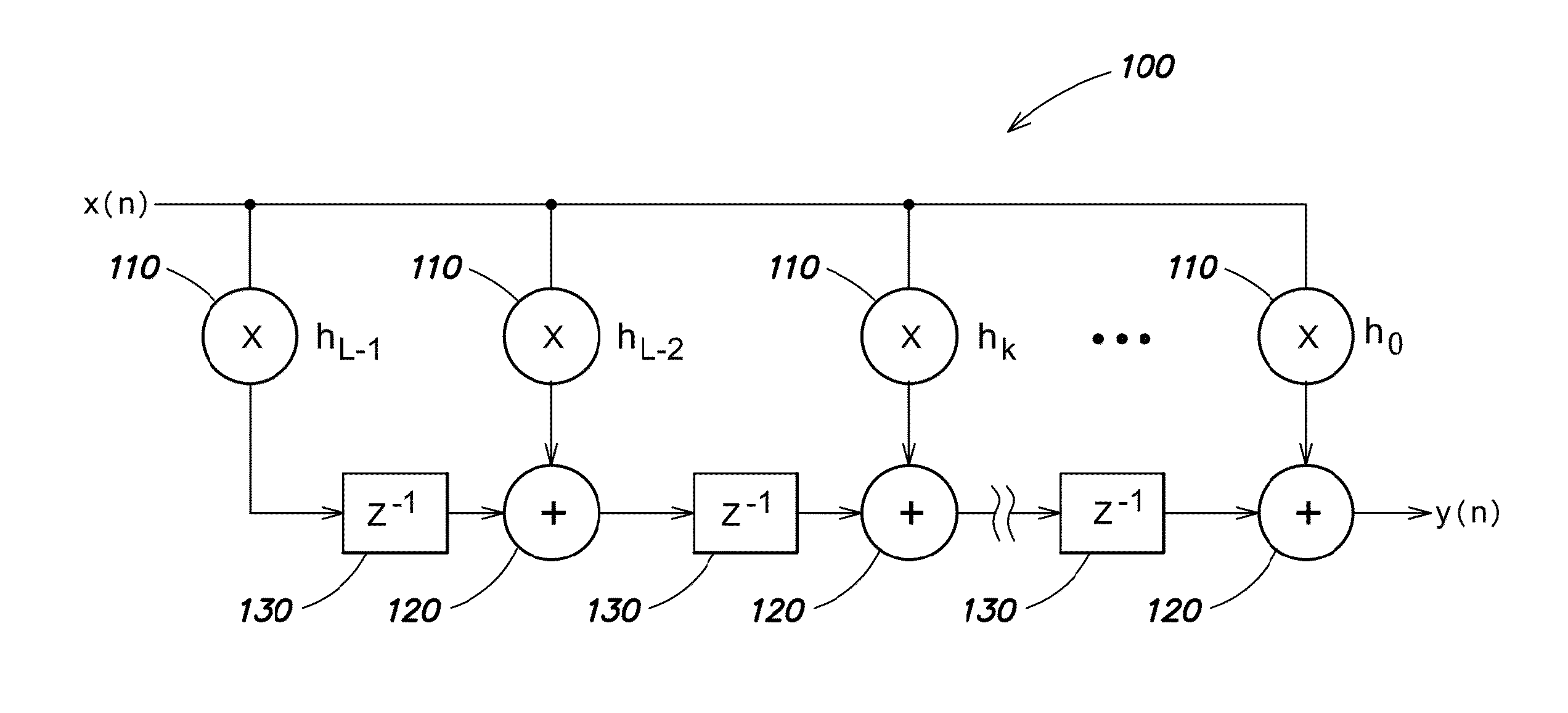

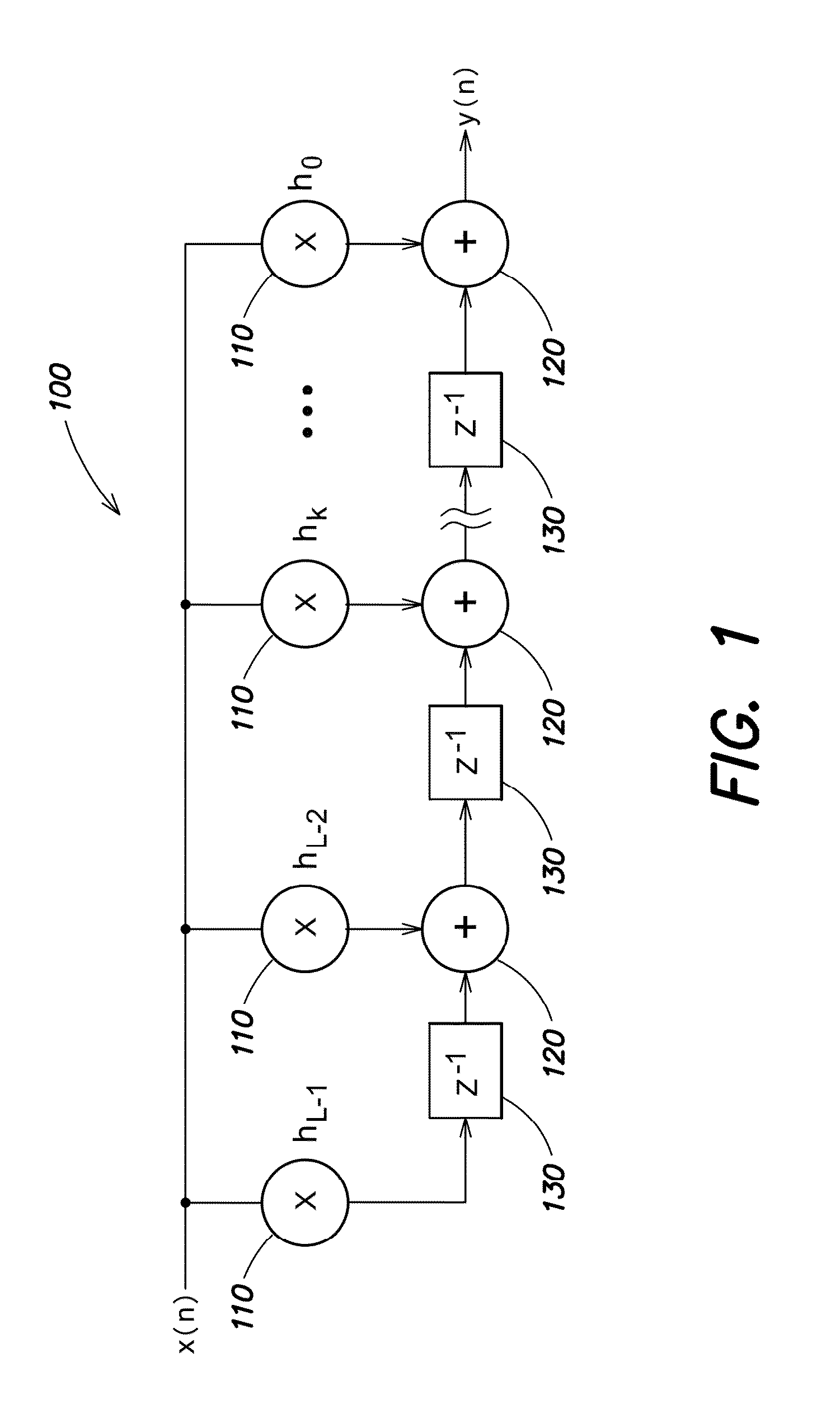

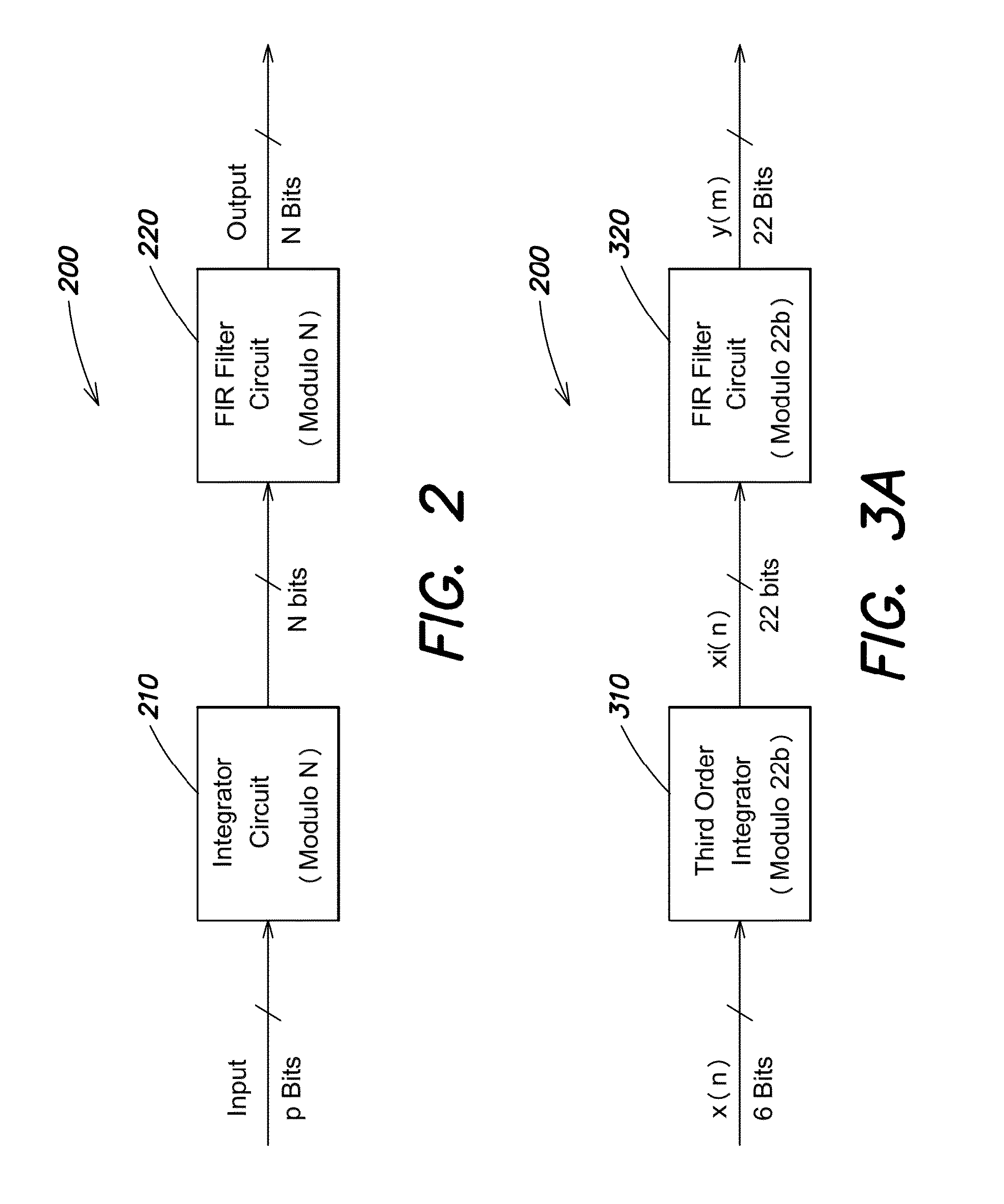

Polyphase decimation fir filters and methods

ActiveUS20160182014A1Accurate outputDigital technique networkComplex mathematical operationsIntegratorIntegrated circuit

A polyphase decimation FIR filter apparatus including a modulo integrator circuit configured to integrate input samples and to provide integrated input samples; and a polyphase FIR filter circuit configured to process the integrated input samples, the polyphase FIR filter circuit including a plurality of multiplier accumulator circuits, each configured to accumulate products of coefficients and respective integrated signal samples, wherein each of the multiplier accumulator circuits receives a subset of FIR filter coefficients, wherein the FIR filter coefficients are derived as the nth difference of original filter coefficients, where n is a number of integrators in the integrator circuit, and wherein the FIR filter circuit is configured to perform computation operations with modulo arithmetic.

Owner:STMICROELECTRONICS INT NV

Multiplier-accumulator block mode splitting

ActiveUS7437401B2Computation using non-contact making devicesDigital computer detailsBinary multiplierProgrammable logic device

A programmable logic device is provided that includes a MAC block having mode splitting capabilities. Different modes of operation may be implemented simultaneously whereby the multipliers and other DSP circuitry of the MAC block may be allocated among the different modes of operation. For example, one multiplier may be used to implement a multiply mode while another two multipliers may be used to implement a sum of two multipliers mode.

Owner:TAHOE RES LTD

Convolution processing apparatus and method

Owner:SAMSUNG ELECTRONICS CO LTD +1

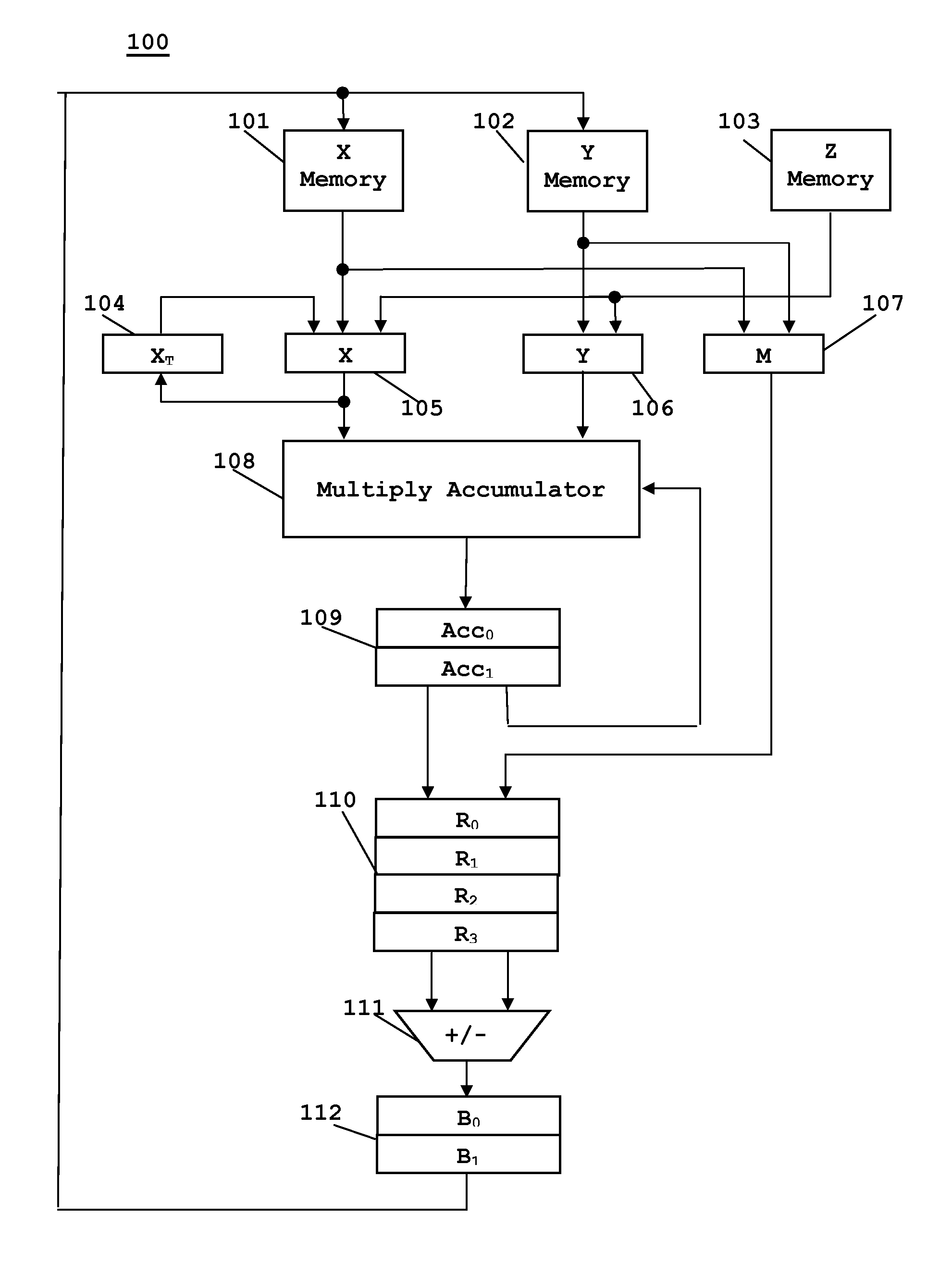

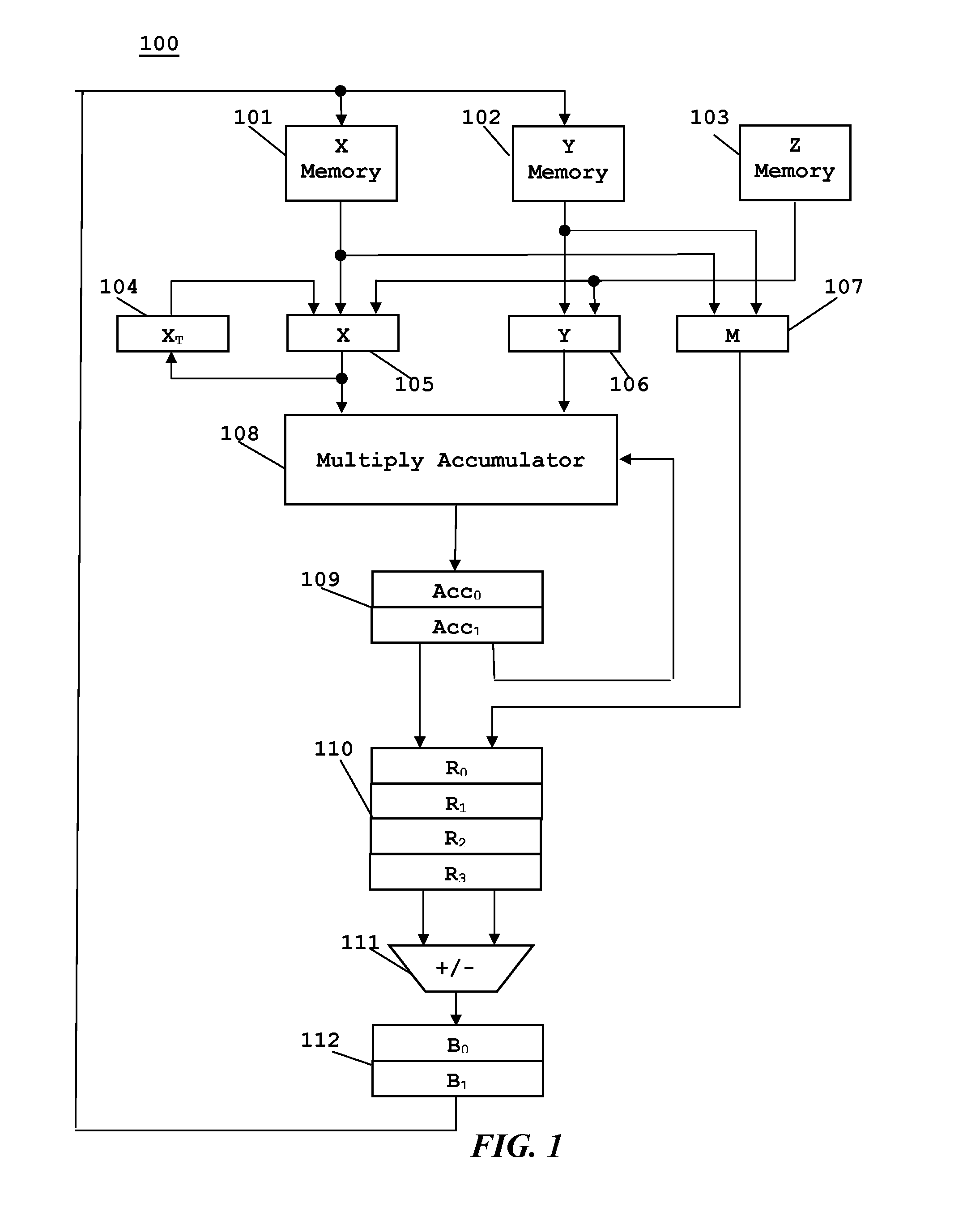

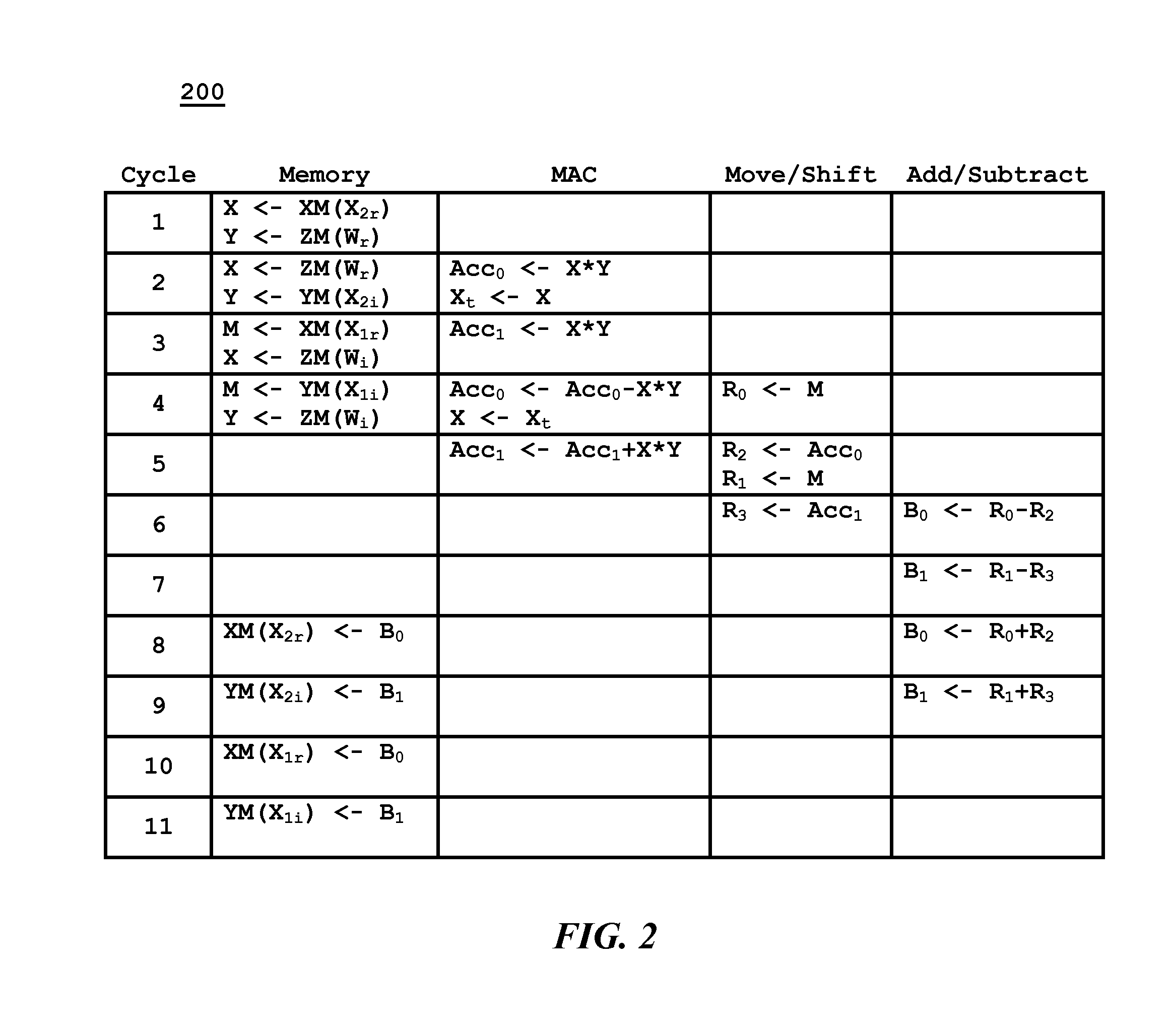

High performance implementation of the FFT butterfly computation

ActiveUS20160132461A1Digital computer detailsComplex mathematical operationsWrite bufferProcessor register

This invention is a FFT butterfly circuit. This circuit includes four temporary data registers connected to three memories. The three memories include read / write X and Y memories and a read only twiddle coefficient memory. A multiplier-accumulator forms a product and accumulates the product with one of two accumulator registers. A register file with plural registers is loaded from one of the accumulator registers or the fourth temporary data register. An adder / subtracter forms a selected one of a sum of registers or a difference of registers. A write buffer with two buffers temporarily stores data from the adder / subtracter before storage in the first or second memory. The X and Y memories must be read / write but the twiddle memory may be read only.

Owner:TEXAS INSTR INC

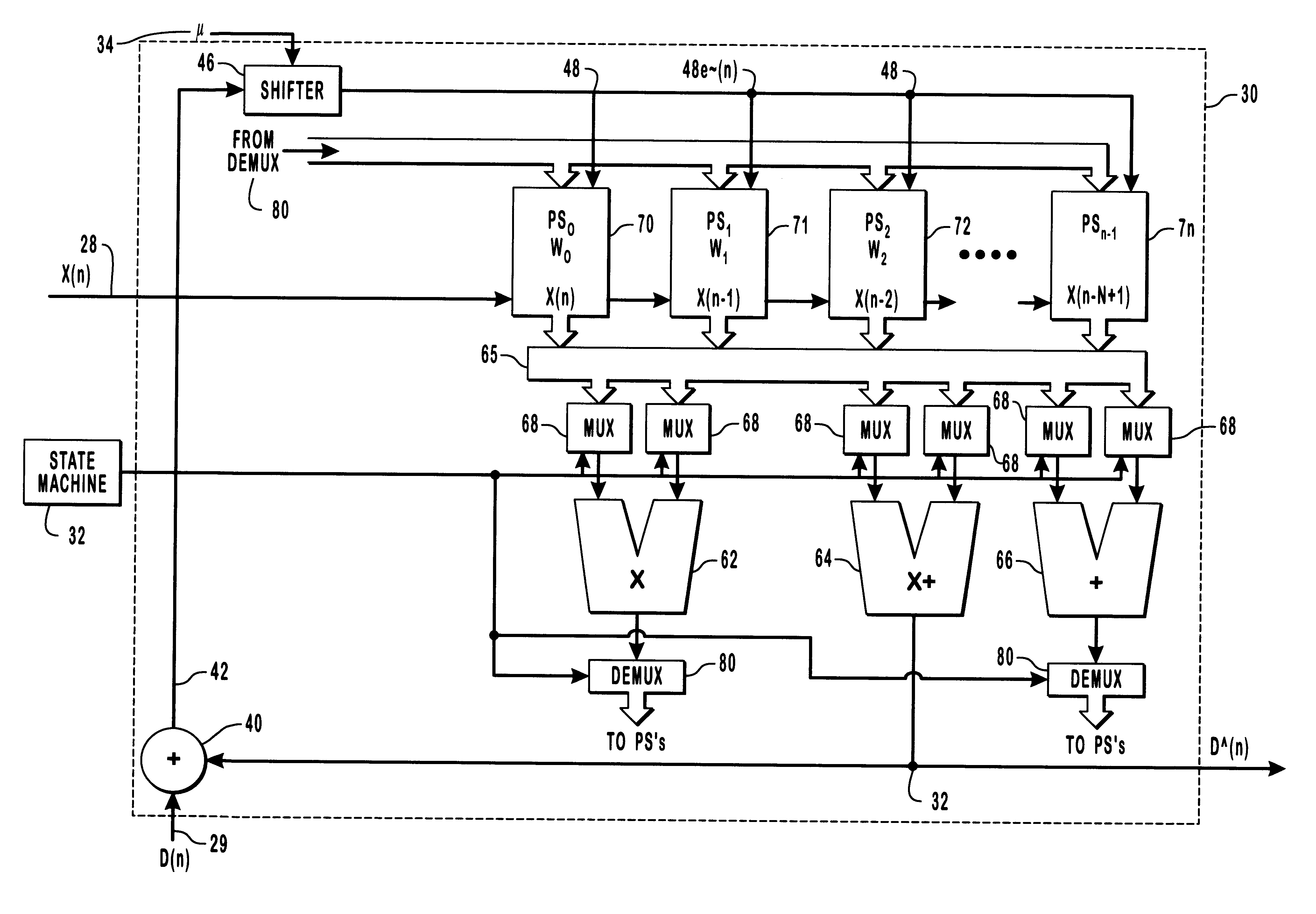

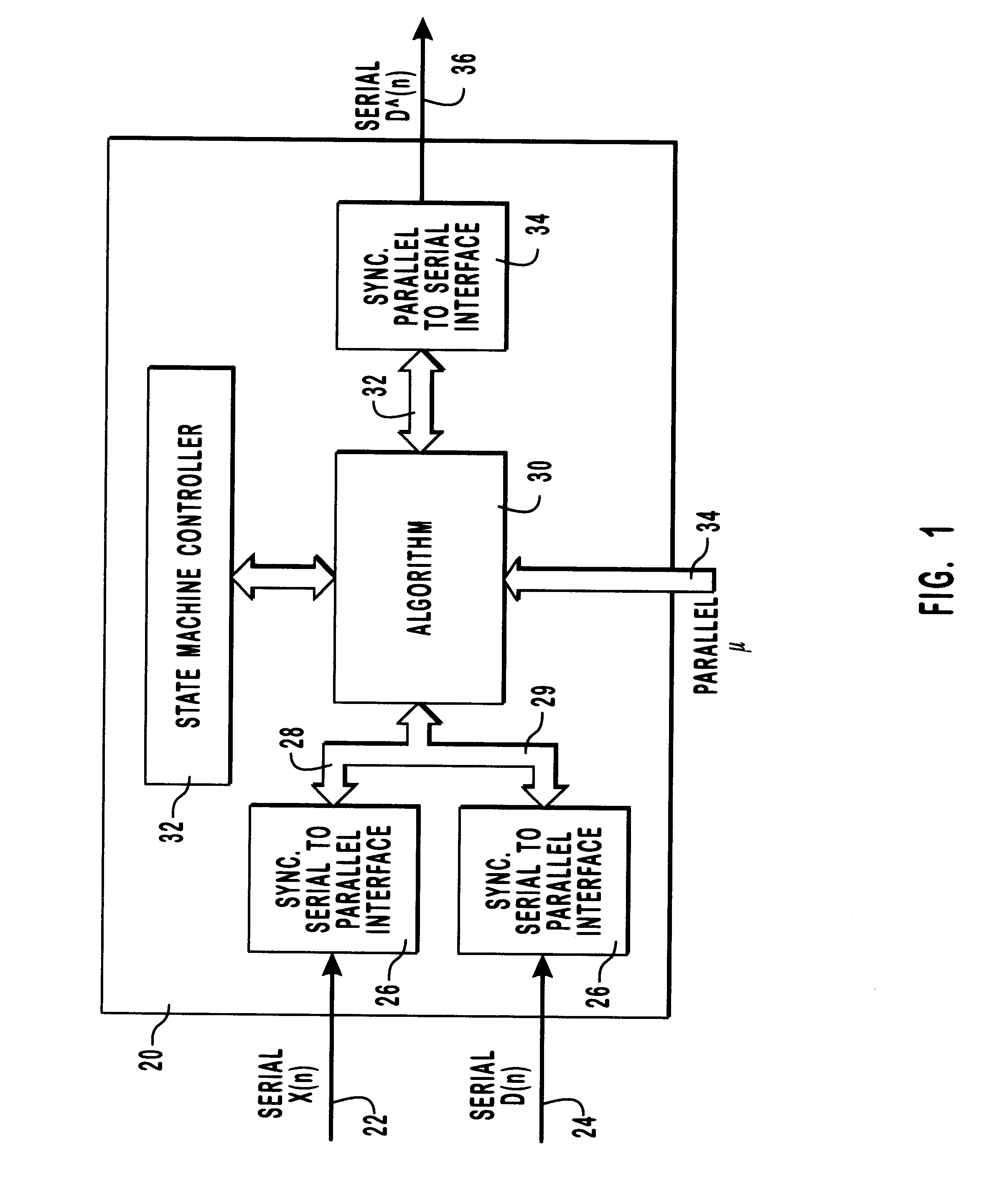

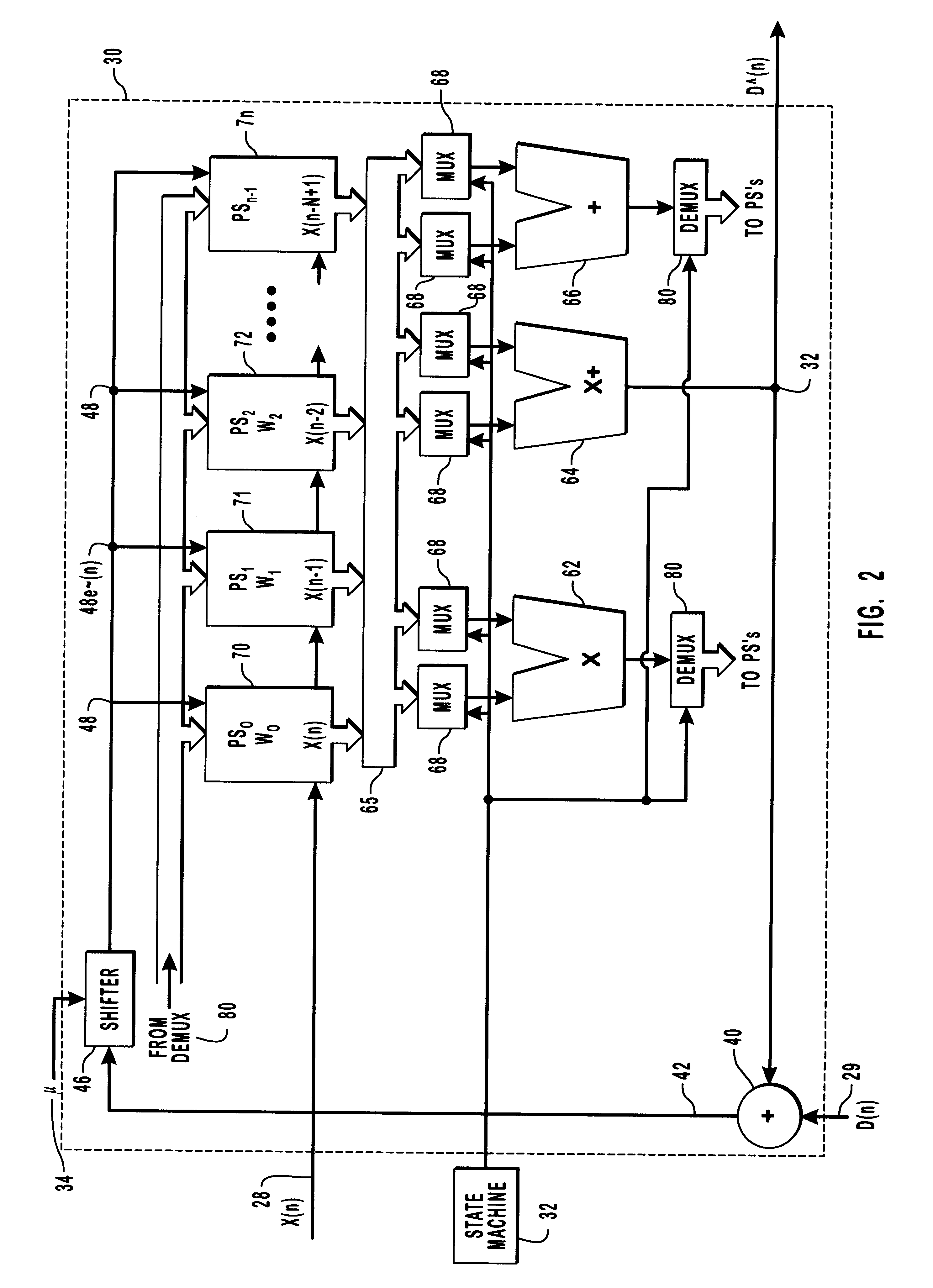

Methods and apparatus for adaptive filters

InactiveUS6308192B1Shorten the timeSave physical spaceAdaptive networkComplex mathematical operationsBinary multiplierLow Pin Count

The present invention relates to improved adaptive filtering techniques and architectures. Preferably, this filtering is performed as part of the digital processing that occurs with a digital signal processor. It is a feature of this invention that the adaptive filtering taught herein provides the advantages of both serial and parallel architectures, without the accompanying disadvantages thereof. In particular, an adaptive filter is taught that possesses low pin counts, fast processing times suitable for high-speed applications and reduced numbers of filter elements. In a preferred embodiment, the inputs and outputs of the adaptive filter are supplied to and from the adaptive filter in a serial manner while the processing is performed internally within the adaptive filter in a parallel manner. The parallel processing is preferably effected by a delayed least-means-squares algorithm implemented using a single adder, a single multiplier and a single multiplier-accumulator instead of by numerous such adders, multipliers and multiplier-accumulators.

Owner:HEWLETT PACKARD DEV CO LP

FIR (finite impulse response) filter structure for low-power fault-tolerant circuit

ActiveCN103955585AReduce areaReduce power consumptionSpecial data processing applicationsDigital signal processingWallace tree

The invention belongs to the technical field of low-power integrated circuits, and particularly relates to an FIR (finite impulse response) filter structure for a low-power fault-tolerant circuit. The FIR filter structure mainly includes transcoding logical units, multiplier-accumulators (MACs), and a residual bit transmission chain, wherein the transcoding logical units are used for conversion between the two's complement and sign-magnitude binary numbers; each MAC mainly includes a sign processing unit, a Wallace tree, a last-stage accumulator and a magnitude computing logical unit; a residual bit is produced by each MAC and transmitted individually, and all the residual bits are added up at the last stage, so as to shorten the critical path. The FIR filter structure for the low-power fault-tolerant circuit has the characteristics of simple structure, small area overhead, low critical path activation probability and the like, and is particularly suitable for a low-power fault-tolerant digital signal processing system based on the VOS (voltage over-scaling) technology.

Owner:FUDAN UNIV

Polyphase filter with optimized silicon area

InactiveUS20050262176A1Increase the number ofDigital technique networkComplex mathematical operationsComputer scienceSilicon

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

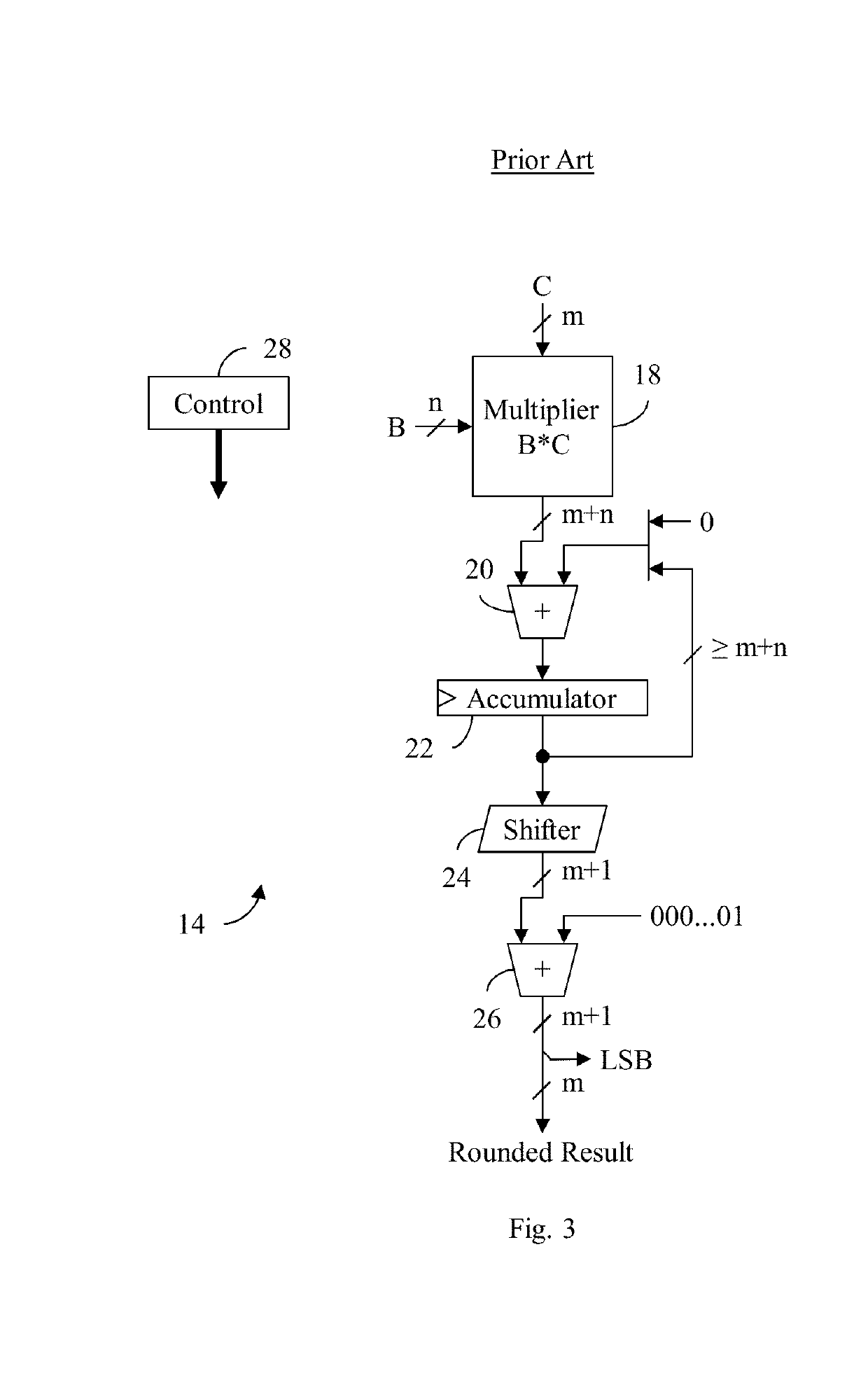

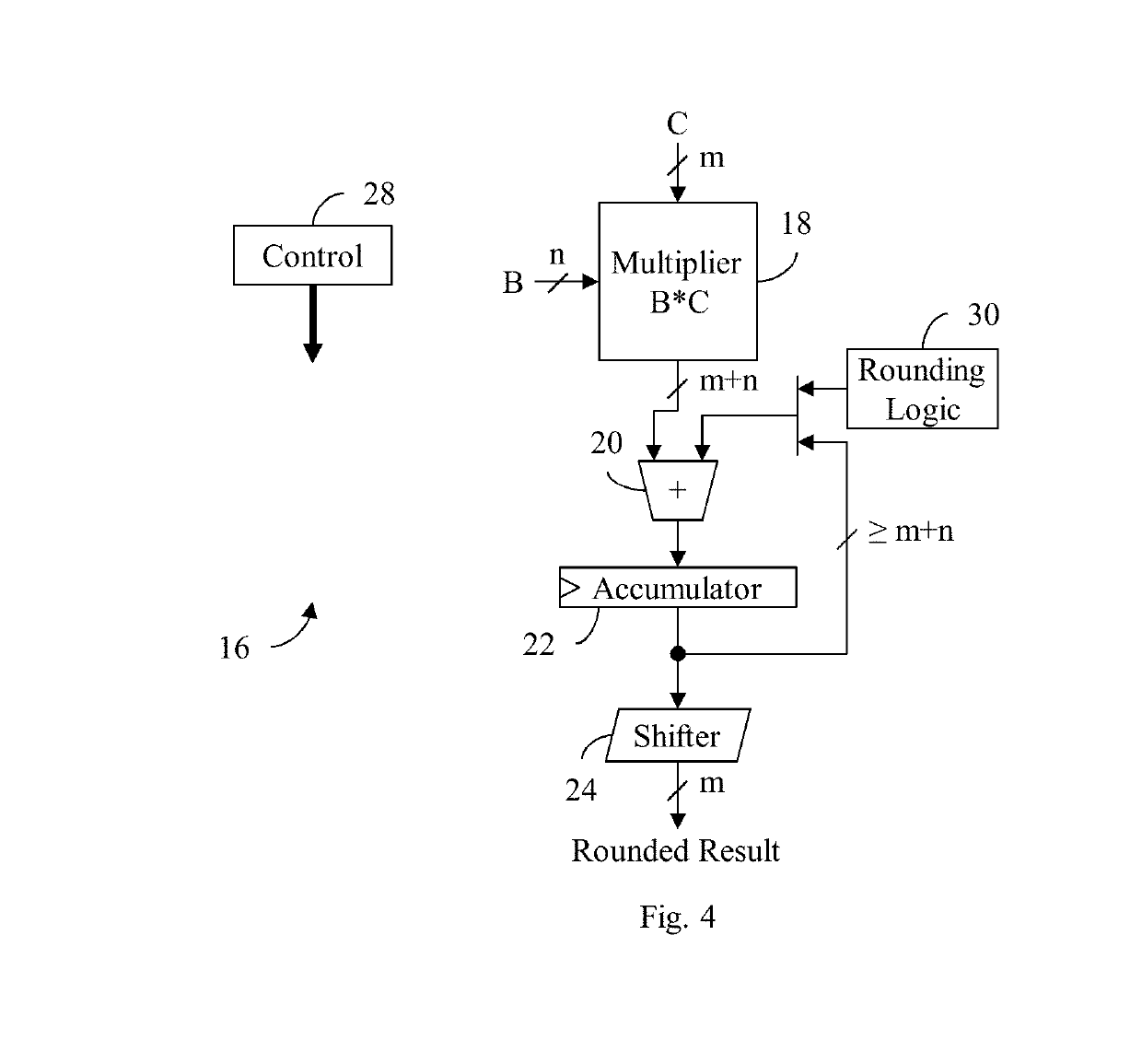

Method and apparatus for pre-rounding in a multiplier-accumulator

Owner:TEMPO SEMICON

Residue number matrix multiplier

ActiveUS20190339942A1Improve efficiencyIncrease speedComputations using residue arithmeticPhysical realisationFormation matrixAlgorithm

Arithmetic circuits and methods that perform efficient matrix multiplication for hardware acceleration of neural networks, machine learning, web search and other applications are disclosed herein. Various arrays of multiplier-accumulators may be coupled to form a matrix multiplier which processes data using high precision, fixed point residue number arithmetic.

Owner:OLSEN IP RESERVE

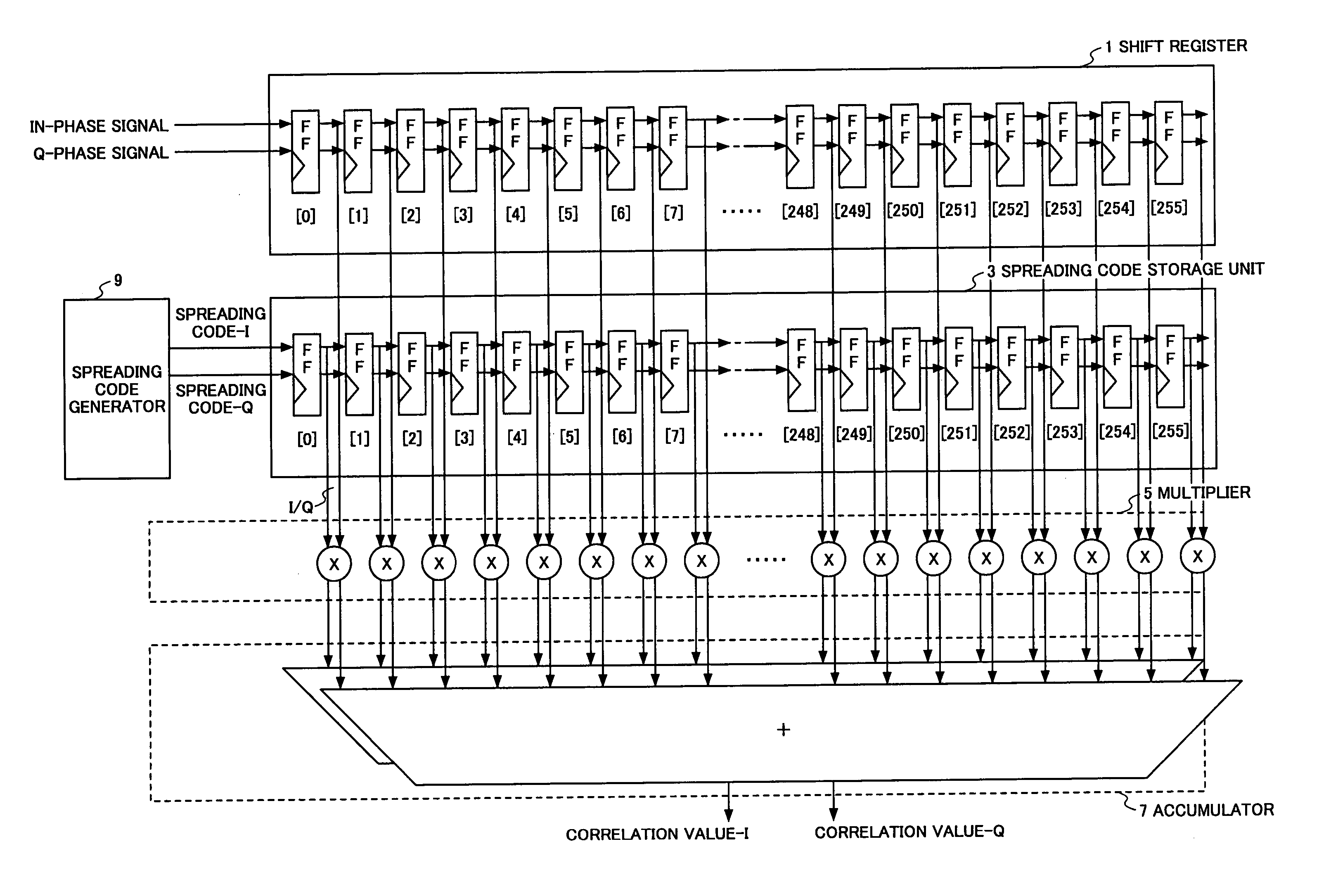

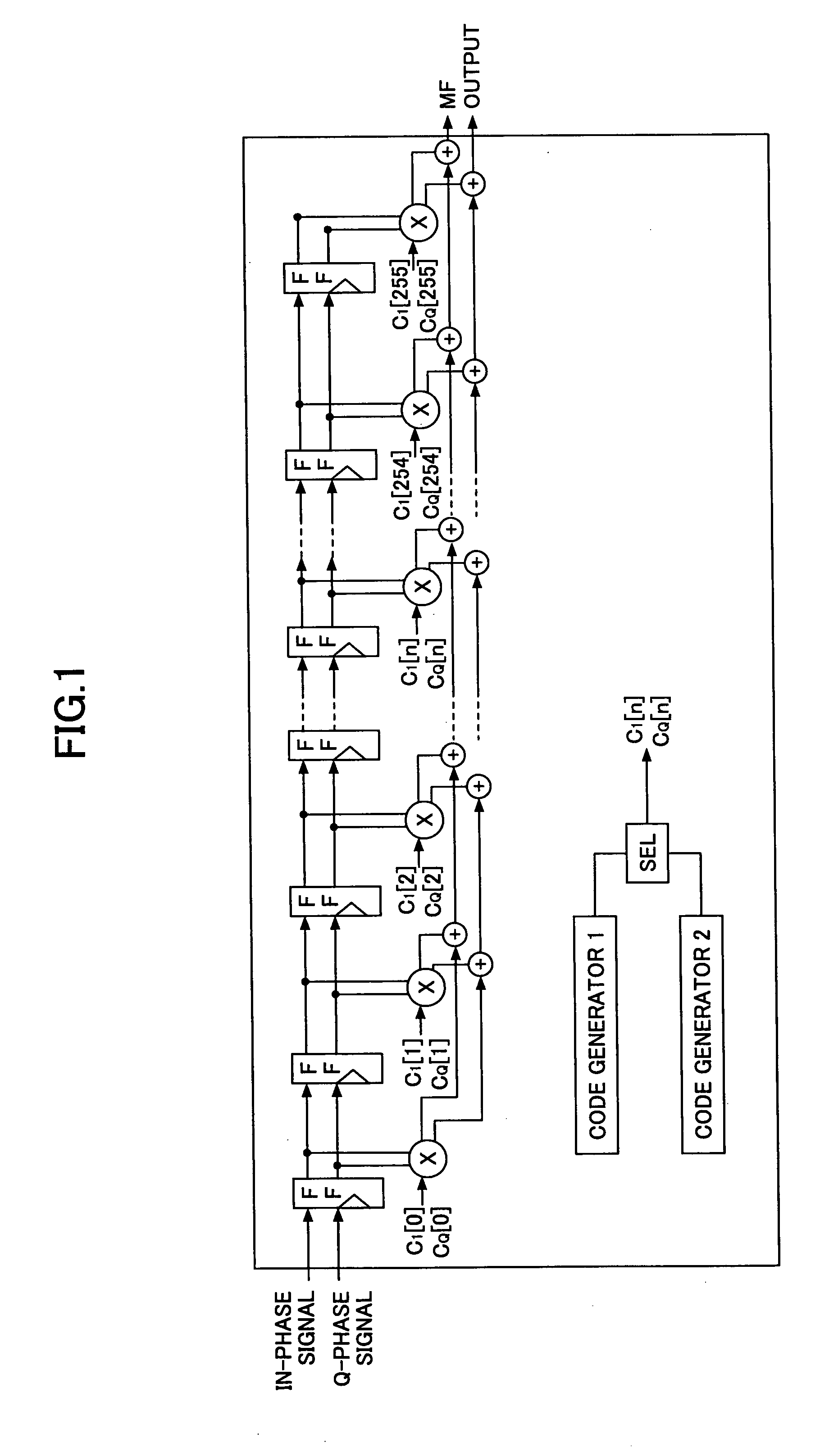

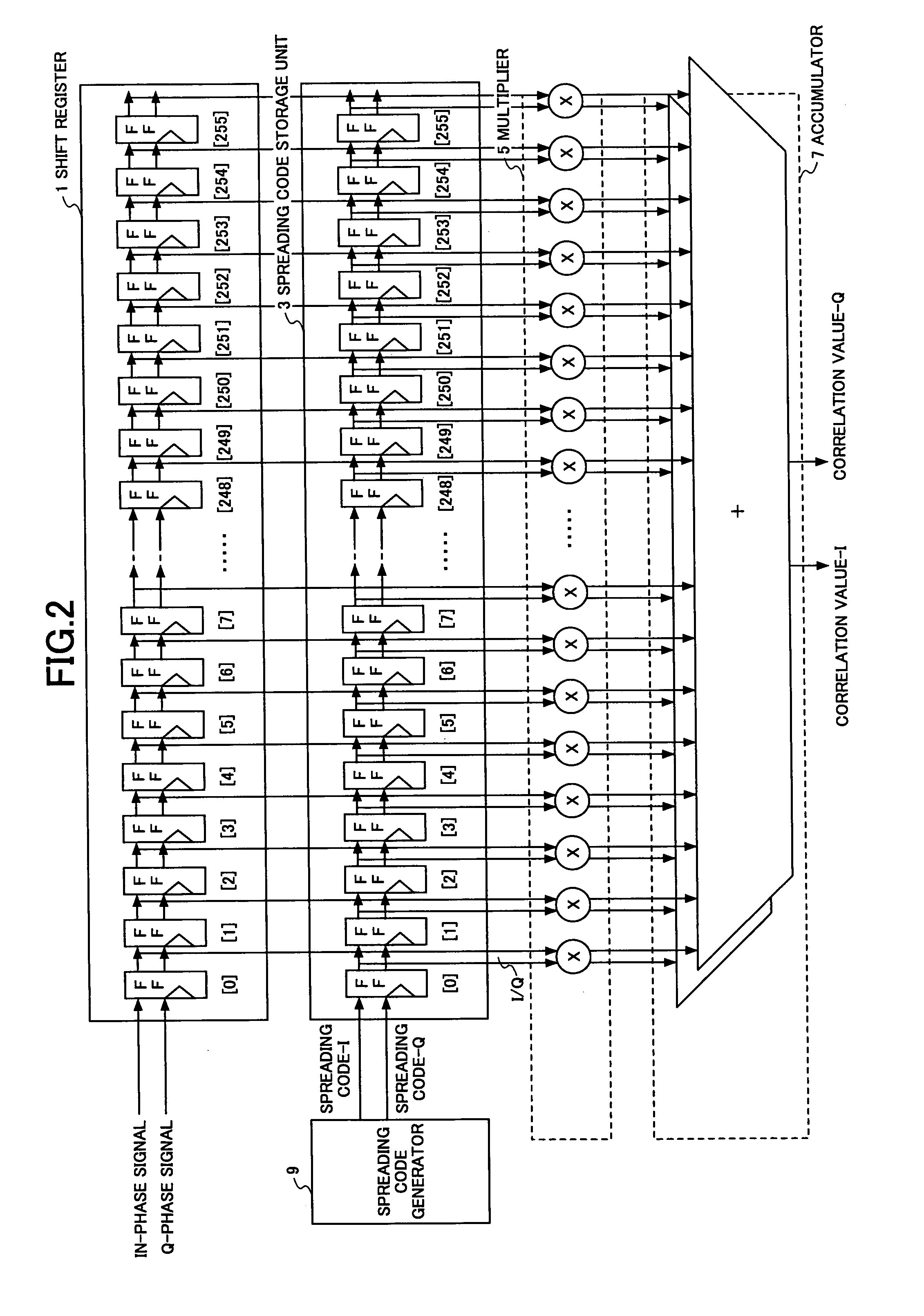

Matched filter system

A matched filter able to reduce the size of a circuit is disclosed that includes a shift register, a spreading code storage unit, a multiplier, an accumulator, a spreading code generator, and an accumulation and storage unit. The shift register has a number of sixteen taps, which equals the square root of the spreading factor 256, and holds and bit-shifts received data. The spreading code generator generates spreading codes divided by the number of the taps at a calculation rate roughly sixteen times higher than a data rate of the received data of 3.84 MHz. The spreading code storage unit stores the divided spreading codes generated in the spreading code generator sequentially at the calculation rate. The multiplier 15 multiplies the received data stored in the shift register with the divided spreading codes stored in the spreading code storage unit at the calculation rate. The accumulator sums the products from the multiplier at the calculation rate. The accumulation and storage unit accumulates correlation values related to processing sequences of the same calculation phase, and stores the accumulation result.

Owner:FUJITSU LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com