Patents

Literature

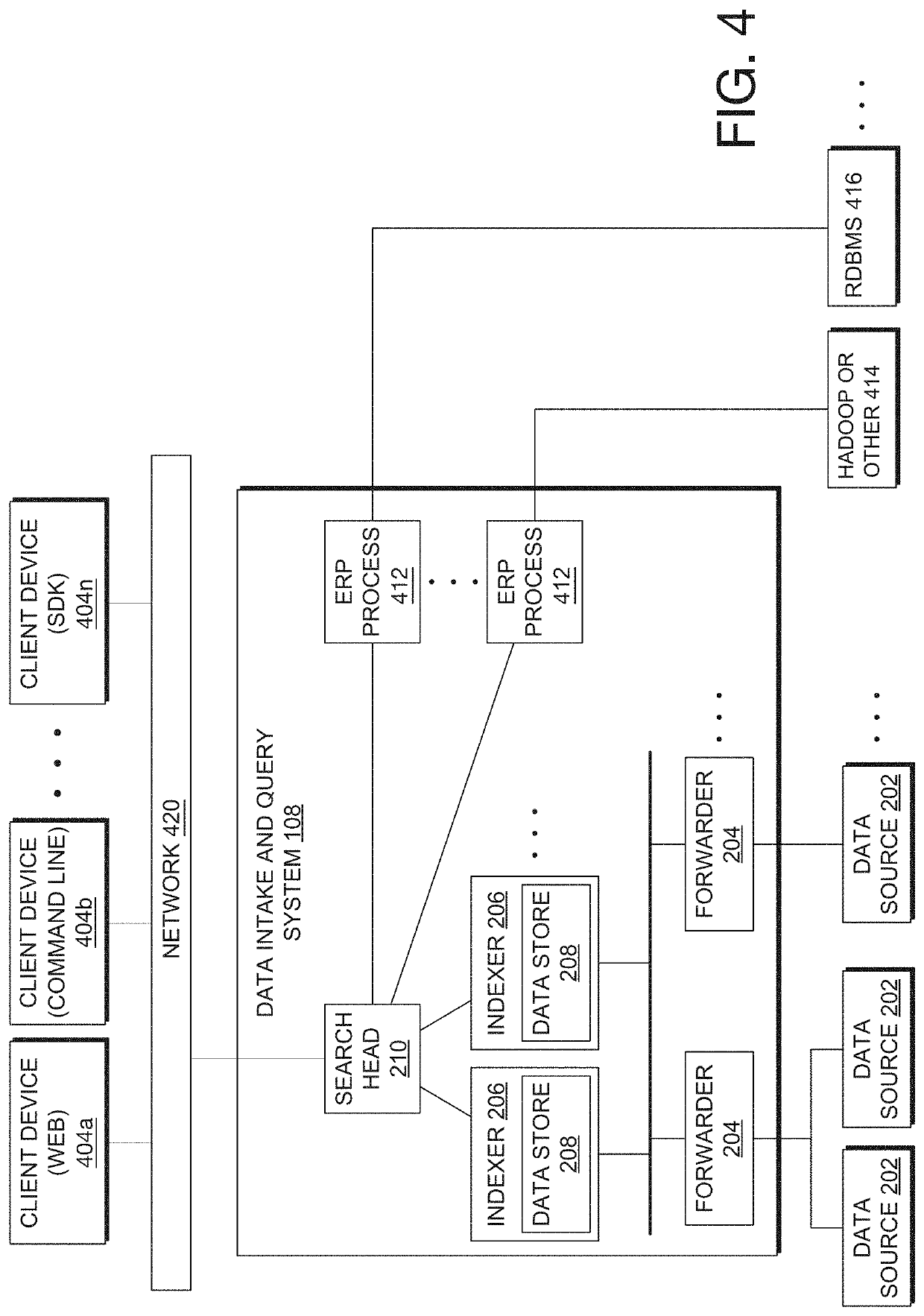

456 results about "Data dependence" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

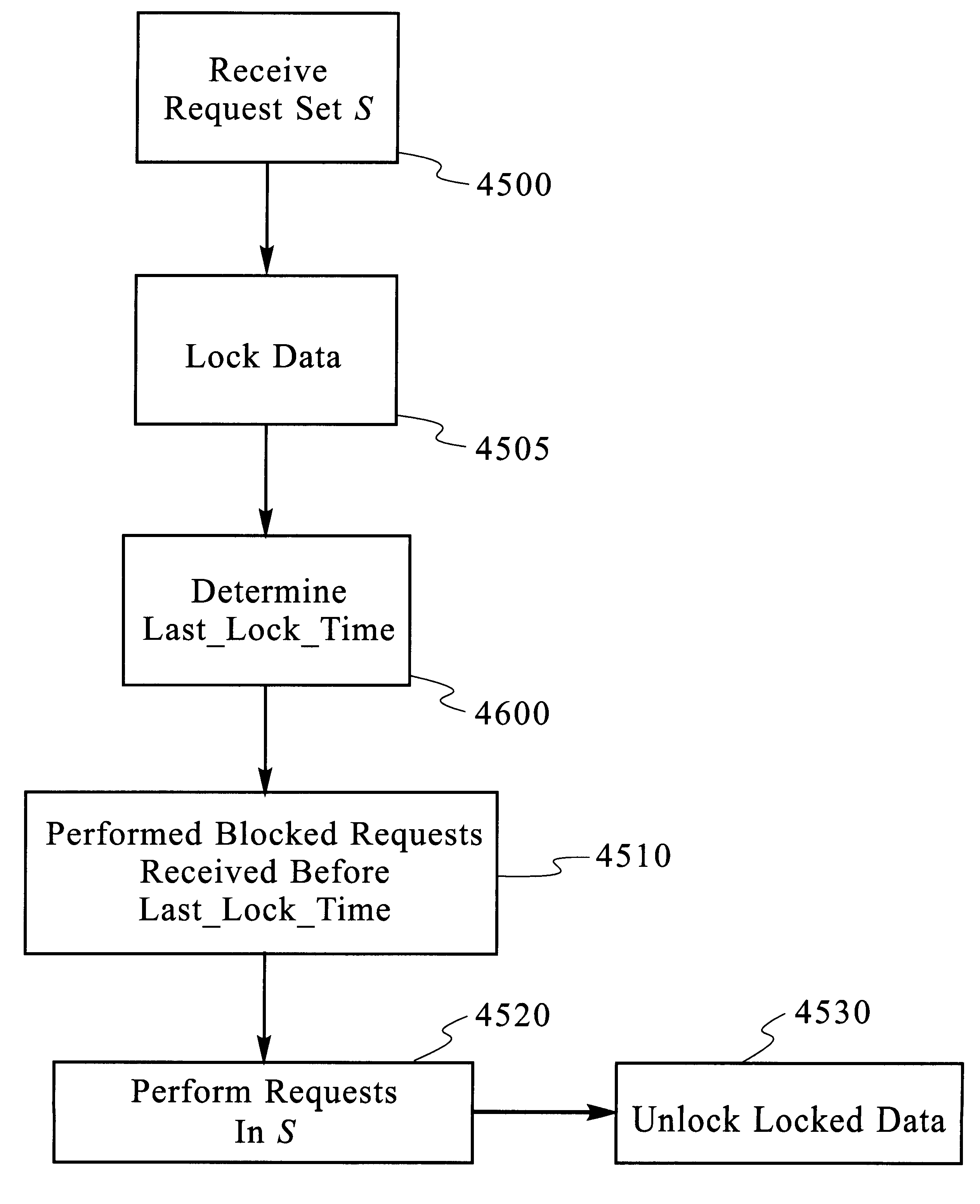

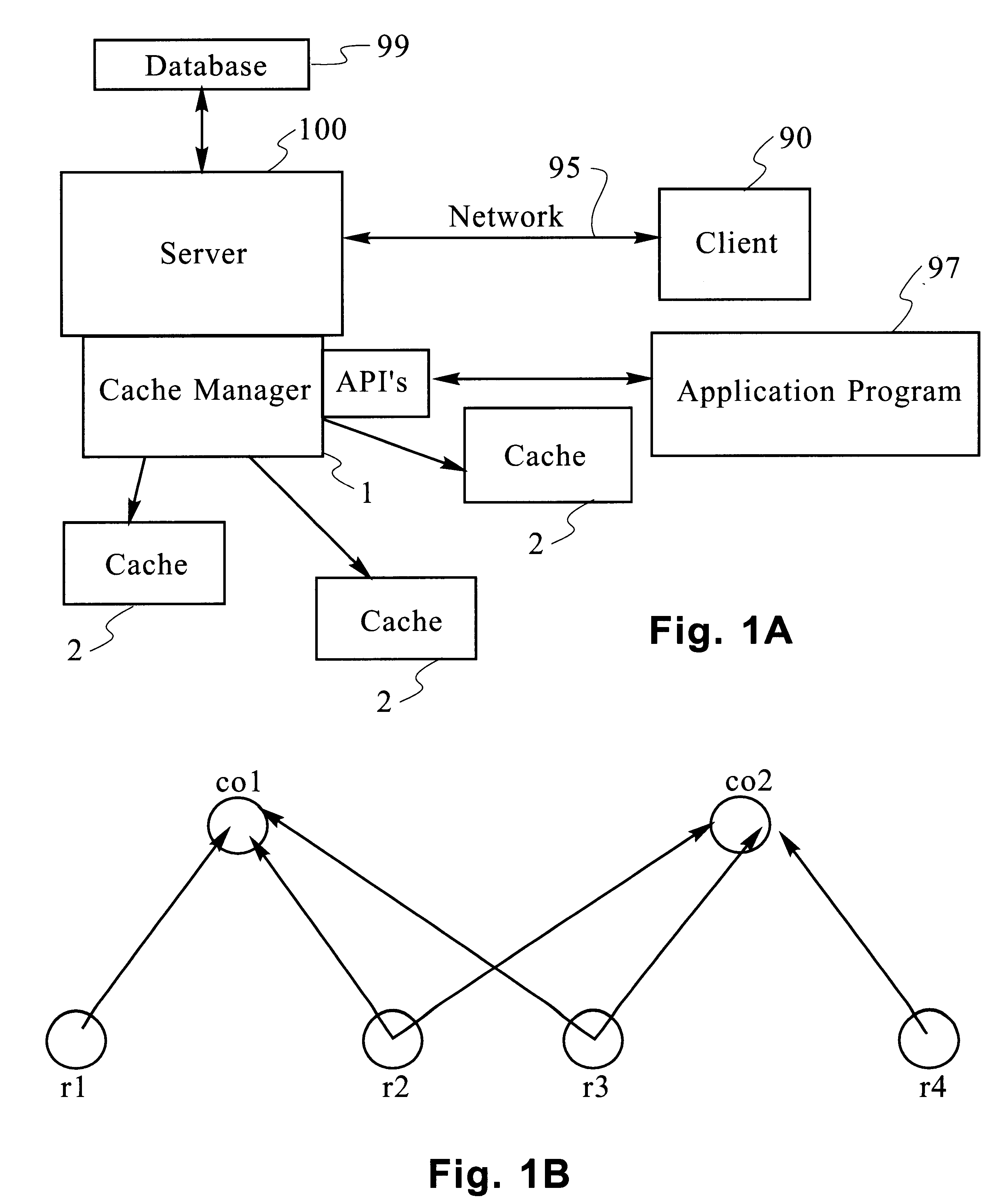

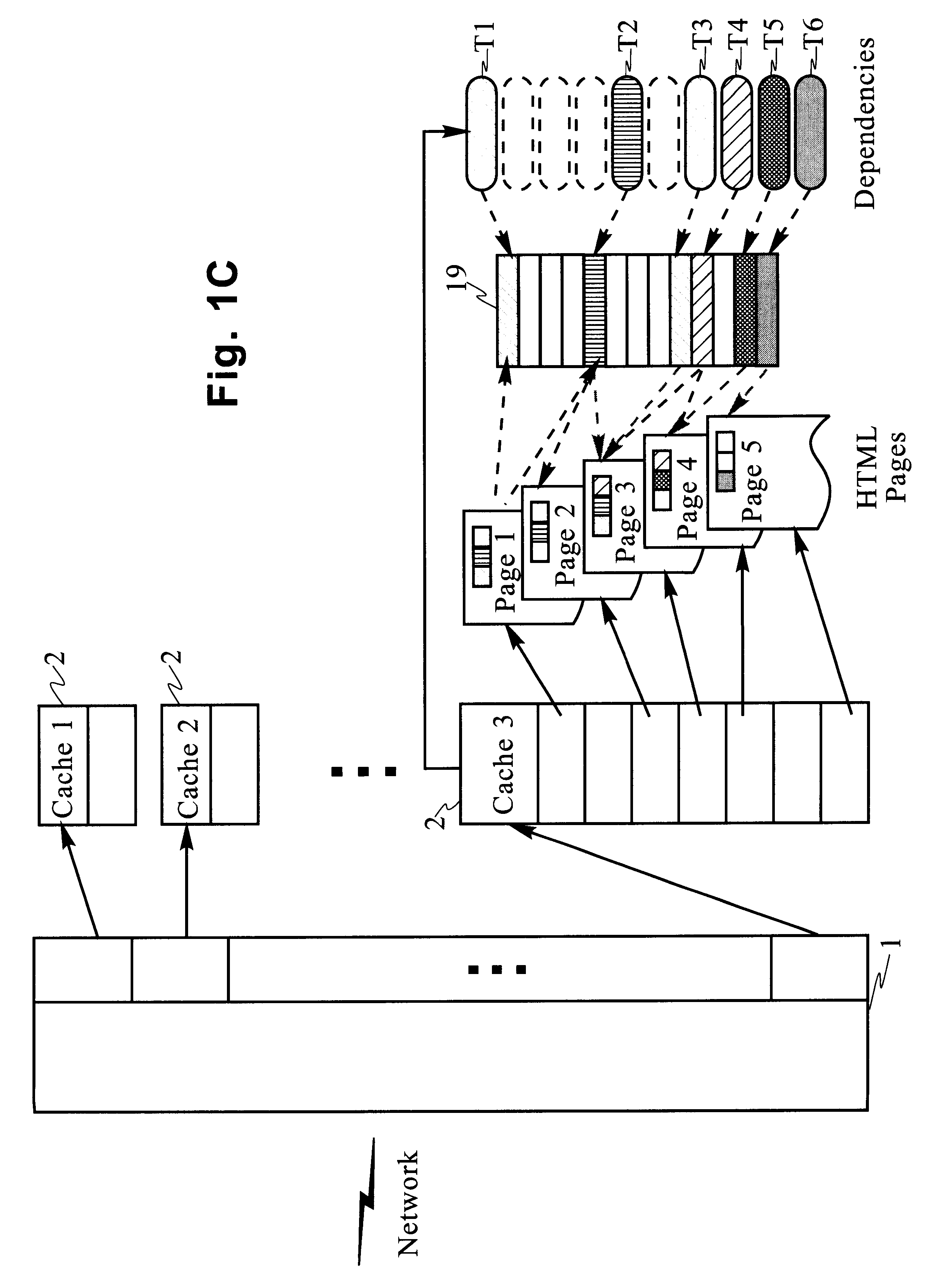

Scaleable method for maintaining and making consistent updates to caches

InactiveUS6216212B1High degreeData processing applicationsDigital data information retrievalData synchronizationTheoretical computer science

A determination can be made of bow changes to underlying data affect the value of objects. Examples of applications are: caching dynamic Web pages; client-server applications whereby a server sending objects (which are changing all the time) to multiple clients can track which versions are sent to which clients and how obsolete the versions are; and any situation where it is necessary to maintain and uniquely identify several versions of objects, update obsolete objects, quantitatively assess how different two versions of the same object are, and / or maintain consistency among a set of objects. A directed graph called an object dependence graph, may be used to represent the data dependencies between objects. Another aspect is constructing and maintaining objects to associate changes in remote data with cached objects. If data in a remote data source changes, database change notifications are used to "trigger" a dynamic rebuild of associated objects. Thus, obsolete objects can be dynamically replaced with fresh objects. The objects can be complex objects, such as dynamic Web pages or compound-complex objects, and the data can be underlying data in a database. The update can include either storing a new version of the object in the cache; or deleting an object from the cache. Caches on multiple servers can also be synchronized with the data in a single common database. Updated information, whether new pages or delete orders, can be broadcast to a set of server nodes, permitting many systems to simultaneously benefit from the advantages of prefetching and providing a high degree of scaleability.

Owner:IBM CORP

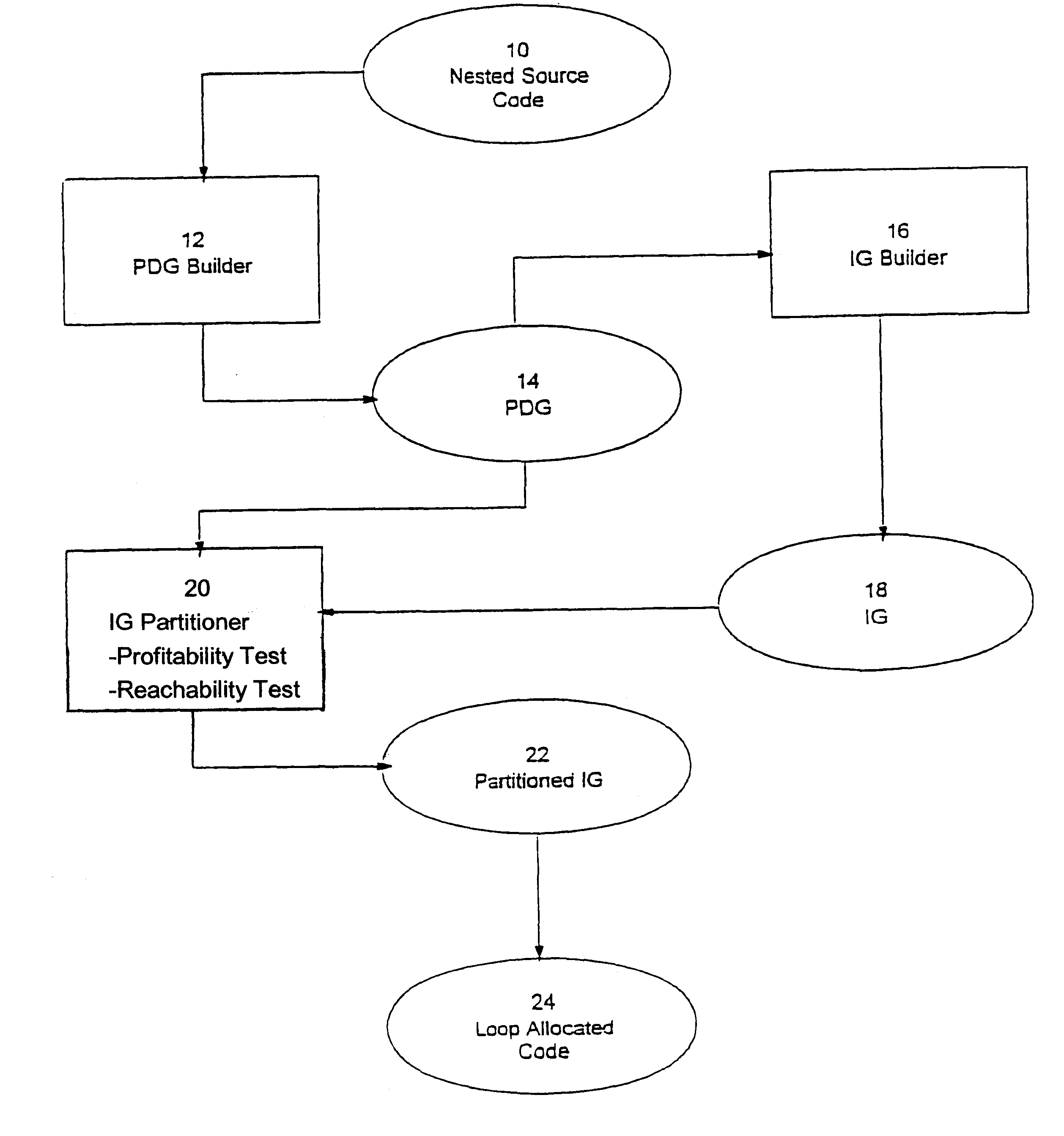

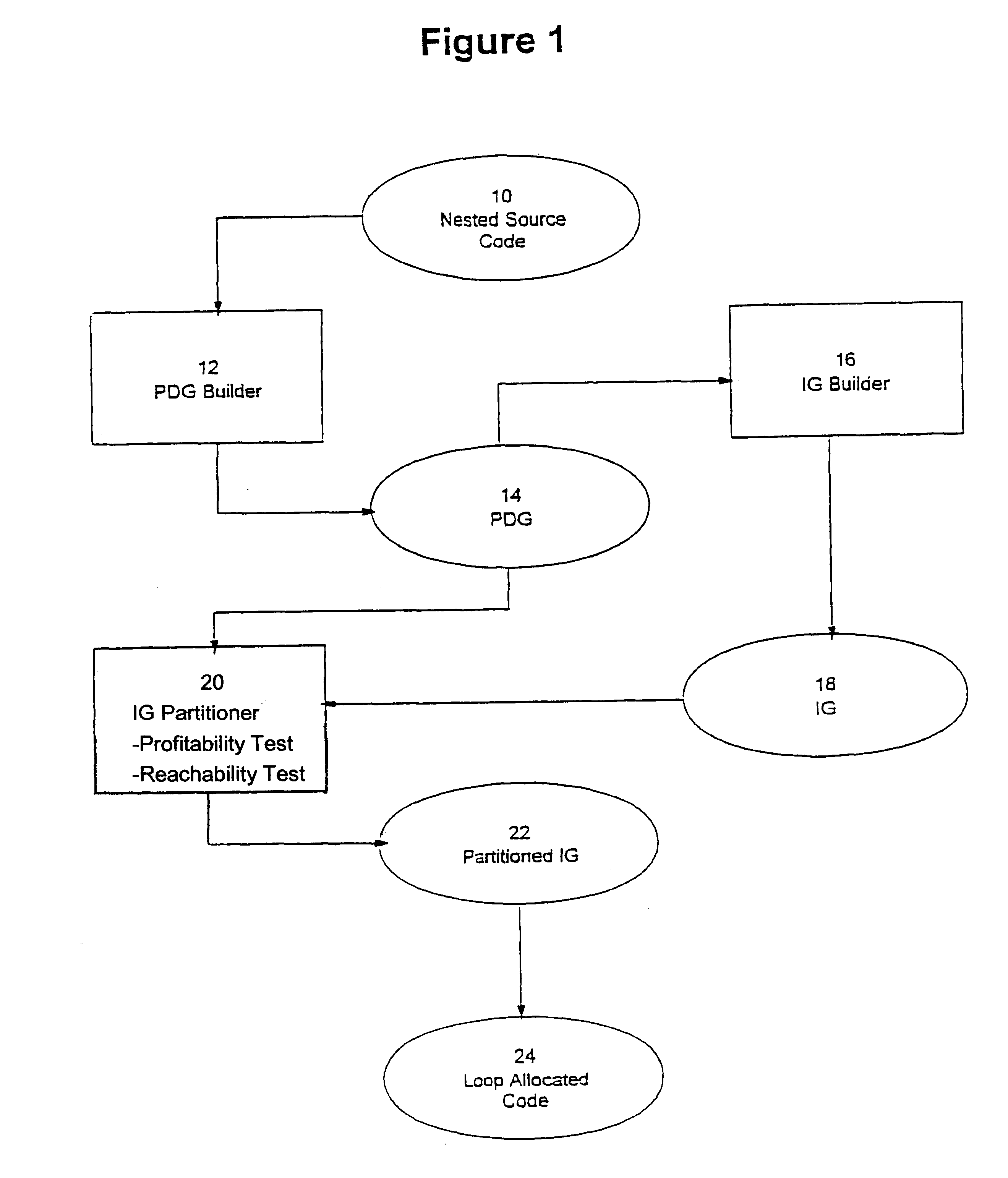

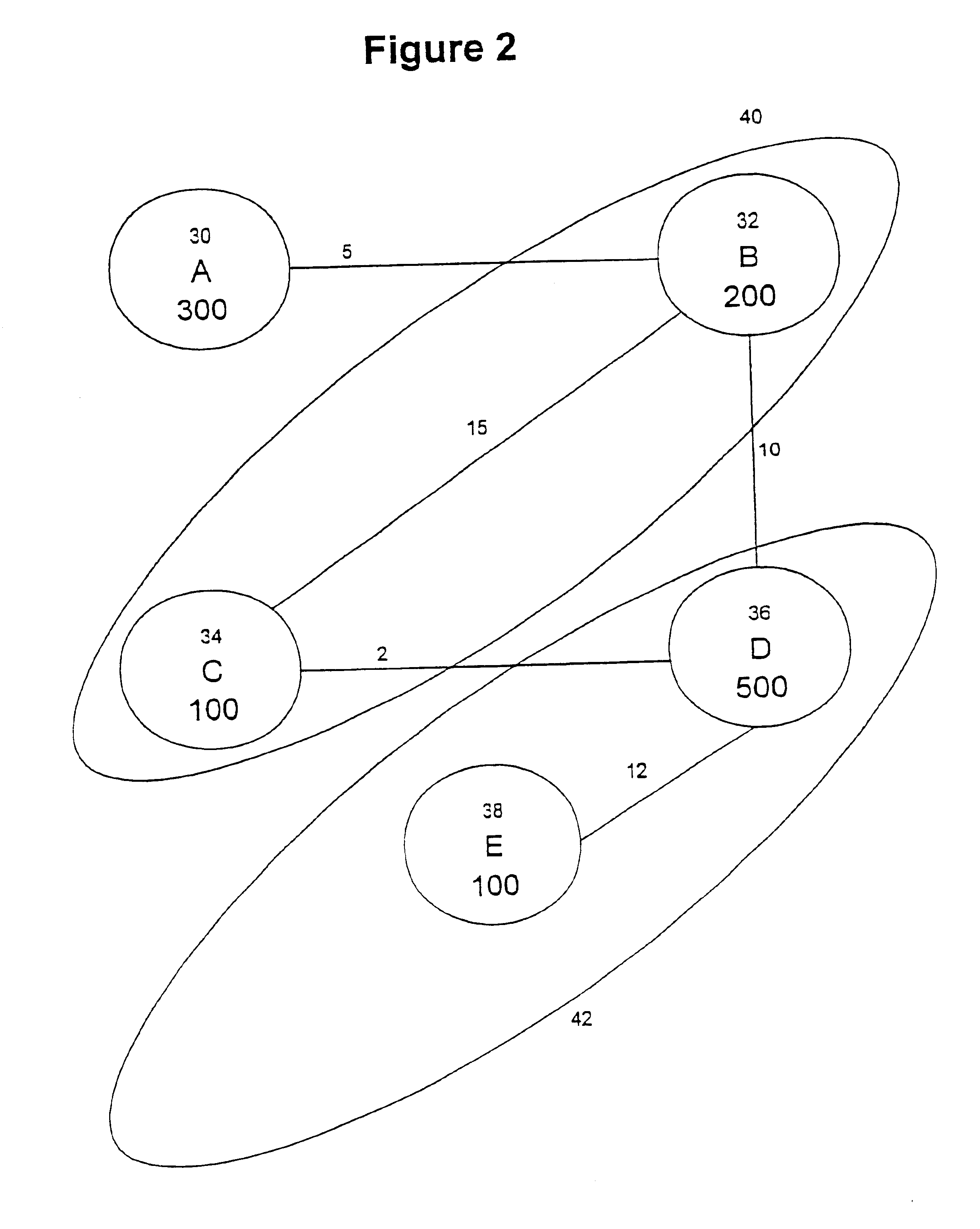

Loop allocation for optimizing compilers

Loop allocation for optimizing compilers includes the generation of a program dependence graph for a source code segment. Control dependence graph representations of the nested loops, from innermost to outermost, are generated and data dependence graph representations are generated for each level of nested loop as constrained by the control dependence graph. An interference graph is generated with the nodes of the data dependence graph. Weights are generated for the edges of the interference graph reflecting the affinity between statements represented by the nodes joined by the edges. Nodes in the interference graph are given weights reflecting resource usage by the statements associated with the nodes. The interference graph is partitioned using a profitability test based on the weights of edges and nodes and on a correctness test based on the reachability of nodes in the data dependence graph. Code is emitted based on the partitioned interference graph.

Owner:IBM CORP

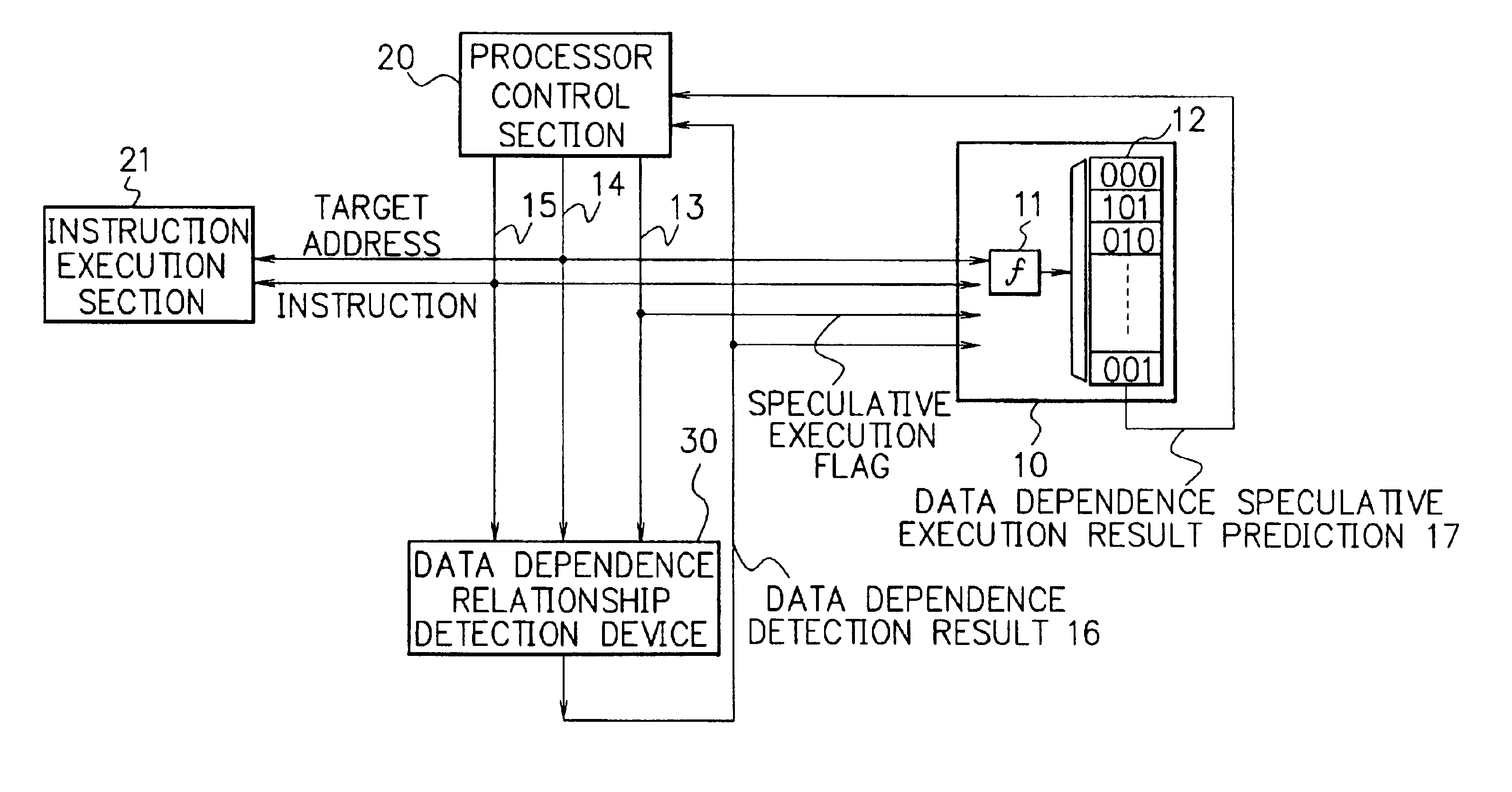

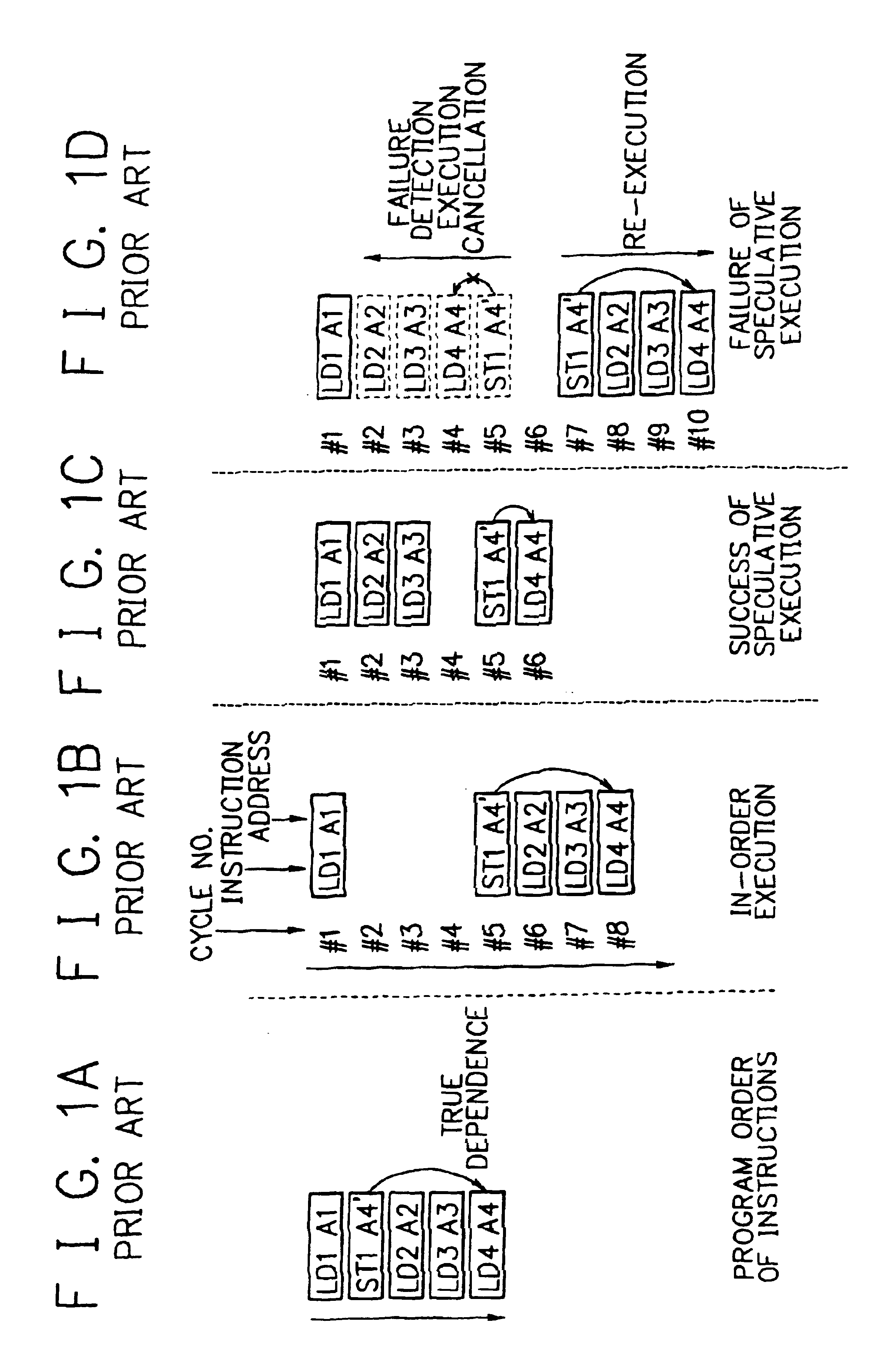

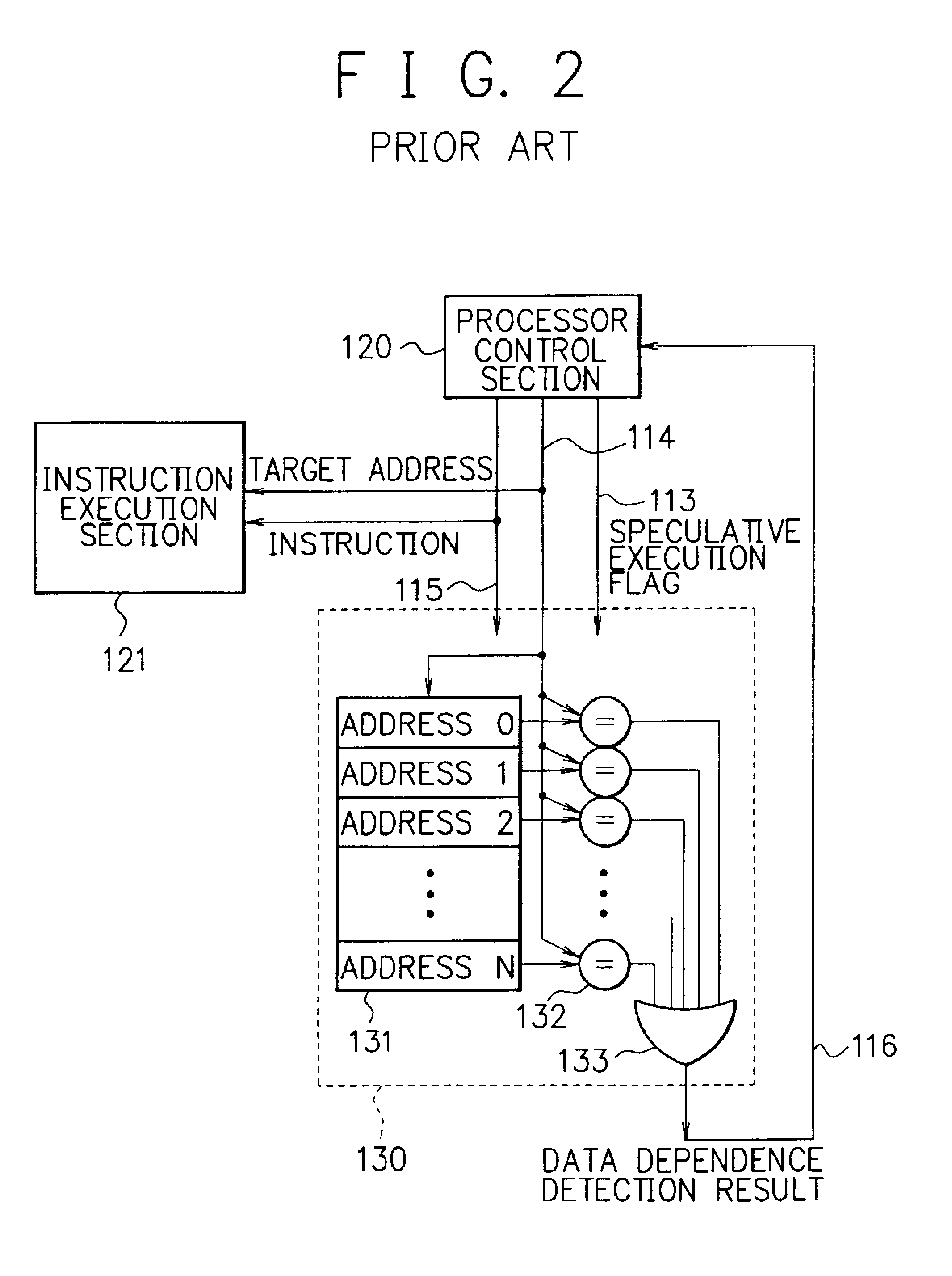

Processor, multiprocessor system and method for speculatively executing memory operations using memory target addresses of the memory operations to index into a speculative execution result history storage means to predict the outcome of the memory operation

InactiveUS6970997B2Low failure rateImprove execution performanceDigital computer detailsConcurrent instruction executionMemory addressSpeculative execution

When a processor executes a memory operation instruction by means of data dependence speculative execution, a speculative execution result history table which stores history information concerning success / failure results of the speculative execution of memory operation instructions of the past is referred to and thereby whether the speculative execution will succeed or fail is predicted. In the prediction, the target address of the memory operation instruction is converted by a hash function circuit into an entry number of the speculative execution result history table (allowing the existence of aliases), and an entry of the table designated by the entry number is referred to. If the prediction is “success”, the memory operation instruction is executed in out-of-order execution speculatively (with regard to data dependence relationship between the instructions). If the prediction is “failure”, the speculative execution is canceled and the memory operation instruction is executed later in the program order non-speculatively. Whether the speculative execution of the memory operation instructions has succeeded or failed is judged by detecting the data dependence relationship between the memory operation instructions, and the speculative execution result history table is updated taking the judgment into account.

Owner:NEC CORP

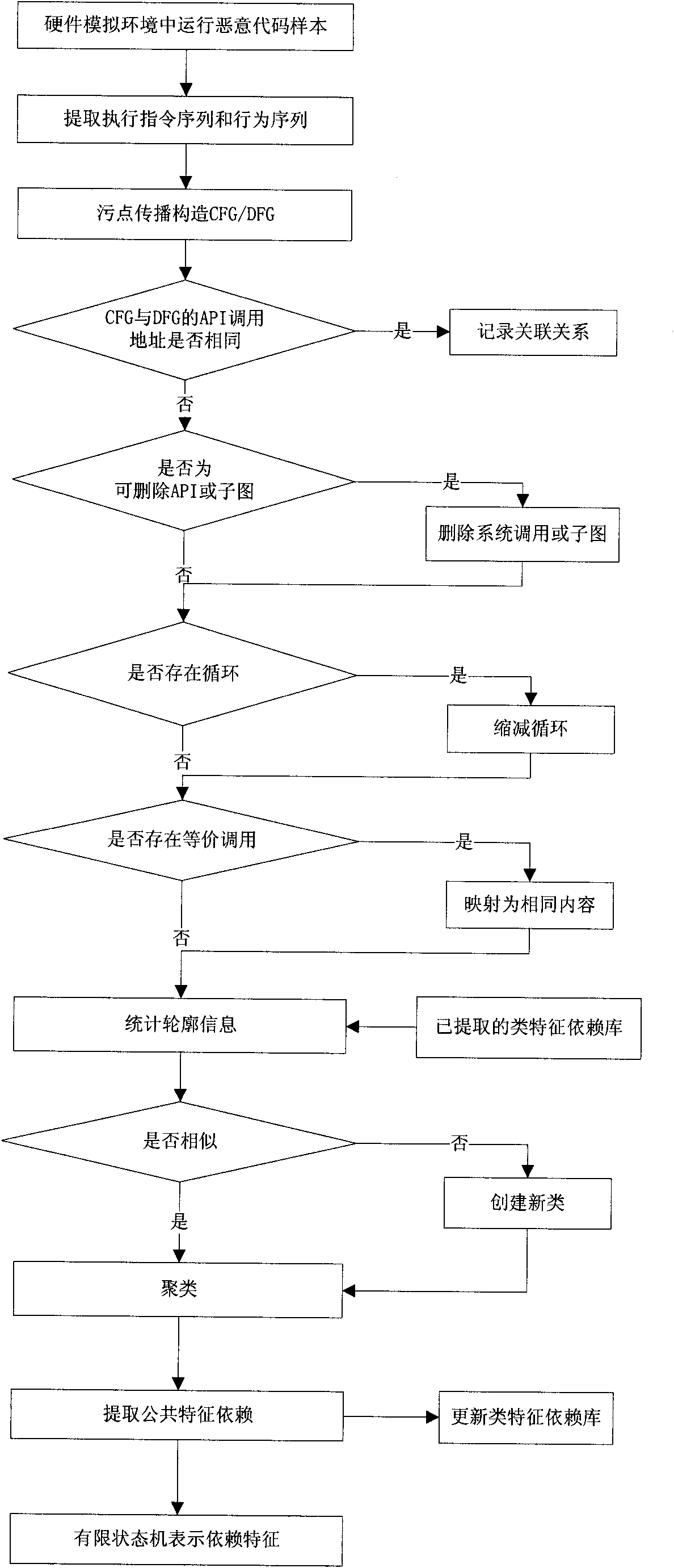

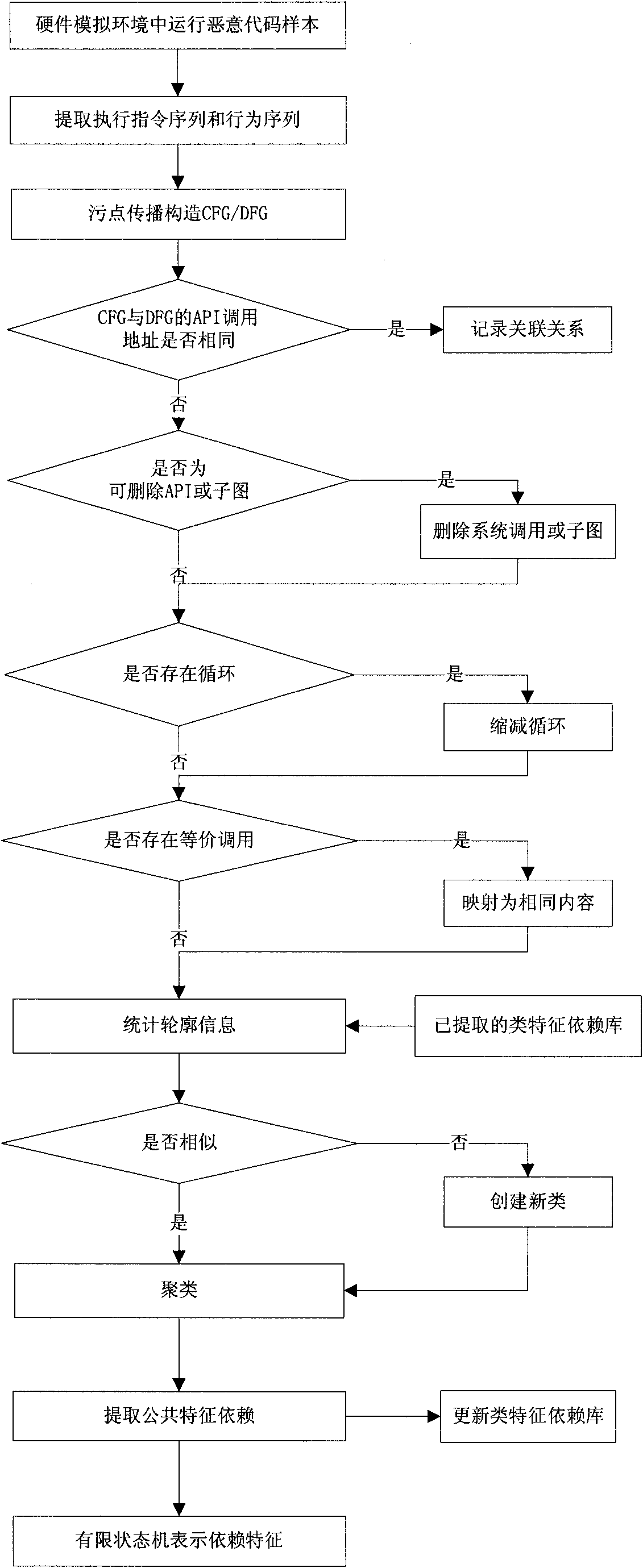

Method for extracting malicious code behavior characteristic

InactiveCN102054149AComprehensive information extractionImprove anti-interference abilityPlatform integrity maintainanceData dependency graphSingle sample

The invention discloses a method for extracting a malicious code behavior characteristic, which belongs to the technical field of network security. The method comprises the following steps of: 1) running a malicious code and extracting executive information of the malicious code, wherein the executive information comprises an executive instruction sequence and a behavior sequence of the maliciouscode; 2) constructing a control dependence graph and a data dependence graph for executing the code according to the executive information; 3) comparing relevance of the control dependence graph and the data dependence graph and recording related relevance information; and 4) comparing the control dependence graphs and the data dependence graphs of different malicious codes and extracting characteristic dependency of each type of samples according to similarity clustering. Compared with the prior art, the method has the characteristics of complete information extraction, high anti-interference performance, certain applicability to varieties of a single sample characteristic, small-sized characteristic library and wide application range.

Owner:GRADUATE SCHOOL OF THE CHINESE ACAD OF SCI GSCAS

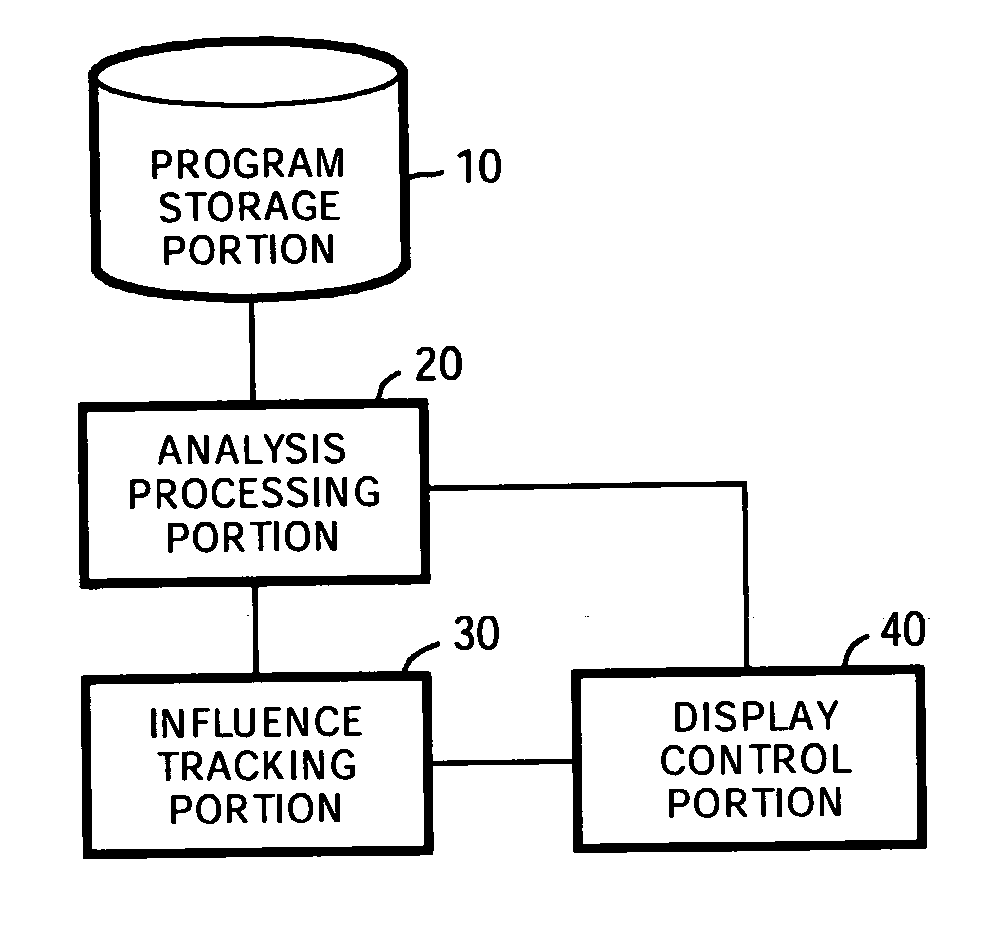

Program analysis device, analysis method and program of same

InactiveUS20050204344A1Easy to trackImprove efficiencySoftware engineeringSpecific program execution arrangementsProgram analysisAnalysis method

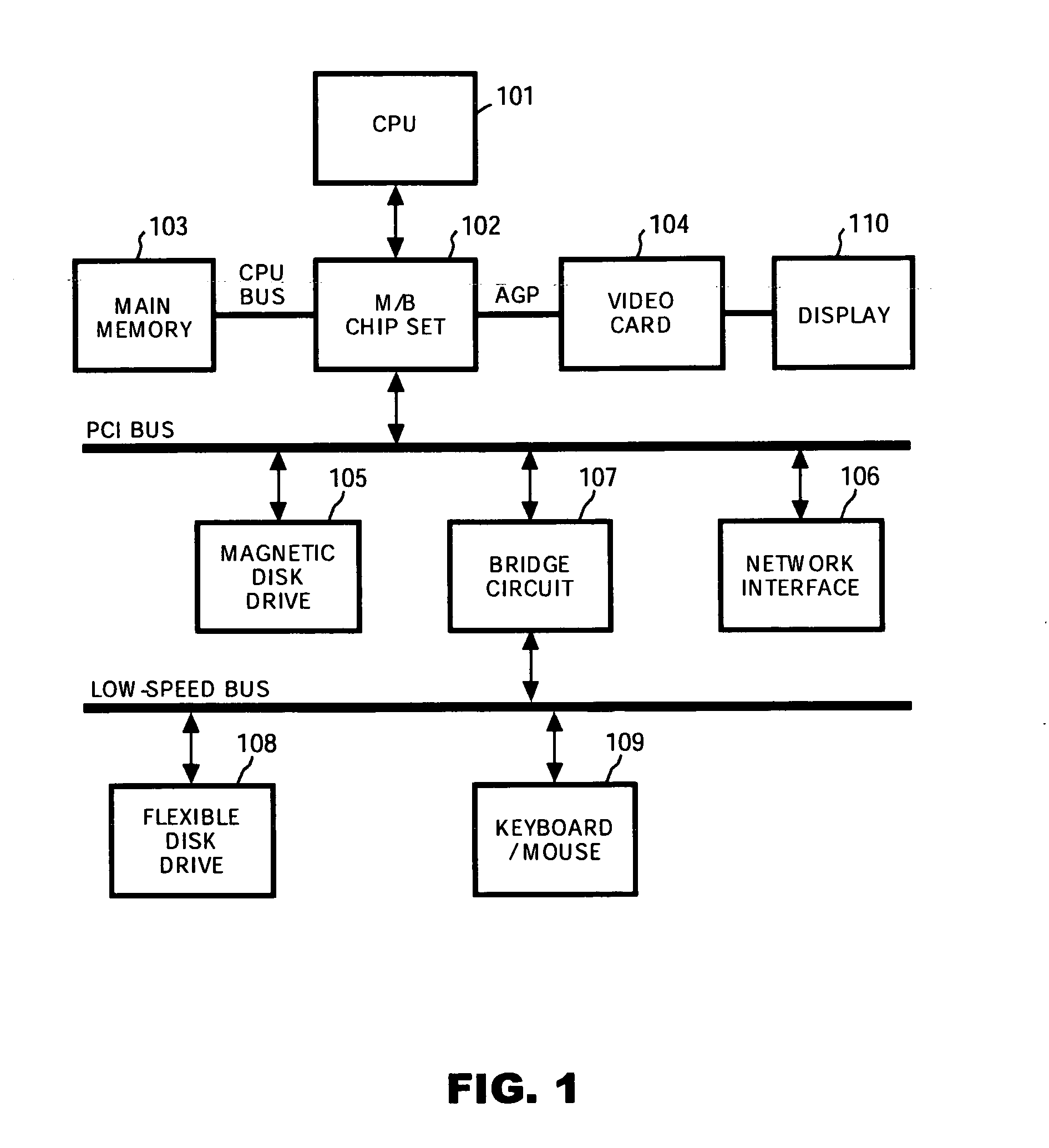

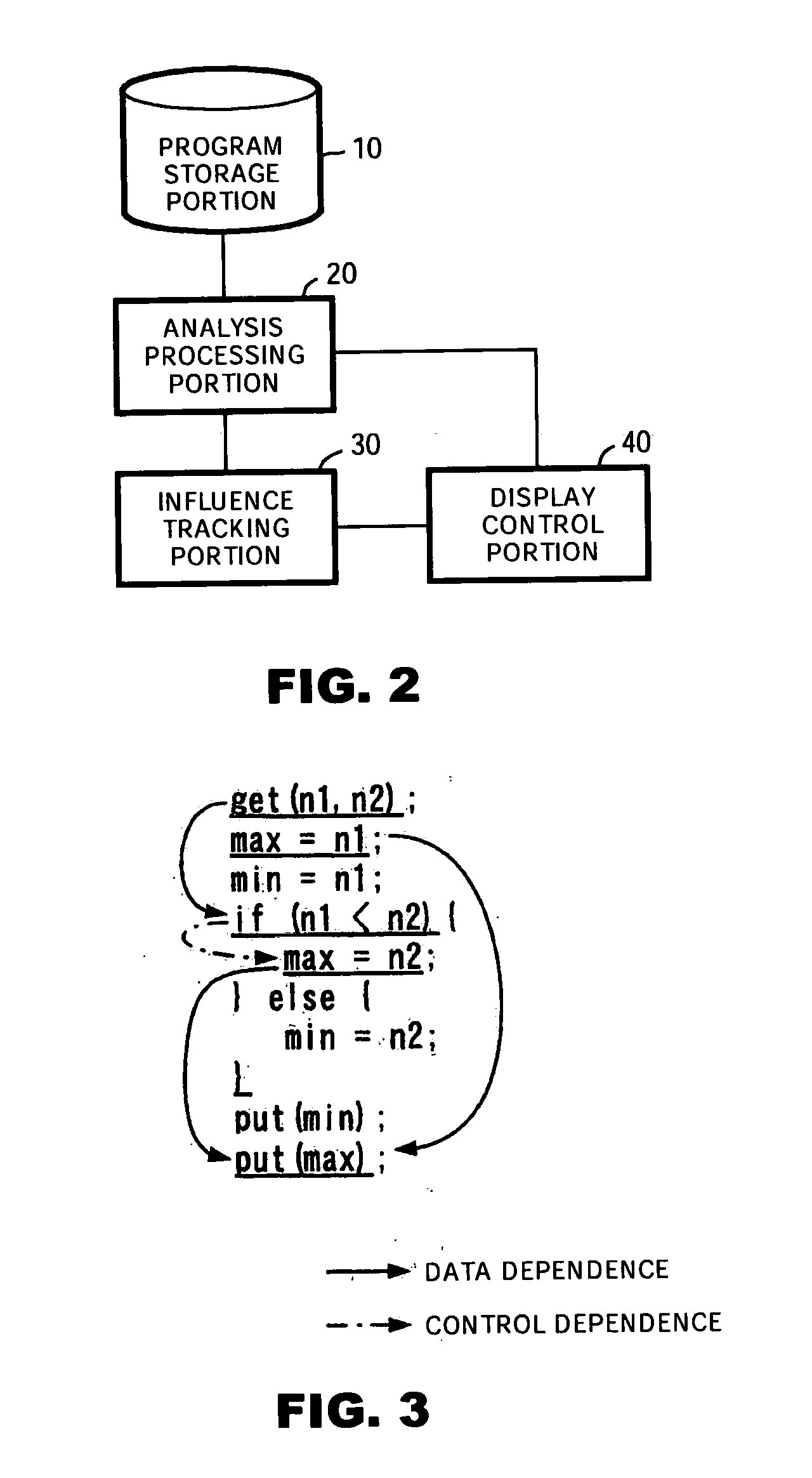

A method for analyzing an original program to check an affected part by weaving an aspect and presenting the analysis result. An analysis device has an analysis processing portion for inputting a program based on aspect oriented programming, and acquiring data dependence information and control dependence information in the input program. A influence tracking portion tracks the data dependence and the control dependence acquired by the analysis processing portion starting from a position of weaving an aspect in the program, and searching a propagation path of the influence due the aspect weaving. A display control detects and displays a part undergoing the aspect weaving influence, based on the result of parsing by the analysis processing portion and information about the propagating path obtained by the influence tracking portion.

Owner:IBM CORP

Parallel transaction execution method based on blockchain

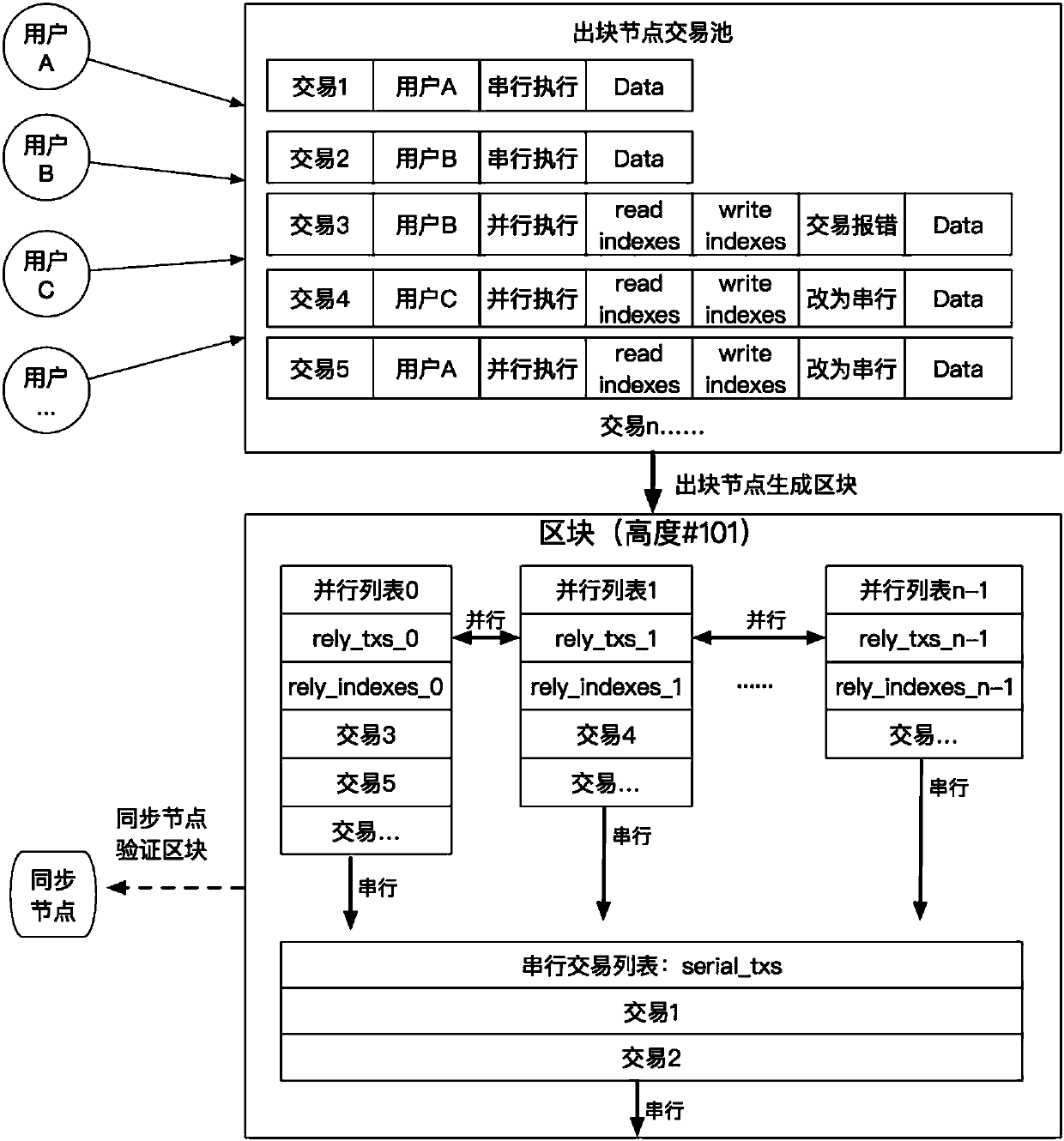

ActiveCN107688999ASimplify concurrency difficultyImprove transaction throughputFinancePayment protocolsUser needsParallel processing

The invention discloses a parallel transaction execution method based on a blockchain. Firstly, a data unit on the datachain is subjected to index numbering, the parallel transaction of a user needs to provide a data index which needs to be read and written for transaction execution in addition to basic transaction contents. The serial transaction of the user only needs to provide the basic transaction contents. A node arranges parallel processing according to the data dependency relationship of the parallel transaction, and the transaction which can not carry out concurrence and the serial transaction can be executed in sequence.

Owner:HANGZHOU RIVTOWER TECH CO LTD

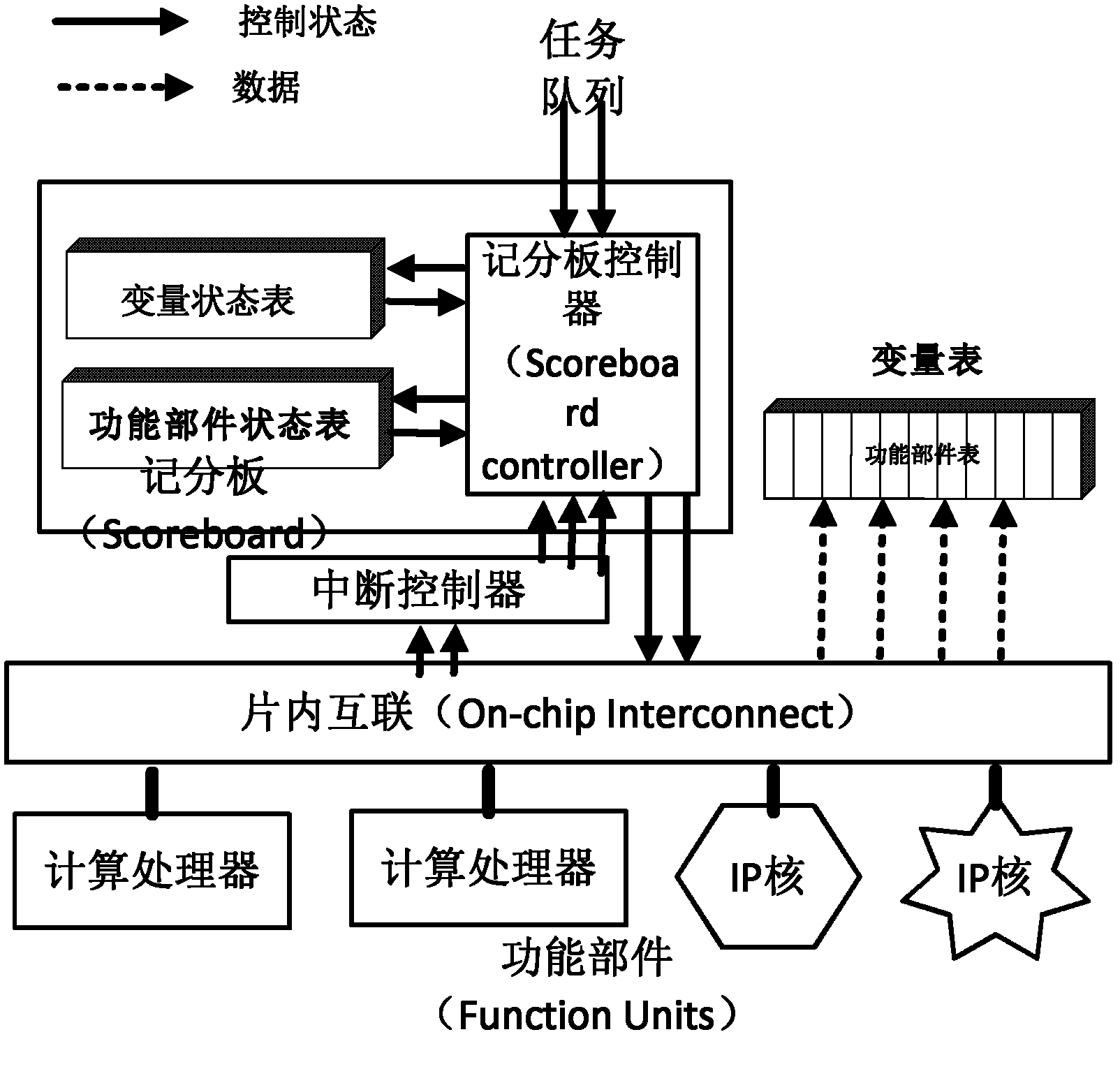

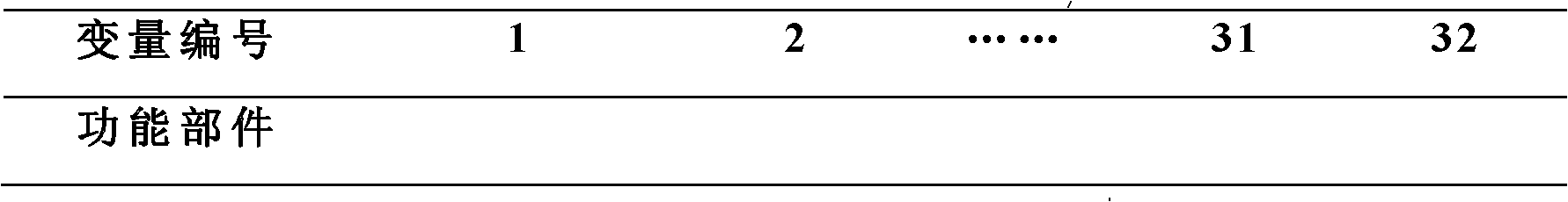

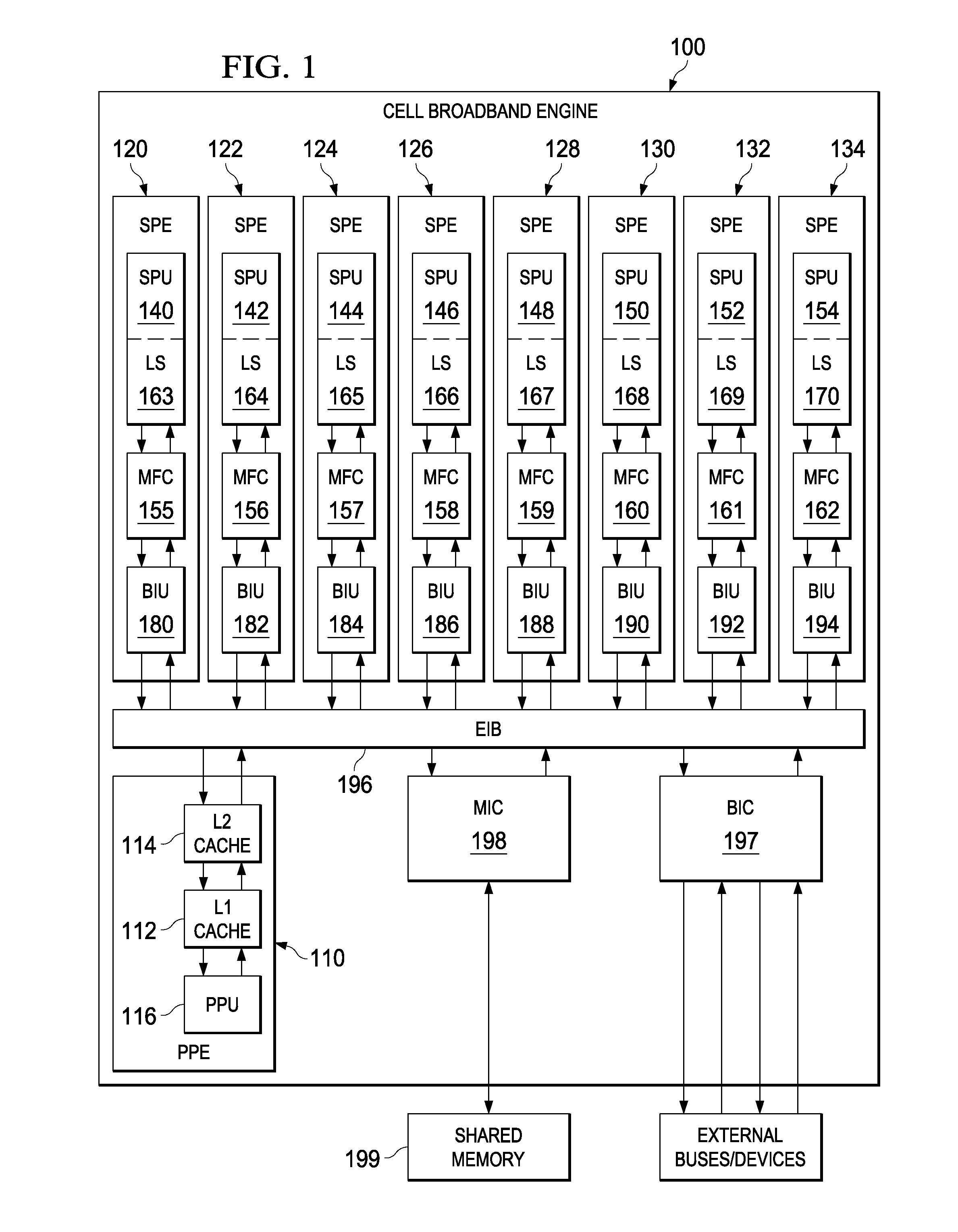

Scheduling system and scheduling execution method of multi-core heterogeneous system on chip

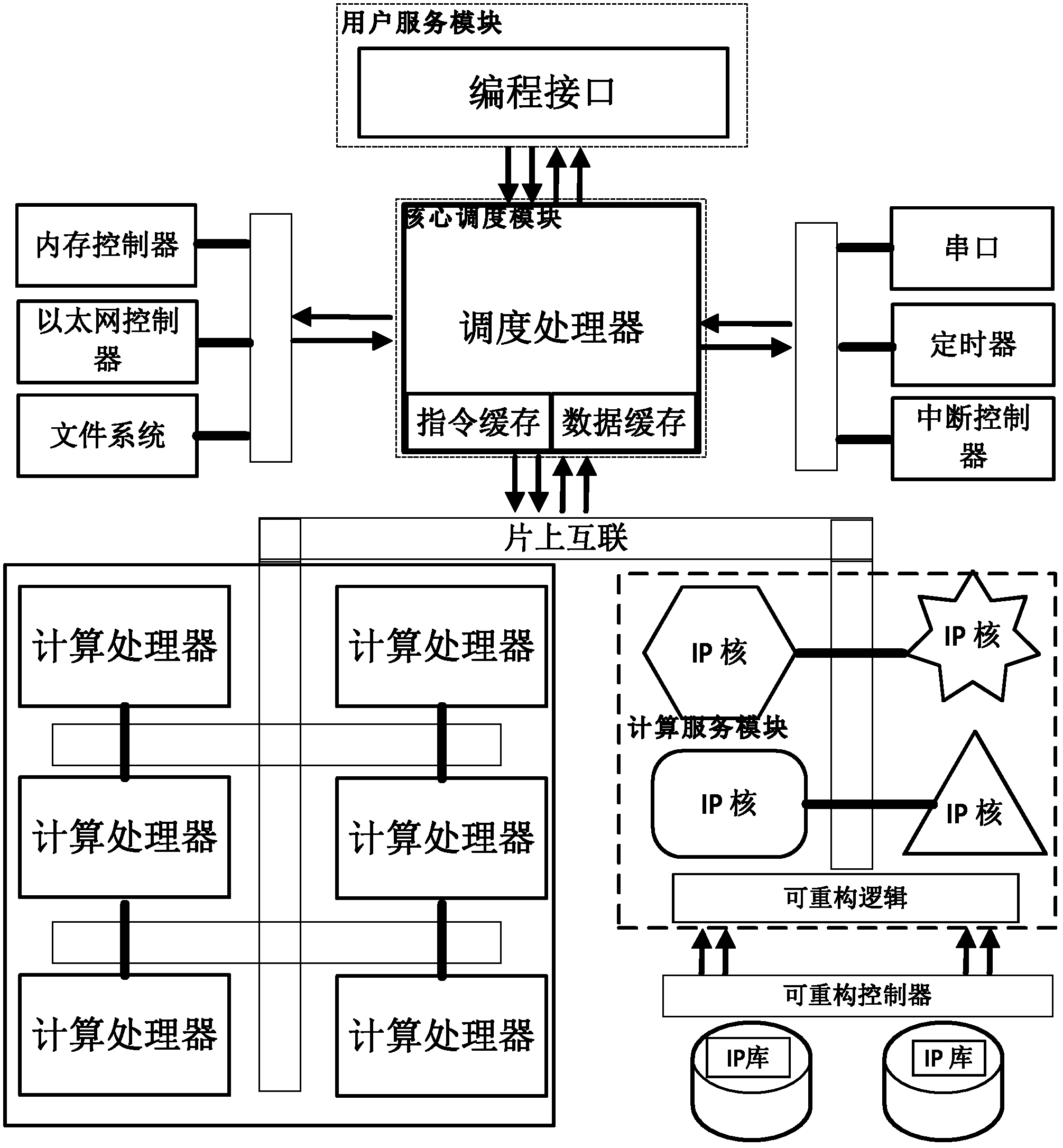

ActiveCN102360309AEliminate spurious correlationImprove throughputResource allocationData dependenceMulticore computing

The invention discloses a scheduling system and a scheduling execution method of a multi-core heterogeneous system on chip. The scheduling system comprises a user service module which provides tasks needed to be executed and is suitable for a plurality of heterogeneous software and hardware, and a plurality of computing service modules for executing a plurality of tasks on a multi-core computing platform on chip; the scheduling system is characterized in that a core scheduling module is arranged between the user service module and the computing service modules, and the core scheduling module is used for accepting a task request of the user service module, recording and judging a data dependence relation among different tasks to schedule the task request to different computing service modules for execution in parallel; the computing service modules are packaged as IP (Internet Protocol) cores, and realize dynamic loading of the IP cores via a reconfigurable controller; and the computing service modules are in on chip interconnections with a plurality of computing processors of the multi-core heterogeneous system on chip, and accept instructions of the core scheduling module to execute different types of computing tasks. The scheduling system improves the platform throughput rate and the system performance by monitoring the relativity of the tasks and executing automatic parallelization in the running process.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

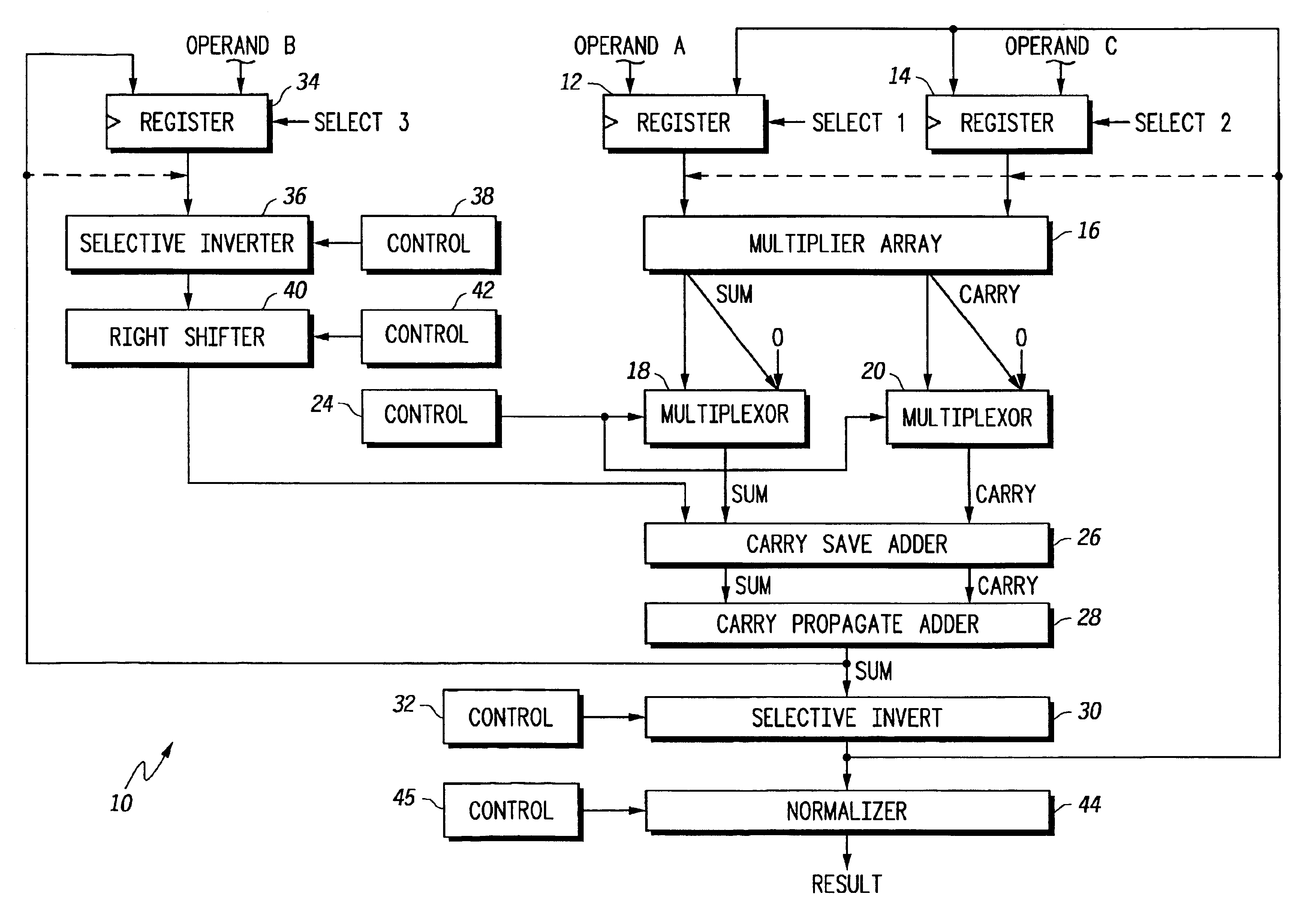

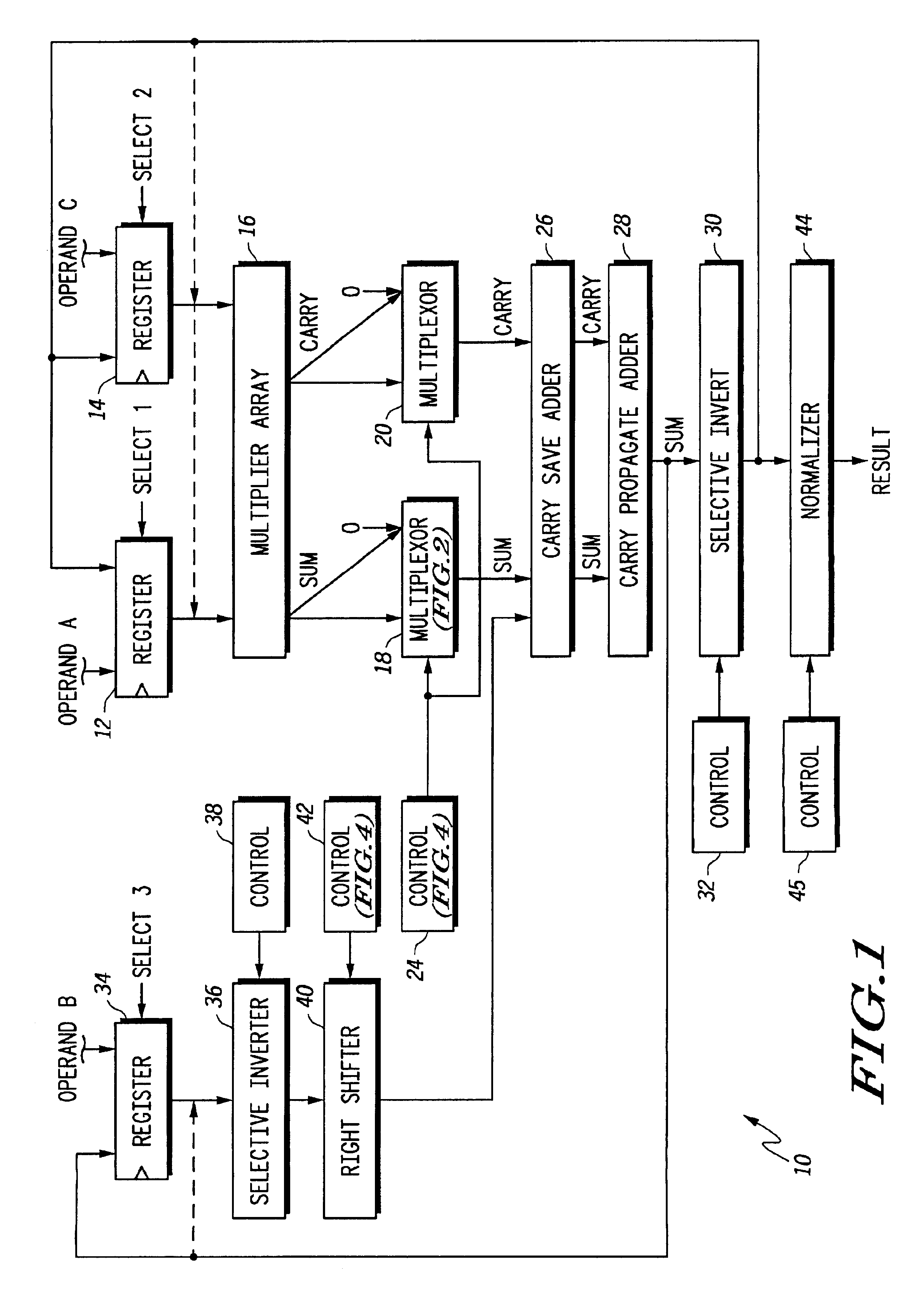

Floating point multiplier/accumulator with reduced latency and method thereof

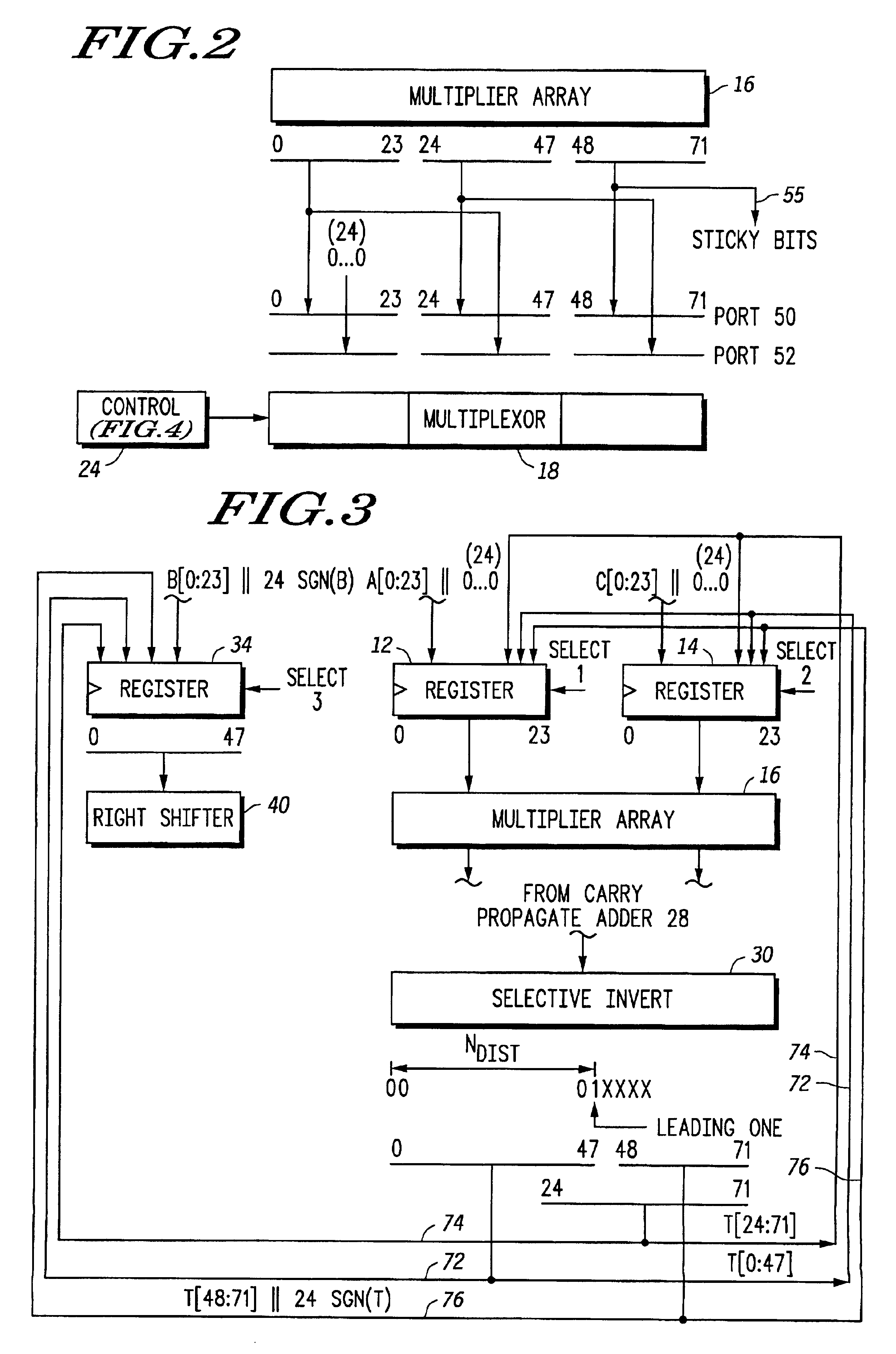

A circuit (10) for multiplying two floating point operands (A and C) while adding or subtracting a third floating point operand (B) removes latency associated with normalization and rounding from a critical speed path for dependent calculations. An intermediate representation of a product and a third operand are selectively shifted to facilitate use of prior unnormalized dependent resultants. Logic circuitry (24, 42) implements a truth table for determining when and how much shifting should be made to intermediate values based upon a resultant of a previous calculation, upon exponents of current operands and an exponent of a previous resultant operand. Normalization and rounding may be subsequently implemented, but at a time when a new cycle operation is not dependent on such operations even if data dependencies exist.

Owner:NORTH STAR INNOVATIONS

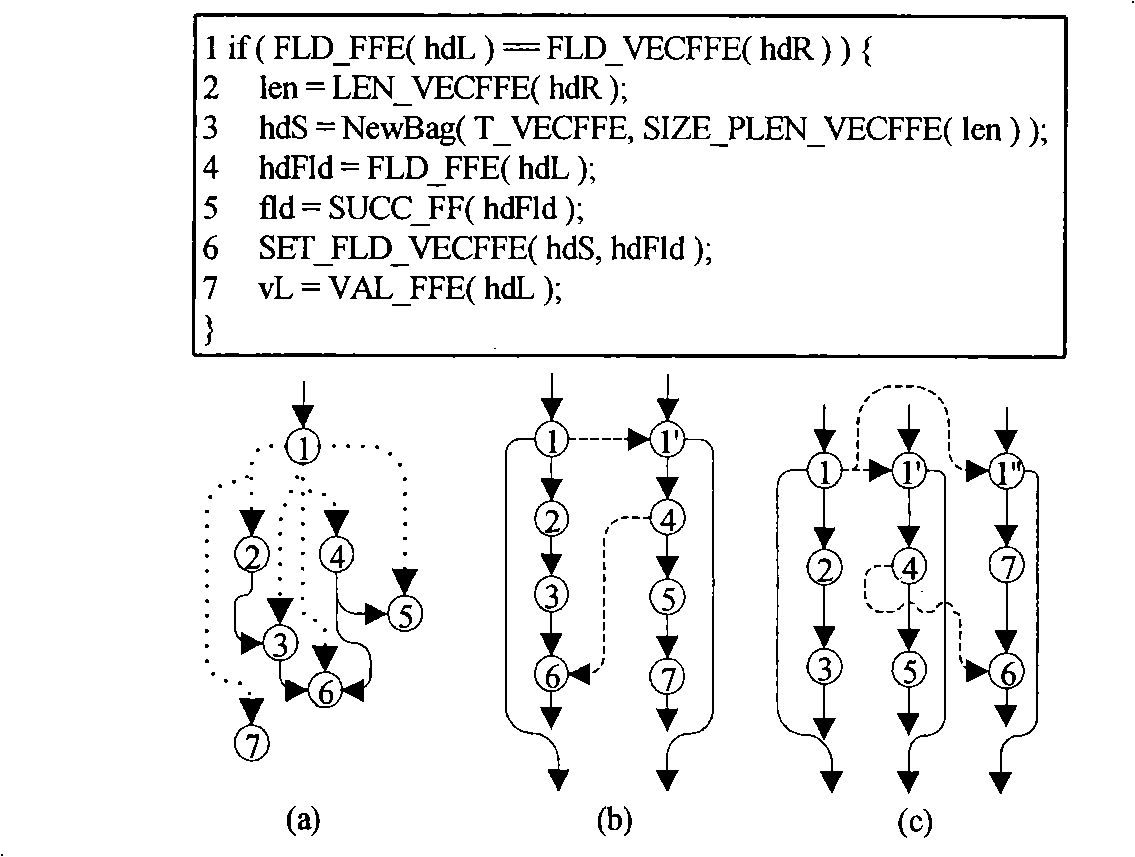

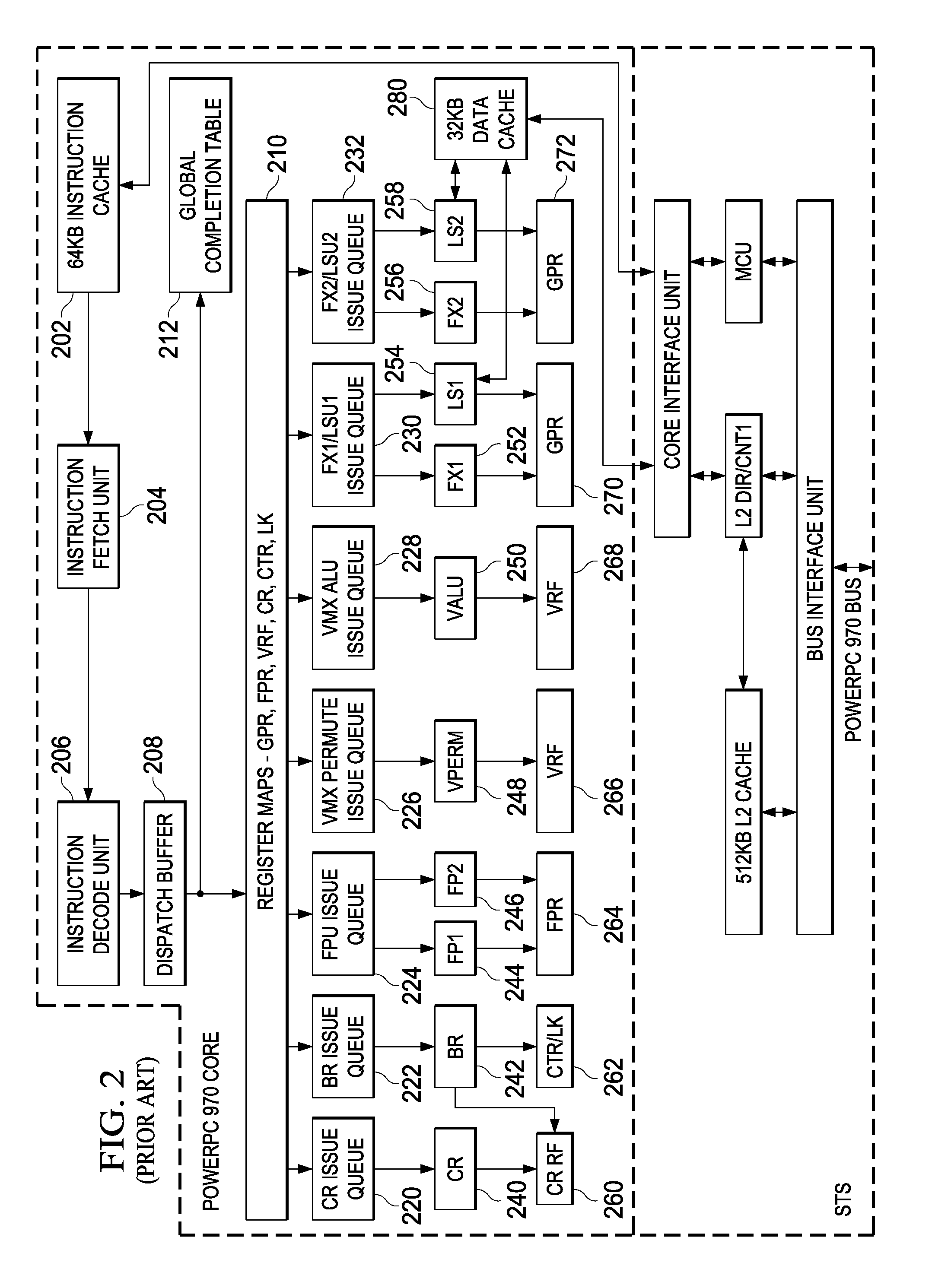

Branch instruction conversion to multi-threaded parallel instructions

InactiveUS7010787B2Process is performedImprove performanceProgram initiation/switchingProgram synchronisationRegister allocationProcessor register

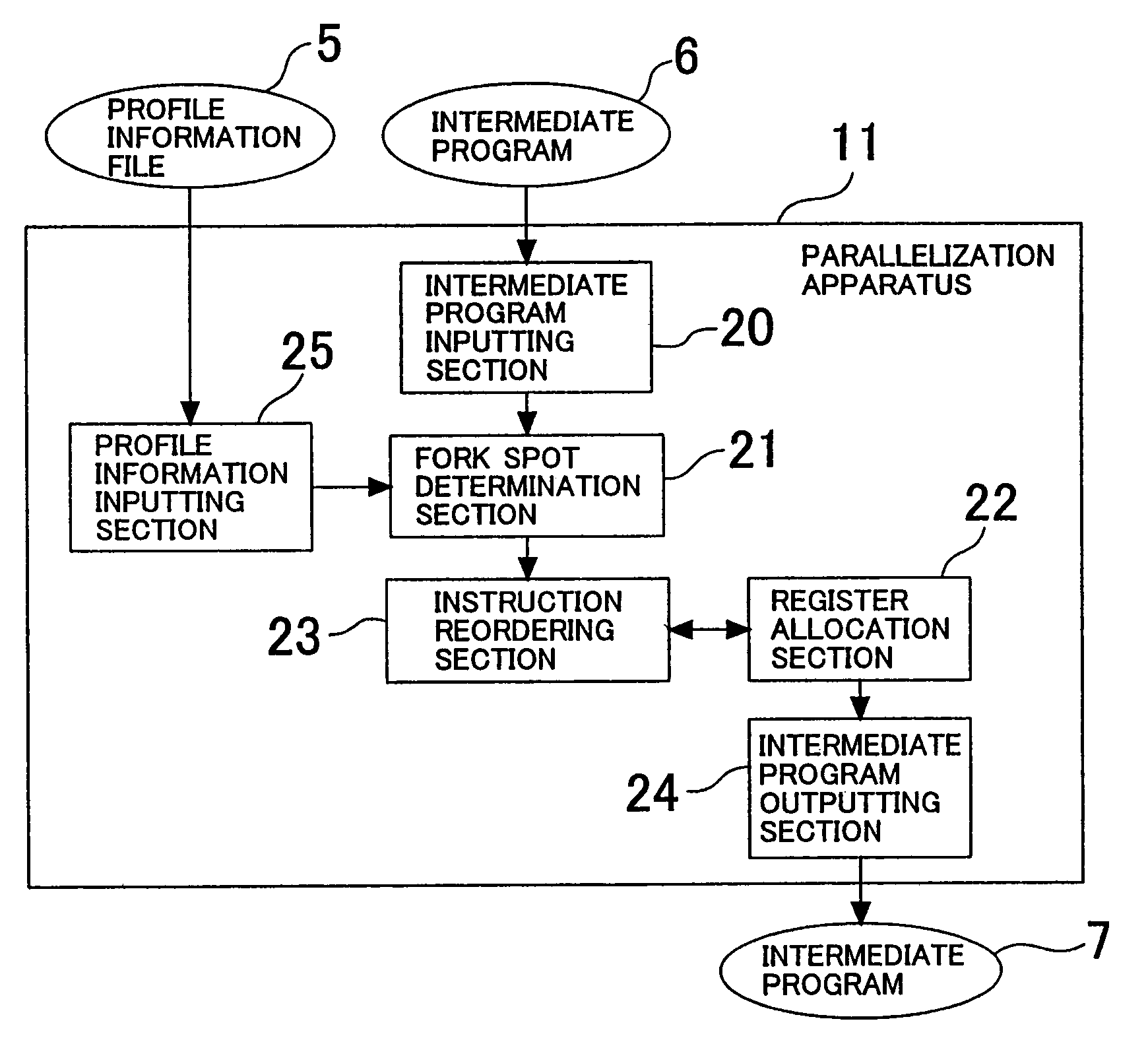

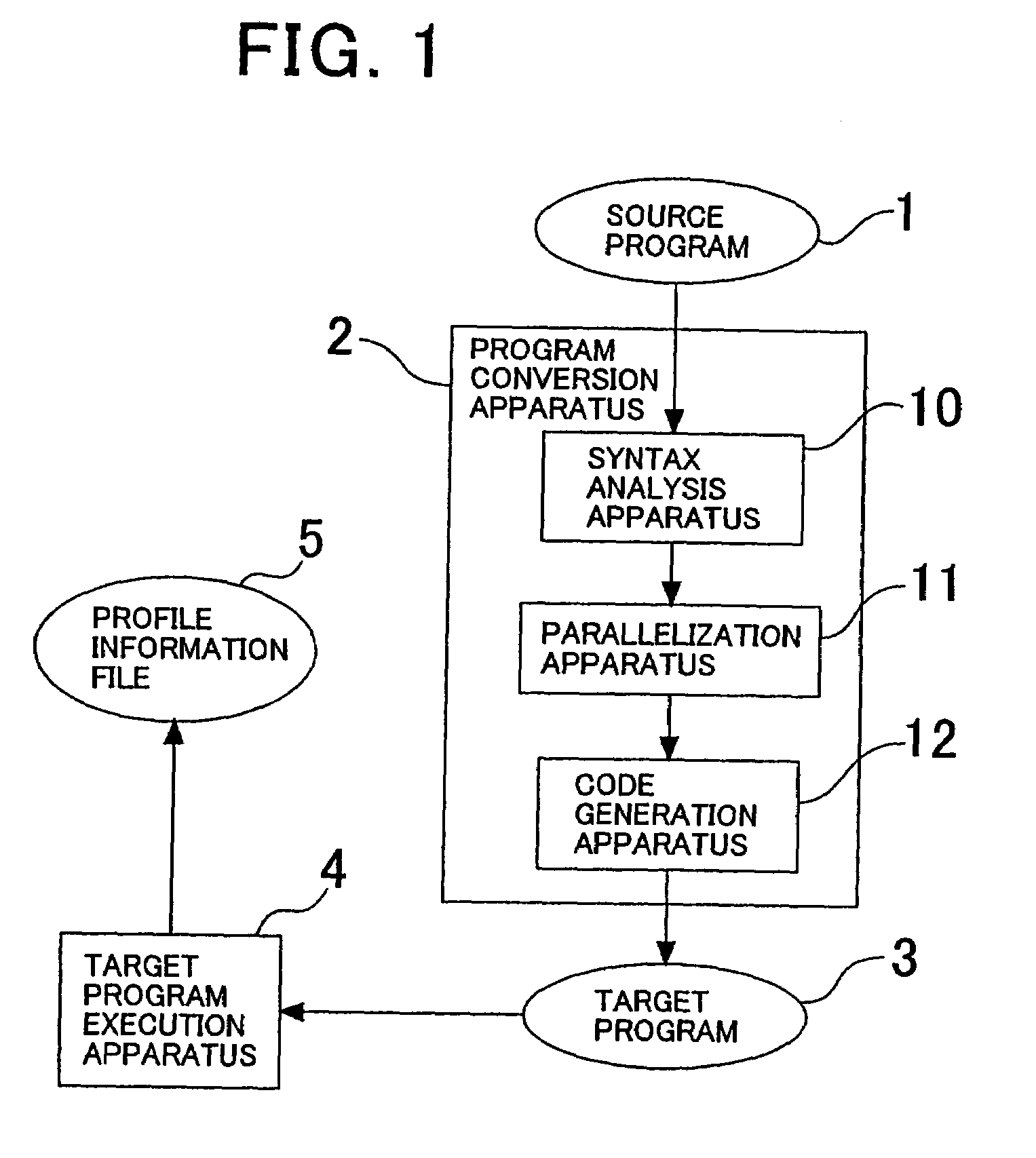

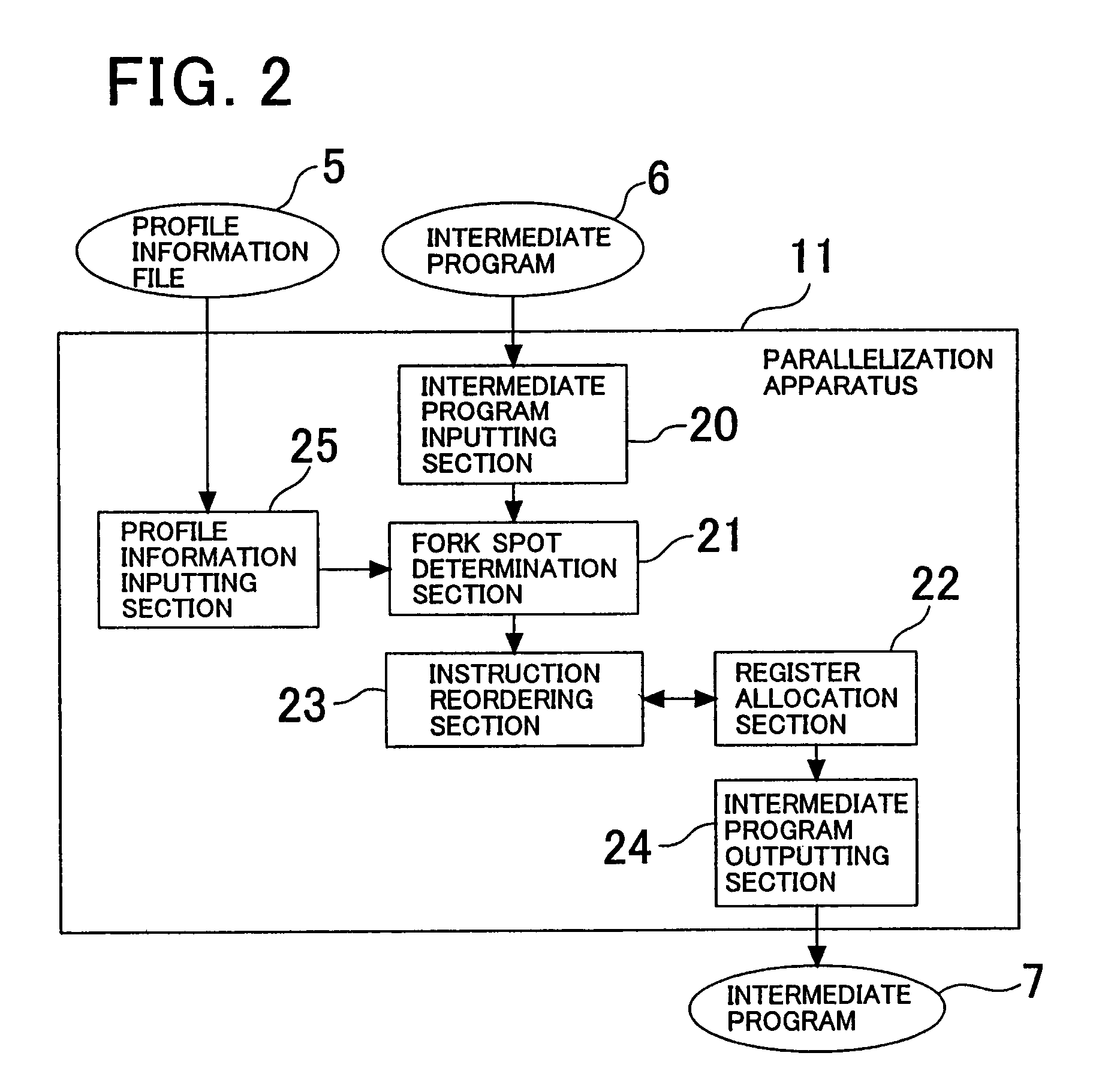

The invention provides a program conversion apparatus which performs parallelization for a multi-thread microprocessor on an intermediate program level. A parallelization apparatus of the program conversion apparatus includes a fork spot determination section, a register allocation section and an instruction reordering section. The fork spot determination section determines a fork spot and a fork system based on a result of a register allocation trial performed by the register allocation section, the number of spots at which memory data dependence is present, and branching probabilities and a data dependence occurrence frequency obtained from a profile information file. The instruction reordering section reorders instructions preceding to and succeeding the FORK instruction in accordance with the determination.

Owner:NEC CORP

Controlling multi-pass rendering sequences in a cache tiling architecture

ActiveUS10535114B2Optimize arbitrarily complexImprove flowImage memory managementCathode-ray tube indicatorsGraphicsComputer architecture

In one embodiment of the present invention a driver configures a graphics pipeline implemented in a cache tiling architecture to perform dynamically-defined multi-pass rendering sequences. In operation, based on sequence-specific configuration data, the driver determines an optimized tile size and, for each pixel in each pass, the set of pixels in each previous pass that influence the processing of the pixel. The driver then configures the graphics pipeline to perform per-tile rendering operations in a region that is translated by a pass-specific offset backward—vertically and / or horizontally—along a tiled caching traversal line. Notably, the offset ensures that the required pixel data from previous passes is available. The driver further configures the graphics pipeline to store the rendered data in cache lines. Advantageously, the disclosed approach exploits the efficiencies inherent in cache tiling architecture while honoring highly configurable data dependencies between passes in multi-pass rendering sequences.

Owner:NVIDIA CORP

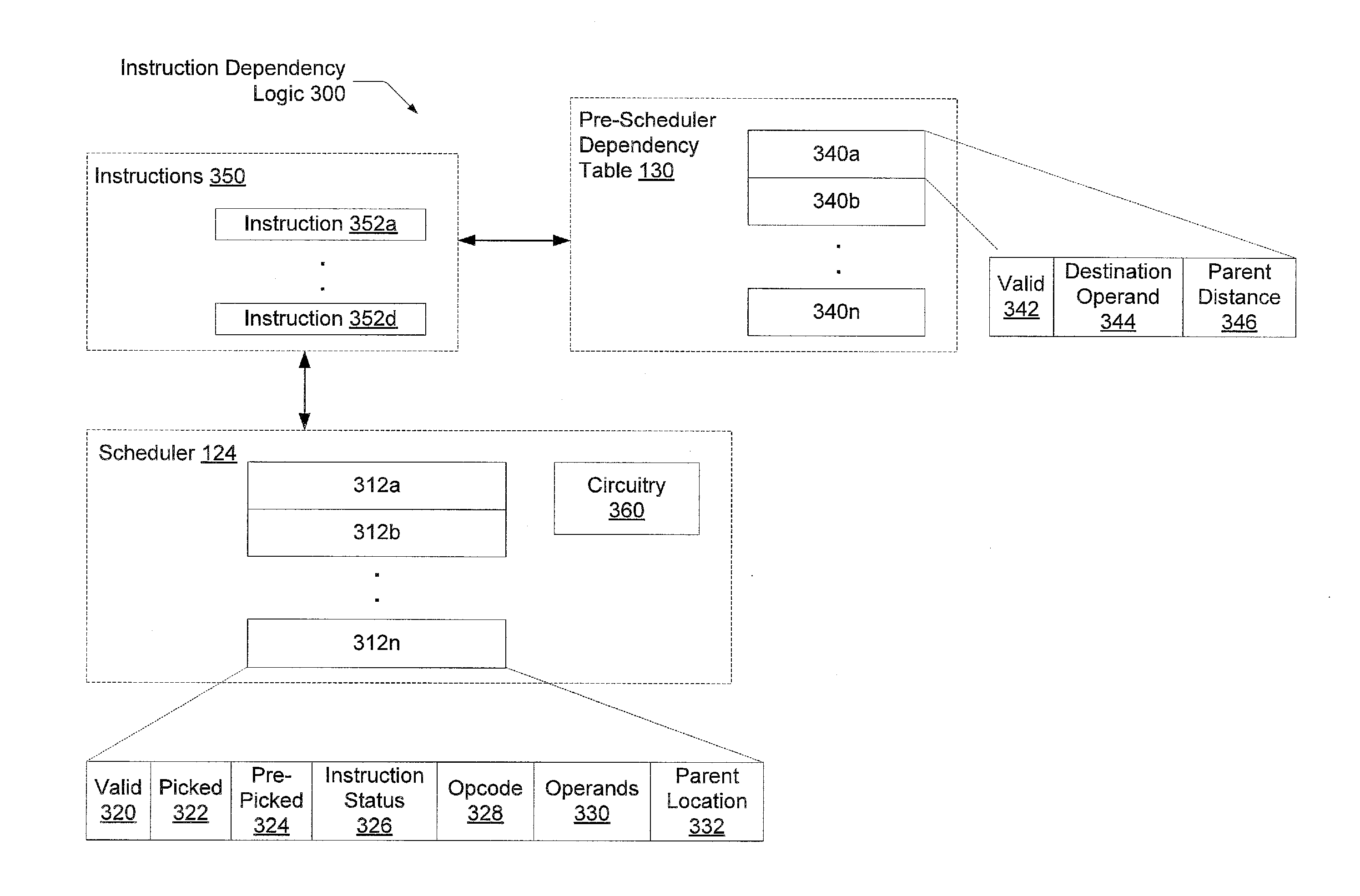

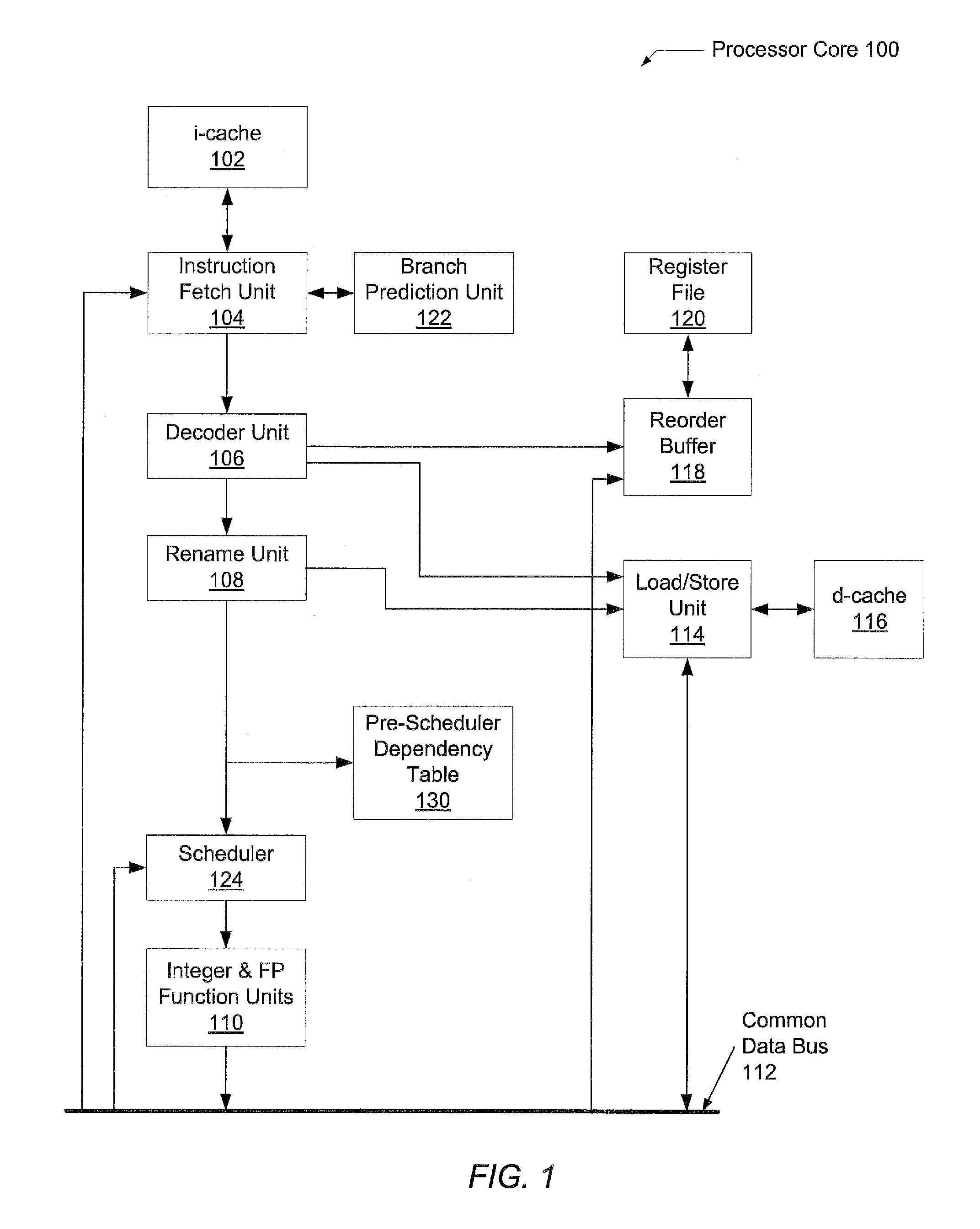

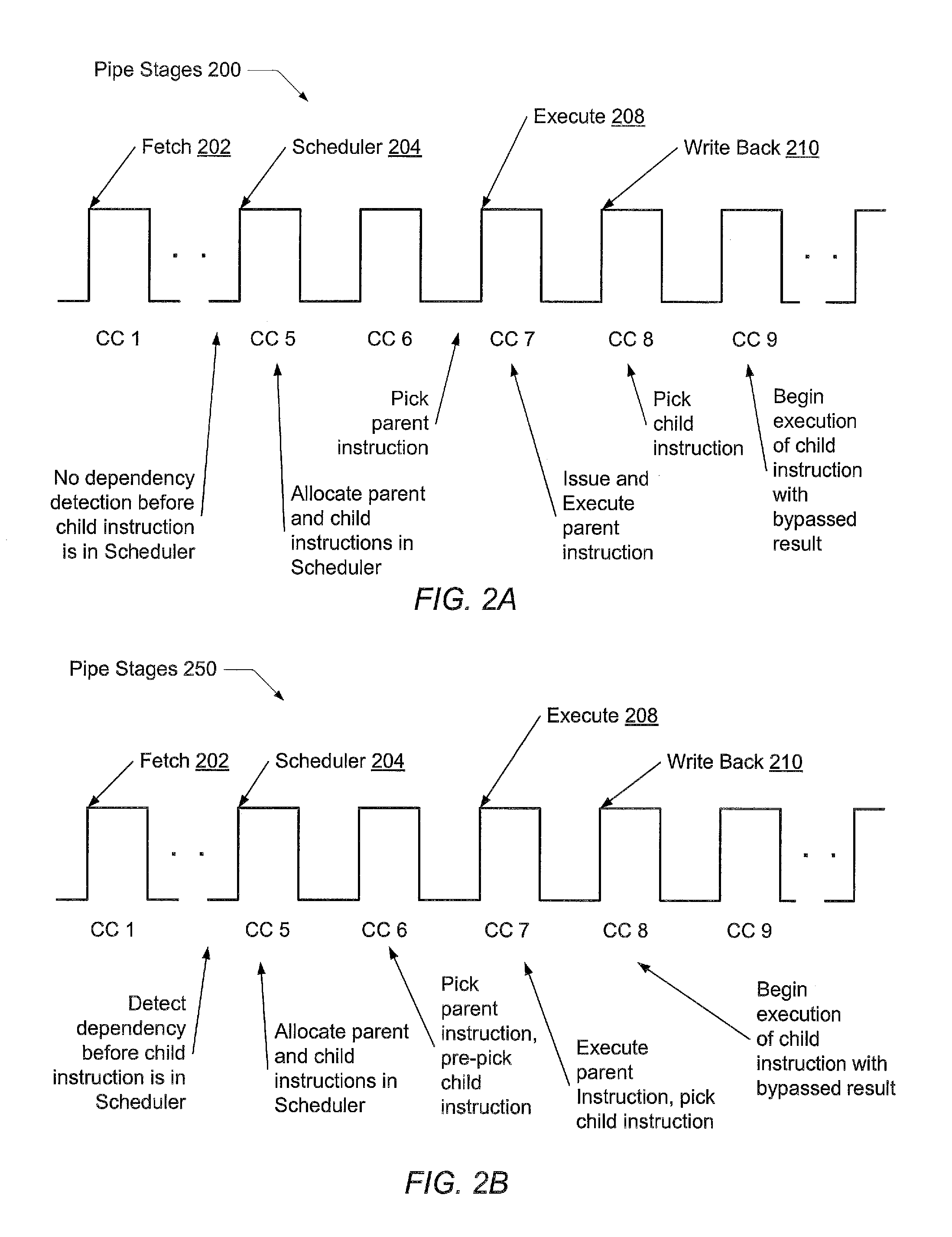

Paired execution scheduling of dependent micro-operations

InactiveUS20120023314A1Lower latencySimple logicDigital computer detailsConcurrent instruction executionMicro-operationData dependence

A method and mechanism for reducing latency of a multi-cycle scheduler within a processor. A processor comprises a front end pipeline that determines data dependencies between instructions prior to a scheduling pipe stage. For each data dependency, a distance value is determined based on a number of instructions a younger dependent instruction is located from a corresponding older (in program order) instruction. When the younger dependent instruction is allocated an entry in a multi-cycle scheduler, this distance value may be used to locate an entry storing the older instruction in the scheduler. When the older instruction is picked for issue, the younger dependent instruction is marked as pre-picked. In an immediately subsequent clock cycle, the younger dependent instruction may be picked for issue, thereby reducing the latency of the multi-cycle scheduler.

Owner:ADVANCED MICRO DEVICES INC

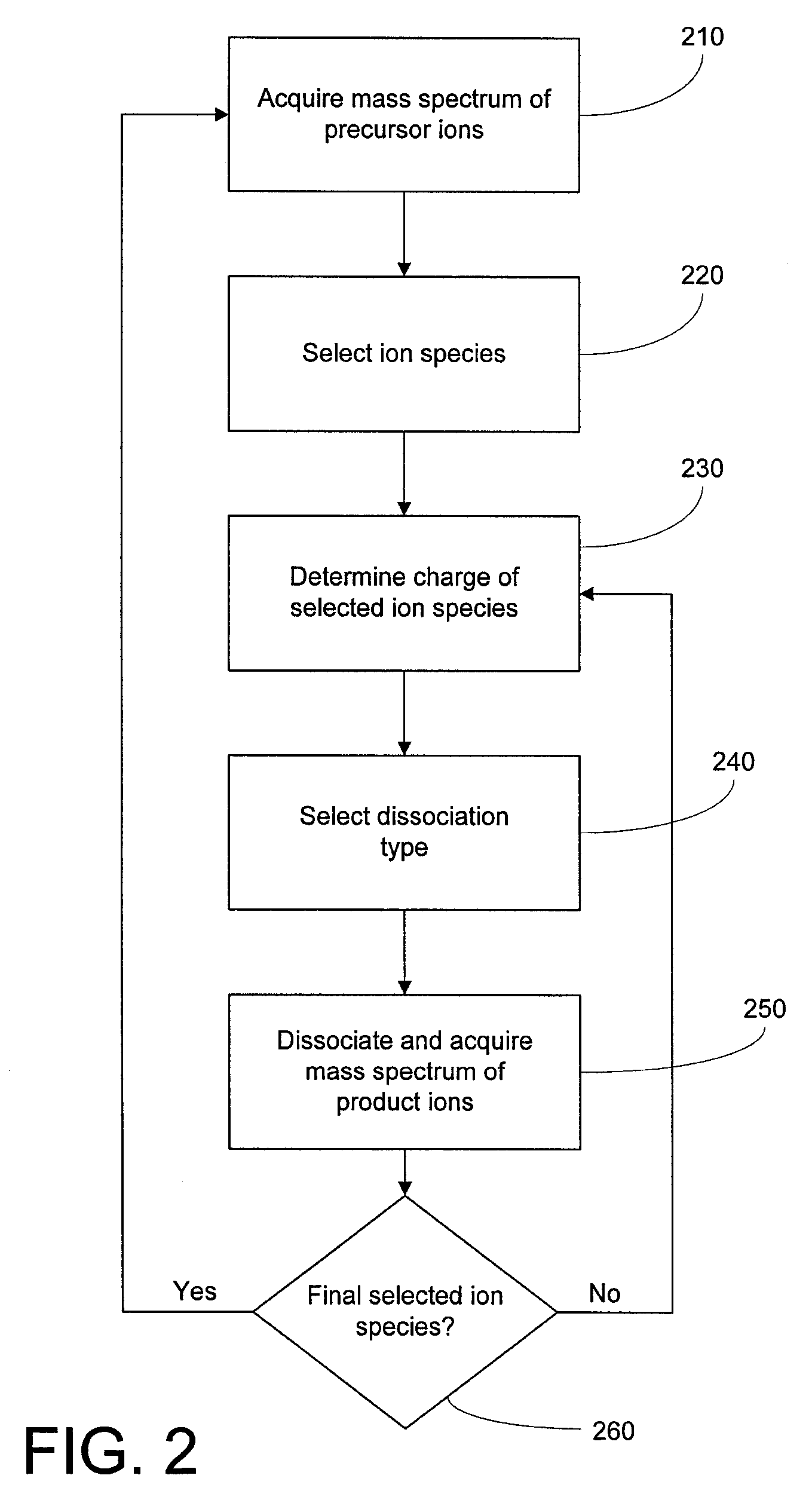

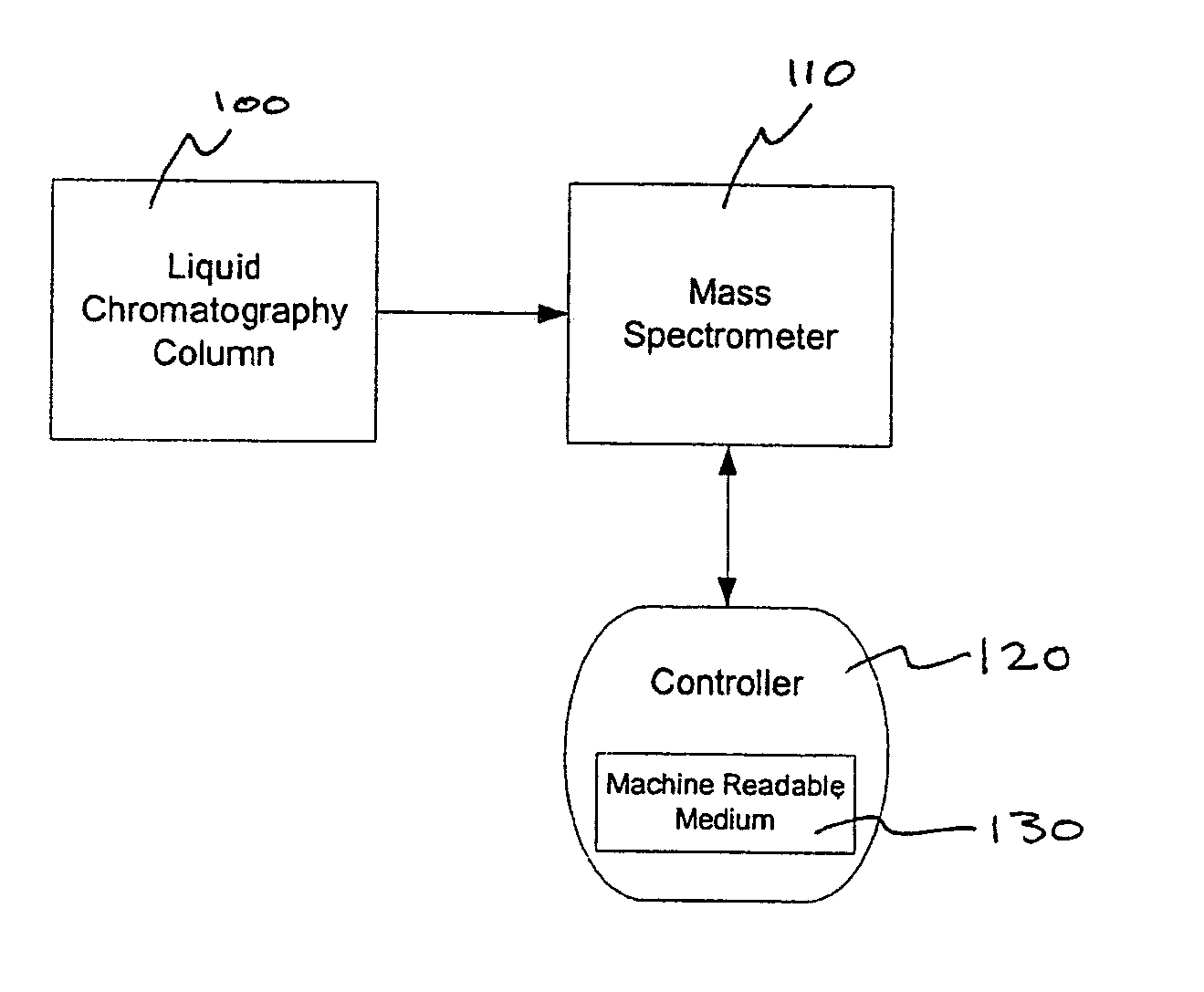

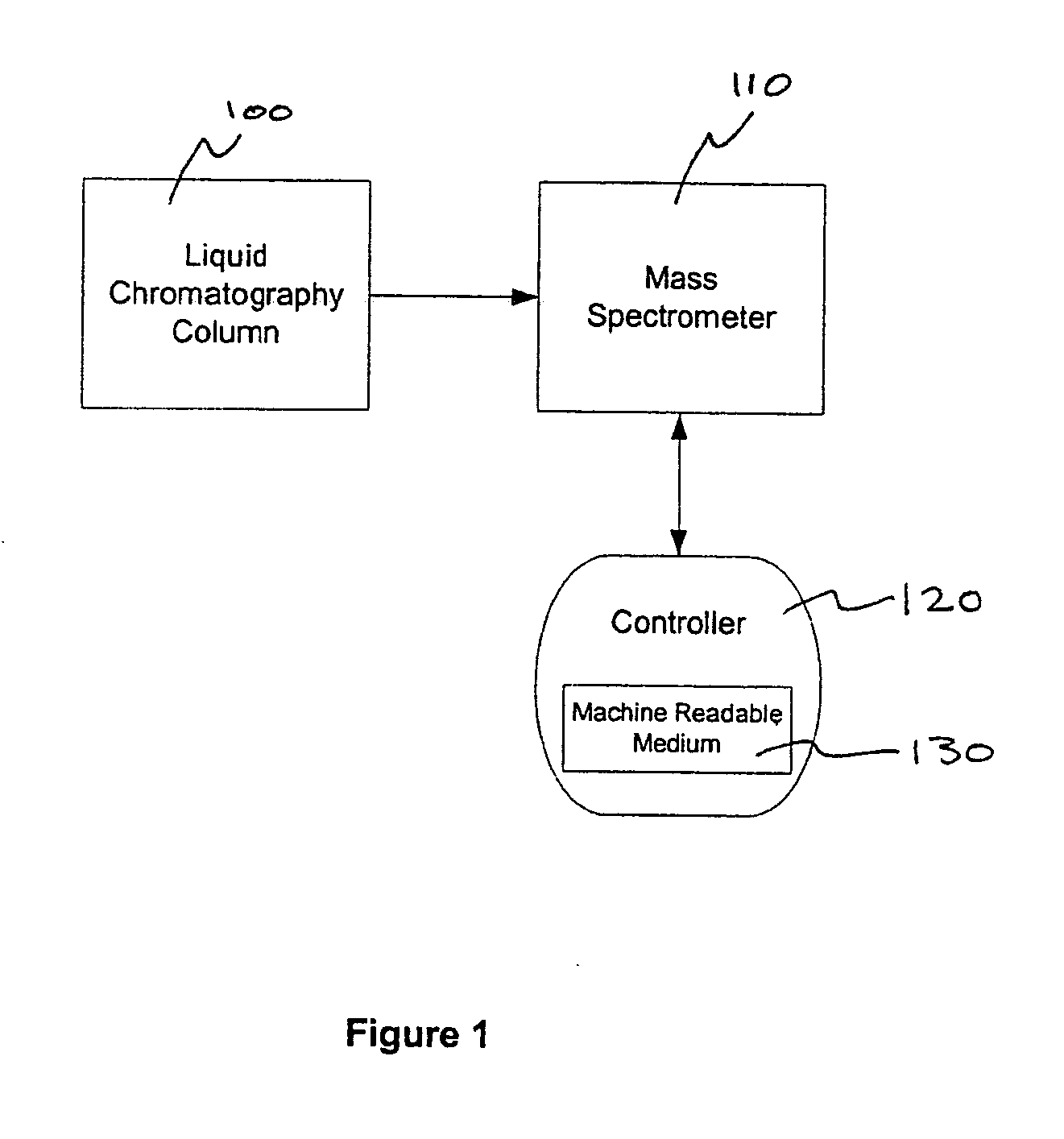

Data-dependent selection of dissociation type in a mass spectrometer

ActiveUS20080048109A1Easy to produceStrong dependenceIsotope separationMass spectrometersBiological activationData dependent

Methods and apparatus for data-dependent mass spectrometric MS / MS or MSn analysis are disclosed. The methods may include determination of the charge state of an ion species of interest, followed by automated selection of a dissociation type (e.g., CAD, ETD, or ETD followed by a non-dissociative charge reduction or collisional activation) based at least partially on the determined charge state. The ion species of interest is then dissociated in accordance with the selected dissociation type, and an MS / MS or MSn spectrum of the resultant product ions may be acquired.

Owner:THERMO FINNIGAN

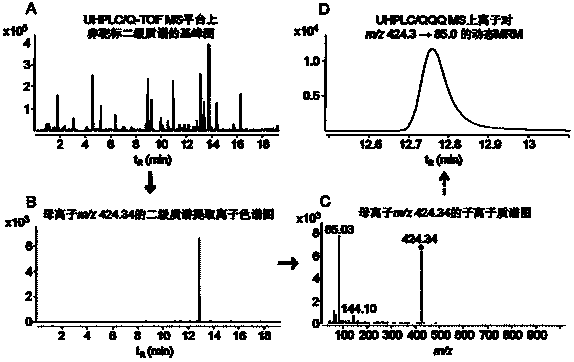

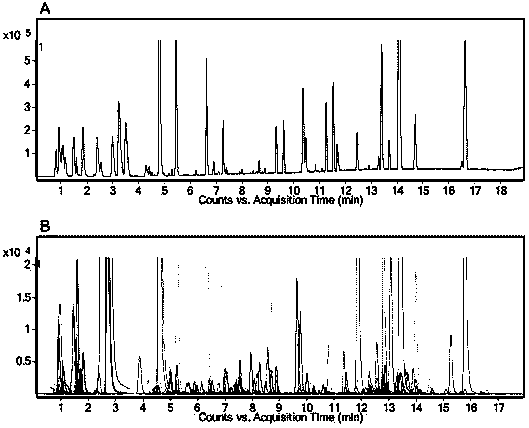

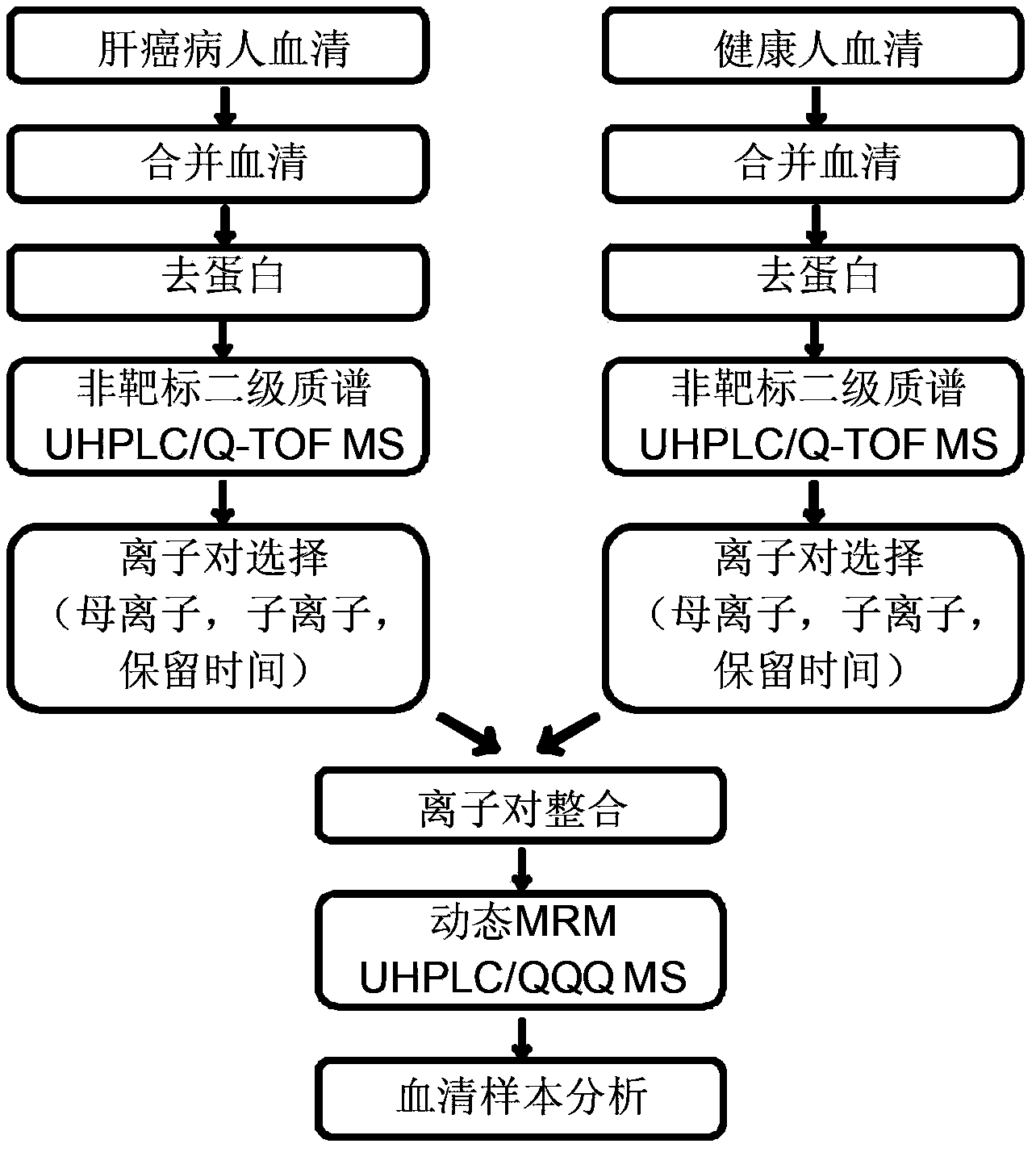

Simulative-target metabonomics analytic method based on combination of liquid chromatography and mass spectrum

ActiveCN104297355AWide detection linear rangeMeet detectionComponent separationMetaboliteRetention time

The invention provides a simulative-target metabonomics analytic method based on combination of liquid chromatography and mass spectrum. The method includes following steps: respectively manufacturing to-be-analyzed samples into merged samples according to groups; automatically collecting secondary mass spectrums of metabolites in each merged samples through data of UHPLS / Q-TOF MS with dependence on a collecting mode; extracting a retaining time and information of parent ions and daughter ions of the metabolites by qualitative analytic software; screening out characteristic ion pair information of the metabolites according to daughter ion response intensity; summing the characteristic ion pair information of each merged samples; and scanning the obtained characteristic ion pair of the metabolites by UHPLC / QQQ MS through a dynamic multi-reaction monitoring mode in an actual sample for obtaining a corresponding spectrogram; and performing integration through quantitative analytic software to obtain the metabolites in the to-be-analyzed samples and content information thereof.

Owner:DALIAN INST OF CHEM PHYSICS CHINESE ACAD OF SCI

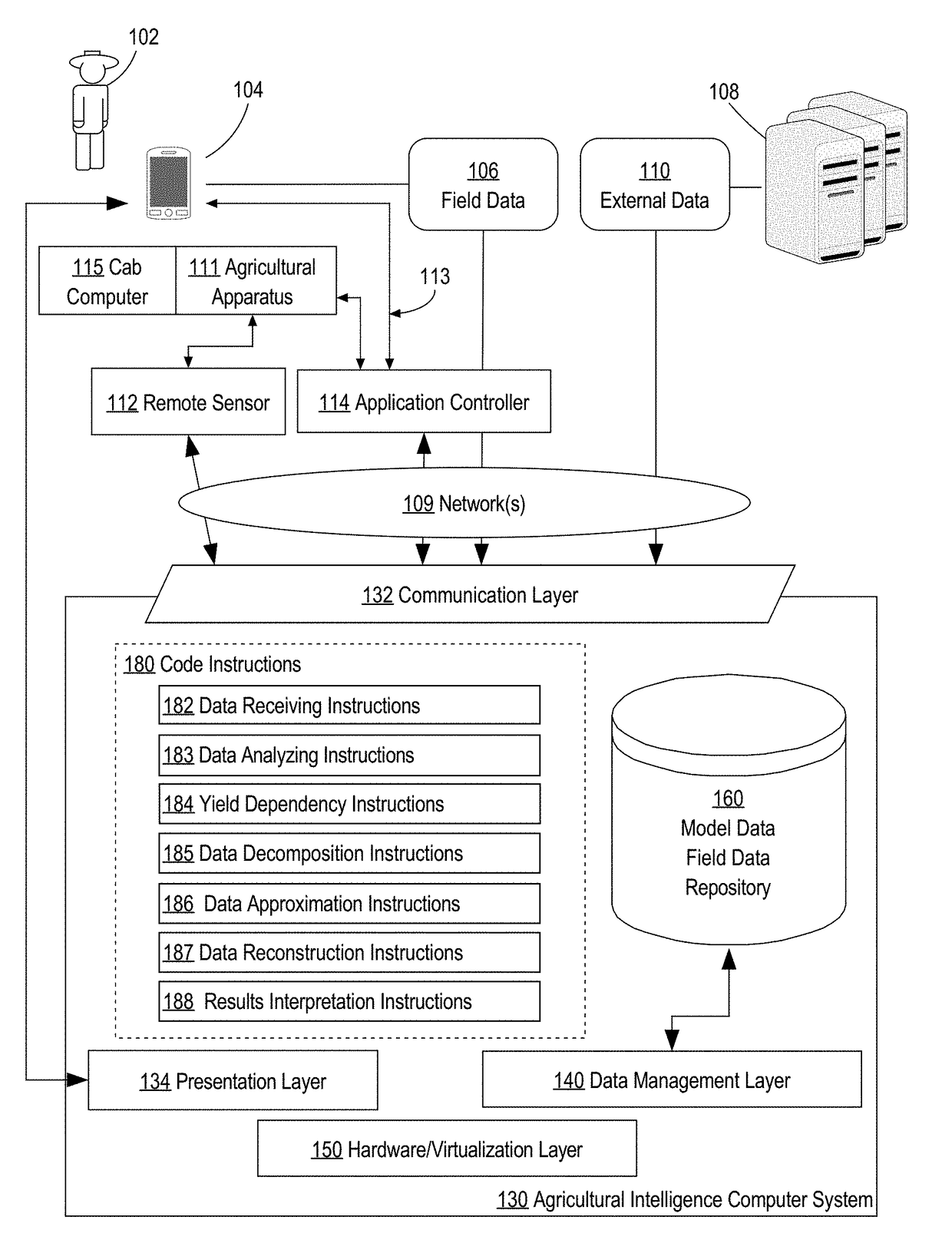

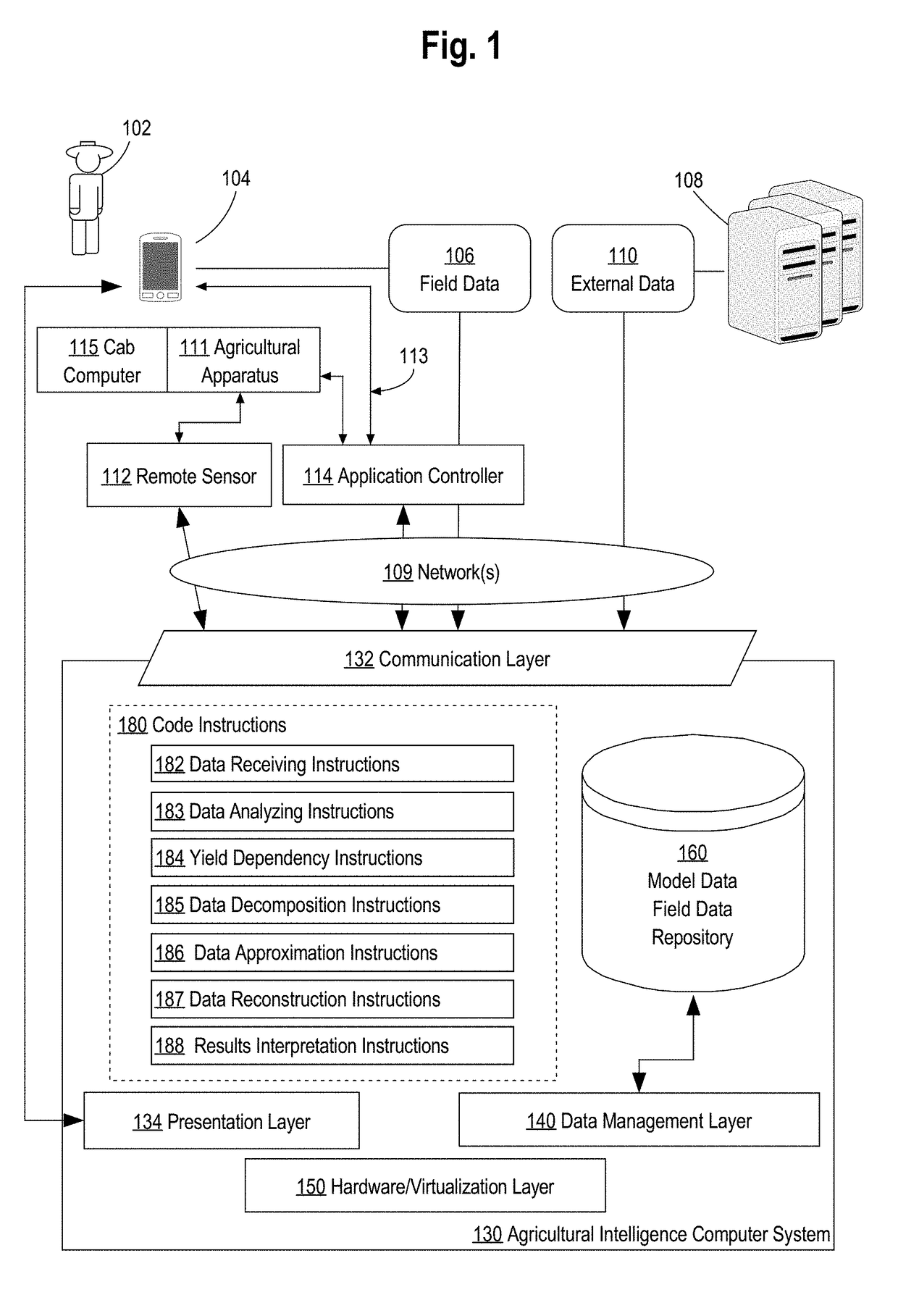

Modeling trends in crop yields

ActiveUS20170228475A1Character and pattern recognitionDesign optimisation/simulationDigital dataCrop yield

A method and system for modeling trends in crop yields is provided. In an embodiment, the method comprises receiving, over a computer network, electronic digital data comprising yield data representing crop yields harvested from a plurality of agricultural fields and at a plurality of time points; in response to receiving input specifying a request to generate one or more particular yield data: determining one or more factors that impact yields of crops that were harvested from the plurality of agricultural fields; decomposing the yield data into decomposed yield data that identifies one or more data dependencies according to the one or more factors; generating, based on the decomposed yield data, the one or more particular yield data; generating forecasted yield data or reconstructing the yield data by incorporating the one or more particular yield data into the yield data.

Owner:THE CLIMATE CORP

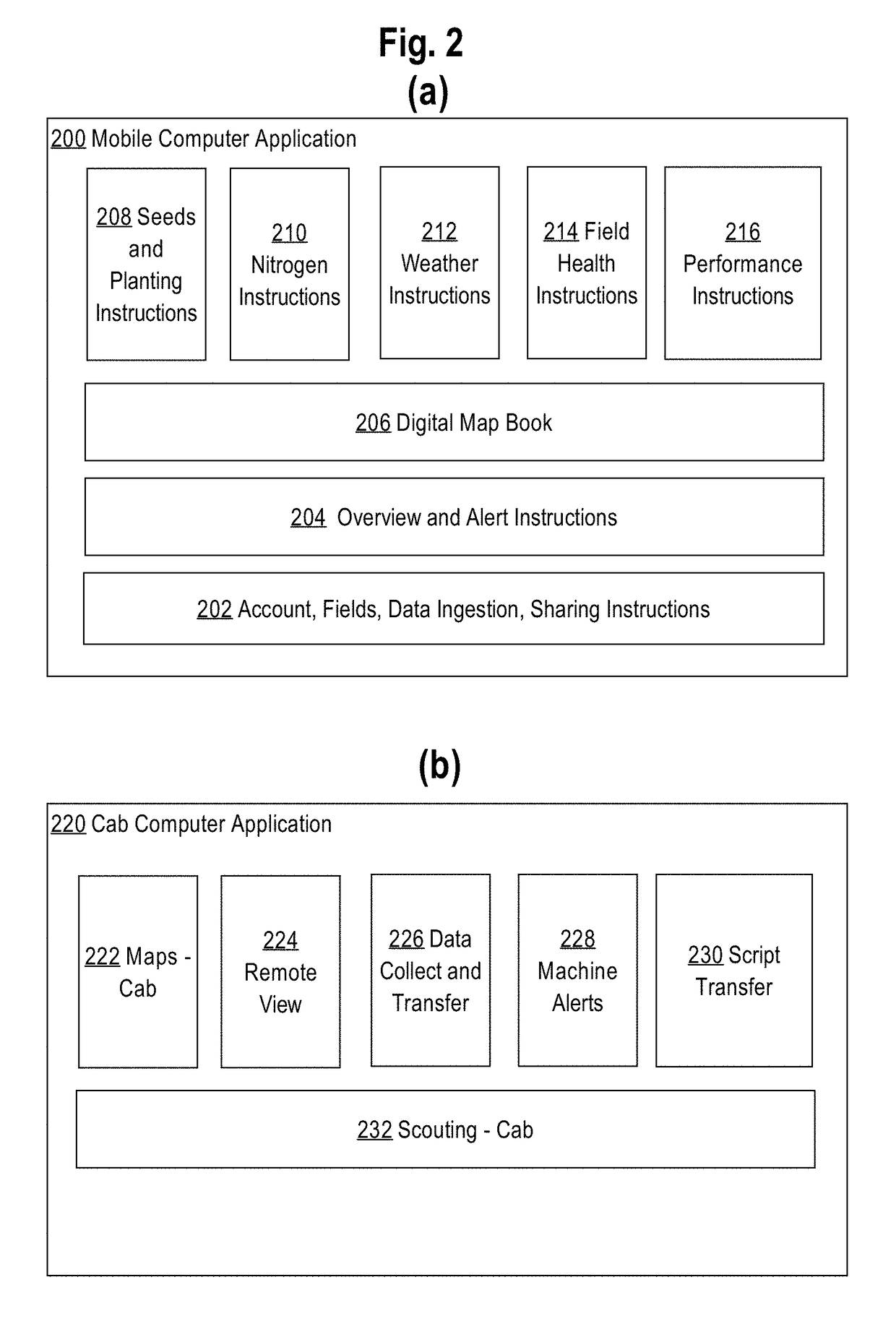

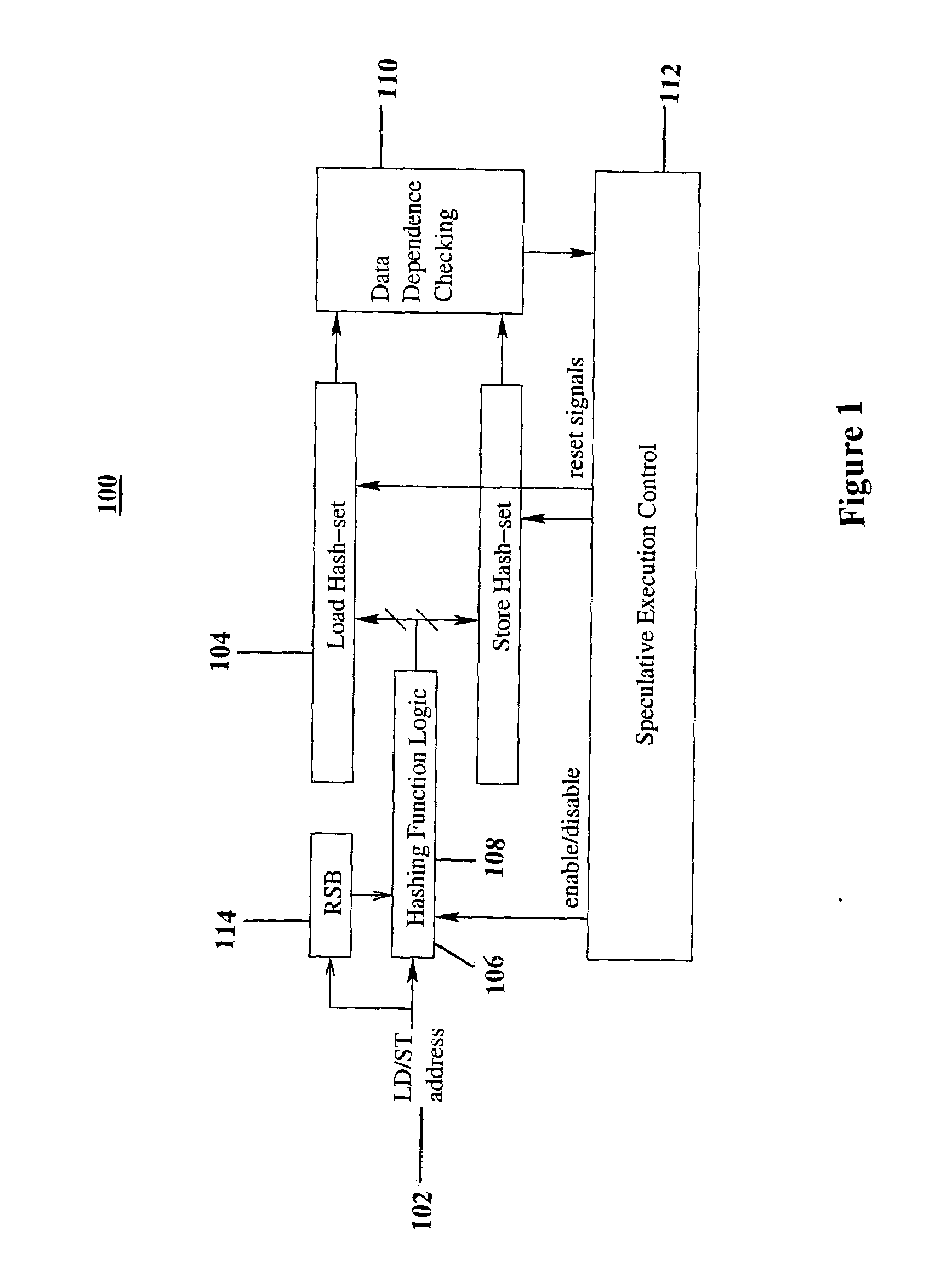

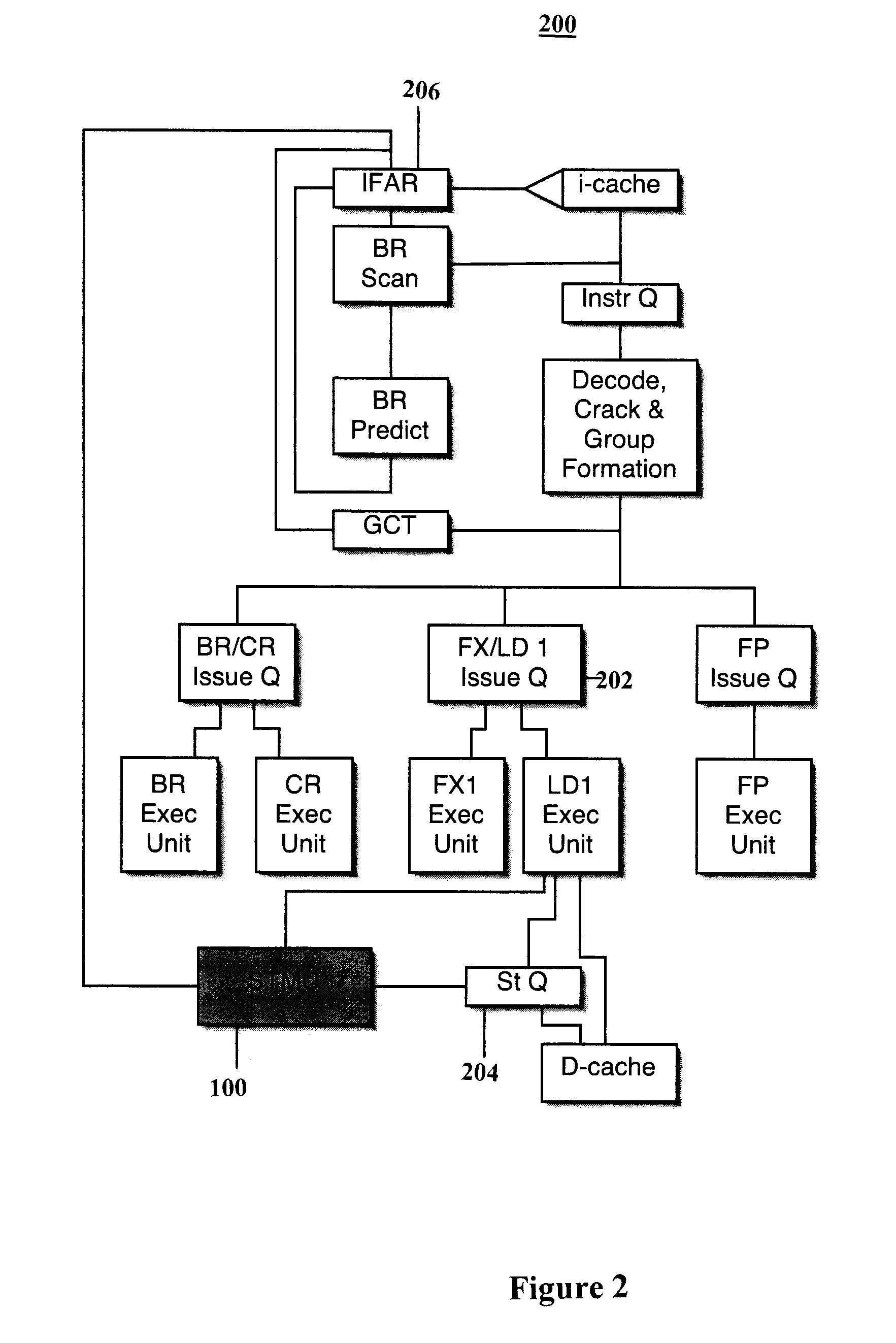

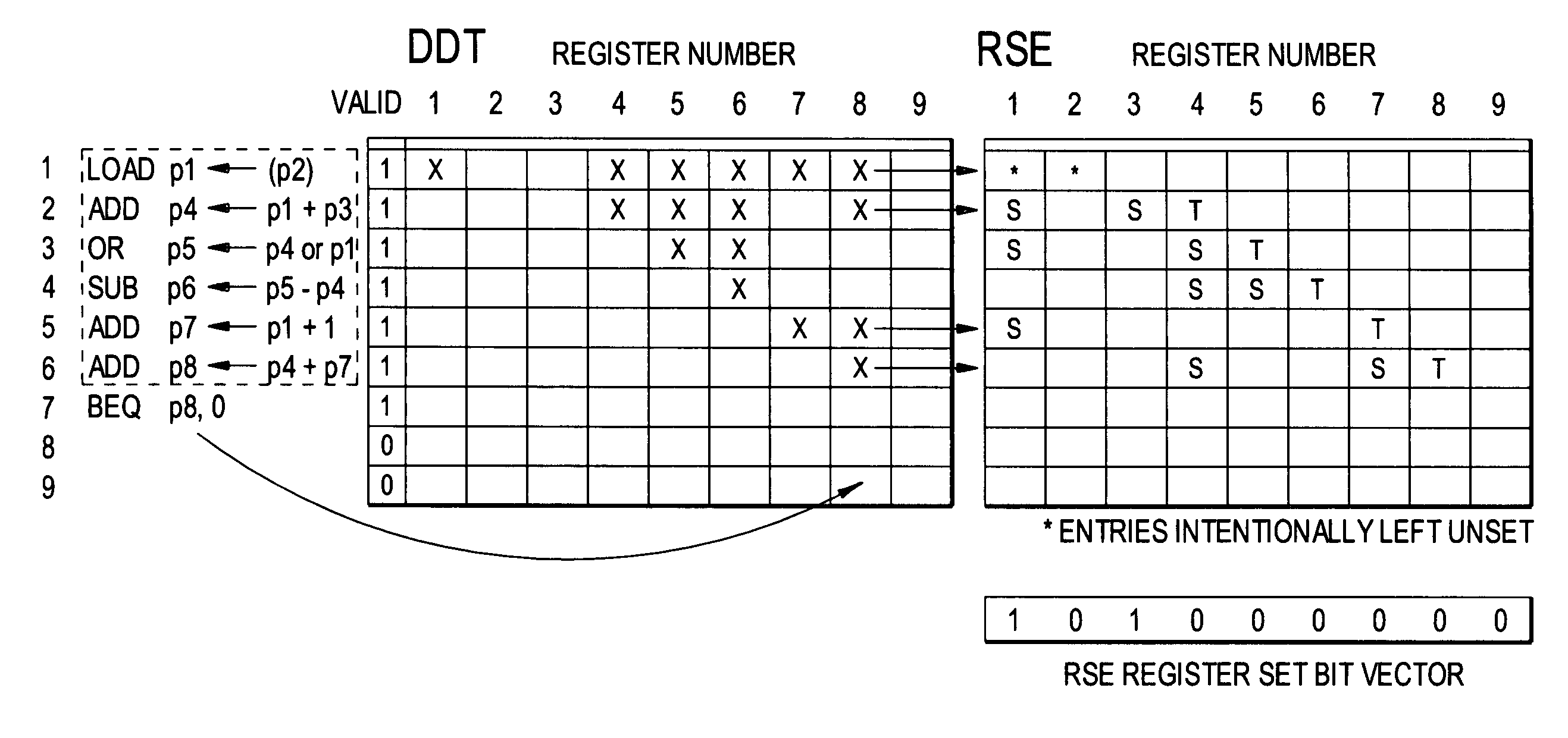

Method and apparatus for implementing efficient data dependence tracking for multiprocessor architectures

InactiveUS20080162889A1Simple and efficientEasy to controlDigital computer detailsMemory systemsData dependenceMultiprocessor architecture

A system for tracking memory dependencies includes a speculative thread management unit, which uses a bit vector to record and encode addresses of memory access. The speculative thread management unit includes a hashing unit that partitions the addresses into a load hash set and a store hash set, a load hash set unit for storing the load hash set, a store hash set unit for storing the store hash set, and a data dependence checking unit that checks data dependence when a thread completes, by comparing a load hash set of the thread to a store hash set of other threads.

Owner:IBM CORP

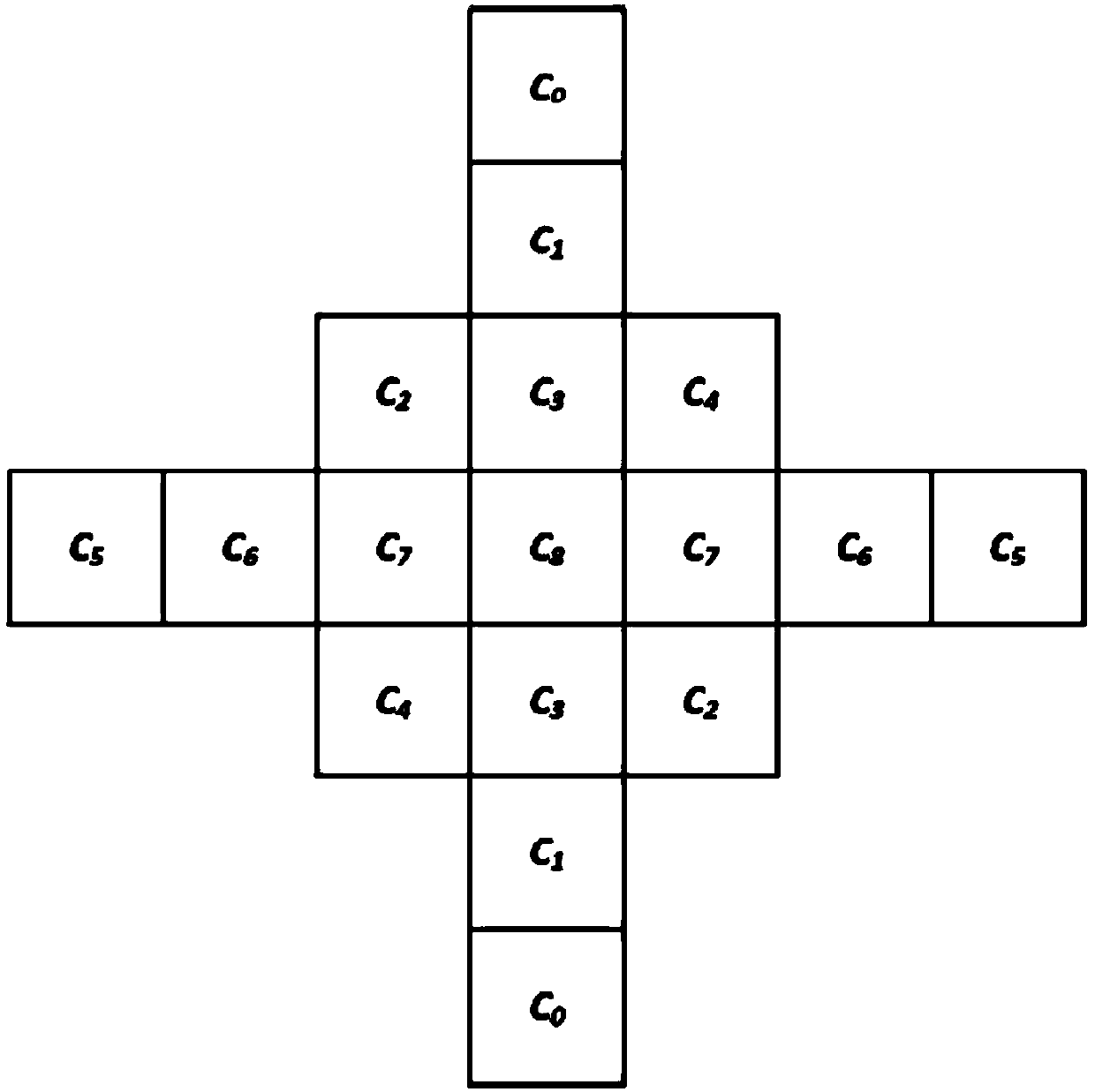

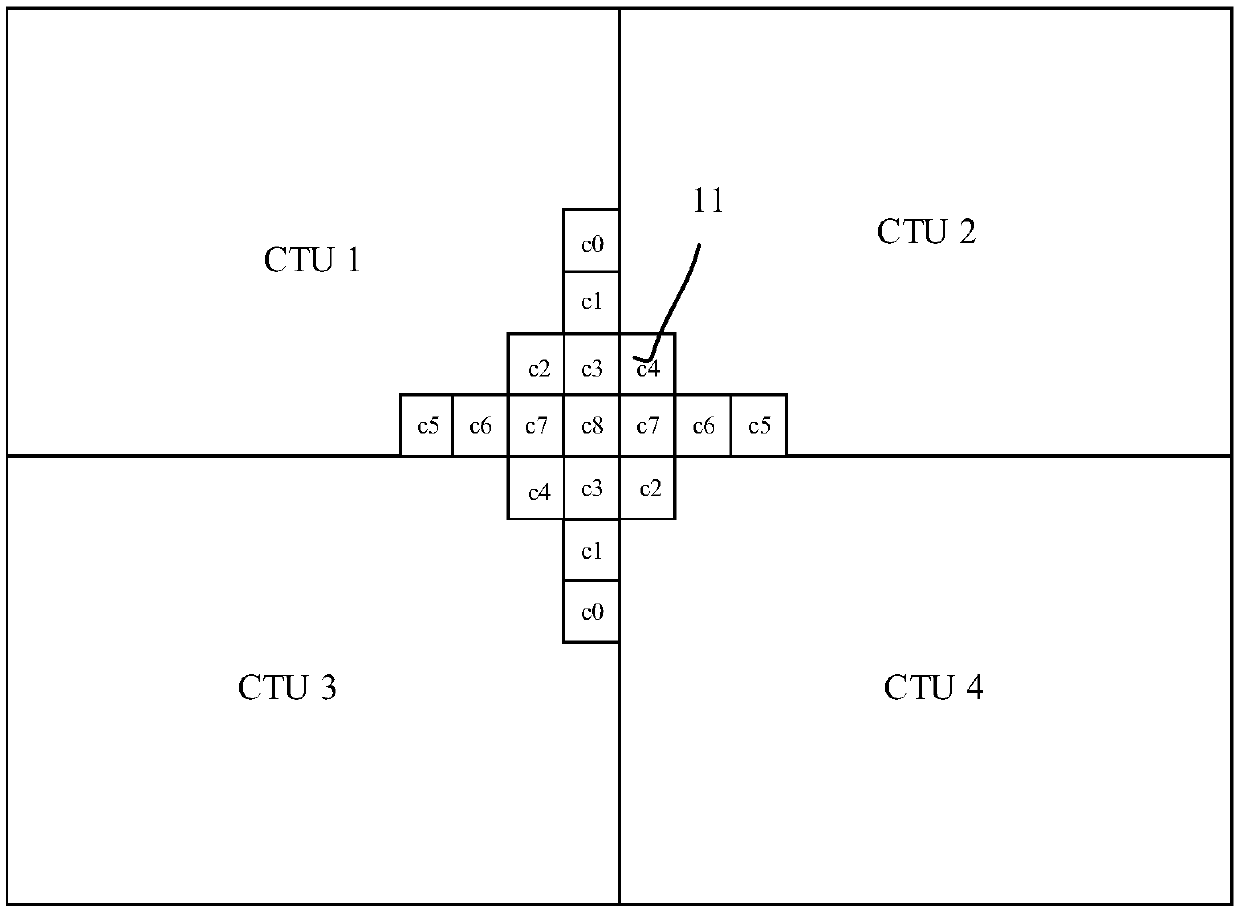

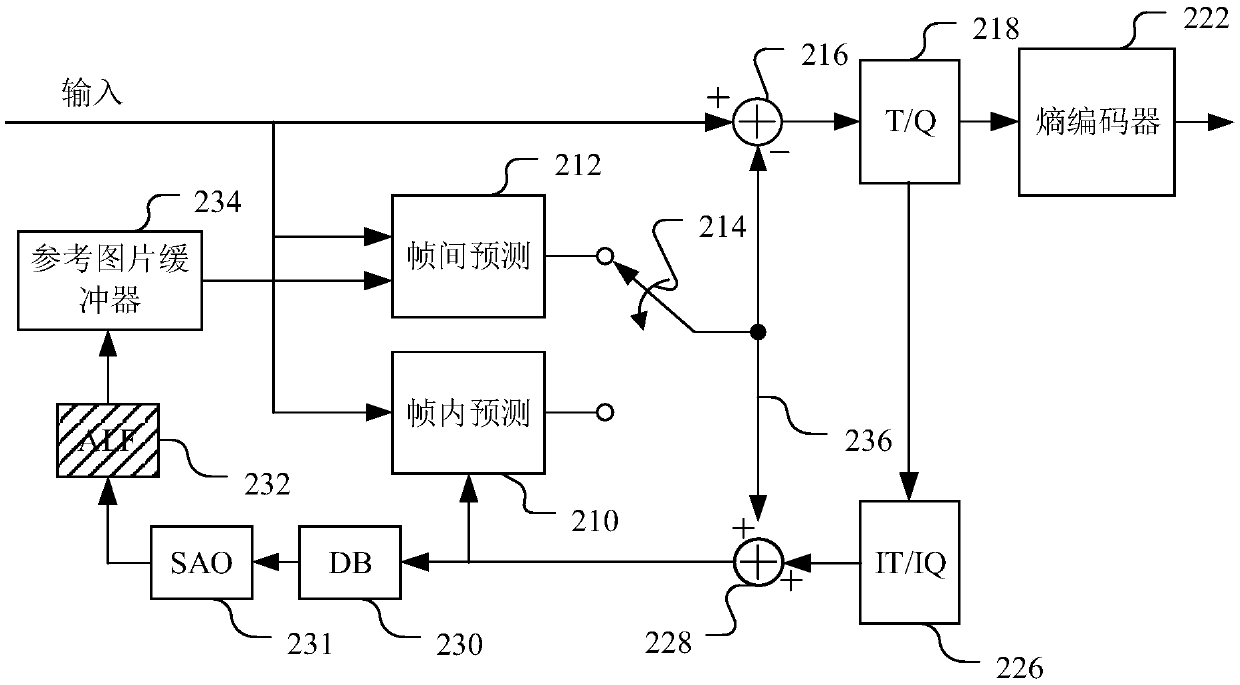

Loop filtering method, loop filtering device, electronic equipment and readable medium

ActiveCN109600611AResolve data dependenciesDigital video signal modificationCoding tree unitVIRTUAL PIXEL

The application relates to a loop filtering method, a loop filtering device, a piece of electronic equipment and a readable medium. The loop filtering method includes the following steps: setting a virtual boundary outside the actual boundary of a coding tree unit according to the size and shape of a filter; filling the gap between the actual boundary and the virtual boundary with virtual pixel samples; and using the virtual pixel samples instead of the pixels beyond the actual boundary of the coding tree unit to carry out loop filtering on the multiple pixels in the coding tree unit. According to the method, a virtual boundary is set outside the actual boundary, and virtual pixel samples are set between the virtual boundary and the actual boundary, so that virtual pixel samples can be used in adaptive loop filtering, and the problem of data dependence between coding tree units is solved.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

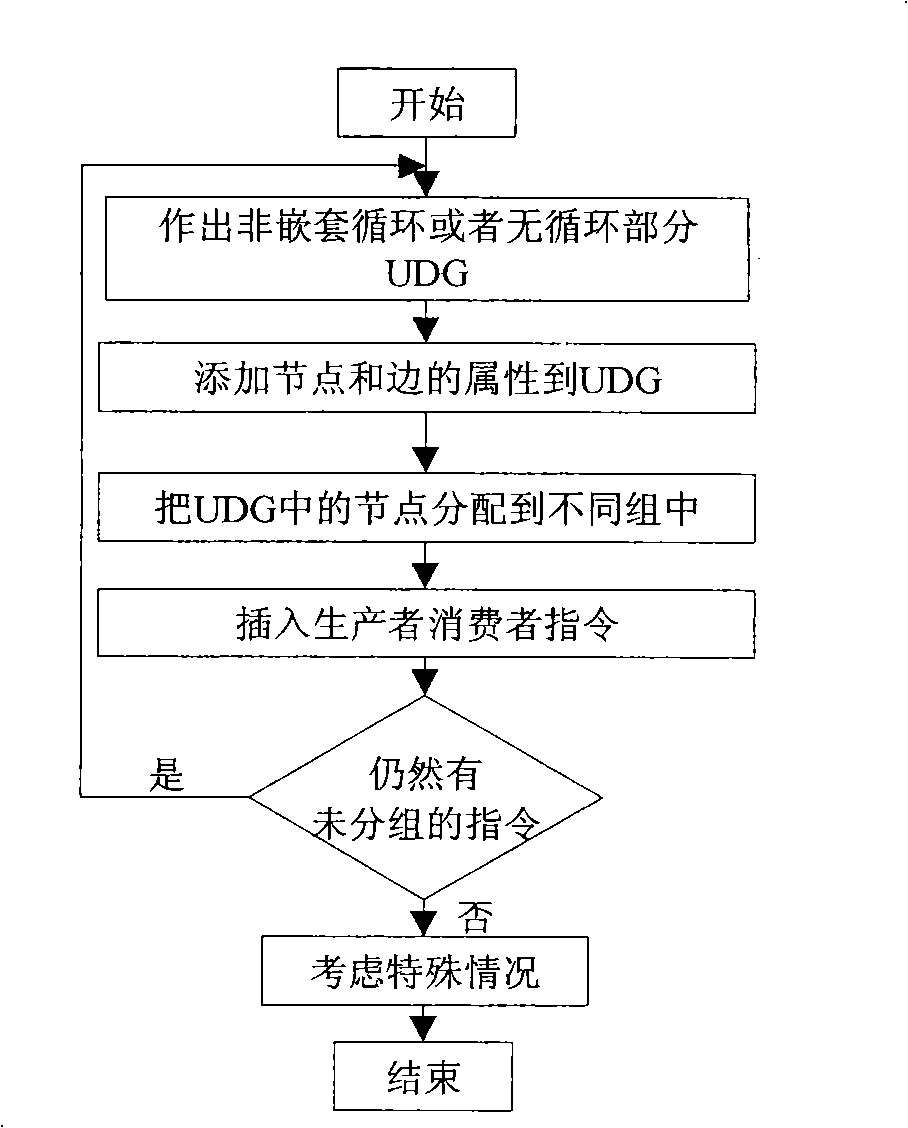

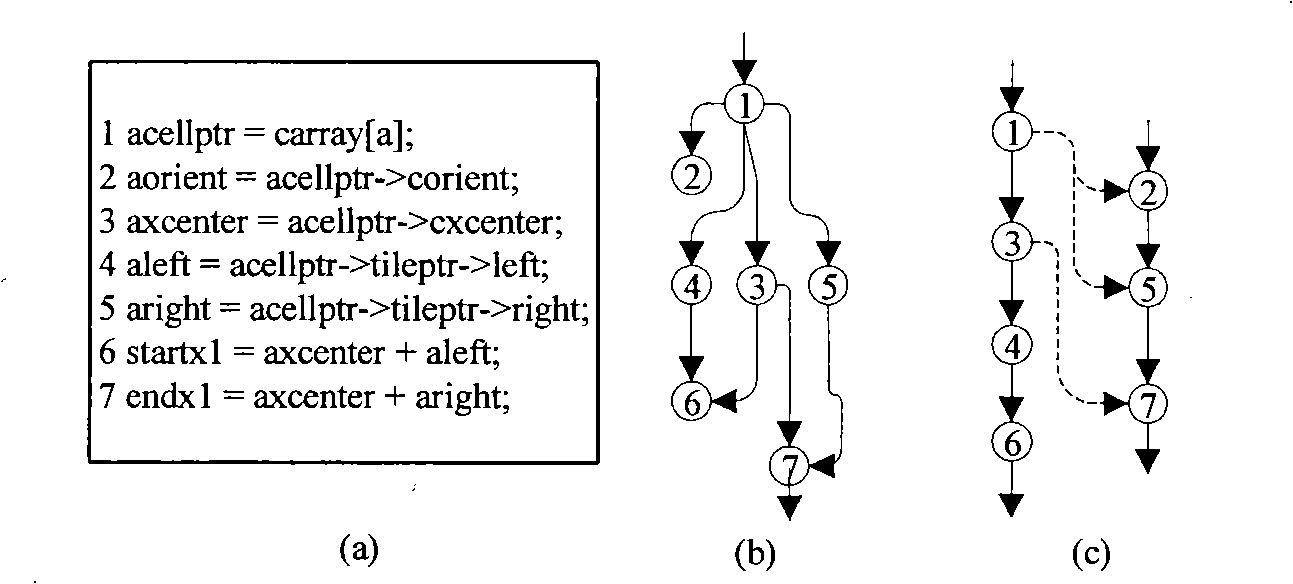

Realization method for parallelization of single-threading program based on analysis of data flow

InactiveCN101515231AImprove execution efficiencyImprove utilization efficiencyConcurrent instruction executionProgram instructionData dependence

The invention discloses a realization method for the parallelization of the single-threading program based on the analysis of data flow. In the invention, by analyzing the data dependence among the instructions in the single-thread program, the single-threading program is transformed to a multi-threading program, the dependence among the instructions in the single-thread program includes a data dependence and a control dependence, wherein the control dependence is a dependence for the control condition value and a special data dependence. In the process of thread analyzing, the invention can take regard of the balance of the thread communication expense and the thread after analyzing. The invention has the advantage that the different parts of the single-threading program are executed in parallel, thus reducing the program executing time and improving the program executing efficiency. The method for the parallelization of the single-threading program is in particular suitable for the current multi-core structure.

Owner:ZHEJIANG UNIV

Memory access abnormity detecting method and memory access abnormity detecting device

ActiveCN104636256AImplement detection operationsRealize the detection operation of memory access out of boundsSoftware testing/debuggingPlatform integrity maintainanceData dependency graphLexical analysis

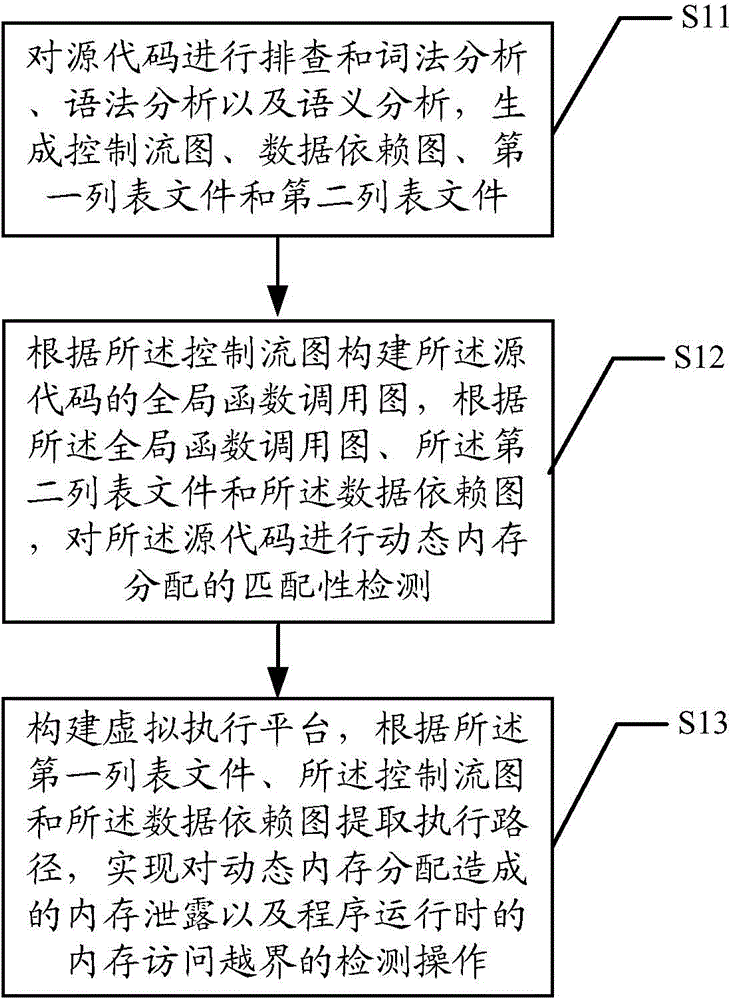

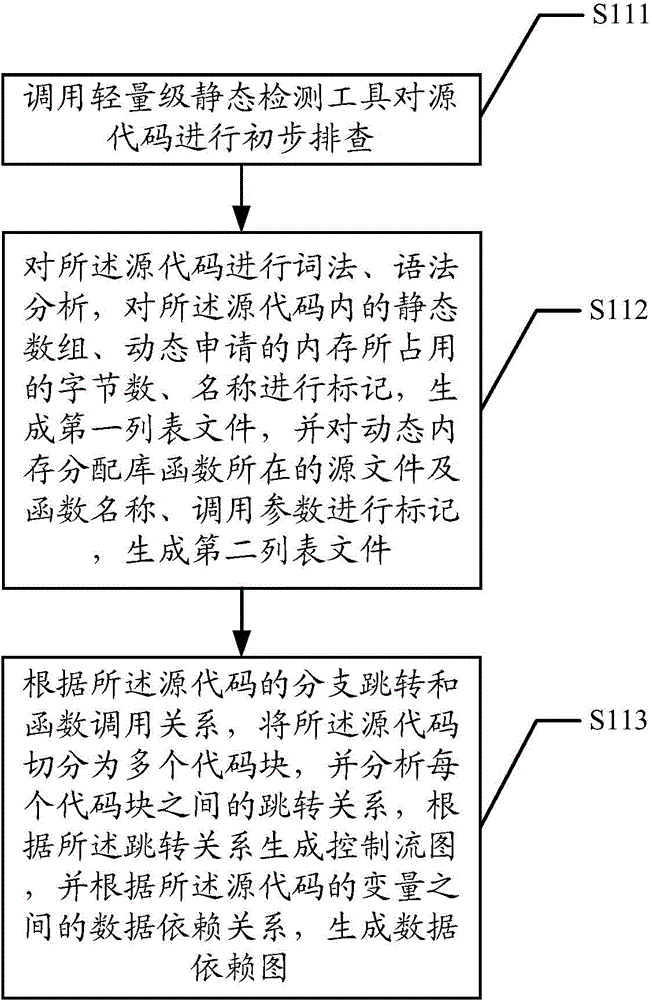

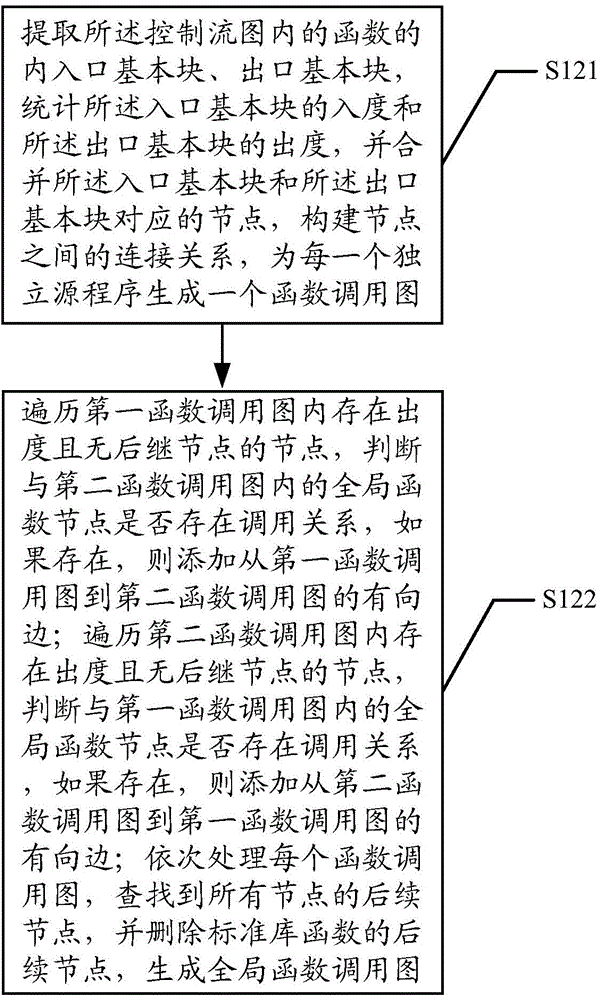

The invention discloses a memory access abnormity detecting method and a memory access abnormity detecting device. The memory access abnormity detecting method comprises the following steps of checking source codes and analyzing the morphology, the grammar and the semanteme of the source codes to generate a control flow diagram, a data dependence diagram, a first list file and a second list file; establishing a global function invocation diagram of the source codes according to the control flow diagram; performing matching detection on dynamic memory allocation of the source codes according to the global function invocation diagram, the second list file and the data dependence diagram; and establishing a virtual executing platform; and extracting an executing path according to the first list file, the control flow diagram and the data dependence diagram so as to detect memory leakage caused by dynamic memory allocation and memory access violation during running of a program. Memory access abnormity in the source codes can be sufficiently dug by analyzing the first list file, the second list file, the control flow diagram, the data dependence diagram and the global function invocation diagram, establishing the virtual executing platform and extracting the executing path, and the memory access abnormity can be detected efficiently.

Owner:AGRICULTURAL BANK OF CHINA

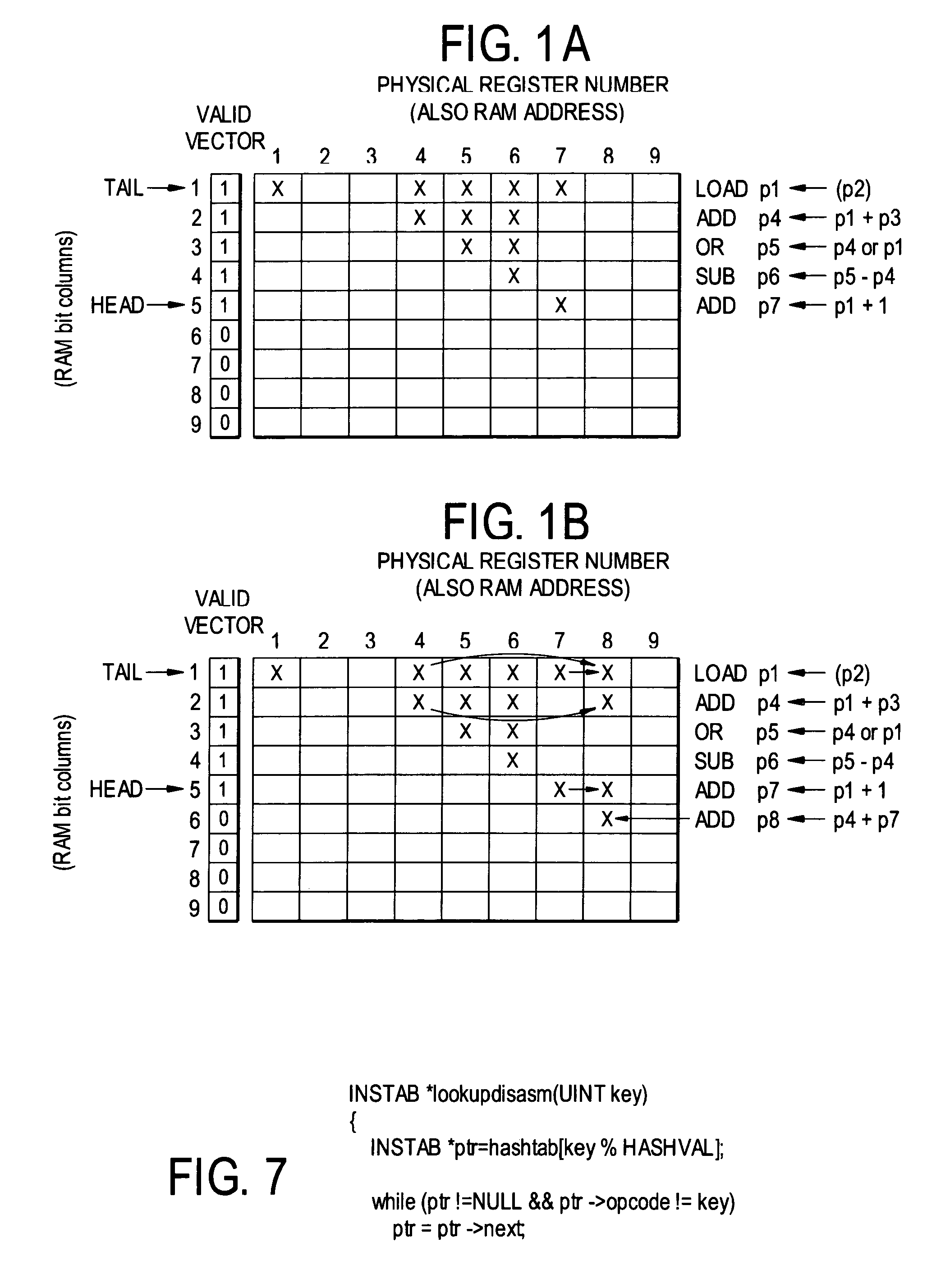

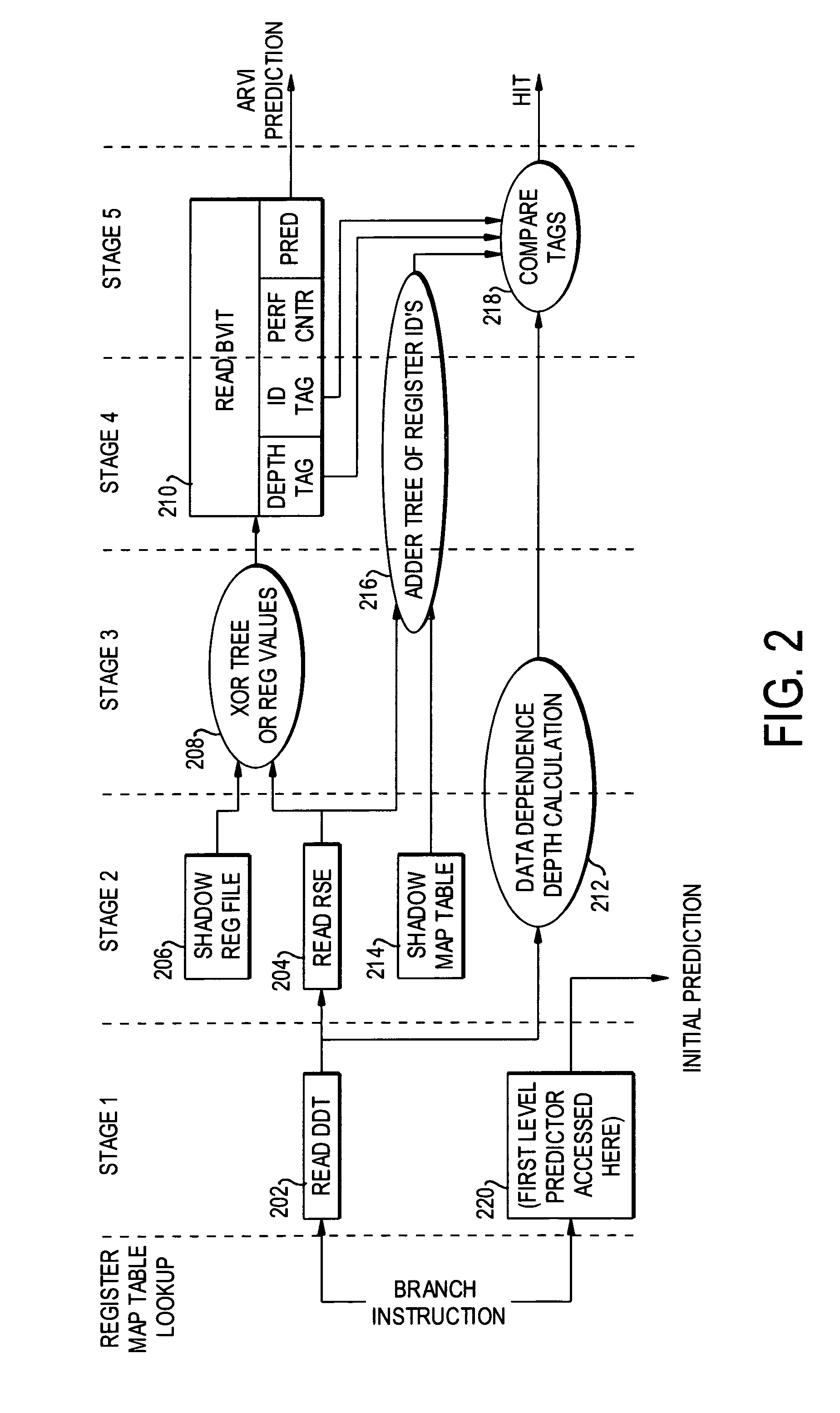

Dynamic data dependence tracking and its application to branch prediction

ActiveUS7571302B1Accurate measurementImproving the accuracy of criticality measuresDigital computer detailsMemory systemsProcessor registerData dependence

A data dependence table in RAM relates physical register addresses to instructions such that for each instruction, the registers on whose data the instruction depends are identified. The table is updated for each instruction added to the pipeline. For a branch instruction, the table identifies the registers relevant to the branch instruction for branch prediction.

Owner:UNIVERSITY OF ROCHESTER

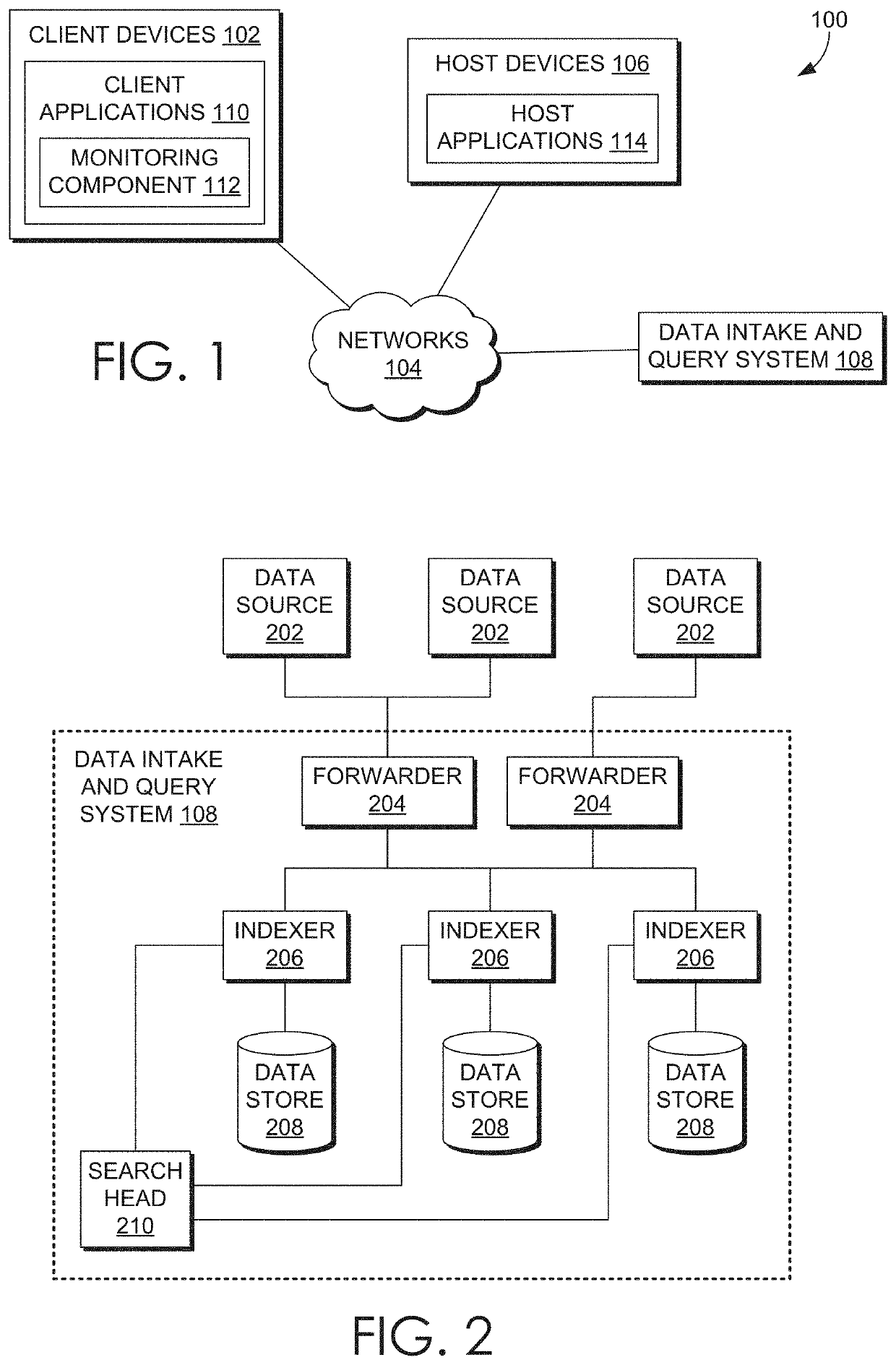

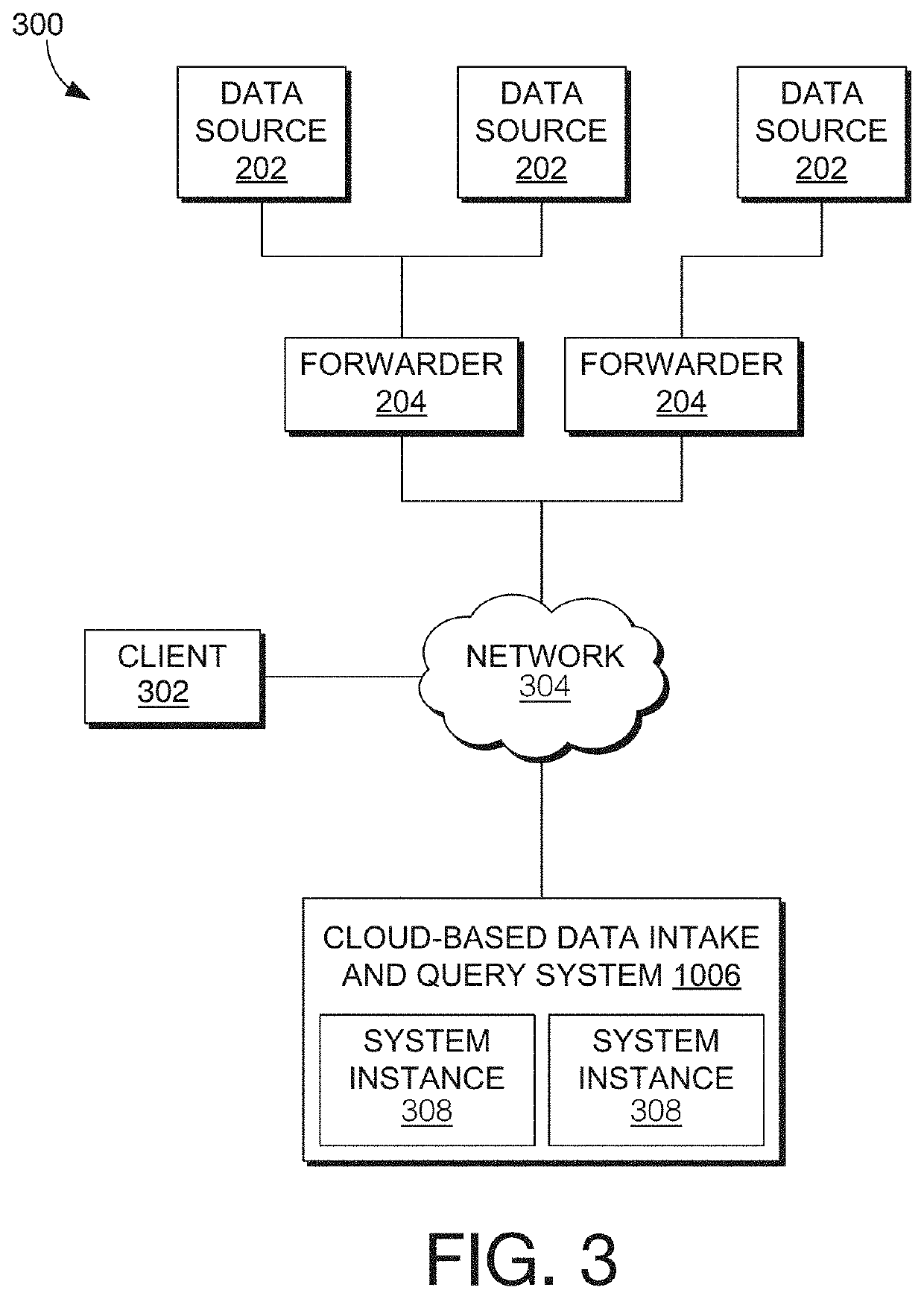

Predicting follow-on requests to a natural language request received by a natural language processing system

In various embodiments, a natural language (NL) application receives a partial NL request associated with a first context, and determining that the partial NL request corresponds to at least a portion of a first next NL request prediction included in one or more next NL request predictions generated based on a first natural language (NL) request, the first context associated with the first NL request, and a first sequence prediction model, where the first sequence prediction model is generated via a machine learning algorithm applied to a first data dependency model and a first request prediction model. In response to determining that the partial NL request corresponds to at least the portion of the first next NL request prediction, the NL application generates a complete NL request based on the first NL request and the partial NL request, and causes the complete NL request to be applied to a data storage system.

Owner:SPLUNK INC

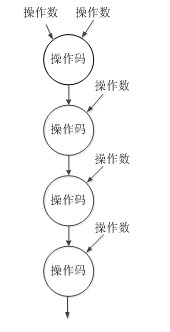

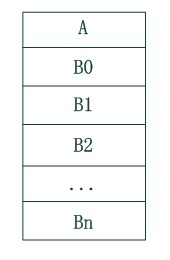

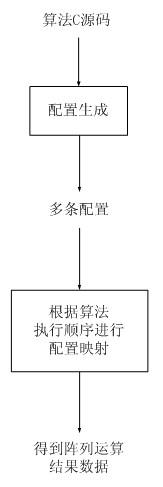

Configuration method applied to coarse-grained reconfigurable array

InactiveCN102508816AReduce the amount of informationReduce visitsProgram controlArchitecture with single central processing unitConfiguration generationEngineering

The invention discloses a configuration method applied to a coarse-grained reconfigurable array, which aims at a coarse-grained reconfigurable array with a certain scale, and comprises a configuration defining scheme taking data links as basic description objects, a corresponding configuration generating scheme and a corresponding configuration mapping scheme. The configuration defining scheme includes that a program corresponds to a plurality of configurations, each configuration corresponds to one data link, and each data link consists of a plurality of reconfigurable cells with data dependence relations. Compared with a traditional scheme taking RCs (reconfigurable cells) as basic description objects, the configuration defining scheme is capable of concealing interlinking information among the RCs and providing a larger configuration information compression space, thereby being beneficial to decrease of the total amount of configuration and time for switching configuration. Besides, the configuration of one description data link consists of a route, a functional configuration and one or more data configurations, the data configurations share one route and functional configuration information, and switching of one configuration includes one-time or multiple switching of the data configuration after one-time switching of the corresponding route and the functional configuration.

Owner:SOUTHEAST UNIV

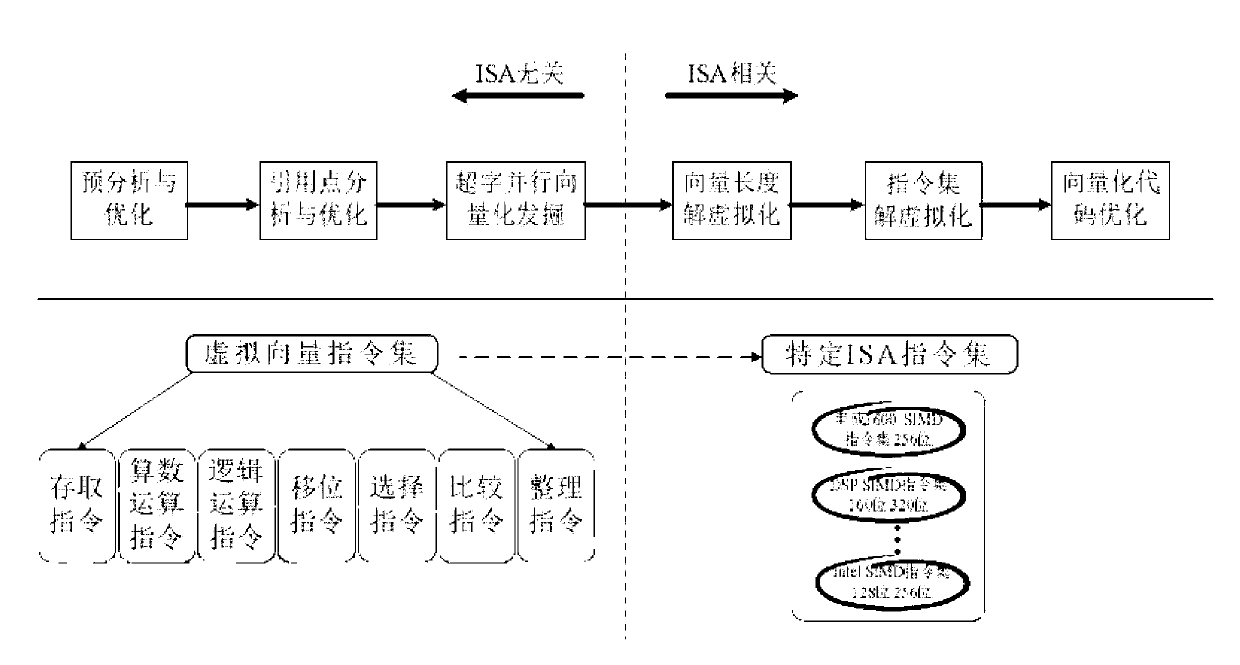

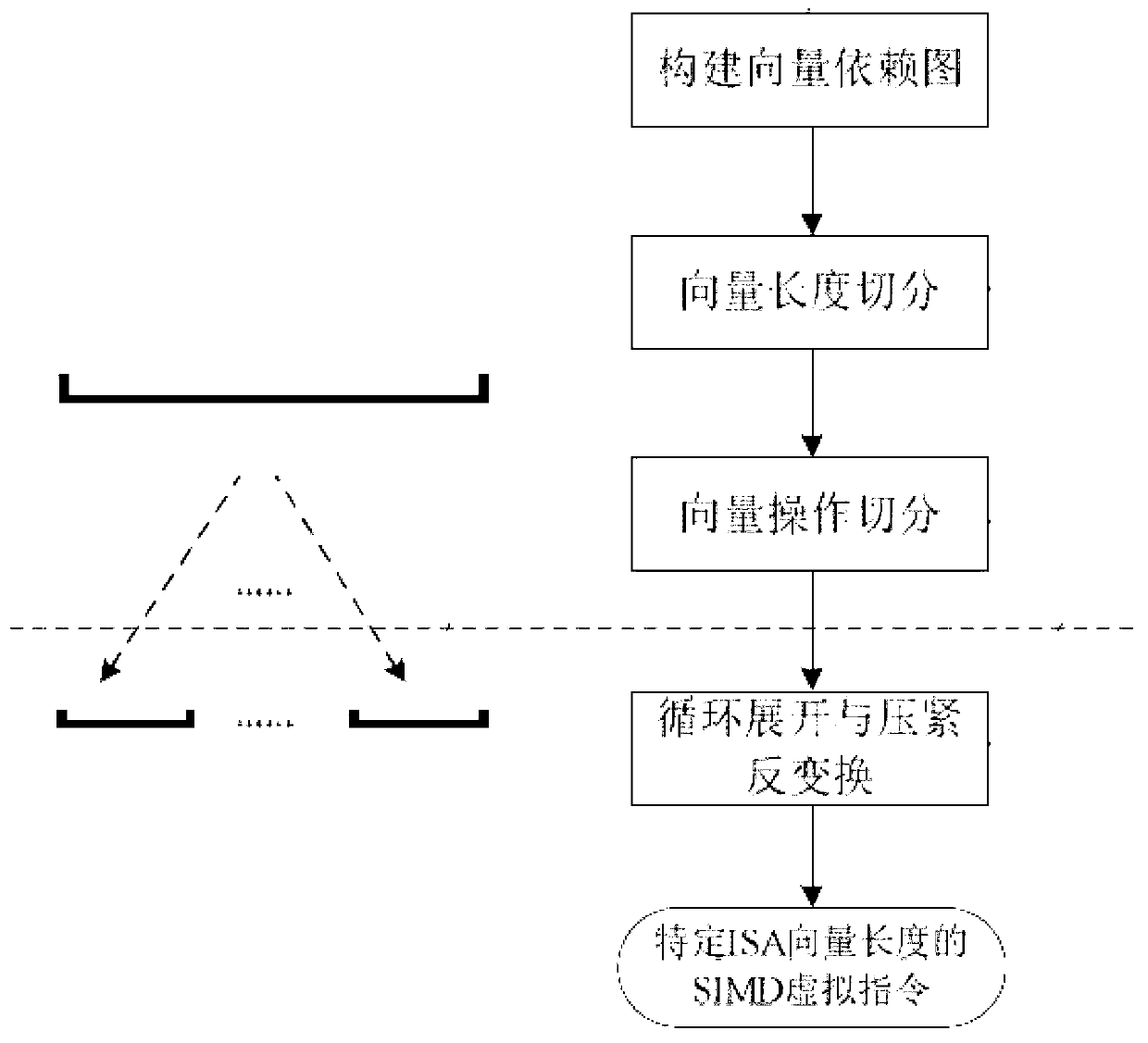

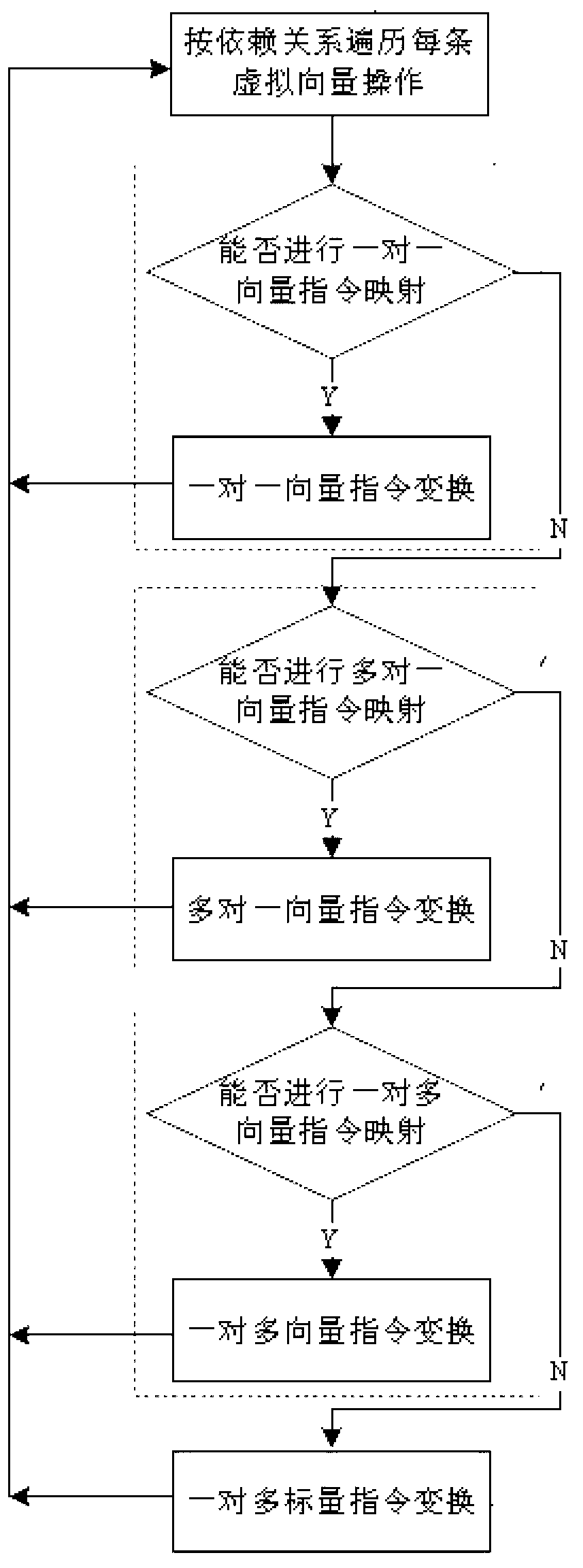

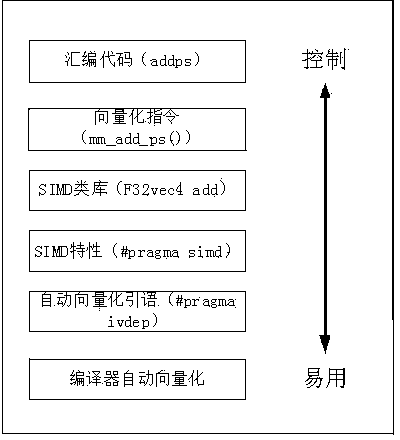

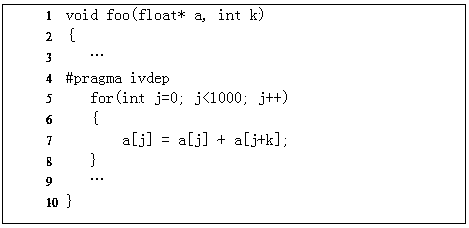

Automatic vectorizing method for heterogeneous SIMD expansion components

InactiveCN103279327AImprove execution efficiencyProgram controlMemory systemsVirtualizationPerformance computing

The invention relates to the field of high-performance computing automatic parallelization, in particular to an automatic vectorizing method for heterogeneous SIMD expansion components. The automatic vectorizing method is suitable for the heterogeneous SIMD expansion components with different vector quantity lengths and different vector quantity instruction sets, a set of virtual instruction sets are designed, and an input C and a Fortran program can be converted into an intermediate representation of virtual instructions under an automatic vectorizing unified framework. The virtual instruction sets are automatically converted into vectorizing codes for the heterogeneous SIMD expansion components through solving virtualization of the vector quantity lengths and solving virtualization of the instruction sets so that a programmer can be free from complex manual vectorizing coding work. The vectorizing method is combined with relative optimizing methods, vectorizing recognition is carried out from different granularities, mixing parallelism of a circulation level and a basic block level is explored to the greatest extent through conventional optimization and invocation point optimization, the redundancy optimization is carried out on generated codes through the analysis about striding data dependence of a basic block, and executing efficiency of a program is effectively improved.

Owner:THE PLA INFORMATION ENG UNIV

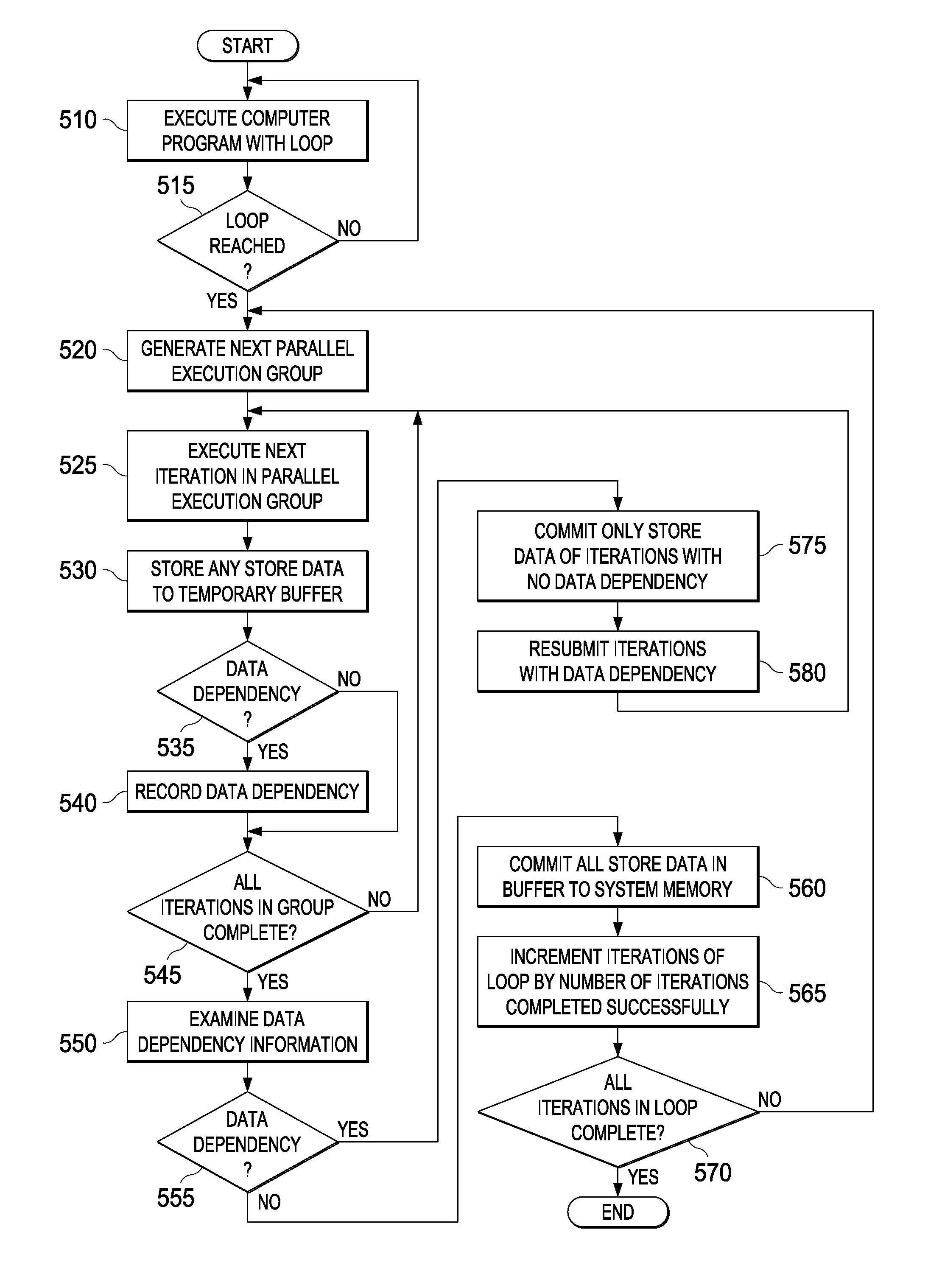

Runtime Extraction of Data Parallelism

Mechanisms for extracting data dependencies during runtime are provided. The mechanisms execute a portion of code having a loop and generate, for the loop, a first parallel execution group comprising a subset of iterations of the loop less than a total number of iterations of the loop. The mechanisms further execute the first parallel execution group and determining, for each iteration in the subset of iterations, whether the iteration has a data dependence. Moreover, the mechanisms commit store data to system memory only for stores performed by iterations in the subset of iterations for which no data dependence is determined. Store data of stores performed by iterations in the subset of iterations for which a data dependence is determined is not committed to the system memory.

Owner:IBM CORP

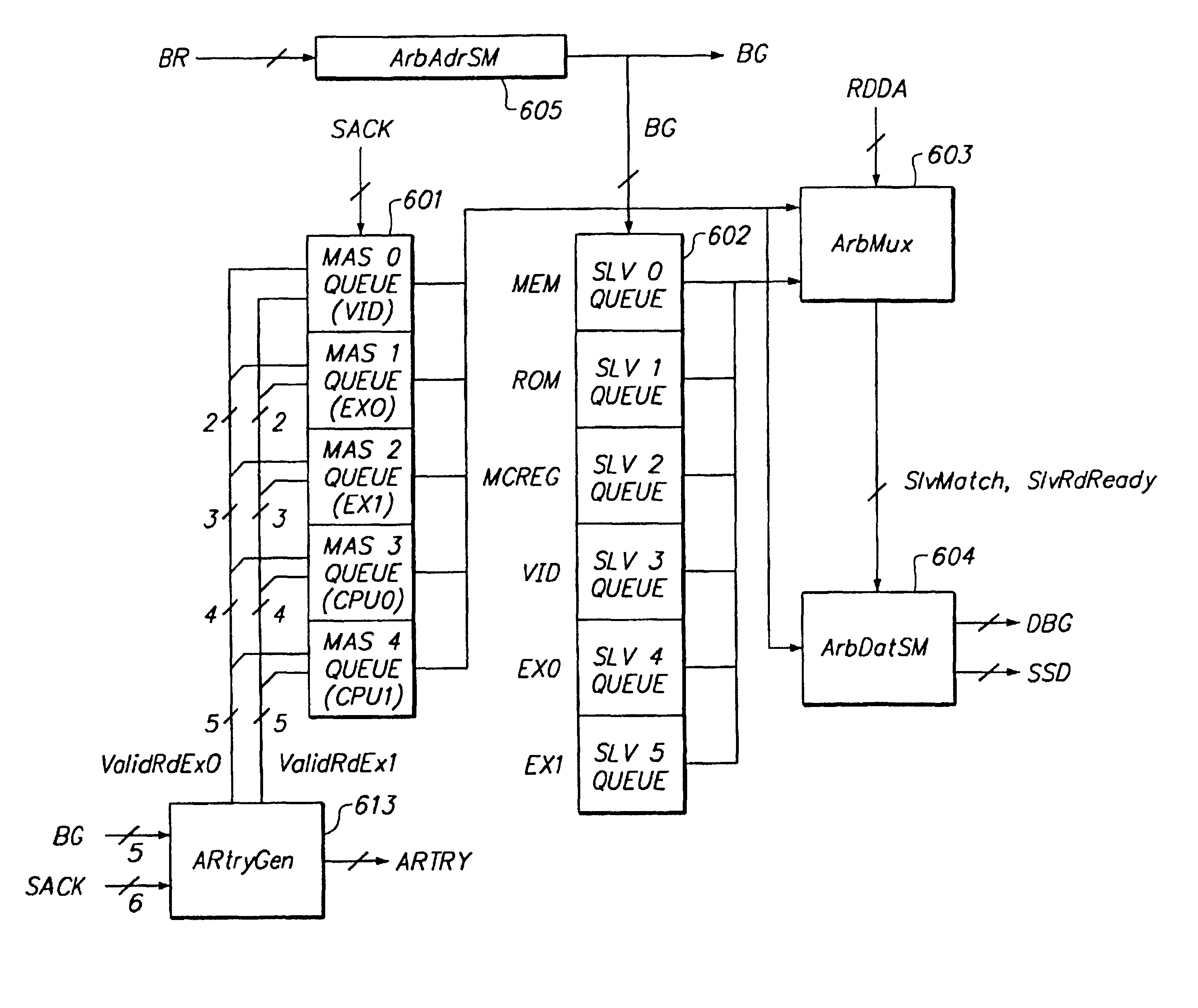

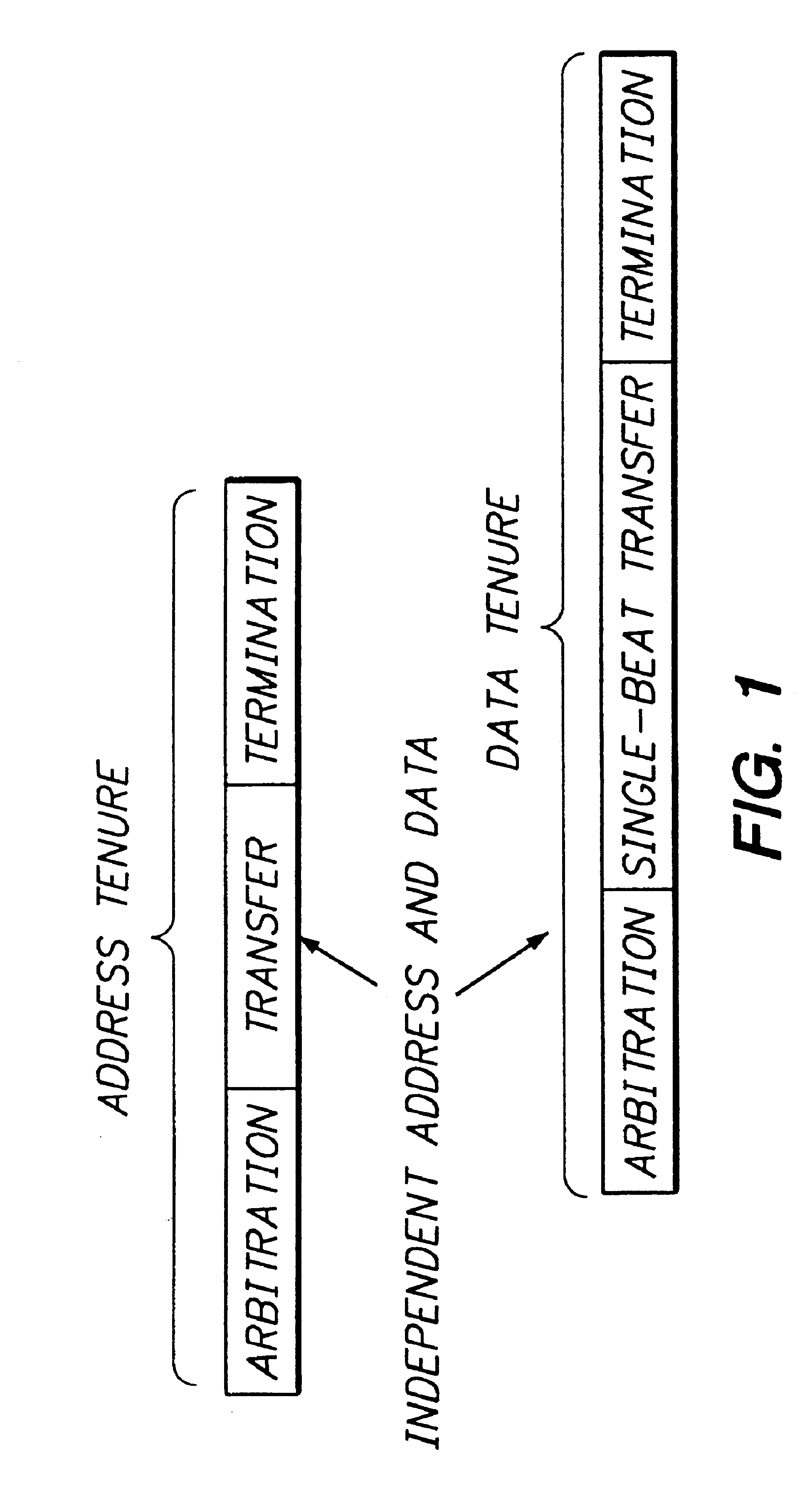

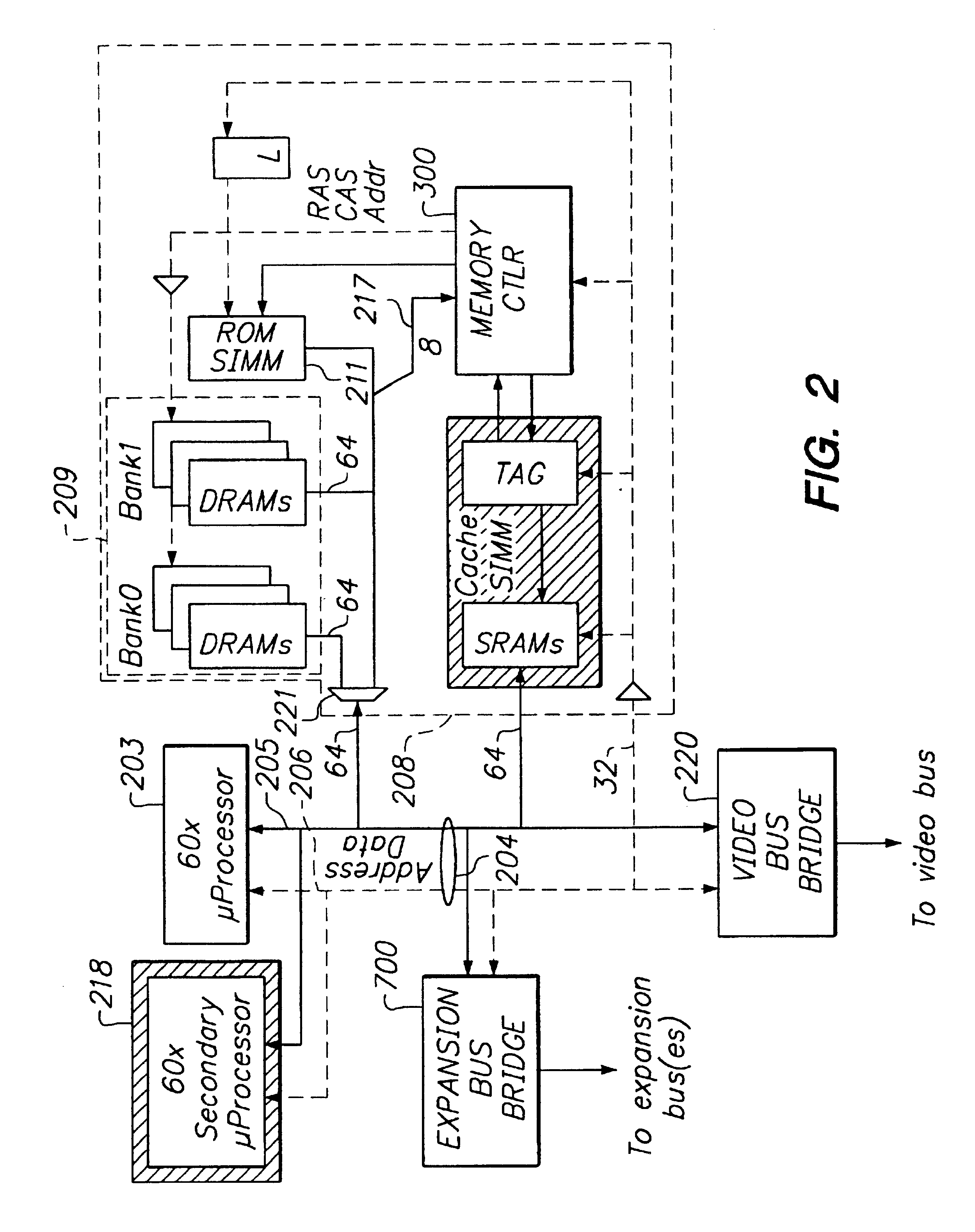

Bus transaction reordering in a computer system having unordered slaves

InactiveUSRE38428E1Improve bus utilizationEasy to implementMultiprogramming arrangementsData switching by path configurationComputerized systemCoupling system

A mechanism is provided for reordering bus transactions to increase bus utilization in a computer system in which a split-transaction bus is bridged to a single-envelope bus. In one embodiment, both masters and slaves are ordered, simplifying implementation. In another embodiment, the system is more loosely coupled with only masters being ordered. Greater bus utilization is thereby achieved. To avoid deadlock, transactions begun on the split-transaction bus are monitored. When a combination of transactions would, if a predetermined further transaction were to begin, result in deadlock, this condition is detected. In the more tightly coupled system, the predetermined further transaction, if it is requested, is refused, thereby avoiding deadlock. In the more loosely-coupled system, the flexibility afforded by unordered slaves is taken advantage of to, in the typical case, reorder the transactions and avoid deadlock without killing any transaction. Where a data dependency exists that would prevent such reordering, the further transactions is killed as in the more tightly-coupled embodiment. Data dependencies are detected in accordance with address-coincidence signals generated by slave devices on a cache-line basis. In accordance with a further optimization, at least one slave device (e.g., DRAM) generates page-coincidence bits. When two transactions to the slave device are to the same address page, the transactions are reordered if necessary to ensure that they are executed one after another without any intervening transaction. Latency of the slave is thereby reduced.

Owner:APPLE INC

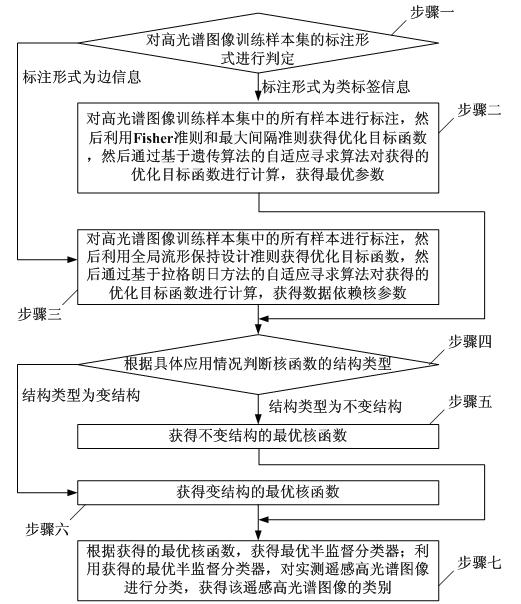

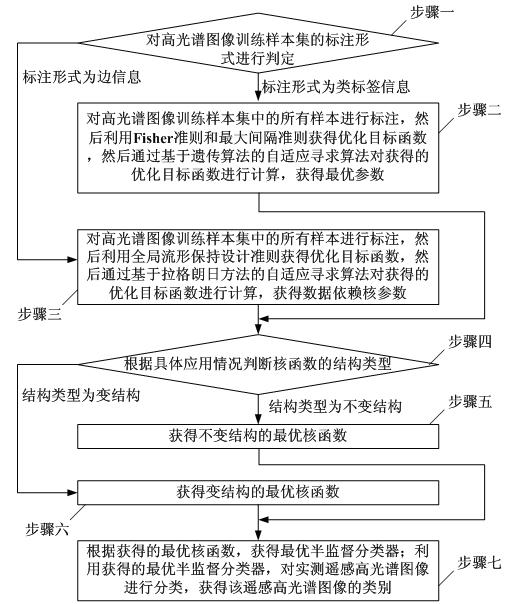

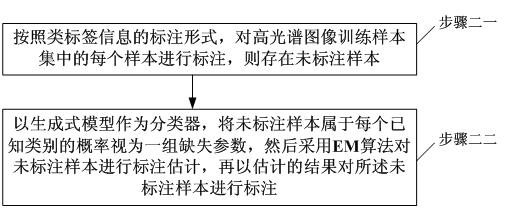

Remote sensing hyperspectral image classification method based on semi-supervised kernel adaptive learning

InactiveCN101814148AAccurate classificationHigh-resolutionCharacter and pattern recognitionTerrainAdaptive learning

The invention discloses a remote sensing hyperspectral image classification method based on semi-supervised kernel adaptive learning, relating to a classification method of remote sensing hyperspectral images and solving the problem of low resolution ratio existing in the traditional remote sensing hyperspectral image classification method. The remote sensing hyperspectral image classification method comprises the steps of: judging a labeling form of a hyperspectral image training sample set, acquiring an optimized target function, and then acquiring an optimal parameter or a data dependency kernel parameter; obtaining an optimal kernel function with an invariant structure or a variable structure according to the acquired parameter, further obtaining an optimal semi-supervised classifier, and realizing the classification of the remote sensing hyperspectral images by utilizing the classifier. The invention can accurately classify the end members of the remote hyperspectral images, improves the resolution ratio of the remote sensing hyperspectral images, and can be applied to the technical field of terrain military target reconnaissance, high-efficient warfare striking effect estimation, navy submarine real-time maritime environment monitoring and emergency responses of emergent natural disasters.

Owner:霍振国

Vectorizing optimization method based on MIC (Many Integrated Core) architecture processor

ActiveCN103440229AImprove computing efficiencyImprove performanceComplex mathematical operationsData dependency analysisSoftware development

The invention provides a vectorizing optimization method based on an MIC architecture processor, which relates to three main steps, i.e. data dependence analysis for an algorithm, vectorizing adjustment and optimization of the algorithm and vectorizing compilation. The specific content includes data dependence analysis for the algorithm, the vectorizing optimization and adjustment of the algorithm, an automatic vectorization technique of a compiler, a user-intervened vectorizing optimization method and the like. The method provided by the invention is applicable to the software optimization of an MIC architecture processor platform, and can direct software developers to rapidly and efficiently carry out the vectorizing optimization of existing software, particularly core algorithms, within the shortest development cycles and with the lowest costs, consequently, the computing resources of vector processors can be utilized by software to the max, the running time of software can be shortened to the max, the hardware resource utilization rate can be remarkably increased, and the computing efficiency and overall performance of software can be increased.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

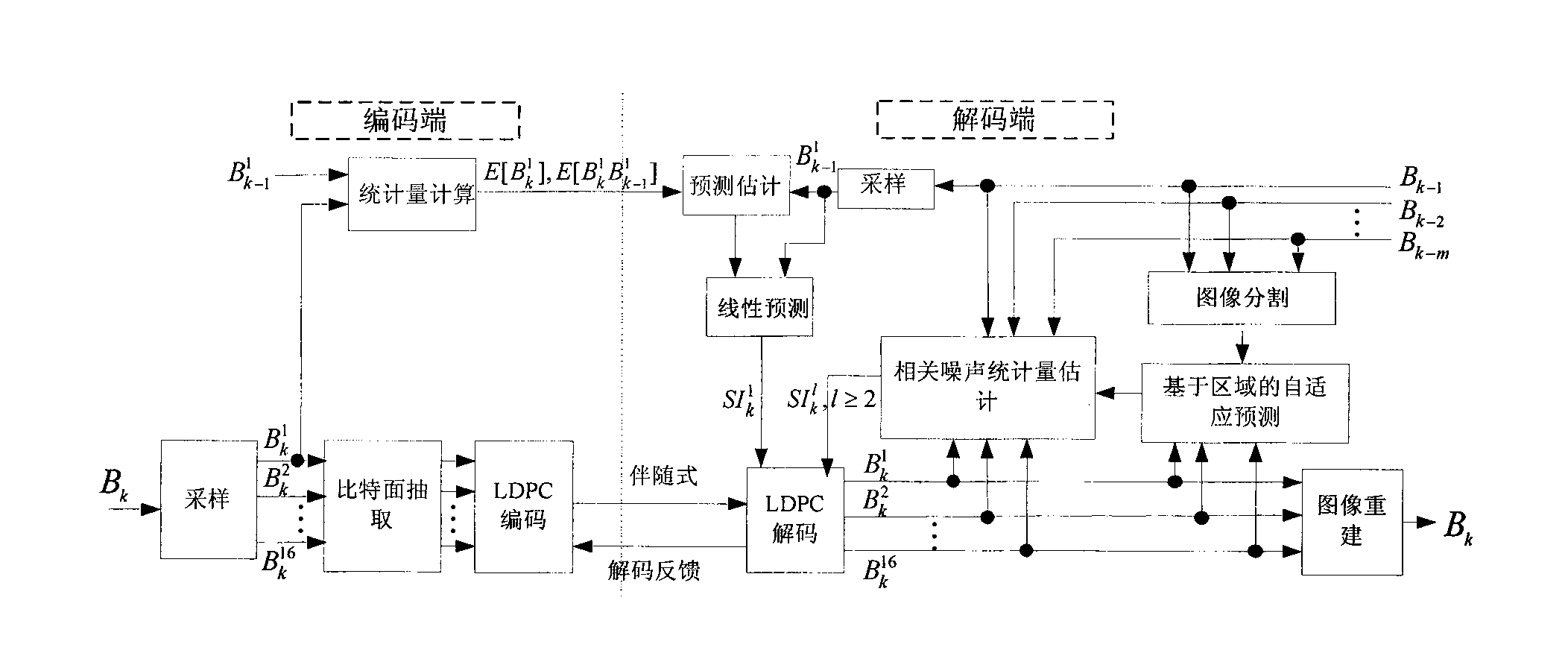

Progressive distribution type encoding and decoding method and device for multispectral image

InactiveCN101640803AProgressive transferImprove compression efficiencyTelevision systemsDigital video signal modificationDecoding methodsMultispectral image

The invention discloses a progressive distribution type encoding and decoding method for a multispectral image. In the method, each band image is directly and independently encoded at an encoding end,and data dependence is only excavated at a decoding end, therefore, the complexity of data calculation can be transferred from the encoding end with resource constraint to the decoding end with richresources so as to optimally utilize resources of a system and be suitable for a remote sensing satellite. The invention also discloses a distribution type encoding and decoding device for a multispectral image. A module which is used for excavating the data dependence is arranged at the decoding end, and therefore, the encoding end is simplified to the maximum to enable the system to be more reasonable. The invention has the advantages of low encoding complexity, high compression efficiency, strong error resilience ability and progressive transfer characteristic and is suitable for lossless compression or nearly lossless compression of the multispectral image, in particular to an image compression application on the satellite.

Owner:UNIV OF SCI & TECH OF CHINA

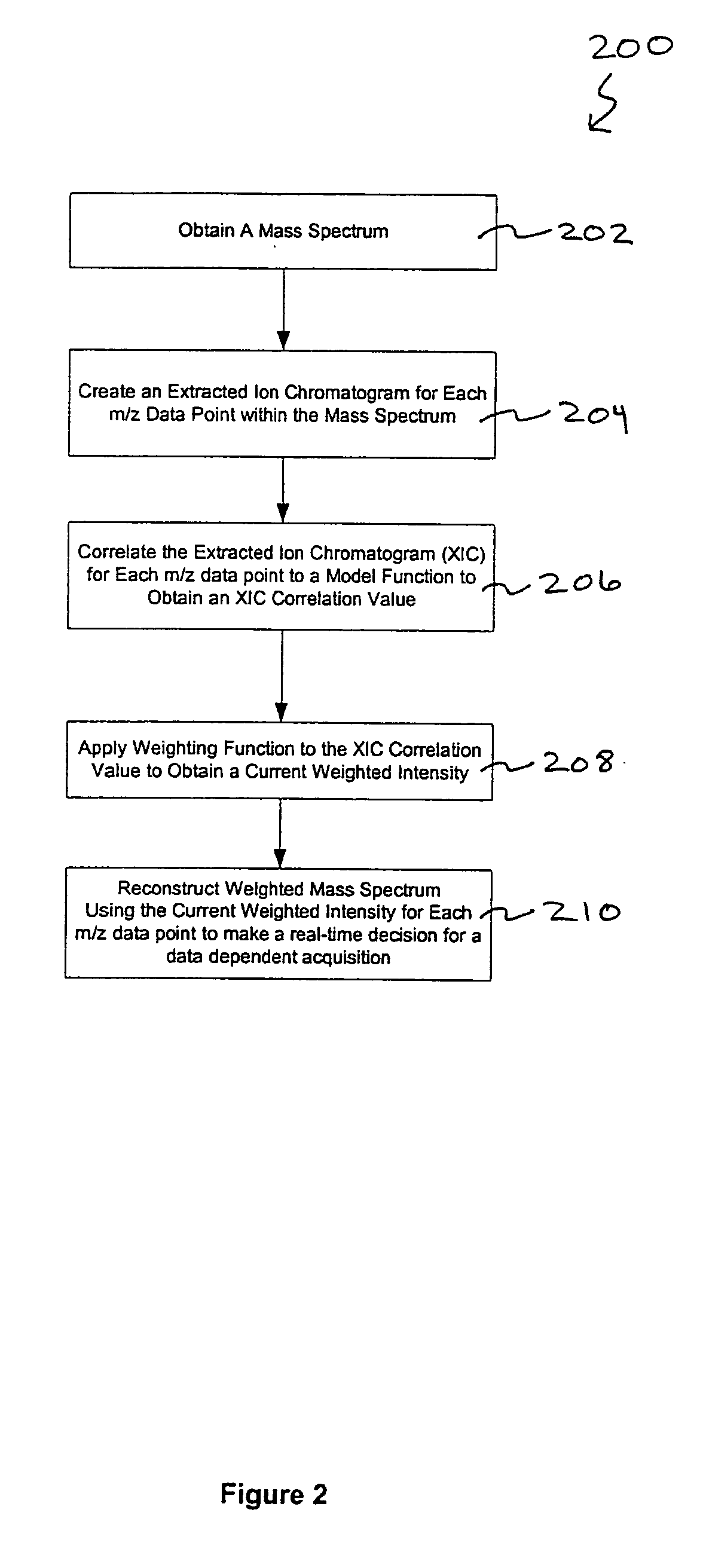

Methods for improved data dependent acquisition

A method of analyzing data from a mass spectrometer for a data dependent acquisition is described. In an embodiment of this method, mass spectral scans are taken of a sample eluted from a liquid chromatography column. An extracted ion chromatogram (XIC) is then created for each m / z data point of the mass spectral scans and the XIC for each m / z data point are correlated to a model function, such as a monotonically increasing function, or the first half of a gaussian function, to obtain a XIC correlation value. A weighting function is then applied to the XIC correlation value to obtain a current weighted intensity. The current weighted intensity for each m / z point is used to reconstruct a weighted mass spectrum, which is then used to make a real-time decision for the data dependent acquisition. In an embodiment, the data dependent acquisition is the performance of tandem mass spectrometry. Embodiments of the invention also describe a sample processing apparatus for the data dependent acquisition and a computer readable medium that provides instructions to the sample processing apparatus to perform the method described above.

Owner:THERMO FINNIGAN

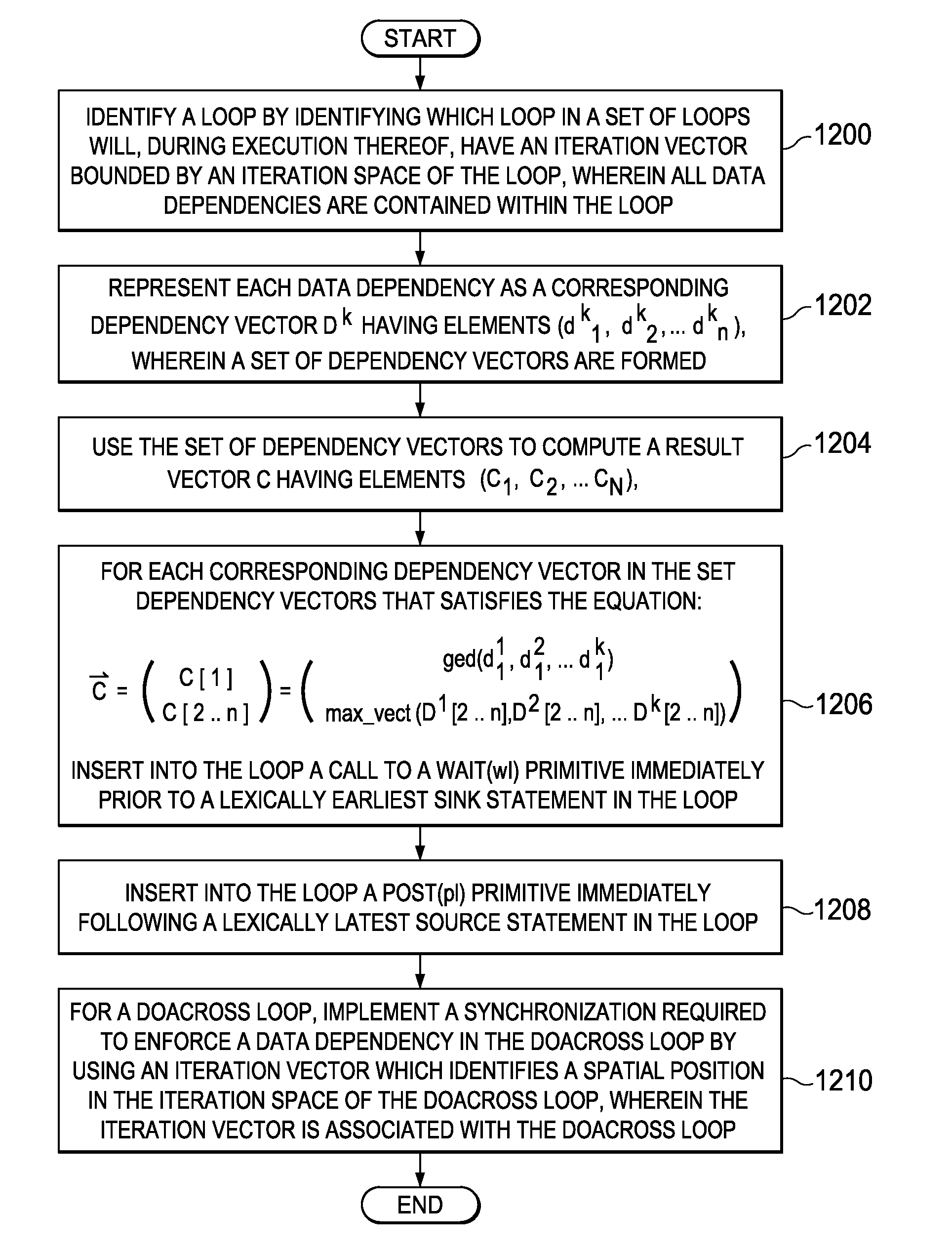

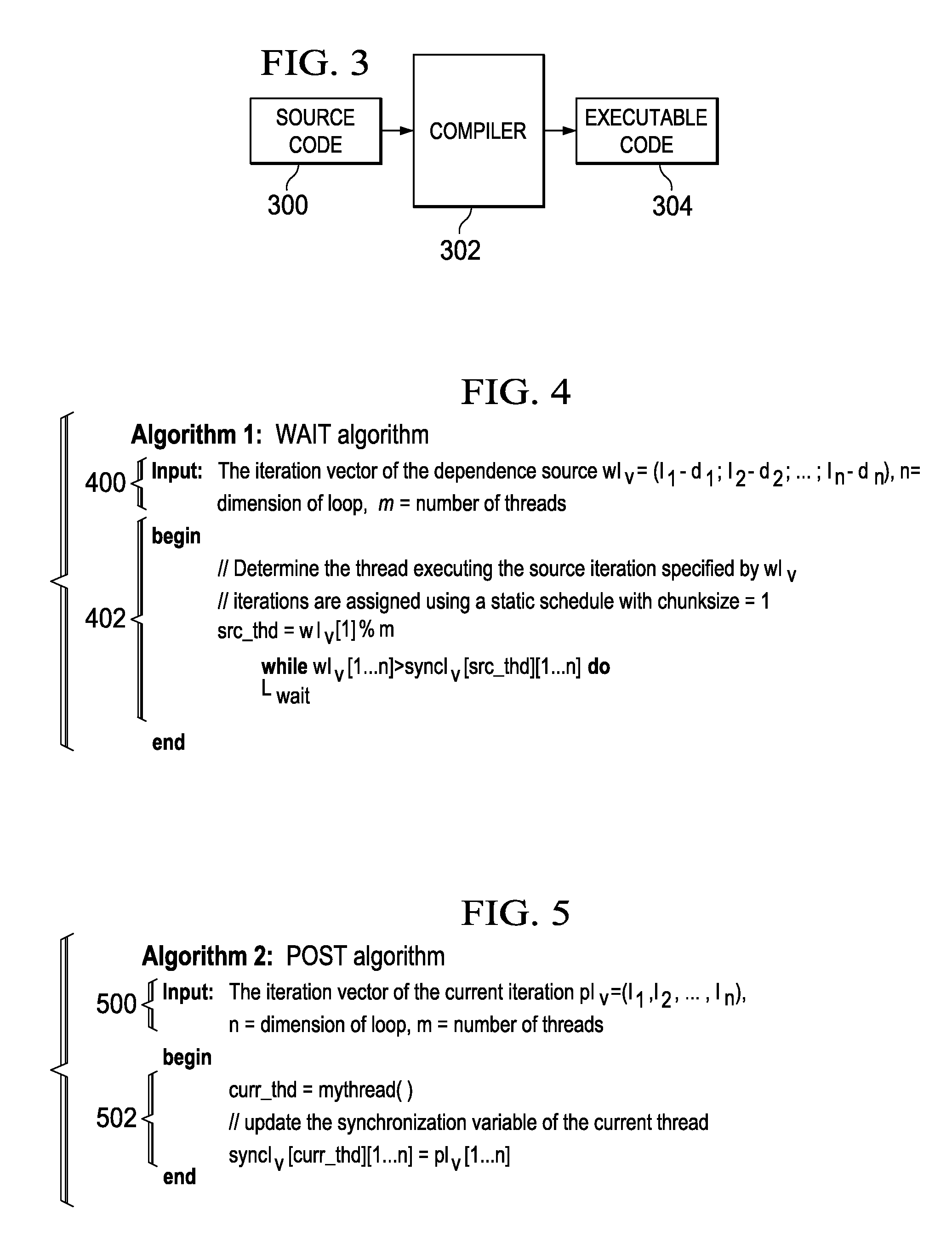

Pipelined parallelization of multi-dimensional loops with multiple data dependencies

A mechanism for folding all the data dependencies in a loop into a single, conservative dependence. This mechanism leads to one pair of synchronization primitives per loop. This mechanism does not require complicated, multi-stage compile time analysis. This mechanism considers only the data dependence information in the loop. The low synchronization cost balances the loss in parallelism due to the reduced overlap between iterations. Additionally, a novel scheme is presented to implement required synchronization to enforce data dependences in a DOACROSS loop. The synchronization is based on an iteration vector, which identifies a spatial position in the iteration space of the loop. Multiple iterations executing in parallel have their own iteration vector for synchronization where they update their position in the iteration space. As no sequential updates to the synchronization variable exist, this method exploits a greater degree of parallelism.

Owner:IBM CORP

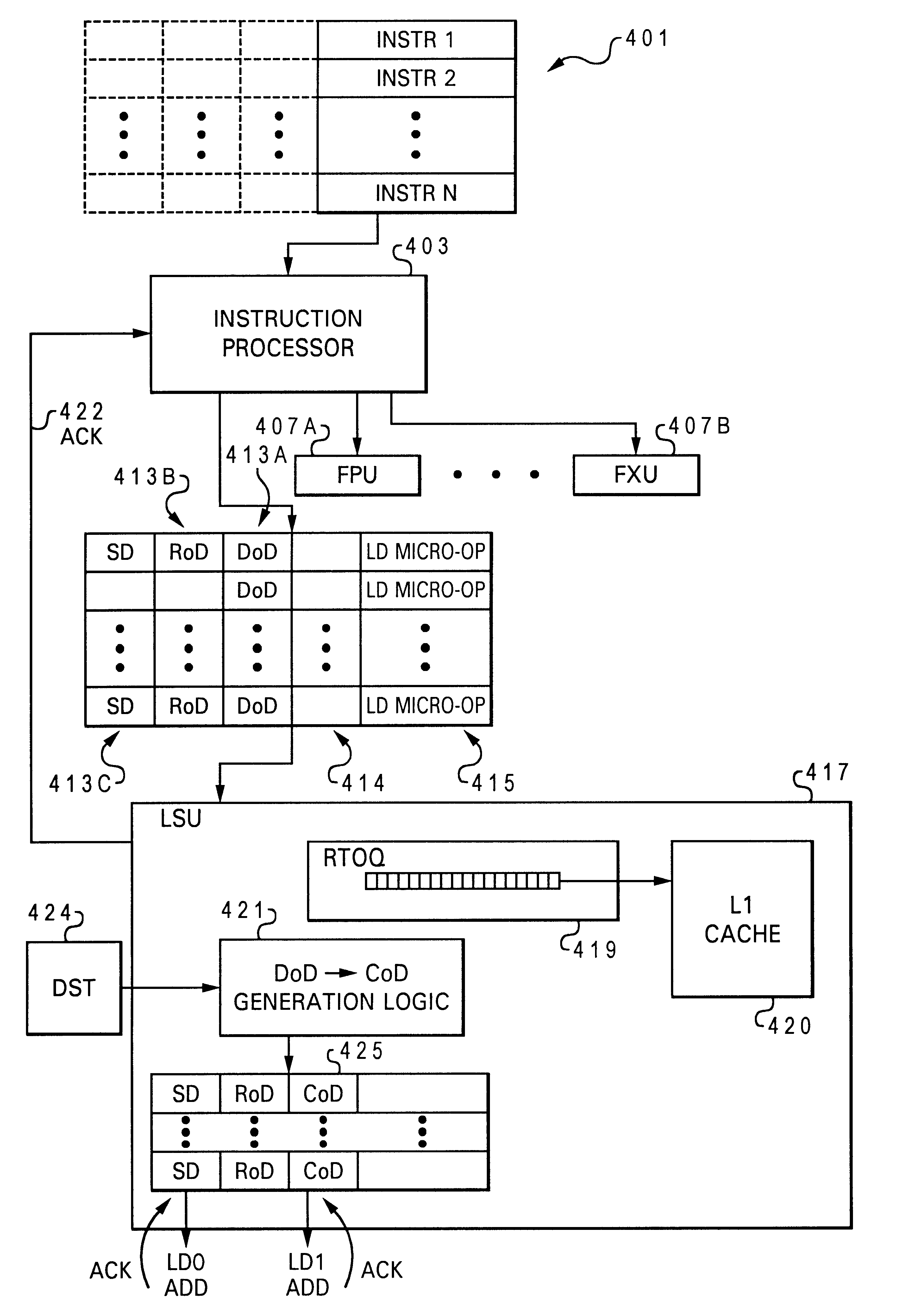

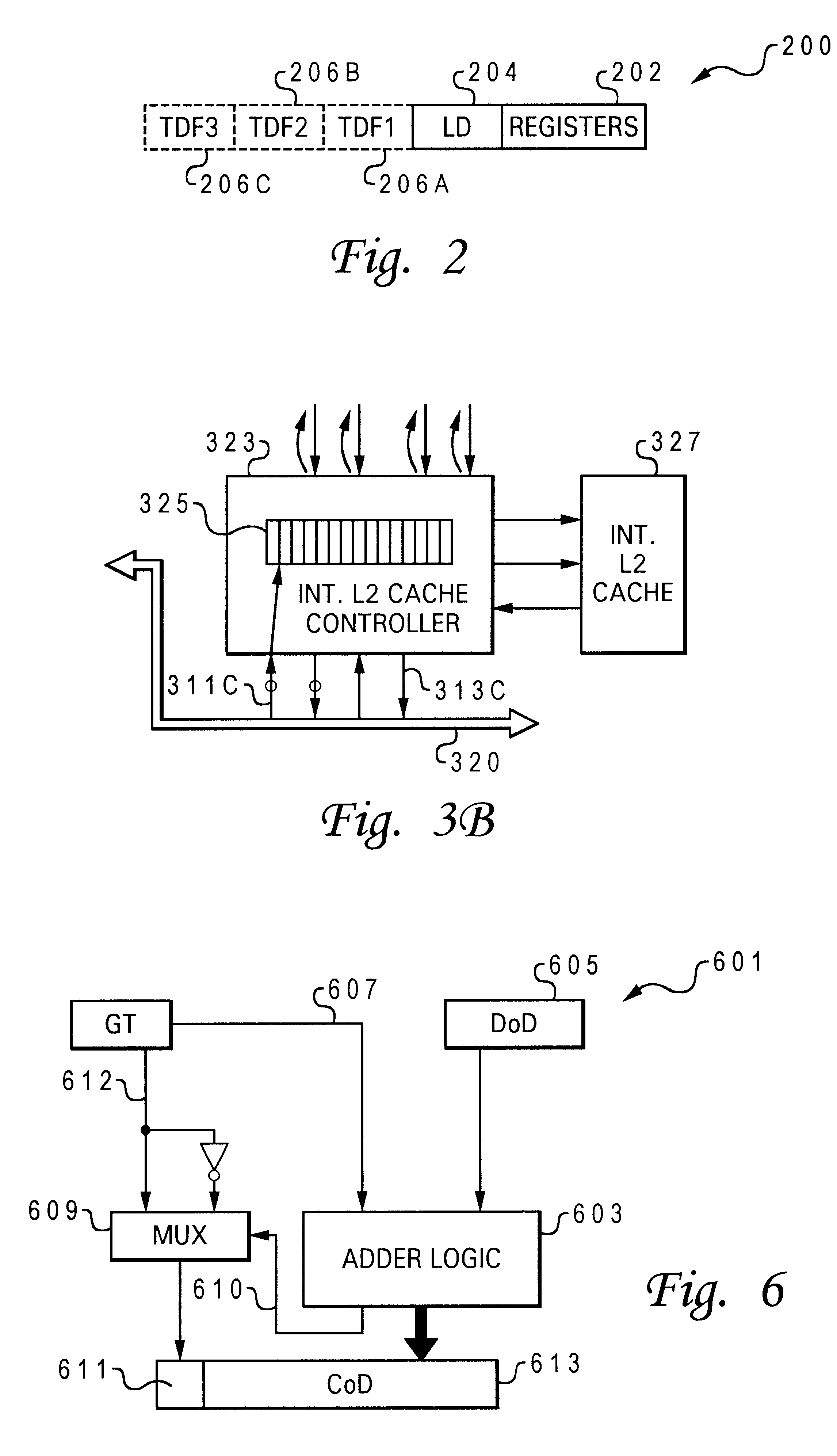

Method for alternate preferred time delivery of load data

InactiveUS6389529B1Efficient managementDigital computer detailsConcurrent instruction executionLoad instructionData dependence

A system for time-ordered execution of load instructions. More specifically, the system enables just-in-time delivery of data requested by a load instruction. The system consists of a processor, an L1 data cache with corresponding L1 cache controller, and an instruction processor. The instruction processor manipulates a plurality of architected time dependency fields of a load instruction to create a plurality of dependency fields. The dependency fields holds a relative dependency value which is utilized to order the load instruction in a Relative Time-Ordered Queue (RTOQ) of the L1 cache controller. The load instruction is sent from RTOQ to the L1 data cache at a particular time so that the data requested is loaded from the L1 data cache at the time specified by one of the dependency fields. The dependency fields are prioritized so that the cycle corresponding to the highest priority field which is available is utilized.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com