Patents

Literature

59 results about "Automatic parallelization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Automatic parallelization, also auto parallelization, autoparallelization, or parallelization, the last one of which implies automation when used in context, refers to converting sequential code into multi-threaded or vectorized (or even both) code in order to utilize multiple processors simultaneously in a shared-memory multiprocessor (SMP) machine. The goal of automatic parallelization is to relieve programmers from the hectic and error-prone manual parallelization process. Though the quality of automatic parallelization has improved in the past several decades, fully automatic parallelization of sequential programs by compilers remains a grand challenge due to its need for complex program analysis and the unknown factors (such as input data range) during compilation.

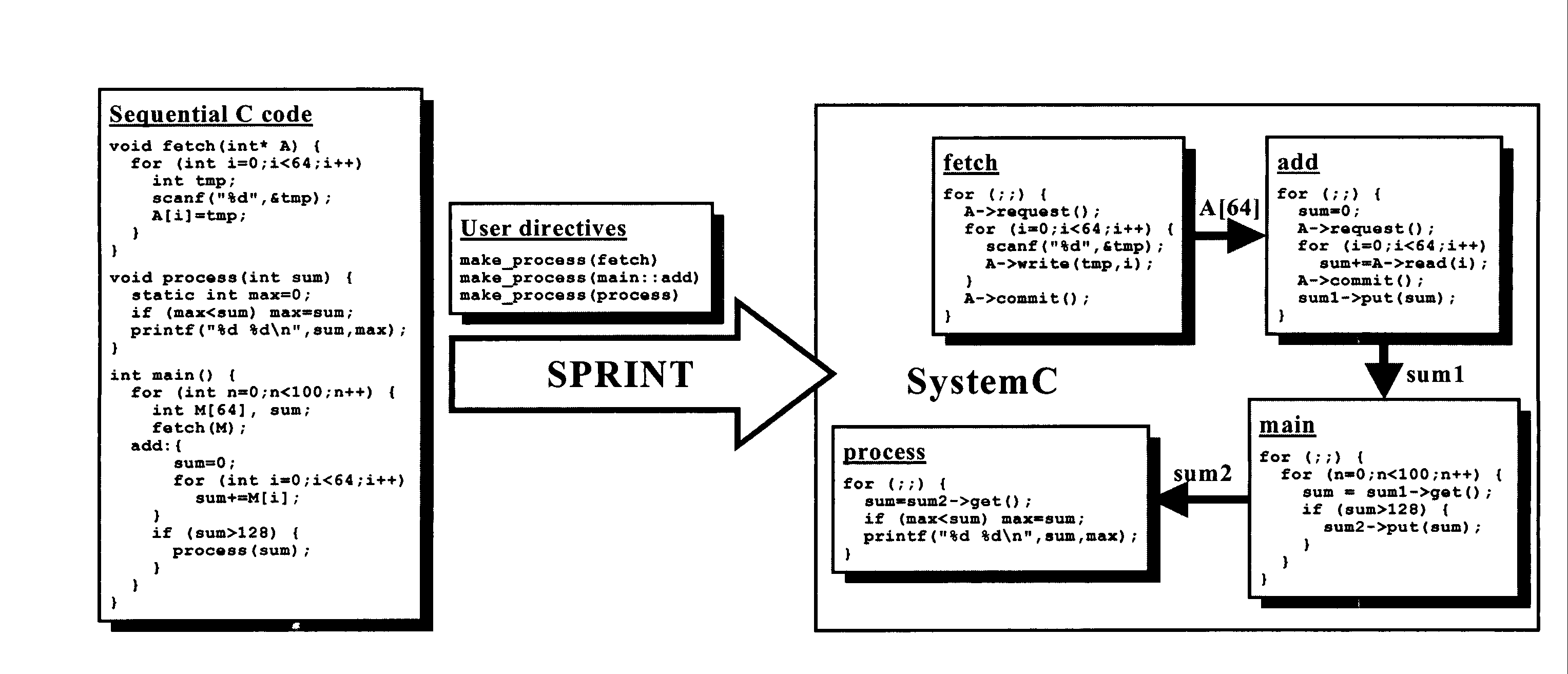

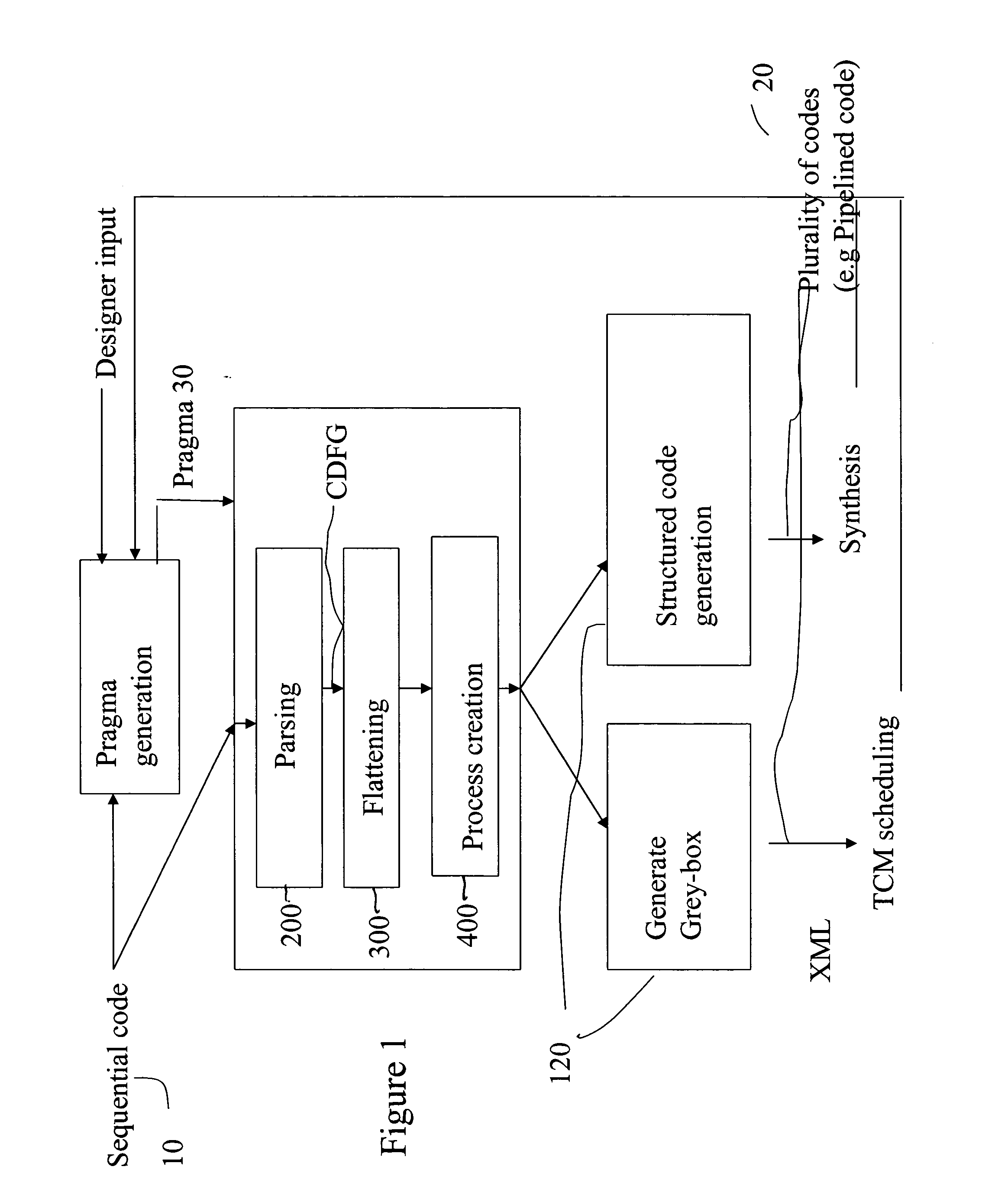

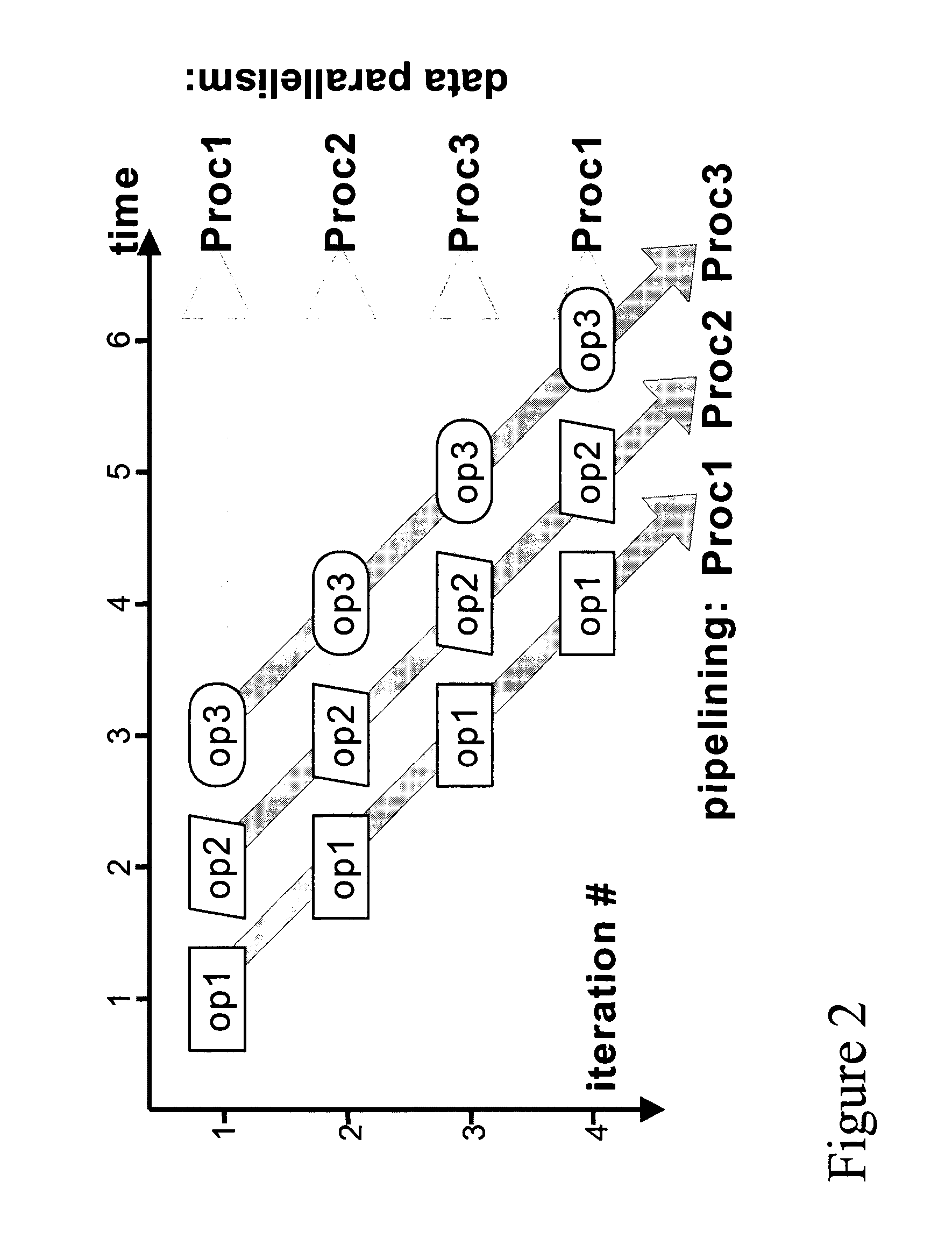

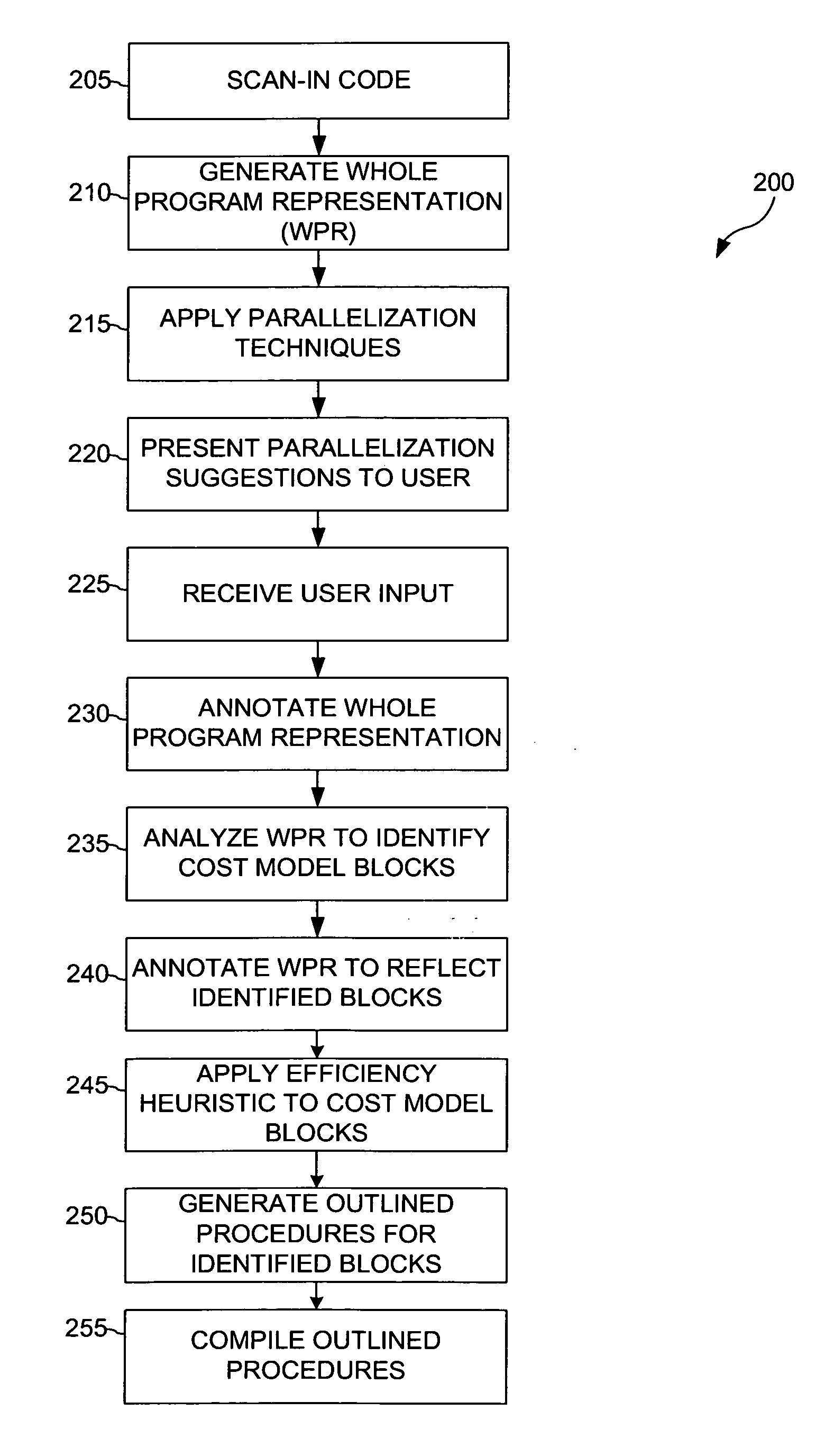

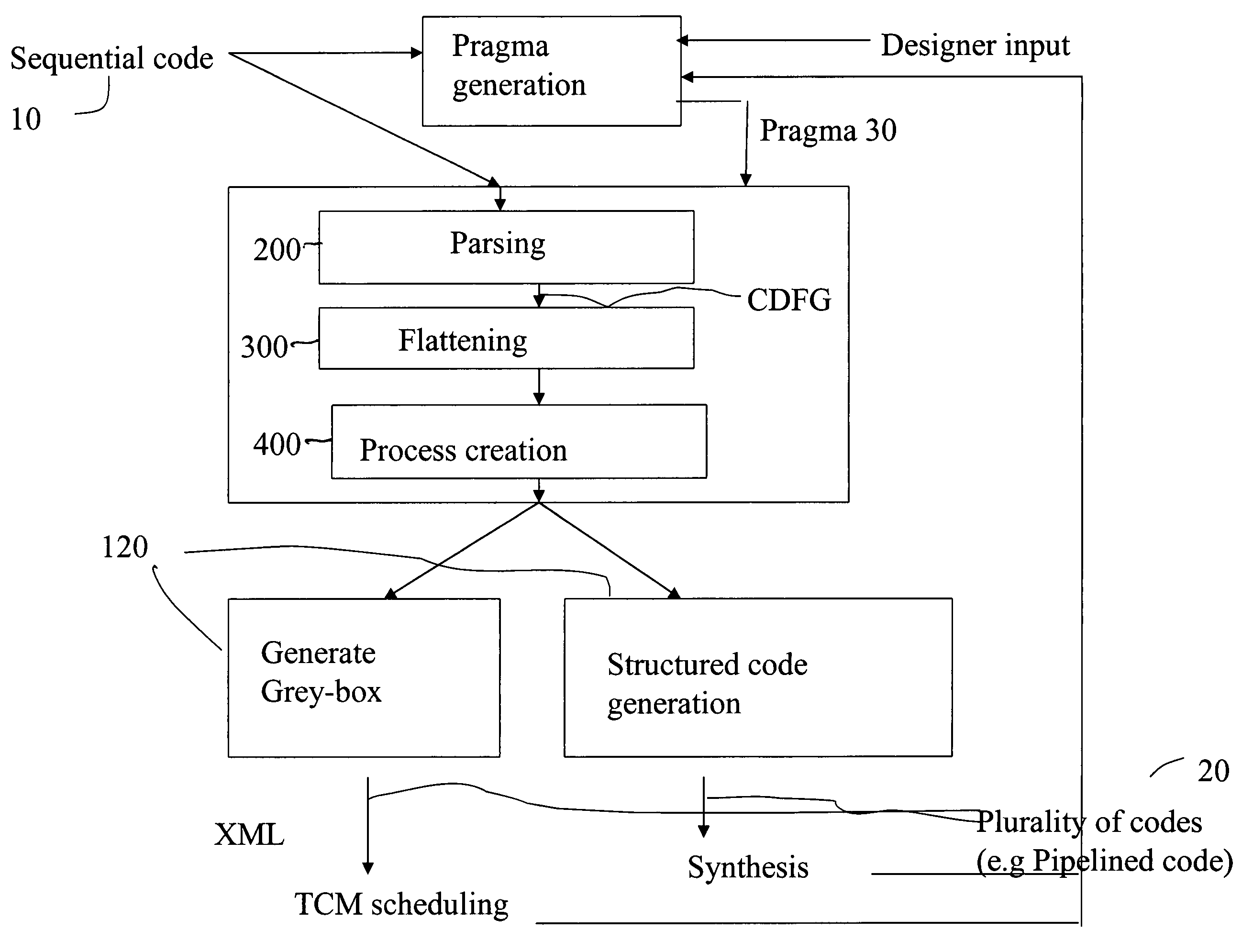

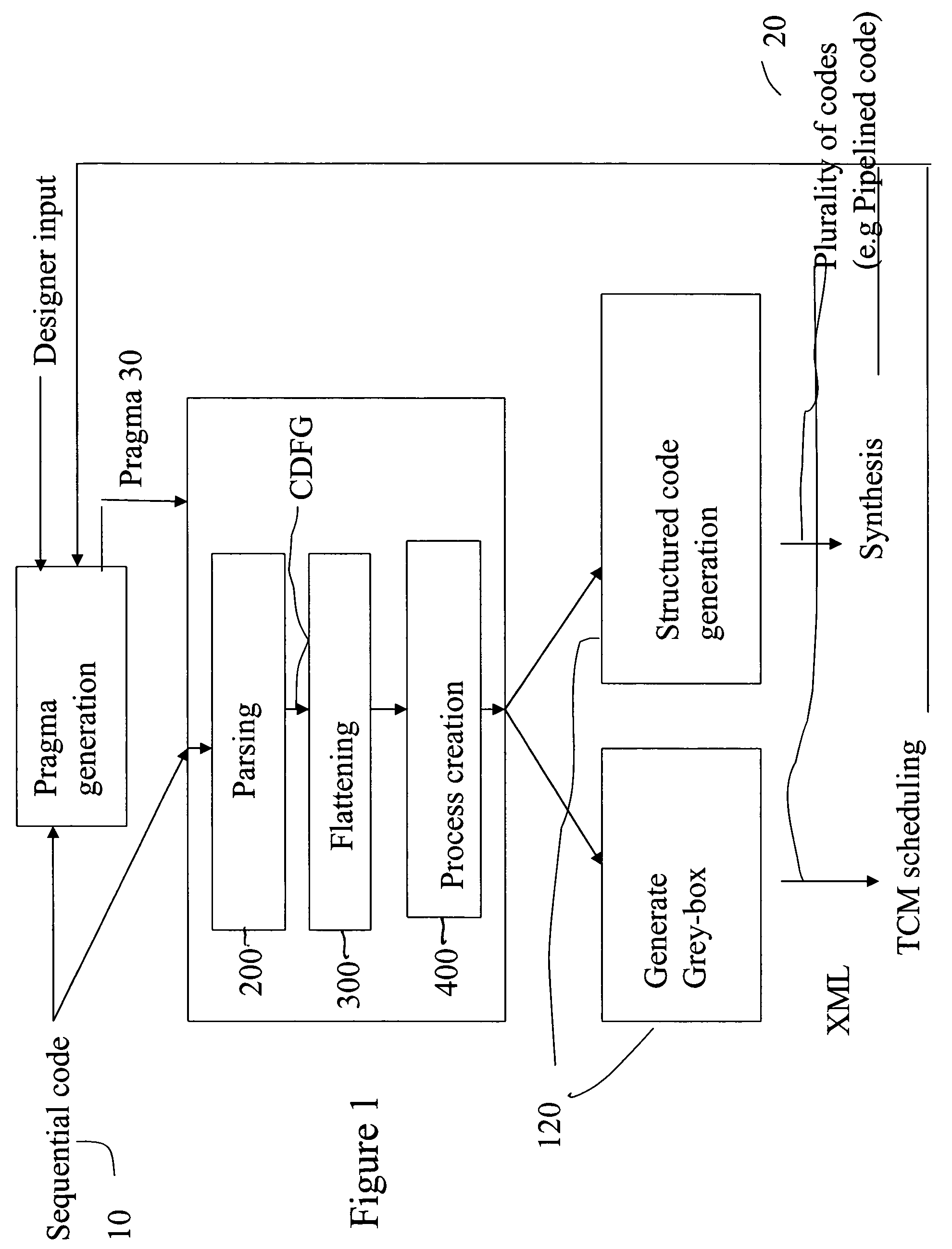

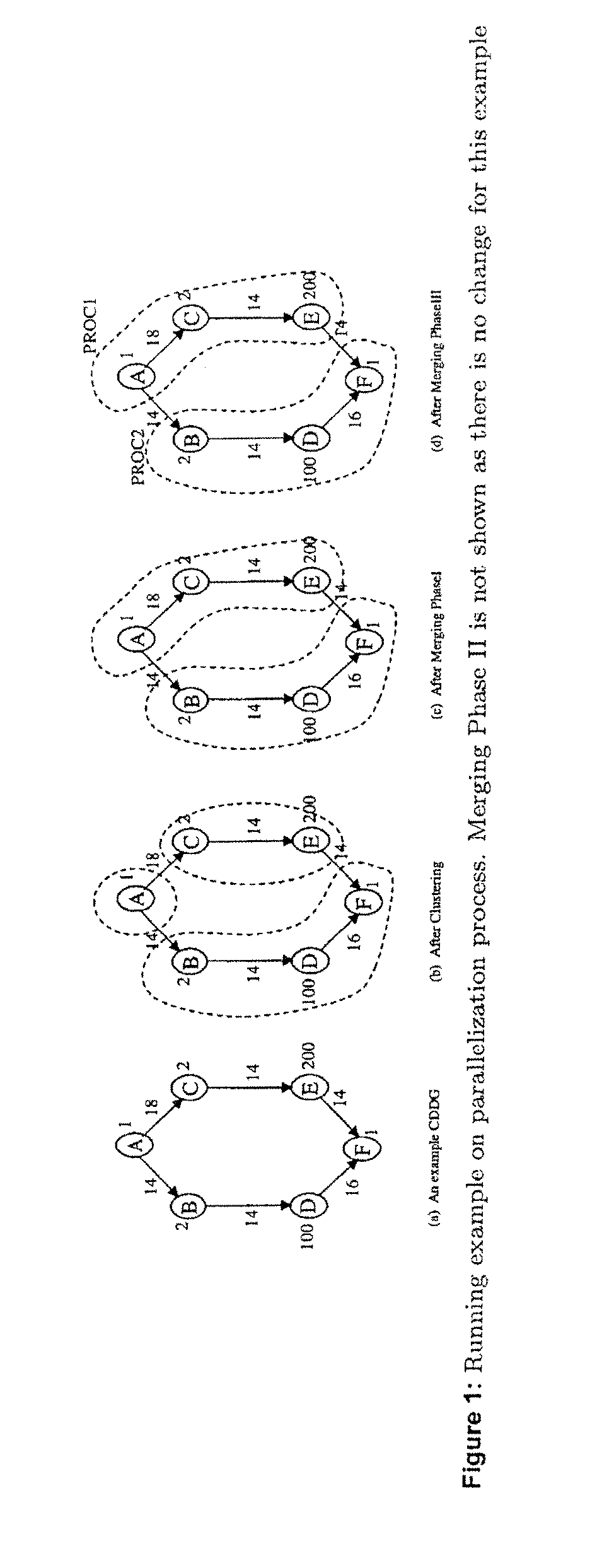

System and method for automatic parallelization of sequential code

Owner:INTERUNIVERSITAIR MICRO ELECTRONICS CENT (IMEC VZW)

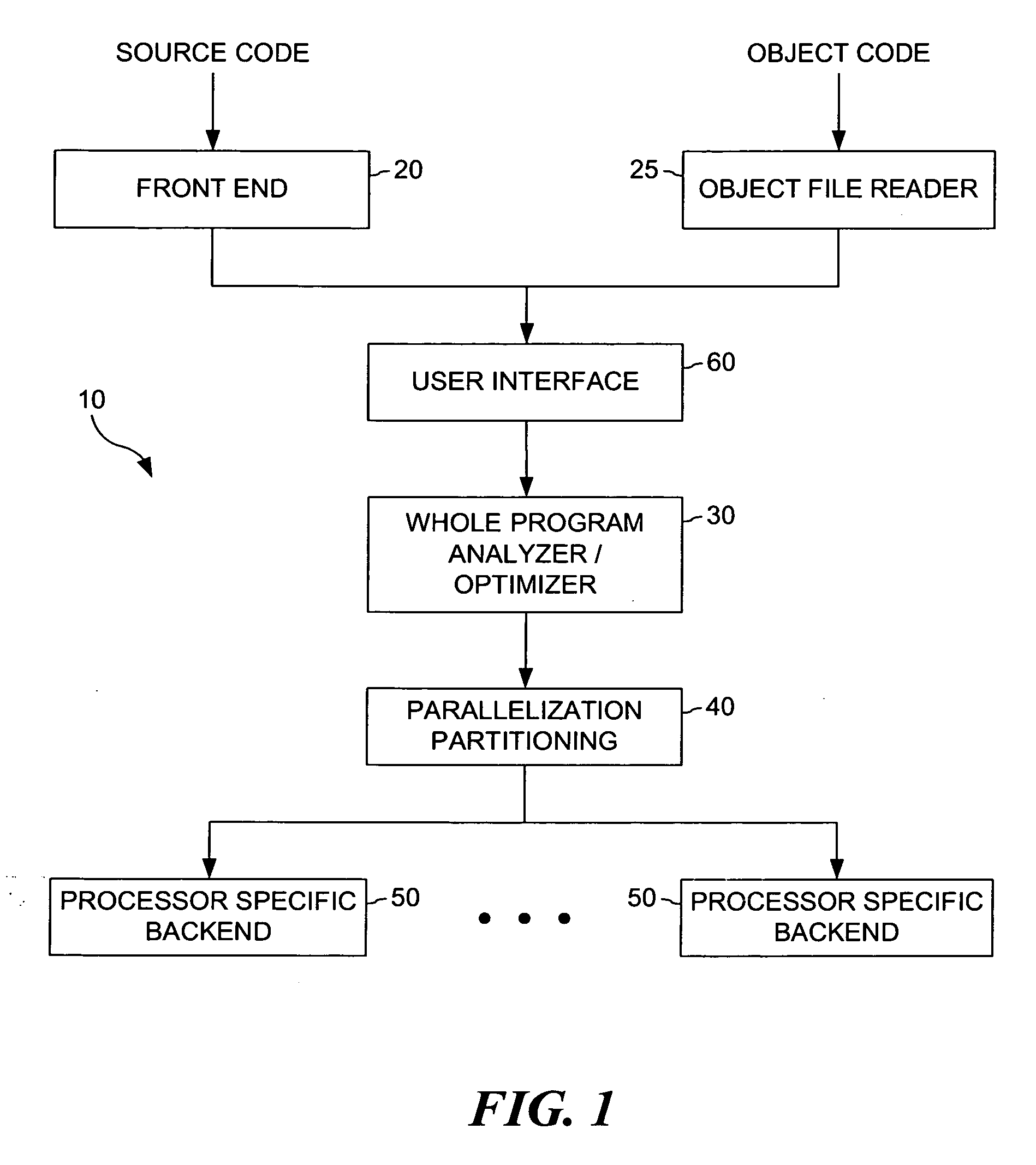

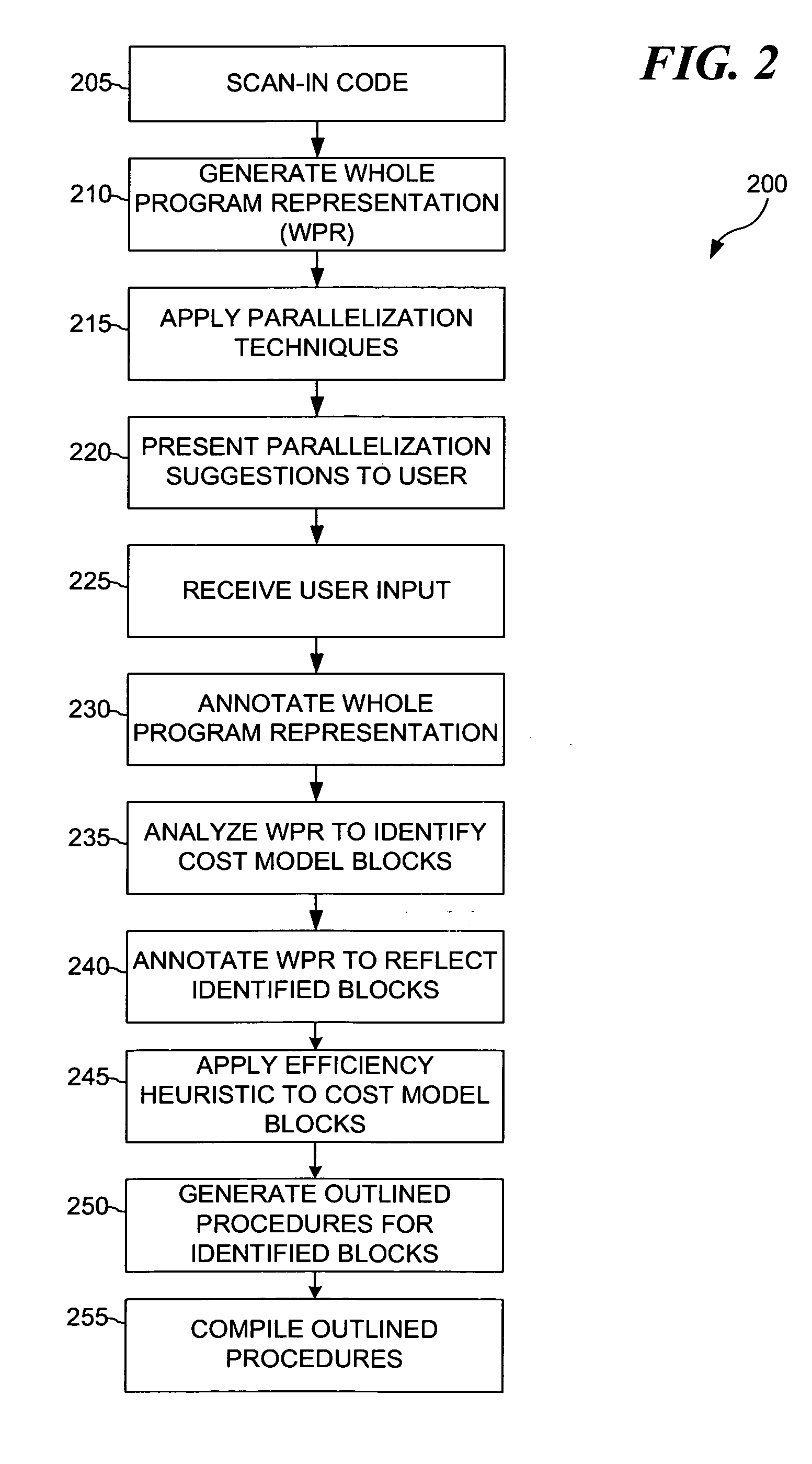

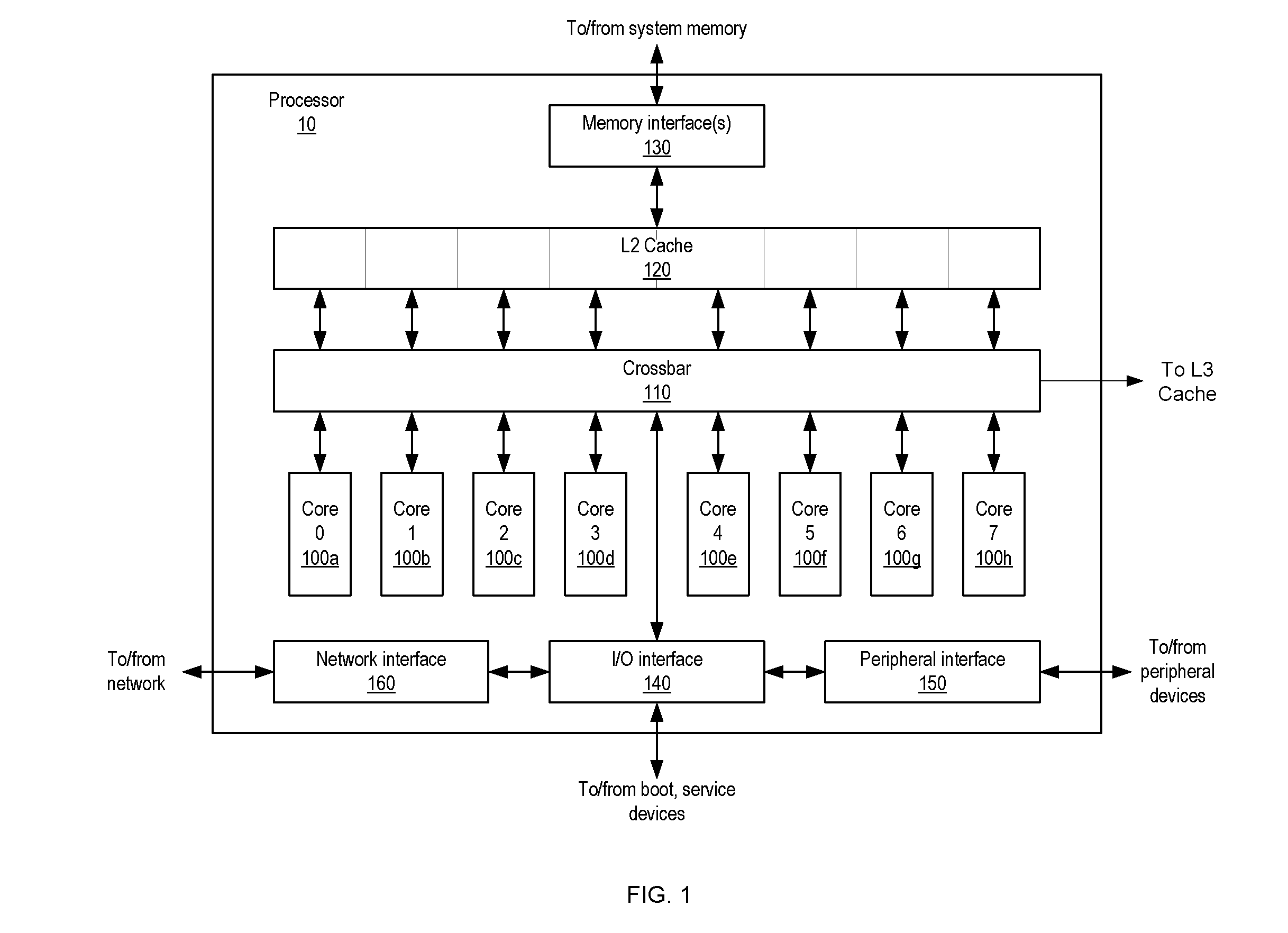

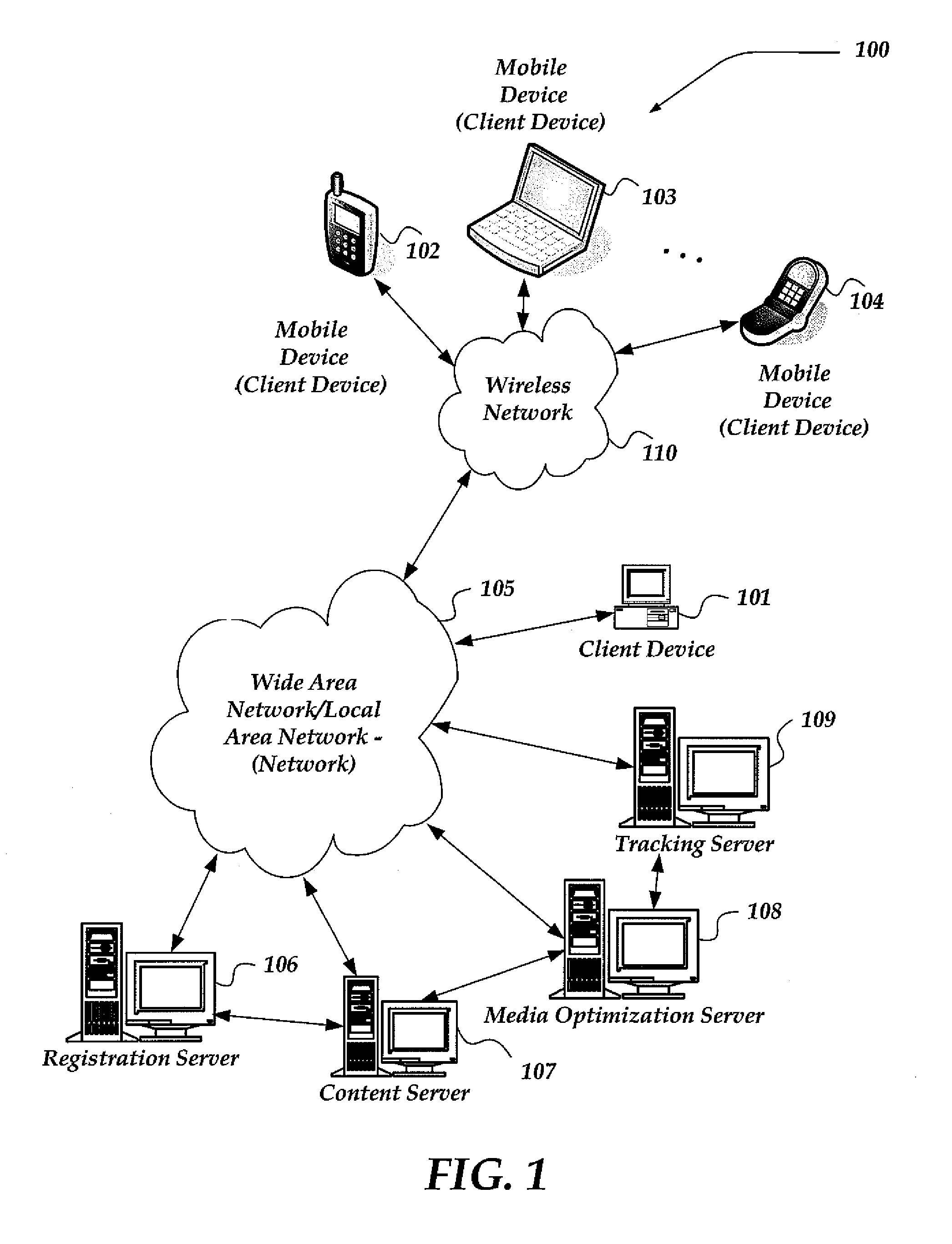

Method and system for exploiting parallelism on a heterogeneous multiprocessor computer system

InactiveUS20060123401A1Frees the application programmerSpecific program execution arrangementsMemory systemsProgramming complexityMulti processor

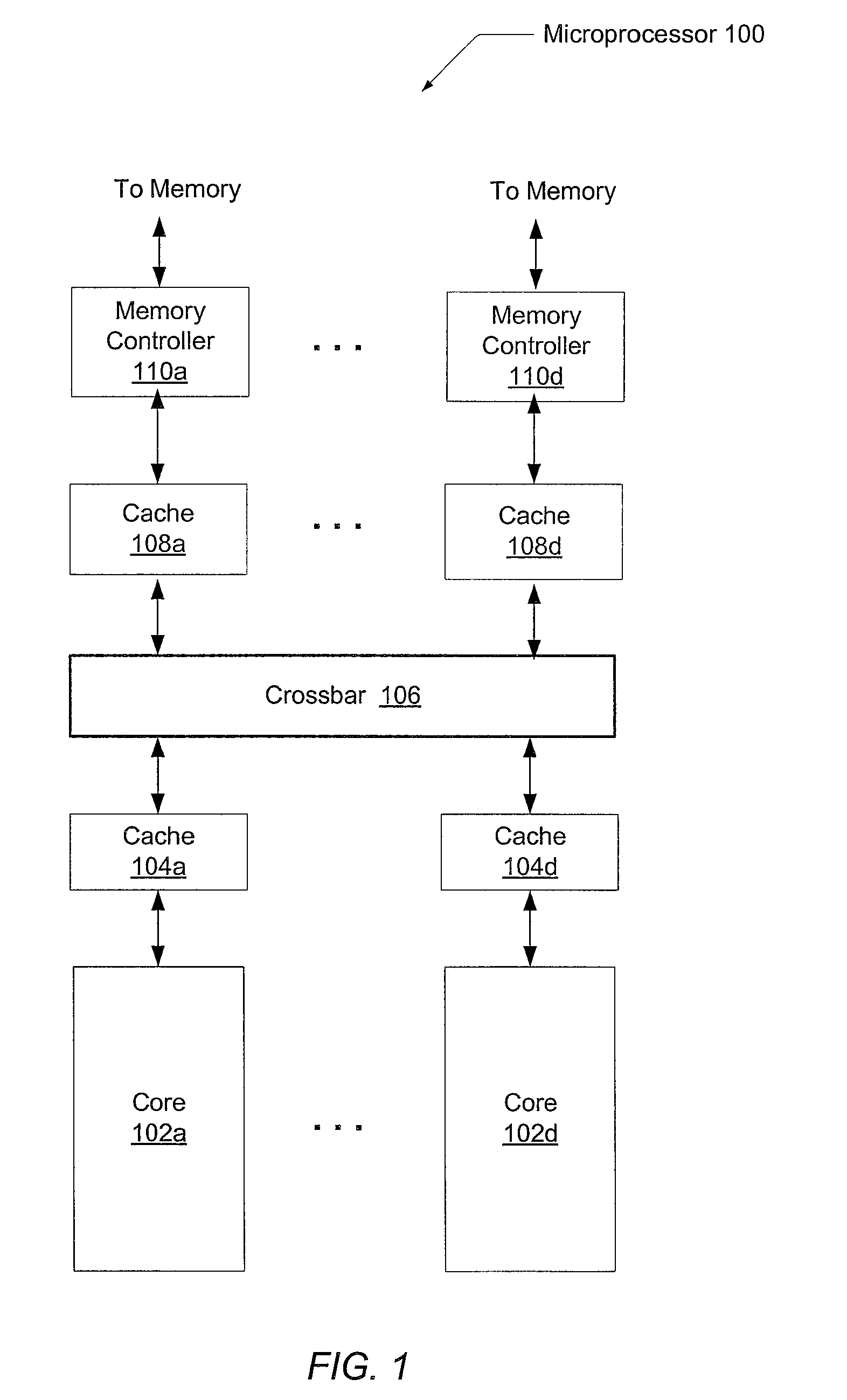

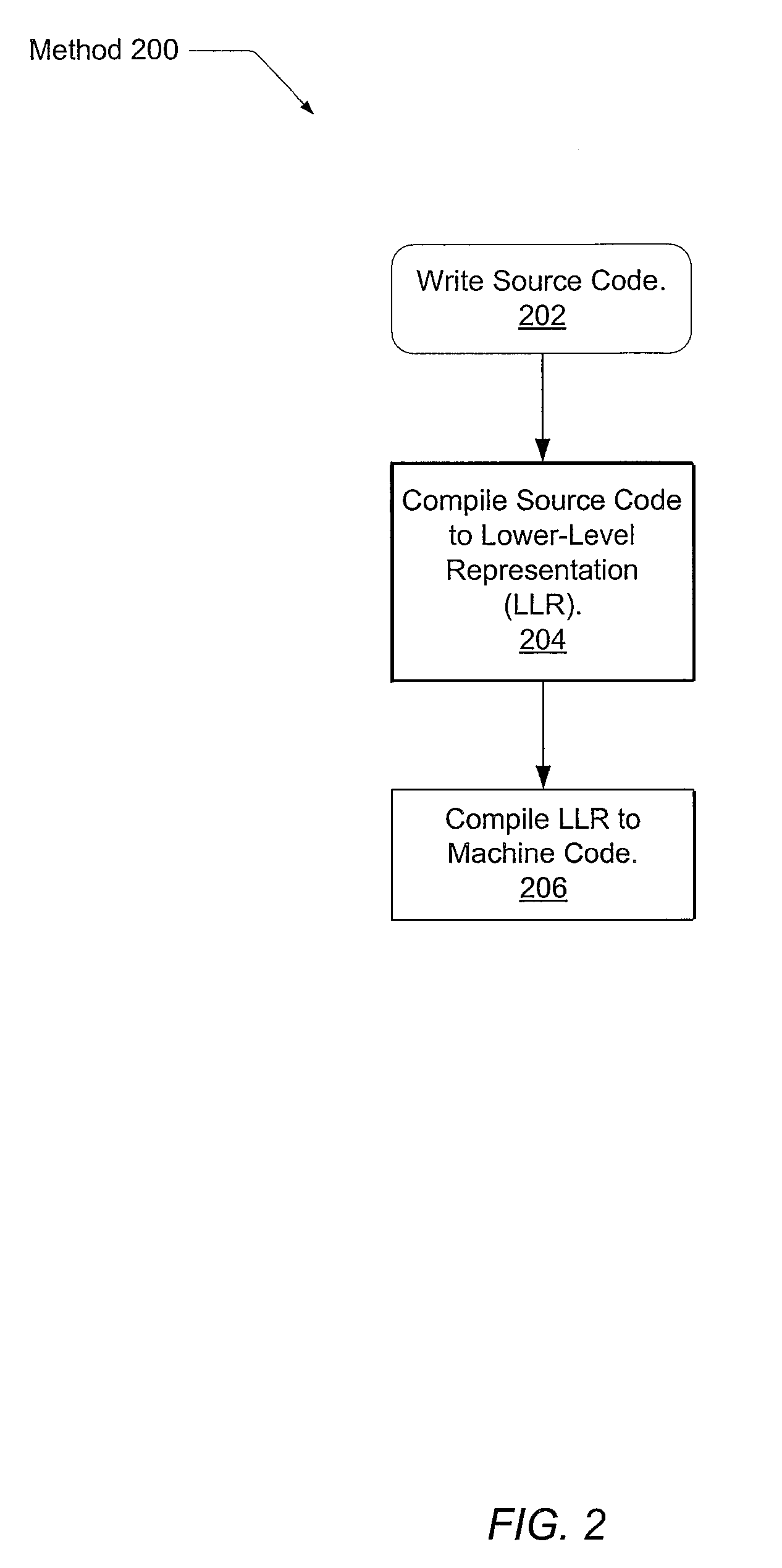

In a multiprocessor system it is generally assumed that peak or near peak performance will be achieved by splitting computation across all the nodes of the system. There exists a broad spectrum of techniques for performing this splitting or parallelization, ranging from careful handcrafting by an expert programmer at the one end, to automatic parallelization by a sophisticated compiler at the other. This latter approach is becoming more prevalent as the automatic parallelization techniques mature. In a multiprocessor system comprising multiple heterogeneous processing elements these techniques are not readily applicable, and the programming complexity again becomes a very significant factor. The present invention provides for a method for computer program code parallelization and partitioning for such a heterogeneous multi-processor system. A Single Source file, targeting a generic multiprocessing environment is received. Parallelization analysis techniques are applied to the received single source file. Parallelizable regions of the single source file are identified based on applied parallelization analysis techniques. The data reference patterns, code characteristics and memory transfer requirements are analyzed to generate an optimum partition of the program. The partitioned regions are compiled to the appropriate instruction set architecture and a single bound executable is produced.

Owner:IBM CORP

System and method for automatic parallelization of sequential code

Owner:INTERUNIVERSITAIR MICRO ELECTRONICS CENT (IMEC VZW)

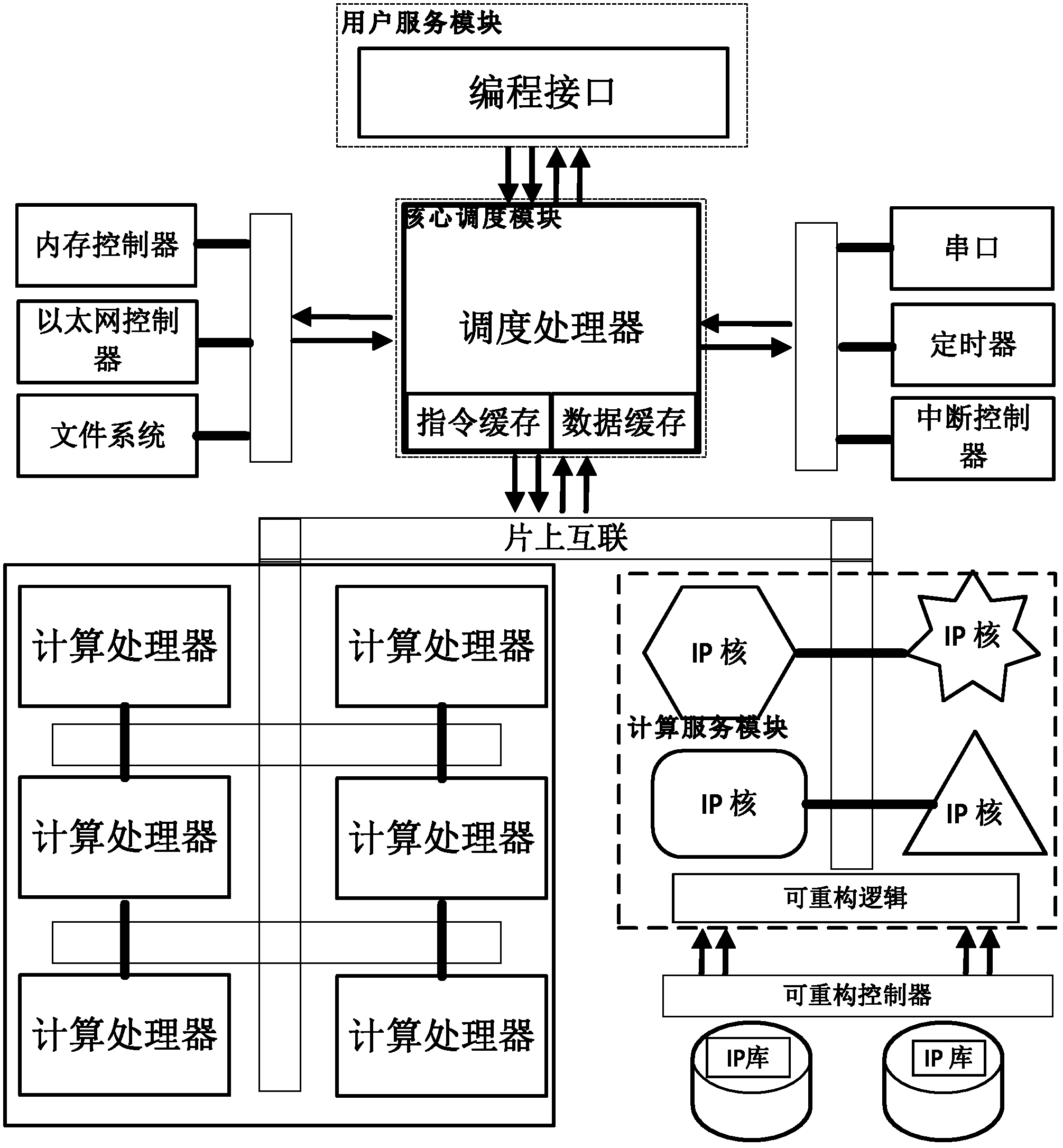

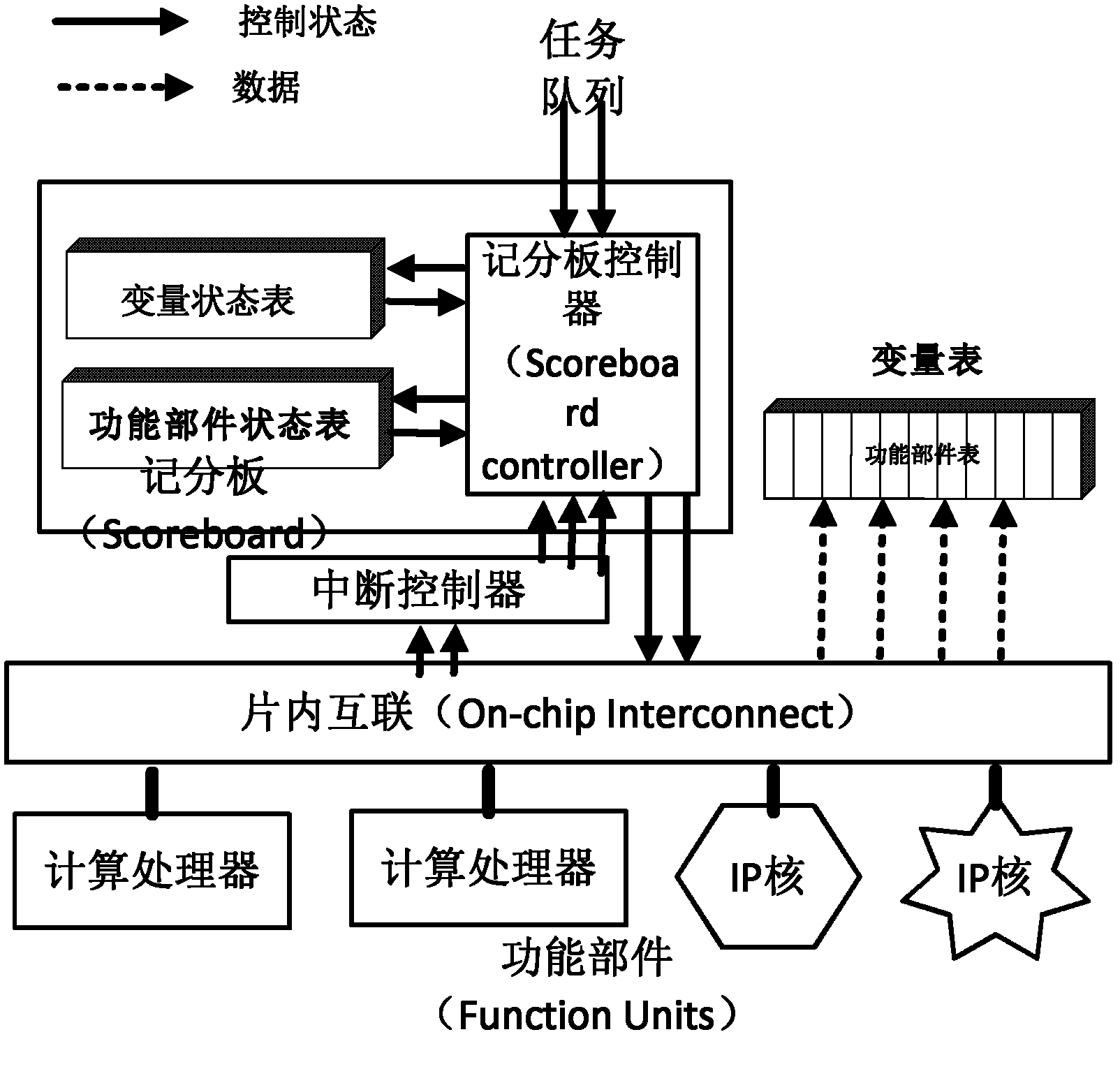

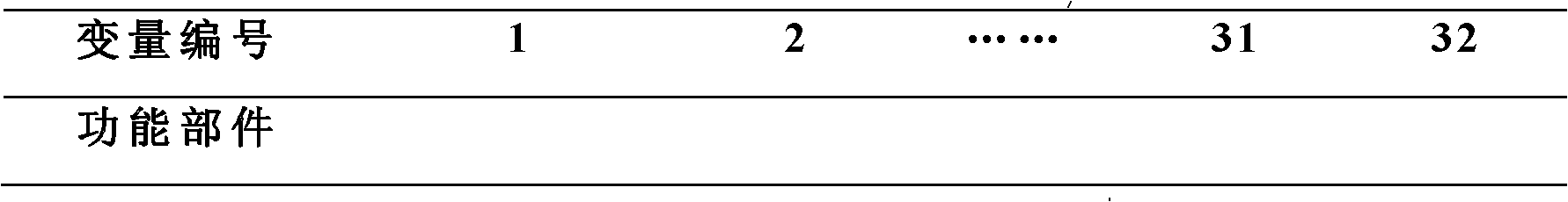

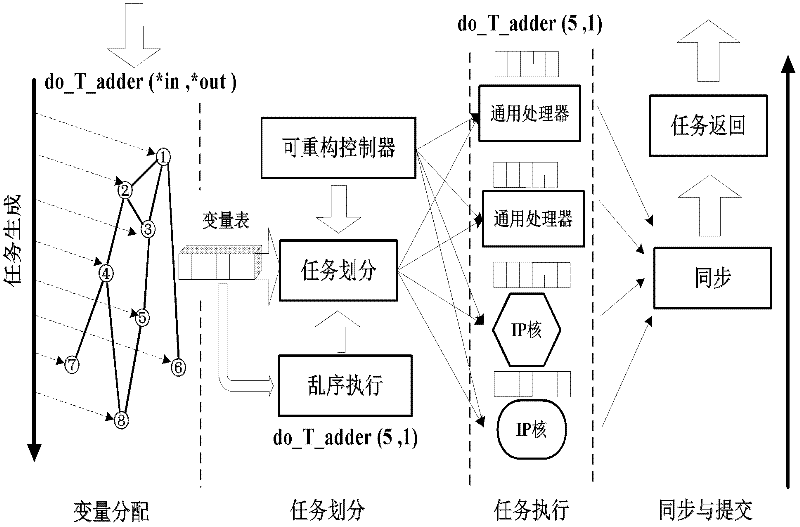

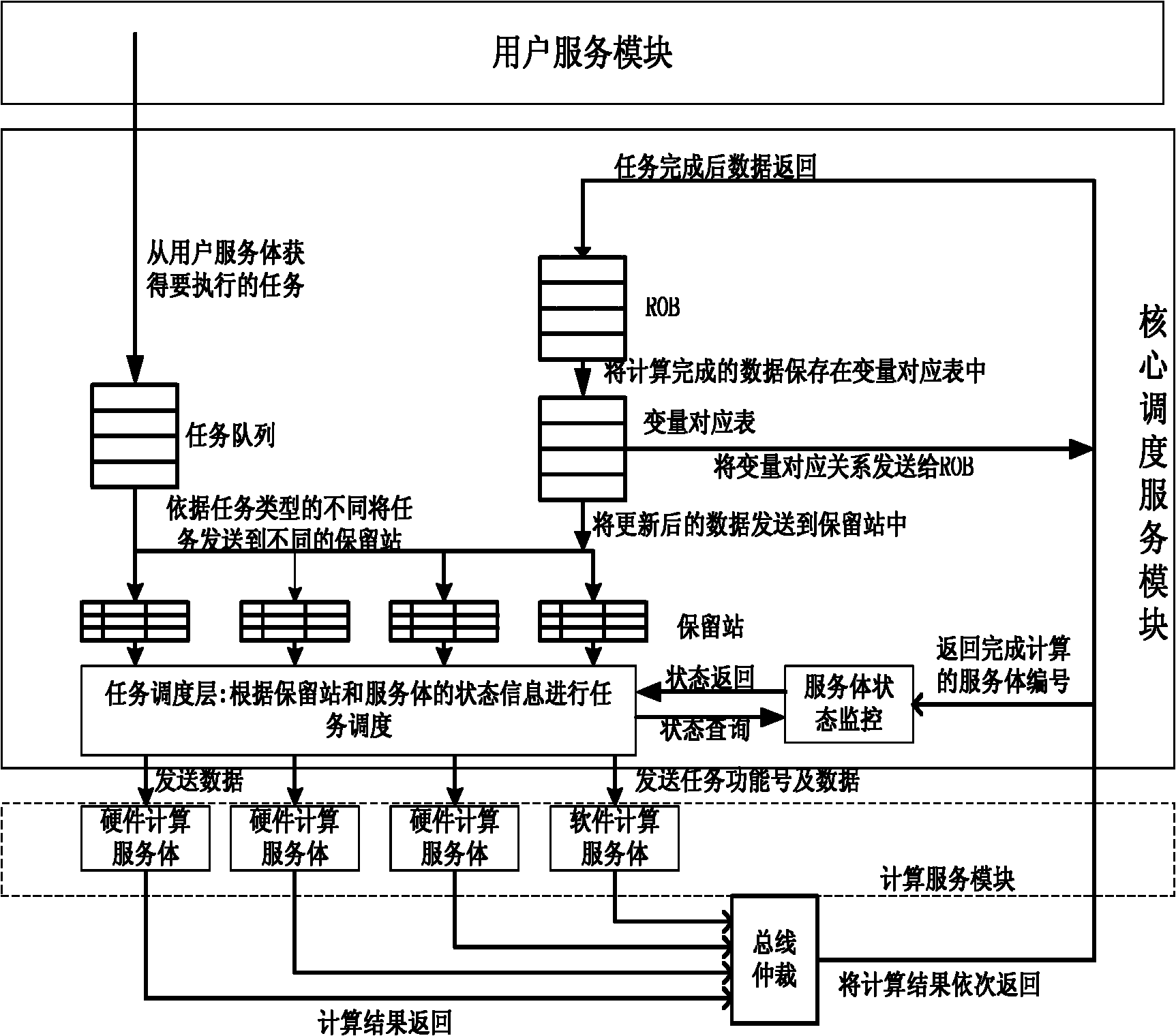

Scheduling system and scheduling execution method of multi-core heterogeneous system on chip

ActiveCN102360309AEliminate spurious correlationImprove throughputResource allocationData dependenceMulticore computing

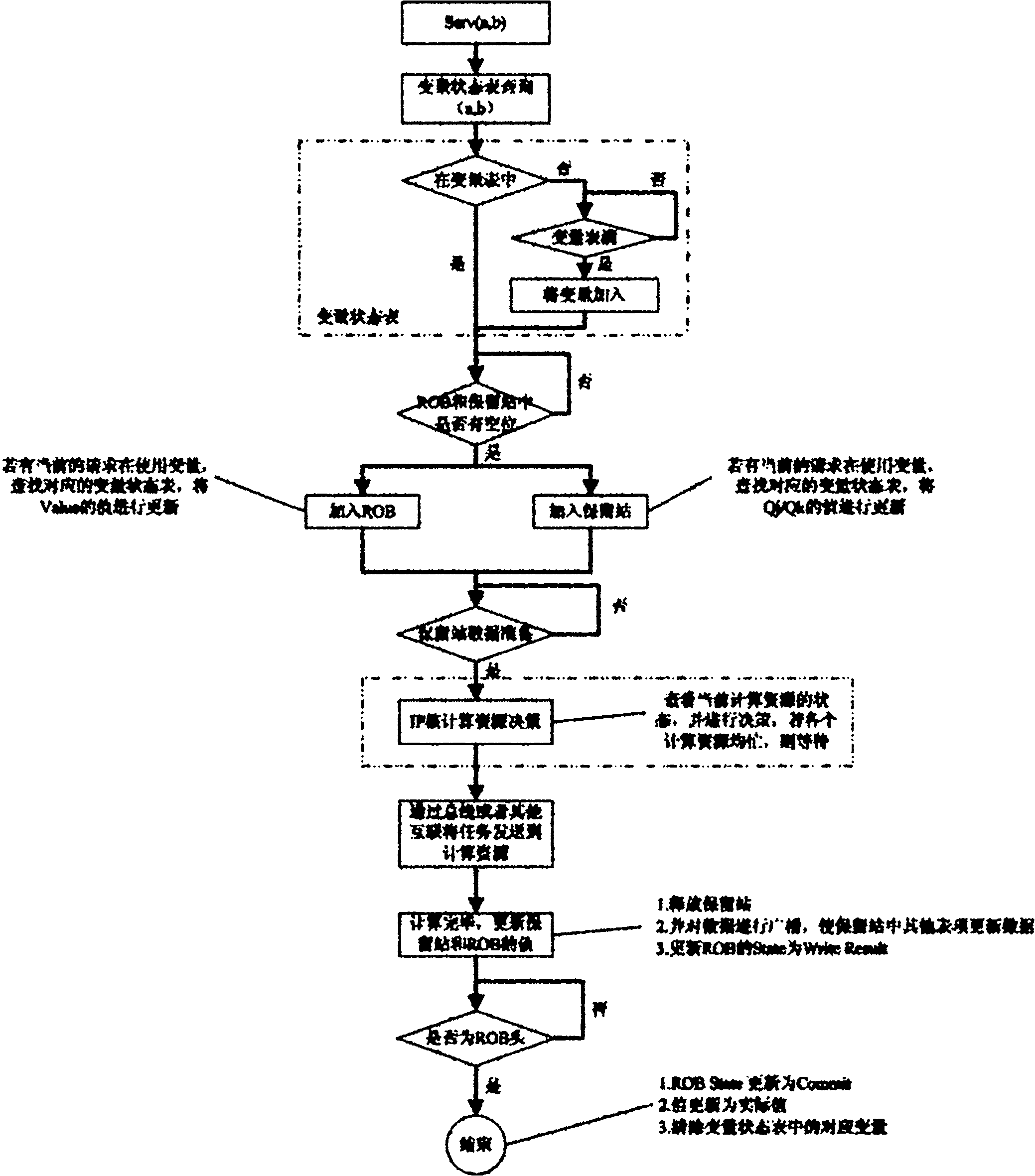

The invention discloses a scheduling system and a scheduling execution method of a multi-core heterogeneous system on chip. The scheduling system comprises a user service module which provides tasks needed to be executed and is suitable for a plurality of heterogeneous software and hardware, and a plurality of computing service modules for executing a plurality of tasks on a multi-core computing platform on chip; the scheduling system is characterized in that a core scheduling module is arranged between the user service module and the computing service modules, and the core scheduling module is used for accepting a task request of the user service module, recording and judging a data dependence relation among different tasks to schedule the task request to different computing service modules for execution in parallel; the computing service modules are packaged as IP (Internet Protocol) cores, and realize dynamic loading of the IP cores via a reconfigurable controller; and the computing service modules are in on chip interconnections with a plurality of computing processors of the multi-core heterogeneous system on chip, and accept instructions of the core scheduling module to execute different types of computing tasks. The scheduling system improves the platform throughput rate and the system performance by monitoring the relativity of the tasks and executing automatic parallelization in the running process.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

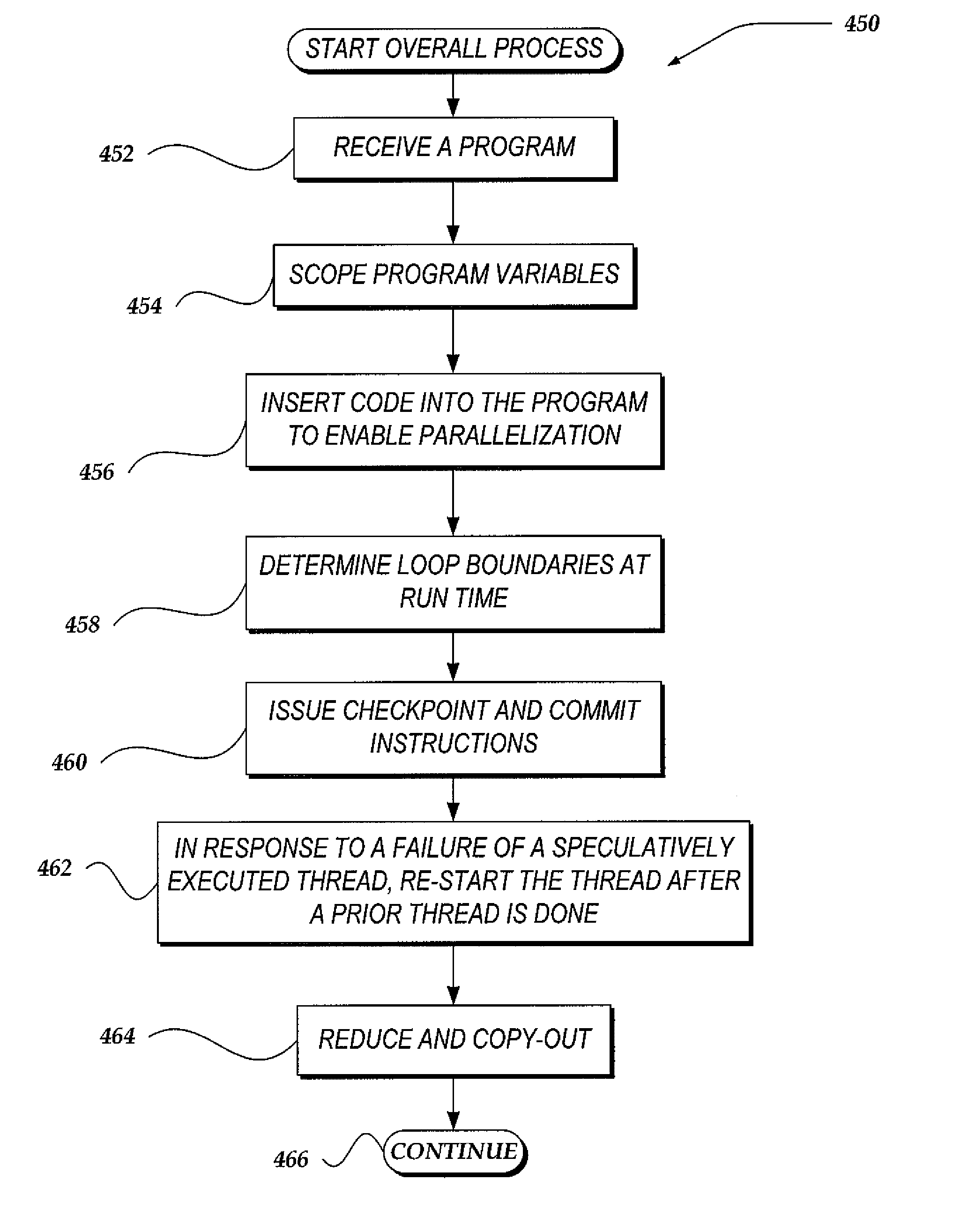

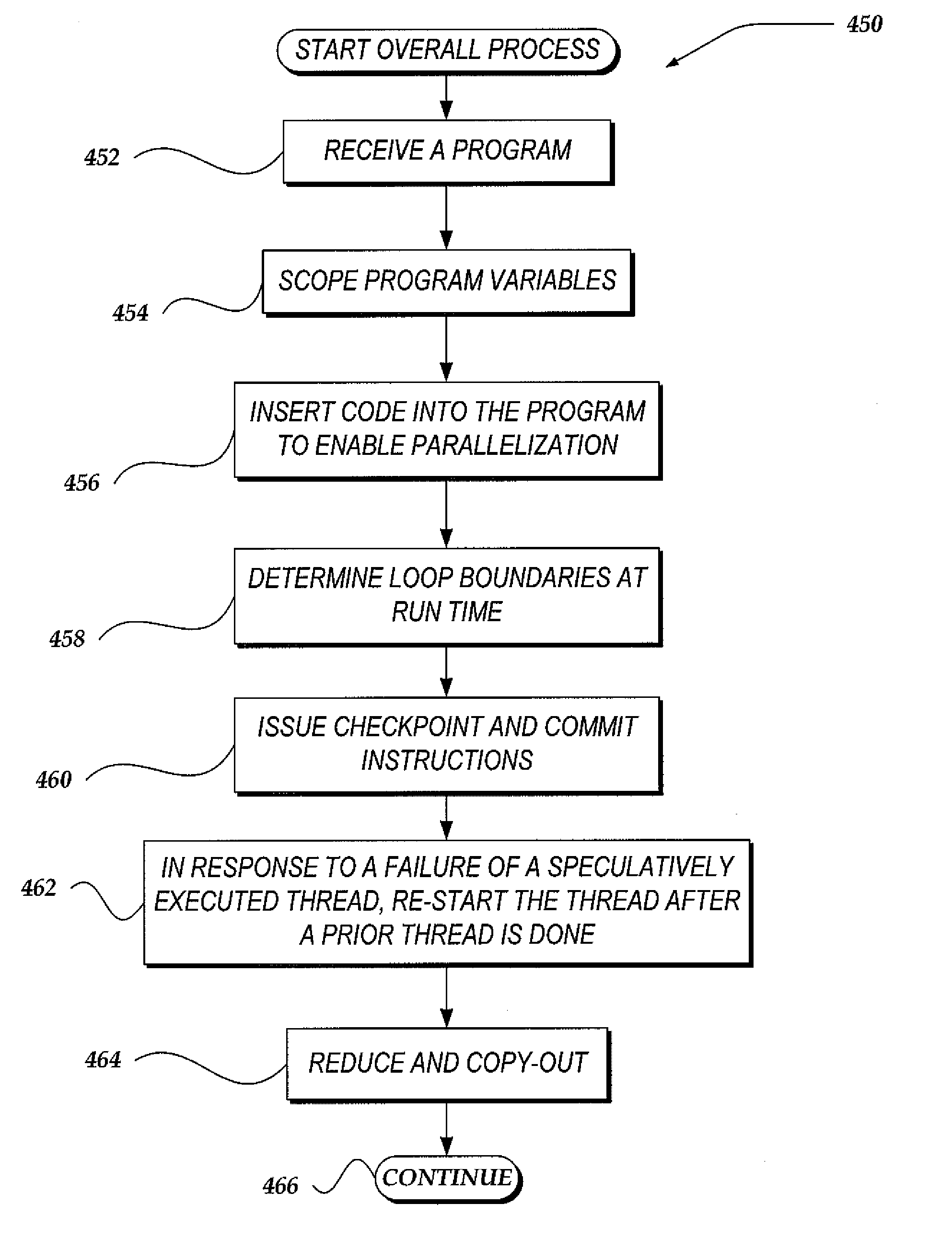

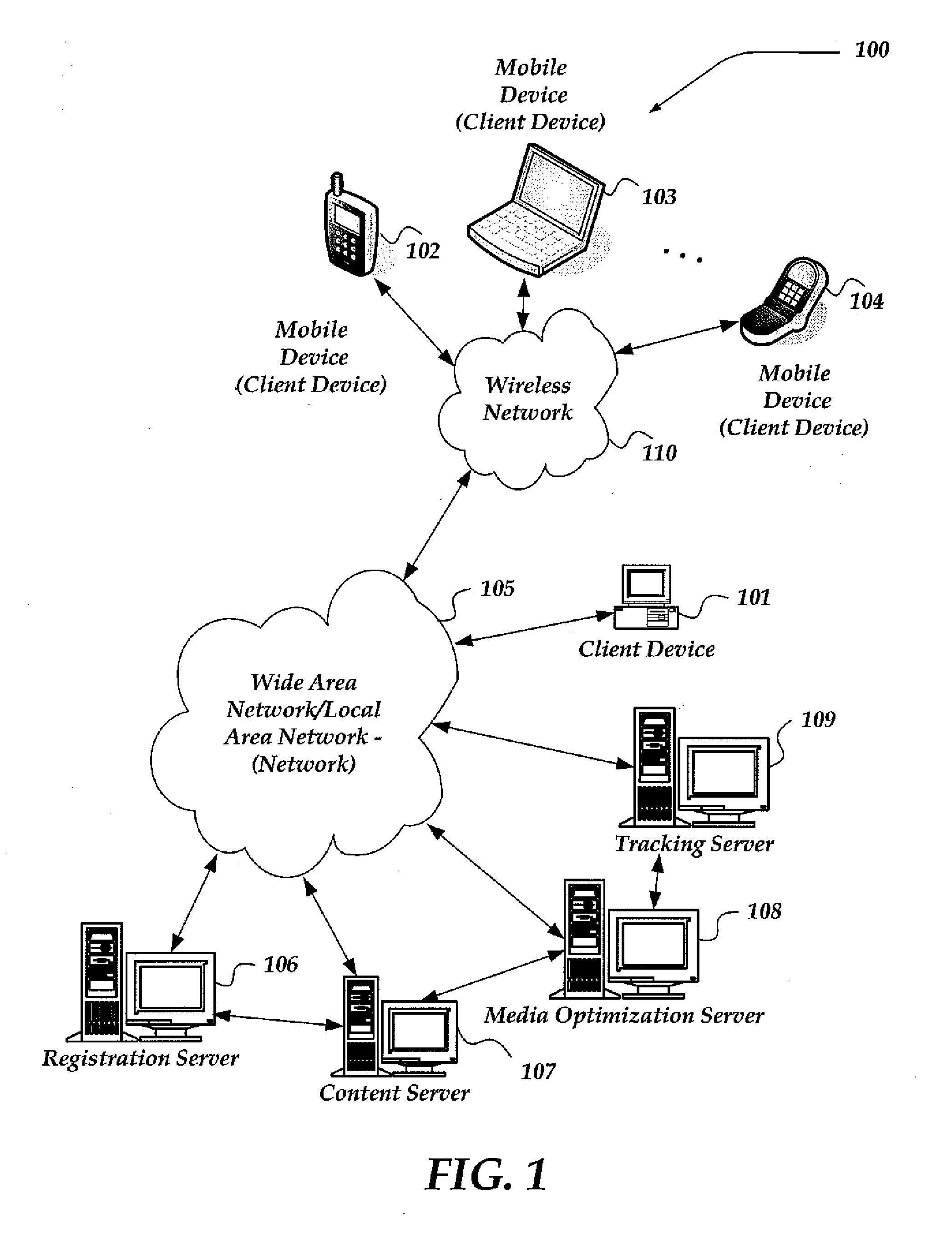

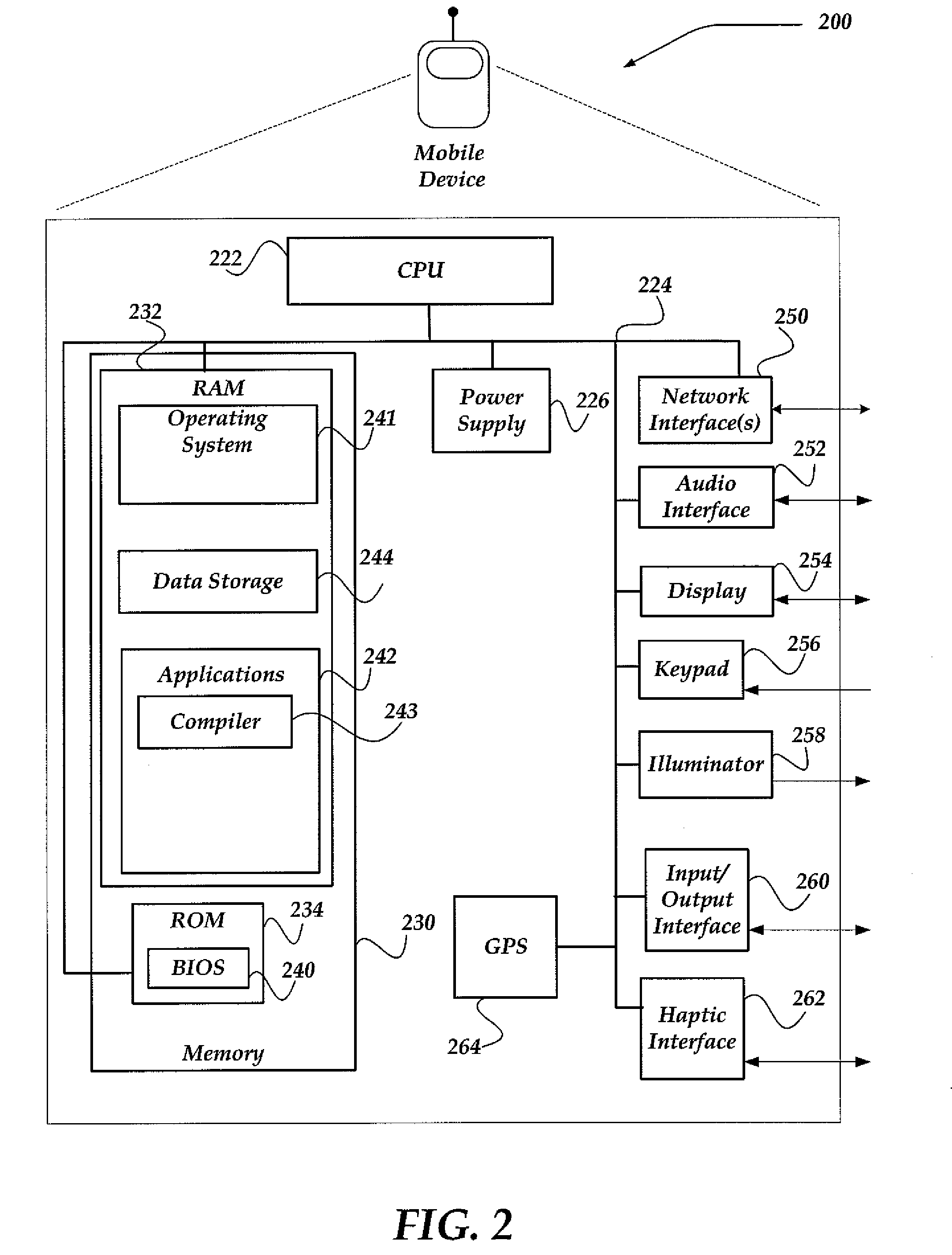

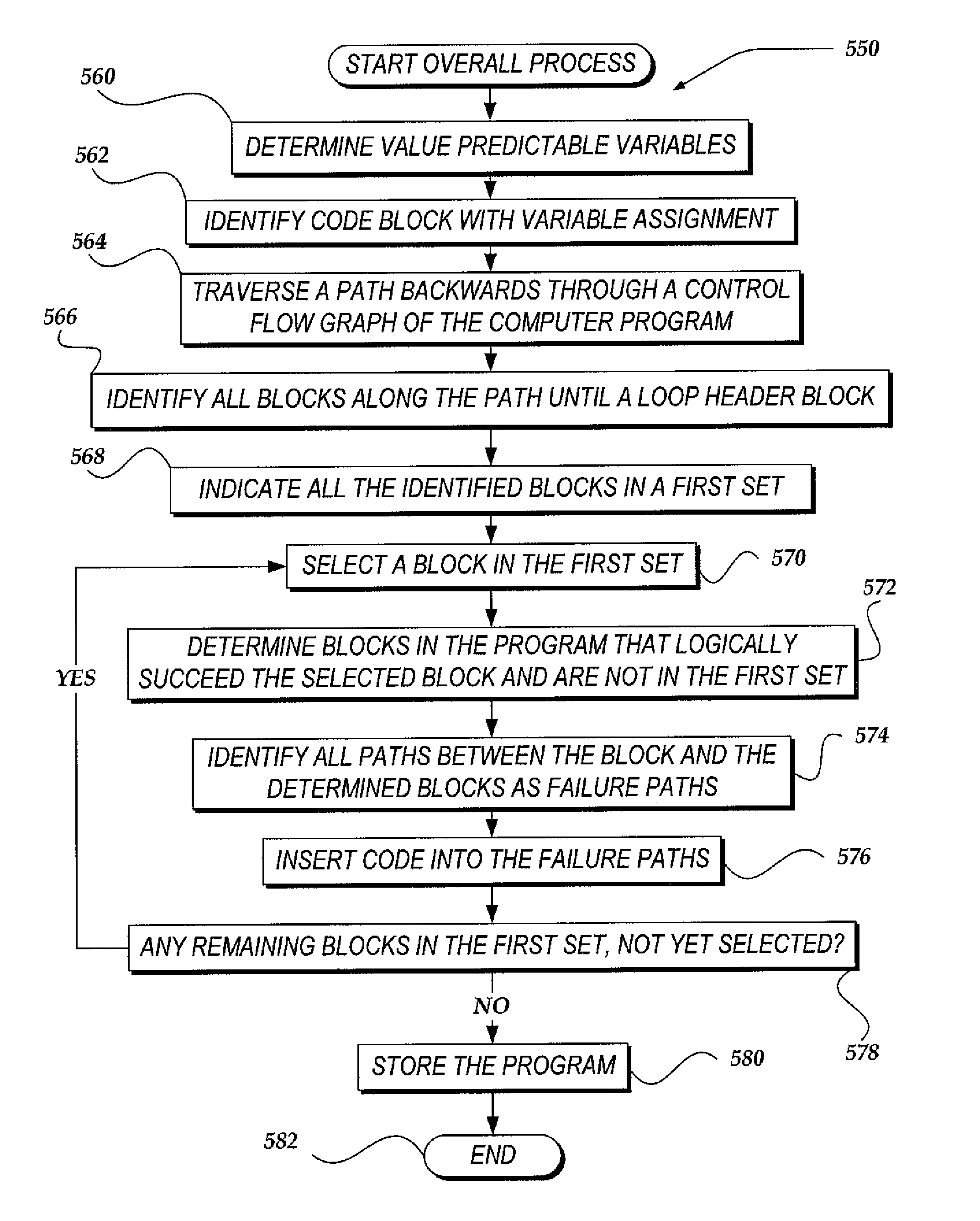

Value predictable variable scoping for speculative automatic parallelization with transactional memory

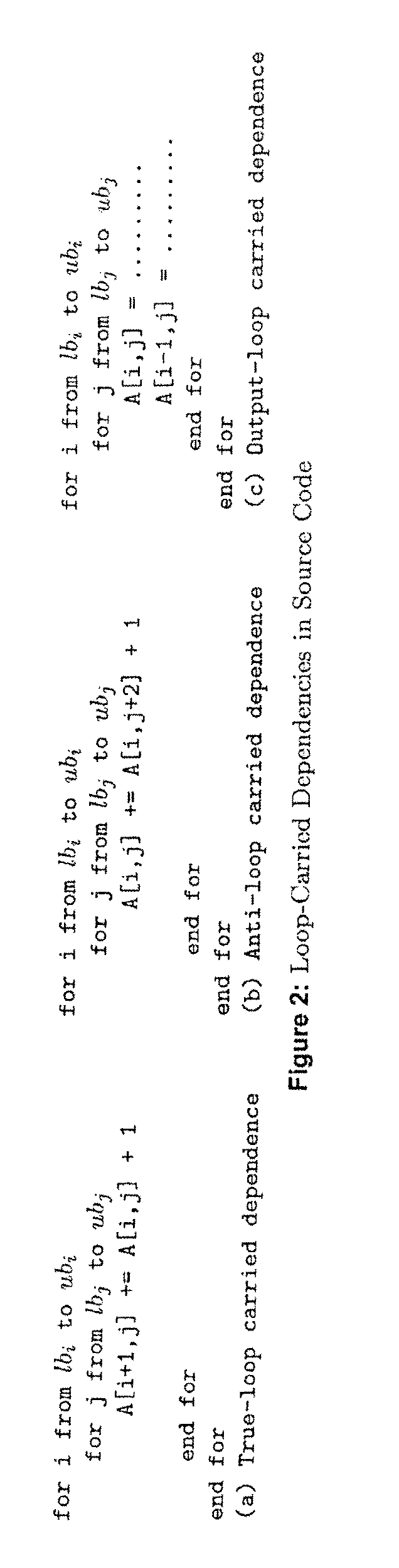

ActiveUS20090235237A1Software engineeringSpecific program execution arrangementsCoding blockInner loop

Parallelize a computer program by scoping program variables at compile time and inserting code into the program. Identify as value predictable variables, variables that are: defined only once in a loop of the program; not defined in any inner loop of the loop; and used in the loop. Optionally also: identify a code block in the program that contains a variable assignment, and then traverse a path backwards from the block through a control flow graph of the program. Name in a set all blocks along the path until a loop header block. For each block in the set, determine program blocks that logically succeed the block and are not in the first set. Identify all paths between the block and the determined blocks as failure paths, and insert code into the failure paths. When executed at run time of the program, the inserted code fails the corresponding path.

Owner:ORACLE INT CORP

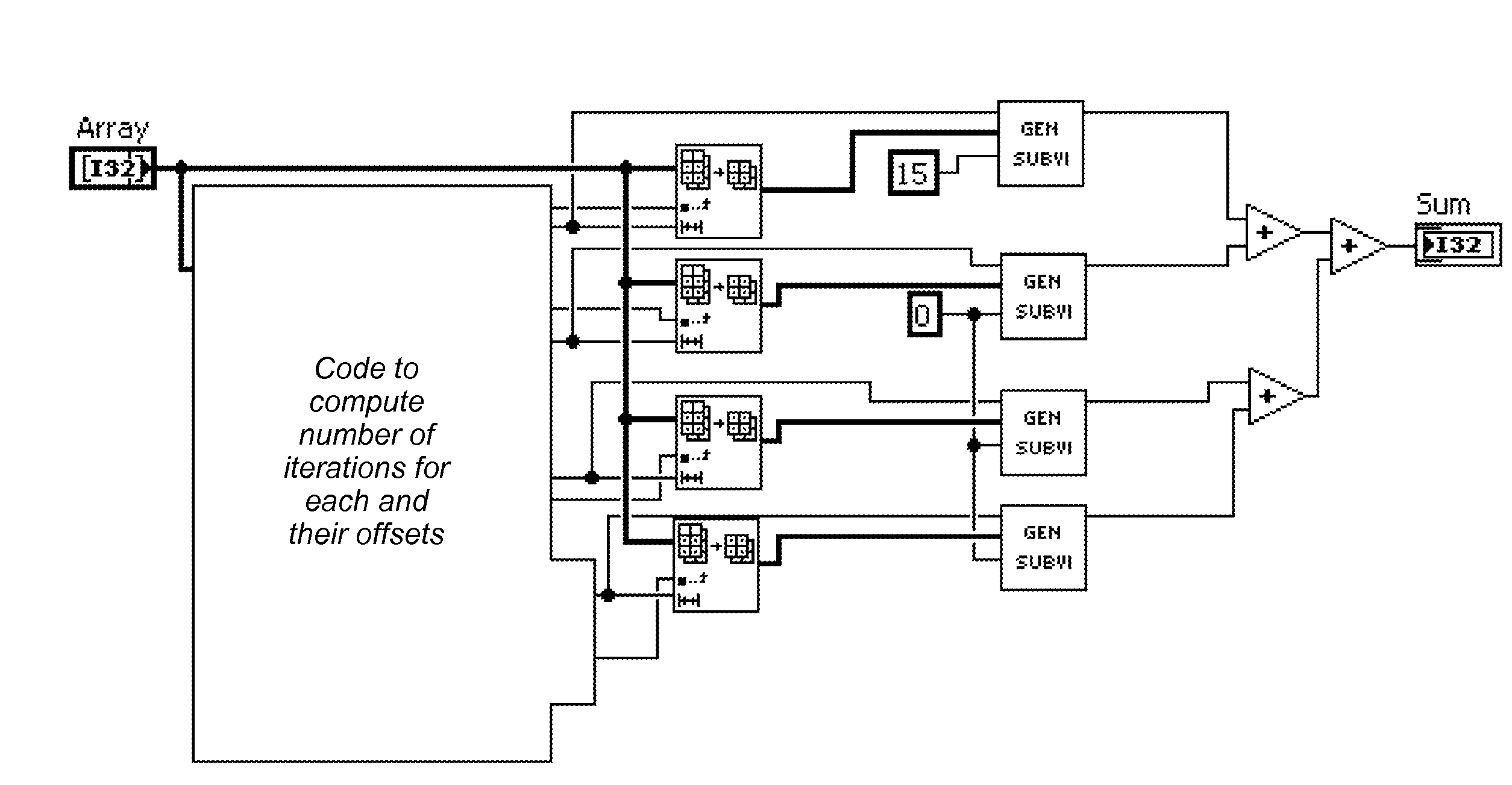

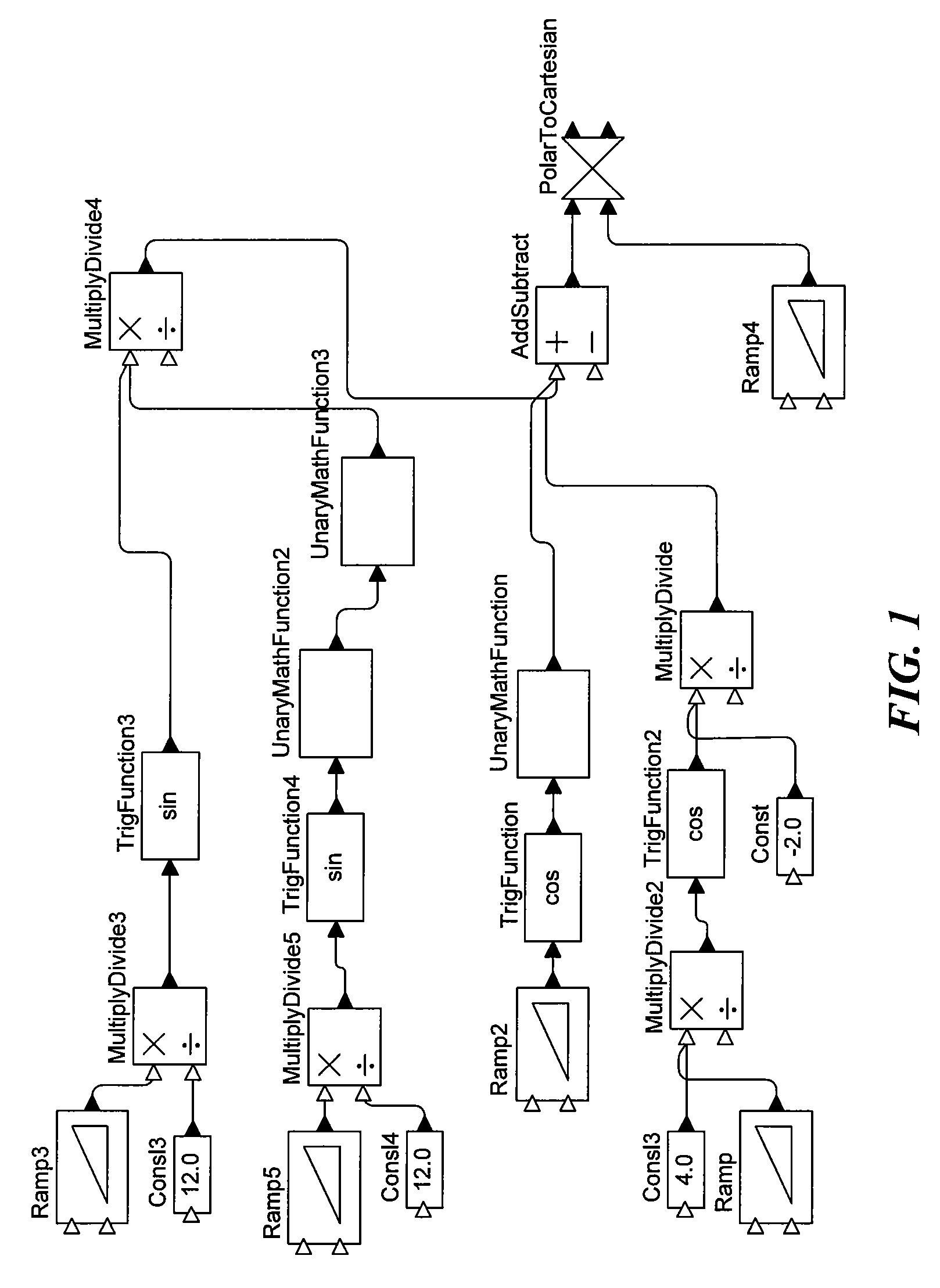

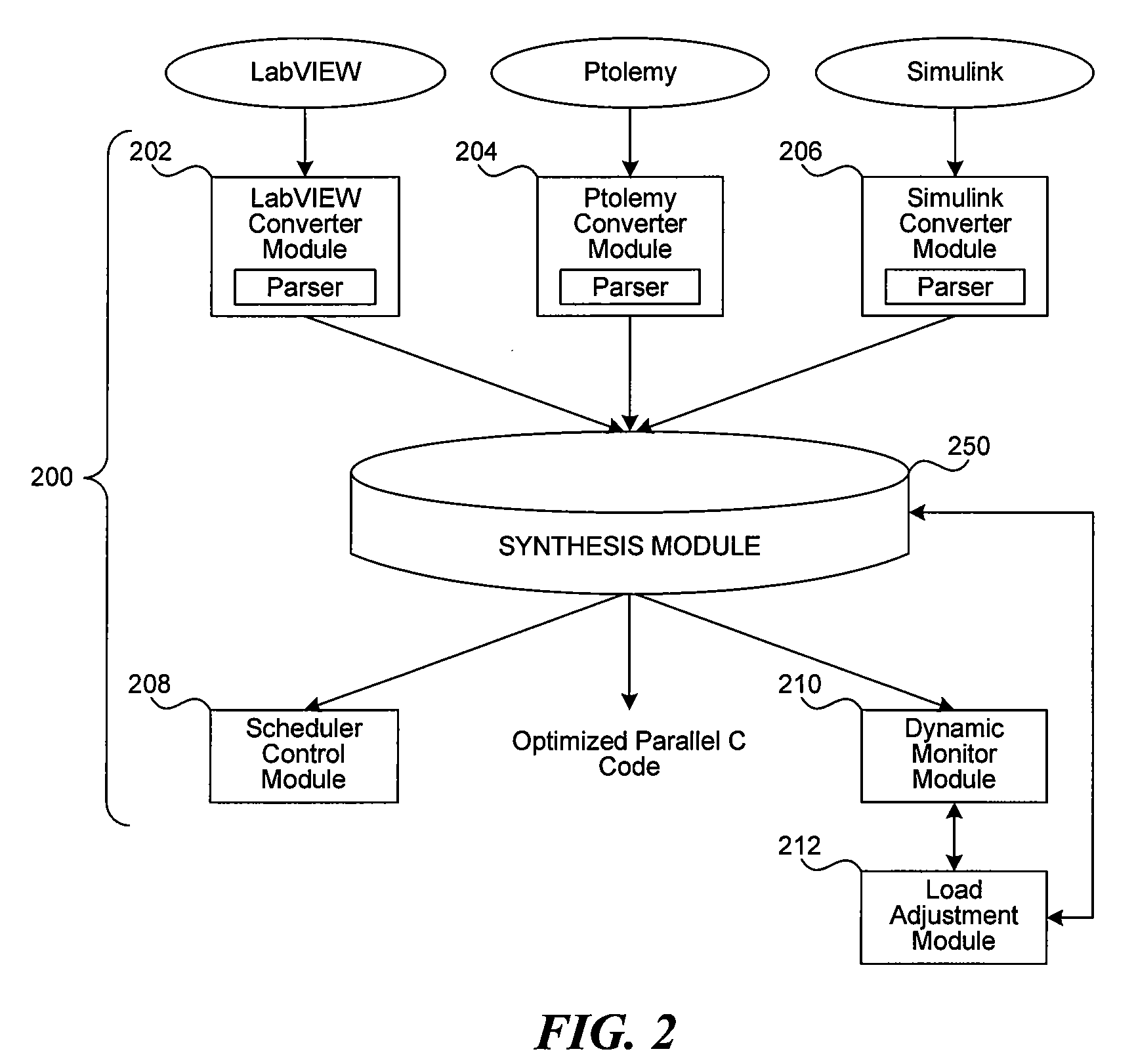

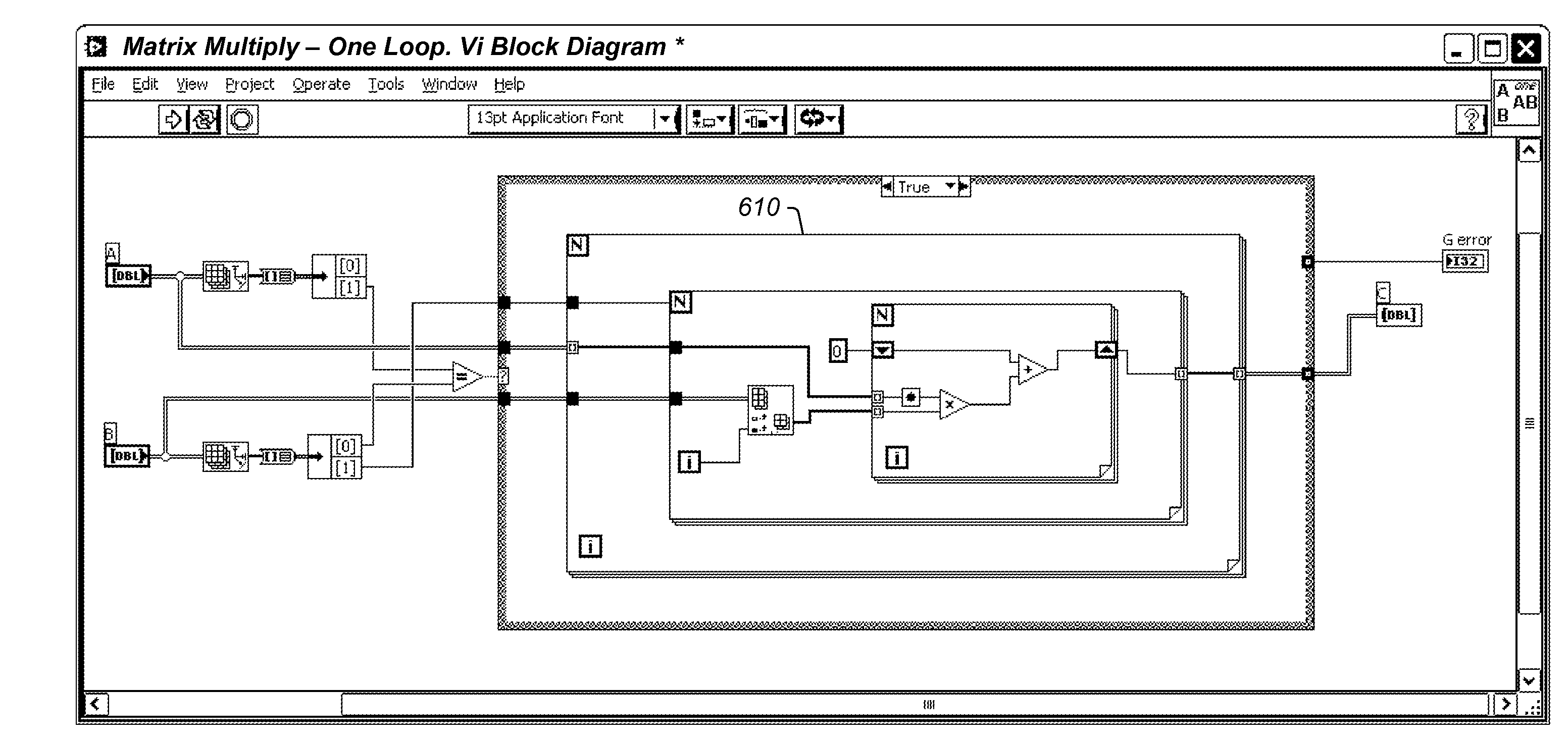

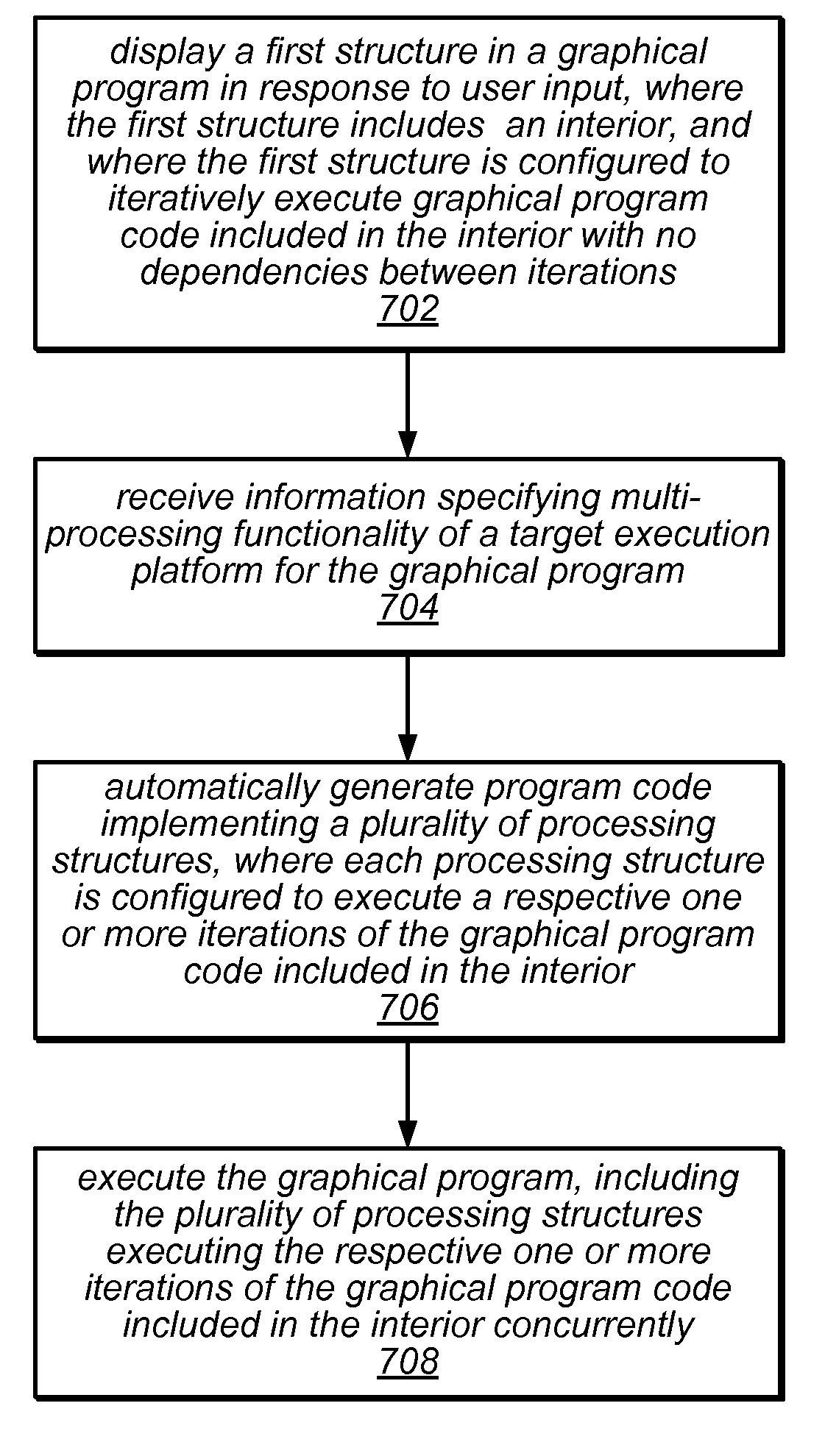

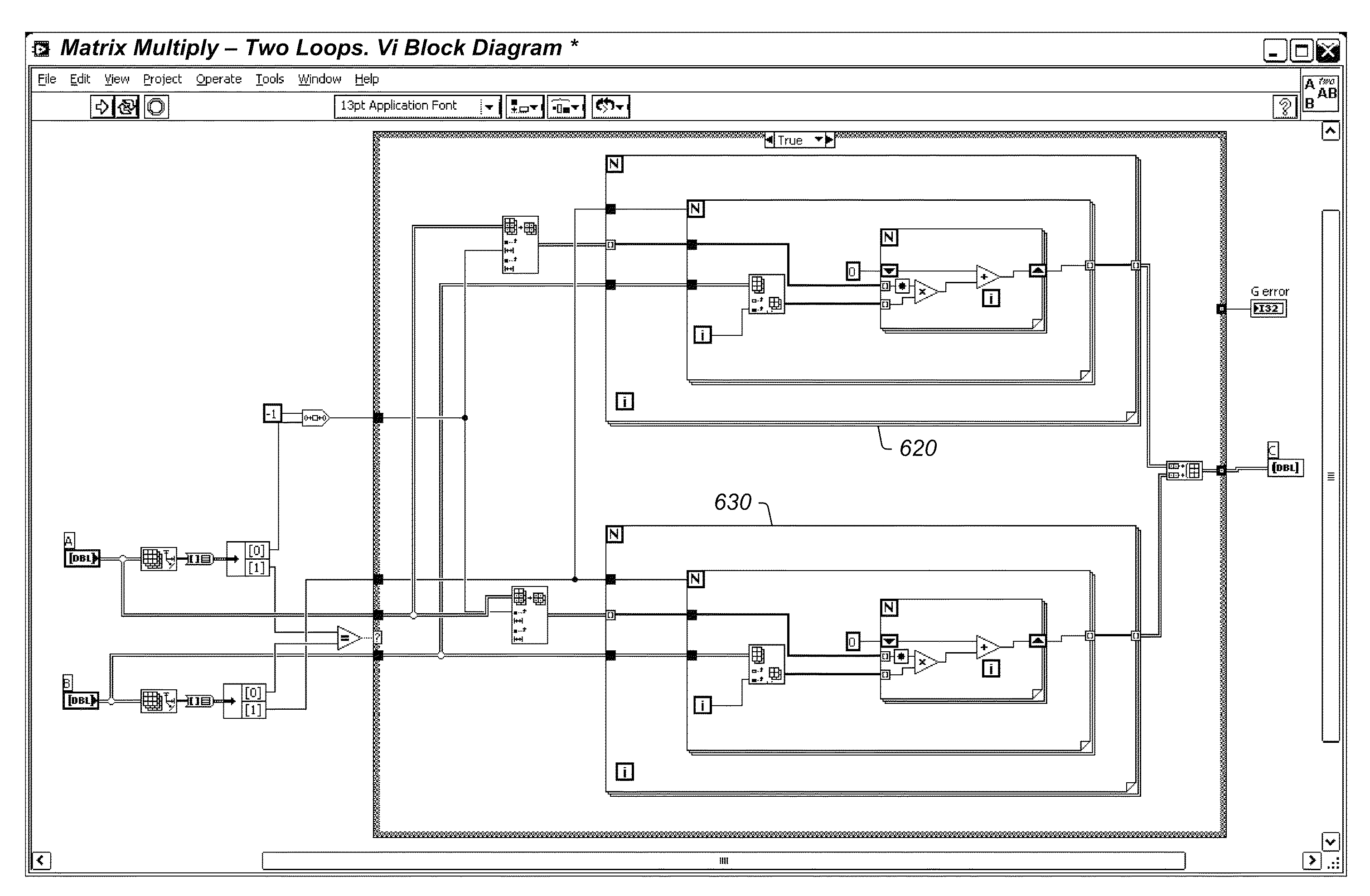

Automatically creating parallel iterative program code in a data flow program

System and method for automatically parallelizing iterative functionality in a data flow program. A data flow program is stored that includes a first data flow program portion, where the first data flow program portion is iterative. Program code implementing a plurality of second data flow program portions is automatically generated based on the first data flow program portion, where each of the second data flow program portions is configured to execute a respective one or more iterations. The plurality of second data flow program portions are configured to execute at least a portion of iterations concurrently during execution of the data flow program. Execution of the plurality of second data flow program portions is functionally equivalent to sequential execution of the iterations of the first data flow program portion.

Owner:NATIONAL INSTRUMENTS

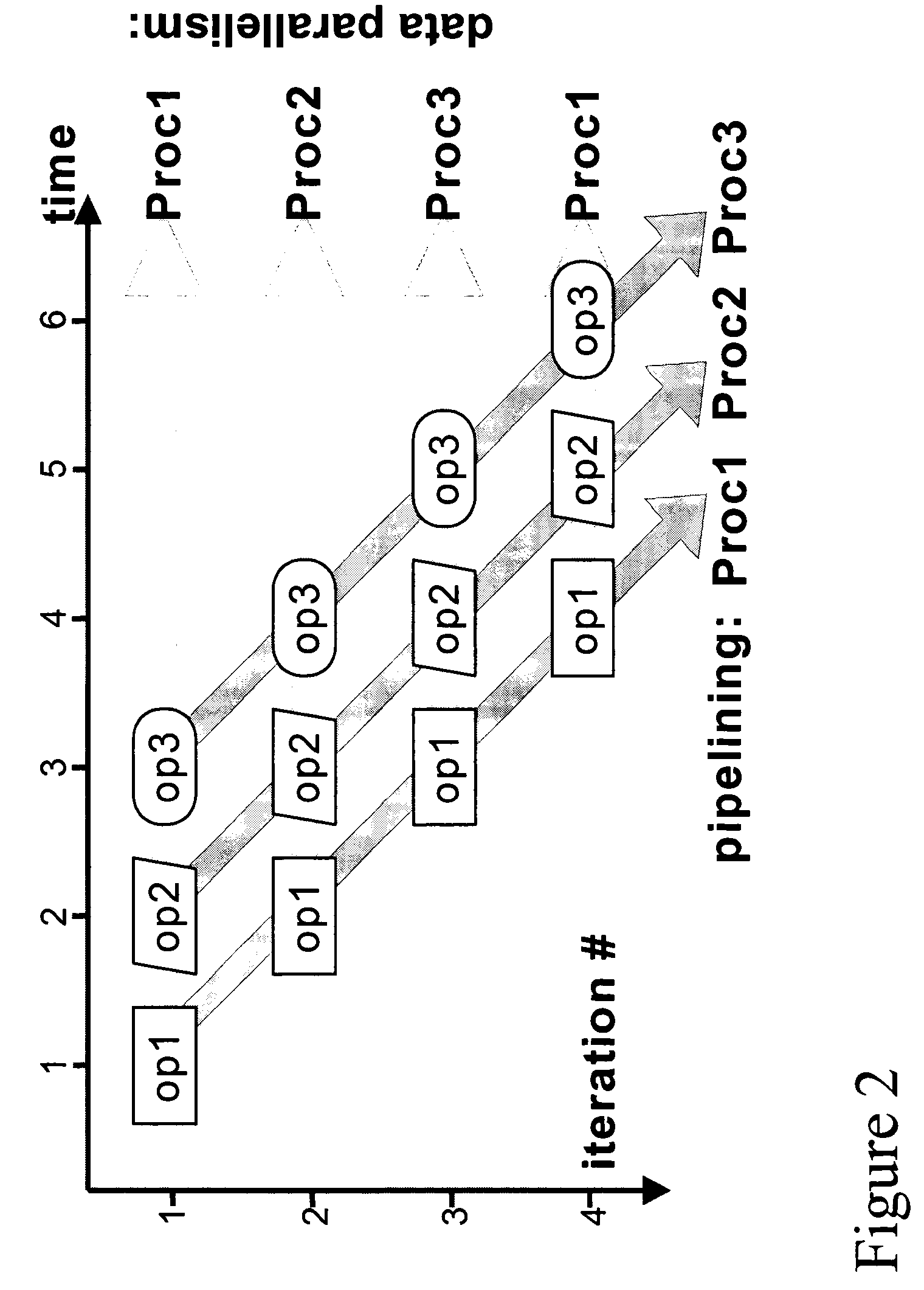

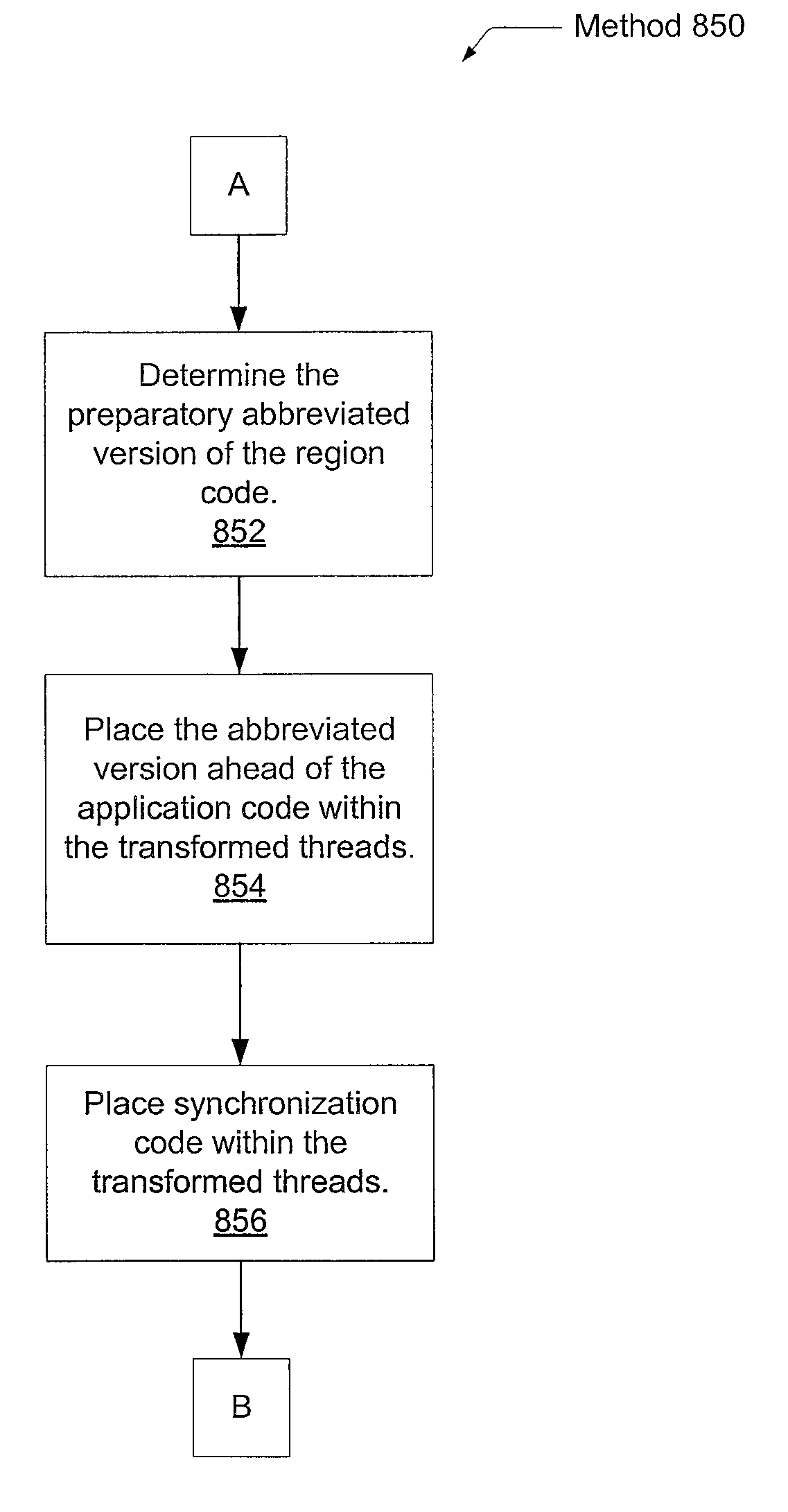

Pipelined parallelization with localized self-helper threading

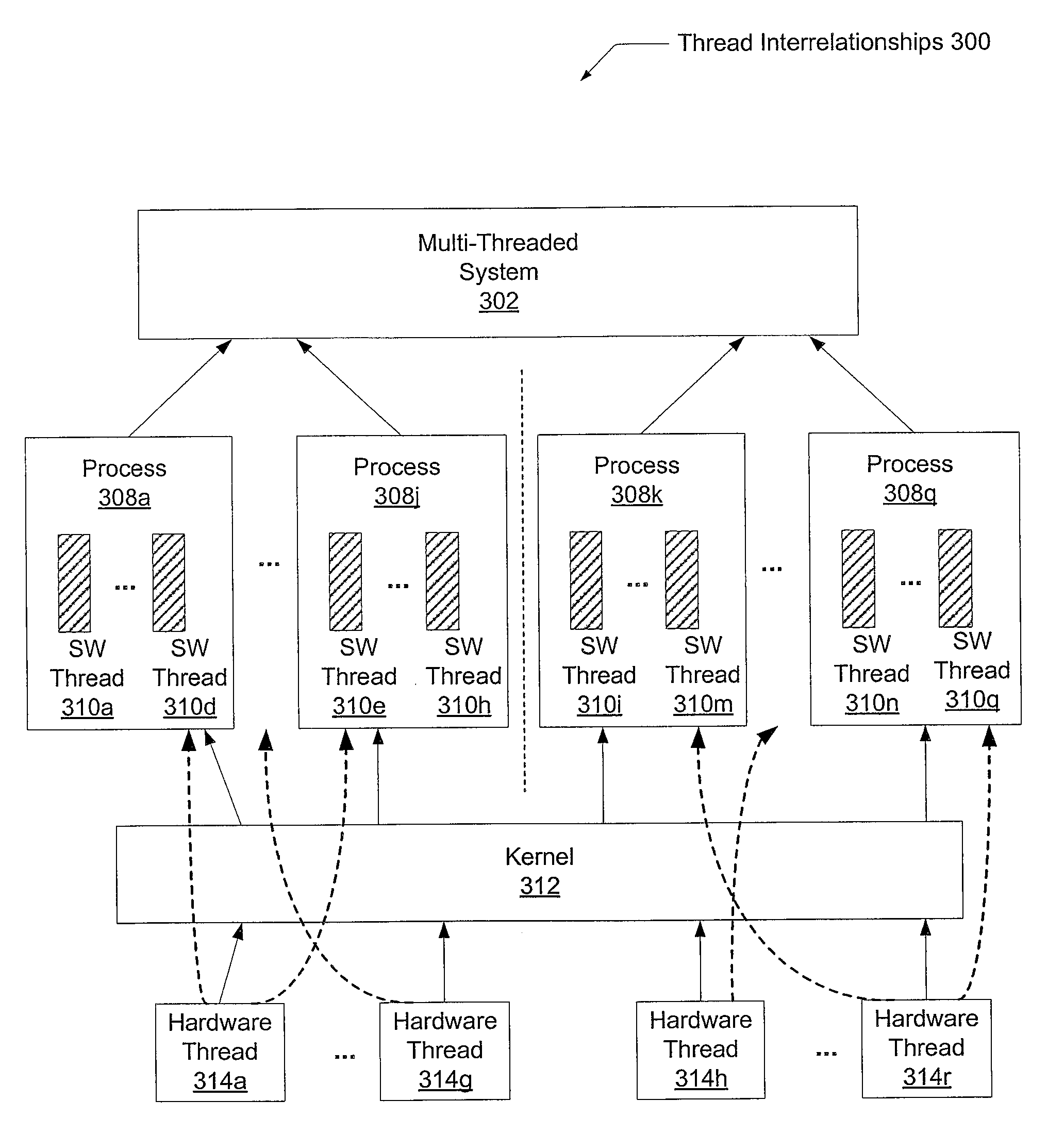

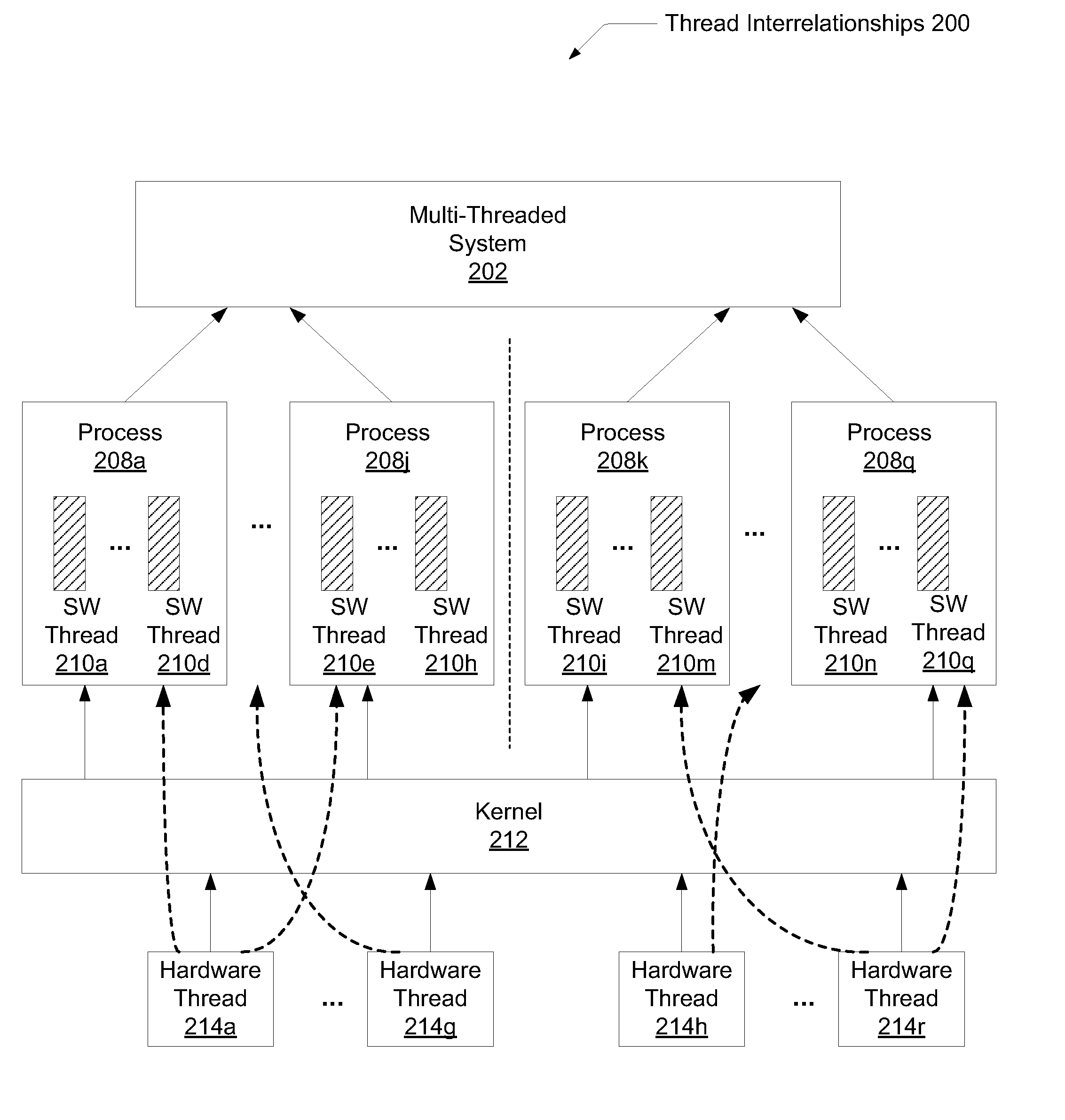

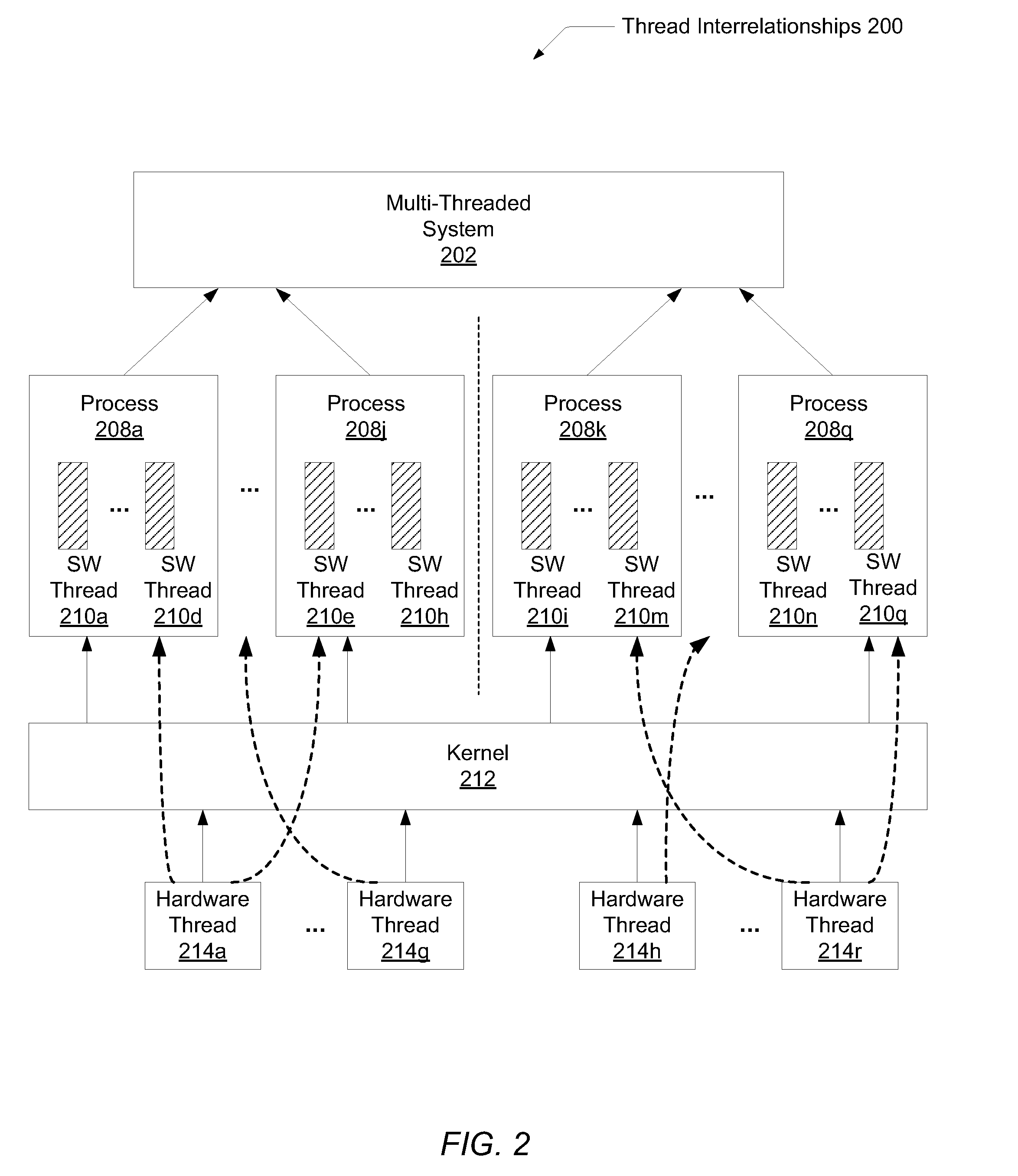

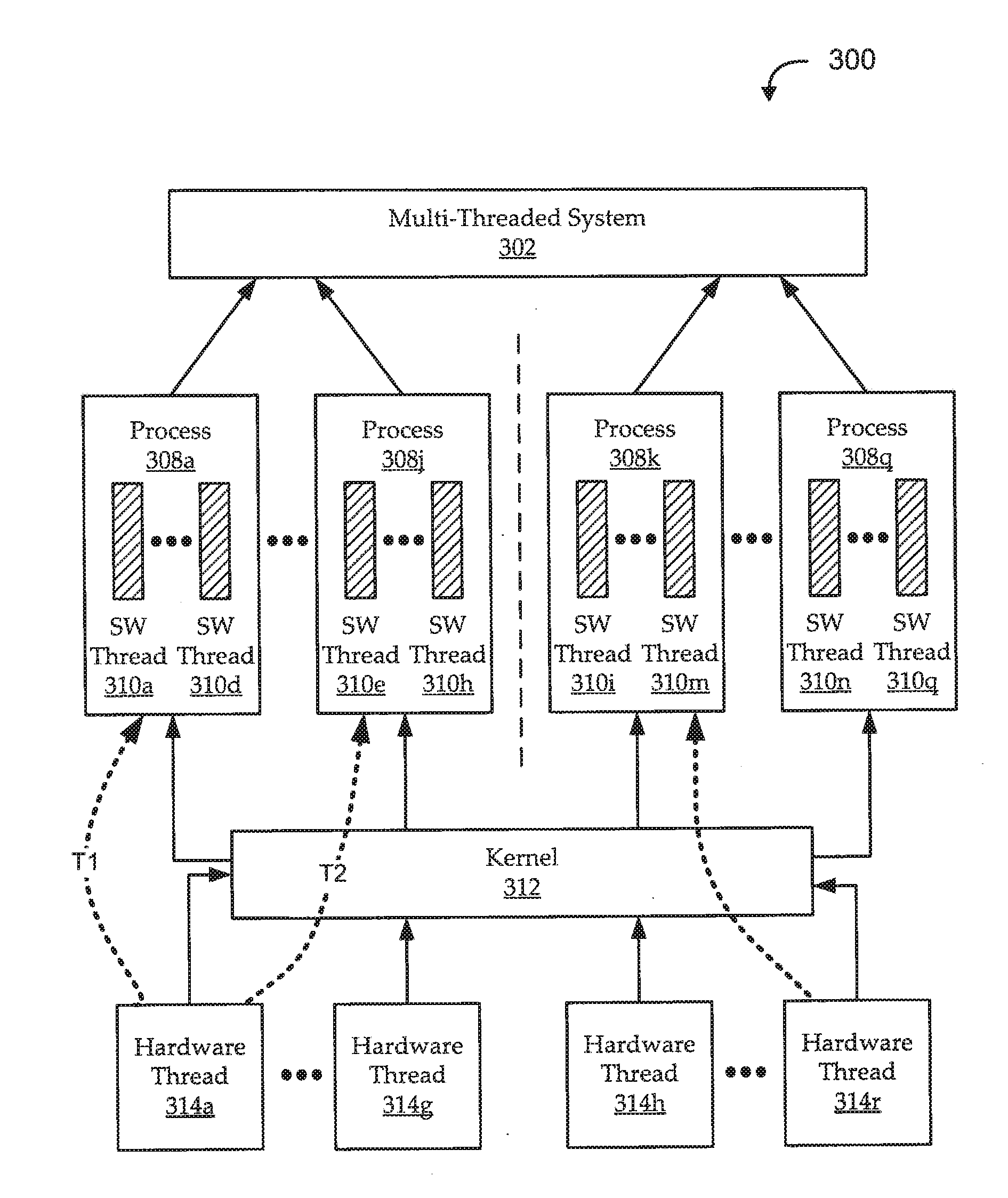

ActiveUS20110067014A1Reduced execution timeFast executionSoftware engineeringProgram controlParallel computingSuper-threading

A system and method for automatically parallelizing a computer program for multi-threaded execution. A compiler identifies and parallelizes non-DOALL parallel regions, such as loops, within a computer program. The compiler determines enhanced helper thread instructions based upon the main body instructions of the non-DOALL region. These helper thread instructions are inserted ahead of the main body instructions within each of the plurality of threads, rather than within a single main thread. Next, synchronization instructions are inserted in one or more threads such that the main body of work of each thread is performed in a pipelined manner. The helper thread instructions within each thread may reduce the total execution time of each thread.

Owner:ORACLE INT CORP

Graphical Indicator which Specifies Parallelization of Iterative Program Code in a Graphical Data Flow Program

System and method for automatically parallelizing iterative functionality in a data flow program. A data flow program is stored that includes a first data flow program portion, where the first data flow program portion is iterative. Program code implementing a plurality of second data flow program portions is automatically generated based on the first data flow program portion, where each of the second data flow program portions is configured to execute a respective one or more iterations. The plurality of second data flow program portions are configured to execute at least a portion of iterations concurrently during execution of the data flow program. Execution of the plurality of second data flow program portions is functionally equivalent to sequential execution of the iterations of the first data flow program portion.

Owner:NATIONAL INSTRUMENTS

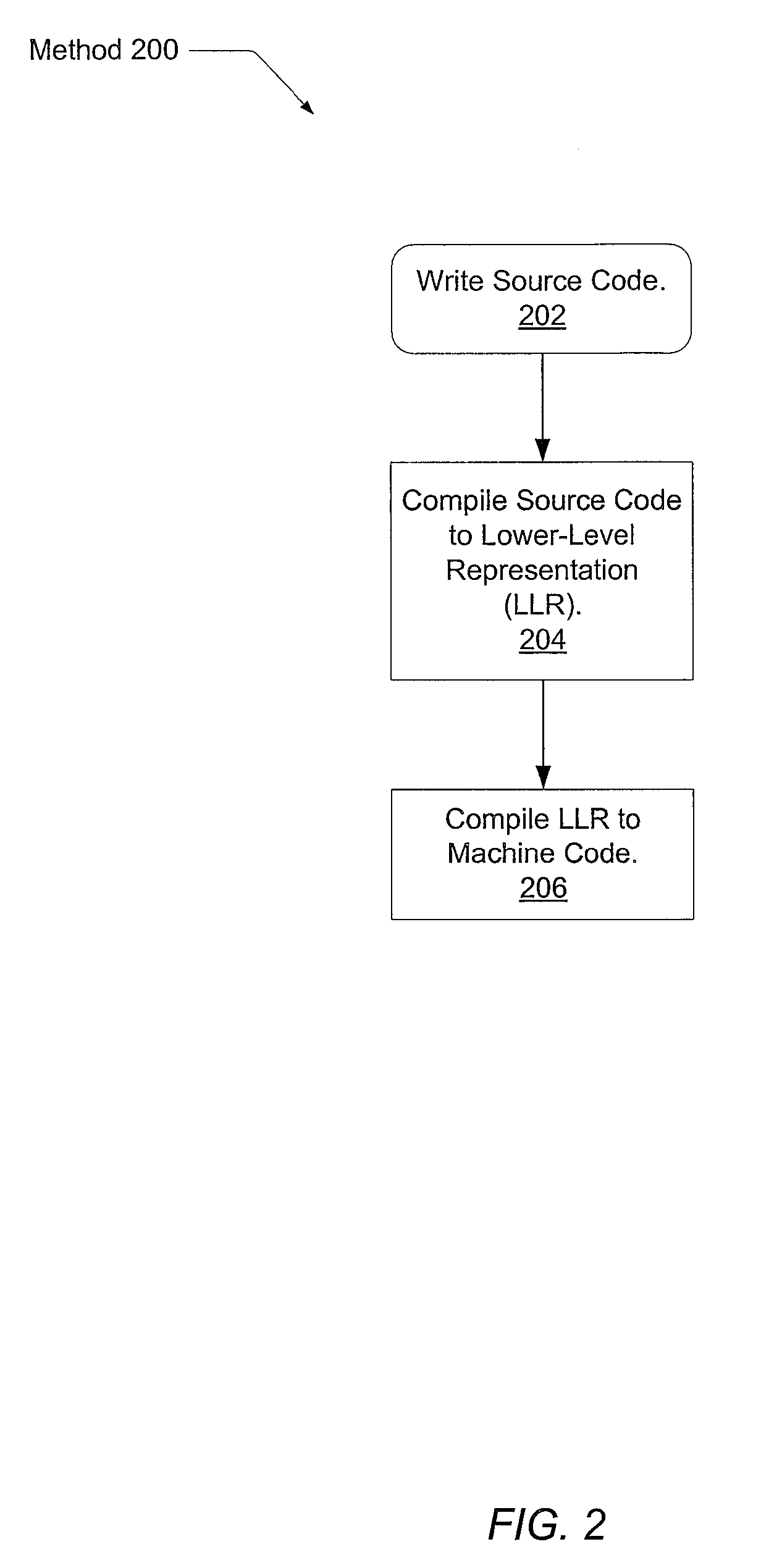

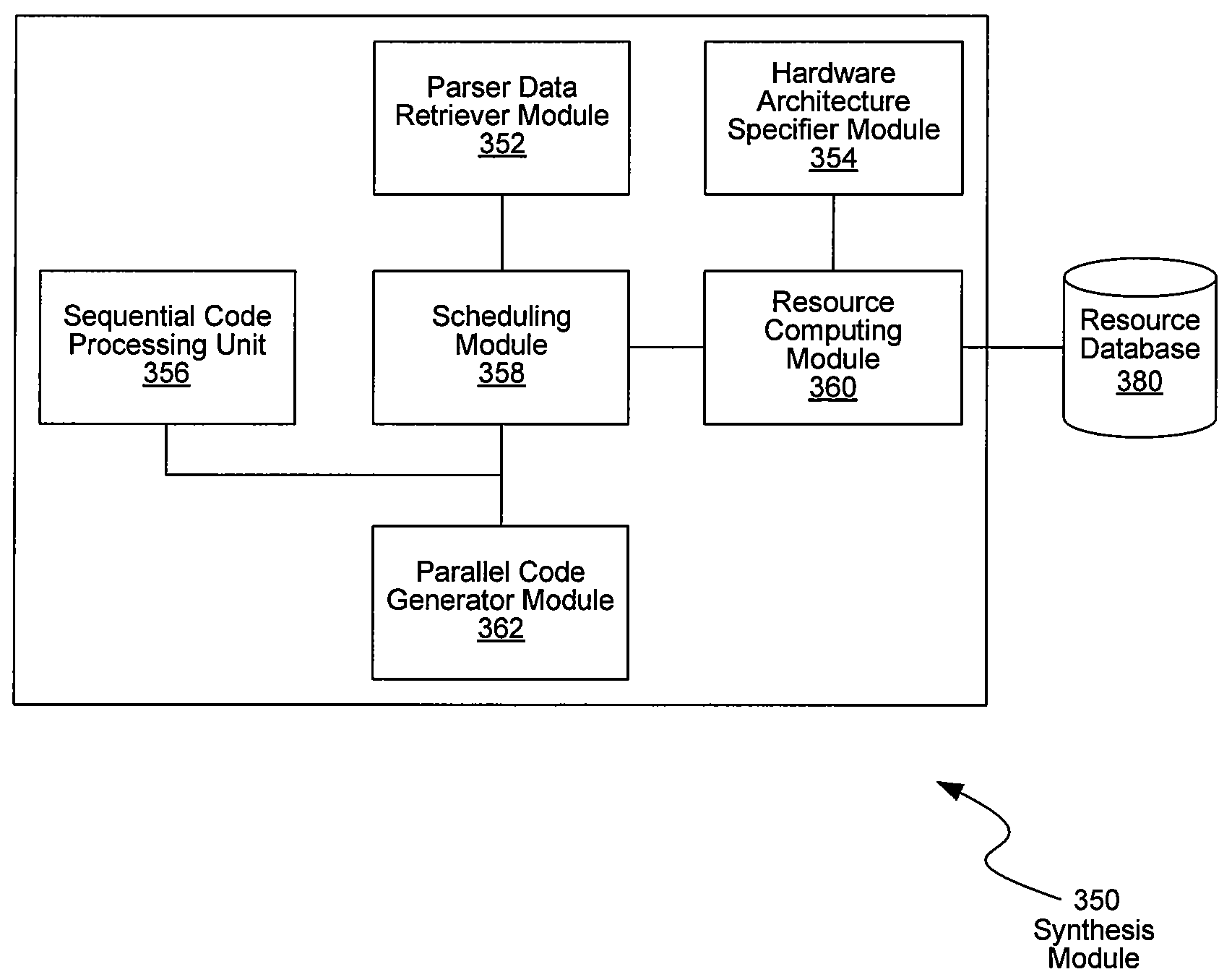

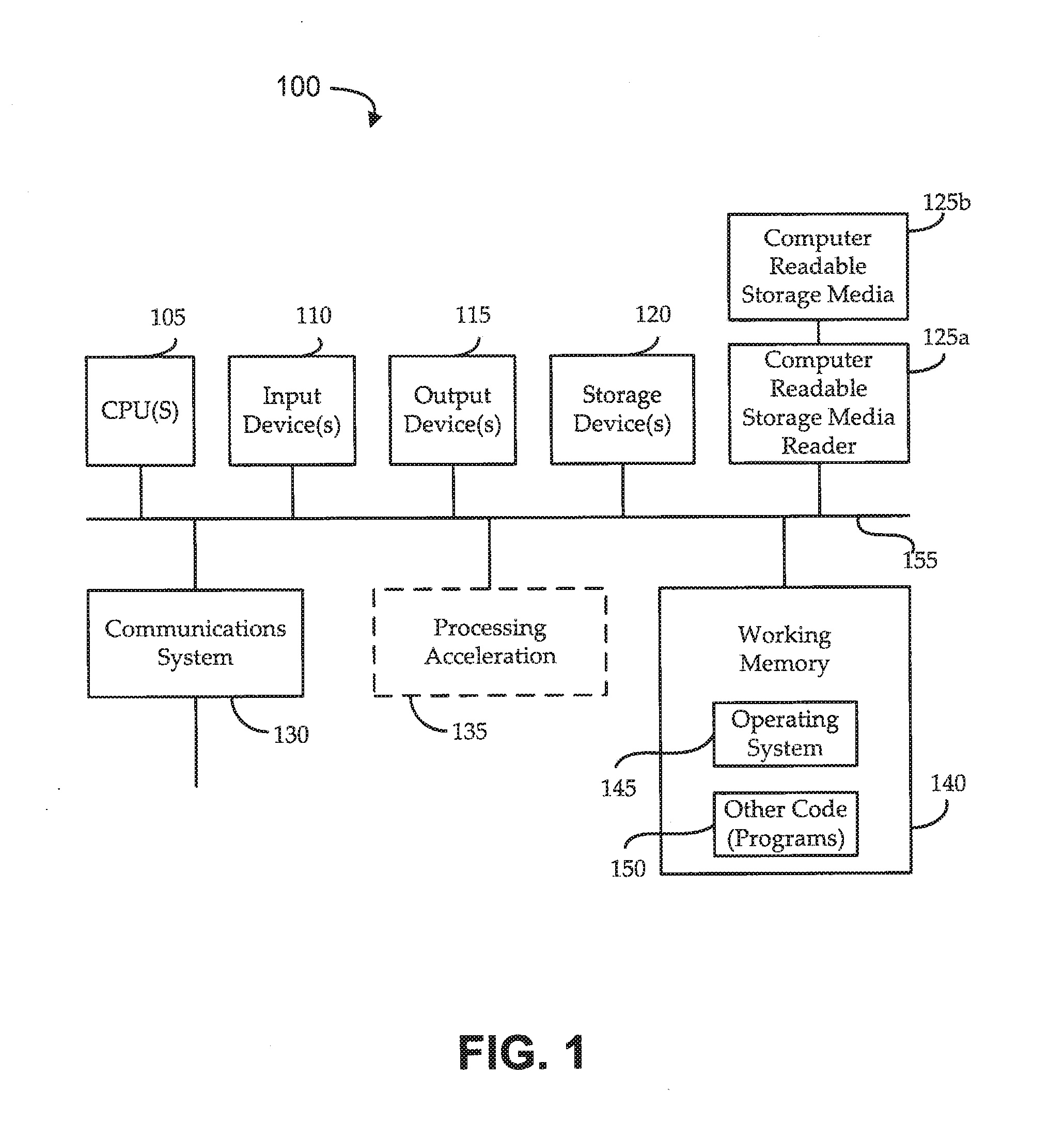

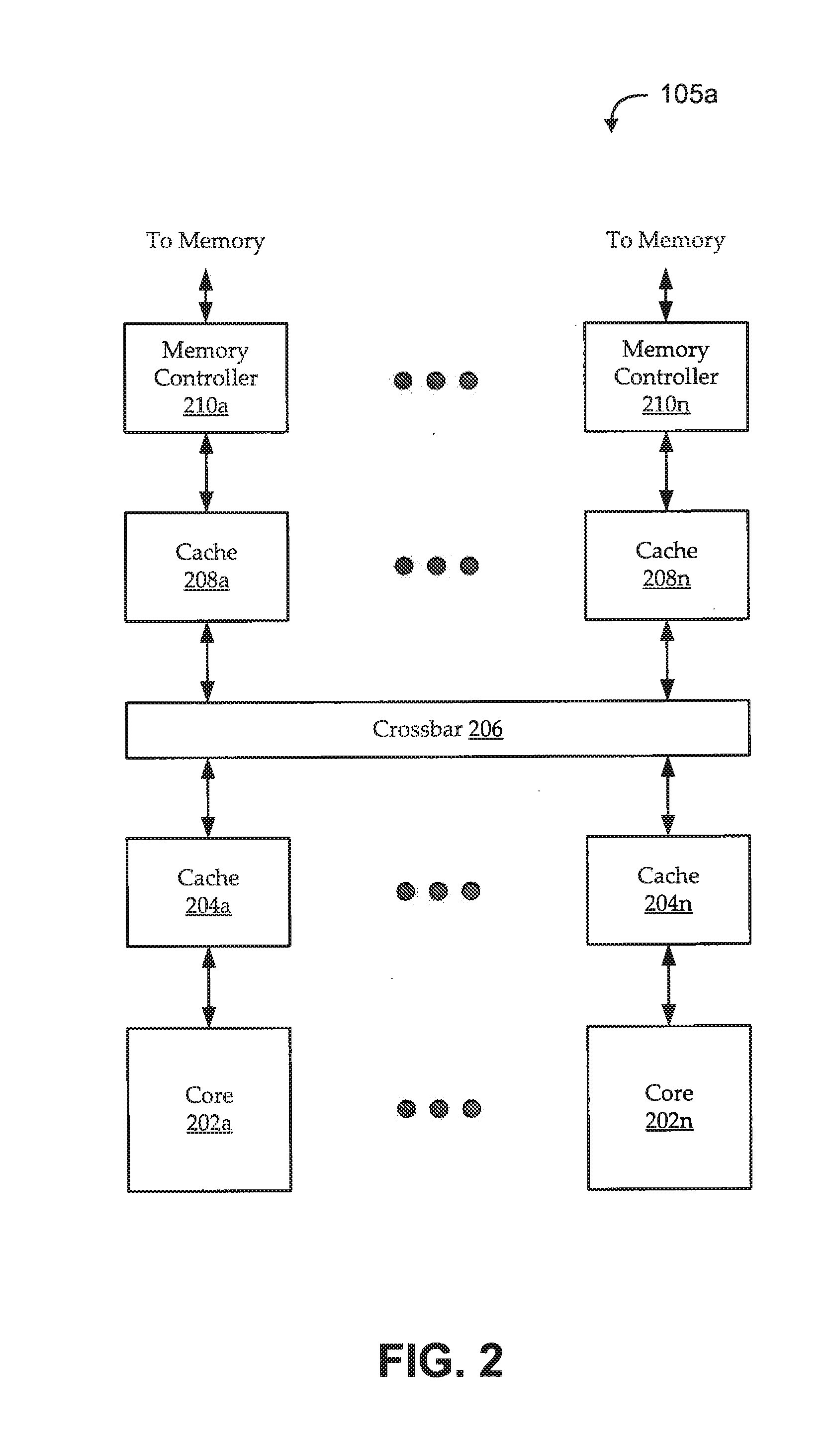

System and method for architecture-adaptable automatic parallelization of computing code

InactiveUS20090172353A1Program control using stored programsSoftware engineeringMulti processorRunning time

Systems and methods for architecture-adaptable automatic parallelization of computing code are described herein. In one aspect, embodiments of the present disclosure include a method of generating a plurality of instruction sets from a sequential program for parallel execution in a multi-processor environment, which may be implemented on a system, of, identifying an architecture of the multi-processor environment in which the plurality of instruction sets are to be executed, determining running time of each of a set of functional blocks of the sequential program based on the identified architecture, determining communication delay between a first computing unit and a second computing unit in the multi-processor environment, and / or assigning each of the set of functional blocks to the first computing unit or the second computing unit based on the running times and the communication time.

Owner:OPTILLEL SOLUTIONS

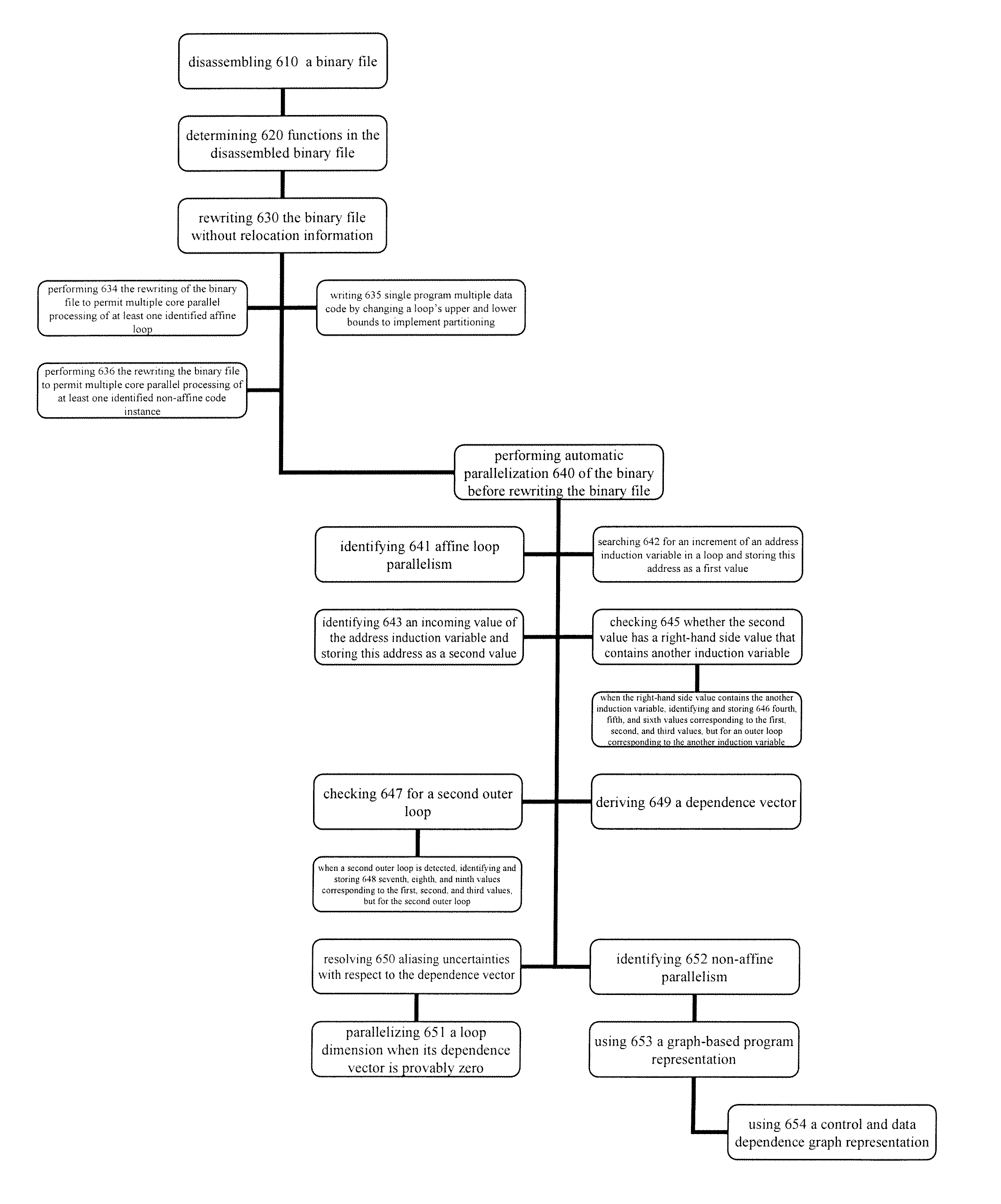

Automatic parallelization using binary rewriting

Binary rewriters that do not require relocation information and automatic parallelizers within binary rewriters are provided, as well as methods for performing binary rewriting and automatic parallelization. The method, in certain embodiments. includes disassembling a binary file and determining functions in the disassembled binary file. The method can further include rewriting the binary file without relying on relocation information or object files. Optionally, the method can further include performing automatic parallelization of the binary before rewriting the binary file.

Owner:UNIV OF MARYLAND

Automatically Creating Parallel Iterative Program Code in a Data Flow Program

ActiveUS20100306733A1Software engineeringGeneral purpose stored program computerData streamParallel computing

System and method for automatically parallelizing iterative functionality in a data flow program. A data flow program is stored that includes a first data flow program portion, where the first data flow program portion is iterative. Program code implementing a plurality of second data flow program portions is automatically generated based on the first data flow program portion, where each of the second data flow program portions is configured to execute a respective one or more iterations. The plurality of second data flow program portions are configured to execute at least a portion of iterations concurrently during execution of the data flow program. Execution of the plurality of second data flow program portions is functionally equivalent to sequential execution of the iterations of the first data flow program portion.

Owner:NATIONAL INSTRUMENTS

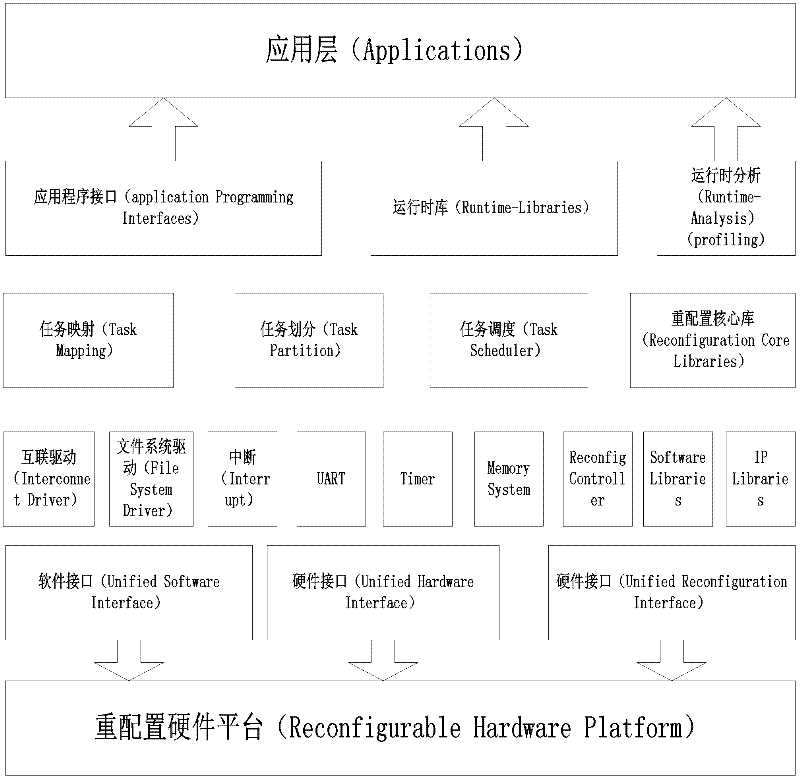

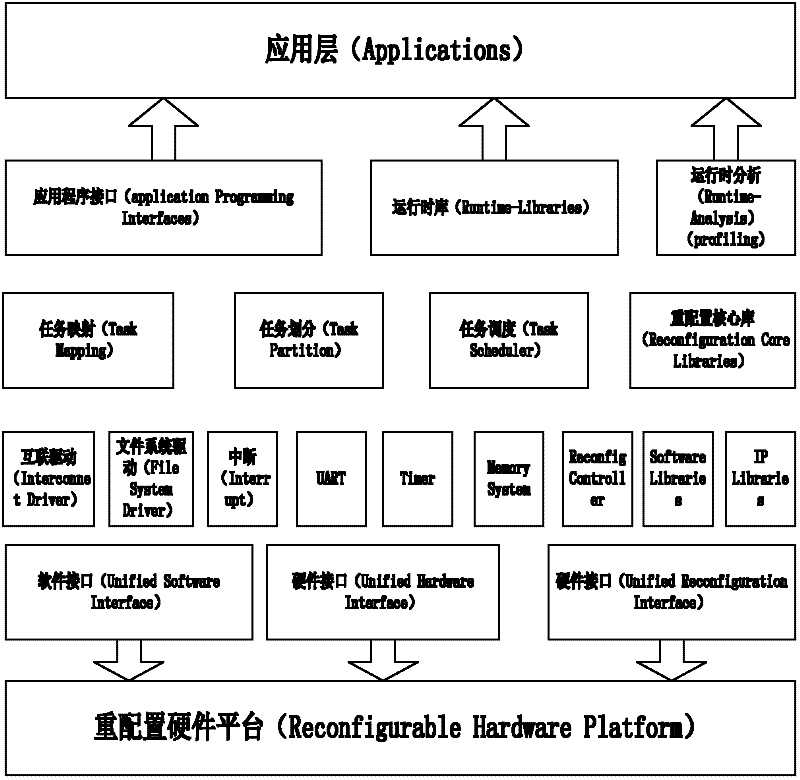

Middleware system of heterogeneous multi-core reconfigurable hybrid system and task execution method thereof

ActiveCN102508712ARealize automatic divisionImplement dynamic schedulingProgram initiation/switchingProgram managementMiddleware

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

Automatically creating parallel iterative program code in a graphical data flow program

System and method for automatically parallelizing iterative functionality in a data flow program. A data flow program is stored that includes a first data flow program portion, where the first data flow program portion is iterative. Program code implementing a plurality of second data flow program portions is automatically generated based on the first data flow program portion, where each of the second data flow program portions is configured to execute a respective one or more iterations. The plurality of second data flow program portions are configured to execute at least a portion of iterations concurrently during execution of the data flow program. Execution of the plurality of second data flow program portions is functionally equivalent to sequential execution of the iterations of the first data flow program portion.

Owner:NATIONAL INSTRUMENTS

Task scheduling system of on-chip multi-core computing platform and method for task parallelization

ActiveCN102129390AJudging relevanceImprove parallelismMultiprogramming arrangementsMultiple digital computer combinationsMulti core computingService module

The invention discloses a task scheduling system of an on-chip multi-core computing platform and a method for task parallelization, wherein the system comprises user service modules for providing tasks which are needed to be executed, and computation service modules for executing a plurality of tasks on the on-chip multi-core computing platform, and the system is characterized in that core scheduling service modules are arranged between the user service modules and the computation service modules, the core scheduling service modules receive task requests of the user service modules as input, judge the data dependency relations among different tasks through records, and schedule the task requests in parallel to different computation service modules for being executed. The system enhances platform throughput and system performance by performing correlation monitoring and automatic parallelization on the tasks during running.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

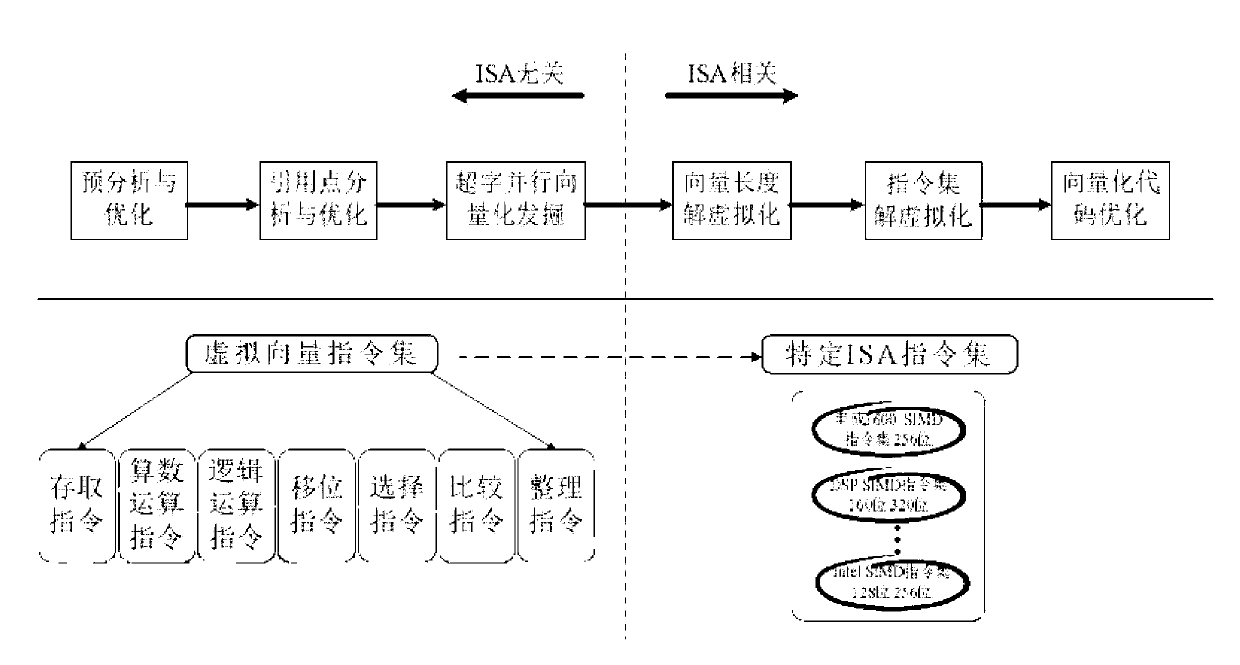

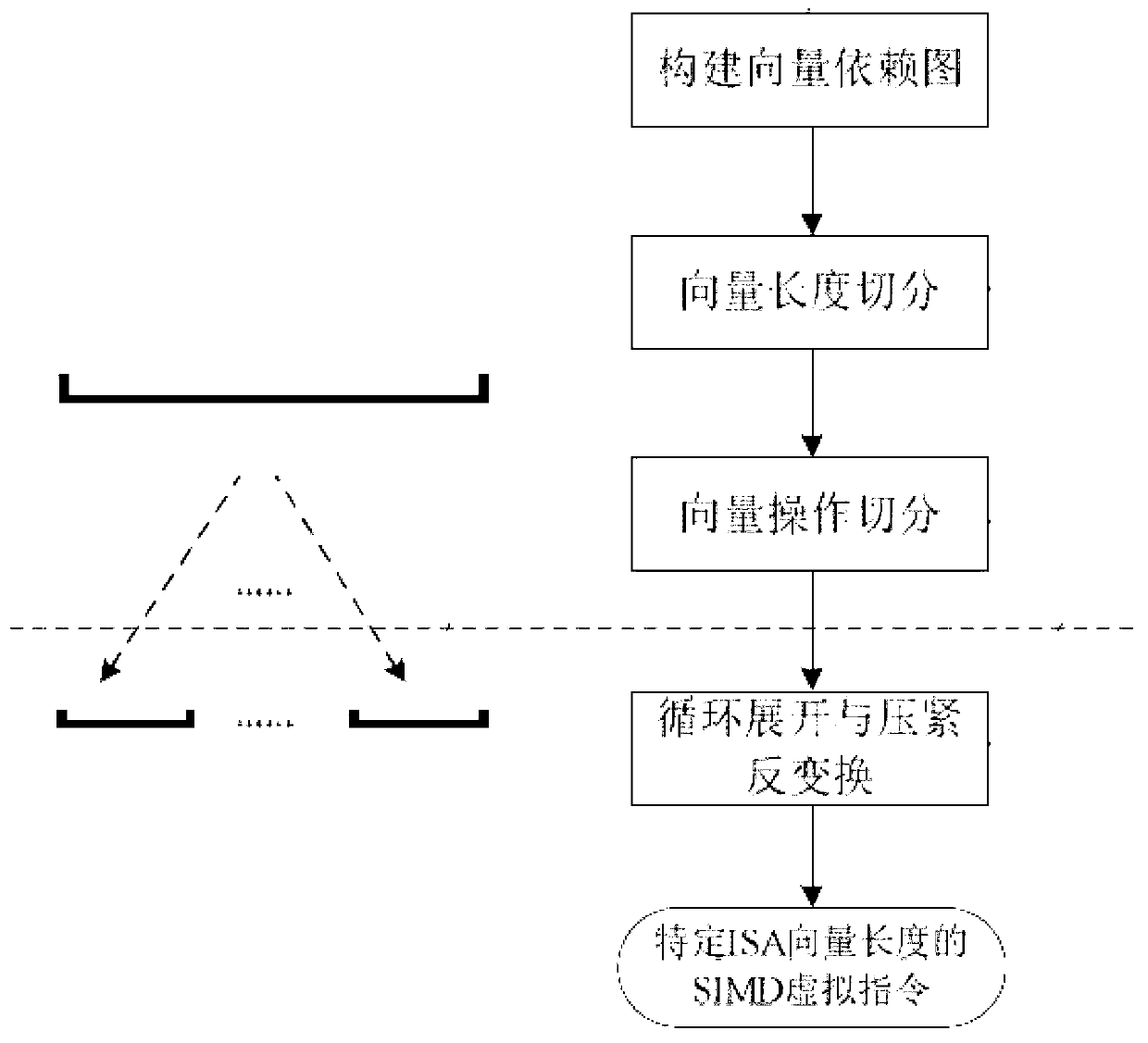

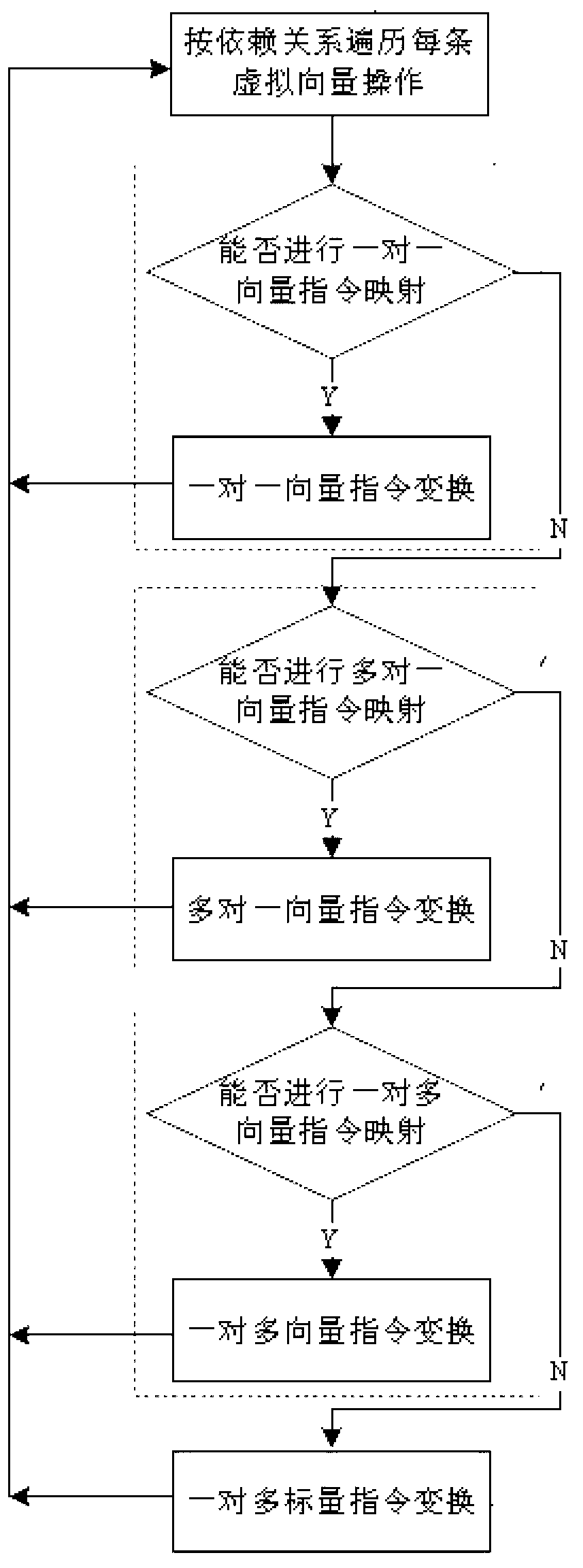

Automatic vectorizing method for heterogeneous SIMD expansion components

InactiveCN103279327AImprove execution efficiencyProgram controlMemory systemsVirtualizationPerformance computing

The invention relates to the field of high-performance computing automatic parallelization, in particular to an automatic vectorizing method for heterogeneous SIMD expansion components. The automatic vectorizing method is suitable for the heterogeneous SIMD expansion components with different vector quantity lengths and different vector quantity instruction sets, a set of virtual instruction sets are designed, and an input C and a Fortran program can be converted into an intermediate representation of virtual instructions under an automatic vectorizing unified framework. The virtual instruction sets are automatically converted into vectorizing codes for the heterogeneous SIMD expansion components through solving virtualization of the vector quantity lengths and solving virtualization of the instruction sets so that a programmer can be free from complex manual vectorizing coding work. The vectorizing method is combined with relative optimizing methods, vectorizing recognition is carried out from different granularities, mixing parallelism of a circulation level and a basic block level is explored to the greatest extent through conventional optimization and invocation point optimization, the redundancy optimization is carried out on generated codes through the analysis about striding data dependence of a basic block, and executing efficiency of a program is effectively improved.

Owner:THE PLA INFORMATION ENG UNIV

Loop Parallelization Analyzer for Data Flow Programs

System and method for automatically parallelizing iterative functionality in a data flow program. A data flow program is stored that includes a first data flow program portion, where the first data flow program portion is iterative. Program code implementing a plurality of second data flow program portions is automatically generated based on the first data flow program portion, where each of the second data flow program portions is configured to execute a respective one or more iterations. The plurality of second data flow program portions are configured to execute at least a portion of iterations concurrently during execution of the data flow program. Execution of the plurality of second data flow program portions is functionally equivalent to sequential execution of the iterations of the first data flow program portion.

Owner:NATIONAL INSTRUMENTS

Controlling and dynamically varying automatic parallelization

A system and method for automatically controlling run-time parallelization of a software application. A buffer is allocated during execution of program code of an application. When a point in program code near a parallelized region is reached, demand information is stored in the buffer in response to reaching a predetermined first checkpoint. Subsequently, the demand information is read from the buffer in response to reaching a predetermined second checkpoint. Allocation information corresponding to the read demand information is computed and stored the in the buffer for the application to later access. The allocation information is read from the buffer in response to reaching a predetermined third checkpoint, and the parallelized region of code is executed in a manner corresponding to the allocation information.

Owner:ORACLE INT CORP

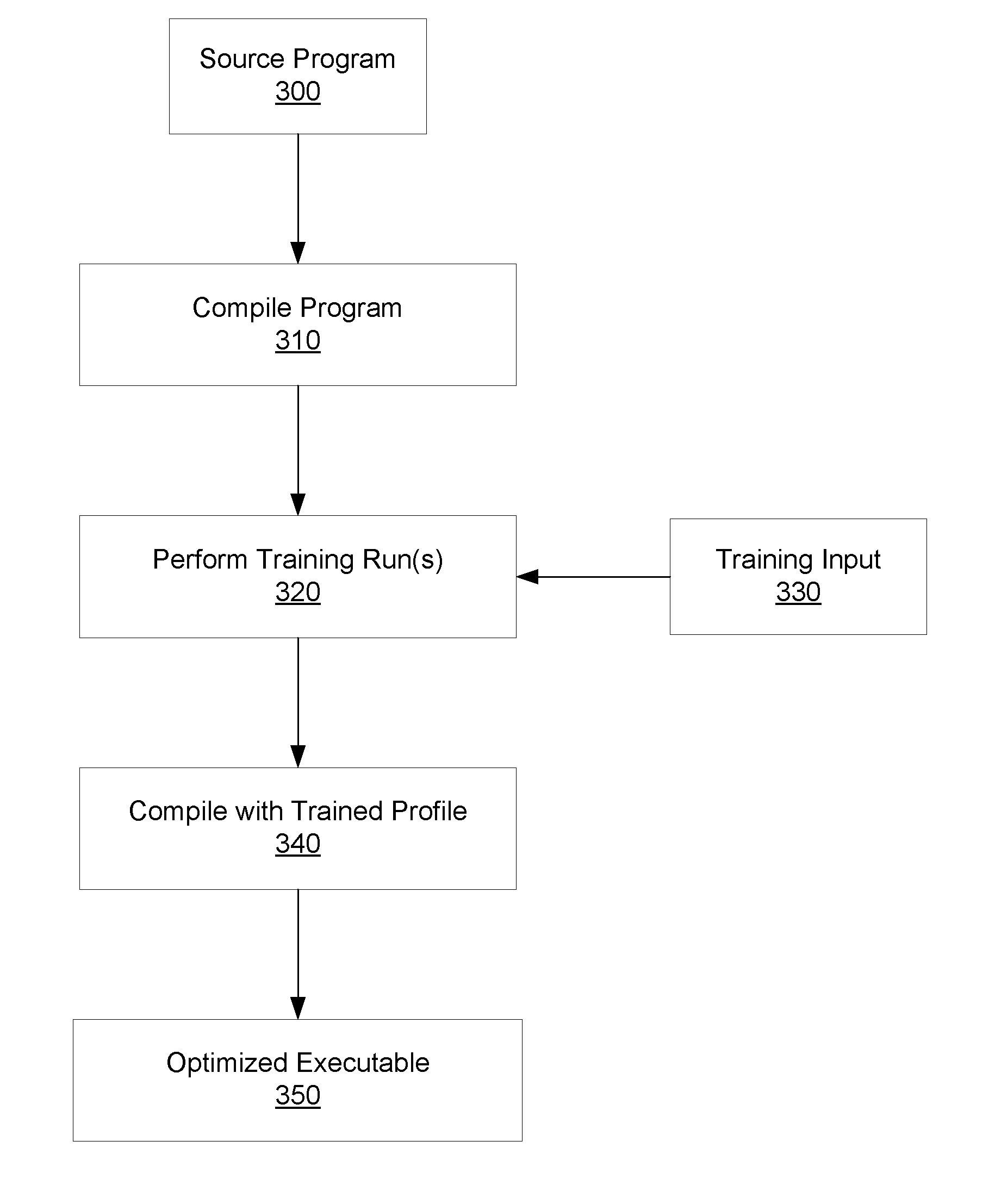

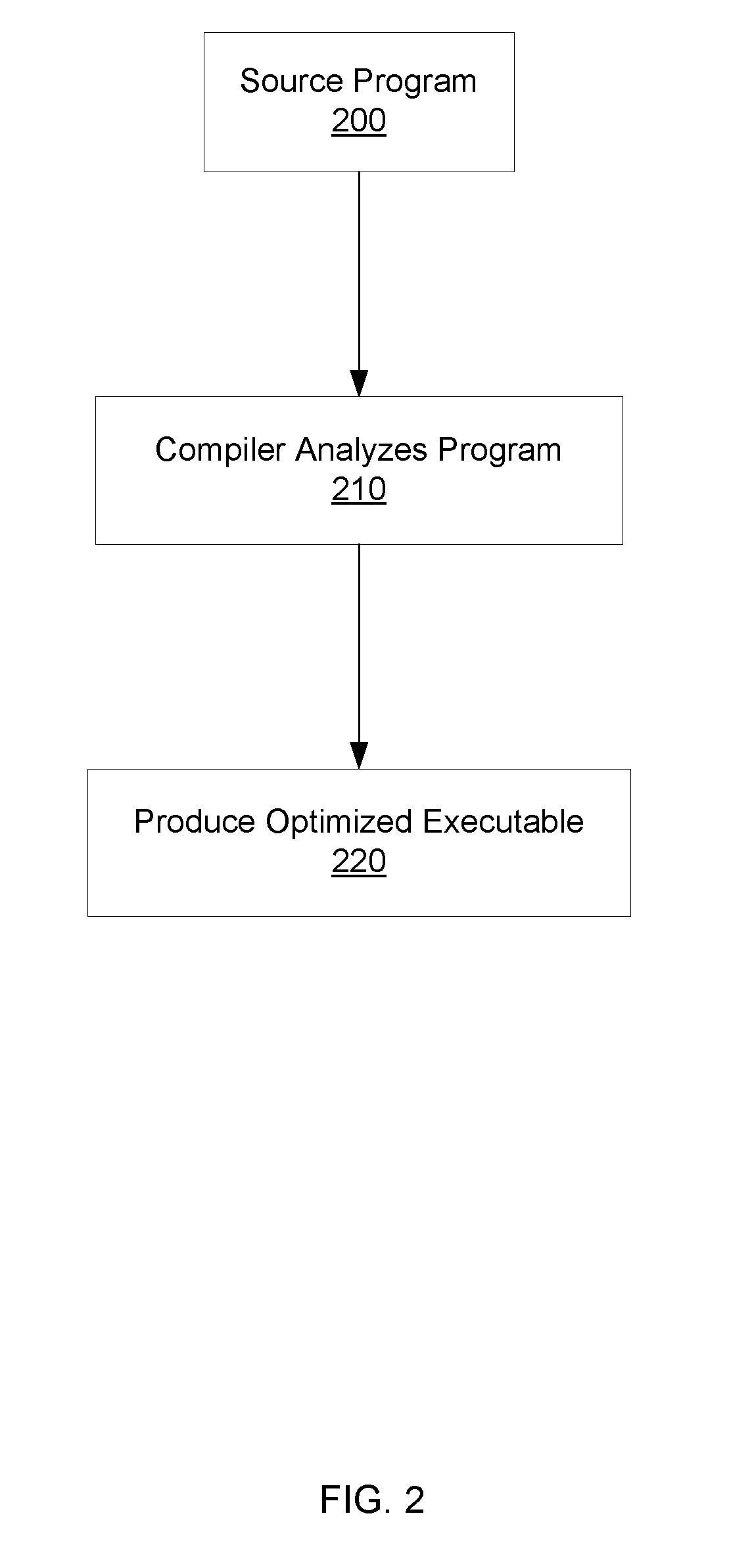

Runtime profitability control for speculative automatic parallelization

A compilation method and mechanism for parallelizing program code. A method for compilation includes analyzing source code and identifying candidate code for parallelization. The method includes parallelizing the candidate code, in response to determining said profitability meets a predetermined criteria; and generating object code corresponding to the source code. The generated object code includes both a non-parallelized version of the candidate code and a parallelized version of the candidate code. During execution of the object code, a dynamic selection between execution of the non-parallelized version of the candidate code and the parallelized version of the candidate code is made. Changing execution from said parallelized version of the candidate code to the non-parallelized version of the candidate code, may be in response to determining a transaction failure count meets a pre-determined threshold. Additionally, changing execution from one version to the other may be in further response to determining an execution time of the parallelized version of the candidate code is greater than an execution time of the non-parallelized version of the candidate code.

Owner:ORACLE INT CORP

Graphical indicator which specifies parallelization of iterative program code in a graphical data flow program

System and method for automatically parallelizing iterative functionality in a data flow program. A data flow program is stored that includes a first data flow program portion, where the first data flow program portion is iterative. Program code implementing a plurality of second data flow program portions is automatically generated based on the first data flow program portion, where each of the second data flow program portions is configured to execute a respective one or more iterations. The plurality of second data flow program portions are configured to execute at least a portion of iterations concurrently during execution of the data flow program. Execution of the plurality of second data flow program portions is functionally equivalent to sequential execution of the iterations of the first data flow program portion.

Owner:NATIONAL INSTRUMENTS

Pipelined loop parallelization with pre-computations

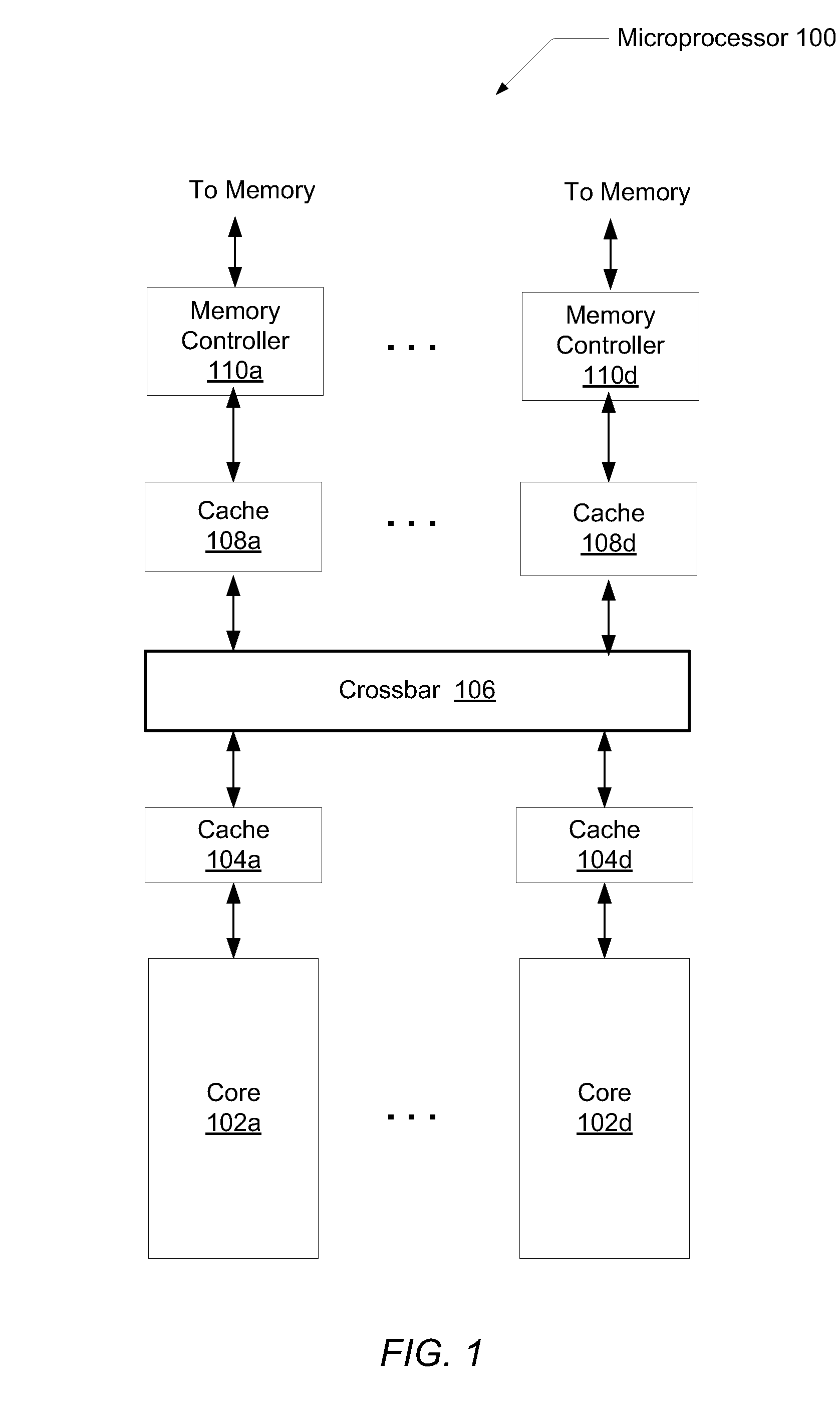

ActiveUS20120254888A1Easy to useMultiprogramming arrangementsMemory systemsComputational scienceHardware thread

Owner:ORACLE INT CORP

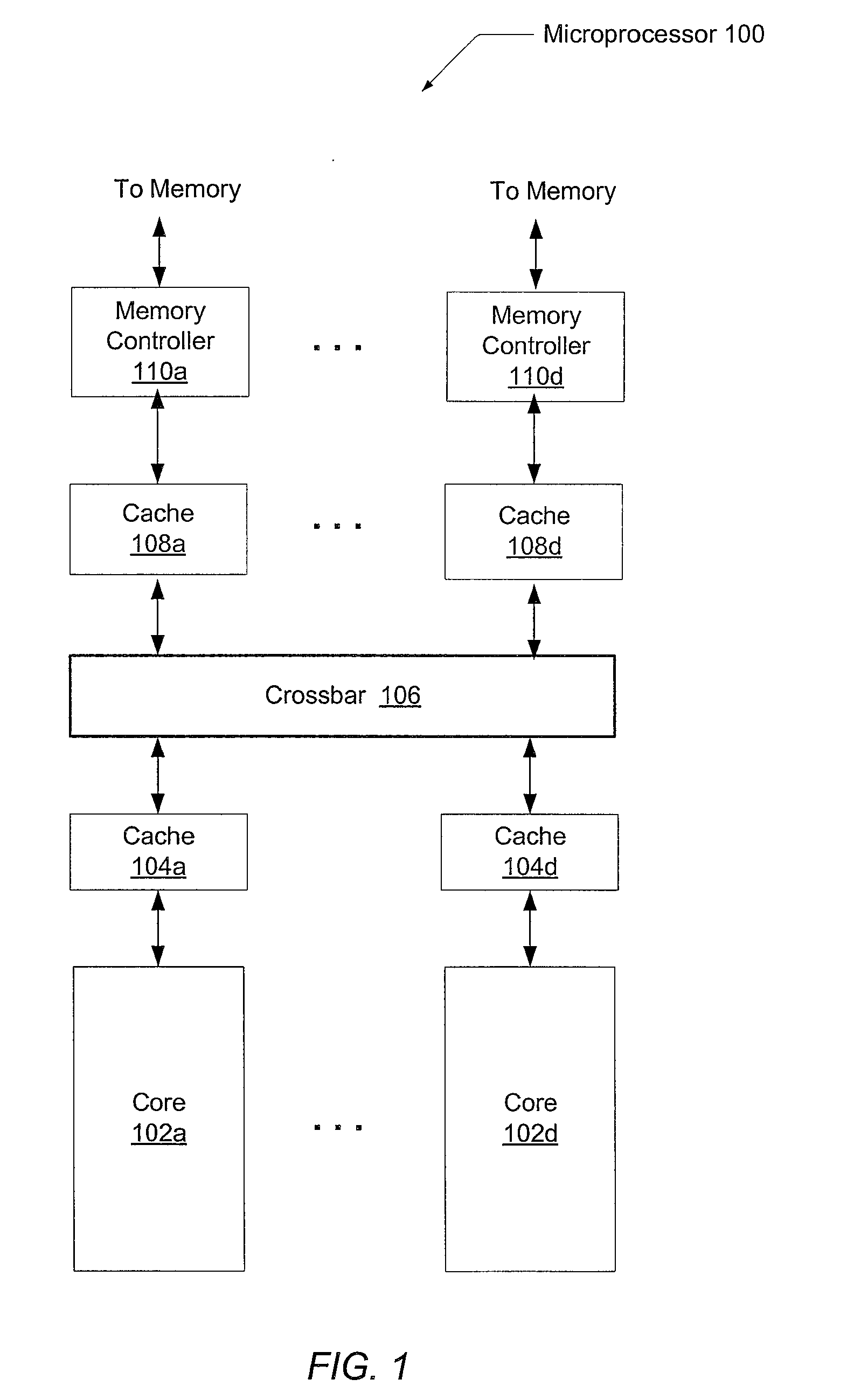

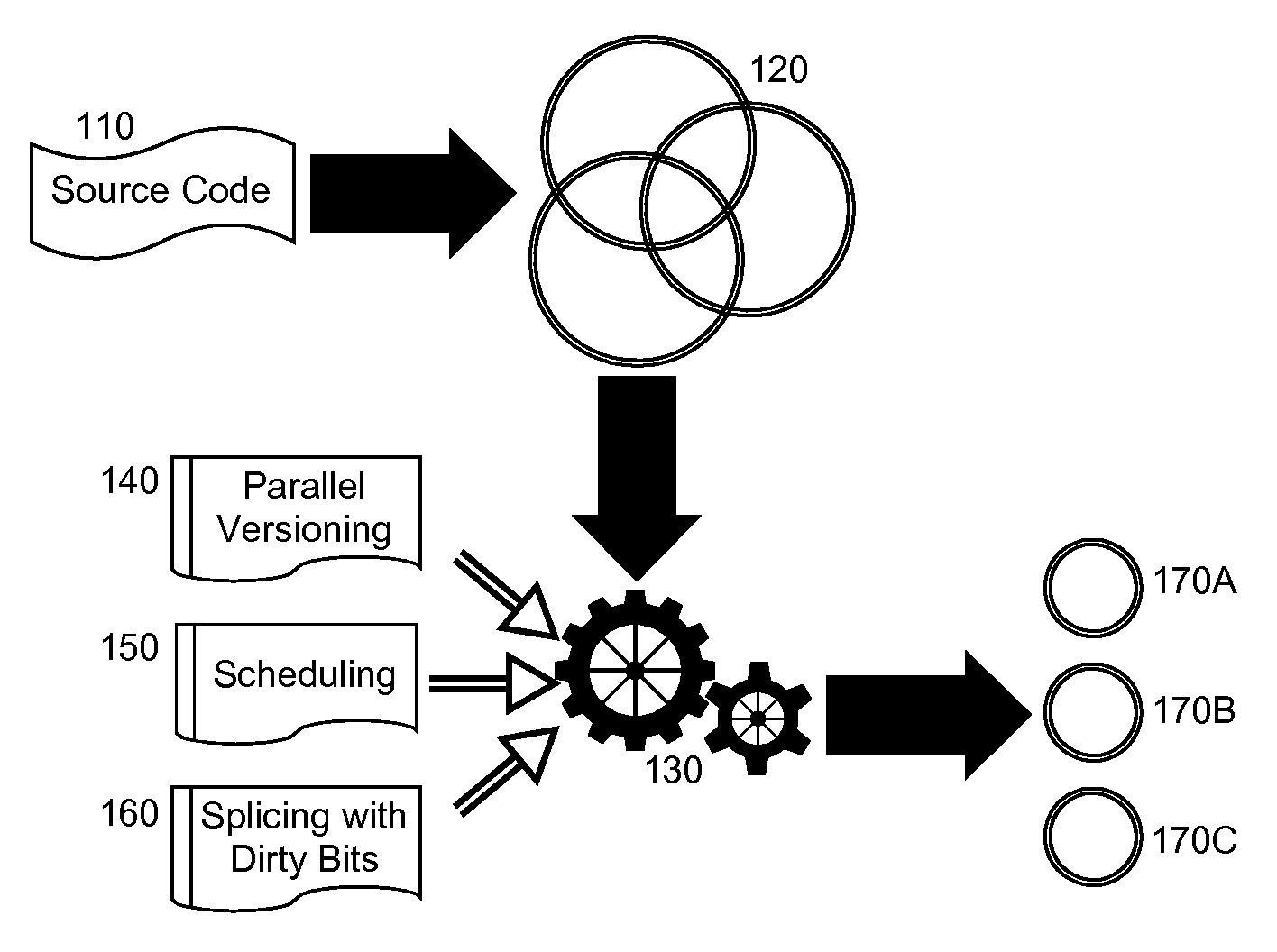

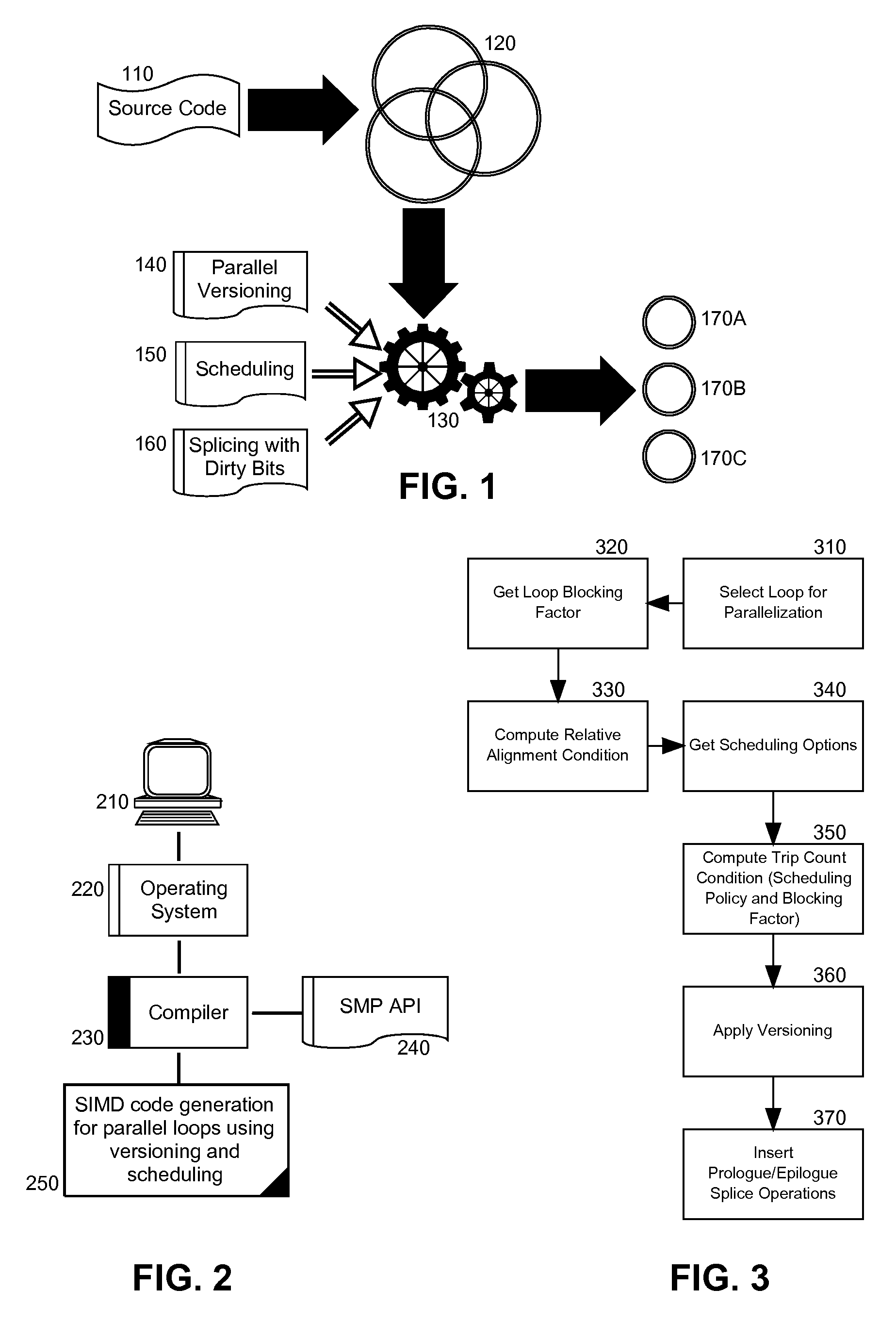

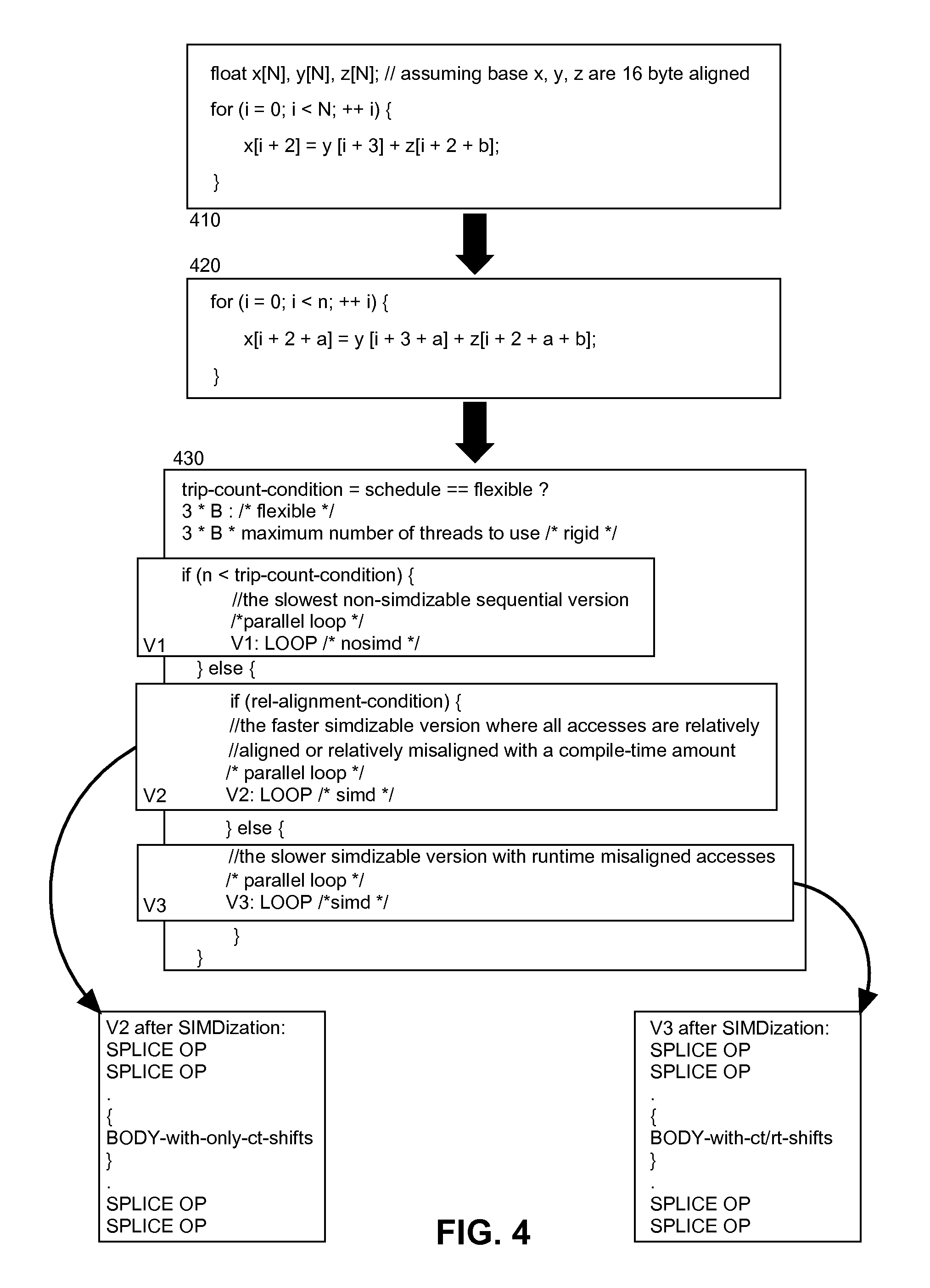

Single instruction multiple data (SIMD) code generation for parallel loops using versioning and scheduling

InactiveUS20100011339A1Software engineeringMultiprogramming arrangementsData processing systemSource code

Embodiments of the present invention address deficiencies of the art in respect to loop parallelization for a target architecture implementing a shared memory model and provide a novel and non-obvious method, system and computer program product for SIMD code generation for parallel loops using versioning and scheduling. In an embodiment of the invention, within a code compilation data processing system a parallel SIMD loop code generation method can include identifying a loop in a representation of source code as a parallel loop candidate, either through a user directive or through auto-parallelization. The method also can include selecting a trip count condition responsive to a scheduling policy set for the code compilation data processing system and also on a minimal simdizable threshold, determining a trip count and an alignment constraint for the selected loop, and generating a version of a parallel loop in the source code according to the alignment constraint and a comparison of the trip count to the trip count condition.

Owner:IBM CORP

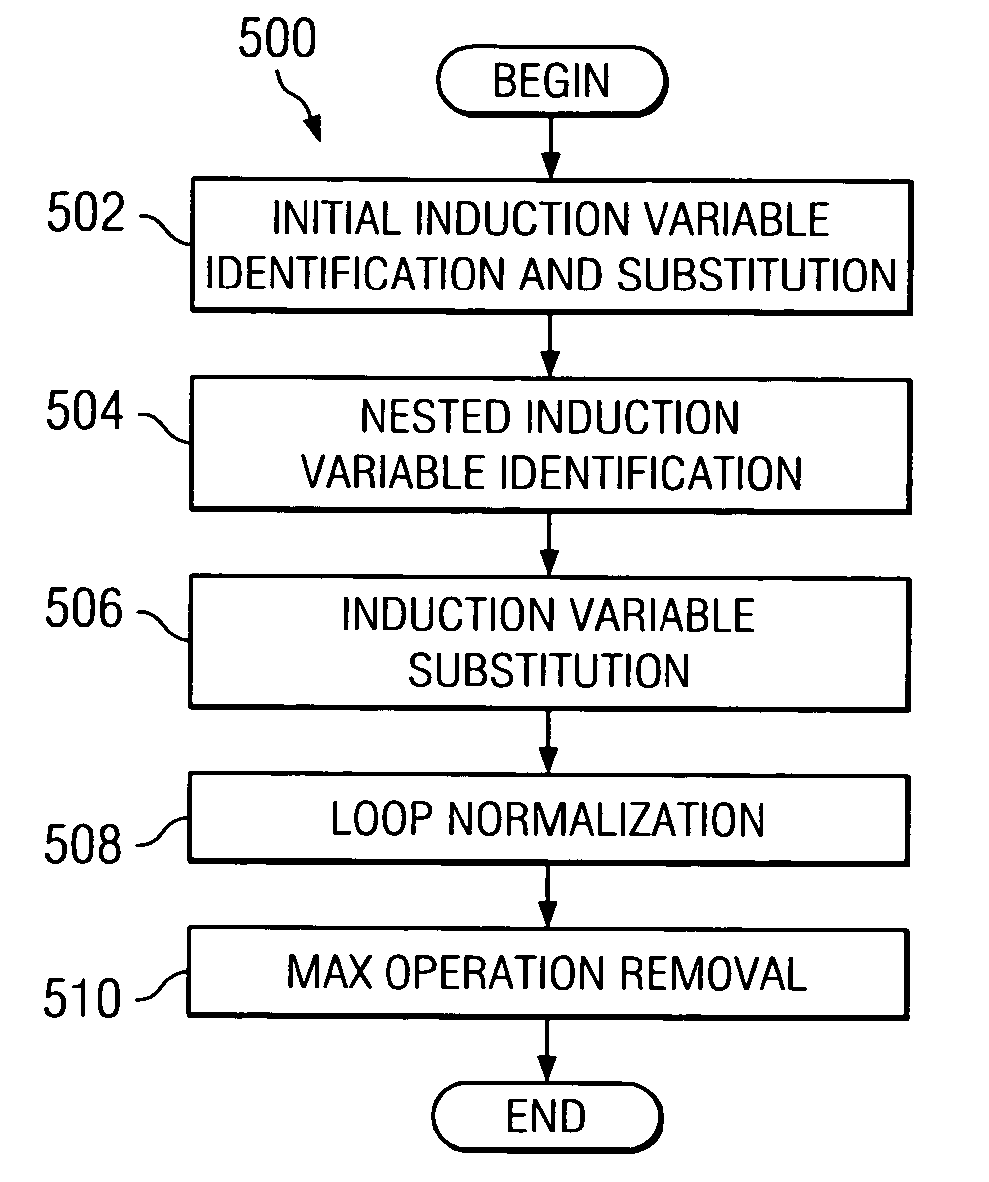

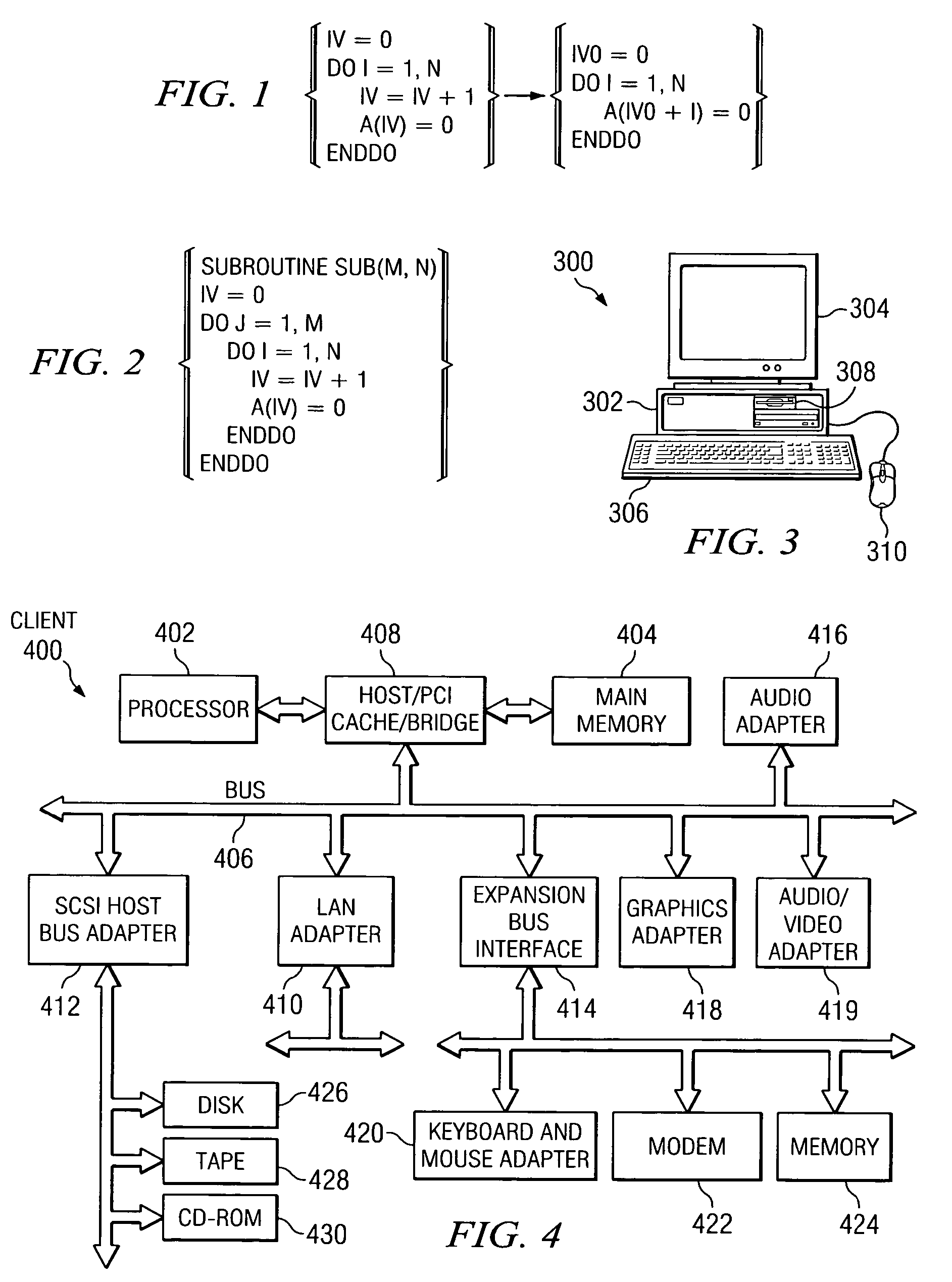

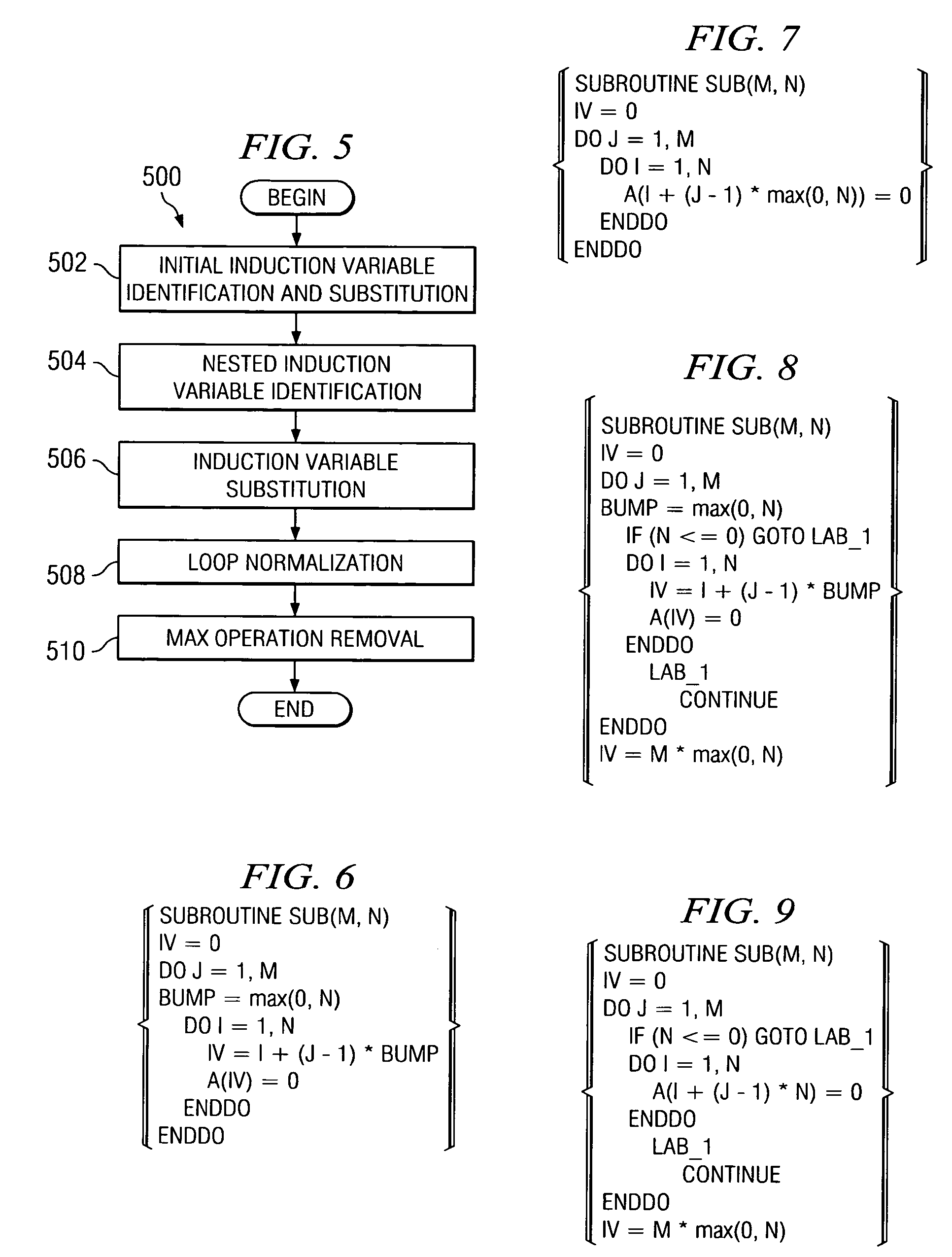

Method and system for auto parallelization of zero-trip loops through induction variable substitution

InactiveUS20060048119A1Eliminates loop dependency on the induction variableSoftware engineeringProgram controlCopy propagationEngineering

A method and system of auto parallelization of zero-trip loops that substitutes a nested basic linear induction variable by exploiting a parallelizing compiler is provided. Provided is a use of a max{0,N} variable for loop iterations in case of no information is known about the value of N, for a typical loop iterating from 1 to N, in which N is the loop invariant. For the nested basic induction variables, an induction variable substitution process is applied to the nested loops starting from the innermost loop to the outermost one. Then a removal of the max operator afterwards through a copy propagation pass of the IBM compiler is provided. In doing so, the loop dependency on the induction variable is eliminated and an opportunity for a parallelizing compiler to parallel the outermost loop is provided.

Owner:IBM CORP

Compiler framework for speculative automatic parallelization with transactional memory

A computer program is speculatively parallelized with transactional memory by scoping program variables at compile time, and inserting code into the program at compile time. Determinations of the scoping can be based on whether scalar variables being scoped are involved in inter-loop non-reduction data dependencies, are used outside loops in which they were defined, and at what point in a loop a scalar variable is defined. The inserted code can include instructions for execution at a run time of the program to determine loop boundaries of the program, and issue checkpoint instructions and commit instructions that encompass transaction regions in the program. A transaction region can include an original function of the program and a spin-waiting loop with a non-transactional load, wherein the spin-waiting loop is configured to wait for a previous thread to commit before the current transaction commits.

Owner:ORACLE INT CORP

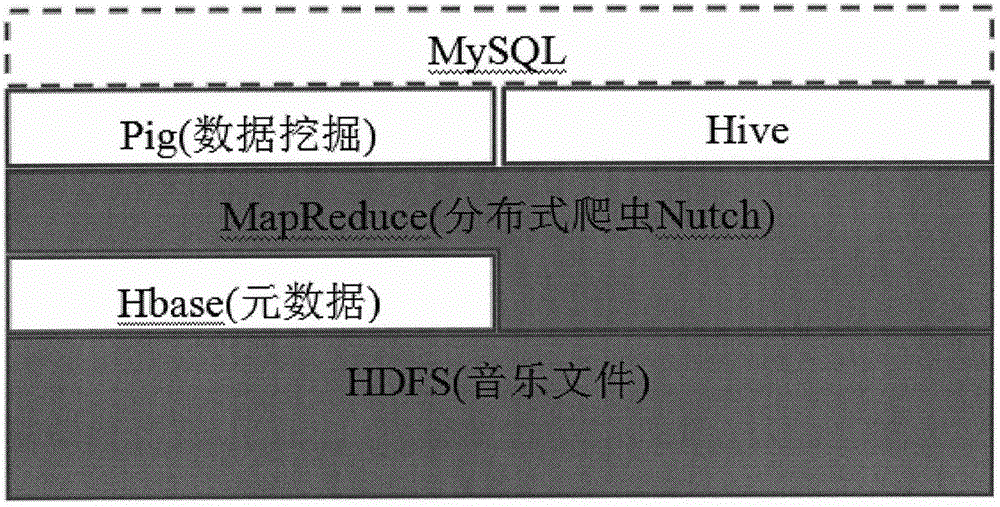

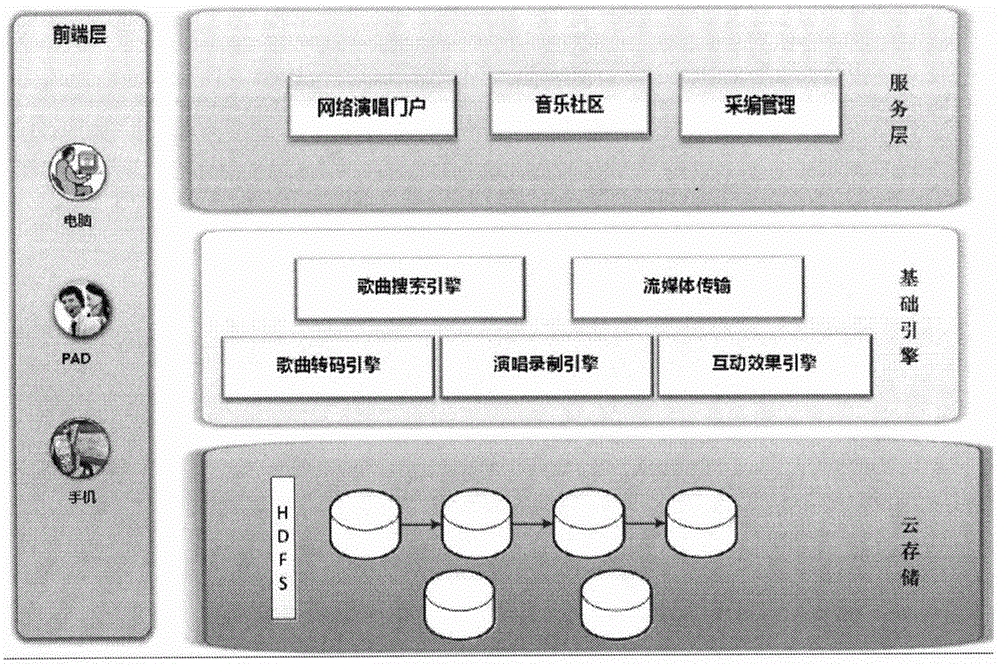

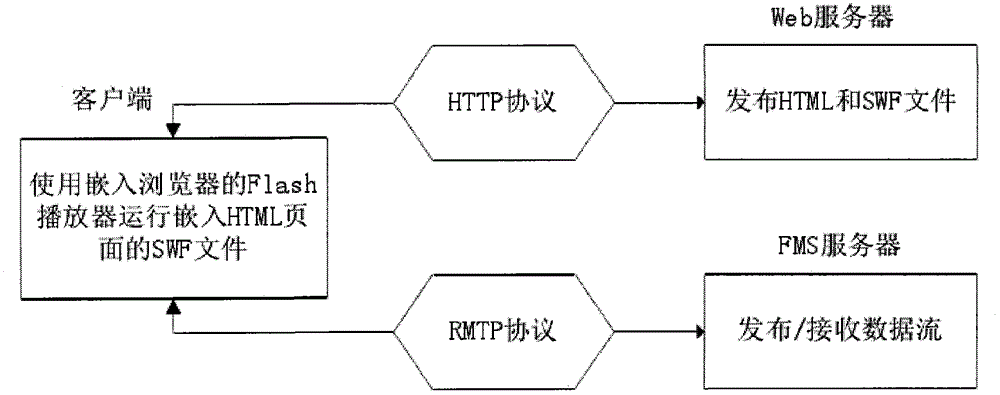

Online singing platform construction method based on cloud technology

ActiveCN104462226AGuaranteed real-timeEfficient storageWebsite content managementTransmissionFault toleranceFile system

The invention discloses an online singing platform construction method based on the cloud technology to provide an online singing platform for music lovers. The platform is based on the cloud technology, a large number of music resources are stored and managed effectively by means of an HDFS, data access handling capacity is high, transparency is high, and fault tolerance is high. PB-class data are processed reliably by means of a MapReduce model, automatic parallelization is realized, load balancing is realized, and flexibility is high. Streaming media transmission is conducted through combination of an FMS streaming media server and the RTMP, the problem of transmission delay is well solved, and real-time performance of a singing system is guaranteed. Display of an environmental background is conducted with the virtual reality technology to enable a user to have an immersive feeling. The platform also has functions of song selection, singing, grading, sing recording saving and recording uploading and sharing. The online singing platform construction method has comprehensive functions.

Owner:COMMUNICATION UNIVERSITY OF CHINA

Compiler framework for speculative automatic parallelization with transactional memory

A computer program is speculatively parallelized with transactional memory by scoping program variables at compile time, and inserting code into the program at compile time. Determinations of the scoping can be based on whether scalar variables being scoped are involved in inter-loop non-reduction data dependencies, are used outside loops in which they were defined, and at what point in a loop a scalar variable is defined. The inserted code can include instructions for execution at a run time of the program to determine loop boundaries of the program, and issue checkpoint instructions and commit instructions that encompass transaction regions in the program. A transaction region can include an original function of the program and a spin-waiting loop with a non-transactional load, wherein the spin-waiting loop is configured to wait for a previous thread to commit before the current transaction commits.

Owner:ORACLE INT CORP

Pipelined parallelization with localized self-helper threading

ActiveUS8561046B2Reduced execution timeSoftware engineeringProgram controlParallel computingSuper-threading

A system and method for automatically parallelizing a computer program for multi-threaded execution. A compiler identifies and parallelizes non-DOALL parallel regions, such as loops, within a computer program. The compiler determines enhanced helper thread instructions based upon the main body instructions of the non-DOALL region. These helper thread instructions are inserted ahead of the main body instructions within each of the plurality of threads, rather than within a single main thread. Next, synchronization instructions are inserted in one or more threads such that the main body of work of each thread is performed in a pipelined manner. The helper thread instructions within each thread may reduce the total execution time of each thread.

Owner:ORACLE INT CORP

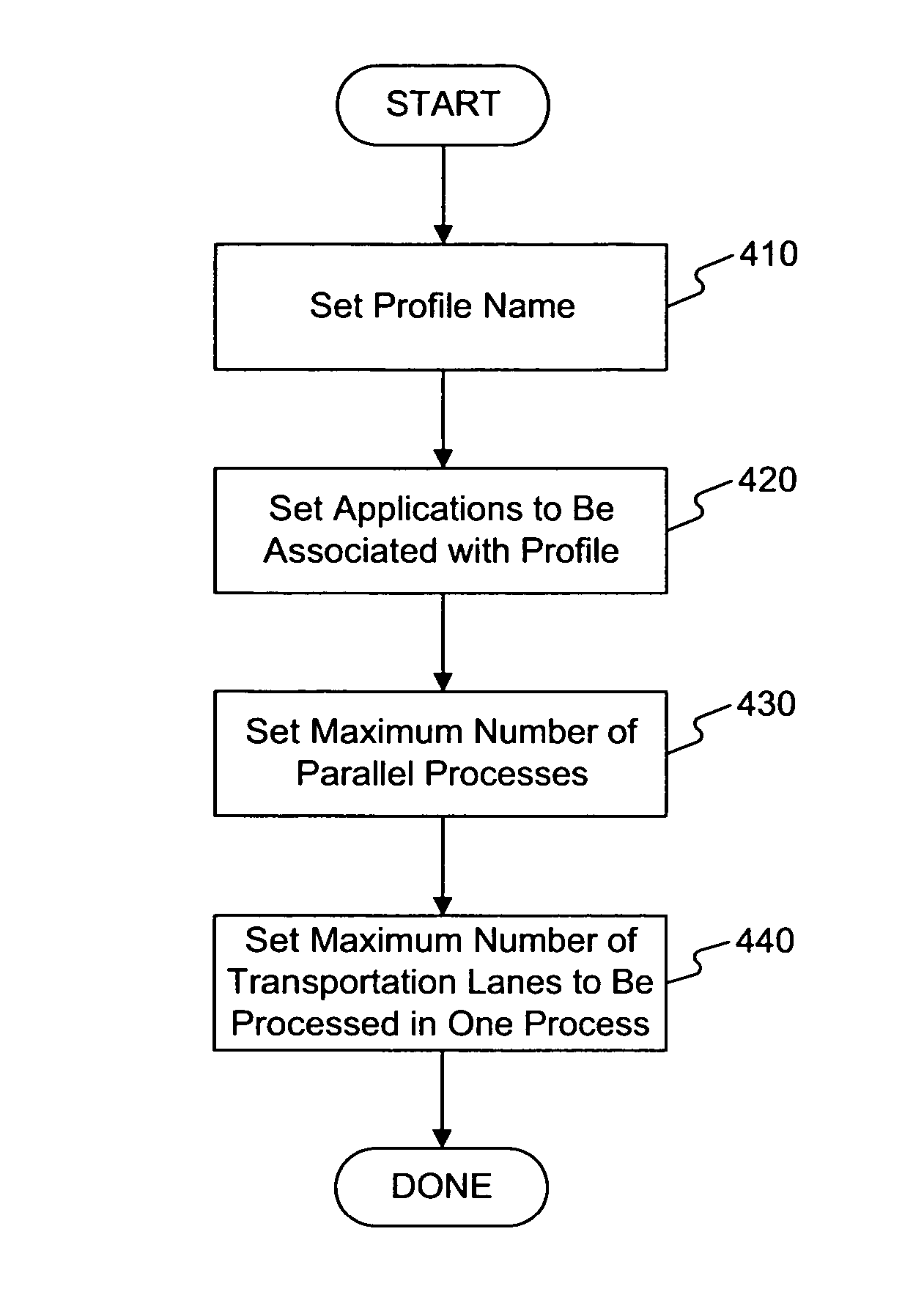

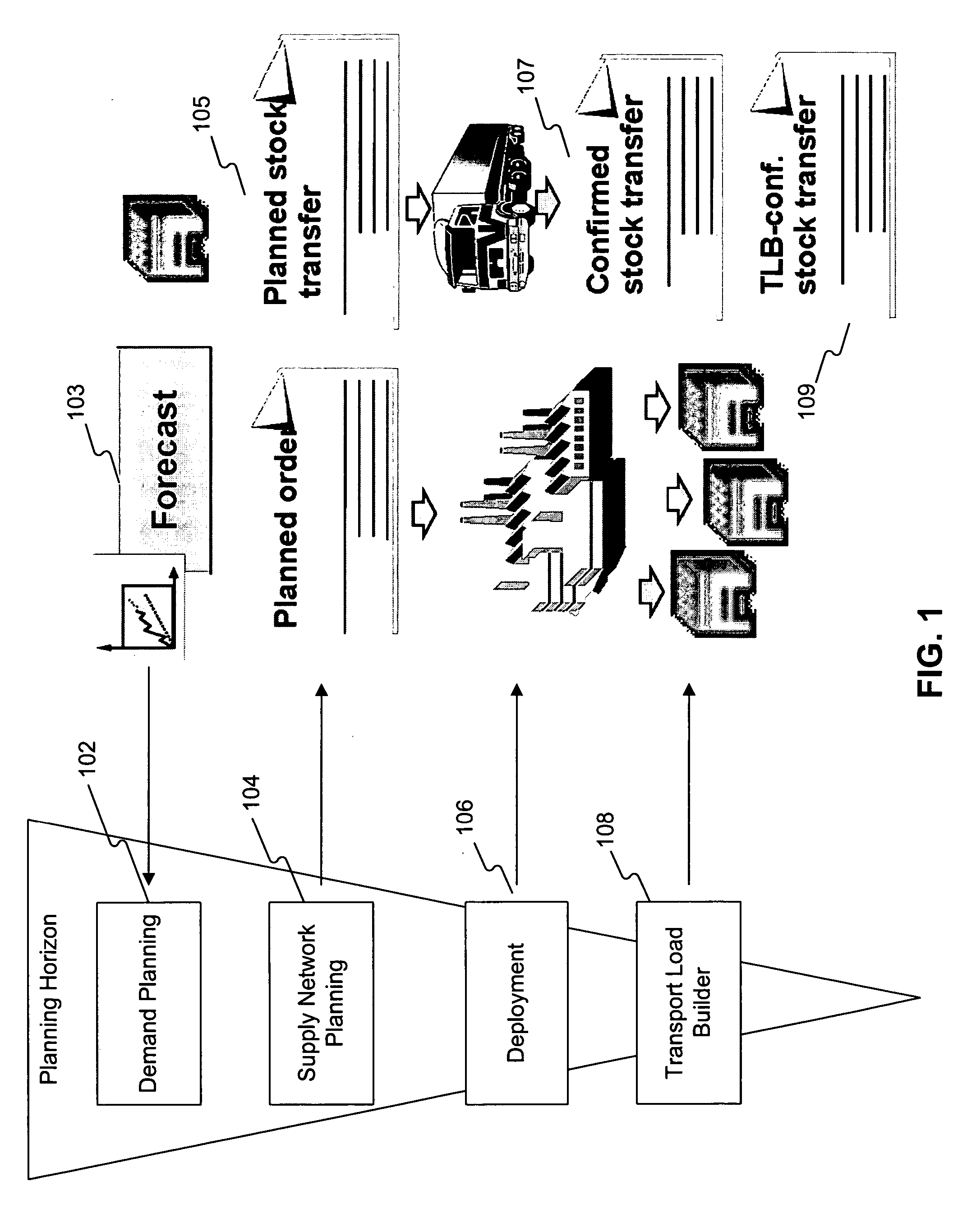

Systems and methods for automated parallelization of transport load builder

ActiveUS8423391B2Facilitate automated parallelizationDigital data processing detailsForecastingProgram planningParallel processing

Systems and methods are provided for creating a transport load for one or more stock transfers. In one exemplary embodiment, the systems and methods may include providing a parallel processing profile to be associated with the distribution plan. The method may also include building one or more packages of one or more transportation lanes, wherein building one or more packages comprises providing a lanes table of the one or more transportation lanes. The lanes table may comprise information associated with each of the one or more transportation lanes. The method may further include selecting the one or more transportation lanes for each package based on the information and the parallel processing profile, and generating the transport load for each package.

Owner:SAP AG

Automatically Creating Parallel Iterative Program Code in a Graphical Data Flow Program

System and method for automatically parallelizing iterative functionality in a data flow program. A data flow program is stored that includes a first data flow program portion, where the first data flow program portion is iterative. Program code implementing a plurality of second data flow program portions is automatically generated based on the first data flow program portion, where each of the second data flow program portions is configured to execute a respective one or more iterations. The plurality of second data flow program portions are configured to execute at least a portion of iterations concurrently during execution of the data flow program. Execution of the plurality of second data flow program portions is functionally equivalent to sequential execution of the iterations of the first data flow program portion.

Owner:NATIONAL INSTRUMENTS

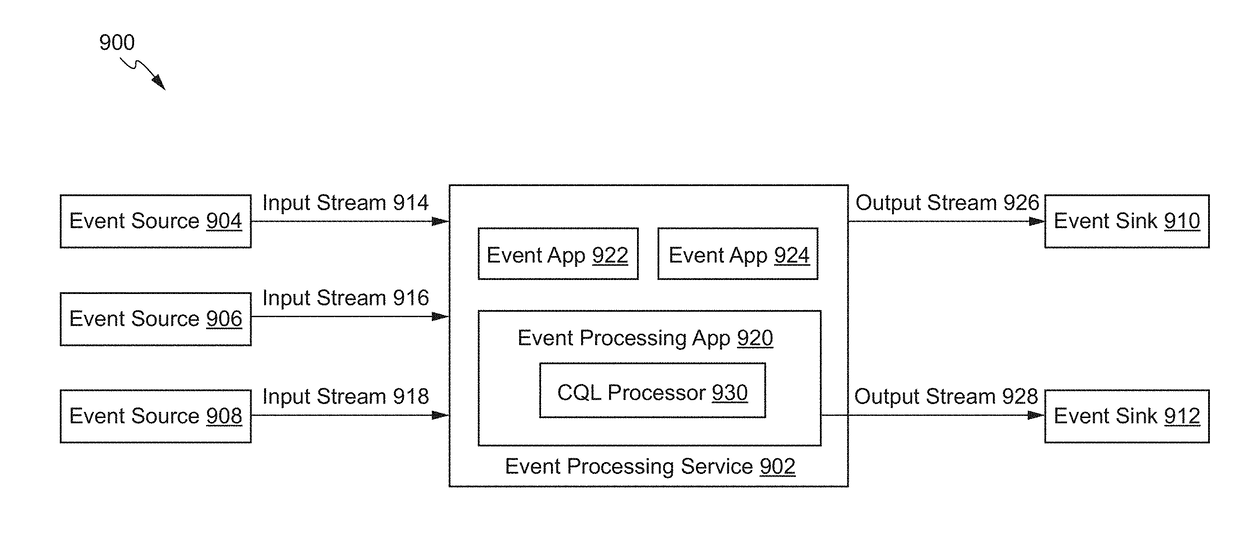

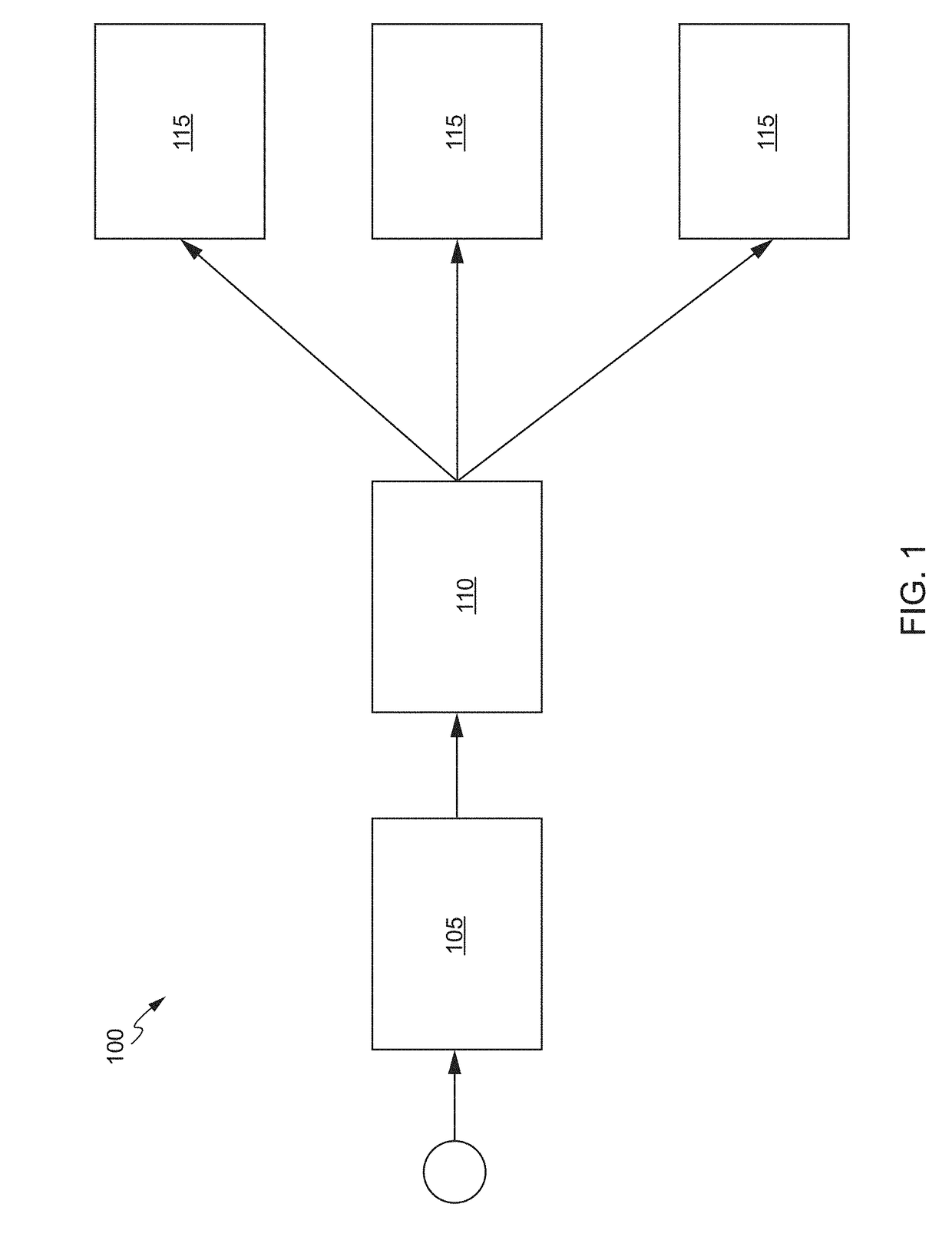

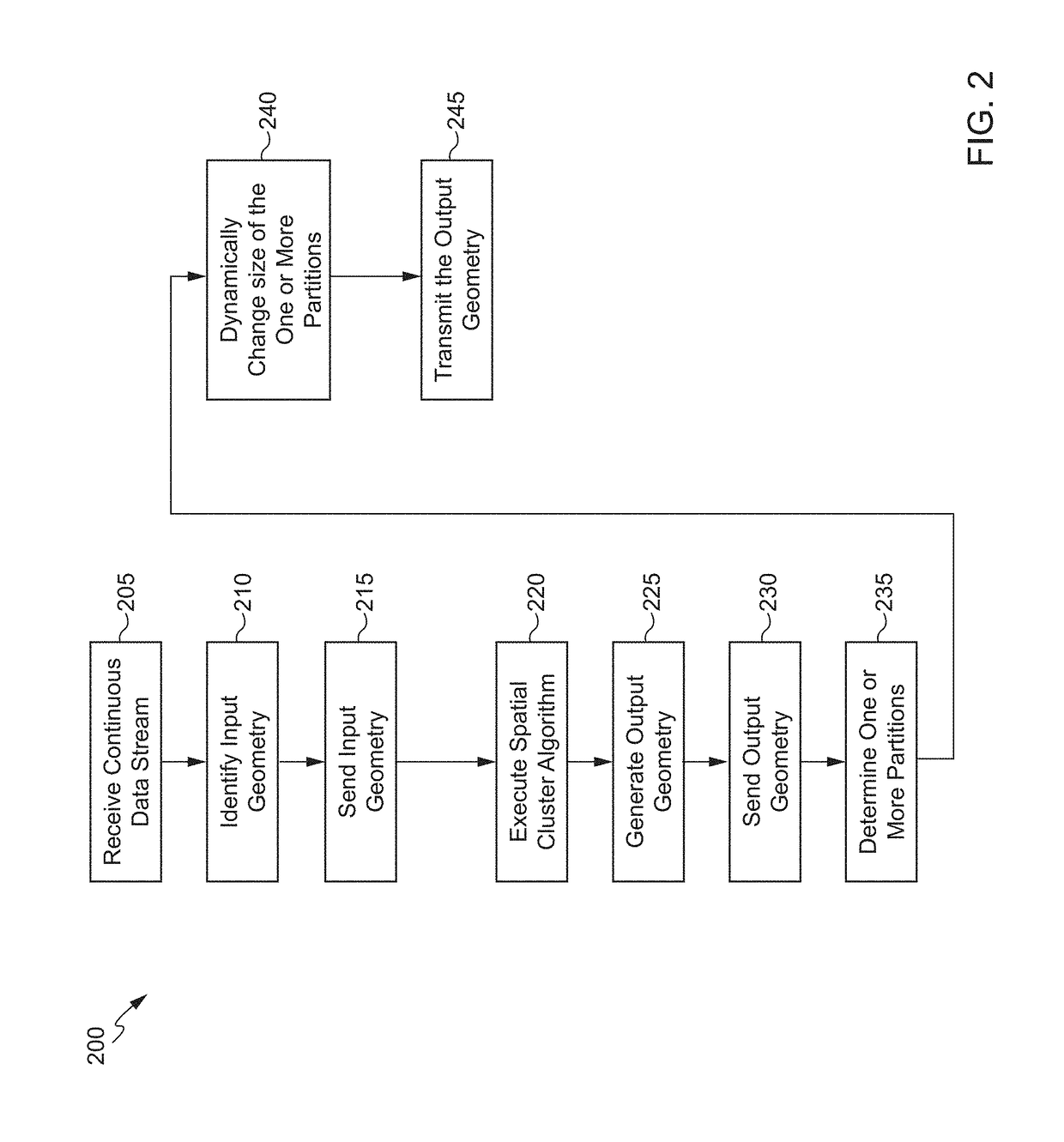

Automatic parallelization for geofence applications

ActiveUS20180075108A1Geographical information databasesLocation information based serviceGraphicsParallel computing

An event processing system for processing events in an event stream is disclosed. The system can execute instructions to receive a continuous data stream related to an application, identify an input geometry associated with the continuous data stream, generate a cluster of geometries based at least in part on the input geometry, generate an output geometry based at least in part on the cluster of geometries and a number of geometries in each cluster of the cluster of geometries, determining one or more partitions for the output geometry based on the cluster of geometries and the number of geometries in each cluster of the cluster of geometries, dynamically change a size of the one or more partitions for the output geometry, and transmitting the output geometry associated with the continuous data stream.

Owner:ORACLE INT CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com