Task scheduling system of on-chip multi-core computing platform and method for task parallelization

A computing platform and task scheduling technology, applied in a variety of digital computer combinations, multi-program devices, etc., can solve problems such as limiting the performance of the platform, unable to achieve automatic parallel execution of tasks, etc., to achieve extended parallelism and throughput. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

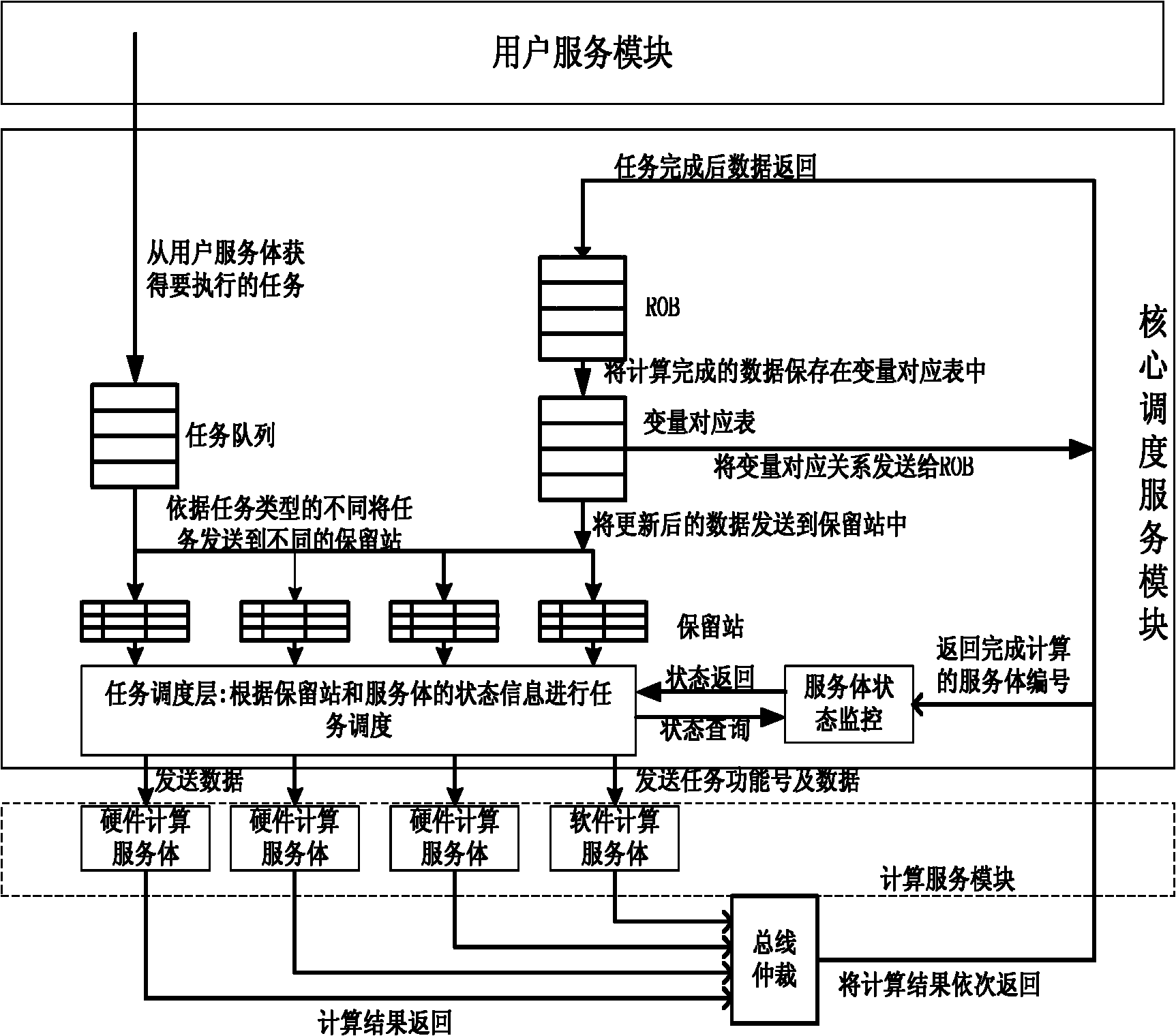

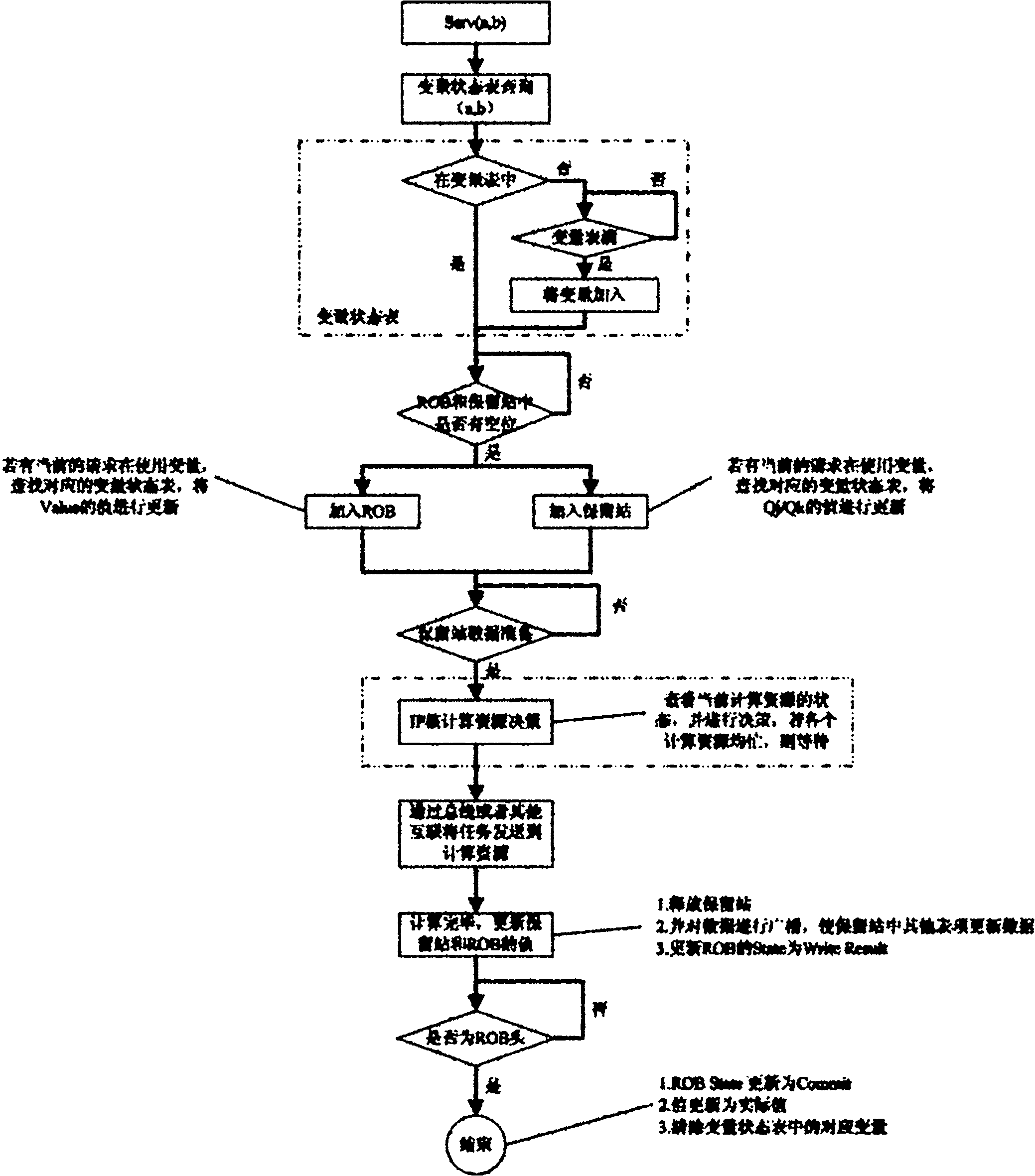

[0038] Such as figure 1 with figure 2 As shown, the task scheduling system of the on-chip multi-core computing platform includes a user service module that provides tasks that need to be executed, and a computing service module that executes multiple tasks on the on-chip multi-core computing platform. A core is set between the user service module and the computing service module. The scheduling service module, the core scheduling service module accepts the task request of the user service module as input, judges the data dependencies between different tasks through records, and schedules the task requests to different computing service modules in parallel for execution.

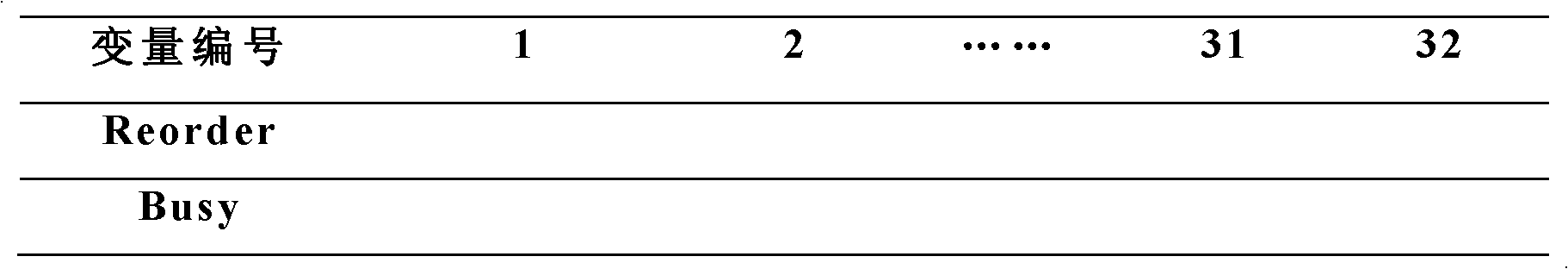

[0039] figure 1 It shows the system architecture diagram of the task scheduling system of the on-chip multi-core computing platform. The modules include a task queue, a variable state table, a group of reserved stations and a ROB table. The specific modules are as follows:

[0040] 1) task queue

[0041] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com