Patents

Literature

294 results about "Task parallelism" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Task parallelism (also known as function parallelism and control parallelism) is a form of parallelization of computer code across multiple processors in parallel computing environments. Task parallelism focuses on distributing tasks—concurrently performed by processes or threads—across different processors. In contrast to data parallelism which involves running the same task on different components of data, task parallelism is distinguished by running many different tasks at the same time on the same data. A common type of task parallelism is pipelining which consists of moving a single set of data through a series of separate tasks where each task can execute independently of the others.

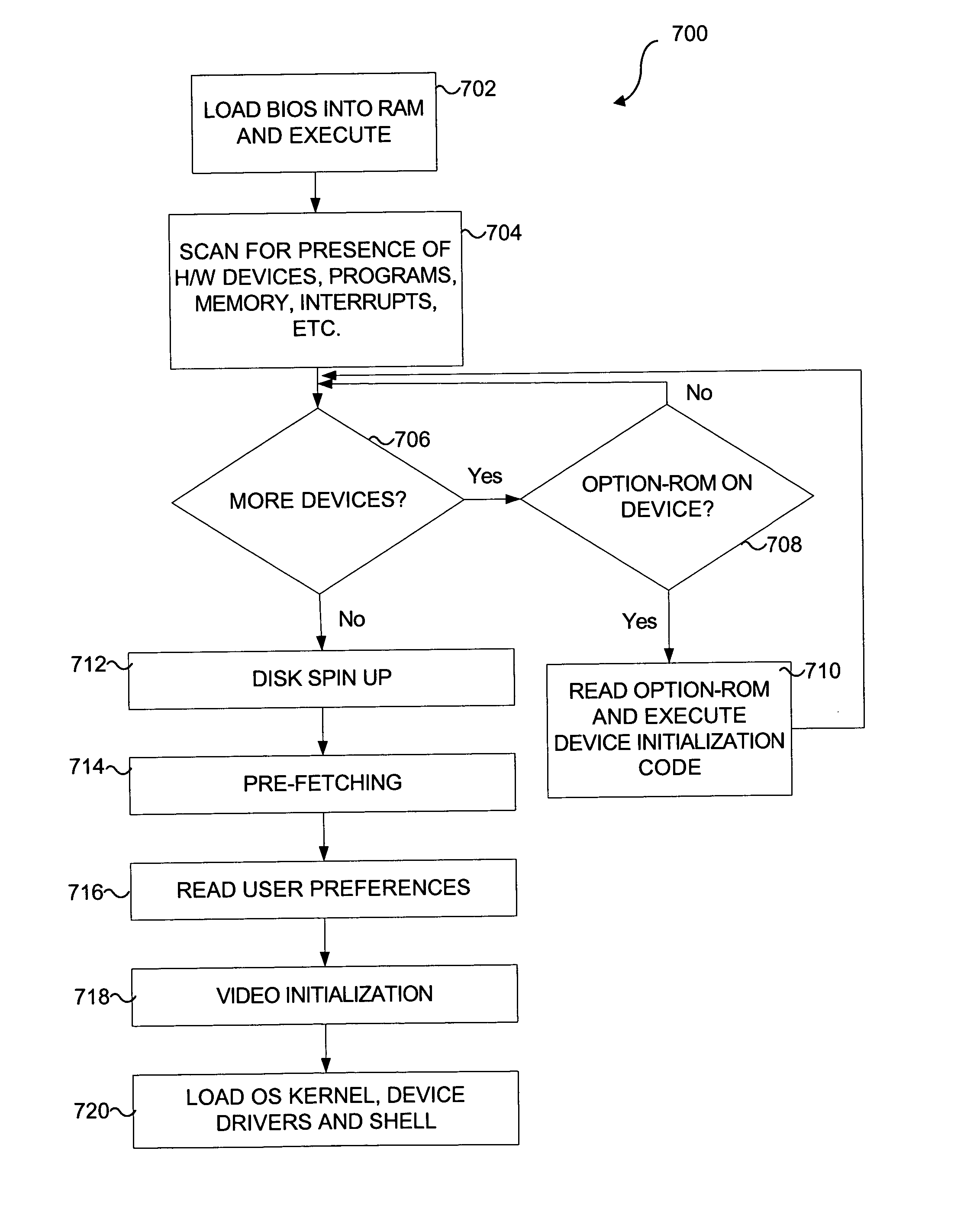

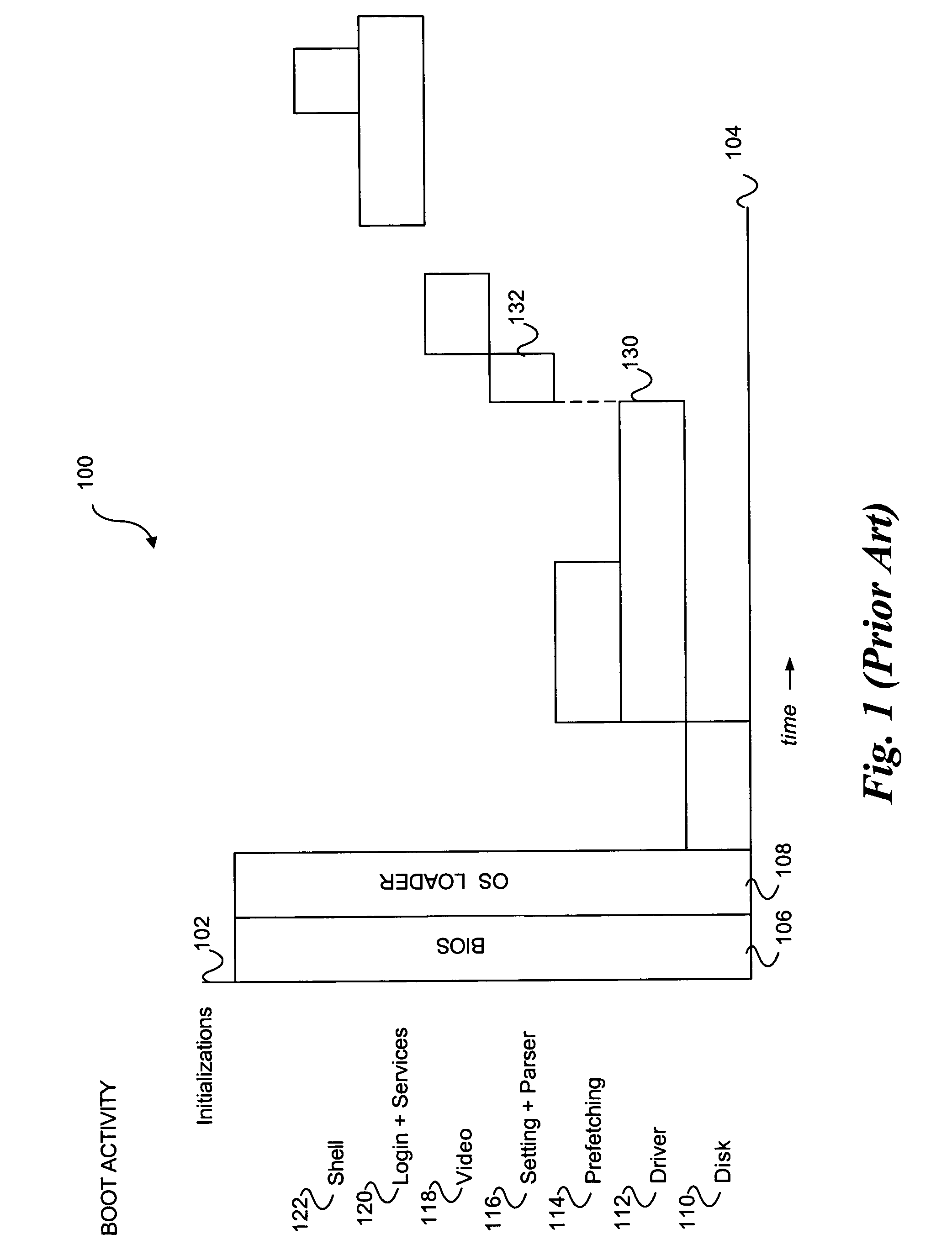

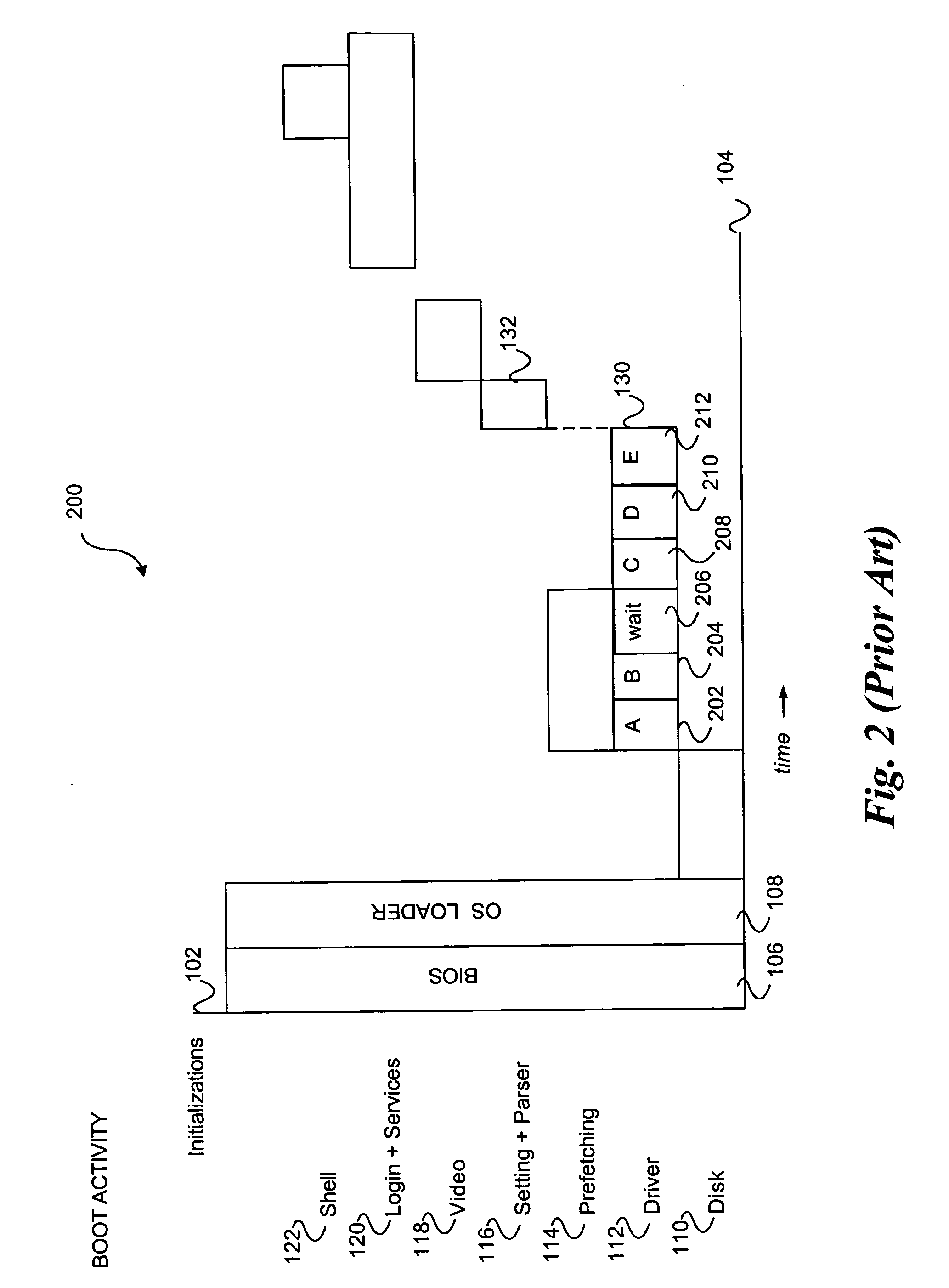

System and method for accelerated device initialization

InactiveUS20050038981A1Digital computer detailsProgram loading/initiatingOption ROMOperational system

A system and method for initialization of a computer system is described. Faster initialization of a computer system is possible by allowing certain device driver initialization tasks to overlap with other initialization and operating system tasks. option-ROMs resident on hardware device drivers define the initialization tasks to be performed prior to device driver initialization. Initial computer code for booting the computing device, such as a BIOS, is executed. As option-ROMs for hardware devices are scanned and executed, specific device initialization information is accessed from the devices and placed in pre-defined buffer areas. These accesses occur in parallel to other start up tasks. When device drivers are loaded, some of their initialization has already completed, thereby shortening the time necessary to boot the system.

Owner:INTEL CORP

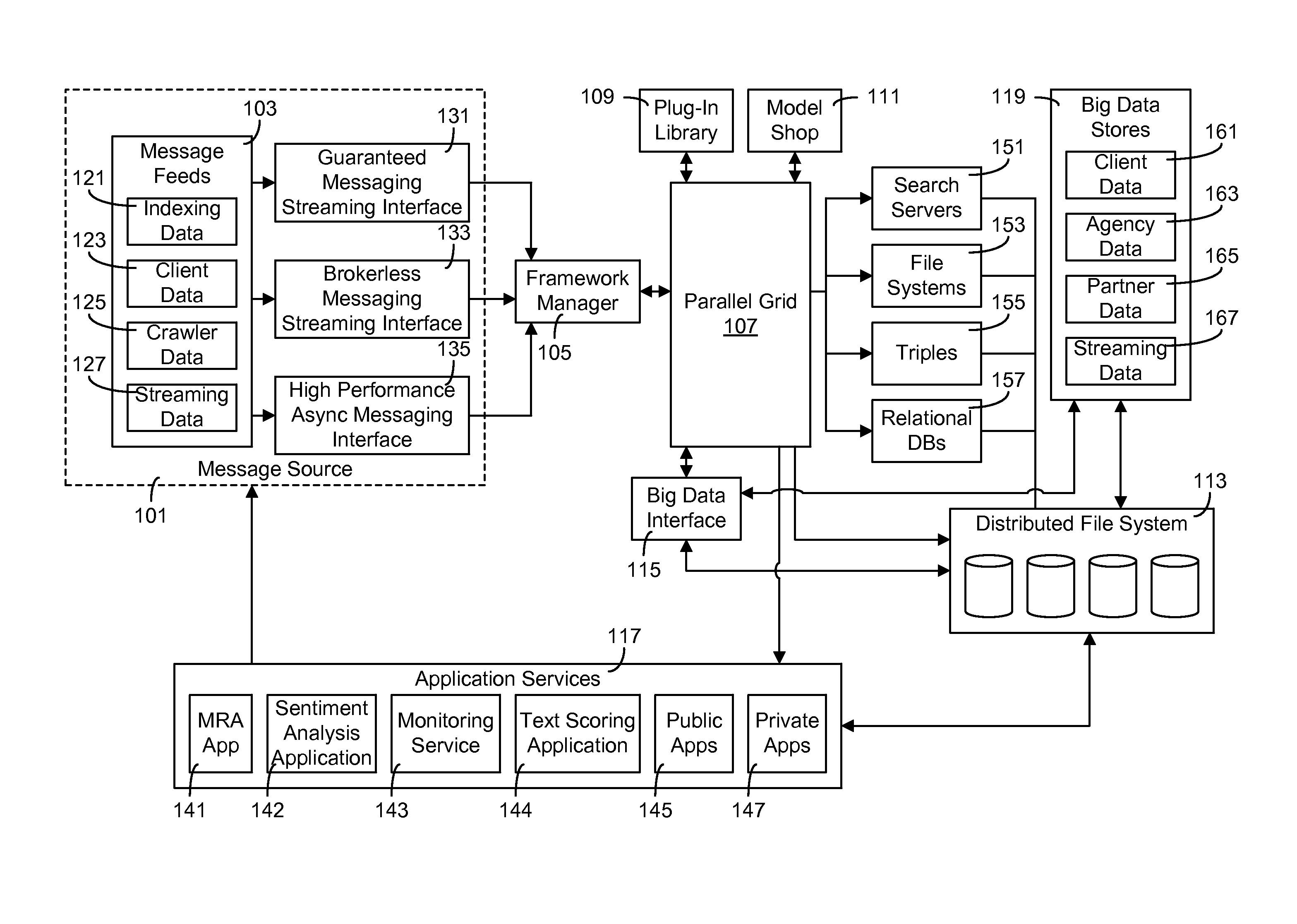

Emotion processing systems and methods

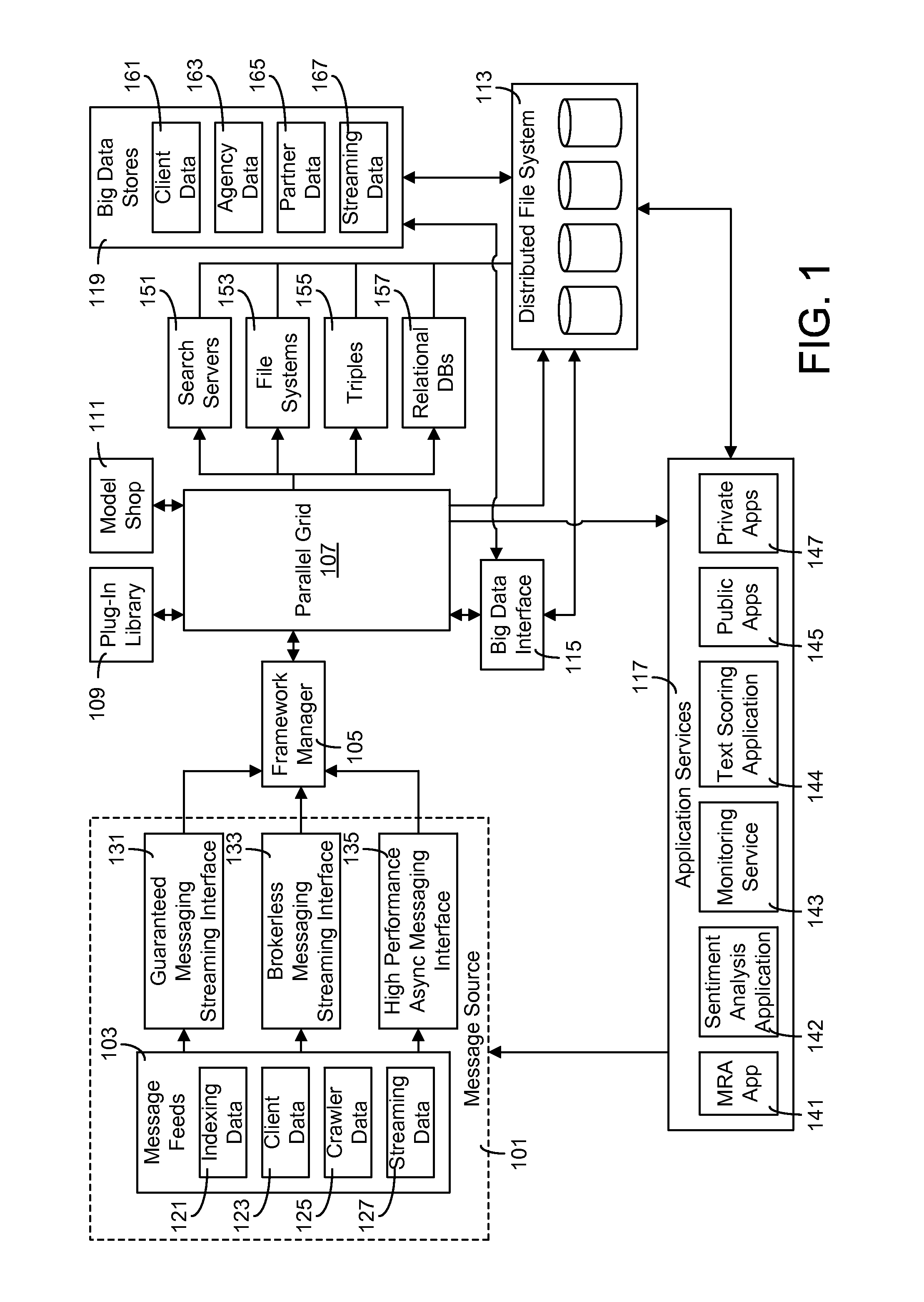

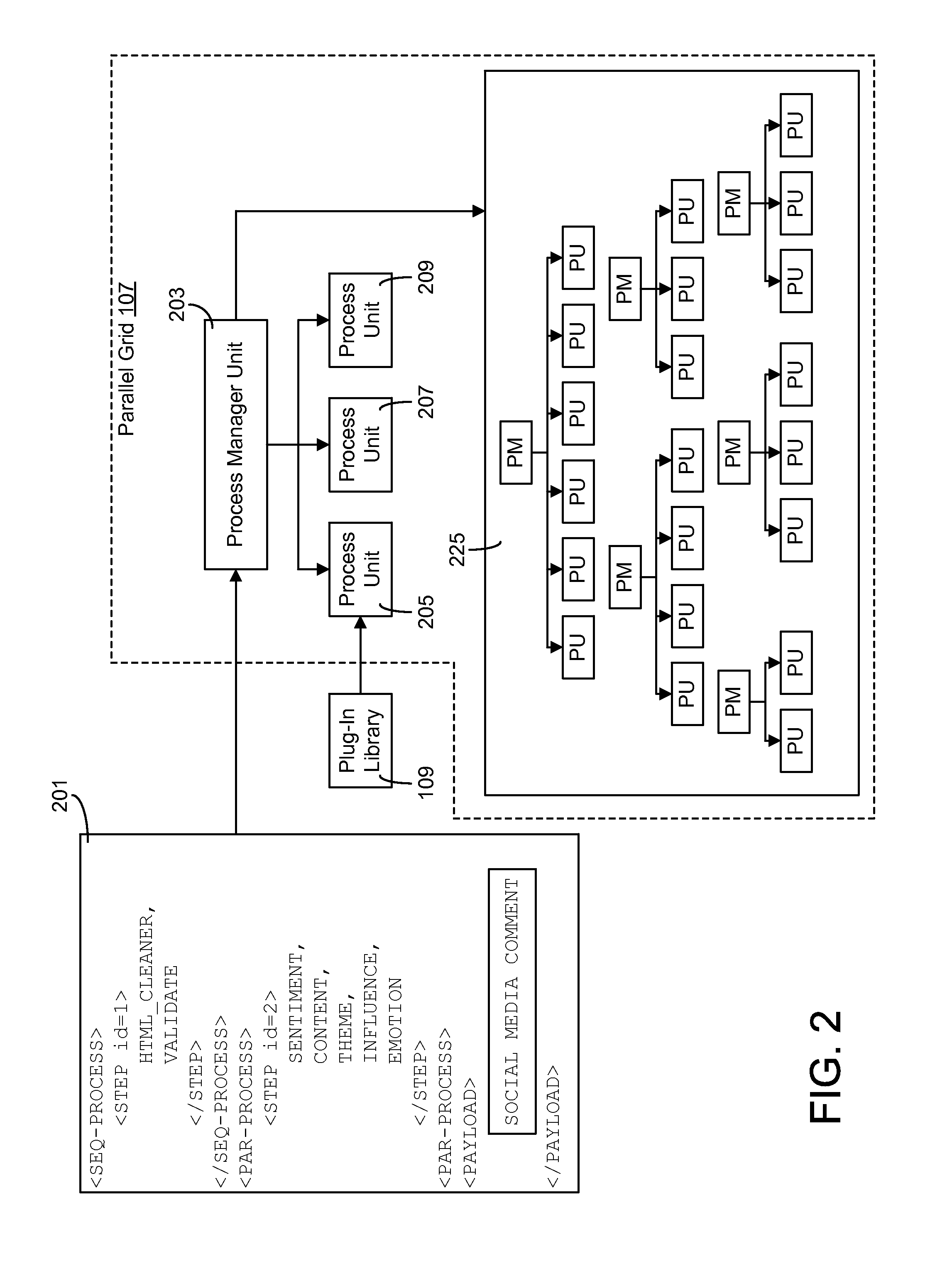

A system for conducting parallelization of tasks is disclosed. The system includes an interface for receiving messages comprising a representation of logic describing two tasks to be executed in parallel, the message further comprising a content payload for use in the tasks. The system further includes a parallel processing grid comprising devices running on independent machines, each device comprising a processing manager unit and at least two processing units. The processing manager is configured to parse the received messages and to distribute the at least two tasks to the at least two processing units for independent and parallel processing relative to the content payload.

Owner:TNHC INVESTMENTS LLC

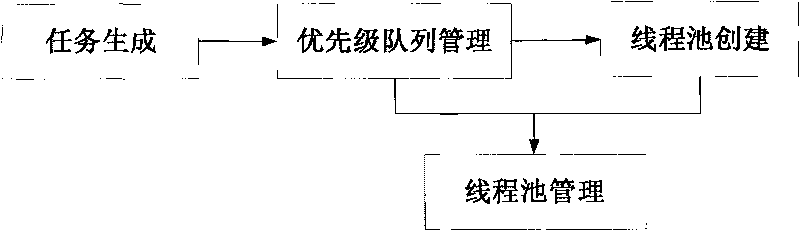

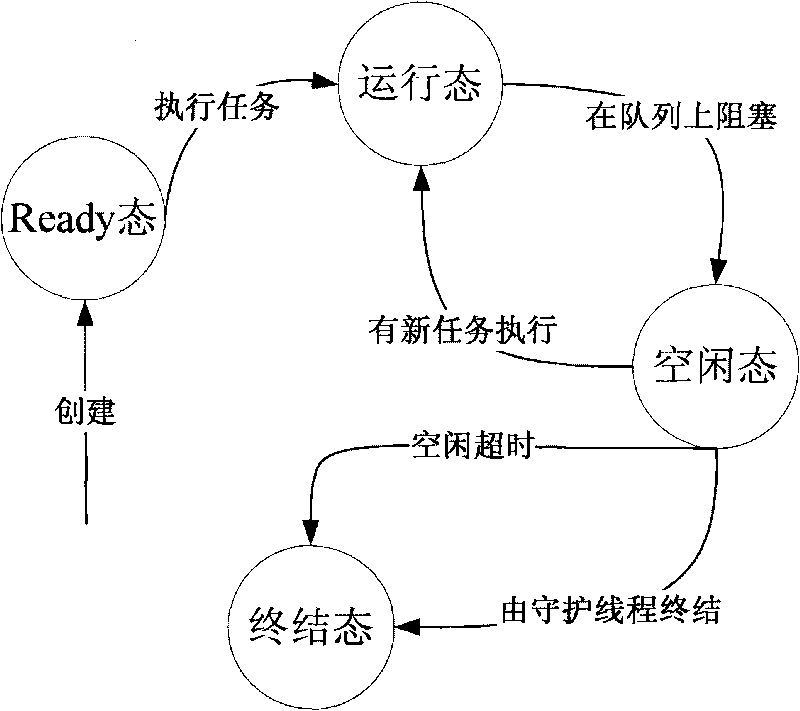

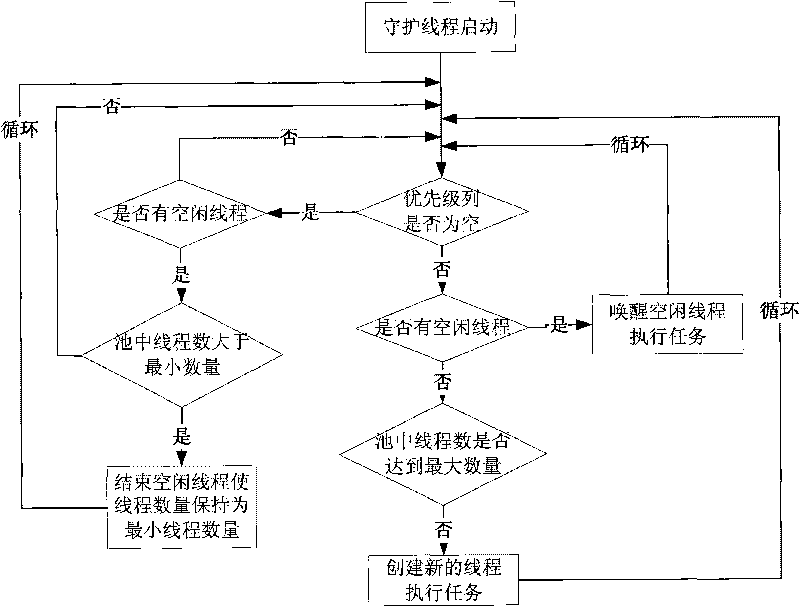

Method for scheduling satellite data product production tasks in parallel based on multithread

ActiveCN101739293ASolve scheduling problemsIncrease the number of tasksResource allocationSatellite dataIdle time

The invention discloses a method for scheduling satellite data product production tasks in parallel based on multithread. The method comprises the following steps: setting priorities for tasks to be scheduled for executing and realizing a uniformed interface; adding the tasks into a priority queue according to an order of the priorities from high to low; setting the maximum number and the minimal number of the tasks of a thread pool, and the longest idle time of a thread; and starting a daemon thread in the thread pool and a plurality of task threads to execute the tasks, wherein the daemon thread regulates the number of the task threads dynamically according to the situation of task amount. The method can utilize system resource rationally, aims at the data product production of a satellite ground application system, and solves the difficult points of complicated product production processes, long task executing time, large task amount, high real time and parallelism degree for scheduling the tasks and the like.

Owner:SPACE STAR TECH CO LTD

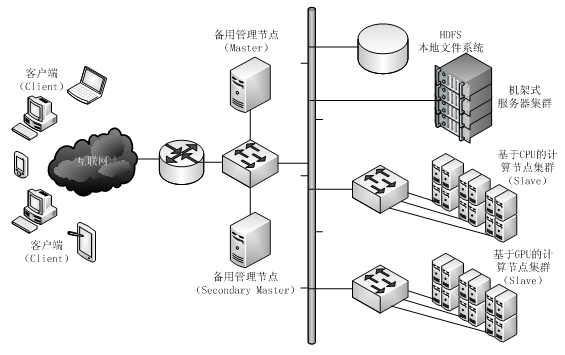

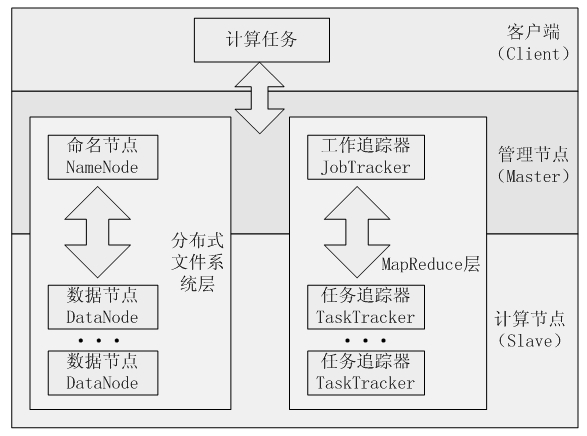

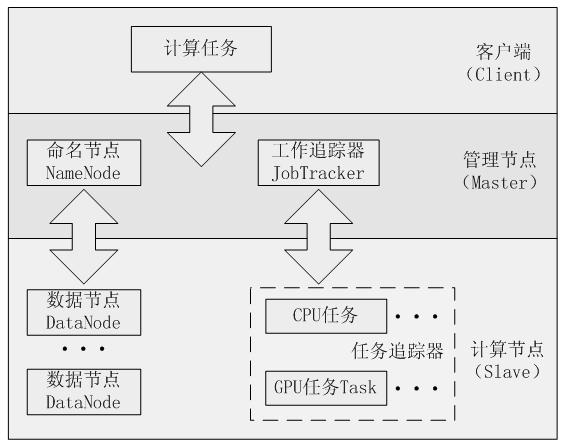

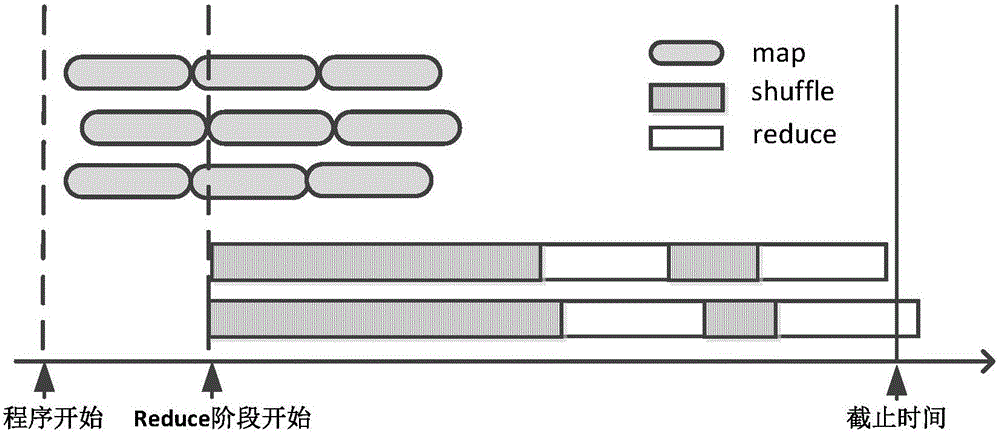

Mapreduce-based multi-GPU (Graphic Processing Unit) cooperative computing method

InactiveCN102662639AReduce communicationImprove general performanceConcurrent instruction executionComputational scienceConcurrent computation

The invention discloses a mapreduce-based multi-GPU (Graphic Processing Unit) cooperative computing method, which belongs to the application field of computer software. Corresponding to single-layer parallel architecture of common high-performance GPU computing and MapReduce parallel computing, a programming model adopts a double-layer GPU and MapReduce parallel architecture to help a developer simplify the program model and multiplex the existing concurrent codes through a MapReduce program model with cloud computing concurrent computation by combining the structure characteristic of a GPU plus CPU (Central Processing Unit) heterogeneous system, thus reducing the programming complexity, having certain system disaster tolerance capacity and reducing the dependency of equipment. According to the computing method provided by the invention, the GPU plus MapReduce double concurrent mode can be used in a cloud computing platform or a common distributive computing system so as to realize concurrent processing of MapReduce tasks on a plurality of GPU cards.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

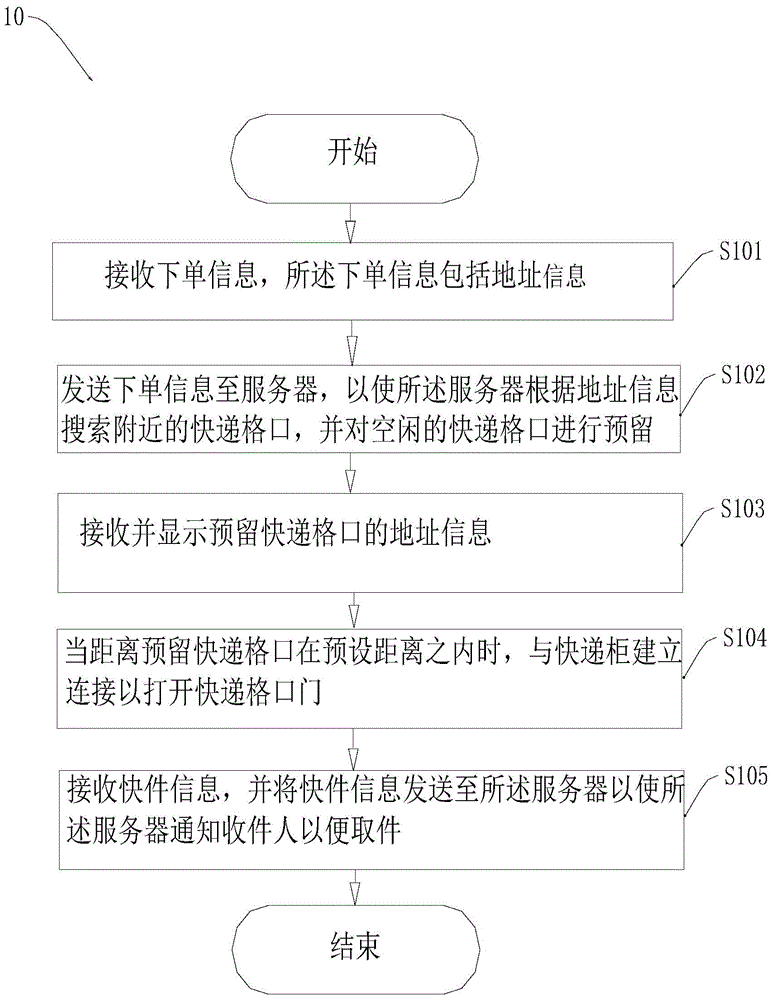

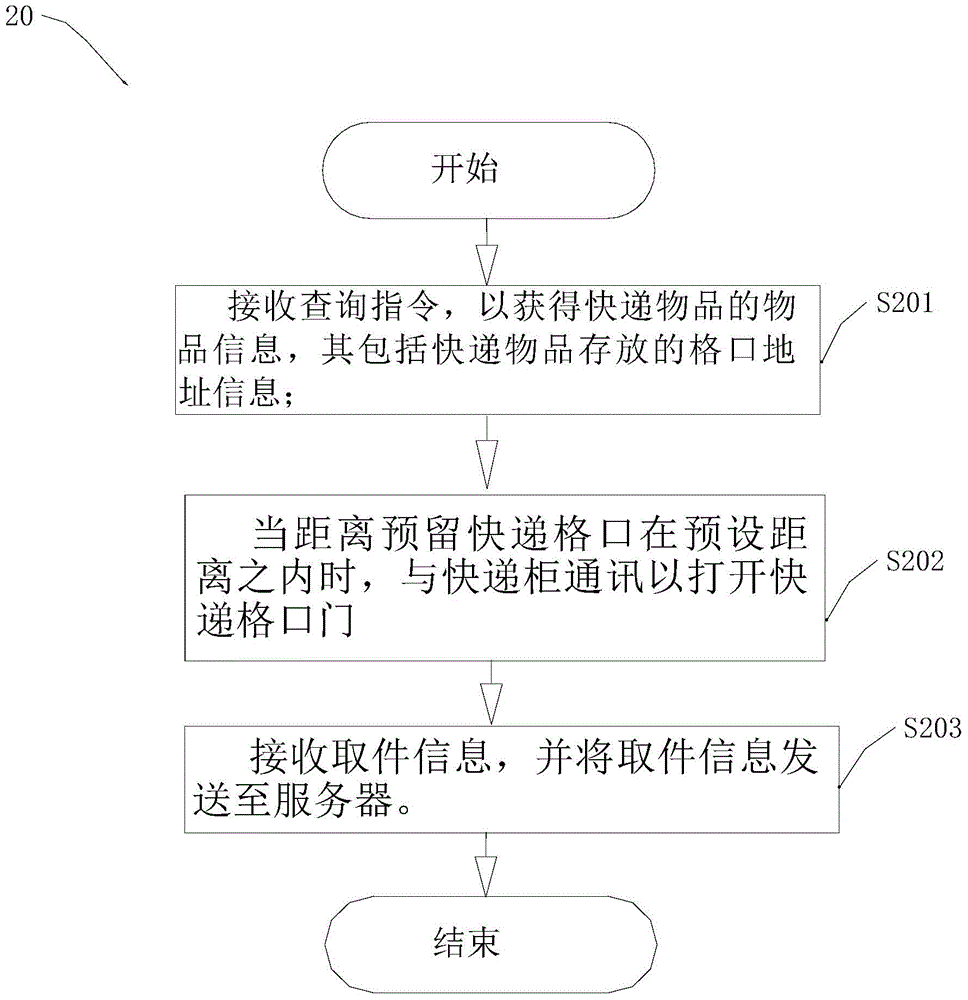

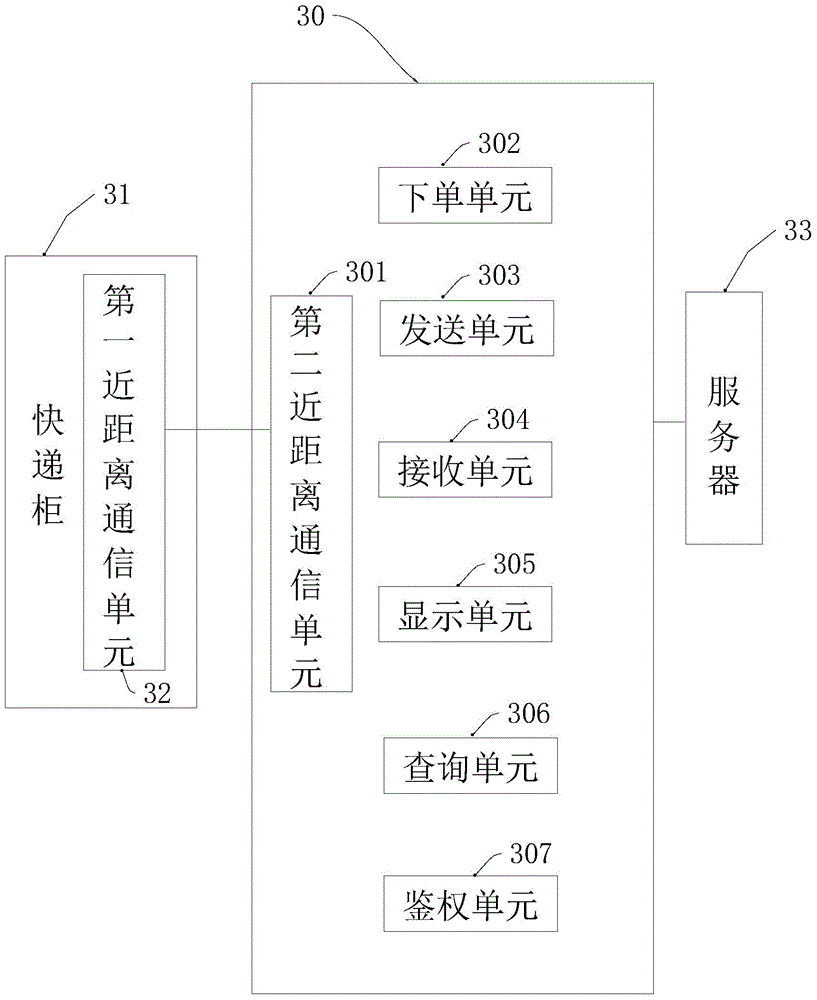

Express cabinet delivery control method, express cabinet pickup control method and control device

ActiveCN105139537AThe number can be increased without limitMeet the needs of different applicationsApparatus for meter-controlled dispensingSpecial data processing applicationsInformation searchingComputer science

The invention provides an express cabinet delivery control method, an express cabinet pickup control method and a control device. The express cabinet delivery control method comprises the steps of receiving order information, sending the order information to a server to enable the server to search for nearby express bins according to address information and reserve an empty express bin, receiving and displaying the address information of the reserved express bin, establishing connection with an express cabinet to open the express bin when the distance to the reserved express bin is within a preset range, and receiving express item information and sending the express item information to the server to enable the server to inform a receiver for pickup. By the adoption of the express cabinet delivery control method, the express cabinet pickup control method and the control device, connection with multiple senders / receivers can be achieved at the same time, so that correlation set between a main cabinet and an auxiliary cabinet and human-computer interaction of the main cabinet are not needed and the multi-task execution access mode is realized; users do not need to wait in line, and the number of express cabinet bins can be increased without limitation so as to meet the requirements of different application occasions.

Owner:SF TECH

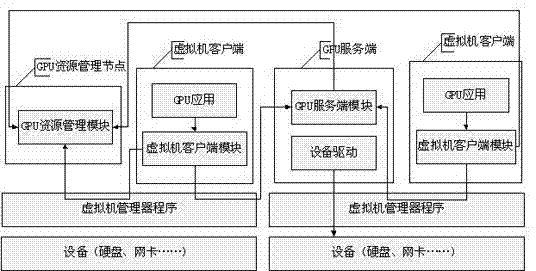

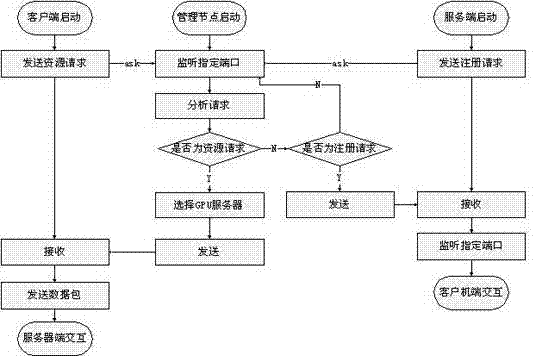

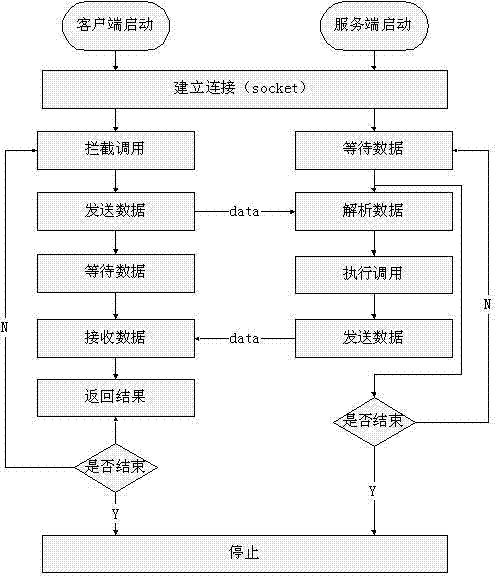

Platform architecture supporting multi-GPU (Graphics Processing Unit) virtualization and work method of platform architecture

ActiveCN102650950AEasy to handleIncrease usageResource allocationEnergy efficient computingVirtualizationComputer architecture

The invention provides a platform architecture supporting multi-GPU (Graphics Processing Unit) virtualization and a work method of the platform architecture. The platform architecture is used as a transmission medium by deploying middleware at a GPU server end and the end part of a virtual machine and using modes such as socket or infiniband to make up the defect that an original virtual machine platform cannot accelerate by using the GPU. The platform architecture can be used for managing the GPU resources through one or more centrally-controlled management nodes, carrying out fine grit division on the GPU resources and providing the function multitasking and execution. The virtual machine requests the GPU resources for the management nodes through the middleware and accelerates by using the GPU resources. The GPU server is used for registering the GPU resources for the management nodes through the middleware and providing service by using the GPU resources. According to the platform architecture disclosed by the invention, the parallel processing capability of the GPU is introduced into the virtual machine; and the utilization rate of the GPU is increased to the maximum extent by combining a management mechanism. According to the platform architecture, energy consumption can be effectively reduced and the calculation efficiency is increased.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

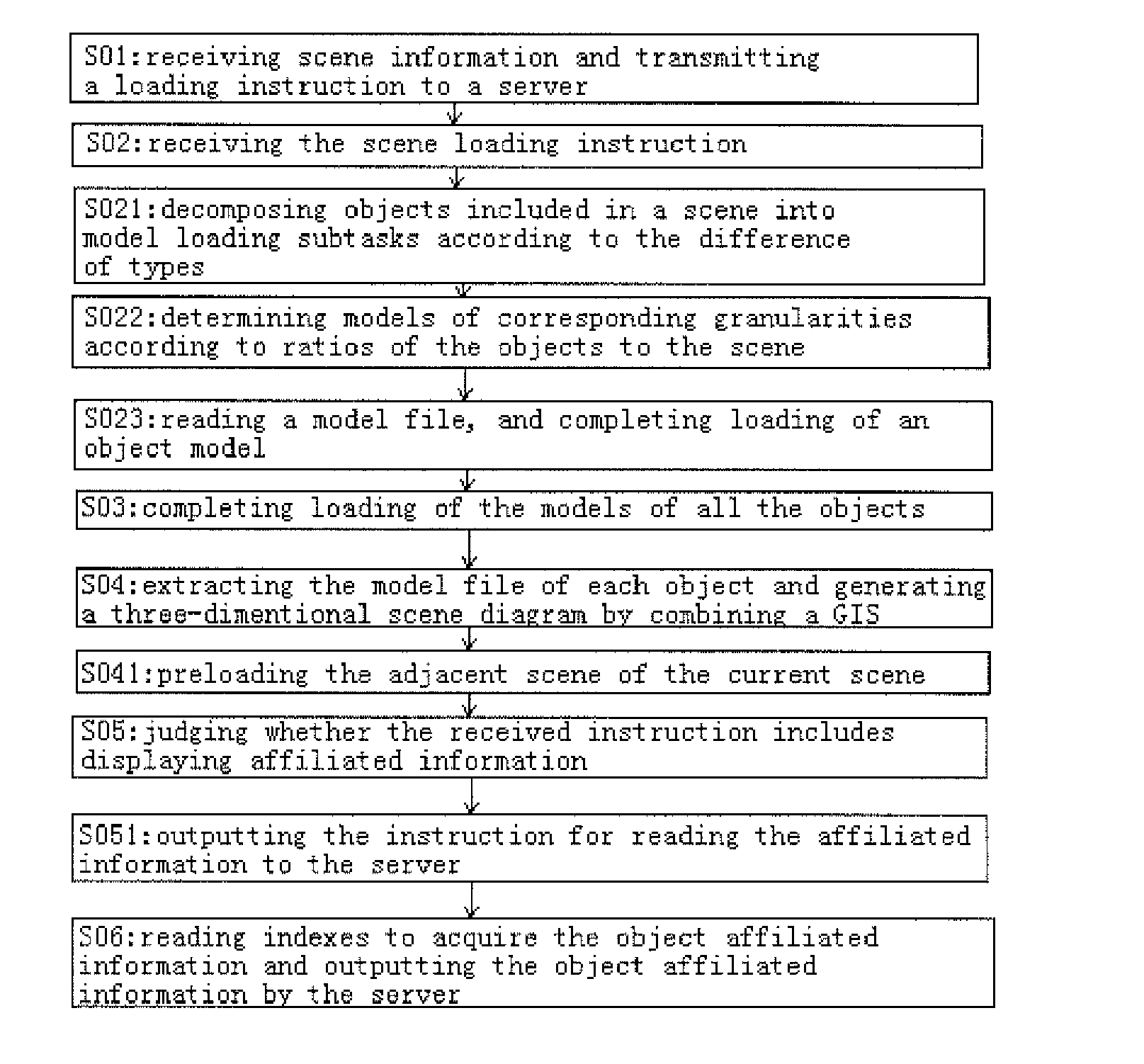

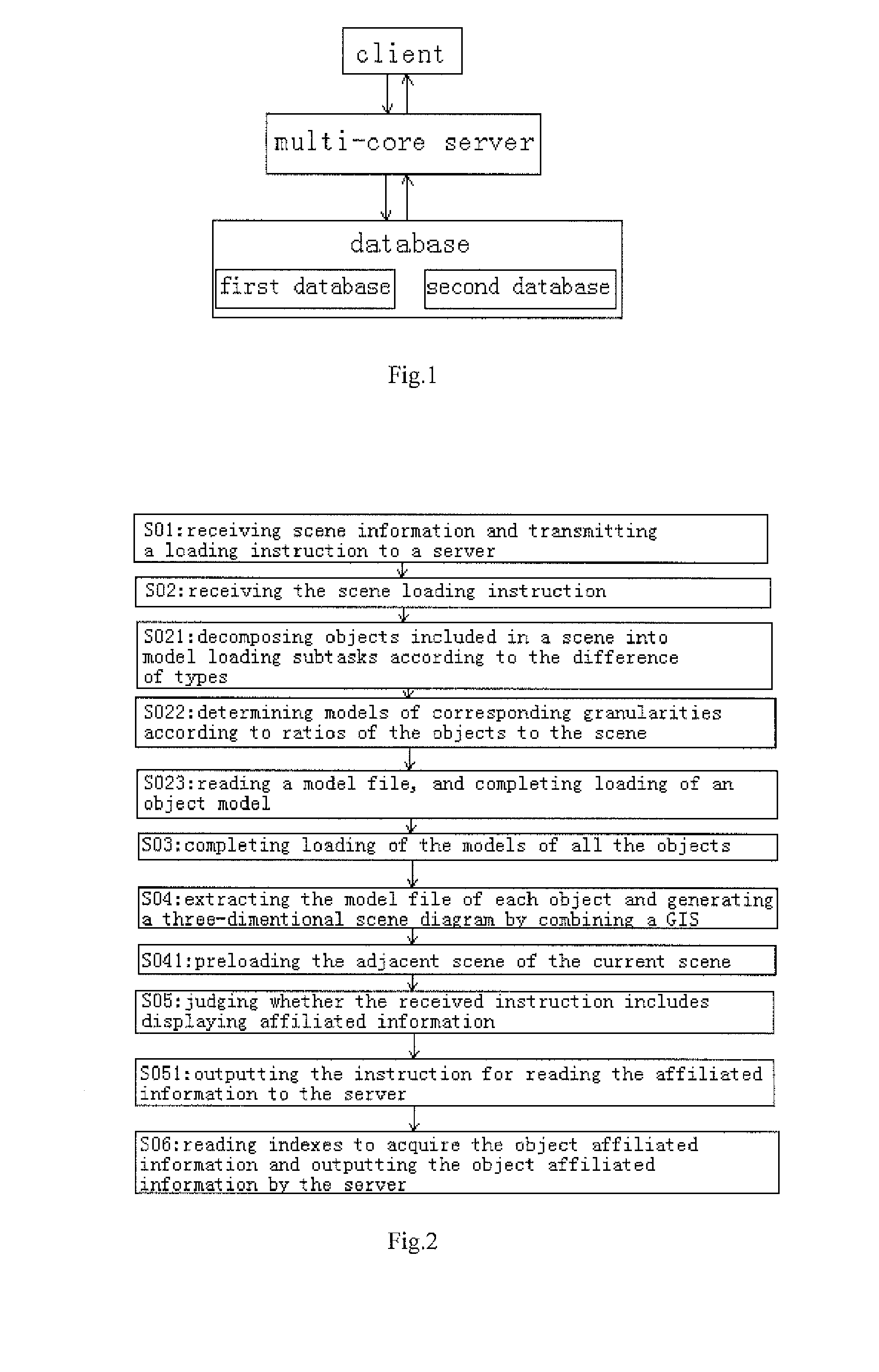

Power grid visualization system and method based on three-dimensional gis technology

InactiveUS20130326388A1Improve loading speedImprove immediacyComputer controlSimulator controlPower gridPower equipment

The invention relates to a power grid visualization system and a power grid visualization method based on a three-dimensional geographic information system (GIS) technology. Models to be loaded are divided into different model loading subtasks according to the difference of object types included in a scene to be loaded, and model files are called in parallel from a plurality of subtasks in a multi-thread mode; and meanwhile, on the basis that loading tasks are divided into a plurality of model loading subtasks according to the loaded object types, model files of objects of each type are only read once, moreover, reuse of the model files is not limited to a client loading task, different clients can reuse the read model files, and the characteristics of limited type and relatively consistent specification of power equipment are fully considered, so that rereading of the model files of the same type is avoided.

Owner:STATE GRID SHANDONG ELECTRIC POWER +1

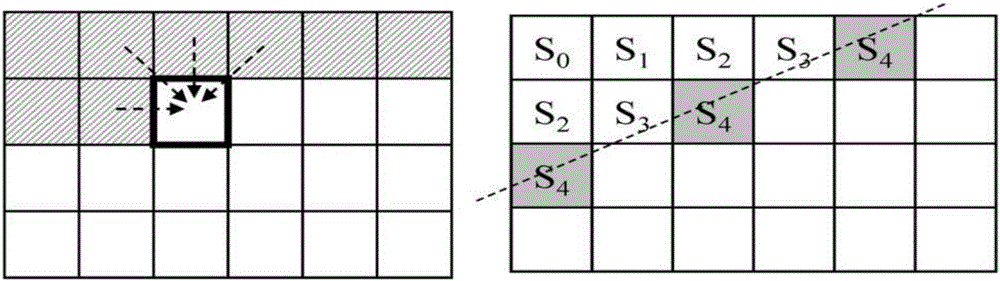

Display method and display terminal

InactiveCN102193719AImplement parallel processingImprove the efficiency of operation switchingInput/output processes for data processingParallel processingComputer science

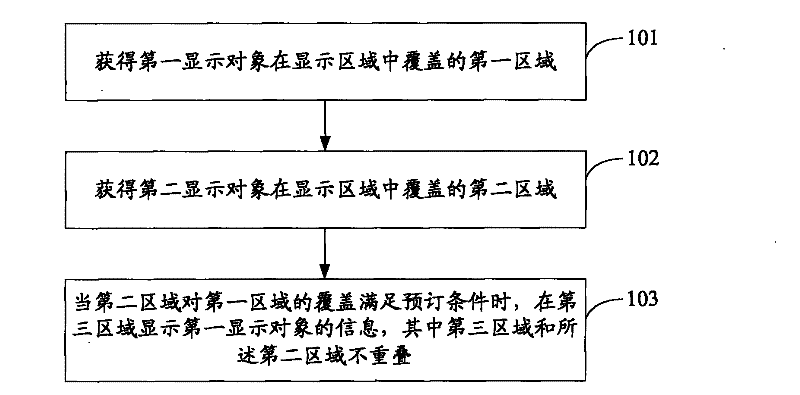

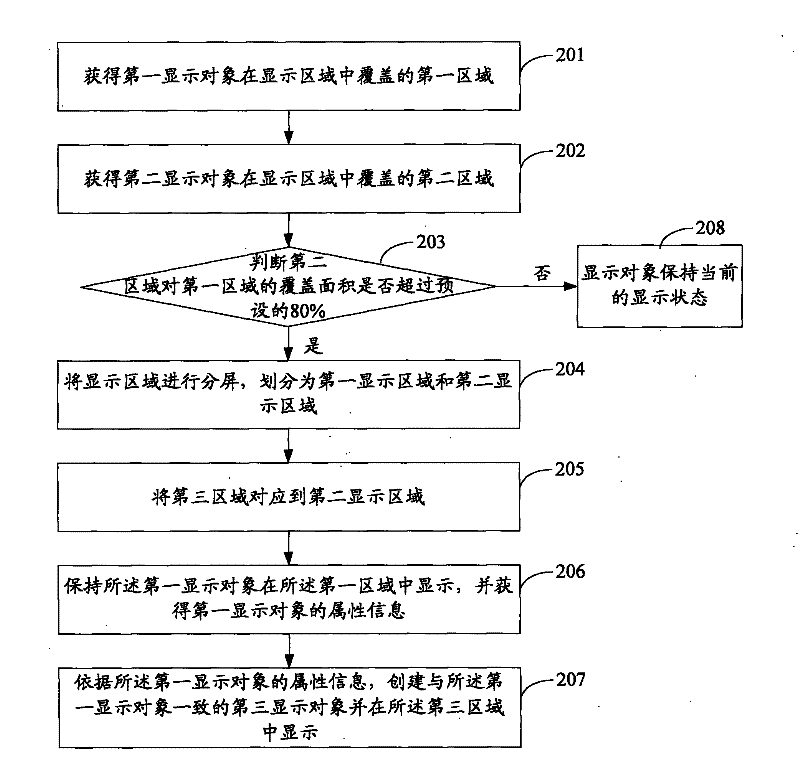

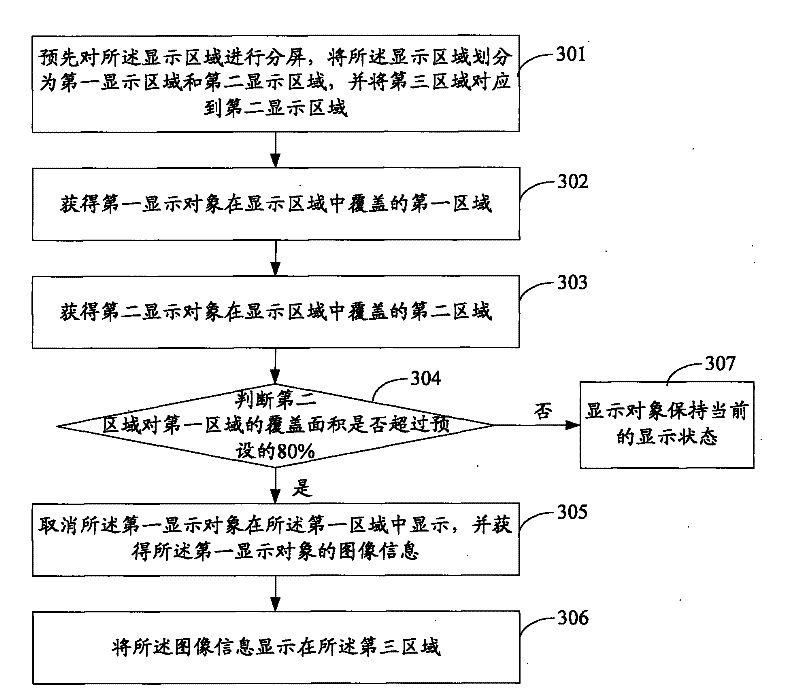

The embodiment of the invention discloses a display method and a display terminal. The display method comprises the following steps of: acquiring a first area covered by a first display object in a display area; acquiring a second area covered by a second display object in the display area; and when the condition of coverage of the second area on the first area meets a preset condition, displaying the information of the first display object in a third area, wherein the third area is not overlapped with the second area. When the method provided by the embodiment of the invention is used for displaying a plurality of objects in the terminal, the other objects which are covered by the fully displayed object can be displayed in the area which is not overlapped with the area of the fully displayed object, thereby ensuring that a user can monitor the states of all objects in real time. The covered object can be operated without task switching, thereby realizing multi-task parallel processing of the terminal and increasing the efficiency of switching operation among tasks.

Owner:LENOVO (BEIJING) LTD

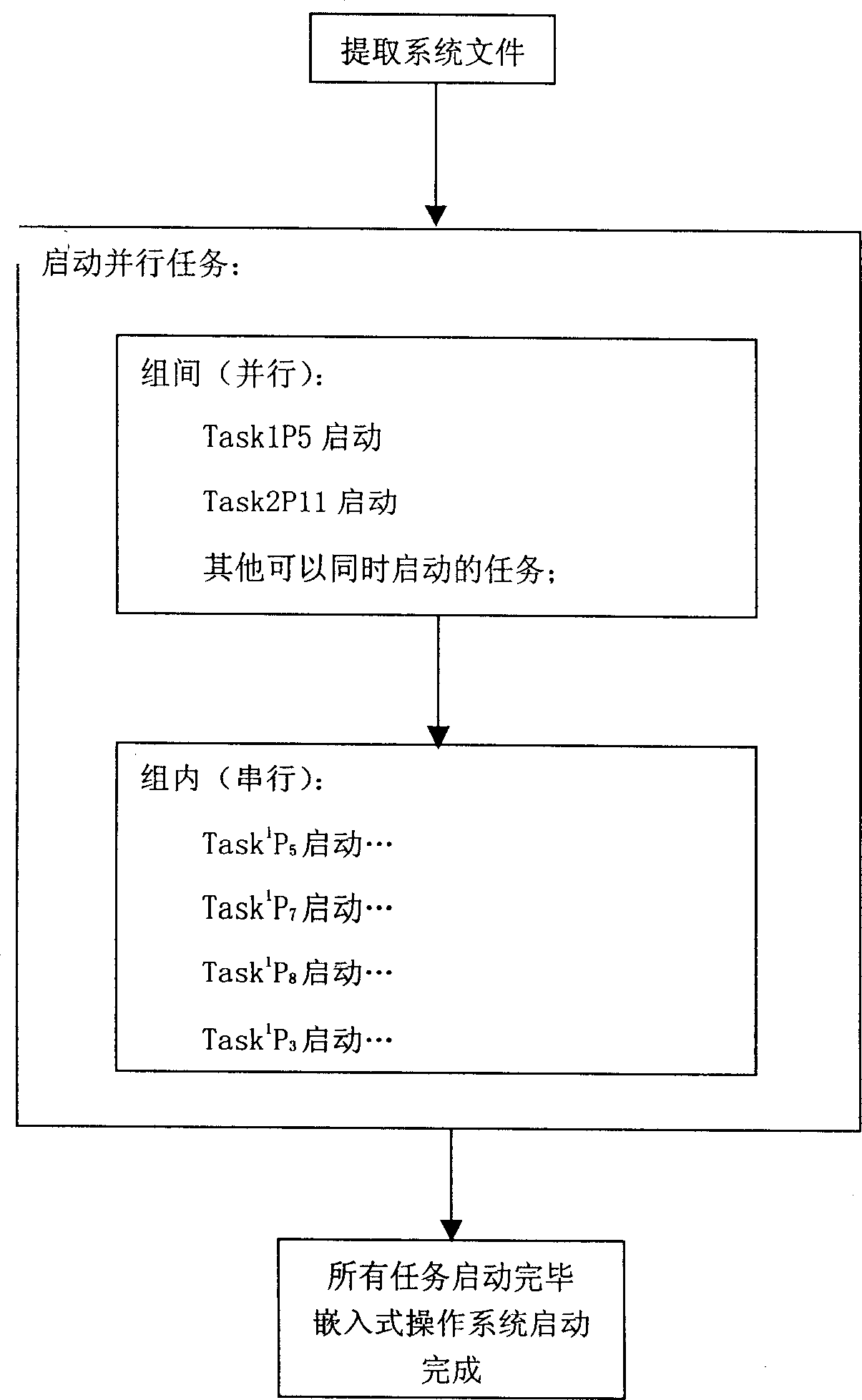

Multi-task parallel starting optimization of built-in operation system

InactiveCN1818868ATake advantage of time-sharing processing capabilitiesPowerful time-sharing processing abilityMultiprogramming arrangementsProgram loading/initiatingOperational systemTime-sharing

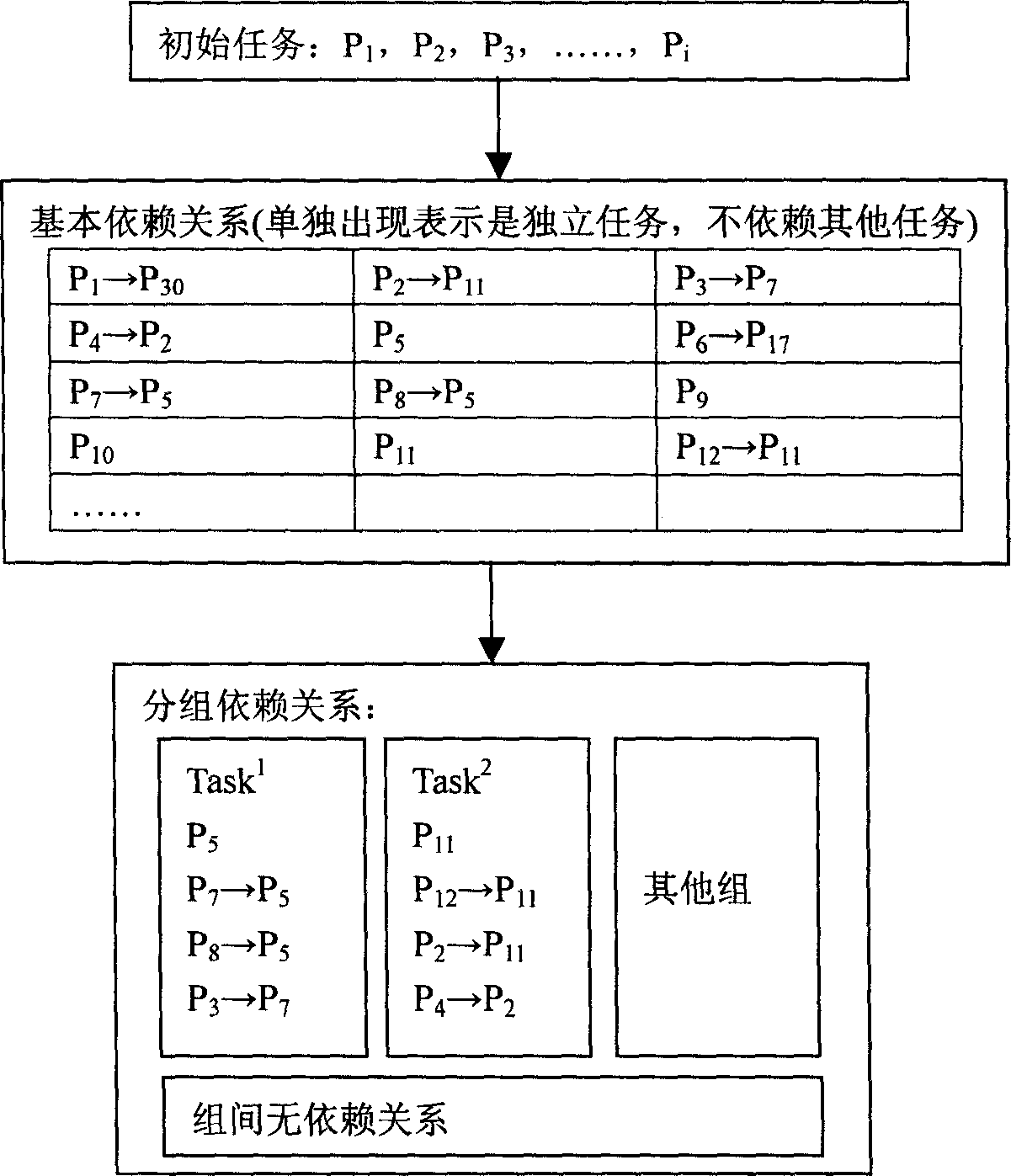

A multi-task parallel startup optimization multitask realization method of embedded operating system, the invention is defining the powerful time-sharing processing ability of the mobile embedded CPU, as the start of the embedded operating system, parallel startup some tasks that are without correlative dependence in turn synchronously, read the file of the saving system after the essence bootstrap and startup tasks in some groups synchronously, start the tasks according to the order of the task-sign until all the tasks finish startup in the same groups, every system task can parallel startup task according to the guiding relation of the tasks, that not only save the system resource but also save the total startup time of the embedded operating system.

Owner:ZHEJIANG UNIV

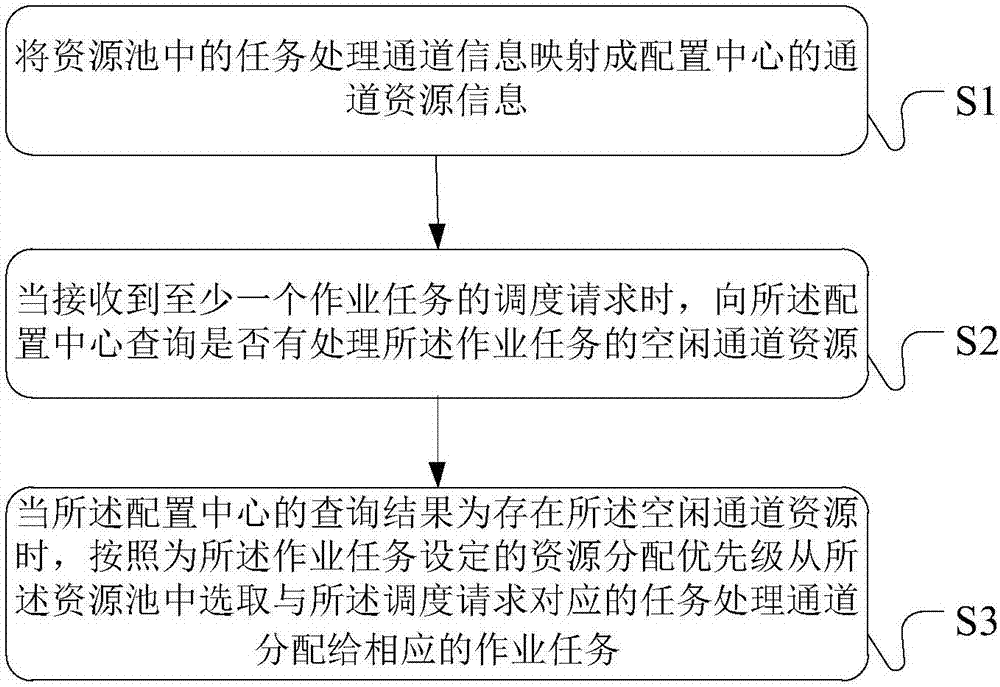

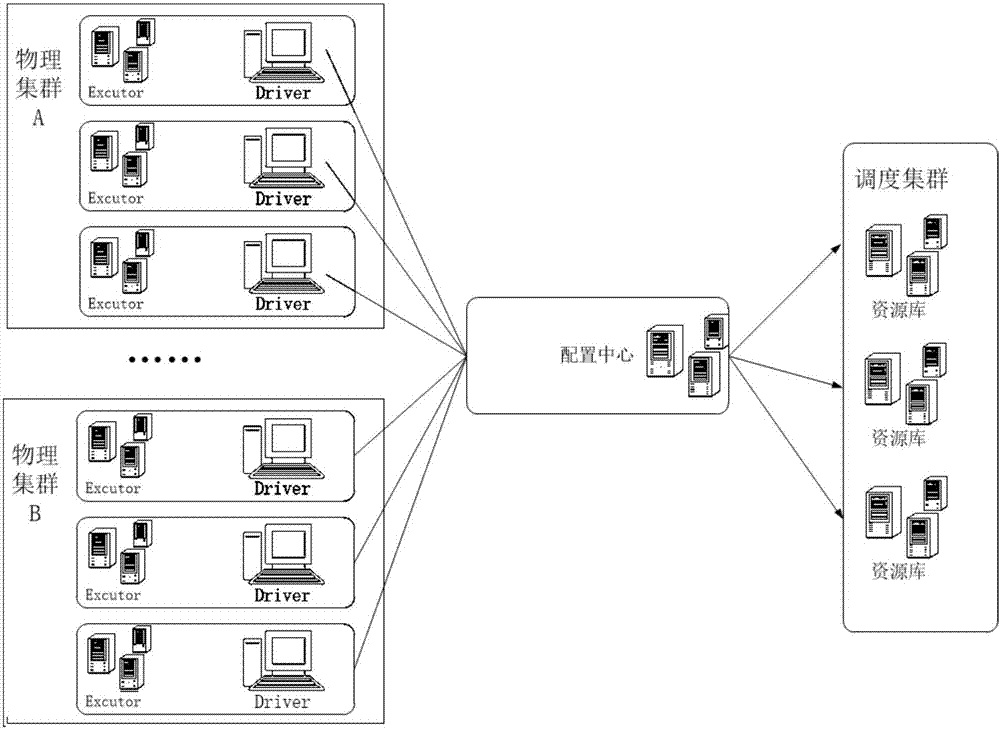

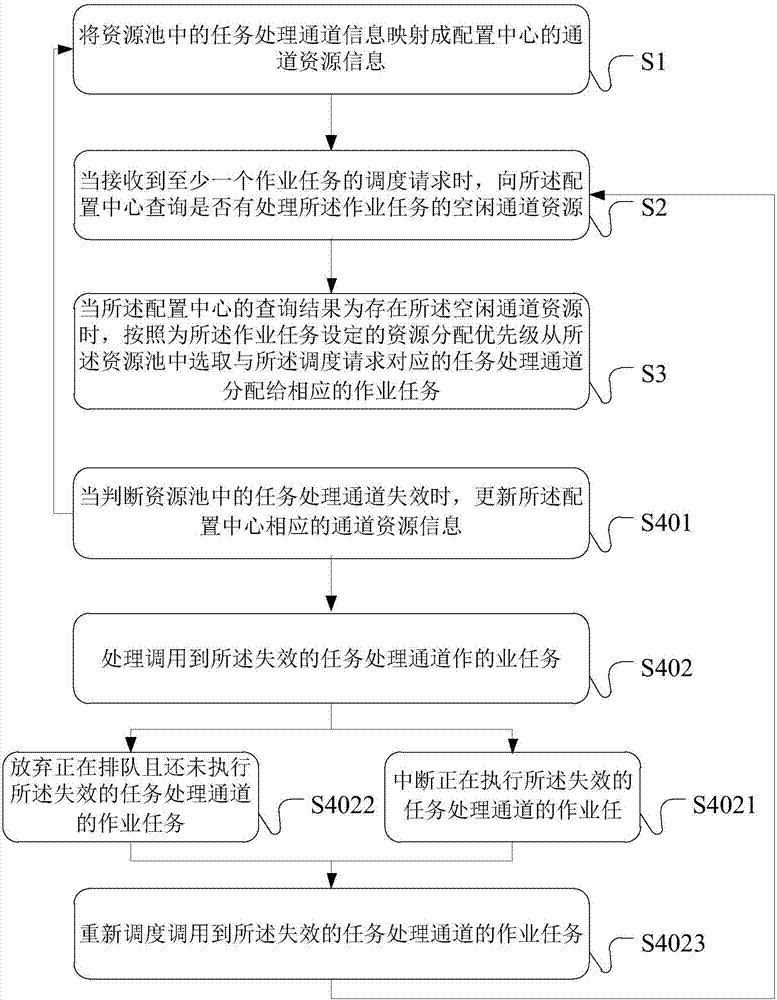

Task scheduling processing method, device and system

ActiveCN107291547AFix non-executable issuesImprove efficiencyResource allocationResource poolResource utilization

Owner:ADVANCED NEW TECH CO LTD

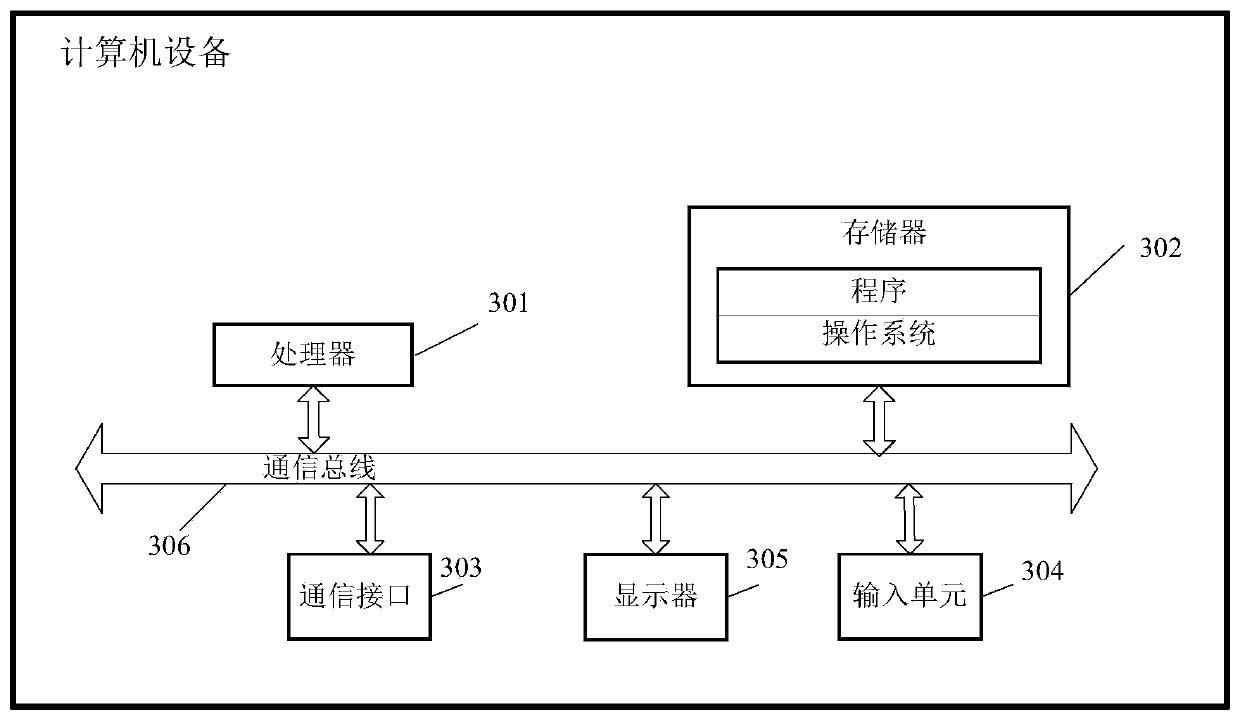

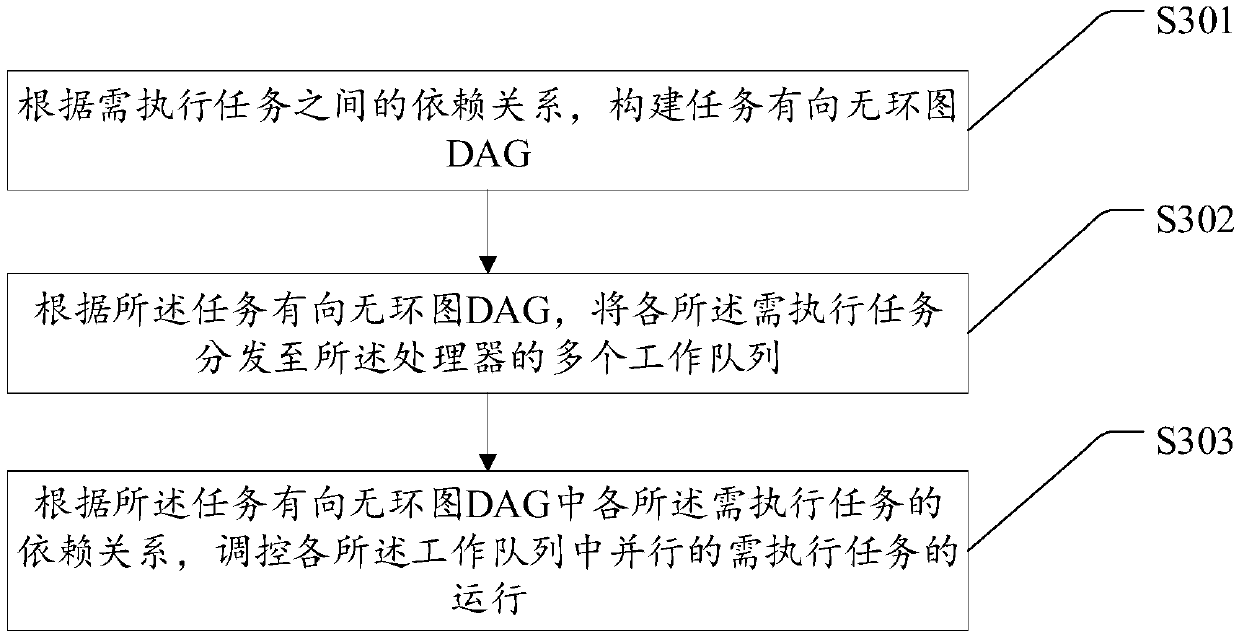

Task scheduling processing method and device and computer equipment

InactiveCN110554909AImprove computing efficiencyImprove execution efficiencyProgram initiation/switchingResource allocationTask dependencyComputer science

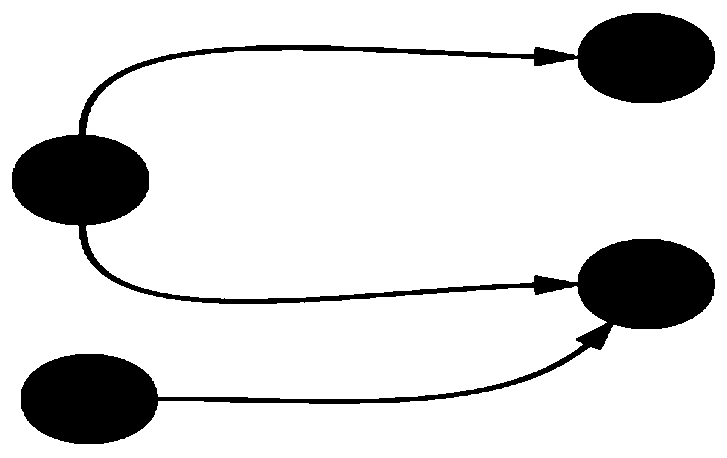

The invention relates to a task scheduling processing method and device and computer equipment. The method comprises the steps of constructing a directed acyclic graph based on a dependency relationship between tasks, constructing a task scheduling queue by performing deep traversal on the constructed directed acyclic graph, and finally controlling scheduling and execution of the tasks based on the dependency relationship between the task scheduling queue and the tasks. The different tasks with the dependency relationship are executed in series according to the dependency relationship, and atleast part of the different tasks without the dependency relationship is executed in parallel. Based on a directed acyclic graph capable of reflecting a task dependency relationship, at least part ofthe different tasks are executed without the dependency relationship in parallel. The utilization rate of computing resources can be increased to a certain extent. Meanwhile, computing efficiency of the tasks is improved, in addition, the dependent tasks are executed in series according to the dependency relationship between the tasks, repeated execution of the tasks on the dependent preposed tasks is avoided, and the task execution efficiency is further improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

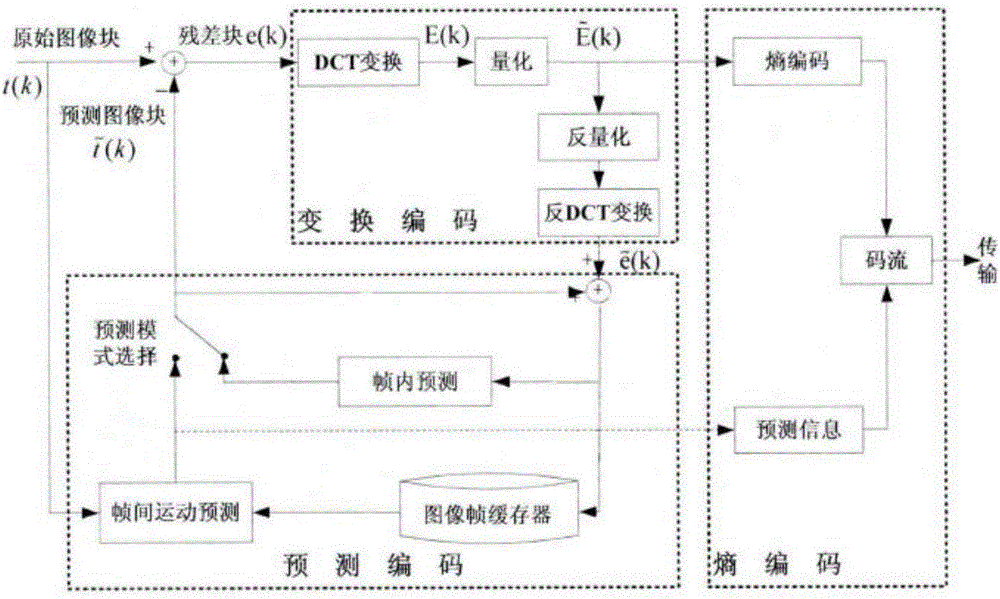

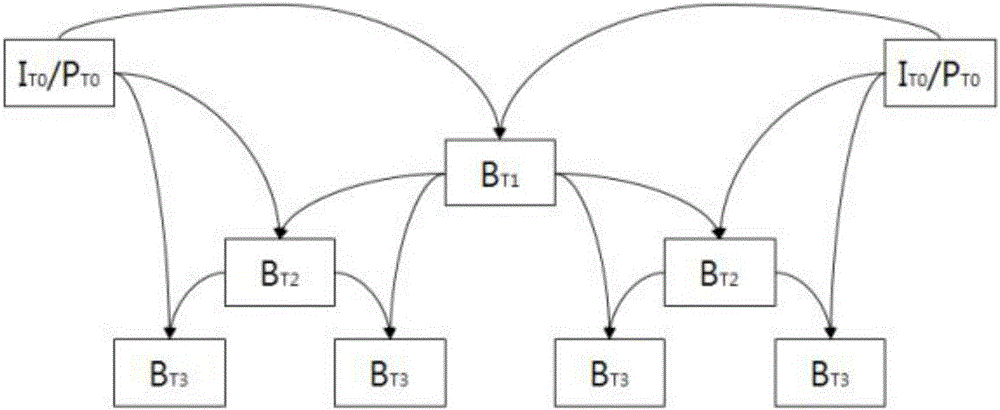

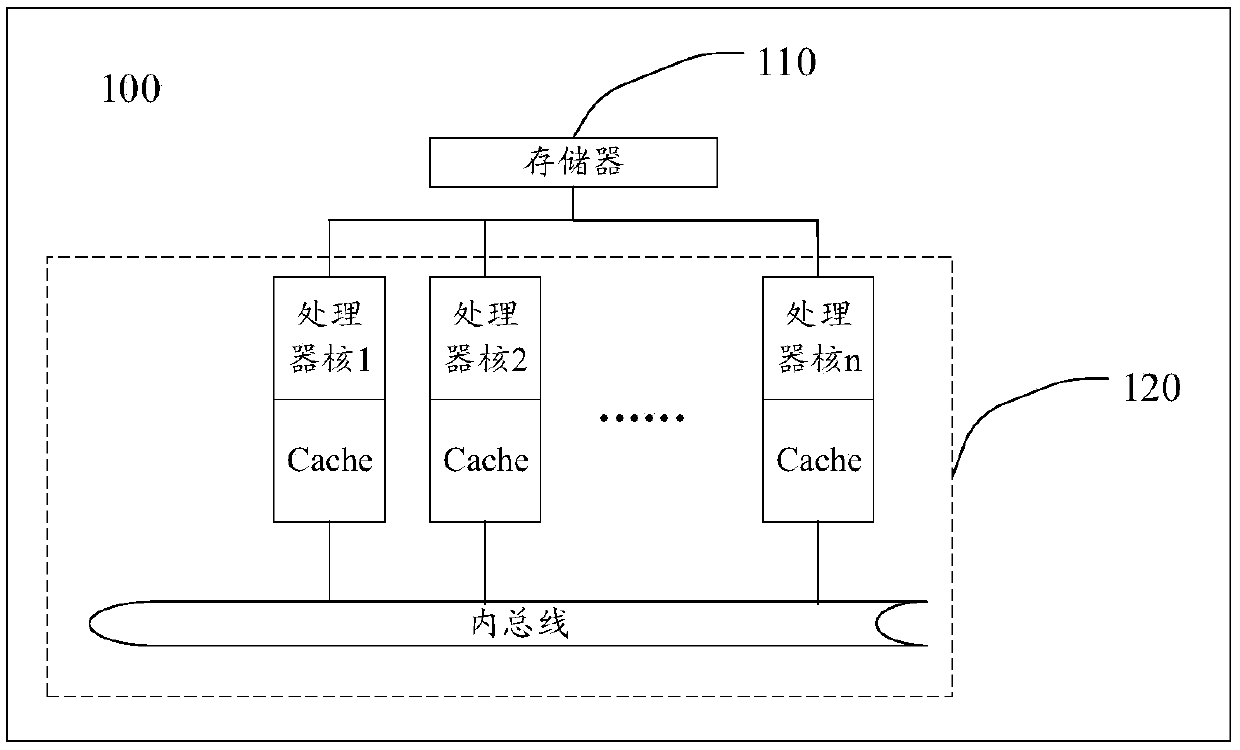

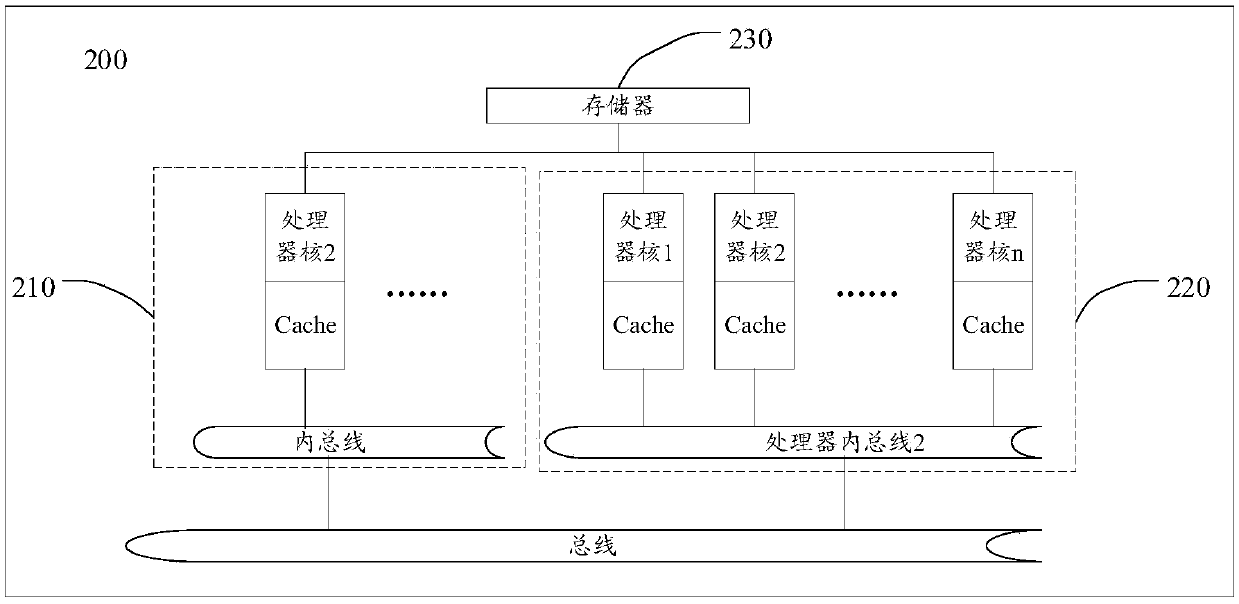

Multilevel multitask parallel decoding algorithm on multicore processor platform

ActiveCN105992008AOptimal Design StructureImprove the design structure to more effectively play the function of the processorDigital video signal modificationRound complexityImaging quality

The invention discloses a multilevel multitask parallel decoding algorithm on a multicore processor platform, and provides the multilevel multitask parallel decoding algorithm for effective combination of tasks and data on the multicore processor platform by utilizing the dependency of HEVC data by aiming at the problems of mass data volume of high-definition videos and ultrahigh processing complexity of HEVC decoding. HEVC decoding is divided into two tasks of frame layer entropy decoding and CTU layer data decoding which are processed in parallel by using different granularity; the entropy decoding task is processed in parallel in a frame level mode; the CTU data decoding task is processed in parallel in a CTU data line mode; and each task is performed by an independent thread and bound to an independent core to operate so that the parallel computing performance of a multicore processor can be fully utilized, and real-time parallel decoding of HEVC full high-definition single code stream using no parallel coding technology can be realized. Compared with serial decoding, the decoding parallel acceleration rate can be greatly enhanced and the decoding image quality can be guaranteed by using the multicore parallel algorithm.

Owner:NANJING UNIV OF POSTS & TELECOMM

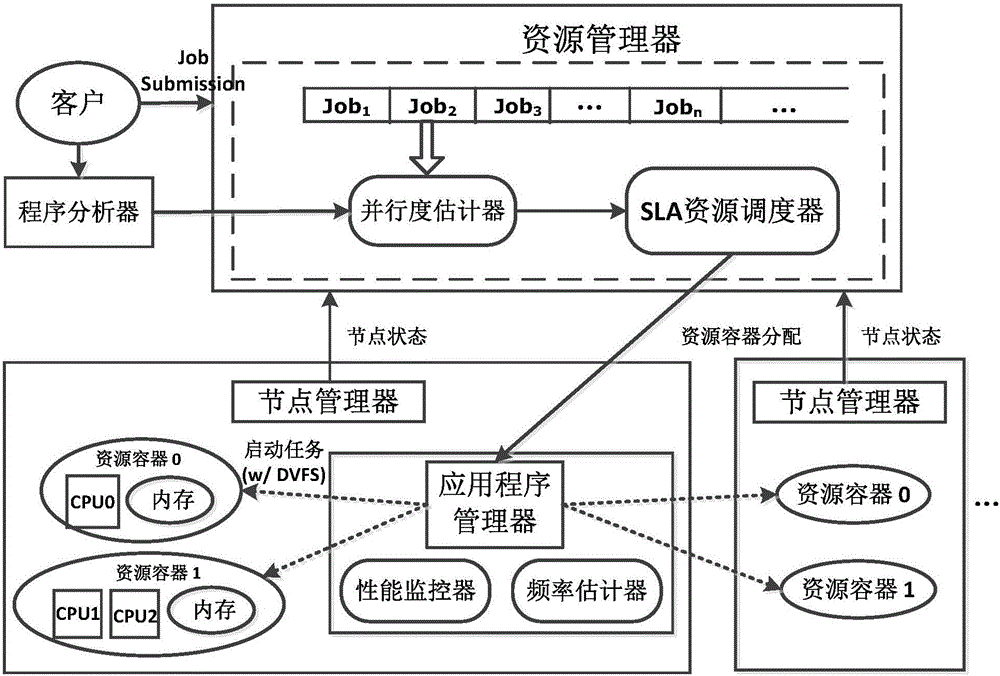

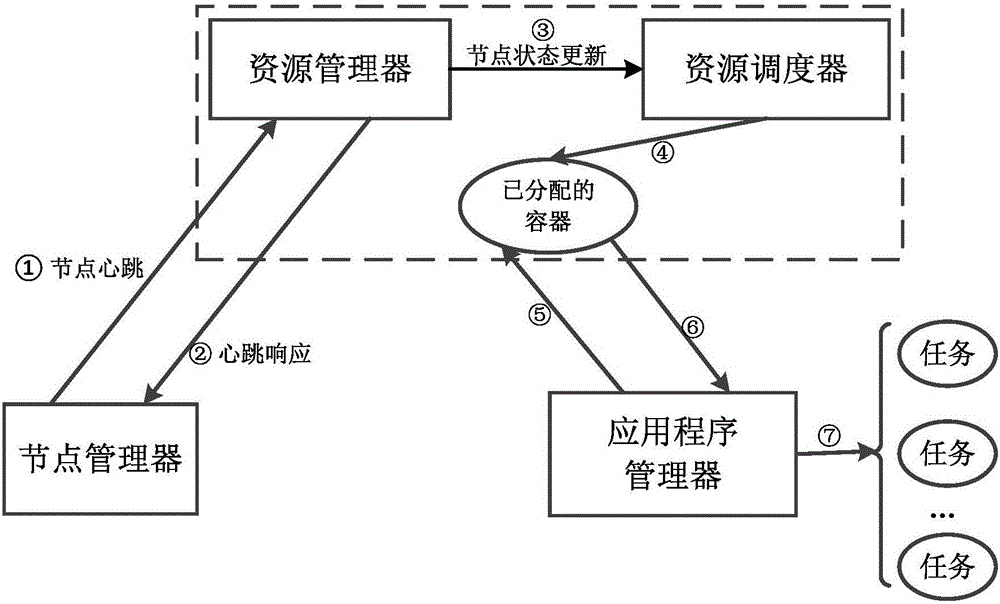

YARN resource allocation and energy-saving scheduling method and system based on service level agreement

ActiveCN104991830AReduce energy consumptionResource allocationPower supply for data processingYarnTask completion

The present invention discloses a YARN resource allocation and energy-saving scheduling method and system based on a service level agreement. The YARN resource allocation and energy-saving scheduling method comprises the following steps of: before submitting MapReduce programs, carrying out pre-analysis on the MapReduce programs and analyzing required performance indexes from previous running logs of the programs; after submitting the MapReduce programs, calculating the minimum task parallelism degrees based on a completion time upper limit according to the performance indexes of the MapReduce programs; according to different parallelism degrees of each MapReduce program allocating quantitative resources to the MapReduce program by an SLA resource scheduler; monitoring the task completion condition of each MapReduce program so as to obtain ideal execution time and frequencies of residual tasks; and according to expected execution frequencies of the residual tasks, dynamically regulating a voltage and a frequency of a CPU by utilizing a CPUfreq subsystem so as to fulfill the aim of saving energy. According to the present invention, on the premise of ensuring the service level agreement of the MapReduce program, the quantitative resources are allocated to the MapReduce program; and a dynamic voltage frequency regulating technology is combined to reduce energy consumption in a cloud calculation platform to the greatest extent.

Owner:SHANDONG UNIV

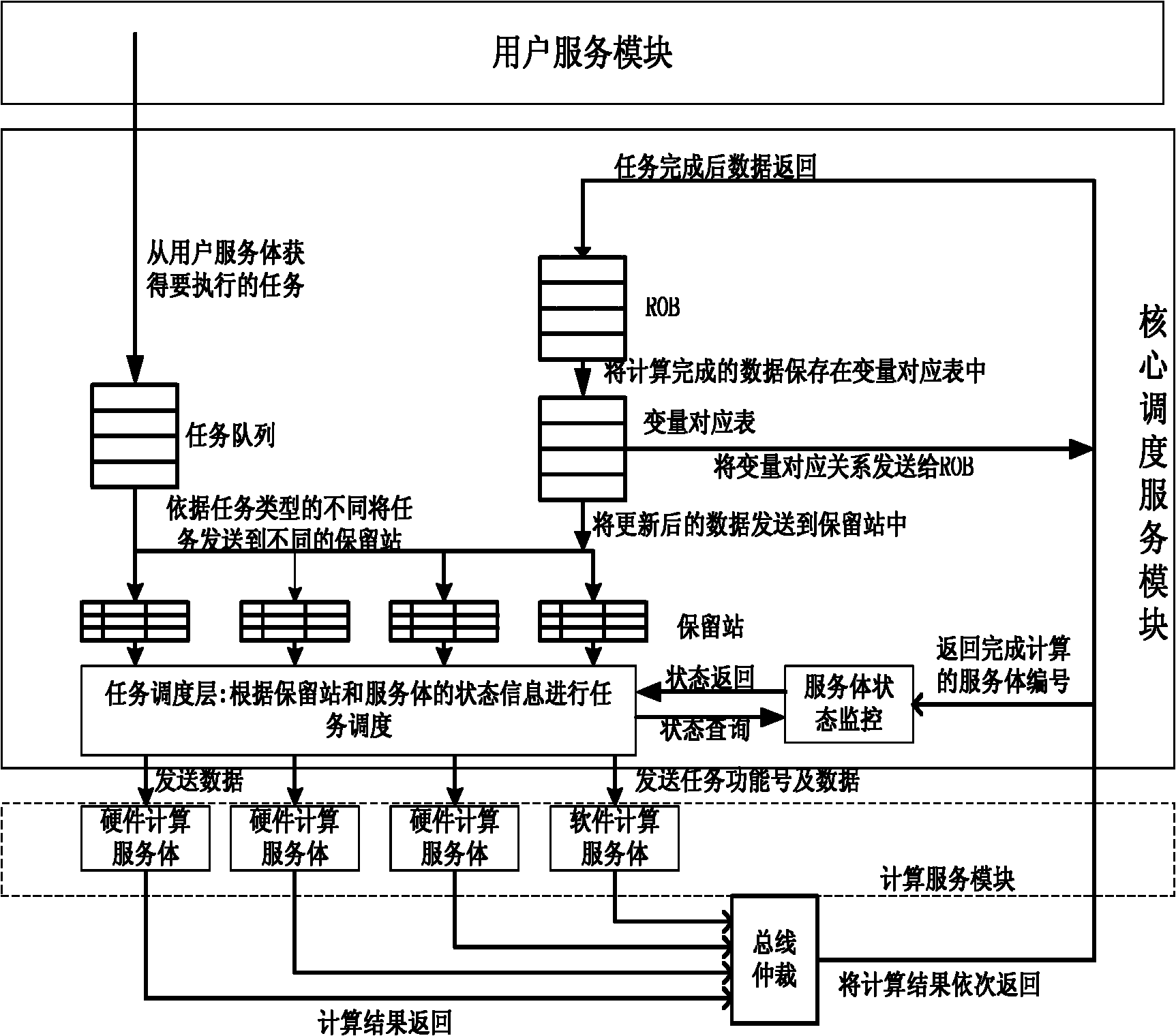

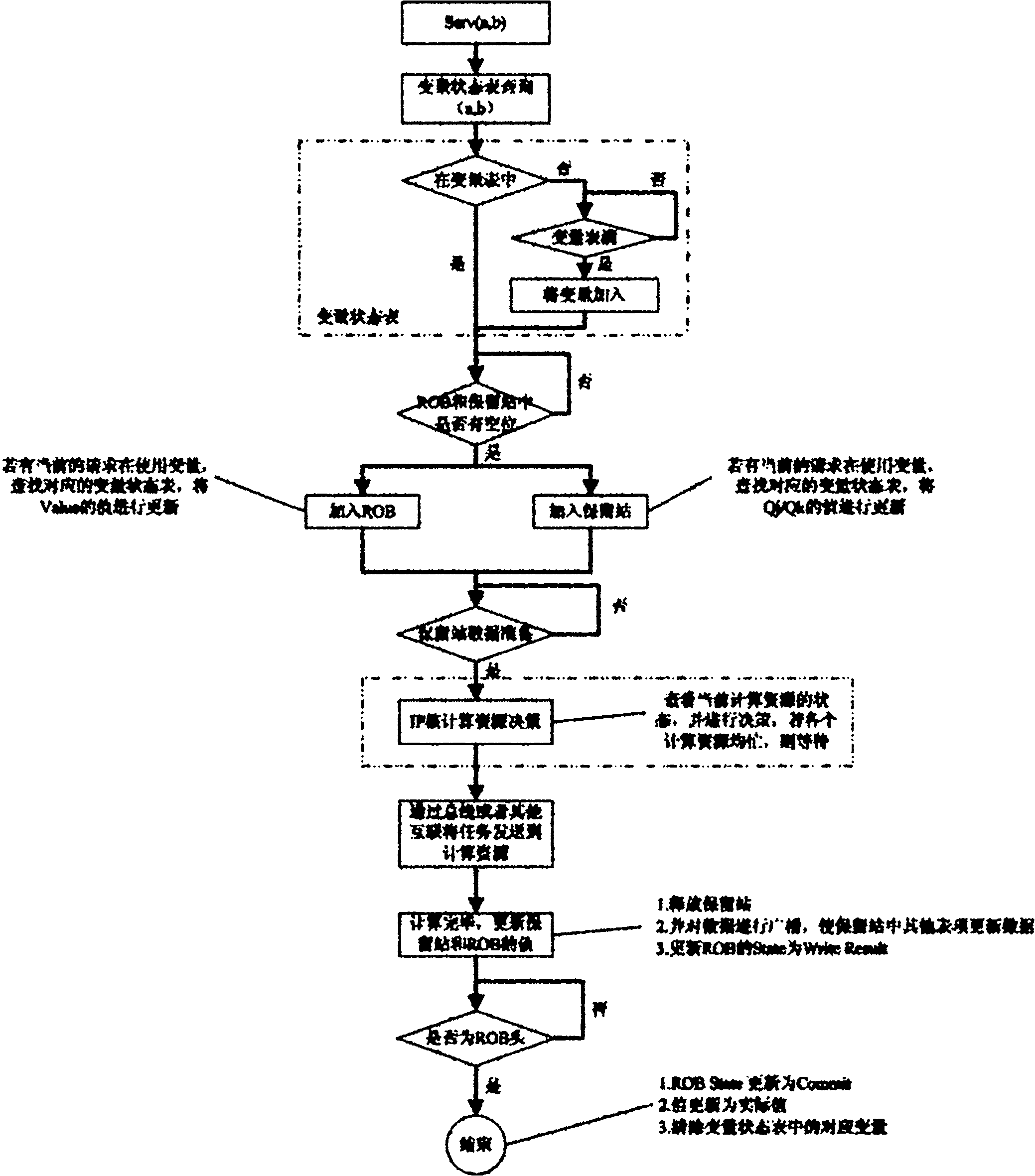

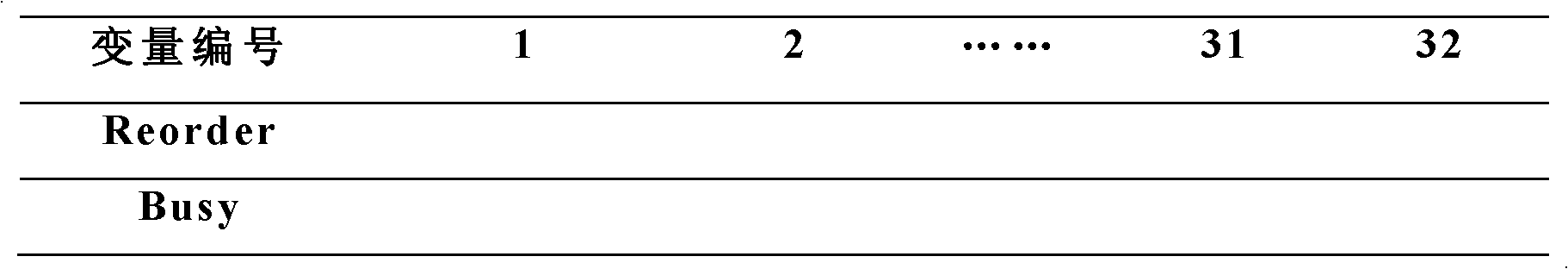

Task scheduling system of on-chip multi-core computing platform and method for task parallelization

ActiveCN102129390AJudging relevanceImprove parallelismMultiprogramming arrangementsMultiple digital computer combinationsMulti core computingService module

The invention discloses a task scheduling system of an on-chip multi-core computing platform and a method for task parallelization, wherein the system comprises user service modules for providing tasks which are needed to be executed, and computation service modules for executing a plurality of tasks on the on-chip multi-core computing platform, and the system is characterized in that core scheduling service modules are arranged between the user service modules and the computation service modules, the core scheduling service modules receive task requests of the user service modules as input, judge the data dependency relations among different tasks through records, and schedule the task requests in parallel to different computation service modules for being executed. The system enhances platform throughput and system performance by performing correlation monitoring and automatic parallelization on the tasks during running.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

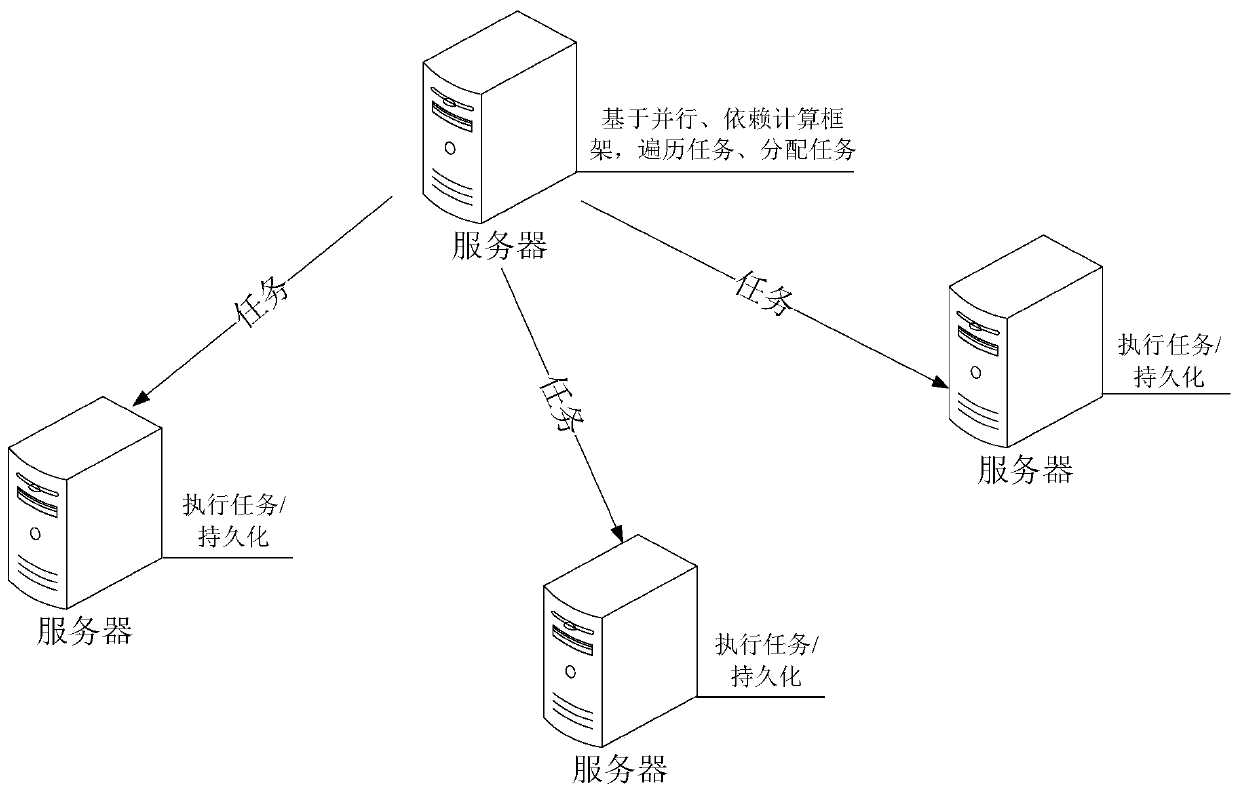

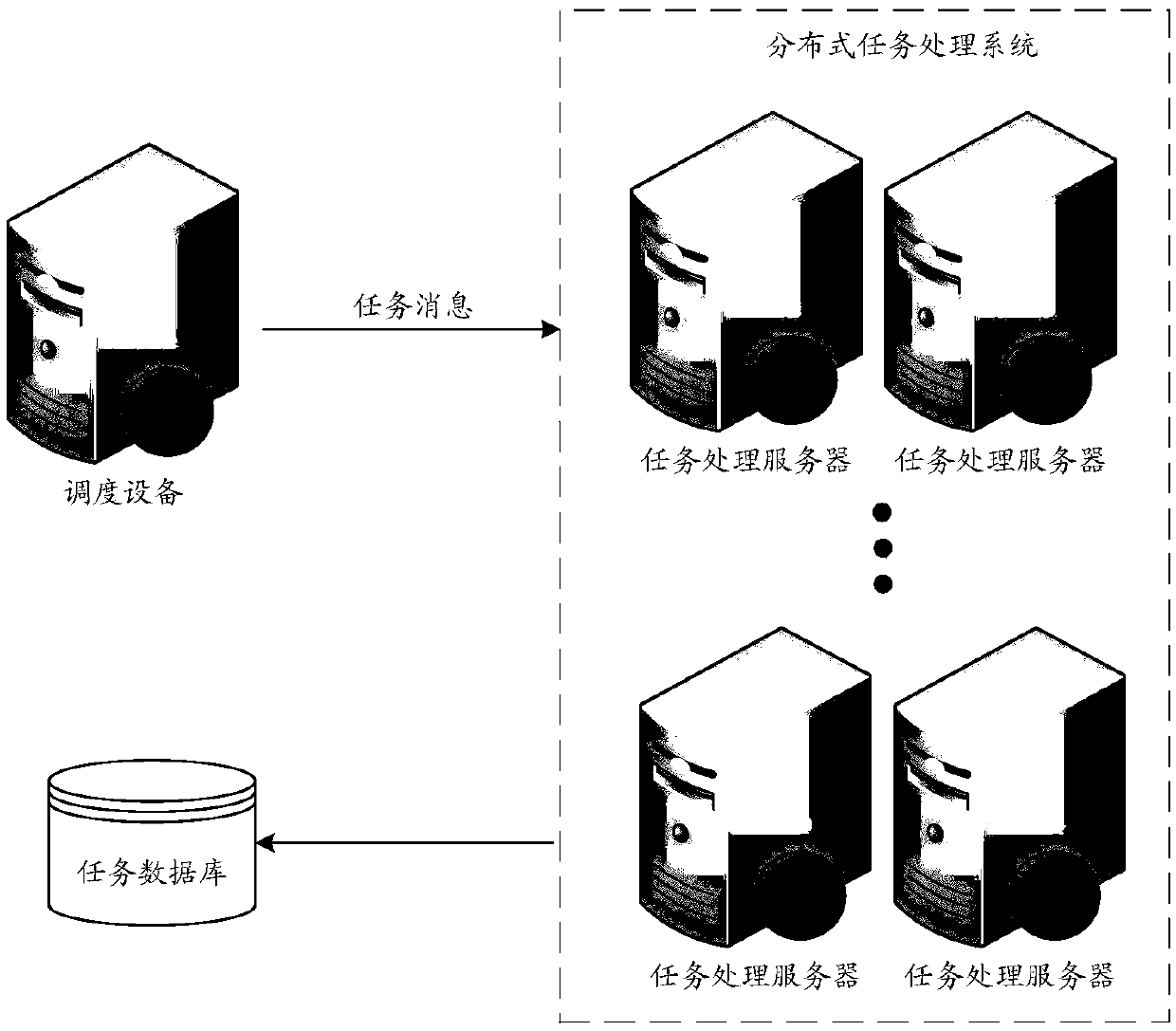

Distributed task processing method, device, system and set

ActiveCN107688500AImplement synchronous mutual exclusion processingDistributed task processing method is simple and efficientProgram synchronisationDistributed computingCentral management

Embodiments of the description disclose distributed task processing method, device, set and system; arranging a central management server in a distributed task processing architecture is not required;however, task messages are distributed to distributed task processing sets through corresponding task distribution sets, so that the task processing sets that receive the task messages 'compete' fora task lock. Only the task processing set that acquires the task lock can process the task messages. A processing mode includes dividing a large task into a certain amount of sub-tasks, and performingparallel processing on the sub-tasks.

Owner:ADVANCED NEW TECH CO LTD

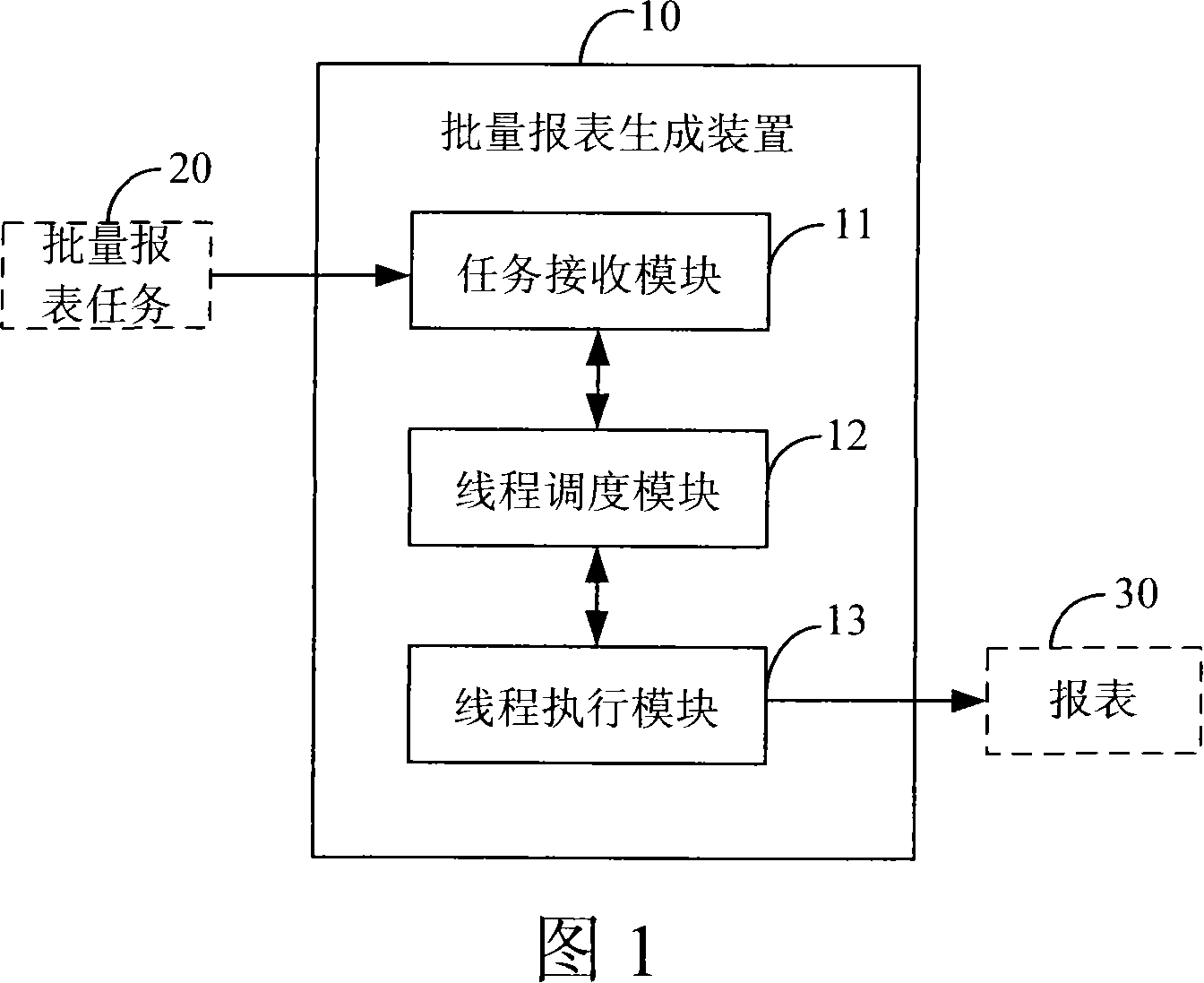

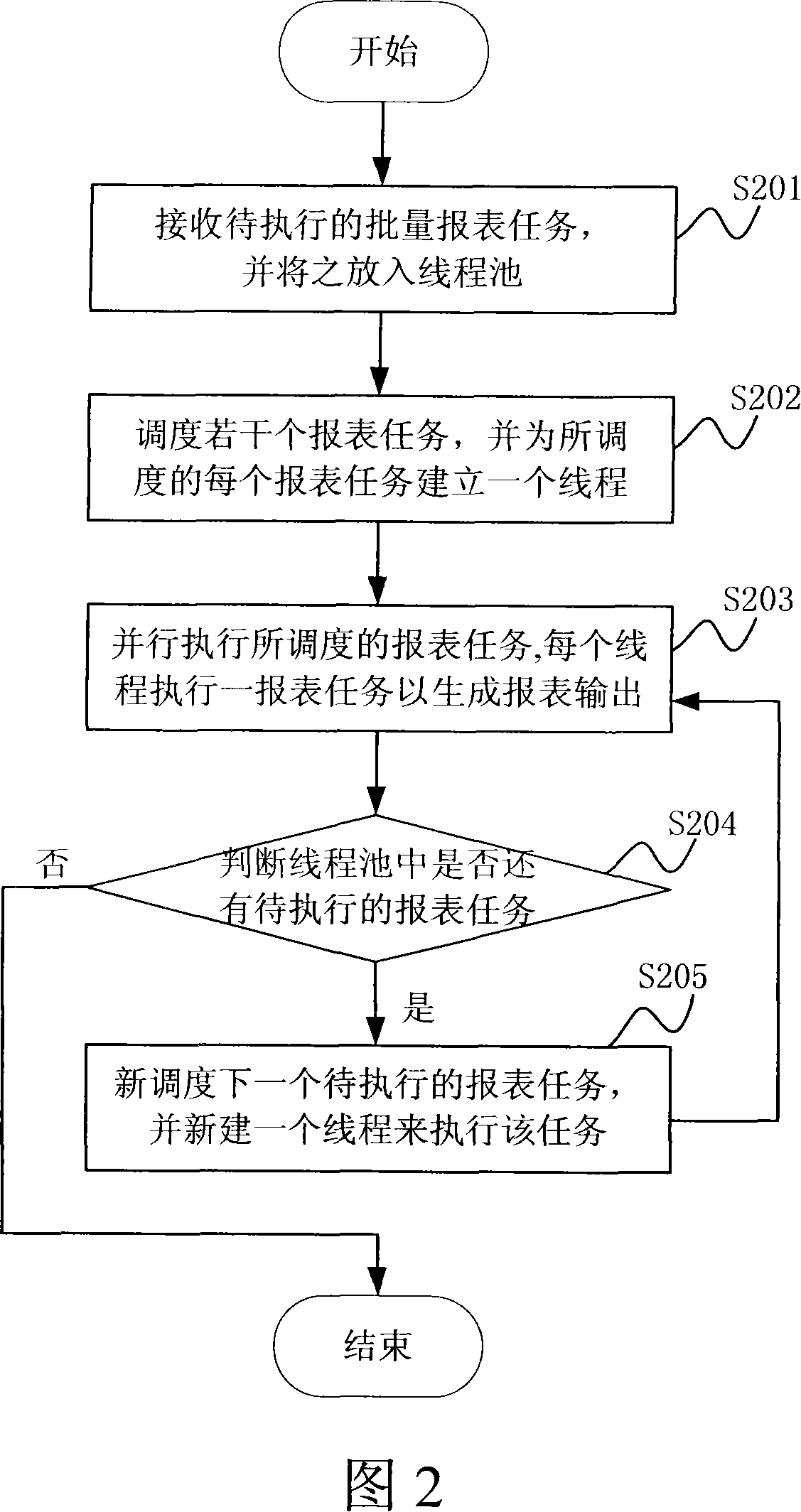

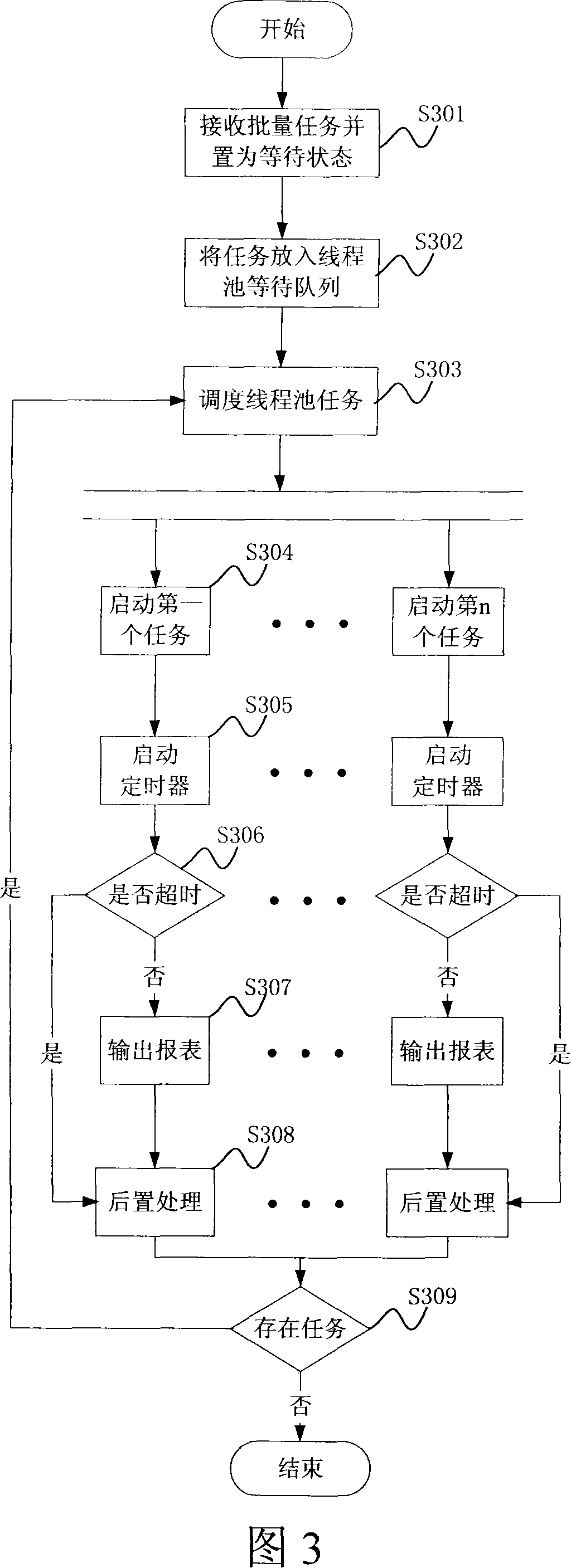

Method and device for realizing batch report generation

InactiveCN101135981AImprove output efficiencyOutput will not affectMultiprogramming arrangementsOffice automationComputer scienceThread pool

The method comprises: receiving the batch report form task awaiting execution, and putting the batch report form task into a thread pool; scheduling several report form tasks from the batch report form tasks in the thread pool, and establishing a thread for each scheduled report form task, and executing the scheduled report form task, and generating an output of report form after each thread executes the report form task; after a thread complete an execution of report form, decide if there are report form tasks awaiting execution in the thread pool; if yes, then scheduling the next report form task awaiting execution, and newly creating a thread to execute the newly-scheduled report task; otherwise, ending the thread. The invention also provides an apparatus thereof.

Owner:ZTE CORP

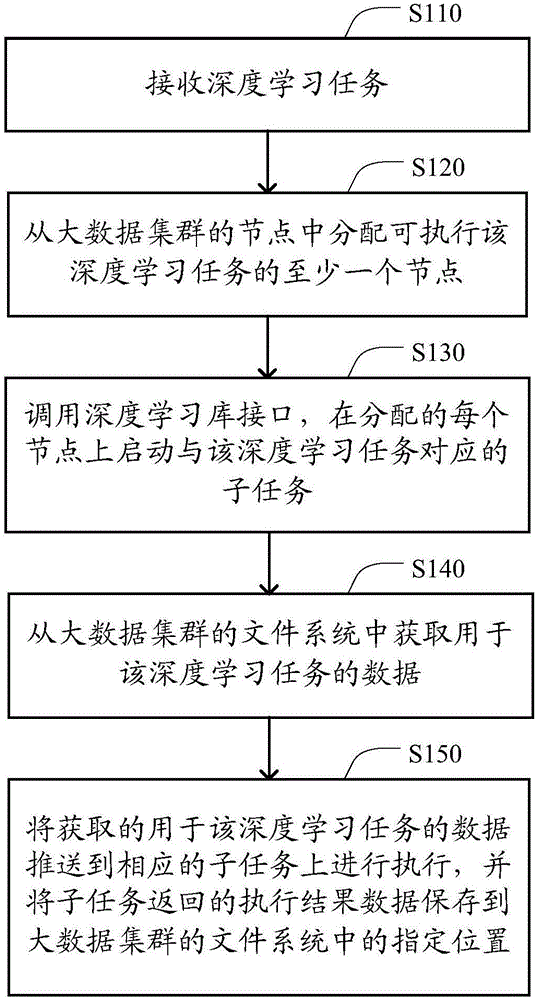

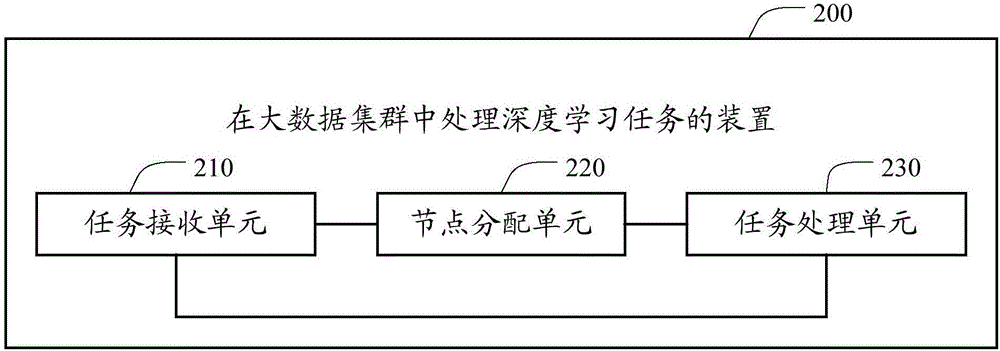

Method and apparatus for processing deep learning task in big-data cluster

InactiveCN106529682AEasy to handleImprove execution efficiencyMachine learningDatabase interfaceFile system

The invention discloses a method and apparatus for processing a deep learning task in a big-data cluster. The method comprises the following steps: receiving the deep learning task; distributing at least one node capable of executing the deep learning task from nodes of the big-data cluster; invoking a deep learning database interface, and starting a subtask corresponding to the deep learning task on each distributed node; obtaining data used for the deep learning task from a file system of the big-data cluster; and pushing the obtained data used for the deep learning task to the corresponding subtask for execution, and storing an execution result returned by the subtask at a specific position in the file system of the big-data cluster. According to the technical scheme, the deep learning task can be effectively processed in the big-data cluster, the advantages of parallel execution of big-data cluster tasks and large data storage quantity are utilized, deep learning can be organically combined with big-data calculation, and the execution efficiency of the deep learning task is greatly improved.

Owner:BEIJING QIHOO TECH CO LTD +1

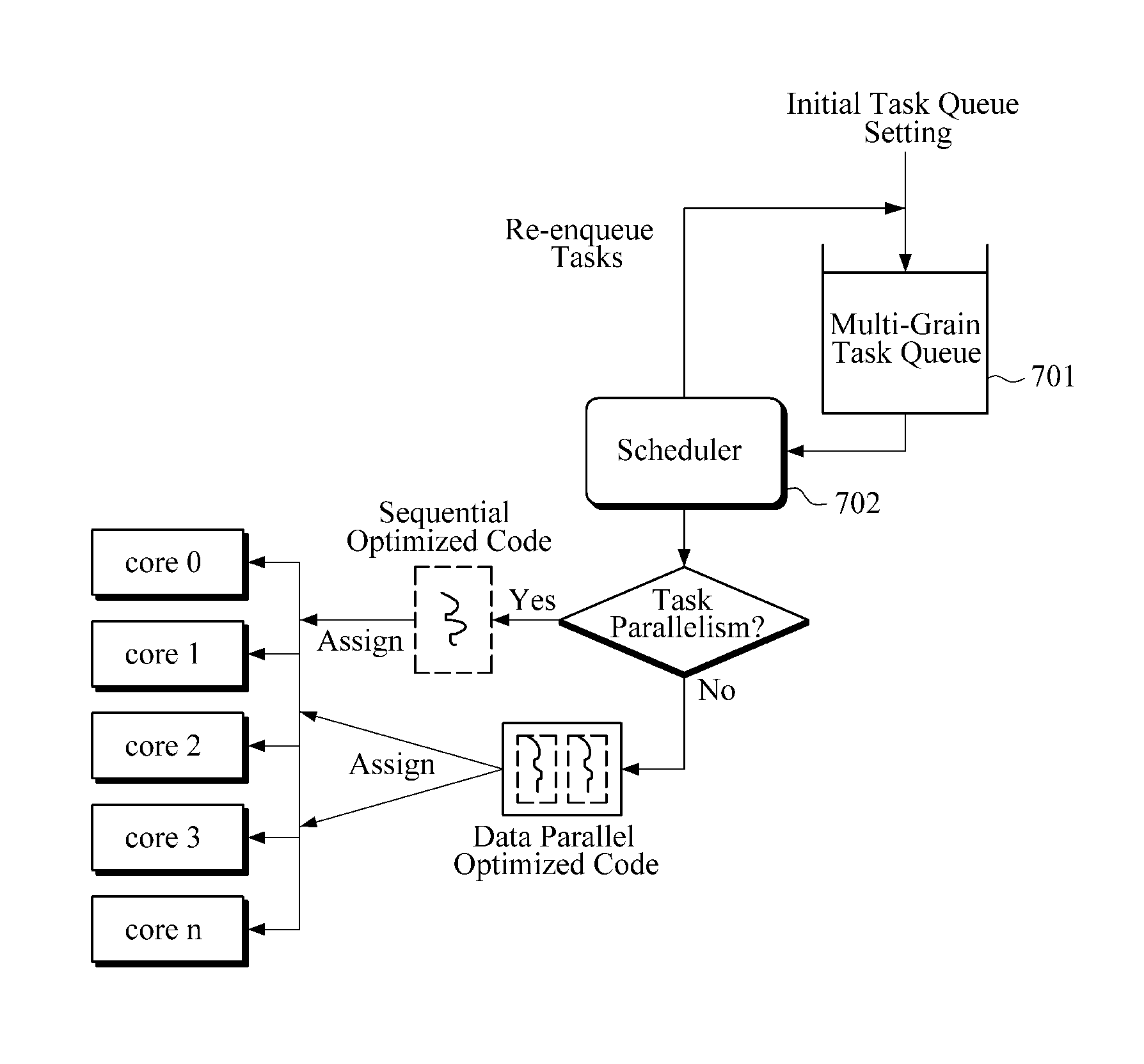

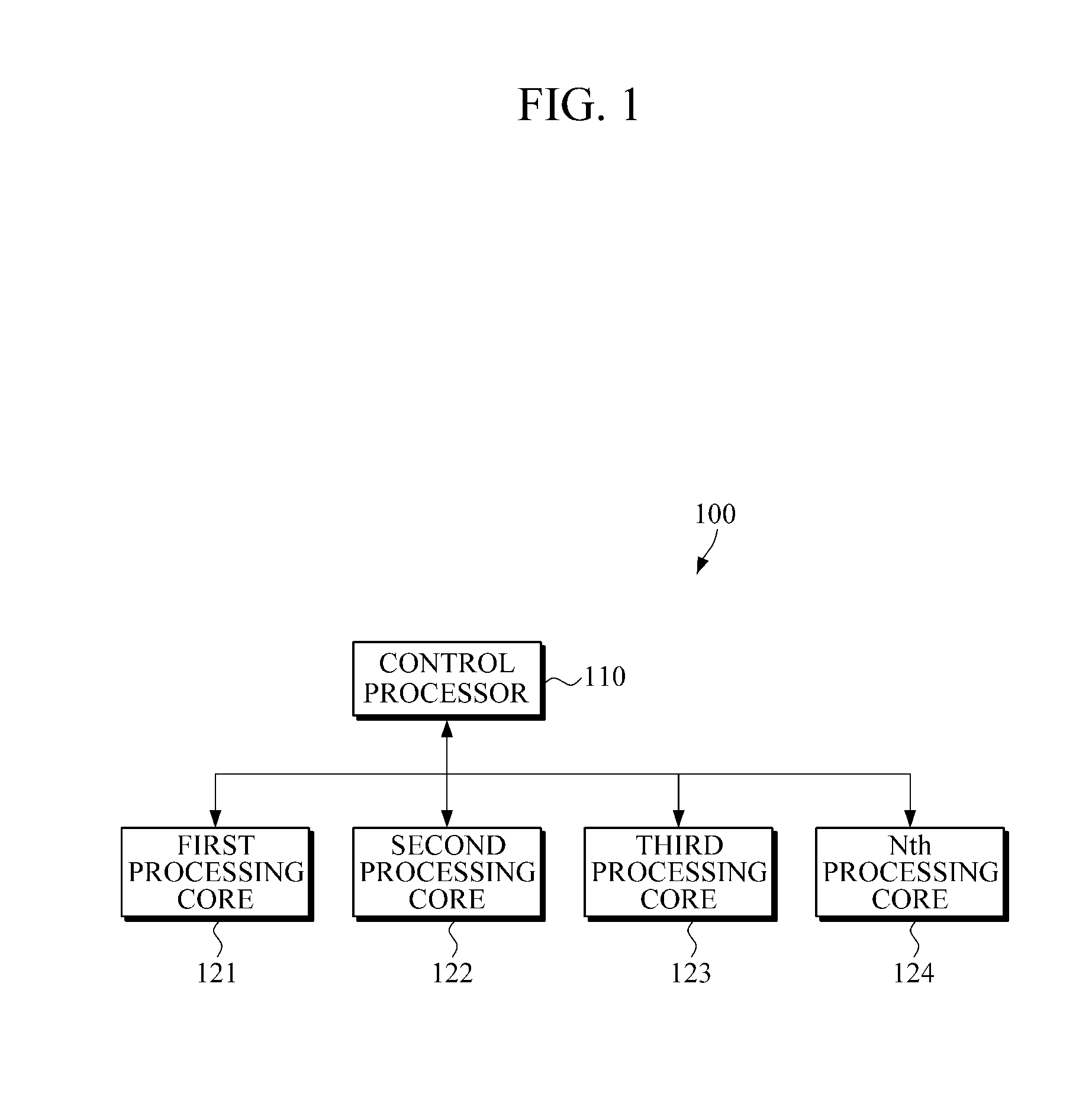

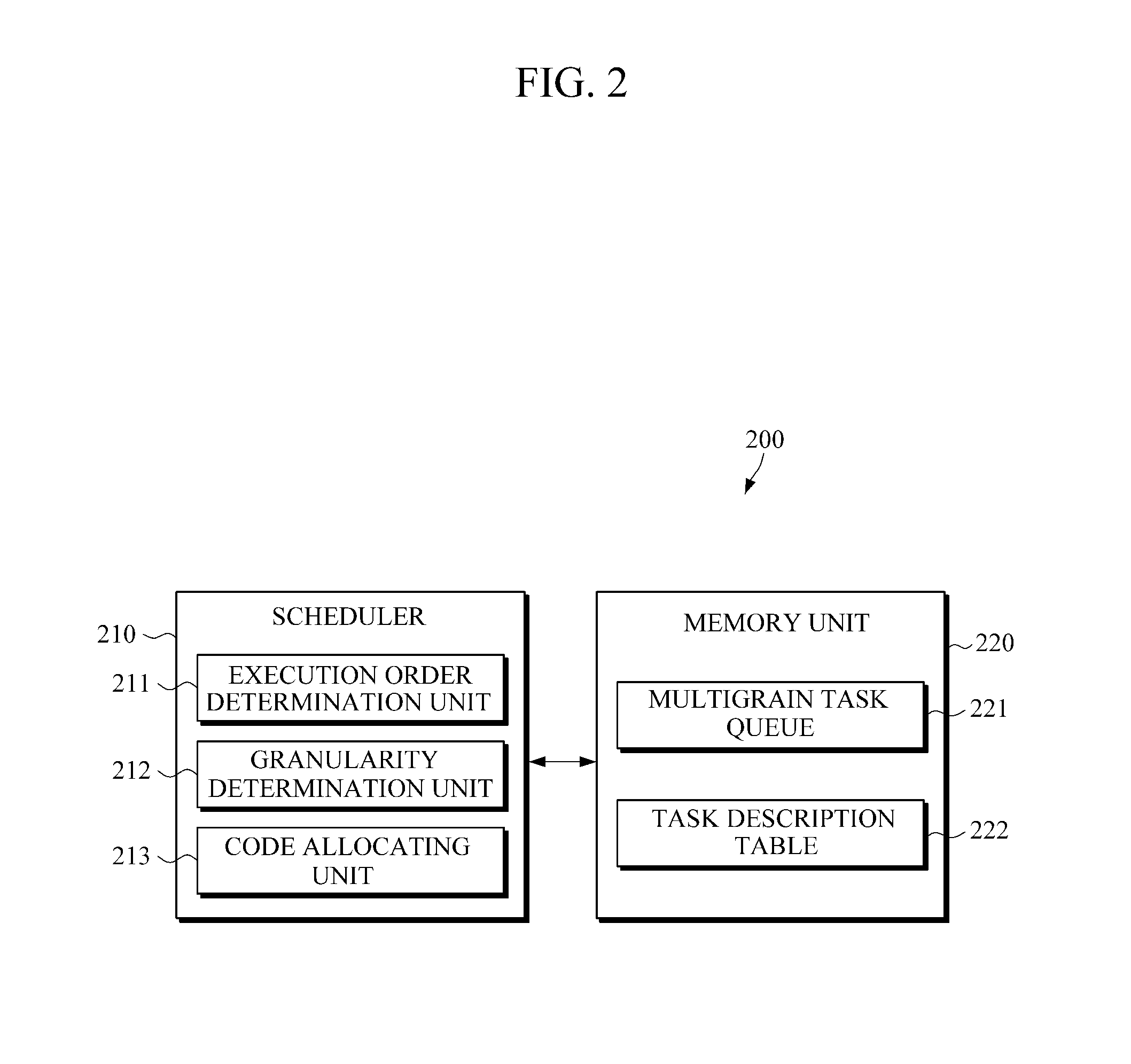

Apparatus and method for parallel processing

InactiveUS20110161637A1Operational speed enhancementDigital computer detailsParallel processingDegree of parallelism

An apparatus and method for parallel processing in consideration of degree of parallelism are provided. One of a task parallelism and a data parallelism is dynamically selected while a job is processed. In response to a task parallelism being selected, a sequential version code is allocated to a core or processor for processing a job. In response to a data parallelism being selected, a parallel version code is allocated to a core a processor for processing a job.

Owner:SAMSUNG ELECTRONICS CO LTD

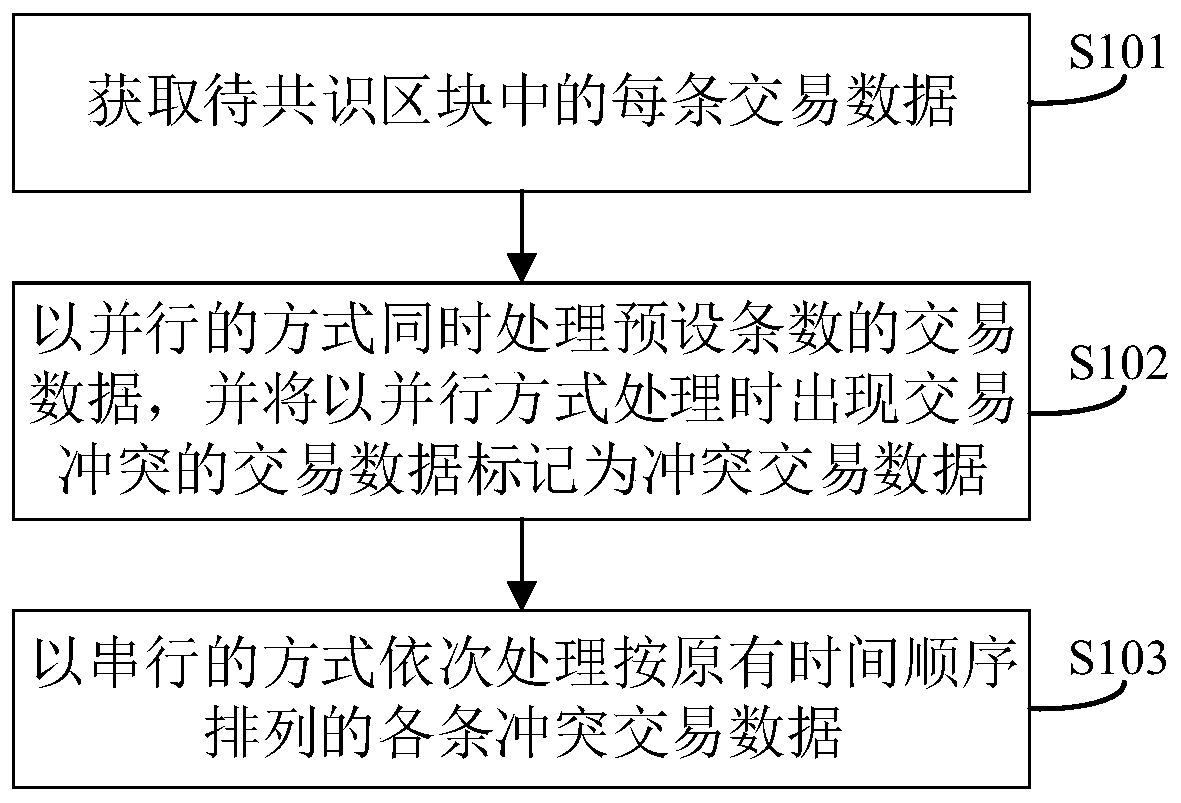

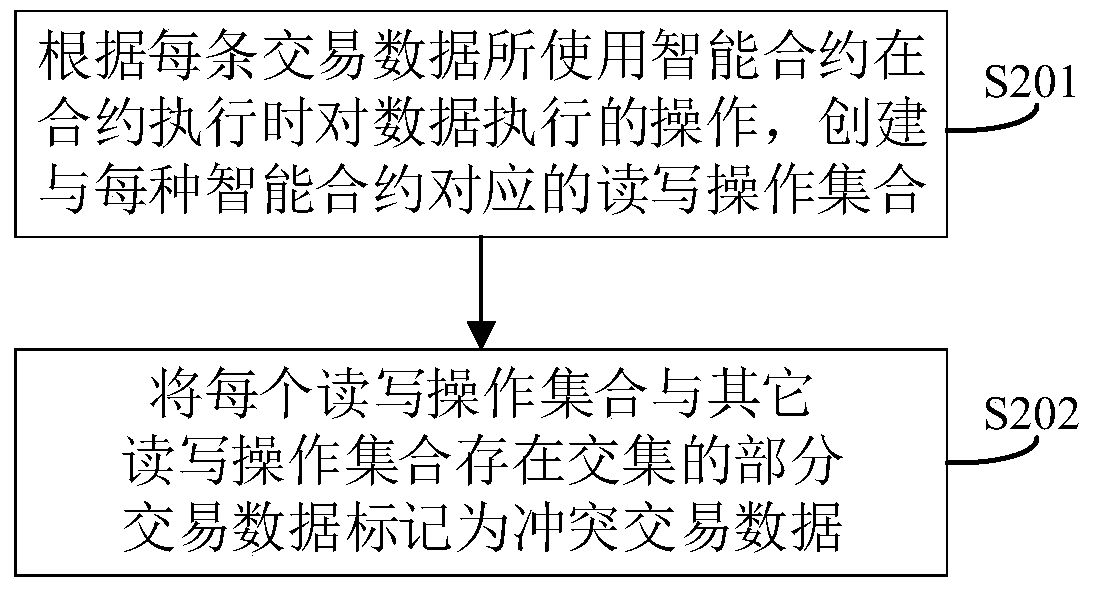

Block chain transaction data processing method and device, electronic device and medium

ActiveCN109784930AReduce processingImprove processing efficiencyPayment protocolsEnergy efficient computingTransaction dataHigh effectiveness

The invention discloses a block chain transaction data processing method. The method comprises the following steps: firstly, trying to simultaneously process transaction data of a to-be-consented block in a parallel manner; due to the fact that transaction conflicts occur when not all the transaction data are processed in parallel, all the transaction data without the transaction conflicts can beprocessed in a high-efficiency mode of parallel processing, and then only the conflict transaction data with the transaction needs to be processed in a serial mode again. Compared with the prior art in which the transaction conflicts are executed in a serial mode no matter whether the transaction conflicts exist or not, the technical scheme provided by the invention can utilize the multi-task parallel processing capacity of the equipment to the maximum extent on the basis that the processing result is not influenced, the processing time consumption is reduced, and the processing efficiency isimproved. The invention further discloses a block chain transaction data processing device, an electronic device and a computer readable storage medium which have the above beneficial effects.

Owner:SHENZHEN THUNDER NETWORK TECH +1

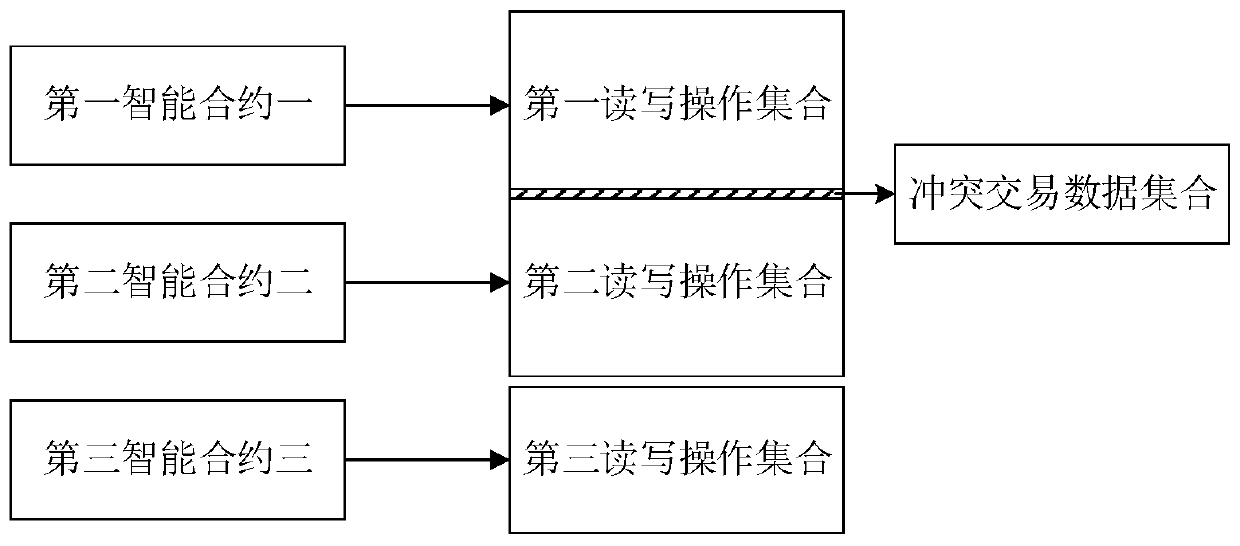

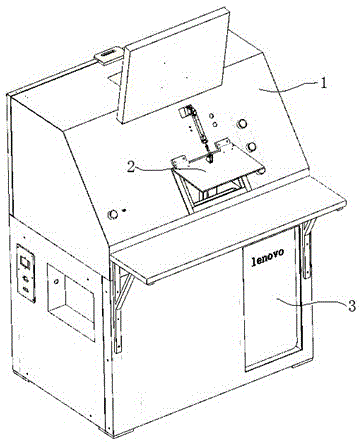

Mobile phone multi-station parallel testing device and realization method thereof

InactiveCN105007357AIndependentNo mutual interferenceSubstation equipmentTest flowTime-division multiplexing

The present invention discloses a mobile phone multi-station parallel testing device and a realization method thereof, and the problems of complexity and low efficiency of existing mobile phone testing equipment are solved. The device comprises a sound insulation box body with a cabinet door, a multi-station parallel testing mechanism arranged in the sound insulation box body, an industrial control machine and a control circuit board. The multi-station parallel testing mechanism comprises a multi-station mobile phone carrier for placing multiple mobile phones, and testing stations fixed in the sound insulation box body and are corresponding to the positions of the multi-station mobile phone carrier for placing mobile phone, and at least two testing stations in the testing stations are used for testing different projects. The multi-station parallel testing mechanism also comprises a central rotation mechanism for rotating the multi-station mobile phone carrier such that the mobile phones placed on the multi-station mobile phone carrier are moved to another station from a current testing station. According to the mobile phone multi-station parallel testing device and the realization method, through time division multiplexing and multi-task parallel test, the working efficiency is four times of a traditional testing process in the condition of not adding hardware resources.

Owner:CHENGDU BOTOVISION TECH CO LTD

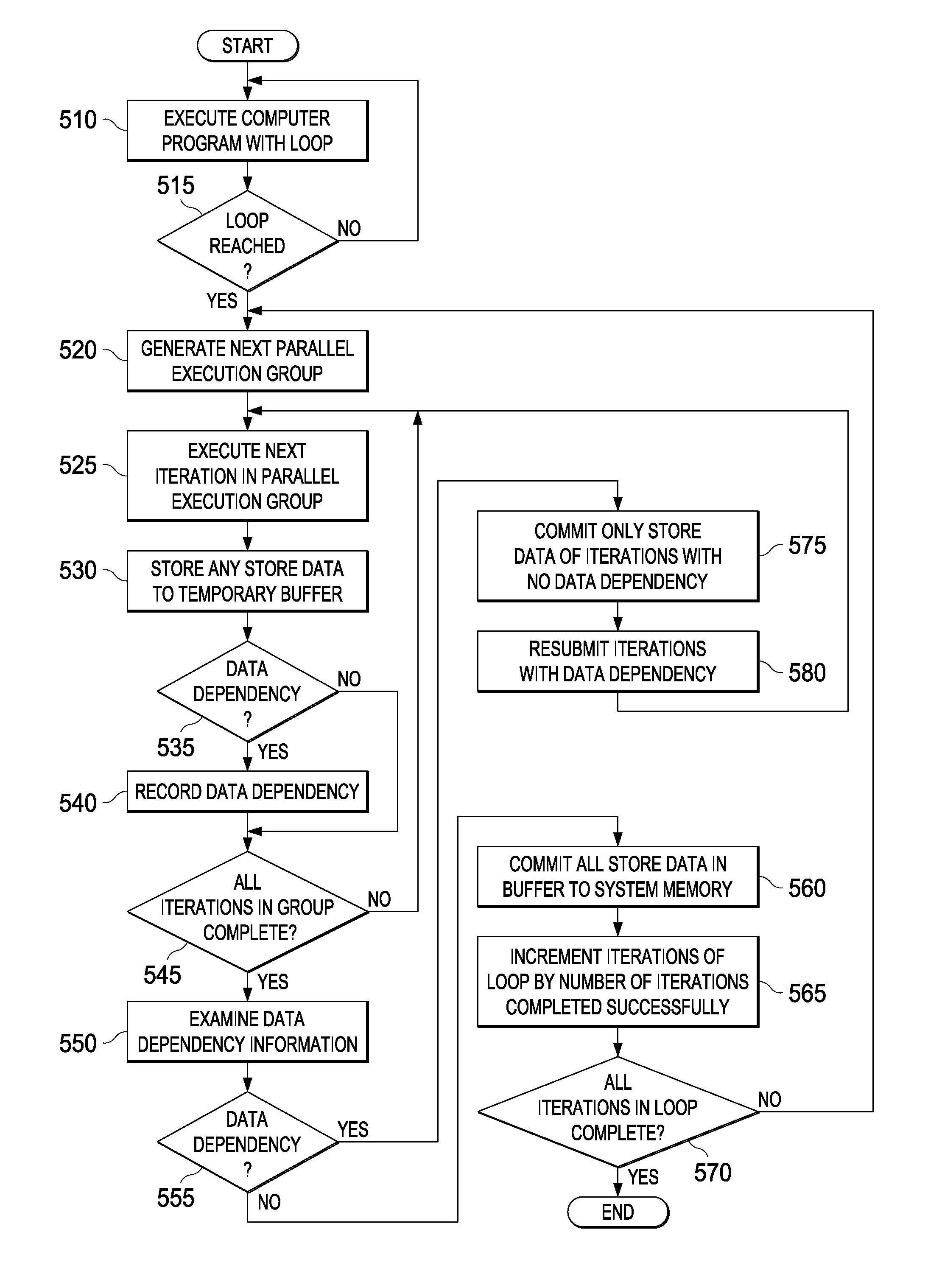

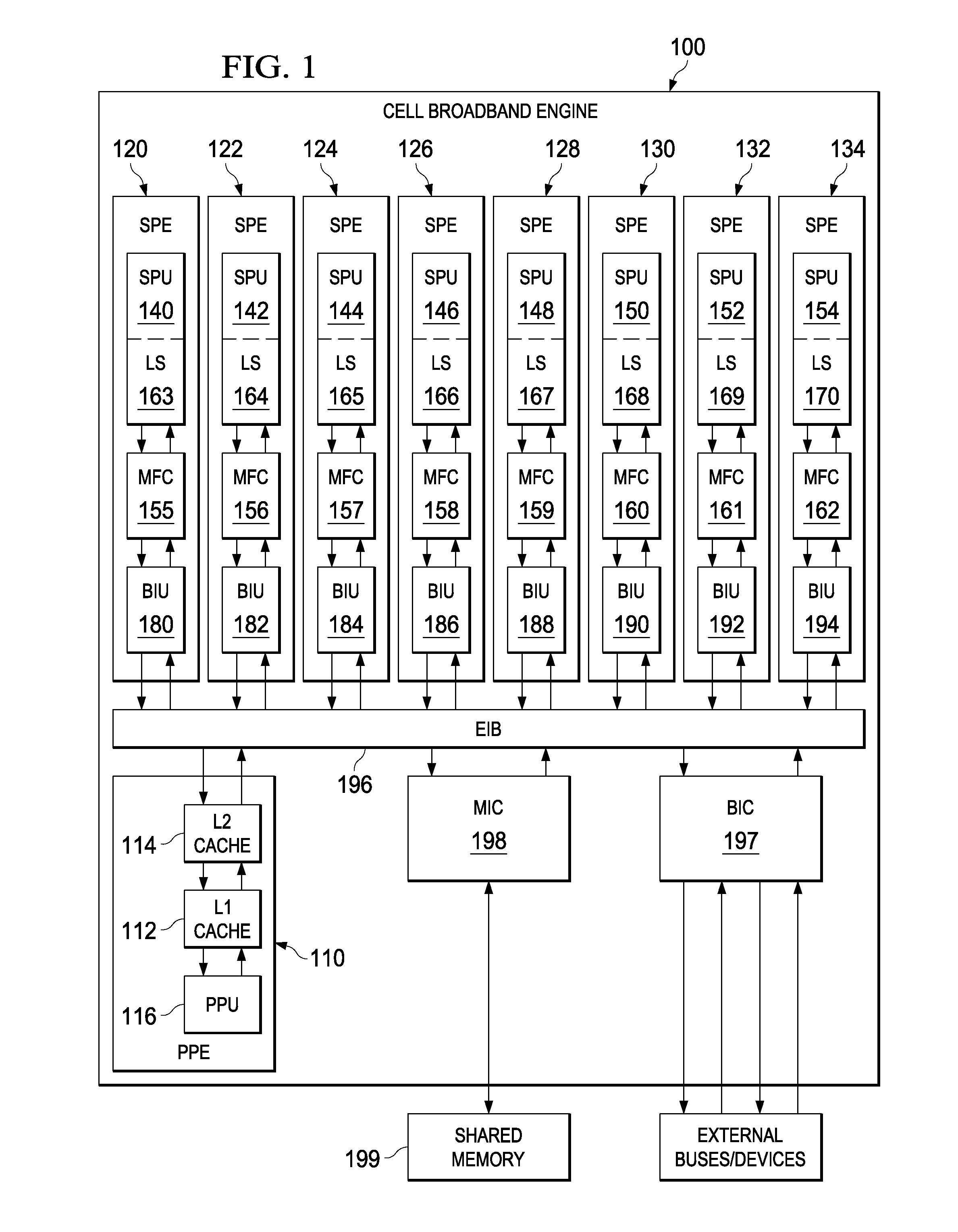

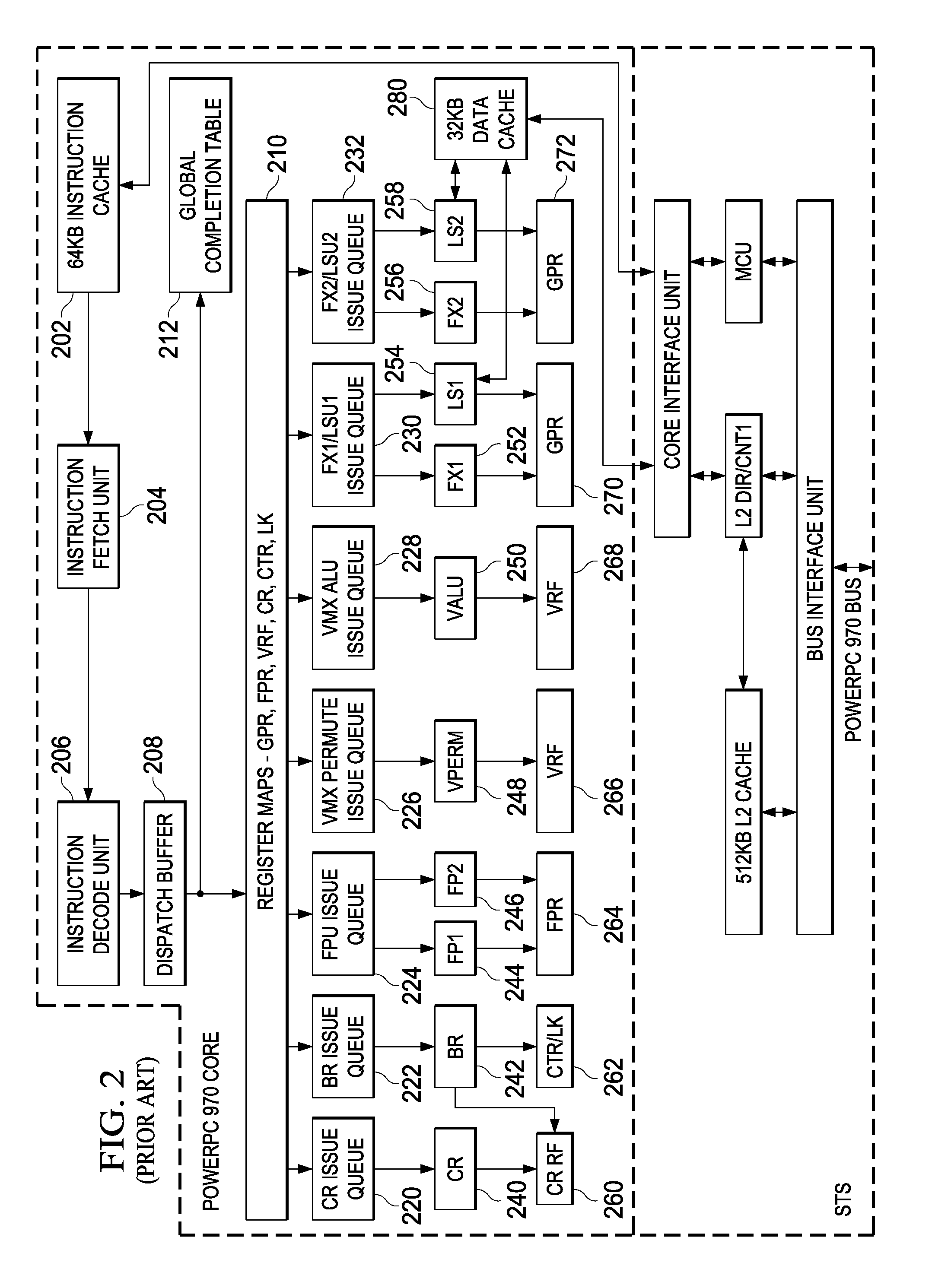

Runtime Extraction of Data Parallelism

Mechanisms for extracting data dependencies during runtime are provided. The mechanisms execute a portion of code having a loop and generate, for the loop, a first parallel execution group comprising a subset of iterations of the loop less than a total number of iterations of the loop. The mechanisms further execute the first parallel execution group and determining, for each iteration in the subset of iterations, whether the iteration has a data dependence. Moreover, the mechanisms commit store data to system memory only for stores performed by iterations in the subset of iterations for which no data dependence is determined. Store data of stores performed by iterations in the subset of iterations for which a data dependence is determined is not committed to the system memory.

Owner:IBM CORP

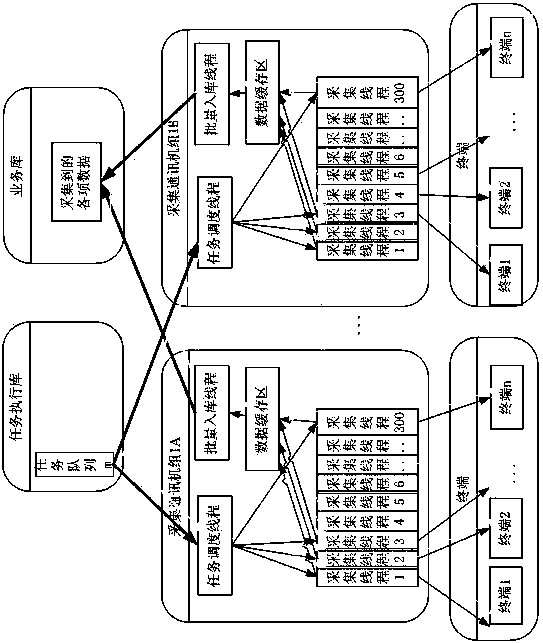

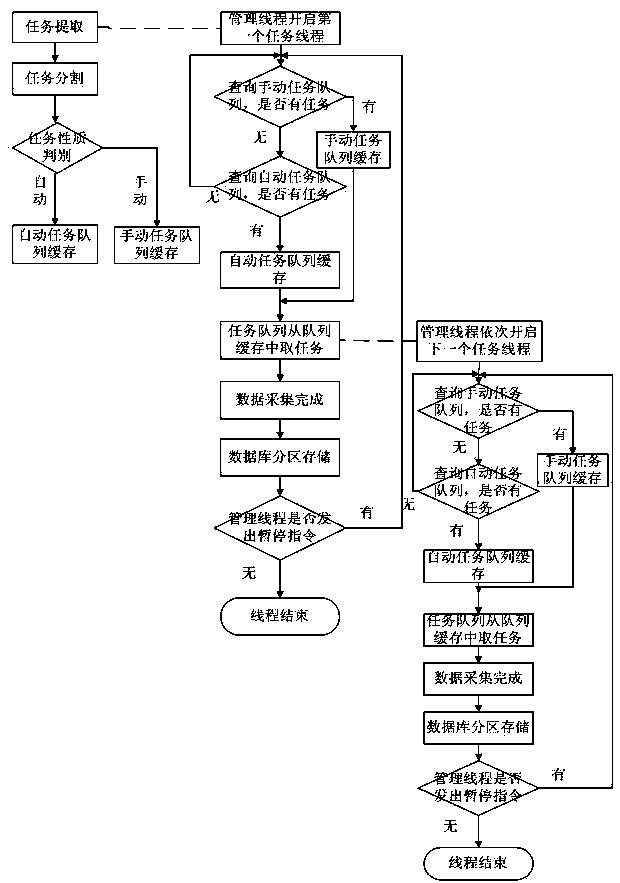

Task parallel processing method for electricity utilization information collection system

ActiveCN103514277AScalableSolve the cumbersomeDatabase management systemsSpecial data processing applicationsElectricityCommunication unit

The invention discloses a task parallel processing method for an electricity utilization information collection system. Distributed collection communication units are established, parallel collection threads of the collection communication units are correspondingly distributed on terminals of various cities to execute collection tasks, and collected tasks are decomposed according to different types of the terminals; operating states of all the collection threads of current collection communication units are inquired through management of the threads, and when vacant collection threads exist, tasks needing to be executed in a task queue are fetched; parallel processing is carried out on the collection tasks of the cities; data items collected through multiple collection threads are stored at the same time, and the threads are partitioned through a data caching area and are simultaneously read and written. Through the distributed parallel processing method, timeliness of task data of different users is guaranteed, and execution efficiency of the collection system is improved.

Owner:STATE GRID CORP OF CHINA +3

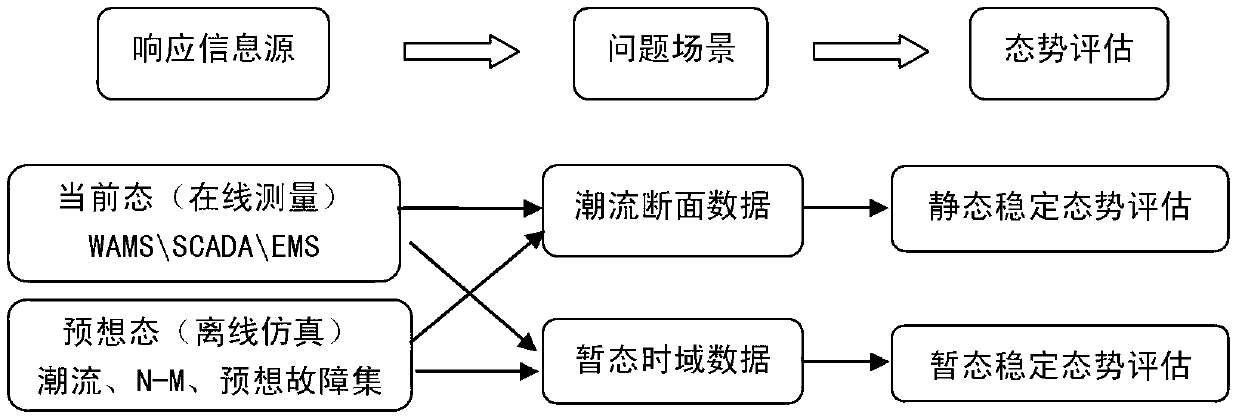

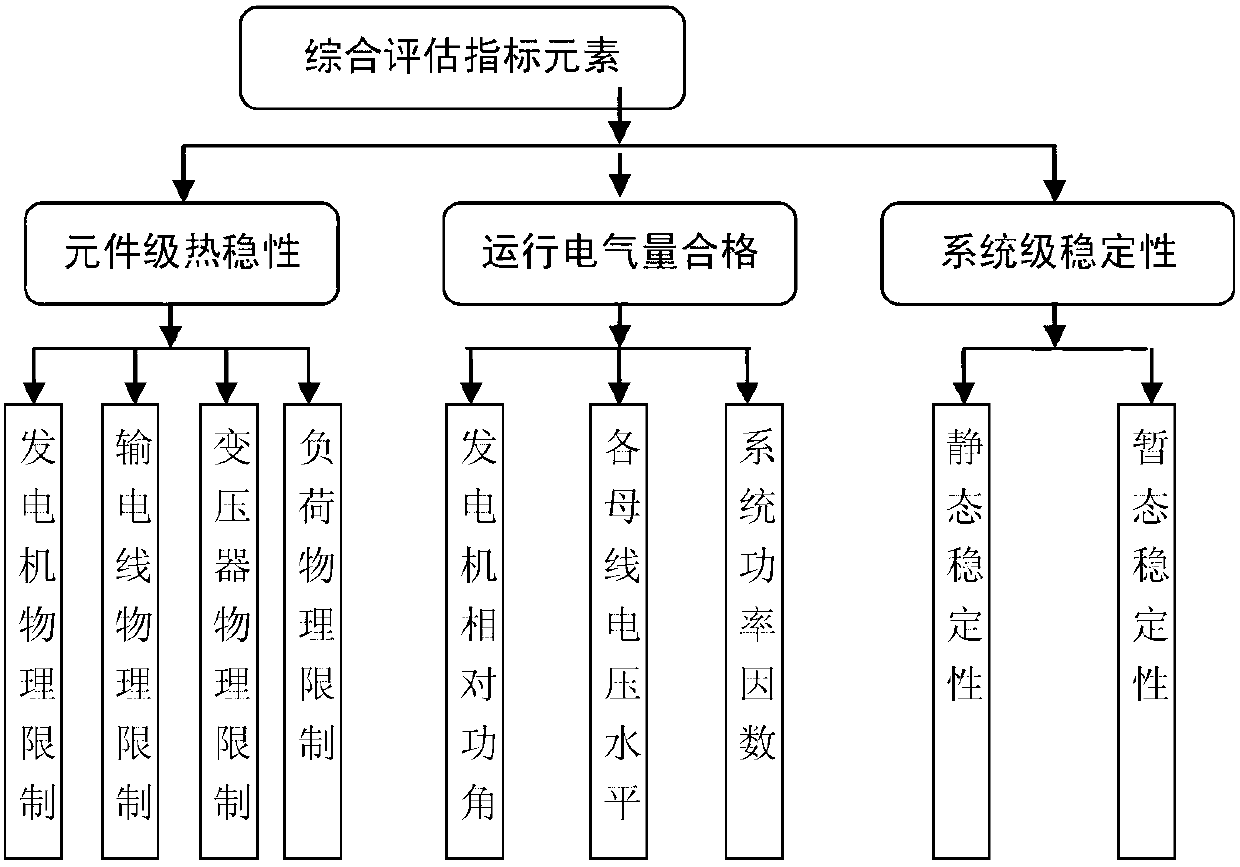

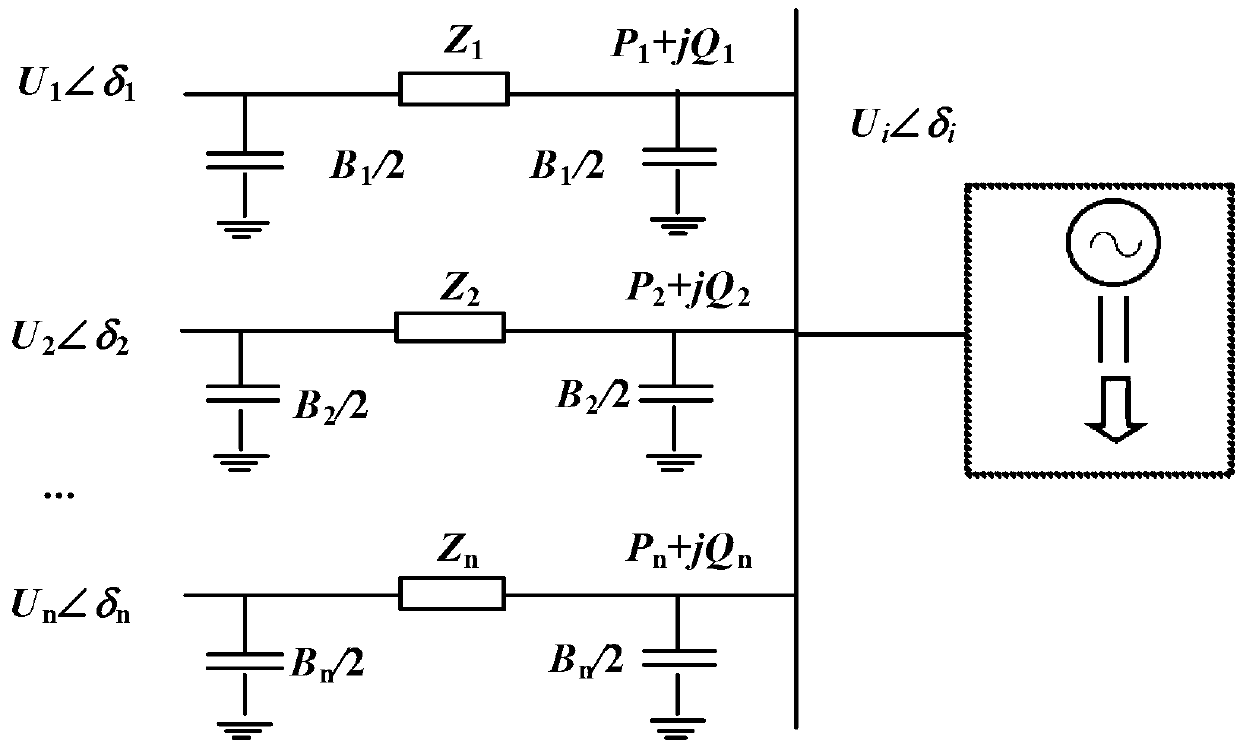

Large power grid overall situation on-line integrated quantitative evaluation method based on response

ActiveCN103279638ARaise the level of early warningReduce workloadData processing applicationsSystems intergating technologiesTime domainStable state

The invention provides a large power grid overall situation on-line integrated quantitative evaluation method based on a response. The method comprises a first step of acquiring power grid topology structural information from an SCADA system and an EMS system and establishing a corresponding relation between the power grid topology structural information and a WAMS system power grid component, a second step of acquiring present power grid operation method trend data from the SCADA system or the EMS system or a WAMS system or acquiring various preconceived trends or transient state fault time domain data from a DSA system, and a third step of dividing responding data into two operation scenes including a steady state (or a quasi-stable state) and a transient state (or a dynamic state) in a macroscopic mode, wherein static state stabilization situation assessment is carried out on a power grid through an on-line node-facing method, and transient state stabilization situation assessment is carried out on the power grid through an on-line unit-facing method. Static state and transient state comprehensive assessment indicators are constructed based on component class thermostabilization, electric parameter acceptable range and system class stabilization, the comprehensiveness and reasonability of the overall situation assessment indicators are improved, and the efficiency of overall situation integrated assessment is improved through the method that tasks are carried out at the same time.

Owner:STATE GRID CORP OF CHINA +1

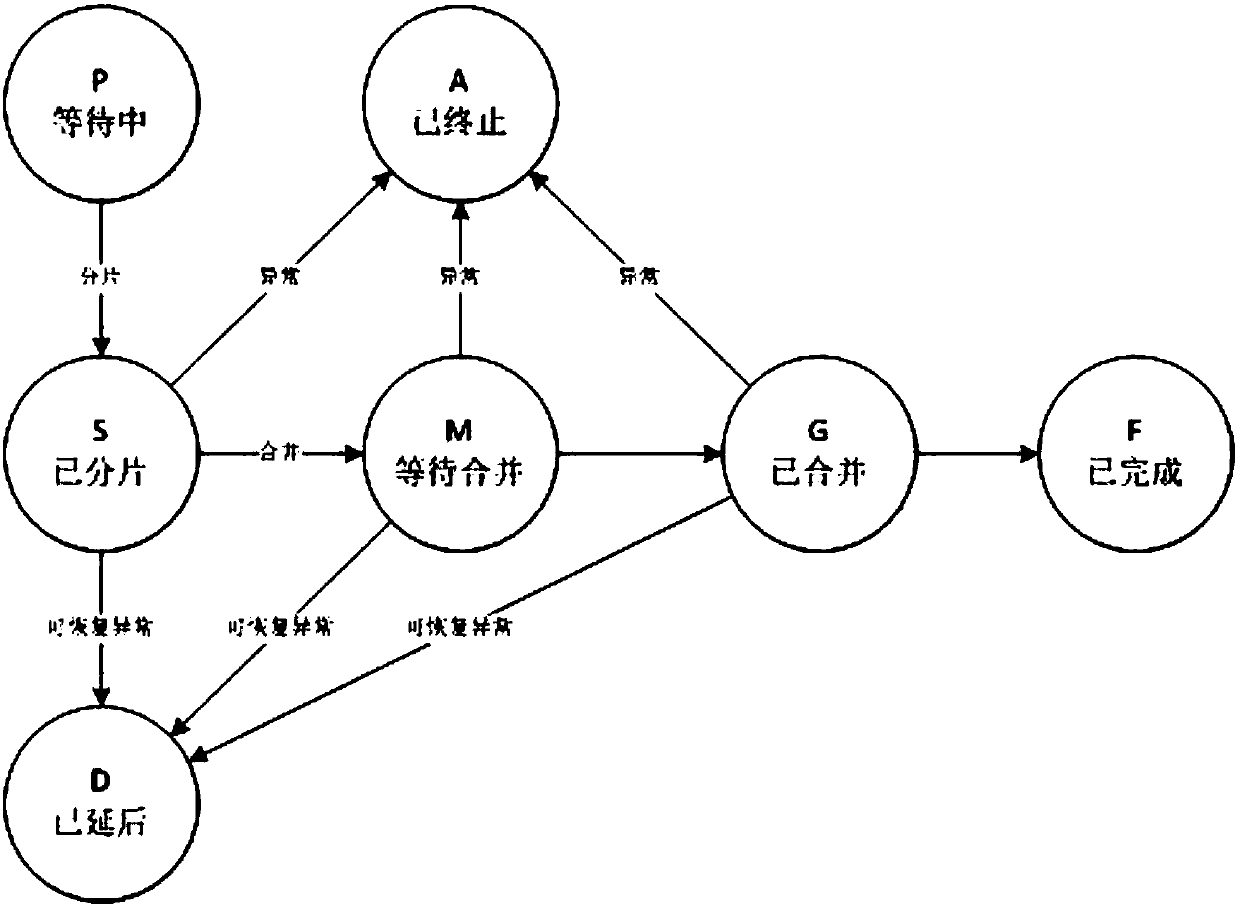

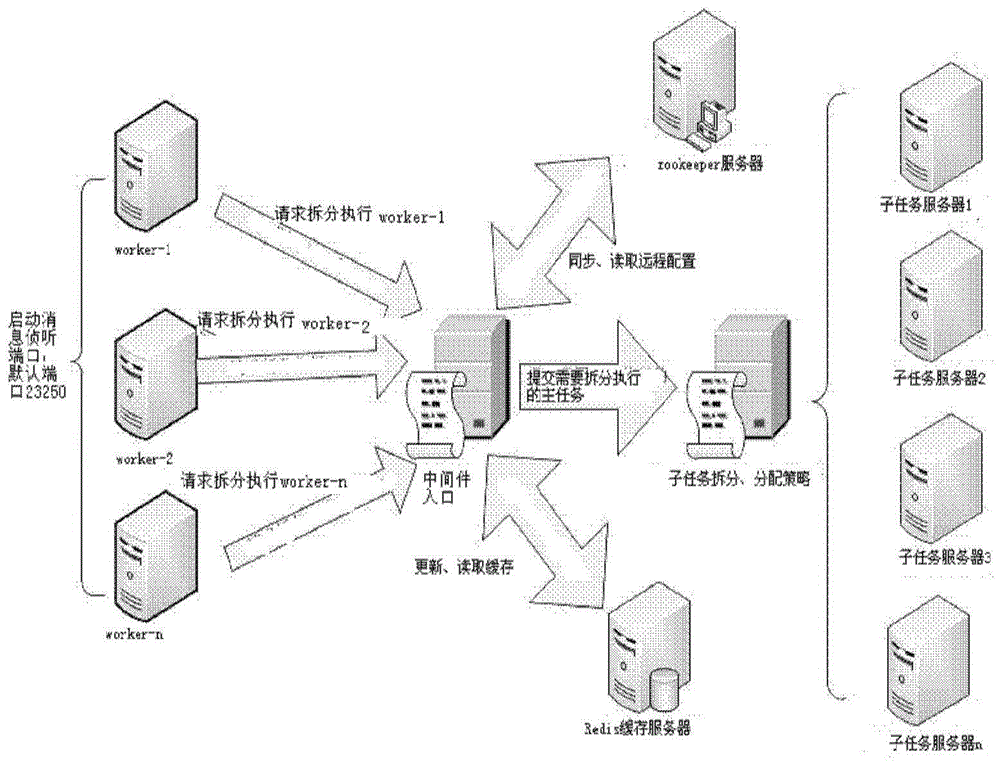

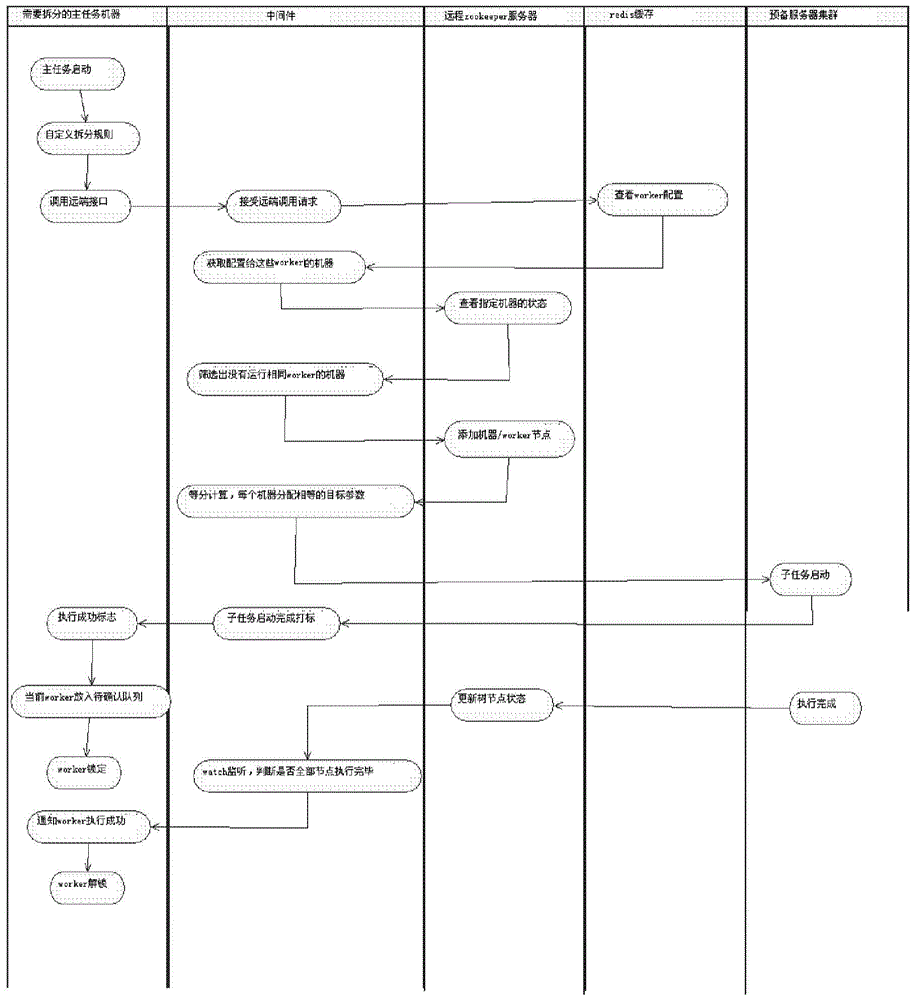

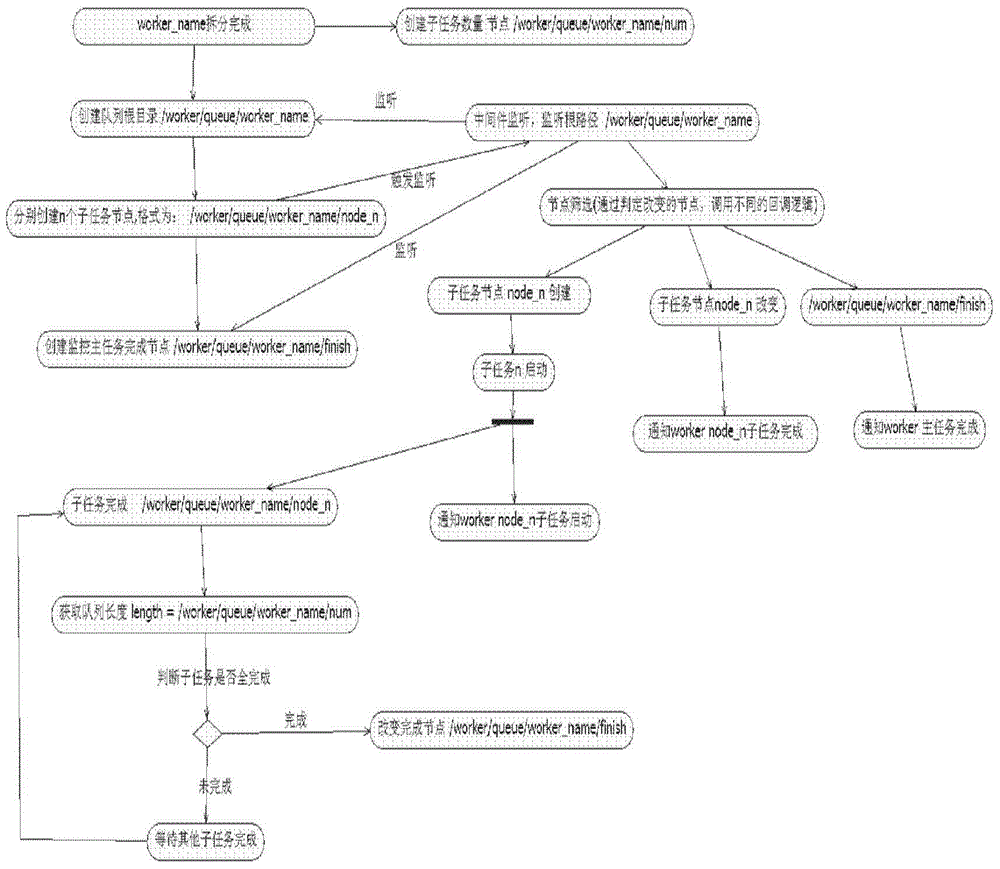

Method and system for automatic splitting of task and parallel execution of sub-task

The present invention provides a method and system for automatic splitting of a task and parallel execution of a sub-task. The method comprises: splitting a main task that needs to be processed into a plurality of sub-tasks; acquiring a unit that can be allocated to each sub-task, checking a state of the unit that can be allocated to each task, and screening out units that do not run a corresponding sub-task; allocating an equivalent parameter to each of the units that are screened out, creating a tree node, and starting the sub-tasks in parallel; after successfully starting all the sub-tasks, locking the corresponding main task; completing parallel execution of the sub-tasks, updating a state of the tree node; determining whether execution of all the sub-tasks is completed; and when it is determined that execution of all the sub-tasks is completed, sending out a message of an execution success, and unlocking the corresponding main task.

Owner:京东益世商服科技有限公司

Task parallel processing method, storage medium, computer equipment, device and system

ActiveCN109814986AImprove processing efficiencyThere is no need to consider issues such as compatibilityProgram initiation/switchingParallel processingDirected acyclic graph

The invention provides a task parallel processing method. storage medium, computer equipment, device and system, the task directed acyclic graph DAG is constructed according to the dependency relationship between the tasks needing to be executed, then the tasks needing to be executed are distributed and controlled according to the task directed acyclic graph DAG, task parallelism of the multi-coreprocessor is achieved depending on the reschedulability of the work queue, and the data processing efficiency is improved. The implementation of the task parallel processing method provided by the embodiment does not depend on tensorflow or caffe and other framework programs, so that the problems of interface compatibility and the like do not need to be considered during program design.

Owner:SHANGHAI CAMBRICON INFORMATION TECH CO LTD

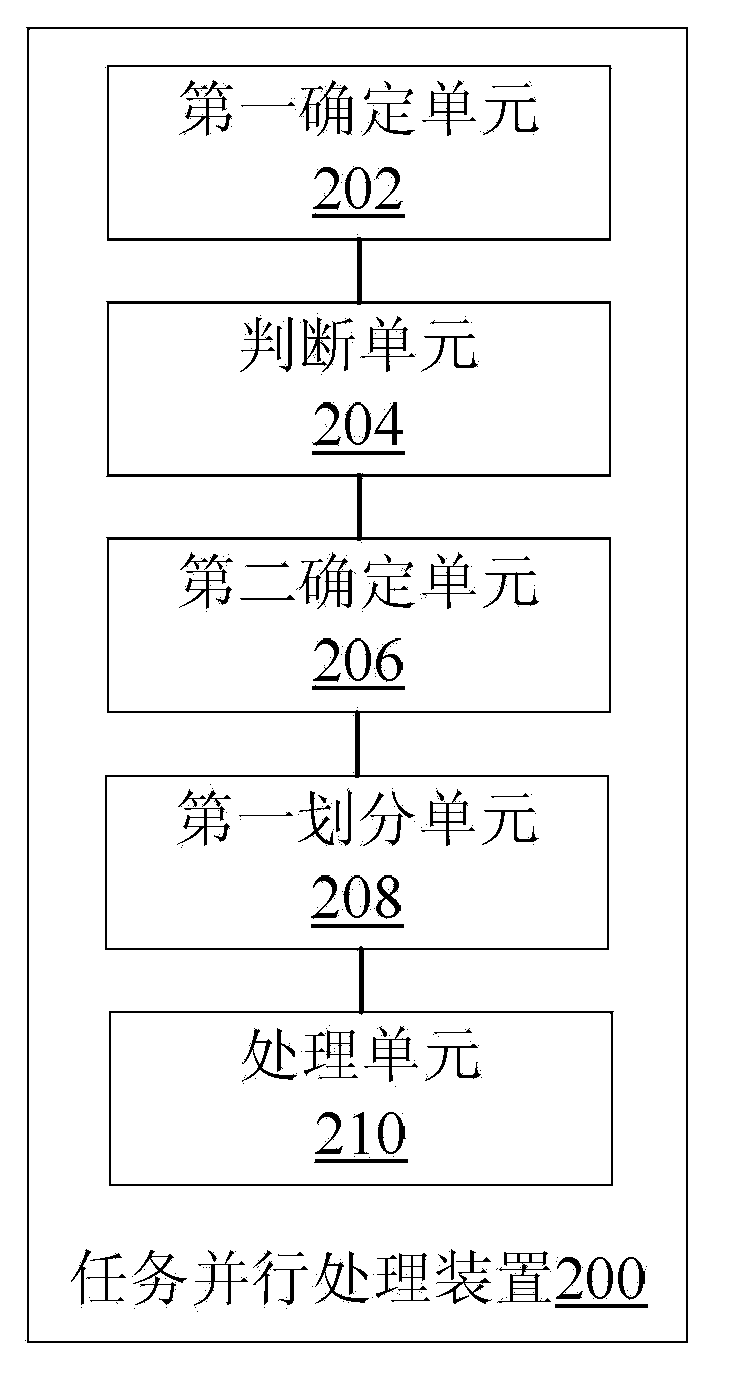

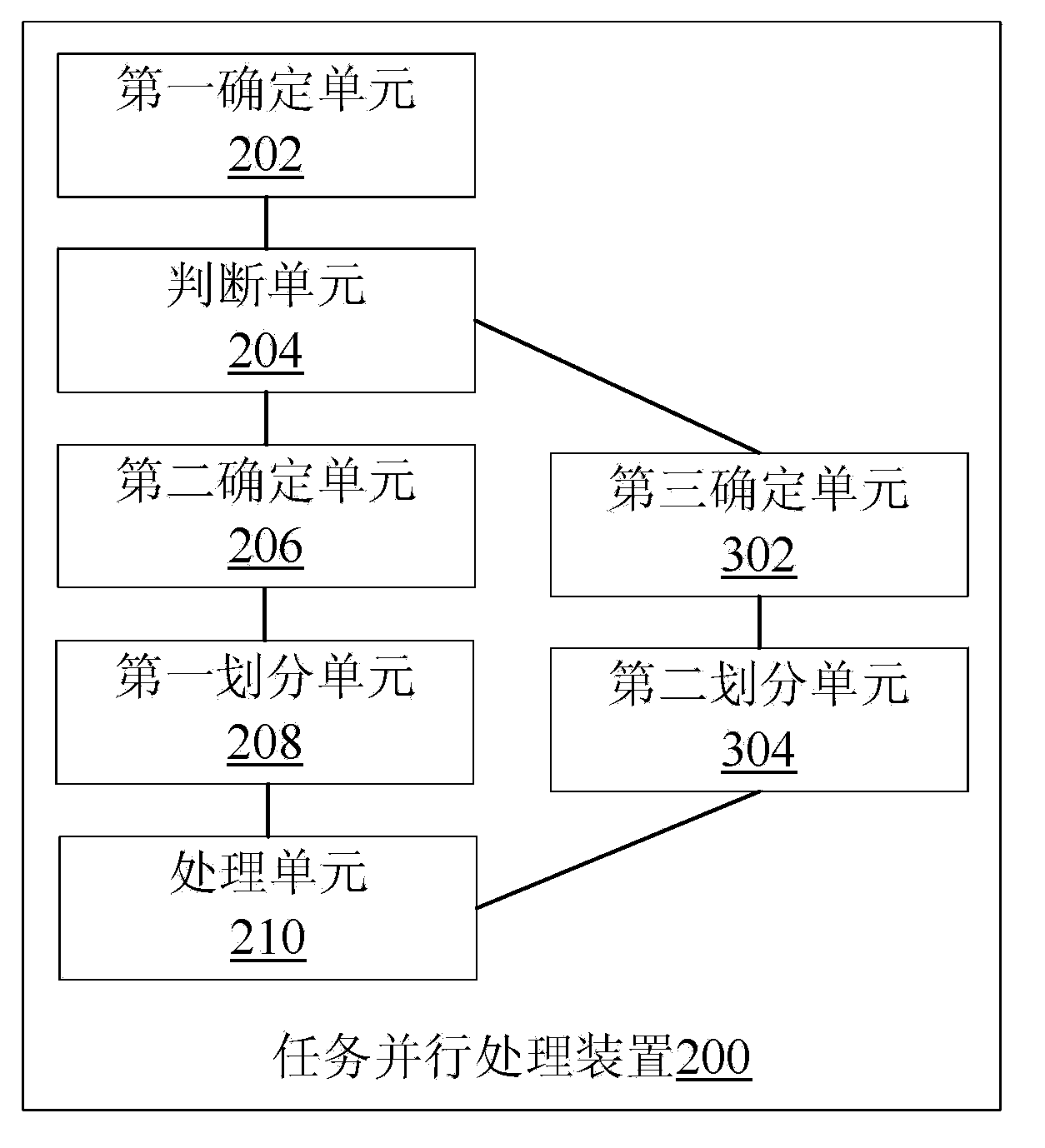

Task parallel processing method and device

ActiveCN103677751ASolve wasteSolve the speed problemConcurrent instruction executionParallel processingTask parallelism

The invention discloses a task parallel processing method and device. The method includes the steps that the number M of tasks to be processed is determined, wherein M is a positive integer; whether the tasks to be processed can be divided into multiple sets of tasks to be processed or not is judged according to the number M, wherein each set of tasks to be processed comprises multiple tasks to be processed, and the number of the tasks to be processed of all the sets is the same; first numerical values capable of dividing the tasks to be processed into the sets of tasks to be processed are determined according to the number M; the tasks to be processed are divided into the sets of the tasks to be processed, wherein each set of tasks to be processed includes the M / N tasks to be processed, wherein N is a positive integer and is one of the first numerical values; parallel processing is performed on the tasks to be processed in the sets of tasks to be processed. The task parallel processing method and device solve the technical problems that any integer is designated to split the tasks to be processed in the prior art, and consequently some of the tasks which can be evenly split are not evenly split, resources are wasted and the task executing speed is lowered.

Owner:ALIBABA GRP HLDG LTD

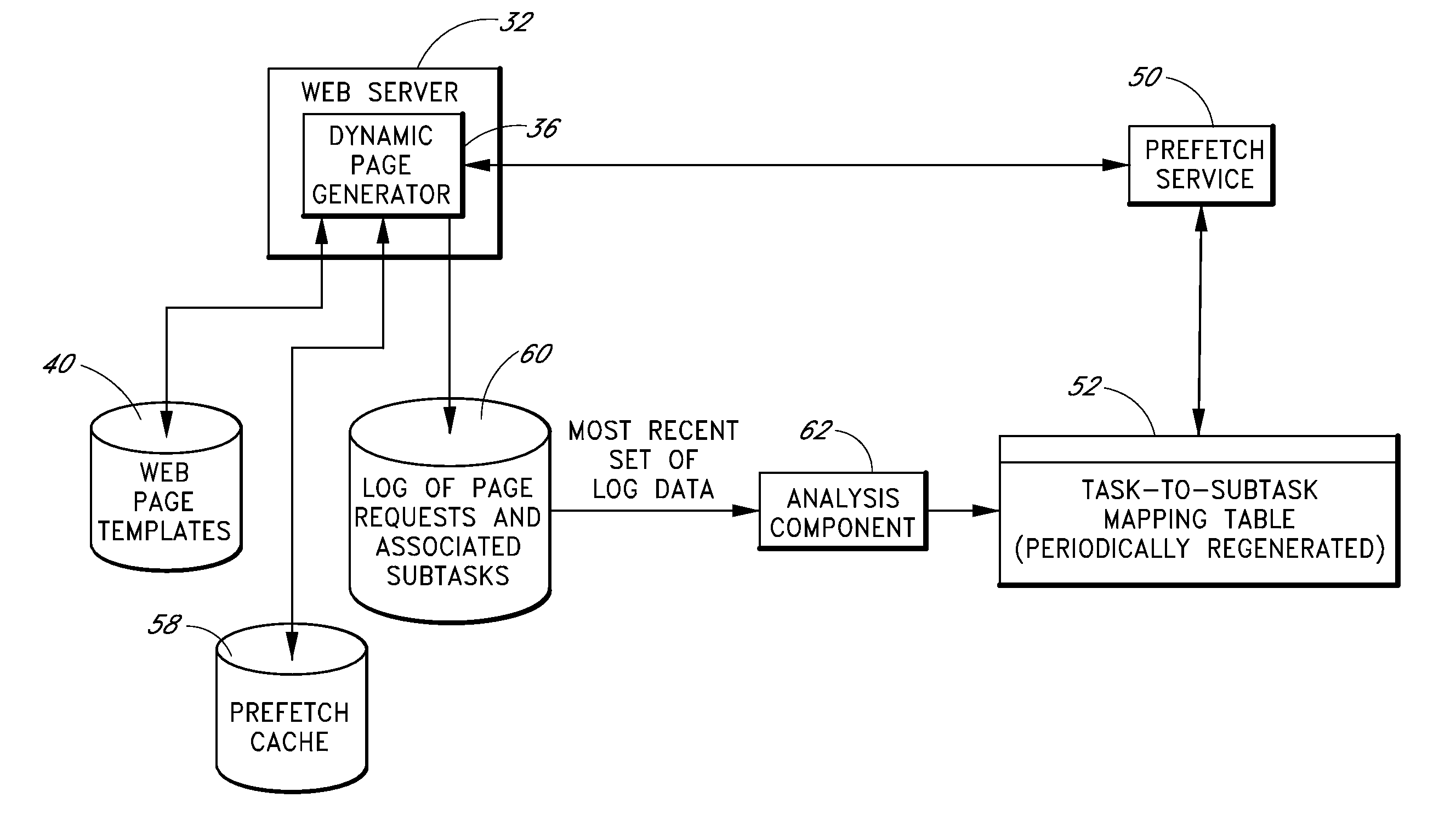

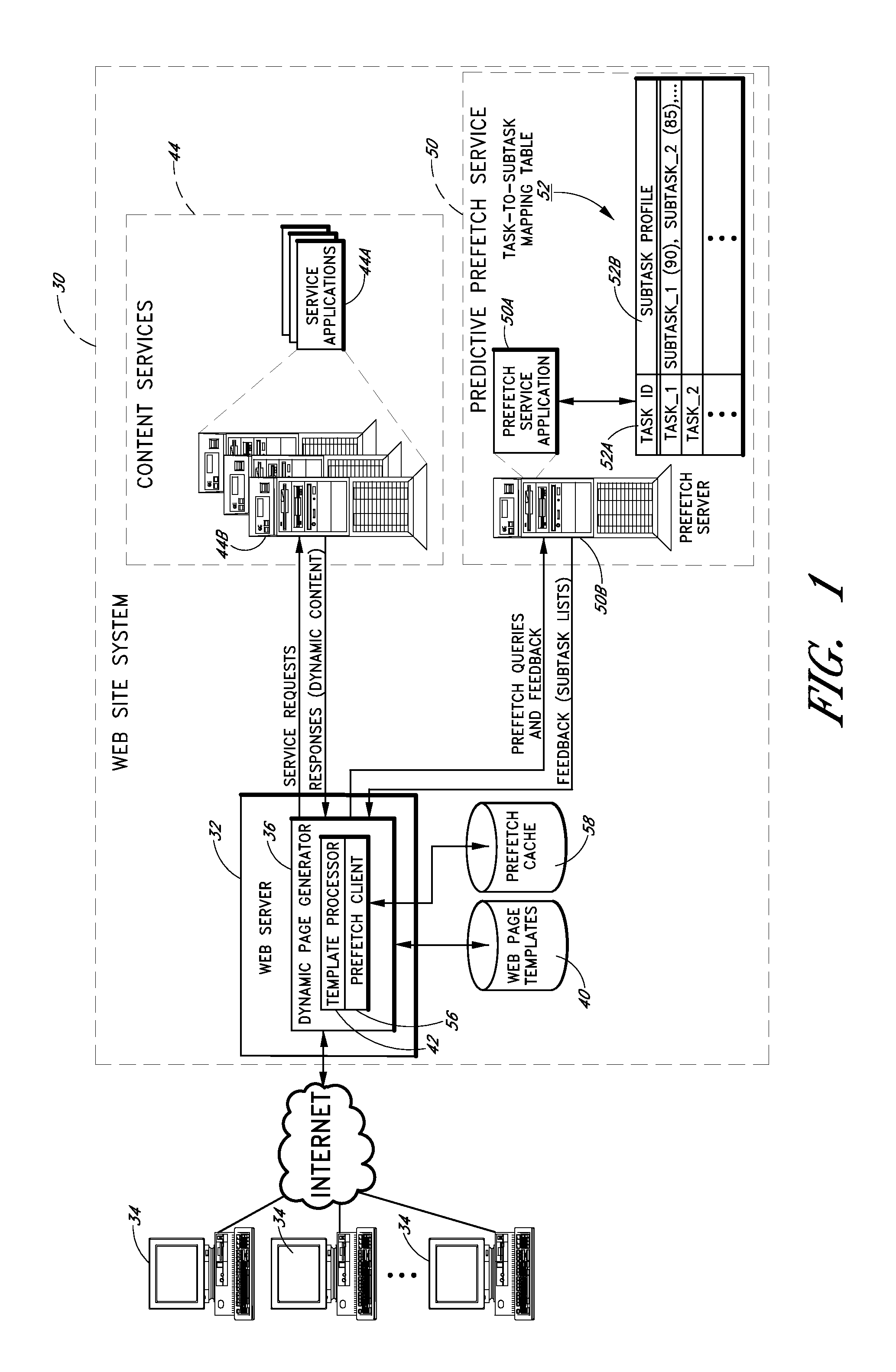

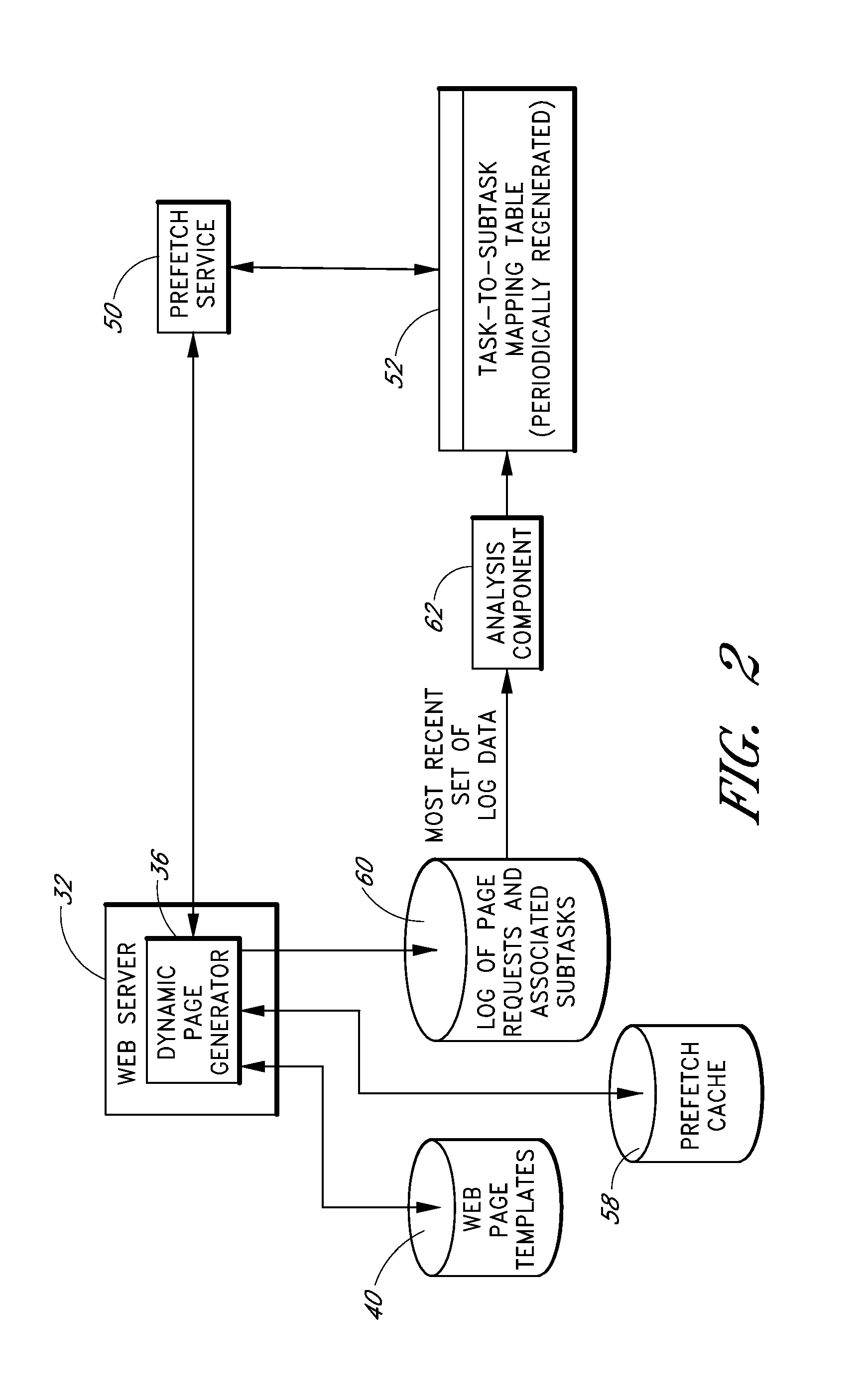

Predictive prefetching to improve parallelization of document generation subtasks

ActiveUS20080091711A1Reduce build timeDigital data processing detailsMultiple digital computer combinationsData retrievalDocument preparation

Owner:AMAZON TECH INC

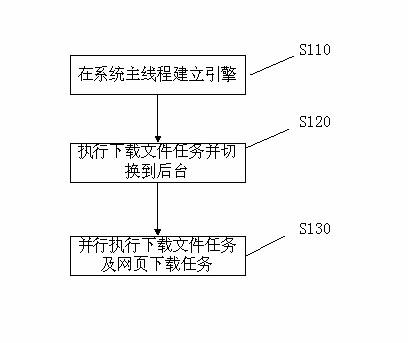

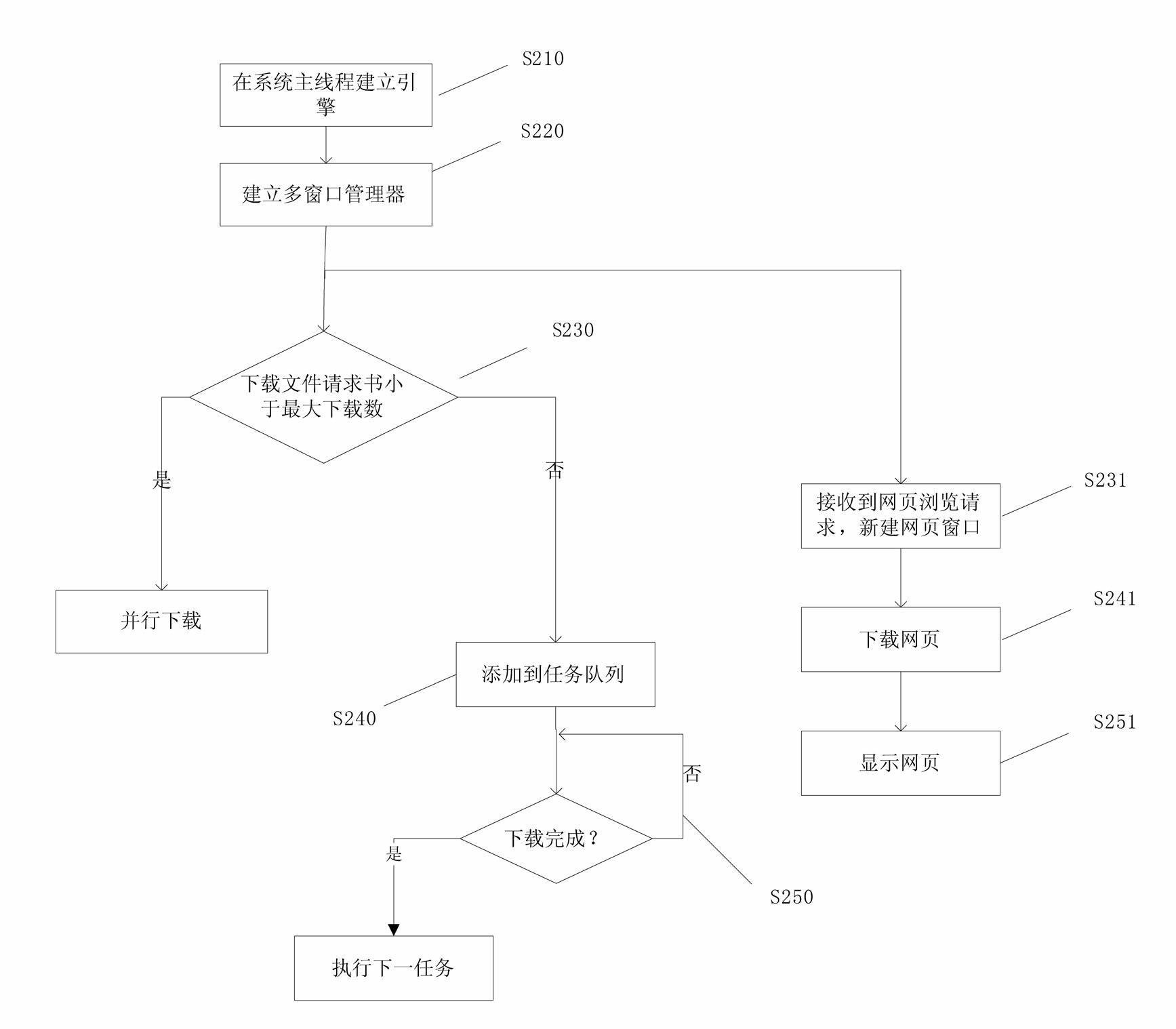

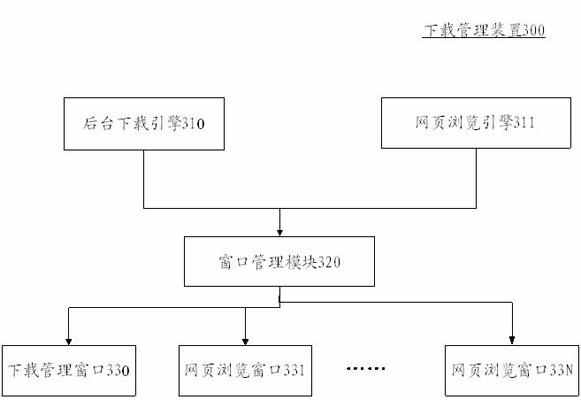

Method and device for managing download of mobile communication equipment terminal browser

ActiveCN102158853ATake advantage ofTransmissionSpecial data processing applicationsUser needsApproaches of management

The invention relates to the file downloading technology for a mobile communication equipment terminal browser, particularly to a method and device for managing download of a mobile communication equipment terminal browser. A method for managing download of a mobile communication equipment terminal browser comprises the following steps of: firstly, establishing a multi-task supporting web browsing engine and a multi-task supporting backstage download engine in a main thread of a mobile communication equipment terminal system; secondly, executing a file download task through the backstage download engine when the mobile communication equipment terminal receives a file download request, and executing the web browsing task through the web browsing engine when the mobile communication equipment terminal receives a web browsing request, wherein the task of the backstage download engine and the task of the web browsing engine are executed in parallel, the maximum number of the download tasks of the backstage download engine can be preset according to user requirements. Due to the adoption of the backstage download technology, the downloading and the web browsing can be carried out at the same time and a plurality of tasks can be downloaded simultaneously, so that resources are sufficiently utilized.

Owner:ALIBABA (CHINA) CO LTD

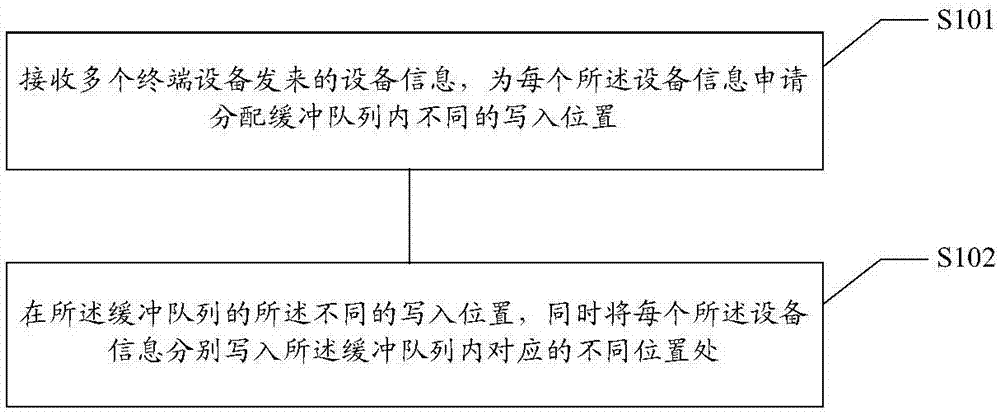

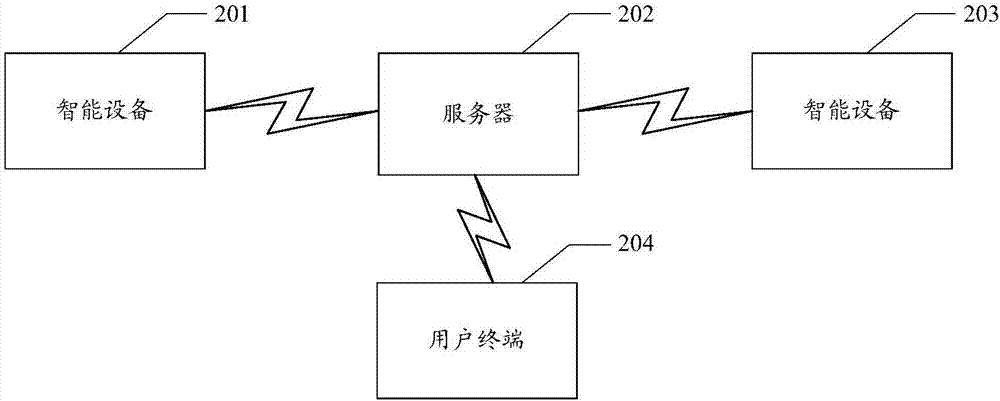

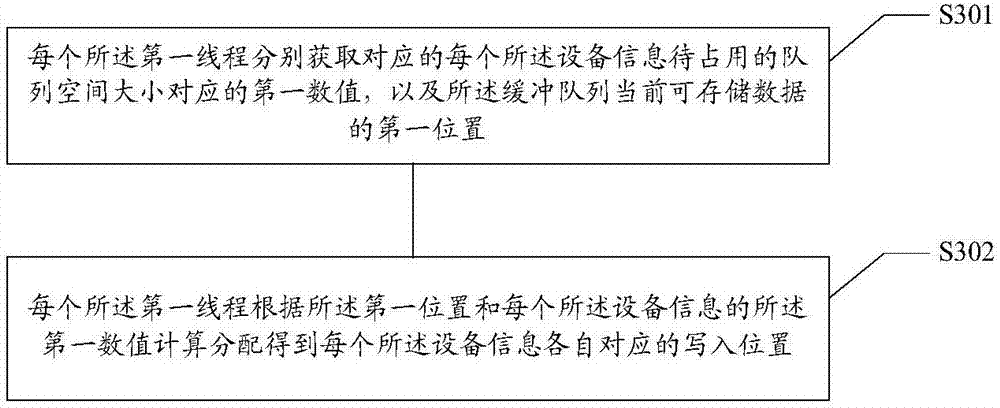

Multi-task parallel data processing method and device based on queue, medium and device

InactiveCN107515795AImprove concurrent executionIncrease throughputInterprogram communicationTerminal equipmentComputer terminal

The invention relates to a multi-task parallel data processing method and device based on a queue, a storage medium and an electronic device. The method includes the steps of receiving device information sent by a plurality of terminal devices, applying to distribute different write-in positions in the buffering queue for the device information, and meanwhile writing the device information in the corresponding different positions in the buffering queue according to the different write-in positions of the buffering queue. Data can be stored in the queue in parallel in batches through a plurality of producer threads, the parallel execution of the producer threads is improved, the time of a server for responding to the reported data of the terminal devices is shortened, the processing amount of the server for processing the information of the terminal devices is increased, tasks can be submitted into the queue without jamming, and the system performance can be improved.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

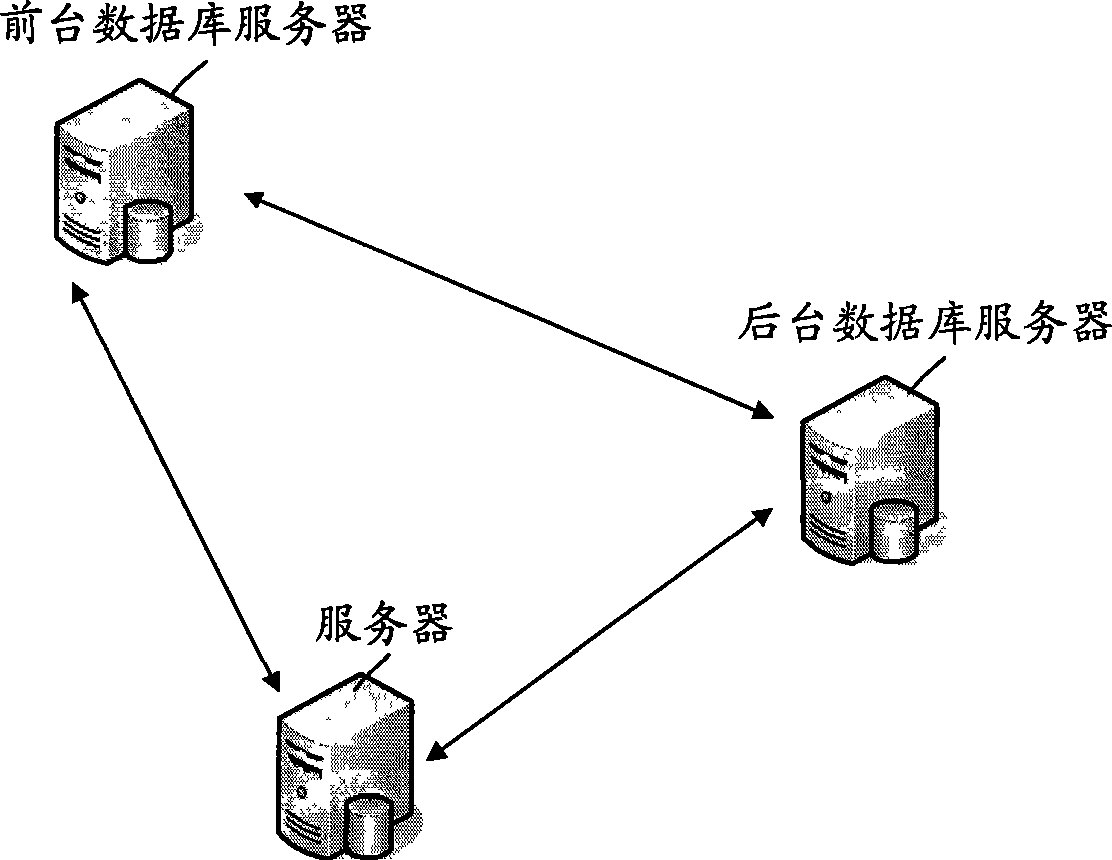

Method and apparatus for synchronizing foreground and background databases

InactiveCN101419615AImprove efficiencyReduce dependenceSpecial data processing applicationsDatabaseForeground-background

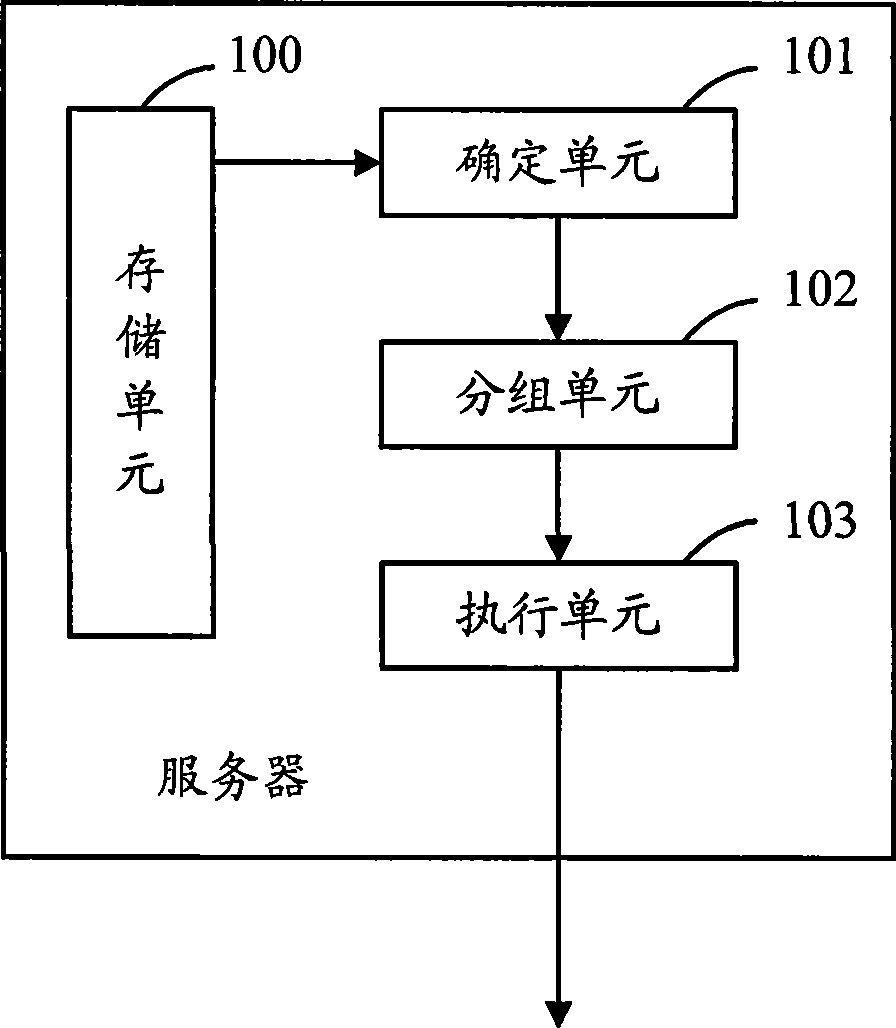

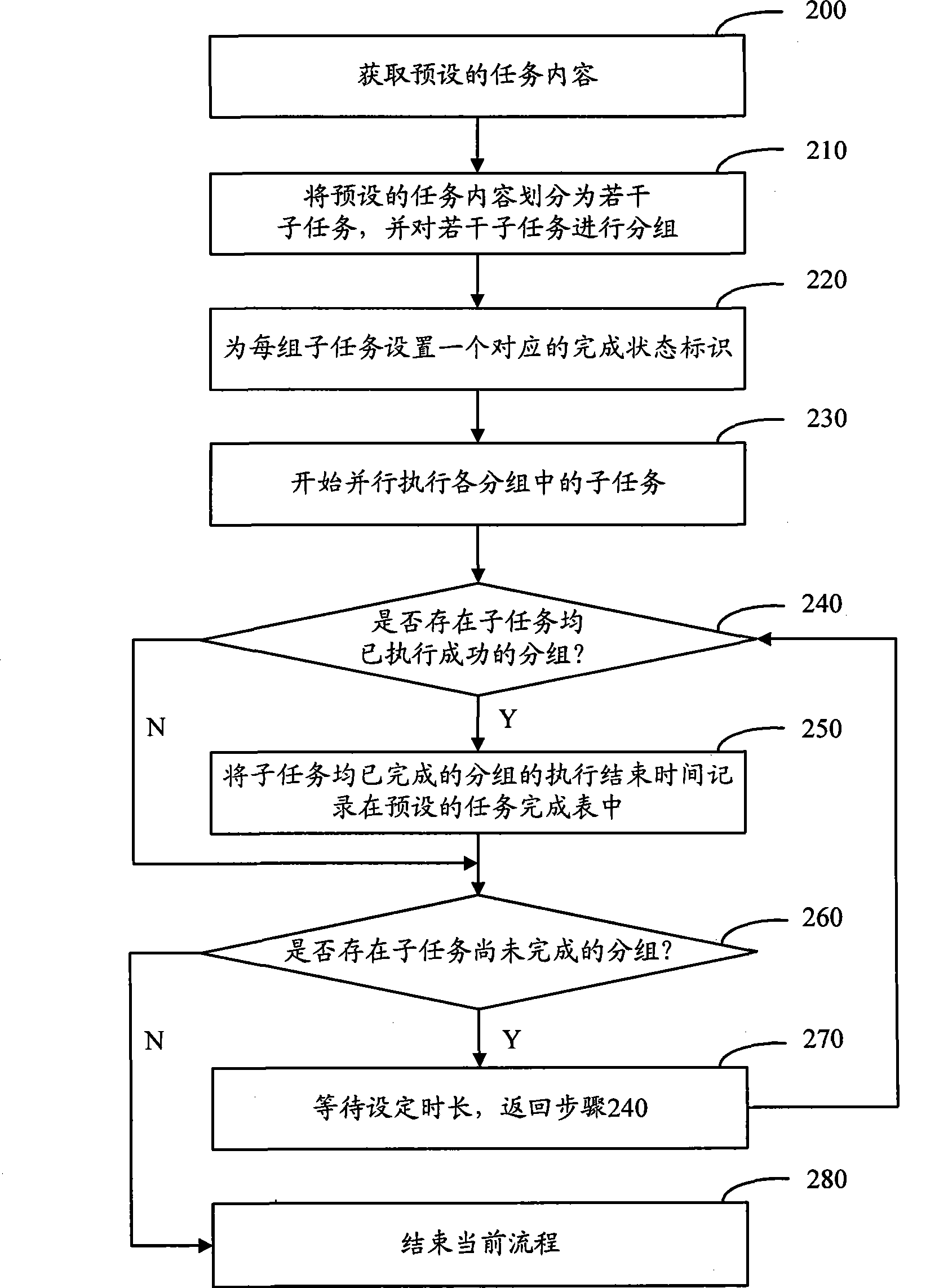

The invention discloses a synchronization method of a foreground-background database. The method comprises: the content of a task which is preset and used for completing the synchronization of a foreground-background database is obtained, and the content of the preset task is divided into a plurality of sub-tasks; the sub-tasks are grouped, wherein, each group comprises at least one sub-task; the sub-task in each group is implemented, wherein, the task of each group is implemented in parallel. Therefore, compared with the way that each sub-task is implemented in series in the prior art, the way that each sub-task belonging to different groups is implemented in parallel causes the sub-task in each group to be independent with each other, so the dependency among sub-tasks is reduced, the time needed by the implementation of the sub-task is shortened to a certain extent, and thus the efficiency for a foreground-background database synchronization flow is further improved effectively. The invention simultaneously discloses a server.

Owner:ALIBABA CLOUD COMPUTING LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com