Patents

Literature

1747 results about "Concurrent computation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Concurrent computing is a form of computing in which several computations are executed during overlapping time periods— concurrently —instead of sequentially (one completing before the next starts). This is a property of a system—this may be an individual program, a computer, or a network —and there is a separate execution point or "thread of control" for each computation ("process").

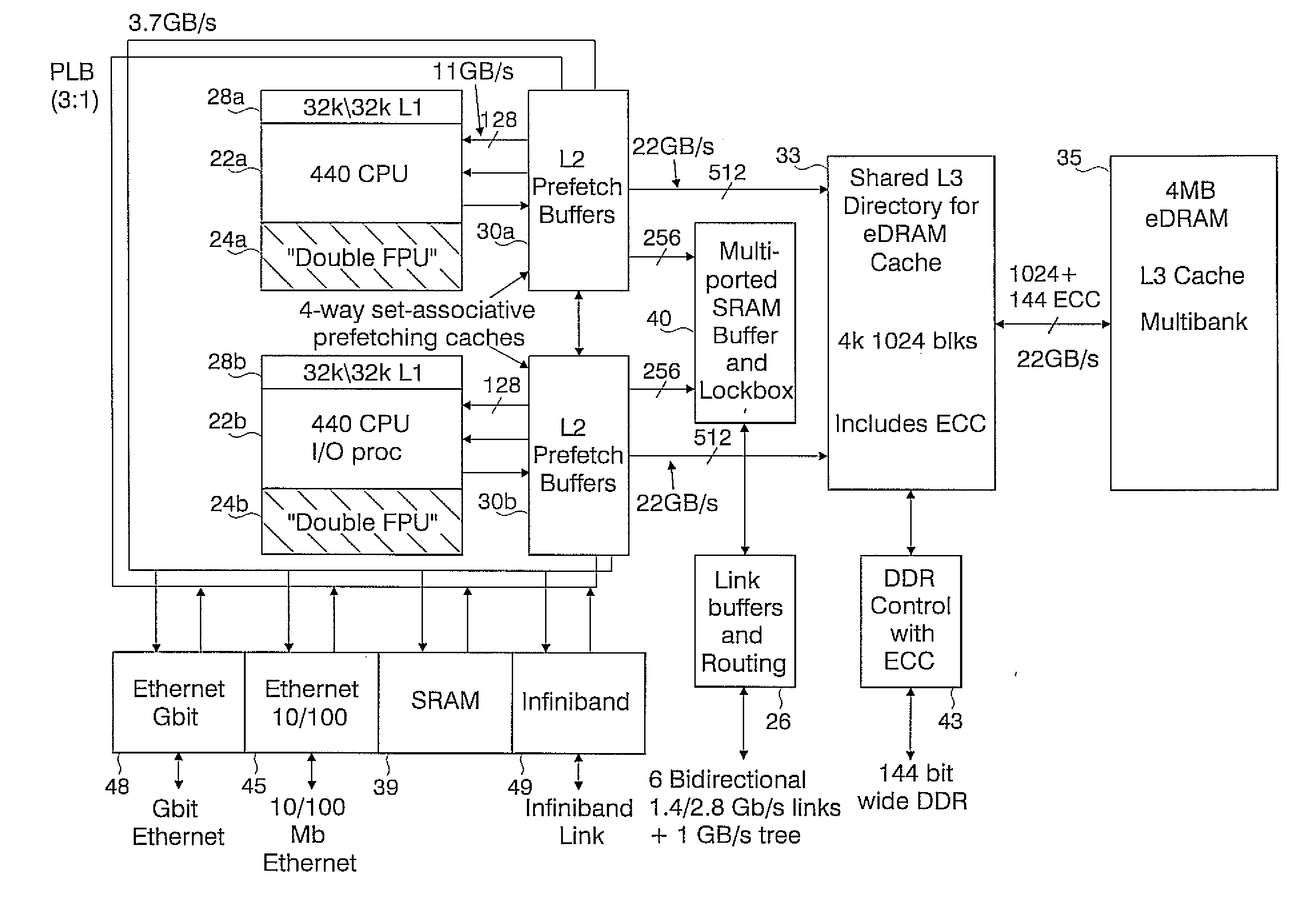

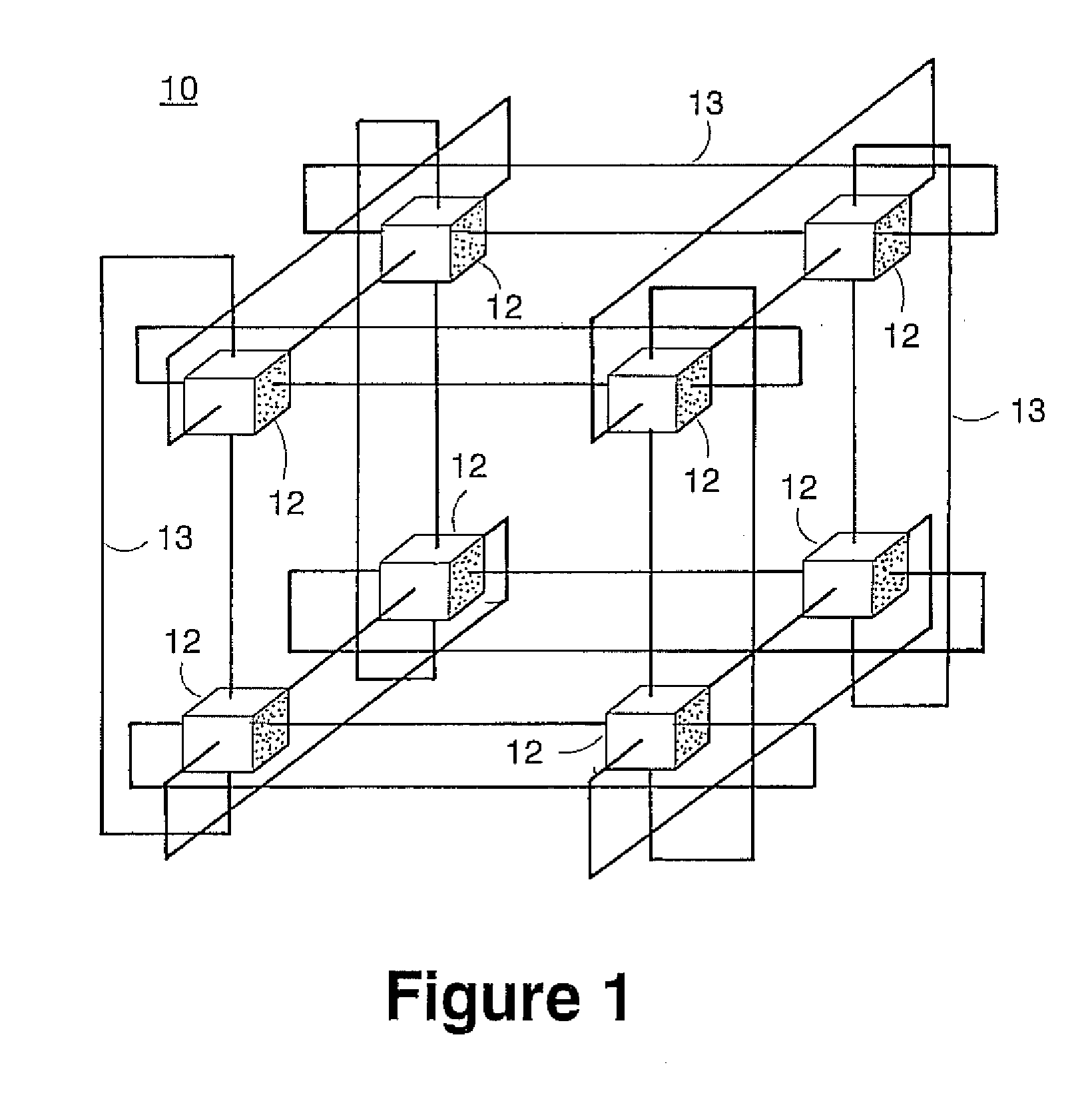

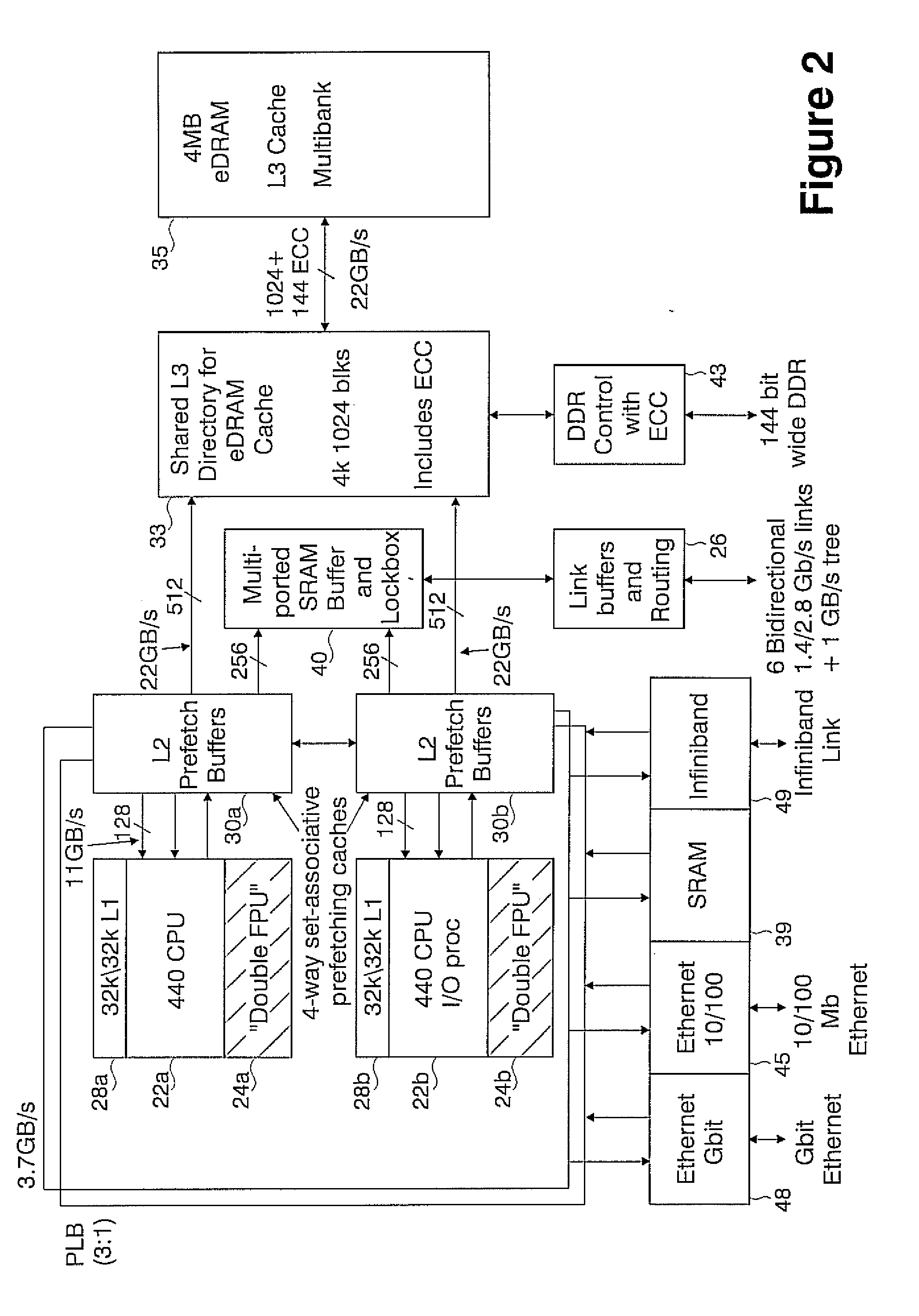

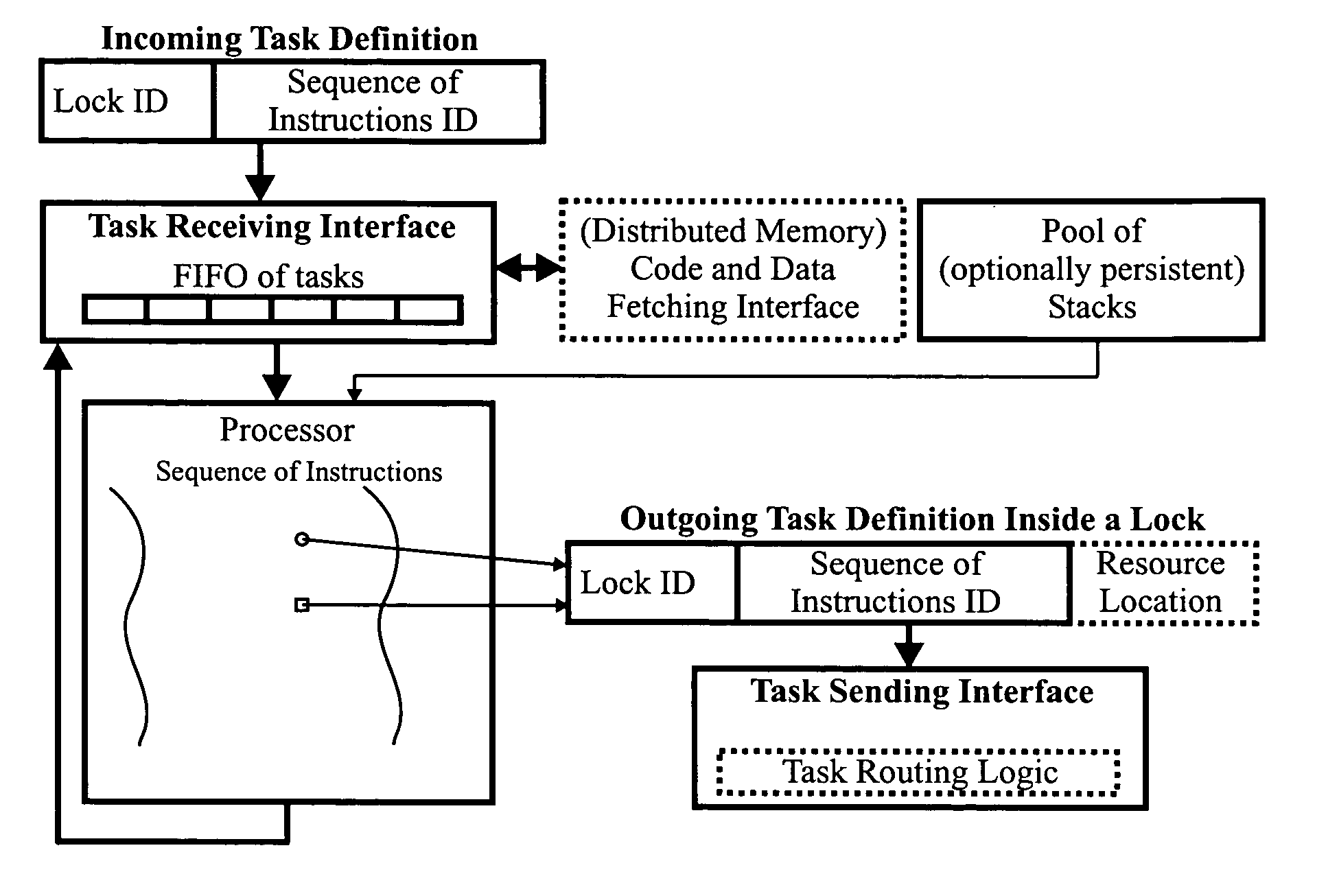

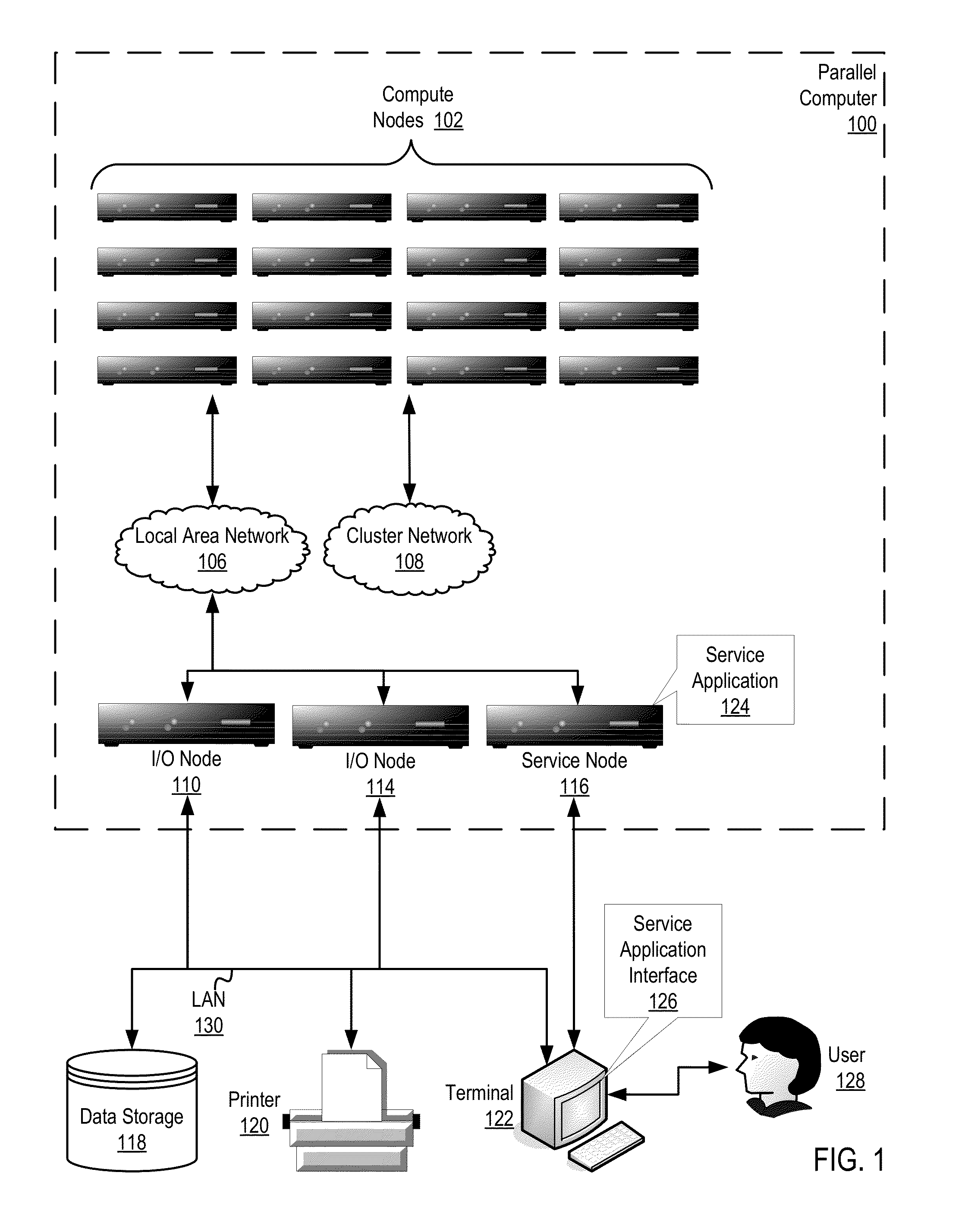

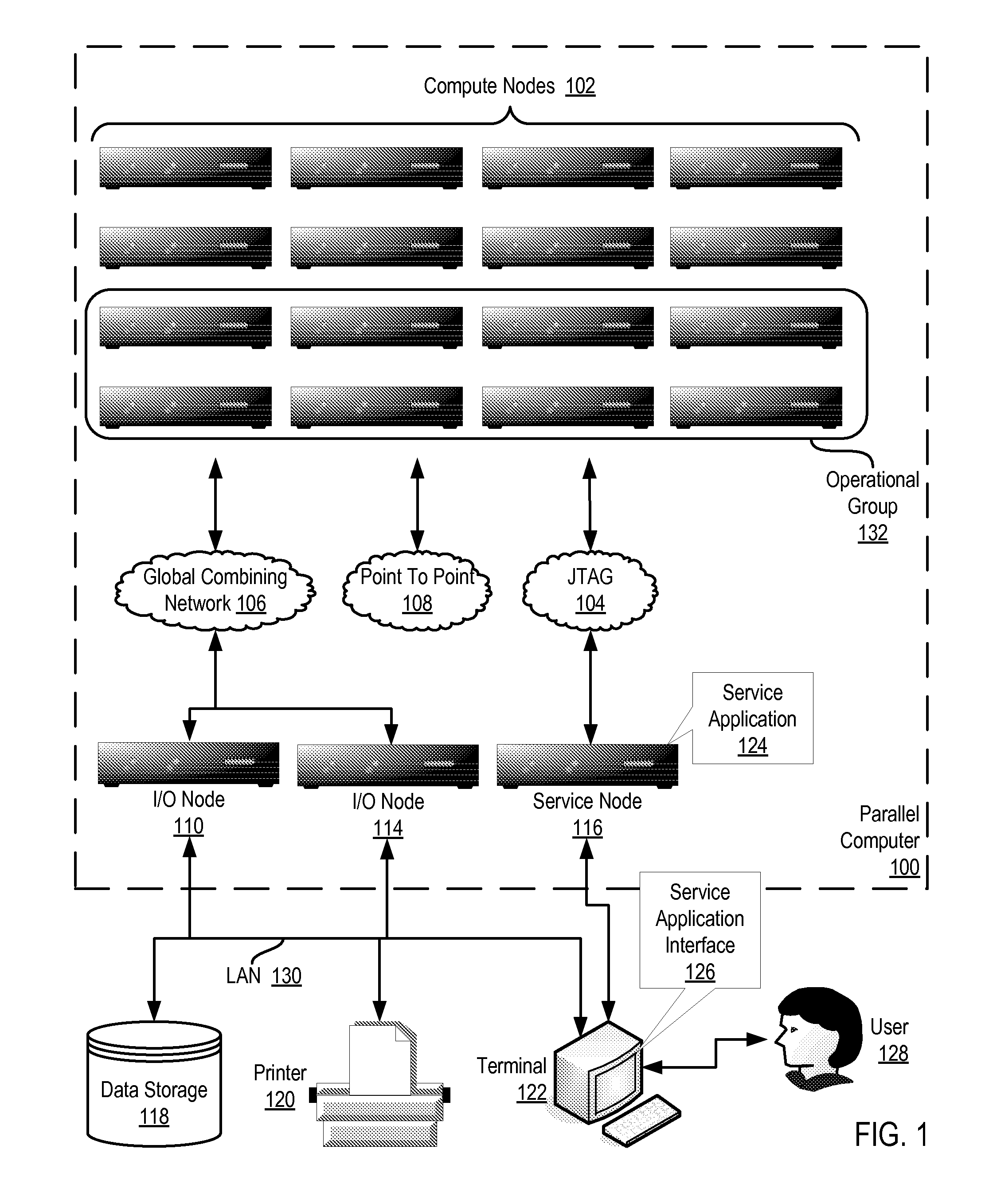

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

Object-oriented, parallel language, method of programming and multi-processor computer

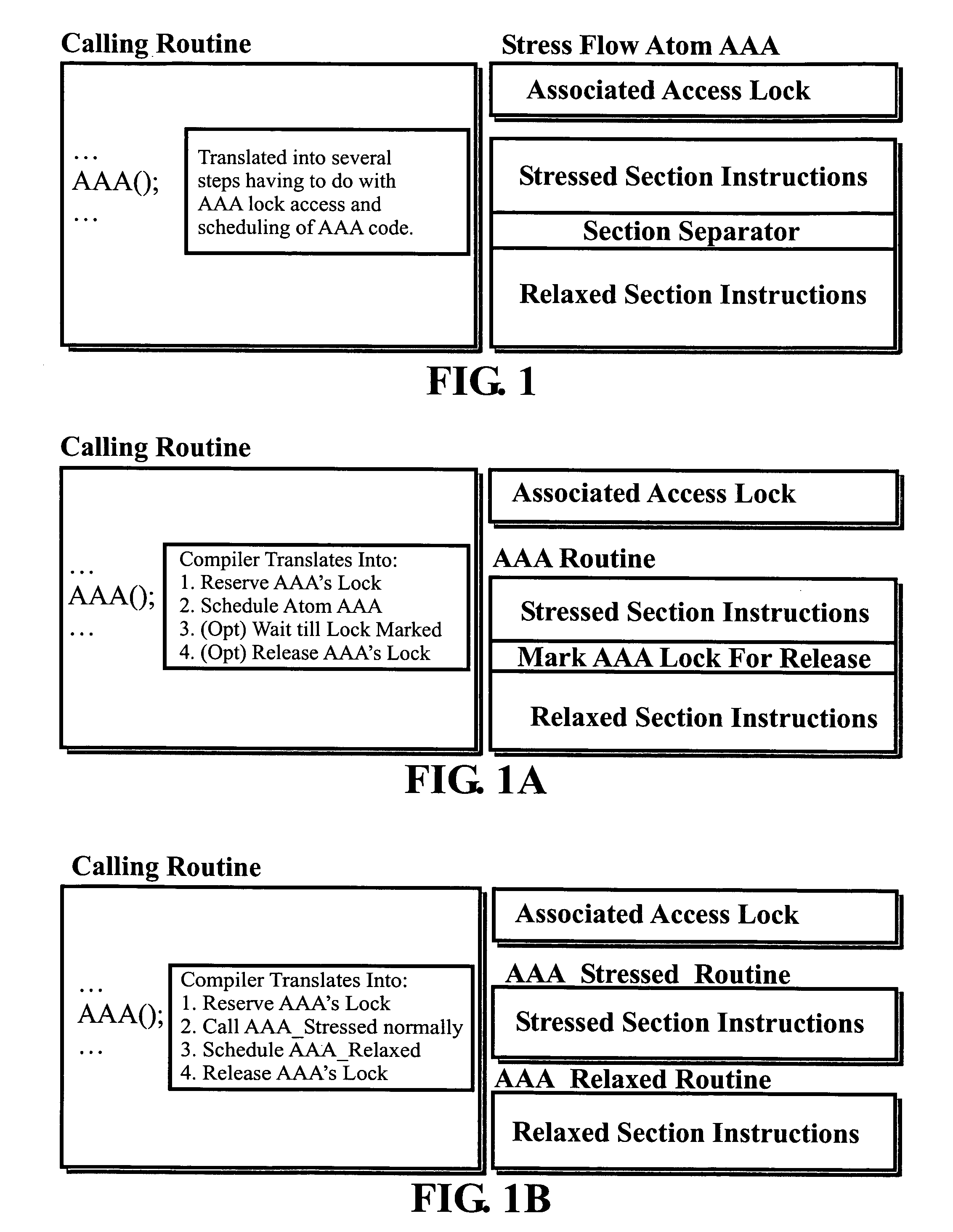

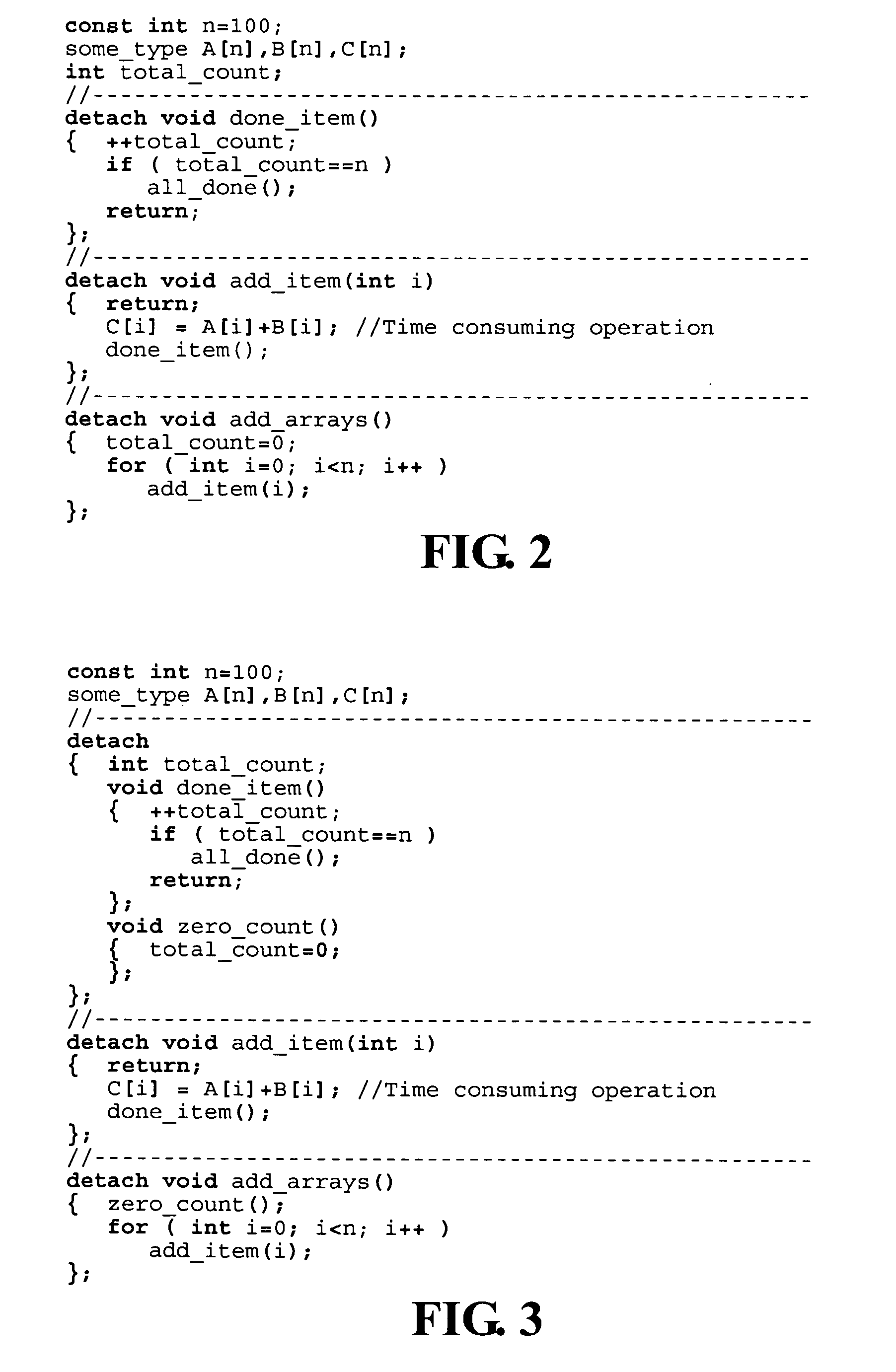

This invention relates to architecture and synchronization of multi-processor computing hardware. It establishes a new method of programming, process synchronization, and of computer construction, named stress-flow by the inventor, allowing benefits of both opposing legacy concepts of programming (namely of both data-flow and control flow) within one cohesive, powerful, object-oriented scheme. This invention also relates to construction of object-oriented, parallel computer languages, script and visual, together with compiler construction and method to write programs to be executed in fully parallel (or multi-processor) architectures, virtually parallel, and single-processor multitasking computer systems.

Owner:JANCZEWSKA NATALIA URSZULA +2

Parallel-aware, dedicated job co-scheduling method and system

InactiveUS20050131865A1Improve performanceImprove scalabilityProgram initiation/switchingSpecial data processing applicationsExtensibilityOperational system

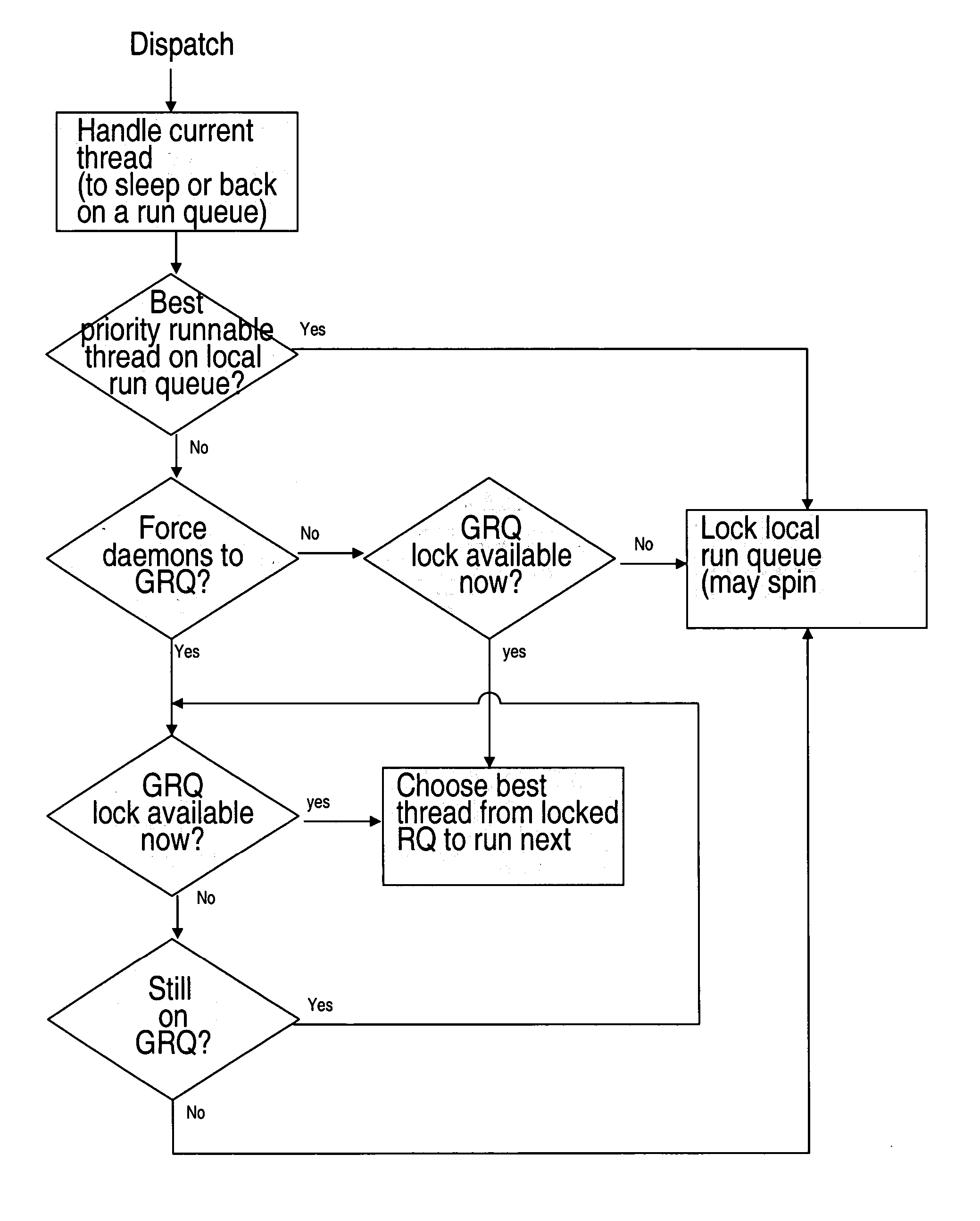

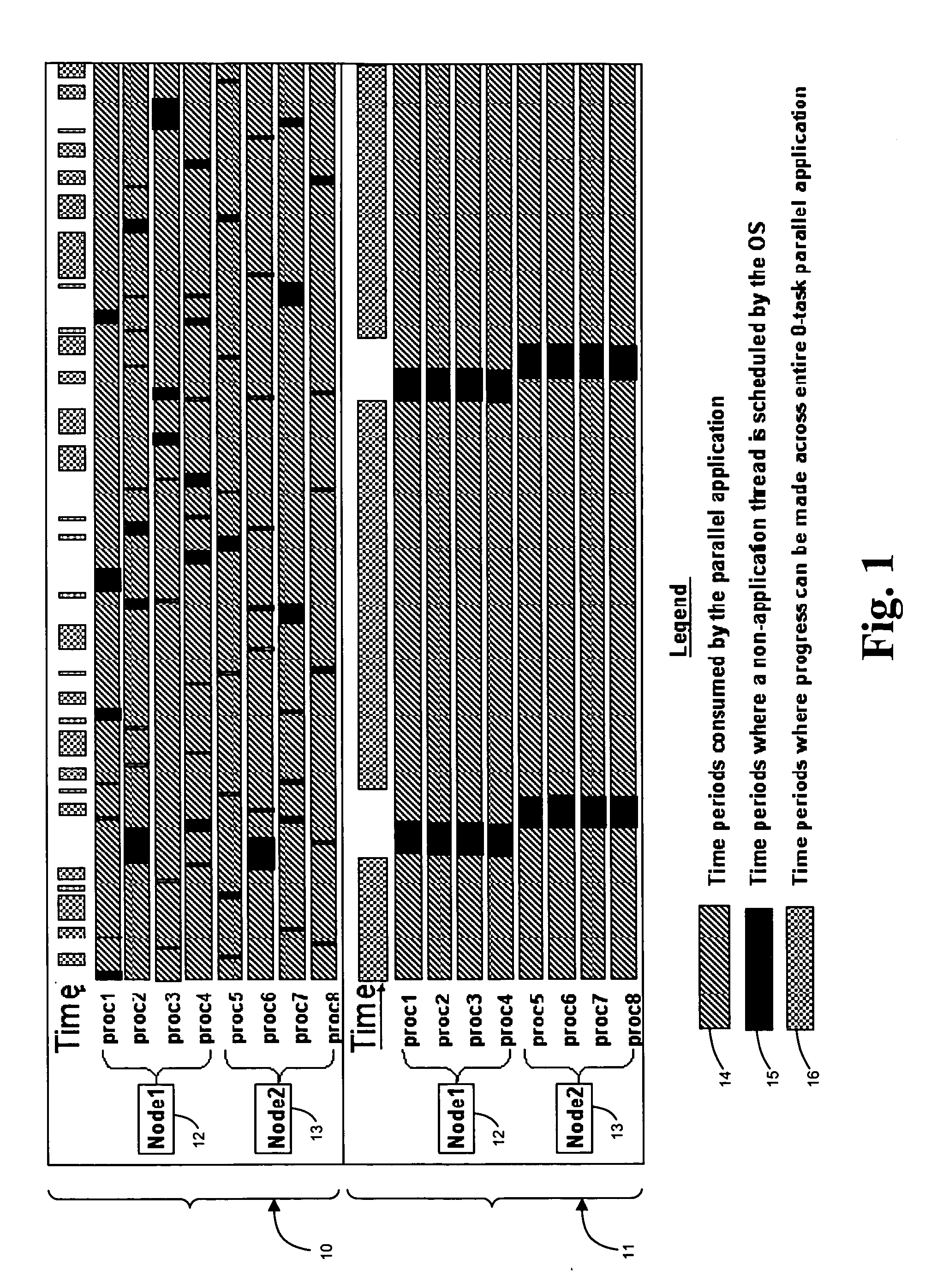

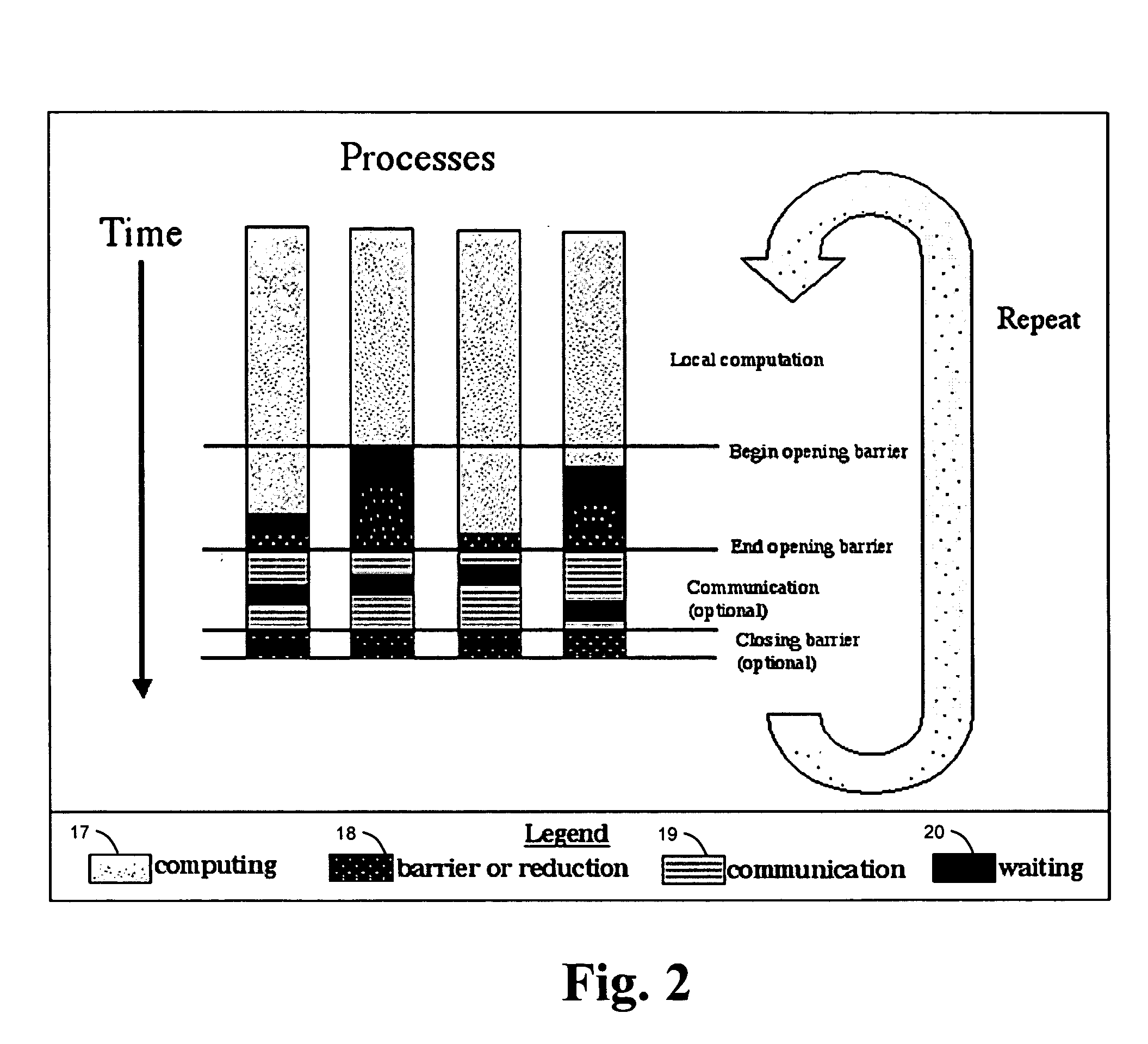

In a parallel computing environment comprising a network of SMP nodes each having at least one processor, a parallel-aware co-scheduling method and system for improving the performance and scalability of a dedicated parallel job having synchronizing collective operations. The method and system uses a global co-scheduler and an operating system kernel dispatcher adapted to coordinate interfering system and daemon activities on a node and across nodes to promote intra-node and inter-node overlap of said interfering system and daemon activities as well as intra-node and inter-node overlap of said synchronizing collective operations. In this manner, the impact of random short-lived interruptions, such as timer-decrement processing and periodic daemon activity, on synchronizing collective operations is minimized on large processor-count SPMD bulk-synchronous programming styles.

Owner:LAWRENCE LIVERMORE NAT SECURITY LLC

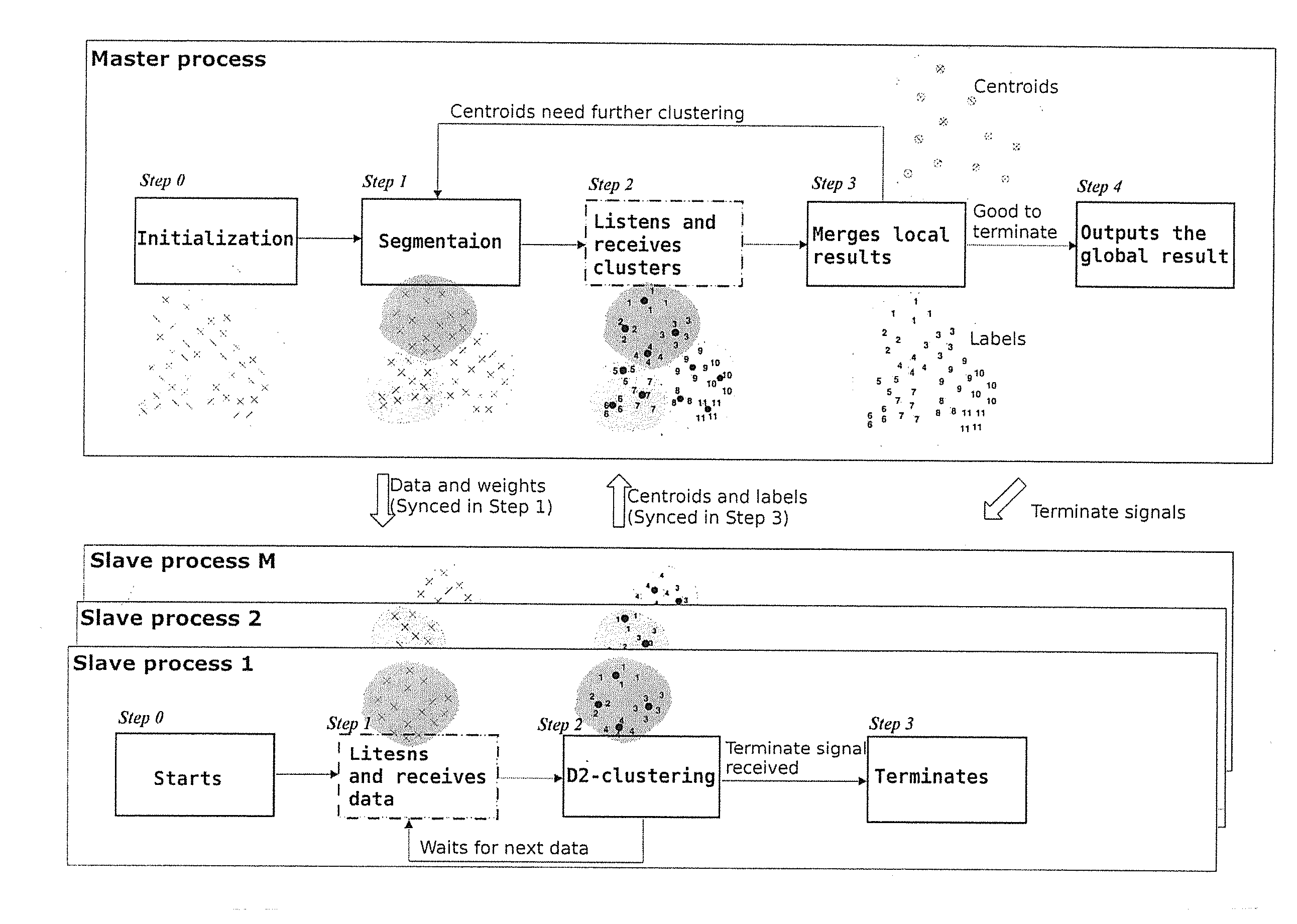

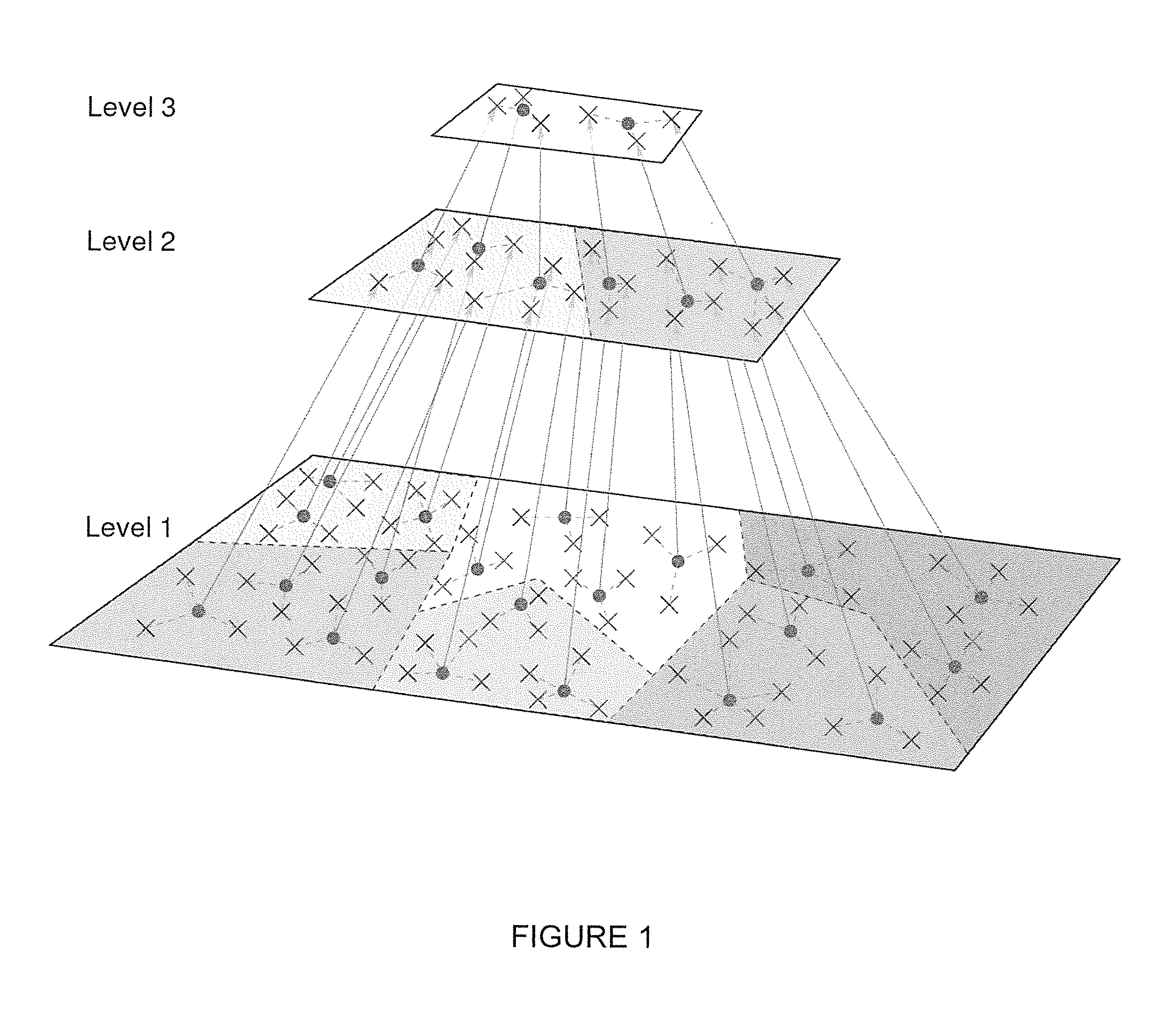

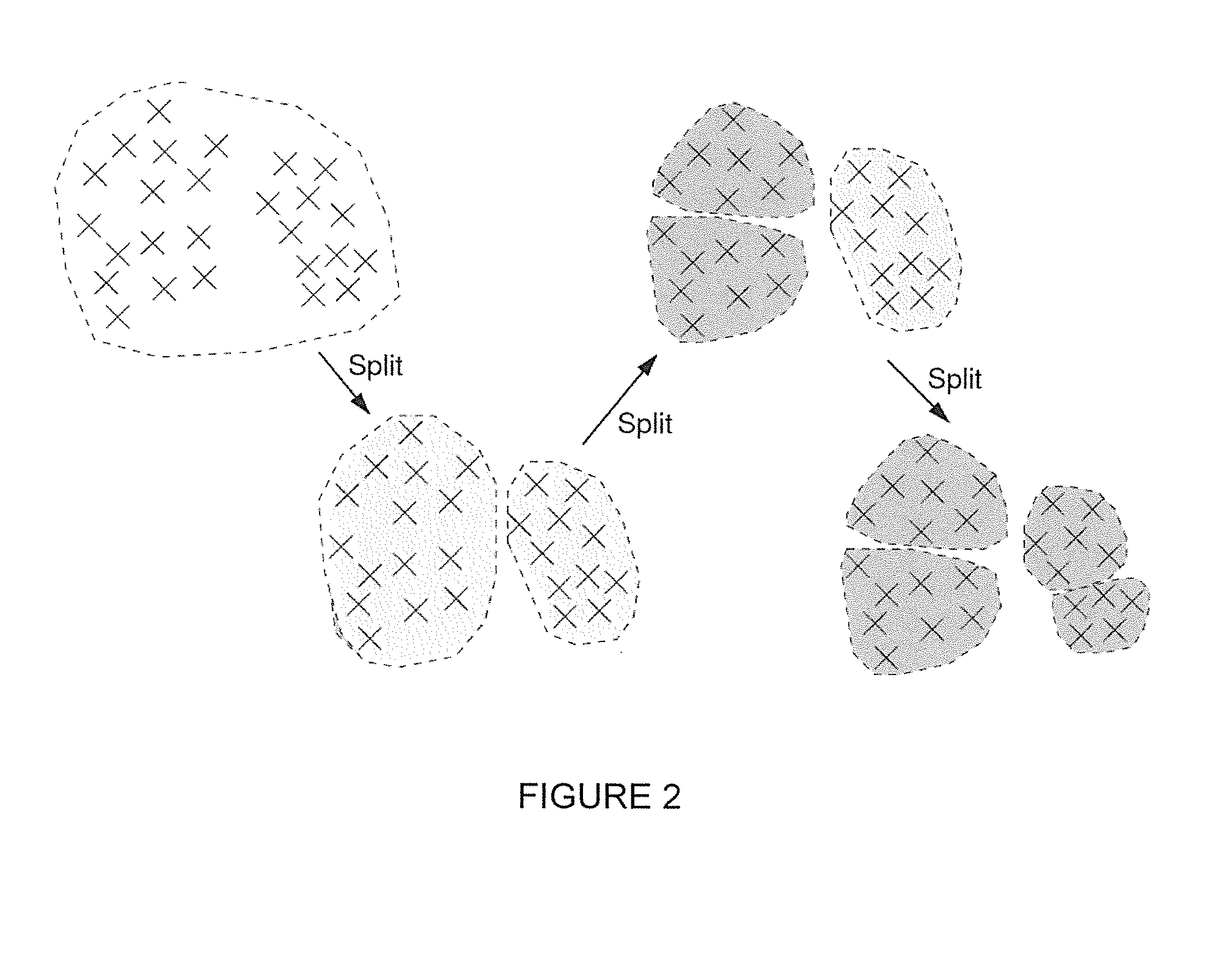

Massive clustering of discrete distributions

ActiveUS20140143251A1Perform data efficientlyEfficient executionDigital data processing detailsRelational databasesCanopy clustering algorithmCluster algorithm

The trend of analyzing big data in artificial intelligence requires more scalable machine learning algorithms, among which clustering is a fundamental and arguably the most widely applied method. To extend the applications of regular vector-based clustering algorithms, the Discrete Distribution (D2) clustering algorithm has been developed for clustering bags of weighted vectors which are well adopted in many emerging machine learning applications. The high computational complexity of D2-clustering limits its impact in solving massive learning problems. Here we present a parallel D2-clustering algorithm with substantially improved scalability. We develop a hierarchical structure for parallel computing in order to achieve a balance between the individual-node computation and the integration process of the algorithm. The parallel algorithm achieves significant speed-up with minor accuracy loss.

Owner:PENN STATE RES FOUND

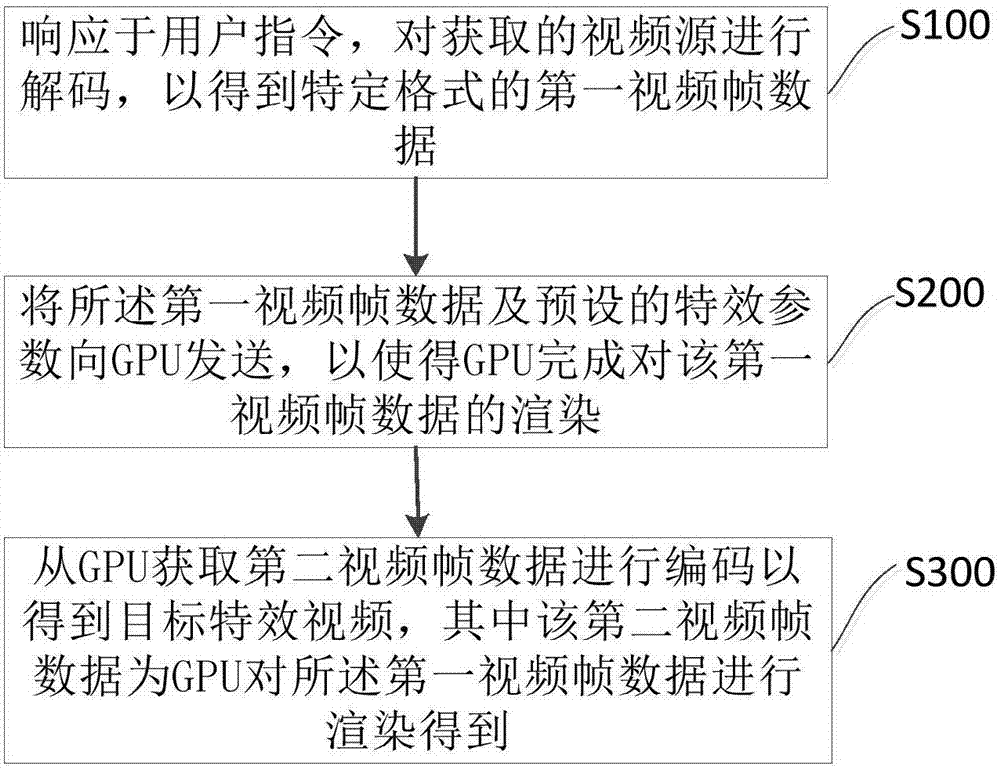

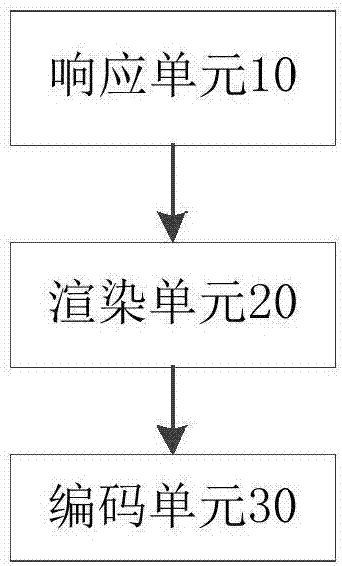

Video special effects rendering method, device and terminal

InactiveCN107277616AReduce total timeReduce occupancySelective content distributionGraphicsOccupancy rate

The invention provides a video special effects rendering method, device and terminal. The video special effects rendering method comprises the following steps: decoding a obtained video source in response to a user instruction to obtain a first video frame data in a particular format; sending the first video frame data and the preset special effects parameters to the GPU, so that the GPU completes the rendering of the first video frame data; and obtaining a second video frame data from the GPU and encoding to obtain a target special effects video, wherein the second video frame data is obtained by rendering the first video frame data by the GPU. According to the video special effects rendering method, the processing ability of the GPU for graphics is effectively utilized, the parallel computing capabilities of the GPU is utilized to speed up the rendering of video effects, and the duration of video rendering is shortened, so that the preview of the video rendering frames is high, the playback of the frames is smooth, and the occupancy rate of the CPU when rendering is reduced, therefore, the heating temperature of the mobile terminal is reduced.

Owner:广州猎游信息科技有限公司

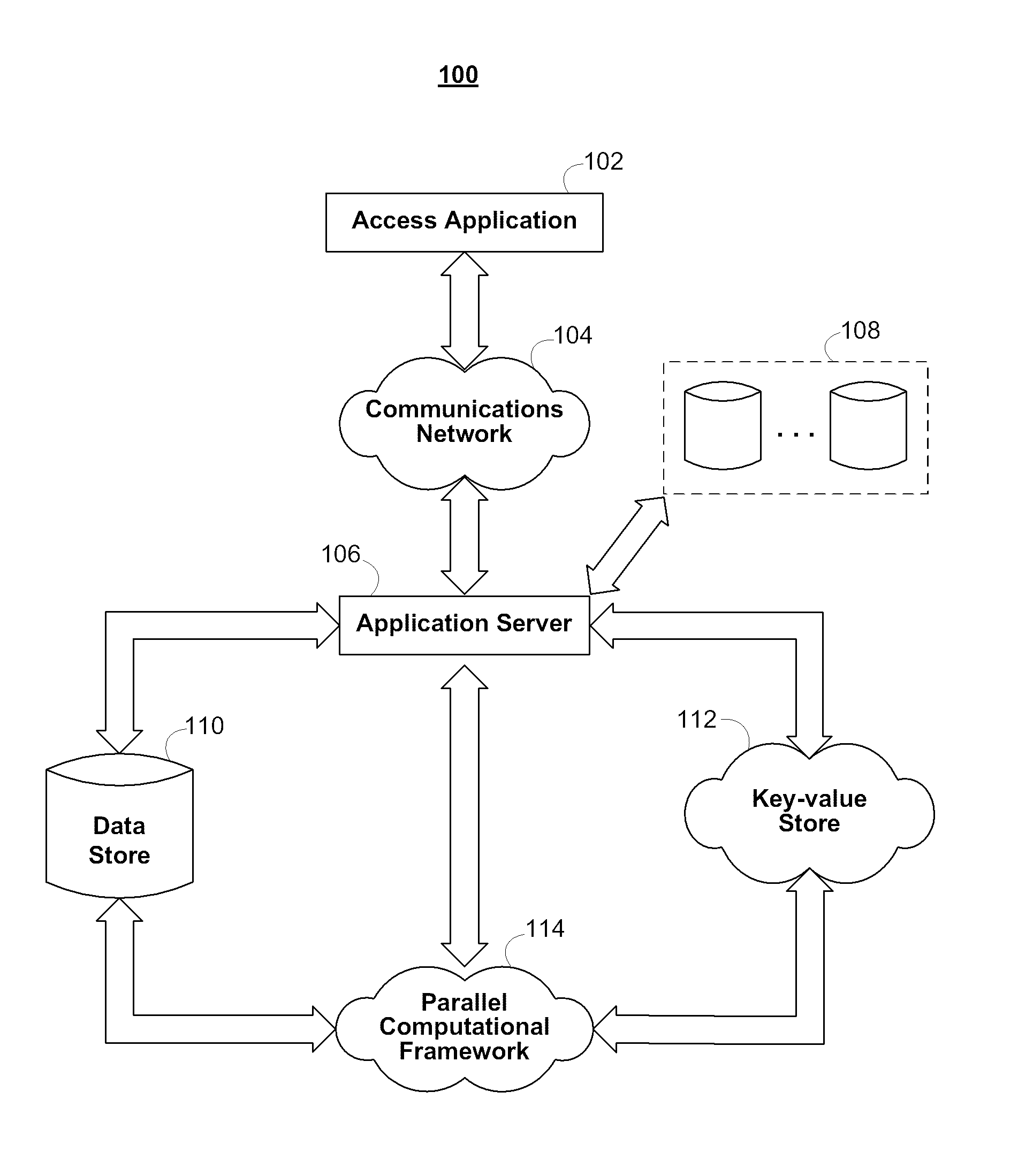

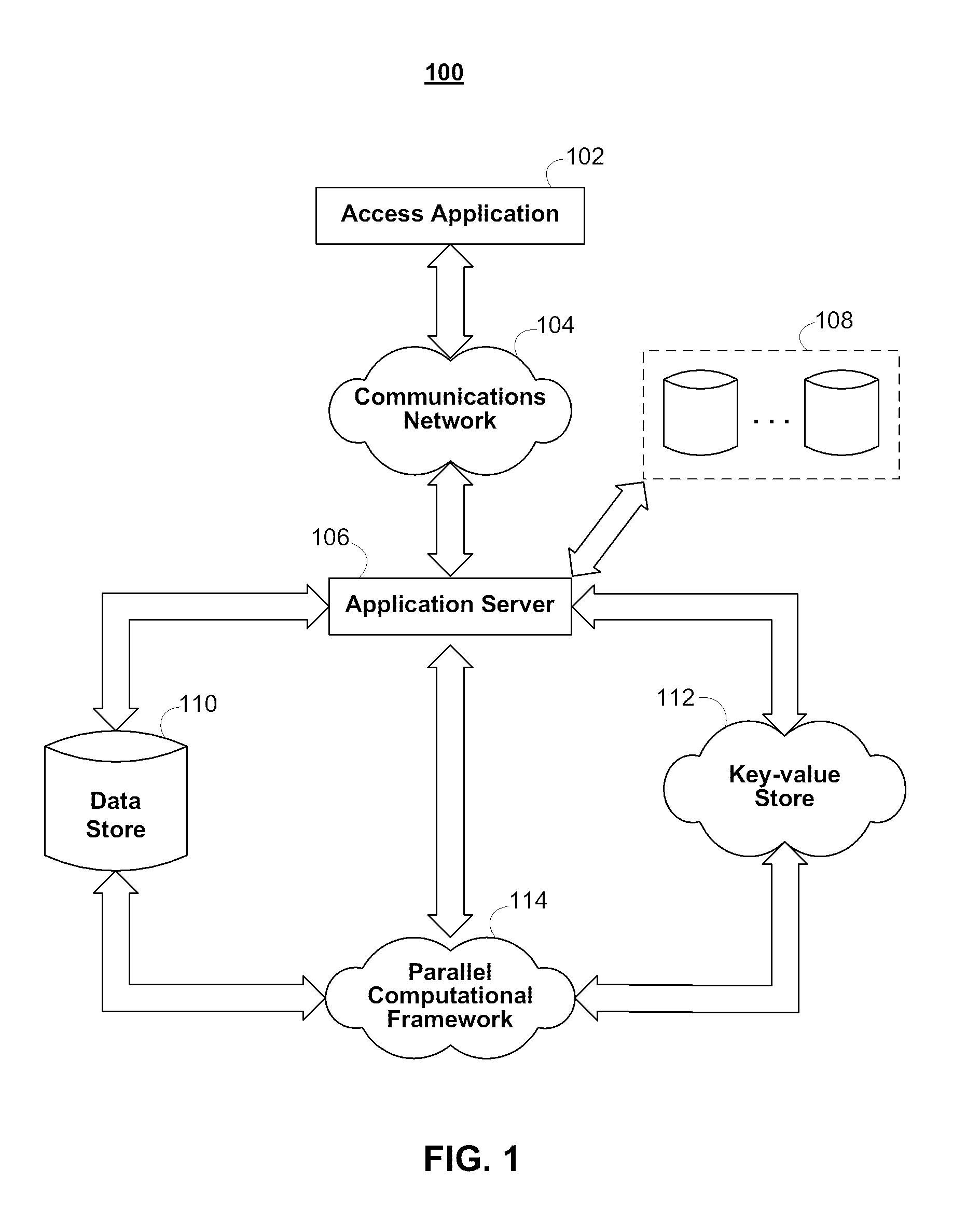

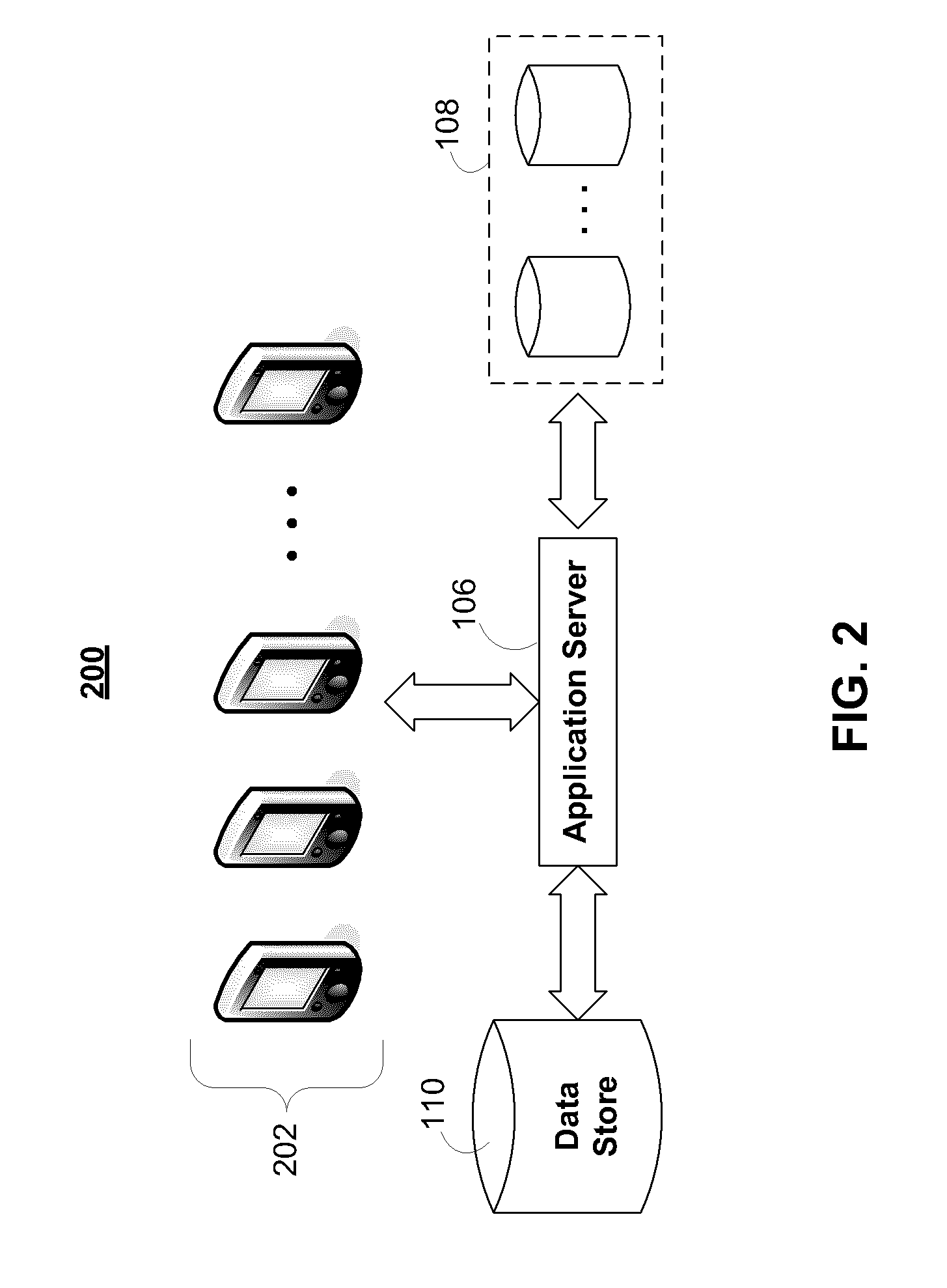

Systems and methods for social graph data analytics to determine connectivity within a community

ActiveUS20120182882A1Error preventionFrequency-division multiplex detailsThird partyCommunity system

Systems and methods for social graph data analytics to determine the connectivity between nodes within a community are provided. A user may assign user connectivity values to other members of the community, or connectivity values may be automatically harvested or assigned from third parties or based on the frequency of interactions between members of the community. Connectivity values may represent such factors as alignment, reputation, status, and / or influence within a social graph of a network community, or the degree of trust. The paths connecting a first node to a second node may be retrieved, and social graph data analytics may be performed on the retrieved paths. For example, a network connectivity value may be determined from all or a subset of all of the retrieved paths. A parallel computational framework may operate in connection with a key-value store to perform some or all of the computations related to the connectivity determinations. Network connectivity values and / or other social graph data may be outputted to third-party processes and services for use in initiating automatic transactions or making automated network-based or real-world decisions.

Owner:WWW TRUSTSCI COM INC

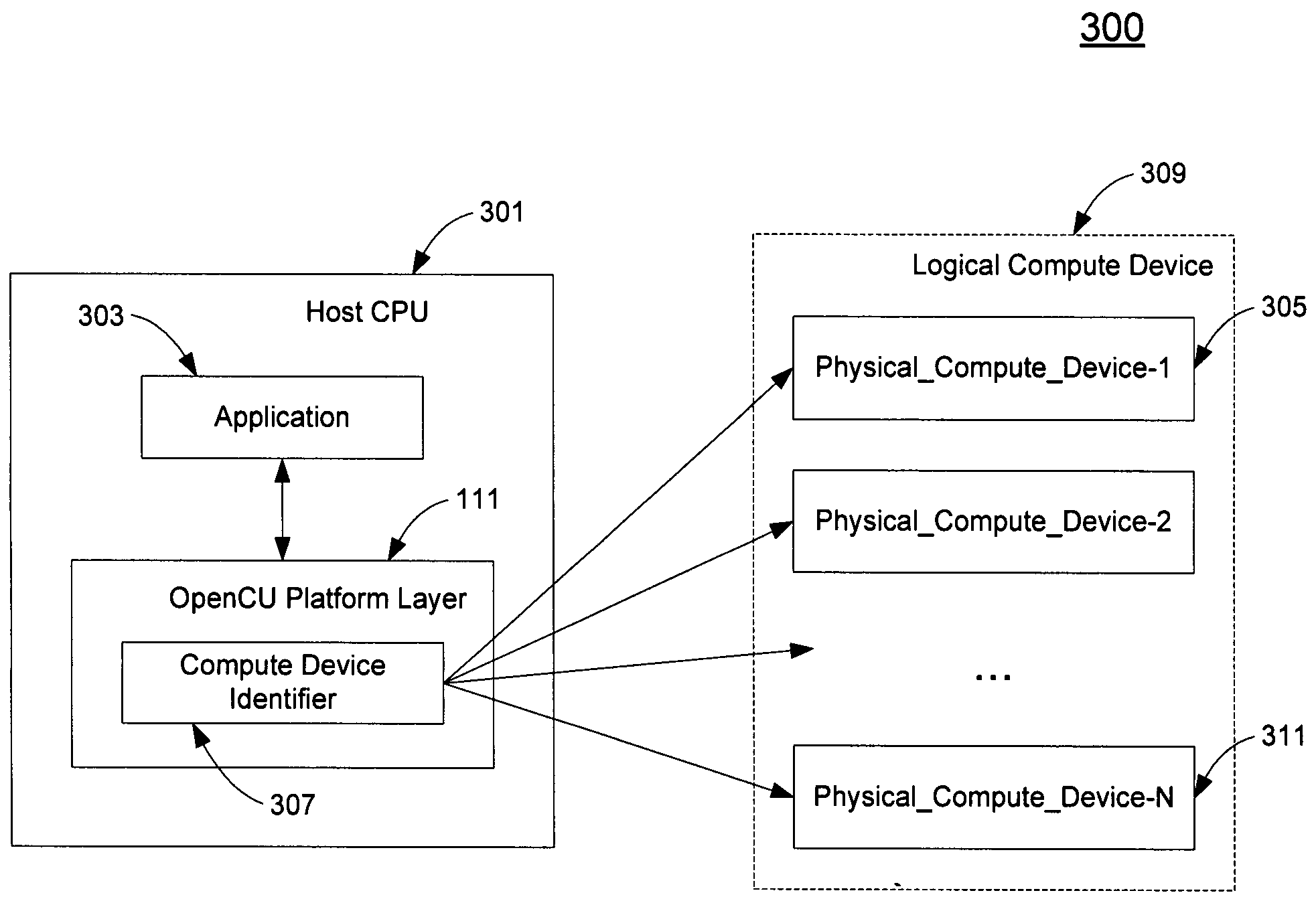

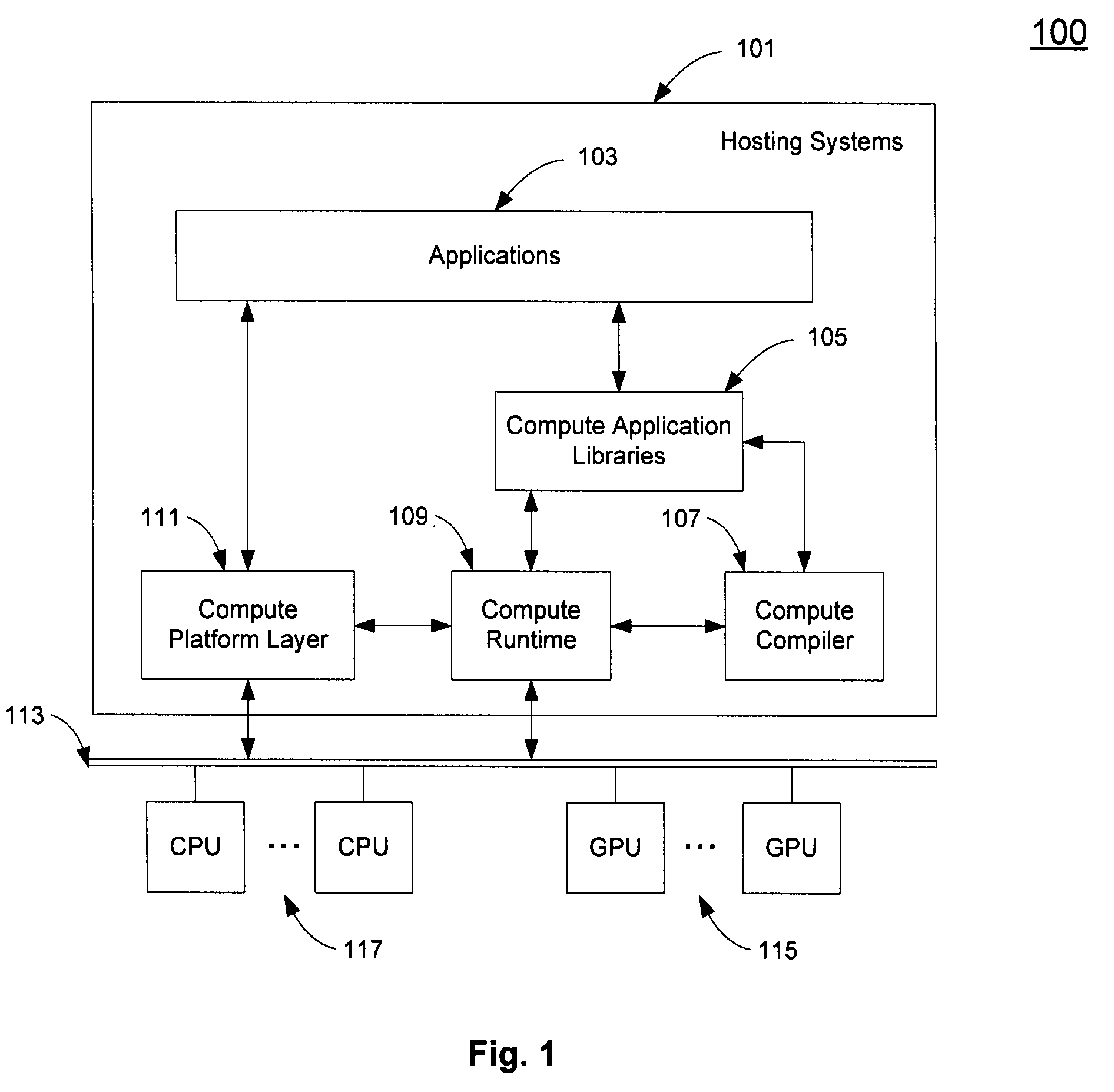

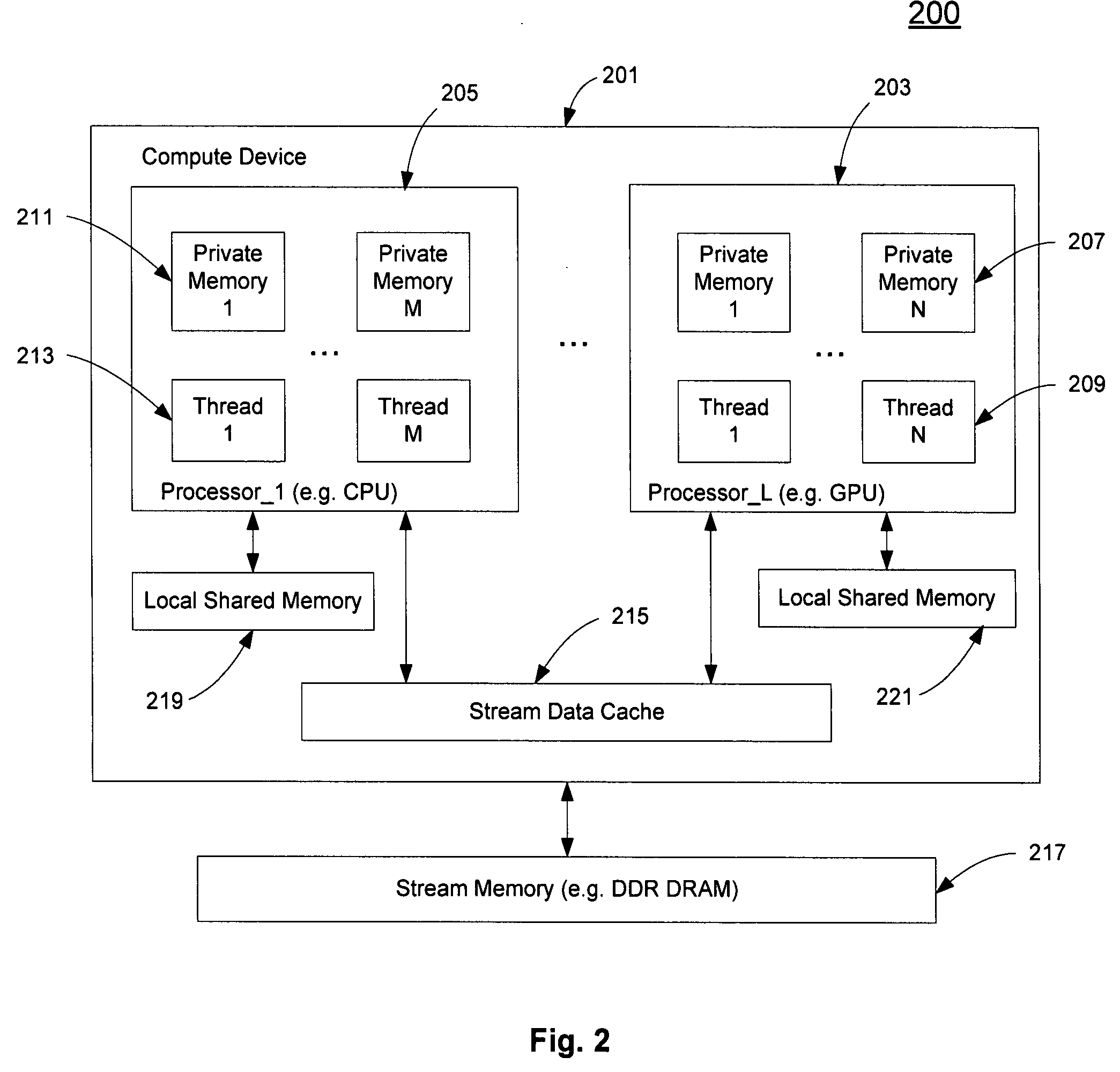

Data parallel computing on multiple processors

ActiveUS20080276261A1Multiprogramming arrangementsSoftware simulation/interpretation/emulationComputer architectureConcurrent computation

A method and an apparatus that allocate one or more physical compute devices such as CPUs or GPUs attached to a host processing unit running an application for executing one or more threads of the application are described. The allocation may be based on data representing a processing capability requirement from the application for executing an executable in the one or more threads. A compute device identifier may be associated with the allocated physical compute devices to schedule and execute the executable in the one or more threads concurrently in one or more of the allocated physical compute devices concurrently.

Owner:APPLE INC

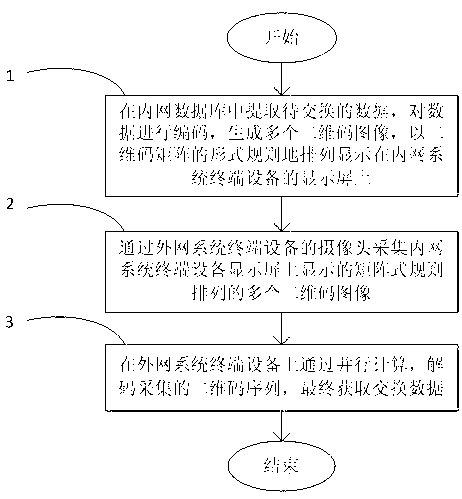

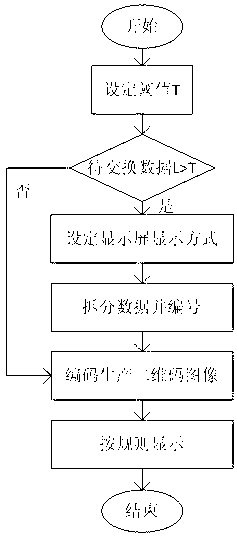

Intranet-extranet physical isolation data exchange method based on QR (quick response) code

InactiveCN103268461AMake up for the high priceMake up for defects such as labor requiredCo-operative working arrangementsInternal/peripheral component protectionParallel algorithmData exchange

The invention discloses an intranet-extranet physical isolation data exchange method based on QR (quick response) code. The method of exchanging data from an intranet to an extranet includes: firstly, extracting data to be exchanged in an intranet database, encoding the data to generate a plurality of QR code images, and regularly arranging and displaying the images on a display screen of intranet system terminal equipment in a form of QR code matrix; secondly, using a camera of extranet system terminal equipment to acquire the matrix-type irregularly arranged QR code images displayed on the display screen of the intranet system terminal equipment; and thirdly decoding acquired QR code sequences by parallel algorithm on the extranet system terminal equipment so as to obtain exchanged data. The method has the advantages that such defects as high precise and need for labor in existing traditional exchange methods of gateway copying or USB (universal serial bus) flash drive copying, the method is high in reliability, high in safety and low in operation and maintenance cost, exchange time is shortened, and efficiency in intranet-extranet data exchange is improved.

Owner:浙江成功软件开发有限公司

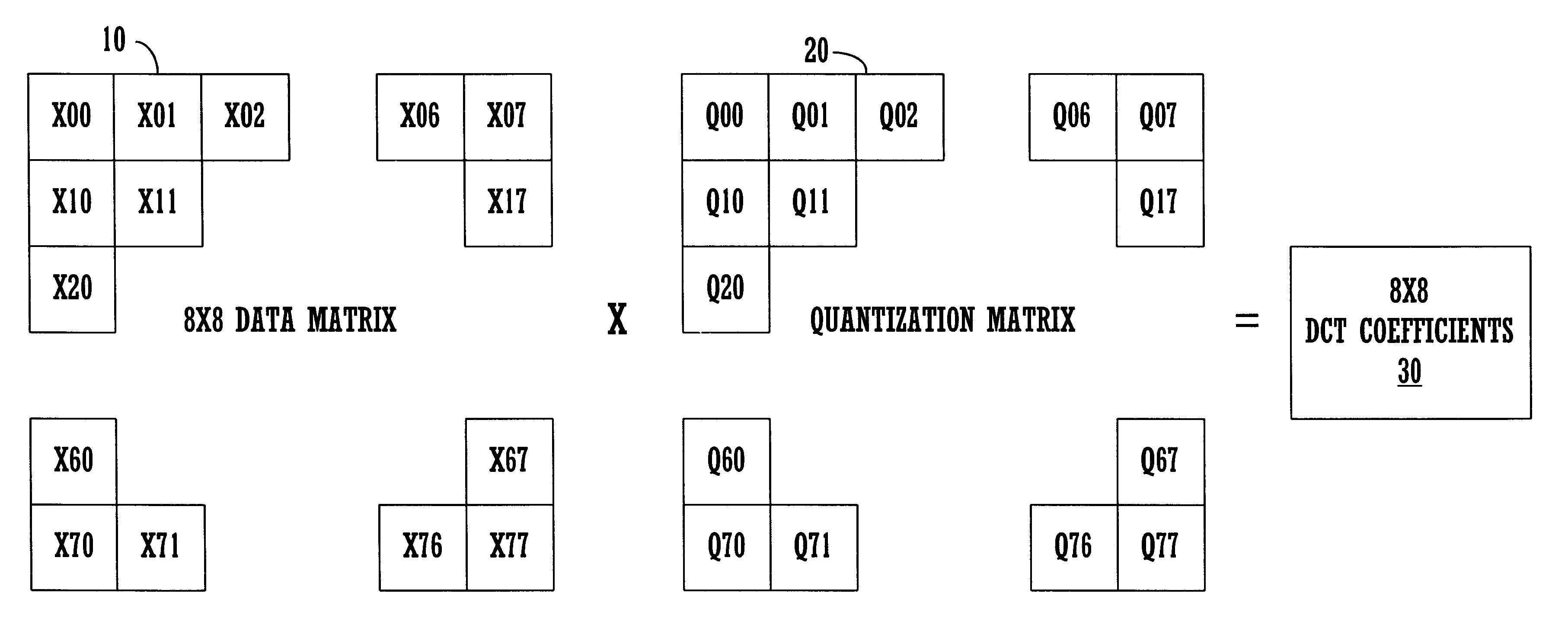

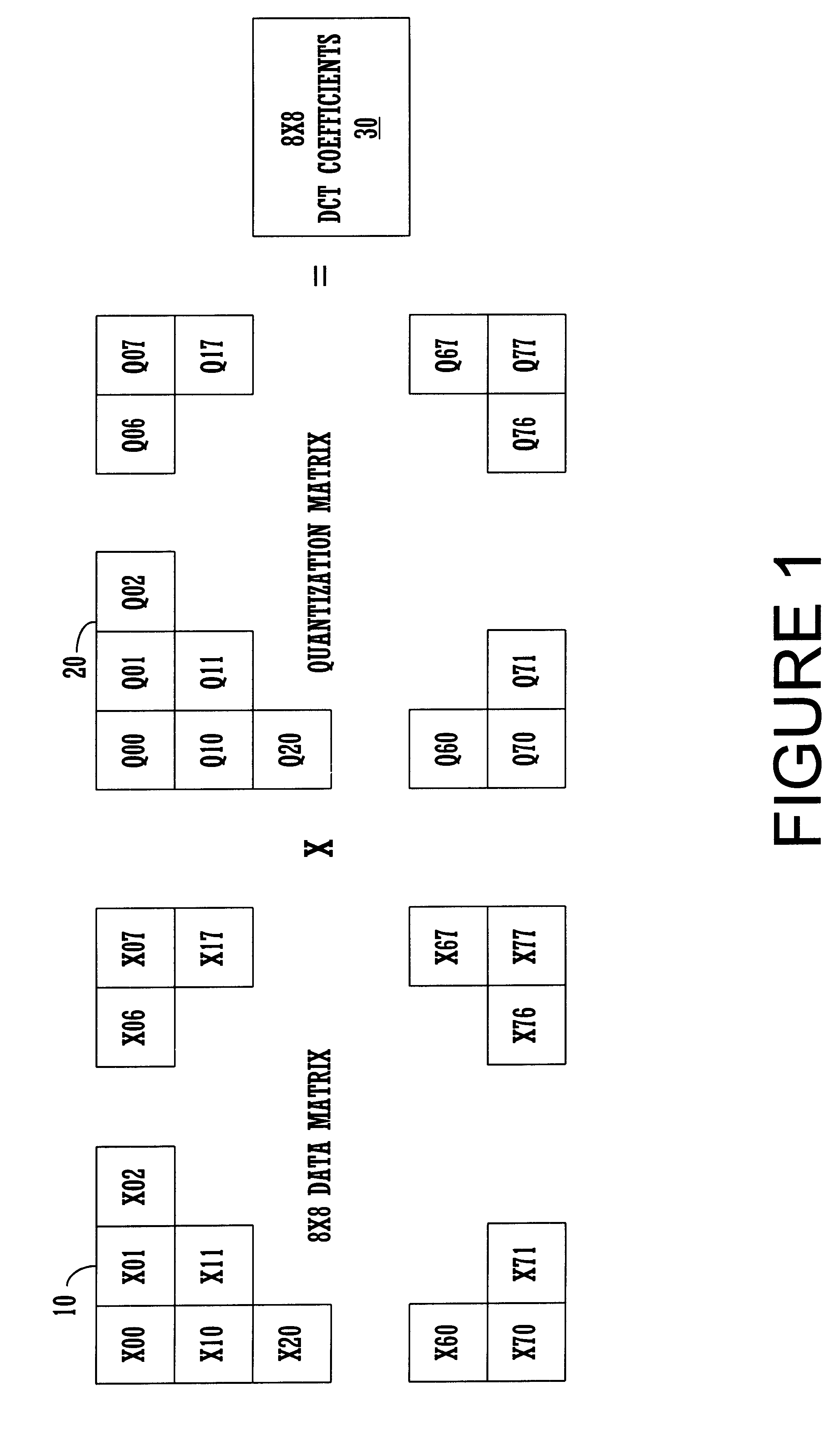

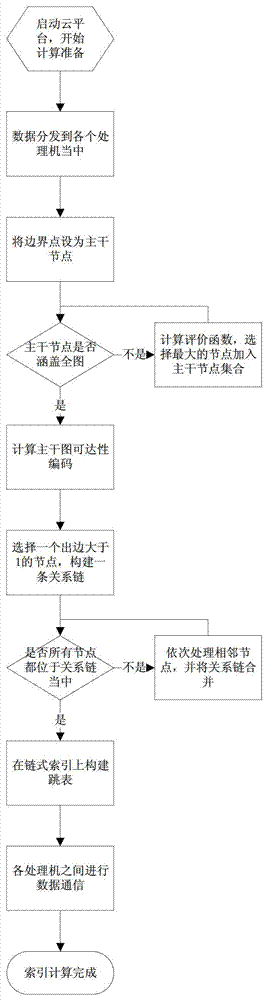

Efficient de-quantization in a digital video decoding process using a dynamic quantization matrix for parallel computations

InactiveUS6507614B1Efficient productionPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningDigital videoArray data structure

An efficient digital video (DV) decoder process that utilizes a specially constructed quantization matrix allowing an inverse quantization subprocess to perform parallel computations, e.g., using SIMD processing, to efficiently produce a matrix of DCT coefficients. The present invention utilizes a first look-up table (for 8x8 DCT) which produces a 15-valued quantization scale based on class number information and a QNO number for an 8x8 data block ("data matrix") from an input encoded digital bit stream to be decoded. The 8x8 data block is produced from a deframing and variable length decoding subprocess. An individual 8-valued segment of the 15-value output array is multiplied by an individual 8-valued segment, e.g., "a row," of the 8x8 data matrix to produce an individual row of the 8x8 matrix of DCT coefficients ("DCT matrix"). The above eight multiplications can be performed in parallel using a SIMD architecture to simultaneously generate a row of eight DCT coefficients. In this way, eight passes through the 8x8 block are used to produce the entire 8x8 DCT matrix, in one embodiment consuming only 33 instructions per 8x8 block. After each pass, the 15-valued output array is shifted by one value position for proper alignment with its associated row of the data matrix. The DCT matrix is then processed by an inverse discrete cosine transform subprocess that generates decoded display data. A second lookup table can be used for 2x4x8 DCT processing.

Owner:SONY ELECTRONICS INC +1

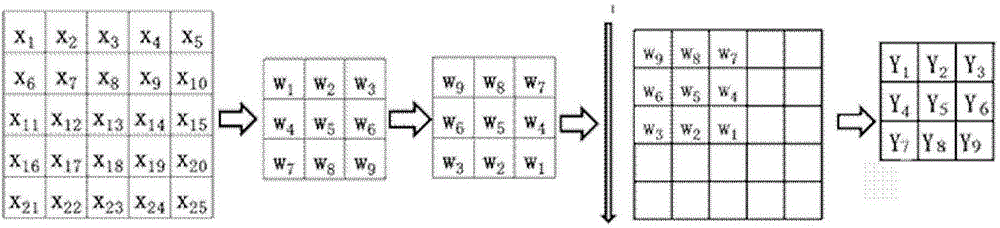

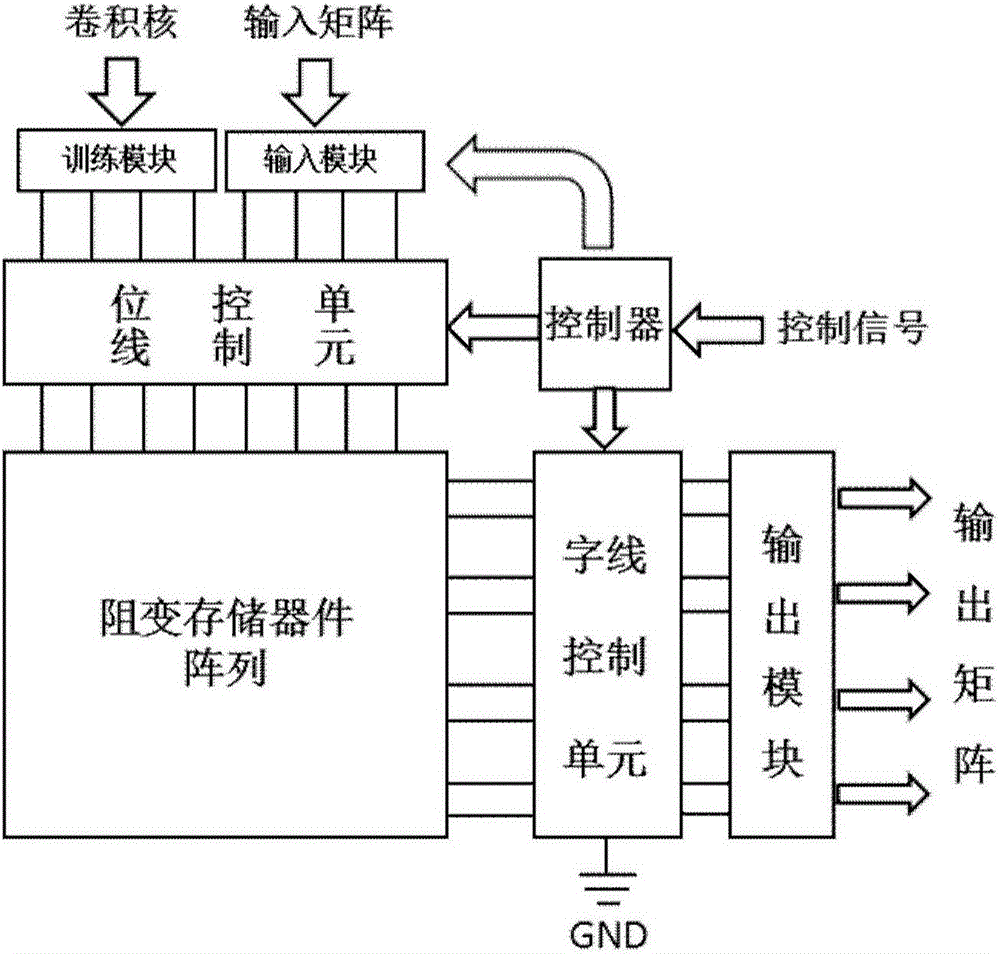

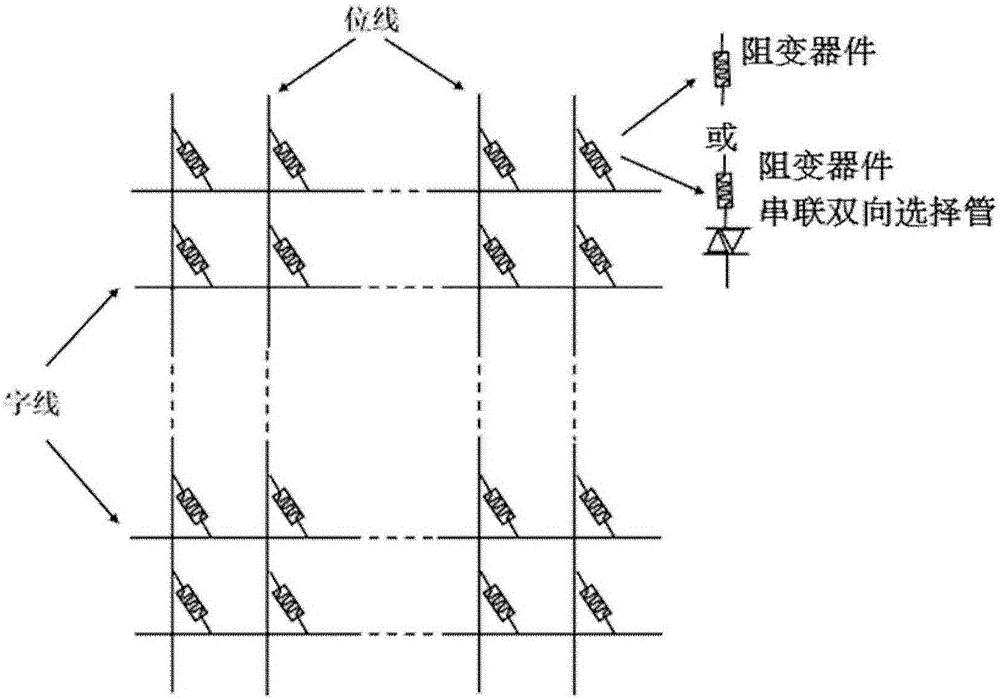

Equipment and method for realizing parallel convolution calculation based on resistive random access memory array

The invention discloses equipment and method for realizing parallel convolution calculation based on a resistive random access memory array. The equipment comprises a resistive random access memory array, a training module, an input module, a bit line control unit, a word line control unit, an output module, and a controller. In addition, the method includes: when convolution is calculated, a convolution kernel is written into each resistive unit by corresponding to an input position, wherein the conductive value of the resistive unit expresses the numerical value of the convolution kernel; the value of a level applied on a bit line expresses an input matrix; each output module expresses a convolution result; and output signals of different output modules express results of different input regions or different convolution kernels, so that parallel calculation of convolution is realized.

Owner:PEKING UNIV

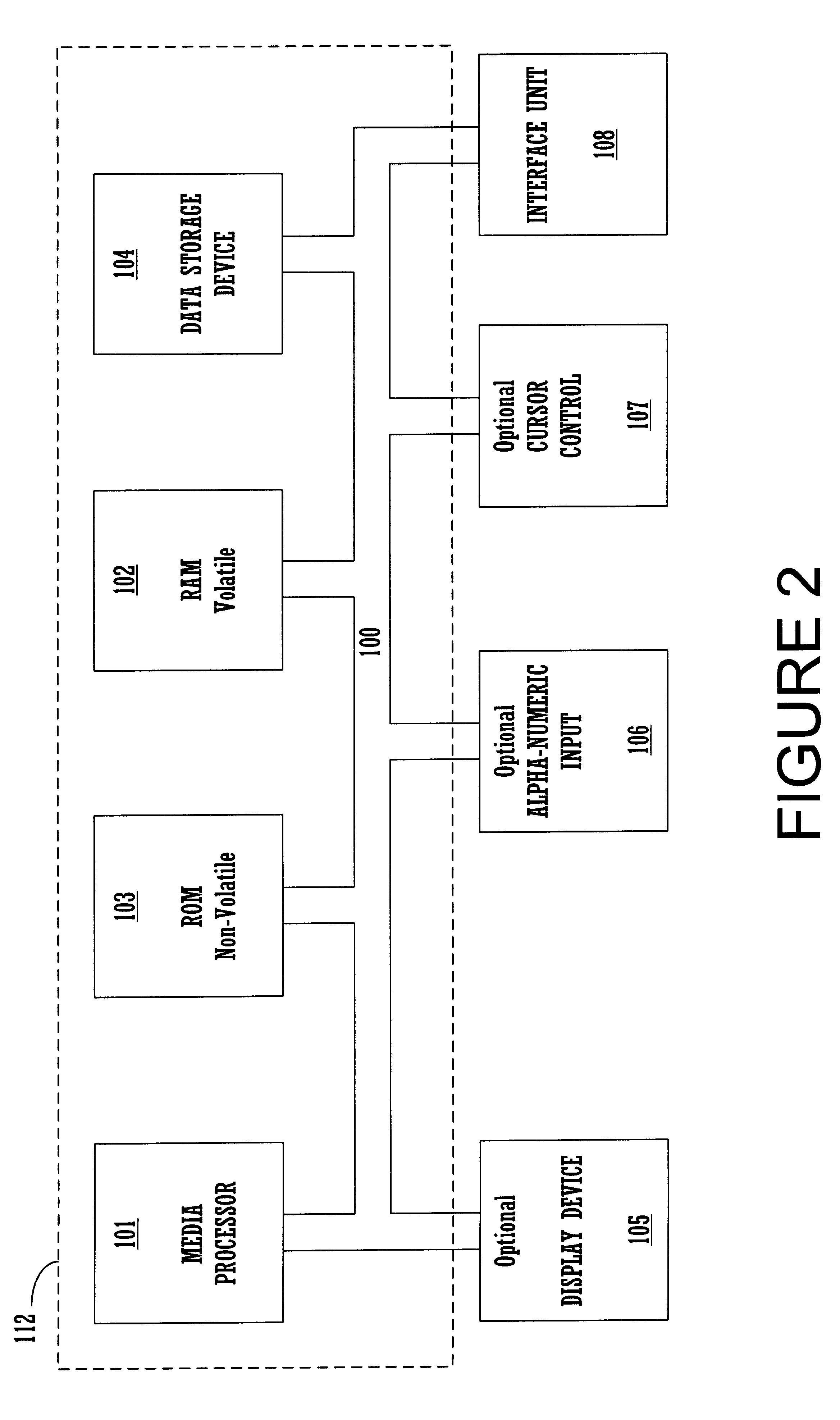

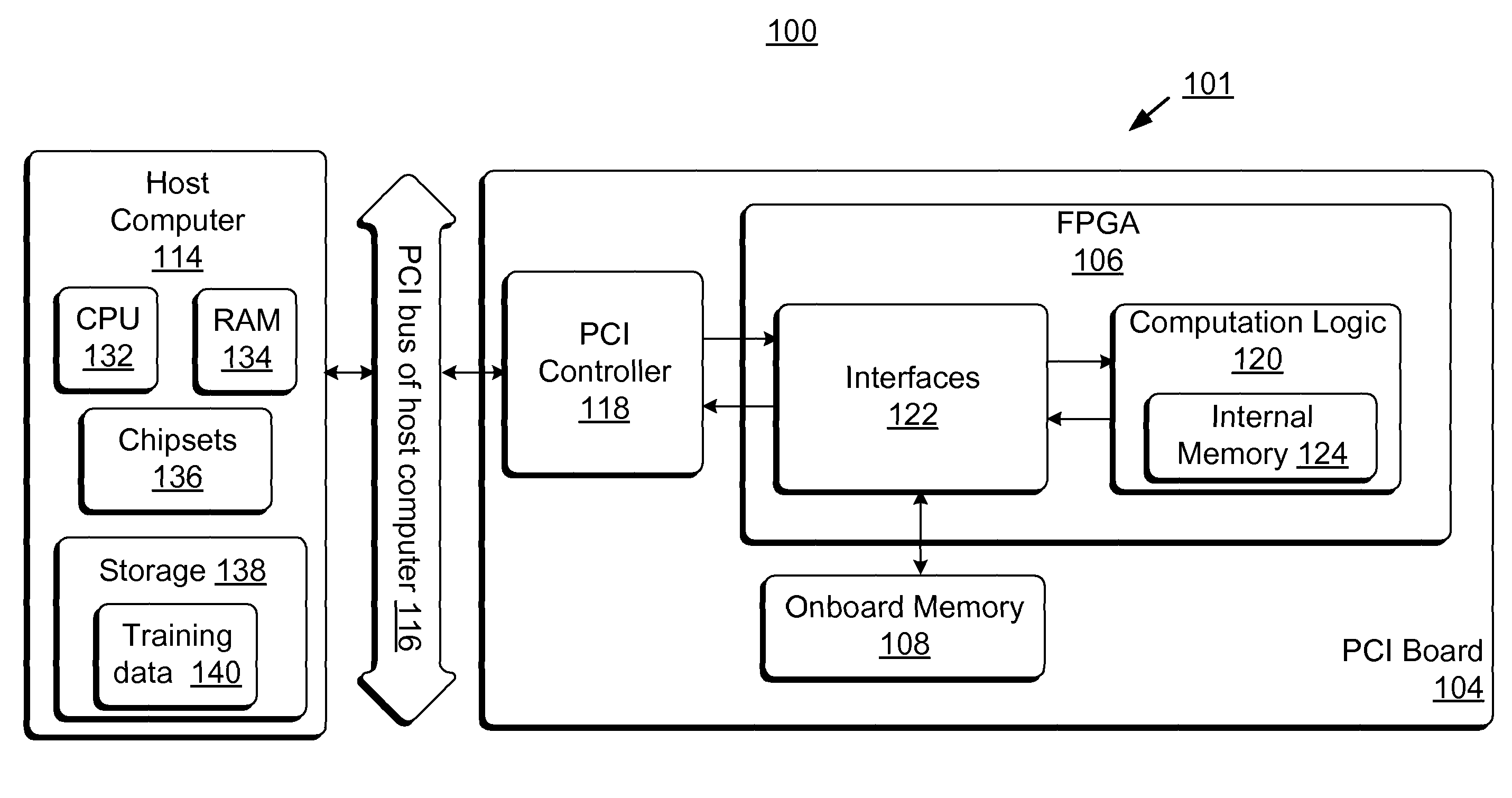

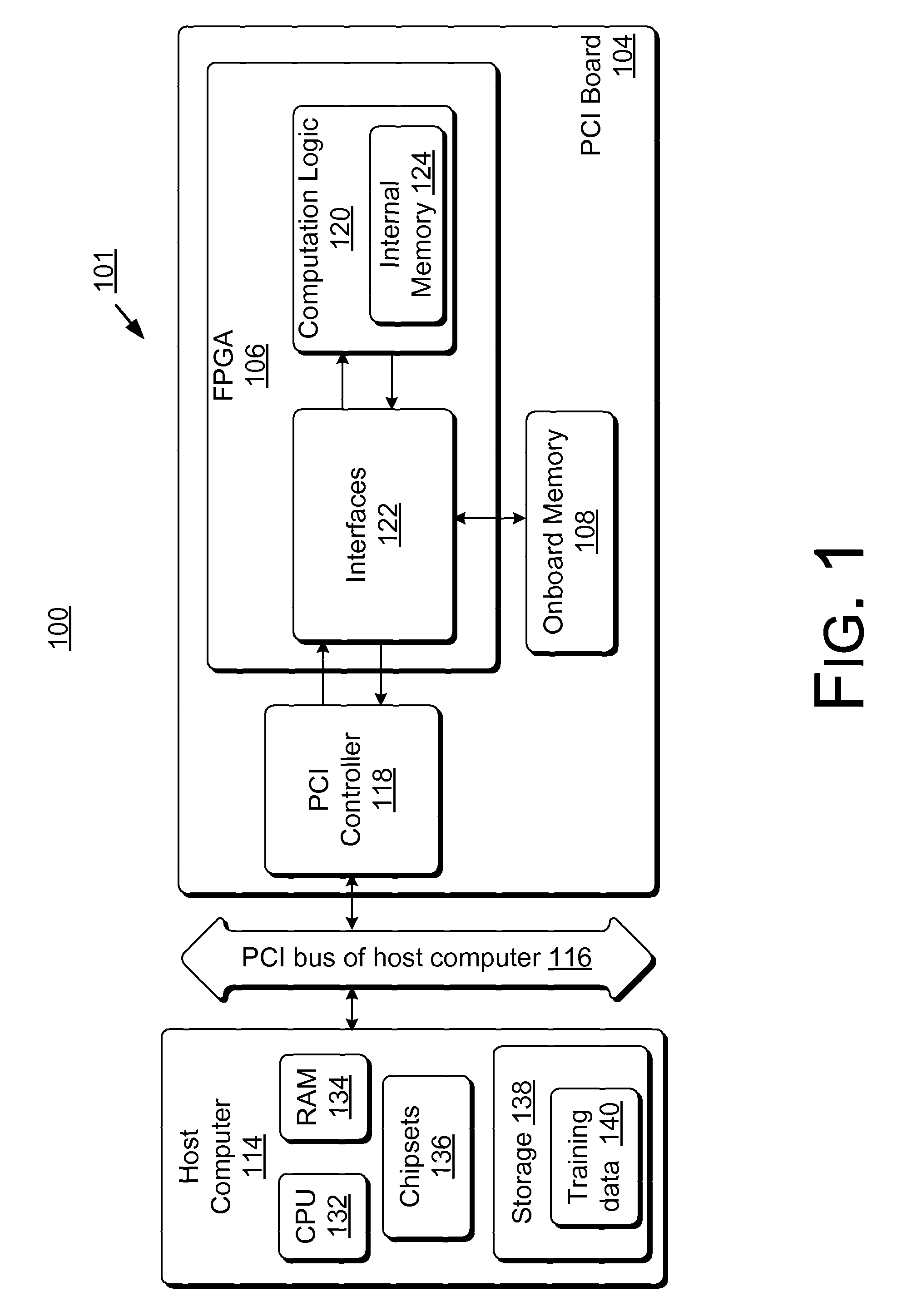

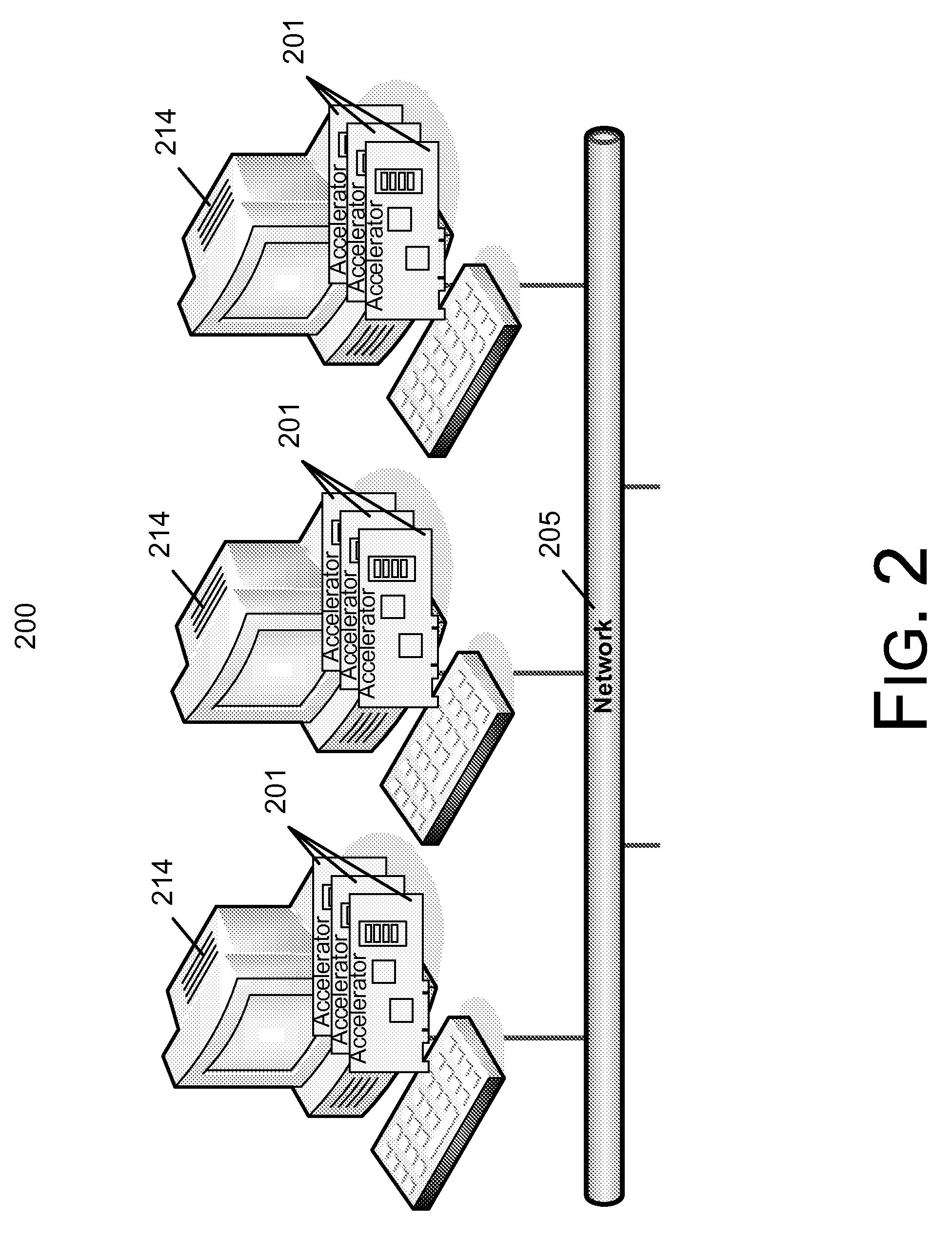

Field-programmable gate array based accelerator system

ActiveUS8131659B2Less timeLess costDigital computer detailsDigital dataHidden layerArithmetic logic unit

Accelerator systems and methods are disclosed that utilize FPGA technology to achieve better parallelism and processing speed. A Field Programmable Gate Array (FPGA) is configured to have a hardware logic performing computations associated with a neural network training algorithm, especially a Web relevance ranking algorithm such as LambaRank. The training data is first processed and organized by a host computing device, and then streamed to the FPGA for direct access by the FPGA to perform high-bandwidth computation with increased training speed. Thus, large data sets such as that related to Web relevance ranking can be processed. The FPGA may include a processing element performing computations of a hidden layer of the neural network training algorithm. Parallel computing may be realized using a single instruction multiple data streams (SIMD) architecture with multiple arithmetic logic units in the FPGA.

Owner:MICROSOFT TECH LICENSING LLC

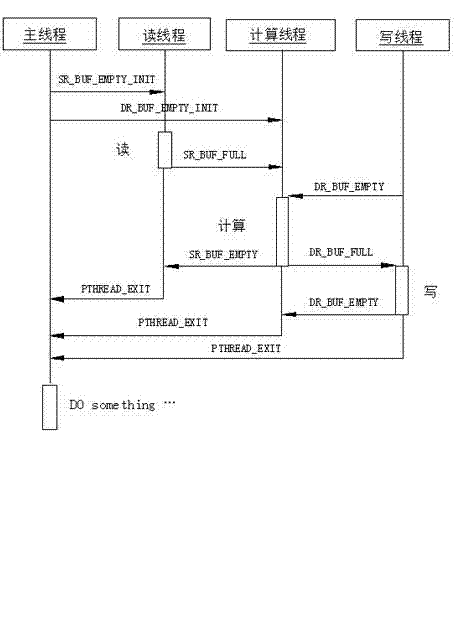

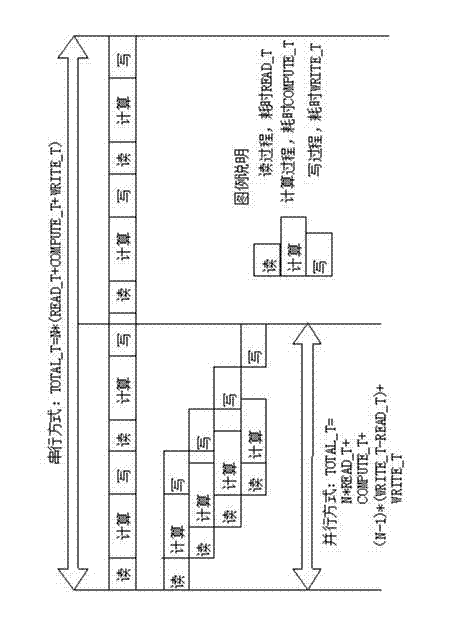

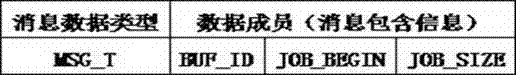

Multi-thread parallel processing method based on multi-thread programming and message queue

ActiveCN102902512AFast and efficient multi-threaded transformationReduce running timeConcurrent instruction executionComputer architectureConcurrent computation

The invention provides a multi-thread parallel processing method based on a multi-thread programming and a message queue, belonging to the field of high-performance computation of a computer. The parallelization of traditional single-thread serial software is modified, and current modern multi-core CPU (Central Processing Unit) computation equipment, a pthread multi-thread parallel computing technology and a technology for realizing in-thread communication of the message queue are utilized. The method comprises the following steps of: in a single node, establishing three types of pthread threads including a reading thread, a computing thread and a writing thread, wherein the quantity of each type of the threads is flexible and configurable; exploring multi-buffering and establishing four queues for the in-thread communication; and allocating a computing task and managing a buffering space resource. The method is widely applied to the application field with multi-thread parallel processing requirements; a software developer is guided to carry out multi-thread modification on existing software so as to realize the optimization of the utilization of a system resource; and the hardware resource utilization rate is obviously improved, and the computation efficiency of software and the whole performance of the software are improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

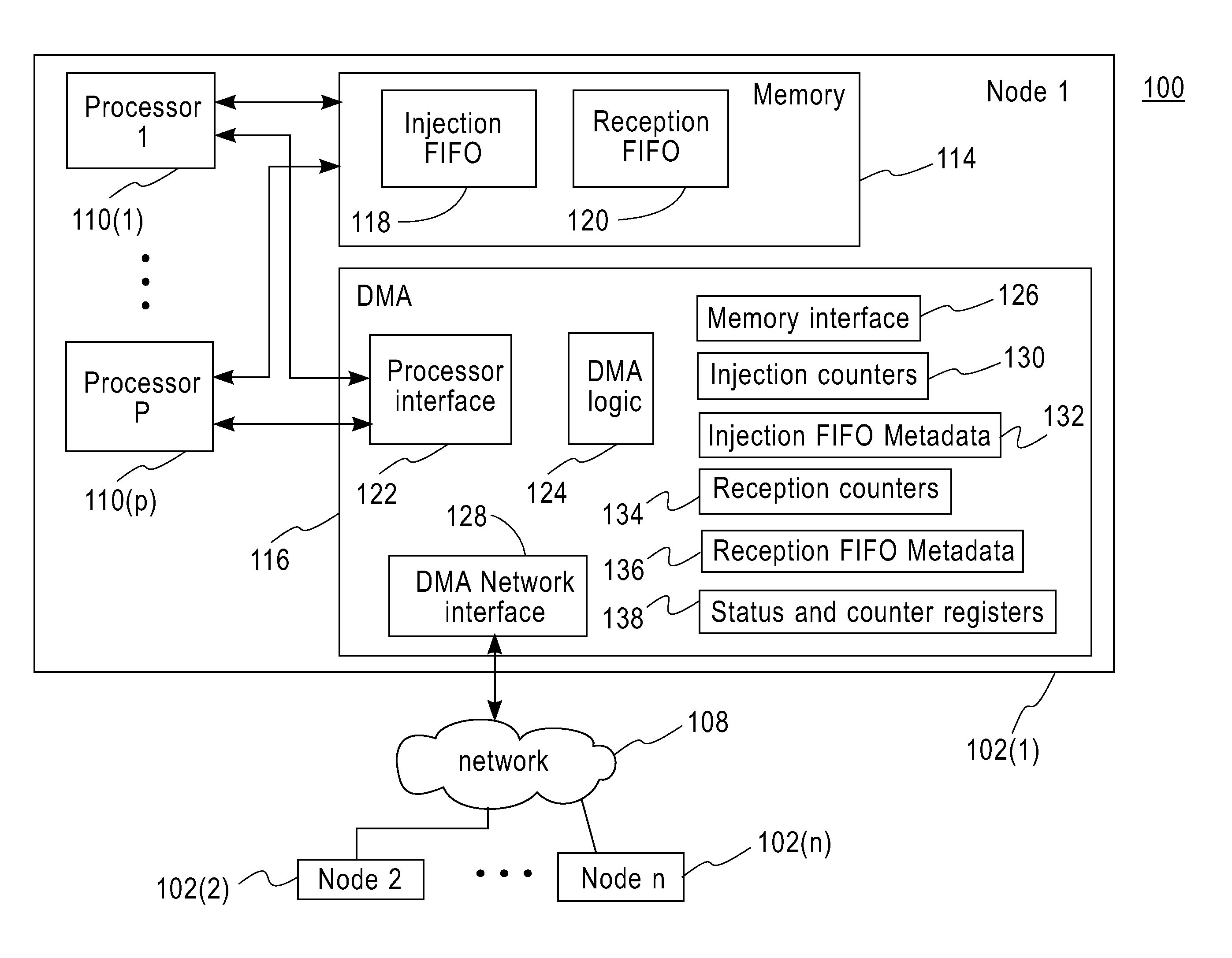

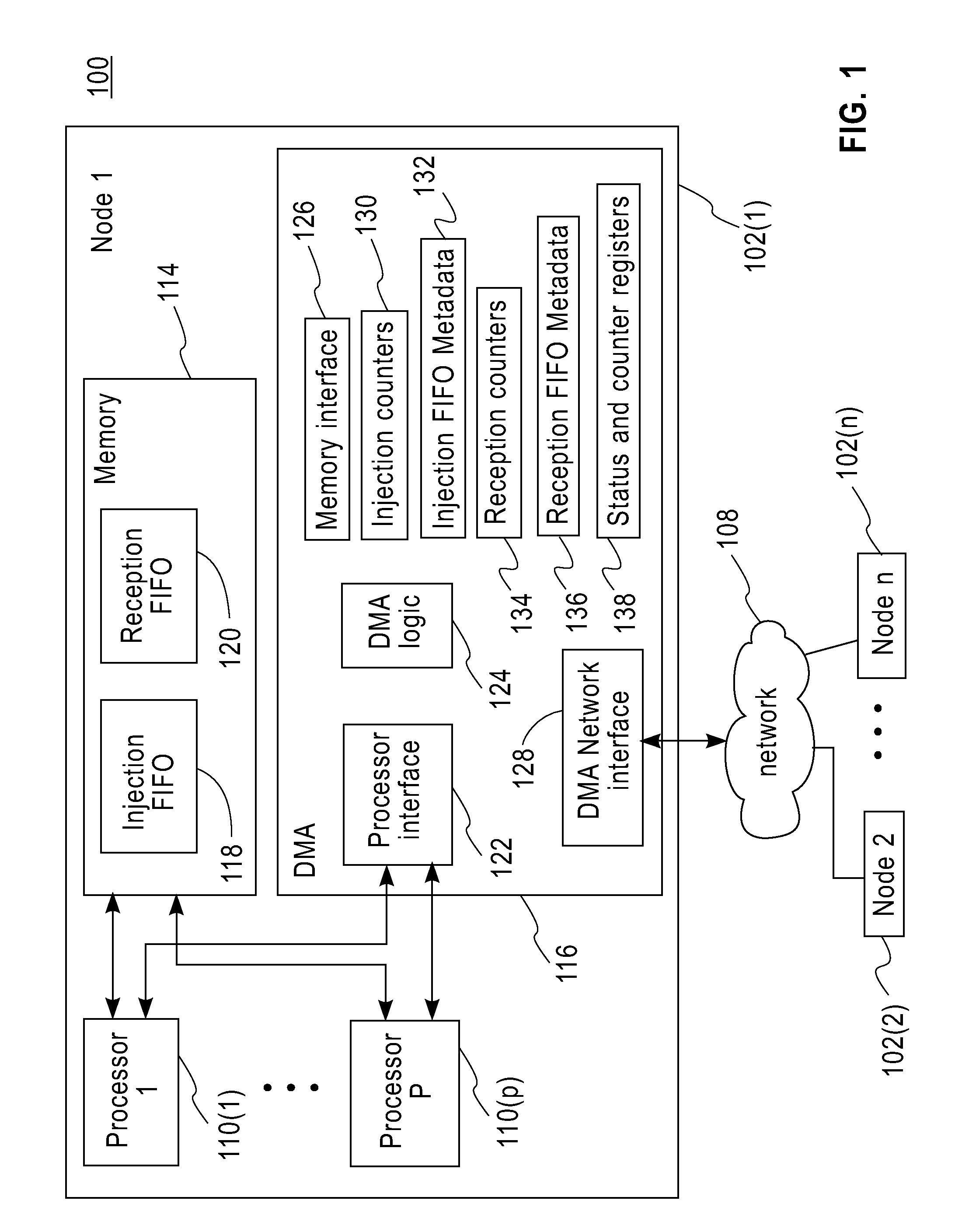

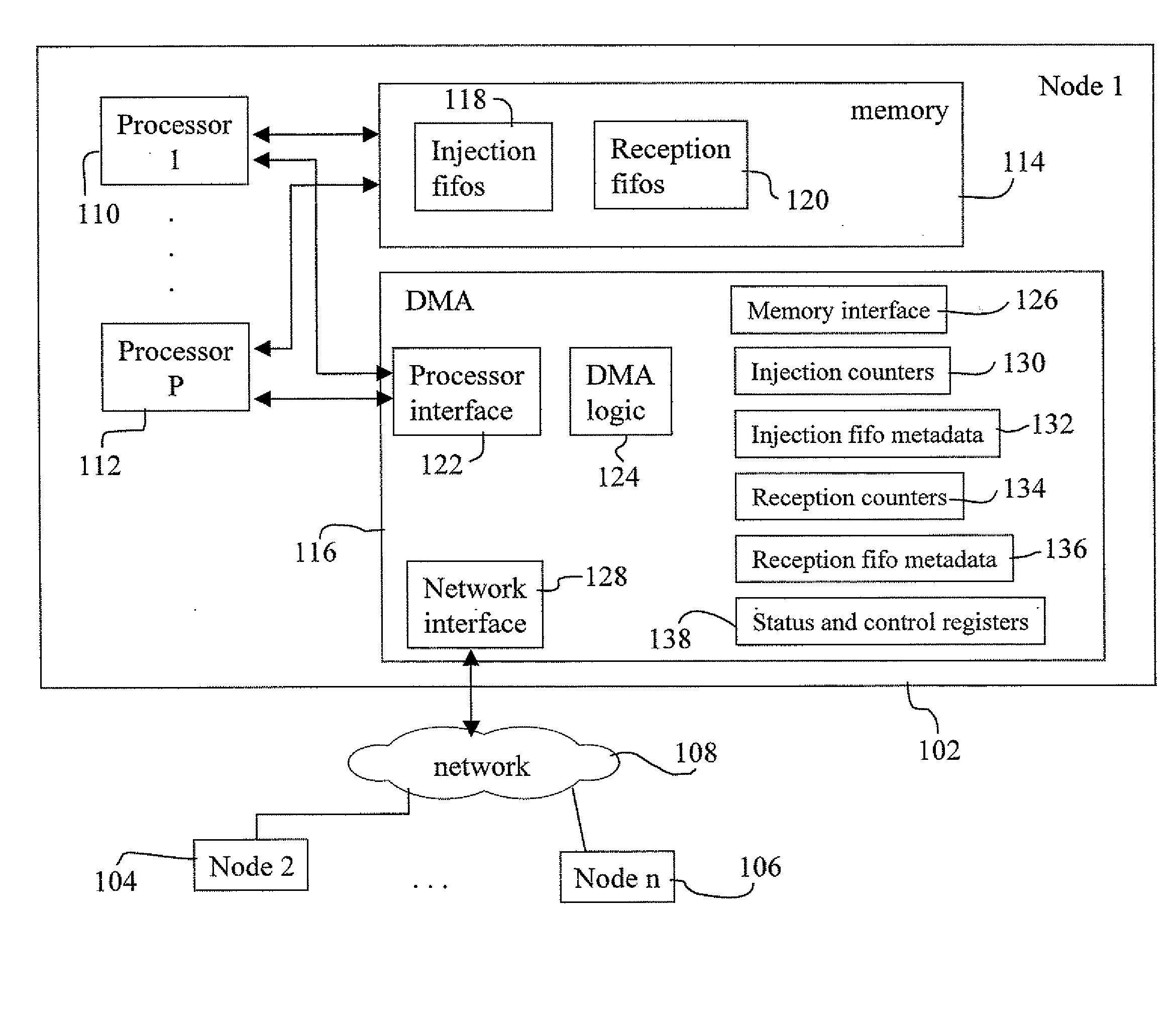

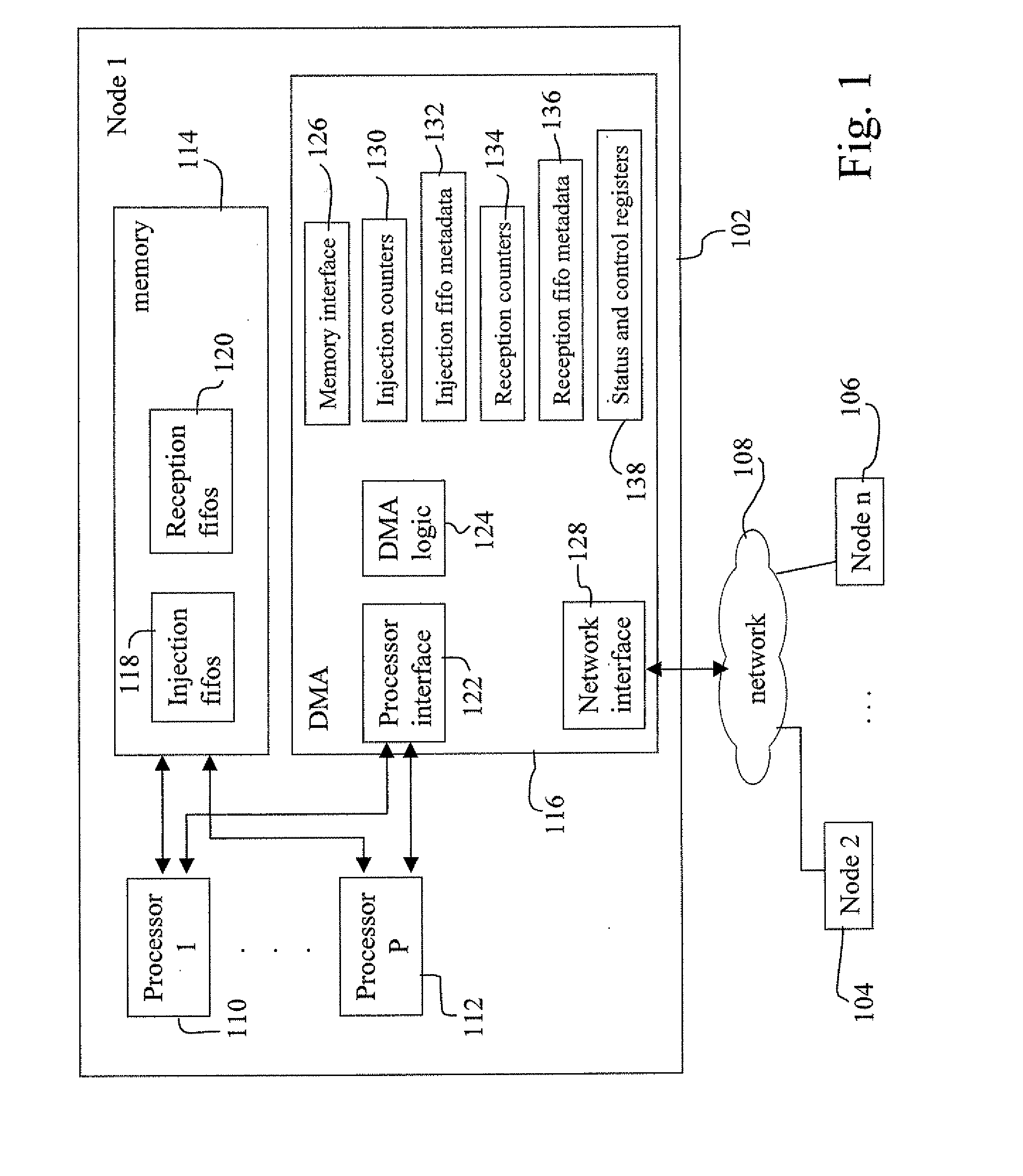

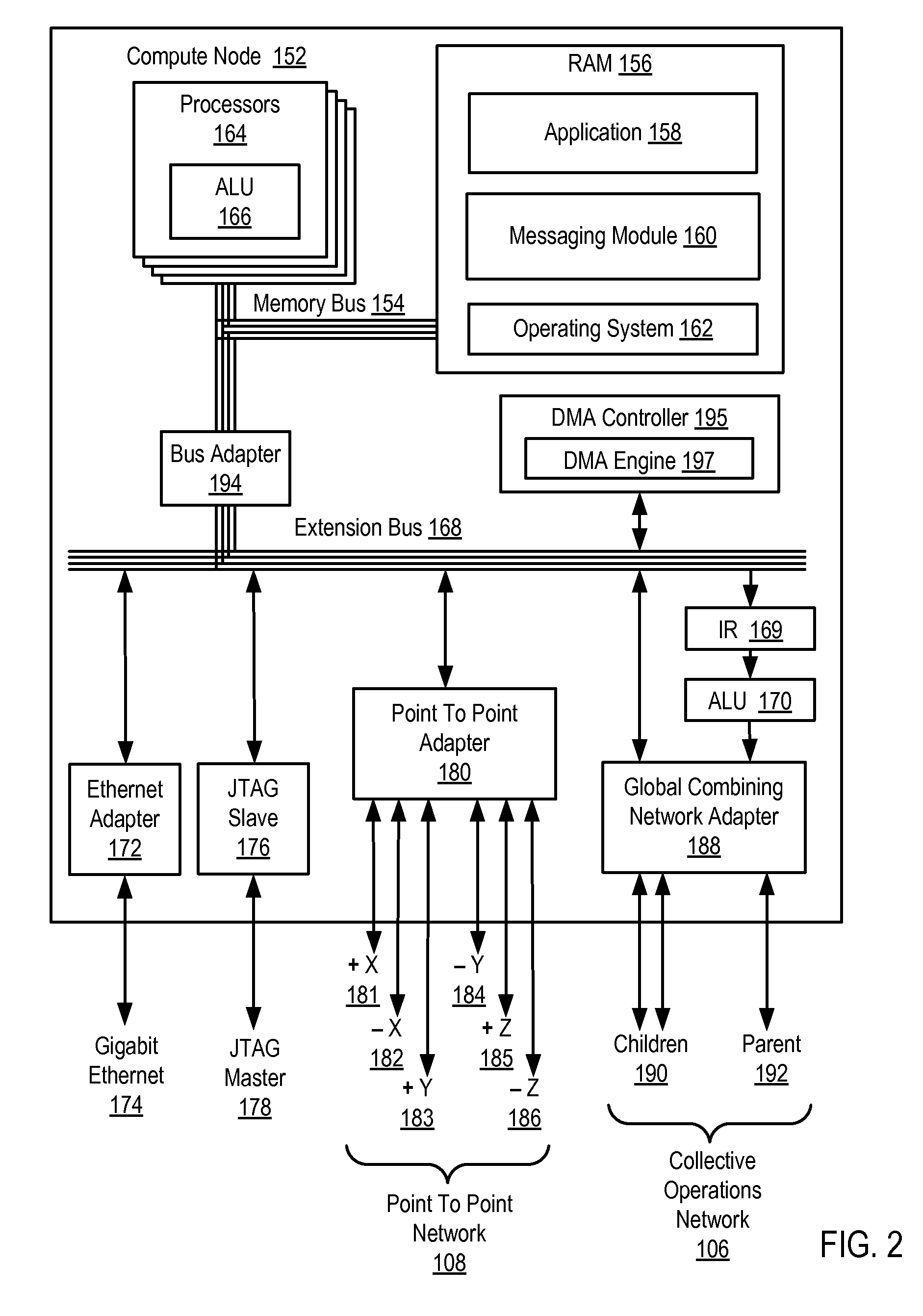

Message passing with a limited number of DMA byte counters

InactiveUS20090007141A1Digital computer detailsSpecific program execution arrangementsMessage passingClear to send

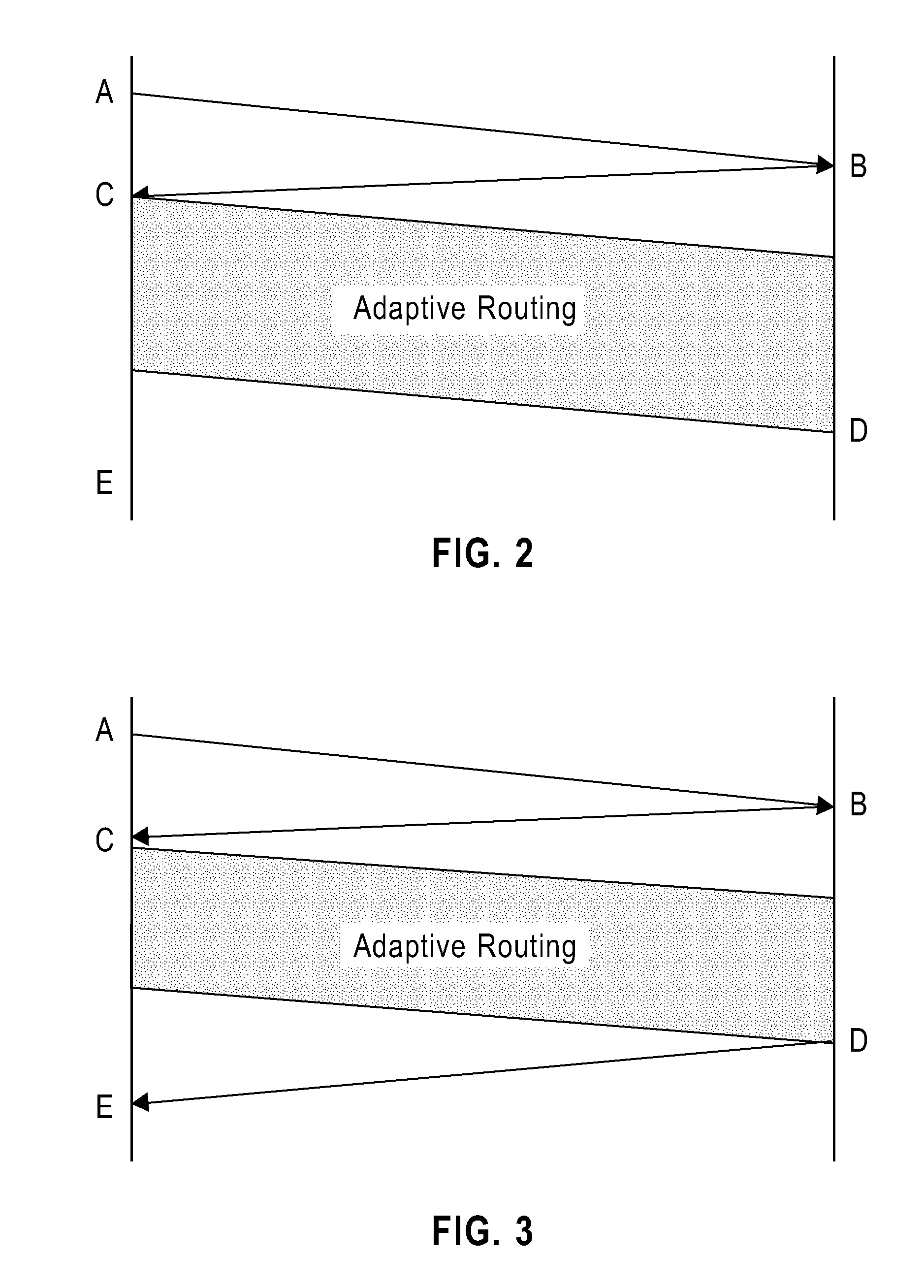

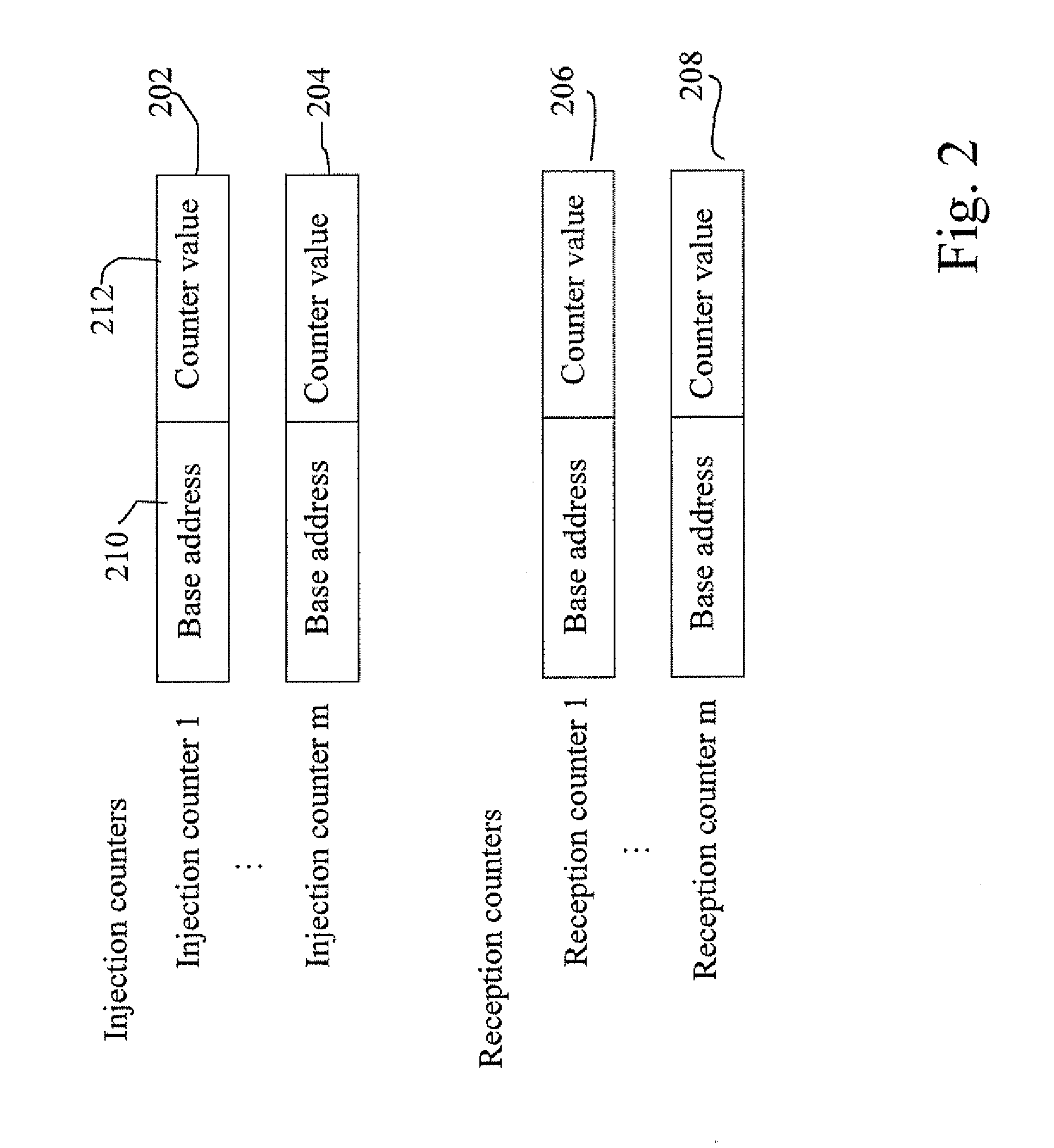

A method for passing messages in a parallel computer system constructed as a plurality of compute nodes interconnected as a network where each compute node includes a DMA engine but includes only a limited number of byte counters for tracking a number of bytes that are sent or received by the DMA engine, where the byte counters may be used in shared counter or exclusive counter modes of operation. The method includes using rendezvous protocol, a source compute node deterministically sending a request to send (RTS) message with a single RTS descriptor using an exclusive injection counter to track both the RTS message and message data to be sent in association with the RTS message, to a destination compute node such that the RTS descriptor indicates to the destination compute node that the message data will be adaptively routed to the destination node. Using one DMA FIFO at the source compute node, the RTS descriptors are maintained for rendezvous messages destined for the destination compute node to ensure proper message data ordering thereat. Using a reception counter at a DMA engine, the destination compute node tracks reception of the RTS and associated message data and sends a clear to send (CTS) message to the source node in a rendezvous protocol form of a remote get to accept the RTS message and message data and processing the remote get (CTS) by the source compute node DMA engine to provide the message data to be sent.

Owner:IBM CORP

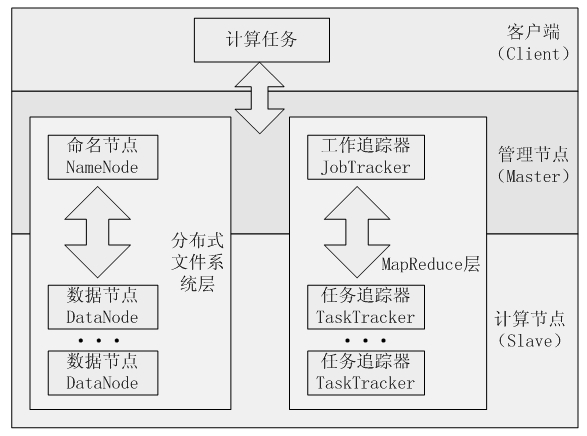

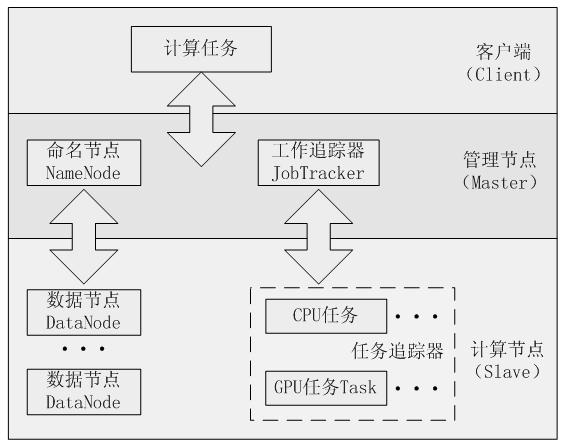

Mapreduce-based multi-GPU (Graphic Processing Unit) cooperative computing method

InactiveCN102662639AReduce communicationImprove general performanceConcurrent instruction executionComputational scienceConcurrent computation

The invention discloses a mapreduce-based multi-GPU (Graphic Processing Unit) cooperative computing method, which belongs to the application field of computer software. Corresponding to single-layer parallel architecture of common high-performance GPU computing and MapReduce parallel computing, a programming model adopts a double-layer GPU and MapReduce parallel architecture to help a developer simplify the program model and multiplex the existing concurrent codes through a MapReduce program model with cloud computing concurrent computation by combining the structure characteristic of a GPU plus CPU (Central Processing Unit) heterogeneous system, thus reducing the programming complexity, having certain system disaster tolerance capacity and reducing the dependency of equipment. According to the computing method provided by the invention, the GPU plus MapReduce double concurrent mode can be used in a cloud computing platform or a common distributive computing system so as to realize concurrent processing of MapReduce tasks on a plurality of GPU cards.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

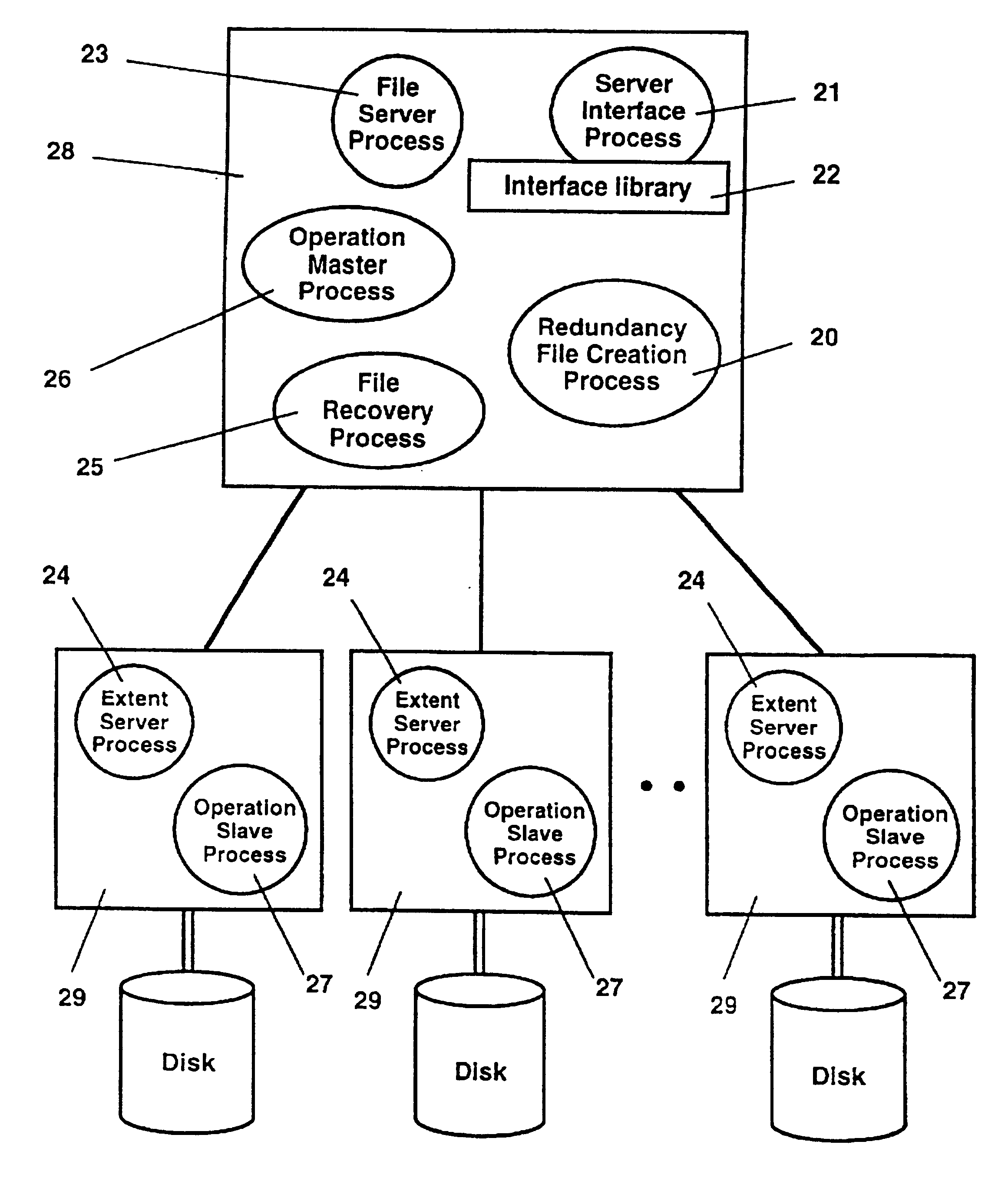

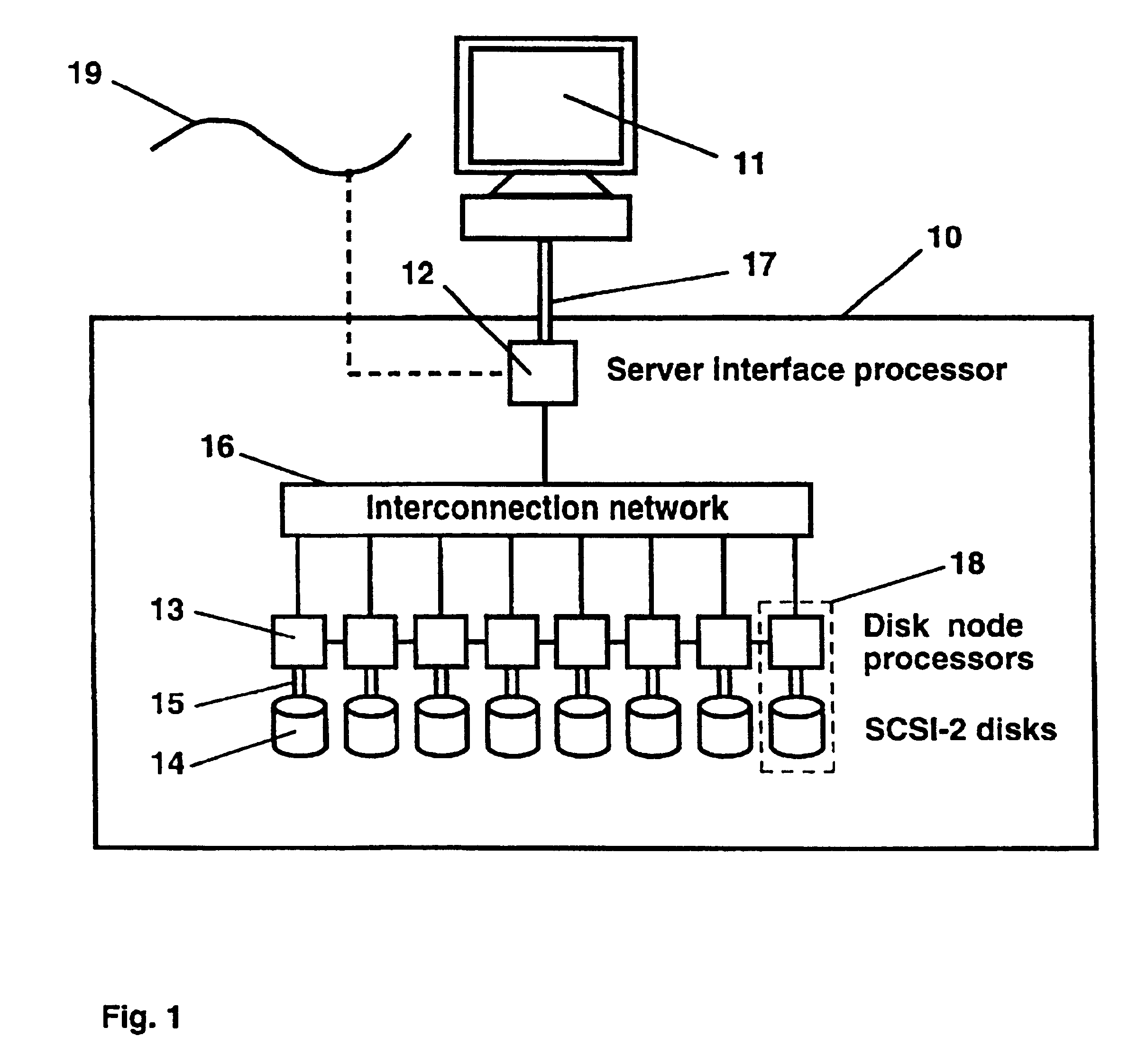

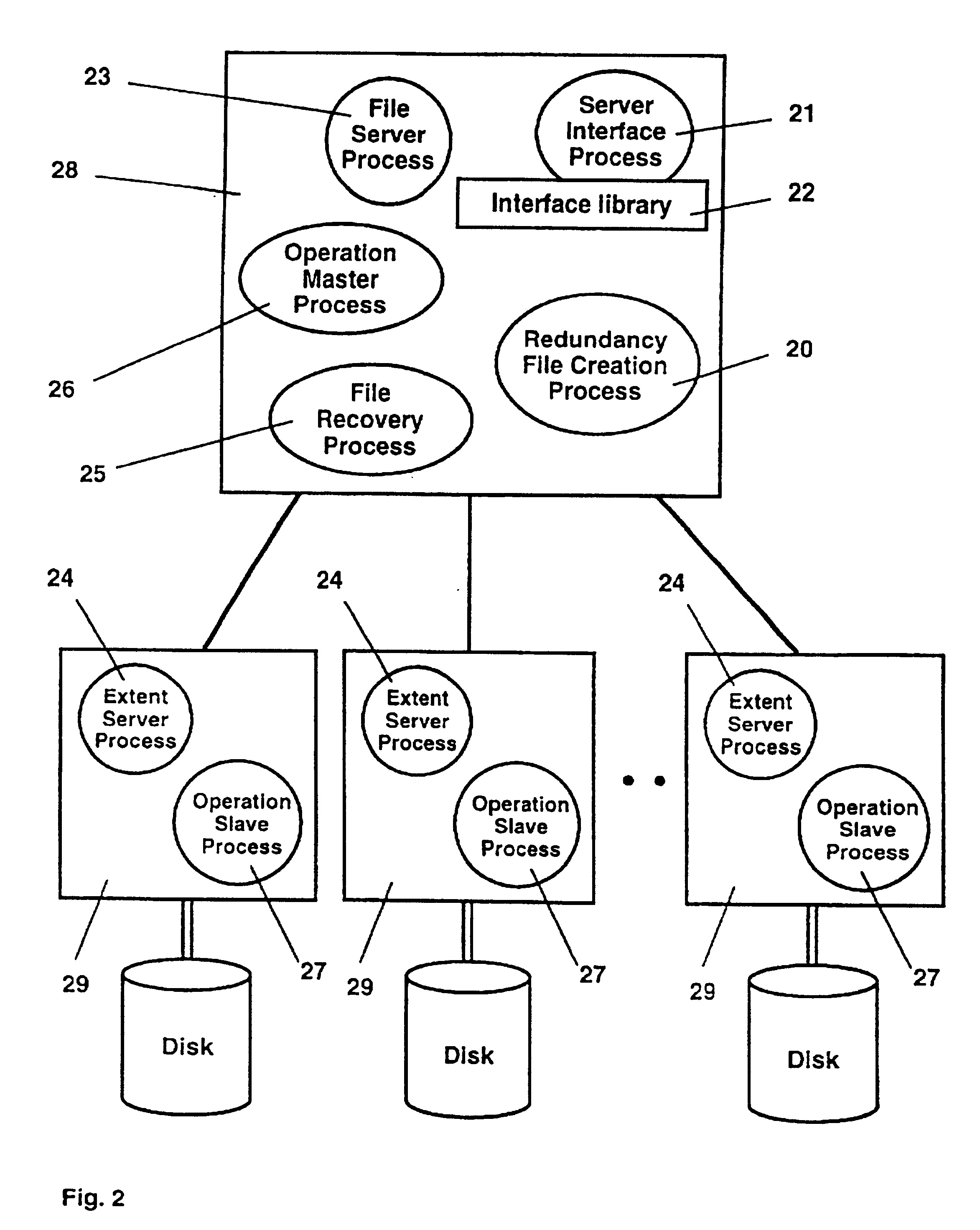

Method and apparatus for a parallel data storage and processing server

InactiveUSRE38410E1Lower latencyImprove throughputImage memory managementMultiple digital computer combinationsConcurrent computationComputer architecture

The present invention concerns a parallel multiprocessor-multidisk storage server which offers low delays and high throughputs when accessing and processing one-dimensional and multi-dimensional file data such as pixmap images, text, sound or graphics. The invented parallel multiprocessor-multidisk storage server may be used as a server offering its services to computer, to client stations residing on a network or to a parallel host system to which it is connected. The parallel storage server comprises (a) a server interface processor interfacing the storage system with a host computer, with a network or with a parallel computing system; (b) an array of disk nodes, each disk node being composed by one processor electrically connected to at least one disk and (c) an interconnection network for connecting the server interface processor with the array of disk nodes. Multi-dimensional data files such as 3-d images (for example tomographic images), respectively 2-d images (for example scanned aerial photographs) are segmented into 3-d, respectively 2-d file extents, extents being striped onto different disks. One-dimensional files are segmented into 1-d file extents. File extents of a given file may have a fixed or a variable size. The storage server is based on a parallel image and multiple media file storage system. This file storage system includes a file server process which receives from the high level storage server process file creation, file opening, file closing and file deleting commands. It further includes extent serving processes running on disk node processors, which receive from the file server process commands to update directory entries and to open existing files and from the storage interface server process commands to read data from a file or to write data into a file. It also includes operation processes responsible for applying in parallel geometric transformations and image processing operations to data read from the disks and a redundancy file creation process responsible for creating redundant parity extent files for selected data files.

Owner:AXS TECH

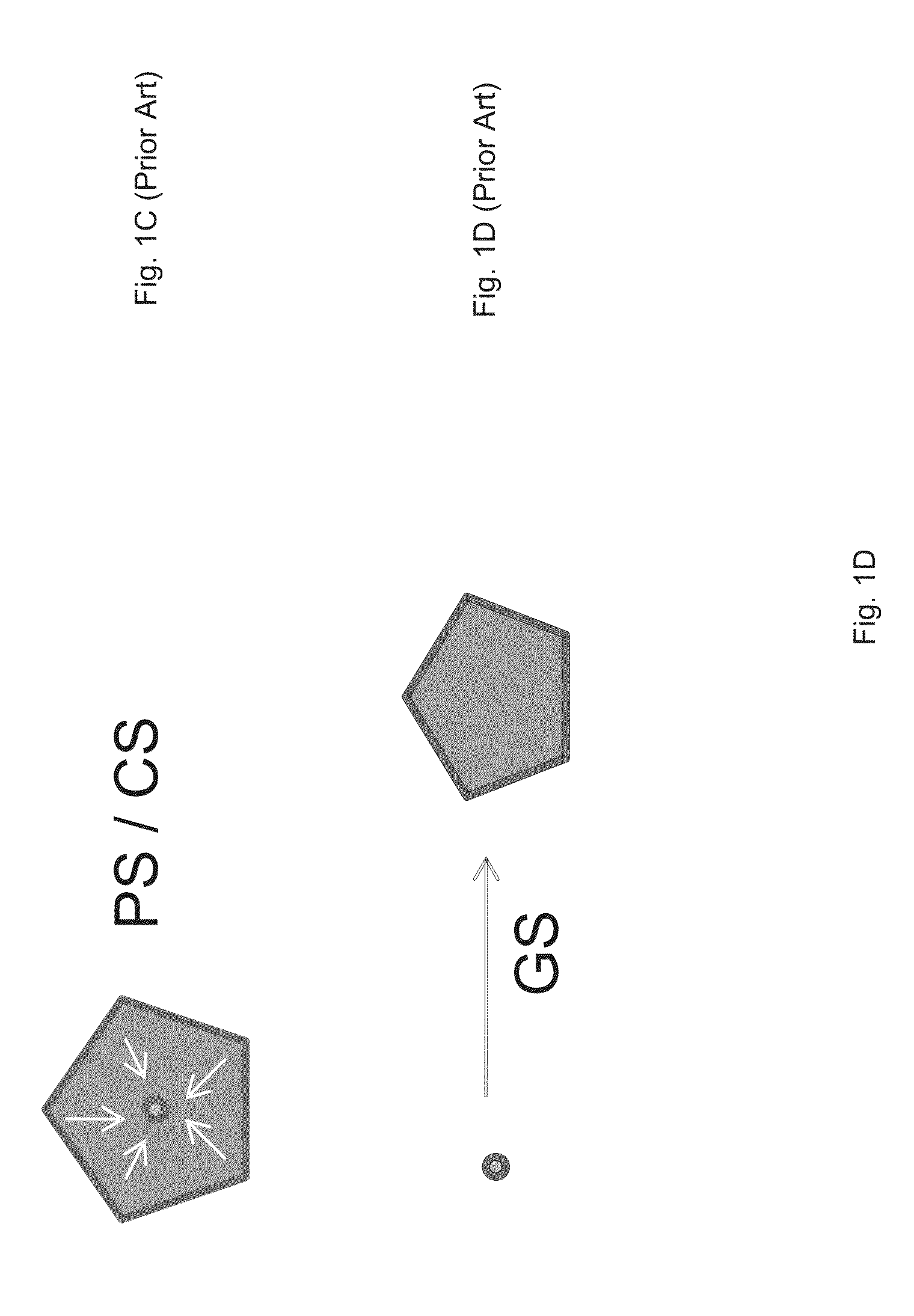

Method and system for rendering simulated depth-of-field visual effect

ActiveUS20140368494A1Quality improvementHigh-fidelity depth-of-field visual effectImage enhancementImage analysisPattern recognitionFast Fourier transform

Systems and methods for rendering depth-of-field visual effect on images with high computing efficiency and performance. A diffusion blurring process and a Fast Fourier Transform (FFT)-based convolution are combined to achieve high-fidelity depth-of-field visual effect with Bokeh spots in real-time applications. The brightest regions in the background of an original image are enhanced with Bokeh effect by virtue of FFT convolution with a convolution kernel. A diffusion solver can be used to blur the background of the original image. By blending the Bokeh spots with the image with gradually blurred background, a resultant image can present an enhanced depth-of-field visual effect. The FFT-based convolution can be computed with multi-threaded parallelism.

Owner:NVIDIA CORP

Optimized collectives using a DMA on a parallel computer

InactiveUS20090006662A1Easy to operateElectric digital data processingDirect memory accessConcurrent computation

Optimizing collective operations using direct memory access controller on a parallel computer, in one aspect, may comprise establishing a byte counter associated with a direct memory access controller for each submessage in a message. The byte counter includes at least a base address of memory and a byte count associated with a submessage. A byte counter associated with a submessage is monitored to determine whether at least a block of data of the submessage has been received. The block of data has a predetermined size, for example, a number of bytes. The block is processed when the block has been fully received, for example, when the byte count indicates all bytes of the block have been received. The monitoring and processing may continue for all blocks in all submessages in the message.

Owner:IBM CORP

Aircraft actuator fault detection and diagnosis method based on depth random forest algorithm

ActiveCN108594788AAccurately describe input and output characteristicsQuick checkElectric testing/monitoringData setFeature extraction

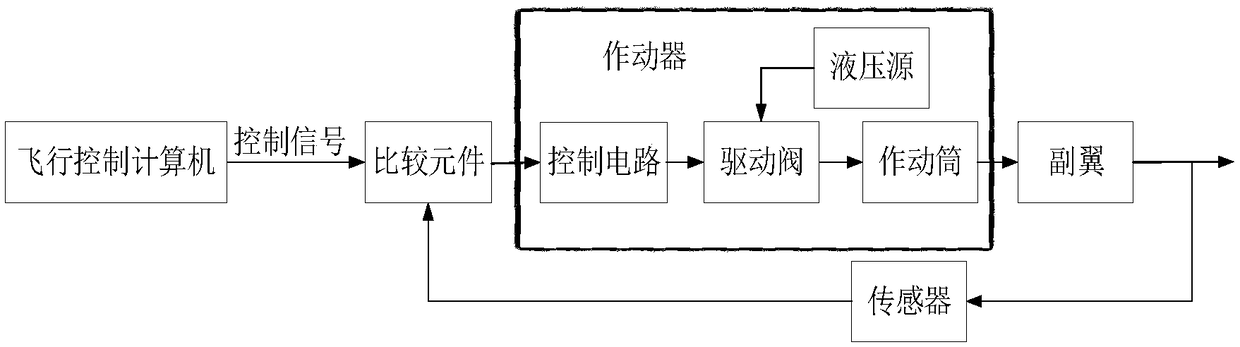

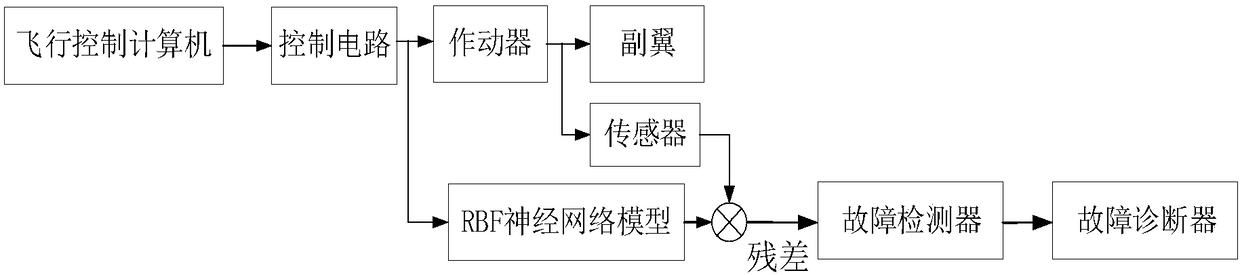

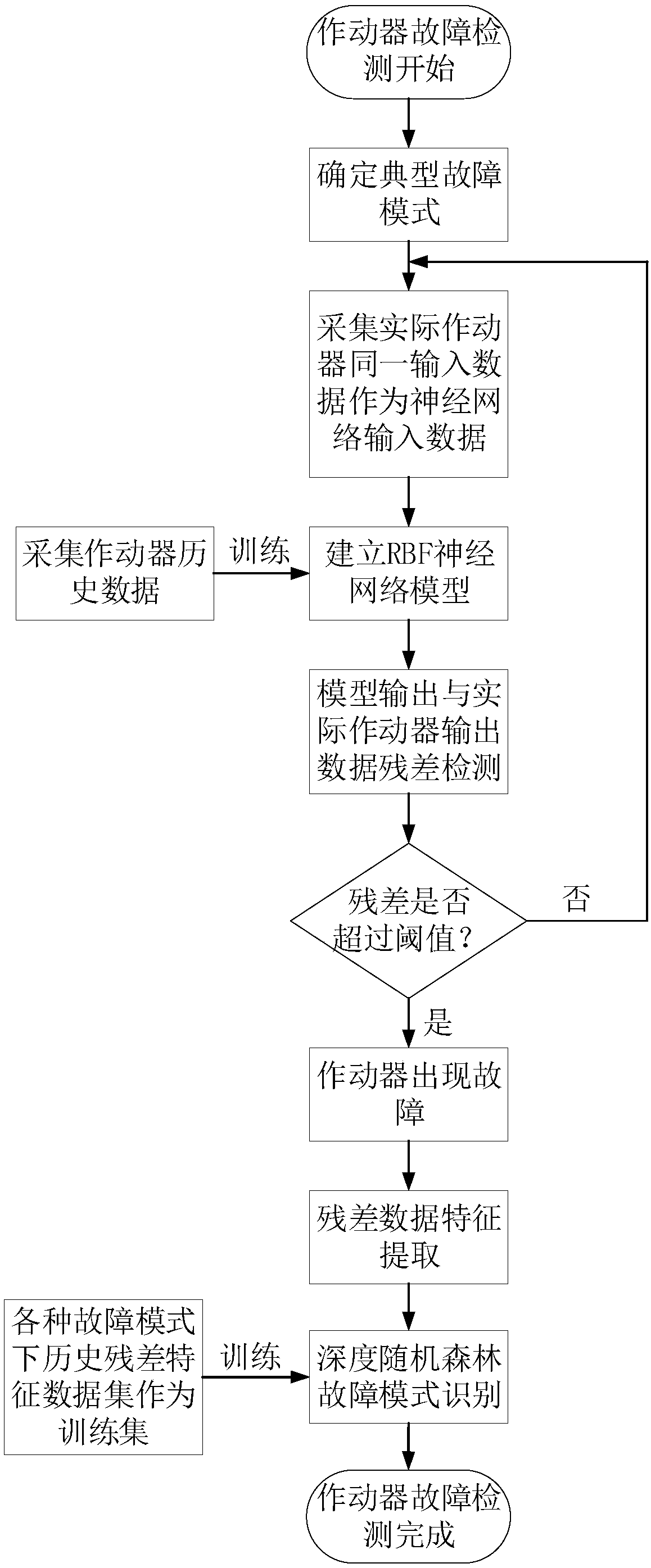

The invention discloses an aircraft actuator fault detection and diagnosis method based on a depth random forest algorithm. The method includes: firstly, summarizing the fault mode of an aircraft actuator; establishing an RBF neural network, and collecting the input and output data of the aircraft actuator under the normal working condition to serve as training data, and training the parameters inthe neural network model to obtain analysis redundancy of the monitored actuator; analyzing the residual data of the output signals by collecting the output of the actual actuator and the neural network model, and after the feature extraction, inputting the feature data set into a trained depth random forest multi-classifier for fault mode recognition. According to the invention, the complex nonlinear input and output relation of the aircraft actuator can be accurately simulated by the neural network, the fault mode is accurately recognized by a depth random forest strong classifier, and moreover, the method has the advantages of parallel calculation and high running speed, can be integrated into a flight management computer of an aircraft, realizes the online real-time monitoring, and the accuracy and the efficiency of fault diagnosis of the aircraft actuator are improved.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

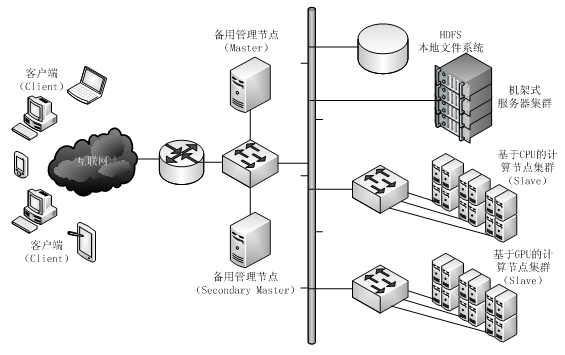

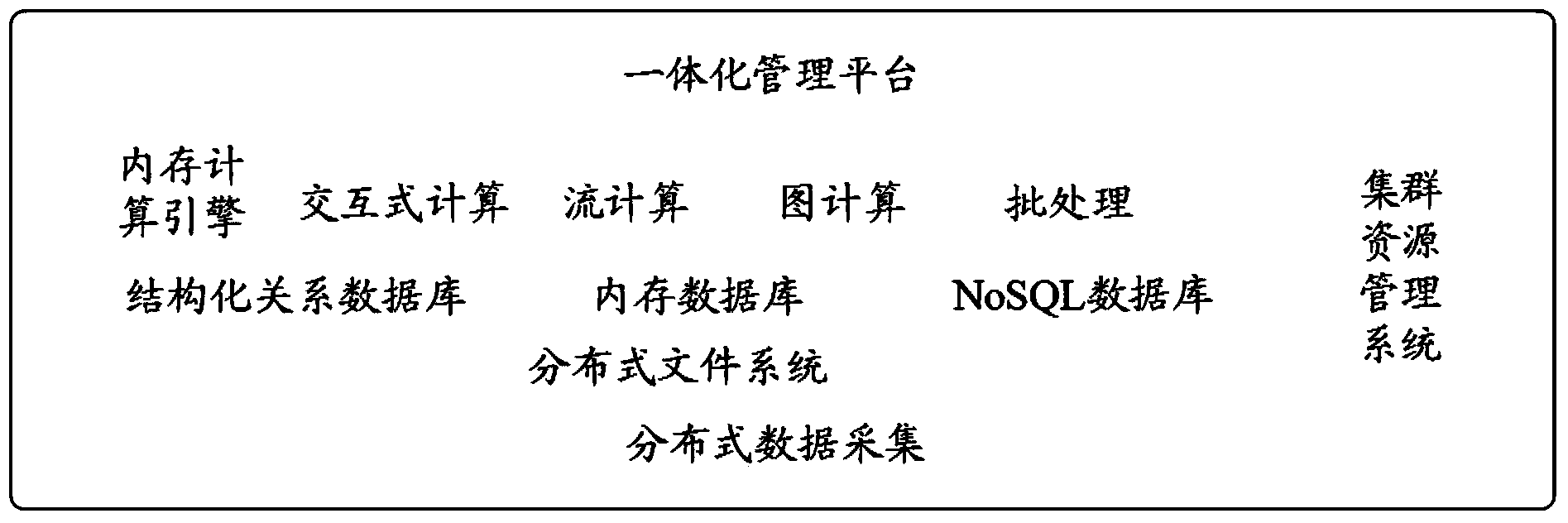

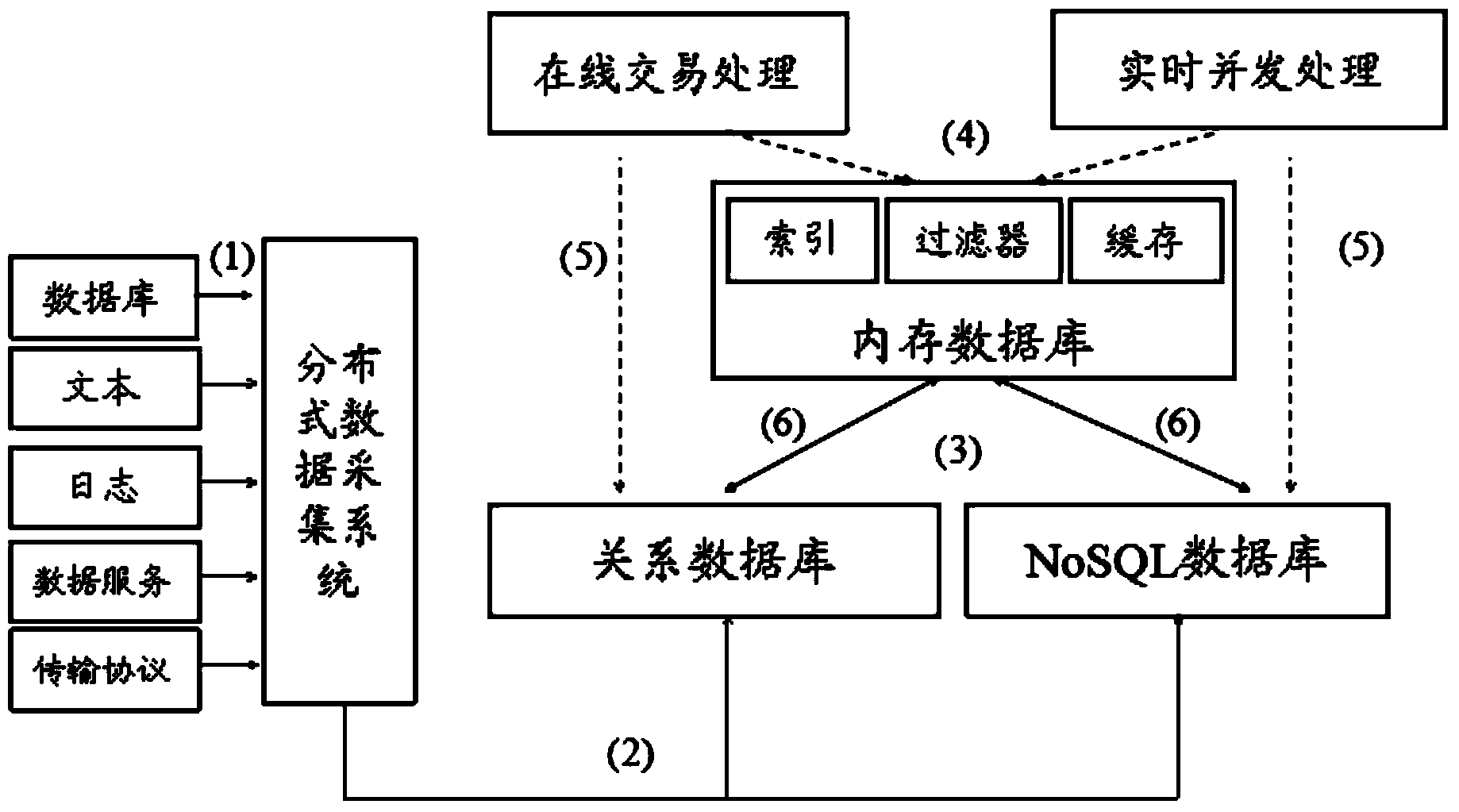

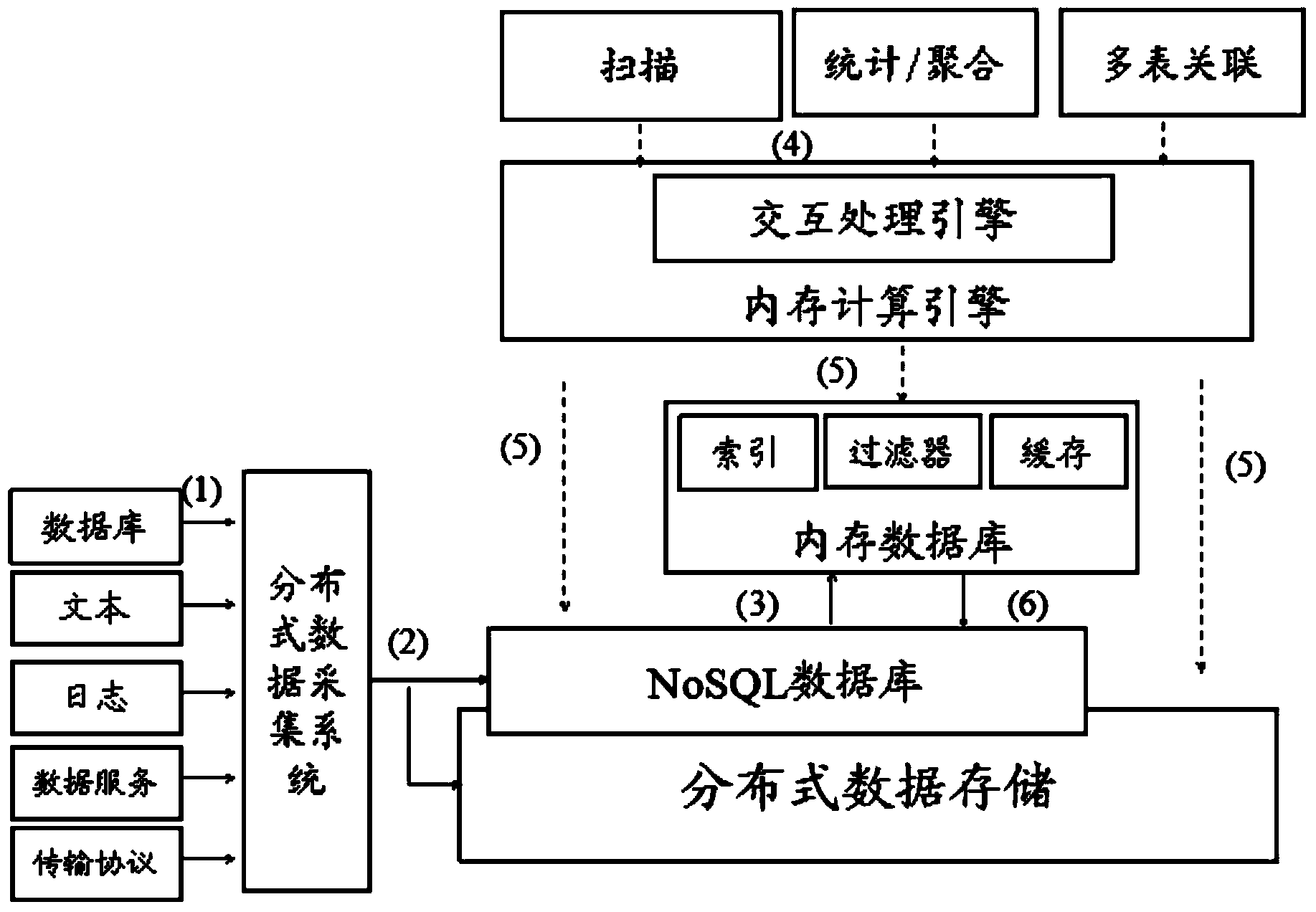

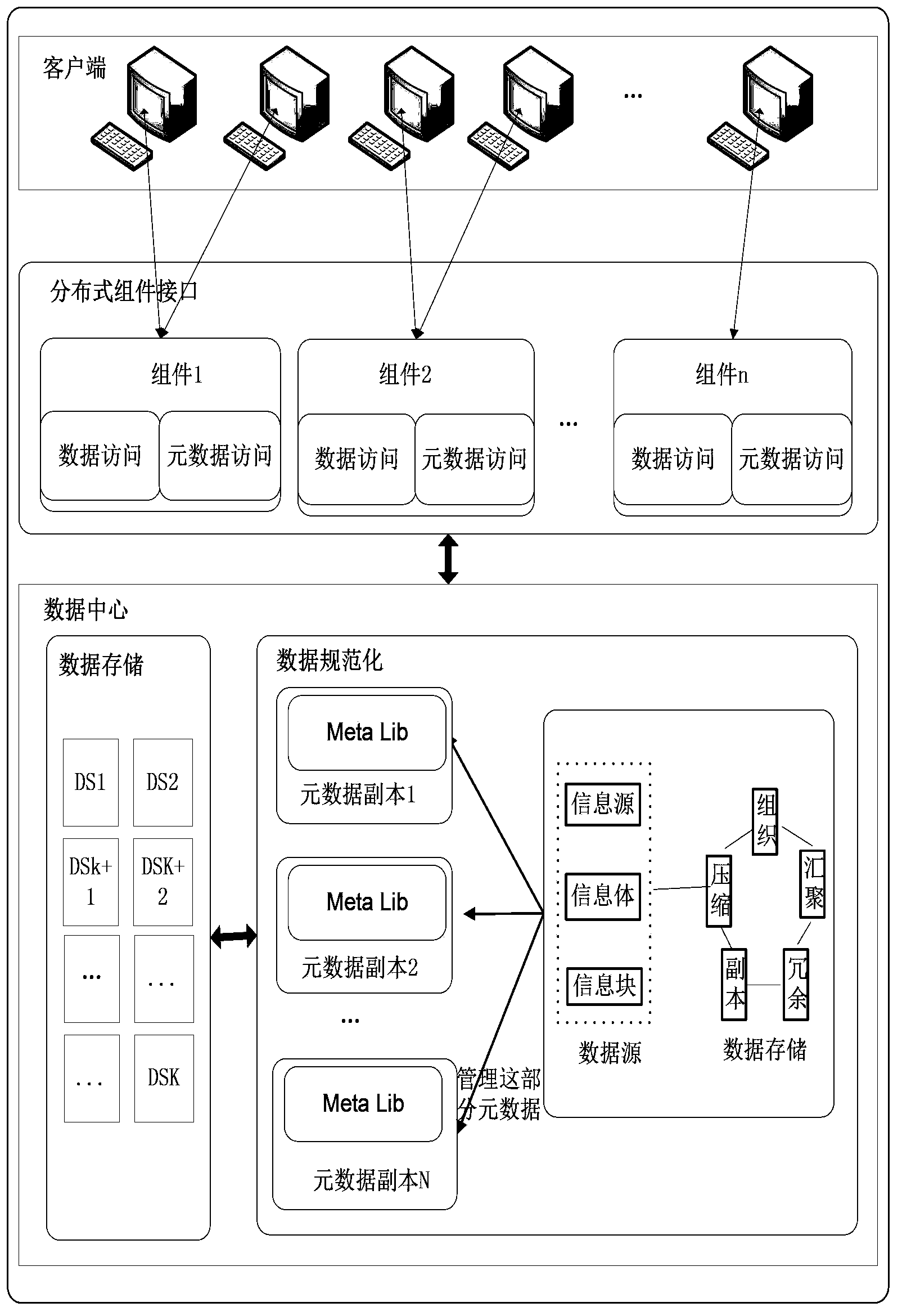

Mixed type processing system and method oriented to industry big data diversity application

InactiveCN104021194ASatisfy handlingMeet interaction analysisOther databases retrievalSpecial data processing applicationsHybrid typeData diversity

The invention discloses a mixed type processing system and method oriented to industry big data diversity application. The system comprises a distributed data collection subsystem used for collecting data from an external system, a storing and parallel calculating subsystem used for storing and calculating the collected data, and an integrated resource and system management platform used for managing the stored and calculated data. The storing and parallel calculating subsystem comprises a big data storing subsystem and a big data processing subsystem, wherein the big data processing subsystem comprises a memory calculating engine which is used for providing distributed memory abstract in a shared-nothing cluster and conducting parallel streamlined and thread lightweight processing on the collected data. The mixed type processing system and method can meet the requirements of industry big data diversity service application, the big data processing performance can be improved by ten or more times through acceleration of the memory calculating engine, and the usability, reliability and expandability of the system can be ensured through the integrated management platform.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

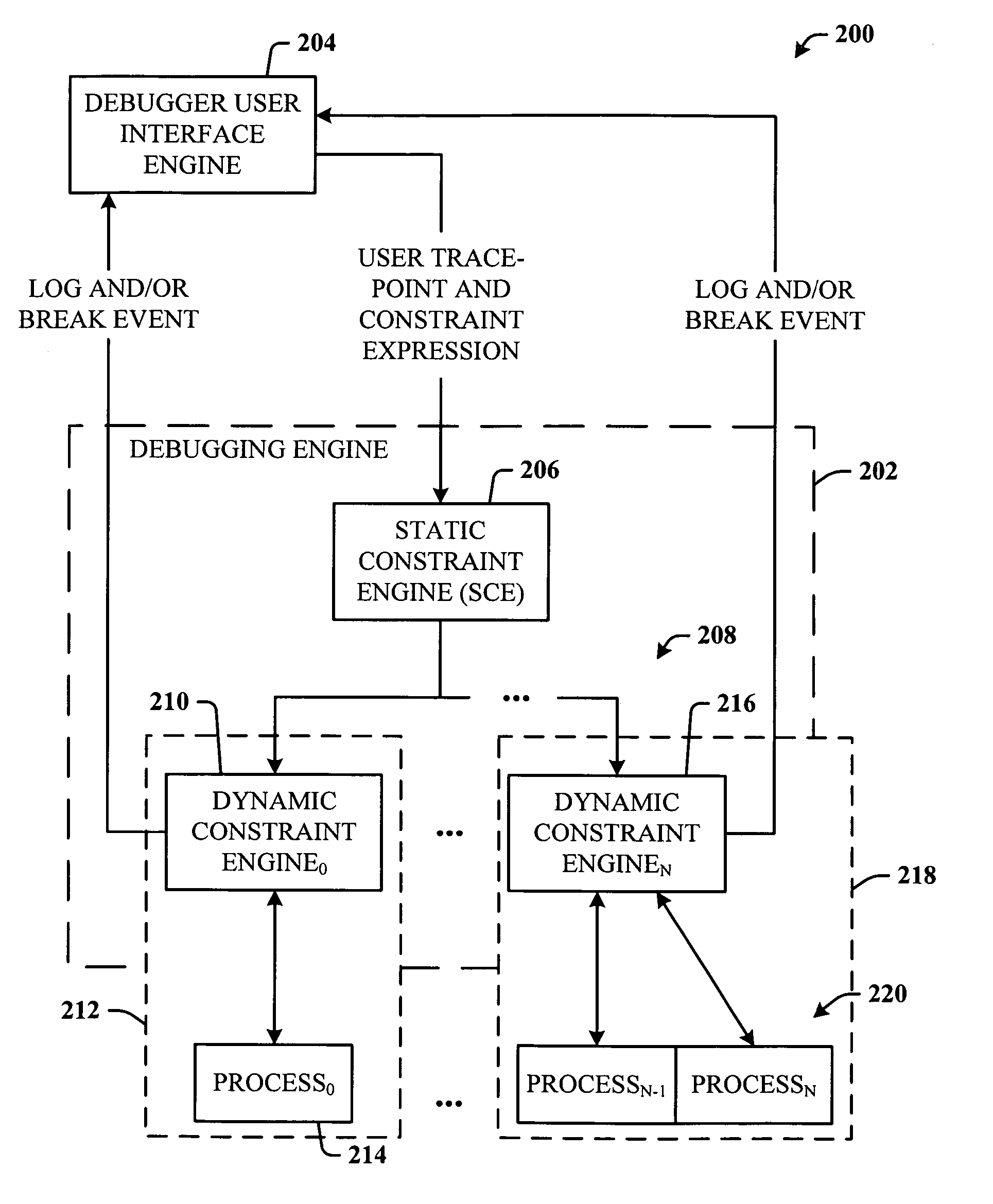

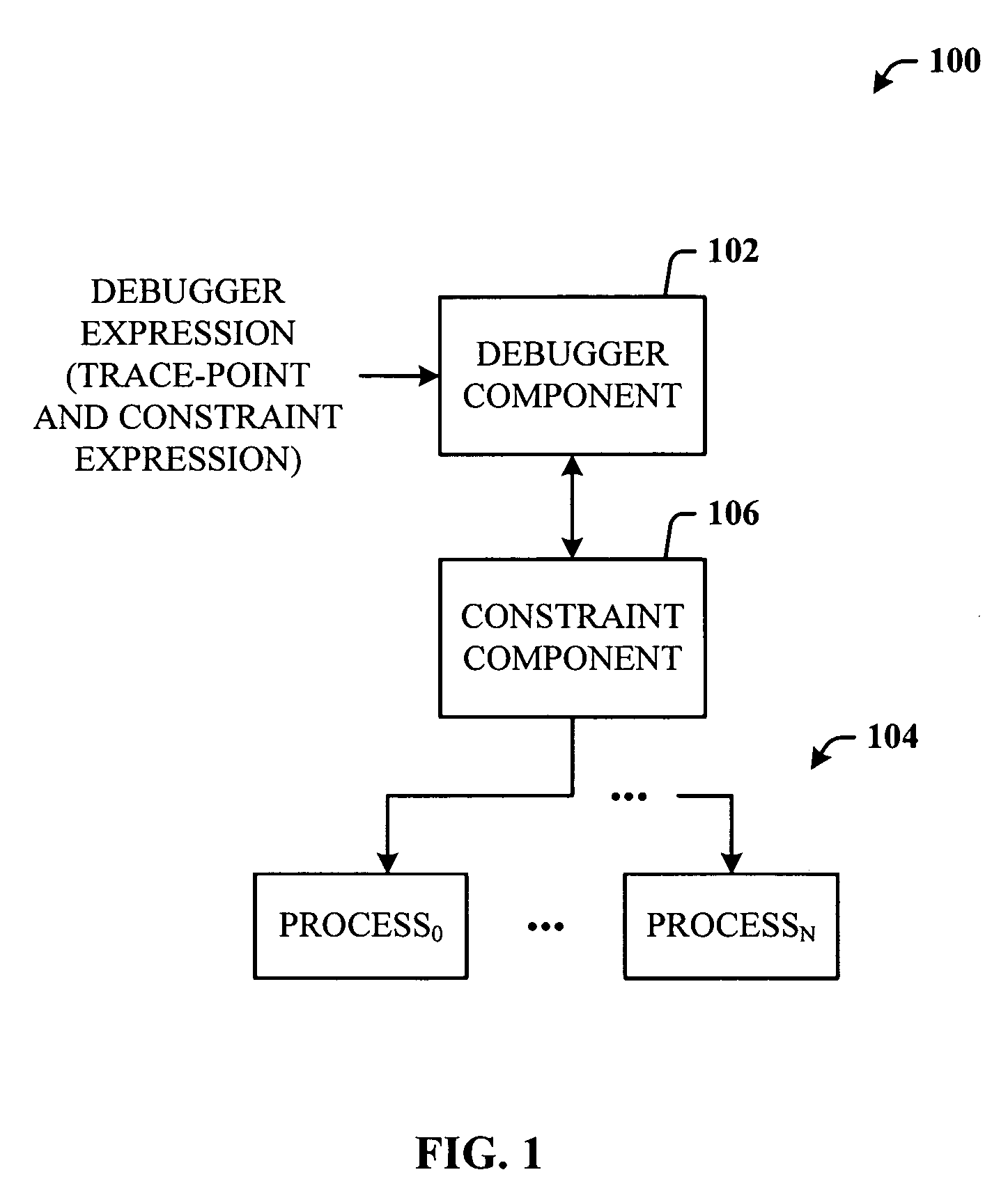

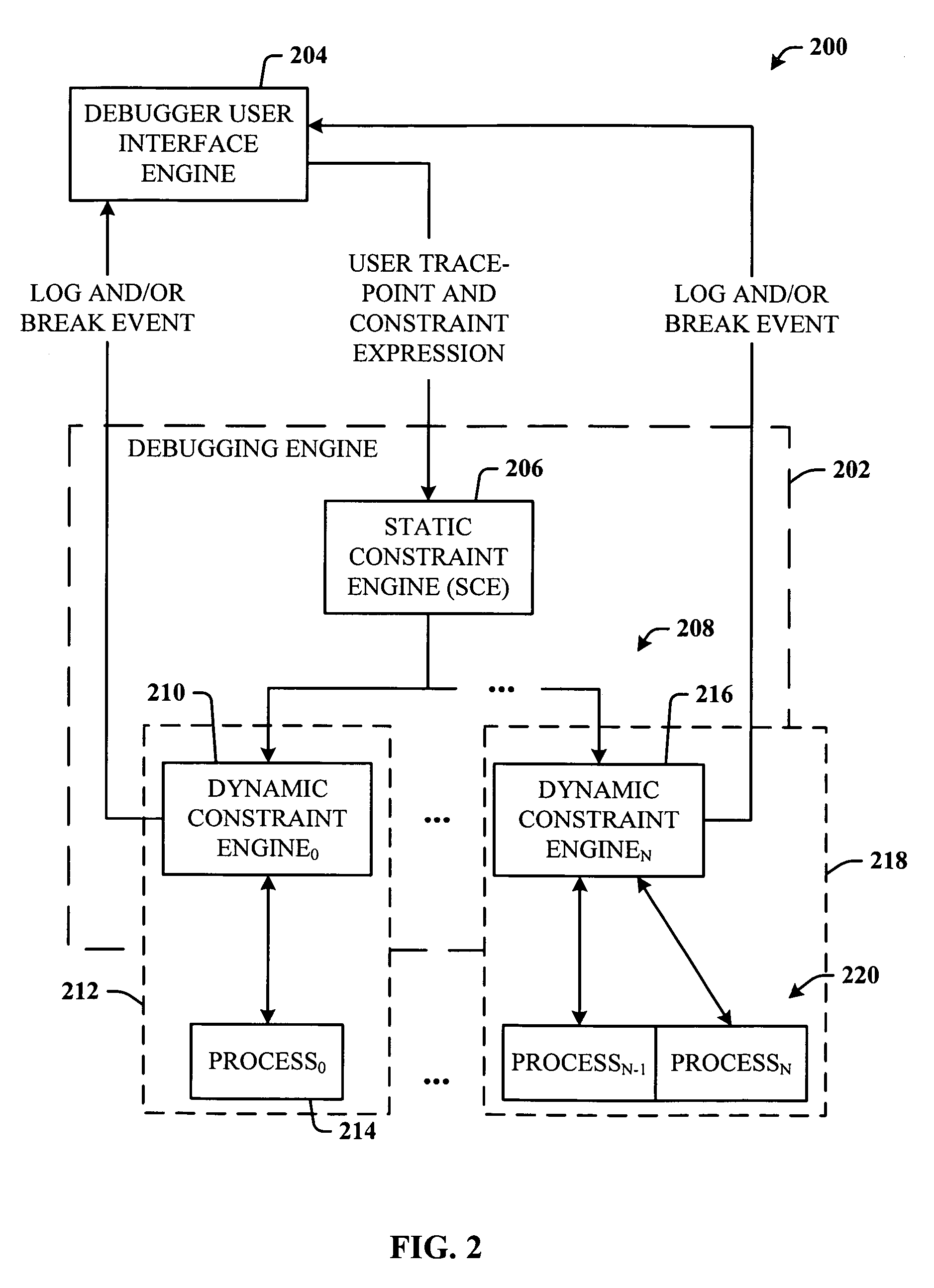

Breakpoint logging and constraint mechanisms for parallel computing systems

InactiveUS20060101405A1Error detection/correctionSpecific program execution arrangementsConcurrent computationEvent data

A system that facilitates debugging of a computing cluster and / or distributed applications environment. A debugger component receives a debugging expression, and a constraint component includes both a static constraint engine (SCE) and a dynamic constraint engine (DCE) processes the debugging expression to automatically perform a debugging process on at least two processes of a plurality of processes. When the user creates a tracepoint or constraint breakpoint the expression is sent directly to the SCE, which parses the constraint and tracepoint expressions, reduces the expression by evaluating parts of the expression based on static values (such as process ID or filename), and passes the remainder on to each of the applicable DCEs. The DCEs register a breakpoint at the applicable location in the process, and upon receiving a breakpoint event, evaluates the remainder of the constraint expression on the dynamic data, and sends log and / or break event data back to the user for viewing.

Owner:MICROSOFT TECH LICENSING LLC

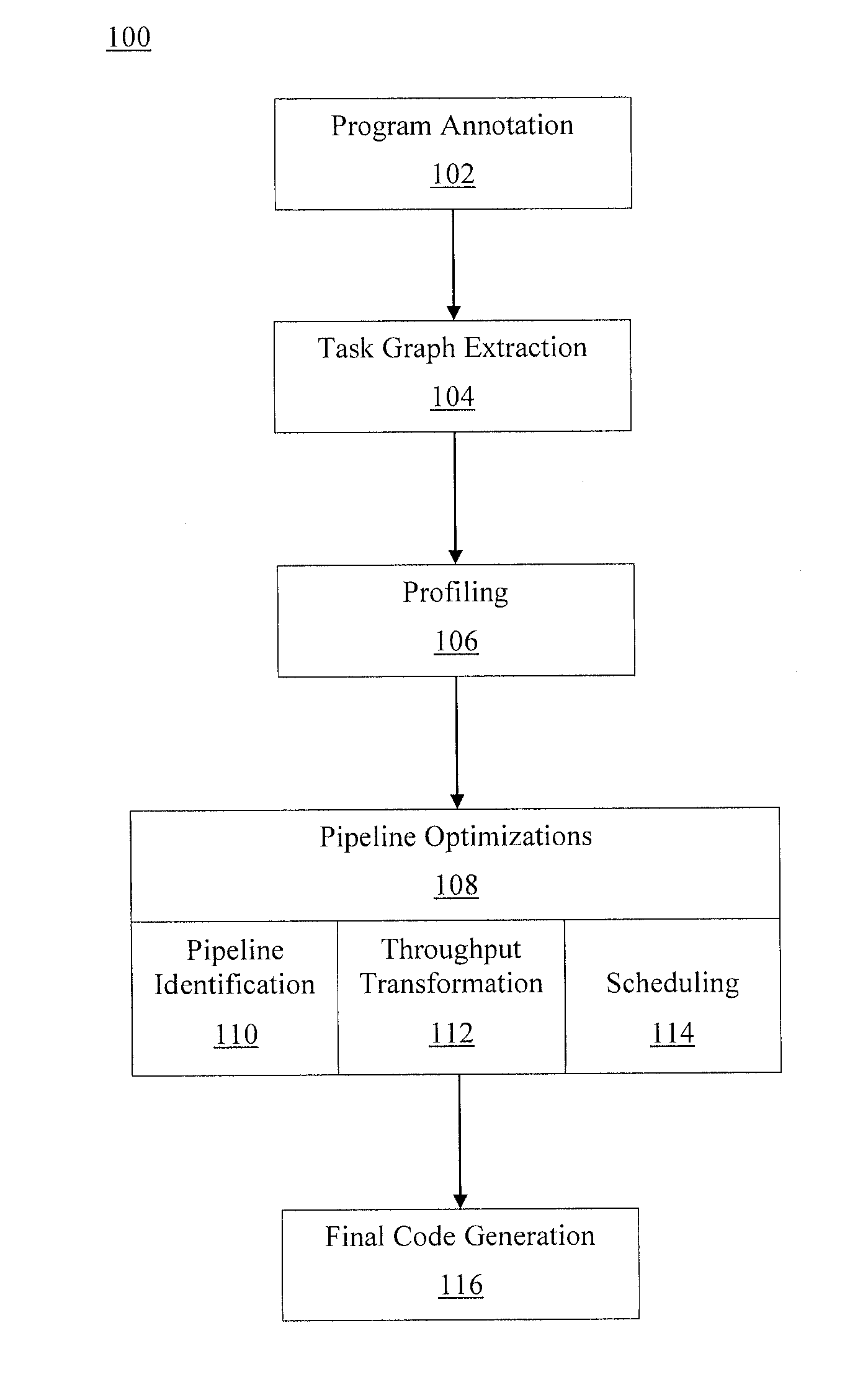

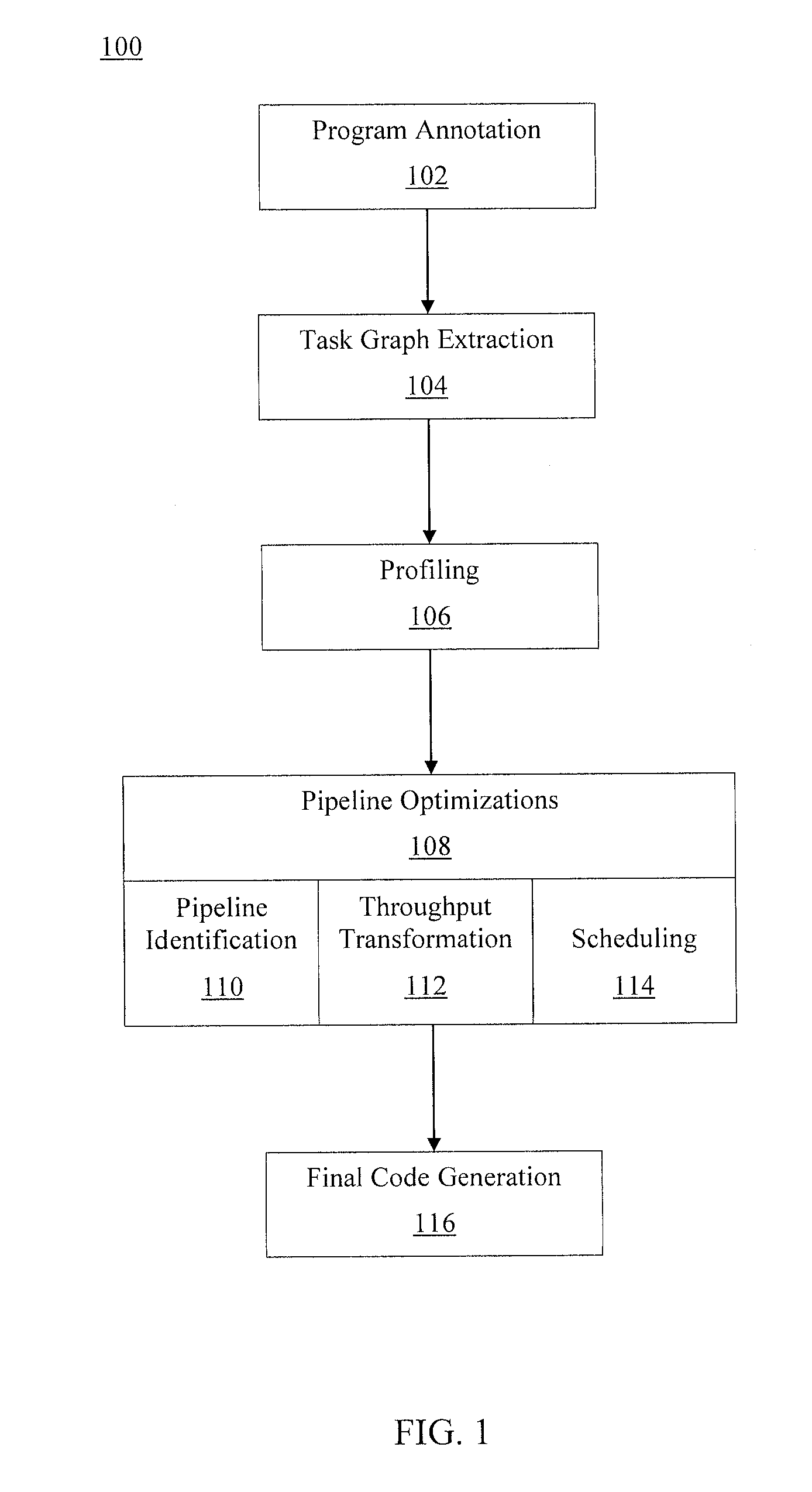

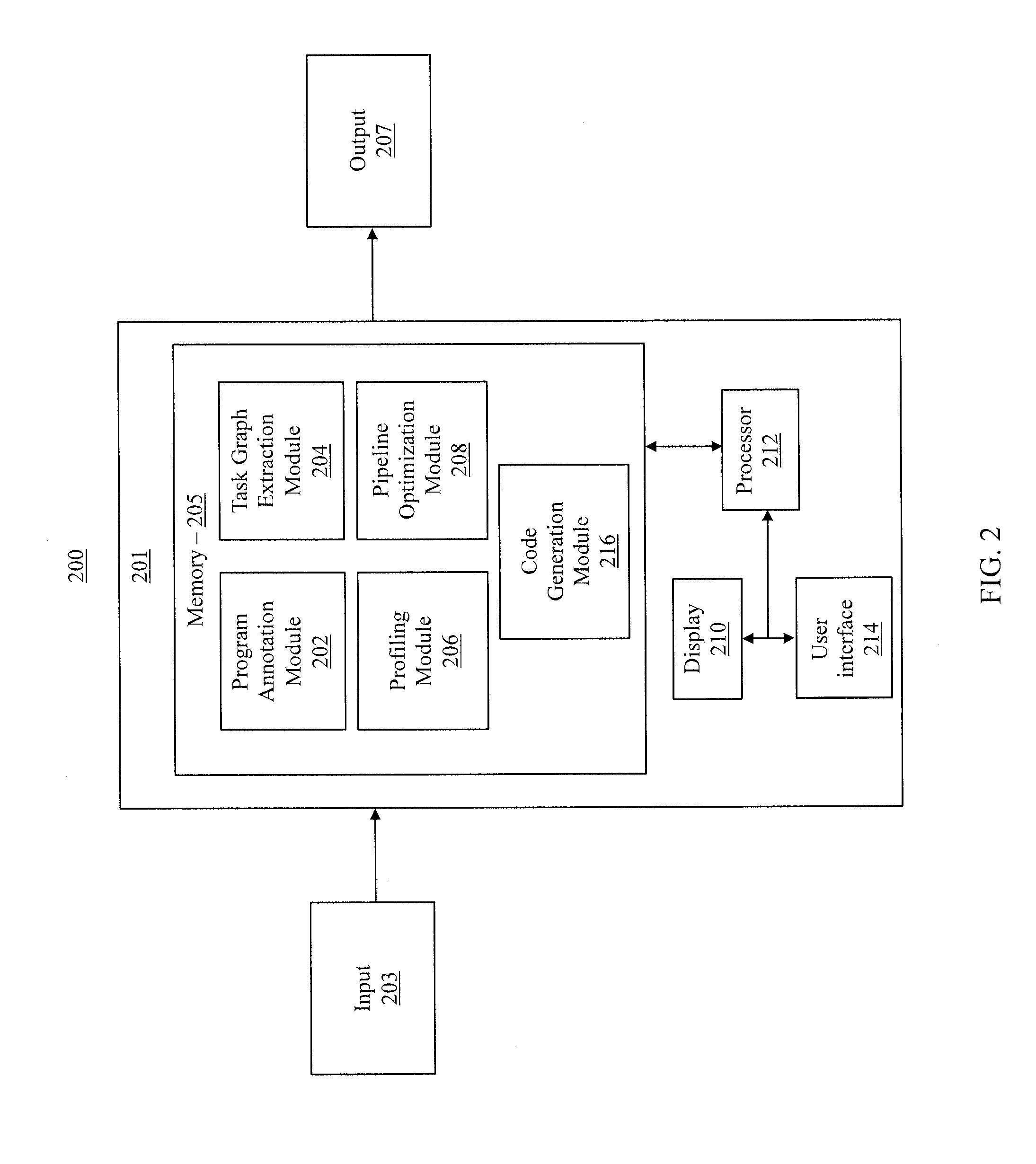

Automatic pipelining framework for heterogeneous parallel computing systems

ActiveUS20130298130A1Program initiation/switchingSoftware engineeringComputer architectureConcurrent computation

Systems and methods for automatic generation of software pipelines for heterogeneous parallel systems (AHP) include pipelining a program with one or more tasks on a parallel computing platform with one or more processing units and partitioning the program into pipeline stages, wherein each pipeline stage contains one or more tasks. The one or more tasks in the pipeline stages are scheduled onto the one or more processing units, and execution times of the one or more tasks in the pipeline stages are estimated. The above steps are repeated until a specified termination criterion is reached.

Owner:NEC CORP

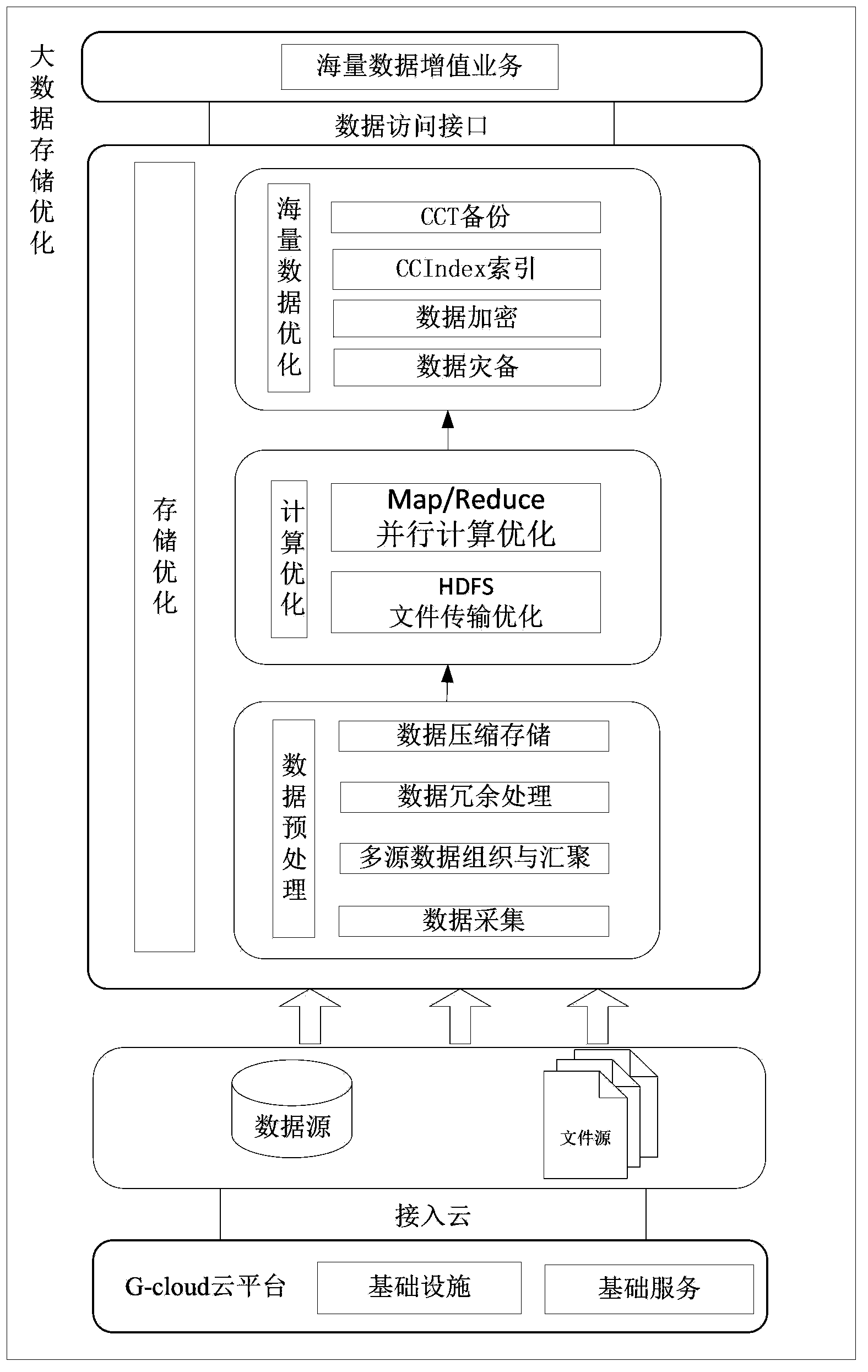

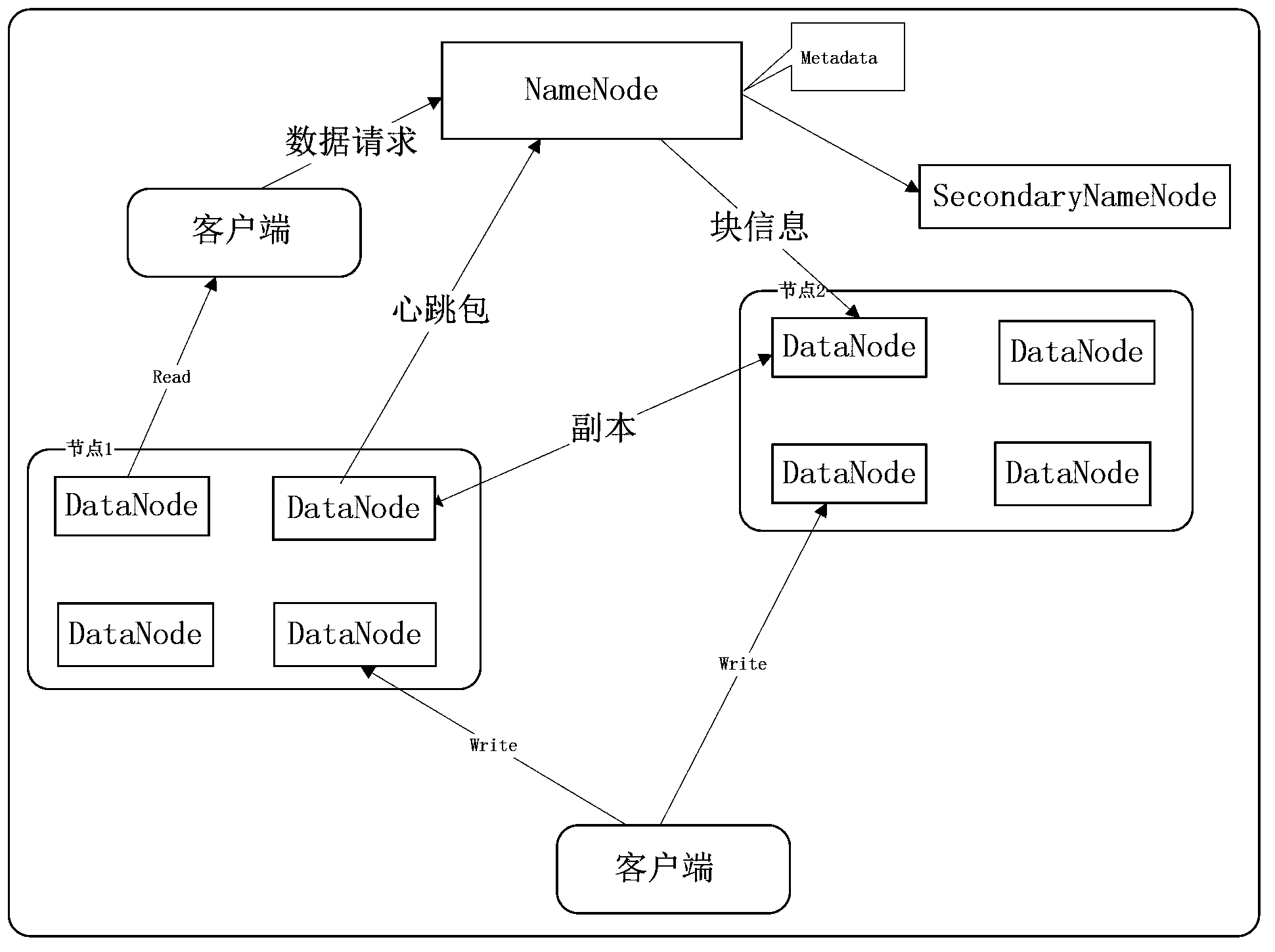

Large-data storage and optimization method

InactiveCN103440244AStable big data storage optimization methodEfficient big data storage optimization methodSpecial data processing applicationsRedundant operation error correctionData compressionParallel computing

The invention relates to the technical field of data processing, in particular to a large-data storage and optimization method facing to sea-cloud coordination. The method comprises the following steps of data preprocessing, calculation optimization and mass data optimization, wherein the step of data preprocessing comprises data collection, multi-source data organization and gathering, data redundant processing and data compression storage; the calculation optimization comprises HDFS (hadoop distributed file system) file transmission and optimization and Map / Reduce parallel calculation and optimization; and the step of mass data optimization comprises data backup for disaster recovery, data encryption, CC index and CCT backup. The large-data storage and optimization method disclosed by the invention can be applied to large-data storage of a cloud platform.

Owner:GUANGDONG ELECTRONICS IND INST

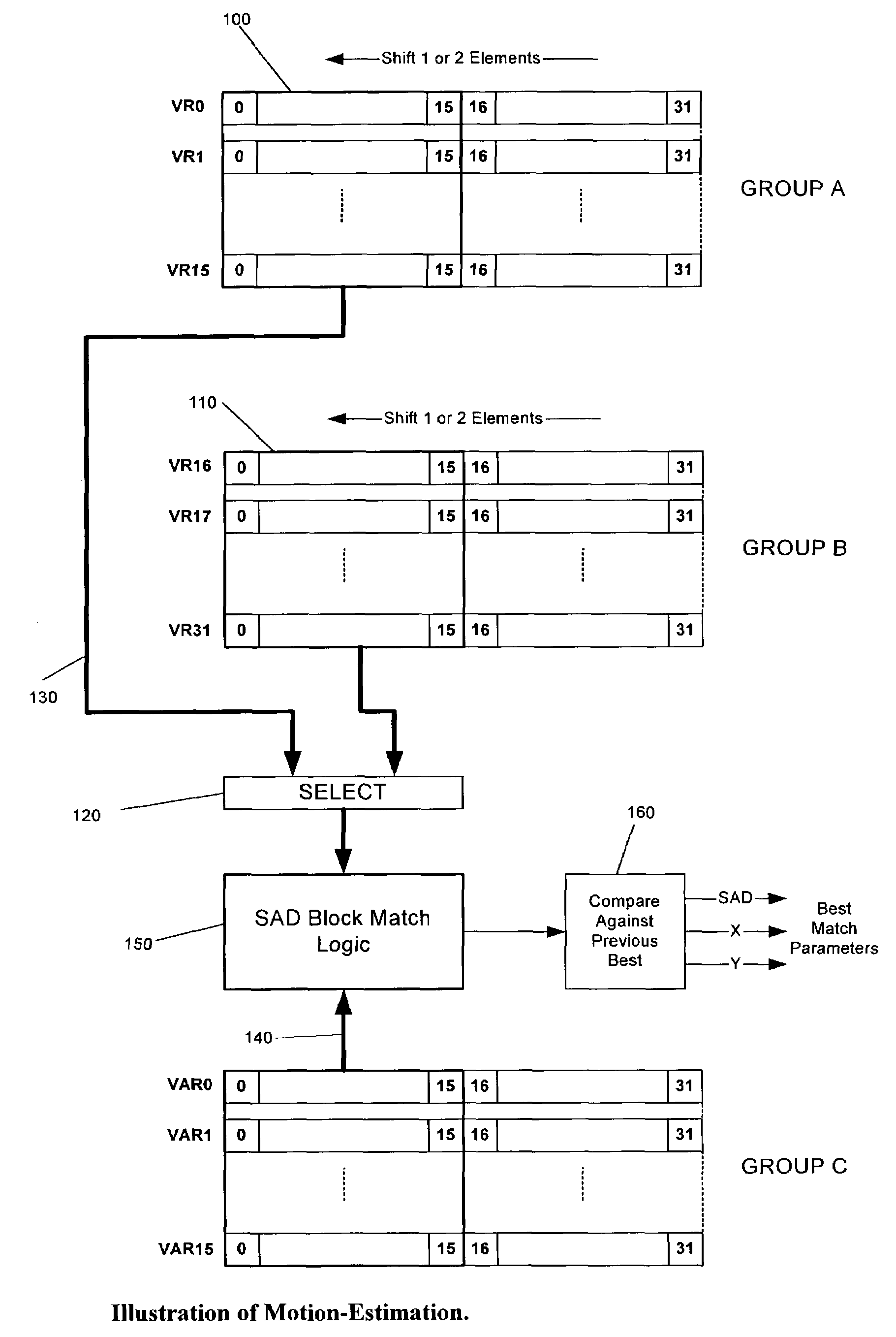

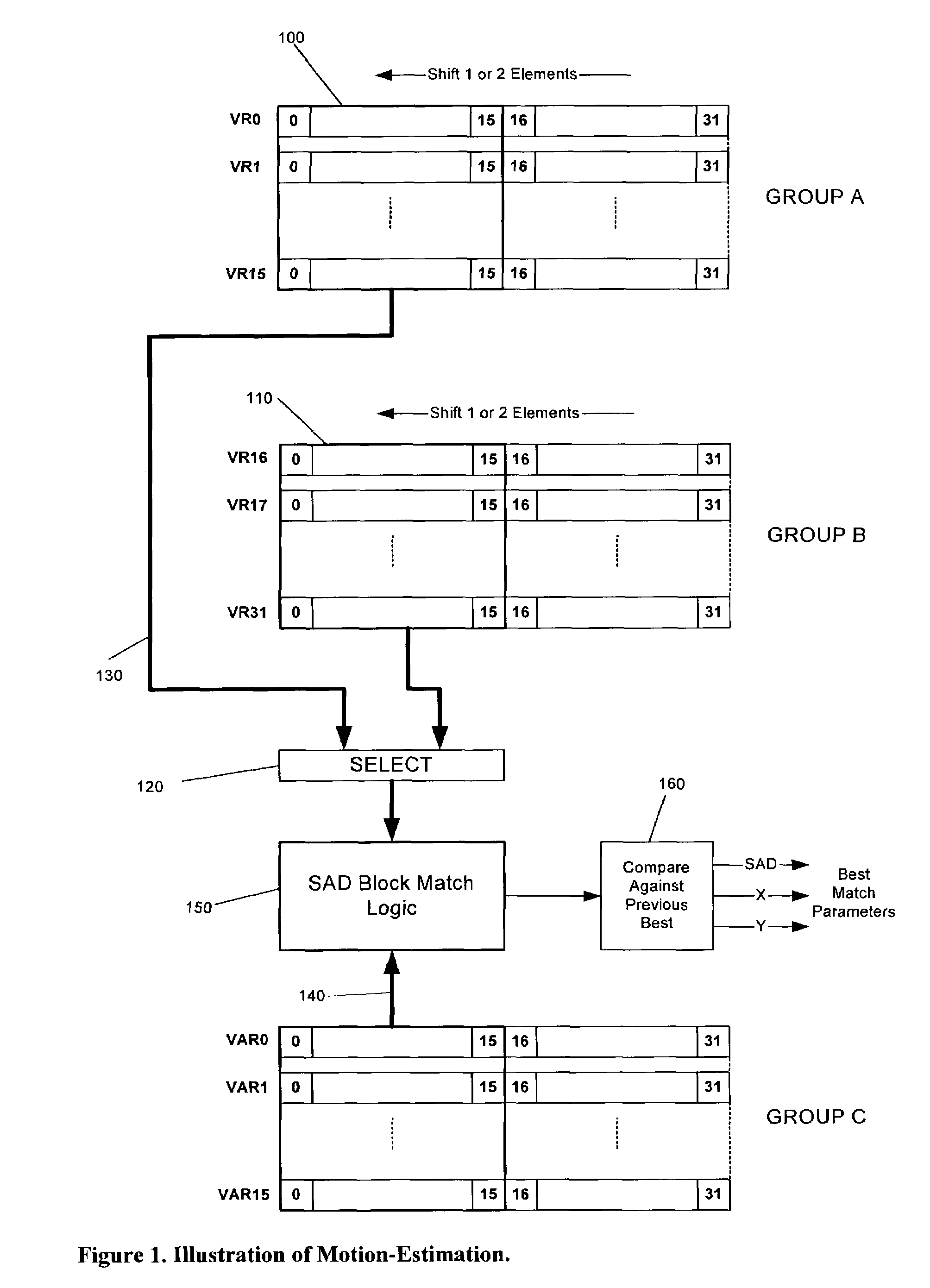

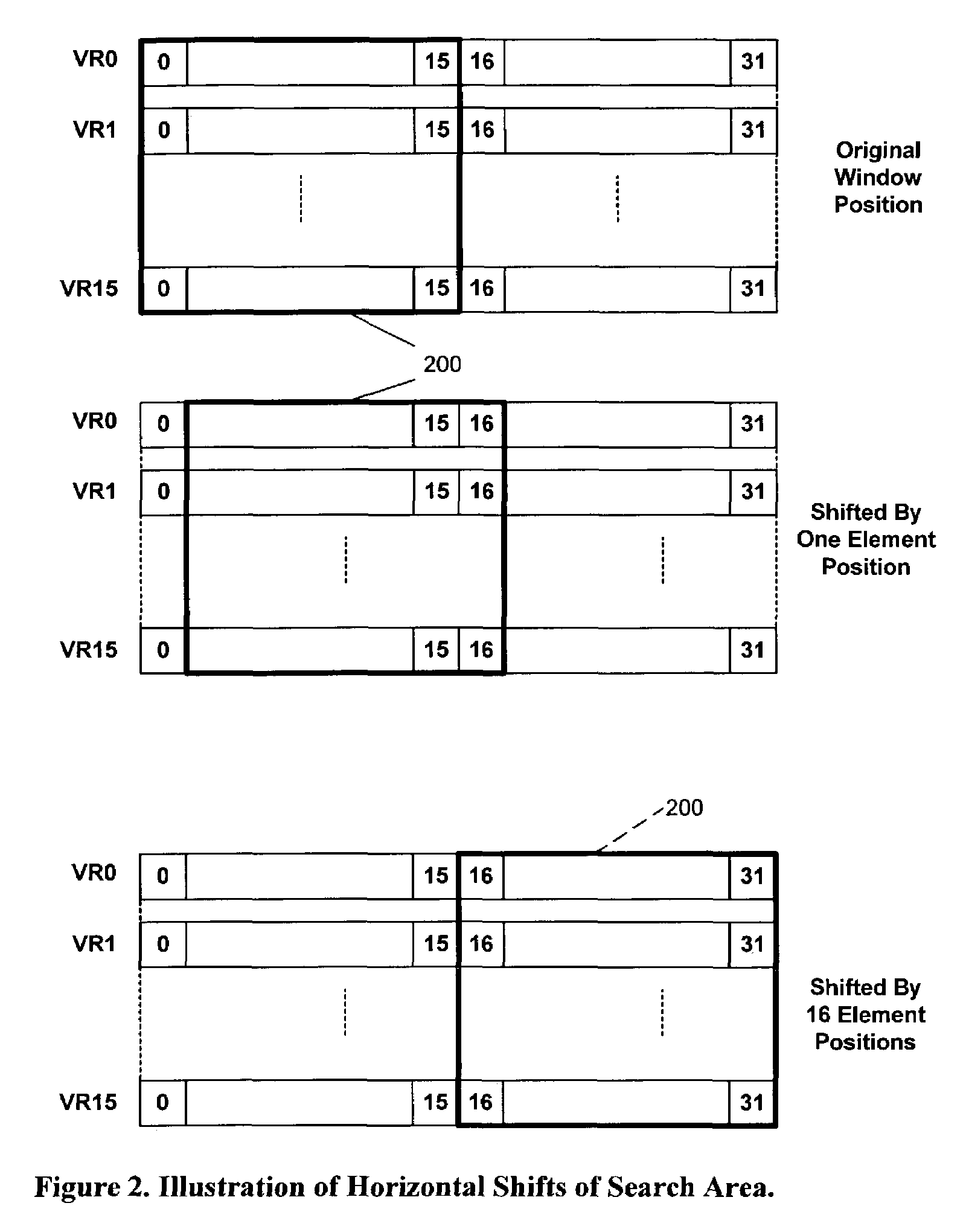

Method for programmable motion estimation in a SIMD processor

The present invention provides a 16×16-sliding window using vector register file with zero overhead for horizontal or vertical shifts to incorporate motion estimation into SIMD vector processor architecture. SIMD processor's vector load mechanism, vector register file with shifting of elements capability, and 16×16 parallel SAD calculation hardware and instruction are used. Vertical shifts of all sixteen-vector registers occur in a ripple-through fashion when the end vector register is loaded. The parallel SAD calculation hardware can calculate one 16-by-16-block match per clock cycle in a pipelined fashion. In addition, hardware for best-match SAD value comparisons and maintaining their pixel location reduces the software overhead. Block matching for less than 16 by 16 block areas is supported using a mask register to mask selected elements, thereby reducing search area to any block size less than 16 by 16.

Owner:MIMAR TIBET

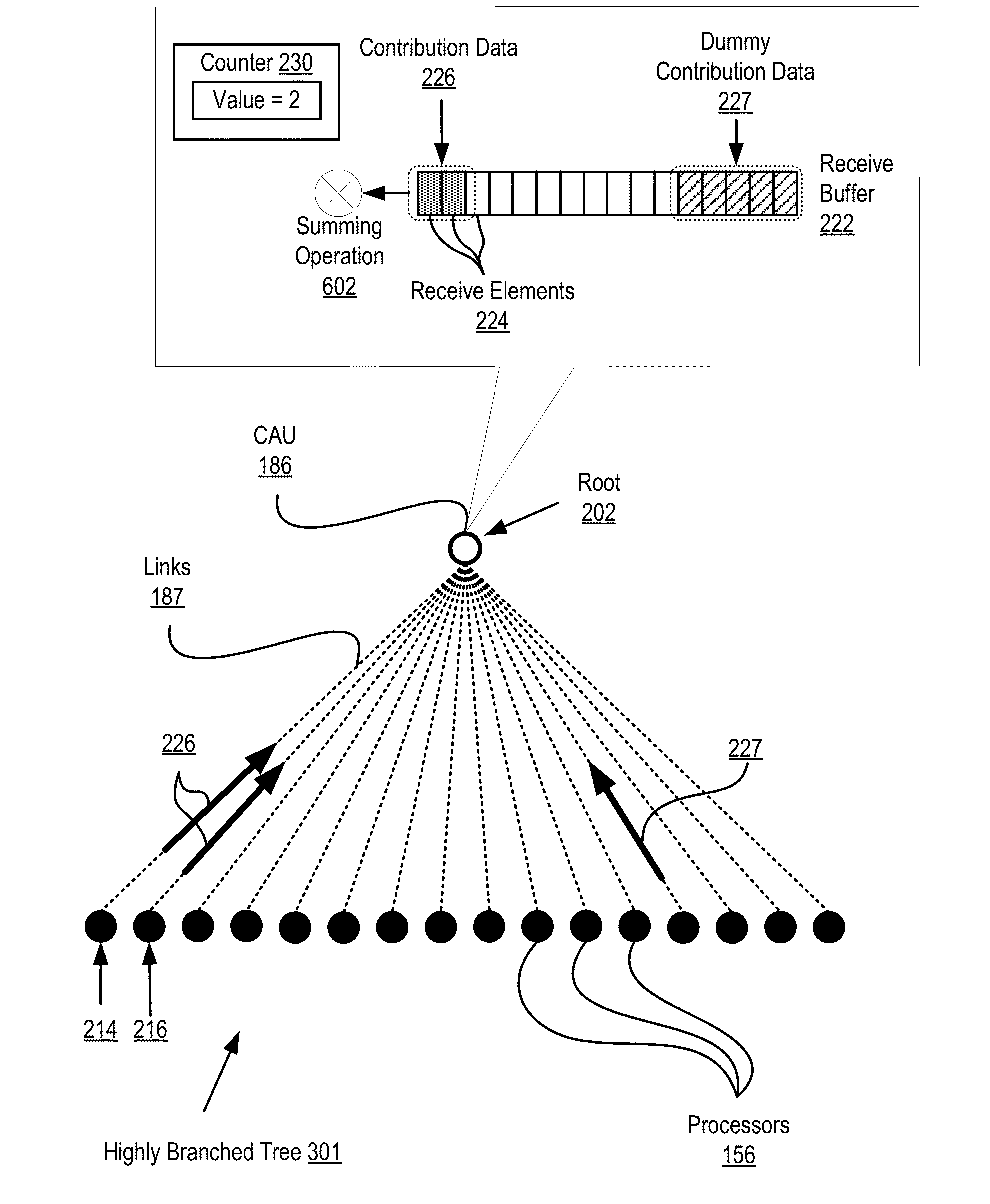

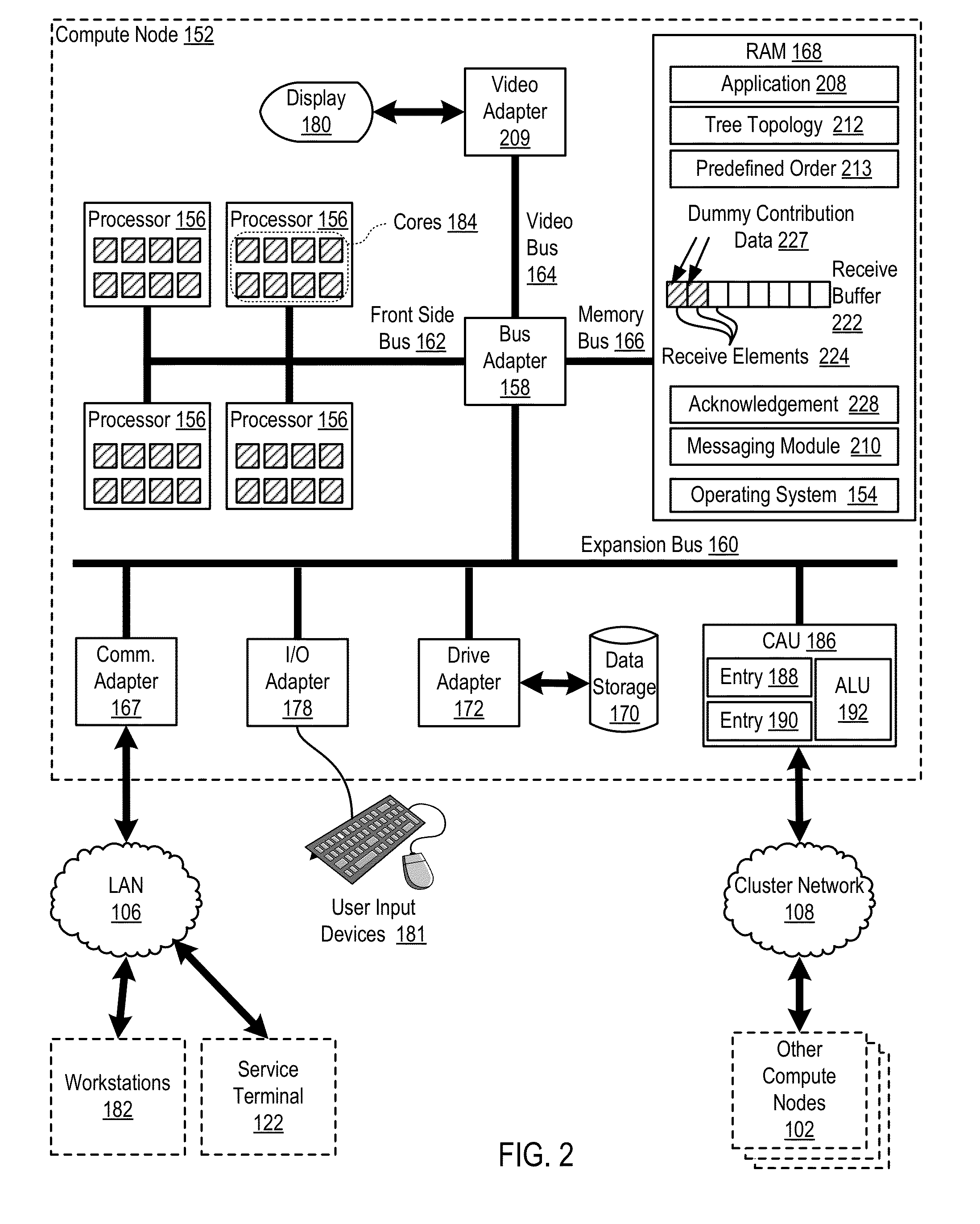

Performing a deterministic reduction operation in a parallel computer

InactiveUS20130290673A1General purpose stored program computerElectric digital data processingConcurrent computationParallel computing

Performing a deterministic reduction operation in a parallel computer that includes compute nodes, each of which includes computer processors and a CAU (Collectives Acceleration Unit) that couples computer processors to one another for data communications, including organizing processors and a CAU into a branched tree topology in which the CAU is a root and the processors are children; receiving, from each of the processors in any order, dummy contribution data, where each processor is restricted from sending any other data to the root CAU prior to receiving an acknowledgement of receipt from the root CAU; sending, by the root CAU to the processors in the branched tree topology, in a predefined order, acknowledgements of receipt of the dummy contribution data; receiving, by the root CAU from the processors in the predefined order, the processors' contribution data to the reduction operation; and reducing, by the root CAU, the processors' contribution data.

Owner:INT BUSINESS MASCH CORP

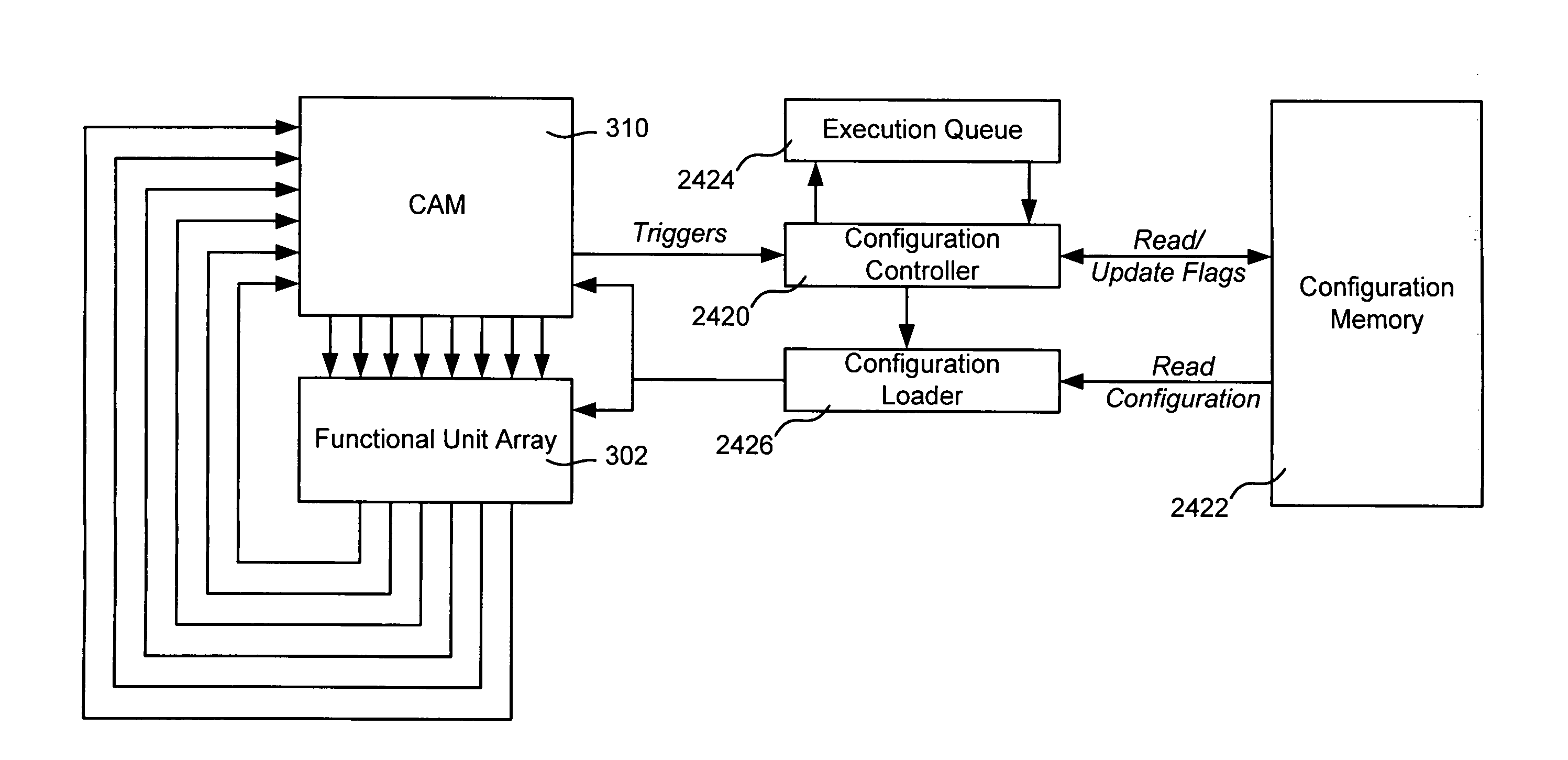

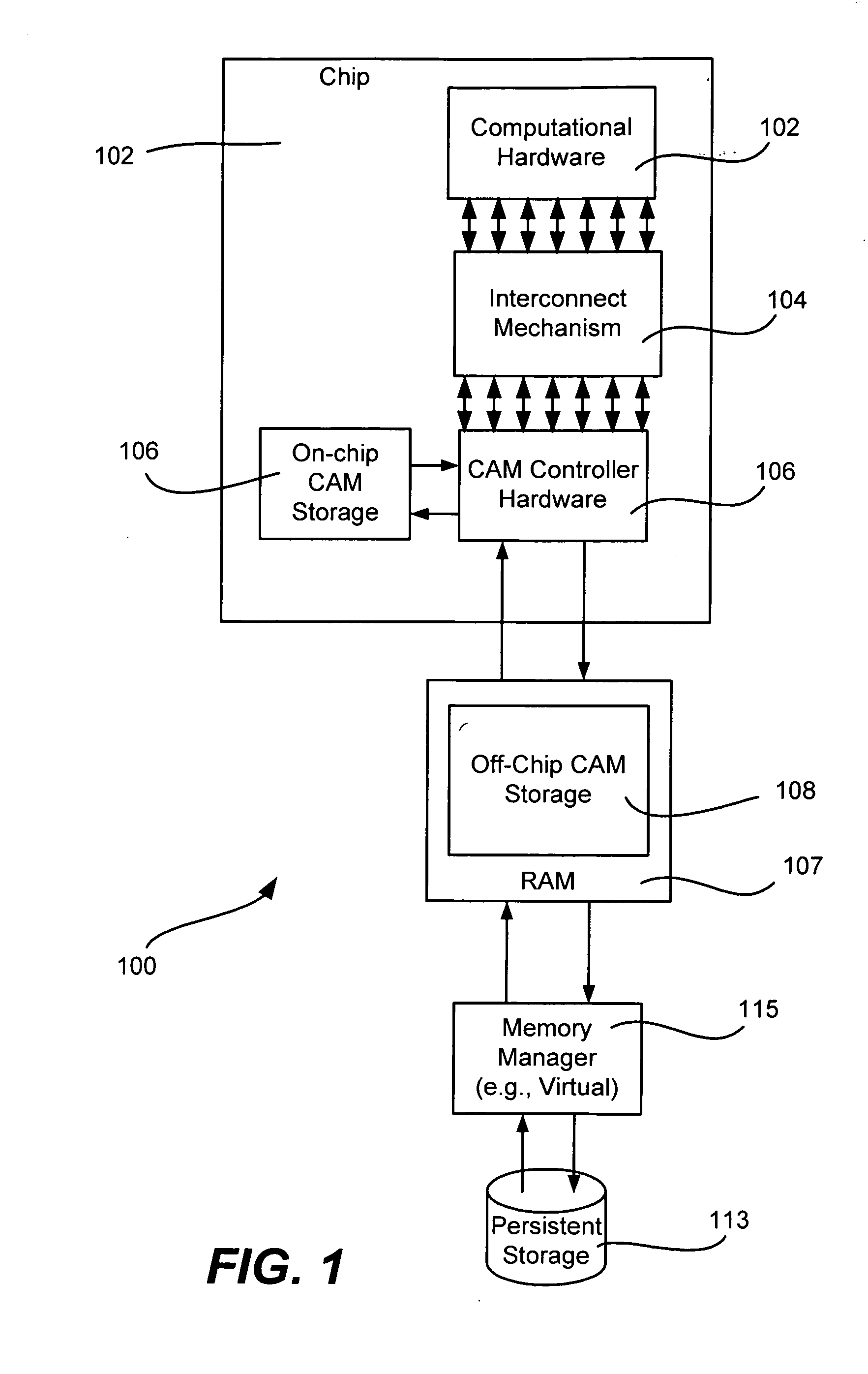

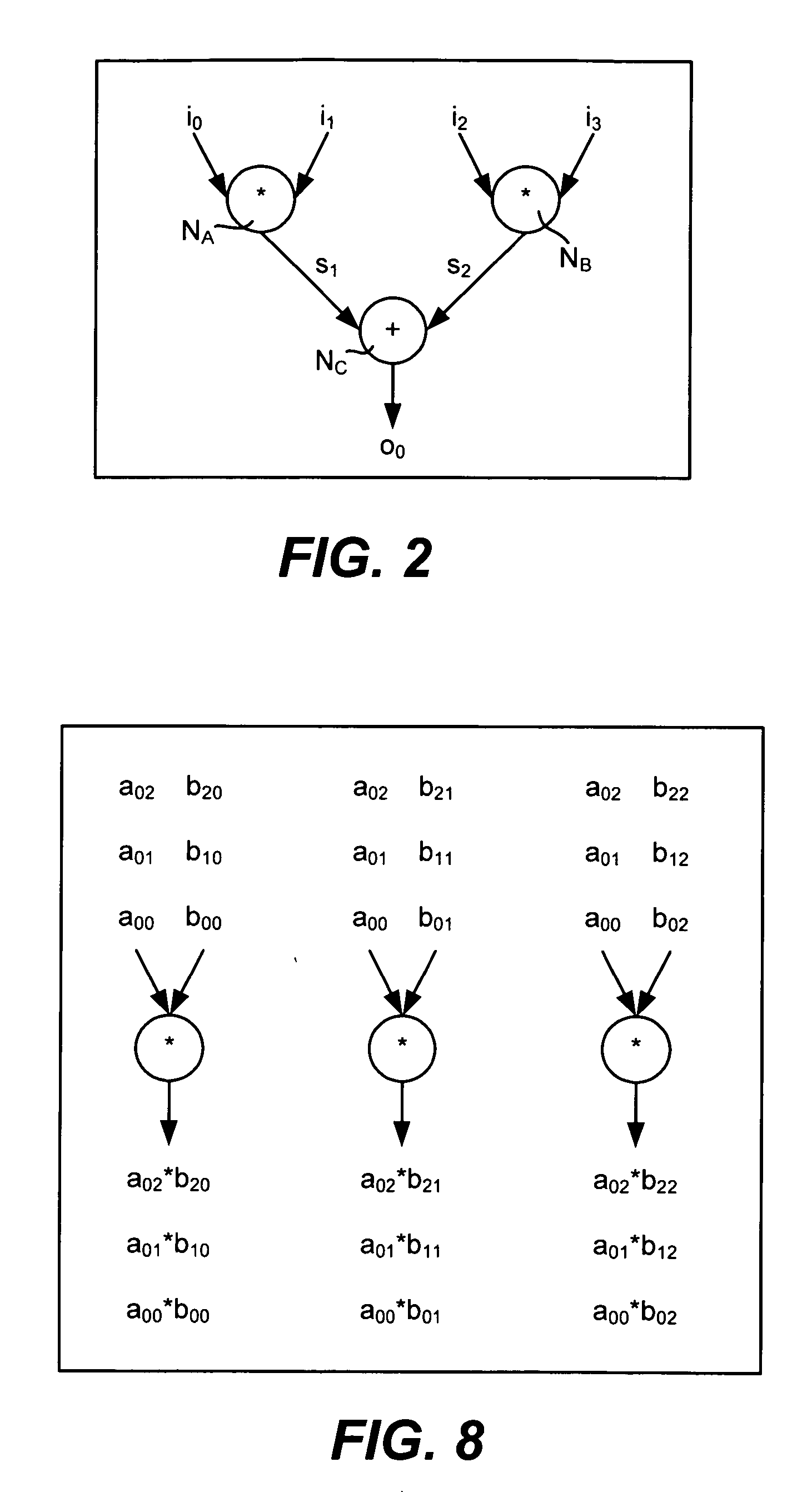

Conditional execution via content addressable memory and parallel computing execution model

ActiveUS20060277392A1Improve performanceOptimize calculation speedProgram control using stored programsDigital data processing detailsConcurrent computationWhile loop

The use of a configuration-based execution model in conjunction with a content addressable memory (CAM) architecture provides a mechanism that enables performance of a number of computing concepts, including conditional execution, (e.g., If-Then statements and while loops), function calls and recursion. If-then and while loops are implemented by using a CAM feature that emits only complete operand sets from the CAM for processing; different seed operands are generated for different conditional evaluation results, and that seed operand is matched with computed data to for an if-then branch or upon exiting a while loop. As a result, downstream operators retrieve only completed operands. Function calls and recursion are handled by using a return tag as an operand along with function parameter data into the input tag space of a function. A recursive function is split into two halves, a pre-recursive half and a post-recursive half that executes after pre-recursive calls.

Owner:MICROSOFT TECH LICENSING LLC

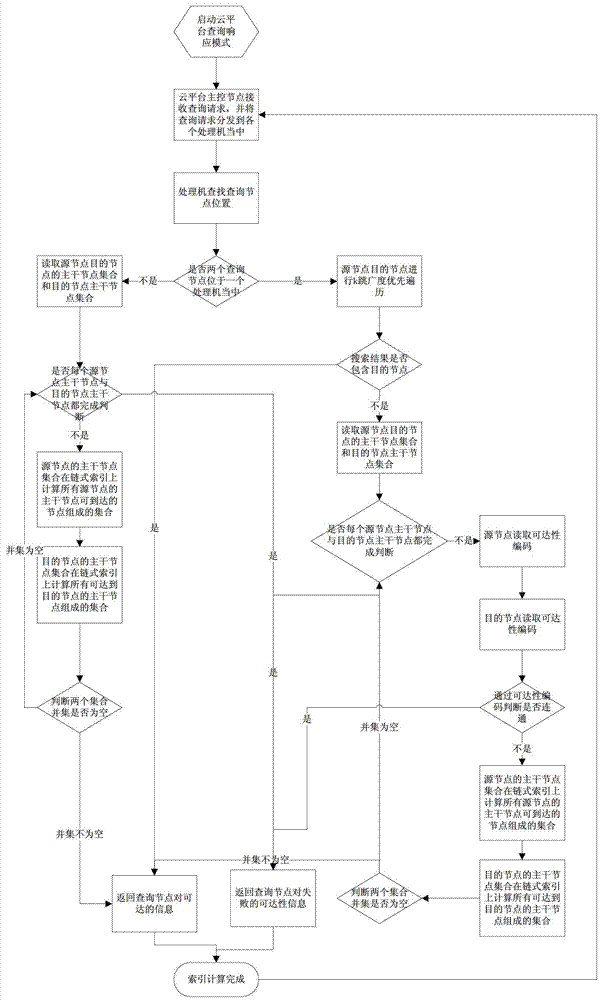

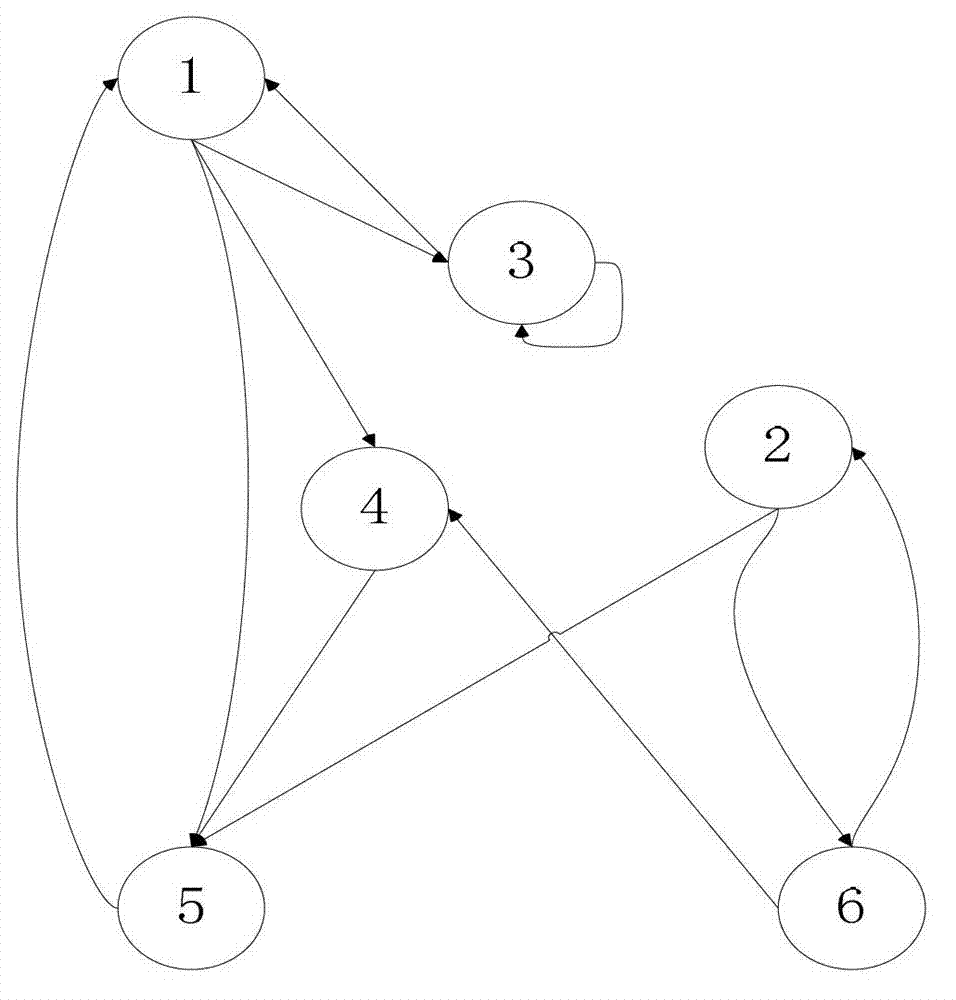

Generation and search method for reachability chain list of directed graph in parallel environment

ActiveCN103399902AReduce sizeReduce computing loadSpecial data processing applicationsData compressionSkip list

The invention belongs to the field of data processing for large graphs and relates to a generation and search method for reachability chain list of a directed graph in the parallel environment. The method includes distributing the directed graph to every processor which stores nodes in the graph and sub-nodes corresponding to the nodes; compressing graph data split to the processors; calculating a backbone node reachability code of a backbone graph; building a chain index; building a skip list on the chain index; allowing data communication among the processors; allowing each processor to send skip list information to other processors; allowing each processor to upgrade own skip list information; and building a reachability index of a total graph. Through use of graph reachability compression technology in the parallel environment, the size of graph data is greatly reduced, system computing load is reduced, and a system can process the graph data on a larger scale. The method has the advantages that the speed of reading data from a disk is higher, search speed is indirectly increased, accuracy of search results is guaranteed, and network communication cost and search time are reduced greatly for a parallel computing system during searching.

Owner:NORTHEASTERN UNIV

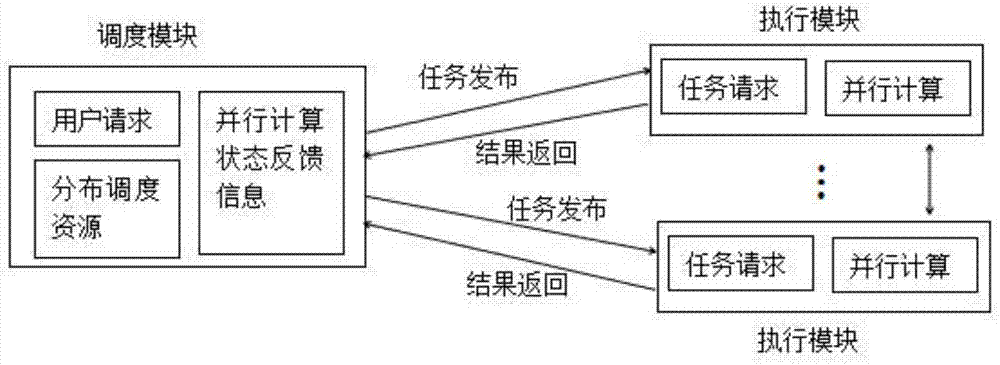

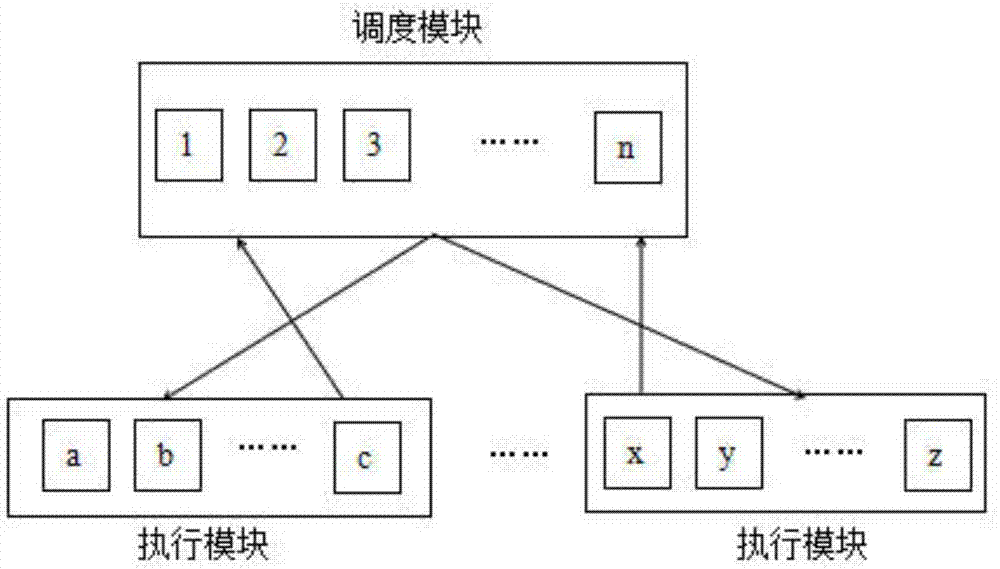

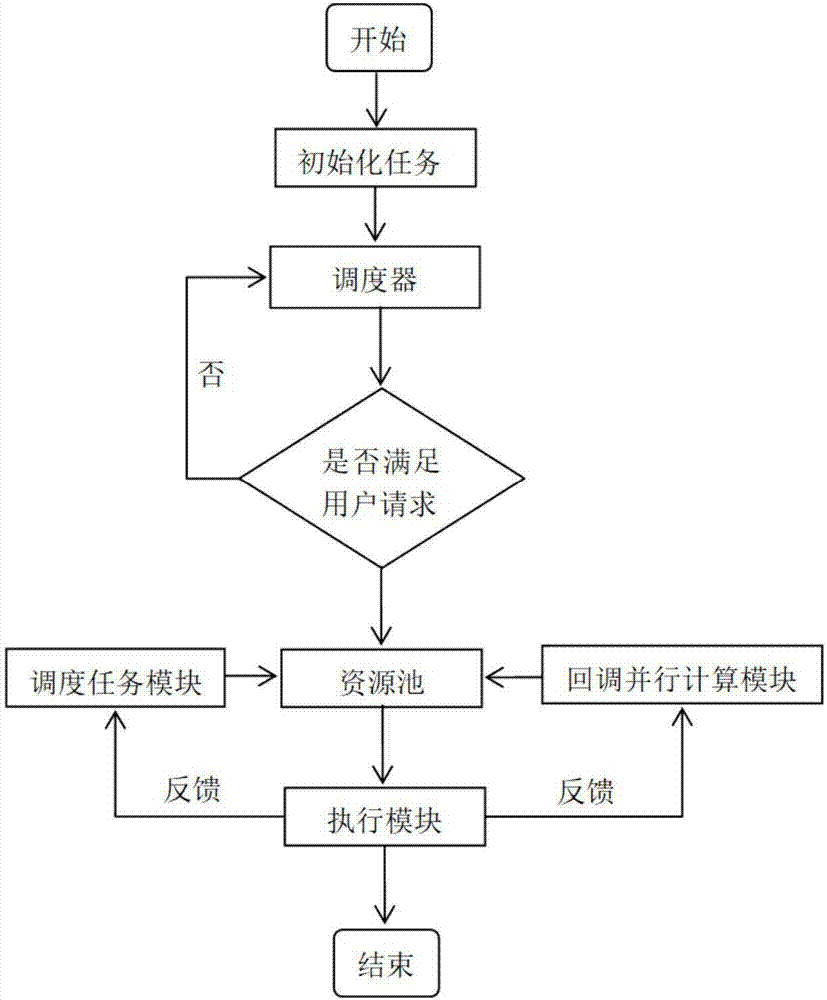

Large-scale resource scheduling system and large-scale resource scheduling method based on deep learning neural network

ActiveCN107888669AImprove training efficiencyGuaranteed stabilityResource allocationTransmissionTask completionResource information

The invention claims a large-scale resource scheduling system and a large-scale resource scheduling method based on a deep learning neural network. The system comprises at least one scheduling controlmodule and at least two execution modules; the scheduling control module is used for receiving a use request, allocating a scheduling resource and performing parallel computing of state feedback; theexecution modules are used for receiving a task request sent by the scheduling control module, opening up a memory space and performing computation. In the system and the method provided by the invention, a user task request interface is provided, and a scheduler receives a submitted task request information, predicts and judges whether a task satisfies expectation of task completion conditions of a user through the deep learning neural network and consequently determines an initialization parameter of a resource scheduling policy. The scheduler segments the task according to the resource scheduling policy and allocates the task to the execution modules to complete computation. While performing computation and tidying for the task, the execution modules feeds back resource information tothe scheduling control module to complete the user task uniformly.

Owner:WUHAN UNIV OF TECH

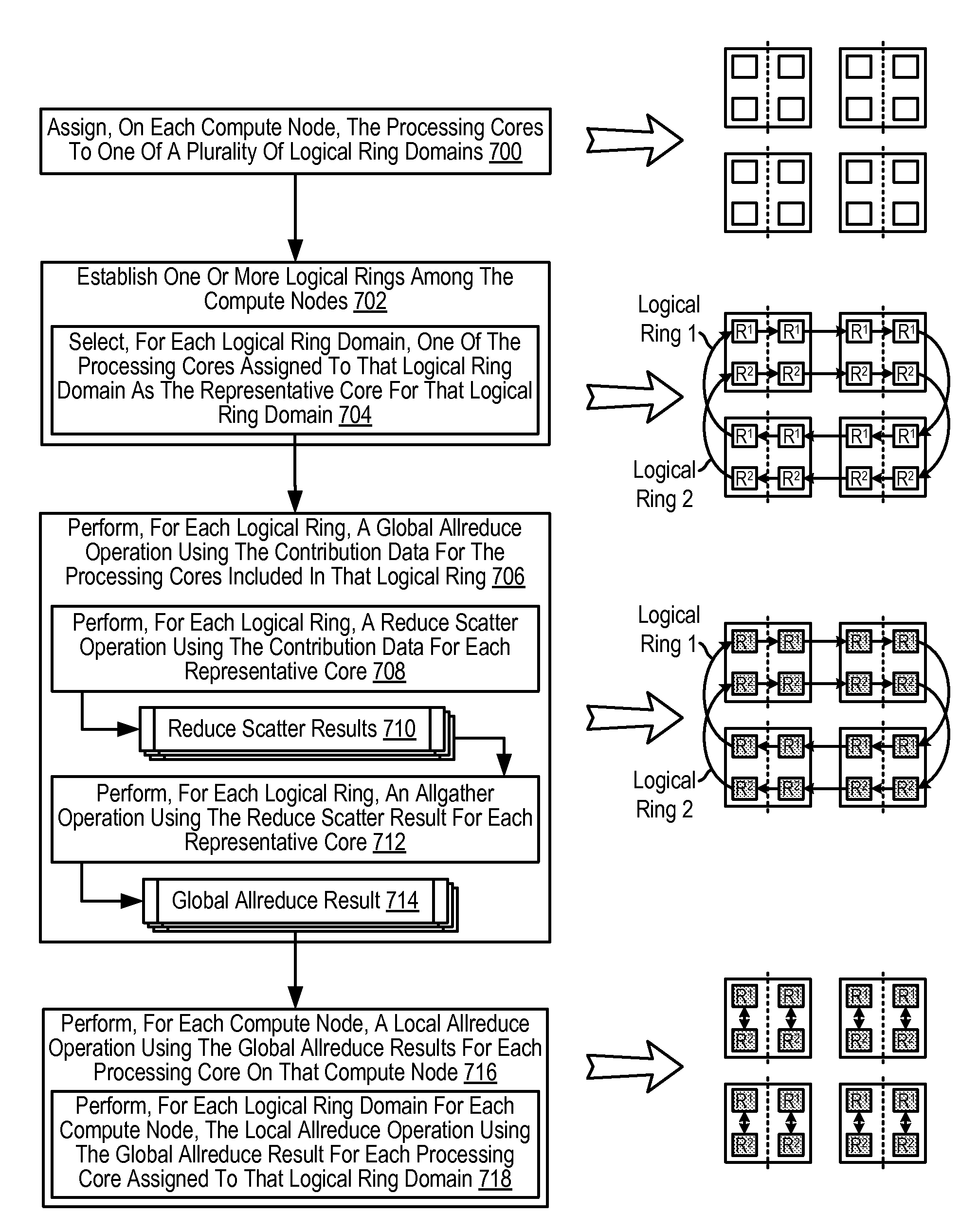

Performing an allreduce operation on a plurality of compute nodes of a parallel computer

InactiveUS8161268B2Interprogram communicationSpecific program execution arrangementsProcessing coreConcurrent computation

Methods, apparatus, and products are disclosed for performing an allreduce operation on a plurality of compute nodes of a parallel computer. Each compute node includes at least two processing cores. Each processing core has contribution data for the allreduce operation. Performing an allreduce operation on a plurality of compute nodes of a parallel computer includes: establishing one or more logical rings among the compute nodes, each logical ring including at least one processing core from each compute node; performing, for each logical ring, a global allreduce operation using the contribution data for the processing cores included in that logical ring, yielding a global allreduce result for each processing core included in that logical ring; and performing, for each compute node, a local allreduce operation using the global allreduce results for each processing core on that compute node.

Owner:INT BUSINESS MASCH CORP

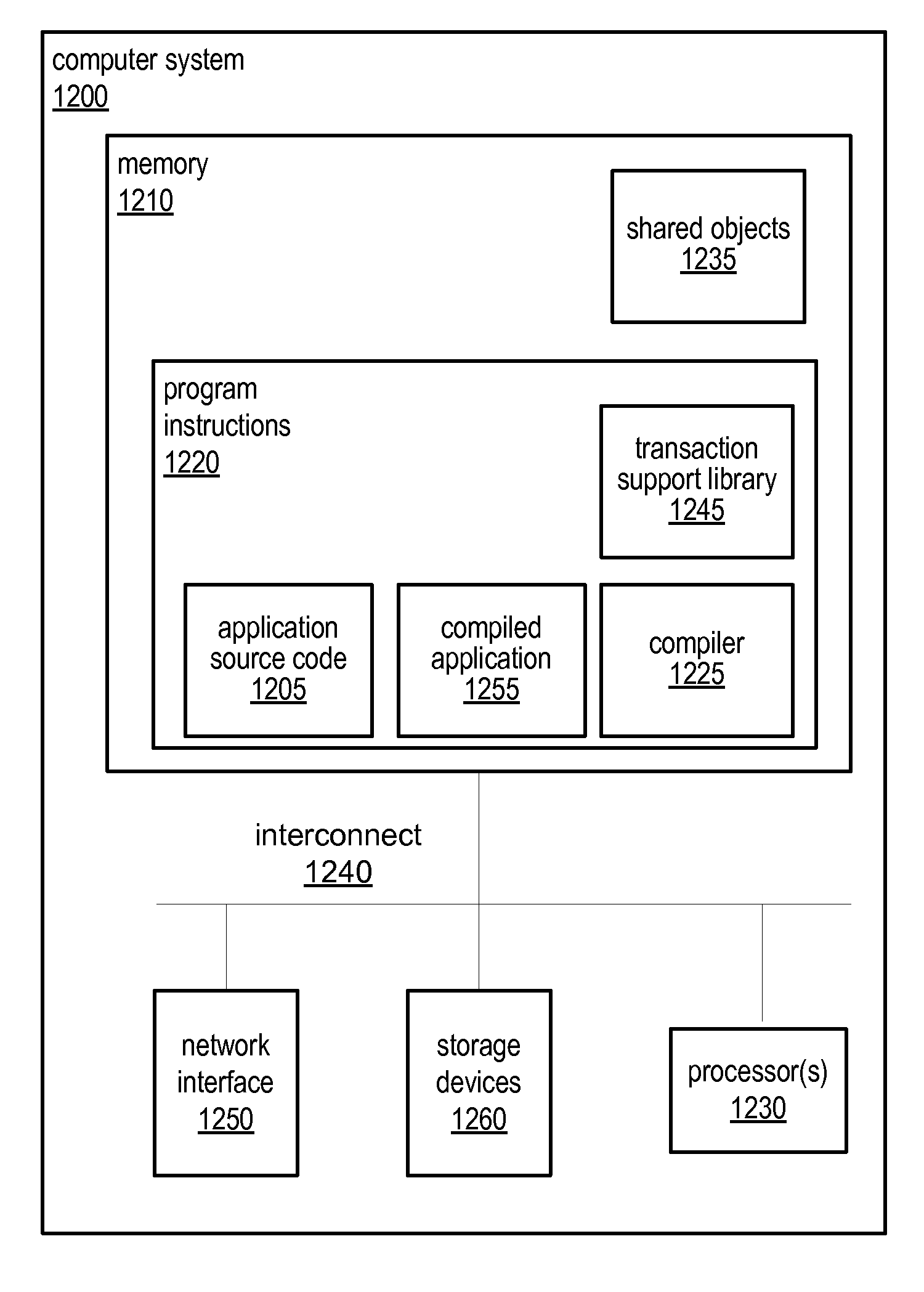

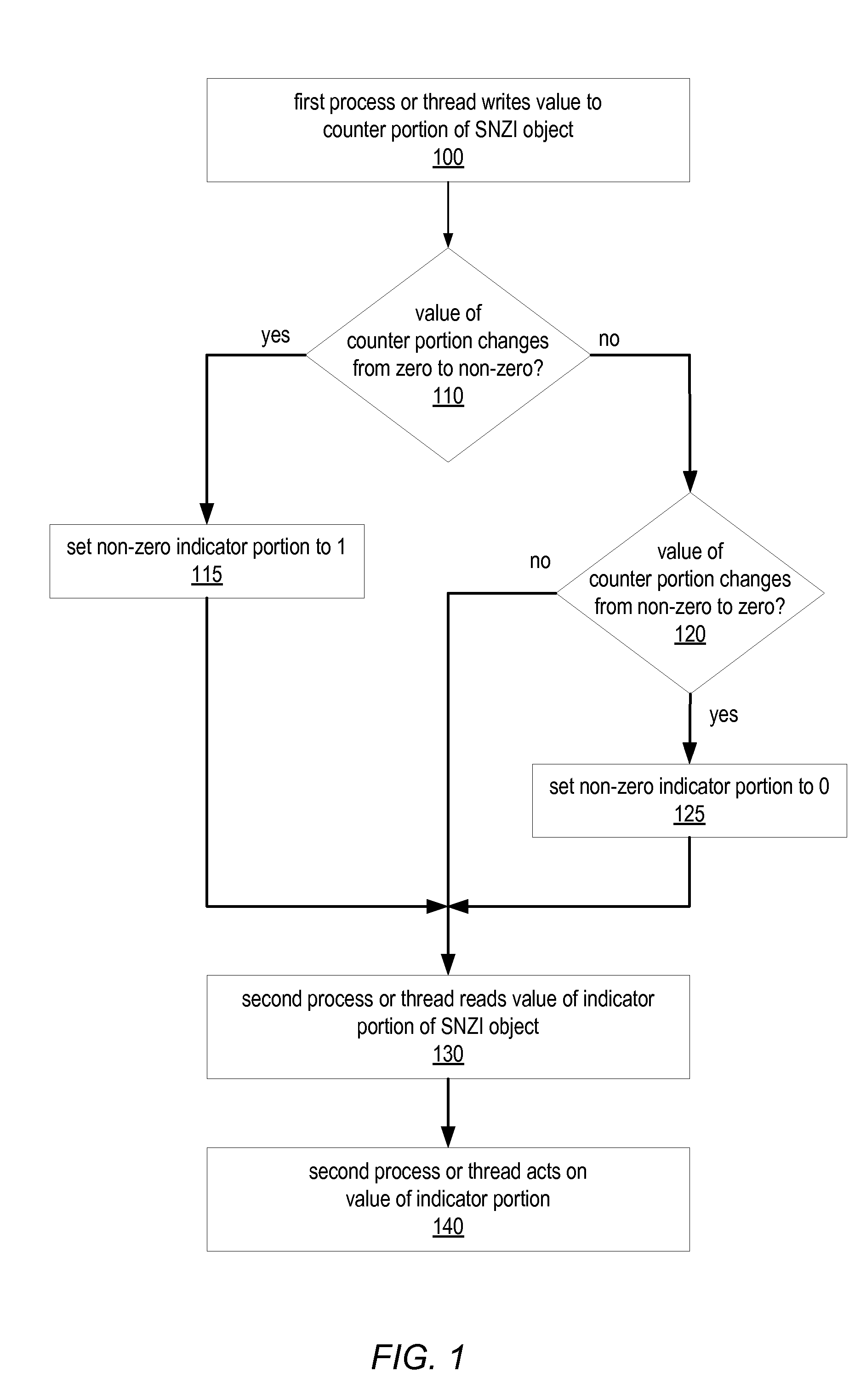

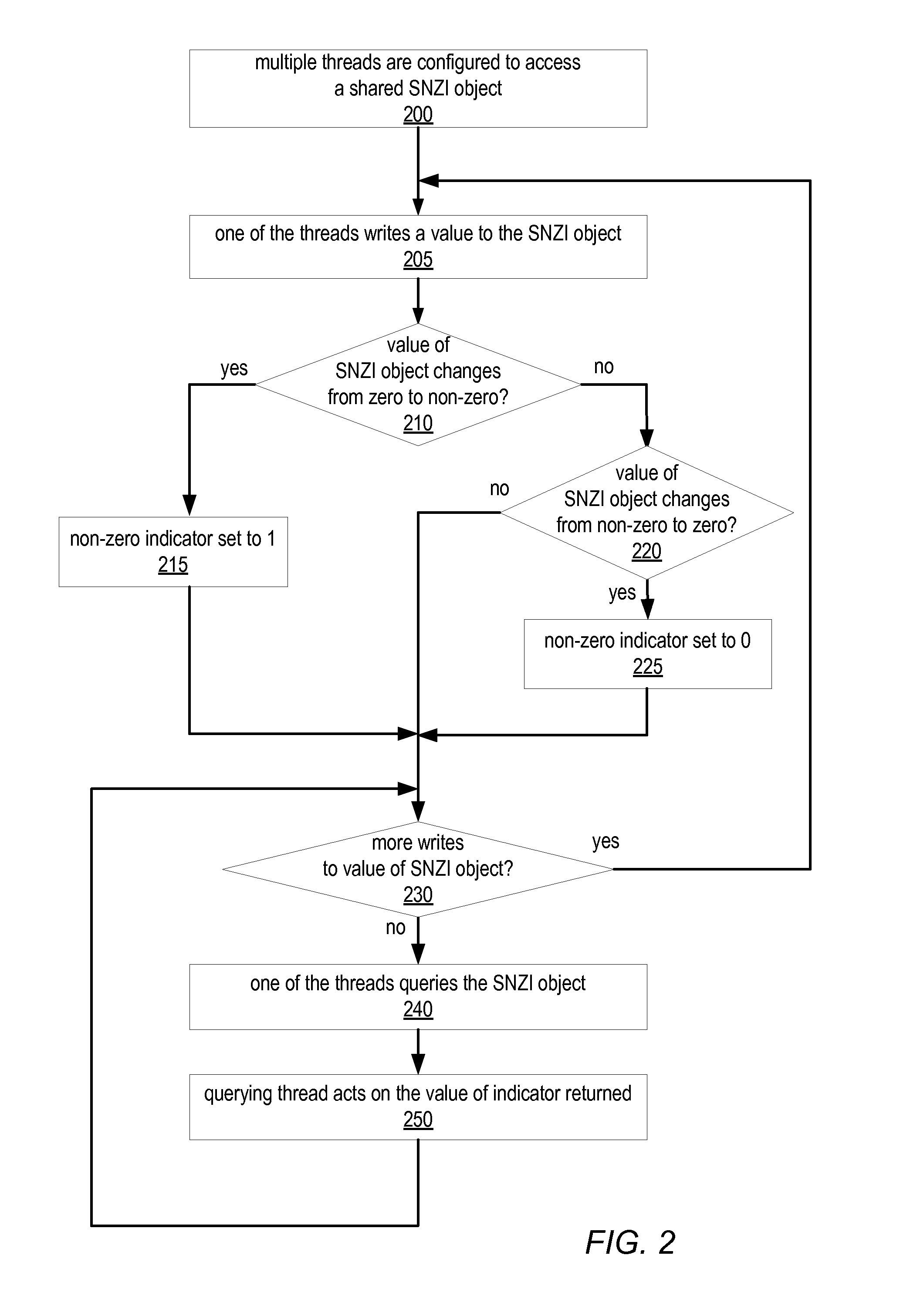

System and Method for Implementing Shared Scalable Nonzero Indicators

ActiveUS20090125548A1Program synchronisationSpecial data processing applicationsConcurrent computingConcurrent computation

A Scalable NonZero Indicator (SNZI) object in a concurrent computing application may include a shared data portion (e.g., a counter portion) and a shared nonzero indicator portion, and / or may be an element in a hierarchy of SNZI objects that filters changes in non-root nodes to a root node. SNZI objects may be accessed by software applications through an API that includes a query operation to return the value of the nonzero indicator, and arrive (increment) and depart (decrement) operations. Modifications of the data portion and / or the indicator portion may be performed using atomic read-modify-write type operations. Some SNZI objects may support a reset operation. A shared data object may be set to an intermediate value, or an announce bit may be set, to indicate that a modification is in progress that affects its corresponding indicator value. Another process or thread seeing this indication may “help” complete the modification before proceeding.

Owner:SUN MICROSYSTEMS INC

Efficient execution of parallel computer programs

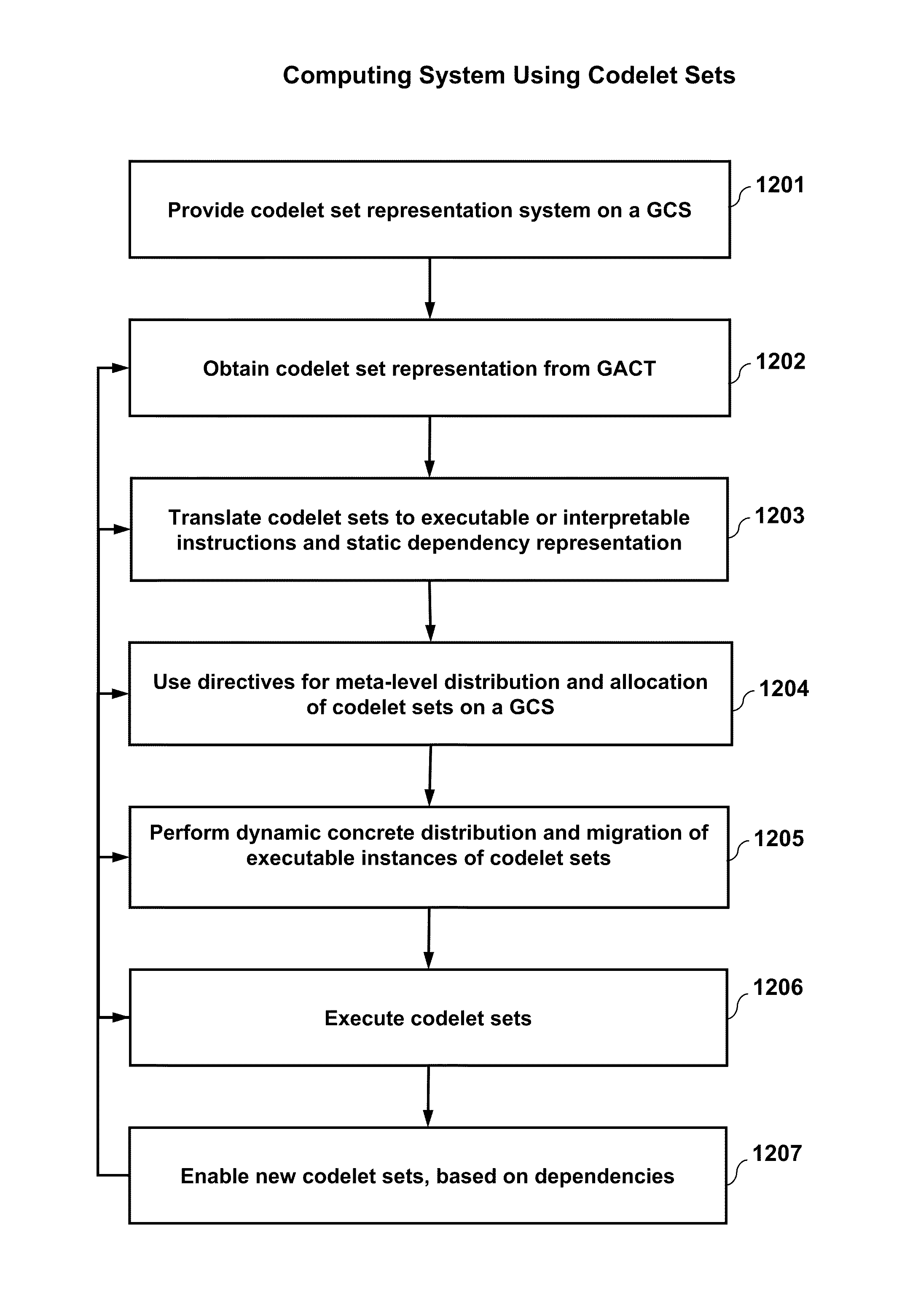

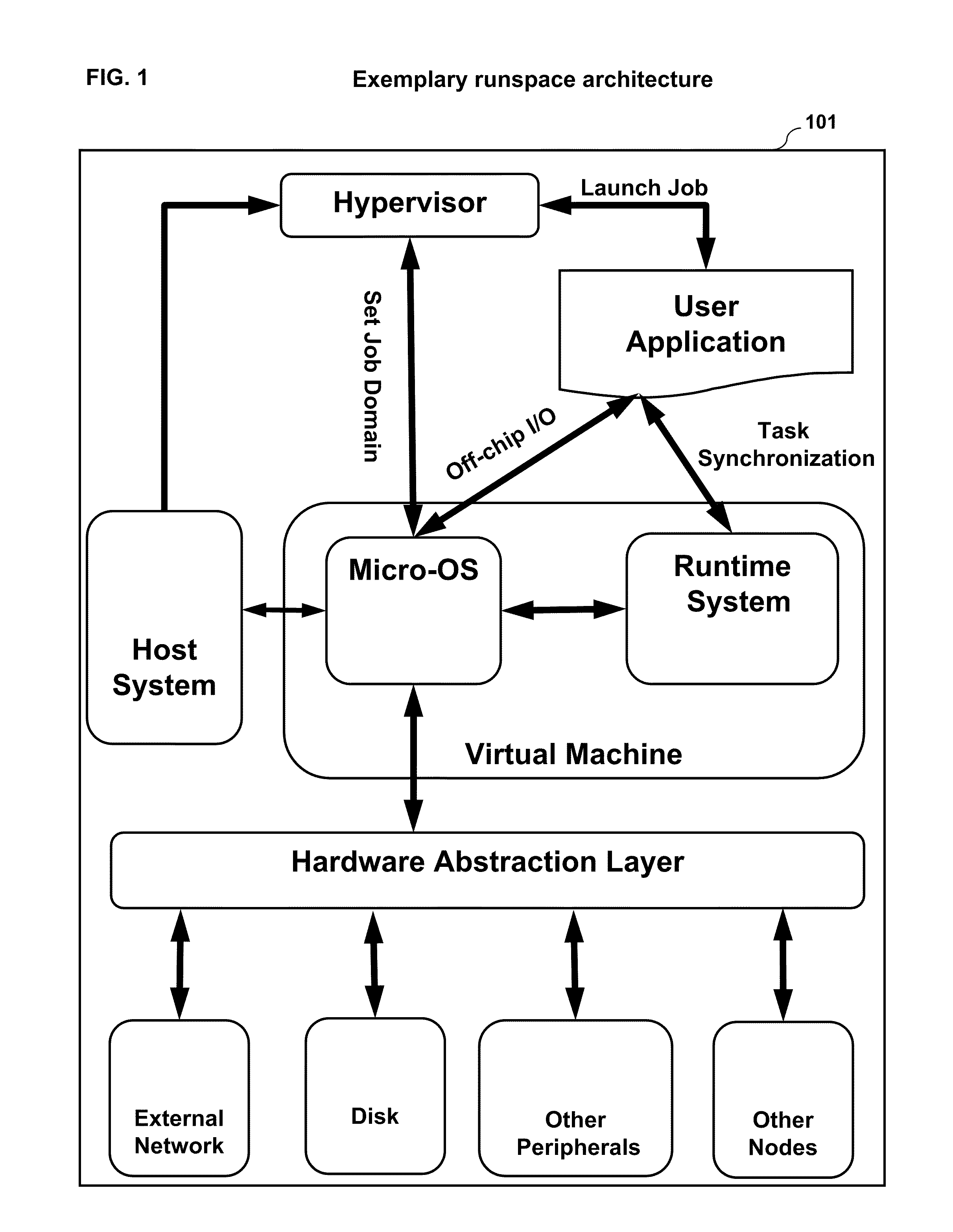

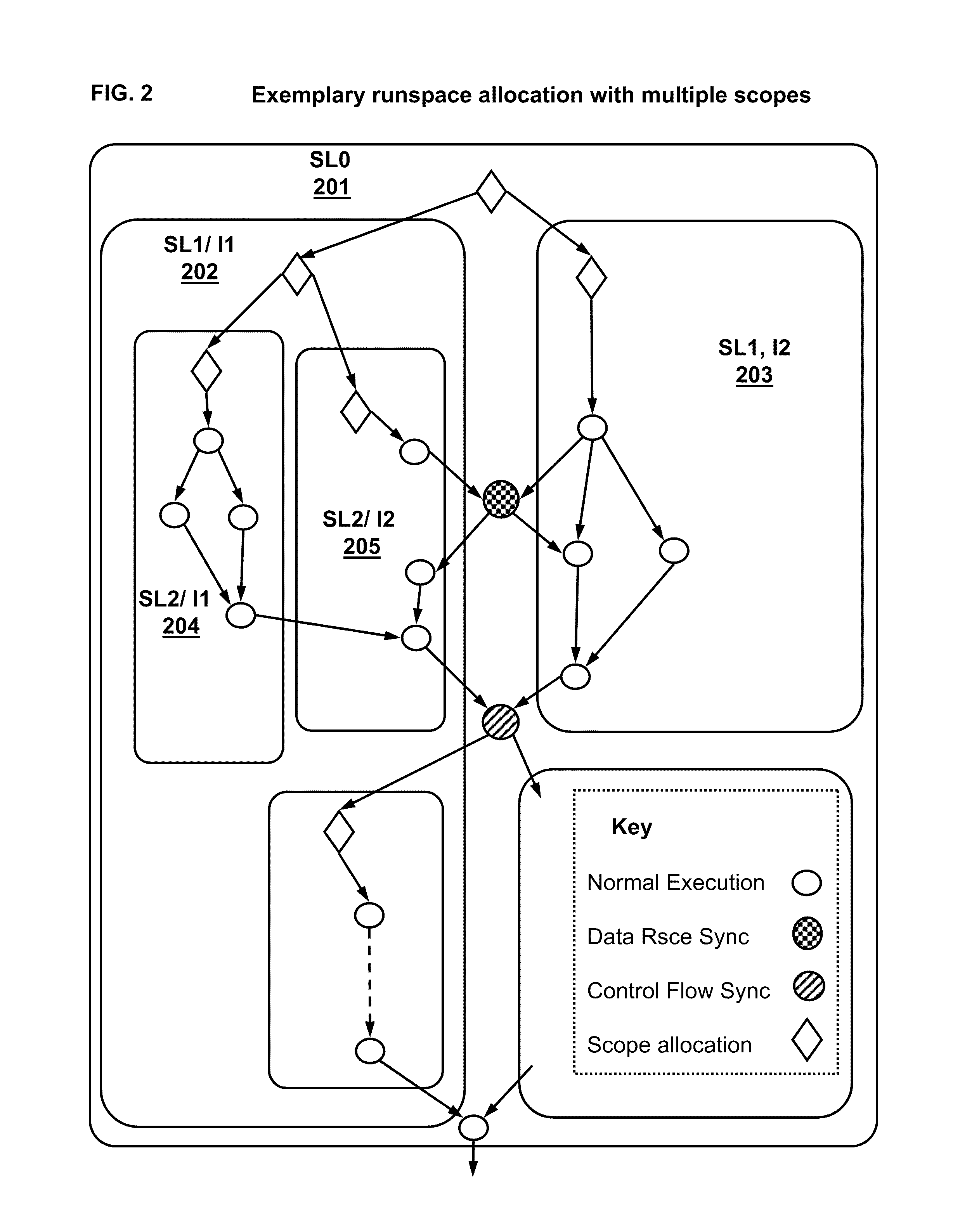

ActiveUS9542231B2Effective distributionEnergy efficient ICTResource allocationConcurrent computationSystems management

The present invention, known as runspace, relates to the field of computing system management, data processing and data communications, and specifically to synergistic methods and systems which provide resource-efficient computation, especially for decomposable many-component tasks executable on multiple processing elements, by using a metric space representation of code and data locality to direct allocation and migration of code and data, by performing analysis to mark code areas that provide opportunities for runtime improvement, and by providing a low-power, local, secure memory management system suitable for distributed invocation of compact sections of code accessing local memory. Runspace provides mechanisms supporting hierarchical allocation, optimization, monitoring and control, and supporting resilient, energy efficient large-scale computing.

Owner:ET INT

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com