Patents

Literature

571 results about "Inverse quantization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

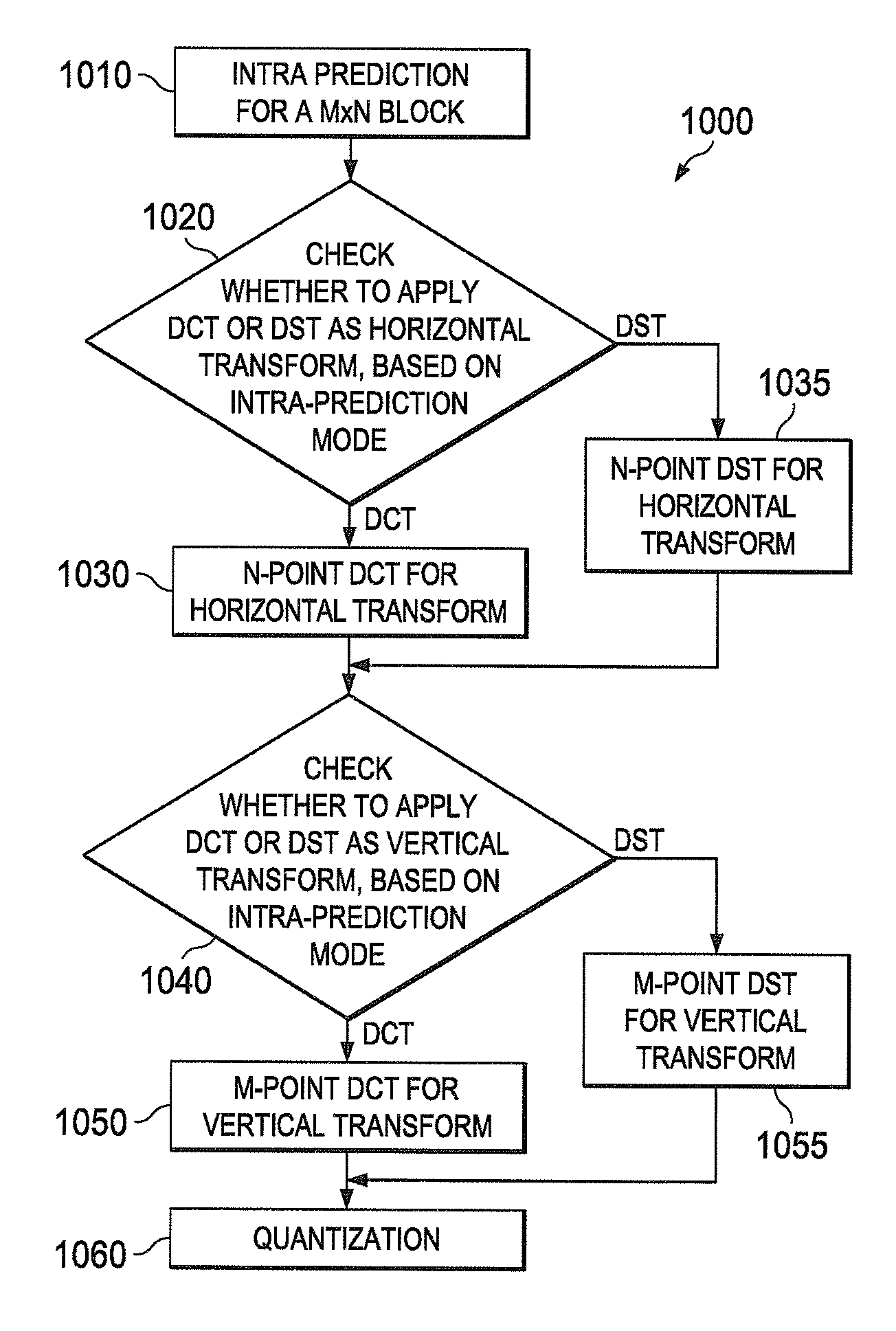

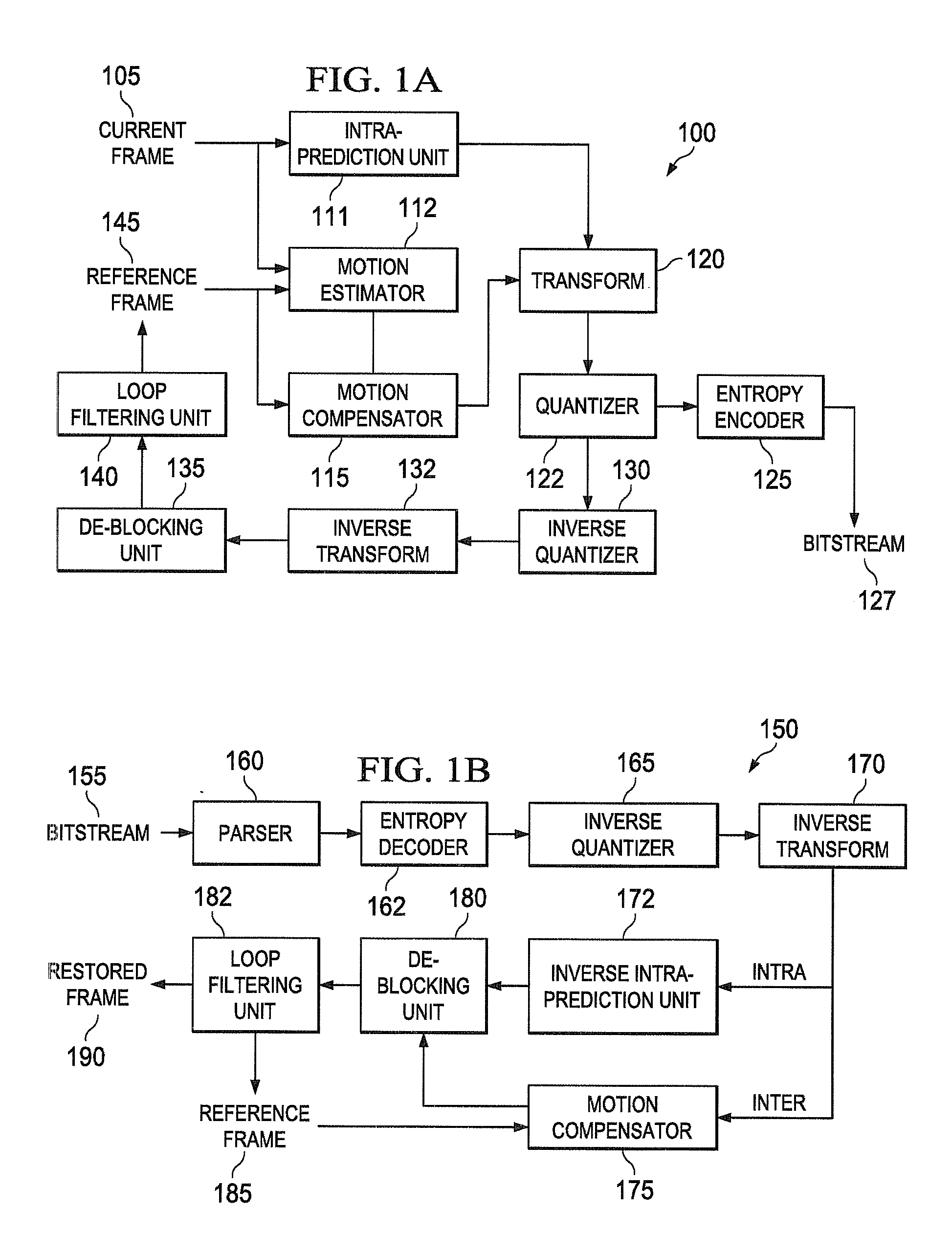

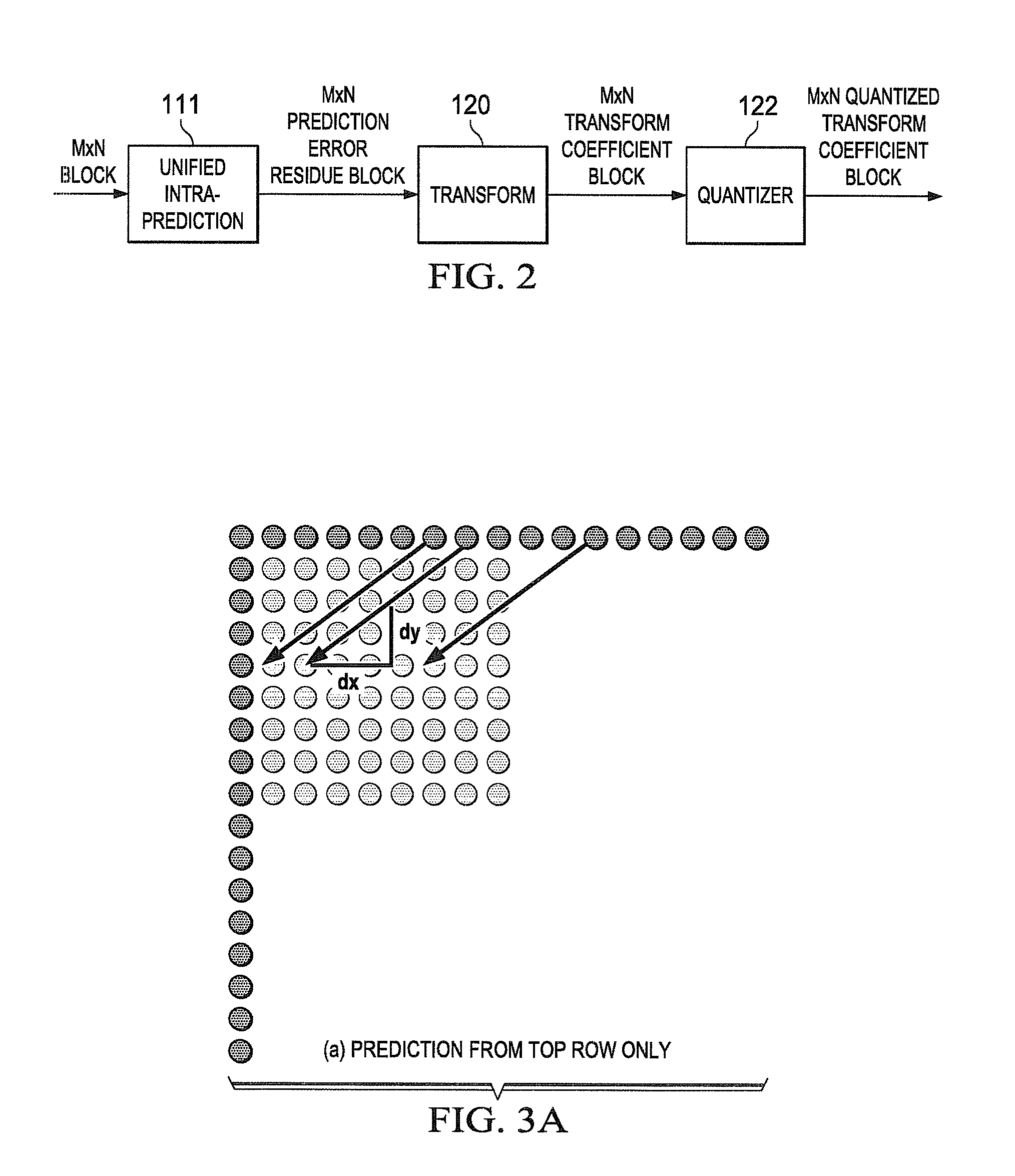

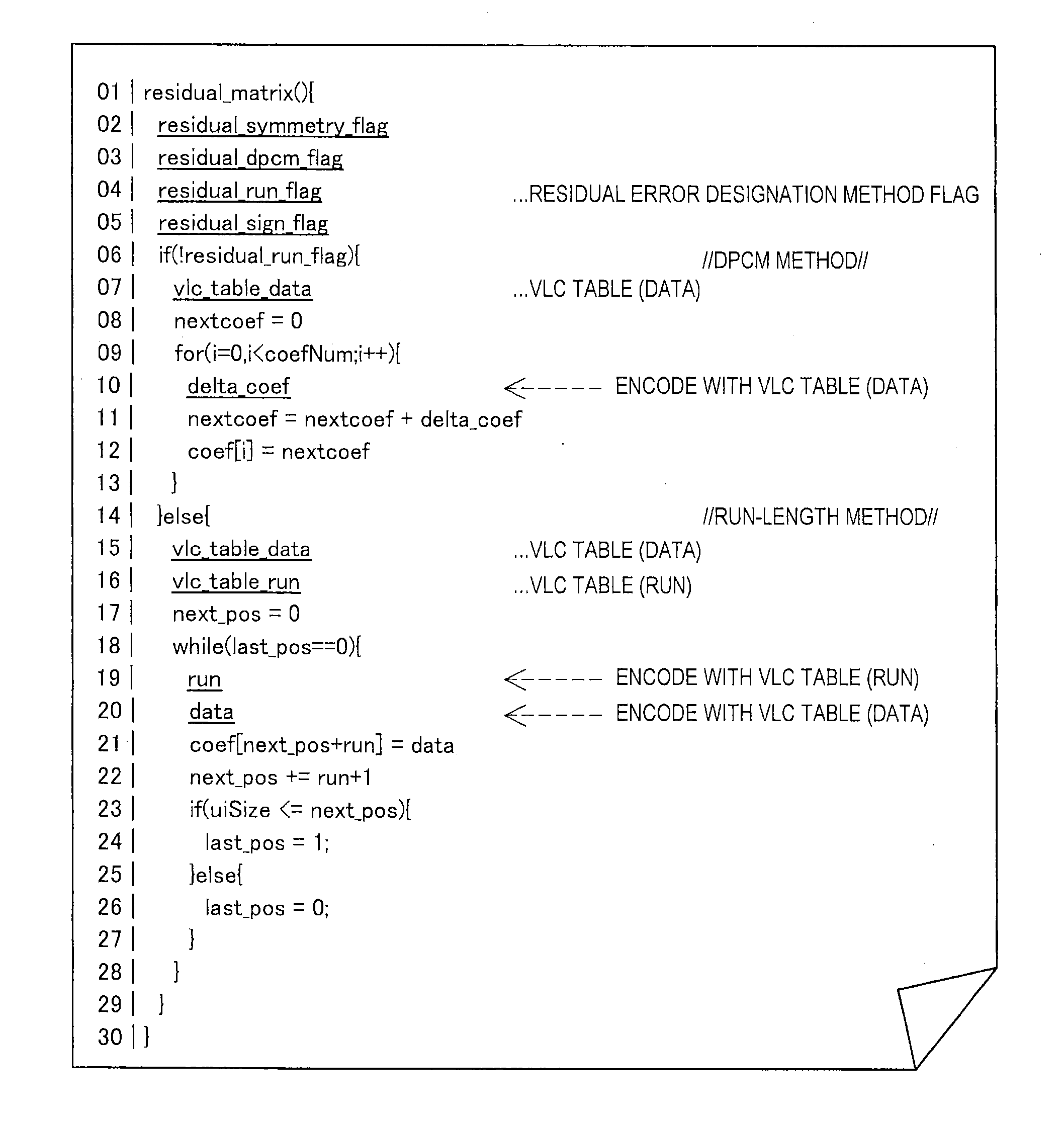

Low complexity transform coding using adaptive dct/dst for intra-prediction

InactiveUS20120057630A1Reduce complexityQuick implementationColor television with pulse code modulationColor television with bandwidth reductionVideo bitstreamSelf adaptive

A method and apparatus encode and decode video by determining whether to use discrete cosine transform (DCT) and DST for each of the horizontal and vertical transforms. During encoding, an intra-prediction is performed based on an intra-prediction mode determined for an M×N input image block to obtain an M×N intra-prediction residue matrix (E). Based on the intra-prediction mode, each of a horizontal transform and a vertical transform is performed using one of DCT and DST according to the intra-prediction mode. During decoding, the intra-prediction mode is determined from an incoming video bitstream. The M×N transformed coefficient matrix of the error residue is obtained from the video bitstream using an inverse quantizer. Based on the intra prediction mode, one of DCT and DST is performed for each of an inverse vertical transform and an inverse horizontal transform.

Owner:SAMSUNG ELECTRONICS CO LTD

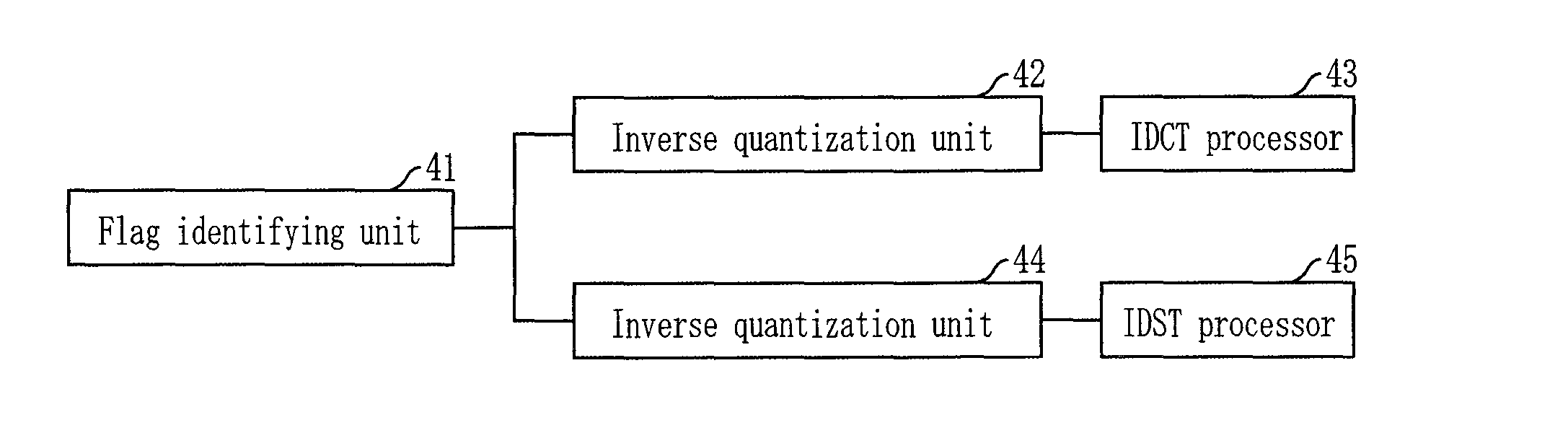

Apparatus and method for encoding and decoding using alternative converter accoding to the correlation of residual signal

InactiveUS20090238271A1Increase the compression ratioColor television with pulse code modulationColor television with bandwidth reductionComputer hardwareRate distortion

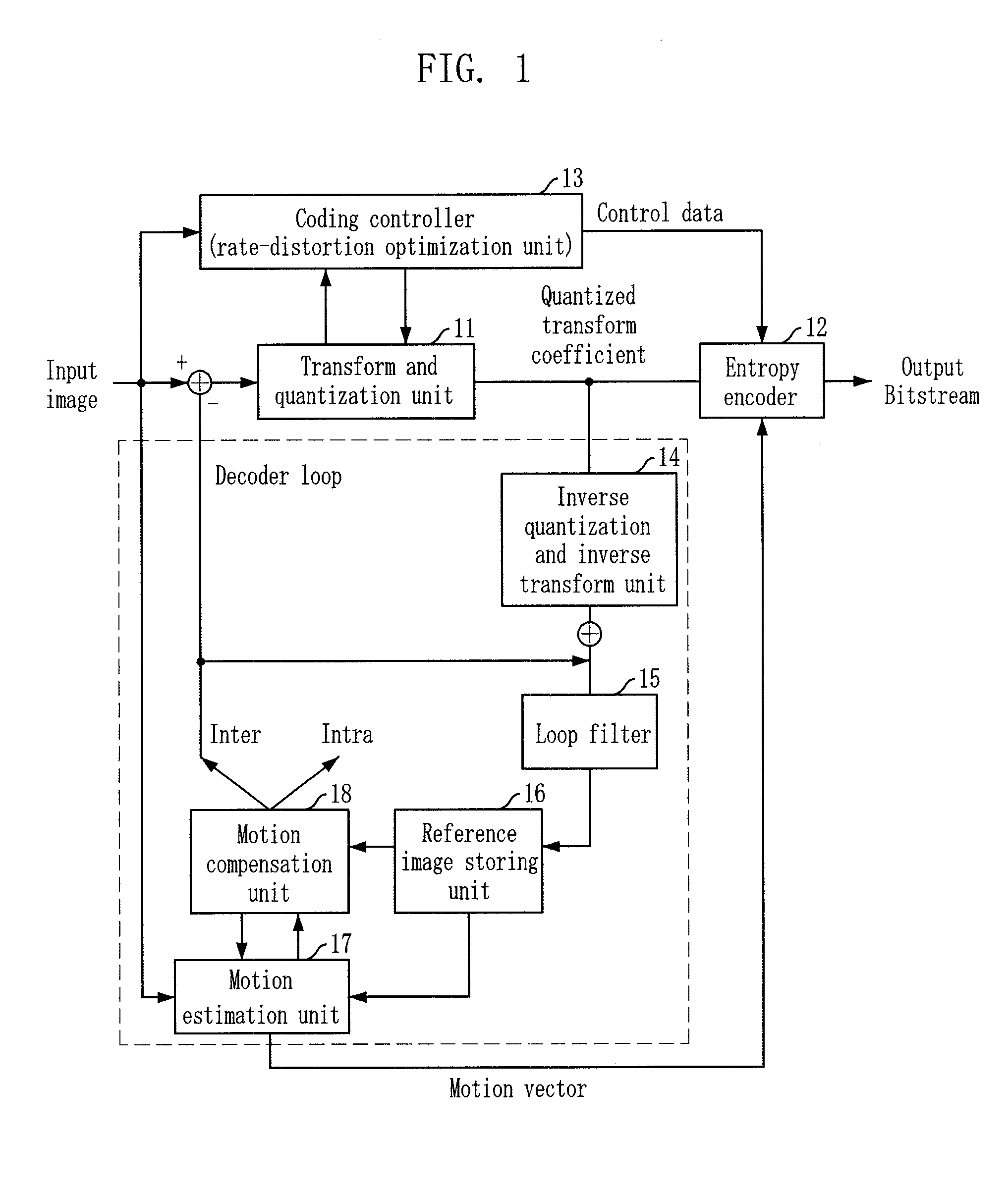

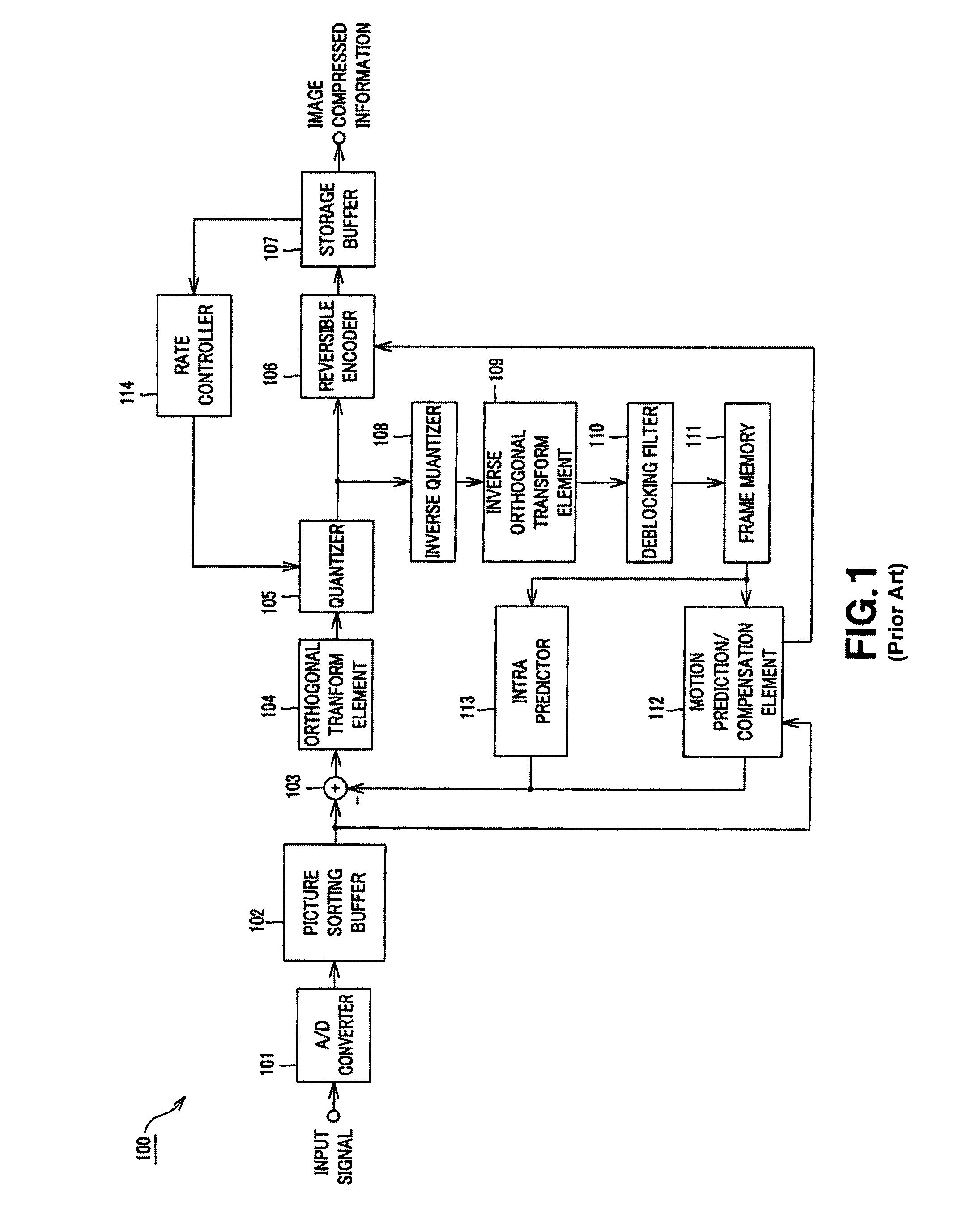

Provided is an apparatus and method for encoding and decoding using alternative transform units according to the correlation of residual signals. The video encoding apparatus includes a first transforming unit for performing discrete cosine transform (DCT), first quantization, first inverse quantization, and inverse DCT on a block basis onto residual coefficients generated after intra frame prediction or inter frame prediction; a second transforming unit for performing discrete sine transform (DST), second quantization, second inverse quantization, and inverse DST on a block basis onto the residual coefficients; a selecting unit for selecting one having a high compression rate between the first and second transforming units for each block through performing rate-distortion optimization; and a flag marking unit for recording information about the selected transforming unit at a flag bit provided on a macroblock basis.

Owner:ELECTRONICS & TELECOMM RES INST +2

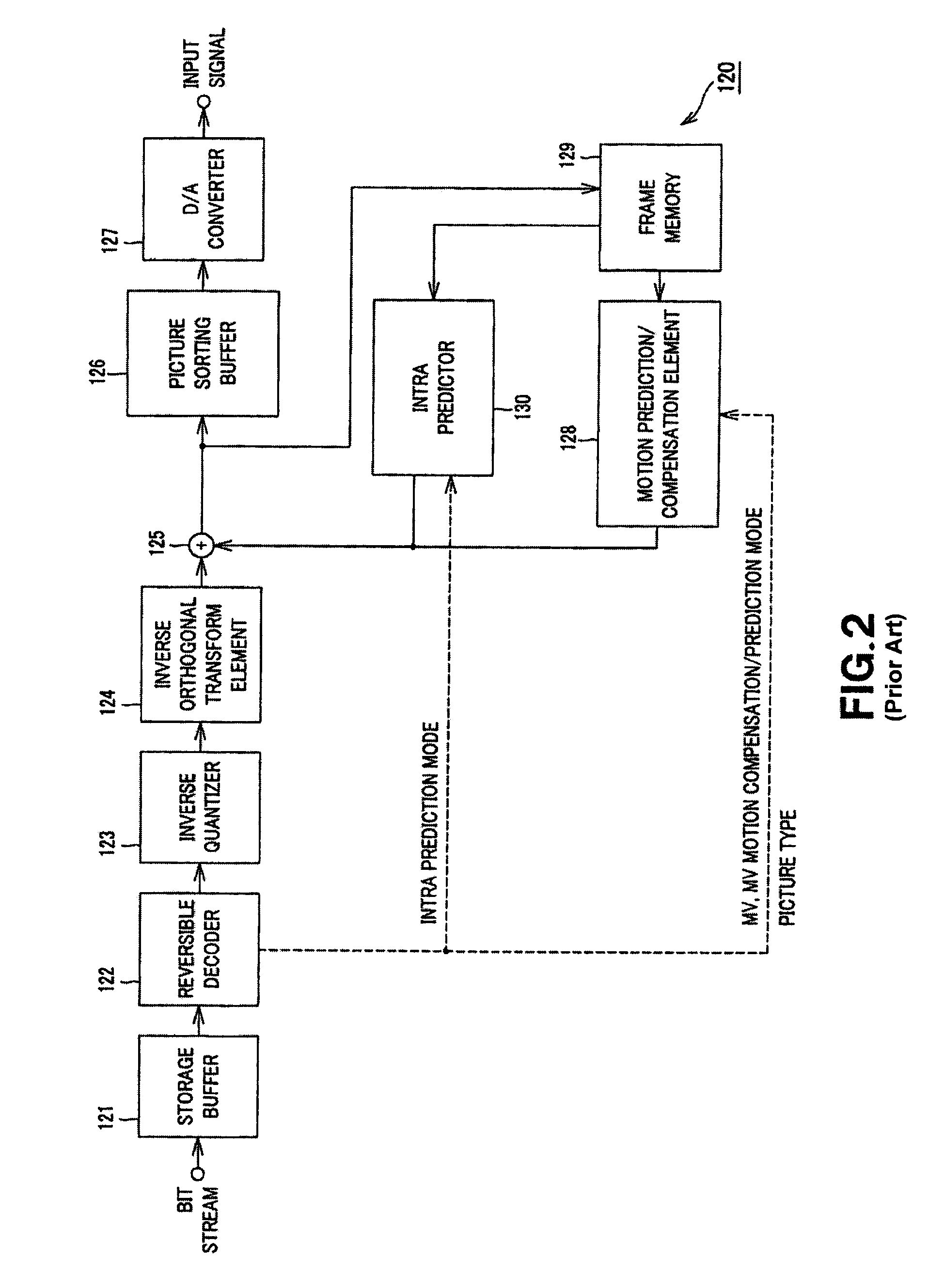

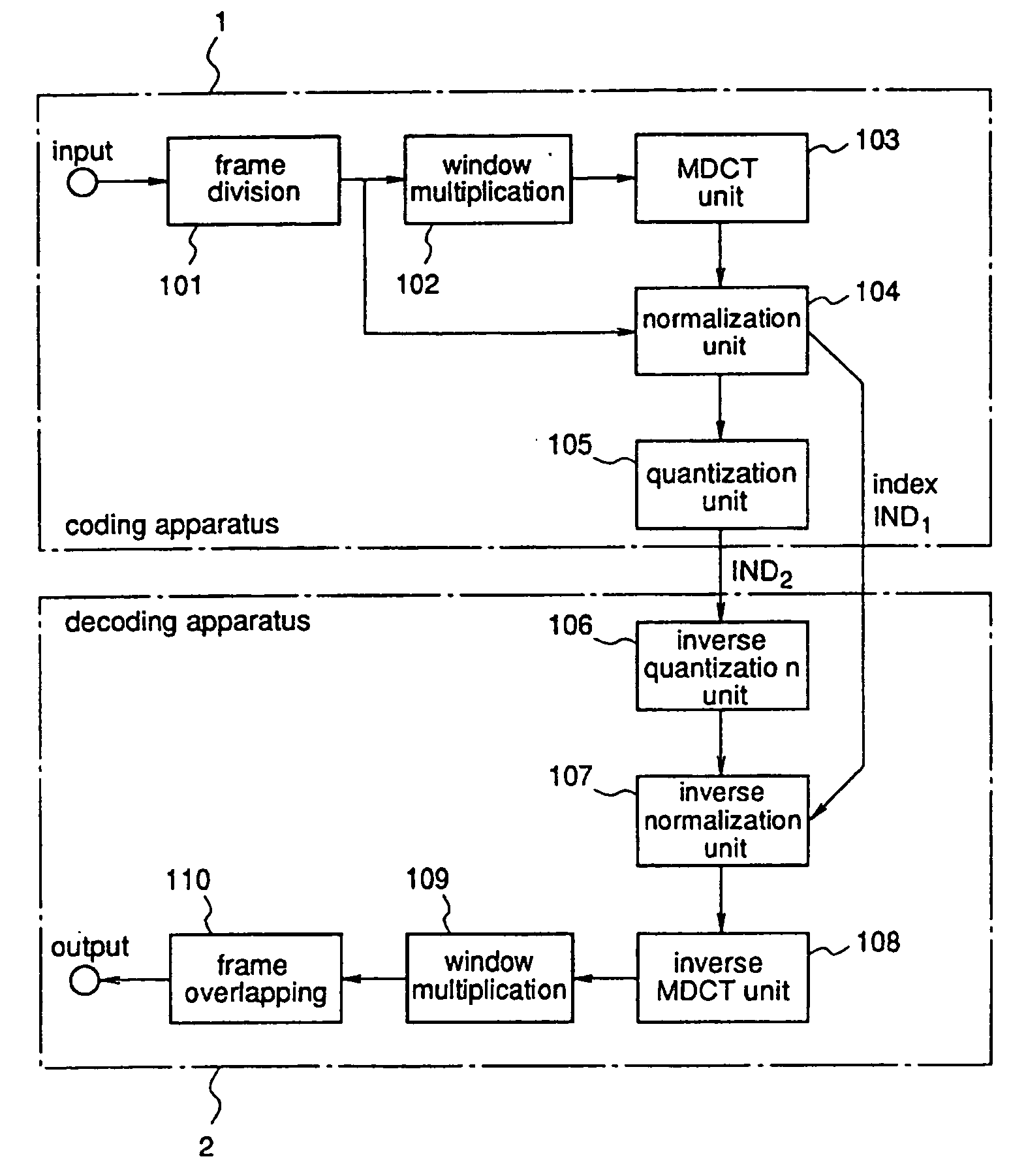

Image decoding device, image decoding method, and image decoding program

InactiveUS8249147B2Improve efficiencyReduce computing costColor television with pulse code modulationColor television with bandwidth reductionComputer hardwareImage compression

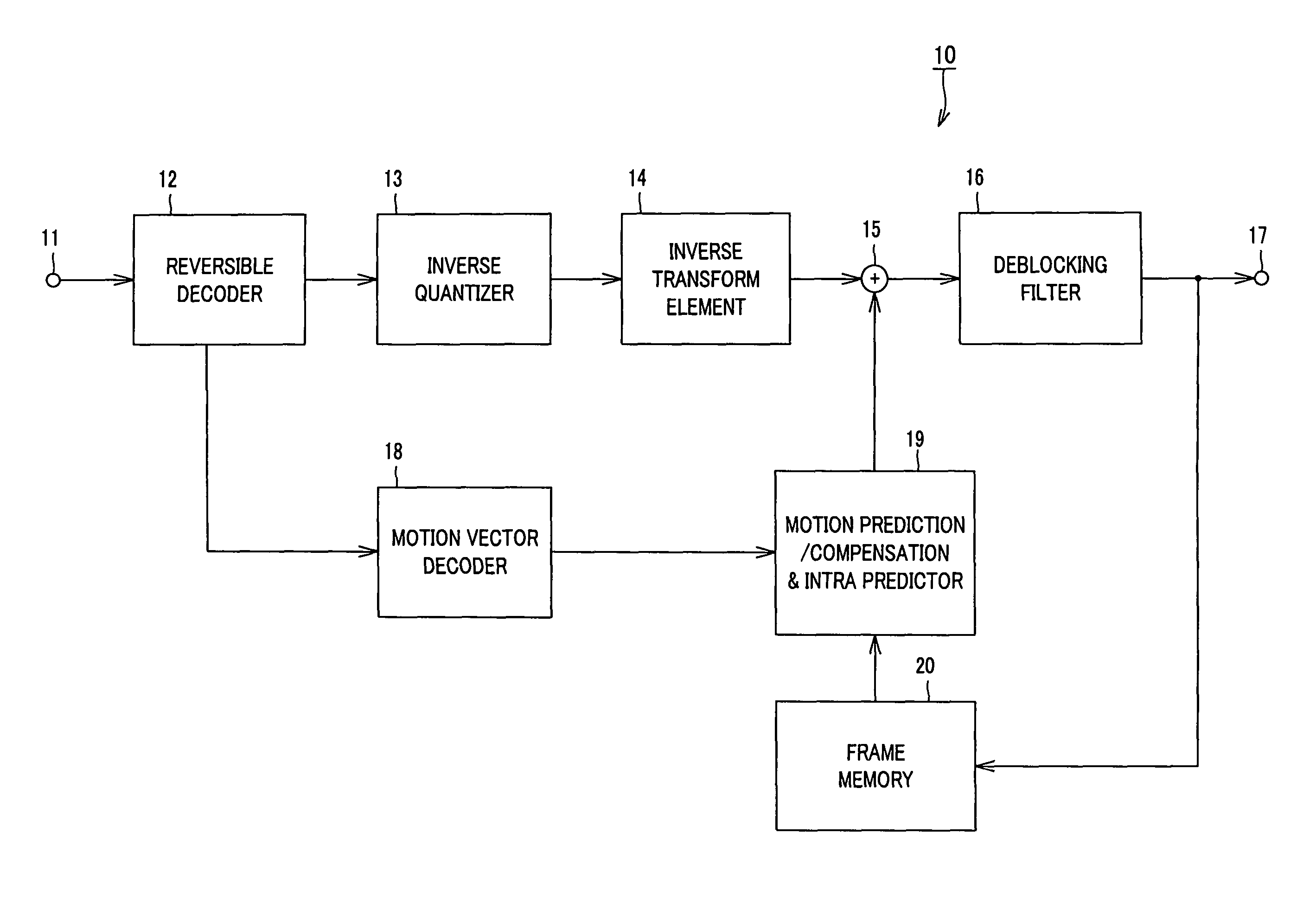

The present invention is directed to an image decoding apparatus adapted for decoding information obtained by implementing inverse quantization and inverse orthogonal transform to image compressed information in which an input image signal is blocked to implement orthogonal transform thereto on the block basis so that quantization is performed with respect thereto, which comprises a reversible decoder (12) for decoding quantized and encoded transform coefficients, an inverse quantizer (13) indicating, as a flag, in inverse-quantizing transform coefficients which have been decoded by the reversible decoder (12), existence of each transform coefficient every processing block of inverse quantization, and an inverse transform element (14) for changing inverse transform processing to be implemented to inverse quantization transform coefficients within processing block by using the flag which has been indicated by the inverse-quantizer (13).

Owner:SONY CORP

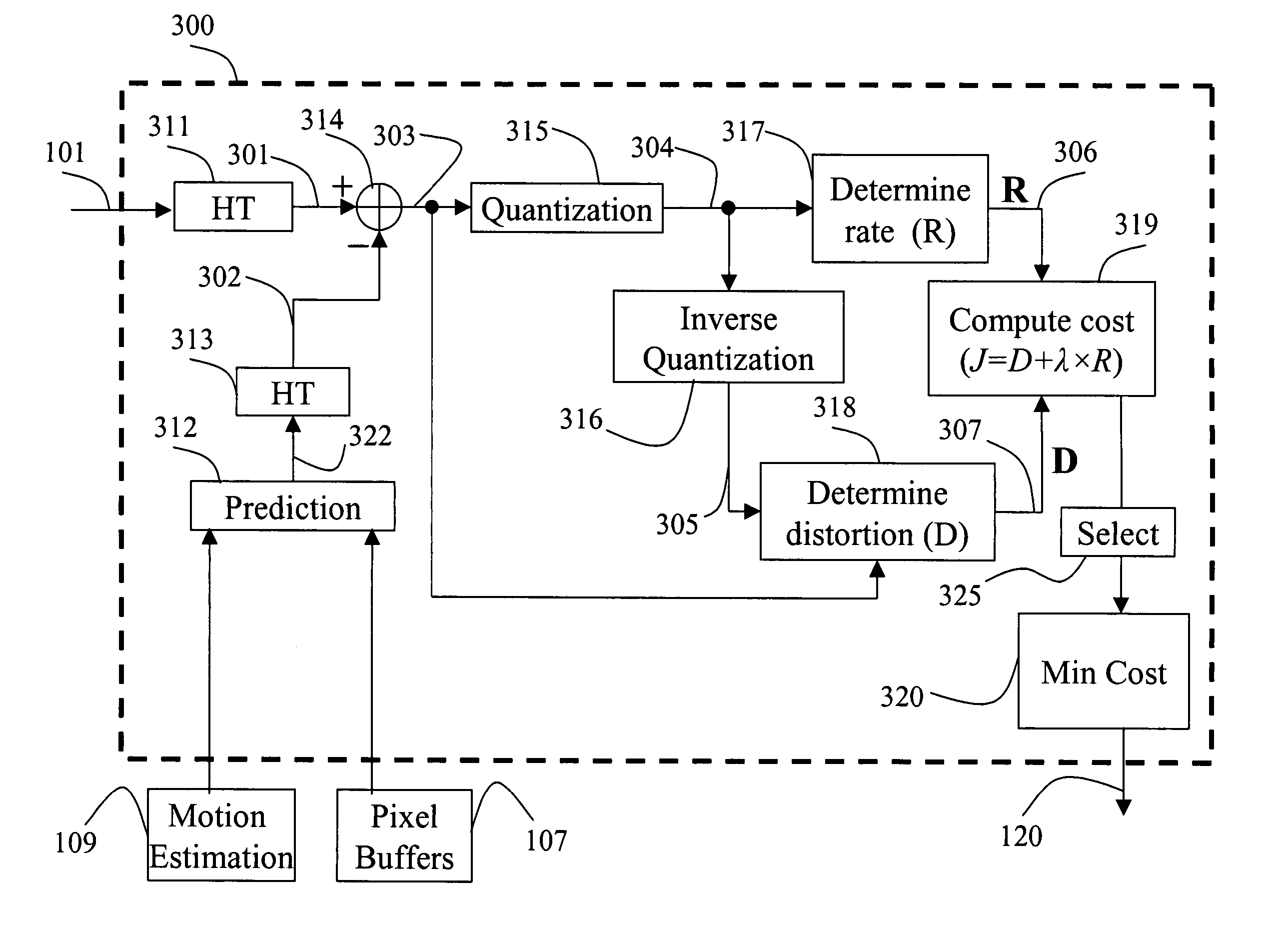

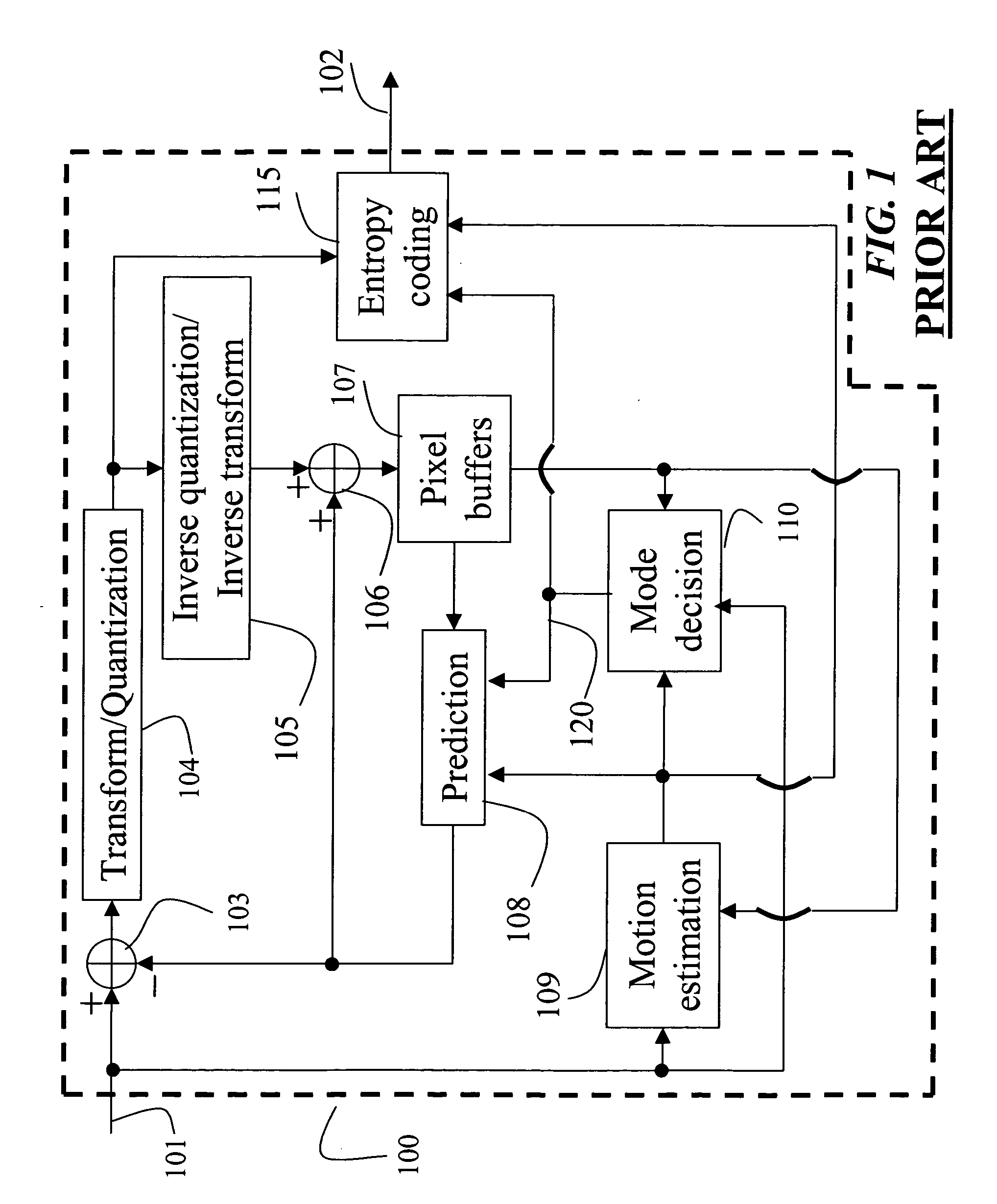

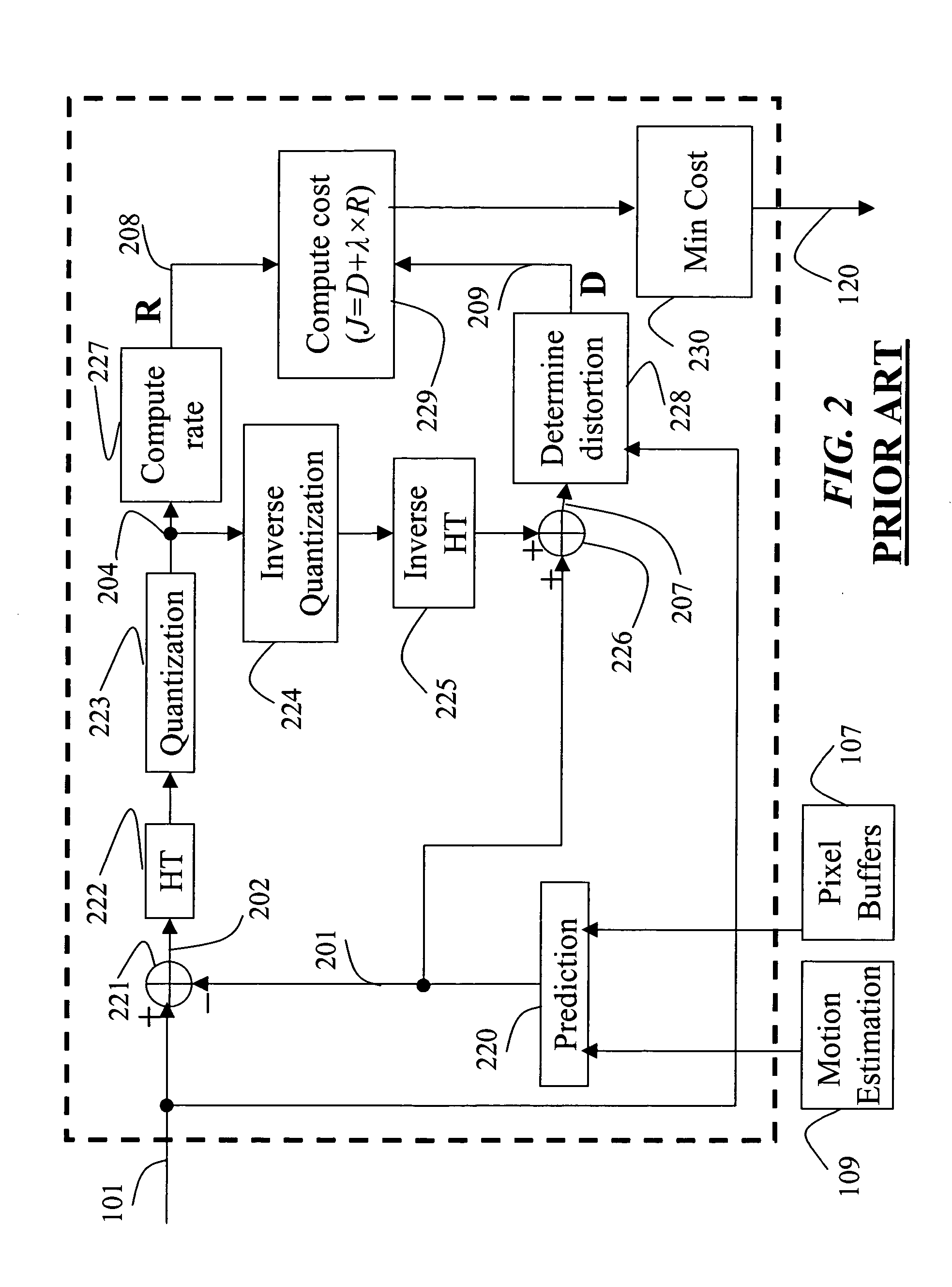

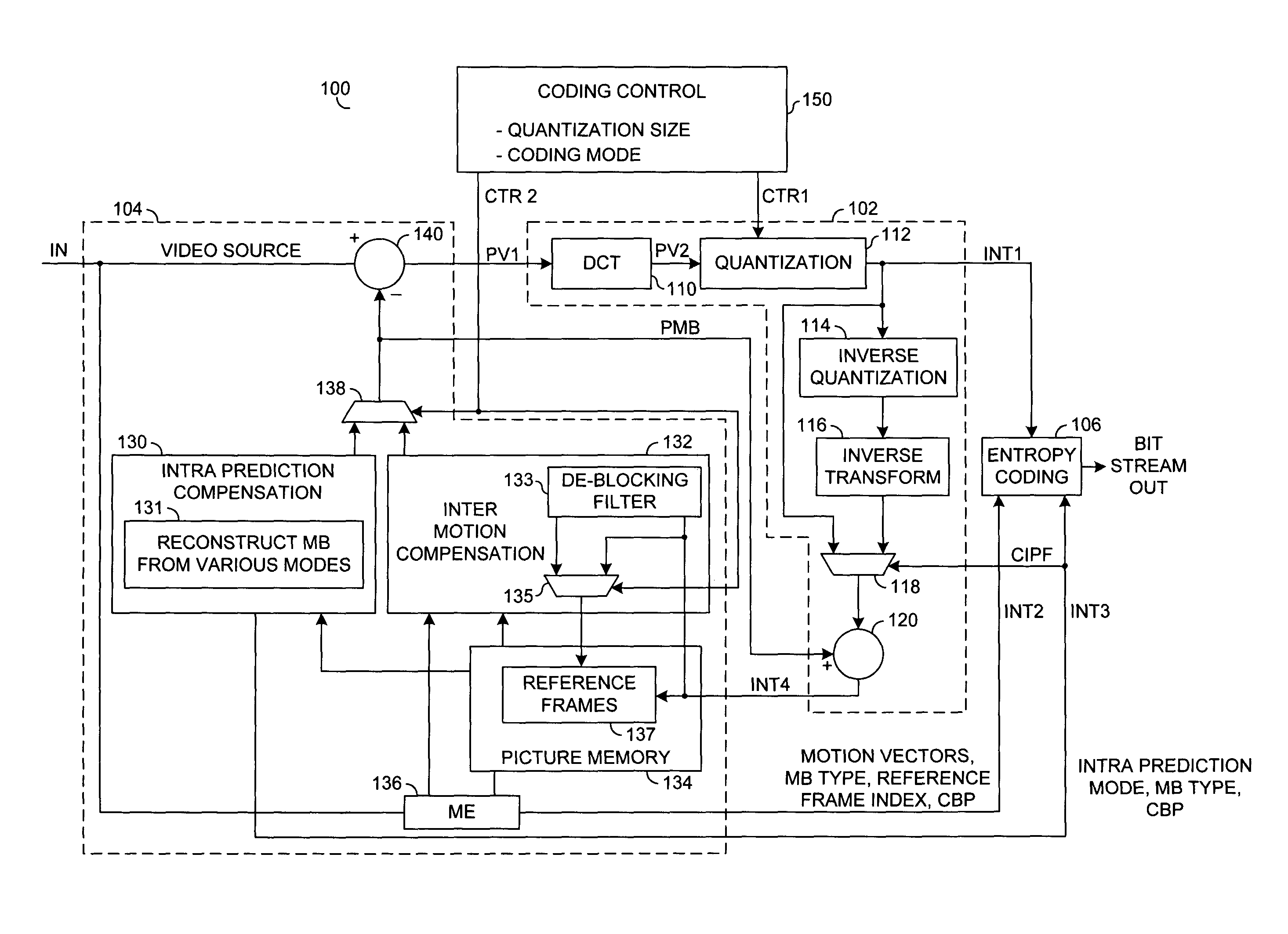

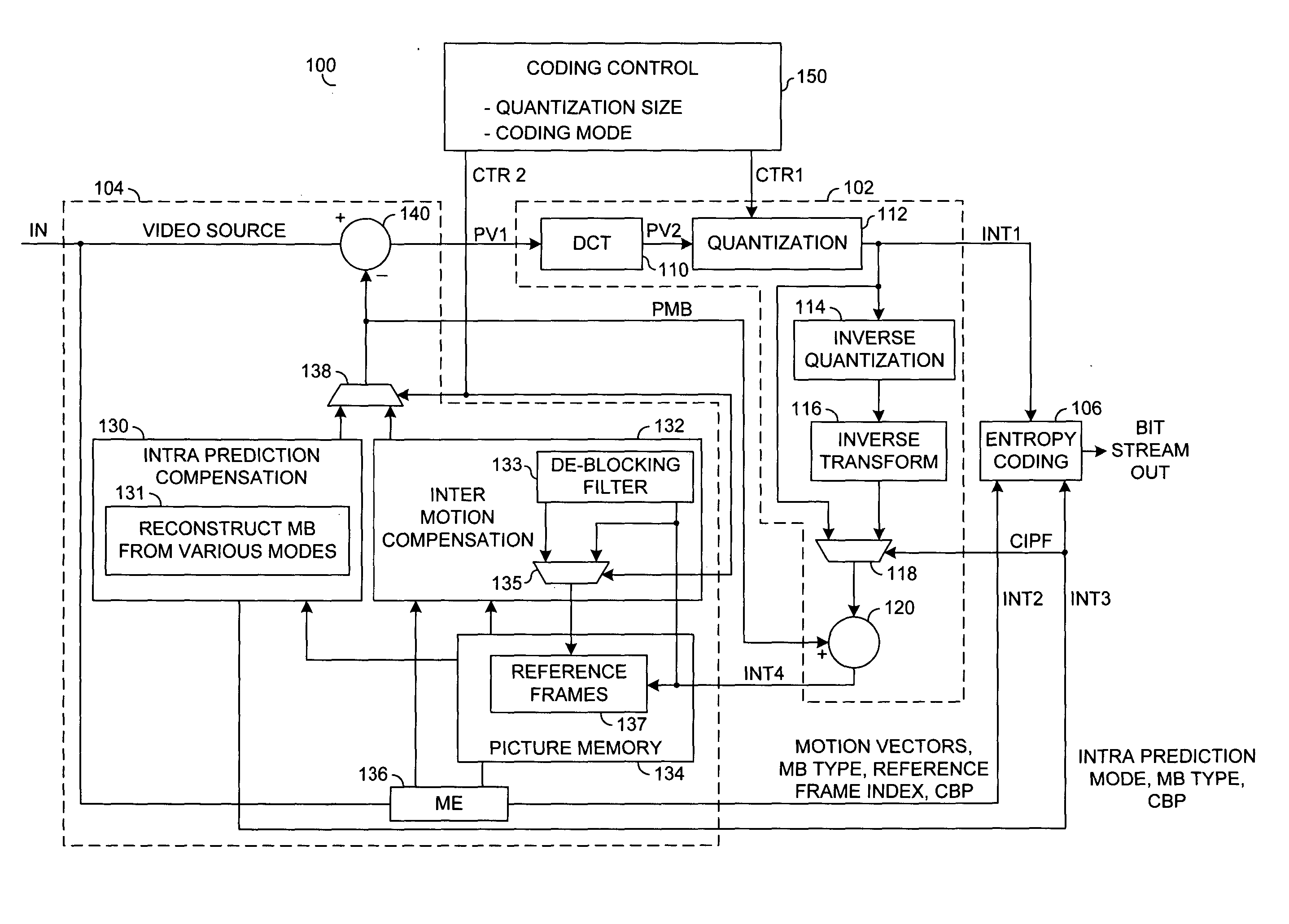

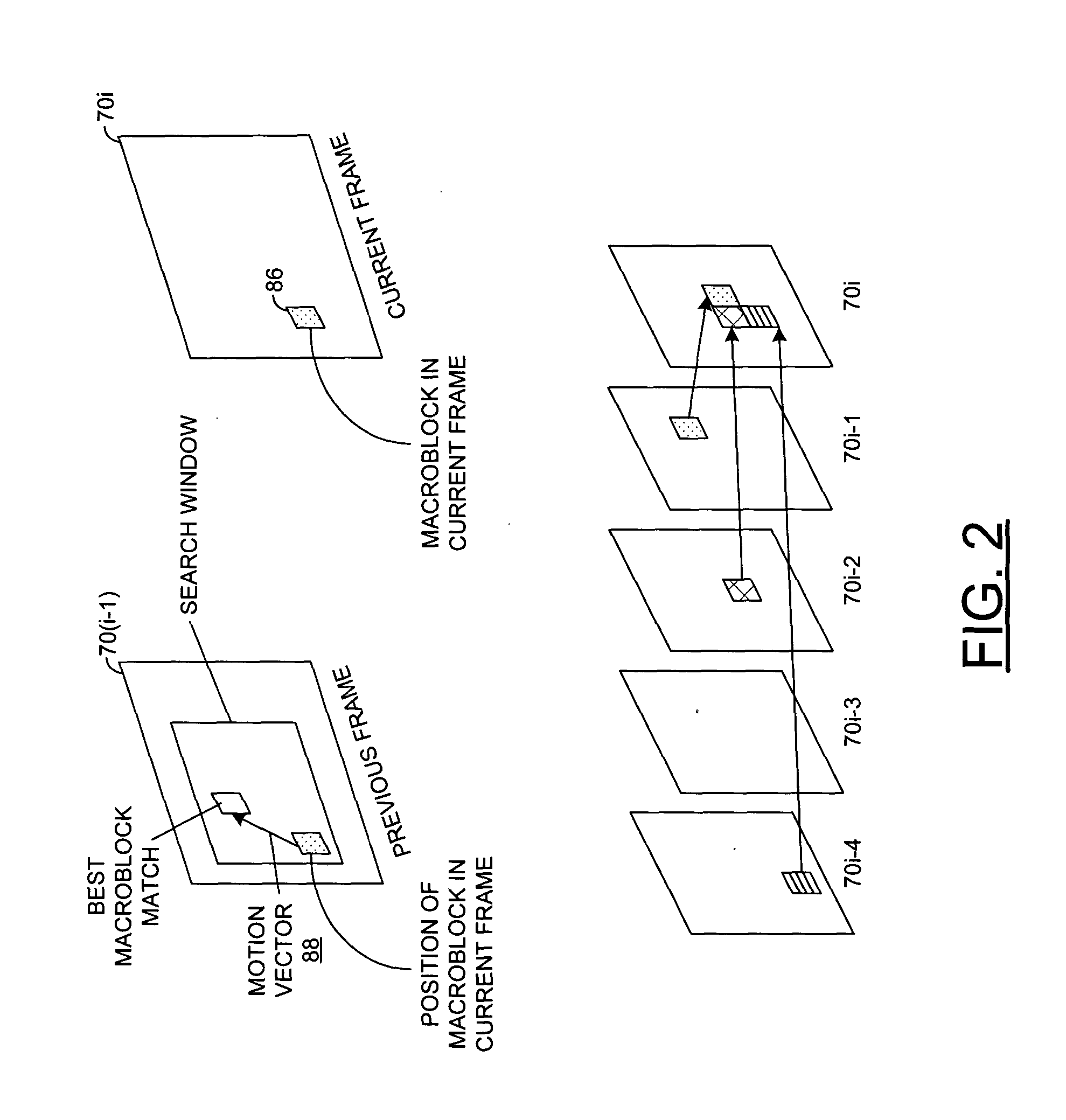

Selecting macroblock coding modes for video encoding

InactiveUS20050276493A1Minimal costCharacter and pattern recognitionTelevision systemsAlgorithmVideo encoding

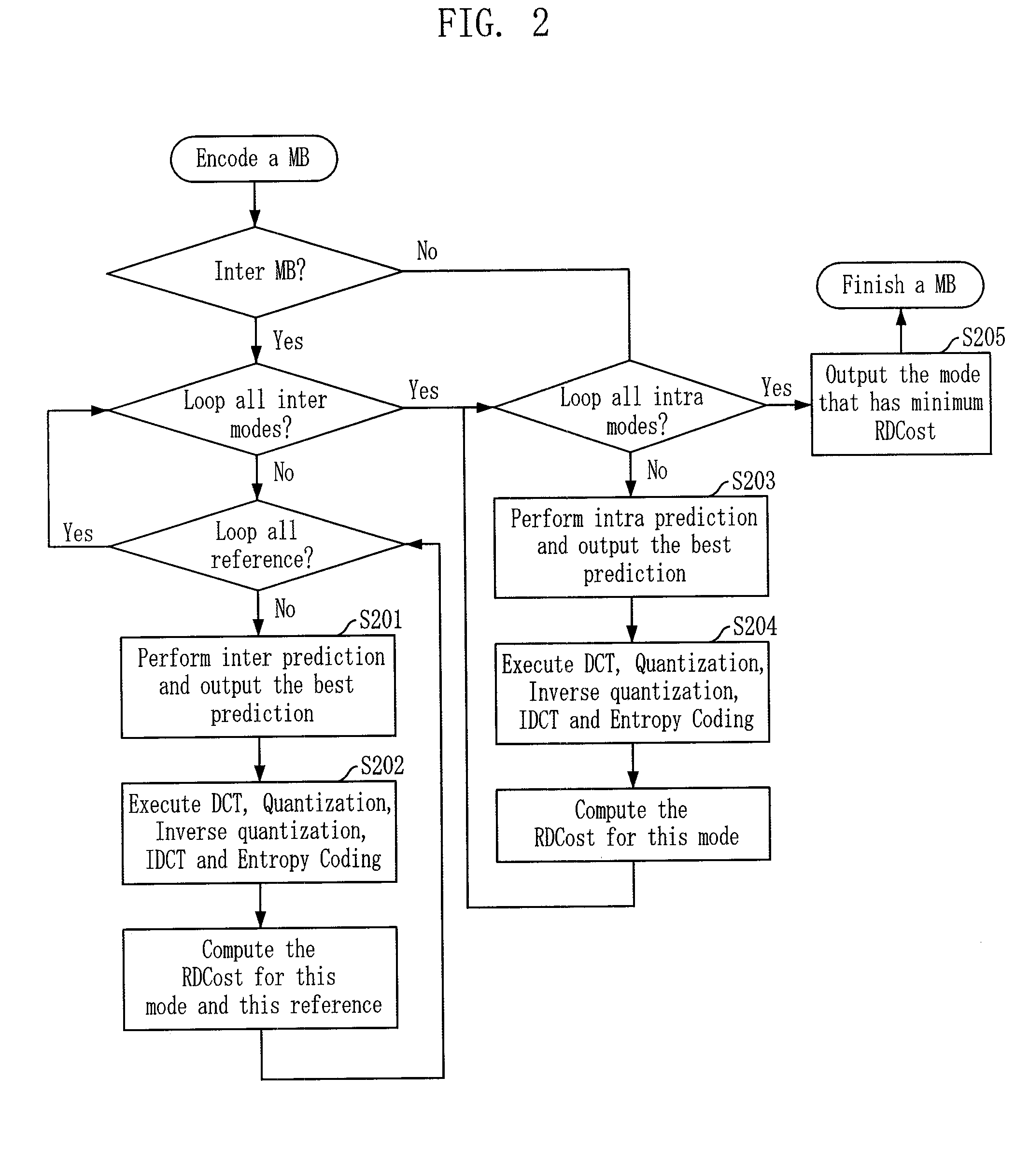

A method selects an optimal coding mode for each macroblock in a video. Each macroblock can be coded according a number of candidate coding modes. A difference between an input macroblock and a predicted macroblock is determined in a transform-domain. The difference is quantized to yield a quantized difference. An inverse quantization is performed on the quantized difference to yield a reconstructed difference. A rate required to code the quantized difference is determined. A distortion is determined according to the difference and the reconstructed difference. Then, a cost is determined for each candidate mode based on the rate and the distortion, and the candidate coding mode that yields a minimum cost is selected as the optimal coding mode for the macroblock.

Owner:MITSUBISHI ELECTRIC RES LAB INC

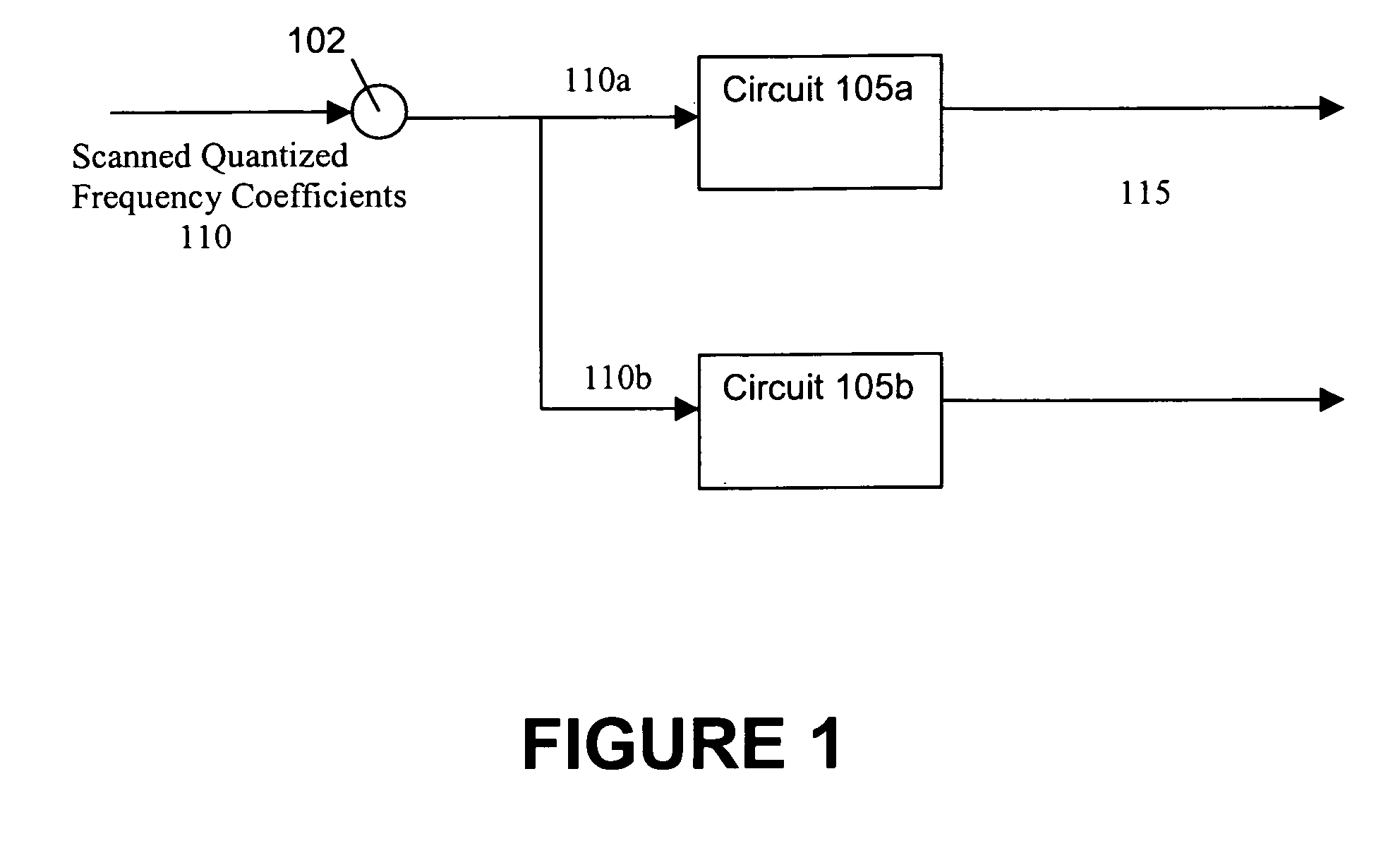

Inverse scan, coefficient, inverse quantization and inverse transform system and method

InactiveUS20070098069A1Color television with pulse code modulationColor television with bandwidth reductionComputer scienceInverse quantization

Presented herein are inverse scan, coefficient prediction, inverse quantization and inverse transform system(s) and method(s). In one embodiment, there is presented a system for converting scanned quantized frequency coefficients to pixel domain data. The system comprises a first circuit and a second circuit. The first circuit converts scanned quantized frequency coefficients to pixel domain data, wherein the scanned quantized frequency coefficients encode video data in accordance with a first encoding standard. The second circuit converts scanned quantized frequency coefficients to pixel domain data, wherein the scanned quantized frequency coefficients encode video data in accordance with a second encoding standard.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Method and/or apparatus for reducing the complexity of non-reference frame encoding using selective reconstruction

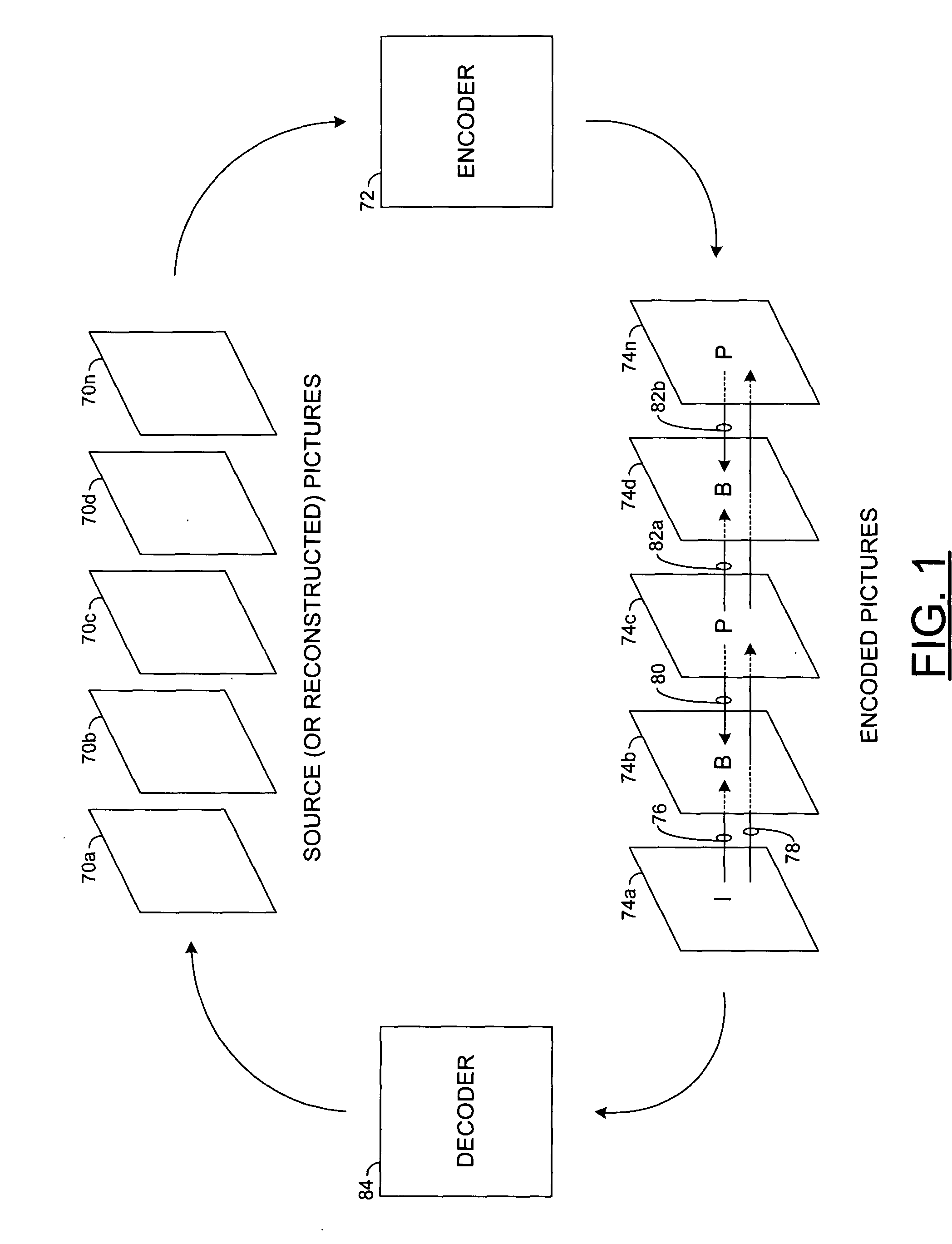

InactiveUS7324595B2Improve encoding performanceReduce complexityColor television with pulse code modulationColor television with bandwidth reductionComputer visionInverse quantization

A method for implementing non-reference frame prediction in video compression comprising the steps of (A) setting a prediction flag (i) “off” if non-reference frames are used for block prediction and (ii) “on” if non-reference frames are not used for block prediction, (B) if the prediction flag is off, generating an output video signal in response to an input video signal by performing an inverse quantization step and an inverse transform step in accordance with a predefined coding specification and (C) if the prediction flag is on, bypassing the inverse quantization step and the inverse transform step.

Owner:AVAGO TECH INT SALES PTE LTD

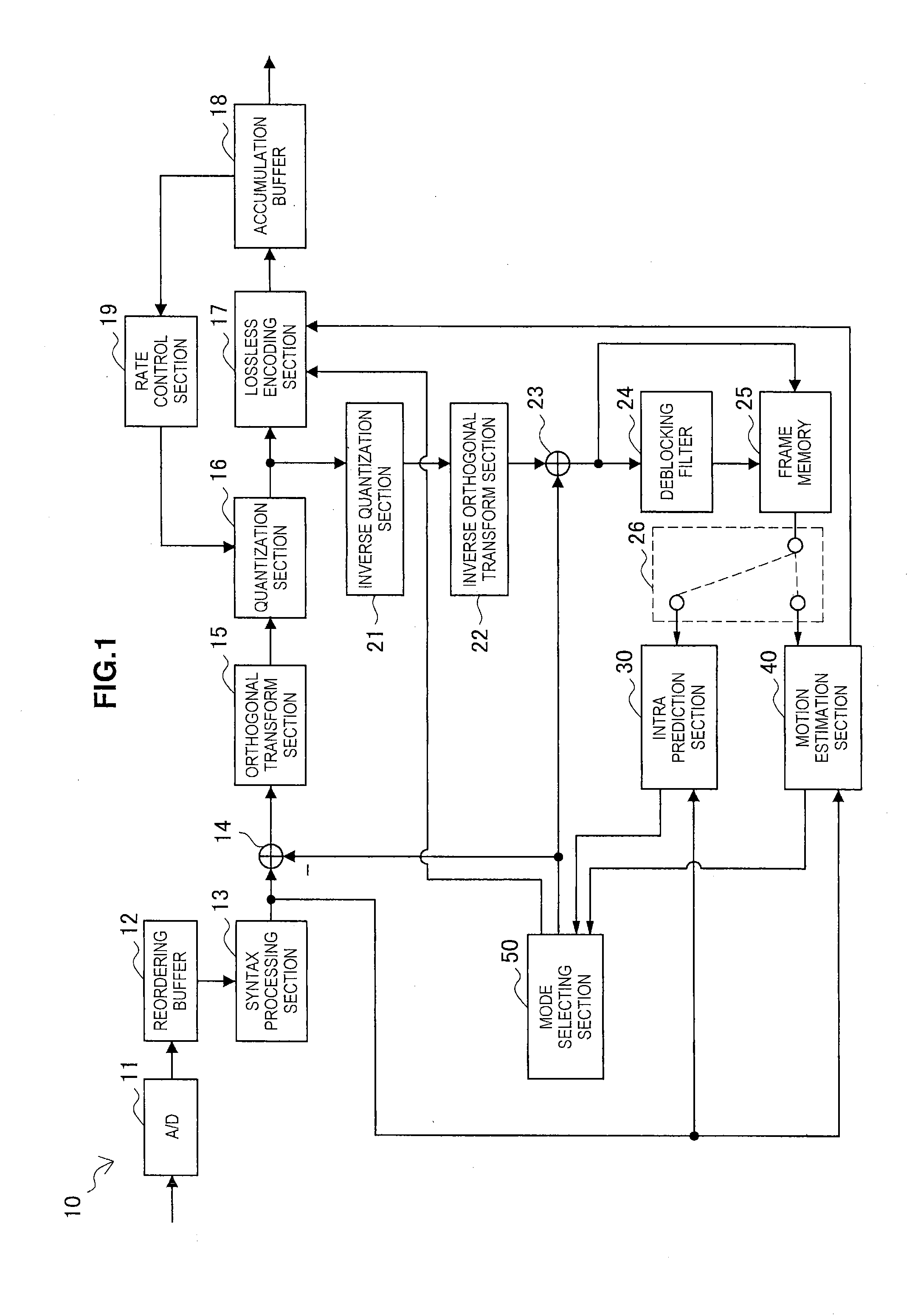

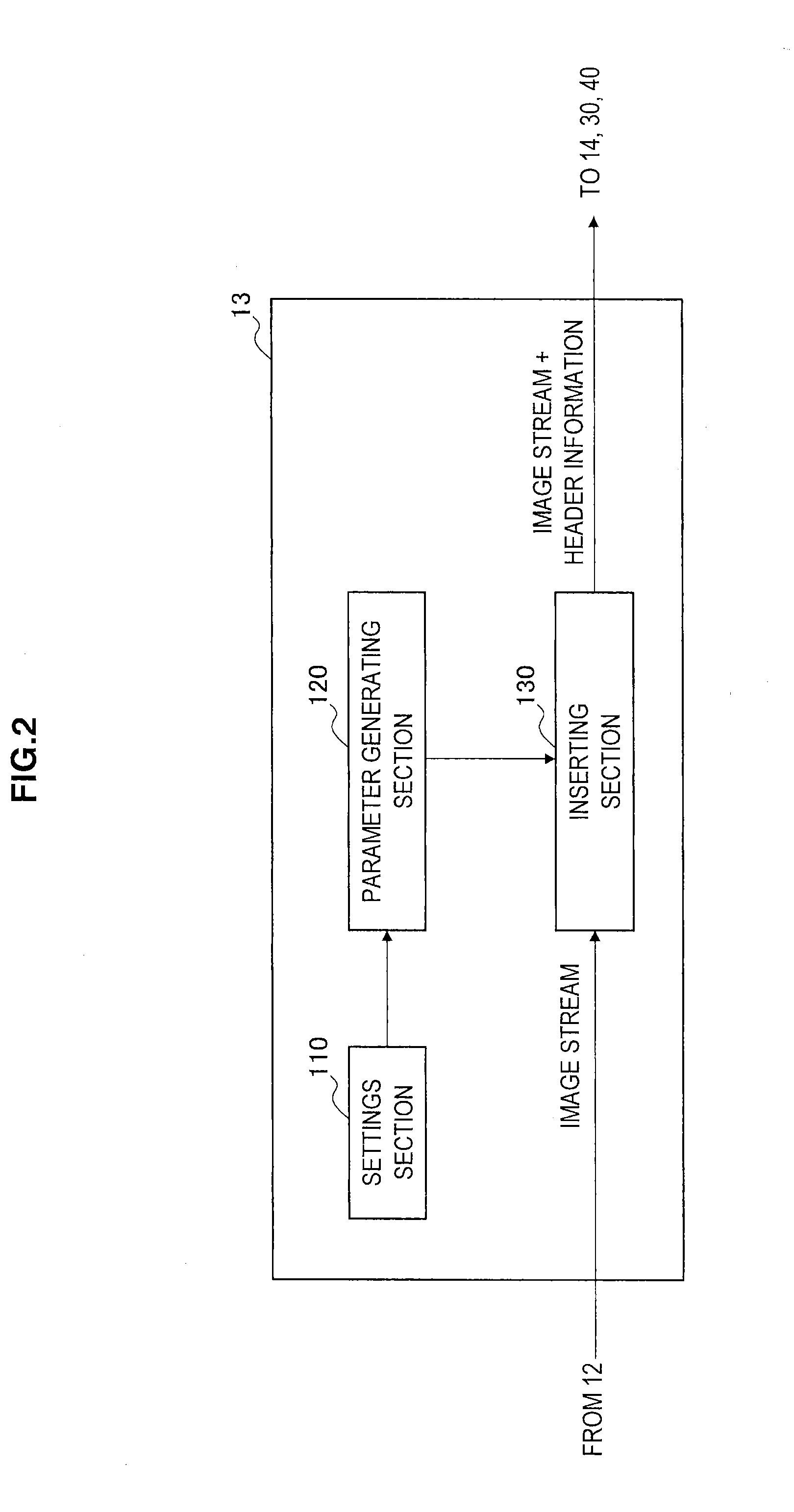

Image processing device and image processing method

ActiveUS20130251032A1Low efficiencyColor television with pulse code modulationColor television with bandwidth reductionImaging processingAlgorithm

An image processing device including an acquiring section configured to acquire quantization matrix parameters from an encoded stream in which the quantization matrix parameters defining a quantization matrix are set within a parameter set which is different from a sequence parameter set and a picture parameter set, a setting section configured to set, based on the quantization matrix parameters acquired by the acquiring section, a quantization matrix which is used when inversely quantizing data decoded from the encoded stream, and an inverse quantization section configured to inversely quantize the data decoded from the encoded stream using the quantization matrix set by the setting section.

Owner:SONY CORP

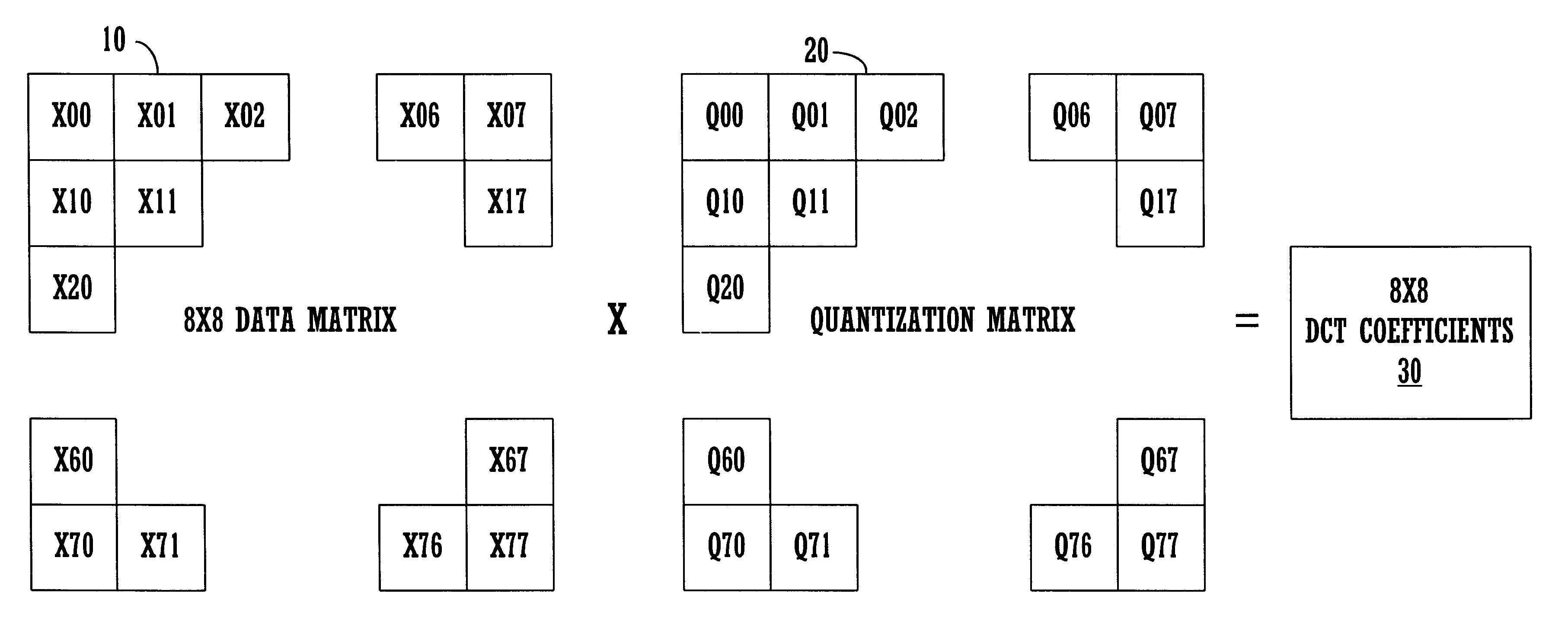

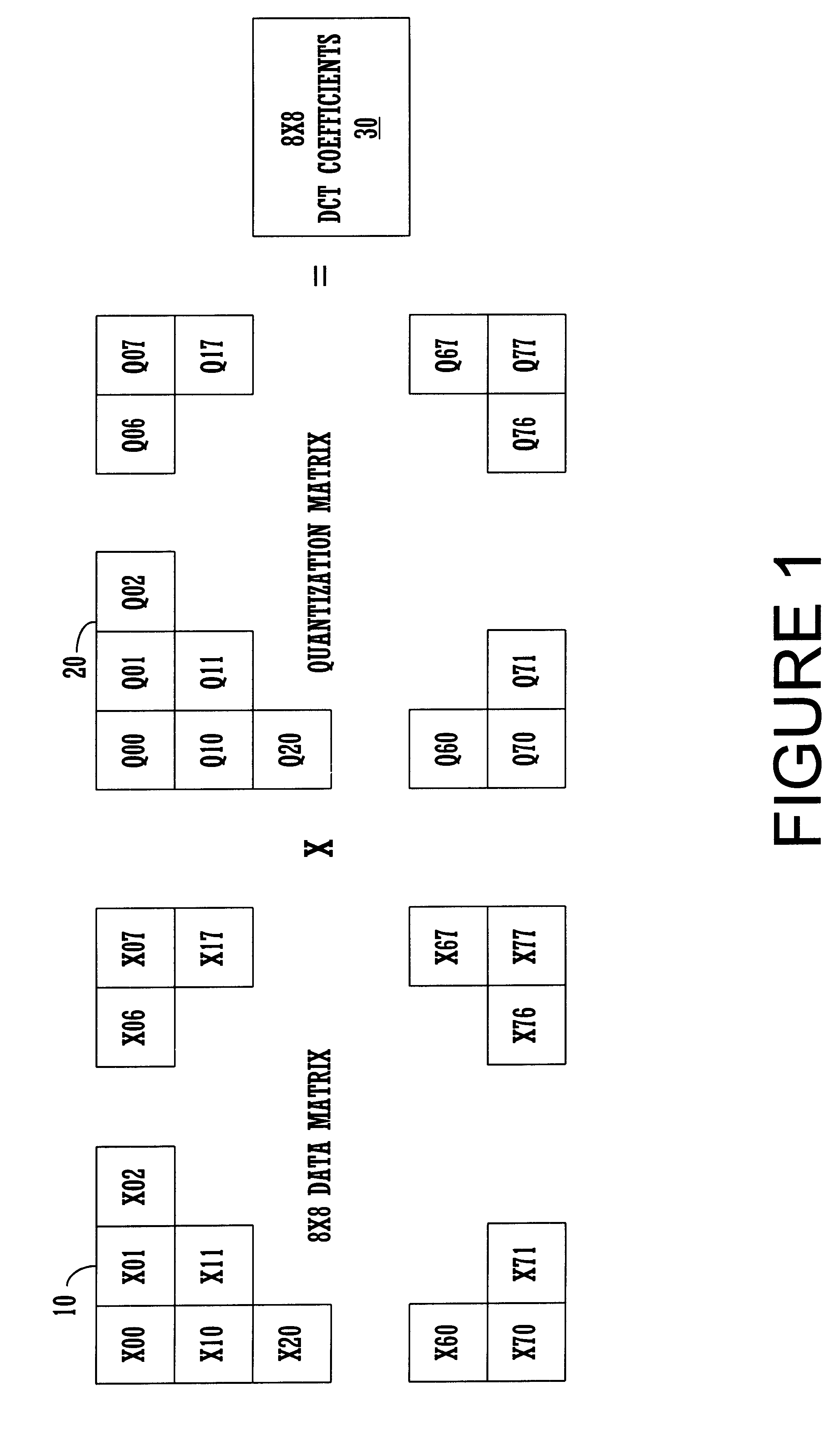

Efficient de-quantization in a digital video decoding process using a dynamic quantization matrix for parallel computations

InactiveUS6507614B1Efficient productionPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningDigital videoArray data structure

An efficient digital video (DV) decoder process that utilizes a specially constructed quantization matrix allowing an inverse quantization subprocess to perform parallel computations, e.g., using SIMD processing, to efficiently produce a matrix of DCT coefficients. The present invention utilizes a first look-up table (for 8x8 DCT) which produces a 15-valued quantization scale based on class number information and a QNO number for an 8x8 data block ("data matrix") from an input encoded digital bit stream to be decoded. The 8x8 data block is produced from a deframing and variable length decoding subprocess. An individual 8-valued segment of the 15-value output array is multiplied by an individual 8-valued segment, e.g., "a row," of the 8x8 data matrix to produce an individual row of the 8x8 matrix of DCT coefficients ("DCT matrix"). The above eight multiplications can be performed in parallel using a SIMD architecture to simultaneously generate a row of eight DCT coefficients. In this way, eight passes through the 8x8 block are used to produce the entire 8x8 DCT matrix, in one embodiment consuming only 33 instructions per 8x8 block. After each pass, the 15-valued output array is shifted by one value position for proper alignment with its associated row of the data matrix. The DCT matrix is then processed by an inverse discrete cosine transform subprocess that generates decoded display data. A second lookup table can be used for 2x4x8 DCT processing.

Owner:SONY ELECTRONICS INC +1

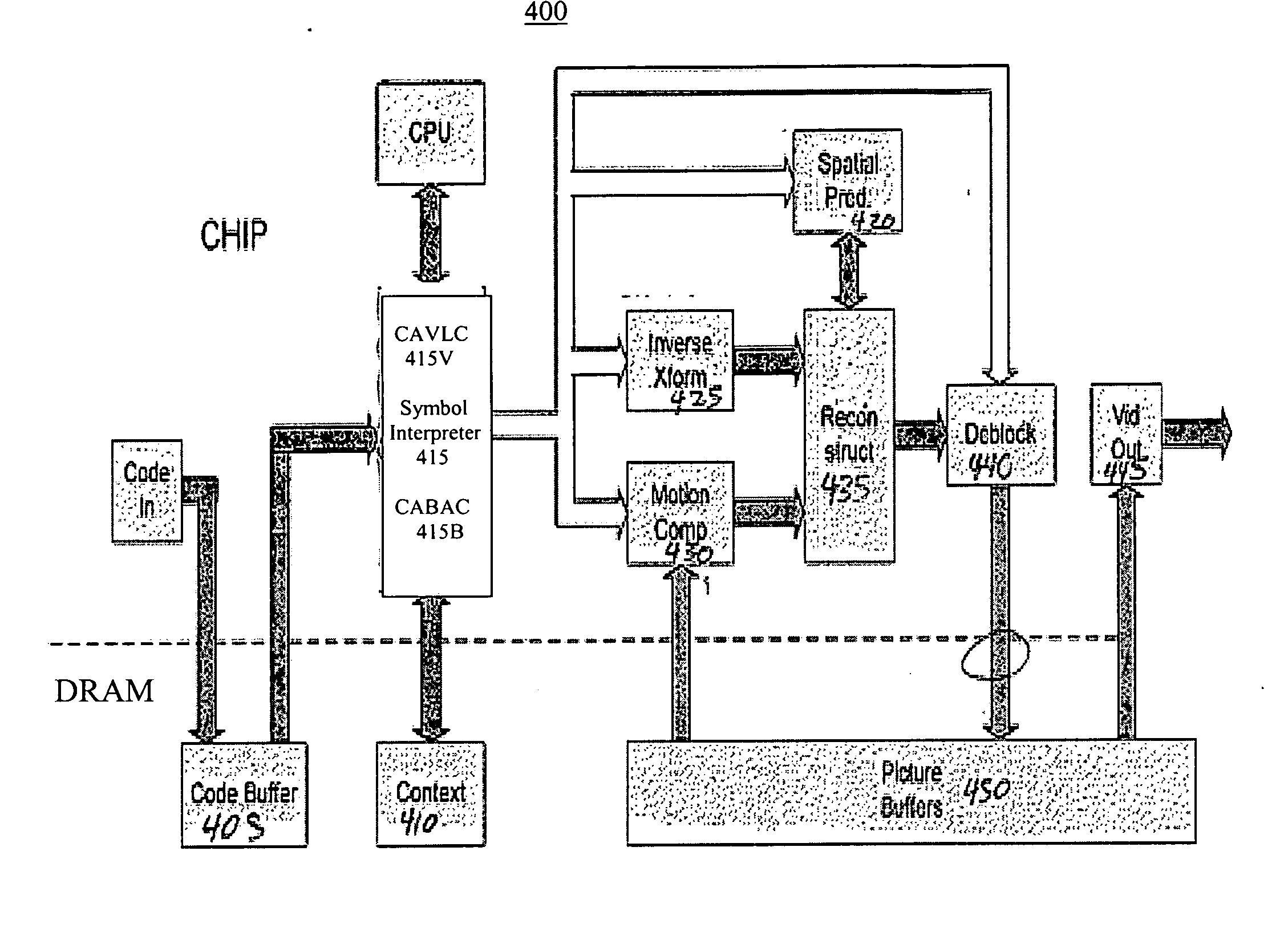

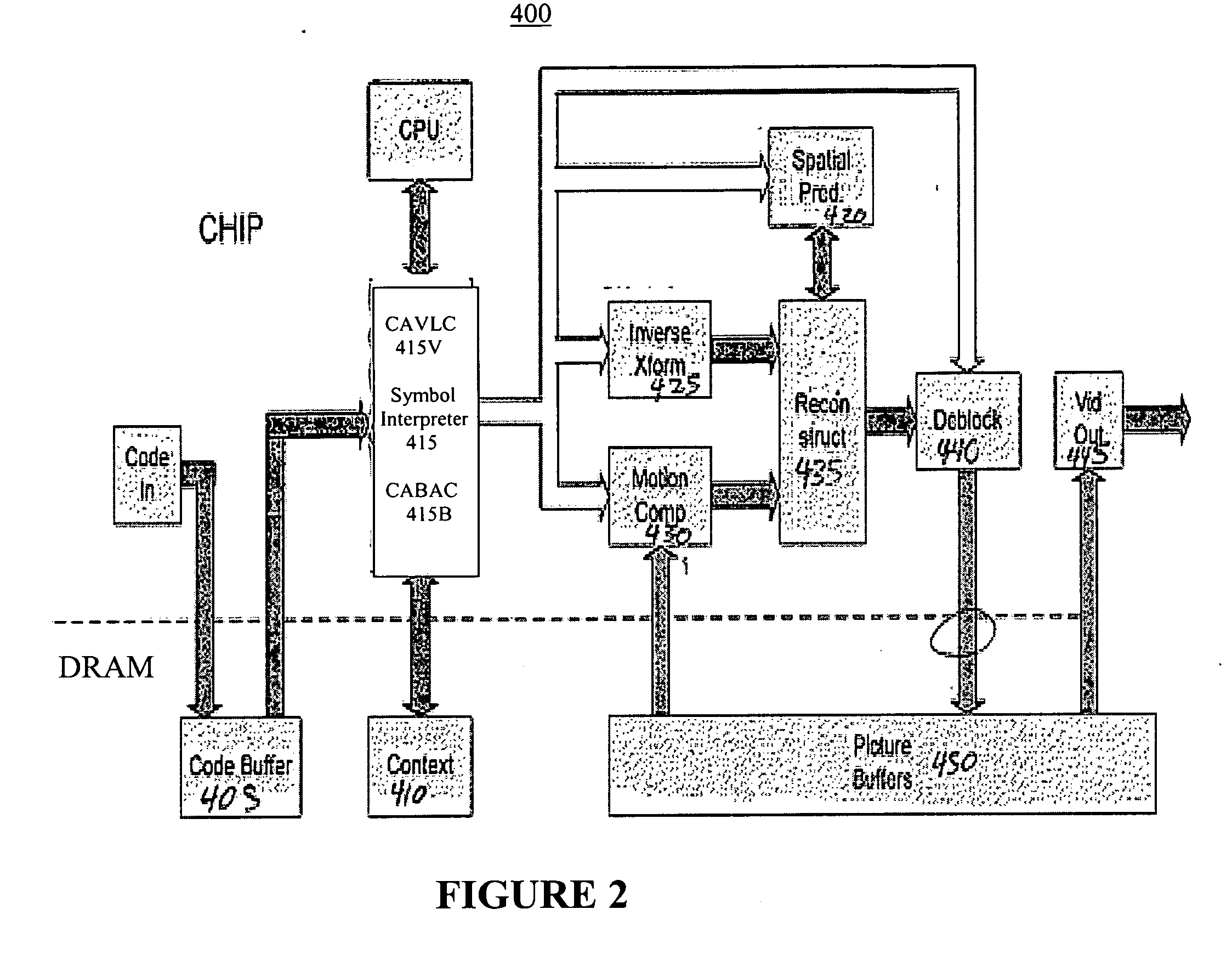

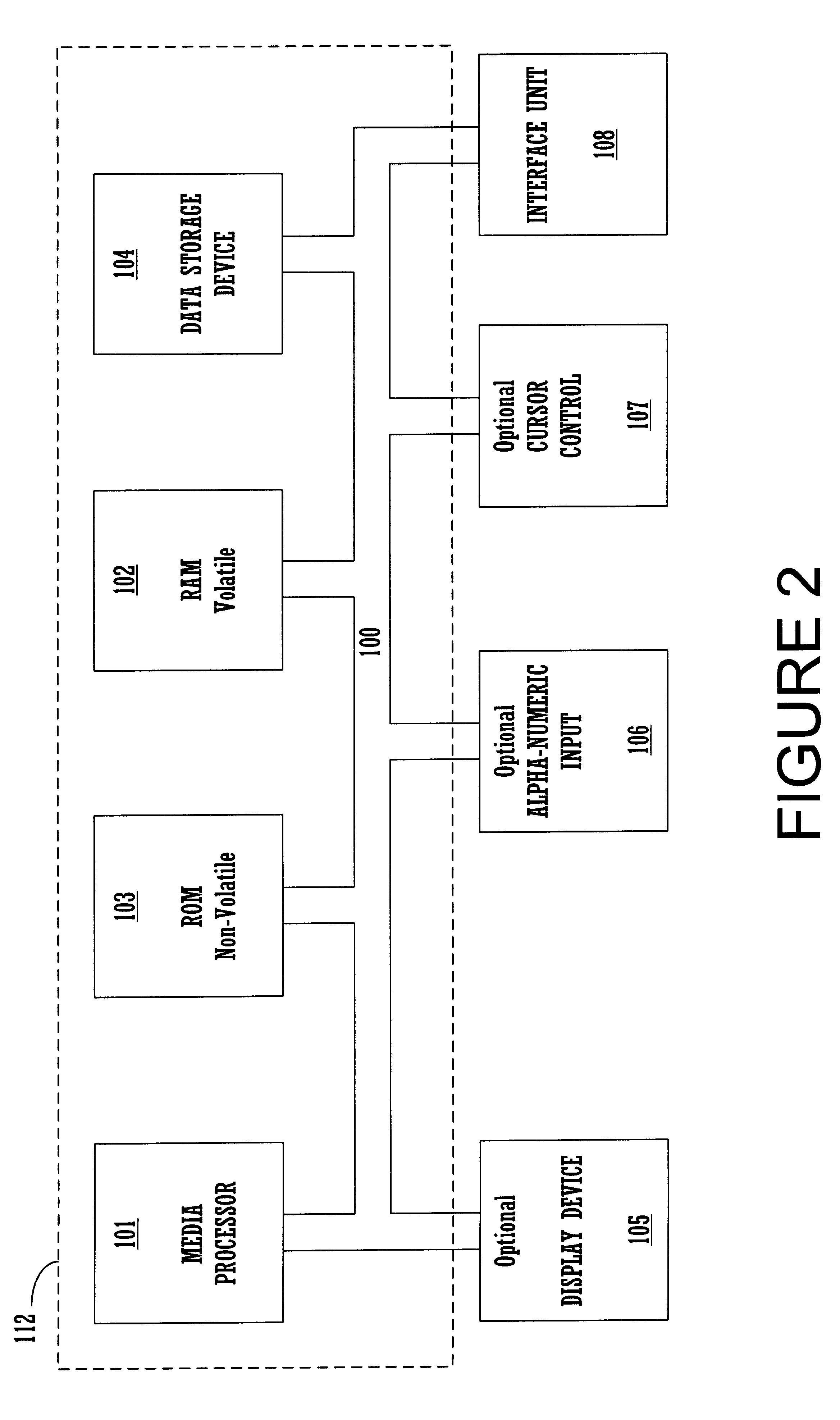

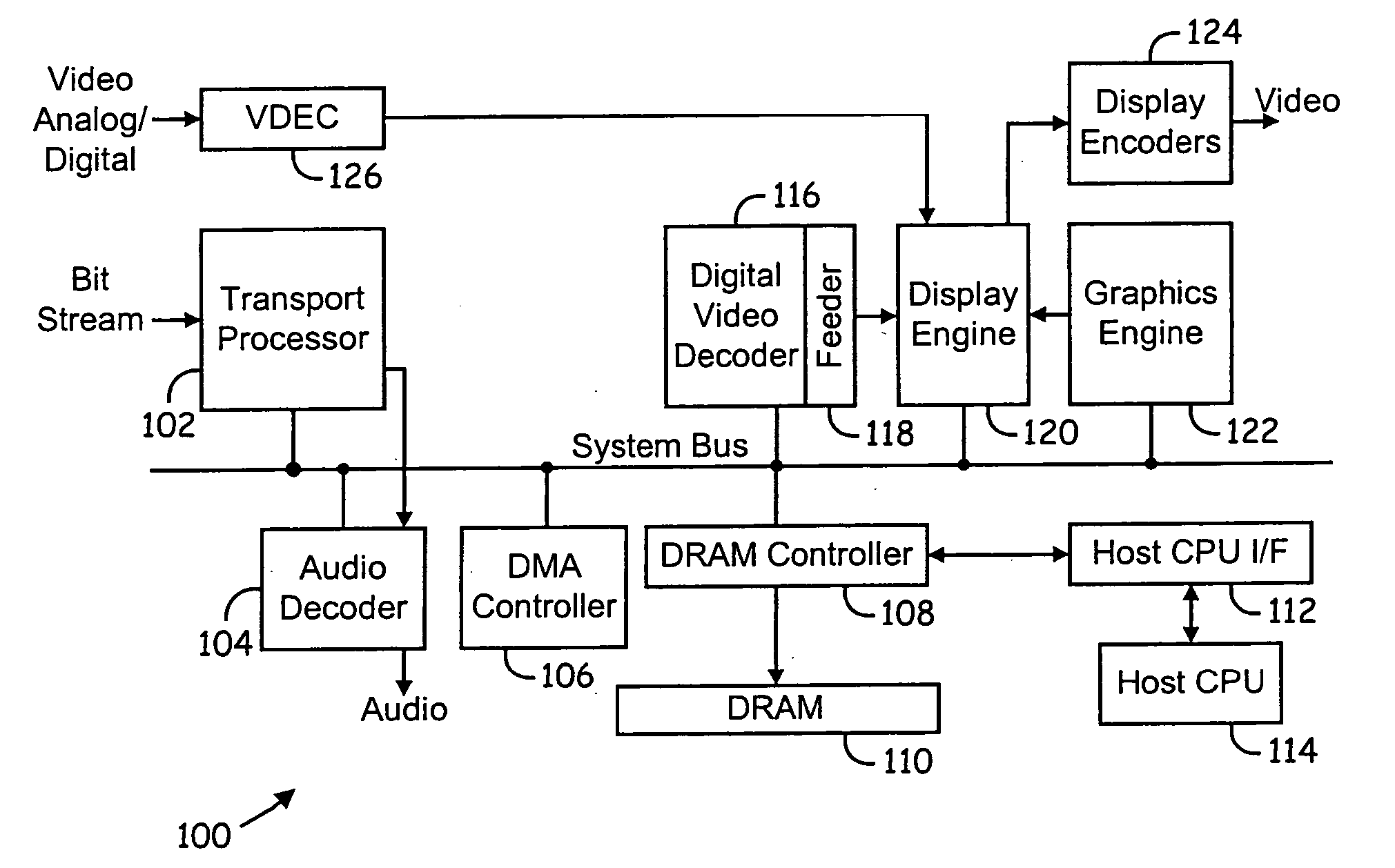

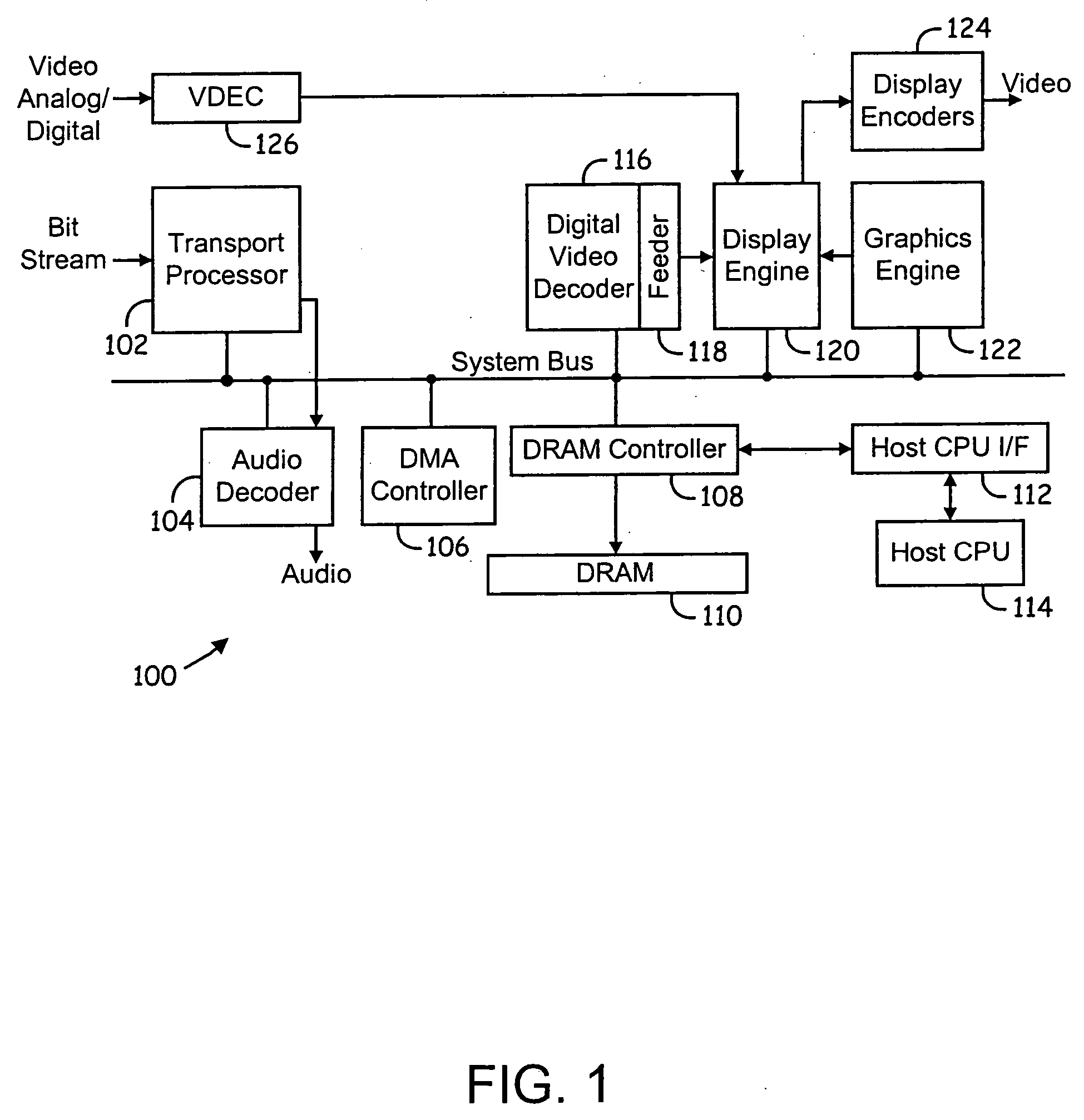

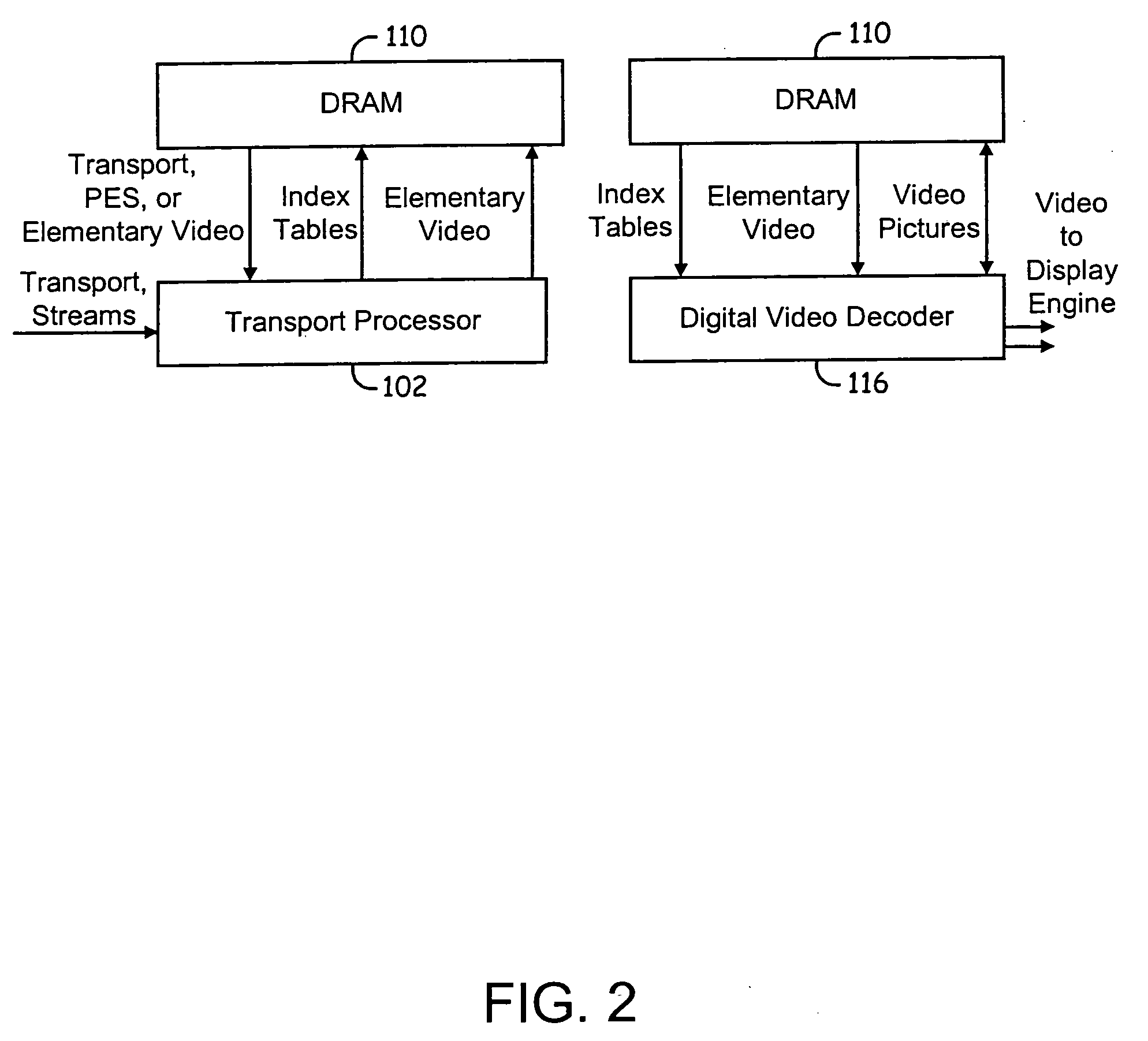

Video decoding system supporting multiple standards

ActiveUS20050123057A1Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningDigital videoData stream

System and method for decoding digital video data. The decoding system employs hardware accelerators that assist a core processor in performing selected decoding tasks. The hardware accelerators are configurable to support a plurality of existing and future encoding / decoding formats. The accelerators are configurable to support substantially any existing or future encoding / decoding formats that fall into the general class of DCT-based, entropy decoded, block-motion-compensated compression algorithms. The hardware accelerators illustratively comprise a programmable entropy decoder, an inverse quantization module, a inverse discrete cosine transform module, a pixel filter, a motion compensation module and a de-blocking filter. The hardware accelerators function in a decoding pipeline wherein at any given stage in the pipeline, while a given function is being performed on a given macroblock, the next macroblock in the data stream is being worked on by the previous function in the pipeline.

Owner:BROADCOM CORP

Method and/or apparatus for reducing the complexity of non-reference frame encoding using selective reconstruction

InactiveUS20050063465A1Improve encoding performanceReduce complexityColor television with pulse code modulationColor television with bandwidth reductionComputer visionInverse quantization

A method for implementing non-reference frame prediction in video compression comprising the steps of (A) setting a prediction flag (i) “off” if non-reference frames are used for block prediction and (ii) “on” if non-reference frames are not used for block prediction, (B) if the prediction flag is off, generating an output video signal in response to an input video signal by performing an inverse quantization step and an inverse transform step in accordance with a predefined coding specification and (C) if the prediction flag is on, bypassing the inverse quantization step and the inverse transform step.

Owner:AVAGO TECH INT SALES PTE LTD

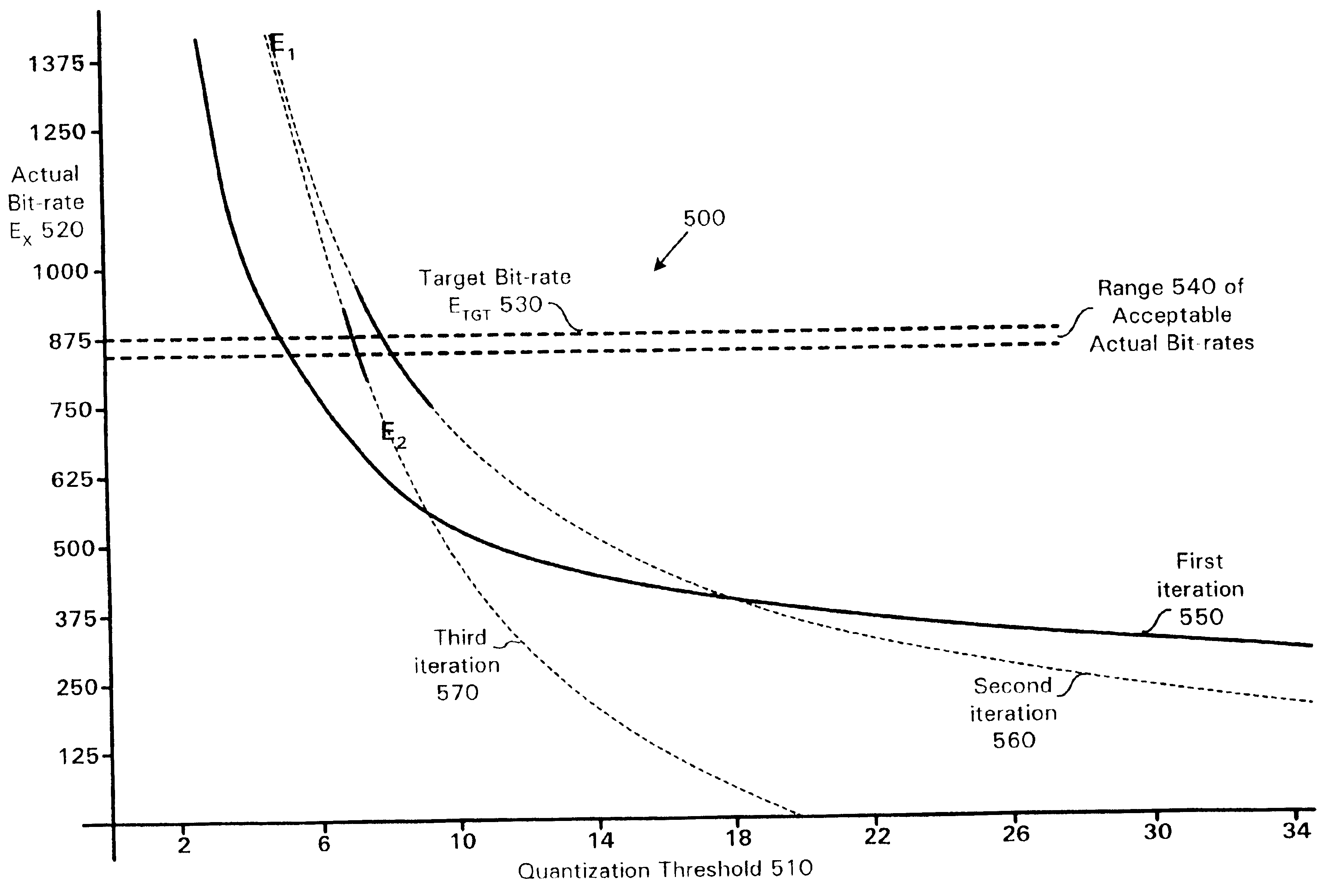

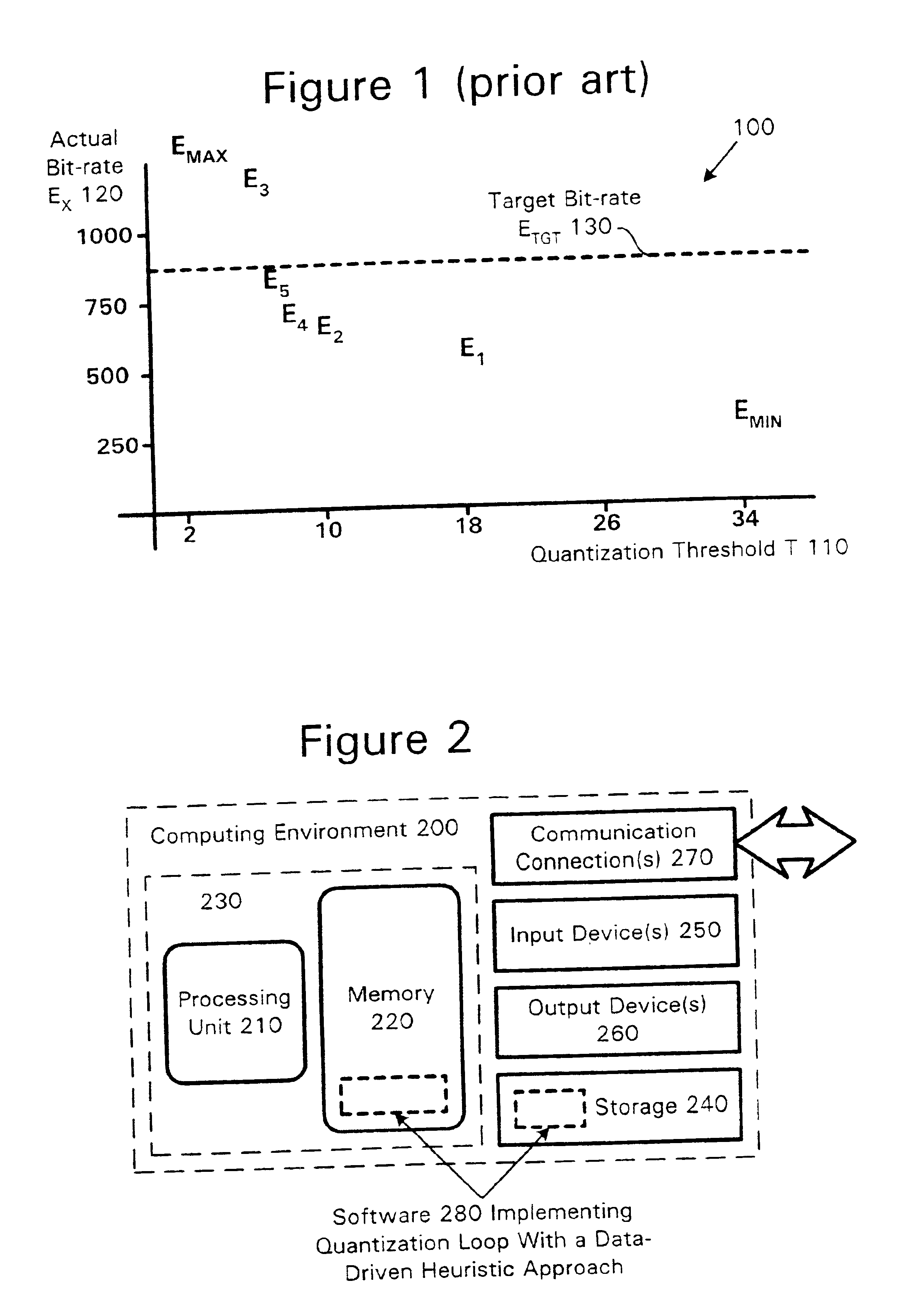

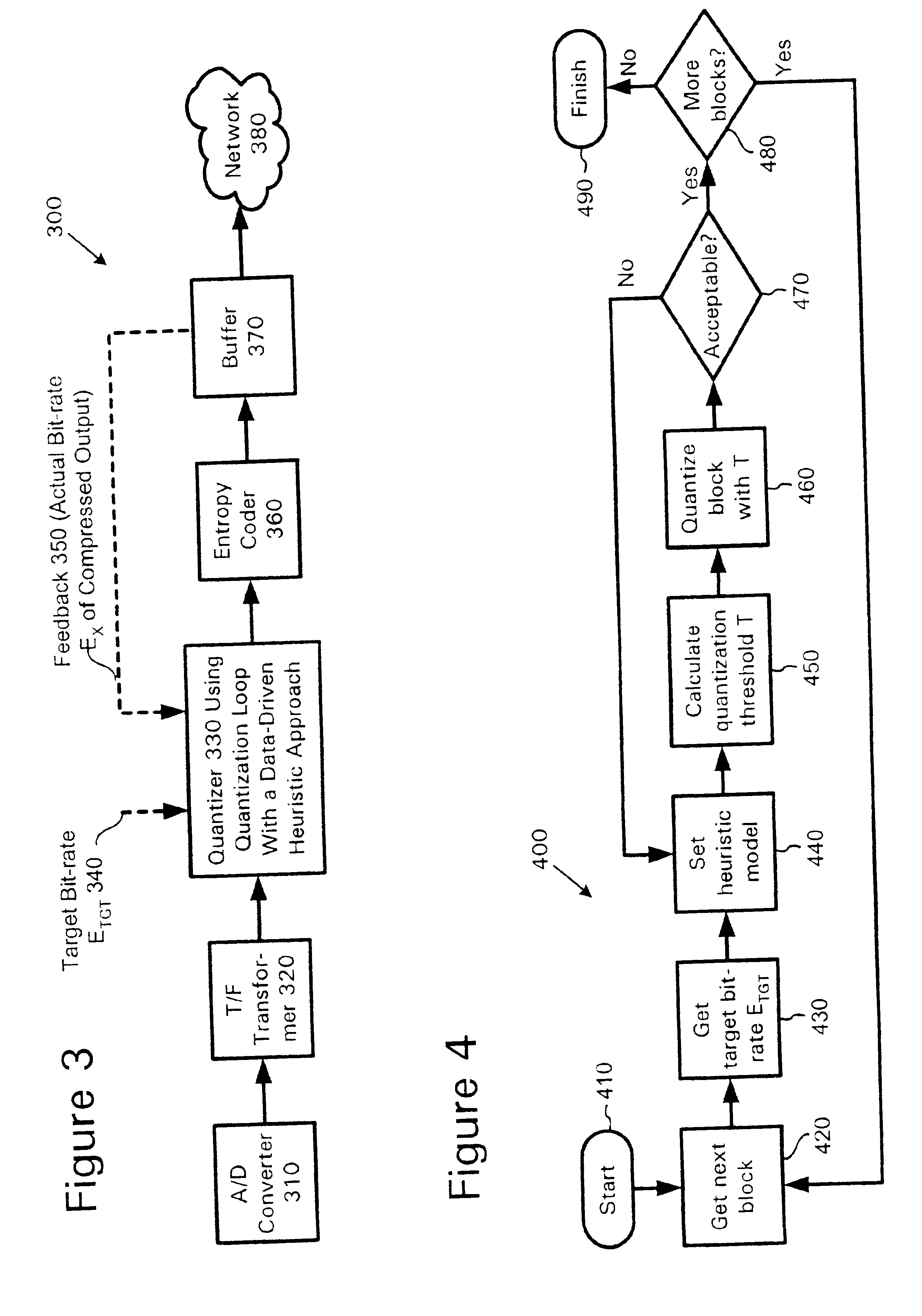

Quantization loop with heuristic approach

InactiveUS7062445B2Reduce the number of iterationsLess-expensive hardwareColor television with pulse code modulationColor television with bandwidth reductionFrequency spectrumAlgorithm

A quantizer finds a quantization threshold using a quantization loop with a heuristic approach. Following the heuristic approach reduces the number of iterations in the quantization loop required to find an acceptable quantization threshold, which instantly improves the performance of an encoder system by eliminating costly compression operations. A heuristic model relates actual bit-rate of output following compression to quantization threshold for a block of a particular type of data. The quantizer determines an initial approximation for the quantization threshold based upon the heuristic model. The quantizer evaluates actual bit-rate following compression of output quantized by the initial approximation. If the actual bit-rate satisfies a criterion such as proximity to a target bit-rate, the quantizer sets accepts the initial approximation as the quantization threshold. Otherwise, the quantizer adjusts the heuristic model and repeats the process with a new approximation of the quantization threshold. In an illustrative example, a quantizer finds a uniform, scalar quantization threshold using a quantization loop with a heuristic model adapted to spectral audio data. During decoding, a dequantizer applies the quantization threshold to decompressed output in an inverse quantization operation.

Owner:MICROSOFT TECH LICENSING LLC

Image processing device and image processing method

ActiveUS20130322525A1Low efficiencyColor television with pulse code modulationColor television with bandwidth reductionImaging processingAlgorithm

Provided is an image processing device including an acquiring section configured to acquire quantization matrix parameters from an encoded stream in which the quantization matrix parameters defining a quantization matrix are set within a parameter set which is different from a sequence parameter set and a picture parameter set, a setting section configured to set, based on the quantization matrix parameters acquired by the acquiring section, a quantization matrix which is used when inversely quantizing data decoded from the encoded stream, and an inverse quantization section configured to inversely quantize the data decoded from the encoded stream using the quantization matrix set by the setting section.

Owner:SONY CORP

Non-separable secondary transform for video coding

Techniques are described in which a decoder is configured to inversely quantize a first coefficient block and apply a first inverse transform to at least part of the inversely quantized first coefficient block to generate a second coefficient block. The first inverse transform is a non-separable transform. The decoder is further configured to apply a second inverse transform to the second coefficient block to generate a residual video block. The second inverse transform converts the second coefficient block from a frequency domain to a pixel domain. The decoder is further configured to form adecoded video block, wherein forming the decoded video block comprises summing the residual video block with one or more predictive blocks.

Owner:QUALCOMM INC

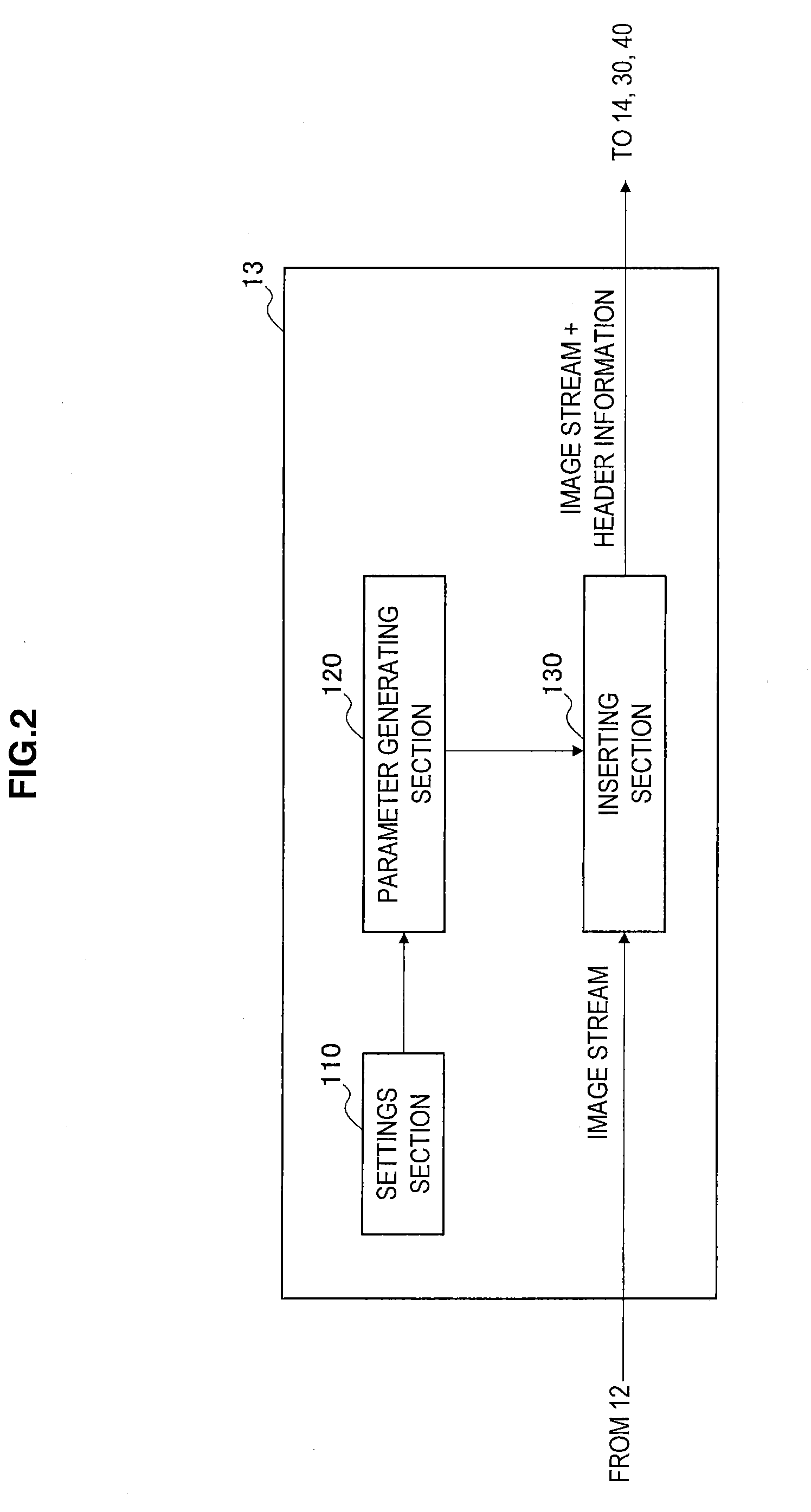

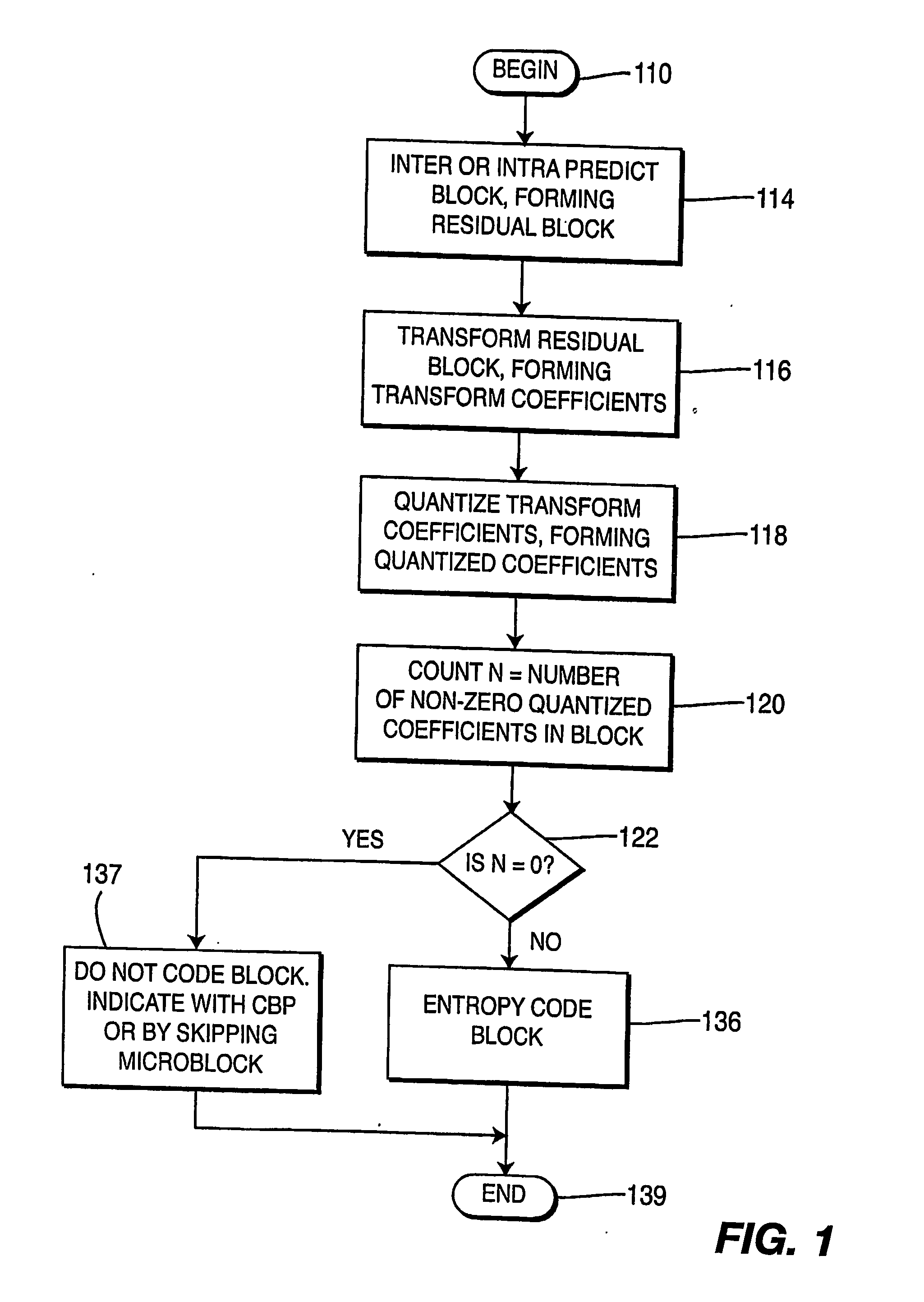

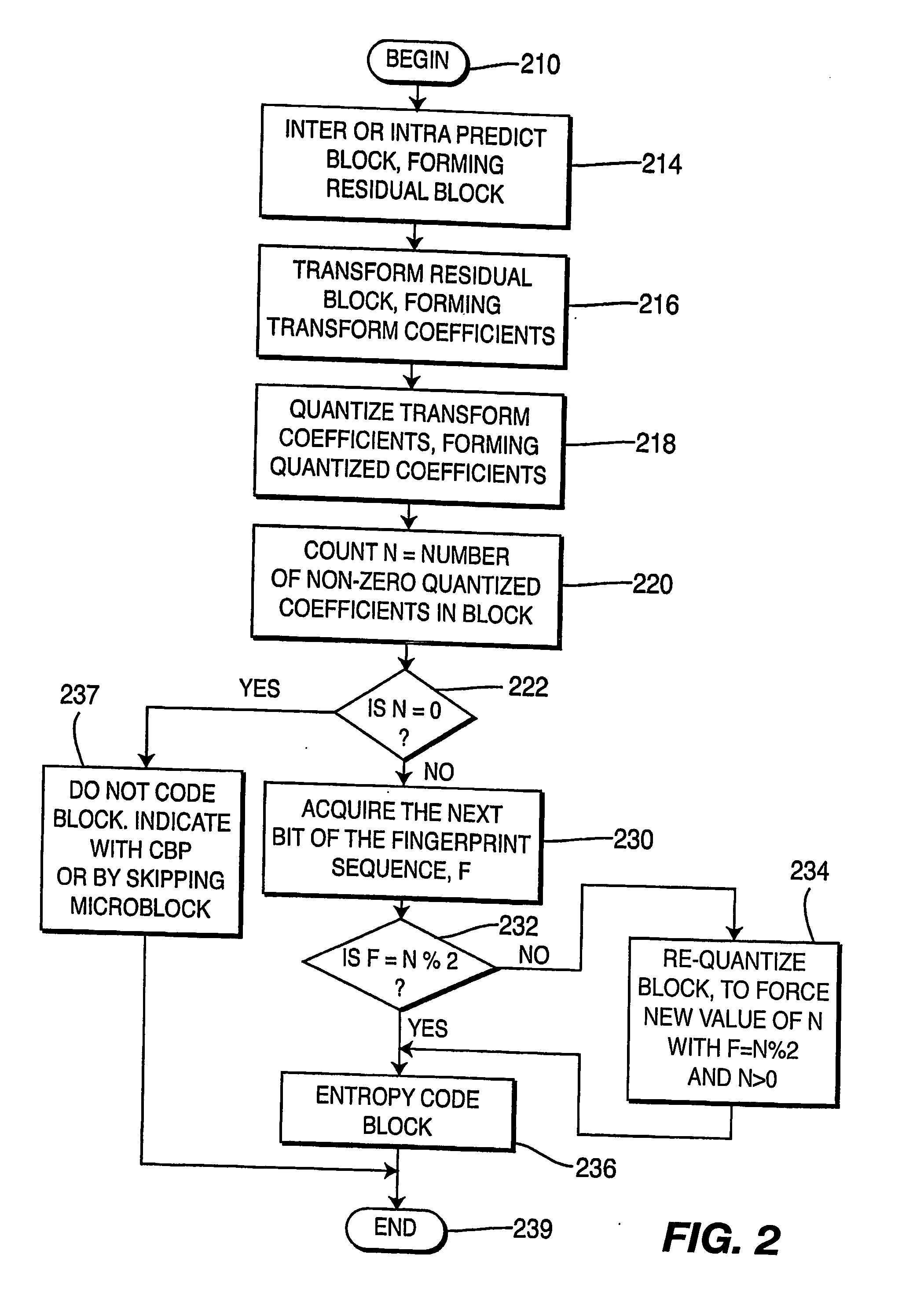

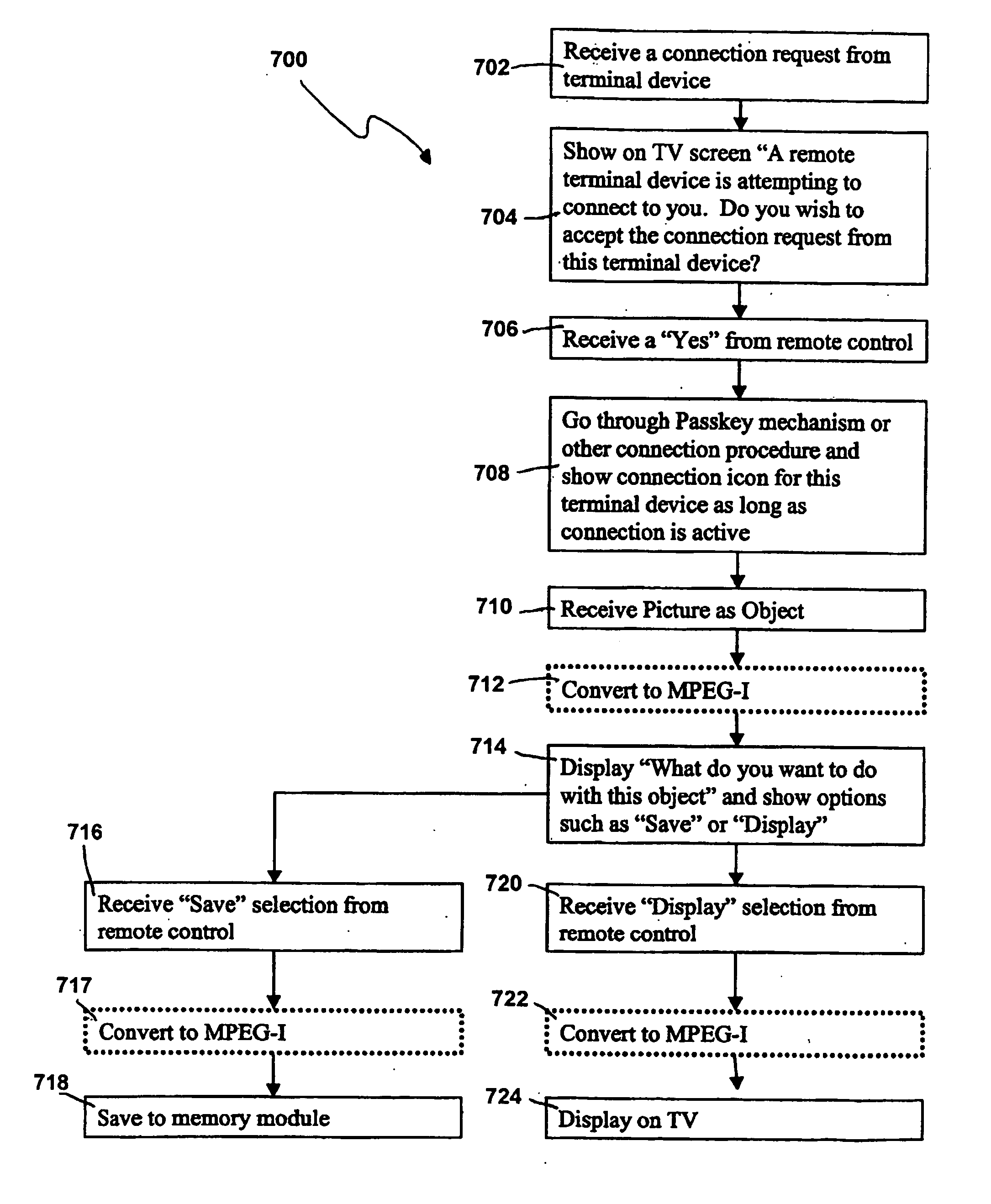

Encoding method and apparatus for insertion of watermarks in a compressed video bitstream

InactiveUS20070053438A1Color television with pulse code modulationColor television with bandwidth reductionComputer hardwareVideo bitstream

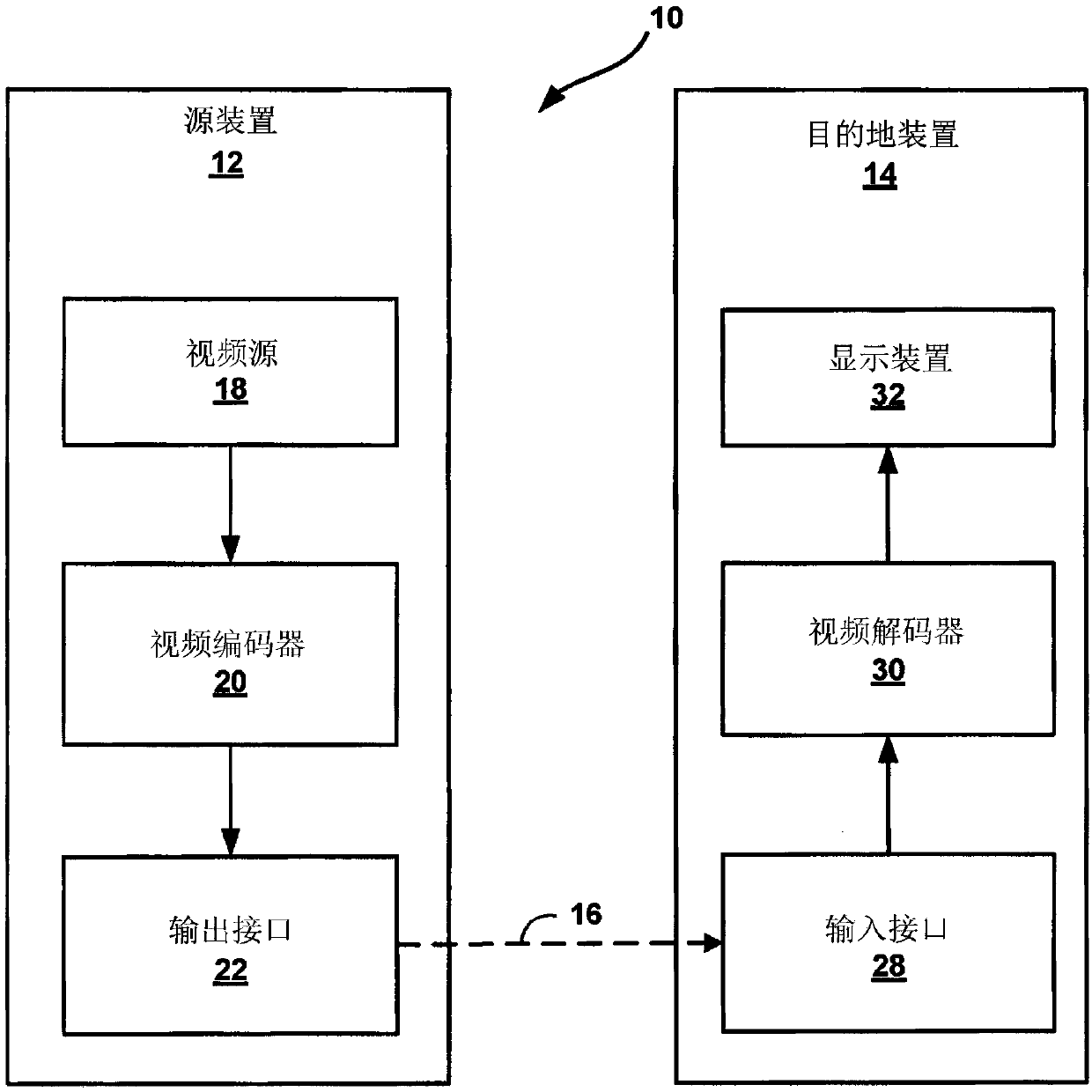

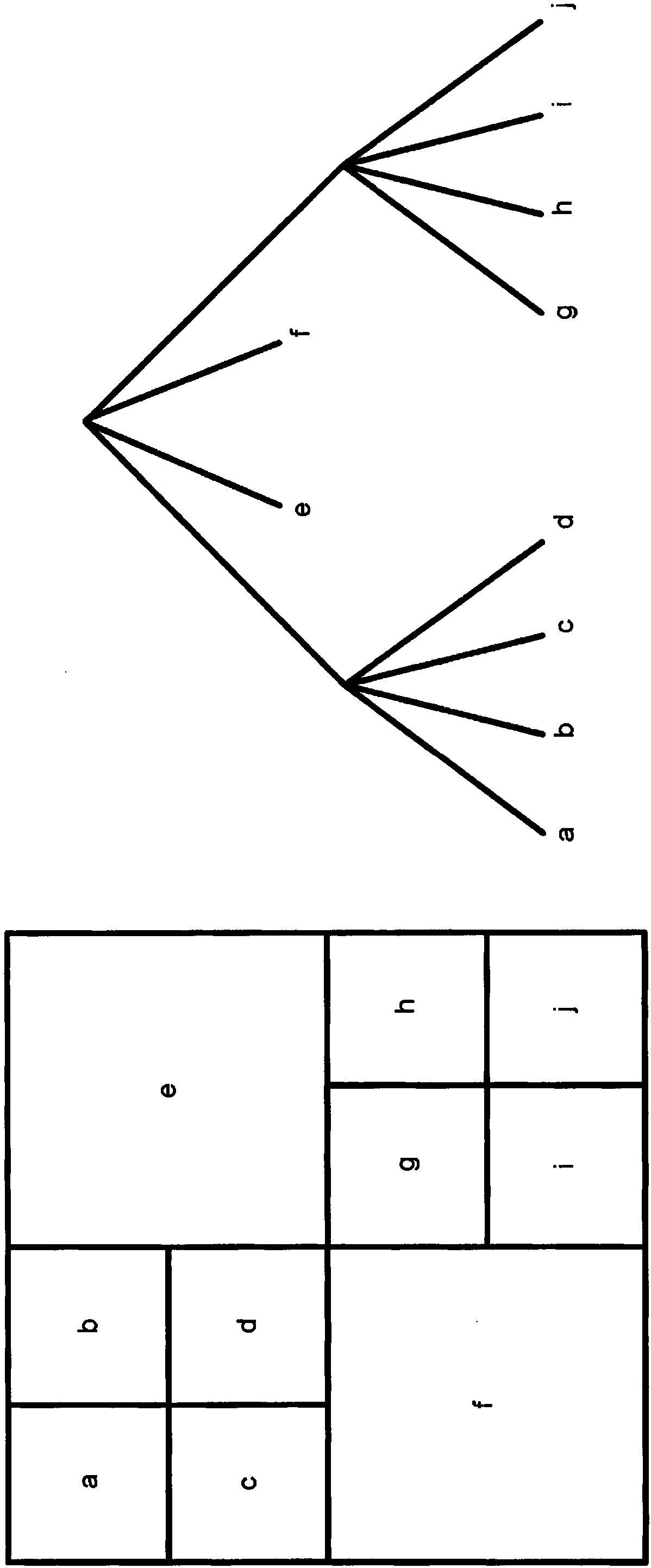

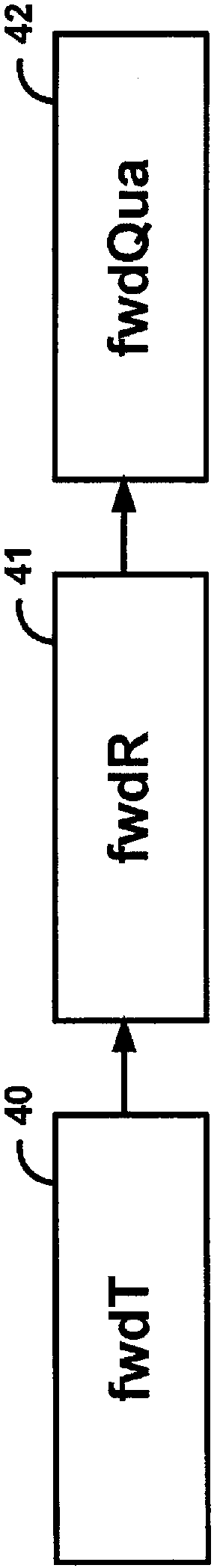

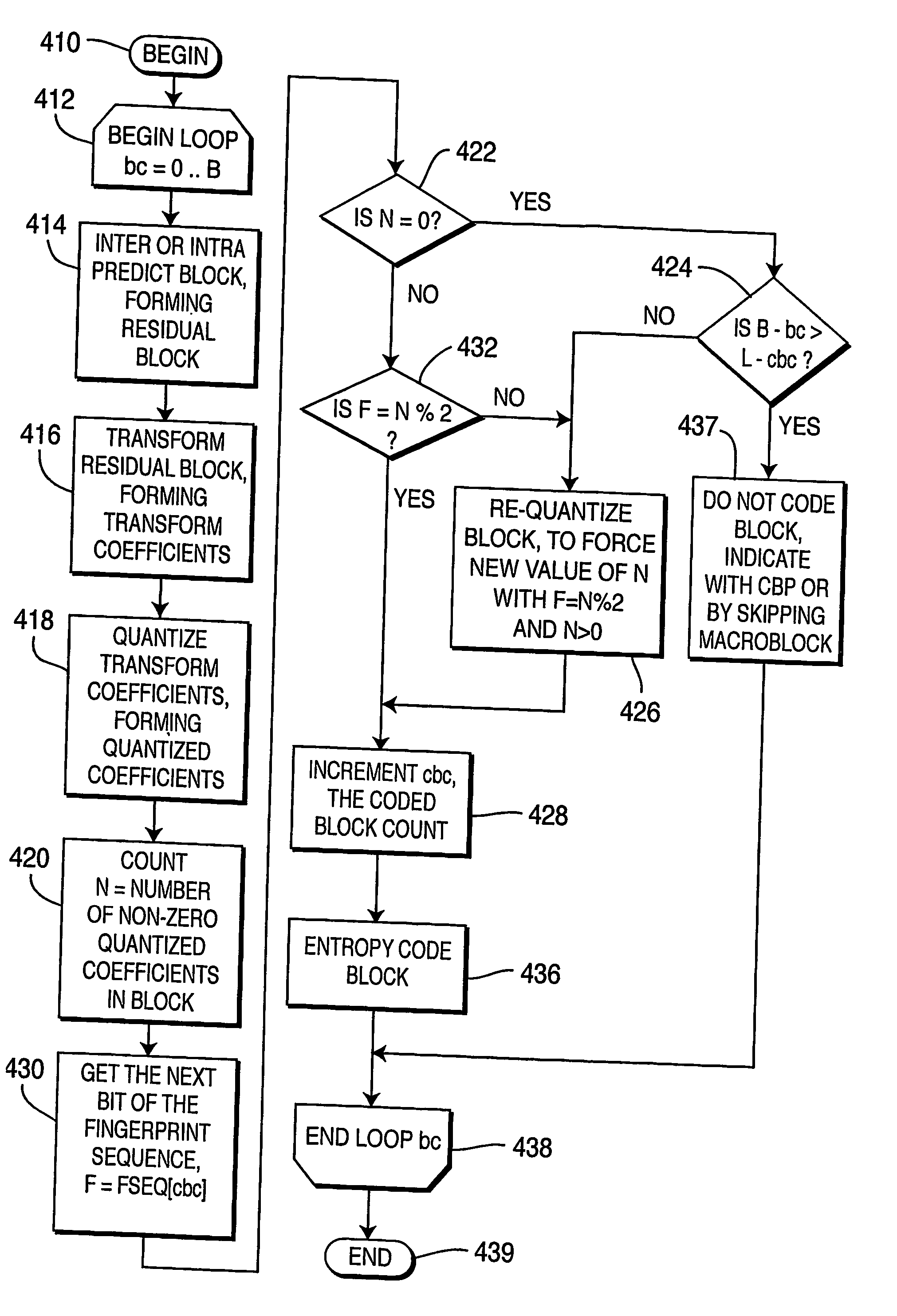

A video encoder, decoder, and methods, for watermarking video content are disclosed; the encoder including a quantization unit for quantizing coefficients of the video bitstream, and an embedding unit in signal communication with the quantization unit for embedding bits of the digital fingerprint in blocks of the video bitstream as a function of the parity of the number of coded coefficients in the block; and the decoder including a detection unit for detecting bits of the digital fingerprint in blocks of the video bitstream as a function of the parity of the number of coded coefficients in the block, and an inverse-quantization unit in signal communication with the detection unit for inverse-quantizing coefficients of the video bitstream.

Owner:THOMSON LICENSING SA

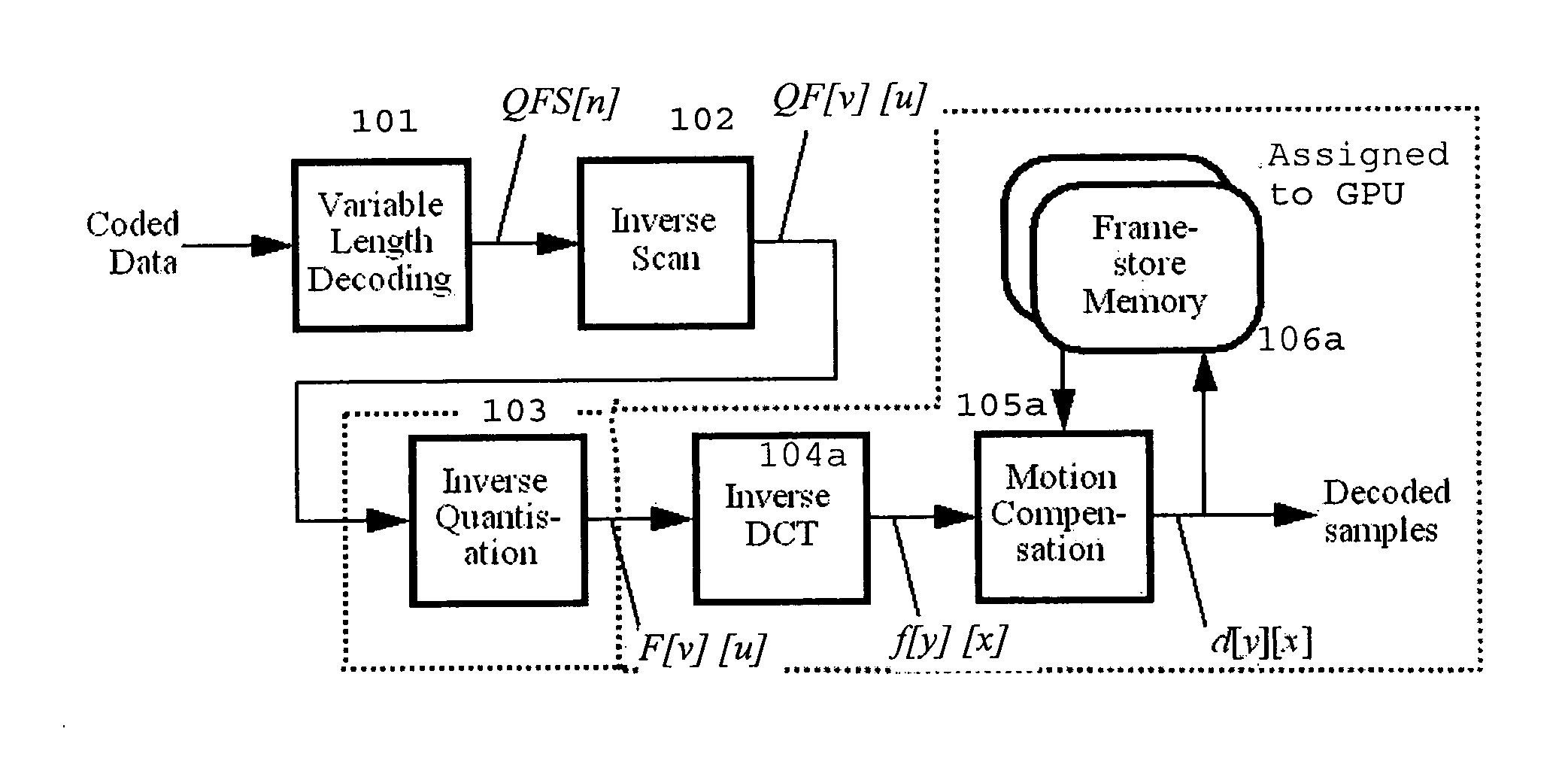

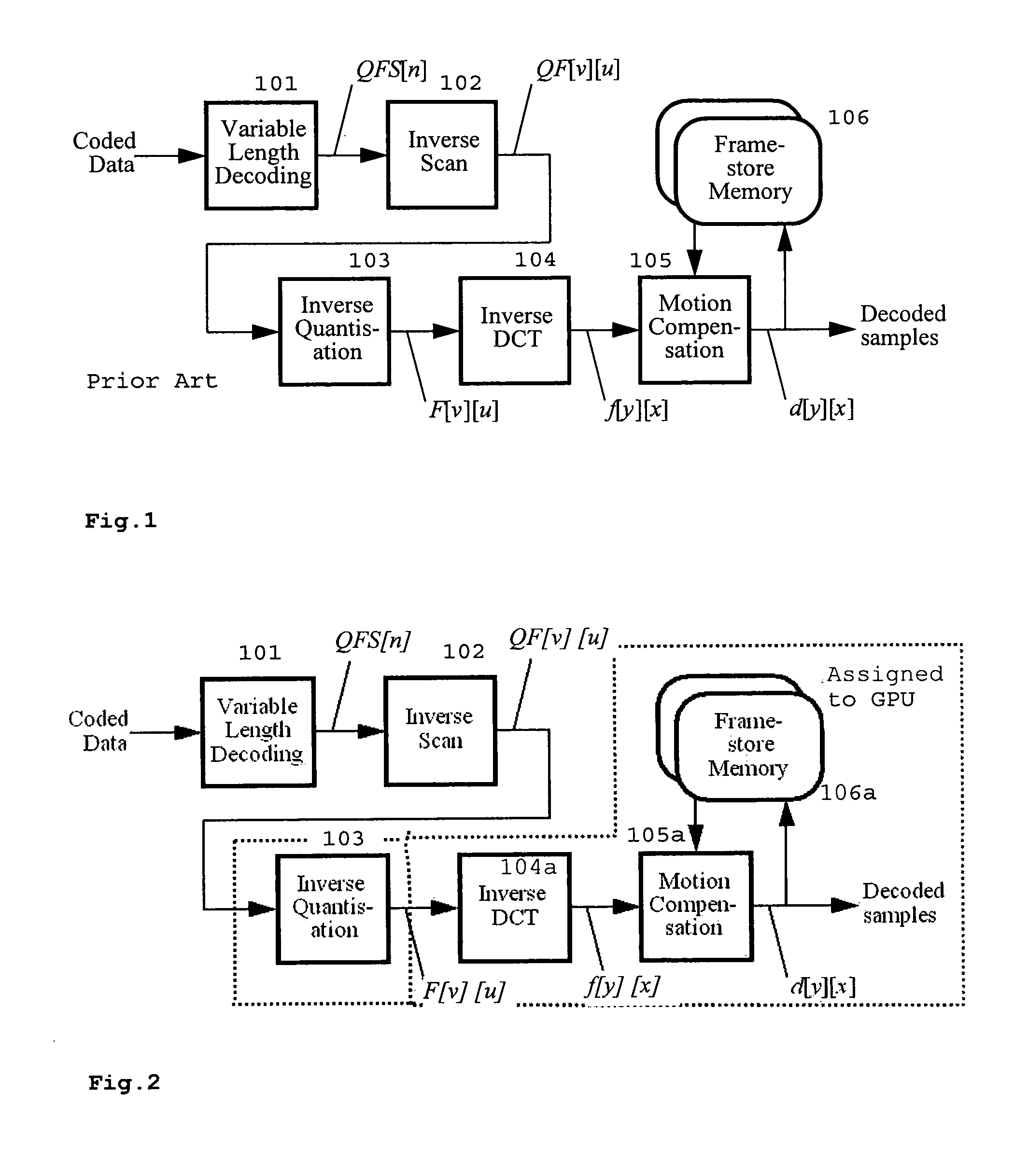

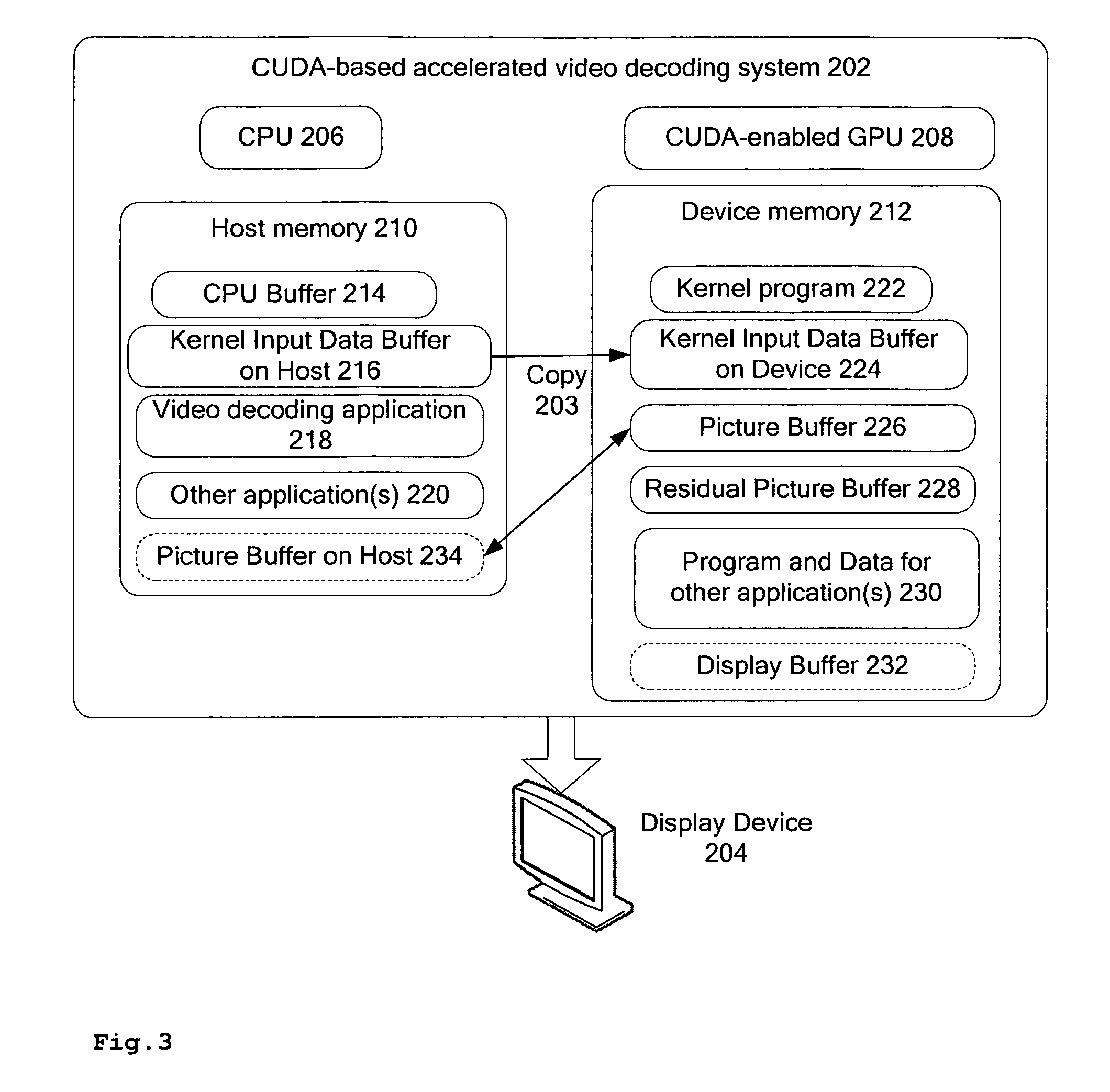

Method for video decoding supported by graphics processing unit

InactiveUS20100135418A1Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningGraphicsData transport

A method for utilizing a CUDA based GPU to accelerate a complex, sequential task such as video decoding, comprises decoding on a CPU headers and macroblocks of encoded video, performing inverse quantization (on CPU or GPU), transferring the picture data to GPU, where it is stored in a global buffer, and then on the GPU performing inverse waveform transforming of the inverse quantized data, performing motion compensation, buffering the reconstructed picture data in a GPU global buffer, determining if the decoded picture data are used as reference for decoding a further picture, and if so, copying the decoded picture data from the GPU global buffer to a GPU texture buffer. Advantages are that the data communication between CPU and GPU is minimized, the workload of CPU and GPU is balanced and the modules off-loaded to GPU can be efficiently realized since they are data-parallel and compute-intensive.

Owner:INTERDIGITAL VC HLDG INC

Method and/or apparatus for reducing the complexity of H.264 B-frame encoding using selective reconstruction

InactiveUS20070263724A1Improve encoding performanceReduce complexityColor television with pulse code modulationColor television with bandwidth reductionComputer scienceInverse quantization

A method for implementing B-frame prediction in video compression comprising the steps of (A) setting a prediction flag (i) “off” if B-frames are used for block prediction and (ii) “on” if B-frames are not used for block prediction, (B) if the prediction flag is off, generating an output video signal in response to an input video signal by performing an inverse quantization step and an inverse transform step in accordance with a predefined coding specification and (C) if the prediction flag is on, bypassing the inverse quantization step and the inverse transform step.

Owner:AVAGO TECH INT SALES PTE LTD

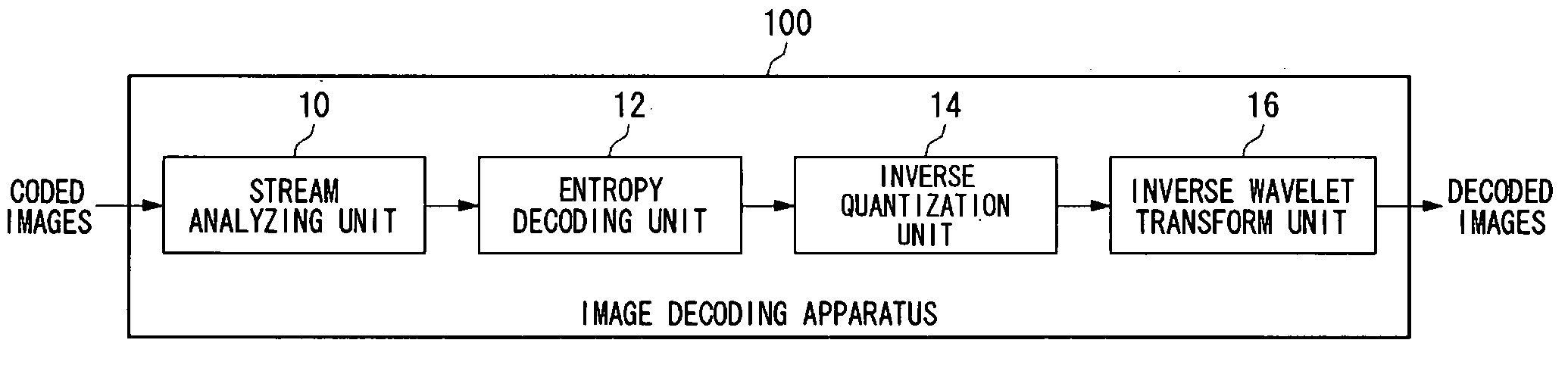

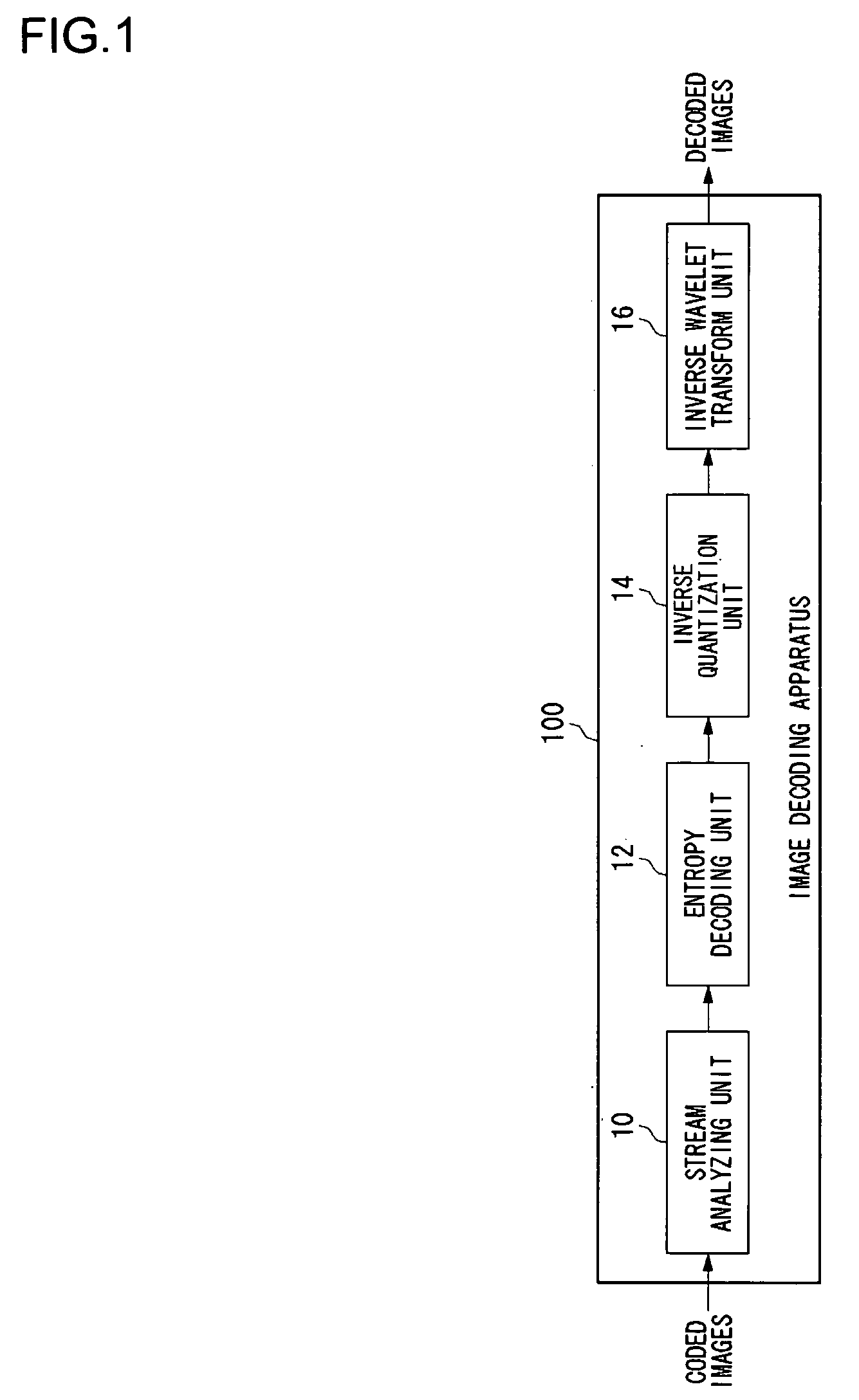

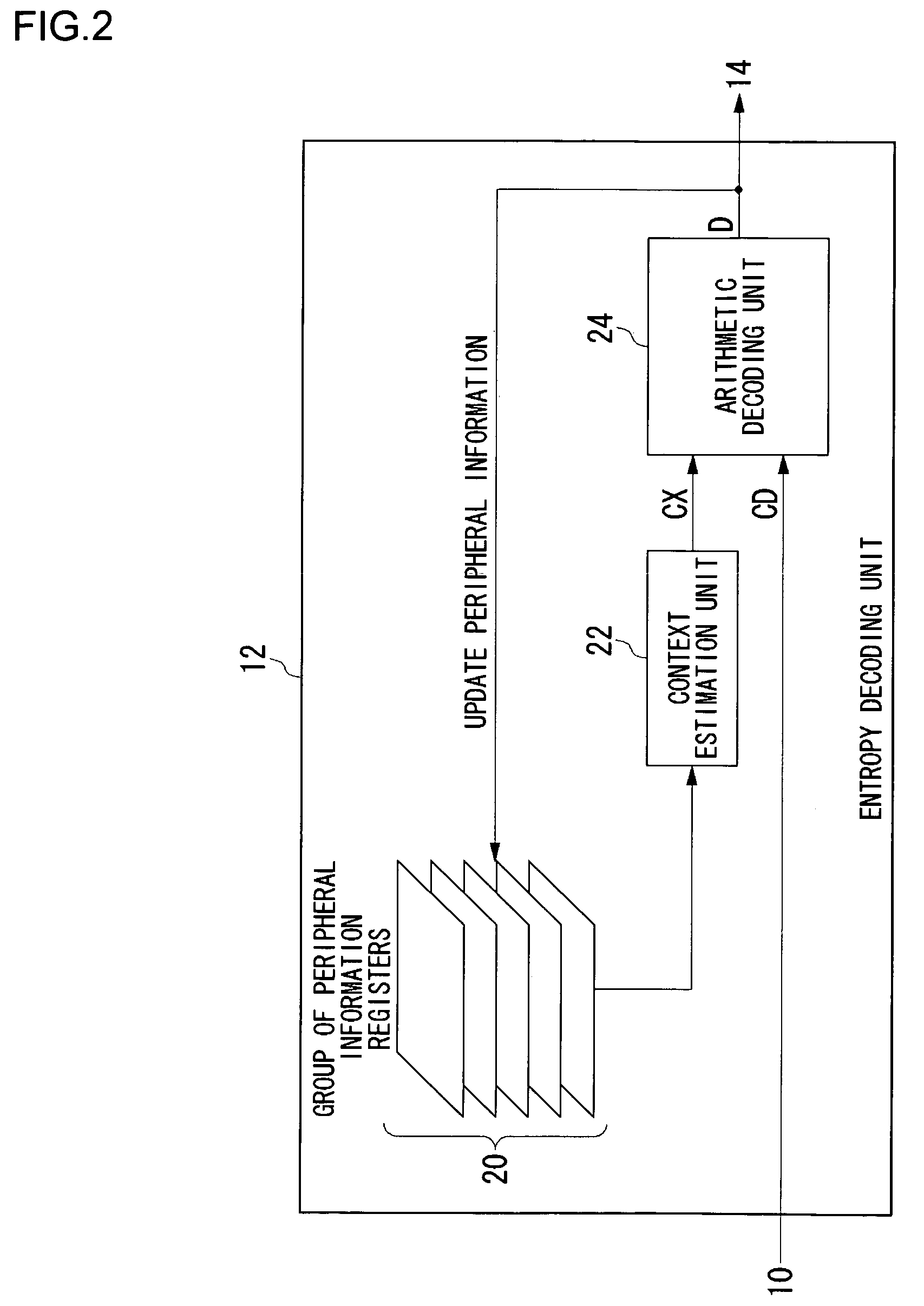

Image decoding apparatus

InactiveUS20050175250A1Easy to parallelizeImprove versatilityCode conversionCharacter and pattern recognitionComputer scienceInverse quantization

Coded data are inputted from a stream analyzing unit to an entropy decoding unit. A group of peripheral information registers stores peripheral information on a target pixel for estimating a context used for arithmetic decoding of the coded data. Based on the peripheral information stored in the group of peripheral registers, a context estimation unit estimates a context and delivers the estimated context to an arithmetic decoding unit. The arithmetic decoding unit decodes the coded data based on a context label, then derives a decision and supplies the decision to an inverse quantization unit. The peripheral information stored in the group of peripheral registers is updated by a decoding result of the arithmetic decoding unit in the same cycle as the decoding.

Owner:SANYO ELECTRIC CO LTD

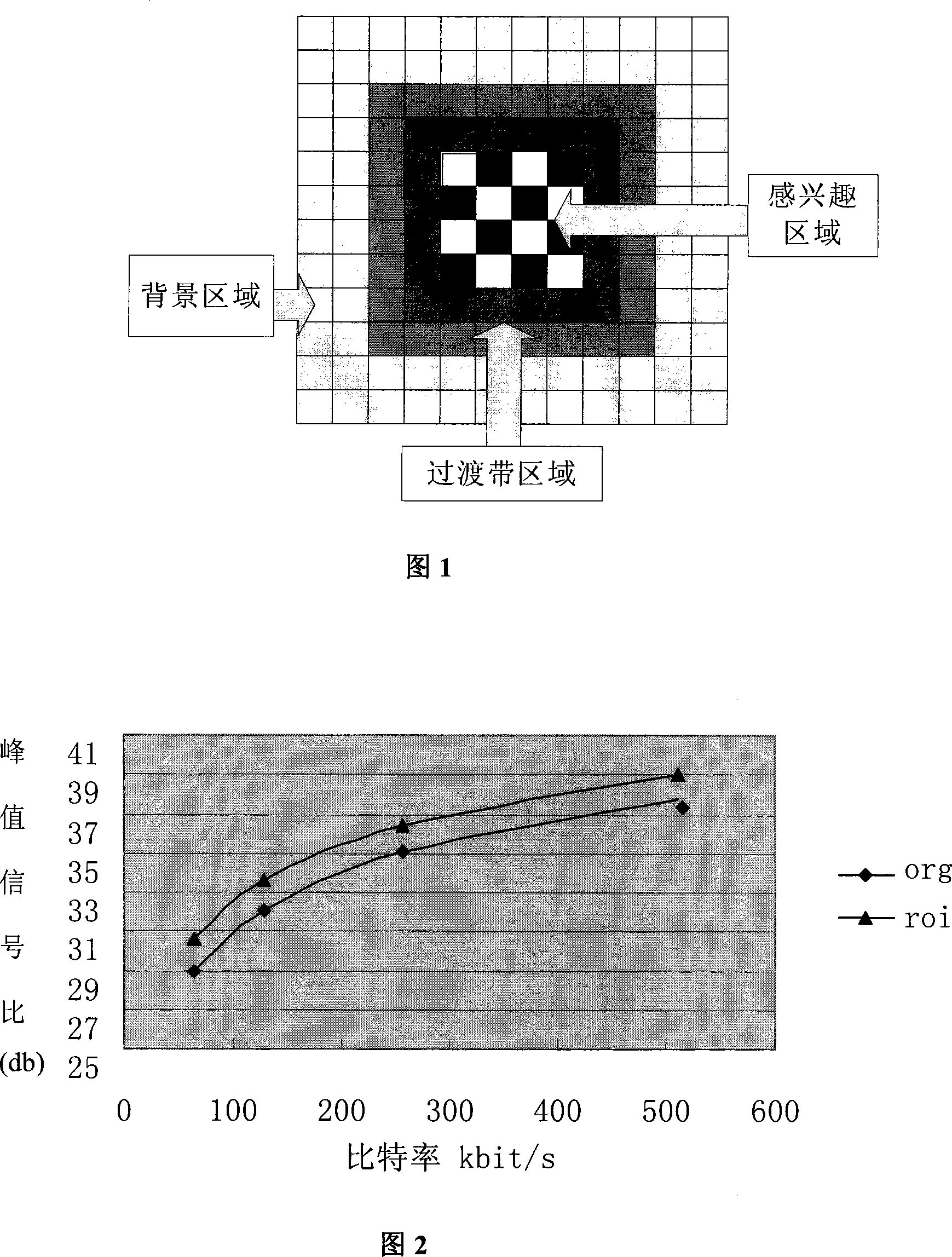

A video image decoding and encoding method and device based on area

InactiveCN101102495AGuaranteed image qualityObjective encoding quality improvementTelevision systemsDigital video signal modificationRegion selectionComputer graphics (images)

The method comprises: 1) encode step, providing the image data, and selecting area for inputting the digital image; making priority classification for the inputted digital image, and making quantization operation for the transformed image data, and recording the priority data; 2) decode step, decoding the location information and priority information of different areas; calculating the quantization value of each area, and using the quantization value to make inverse quantization operation for the data in each area. The structure of the apparatus thereof is: setting a area selection, priority division and quantization unit in the decode device. The decode device comprises an area information decoding and inversely-quantizing unit.

Owner:WUHAN UNIV

Image coding apparatus and image decoding apparatus

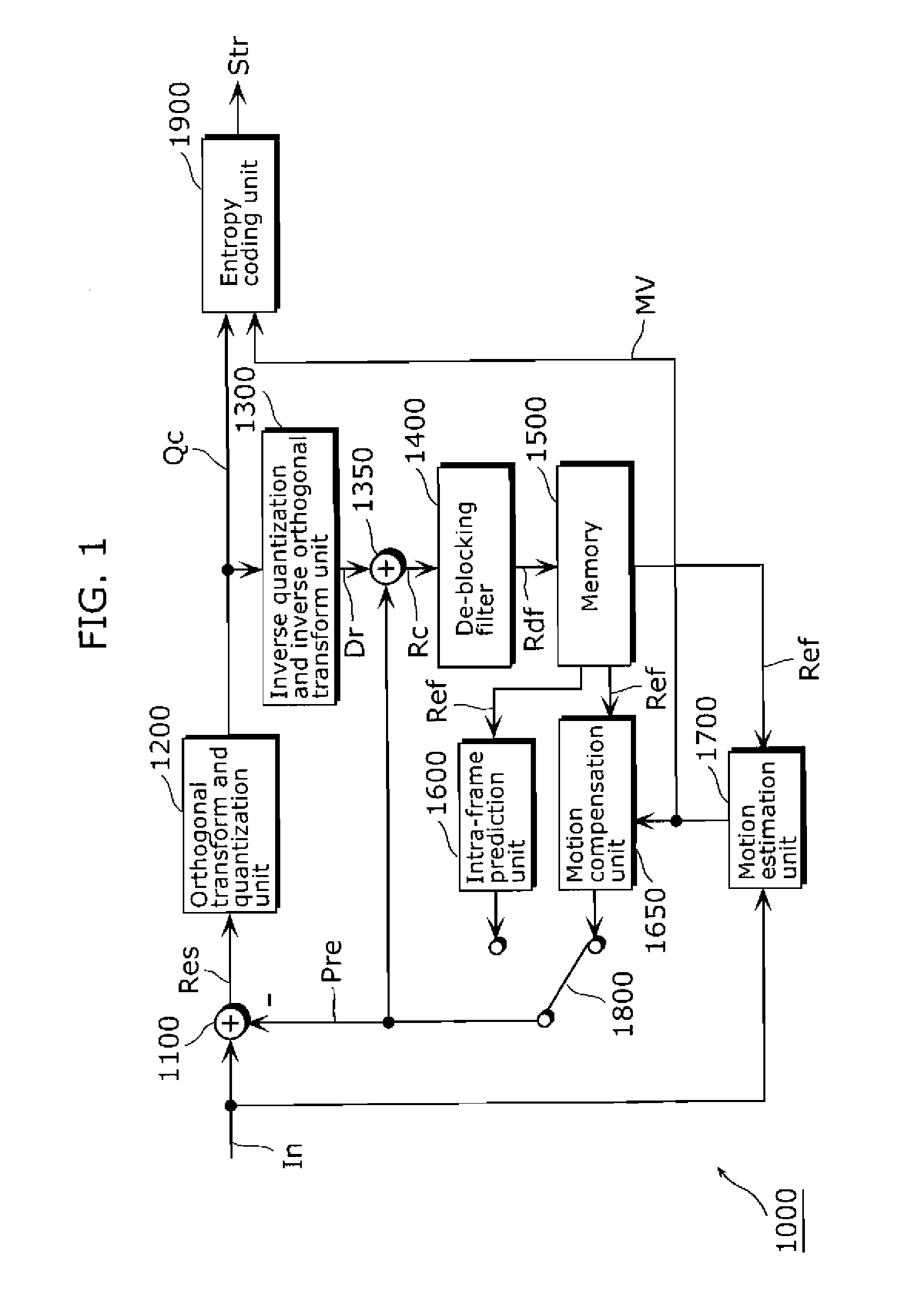

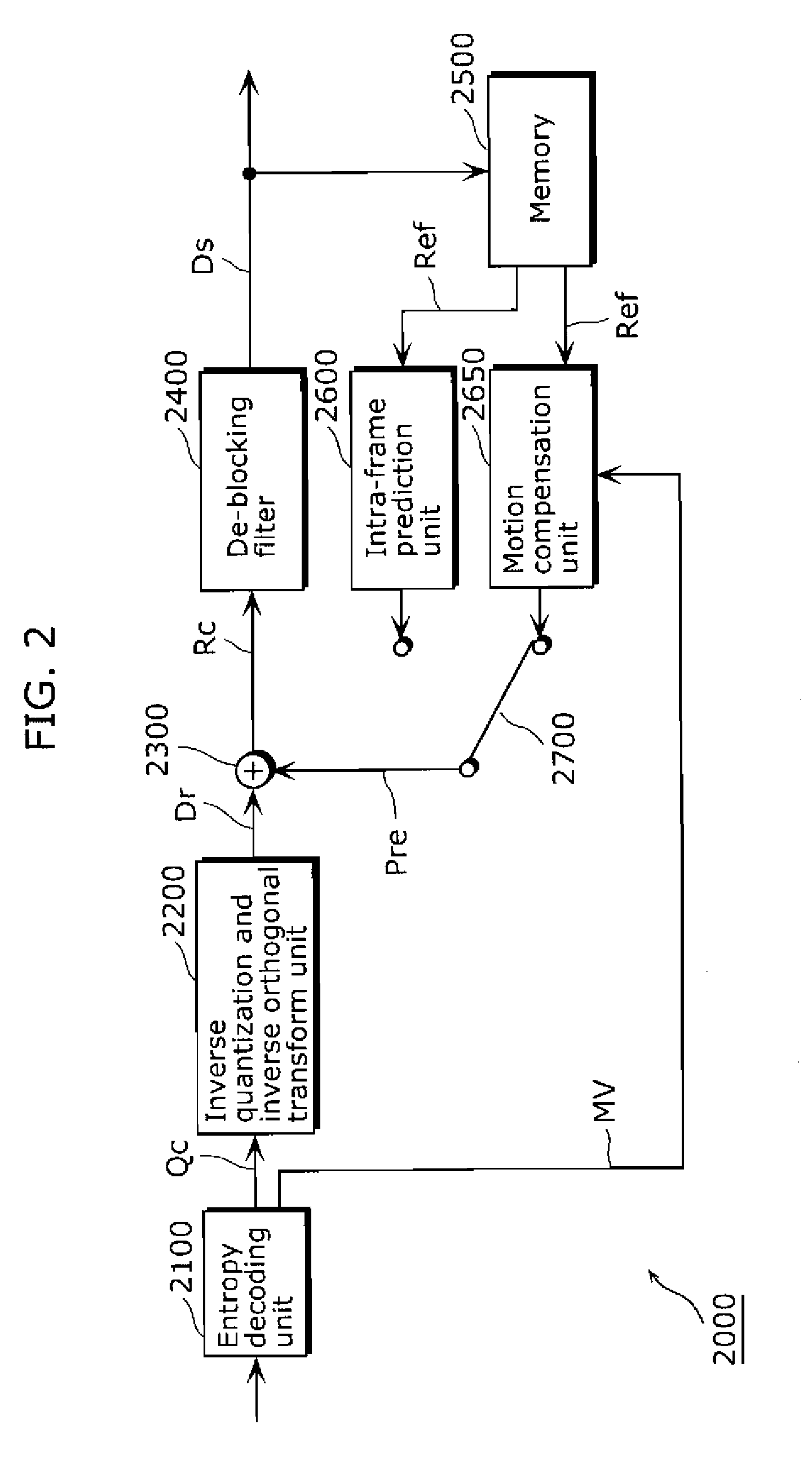

ActiveUS20100014763A1Deterioration of image qualityInhibit deteriorationCharacter and pattern recognitionDigital video signal modificationAdaptive filterImaging quality

Provide is an image decoding apparatus which reliably prevents deterioration of the image quality of decoded images which have been previously coded. An image decoding apparatus (200) includes: an inverse quantization and inverse orthogonal transform unit (220) and an adder (230) which decode a coded image included in a coded stream (Str) to generate a decoded image (Rc); an entropy decoding unit (210) which extracts cross-correlation data (p) which indicates a cross-correlation between the decoded image (Rc) and an image which corresponds to the decoded image and has not yet been coded; and an adaptive filter (240) which computes a filter parameter (w) based on the extracted cross-correlation data (p), and performs a filtering operation on the decoded image (Rc) according to the filter parameter (w).

Owner:SUN PATENT TRUST

Picture Coding Method, Picture Decoding Method, Picture Coding Apparatus, Picture Decoding Apparatus, and Program Thereof

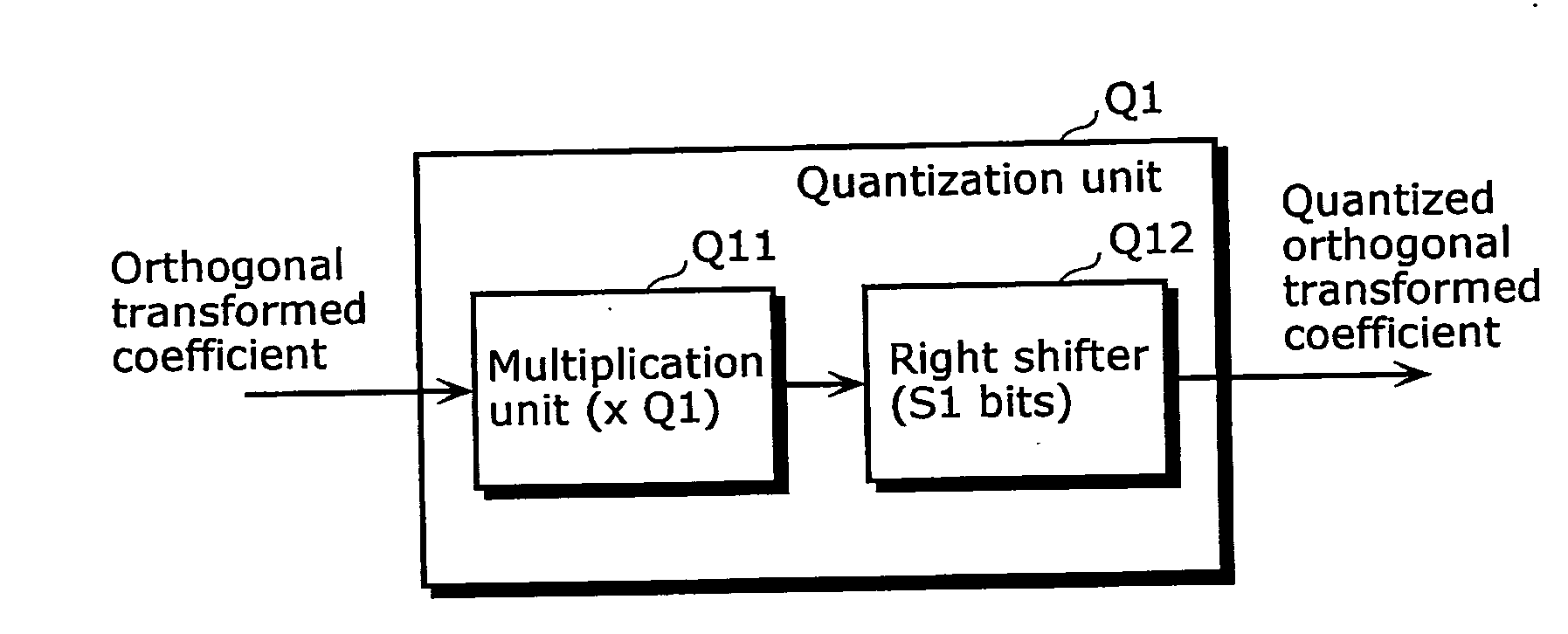

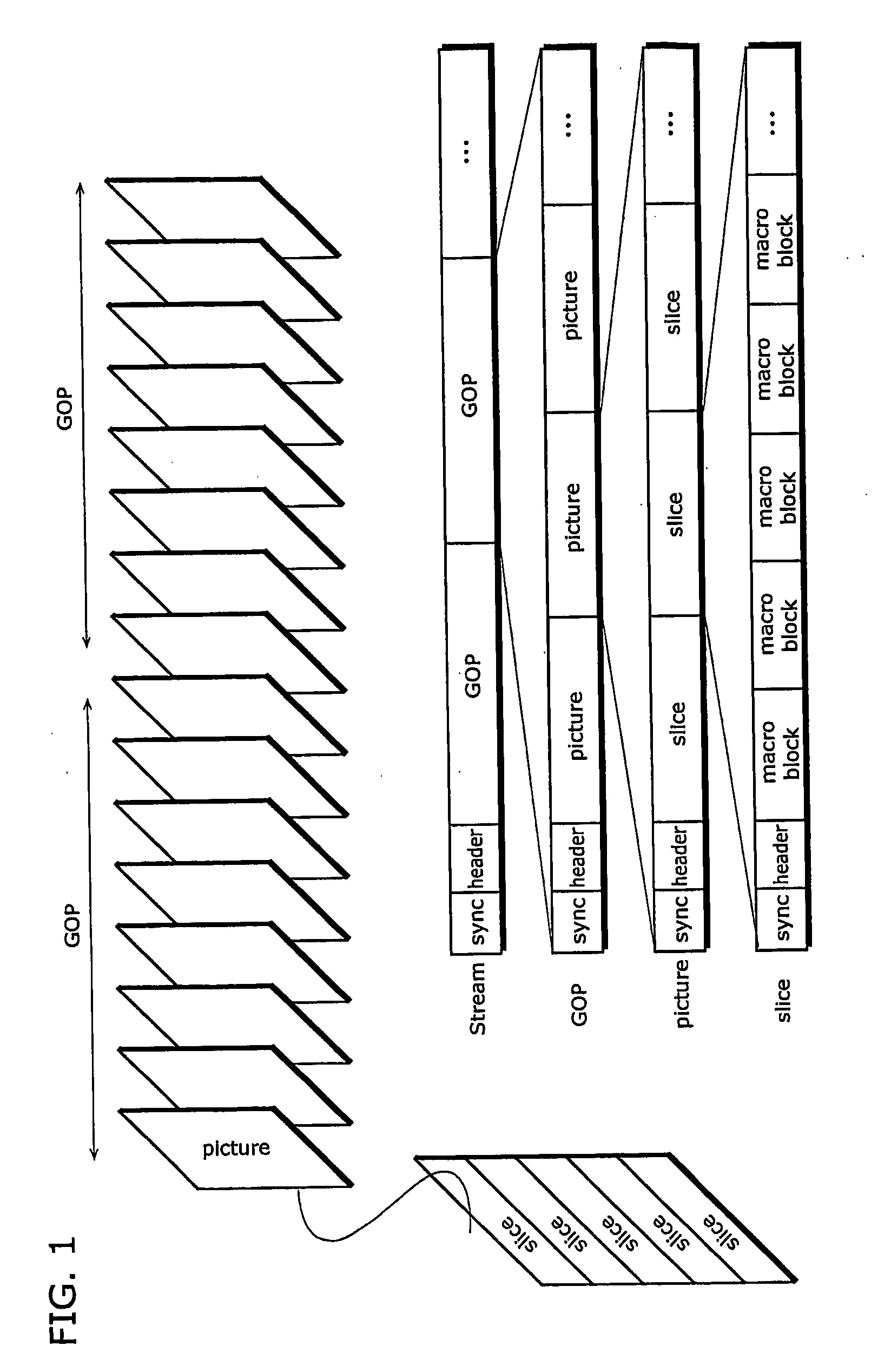

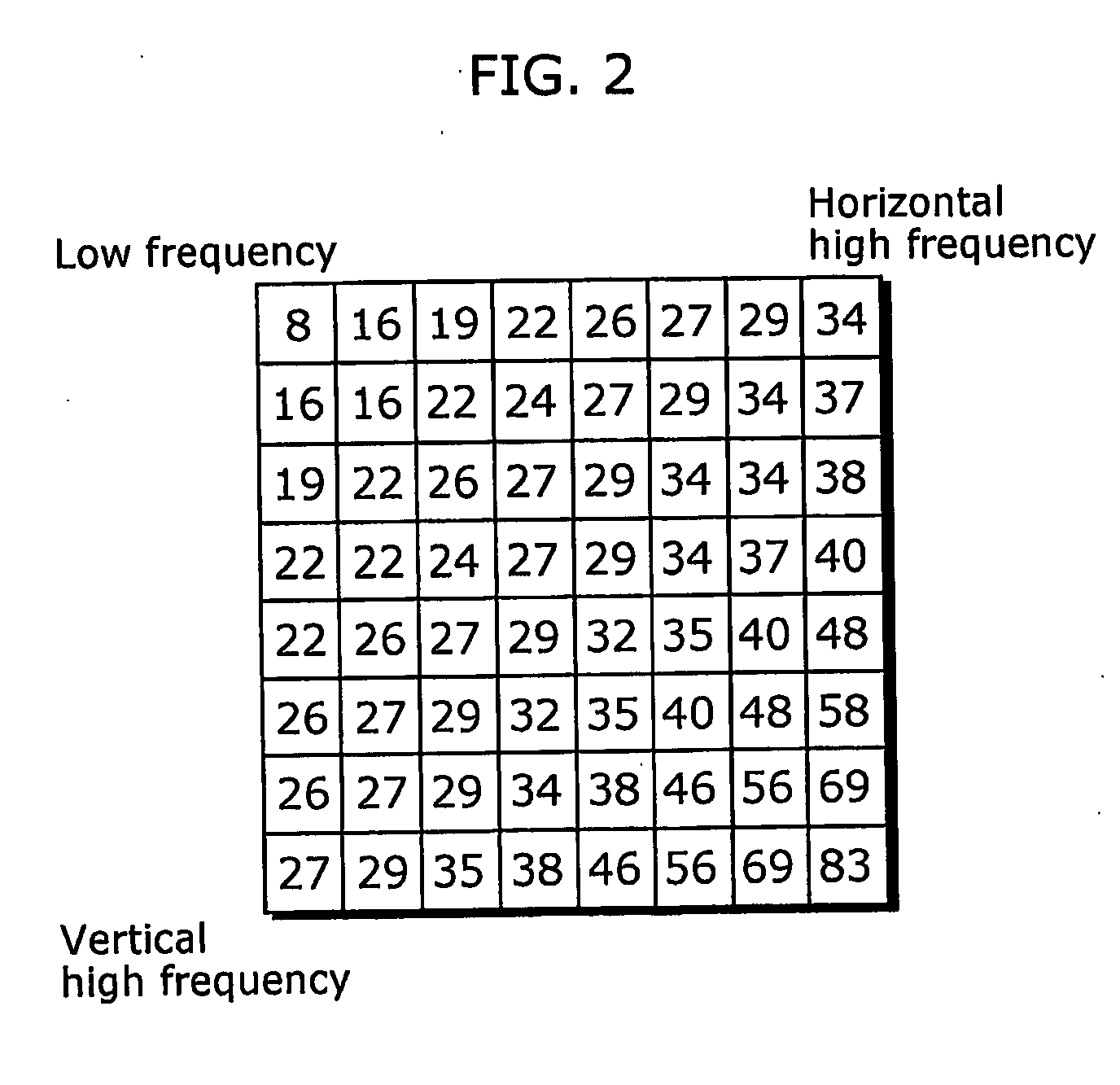

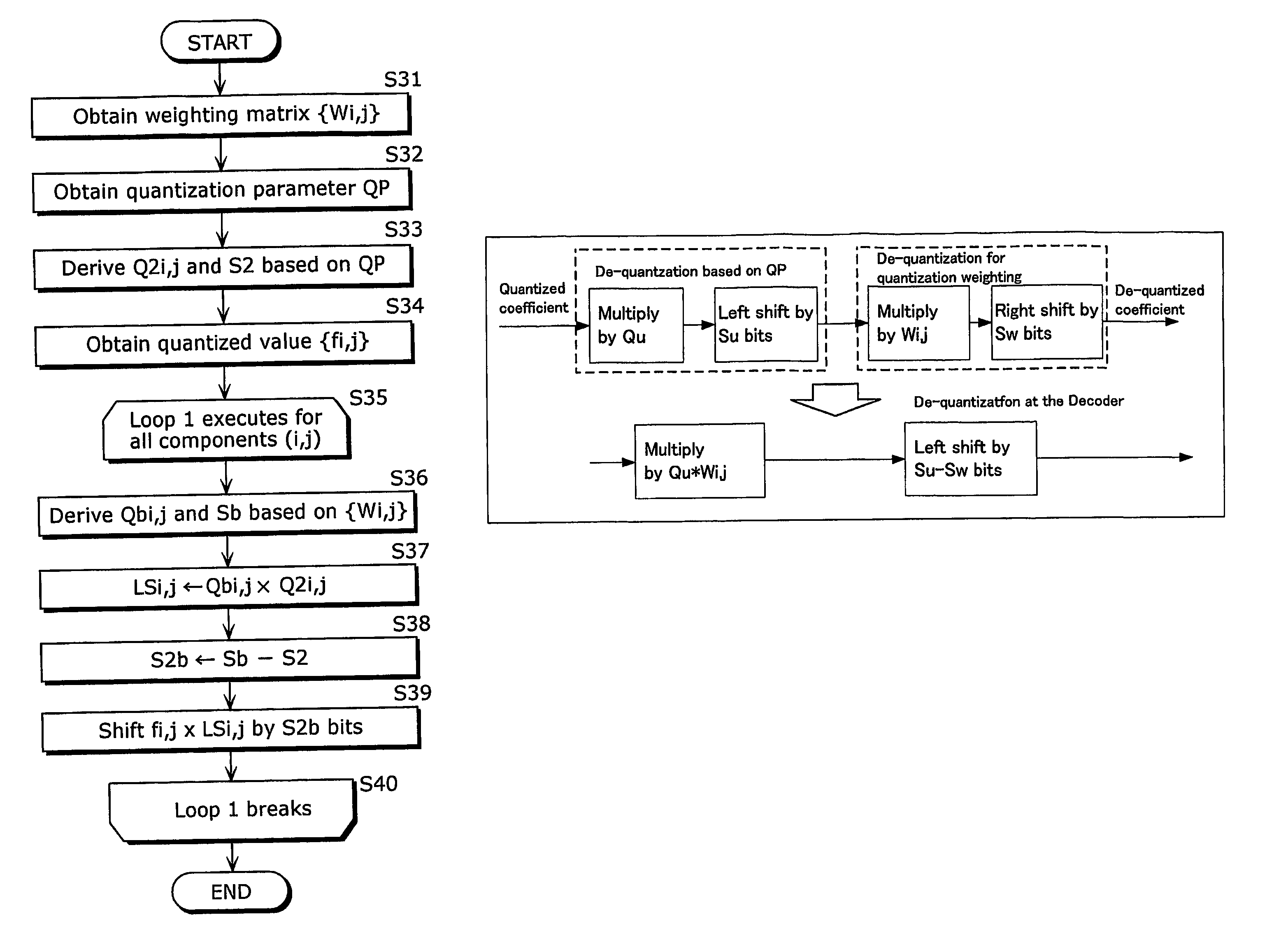

ActiveUS20080192838A1Reduce loadDegradation is multipliedColor television with pulse code modulationColor television with bandwidth reductionComputer architectureQuantization matrix

The picture decoding method according to the present invention is a decoding method for decoding coded pictures by inverse quantization and inverse orthogonal transformation, in which a quantization matrix which defines a scaling ratio of a quantization step for each component is multiplied by a multiplier, which is a coefficient for frequency transformation or a quantization step, and also, a result of the multiplication is multiplied by a quantized value, as a process of inverse quantization.

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

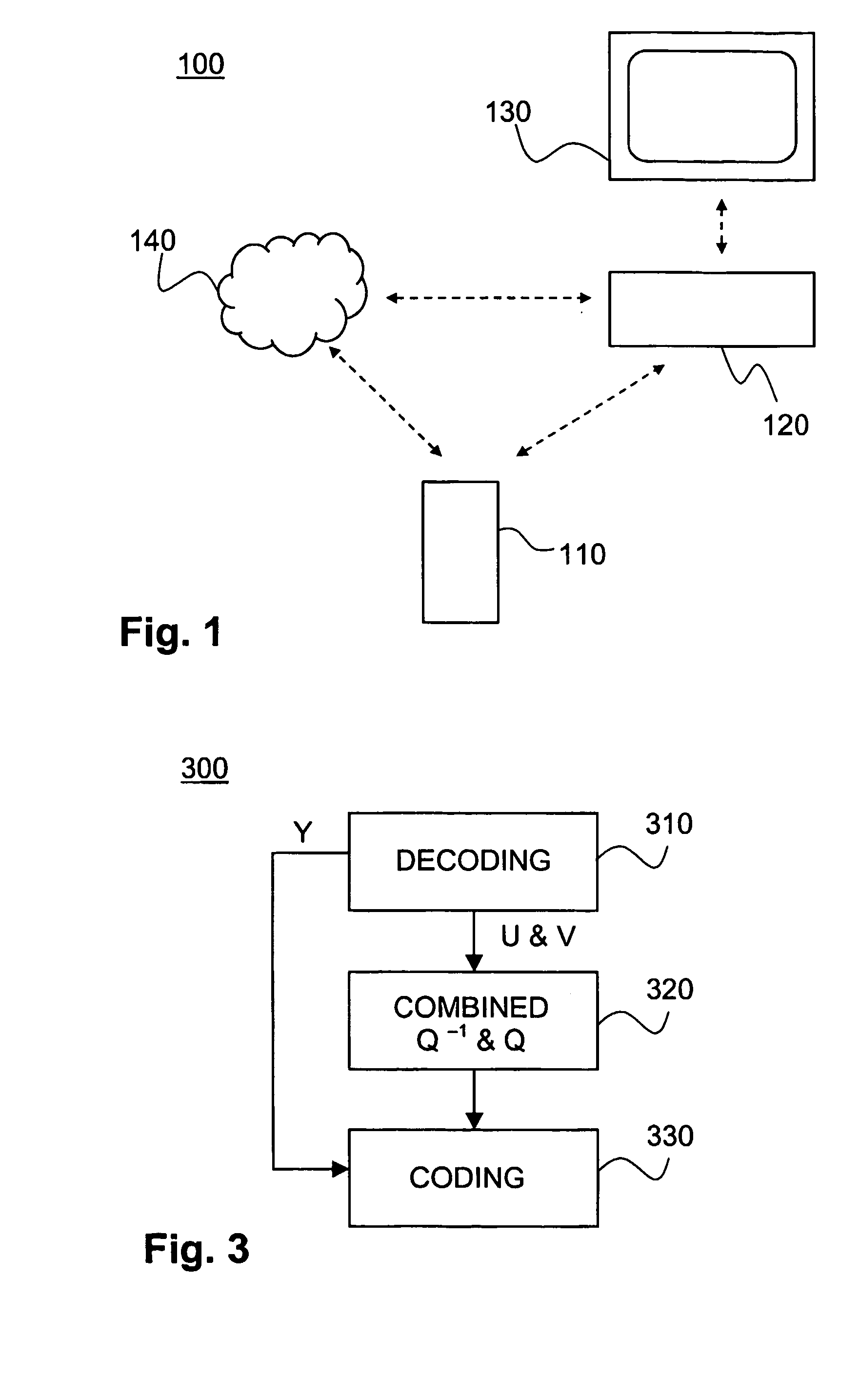

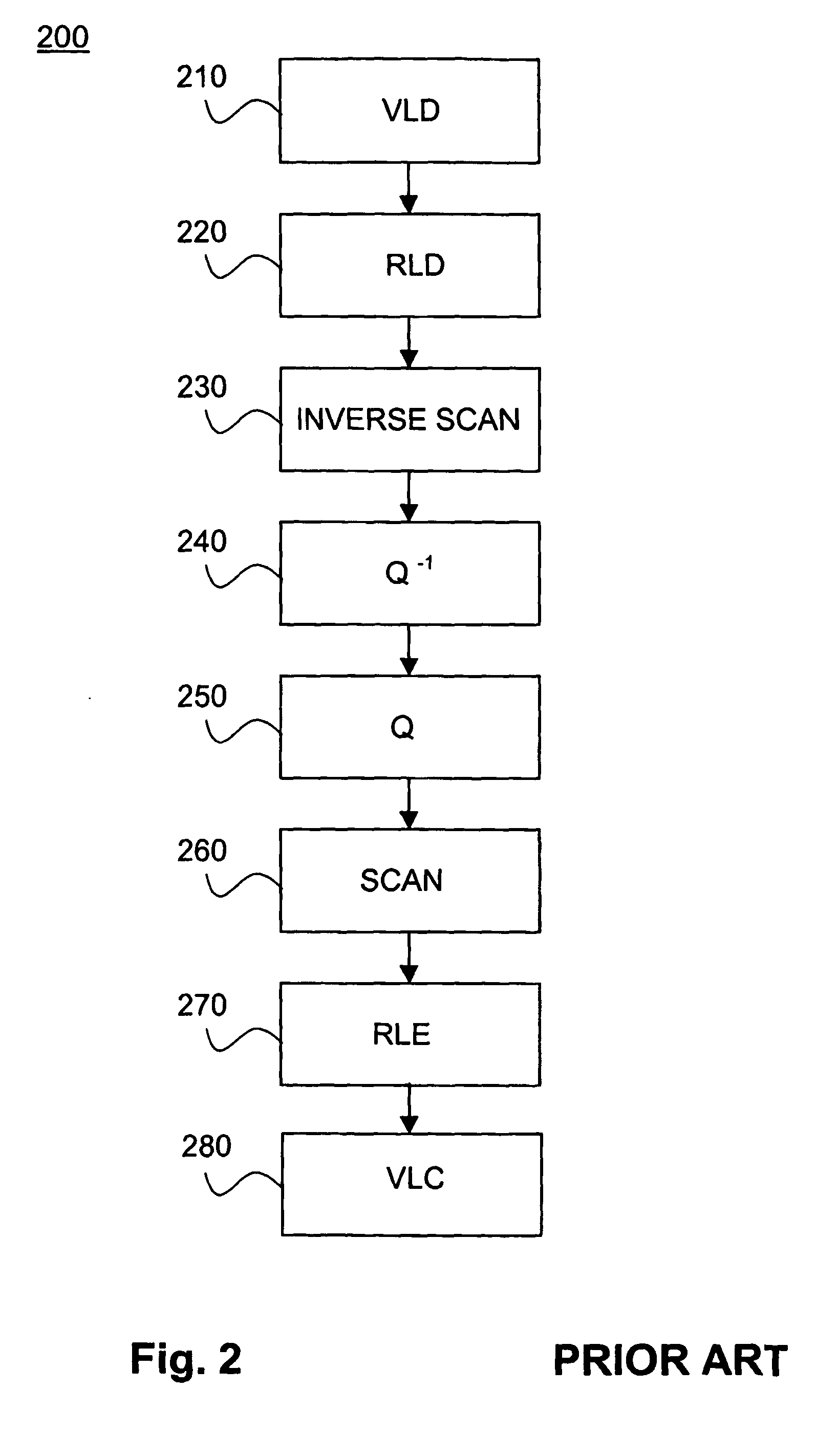

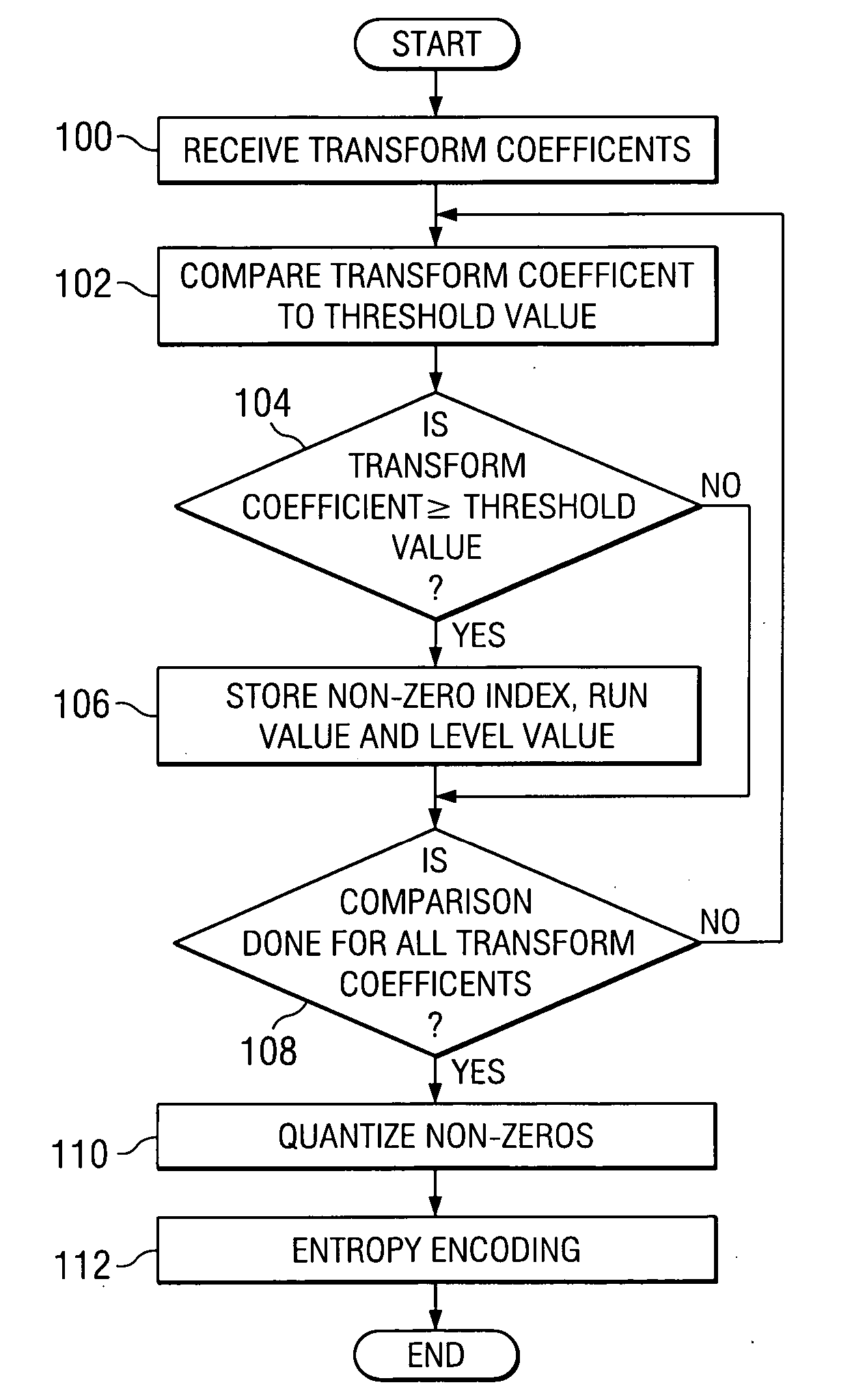

Method and device for transcoding images

InactiveUS20060050784A1Reduce complexityIncrease speedTelevision system detailsPicture reproducers using cathode ray tubesJPEGDigital image

A method and device for transcoding digital images where at least portions of a first image coded according to a first method is decoded for obtaining first coefficients of a luminance component and chrominance components of the first image, where the chrominance components are subjected to a combined inverse quantization according to the first method and quantization according to a second method. The combined inverse quantization and quantization respectively uses a chrominance quantization matrix and a luminance quantization matrix for obtaining second coefficients for chrominance components of the second image having the same chroma format as the JPEG image. Finally, the first coefficients of the luminance component of the first image and the second coefficients of the chrominance components of the second image are coded for obtaining the second image decodable according to the second method.

Owner:NOKIA CORP

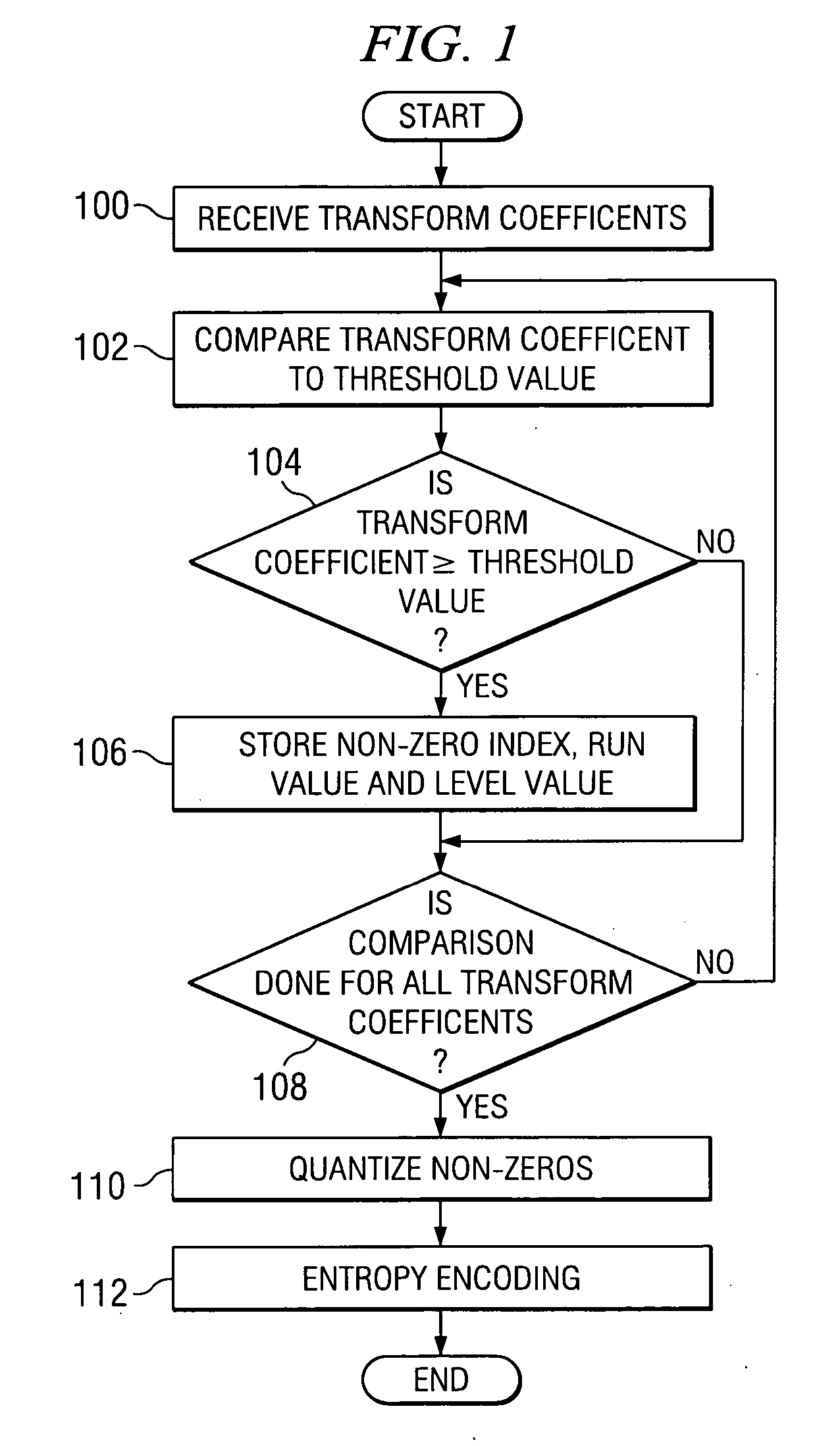

System and method of quantization

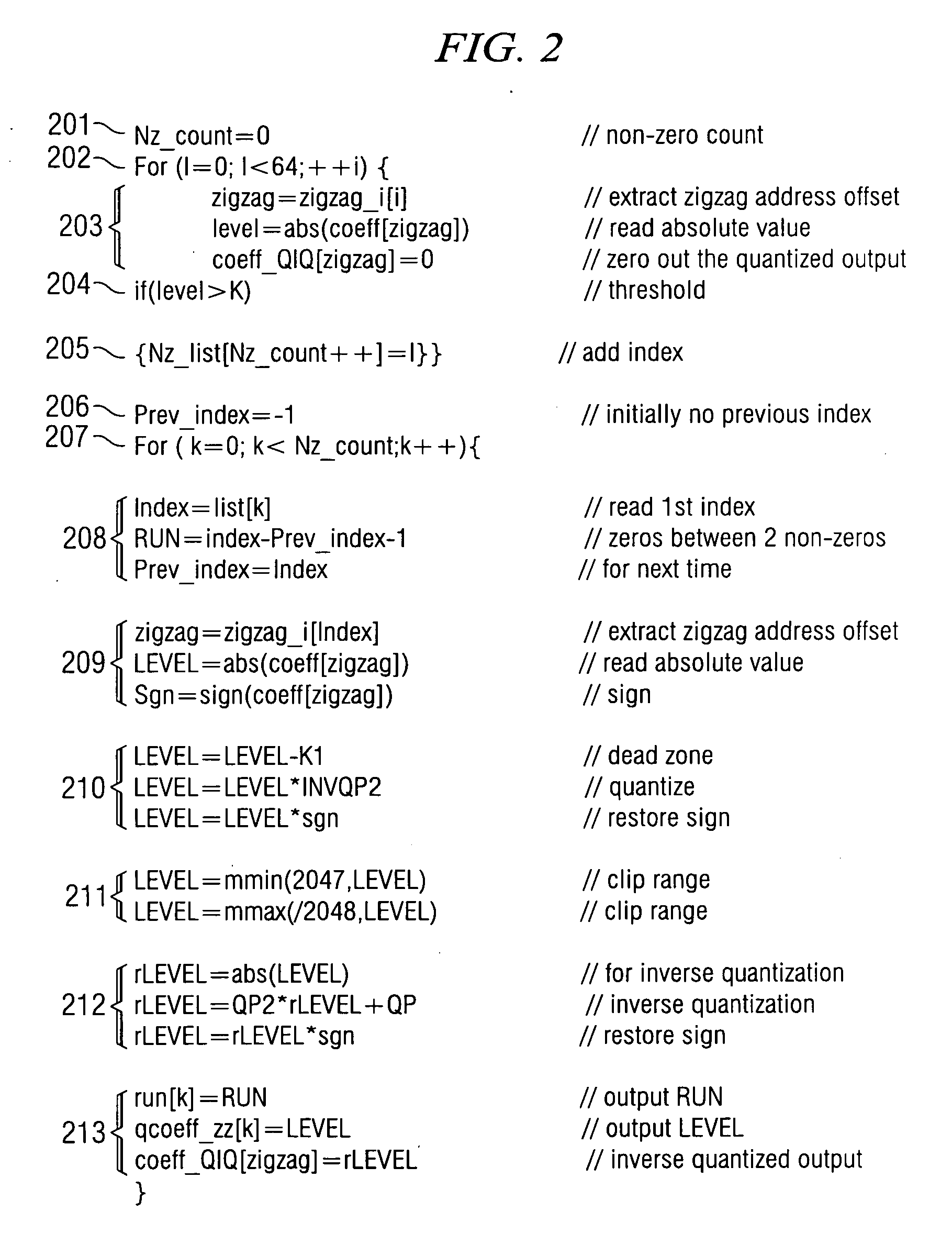

InactiveUS20070041653A1Not distinguishCharacter and pattern recognitionDigital video signal modificationNonzero coefficientsComputer science

Systems and methods for quantization are provided. Some embodiments provide a system and method for quantization comprising preprocessing the transform coefficients to predict one or more non-zero coefficients and one or more zero coefficients as well as predict the non-existence of non-zero and zero coefficients, storing indices representing the predicted non-zero coefficients, and performing a quantization process on the predicted non-zero coefficients, as well as the inverse quantization process of those non-zero quantized coefficients.

Owner:TEXAS INSTR INC

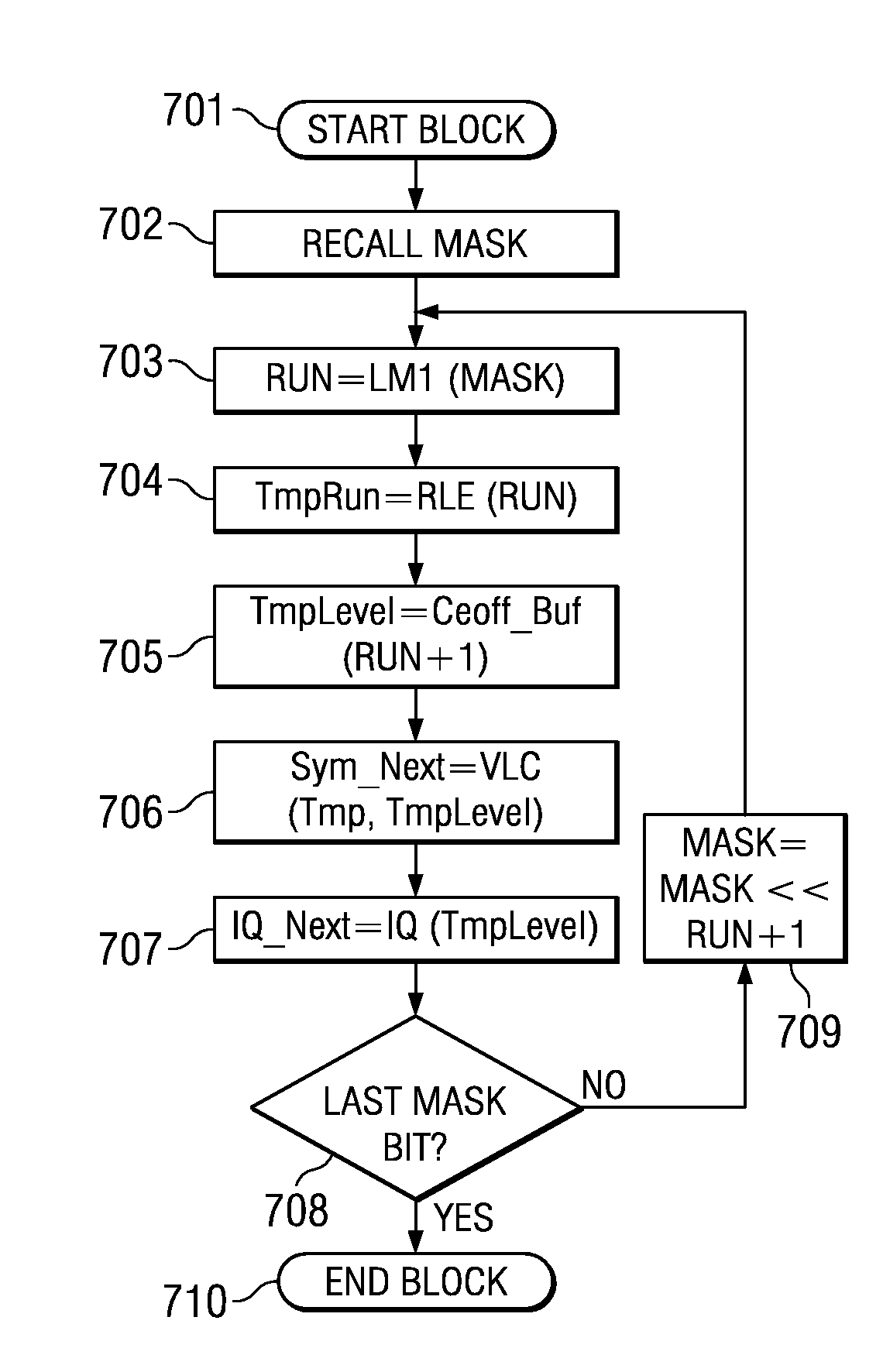

Run Length Encoding in VLIW Architecture

ActiveUS20080046698A1Reduce bitrateEasy to harvestPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningVariable-length codeGlyph

A computer implemented method of video date encoding generates a mask having one bit corresponding each spatial frequency coefficient of a block during quantization. The bit state of the mask depends upon whether the corresponding quantized spatial frequency coefficient is zero or non-zero. The runs of zero quantized spatial frequency coefficients determined by a left most bit detect instruction are determined from the mask and run length encoded. The mask is generated using a look up table to map the scan order of quantization to the zig-zag order of run length encoding. Variable length coding and inverse quantization optionally take place within the run length encoding loop.

Owner:TEXAS INSTR INC

Picture coding method, picture decoding method, picture coding apparatus, picture decoding apparatus, and program thereof

ActiveUS7630435B2Reduce loadDegradation is multipliedColor television with pulse code modulationColor television with bandwidth reductionComputer architectureQuantization matrix

The picture decoding method according to the present invention is a decoding method for decoding coded pictures by inverse quantization and inverse orthogonal transformation, in which a quantization matrix which defines a scaling ratio of a quantization step for each component is multiplied by a multiplier, which is a coefficient for frequency transformation or a quantization step, and also, a result of the multiplication is multiplied by a quantized value, as a process of inverse quantization.

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

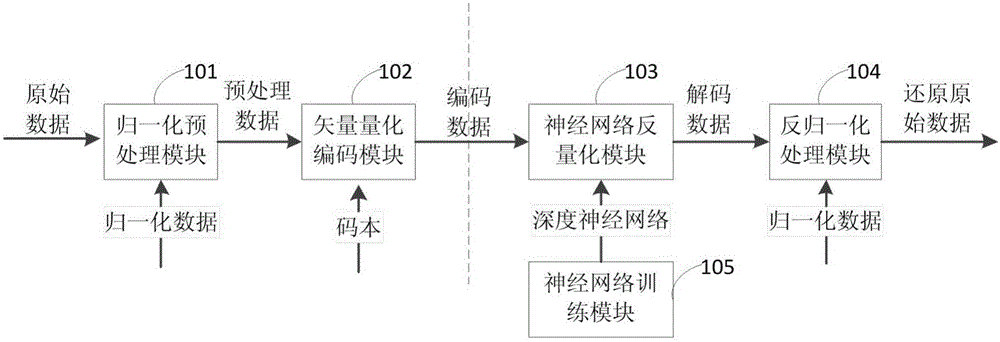

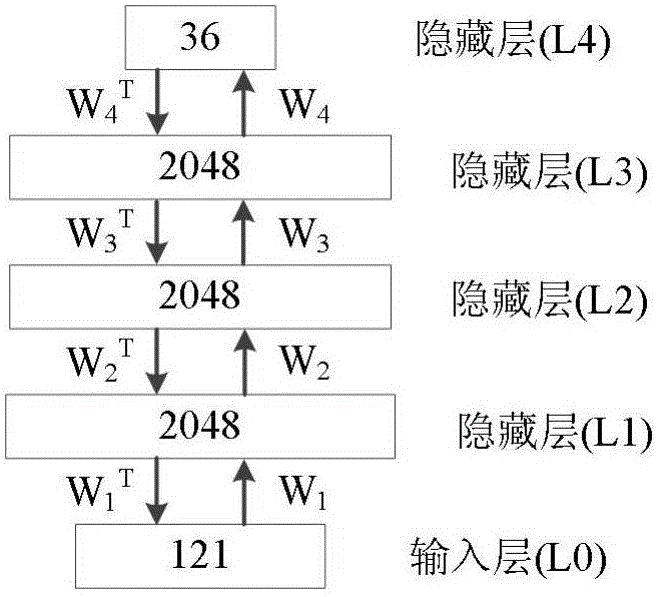

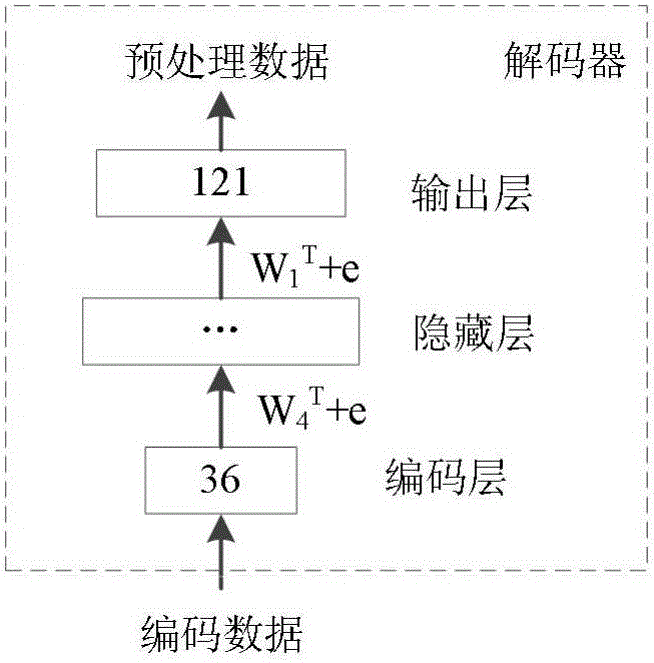

Depth neural network based vector quantization system and method

ActiveCN106203624AEffective dimensionality reductionData error is smallNeural learning methodsCode modulePattern recognition

The invention provides a depth neural network based vector quantization system and method, comprising a normalization preprocessing module for normalizing original data through normalized data and outputting preprocessed data after normalization; a vector quantization and coding module for receiving the preprocessed data and the codebook and carrying out vector quantization coding to the preprocessed data through the codebook and outputting the coded data; a neural network inverse quantization module for performing the decoding of the inverse quantization to the coded data through a depth neural network and outputting the decoded data; a processing module after inverse normalization for performing an inverse normalization process to the decoded data through the normalized data and outputting the restored original data after the inverse normalization; and a neural network training module for carrying out trainings to the neural network through the pre-processed training data and coded training data after normalization processing and outputting the neural network to the neural network inverse quantization module. The system and the method of the invention can effectively solve the problem that the quantization error is large in high dimension signal vector quantization.

Owner:SHANGHAI JIAO TONG UNIV

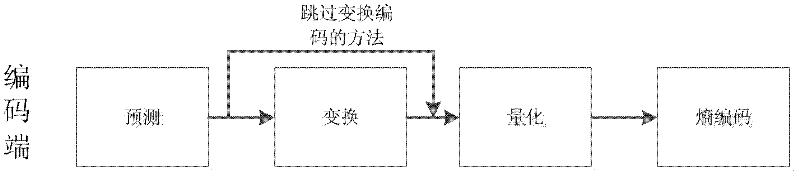

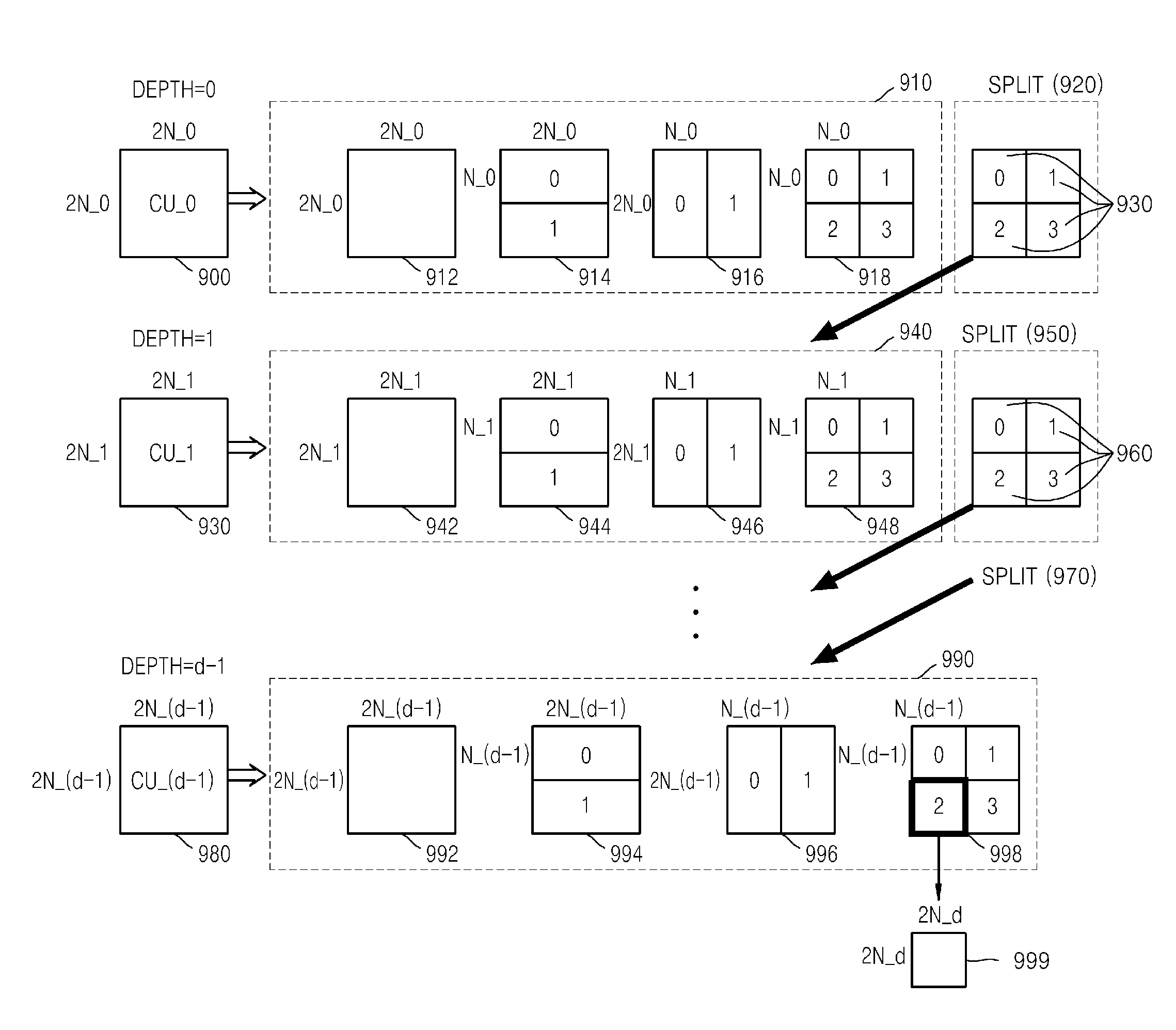

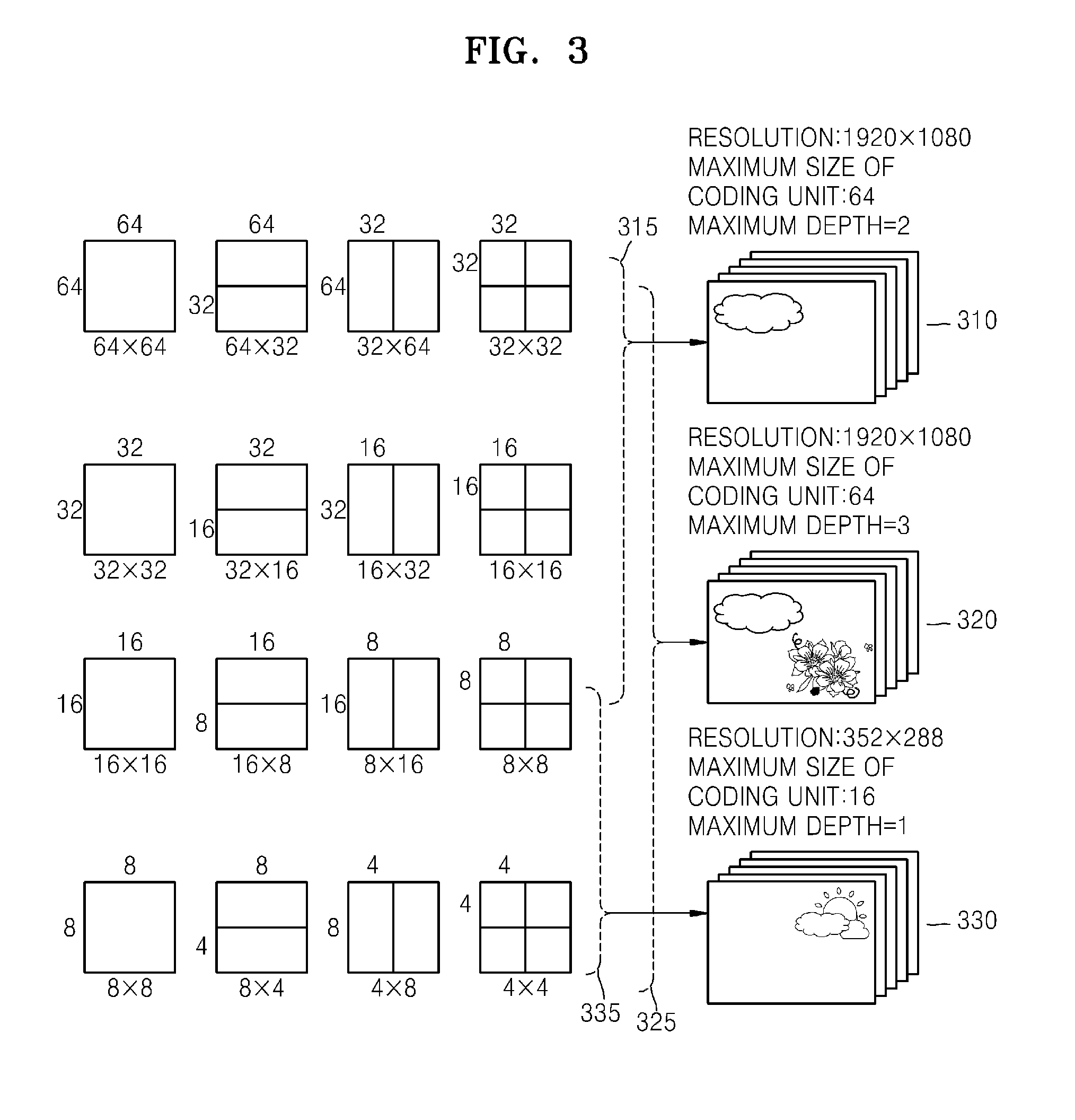

Video sequence coding method aiming at HEVC (High Efficiency Video Coding)

InactiveCN102447907AEasy to compressReduce complexityTelevision systemsDigital video signal modificationRate distortionVideo sequence

The invention relates to a video sequence coding method aiming at HEVC (High Efficiency Video Coding), and the method is good in compaction effect, low in complexity and suitable for practical application. The video sequence coding method comprises the following steps of: (1) obtaining a prediction block according to the brightness component of a PU (Prediction Unit), subtracting an original value to obtain residual, and dividing based on the rate-distortion cost to obtain a TU (Transformation Unit); (2) determining whether the current TU selects to transform or not according to the minimum rate-distortion cost principle; (3) quantizing by utilizing a relative method, and transmitting marks of a quantization coefficient and transformation or non-transformation to a decoding end; (4) reading out the marks and judging whether inverse transformation is executed or not at the decoding end by each TU; (5) executing corresponding inverse quantization according to the read marks by each TU; (6) deciding whether the inverse transformation is executed according to the read marks, and executing corresponding operations; and (7) combining TUs to be the PU, and adding the PU with a prediction value, obtained through motion compensation, of the current PU to obtain a reconfiguration value of the PU so as to reconfigure a current CU (Coding Unit).

Owner:BEIJING UNIV OF TECH

Multistage inverse quantization having the plurality of frequency bands

InactiveUS20050060147A1Efficiently quantizedLess quantization errorSpeech analysisPayment architectureIntermediate frequencyIntermediate band

With respect to audio signal coding and decoding apparatuses, there is provided a coding apparatus that enables a decoding apparatus to reproduce an audio signal even through it does not use all of data from the coding apparatus, and a decoding apparatus corresponding to the coding apparatus. A quantization unit constituting a coding apparatus includes a first sub-quantization unit comprising sub-quantization units for low-band, intermediate-band, and high-band; a second sub-quantization unit for quantizing quantization errors from the first sub-quantization unit; and a third sub-quantization unit for quantizing quantization errors which have been processed by the first sub-quantization unit and the second sub-quantization unit.

Owner:PANASONIC CORP

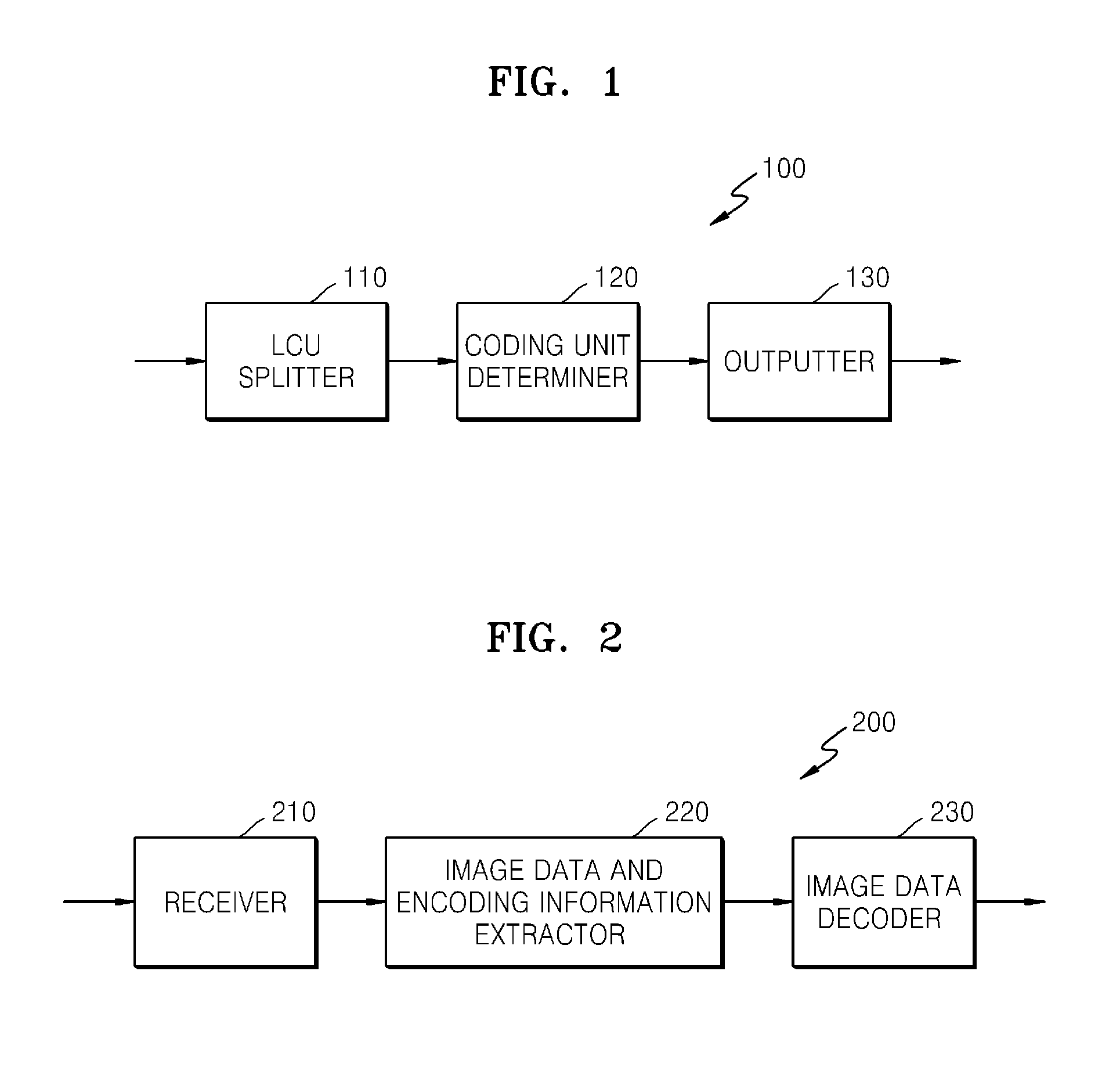

Method and apparatus for hierarchical data unit-based video encoding and decoding comprising quantization parameter prediction

ActiveUS20140341276A1Easy to handleSpeed up the processColor television with pulse code modulationColor television with bandwidth reductionVideo encodingComputer science

A method of decoding a video includes determining an initial value of a quantization parameter (QP) used to perform inverse quantization on coding units included in a slice segment, based on syntax obtained from a bitstream; determining a slice-level initial QP for predicting the QP used to perform inverse quantization on the coding units included in the slice segment, based on the initial value of the QP; and determining a predicted QP of a first quantization group of a parallel-decodable data unit included in the slice segment, based on the slice-level initial QP.

Owner:SAMSUNG ELECTRONICS CO LTD

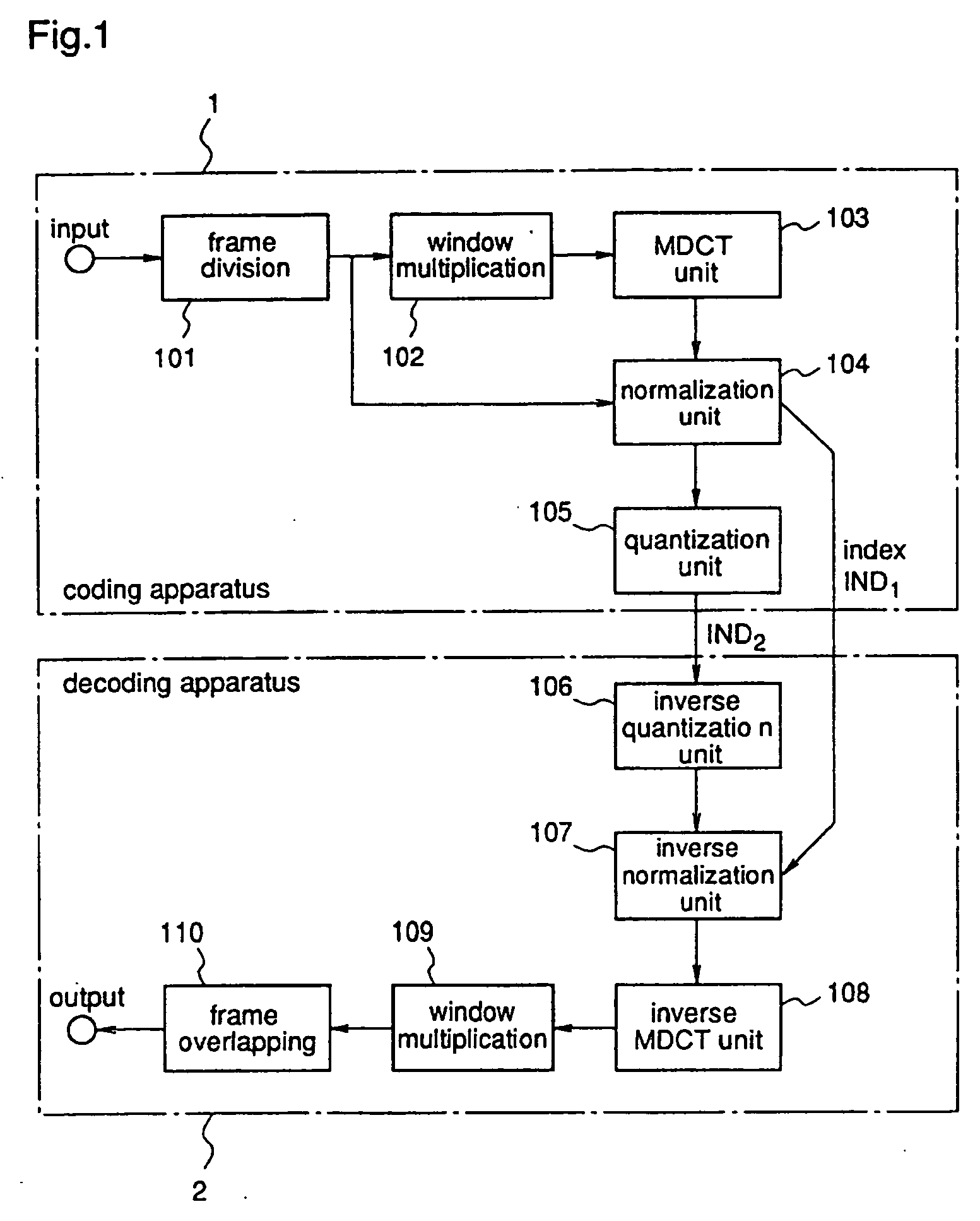

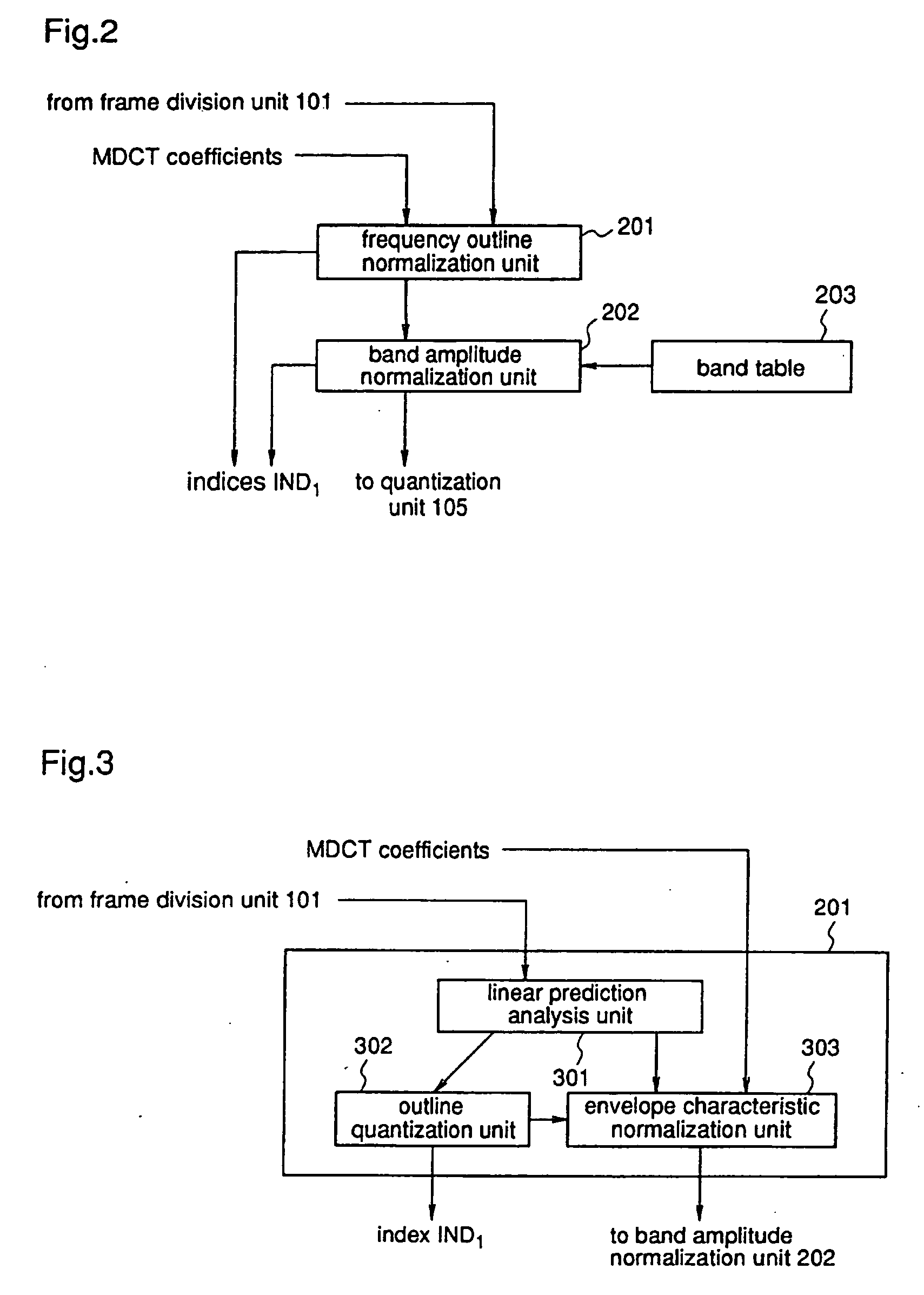

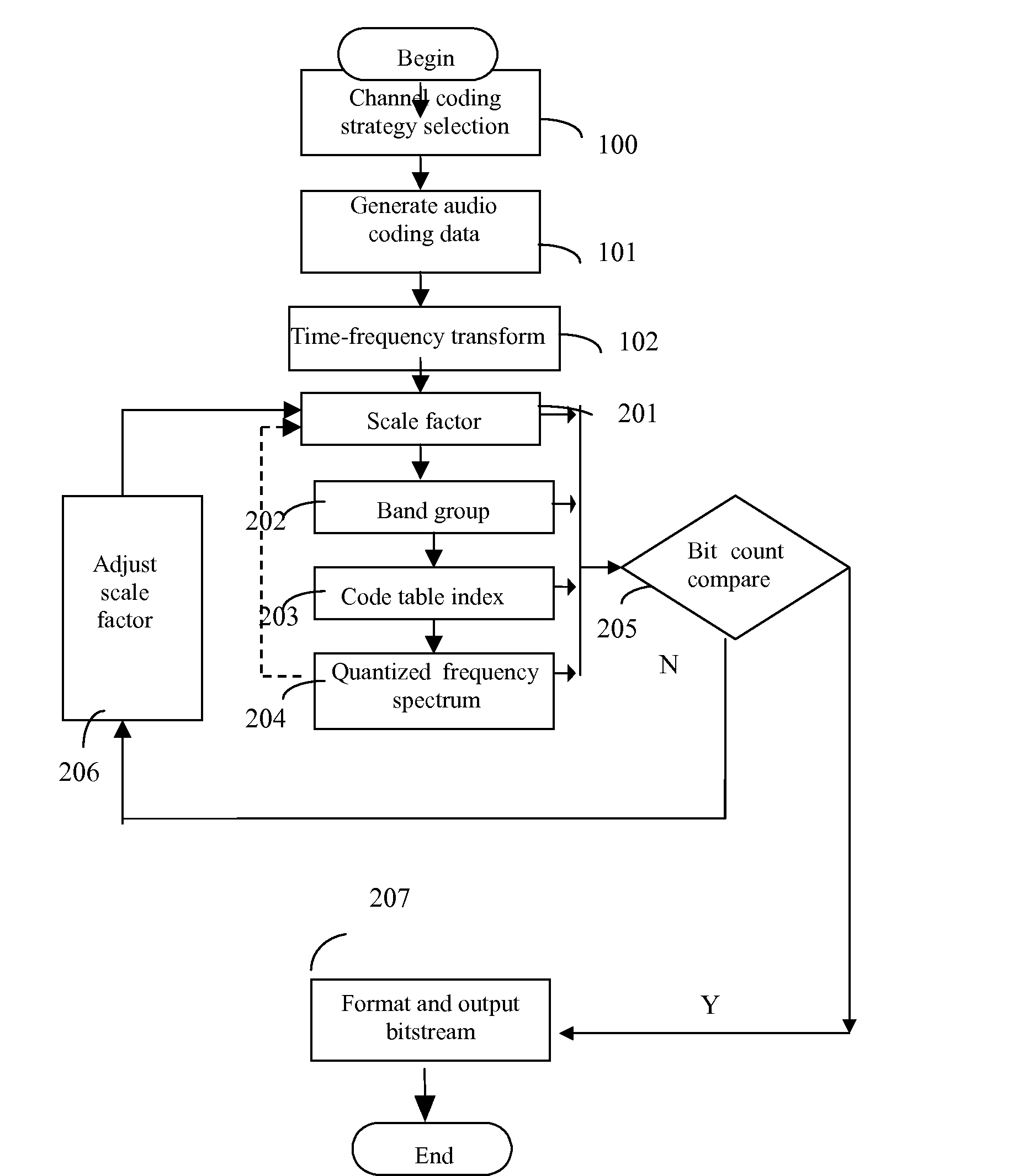

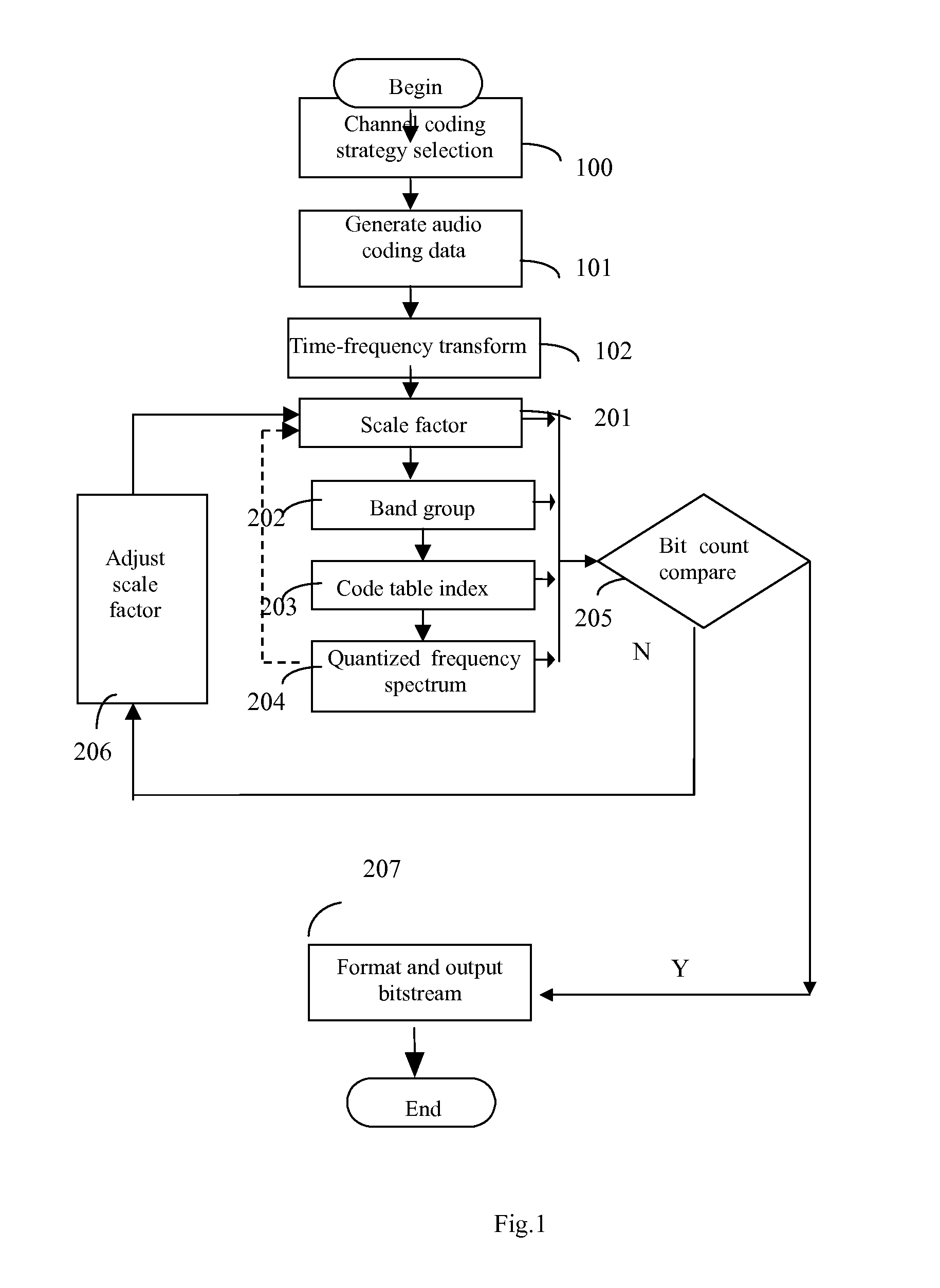

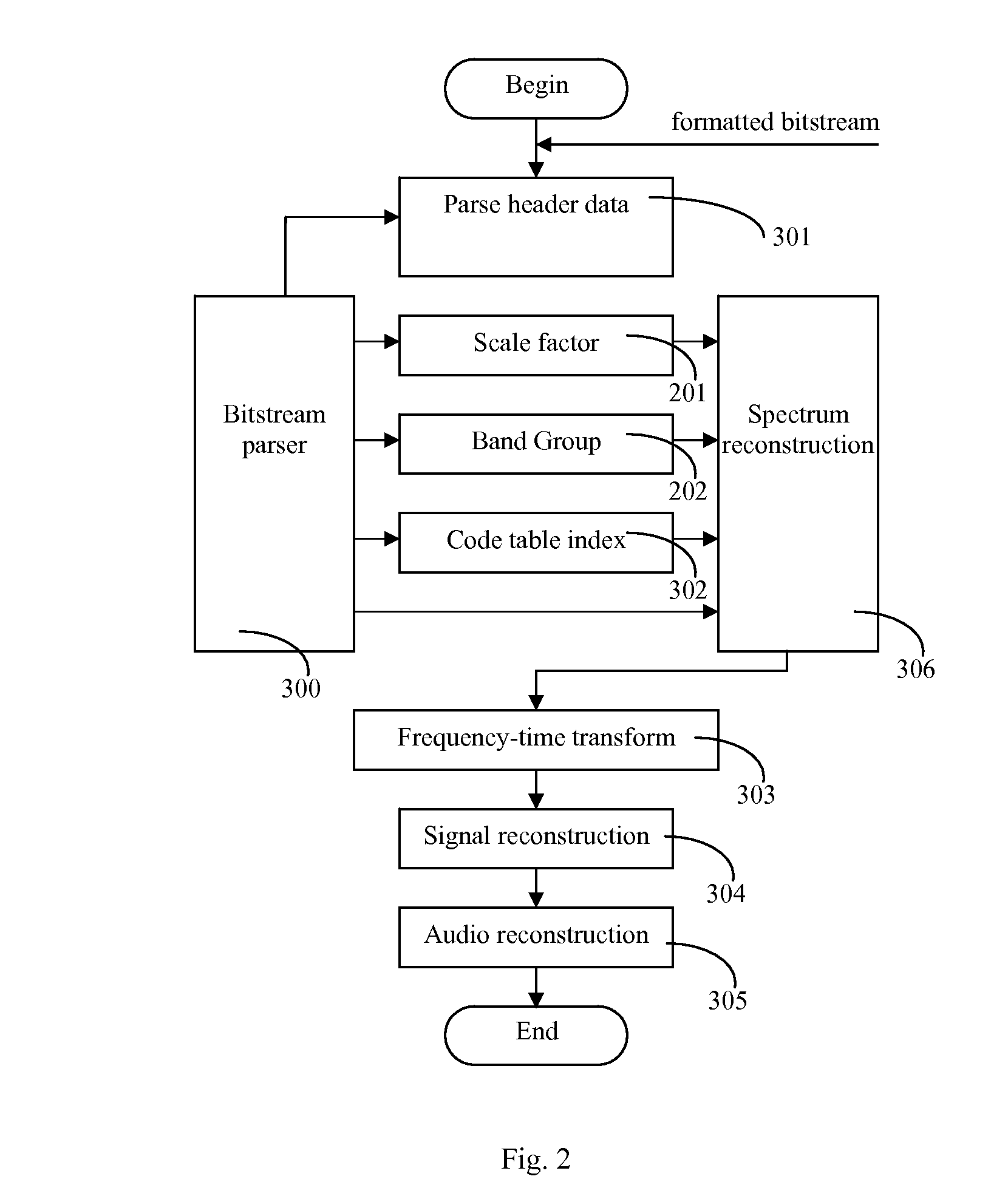

Method of implementation of audio codec

InactiveUS20070027677A1Easily identifiableReduce complexitySpeech analysisFrequency spectrumComputation complexity

This invention discloses an implementation of audio codec, which has low computational complexity, small memory footprint and high coding efficiency. It can be used in handheld devices, SoC or ASIC products and embedded systems. At the encoder side: first, apply time-to-frequency transform to audio signals, obtaining un-quantized spectrum data; second, based on the un-quantized spectrum data and target bit count, calculate the corresponding information of optimal scale factor, frequency band group, code table index and quantized spectrum by iteration; third, calculate and format bit-stream; fourth, output formatted bit-stream. At the decoder side: parse the formatted bit-stream, apply decoding and inverse quantization to the spectrum of each frame, reconstruct temporal audio data by frequency-to-time transform, and reconstruct the time-domain signals of each channel.

Owner:SHANGHAI JADE TECH

Method and apparatus for quantization, and method and apparatus for inverse quantization

InactiveUS20090147843A1Great deterioration of video qualityReduce in quantityColor television with pulse code modulationColor television with bandwidth reductionVideo qualityComputer science

Provided are a quantization method and apparatus and an inverse-quantization method and apparatus for determining quantization steps using lengths of runs that are transform coefficients having consecutive zero values and modifying the transform coefficients. The quantization apparatus can modify quantization steps so that the quantization steps are proportional to lengths of previous runs to quantize significant transform coefficients. As a result, a number of bits generated during coding can be reduced without a great deterioration of video quality.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com