Selecting macroblock coding modes for video encoding

a coding mode and macroblock technology, applied in the field of video coding, can solve the problem of very intensive computation of the rate-distortion optimized coding mode decision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] Our invention provides a method for determining a Lagrange cost, which leads to an efficient, rate-distortion optimized macroblock mode decision.

[0031] Method and System Overview

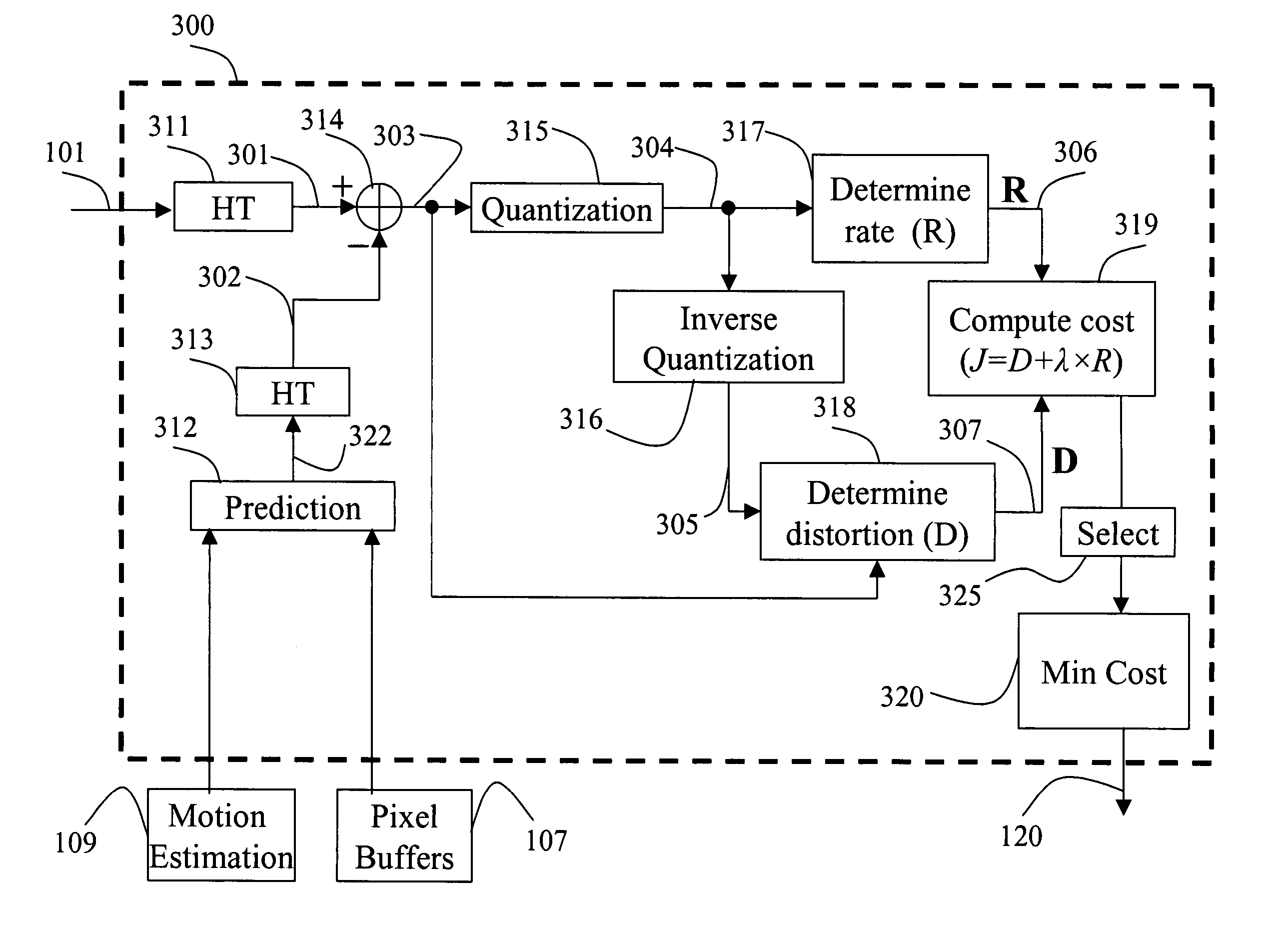

[0032]FIG. 3 shows the method and system 300, according to the invention, for selecting an optimal coding mode from multiple available candidate coding modes for each macroblock in a video. The selection is based on a Lagrange cost for a coding mode of a macroblock partition.

[0033] Both an input macroblock partition 101 and a predicted 312 macroblock partition prediction 322 are subject to HT-transforms 311 and 313, respectively. Each transform produces respective input 301 and predicted 302 HT-coefficients. Then, a difference 303 between the input HT-coefficient 301 and predicted HT-coefficient 302 is determined 314. The difference 303 is quantized 315 to produce a quantized difference 304 from which a coding rate R 306 is determined 317.

[0034] The quantized difference HT-coefficients are also su...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com