Patents

Literature

253 results about "Hardware thread" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

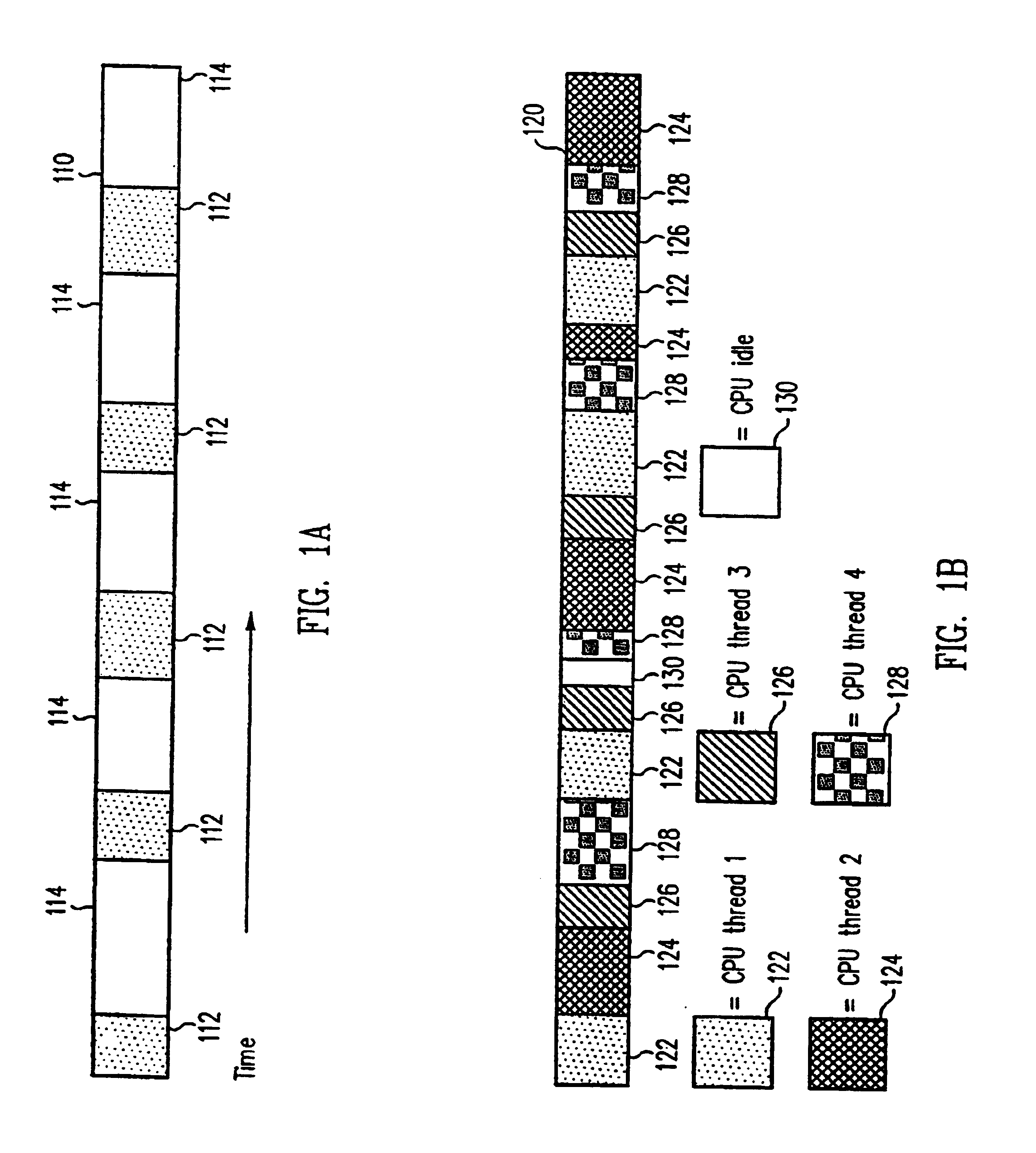

Hardware threads are a feature of some processors that allow better utilisation of the processor under some circumstances. They may be exposed to/by the operating system as appearing to be additional cores ("hyperthreading"). In Java, the threads you create maintain the software thread abstraction, where the JVM is the "operating system".

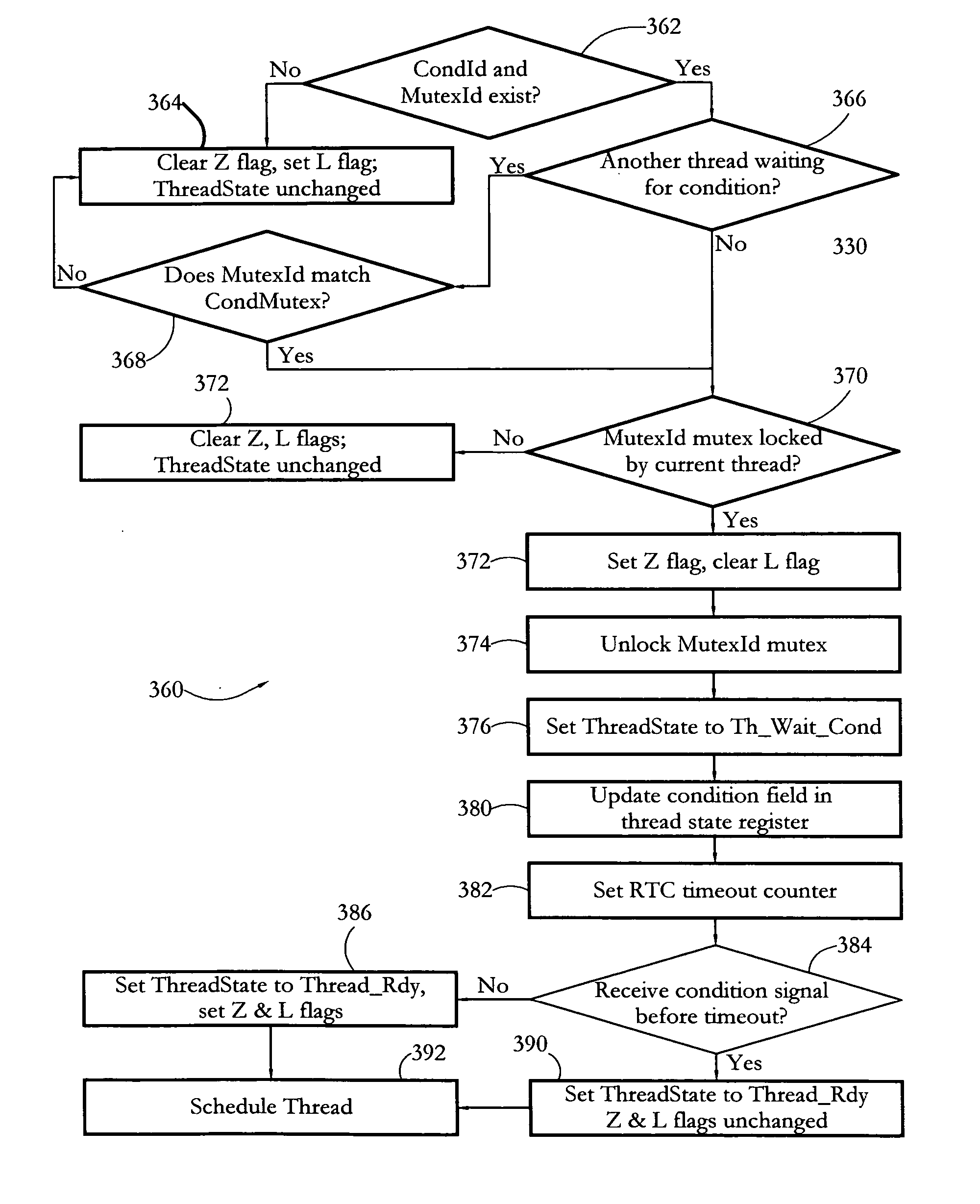

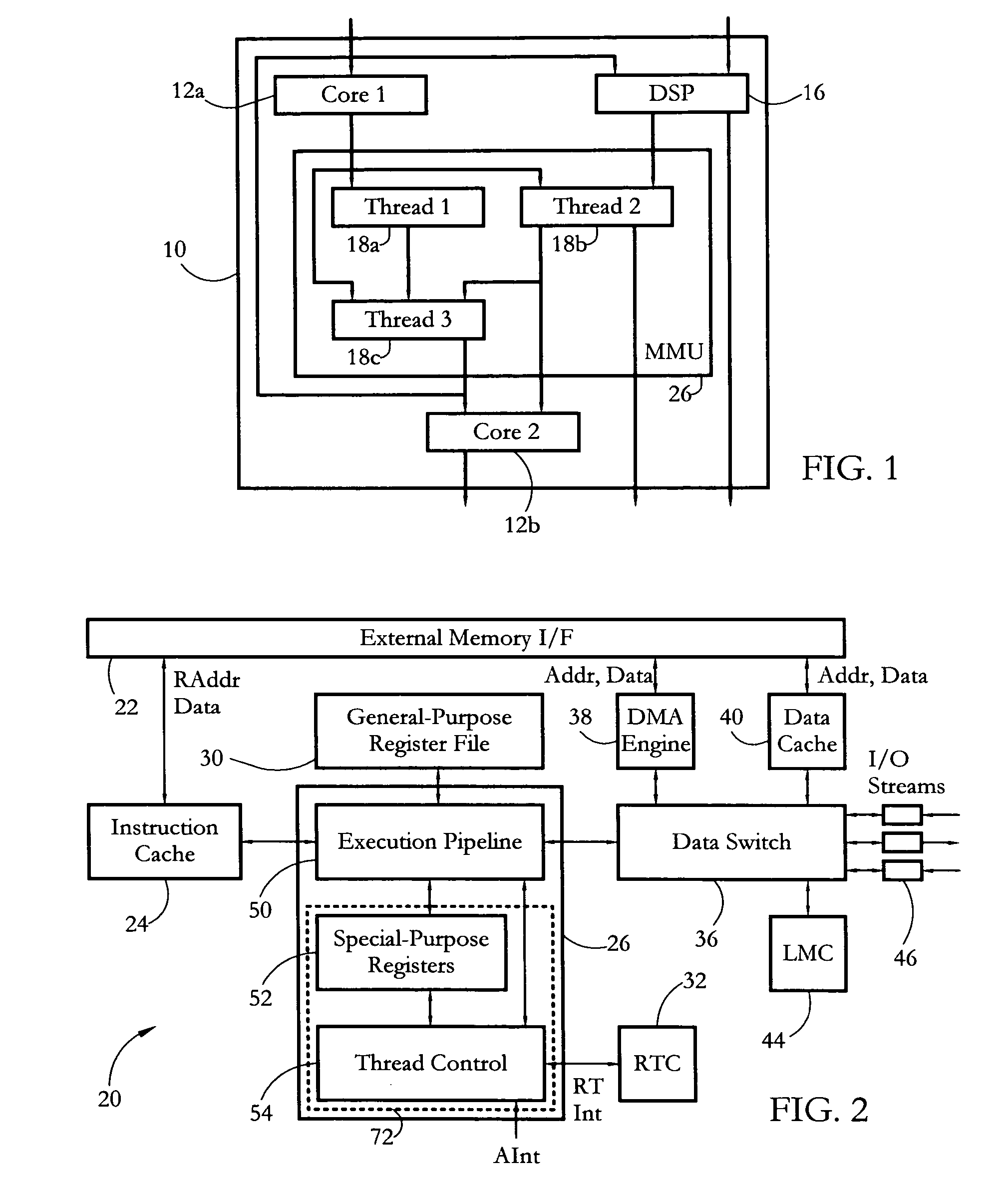

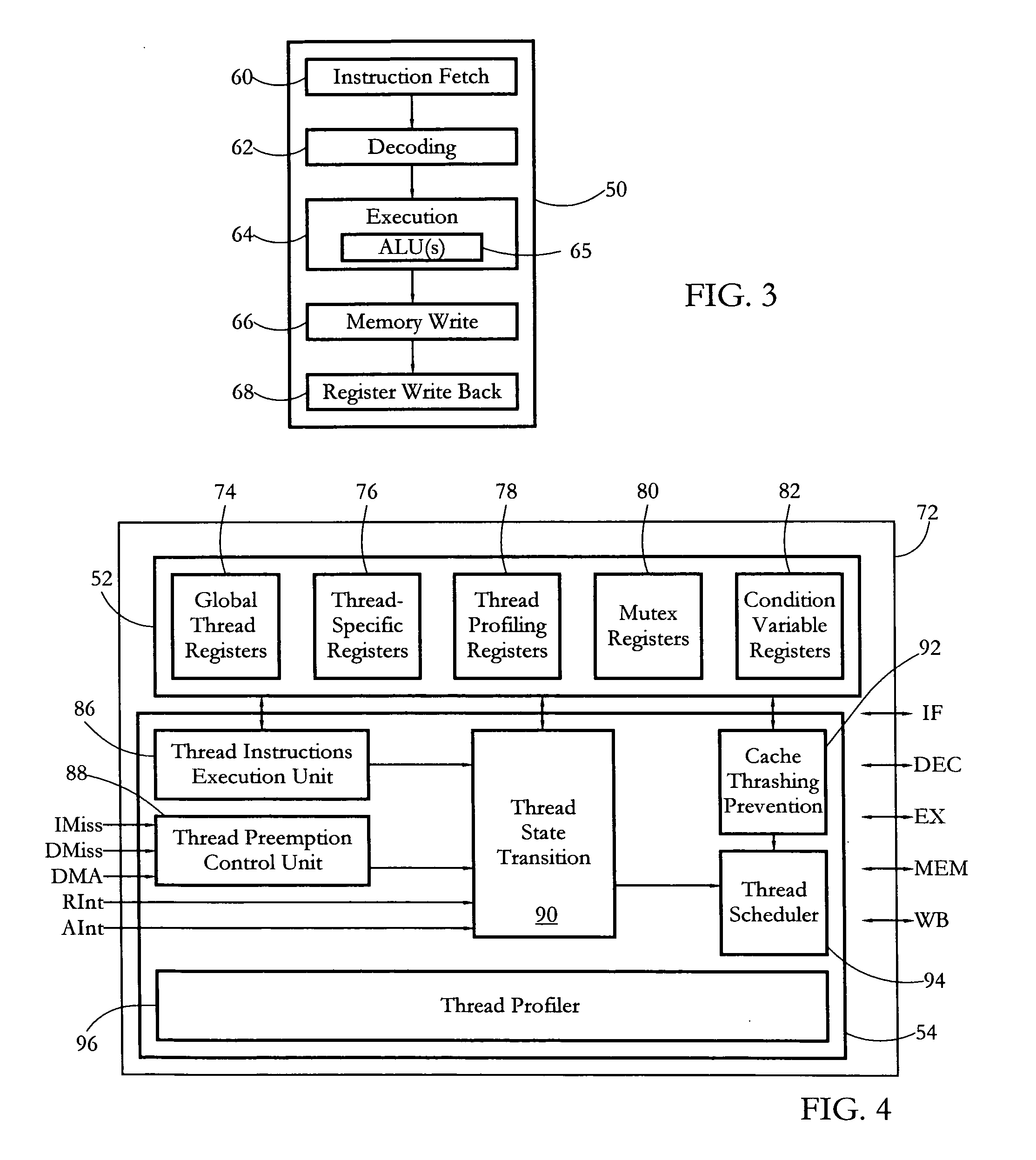

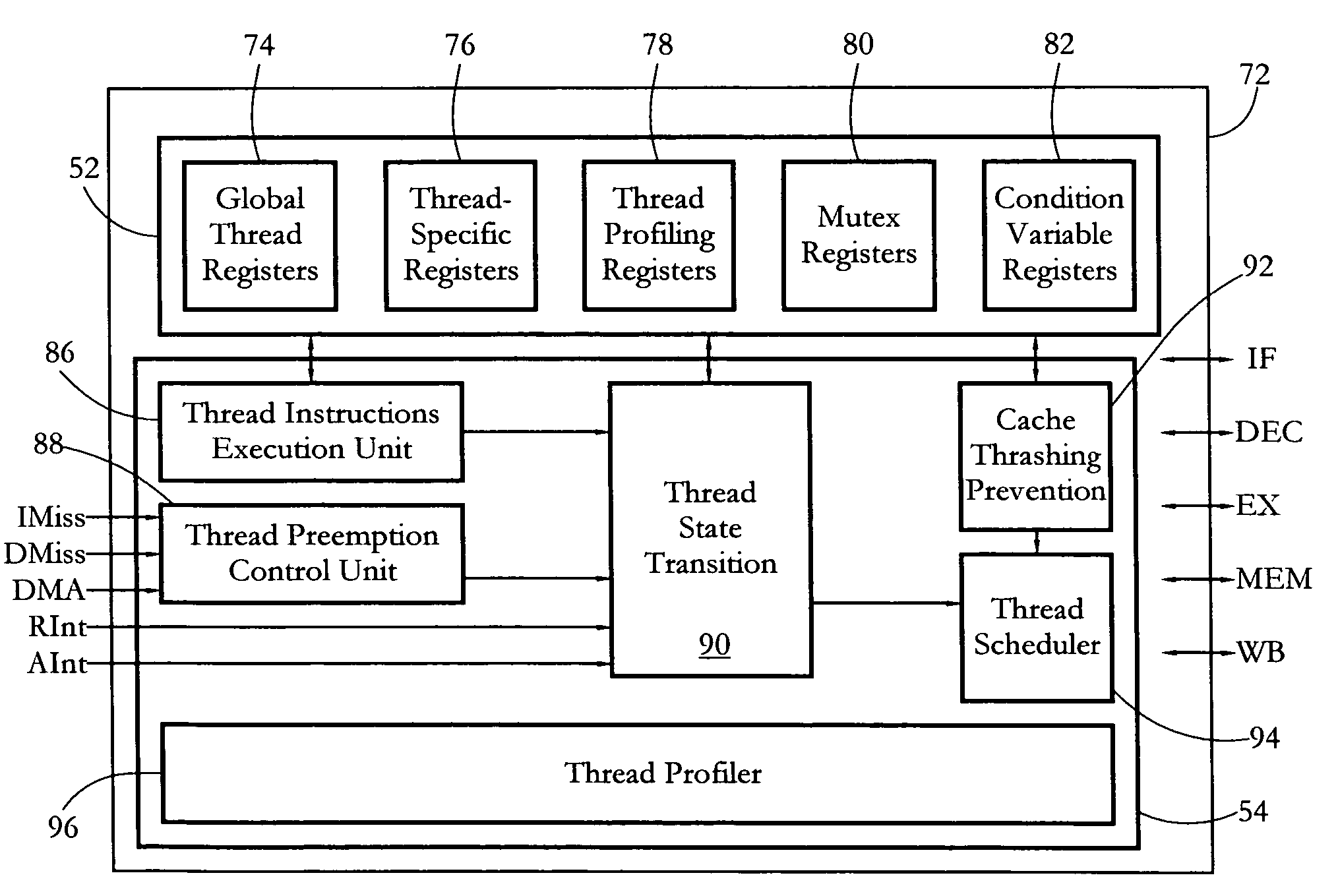

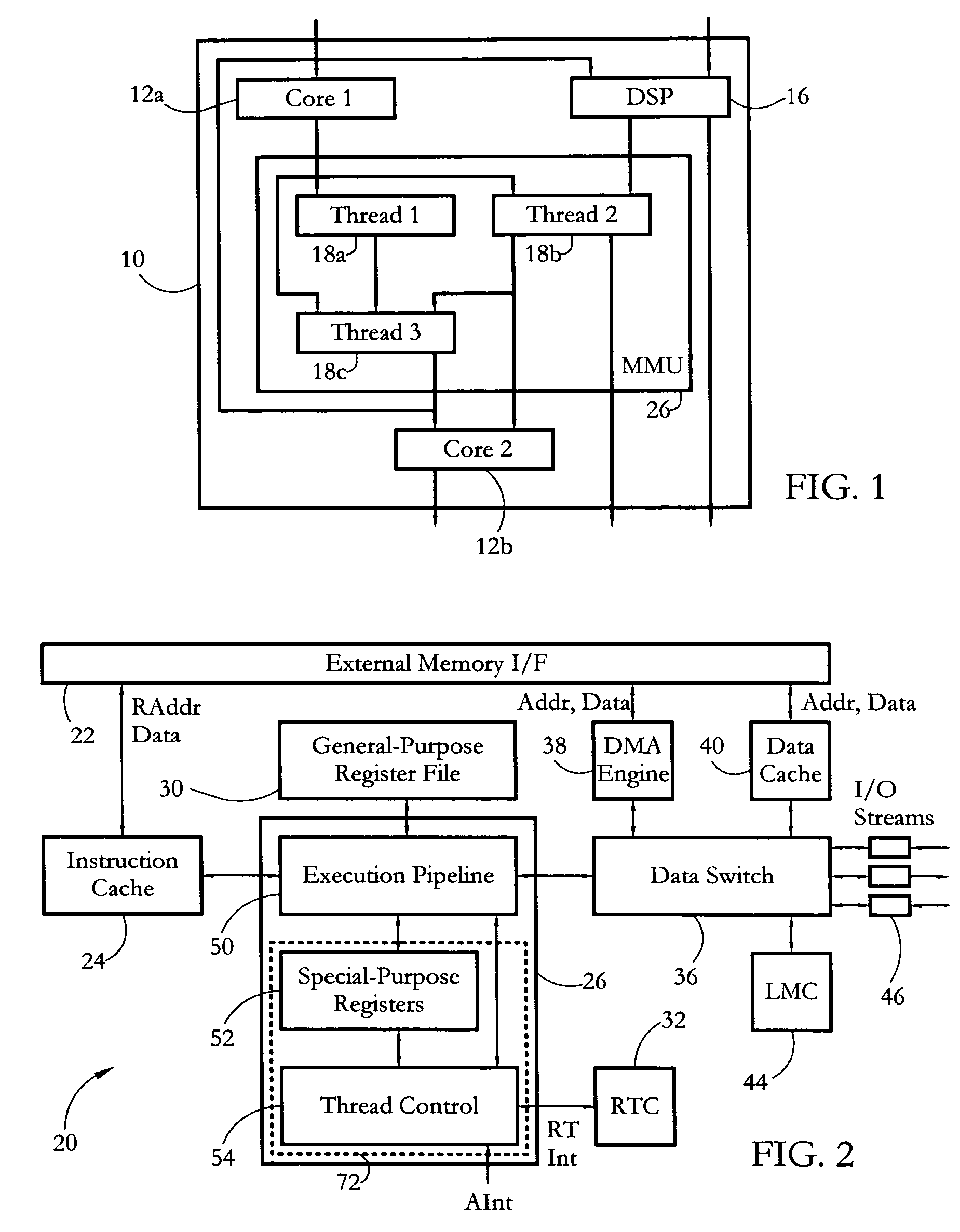

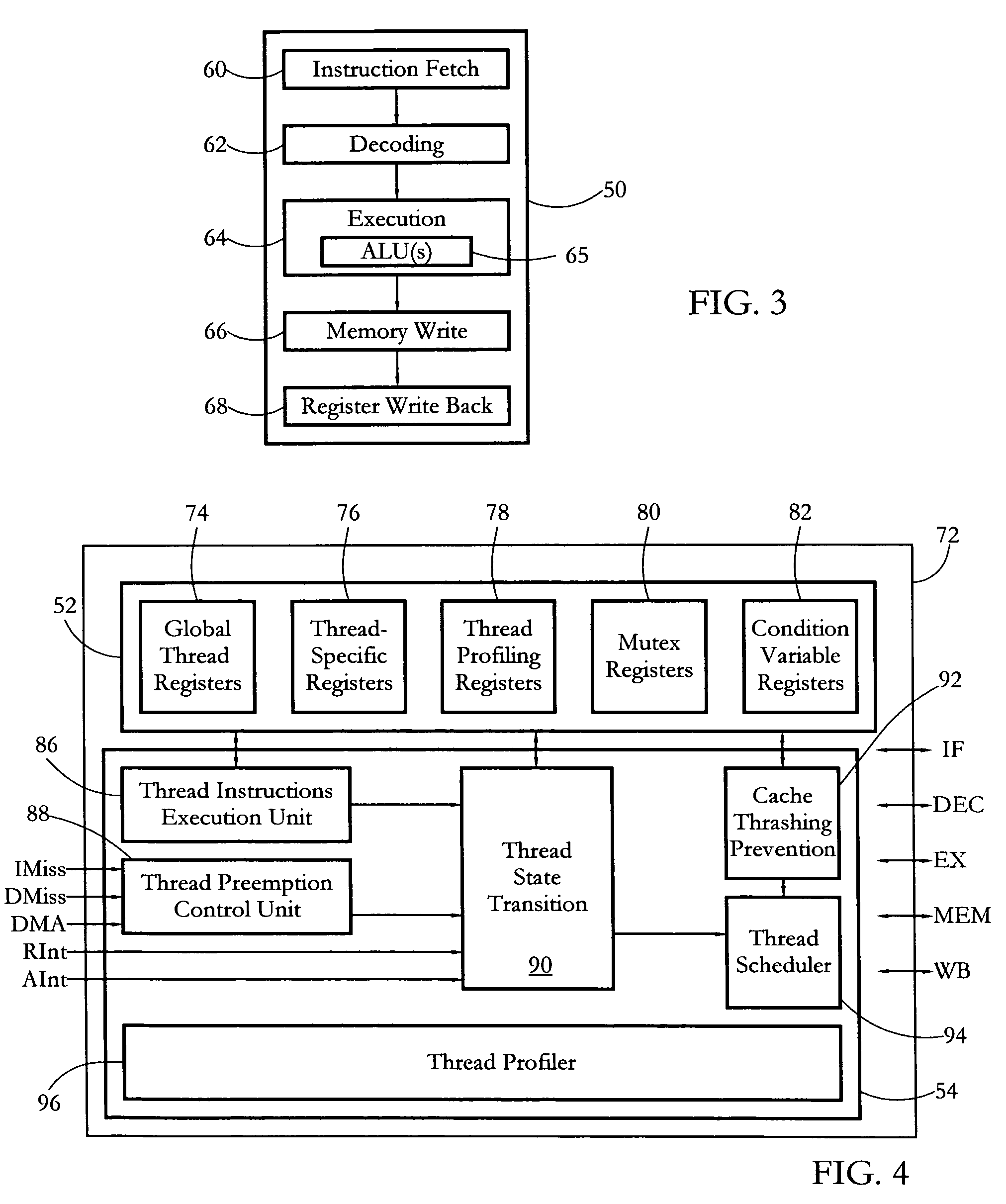

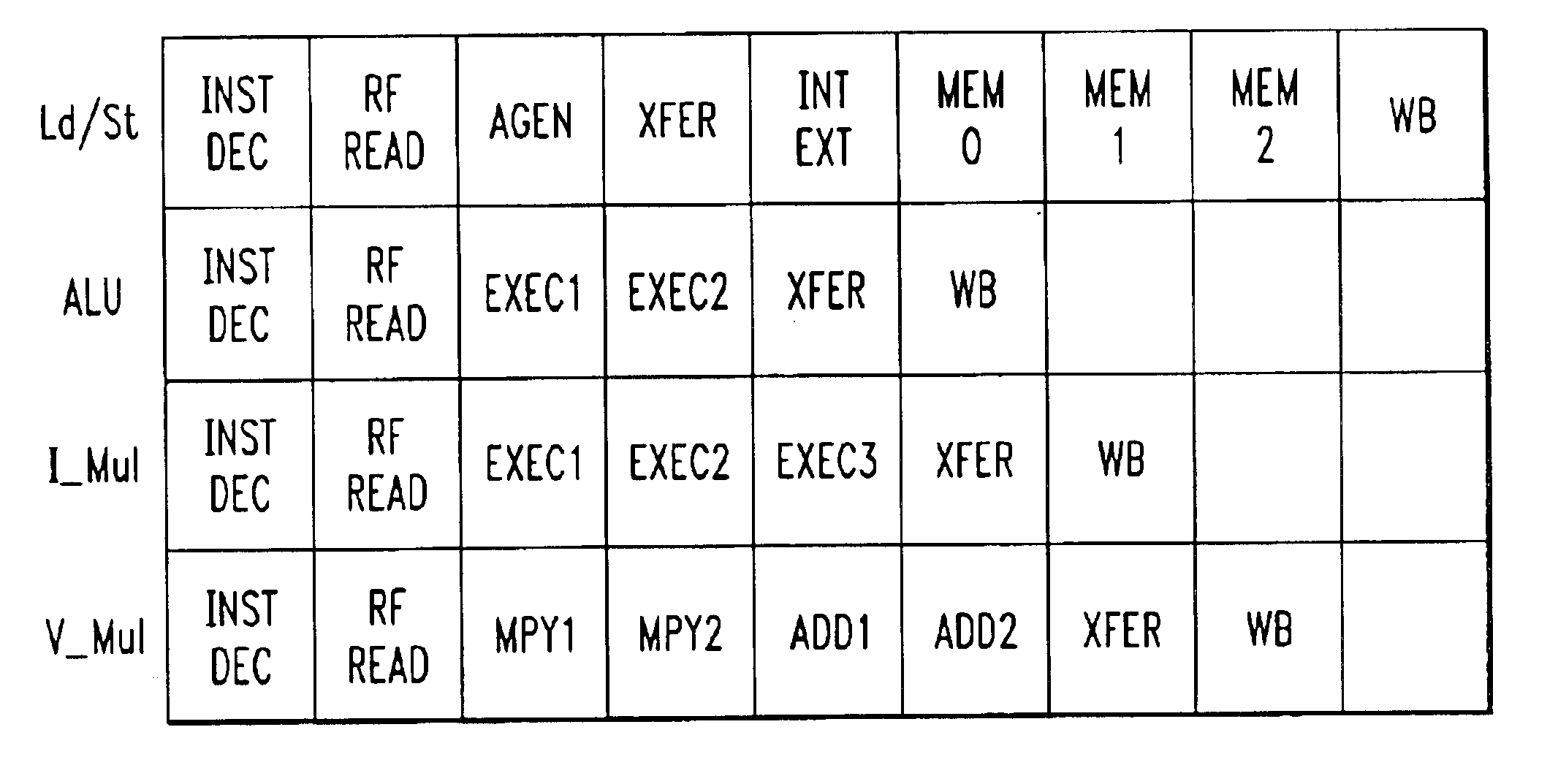

Hardware multithreading systems and methods

According to some embodiments, a multithreaded microcontroller includes a thread control unit comprising thread control hardware (logic) configured to perform a number of multithreading system calls essentially in real time, e.g. in one or a few clock cycles. System calls can include mutex lock, wait condition, and signal instructions. The thread controller includes a number of thread state, mutex, and condition variable registers used for executing the multithreading system calls. Threads can transition between several states including free, run, ready and wait. The wait state includes interrupt, condition, mutex, I-cache, and memory substates. A thread state transition controller controls thread states, while a thread instructions execution unit executes multithreading system calls and manages thread priorities to avoid priority inversion. A thread scheduler schedules threads according to their priorities. A hardware thread profiler including global, run and wait profiler registers is used to monitor thread performance to facilitate software development.

Owner:GEO SEMICONDUCTOR INC

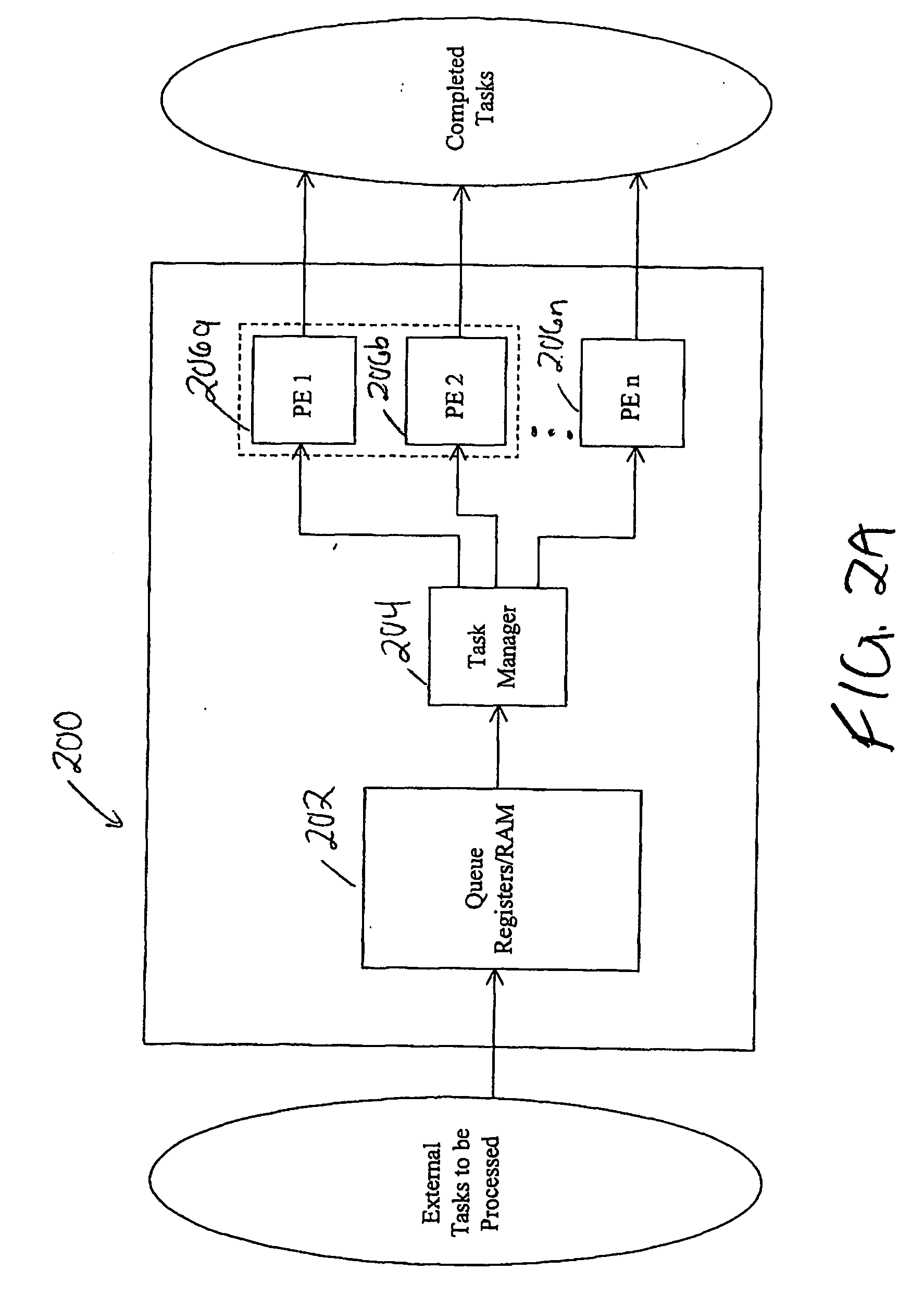

Multithreaded physics engine with predictive load balancing

InactiveUS20110321057A1Raise the possibilityOptimizationMultiprogramming arrangementsMemory systemsHardware threadPhysics engine

A circuit arrangement and method utilize predictive load balancing to allocate the workload among hardware threads in a multithreaded physics engine. The predictive load balancing is based at least in part upon the detection of predicted future collisions between objects in a scene, such that the reallocation of respective loads of a plurality of hardware threads may be initiated prior to detection of the actual collisions, thereby increasing the likelihood that hardware threads will be optimally allocated when the actual collisions occur.

Owner:IBM CORP

Optimized thread scheduling via hardware performance monitoring

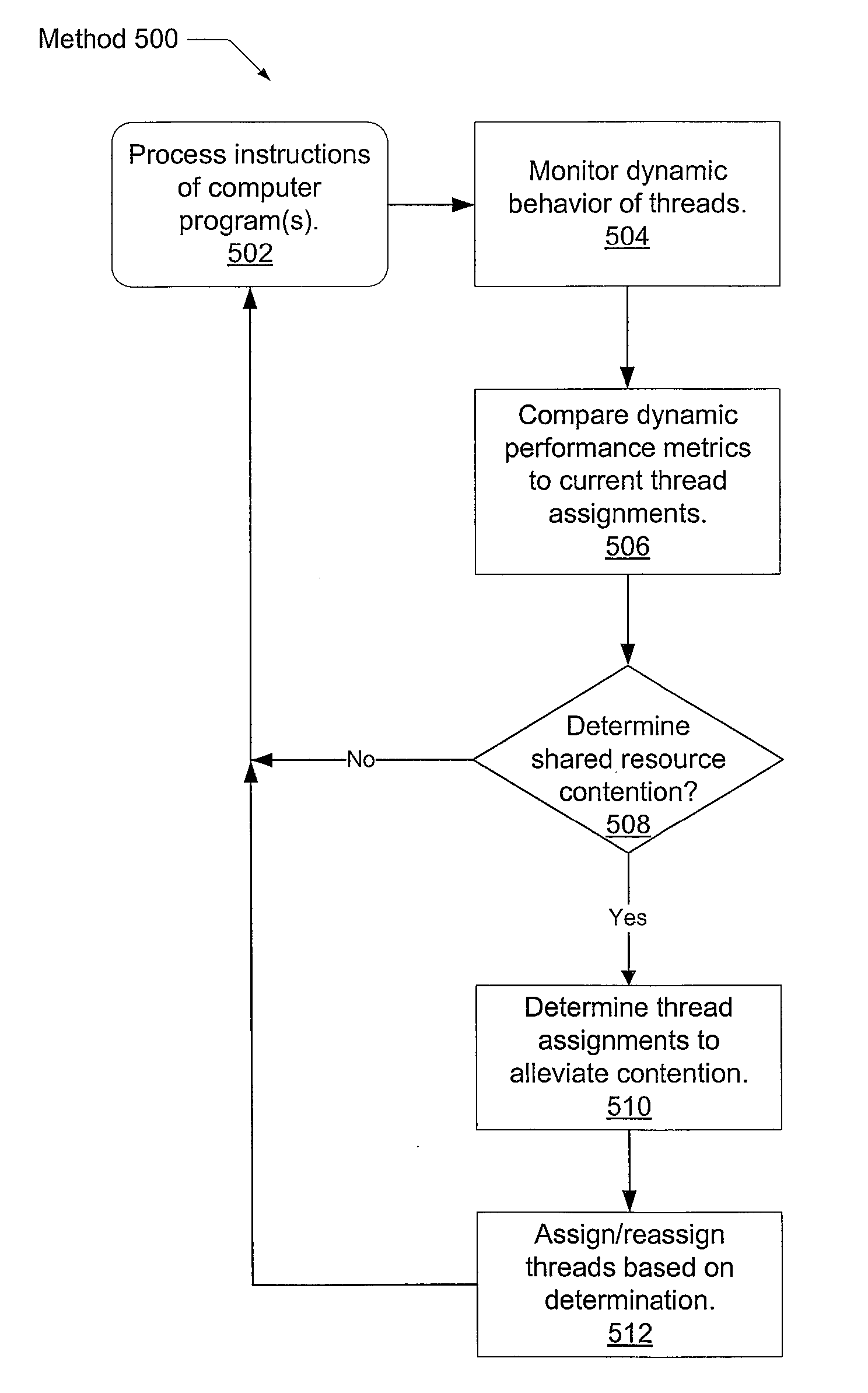

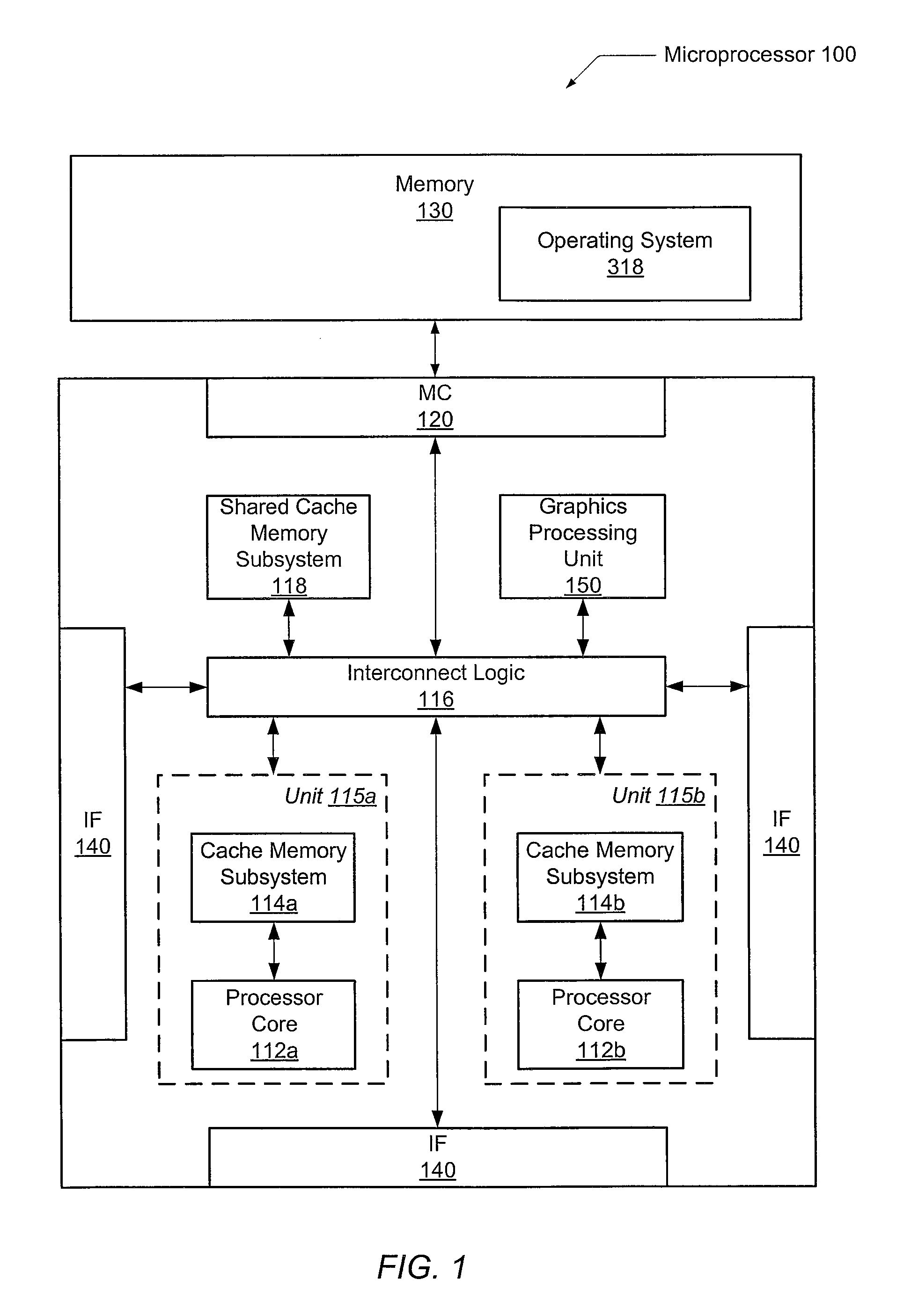

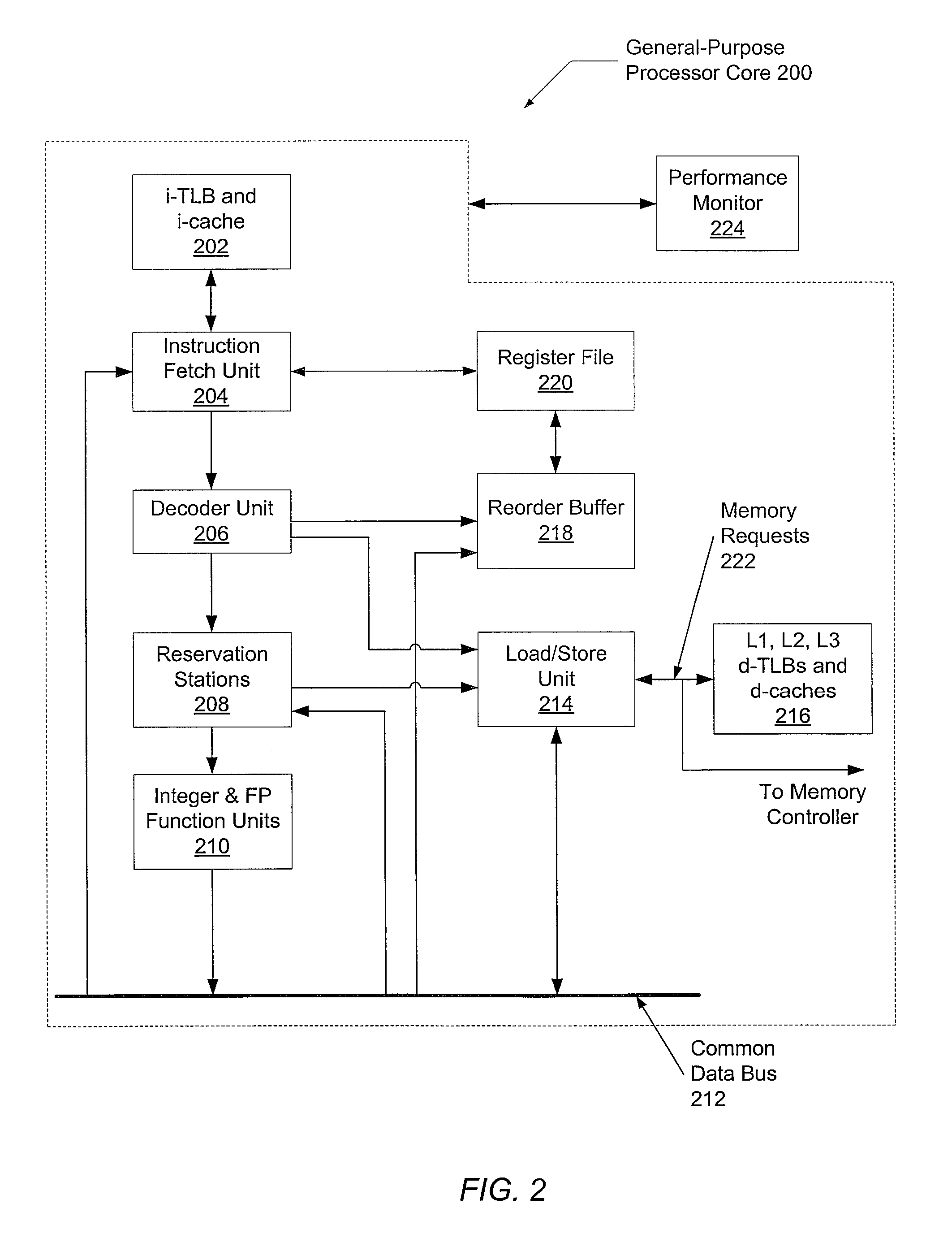

A system and method for efficient dynamic scheduling of tasks. A scheduler within an operating system assigns software threads of program code to computation units. A computation unit may be a microprocessor, a processor core, or a hardware thread in a multi-threaded core. The scheduler receives measured data values from performance monitoring hardware within a processor as the one or more processors execute the software threads. The scheduler may be configured to reassign a first thread assigned to a first computation unit coupled to a first shared resource to a second computation unit coupled to a second shared resource. The scheduler may perform this dynamic reassignment in response to determining from the measured data values a first measured value corresponding to the utilization of the first shared resource exceeds a predetermined threshold and a second measured value corresponding to the utilization of the second shared resource does not exceed the predetermined threshold.

Owner:ADVANCED MICRO DEVICES INC

Performing escape actions in transactions

ActiveUS20100332807A1Memory adressing/allocation/relocationDigital computer detailsHardware threadFinancial transaction

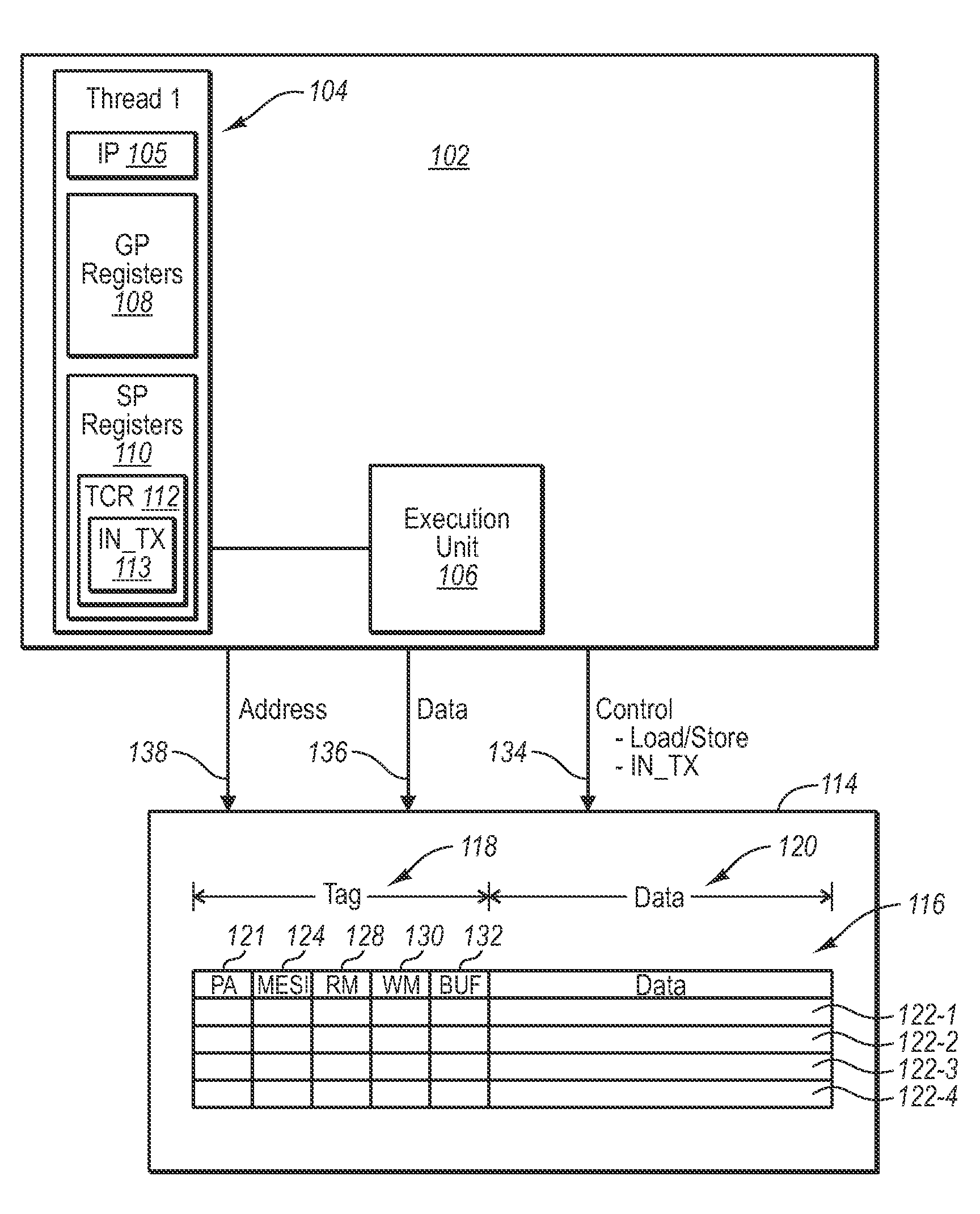

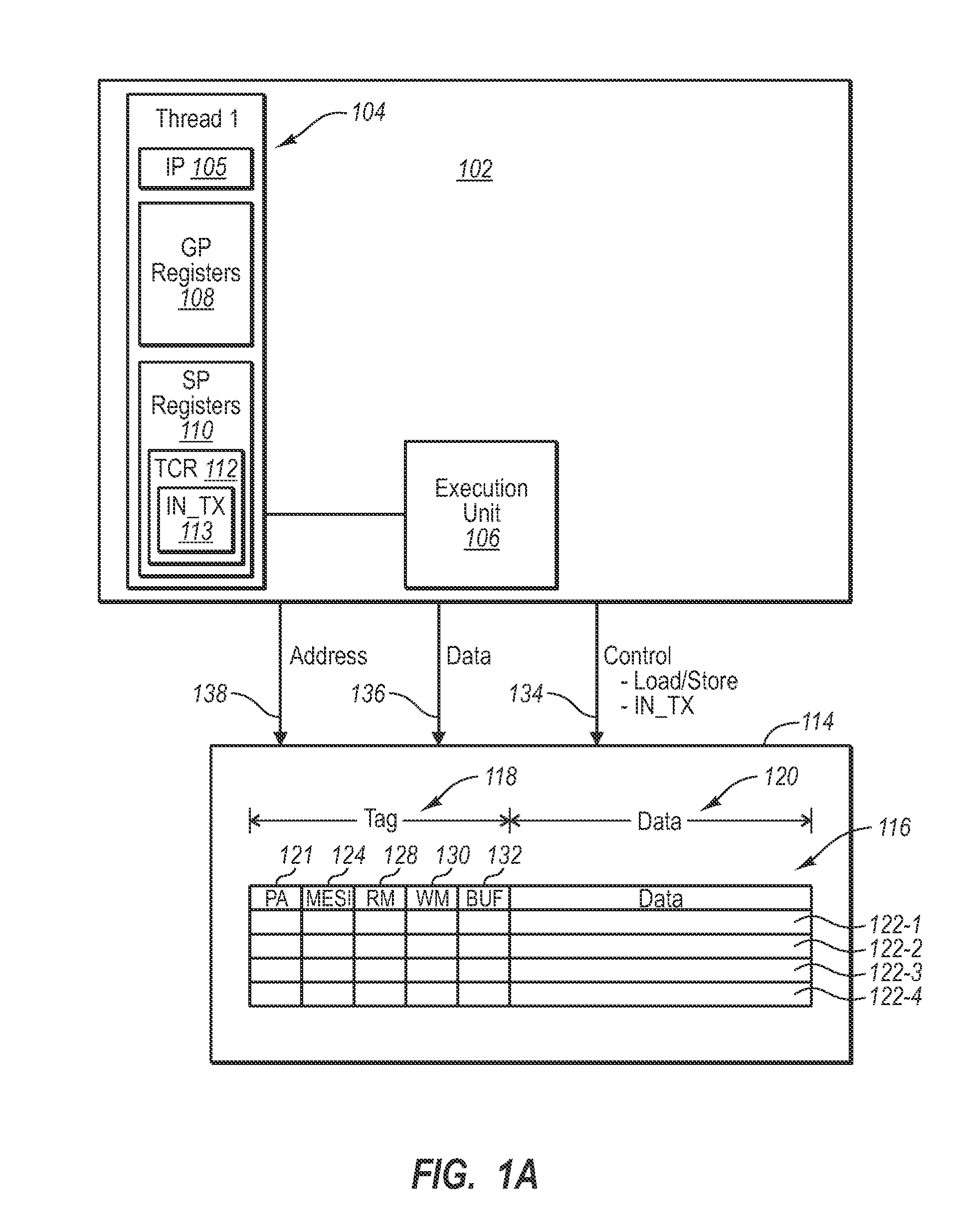

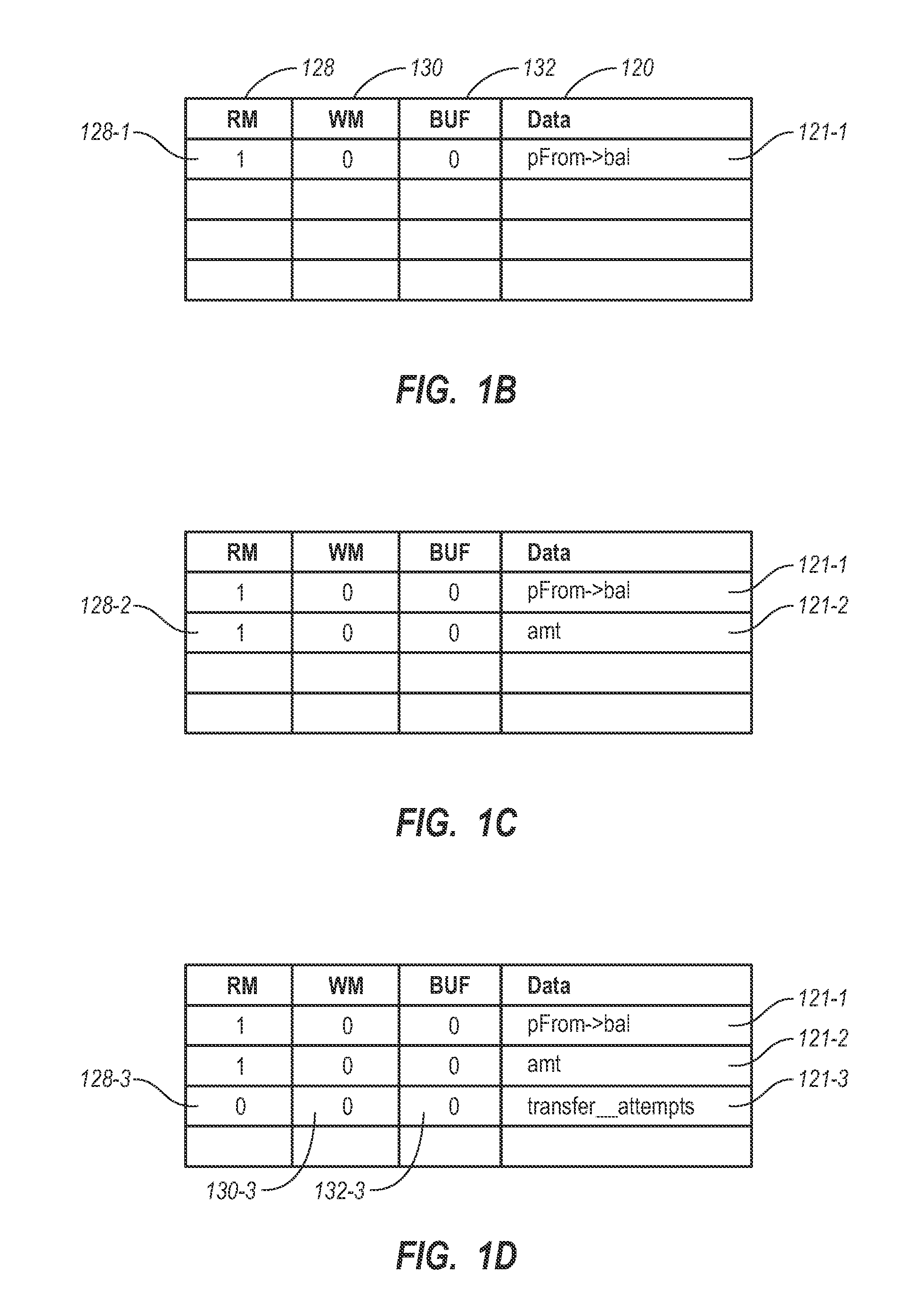

Performing non-transactional escape actions within a hardware based transactional memory system. A method includes at a hardware thread on a processor beginning a hardware based transaction for the thread. Without committing or aborting the transaction, the method further includes suspending the hardware based transaction and performing one or more operations for the thread, non-transactionally and not affected by: transaction monitoring and buffering for the transaction, an abort for the transaction, or a commit for the transaction. After performing one or more operations for the thread, non-transactionally, the method further includes resuming the transaction and performing additional operations transactionally. After performing the additional operations, the method further includes either committing or aborting the transaction.

Owner:MICROSOFT TECH LICENSING LLC

Hardware multithreading systems with state registers having thread profiling data

According to some embodiments, a multithreaded microcontroller includes a thread control unit comprising thread control hardware (logic) configured to perform a number of multithreading system calls essentially in real time, e.g. in one or a few clock cycles. System calls can include mutex lock, wait condition, and signal instructions. The thread controller includes a number of thread state, mutex, and condition variable registers used for executing the multithreading system calls. Threads can transition between several states including free, run, ready and wait. The wait state includes interrupt, condition, mutex, I-cache, and memory substates. A thread state transition controller controls thread states, while a thread instructions execution unit executes multithreading system calls and manages thread priorities to avoid priority inversion. A thread scheduler schedules threads according to their priorities. A hardware thread profiler including global, run and wait profiler registers is used to monitor thread performance to facilitate software development.

Owner:GEO SEMICONDUCTOR INC

Method and apparatus for token triggered multithreading

InactiveUS6842848B2Interprogram communicationDigital computer detailsHardware threadProcessor register

Techniques for token triggered multithreading in a multithreaded processor are disclosed. An instruction issuance sequence for a plurality of threads of the multithreaded processor is controlled by associating with each of the threads at least one register which stores a value identifying a next thread to be permitted to issue one or more instructions, and utilizing the stored value to control the instruction issuance sequence. For example, each of a plurality of hardware thread units of the multithreaded processor may include a corresponding local register updatable by that hardware thread unit, with the local register for a given one of the hardware thread units storing a value identifying the next thread to be permitted to issue one or more instructions after the given hardware thread unit has issued one or more instructions. A global register arrangement may also or alternatively be used. The processor may be configured so as to permit the instruction issuance sequence to correspond to an arbitrary alternating even-odd sequence of threads, without introducing blocking conditions leading to thread stalls.

Owner:QUALCOMM INC

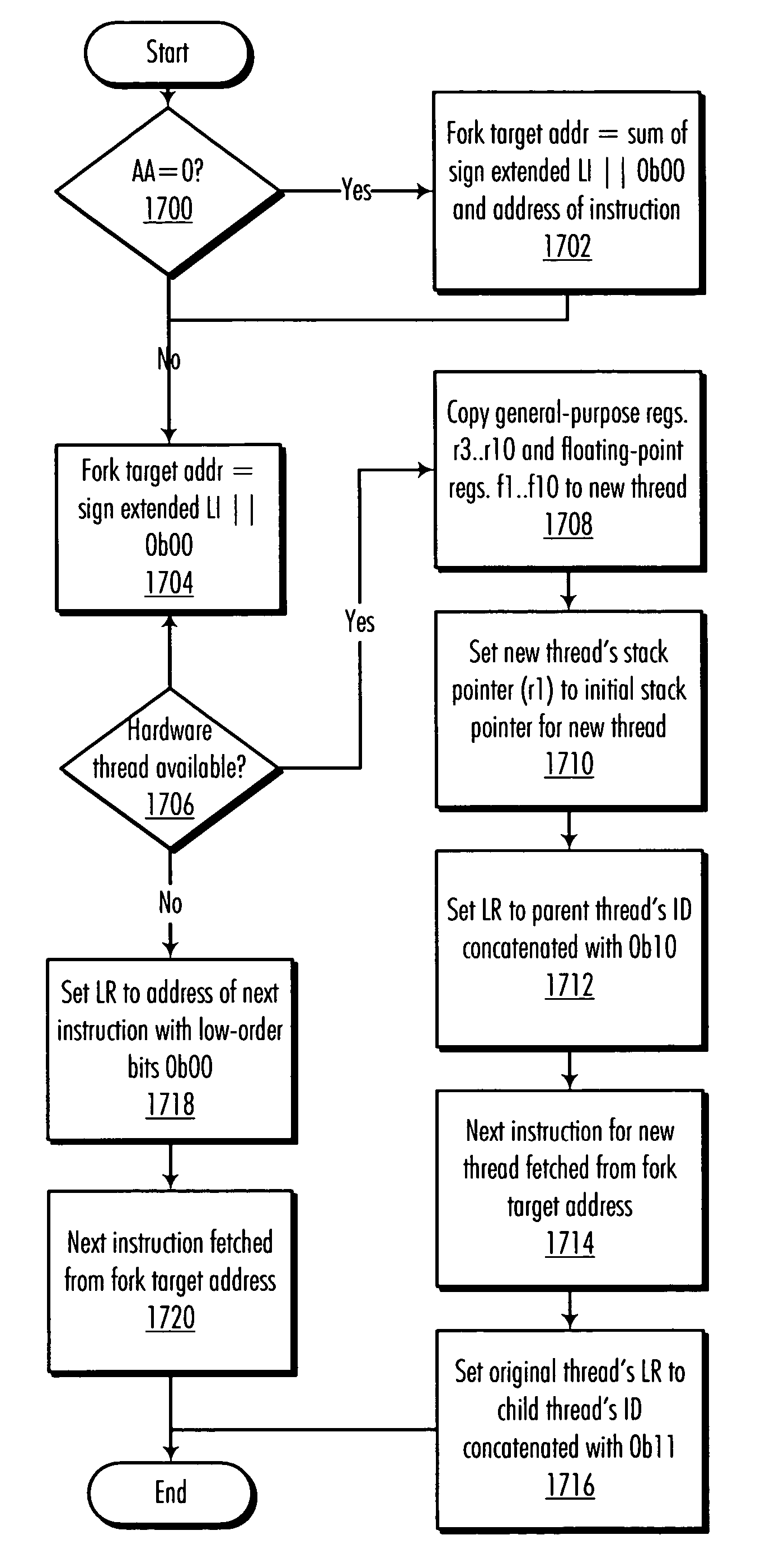

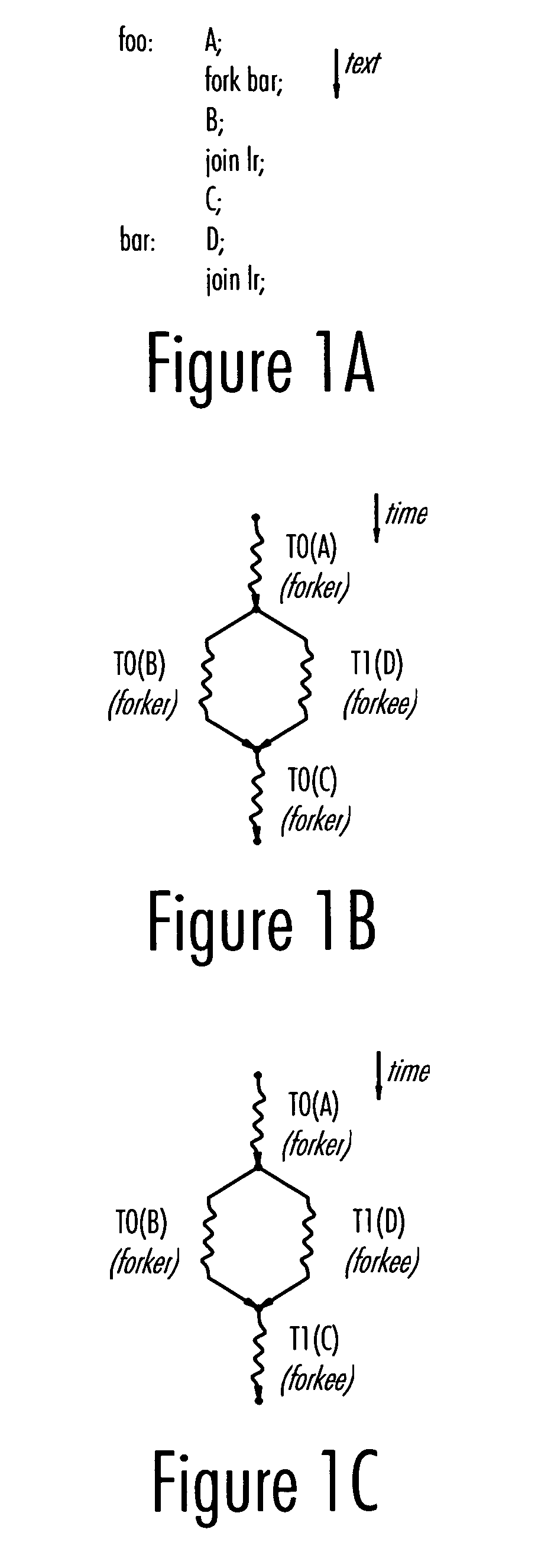

Multithreaded processor architecture with implicit granularity adaptation

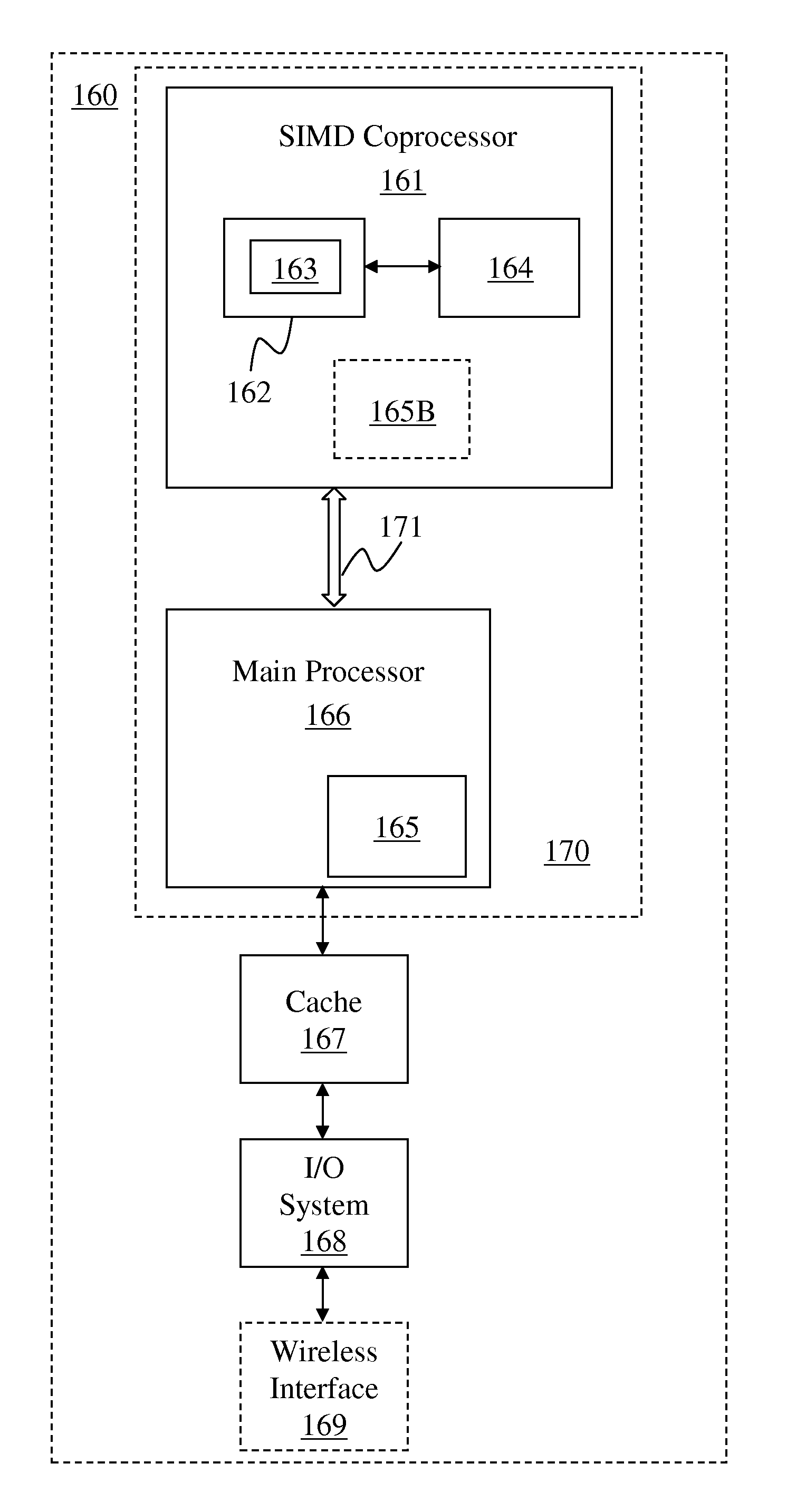

InactiveUS20060230409A1High of latency hidingReduce in quantityMultiprogramming arrangementsMemory systemsSelf adaptiveHardware thread

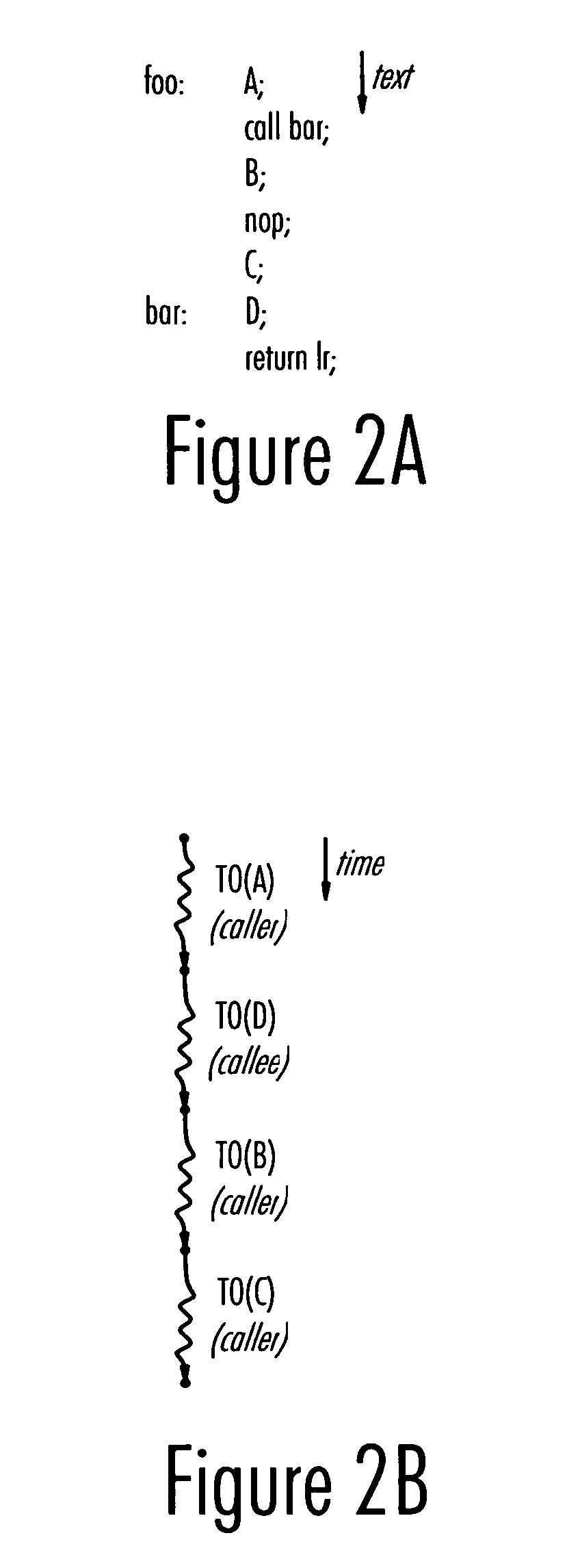

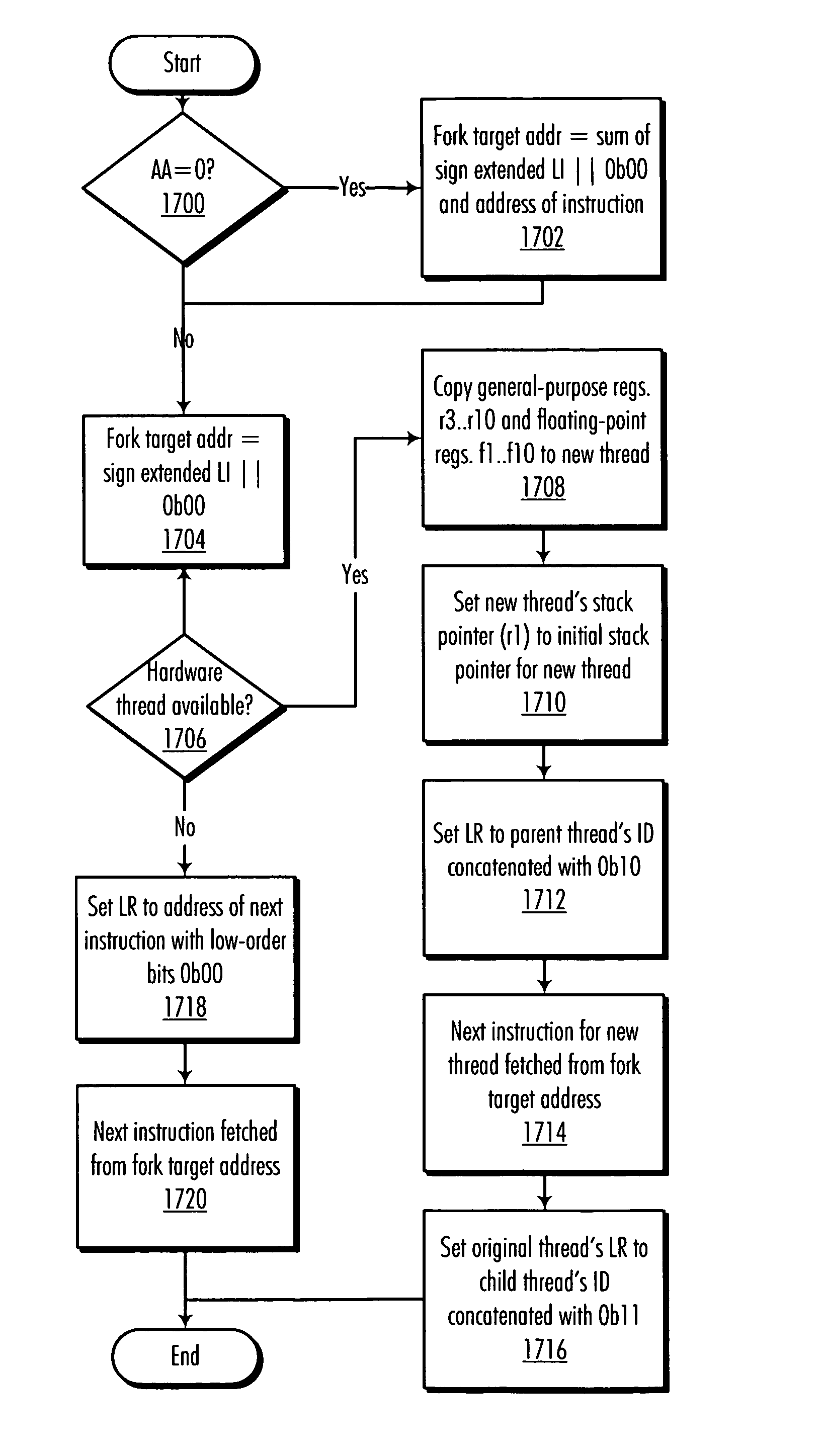

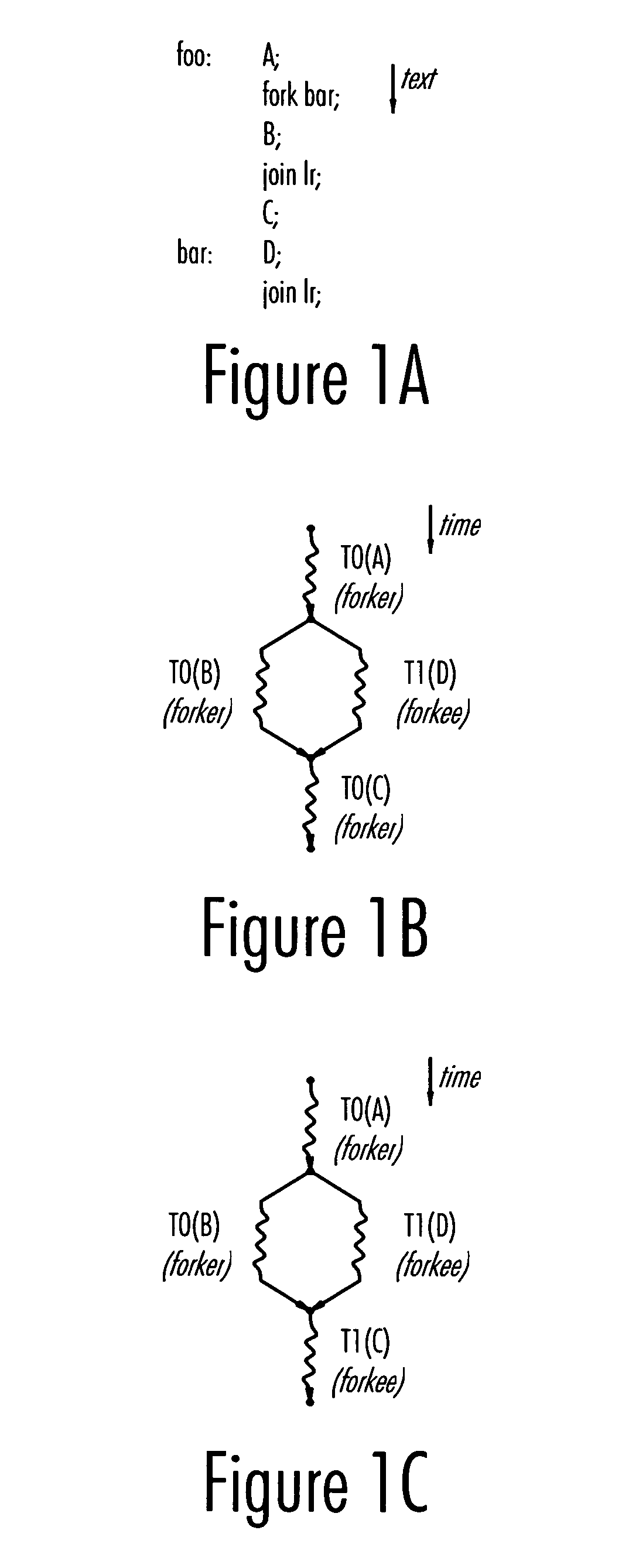

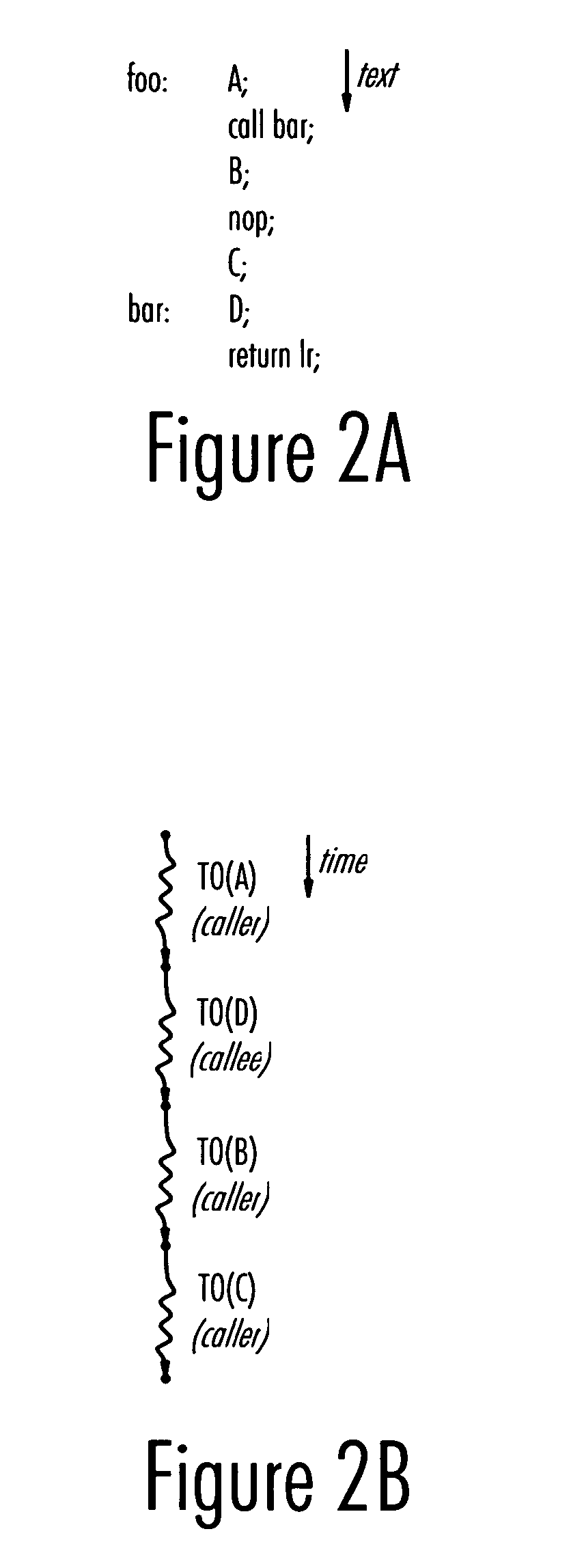

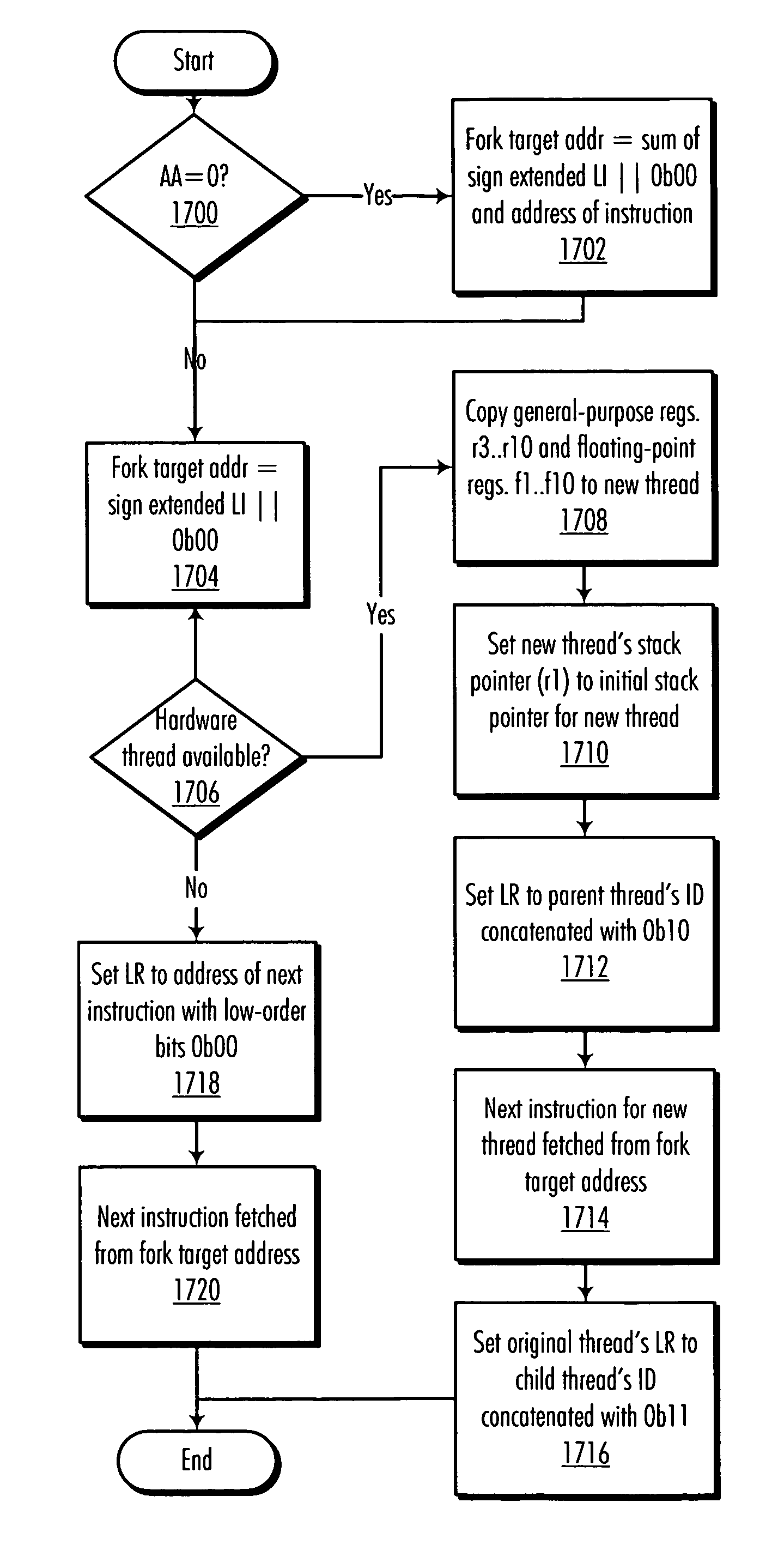

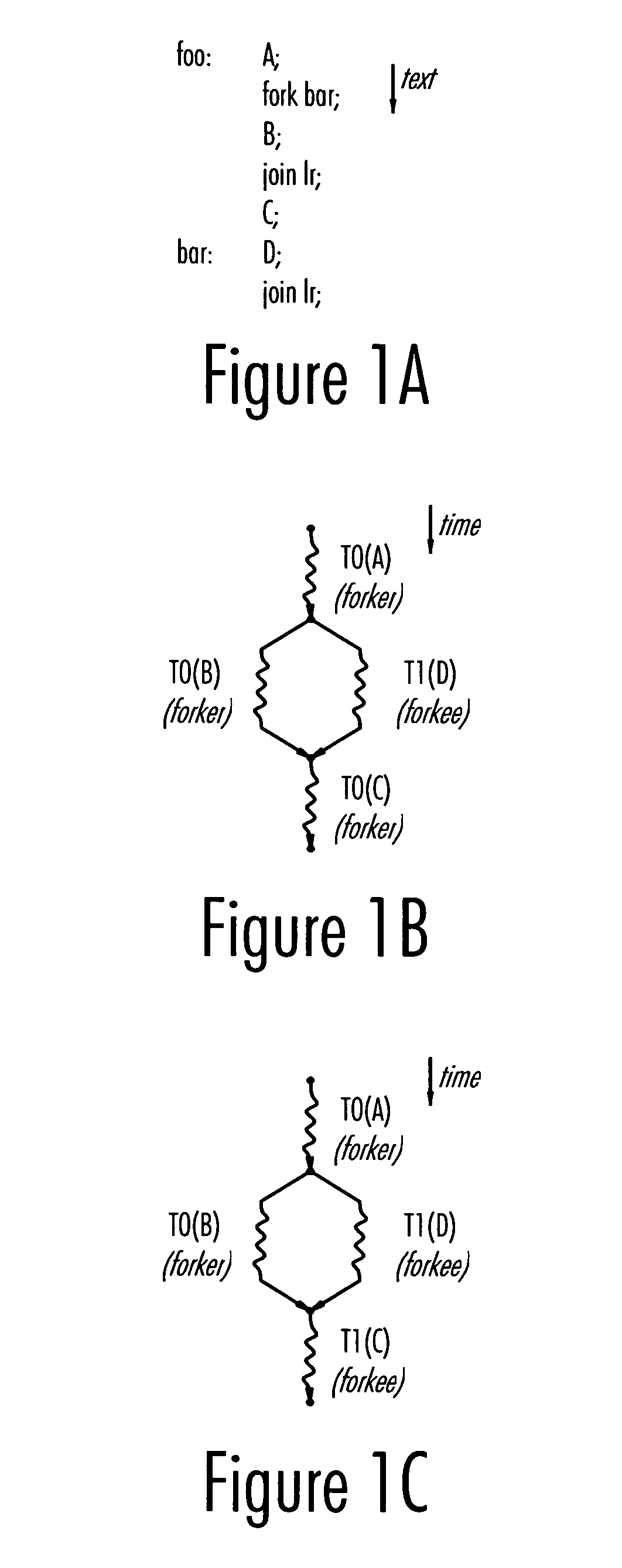

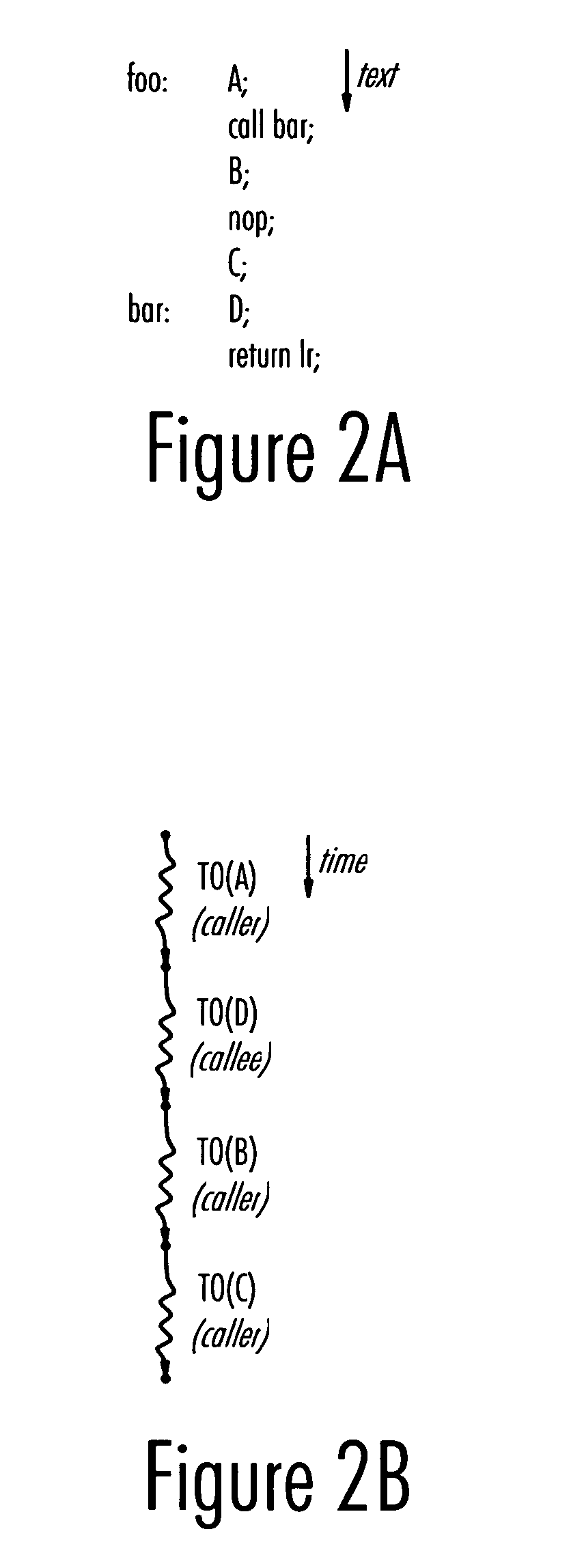

A method and processor architecture for achieving a high level of concurrency and latency hiding in an “infinite-thread processor architecture” with a limited number of hardware threads is disclosed. A preferred embodiment defines “fork” and “join” instructions for spawning new threads and having a novel operational semantics. If a hardware thread is available to shepherd a forked thread, the fork and join instructions have thread creation and termination / synchronization semantics, respectively. If no hardware thread is available, however, the fork and join instructions assume subroutine call and return semantics respectively. The link register of the processor is used to determine whether a given join instruction should be treated as a thread synchronization operation or as a return from subroutine operation.

Owner:IBM CORP

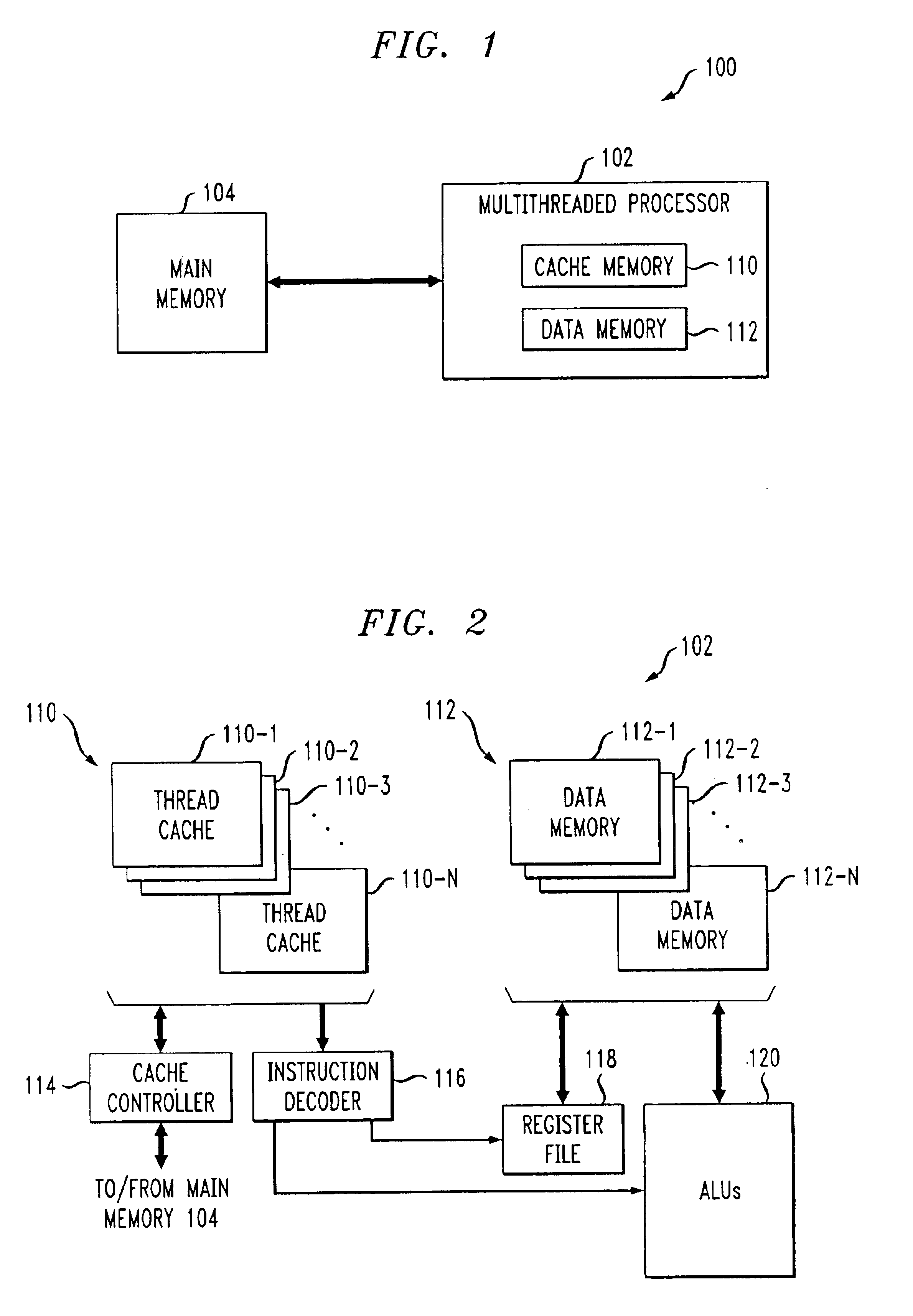

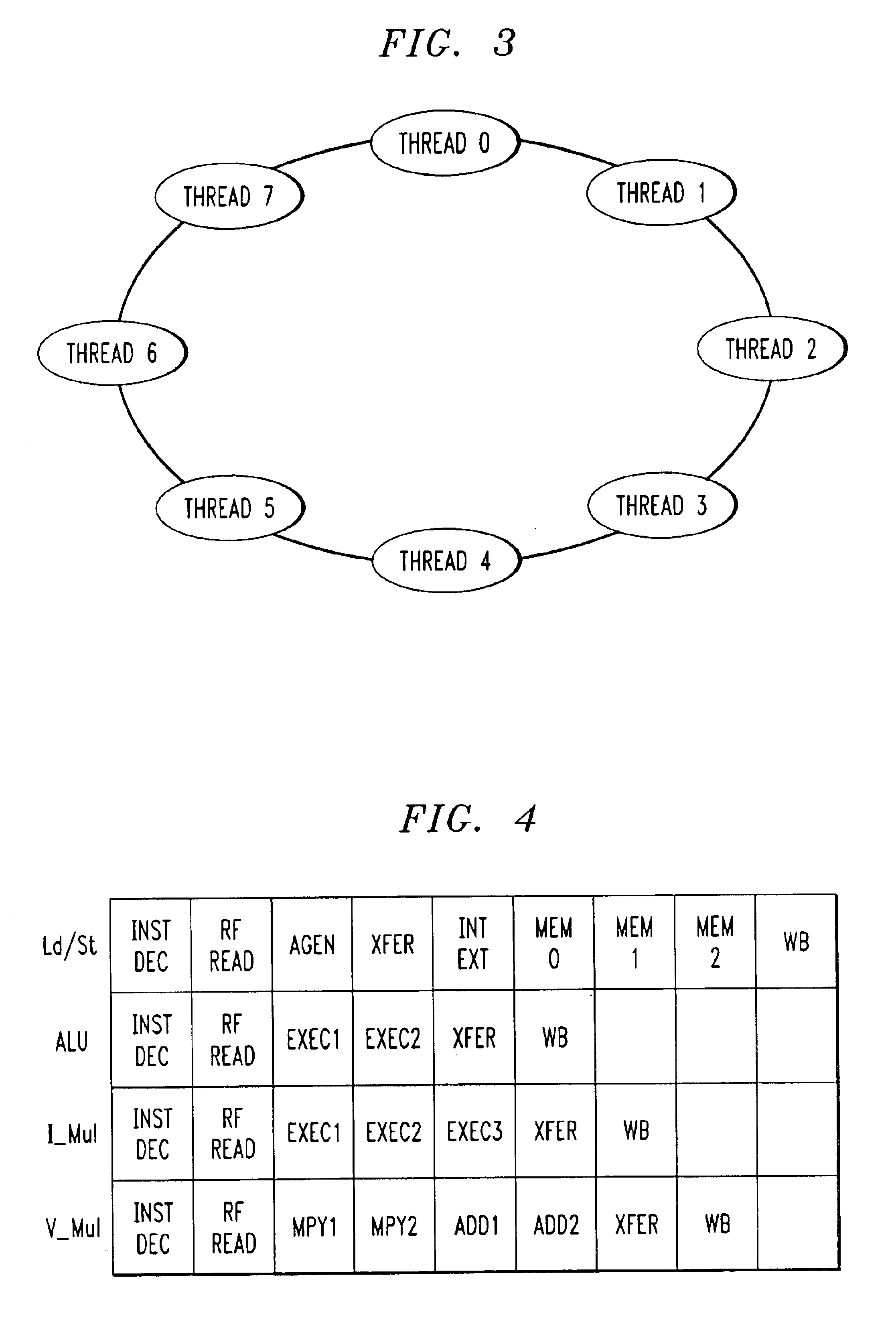

Computer Processors With Plural, Pipelined Hardware Threads Of Execution

InactiveUS20090260013A1Digital computer detailsMultiprogramming arrangementsHardware threadParallel computing

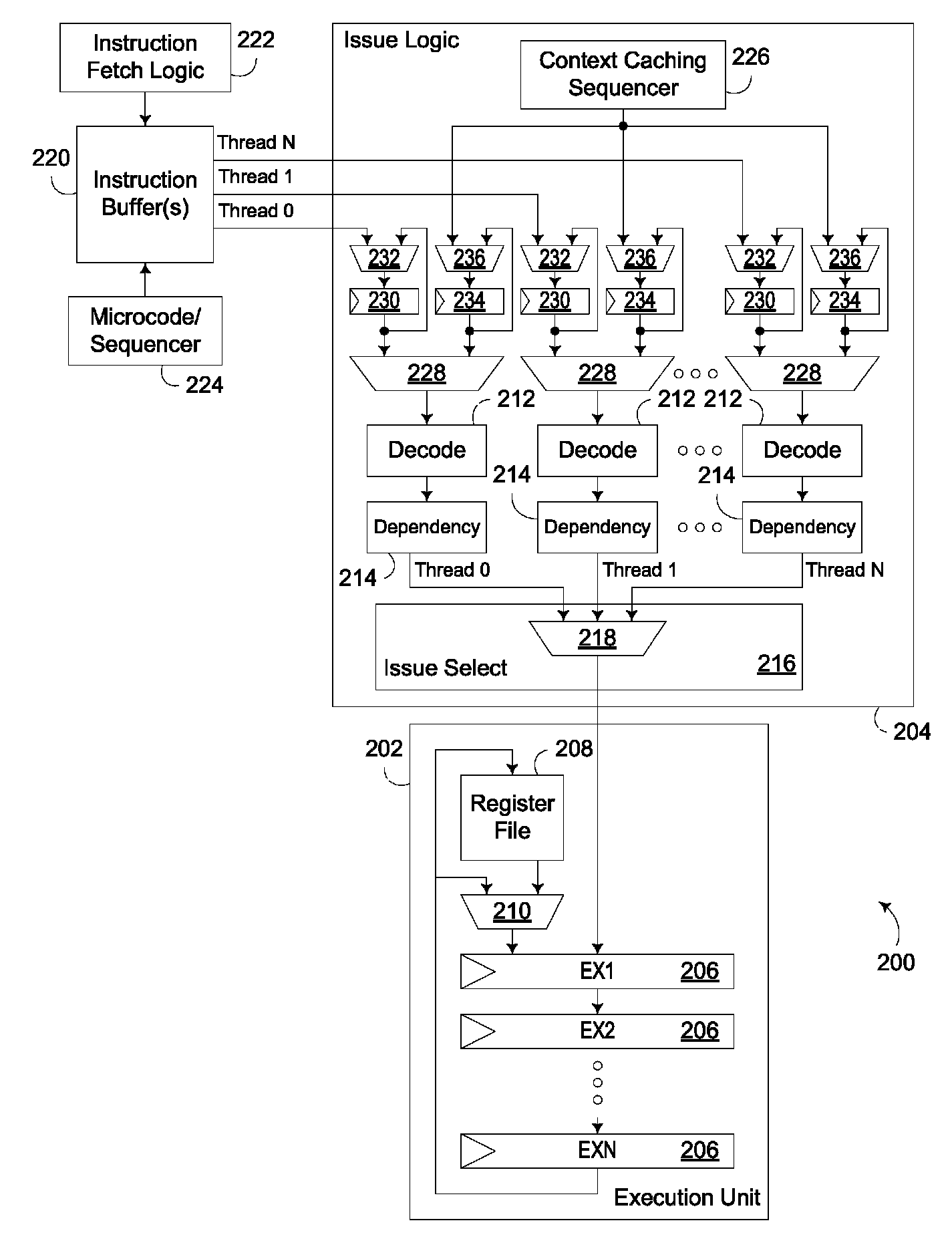

Computer processors and methods of operation of computer processors that include a plurality of pipelined hardware threads of execution, each thread including a plurality of computer program instructions; an instruction decoder that determines dependencies and latencies among instructions of a thread; and an instruction dispatcher that arbitrates, in the presence of resource contention and in accordance with the dependencies and latencies, priorities for dispatch of instructions from the plurality of threads of execution.

Owner:IBM CORP

Indirect inter-thread communication using a shared pool of inboxes

InactiveUS20130160026A1Facilitating data packet orderingMultiprogramming arrangementsInput/output processes for data processingHardware threadNetwork packet

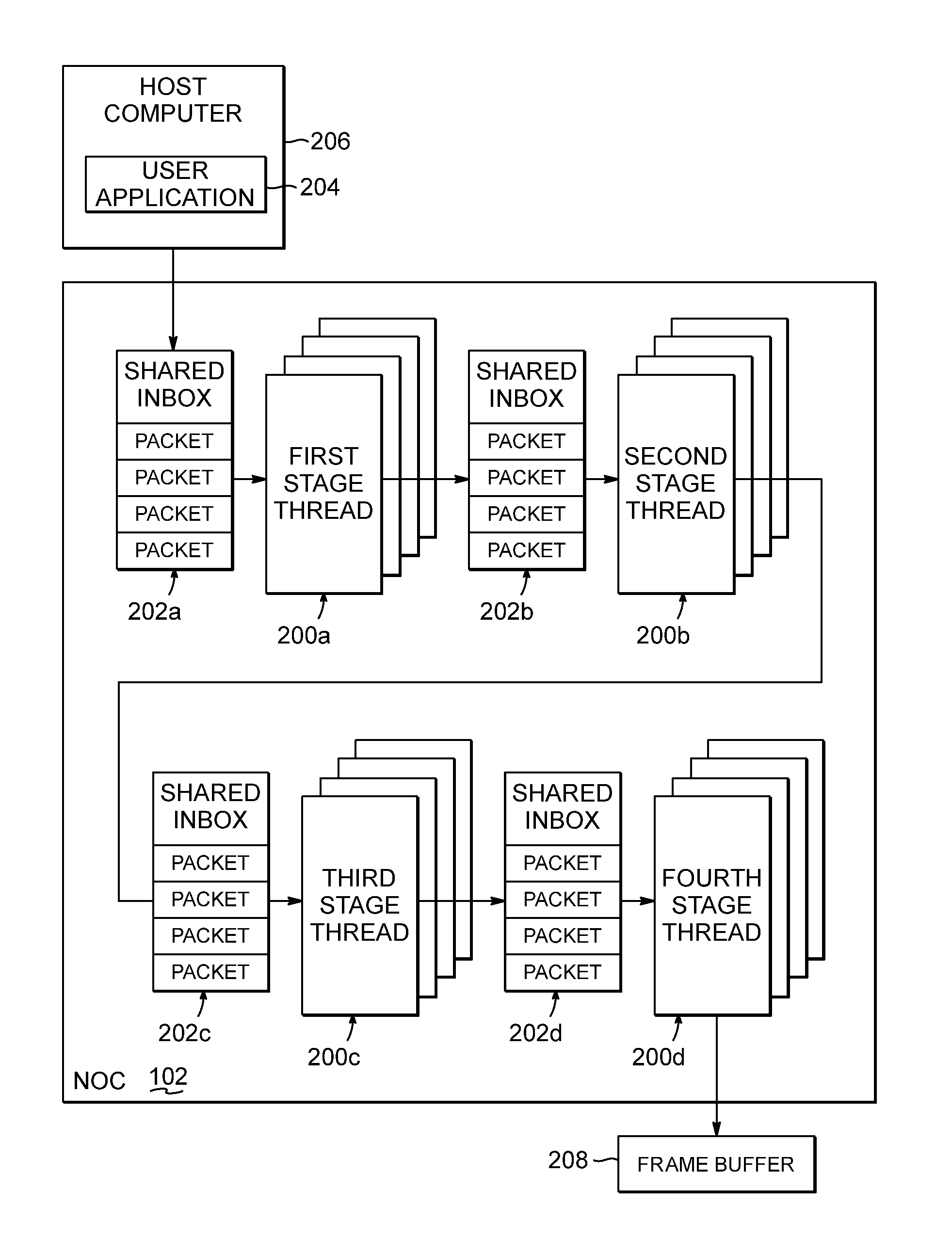

A circuit arrangement, method, and program product for communicating data between hardware threads of a network on a chip processing unit utilizes shared inboxes to communicate data to pools of hardware threads. The associated hardware in the pools threads receive data packets from the shared inboxes in response to issuing work requests to an associated shared inbox. Data packets include a source identifier corresponding to a hardware thread from which the data packet was generated, and the shared inboxes may manage data packet distribution to associated hardware threads based on the source identifier of each data packet. A shared inbox may also manage workload distribution and uneven workload lengths by communicating data packets to hardware threads associated with the shared inbox in response to receiving work requests from associated hardware threads.

Owner:IBM CORP

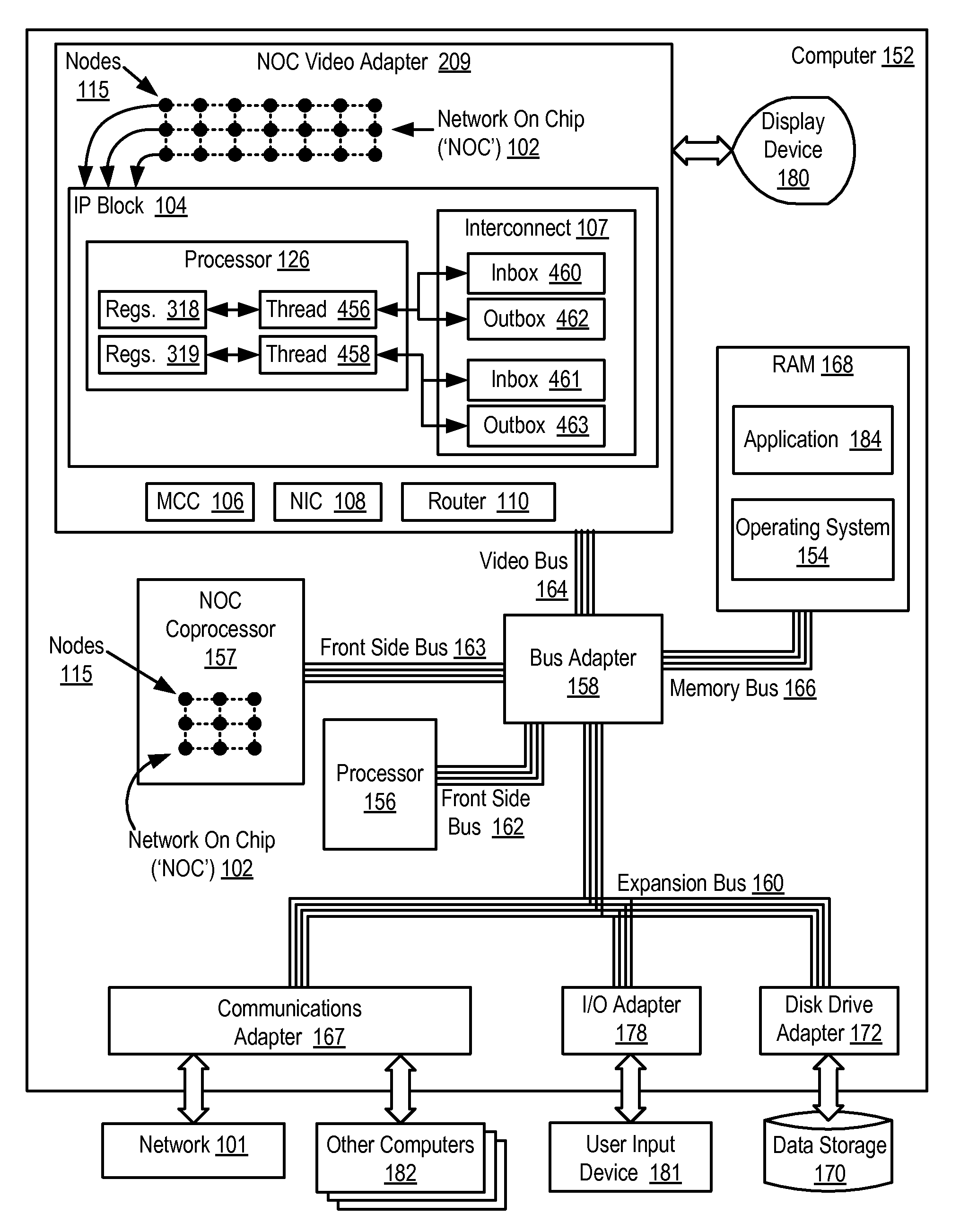

Network on chip with low latency, high bandwidth application messaging interconnects that abstract hardware inter-thread data communications into an architected state of a processor

ActiveUS7991978B2General purpose stored program computerConcurrent instruction executionHardware threadHigh bandwidth

Owner:INT BUSINESS MASCH CORP

Multithreaded processor architecture with operational latency hiding

ActiveUS8230423B2High of latencyHigh levelError detection/correctionRuntime instruction translationHardware threadLogical operations

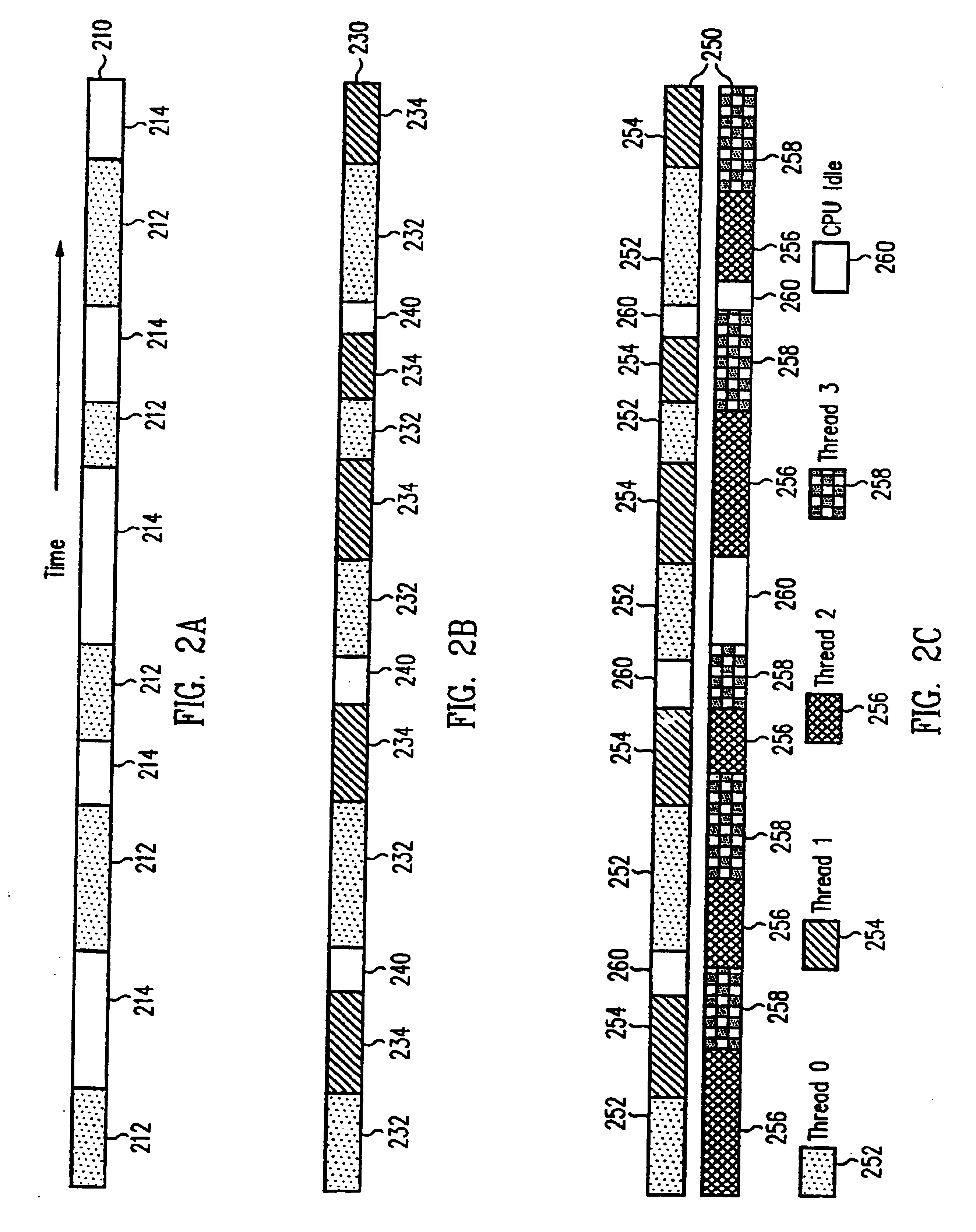

A method and processor architecture for achieving a high level of concurrency and latency hiding in an “infinite-thread processor architecture” with a limited number of hardware threads is disclosed. A preferred embodiment defines “fork” and “join” instructions for spawning new context-switched threads. Context switching is used to hide the latency of both memory-access operations (i.e., loads and stores) and arithmetic / logical operations. When an operation executing in a thread incurs a latency having the potential to delay the instruction pipeline, the latency is hidden by performing a context switch to a different thread. When the result of the operation becomes available, a context switch back to that thread is performed to allow the thread to continue.

Owner:IBM CORP

Processor with multiple-thread, vertically-threaded pipeline

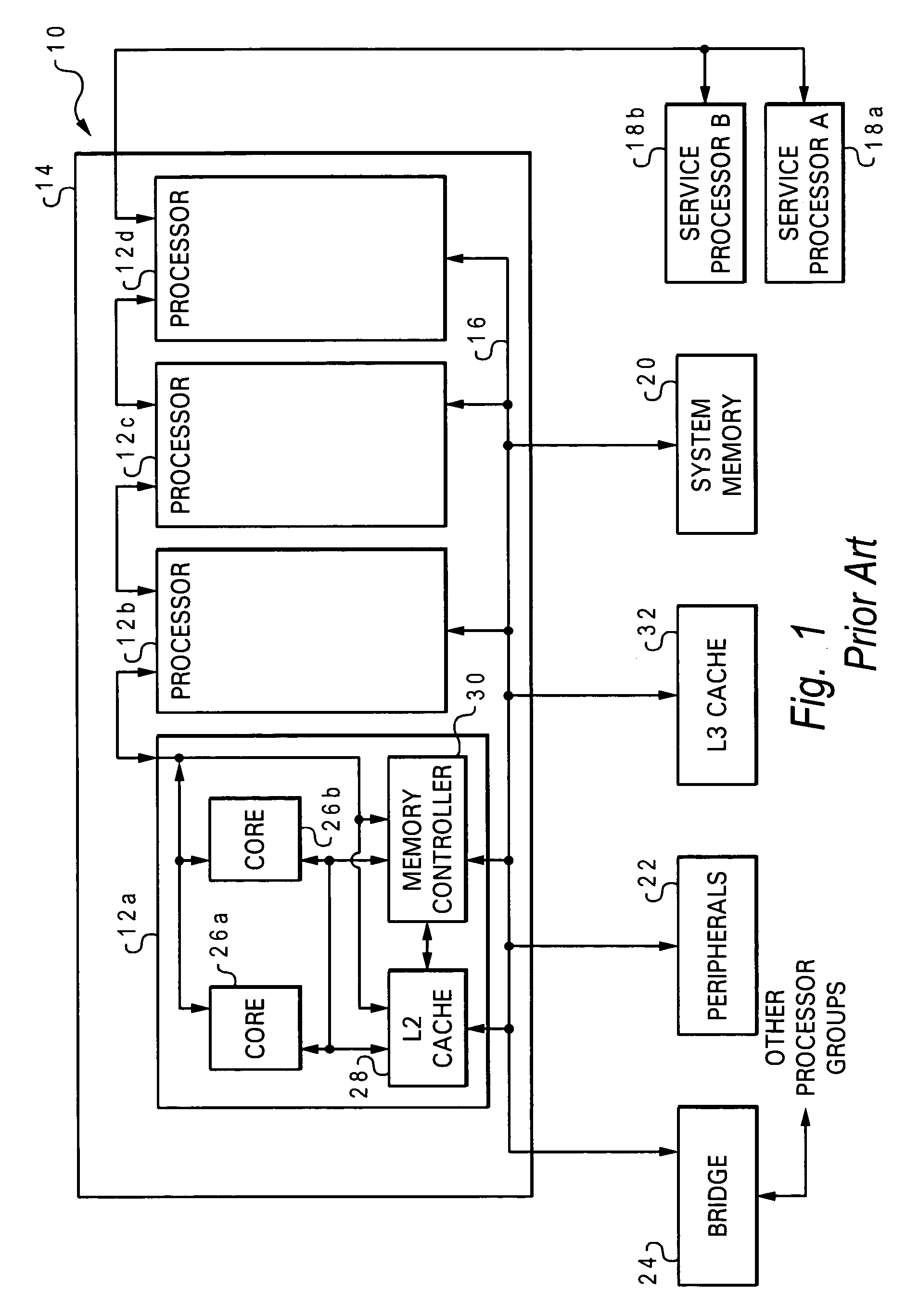

InactiveUS6938147B1Reduces wasted cycle timeRaise the ratioProgram initiation/switchingGeneral purpose stored program computerHardware threadOperational system

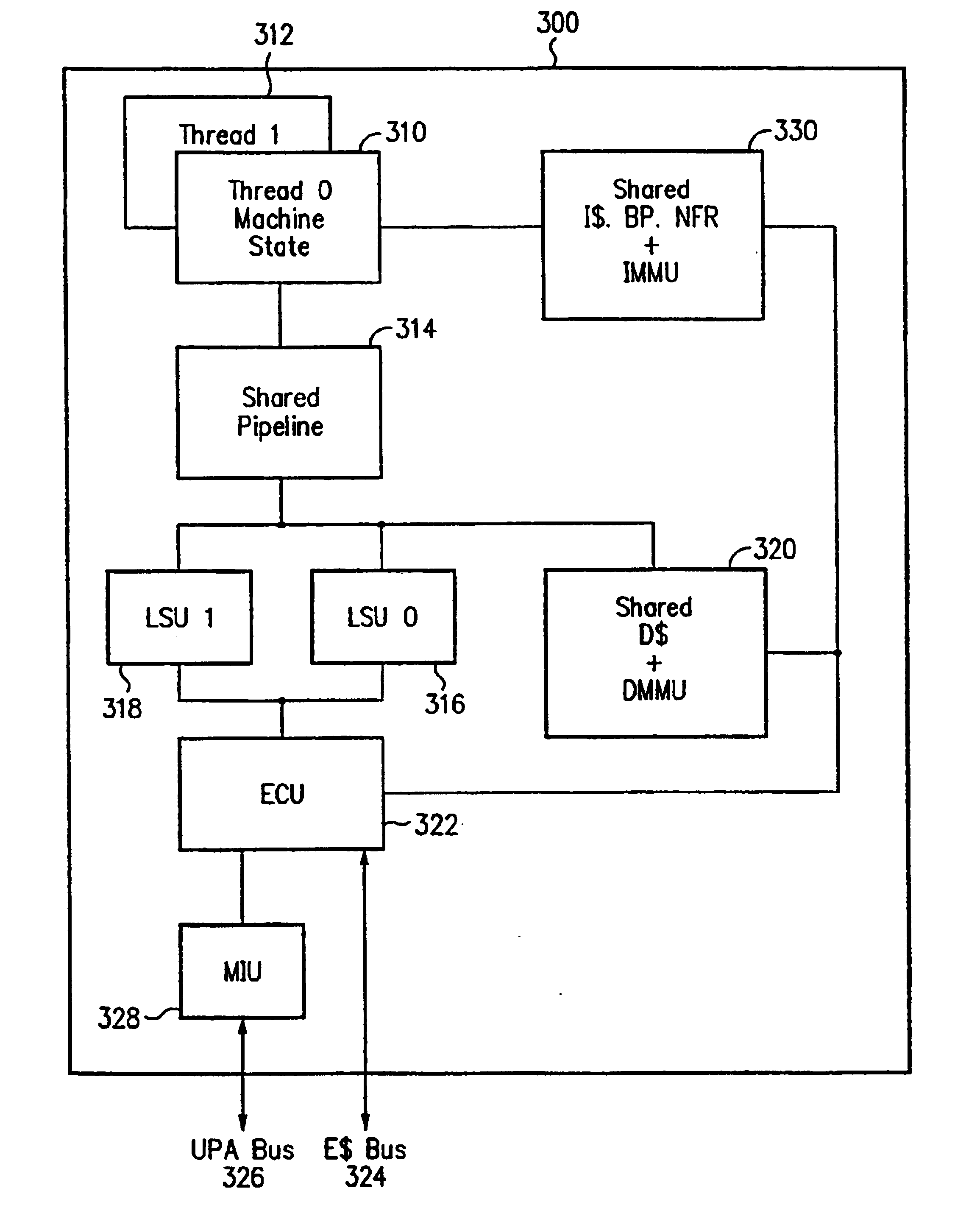

A processor reduces wasted cycle time resulting from stalling and idling, and increases the proportion of execution time, by supporting and implementing both vertical multithreading and horizontal multithreading. Vertical multithreading permits overlapping or “hiding” of cache miss wait times. In vertical multithreading, multiple hardware threads share the same processor pipeline. A hardware thread is typically a process, a lightweight process, a native thread, or the like in an operating system that supports multithreading. Horizontal multithreading increases parallelism within the processor circuit structure, for example within a single integrated circuit die that makes up a single-chip processor. To further increase system parallelism in some processor embodiments, multiple processor cores are formed in a single die. Advances in on-chip multiprocessor horizontal threading are gained as processor core sizes are reduced through technological advancements.

Owner:ORACLE INT CORP

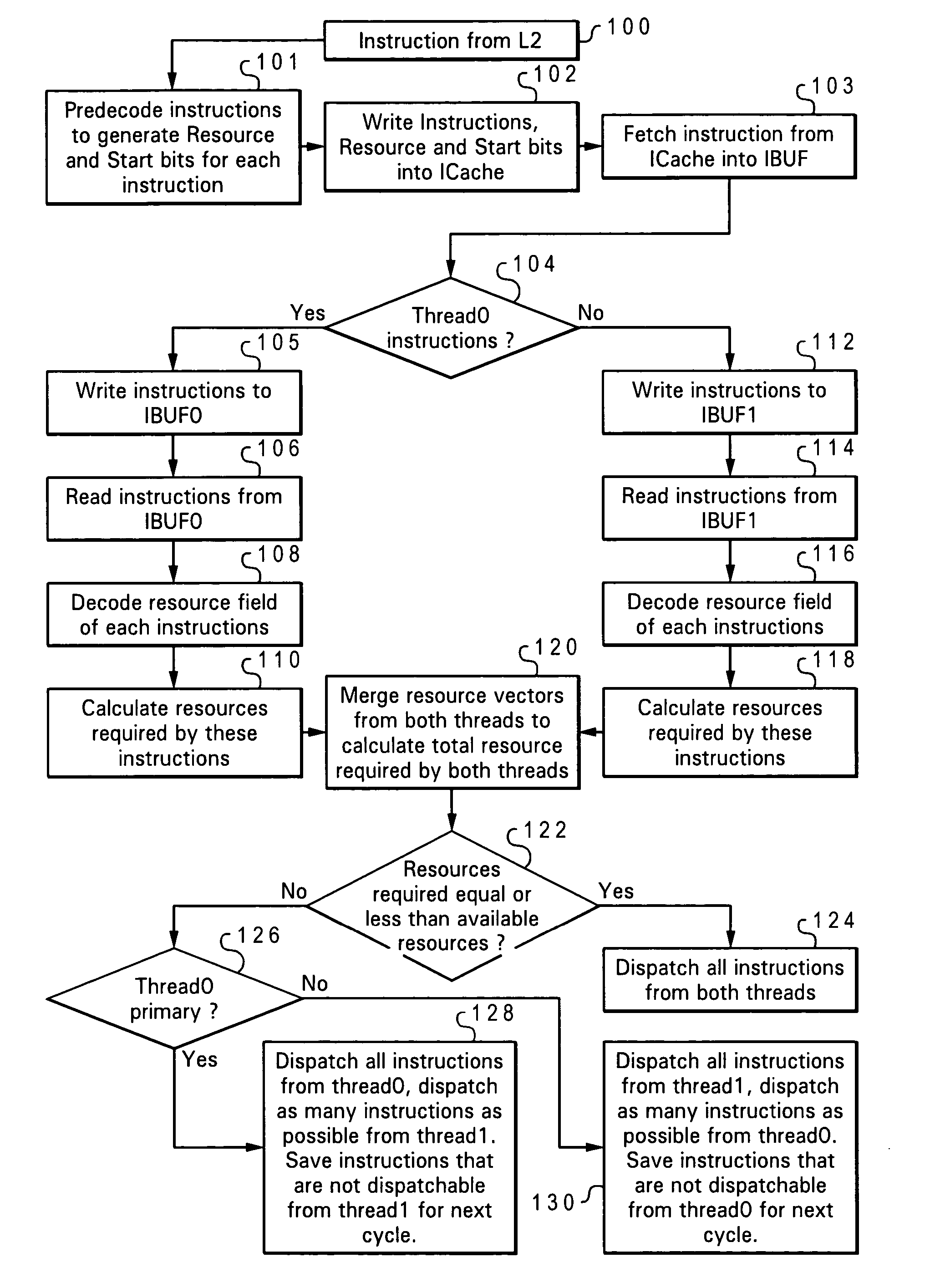

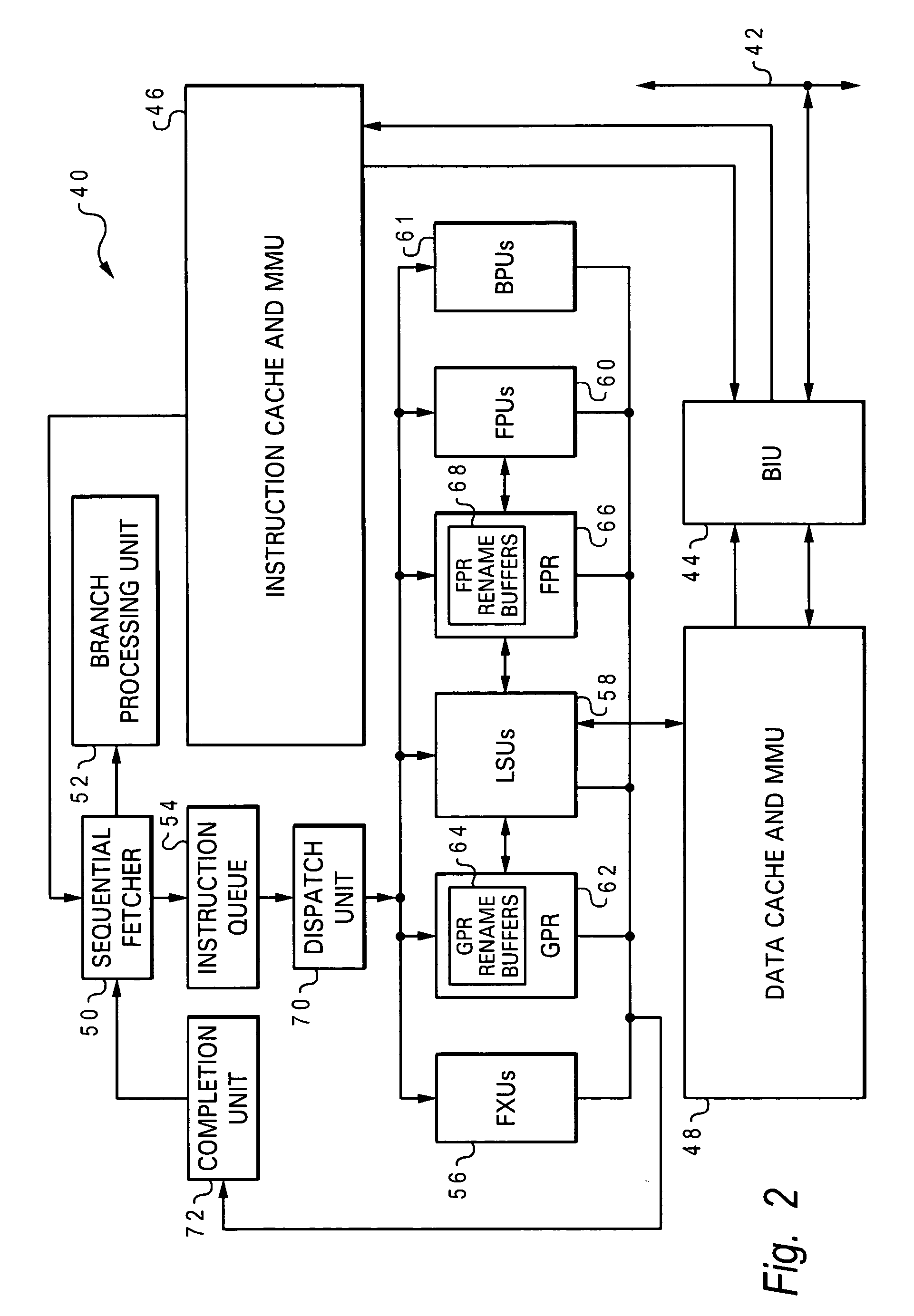

Instruction group formation and mechanism for SMT dispatch

InactiveUS20060101241A1Increase dispatching bandwidthEasy constructionInstruction analysisDigital computer detailsHardware threadProgram instruction

A more efficient method of handling instructions in a computer processor, by associating resource fields with respective program instructions wherein the resource fields indicate which of the processor hardware resources are required to carry out the program instructions, calculating resource requirements for merging two or more program instructions based on their resource fields, and determining resource availability for simultaneously executing the merged program instructions based on the calculated resource requirements. Resource vectors indicative of the required resource may be encoded into the resource fields, and the resource fields decoded at a later stage to derive the resource vectors. The resource fields can be stored in the instruction cache associated with the respective program instructions. The processor may operate in a simultaneous multithreading mode with different program instructions being part of different hardware threads. When the resource availability equals or exceeds the resource requirements for a group of instructions, those instructions can be dispatched simultaneously to the hardware resources. A start bit may be inserted in one of the program instructions to define the instruction group. The hardware resources may in particular be execution units such as a fixed-point unit, a load / store unit, a floating-point unit, or a branch processing unit.

Owner:IBM CORP

Message selection for inter-thread communication in a multithreaded processor

A method and circuit arrangement process a workload in a multithreaded processor that includes a plurality of hardware threads. Each thread receives at least one message carrying data to process the workload through a respective inbox from among a plurality of inboxes. A plurality of messages are received at a first inbox among the plurality of inboxes, wherein the first inbox is associated with a first thread among the plurality of hardware threads, and wherein each message is associated with a priority. From the plurality of received messages, a first message is selected to process in the first thread based on that first message being associated with the highest priority among the received messages. A second message is selected to process in the first thread based on that second message being associated with the earliest time stamp among the received messages and in response to processing the first message.

Owner:IBM CORP

Circuit having hardware threading

ActiveUS20060225002A1Dynamic adaptabilityEasy to processCAD circuit designSoftware simulation/interpretation/emulationHardware threadWorkload

Hardware threading optimizes use of hardware resources in a dynamic workload environment. Unutilized hardware resources are dynamically borrowed to increase throughput performance and / or power savings by enabling parallel processing of application pipeline stages.

Owner:TUFTS UNIV

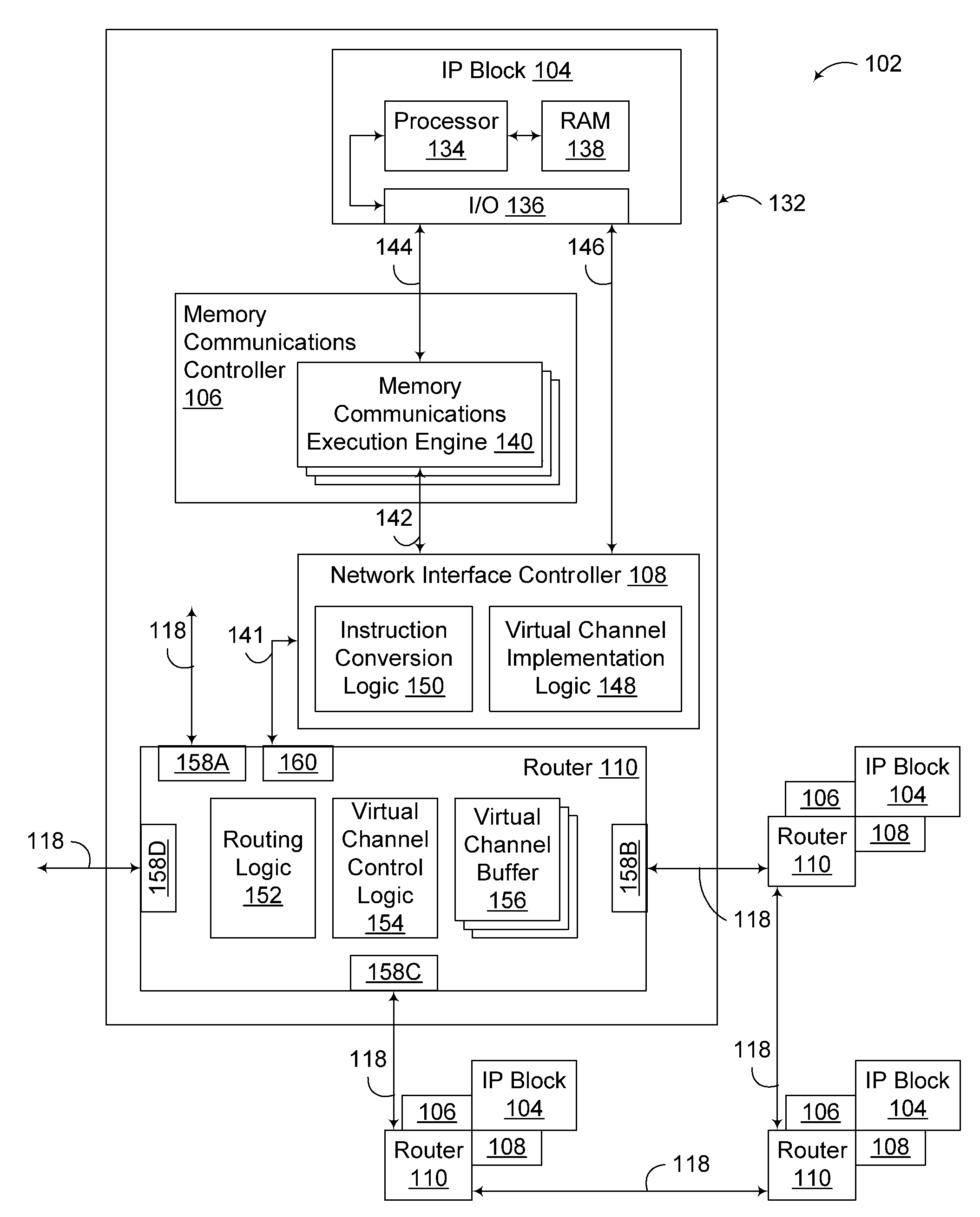

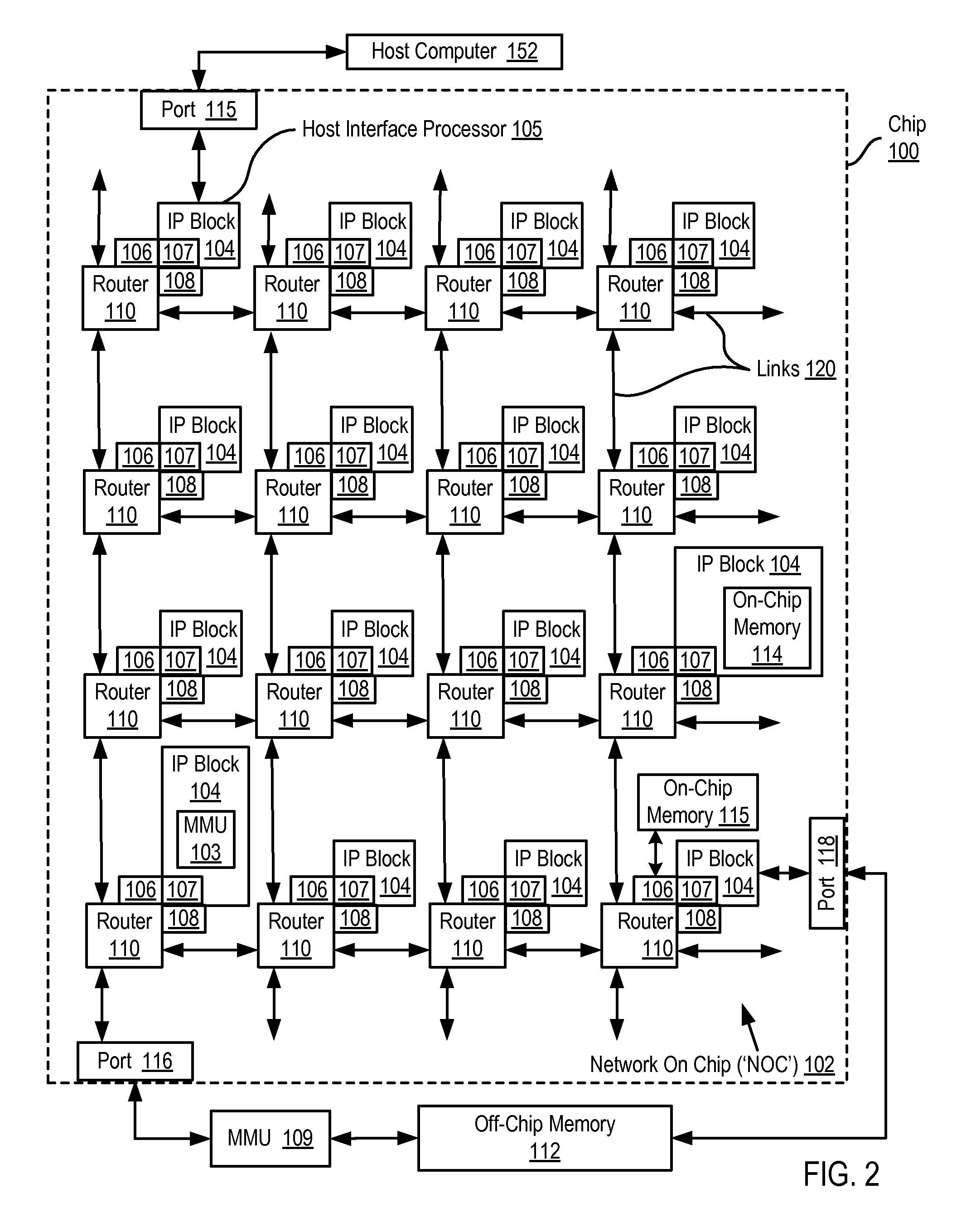

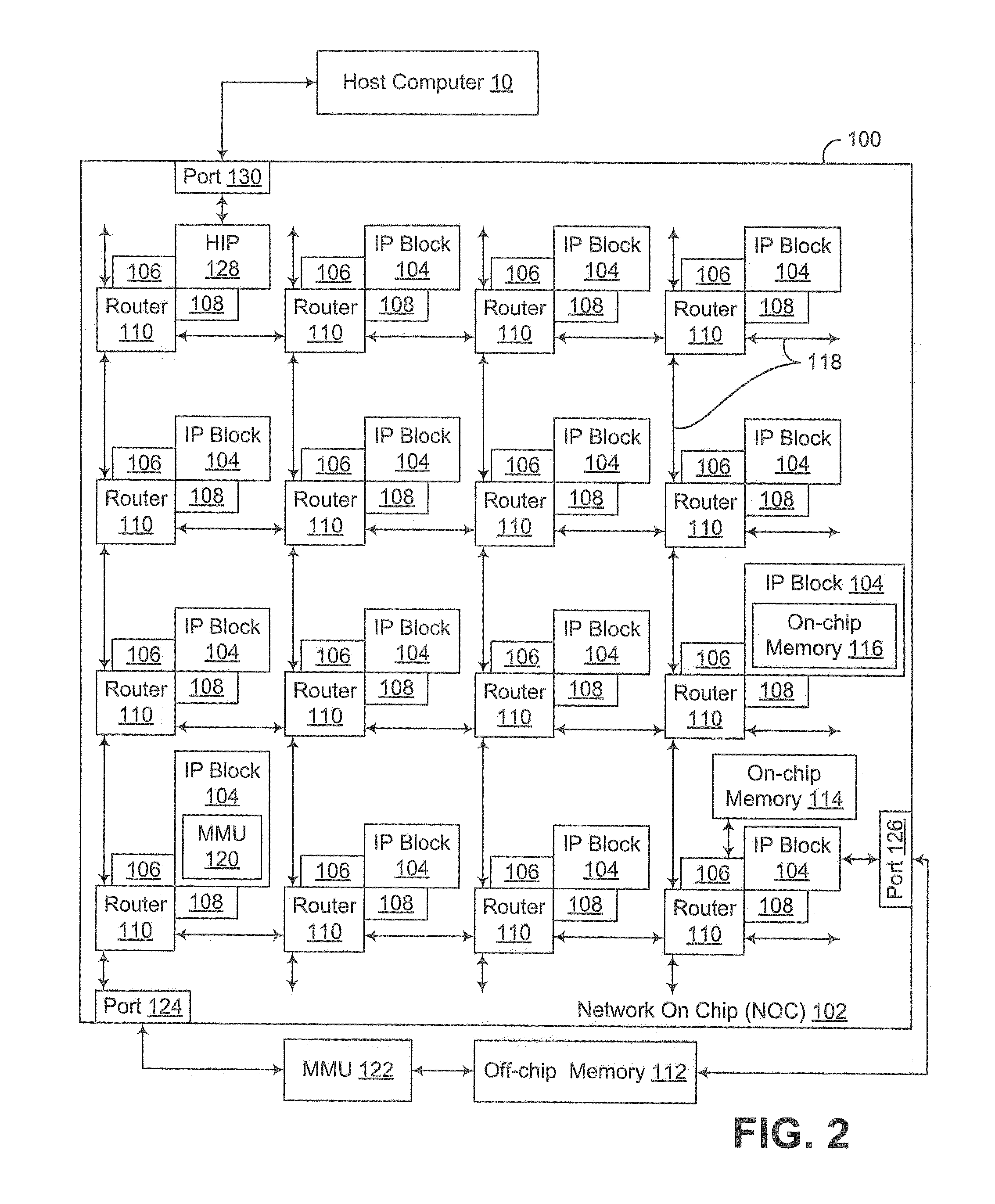

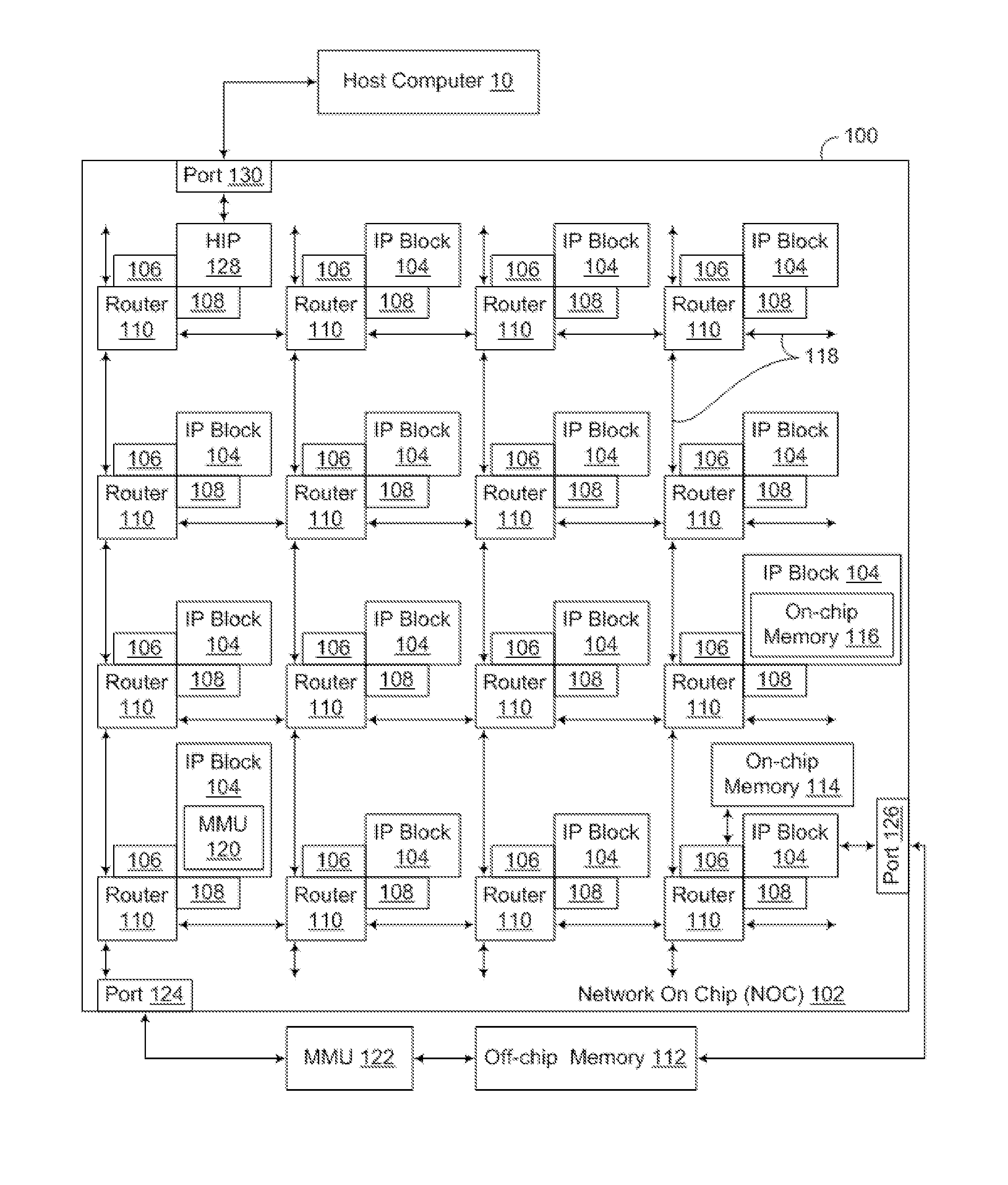

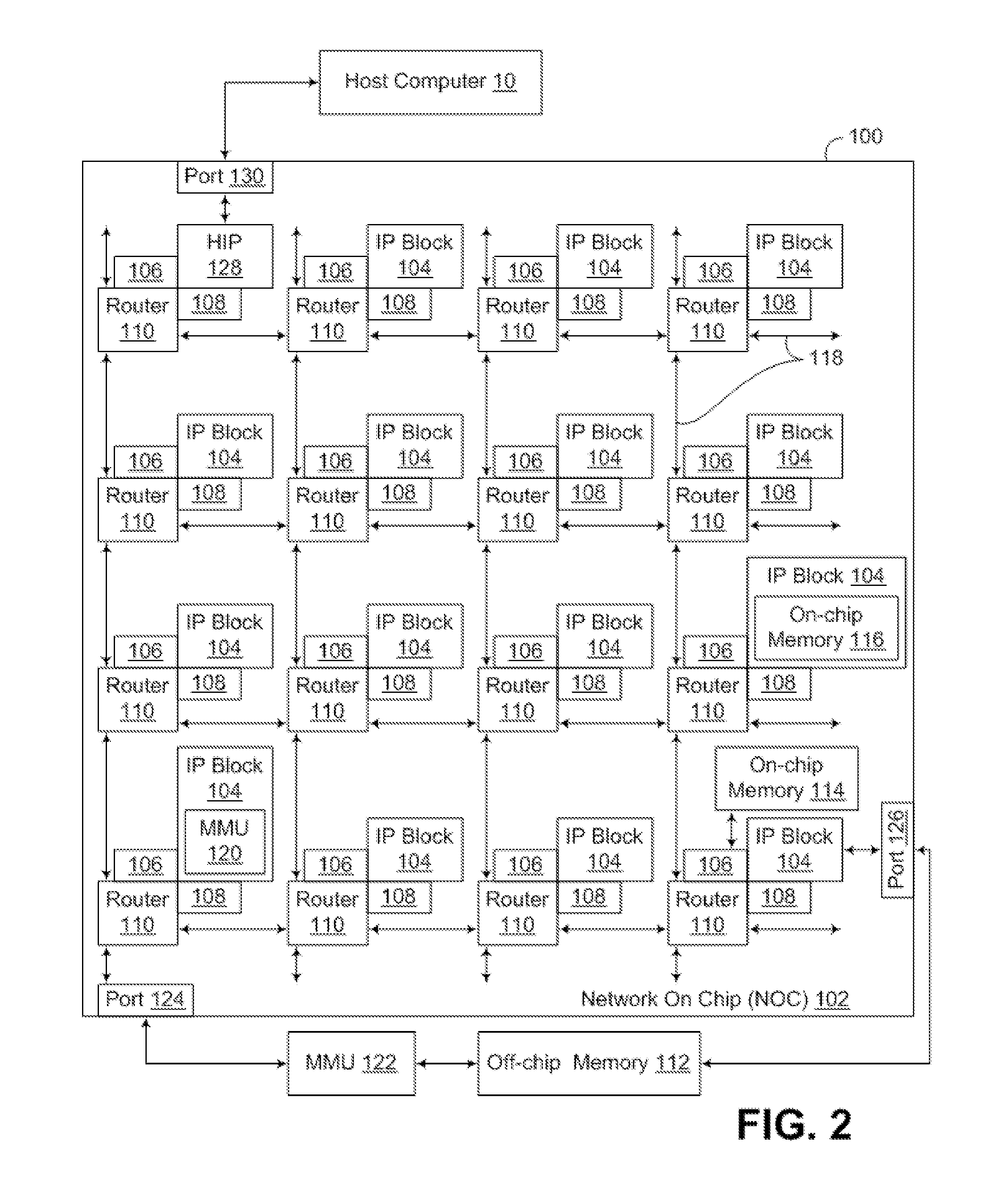

Network On Chip With Caching Restrictions For Pages Of Computer Memory

InactiveUS20100070714A1Memory adressing/allocation/relocationDigital computer detailsHardware threadParallel computing

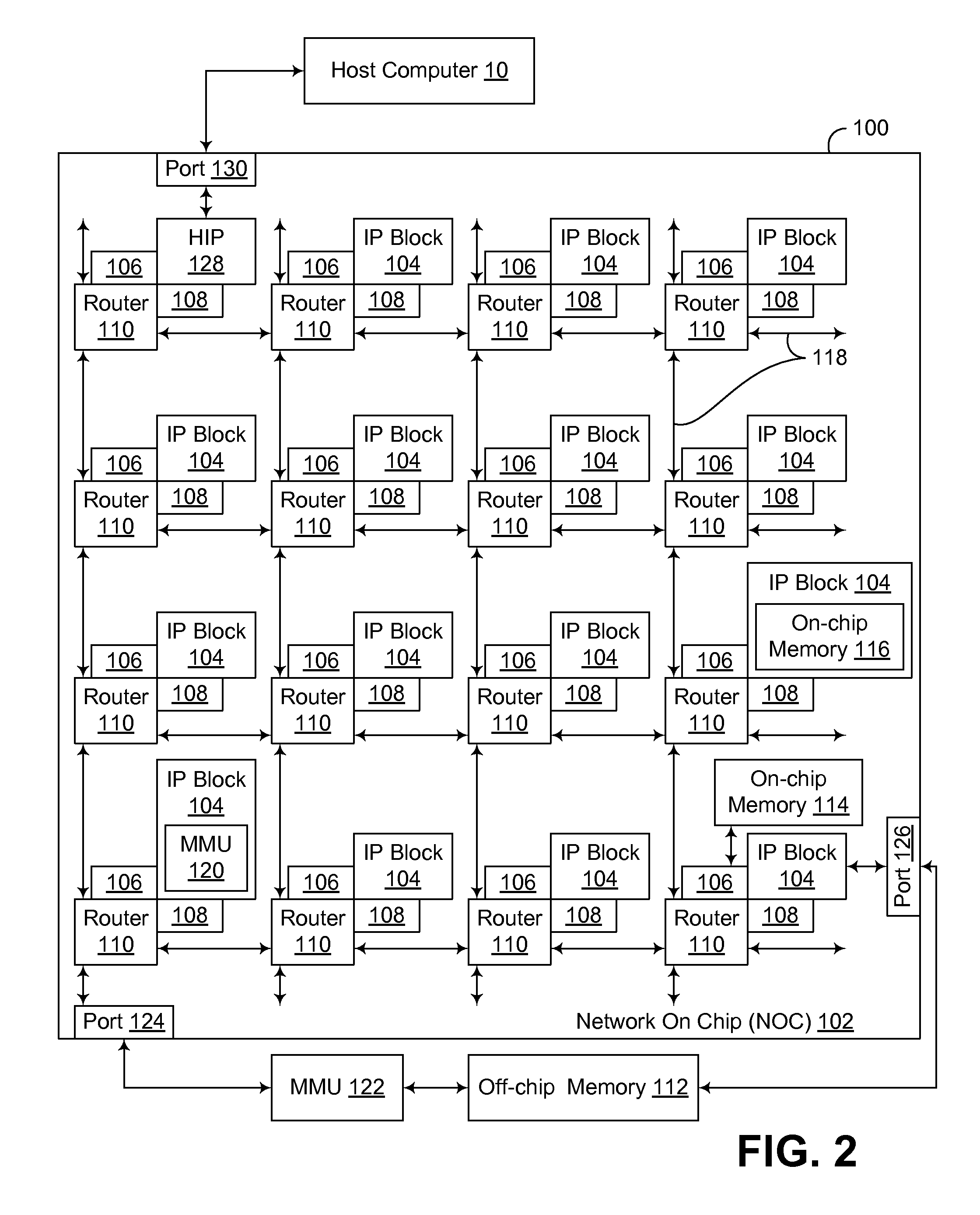

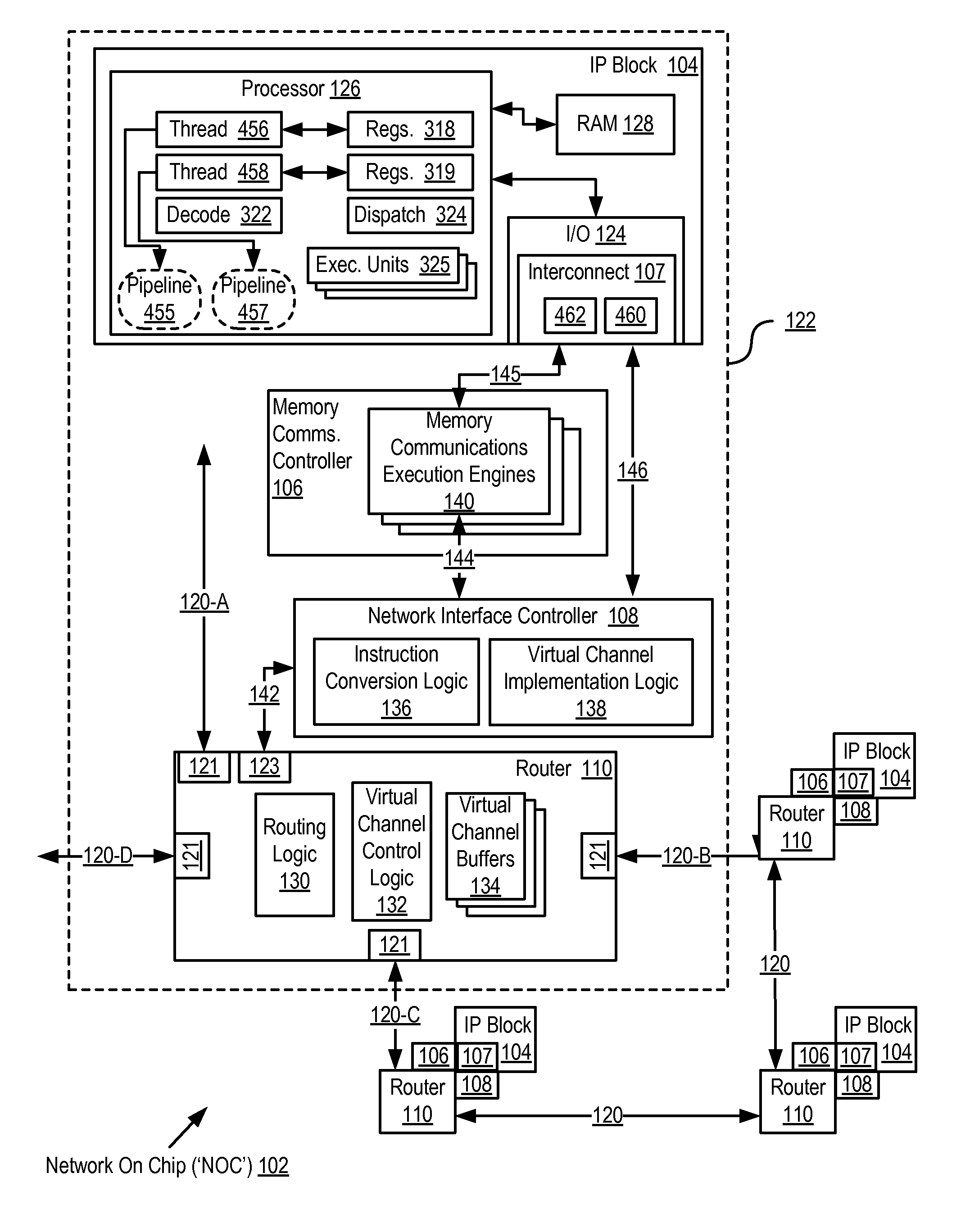

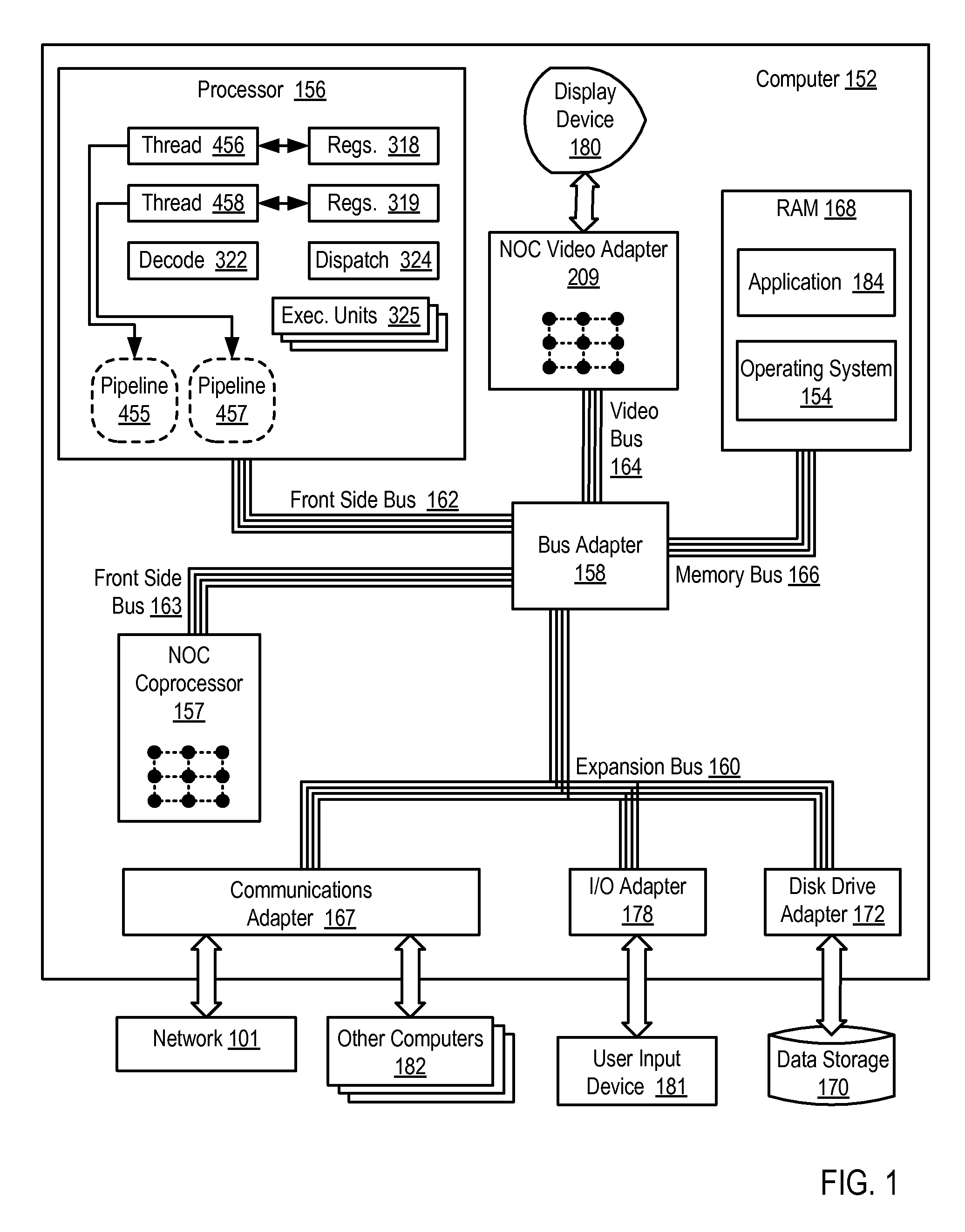

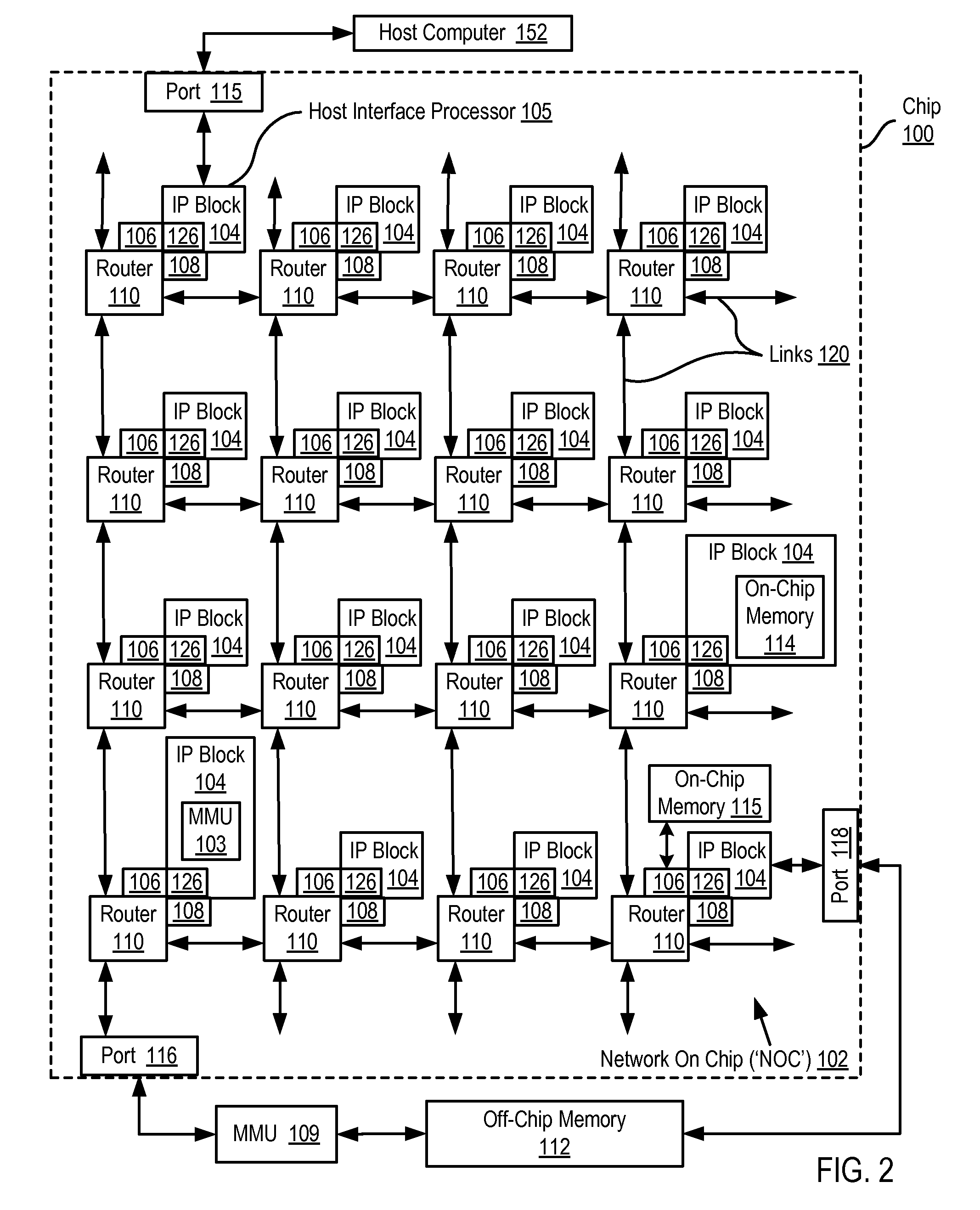

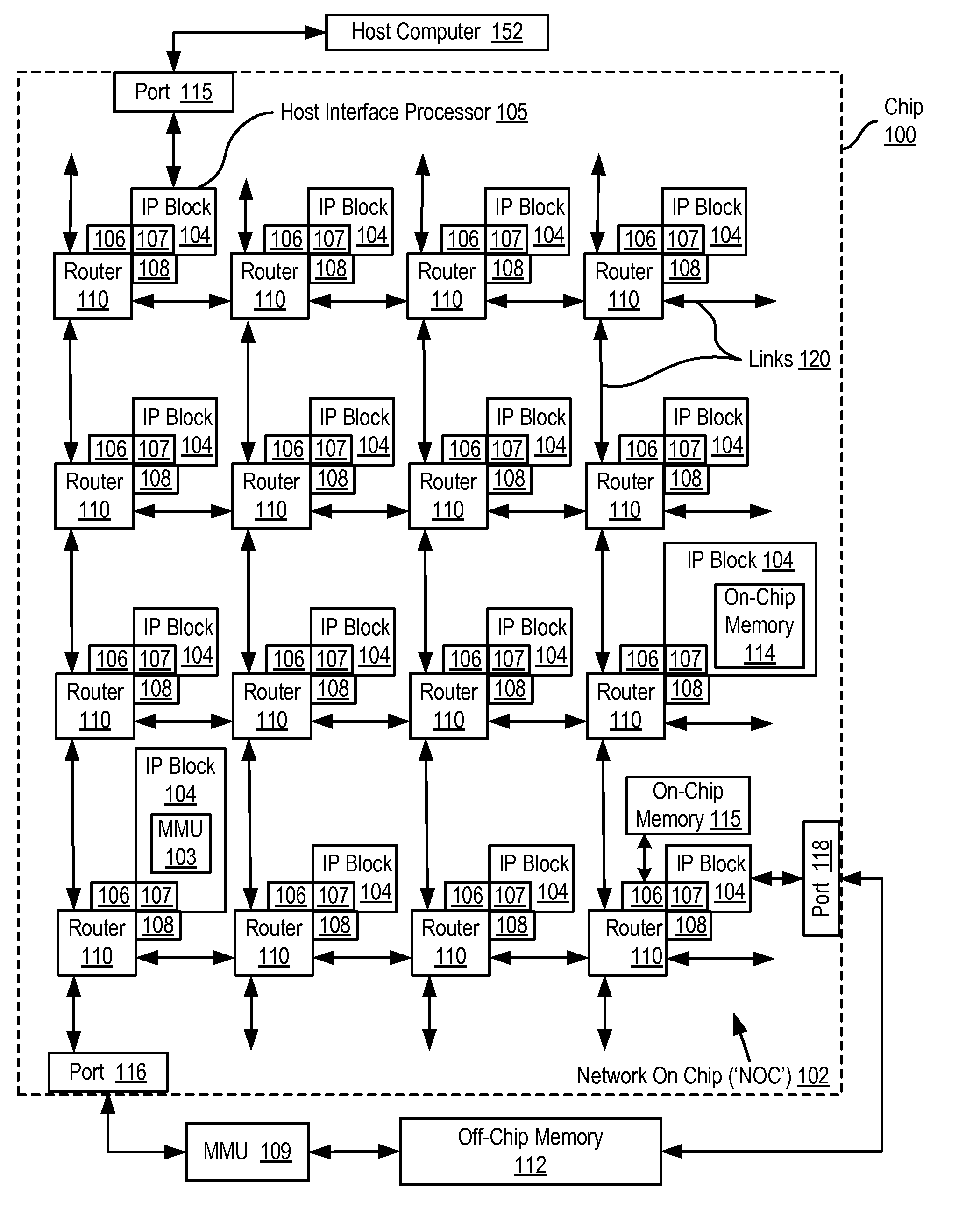

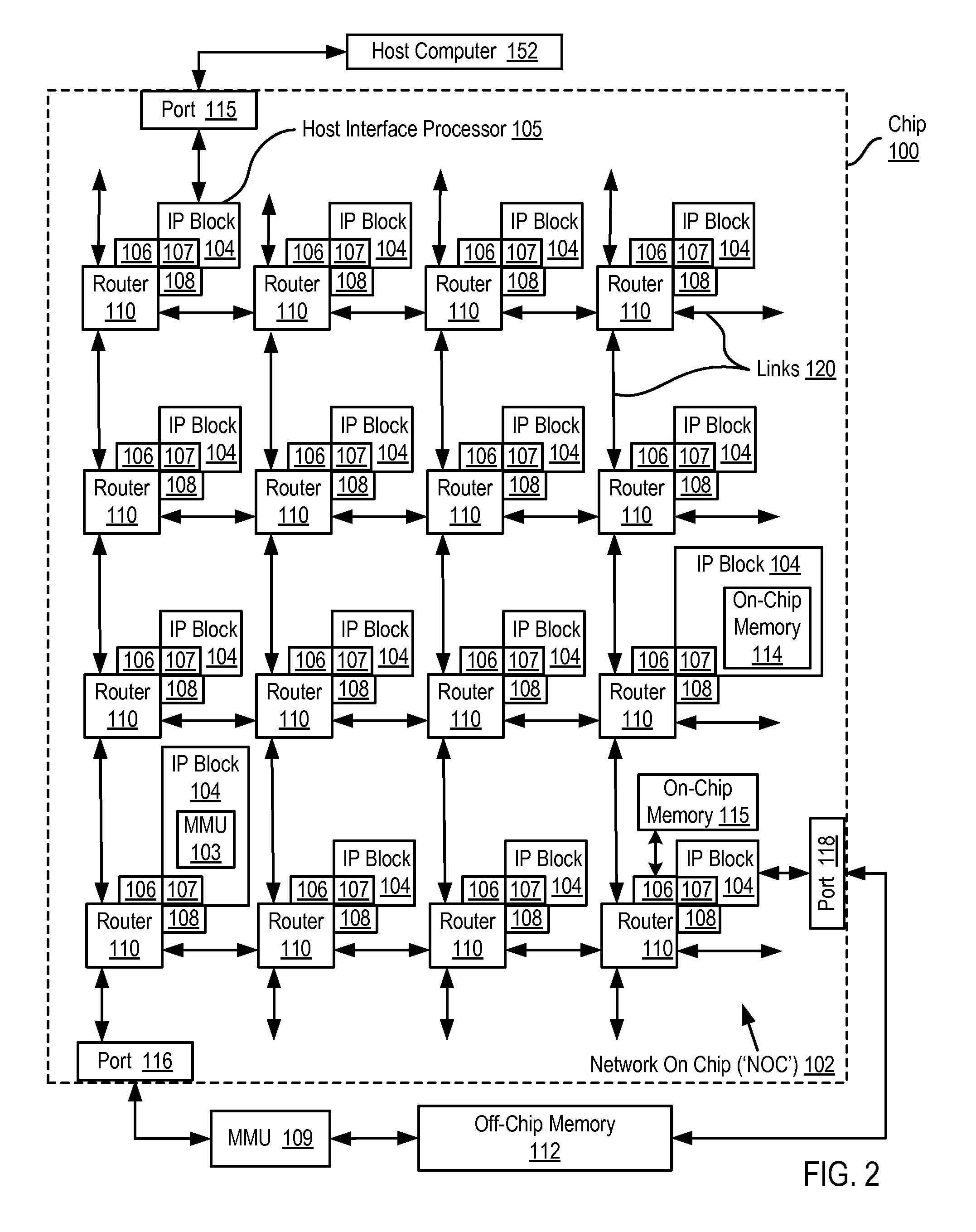

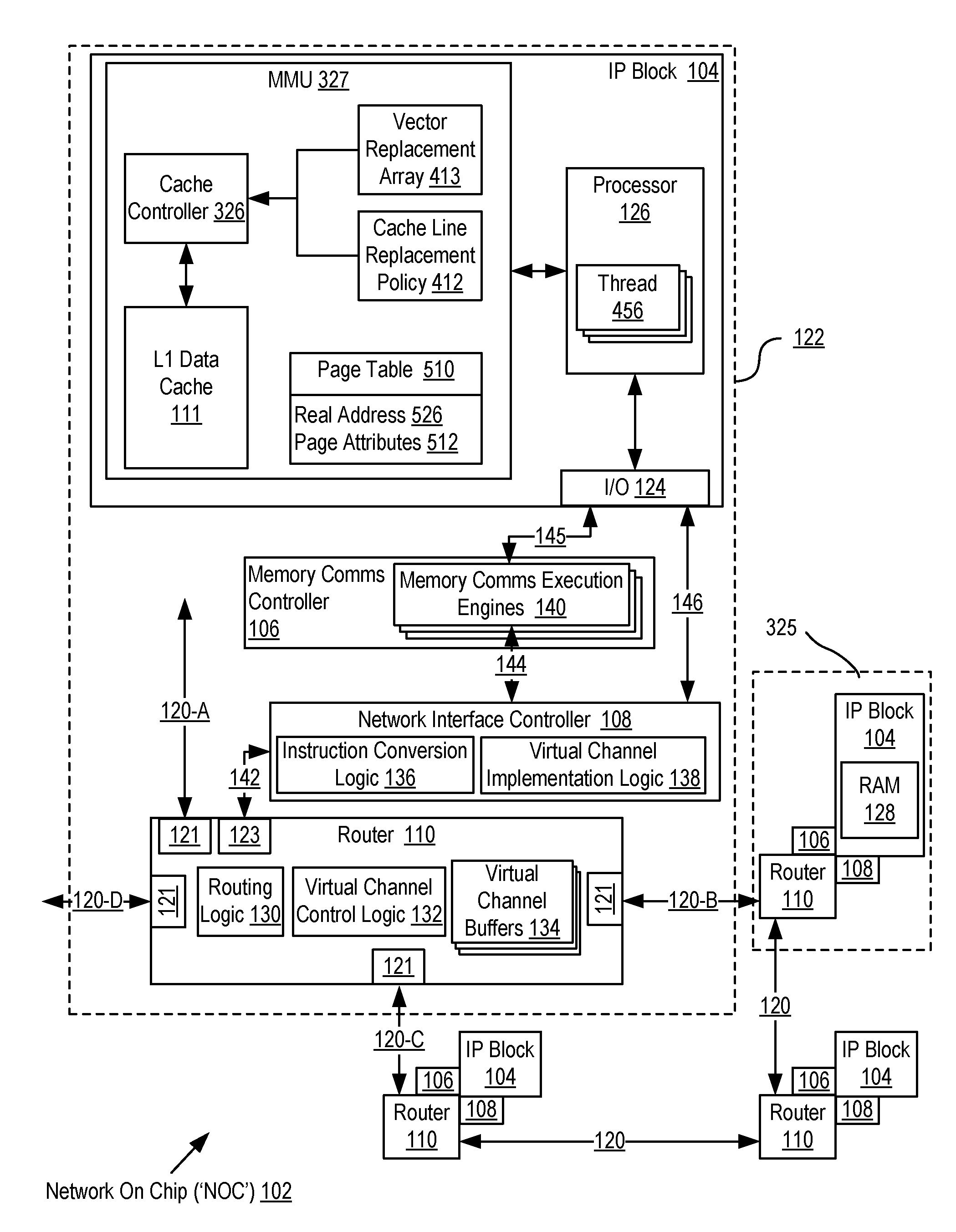

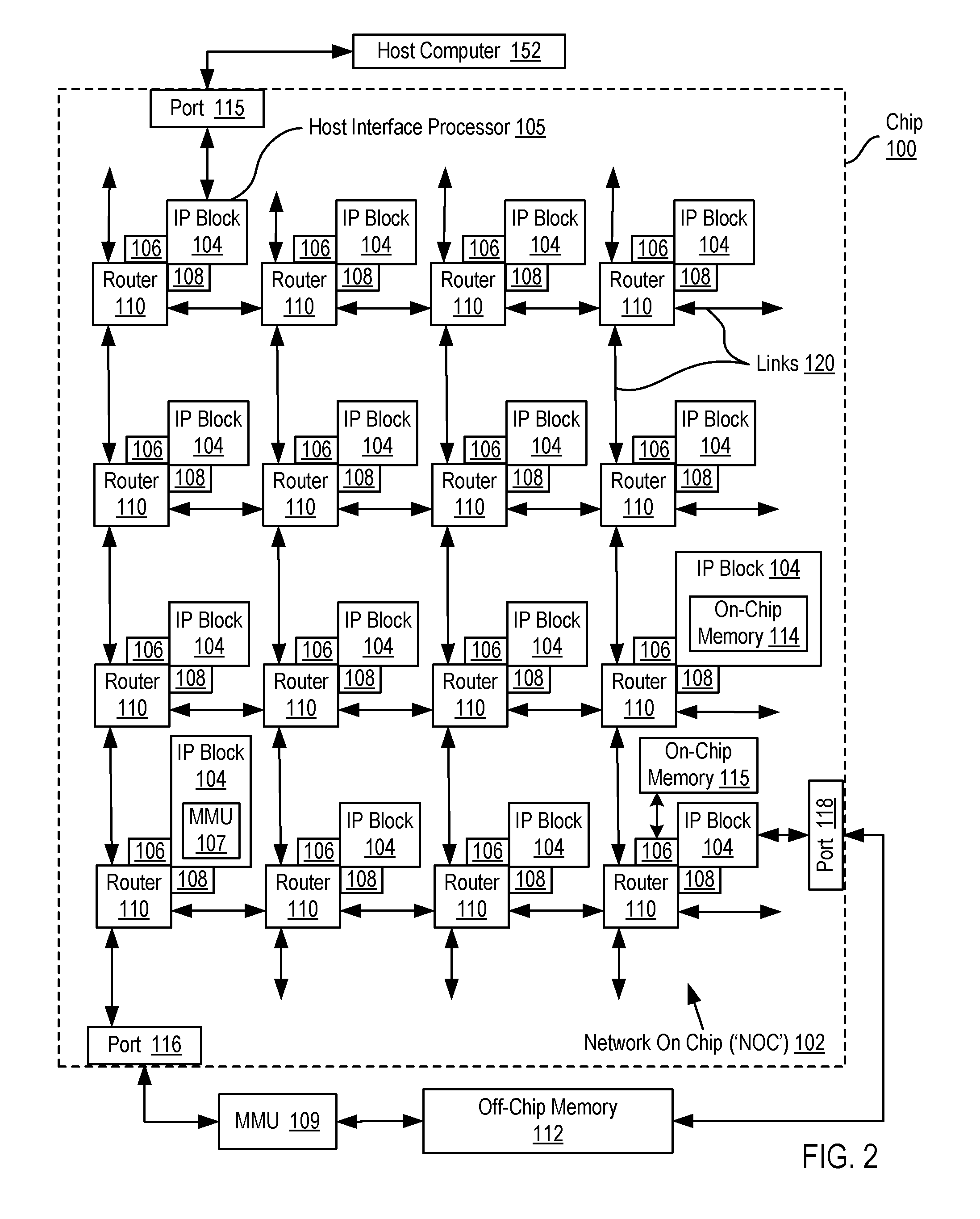

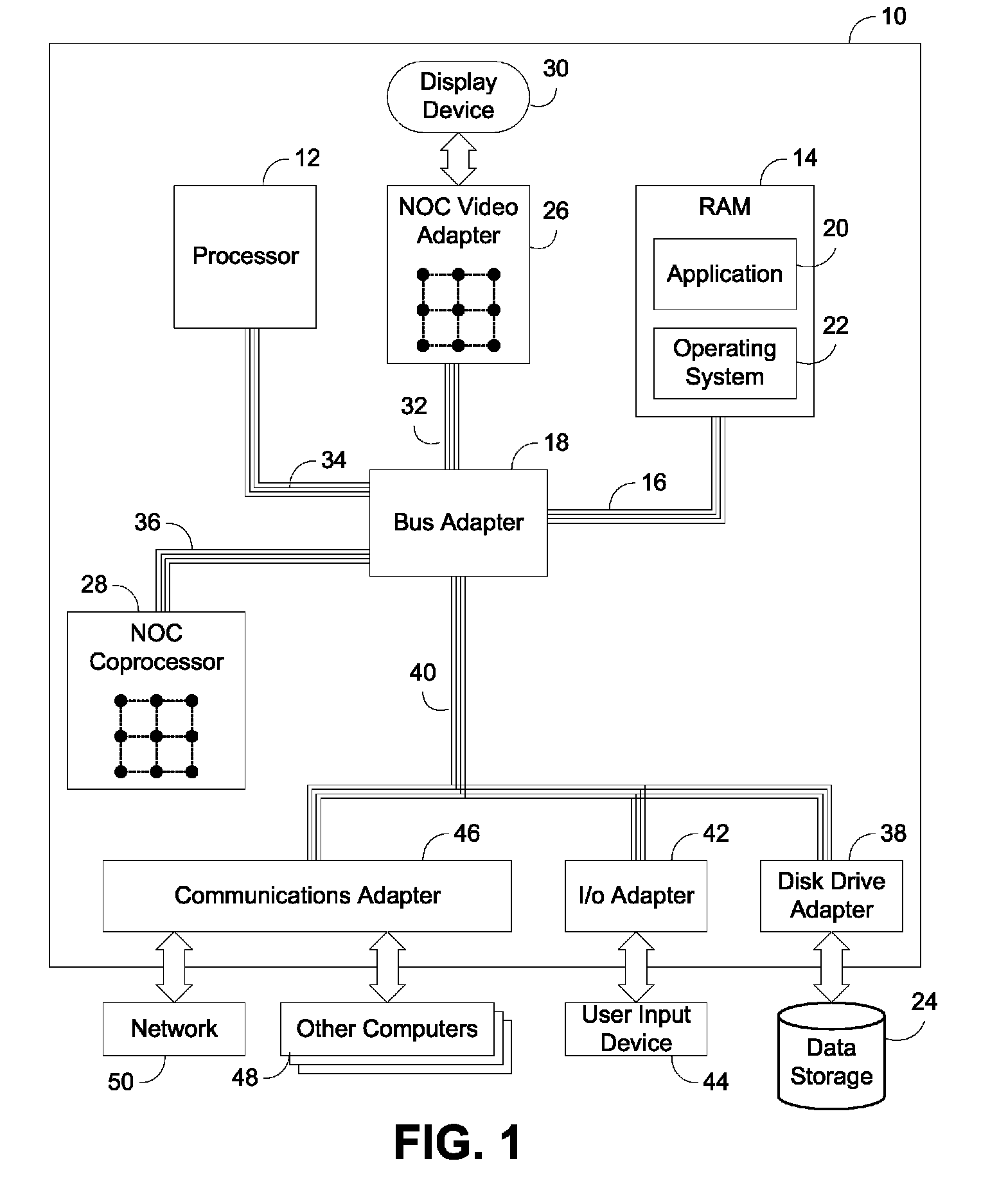

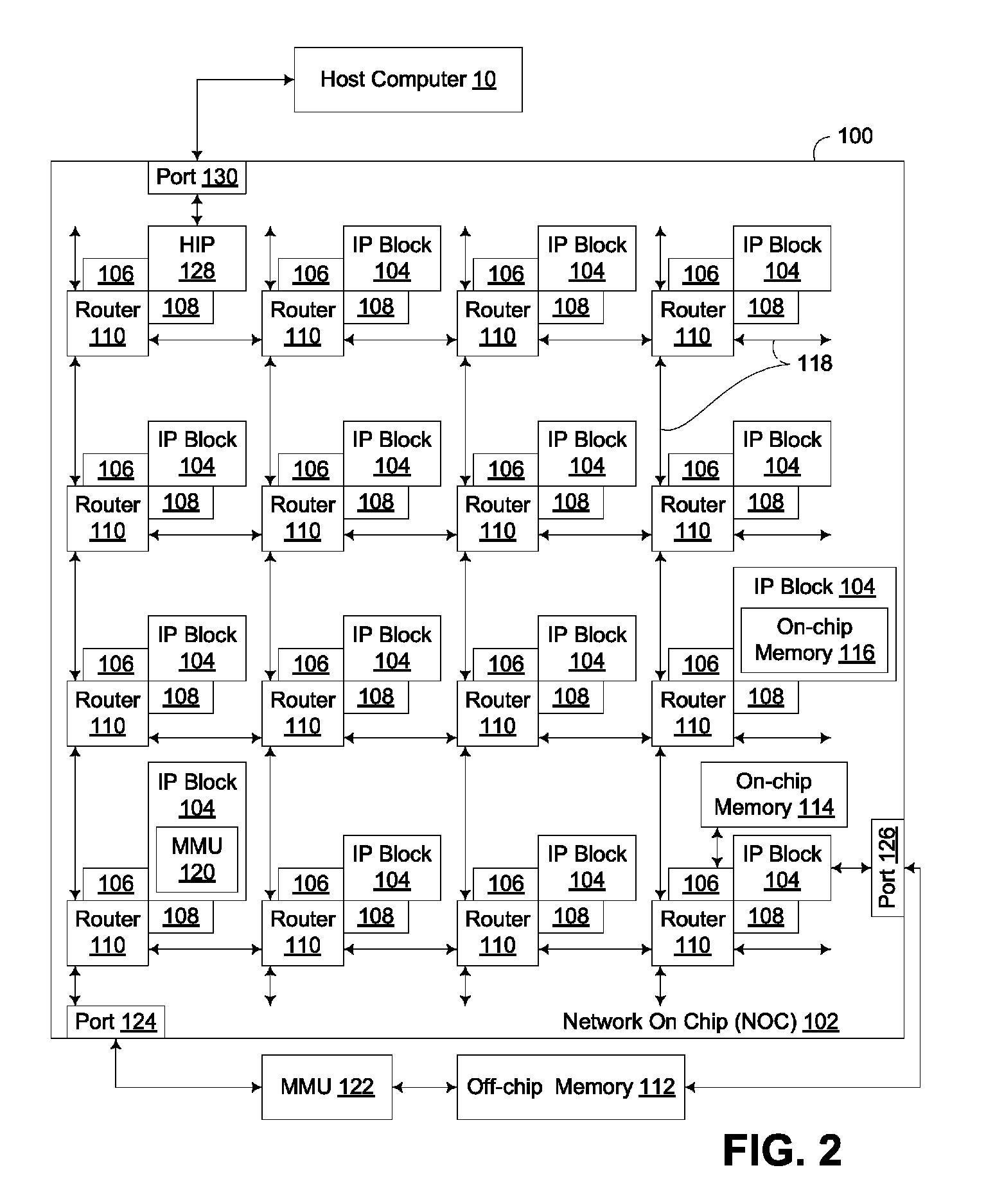

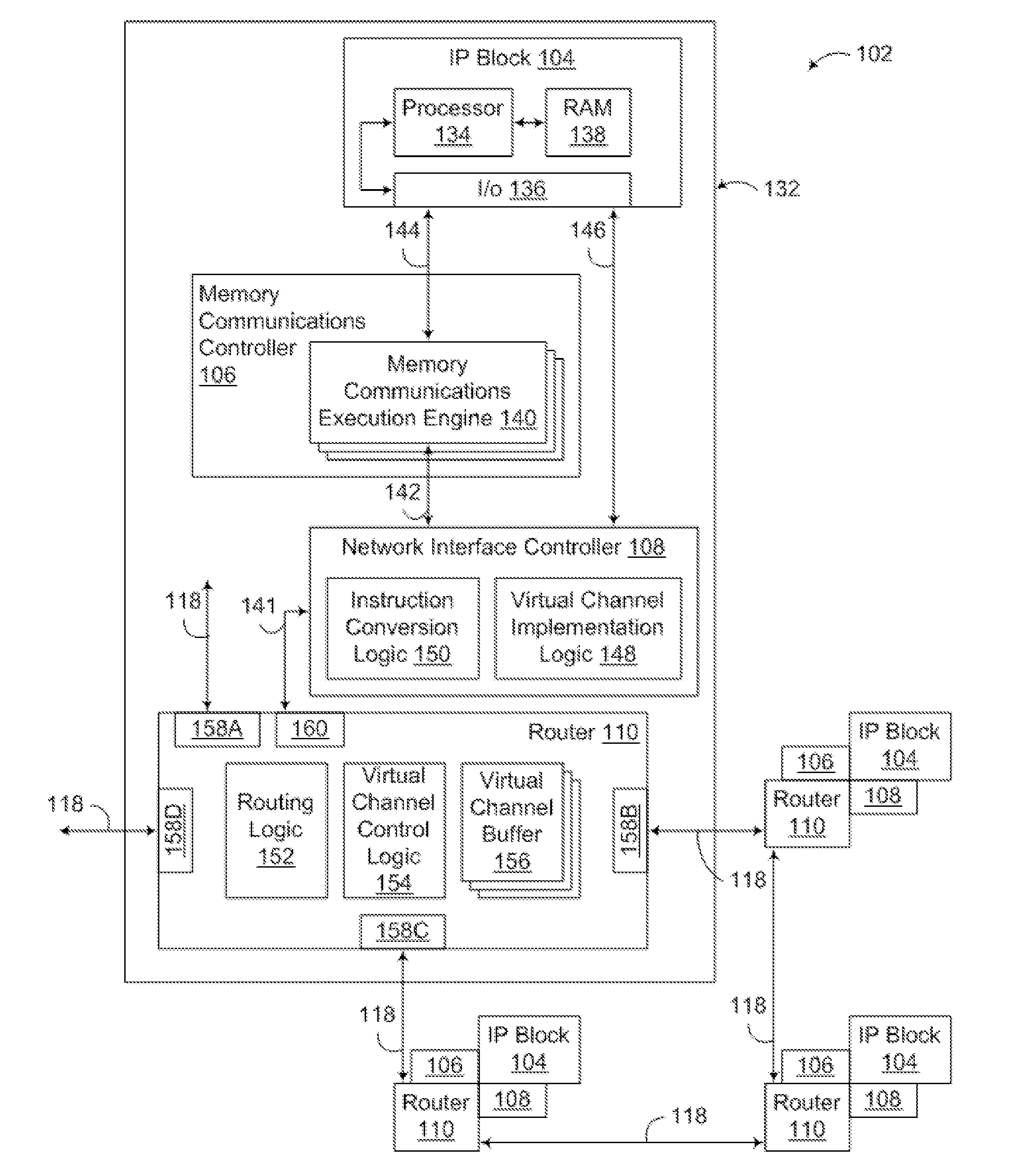

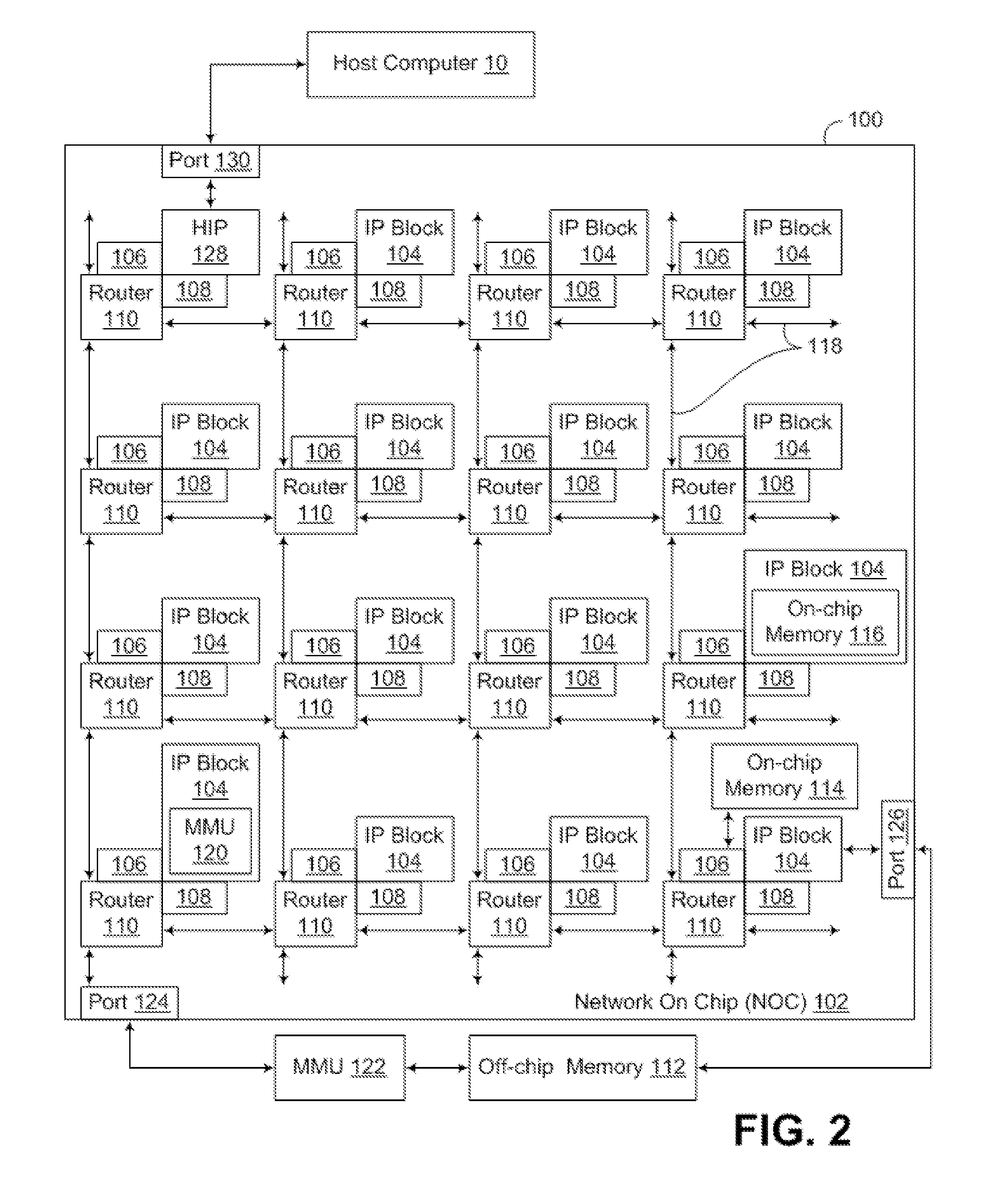

A network on chip (‘NOC’) that includes integrated processor (‘IP’) blocks, routers, memory communications controllers, and network interface controllers, each IP block adapted to a router through a memory communications controller and a network interface controller, a multiplicity of computer processors, each computer processor implementing a plurality of hardware threads of execution; and computer memory, the computer memory organized in pages and operatively coupled to one or more of the computer processors, the computer memory including a set associative cache, the cache comprising cache ways organized in sets, the cache being shared among the hardware threads of execution, each page of computer memory restricted for caching by one replacement vector of a class of replacement vectors to particular ways of the cache, each page of memory further restricted for caching by one or more bits of a replacement vector classification to particular sets of ways of the cache.

Owner:IBM CORP

Instructions and logic to provide advanced paging capabilities for secure enclave page caches

InactiveUS20140297962A1Memory architecture accessing/allocationMemory adressing/allocation/relocationHardware threadProcessing core

Instructions and logic provide advanced paging capabilities for secure enclave page caches. Embodiments include multiple hardware threads or processing cores, a cache to store secure data for a shared page address allocated to a secure enclave accessible by the hardware threads. A decode stage decodes a first instruction specifying said shared page address as an operand, and execution units mark an entry corresponding to an enclave page cache mapping for the shared page address to block creation of a new translation for either of said first or second hardware threads to access the shared page. A second instruction is decoded for execution, the second instruction specifying said secure enclave as an operand, and execution units record hardware threads currently accessing secure data in the enclave page cache corresponding to the secure enclave, and decrement the recorded number of hardware threads when any of the hardware threads exits the secure enclave.

Owner:INTEL CORP

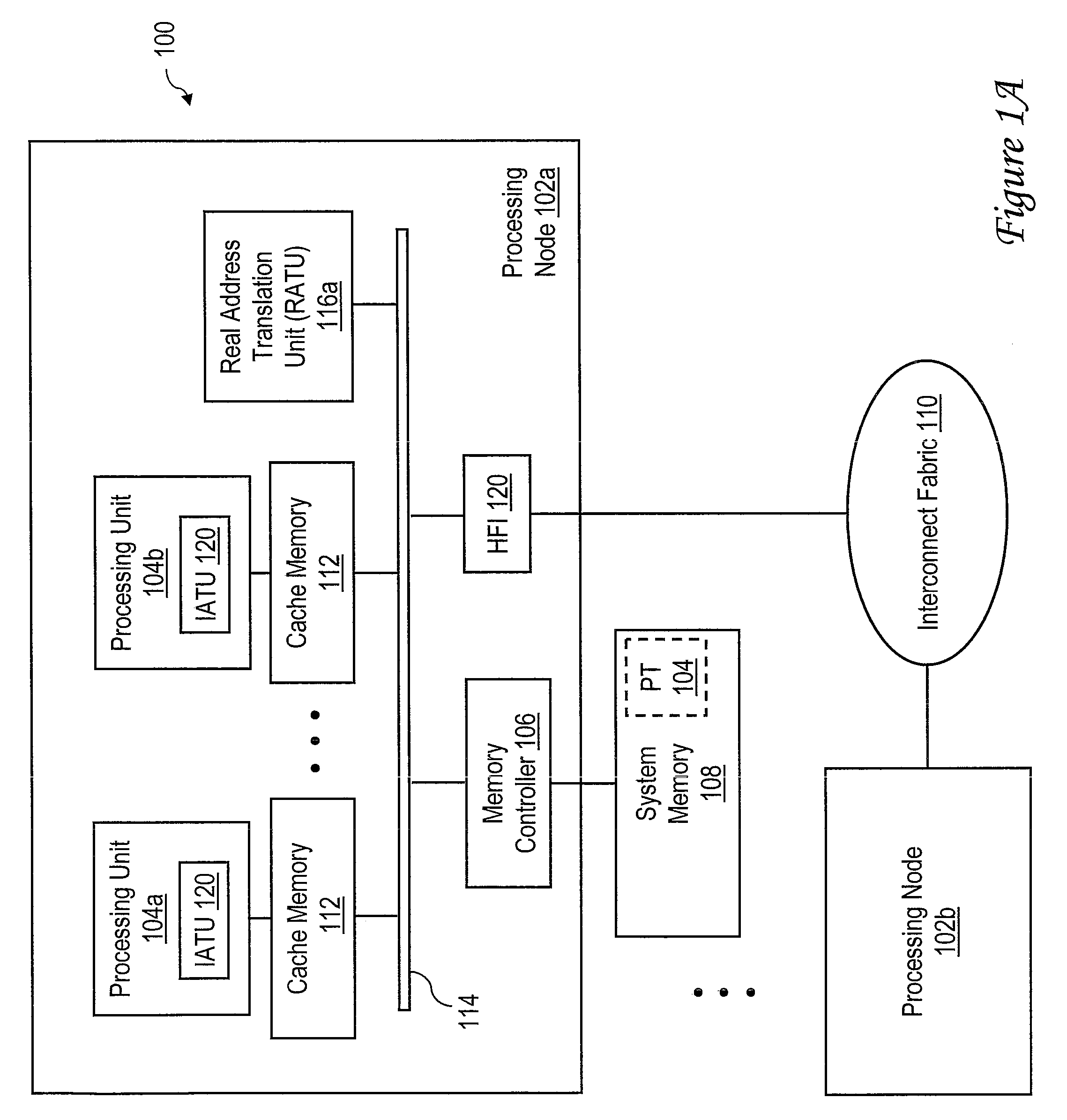

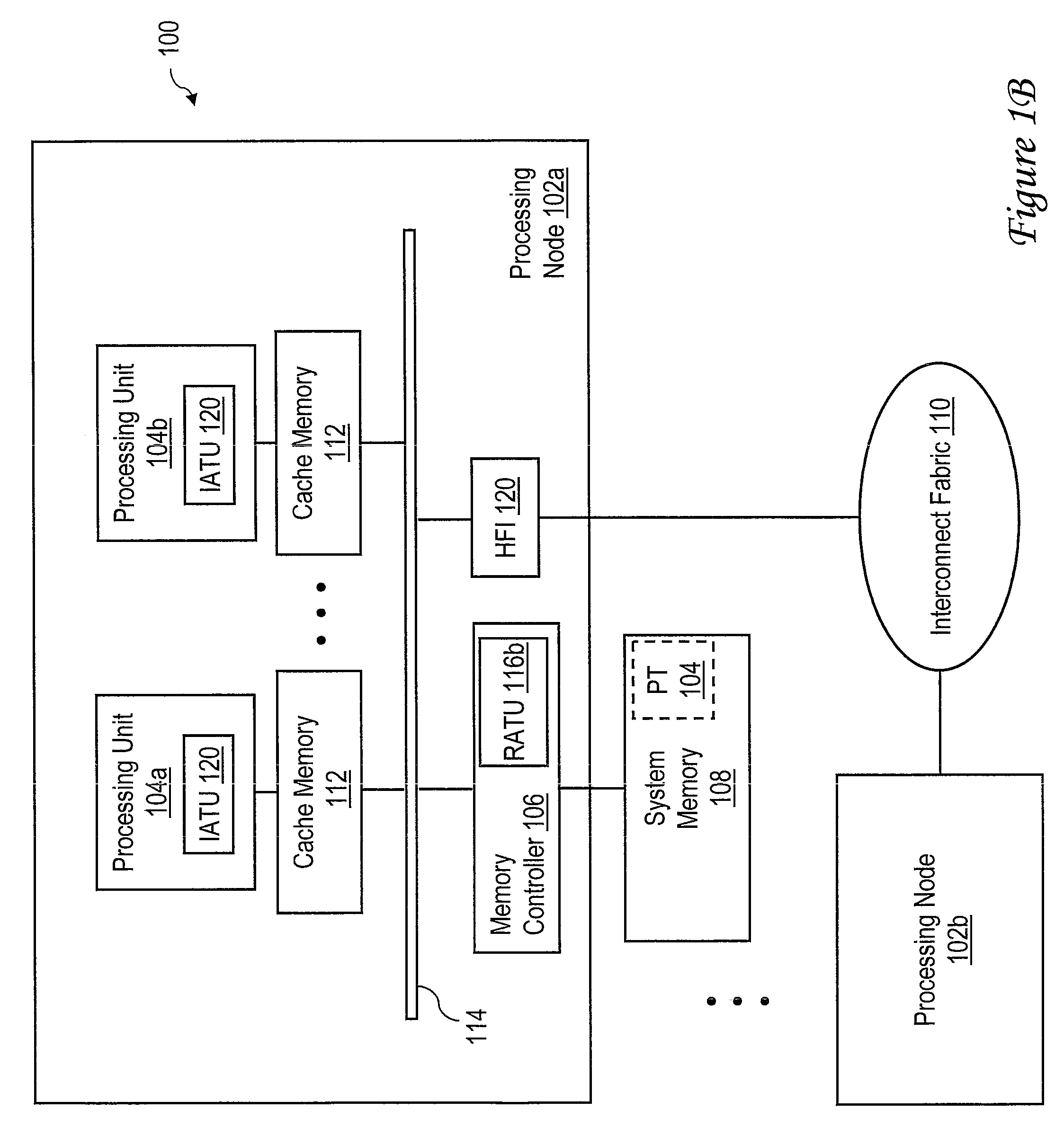

Method, System and Program Product for Address Translation Through an Intermediate Address Space

InactiveUS20090113164A1Memory architecture accessing/allocationMemory systemsAddress spaceHardware thread

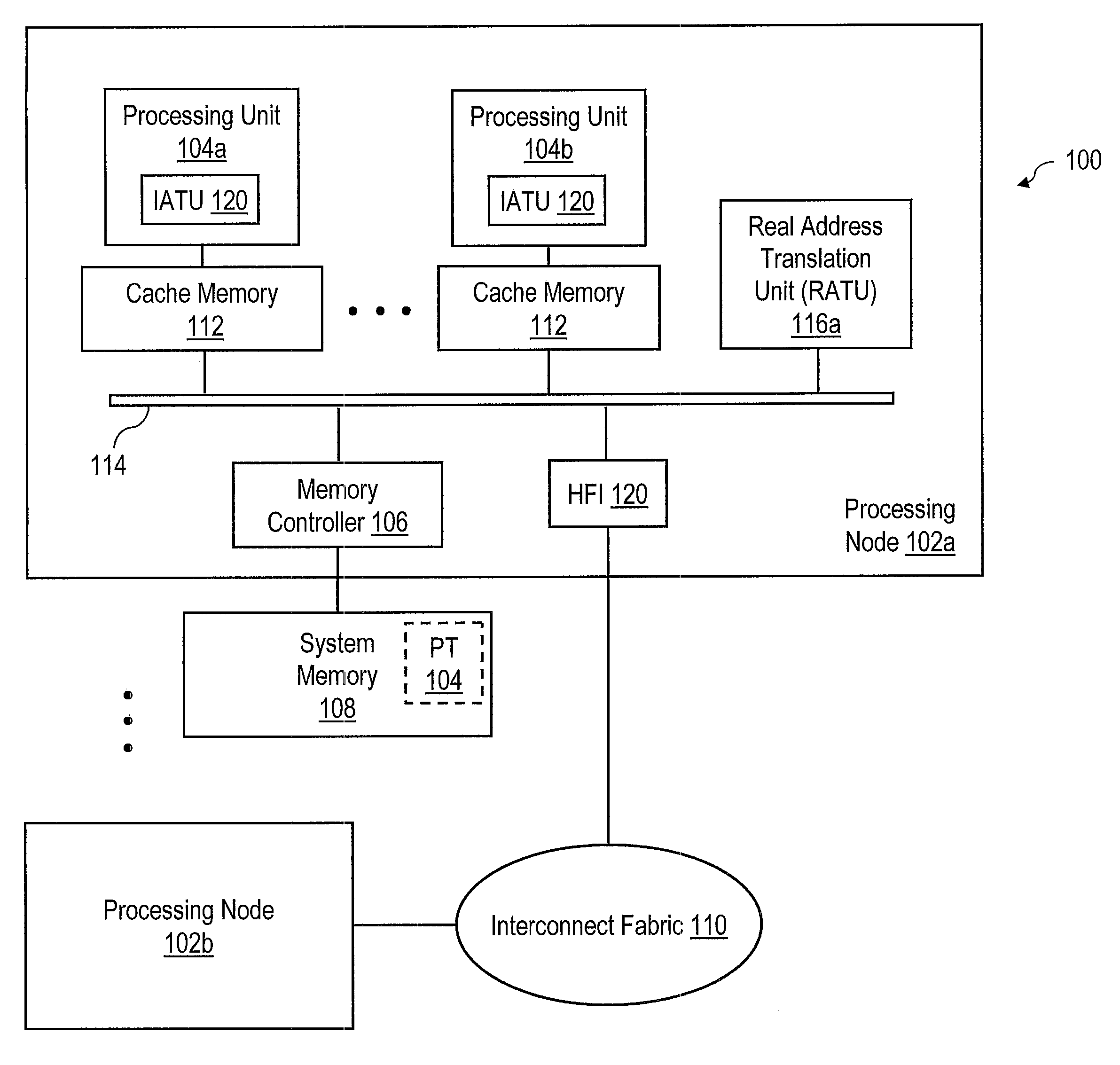

In a data processing system capable of concurrently executing multiple hardware threads of execution, an intermediate address translation unit in a processing unit translates an effective address for a memory access into an intermediate address. A cache memory is accessed utilizing the intermediate address. In response to a miss in cache memory, the intermediate address is translated into a real address by a real address translation unit that performs address translation for multiple hardware threads of execution. The system memory is accessed with the real address.

Owner:IBM CORP

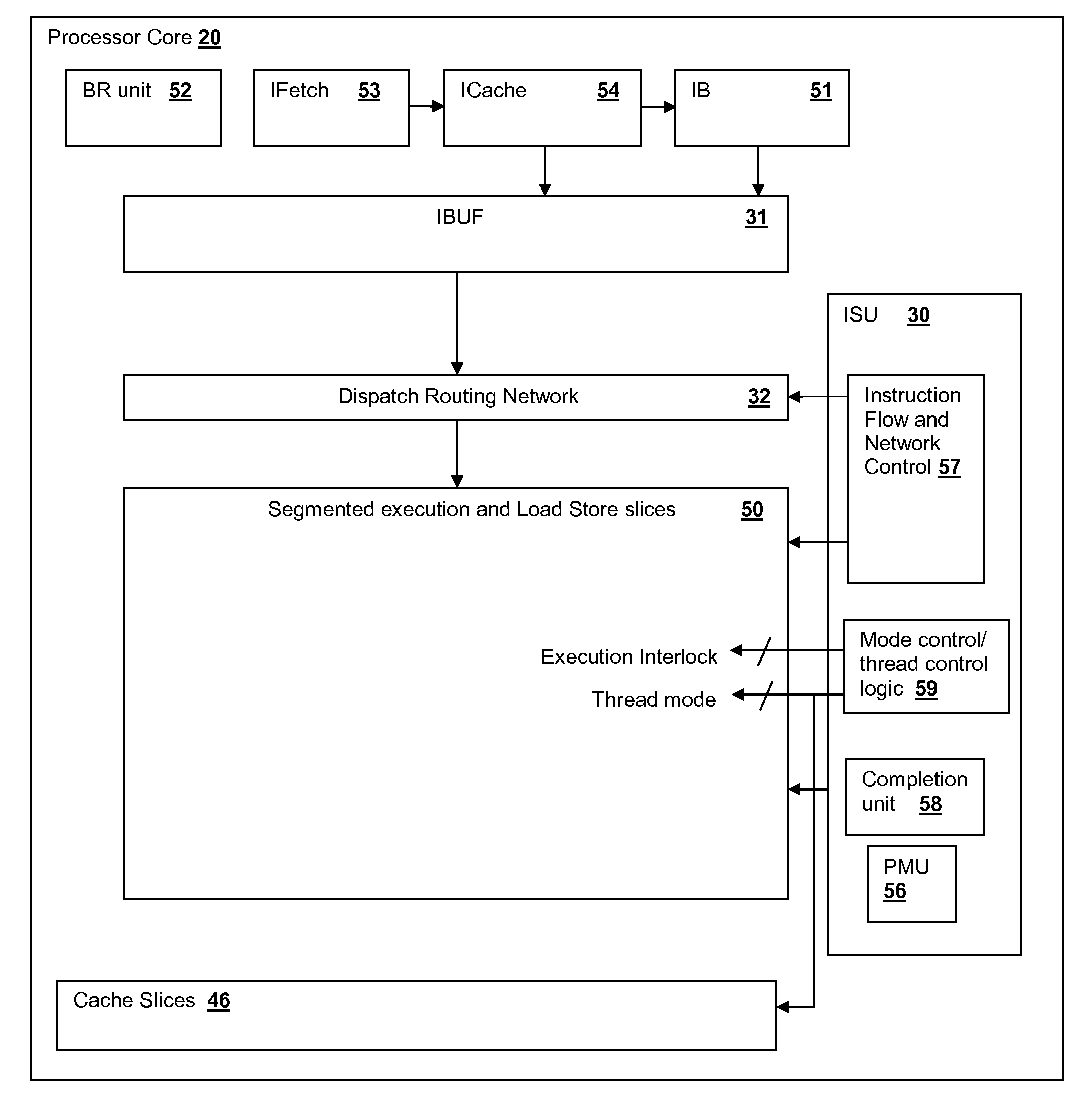

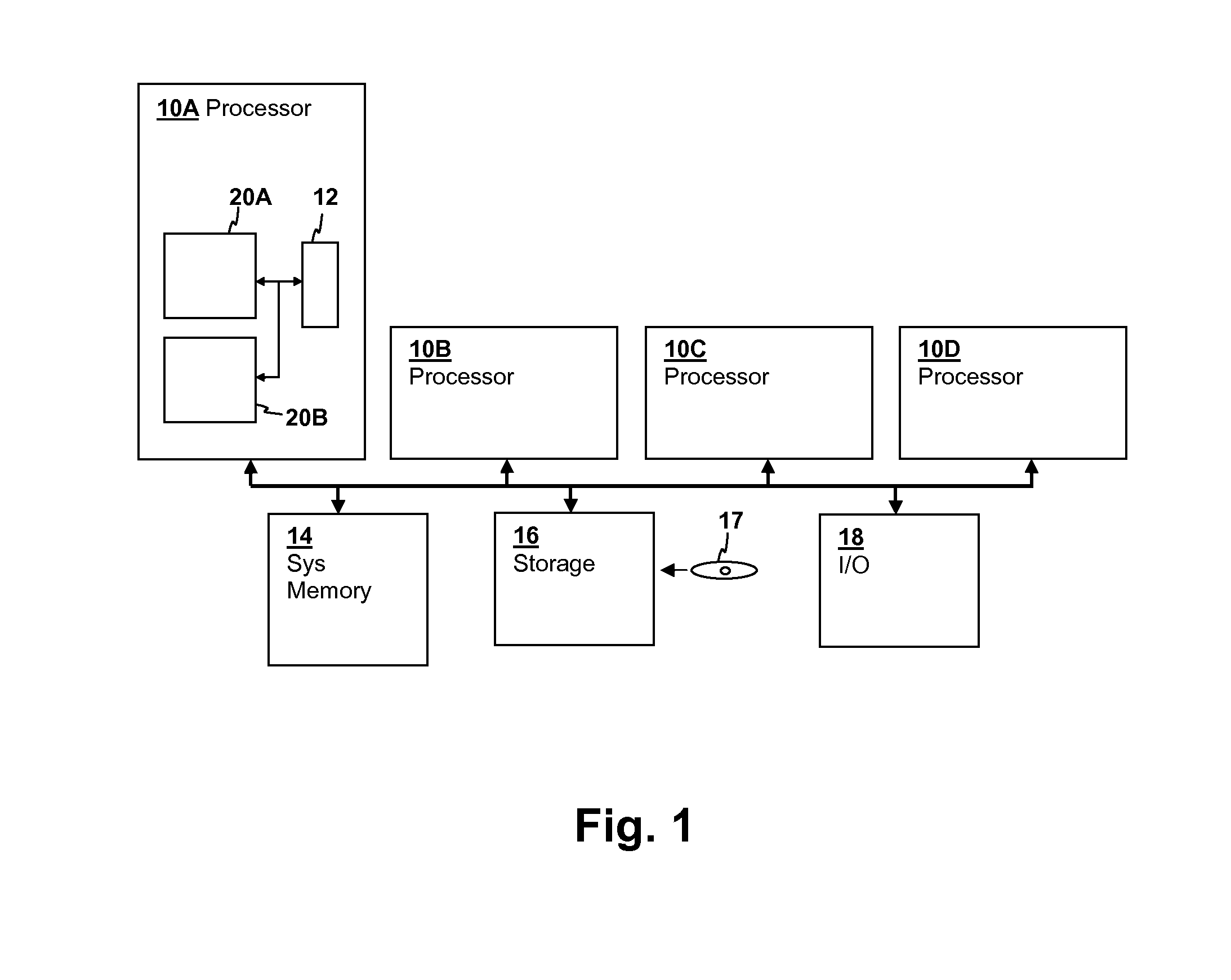

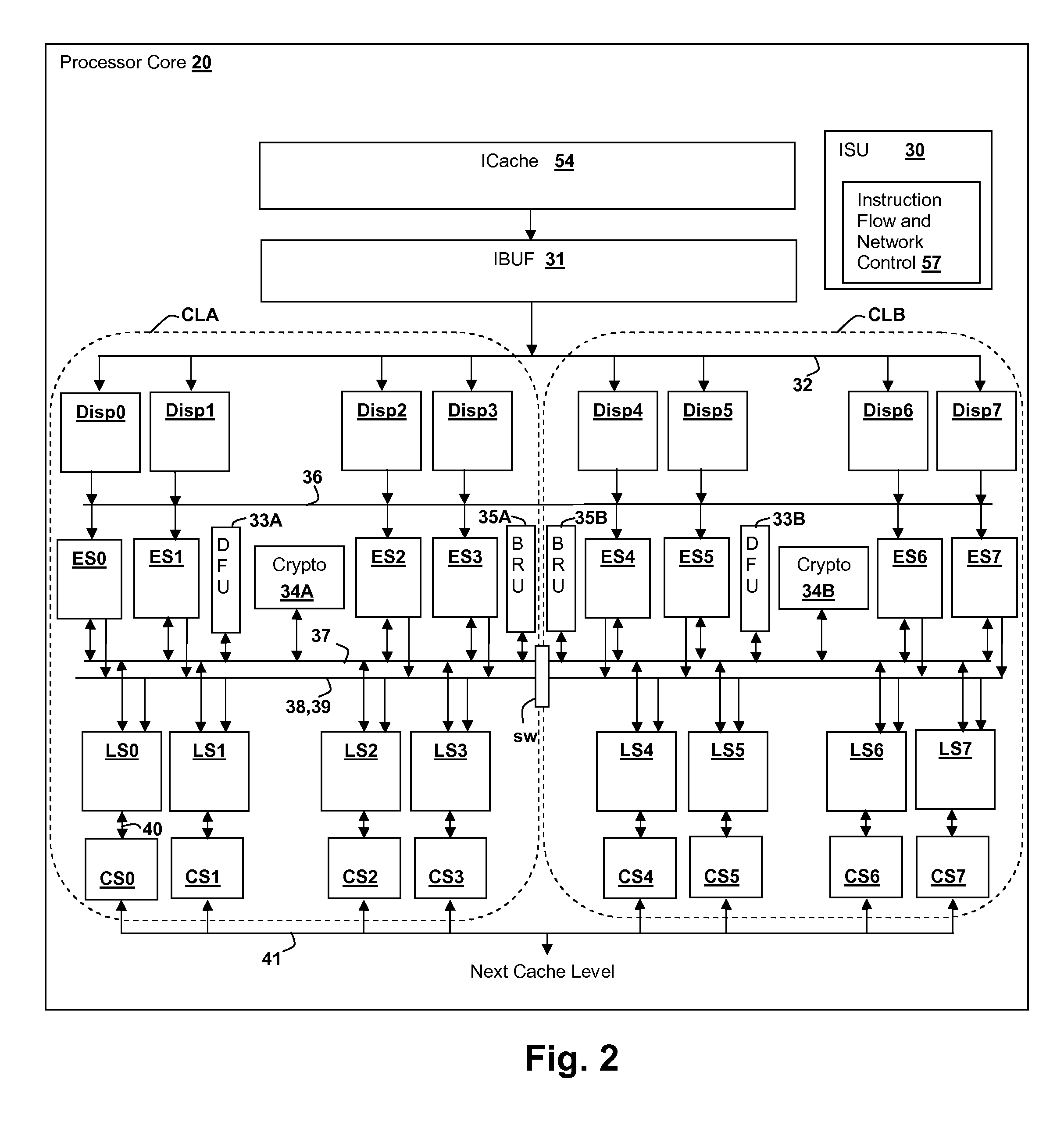

Reconfigurable parallel execution and load-store slice processing methods

ActiveUS20160202991A1Memory architecture accessing/allocationInstruction analysisHardware threadControl signal

A method of operating a processor core having multiple parallel instruction execution slices and coupled to multiple dispatch queues by a dispatch routing network provides flexible and efficient use of internal resources. The configuration of the execution slices is selectable so that capabilities of the processor core can be adjusted according to execution requirements for the instruction streams. Two or more execution slices can be combined as super-slices to handle wider data, wider operands and / or vector operations, according to one or more mode control signal that also serves as a configuration control signal. The mode control signal is also used to partition clusters of the execution slices within the processor core according to whether single-threaded or multi-threaded operation is selected, and additionally according to a number of hardware threads that are active.

Owner:IBM CORP

Multithreaded processor architecture with operational latency hiding

ActiveUS20060230408A1High of latencyHide latencyError detection/correctionRuntime instruction translationHardware threadLogical operations

A method and processor architecture for achieving a high level of concurrency and latency hiding in an “infinite-thread processor architecture” with a limited number of hardware threads is disclosed. A preferred embodiment defines “fork” and “join” instructions for spawning new context-switched threads. Context switching is used to hide the latency of both memory-access operations (i.e., loads and stores) and arithmetic / logical operations. When an operation executing in a thread incurs a latency having the potential to delay the instruction pipeline, the latency is hidden by performing a context switch to a different thread. When the result of the operation becomes available, a context switch back to that thread is performed to allow the thread to continue.

Owner:IBM CORP

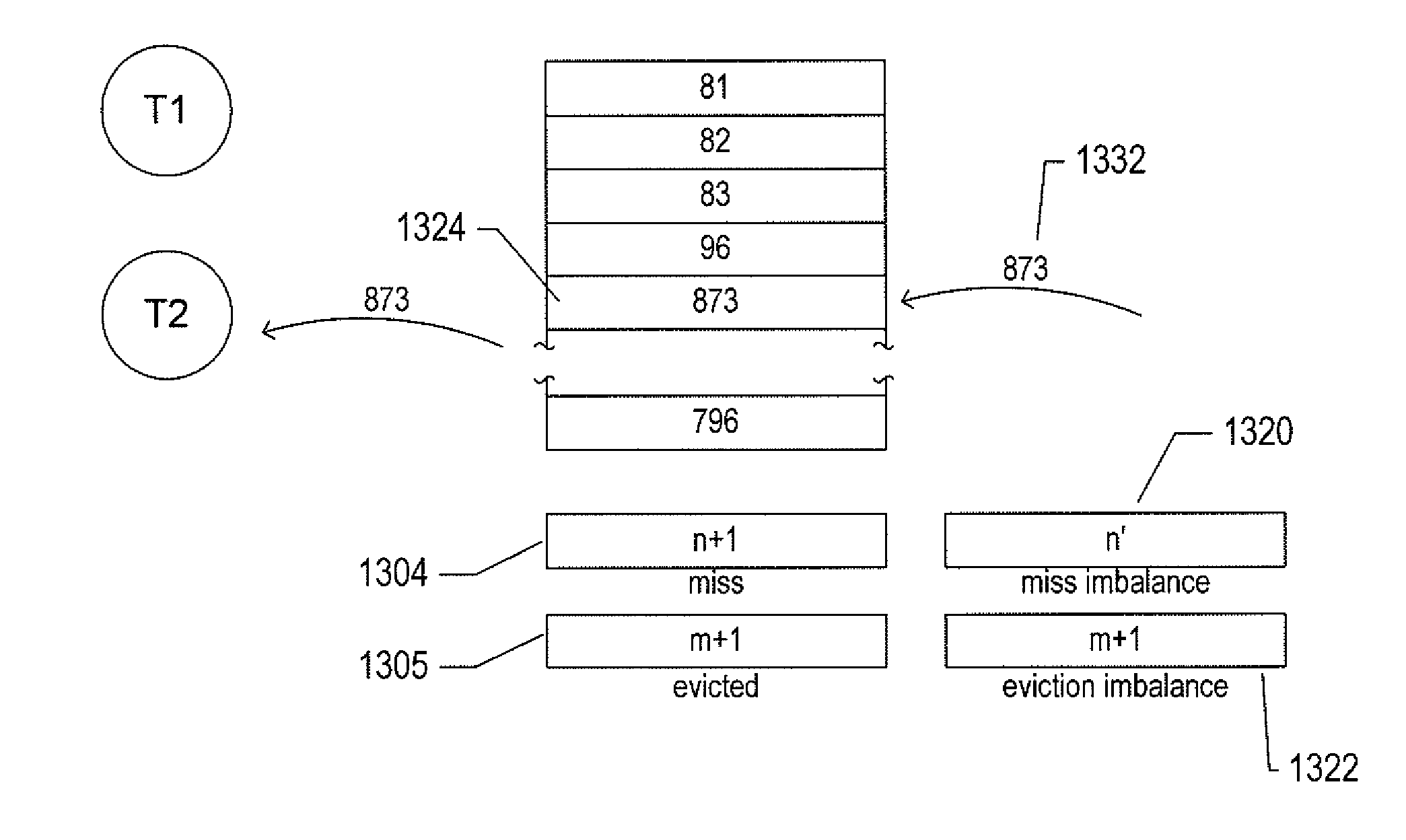

Performance-imbalance-monitoring processor features

The current application is directed to architected hardware support within computer processors for detecting and monitoring various types of potential performance imbalances with respect to simultaneously executing hardware threads in simultaneous multi-threading (“SMT”) processors and SMT-processor cores. The architected hardware support may include various types of performance-imbalance-monitoring registers that accumulate indications of performance imbalances and that can be used, by performance-monitoring software and by human analysts to detect performance-degrading conflicts between simultaneously executing hardware threads. Such conflicts can be ameliorated by changing the scheduling of virtual machines, tasks, and other computational entities, by redesigning and re-implementing all or portions of performance-limited and performance-degrading applications, by altering resource-allocation strategies, and by other means. In addition, performance imbalance detection and monitoring can be used to provide accurate, computational-throughput-based accounting in cloud-computing environments.

Owner:VMWARE INC

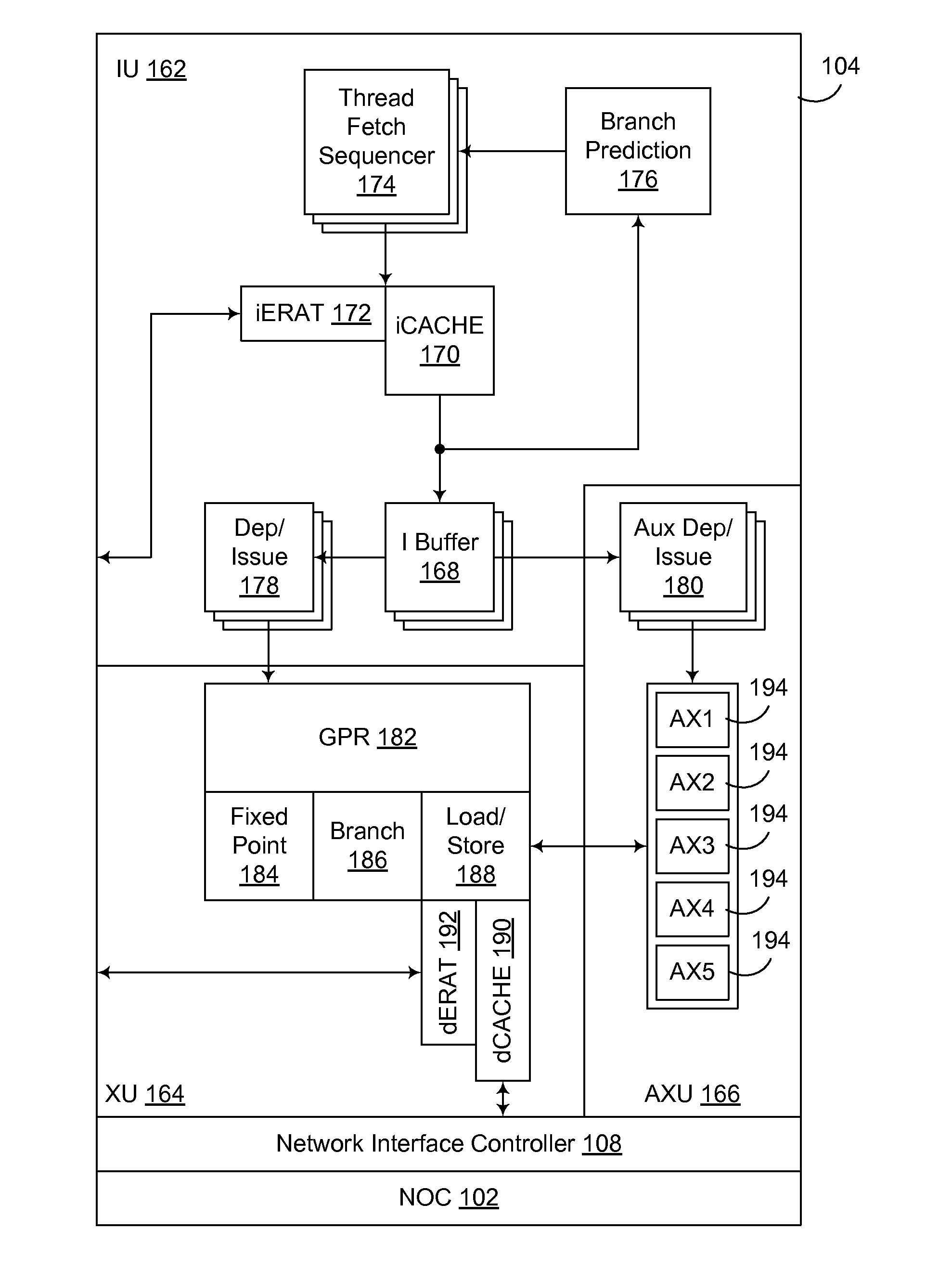

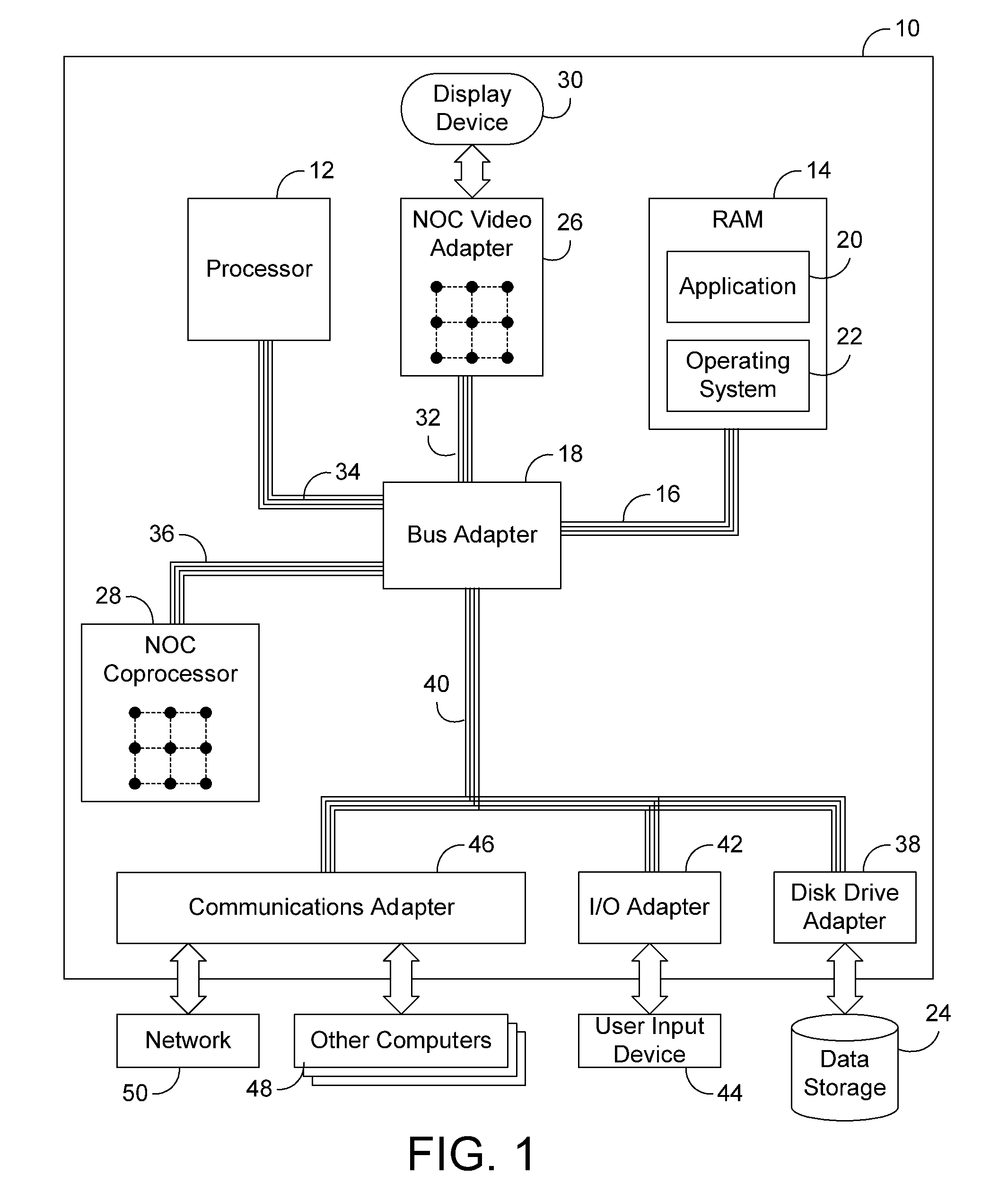

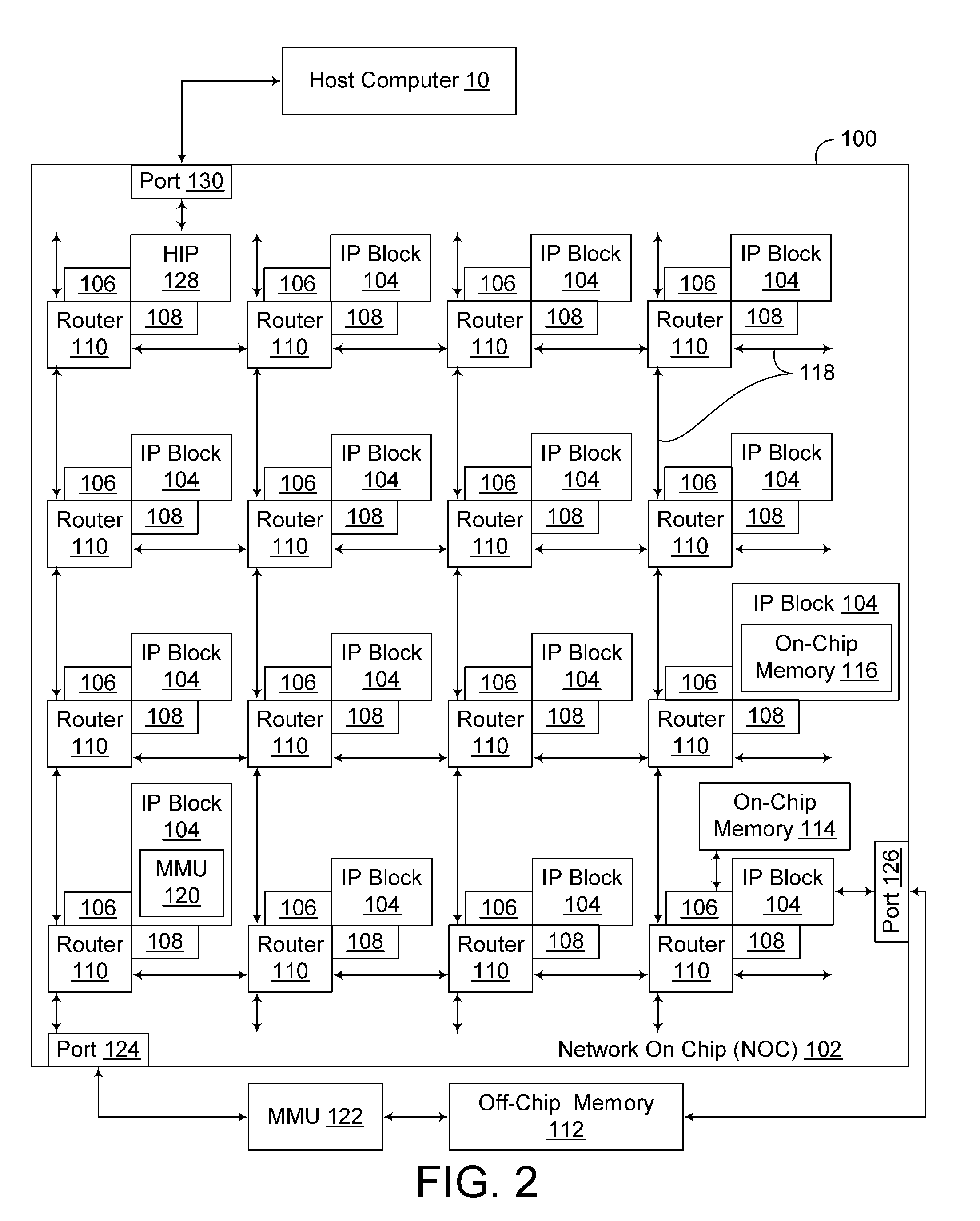

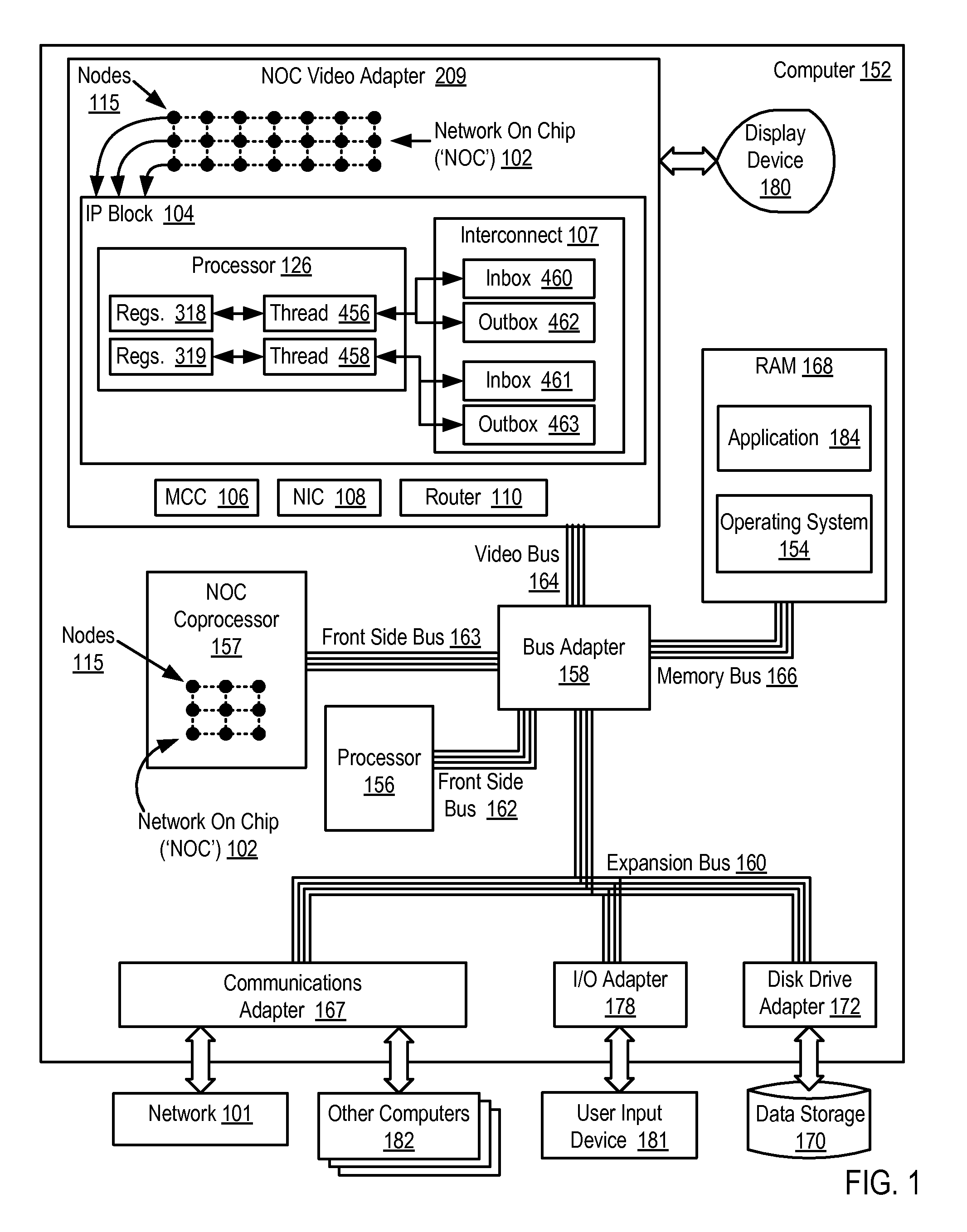

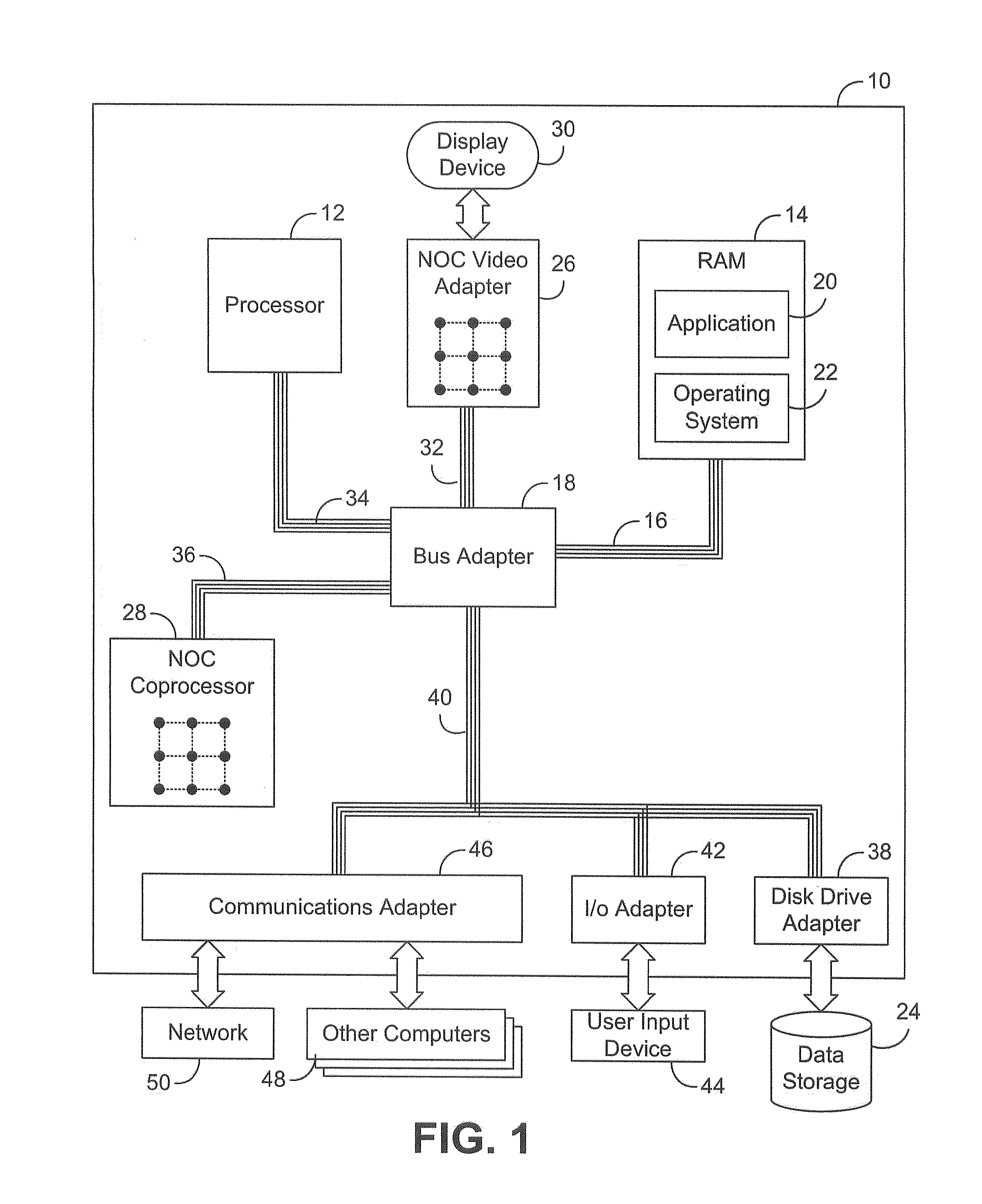

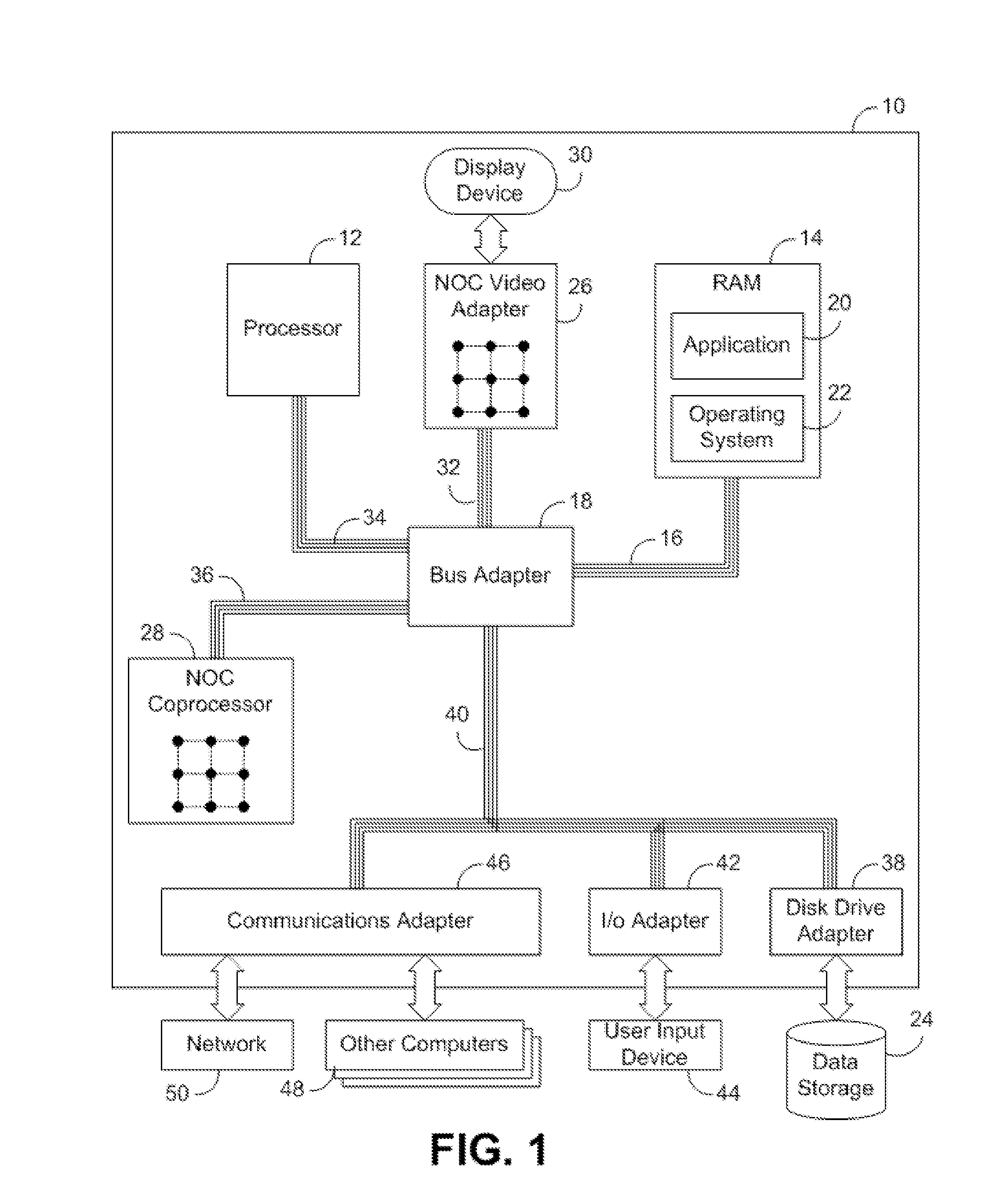

Network On Chip With Low Latency, High Bandwidth Application Messaging Interconnects That Abstract Hardware Inter-Thread Data Communications Into An Architected State of A Processor

ActiveUS20090282214A1Lower latencyHigh bandwidthProgram control using wired connectionsGeneral purpose stored program computerHardware threadHigh bandwidth

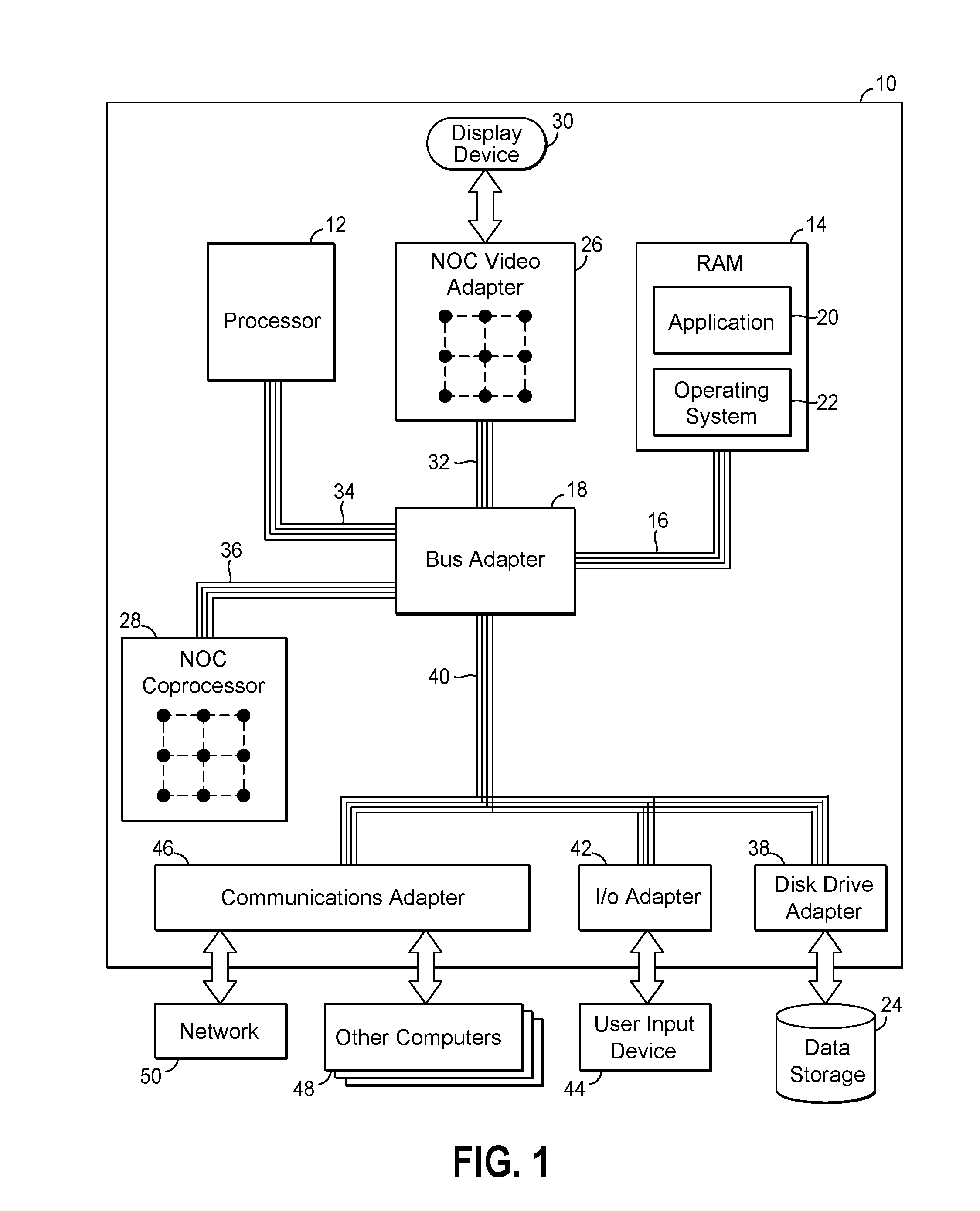

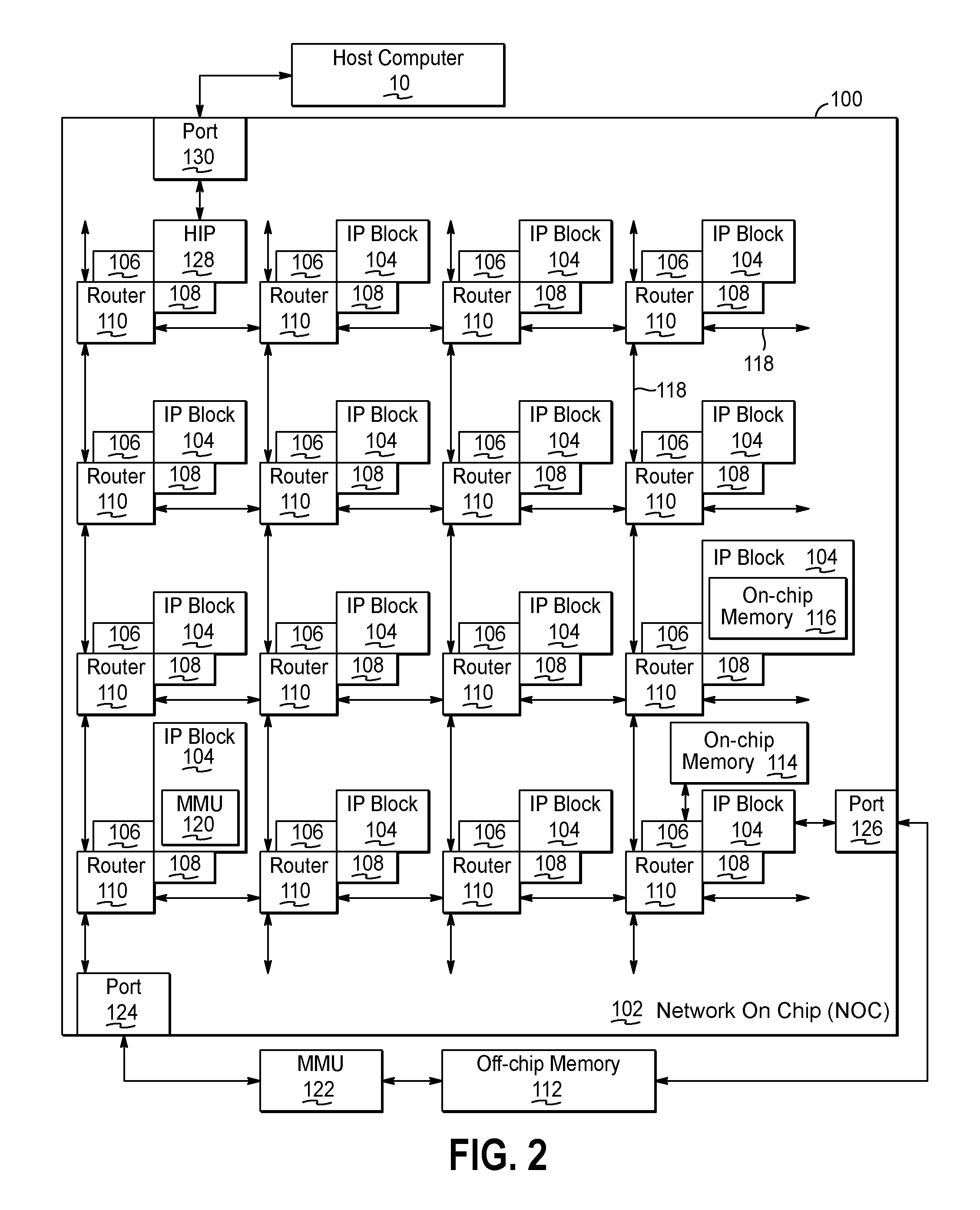

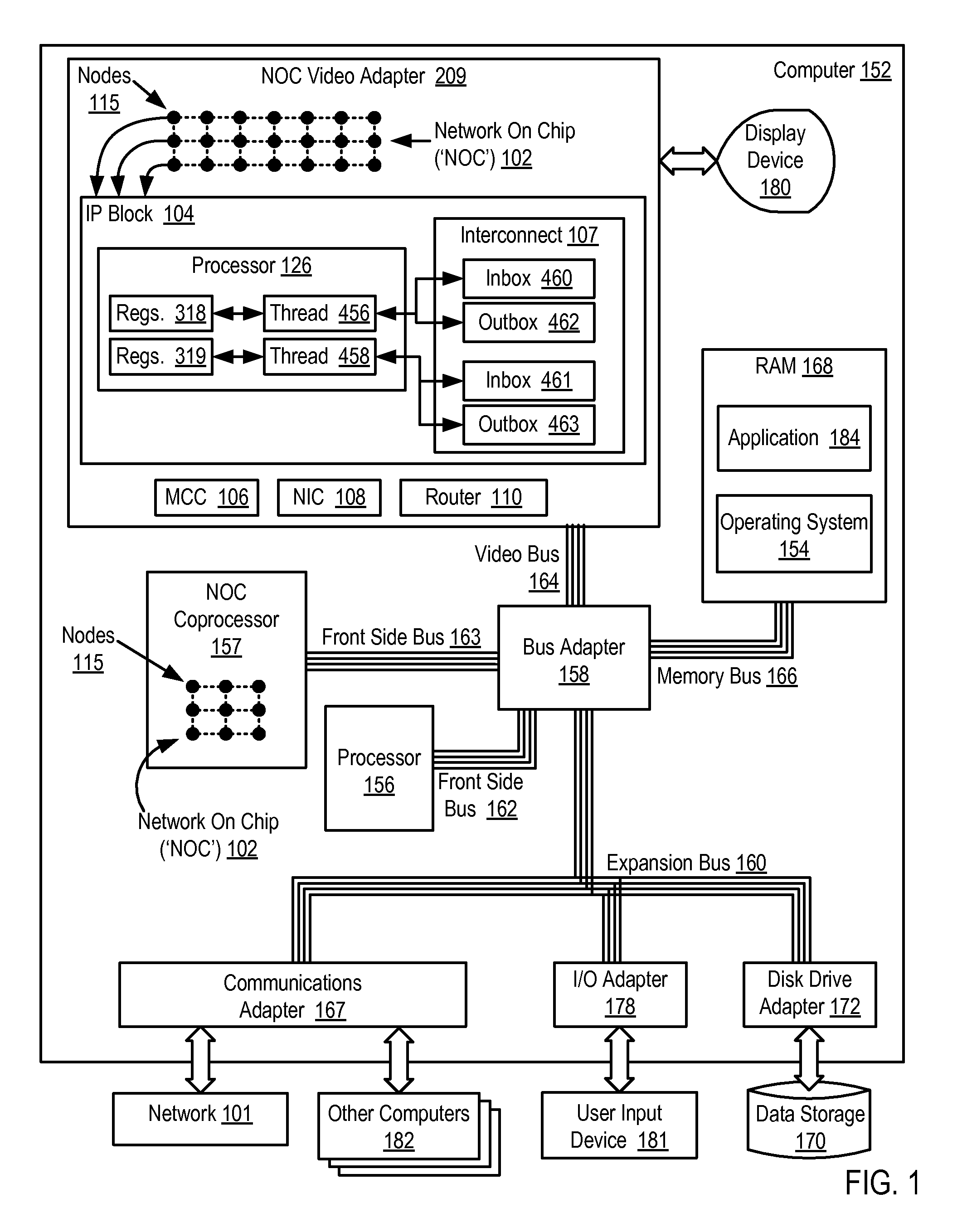

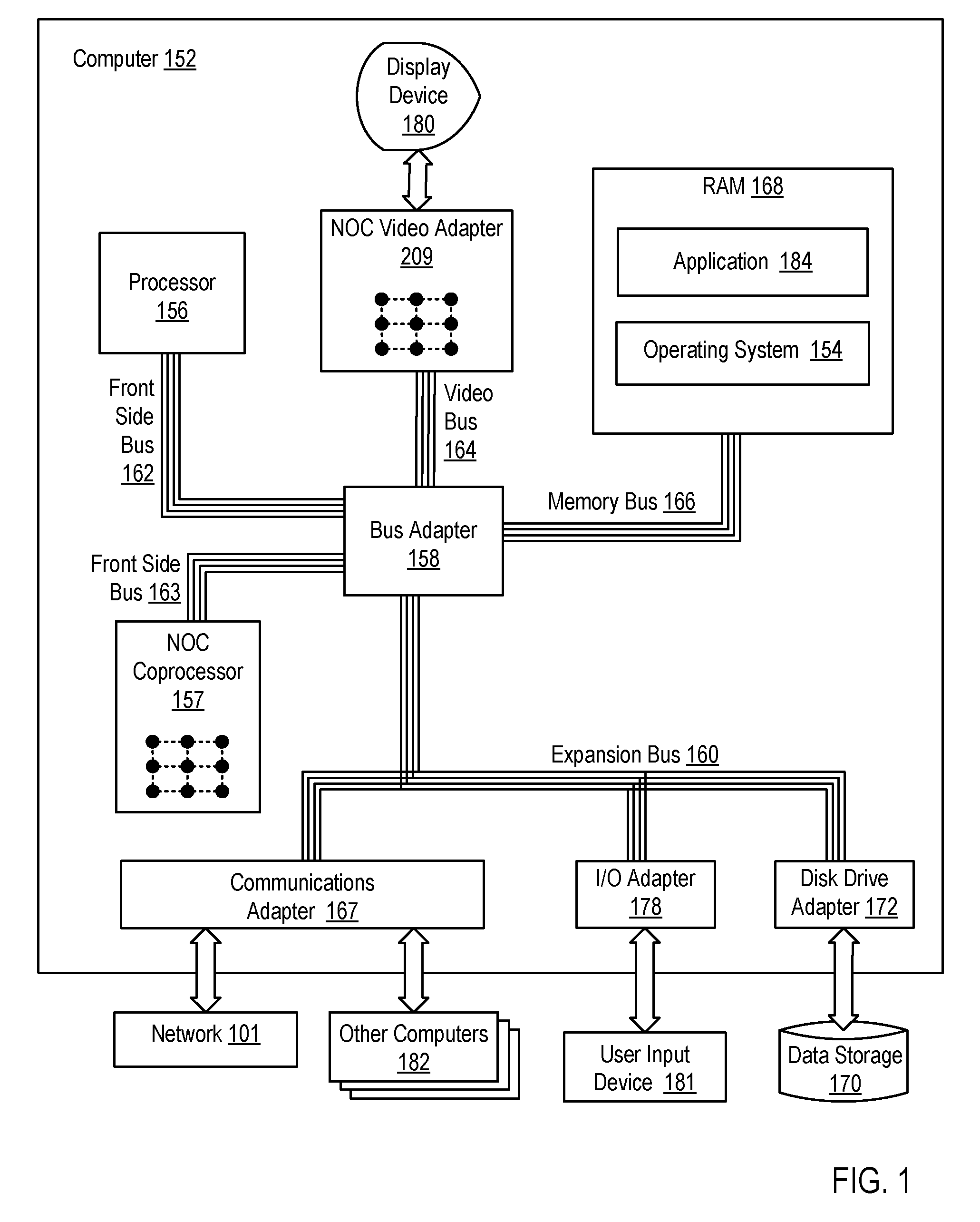

Data processing on a network on chip (‘NOC’) that includes integrated processor (‘IP’) blocks, each of a plurality of the IP blocks including at least one computer processor, each such computer processor implementing a plurality of hardware threads of execution; low latency, high bandwidth application messaging interconnects; memory communications controllers; network interface controllers; and routers; each of the IP blocks adapted to a router through a separate one of the low latency, high bandwidth application messaging interconnects, a separate one of the memory communications controllers, and a separate one of the network interface controllers; each application messaging interconnect abstracting into an architected state of each processor, for manipulation by computer programs executing on the processor, hardware inter-thread communications among the hardware threads of execution; each memory communications controller controlling communication between an IP block and memory; each network interface controller controlling inter-IP block communications through routers.

Owner:IBM CORP

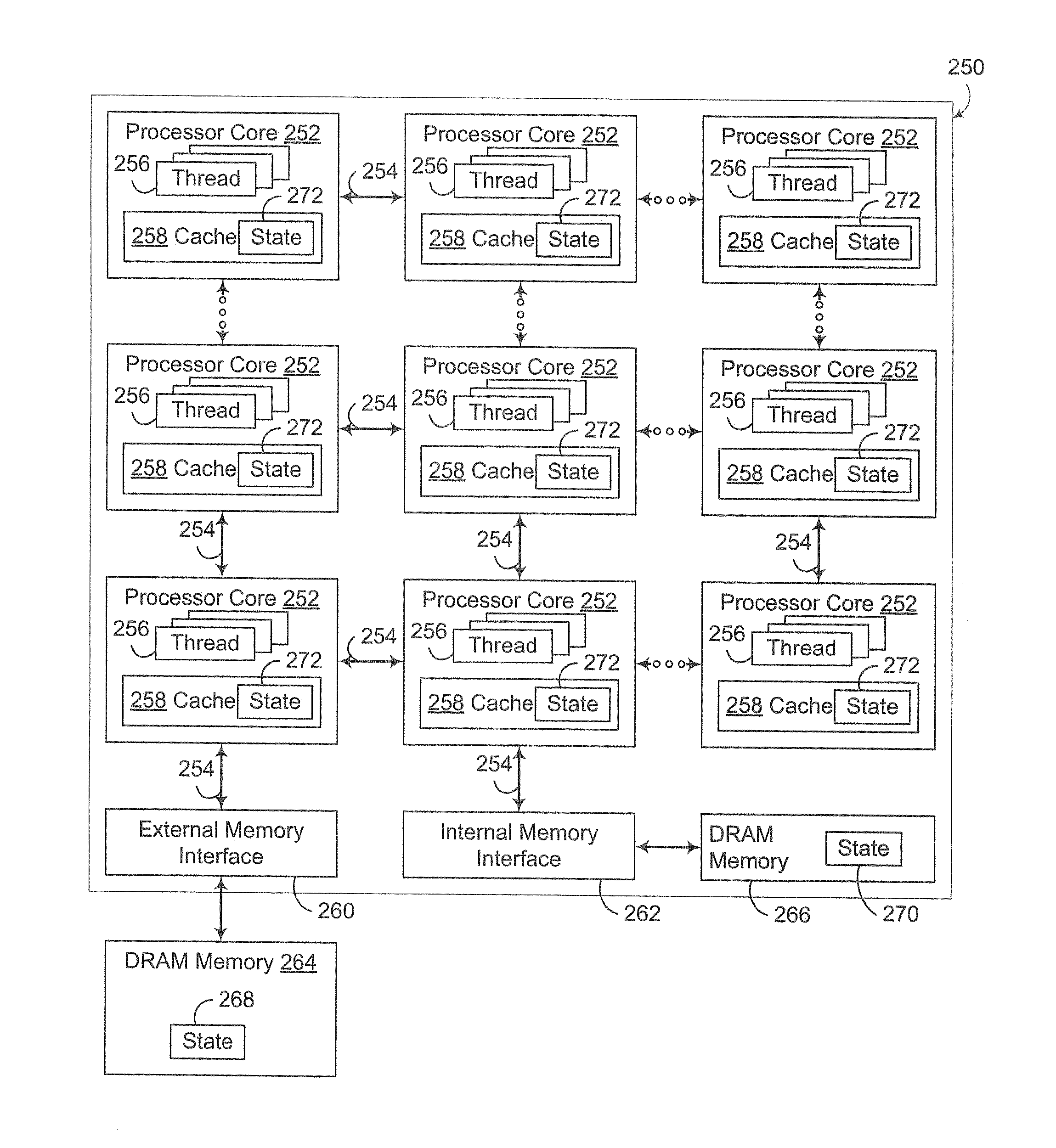

Propagating shared state changes to multiple threads within a multithreaded processing environment

InactiveUS20110320719A1Preserving memory bandwidthImprove performanceMemory adressing/allocation/relocation3D-image renderingHardware threadMemory bandwidth

A circuit arrangement and method make state changes to shared state data in a highly multithreaded environment by propagating or streaming the changes to multiple parallel hardware threads of execution in the multithreaded environment using an on-chip communications network and without attempting to access any copy of the shared state data in a shared memory to which the parallel threads of execution are also coupled. Through the use of an on-chip communications network, changes to the shared state data may be communicated quickly and efficiently to multiple threads of execution, enabling those threads to locally update their local copies of the shared state. Furthermore, by avoiding attempts to access a shared memory, the interface to the shared memory is not overloaded with concurrent access attempts, thus preserving memory bandwidth for other activities and reducing memory latency. Particularly for larger shared states, propagating the changes, rather than an entire shared state, further improves performance by reducing the amount of data communicated over the on-chip communications network.

Owner:IBM CORP

Low latency variable transfer network for fine grained parallelism of virtual threads across multiple hardware threads

InactiveUS20130159669A1Reduce transferProgram control using wired connectionsGeneral purpose stored program computerHardware threadProcessing core

A method and circuit arrangement utilize a low latency variable transfer network between the register files of multiple processing cores in a multi-core processor chip to support fine grained parallelism of virtual threads across multiple hardware threads. The communication of a variable over the variable transfer network may be initiated by a move from a local register in a register file of a source processing core to a variable register that is allocated to a destination hardware thread in a destination processing core, so that the destination hardware thread can then move the variable from the variable register to a local register in the destination processing core.

Owner:IBM CORP

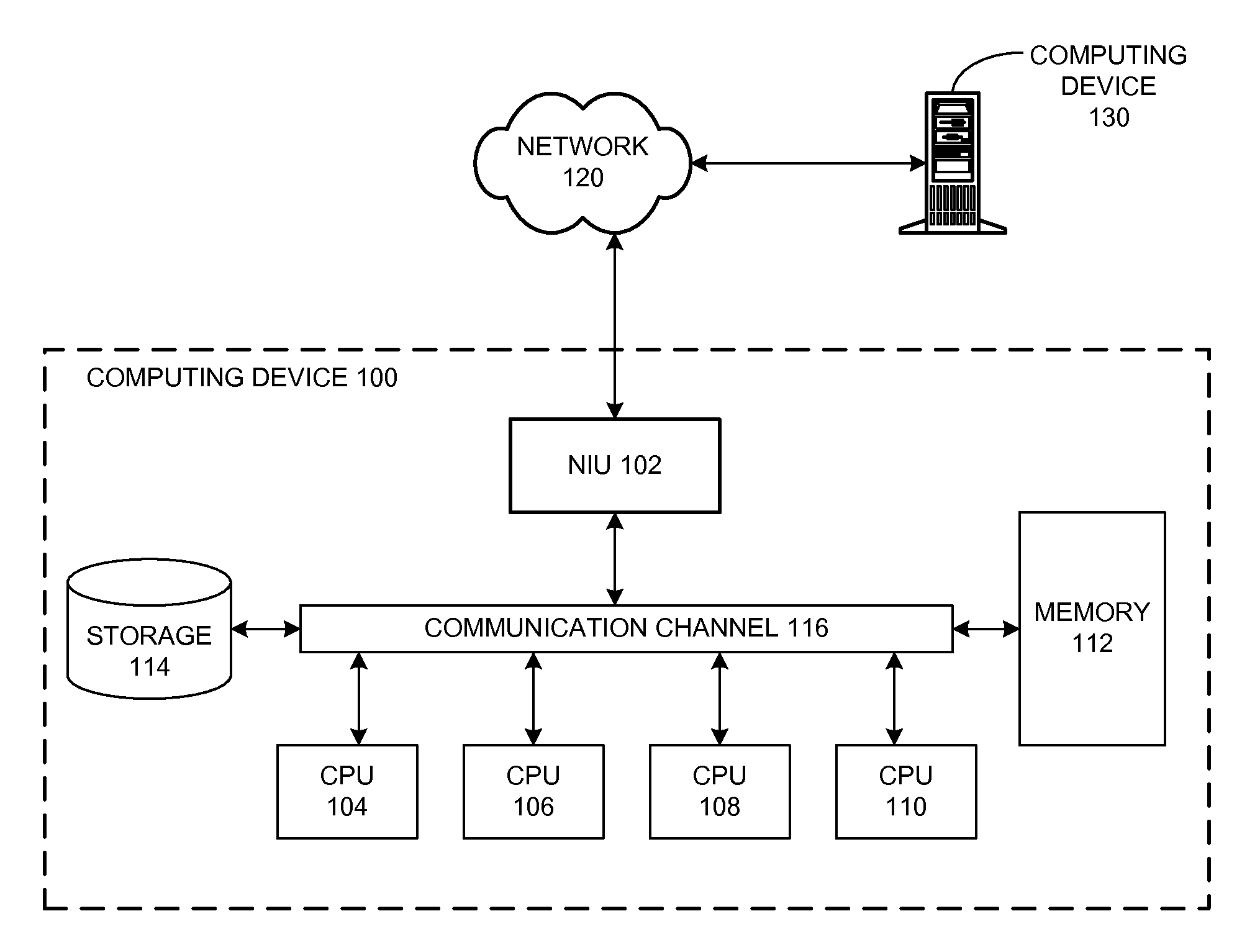

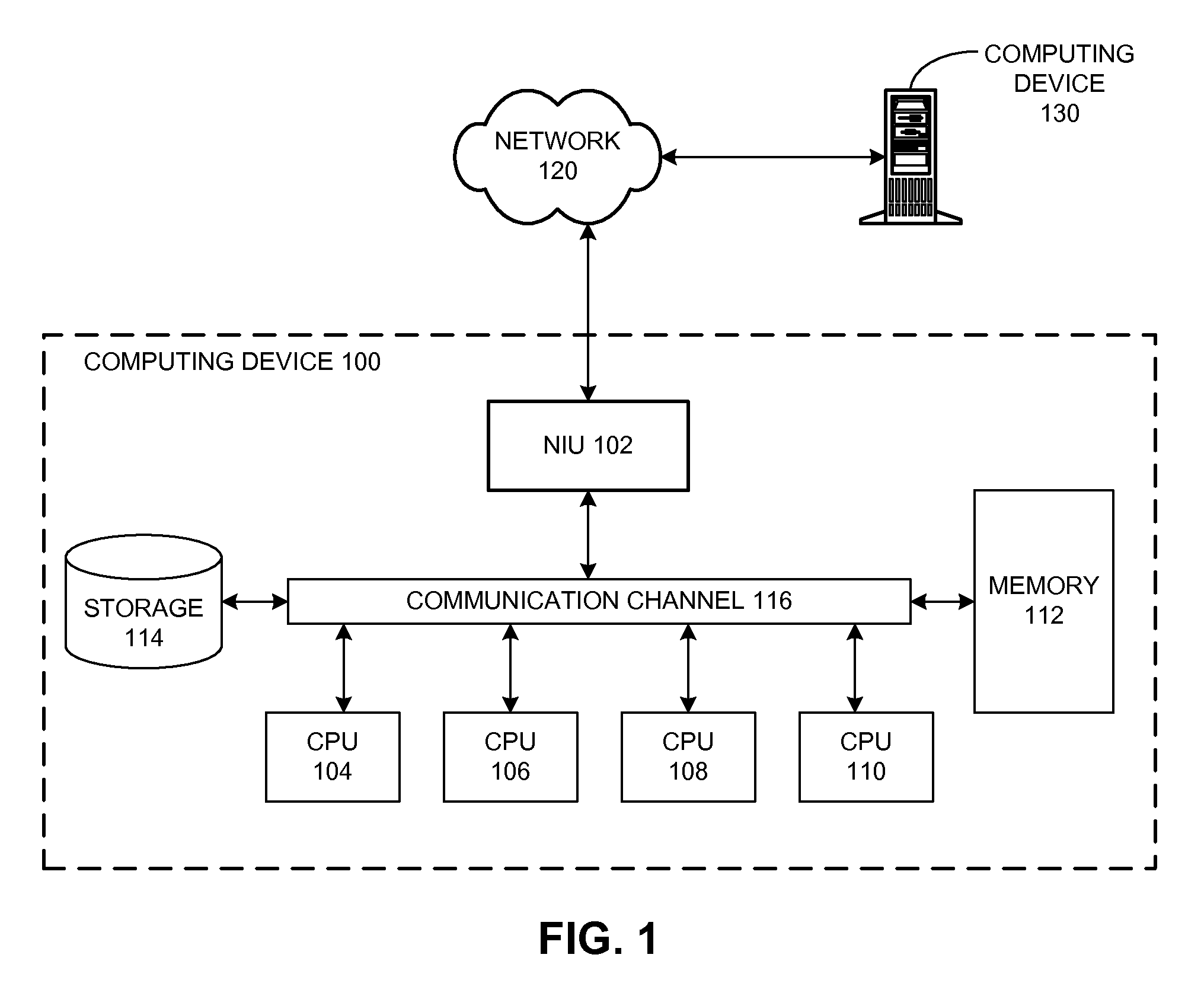

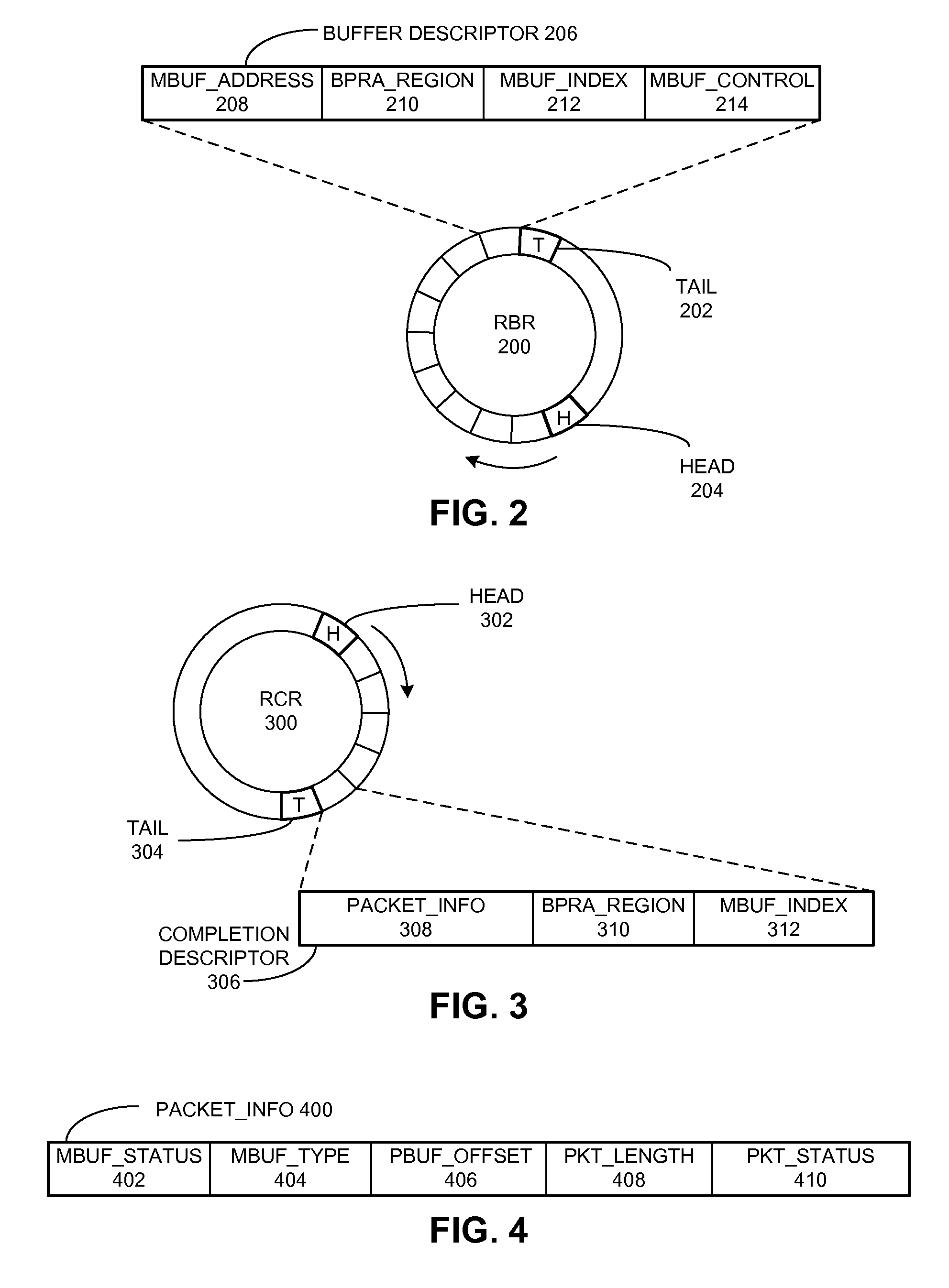

Efficient buffer management in a multi-threaded network interface

ActiveUS20100332698A1Efficiently share RBREfficient sharingTransmissionInput/output processes for data processingHardware threadSystem usage

Some embodiments of the present invention provide a system for receiving packets on a multi-threaded computing device which uses a memory-buffer-usage scorecard (MBUS) to enable multiple hardware threads to share a common pool of memory buffers. During operation, the system can identify a memory-descriptor location for posting a memory descriptor for a memory buffer. Next, the system can post the memory descriptor for the memory buffer at the memory-descriptor location. The system can then update the MBUS to indicate that the memory buffer is in use. Next, the system can store a packet in the memory buffer, and post a completion descriptor in a completion-descriptor location to indicate that the packet is ready to be processed. If the completion-descriptor indicates that the memory buffer is ready to be reclaimed, the system can reclaim the memory buffer, and update the MBUS to indicate that the memory buffer has been reclaimed.

Owner:ORACLE INT CORP

Instruction and logic to provide pushing buffer copy and store functionality

InactiveUS20140149718A1Memory architecture accessing/allocationDigital computer detailsMemory addressHardware thread

Instructions and logic provide pushing buffer copy and store functionality. Some embodiments include a first hardware thread or processing core, and a second hardware thread or processing core, a cache to store cache coherent data in a cache line for a shared memory address accessible by the second hardware thread or processing core. Responsive to decoding an instruction specifying a source data operand, said shared memory address as a destination operand, and one or more owner of said shared memory address, one or more execution units copy data from the source data operand to the cache coherent data in the cache line for said shared memory address accessible by said second hardware thread or processing core in the cache when said one or more owner includes said second hardware thread or processing core.

Owner:INTEL CORP

Multithreaded processor with multiple concurrent pipelines per thread

ActiveUS20060095729A1Improve concurrencyControl consumptionGeneral purpose stored program computerConcurrent instruction executionHardware threadExecution unit

A multithreaded processor comprises a plurality of hardware thread units, an instruction decoder coupled to the thread units for decoding instructions received therefrom, and a plurality of execution units for executing the decoded instructions. The multithreaded processor is configured for controlling an instruction issuance sequence for threads associated with respective ones of the hardware thread units. On a given processor clock cycle, only a designated one of the threads is permitted to issue one or more instructions, but the designated thread that is permitted to issue instructions varies over a plurality of clock cycles in accordance with the instruction issuance sequence. The instructions are pipelined in a manner which permits at least a given one of the threads to support multiple concurrent instruction pipelines.

Owner:QUALCOMM INC

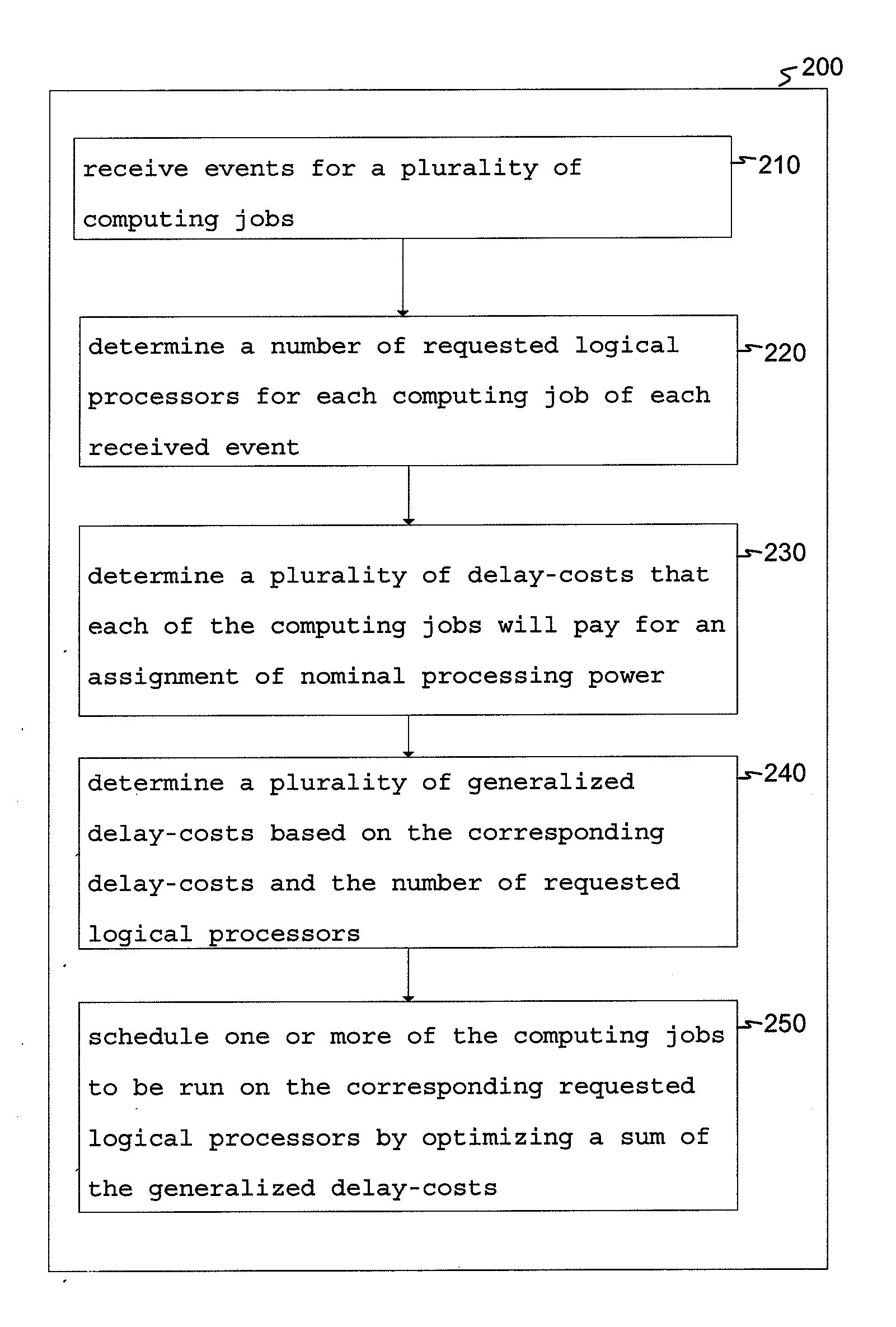

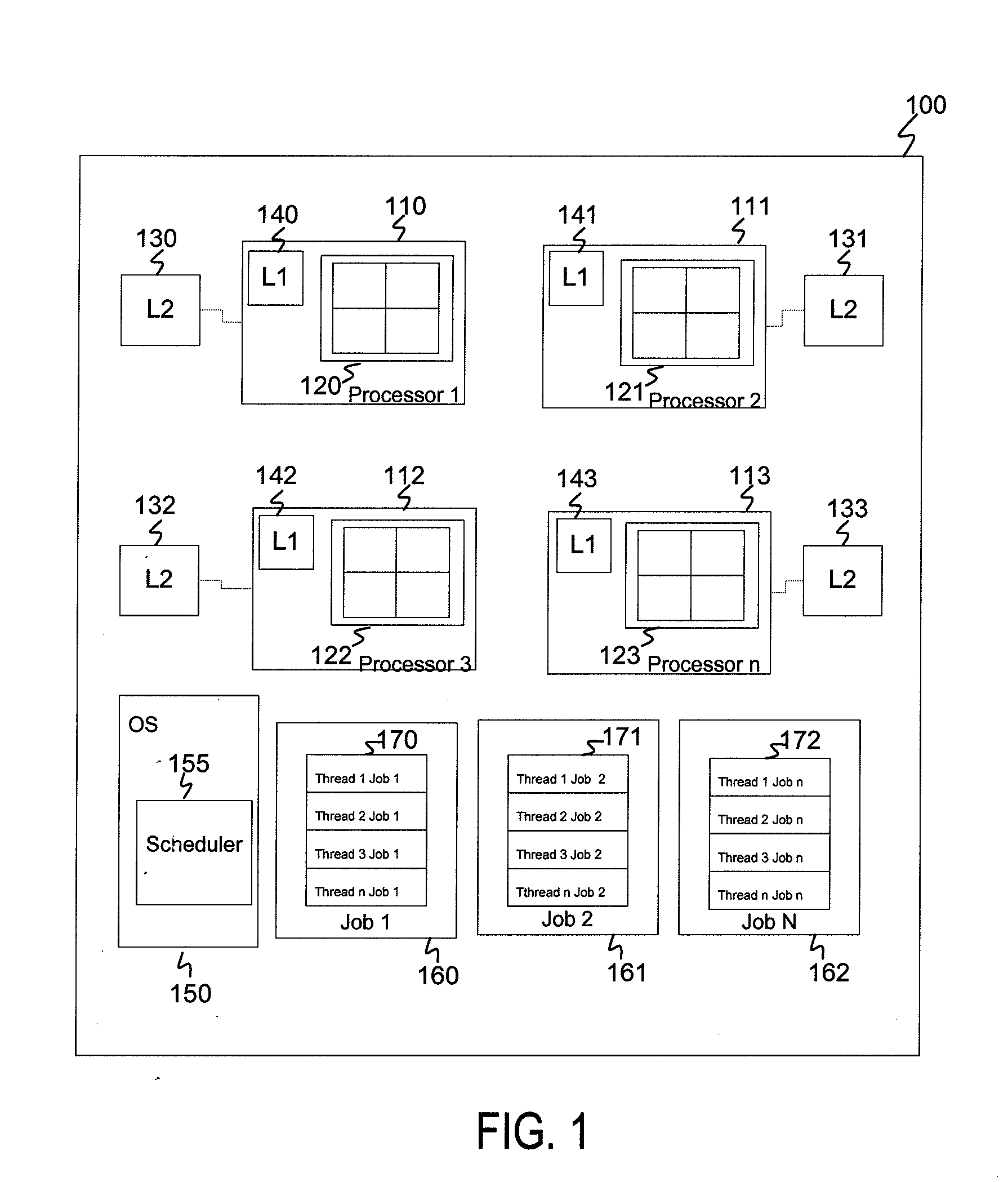

System and method for event-driven scheduling of computing jobs on a multi-threaded machine using delay-costs

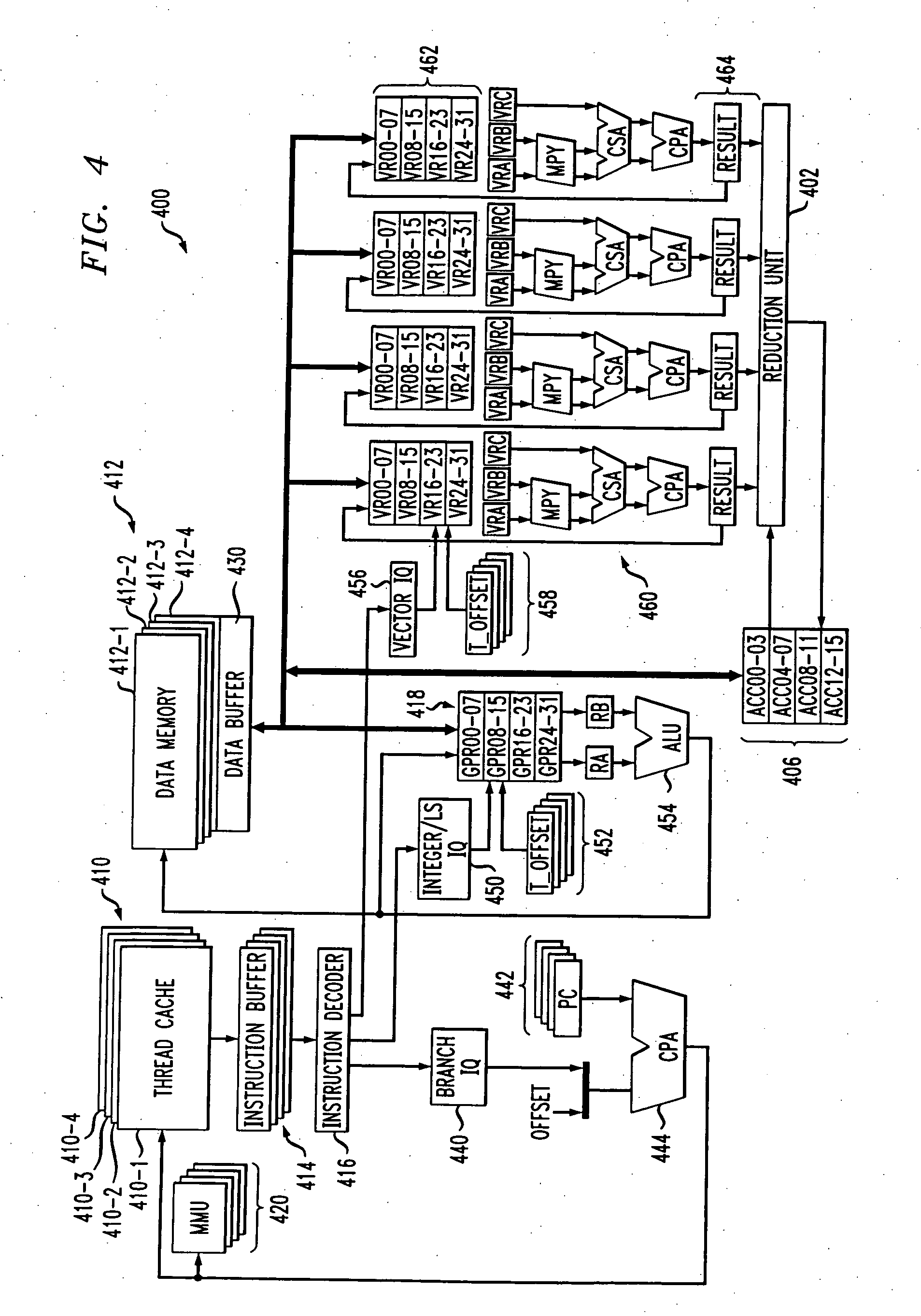

InactiveUS20090070762A1Balance computing loadMultiprogramming arrangementsMemory systemsHardware threadOperational system

A computer system includes N multi-threaded processors and an operating system. The N multi-threaded processors each have O hardware threads forming a pool of P hardware threads, where N, O, and P are positive integers and P is equal to N times O. The operating system includes a scheduler which receives events for one or more computing jobs. The scheduler receives one of the events and allocates R hardware threads of the pool of P hardware threads to one of the computing jobs by optimizing a sum of priorities of the computing jobs, where each priority is based in part on the number of logical processors requested by a corresponding computing job and R is an integer that is greater than or equal to 0.

Owner:IBM CORP

Pre-loading context states by inactive hardware thread in advance of context switch

InactiveUS7873816B2Latency of context switchLower latencyDigital computer detailsSpecific program execution arrangementsHardware threadContext switch

Owner:INT BUSINESS MASCH CORP

Inter-thread communication with software security

InactiveUS20130160114A1Communication securityDigital data processing detailsUnauthorized memory use protectionHardware threadComputer science

A circuit arrangement and method utilize a process context translation data structure in connection with an on-chip network of a processor chip to implement secure inter-thread communication between hardware threads in the processor chip. The process context translation data structure maps processes to inter-thread communication hardware resources, e.g., the inbox and / or outbox buffers of a NOC processor, such that a user process is only allowed to access the inter-thread communication hardware resources that it has been granted access to, and typically with only certain types of authorized access types. Moreover, a hypervisor or supervisor may manage the process context translation data structure to grant or deny access rights to user processes such that, once those rights are established in the data structure, user processes are permitted to perform inter-thread communications without requiring context switches to a hypervisor or supervisor in order to handle the communications.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com