Patents

Literature

34 results about "Priority inversion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

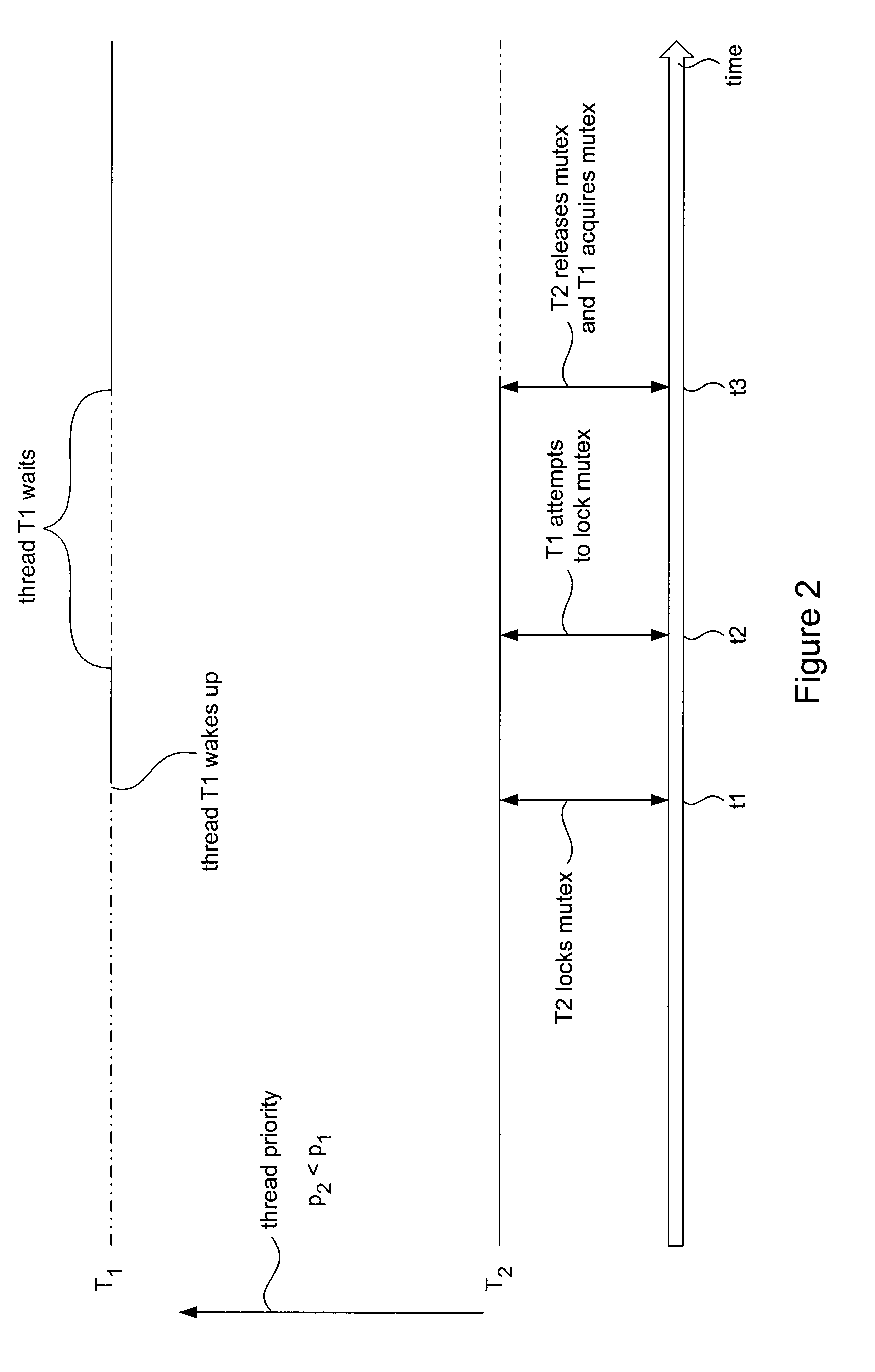

In computer science, priority inversion is a scenario in scheduling in which a high priority task is indirectly preempted by a lower priority task effectively inverting the relative priorities of the two tasks.

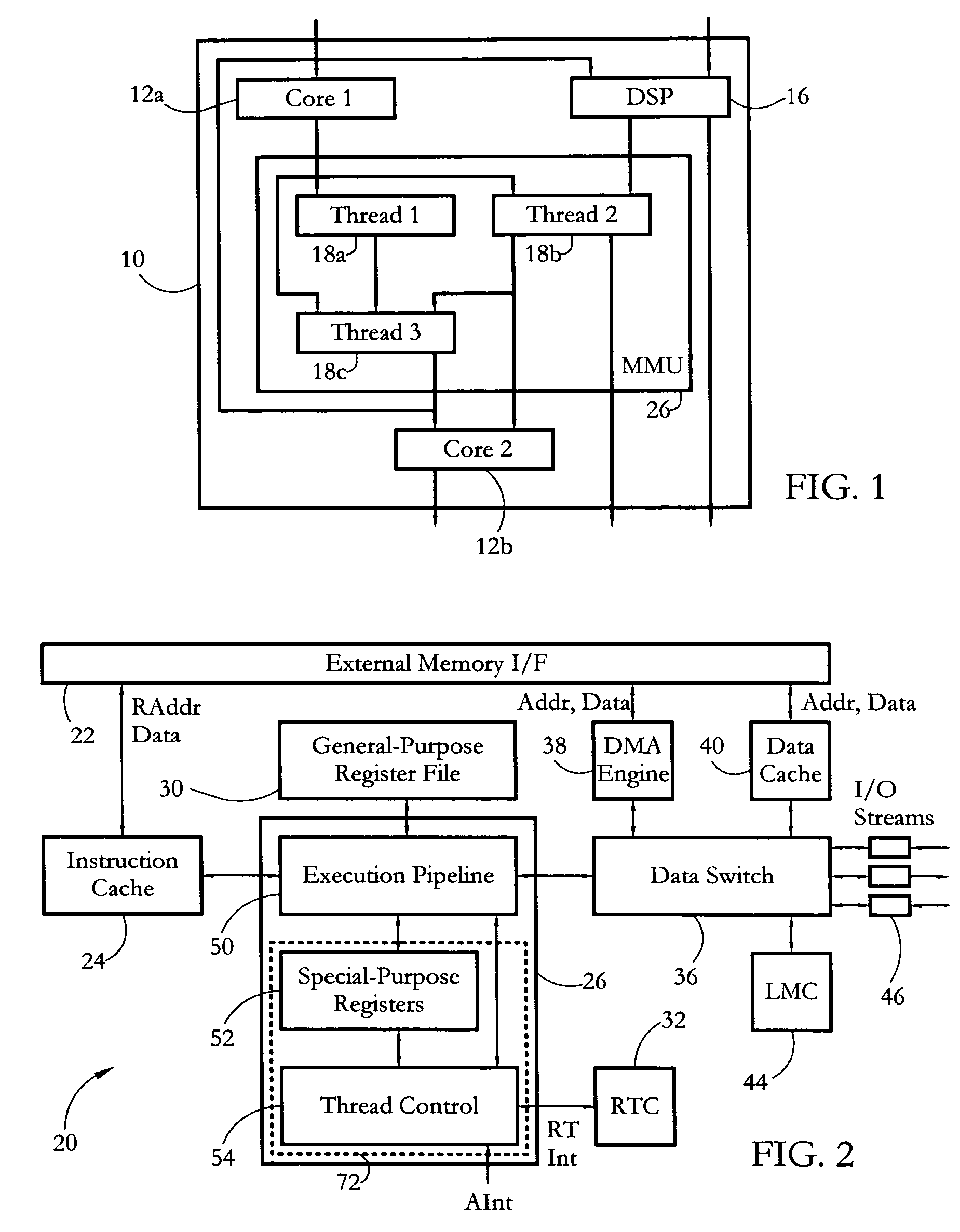

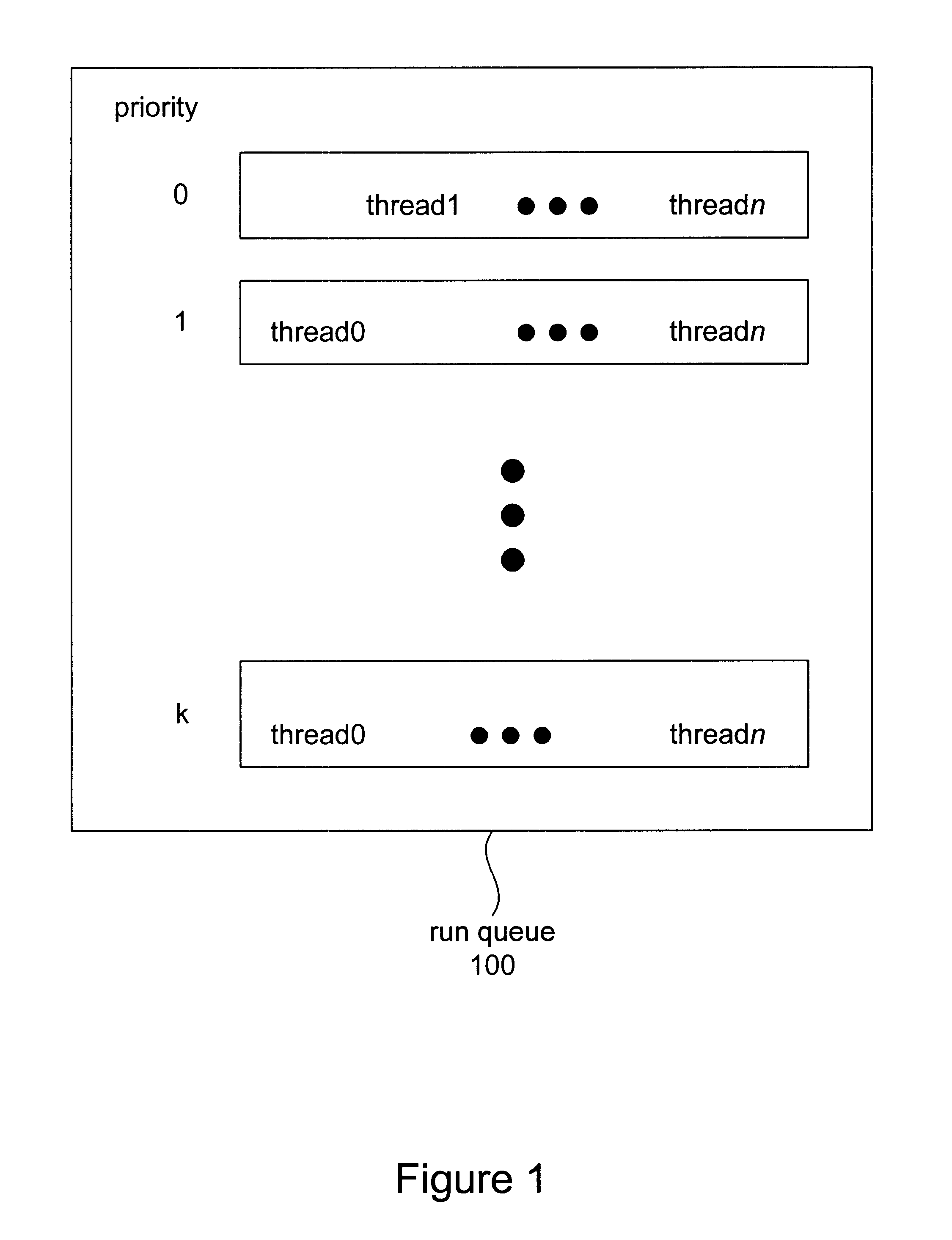

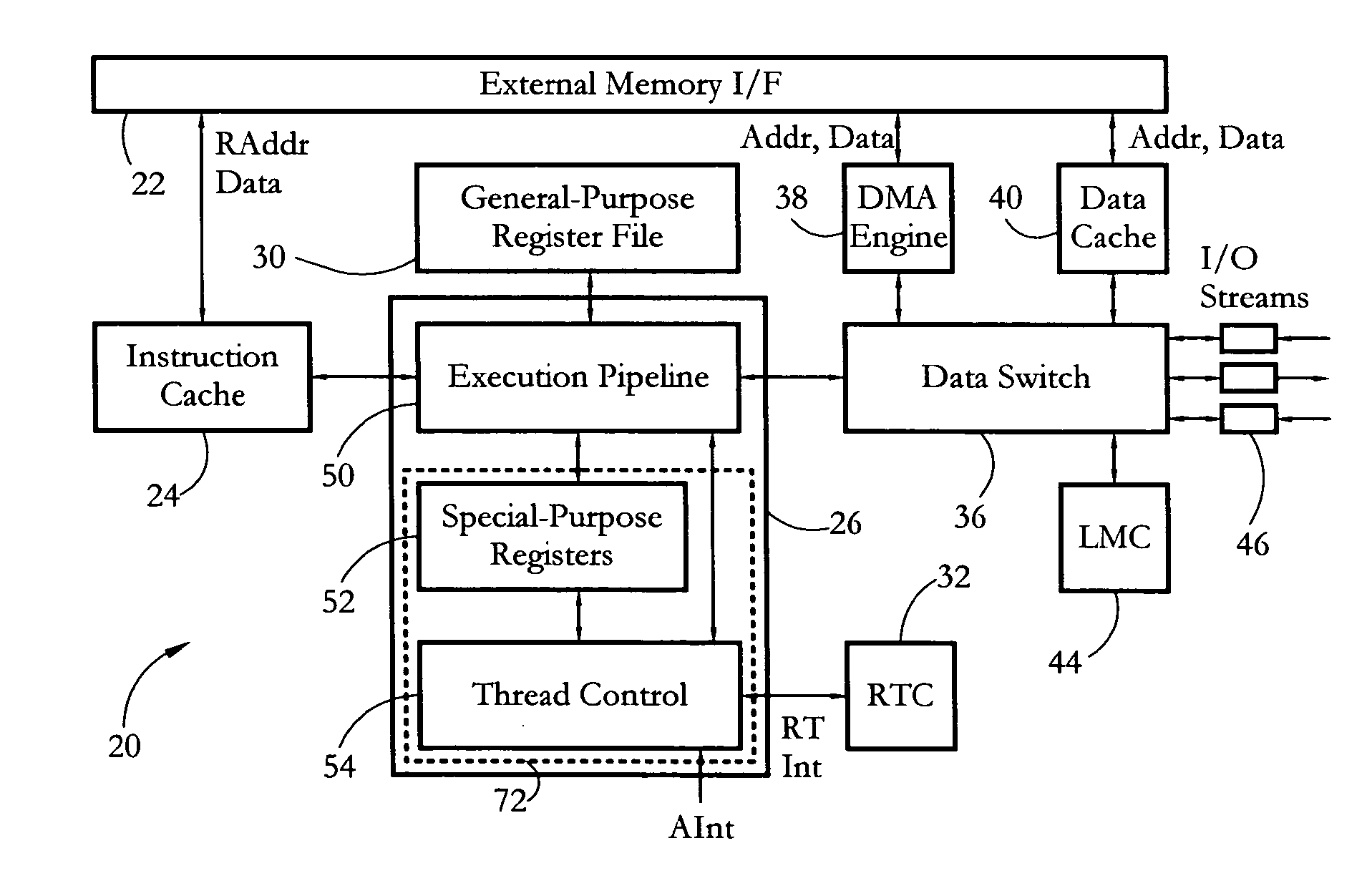

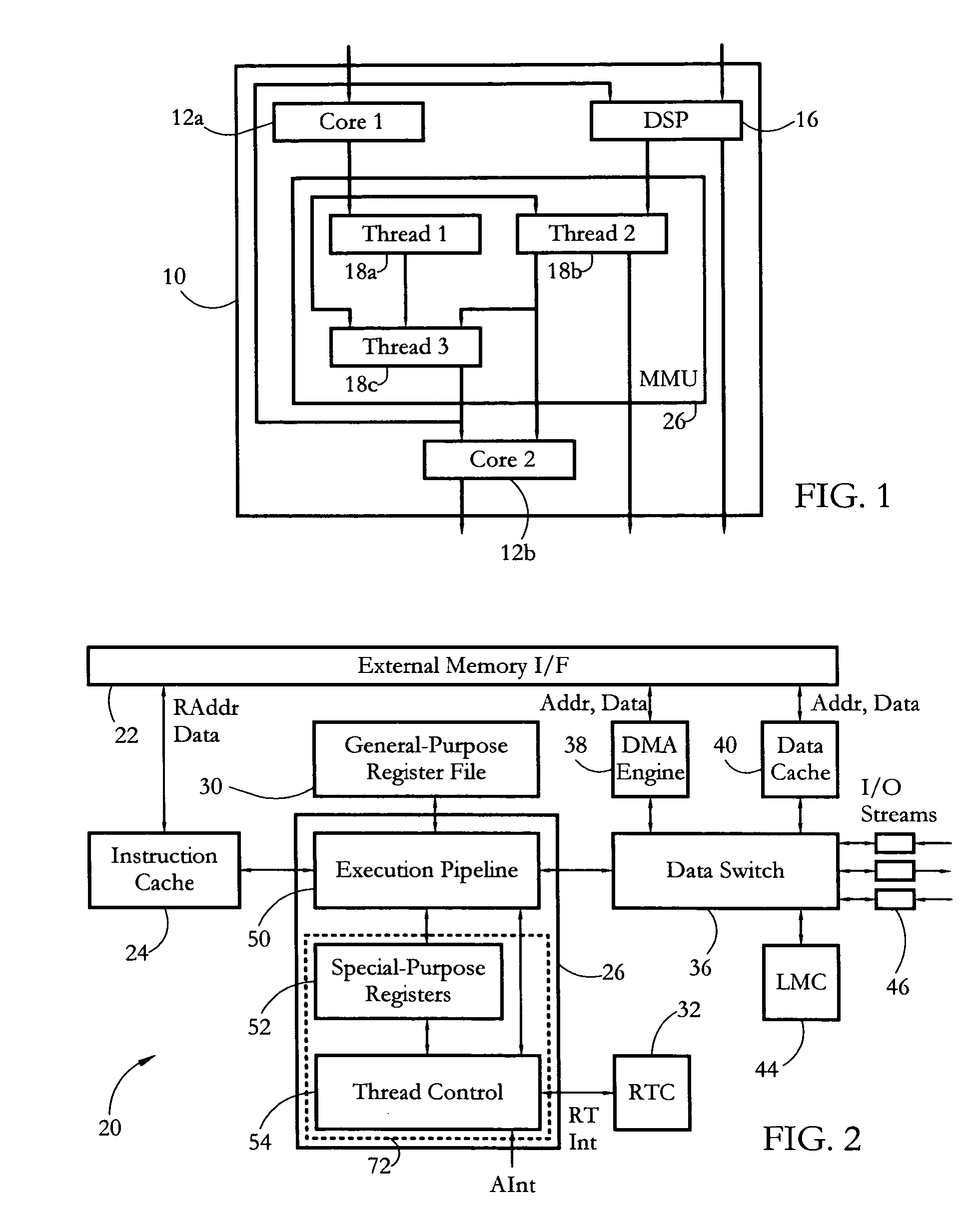

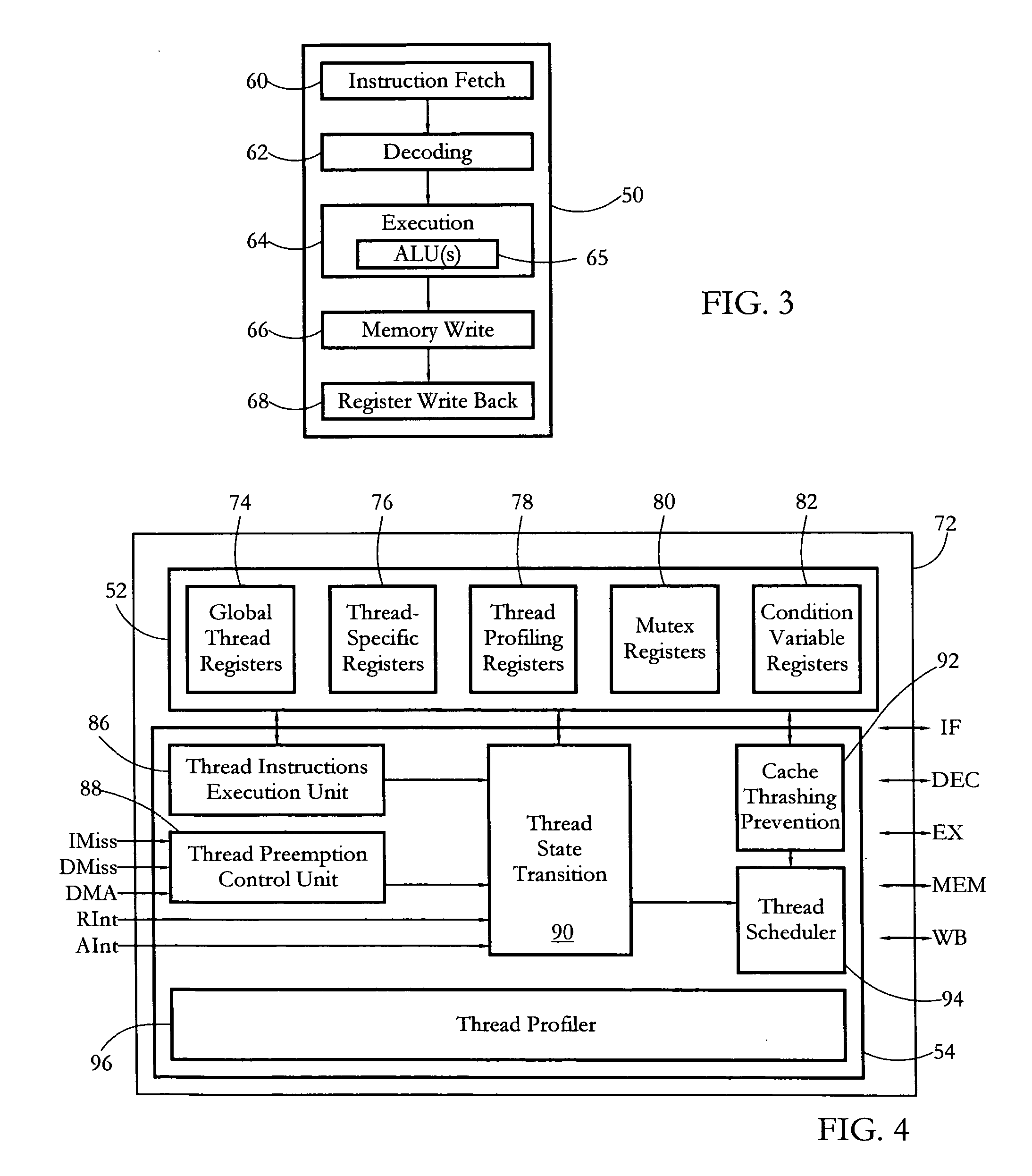

Hardware multithreading systems and methods

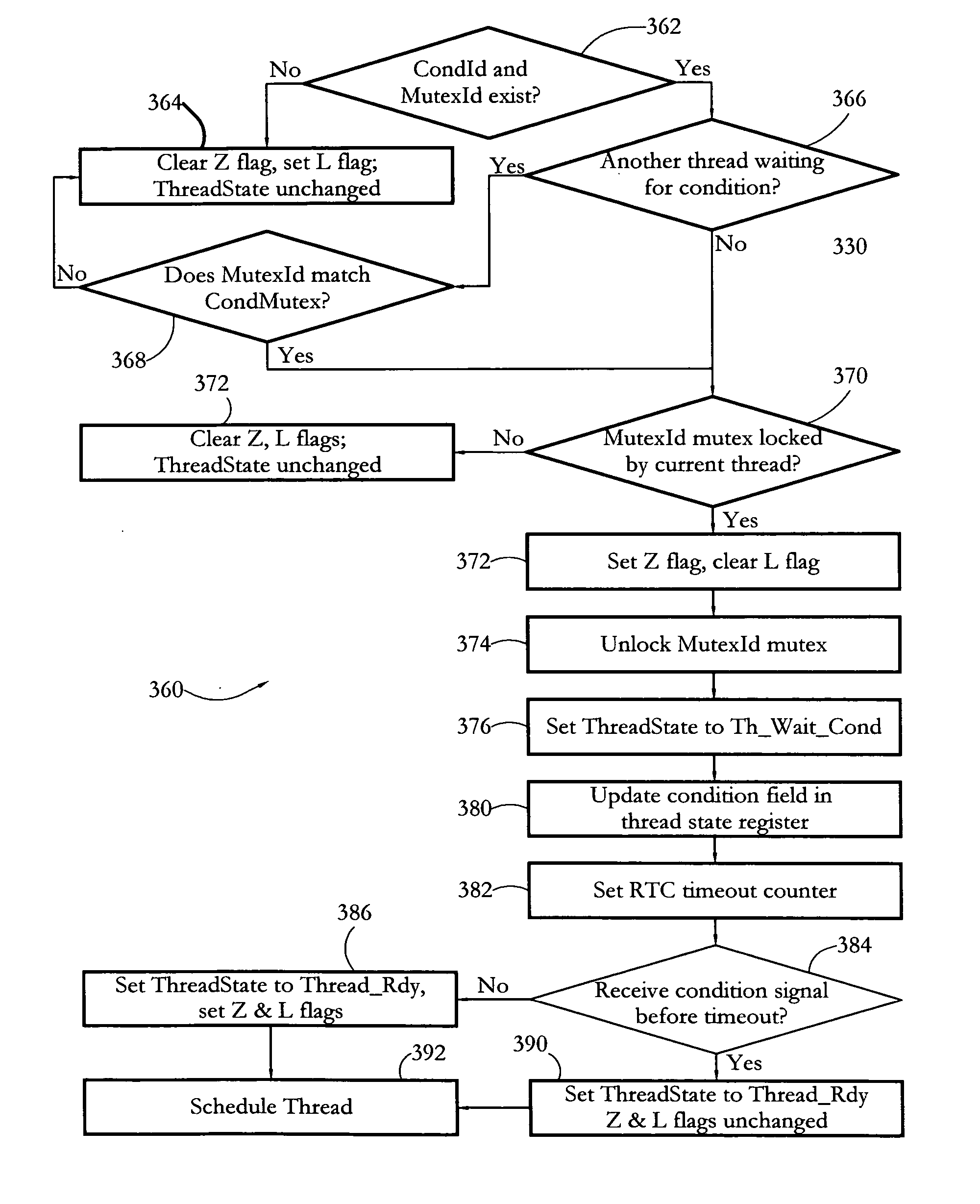

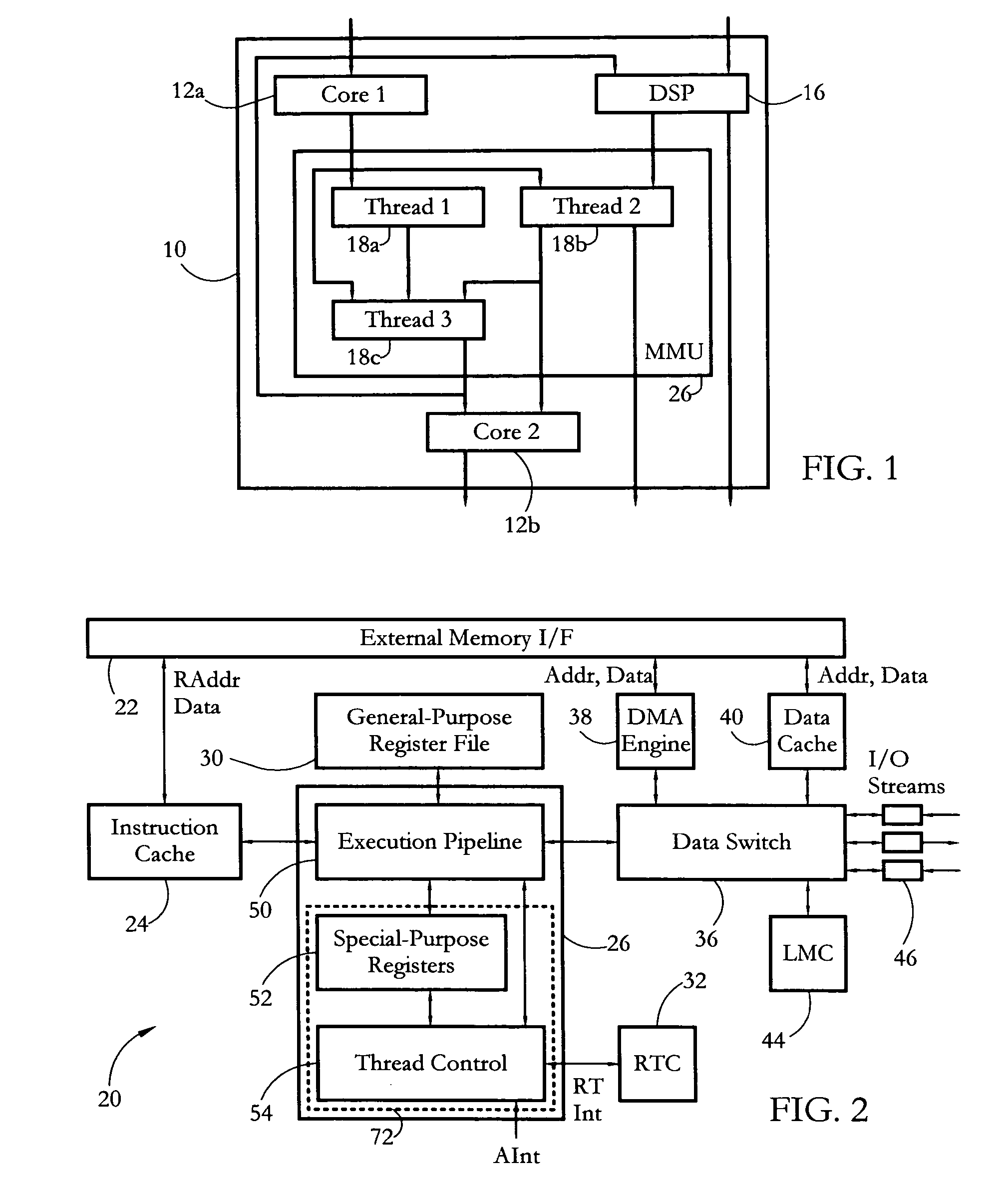

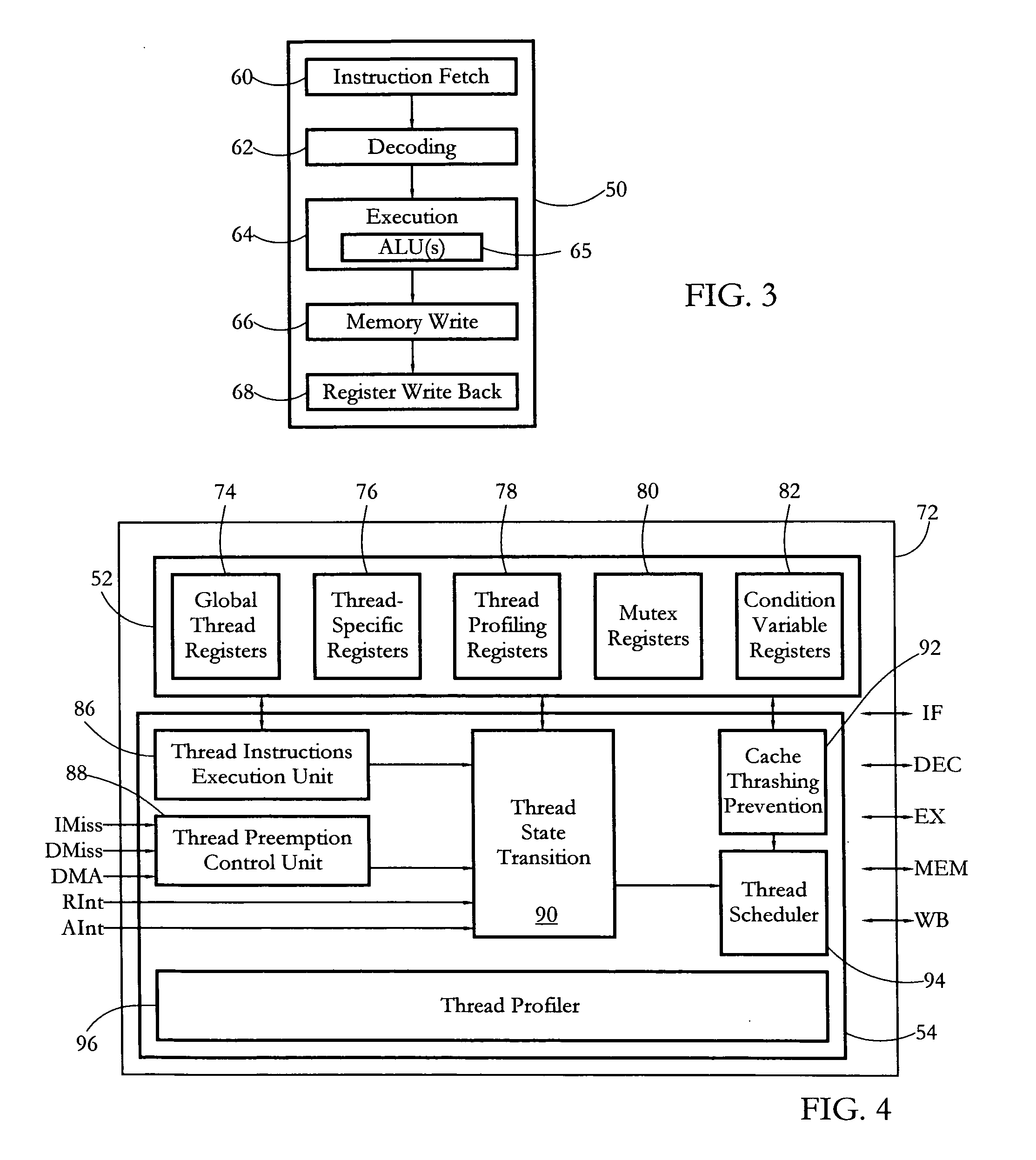

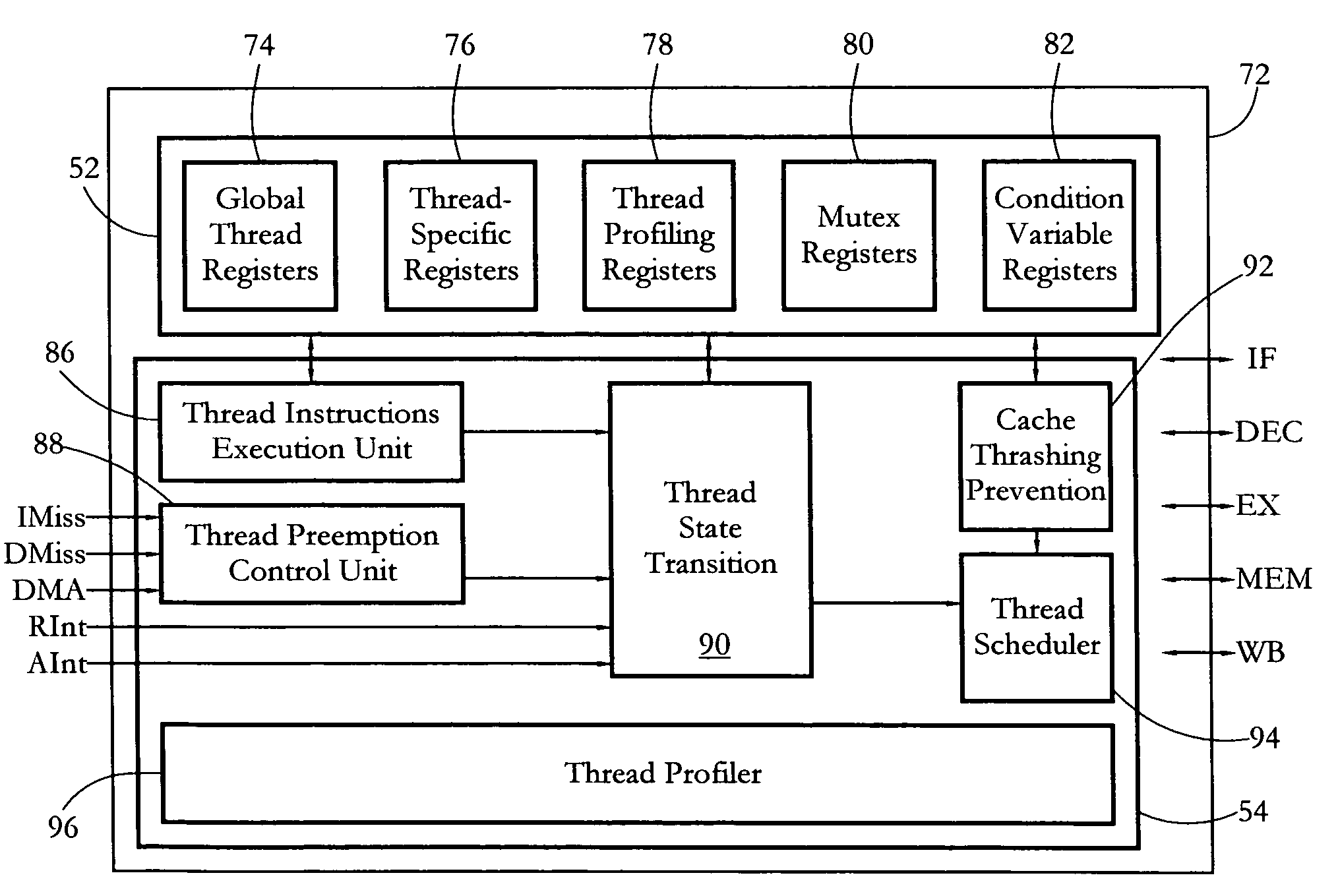

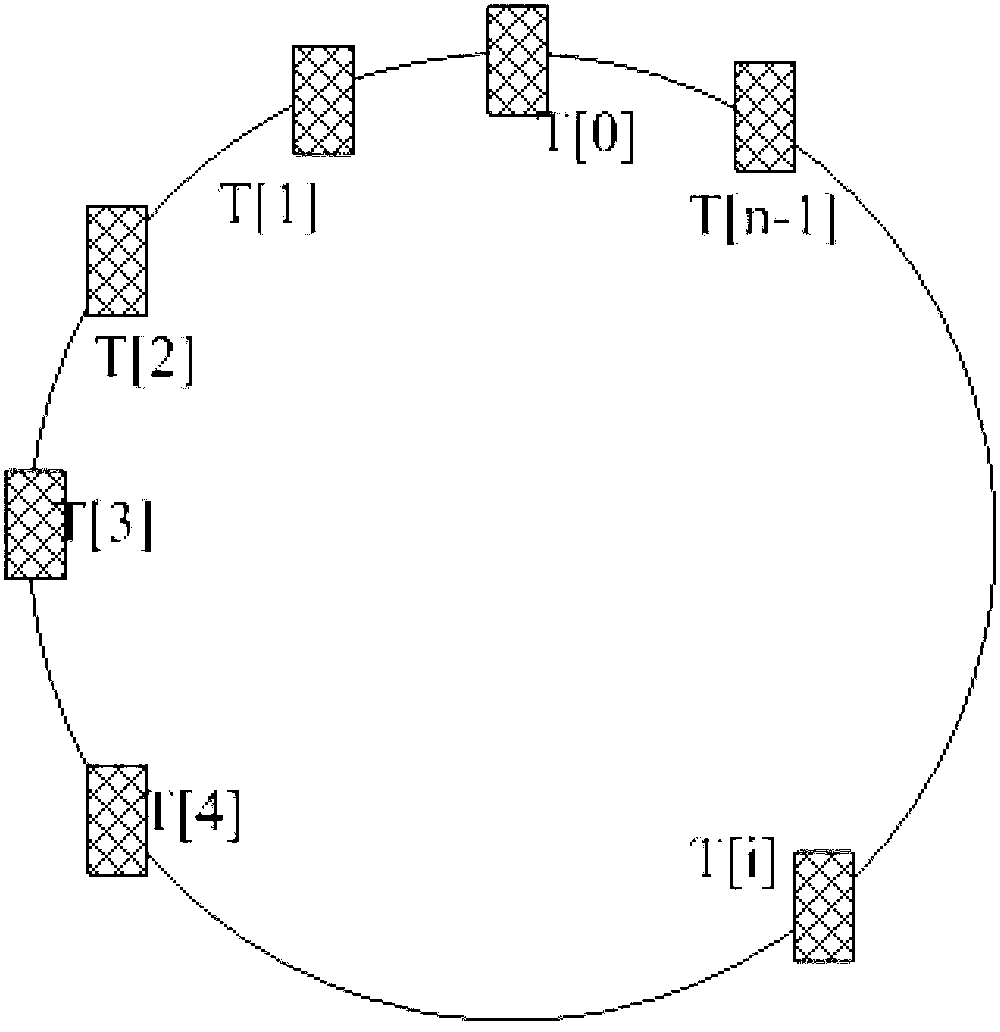

According to some embodiments, a multithreaded microcontroller includes a thread control unit comprising thread control hardware (logic) configured to perform a number of multithreading system calls essentially in real time, e.g. in one or a few clock cycles. System calls can include mutex lock, wait condition, and signal instructions. The thread controller includes a number of thread state, mutex, and condition variable registers used for executing the multithreading system calls. Threads can transition between several states including free, run, ready and wait. The wait state includes interrupt, condition, mutex, I-cache, and memory substates. A thread state transition controller controls thread states, while a thread instructions execution unit executes multithreading system calls and manages thread priorities to avoid priority inversion. A thread scheduler schedules threads according to their priorities. A hardware thread profiler including global, run and wait profiler registers is used to monitor thread performance to facilitate software development.

Owner:GEO SEMICONDUCTOR INC

Hardware multithreading systems with state registers having thread profiling data

According to some embodiments, a multithreaded microcontroller includes a thread control unit comprising thread control hardware (logic) configured to perform a number of multithreading system calls essentially in real time, e.g. in one or a few clock cycles. System calls can include mutex lock, wait condition, and signal instructions. The thread controller includes a number of thread state, mutex, and condition variable registers used for executing the multithreading system calls. Threads can transition between several states including free, run, ready and wait. The wait state includes interrupt, condition, mutex, I-cache, and memory substates. A thread state transition controller controls thread states, while a thread instructions execution unit executes multithreading system calls and manages thread priorities to avoid priority inversion. A thread scheduler schedules threads according to their priorities. A hardware thread profiler including global, run and wait profiler registers is used to monitor thread performance to facilitate software development.

Owner:GEO SEMICONDUCTOR INC

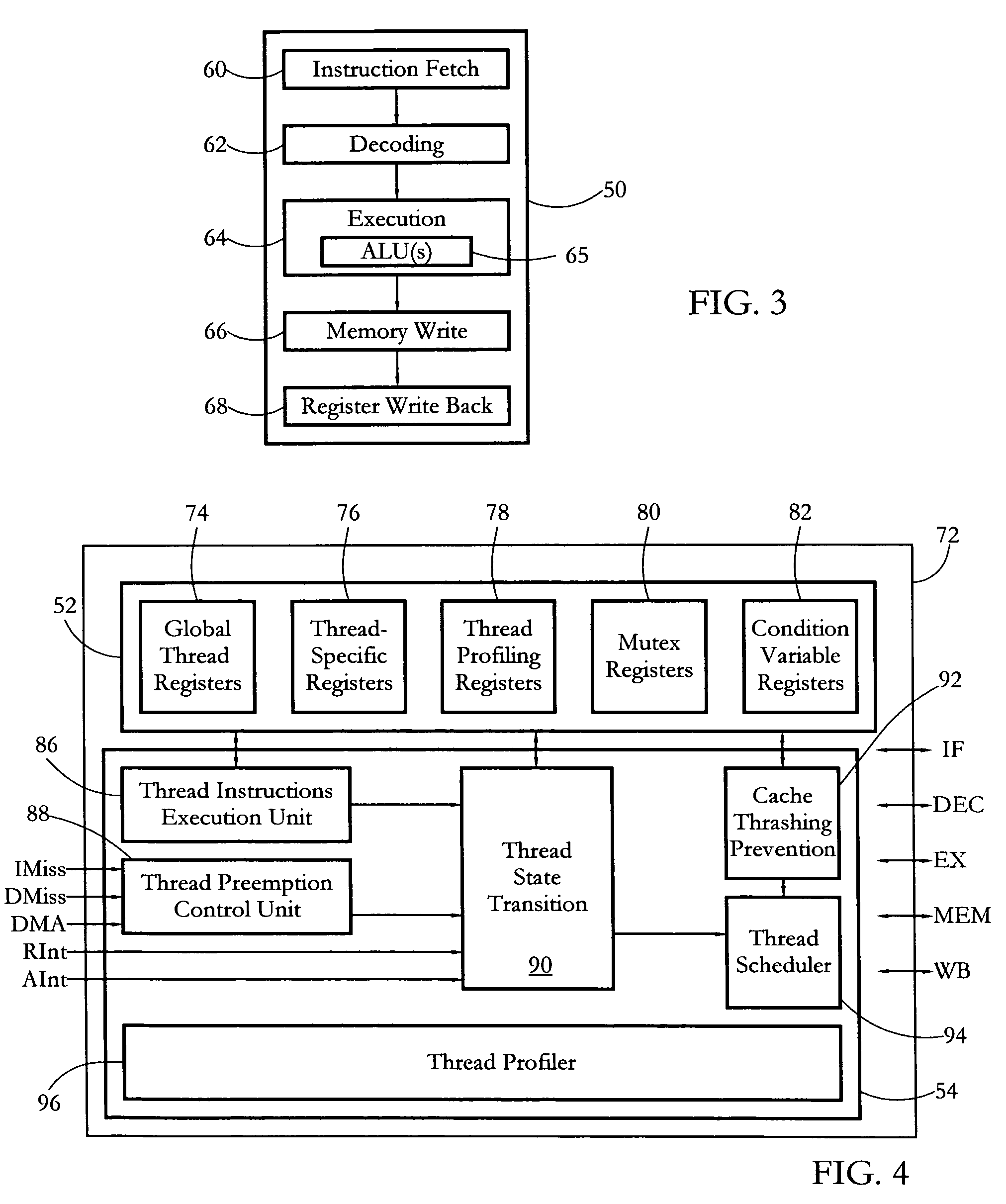

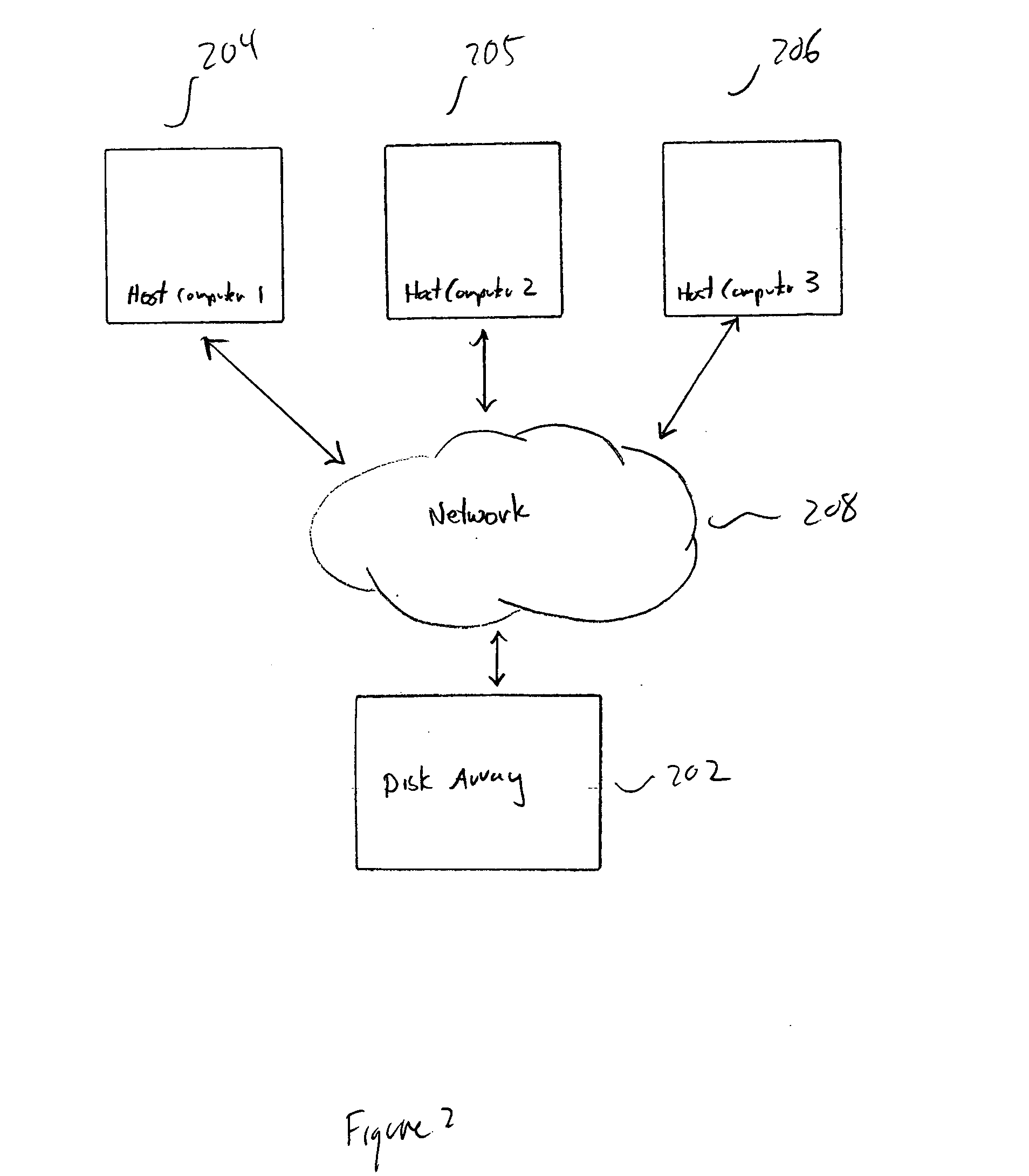

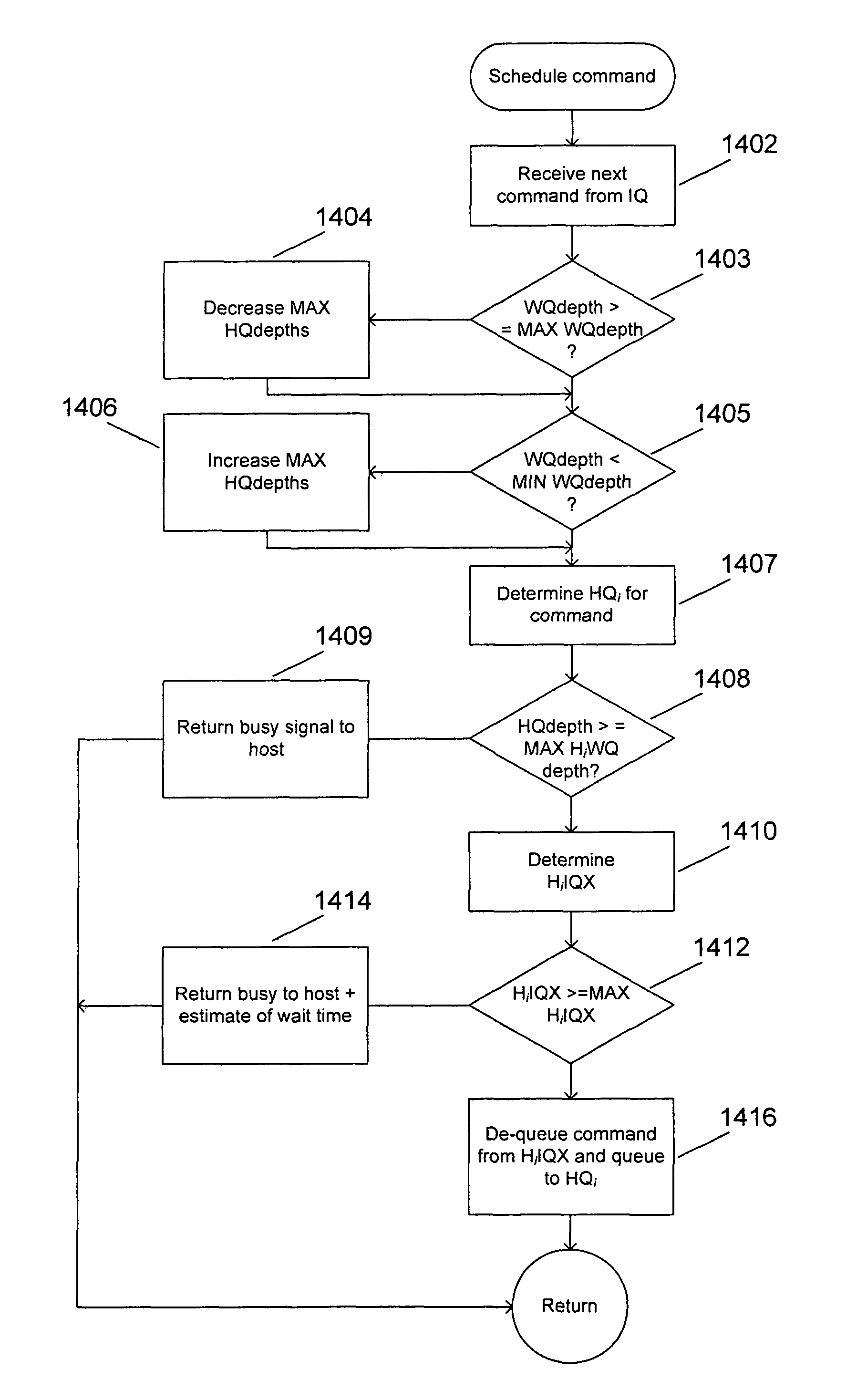

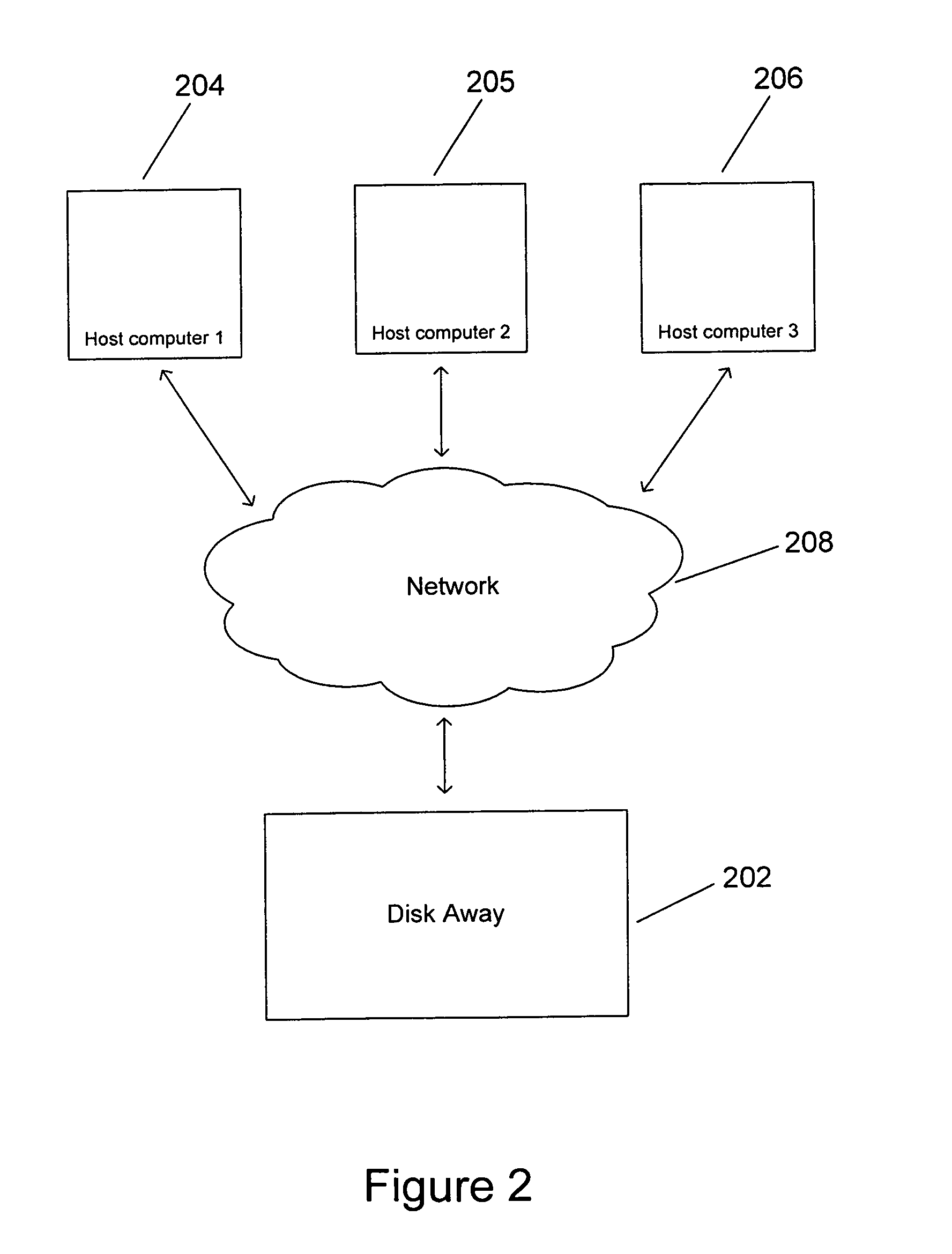

Method and system for achieving fair command processing in storage systems that implement command-associated priority queuing

ActiveUS20080104283A1Avoid hungerEliminating and minimizing of and priority-related deadlockTransmissionInput/output processes for data processingComputer accessPriority inversion

In certain, currently available data-storage systems, incoming commands from remote host computers are subject to several levels of command-queue-depth-fairness-related throttles to ensure that all host computers accessing the data-storage systems receive a reasonable fraction of data-storage-system command-processing bandwidth to avoid starvation of one or more host computers. Recently, certain host-computer-to-data-storage-system communication protocols have been enhanced to provide for association of priorities with commands. However, these new command-associated priorities may lead to starvation of priority levels and to a risk of deadlock due to priority-level starvation and priority inversion. In various embodiments of the present invention, at least one additional level of command-queue-depth-fairness-related throttling is introduced in order to avoid starvation of one or more priority levels, thereby eliminating or minimizing the risk of priority-level starvation and priority-related deadlock.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

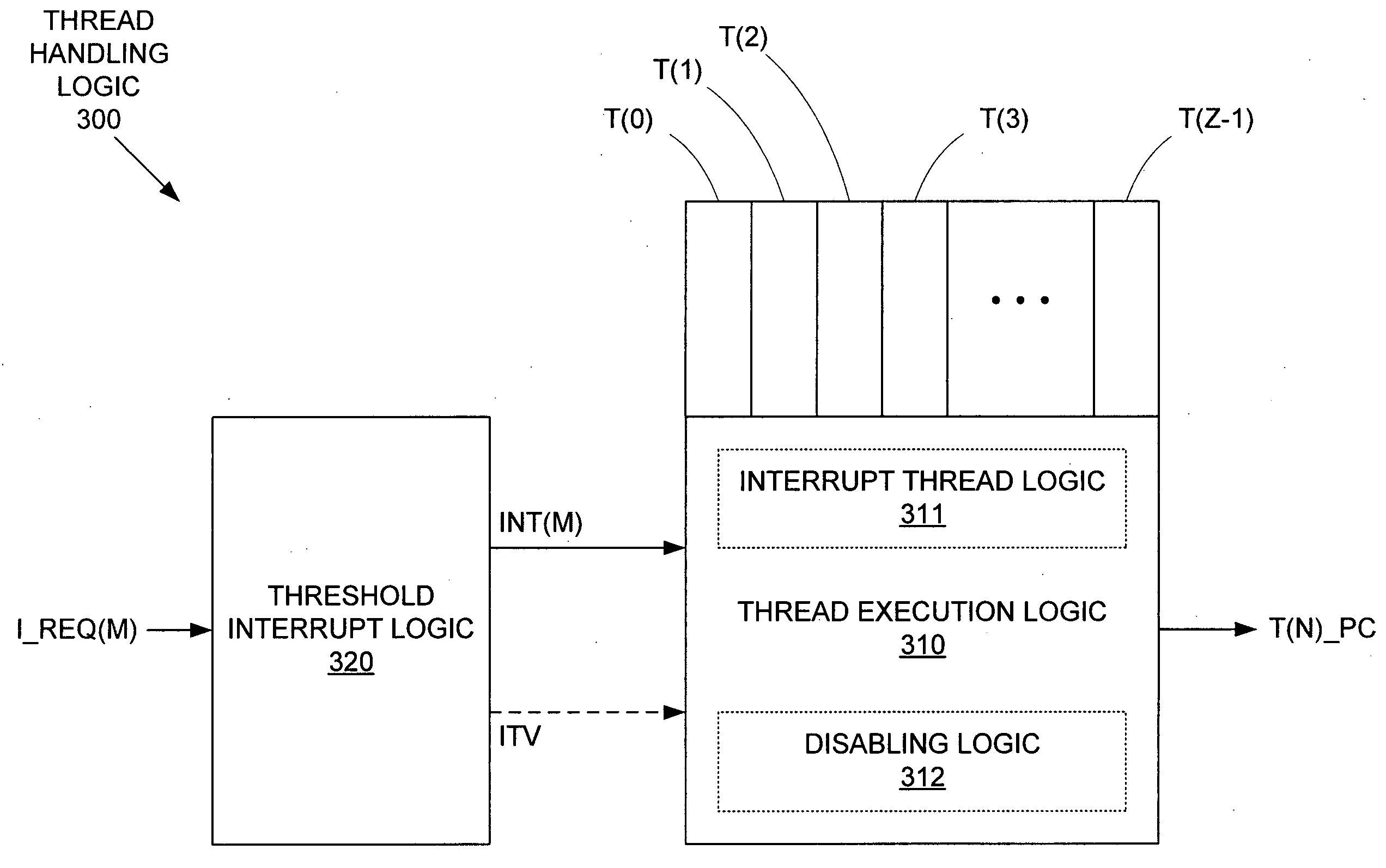

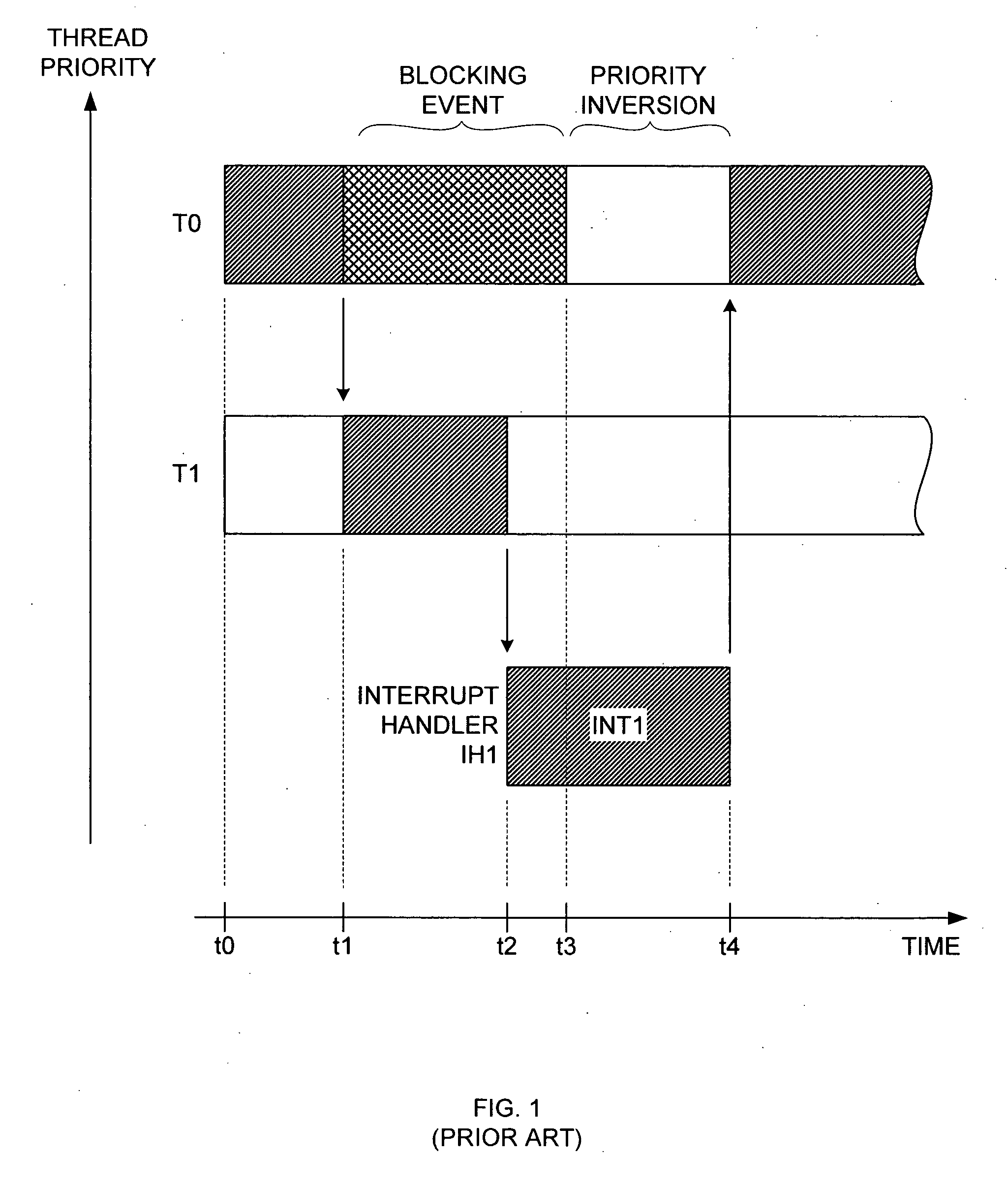

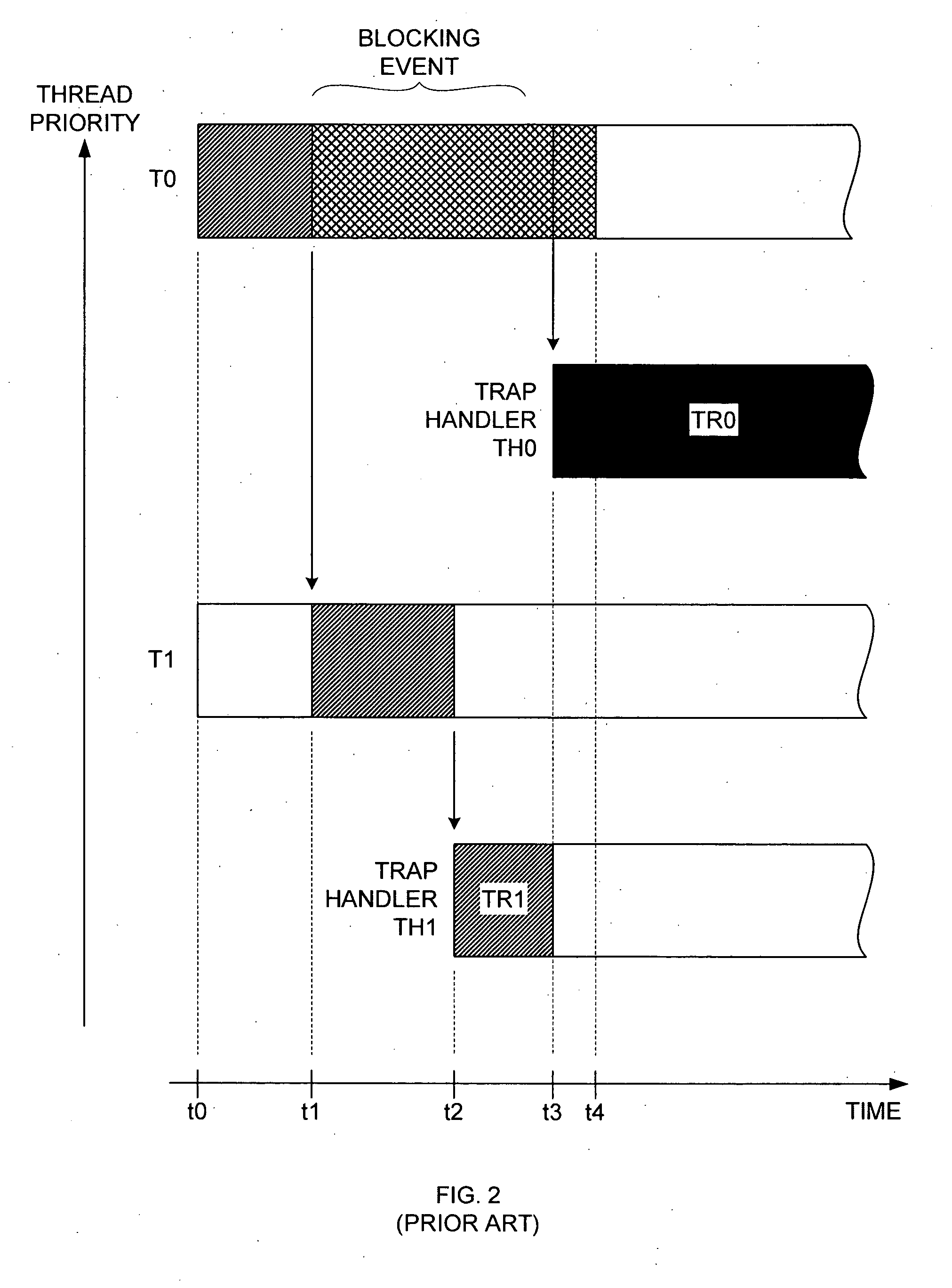

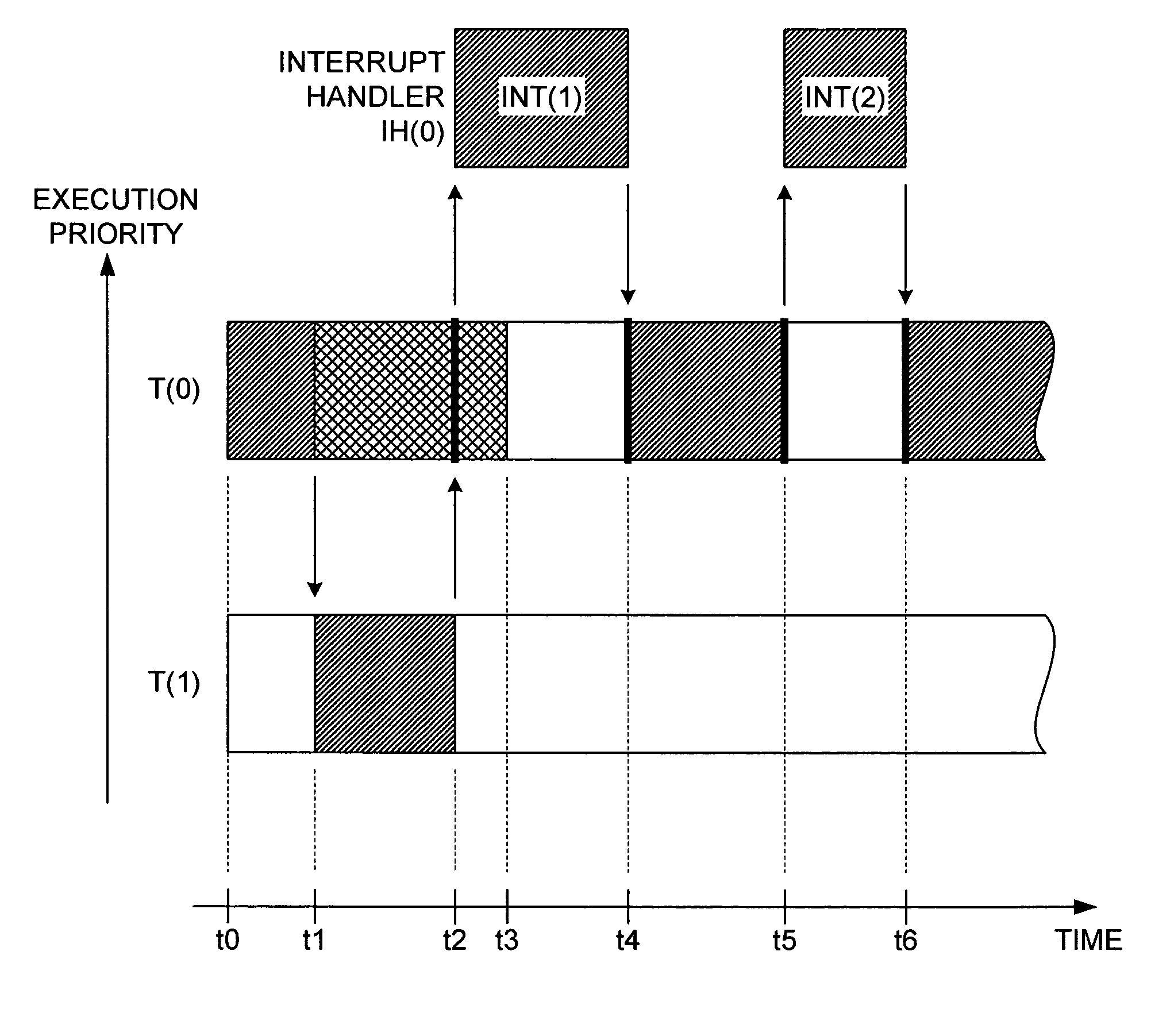

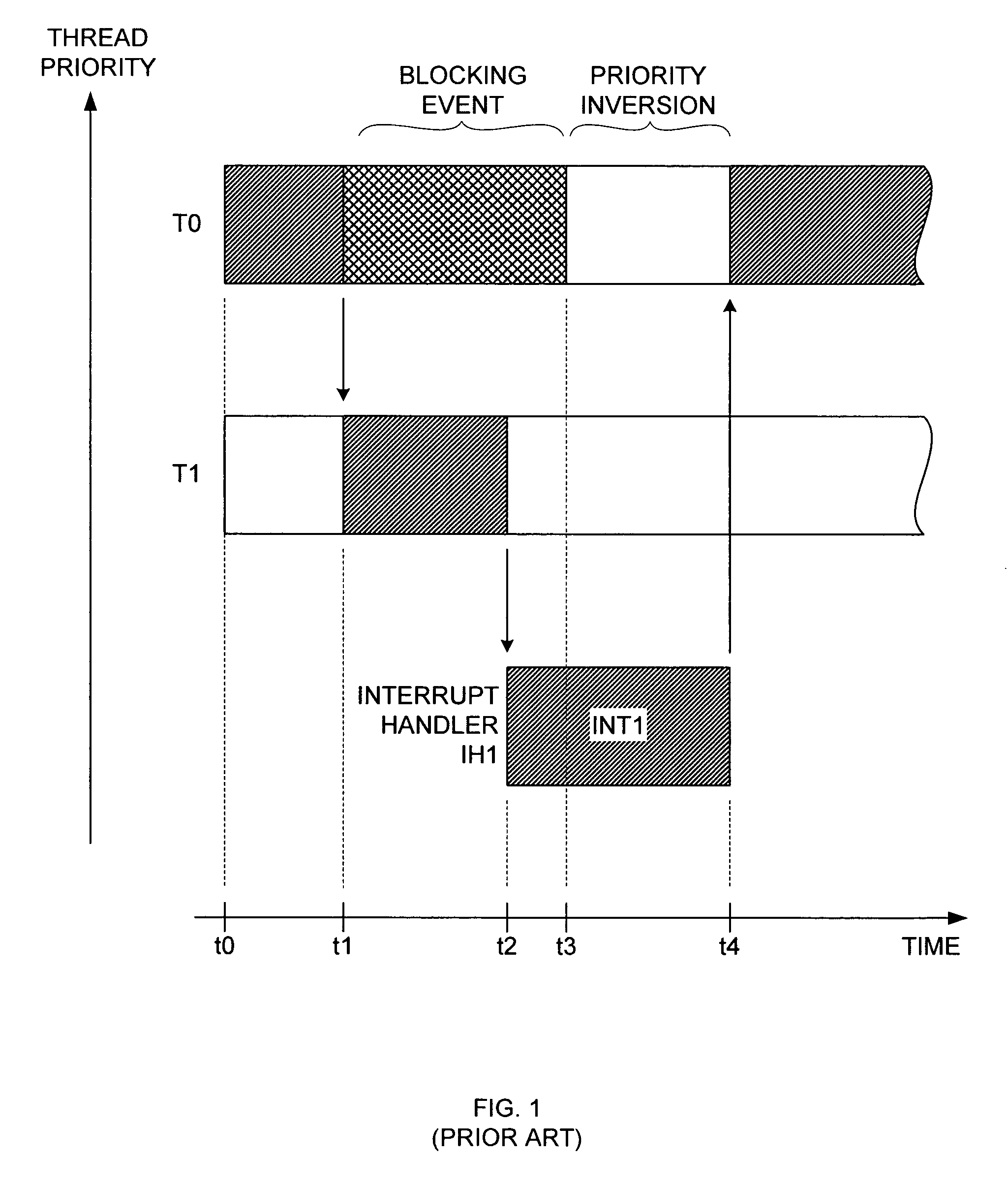

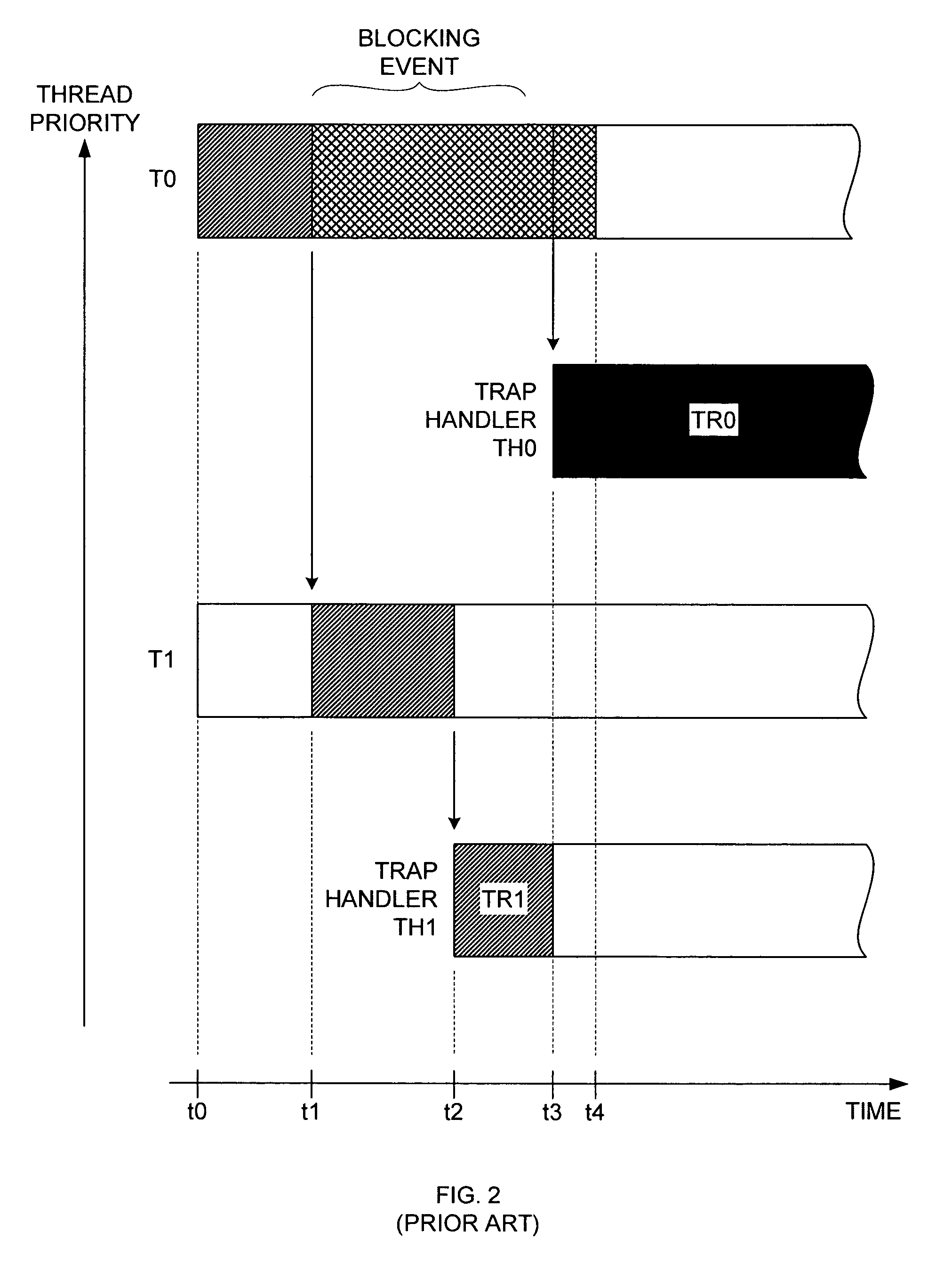

Interrupt and trap handling in an embedded multi-thread processor to avoid priority inversion and maintain real-time operation

ActiveUS20050102458A1Preventing unintended trap re-entrancySave on handlingProgram initiation/switchingDigital computer detailsPriority inversionEmbedded system

A real-time, multi-threaded embedded system includes rules for handling traps and interrupts to avoid problems such as priority inversion and re-entrancy. By defining a global interrupt priority value for all active threads and only accepting interrupts having a priority higher than the interrupt priority value, priority inversion can be avoided. Switching to the same thread before any interrupt servicing, and disabling interrupts and thread switching during interrupt servicing can simplify the interrupt handling logic. By storing trap background data for traps and servicing traps only in their originating threads, trap traceability can be preserved. By disabling interrupts and thread switching during trap servicing, unintended trap re-entrancy and servicing disruption can be prevented.

Owner:INFINEON TECH AG

Real time synchronization in multi-threaded computer systems

InactiveUS6587955B1Program synchronisationEmergency protective arrangements for automatic disconnectionPriority inversionDatabase

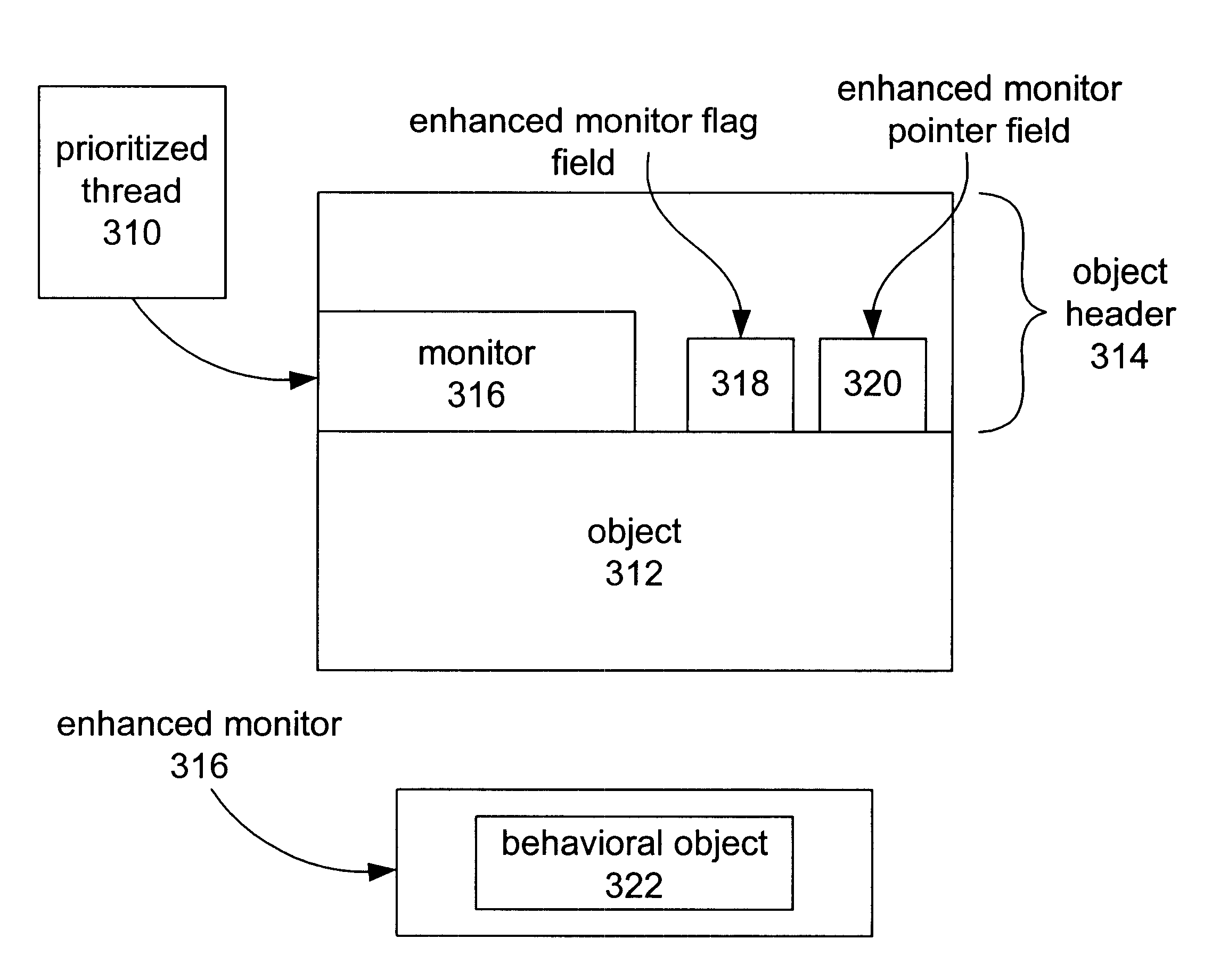

Methods and apparatus for implementing priority inversion avoidance protocols and deterministic locking where an API is used to select objects in a multi-threaded computer system are disclosed. In one aspect of the invention, an enhanced monitor is associated with one or more selected objects by way of an associated API. The enhanced monitor is arranged to set behavior for a lock associated with the selected objects as determined by a user defined behavior object included within the enhanced monitor. In this arrangement, only the selected one or more objects are associated with the enhanced monitor.

Owner:ORACLE INT CORP

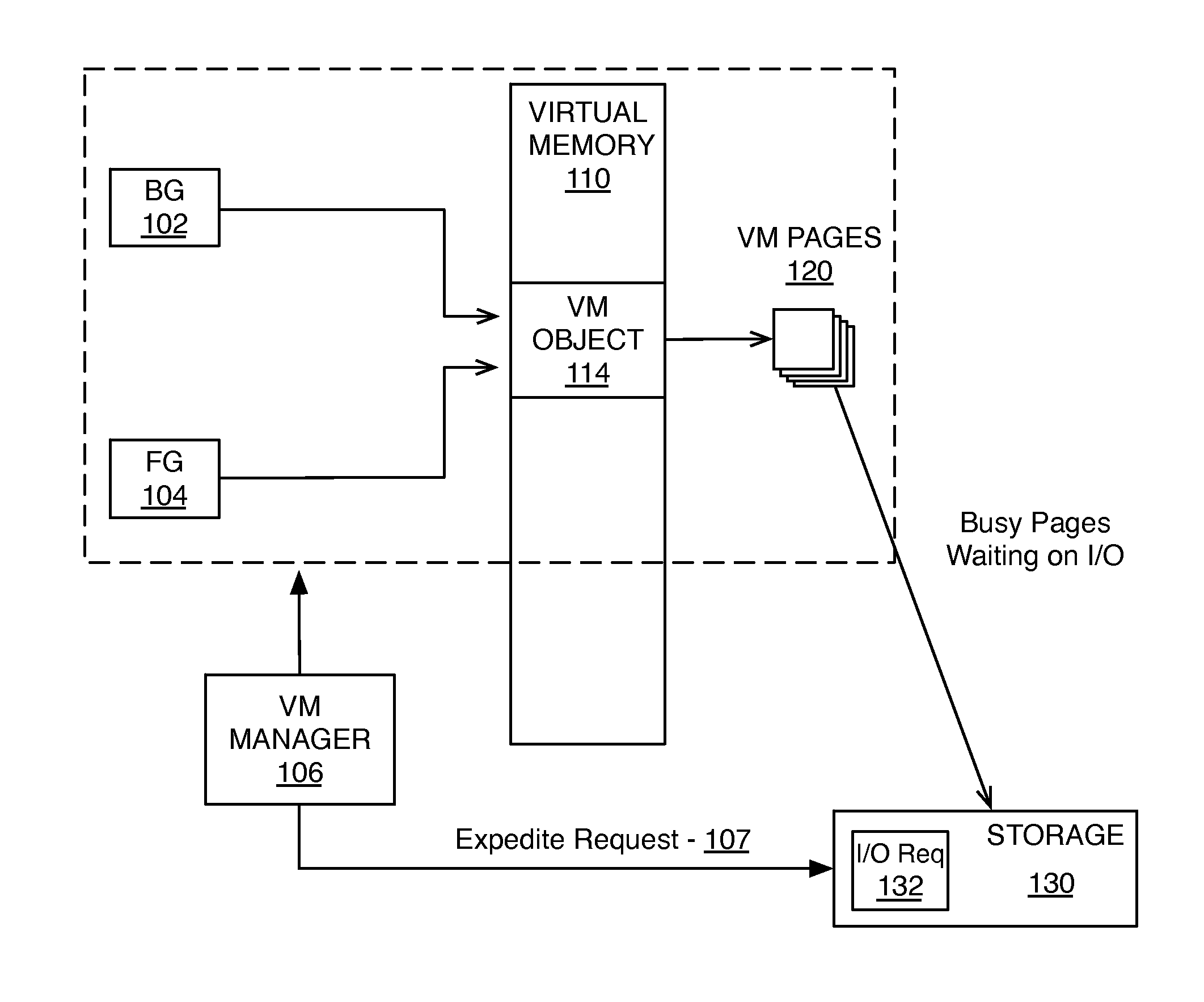

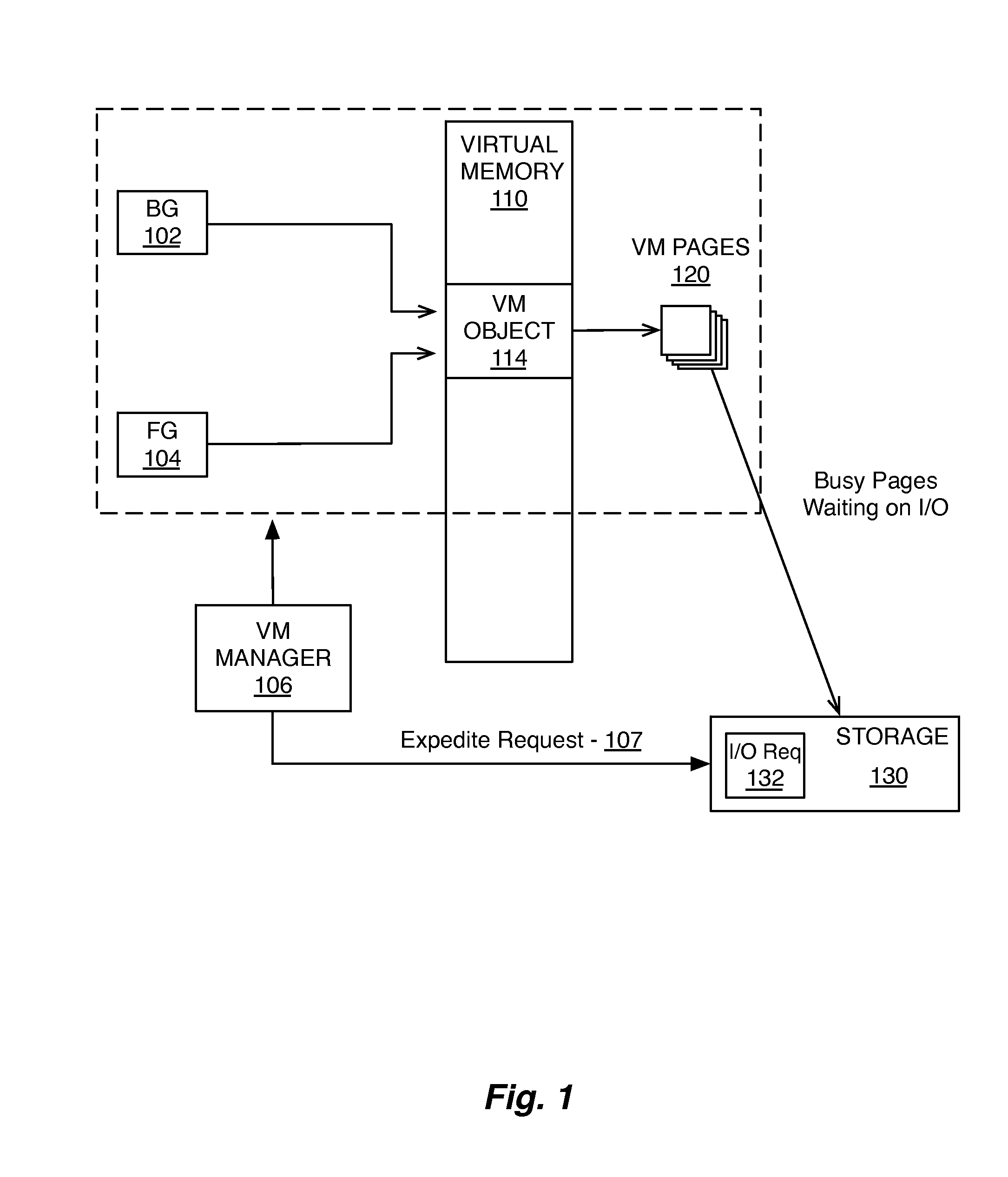

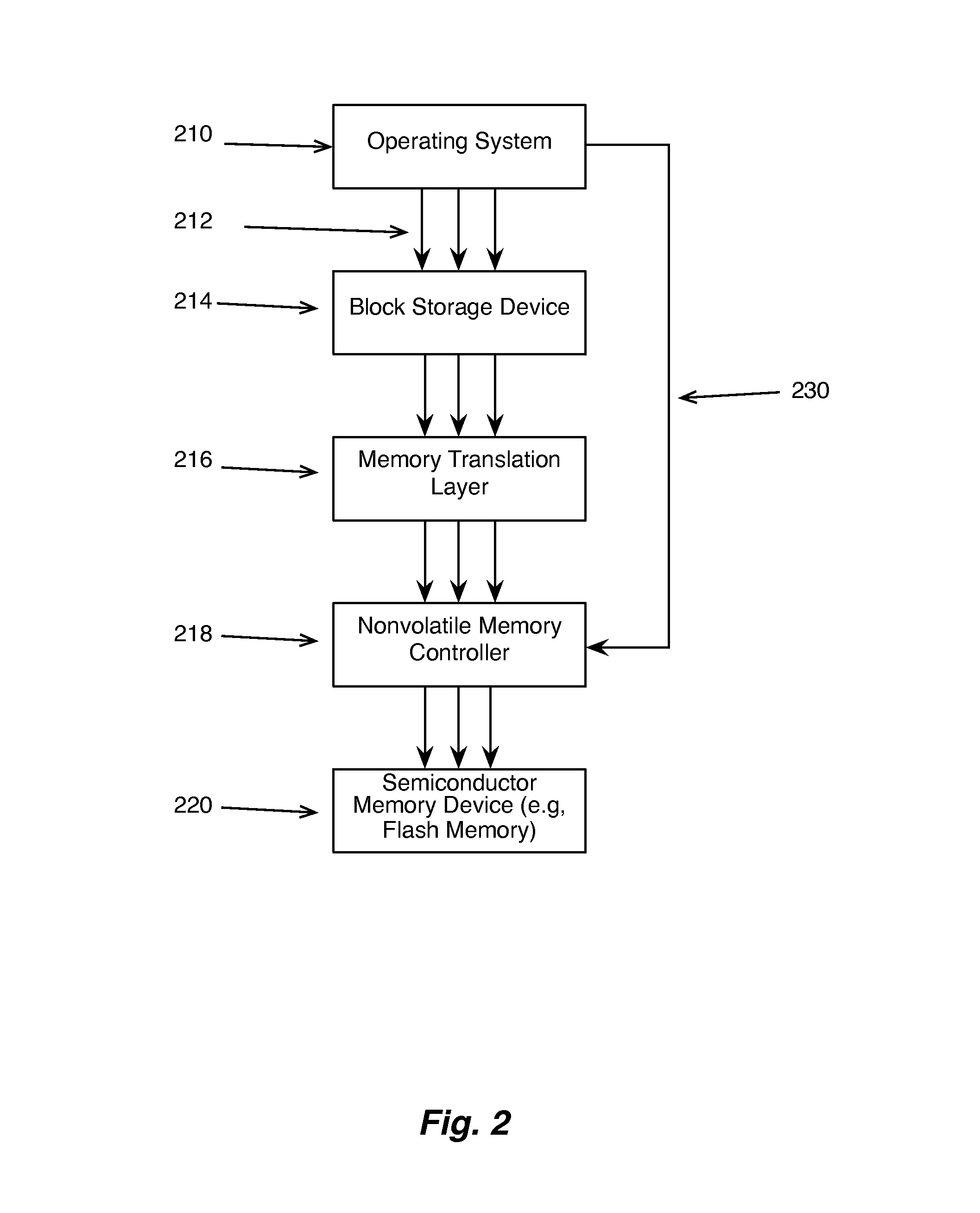

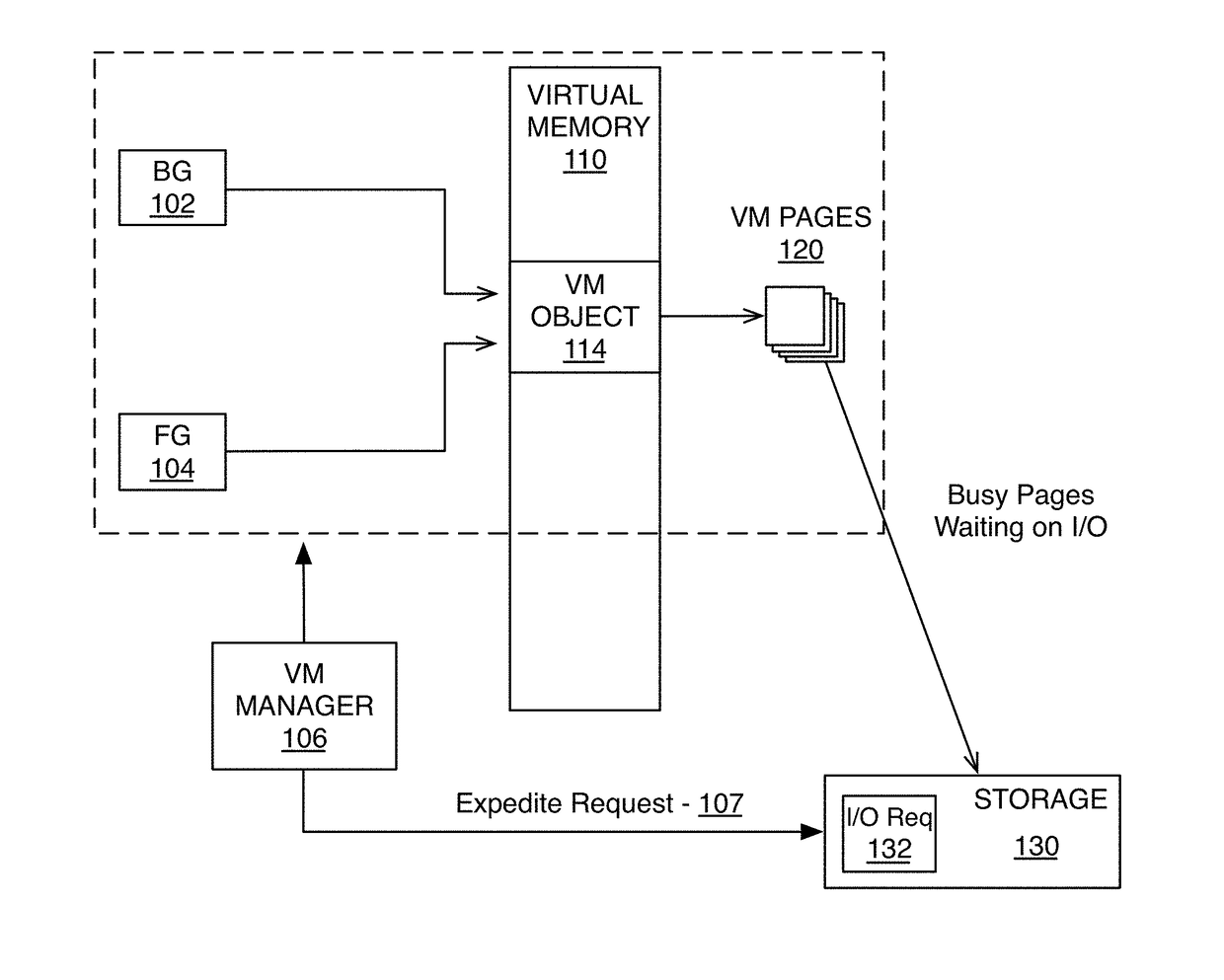

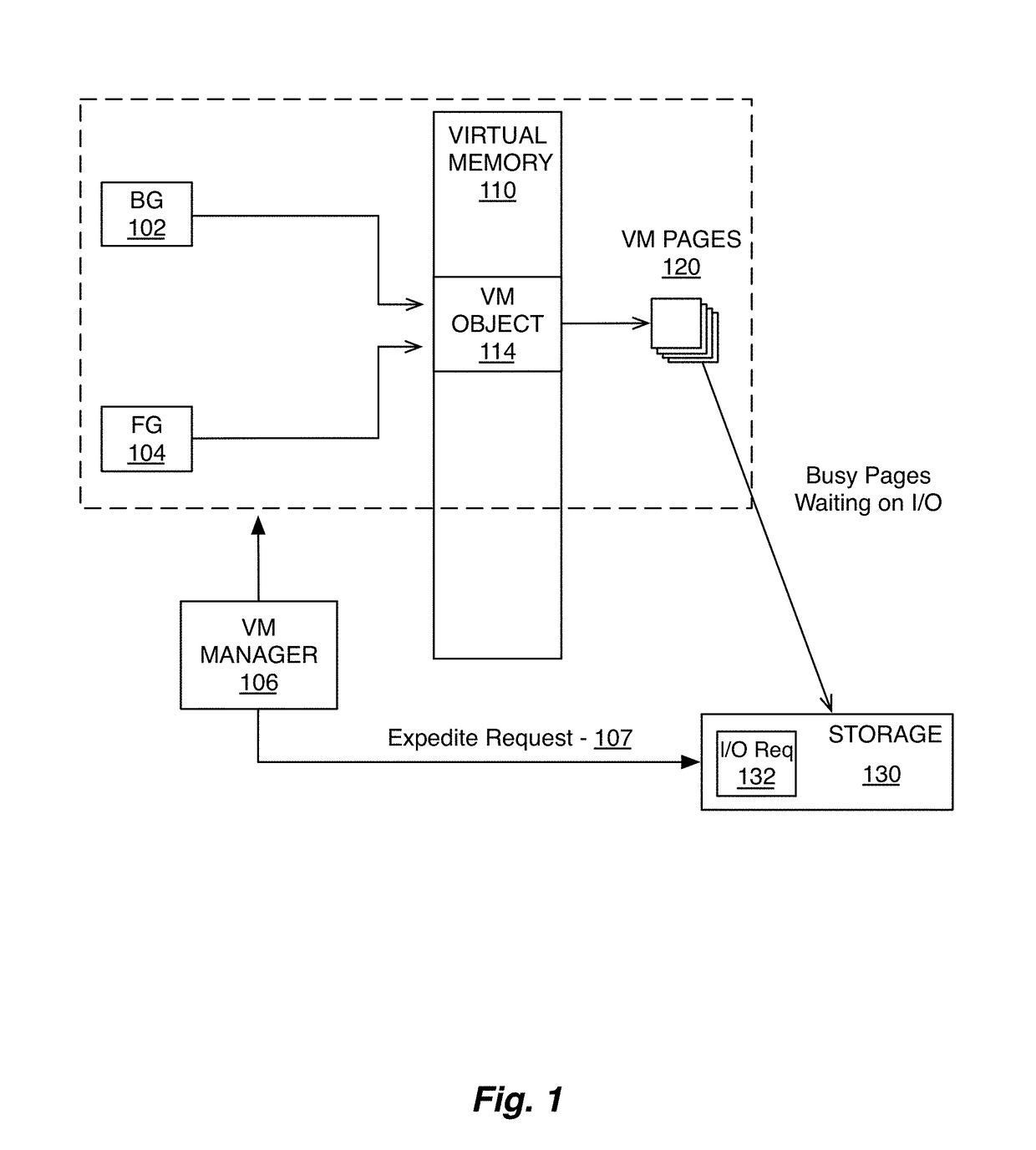

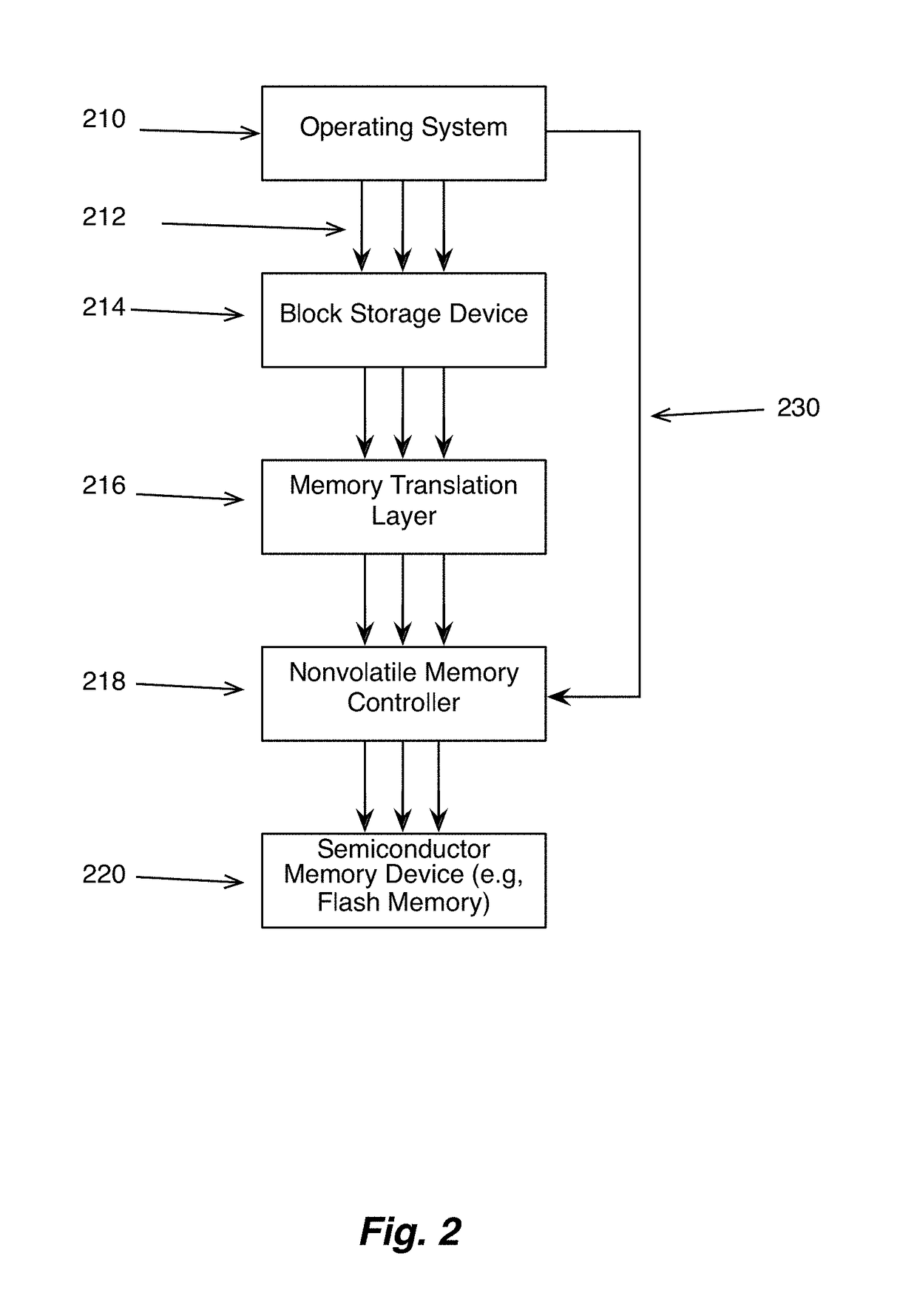

I/O scheduling

ActiveUS20150347327A1Input/output to record carriersProgram controlComputer hardwarePriority inversion

In one embodiment, input-output (I / O) scheduling system detects and resolves priority inversions by expediting previously dispatched requests to an I / O subsystem. In response to detecting the priority inversion, the system can transmit a command to expedite completion of the blocking I / O request. The pending request can be located within the I / O subsystem and expedited to reduce the pendency period of the request.

Owner:APPLE INC

Hardware Multithreading Systems and Methods

Owner:GEO SEMICONDUCTOR INC

Method and system for achieving fair command processing in storage systems that implement command-associated priority queuing

ActiveUS7797468B2Avoid hungerRisk minimizationTransmissionMemory systemsPriority inversionComputer access

In certain, currently available data-storage systems, incoming commands from remote host computers are subject to several levels of command-queue-depth-fairness-related throttles to ensure that all host computers accessing the data-storage systems receive a reasonable fraction of data-storage-system command-processing bandwidth to avoid starvation of one or more host computers. Recently, certain host-computer-to-data-storage-system communication protocols have been enhanced to provide for association of priorities with commands. However, these new command-associated priorities may lead to starvation of priority levels and to a risk of deadlock due to priority-level starvation and priority inversion. In various embodiments of the present invention, at least one additional level of command-queue-depth-fairness-related throttling is introduced in order to avoid starvation of one or more priority levels, thereby eliminating or minimizing the risk of priority-level starvation and priority-related deadlock.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

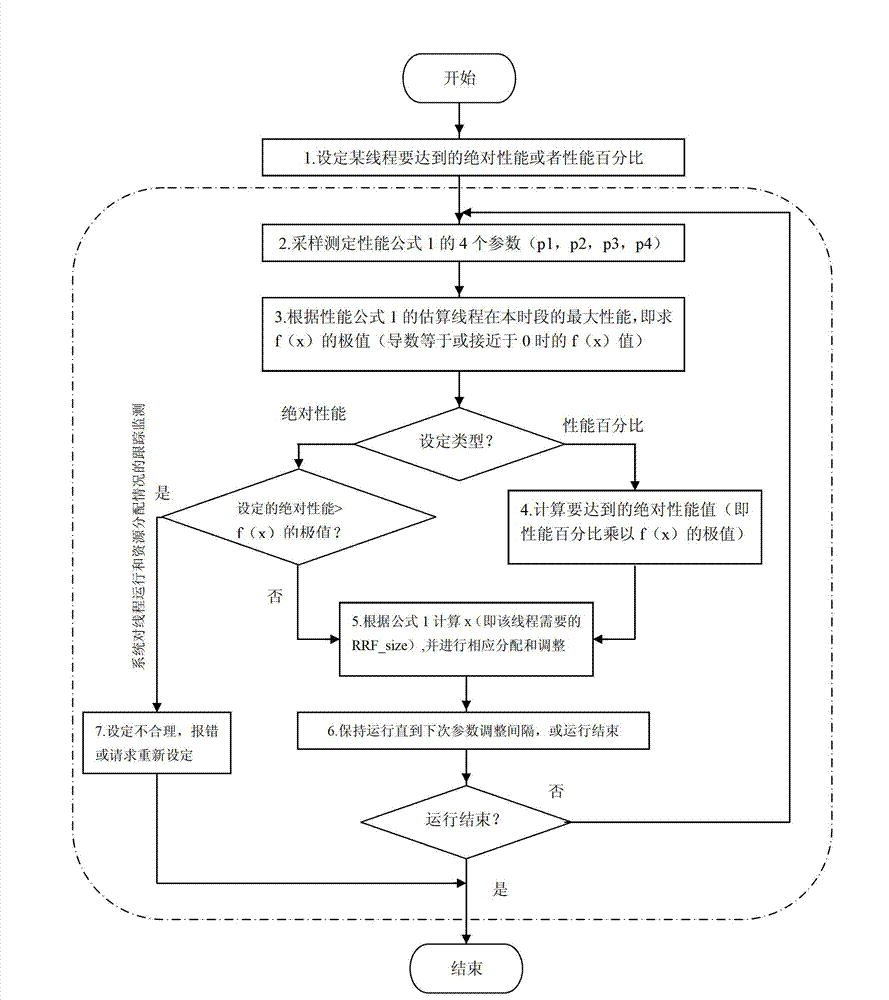

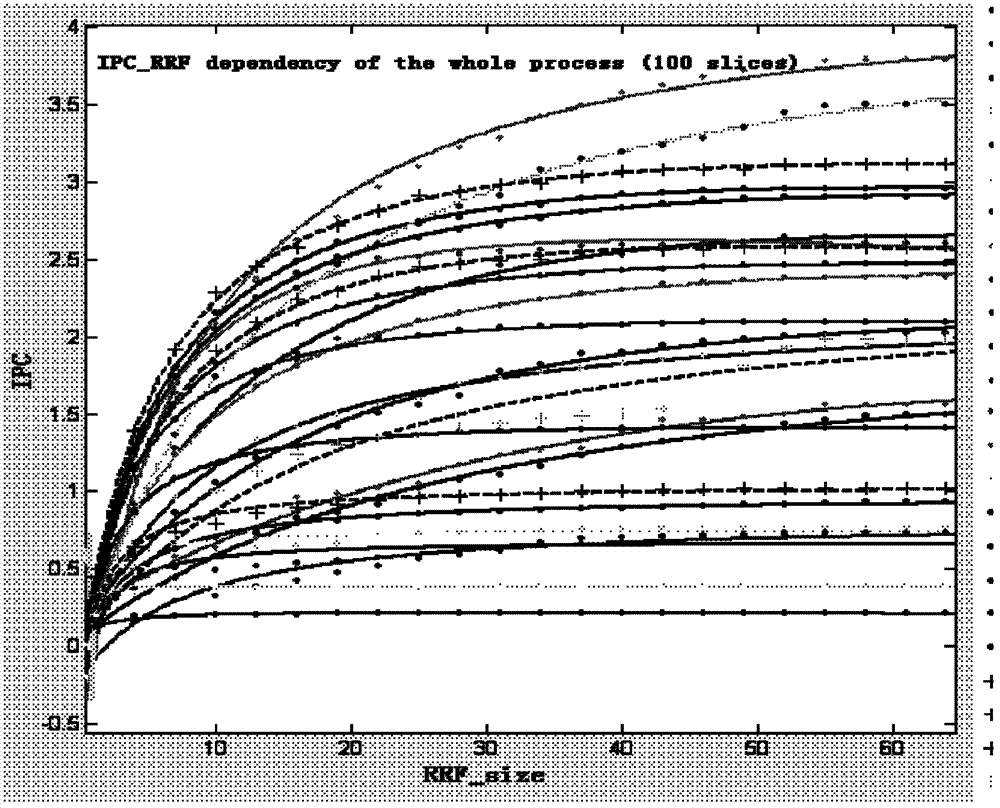

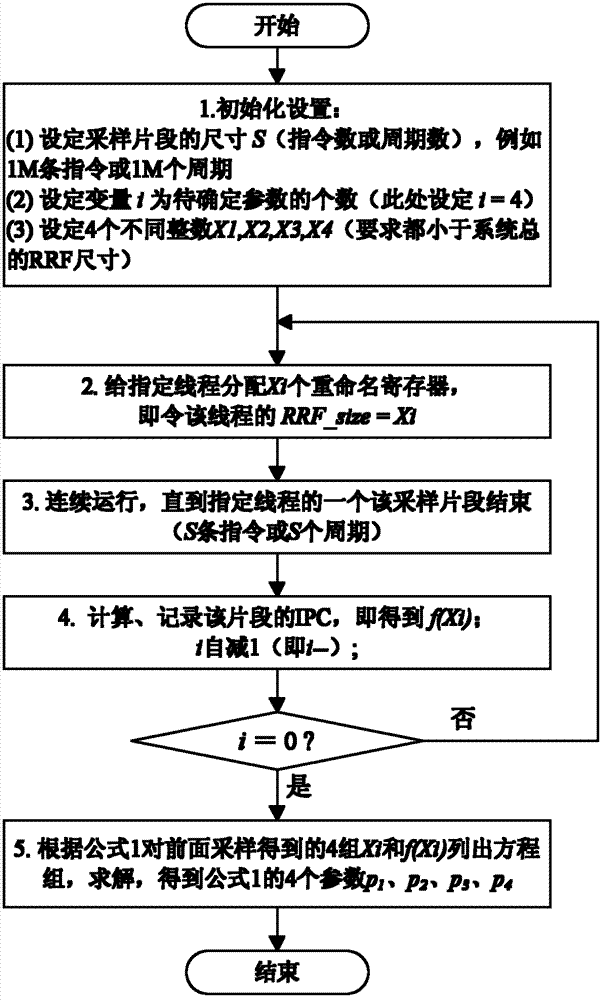

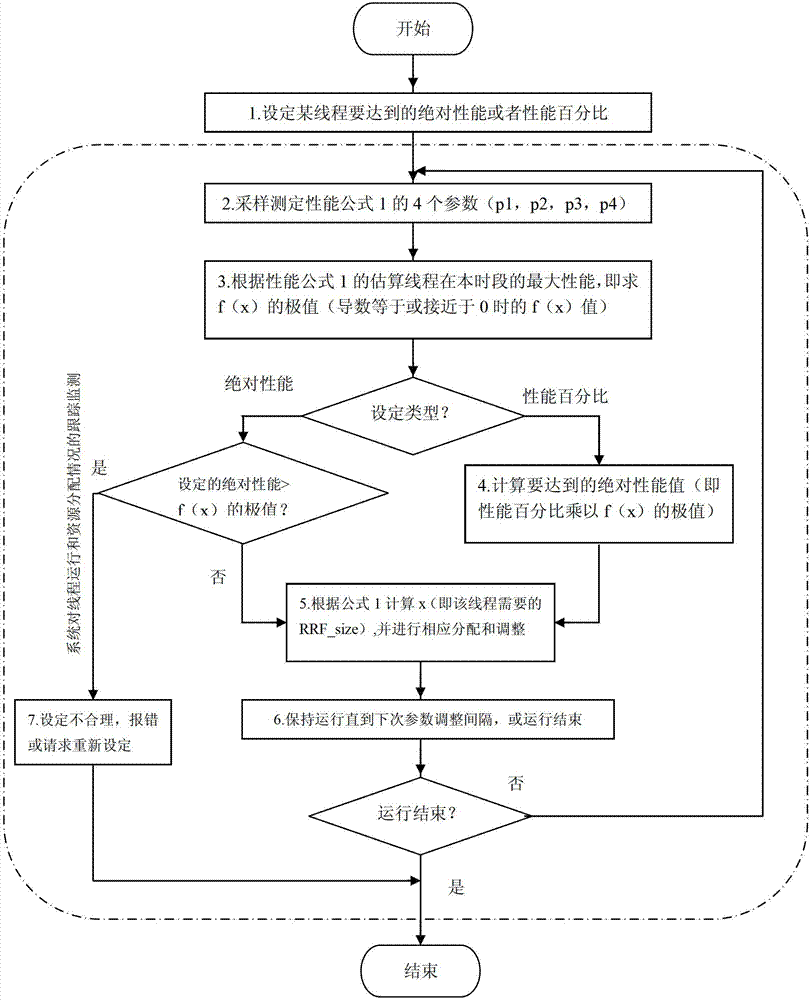

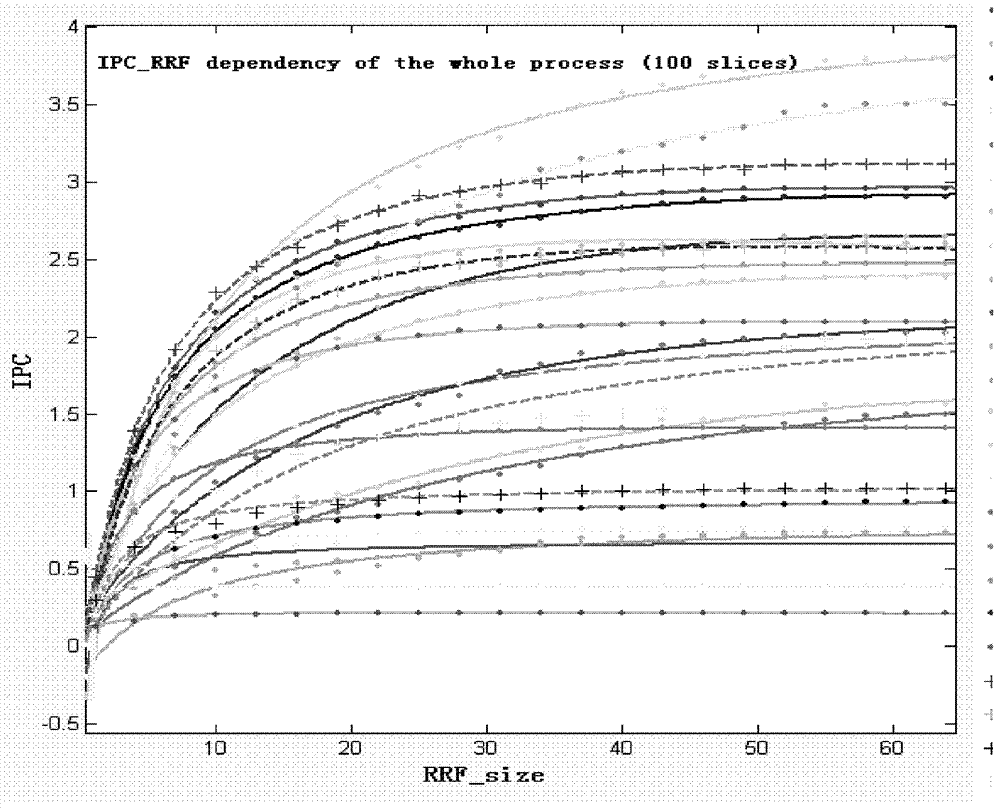

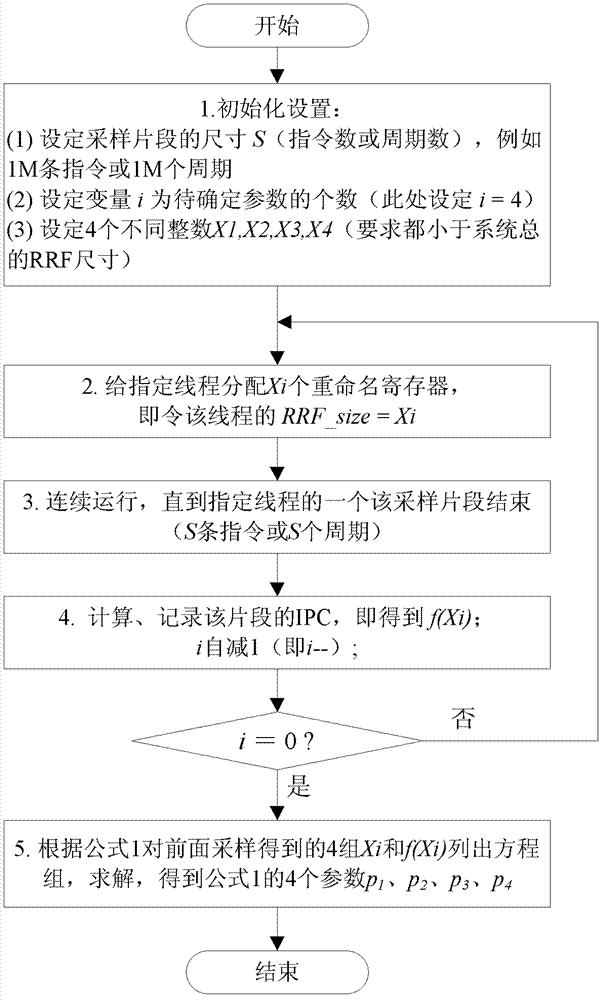

Thread performance prediction and control method of chip multi-threading (CMT) computer system

InactiveCN102708007AAddressing Unexpected HungerResolve stagnationResource allocationPriority inversionOnline study

The invention relates to a thread performance prediction and control method of a CMT computer system, which is designed to solve the technical problems of accidental starvation and stagnation of threads, misuse of resources, priority inversion and so on in the existing CMT system. The method comprises guiding the distribution of key resources (RRF, rename register file) in the CMT system by using the performance-resources dependency relationship model to predict and control the thread performance; acquiring and adjusting the model parameters by thread sampling and online studying to real-timely track and accurately predict the thread performance; and calculating the number of the key resources desired for achieving the performance by use of a parameter determination model according to a set performance requirement, and re-adjusting the resource distribution. The method provided by the invention has the advantages: the model is simple and can accurately describe the dependency relationship between the performance and resources; the method has high adaptability to realize accurate performance prediction and control of all kinds of threads; the method supports two control modes of absolute performance and performance percentage; and the method is low in realization cost, is easy to realize on basis of the existing system structure and can realize ordered distribution of multi-threading chip resources and performance predictability and controllability.

Owner:SHENYANG AEROSPACE UNIVERSITY

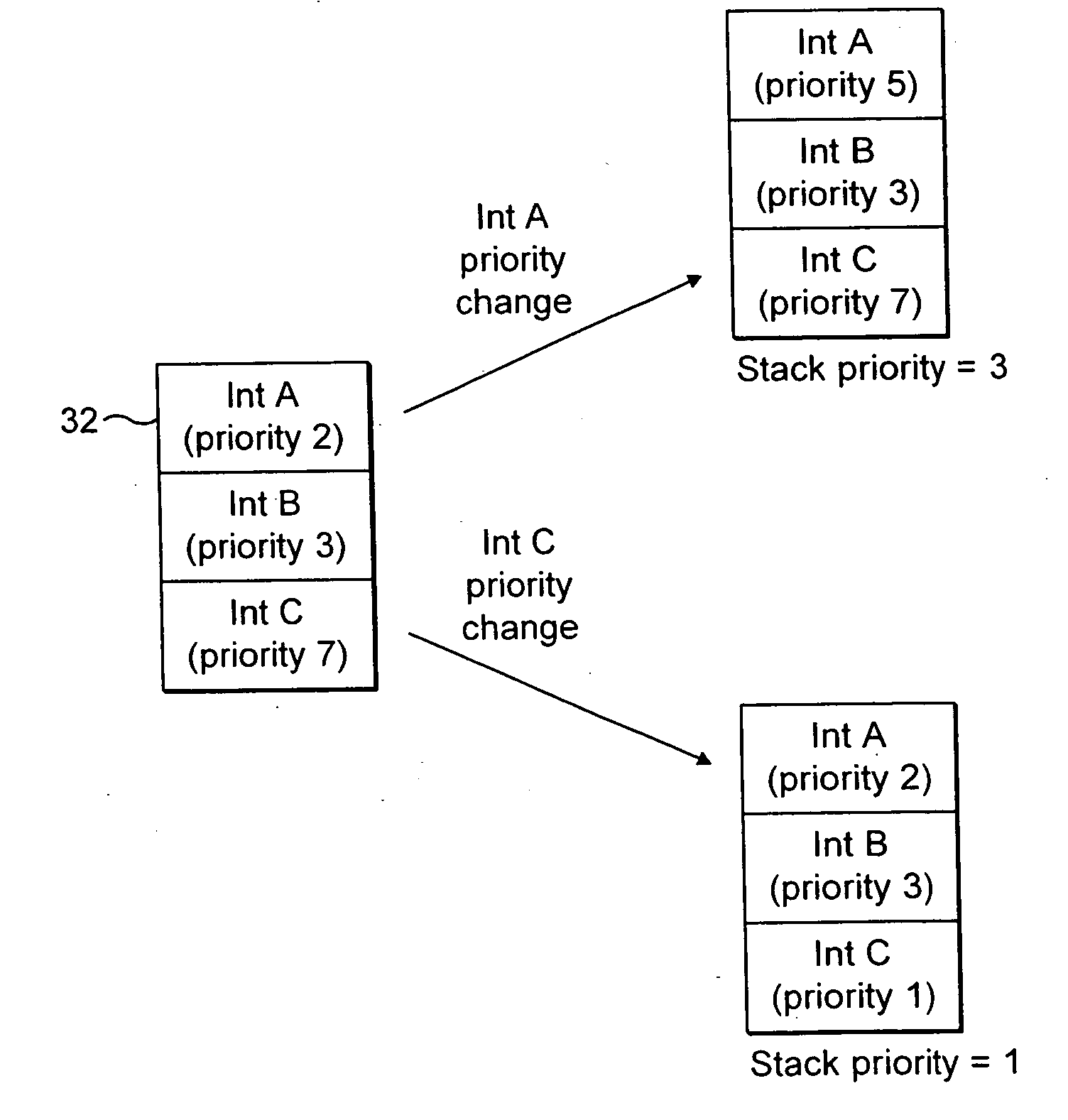

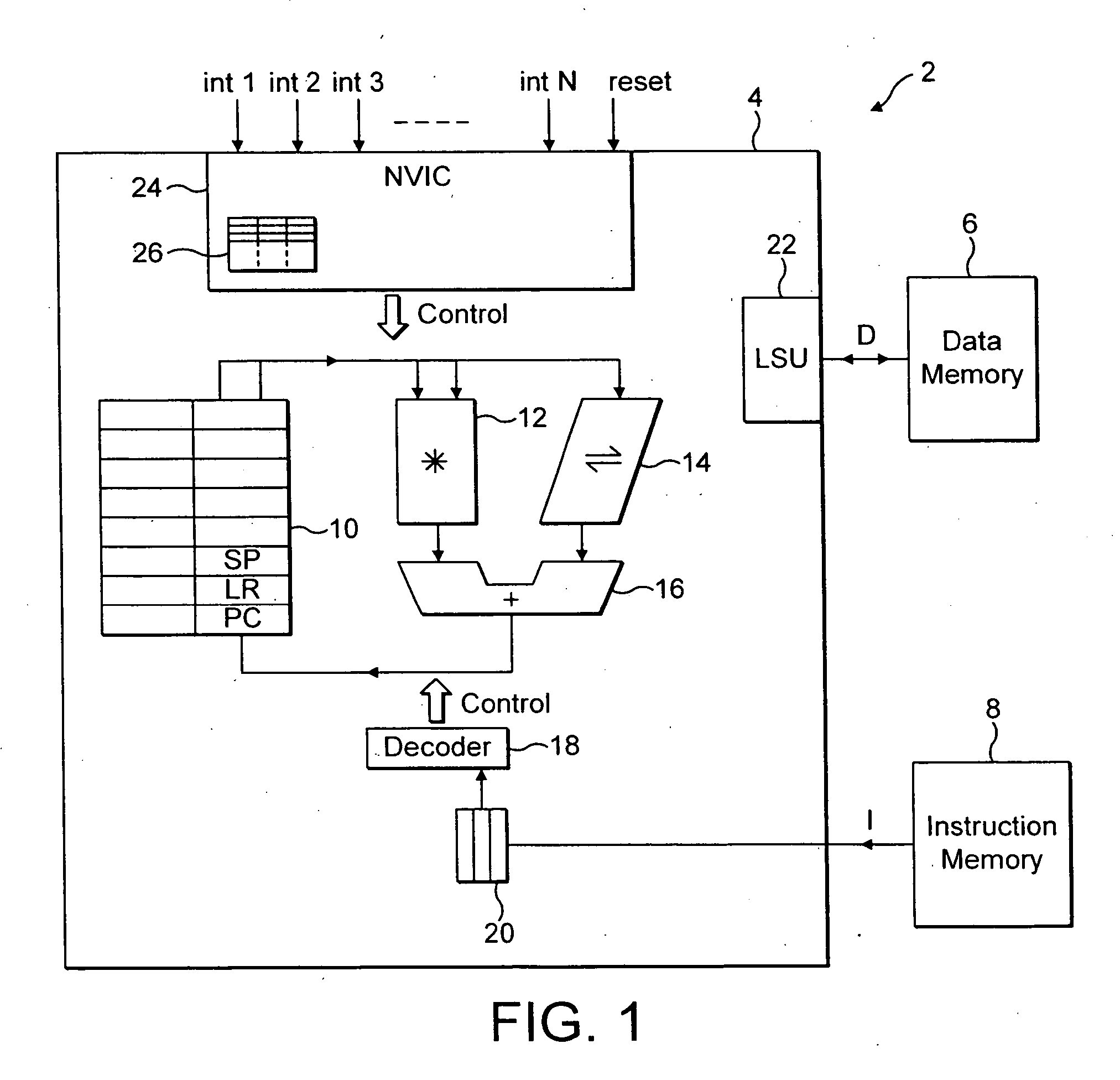

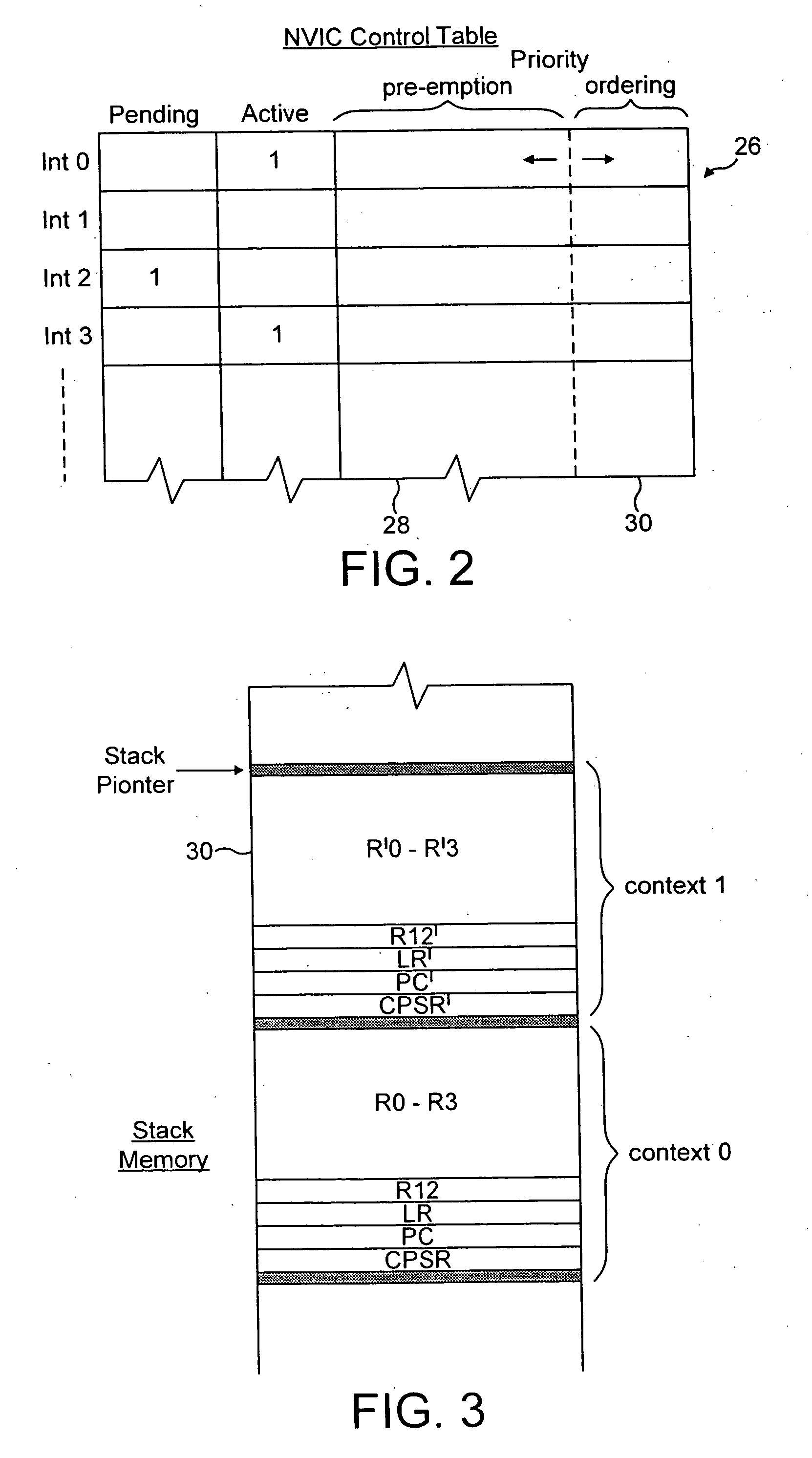

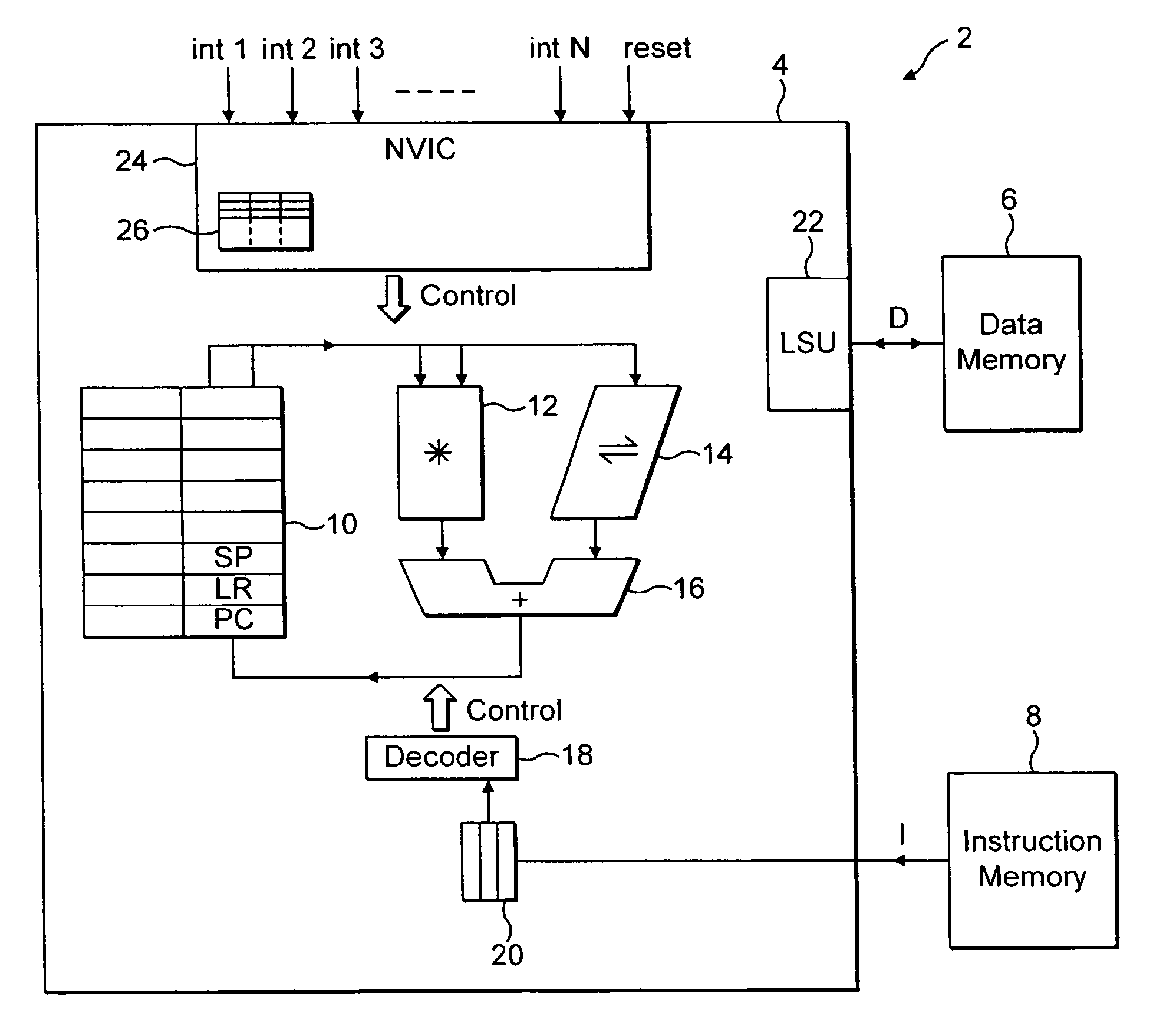

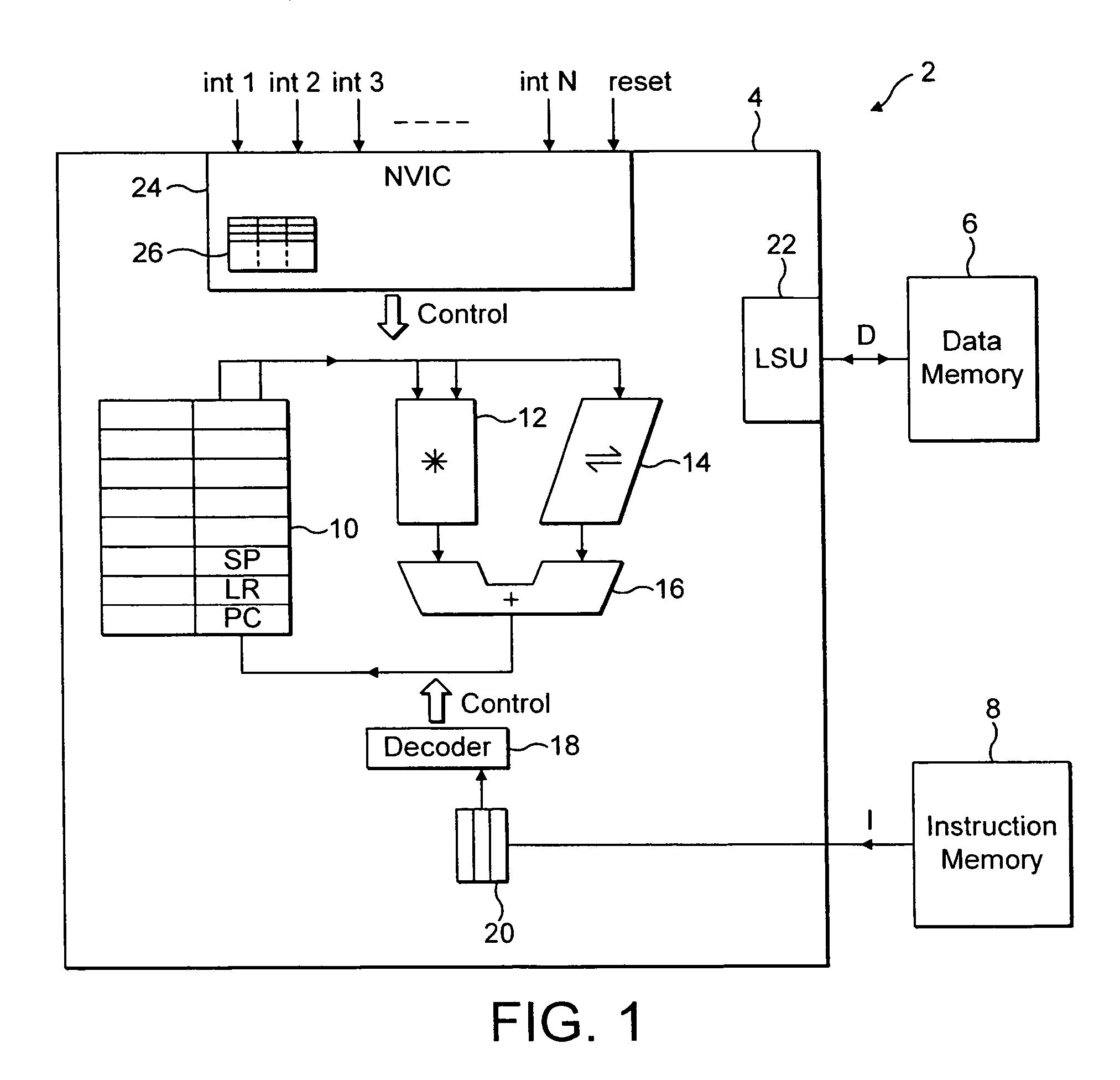

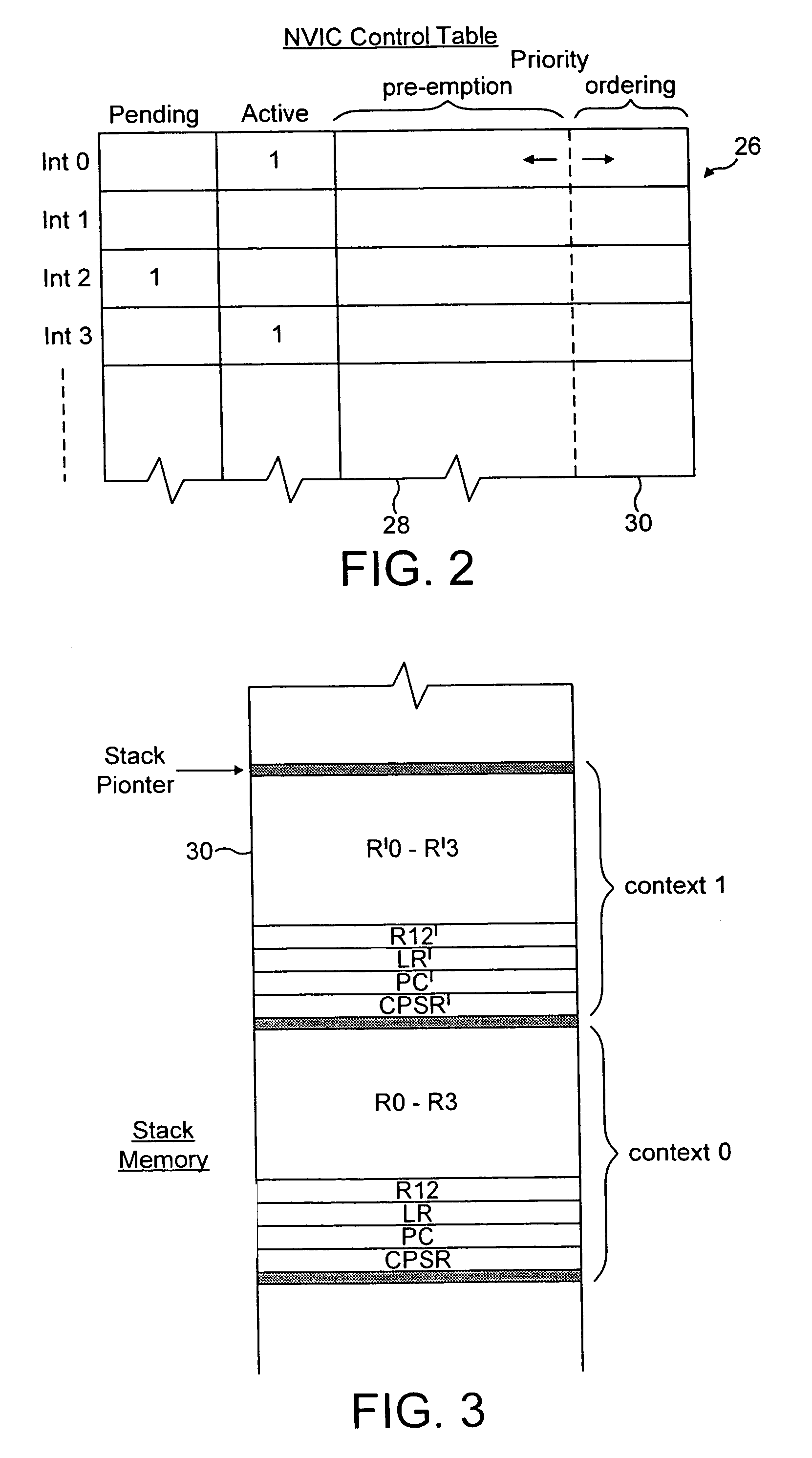

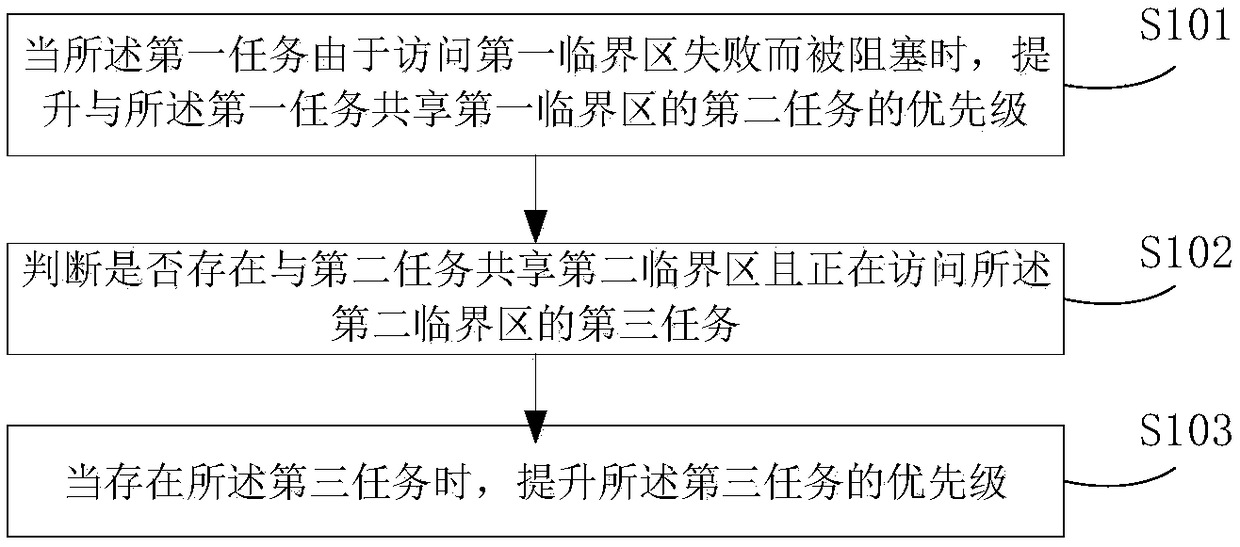

Interrupt priority control within a nested interrupt system

ActiveUS20050177667A1Data storage capacity is not exceededRaise priorityProgram initiation/switchingDigital computer detailsData processing systemPriority inversion

A data processing system 2 having a nested interrupt controller 24 supports nested active interrupts. The priority levels associated with different interrupts are alterable (possibly programmable) whilst the system is running. In order to prevent problems associated with priority inversions within nested interrupts, the nested interrupt controller when considering whether a pending interrupt should pre-empt existing active interrupts, compares the priority of the pending interrupt with the highest priority of any of the currently active interrupts that are nested together.

Owner:ARM LTD

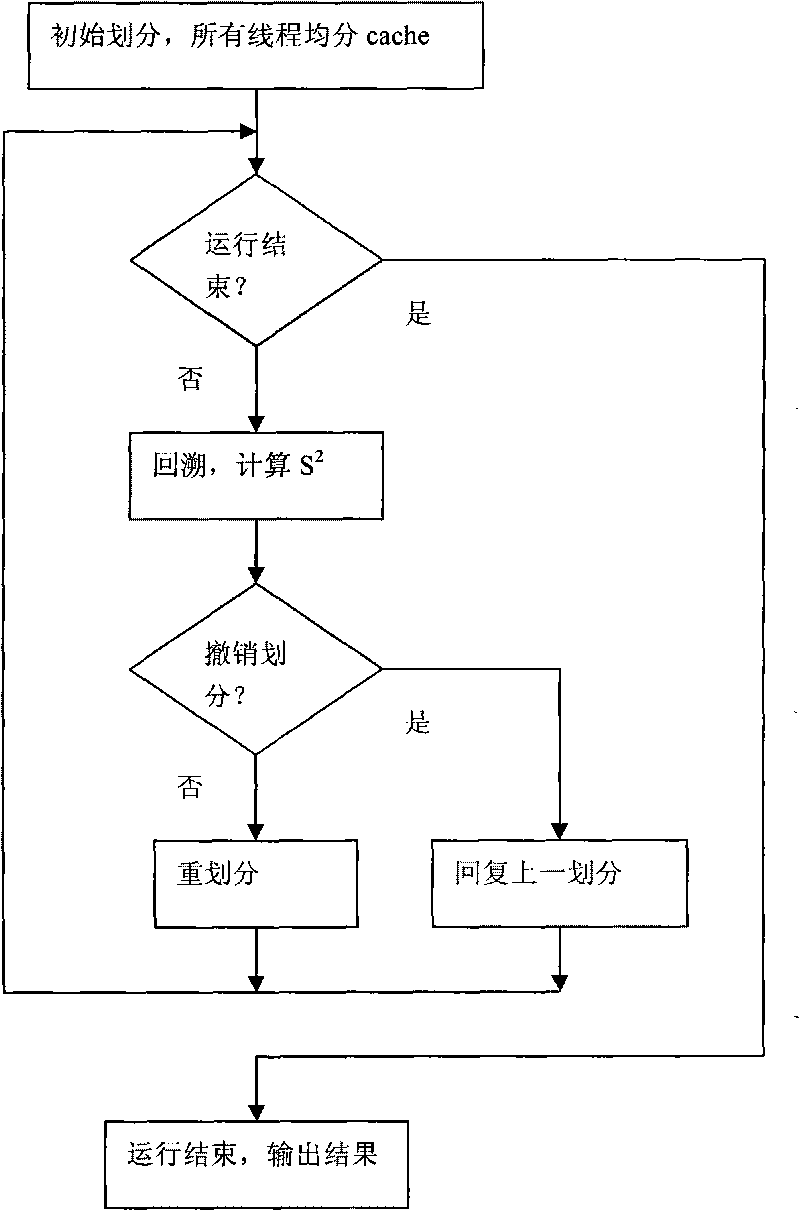

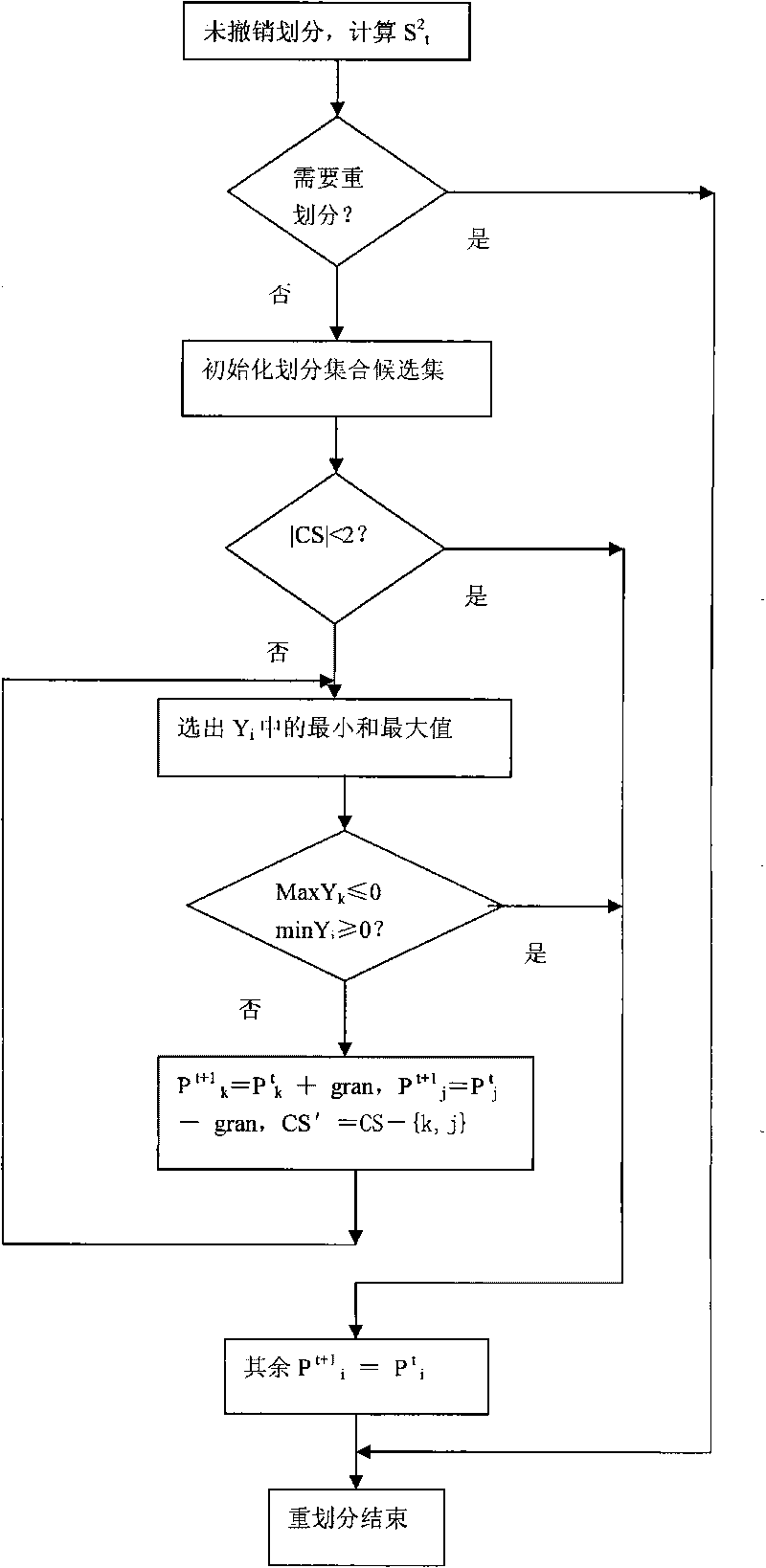

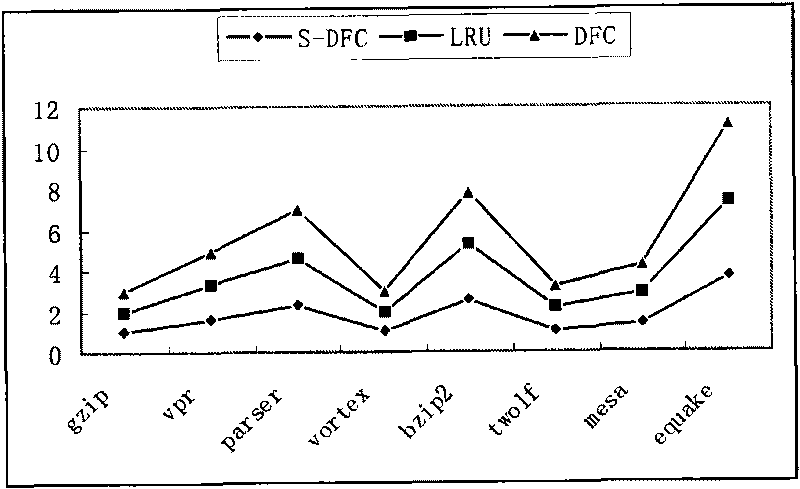

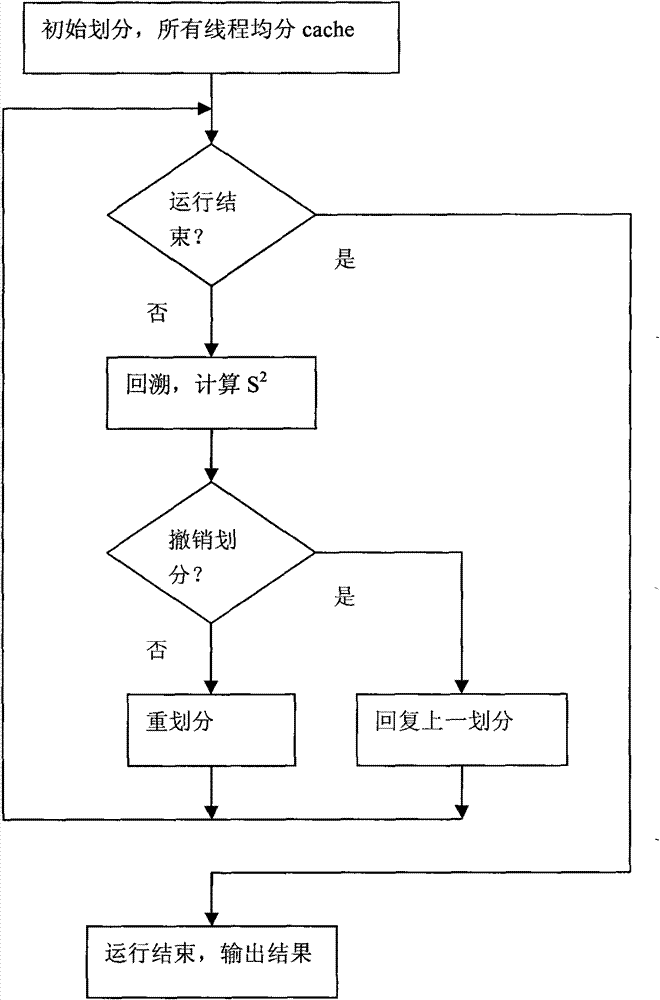

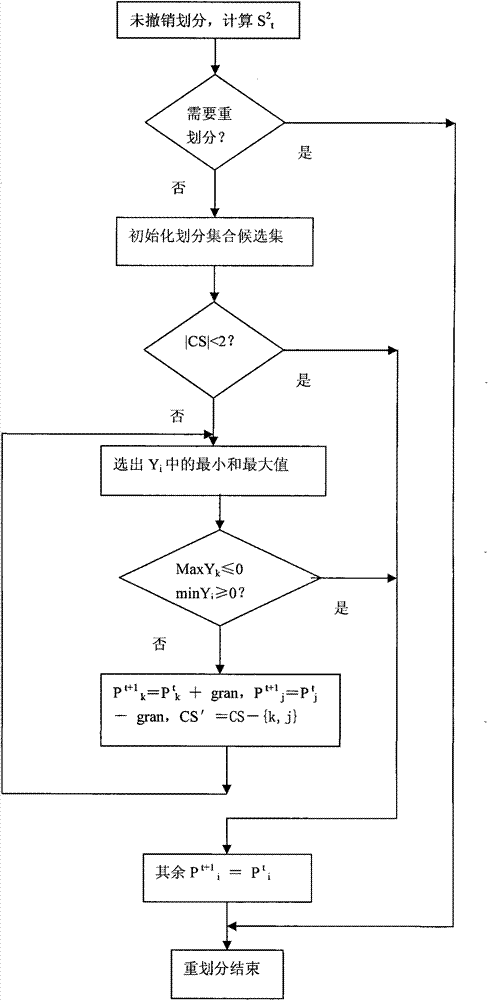

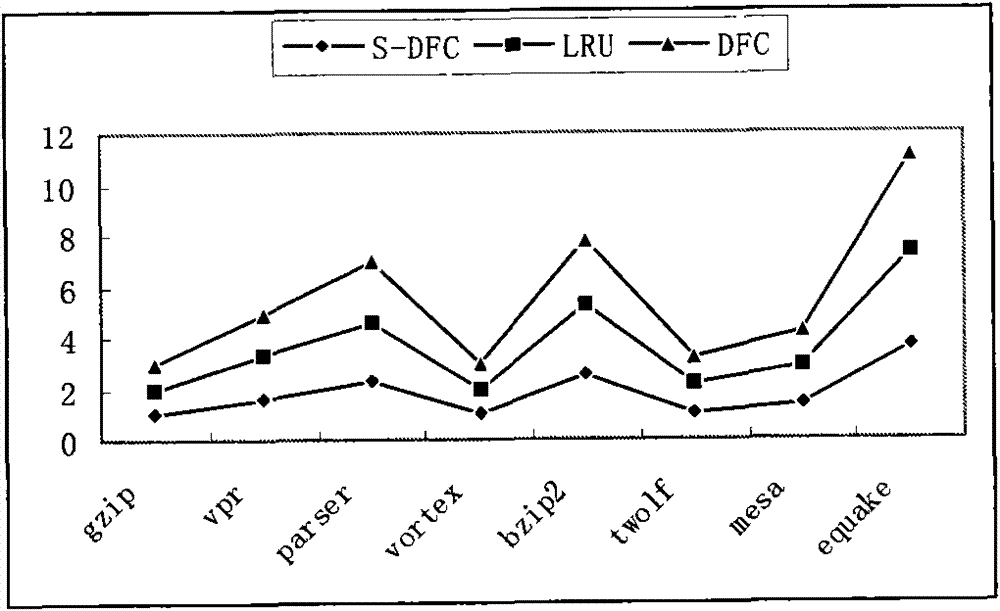

Method for dynamically and fairly partitioning shared cache based on chip multiprocessor

InactiveCN101739299ASimplify the division stepsImprove division efficiencyResource allocationParallel computingPriority inversion

The invention relates to a method for dynamically and fairly partitioning shared cache based on a chip multiprocessor, and belongs to the field of computer architectures. Fairness is a key optimization problem; when a system lacks of the fairness, problems such as starvation of a thread and priority inversion may take place. But the current design of CMP mainly aims to improve throughput of the system, so the fairness of the system can be sacrificed generally. The invention provides a new fairness parameter and a division method. The partitioning method comprises three steps: initializing, roll-backing and repartitioning, wherein at the initializing stage, the cache is equally partitioned before the running of the thread; at the roll-backing stage, the partition which is repartitioned forthe previous partition but has reduced fairness is canceled; and at the repartitioning stage, the cache is newly partitioned for all the threads if no roll-backing occurs and the fairness parameter is greater than a repartition threshold. The partitioning method for the shared cache provided by the invention remarkably improves the fairness of the system; and the throughput of the system is also improved slightly.

Owner:BEIJING UNIV OF TECH

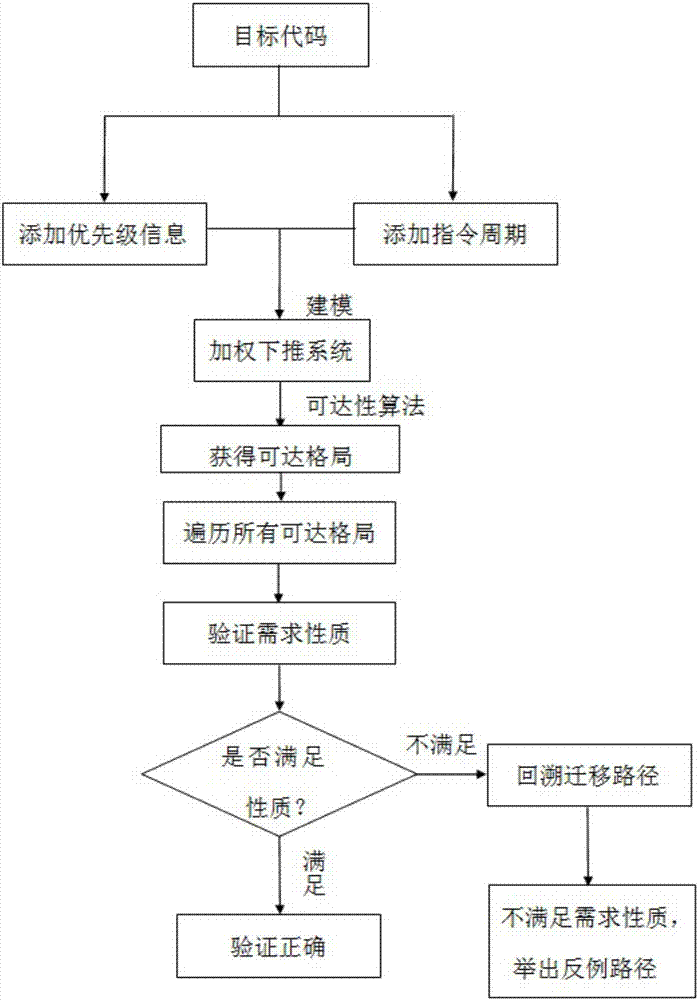

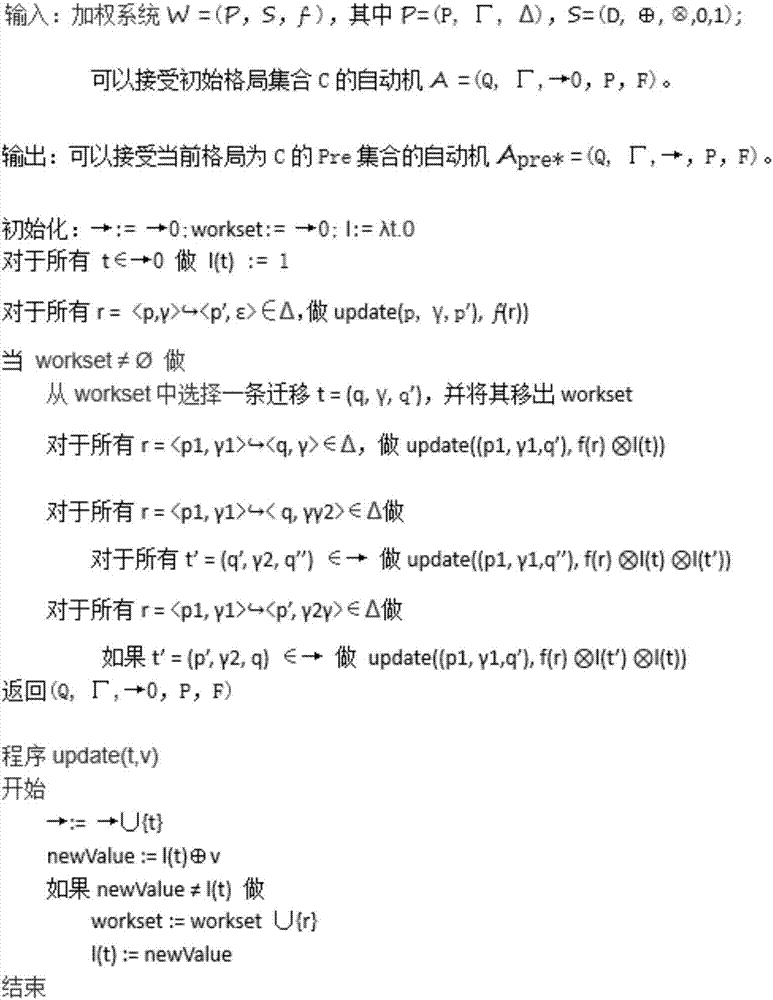

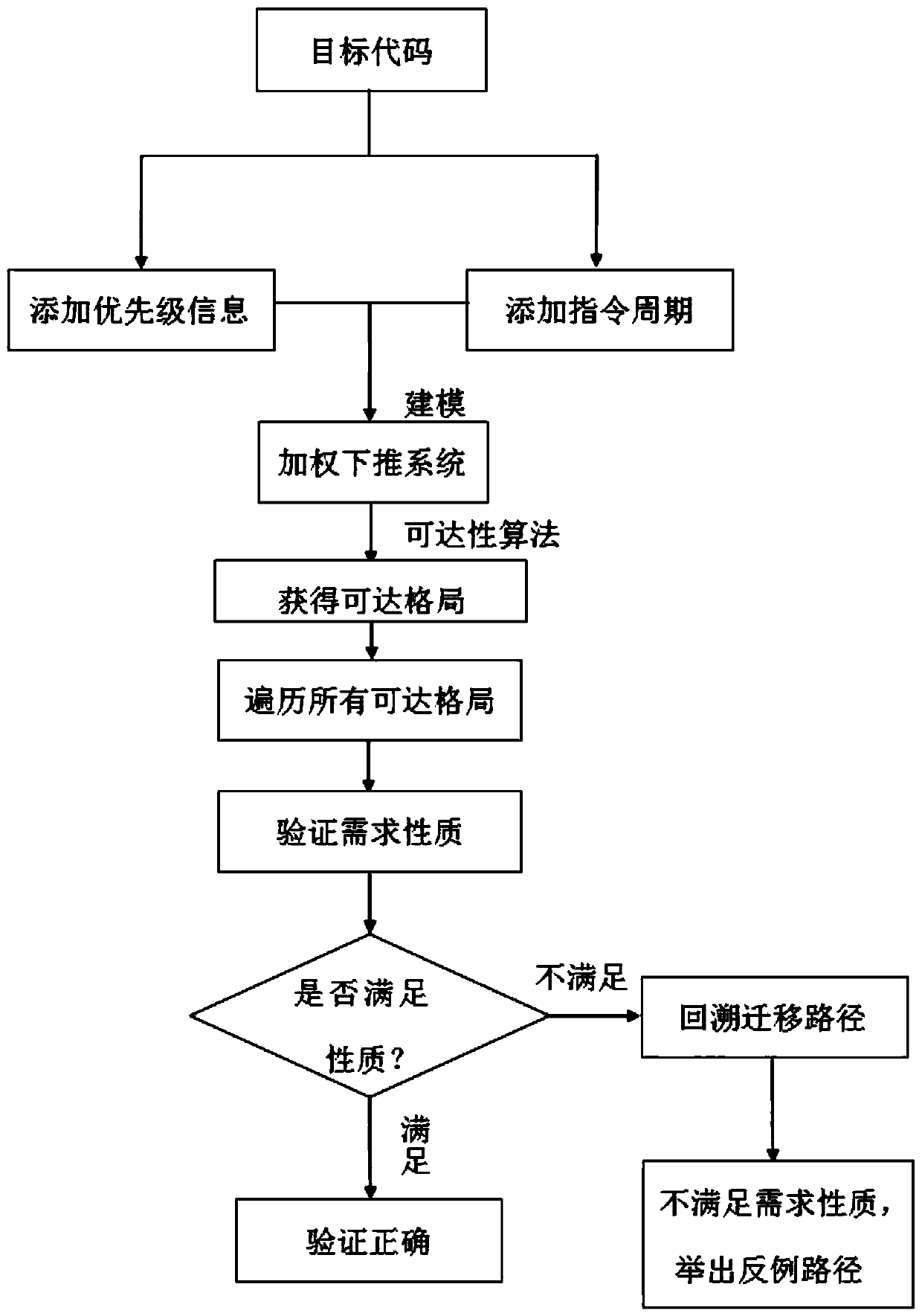

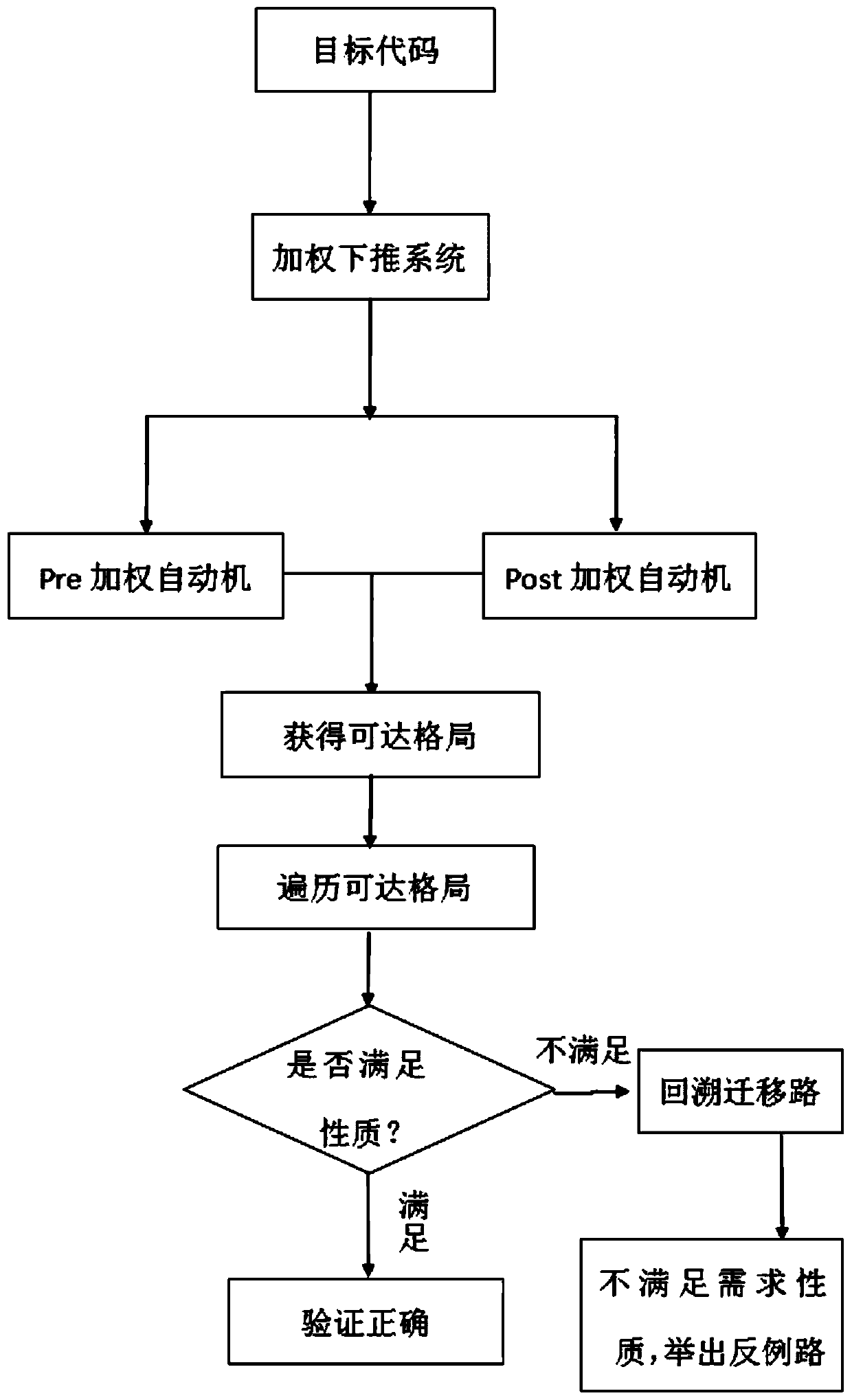

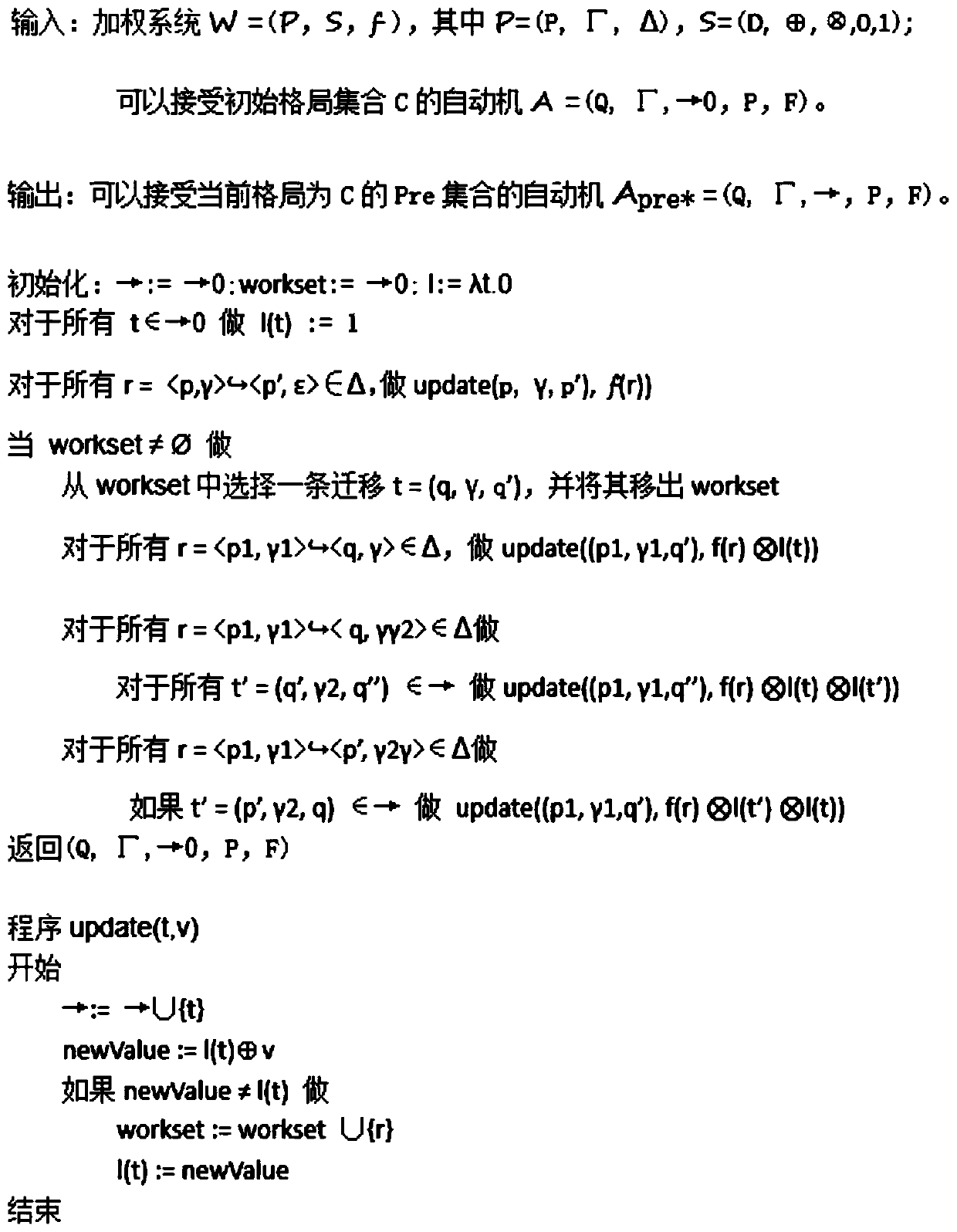

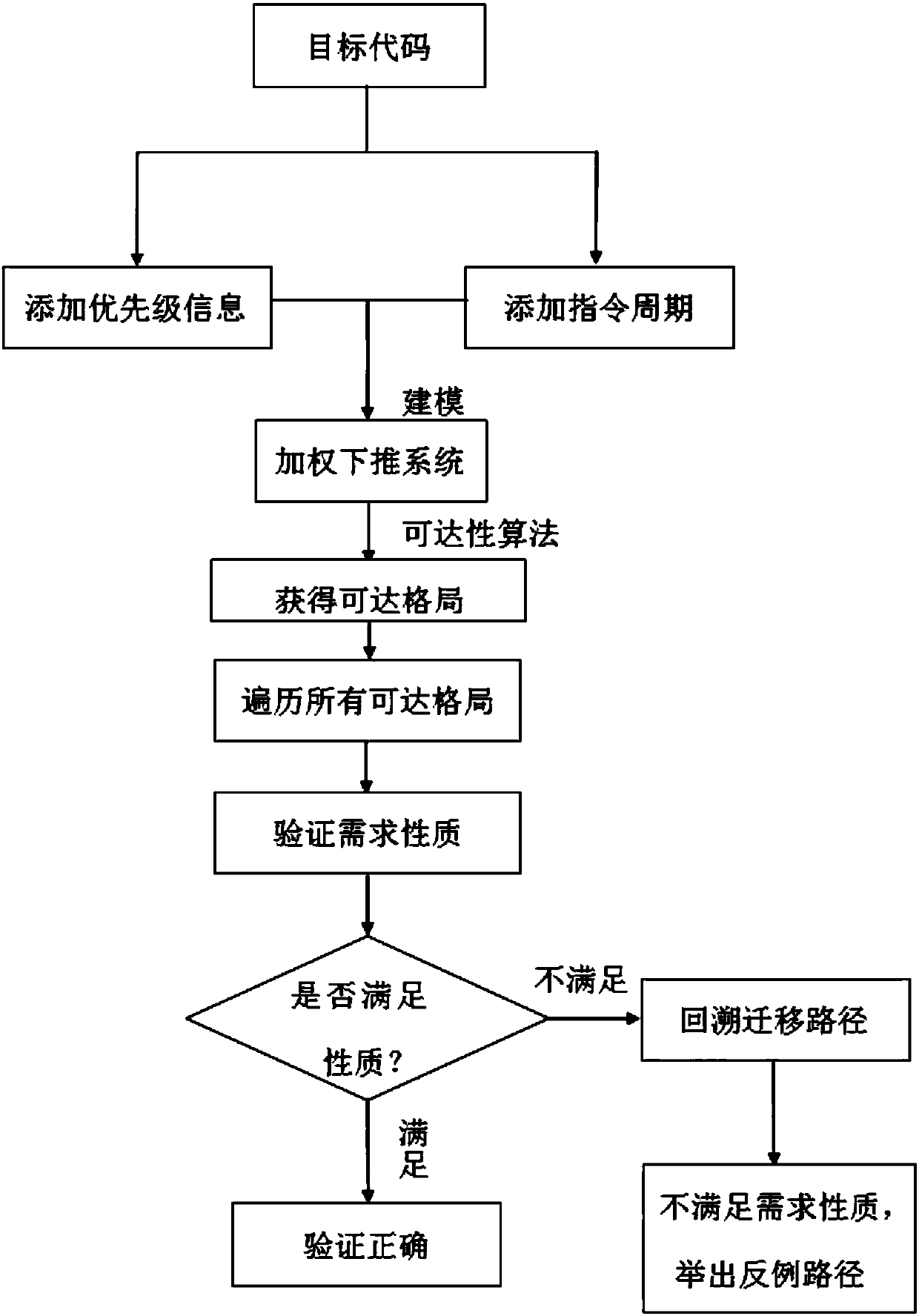

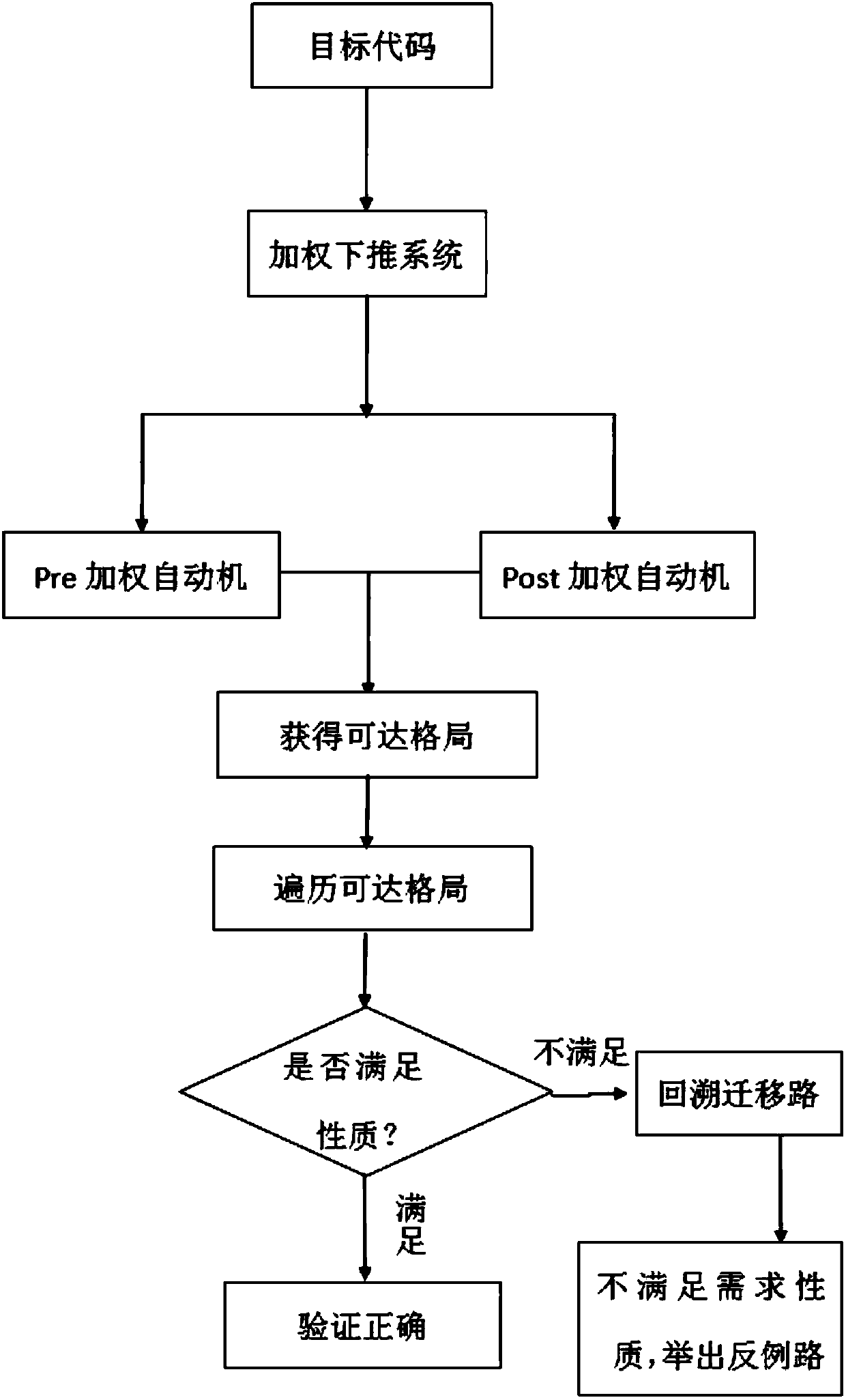

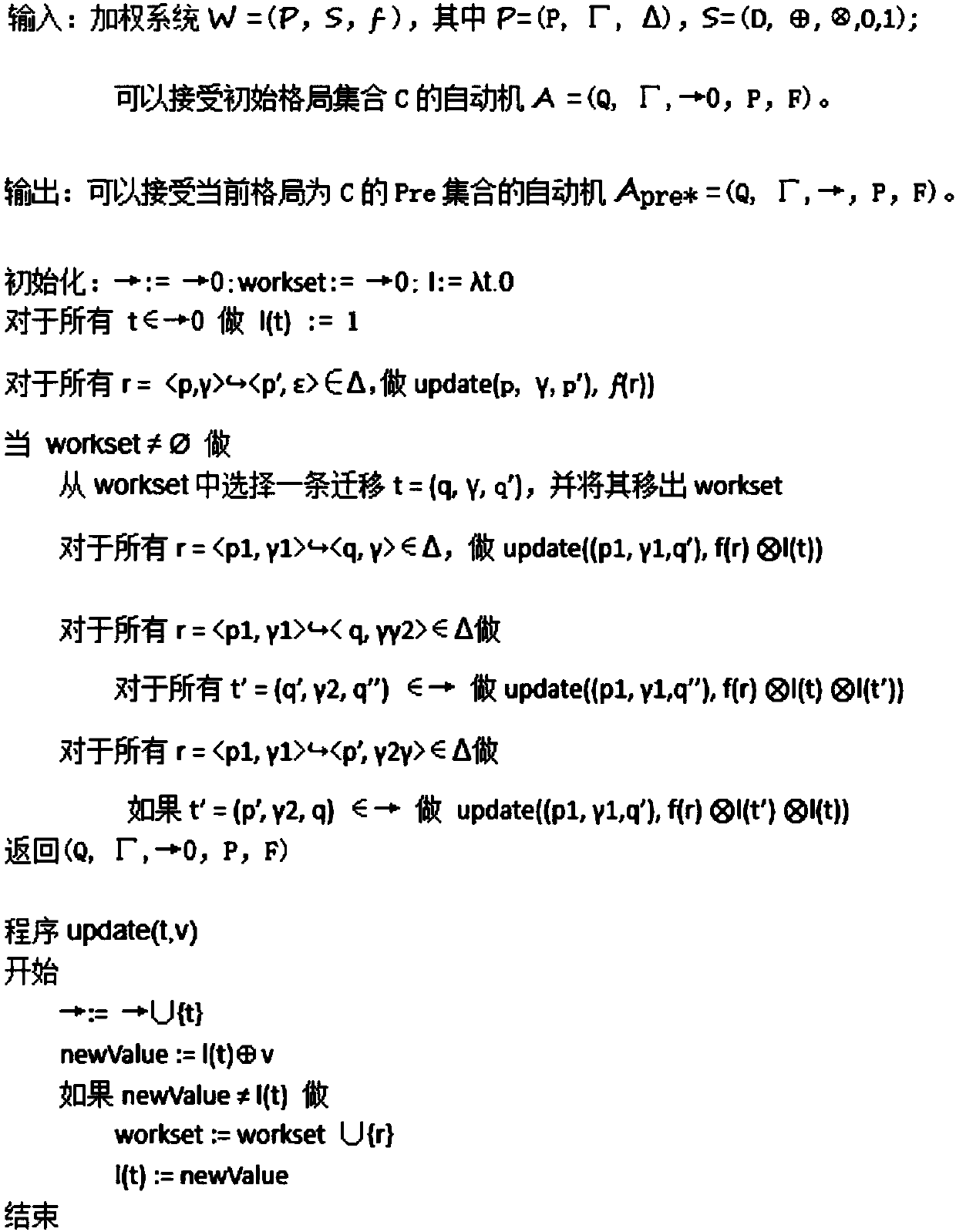

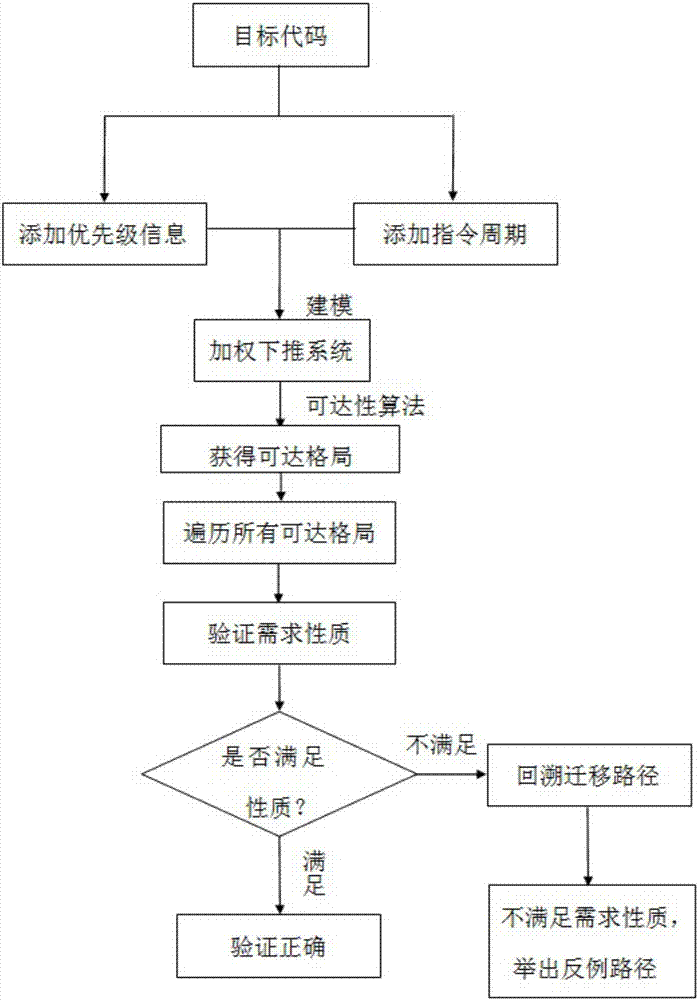

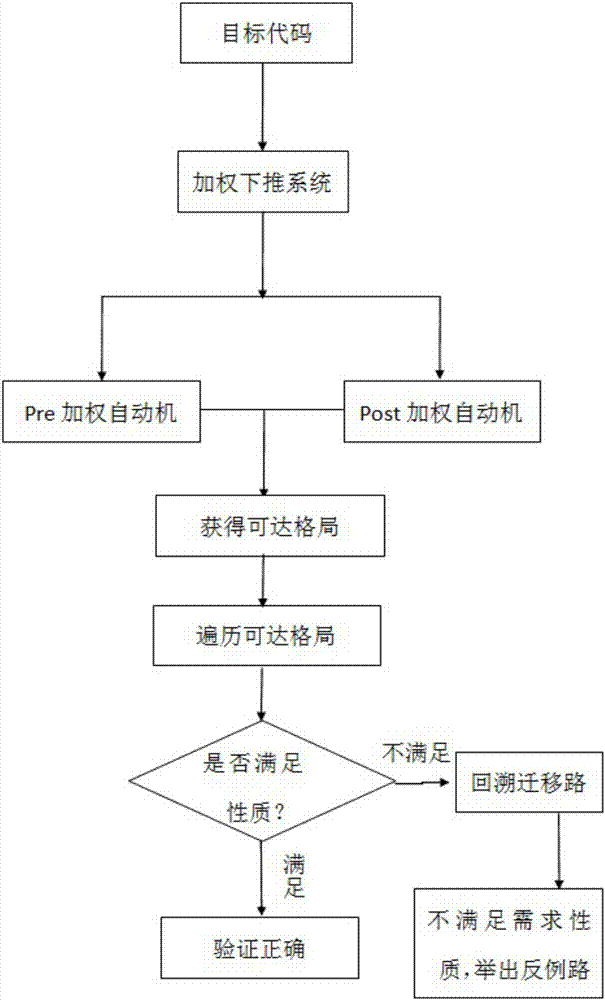

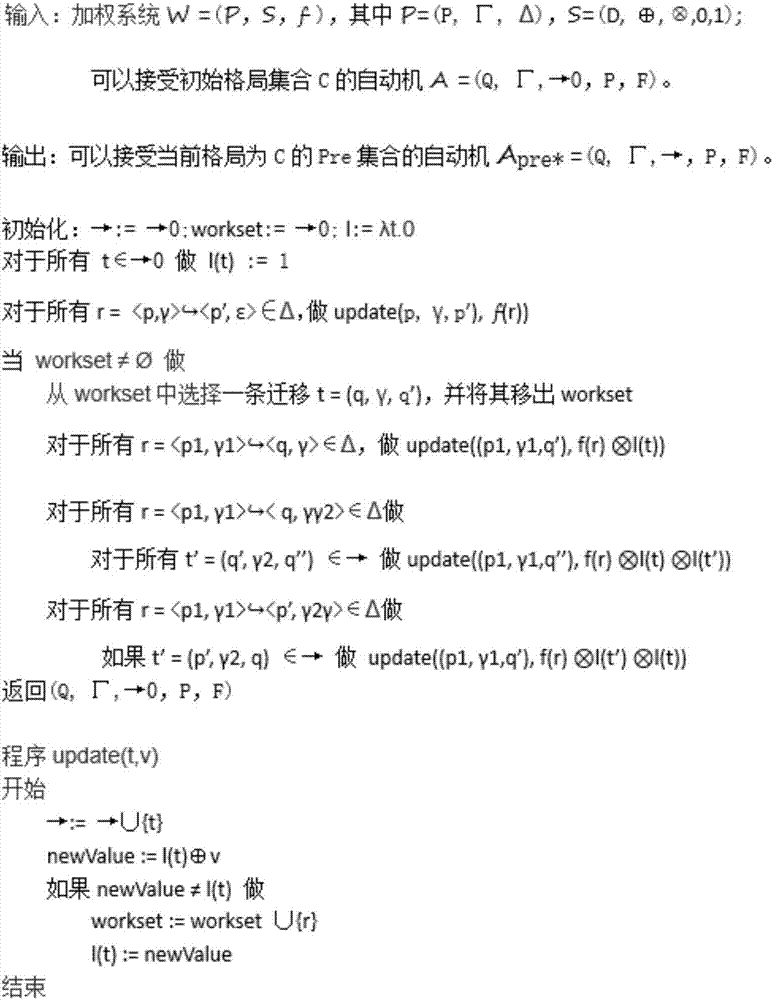

Interrupt verification method based on weighted pushdown system

ActiveCN106940659AImprove reliabilityImprove robustnessProgram initiation/switchingObject codeValidation methods

The invention discloses an interrupt verification method based on a weighted pushdown system. The method comprises the following steps of firstly, modeling the weighted pushdown system according to instruction jumping relationship in a program target code; then, obtaining all reachable layouts through a reachable algorithm of the weighted pushdown system; traversing all of the obtained reachable layouts; judging whether current layout information meets the requirement or not; if not, judging mistake types and returning mistake paths; if no mistake is discovered after all of the reachable layouts are traversed, returning correct. The method provided by the invention has the advantages that the interrupt verification of a real-time system is combined with the weighted pushdown system; a formalization mode is used for verifying the real-time system interrupt; the verification reliability and robustness are improved; sequential logic relevant to the interrupt, priority inversion, memory access conflict and the time-out problem can be verified at the same time under the same model; the efficiency is high; meanwhile, the cost is also reduced.

Owner:上海丰蕾信息科技有限公司

Interrupt and trap handling in an embedded multi-thread processor to avoid priority inversion and maintain real-time operation

ActiveUS7774585B2Preventing unintended trap re-entrancySave on handlingProgram initiation/switchingDigital computer detailsBitwise operationPriority inversion

A real-time, multi-threaded embedded system includes rules for handling traps and interrupts to avoid problems such as priority inversion and re-entrancy. By defining a global interrupt priority value for all active threads and only accepting interrupts having a priority higher than the interrupt priority value, priority inversion can be avoided. Switching to the same thread before any interrupt servicing, and disabling interrupts and thread switching during interrupt servicing can simplify the interrupt handling logic. By storing trap background data for traps and servicing traps only in their originating threads, trap traceability can be preserved. By disabling interrupts and thread switching during trap servicing, unintended trap re-entrancy and servicing disruption can be prevented.

Owner:INFINEON TECH AG

Interrupt priority control within a nested interrupt system

ActiveUS7206884B2Data storage capacity is not exceededRaise priorityProgram initiation/switchingDigital computer detailsData processing systemVectored Interrupt

A data processing system 2 having a nested interrupt controller 24 supports nested active interrupts. The priority levels associated with different interrupts are alterable (possibly programmable) whilst the system is running. In order to prevent problems associated with priority inversions within nested interrupts, the nested interrupt controller when considering whether a pending interrupt should pre-empt existing active interrupts, compares the priority of the pending interrupt with the highest priority of any of the currently active interrupts that are nested together.

Owner:ARM LTD

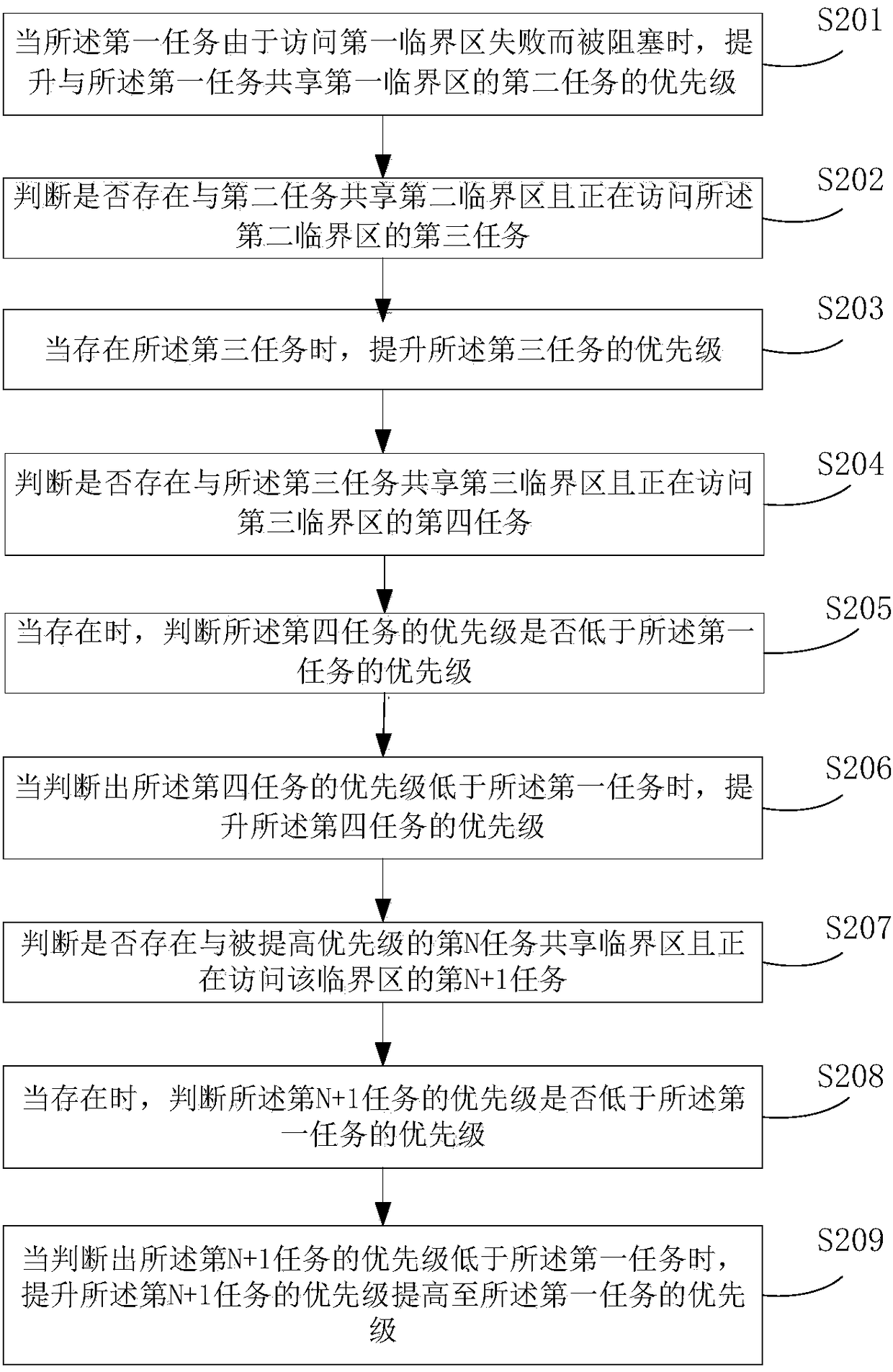

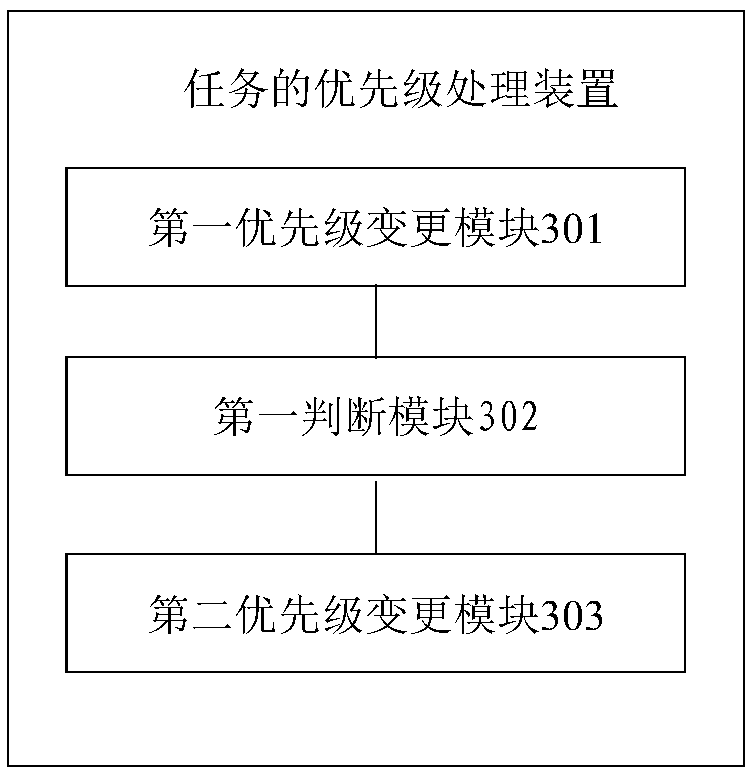

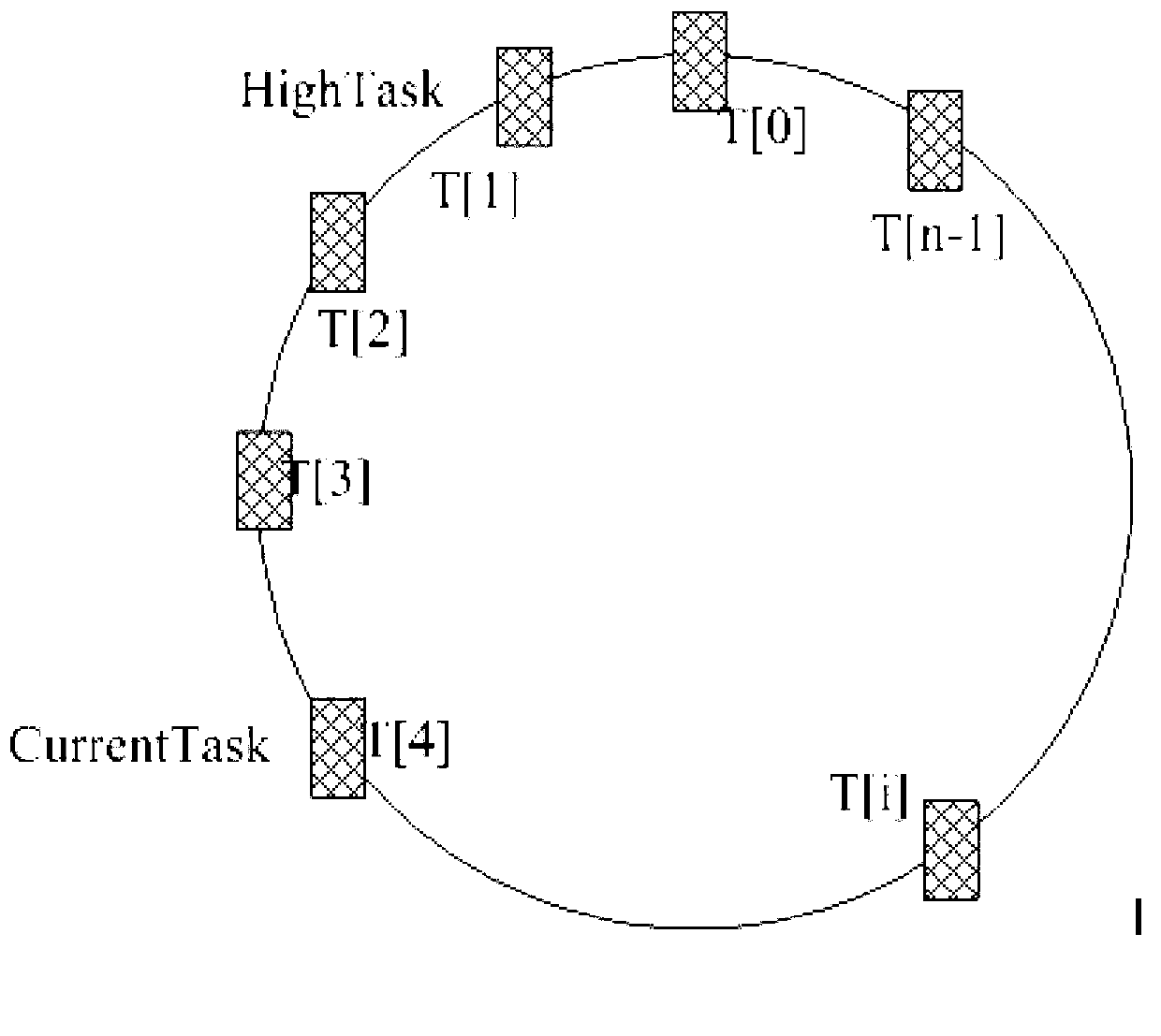

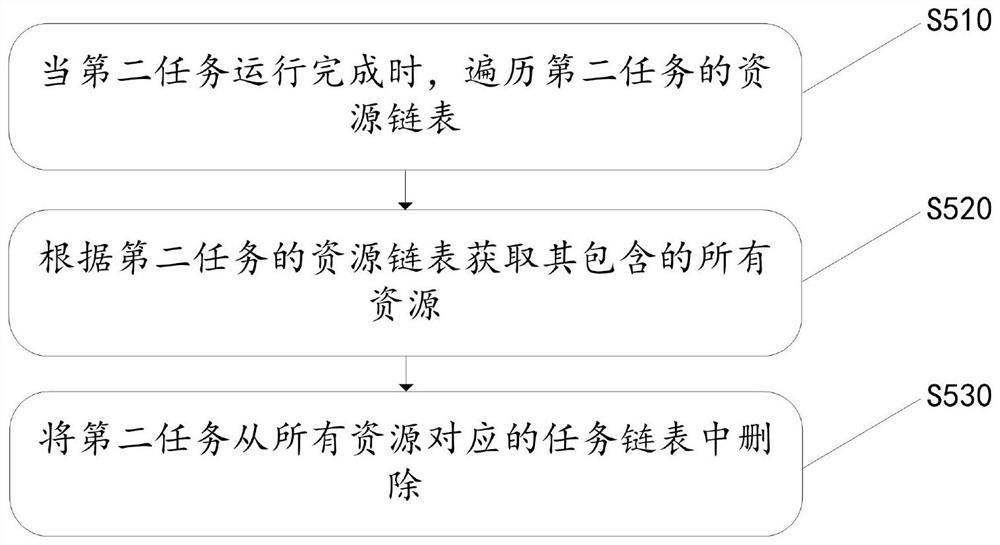

Priority processing method and processing apparatus for task

InactiveCN109144682ADelay in executionProgram initiation/switchingProgram synchronisationPriority inversionCritical zone

A priority processing method and a processing apparatus for a task are disclosed. The tasks include a first task, a second task, and a third task, the first task having a higher priority than the second task and the third task. The method includes raising a priority of a second task that shares a first critical region with the first task and is accessing the first critical region when the first task is blocked due to a failure to access the first critical region; determining whether there is a third task that shares a second critical region with the second task and is accessing the second critical region; when the third task exists, the priority of the third task is raised. Embodiments of the present application provide a scheme that avoids delaying the execution of a second task by a third task having a low priority, thereby avoiding priority inversion caused by delaying the execution of a first task having a high priority.

Owner:ALIBABA GRP HLDG LTD

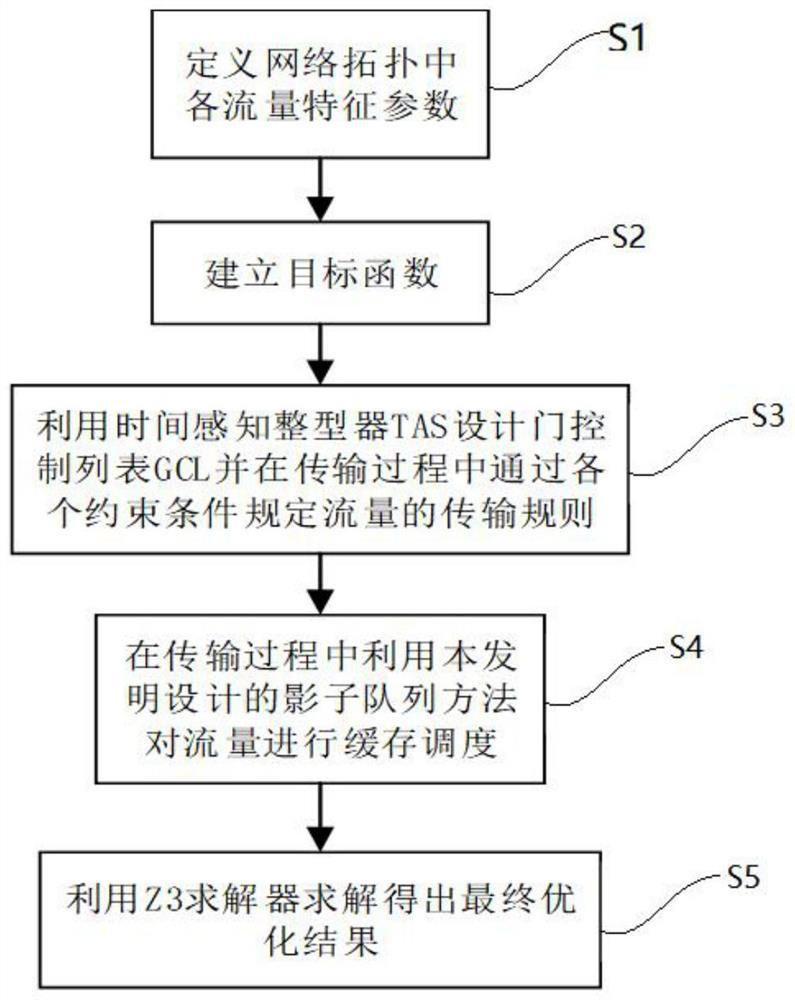

Time-sensitive network traffic hierarchical scheduling method for industrial site

PendingCN114301851AGuaranteed normal transmissionReal-time transmissionDesign optimisation/simulationConstraint-based CADTraffic characteristicPacket loss

The invention relates to the technical field of industrial Internet of Things time-sensitive networks, in particular to an industrial site-oriented time-sensitive network traffic hierarchical scheduling method, which comprises the following steps of: S1, defining each traffic characteristic parameter in a network topology; s2, establishing a target function; s3, designing a gate control list (GCL) by using a time-aware shaper (TAS), and specifying a traffic transmission rule through each constraint condition in a transmission process; s4, performing cache scheduling on the flow by using the shadow queue designed in the invention in the transmission process; s5, solving by using a Z3 solver to obtain a final optimization result; according to the shadow queue designed by the invention, the queuing delay of packet loss of high-priority TT flow is reduced; a priority inversion method is designed, so that the TSN can more flexibly deal with the transmission of emergency traffic; and the throughput of the whole system is improved.

Owner:YANSHAN UNIV

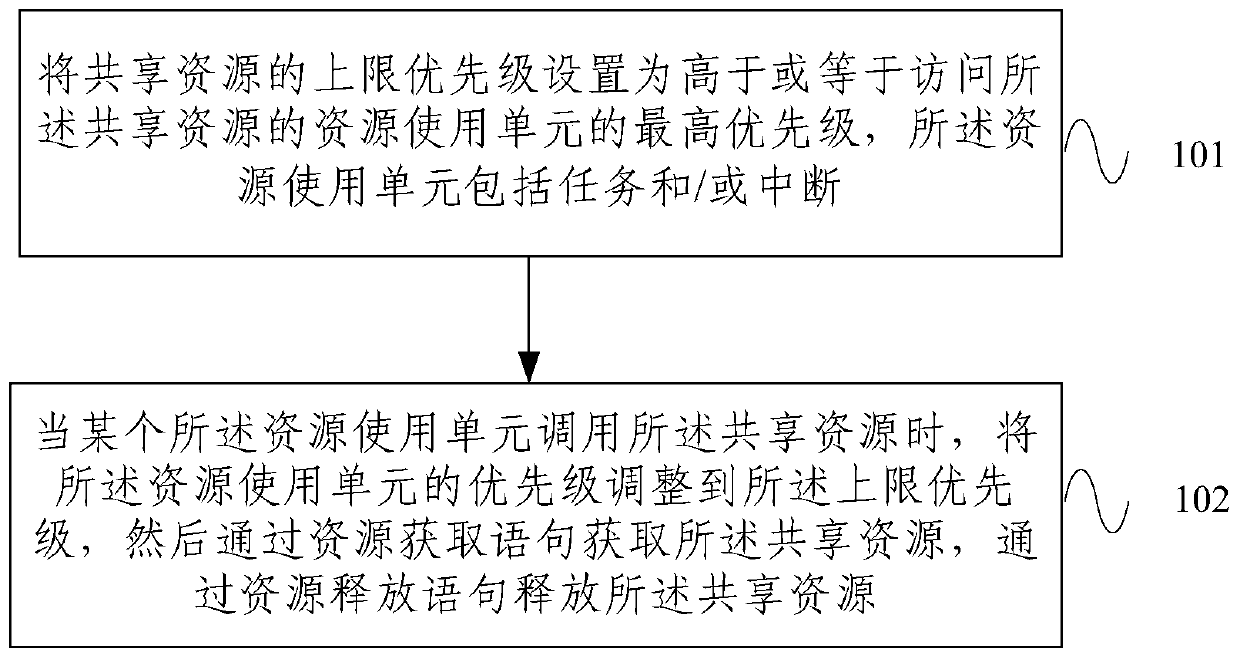

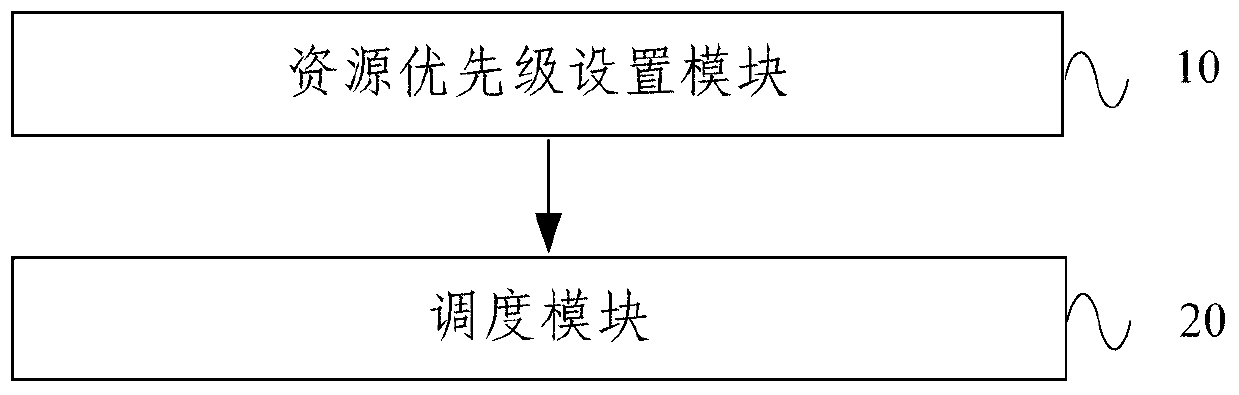

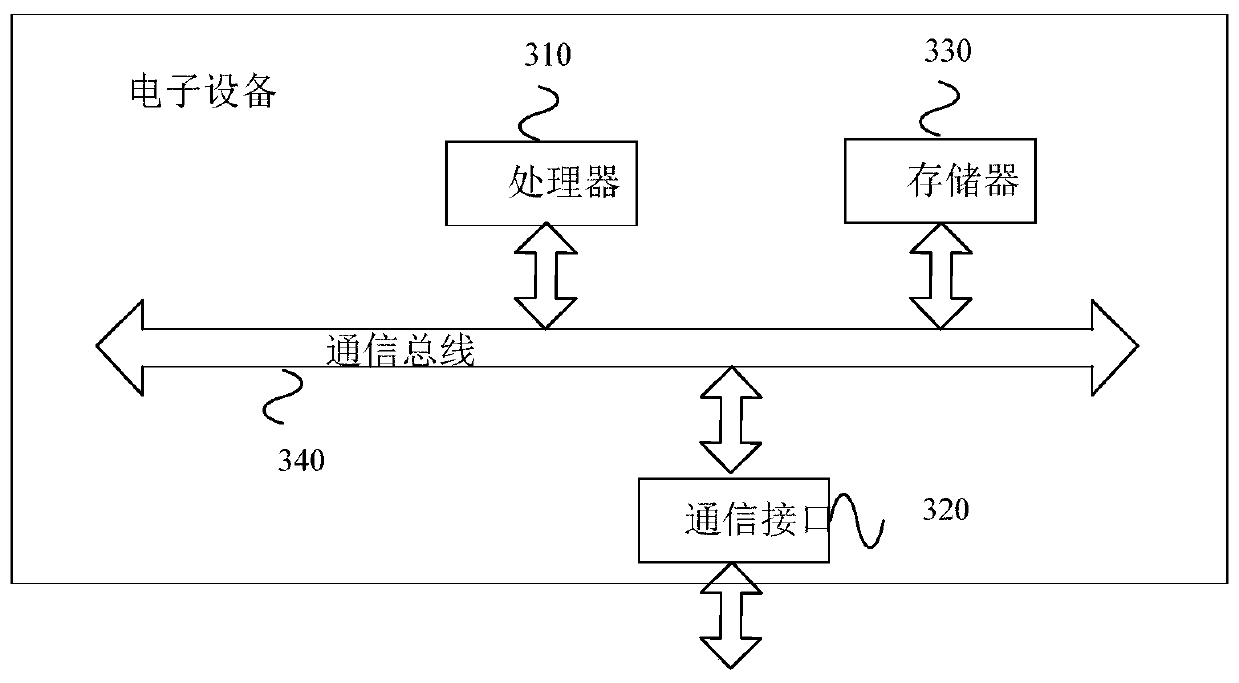

Shared resource access method and device

PendingCN111506438AAchieve inversion minimizationAvoid deadlockResource allocationProgram synchronisationAccess methodPriority inversion

The embodiment of the invention provides a shared resource access method and device, and the method comprises the steps: setting the upper limit priority of a shared resource to be higher than or equal to the highest priority of a resource use unit for accessing the shared resource, wherein the resource use unit comprises a task and / or interruption; when a certain resource using unit calls the shared resources, adjusting the priority of the resource using unit to the upper limit priority, then obtaining the shared resources through a resource obtaining statement, and releasing the shared resources through a resource releasing statement. According to the shared resource access method and device, theupper limit priority of the shared resources is set to be higher than or equal to the highestpriority of the resource use units accessing the shared resources, and when a certain resource use unit calls the shared resources, the priority of the resource use units is adjusted to the upper limit priority, so that the priority inversion minimization is realized, and the deadlock phenomenon is avoided.

Owner:CHINA VAGON AUTOMOTIVES HLDG CO LTD

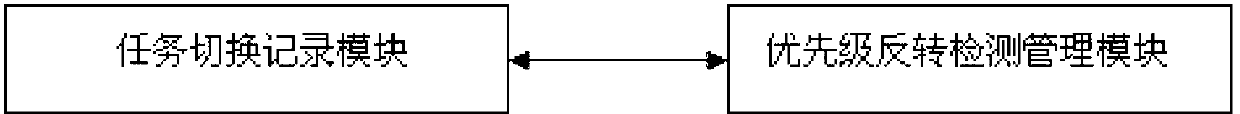

Test system and test method of task priority inversion of multiple task operating system

ActiveCN103106111AThe detection method is simpleRapid positioningProgram initiation/switchingRelevant informationOperational system

The invention relates to the field of computer multiple task operating system, discloses a test method of task priority inversion of a multiple task operating system based on task priority scheduling, and is used for positioning relevant information of task priority inversion quickly. The test method of the task priority inversion of the multiple task operating system includes the steps of: a. a priority inversion test management module registers a hook function on the operating system based on a user interface command and informs a task switching logging module when task priority inversion of the operating system needs testing, b. the task switching logging module records task switching information of the operating system, receives the notice of registering the hook function by the priority inversion test management module, and invoking the hook function when task is switched, c. the hook function tests whether priority inversion occurs on a dispatched task and tests relevant information of the priority inverted task. Further, the invention discloses a corresponding test system, and is suitable for the computer multi-task operating system.

Owner:MAIPU COMM TECH CO LTD

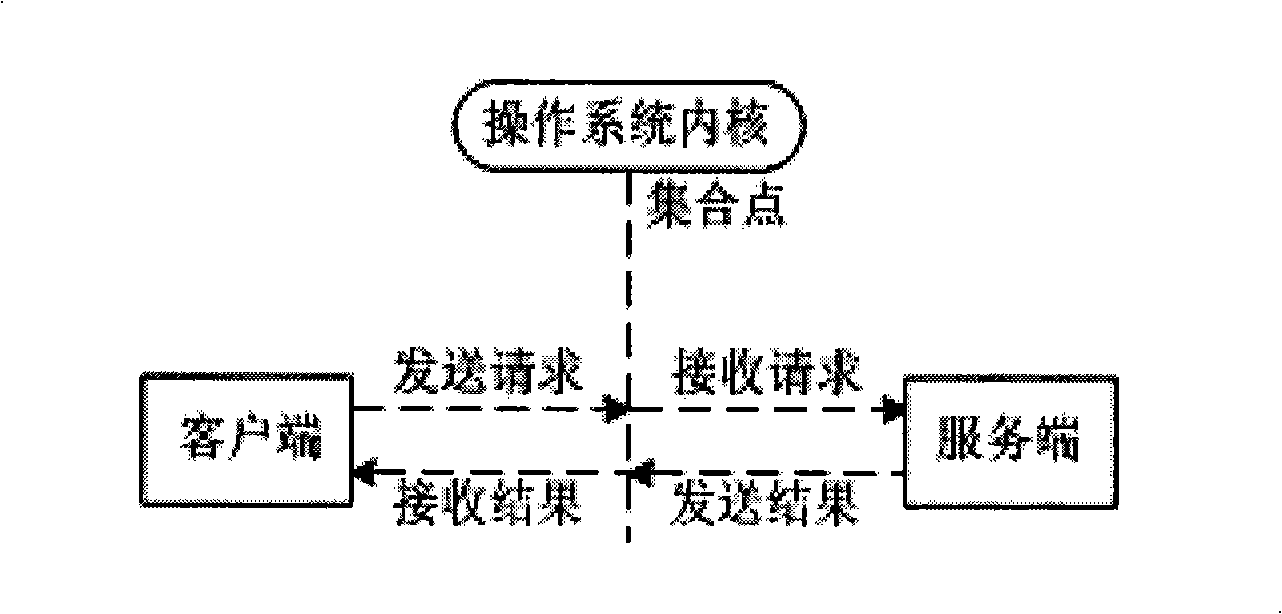

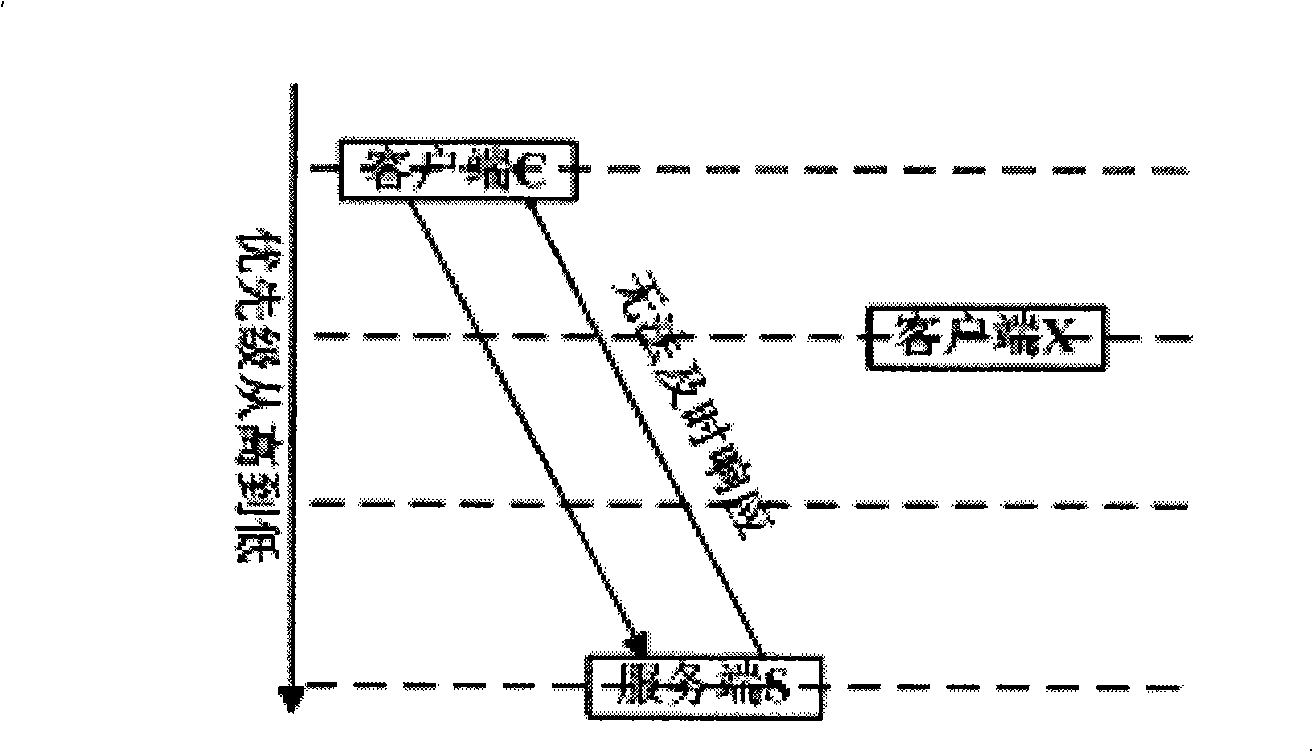

Component interactive synchronization method based on transaction

InactiveCN101295269ASolve the priority inversion problemSupport for remote method callsInterprogram communicationTransmissionOperational systemCore component

The invention discloses a method for component interaction synchronization based on objects. The method realizes the data interaction communication between the client and the server of the component by a component-typed built-in operation system; when the server receives the request sent by the client, the server gains the event context of the interactive synchronization component based on the objects and determines the priority of the component interaction according to the event context of the component interaction synchronous mechanism based on the objects, and runs as the priority of the client. The invention also discloses a component-typed interaction model based on the event; the model is based on the event context and realizes the precise interaction between the components. The method of the invention solves the priority inversion problem between the independent components by introducing the event context of the interaction synchronous component based on the objects, is realized on a multi-core component-typed built-in operation system platform Pcanel and ensures the high efficiency and low expense of the interaction between the components.

Owner:ZHEJIANG UNIV

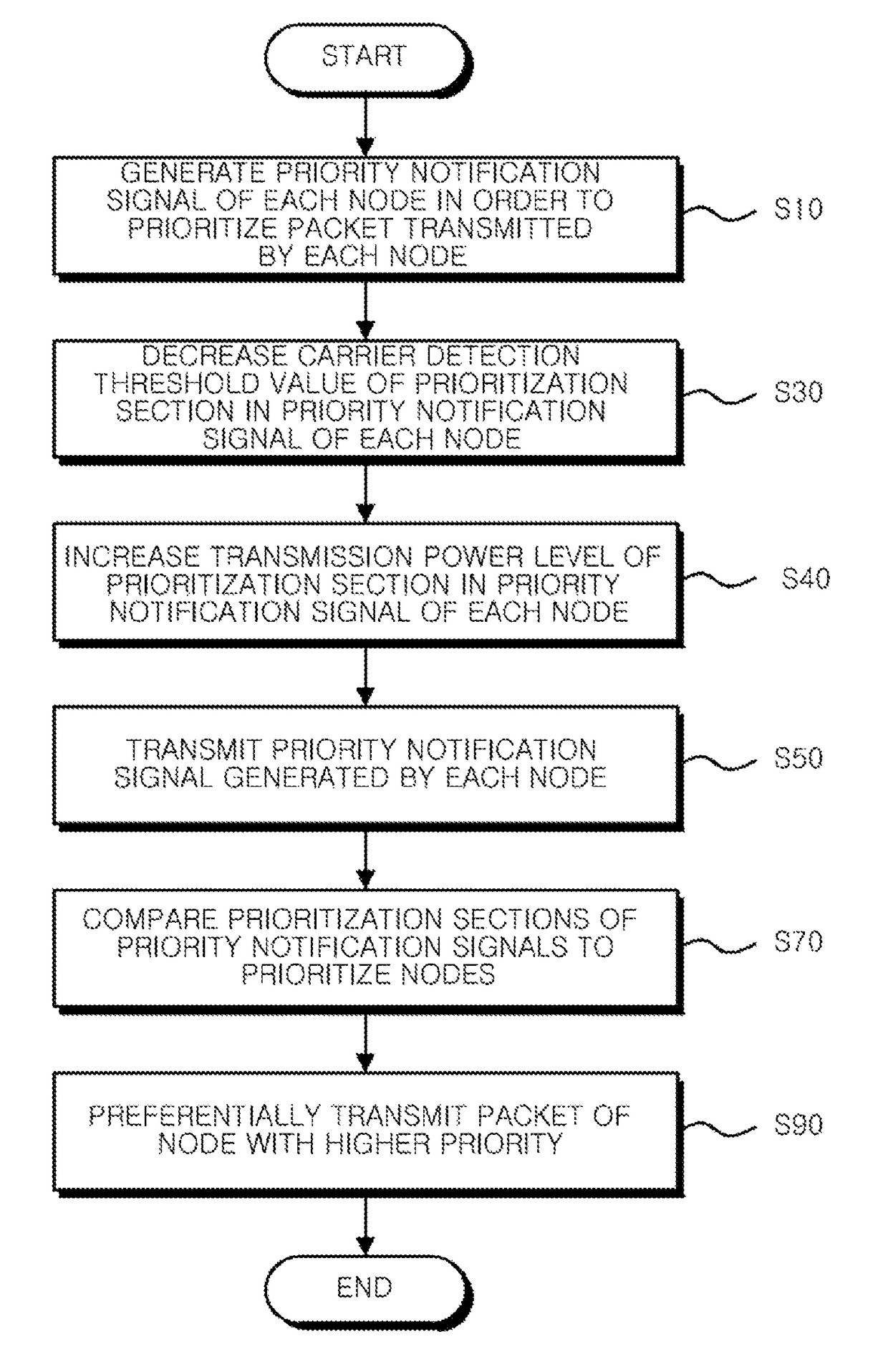

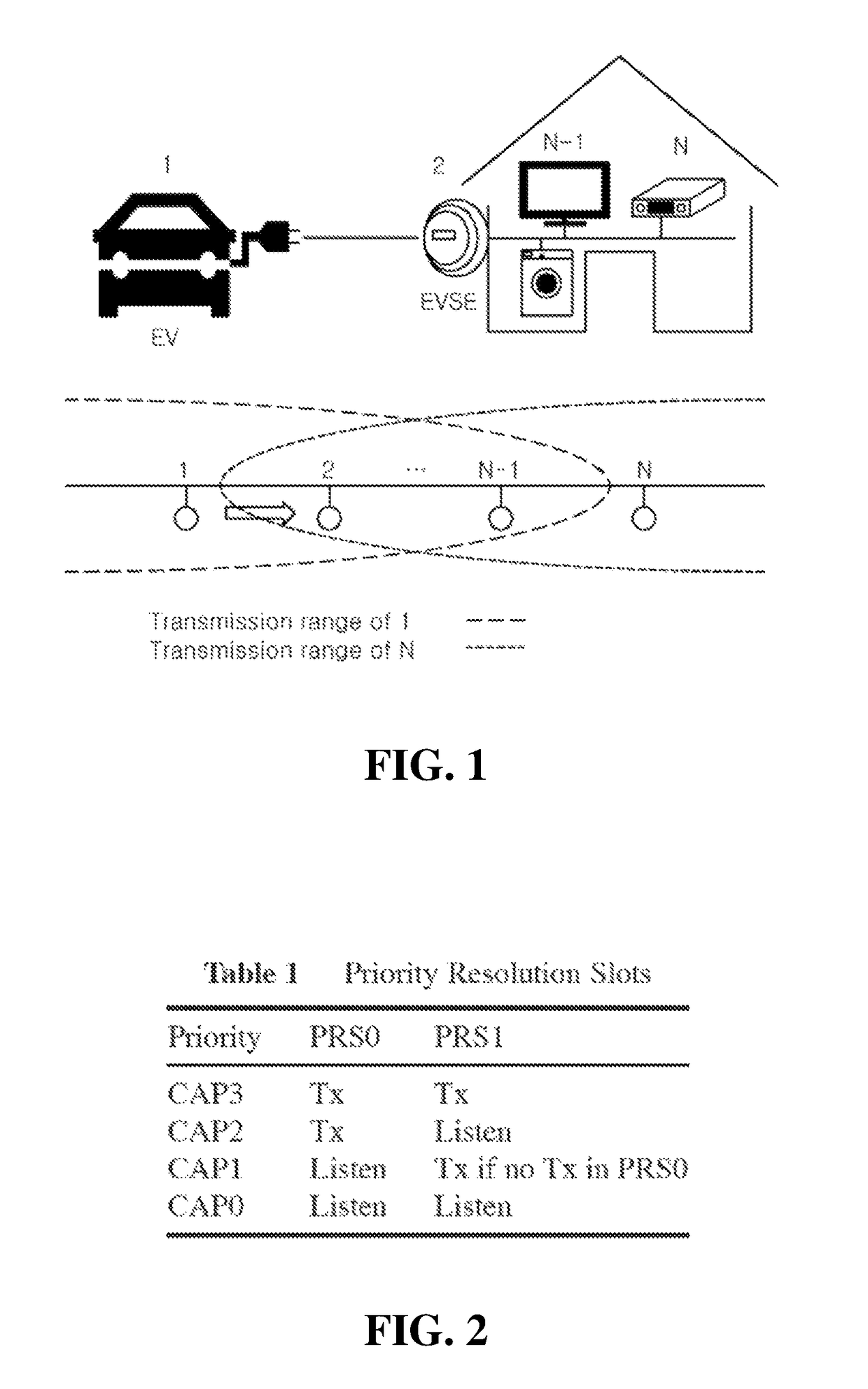

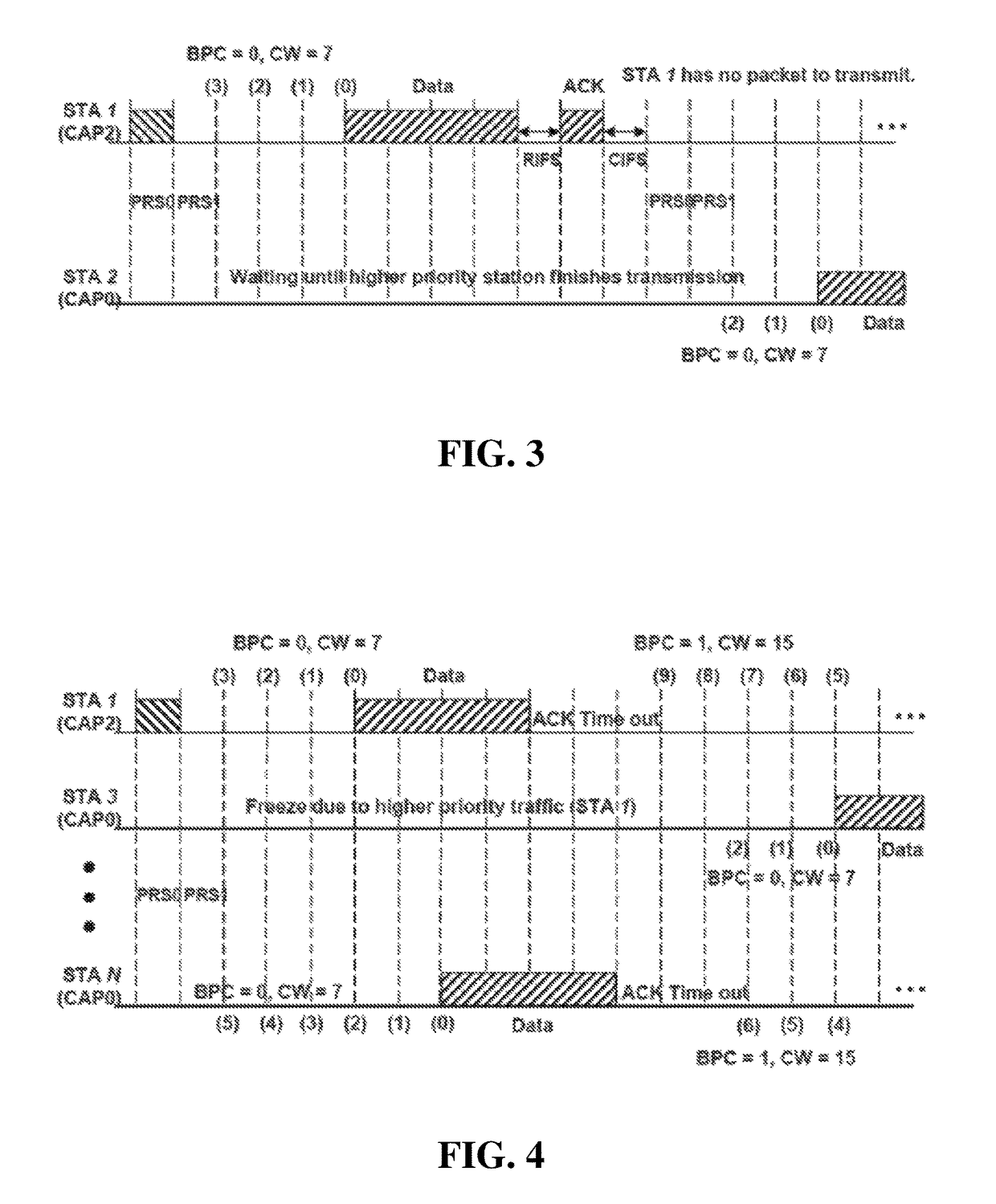

Method of preventing priority inversion in power line communication, recording medium and apparatus for performing the same

ActiveUS9935686B2Prevent inversionPower distribution line transmissionPilot signal allocationCarrier signalPriority inversion

A method of preventing priority inversion in power line communication includes generating a priority notification signal of each node in order to prioritize a packet transmitted by each of the nodes, decreasing carrier detection threshold value of a prioritization section in the priority notification signal of each of the nodes, increasing transmission power level of the prioritization section in the priority notification signal of each of the nodes, transmitting the priority notification signal generated by each of the nodes, prioritizing the nodes by comparing the prioritization sections of the priority notification signals, and preferentially transmitting a packet of a node with a higher priority. This can solve a priority inversion problem between signals in a network to safely transmit signals without collisions and latency.

Owner:FOUND OF SOONGSIL UNIV IND COOP

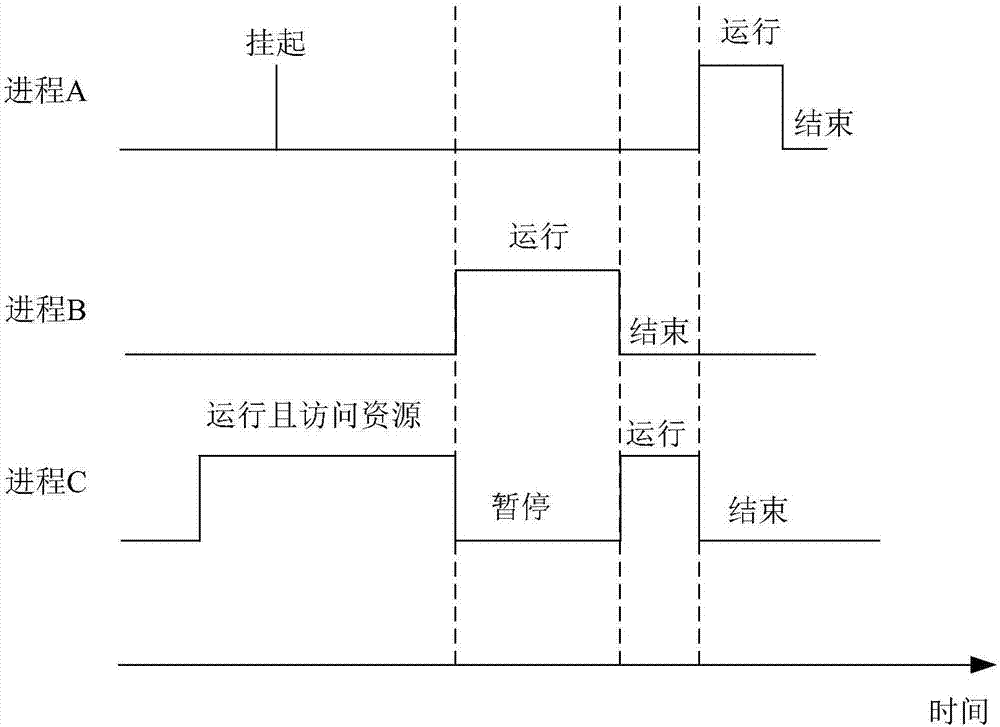

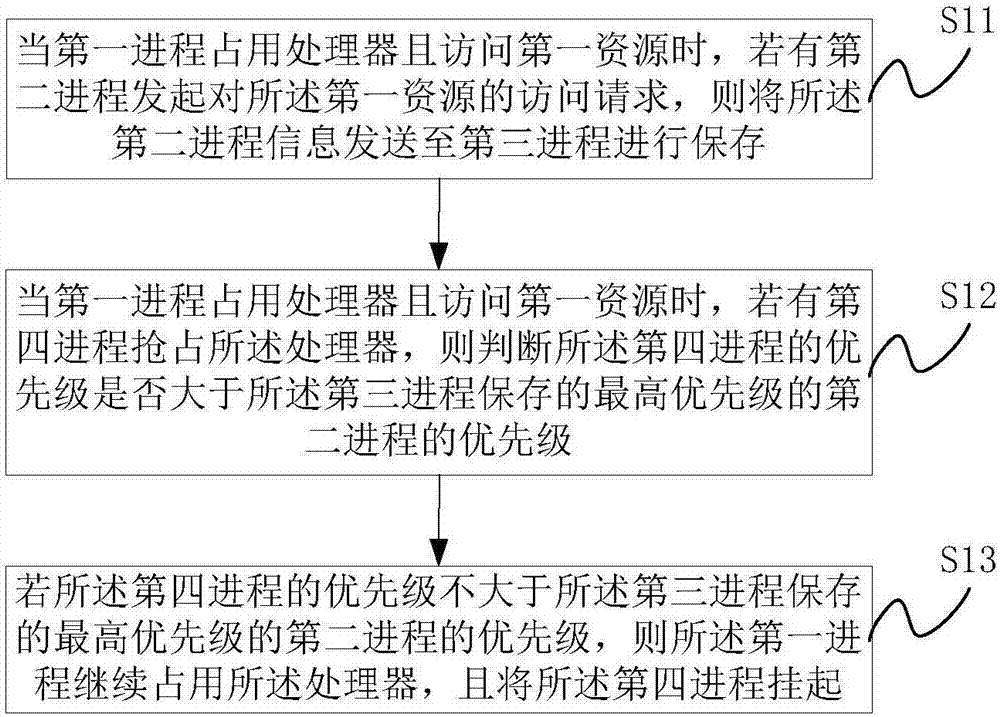

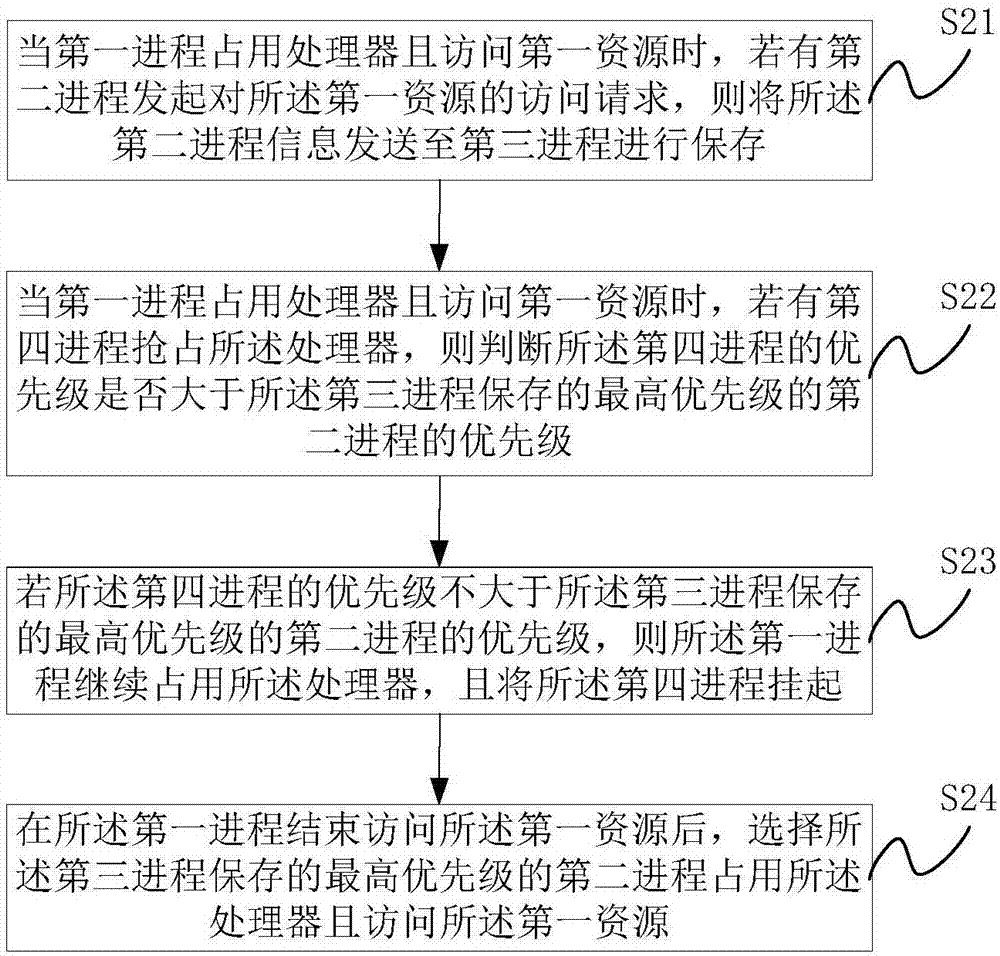

Data processing method and device

InactiveCN107291552AReduce reversalAvoid power consumptionResource allocationEnergy efficient computingPriority inversionComputer science

The invention discloses a data processing method and device. Information of a second process requiring first resources is stored through a third process, and the process is not allowed to preempt a processor if the priority of the process preempting the processor is not greater than the priority of the process with the highest priority stored in the third process. Further, priority inversion is reduced, extra power consumption caused by frequent change of priority is avoided, and other problems caused by change of priority are avoided.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

I/O scheduling

In one embodiment, input-output (I / O) scheduling system detects and resolves priority inversions by expediting previously dispatched requests to an I / O subsystem. In response to detecting the priority inversion, the system can transmit a command to expedite completion of the blocking I / O request. The pending request can be located within the I / O subsystem and expedited to reduce the pendency period of the request.

Owner:APPLE INC

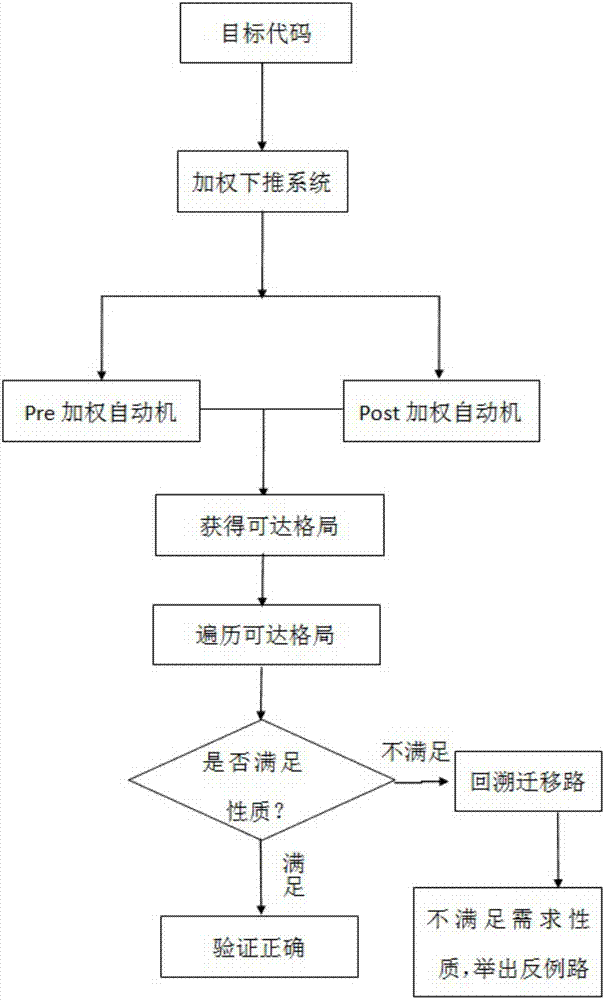

Interrupt Verification System Based on Weighted Pushdown System

ActiveCN106959890BImprove reliabilityImprove robustnessProgram initiation/switchingComputer modulePriority inversion

The invention discloses an interruption verification system based on a weighted pushdown system. The system comprises a target code modeling module, an accessible pattern acquisition module, an accessible pattern traversal module, a current pattern backtracking module and a result output module. According to the method provided by the invention, interruption verification of a real-time system is combined with the weighted pushdown system, interruption of the real-time system is verified in a formalized manner, and reliability and robustness of verification are improved; and sequential logic, priority inversion, memory access conflict and timeout problems related to interruption are simultaneously verified under a same model, so that the system is high in efficiency and meanwhile saving in cost.

Owner:上海丰蕾信息科技有限公司

Thread performance prediction and control method of chip multi-threading (CMT) computer system

InactiveCN102708007BAccurate predictionEasy to controlResource allocationControl mannerModel parameters

The invention relates to a thread performance prediction and control method of a CMT computer system, which is designed to solve the technical problems of accidental starvation and stagnation of threads, misuse of resources, priority inversion and so on in the existing CMT system. The method comprises guiding the distribution of key resources (RRF, rename register file) in the CMT system by using the performance-resources dependency relationship model to predict and control the thread performance; acquiring and adjusting the model parameters by thread sampling and online studying to real-timely track and accurately predict the thread performance; and calculating the number of the key resources desired for achieving the performance by use of a parameter determination model according to a set performance requirement, and re-adjusting the resource distribution. The method provided by the invention has the advantages: the model is simple and can accurately describe the dependency relationship between the performance and resources; the method has high adaptability to realize accurate performance prediction and control of all kinds of threads; the method supports two control modes of absolute performance and performance percentage; and the method is low in realization cost, is easy to realize on basis of the existing system structure and can realize ordered distribution of multi-threading chip resources and performance predictability and controllability.

Owner:SHENYANG AEROSPACE UNIVERSITY

An Outage Verification Method Based on Weighted Pushdown System

ActiveCN106940659BImprove reliabilityImprove robustnessProgram initiation/switchingObject codeValidation methods

The invention discloses an interrupt verification method based on a weighted pushdown system. The method comprises the following steps of firstly, modeling the weighted pushdown system according to instruction jumping relationship in a program target code; then, obtaining all reachable layouts through a reachable algorithm of the weighted pushdown system; traversing all of the obtained reachable layouts; judging whether current layout information meets the requirement or not; if not, judging mistake types and returning mistake paths; if no mistake is discovered after all of the reachable layouts are traversed, returning correct. The method provided by the invention has the advantages that the interrupt verification of a real-time system is combined with the weighted pushdown system; a formalization mode is used for verifying the real-time system interrupt; the verification reliability and robustness are improved; sequential logic relevant to the interrupt, priority inversion, memory access conflict and the time-out problem can be verified at the same time under the same model; the efficiency is high; meanwhile, the cost is also reduced.

Owner:上海丰蕾信息科技有限公司

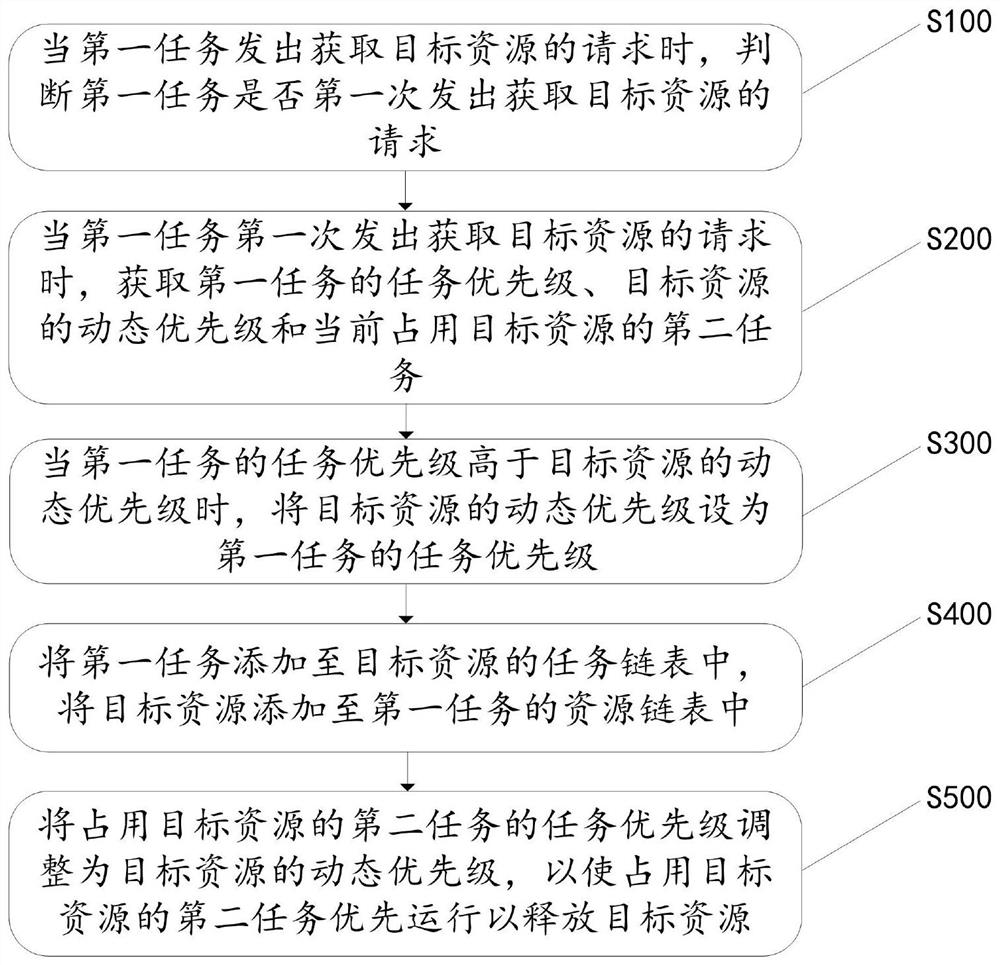

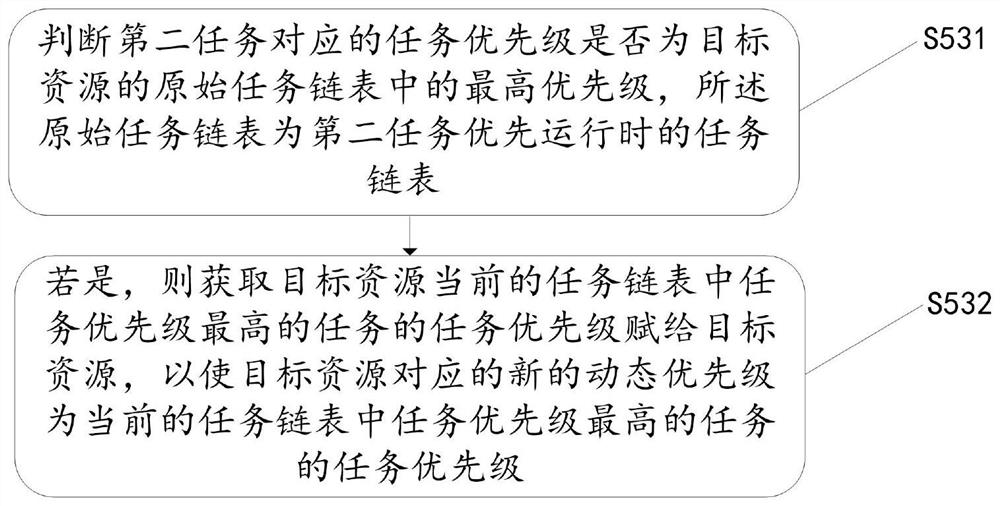

Method and device for dynamically adjusting priority

PendingCN114510327APrevent reversalRun fastProgram initiation/switchingResource allocationPriority inversionDistributed computing

The invention relates to a priority dynamic adjustment method and device, and the method comprises the steps: judging whether a first task transmits a request for obtaining a target resource for the first time or not when the first task transmits the request for obtaining the target resource; if yes, obtaining the task priority of the first task, the dynamic priority of the target resource and a second task currently occupying the target resource; when the task priority of the first task is higher than the dynamic priority of the target resource, setting the dynamic priority of the target resource as the task priority of the first task; adding the first task to a task chain table of a target resource, and adding the target resource to a resource chain table of the first task; and adjusting the task priority of the second task occupying the target resource to the dynamic priority of the target resource. By temporarily improving the task priority of the task occupying the resource to the dynamic priority of the target resource, the task is ensured to have enough priority for preferential operation so as to release the target resource as soon as possible, and priority inversion is avoided.

Owner:CHINA AUTOMOTIVE INNOVATION CORP

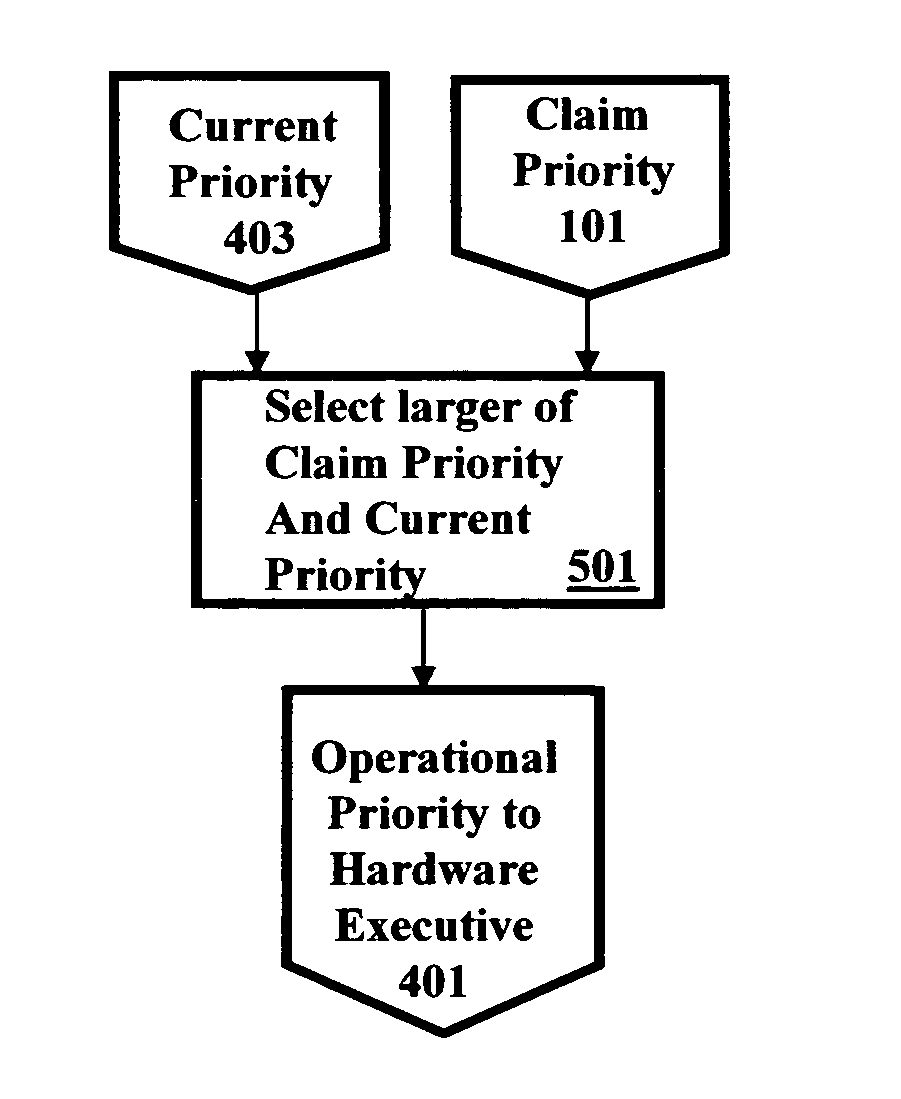

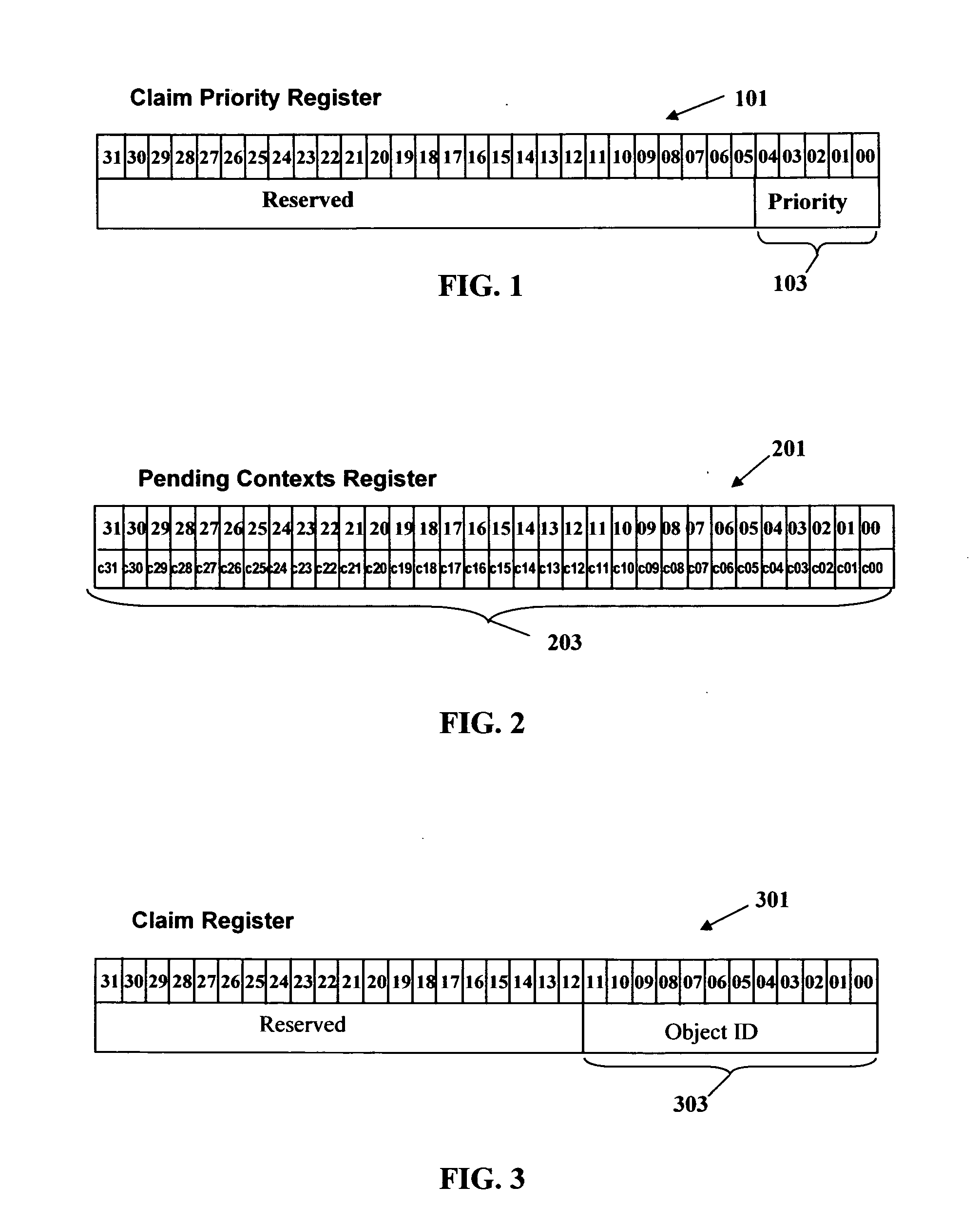

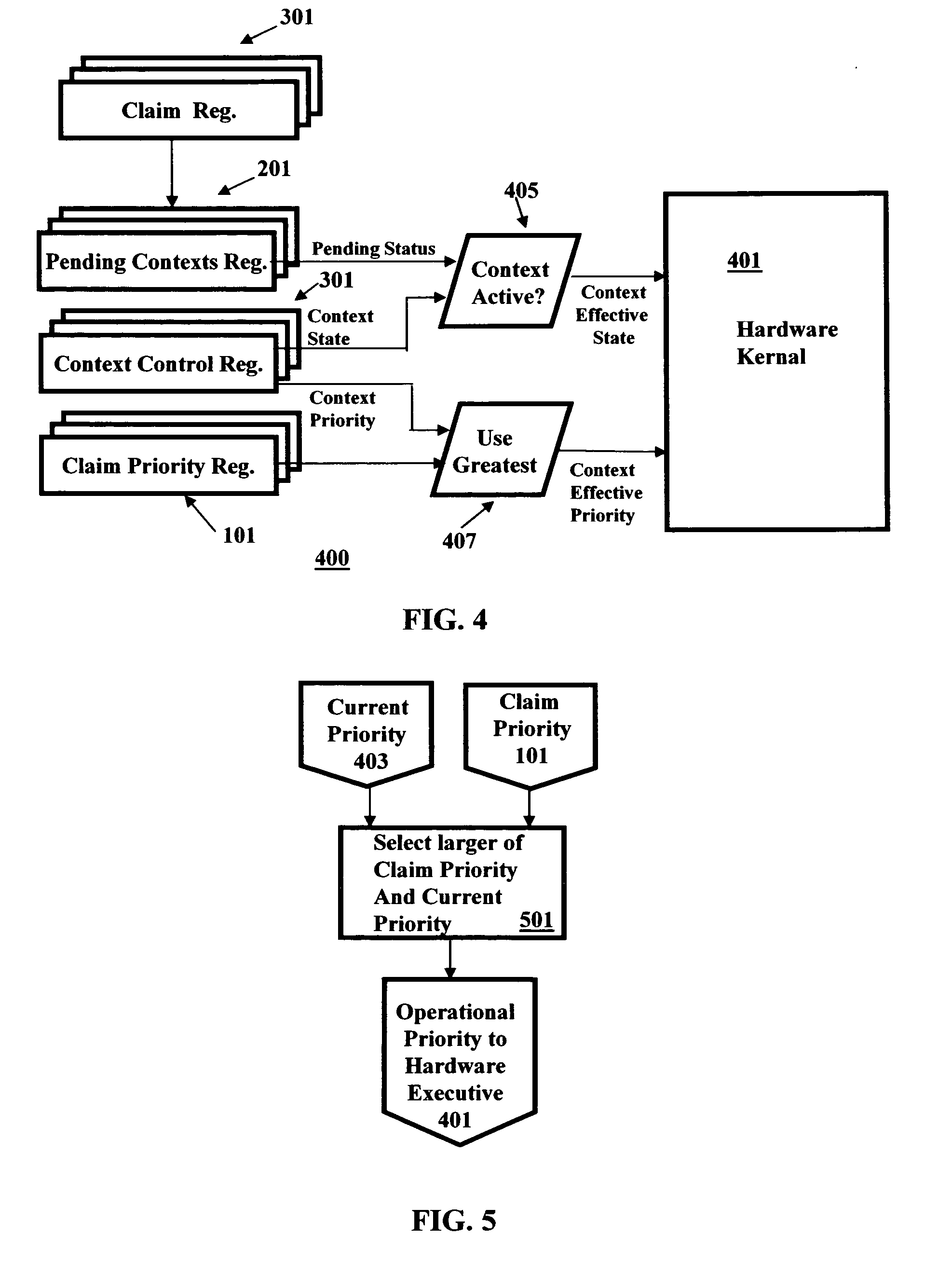

Processor with hardware solution for priority inversion

ActiveUS20080072227A1Prevent priority inversionPrevent inversionMemory systemsProgram saving/restoringMultiple contextOperational system

A method for preventing priority inversion in a processor system having an operating system operable in a plurality of contexts is provided. The method comprises: providing a plurality of context control registers with each context control register being associated with a corresponding one context for controlling execution of the context; providing a plurality of sets of hardware registers, each set corresponding to one context of the plurality of contexts; and utilizing the plurality of context control registers and said plurality of sets of hardware registers to prevent priority inversion.

Owner:INNOVASIC

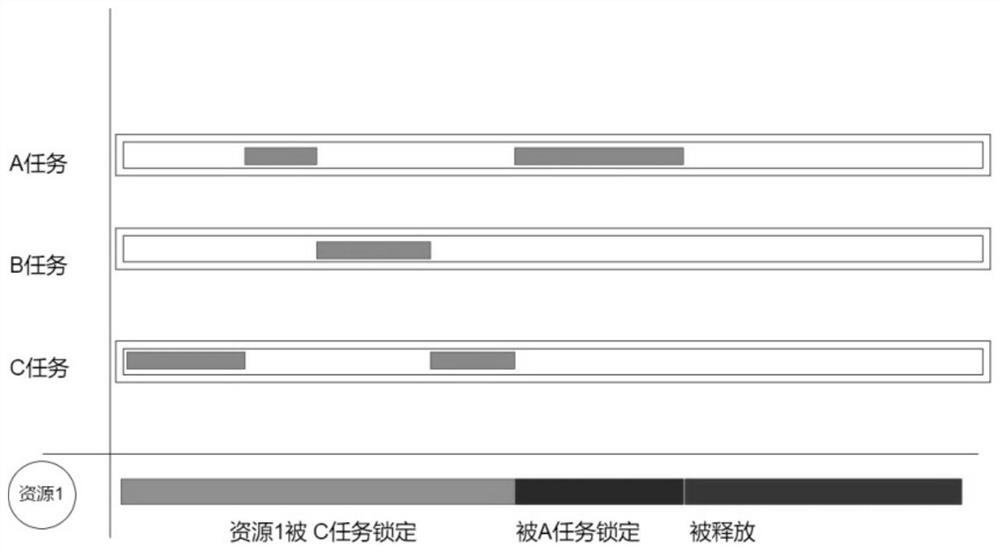

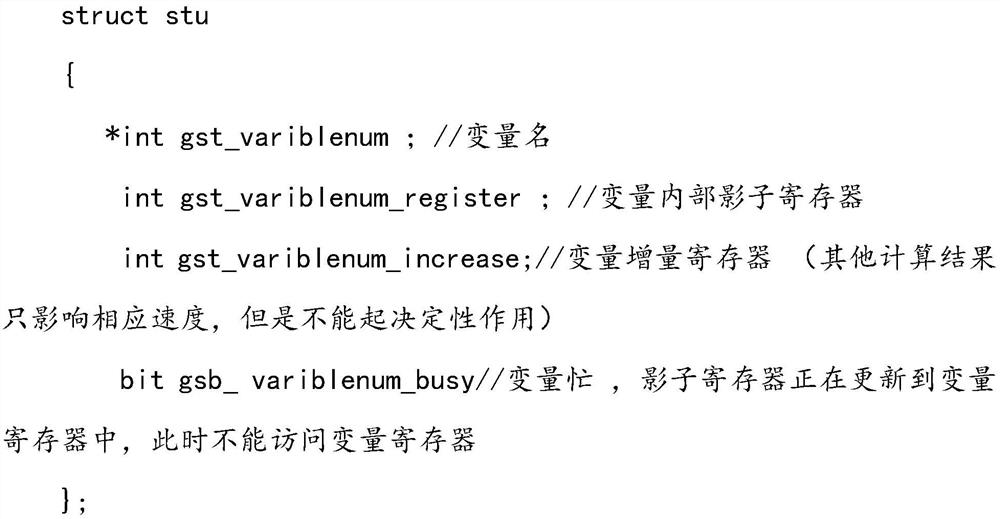

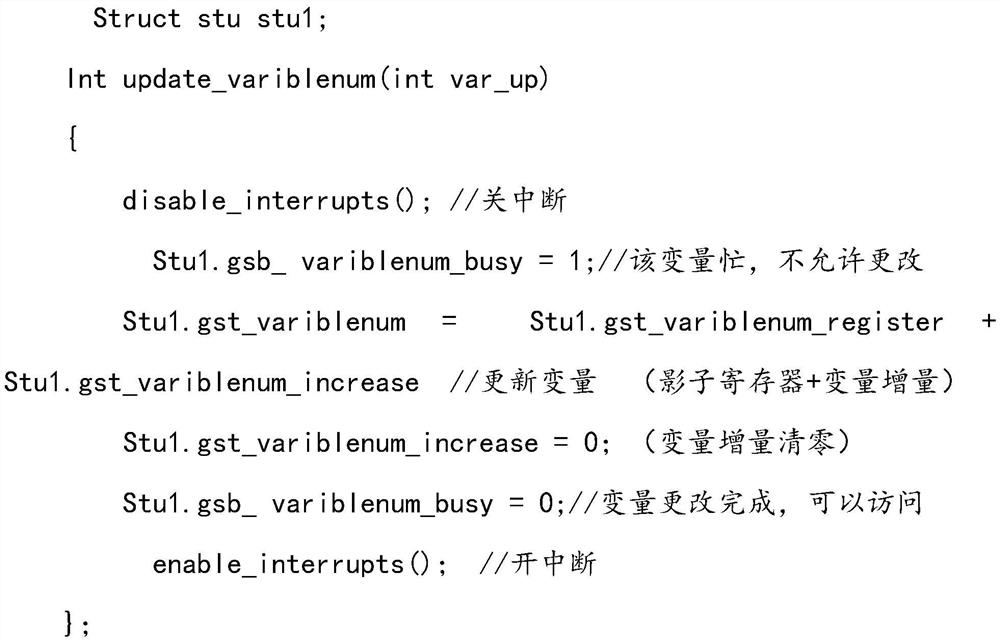

Priority inversion method in optimized embedded system

PendingCN112068945APrevent reversalProgram initiation/switchingInformation processingComputer architecture

The invention relates to the technical field of computer information processing, in particular to a priority inversion method in an optimized embedded system. The priority inversion method in the optimized embedded system comprises the following steps: changing an external register with a locking function when the external register is accessed into an external register capable of being repeatedlyaccessed, wherein the external register can be accessed by a plurality of tasks at the same time, and the external register can be locked only in a specific time period of resource updating. By adopting the priority inversion method in the optimized embedded system, the condition that the highest priority cannot be confirmed due to different priority mechanisms of the cores in the multi-core system can be effectively avoided; and meanwhile, the defect that other tasks cannot be quickly operated due to the fact that data resources are occupied for a long time due to task updating is avoided, and the method has wide market application prospects.

Owner:厦门势拓御能科技有限公司

Method for dynamically and fairly partitioning shared cache based on chip multiprocessor

InactiveCN101739299BSimplify the division stepsImprove division efficiencyResource allocationParallel computingPriority inversion

The invention relates to a method for dynamically and fairly partitioning shared cache based on a chip multiprocessor, and belongs to the field of computer architectures. Fairness is a key optimization problem; when a system lacks of the fairness, problems such as starvation of a thread and priority inversion may take place. But the current design of CMP mainly aims to improve throughput of the system, so the fairness of the system can be sacrificed generally. The invention provides a new fairness parameter and a division method. The partitioning method comprises three steps: initializing, roll-backing and repartitioning, wherein at the initializing stage, the cache is equally partitioned before the running of the thread; at the roll-backing stage, the partition which is repartitioned forthe previous partition but has reduced fairness is canceled; and at the repartitioning stage, the cache is newly partitioned for all the threads if no roll-backing occurs and the fairness parameter is greater than a repartition threshold. The partitioning method for the shared cache provided by the invention remarkably improves the fairness of the system; and the throughput of the system is also improved slightly.

Owner:BEIJING UNIV OF TECH

Interruption verification system based on weighted pushdown system

ActiveCN106959890AImprove reliabilityImprove robustnessProgram initiation/switchingObject codeTheoretical computer science

The invention discloses an interruption verification system based on a weighted pushdown system. The system comprises a target code modeling module, an accessible pattern acquisition module, an accessible pattern traversal module, a current pattern backtracking module and a result output module. According to the method provided by the invention, interruption verification of a real-time system is combined with the weighted pushdown system, interruption of the real-time system is verified in a formalized manner, and reliability and robustness of verification are improved; and sequential logic, priority inversion, memory access conflict and timeout problems related to interruption are simultaneously verified under a same model, so that the system is high in efficiency and meanwhile saving in cost.

Owner:上海丰蕾信息科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com