Patents

Literature

480 results about "Priority scheduling" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Priority scheduling involves priority assignment to every process, and processes with higher priorities are carried out first, whereas tasks with equal priorities are carried out on a first-come-first-served (FCFS) or round robin basis.

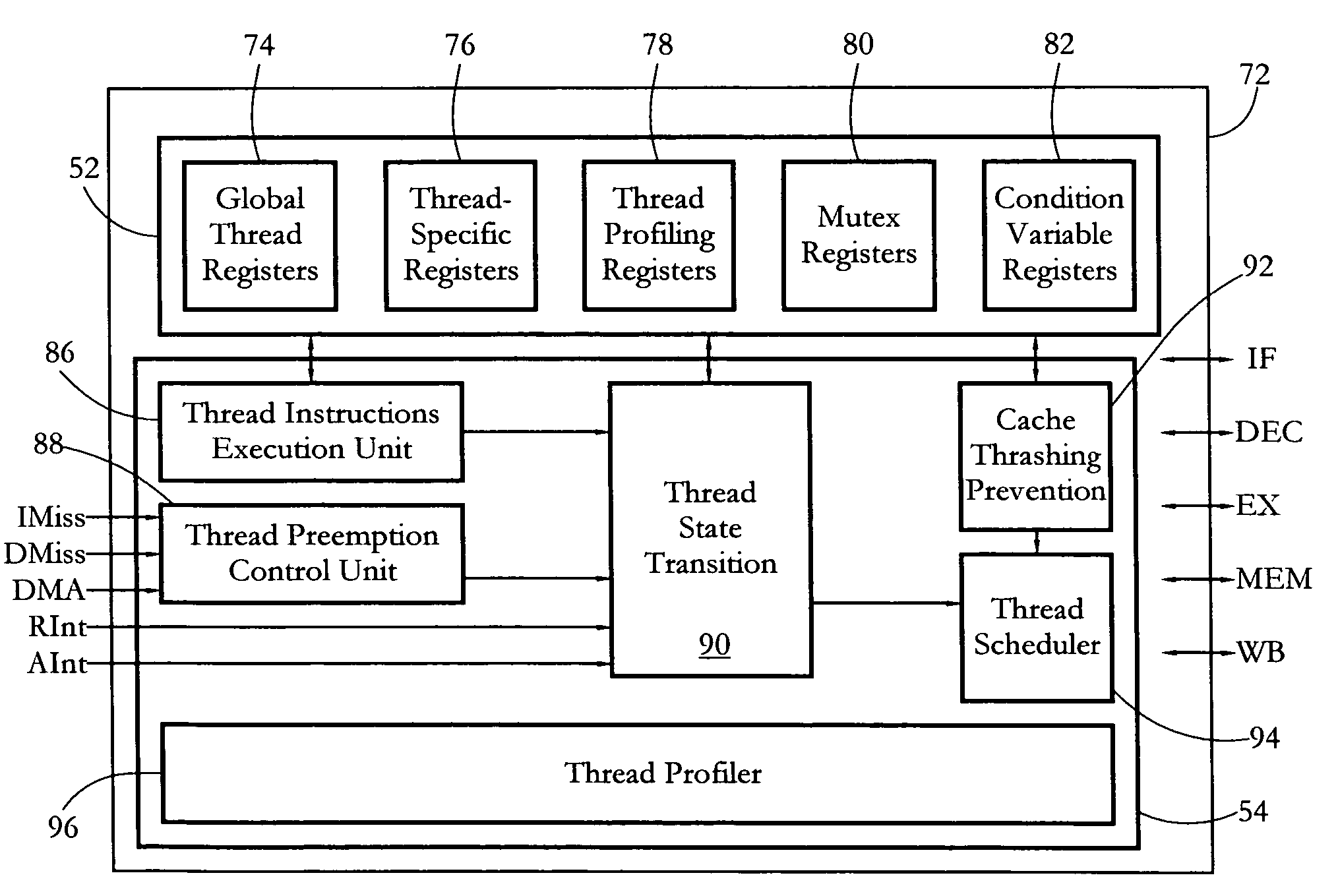

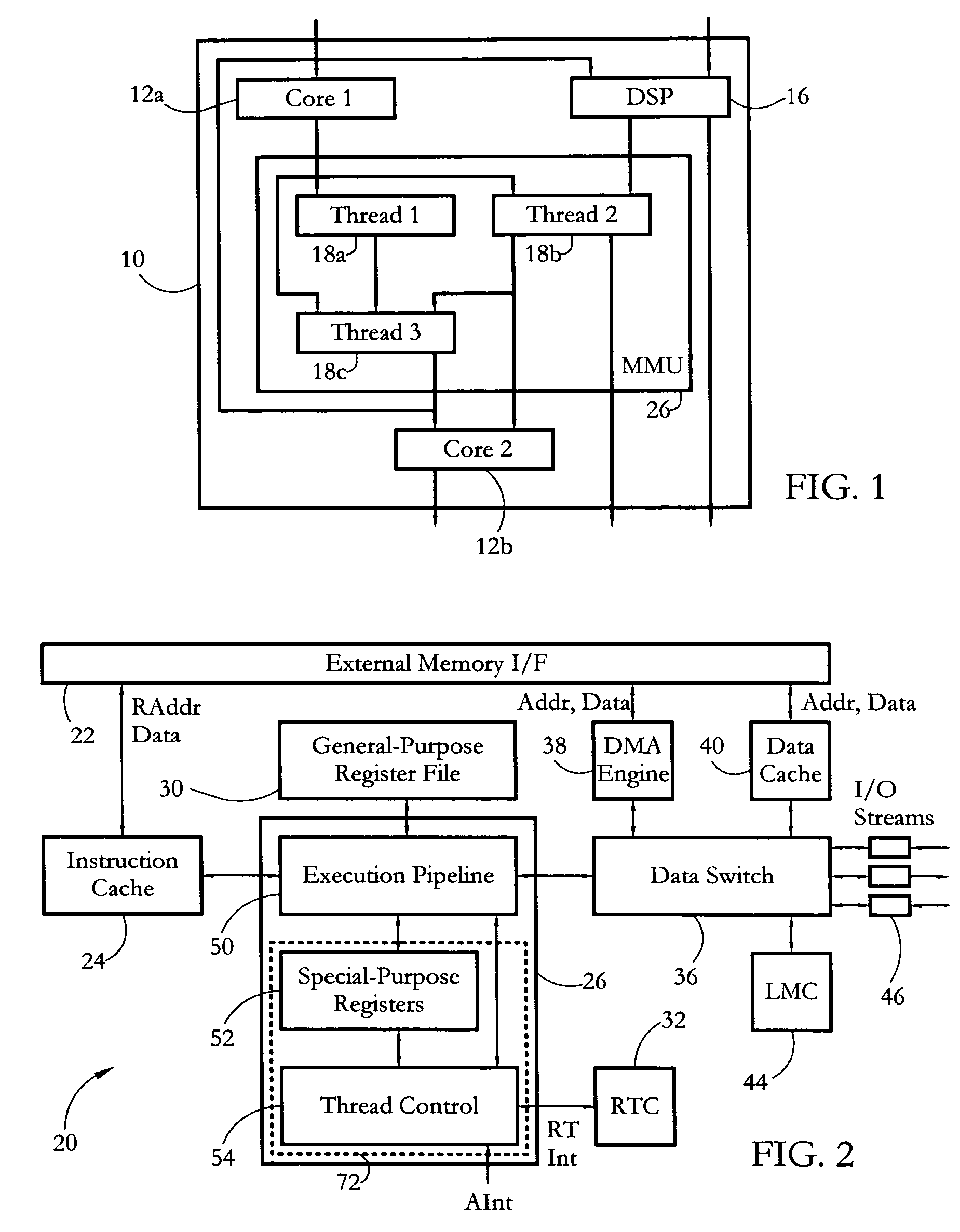

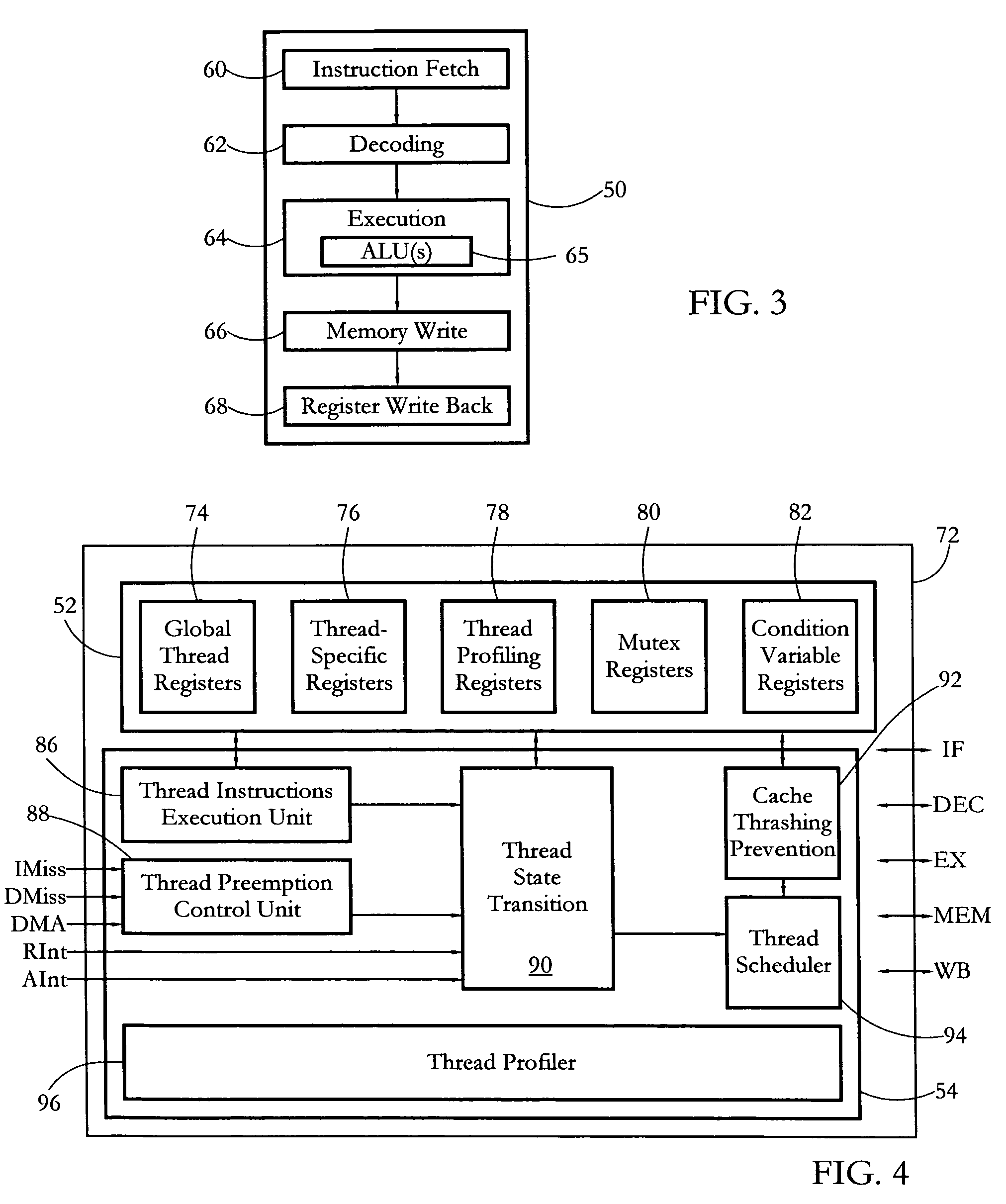

Hardware multithreading systems and methods

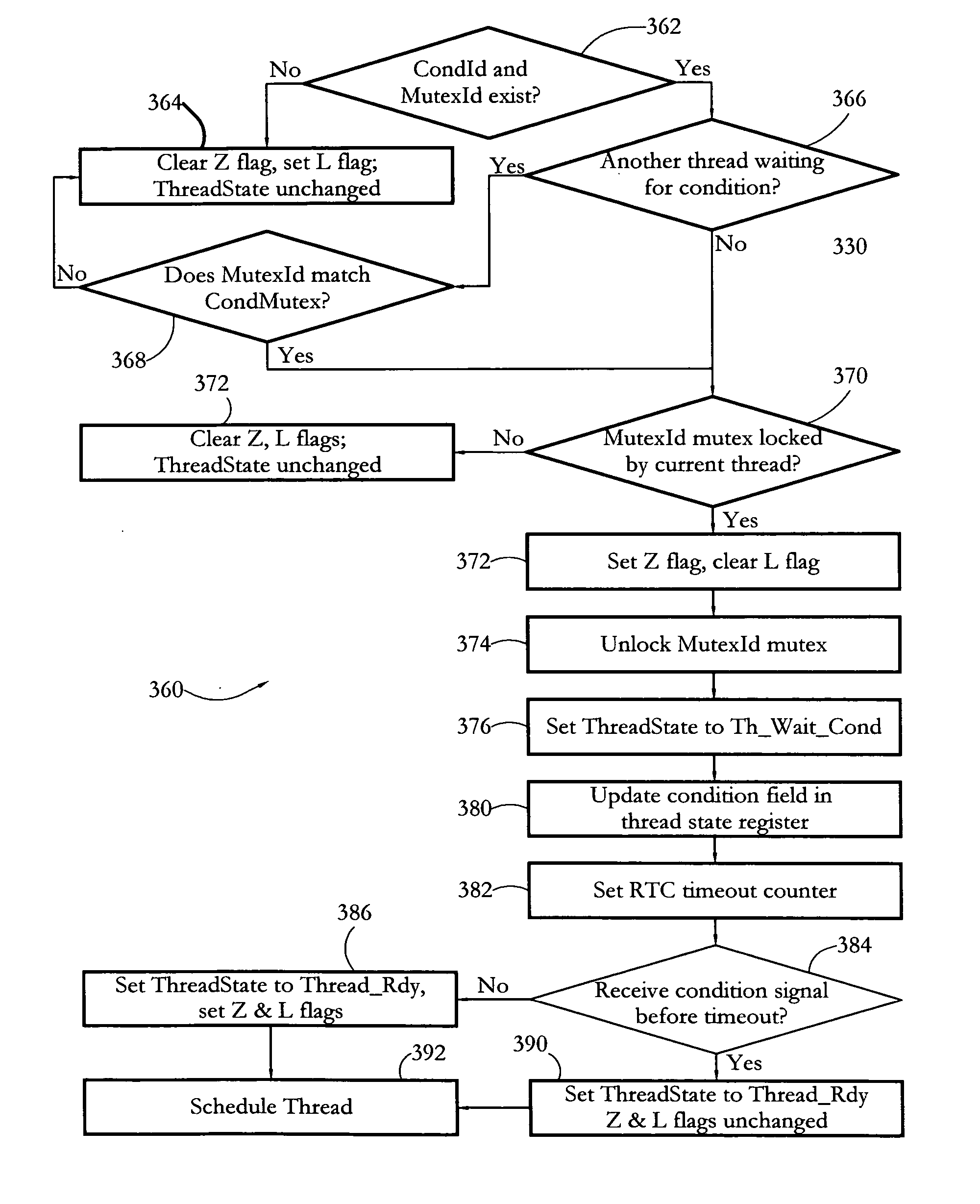

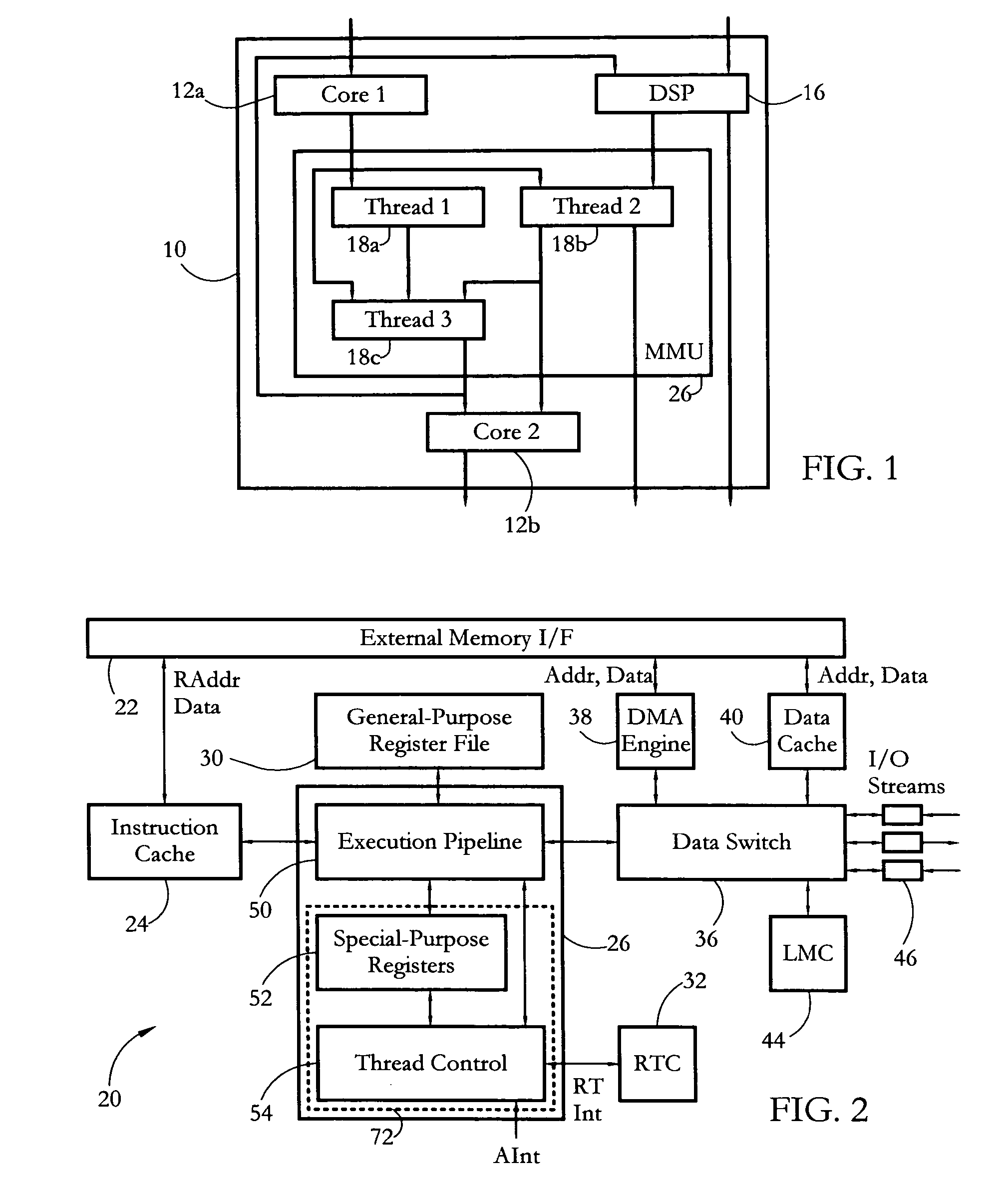

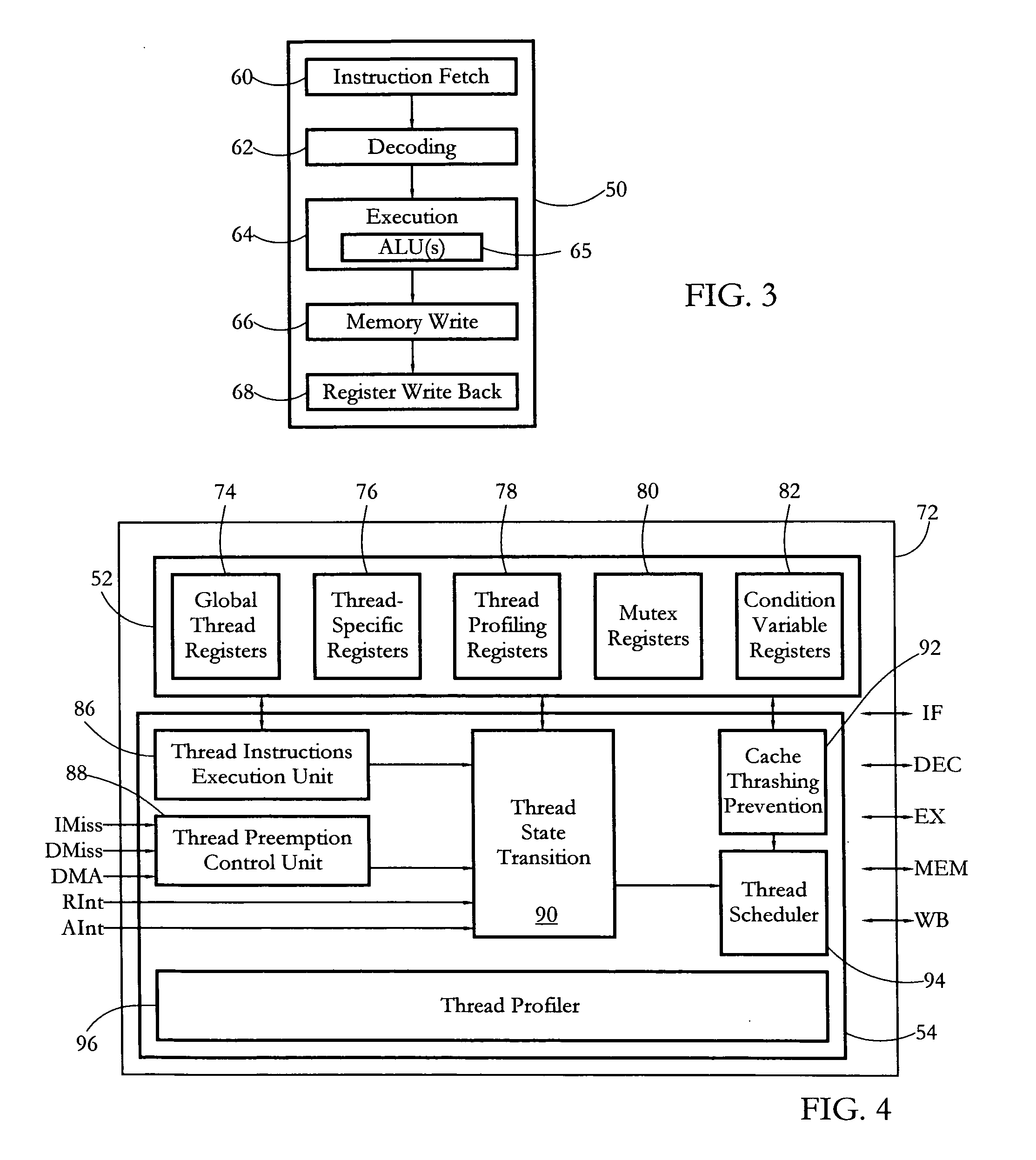

According to some embodiments, a multithreaded microcontroller includes a thread control unit comprising thread control hardware (logic) configured to perform a number of multithreading system calls essentially in real time, e.g. in one or a few clock cycles. System calls can include mutex lock, wait condition, and signal instructions. The thread controller includes a number of thread state, mutex, and condition variable registers used for executing the multithreading system calls. Threads can transition between several states including free, run, ready and wait. The wait state includes interrupt, condition, mutex, I-cache, and memory substates. A thread state transition controller controls thread states, while a thread instructions execution unit executes multithreading system calls and manages thread priorities to avoid priority inversion. A thread scheduler schedules threads according to their priorities. A hardware thread profiler including global, run and wait profiler registers is used to monitor thread performance to facilitate software development.

Owner:GEO SEMICONDUCTOR INC

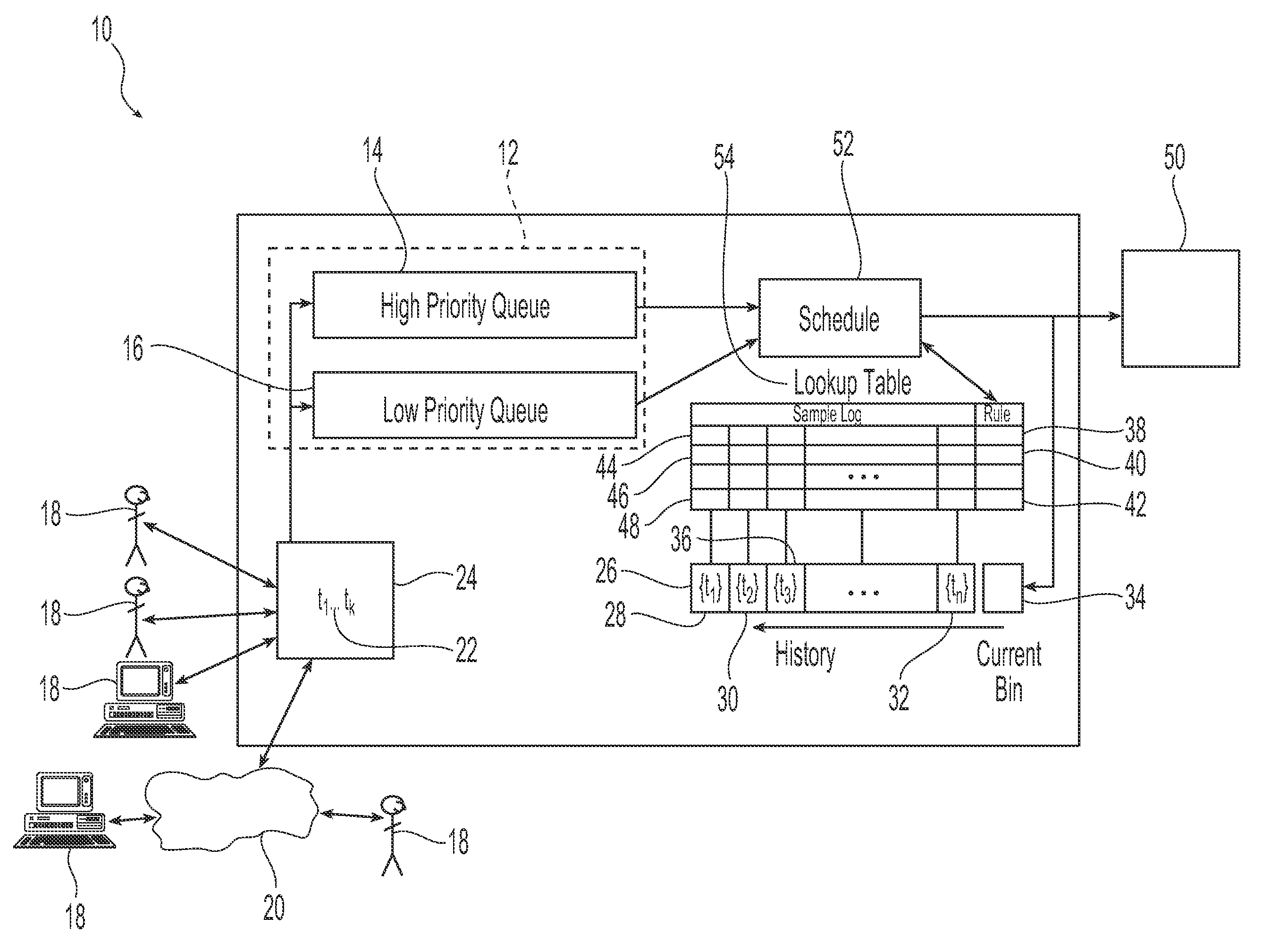

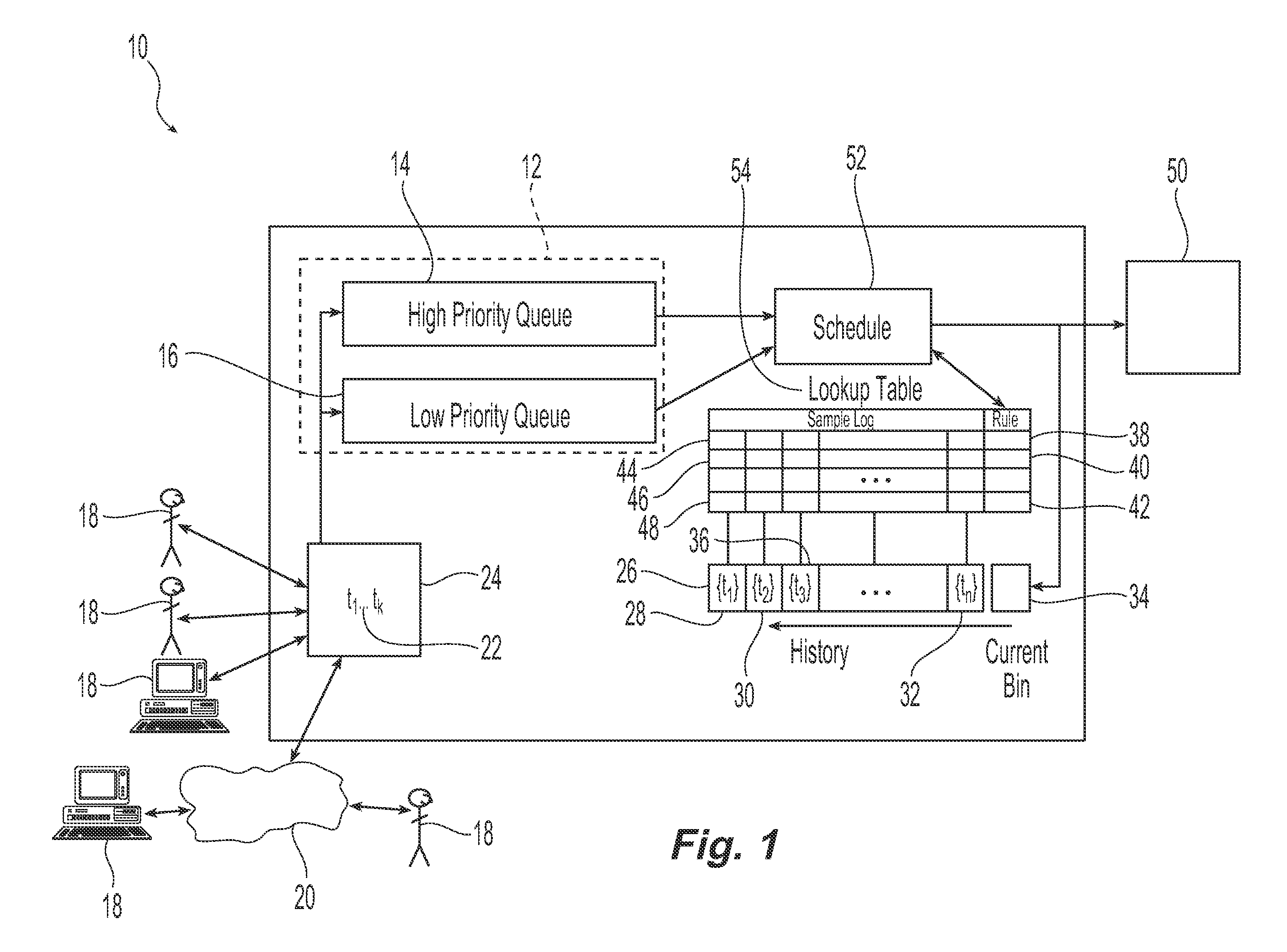

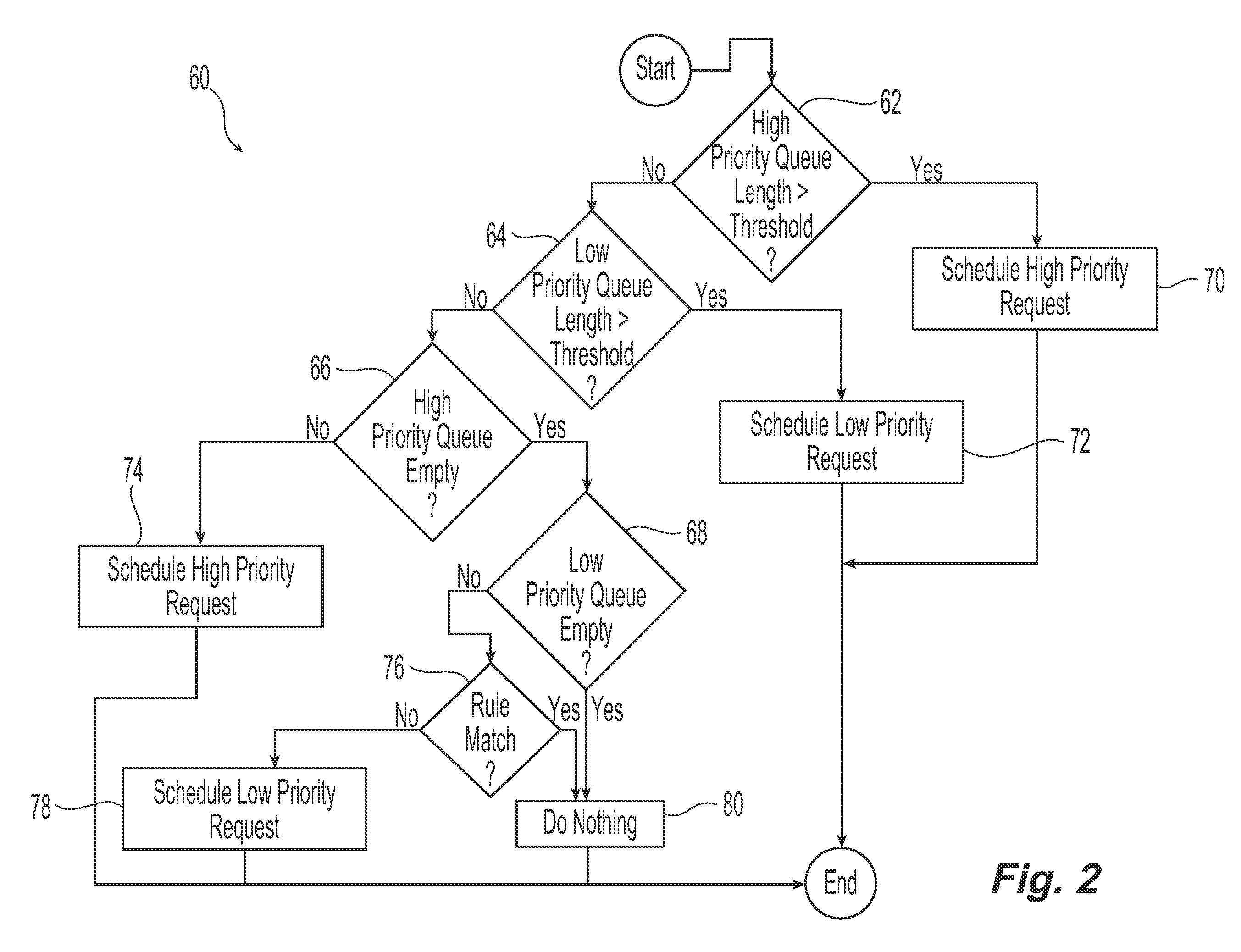

Prediction Based Priority Scheduling

ActiveUS20080222640A1Interference minimizationReduce queuing delayMultiprogramming arrangementsMemory systemsComputerized systemComputing systems

Systems and methods are provided that schedule task requests within a computing system based upon the history of task requests. The history of task requests can be represented by a historical log that monitors the receipt of high priority task request submissions over time. This historical log in combination with other user defined scheduling rules is used to schedule the task requests. Task requests in the computer system are maintained in a list that can be divided into a hierarchy of queues differentiated by the level of priority associated with the task requests contained within that queue. The user-defined scheduling rules give scheduling priority to the higher priority task requests, and the historical log is used to predict subsequent submissions of high priority task requests so that lower priority task requests that would interfere with the higher priority task requests will be delayed or will not be scheduled for processing.

Owner:IBM CORP

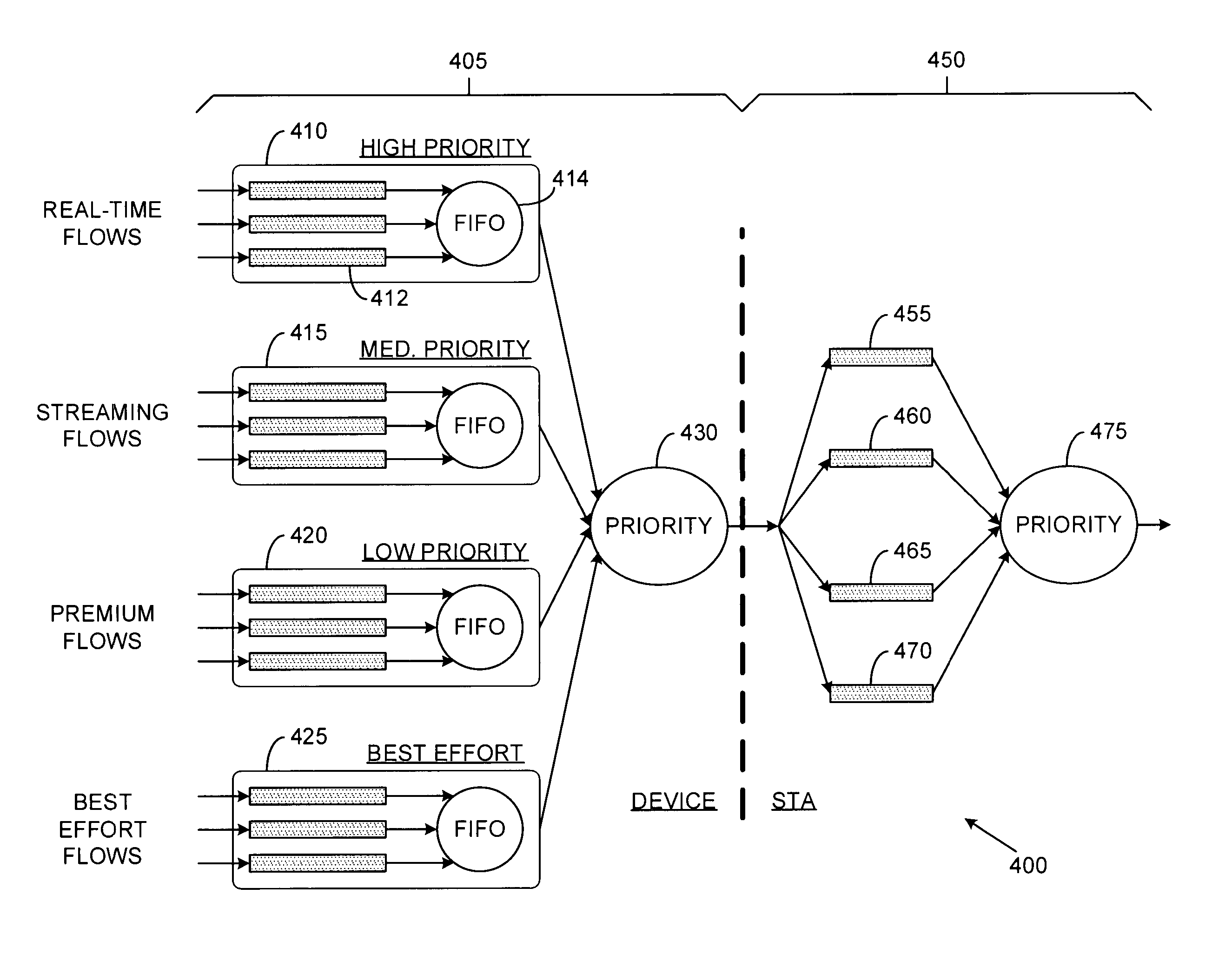

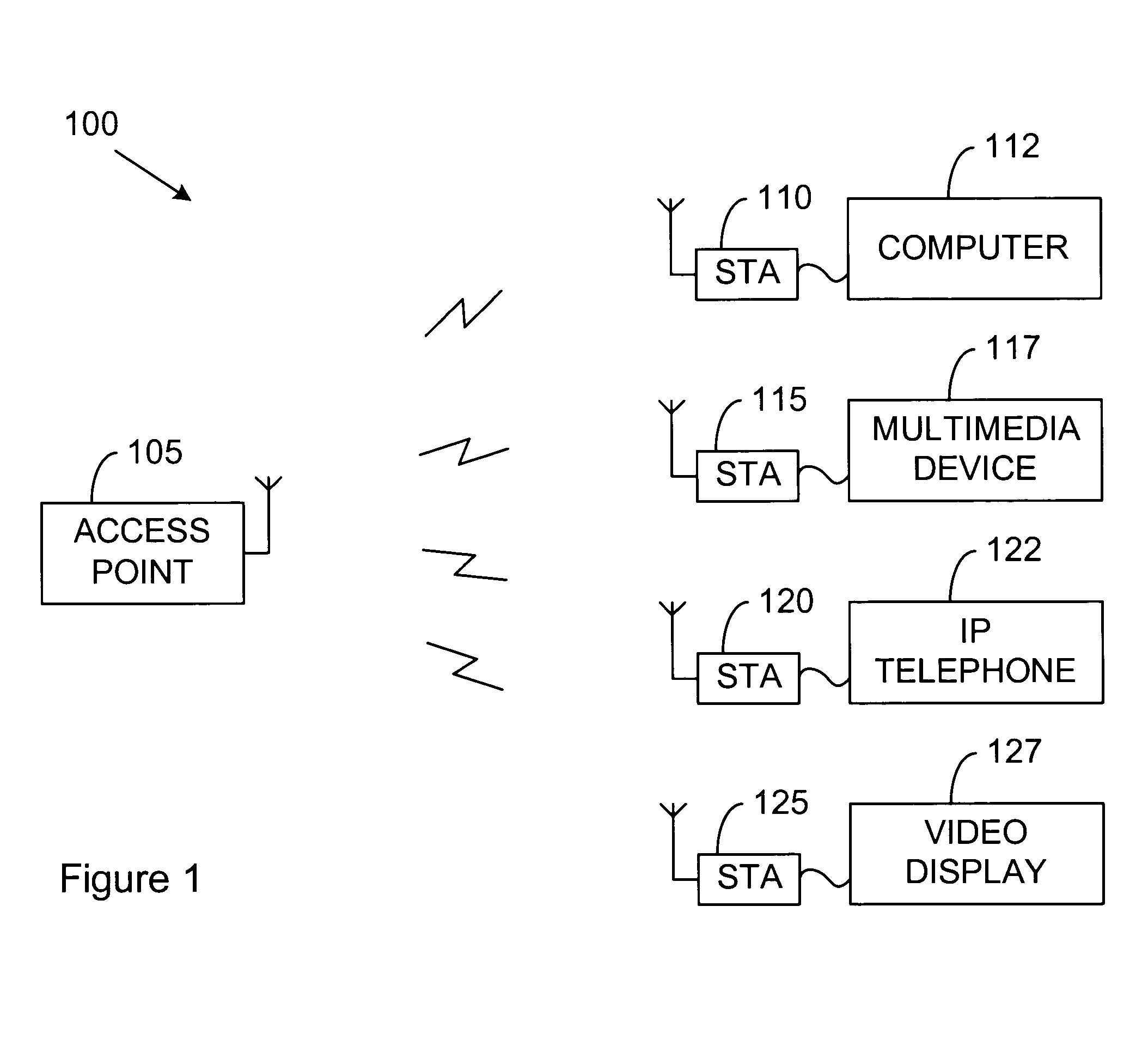

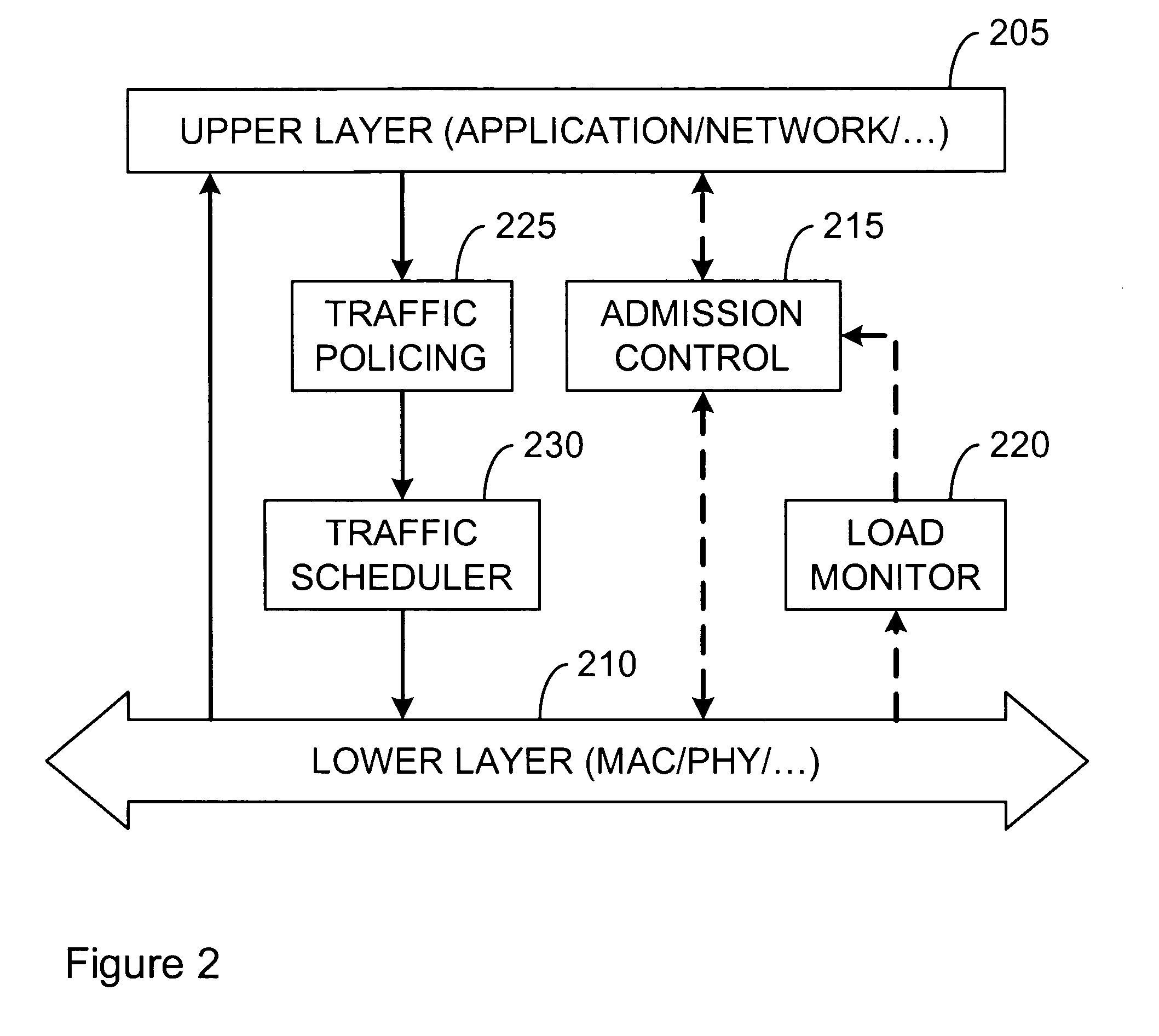

Hierarchical scheduling for communications systems

InactiveUS20050047425A1Addressing slow performanceEasy to modifyNetwork traffic/resource managementData switching by path configurationCommunications systemDistributed computing

System and method for scheduling messages in a digital communications system with reduced system resource requirements. A preferred embodiment comprises a plurality of traffic queues (such as traffic queue 410) used to enqueue message of differing traffic types and a first scheduler (such as priority scheduler 430). The first scheduler to select messages from the traffic queues and provide them to a plurality of priority queues (such as priority queue 455) used to enqueue messages of differing priorities. A second scheduler (such as priority scheduler 475) then selects messages for transmission based on message priority, transmission opportunity, and time to transmit.

Owner:TEXAS INSTR INC

Hardware multithreading systems with state registers having thread profiling data

According to some embodiments, a multithreaded microcontroller includes a thread control unit comprising thread control hardware (logic) configured to perform a number of multithreading system calls essentially in real time, e.g. in one or a few clock cycles. System calls can include mutex lock, wait condition, and signal instructions. The thread controller includes a number of thread state, mutex, and condition variable registers used for executing the multithreading system calls. Threads can transition between several states including free, run, ready and wait. The wait state includes interrupt, condition, mutex, I-cache, and memory substates. A thread state transition controller controls thread states, while a thread instructions execution unit executes multithreading system calls and manages thread priorities to avoid priority inversion. A thread scheduler schedules threads according to their priorities. A hardware thread profiler including global, run and wait profiler registers is used to monitor thread performance to facilitate software development.

Owner:GEO SEMICONDUCTOR INC

Apparatus and method for downlink spatial division multiple access scheduling in a wireless network

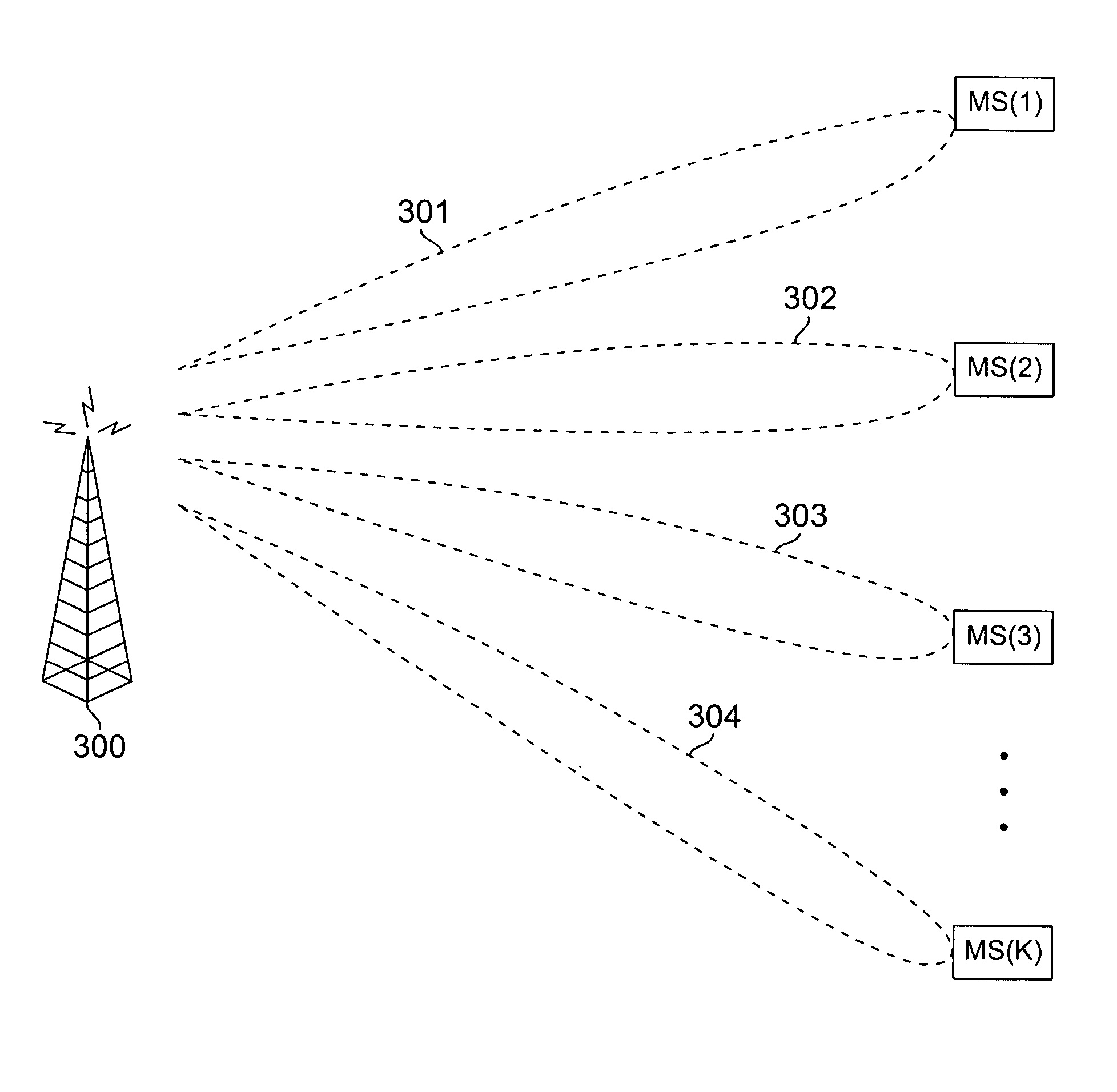

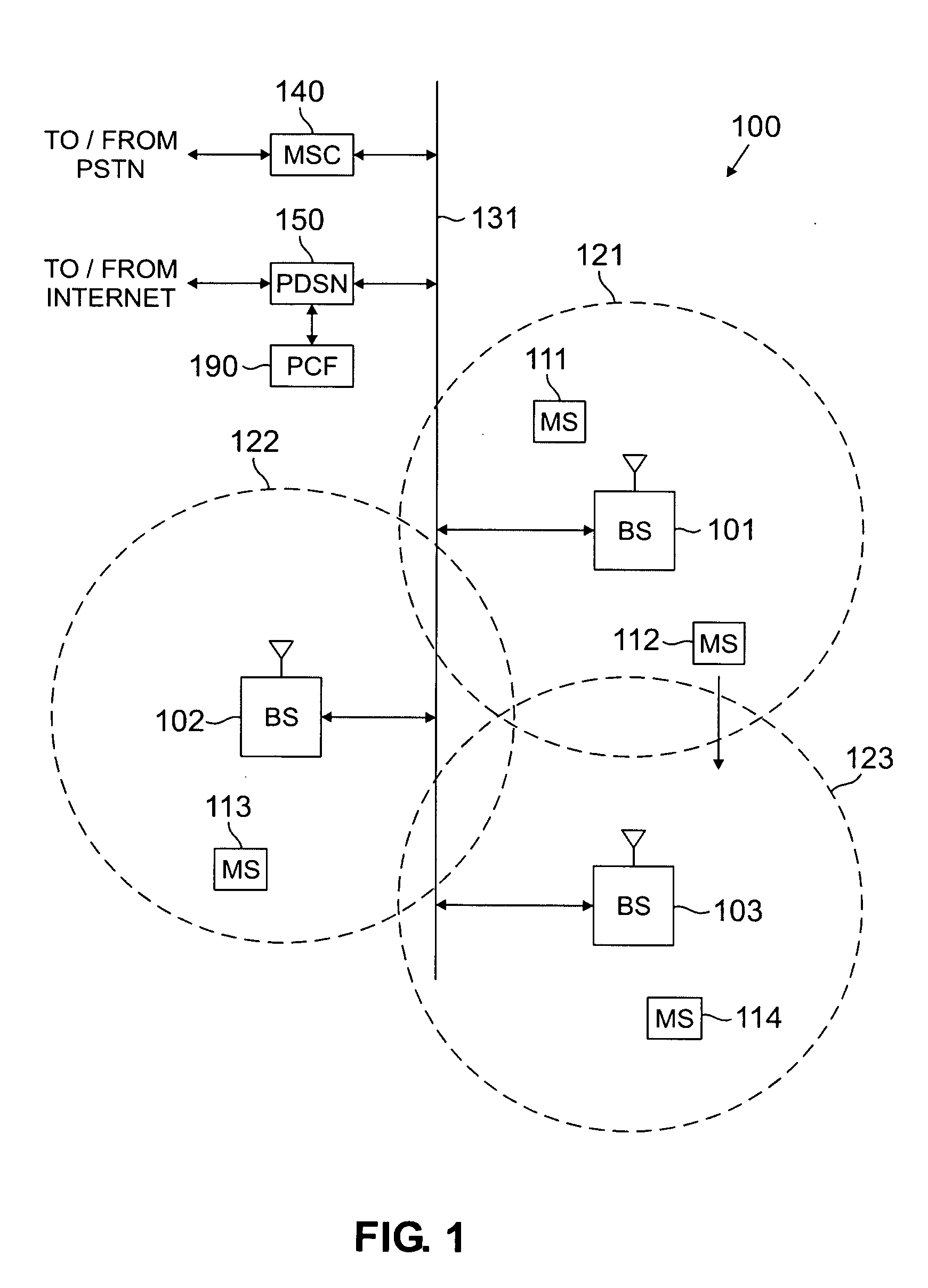

A base station for communicating with mobile stations in a coverage area of the wireless network. The base station comprises: 1) a transceiver for transmitting downlink OFDMA signals to each mobile station; 2) an antenna array for transmitting the downlink OFDMA signals to the mobile stations using spatially directed beams; and 3) an SDMA scheduling controller for scheduling downlink transmissions to the mobile stations. The SDMA scheduling controller determines a first mobile station having a highest priority and schedules the first mobile station for downlink transmission in a particular time-frequency slot. The SDMA scheduling controller then determines additional mobile stations that are spatially uncorrelated with the first mobile station, as well as each other, and schedules the additional mobile stations for downlink transmission in that particular time-frequency slot according to priority.

Owner:SAMSUNG ELECTRONICS CO LTD

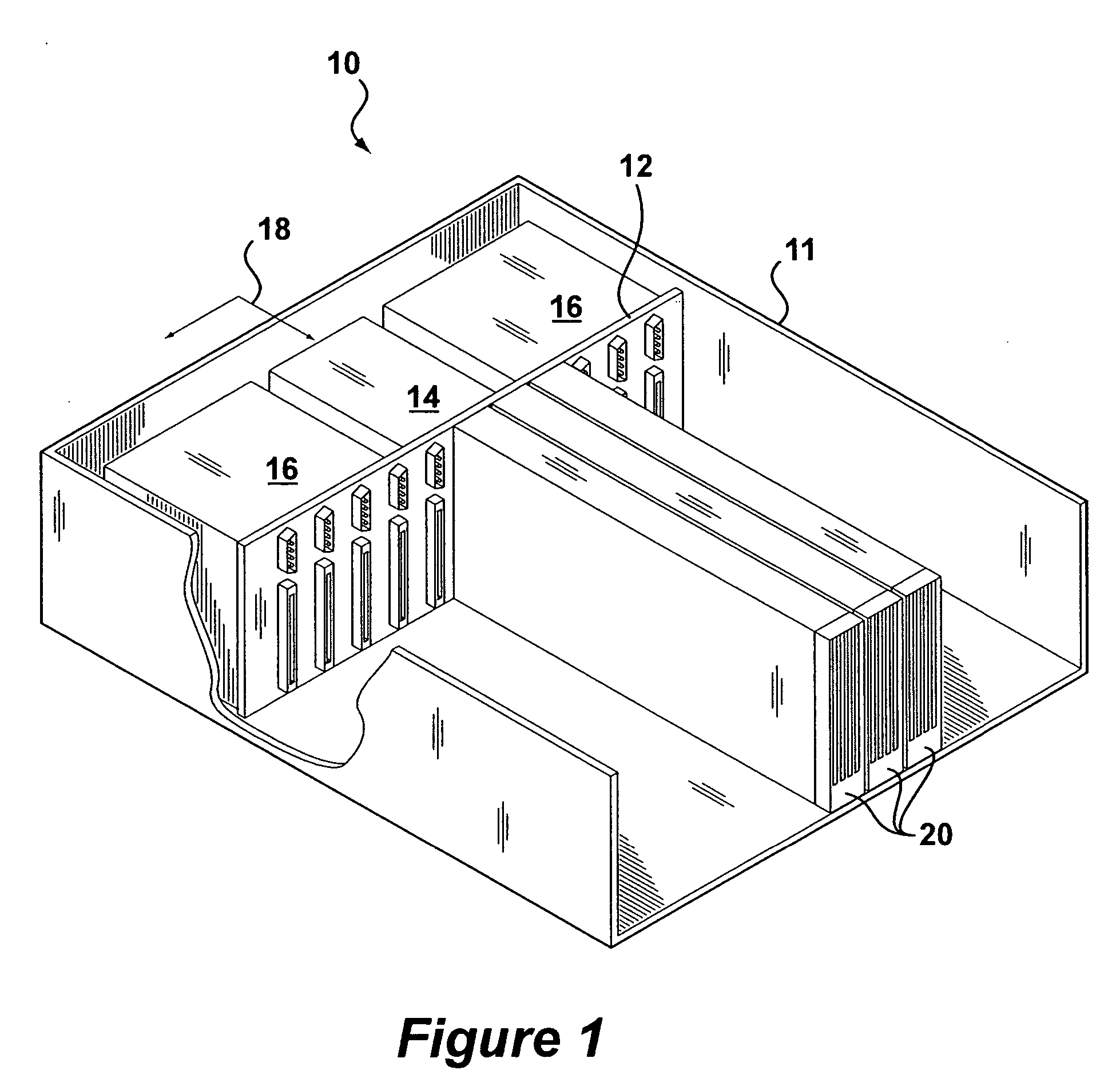

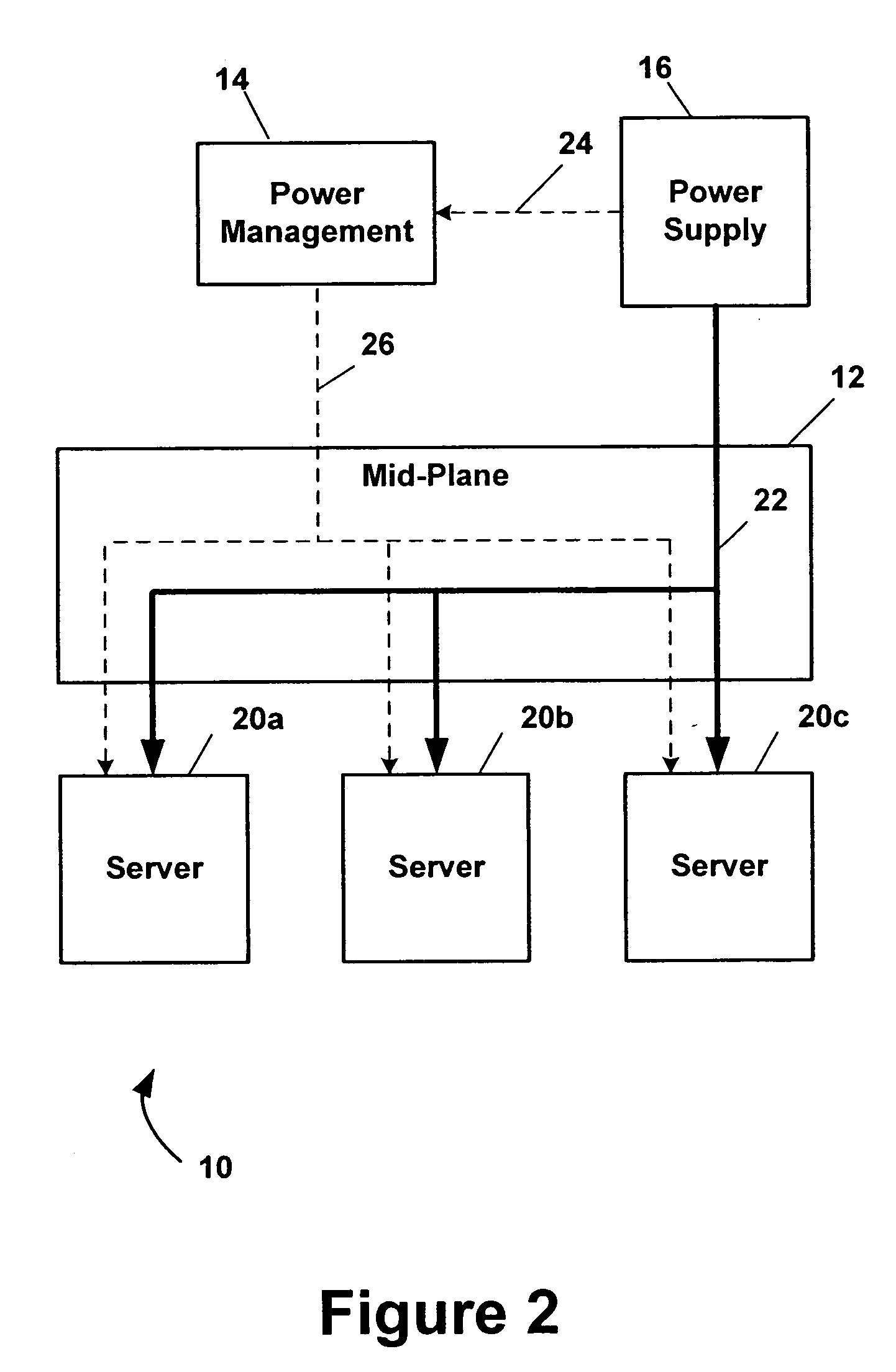

Prioritizing power throttling in an information handling system

InactiveUS20060161794A1Reduce capacityConfigurable power throttling requestsVolume/mass flow measurementPower supply for data processingInformation processingElectric power system

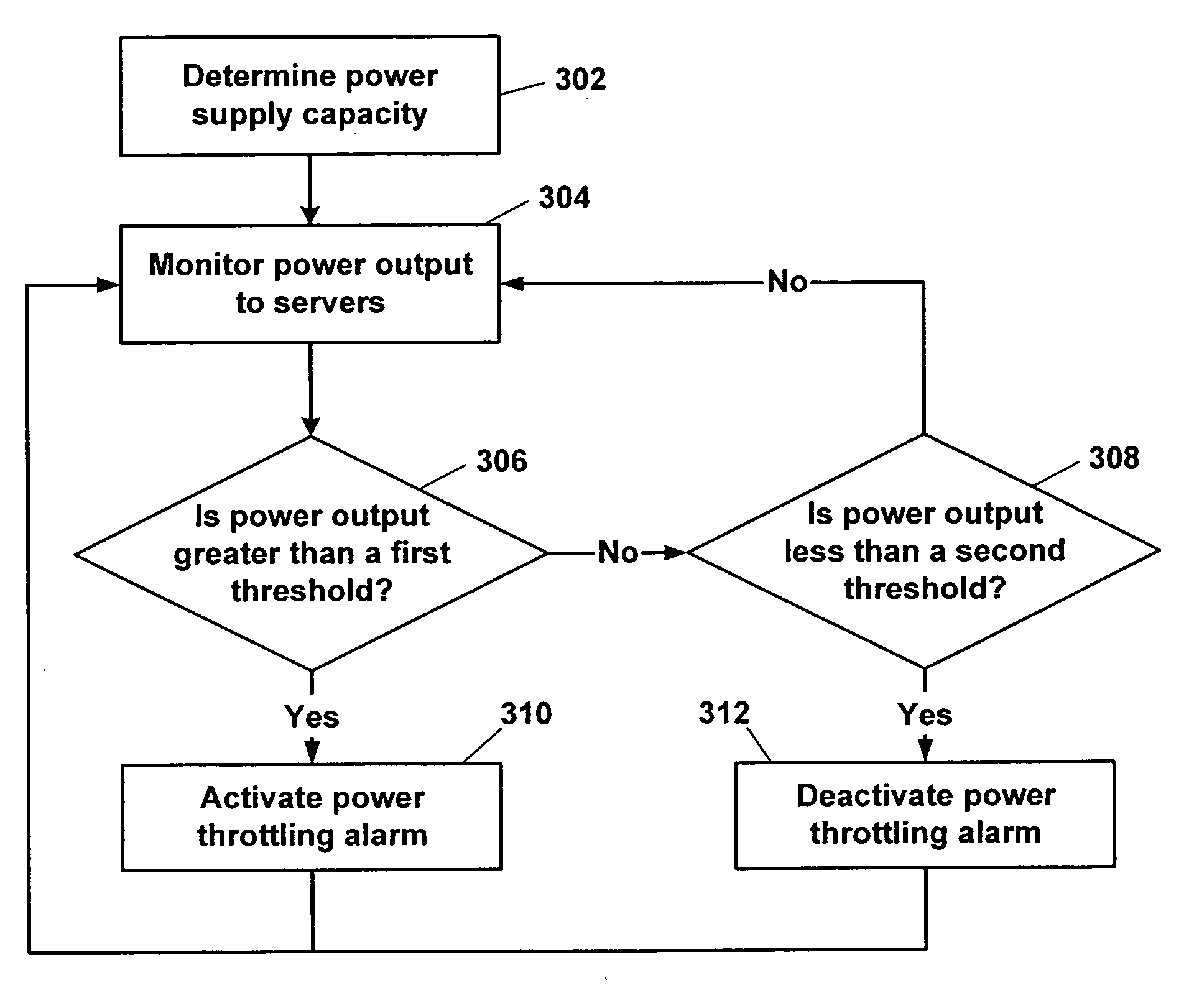

Prioritization of power throttling of servers in an information handling system may be based upon slot or location priority, subscription priority, and / or greatest power usage. A power system having a capacity of less than the maximum connected power load is monitored to determine the power being drawn therefrom. When the power drawn from the power supply system exceeds a first threshold, power throttling requests are issued to the appropriate servers until the power drawn from the power supply is less than a second threshold. The second threshold less than the first threshold. The appropriate servers may be configurable e.g., determined by a configurable prioritization schedule.

Owner:DELL PROD LP

Multidimensional resource scheduling system and method for cloud environment data center

InactiveCN102932279AAccurate abstractionIncrease weightData switching networksResource poolVirtualization

The invention provides a multidimensional resource scheduling system and method for a cloud environment data center, belonging to the field of cloud computing. The system provided by the invention comprises a user application request submitting module, a resource status acquiring module, a resource scheduling module, an application request priority queuing module and a virtualized physical resource pool. The method provided by the invention comprises the following steps of: firstly detecting the status information of multidimensional resources in the virtualized physical resource pool and a current application request set in the user application request submitting module, which are acquired by the resource status acquiring module; then defining an application request priority queue which is suitable for the status balanced consumption of the current multidimensional resources in the virtualized physical resource pool by using a multiple-attribute-decision-making application priority scheduling algorithm; and finally submitting the application request which has the highest priority and meets resource constraints to the cloud environment data center for execution.

Owner:BEIJING UNIV OF POSTS & TELECOMM

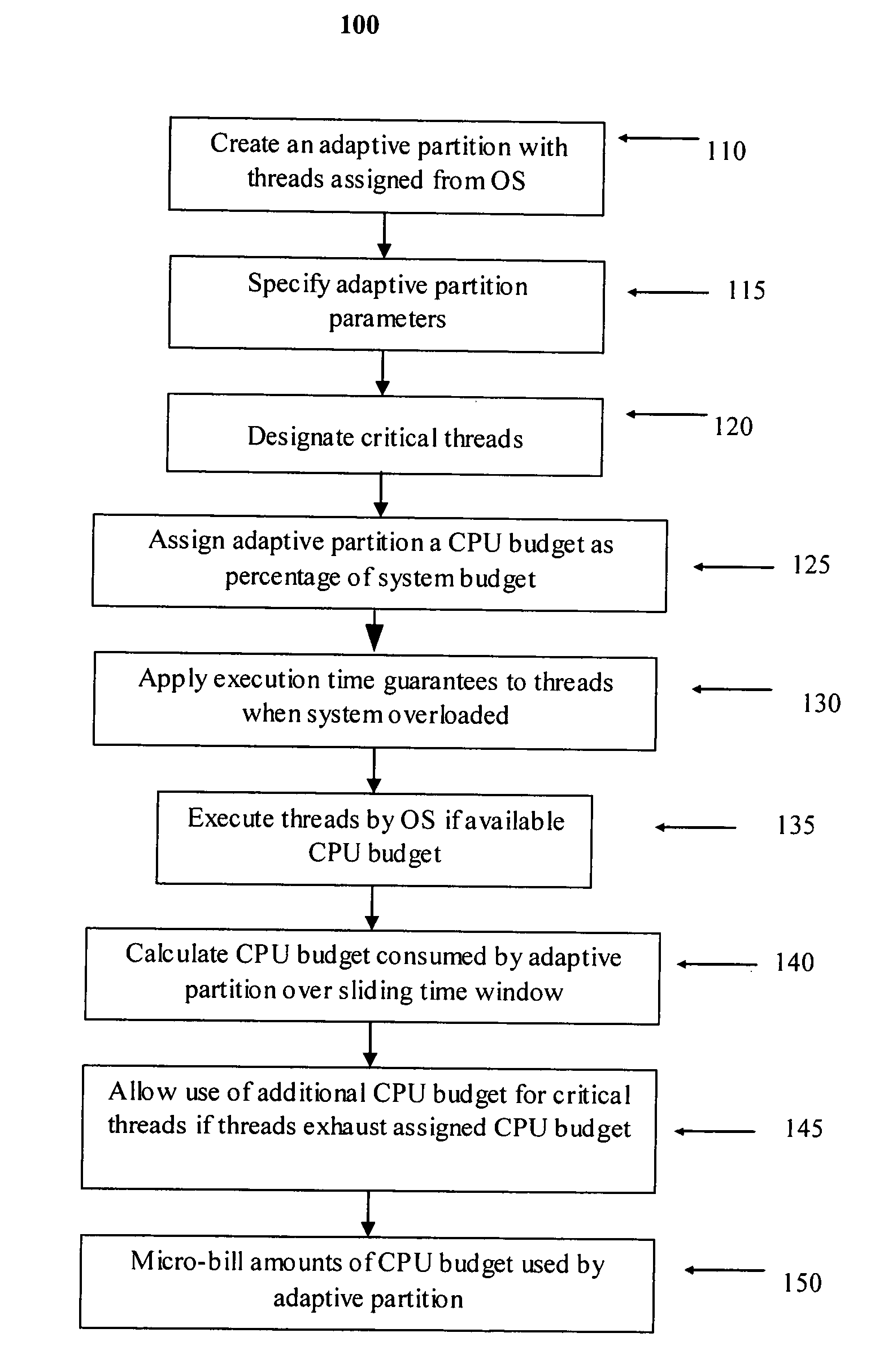

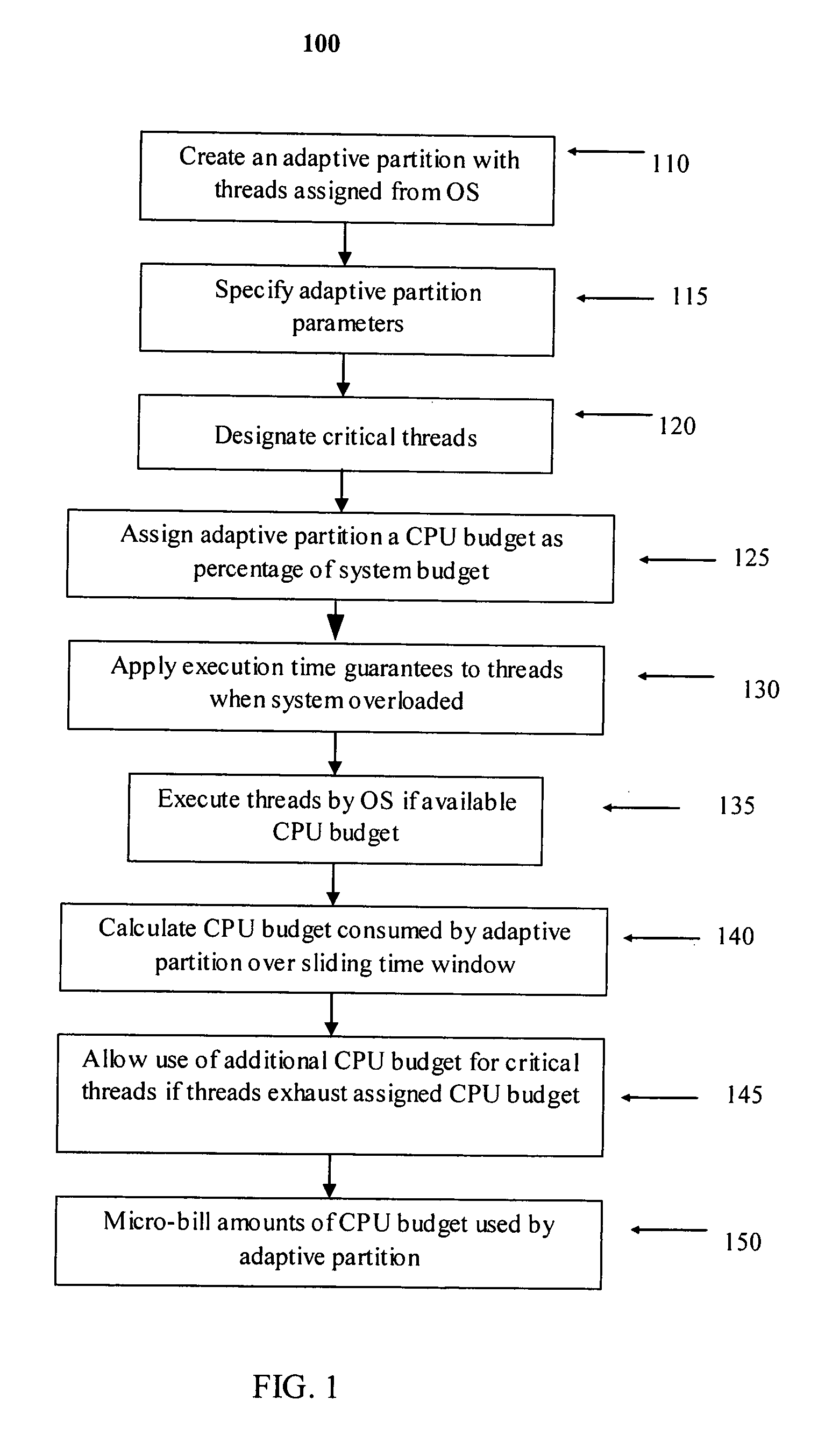

Adaptive partitioning for operating system

An adaptive partition scheduler is a priority-based scheduler that also provides execution time guarantees (fair-share). Execution time guarantees apply to threads or groups of threads when the system is overloaded. When the system is not overloaded, threads are scheduled based strictly on priority, maintaining strict real-time behavior. Even when overloaded, the scheduler provides real-time guarantees to a set of critical threads, as specified by the system architect.

Owner:MALIKIE INNOVATIONS LTD

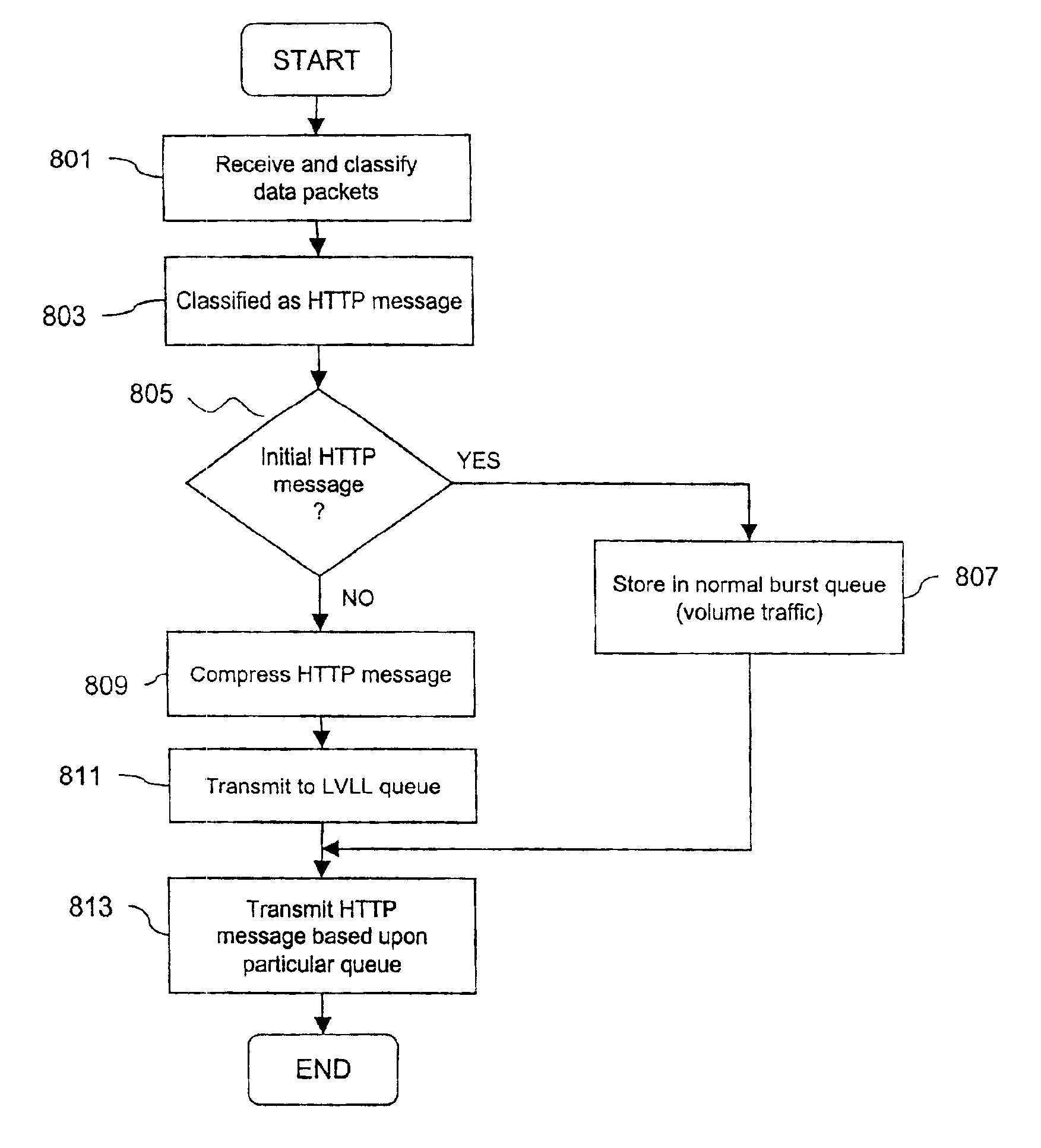

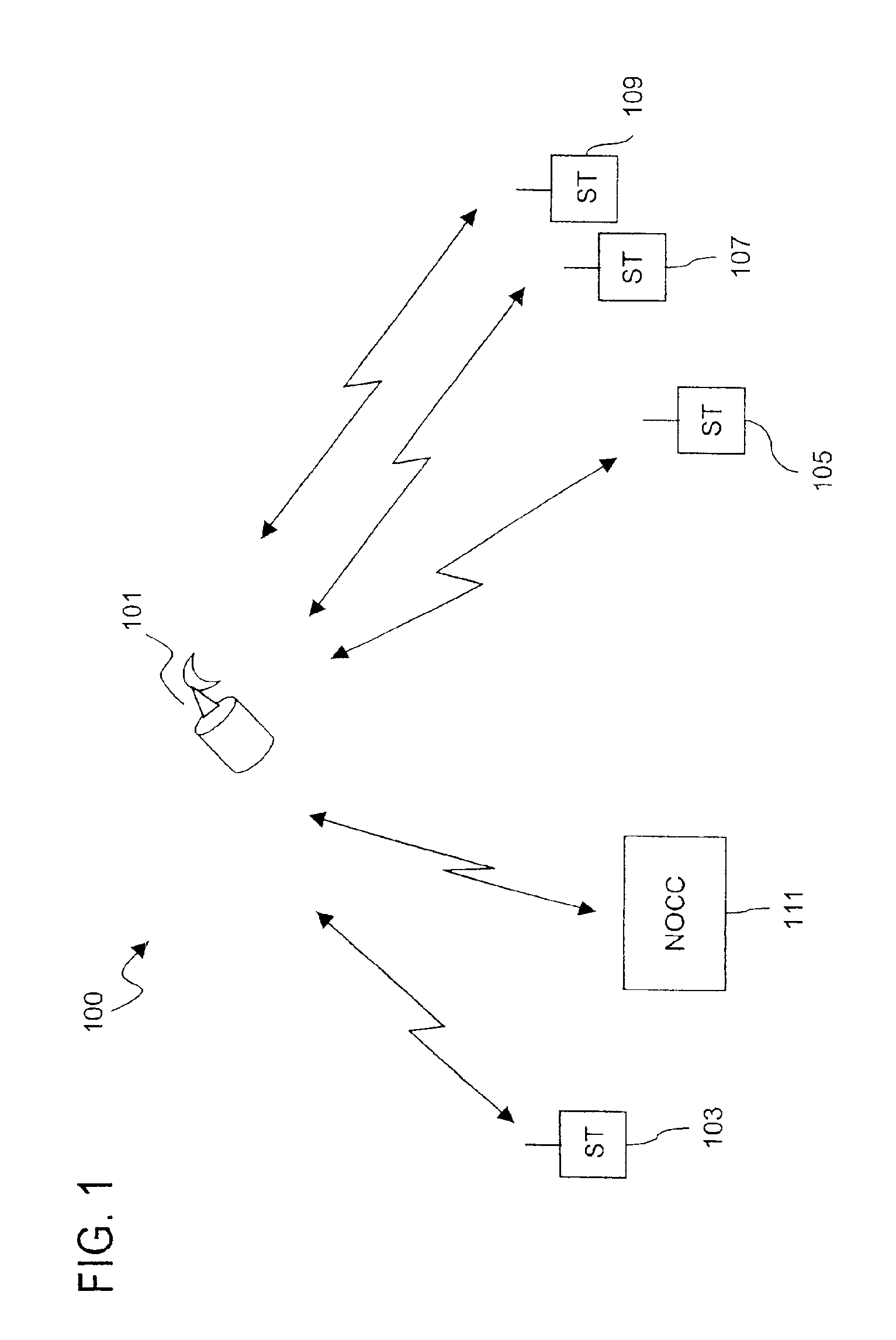

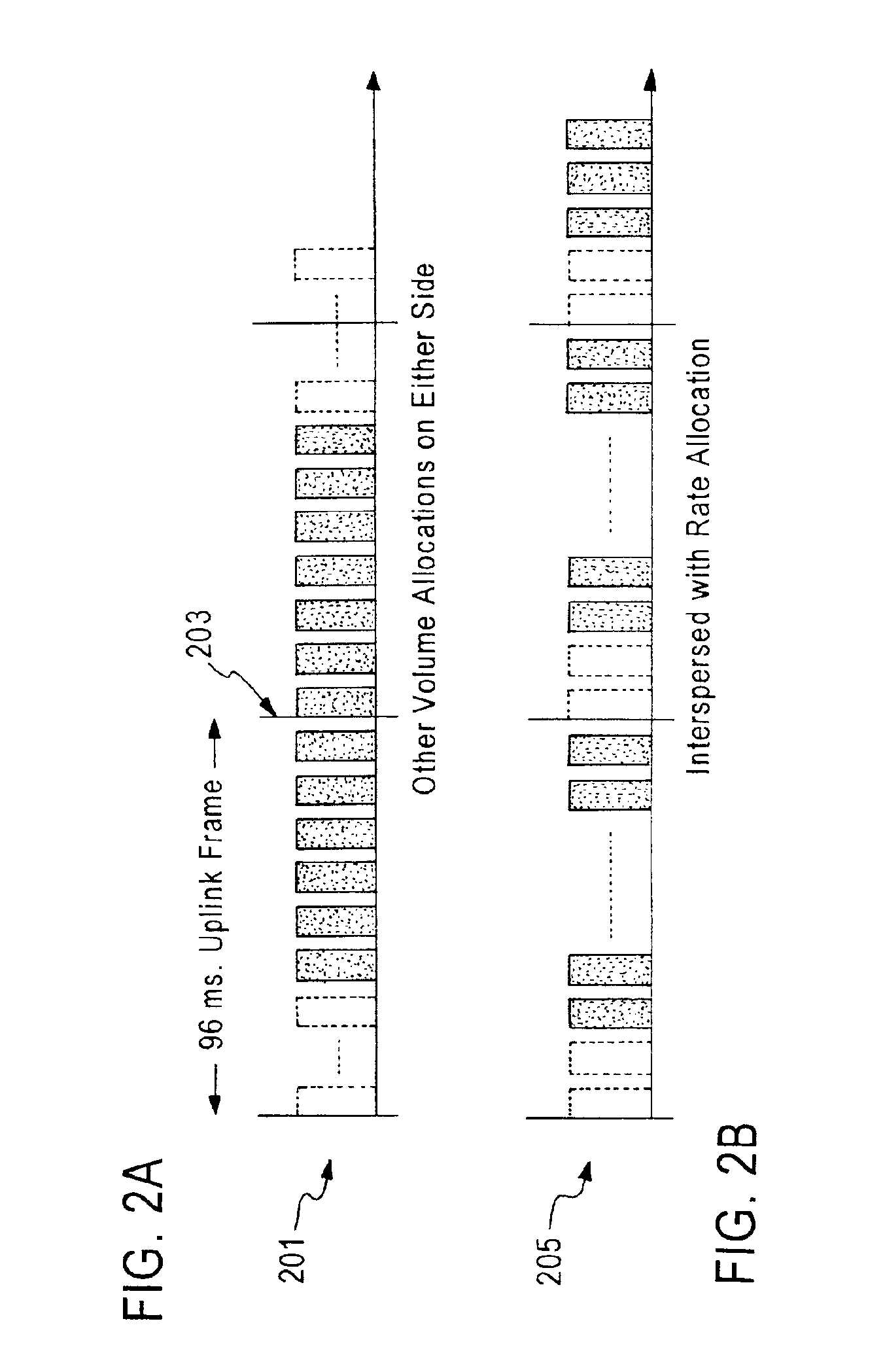

Low latency handling of transmission control protocol messages in a broadband satellite communications system

InactiveUS6961539B2Minimize impactImprove system throughputError prevention/detection by using return channelFrequency-division multiplex detailsCommunications systemLatency (engineering)

An approach for transmitting packets conforming with the TCP (Transmission Control Protocol) over a satellite communications network comprises a plurality of prioritized queues that are configured to store the packets. The packets conform with a predetermined protocol. A classification logic classifies the packets based upon the predetermined protocol. The packet is selectively stored in one of the plurality of queues, wherein the one queue is of a relatively high priority. The packet is scheduled for transmission over the satellite communications network according to the relative priority of the one queue.

Owner:HUGHES NETWORK SYST

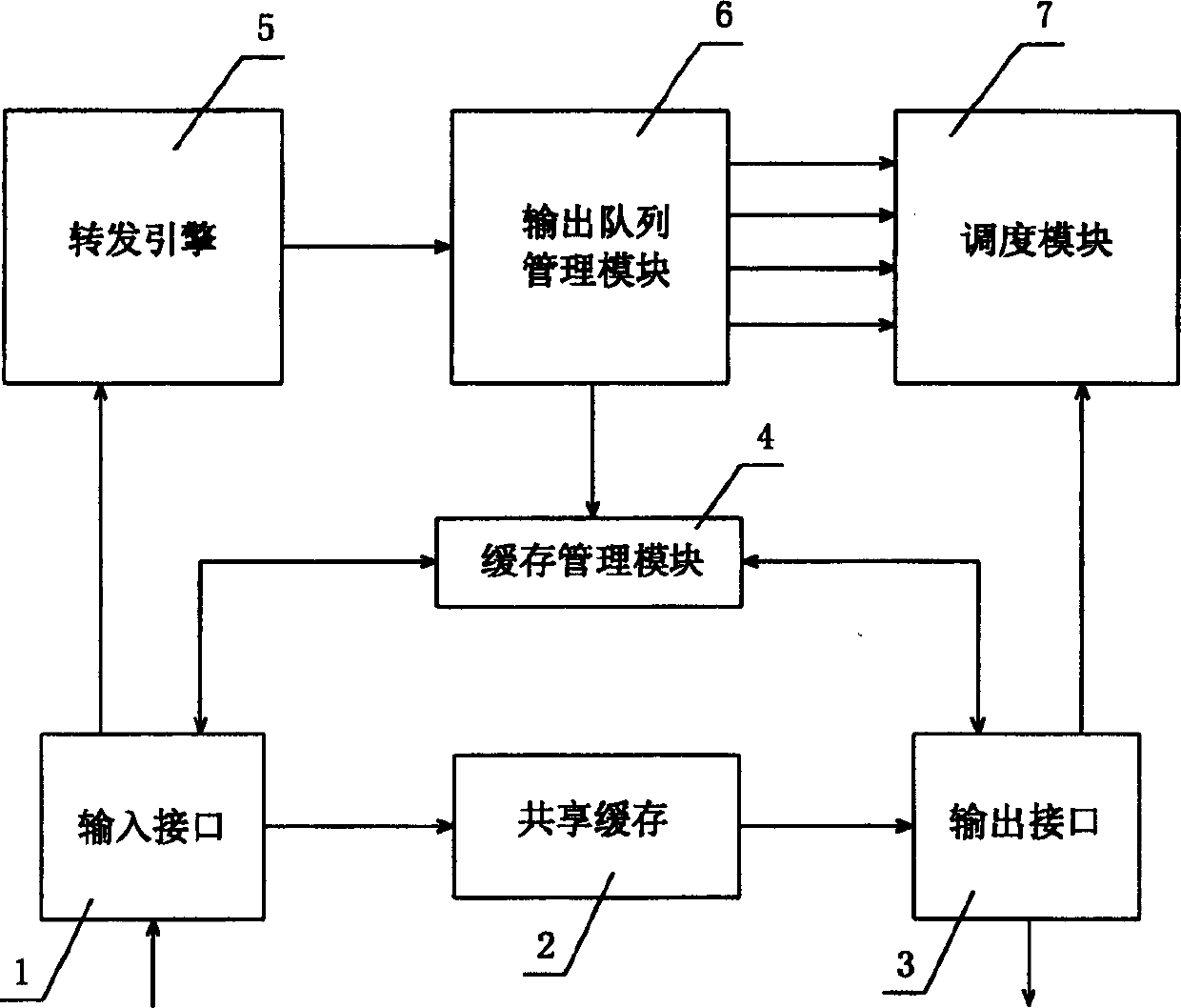

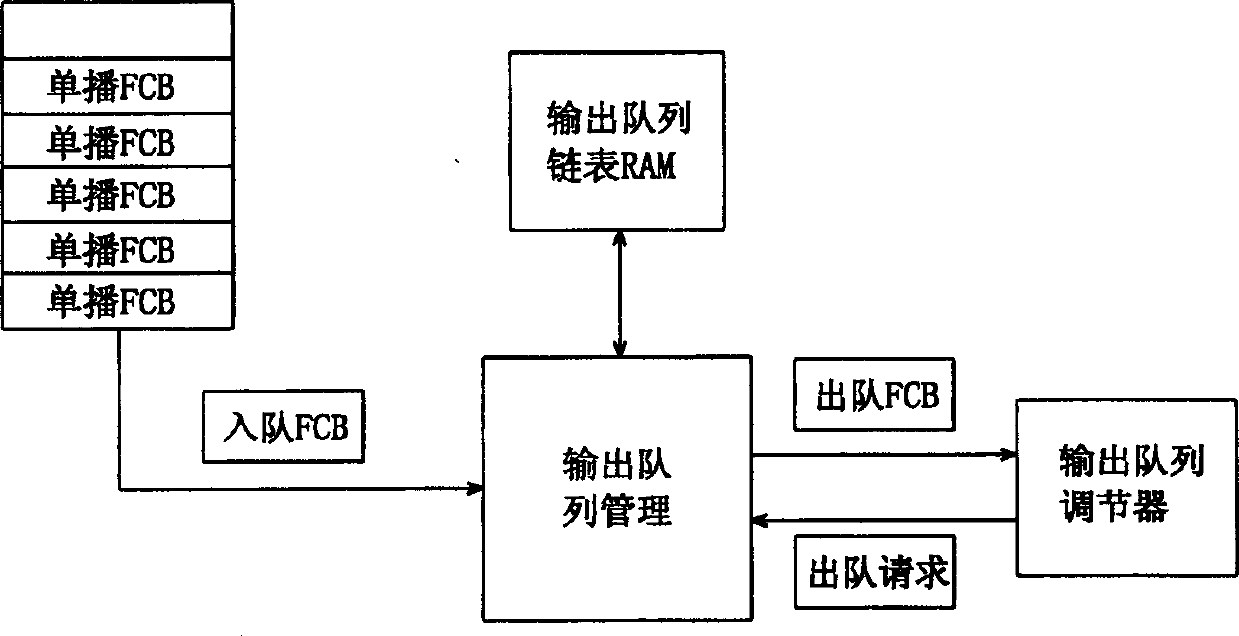

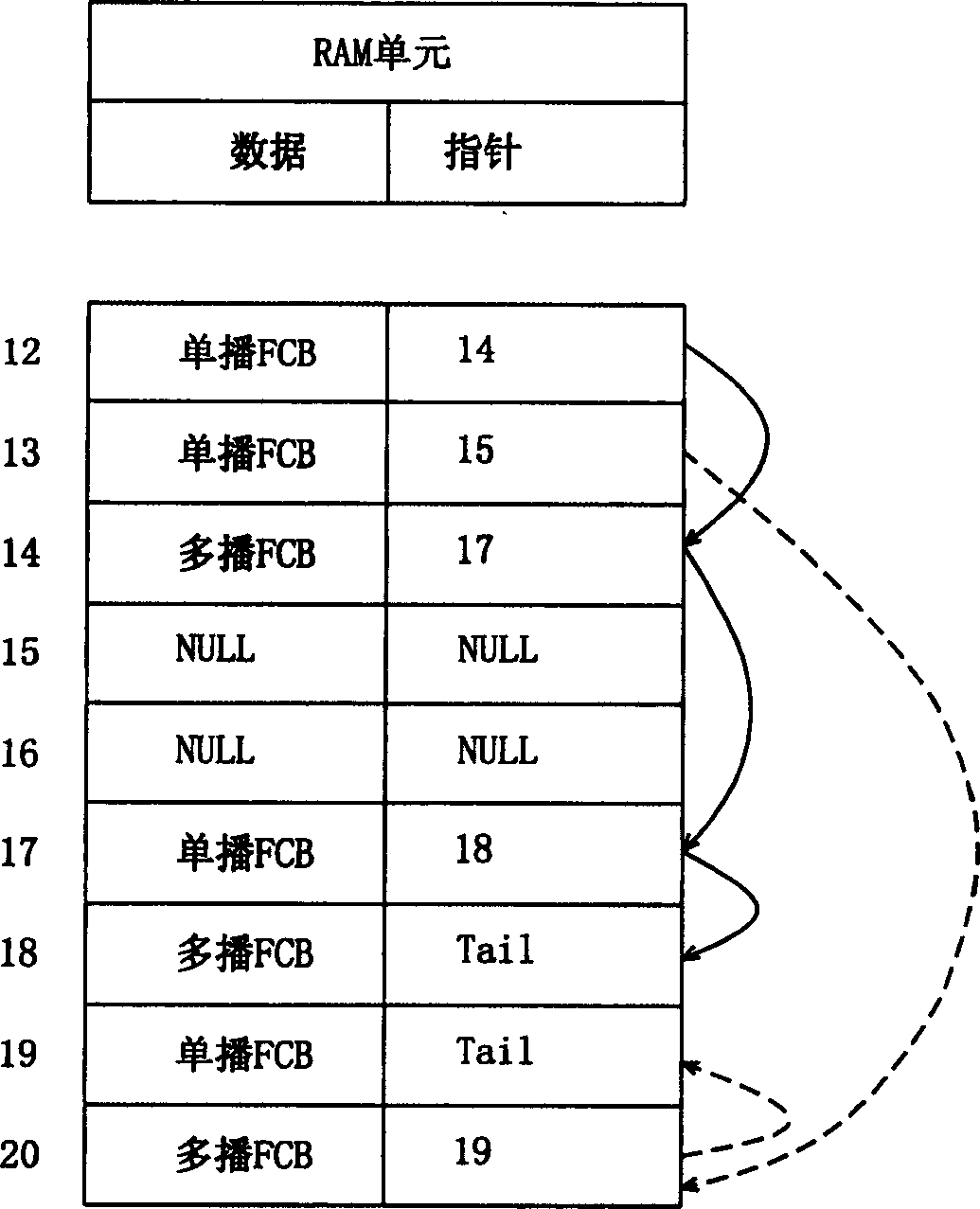

Ethernet exchange chip output queue management and dispatching method and device

InactiveCN1411211ADistribute quicklyEffective distributionData switching by path configurationMessage queueFifo queue

A management method and device of Ethernet chip output quene is that a single / multi message control separation module outputs queue from the frame control module based on single, multimessage separation way of each interface, the single data frame control module sets single-message queue in mode with multiple priority queue and uses congestion control algorithm to safeguard the single queue, the multiple one organizes in a way of FIFO queue to apply congestion control algorithm to directly discard queue end then carry out single / multi message priority quene matched arrangement. After interface dispatch between interfaces and priority dispatch inside interface in output quene dispatches, if single / multiple in priority.

Owner:HISILICON TECH

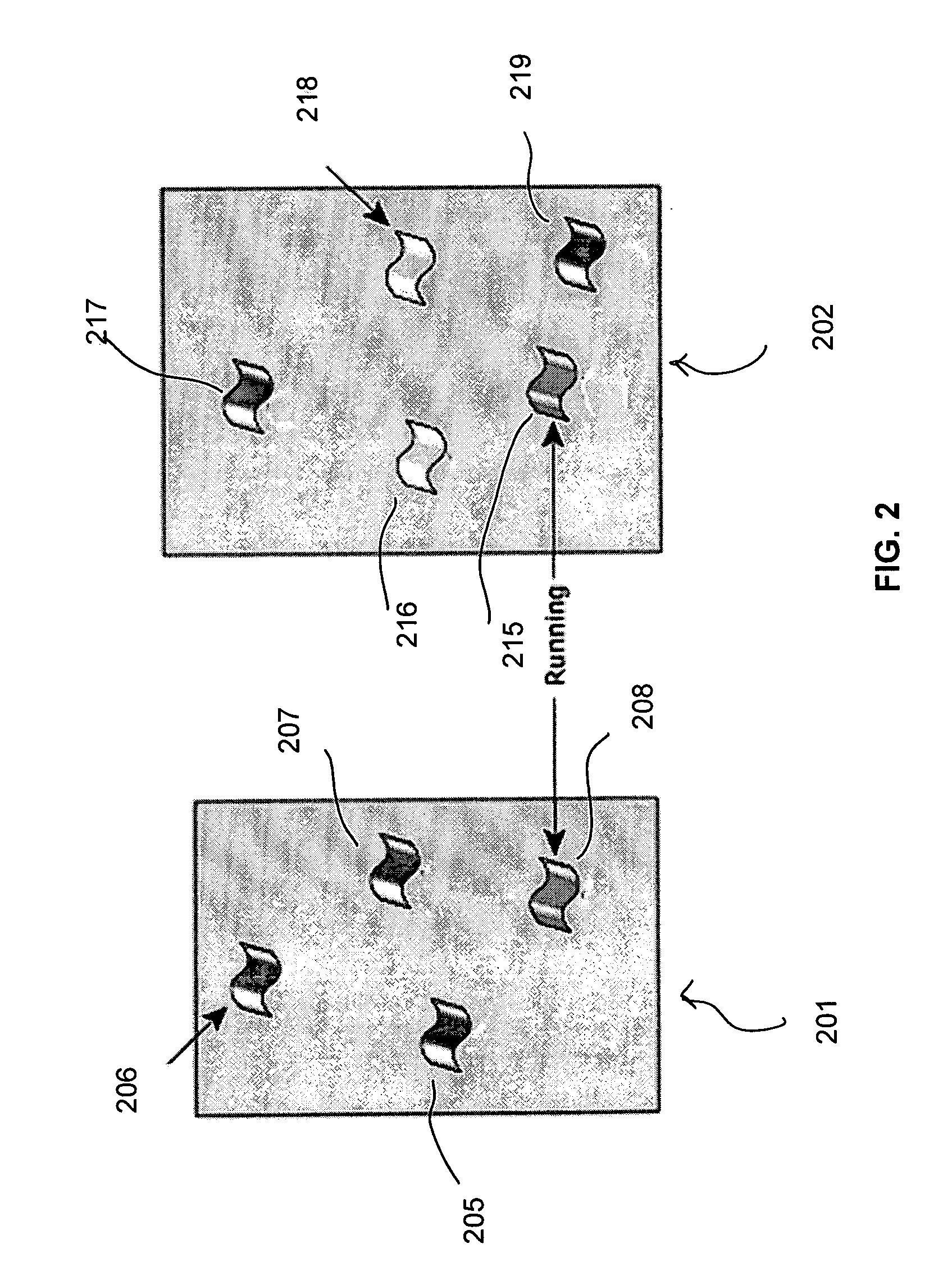

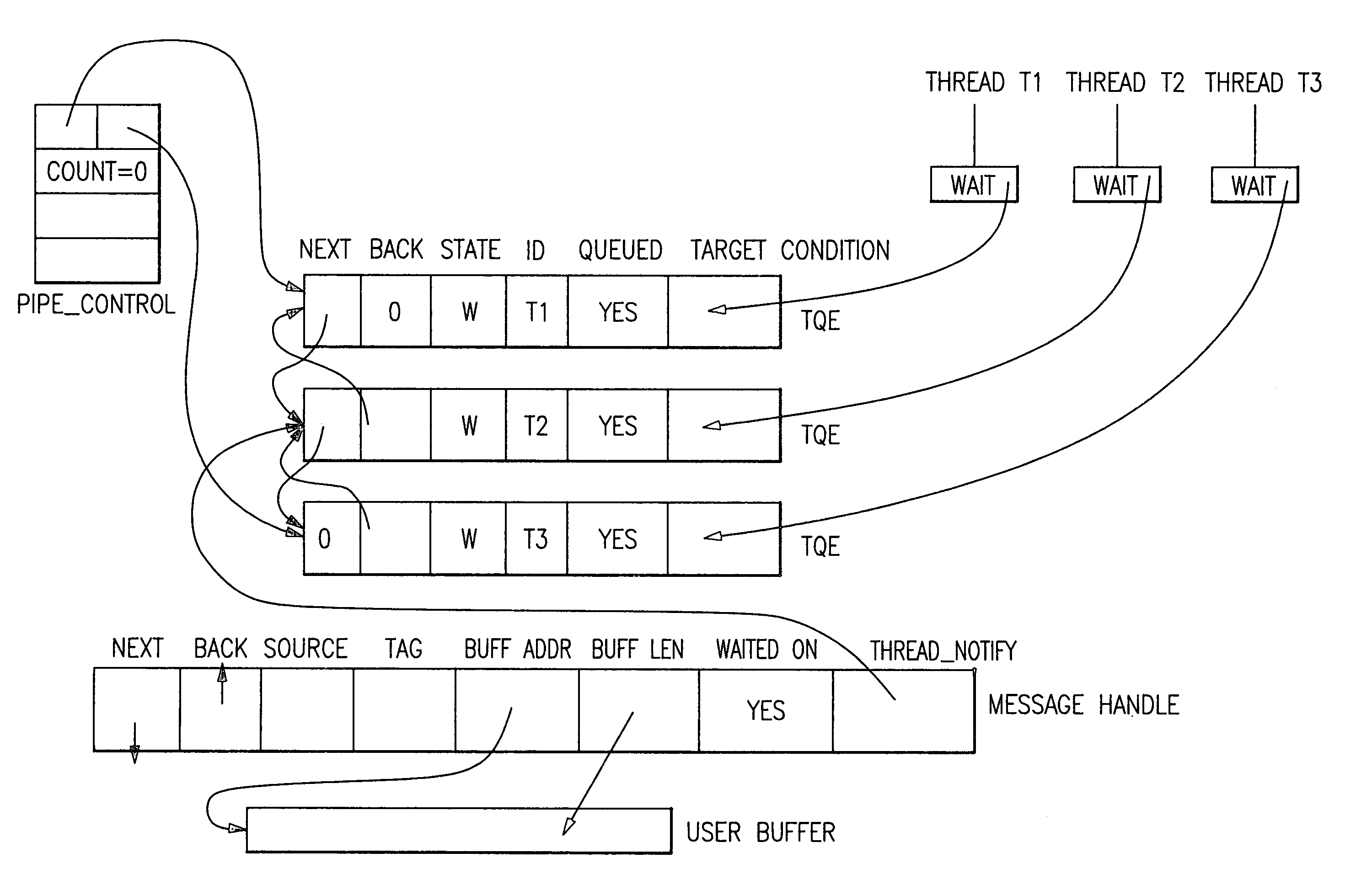

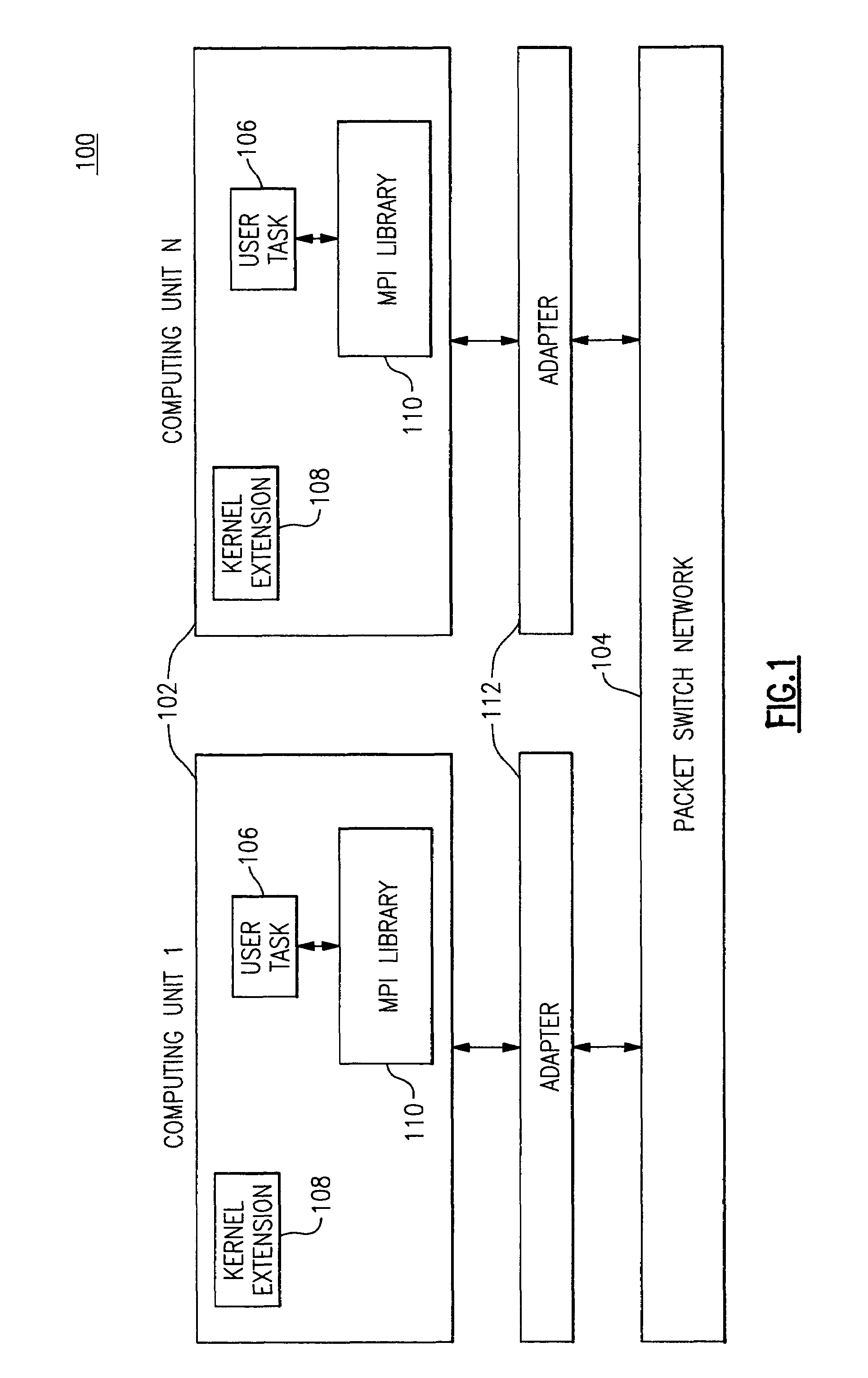

Thread dispatcher for multi-threaded communication library

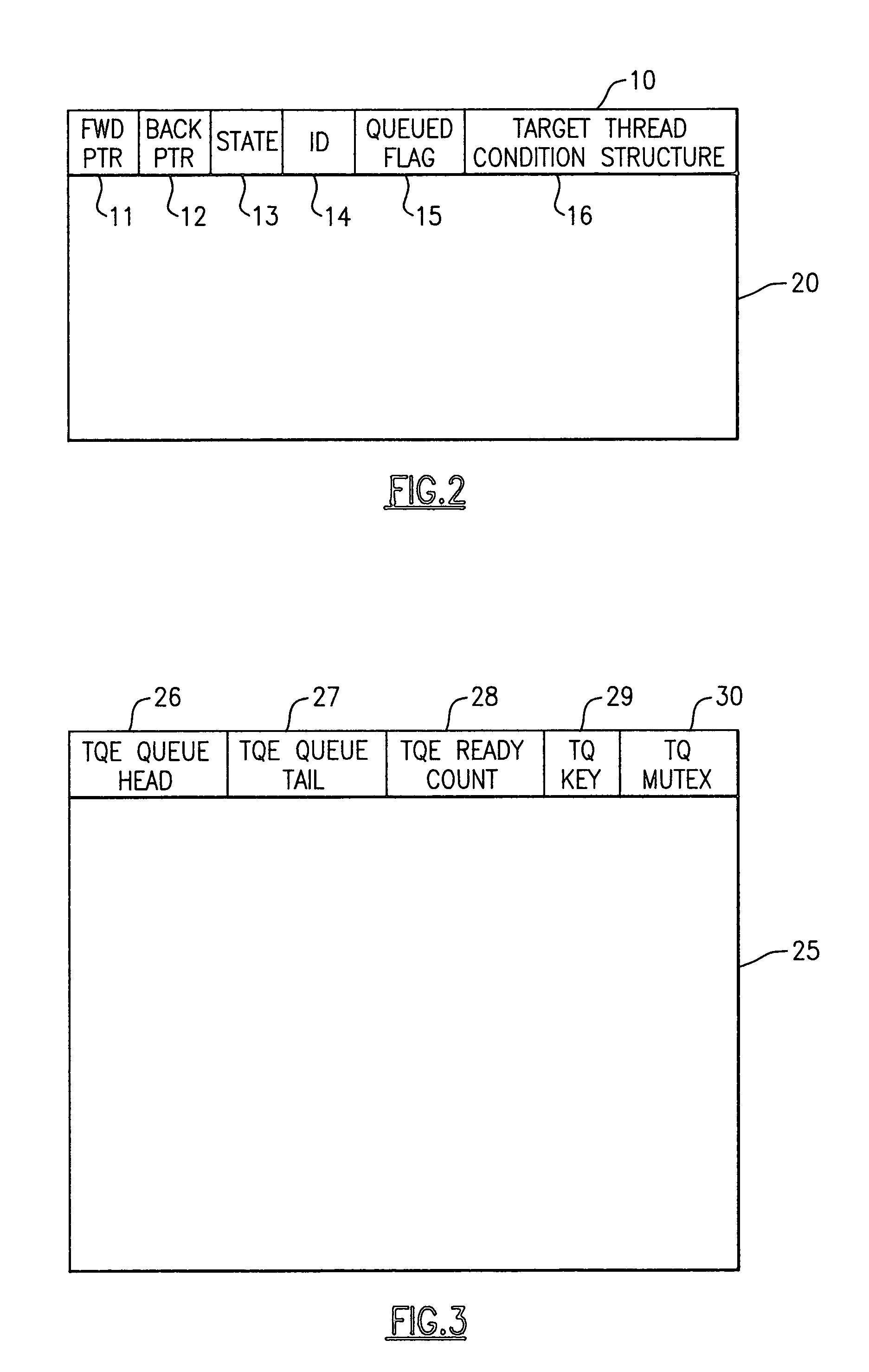

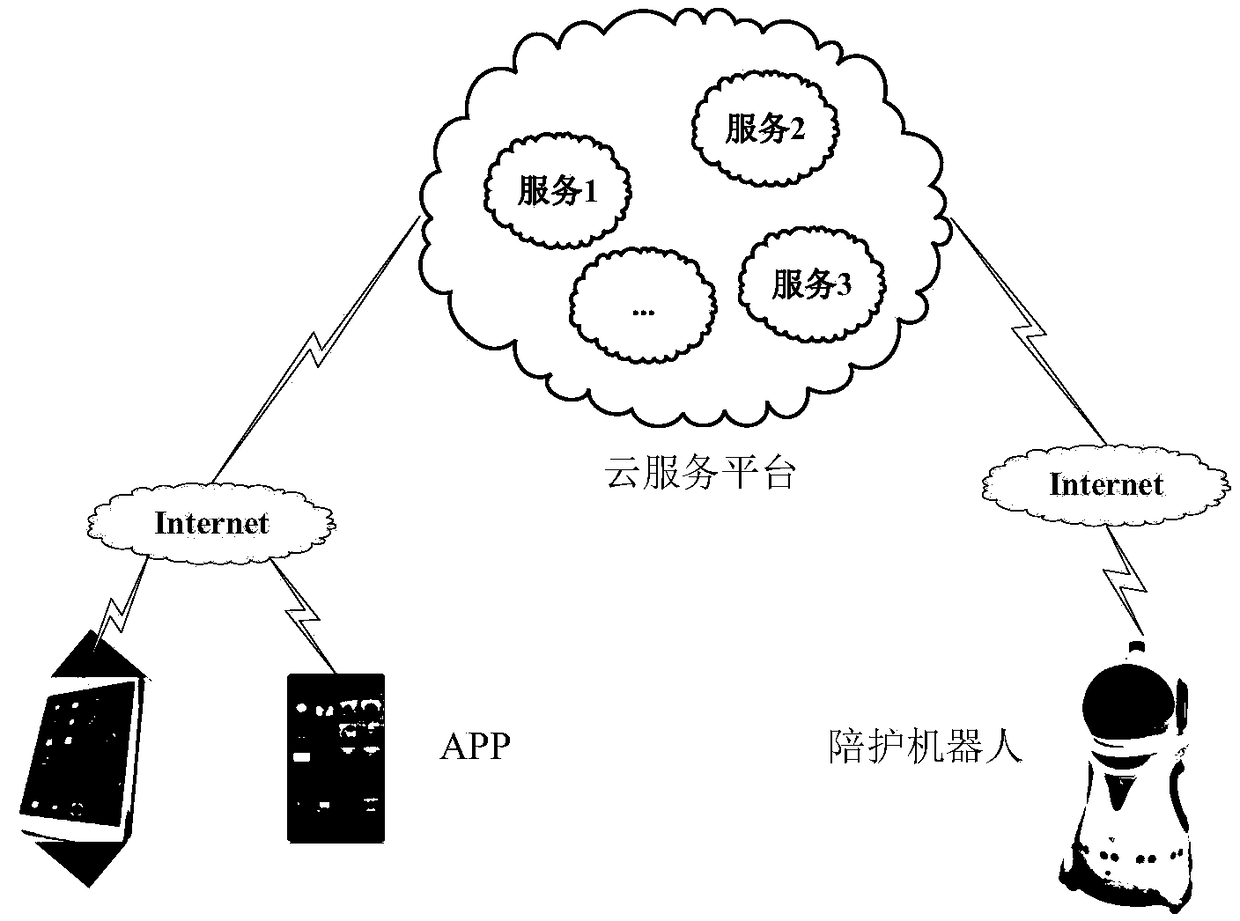

InactiveUS6934950B1Improve efficiencyRaise priorityMultiprogramming arrangementsMemory systemsPOSIX ThreadsThread scheduling

Method, computer program product, and apparatus for efficiently dispatching threads in a multi-threaded communication library which become runnable by completion of an event. Each thread has a thread-specific structure containing a “ready flag” and a POSIX thread condition variable unique to that thread. Each message is assigned a “handle”. When a thread waits for a message, thread-specific structure is attached to the message handle being waited on, and the thread is enqueued, waiting for its condition variable to be signaled. When a message completes, the message matching logic sets the ready flag to READY, and causes the queue to be examined. The queue manager scans the queue of waiting threads, and sends a thread awakening condition signal to one of the threads with its ready flag set to READY. The queue manager can implement any desired policy, including First-In-First-Out (FIFO), Last-In-First-Out (LIFO), or some other thread priority scheduling policy. This ensures that the thread which is awakened has the highest priority message to be processed, and enhances the efficiency of message delivery. The priority of the message to be processed is computed based on the overall state of the communication subsystem.

Owner:IBM CORP

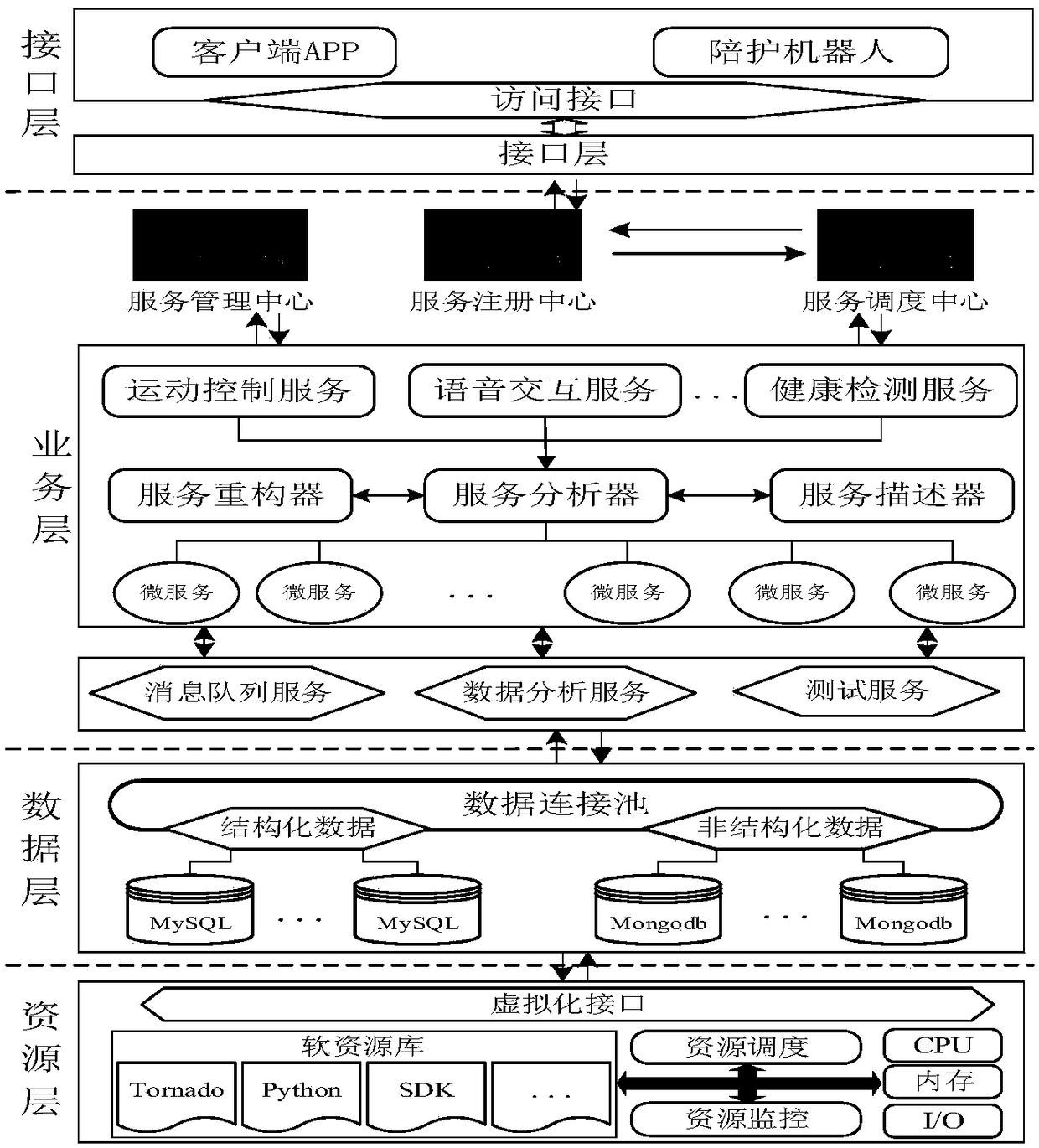

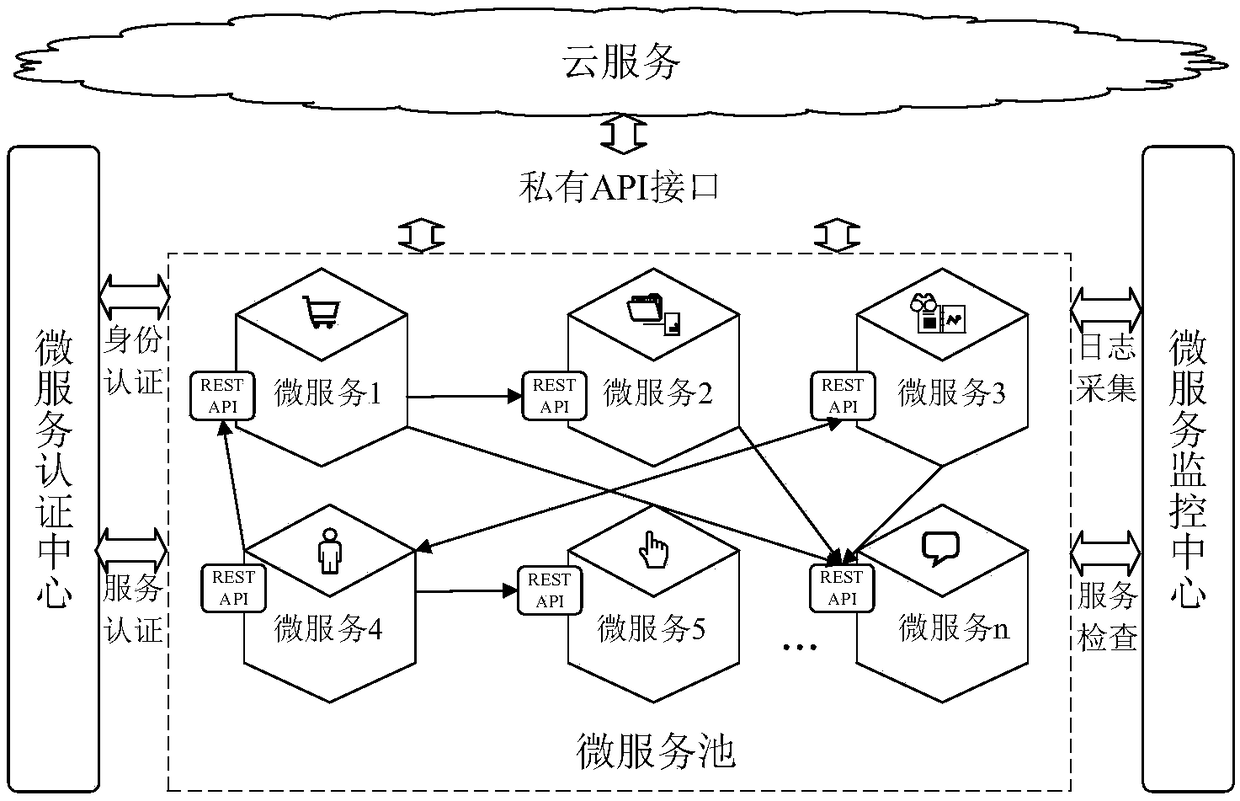

Accompanying robot cloud service system and method based on micro-service

ActiveCN108880887AImprove resource utilizationImprove concurrencyData switching networksGranularityInterface layer

The invention discloses an accompanying robot cloud service system and an accompanying robot cloud method based on a micro-service. The system comprises a terminal and a cloud service platform, wherein the terminal comprises a mobile terminal and an accompanying robot; the cloud service platform receives a connection request initiated by the terminal, extracts and stores a terminal protocol via aprotocol extraction model, then analyzes the connection request of the terminal and performs service processing, and at last feeds a processing result back to the terminal via a response interface; the service layer of the cloud service platform is implemented based on the micro-service, and comprises multiple fine granularities of bottom-layer micro-services, and a protocol extraction mechanism is designed at an interface layer; a data layer adopts a mixed storage mode of MySQL+MongoDB for storing data. According to the system and method, a multi-priority scheduling strategy based on resourcematching is provided from the aspect of scheduling, the utilization rate of system resources is improved, and from the aspect of development, the Mix-IO model of select+epoll is adopted for enhancingthe system concurrency.

Owner:山东芯辰人工智能科技有限公司

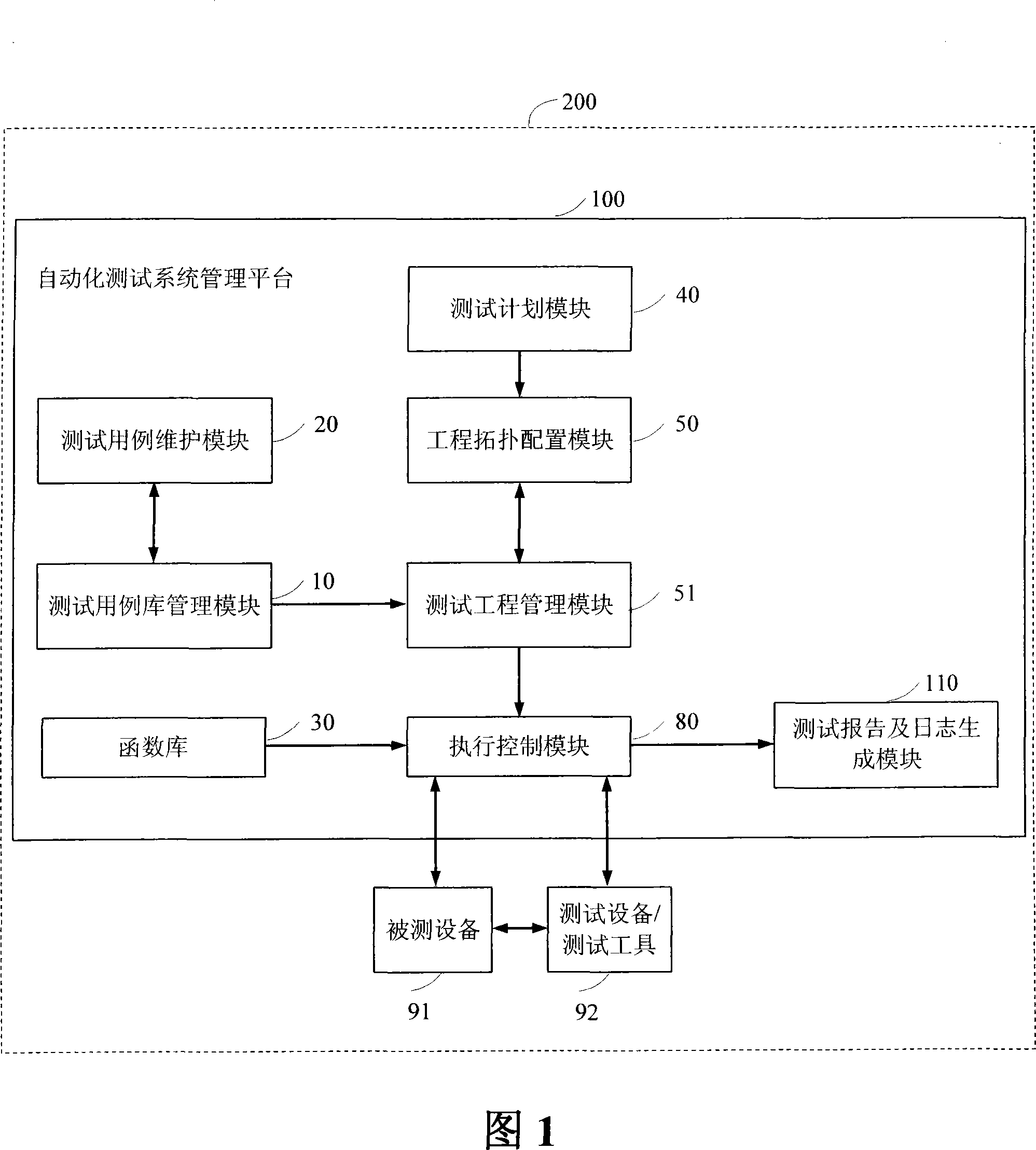

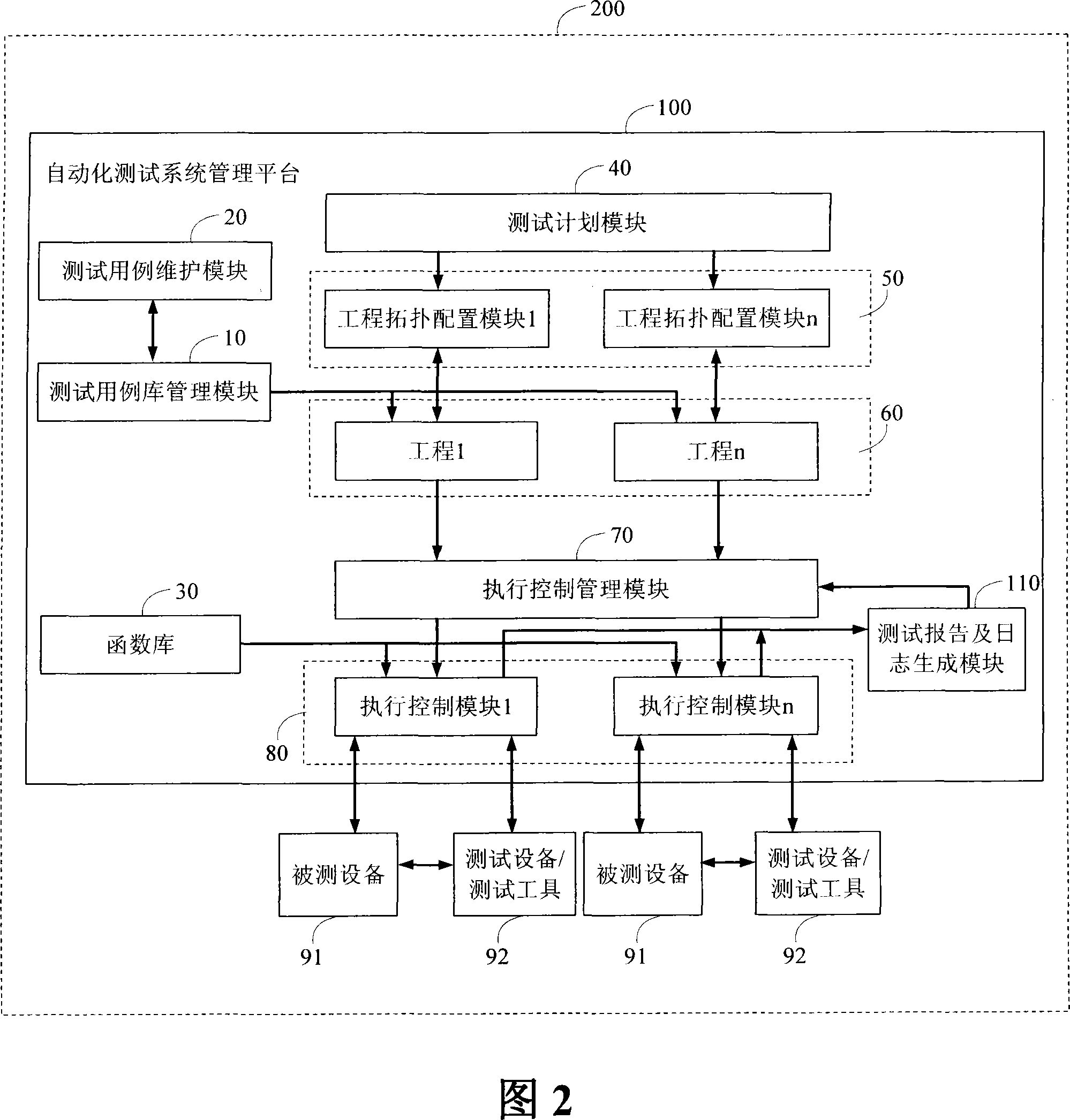

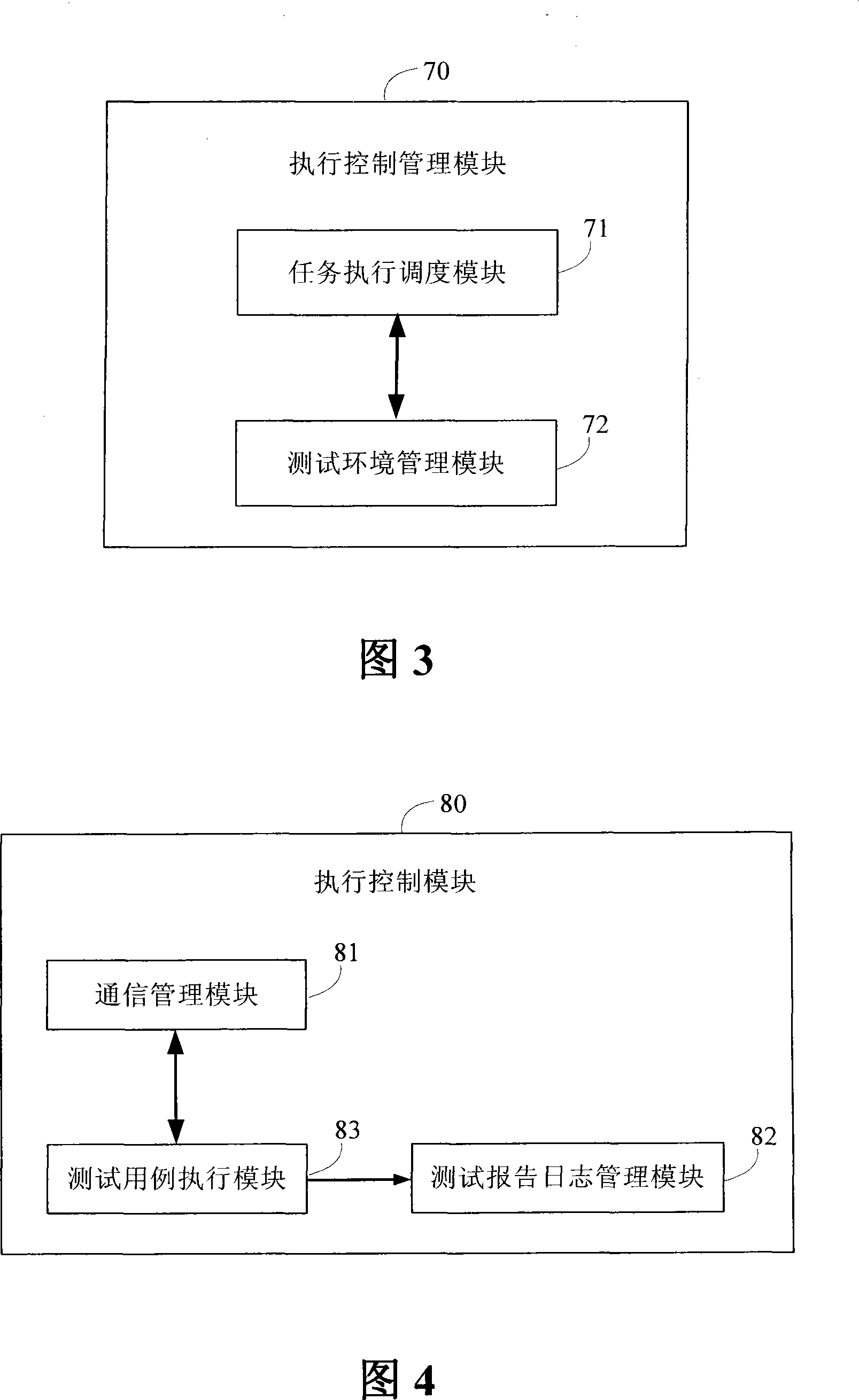

System for parallel executing automatization test based on priority level scheduling and method thereof

InactiveCN101227350ATake advantage ofImprove test efficiencyData switching networksResource utilizationExecution control

The invention discloses a parallel execution automation test system and a method which are based on priority scheduling, the system comprises a test plan module which is used to manage and draw up testing contents, an engineering topology configuring module which is used to topologically allocate test environment and manage the essential information of test environment, a test report and journal generating module for generating test journal and / or test report and a execution control module which is responsible for executing a plurality of test environment, and the invention also comprises an execution control management module, a connection execution control module, an engineering topology configuring module, a test report and journal generating module, a testing use case group with higher priority level which controls the execution control module to preferentially operate in test environment according to testing use case group, when the priority level is same, the relative testing use case group is operated in parallel. The invention greatly improves testing efficiency, which improves recourse utilization ratio, timely avoids ineffective test, and improves availability of test.

Owner:ZTE CORP

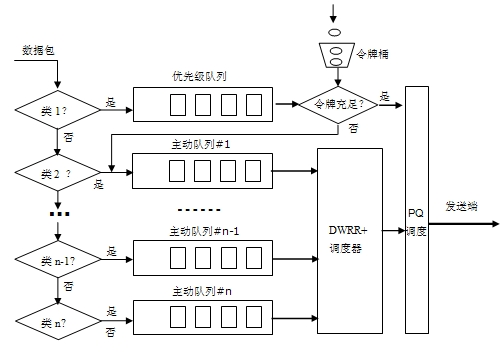

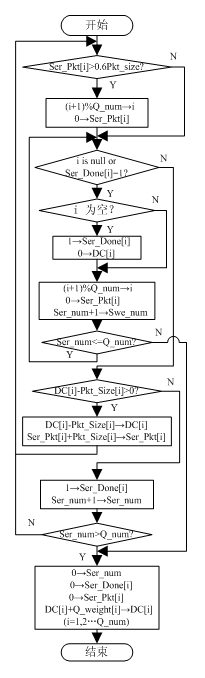

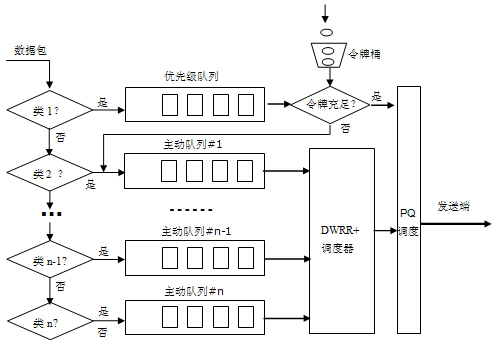

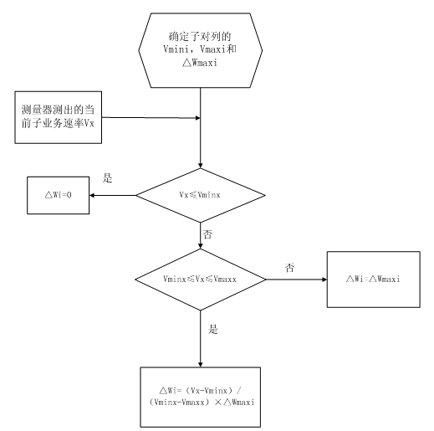

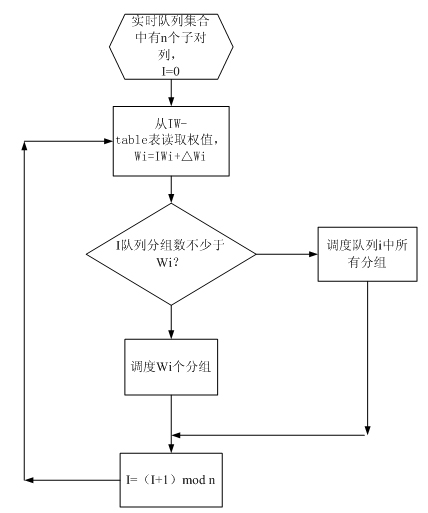

Differentiated service-based queue scheduling method

InactiveCN101964758AGuaranteed low latency requirementsGuaranteed Latency RequirementsData switching networksDifferentiated servicesQos quality of service

The invention discloses a differentiated service-based queue scheduling method Deficit Weighed Round Robin plus (DWRR+). In the method, the maximum byte count of a sent packet in once service is dynamically set according to the length of a packet in the current queue, so that the delay characteristic of a low-weight service is ensured, the relative fairness of bandwidth allocation is ensured, the defect that a low-priority queue may not be serviced for a long time is overcome, and the fact that a Deficit Weighed Round Robin plus (DWRR) algorithm cannot better met the requirement on the delay characteristic of the service is improved; a priority queue is set, and a token bucket algorithm is taken as a flow regulator, so that the priority of a real-time service is ensured; and the DWRR+ algorithm and a priority scheduling algorithm PQ are combined to serve as the scheduling strategy of a network node scheduler, so that on the premise of ensuring the priority of the real-time service and output bandwidths of other services, the time delay is reduced, and the service quality of different services can be ensured to a certain extent.

Owner:NANJING UNIV OF POSTS & TELECOMM

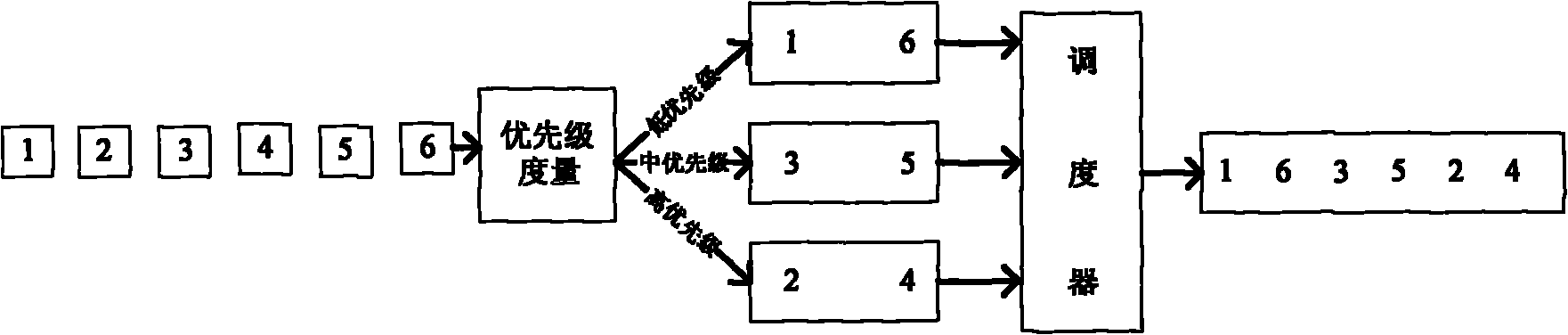

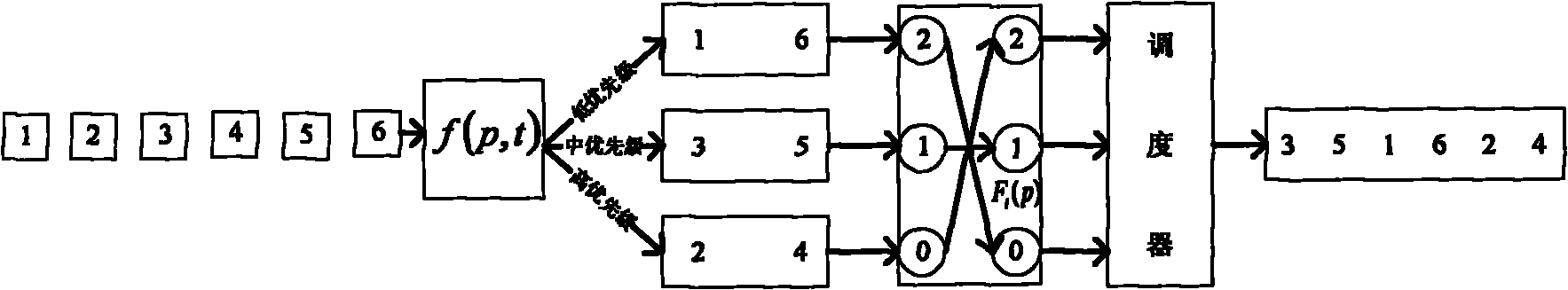

Virtual dynamic priority packet dispatching method

InactiveCN102014052AAddress inequitiesNetwork traffic/resource managementData switching networksTime delaysNetwork packet

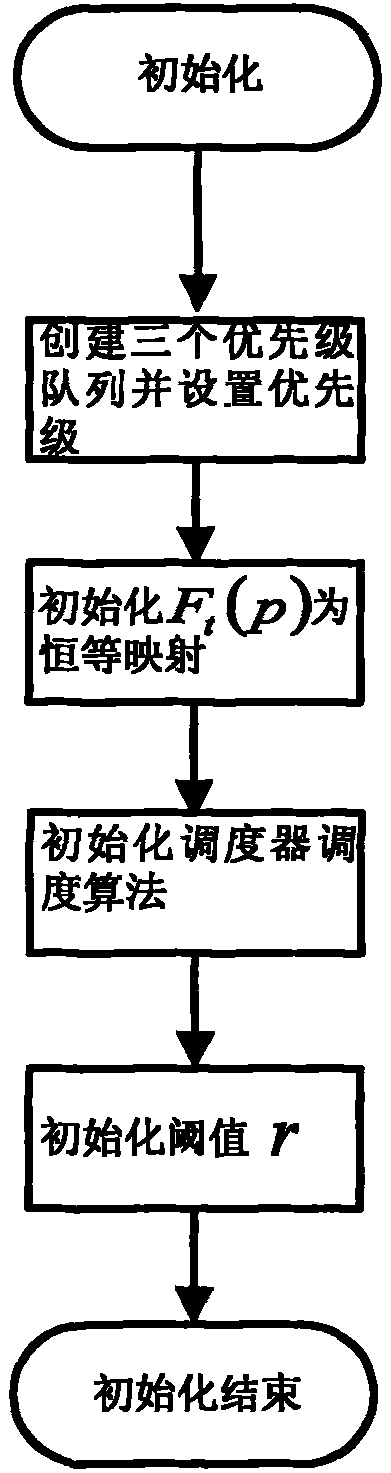

The invention relates to a virtual dynamic priority packet dispatching method. The virtual dynamic priority packet dispatching method solves the problems of dynamic measurement of packet priority and unfairness of a packet priority dispatching algorithm by introducing a random variable c(t), a threshold value r and a mapping function Ft(p). The method comprises the following steps of: 1) initializing a priority queue, the mapping function Ft(p), a dispatcher dispatching algorithm and the threshold value r; 2) introducing the random variable c(t), and calculating the priority of a data packet according to the processing time delay, service type and value of the reaching data packet; 3) interpolating the data packet into the priority queue, and interpolating n data packets into the priority queue according to the priority calculated in the step 2); 4) solving a virtual priority corresponding to the current priority queue; and 5) putting the data packets into a sending queue by using a dispatcher and sending the data packets. Therefore, the virtual dynamic priority packet dispatching method is implemented.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

Method for managing combined wireless resource of self-adaption MIMO-OFDM system based on across layer

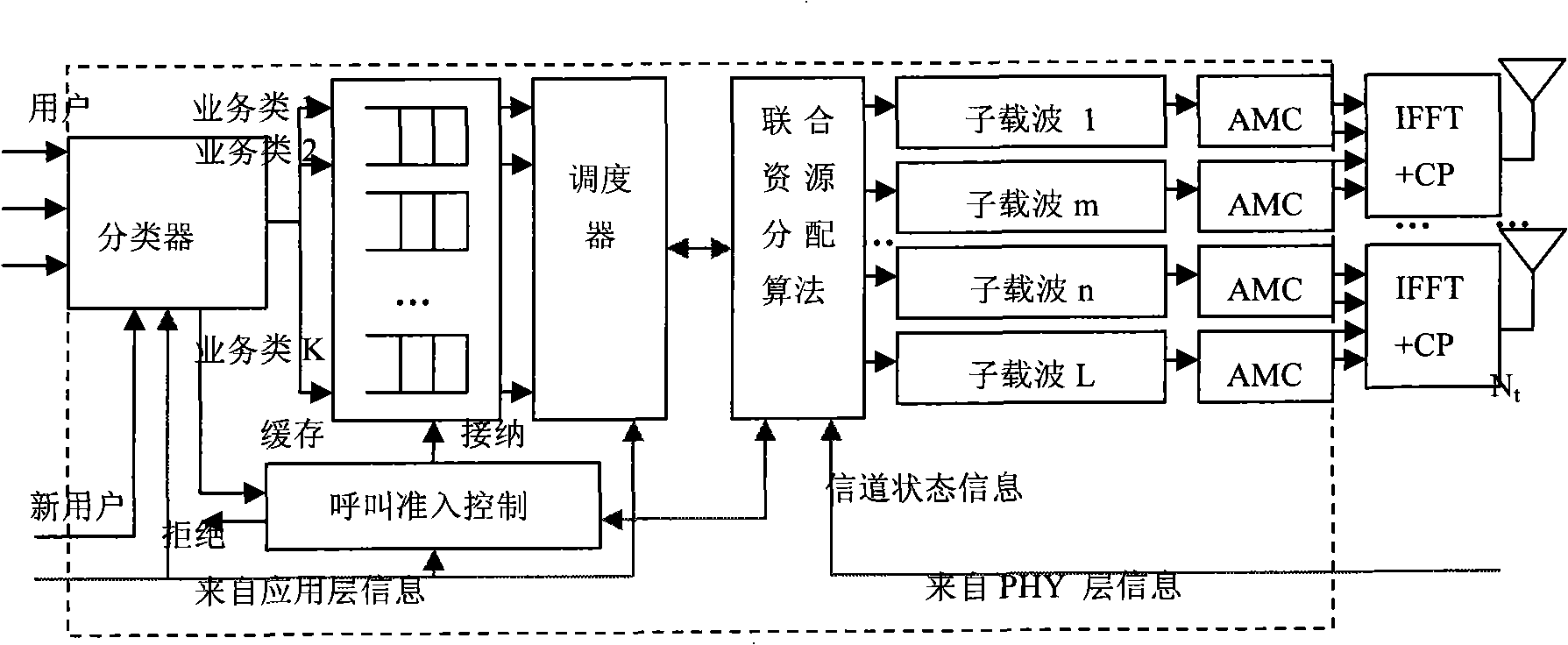

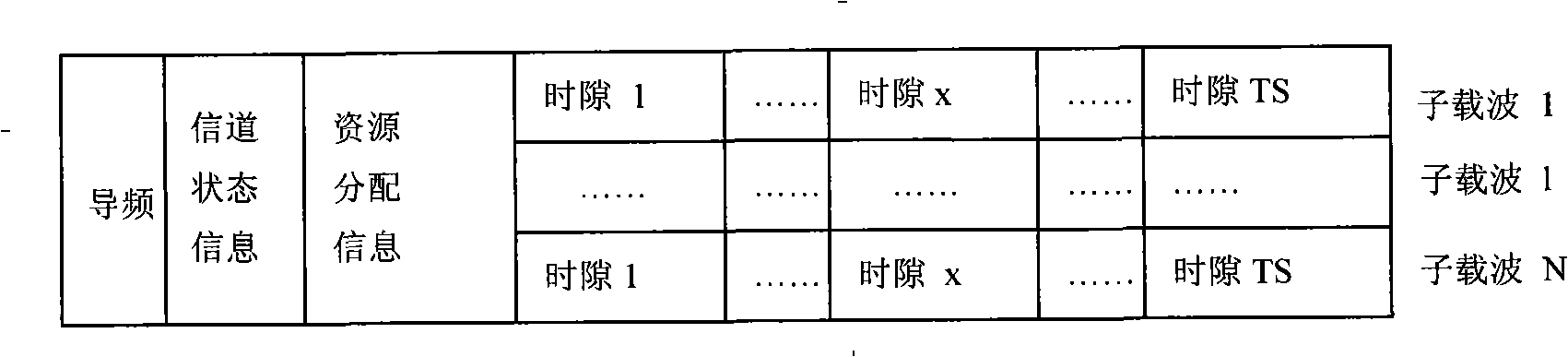

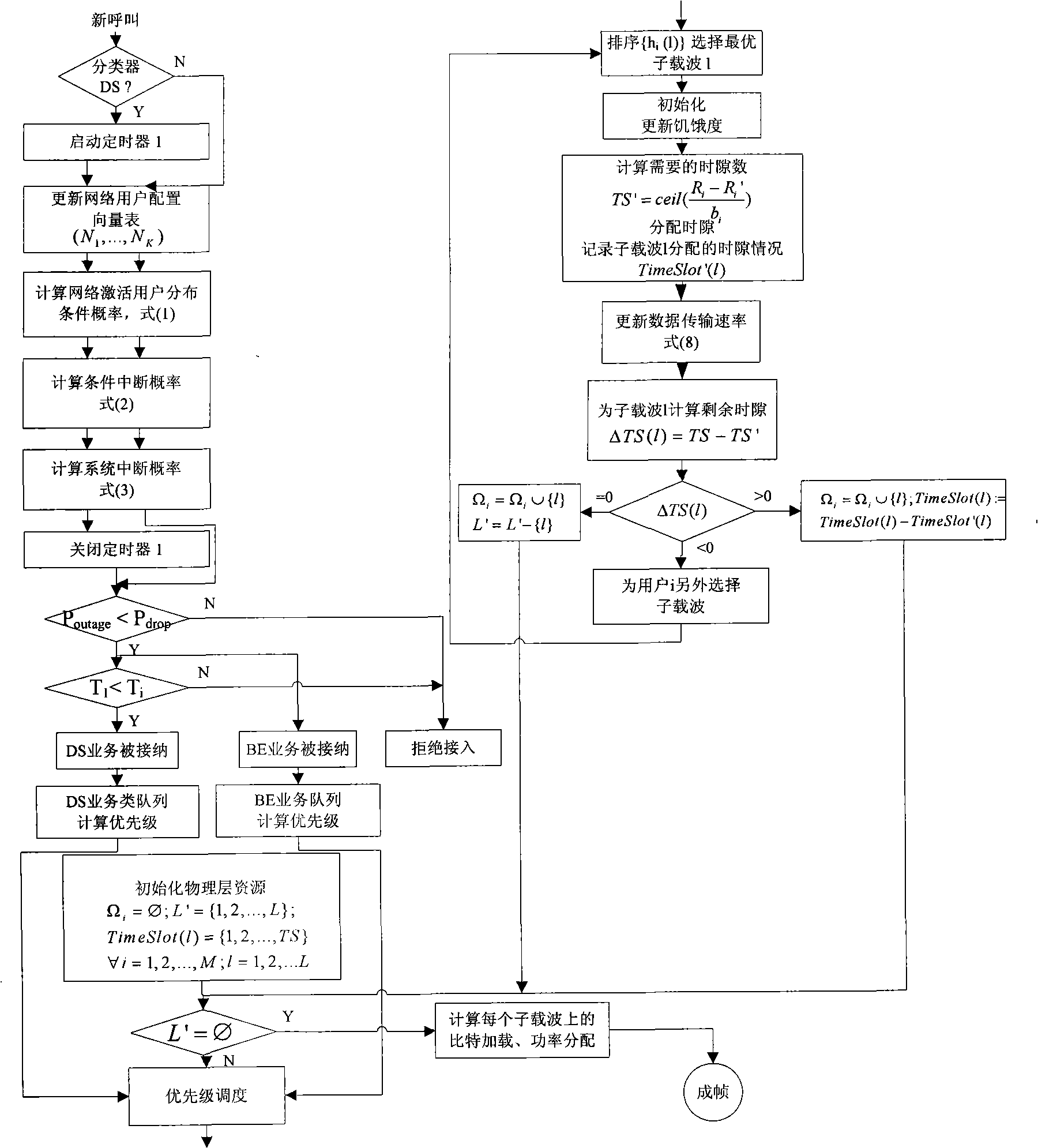

InactiveCN101282324ABreaking down assumptions of occupancyImprove performanceSpatial transmit diversityMulti-frequency code systemsInformation transmissionData link layer

The invention relates to a joint radio resource management method based on a cross-layer and used to a self-adapting MIMO-OFDM system, comprising: when a plurality of users initiate access requirement, a classifier processes to classify according to service types of the access requirement, and CAC module decides if the access requirement is allowed to accept according to different QoS requirements of a heterogeneous service and resource conditions; a priority scheduling mechanism faces the users, and a scheduler processes to schedule for the heterogeneous service types, different QoS requirements, and user preference information according to priority rules, and transmits information to a physical layer module, so as to determine the users of each time slot which is provided with resource allocation options; the physical layer adopts a resource allocation algorithm to allocate sub-carriers, loading bit streams and allocate corresponding transmission power according to data link layer information, and designs a net utility function for each classification on the base of the game theory, and transforms a target of pursuing net utility function into solving a non-cooperation game process. Compared with traditional methods, the method shows better performance advantages on outage probability, degree of satisfaction of the users for the service and system throughput.

Owner:BEIJING JIAOTONG UNIV

Scheduling method and device

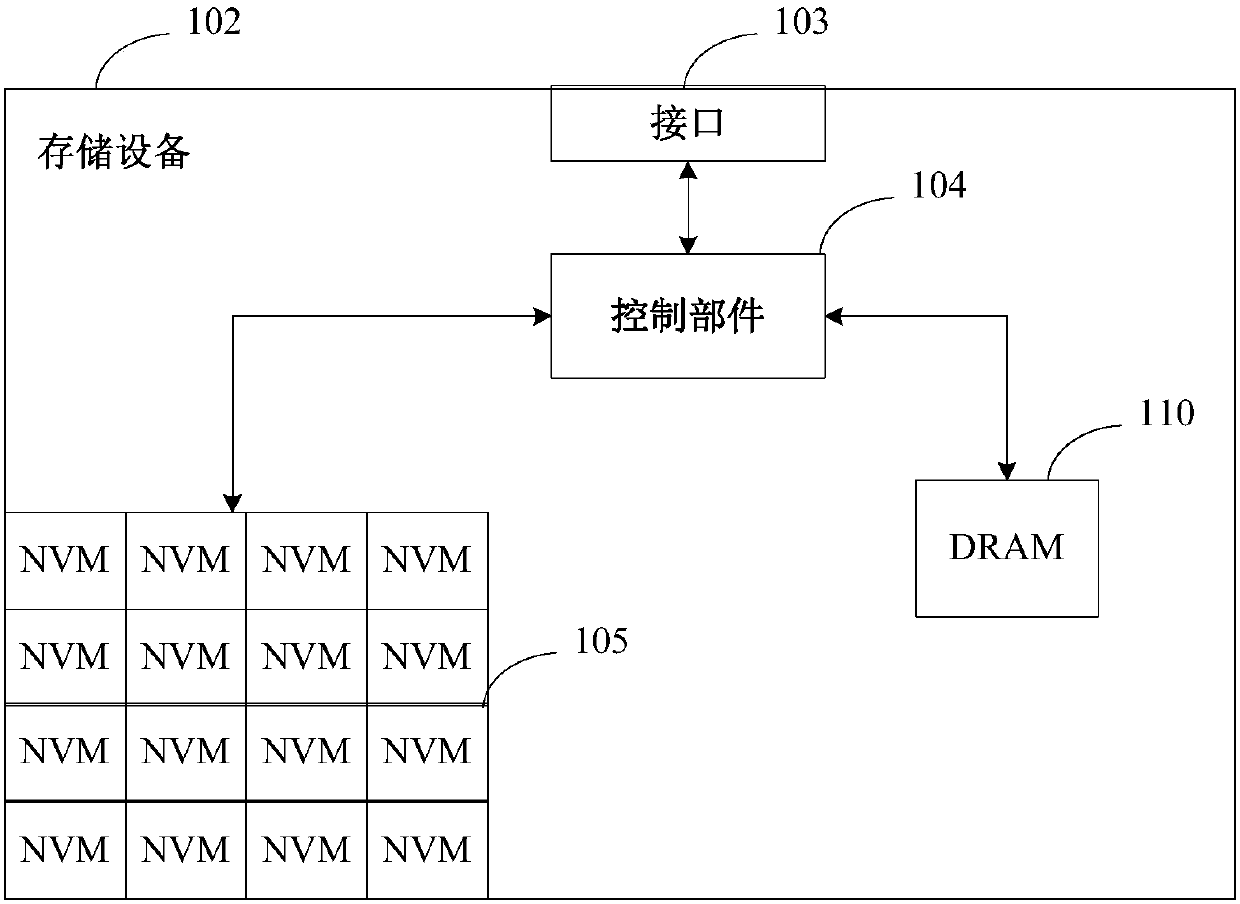

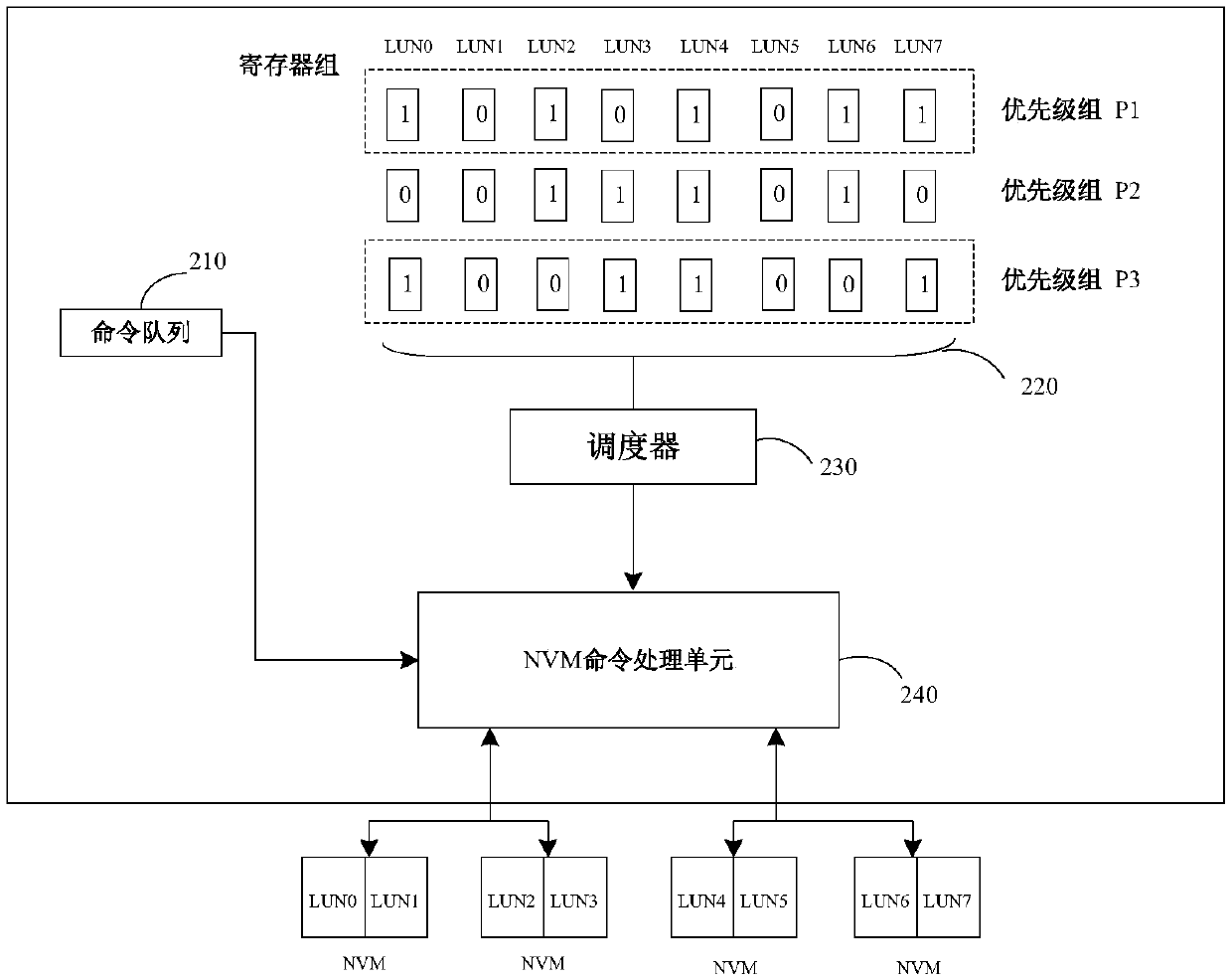

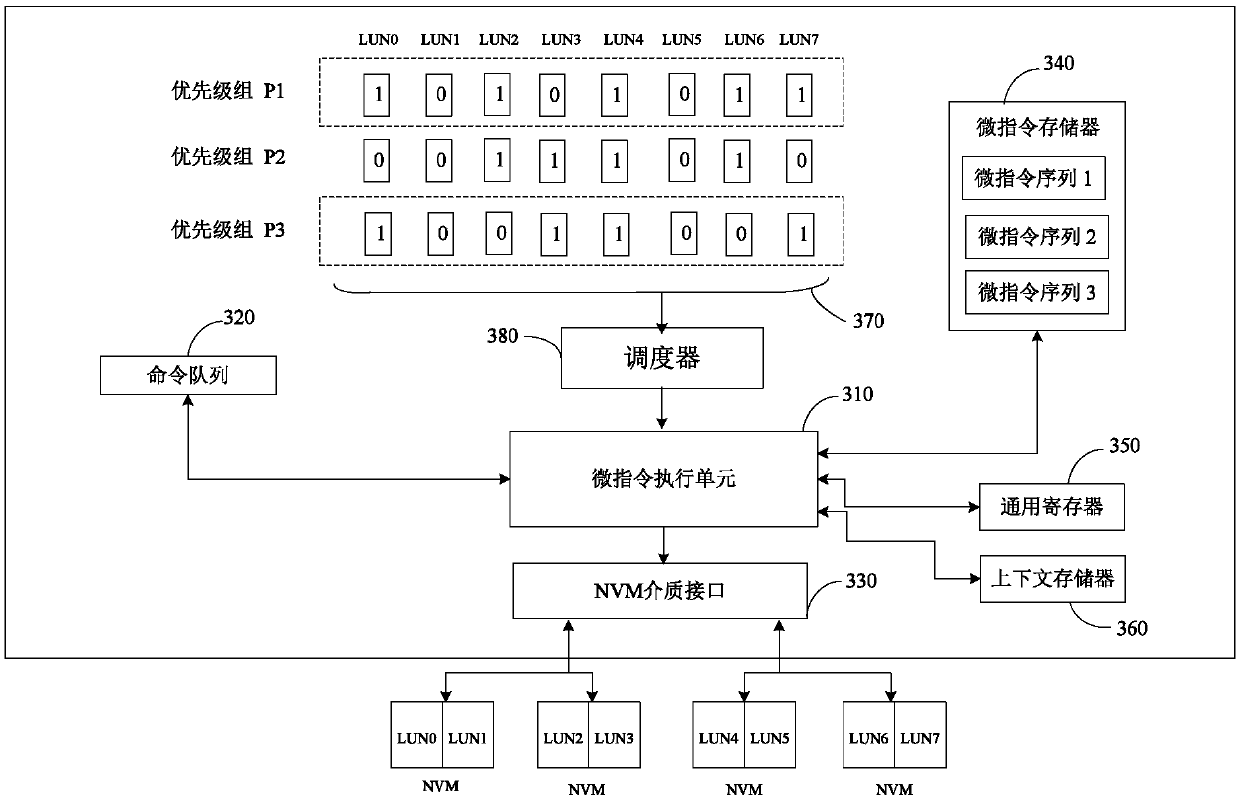

PendingCN107870779AImprove scheduling efficiencyIncrease configuration flexibilityRegister arrangementsInstruction memoryComputer architecture

The invention discloses a scheduling method and device. The scheduling method comprises the following steps of: selecting a first register with a first value from a high-priority group of a register group, wherein the first register corresponds to a first to-be-processed event; and scheduling a first thread corresponding to the first register to process the first to-be-processed event. The scheduling device comprises a command queue, a micro instruction memory, a micro instruction execution unit, the register group, a scheduler and an NVM medium interface, wherein the register group is used for indicating to-be-processed events and priorities of the to-be-processed events; the scheduler is used for scheduling threads according to registers in the register group; and the micro instruction execution unit is used for receiving the indication of the scheduler and executing the scheduled threads.

Owner:BEIJING STARBLAZE TECH CO LTD

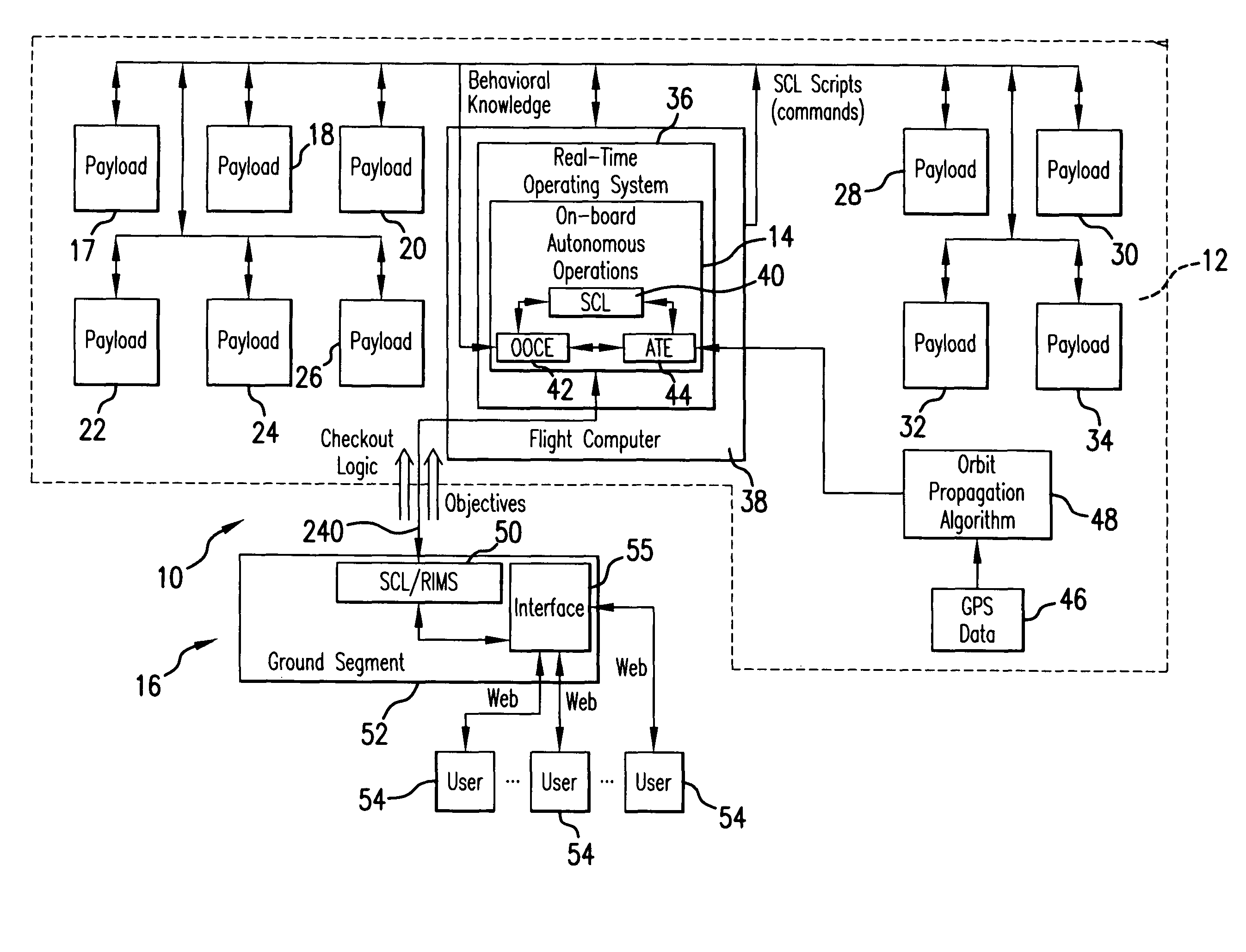

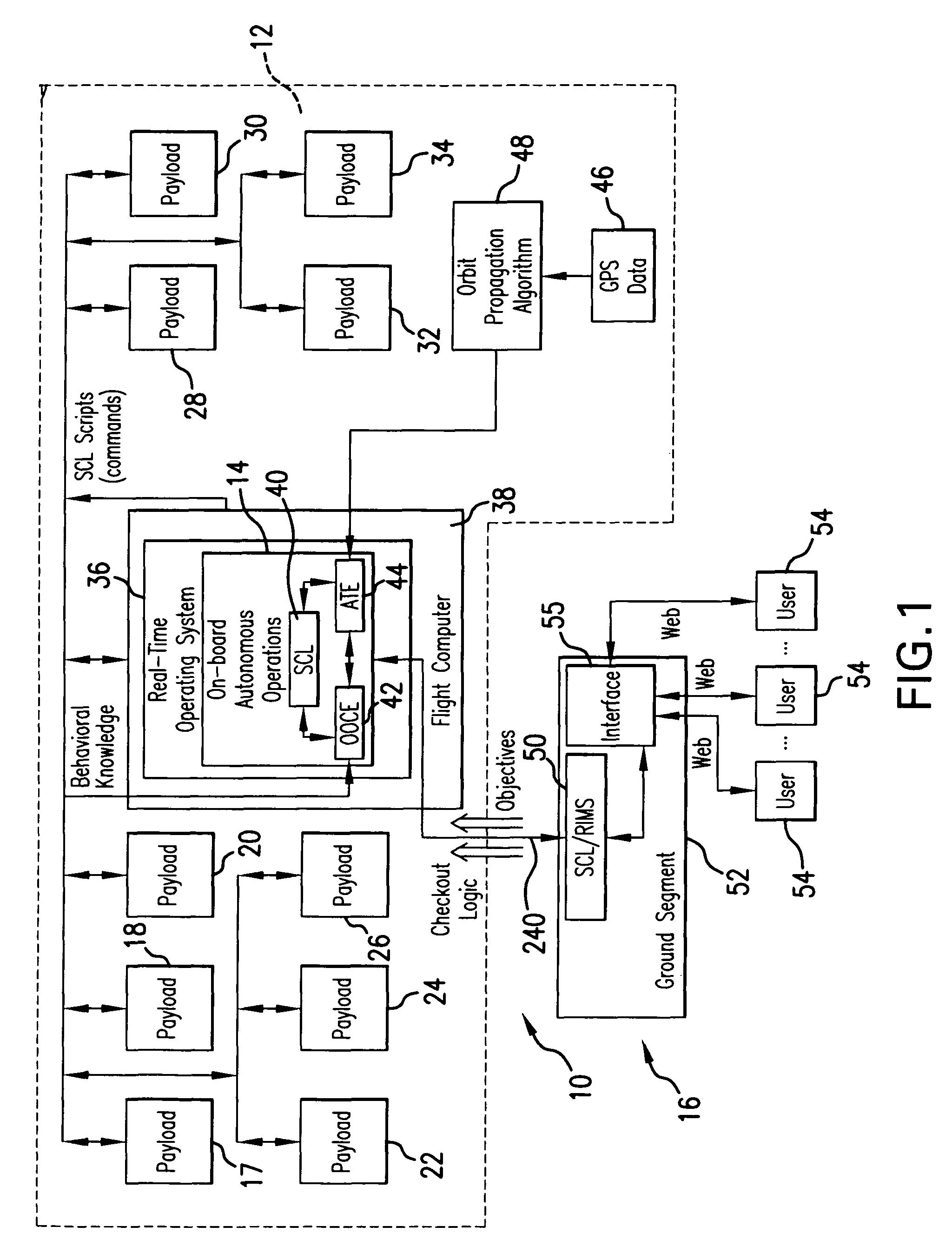

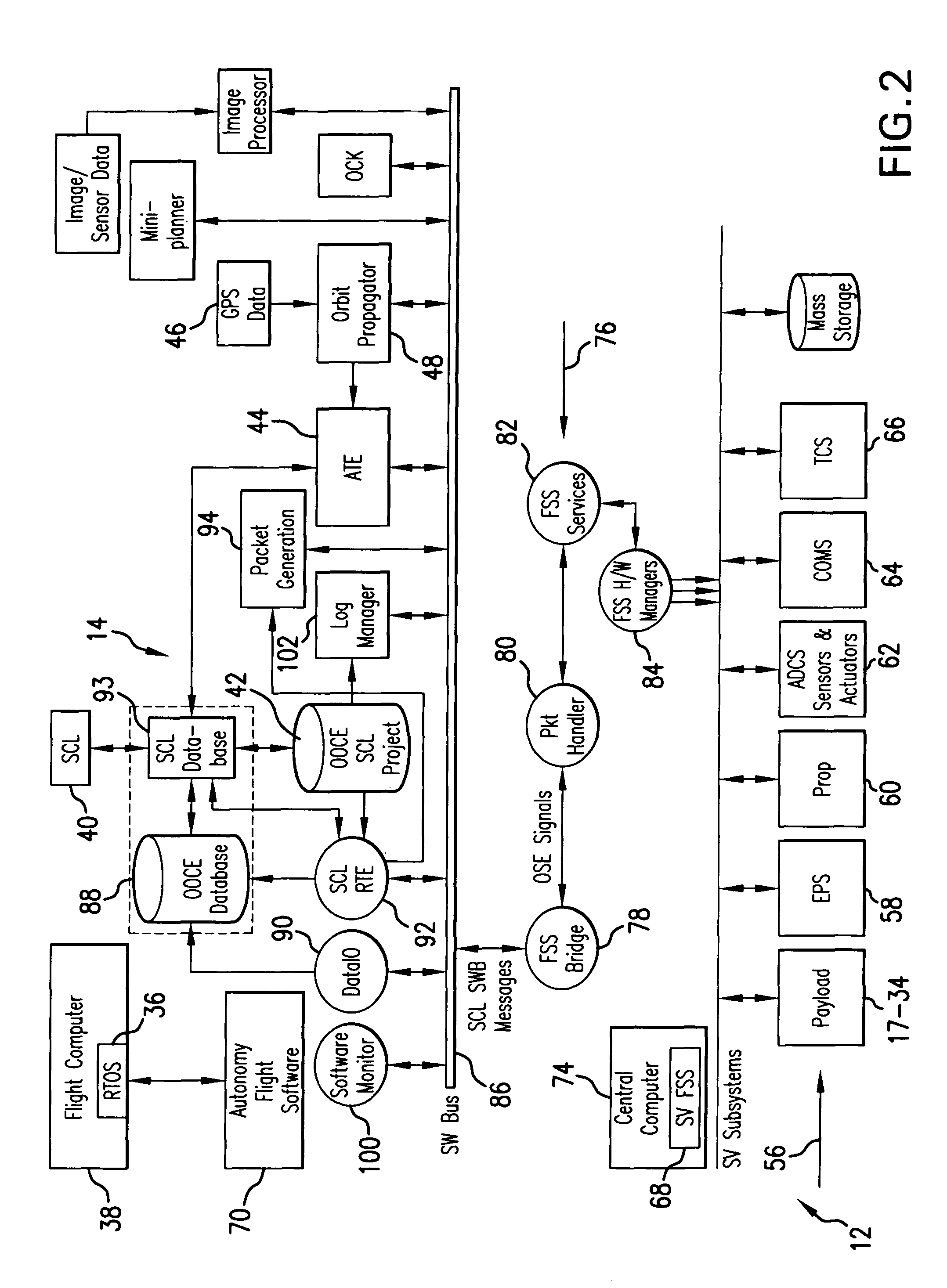

Intelligent system and method for spacecraft autonomous operations

InactiveUS7856294B2Improve responsivenessReduce time delayVehicle testingRegistering/indicating working of vehiclesCommand languageOn board

The intelligent system for autonomous spacecraft operations includes an on-board Autonomous Operations subsystem integrated with an on-ground Web-based Remote Intelligent Monitor System (RIMS) providing interface between on-ground users and the autonomous operations intelligent system. The on-board Autonomous Operation subsystem includes an On-Orbit Checkout Engine (OOCE) unit and an Autonomous Tasking Engine (ATE) unit. Spacecraft Command Language (SCL) engine underlies the operations of the OOCE, ATE and RIMS, and serves as an executer of commands sequences. The OOCE uses the SCL engine to execute a series of SCL command scripts to perform a rapid on-orbit checkout of subsystems / components of the spacecraft in 1-3 days. The ATE is a planning and scheduling tool which receives requests for “activities” and uses the SCL scripts to verify the validity of the “activity” prior to execution, and scheduling the “activity” for execution or re-scheduling the same depending on verification results. Automated Mission Planning System (AMPS) supports the ATE operations. The ATE uses a priority schedule if multiple “activities” are to be executed.

Owner:SRA INTERNATIONAL

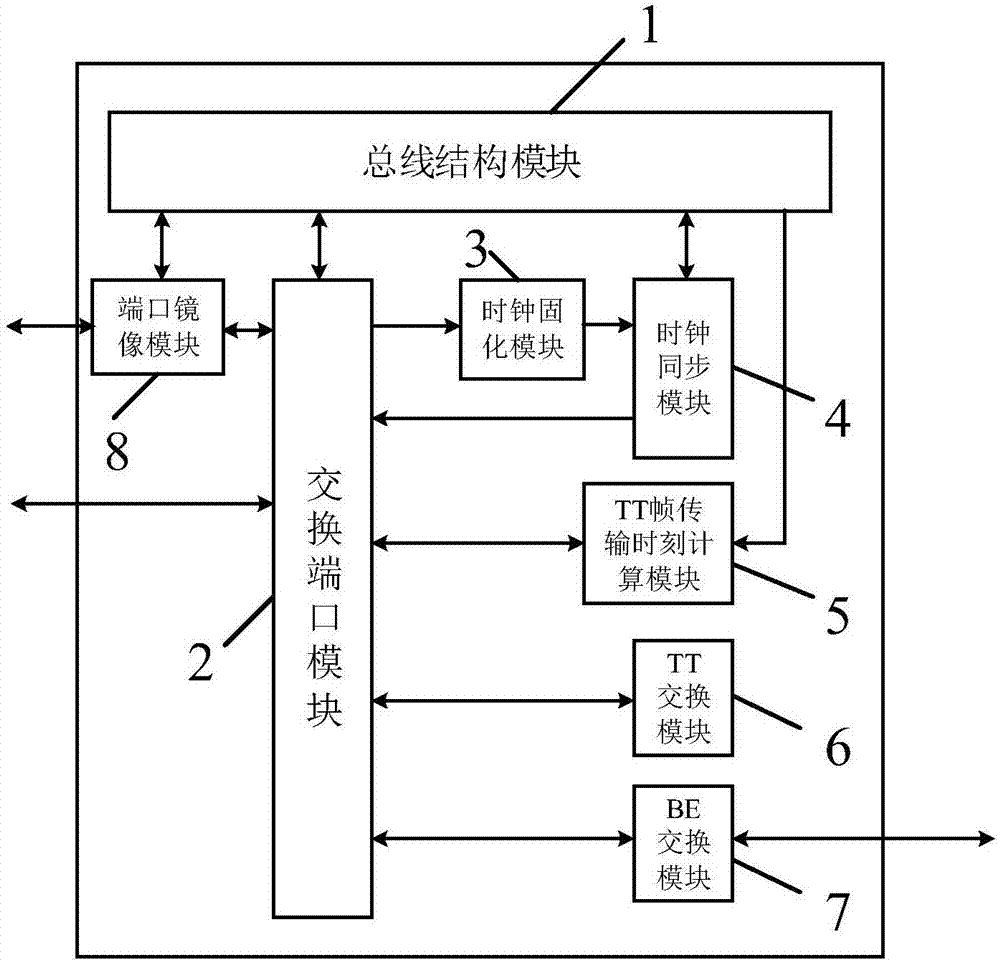

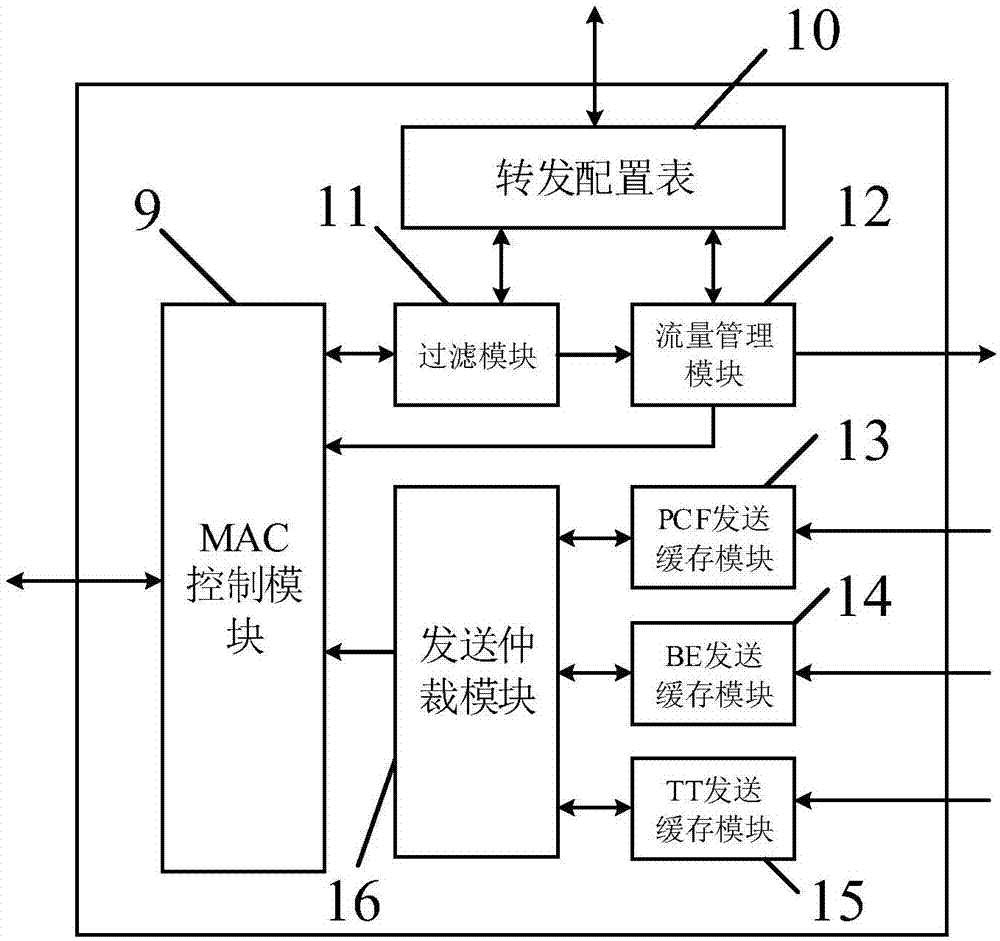

Time-triggered Ethernet exchange controller and control method thereof

ActiveCN107342833AReliable transmissionGuaranteed priority real-time exchangeTime-division multiplexData switching networksBus interfaceEmbedded system

The invention discloses a time-triggered Ethernet exchange controller and a control method thereof. The exchange controller comprises a bus interface module which is used for realizing data exchange between an external bus and an on-chip bus; a port mirroring module which is used for carrying out data debugging; an exchange port module which is used for carrying out key data analysis on received data frames; and a clock solidifying module which is used for restoring a synchronous data frame clock solidifying points. The exchange controller also comprises a TT frame transmission moment calculation module, a TT exchange control module, a BE exchange control module and a clock synchronization module connected with the clock solidifying module. According to the exchange controller and the method, legality judgment and traffic management are carried out on data through the exchange port module; the TT frame transmission moment calculation module finishes hardware real-time calculation of a TT frame transmission moment, and the data transmission moment configuration is simplified. Through combination of a virtual link and time slot division and through adoption of multi-priority scheduling and time slot locking technologies, the clock synchronization module realizes time compression processing in a pipeline mode, and fault isolation and recovery are supported.

Owner:XIAN MICROELECTRONICS TECH INST

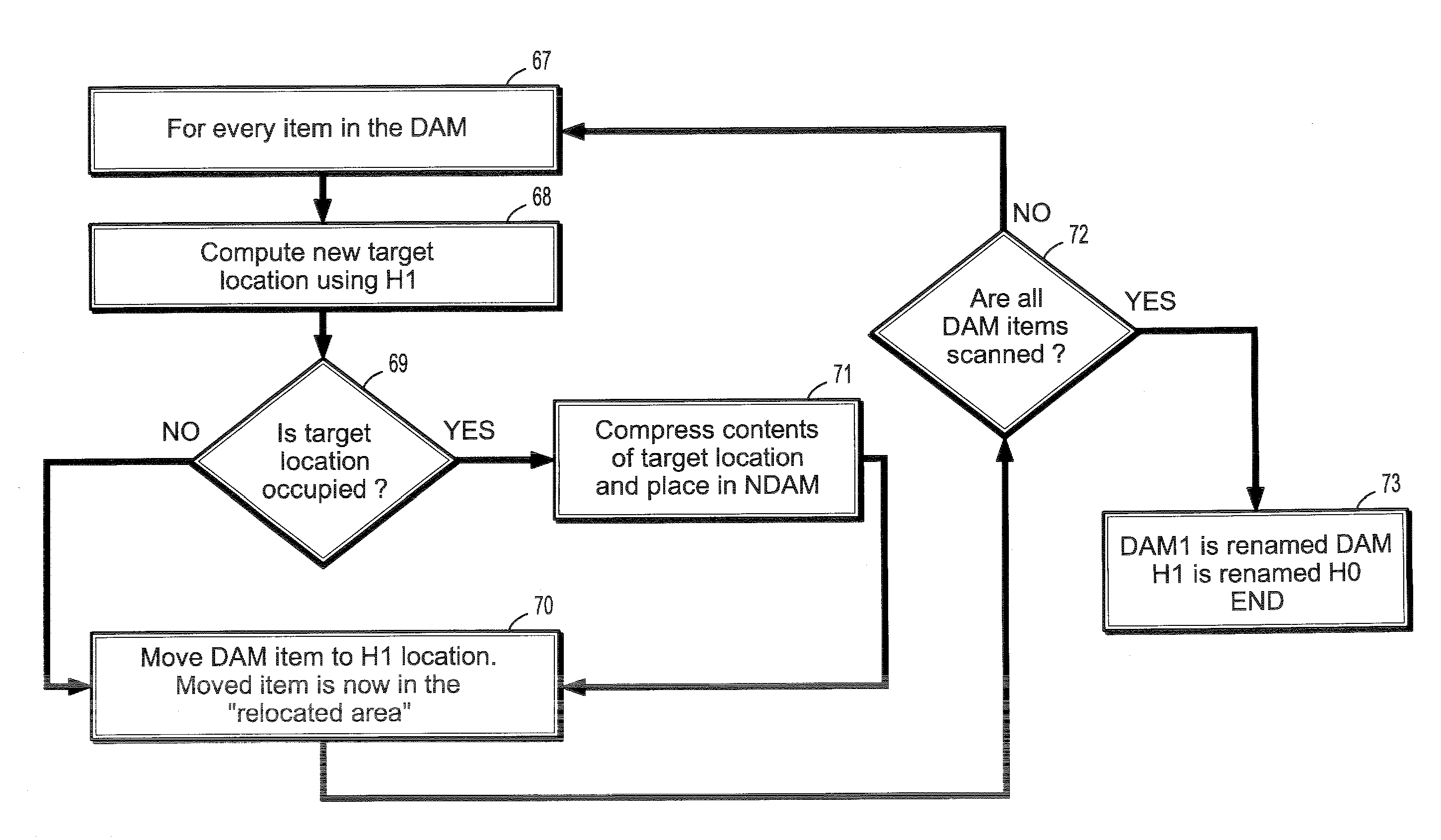

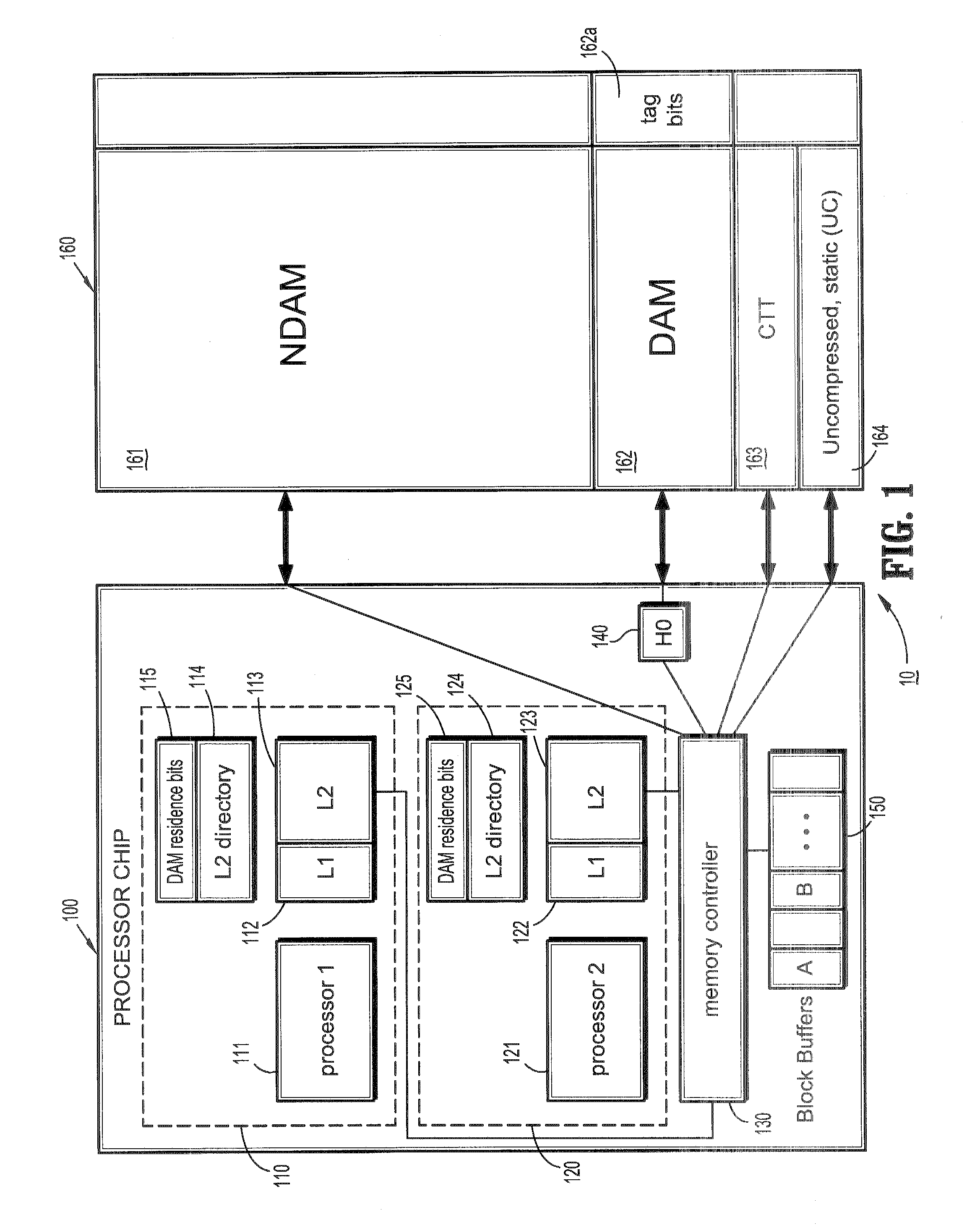

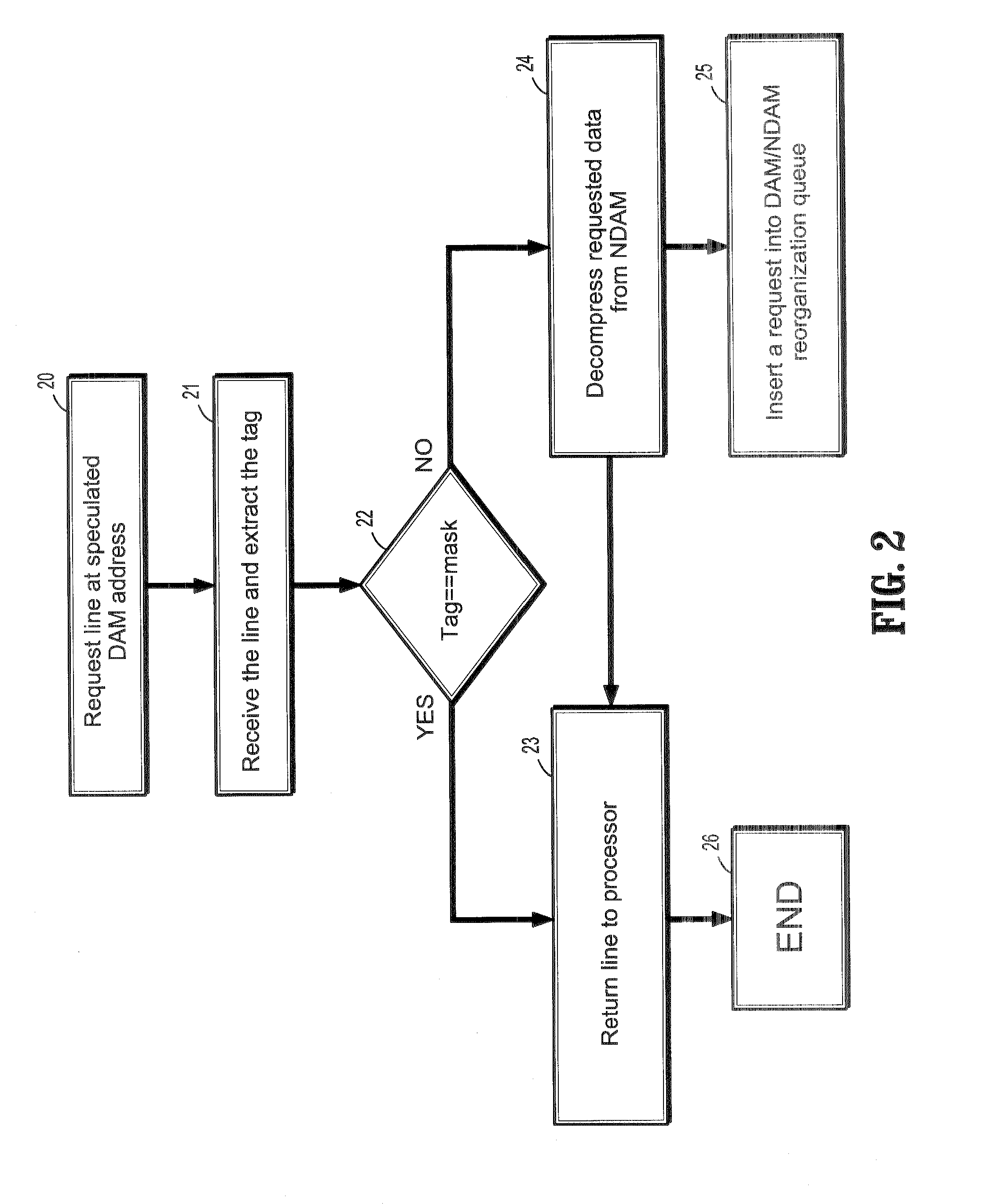

Systems and methods for masking latency of memory reorganization work in a compressed memory system

InactiveUS20080059728A1Work lessAllow useMemory architecture accessing/allocationMemory adressing/allocation/relocationComputer memoryManagement system

Owner:IBM CORP

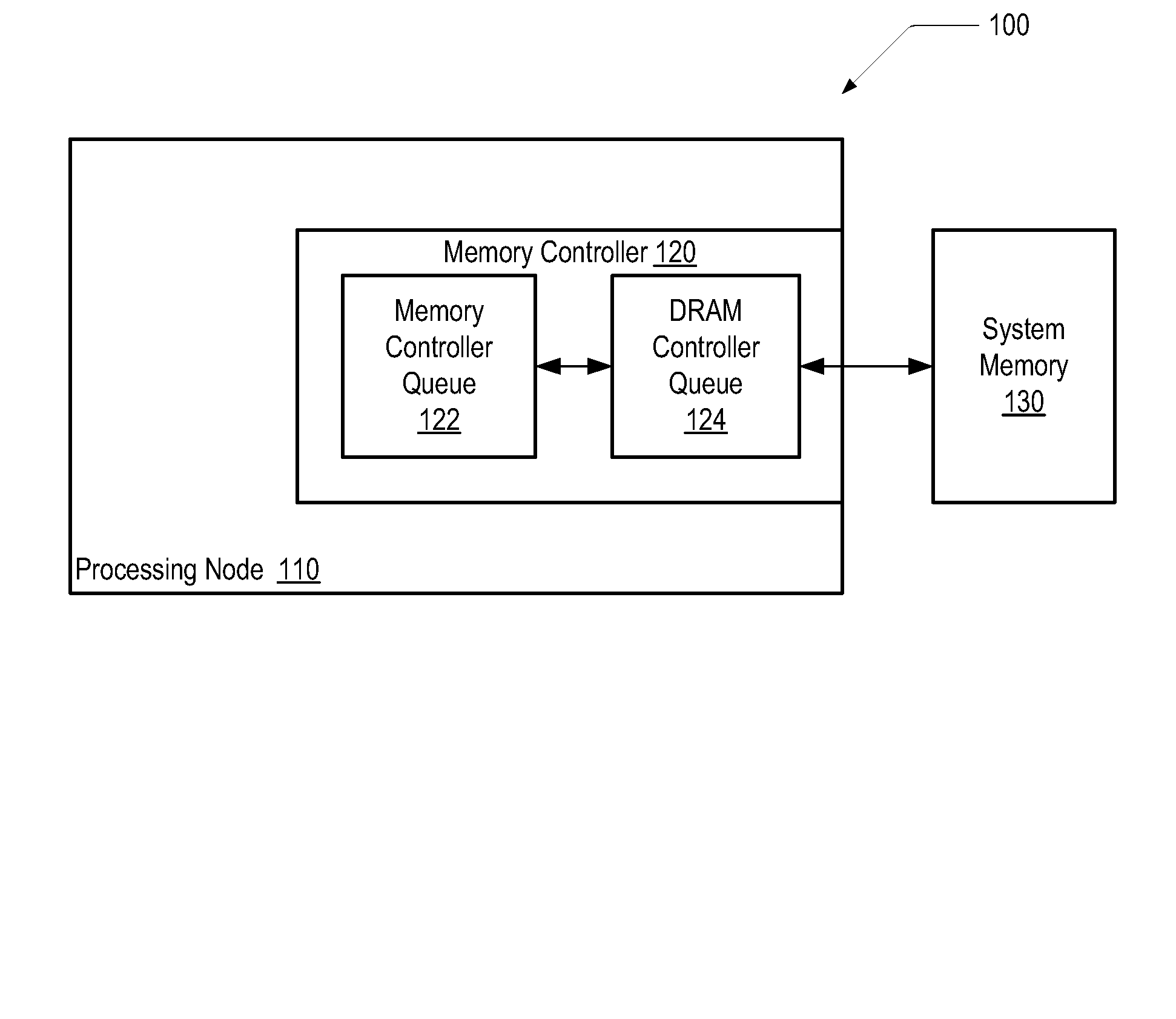

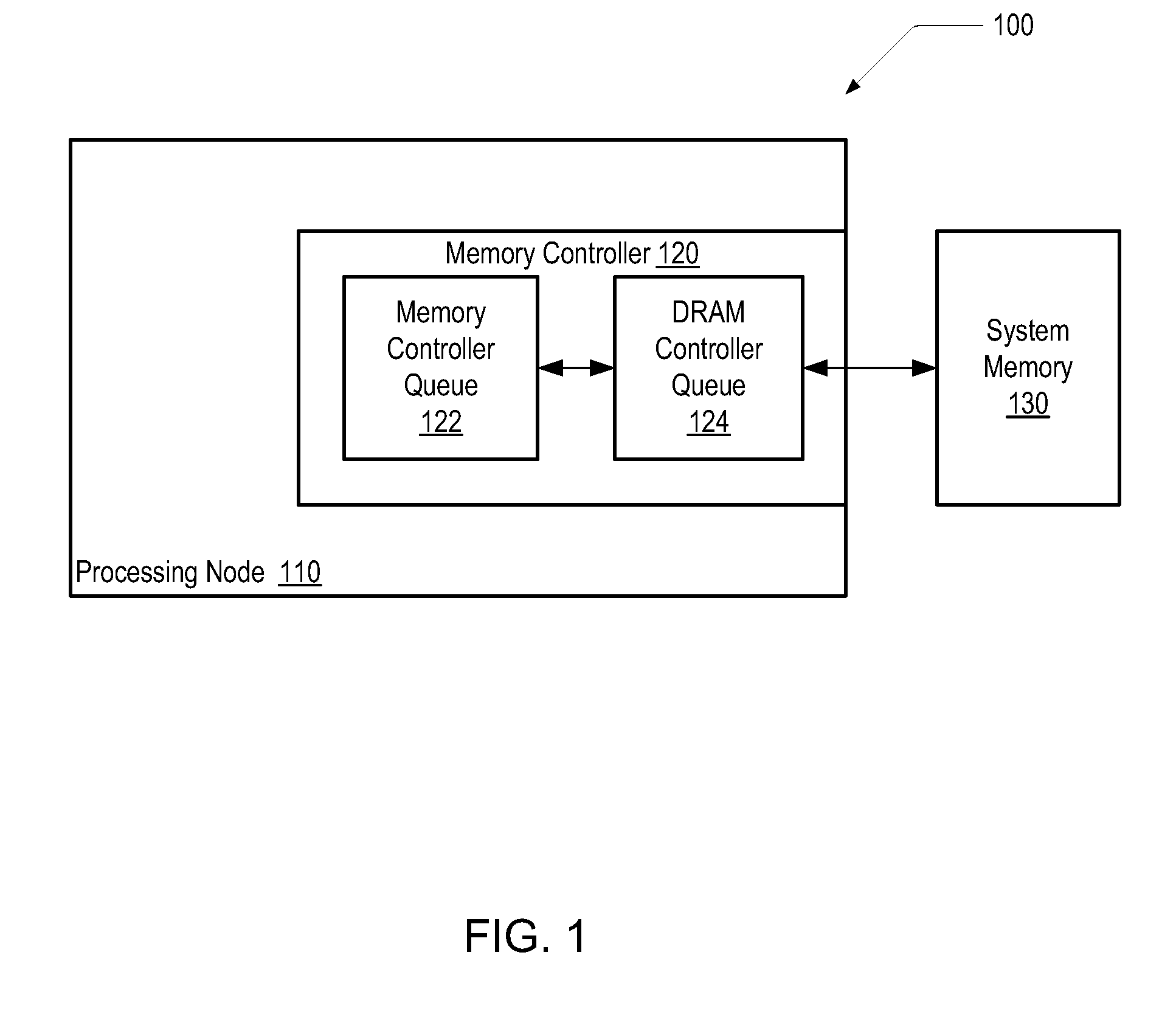

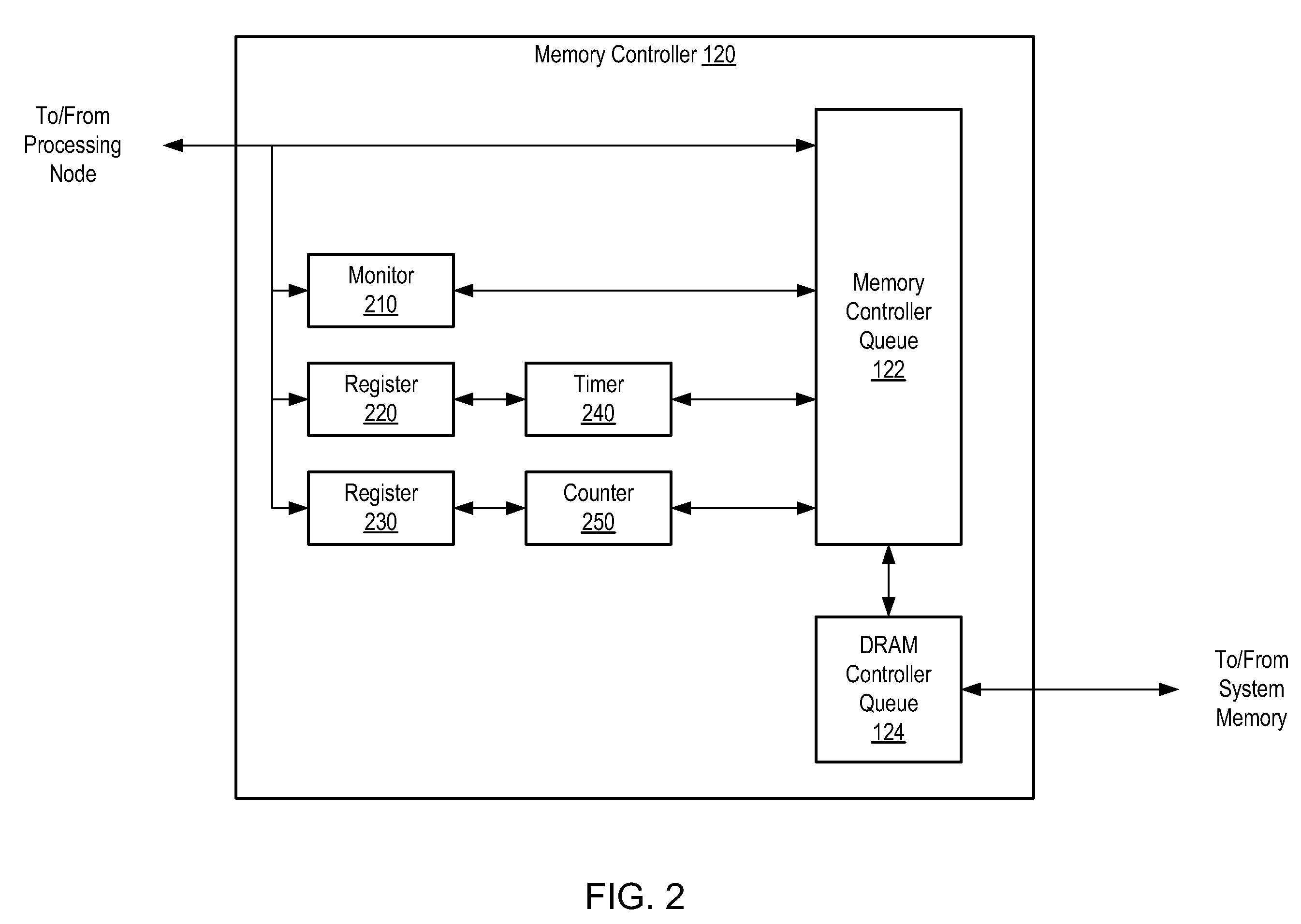

Memory controller prioritization scheme

Owner:ADVANCED MICRO DEVICES INC

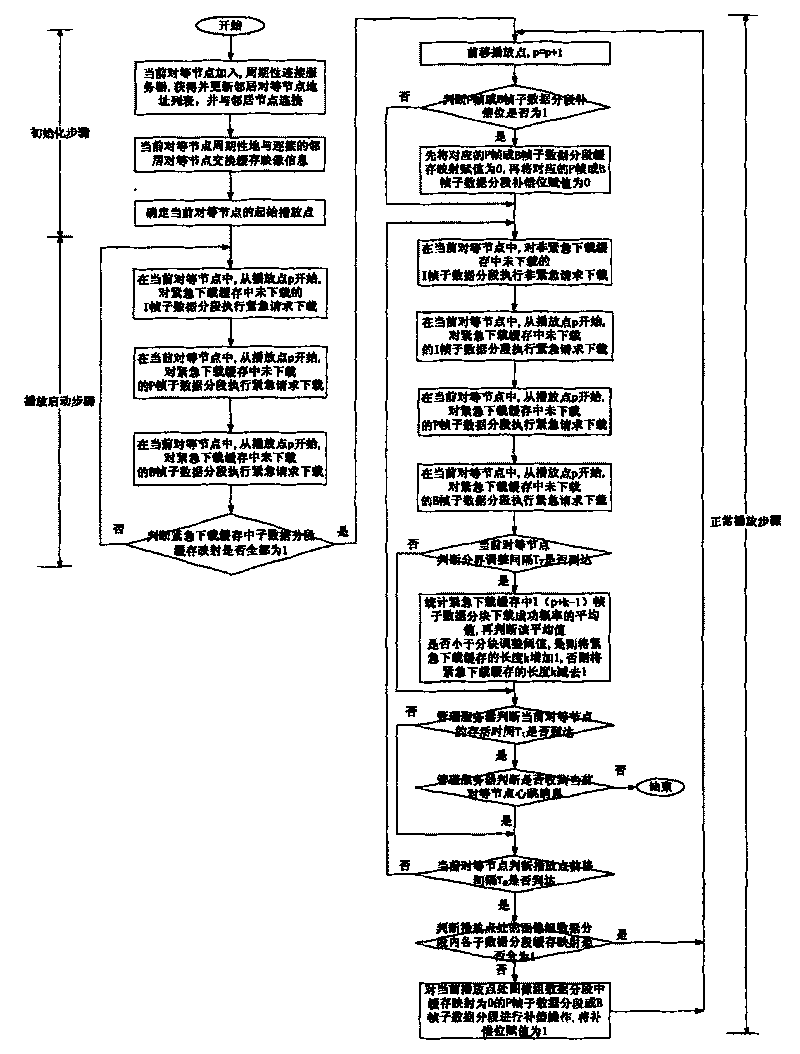

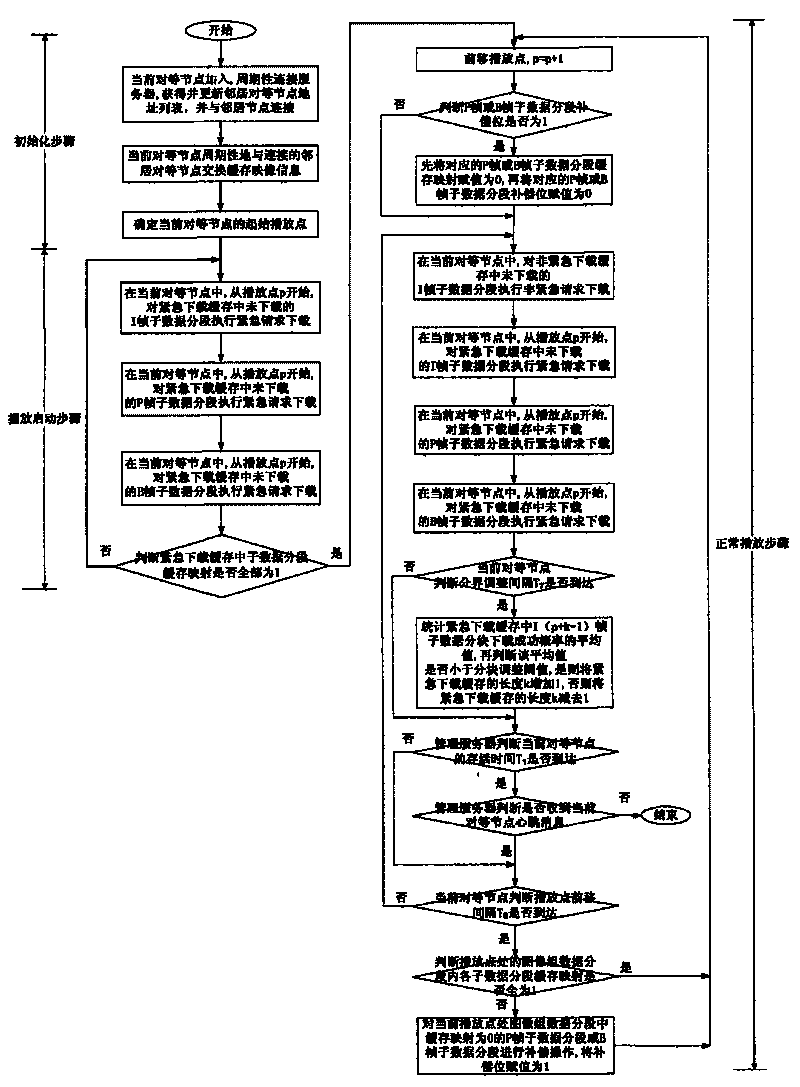

Method for scheduling P2P streaming media video data transmission

InactiveCN101697554APrevent proliferationGood playback continuityPulse modulation television signal transmissionTransmissionPacket lossVideo transmission

The invention relates to a method for scheduling P2P streaming media video data transmission, which belongs to a method for scheduling streaming media video data transmission and is used for transmitting streaming media video data on a network. The method solves the problems that the prior method for scheduling the P2P streaming media video transmission does not consider that video frames and downloading cache partitions have different importance degrees, thereby effectively promoting the playing continuity and further promoting the user experience quality. The method comprises the steps of initialization, startup playing and normal playing. The method can request the scheduling of an I frame subdata subsection in preference under the condition of having the same packet loss ratio, then the scheduling of a P frame subdata subsection, and finally the scheduling of a B frame subdata subsection, thereby keeping the playing continuity to the utmost extent and further effectively promoting the experience quality; besides, the method combining a sequential scheduling for an emergency download cache and a rare priority scheduling for a non-emergency download cache can reach the dynamic best compromise between the reduction of the starting time and improvement on the playing continuity and extendibility.

Owner:HUAZHONG UNIV OF SCI & TECH

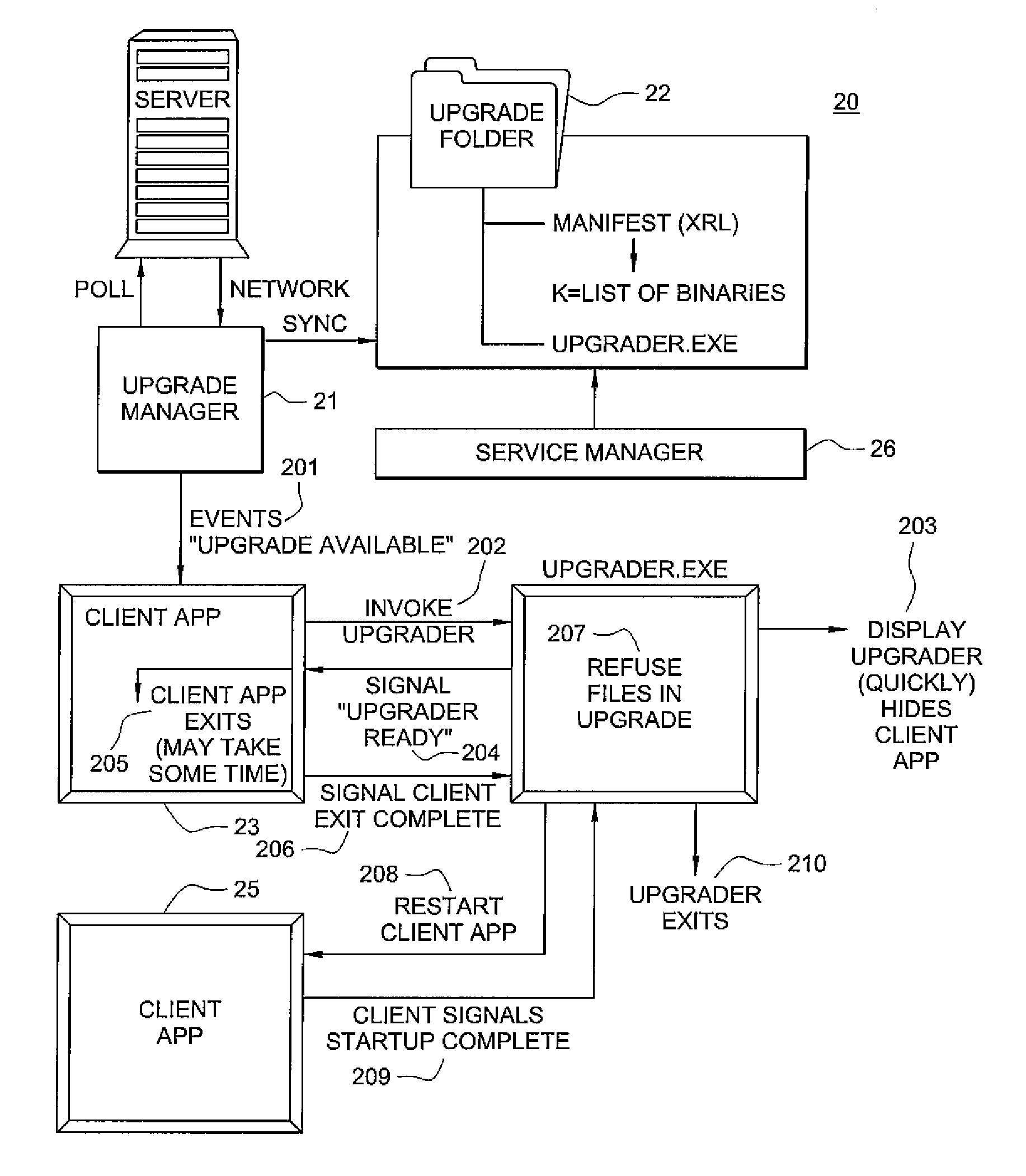

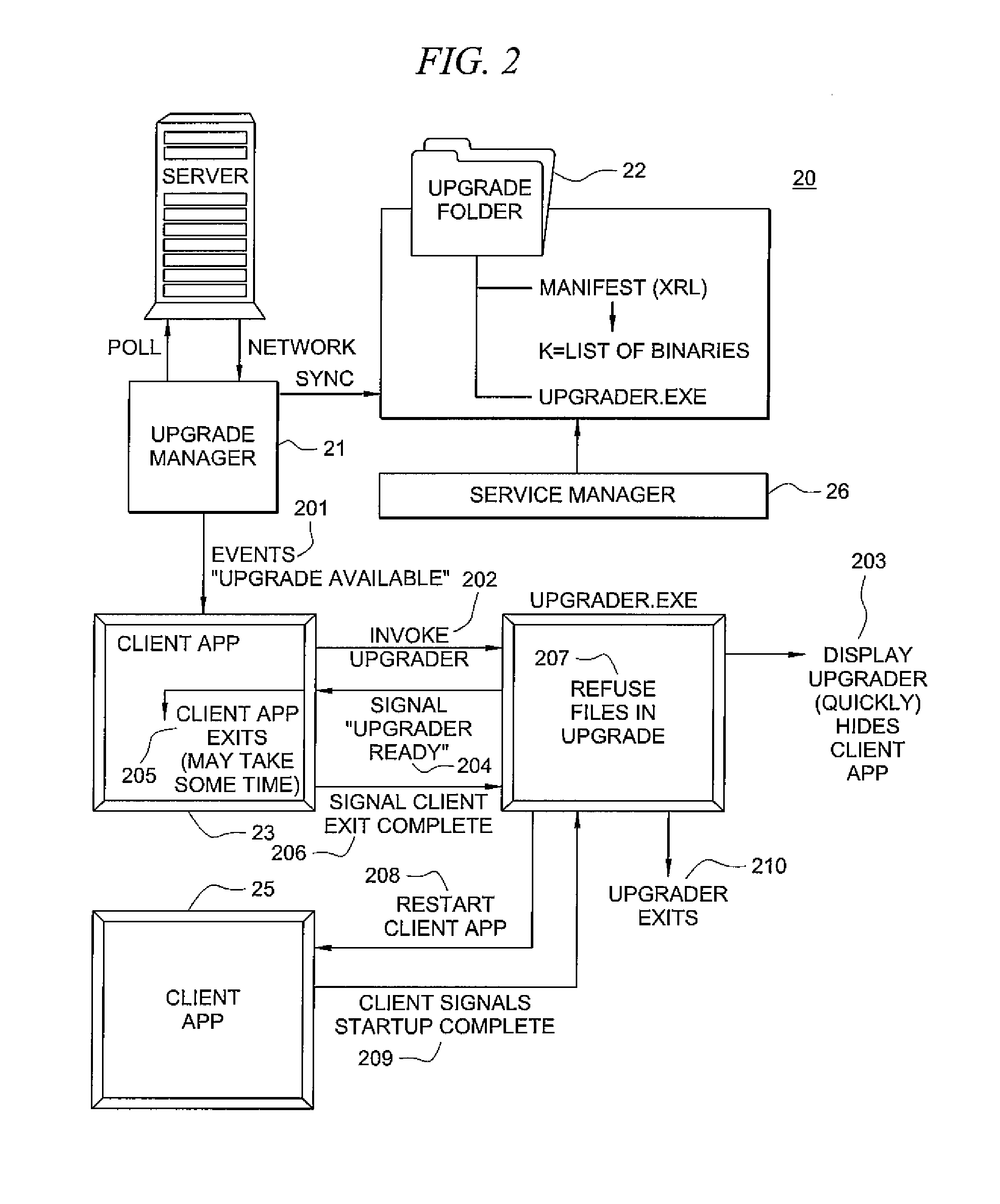

Systems and methods for coordinating the updating of applications on a computing device

InactiveUS20120210310A1Specific program execution arrangementsMemory systemsTime scheduleChange factor

The present invention is directed to systems and methods which schedule the updating of applications and / or application data to occur according to a priority dependant upon a variety of dynamically changing factors. In one embodiment, a service manager schedules the update from the network server to occur when the device on which the updating application resides is not otherwise busy with functions that would cause a burden on network usage or with the user's current experience with the device or with battery life. The new data is transferred from the network server to the wireless device, upgrading on an irregular schedule based on at least some factors individual to the particular applications. In the embodiment shown, after the service manager has determined that new data has been transferred to the device for a particular application, then that application is prompted to begin the data upgrade process only at a time when the impact on the user and on the battery level of the device is only minimally affected.

Owner:APPLE INC

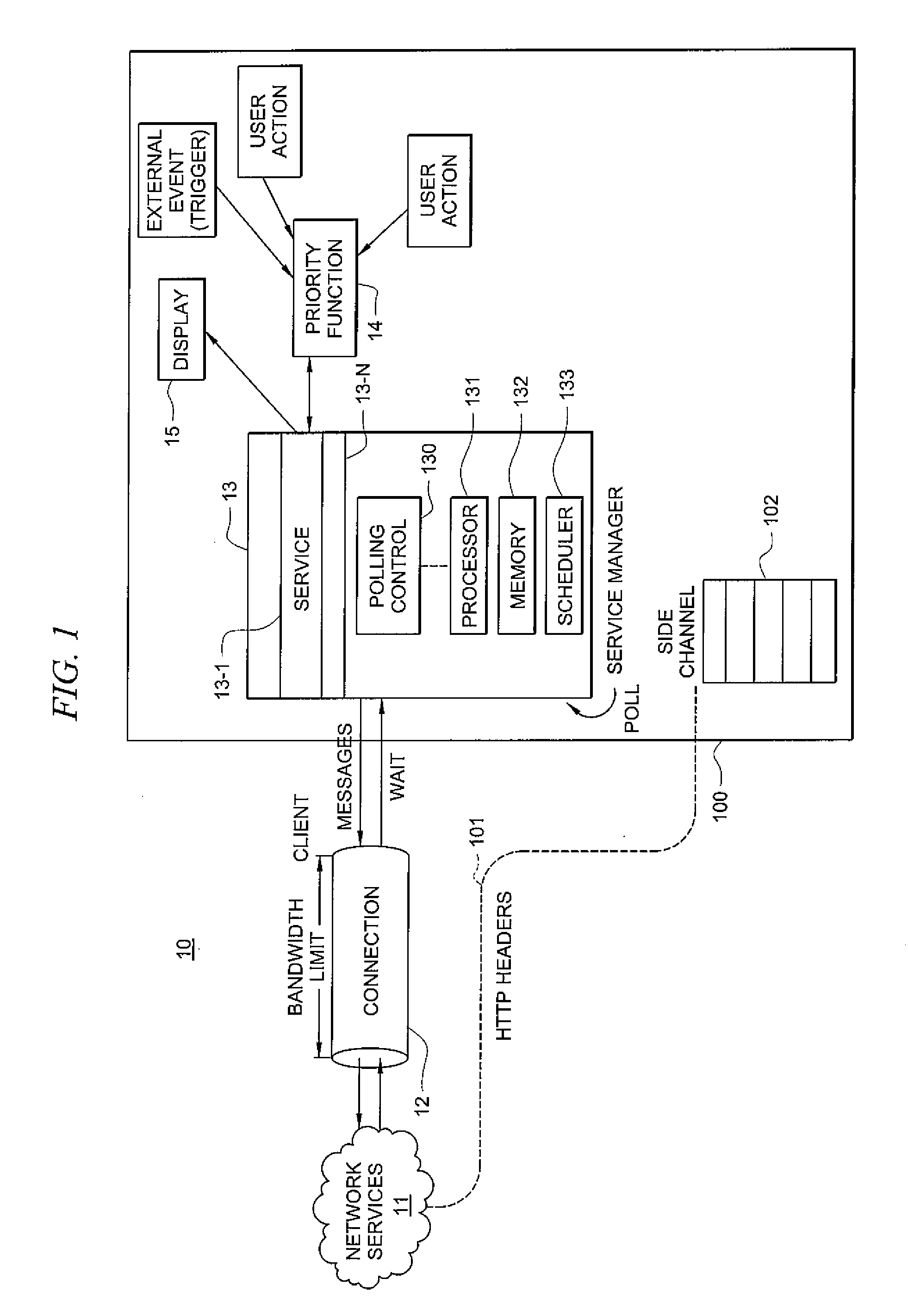

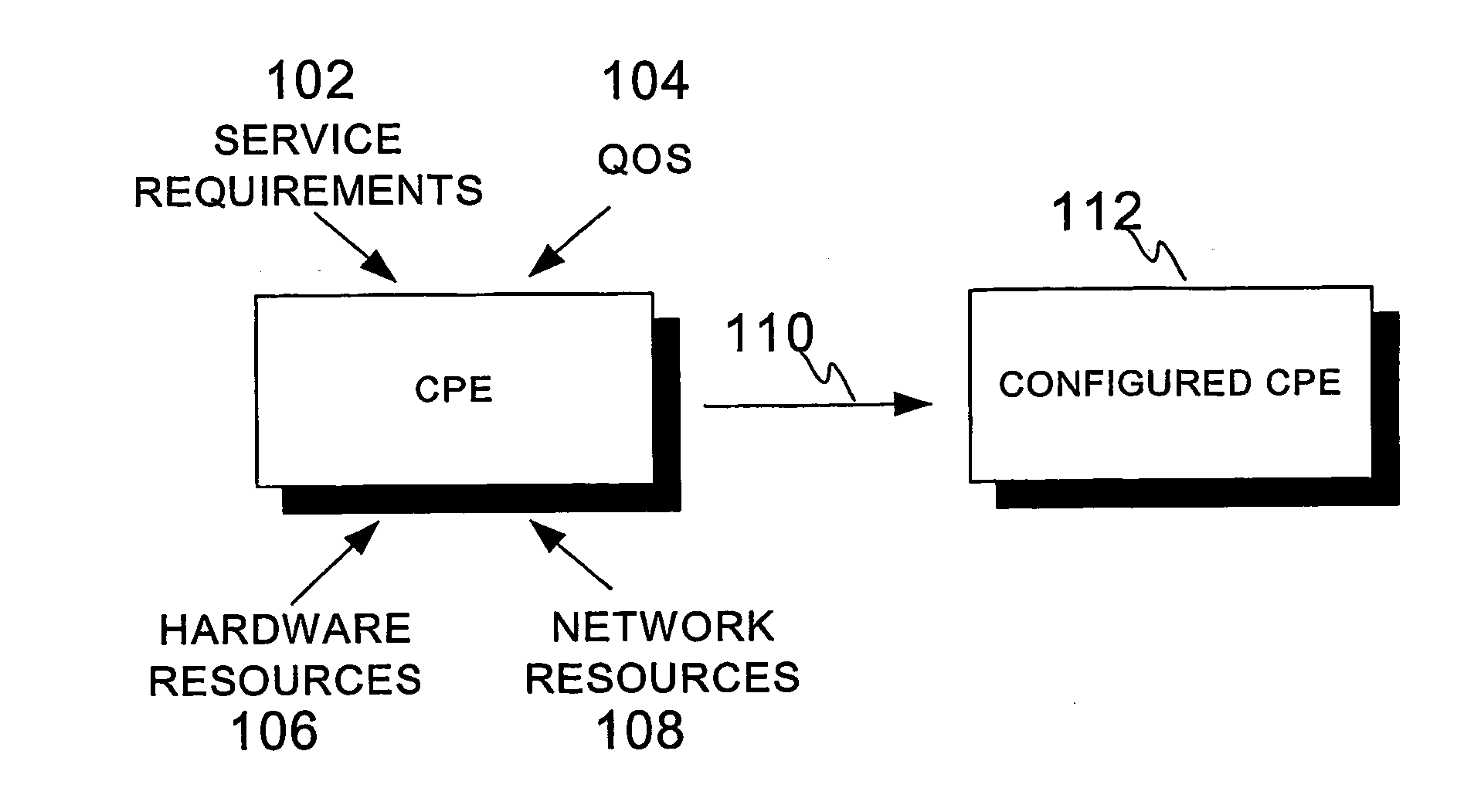

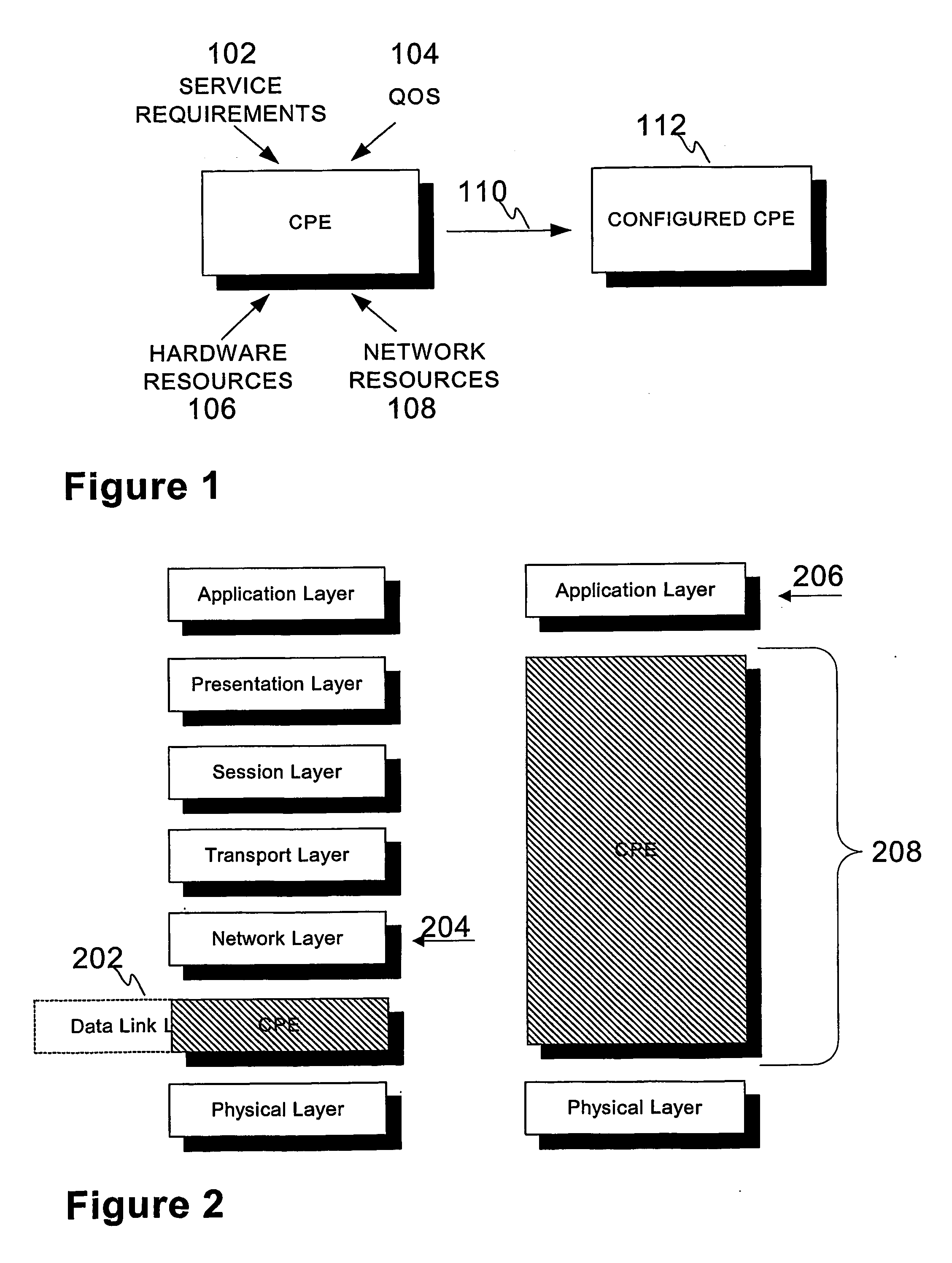

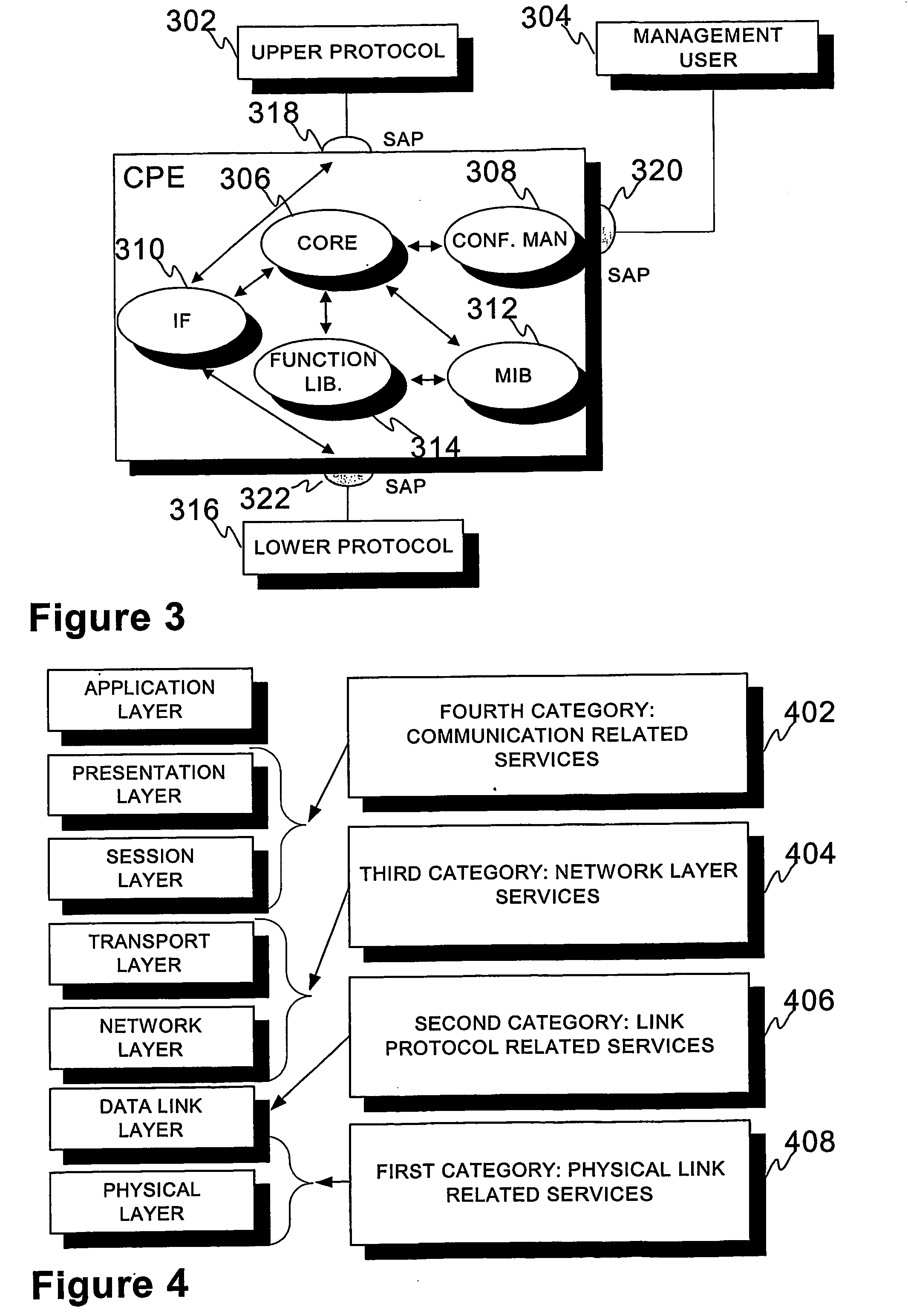

Configurable Protocol Engine

ActiveUS20080039055A1Meet the requirementsEfficient schedulingSpecial service for subscribersData switching by path configurationQuality of servicePriority call

A configurable protocol engine (CPE) capable of constructing (110) a desired protocol structure (112) according to the received configuration information. In addition, the CPE schedules the processing of received service primitives according to the priority levels thereof. The configuration information may include service requirements (102), indications of hardware and software resources (106, 108), and the required QoS (Quality of Service, 104) level. The CPE may be implemented as software, hardware, or as a combination of both.

Owner:NOKIA TECHNOLOGLES OY

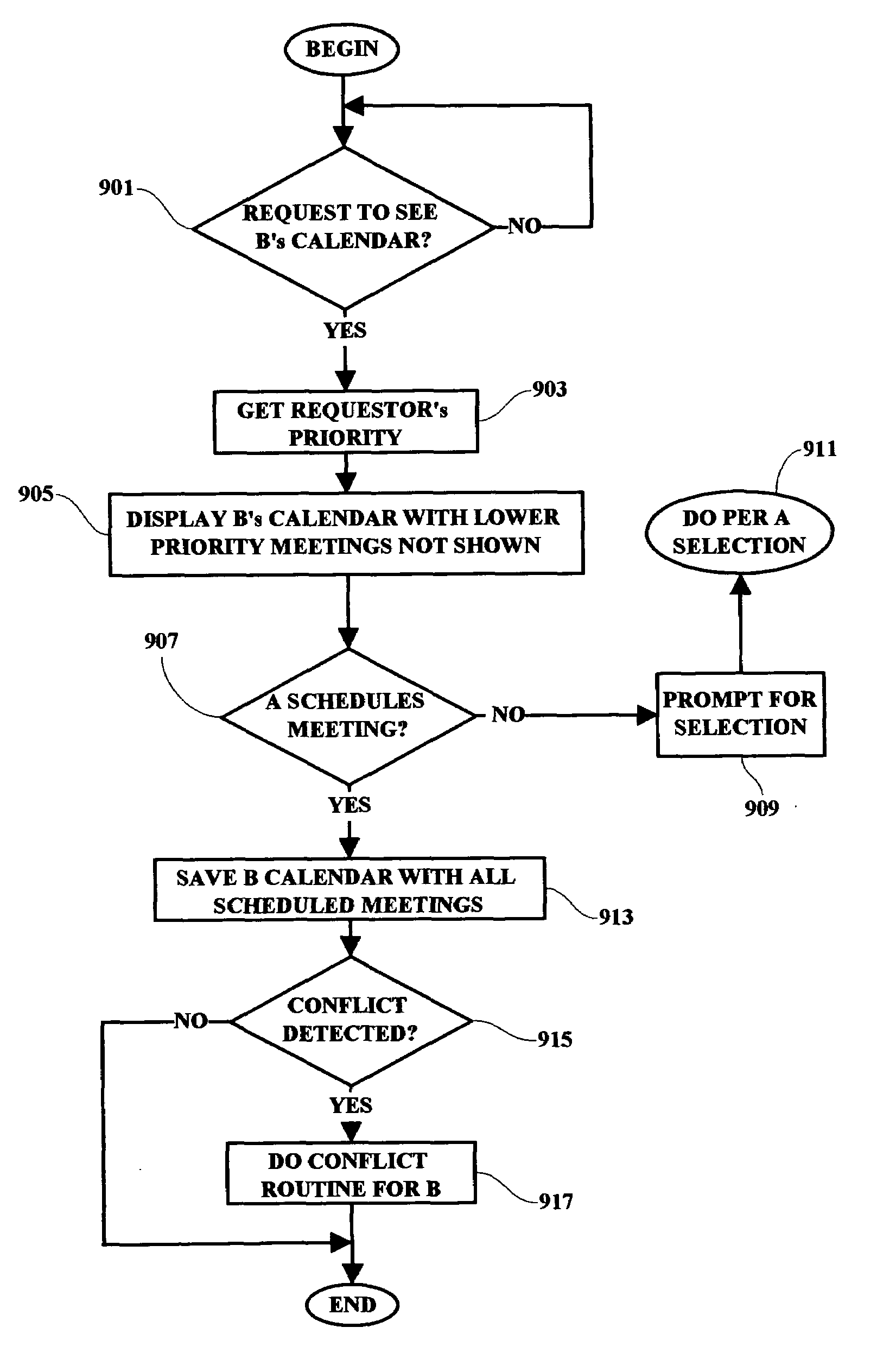

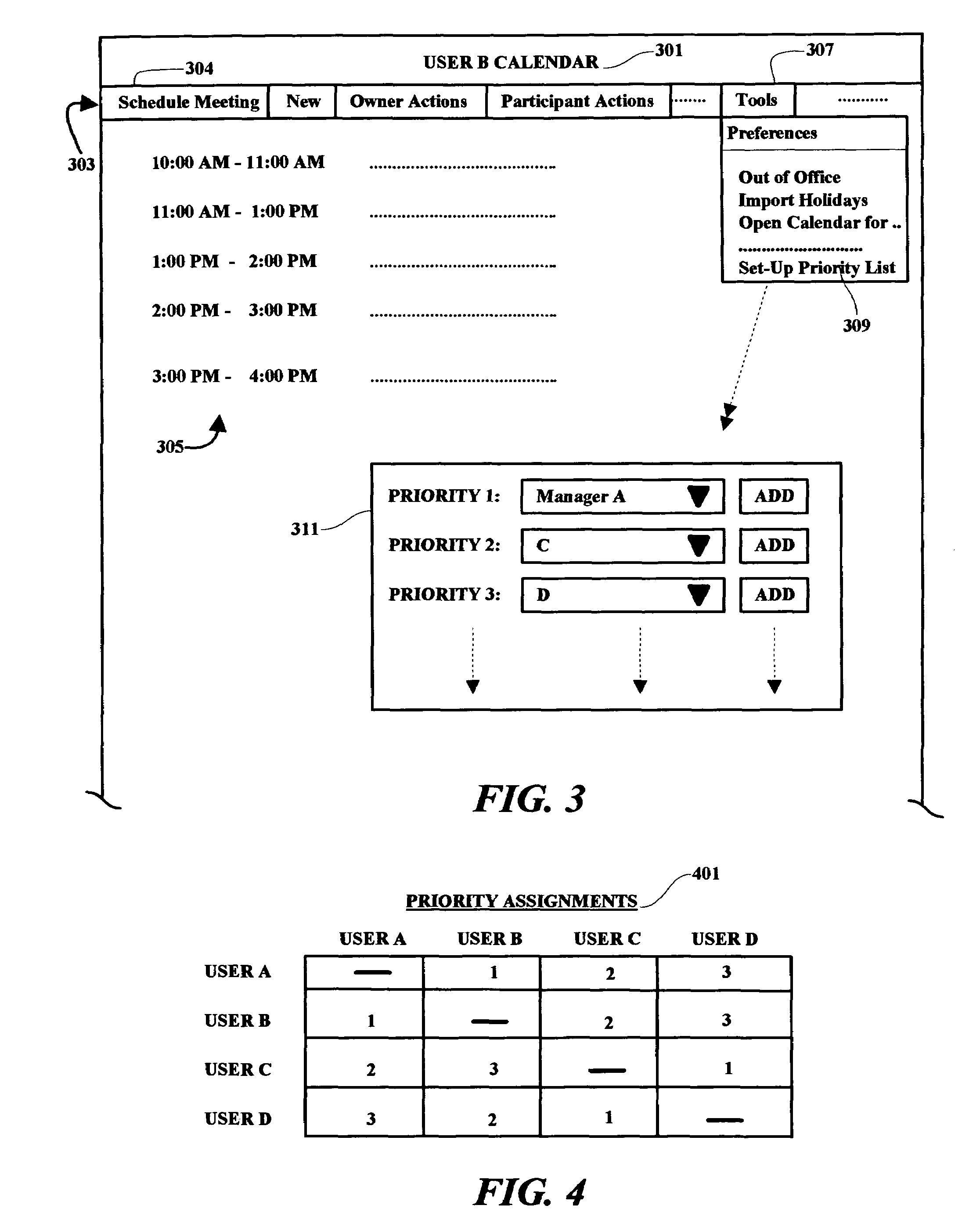

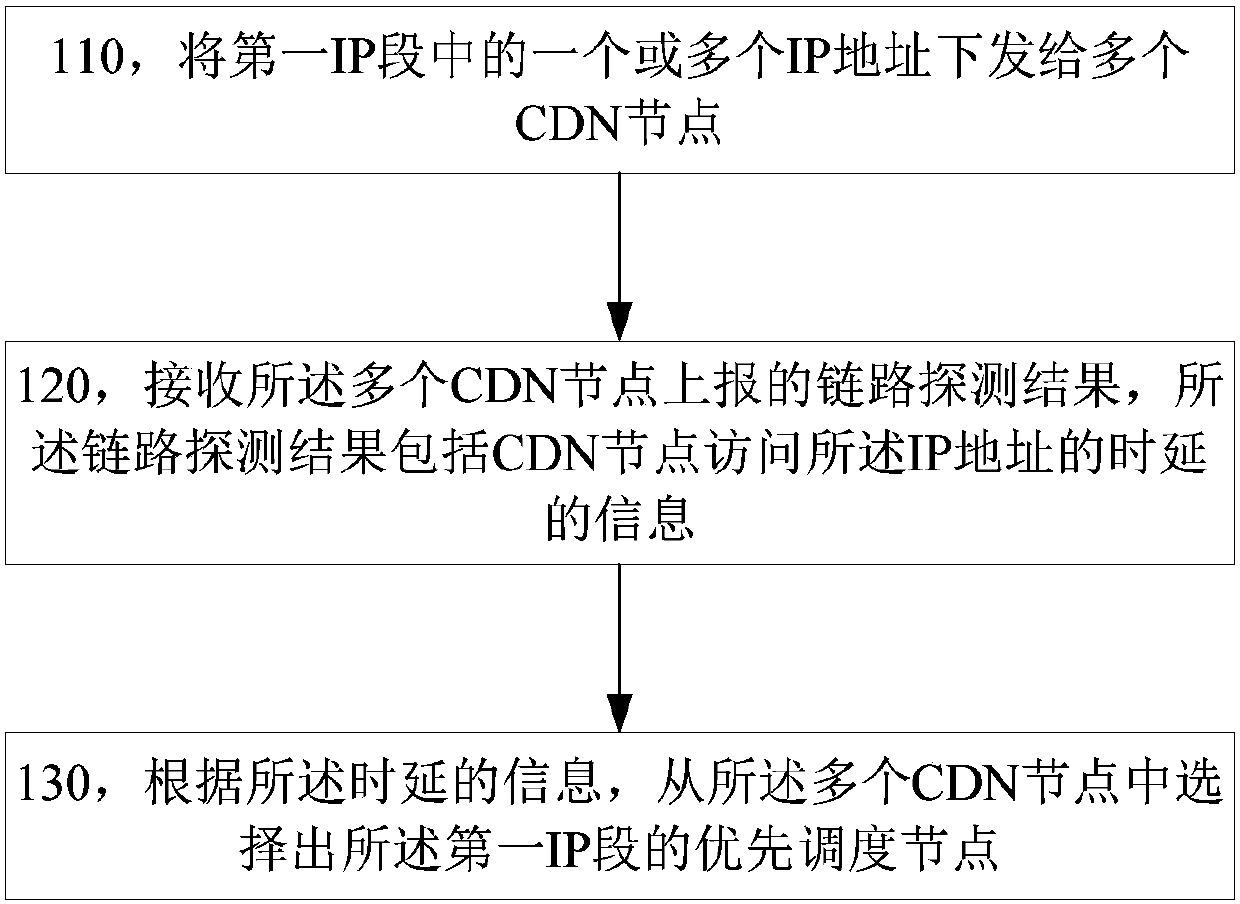

Meeting scheduling system

InactiveUS20090327019A1Convenient ArrangementRaise priorityInstrumentsPriority callDistributed computing

A method, programmed medium and system are provided in which a user has an option to prioritize meetings and individuals who have access to the user's calendar. The disclosed exemplary embodiments provide for prioritizing meetings and allowing a certain set of favorite people see the availability based on priority. Users are enabled to designate specific individuals and corresponding priority levels for the designated individuals who have access to the user's calendar. A high priority user is enabled to schedule a meeting on other users' calendars based on the priority level of the scheduling user. The system automatically determines the priority level of the scheduling user and displays calendars of other invited users by displaying only other scheduled meetings which have a higher priority level thereby enabling automatic priority level scheduling for all users of the calendar system.

Owner:IBM CORP

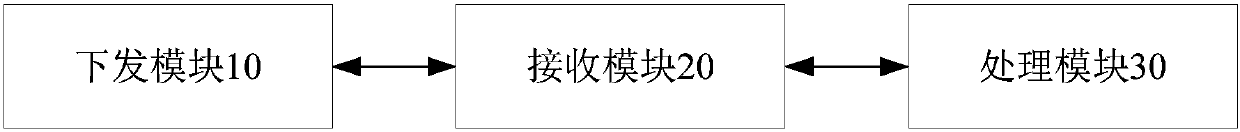

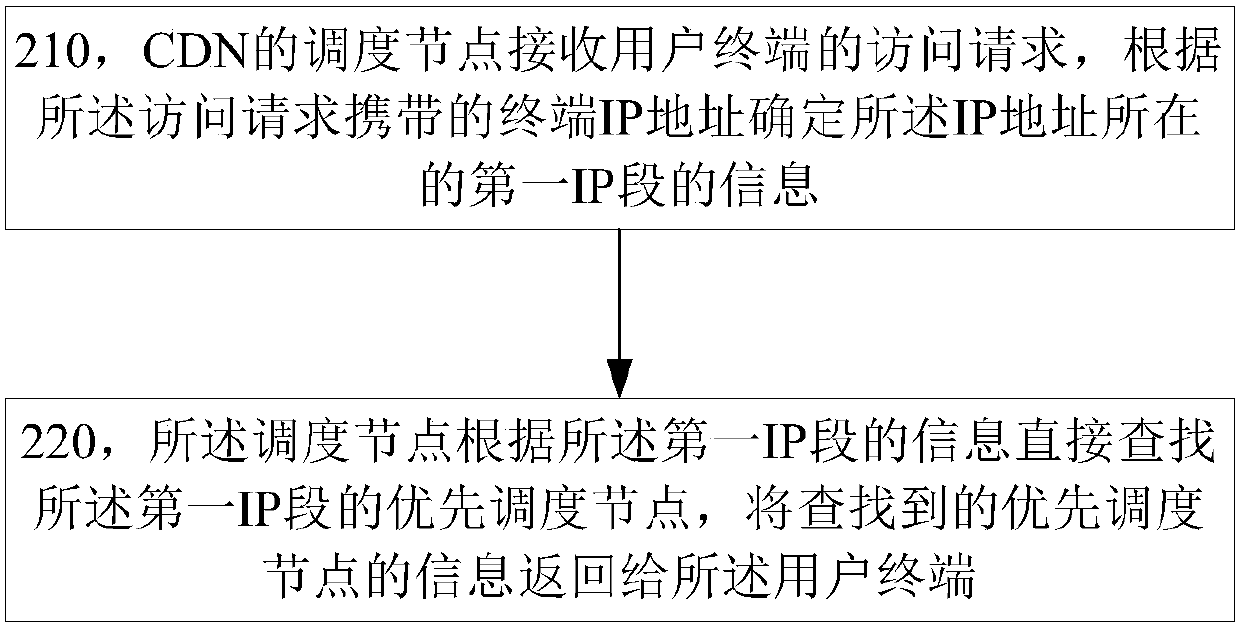

Detection processing and scheduling method of content distribution network, corresponding apparatus and nodes

InactiveCN107645525AAvoid the problem of insecure service qualityTransmissionQuality of serviceContent distribution

A detection processing and scheduling method of a content distribution network, a corresponding apparatus and nodes are disclosed. A detection processing apparatus issues one or more IP addresses in afirst IP segment to a plurality of CDN nodes and receives a link detection result reported by the plurality of CDN nodes. The link detection result comprises information of time delay when the CDN nodes access the IP addresses. According to the time delay information, a priority scheduling node of the first IP segment is selected from the plurality of CDN nodes. The scheduling node directly searches the priority scheduling node of the first IP segment according to information of the first IP segment and the information of the searched priority scheduling node is returned to a user terminal. In the invention, a problem that service quality is not guaranteed when ''near access'' is performed on the CDN nodes can be avoided.

Owner:ALIBABA GRP HLDG LTD

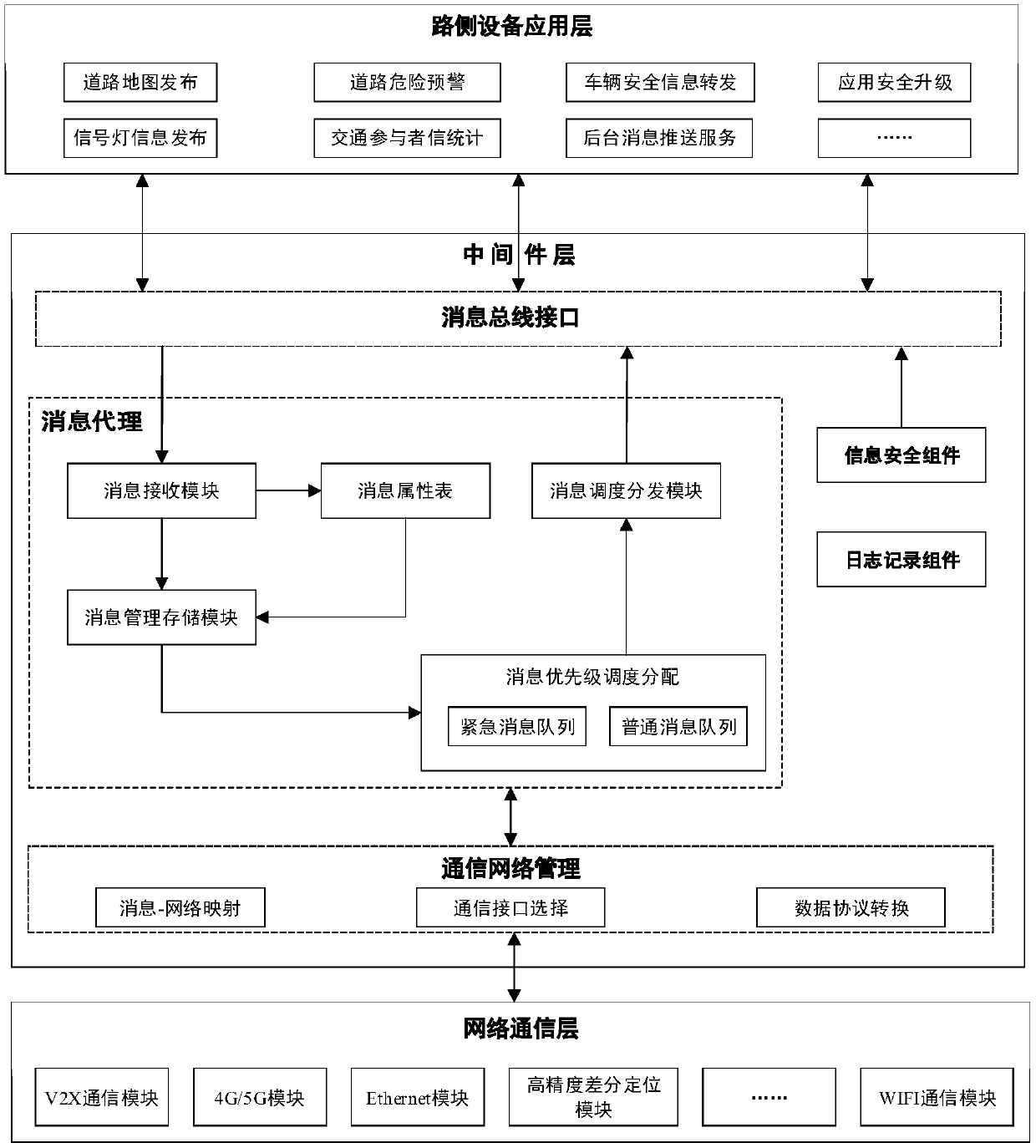

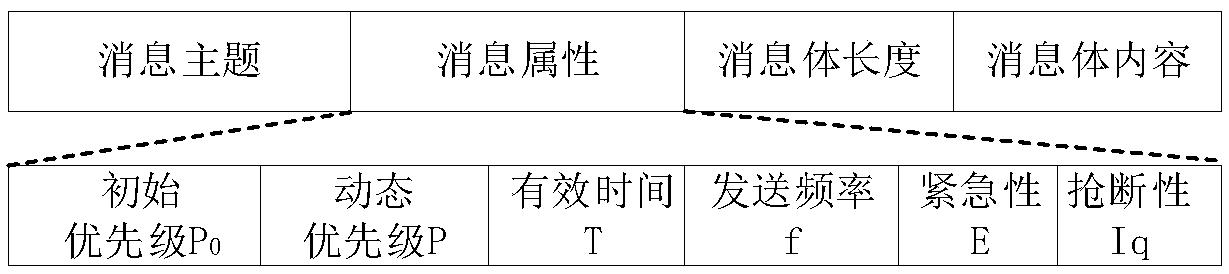

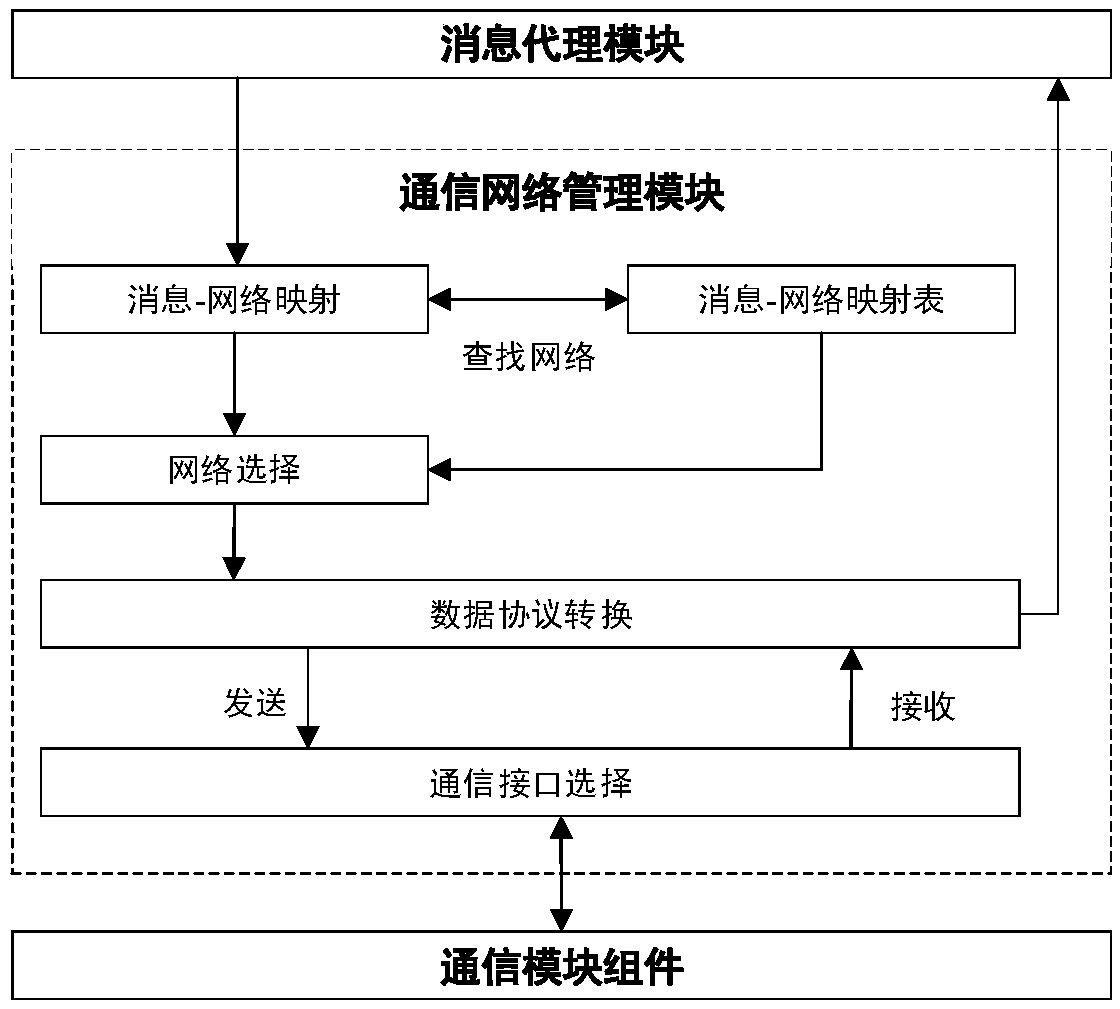

V2X-based roadside unit system and information distribution method thereof

ActiveCN109672996ATraffic scene functions are simpleImprove distribution efficiencyParticular environment based servicesVehicle infrastructure communicationUnit systemHeterogeneous network

The invention provides a V2X-based roadside unit system and an information distribution method thereof. The system mainly comprises a communication network management module, a message proxy module, an information security component module, a log management component module, a message bus interface module and the like. An RSU (roadside unit) message proxy module is responsible for parsing, priority scheduling and distribution of messages, sent by an application layer through a message bus interface, to a message bus. The RSU communication network management module is responsible for shieldingprotocol difference of a platform and RSU auxiliary communication equipment, and a unified communication calling interface is provided for middleware message proxy. According to the system and the method in the invention, heterogeneous network multi-source information from a road sensor, a vehicle, a cloud platform, a differential positioning base station and the like is managed through the V2X RSU in a unified way, a unified communication calling interface is provided for an upper-layer application, and the application development efficiency and the function expandability are improved; the messages are scheduled on the basis of a priority policy so as to ensure that the information with relatively high information tightness is preferentially sent.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

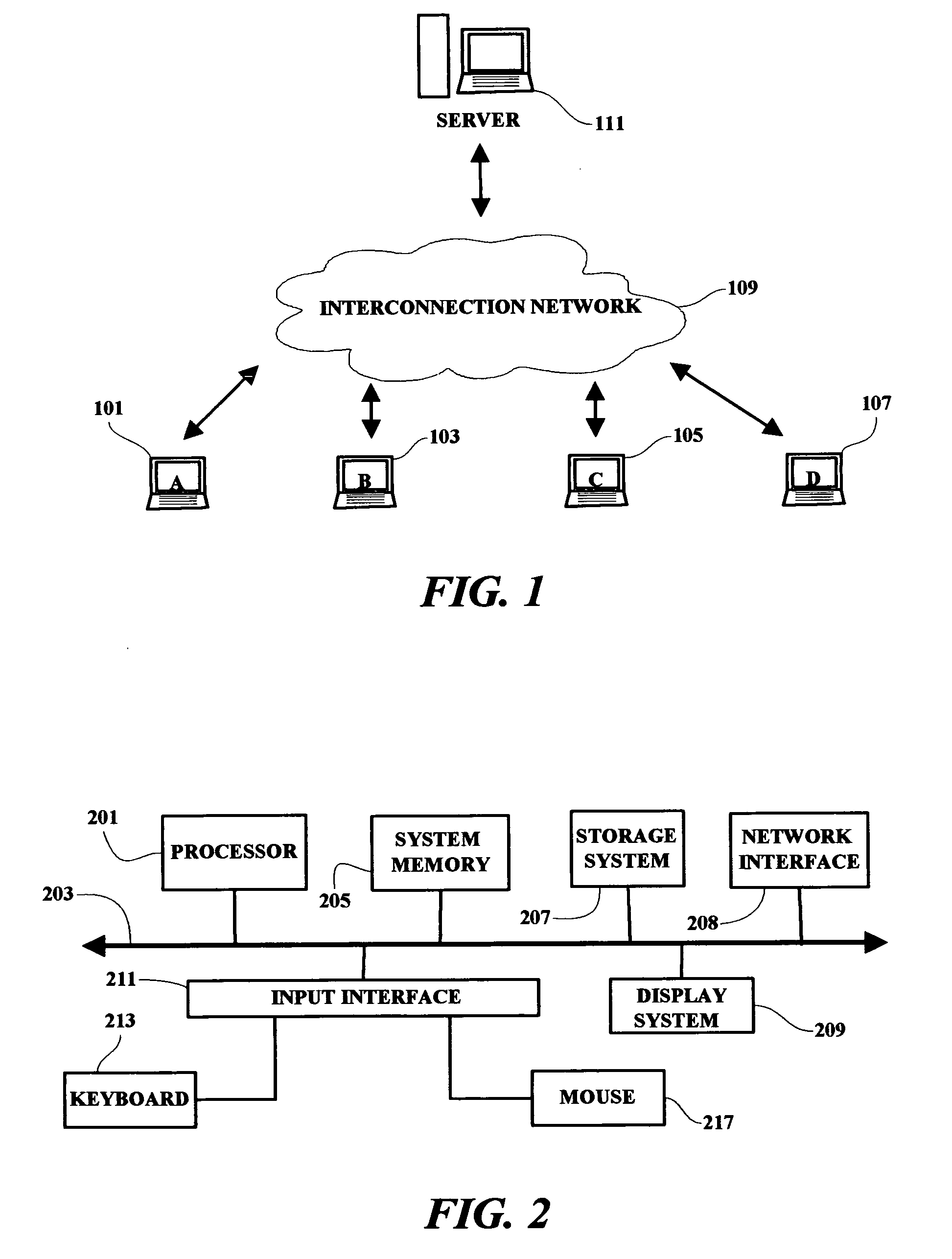

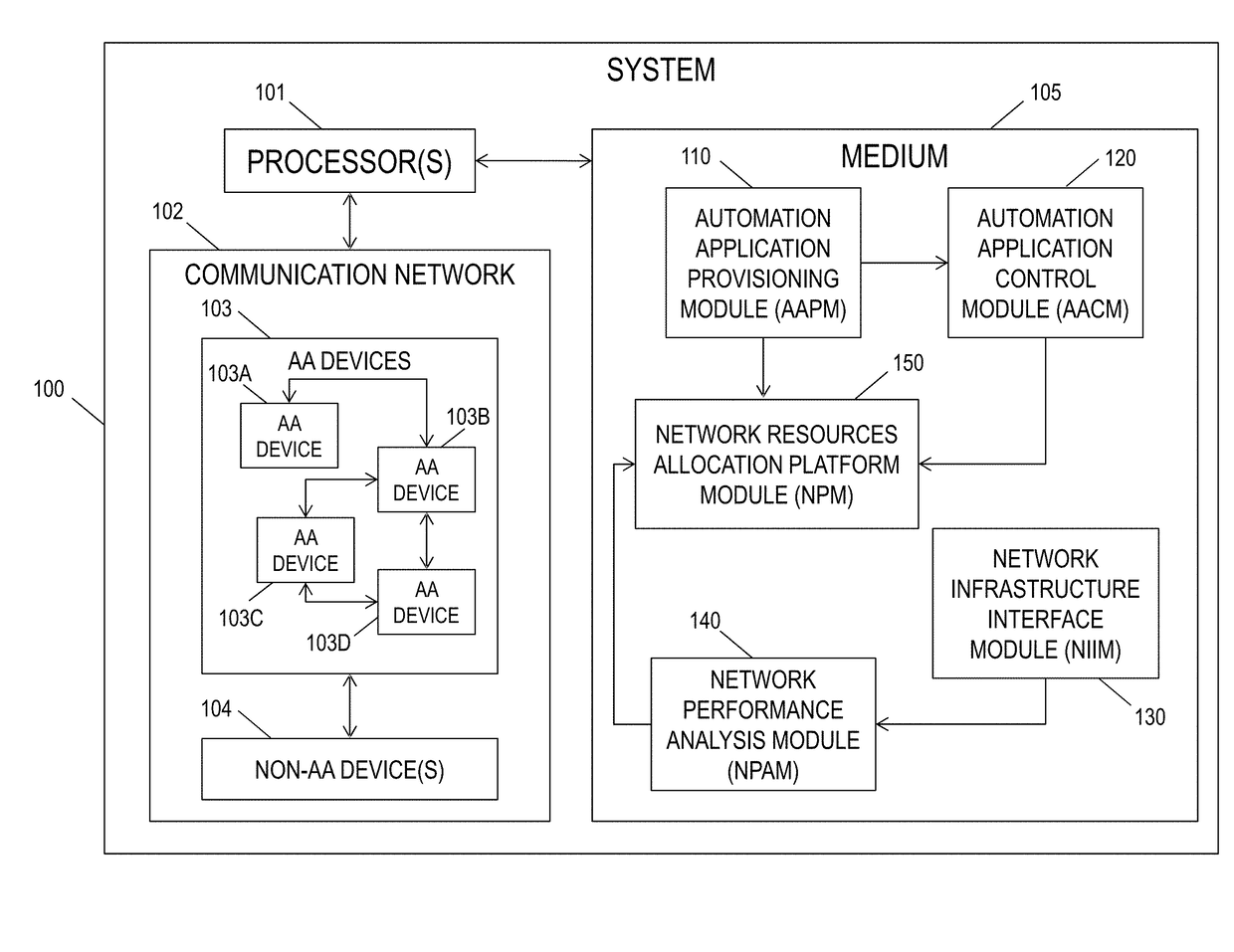

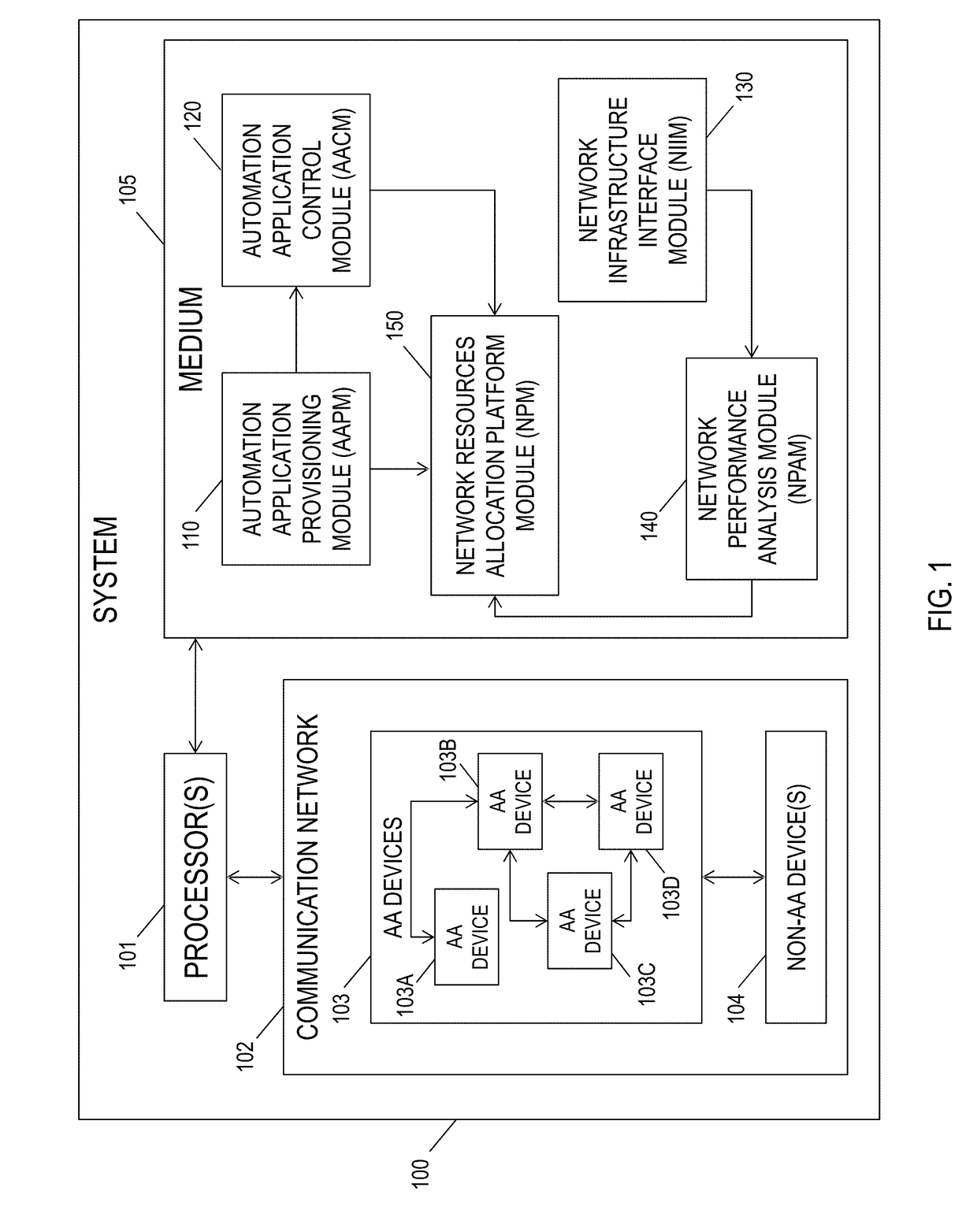

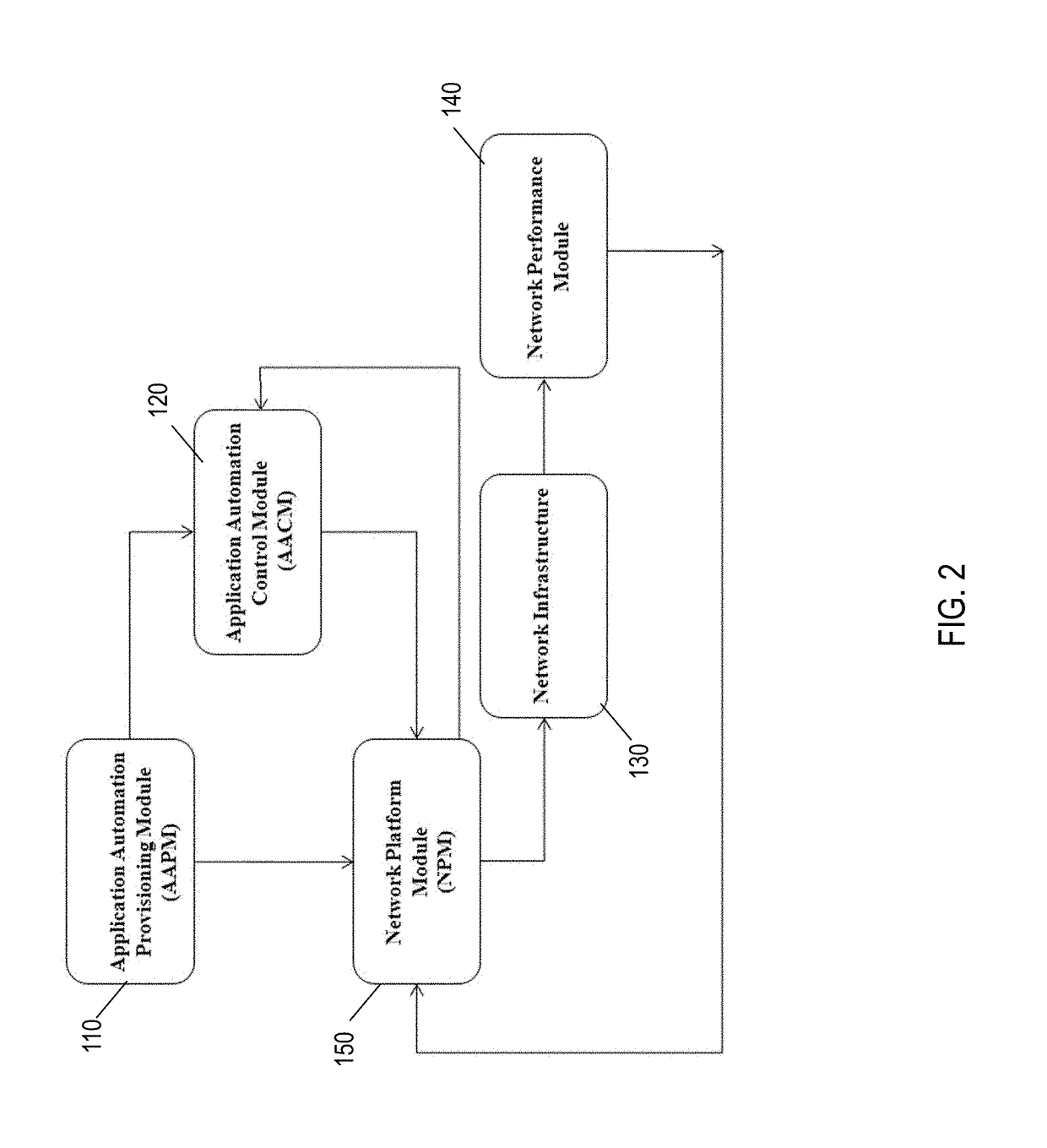

Systems, methods, and computer medium to provide adaptive priority scheduling of communications over a network and dynamic resources allocation among devices within the network

ActiveUS9722951B2Reduce in quantityFunction increaseProgramme controlData switching networksDynamic resourceSelf adaptive

Systems, computer-implemented methods, and non-transitory computer-readable medium having computer program stored therein can provide adaptive priority scheduling of communications over a communication network and dynamic resources allocation among a plurality of devices positioned in the communication network. A system according to an embodiment can include an automation application provisioning module (AAPM) to configure and provision relationships among automation application (AA) devices and non-AA devices; an automation application control module (AACM) to control network resources allocation responsive to the AAPM; a network infrastructure interface module (NIIM) to interface with and measure performance of the devices; a network performance analysis module (NPAM) to analyze performance of the devices and identify optimal network topologies responsive to the NIIM; and a network resources allocation platform module (NPM) to control network resources allocation responsive to the AAPM, the AACM, and the NPAM thereby to enhance coexistence of the AA and non-AA devices within the network.

Owner:SAUDI ARABIAN OIL CO

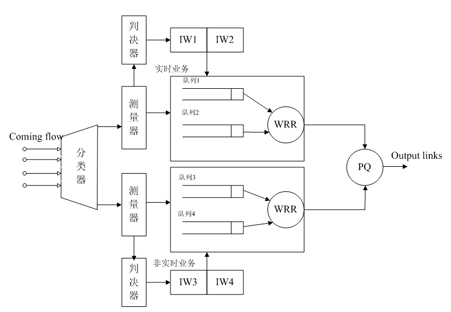

Active queue scheduling method based on QoS (Quality of Service) in differentiated service network

InactiveCN101958844AGuaranteed Fairness FeaturesMeet real-time characteristicsData switching networksCode pointDifferentiated service

The invention discloses an active queue scheduling method based on QoS (Quality of Service) in a differentiated service network, comprising the following steps of: classifying businesses of a coming business grouping by a classifier according to a DSCP (Differentiated Services Code Point) domain thereof, partitioning all classified business kinds to be a real-time business kind aggregate and a non-real-time business kind aggregate; grouping and scheduling the business kind grouping of the non-real-time business kind aggregate and the real-time business kind aggregate through a self-adapting weighting polling scheduler respectively; and scheduling the scheduling groupings output by the two schedulers through a strict PQ (Priority Queuing) scheduler. The invention can not only provide dynamic band width distribution according to the practical load situation of nodes, but also efficiently ensures the time delay requirements on a real-time business.

Owner:NANJING UNIV OF POSTS & TELECOMM

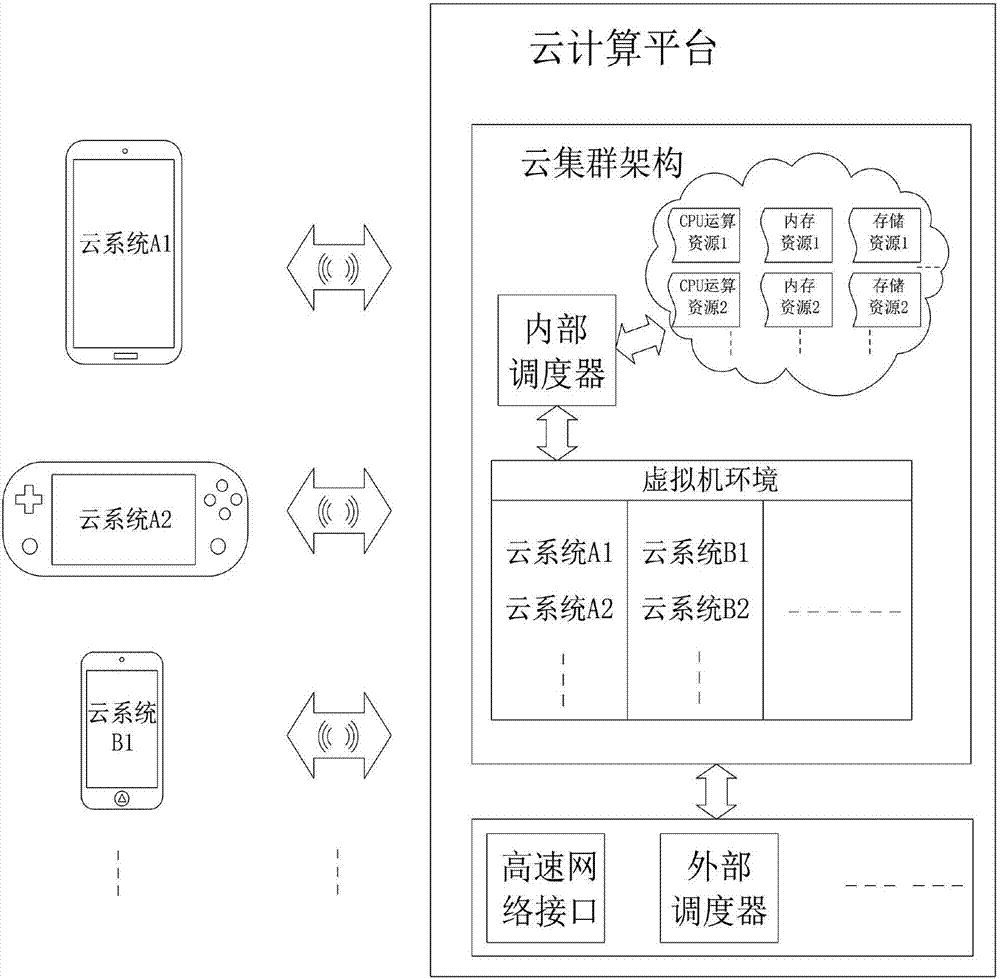

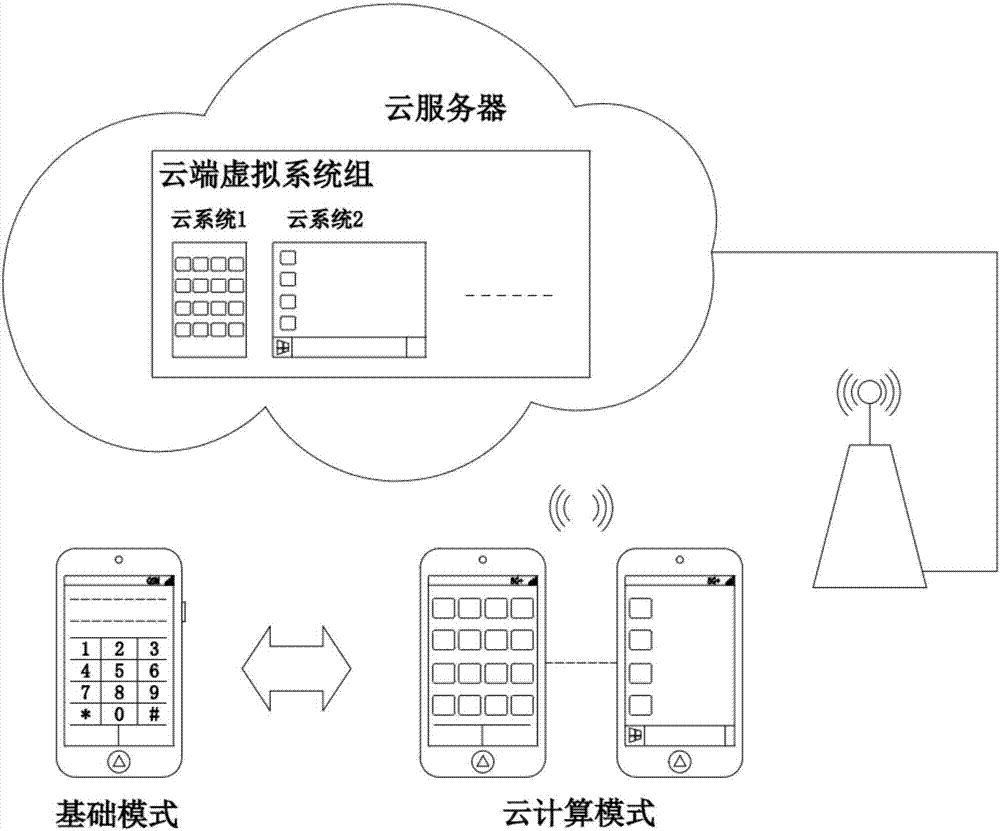

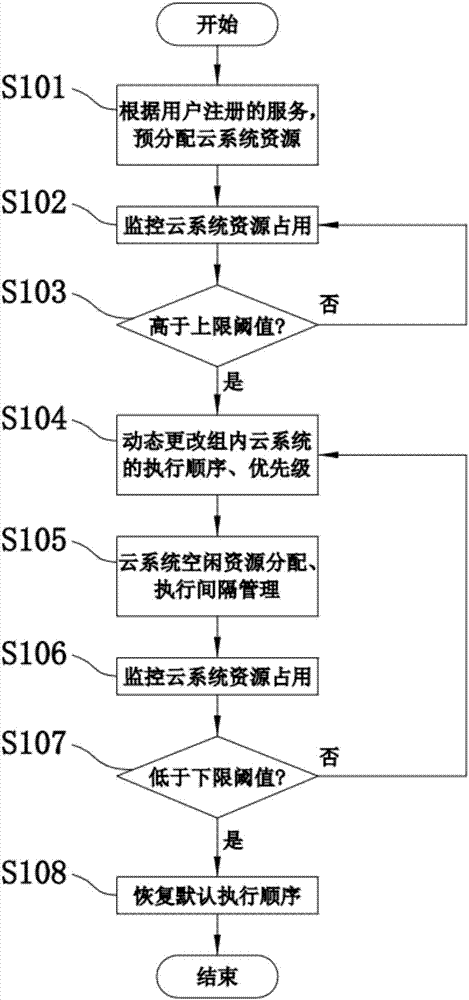

Scheduling method and device for mobile cloud computing platform

ActiveCN107018175AOptimize and rationally allocateOptimize and rationalize resourcesTransmissionCloud systemsLow resource

The invention discloses a scheduling method for a mobile cloud computing platform. The method comprises the following steps: monitoring resource occupancy of cloud system programs while running a group of cloud systems; if current total resource occupancy is higher than a preset upper threshold, dynamically changing an execution sequence of the group of cloud system programs according to a resource request amount occupied by each of the plurality of cloud system programs, so that execution priorities of the cloud system programs with relatively low resource occupancy are higher than the execution priorities of the cloud system programs with relatively high resource occupancy; executing the cloud system programs with relatively high resource occupancy at idle periods of execution intervals of the plurality of cloud system programs with relatively low resource occupancy; and when the current total resource occupancy is lower than a preset lower threshold, restoring or maintaining an original execution sequence. The invention also discloses a scheduling device for the mobile cloud computing platform. The scheduling device comprises a monitoring module, an execution priority scheduling module, an idle work scheduling module and an execution priority restoring module.

Owner:杨立群

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com