Patents

Literature

101results about How to "Avoid hunger" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Resource scheduling

InactiveUS7219347B1Avoid hungerRaise priorityProgram initiation/switchingResource allocationPublic interfaceResource utilization

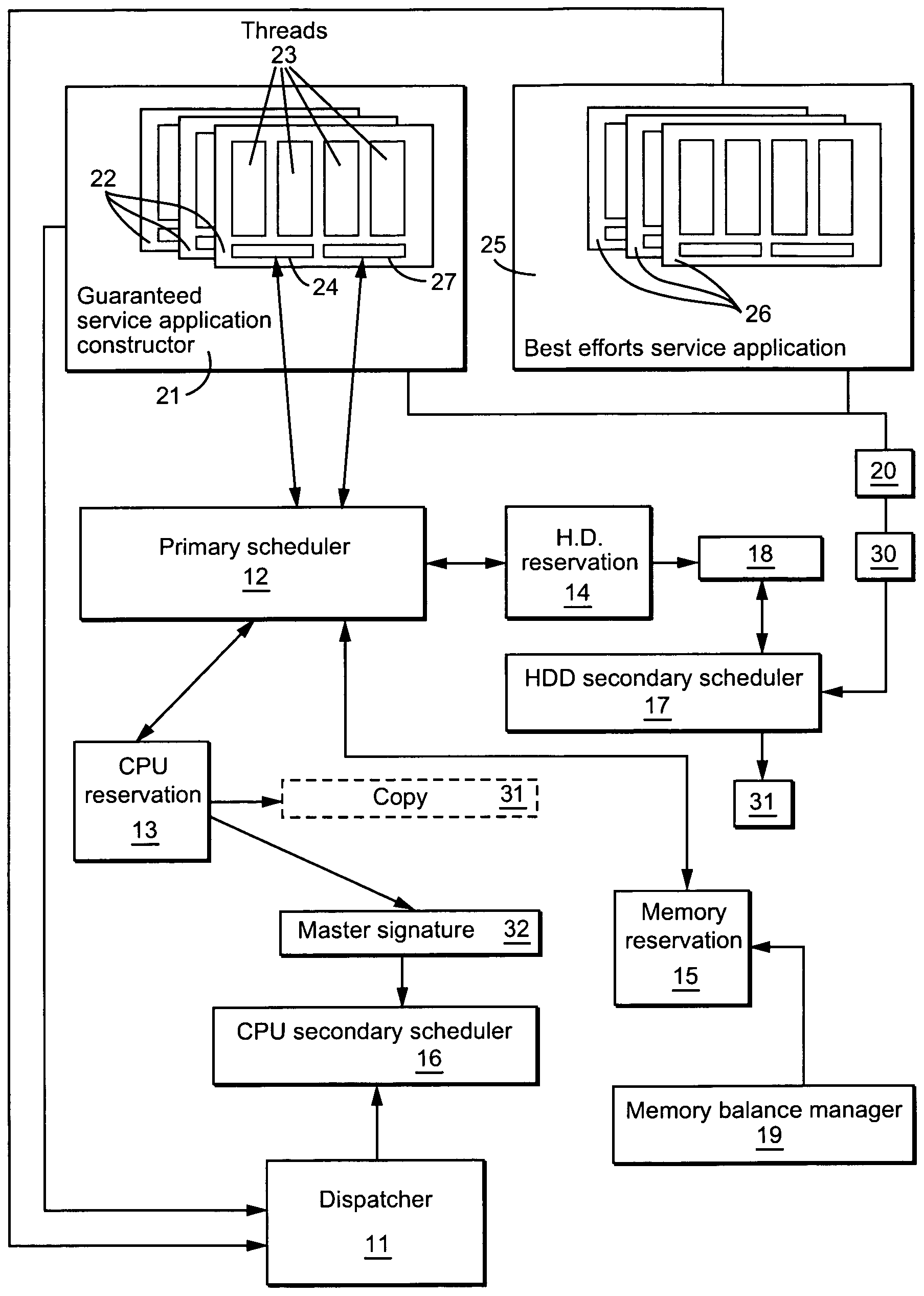

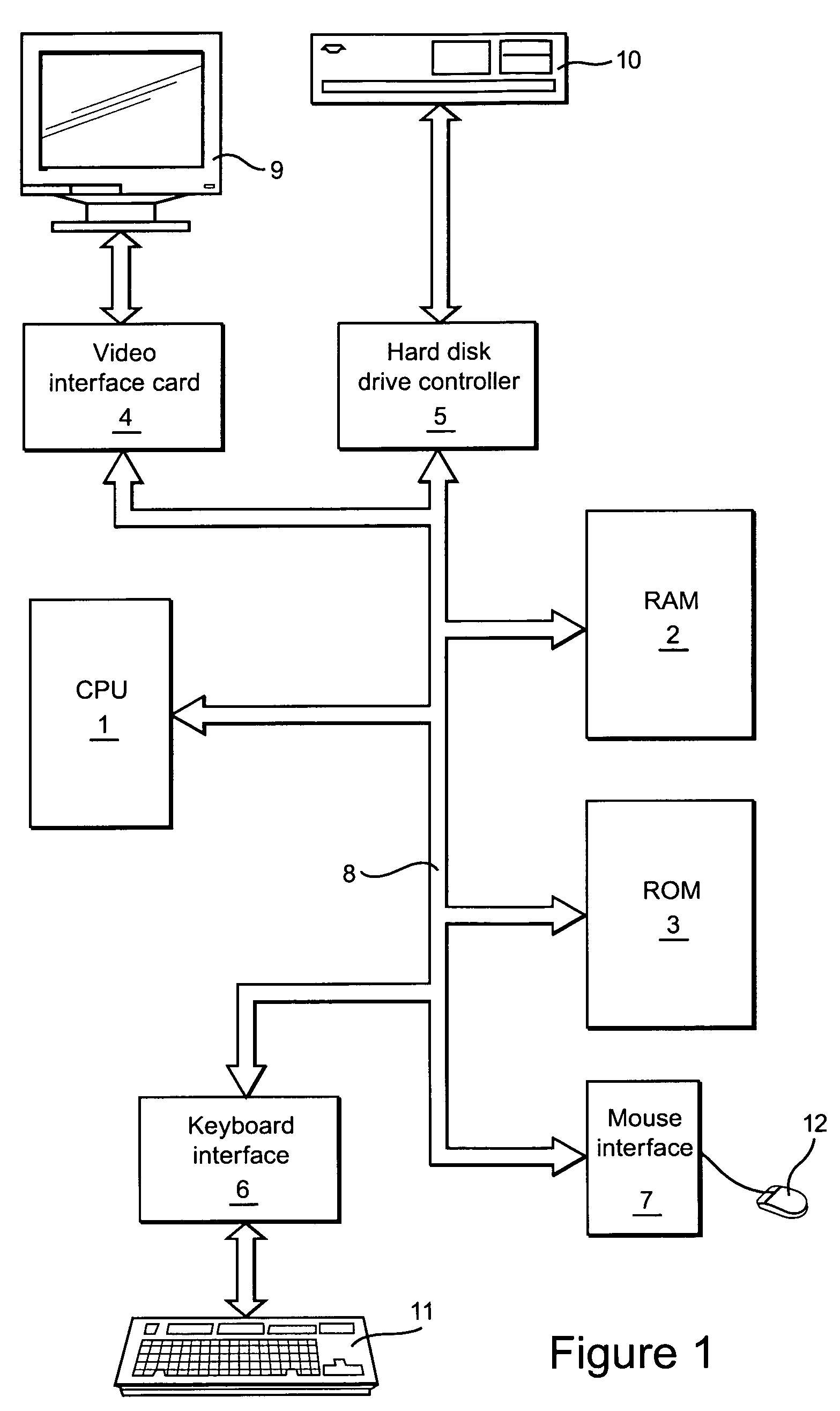

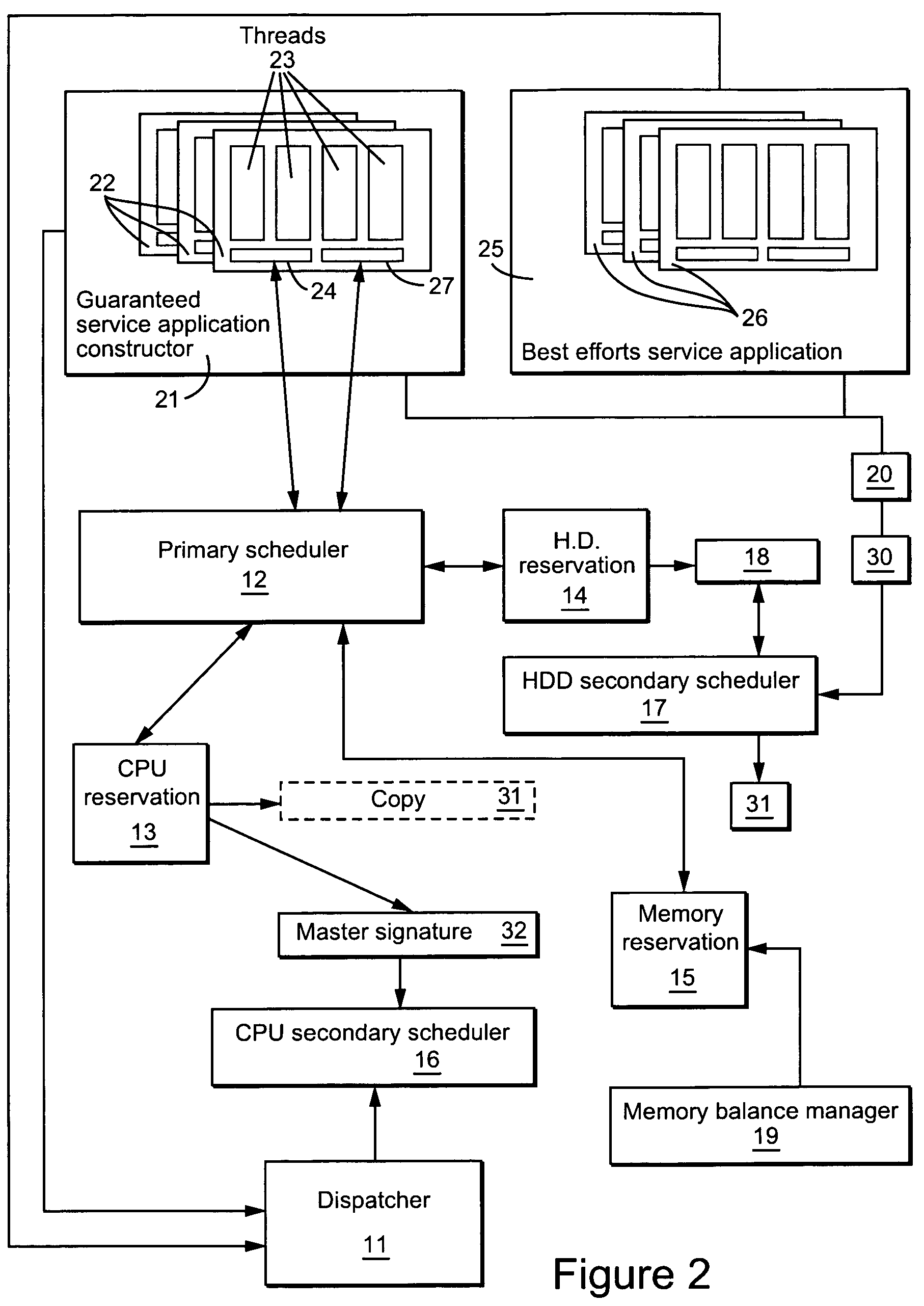

Resource utilization in a computer is achieved by running a first process to make a reservation for access to a resource in dependence on a resource requirement communication from an application and running a second process to grant requests for access to that resource from that application in dependence on the reservation. A further process provides a common interface between the first process for each resource and the application. The further process converts high-level abstract resource requirement definitions into formats applicable to the first process for the resource in question. The processes are preferably implemented as methods of software objects.

Owner:BRITISH TELECOMM PLC

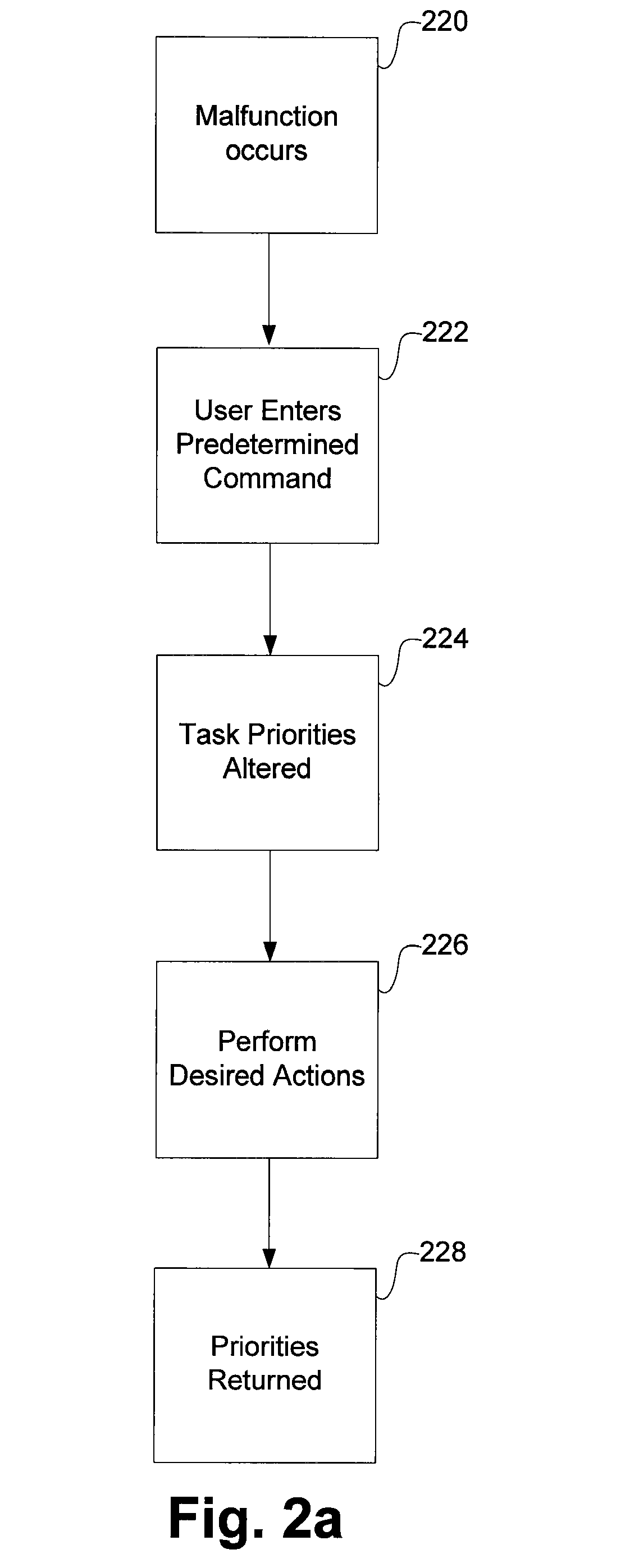

User control of task priority

ActiveUS7562362B1Sufficient flexibilityGood solveMultiprogramming arrangementsNon-redundant fault processingComputer scienceLower priority

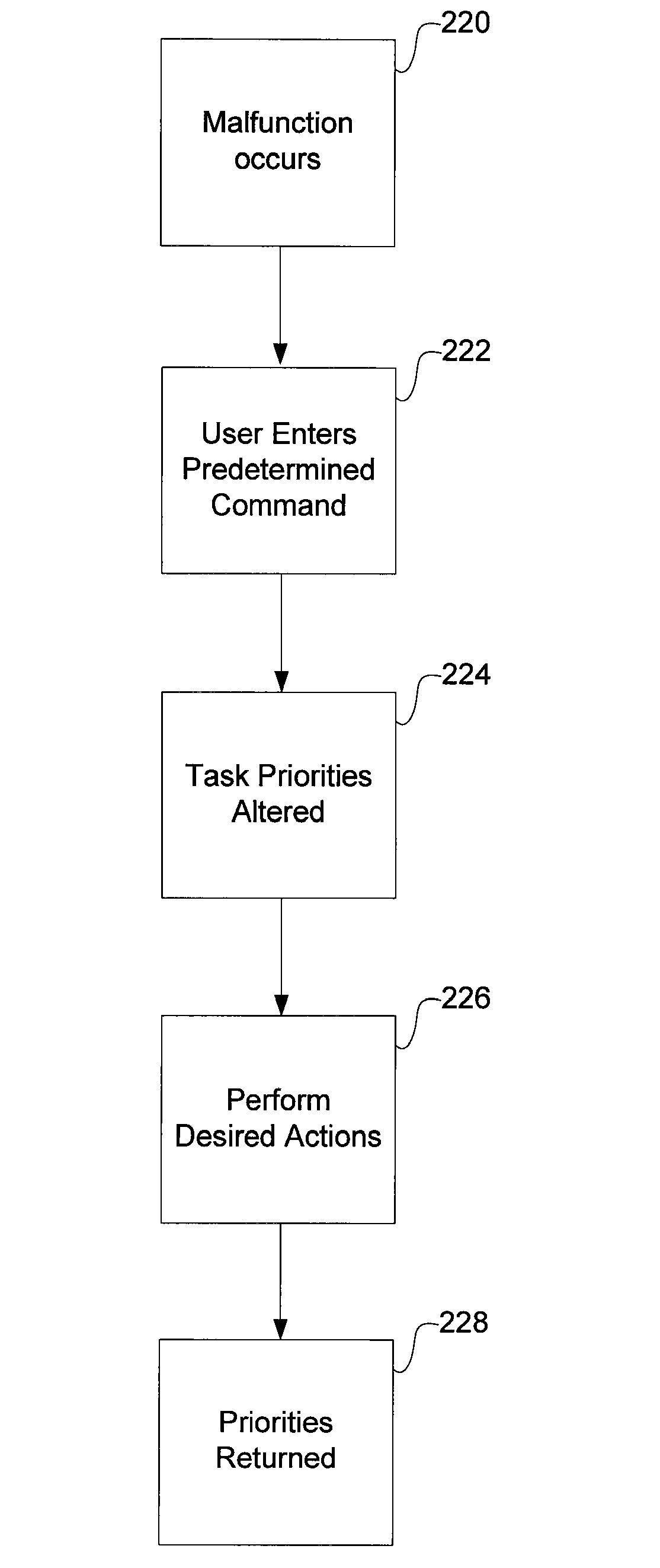

In response to user action, lower priority tasks are scheduled as high priority tasks. This allows lower priority tasks to function even if a higher priority task has malfunctioned and starved the lower priority tasks of instructions. This advantageously provides the user with increased abilities to solve or work around malfunctioning tasks.

Owner:APPLE INC

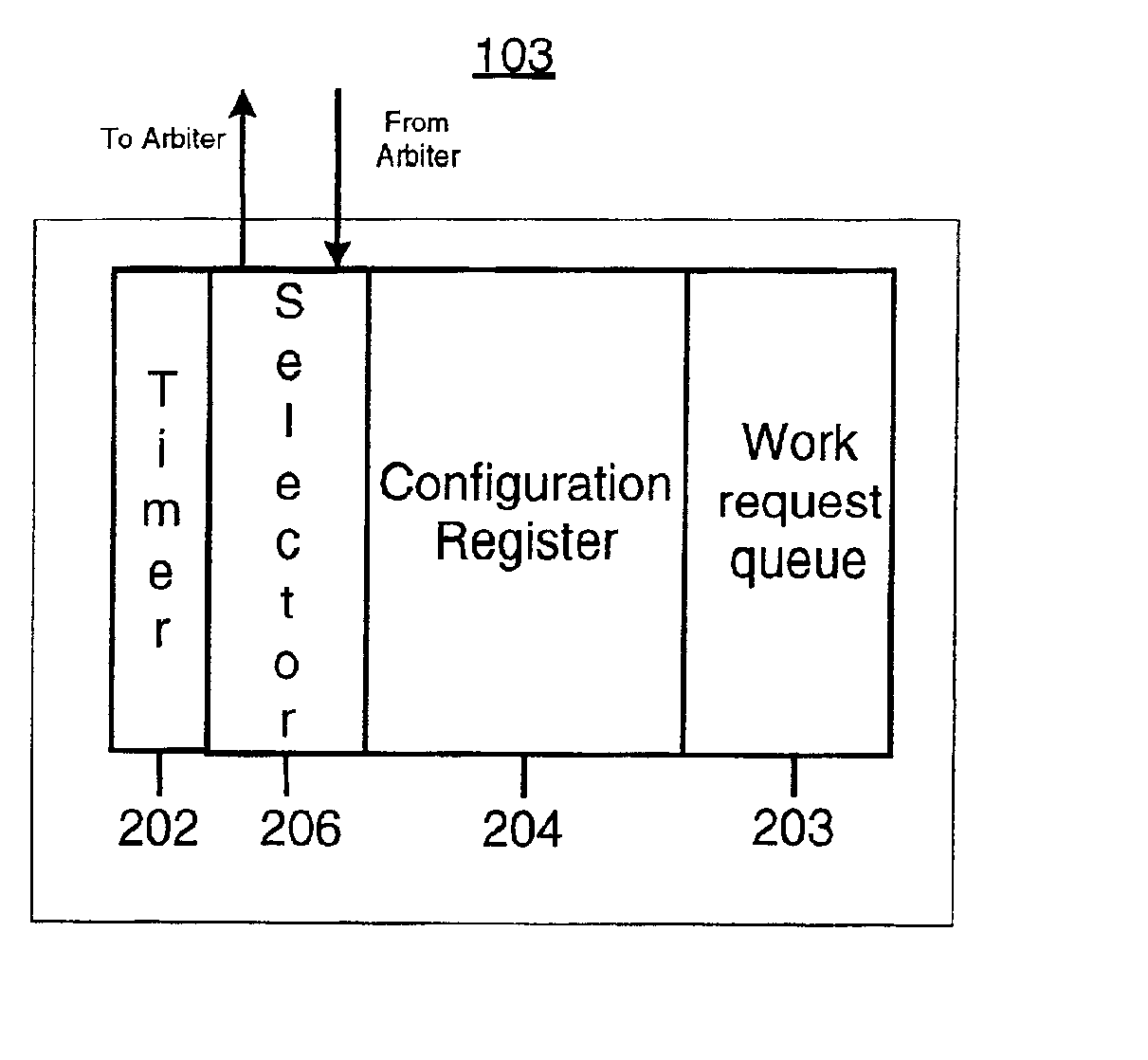

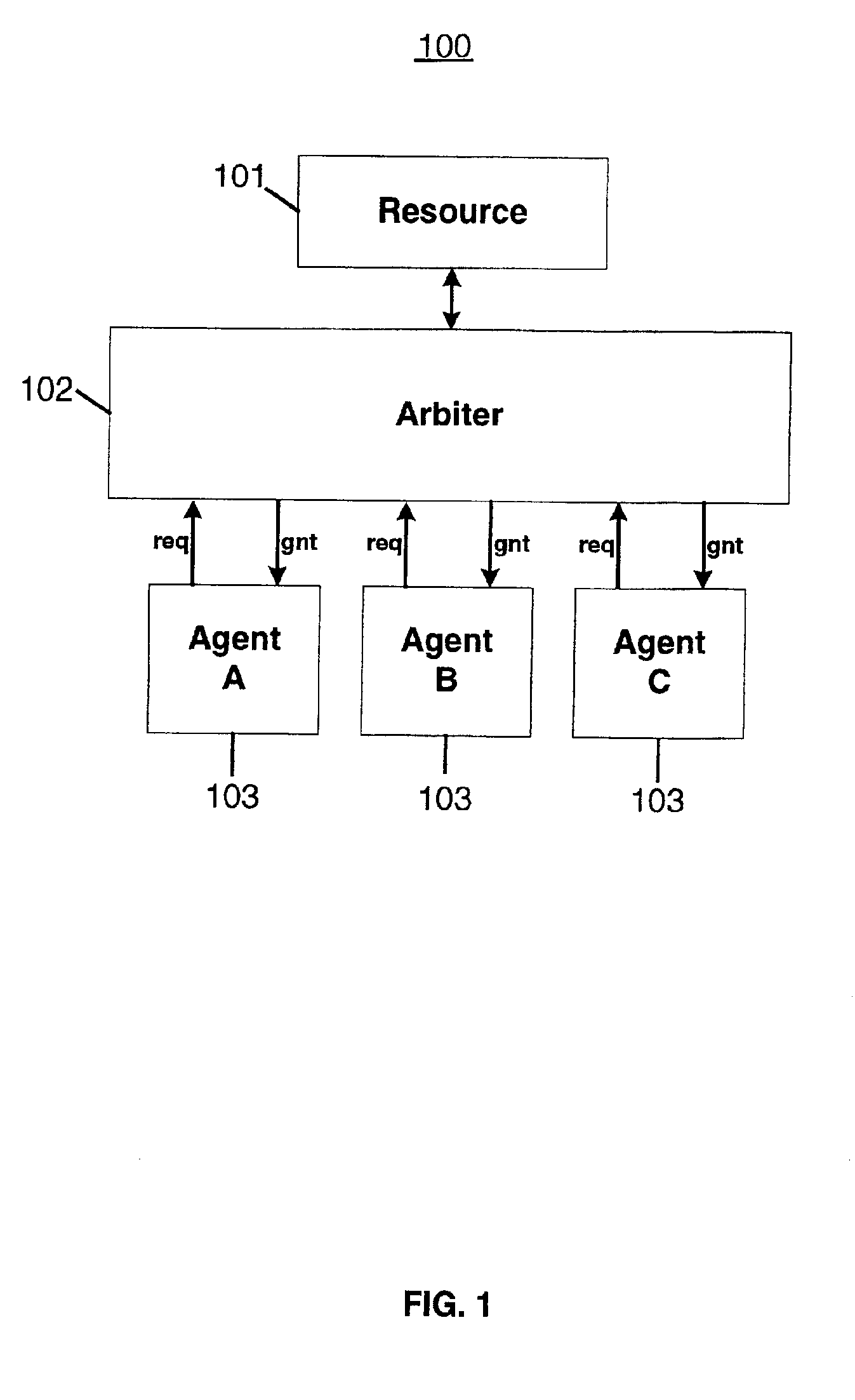

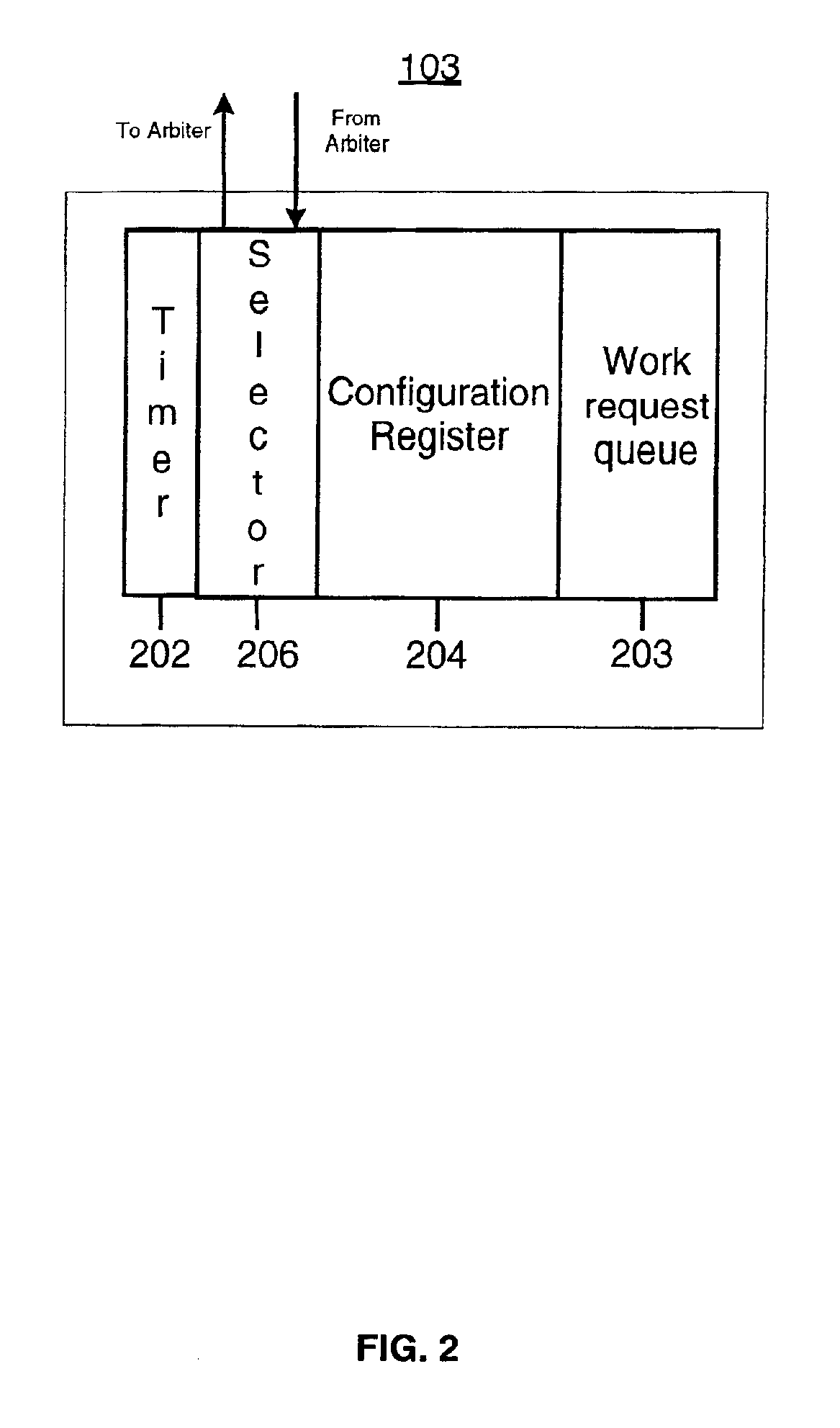

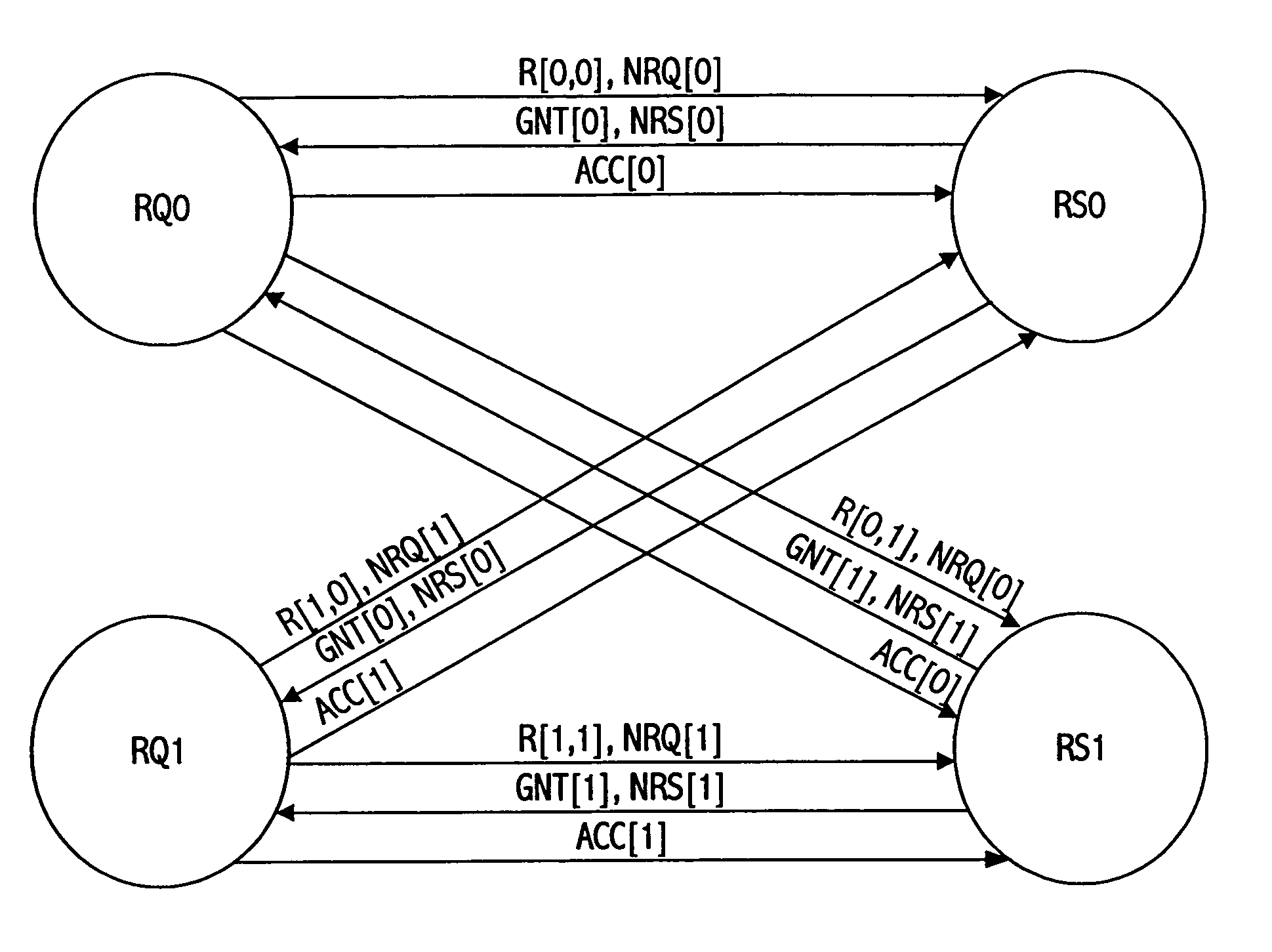

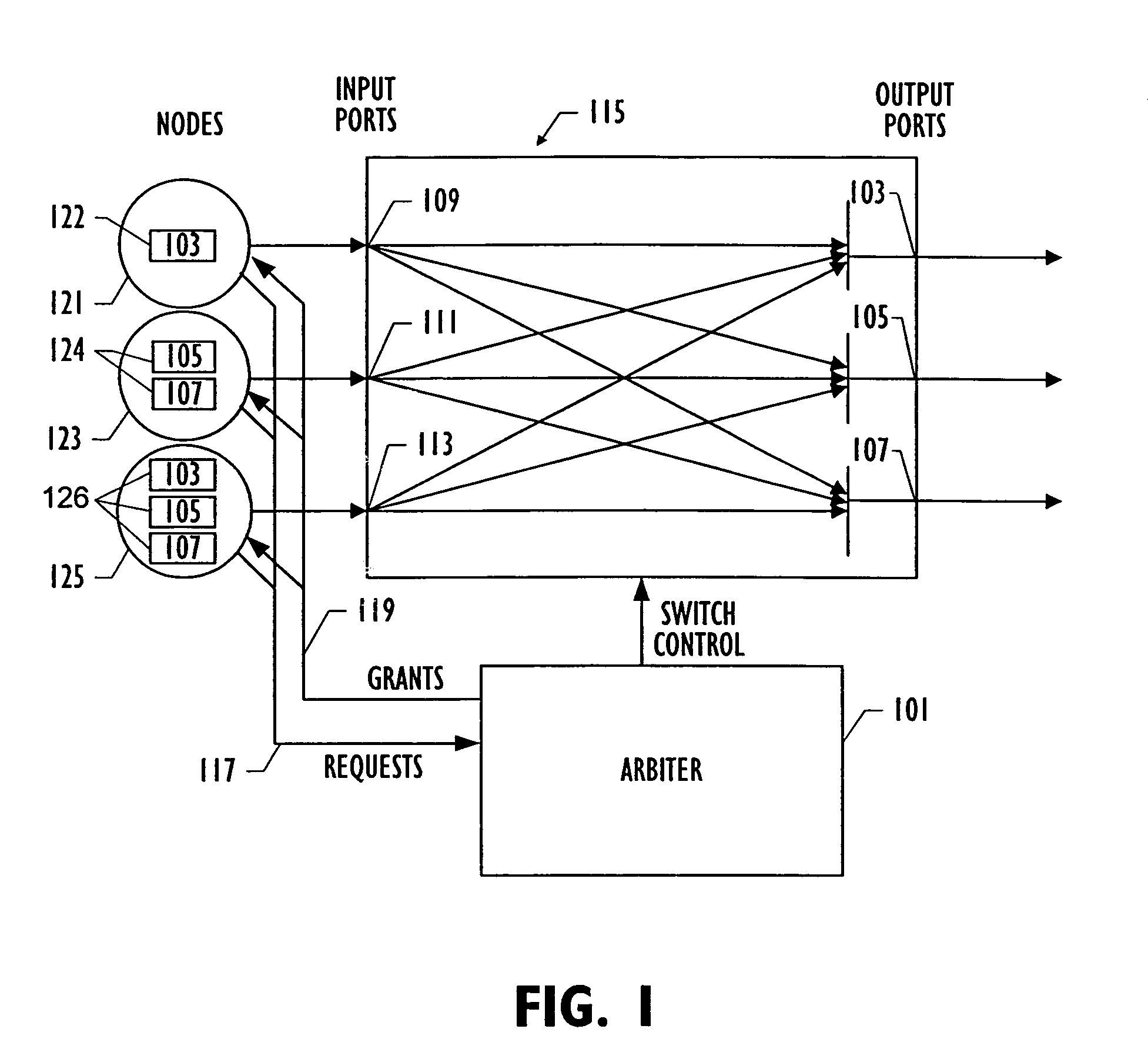

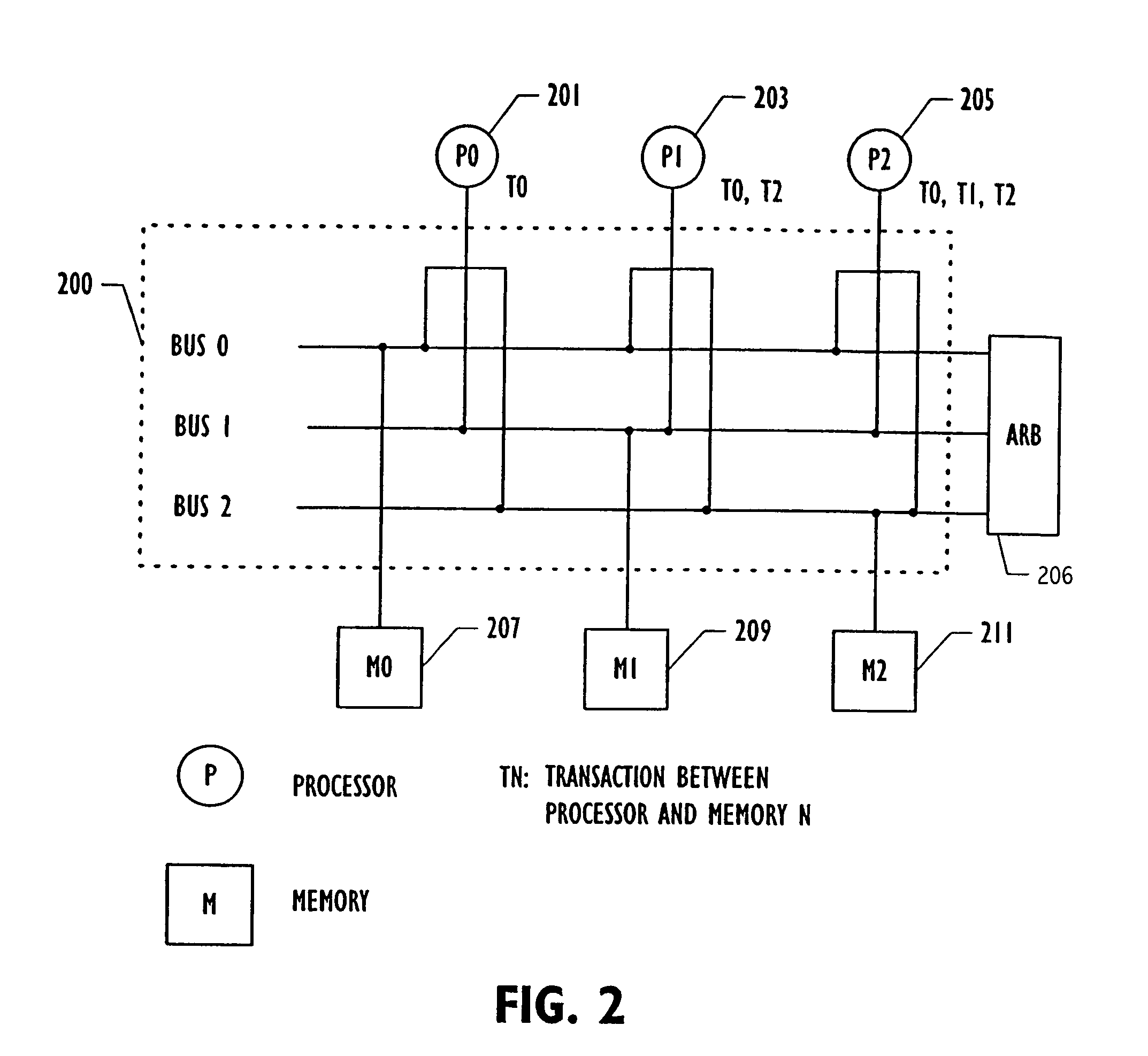

Dynamic request priority arbitration

Owner:ORACLE INT CORP

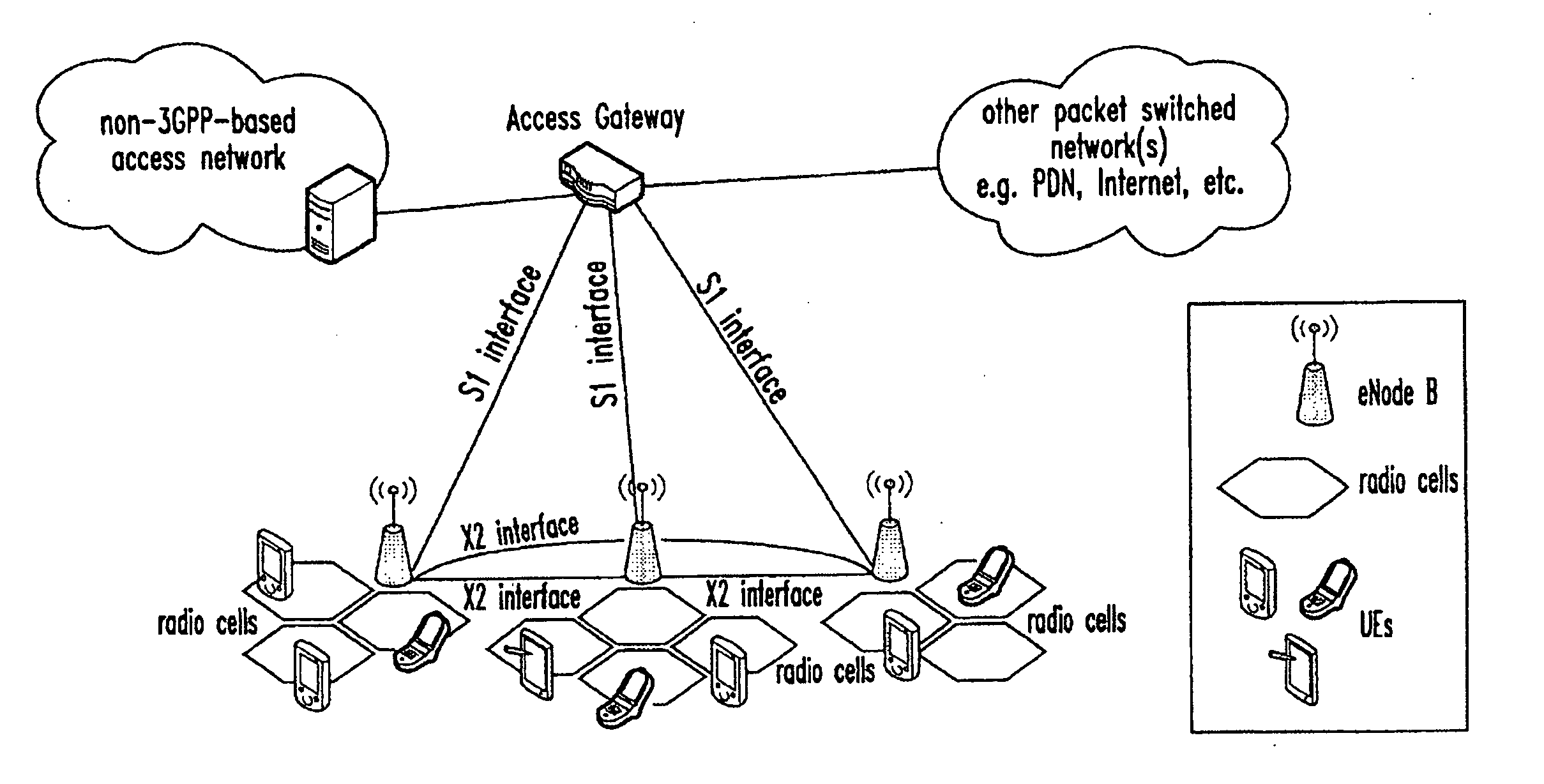

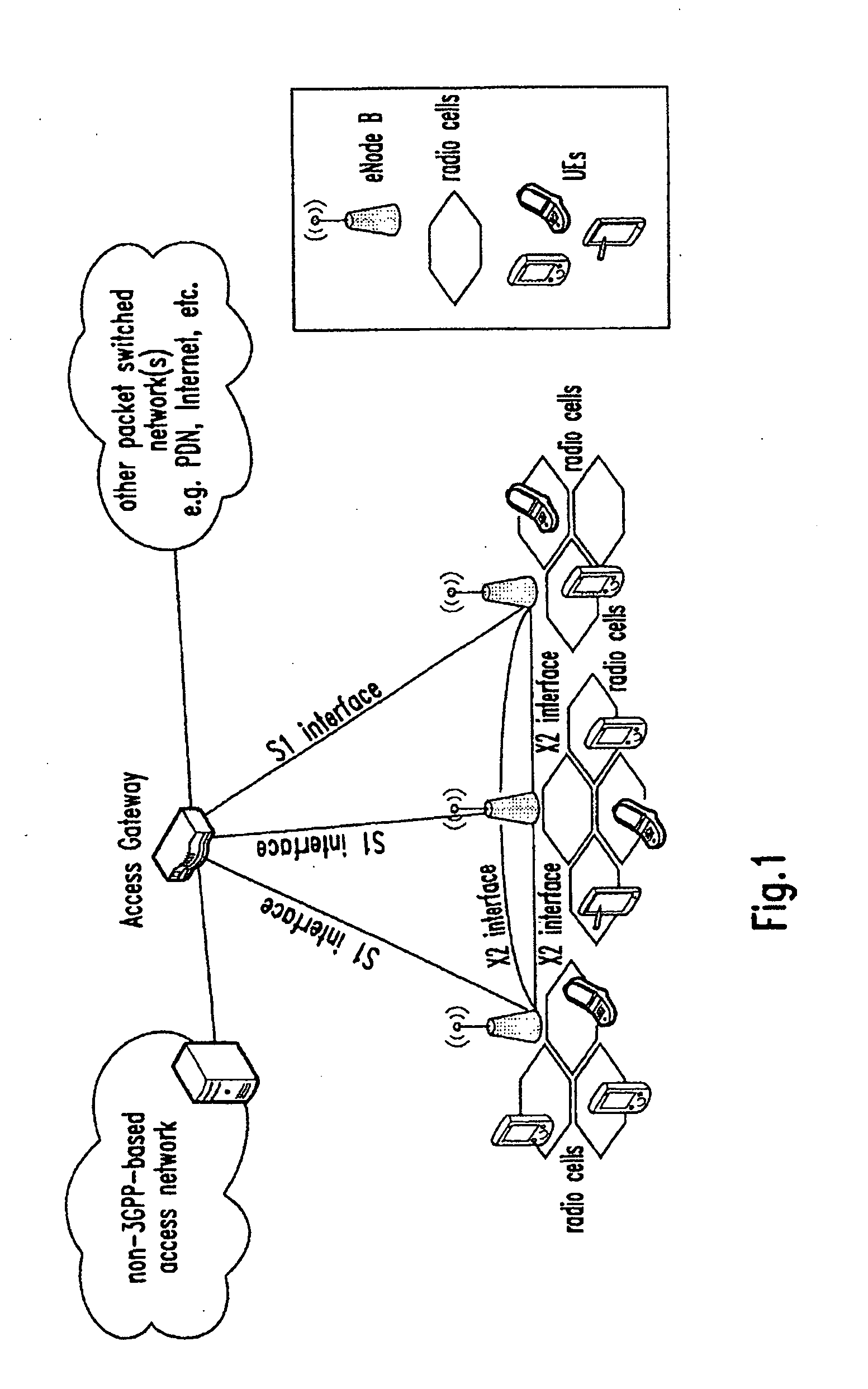

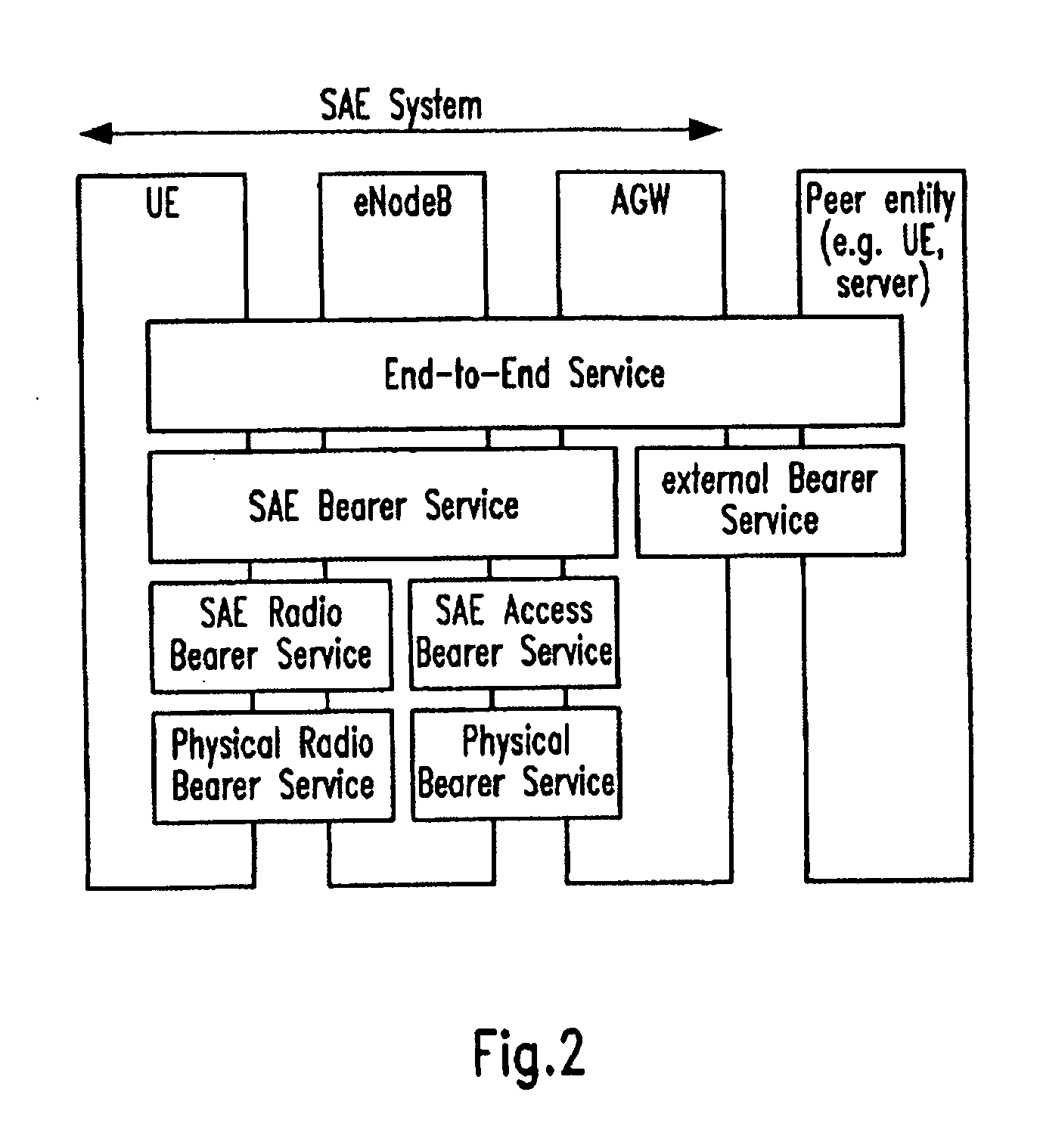

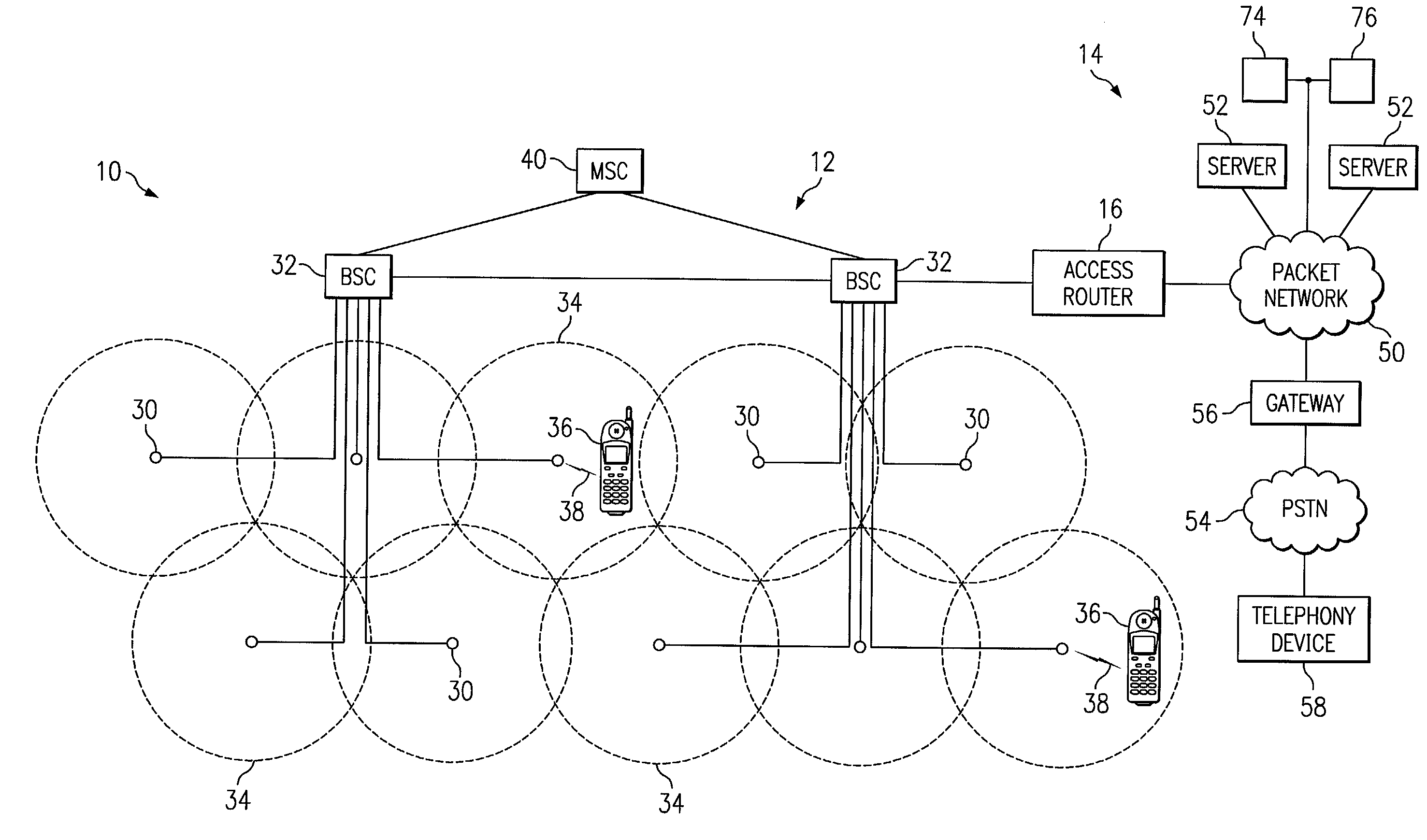

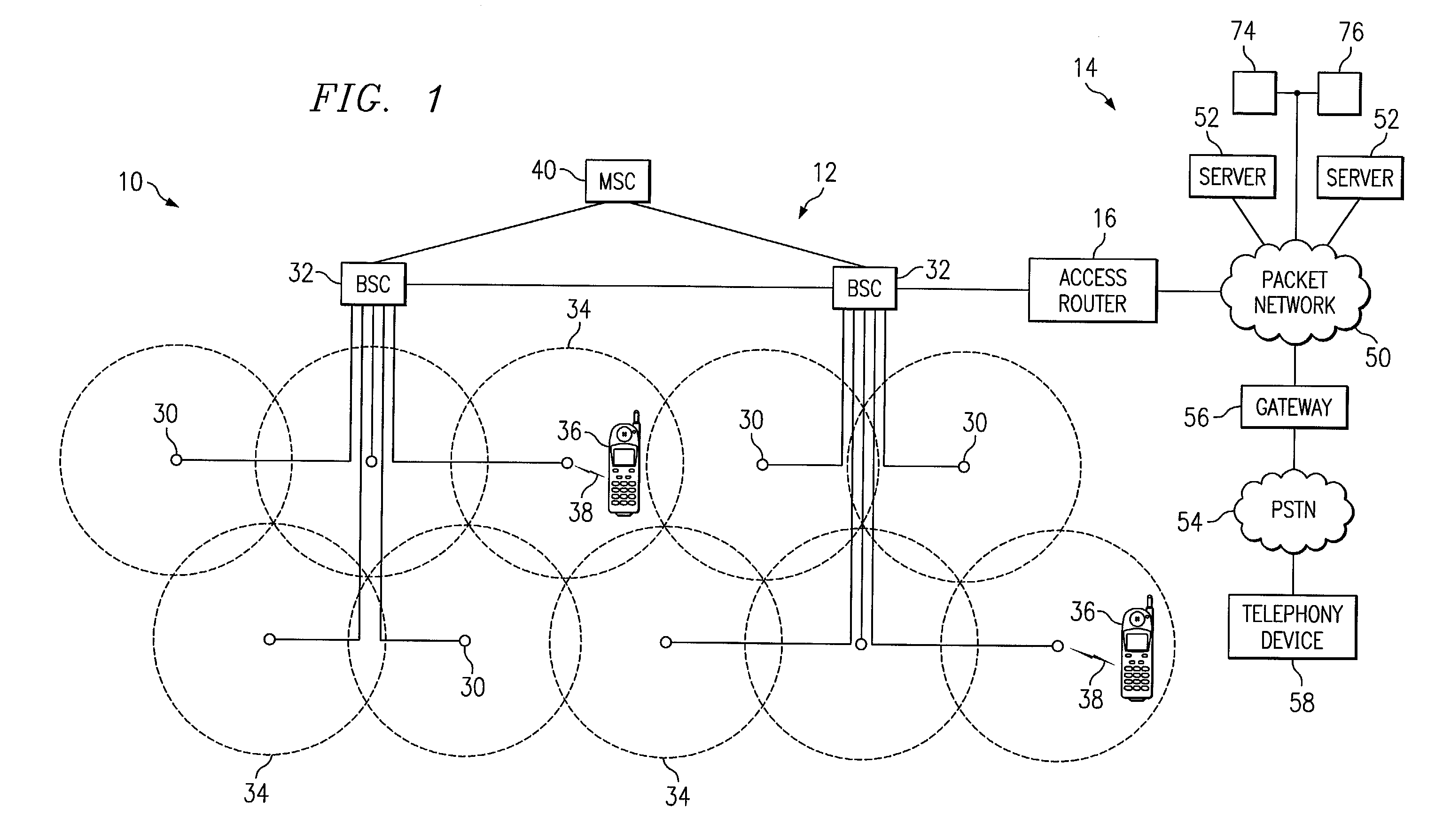

Method for supporting quality of service over a connection lifetime

InactiveUS20100240385A1Avoid hungerRaise priorityWireless communicationQuality of serviceWireless resource allocation

The invention relates to a method for determining Quality of Service information of a radio bearer configured in a mobile node and distributing scheduled radio resources to the radio bearer. Further, the invention also provides a base station and mobile node performing these methods, respectively. The invention suggests a scheme that allows for supporting an efficient Quality of Service over the lifetime of a connection, even upon handover of the mobile node between two radio cells. This is achieved by providing Quality of Service measurements upon handover, such as a provided bit rate of radio bearers configured for the mobile node, to the base station controlling the target radio cell, either by the source base station in the source radio cell or the mobile node.

Owner:PANASONIC CORP

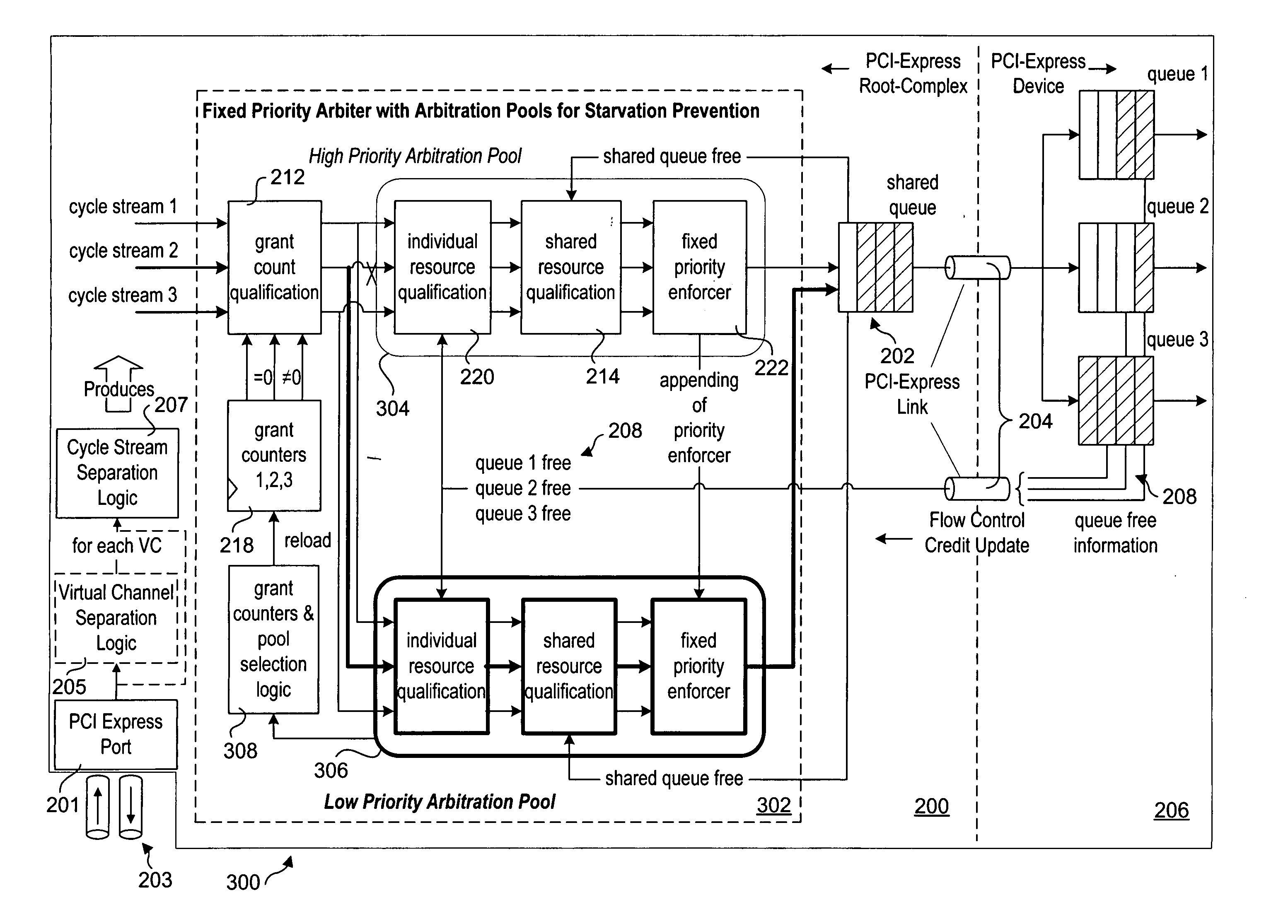

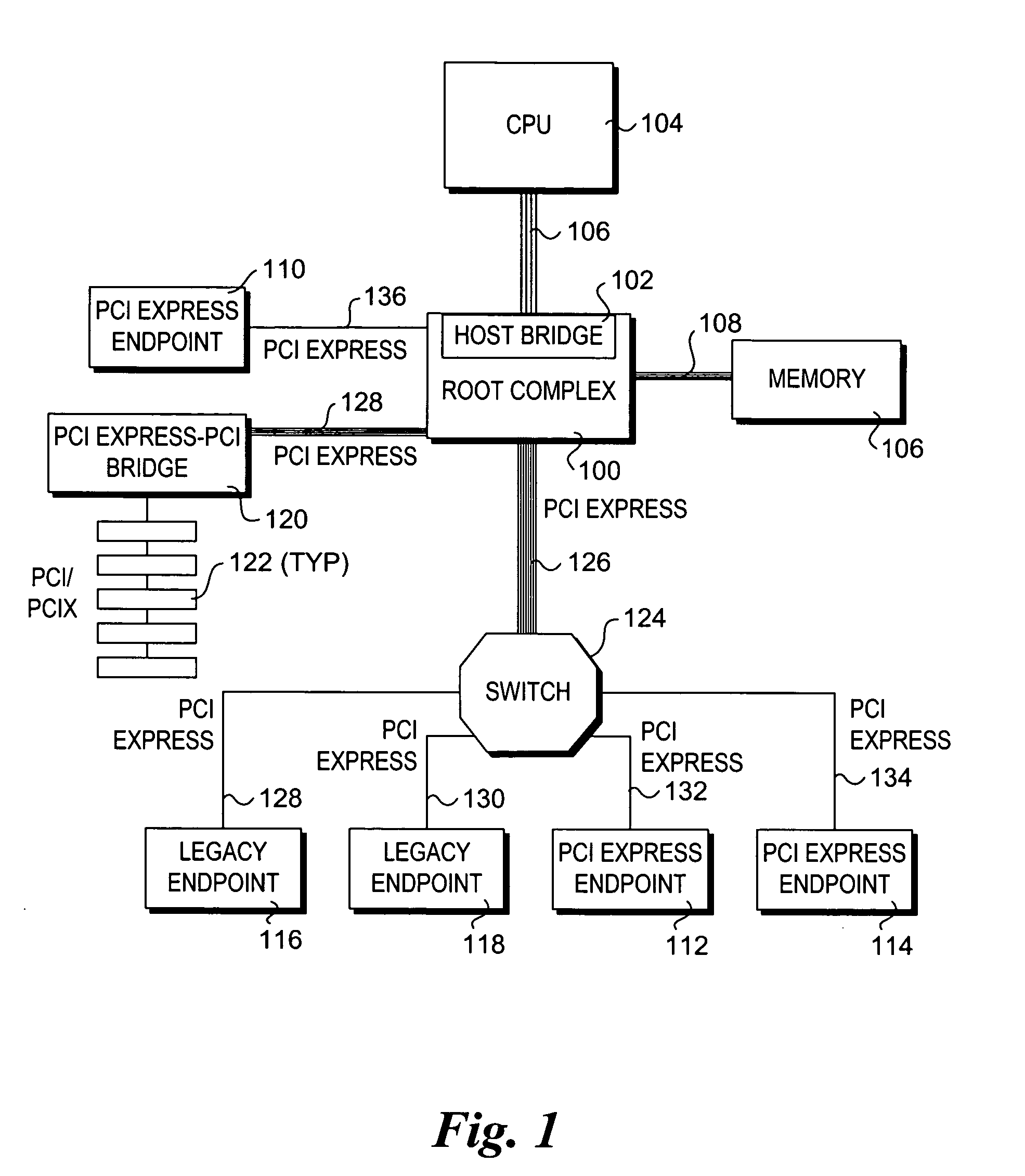

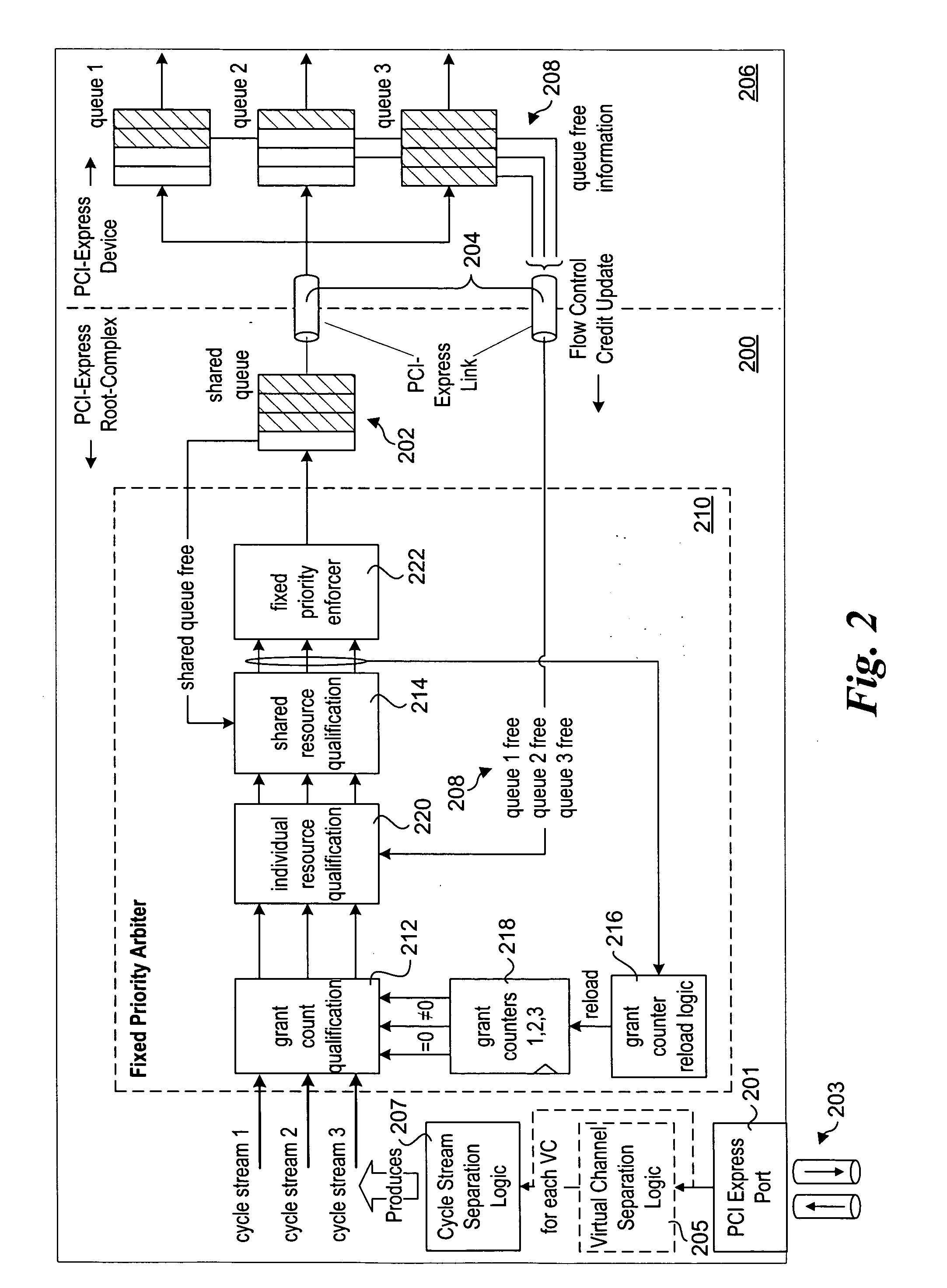

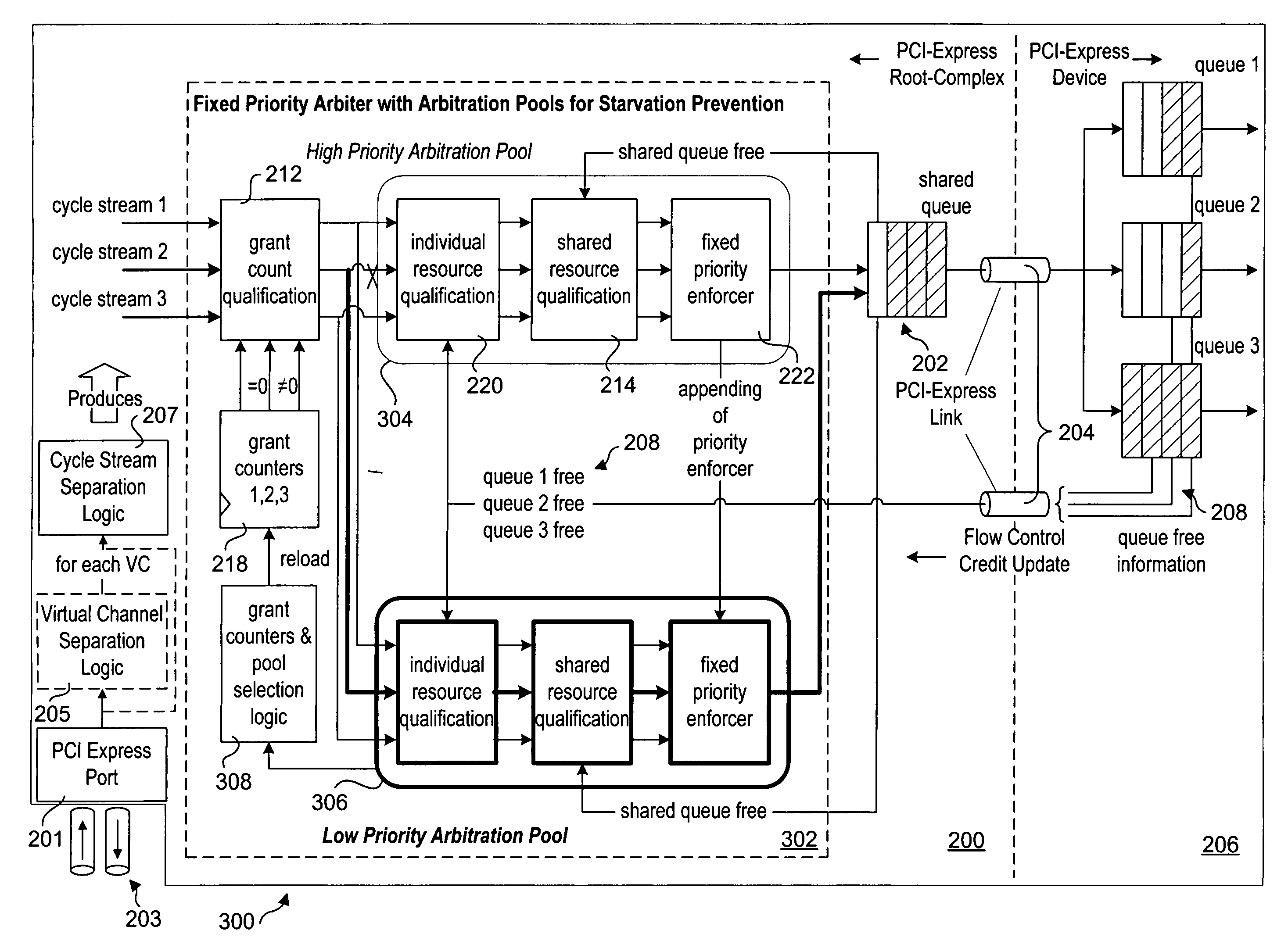

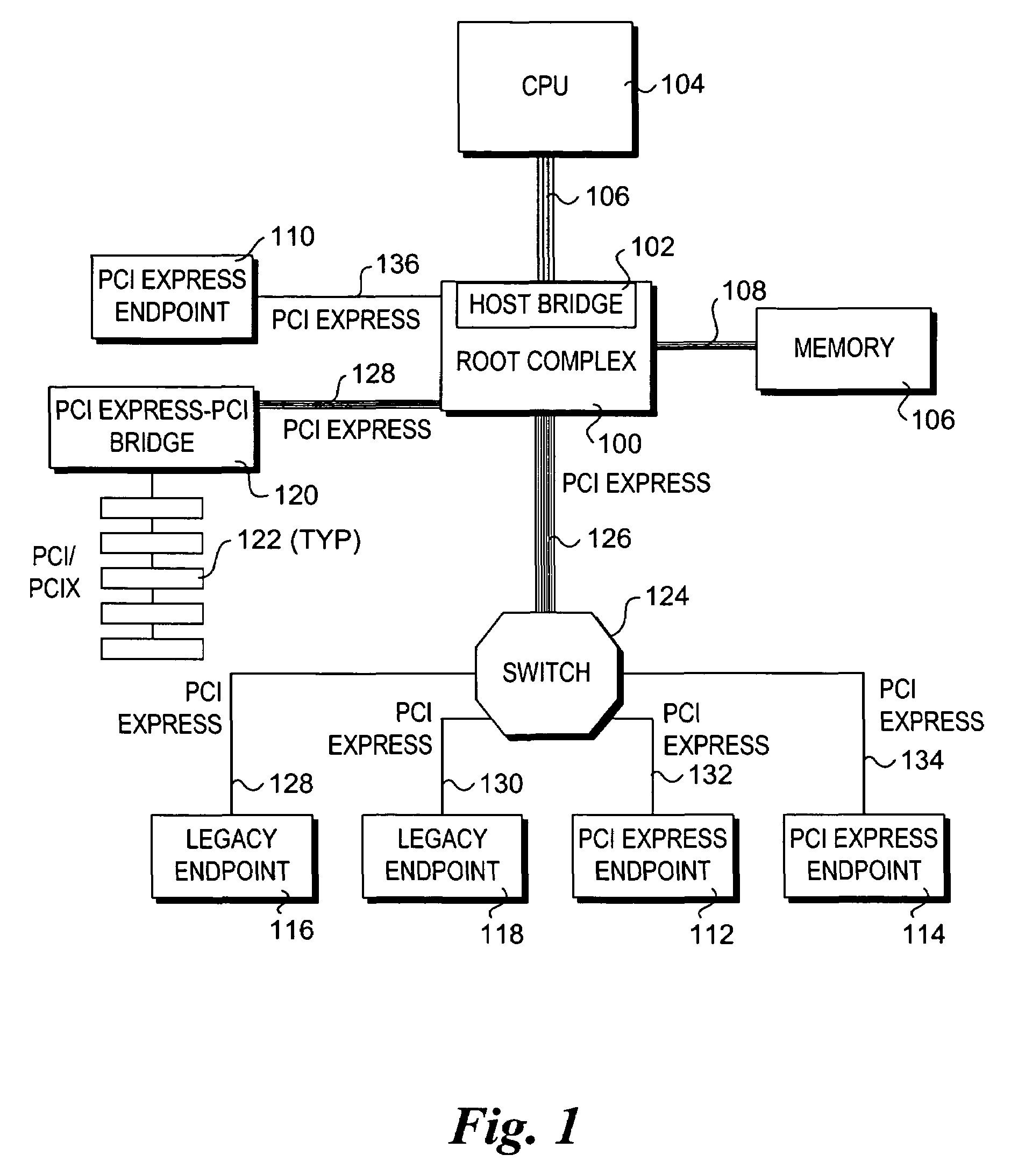

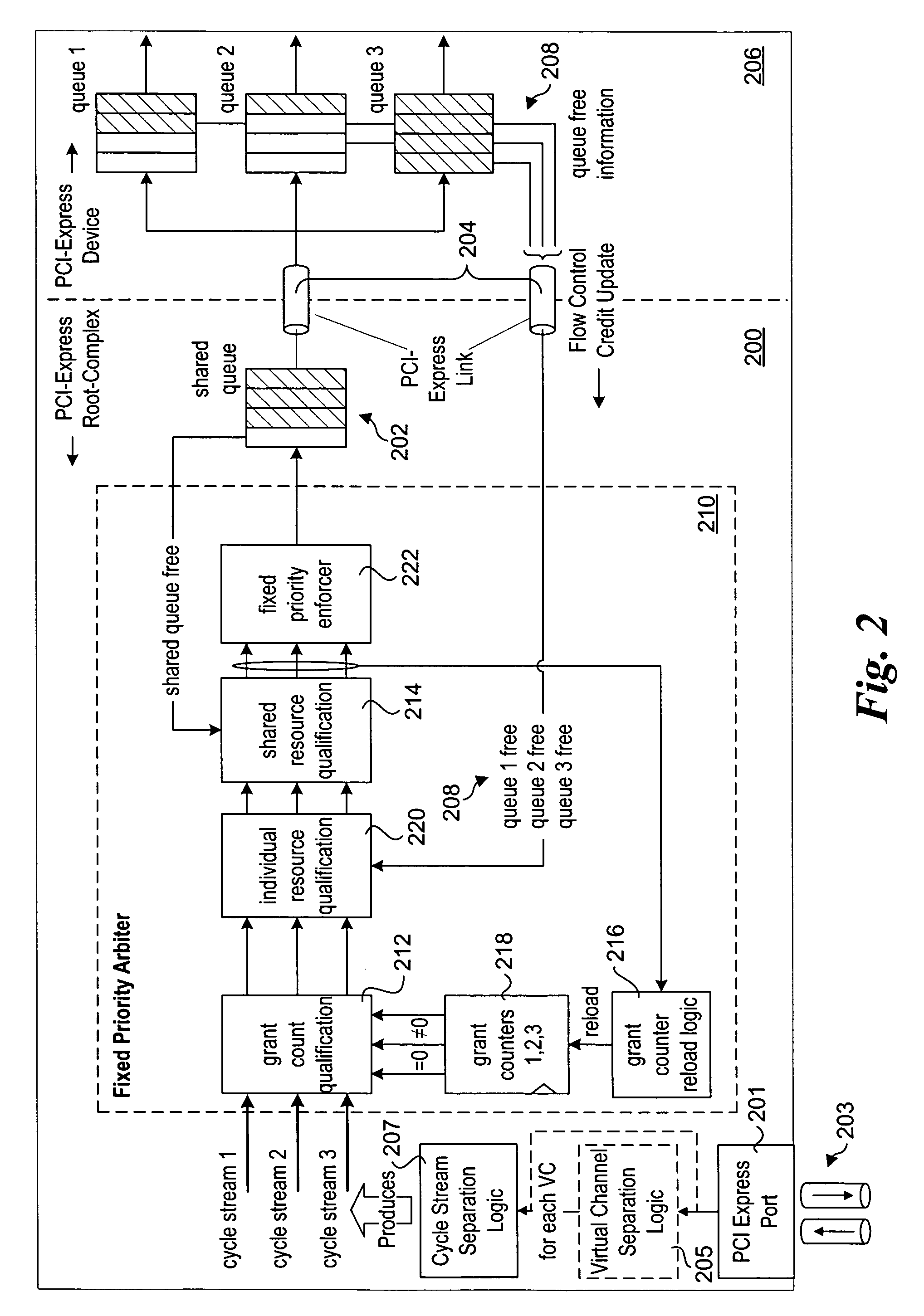

Starvation prevention scheme for a fixed priority PCI-Express arbiter with grant counters using arbitration pools

Method and apparatus for arbitrating prioritized cycle streams in a manner that prevents starvation. High priority and low priority arbitration pools are employed for arbitrating multiple input cycle streams. Each cycle stream contains a stream of requests of a given type and associated priority. Under normal circumstances in which resource buffer availability for a destination device is not an issue, higher priority streams are provided grants over lower priority streams, with all streams receiving grants. However, when a resource buffer is not available for a lower priority stream, arbitration of high priority streams with available buffer resources are redirected to the low priority arbitration pool, resulting in generation of grant counts for both the higher and lower priority streams. When the resource buffer for the low priority stream becomes available and a corresponding request is arbitrated in the high priority arbitration pool, a grant for the request can be immediately made since grant counts for the stream already exist.

Owner:INTEL CORP

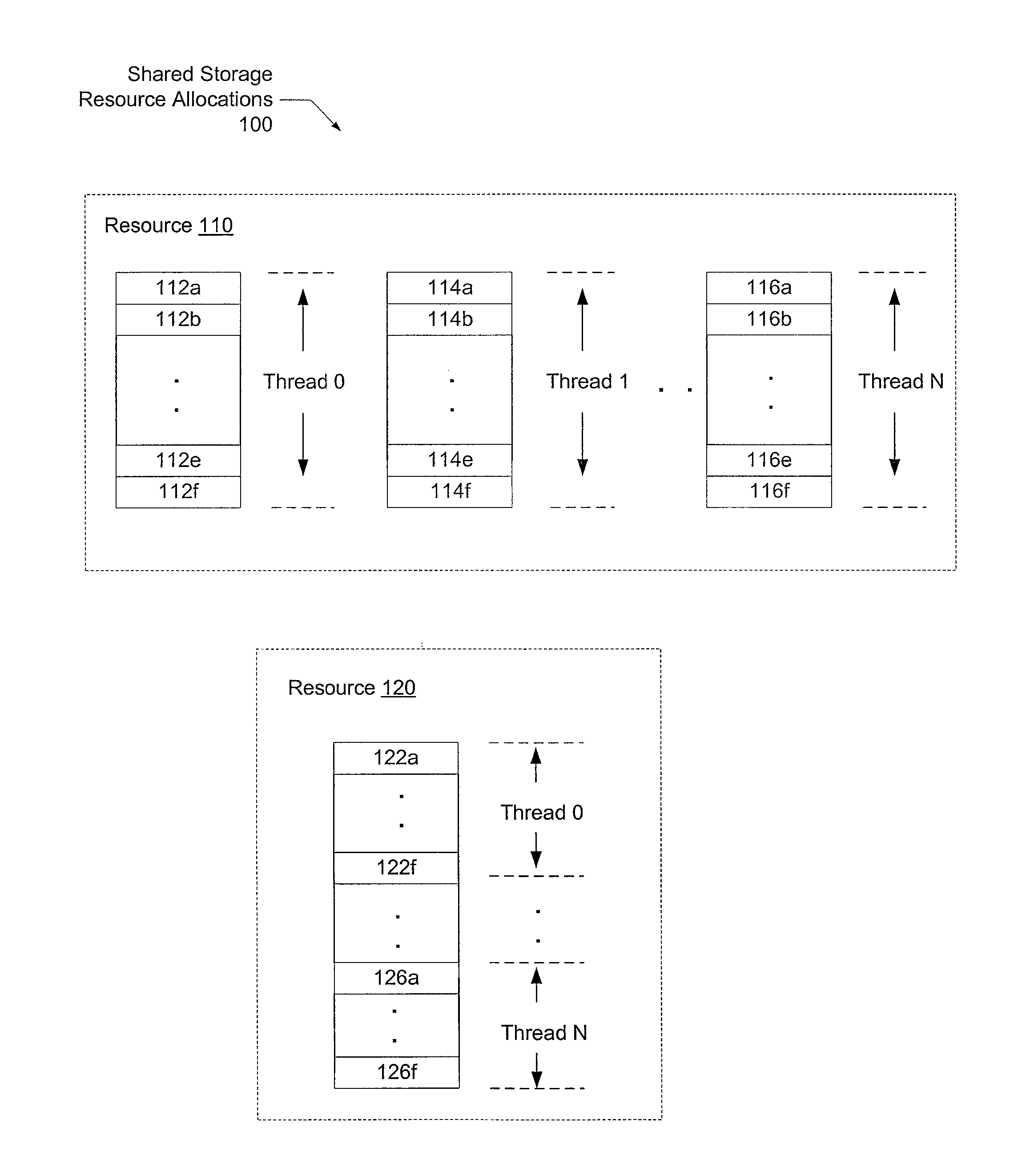

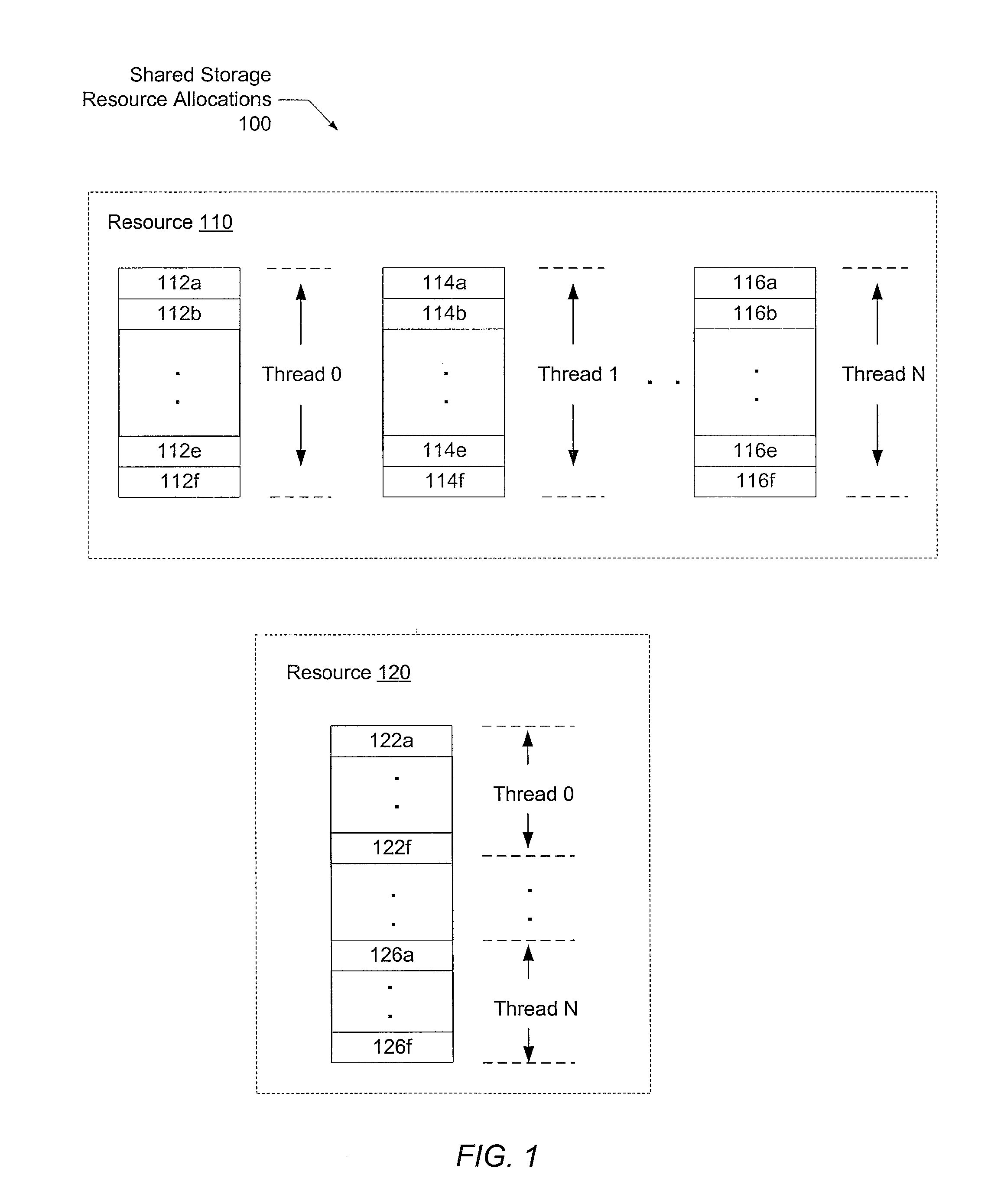

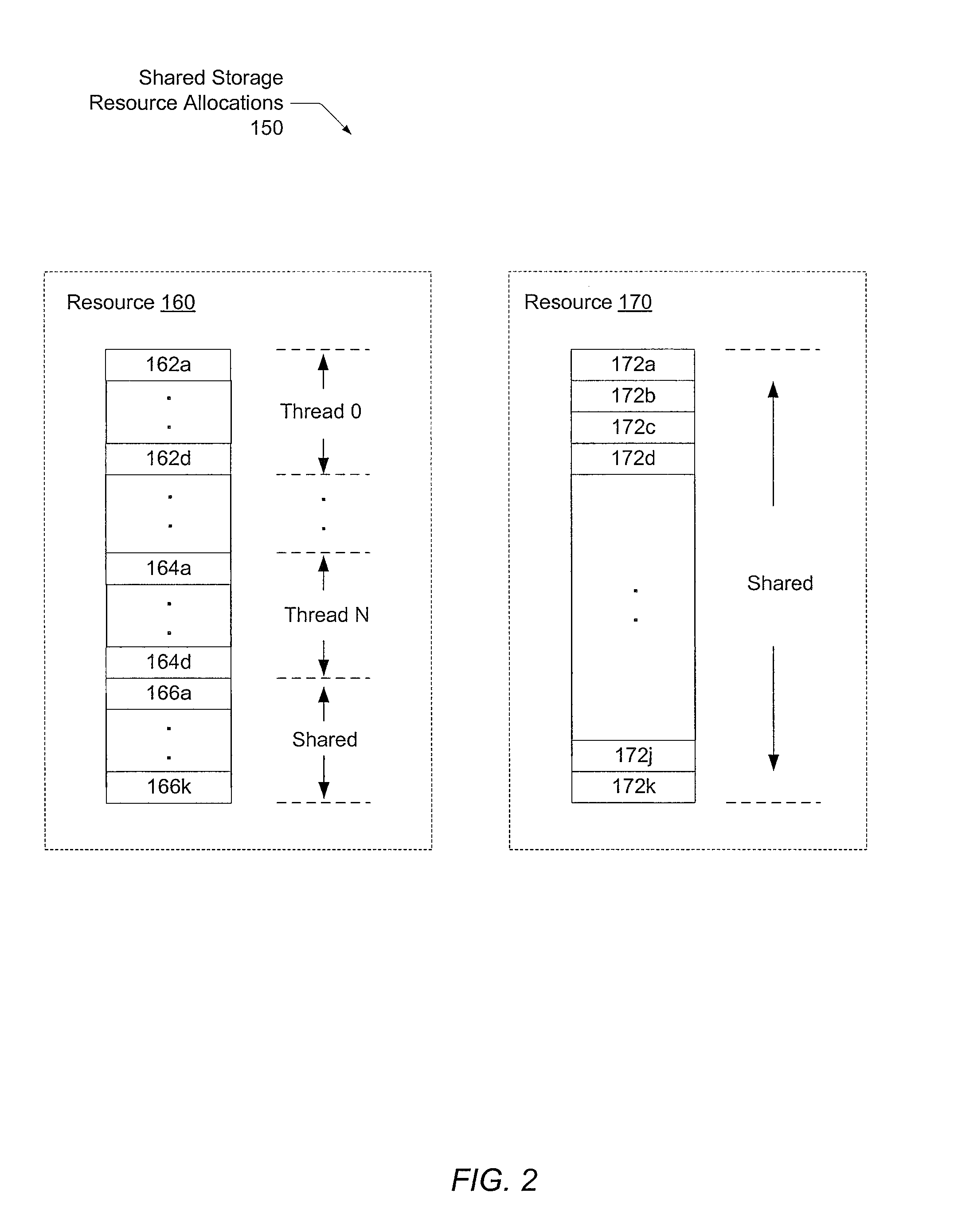

Dynamic allocation of resources in a threaded, heterogeneous processor

ActiveUS20100299499A1Efficient use ofIncrease profitDigital computer detailsConcurrent instruction executionParallel computingBiological activation

Systems and methods for efficient dynamic utilization of shared resources in a processor. A processor comprises a front end pipeline, an execution pipeline, and a commit pipeline, wherein each pipeline comprises a shared resource with entries configured to be allocated for use in each clock cycle by each of a plurality of threads supported by the processor. To avoid starvation of any active thread, the processor further comprises circuitry configured to ensure each active thread is able to allocate at least a predetermined quota of entries of each shared resource. Each pipe stage of a total pipeline for the processor may include at least one dynamically allocated shared resource configured not to starve any active thread. Dynamic allocation of shared resources between a plurality of threads may yield higher performance over static allocation. In addition, dynamic allocation may require relatively little overhead for activation / deactivation of threads.

Owner:ORACLE INT CORP

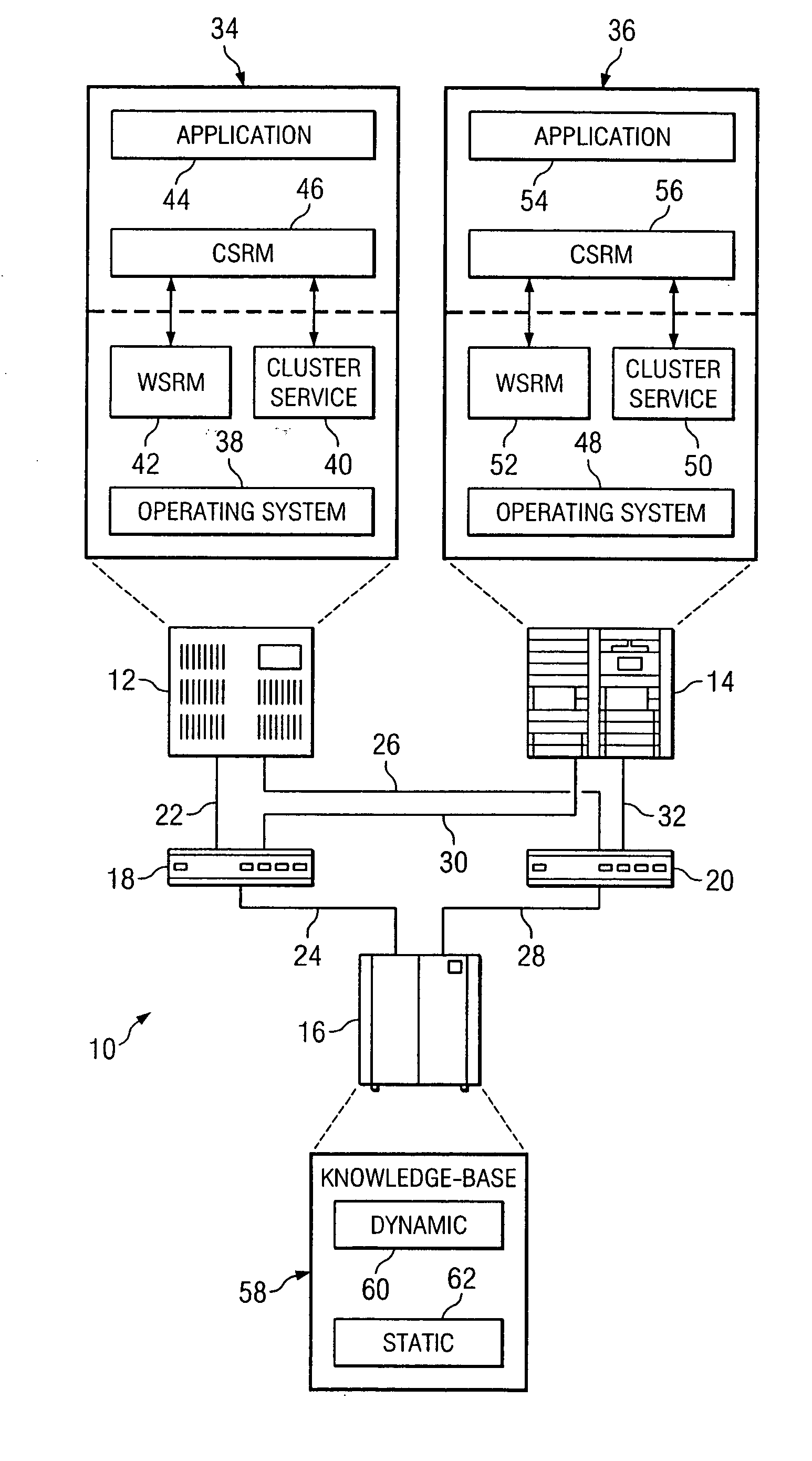

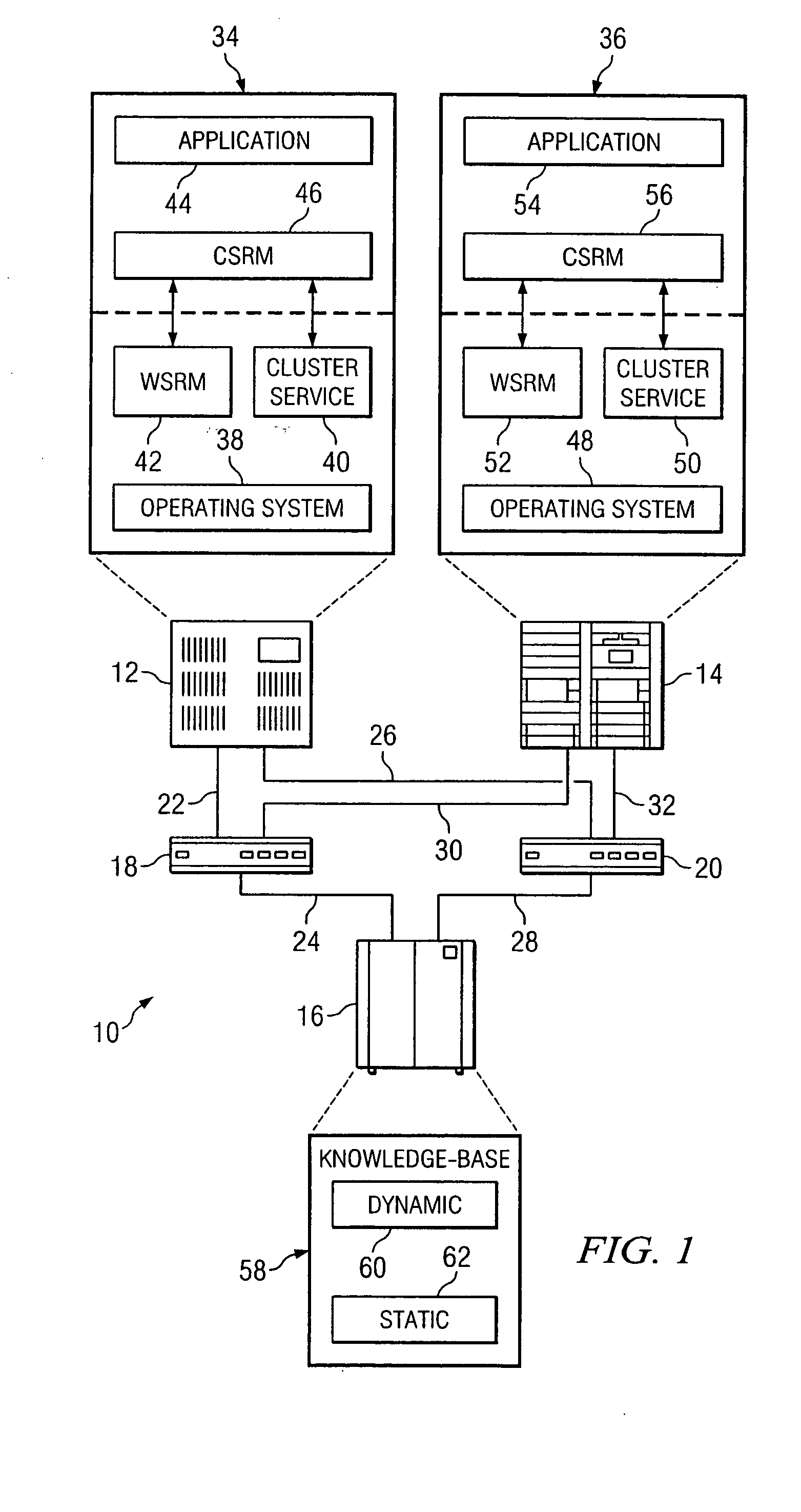

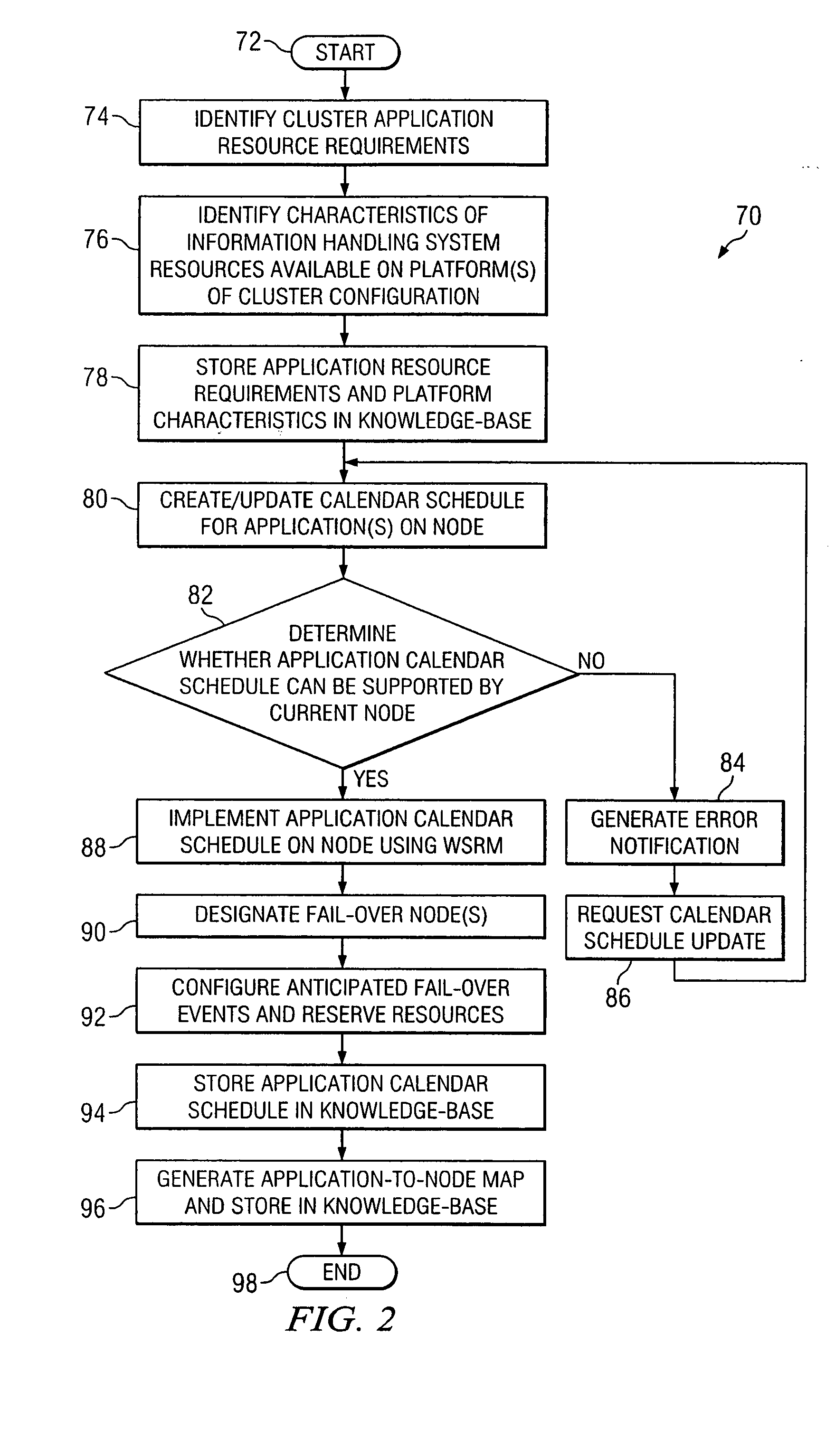

Method, system and software for allocating information handling system resources in response to high availability cluster fail-over events

InactiveUS20050132379A1Preventing application starvationReducing application resource requirementResource allocationMemory systemsFailoverInformation processing

A method, system and software for allocating information handling system resources in response to cluster fail-over events are disclosed. In operation, the method provides for the calculation of a performance ratio between a failing node and a fail-over node and the transformation of an application calendar schedule from the failing node into a new application calendar schedule for the fail-over node. Before implementing the new application calendar schedule for the failing-over application on the fail-over node, the method verifies that the fail-over node includes sufficient resources to process its existing calendar schedule as well as the new application calendar schedule for the failing-over application. A resource negotiation algorithm may be applied to one or more of the calendar schedules to prevent application starvation in the event the fail-over node does not include sufficient resources to process the failing-over application calendar schedule as well as its existing application calendar schedules.

Owner:DELL PROD LP

Starvation prevention scheme for a fixed priority PCI-Express arbiter with grant counters using arbitration pools

Method and apparatus for arbitrating prioritized cycle streams in a manner that prevents starvation. High priority and low priority arbitration pools are employed for arbitrating multiple input cycle streams. Each cycle stream contains a stream of requests of a given type and associated priority. Under normal circumstances in which resource buffer availability for a destination device is not an issue, higher priority streams are provided grants over lower priority streams, with all streams receiving grants. However, when a resource buffer is not available for a lower priority stream, arbitration of high priority streams with available buffer resources are redirected to the low priority arbitration pool, resulting in generation of grant counts for both the higher and lower priority streams. When the resource buffer for the low priority stream becomes available and a corresponding request is arbitrated in the high priority arbitration pool, a grant for the request can be immediately made since grant counts for the stream already exist.

Owner:INTEL CORP

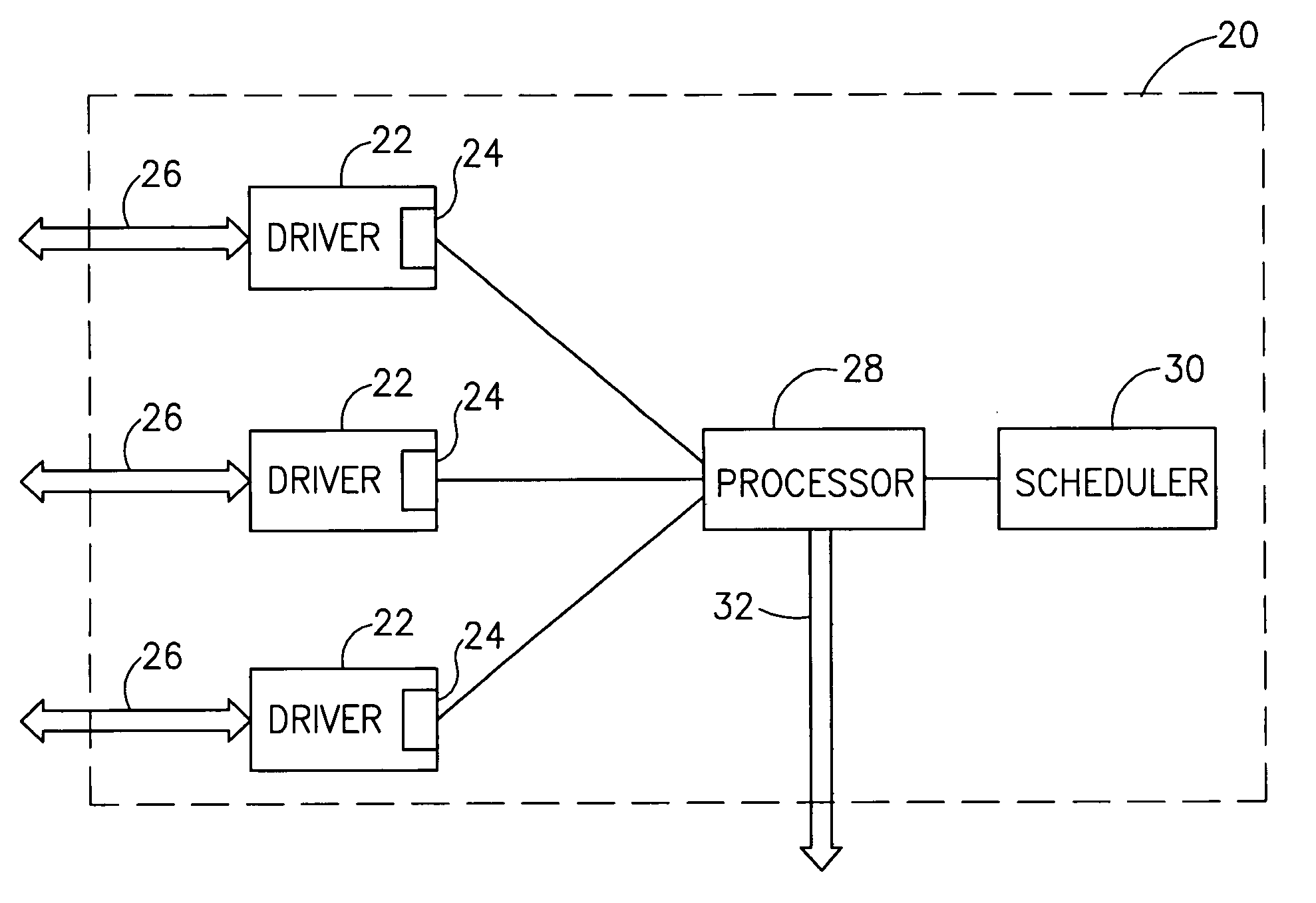

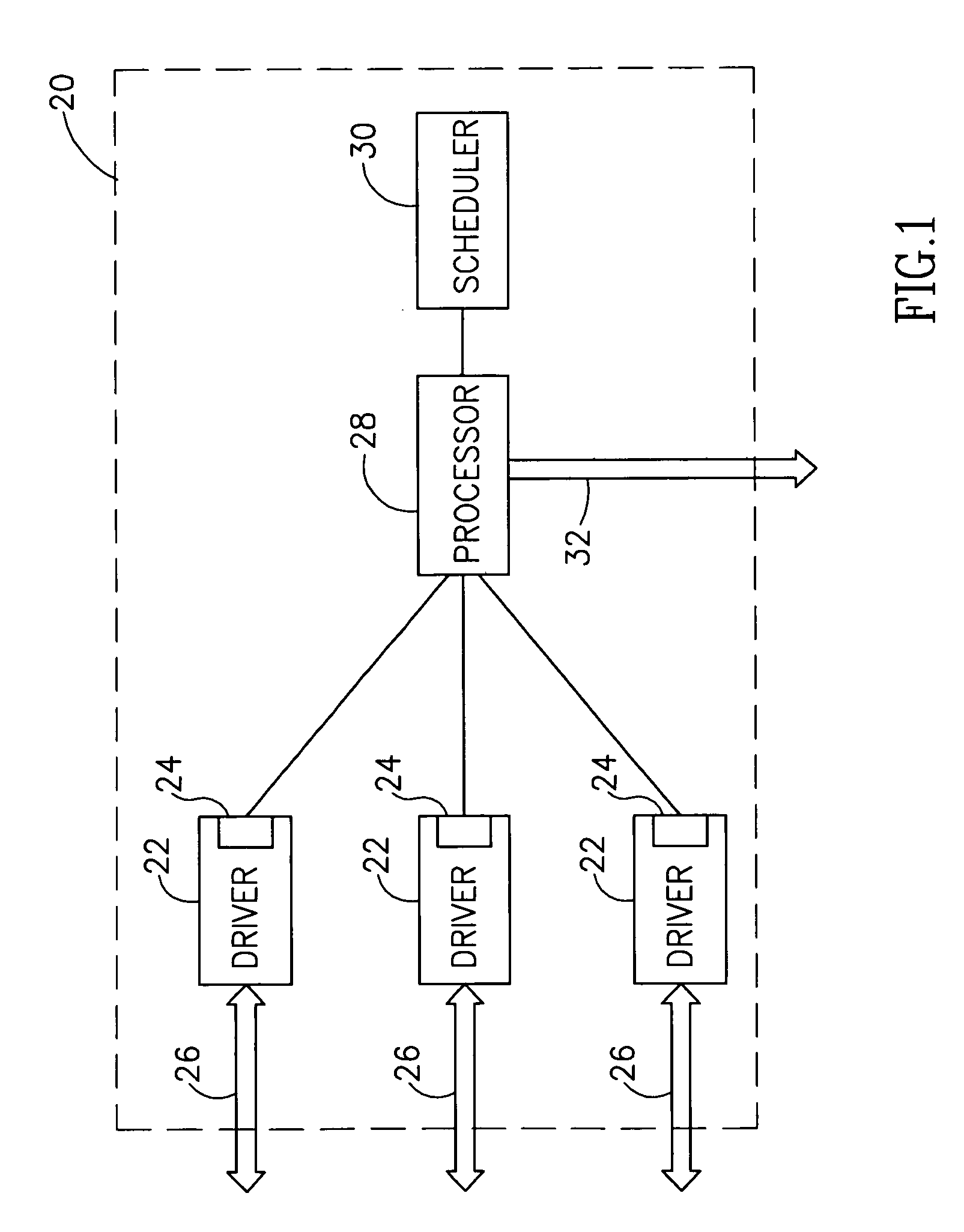

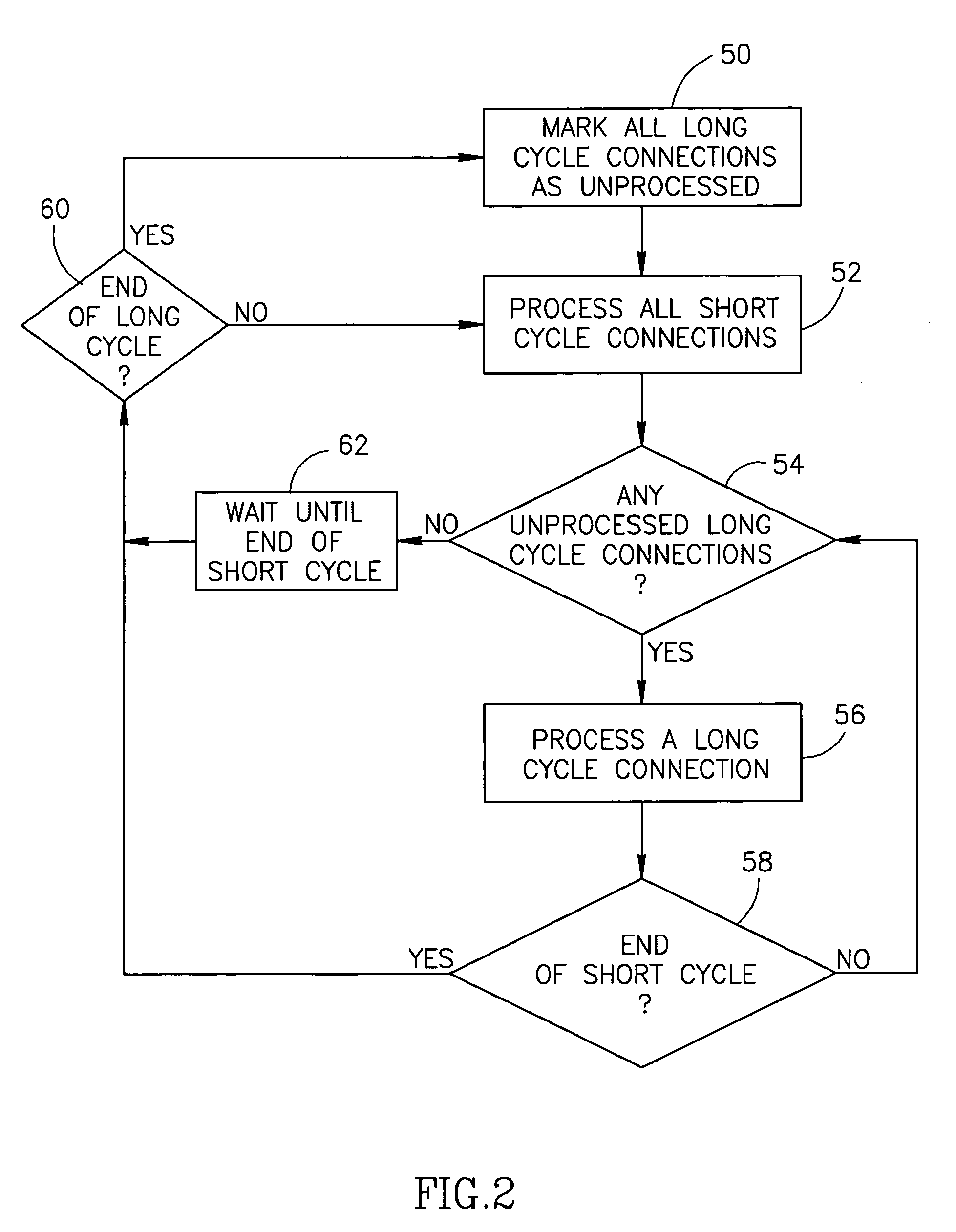

Scheduling in a remote-access server

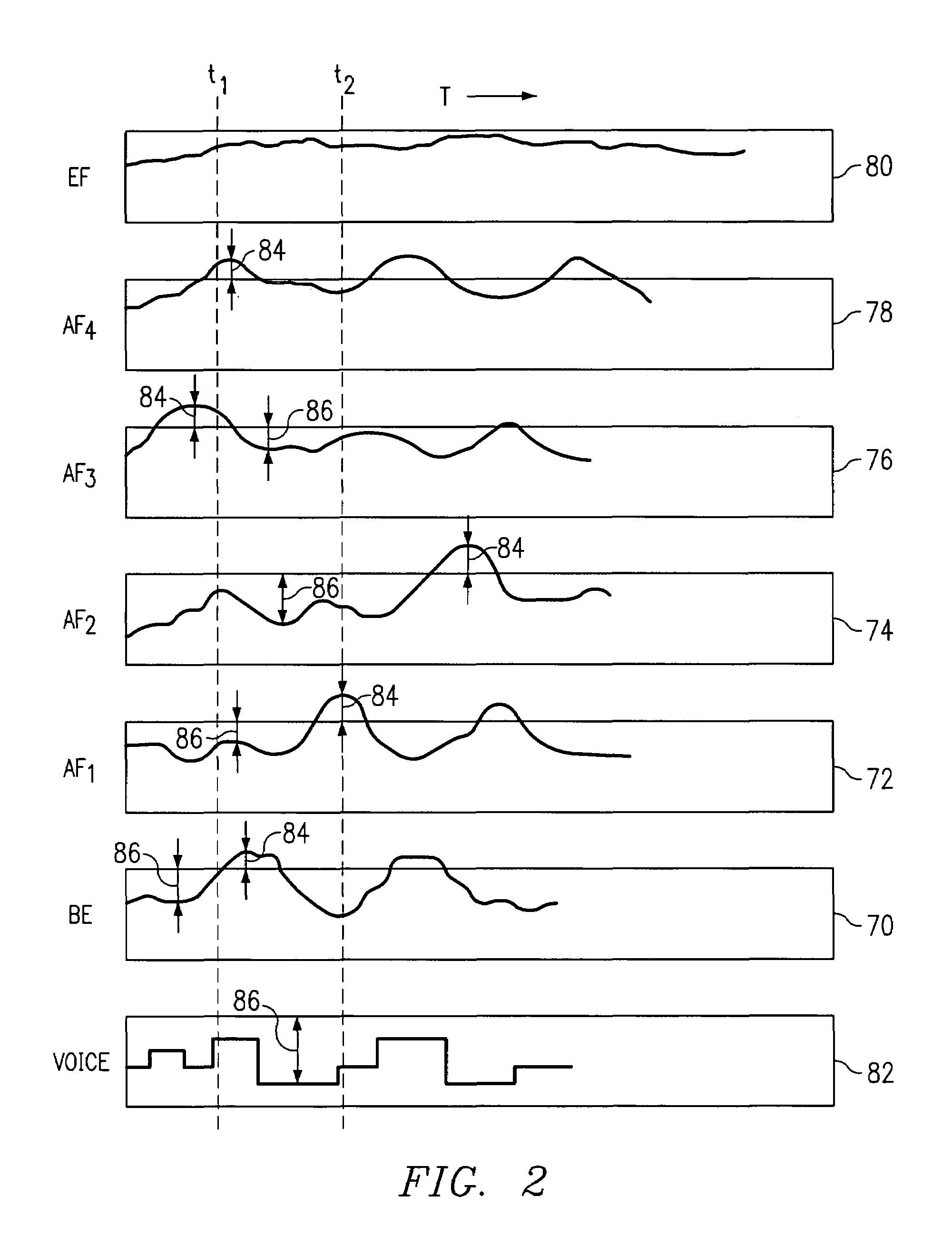

InactiveUS6978311B1Avoid hungerError preventionFrequency-division multiplex detailsData treatmentCycle time

A method of scheduling the handling of data from a plurality of channels. The method includes accumulating data from a plurality of channels by a remote access server, scheduling a processor of the server to handle the accumulated data from at least one first one of the channels, once during a first cycle time, and scheduling the processor to handle the accumulated data from at least one second one of the channels, once during a second cycle time different from the first cycle time.

Owner:SURF COMM SOLUTIONS

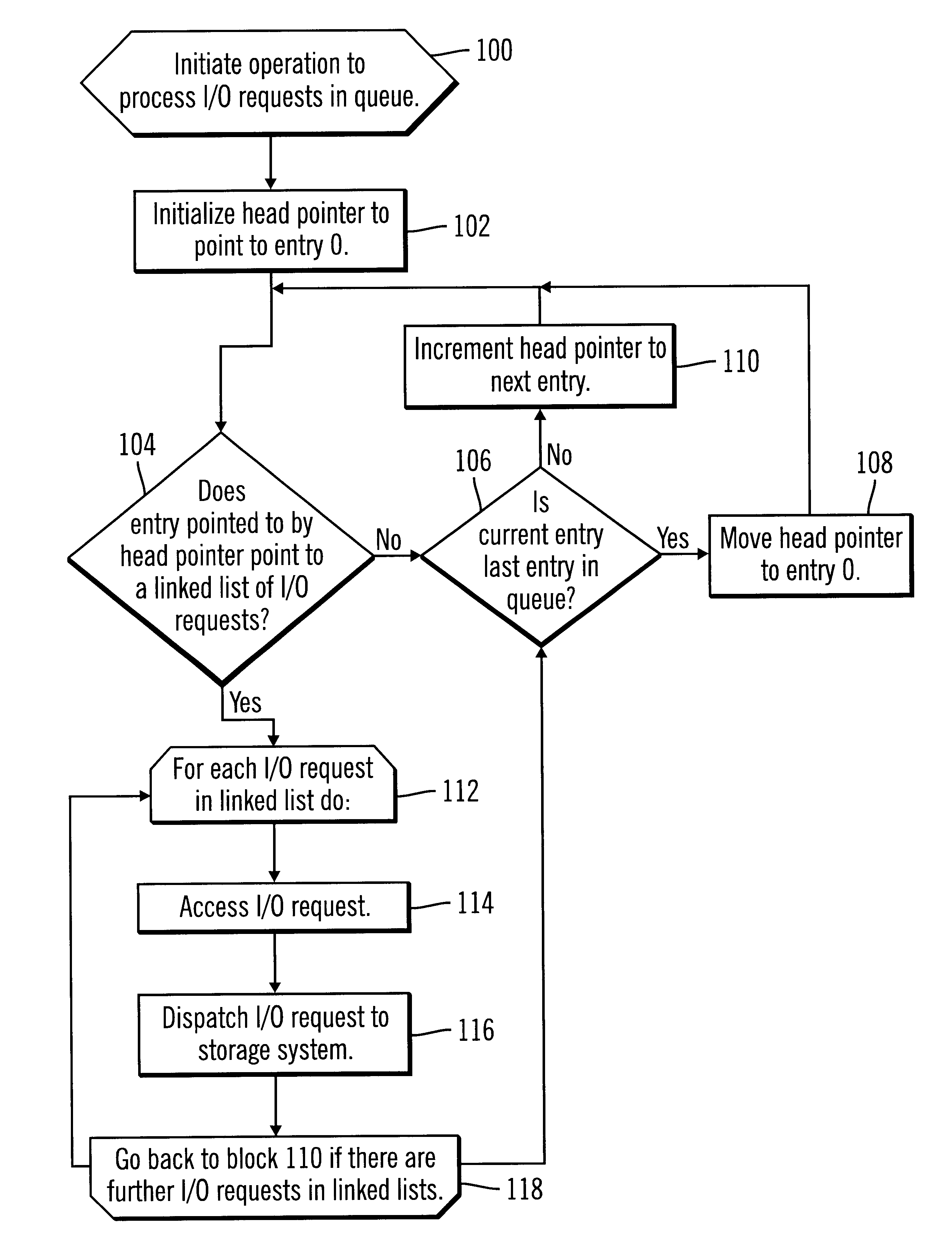

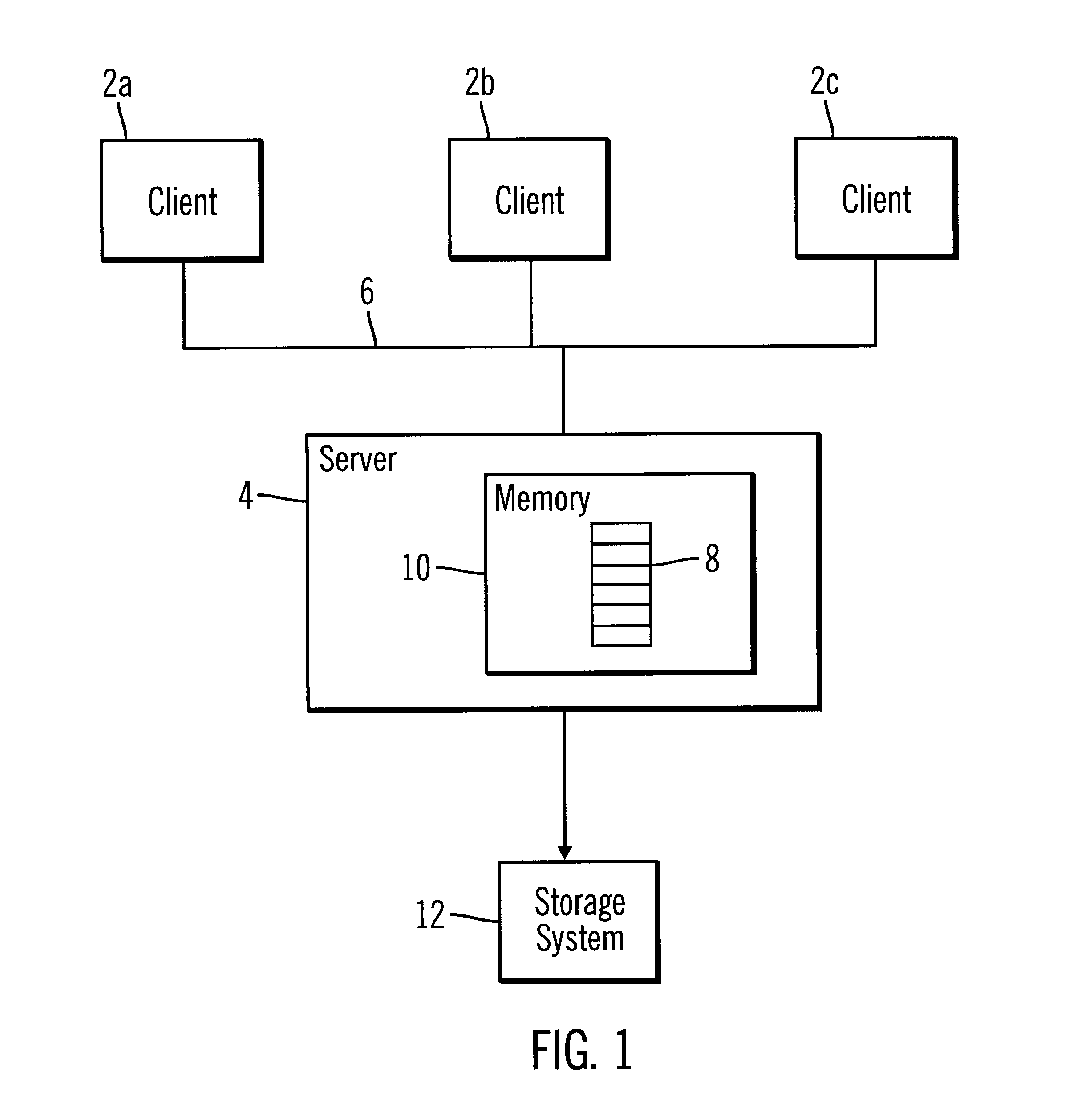

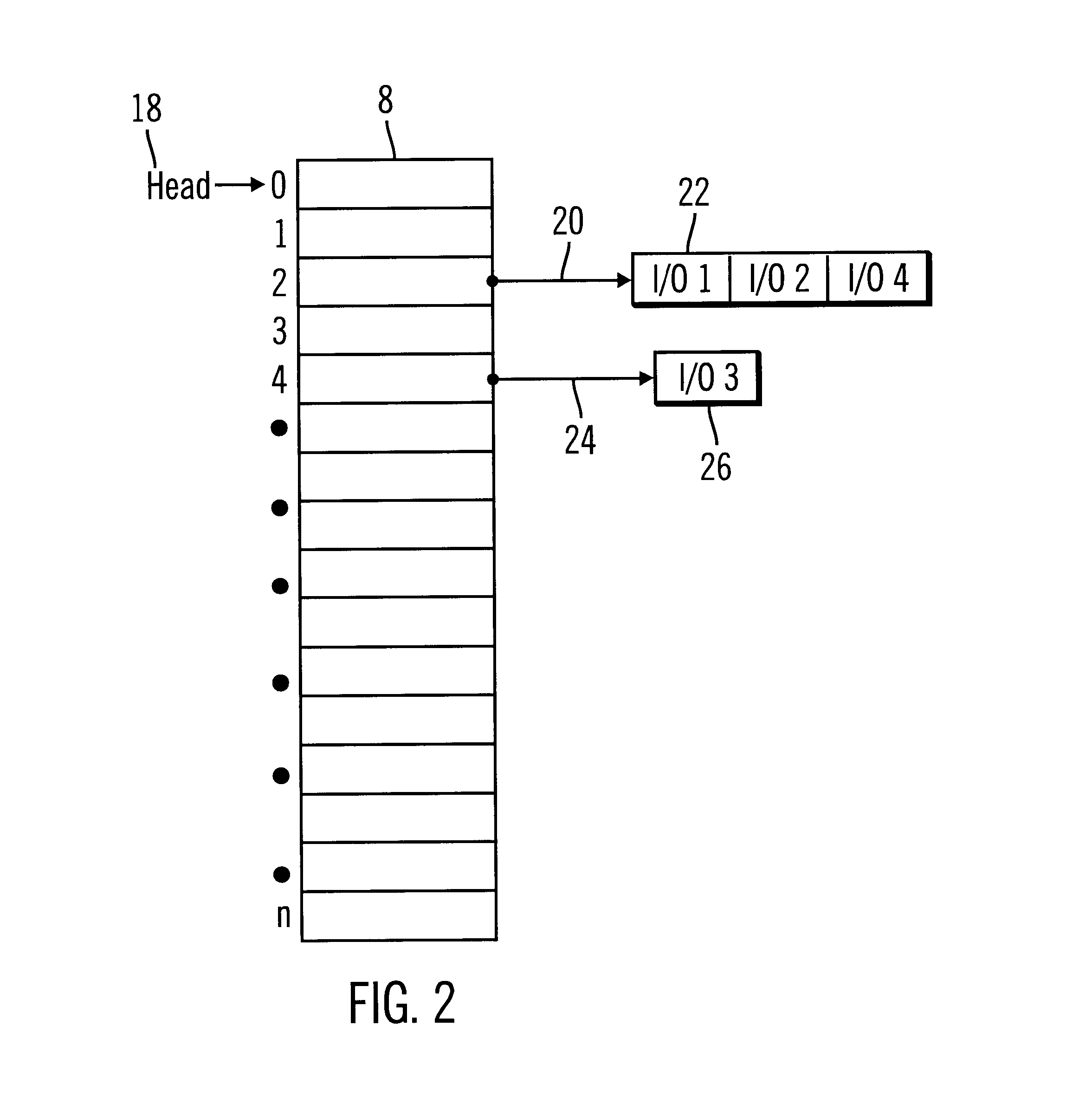

Method, system, program, and data structure for queuing requests having different priorities

InactiveUS6745262B1Low priorityBurdening and degrading memory performanceProgram initiation/switchingData switching networksEntry pointComputer science

Disclosed is a method, system, program, and data structure for queuing requests. Each request is associated with one of a plurality of priority levels. A queue is generated including a plurality of entries. Each entry corresponds to a priority level and a plurality of requests can be queued at one entry. When a new request having an associated priority is received to enqueue on the queue, a determination is made of an entry pointed to by a pointer. The priority associated with the new request is adjusted by a value such that the adjusted priority is associated with an entry different from the entry pointed to by the pointer. The new request is queued at one entry associated with the adjusted priority.

Owner:GOOGLE LLC

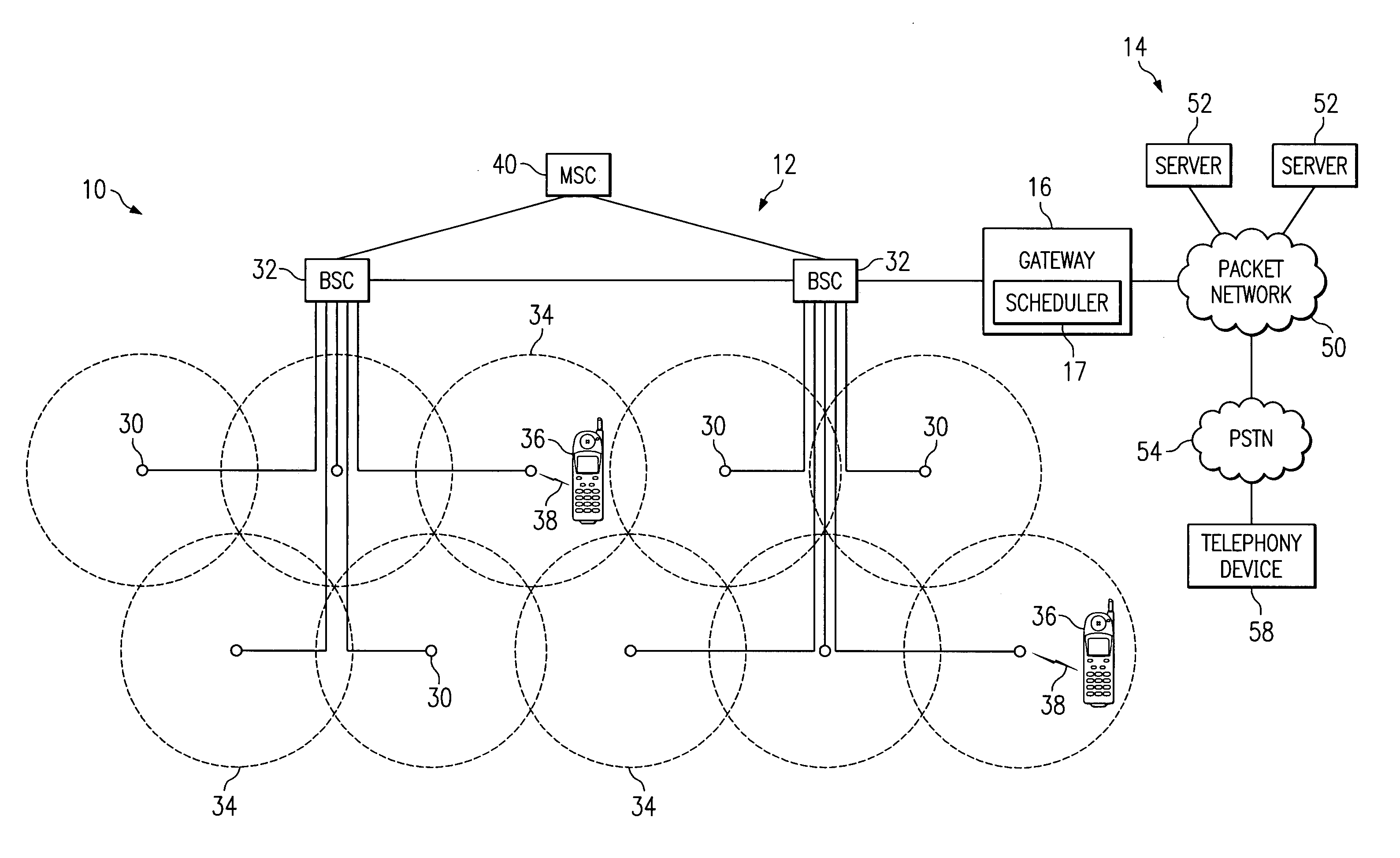

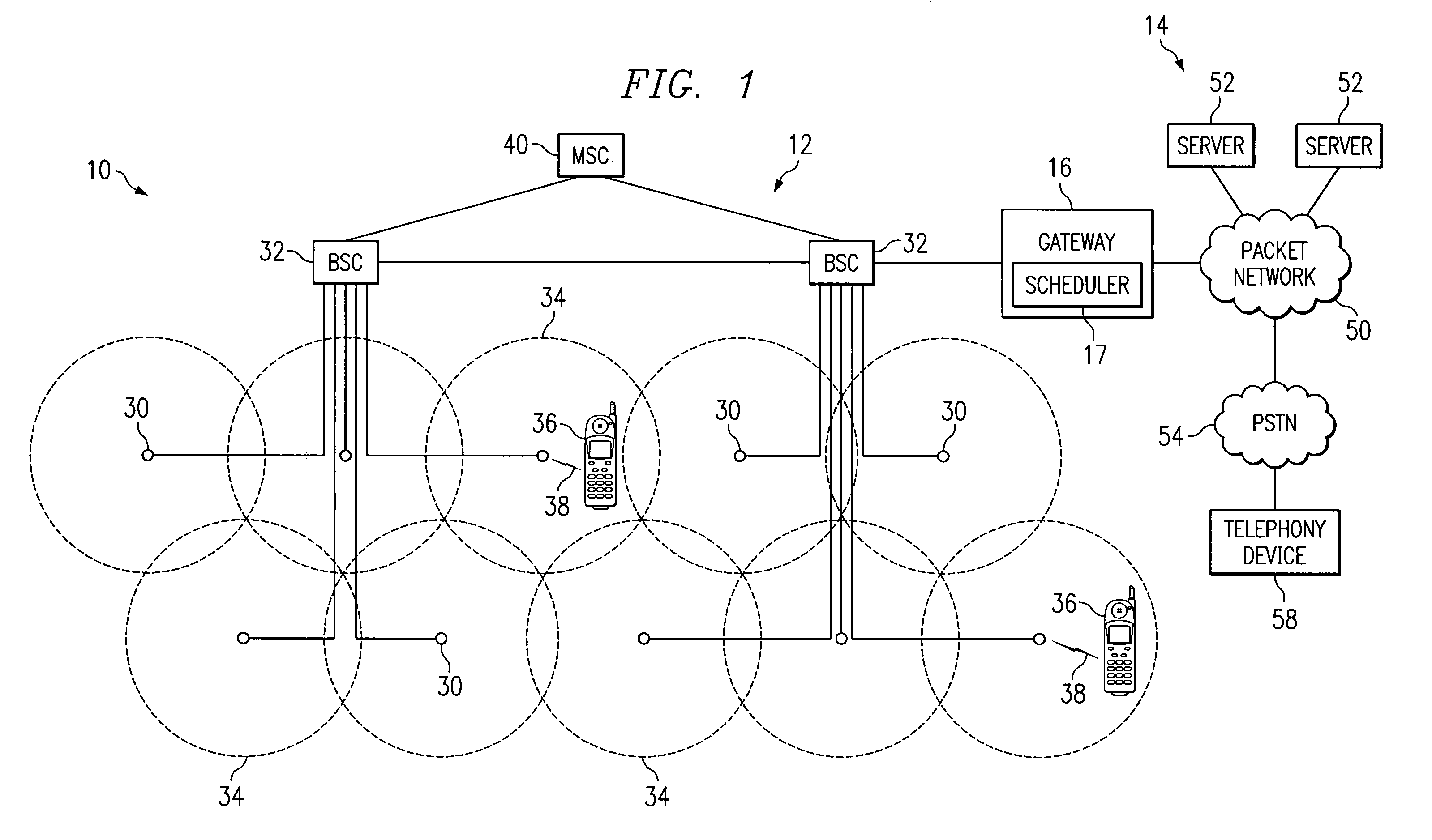

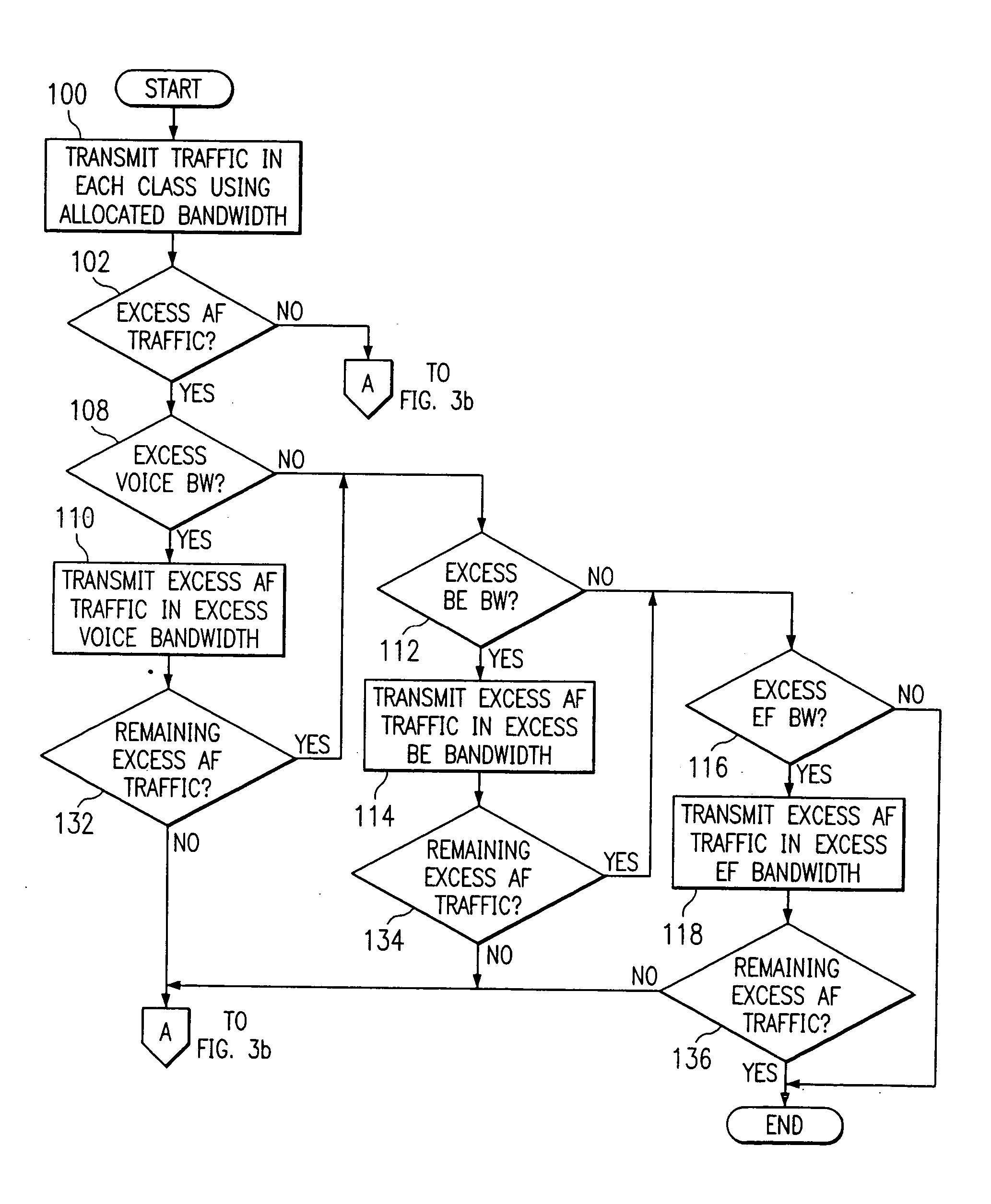

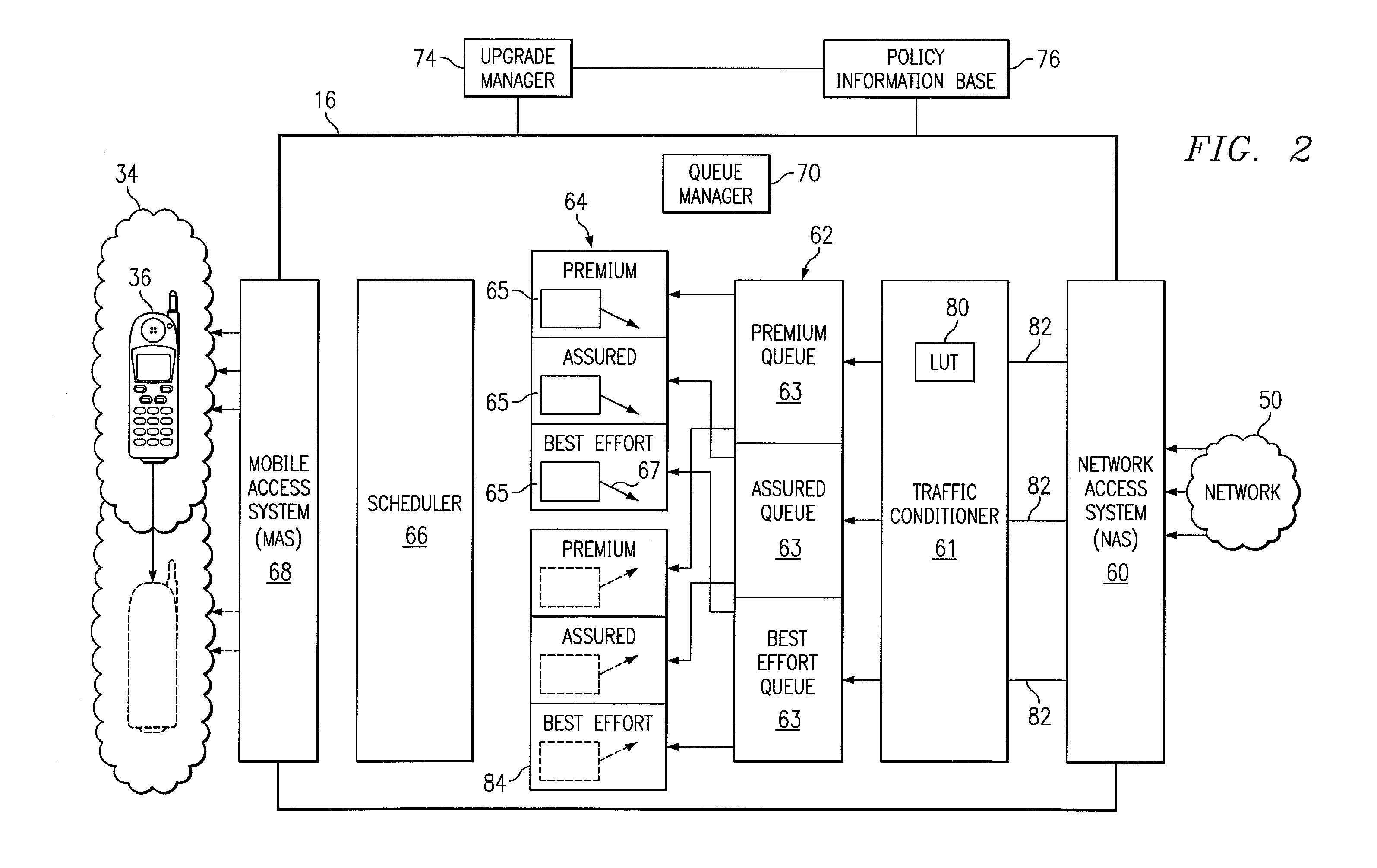

Method and system for sharing over-allocated bandwidth between different classes of service in a wireless network

InactiveUS6973315B1Reduce and eliminate and disadvantageReduce and eliminate problemError preventionFrequency-division multiplex detailsClass of serviceDistributed computing

A method and system for sharing over-allocated bandwidth between different classes of service in a wireless network. Traffic is transmitted for a first service class in excess of bandwidth allocated to the first service class using unused bandwidth allocated to a second class. After transmitting traffic for the first service class in excess of bandwidth allocated to the first service class using unused bandwidth allocated to a second class, traffic for a third service class is transmitted in unused bandwidth remaining in the second service class.

Owner:CISCO TECH INC

Method, system and router providing active queue management in packet transmission systems

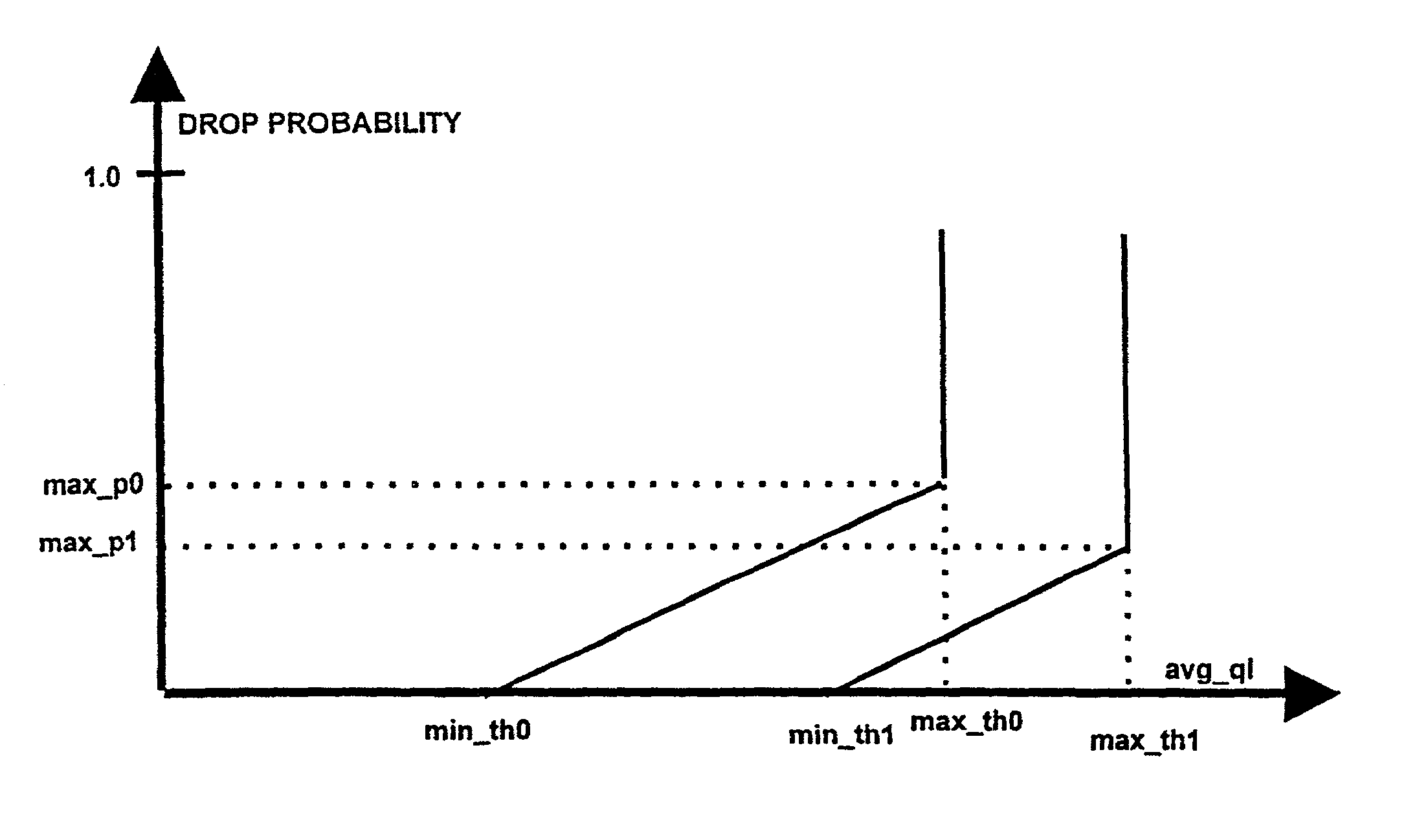

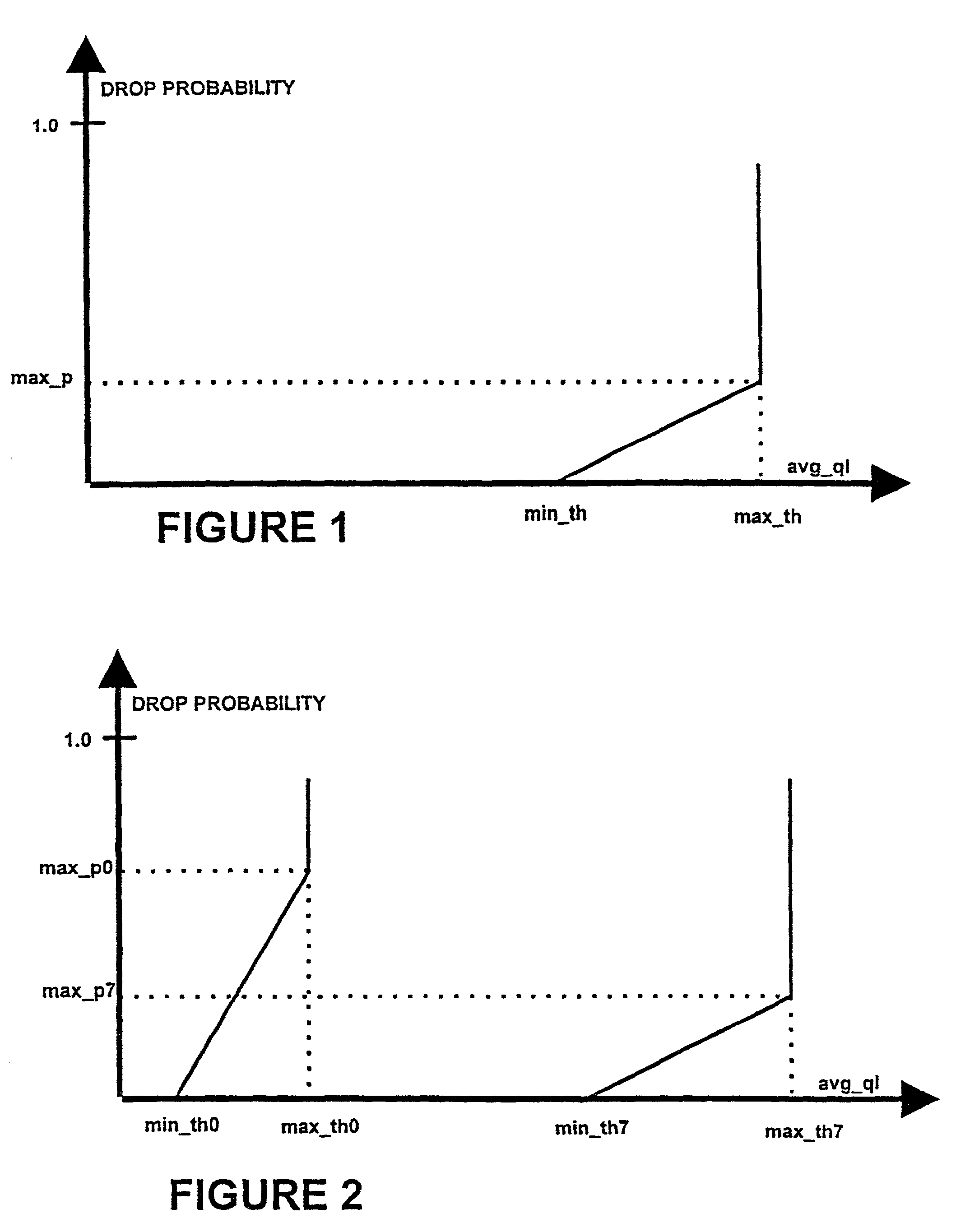

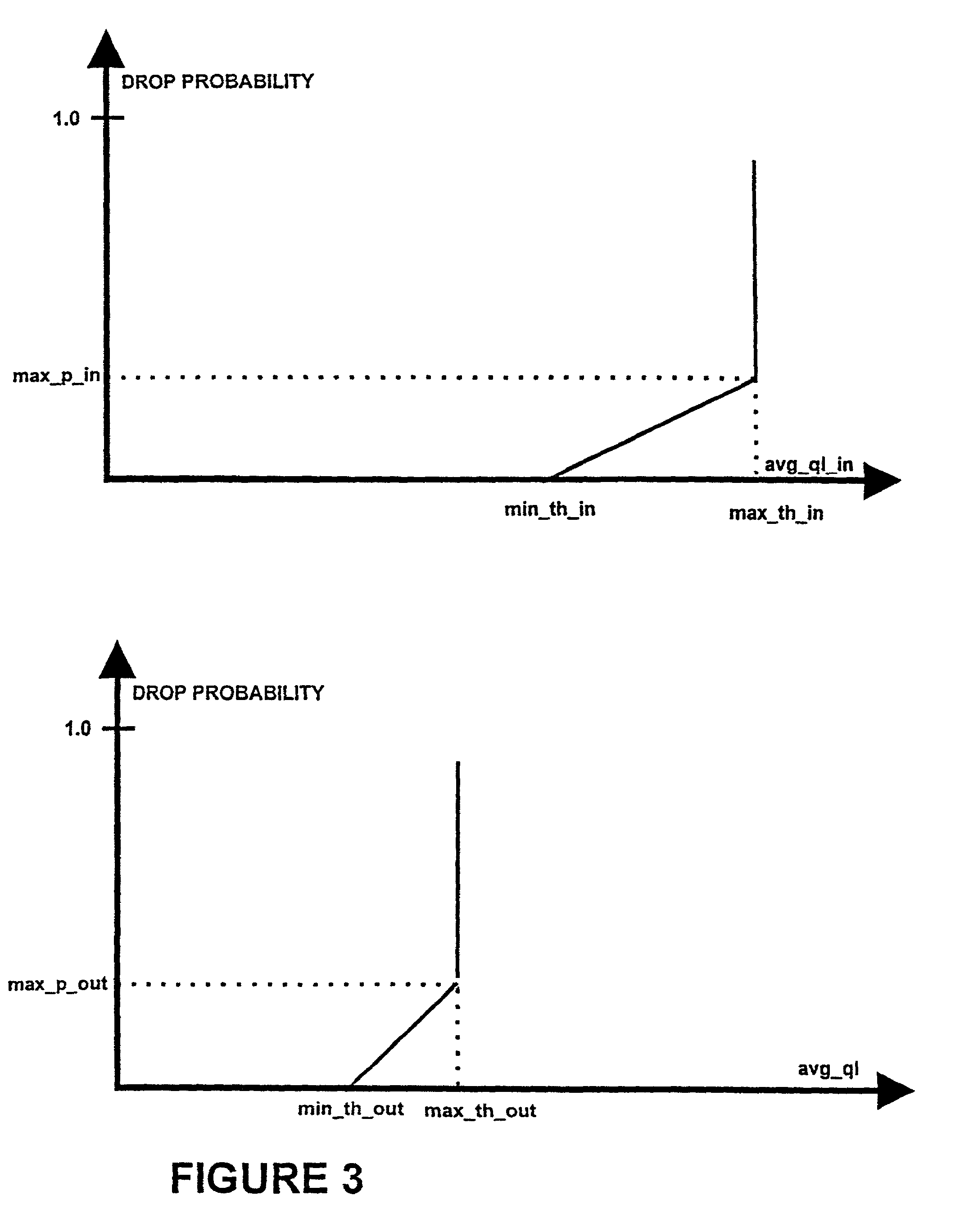

InactiveUS7139281B1Avoid hungerLess probability of lossError preventionFrequency-division multiplex detailsRate adaptationPacket loss

A method of active queue management, for handling prioritized traffic in a packet transmission system, the method including: providing differentiation between traffic originating from rate adaptive applications that respond to packet loss, wherein traffic is assigned to one of at least two drop precedent levels in-profile and out-profile; preventing starvation of low prioritized traffic; preserving a strict hierarchy among precedence levels; providing absolute differentiation of traffic; and reclassifying a packet of the traffic of the packet transmission system, tagged as in-profile, as out-profile, when a drop probability assigned to the packet is greater than a drop probability calculated from an average queue length for in-profile packets.

Owner:TELIASONERA

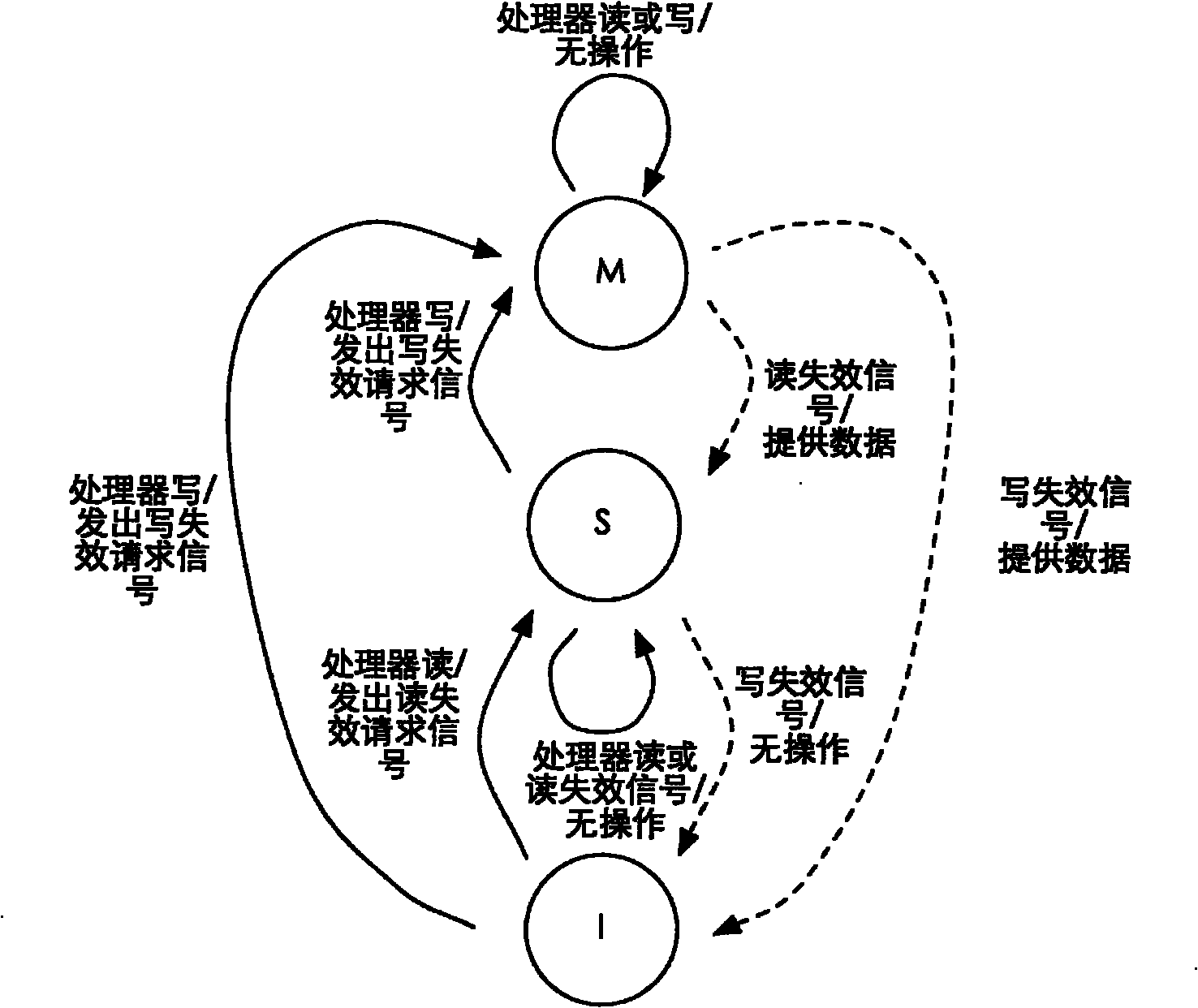

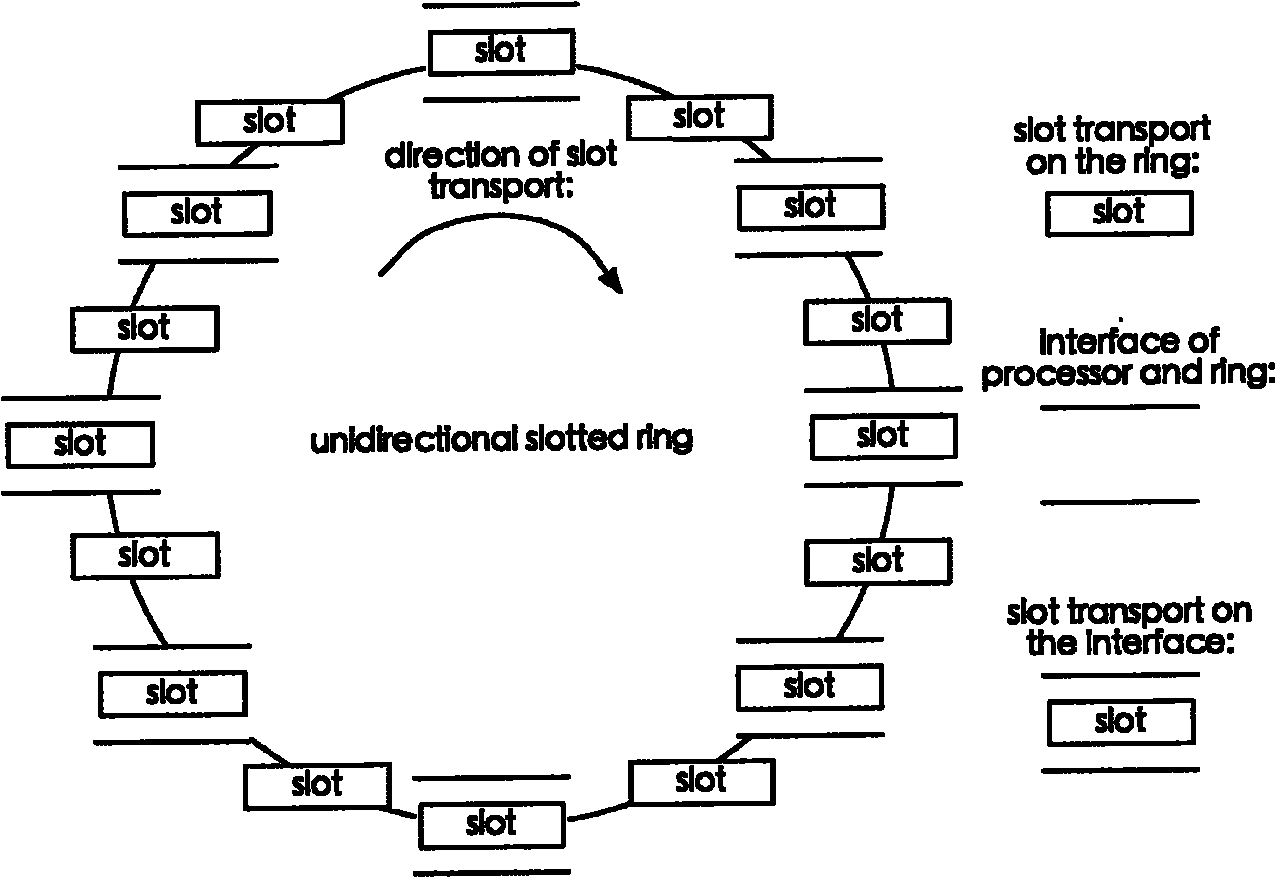

Method for realizing cache coherence protocol of chip multiprocessor (CMP) system

ActiveCN102103568AImprove performanceImprove stabilityDigital computer detailsElectric digital data processingData informationData provider

The invention discloses a method for realizing a cache coherence protocol of a chip multiprocessor (CMP) system, and the method comprises the following steps: 1, cache is divided into a primary Cache and a secondary Cache, wherein the primary Cache is a private Cache of each processor in the processor system, and the secondary Cache is shared by the processors in the processor system; 2, each processor accesses the private primary Cache, and when the access fails, a failure request information slot is generated, sent to a request information ring, then transmitted to other processors by the request information ring to carry out intercepting; and 3, after a data provider intercepts the failure request, a data information slot is generated and sent to a data information ring, then transmitted to a requestor by the data information ring, finally, the requestor receives data blocks and then completes corresponding access operations. The method disclosed by the invention has the advantages of effectively improving the performance of the system, reducing the power consumption and bandwidth utilization, avoiding the occurrence of starvation, deadlock and livelock, and improving the stability of the system.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

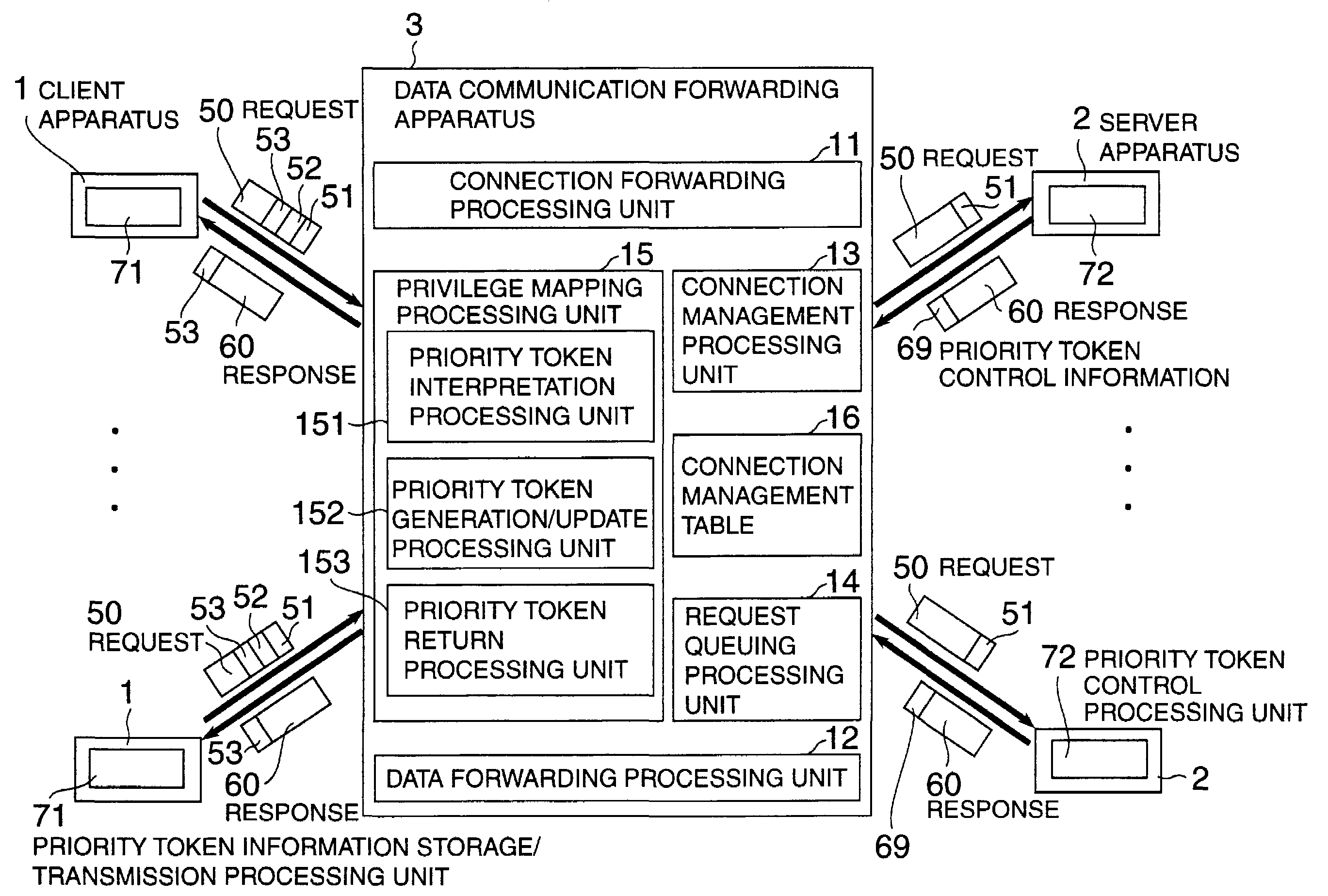

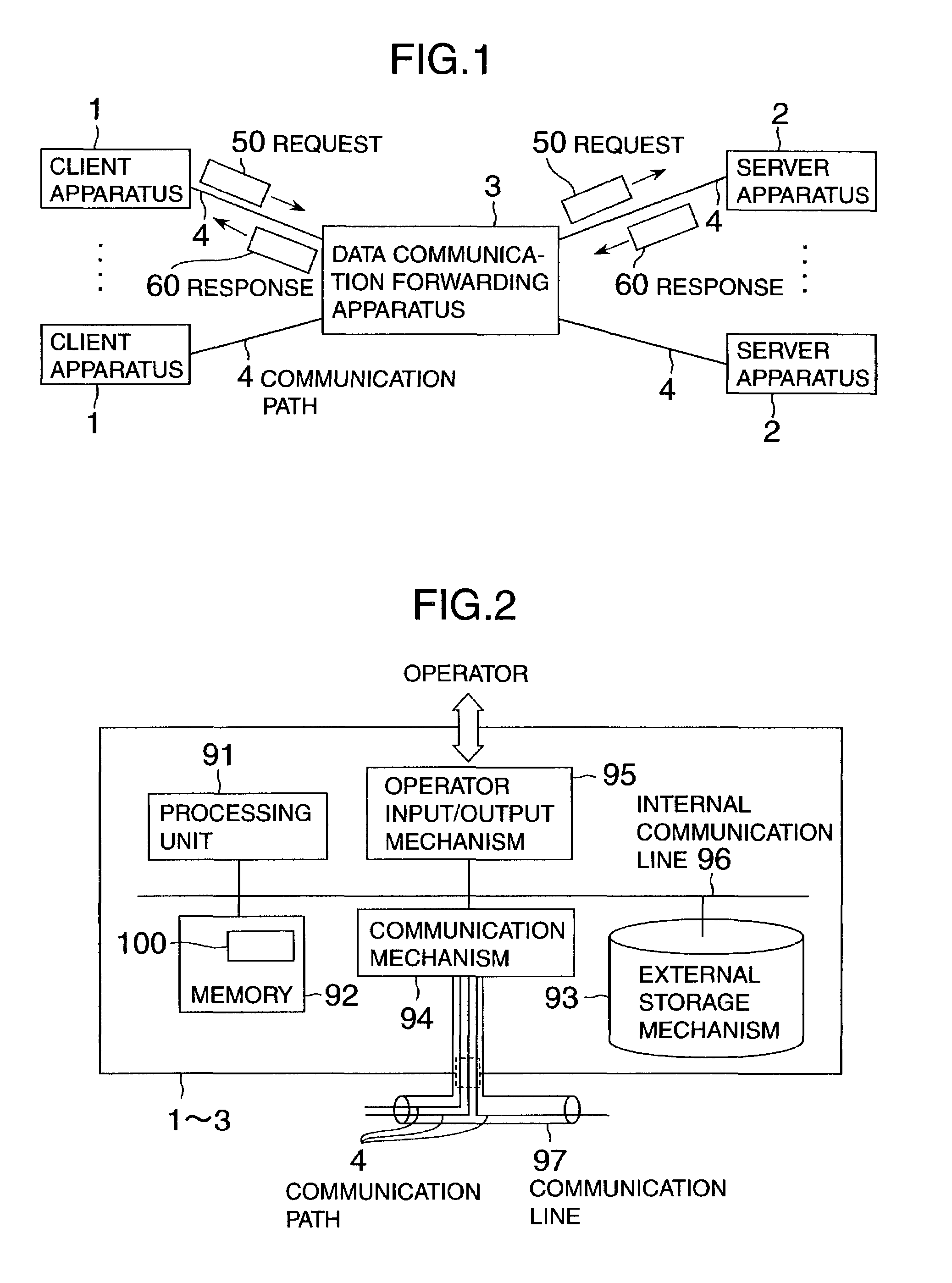

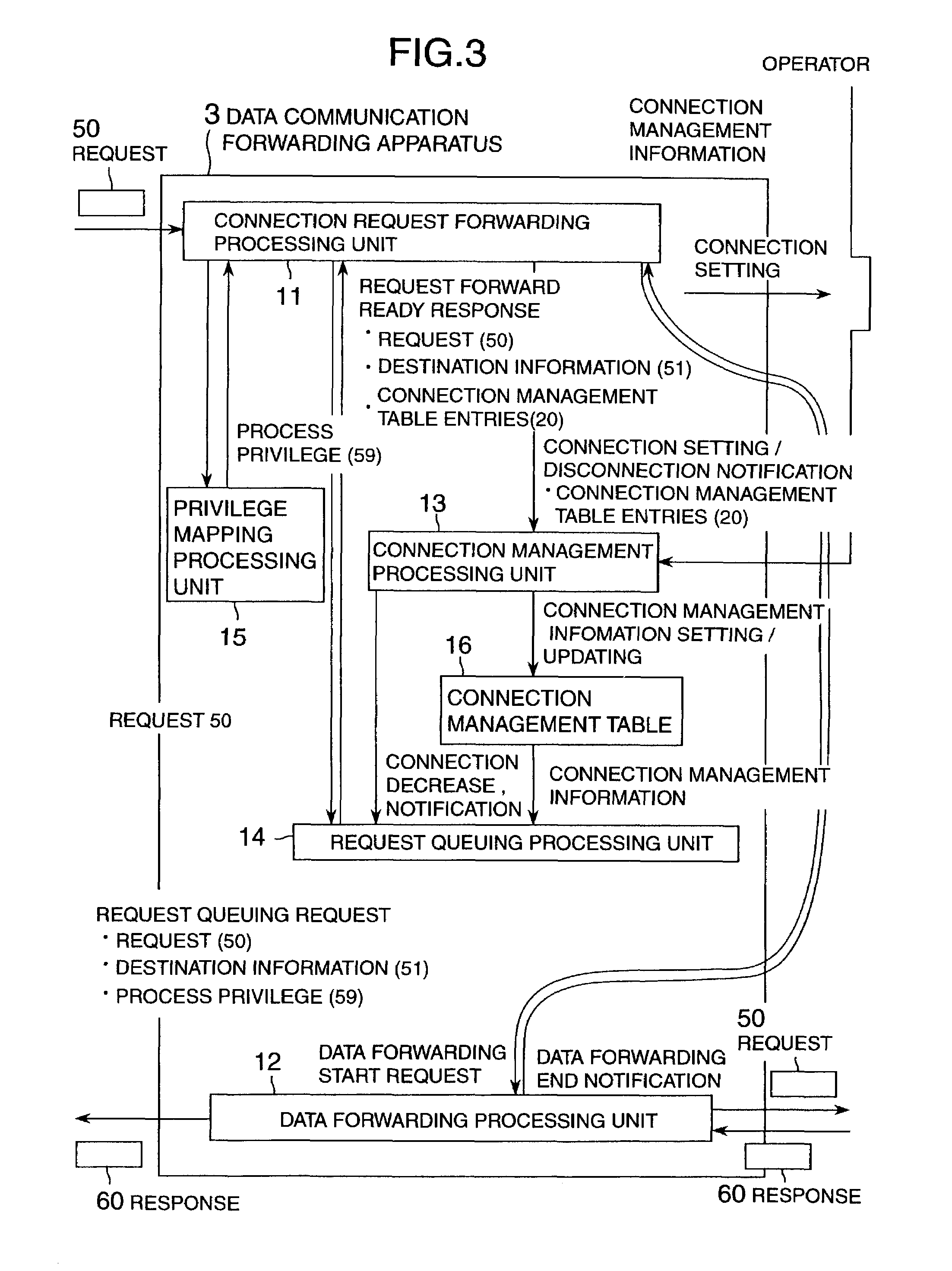

Data communication system using priority queues with wait count information for determining whether to provide services to client requests

InactiveUS7130912B2Reduce the valueRaise priorityData switching by path configurationMultiple digital computer combinationsCommunications systemClient-side

A service system in which a server offers a service in response to a request from a client. The system can offer the stable service even in the case of an access from the client and also can offer a preferential service under certain conditions. The request from the client to the server is carried out via a data communication forwarding apparatus, the apparatus has a unit for queuing the request with a priority and has a unit for changing the forwarding sequence of the request according to the priority. Thereby the number of simultaneous requests to the server can be suppressed to within the processing ability of the server with the stable service. Further, the request can be preferentially processed according to the user, transaction, wait time, etc.

Owner:HITACHI LTD

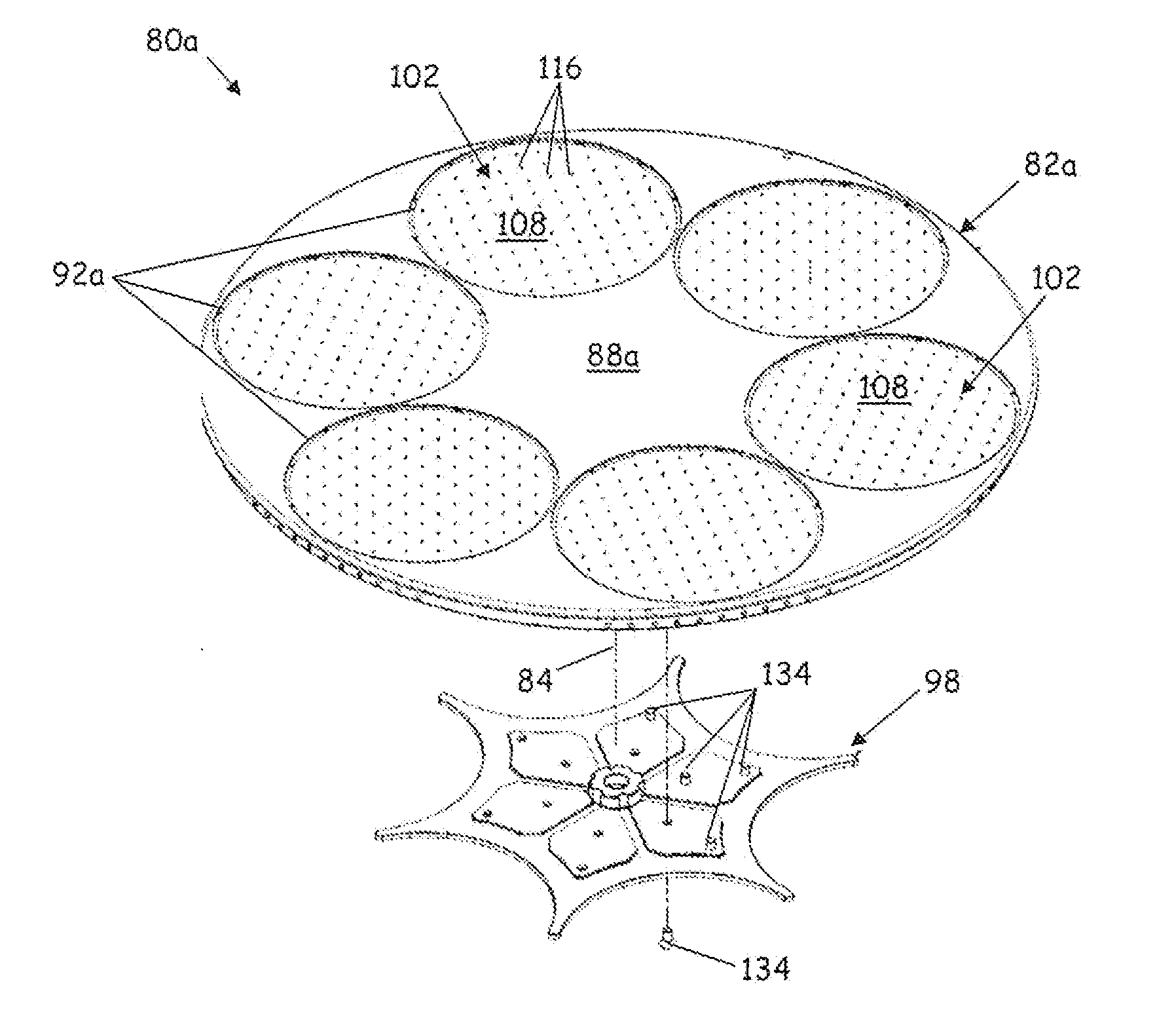

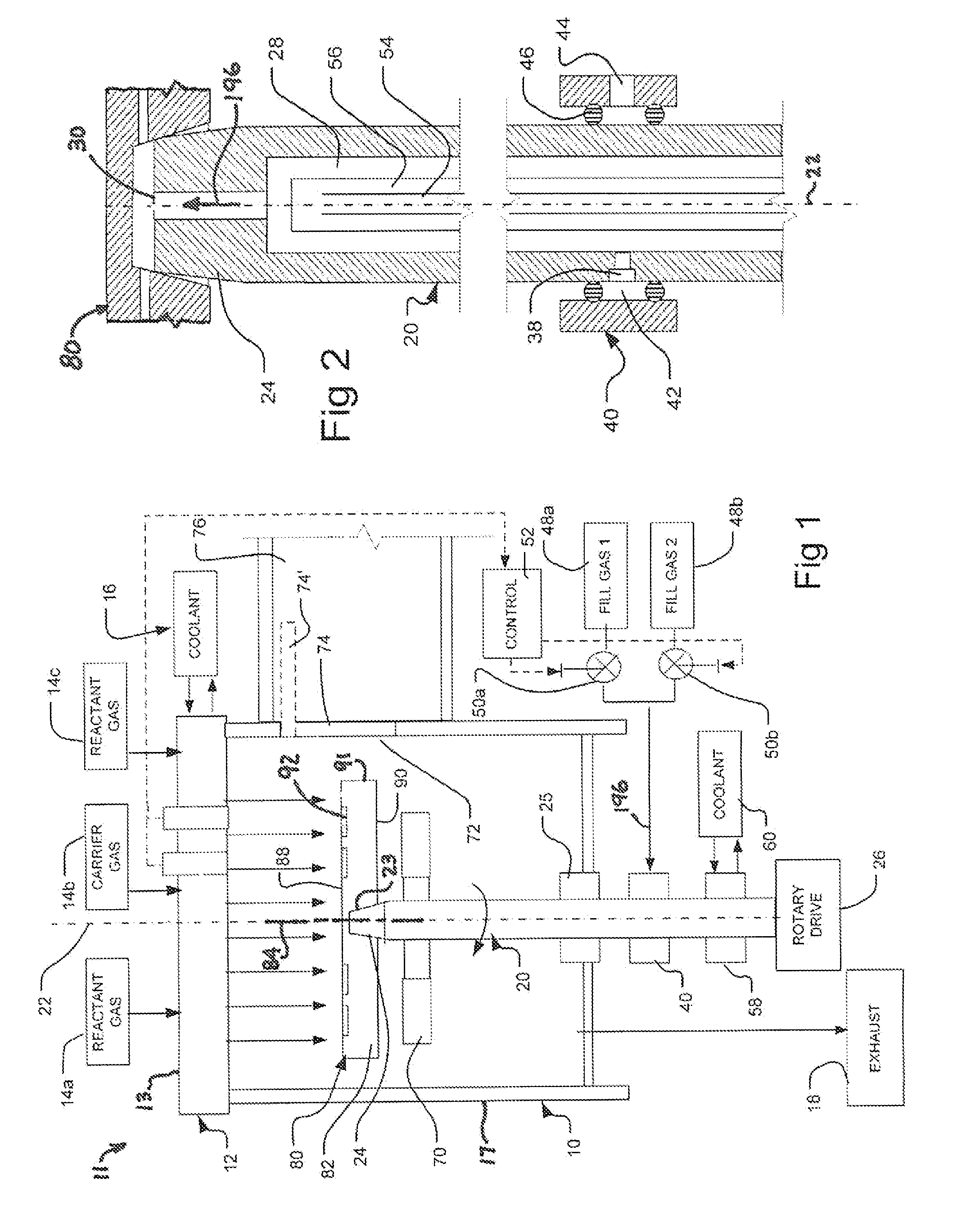

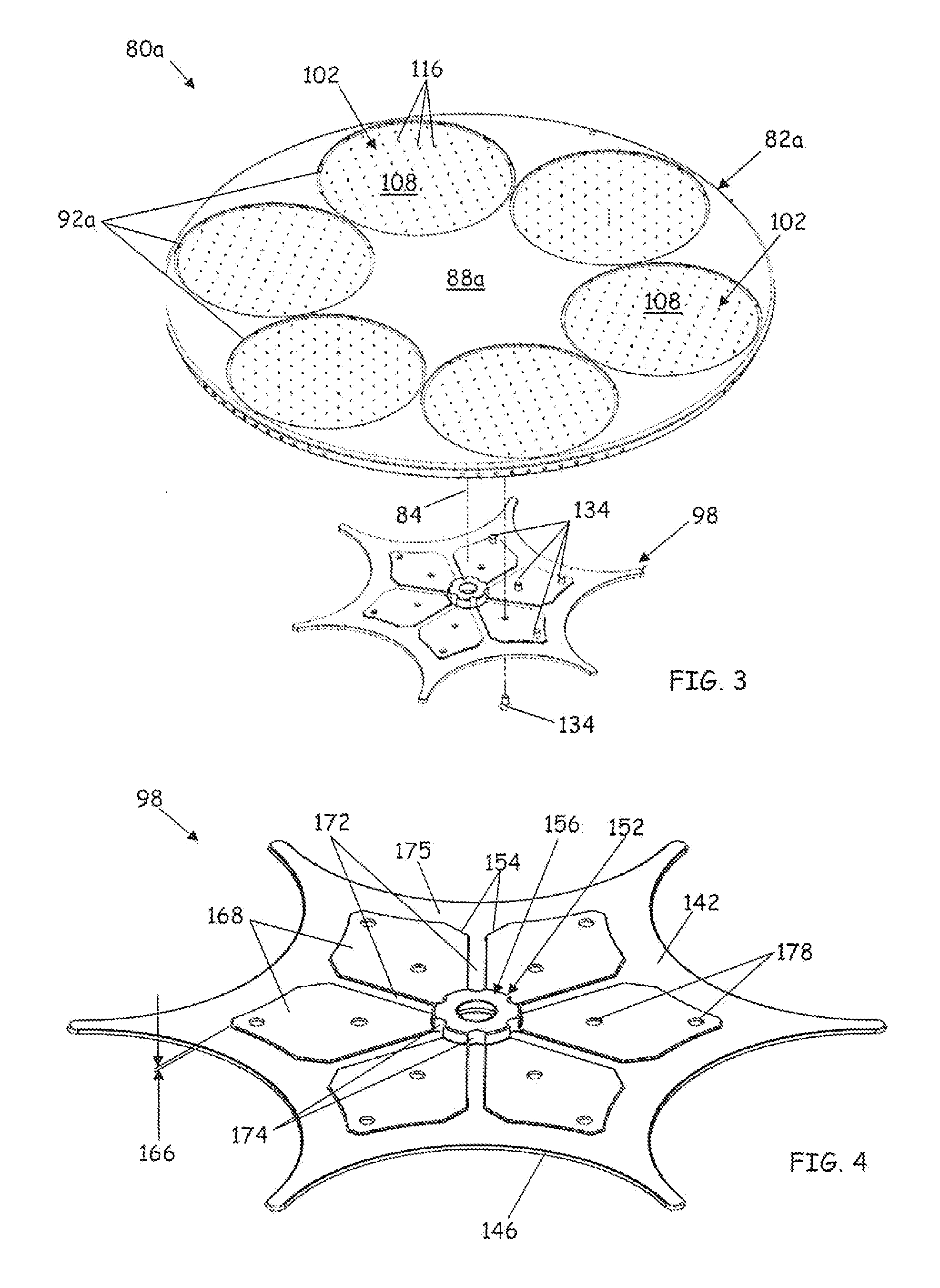

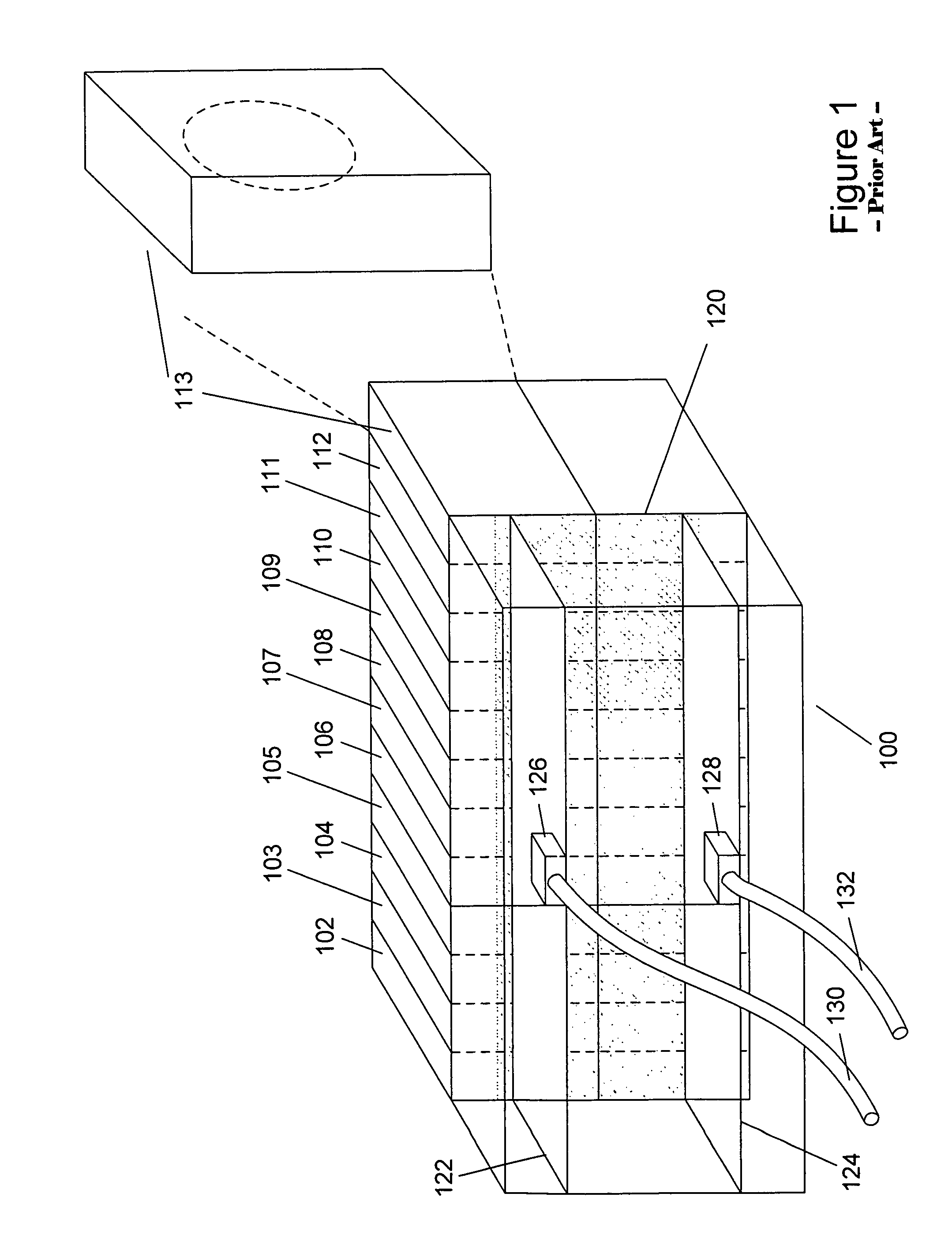

Wafer carrier with temperature distribution control

InactiveUS20140261698A1Enhanced temperature uniformityHigh conductivityPolycrystalline material growthPipeline systemsDistribution controlEngineering

Wafer carrier arranged to hold a plurality wafers and to inject a fill gas into gaps between the wafers and the wafer carrier for enhanced heat transfer and to promote uniform temperature of the wafers. The apparatus is arranged to vary the composition, flow rate, or both of the fill gas so as to counteract undesired patterns of temperature non-uniformity of the wafers. In various embodiments, the wafer carrier utilizes at least one plenum structure contained within the wafer carrier to source a plurality of weep holes for passing a fill gas into the wafer retention pockets of the wafer carrier. The plenum(s) promote the uniformity of the flow, thus providing efficient heat transfer and enhanced uniformity of wafer temperatures.

Owner:VEECO INSTR

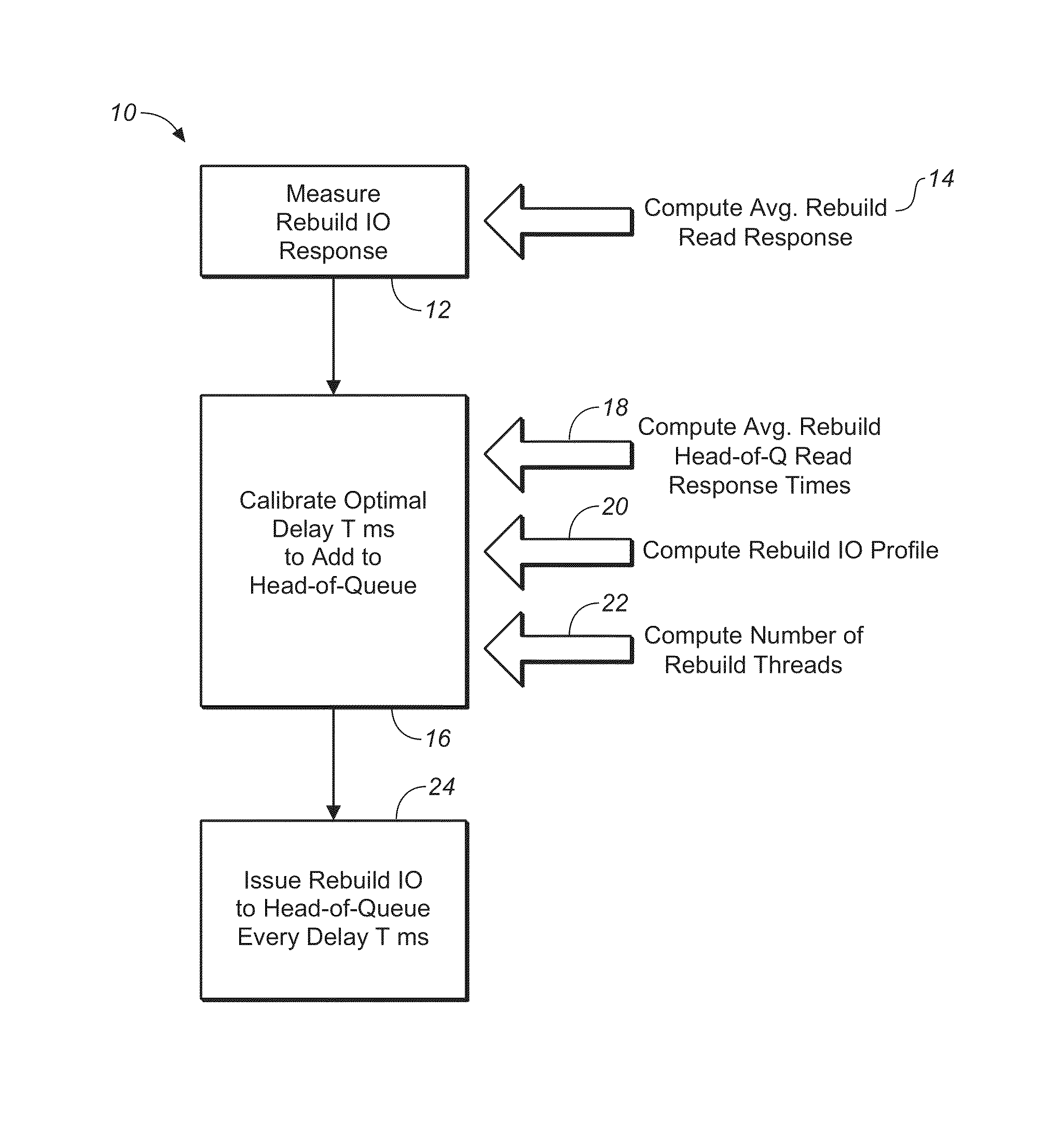

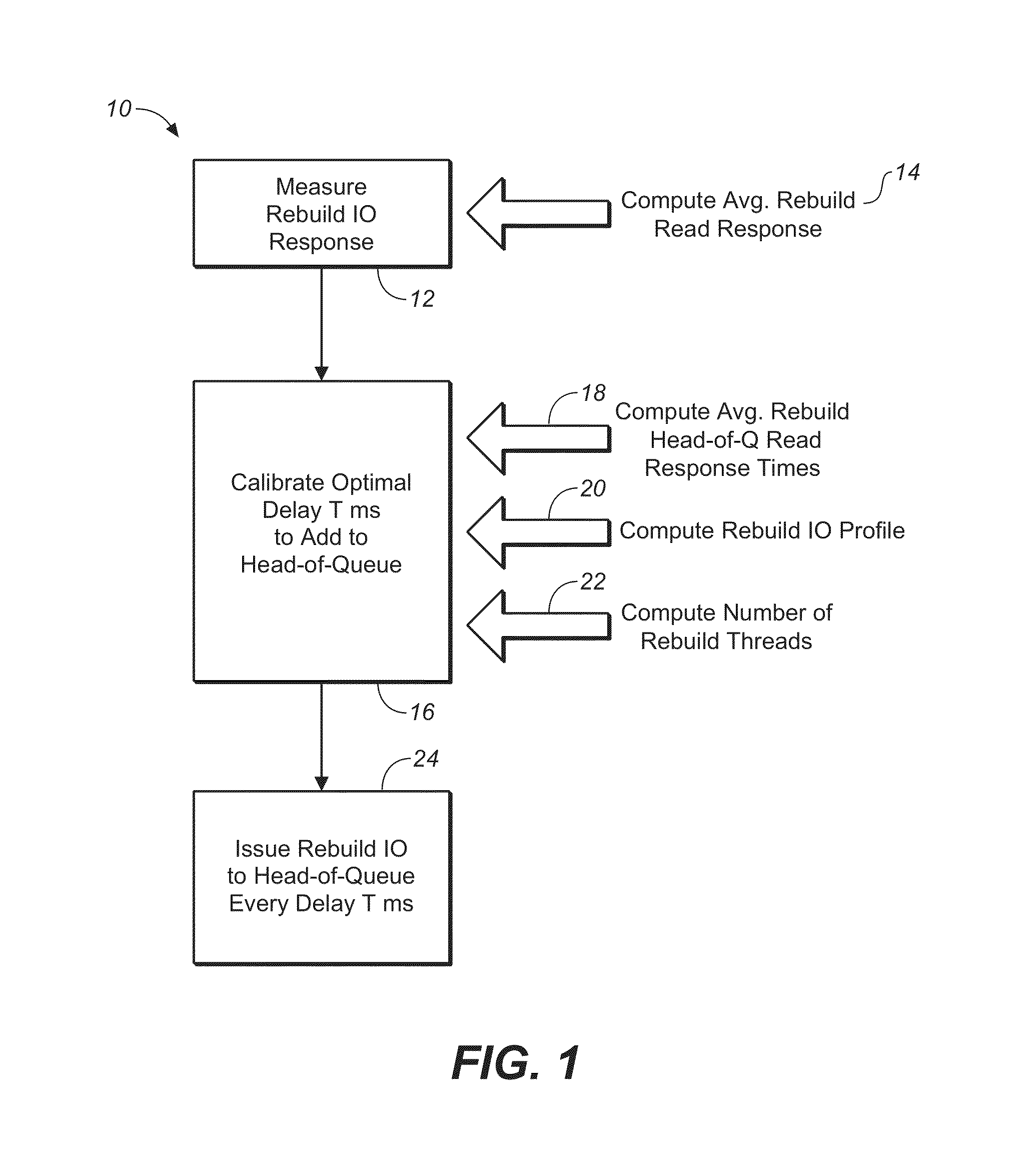

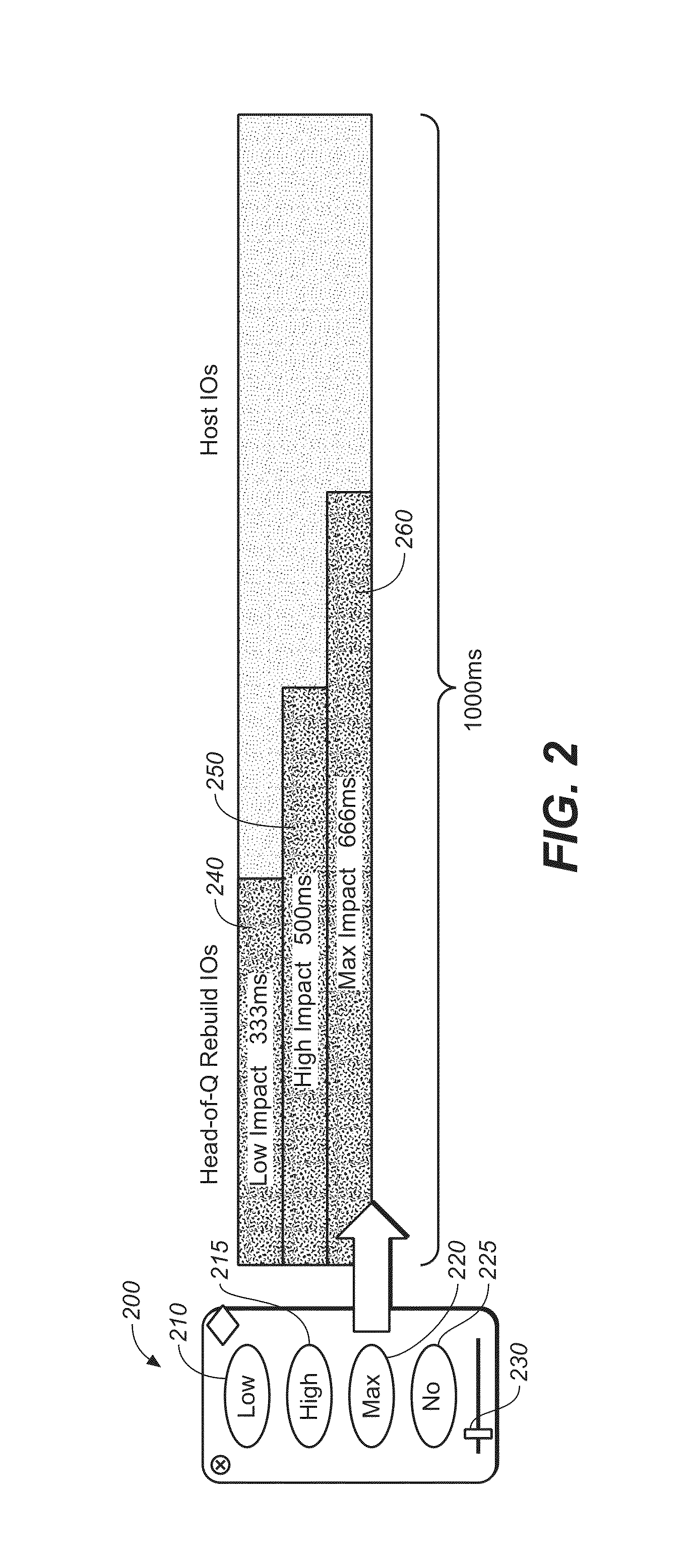

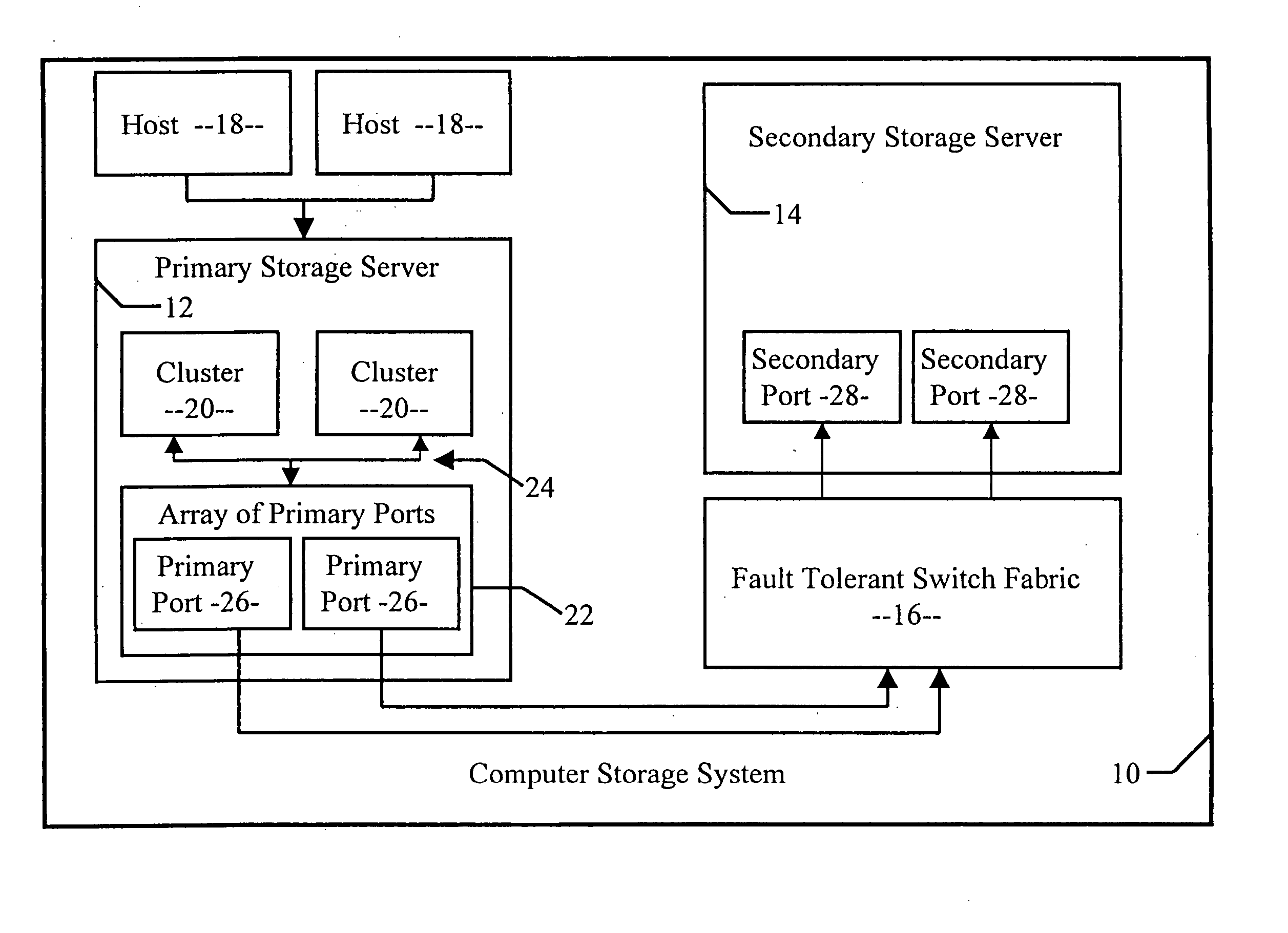

System and method for improved rebuild in RAID

ActiveUS8751861B2Extension of timeIncrease delayMemory loss protectionRedundant hardware error correctionRAIDHeuristic function

Owner:AVAGO TECH INT SALES PTE LTD

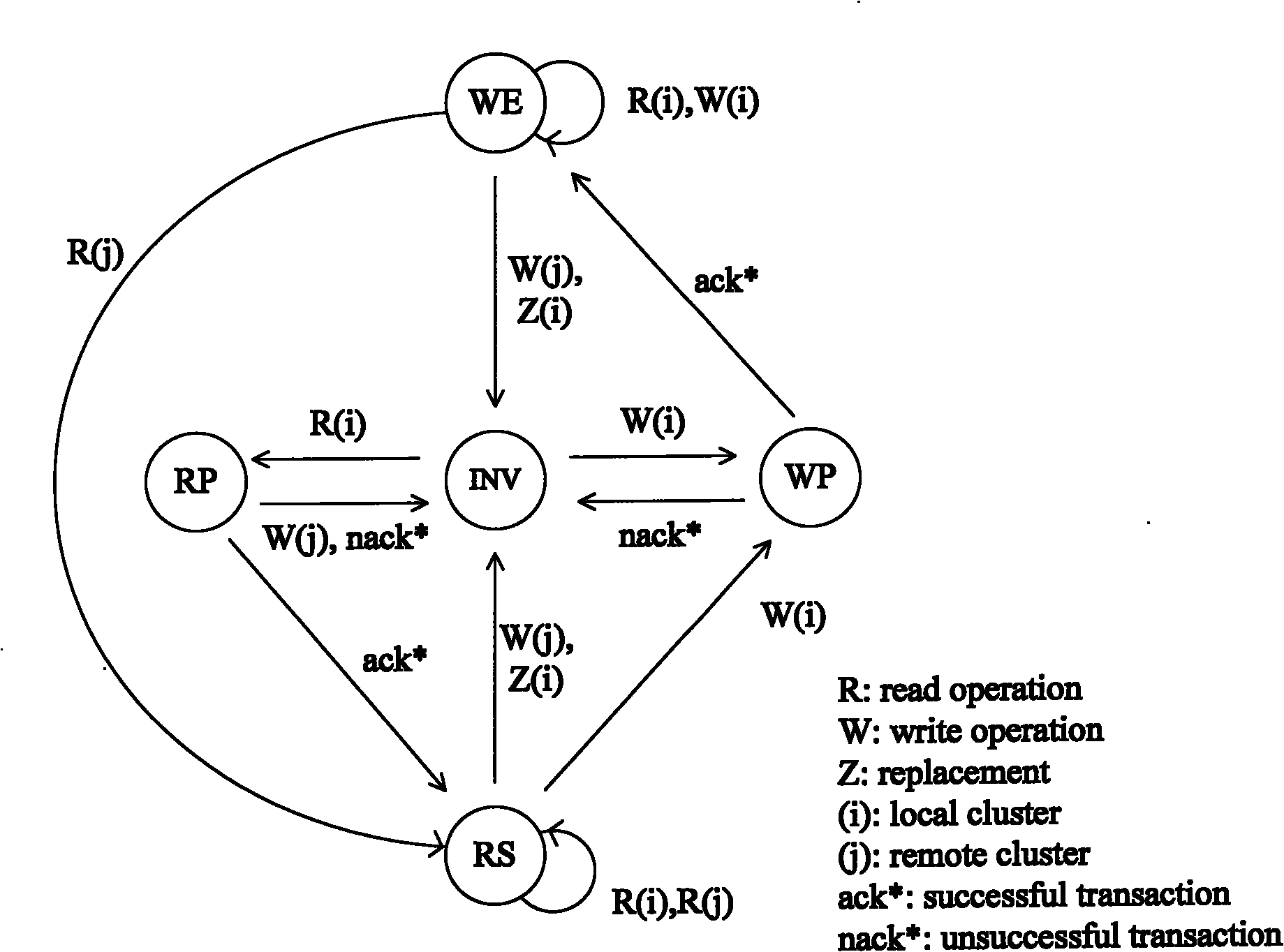

Method of adapting an optical network to provide lightpaths to dynamically assigned higher priority traffic

InactiveUS7499468B2Maintain fairnessAvoid hungerWavelength-division multiplex systemsTime-division multiplexTraffic capacityTelecommunications network

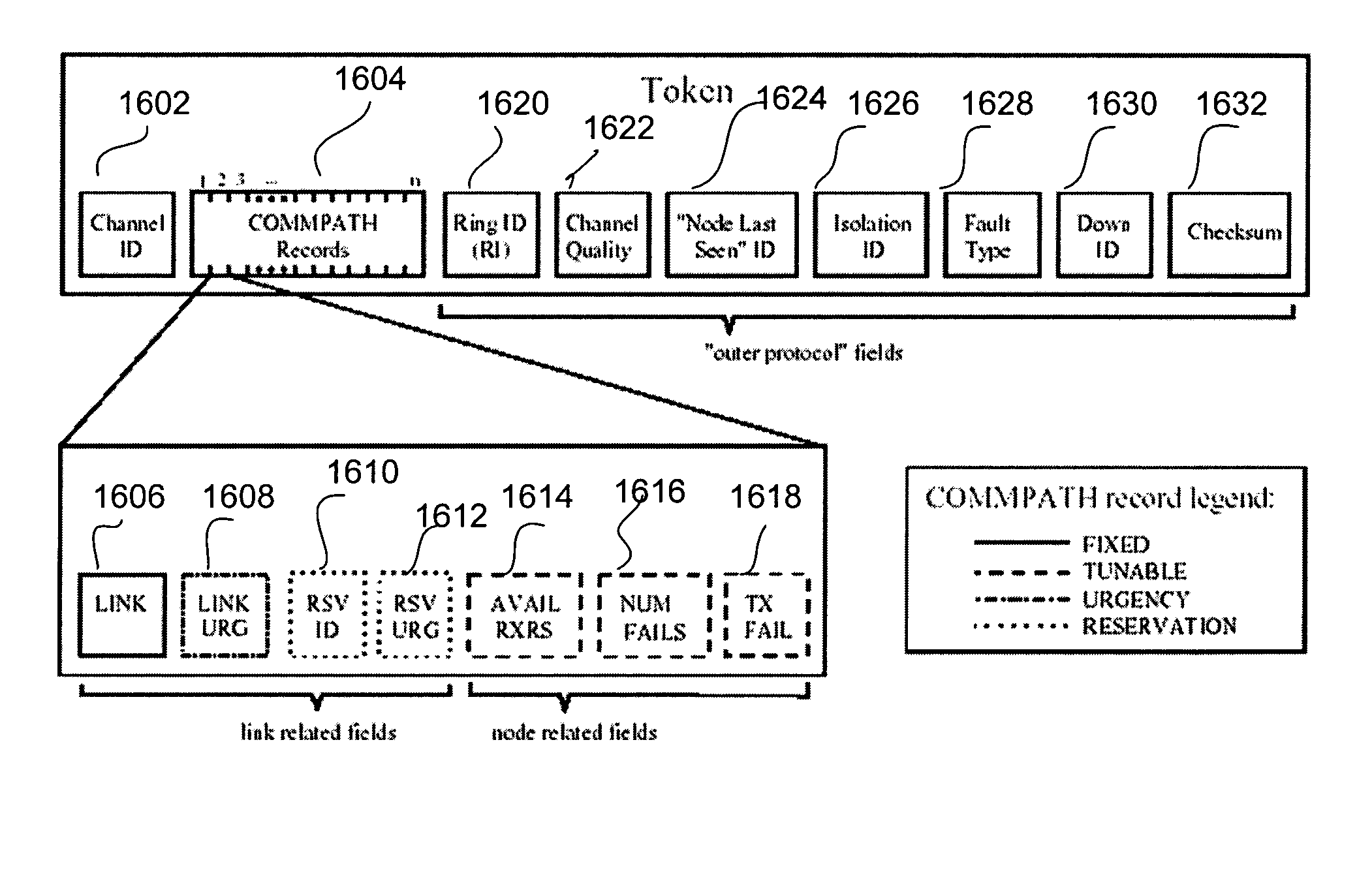

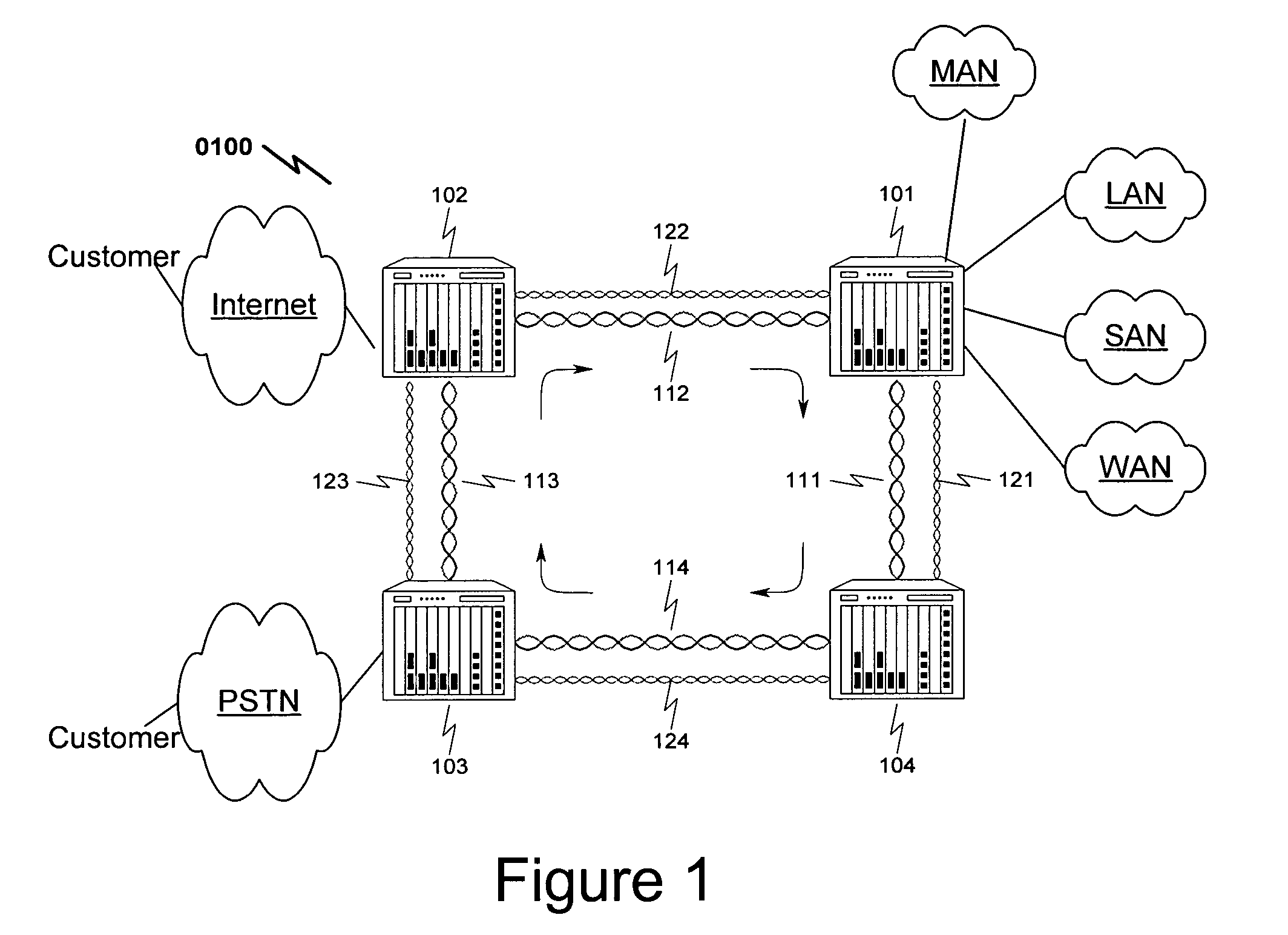

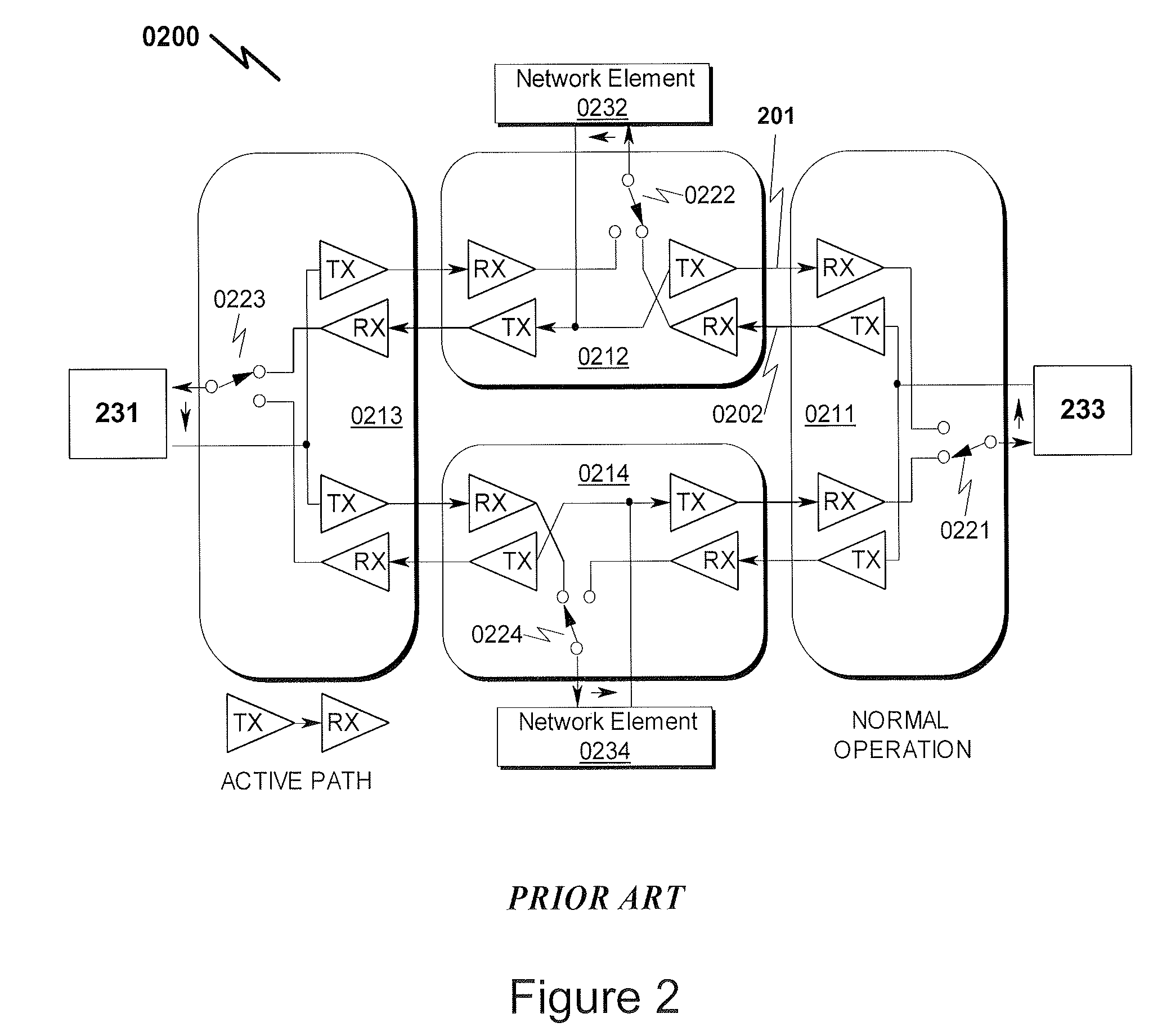

A system and method for dynamically establishing lightpaths in an optical telecommunications network. The system implements tokens which are used to advertise the availability of receivers downstream. The tokens notify a source when a transmission fails. The tokens also include lightpath reservations and indicate priority of reservations. The innovative system preferably comprises a ring topology with chords that connect non-contiguous nods of the ring.

Owner:MONTGOMERY JR CHARLES DONALD

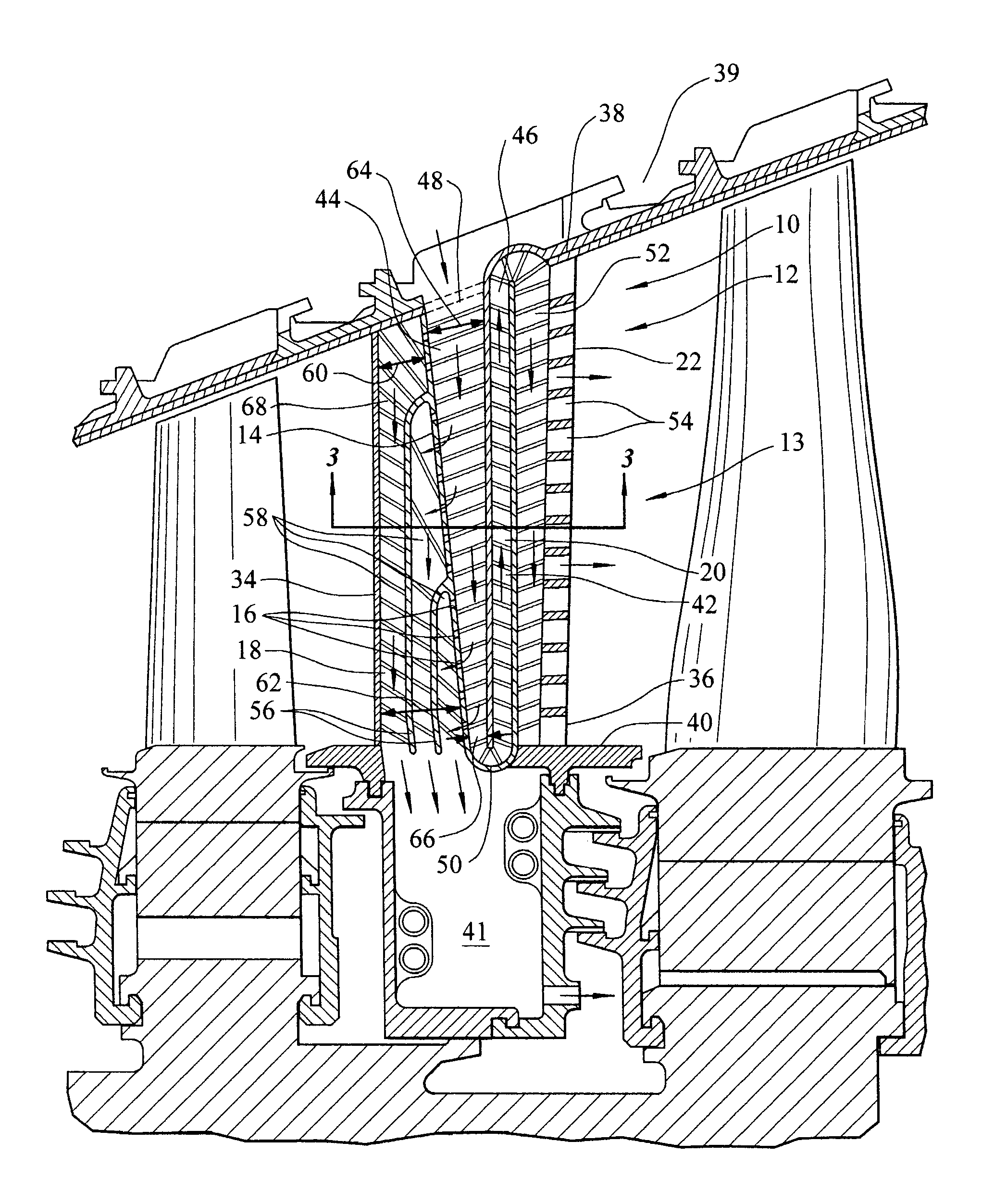

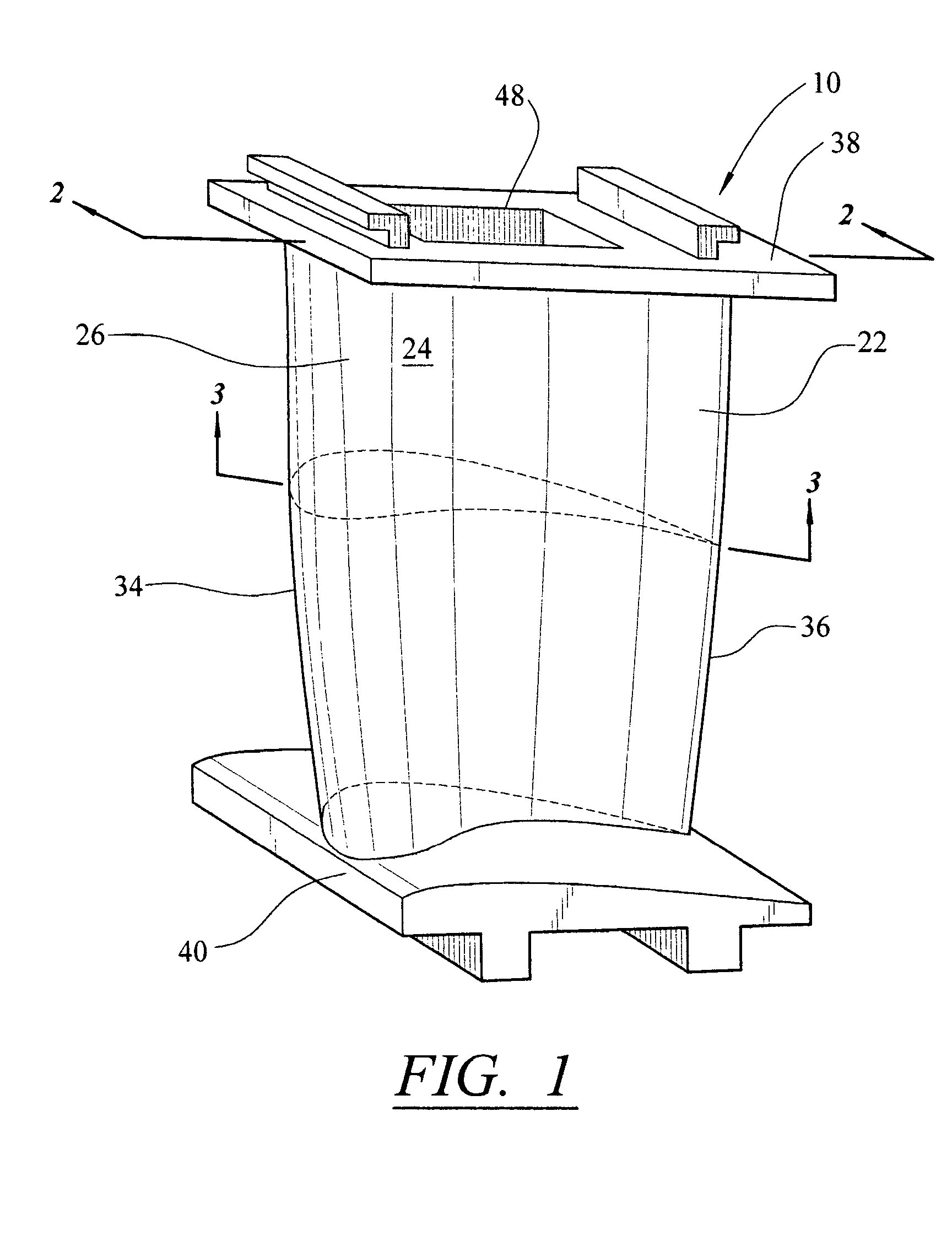

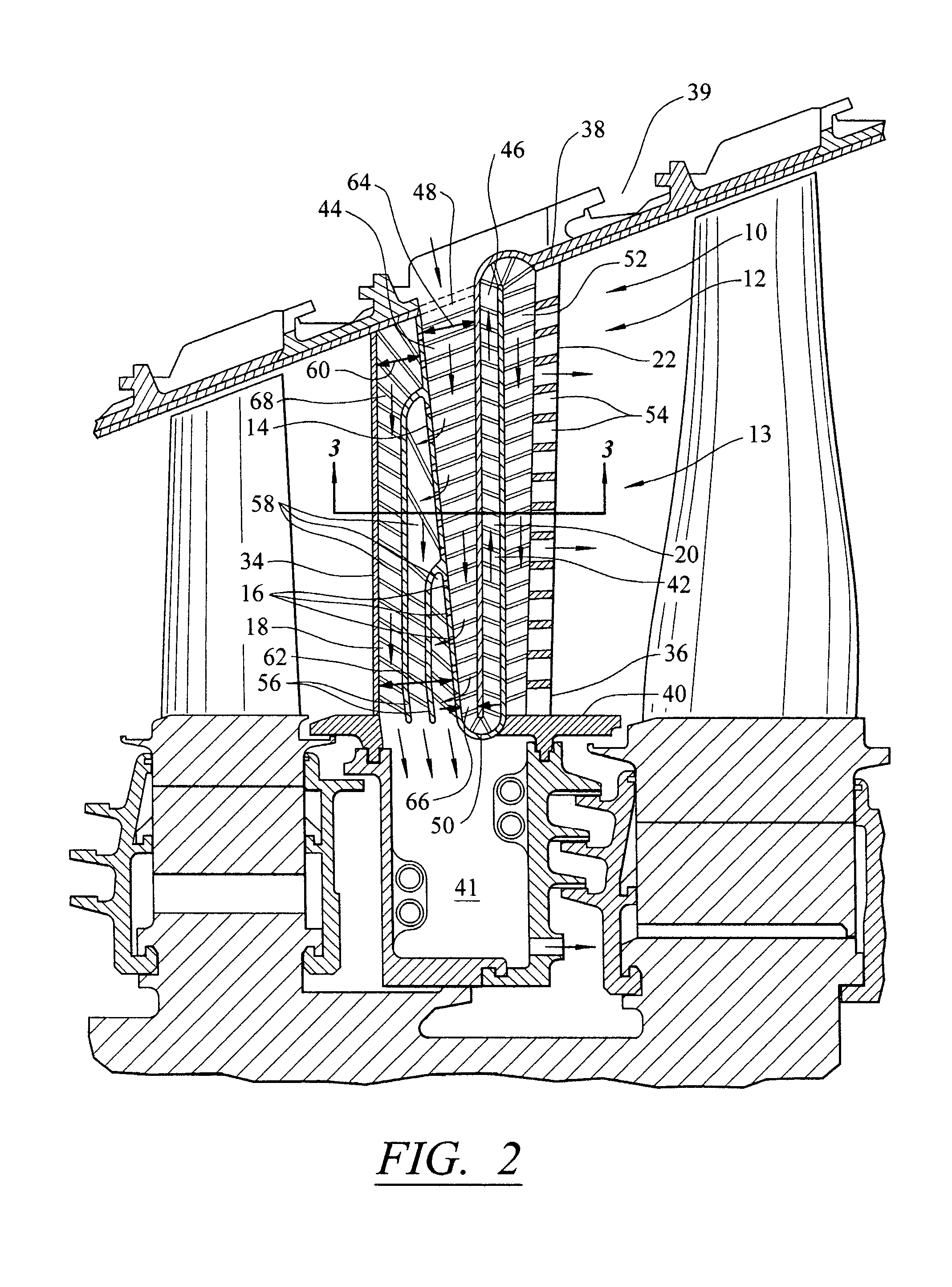

Gas turbine vane with integral cooling flow control system

ActiveUS7090461B2Maximize effectivenessMinimizing flow separationPump componentsEngine fuctionsLeading edgeControl system

A turbine vane usable in a turbine engine and having at least one cooling system. The cooling system includes a leading edge cavity and a trailing edge cavity. The cavities may be separated with a metering rib having one or more metering orifices for regulating flow of cooling fluids to a manifold cooling system and to trailing edge exhaust orifices. In at least one embodiment, the trailing edge cavity may be a serpentine cooling pathway and the leading edge cavity may include a plurality of leading edge cooling paths.

Owner:SIEMENS ENERGY INC

Method and system for sharing over-allocated bandwidth between different classes of service in a wireless network

InactiveUS20050286559A1Reduce and eliminate and disadvantageReduce and eliminate problemError preventionNetwork traffic/resource managementClass of serviceDistributed computing

A method and system for sharing over-allocated bandwidth between different classes of service in a wireless network. Traffic is transmitted for a first service class in excess of bandwidth allocated to the first service class using unused bandwidth allocated to a second class. After transmitting traffic for the first service class in excess of bandwidth allocated to the first service class using unused bandwidth allocated to a second class, traffic for a third service class is transmitted in unused bandwidth remaining in the second service class.

Owner:CISCO TECH INC

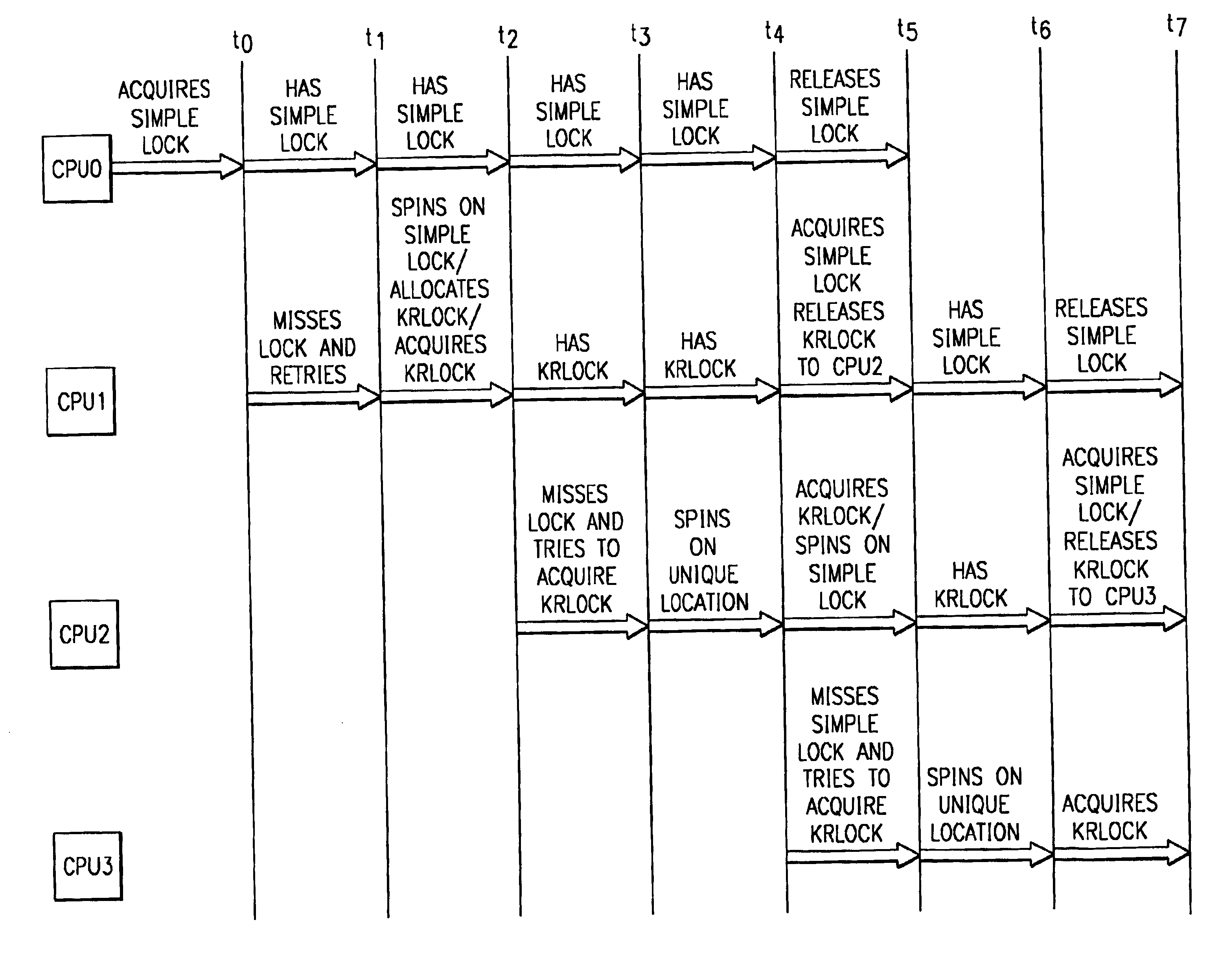

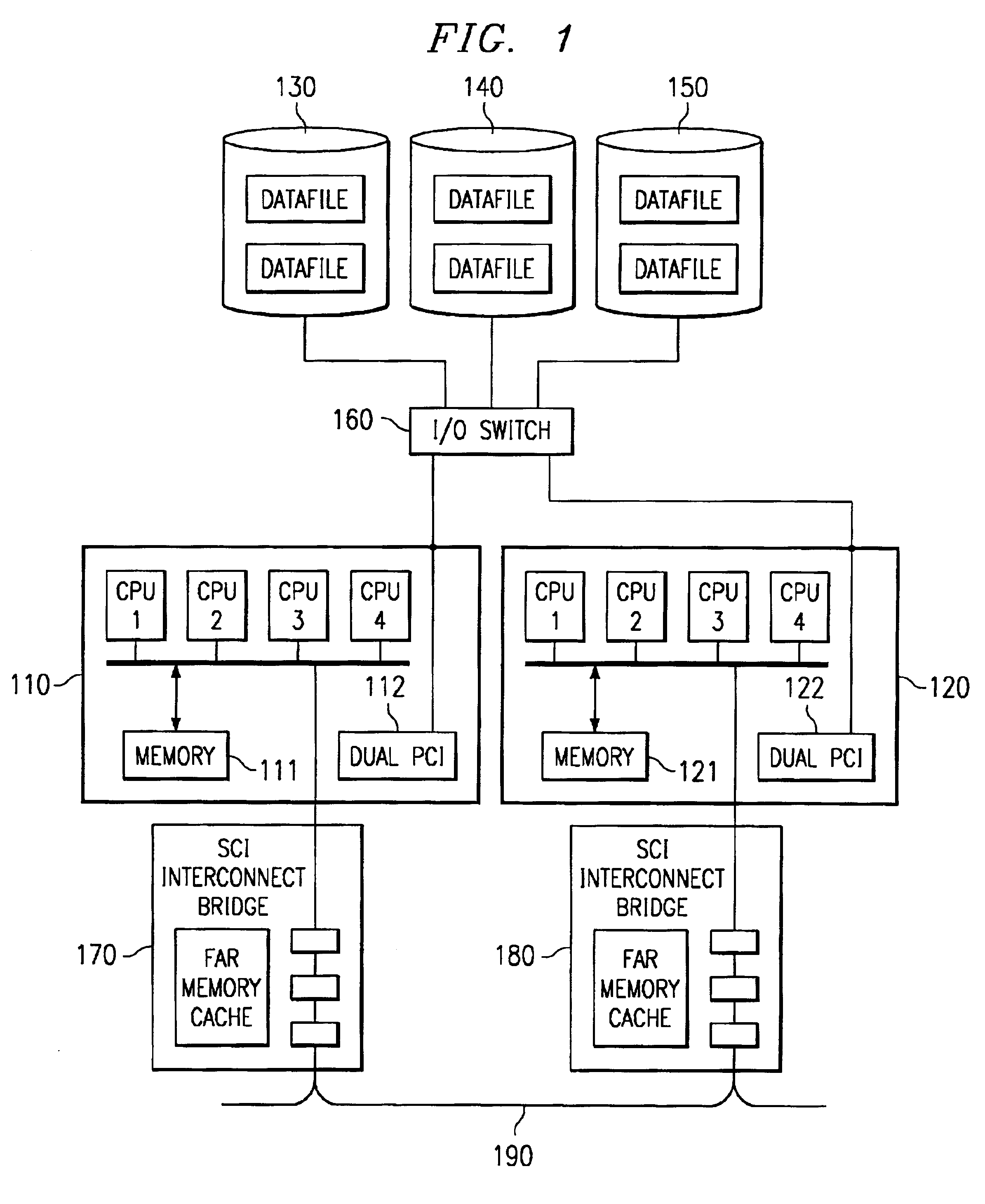

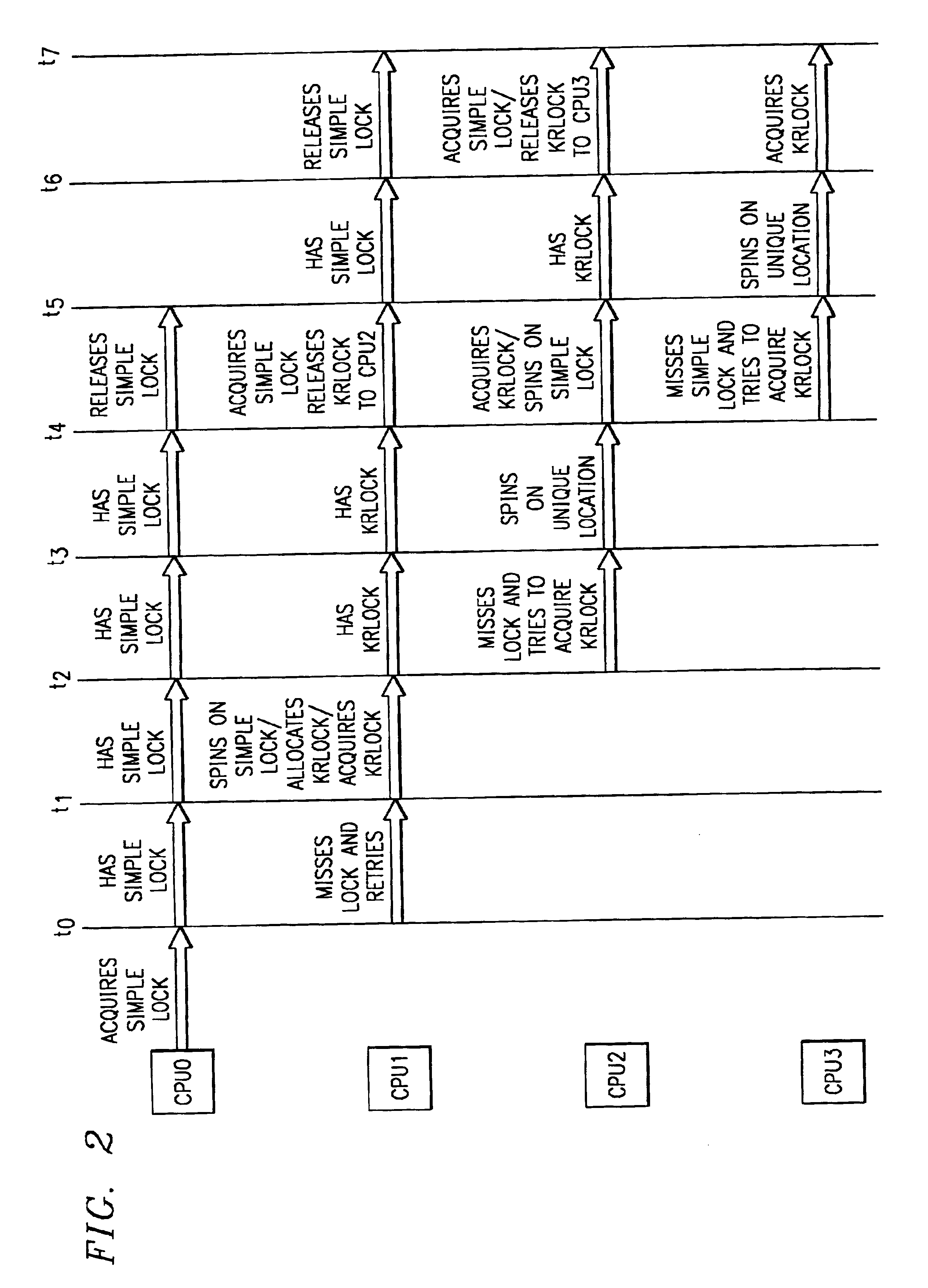

Apparatus, method and computer program product for converting simple locks in a multiprocessor system

InactiveUS6842809B2Avoid hungerExtra overhead is avoidedUnauthorized memory use protectionMultiprogramming arrangementsMulti processorParallel computing

An apparatus, method and computer program product for minimizing the negative effects that occur when simple locks are highly contended among processors which may or may not have identical latencies to the memory that represents a given lock, are provided. The apparatus, method and computer program product minimize these effects by converting simple locks such that they act as standard simple locks when there is no contention and act as krlocks when there is contention for a lock. In this way, the number of processors spinning on a lock is limited to a single processor, thereby reducing the number of processors that are in a wait state and not performing any useful work.

Owner:IBM CORP

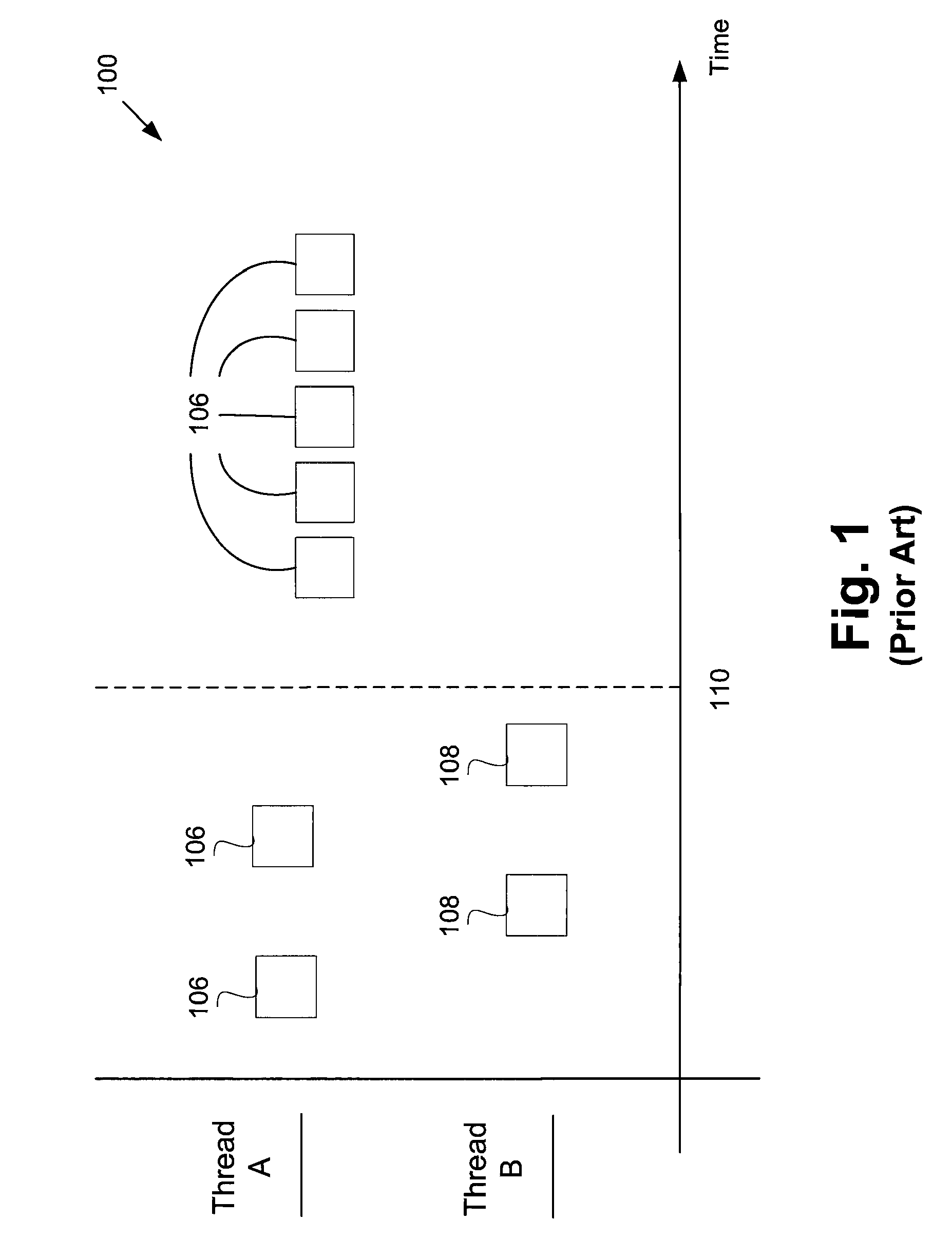

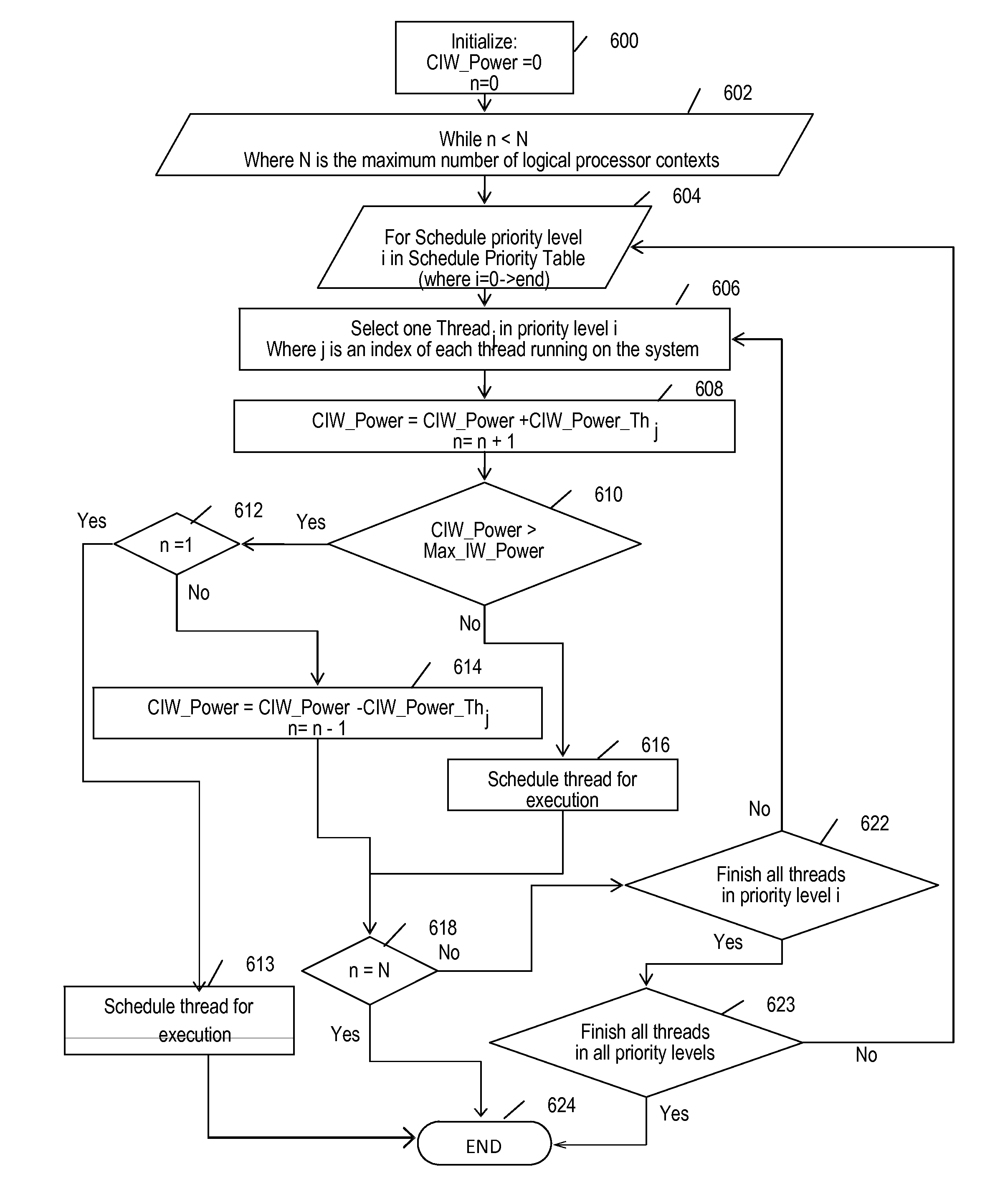

Scheduling threads

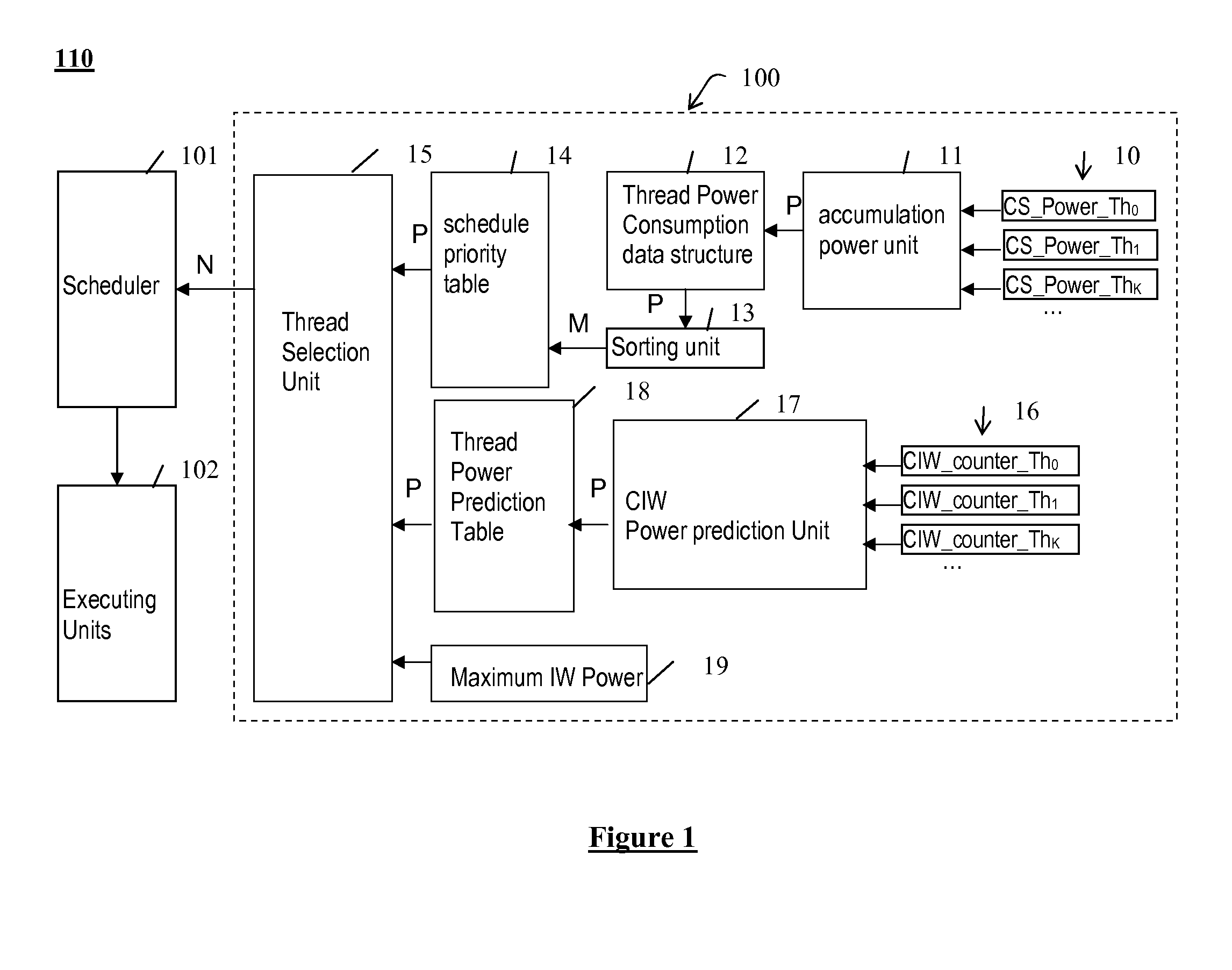

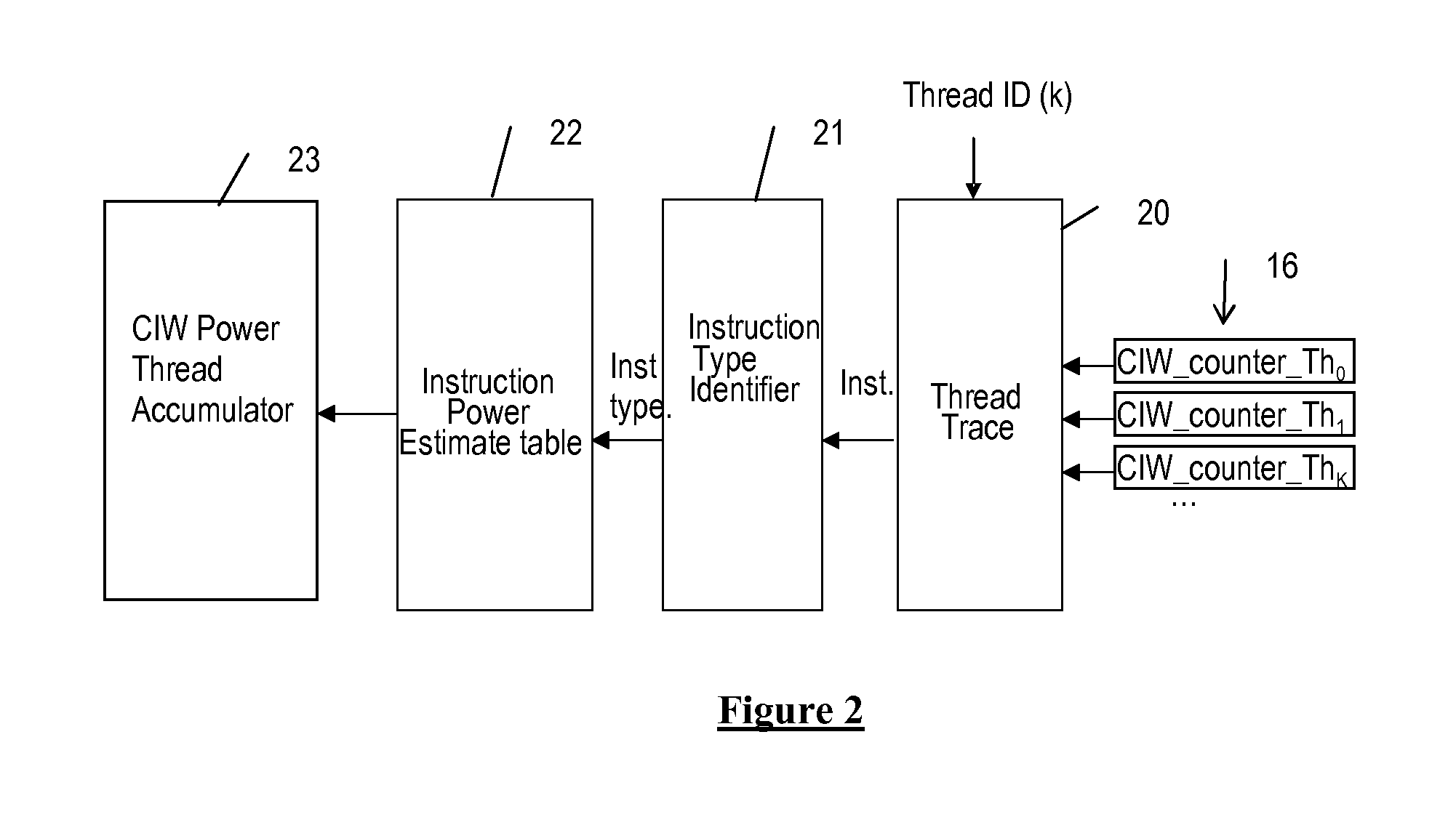

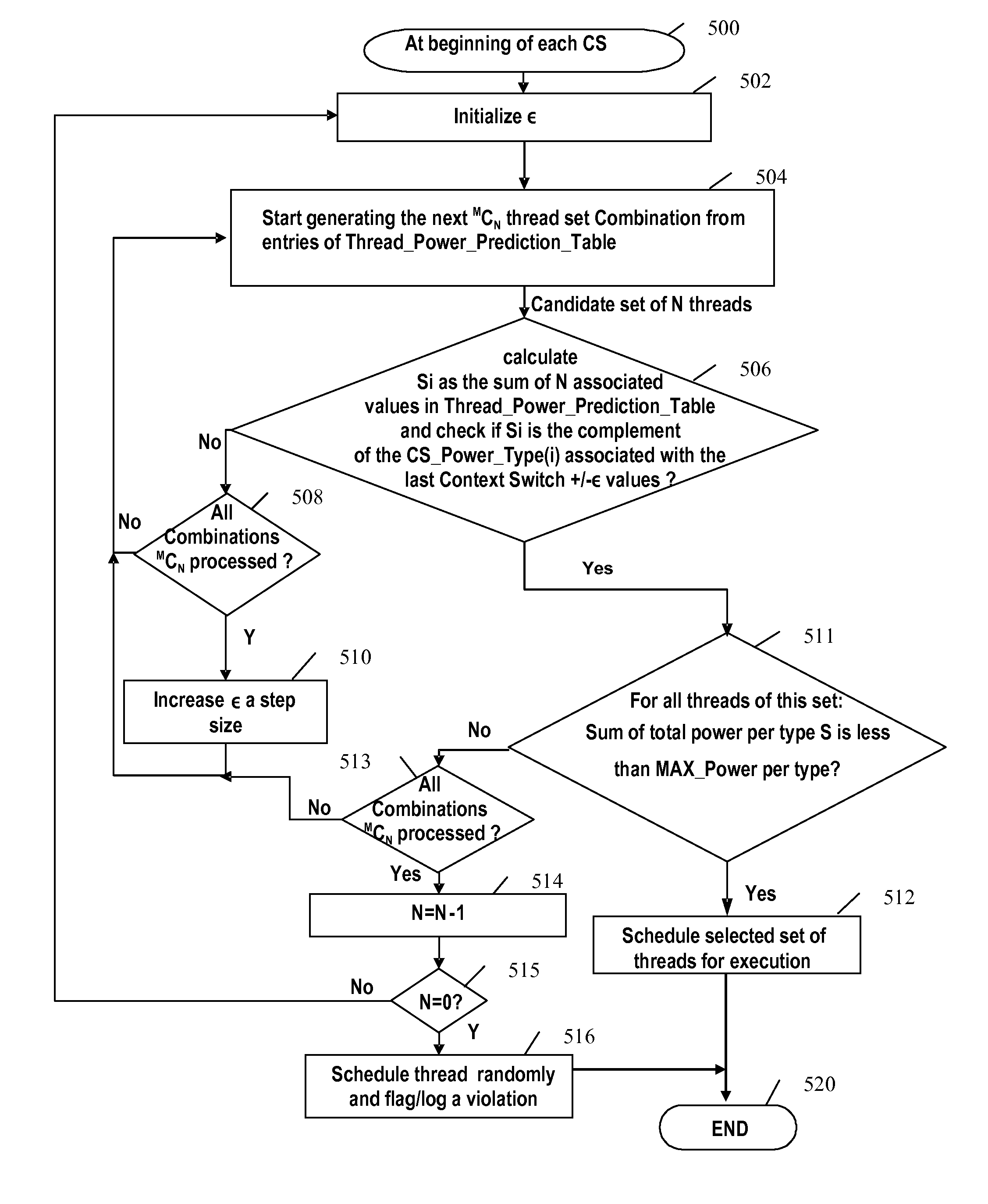

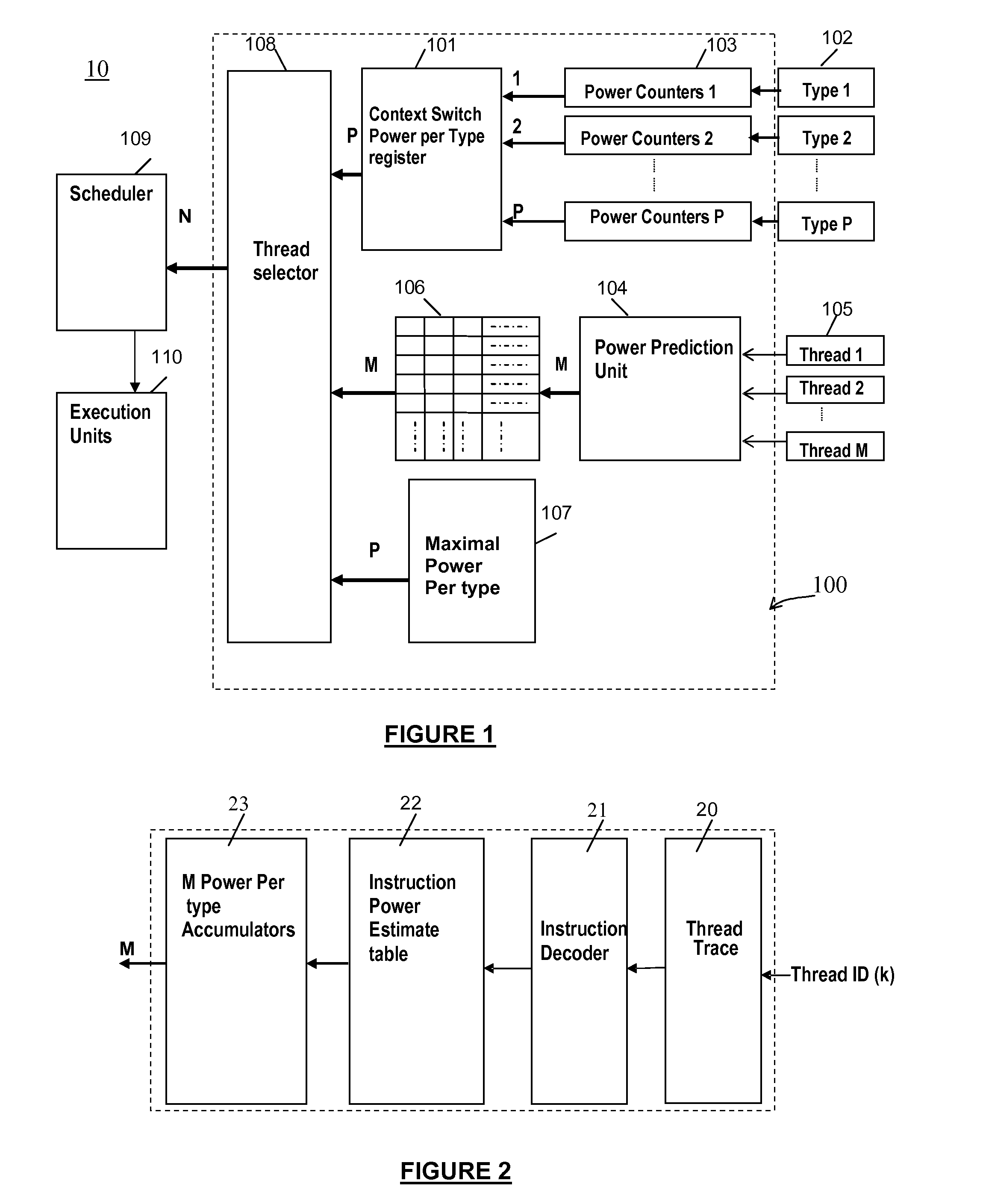

InactiveUS20120192195A1Easy to implementAvoiding thread starvationEnergy efficient ICTMultiprogramming arrangementsInstruction windowMulti-core processor

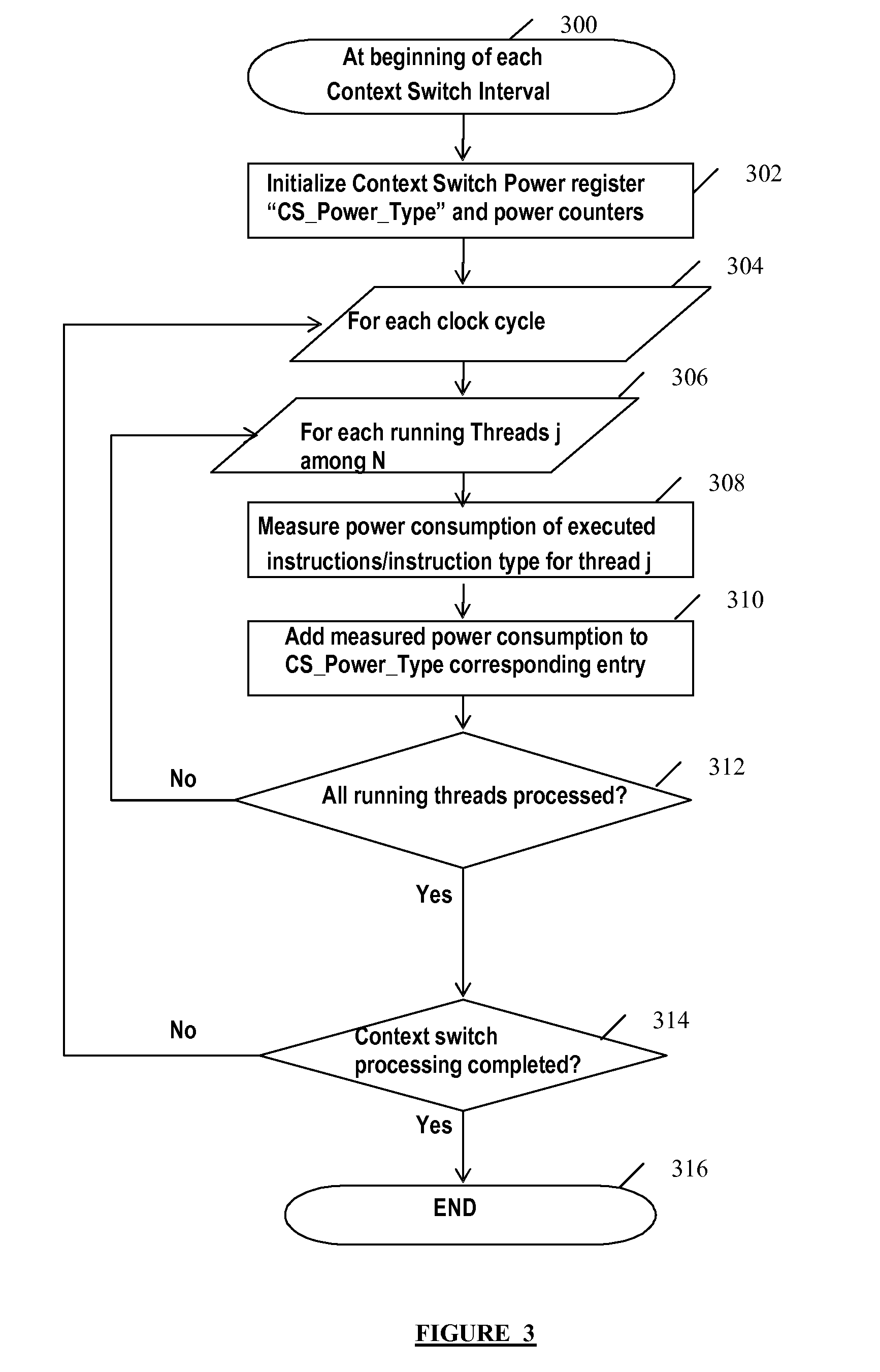

Scheduling threads in a multi-threaded / multi-core processor having a given instruction window, and scheduling a predefined number N of threads among a set of M active threads in each context switch interval are provided. The actual power consumption of each running thread during a given context switch interval is determined, and a predefined priority level is associated with each thread among the active threads based on the actual power consumption determined for the threads. The power consumption expected for each active thread during the next context switch interval in the current instruction window (CIW_Power_Th) is predicted, and a set of threads to be scheduled among the active threads are selected from the priority level associated with each active thread and the power consumption predicted for each active thread in the current instruction window.

Owner:IBM CORP

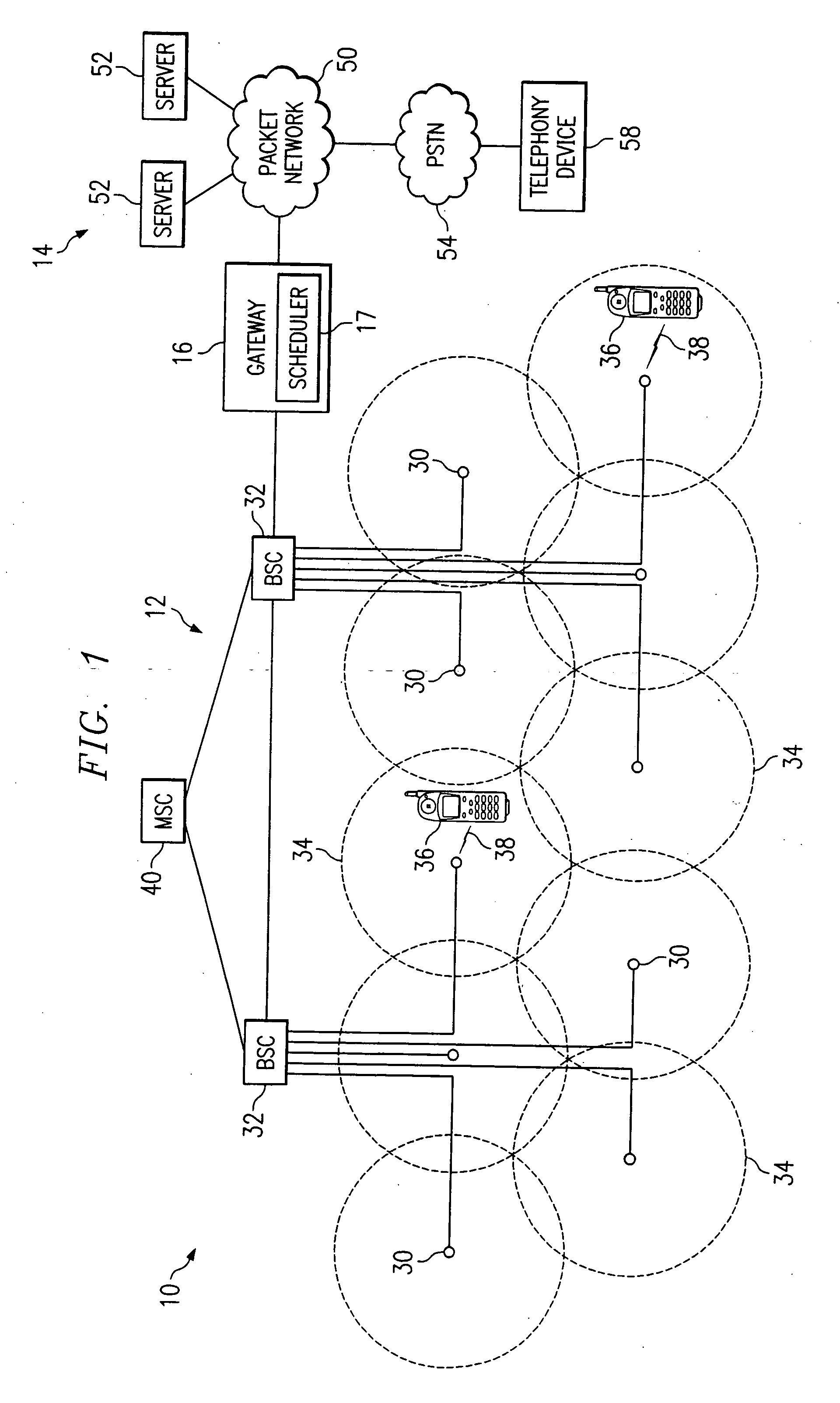

System and method for upgrading service class of a connection in a wireless network

ActiveUS7155215B1Reduce and eliminate and disadvantageReduce and eliminate problemError preventionFrequency-division multiplex detailsComputer networkBandwidth availability

A system and method for upgrading service class of a connection in a wireless network includes identifying a congested CoS in a sector of a wireless network. Bandwidth availability in the sector is determined at an enhanced CoS in relation to the congested CoS. A communications session is selected in the congested CoS for upgrading. The communications session is upgraded to the enhanced CoS.

Owner:CISCO TECH INC

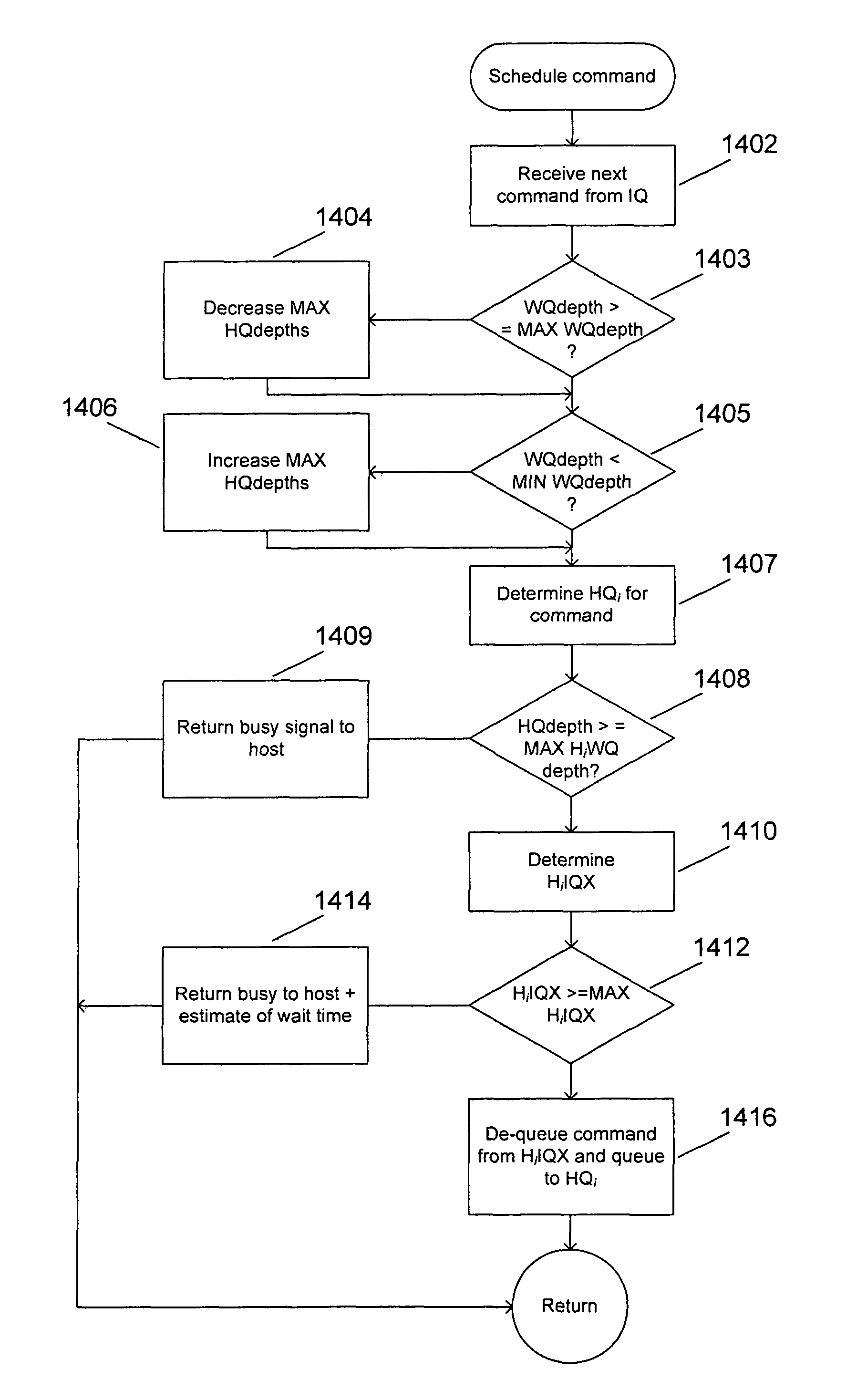

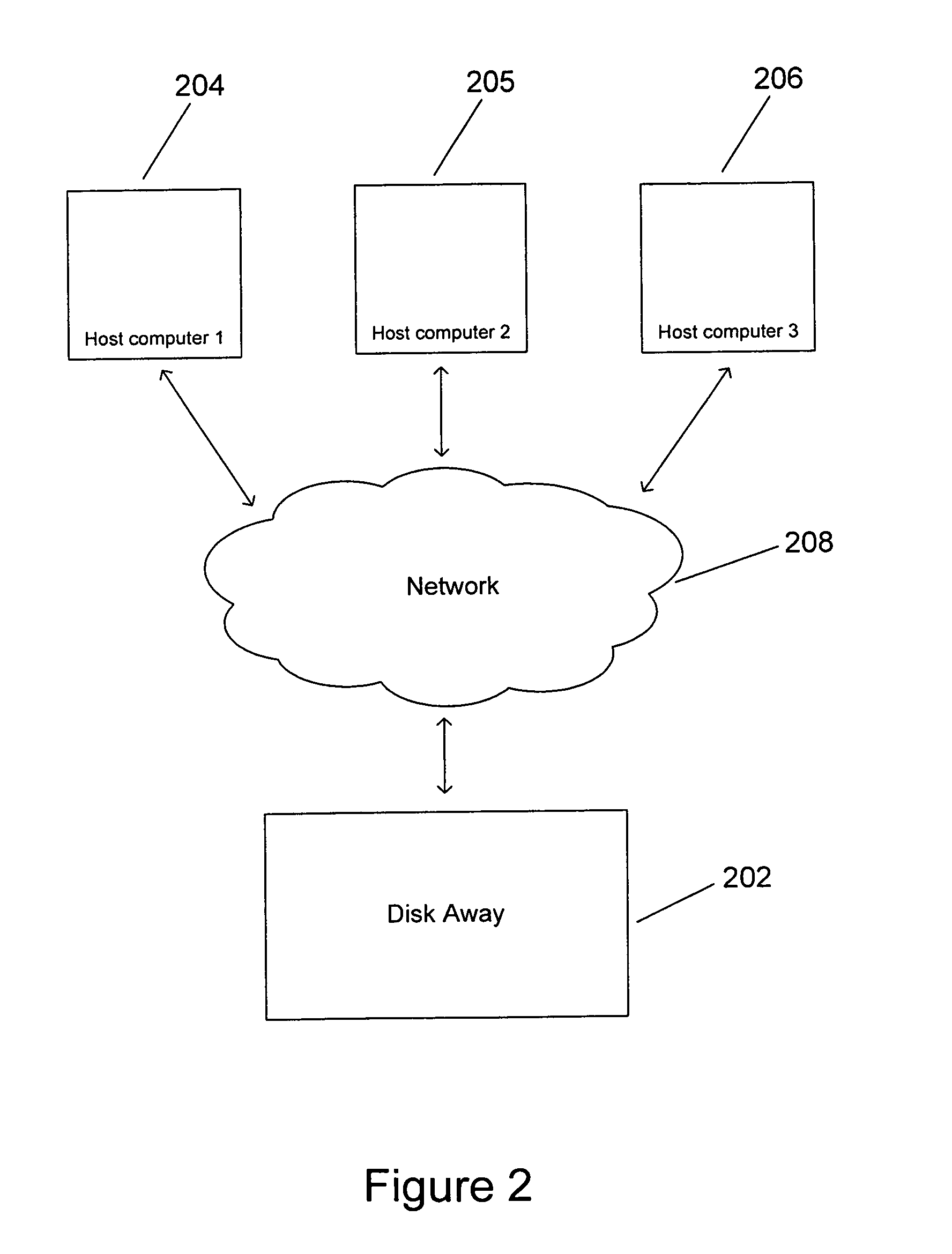

Method and system for achieving fair command processing in storage systems that implement command-associated priority queuing

ActiveUS7797468B2Avoid hungerRisk minimizationTransmissionMemory systemsPriority inversionComputer access

In certain, currently available data-storage systems, incoming commands from remote host computers are subject to several levels of command-queue-depth-fairness-related throttles to ensure that all host computers accessing the data-storage systems receive a reasonable fraction of data-storage-system command-processing bandwidth to avoid starvation of one or more host computers. Recently, certain host-computer-to-data-storage-system communication protocols have been enhanced to provide for association of priorities with commands. However, these new command-associated priorities may lead to starvation of priority levels and to a risk of deadlock due to priority-level starvation and priority inversion. In various embodiments of the present invention, at least one additional level of command-queue-depth-fairness-related throttling is introduced in order to avoid starvation of one or more priority levels, thereby eliminating or minimizing the risk of priority-level starvation and priority-related deadlock.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Distributed least choice first arbiter

InactiveUS7006501B1Increase the number ofImprove resource usageTime-division multiplexData switching by path configurationResource basedResource allocation

A distributed arbiter prioritizes requests for resources based on the number of requests made by each requester. Each resource gives the highest priority to servicing requests made by the requester that has made the fewest number of requests. That is, the requester with the fewest requests (least number of choices) is chosen first. Resources may be scheduled sequentially or in parallel. If a requester receives multiple grants from resources, the requester may select a grant based on resource priority, which is inversely related to the number of requests received by a granting resource. In order to prevent starvation, a round robin scheme may be used to allocate a resource to a requester, prior to issuing grants based on requester priority.

Owner:ORACLE INT CORP

Method of balancing work load with prioritized tasks across a multitude of communication ports

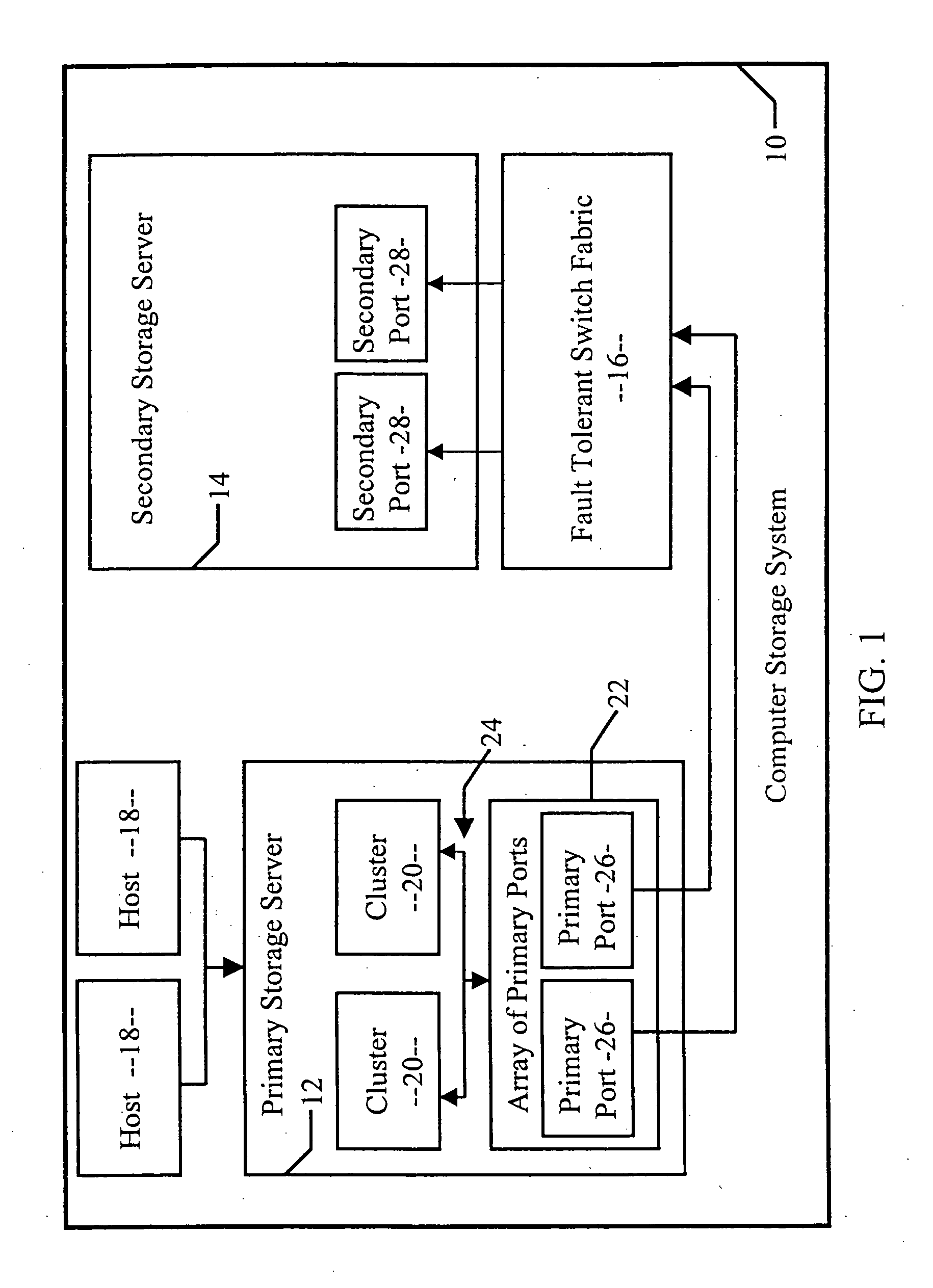

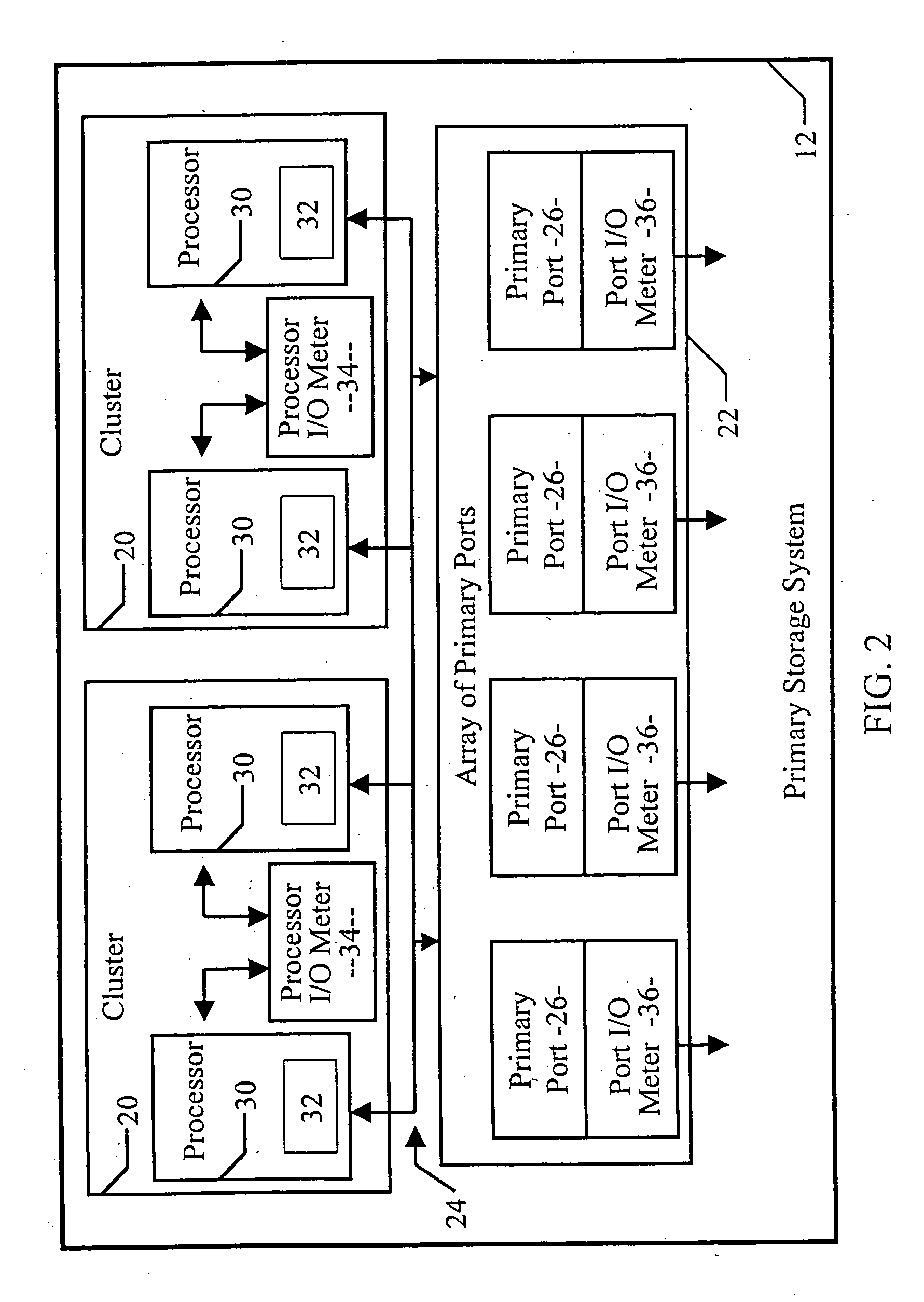

ActiveUS20050210321A1Maximize high-priority task completionAvoid hungerError detection/correctionDigital computer detailsData transmissionComputer science

A processor is used to evaluate information regarding the number, size, and priority level of data transfer requests sent to a plurality of communication ports. Additional information regarding the number, size, and priority level of data requests received by the communication ports from this and other processors is evaluated as well. This information is applied to a control algorithm that, in turn, determines which of the communication ports will receive subsequent data transfer requests. The behavior of the control algorithm varies based on the current utilization rate of communication port bandwidths, the size of data transfer requests, and the priority level of the these transfer requests.

Owner:IBM CORP

Scheduling threads in a processor based on instruction type power consumption

ActiveUS8656408B2Improve reliabilityAvoid hungerEnergy efficient ICTDigital data processing detailsThread schedulingComputer science

Guiding OS thread scheduling in multi-core and / or multi-threaded microprocessors by: determining, for each thread among the active threads, the power consumed by each instruction type associated with an instruction executed by the thread during the last context switch interval; determining for each thread among the active threads, the power consumption expected for each instruction type associated with an instruction scheduled by said thread during the next context switch interval; generating at least one combination of N threads among the active threads (M), and for each generated combination determining if the combination of N threads satisfies a main condition related to the power consumption per instruction type expected for each thread of the thread combination during the next context switch interval and to the thread power consumption per instruction type determined for each thread of the thread combination during the last context switch interval; and selecting a combination of N threads.

Owner:INT BUSINESS MASCH CORP

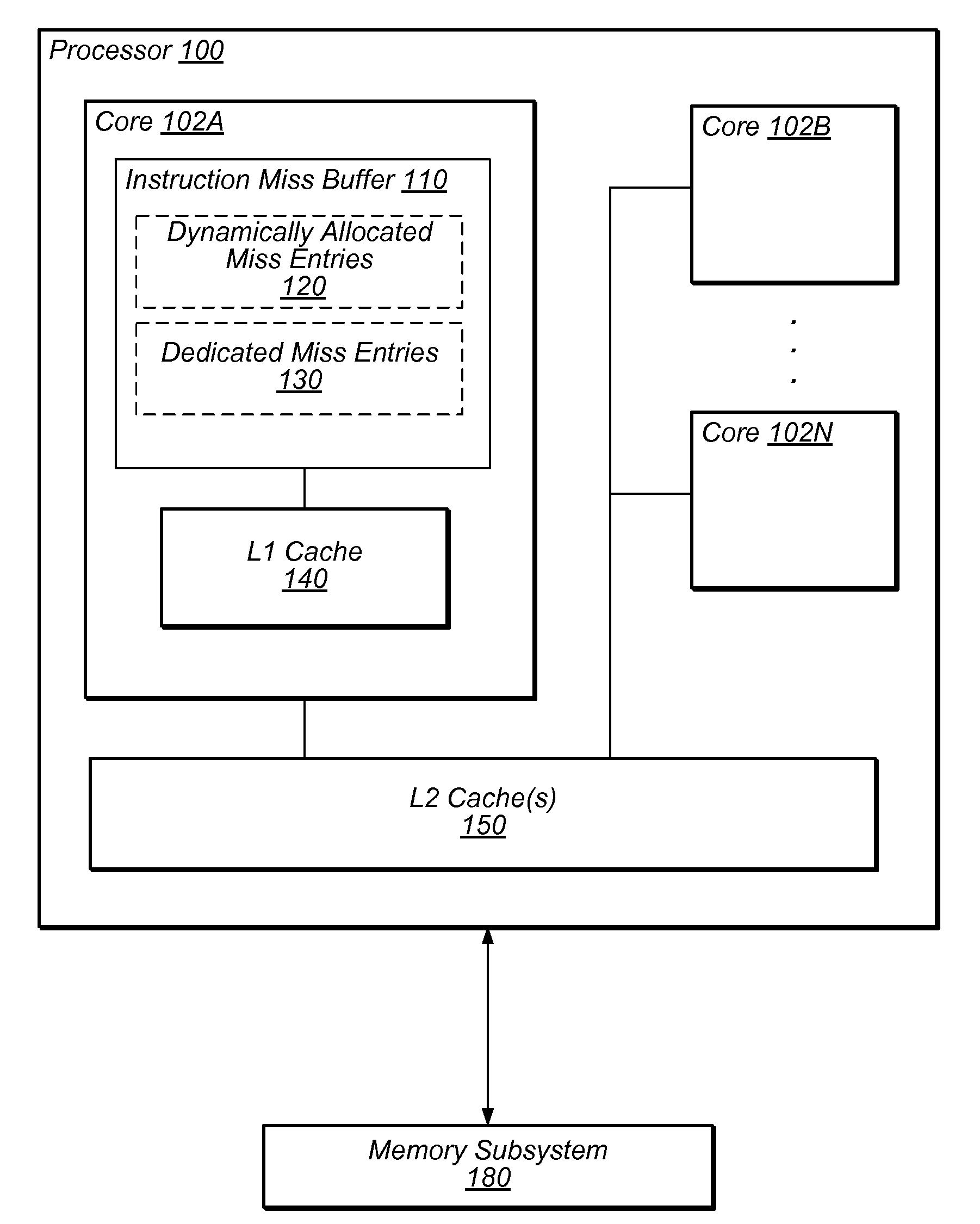

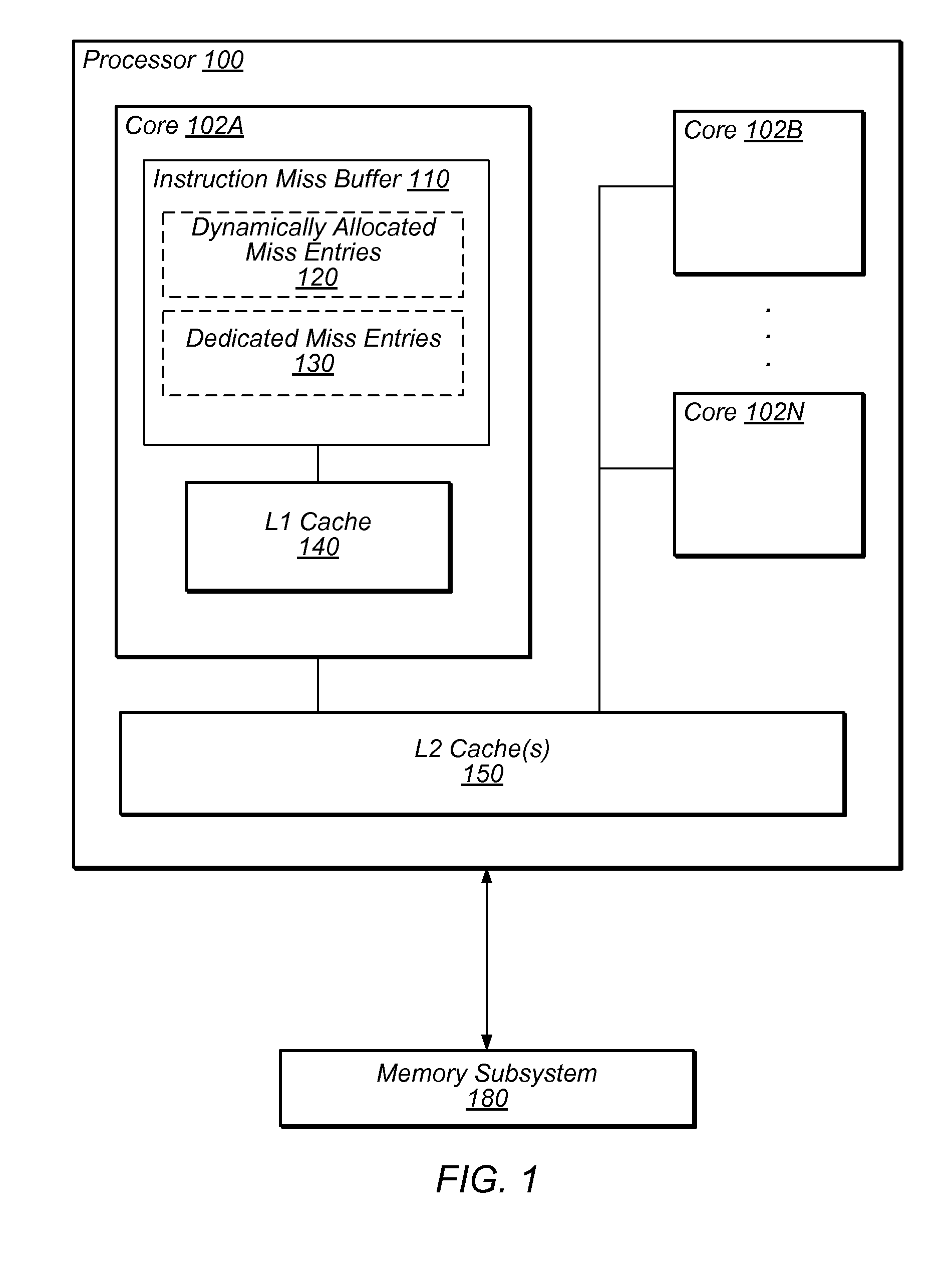

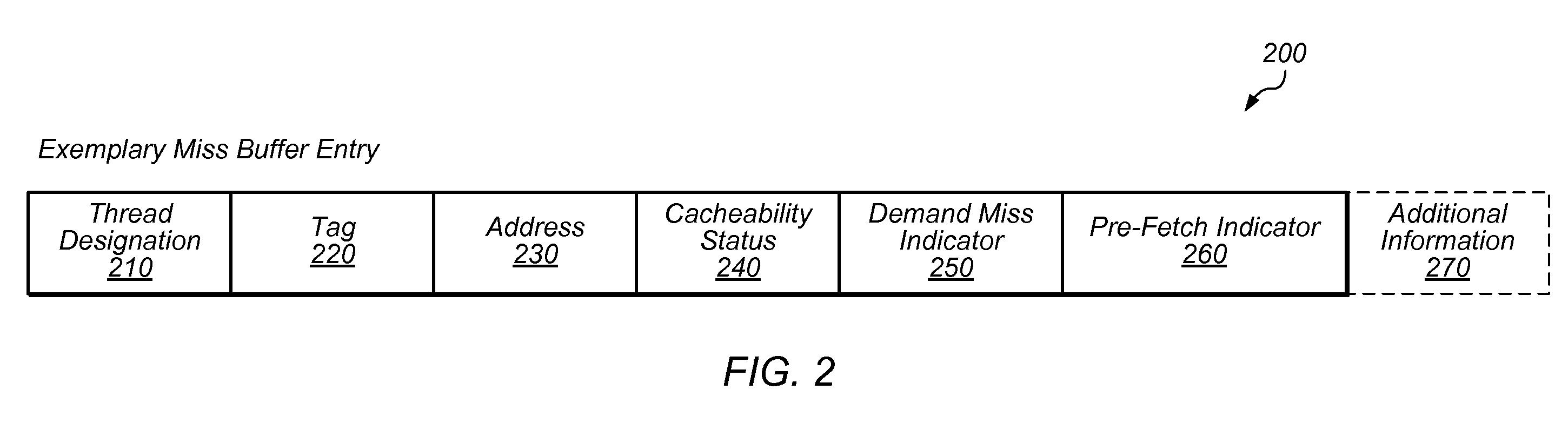

Miss buffer for a multi-threaded processor

ActiveUS20120137077A1Period can be reduced and eliminatedAvoid hungerMemory adressing/allocation/relocationProgram controlOperating systemComputer architecture

A multi-threaded processor configured to allocate entries in a buffer for instruction cache misses is disclosed. Entries in the buffer may store thread state information for a corresponding instruction cache miss for one of a plurality of threads executable by the processor. The buffer may include dedicated entries and dynamically allocable entries, where the dedicated entries are reserved for a subset of the plurality of threads and the dynamically allocable entries are allocable to a group of two or more of the plurality of threads. In one embodiment, the dedicated entries are dedicated for use by a single thread and the dynamically allocable entries are allocable to any of the plurality of threads. The buffer may store two or more entries for a given thread at a given time. In some embodiments, the buffer may help ensure none of the plurality of threads experiences starvation with respect to instruction fetches.

Owner:ORACLE INT CORP

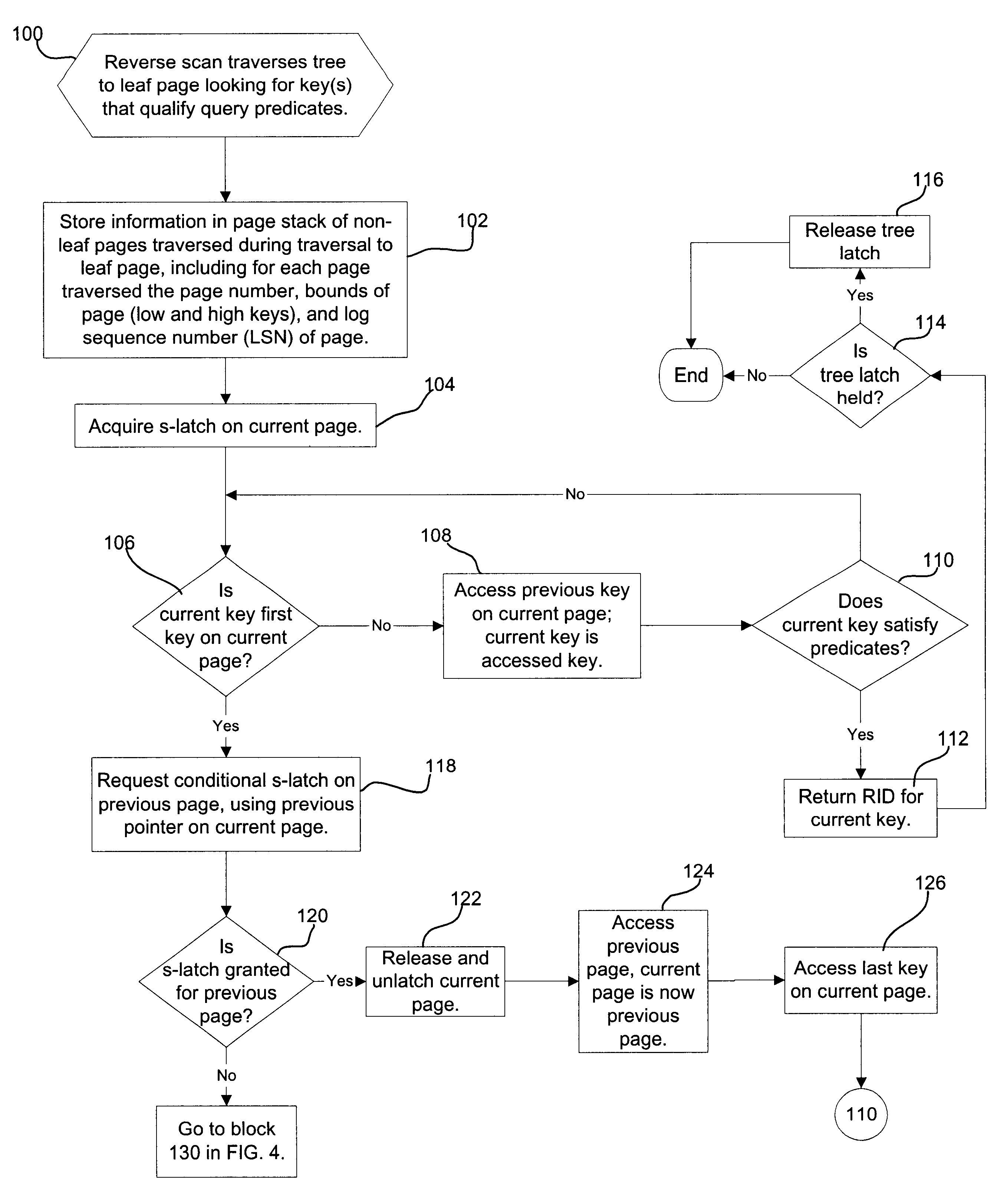

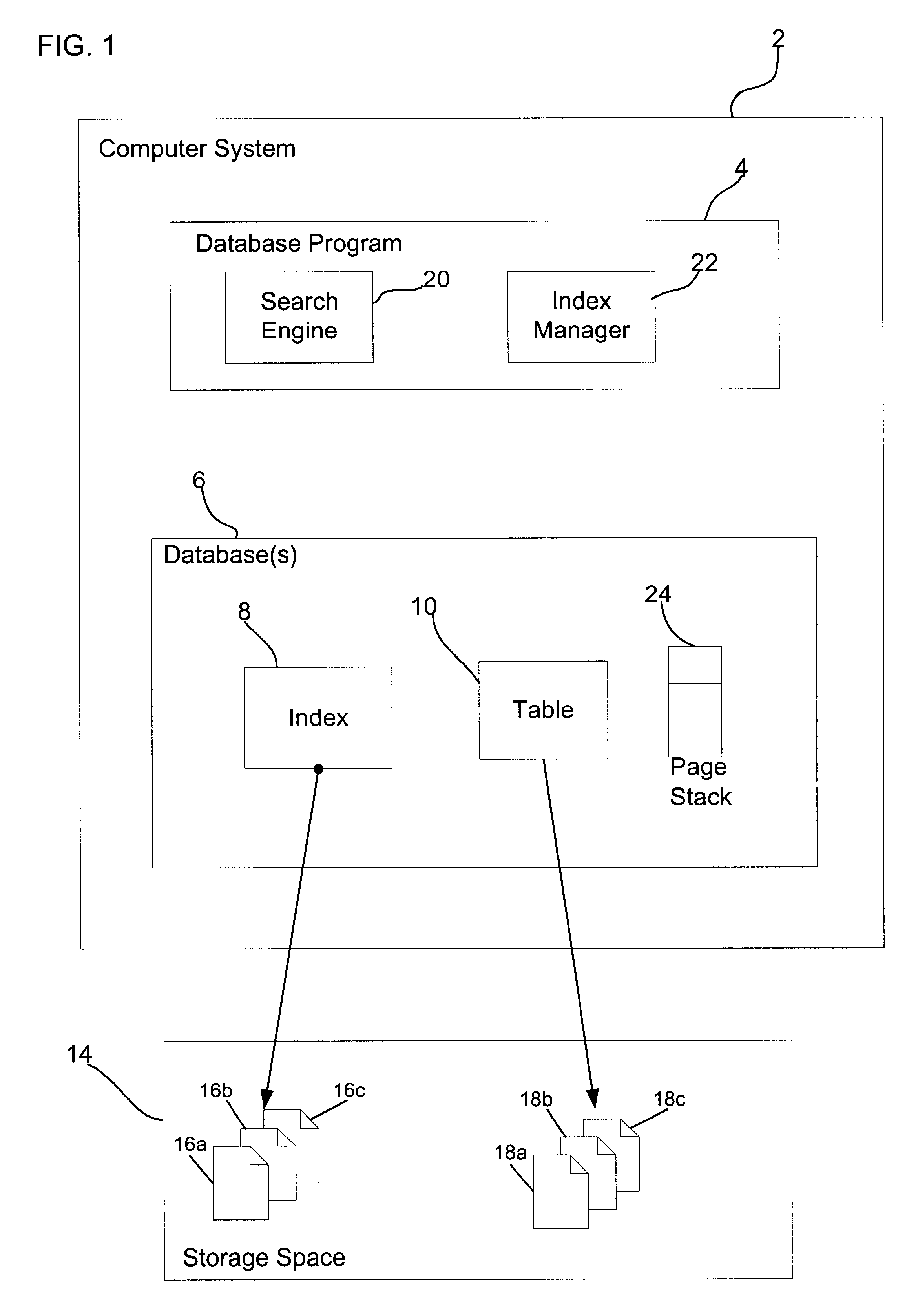

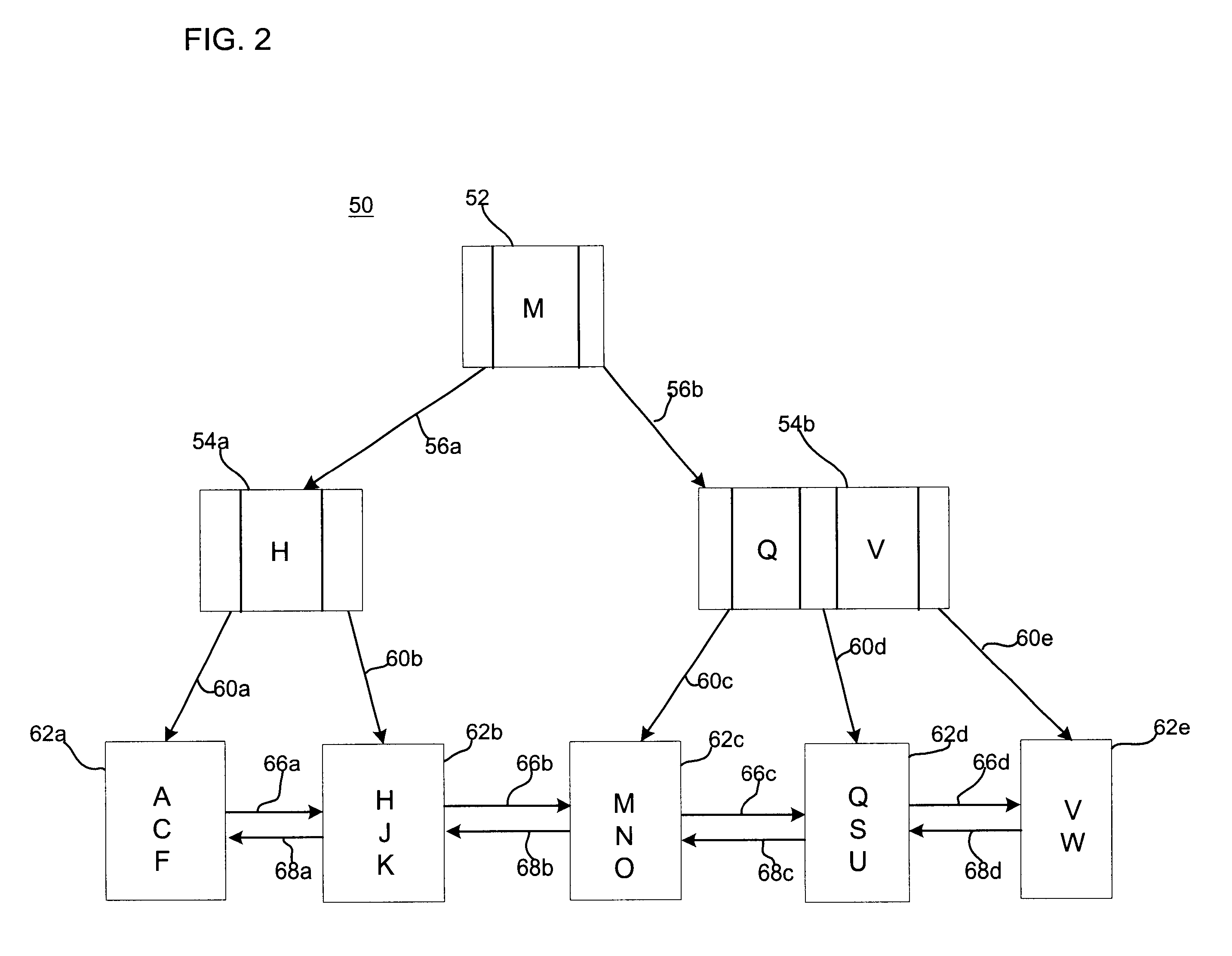

Method, system, and program for reverse index scanning

InactiveUS6647386B2Avoid deadlockAvoid hungerData processing applicationsDigital data information retrievalReverse indexWorld Wide Web

Provided is a system, method, and program for performing a reverse scan of an index implemented as a tree of pages. Each leaf page includes one or more ordered index keys and previous and next pointers to the previous and next pages, respectively. The scan is searching for keys in the leaf pages that satisfy the search criteria. If a current index key is a first key on a current page, then a request is made for a conditional shared latch on a previous page prior to the current page. If the requested conditional shared latch is not granted, then the latch on the current page is released and a request is made for unconditional latches on the previous page and the current page. After receiving the latches on the previous and current pages, a determination is made of whether the current index key is on the current page if the current page was modified since the unconditional latch was requested. The current index key is located on the current page if the current index key is on the current page. A determination is then made of whether the located current index key satisfies the search criteria.

Owner:GOOGLE LLC

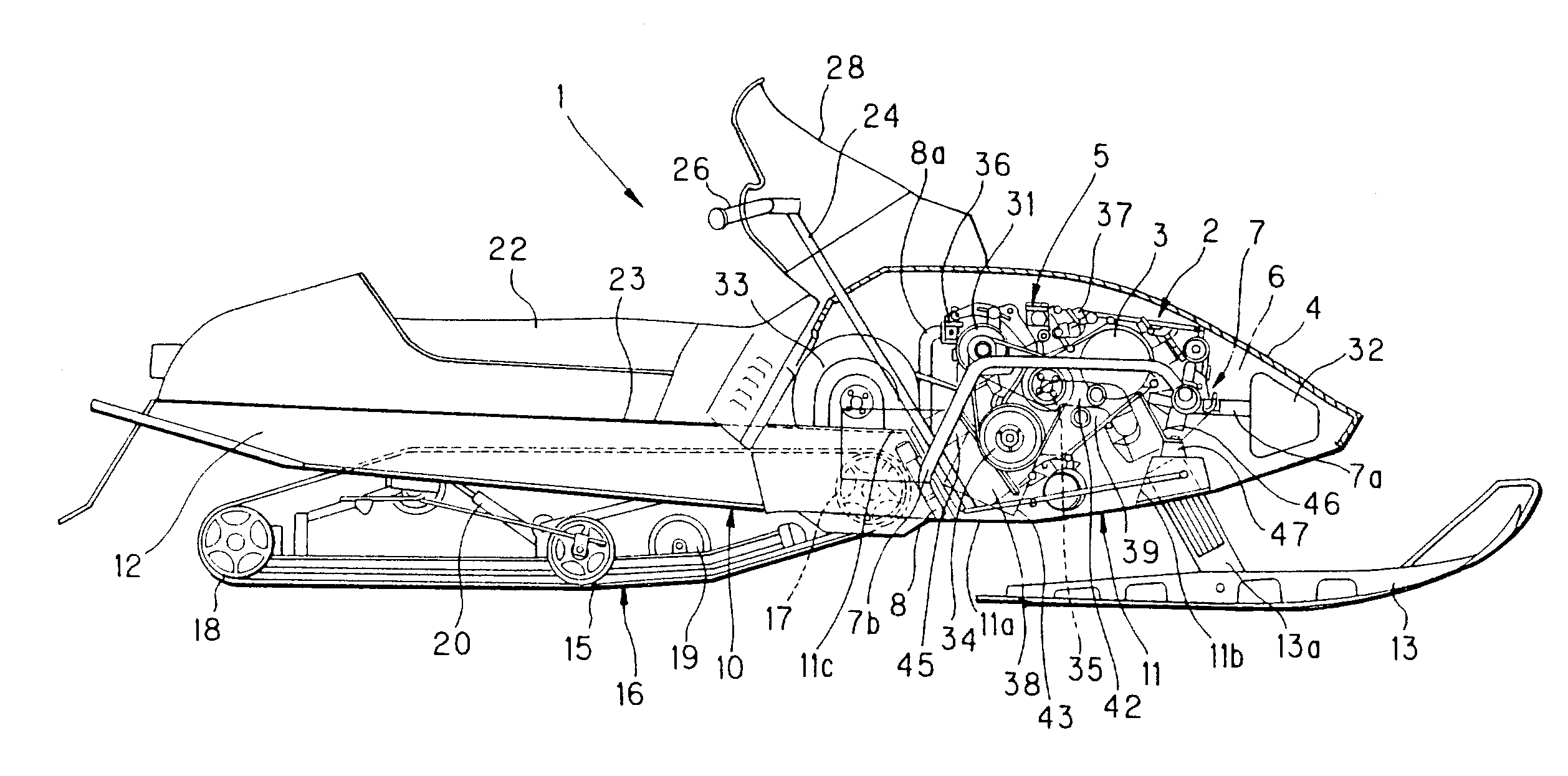

Snowmobile four-cycle engine arrangement

InactiveUS7036619B2Simplify communication pathLow costMachines/enginesRider propulsionCylinder headFuel tank

A snowmobile four-cycle engine arrangement, includes: a four-cycle engine arranged in an engine compartment formed in the front body of a snowmobile with its crankshaft laid substantially parallel to the body width and having a cylinder case inclined forwards with respect to the vehicle's direction of travel. The engine employs a dry sump oil supplying system and an oil tank separate from the engine is provided. Another snowmobile four-cycle engine arrangement includes: a four-cycle engine having a cylinder head at its top, arranged in the engine compartment and inclined forwards with respect to the vehicle's direction of travel with an intake path provided on the upper portion of the engine body. An intercooler for cooling the intake air is arranged in a tunnel created inside the body frame for accommodating a track belt.

Owner:SUZUKI MOTOR CORP

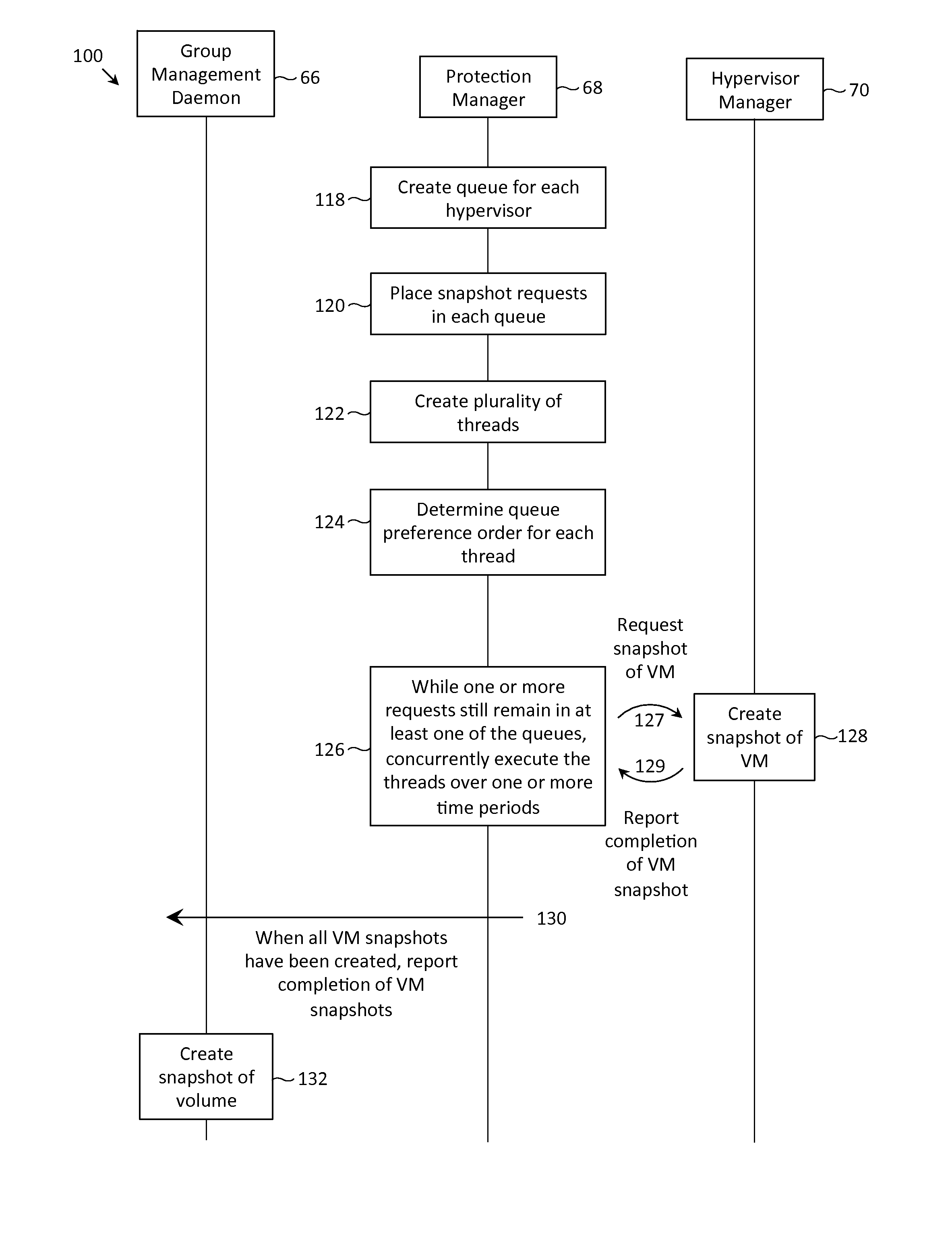

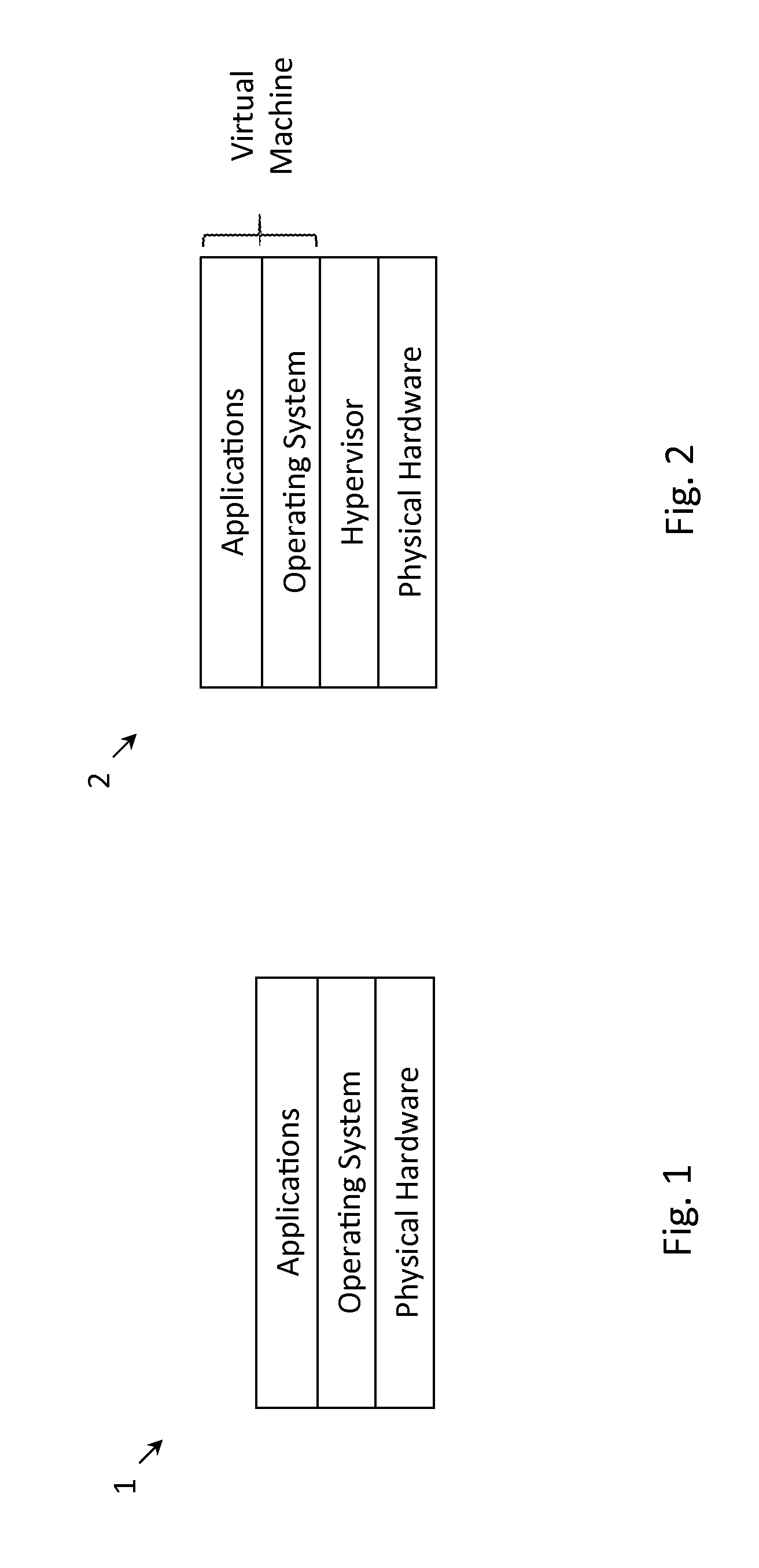

Methods and systems for concurrently taking snapshots of a plurality of virtual machines

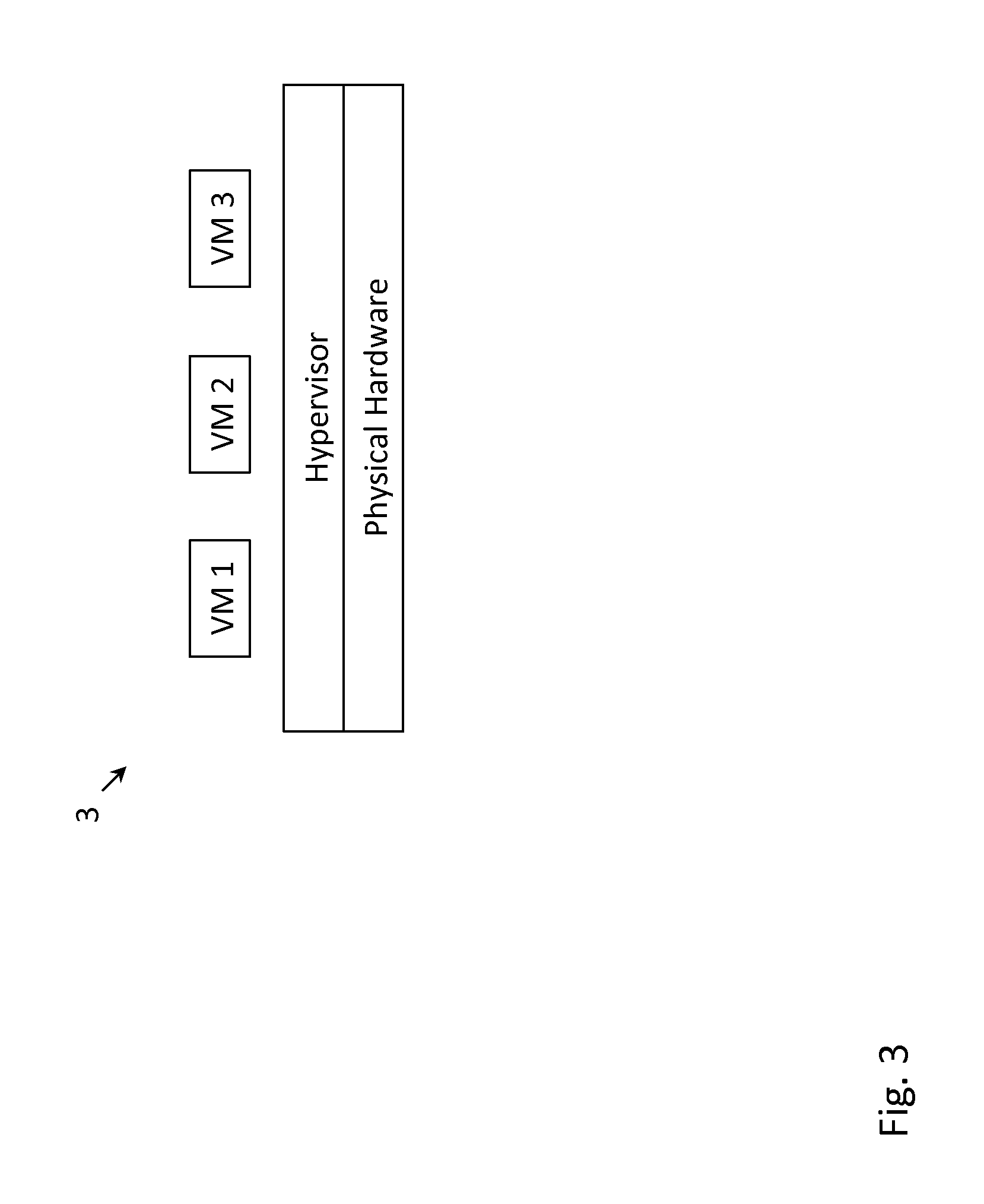

ActiveUS20160103738A1Avoid hungerDigital data processing detailsWebsite content managementVirtual machineHypervisor

Techniques are described herein which minimize the impact of virtual machine snapshots on the performance of virtual machines and hypervisors. In the context of a volume snapshot which may involve (i) taking virtual machine snapshots of all virtual machines associated with the volume, (ii) taking the volume snapshot, and (iii) removing all the virtual machine snapshots, multiple virtual machine snapshots may be created in parallel. In the process of creating virtual machine snapshots, a storage system may determine which snapshots to create in parallel. The storage system may also prioritize snapshots from certain hypervisors in order to avoid the problem of “starvation”, in which busy hypervisors prevent less busy hypervisors from creating snapshots. The techniques described herein, while mainly described in the context of snapshot creation, are readily applied to snapshot removal.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com