Patents

Literature

547 results about "Context switch" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

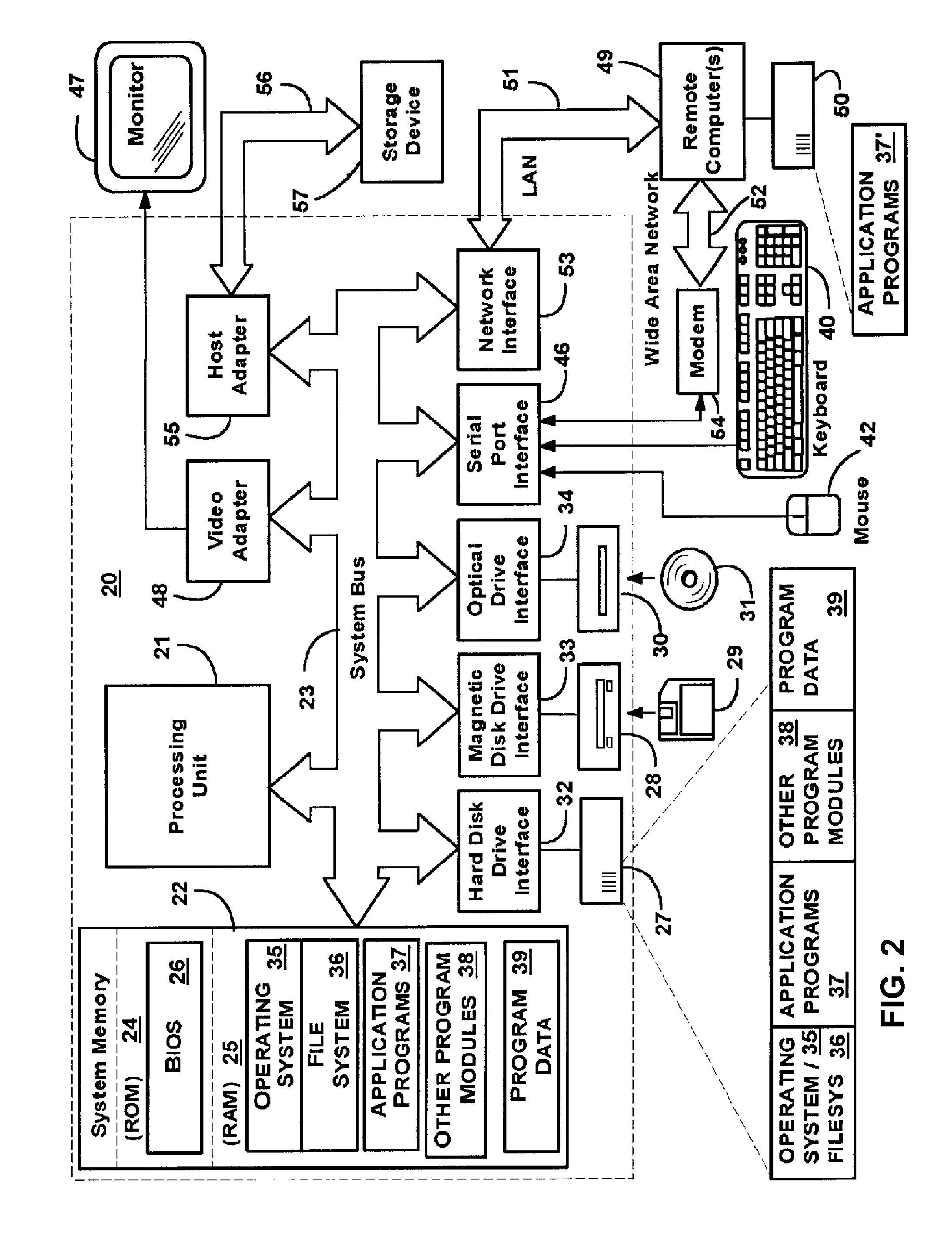

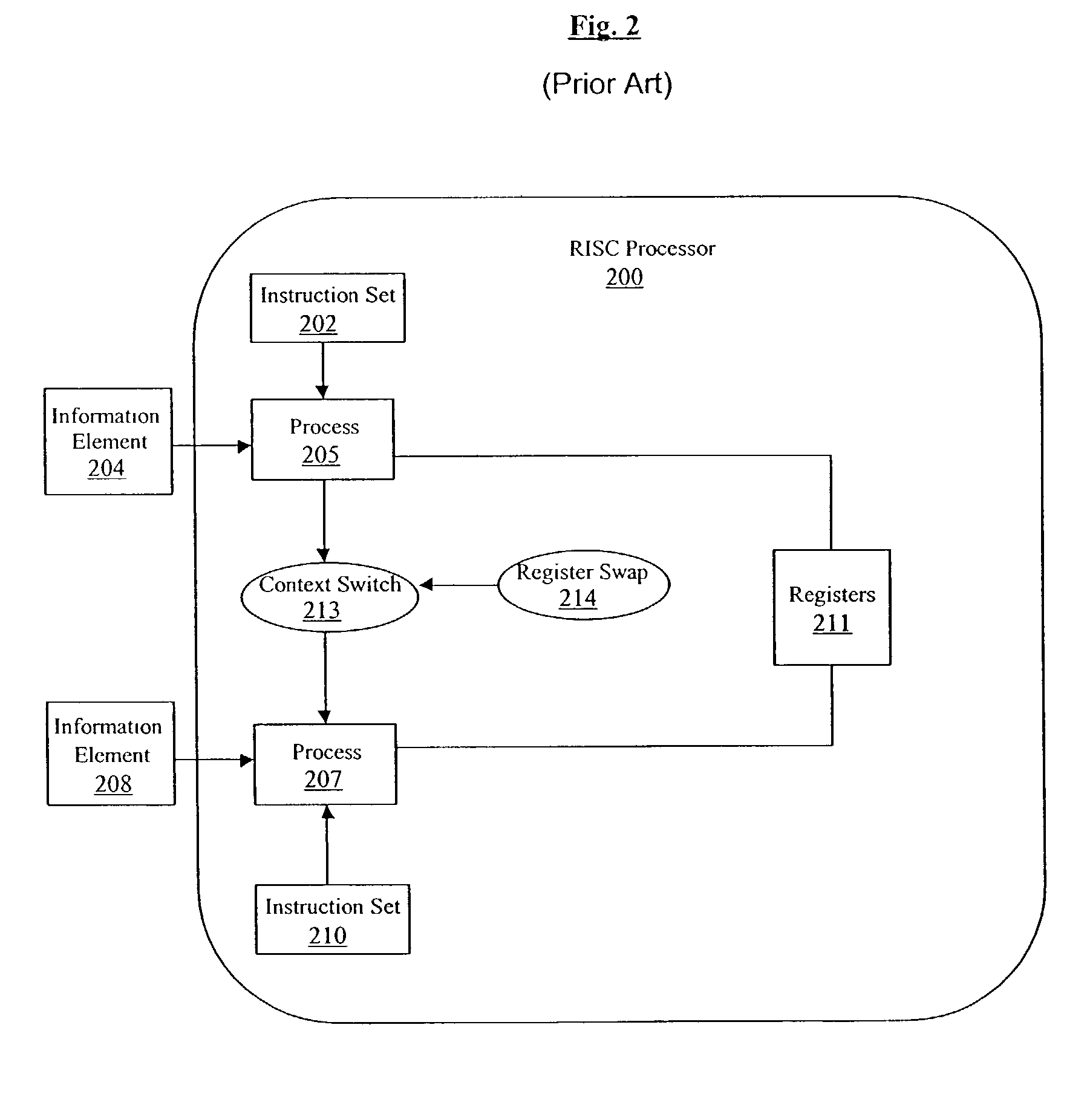

In computing, a context switch is the process of storing the state of a process or of a thread, so that it can be restored and execution resumed from the same point later. This allows multiple processes to share a single CPU, and is an essential feature of a multitasking operating system.

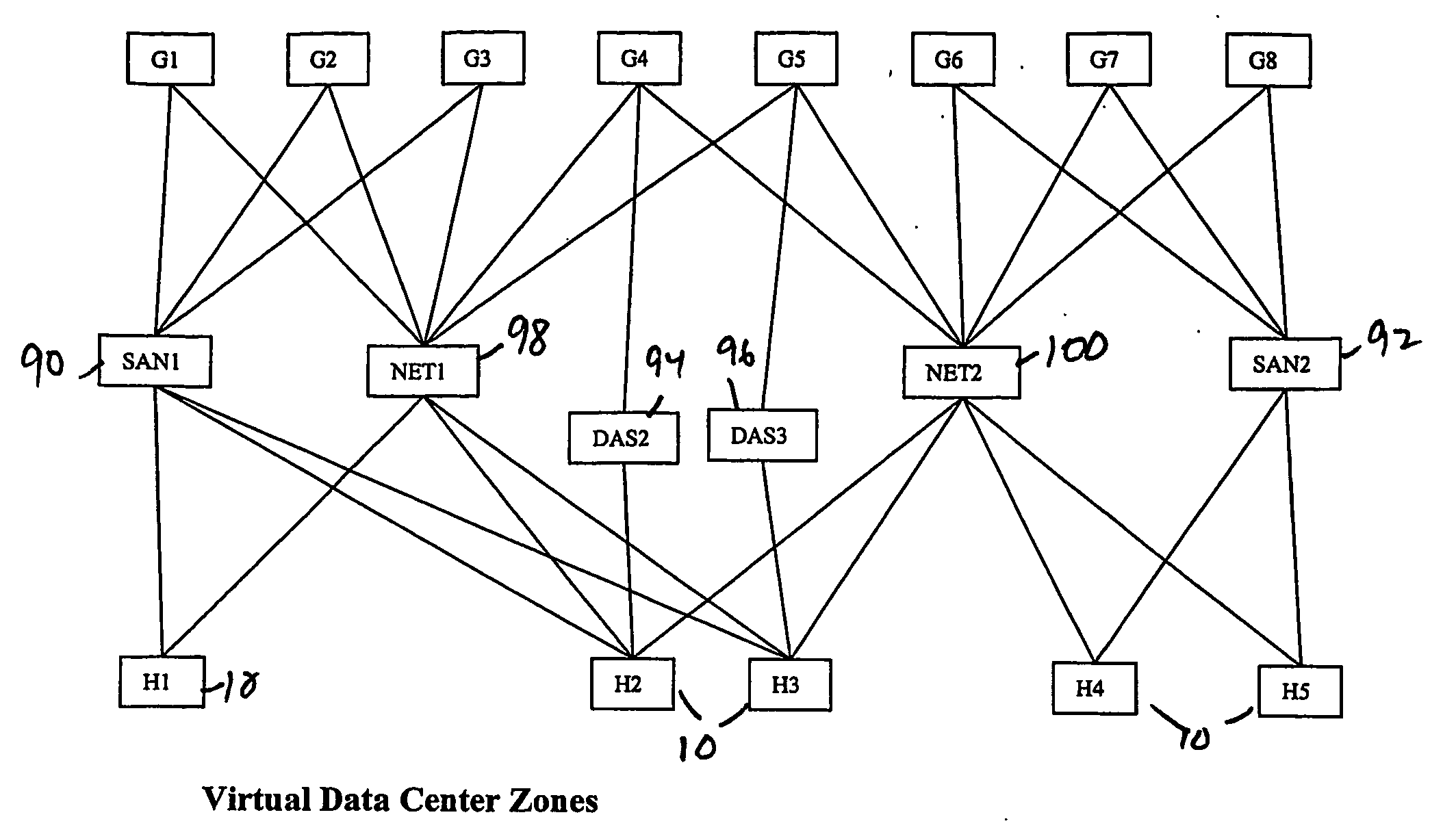

Virtual data center that allocates and manages system resources across multiple nodes

ActiveUS20070067435A1Improve securityExcessive removalError detection/correctionMemory adressing/allocation/relocationOperational systemData center

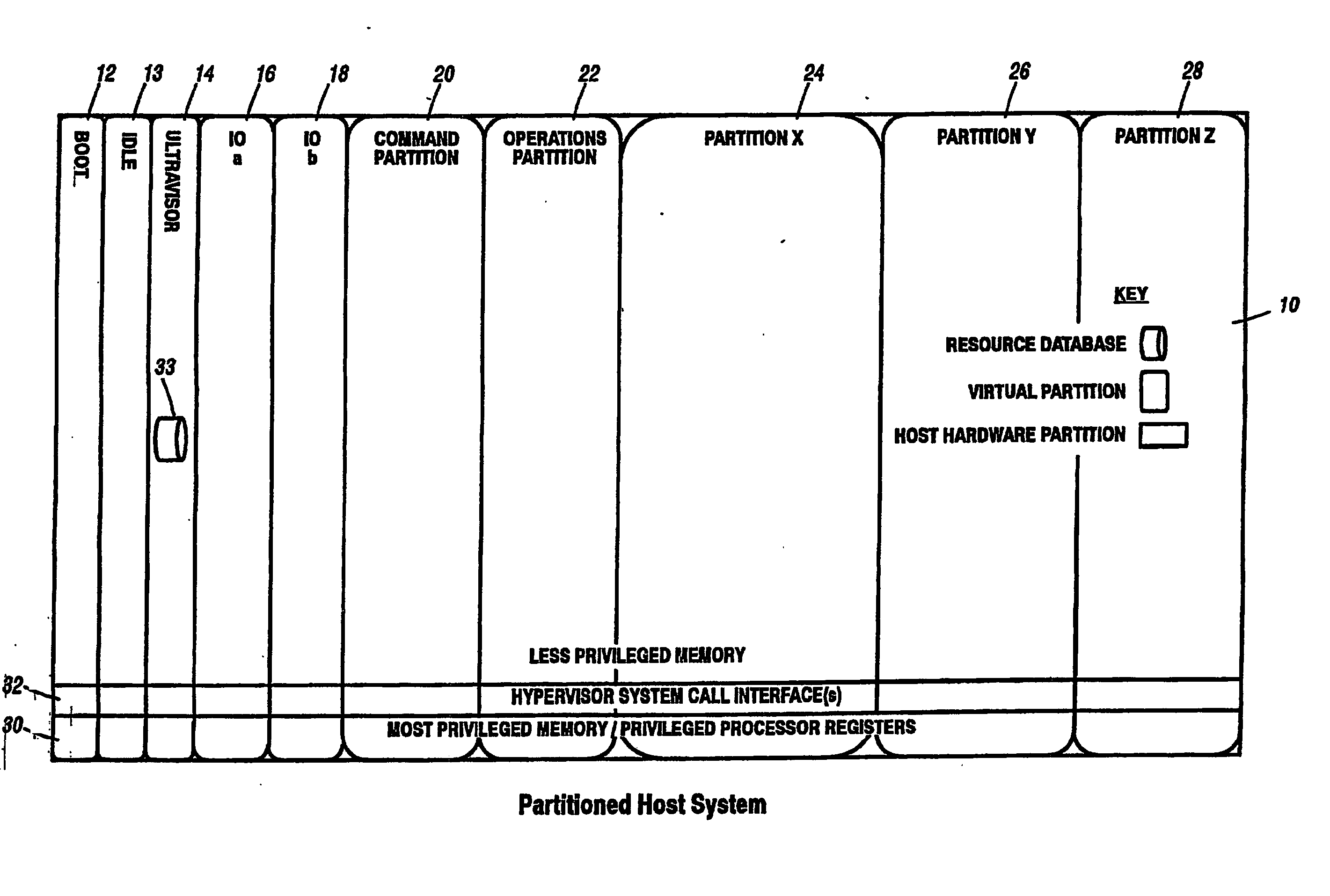

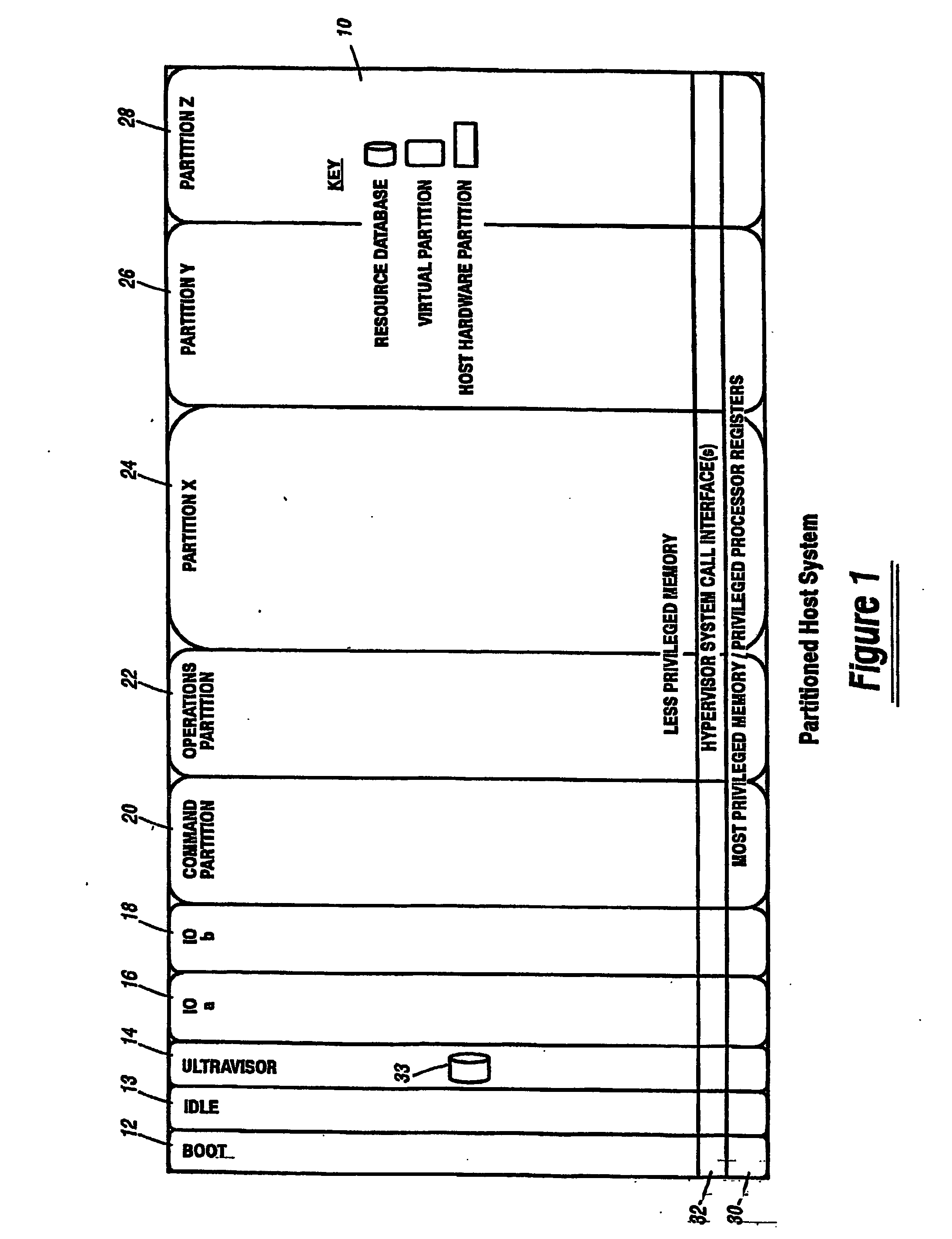

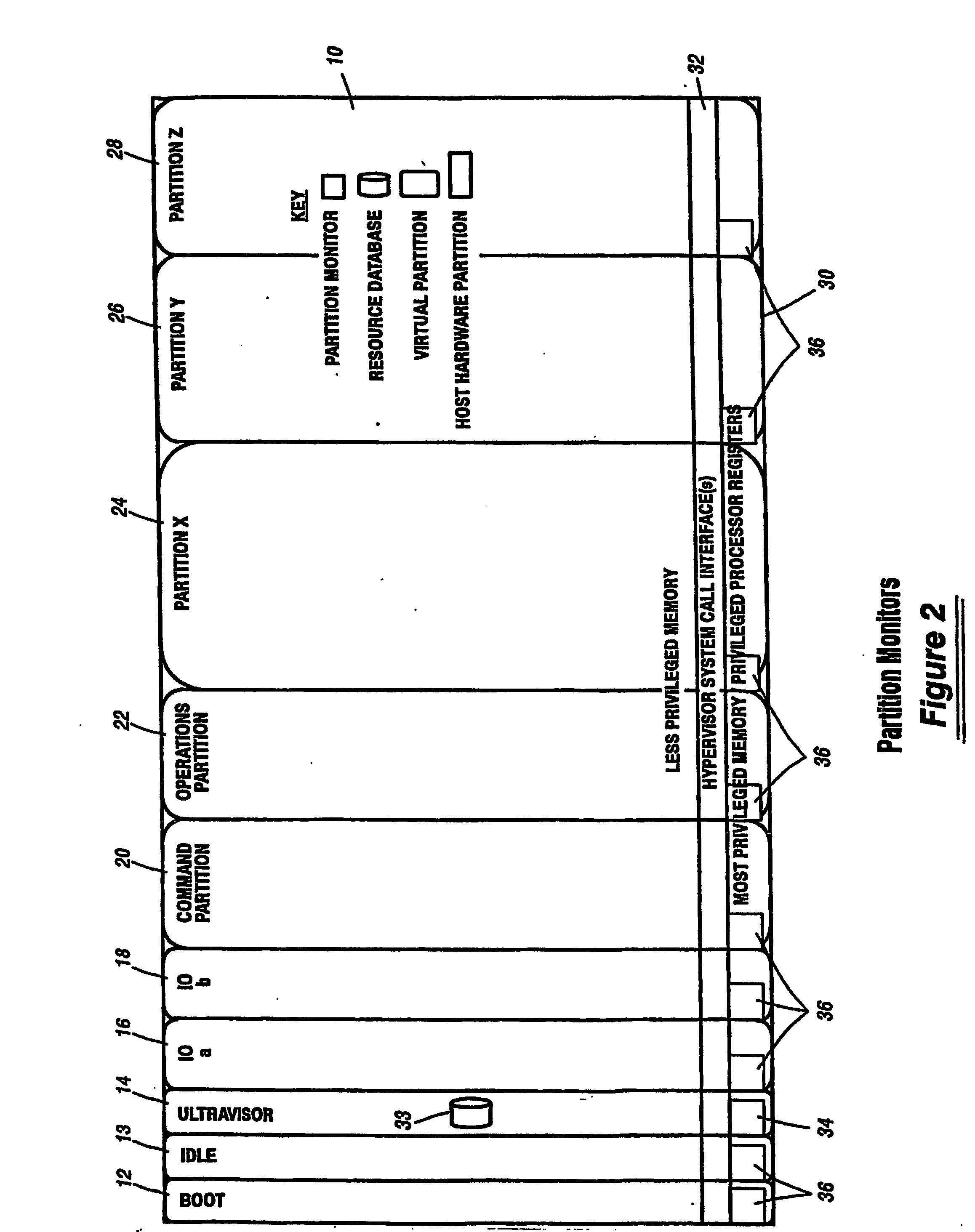

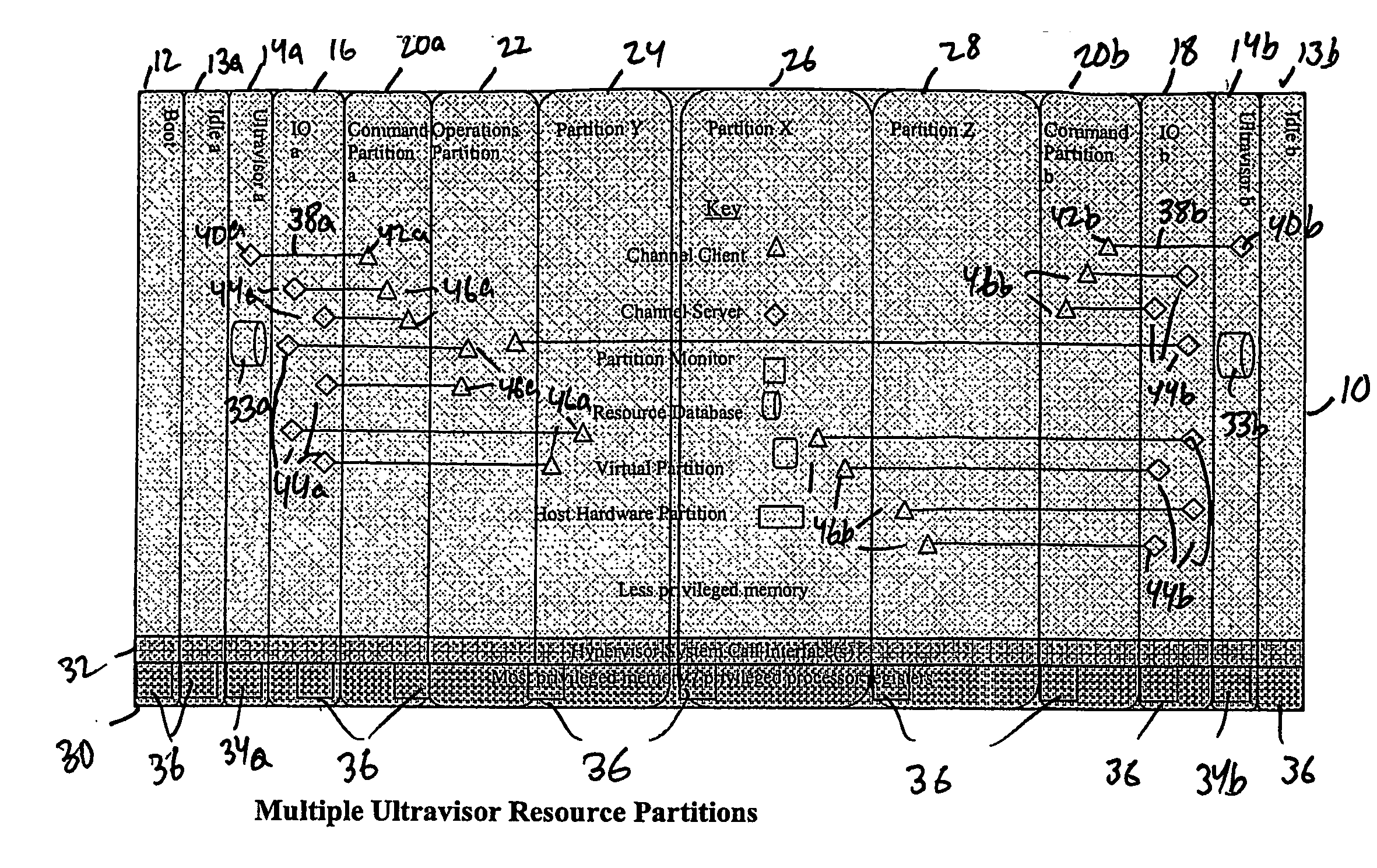

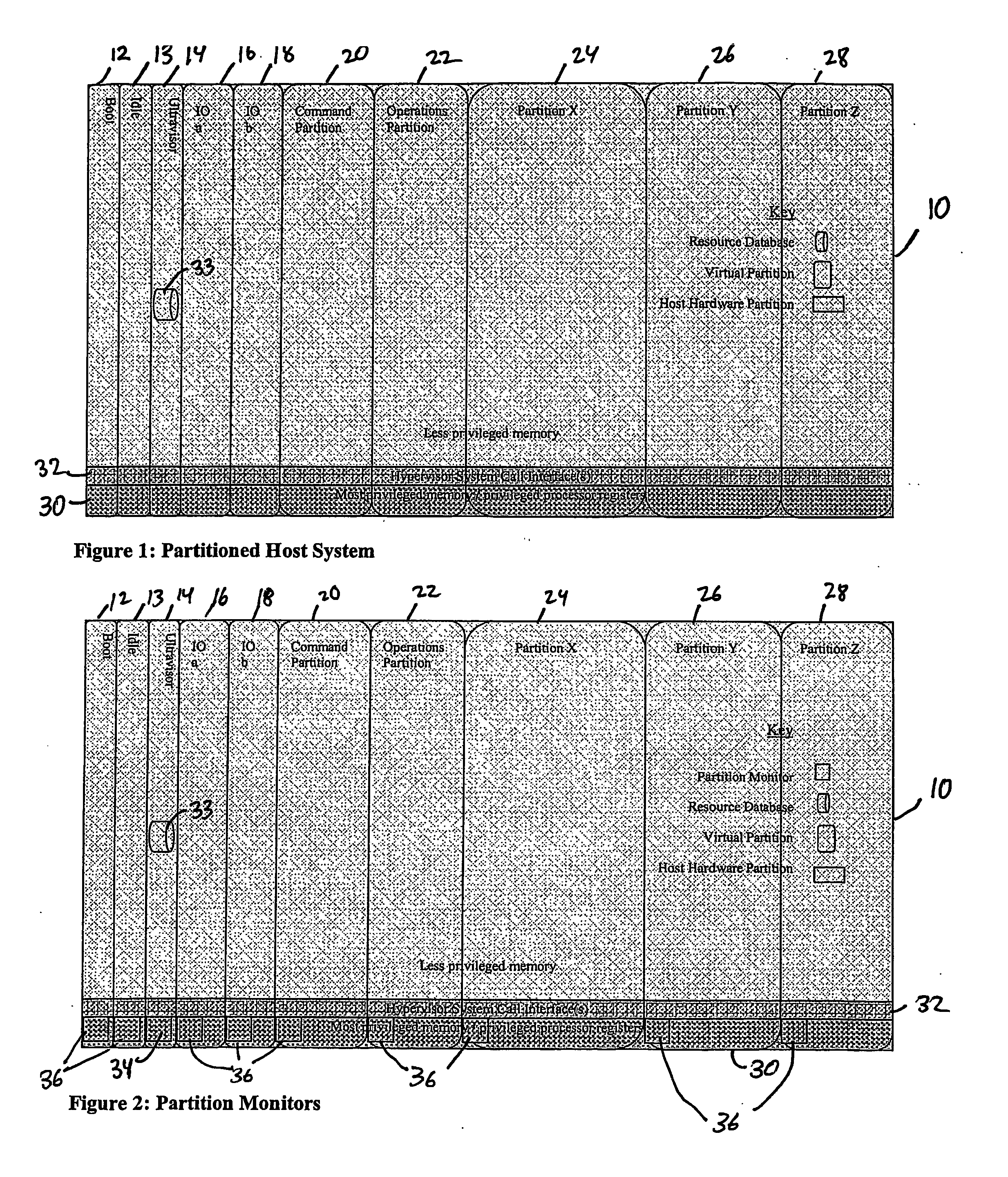

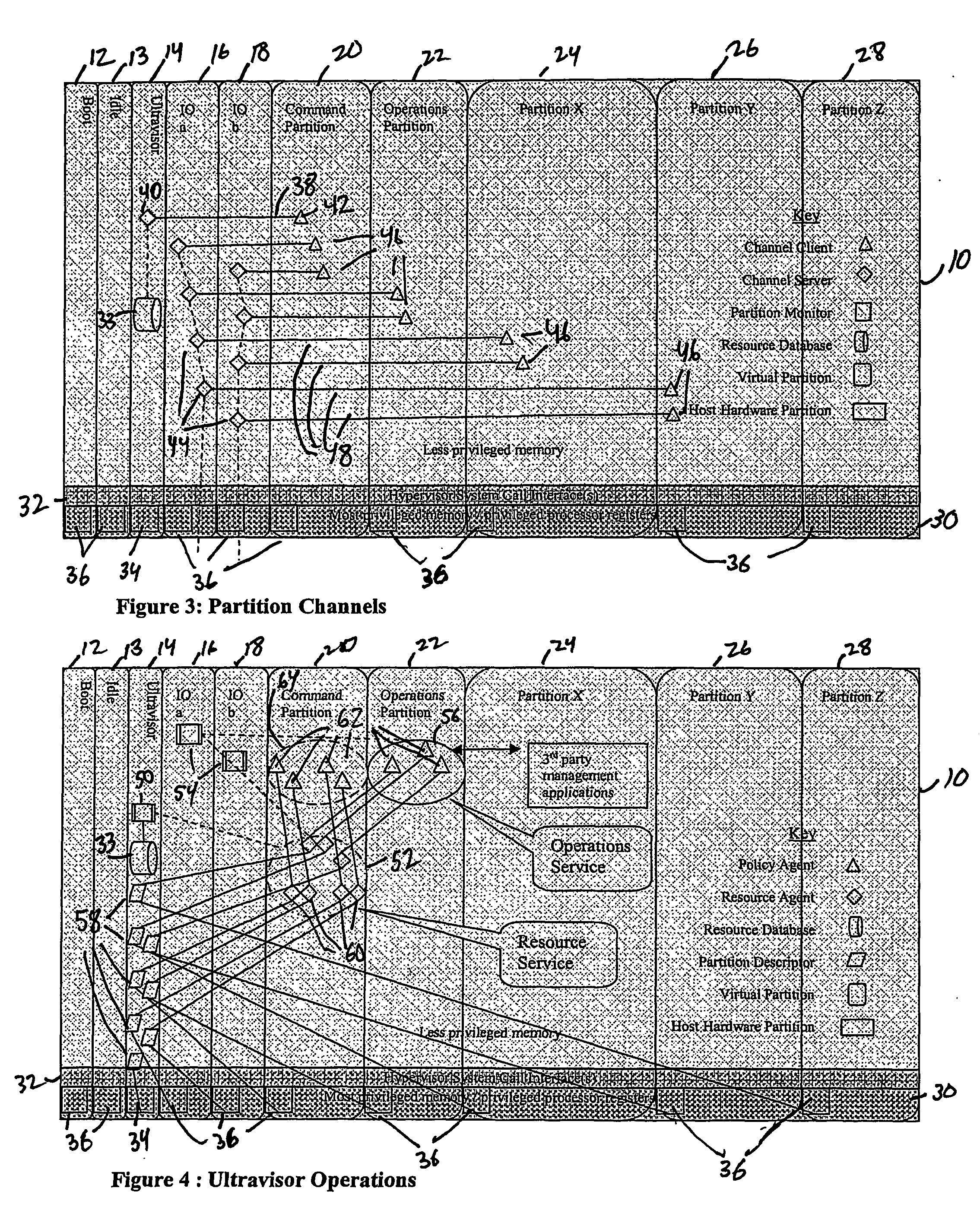

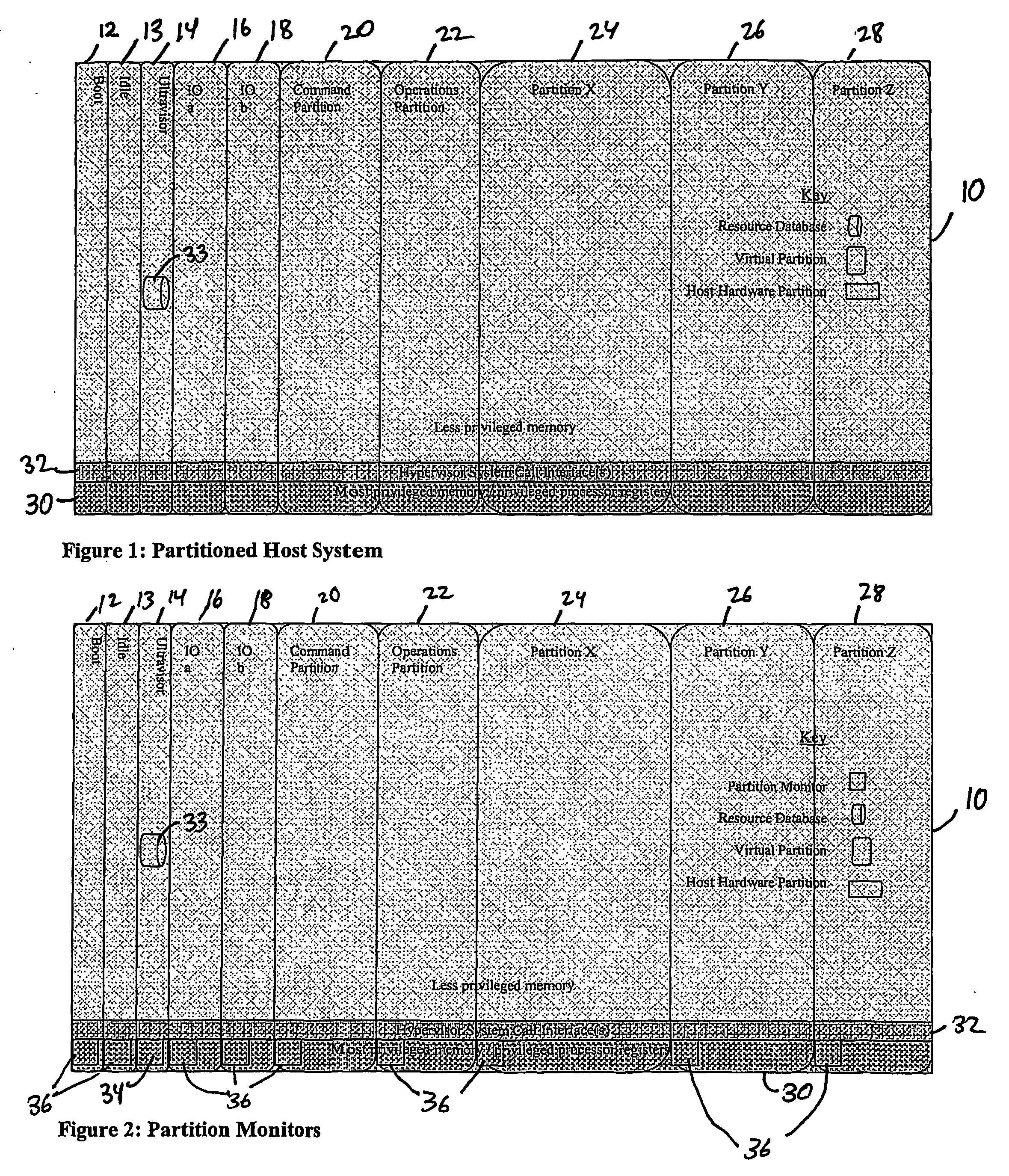

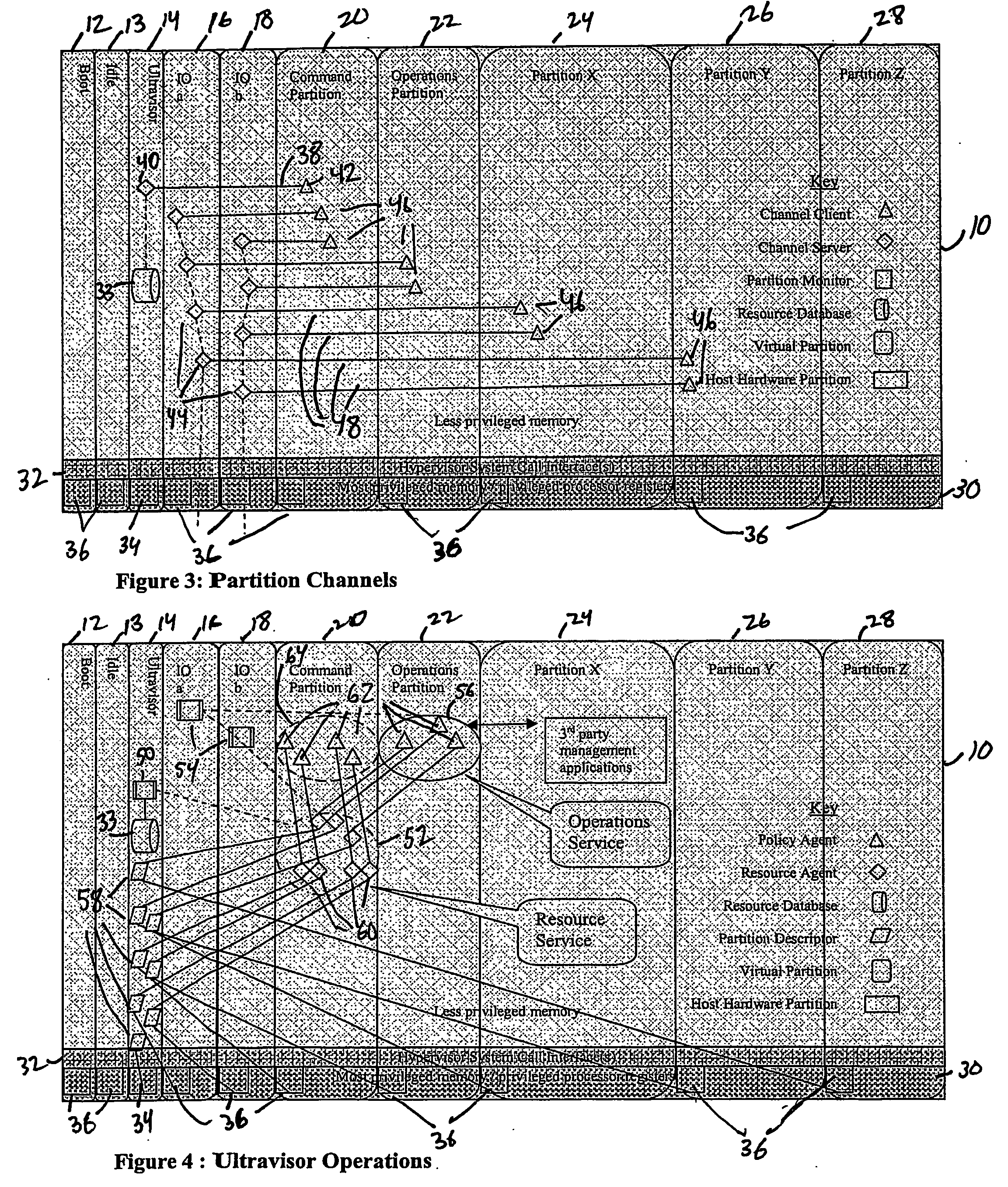

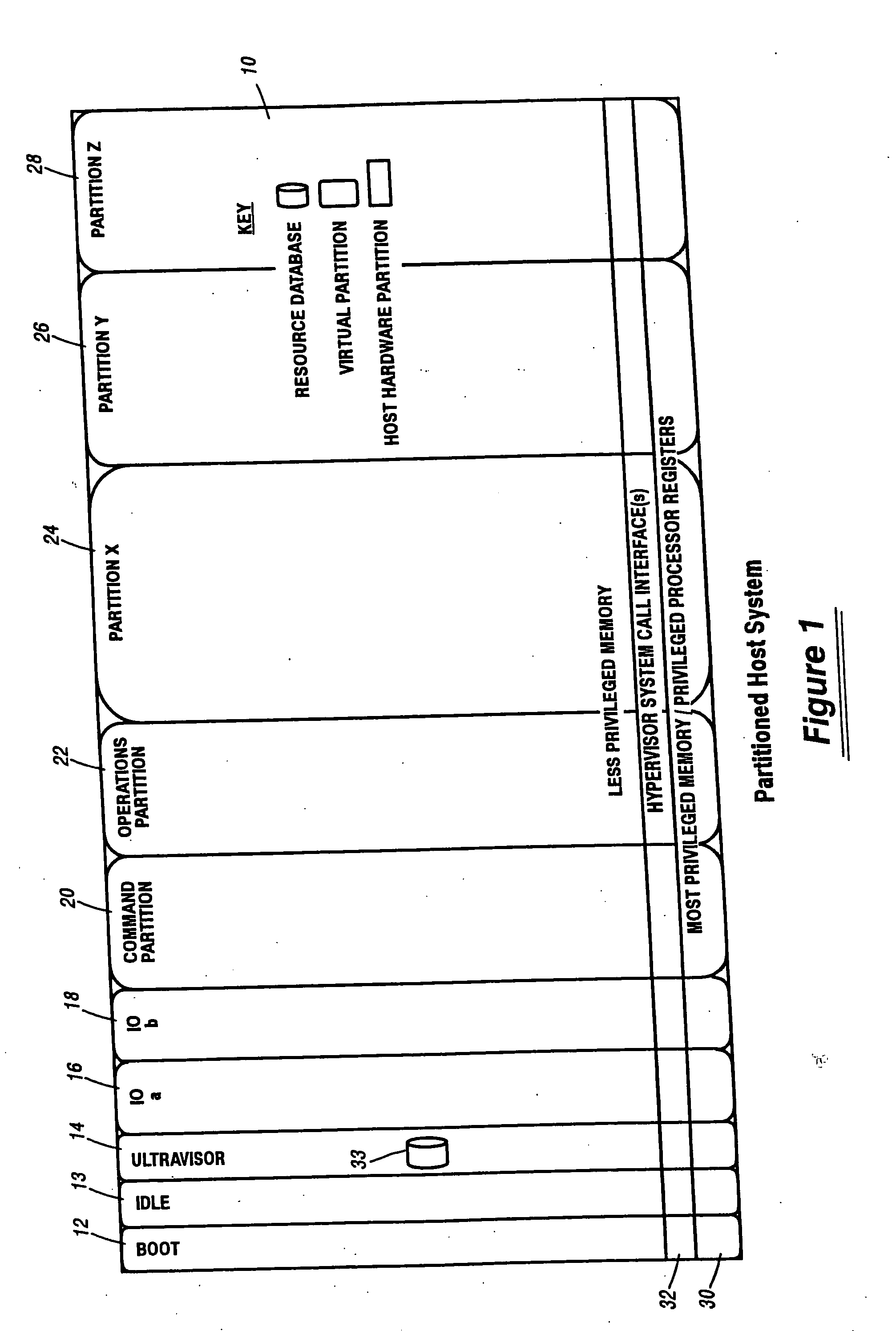

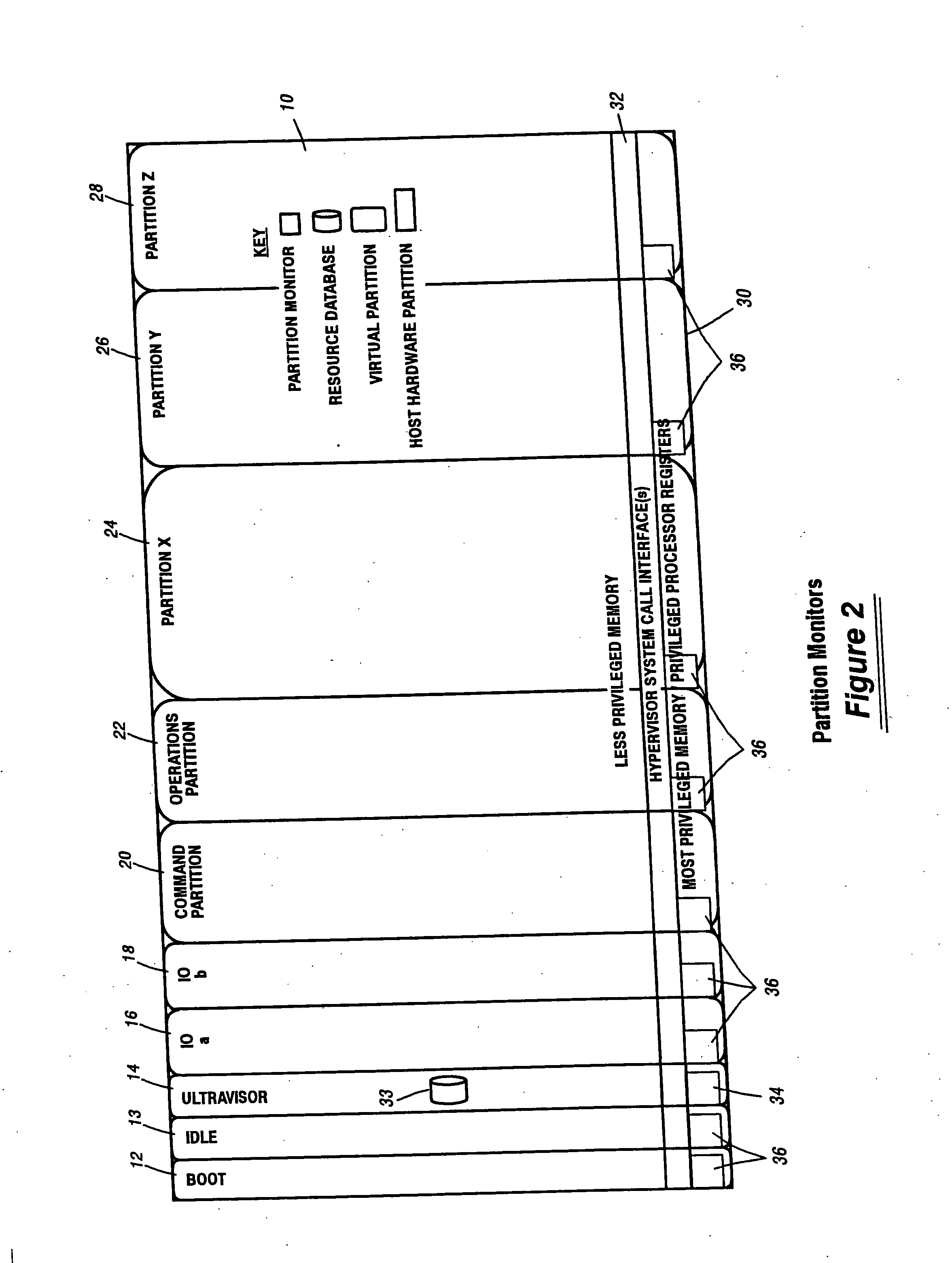

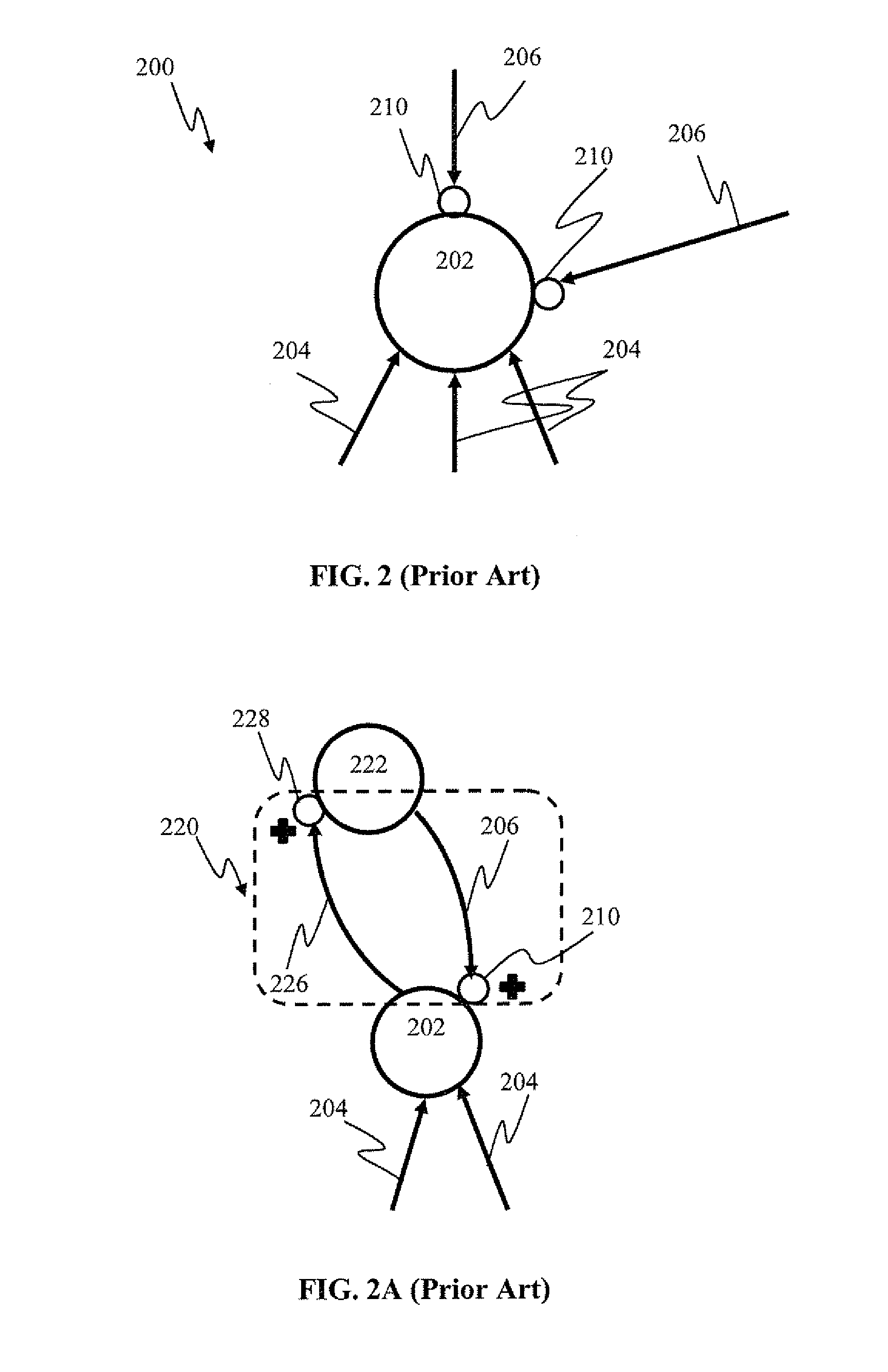

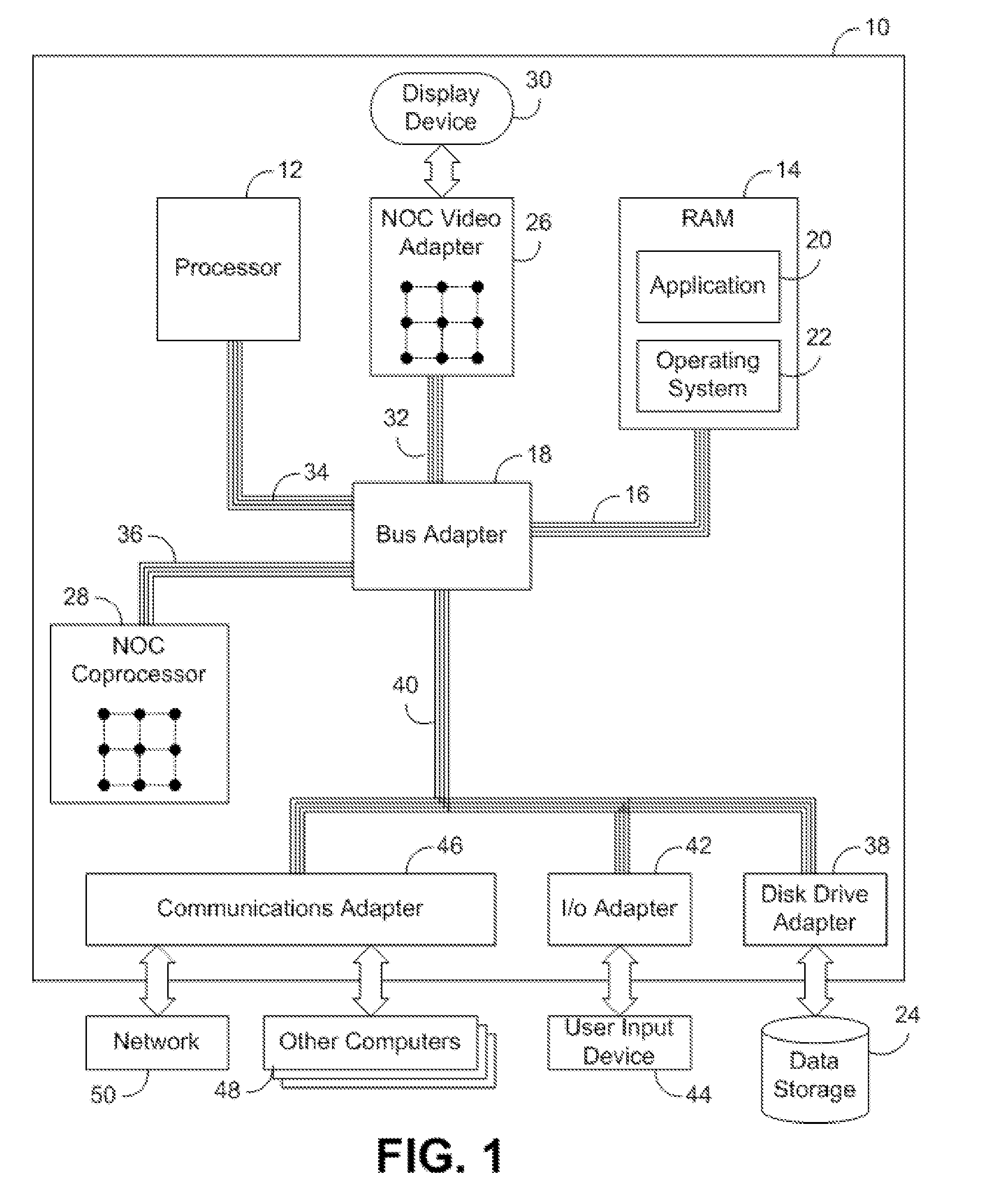

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. Operating systems in other logical or virtual partitions communicate with the I / O partitions via memory channels established by the ultravisor partition. The guest operating systems in the respective logical or virtual partitions are modified to access monitors that implement a system call interface through which the ultravisor, I / O, and any other special infrastructure partitions may initiate communications with each other and with the respective guest partitions. The guest operating systems are modified so that they do not attempt to use the “broken” instructions in the x86 system that complete virtualization systems must resolve by inserting traps. System resources are separated into zones that are managed by a separate partition containing resource management policies that may be implemented across nodes to implement a virtual data center.

Owner:UNISYS CORP

Computer system para-virtualization using a hypervisor that is implemented in a partition of the host system

ActiveUS20070028244A1Improve securityExcessive removalError detection/correctionMemory adressing/allocation/relocationOperational systemSystem call

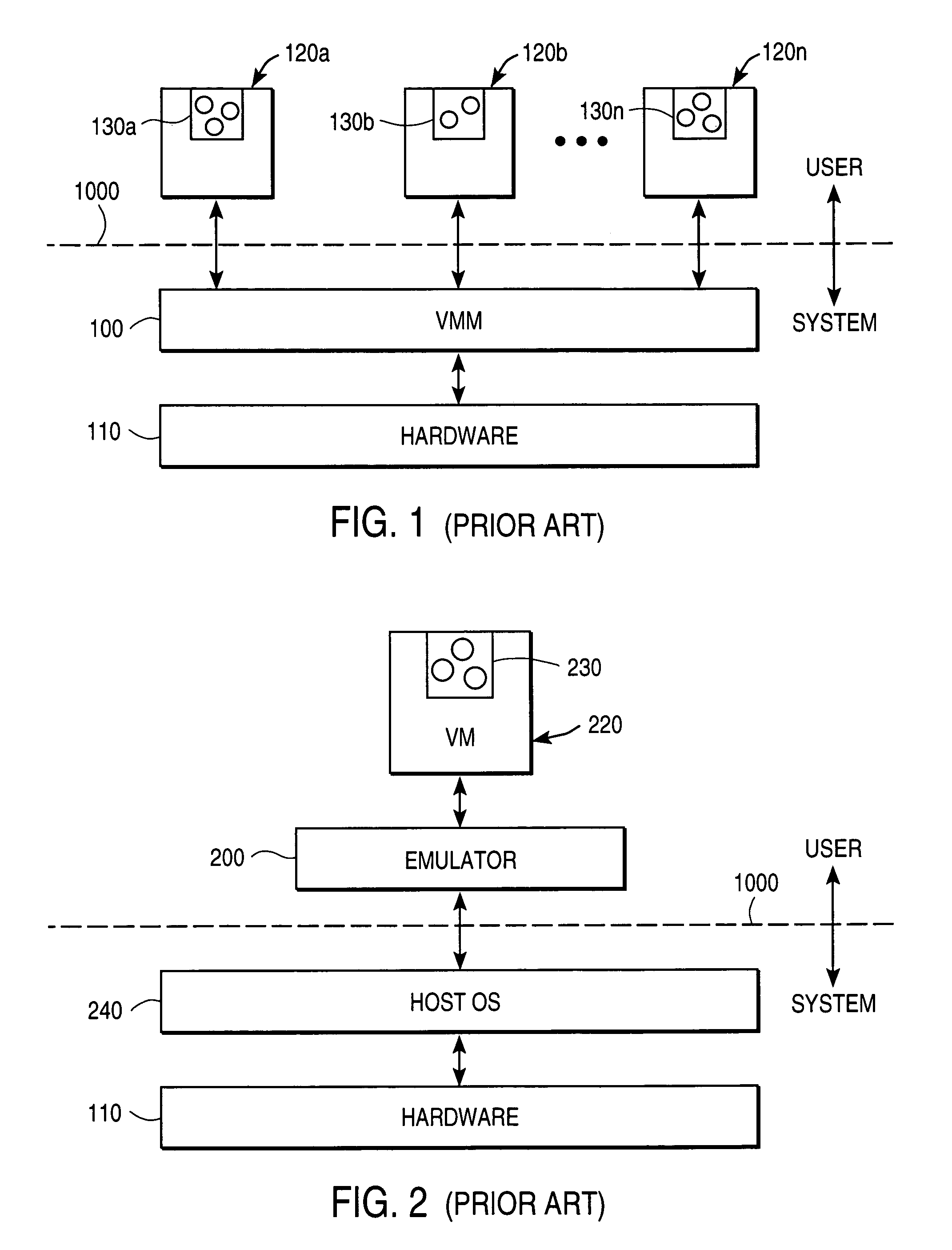

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. Operating systems in other logical or virtual partitions communicate with the I / O partitions via memory channels established by the ultravisor partition. The guest operating systems in the respective logical or virtual partitions are modified to access monitors that implement a system call interface through which the ultravisor, I / O, and any other special infrastructure partitions may initiate communications with each other and with the respective guest partitions. The guest operating systems are modified so that they do not attempt to use the “broken” instructions in the x86 system that complete virtualization systems must resolve by inserting traps.

Owner:UNISYS CORP

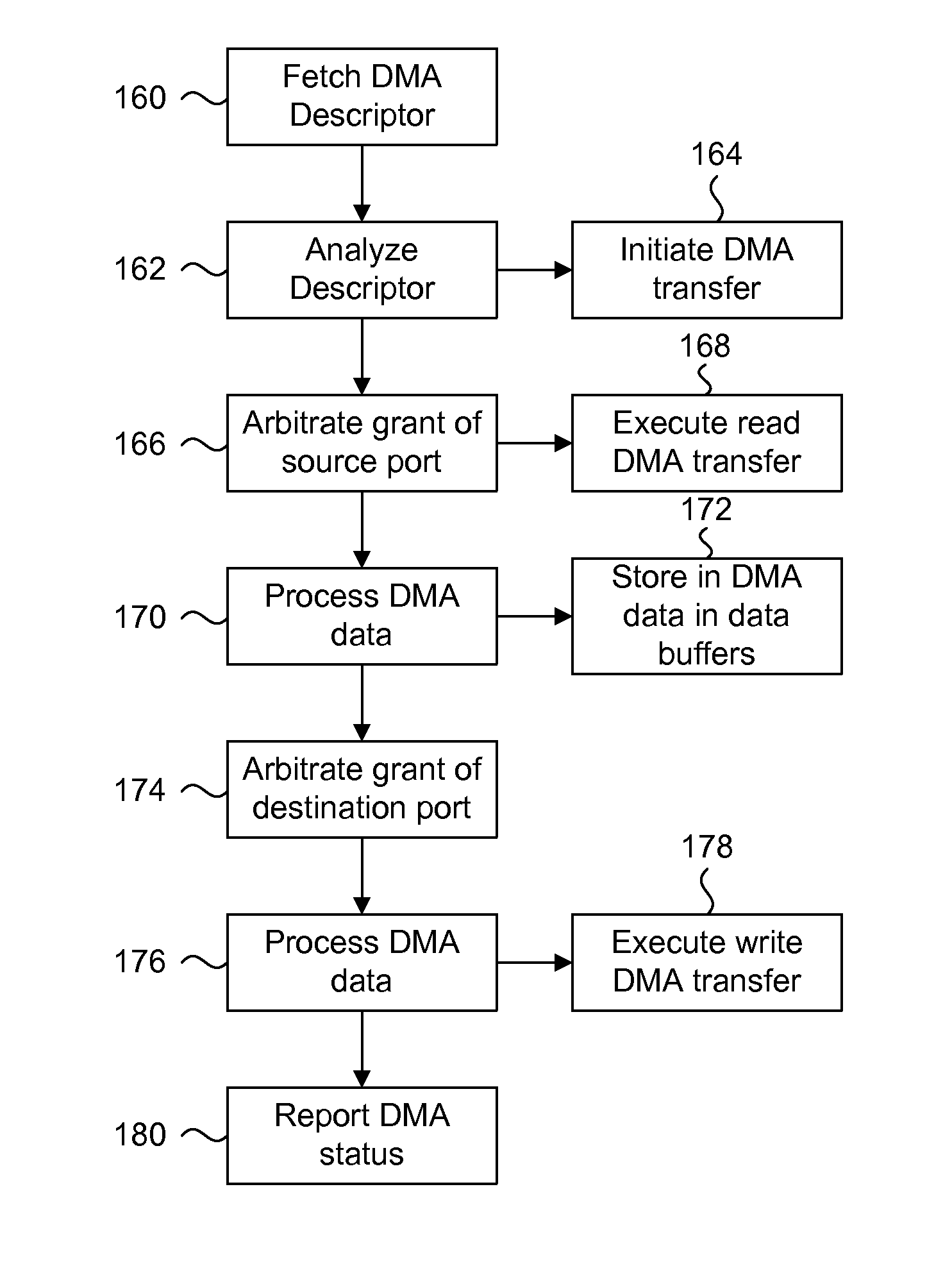

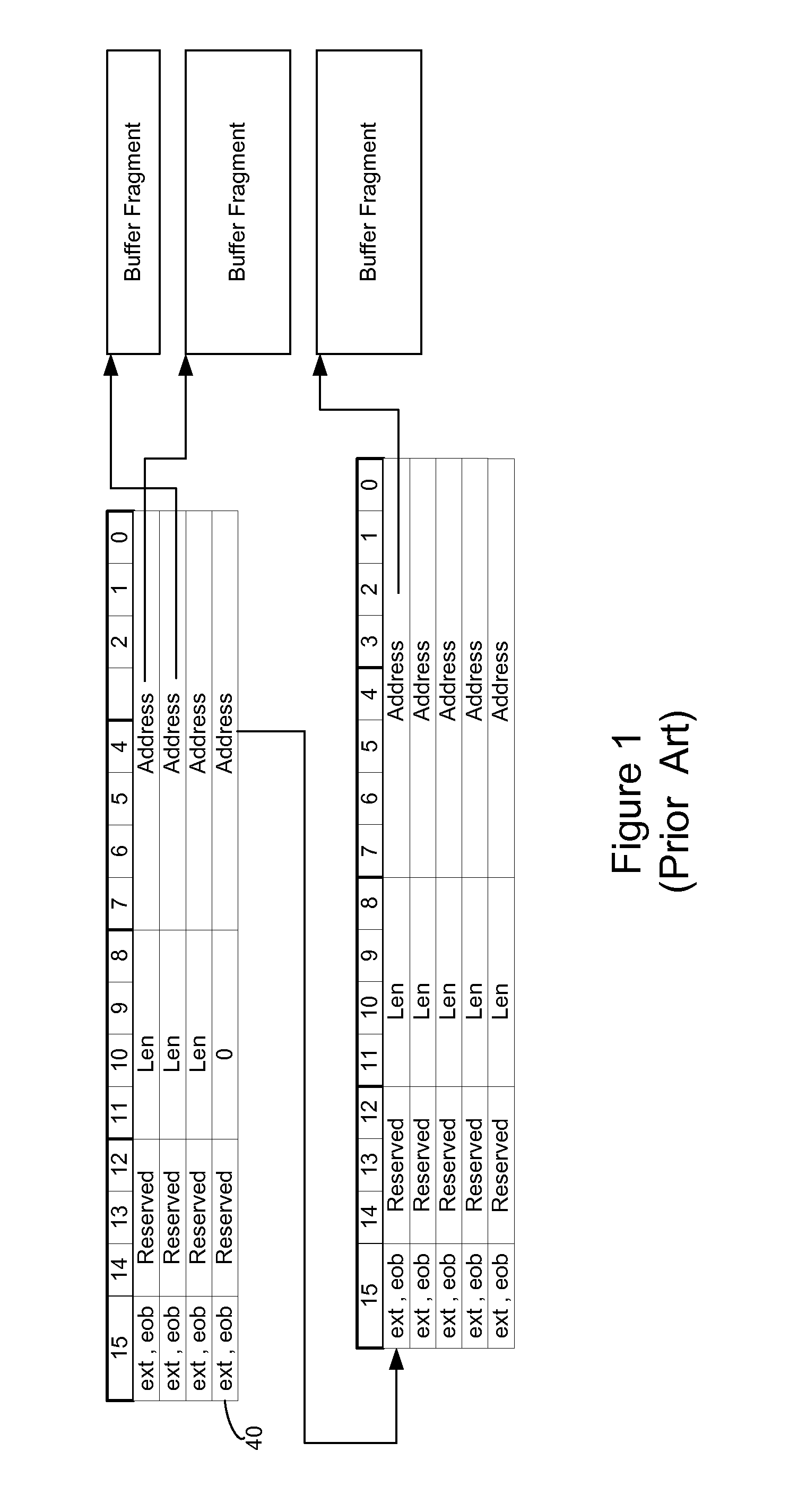

Logical address direct memory access with multiple concurrent physical ports and internal switching

A DMA engine is provided that is suitable for higher performance System On a Chip (SOC) devices that have multiple concurrent on-chip / off-chip memory spaces. The DMA engine operates either on logical addressing method or physical addressing method and provides random and sequential mapping function from logical address to physical address while supporting frequent context switching among a large number of logical address spaces. Embodiments of the present invention utilize per direction (source-destination) queuing and an internal switch to support non-blocking concurrent transfer of data on multiple directions. A caching technique can be incorporated to reduce the overhead of address translation.

Owner:MICROSEMI SOLUTIONS (US) INC

Para-virtualized computer system with I/0 server partitions that map physical host hardware for access by guest partitions

InactiveUS20070061441A1Improve efficiencyImprove securityError detection/correctionDigital computer detailsOperational systemSystem call

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. Operating systems in other logical or virtual partitions communicate with the I / O partitions via memory channels established by the ultravisor partition. The guest operating systems in the respective logical or virtual partitions are modified to access monitors that implement a system call interface through which the ultravisor, I / O, and any other special infrastructure partitions may initiate communications with each other and with the respective guest partitions. The guest operating systems are modified so that they do not attempt to use the “broken” instructions in the x86 system that complete virtualization systems must resolve by inserting traps.

Owner:UNISYS CORP

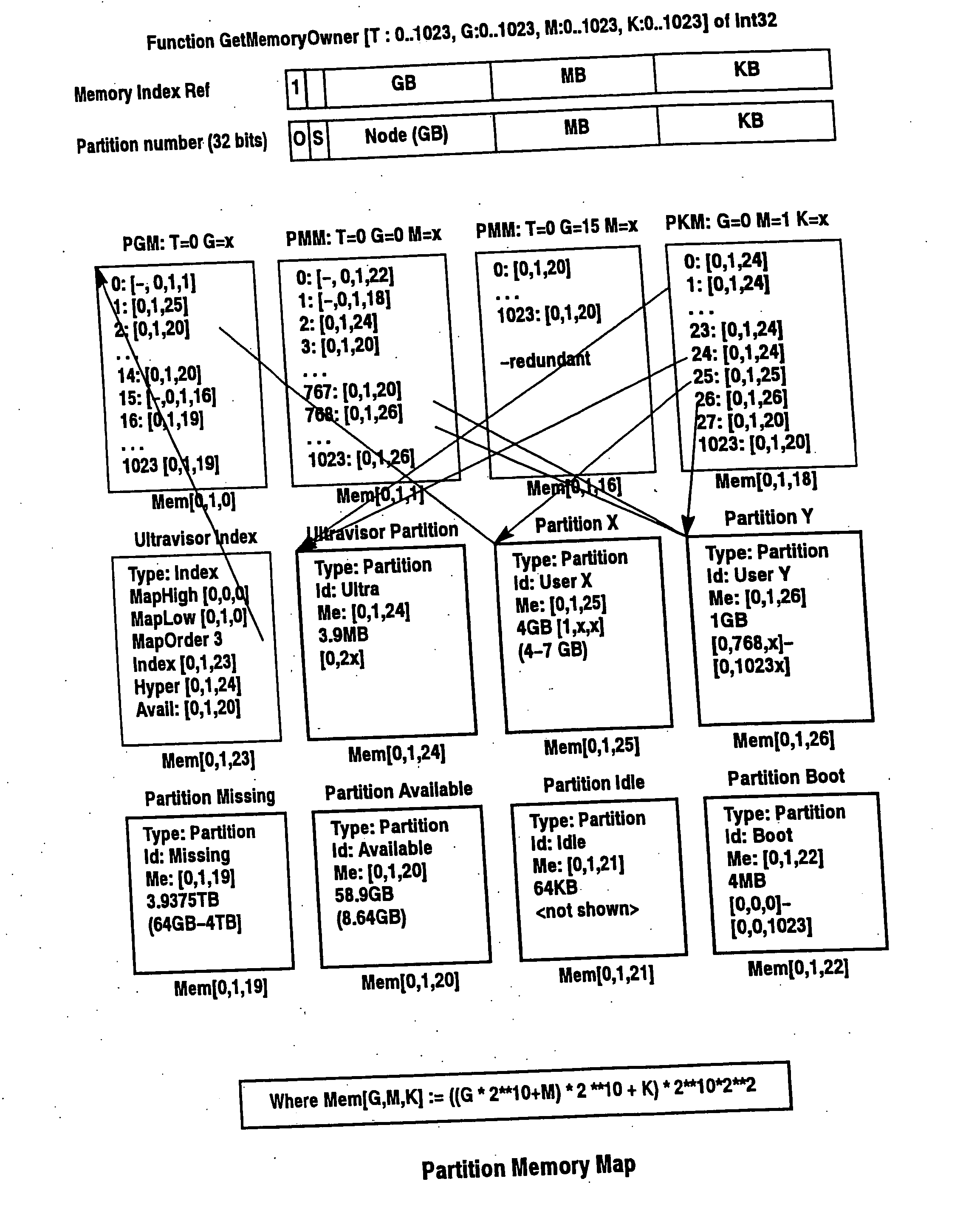

Scalable partition memory mapping system

InactiveUS20070067366A1Improve efficiencyImprove securityMemory architecture accessing/allocationSoftware simulation/interpretation/emulationIn-memory databaseOperational system

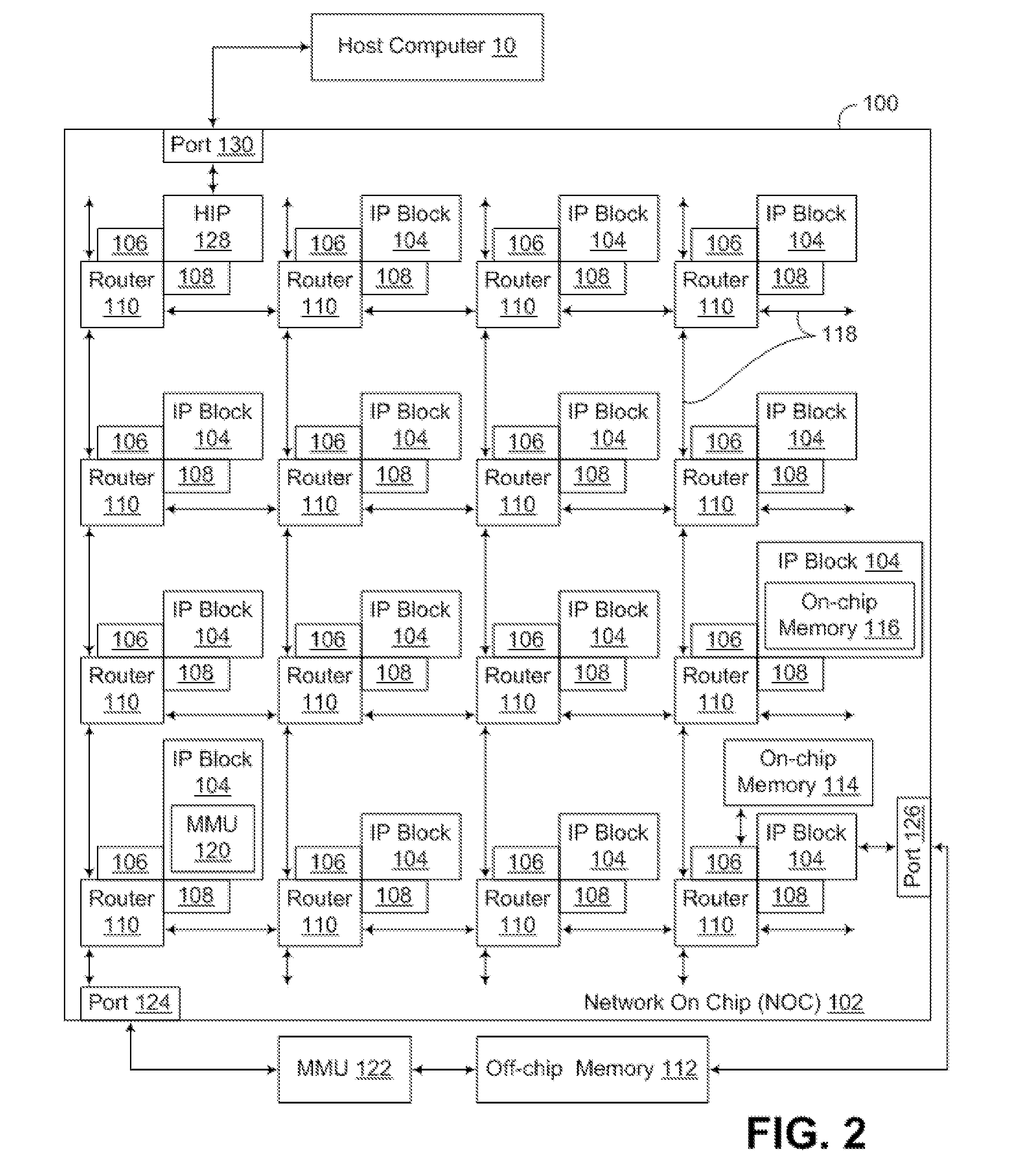

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. A scalable partition memory mapping system is implemented in the ultravisor partition so that the virtualized system is scalable to a virtually unlimited number of pages. A log (210) based allocation allows the virtual partition memory sizes to grow over multiple generations without increasing the overhead of managing the memory allocations. Each page of memory is assigned to one partition descriptor in the page hierarchy and is managed by the ultravisor partition.

Owner:UNISYS CORP

Speech Recognition Dialog Management

InactiveUS20090018829A1Increase flexibilityEasy and faster and more productive to interactSpeech recognitionNatural language processingDialog management

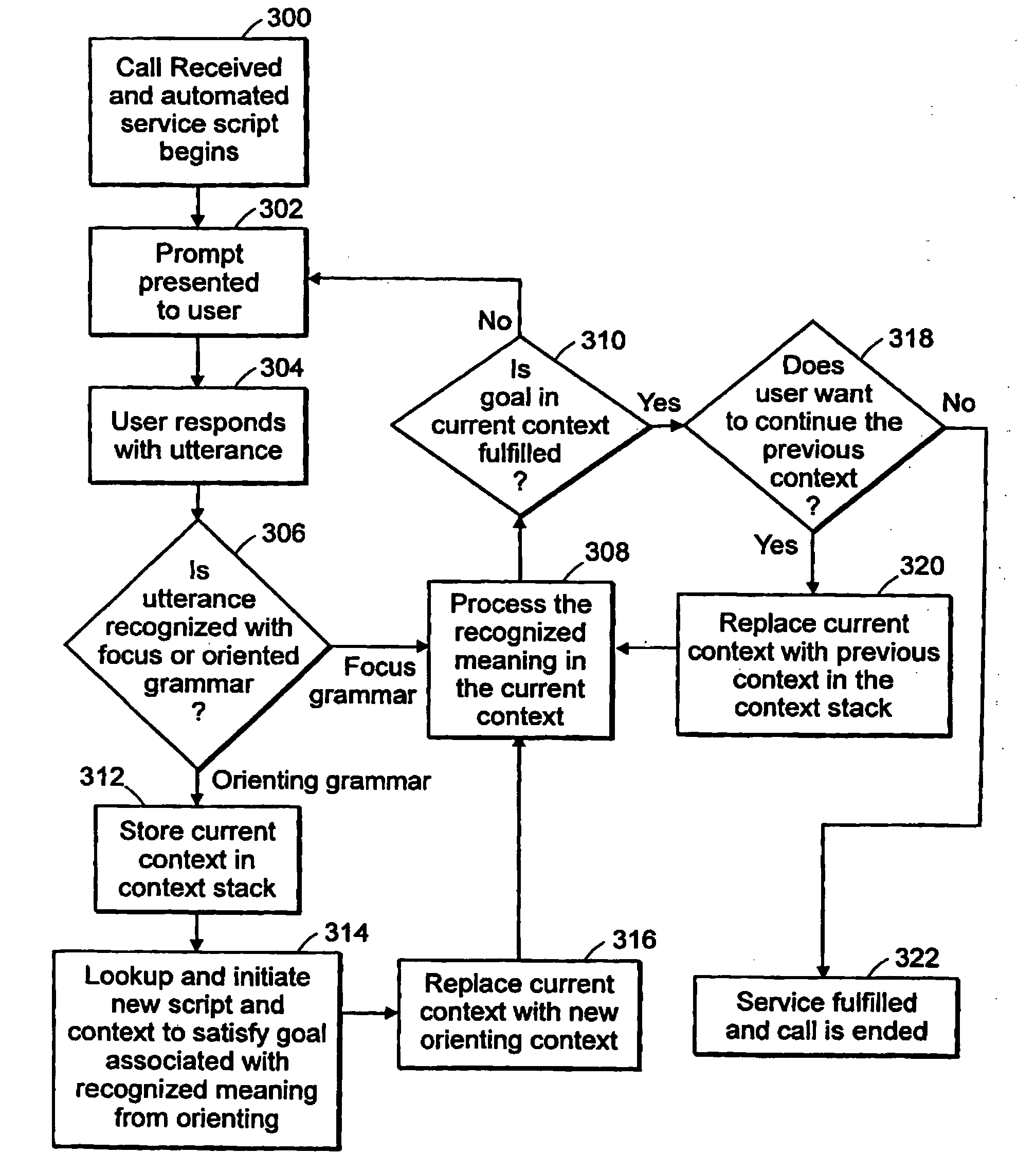

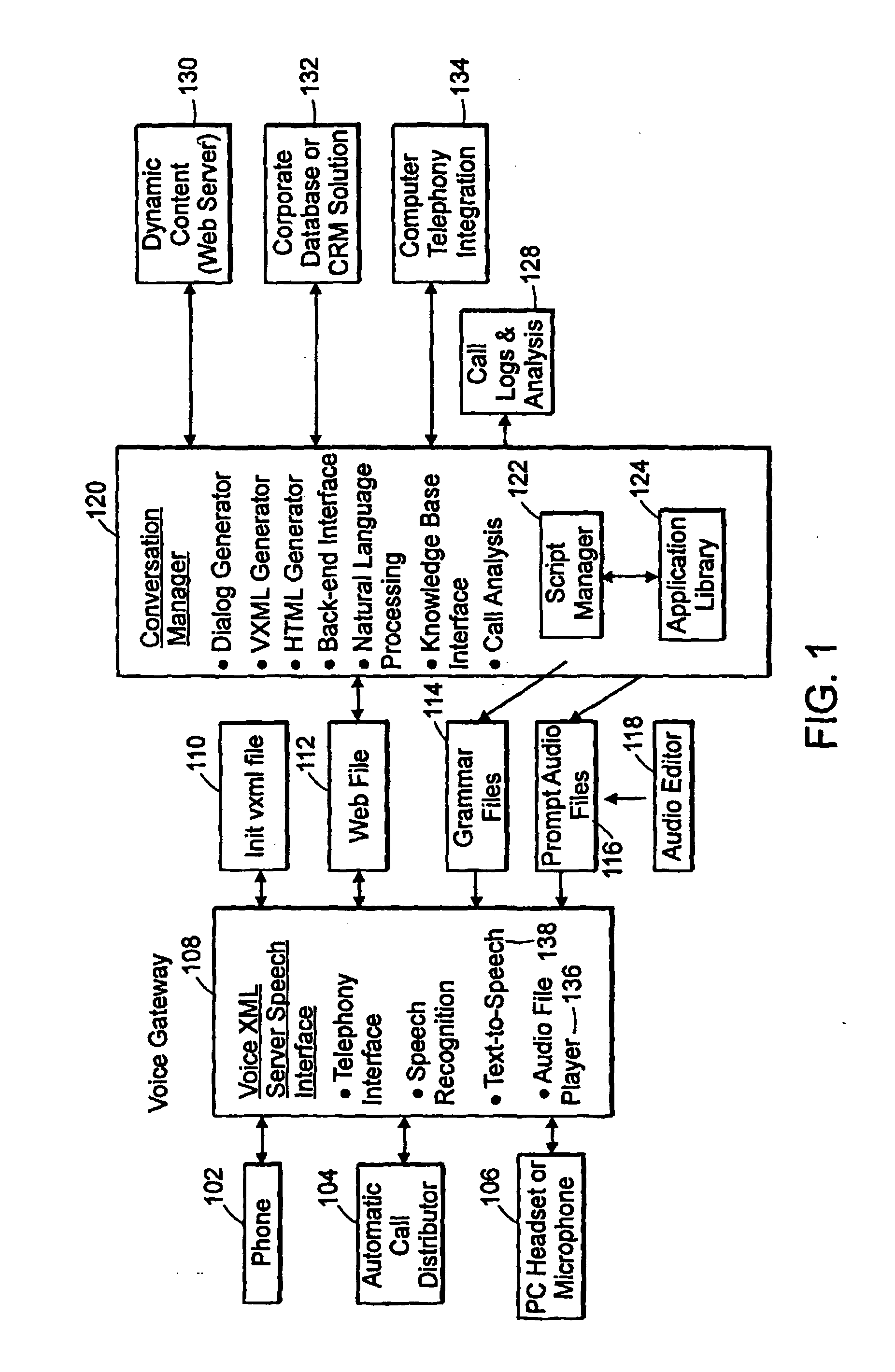

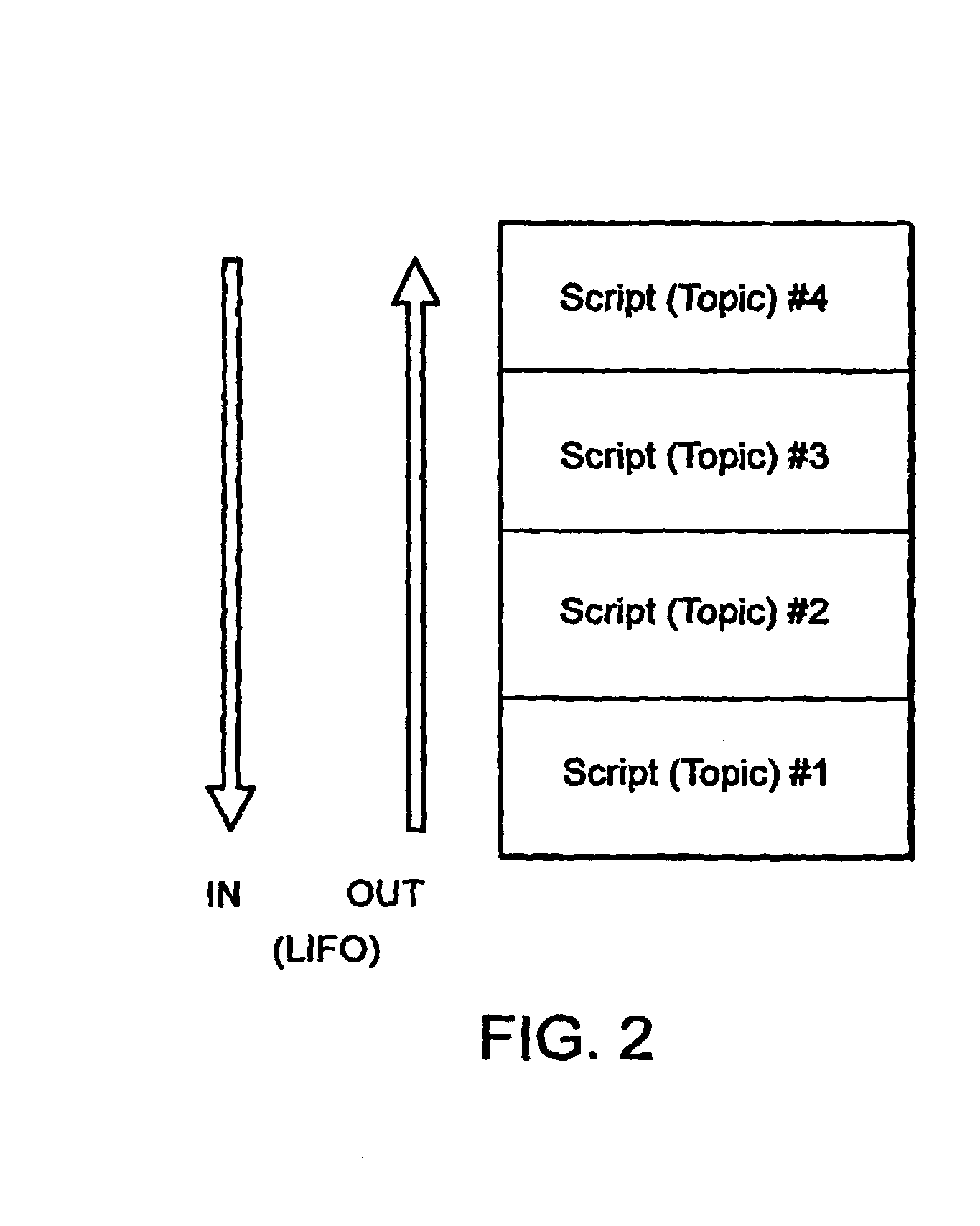

Described is a speech recognition dialog management system that allows more open-ended conversations between virtual agents and people than are possible using just agent-directed dialogs. The system uses both novel dialog context switching and learning algorithms based on spoken interactions with people. The context switching is performed through processing multiple dialog goals in a last-in-first-out (LIFO) pattern. The recognition accuracy for these new flexible conversations is improved through automated learning from processing errors and addition of new grammars.

Owner:METAPHOR SOLUTIONS

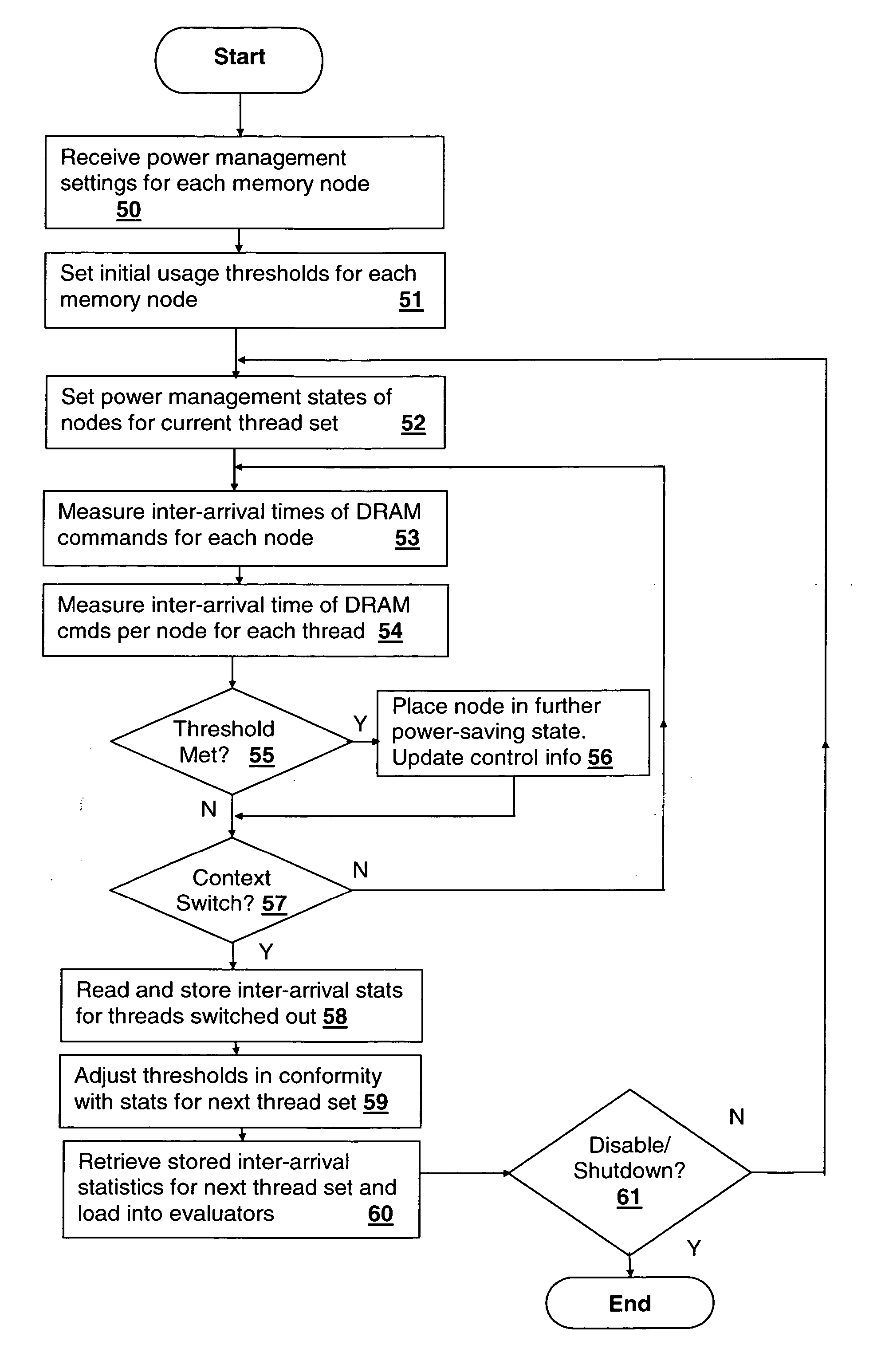

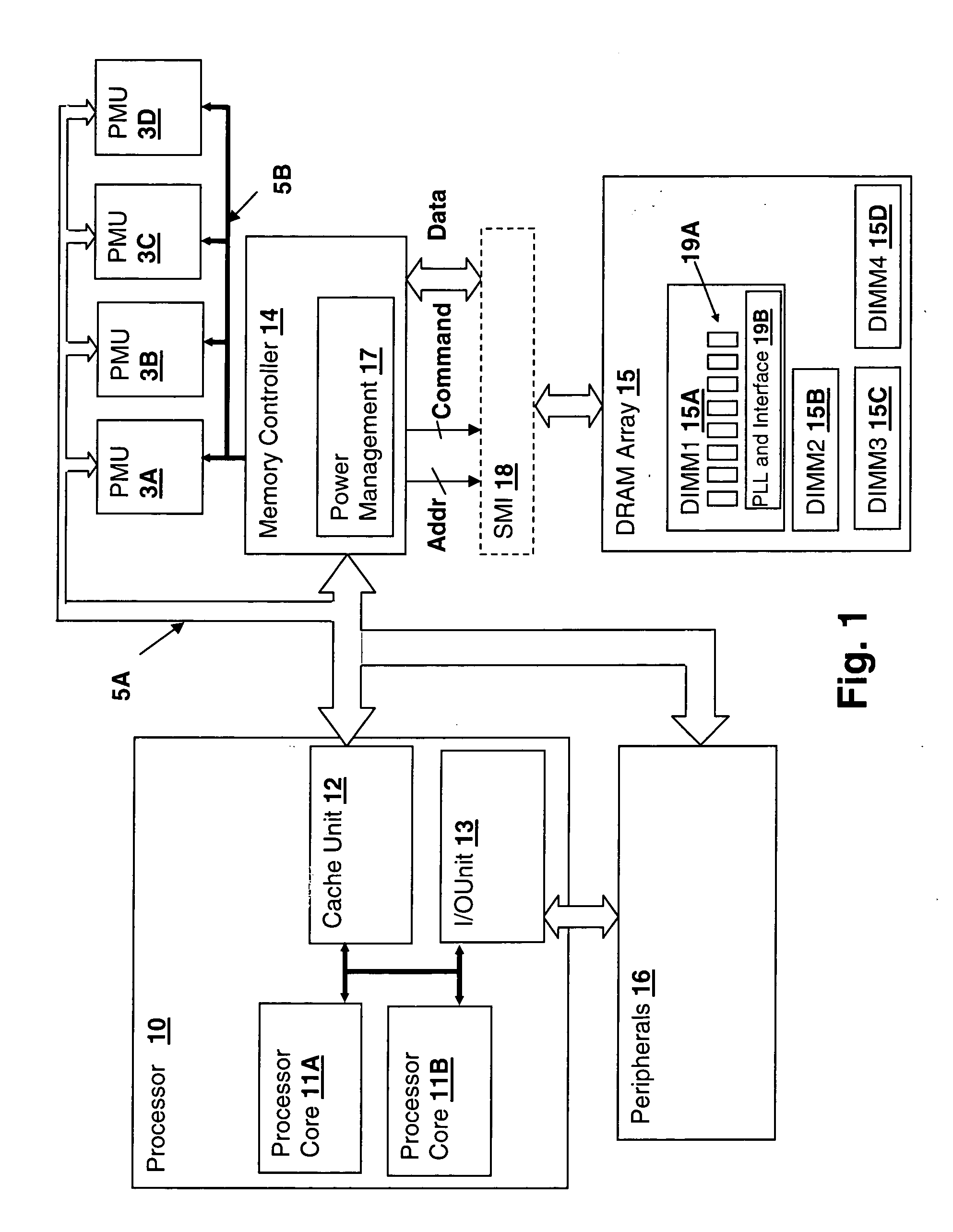

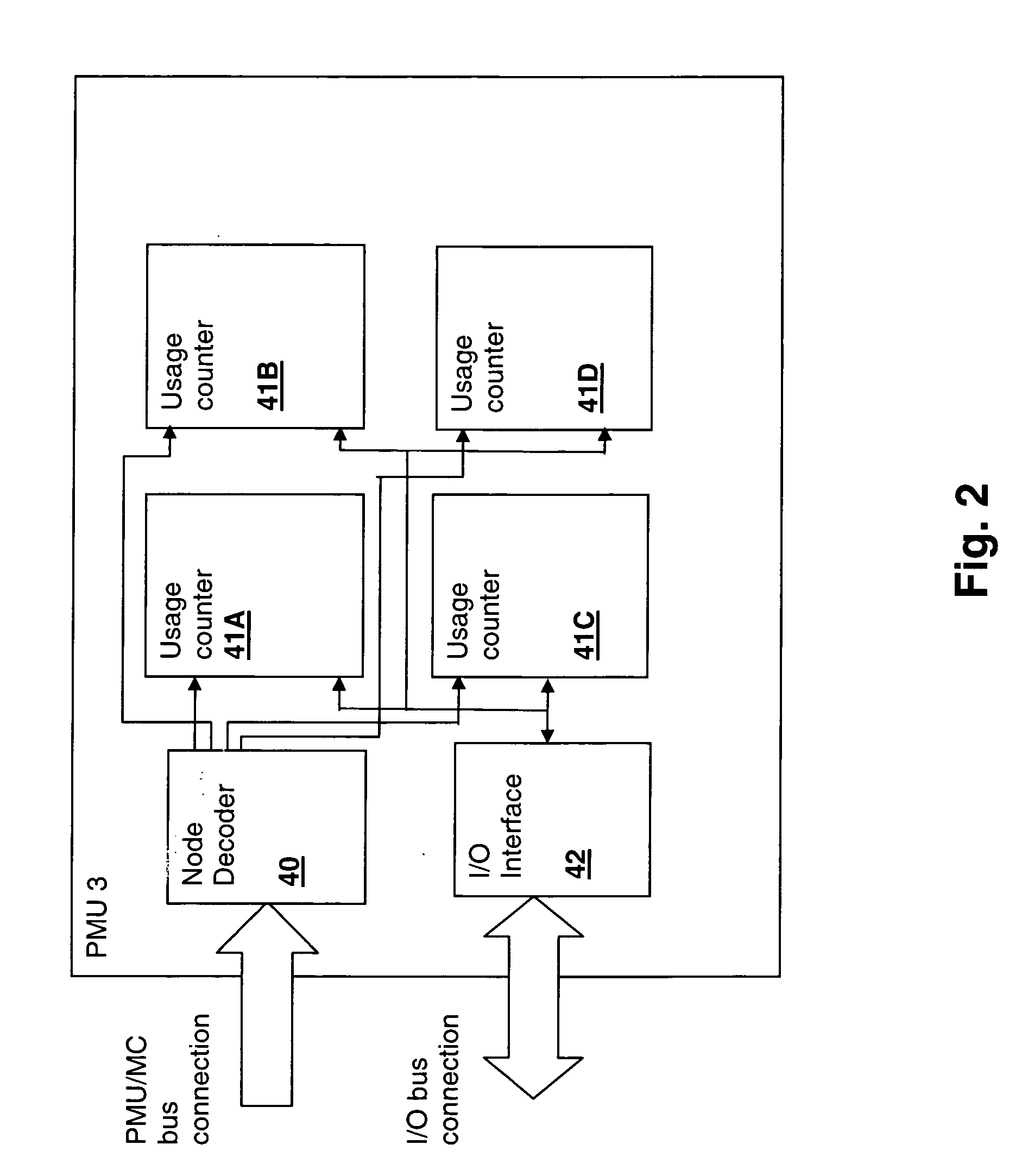

Method and system for power management including device controller-based device use evaluation and power-state control

InactiveUS20050125702A1Lower latencyReduce power consumptionEnergy efficient ICTVolume/mass flow measurementOperational systemDevice Usage

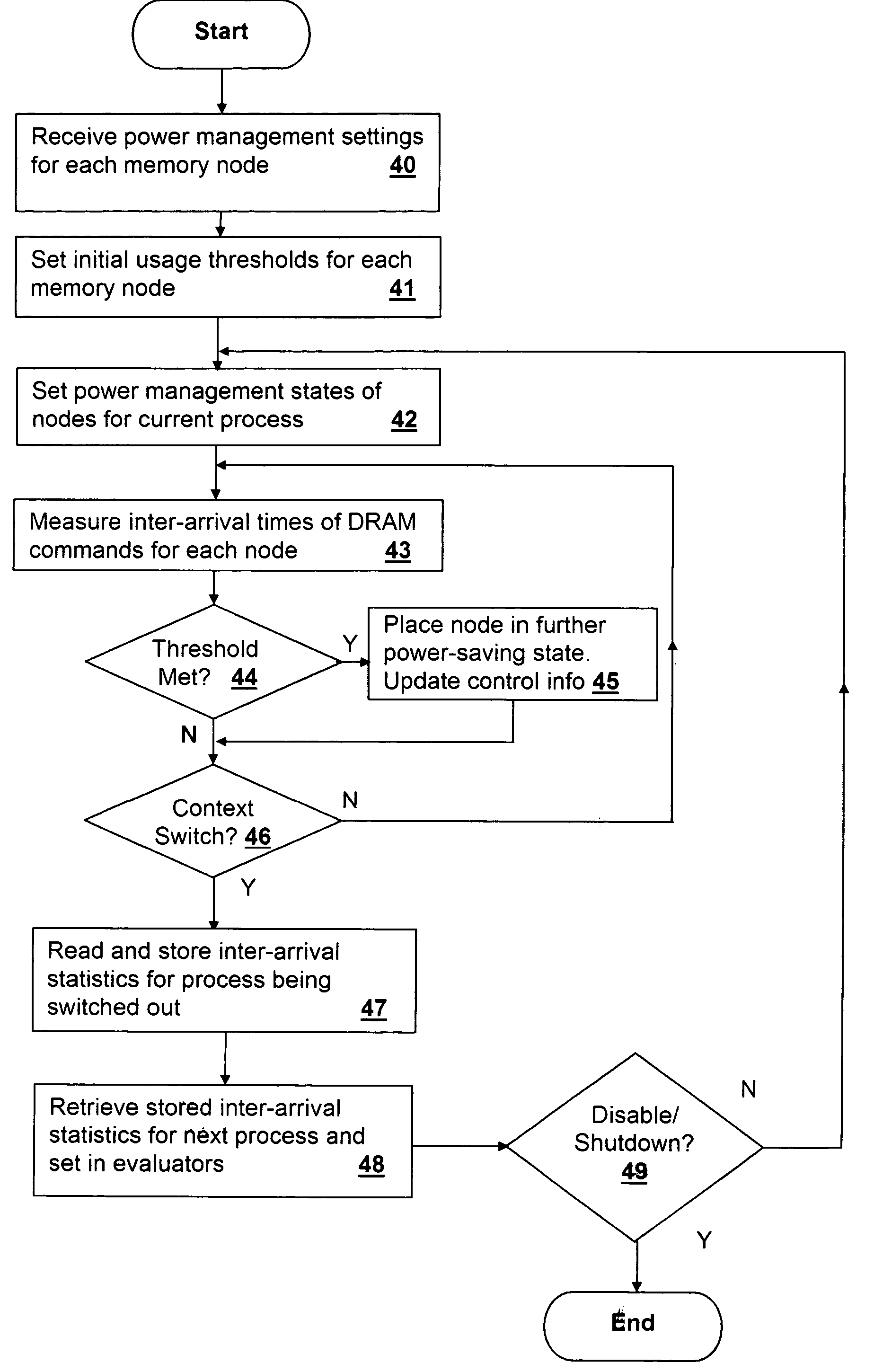

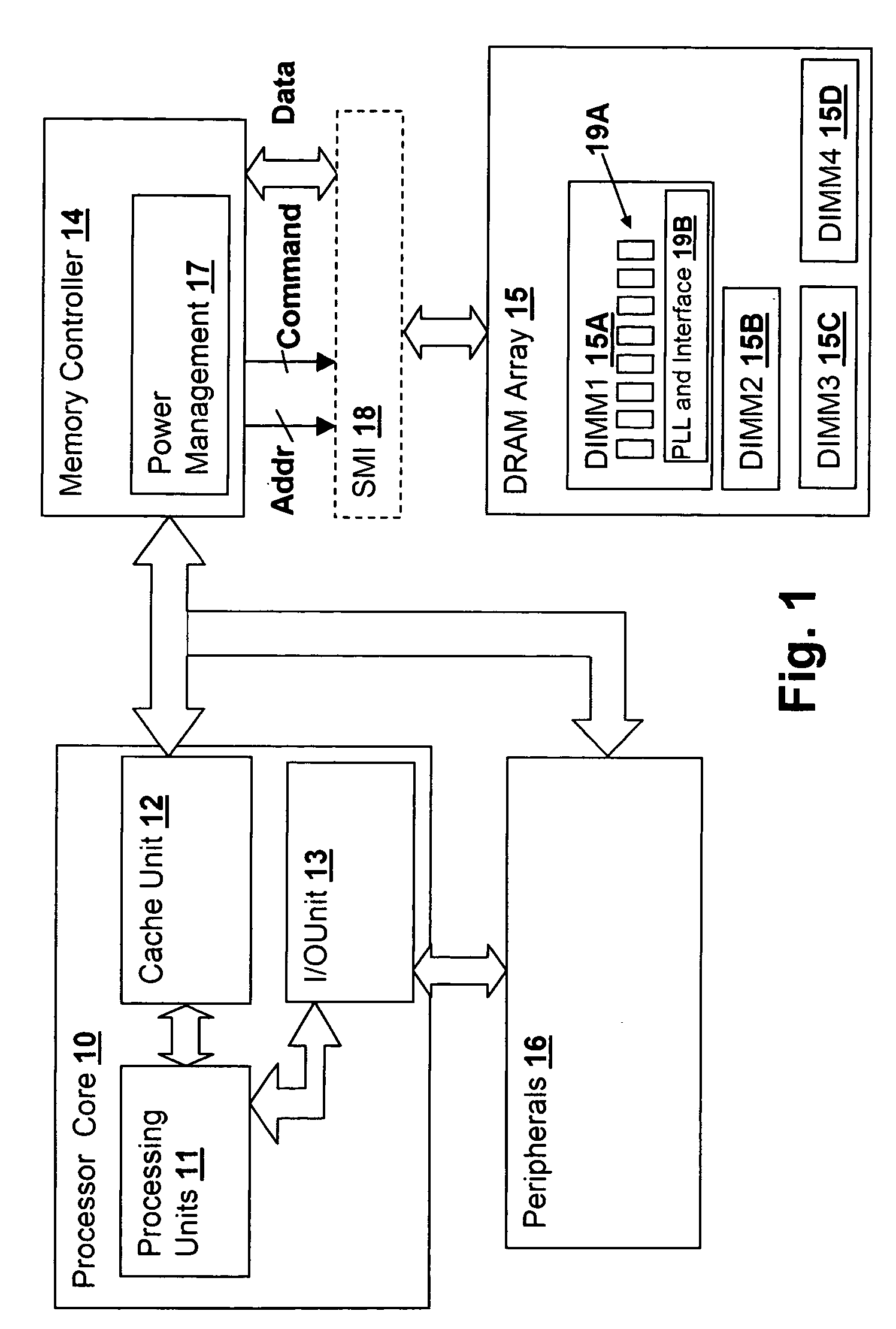

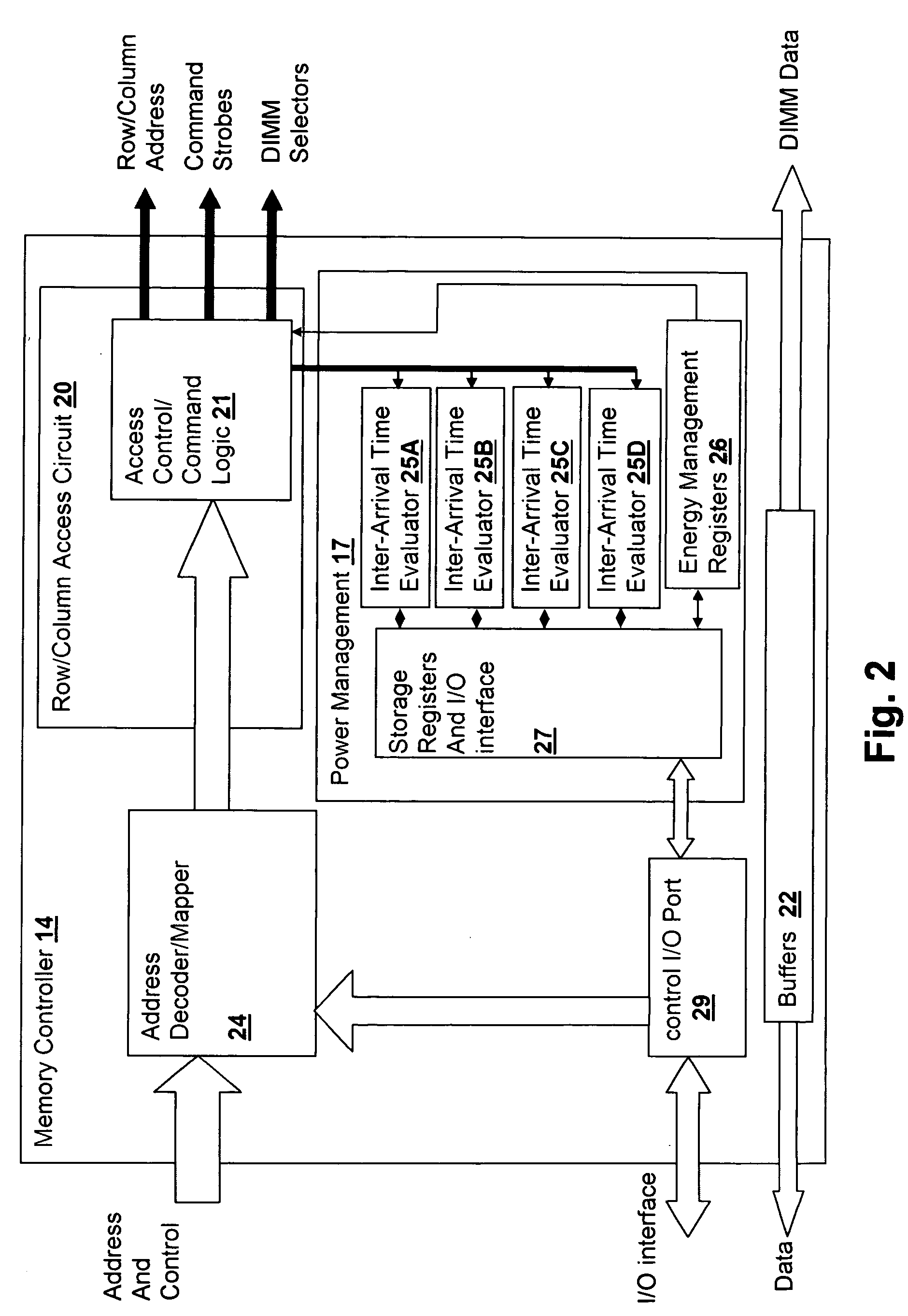

A method and system for power management including device controller-based device use evaluation and power-state control provides improved performance in a power-managed processing system. Per-device usage information is measured and evaluated during process execution and is retrieved from the device controller upon a context switch, so that upon reactivation of the process, the previous usage evaluation state can be restored. The device controller can then provide for per-process control of attached device power management states without intervention by the processor and without losing the historical evaluation state when a process is switched out. The device controller can control power-saving states of connected devices in conformity with the usage evaluation without processor intervention and across multiple process execution slices. The device controller may be a memory controller and the controlled devices memory modules or banks within modules if individual banks can be power-managed. Local thresholds provide the decision-making mechanism for each controlled device. The thresholds may be history-based, fixed or adaptive and are generally set initially by the operating system and may be updated by the memory controller adaptively or using historical collected usage evaluation counts or alternatively by the operating system via a system processor.

Owner:IBM CORP

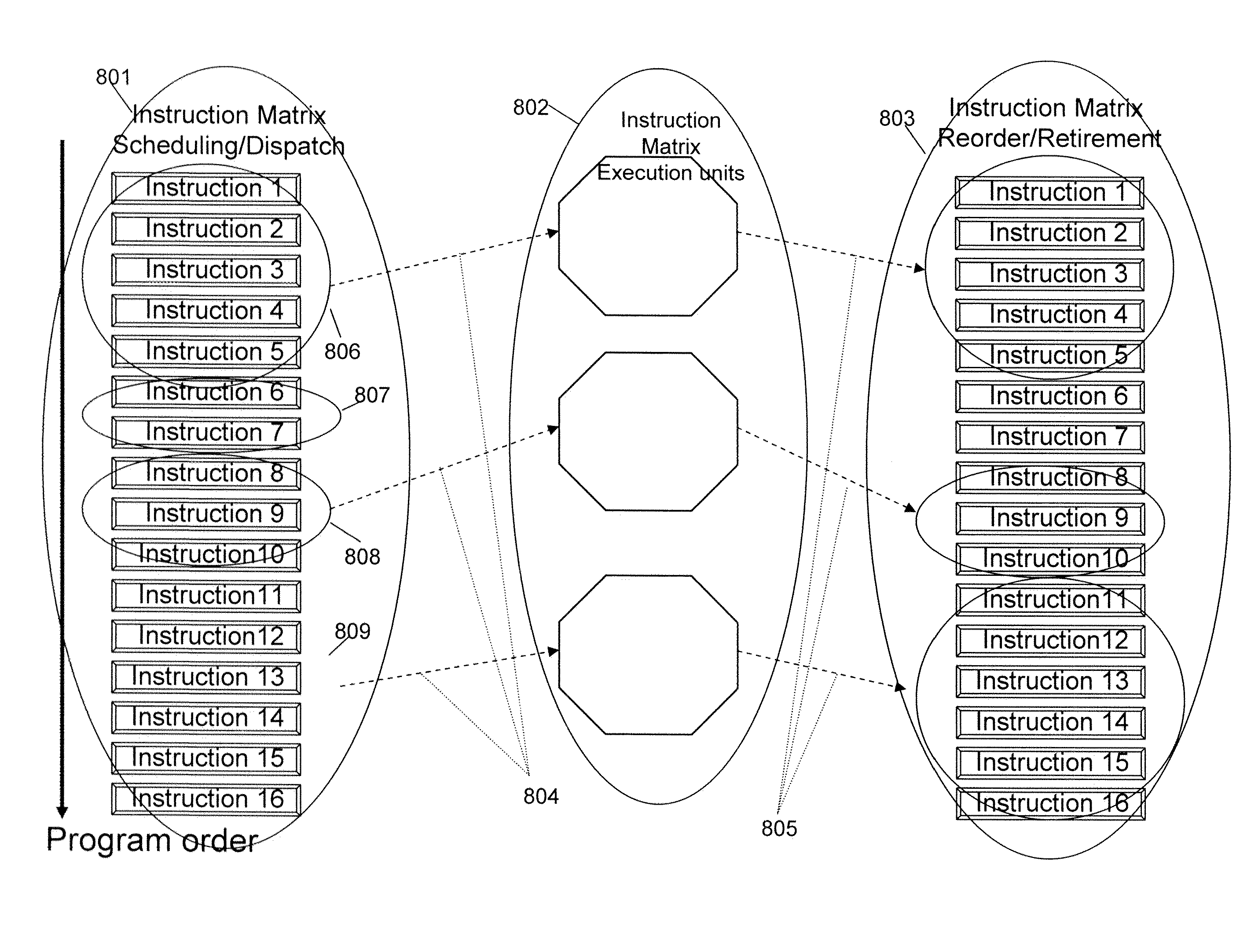

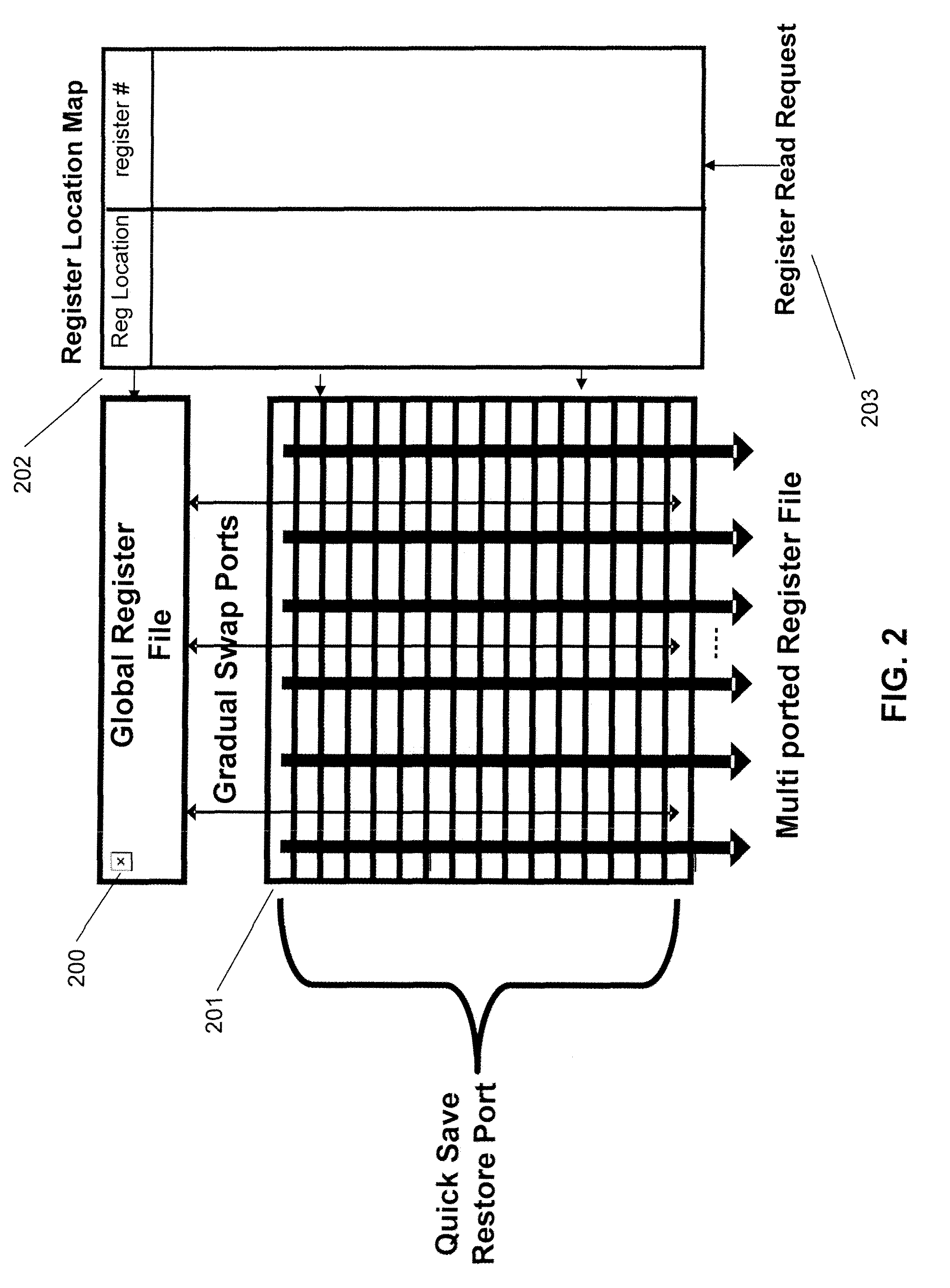

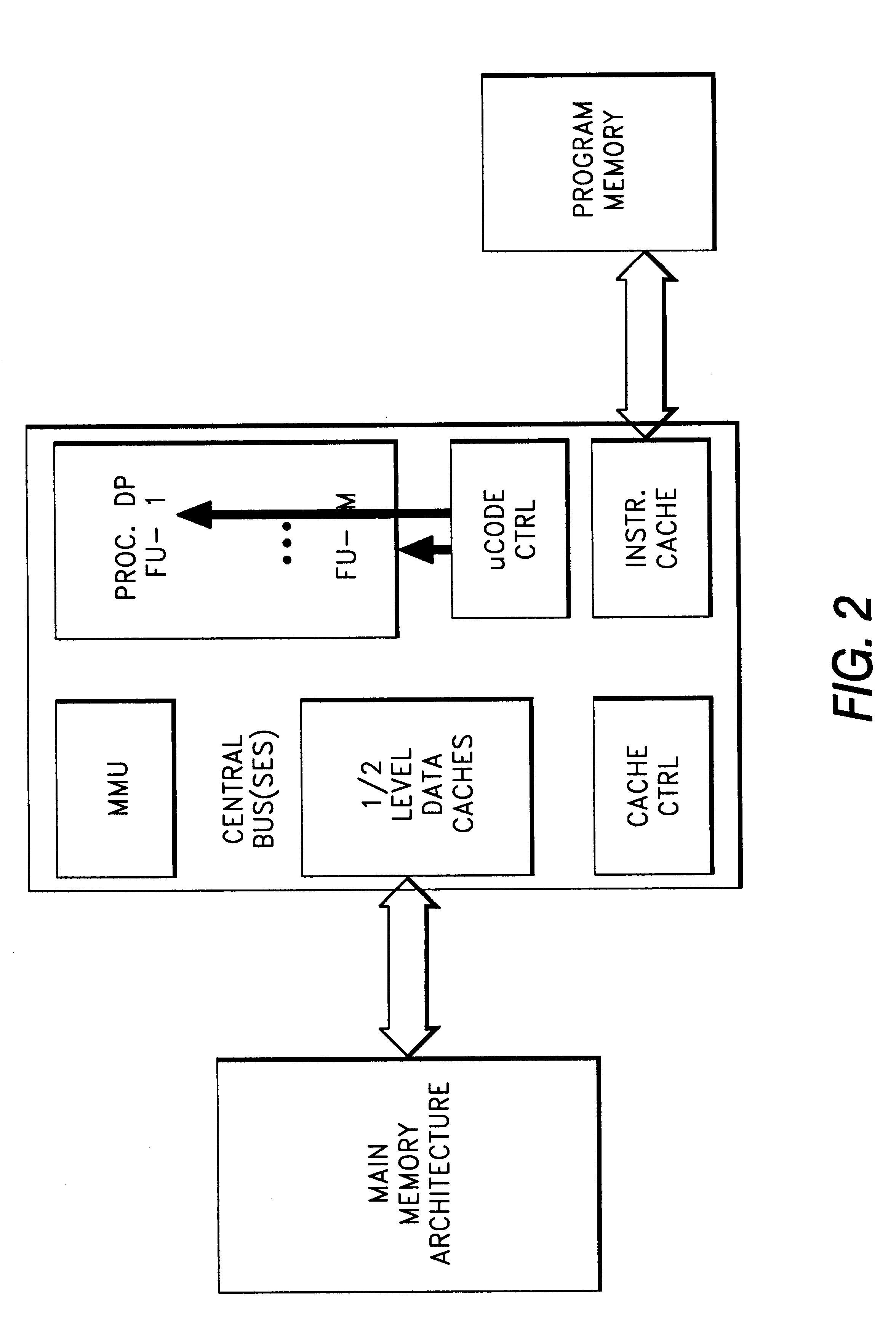

Apparatus and Method for Processing Complex Instruction Formats in a Multi-Threaded Architecture Supporting Various Context Switch Modes and Virtualization Schemes

ActiveUS20100161948A1Efficient context switchingEfficient switchingInstruction analysisDigital computer detailsVirtualizationProcessor register

A unified architecture for dynamic generation, execution, synchronization and parallelization of complex instructions formats includes a virtual register file, register cache and register file hierarchy. A self-generating and synchronizing dynamic and static threading architecture provides efficient context switching.

Owner:INTEL CORP

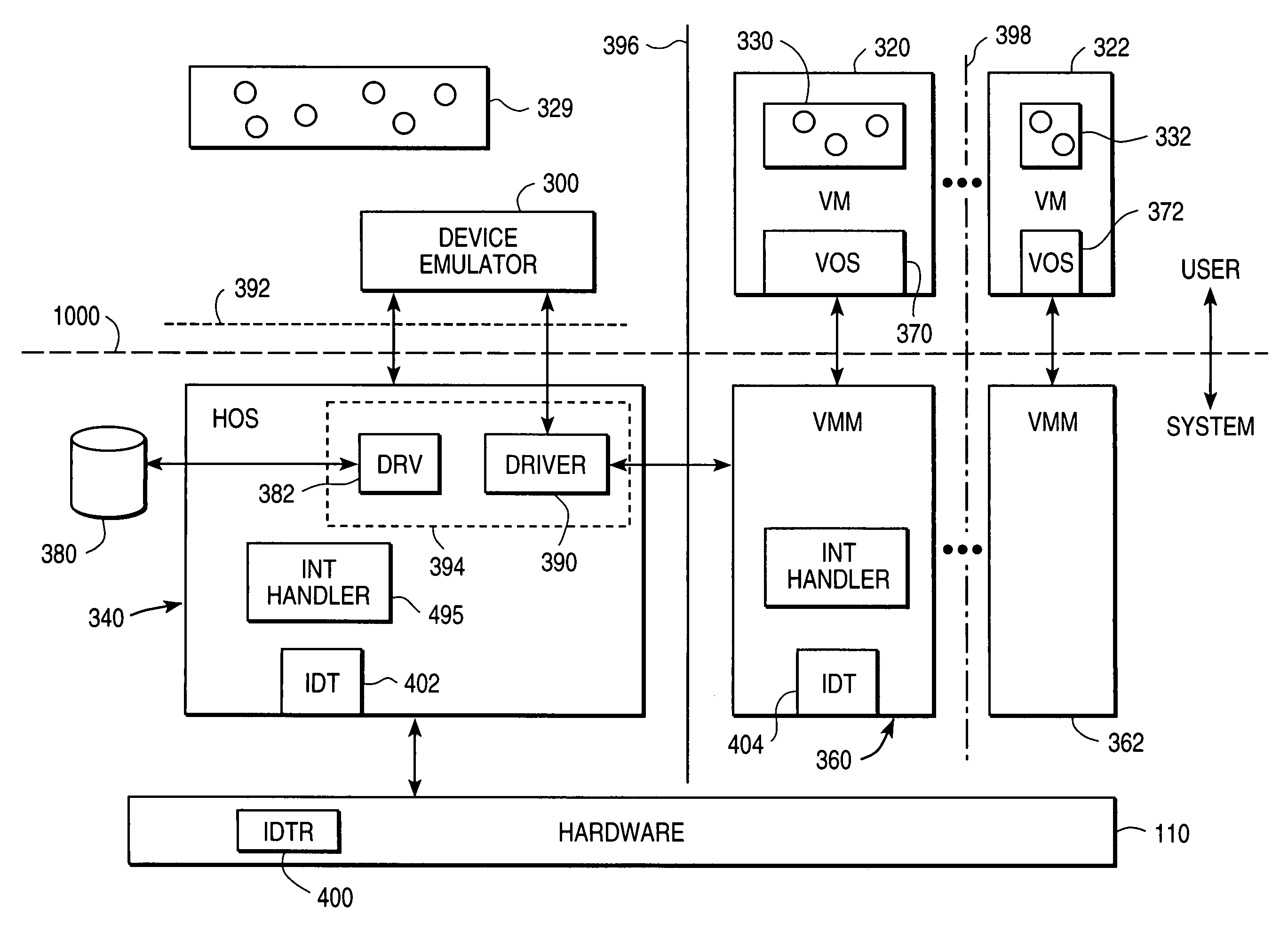

System and method for facilitating context-switching in a multi-context computer system

InactiveUS6944699B1Software simulation/interpretation/emulationMemory systemsOperational systemProcedure calls

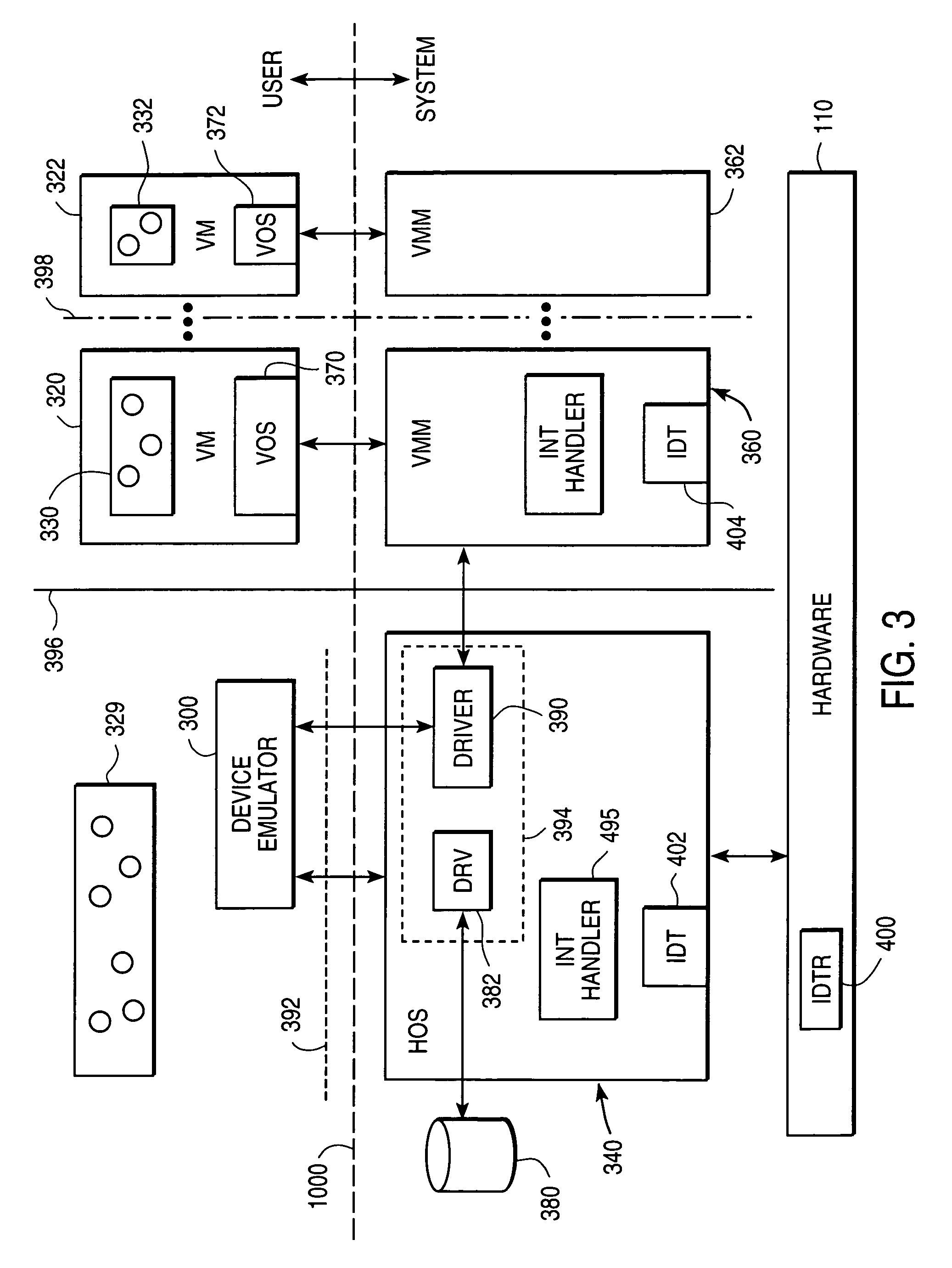

A virtual machine monitor (VMM) is included in a computer system that has a protected host operating system (HOS). A virtual machine running at least one application via a virtual operating system is connected to the VMM. Both the HOS and the VMM have separate operating contexts and disjoint address spaces, but are both co-resident at system level. A driver that is downloadable into the HOS at system level forms a total context switch between the VMM and HOS contexts. A user-level emulator accepts commands from the VMM via the system-level driver and processes these commands as remote procedure calls. The emulator is able to issue host operating system calls and thereby access the physical system devices via the host operating system. The host operating system itself thus handles execution of certain VMM instructions, such as accessing physical devices.

Owner:VMWARE INC

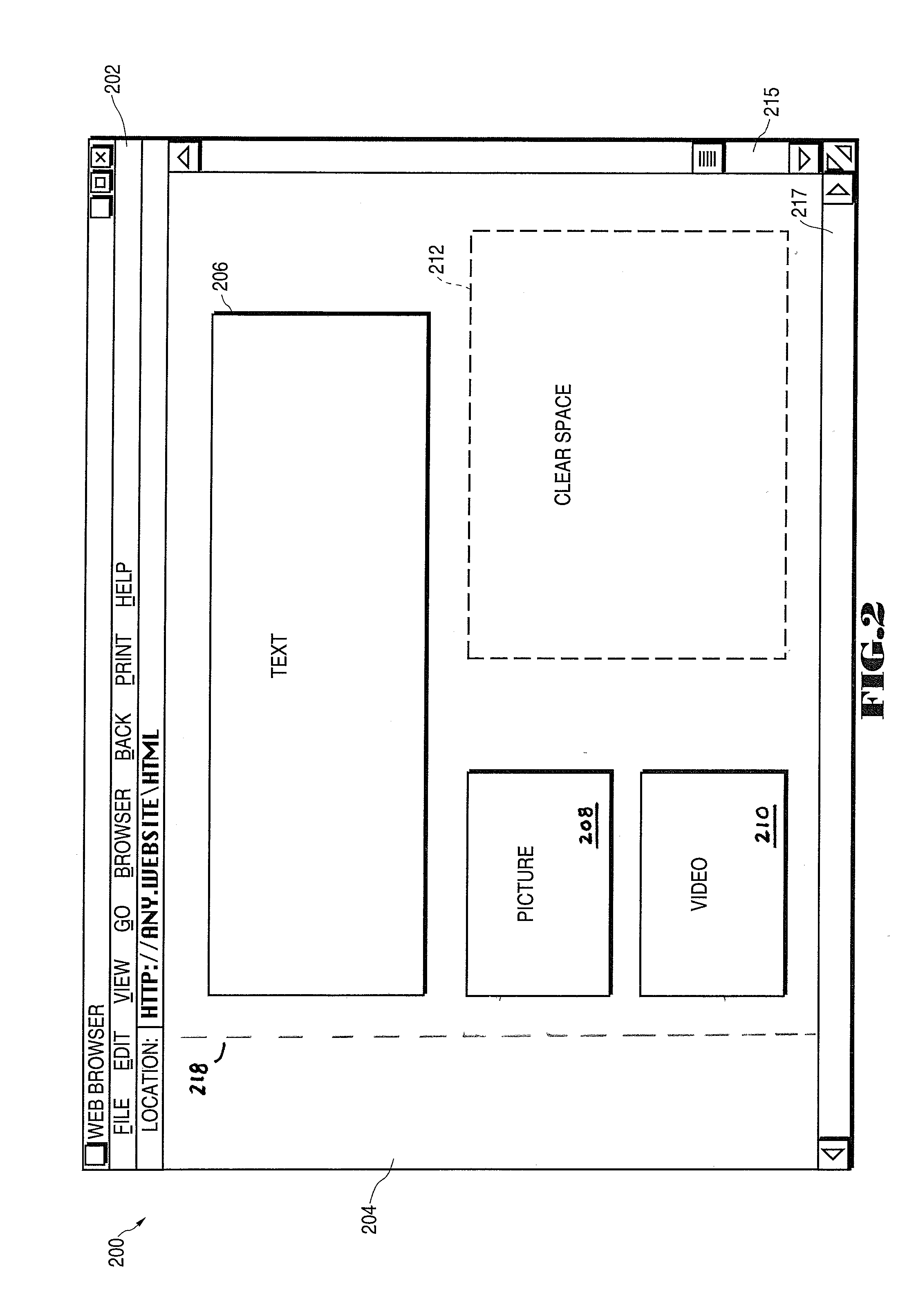

Displaying Advertising Messages in the Unused Portion and During a Context Switch Period of a Web Browser Display Interface

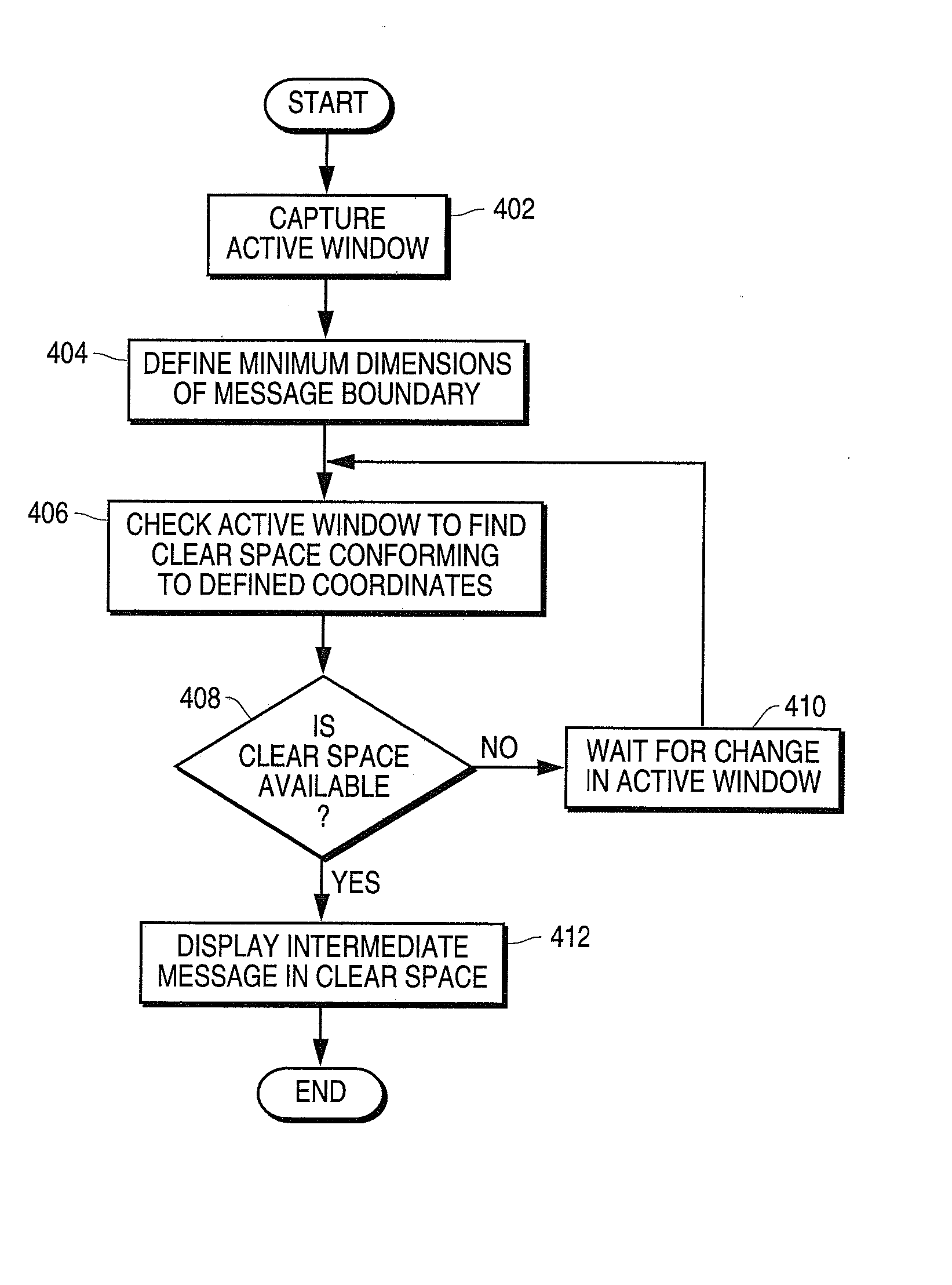

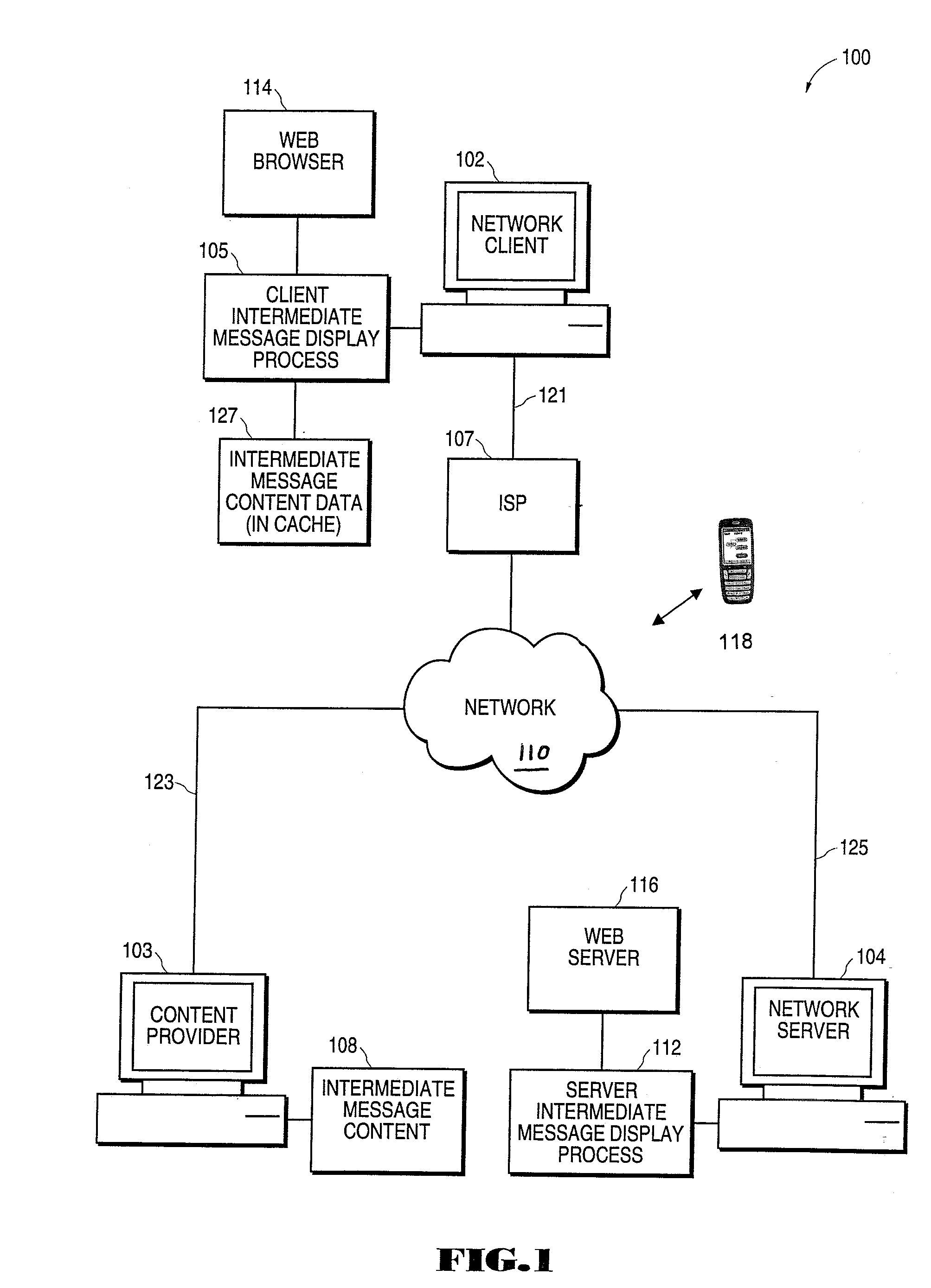

A system for displaying intermediate message content over the unused area of a web browser is described. An intermediate message display process is linked to the web browser program executed on a client computer. The process monitors user activity on the client computer and identifies areas of the web browser display area that are not used. Upon detection of an unused clear space within the web browser display area, an intermediate message is displayed in the clear area of the web browser. The intermediate message could be an advertisement display provided by a third party content provider. A timer process and clear space detection routine within the intermediate message display process govern the display of the intermediate message in accordance with specified background pattern and message window dimension parameters.

Owner:KHOO SOON HUAT

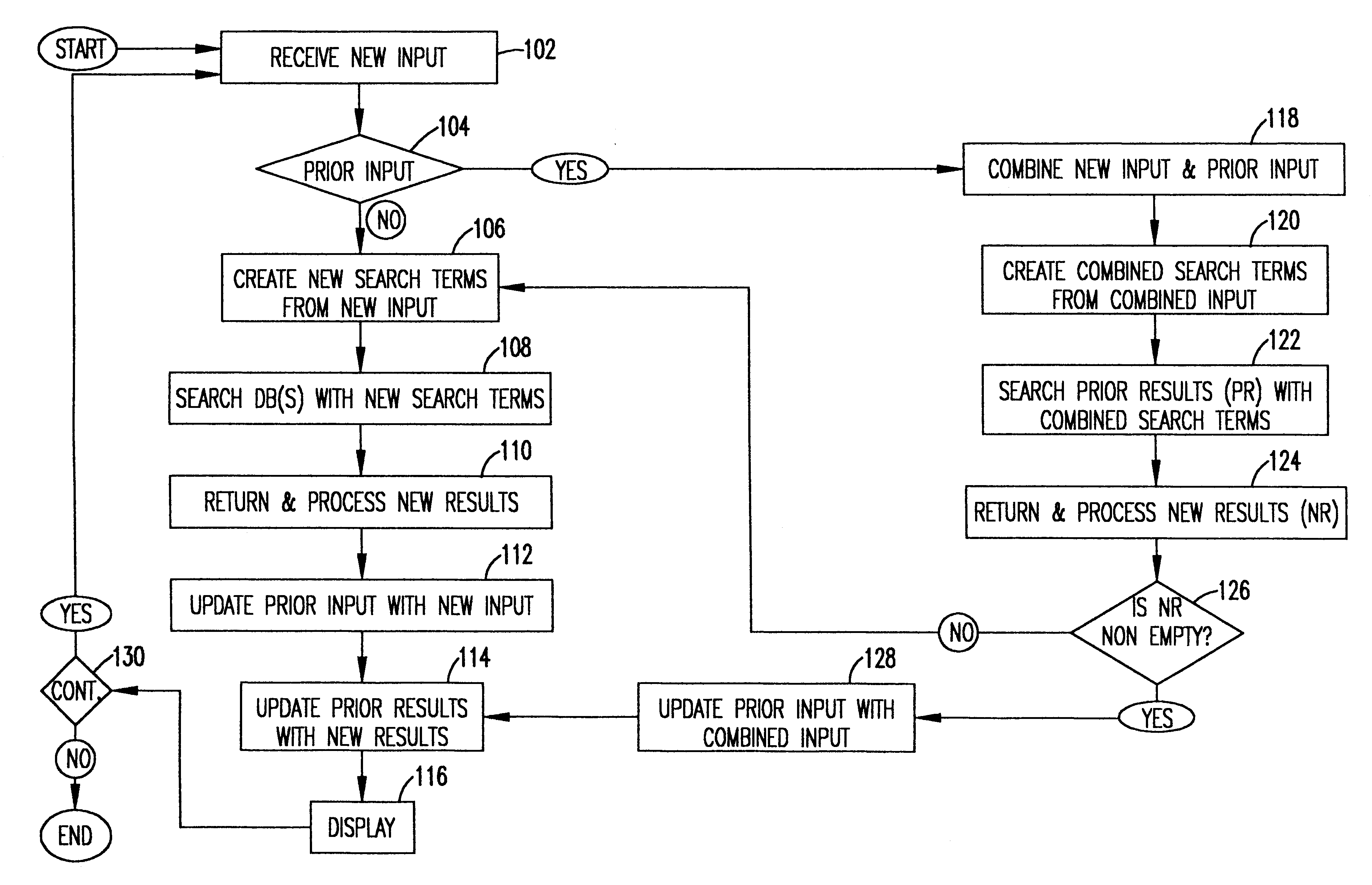

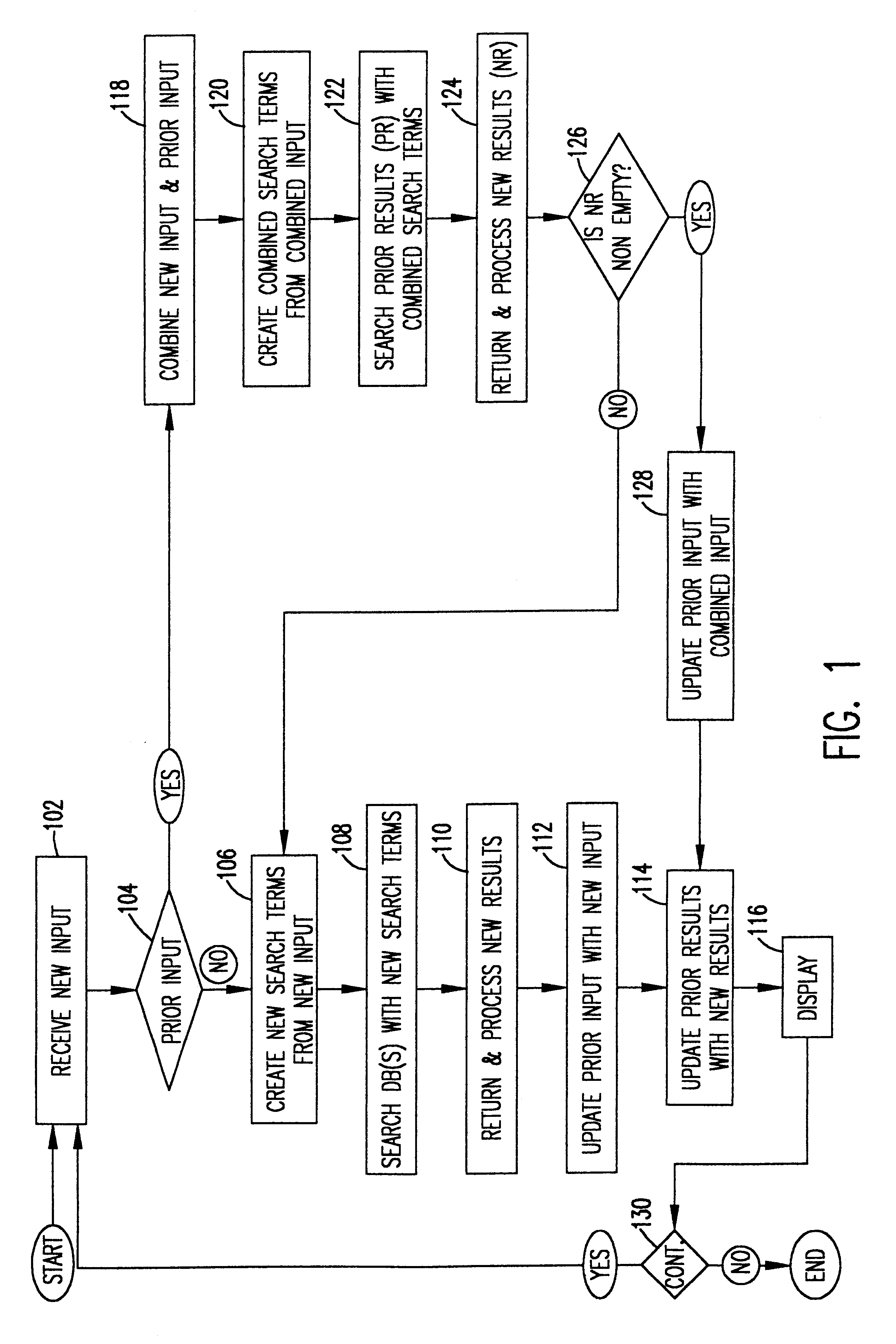

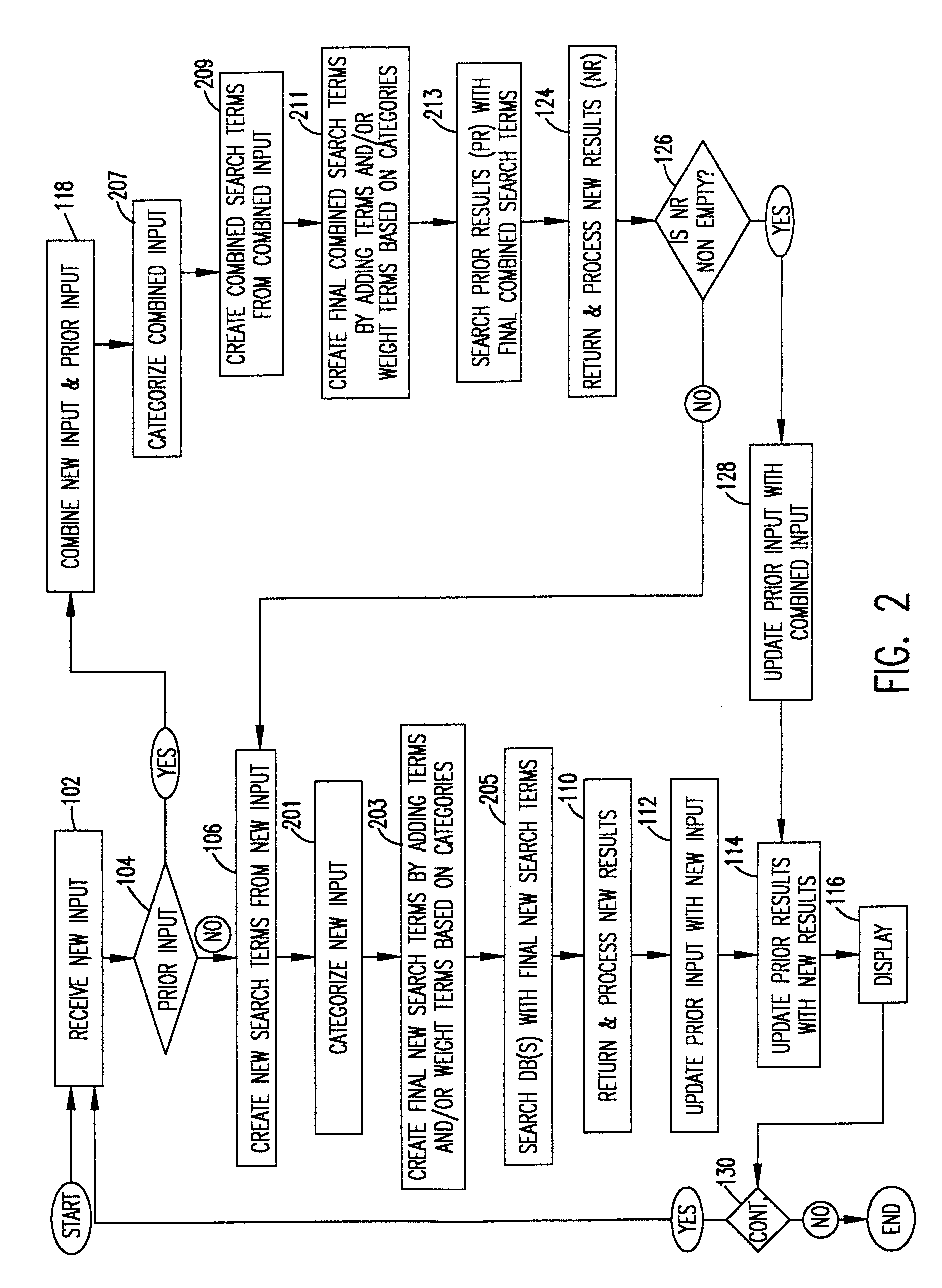

Automatic topic identification and switch for natural language search of textual document collections

InactiveUS6574624B1Data processing applicationsDigital data information retrievalDrill downFree form

A method for iteratively drilling-down on a user's textual free-form natural language query uses a session history to interpret successive queries in the context of previous queries on a topic or topics and to detect an implicit switch in topic. By maintaining a session history of the user's free-form natural language input and by automatically determining whether there is a topic or context switch, the search process is substantially simplified and is more effective; that is, more accurate answers to a user's queries are found faster. In addition, as the system operates on free-form natural language input, automatically constructing the actual search expressions, the complexity of constructing successive search expressions is obviated. If the system determines the user is, according to its session history and tests, asking successive questions within a given topic or context, the system keeps searching within a previously determined given set of previous responses on that context or topic. This effectively narrows the documents found allowing the user to quickly and accurately find just the documents of interest. If the system determines the user has implicitly changed context or topic, based on its session history and tests, it searches all the information at its disposal; i.e., all of the collections of documents.

Owner:IBM CORP

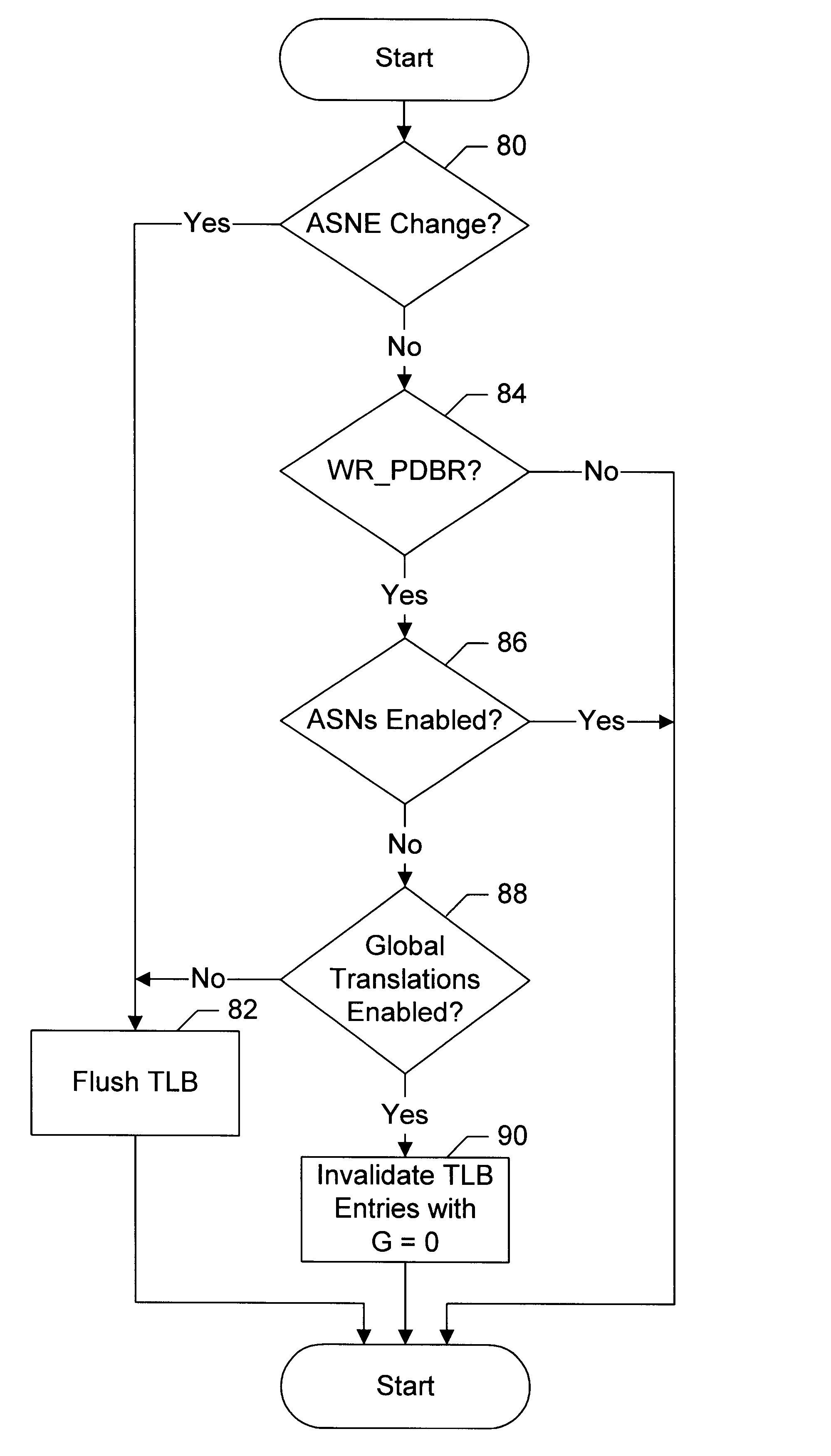

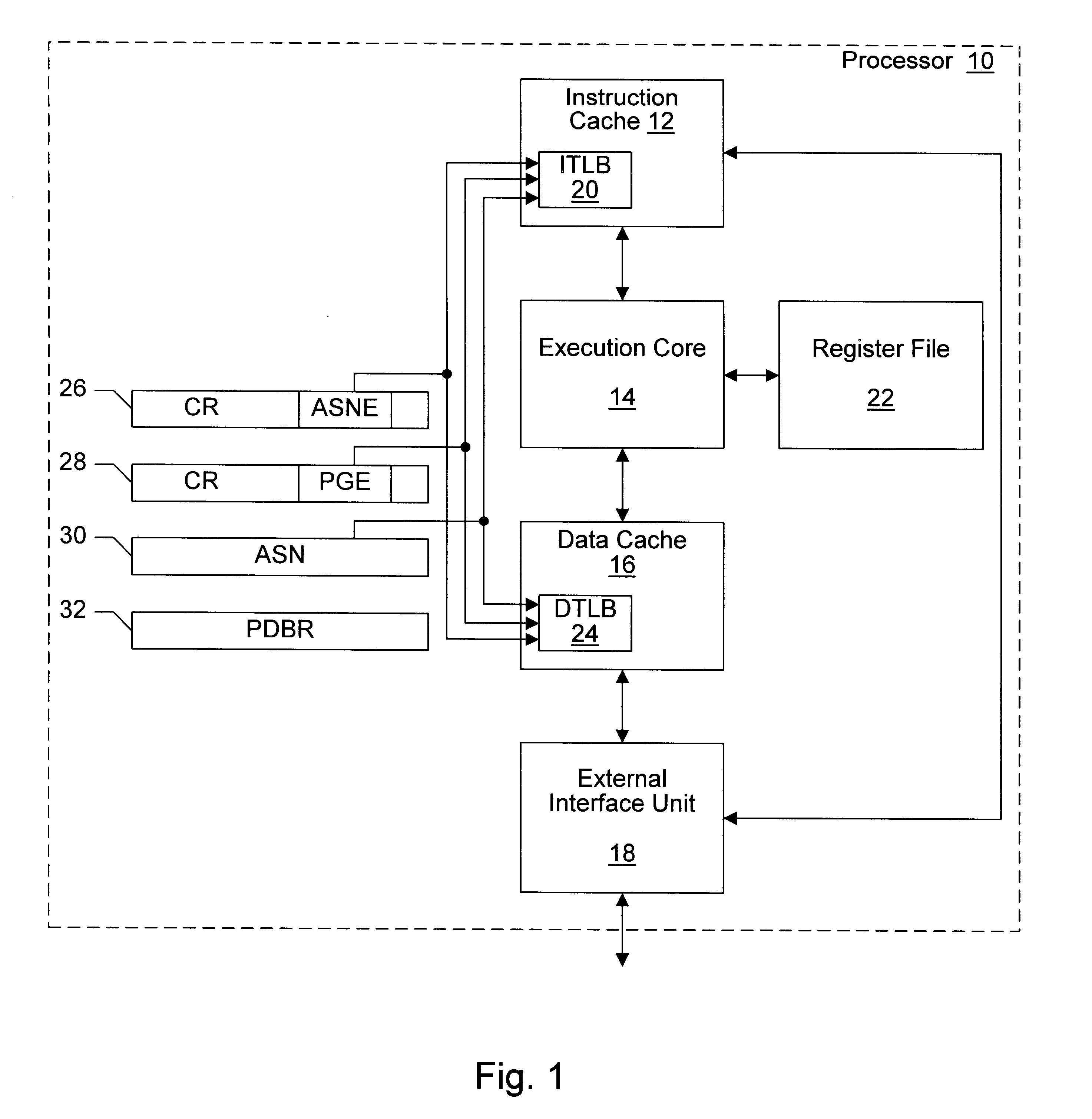

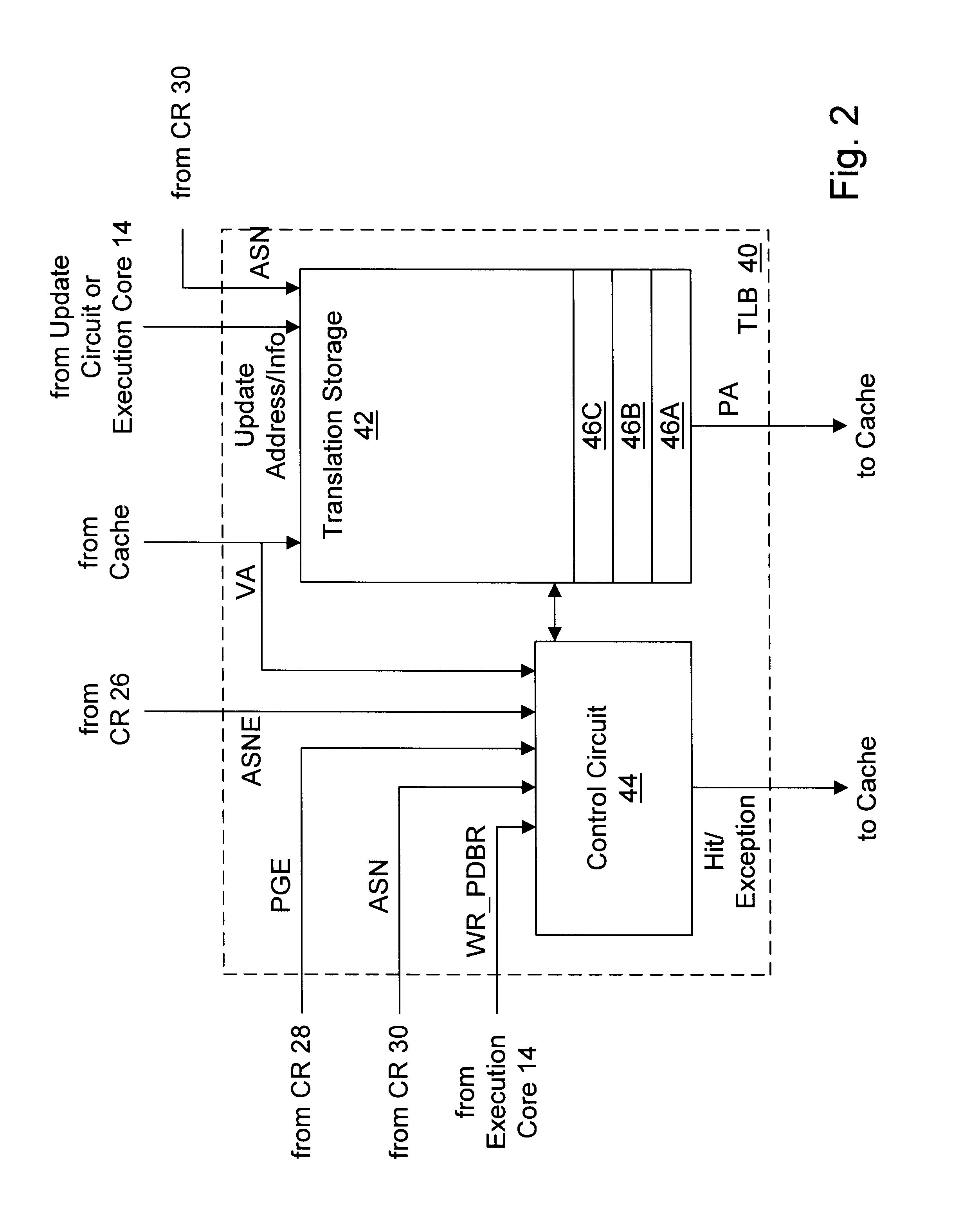

Providing global translations with address space numbers

InactiveUS6604187B1Easy to useMemory architecture accessing/allocationMemory adressing/allocation/relocationOperational systemProcessor register

A processor provides a register for storing an address space number (ASN). Operating system software may assign different ASNs to different processes. The processor may include a TLB to cache translations, and the TLB may record the ASN from the ASN register in a TLB entry being loaded. Thus, translations may be associated with processes through the ASNs. Generally, a TLB hit will be detected in an entry if the virtual address to be translated matches the virtual address tag and the ASN matches the ASN stored in the register. Additionally, the processor may use an indication from the translation table entries to indicate whether or not a translation is global. If a translation is global, then the ASN comparison is not included in detecting a hit in the TLB. Thus, translations which are used by more than one process may not occupy multiple TLB entries. Instead, a hit may be detected on the TLB entry storing the global translation even though the recorded ASN may not match the current ASN. In one embodiment, if ASNs are disabled, the TLB may be flushed on context switches. However, the indication from the translation table entries used to indicate that the translation is global may be used (when ASNs are disabled) by the TLB to selectively invalidate non-global translations on a context switch while not invalidating global translations.

Owner:GLOBALFOUNDRIES US INC

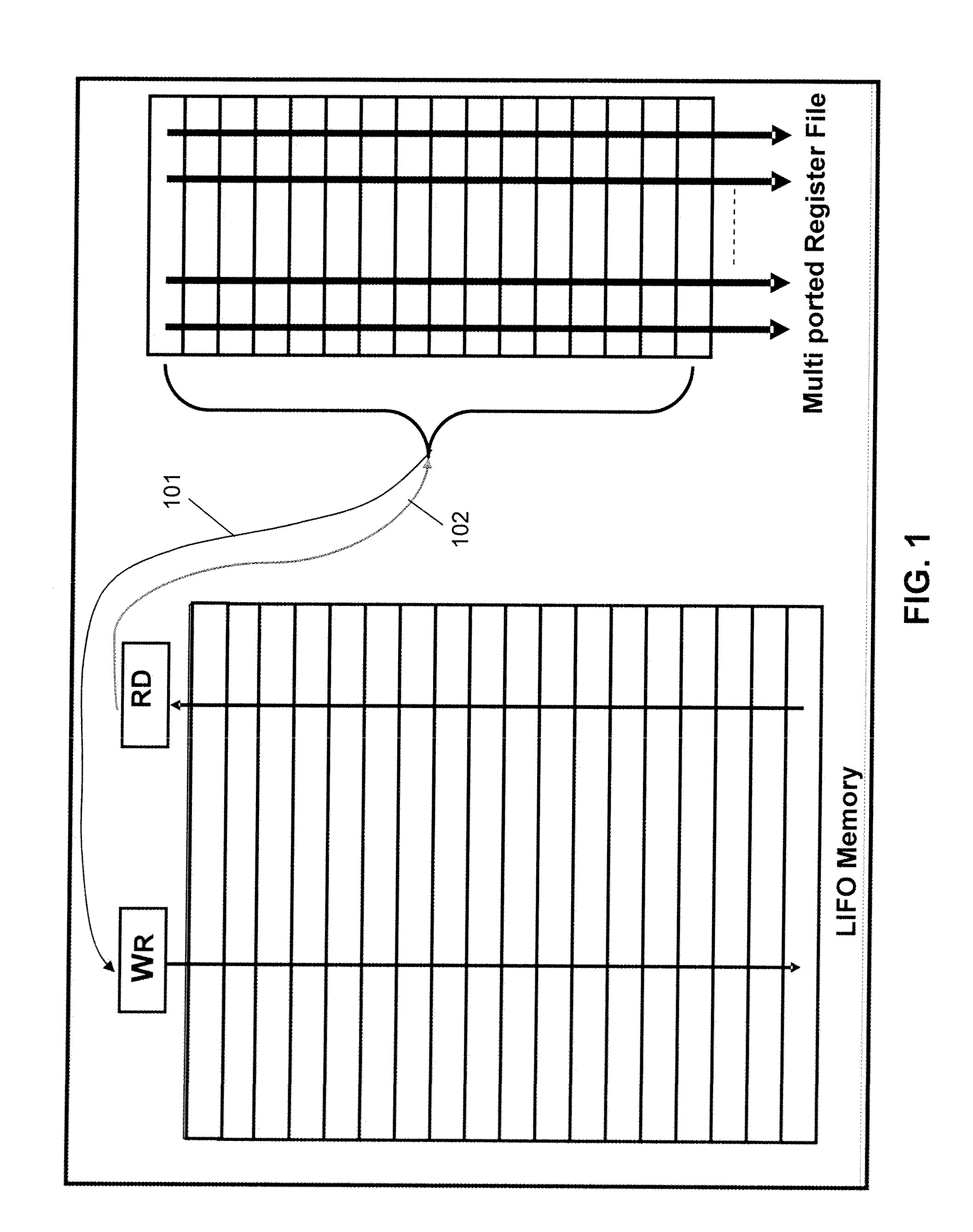

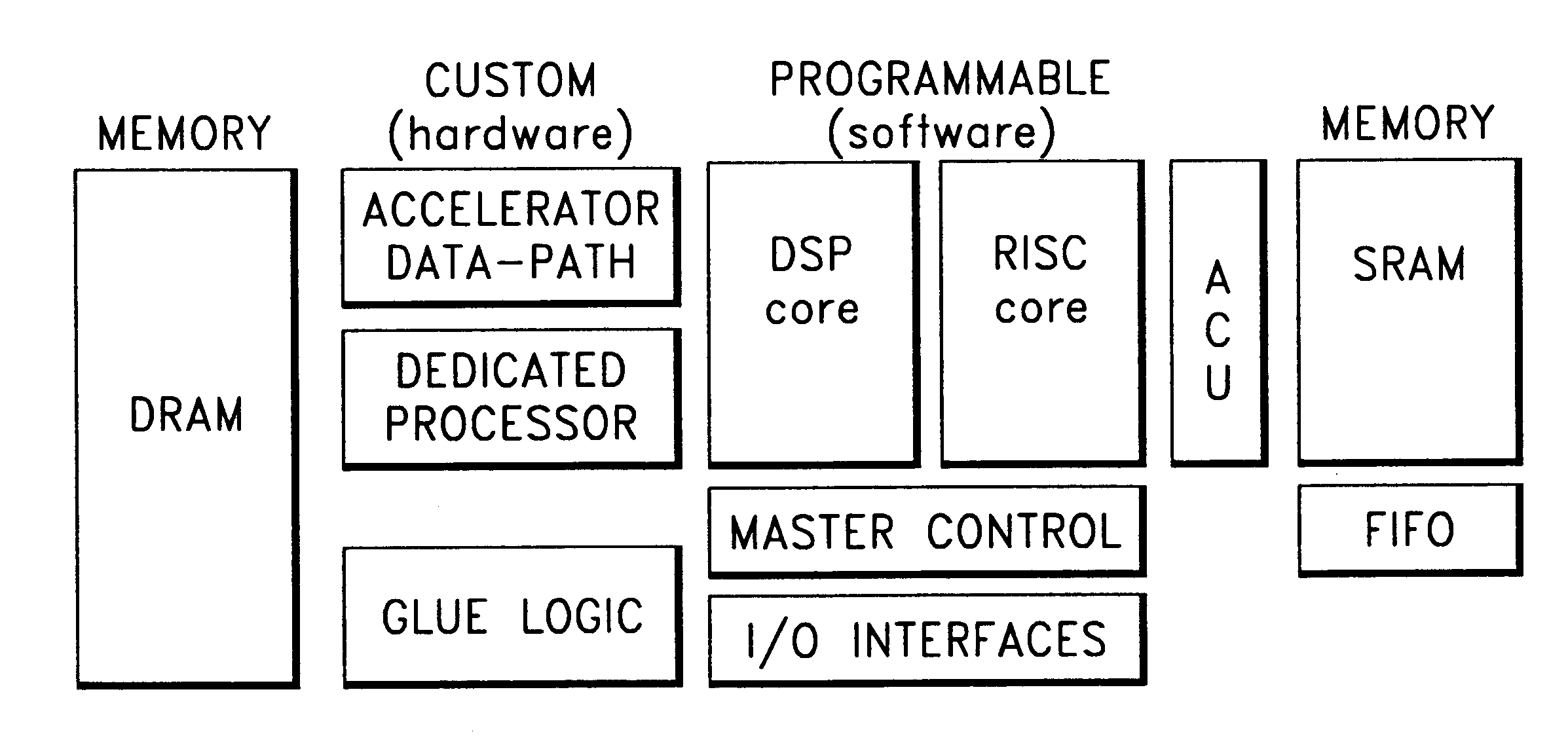

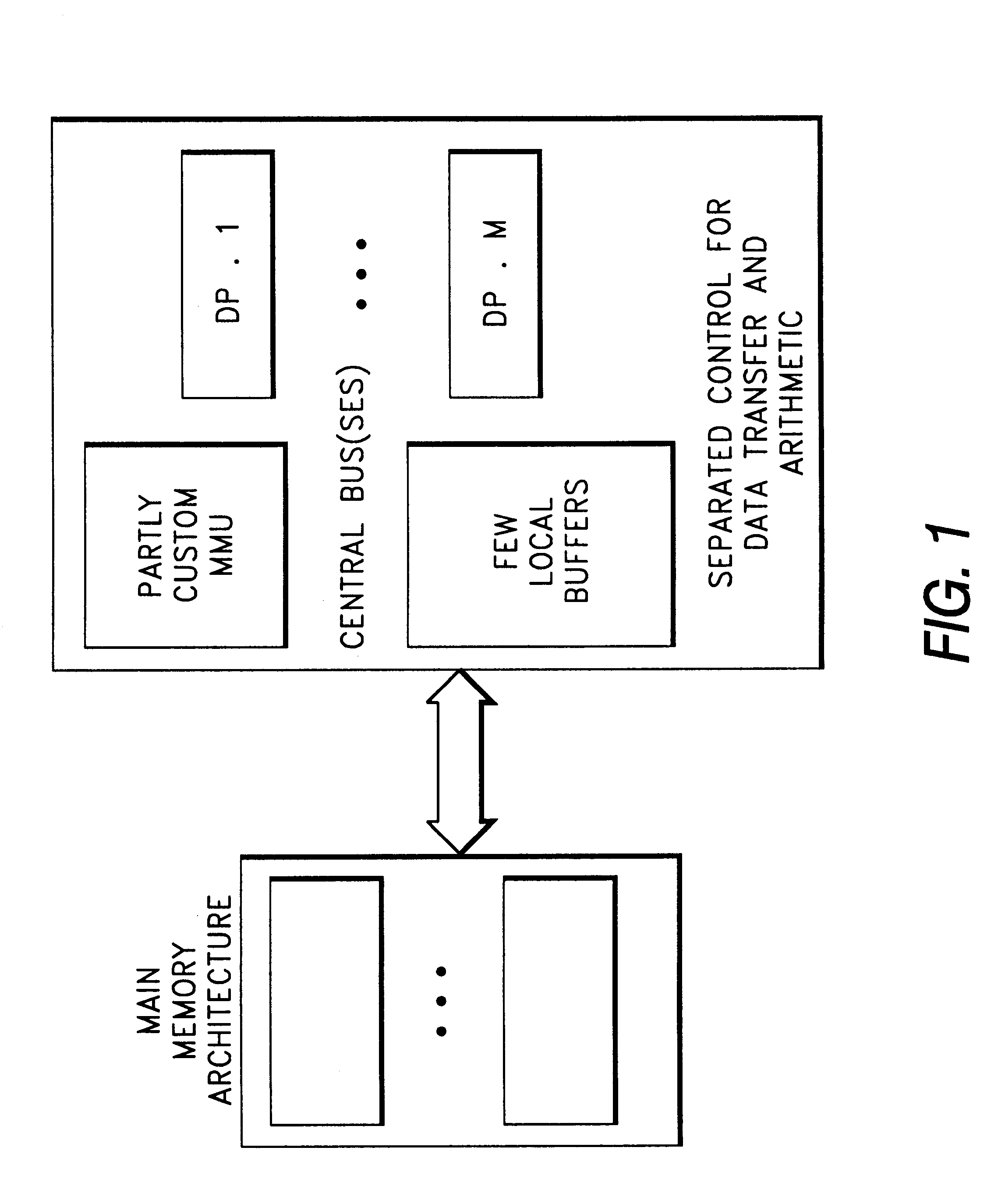

Power-and speed-efficient data storage/transfer architecture models and design methodologies for programmable or reusable multi-media processors

InactiveUS6223274B1Reduce decreaseStay flexibleEnergy efficient ICTMemory adressing/allocation/relocationParallel computingData memory

A programmable processing engine and a method of operating the same is described, the processing engine including a customized processor, a flexible processor and a data store commonly sharable between the two processors. The customized processor normally executes a sequence of a plurality of pre-customized routines, usually for which it has been optimized. To provide some flexibility for design changes and optimizations, a controller for monitoring the customized processor during execution of routines is provided to select one of a set of pre-customized processing interruption points and for switching context from the customized processor to the flexible processor at the interruption point. The customized processor can then be switched off and the flexible processor carries out a modified routine. By using sharable a data store, the context switch can be chosen at a time when all relevant data is in the sharable data store. This means that the flexible processor can pick up the modified processing cleanly. After the modified processing the flexible processor writes back new data into the data store and the customized processor can continue processing either where it left off or may skip a certain number of cycles as instructed by the flexible processor, before beginning processing of the new data.

Owner:INTERUNIVERSITAIR MICRO ELECTRONICS CENT (IMEC VZW)

Method and system for energy management in a simultaneous multi-threaded (SMT) processing system including per-thread device usage monitoring

InactiveUS20050138442A1Lower latencyReduce power consumptionEnergy efficient ICTVolume/mass flow measurementDecision controlOperational system

A method and system for energy management in a simultaneous multi-threaded (SMT) processing system including per-thread device usage monitoring provides control of energy usage that accommodates thread parallelism. Per-device usage information is measured and stored on a per-thread basis, so that upon a context switch, the previous usage evaluation state can be restored. The per-thread usage information is used to adjust the thresholds of device energy management decision control logic, so that energy use can be managed with consideration as to which threads will be running in a given execution slice. A device controller can then provide for per-thread control of attached device power management states without intervention by the processor and without losing the historical evaluation state when a process is switched out. The device controller may be a memory controller and the controlled devices memory modules or banks within modules if individual banks can be power-managed. Local thresholds provide the decision-making mechanism for each controlled device and are adjusted by the operating system in conformity with the measured usage level for threads executing within the processing system. The per-thread usage information may be obtained from a performance monitoring unit that is located within or external to the device controller and the usage monitoring state is then retrieved and replaced by the operating system at each context switch.

Owner:IBM CORP

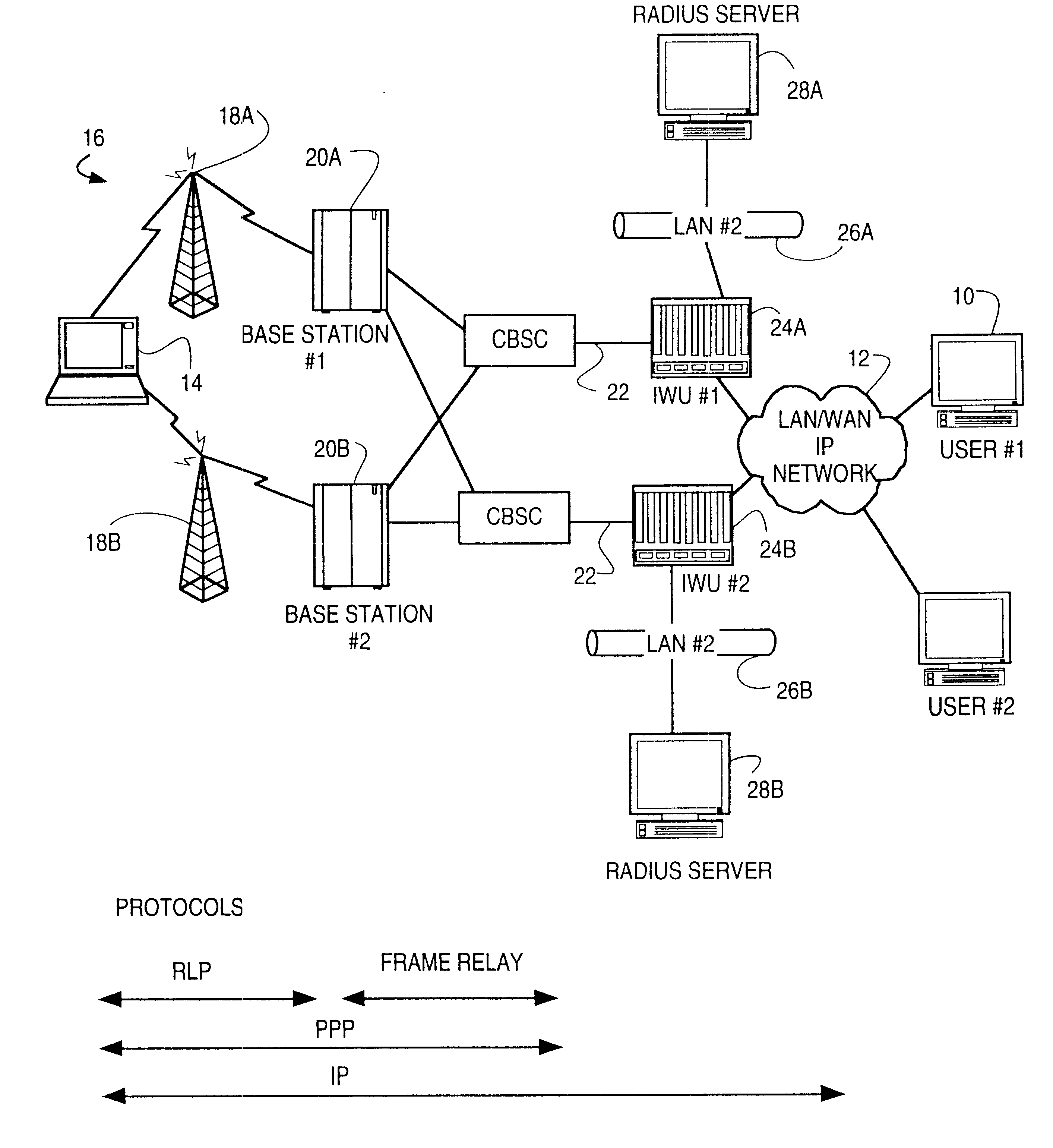

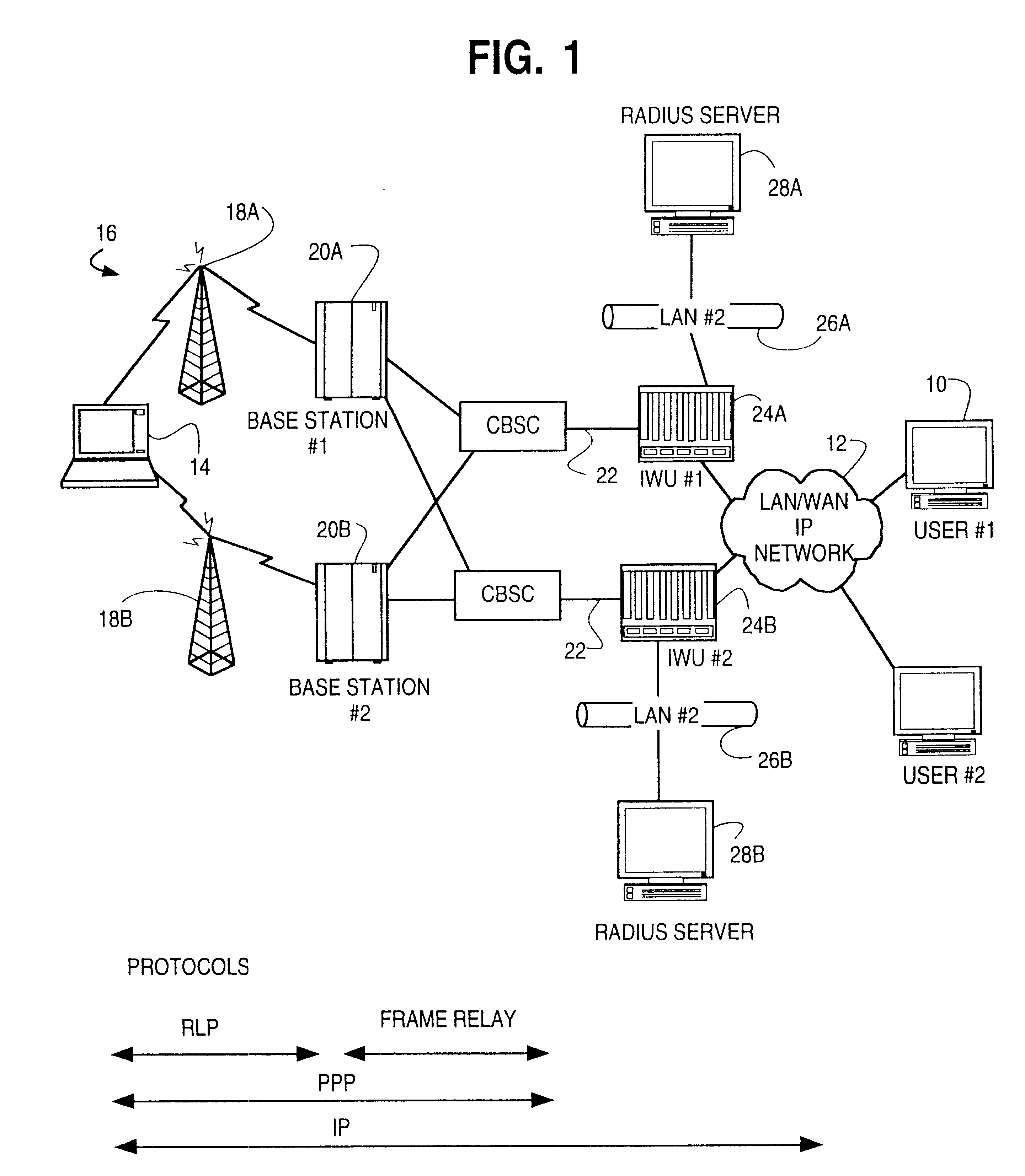

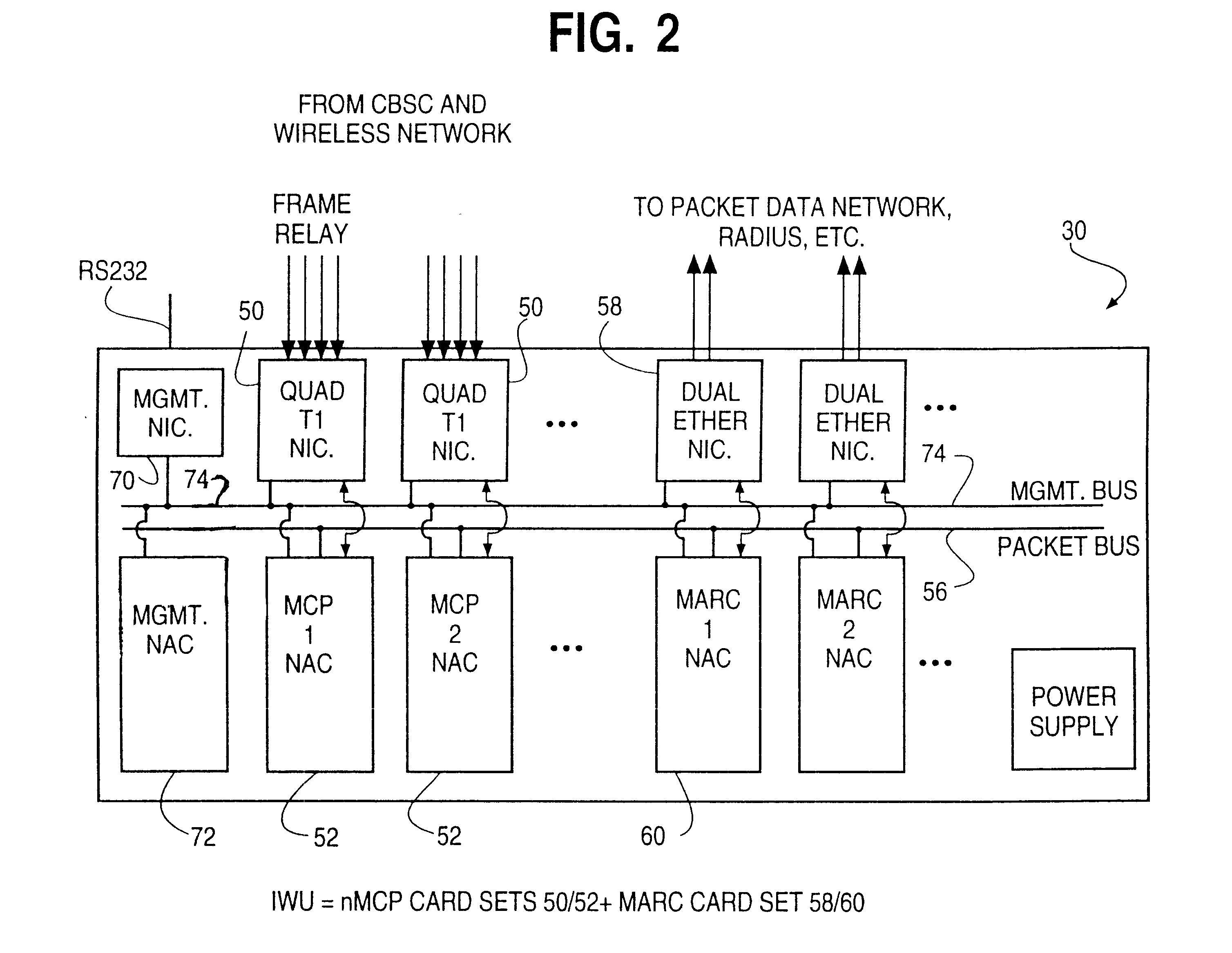

Instant activation of point-to point protocol (PPP) connection using existing PPP state

InactiveUS6628671B1Time-division multiplexWide area networksNetwork access serverPoint-to-Point Protocol

A network access server providing remote access to an IP network for a remote client initiates a PPP connection for a remote client quickly, and without requiring re-negotiation of Link Control Protocols and Network Control Protocols. The network access server has a PPP session with the remote client go dormant, for example when the user is a wireless user and goes out of range of a radio tower and associated base station. The network access server does not get rid of the PPP state for the dormant session, but rather switches that PPP state to a new session, such as when the client moves into range of a different radio tower and associated base station and initiates a new active session on the interface to the wireless network. The switching of PPP states may be within a single network access server, or from one network access server to another. This "context switching" of the active PPP session allows the mobile user to seamlessly move about the wireless network without having to re-negotiate Link Control Protocols and Network Control Protocols every time they move out of range of one radio tower and into range of another radio tower.

Owner:UTSTARCOM INC

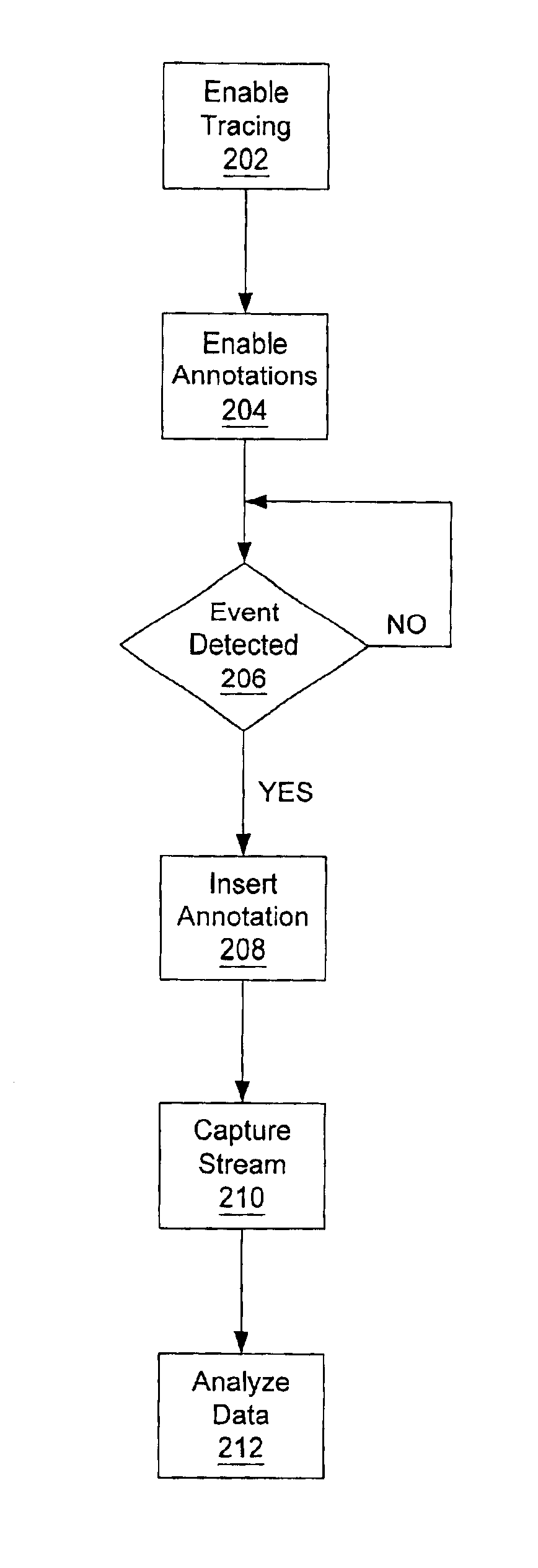

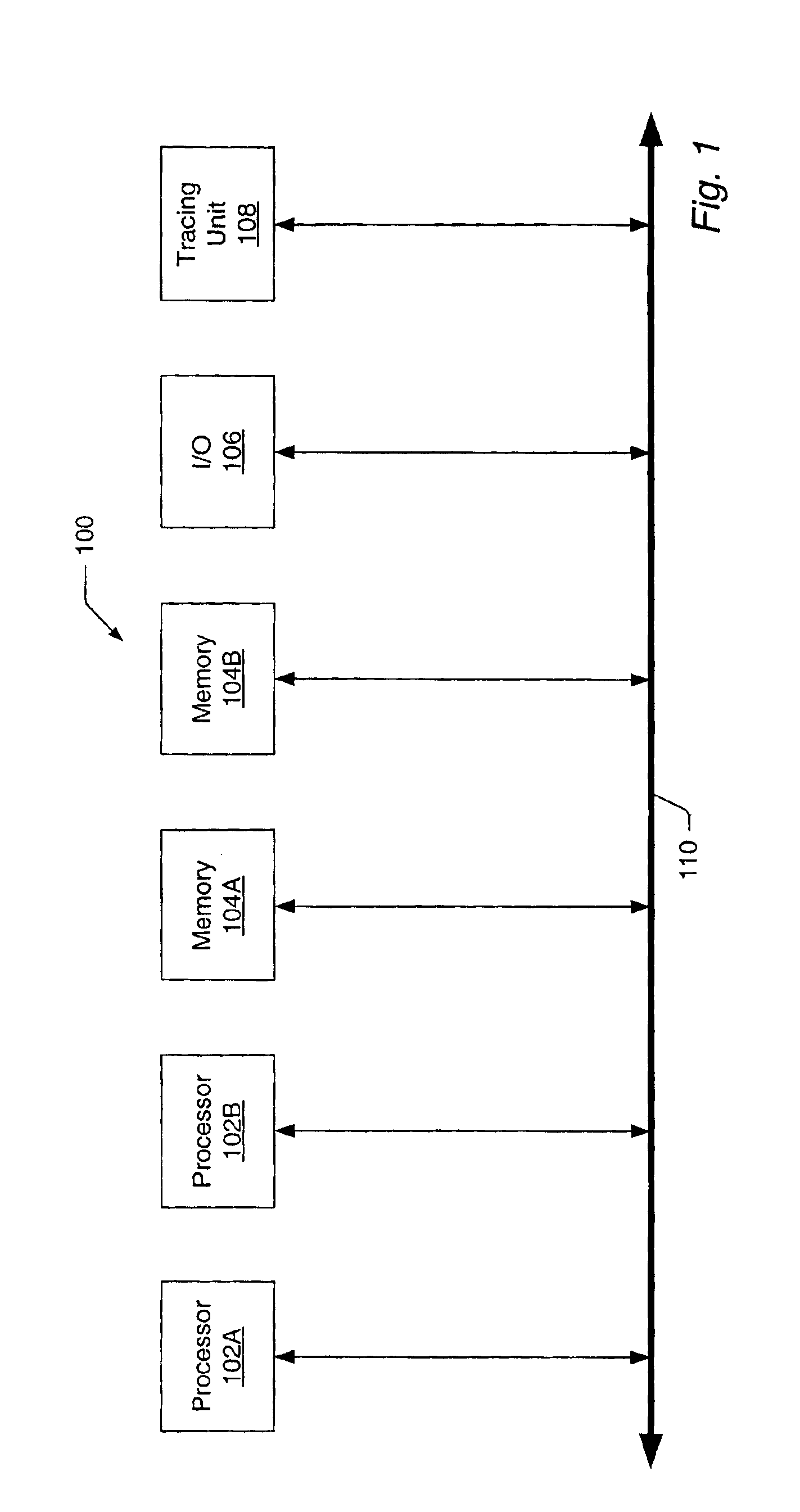

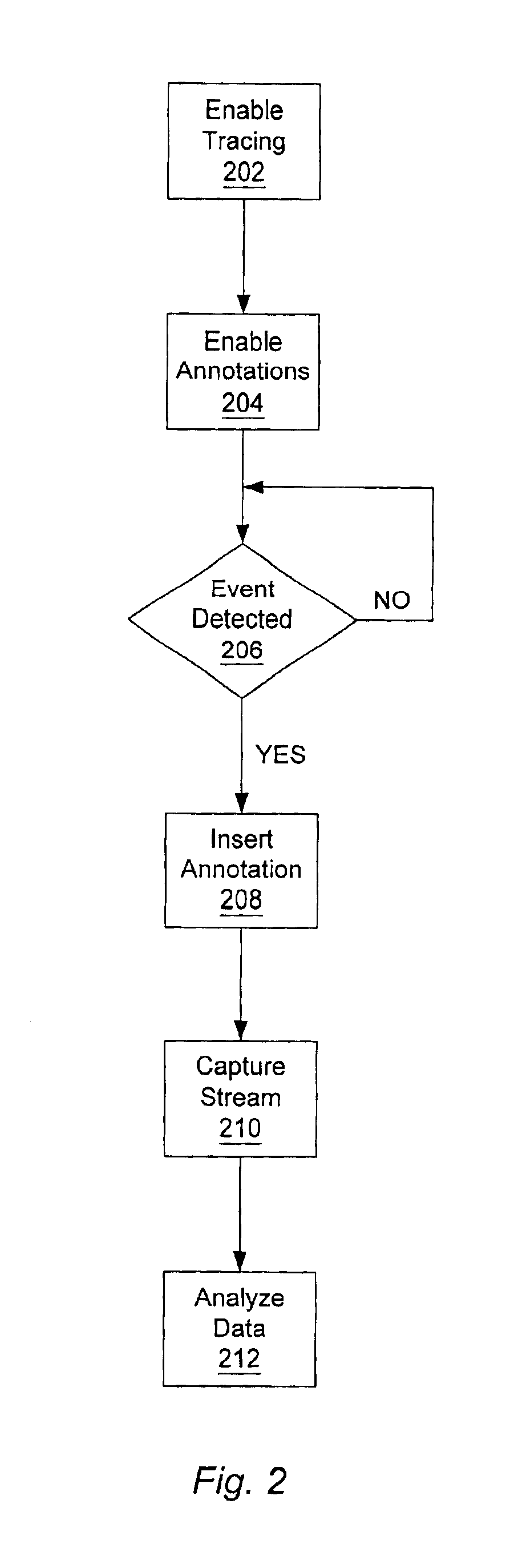

Annotations for transaction tracing

InactiveUS6883162B2Enhanced debugEnhanced performance analysisDigital computer detailsHardware monitoringTimestampOperational system

A method and mechanism for annotating a transaction stream. A processing unit is configured to generate annotation transactions which are inserted into a transaction stream. The transaction stream, including the annotations, are subsequently observed by a trace unit for debug or other analysis. In one embodiment, a processing unit includes a trace address register and an annotation enable bit. The trace address register is configured to store an address corresponding to a trace unit and the enable bit is configured to indicate whether annotation transactions are to be generated. Annotation instructions are added to operating system or user code at locations where annotations are desired. In one embodiment, annotation transactions correspond to transaction types which are not unique to annotation transactions. In one embodiment, an annotation instruction includes a reference to the trace address register which contains the address of the trace unit. Upon detecting the annotation instruction, and detecting annotations are enabled, the processing unit generates an annotation transaction addressed to the trace unit. In one embodiment, annotation transactions may be used to indicate context switches, processor mode changes, timestamps, or address translation information.

Owner:ORACLE INT CORP

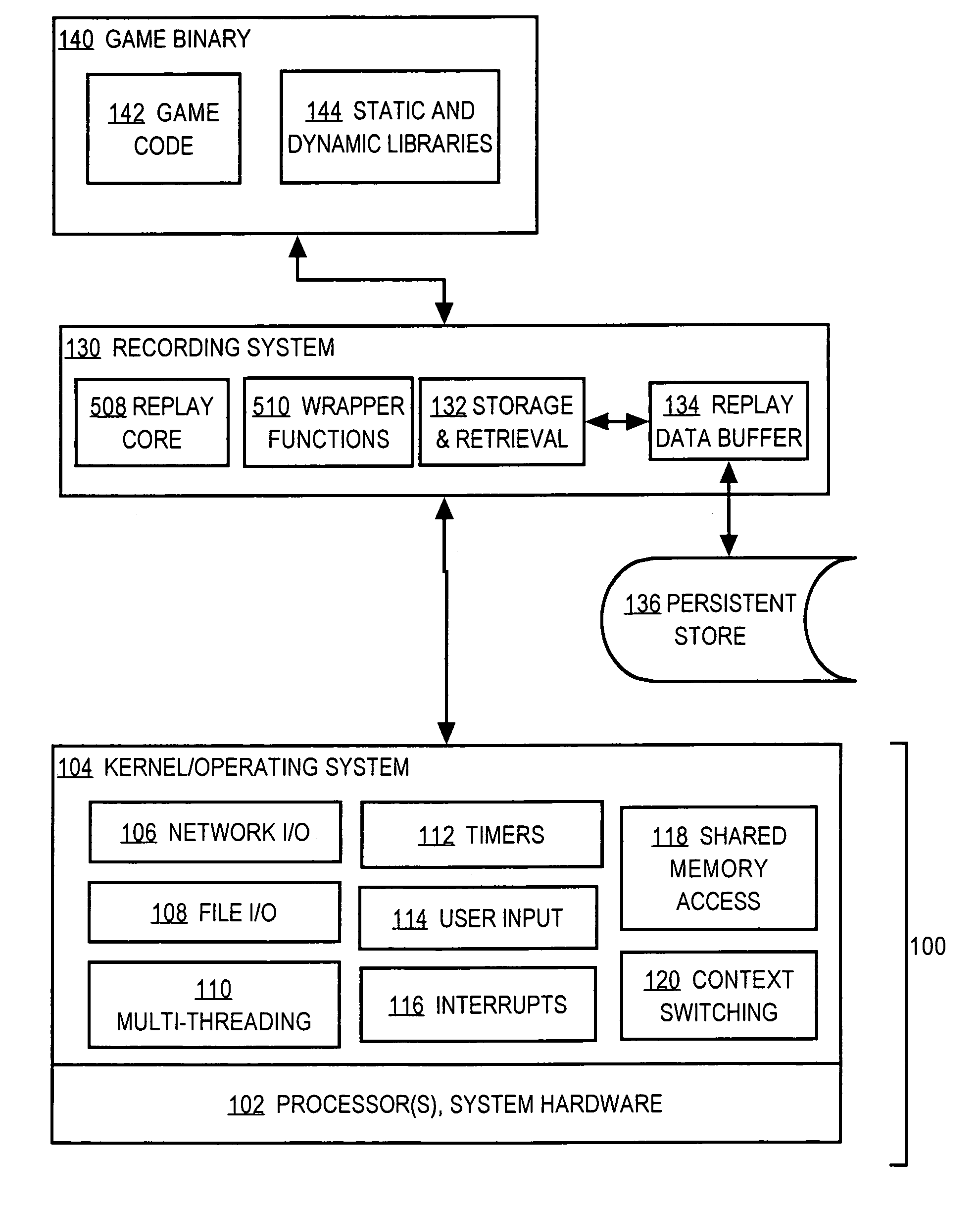

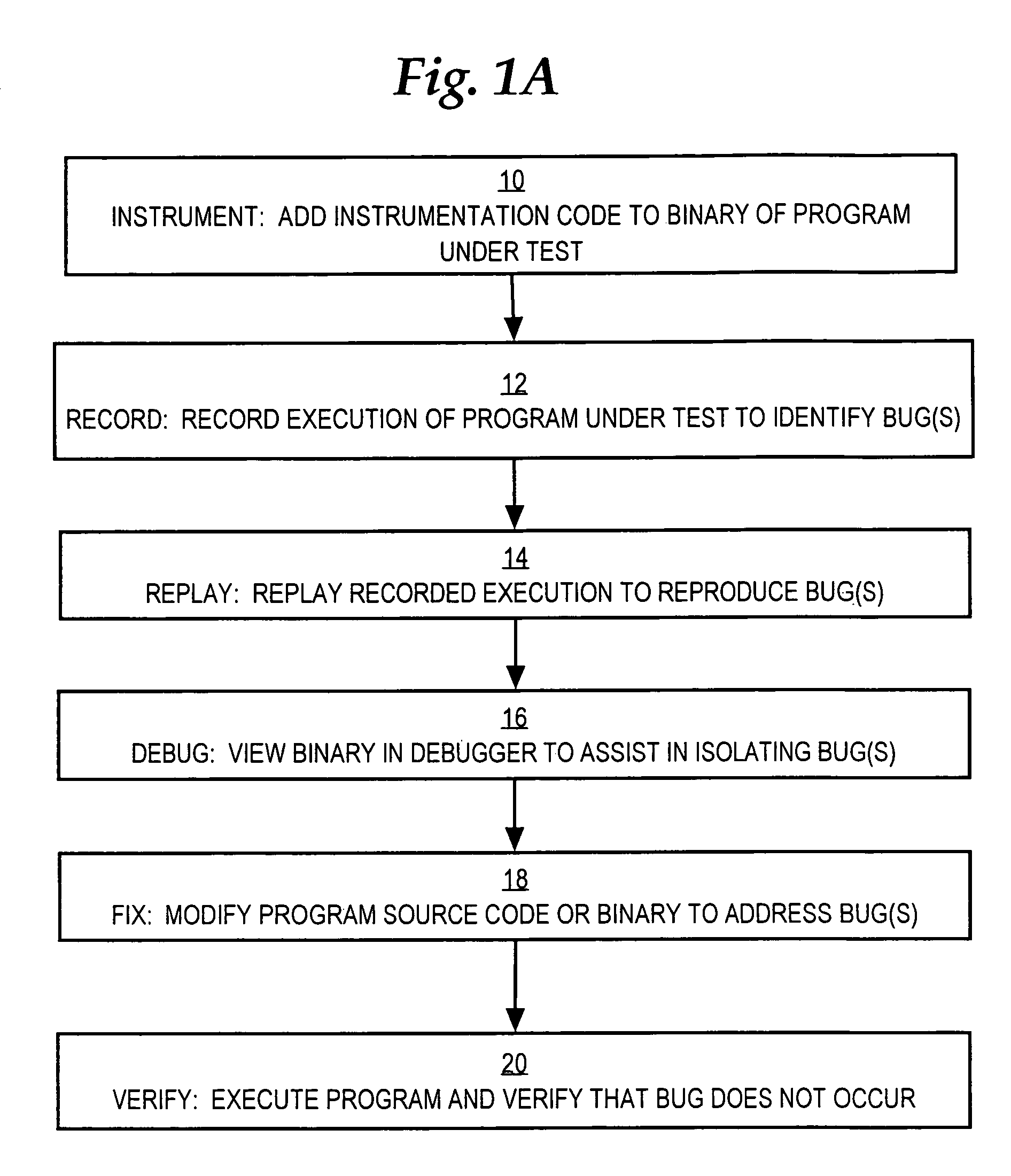

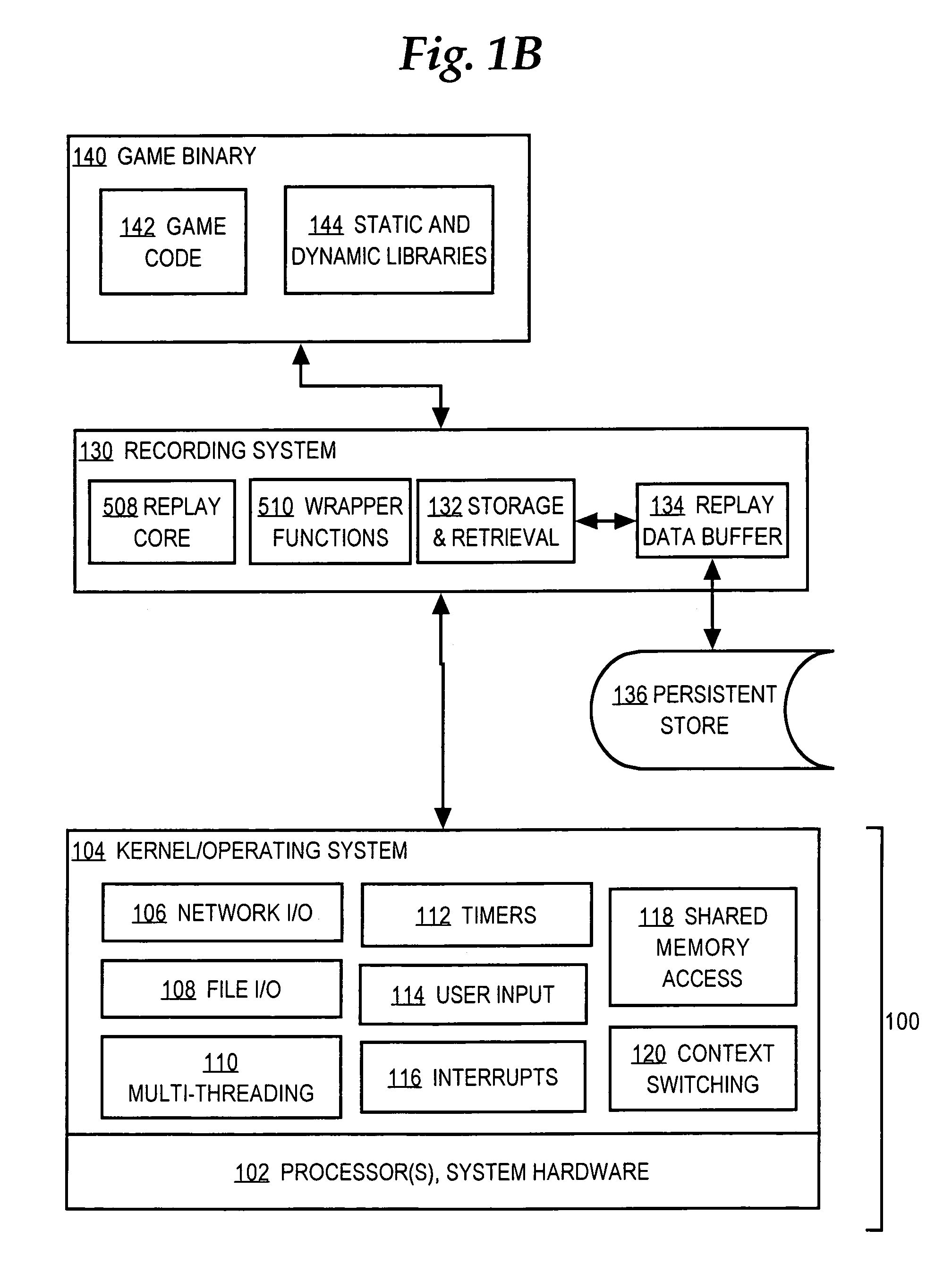

Recording and replaying computer programs

InactiveUS7506318B1Error detection/correctionSpecific program execution arrangementsParallel computingCoded element

A method is disclosed for recording and replaying computer programs. In one embodiment, a method of modifying a computer program to support recording execution, comprises the computer-implemented steps of receiving an executable application binary; modifying the executable application binary by adding one or more proxy code elements to result in creating a modified application binary, wherein upon execution of the modified application binary, the one or more proxy code elements create and store recorded information representing all non-deterministic events that occur during the execution. For example, asynchronous callbacks and thread context switches are recorded and can be replayed.

Owner:CA TECH INC

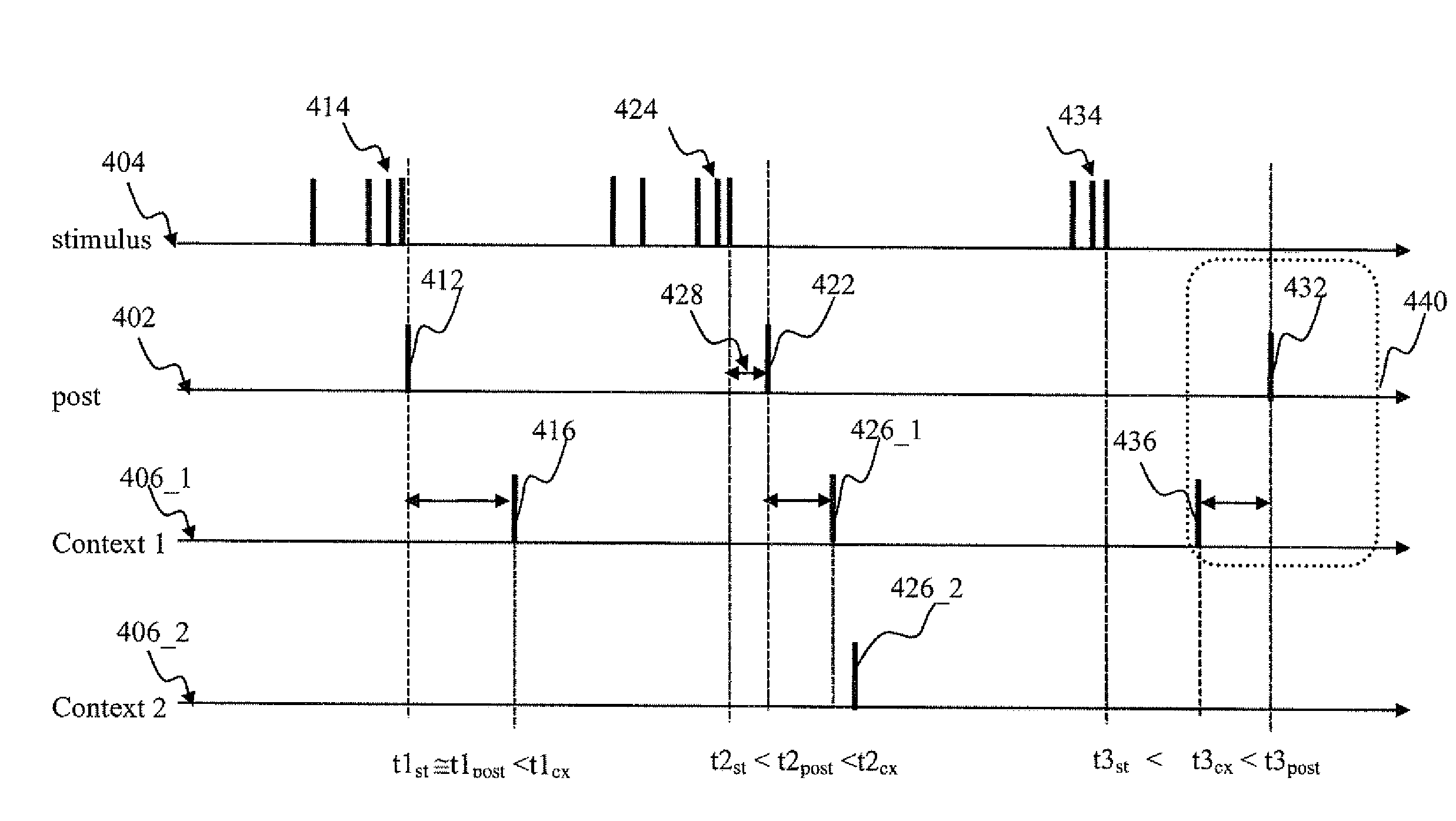

Sensory input processing apparatus in a spiking neural network

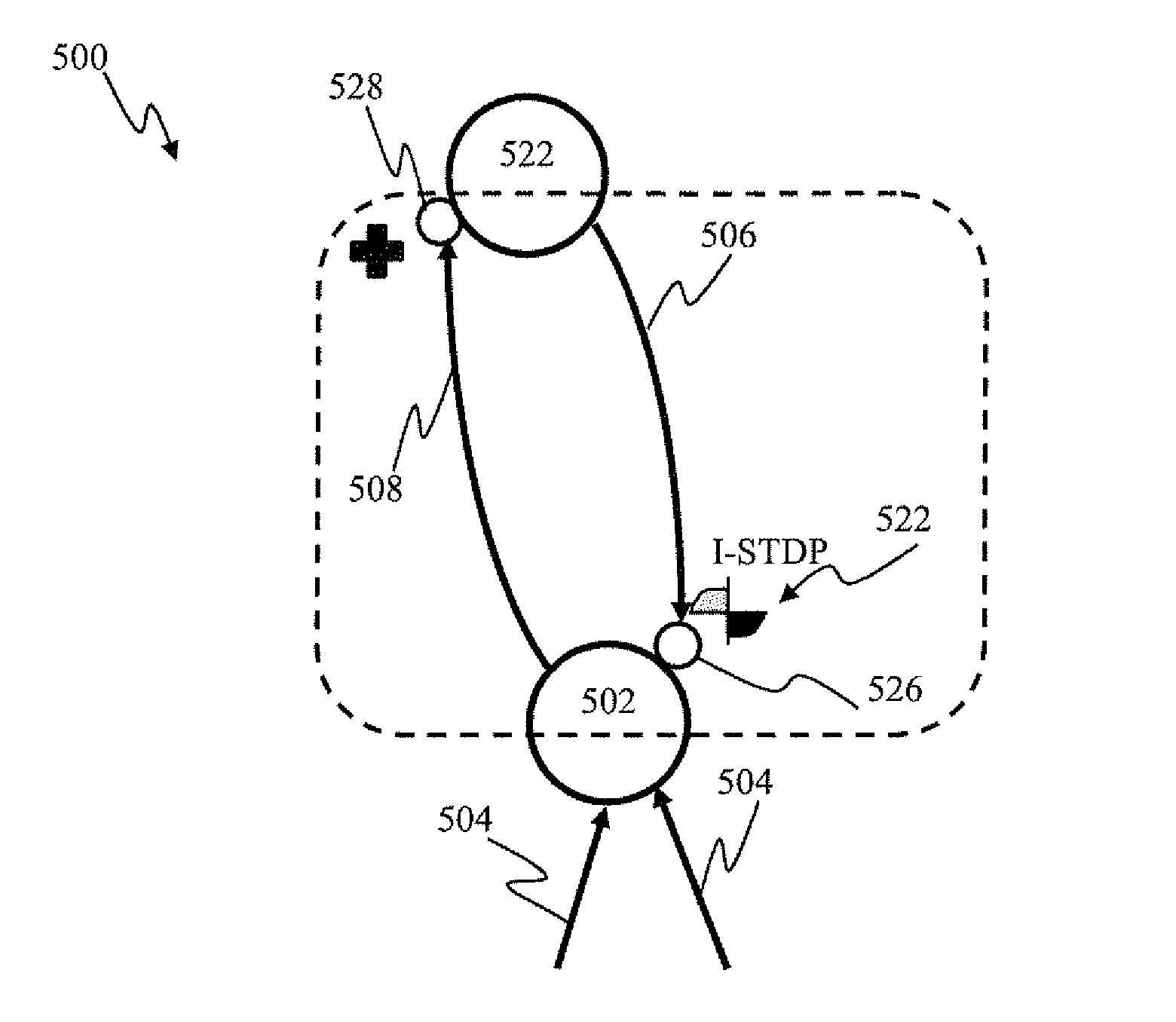

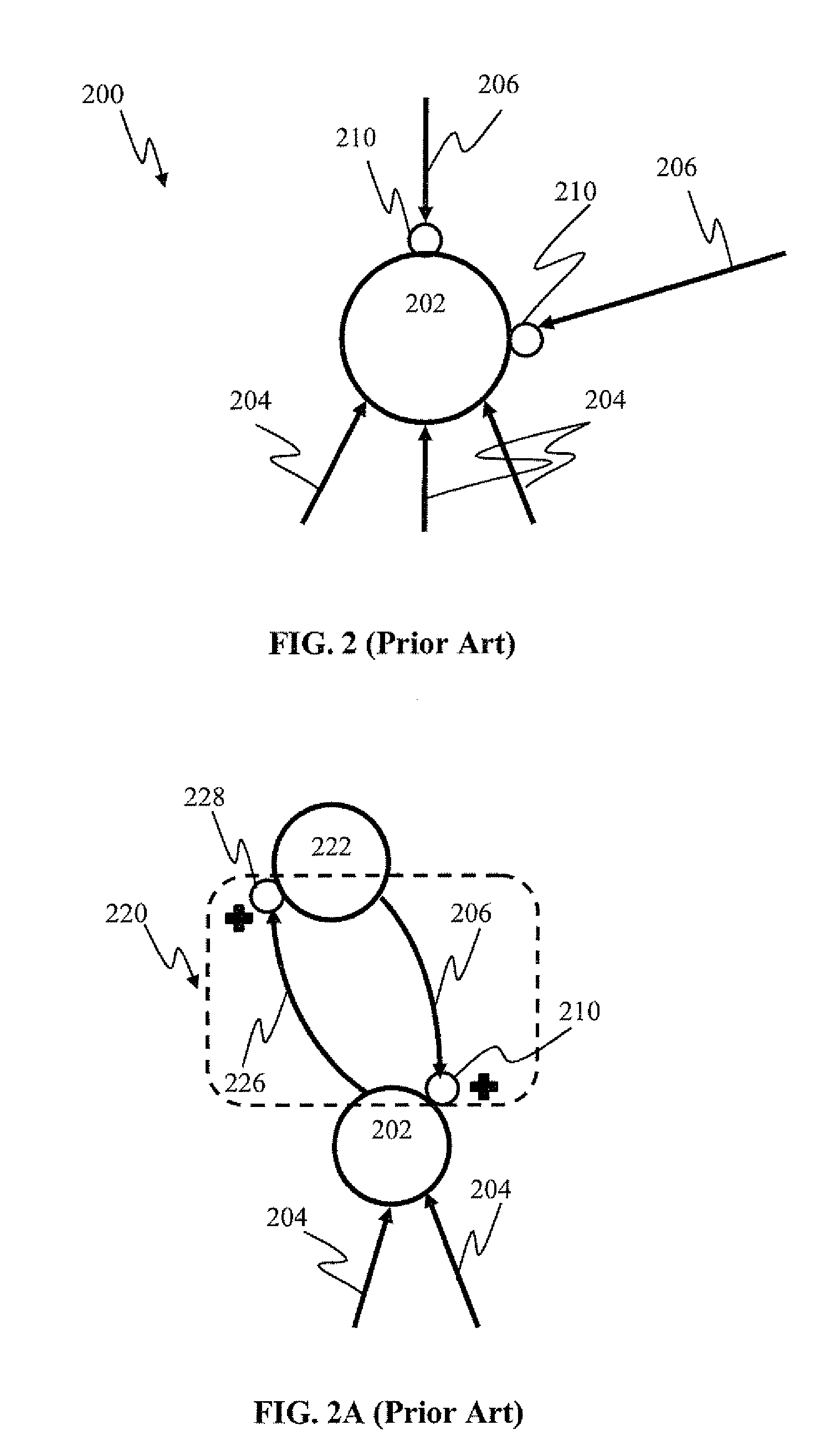

ActiveUS20130297542A1Reduce formationDigital computer detailsDigital dataSpiking neural networkSpike-timing-dependent plasticity

Apparatus and methods for feedback in a spiking neural network. In one approach, spiking neurons receive sensory stimulus and context signal that correspond to the same context. When the stimulus provides sufficient excitation, neurons generate response. Context connections are adjusted according to inverse spike-timing dependent plasticity. When the context signal precedes the post synaptic spike, context synaptic connections are depressed. Conversely, whenever the context signal follows the post synaptic spike, the connections are potentiated. The inverse STDP connection adjustment ensures precise control of feedback-induced firing, eliminates runaway positive feedback loops, enables self-stabilizing network operation. In another aspect of the invention, the connection adjustment methodology facilitates robust context switching when processing visual information. When a context (such an object) becomes intermittently absent, prior context connection potentiation enables firing for a period of time. If the object remains absent, the connection becomes depressed thereby preventing further firing.

Owner:BRAIN CORP

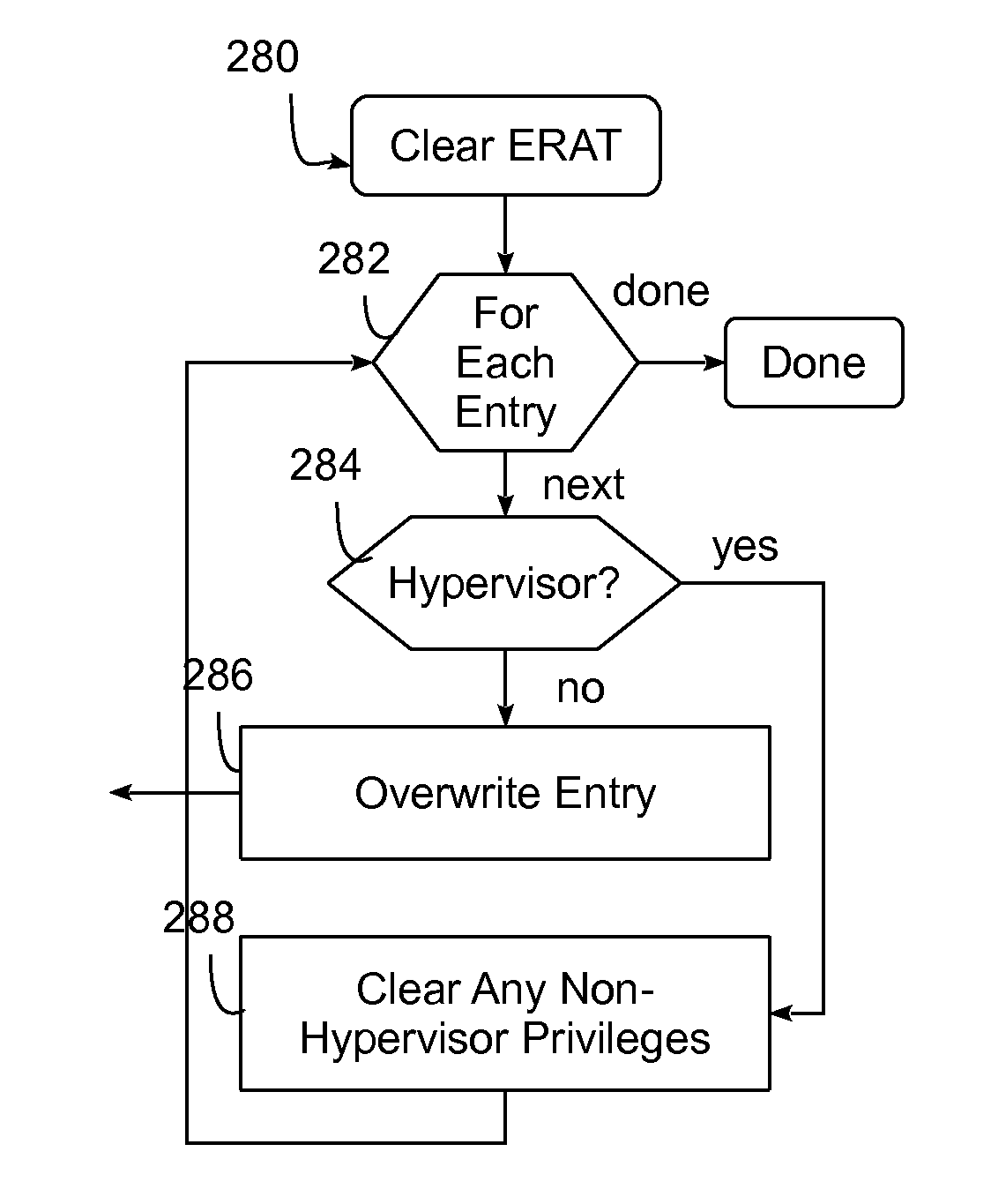

Instruction set architecture with secure clear instructions for protecting processing unit architected state information

InactiveUS20140230077A1Digital data processing detailsAnalogue secracy/subscription systemsComputer hardwareSupervisory program

A method and circuit arrangement utilize secure clear instructions defined in an instruction set architecture (ISA) for a processing unit to clear, overwrite or otherwise restrict unauthorized access to the internal architected state of the processing unit in association with context switch operations. The secure clear instructions are executable by a hypervisor, operating system, or other supervisory program code in connection with a context switch operation, and the processing unit includes security logic that is responsive to such instructions to restrict access by an operating system or process associated with an incoming context to architected state information associated with an operating system or process associated with an outgoing context.

Owner:IBM CORP

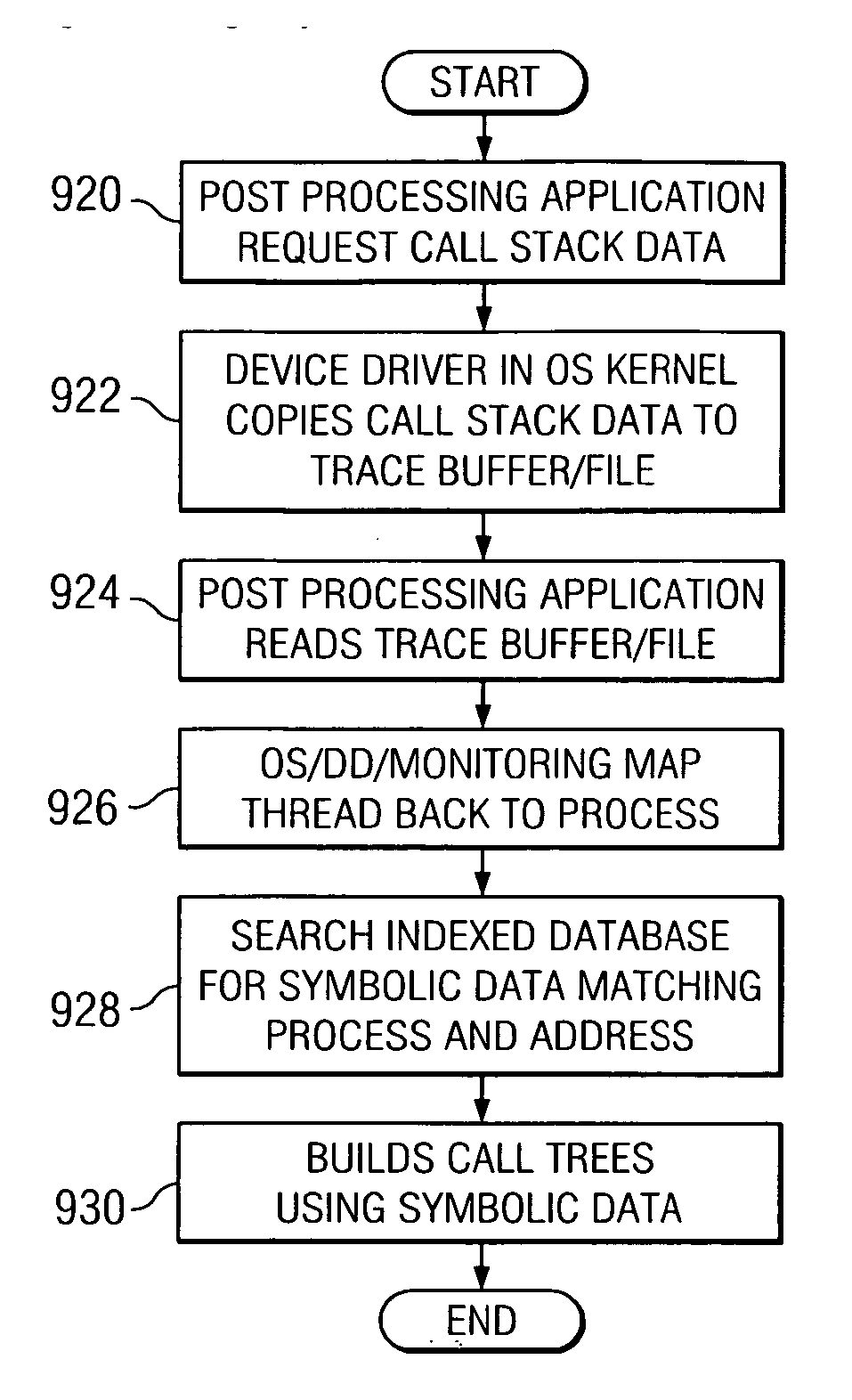

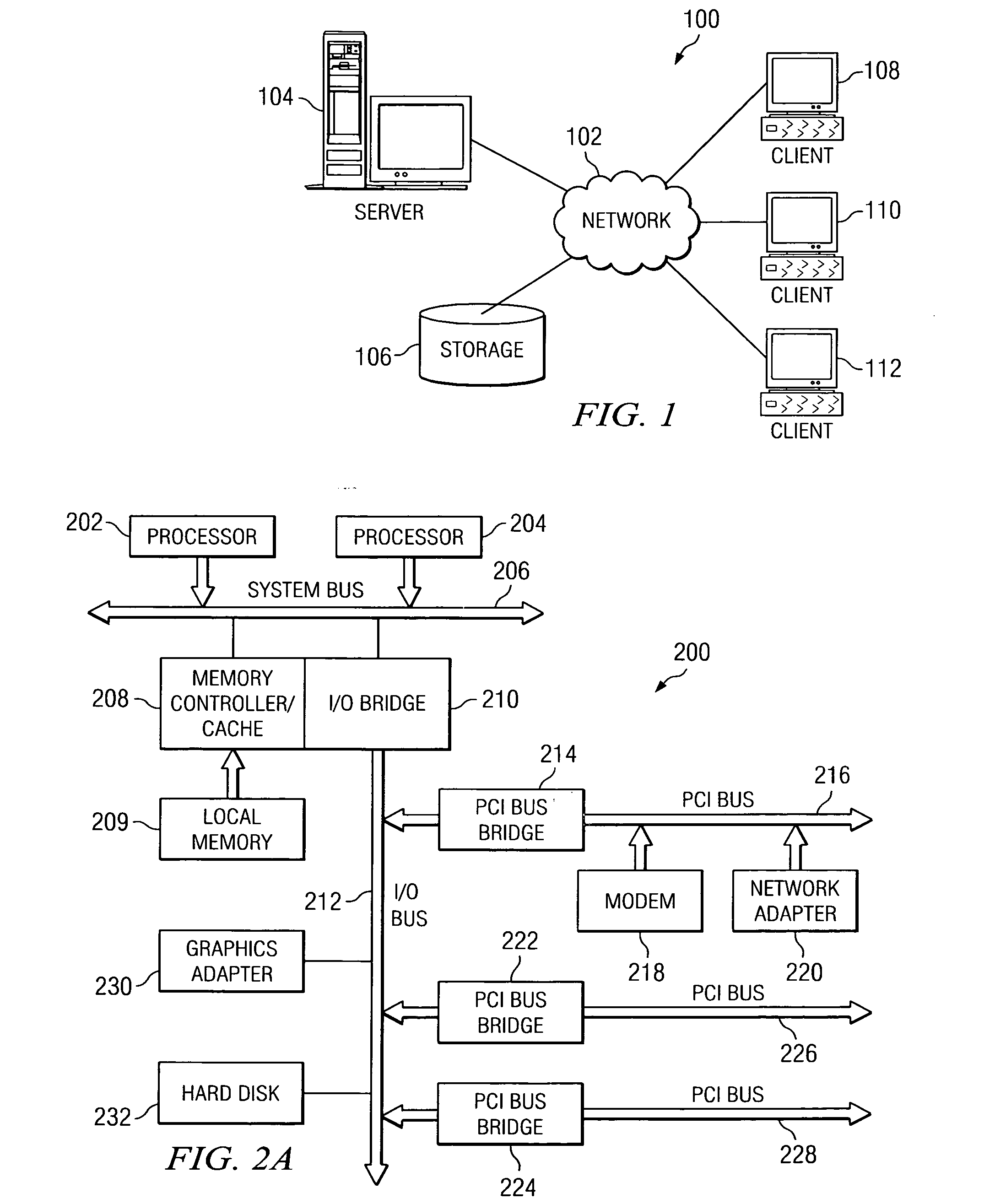

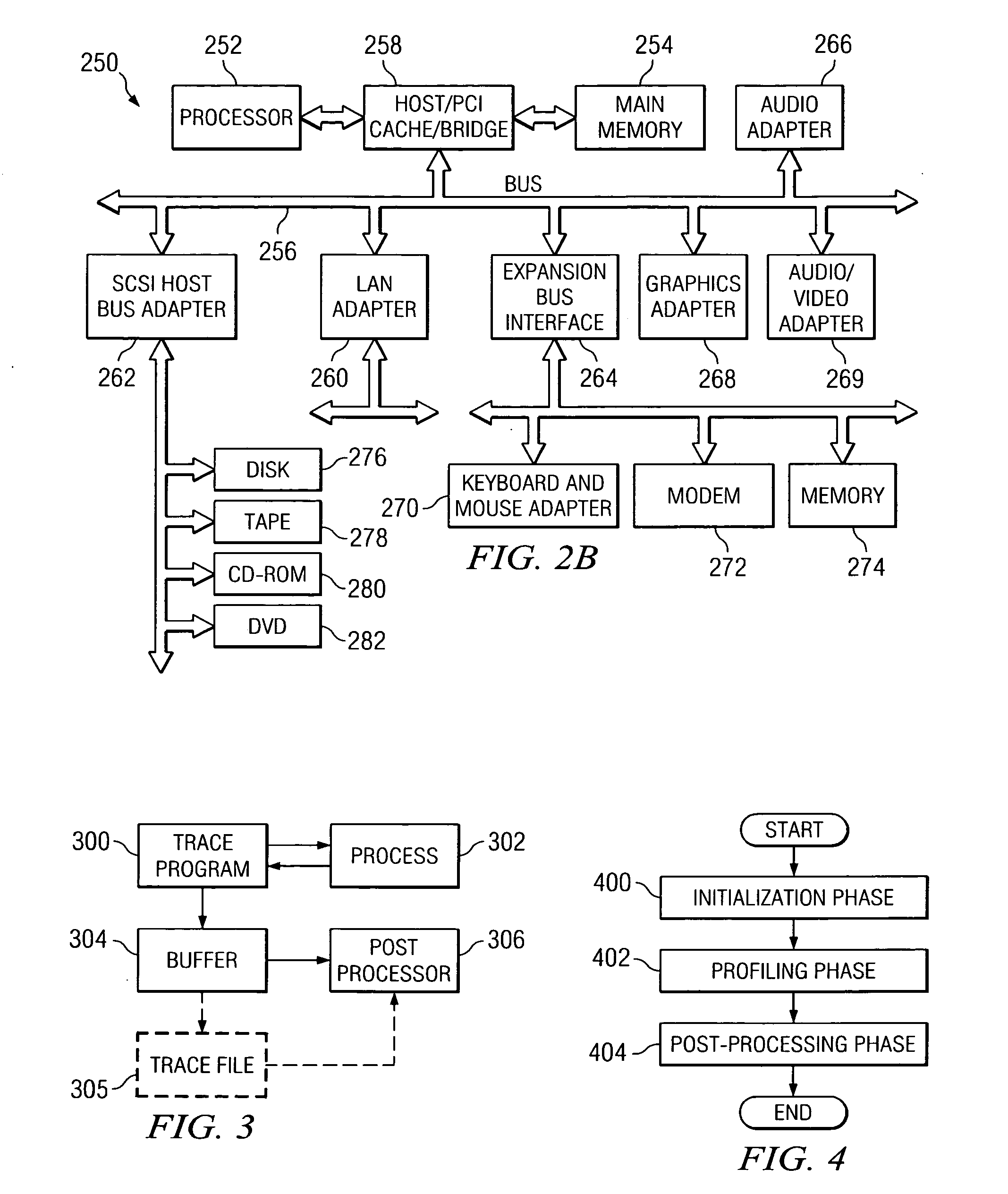

Method and apparatus for determining computer program flows autonomically using hardware assisted thread stack tracking and cataloged symbolic data

InactiveUS20050210454A1Error detection/correctionDigital computer detailsCall stackOperational system

A method, apparatus, and computer instructions for determining computer flows autonomically using hardware assisted thread stack and cataloged symbolic data. When a new thread is spawned during execution of a computer program, new thread work area is allocated by the operating system in memory for storage of call stack information for the new thread. Hardware registers are set with values corresponding to the new thread work area. Upon context switch, values of the registers are saved in a context save area for future restoration. When call stack data is post-processed, the operating system or a device driver copies call stack data from the thread work areas to a consolidated buffer and each thread is mapped to a process. Symbolic data may be obtained based on the process identifier and address of the method / routine that was called / returned in the thread. Corresponding program flow is determined using retrieved symbolic data and call stack data.

Owner:IBM CORP

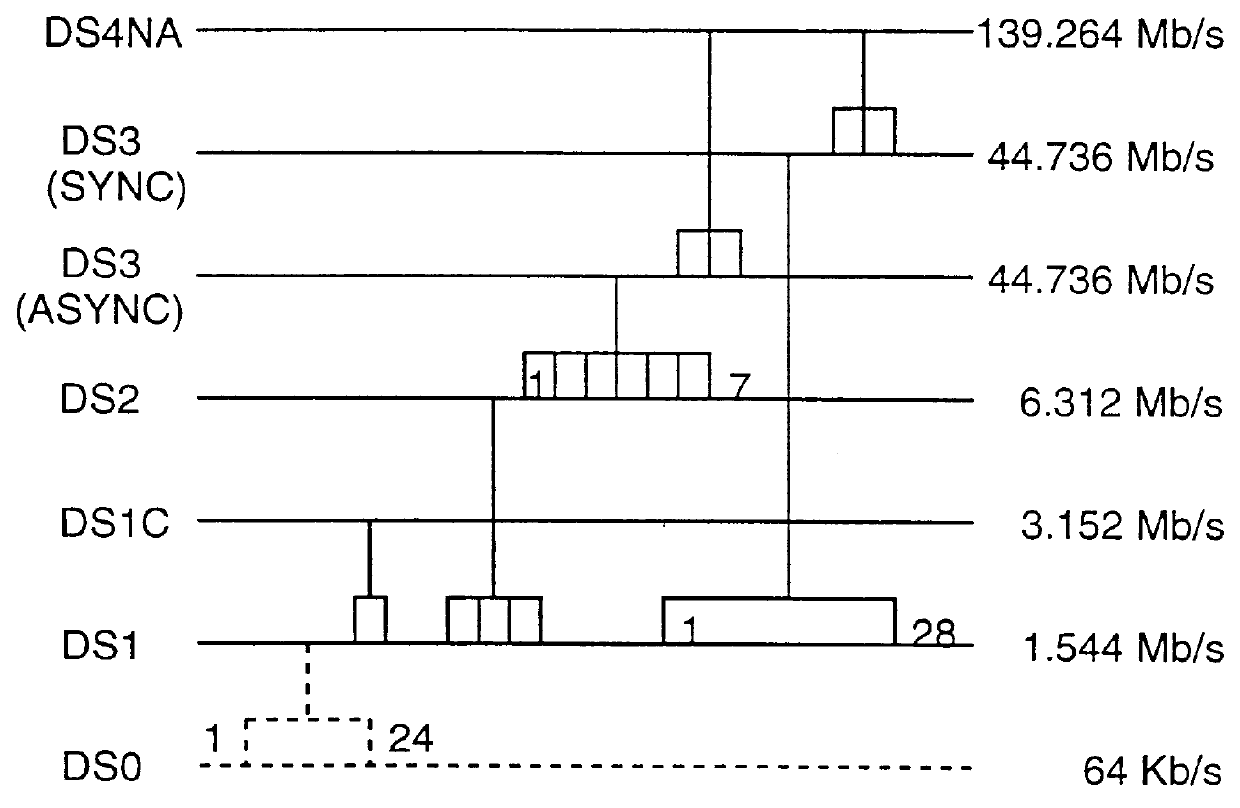

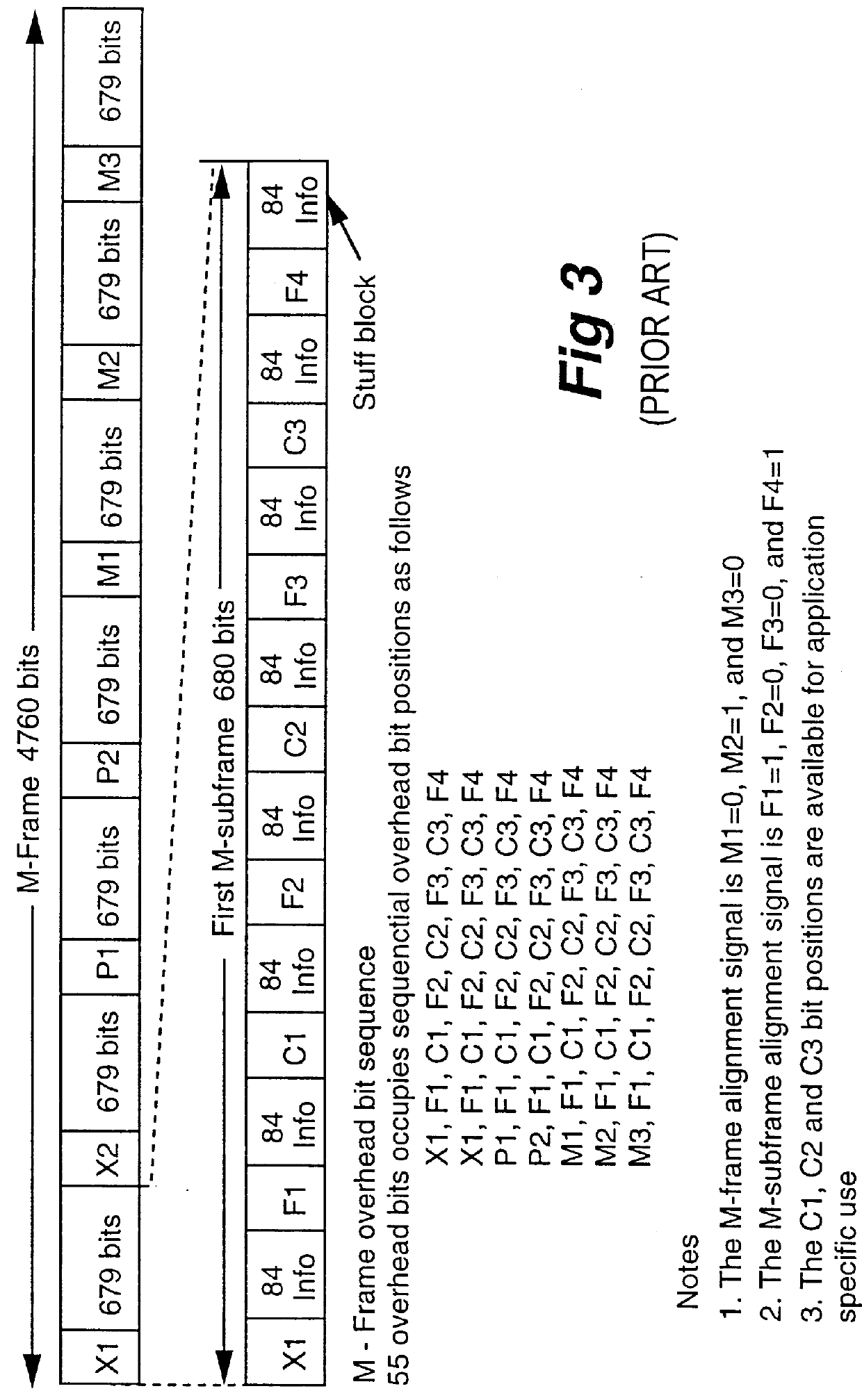

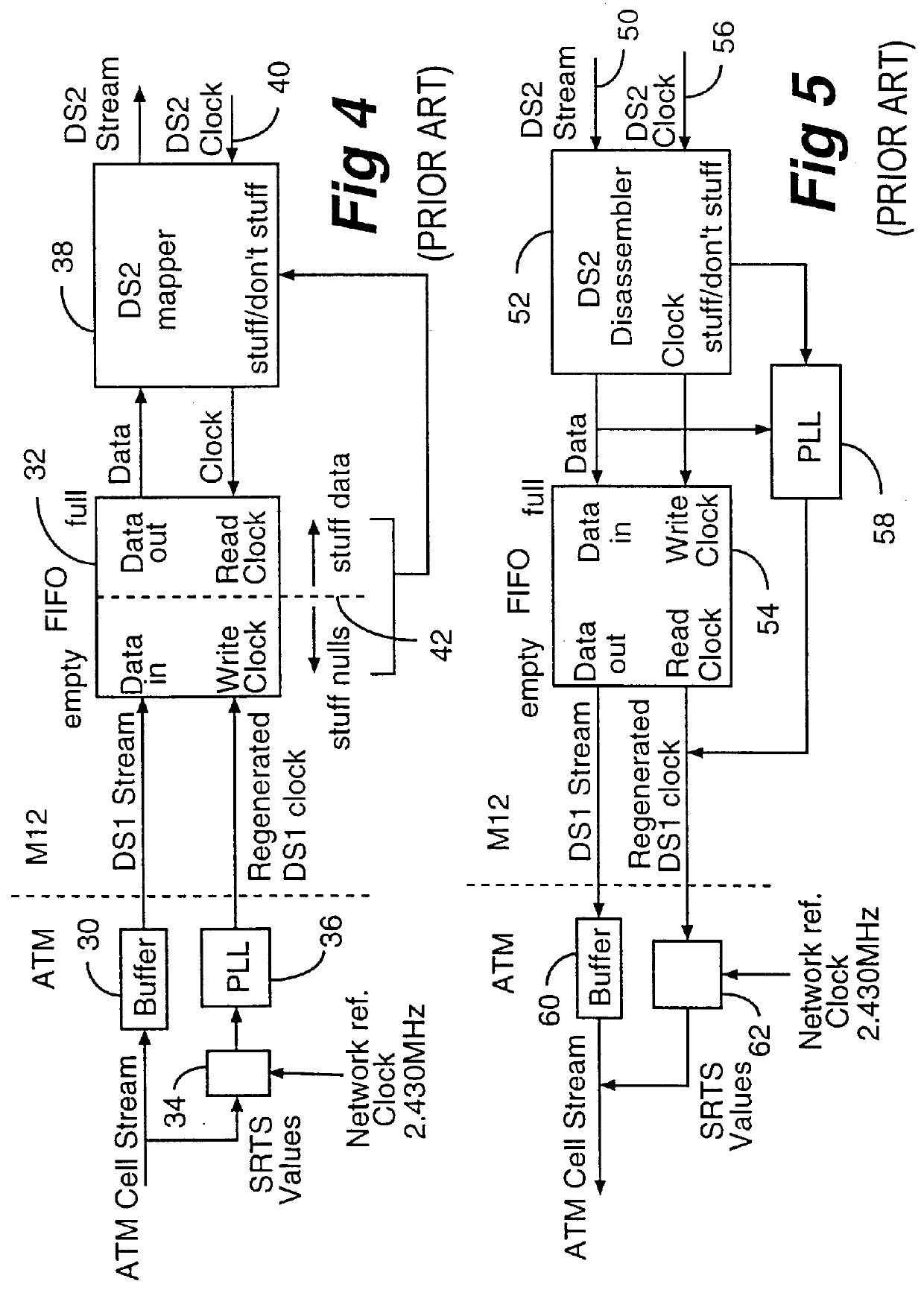

Method of and apparatus for multiplexing and demultiplexing digital signal streams

Multiplexing and demultiplexing are commonplace for an efficient bandwidth utilization in telecommunications. SRTS (Synchronous Residual Time Stamp) technique is widely used for timing recovery in processing of digital signal streams. The bit stuffing is also prevalent for various purposes, one being rate adjustment. The invention performs the SRTS technique entirely digitally to monitor the rate of slower speed signal streams in relation to the rate of a higher speed stream. The digital implementation permits the use of context switching for processing a plurality of digital signal streams. As the result, hardware requirement is greatly reduced.

Owner:NORTEL NETWORKS LTD

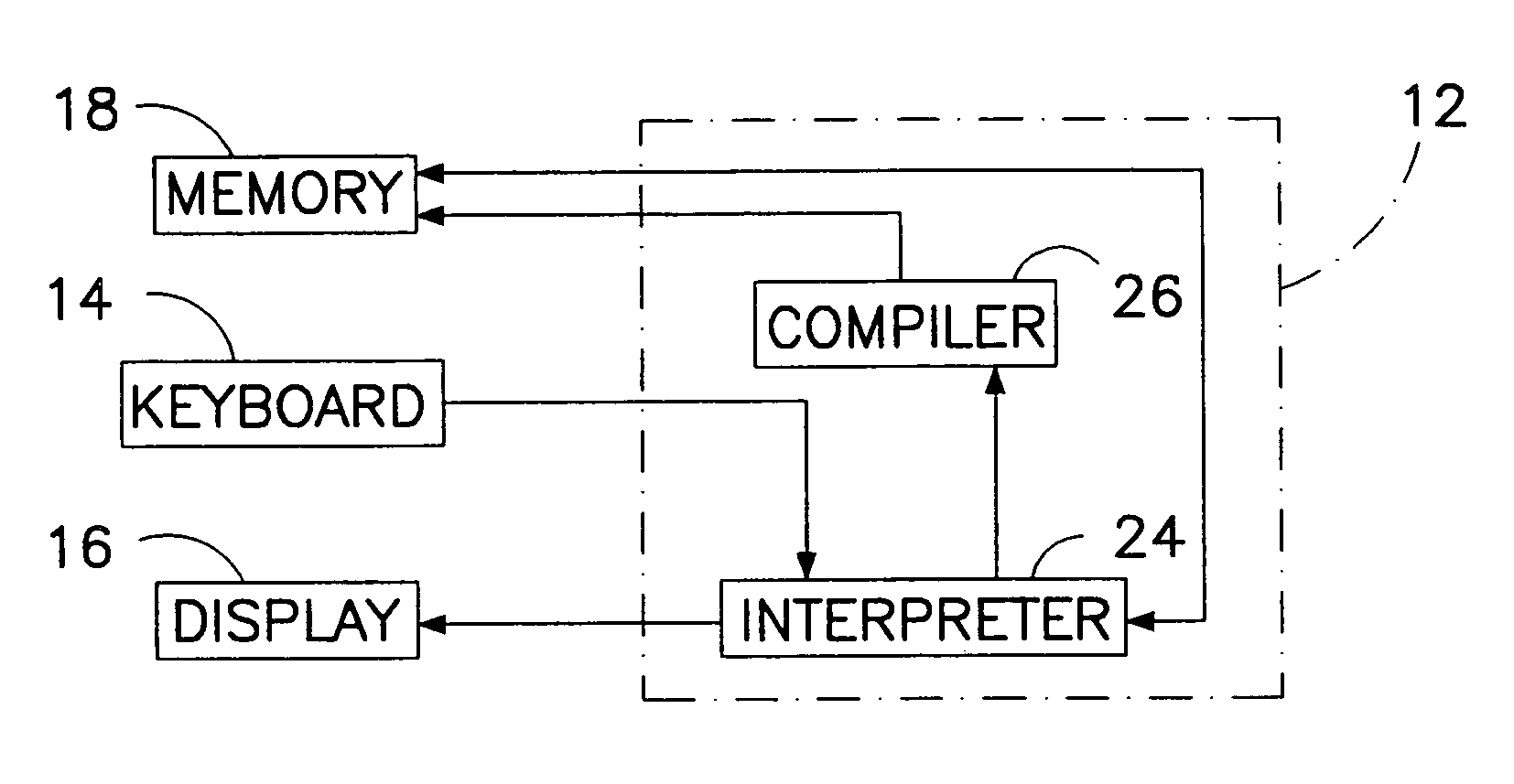

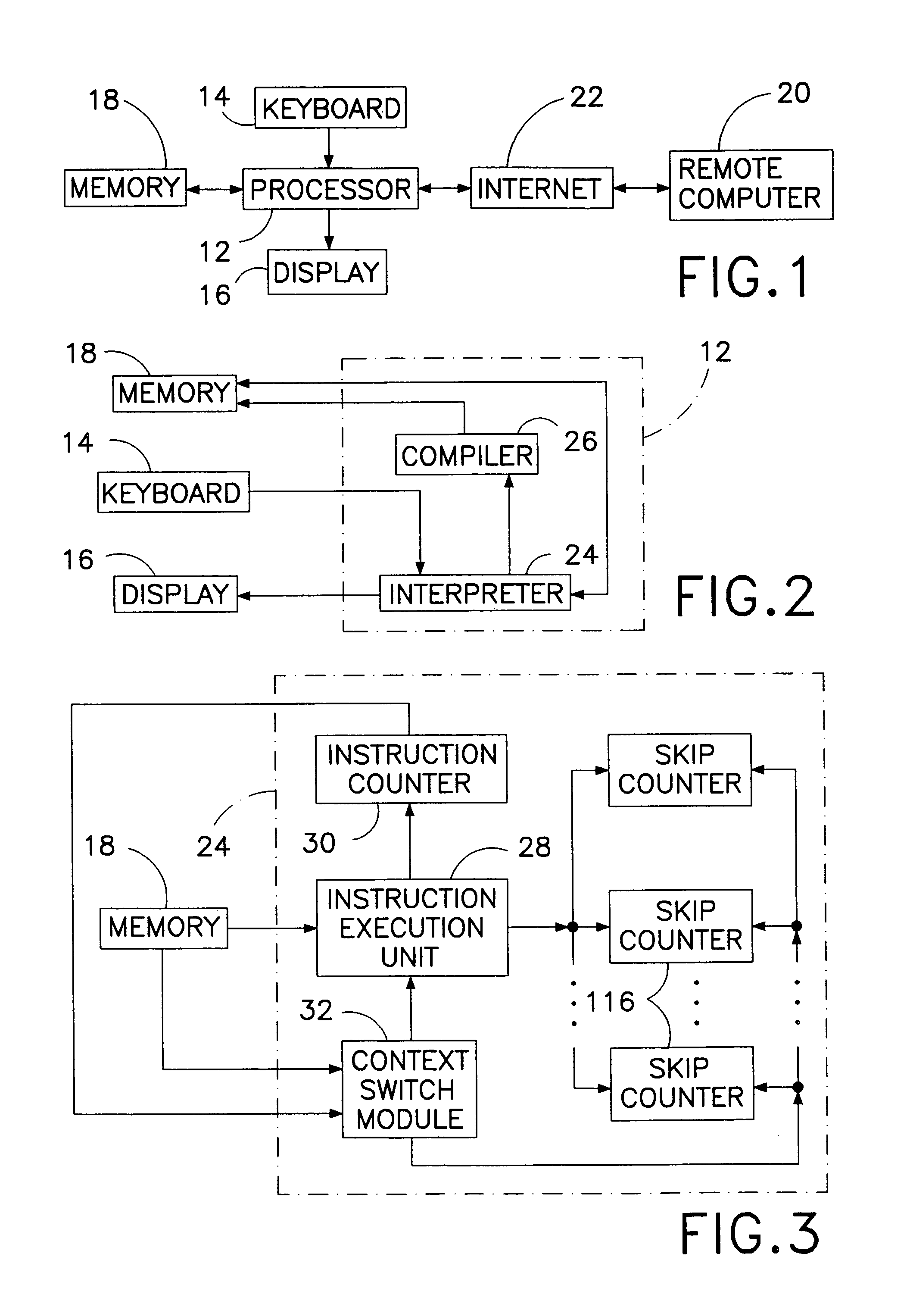

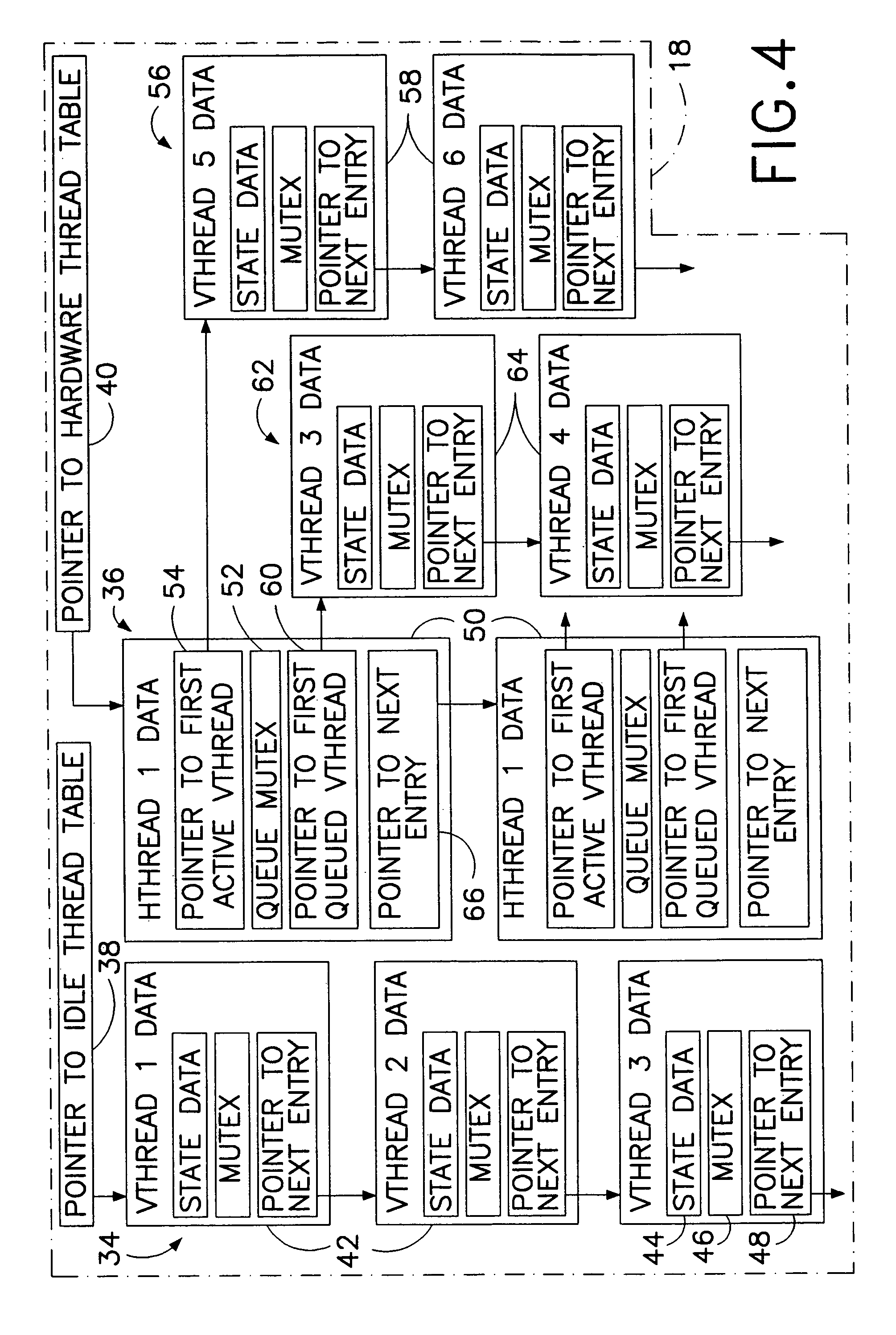

Computer multi-tasking via virtual threading using an interpreter

InactiveUS7234139B1Eliminate dependenciesDifferent operationalProgram initiation/switchingInterprogram communicationLocal variablePseudocode

In the operation of a computer, a plurality of bytecode or pseudocode instructions, at least some of the pseudocode instructions comprising a plurality of machine code instructions, are stored in a computer memory. For each of a plurality of tasks or jobs to be performed by the computer, a respective virtual thread of execution context data is automatically created. The virtual threads each include (a) a memory location of a next one of the pseudocode instructions to be executed in carrying out the respective task or job and (b) the values of any local variables required for carrying out the respective task or job. At least some of the tasks or jobs each entails execution of a respective one of the pseudocode instructions comprising a plurality of machine language instructions. Each of the tasks or jobs are processed in a respective series of time slices or processing slots under the control of the respective virtual thread, and, in every context switch between different virtual threads, such context switch is undertaken only after completed execution of a currently executing one of the pseudocode instructions.

Owner:CERINET USA

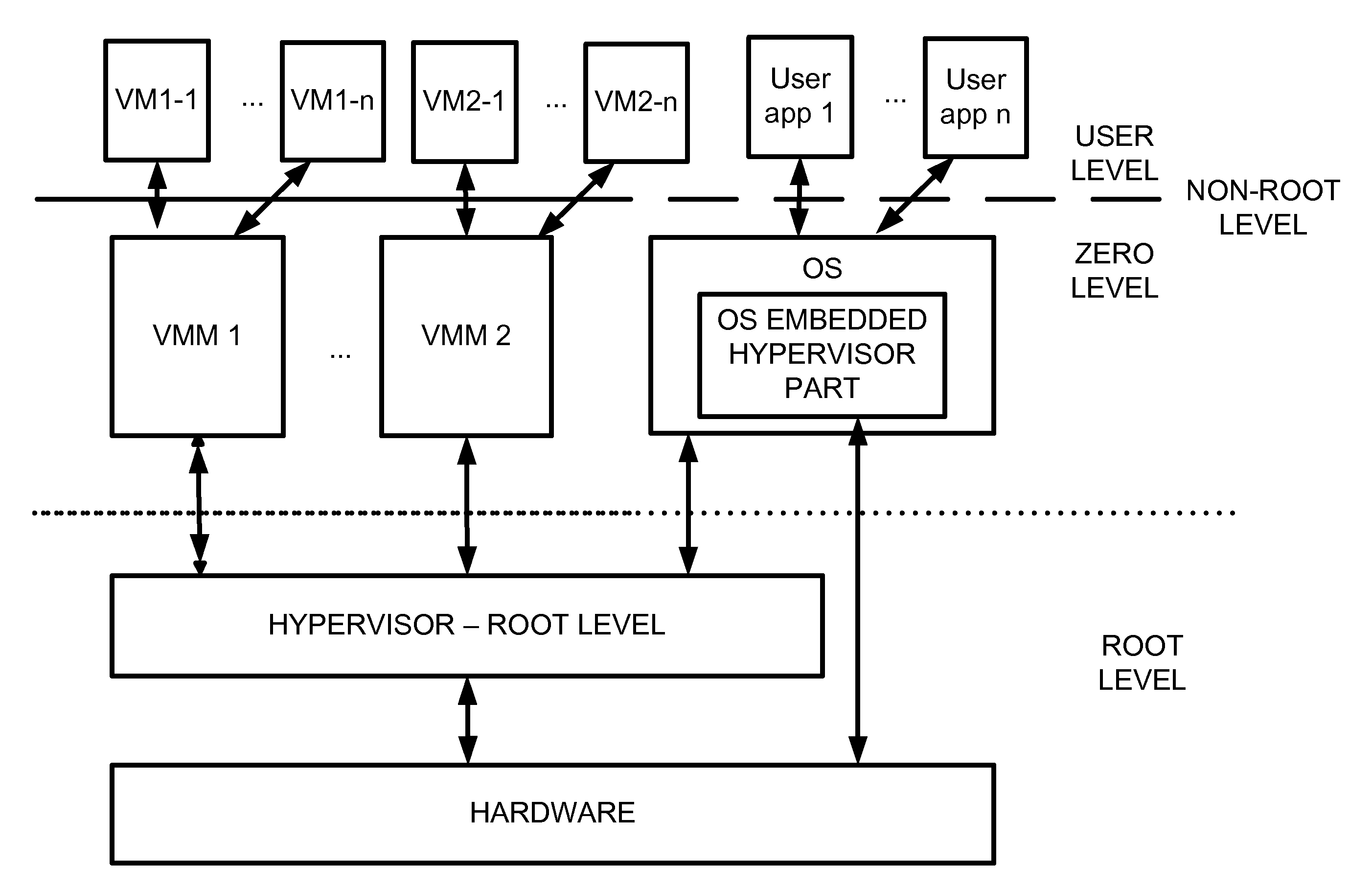

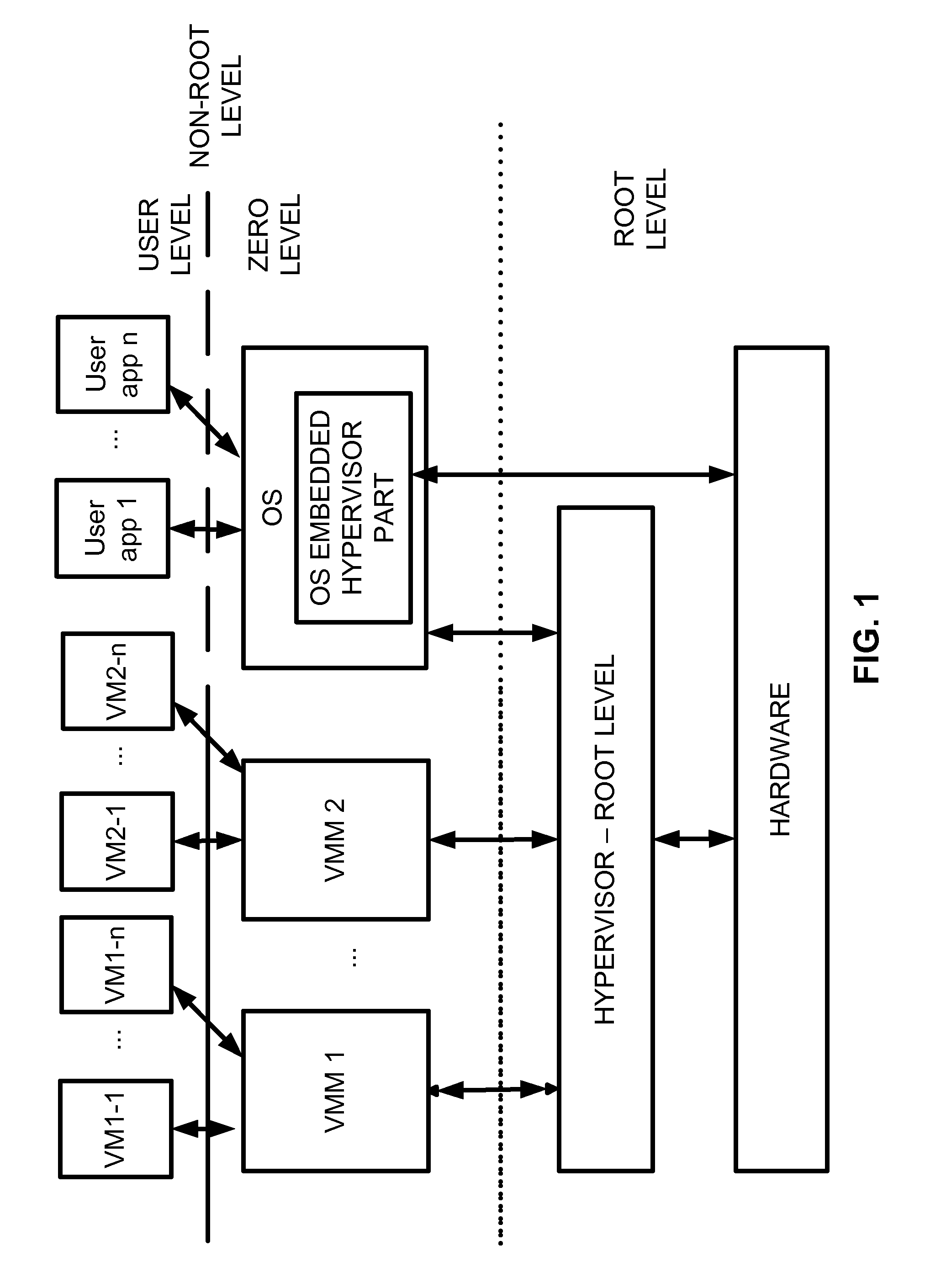

Virtualization system with hypervisor embedded in bios or using extensible firmware interface

A computer system includes a first portion of a Hypervisor is loaded into the memory as a part of an Extensible Firmware Interface upon start up and prior to loading of an operating system. The first portion is responsible for context switching, at least some interrupt handling, and memory protection fault handling. The first portion runs on a root level. An operating system is loaded into a highest privilege level. A second portion of the Hypervisor is loaded into operating system space together with the operating system, and runs on the highest privilege level, and is responsible for (a) servicing the VMM, (b) servicing the VMs, (c) enabling communication between code launched on non-root level with the second portion of the Hypervisor to perform security checks of trusted code portions and to enable root mode for the code portions if allowable. The VMM runs on the highest privilege level. A Virtual Machine is running under control of the VMM. Trusted code runs on non-root level. The first portion of the Hypervisor verifies trusted code portions during their loading or launch time, and the trusted code is executed on root level.

Owner:PARALLELS INT GMBH

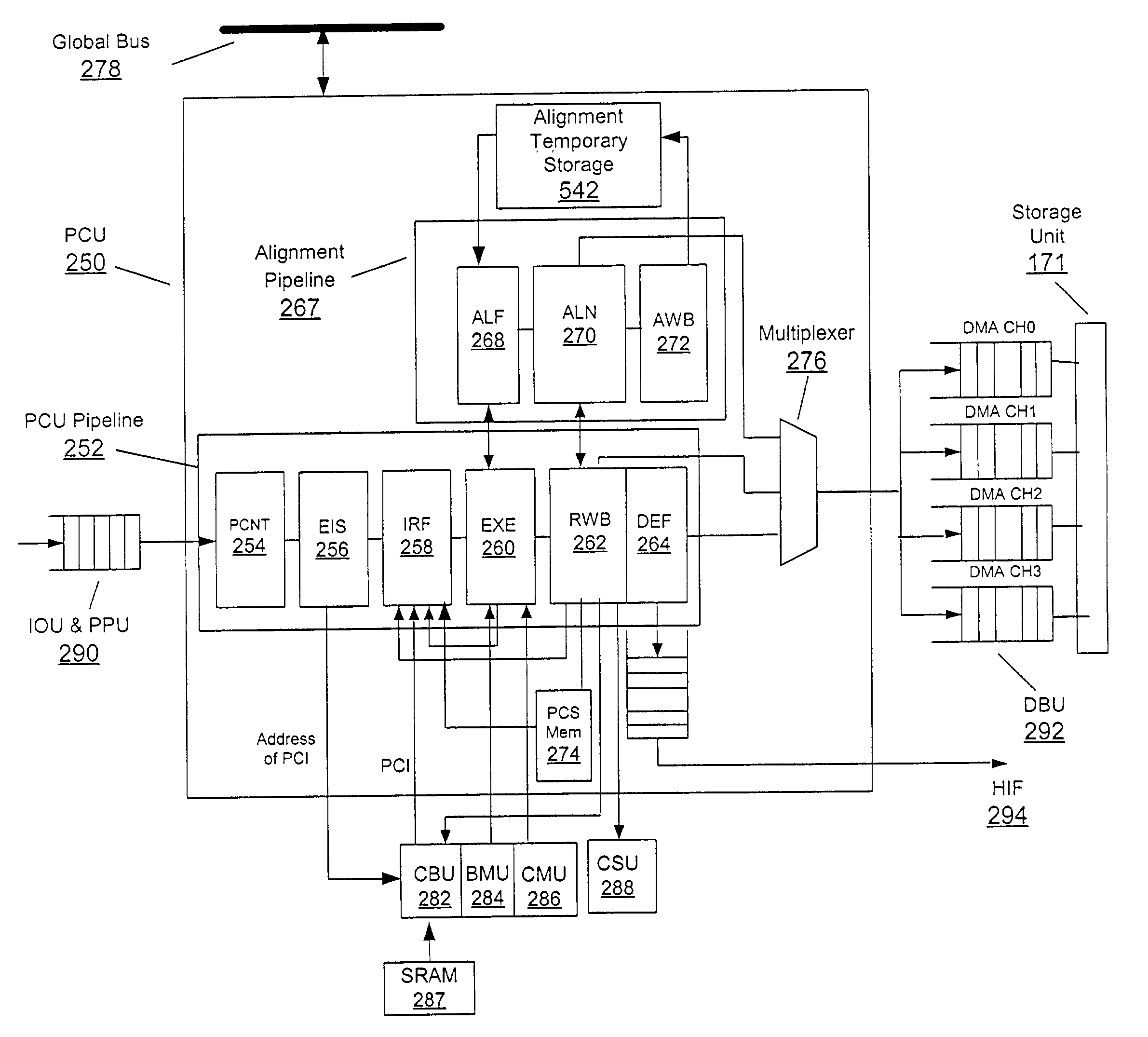

Vertical instruction and data processing in a network processor architecture

ActiveUS6996117B2Time-division multiplexGeneral purpose stored program computerRolloverNetwork architecture

An embodiment of this invention pertains to a network processor that processes incoming information element segments at very high data rates due, in part, to the fact that the processor is deterministic (i.e., the time to complete a process is known) and that it employs a pipelined “multiple instruction single date” (“MISD”) architecture. This MISD architecture is triggered by the arrival of the incoming information element segment. Each process is provided dedicated registers thus eliminating context switches. The pipeline, the instructions fetched, and the incoming information element segment are very long in length. The network processor includes a MISD processor that performs policy control functions such as network traffic policing, buffer allocation and management, protocol modification, timer rollover recovery, an aging mechanism to discard idle flows, and segmentation and reassembly of incoming information elements.

Owner:BAY MICROSYSTEMS INC

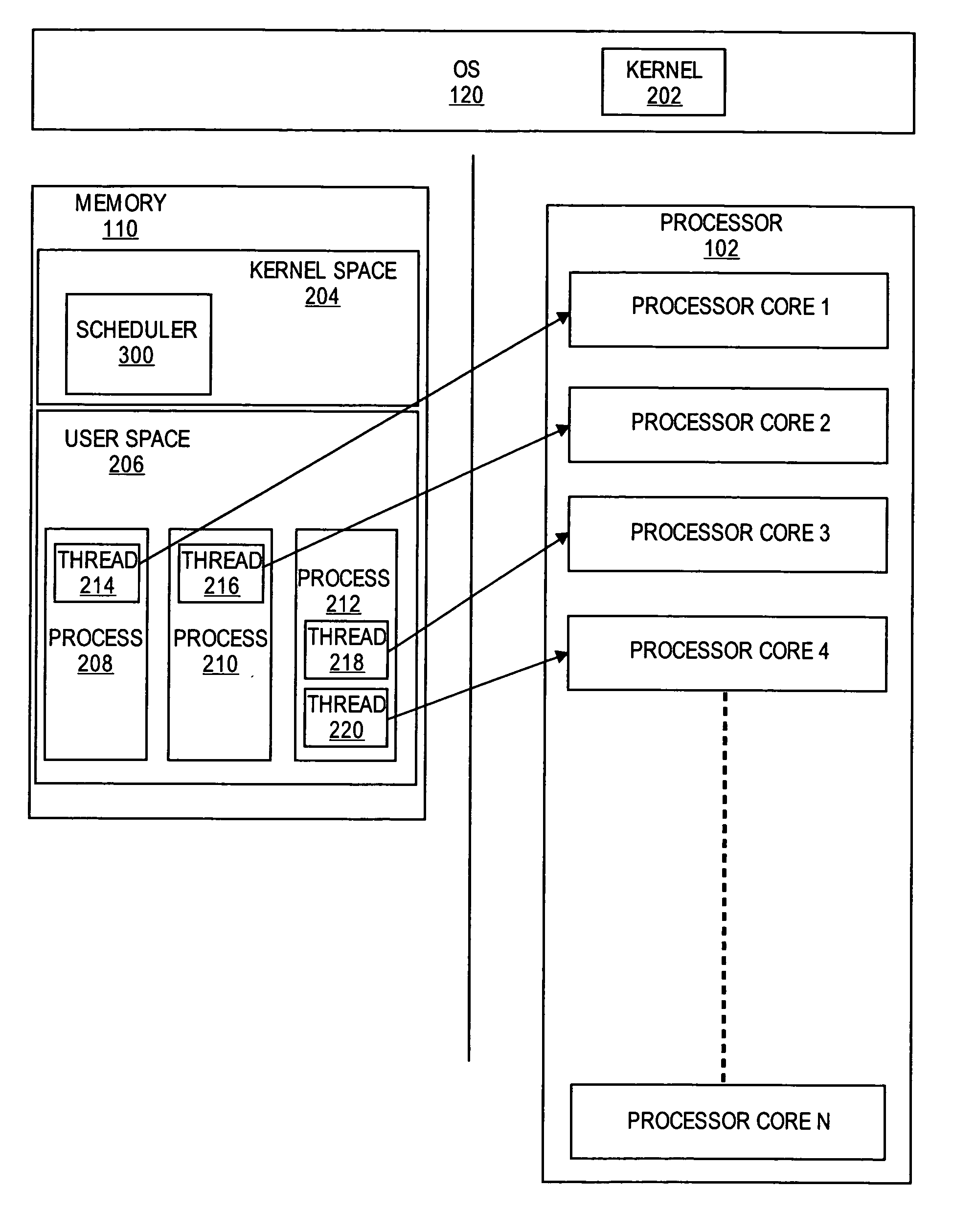

Methods and systems for scheduling processes in a multi-core processor environment

InactiveUS20070204268A1Energy efficient ICTEnergy efficient computingOperational systemMulti-core processor

Embodiments of the present invention provide efficient scheduling in a multi-core processor environment. In some embodiments, each core is assigned, at most, one execution context. Each execution context may then asynchronously run on its assigned core. If execution context is blocked, then its dedicated core may be suspended or powered down until the execution context resumes operation. The processor core may remain dedicated to a particular thread, and thus, avoid the costly operations of a process or context switch, such as clearing register contents. In other embodiments, execution contexts are partitioned into two groups. The execution contexts may be partitioned based on various factors, such as their relative priority. One group of the execution contexts may be assigned their own dedicated core and allowed to run asynchronously. The other group of execution contexts, such as those with a lower priority, are co-scheduled among the remaining cores by the scheduler of the operating system.

Owner:RED HAT

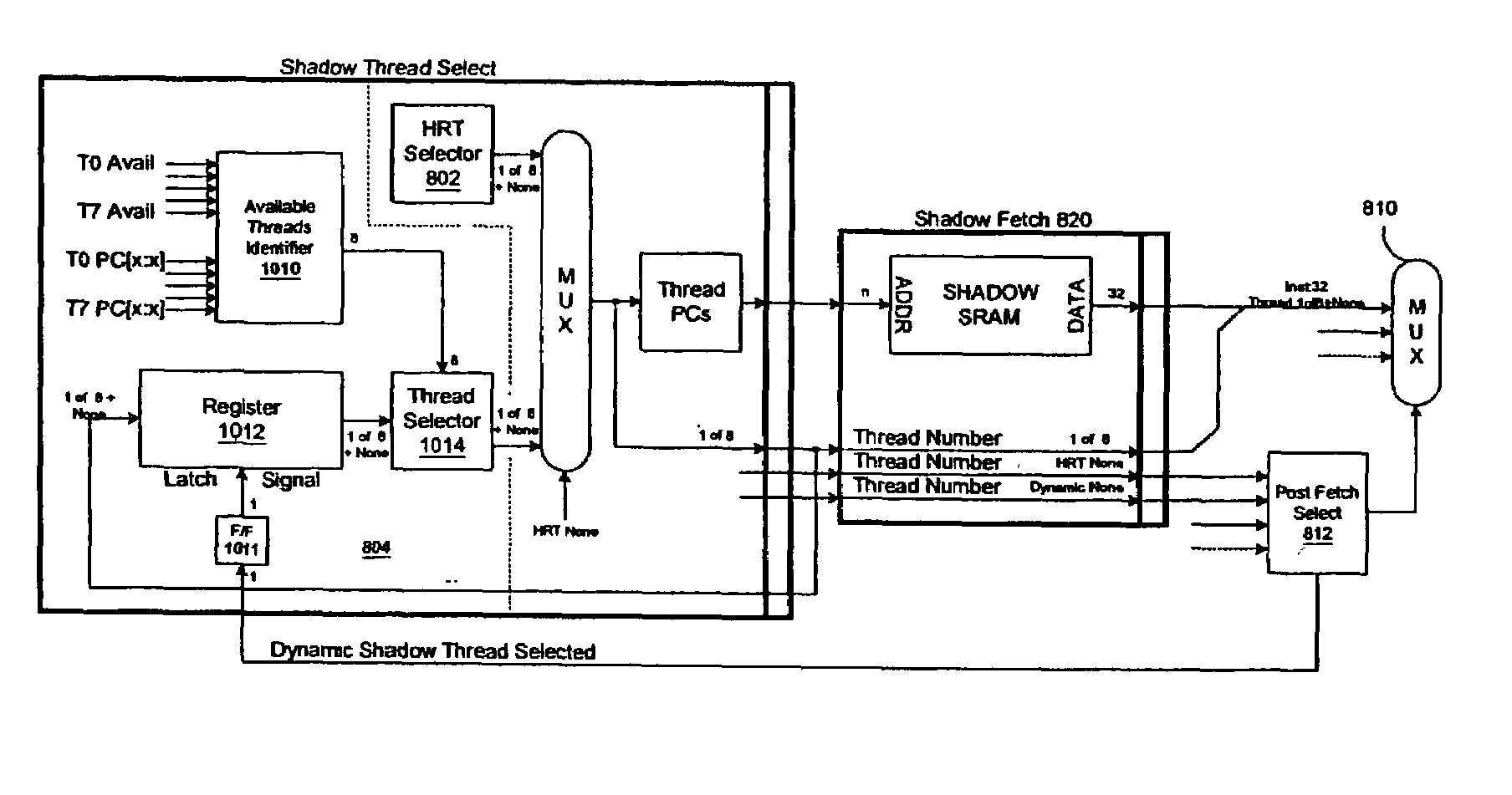

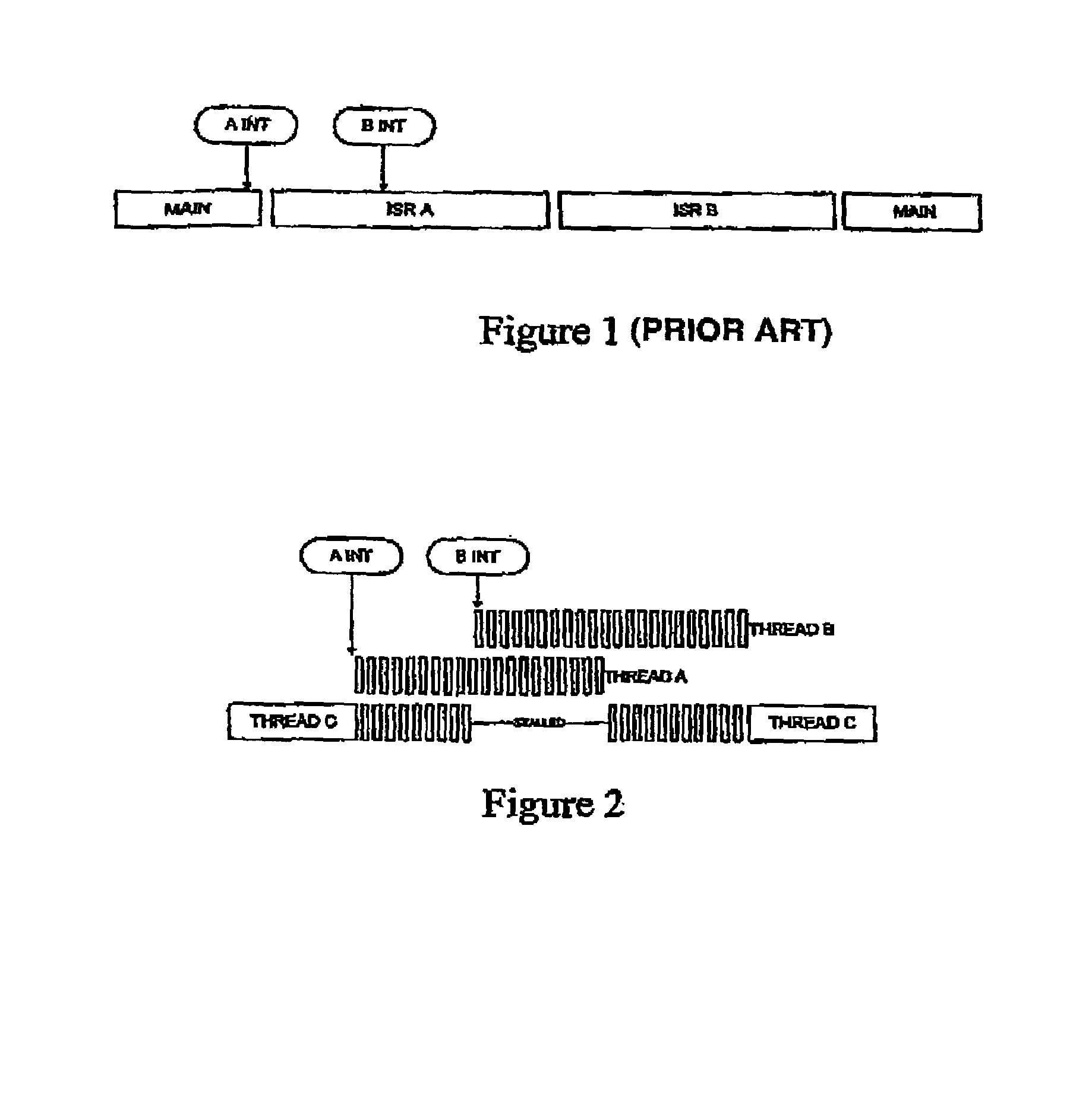

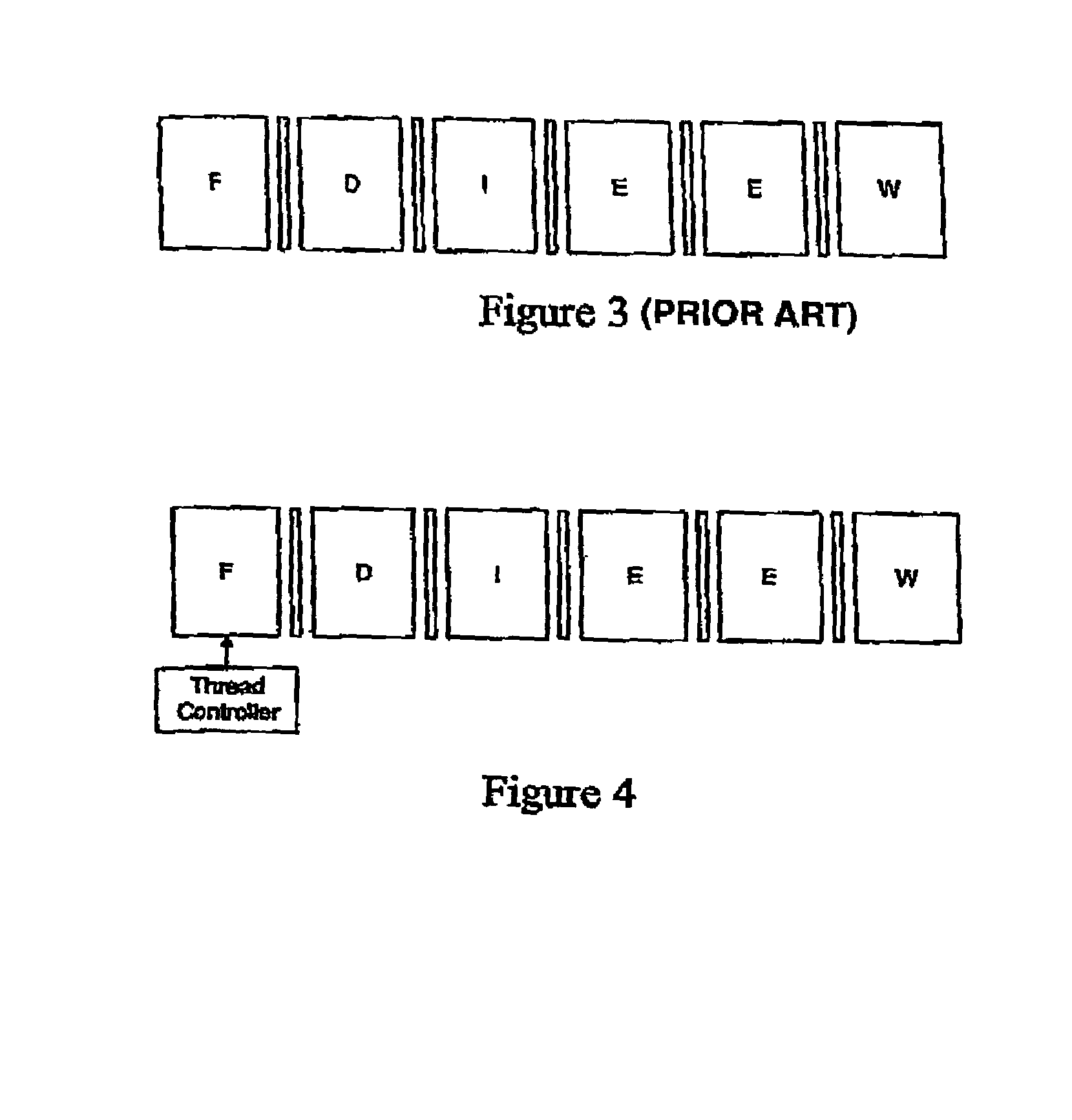

System and method for instruction level multithreading scheduling in a embedded processor

A system and method for enabling multithreading in a embedded processor, invoking zero-time context switching in a multithreading environment, scheduling multiple threads to permit numerous hard-real time and non-real time priority levels, fetching data and instructions from multiple memory blocks in a multithreading environment, and enabling a particular thread to modify the multiple states of the multiple threads in the processor core.

Owner:MAYFIELD XI +9

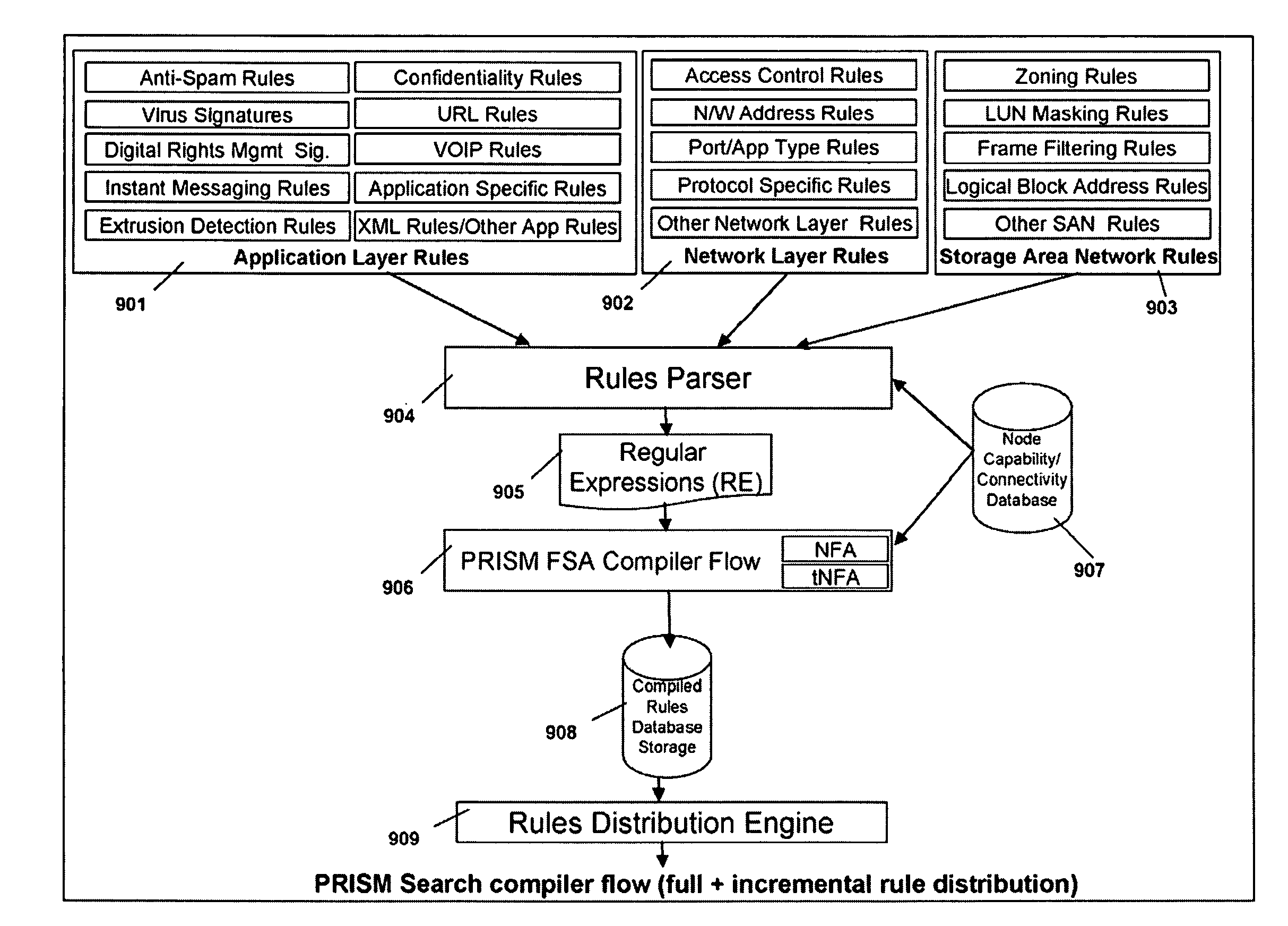

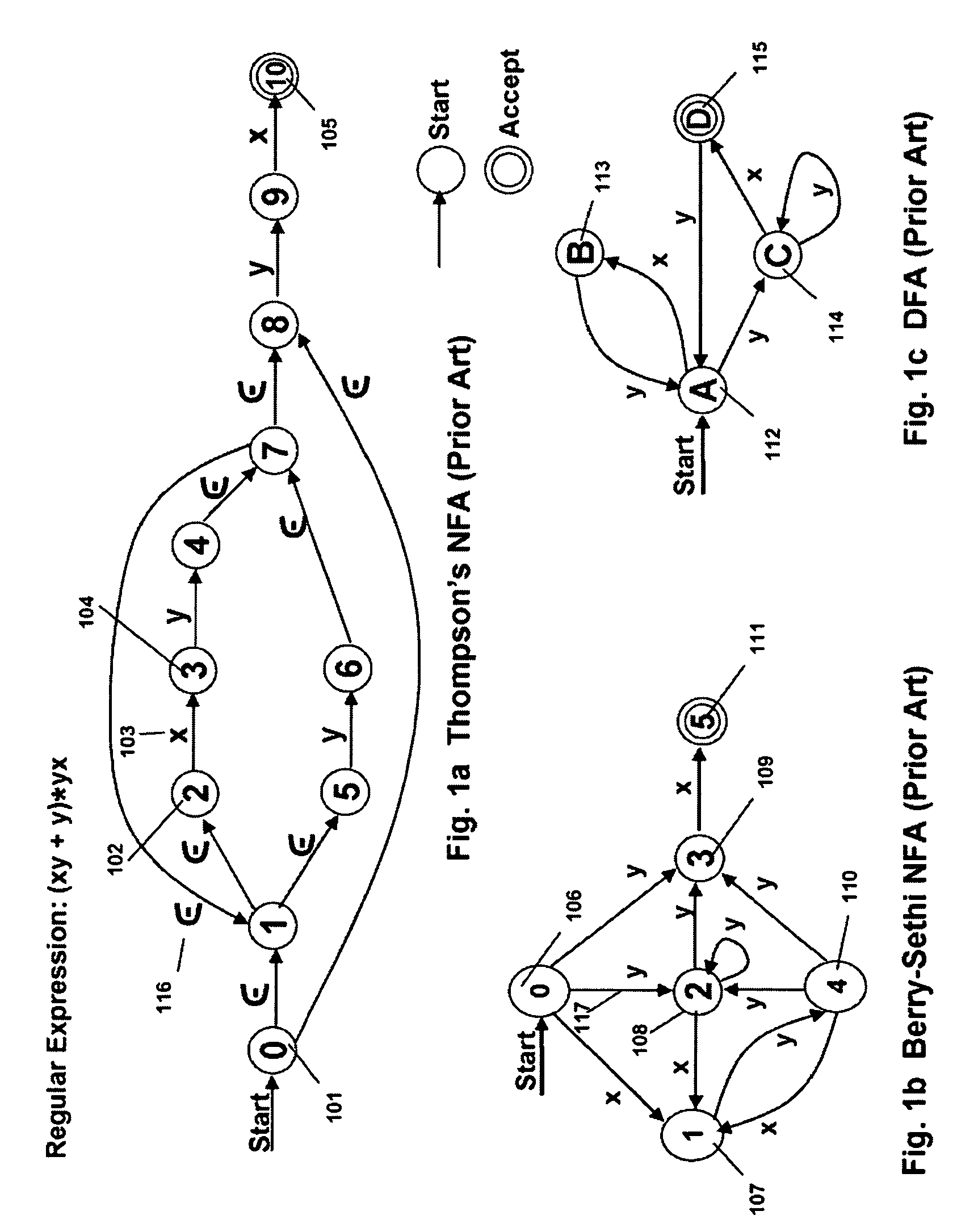

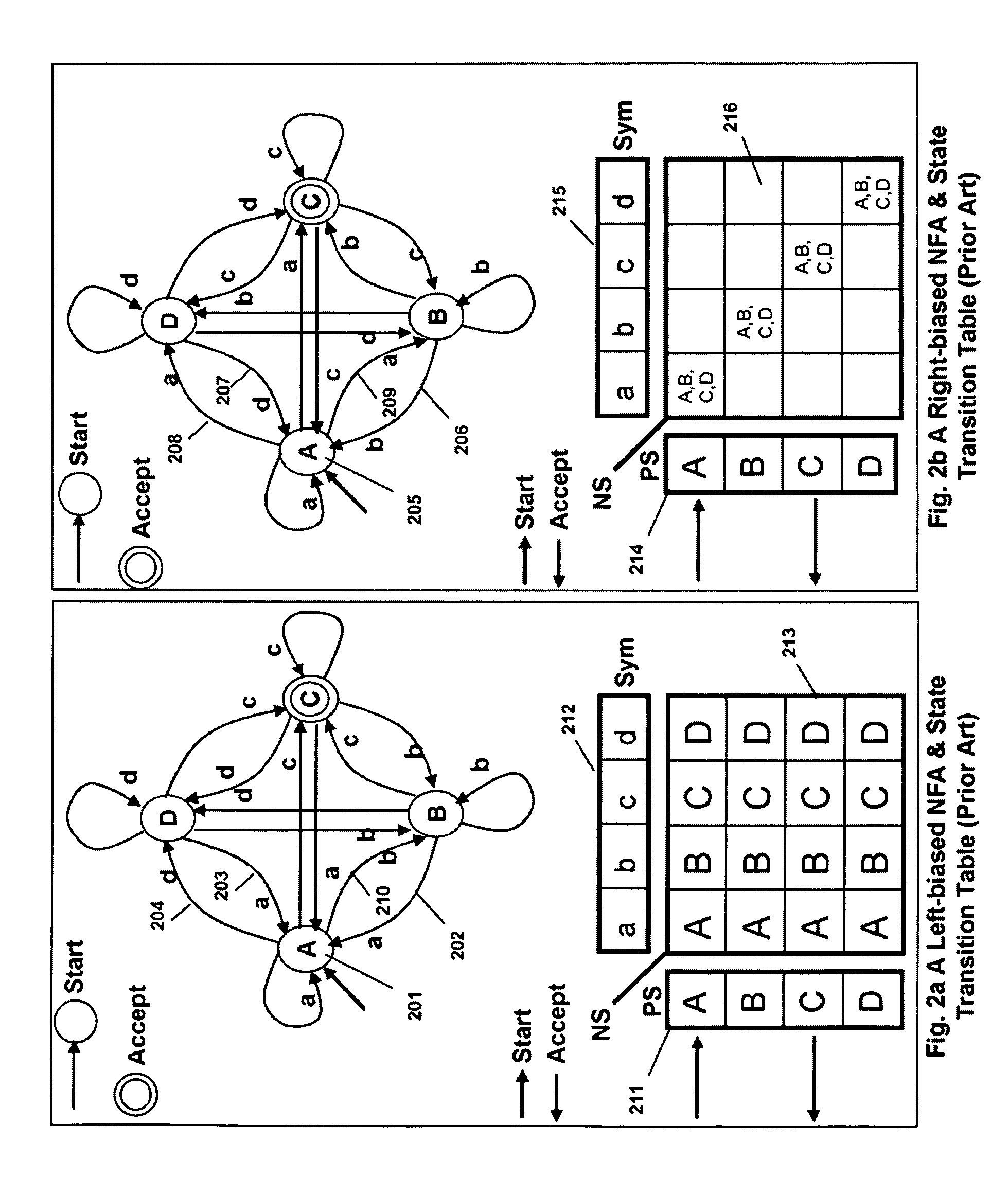

FSA Context Switch Architecture for Programmable Intelligent Search Memory

ActiveUS20090049230A1Reduce overheadSoap detergents with organic compounding agentsDigital data information retrievalMemory architectureAutomaton

Memory architecture provides capabilities for high performance content search. The architecture creates an innovative memory that can be programmed with content search rules which are used by the memory to evaluate presented content for matching with the programmed rules. When the content being searched matches any of the rules programmed in the Programmable Intelligent Search Memory (PRISM) action(s) associated with the matched rule(s) are taken. Content search rules comprise of regular expressions which are converted to finite state automata (FSA) and then programmed in PRISM for evaluating content with the search rules. PRISM architecture comprises of a plurality of programmable PRISM Search Engines (PSE) organized in PRISM memory clusters that are used simultaneously to search content presented to PRISM. A context switching architecture enables transitioning of PSE states between different input contexts.

Owner:INFOSIL INC

Spiking neural network object recognition apparatus and methods

InactiveUS20130297539A1Reduce probabilityDigital computer detailsDigital dataSynapseSpiking neural network

Apparatus and methods for feedback in a spiking neural network. In one approach, spiking neurons receive sensory stimulus and context signal that correspond to the same context. When the stimulus provides sufficient excitation, neurons generate response. Context connections are adjusted according to inverse spike-timing dependent plasticity. When the context signal precedes the post synaptic spike, context synaptic connections are depressed. Conversely, whenever the context signal follows the post synaptic spike, the connections are potentiated. The inverse STDP connection adjustment ensures precise control of feedback-induced firing, eliminates runaway positive feedback loops, enables self-stabilizing network operation. In another aspect of the invention, the connection adjustment methodology facilitates robust context switching when processing visual information. When a context (such an object) becomes intermittently absent, prior context connection potentiation enables firing for a period of time. If the object remains absent, the connection becomes depressed thereby preventing further firing.

Owner:BRAIN CORP

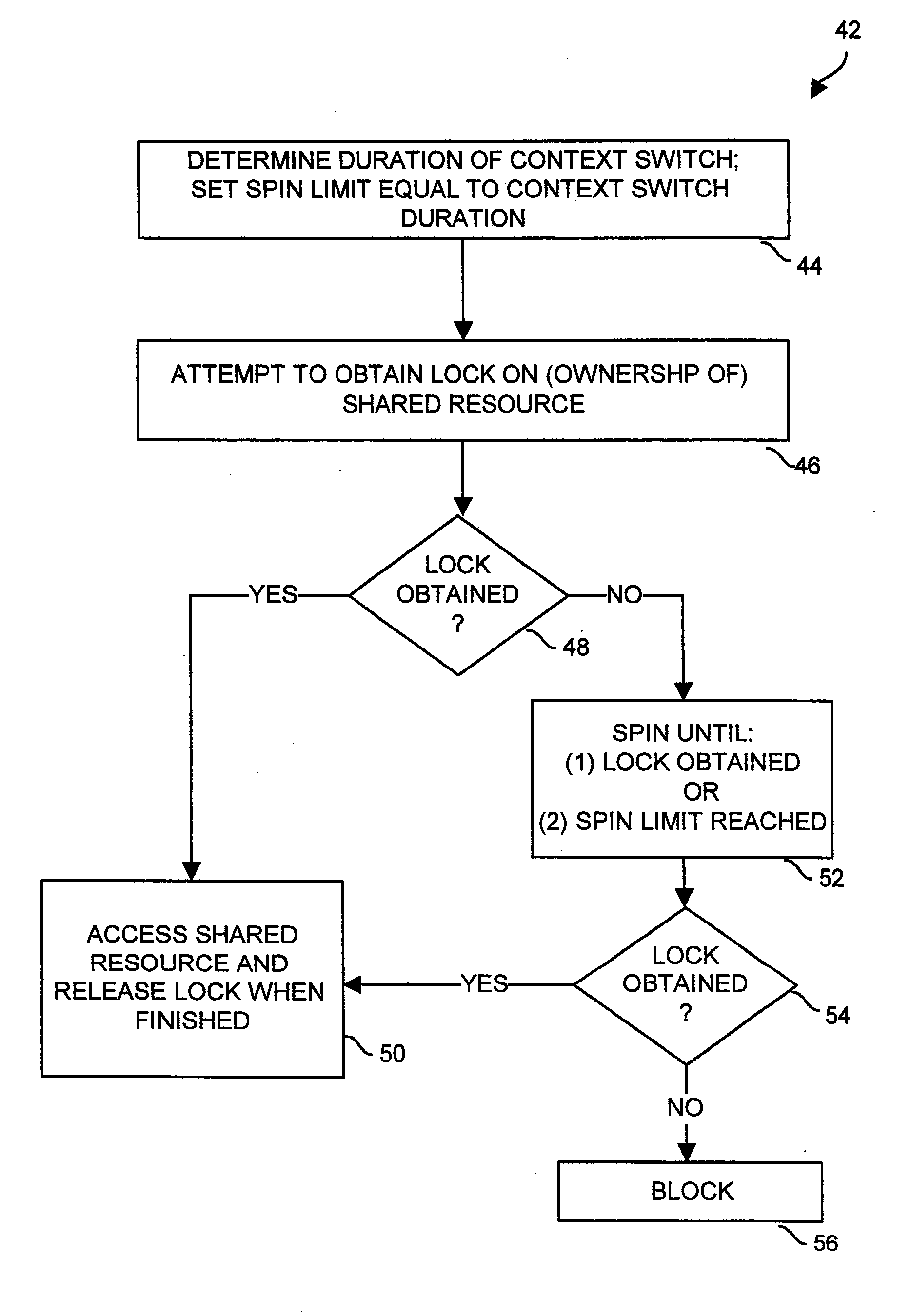

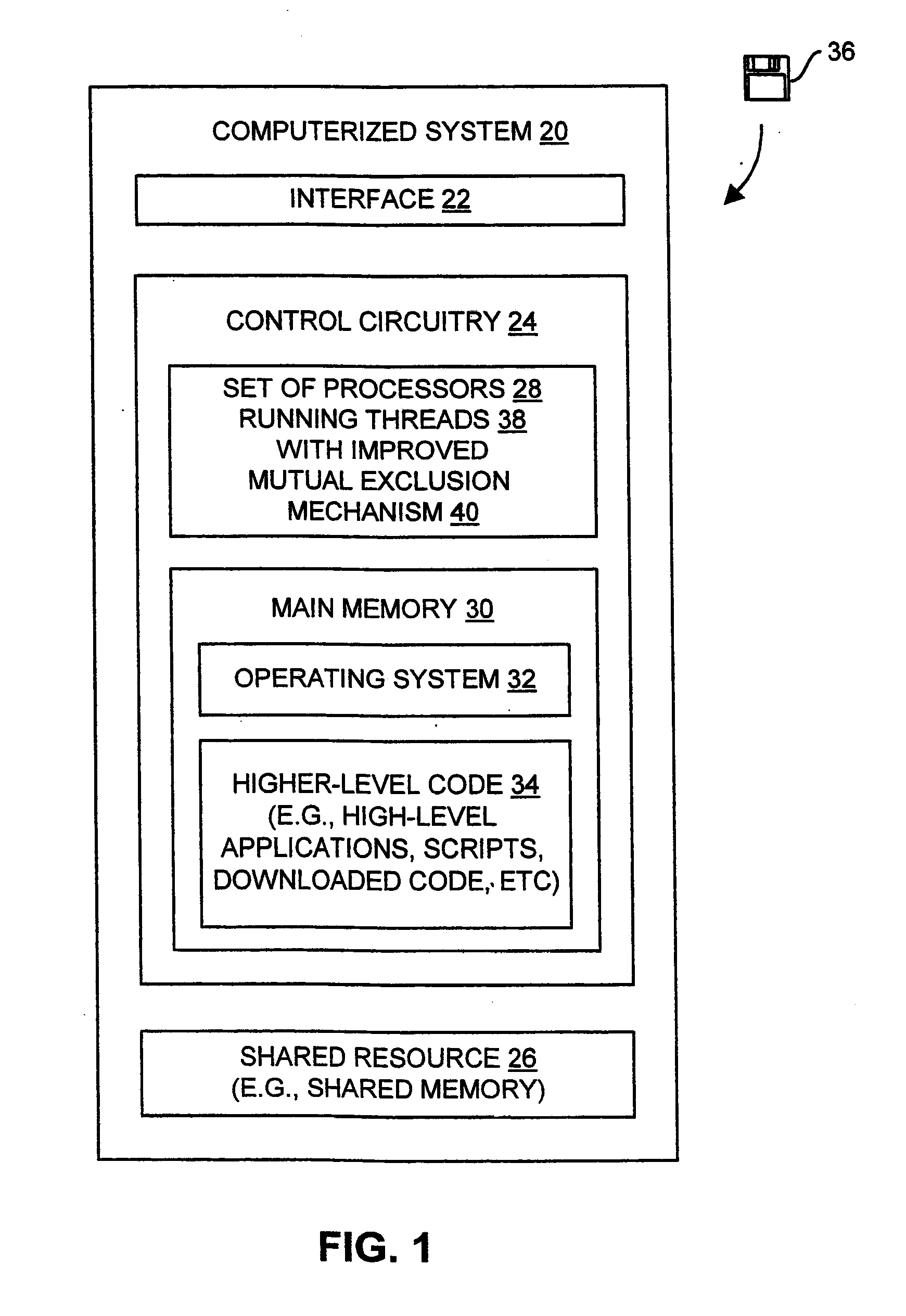

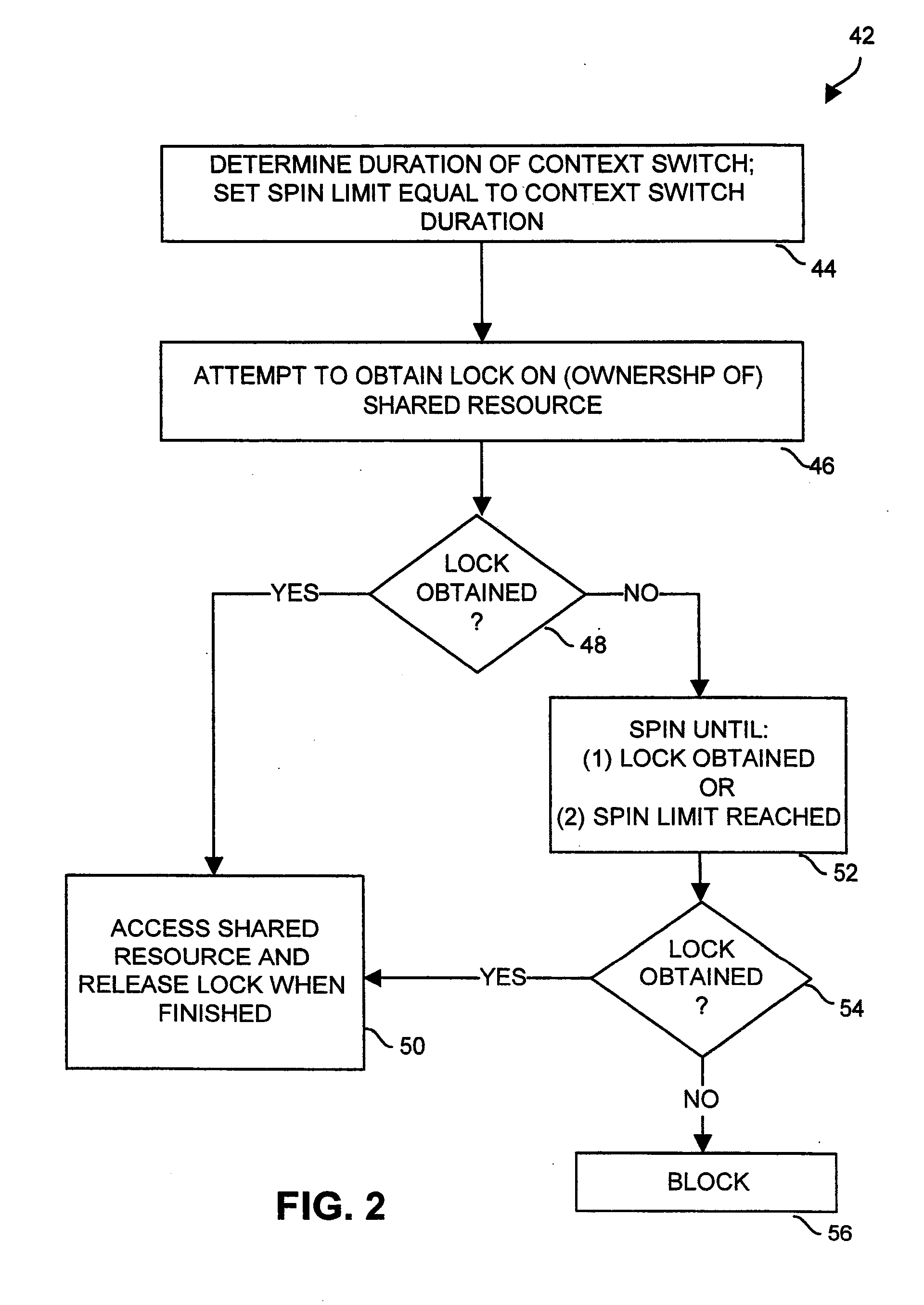

Adaptive spin-then-block mutual exclusion in multi-threaded processing

ActiveUS20090328053A1Avoid excessive delayMiss migrationMemory systemsProgram saving/restoringSpinsEngineering

Adaptive modifications of spinning and blocking behavior in spin-then-block mutual exclusion include limiting spinning time to no more than the duration of a context switch. Also, the frequency of spinning versus blocking is limited to a desired amount based on the success rate of recent spin attempts. As an alternative, spinning is bypassed if spinning is unlikely to be successful because the owner is not progressing toward releasing the shared resource, as might occur if the owner is blocked or spinning itself. In another aspect, the duration of spinning is generally limited, but longer spinning is permitted if no other threads are ready to utilize the processor. In another aspect, if the owner of a shared resource is ready to be executed, a thread attempting to acquire ownership performs a “directed yield” of the remainder of its processing quantum to the other thread, and execution of the acquiring thread is suspended.

Owner:ORACLE INT CORP

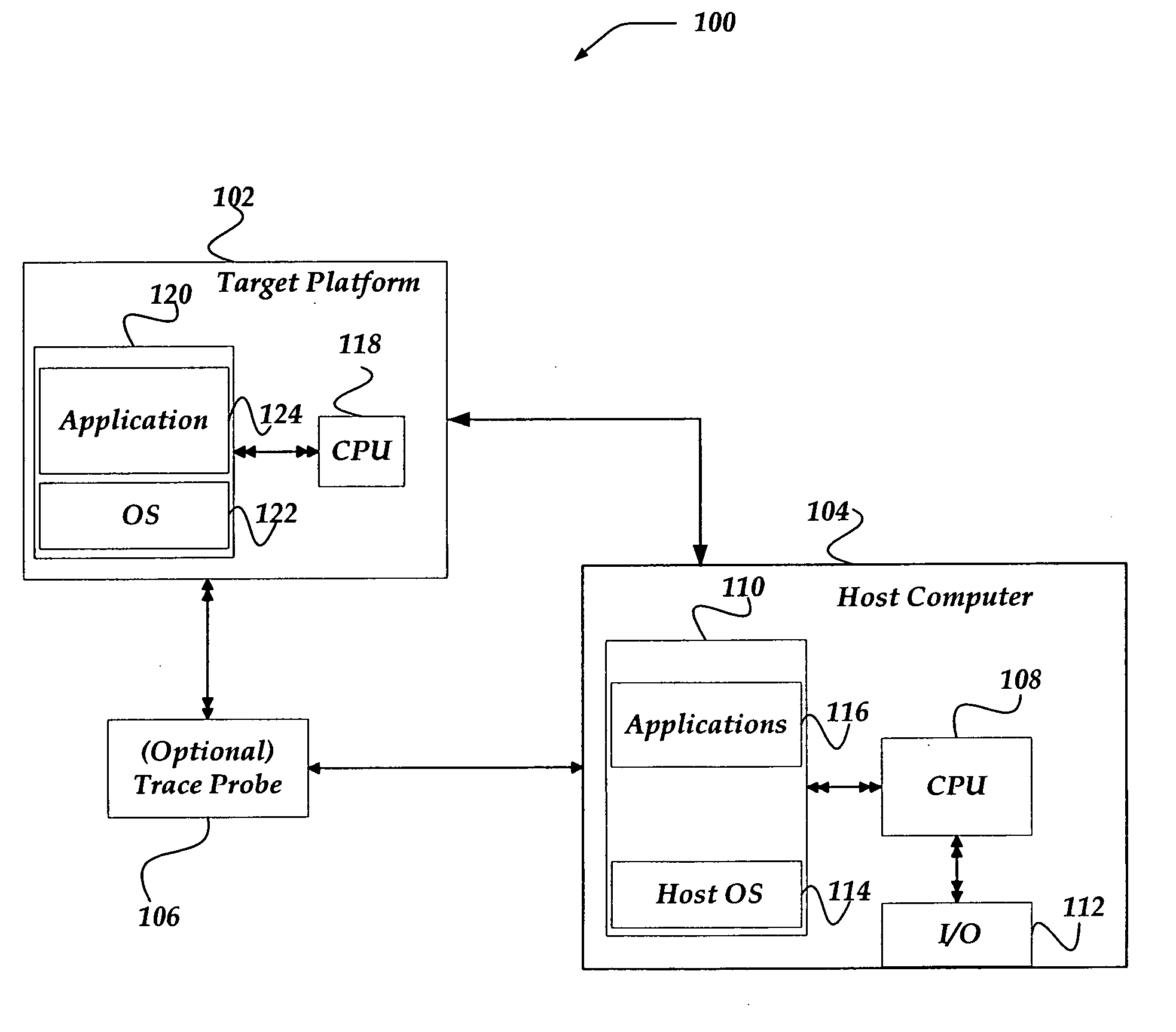

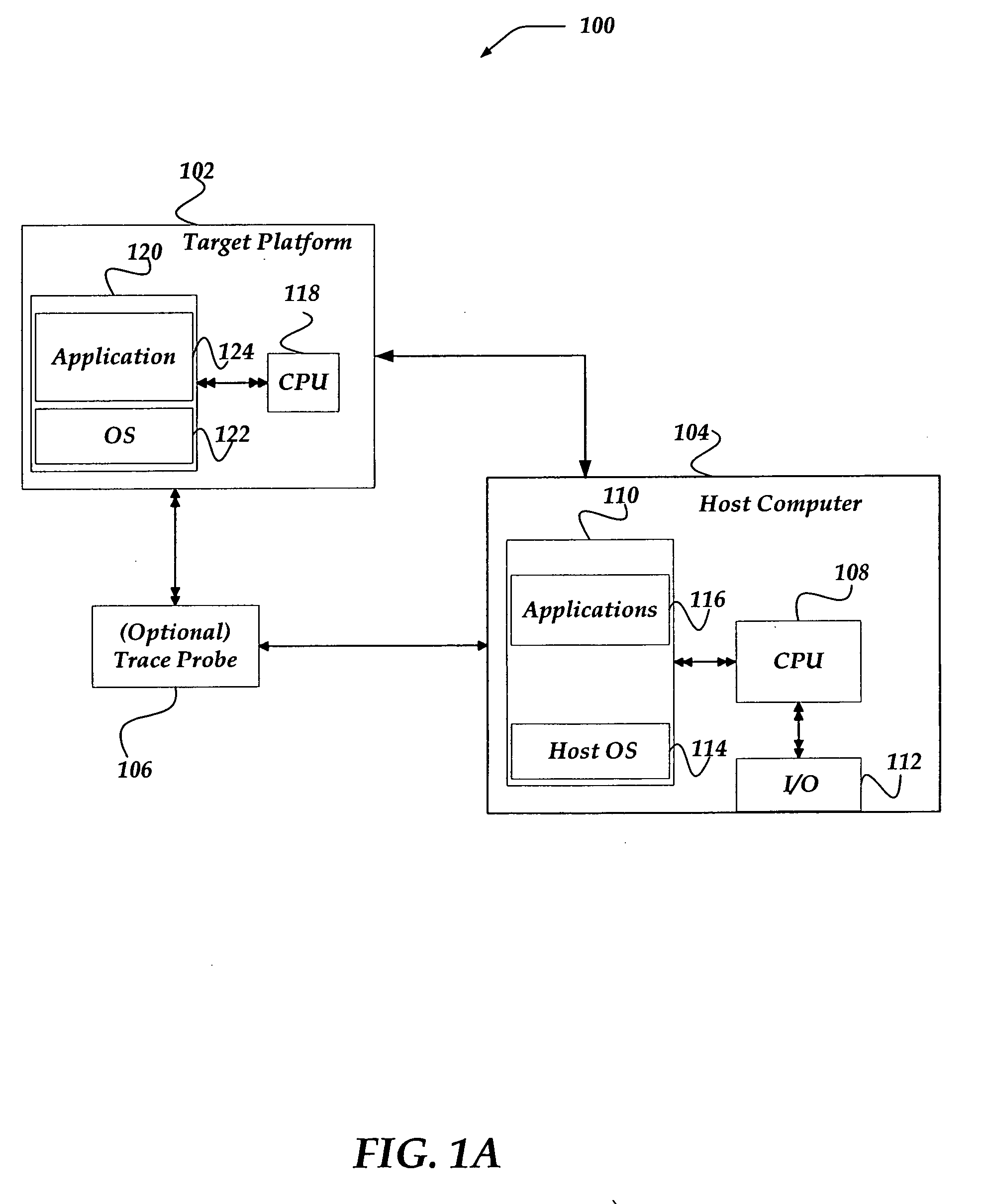

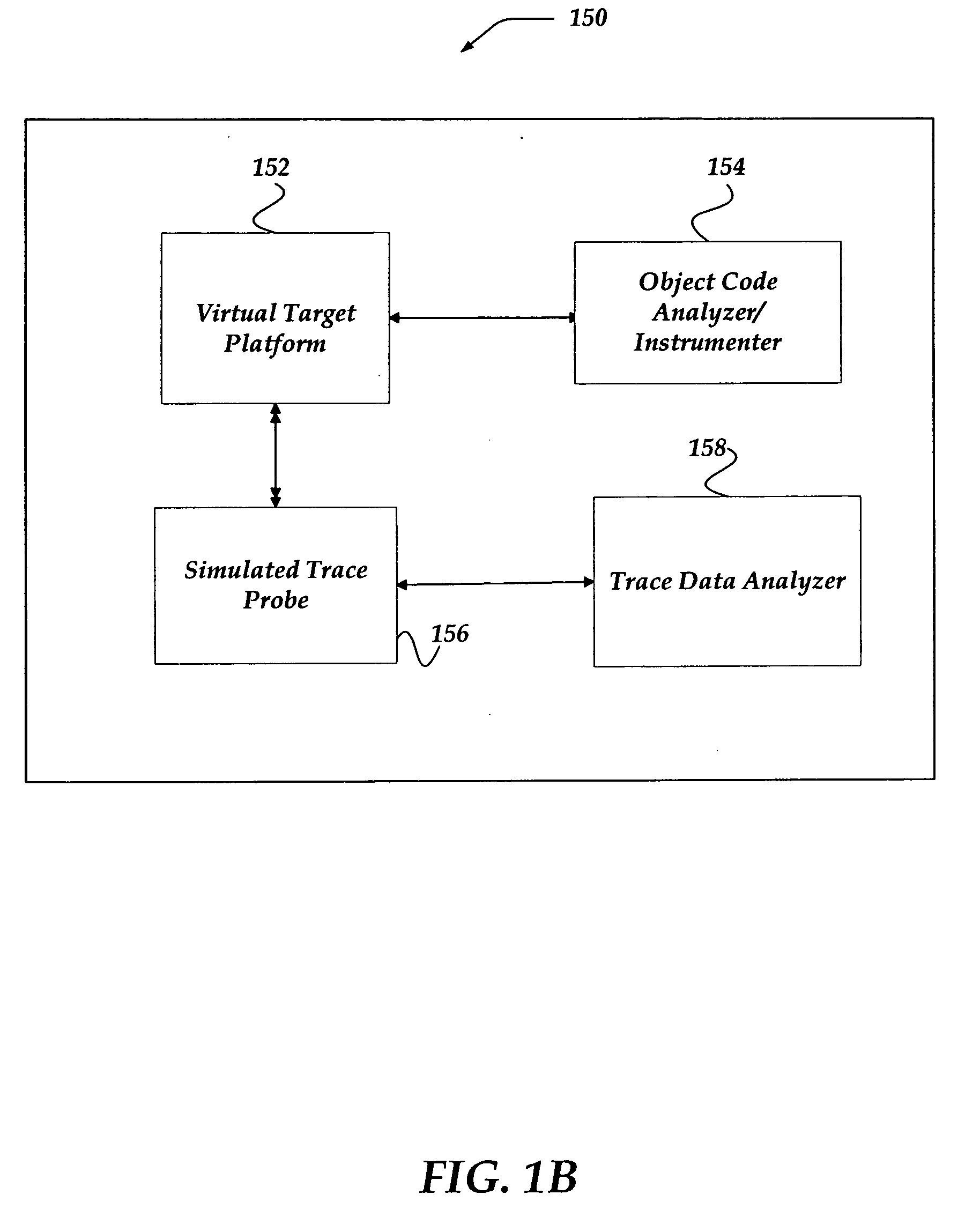

Post-compile instrumentation of object code for generating execution trace data

ActiveUS20060190930A1Error detection/correctionSpecific program execution arrangementsVisibilityOperational system

The invention is directed to instrumenting object code of an application and / or an operating system on a target machine so that execution trace data can be generated, collected, and subsequently analyzed for various purposes, such as debugging and performance. Automatic instrumentation may be performed on an application's object code before, during or after linking. A target machine's operating system's object code can be manually or automatically instrumented. By identifying address space switches and thread switches in the operating system's object code, instrumented code can be inserted at locations that enable the execution trace data to be generated. The instrumentation of the operating system and application can enable visibility of total system behavior by enabling generation of trace information sufficient to reconstruct address space switches and context switches.

Owner:GREEN HILLS SOFTWARE

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com