Patents

Literature

135 results about "Active queue management" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

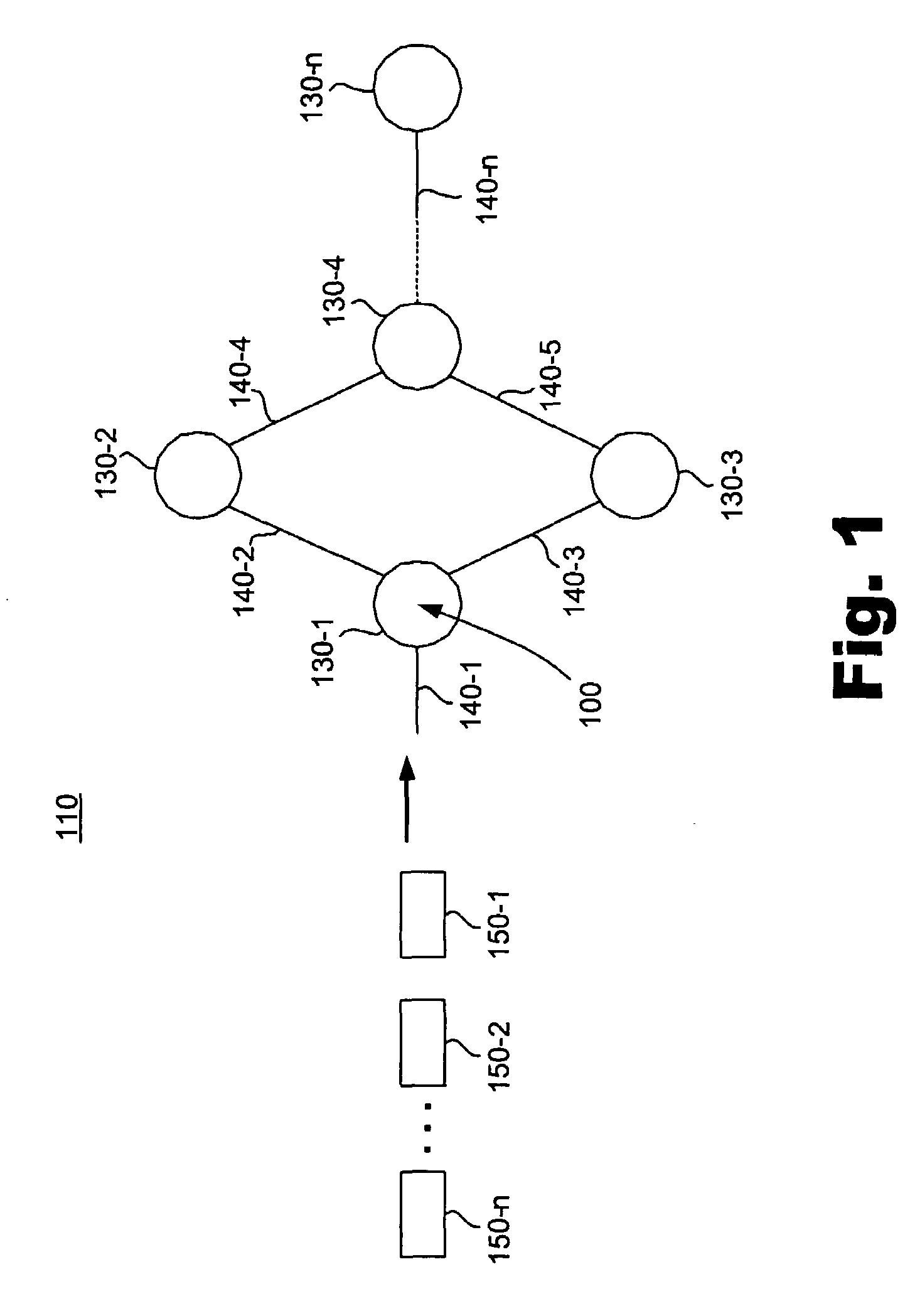

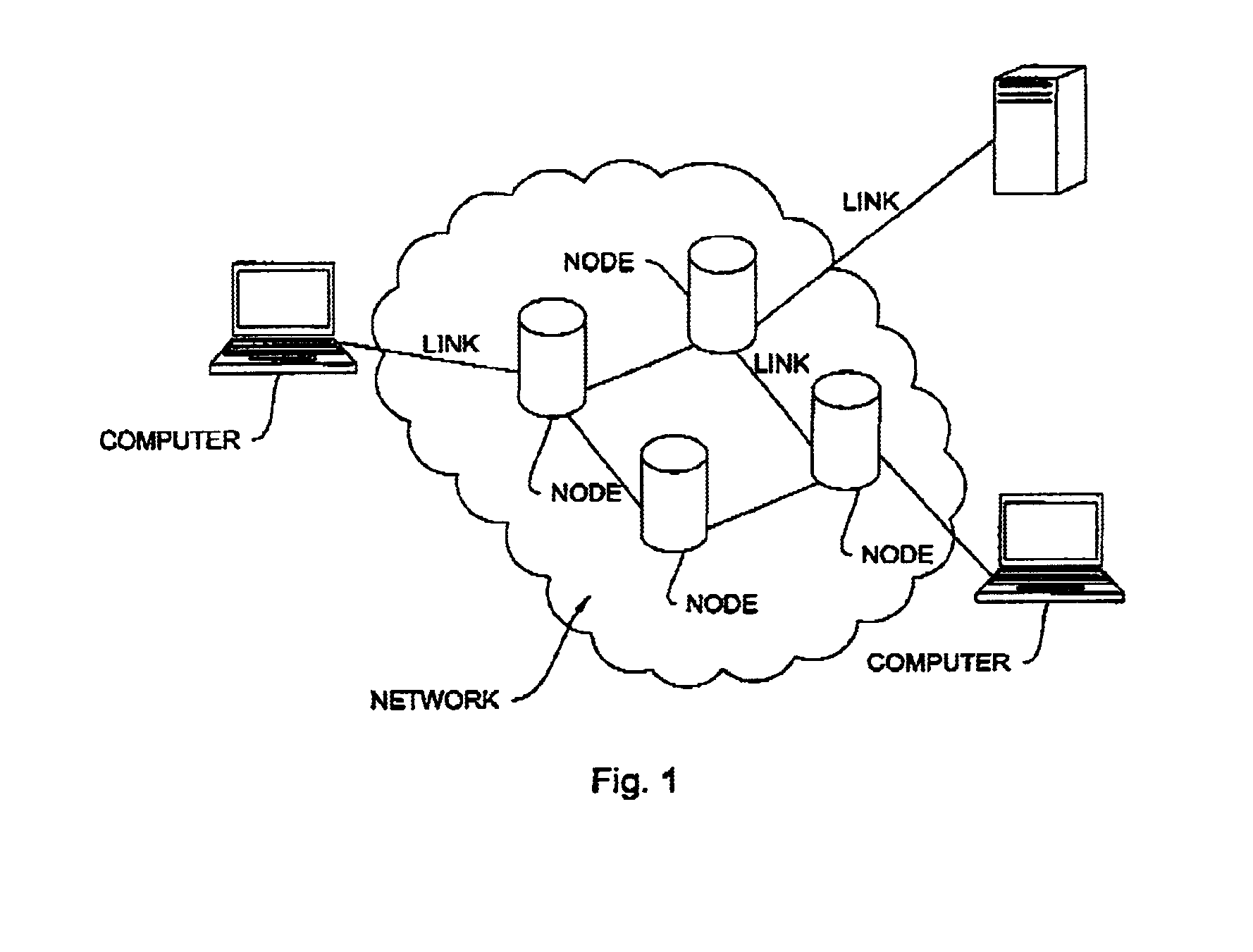

In routers and switches, active queue management (AQM) is the policy of dropping packets inside a buffer associated with a network interface controller (NIC) before that buffer becomes full, often with the goal of reducing network congestion or improving end-to-end latency. This task is performed by the network scheduler, which for this purpose uses various algorithms such as random early detection (RED), Explicit Congestion Notification (ECN), or controlled delay (CoDel). RFC 7567 recommends active queue management as a best practice.

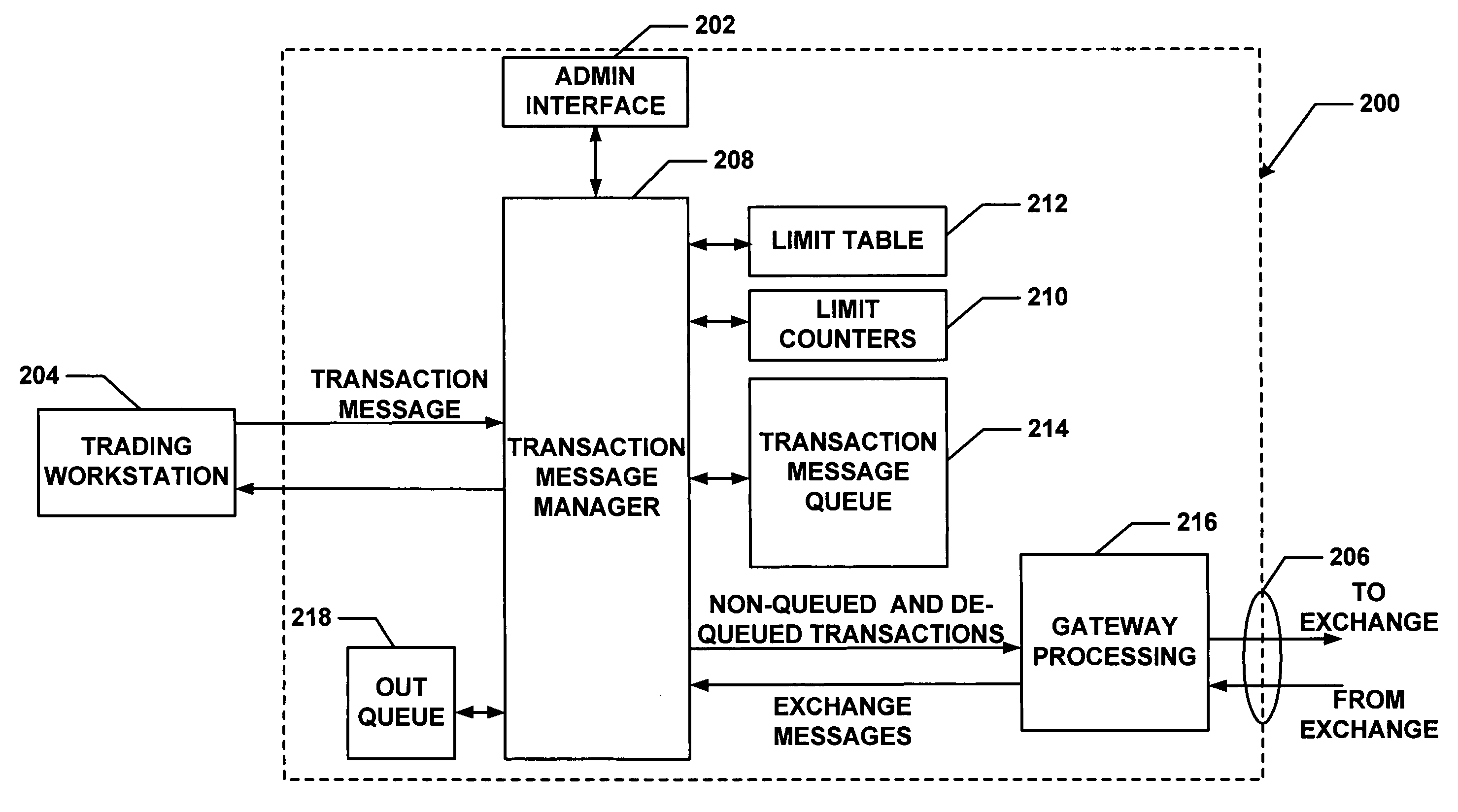

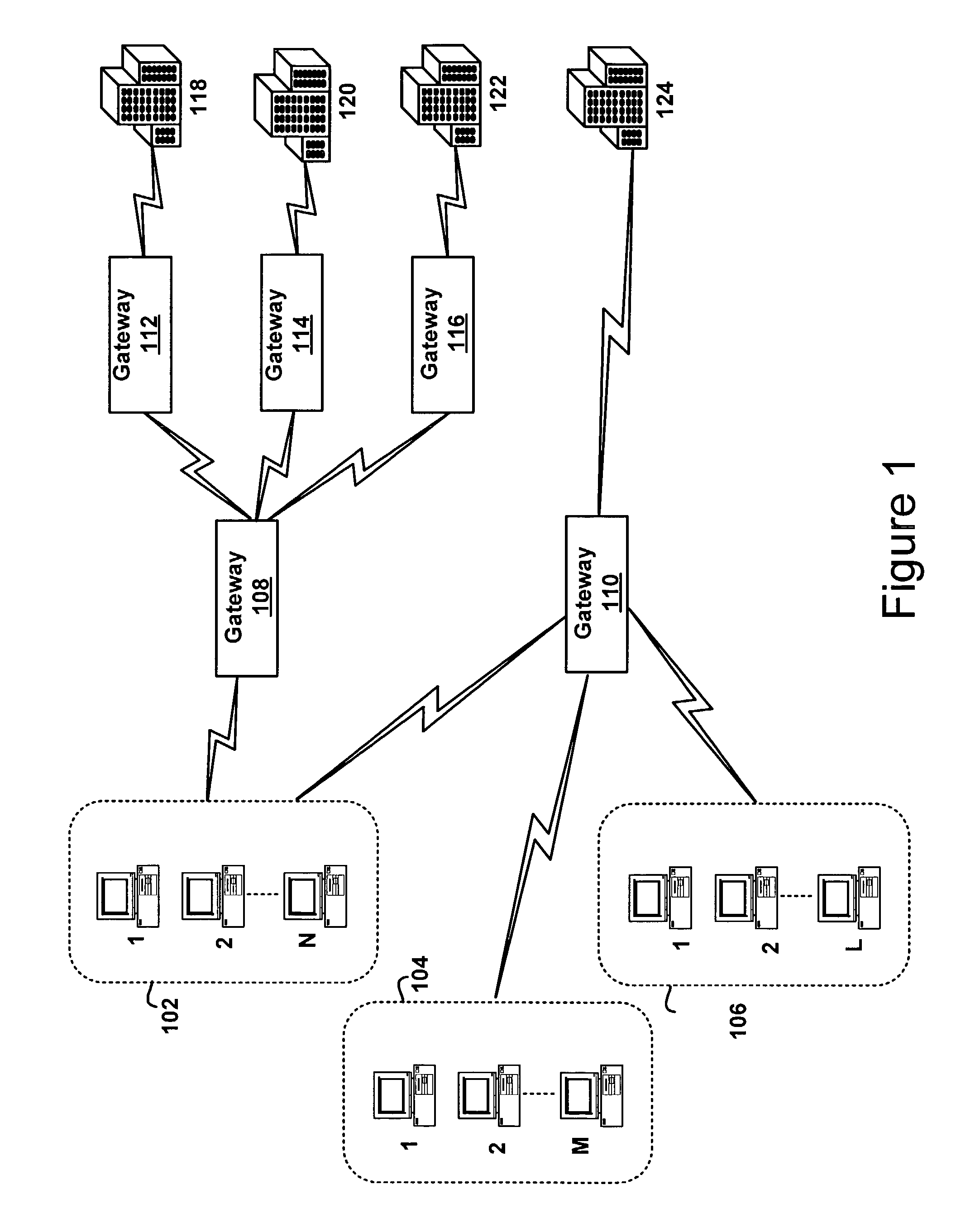

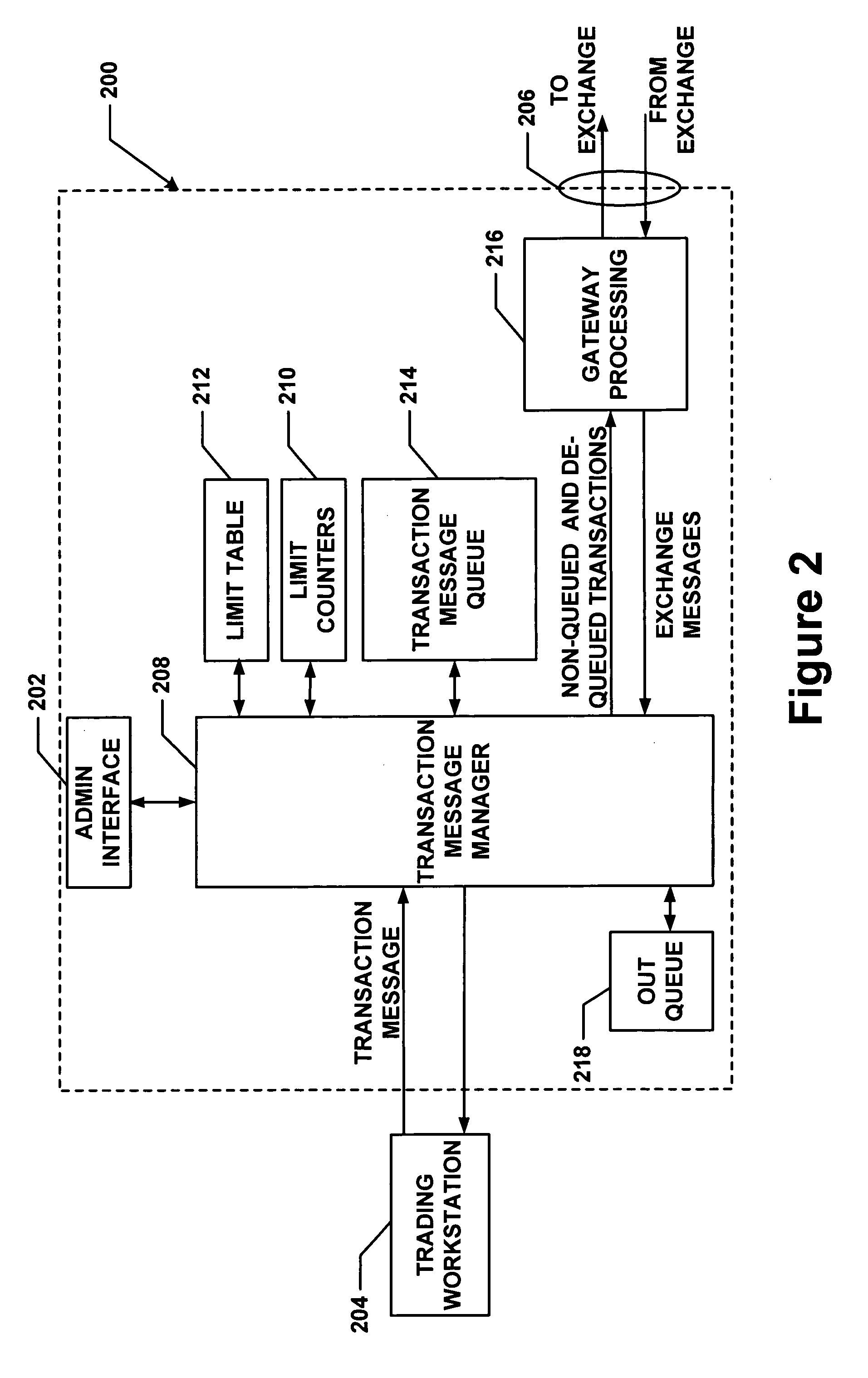

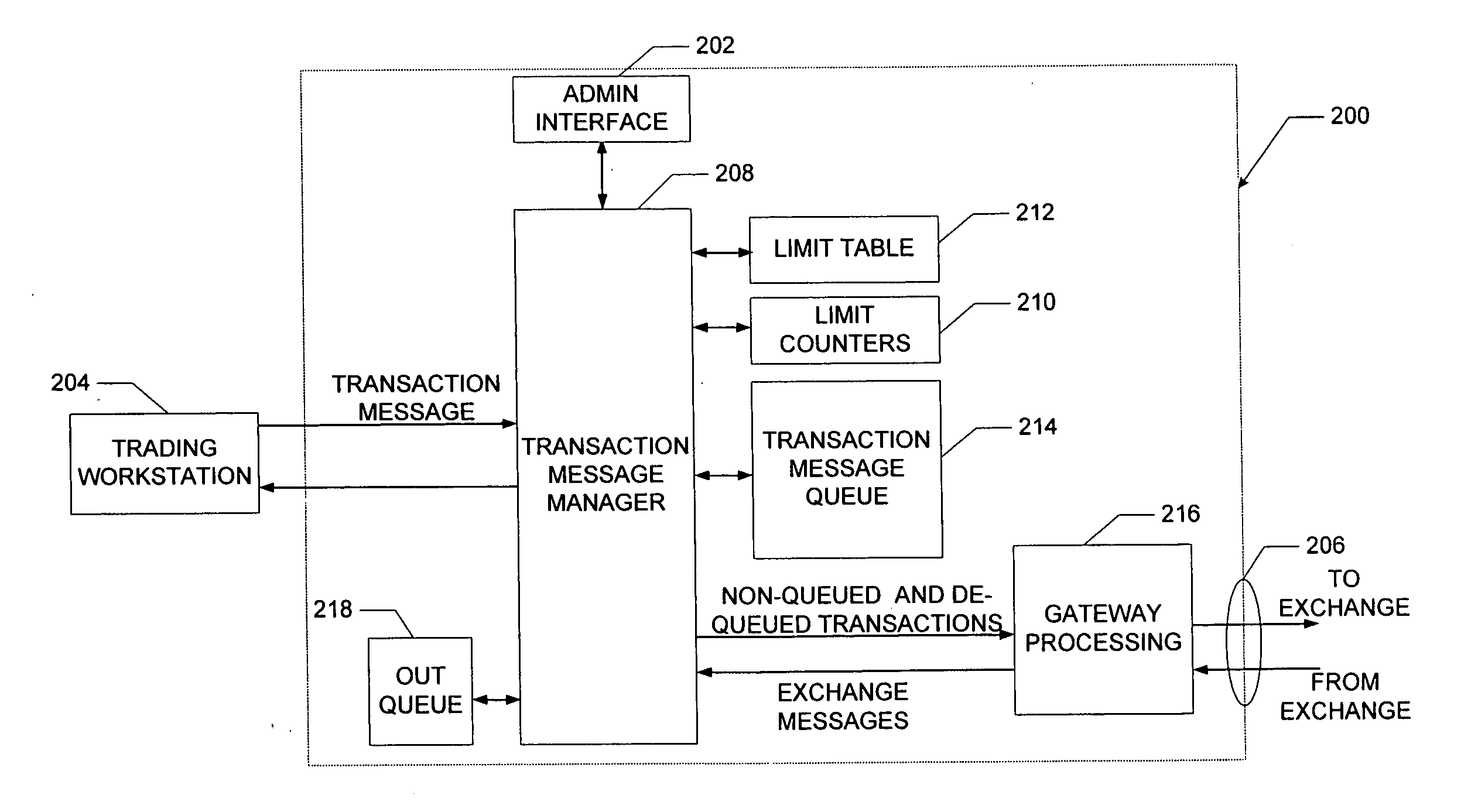

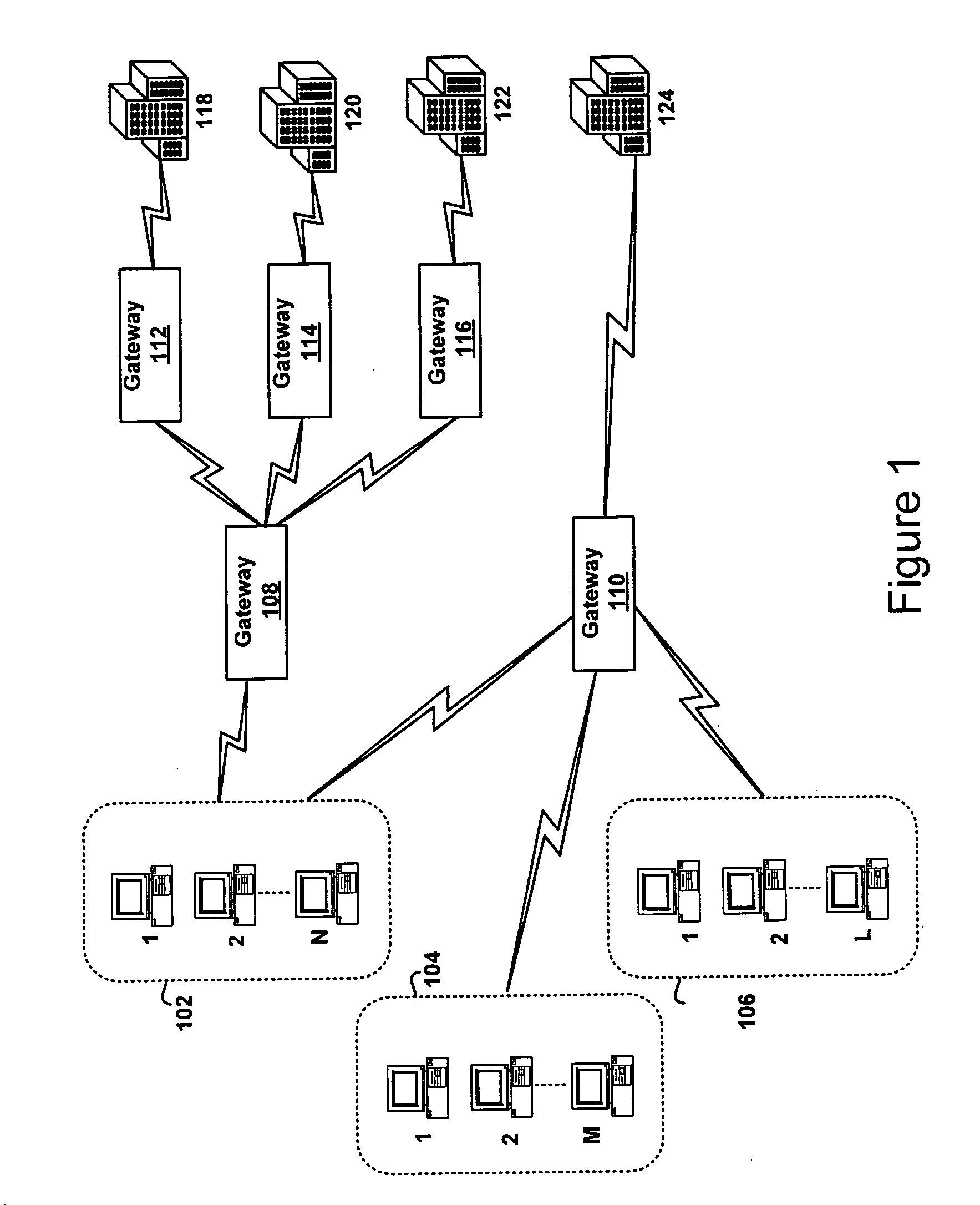

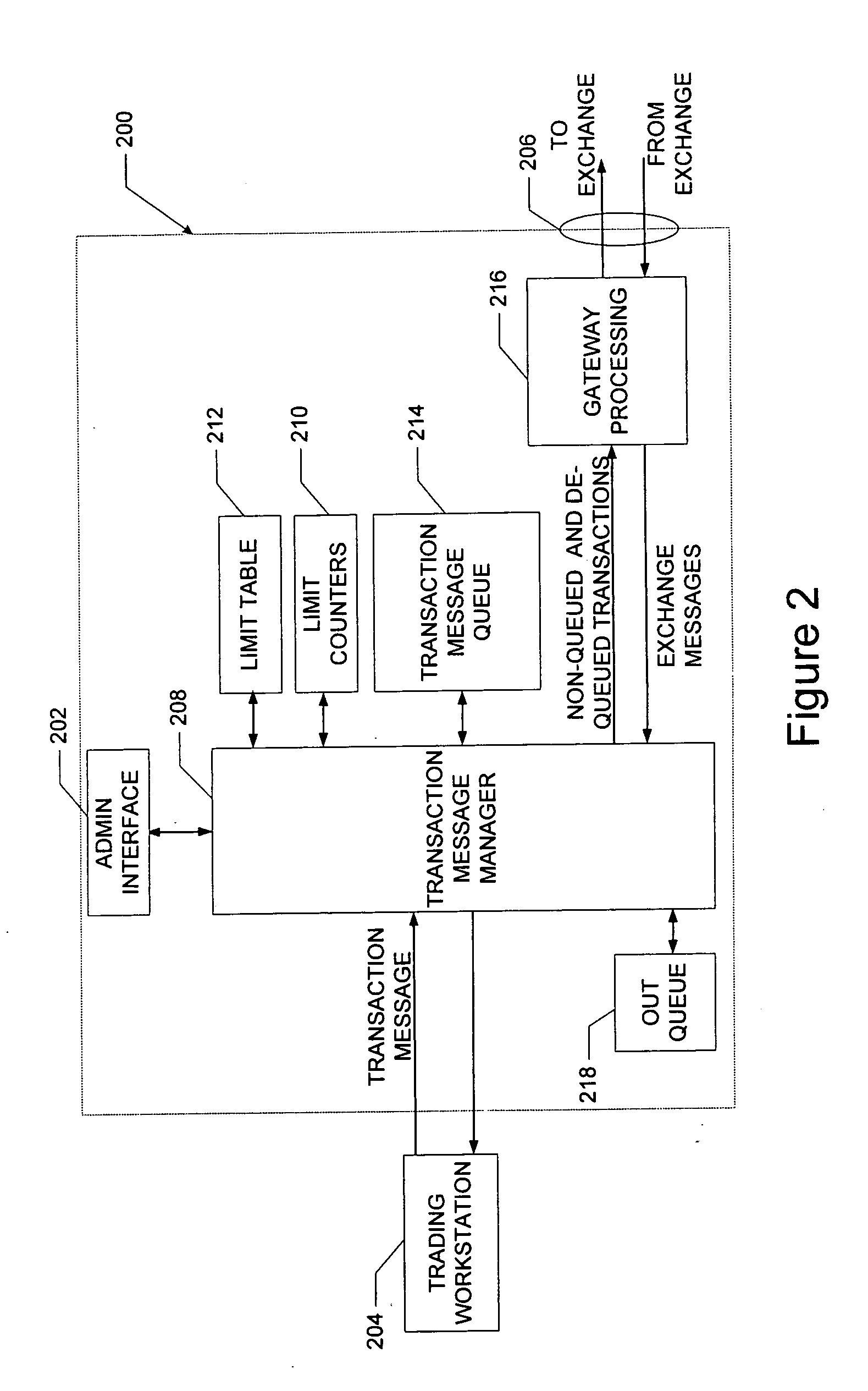

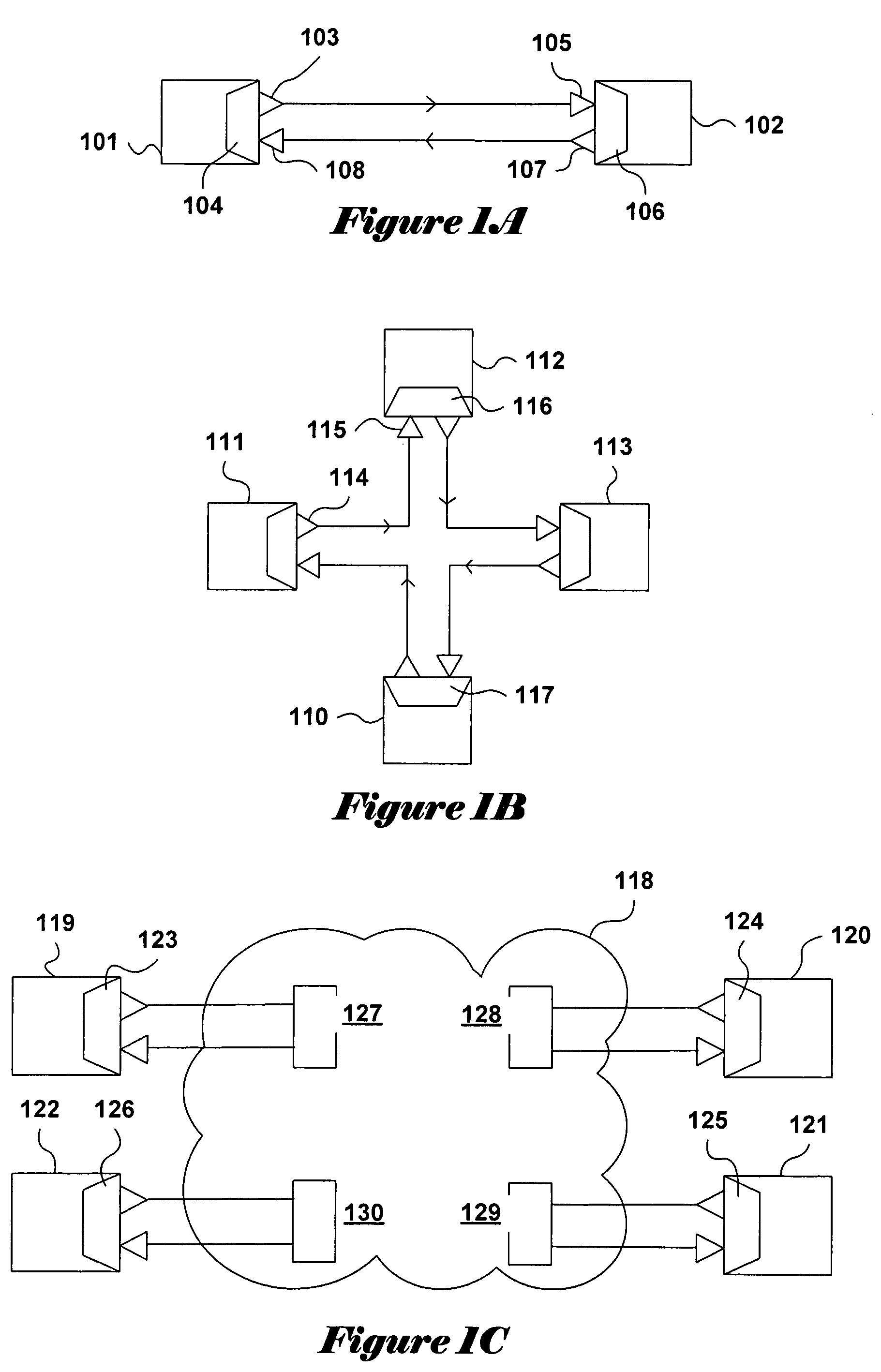

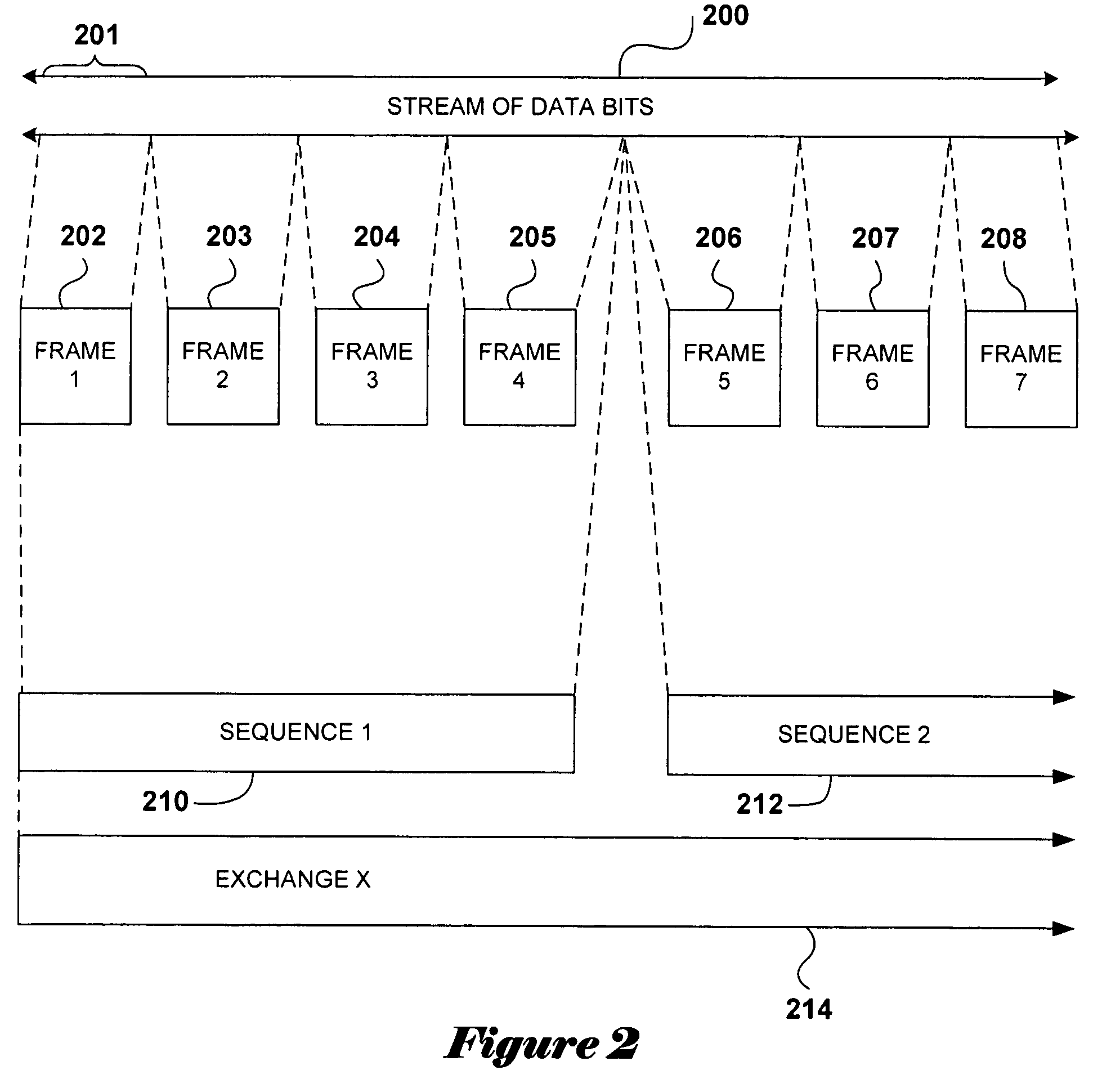

Method and apparatus for message flow and transaction queue management

Management of transaction message flow utilizing a transaction message queue. The system and method are for use in financial transaction messaging systems. The system is designed to enable an administrator to monitor, distribute, control and receive alerts on the use and status of limited network and exchange resources. Users are grouped in a hierarchical manner, preferably including user level and group level, as well as possible additional levels such as account, tradable object, membership, and gateway levels. The message thresholds may be specified for each level to ensure that transmission of a given transaction does not exceed the number of messages permitted for the user, group, account, etc.

Owner:TRADING TECH INT INC

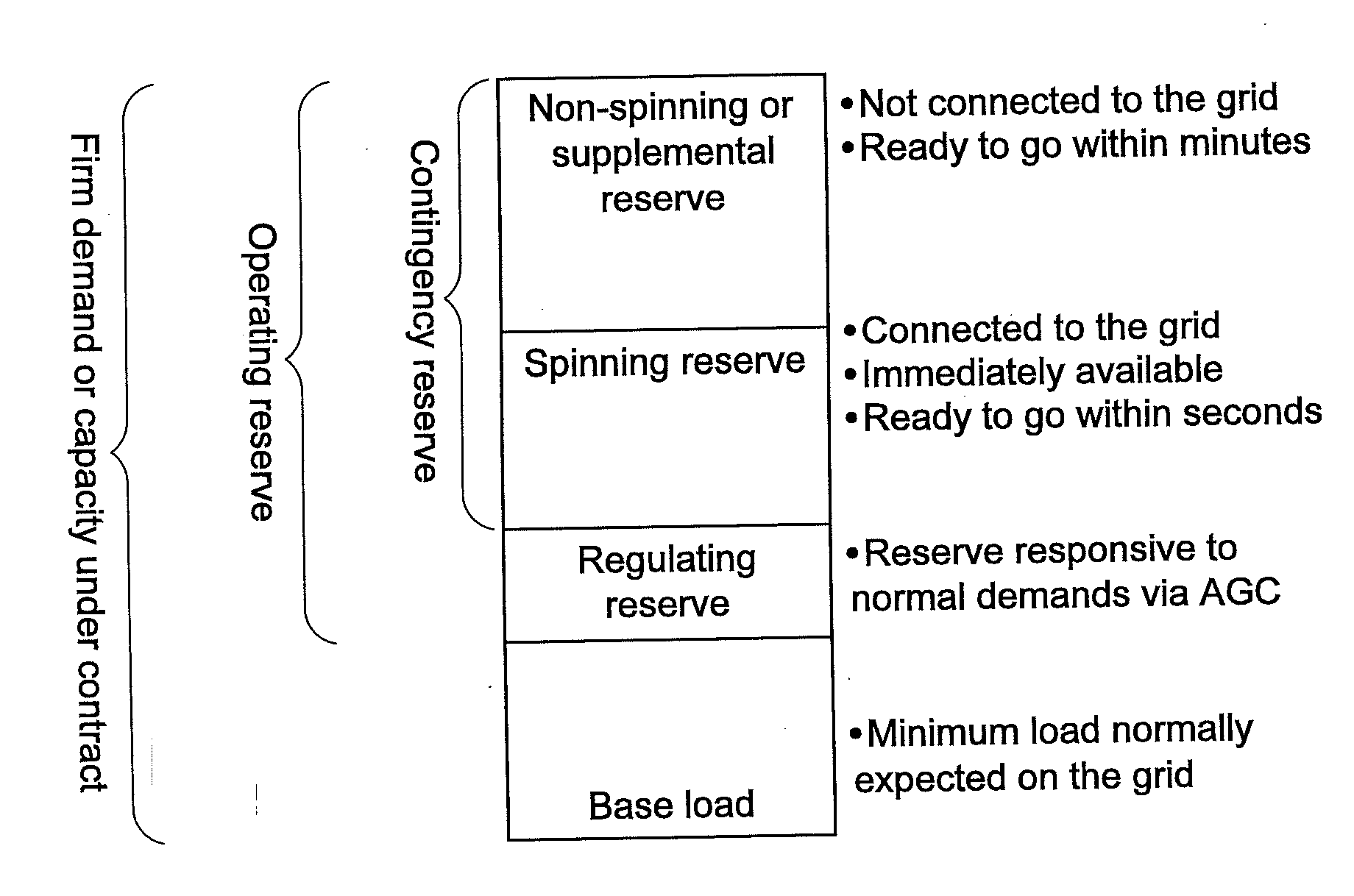

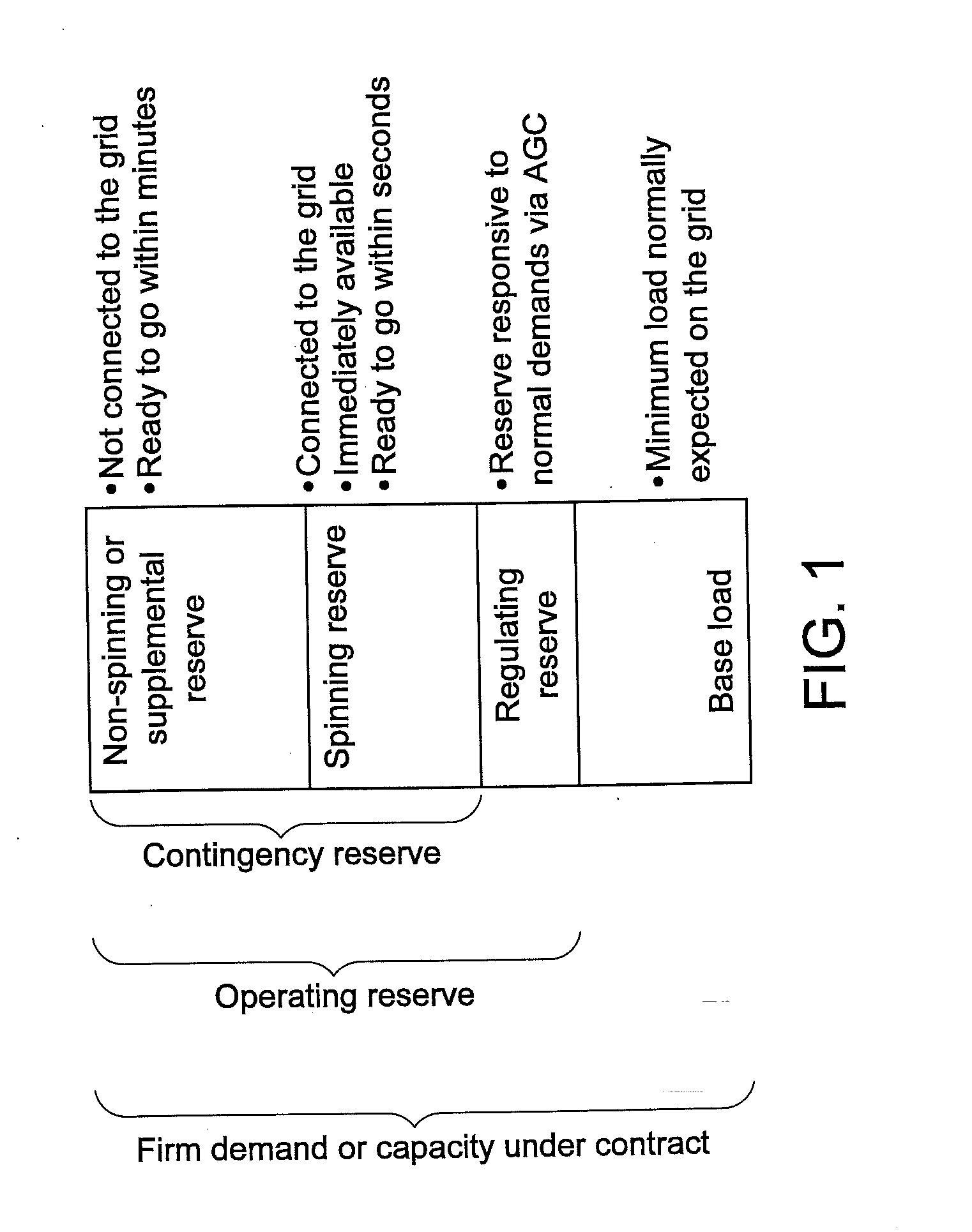

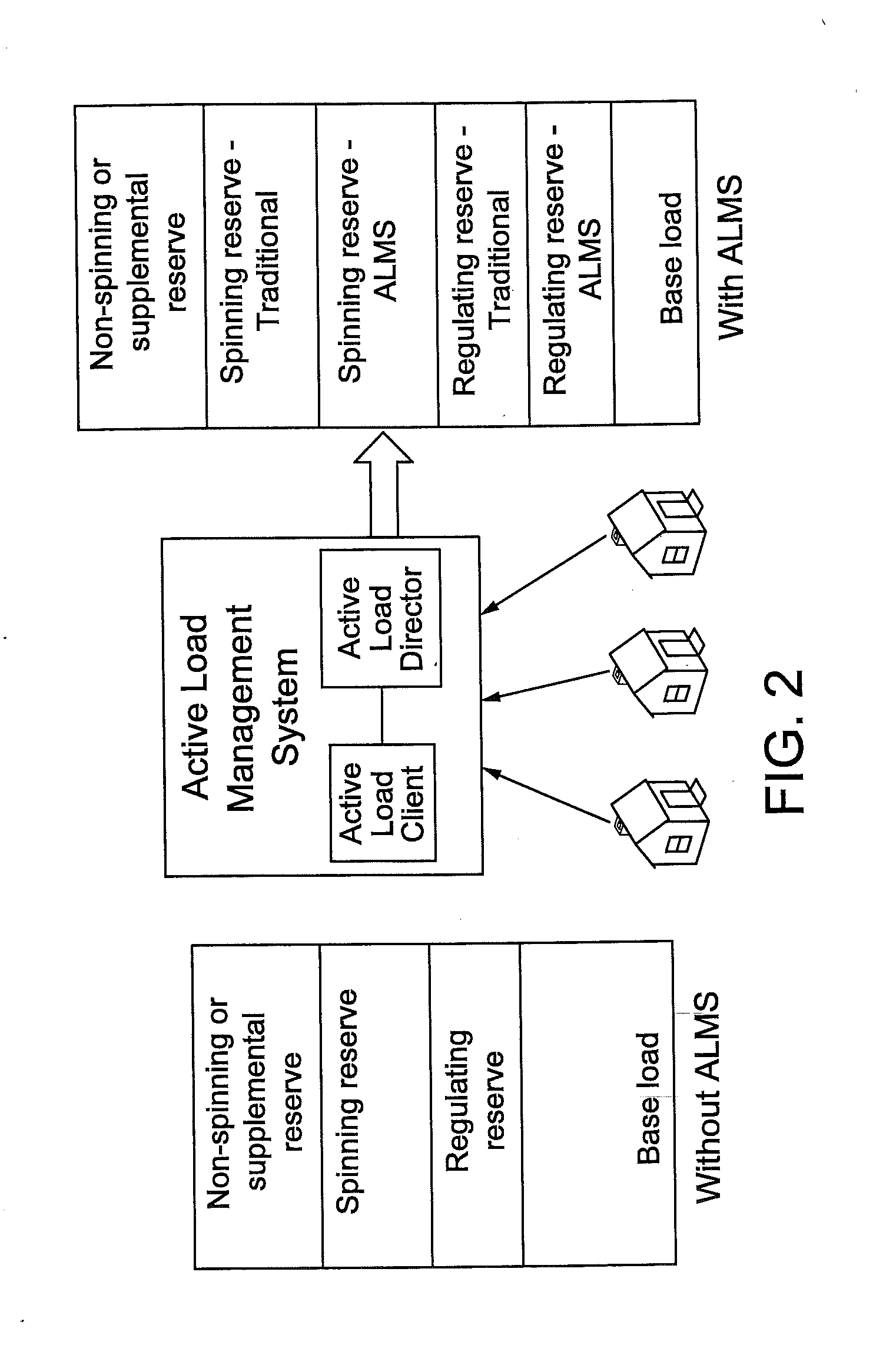

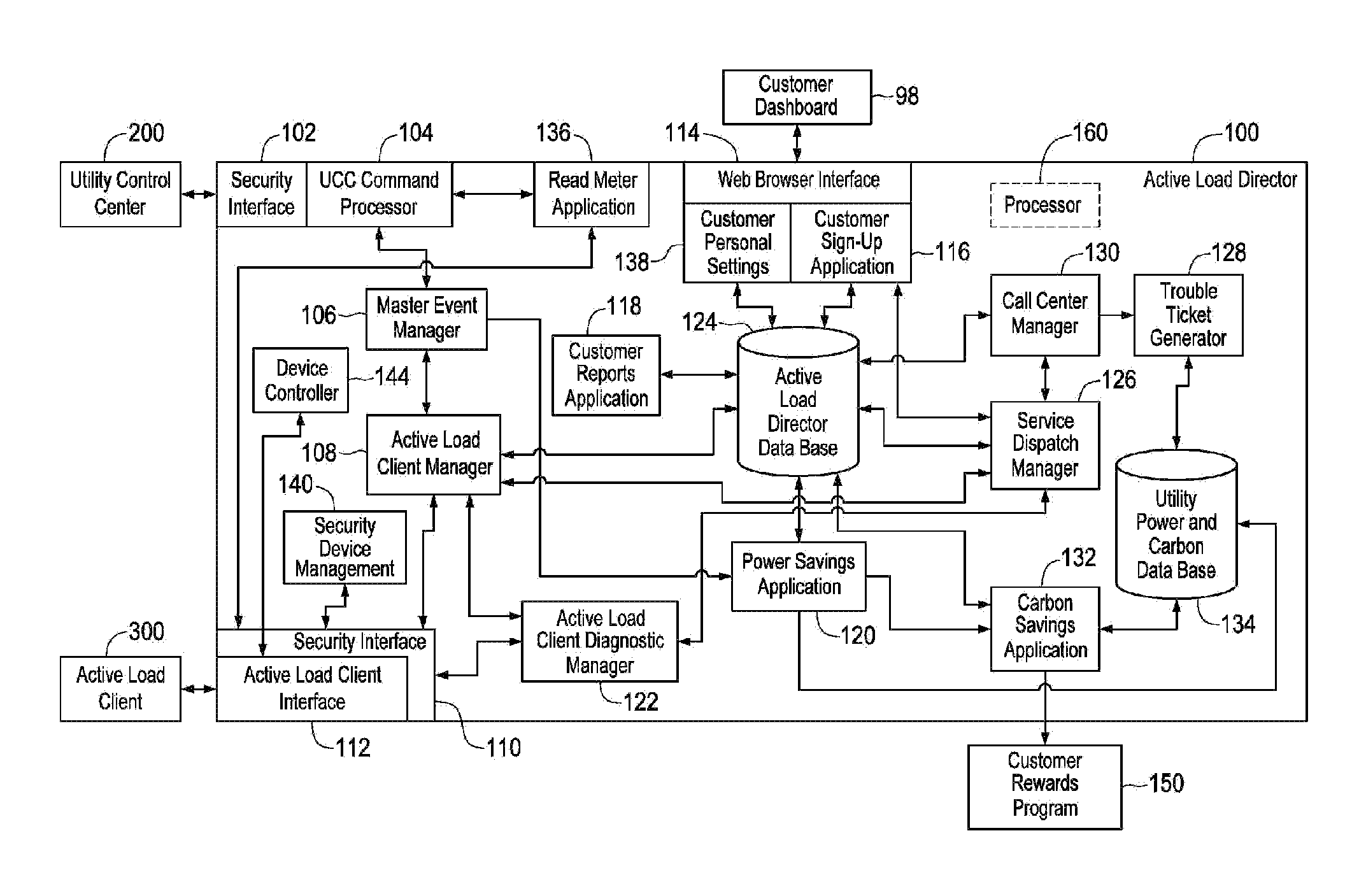

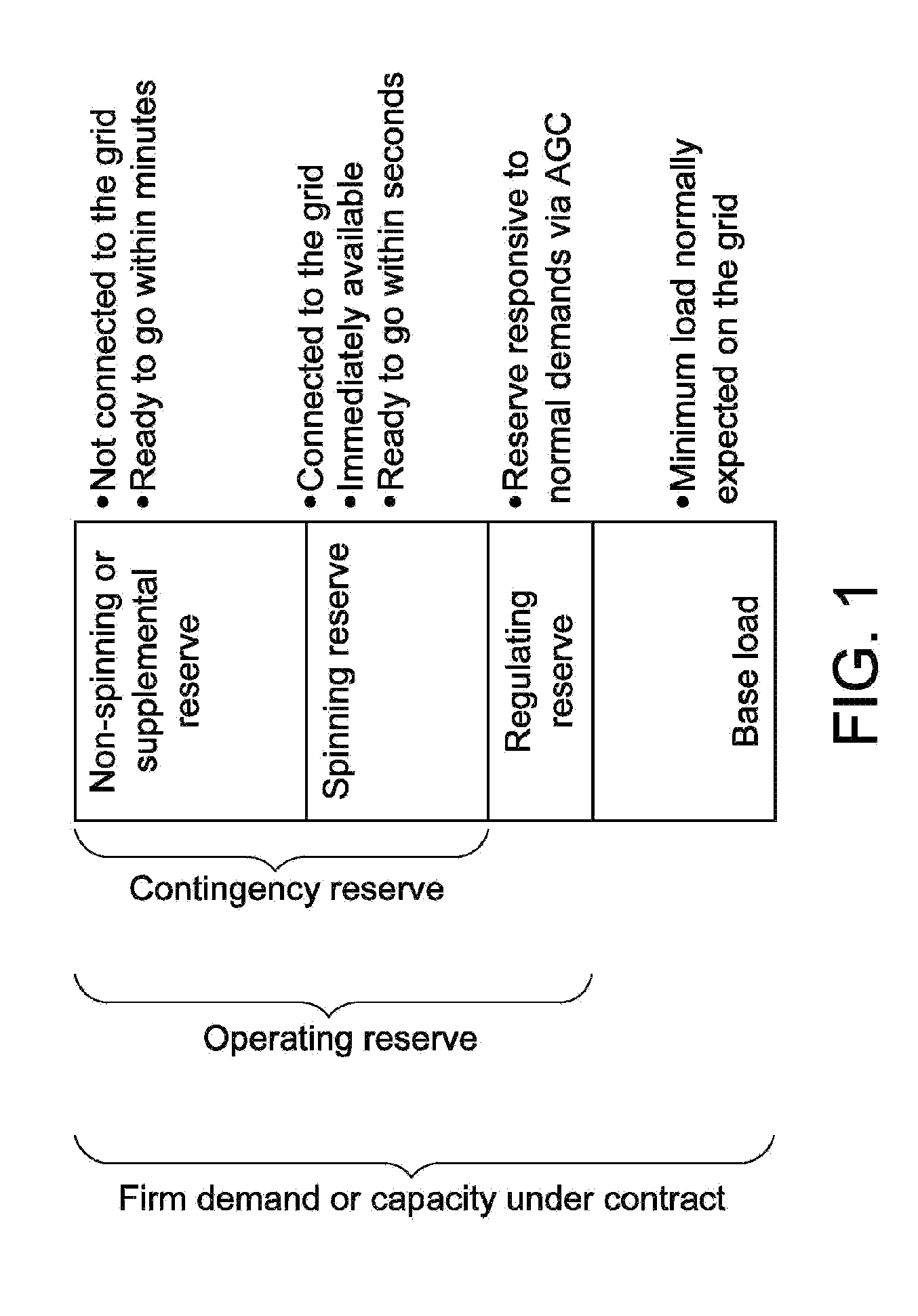

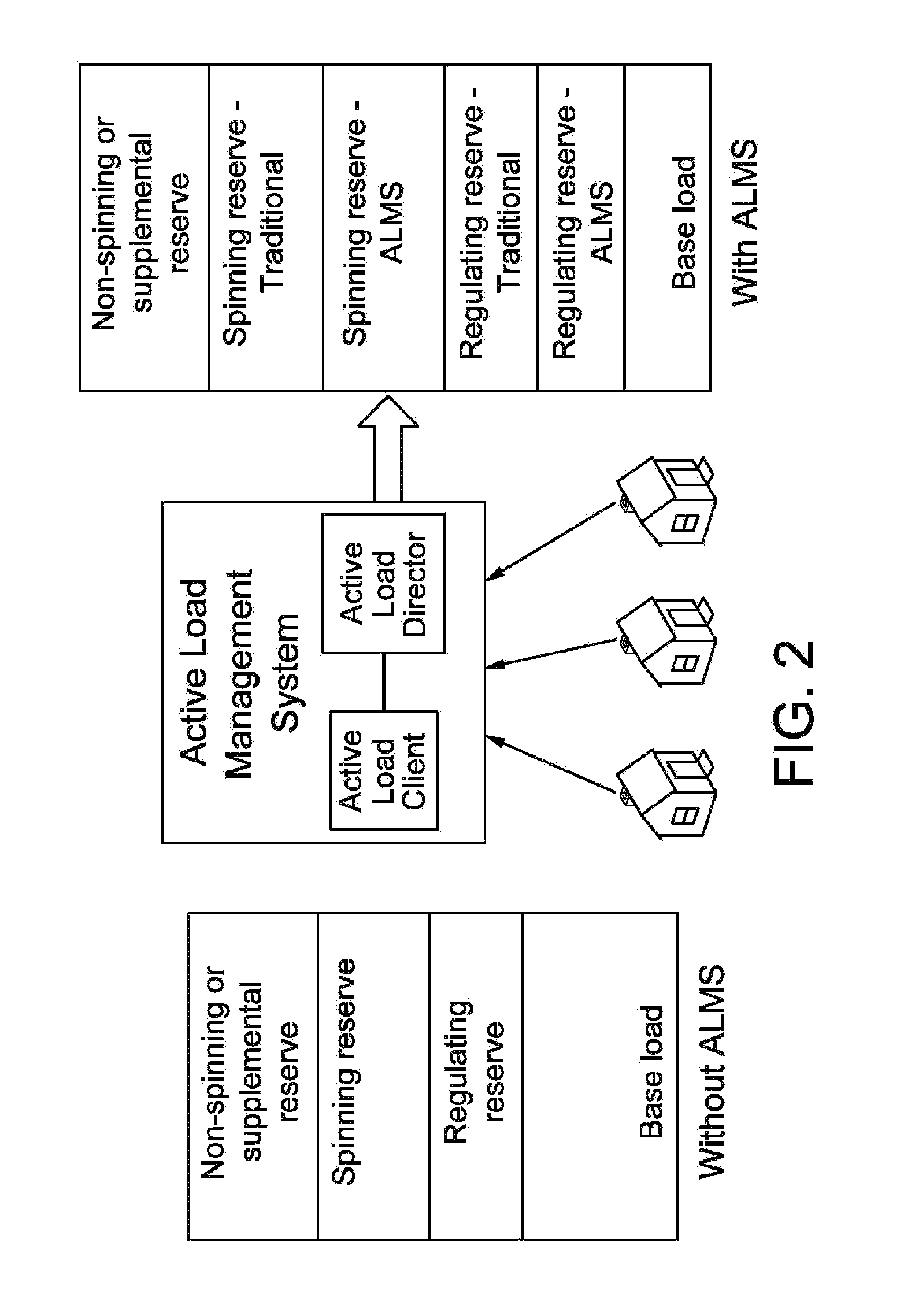

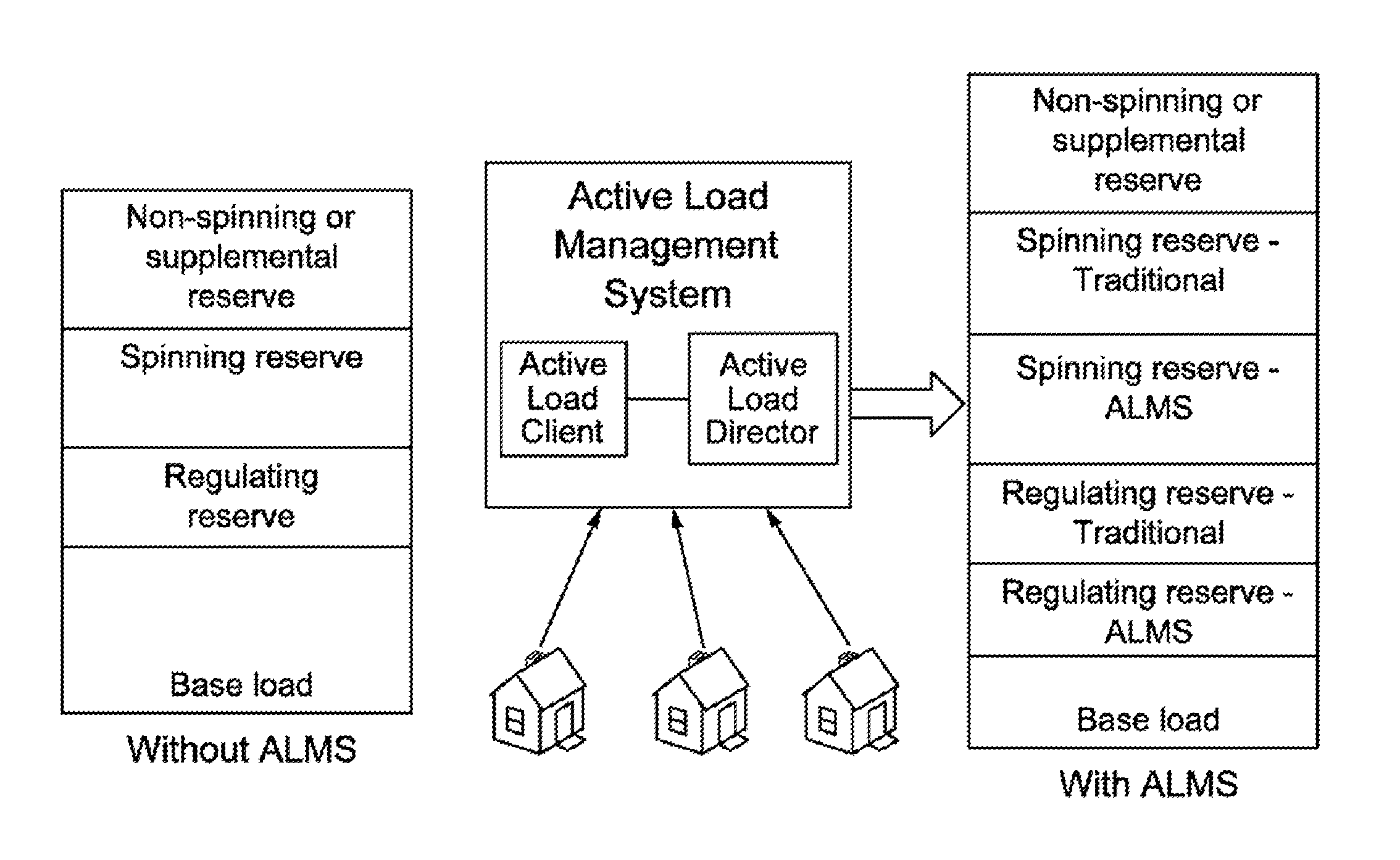

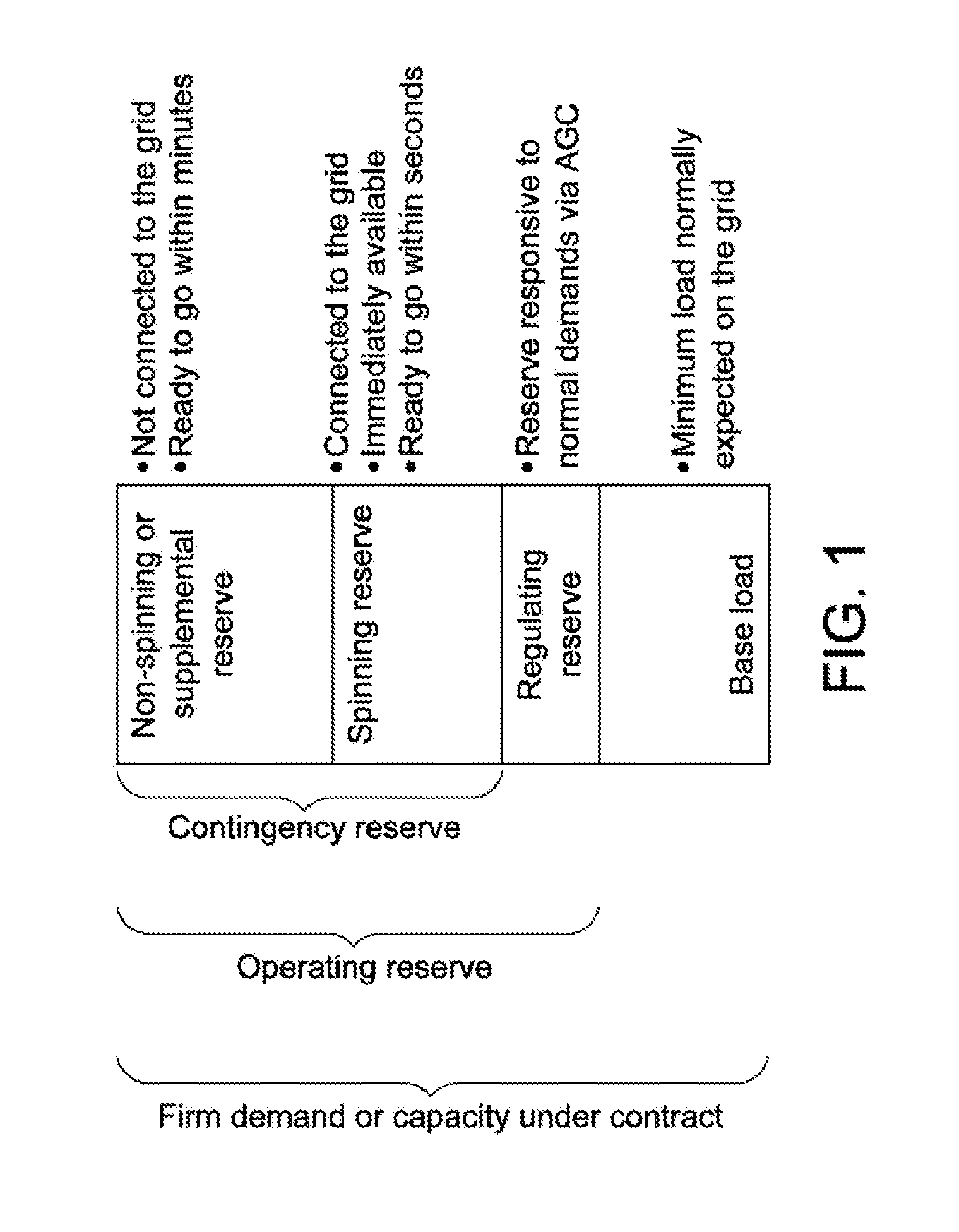

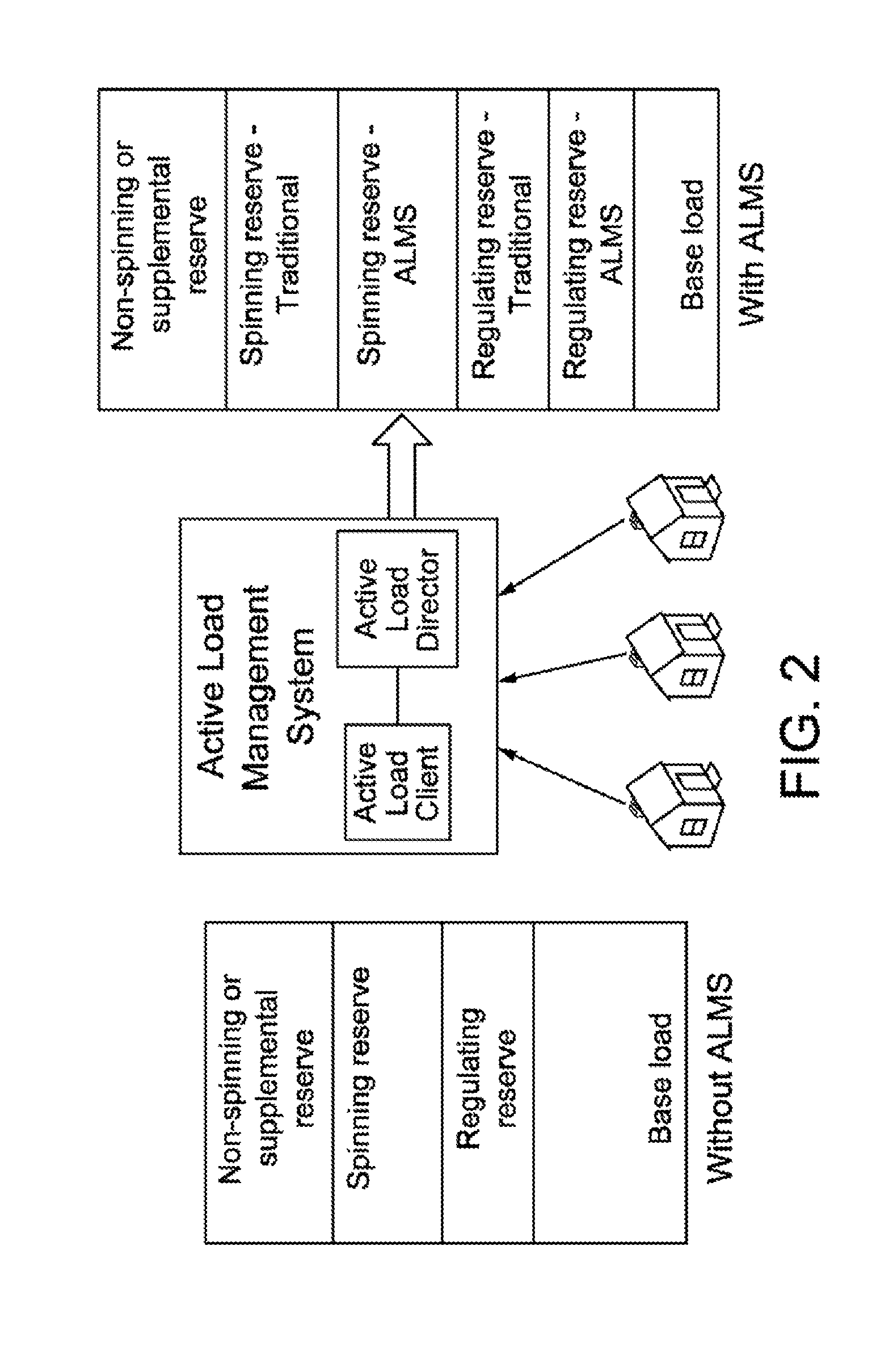

System and method for estimating and providing dispatchable operating reserve energy capacity through use of active load management

ActiveUS20110172837A1Generation forecast in ac networkLevel controlElectric vehicleActive Network Management

A utility employs an active load management system (ALMS) to estimate available operating reserve for possible dispatch to the utility or another requesting entity (e.g., an independent system operator). According to one embodiment, the ALMS determines amounts of electric power stored in power storage devices, such as electric or hybrid electric vehicles, distributed throughout the utility's service area. The ALMS stores the stored power data in a repository. Responsive to receiving a request for operating reserve, the ALMS determines whether the stored power data alone or in combination with projected energy savings from a control event is sufficient to meet the operating reserve requirement. If so, the ALMS dispatches power from the power storage devices to the power grid to meet the operating reserve need. The need for operating reserve may also be communicated to mobile power storage devices to allow them to provide operating reserve as market conditions require.

Owner:JOSEPH W FORBES JR +1

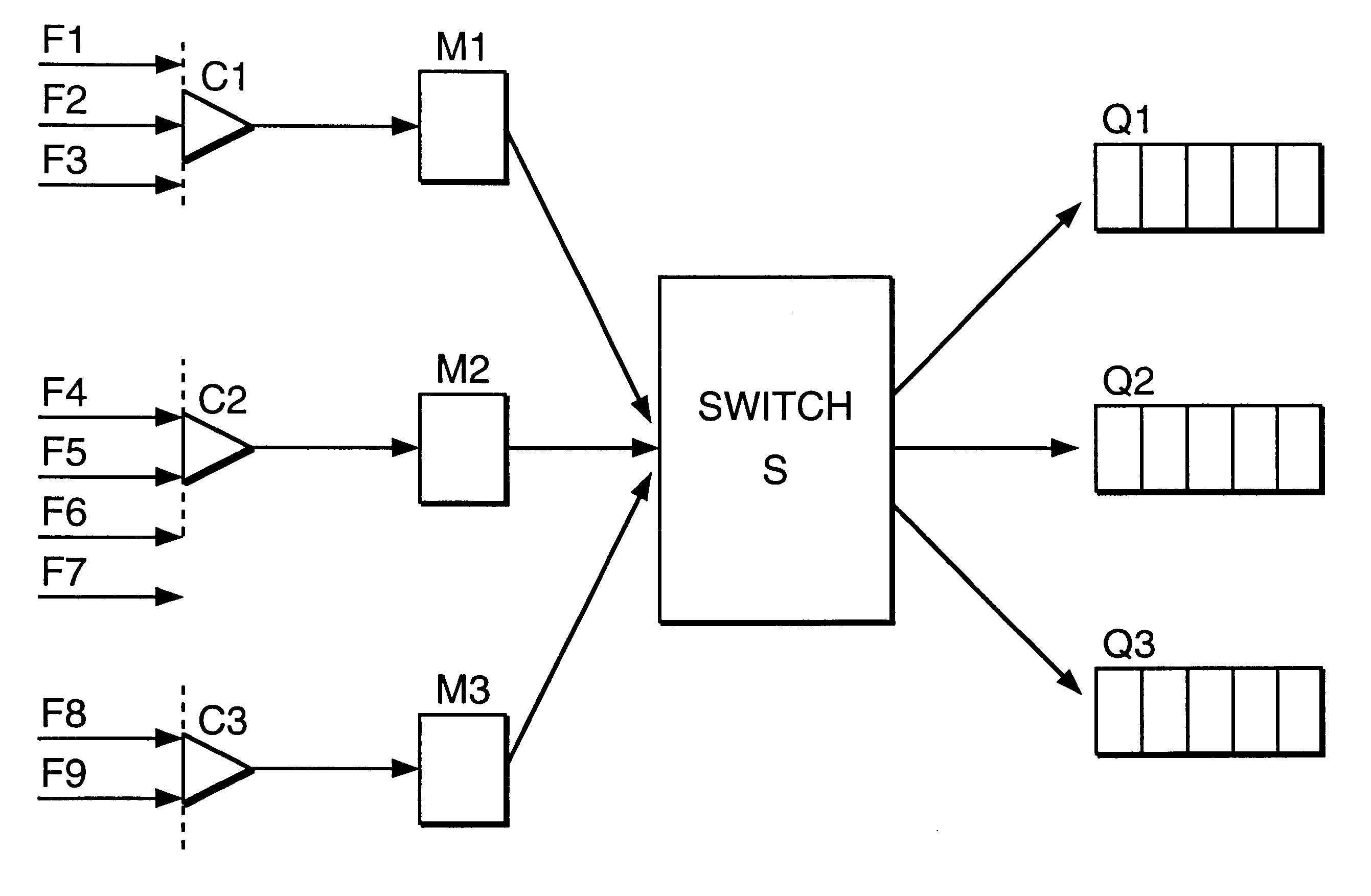

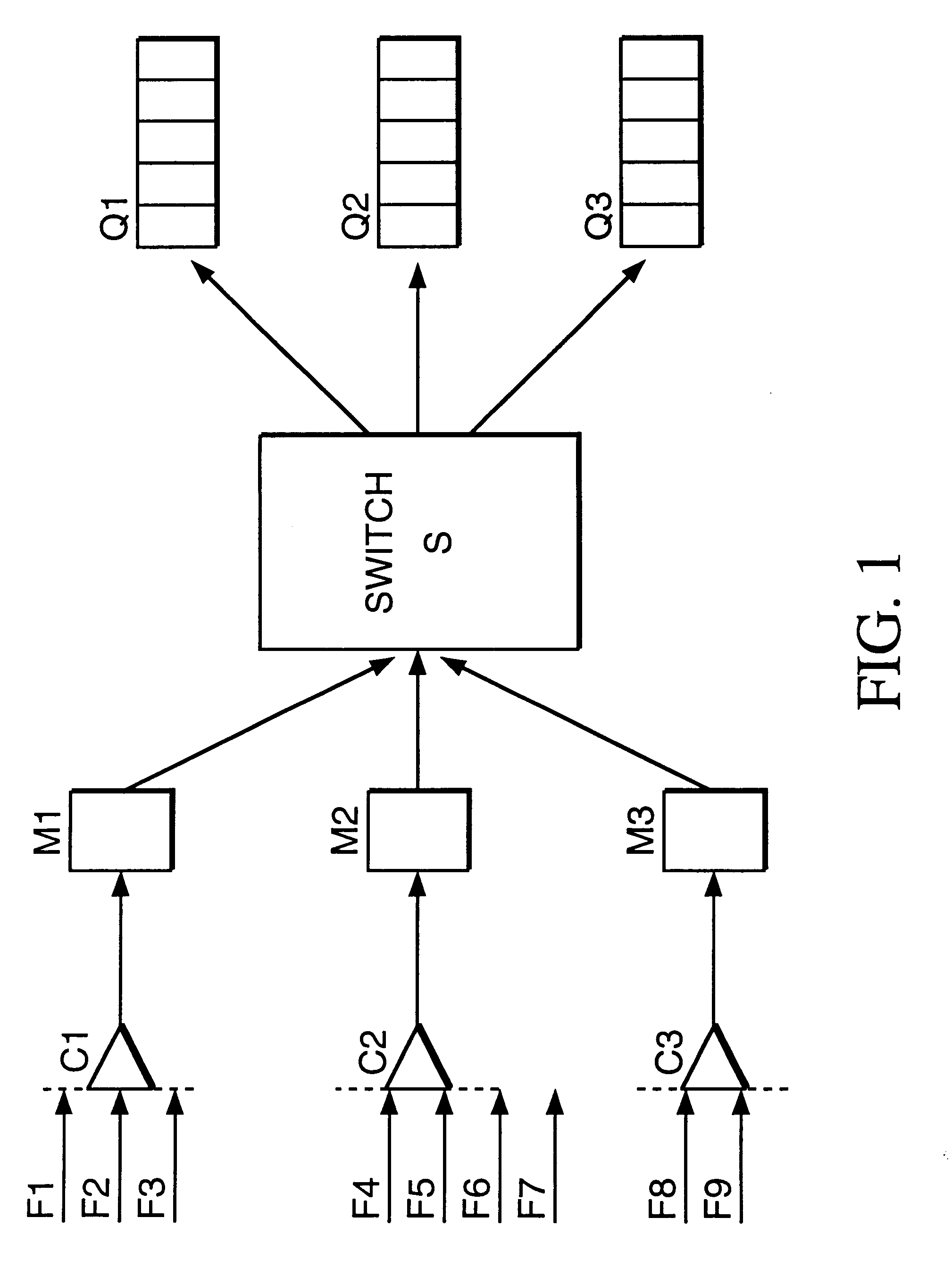

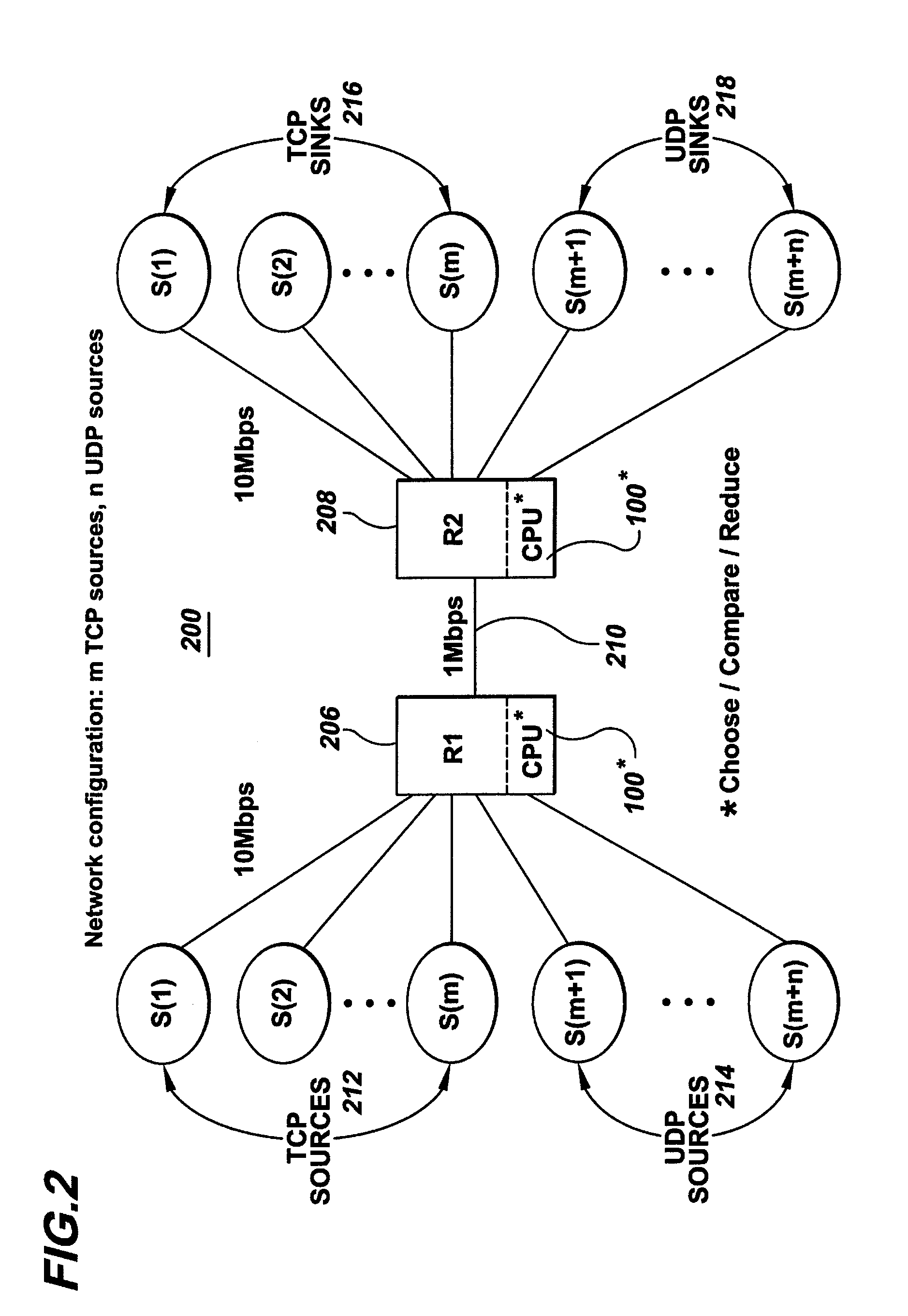

System performance in a data network through queue management based on ingress rate monitoring

InactiveUS6252848B1Error preventionFrequency-division multiplex detailsTraffic capacityQueue management system

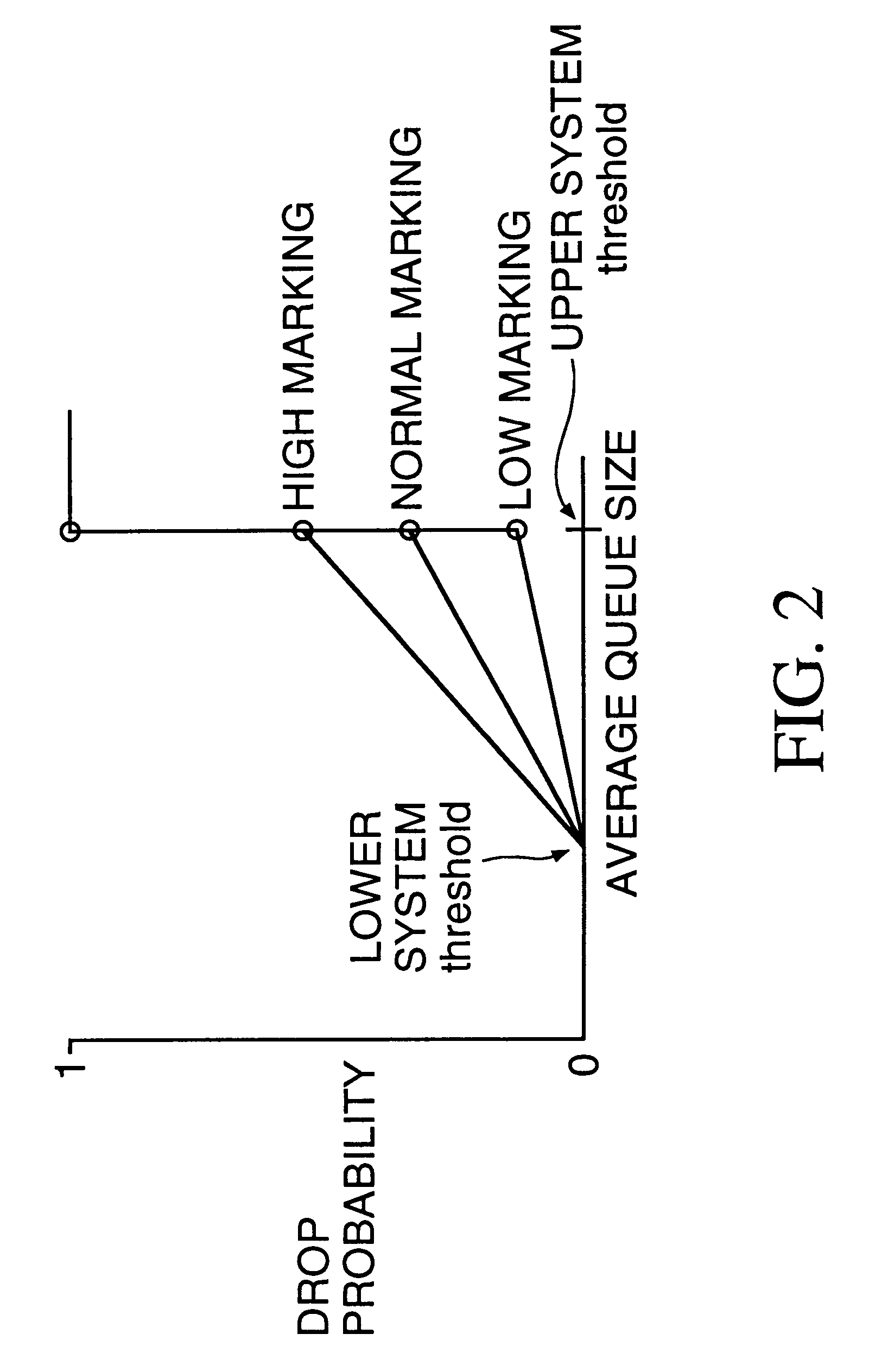

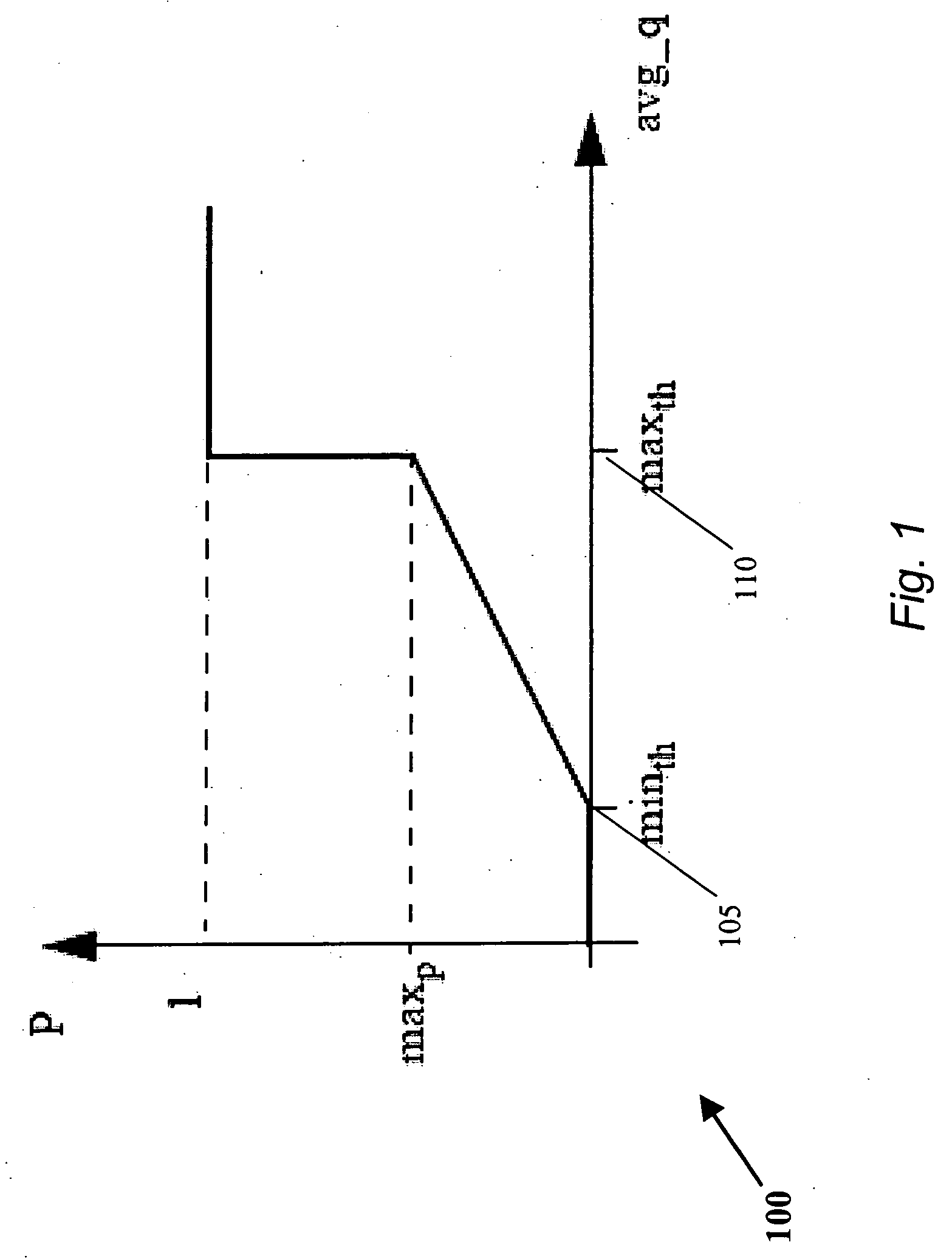

A method optimizes performance in a data network including a plurality of ingress ports and output queues. Ingress rates of a plurality of flows are monitored, where each flow includes a sequence of packets passing from an ingress port to an output queue and each flow has a profile related to flow characteristics. Each packet is marked with a marking based on criteria including the ingress flow rate and the flow profile. A drop probability of each packet is adjusted at an output queue according to a value of a drop function taken as a function of a queue size. The drop function is selected according to the marking on the packet. The drop functions are zero for queue sizes less than a lower threshold range and positive for queue sizes greater than the lower threshold range. By selecting drop functions according to ingress flow rate measurements and flow profiles, the data network can be optimized for overall system performance.

Owner:PLURIS

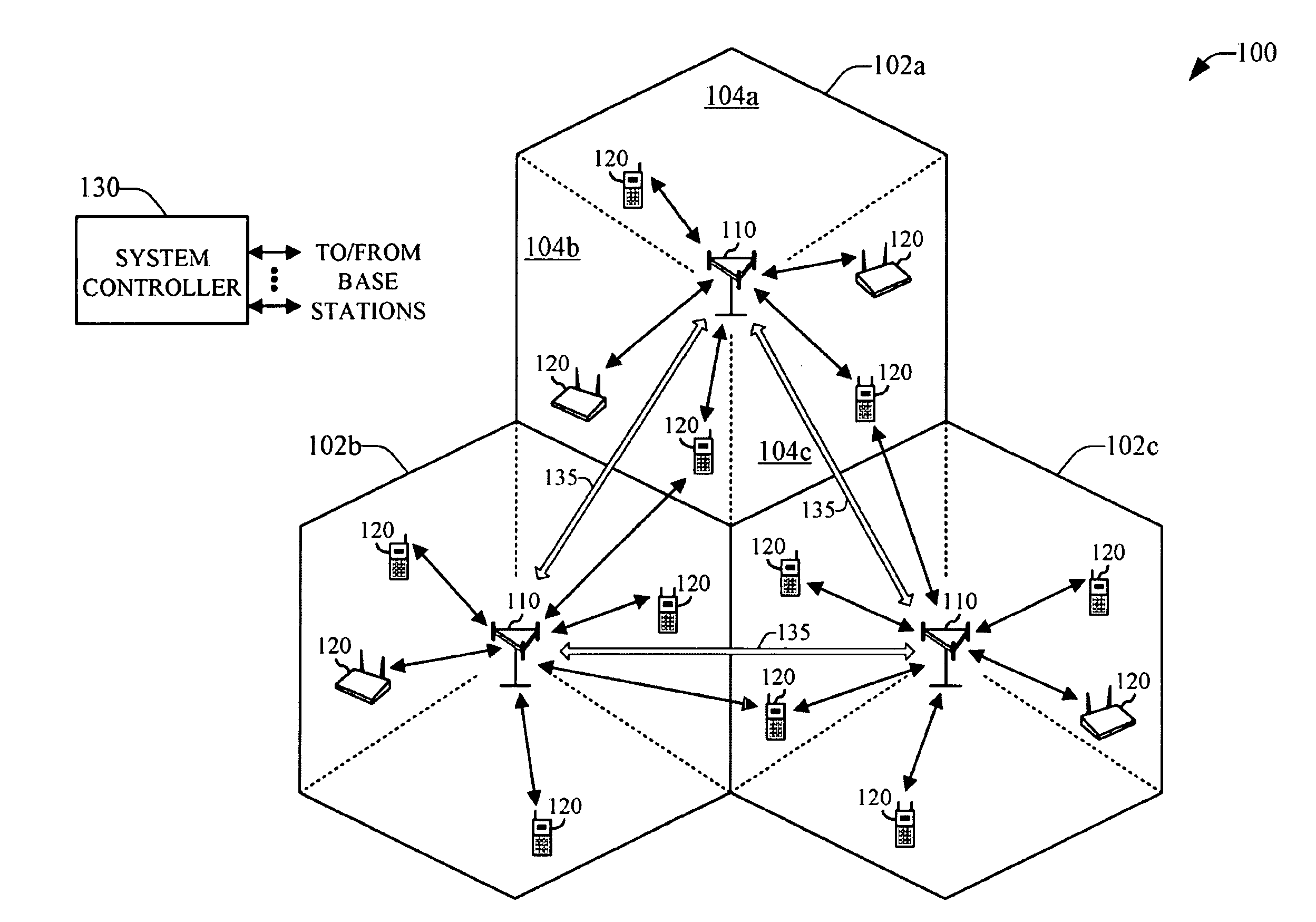

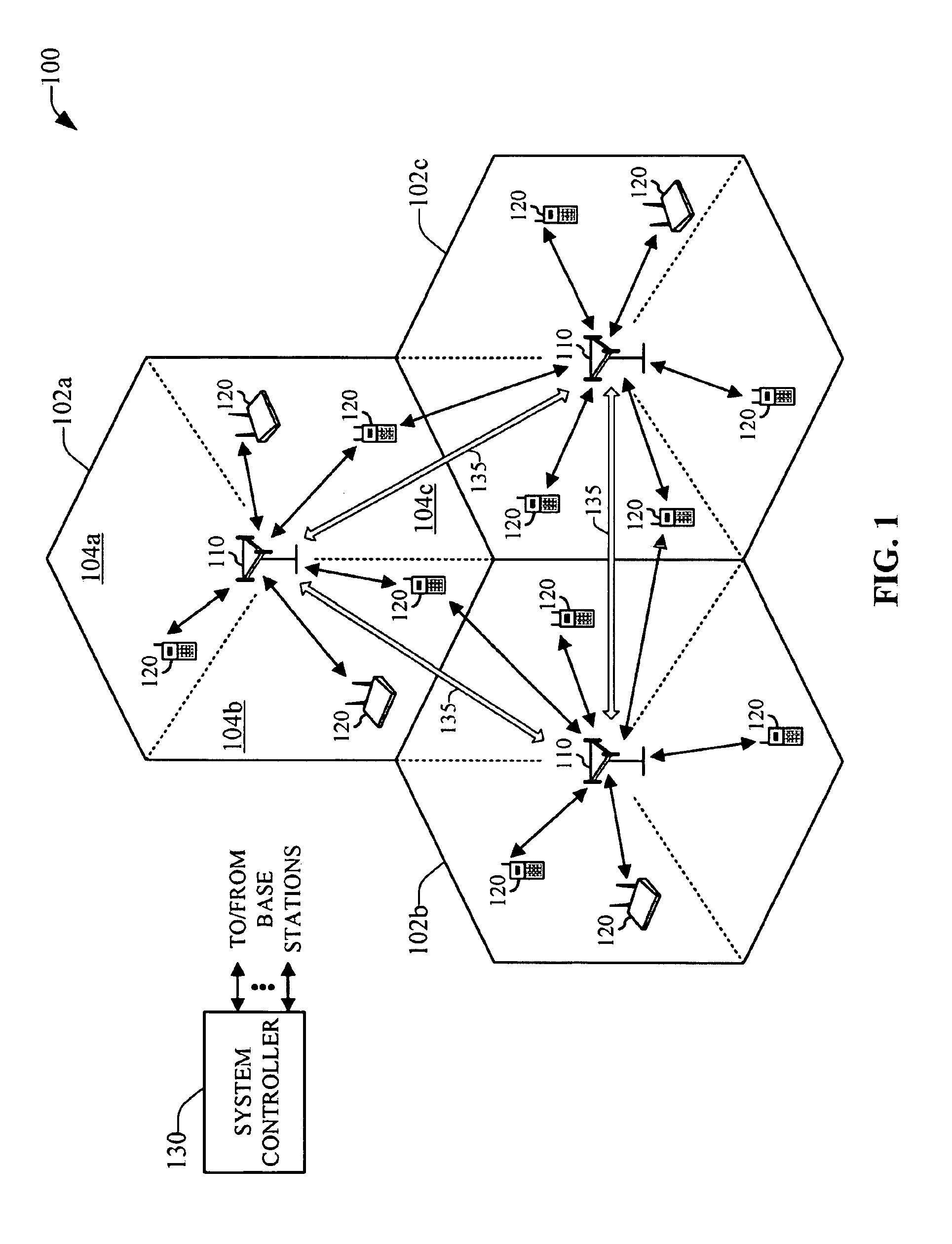

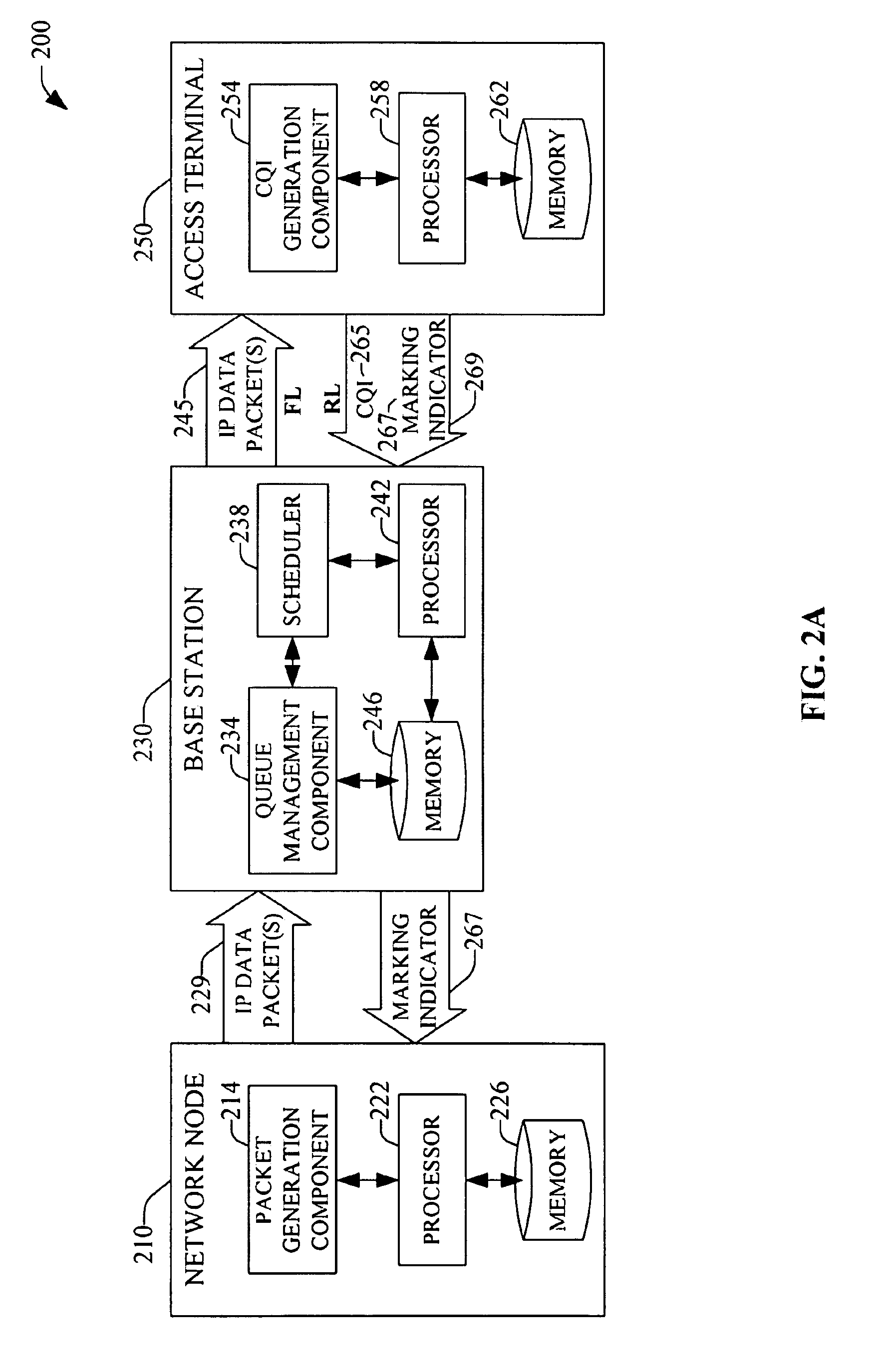

Method and system for reducing backhaul utilization during base station handoff in wireless networks

InactiveUS20080186918A1Well formedEnergy efficient ICTNetwork traffic/resource managementWireless mesh networkFrequency reuse

Systems and methods are provided that facilitate active queue management of internet-protocol data packets generated in a data packet switched wireless network. Queue management can be effected in a serving base station as well as in an access terminal, and the application that generates the data packets can be executed locally or remotely to either the base station or access terminal. Management of the generated data packets is effected via a marking / dropping of data packets according to an adaptive response function that can be deterministic or stochastic, and can depend of multiple communication generalized indicators, which include packet queue size, queue delay, channel conditions, frequency reuse, operation bandwidth, and bandwidth-delay product. Historical data related to the communication generalized indicators can be employed to determine response functions via thresholds and rates for marking / dropping data packets.

Owner:QUALCOMM INC

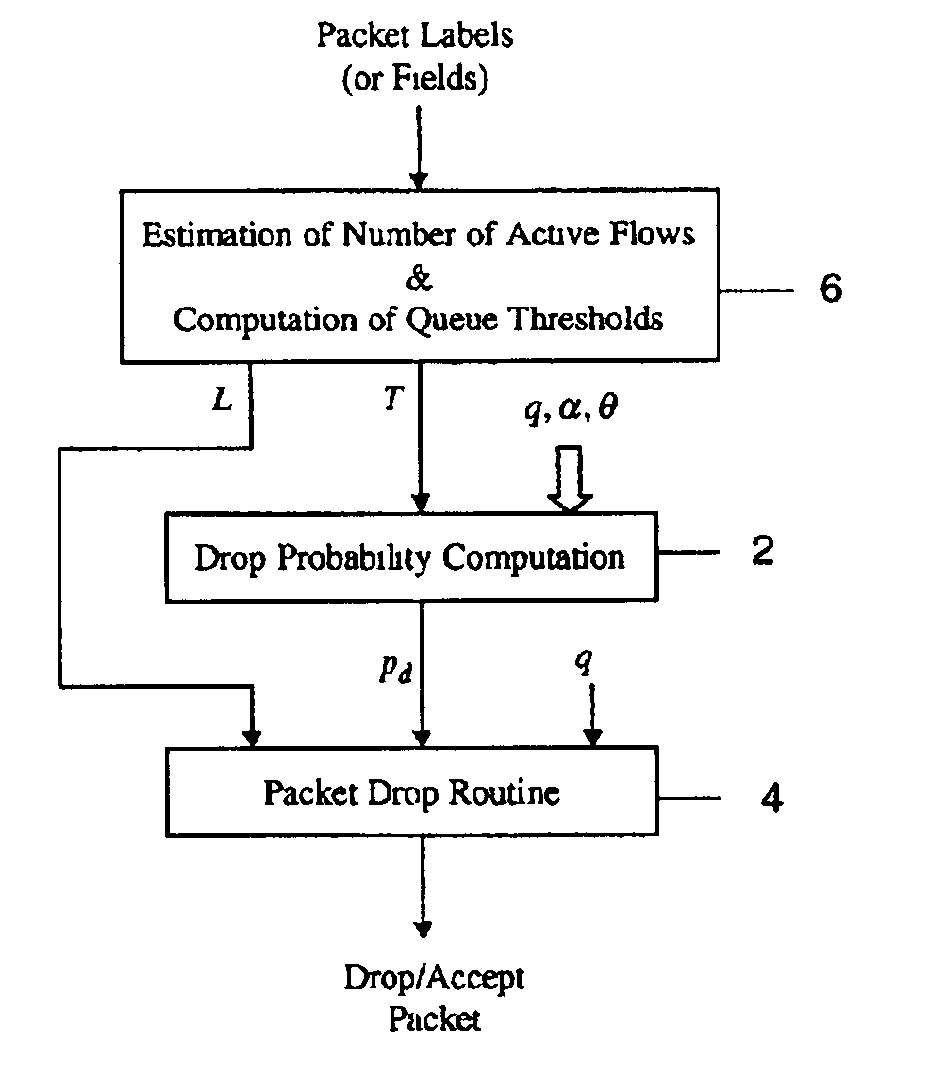

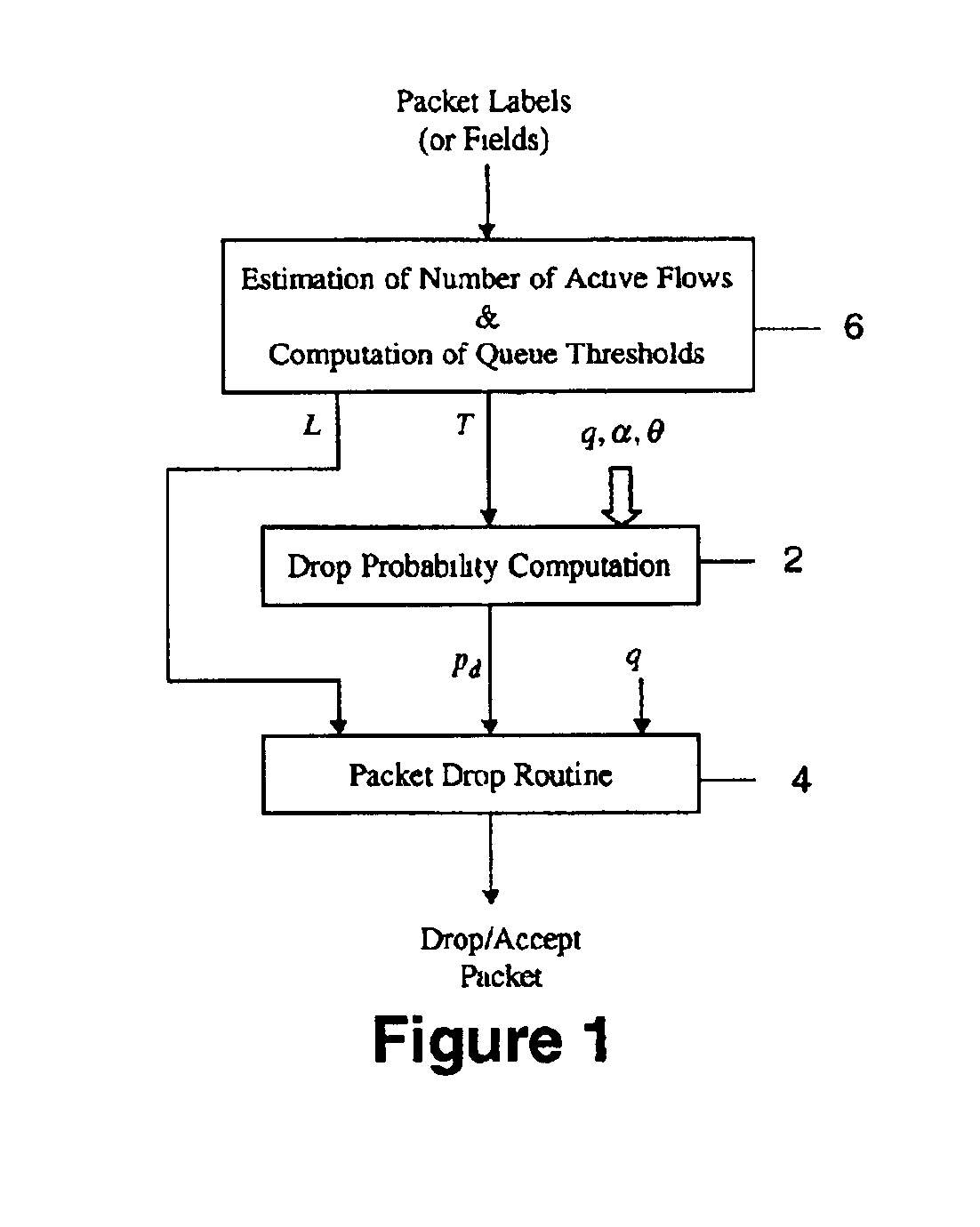

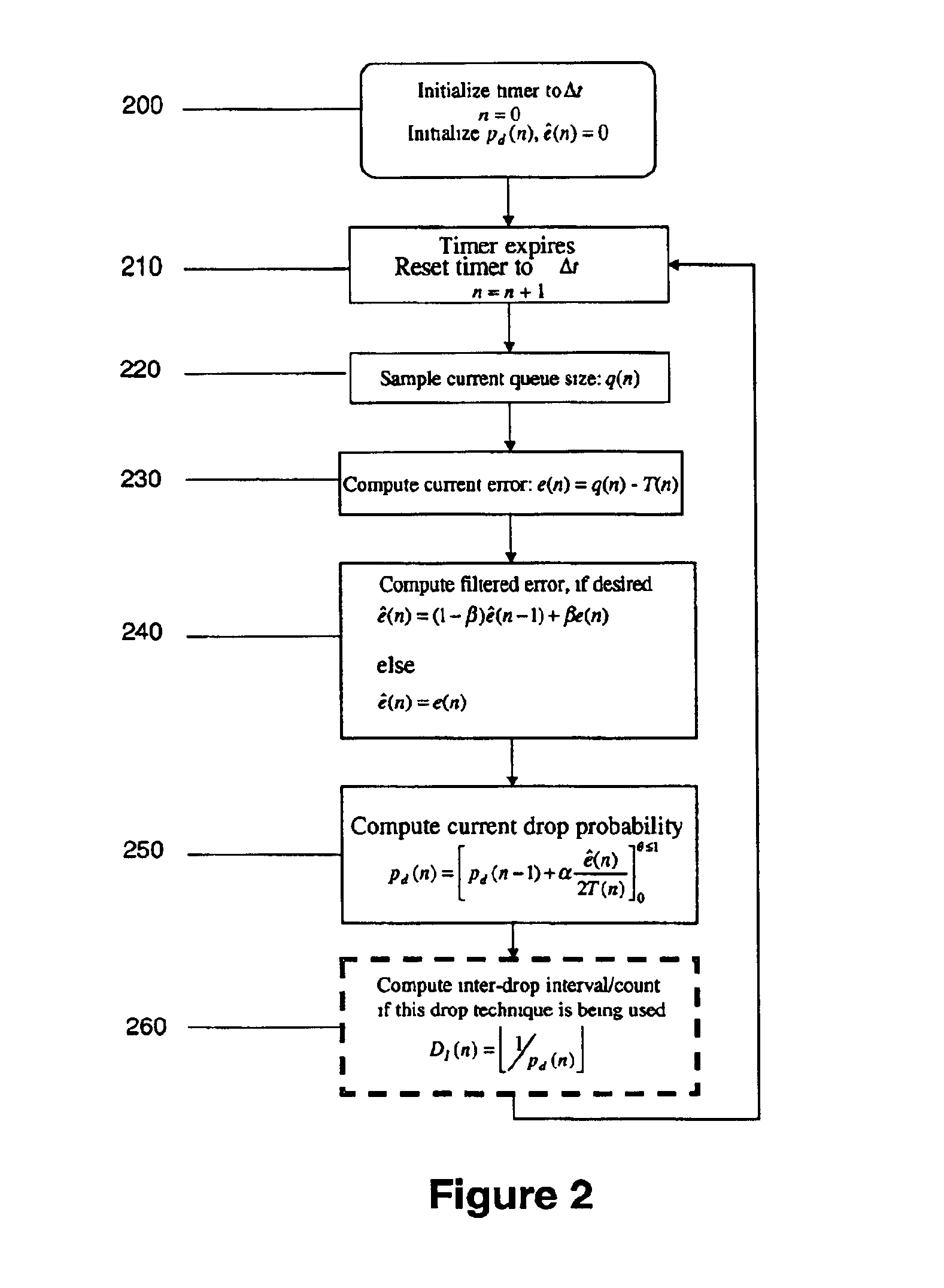

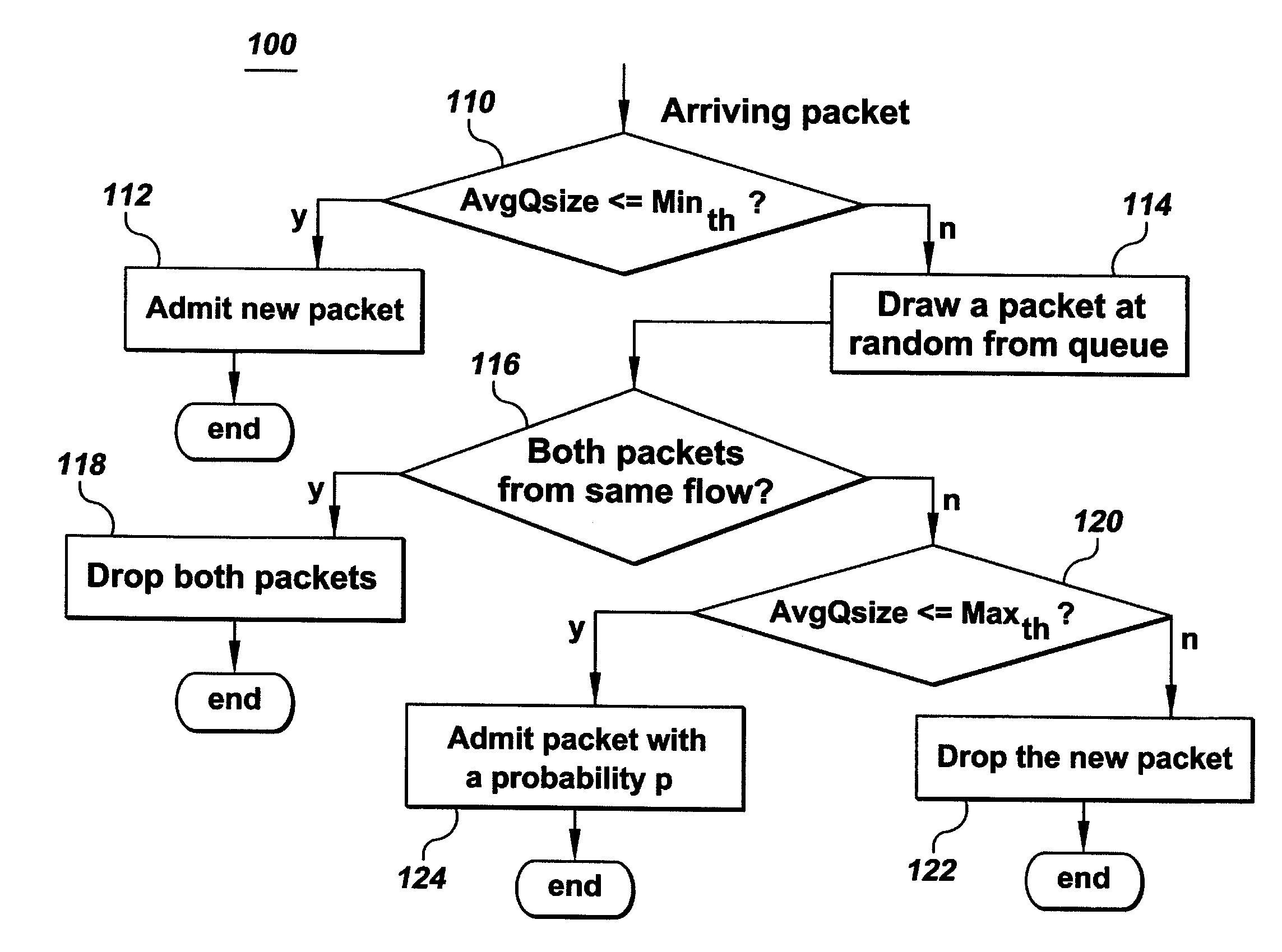

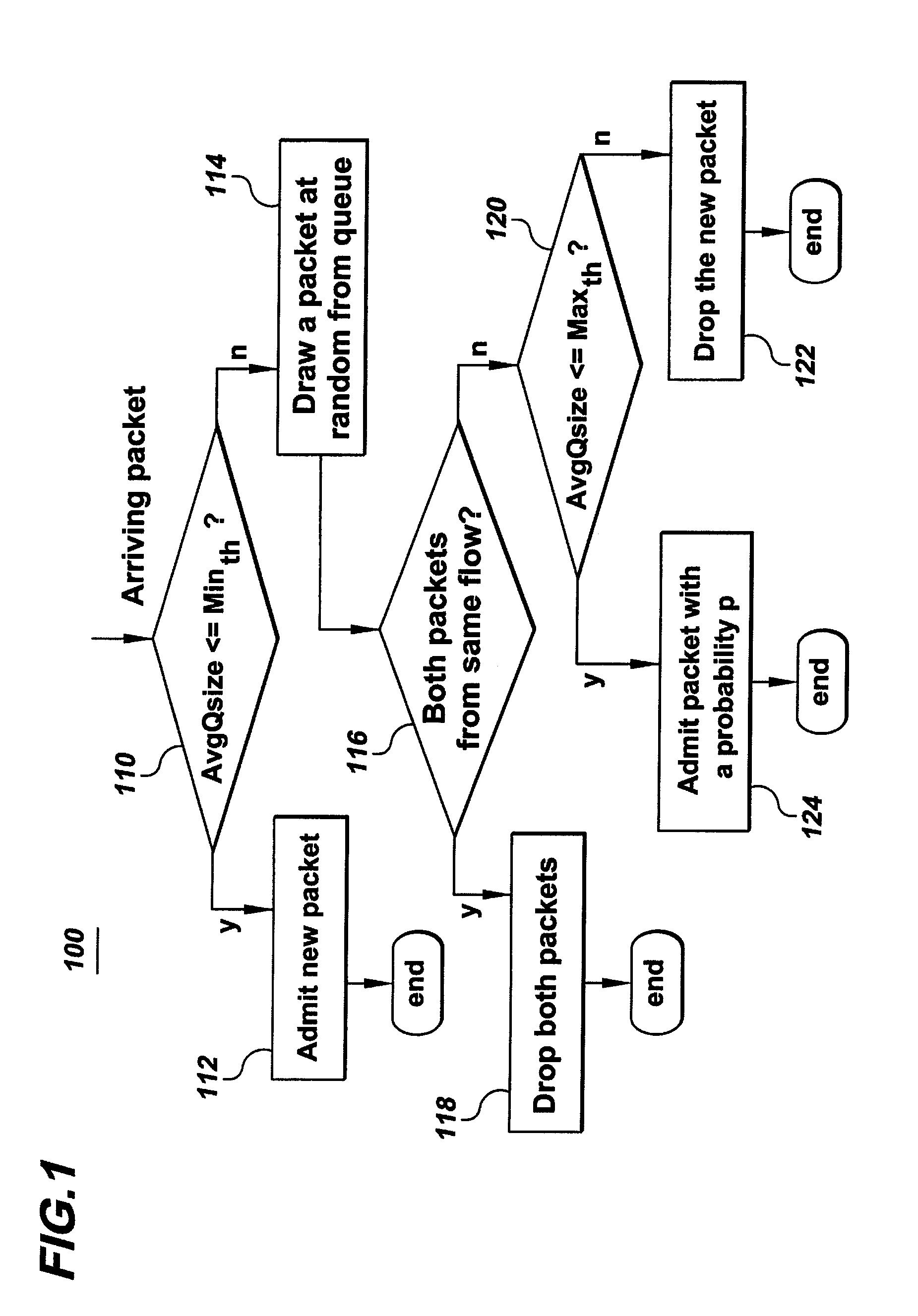

Active queue management with flow proportional buffering

InactiveUS6901593B2Guaranteed maximum utilizationError preventionTransmission systemsTraffic capacityPacket loss

A technique for an improved active queue management scheme which dynamically changes its threshold settings as the number of connections (and system load) changes is disclosed. Using this technique, network devices can effectively control packet losses and TCP timeouts while maintaining high link utilization. The technique also allows a network to support a larger number of connections during congestion periods.

Owner:AVAYA MANAGEMENT LP

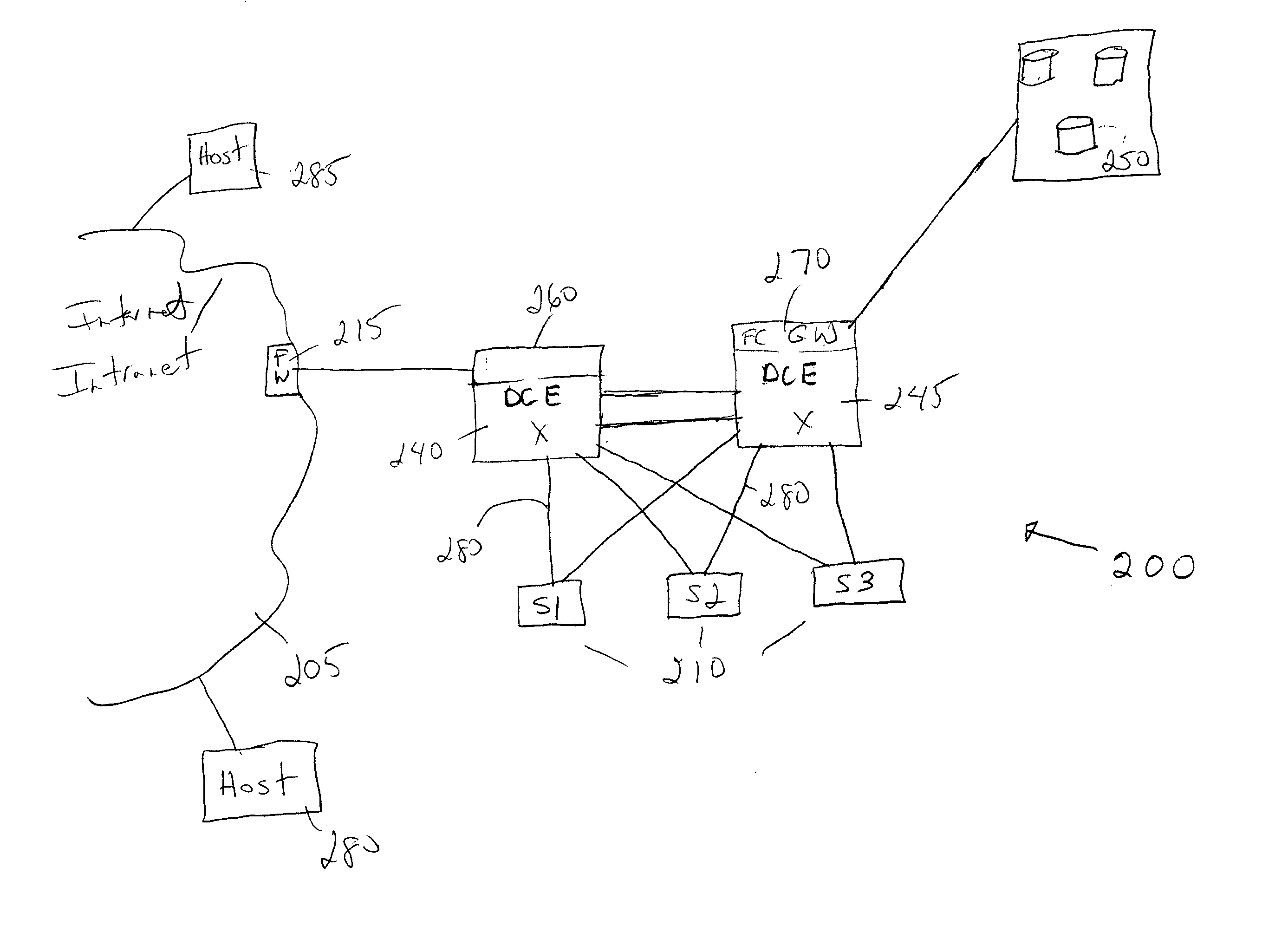

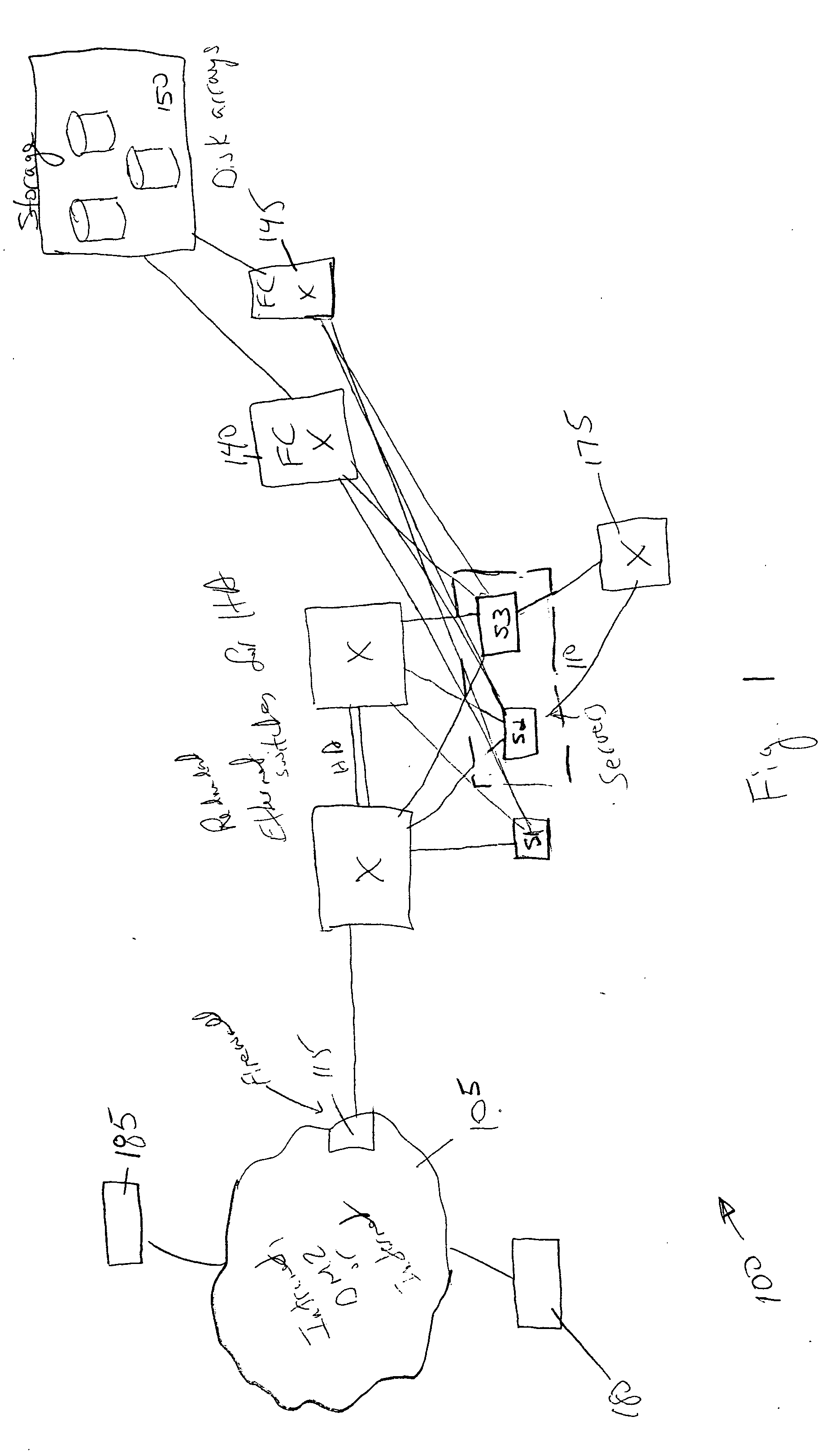

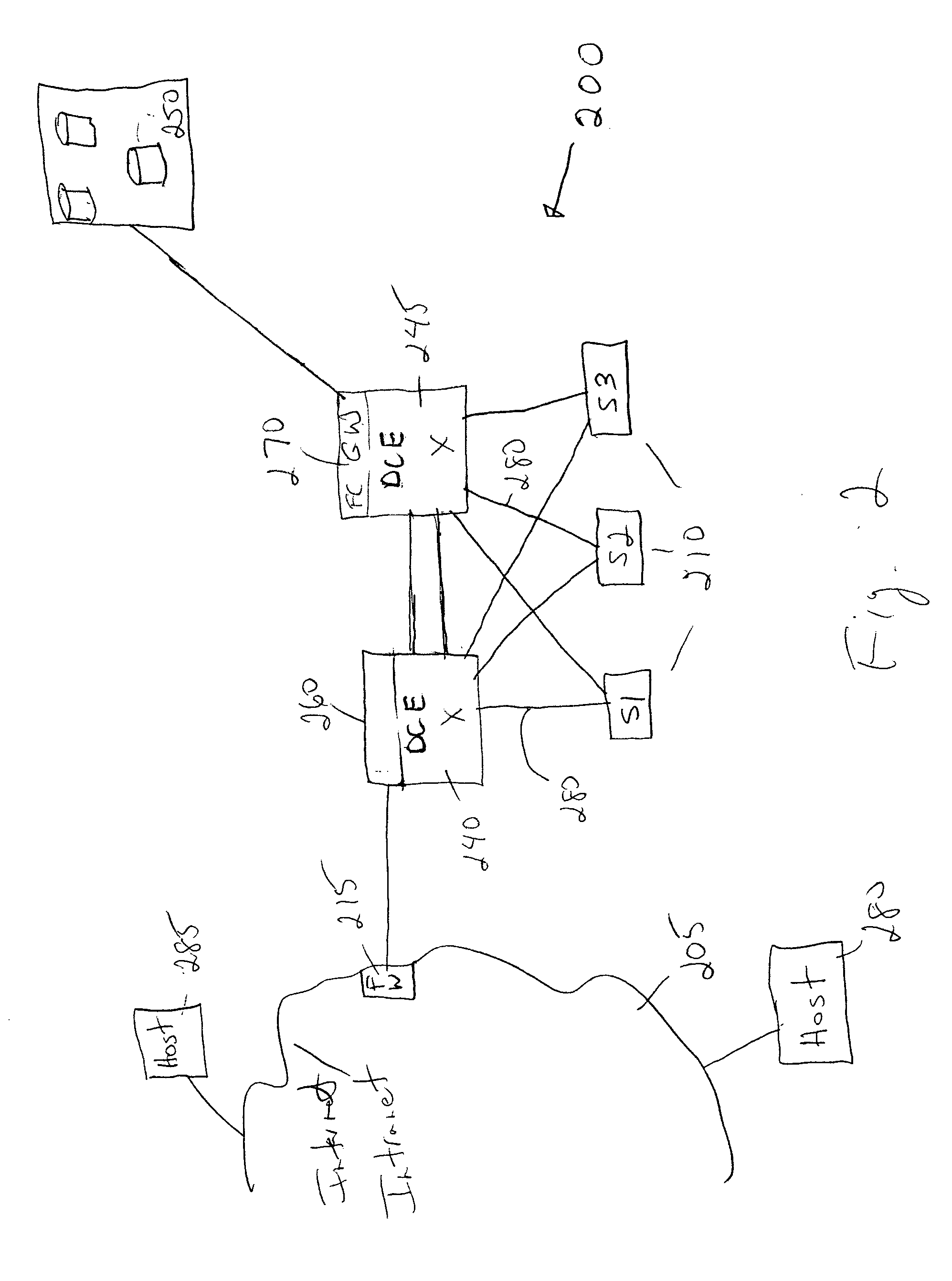

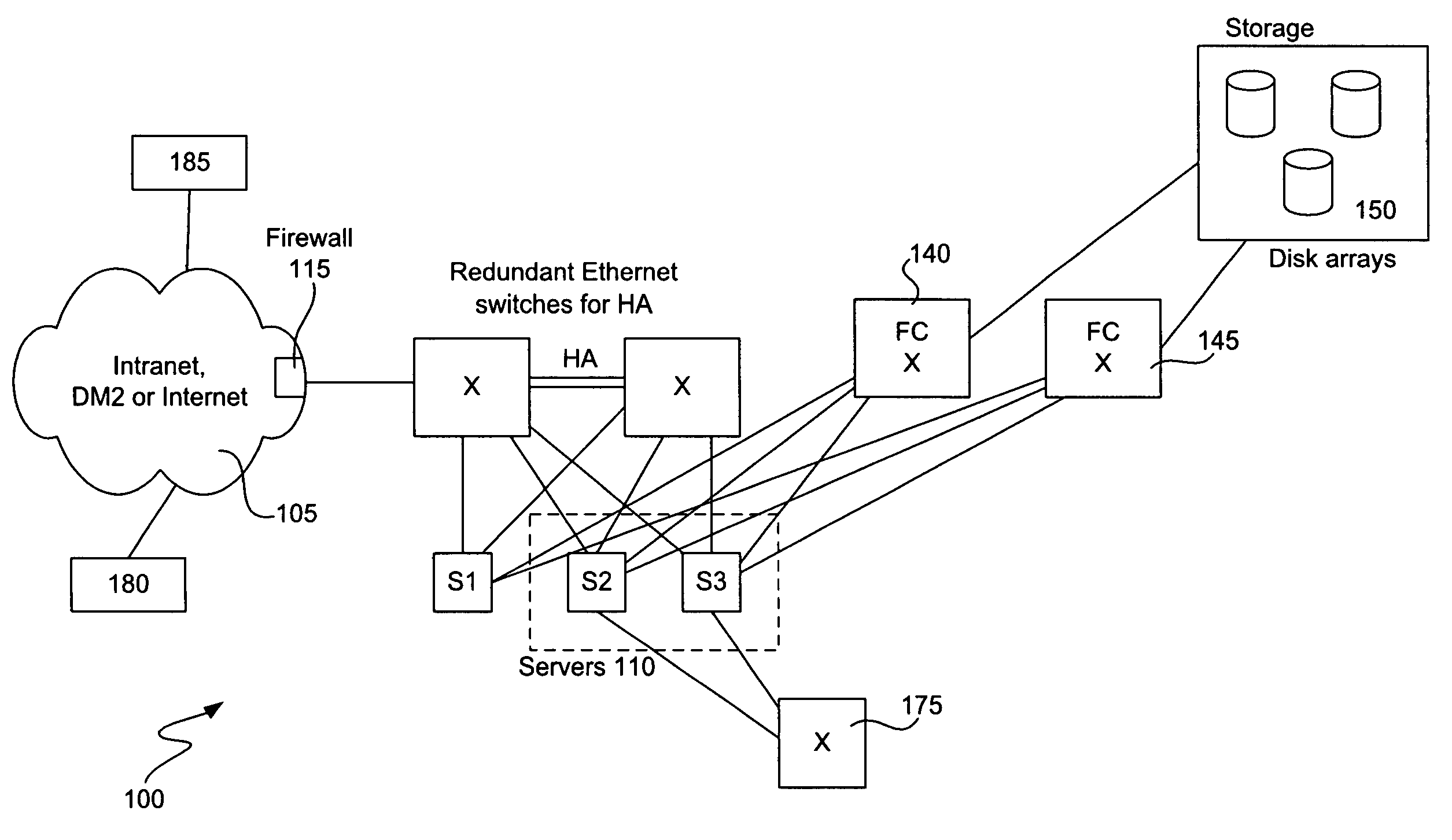

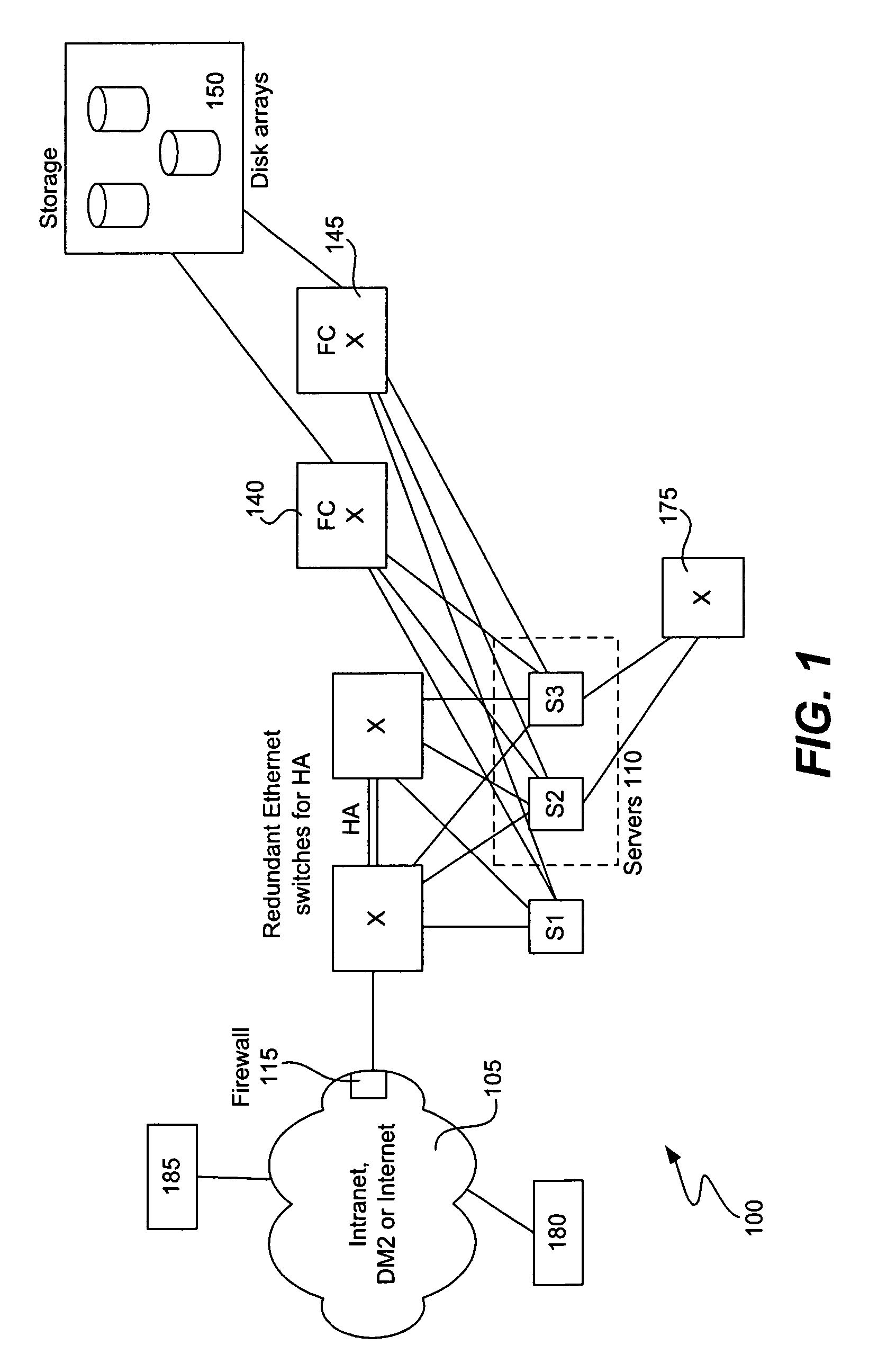

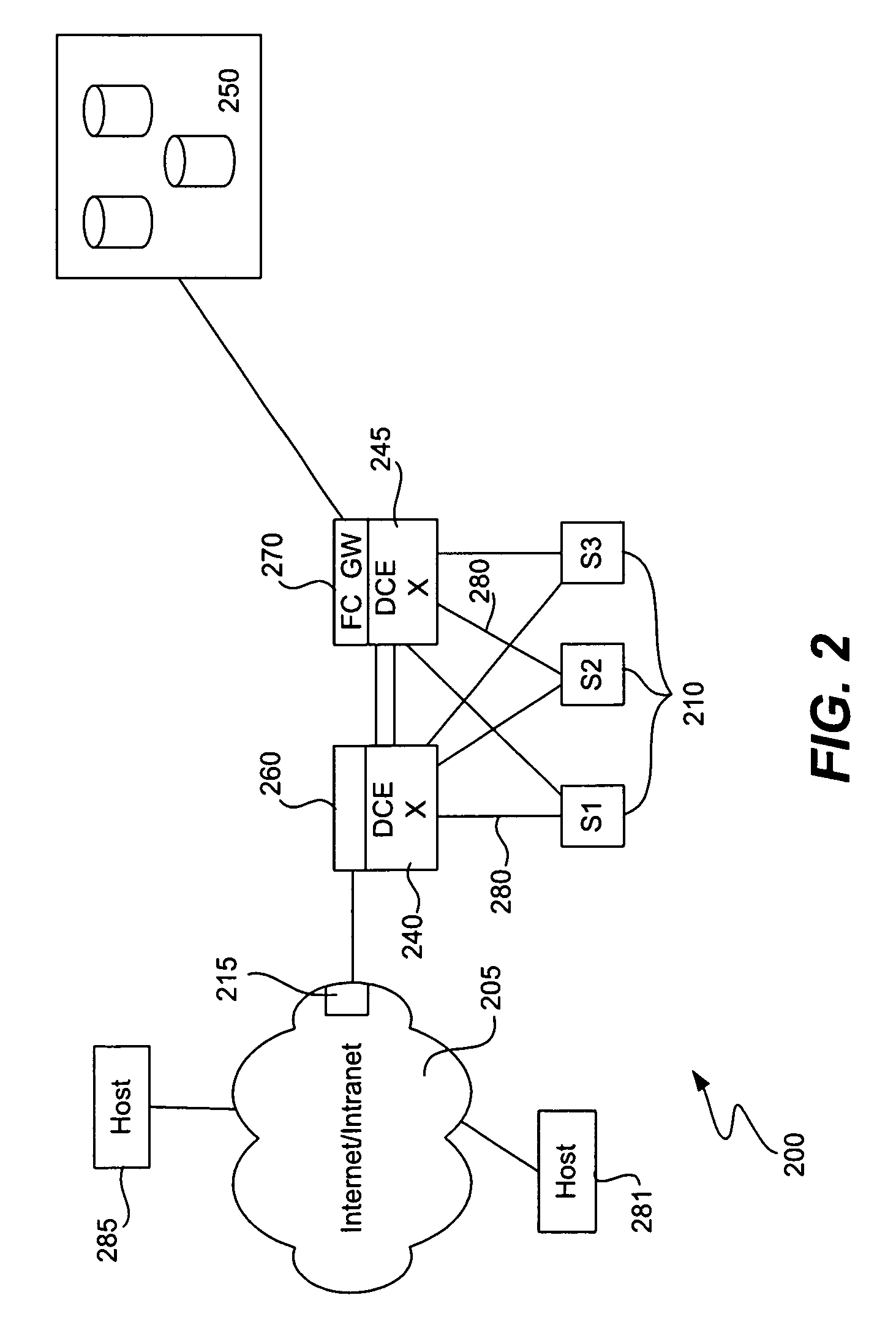

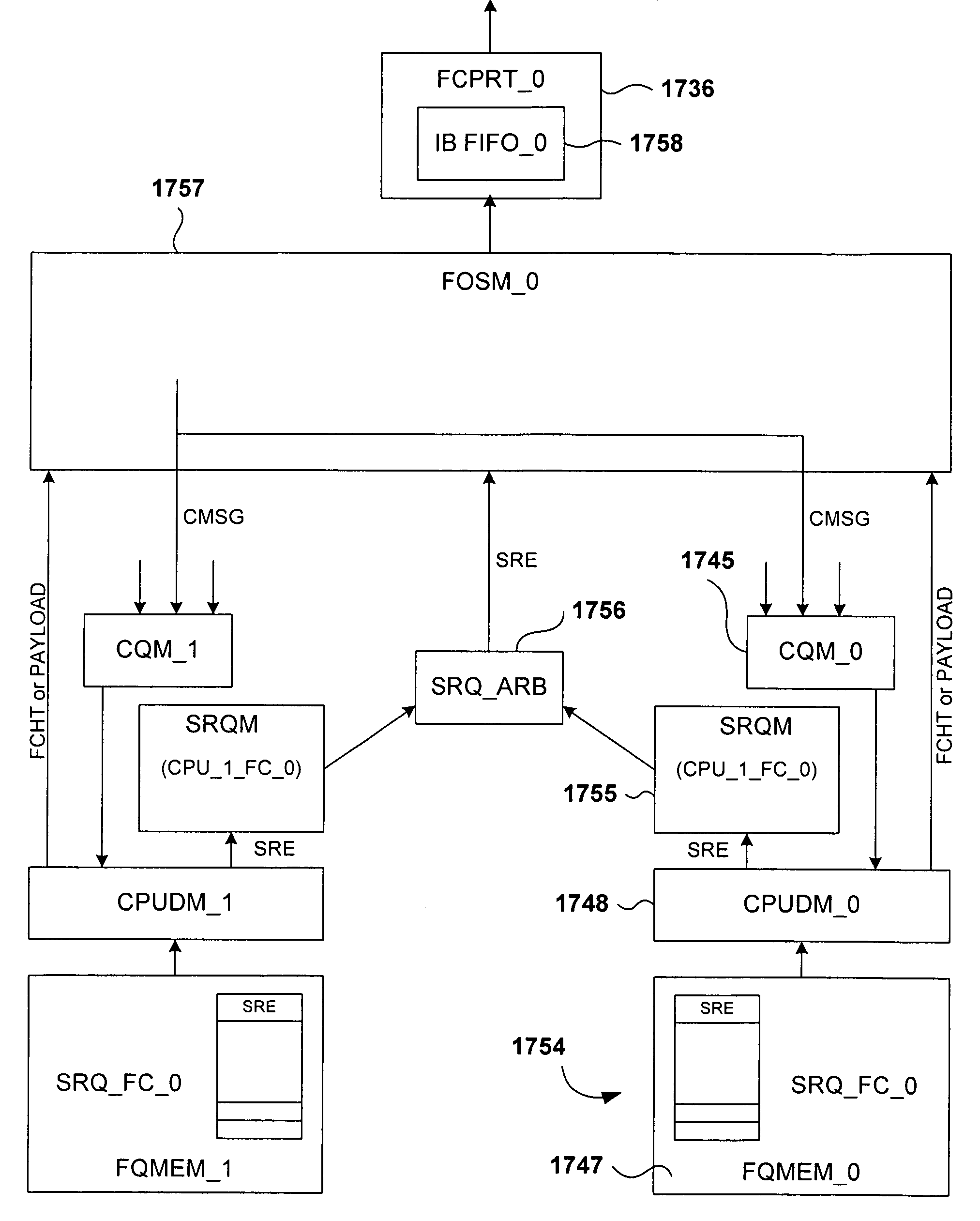

Ethernet extension for the data center

ActiveUS20060101140A1Improve reliabilityLower latencyDigital computer detailsData switching networksTraffic capacityHigh bandwidth

The present invention provides methods and devices for implementing a Low Latency Ethernet (“LLE”) solution, also referred to herein as a Data Center Ethernet (“DCE”) solution, which simplifies the connectivity of data centers and provides a high bandwidth, low latency network for carrying Ethernet and storage traffic. Some aspects of the invention involve transforming FC frames into a format suitable for transport on an Ethernet. Some preferred implementations of the invention implement multiple virtual lanes (“VLs”) in a single physical connection of a data center or similar network. Some VLs are “drop” VLs, with Ethernet-like behavior, and others are “no-drop” lanes with FC-like behavior. Some preferred implementations of the invention provide guaranteed bandwidth based on credits and VL. Active buffer management allows for both high reliability and low latency while using small frame buffers. Preferably, the rules for active buffer management are different for drop and no drop VLs.

Owner:CISCO TECH INC

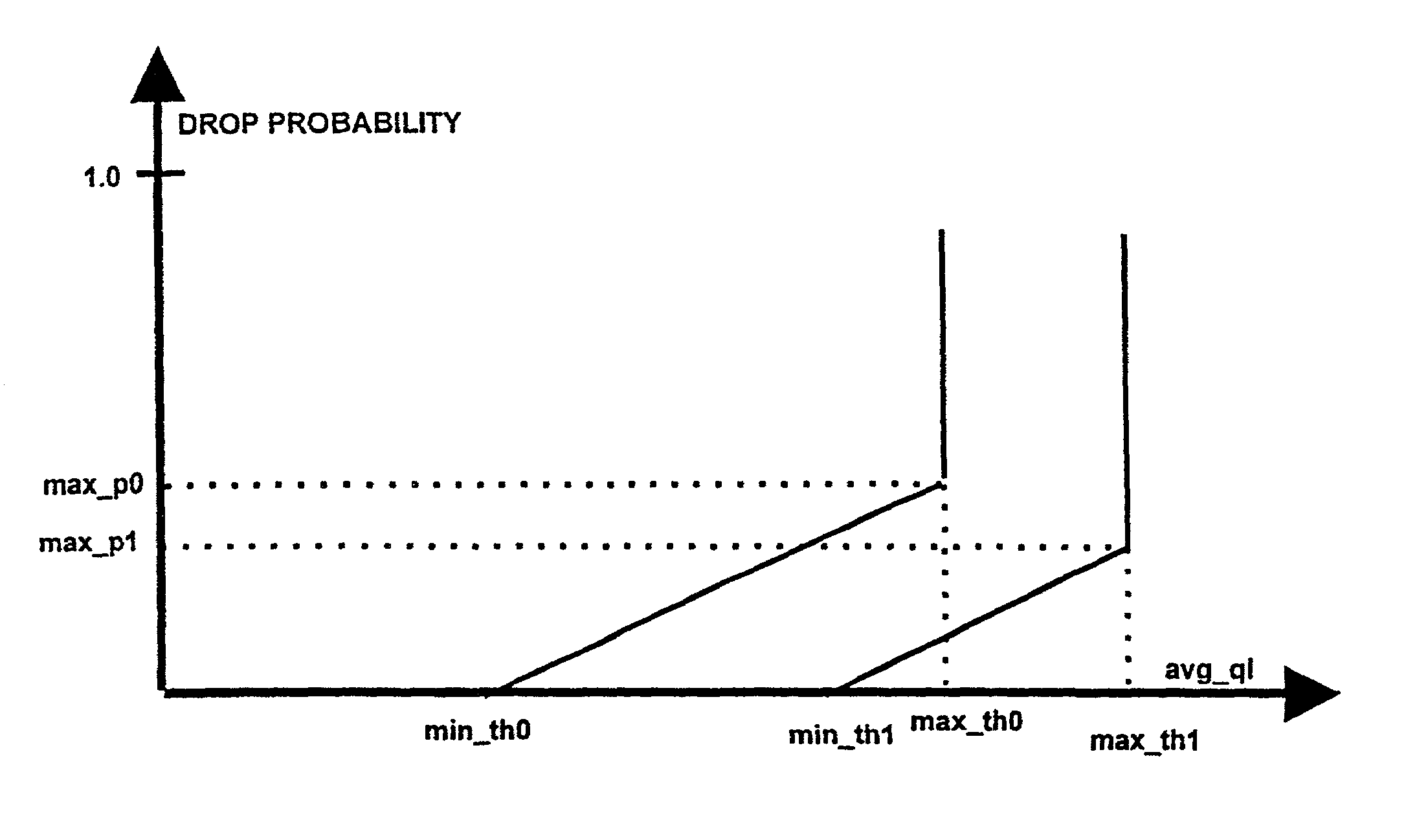

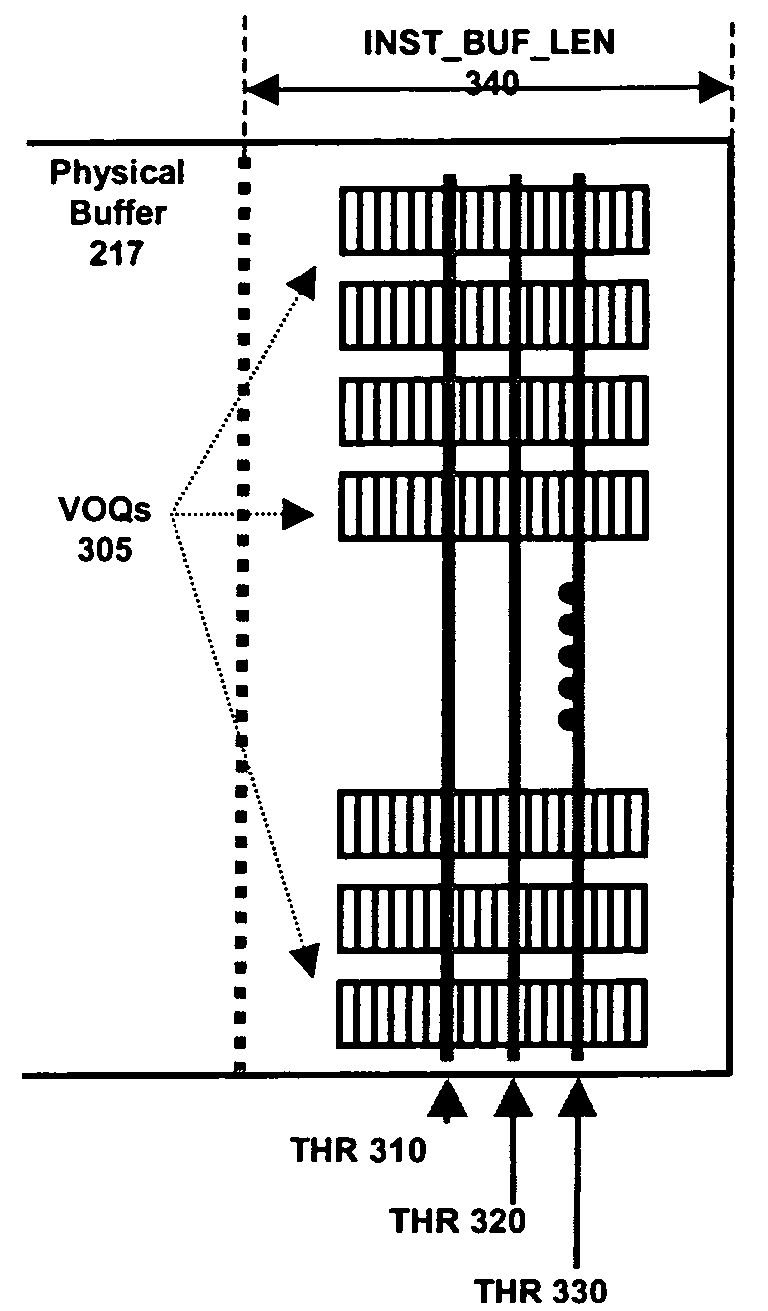

Queue based multi-level AQM with drop precedence differentiation

InactiveUS7286485B1Minimize occurrenceReduce complexityError preventionTransmission systemsNetwork conditionsActive queue management

Disclosed is a queue based multi-level Active Queue Management with drop precedence differentiation method and apparatus which uses queue size information for congestion control. The method provides for a lower complexity in parameter configuration and greater ease of configuration over a wide range of network conditions. A key advantage is a greater ability to maintain stabilized network queues, thereby minimizing the occurrences of queue overflows and underflows, and providing high system utilization.

Owner:AVAYA HLDG

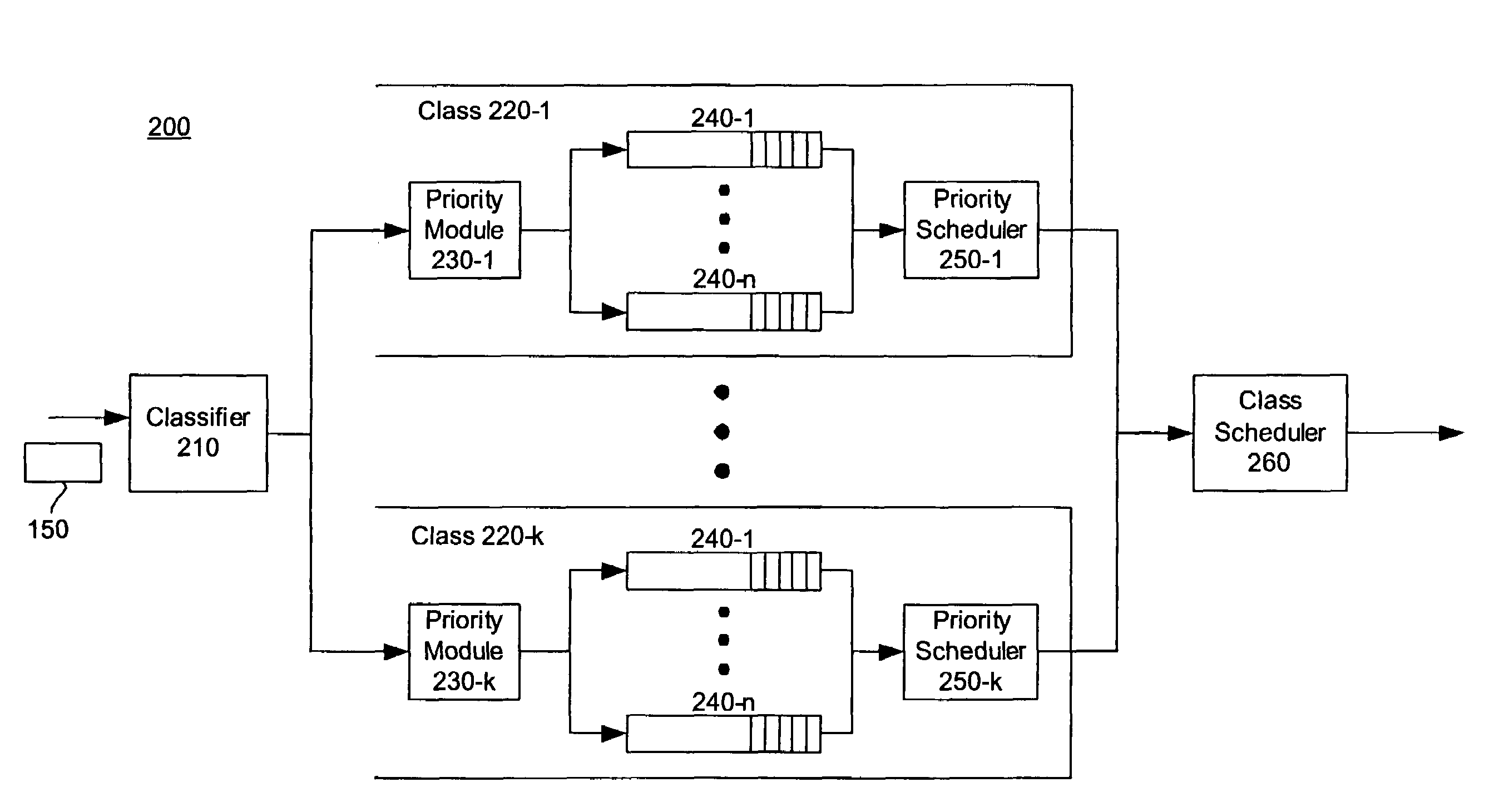

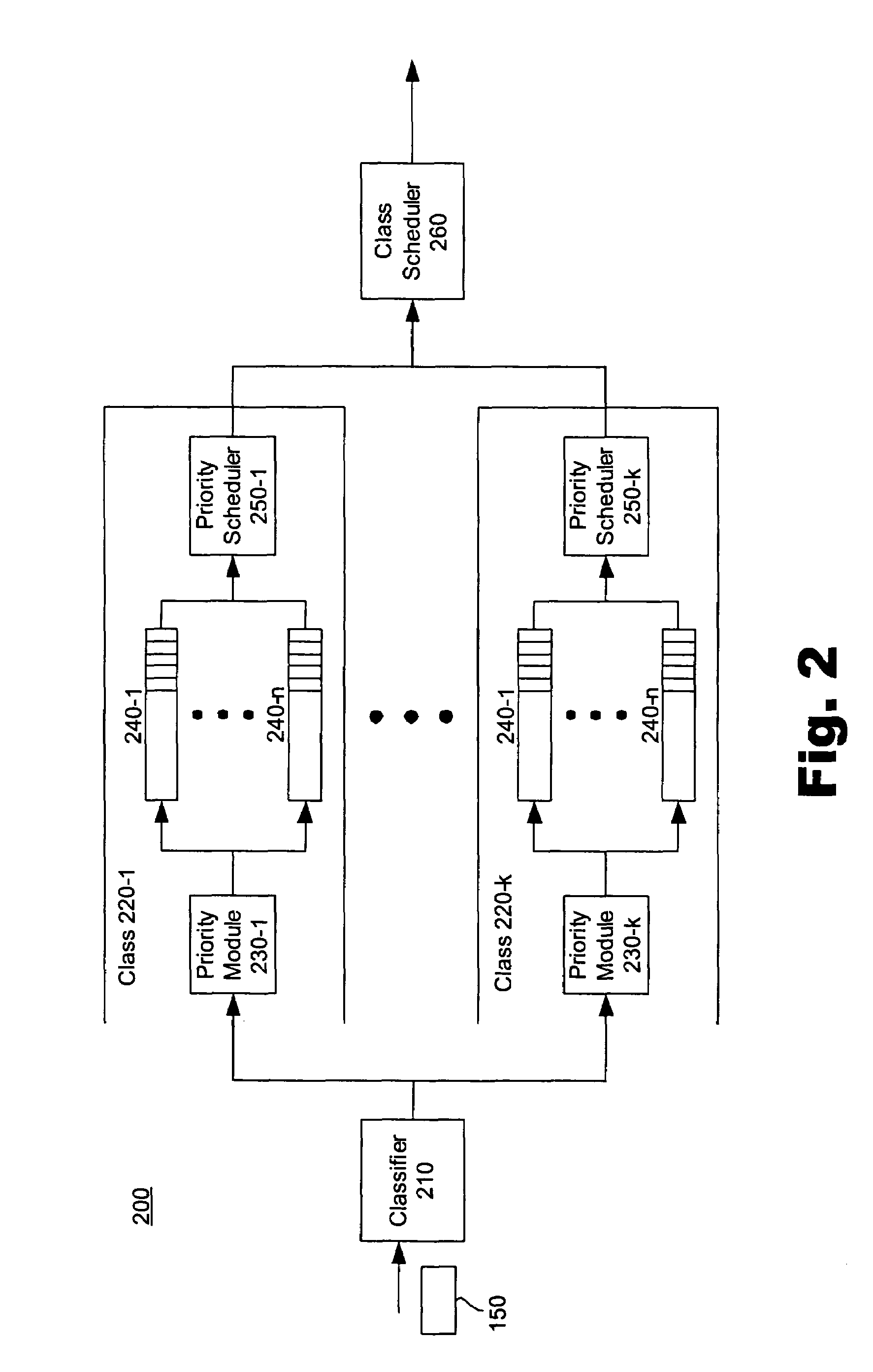

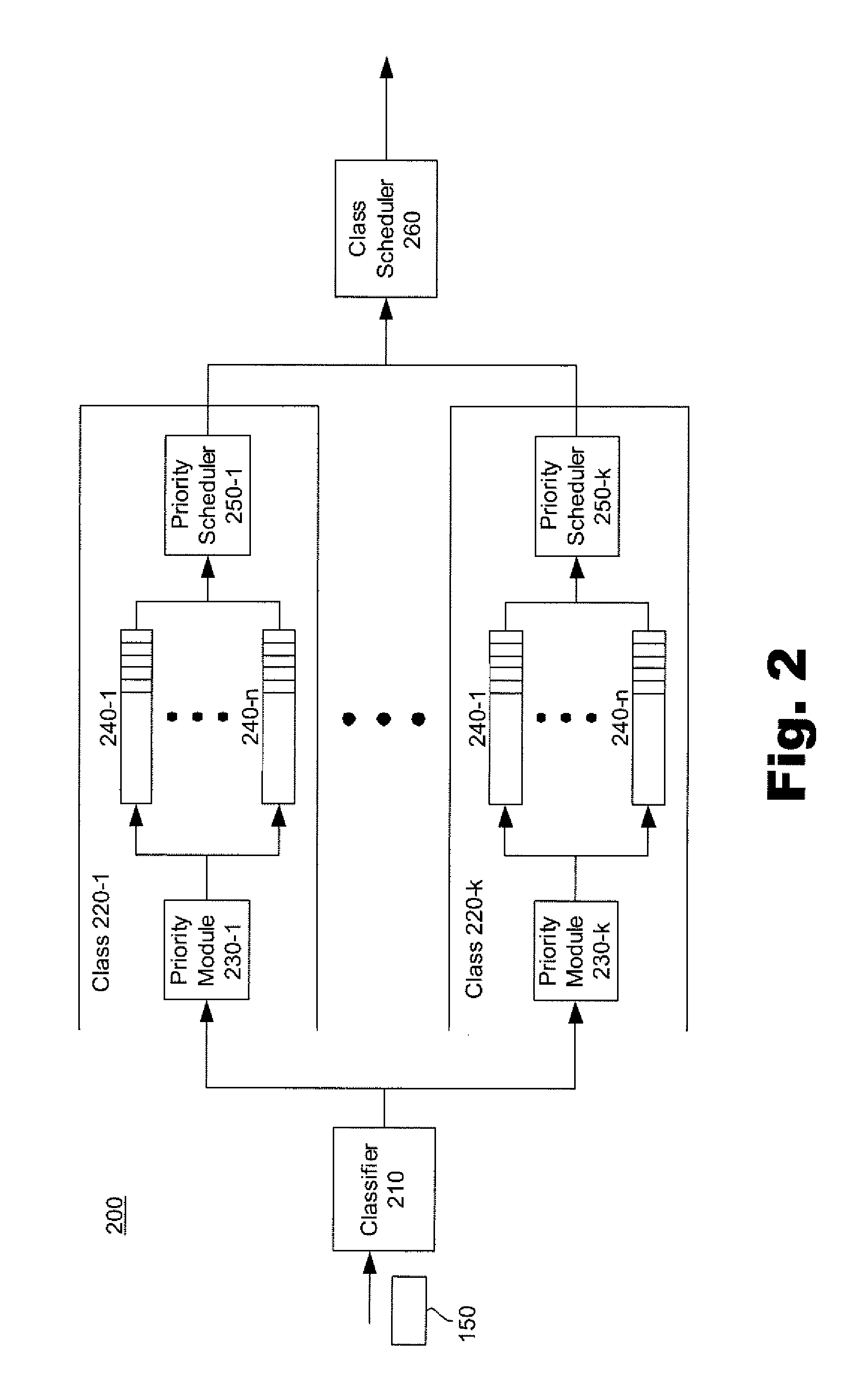

Systems and methods for queue management in packet-switched networks

InactiveUS7680139B1Error preventionTransmission systemsQueue management systemActive queue management

This disclosure relates to methods and systems for queuing traffic in packet-switched networks. In one of many possible embodiments, a queue management system includes a plurality of queues and a priority module configured to assign incoming packets to the queues based on priorities associated with the incoming packets. The priority module is further configured to drop at least one of the packets already contained in the queues. The priority module is configured to operate across multiple queues when determining which of the packets contained in the queues to drop. Some embodiments provide for hybrid queue management that considers both classes and priorities of packets.

Owner:VERIZON PATENT & LICENSING INC +1

System and method for generating and providing dispatchable operating reserve energy capacity through use of active load management to compensate for an over-generation condition

InactiveUS20120245753A1Level controlLoad forecast in ac networkUtility companyActive Network Management

A utility employs a method for generating available operating reserve. Electric power consumption device(s) serviced by the utility is determined during period(s) of time to produce power consumption data, stored in a repository. A determination is made that a control event is to occur during which power is to be reduced to one or more devices. Prior to the control event and under an assumption that it is not to occur, power consumption behavior expected of the device(s) is generated for a time period during which the control event is expected to occur based on the stored power consumption data. Additionally, prior to the control event, projected energy savings resulting from the control event, and associated with a power supply value (PSV) are determined based on the devices' power consumption behavior. An amount of available operating reserve is determined based on the projected energy savings.

Owner:CAUSAM ENERGY INC

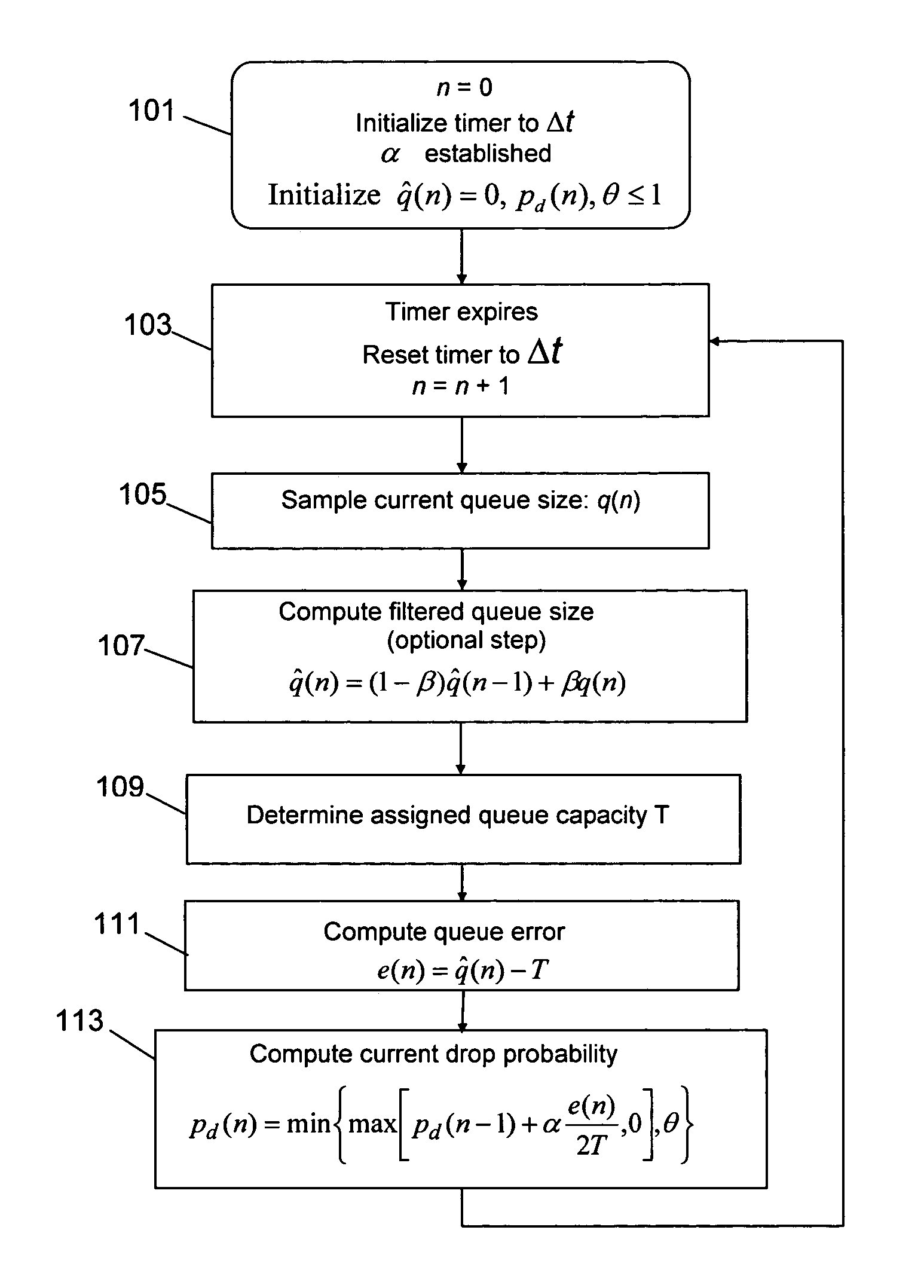

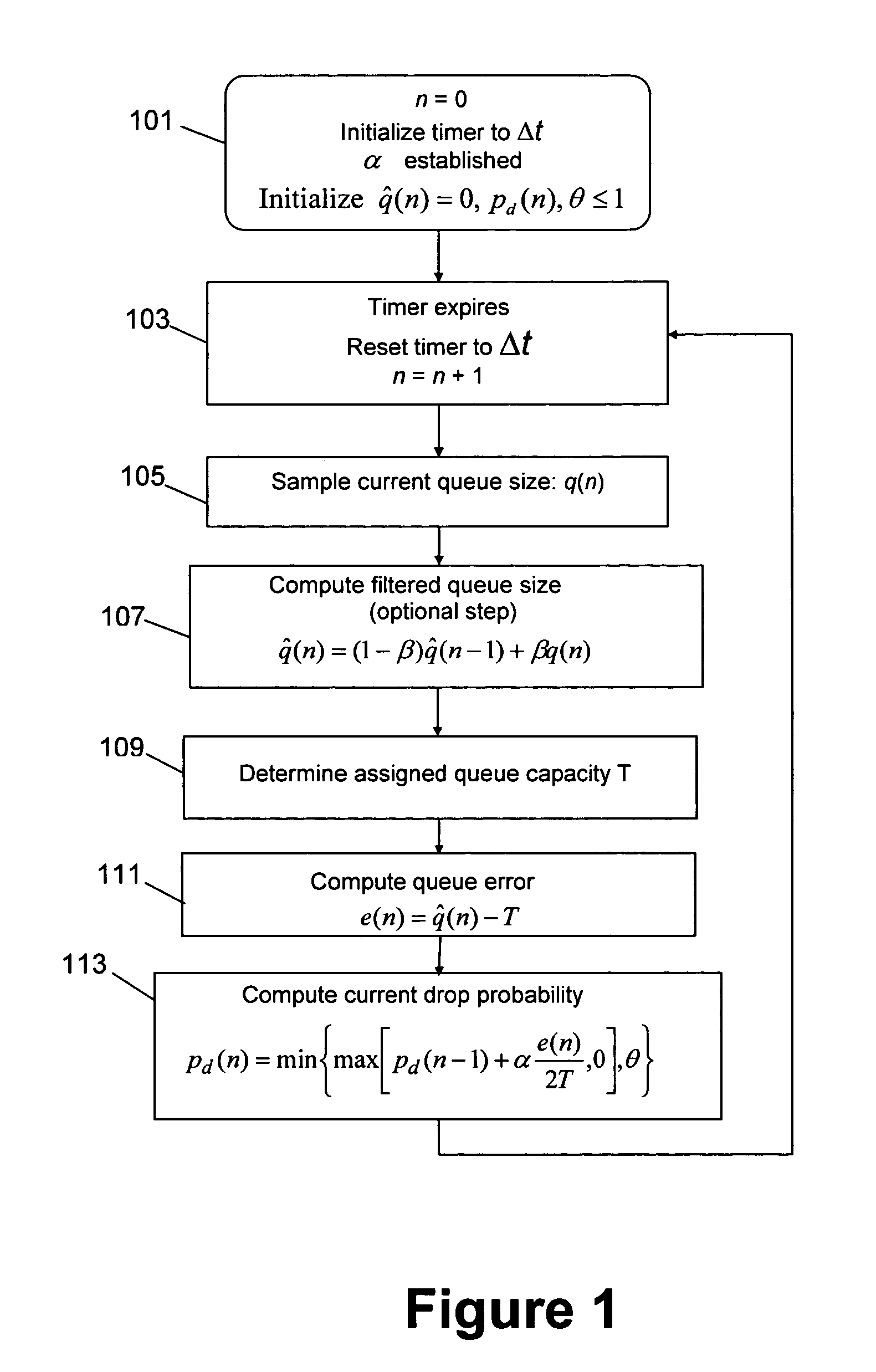

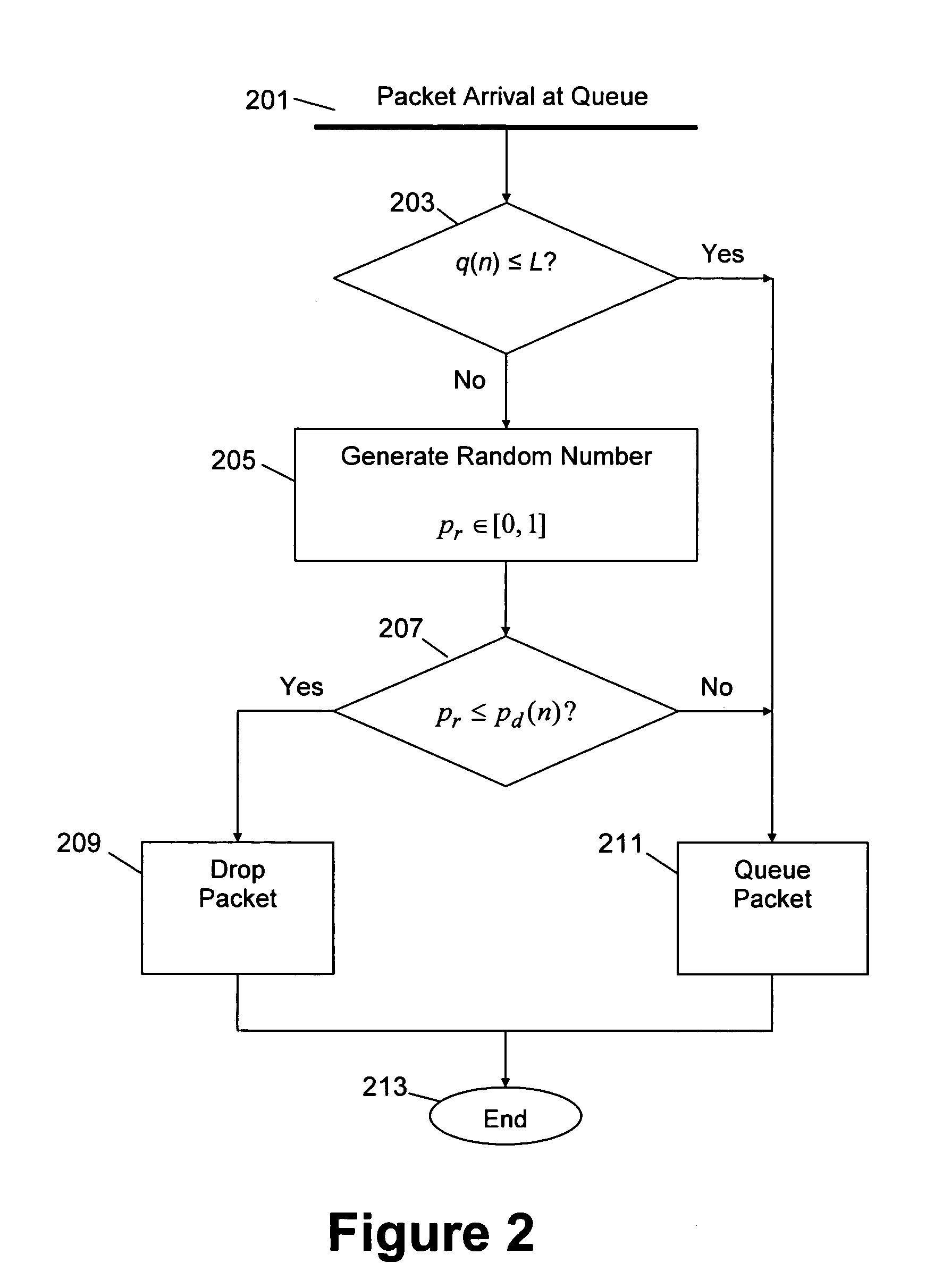

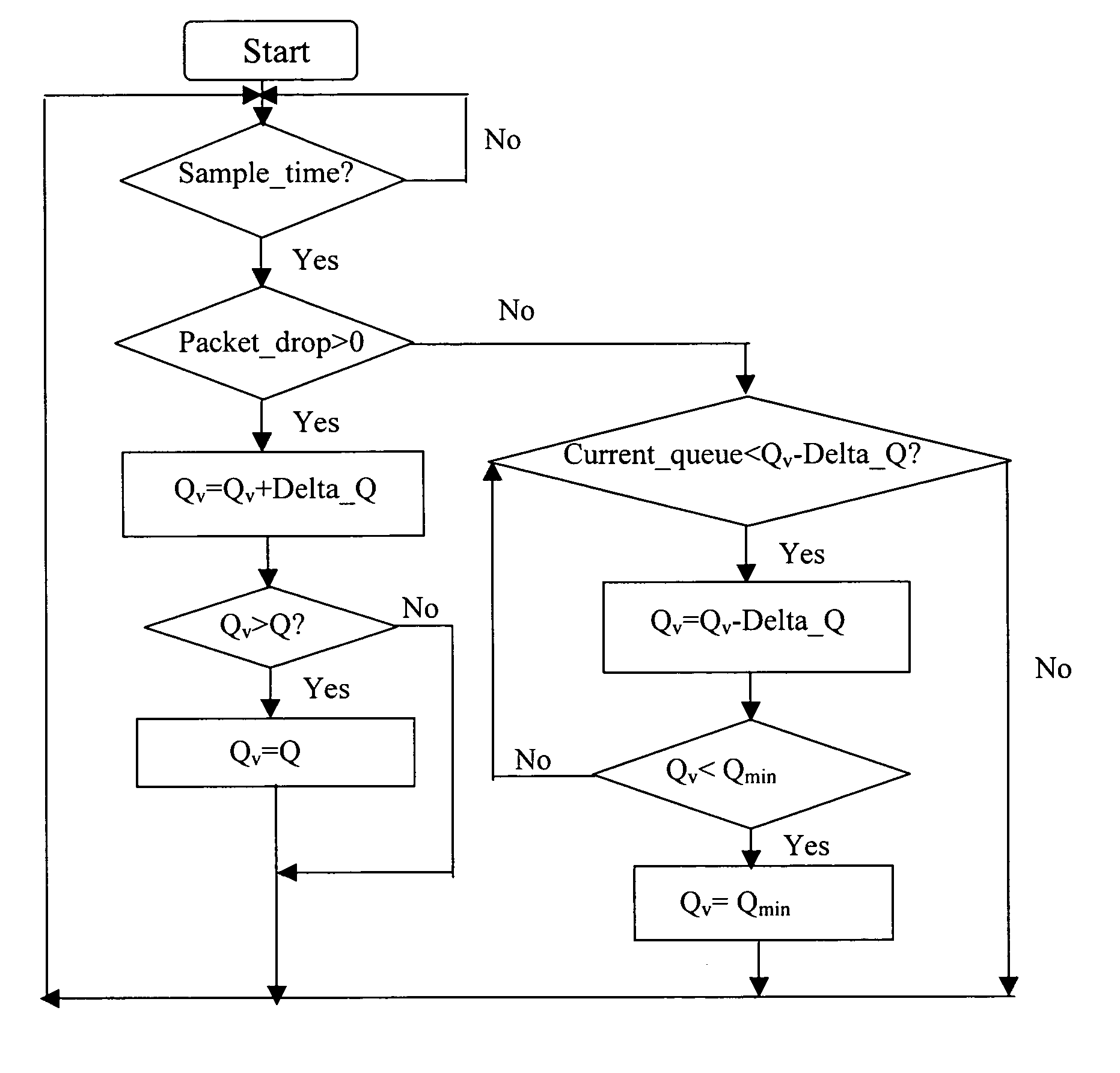

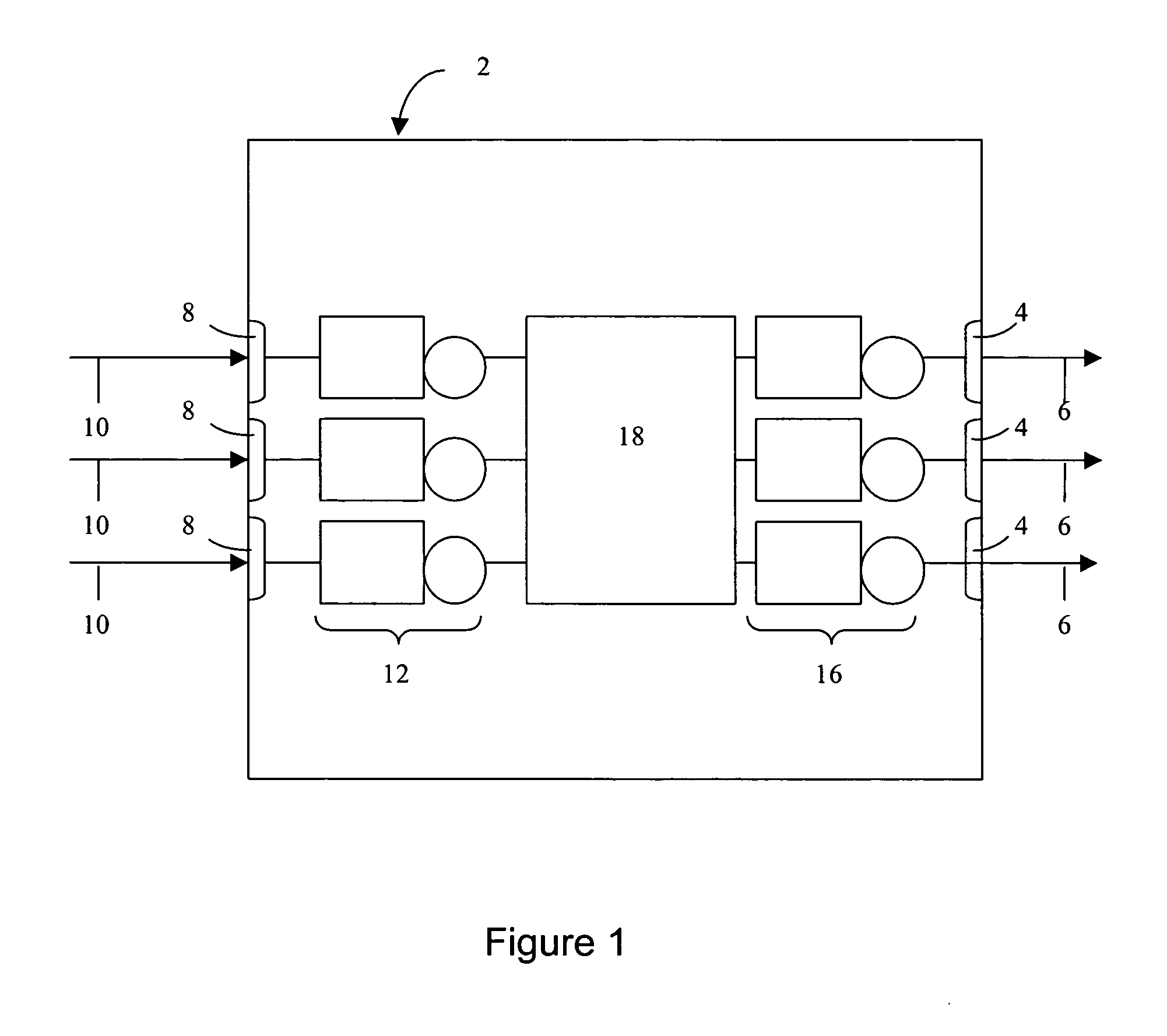

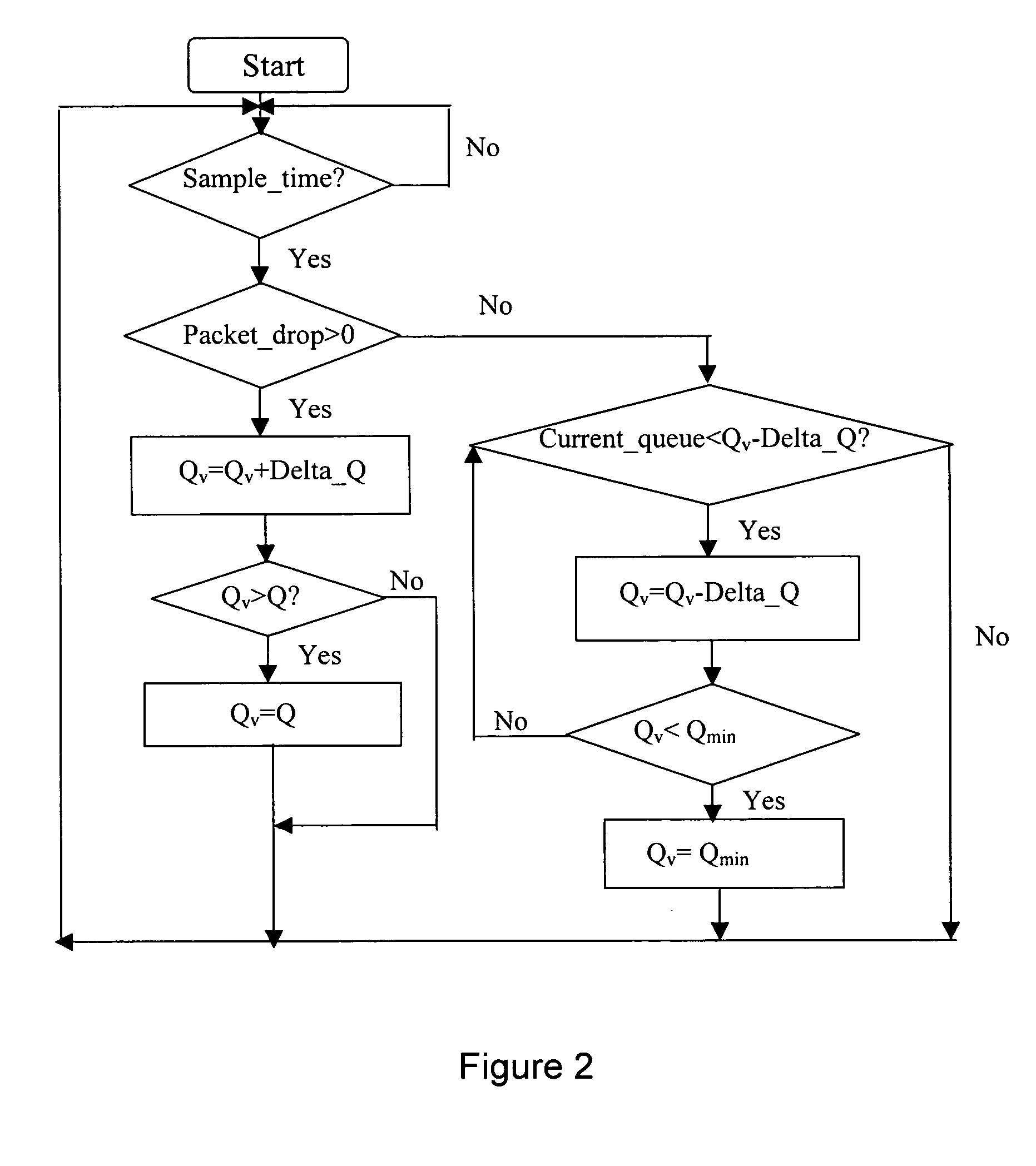

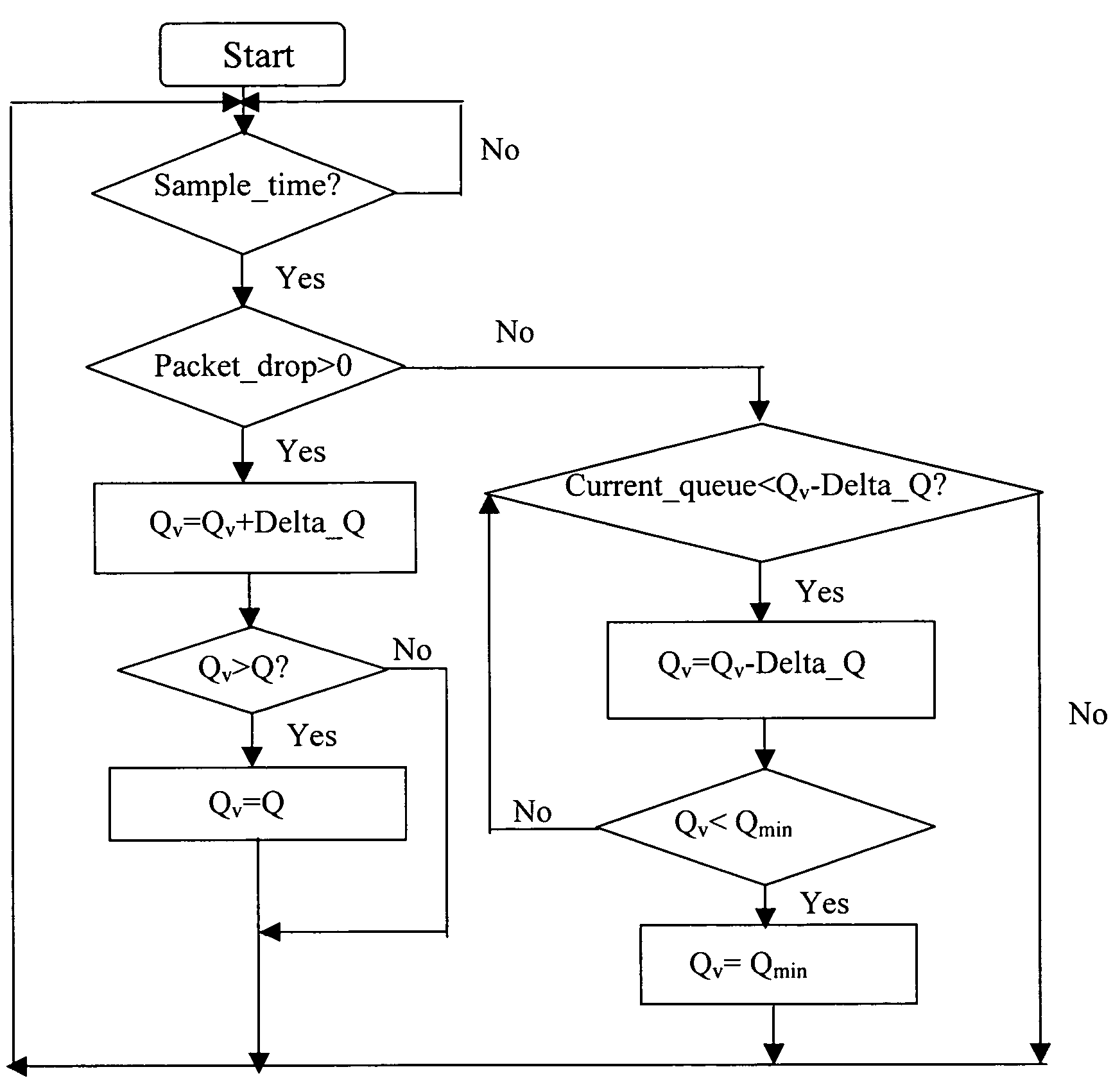

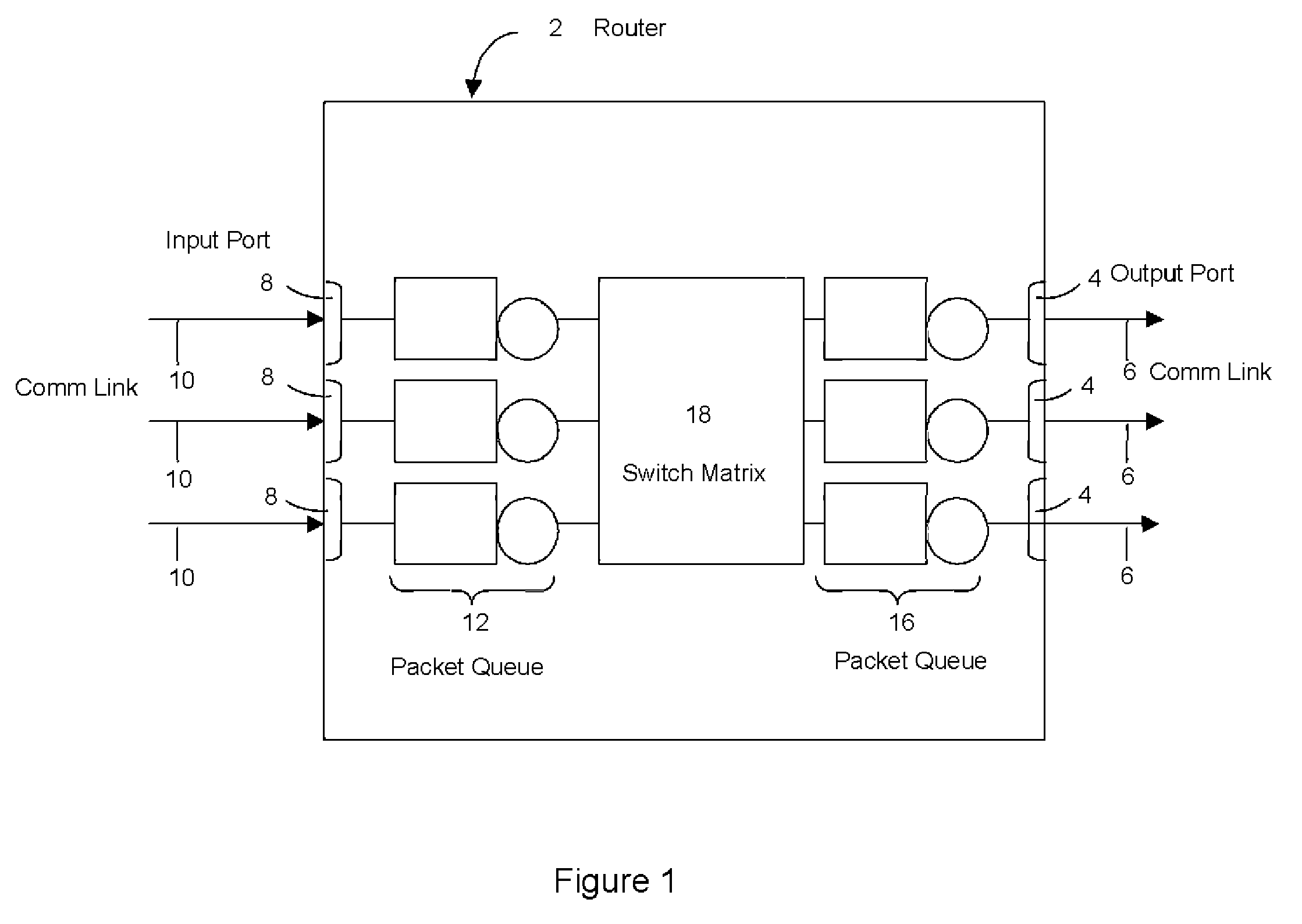

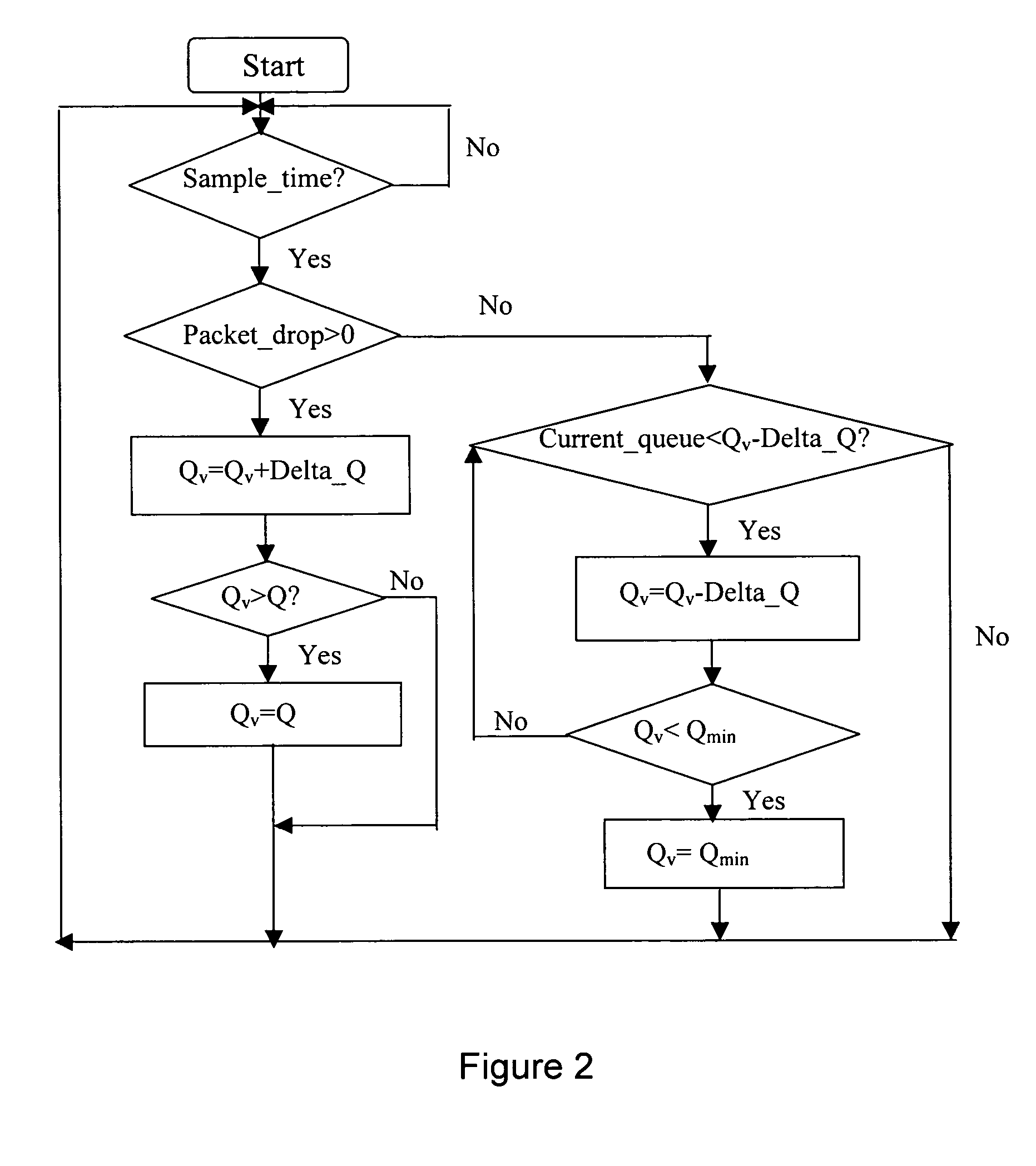

Queue-based active queue management process

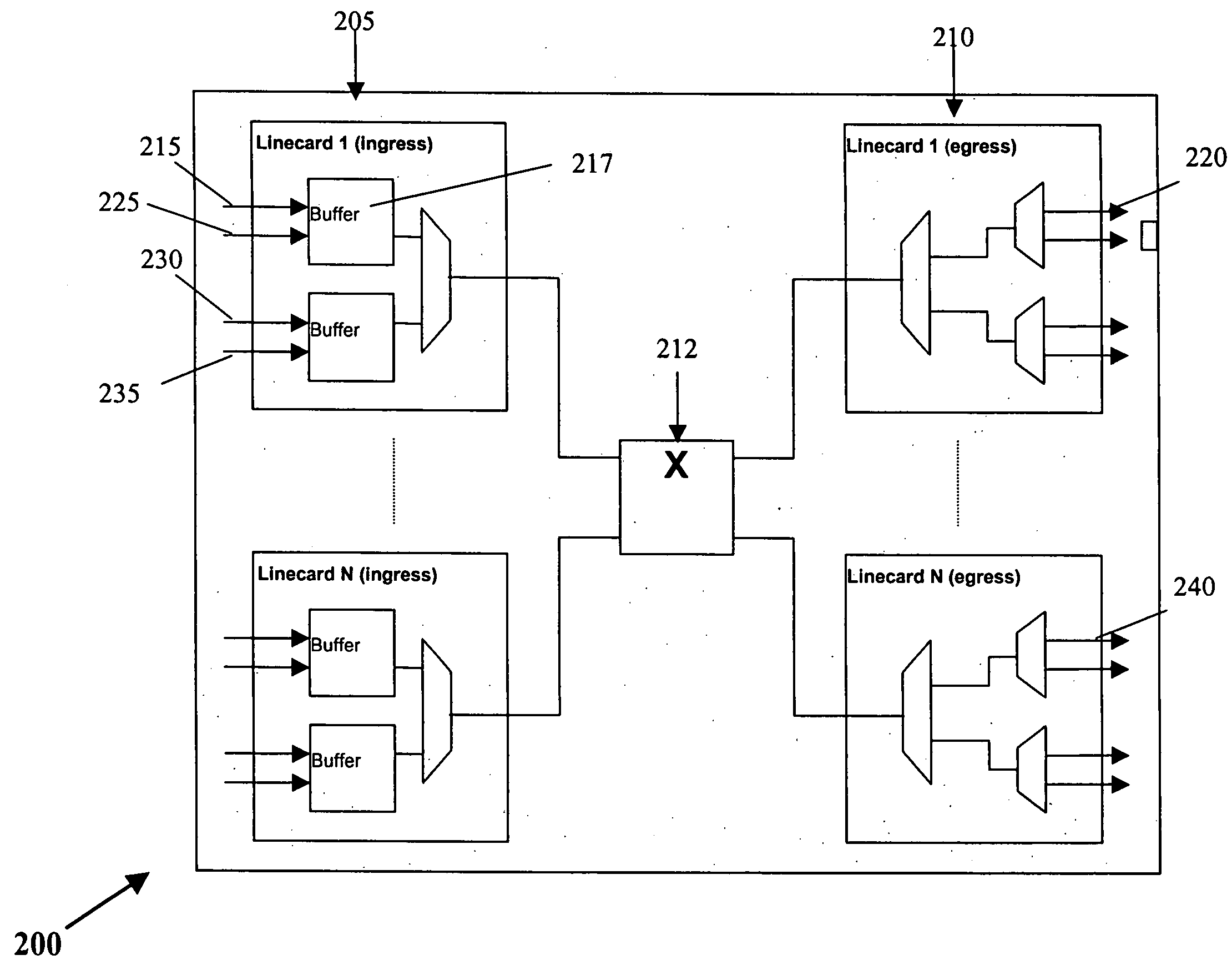

ActiveUS20060045008A1More buffer capacityIncreasing queue sizeError preventionTransmission systemsNetwork communicationActive queue management

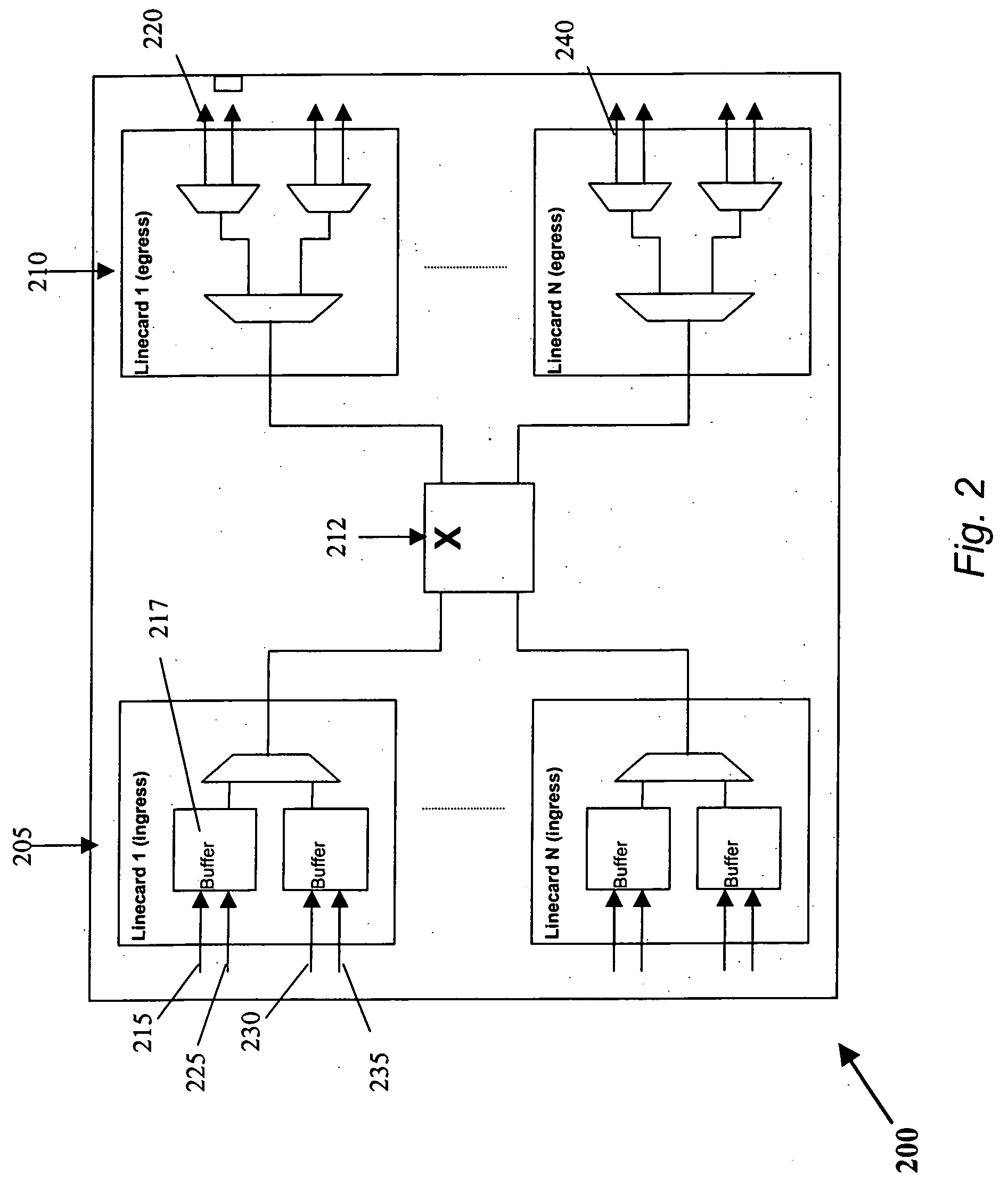

An active queue management (AQM) process for network communications equipment. The AQM process is queue based and involves applying at a queue size threshold congestion notification to communications packets in a queue of a link via packet dropping; and adjusting said queue size threshold on the basis of the congestion level. The AQM process releases more buffer capacity to accommodate more incoming packets by increasing said queue size threshold when congestion increases; and decreases buffer capacity by reducing said queue size threshold when congestion decreases. Network communications equipment includes a switch component for switching communications packets between input ports and output ports, packet queues for at least the output ports, and an active queue manager for applying congestion notification to communications packets in the queues for the output ports via packet dropping. The congestion notification is applied at respective queue size thresholds for the queues, and the thresholds adjusted on the basis of the respective congestion levels of the queues of the output ports.

Owner:INTELLECTUAL VENTURES II

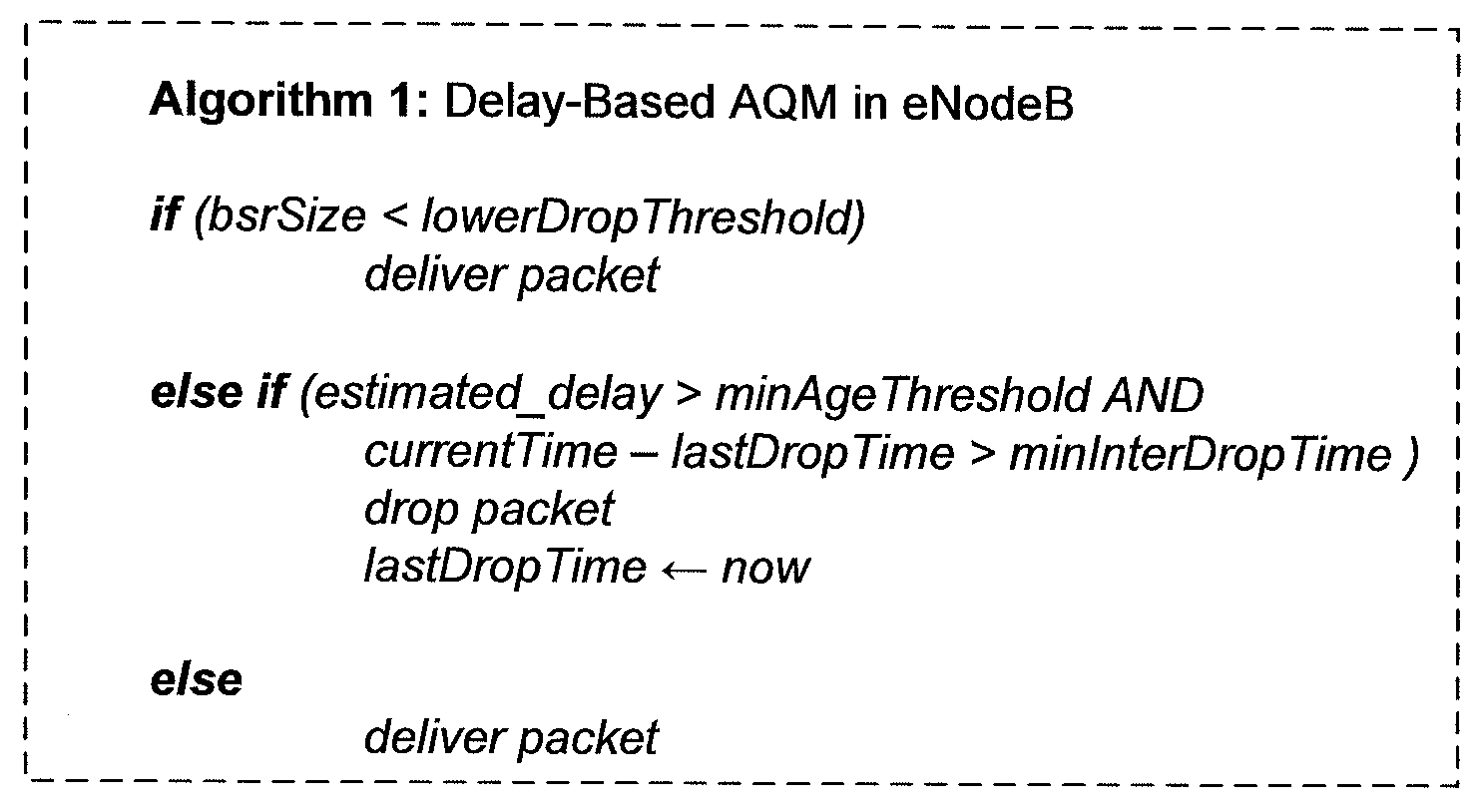

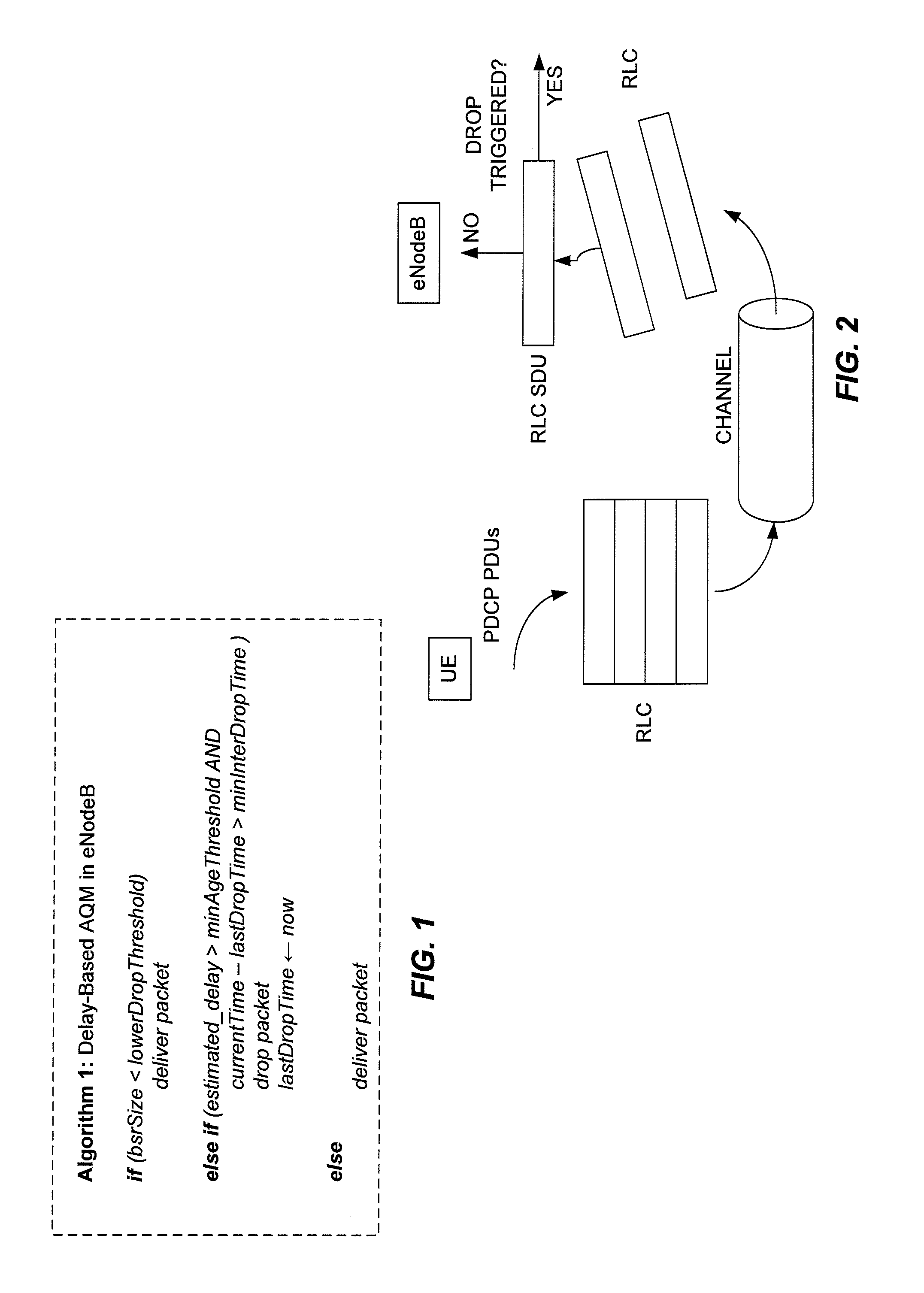

Active Queue Management for Wireless Communication Network Uplink

InactiveUS20120039169A1Process controlQueue size be smallError preventionTransmission systemsUplink transmissionComputer terminal

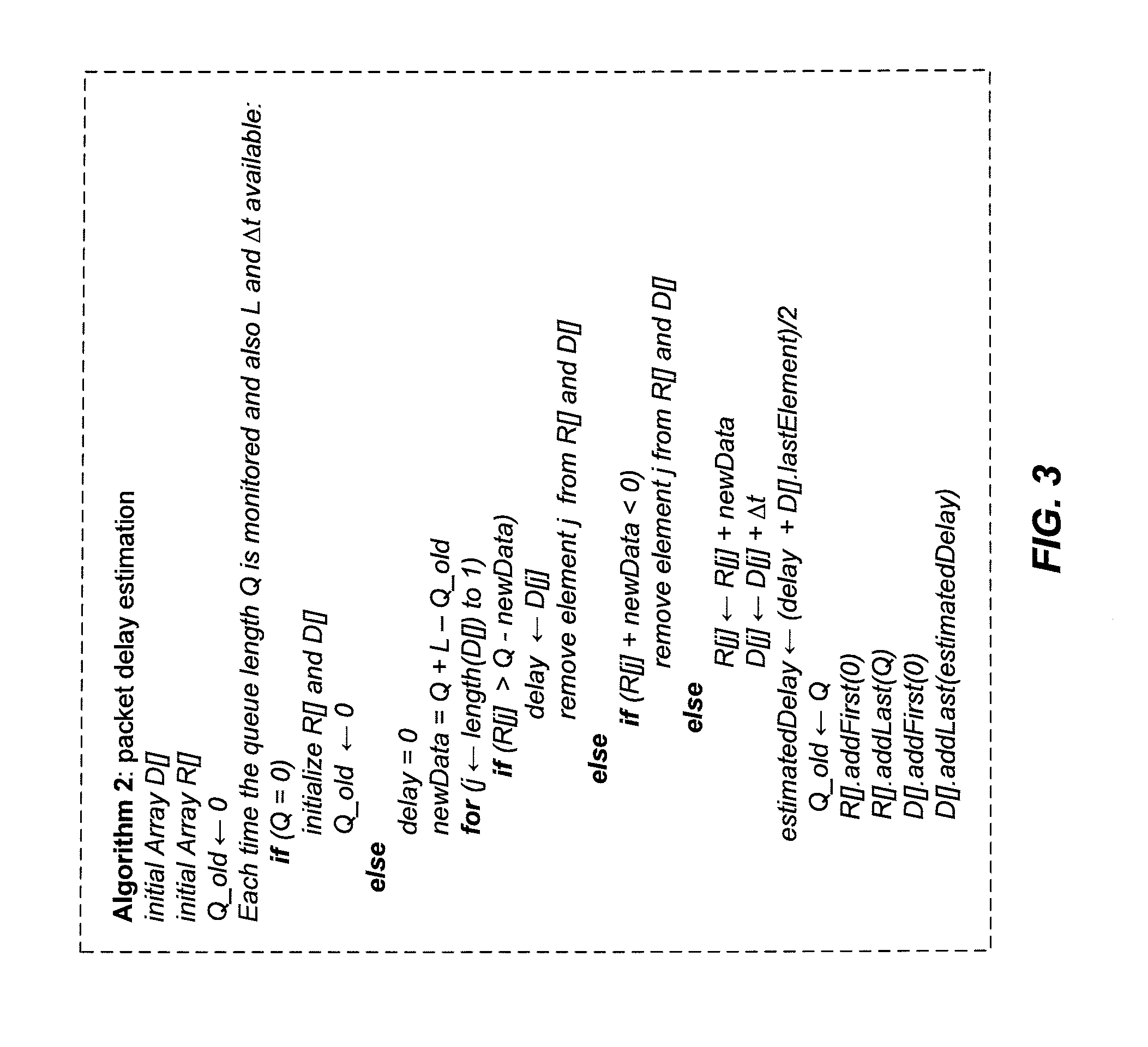

According to the teachings presented herein, a base station implements active queue management (AQM) for uplink transmissions from user equipment (UE), such as a mobile terminal. The base station, e.g., an eNodeB in a Long Term Evolution (LTE) network, uses buffer status reports, for example, to estimate packet delays for packets in the UE's uplink transmit buffer. In one embodiment, a (base station) method of AQM for the uplink includes estimating at least one of a transmit buffer size and a transmit buffer queuing delay for a UE, and selectively dropping or congestion-marking packets received at the base station from the UE. The selective dropping or marking is based on the estimated transmit buffer size and / or the estimated transmit buffer queuing delay.

Owner:TELEFON AB LM ERICSSON (PUBL)

Token-based active queue management

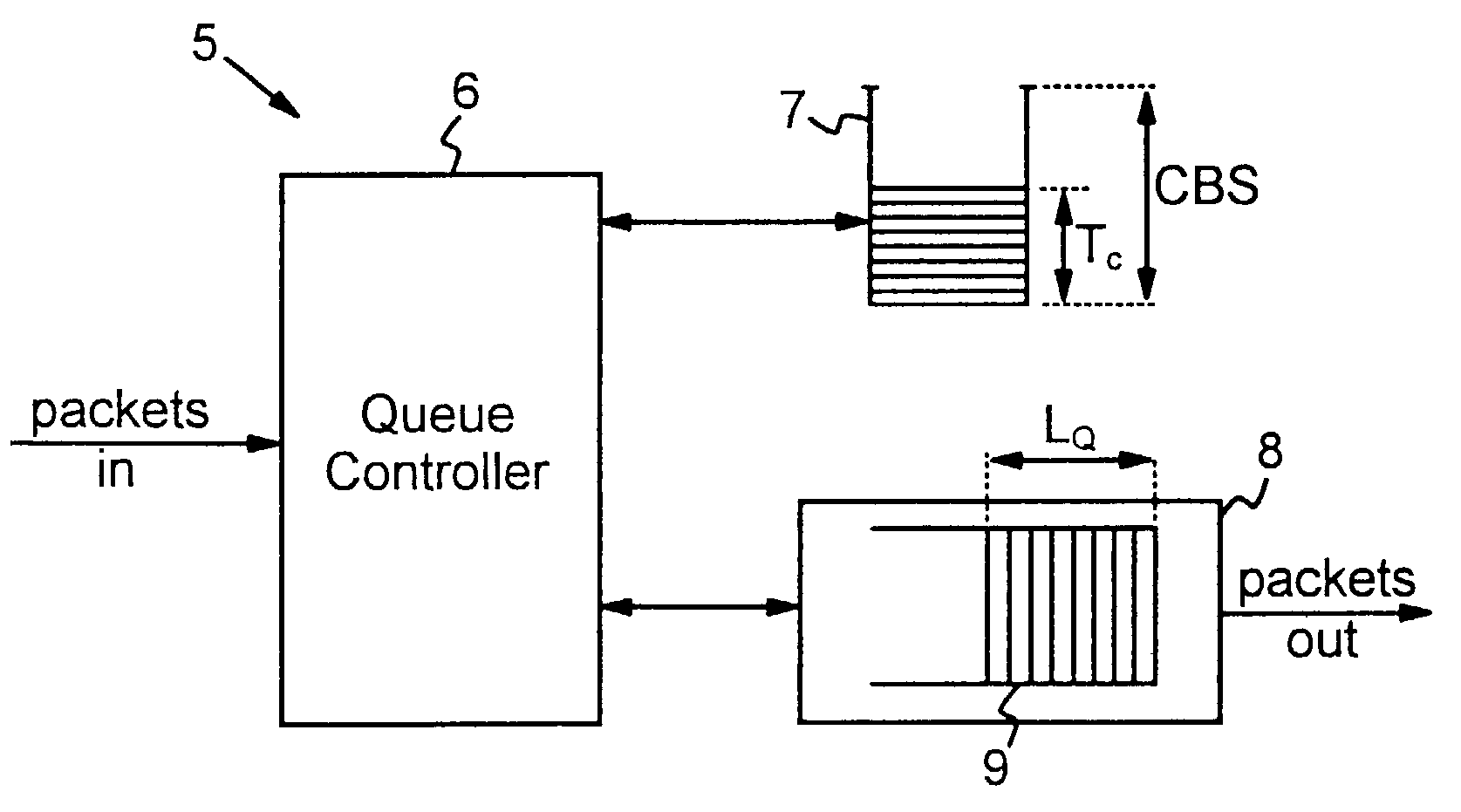

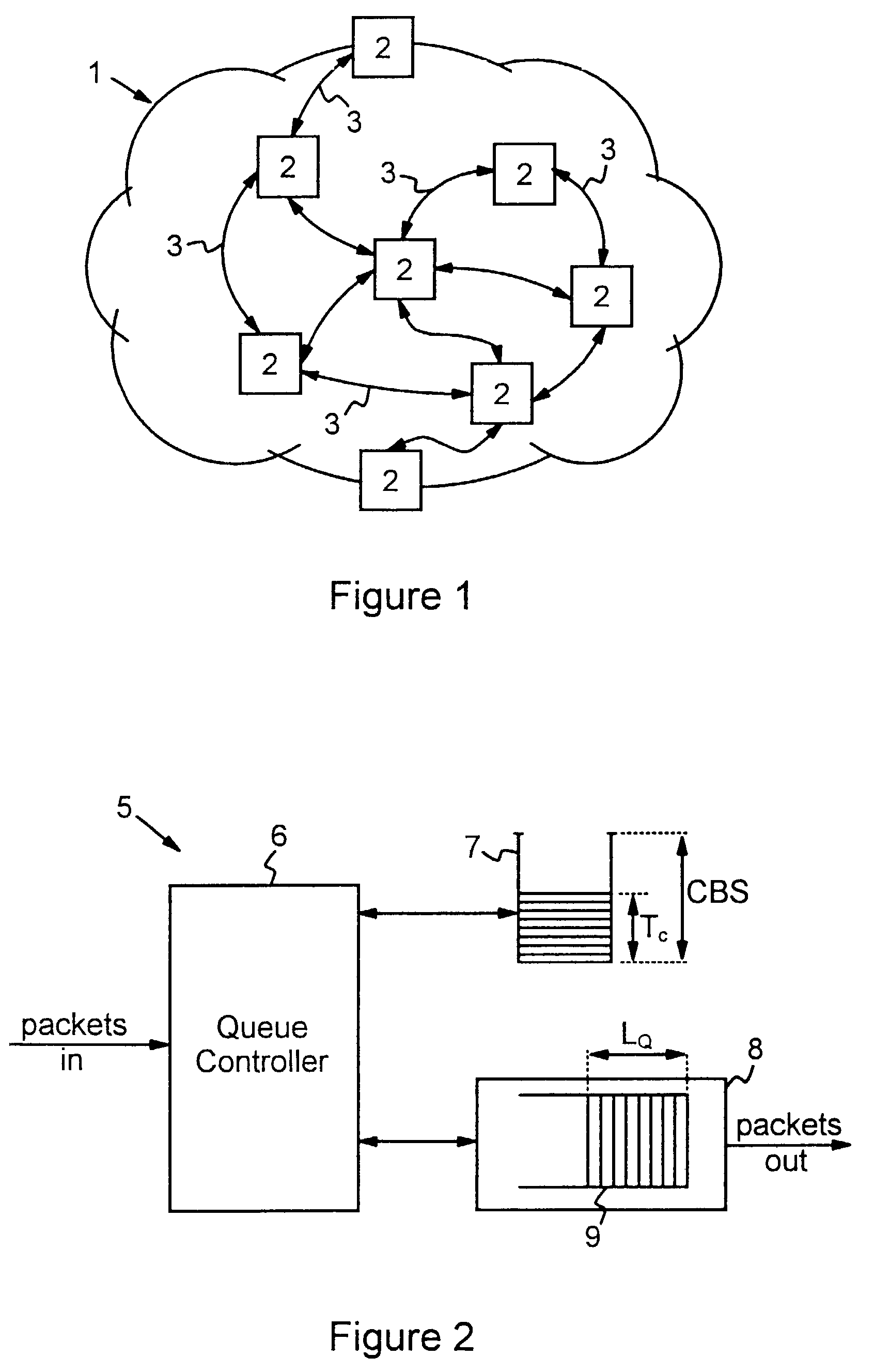

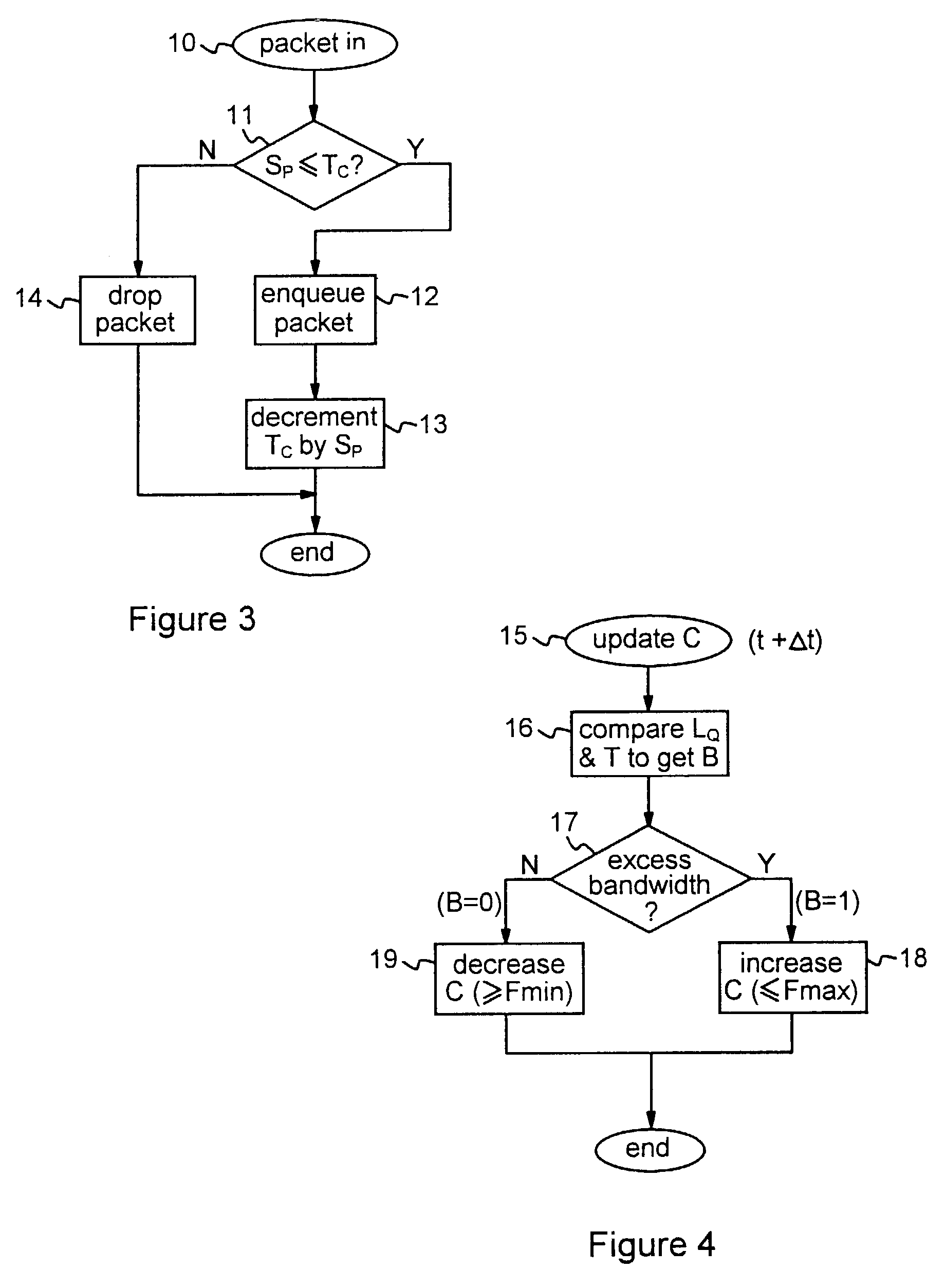

InactiveUS7280477B2Avoid potential instabilityIncrease ratingsError preventionFrequency-division multiplex detailsActive queue managementDistributed computing

Methods and apparatus are provided for managing a data packet queue corresponding to a resource of a network device. A token count TC is maintained for a predefined flow of data packets, and the transmission of packets in the flow into the queue is controlled in dependence on this token count. The token count is decremented when packets in the flow are transmitted into the queue, and the token count is incremented at a token increment rate C. A bandwidth indicator, indicative of bandwidth availability in the resource, is monitored, and the token increment rate C is varied in dependence on this bandwidth indicator. The bandwidth-dependent variation of the token increment rate C is such that, when available bandwidth is indicated, the increment rate C is increased, and when no available bandwidth is indicated the increment rate C is decreased.

Owner:GOOGLE LLC

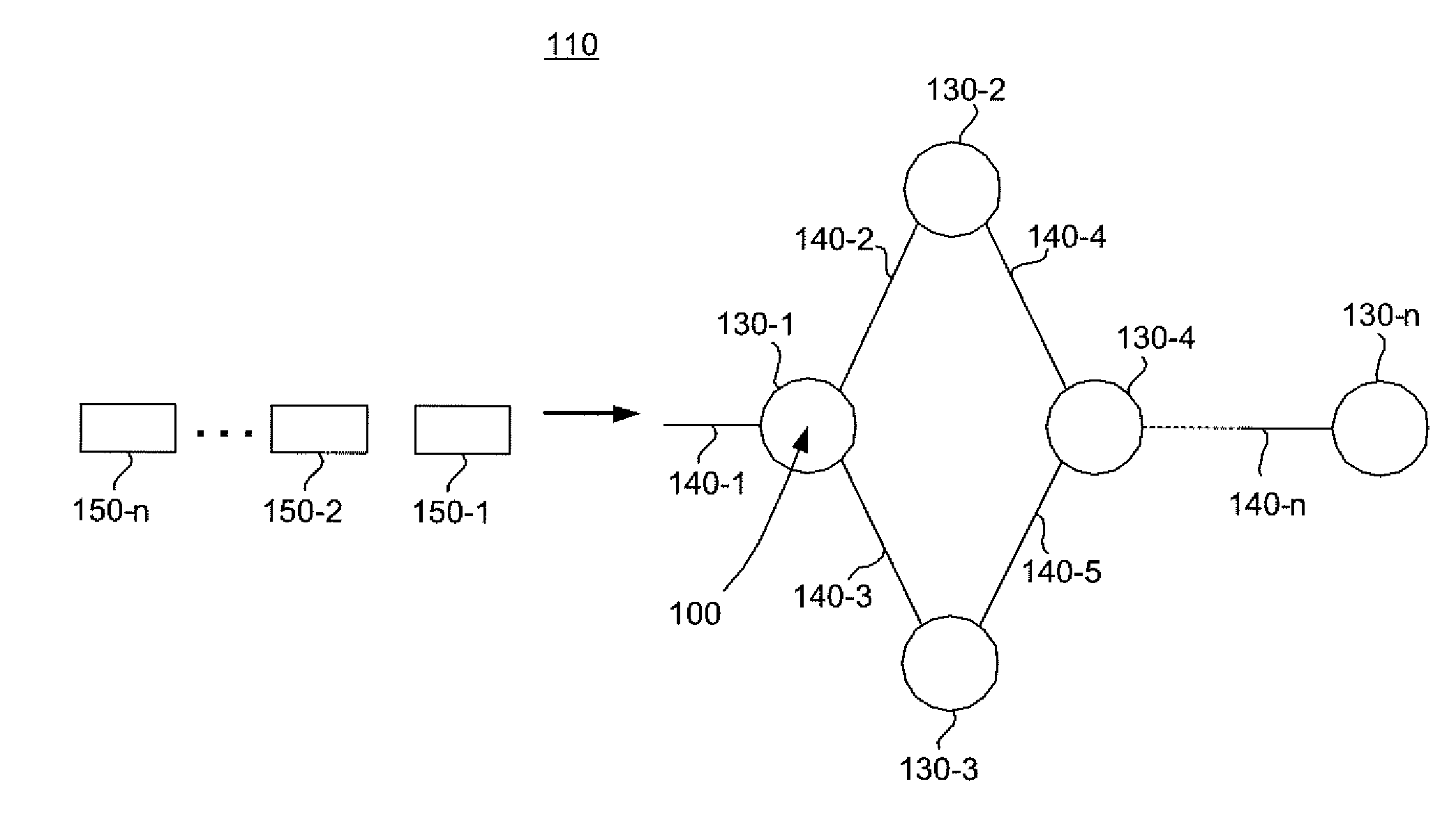

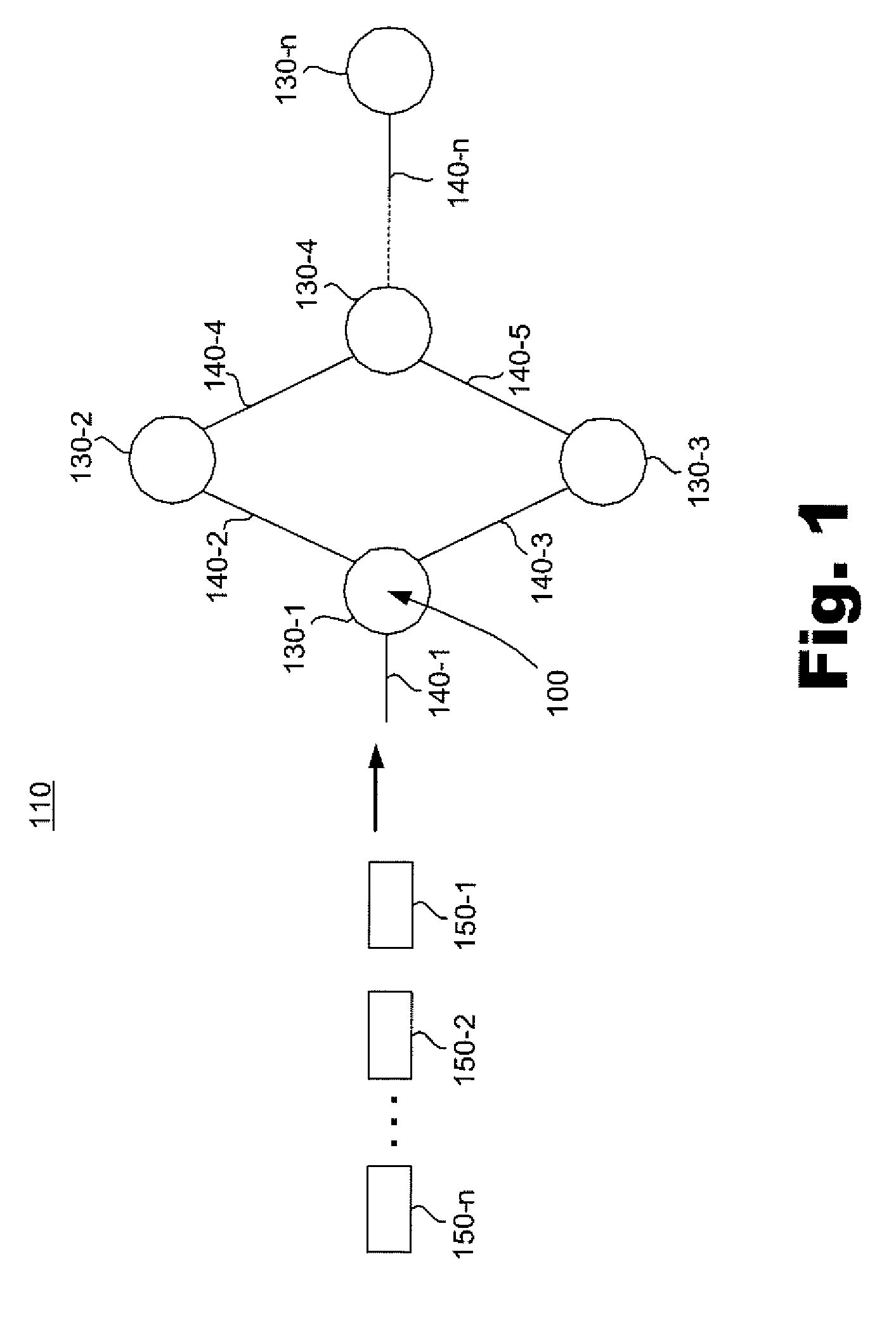

Method and apparatus for message flow and transaction queue management

Management of transaction message flow utilizing a transaction message queue. The system and method are for use in financial transaction messaging systems. The system is designed to enable an administrator to monitor, distribute, control and receive alerts on the use and status of limited network and exchange resources. Users are grouped in a hierarchical manner, preferably including user level and group level, as well as possible additional levels such as account, tradable object, membership, and gateway levels. The message thresholds may be specified for each level to ensure that transmission of a given transaction does not exceed the number of messages permitted for the user, group, account, etc.

Owner:TRADING TECH INT INC

Active queue management methods and devices

Owner:CISCO TECH INC

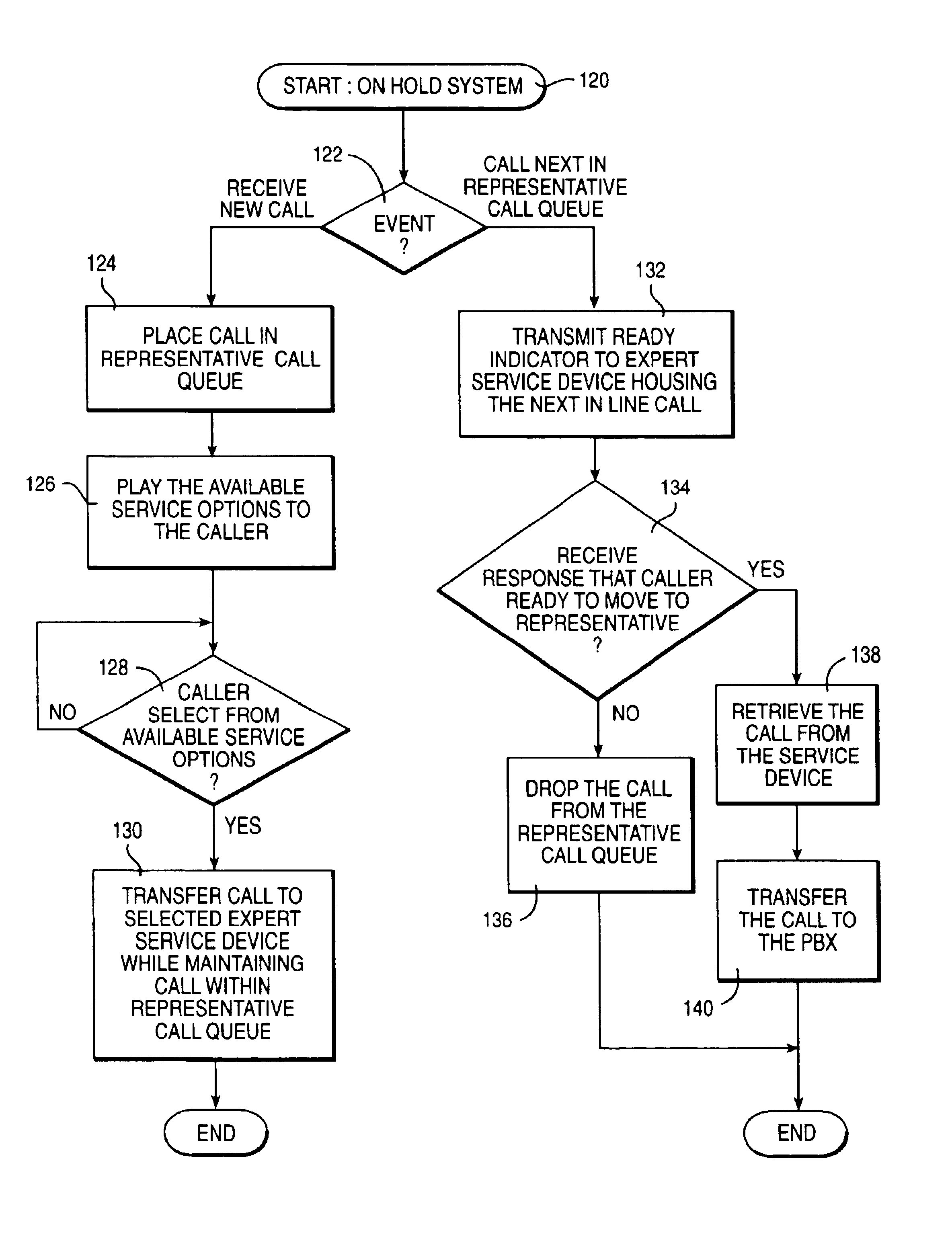

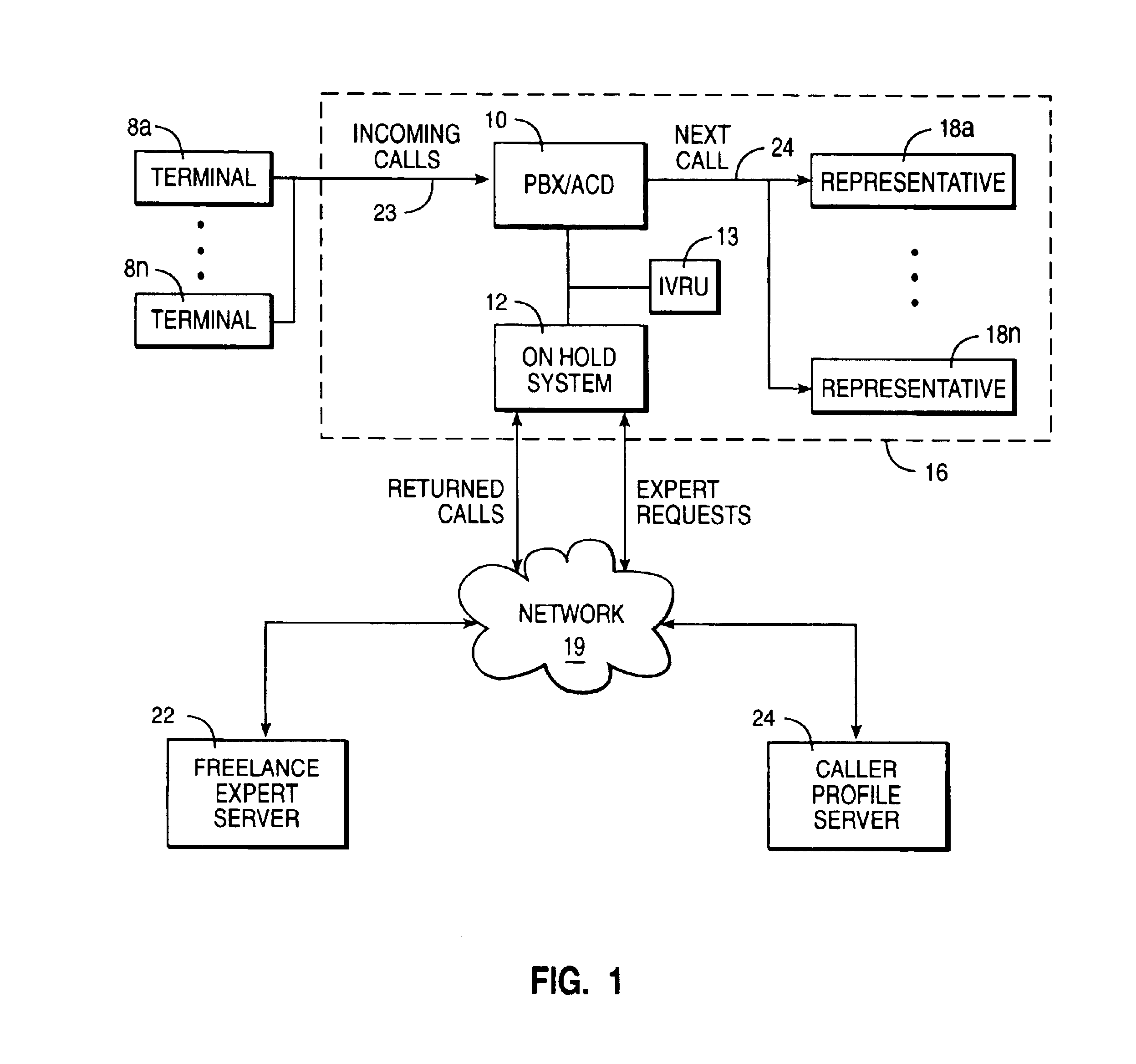

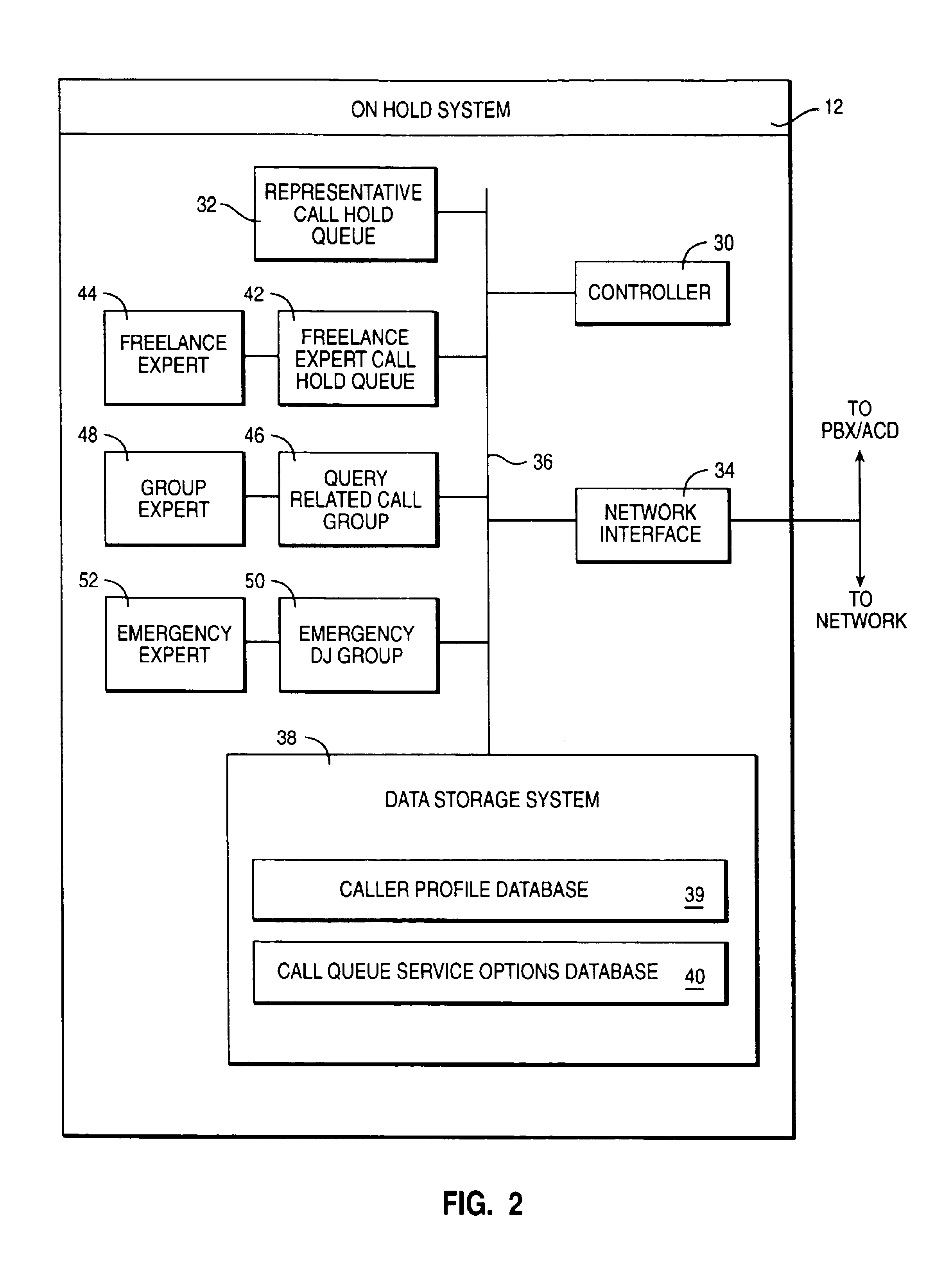

Expert hold queue management

InactiveUS6959081B2Special service for subscribersManual exchangesQueue management systemCall forwarding

A method, system, and program for expert hold queue management are provided. A call is received at a call center. The call is placed on hold in a hold queue until a representative of the call center is available to answer the call. While on hold in the hold queue, the call is transferred to an expert. In particular, the call may be transferred to a second hold queue within the first hold queue if the expert is not immediately available. Experts may include freelance experts, query based experts, and emergency response experts. Then, responsive to detecting the call at the top of the call queue, the caller is notified of an availability of a representative. The caller may select to remain with the expert or transfer to the representative.

Owner:IBM CORP

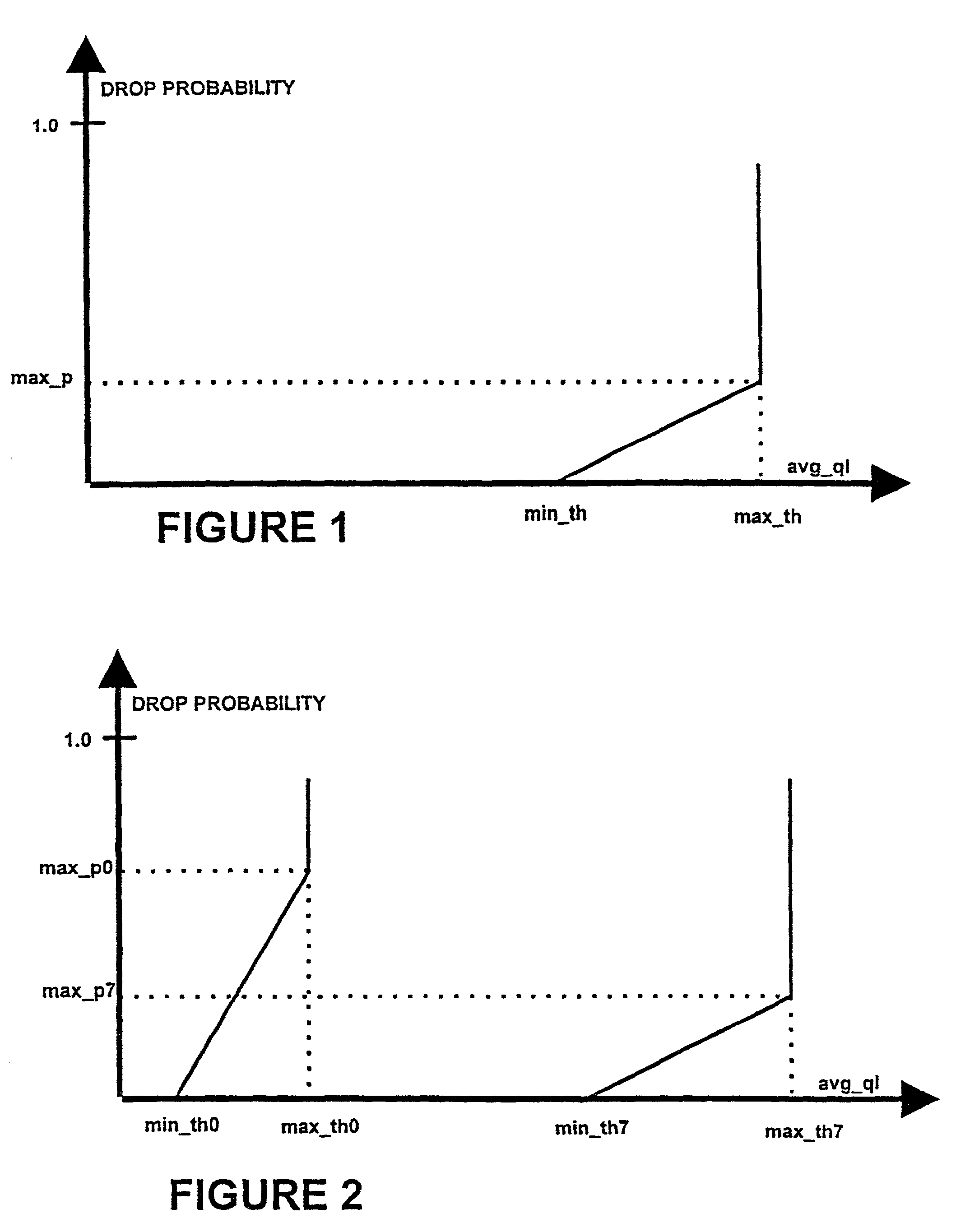

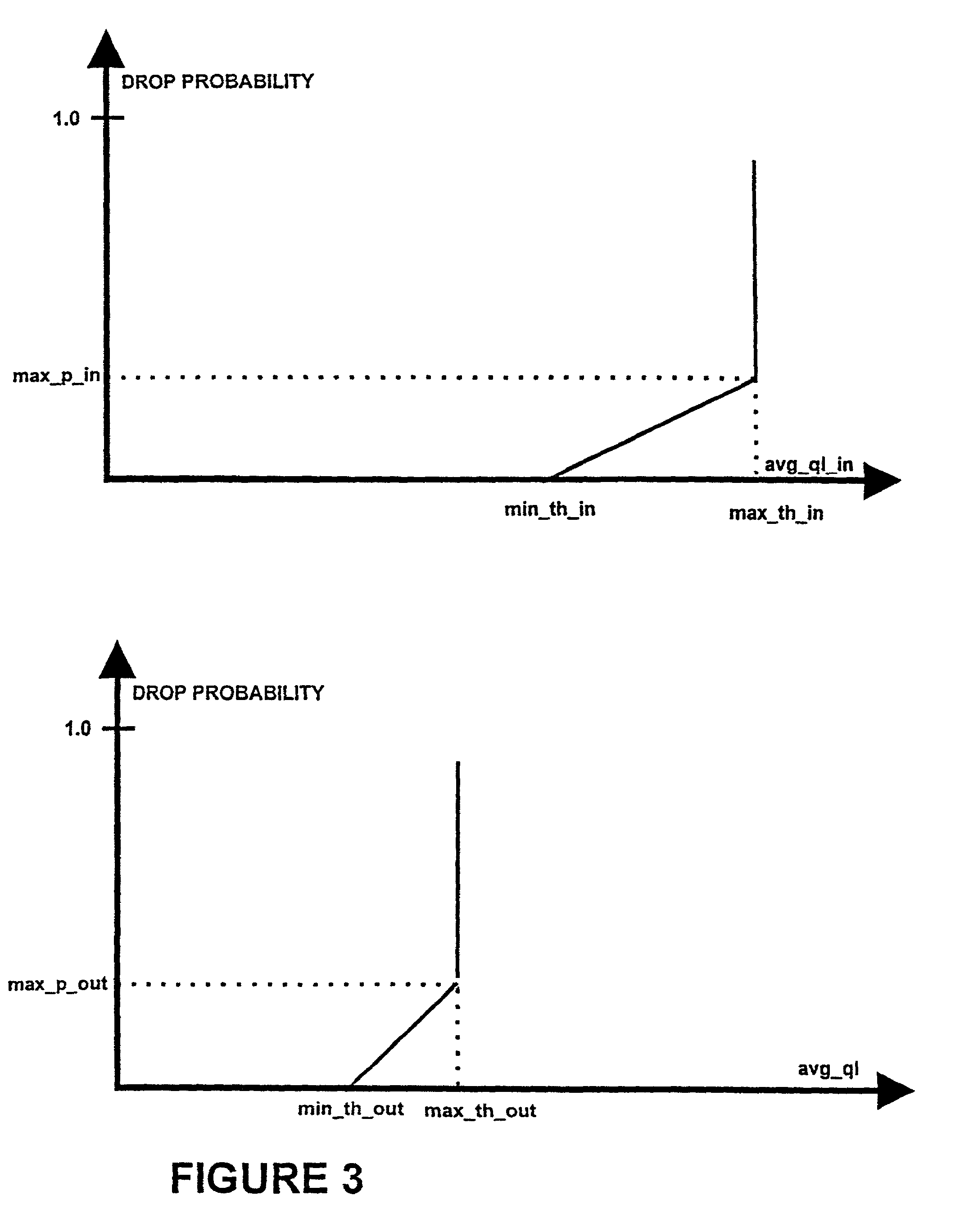

Method, system and router providing active queue management in packet transmission systems

InactiveUS7139281B1Avoid hungerLess probability of lossError preventionFrequency-division multiplex detailsRate adaptationPacket loss

A method of active queue management, for handling prioritized traffic in a packet transmission system, the method including: providing differentiation between traffic originating from rate adaptive applications that respond to packet loss, wherein traffic is assigned to one of at least two drop precedent levels in-profile and out-profile; preventing starvation of low prioritized traffic; preserving a strict hierarchy among precedence levels; providing absolute differentiation of traffic; and reclassifying a packet of the traffic of the packet transmission system, tagged as in-profile, as out-profile, when a drop probability assigned to the packet is greater than a drop probability calculated from an average queue length for in-profile packets.

Owner:TELIASONERA

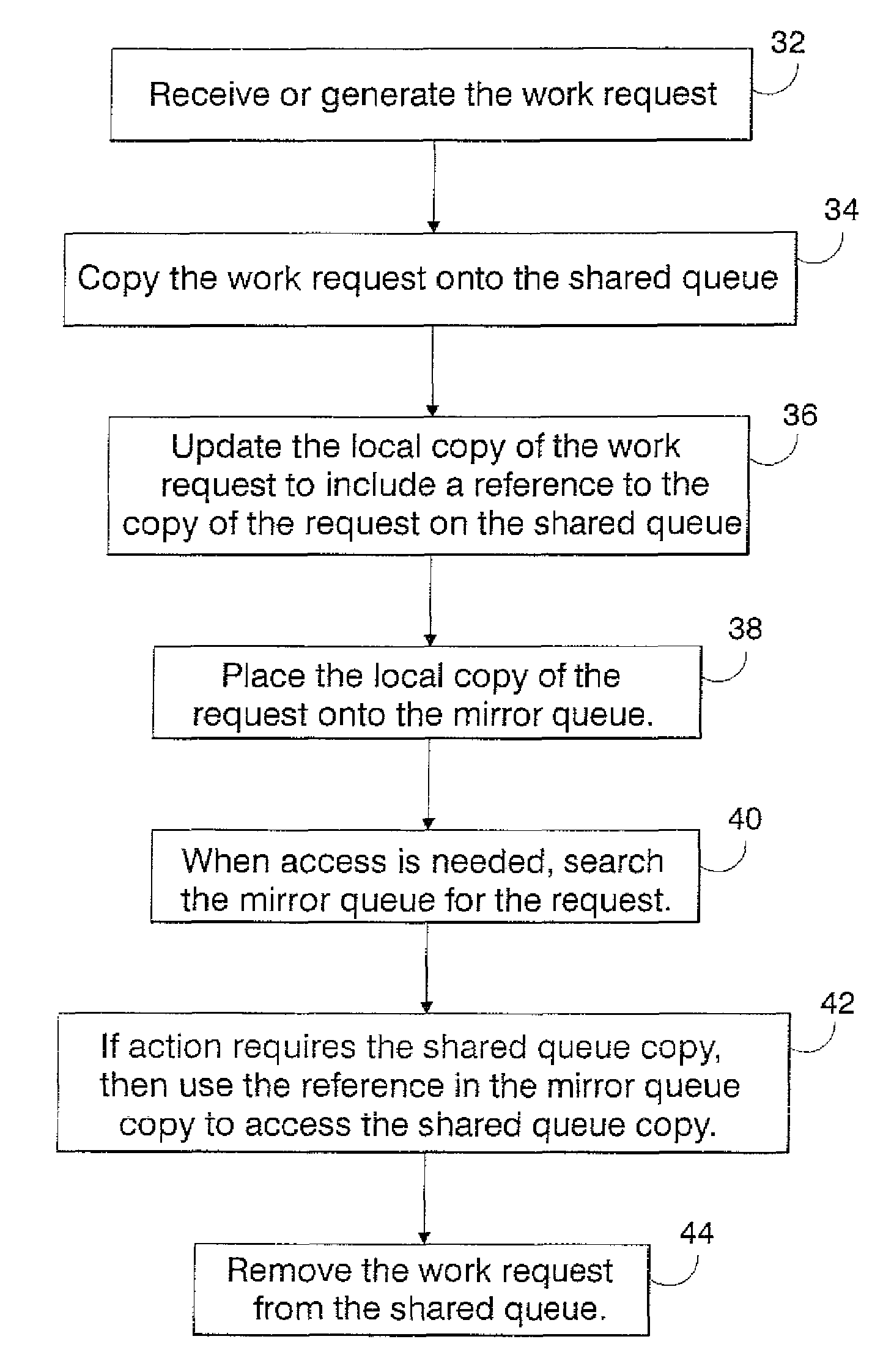

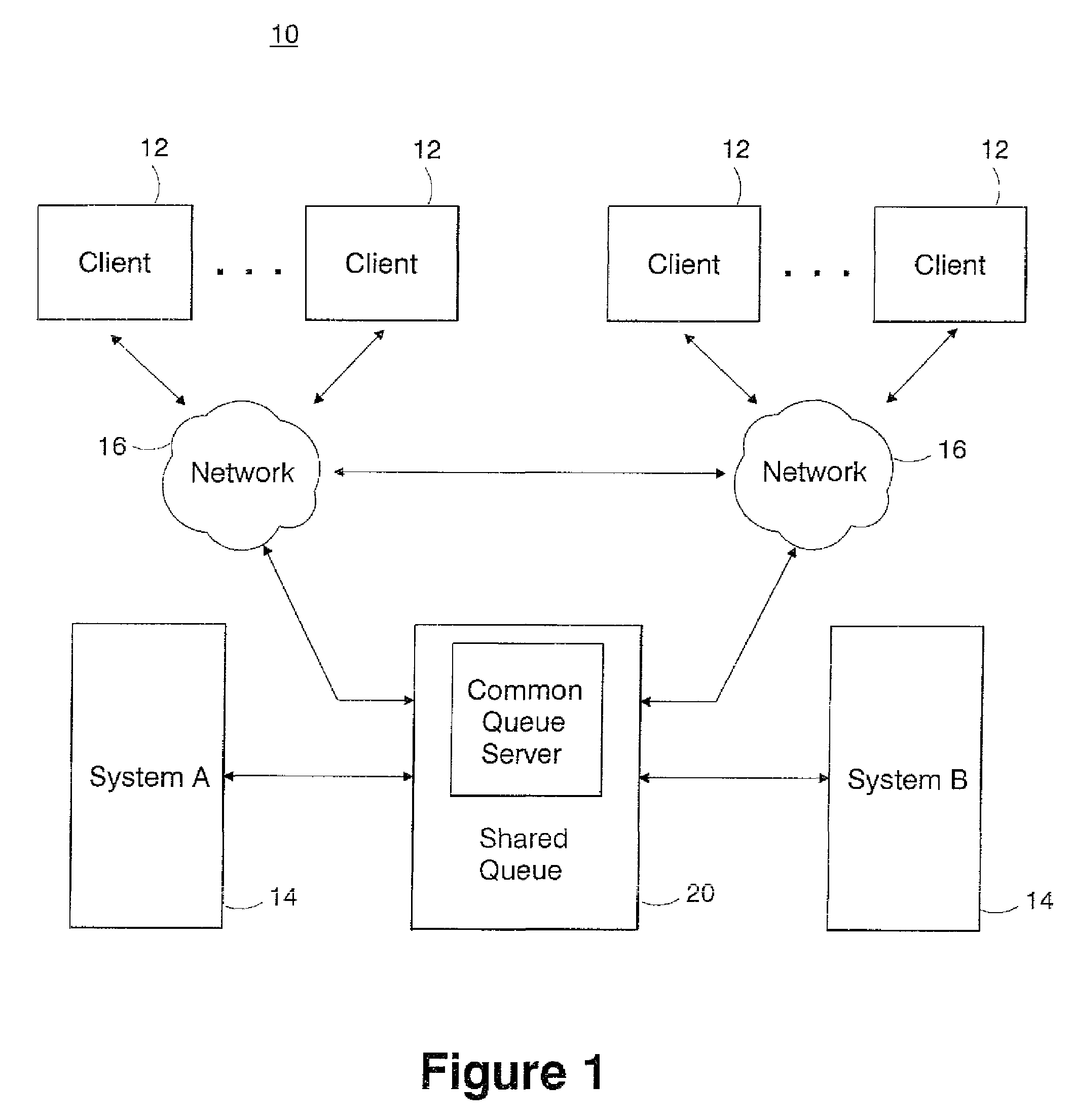

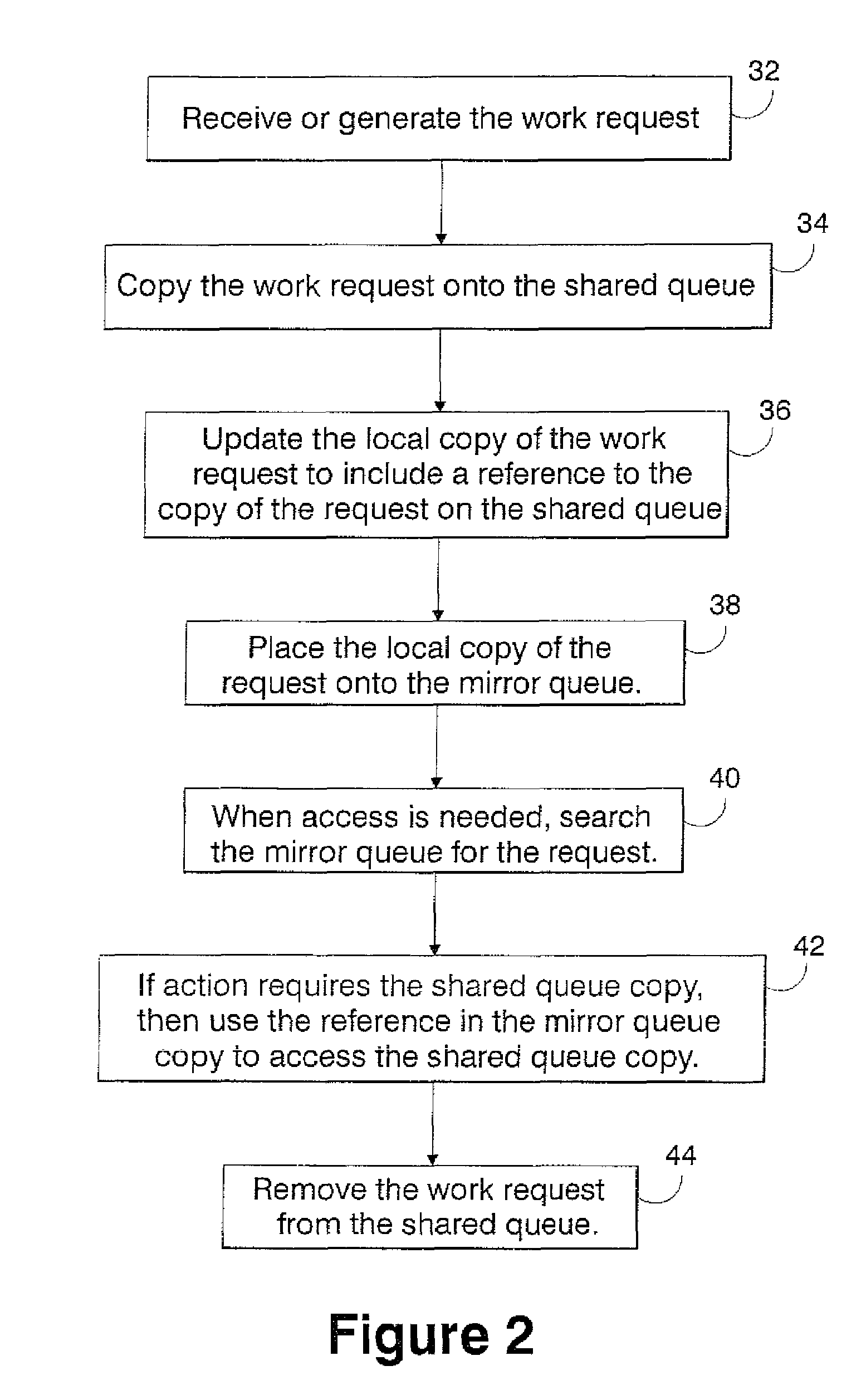

Mirror queue in a shared queue environment

InactiveUS7464138B2Increase heightMultiple digital computer combinationsMemory systemsQueue management systemClient-side

Disclosed are a queue management system and a method of managing a queue. This system and method are for use with a parallel processing system including a plurality of clients and a plurality of processors. The clients receive messages and transmit the messages to a shared queue for storage, and the processors retrieve messages from the shared queue and process said messages. The queue management system includes a mirror queue for maintaining a copy of each message transmitted to the shared queue by one of the clients; and the queue management system stores to the mirror queue, a copy of each message transmitted to the shared queue by that one of the clients. The mirror queue provides the system with continuity in case of an outage of the shared queue. In the event of such an outage, each instance of an application can simply discontinue using the shared queue and process requests from the mirror queue. The mirror queue is used until the shared queue is once again available. Preferably, the copy of each message transmitted to the mirror queue is provided with a reference, such as a poiner, to the location of the message on the shared queue.

Owner:IBM CORP

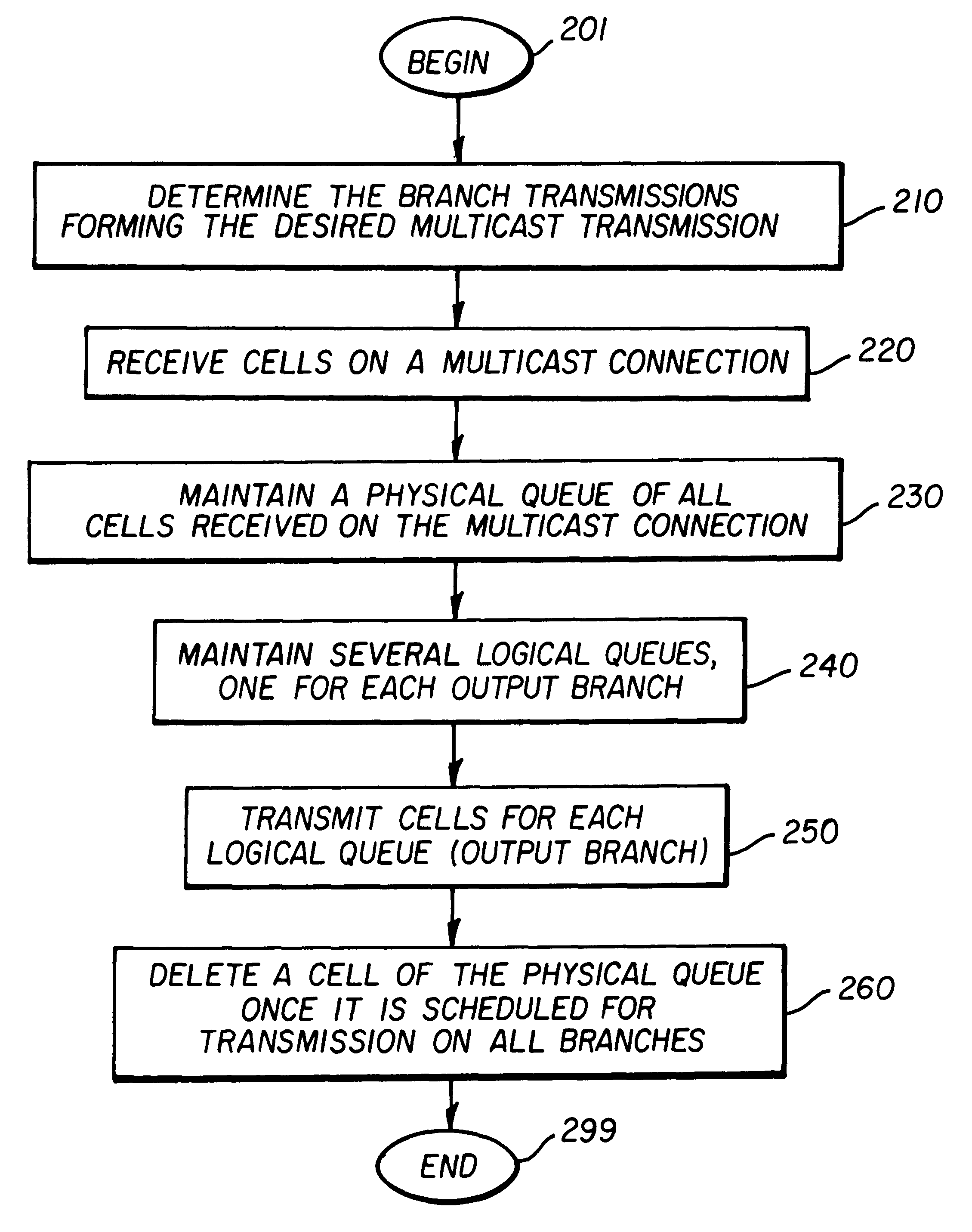

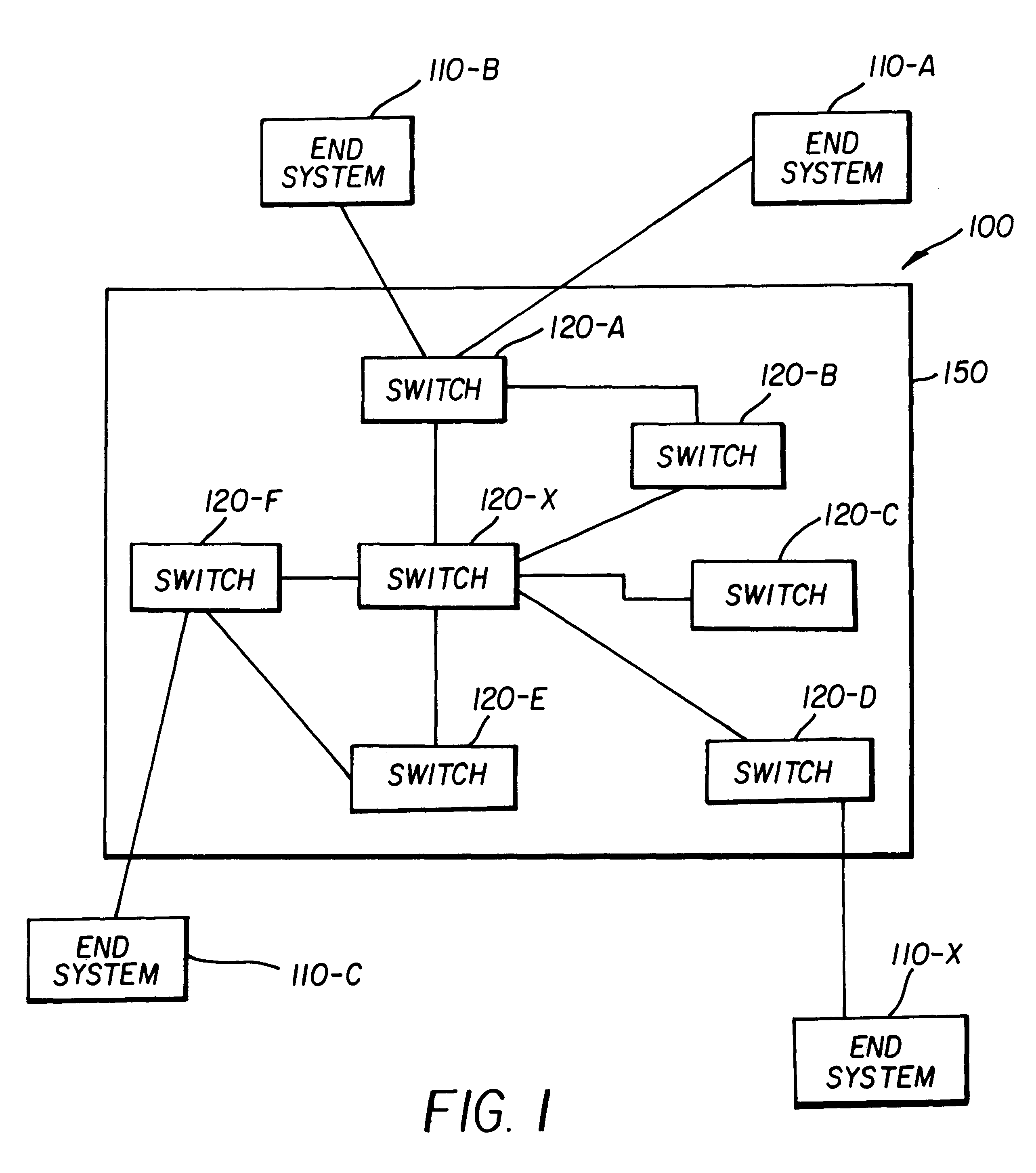

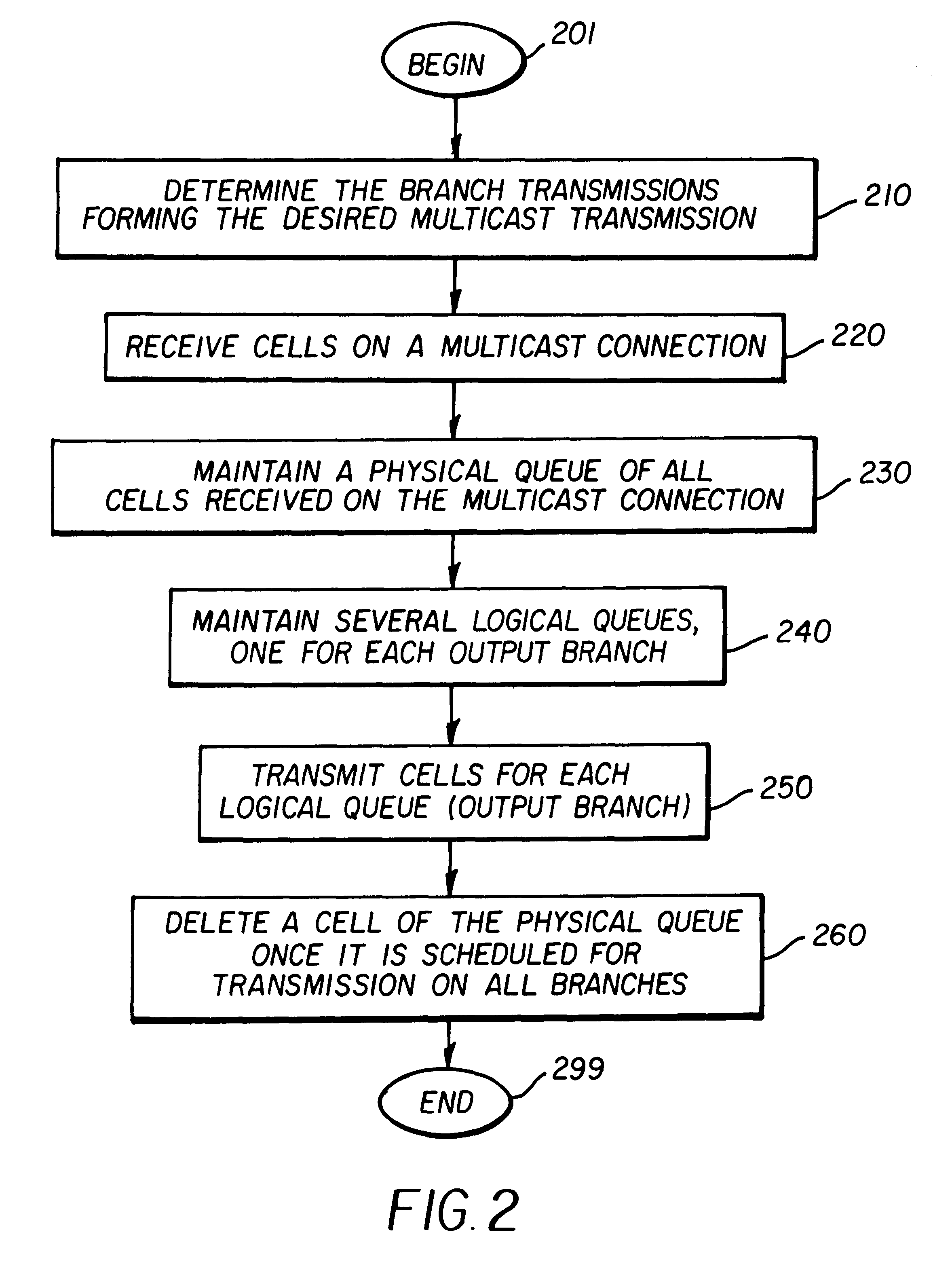

Queue management with support for multicasts in an asynchronous transfer mode (ATM) switch

InactiveUS6219352B1Quantity minimizationImprove throughput performanceSpecial service provision for substationTime-division multiplexQueue management systemAsynchronous Transfer Mode

An ATM switch supporting multicast transmissions and efficient transmission of frames. A cell is received on a multicast connection and transmitted on several branches / ports. Instead of copying a multicast cell several times for each output branch, only one copy of each multicast cell is maintained. The cell order and the stored cell data form a physical queue. Several logical queues are maintained, one for each output branch. In one embodiment, linked lists are used to maintain the queues. A cell in the physical queue is deleted after all logical queues traverse that cell. A shared tail pointer is used for all the logical queues to minimize additional processing and memory requirements due to the usage of logical queues. The queues enable cells forming a frame to be buffered until the end of frame cell is received, which provides for efficient handling of frames.

Owner:RIVERSTONE NETWORKS

System and method for estimating and providing dispatchable operating reserve energy capacity through use of active load management

A method for generating a value for available operating reserve for electric utility. Electric power consumption by at least one device is determined during at least one period of time to produce power consumption data, stored in a repository. Prior to a control event for power reduction and under an assumption that it is not to occur, power consumption behavior expected of the device(s) is determined for a time period during which the control event is expected to occur based on stored power consumption data. Additionally, prior to the control event, projected energy savings resulting from the control event, and associated with a power supply value (PSV) are determined based on devices' power consumption behavior. Amount of available operating reserve is determined based on projected energy savings.

Owner:CAUSAM ENERGY INC

Active queue management toward fair bandwidth allocation

InactiveUS7324442B1Low priorityMinimal implementation overheadError preventionTransmission systemsQueue management systemManagement process

In a packet-queue management system, a bandwidth allocation approach fairly addresses each of n flows that share the outgoing link of an otherwise congested router. According to an example embodiment of the invention, a buffer at the outgoing link is a simple FIFO, shared by packets belonging to the n flows. A packet priority-reduction (e.g., packet dropping) process is used to discriminate against the flows that submit more packets / sec than is allowed by their fair share. This packet management process therefore attempts to approximate a fair queuing policy. The embodiment is advantageously easy to implement and can control unresponsive or misbehaving flows with a minimum overhead.

Owner:SANDFORD UNIV

Ethernet extension for the data center

ActiveUS7969971B2Improve reliabilityLower latencyTime-division multiplexData switching by path configurationHigh bandwidthData center

Owner:CISCO TECH INC

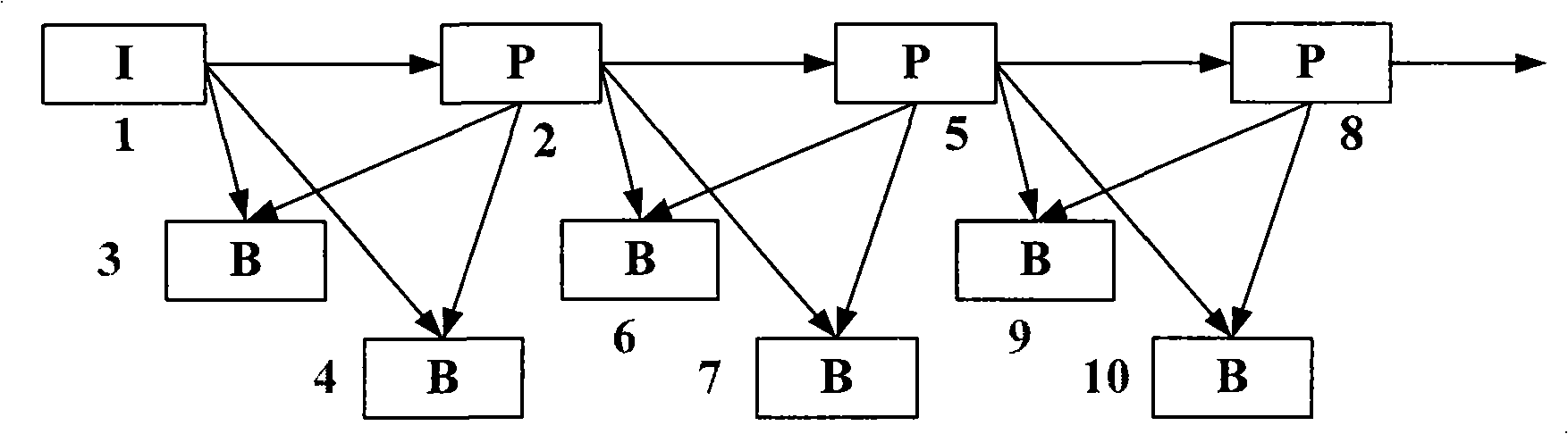

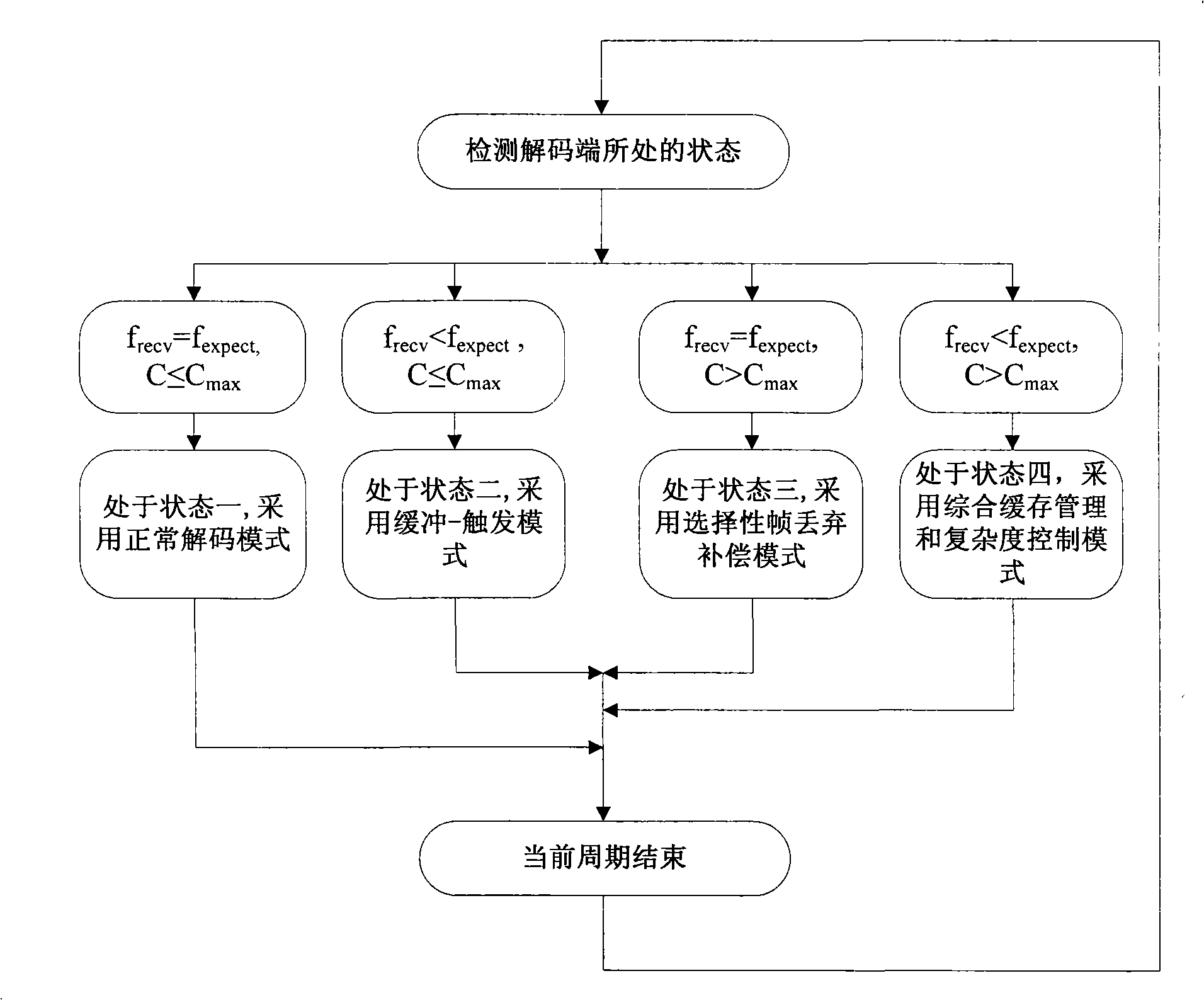

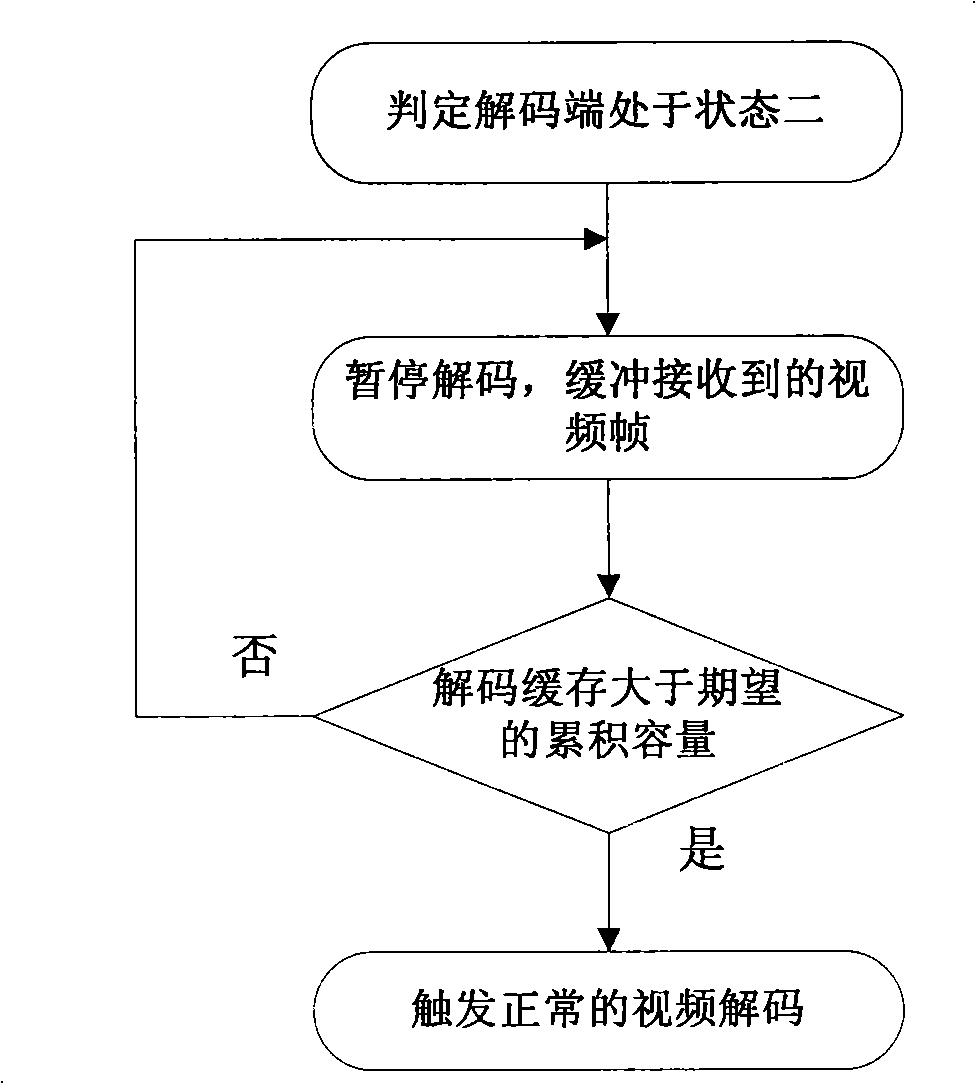

Video decoding method with active buffer management and complexity control function

ActiveCN101287122ADecoding Complexity ControlDecoding and playback effect is goodTelevision systemsDigital video signal modificationCache managementVideo decoding

The invention relates to a video decoding method with active cache management and complexity control functions, which pertains to the technical field of multimedia communication. The method comprises the steps as follows: frame rate f, maximum decoding capacity Cmax, required decoding capacity C predicted currently and the parameters of decoding buffer occupied amount Bf are tested and received periodically by a decoding end, the state of the decoding end is judged and self-adaptive decoding video streaming is carried out to different states by respectively adopting one of the following models, namely, a normal decoding model, a buffering-triggering model, a selective frame discard-and-compensation model and a comprehensive cache management and complexity control model, thereby obtaining continuous and high-quality video playing effect. The method is simple and practical, is effective to video coding standards that define an I-frame (Intra-frame frame), a P-frame (unidirectional predictive frame) and a B-frame (bidirectional predictive frame), and the decoding end can realize decoding cache management and complexity control without the coordination of the coding end.

Owner:TSINGHUA UNIV

Active queue management methods and devices

Owner:CISCO TECH INC

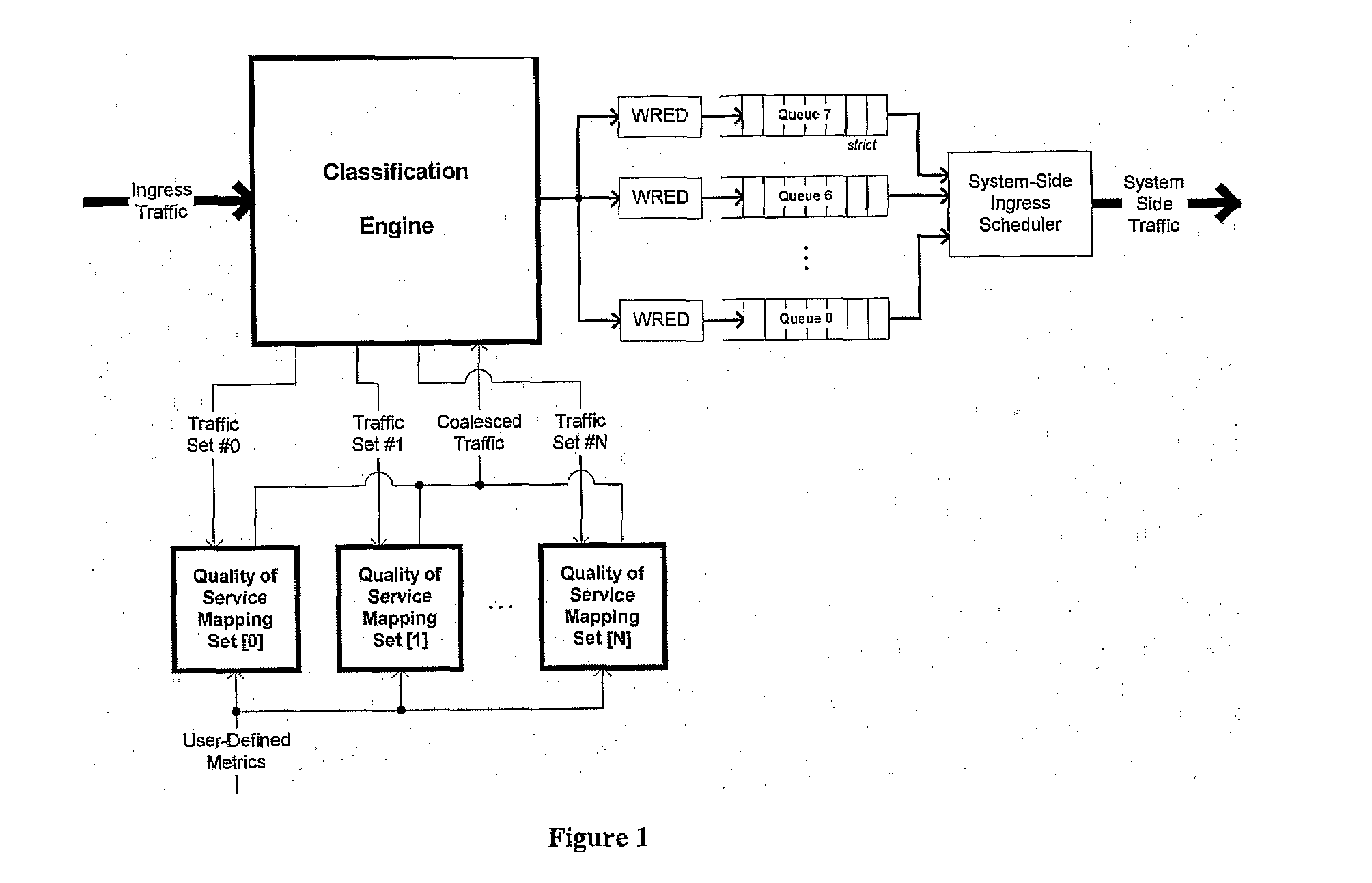

Coalescence of Disparate Quality of Service Matrics Via Programmable Mechanism

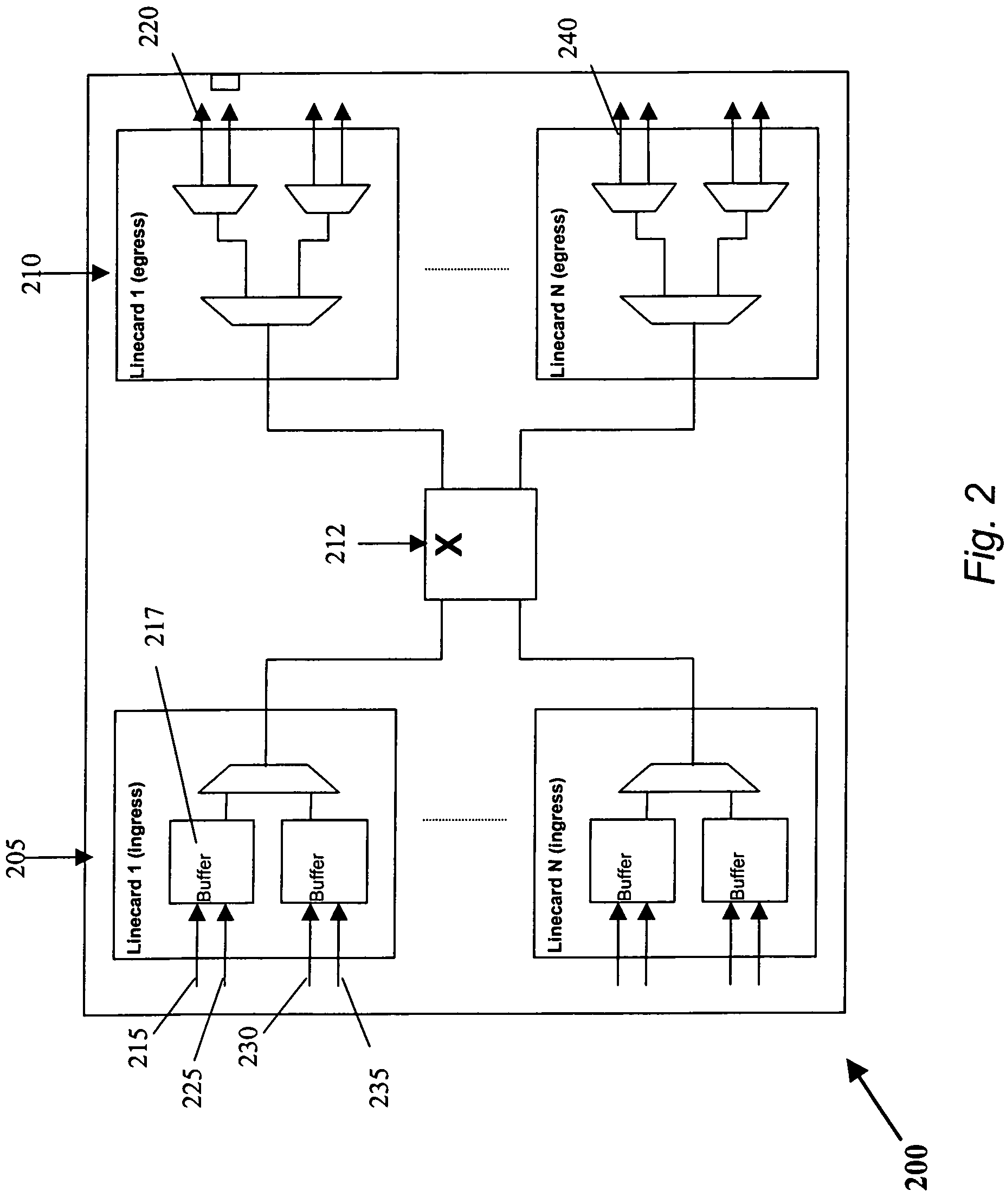

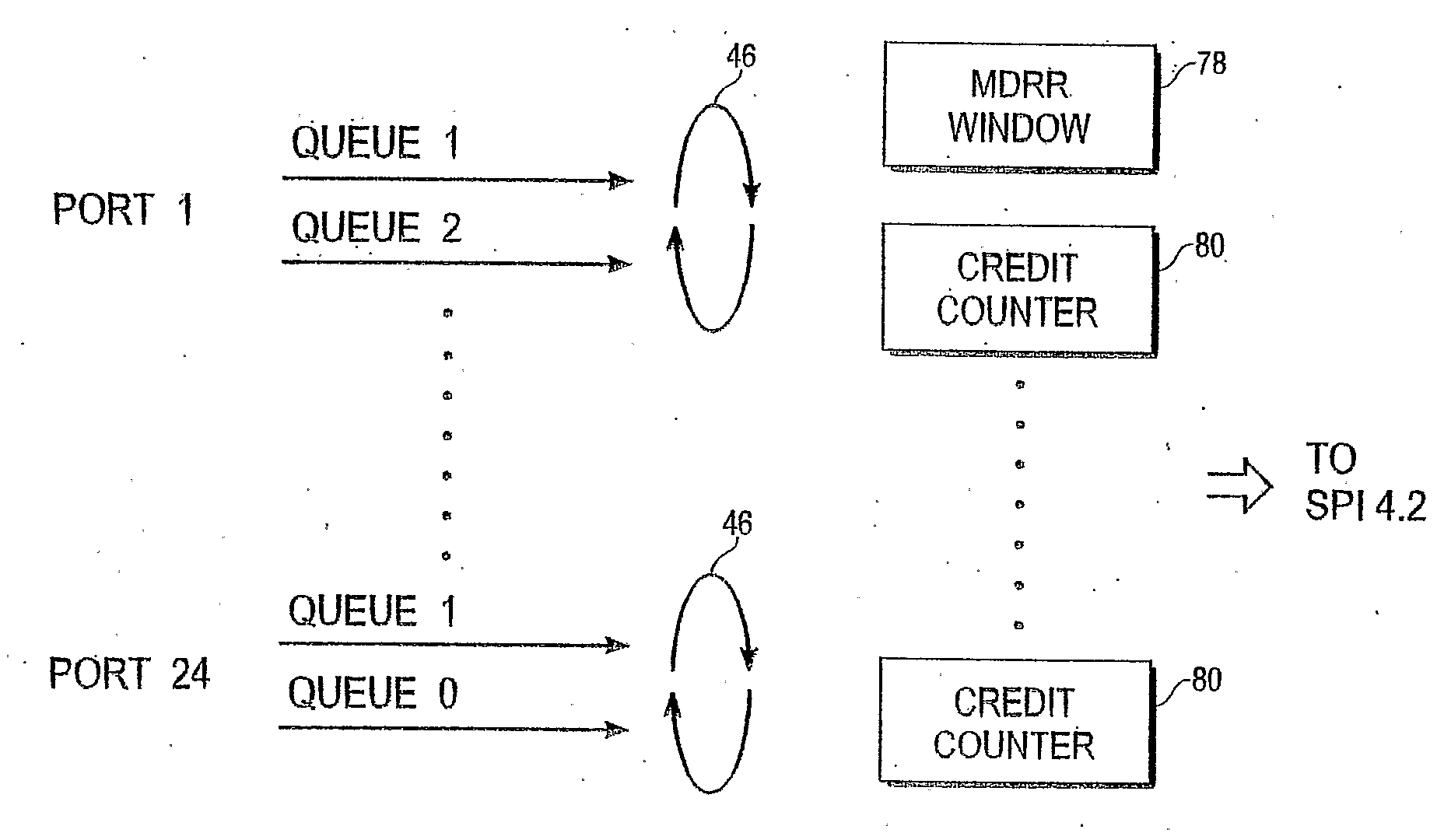

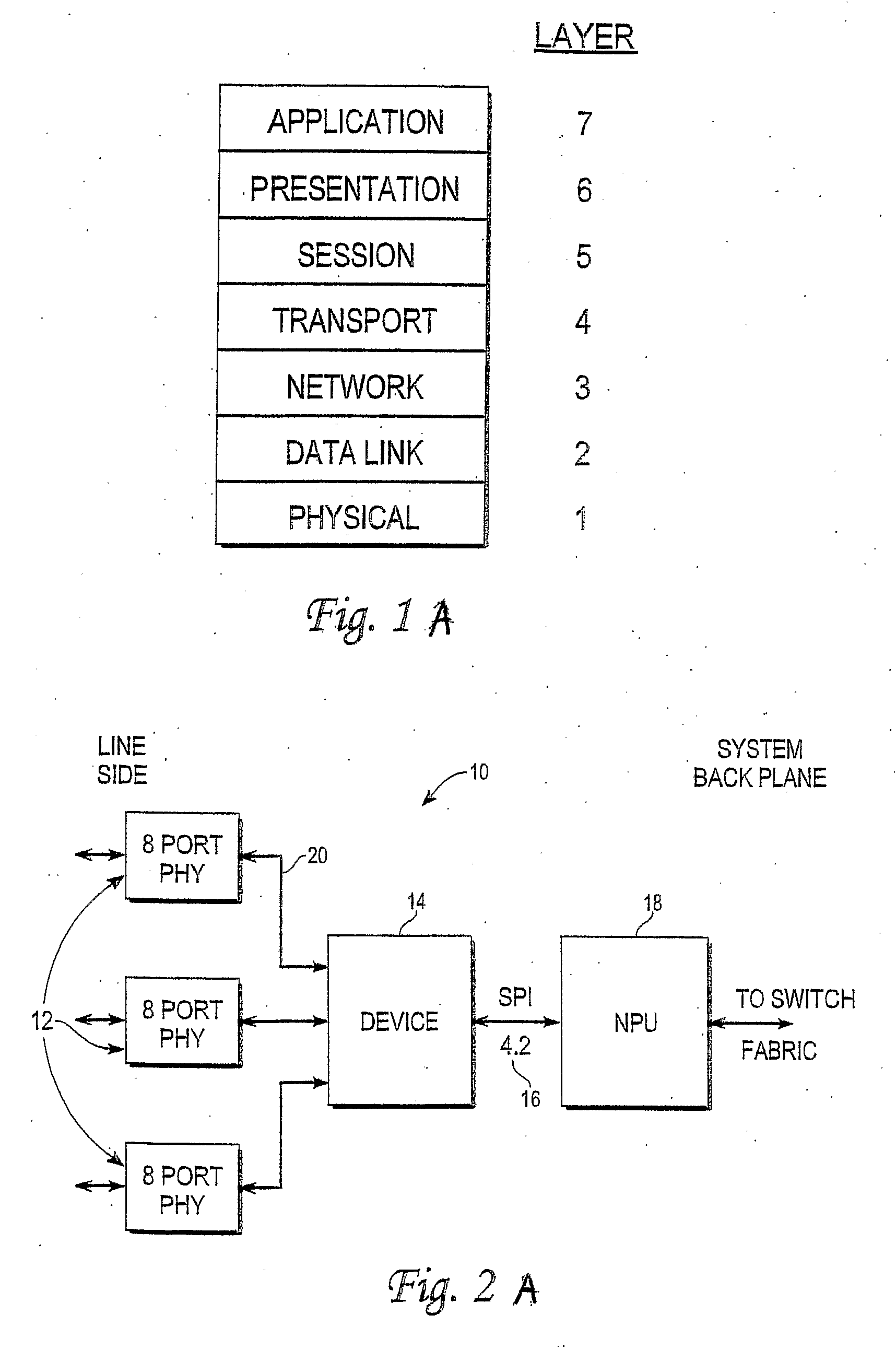

A method for classifying the Quality of Service of the incoming data traffic before the traffic is placed into the priority queues of the Active Queue Management Block of the device is disclosed. By employing a range of mapping schemes during the classification stage of the ingress traffic processing, the invention permits the traffic from a number of users to be coalesced into the appropriate Quality of Service level in the device.

Owner:MINDSPEED TECH INC

Systems and methods for queue management in packet-switched networks

Owner:VERIZON PATENT & LICENSING INC +1

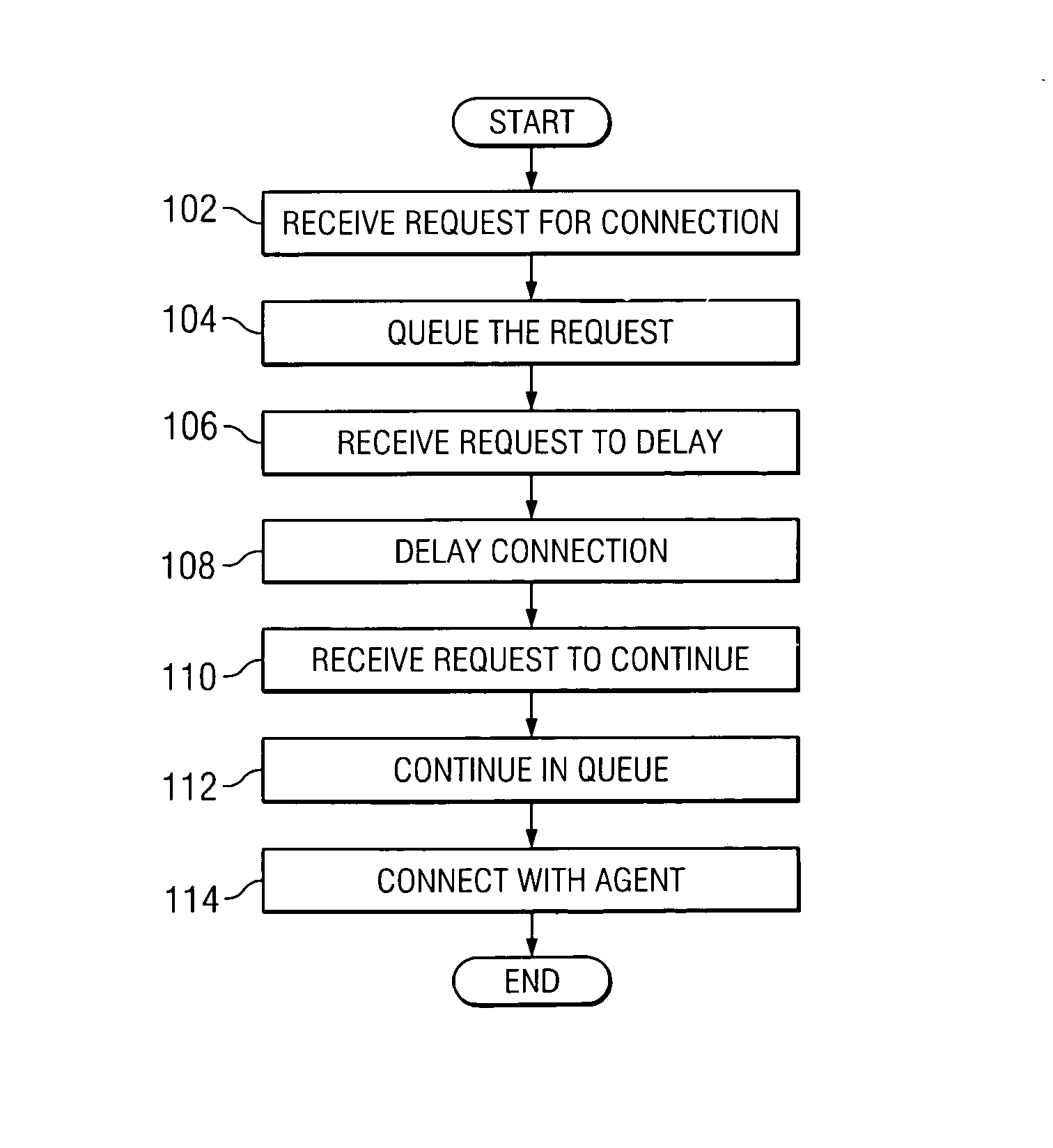

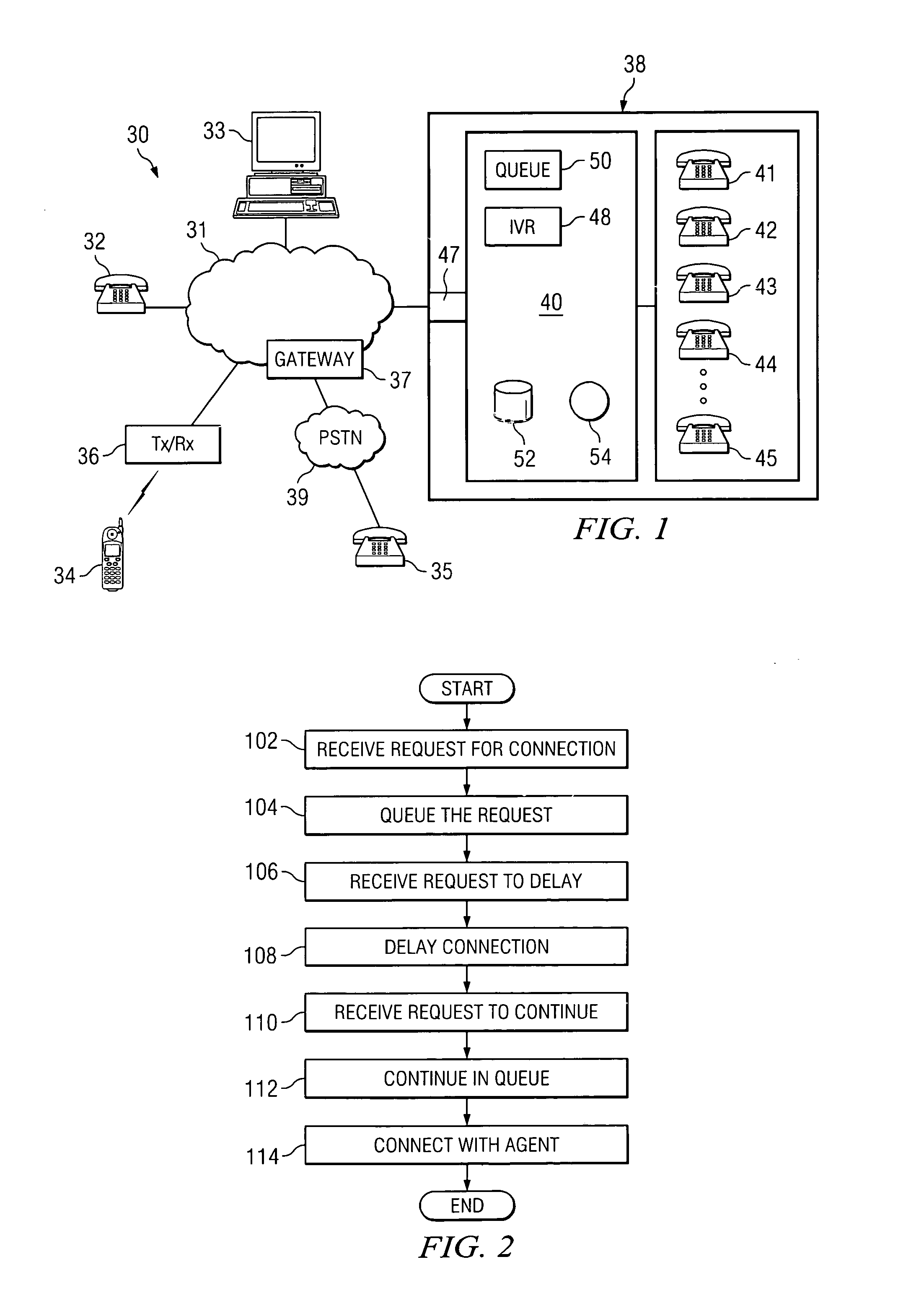

System and method for integrated queue management

ActiveUS8081749B1Eliminate and reduce and disadvantageEliminate and reduce problemSpecial service for subscribersManual exchangesQueue management systemActive queue management

A system and method for integrated queue management are provided. The method may include receiving, from a user, a request for a connection to one of a plurality of agents. The request for a connection may be queued if the plurality of agents are unavailable. A request to delay the connection with the one of the plurality of agents may be received, from the user. In accordance with a particular embodiment of the present invention, the connection with the one of the plurality of agents is delayed. A request to continue in the queue may be received from the user. Finally, the user may be connected with one of the plurality of agents.

Owner:CISCO TECH INC

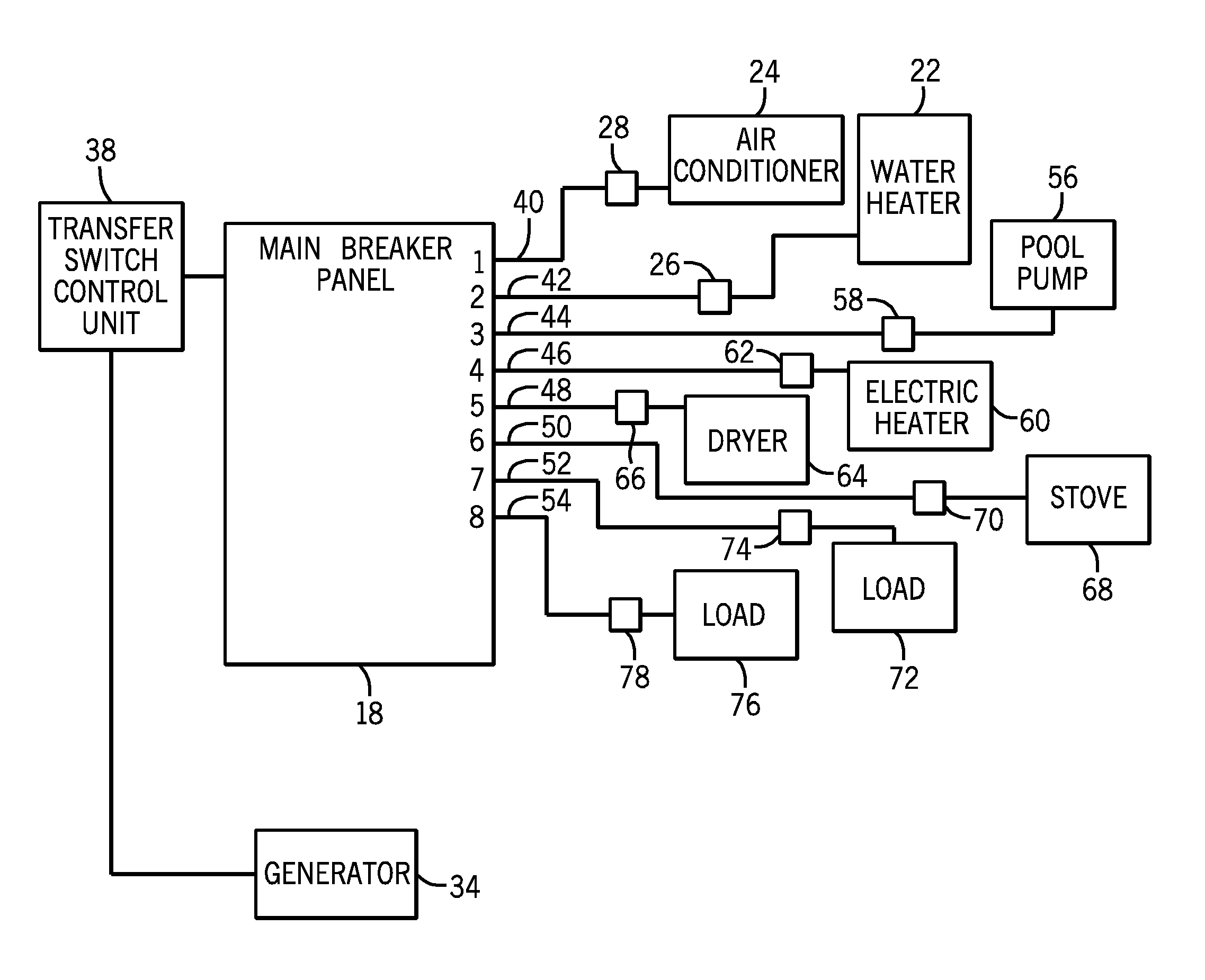

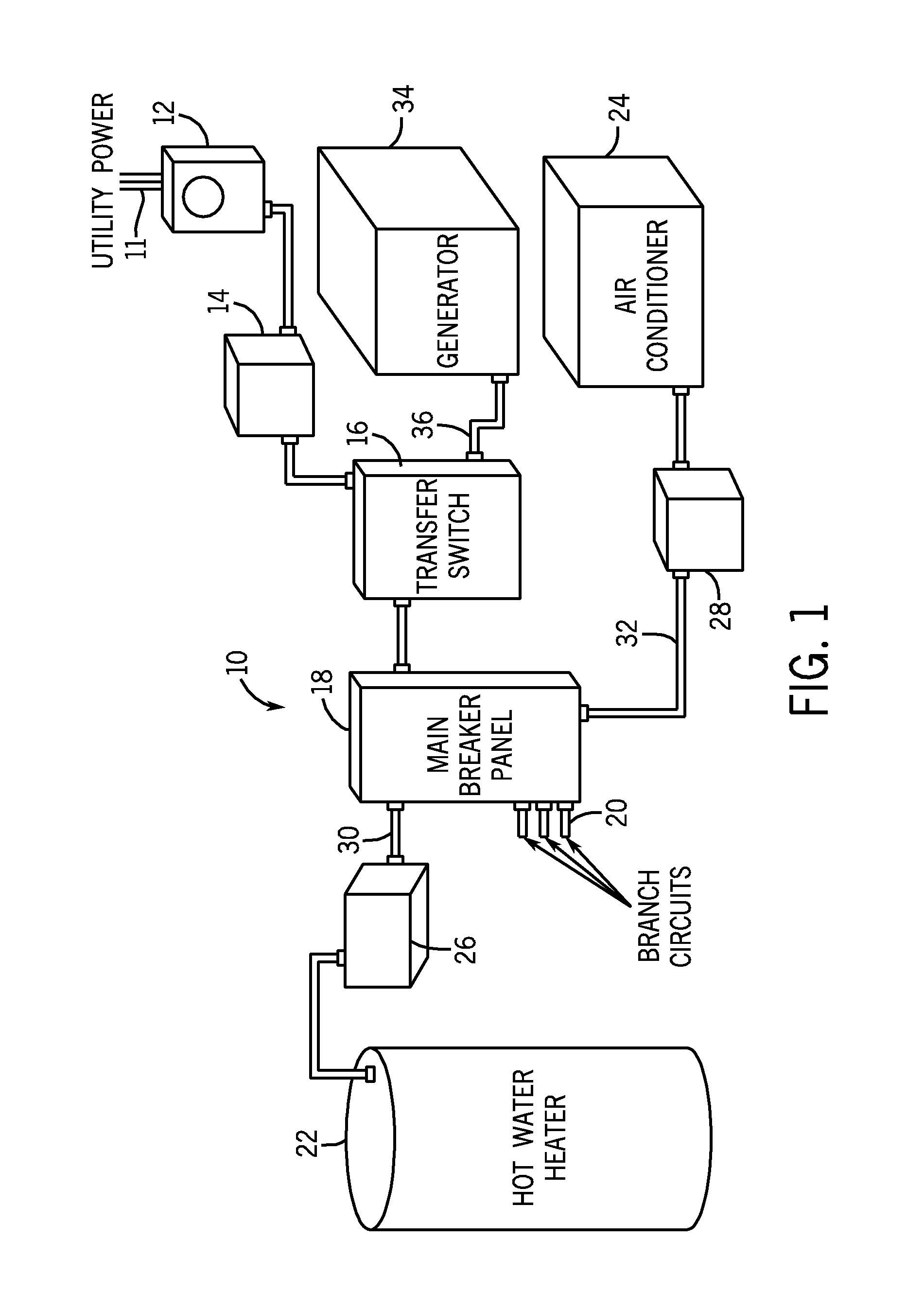

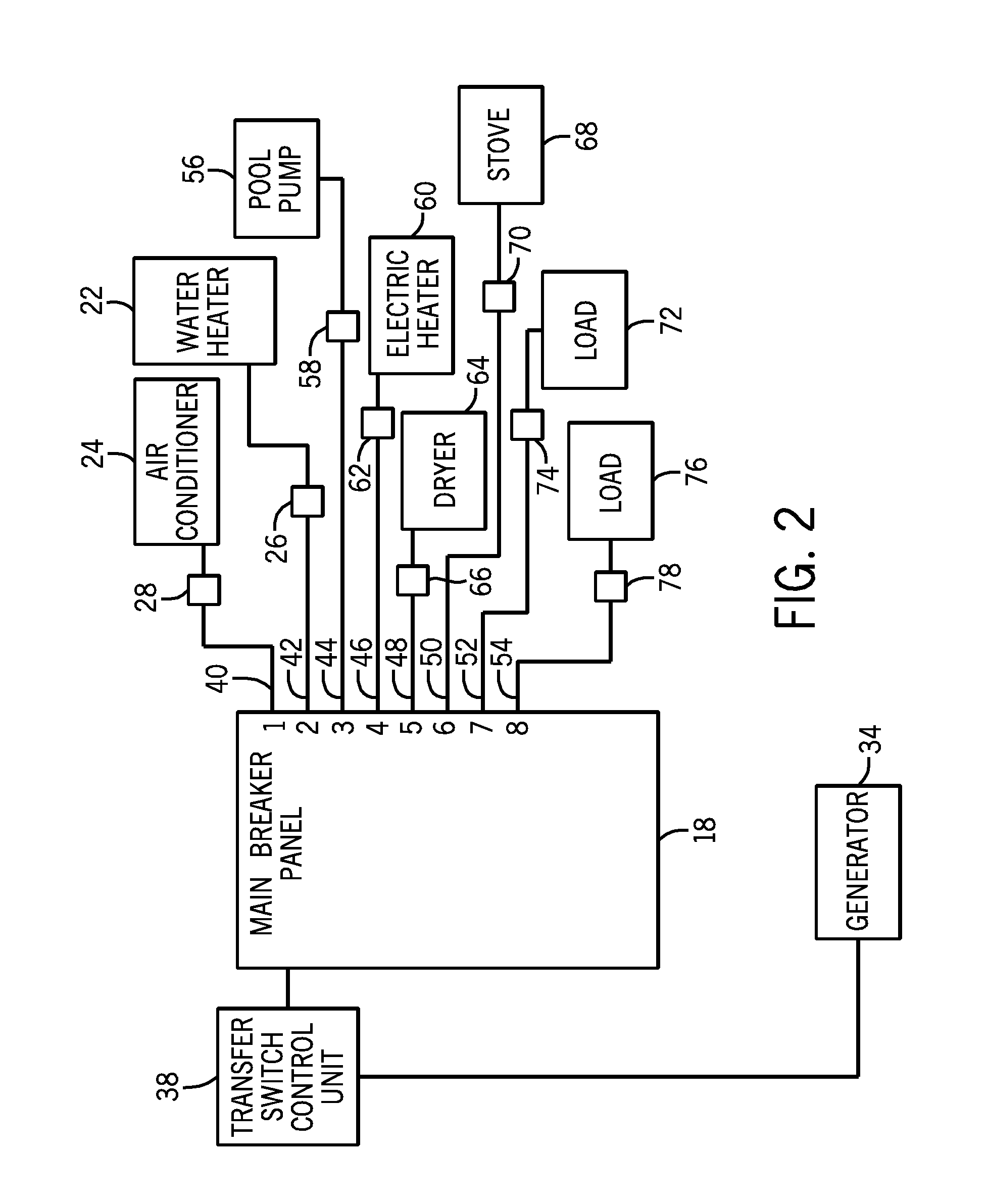

Active load management system

ActiveUS20110298285A1Management loadDc network circuit arrangementsConversion with intermediate conversion to dcTransfer switchEngineering

A method and system for managing loads powered by a standby generator. The method includes utilizing a transfer switch control unit to selectively shed loads each associated with one of a series of priority circuits. The loads are shed in a sequential order based upon the priority circuit to which the load is applied. Once a required load has been shed, the control unit determines whether any of the lower priority loads can be reconnected to the generator without exceeding the rating of the generator while one of the higher priority circuits remains open.

Owner:BRIGGS & STRATTON LLC

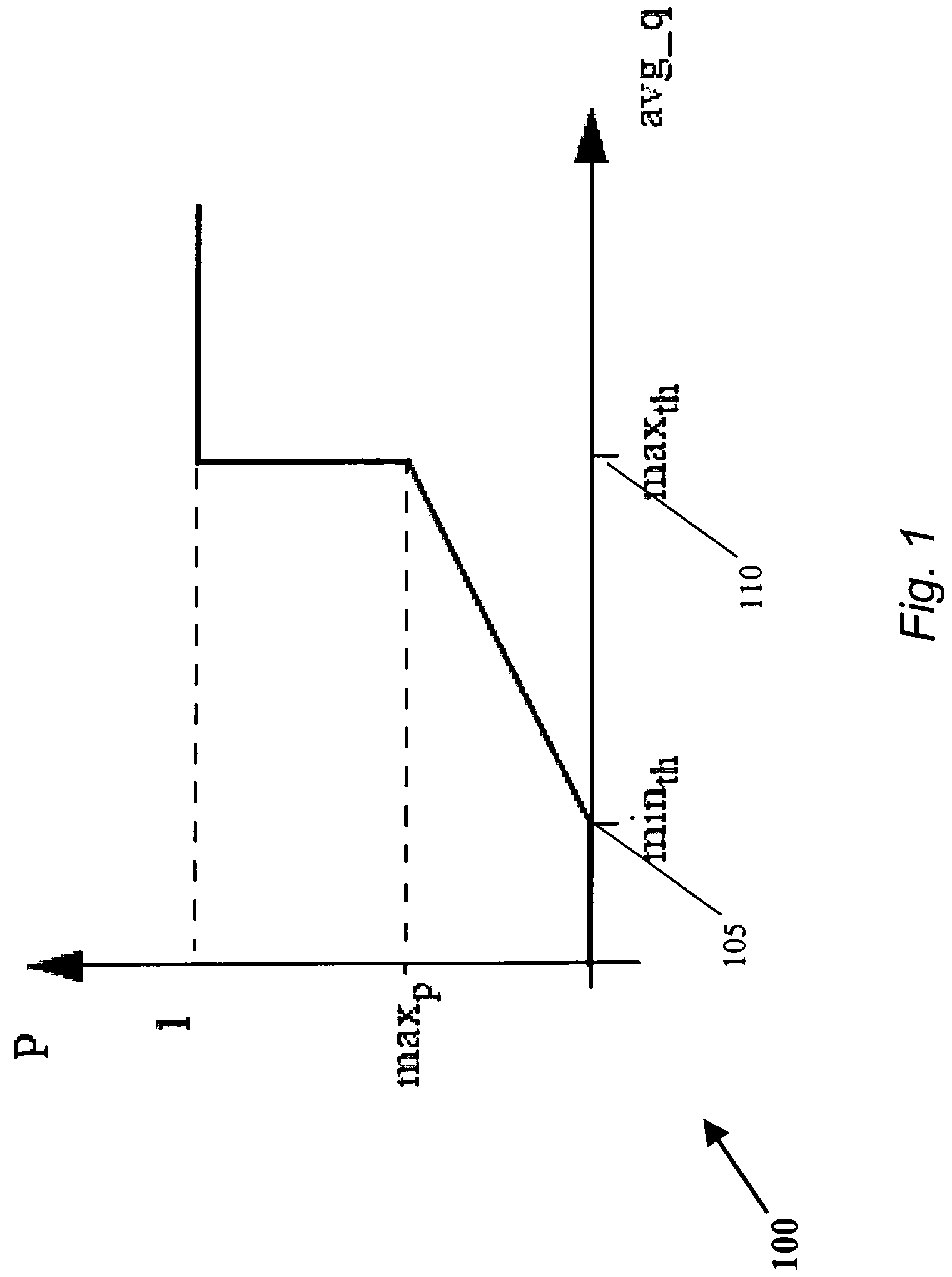

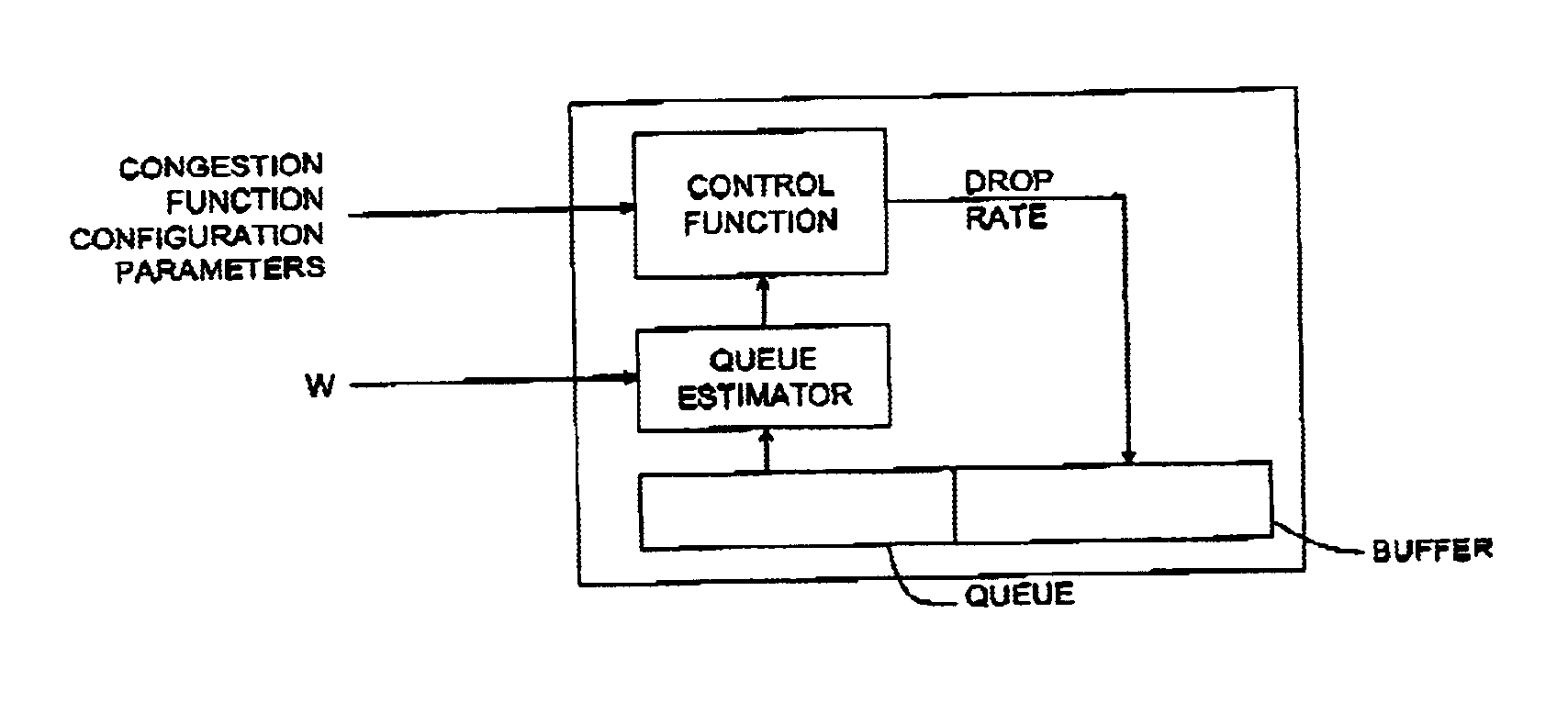

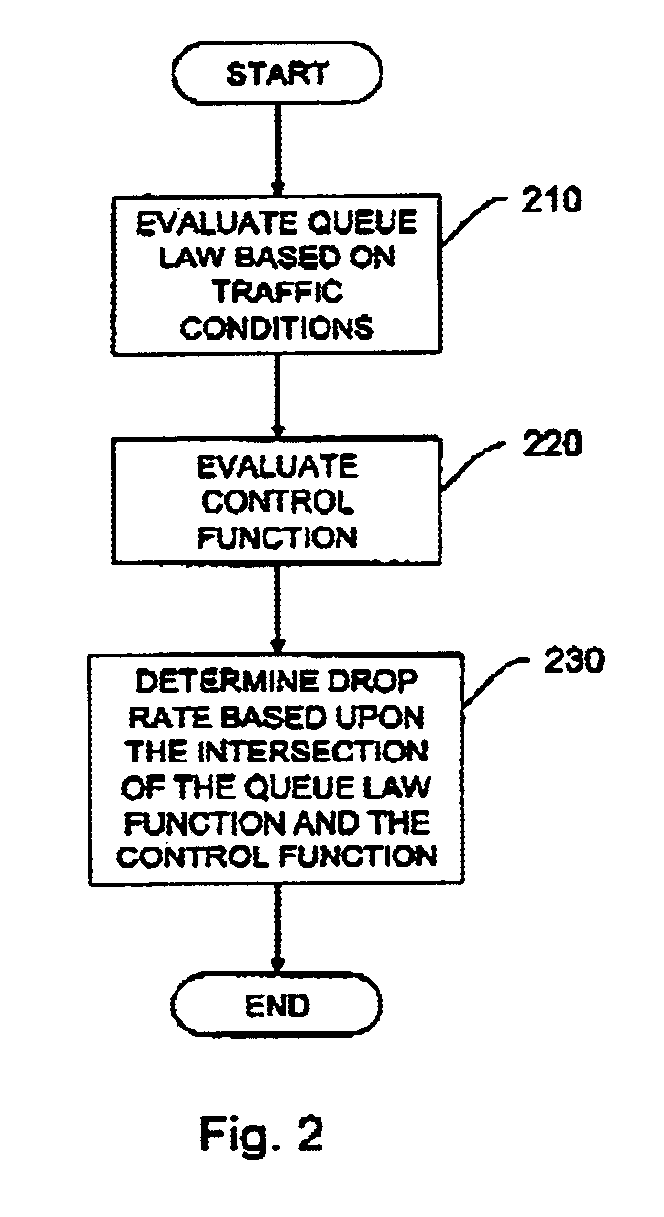

Method and apparatus for queue management

A method, apparatus, and computer program product for determining a drop probability for use in a congestion control module located in a node in a network is disclosed. A weight value for determining a weighted moving average of a queue in a node is first systematically calculated. The weighted moving average is calculating and an average queue size for the node is determined based upon the weighted moving average. A control function associated with the congestion control module is evaluated using the average queue size to determine the drop probability. In a further embodiment, the control function is calculated based upon a queue function where the queue function is calculated based upon predetermined system parameters. Thus, when the congestion control module drops packets based upon the drop probability determined by the control function the queue will not oscillate.

Owner:AVAYA INC

Method and system for efficient queue management

InactiveUS7801120B2Multiplex system selection arrangementsData switching by path configurationActive queue managementOperating system

Embodiments of the present invention are directed to methods for efficient queue management, and device implementations that incorporate these methods, for systems that include two or more electronic devices that share a queue residing in the memory of one of the two or more electronic devices. In certain embodiments of the present invention, a discard field or bit is included in each queue entry. The bit or field is set to a first value, such as the Boolean value “0,” by a producing device to indicate that the entry is valid, or, in other words, that the entry can be consumed by the consuming device. After placing entries into the queue, the producing device may subsequently remove one or more entries from the queue by setting the discard field or bit to a second value, such as Boolean value “1.” The consuming device removes each entry from the queue, in turn, as the consuming device processes queue entries, discarding, without further processing, those entries with the discard bit or field set to the second value.

Owner:AVAGO TECH INT SALES PTE LTD

Queue-based active queue management process

ActiveUS7706261B2More buffer capacityIncrease in sizeError preventionFrequency-division multiplex detailsNetwork communicationActive queue management

Owner:INTELLECTUAL VENTURES II

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com