Patents

Literature

77results about How to "Solve load imbalance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

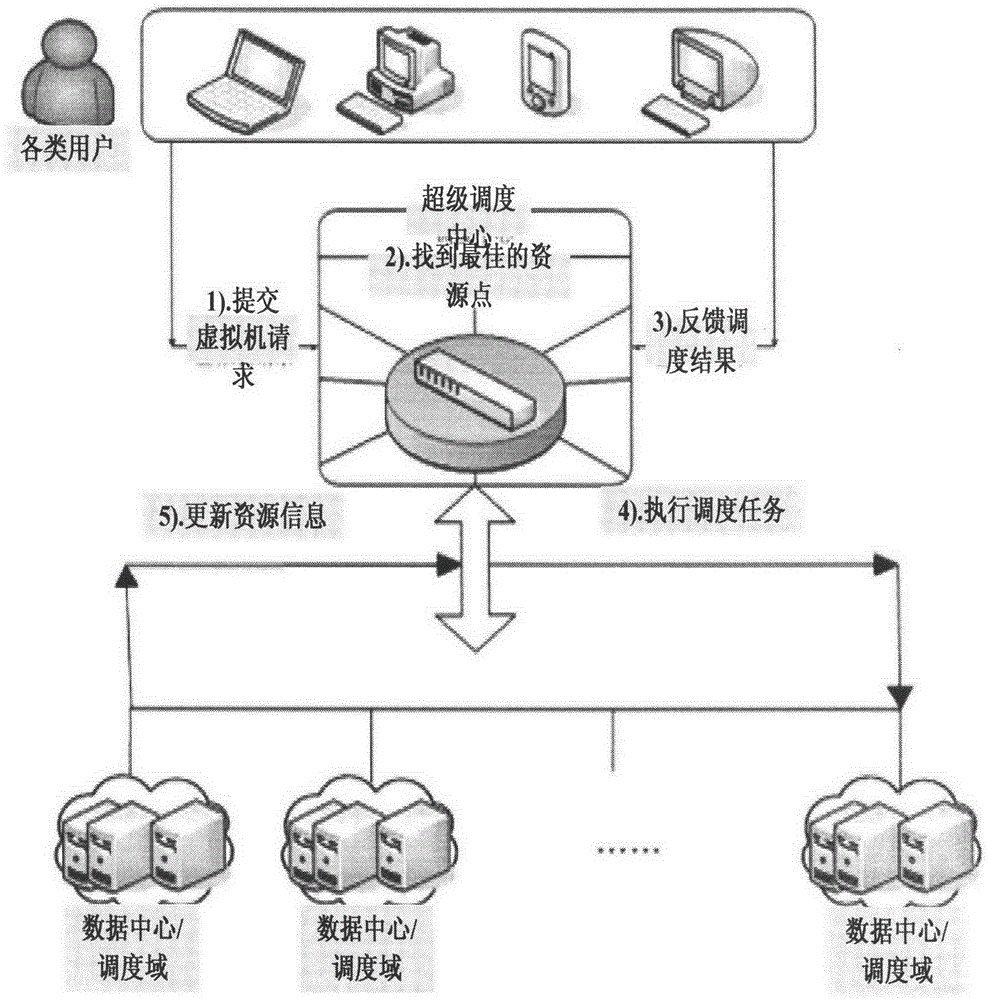

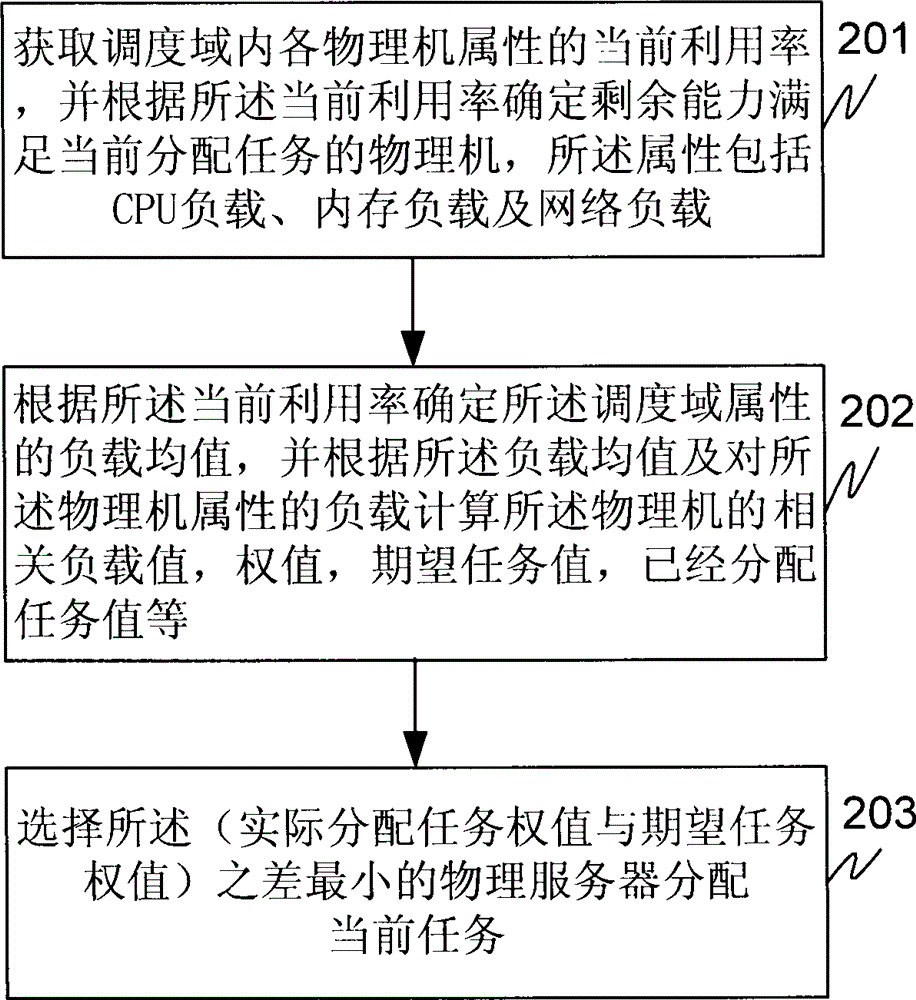

Method and device for realizing data center resource load balance in proportion to comprehensive allocation capability

ActiveCN102185779ATimely determination of load statusSolve load imbalanceData switching networksUser needsData center

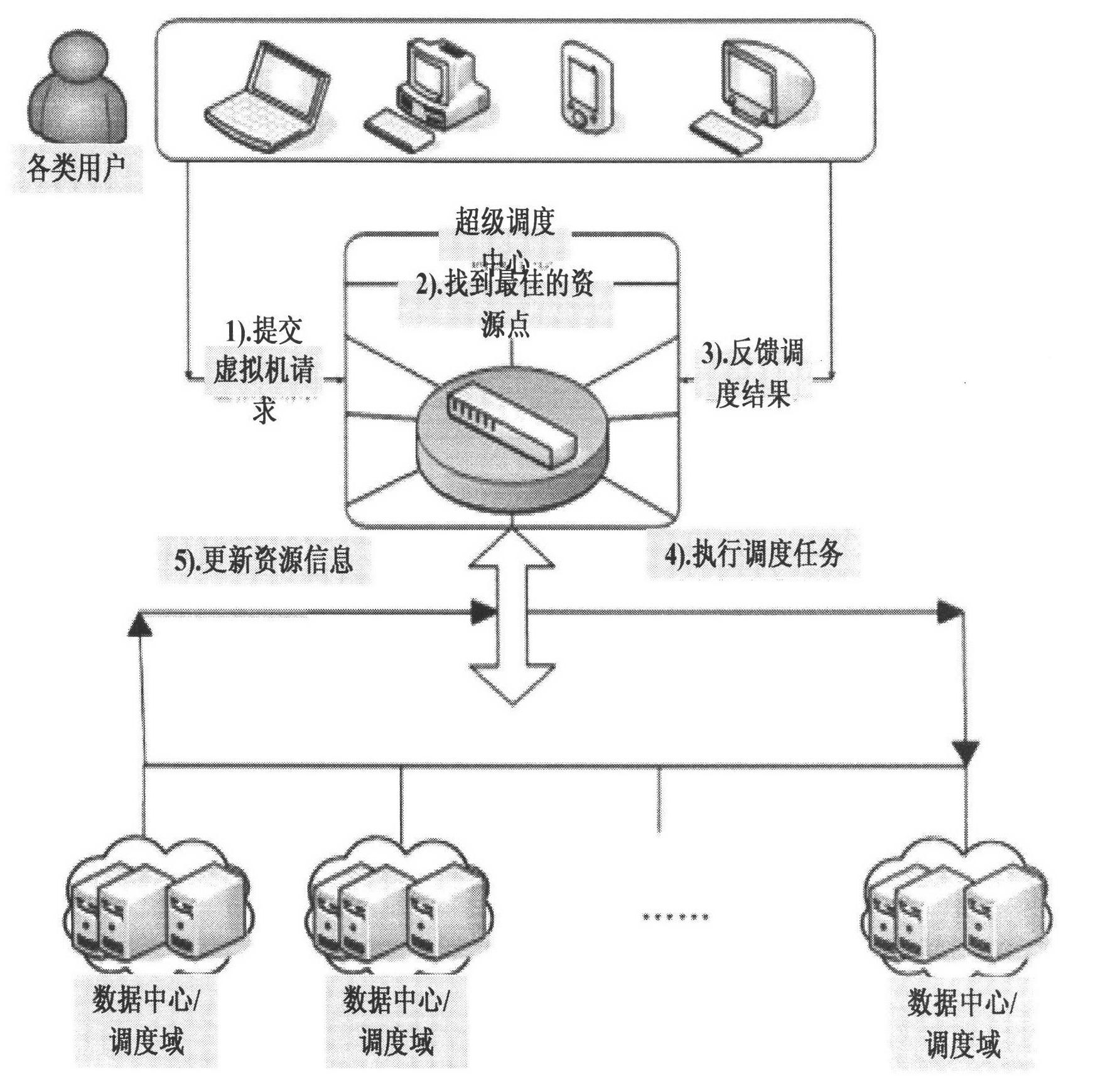

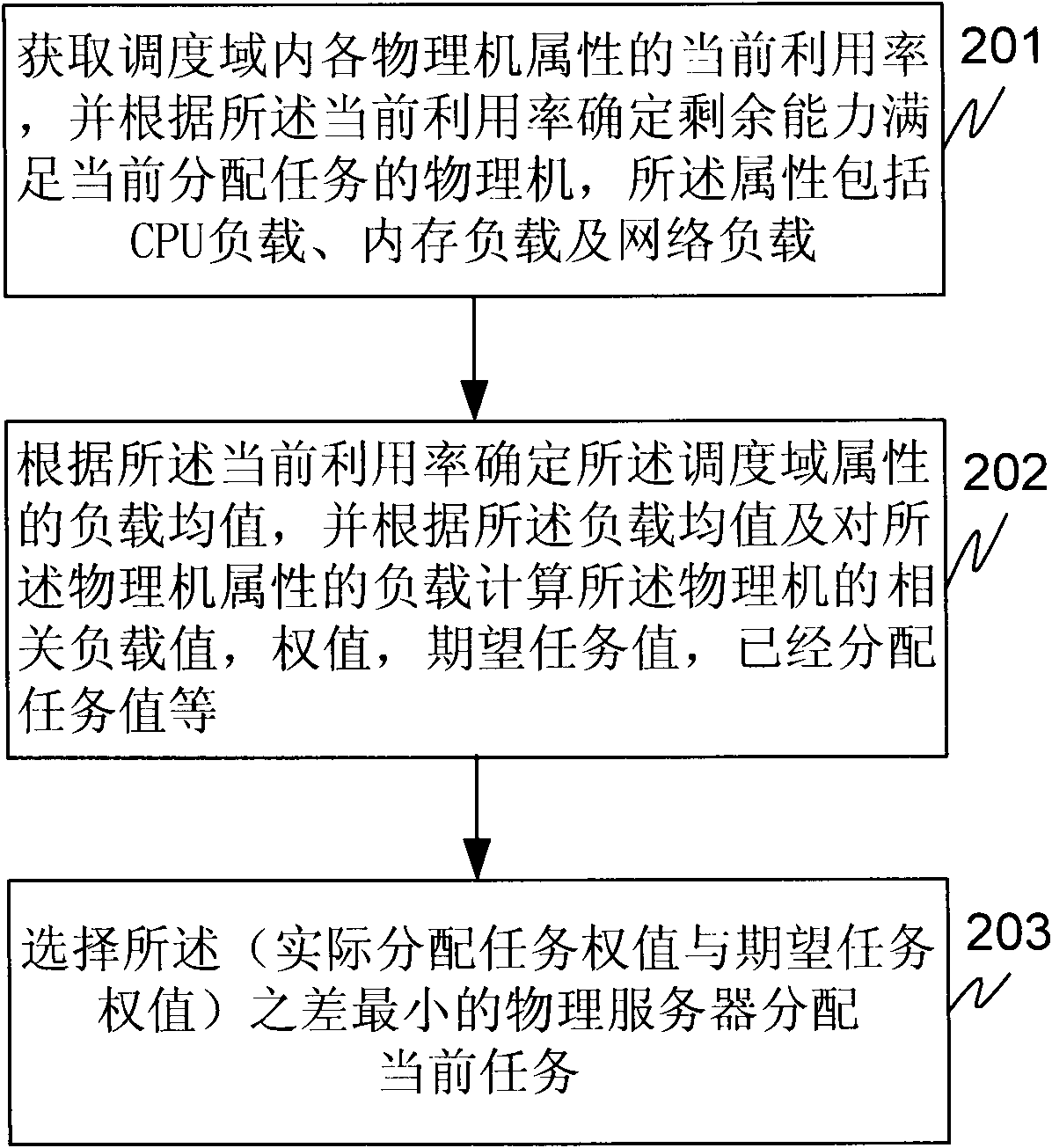

The invention relates to a method and a device for realizing data center resource load balance. The method of the technical scheme comprises the following steps of: acquiring the current utilization rates of attributes of each physical machine in a scheduling domain, and determining the physical machine for a currently allocated task according to the principle of fair distribution in proportion to the allocation capability of a server, an actual allocated task weight value and an expected task weight value, wherein the attributes comprise a central processing unit (CPU) load, a memory load and a network load; determining a mean load value of the attributes of the scheduling domain according to the current utilization rates, and calculating a difference between the actual allocated task weight value and expected task weight value of the physical machine according to the mean load value and predicted load values of the attributes of the physical machine; and selecting the physical machine of which the difference between the actual allocated task weight value and the expected task weight value is the smallest for the currently allocated task. The device provided by the invention comprises a selection control module, a calculation processing module and an allocation execution module. By the technical scheme provided by the invention, the problem of physical server load unbalance caused by inconsistency between user need provisions and physical server provisions can be solved.

Owner:田文洪

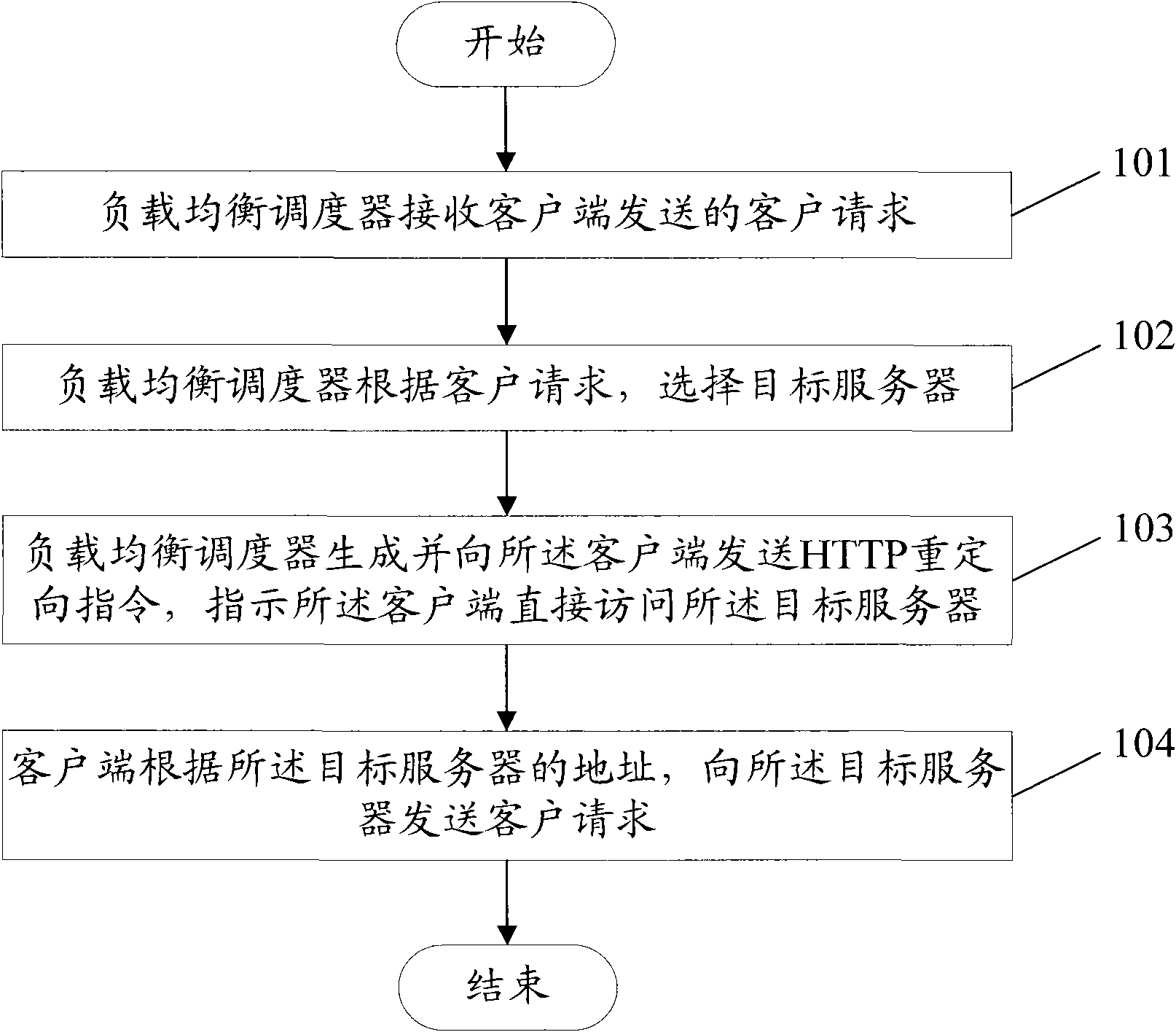

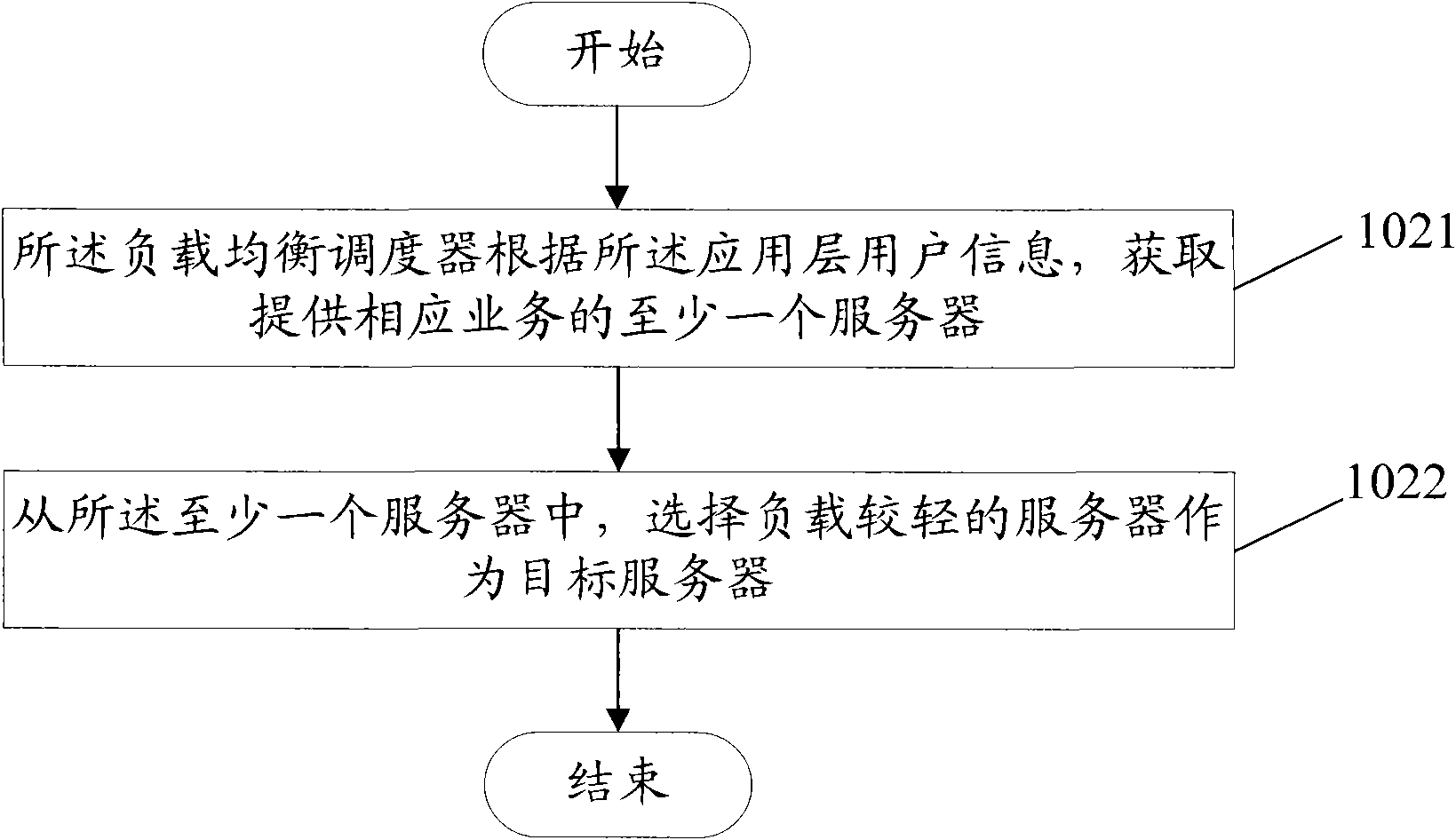

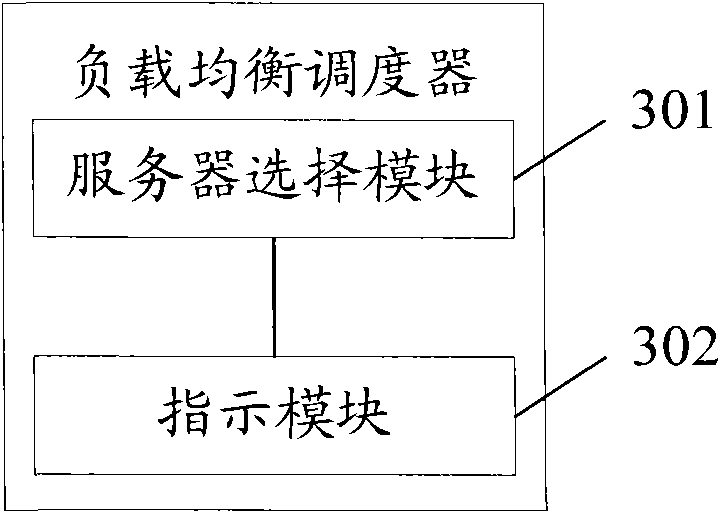

Access method, device and system of server

InactiveCN101808118AImprove access efficiencySolve load imbalanceData switching networksClient-sideUser information

The invention provides access method, device and system of a server, relating to the field of computing and solving the problems of resource waste and low efficiency when a client accesses the server through four-layer load balance. In the access method, a load balance dispatcher selects a target server for the client according to application layer user information contained in a client request sent by the client; and the load balance dispatcher indicates the client to directly access the target server. The invention is suitable for the four-layer load balance of a network.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

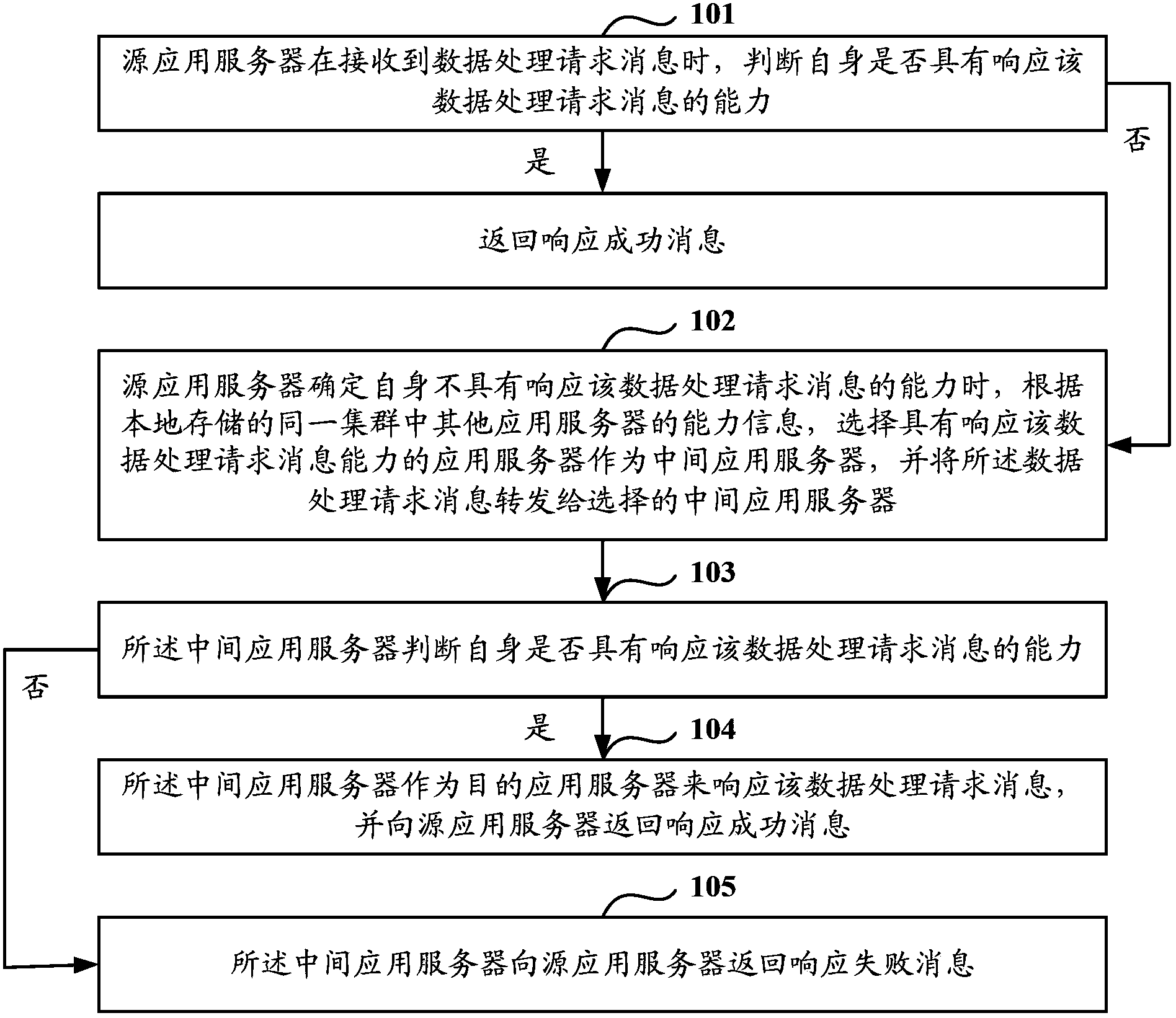

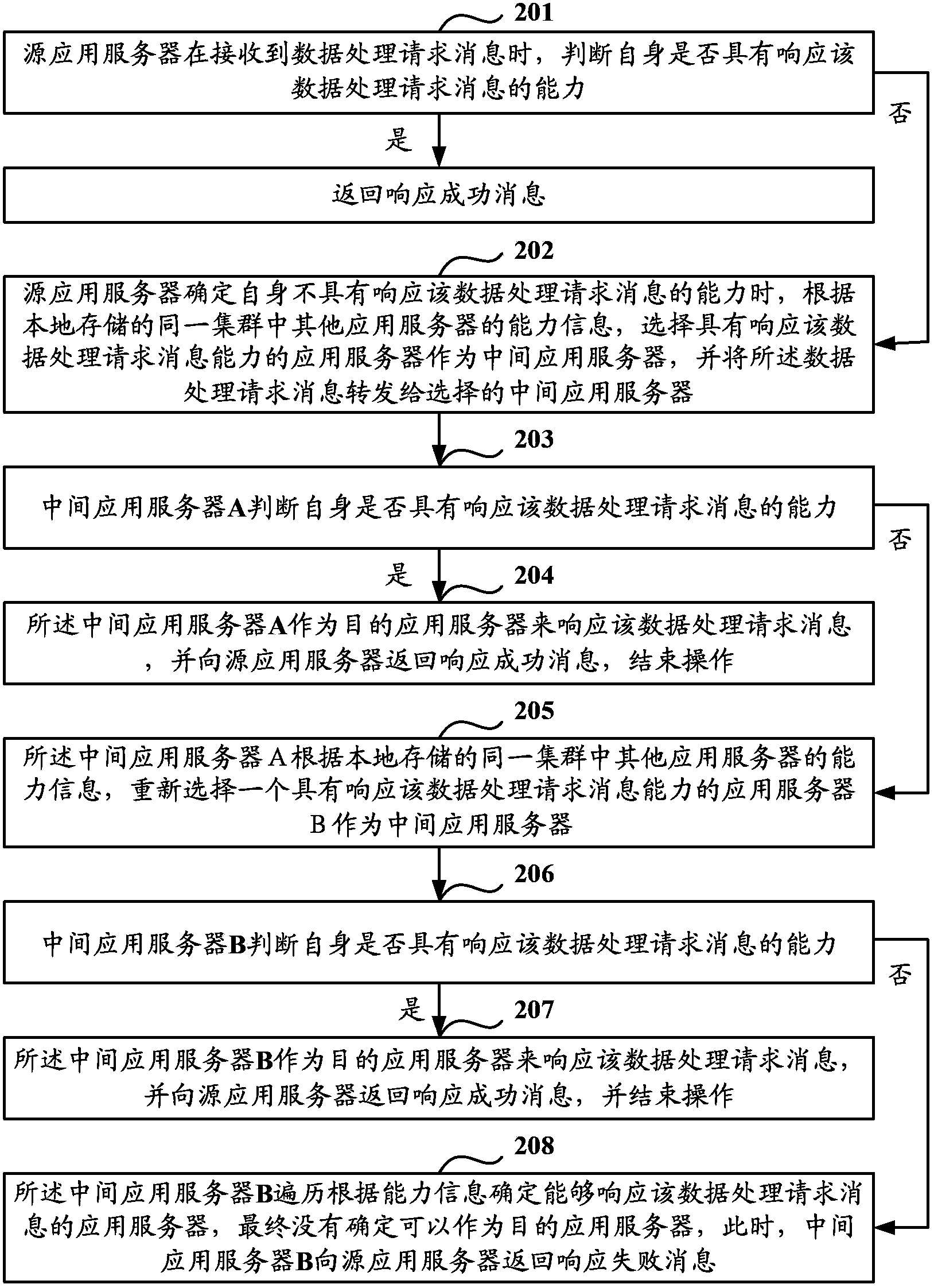

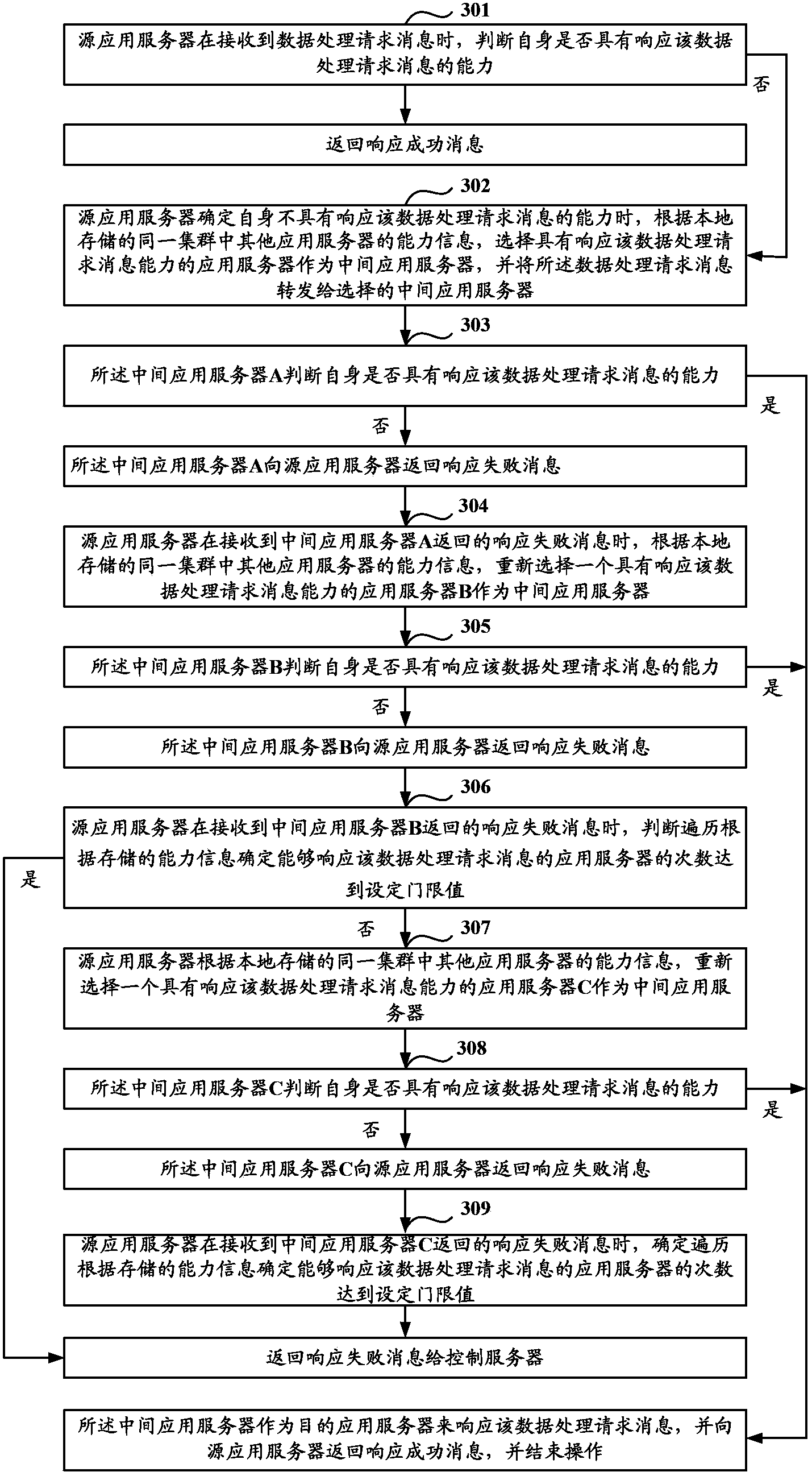

A data processing method, an application server and an application server cluster

InactiveCN103516744ASolve load imbalanceImprove processing efficiencyTransmissionApplication serverDistributed computing

The application discloses a data processing method, an application server and an application server cluster. The method mainly comprises the steps of selecting, based on locally stored ability messages of other application servers in the same cluster, an application server that is capable of responding to data processing request messages to make the server serve as a central application server when a source application server receives the data processing request messages and is determined not to be able to respond to data processing request messages; and then forwarding the data processing request messages to the selected central application server. Therefore, if a source application server selected by a control server on the basis of a load balancing algorithm or a load balancing configuration strategy cannot meet load demands, other application servers with processing abilities in the same cluster can be selected by the source application server to replace the source application server to respond to data processing request messages, thus solving problems of the prior art that a source application server is forced to respond to data processing request messages to cause load imbalance.

Owner:ALIBABA GRP HLDG LTD

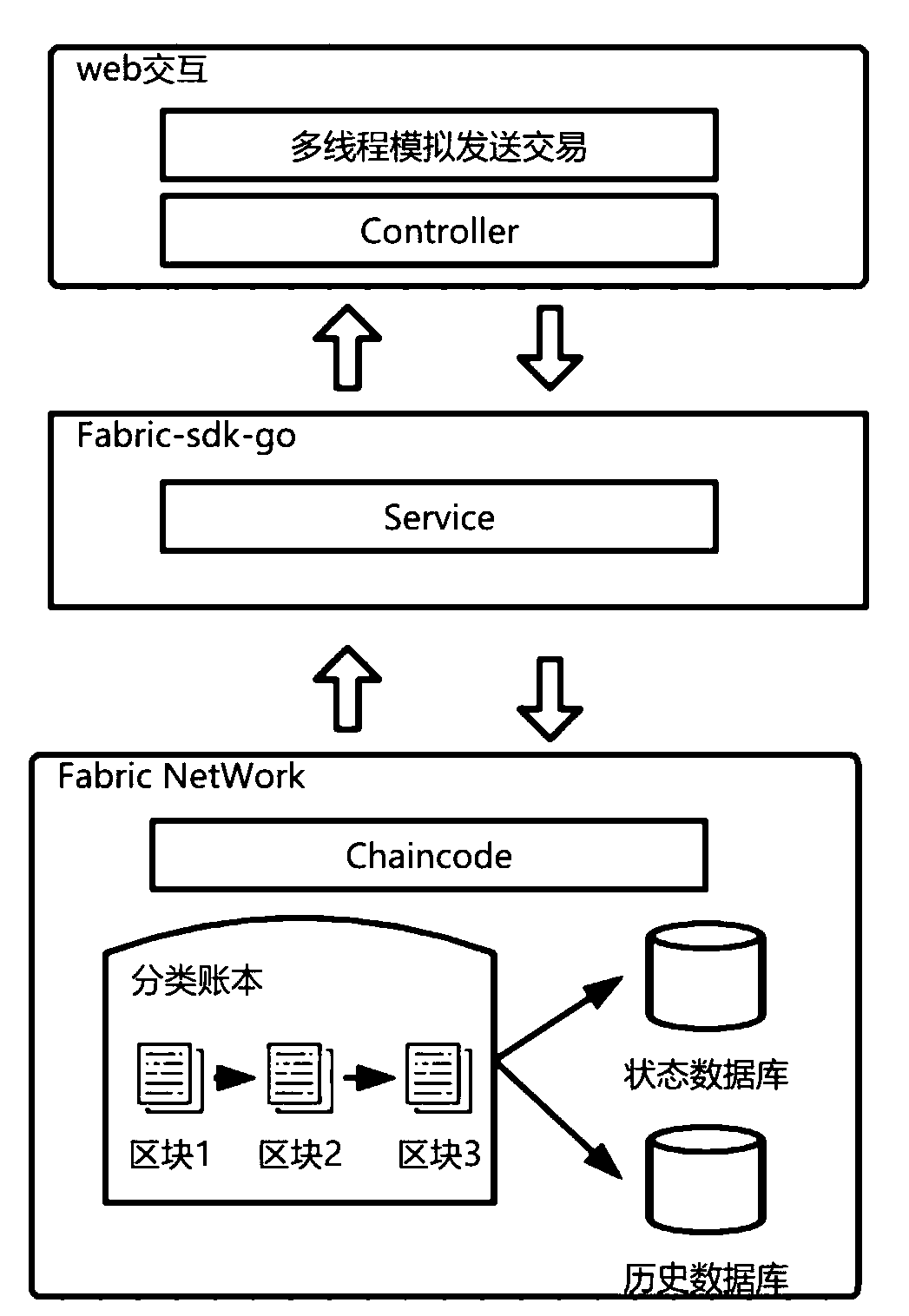

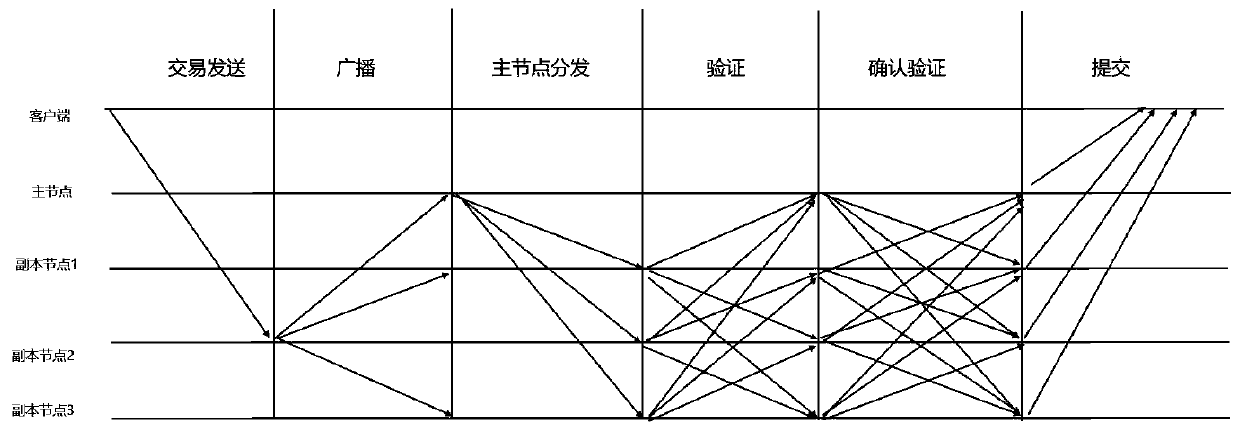

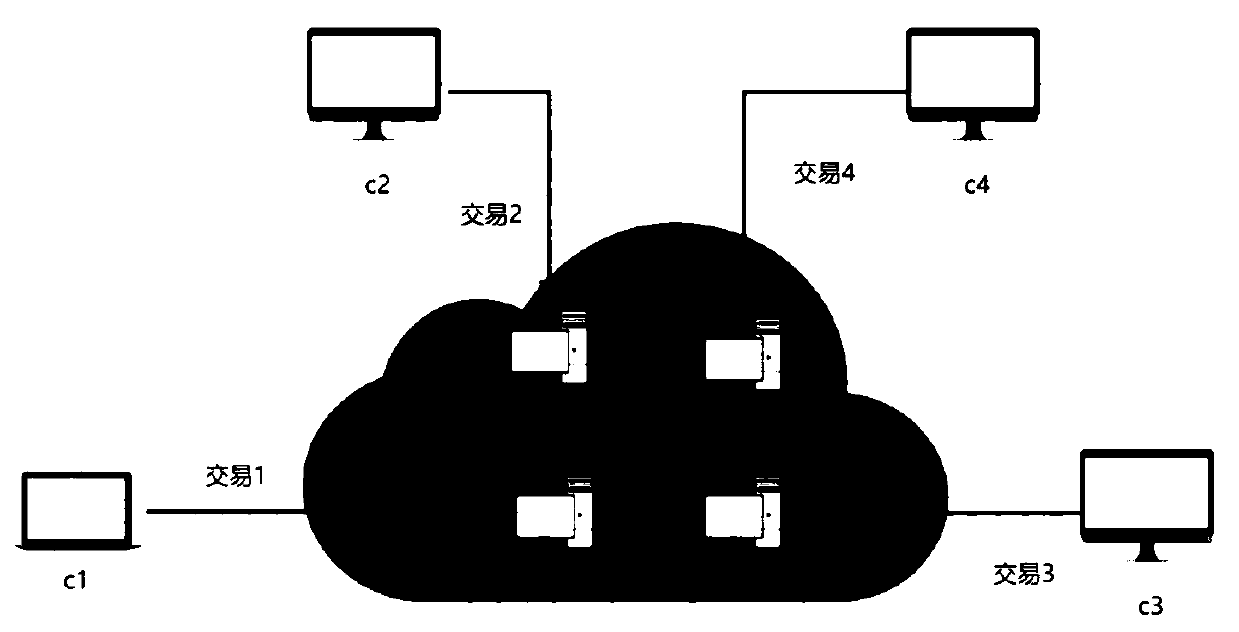

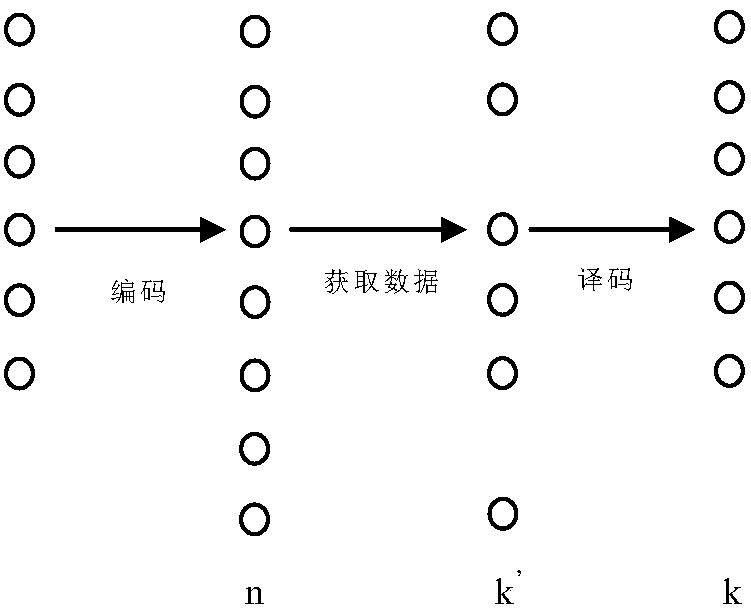

Block chain network node load balancing method based on PBFT

ActiveCN110971684AGuaranteed validityGuaranteed fault toleranceFinanceUser identity/authority verificationEngineeringFinancial transaction

The invention discloses a block chain network node load balancing method based on PBFT, the method is realized based on an alliance chain platform-super account book Fabric, and the system is dividedinto a web layer, a business layer Fabric-sdk-go, an intelligent contract layer namely a chain code layer, and a Fabric namely a block chain storage layer. According to the invention, a main node carries out transaction legality judgment, verification, hash calculation and the like. When a large number of transactions arrive at a main node at the same time, the workload of the main node is very large, the other nodes are in an idle state, and when block information is broadcasted, the large number of transactions can cause too large block output bandwidth of the main node and too heavy load, which is an important factor influencing the performance bottleneck of a block chain system. The load balancing method solves the problem, a part of work of the main node is completed by other nodes, the compressed transaction information is broadcasted, the network exit bandwidth is reduced, the transaction throughput of the system is improved, and meanwhile, the safety and the error-tolerant rateof the system are still ensured.

Owner:BEIJING UNIV OF TECH

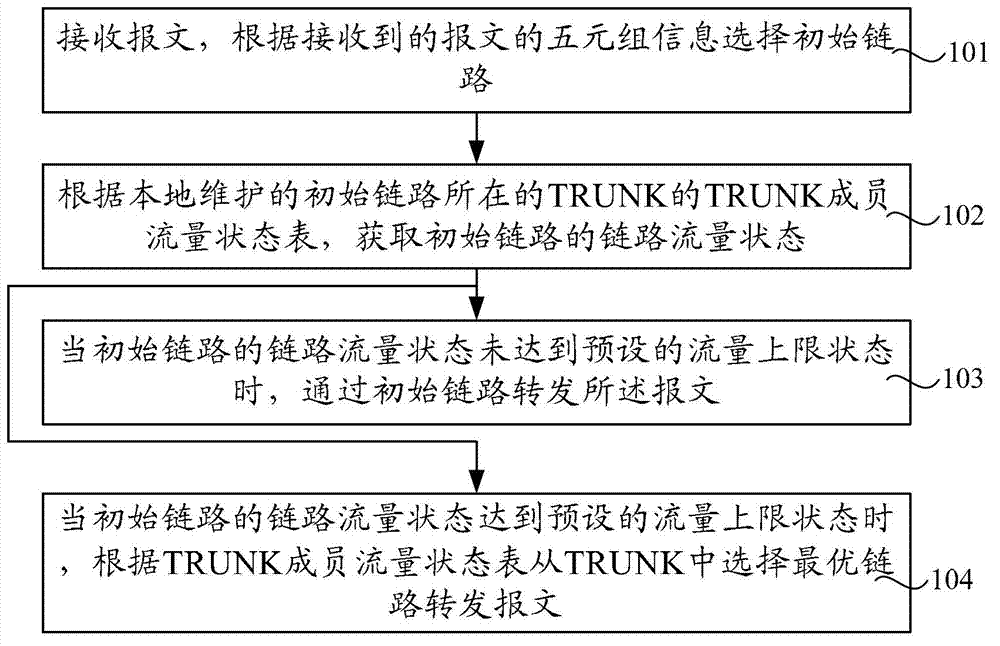

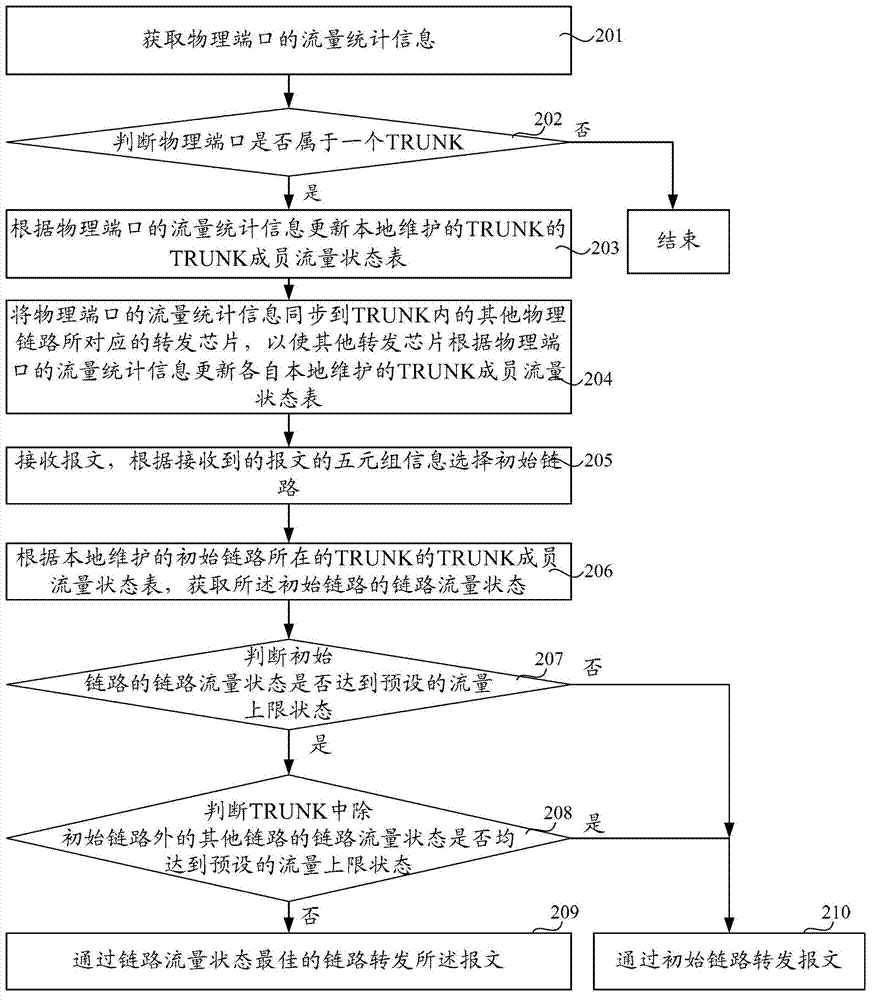

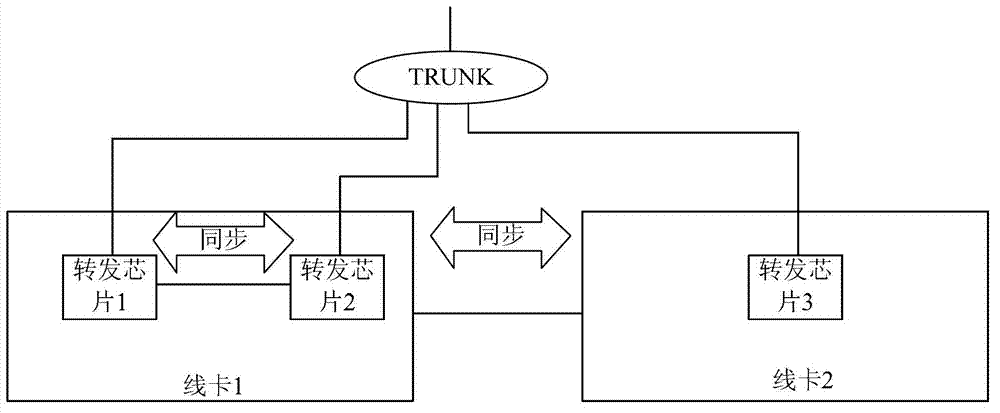

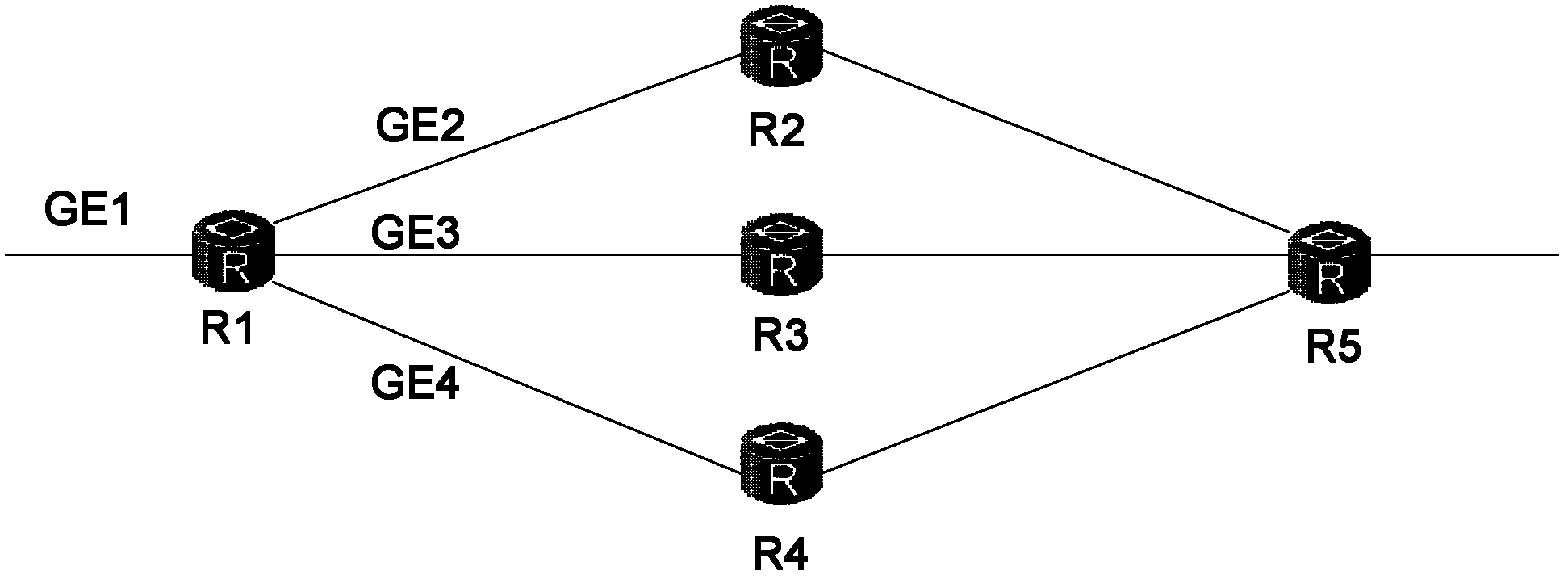

Method for selecting link and device therefore

ActiveCN102761479ASolve load imbalanceIncrease profitData switching networksTraffic capacityReal-time computing

The embodiment of the invention provides a method for selecting a link and a device for selecting the link. The method comprises the following steps of: receiving a message, and selecting an initial link according to five-tuple information of the received message; collecting a TRUNK member flow status table of TRUNK according to a port where the locally-mutinied initial link is arranged, to obtain the link status flow of the initial link; when the link status flow of the initial link does not reach the preset flow upper limit status, transmitting the message through the initial link; and when the link status flow of the initial link reaches the preset flow upper limit status, selecting the optimal link from the TRUNK according to the TRUNK member flow status table to transmit the message. The embodiment of the invention further provides the device for selecting the link. According to the embodiments, the problem of the imbalance of the link load can be effectively solved, and the use ratio of the link resource can be improved.

Owner:HUAWEI TECH CO LTD

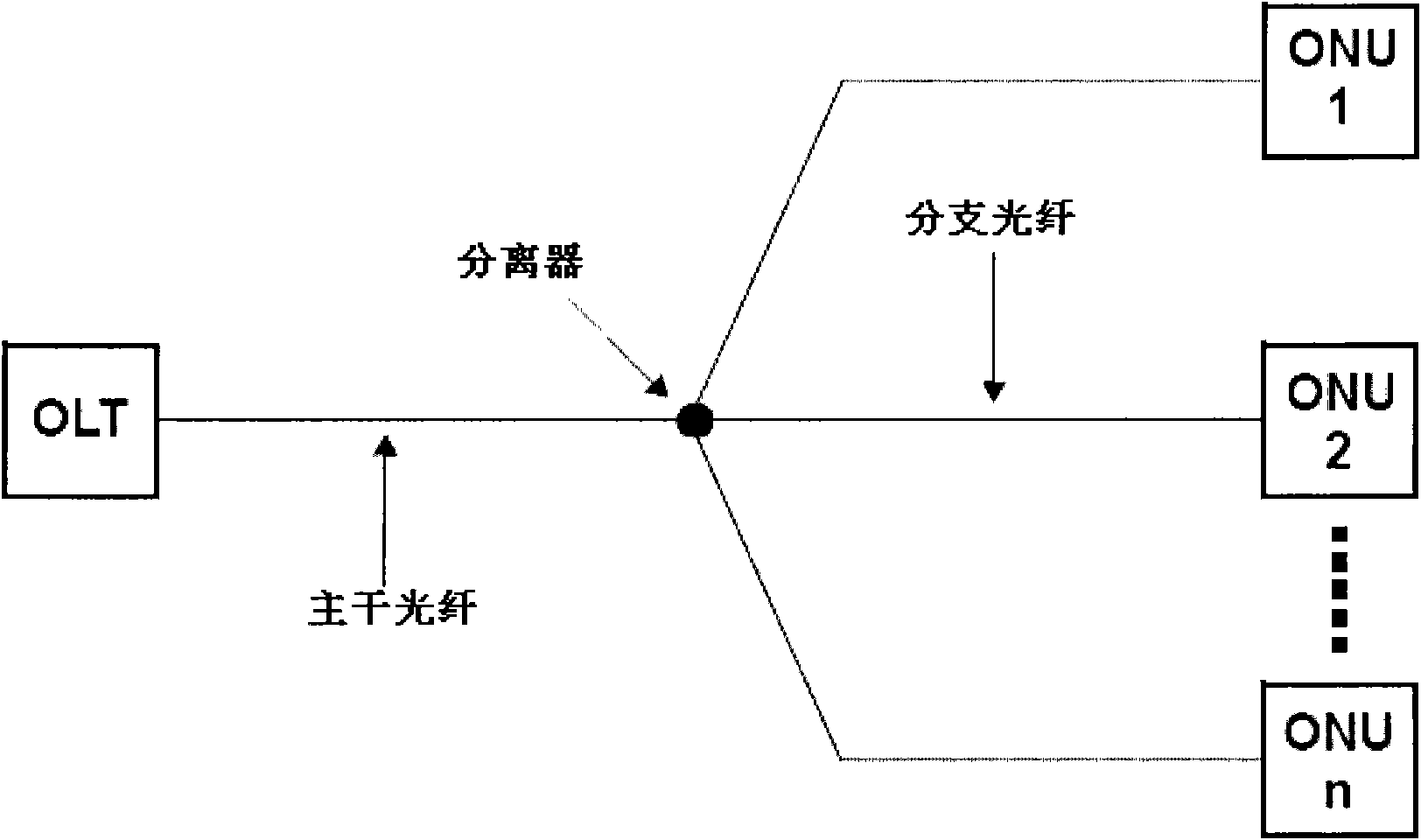

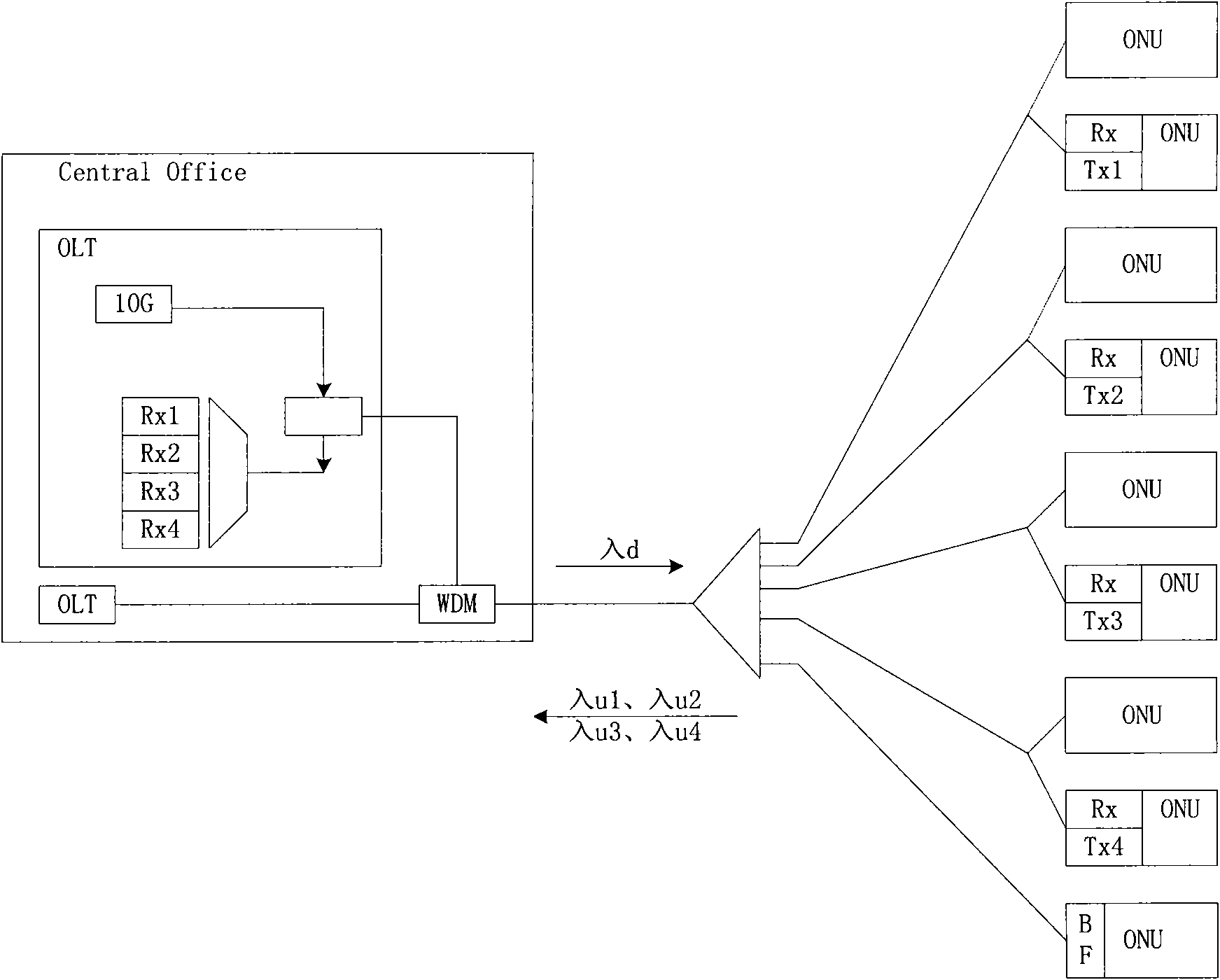

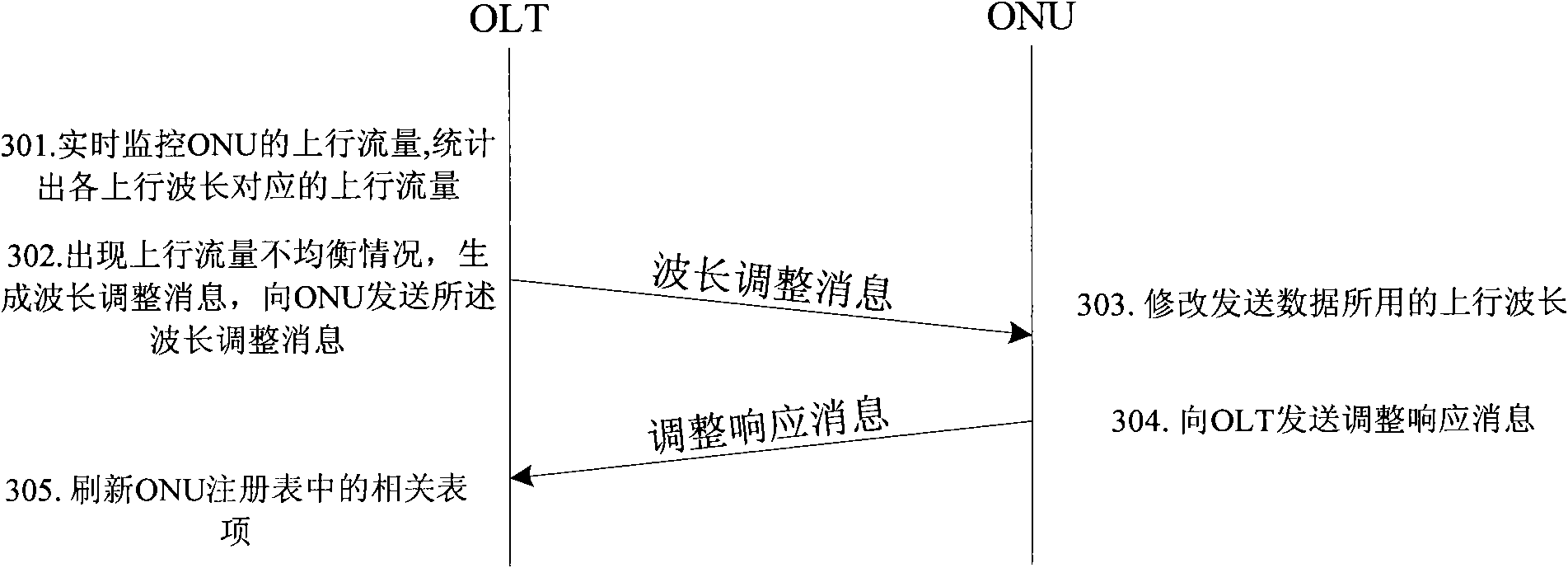

Passive optical network system, optical line terminal and optical network units

ActiveCN101621454ATake advantage ofAvoid load imbalanceTime-division multiplexData switching by path configurationOptical line terminationLength wave

The invention discloses a passive optical network system, an optical line terminal, optical network units and a method for realizing load balance. The system comprises the optical line terminal OLT and the optical network units ONU, wherein the OLT is used for monitoring the uplink flow rate of at least one ONU, counting the uplink flow rates of uplink wavelengths according to the monitored uplink flow rates of the ONUs, judging whether uplink flow rate unbalance occurs among the uplink wavelengths according to preset conditions, determining the ONUs needing wavelength adjustment if the uplink flow rate unbalance occurs, generating wavelength adjustment messages and transmitting the messages to the ONUs needing wavelength adjustment; and the ONUs receive the wavelength adjustment messages from the OLT and perform corresponding wavelength adjustment according to the wavelength adjustment messages.

Owner:HUAWEI TECH CO LTD

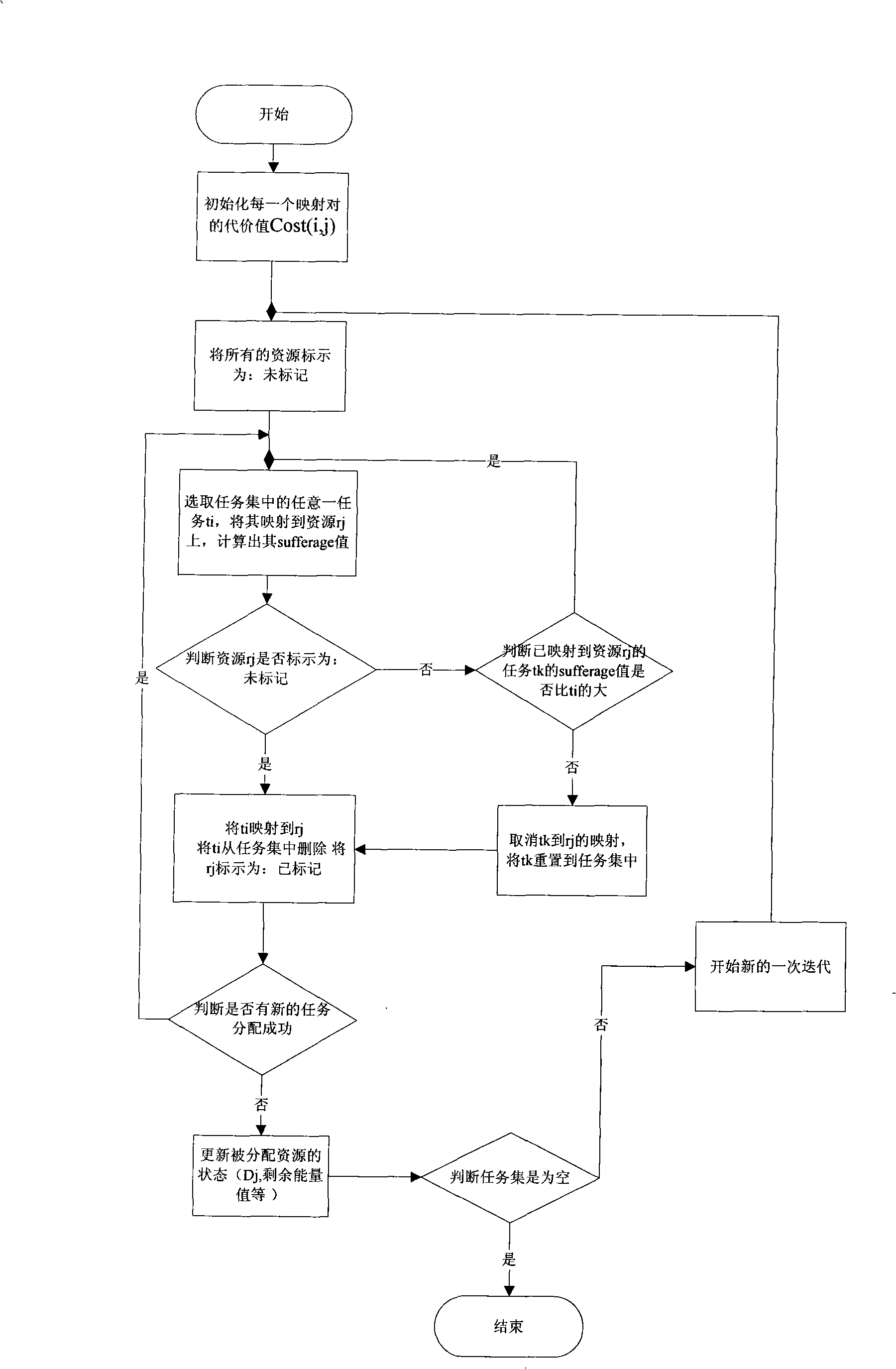

Gridding scheduling method based on energy optimization

InactiveCN101271407AAchieve optimizationConsumption value is smallEnergy efficient ICTResource allocationGrid resourcesEnergy based

The invention relates to a grid scheduling method based on energy optimization, which includes: 1. energy resources are the research focus of scheduling and factors such as initial energy value, network bandwidth, etc. are taken into consideration; 2. the energy optimization is achieved to ensure minimum energy consumption in the resource scheduling and the energy consumption in the grid resource scheduling is divided into calculation consumption and network communication consumption; 3. the optimization of time span is taken into consideration so as to ensure the load balance of the resource in the process of energy optimization; 4. a grid scheduling method based on energy optimization with a comprehensive consideration of energy constraint and time constraint is proposed. The method has the advantages that: 1. the limitation of singleness of resource type in the traditional resource scheduling calculation is changed; 2. the energy optimization is taken as the research focus of the scheduling model; 3. the problem of load imbalance caused in the process of energy optimization is solved; 4. an energy cost function is defined on the basis of time span optimization and energy optimization and the grid resource scheduling method based on energy optimization is proposed on the basis of the cost function.

Owner:WUHAN UNIV OF TECH

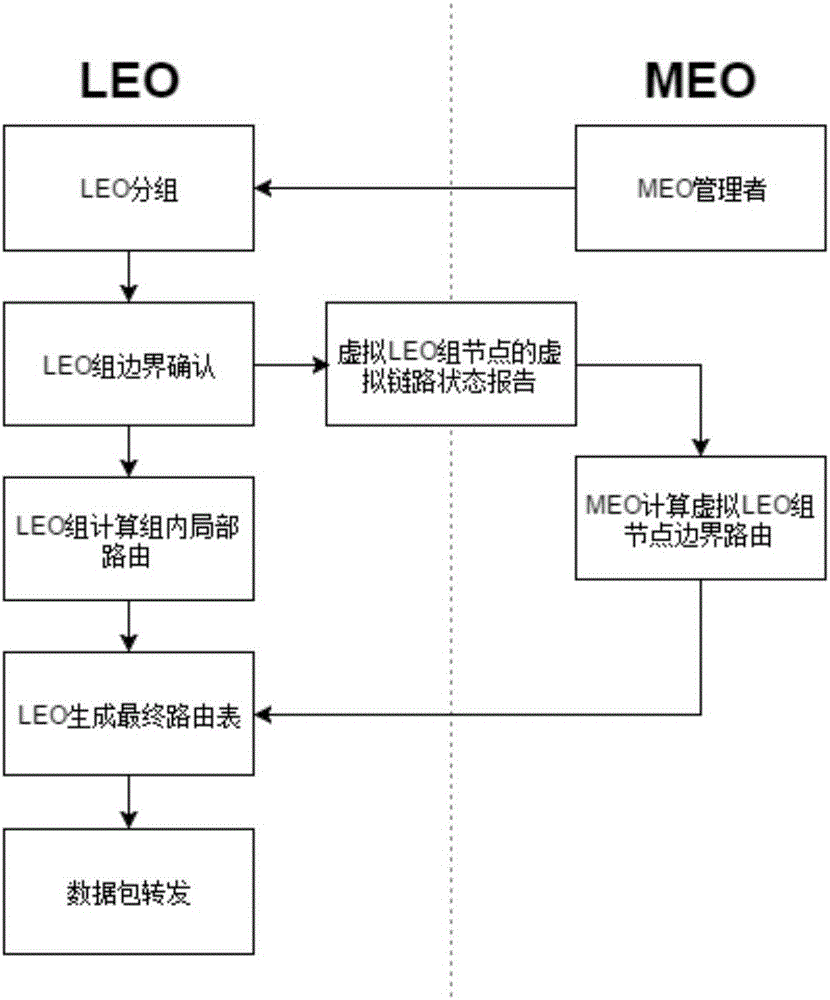

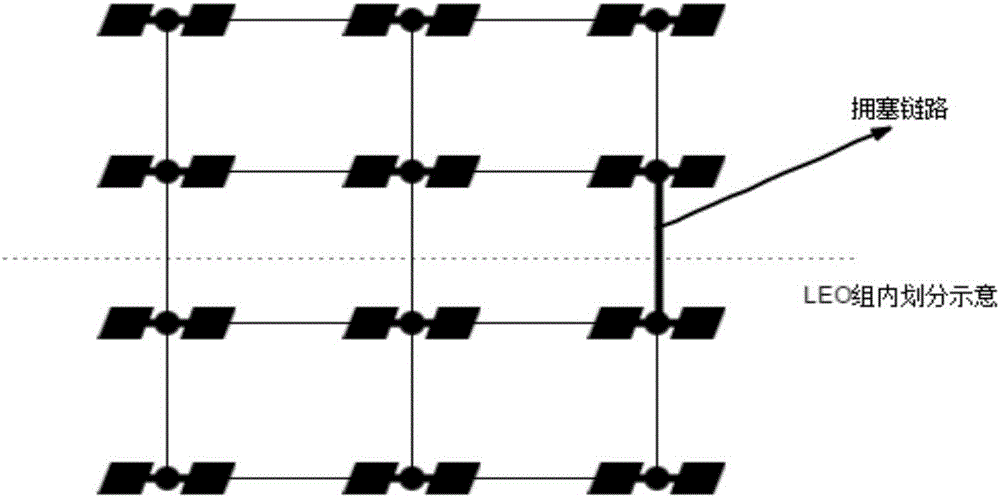

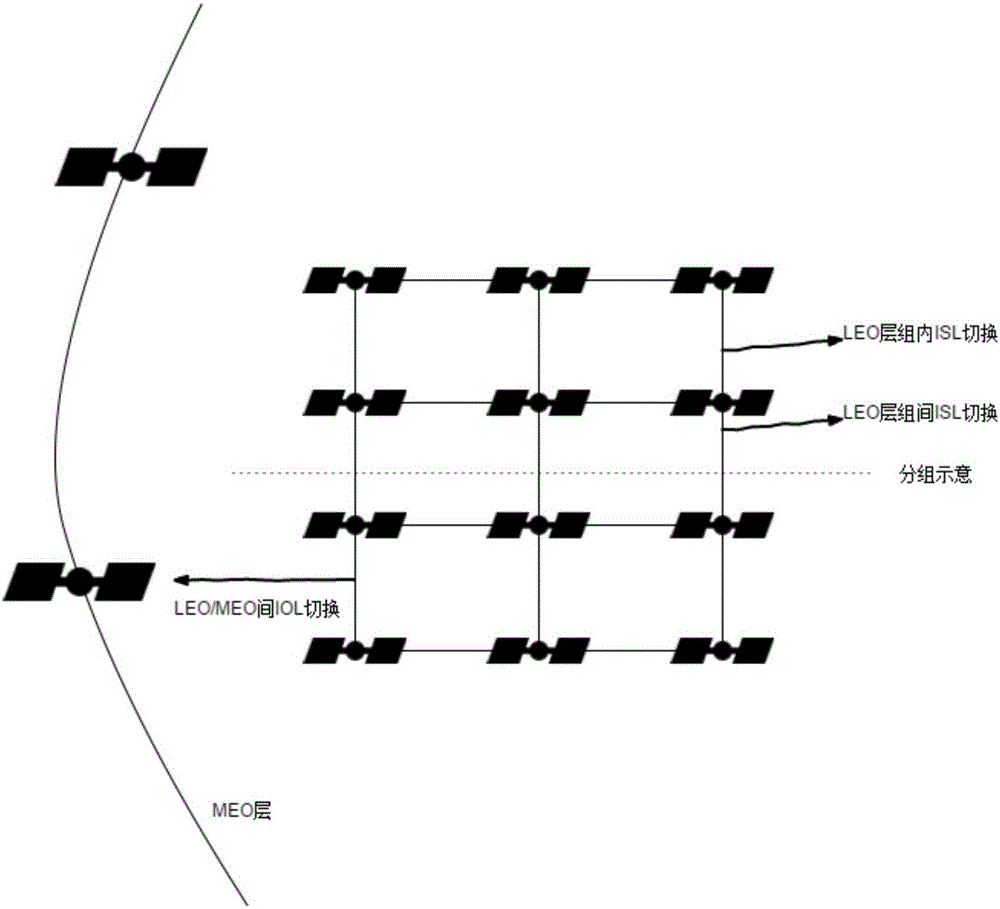

Double-layer satellite packet routing method based on virtual LEO group node

ActiveCN106788666ASolve load imbalanceSolve the problem of frequent snapshot switchingRadio transmissionData switching networksState variationState switching

The invention provides a double-layer satellite packet routing method based on a virtual LEO group node. The method comprises step 1, grouping LEOs; step 2, obtaining an intra-group local routing algorithm and a border routing algorithm according to an LEO intra-group link state report and a link state report of the virtual LEO group node, and forwarding data; step 3, updating an algorithm during LEO layer intra-group ISL switching, LEO layer inter-group ISL switching and LEO / MEO layer IOL state switching; and step 4, performing congestion control and load balance on the situation of congestion in the LEO group and / or congestion at the border outside the LEO group. The method solves the problem of frequent satellite snapshot switching and the problem of load imbalance in network topology, hides LEO layer ISL link state changes and LEO / MEO interlayer IOL link state changes, reduces unnecessary snapshot switching, and improves the working efficiency of satellites by corresponding congestion control.

Owner:SHANGHAI JIAO TONG UNIV

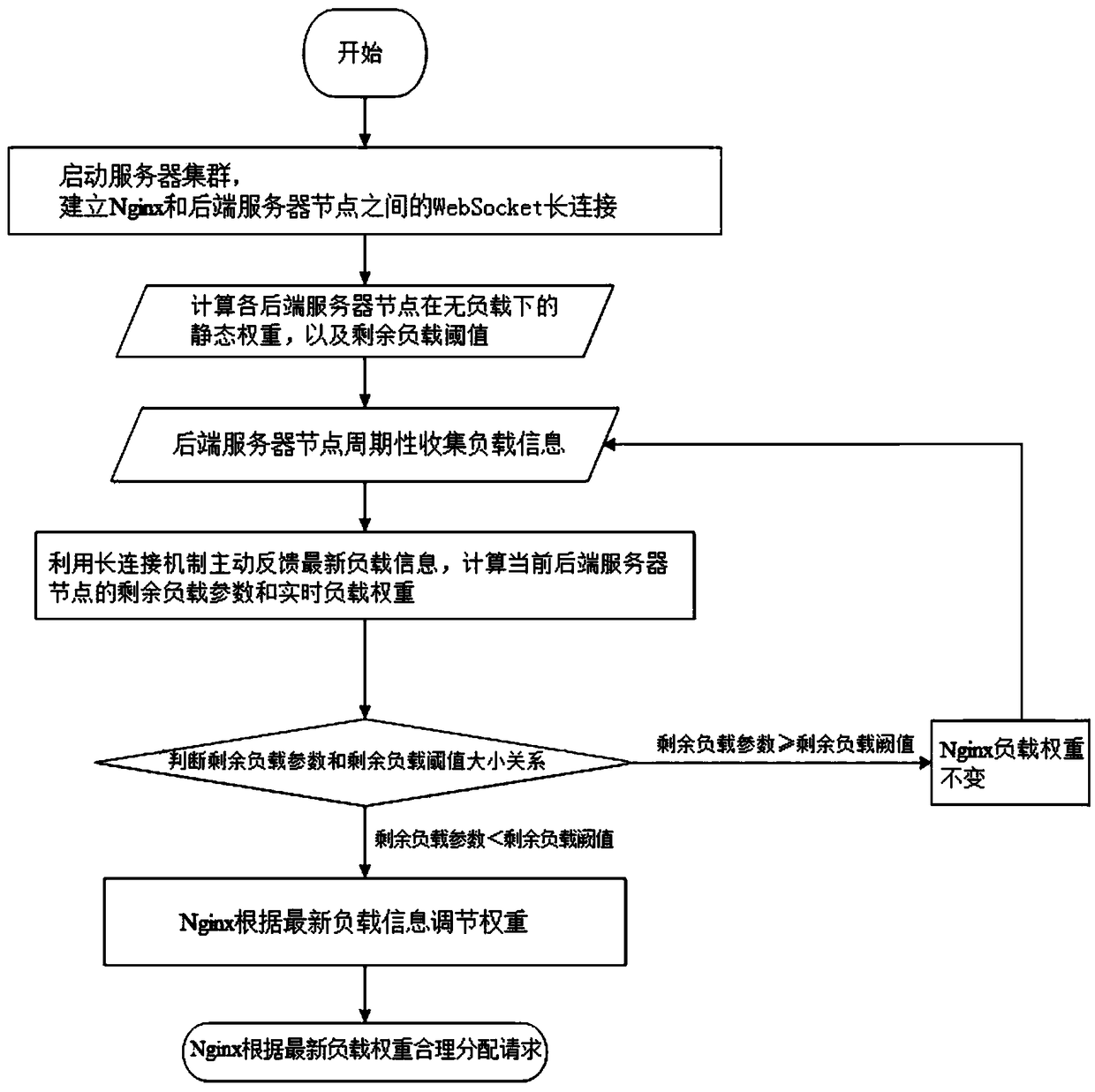

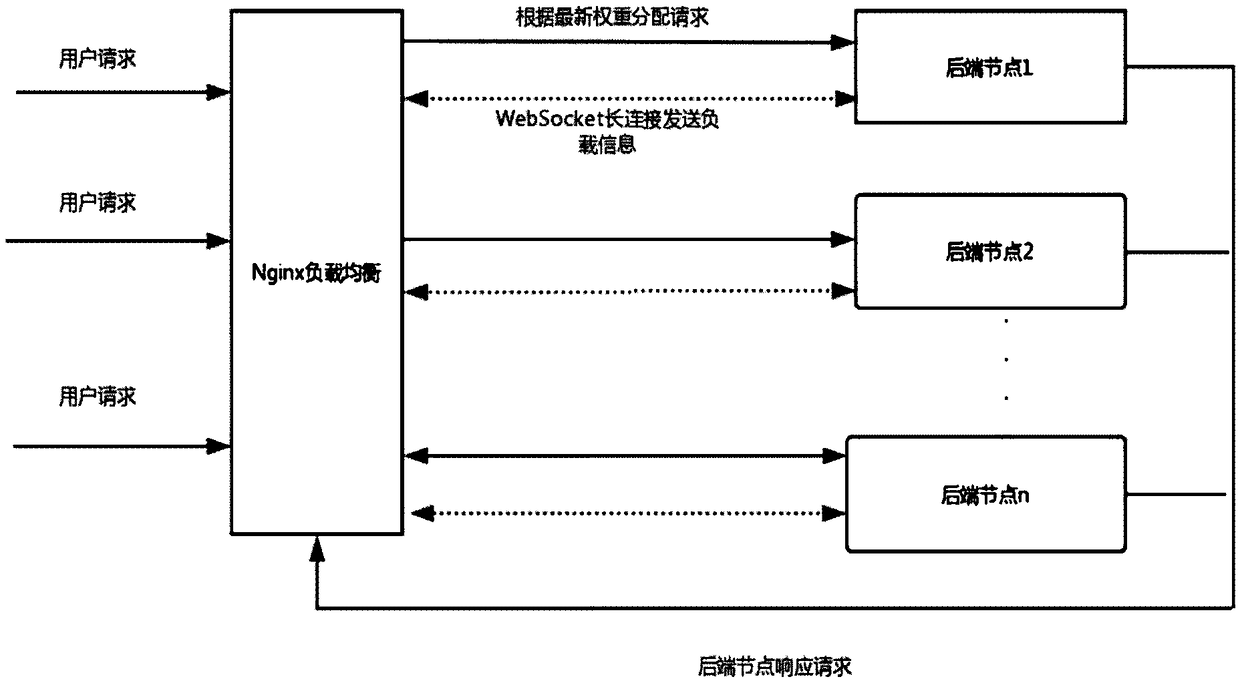

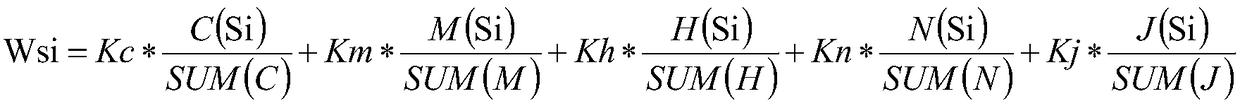

A dynamic load balancing method of Nginx based on WebSocket long connection

ActiveCN109308221ASolve load imbalanceSolve the problem of the influence of load weightResource allocationSoftware simulation/interpretation/emulationWebSocketDynamic load balancing

The invention discloses a Nginx dynamic load balancing method based on WebSocket long connection, includes establishing a long WebSocket connection between Nginx and the back-end server node, calculating the static weight and the remaining load threshold of each back-end server node under no load, collecting periodic load information of back-end server node, calculating residual load parameters and real-time load weights of current server back-end node, judging the relationship between residual load parameters and residual load threshold value, and reasonably distributing the request by Nginxaccording to the latest load weights; It mainly solves the problem that the load weight can not be changed according to the actual situation of the back-end server in the traditional static load balancing method, which leads to the load imbalance.

Owner:NANJING UNIV OF POSTS & TELECOMM

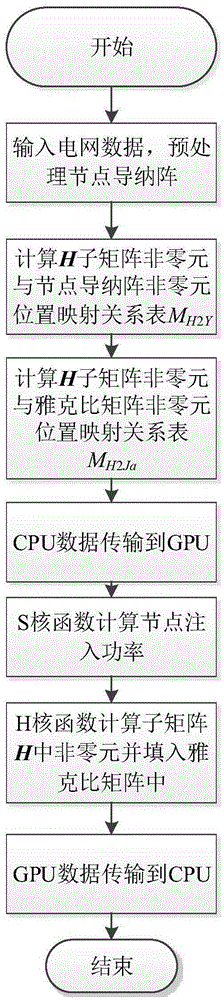

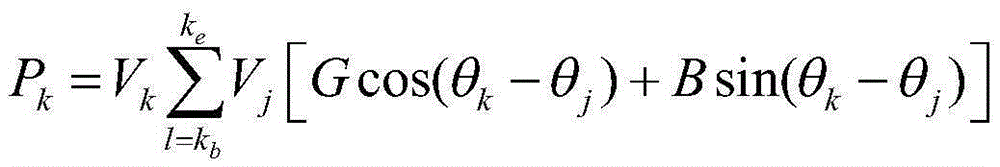

GPU thread design method of power flow Jacobian matrix calculation

InactiveCN105391057AImprove branch execution efficiencySolve load imbalanceAc network circuit arrangementsNODALPower flow

The invention discloses a GPU thread design method of power flow Jacobian matrix calculation. The method comprises the steps that: power grid data is input, and pre-processing is carried out on a node admittance matrix Y; a CPU calculates position mapping relation tables respectively between sub-matrix non-zero elements and node admittance matrix Y non-zero elements; the calculates position mapping relation tables respectively between sub-matrix non-zero elements and Jacobian matrix non-zero elements; in a GPU, injection power of each node is calculated by an injection power kernel function S; and in the GPU, non-zero elements in sub-matrixes are respectively calculated by Jacobian sub-matrix calculation kernel functions and stored in a Jacobian matrix. According to the invention, the calculation of Jacobian matrix non-zero elements is carried out by the sub-matrixes, and the judgment of branch structures of sub-matrix regional process belonging to the elements, which is required by the direct calculation process using a single kernel function, is avoided, so that the execution efficiency is improved; in addition, the non-zero elements in the sub-matrixes having identical calculation formulas are calculated in a centralized manner, so that the problem of unbalanced thread loads is solved, and the efficiency of parallel calculation is improved.

Owner:STATE GRID CORP OF CHINA +3

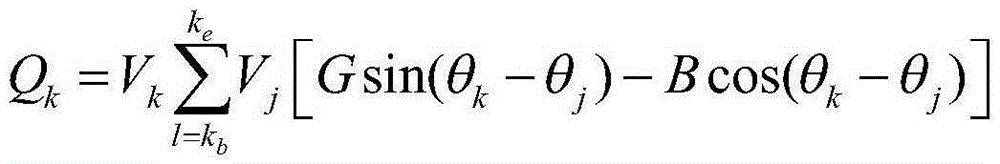

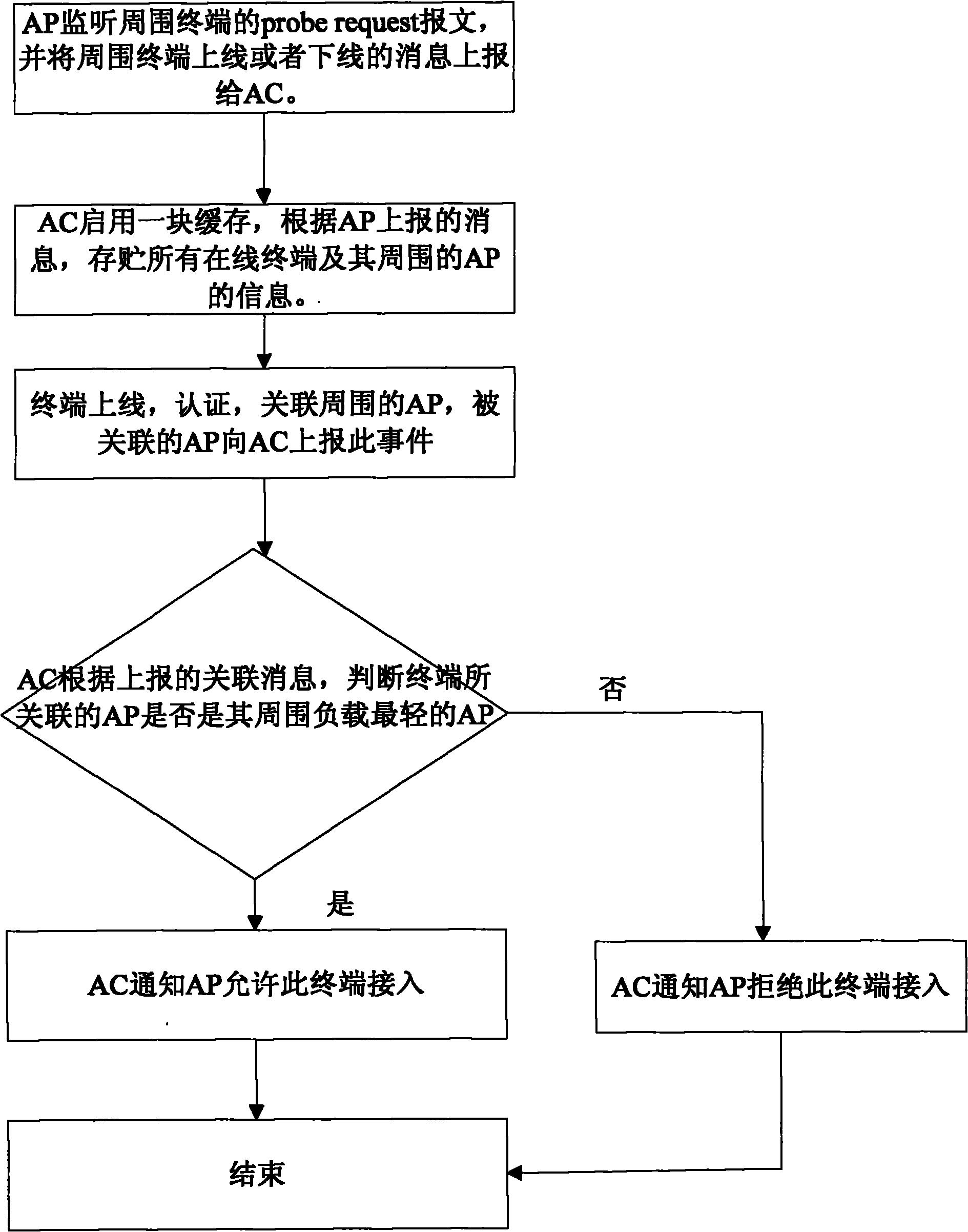

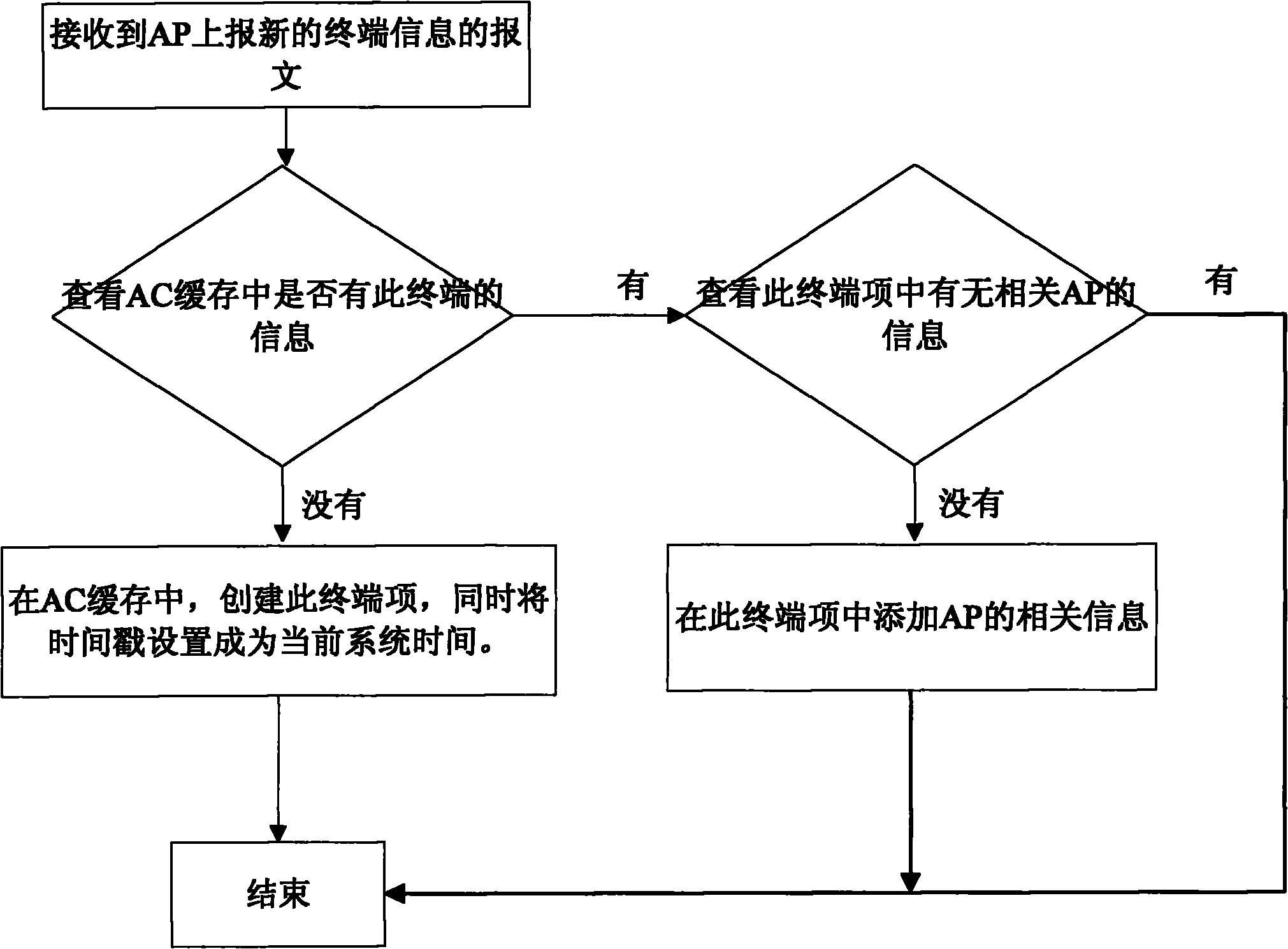

Method for balancing wireless local area network load by wireless access controller

ActiveCN101938785ASolve load imbalanceImprove load balancing effectNetwork traffic/resource managementNetwork topologiesNetwork topologyWireless LAN controller

The invention discloses a method for balancing wireless local area network loads by a wireless access controller, and the wireless access controller receives information of a wireless terminal actively reported by a wireless access point (AP) when the wireless terminal in the wireless local area network sends a Probe Request message to the wireless AP. The method is characterized by comprising the following steps: (1) when the wireless terminal begins to associate, the wireless access controller (AC) performs load balancing treatment on the current wireless terminal based on the currently collected information; or (2) the wireless AC checks the load conditions of wireless AP associated with all on-line wireless terminals at frequent intervals and performs load balancing treatment based on the load conditions. The method in the invention can better cope with the condition that wireless network topology changes abruptly and has good actual load balancing effect.

Owner:TAICANG T&W ELECTRONICS CO LTD

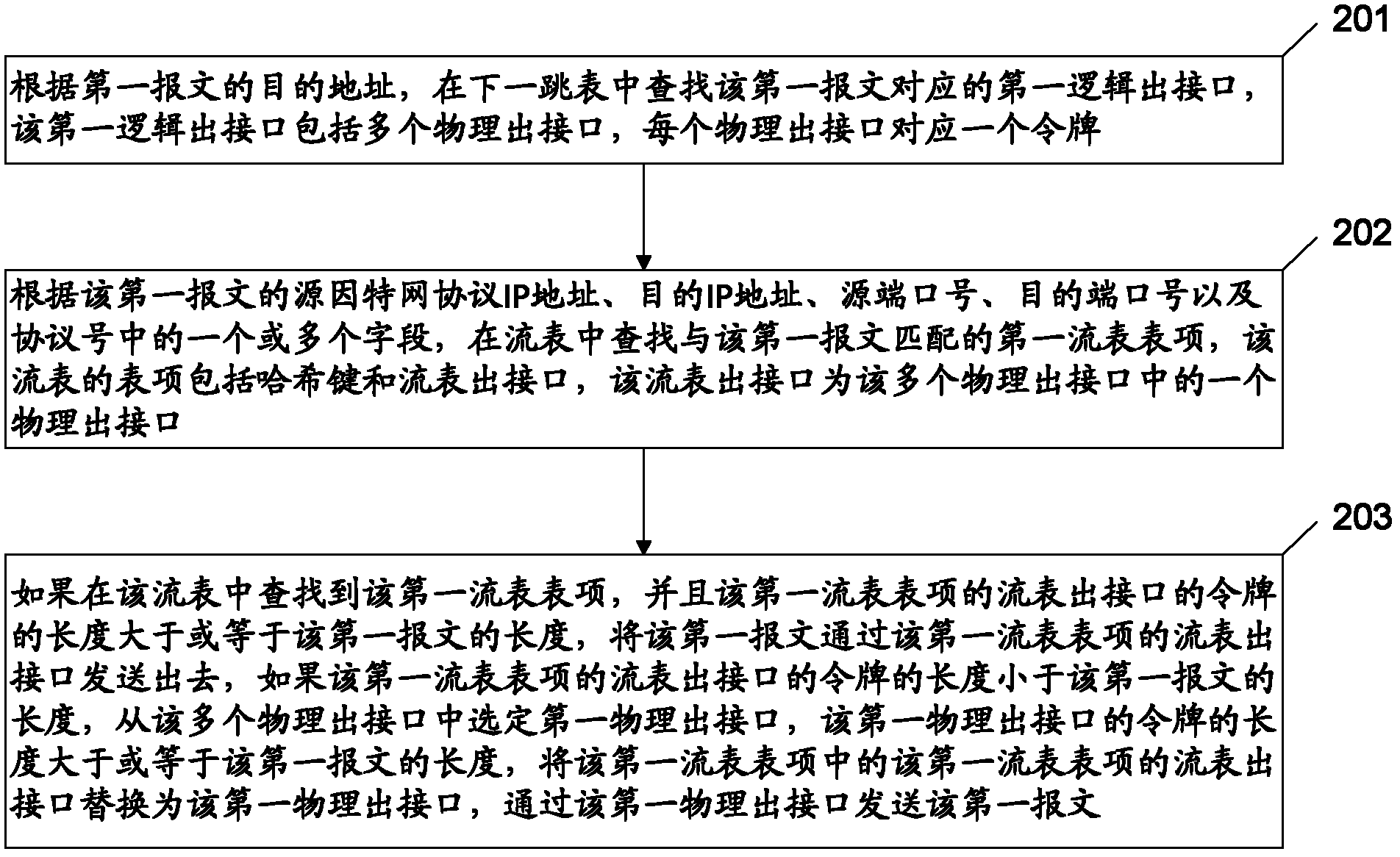

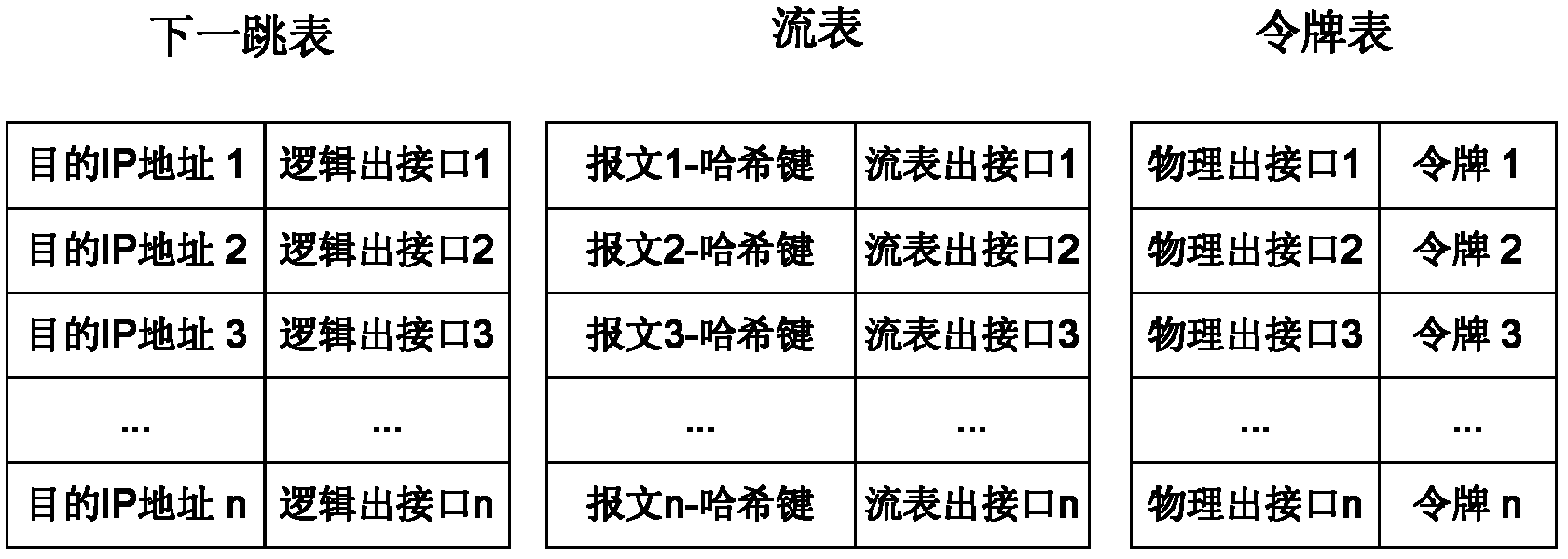

Method and device for load sharing

InactiveCN102255816ASolve load imbalanceImprove overall utilizationData switching networksTraffic volumeComputer hardware

The embodiment of the invention discloses a method for load sharing, comprising the steps of: searching a corresponding first logical output interface according to the destination address of a first message; searching a matched first stream table entry in a stream table according to one or a plurality of fields in the quintuple of the first message; if the length of the stream table output interface of the first stream table entry is greater than or equal to the length of the first message, then sending the first message via the stream table output interface of the first stream table entry; or else, selecting a first physical output interface from a plurality of physical output interfaces, replacing the stream table output interface of the first stream table entry by the first physical output interface, and sending the first message via the first physical output interface. Additionally, the embodiment of the invention further provides a corresponding device for load sharing as well as another method and device for load sharing. Via the method and device for load sharing provided by the embodiment of the invention, the problem of load unbalance caused to traffic Hash can be solved, and the overall utilization rate of the physical output interfaces is increased.

Owner:BEIJING HUAWEI DIGITAL TECH

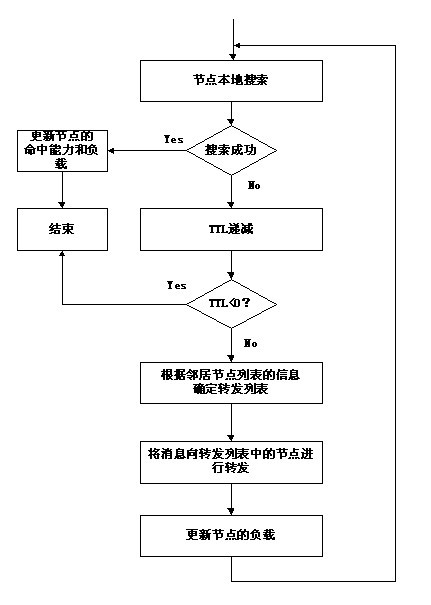

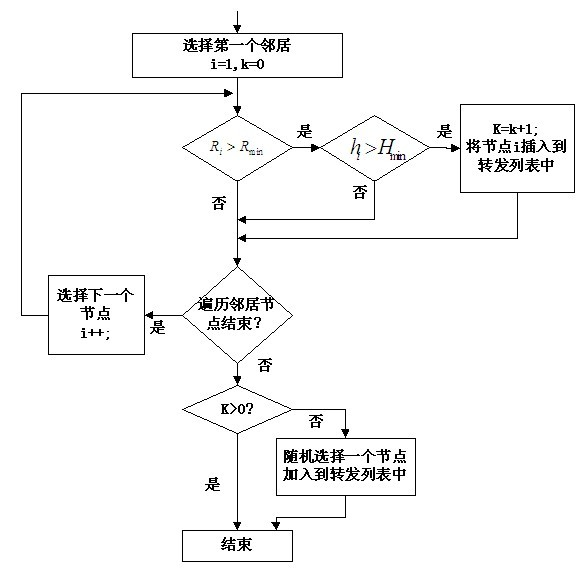

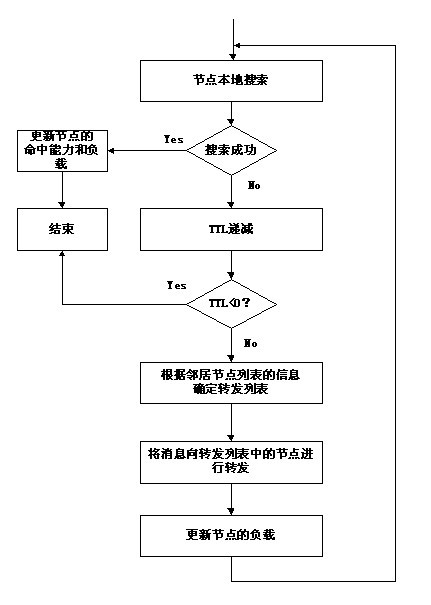

Balanced quick searching method in structureless P2P (Peer-to-Peer) network

InactiveCN102006238ASolve load imbalanceAvoid congestionData switching networksNODALNetwork overhead

The invention relates to the technical field of peer-to-peer (P2P) networks, in particular to a balanced quick searching method in a structureless P2P network. The method comprises the following steps of: receiving a query message by a node, searching whether an object file is received at home, if yes, then searching successfully, and updating hitting capability and load per se; judging whether the life cycle of the query message is expired in the node, if yes, then not transmitting the message, or else selecting the next node to transmit; determining a transmission list according to the information of a neighbor node list; transmitting the message to a node in the transmission list; and updating the load of the node. The invention obviously increases the throughput rate of the system, increases the search hitting rate, reduces the average searching delay and saves the network expenses under the condition of avoiding node congestion in the network as much as possible.

Owner:WUHAN UNIV

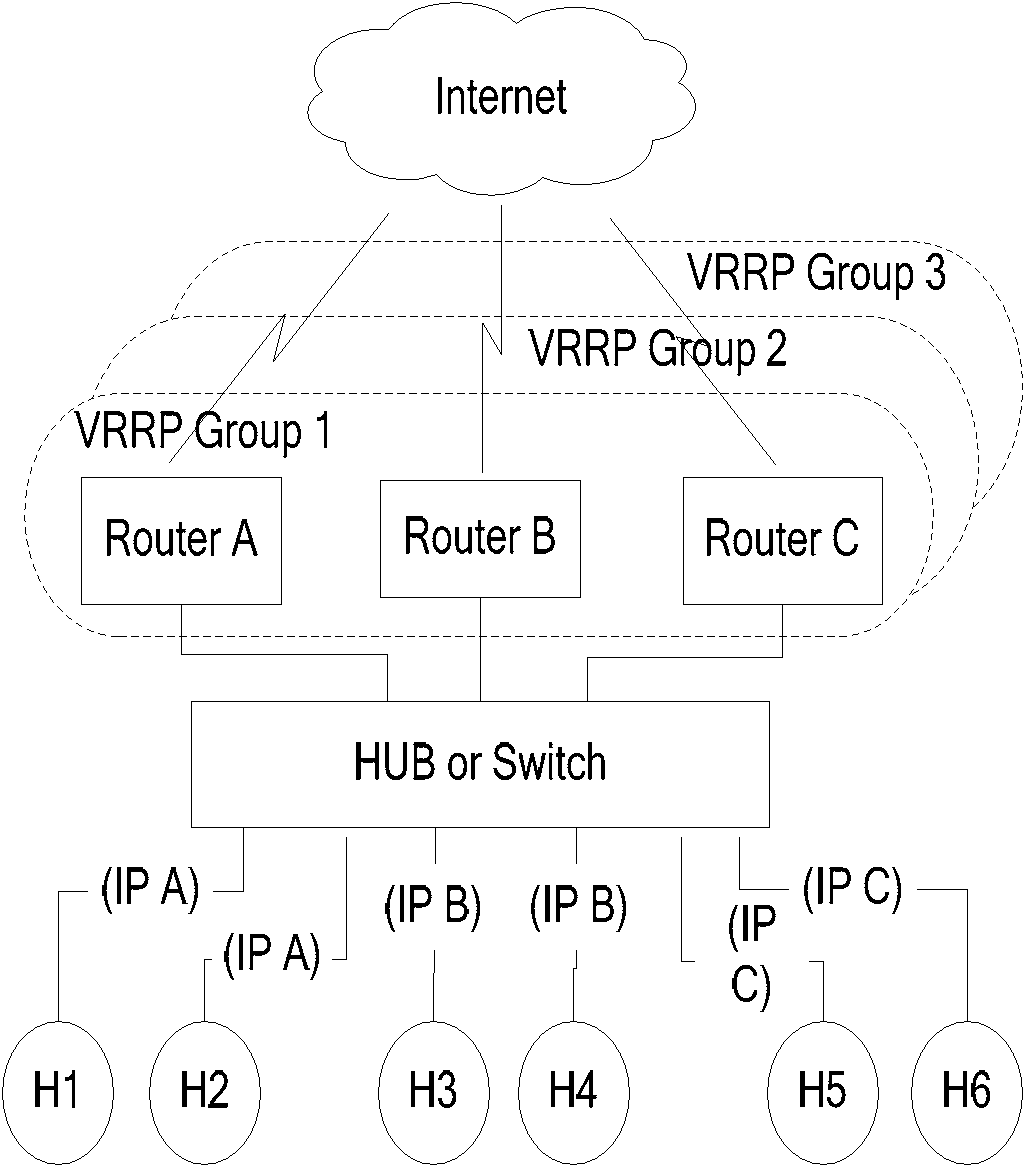

Equipment priority realization method and device in virtual router redundancy protocol (VRRP) backup group

ActiveCN102469018ASolve load imbalanceBalanced sharingData switching networksTraffic volumeTraffic capacity

The invention discloses an equipment priority realization method and a device in a virtual router redundancy protocol (VRRP) backup group, wherein the method comprises the following steps that: when a preset moment is reached, the current forwarding capability and the current carrying flow rate of each equipment are obtained; and the recorded priority of each equipment in each VRRP backup group is updated respectively according to the recorded priority of each equipment in each VRRP backup group, the recorded rest bandwidth of the equipment and the obtained current forwarding capability and the current carrying flow rate of the equipment. Through the method and the device, the VRRP equipment can realize the balanced sharing of forwarding loads of a network in an environment of a plurality of VRRP backup groups.

Owner:ZTE CORP

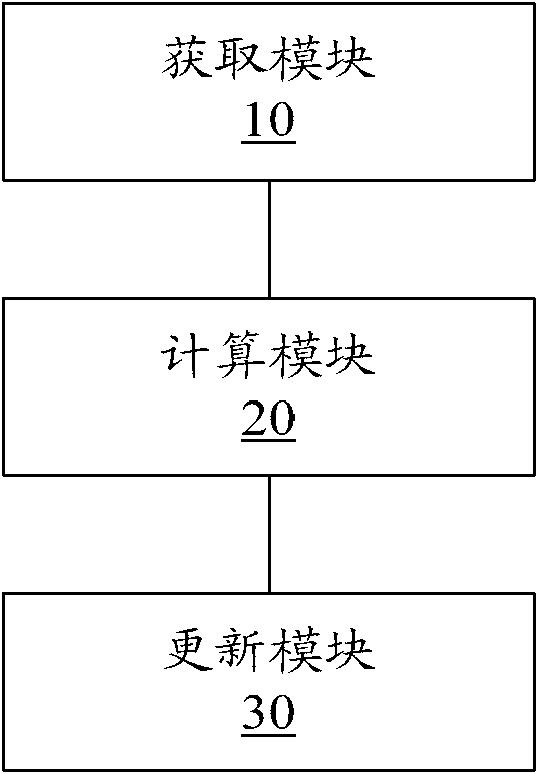

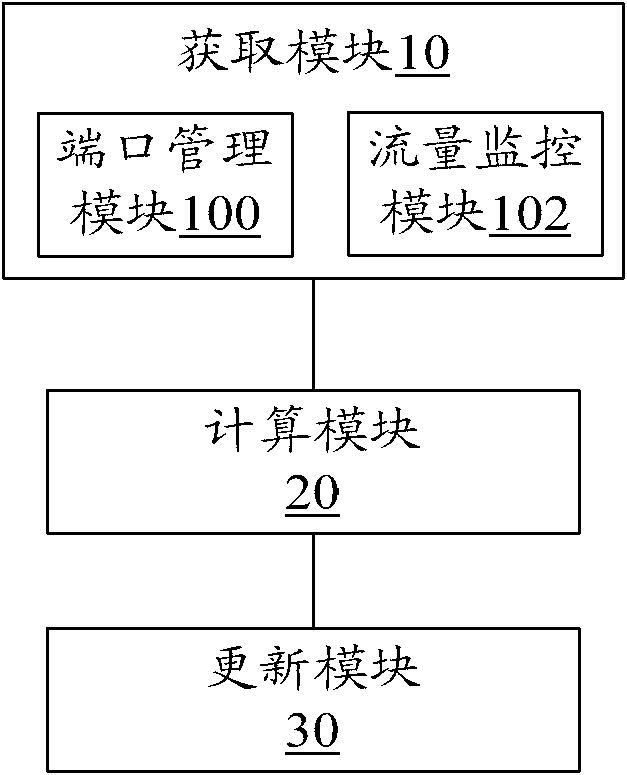

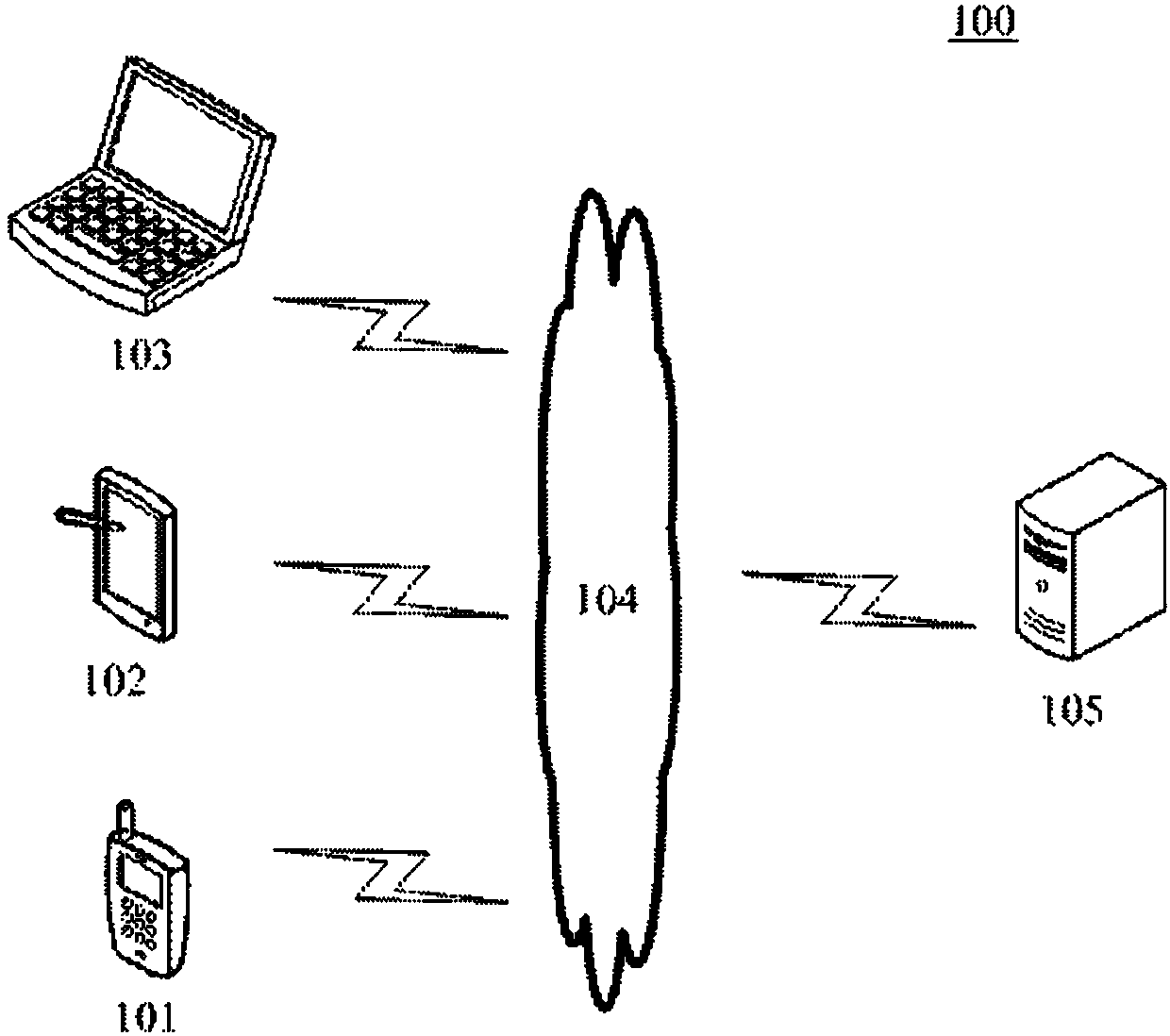

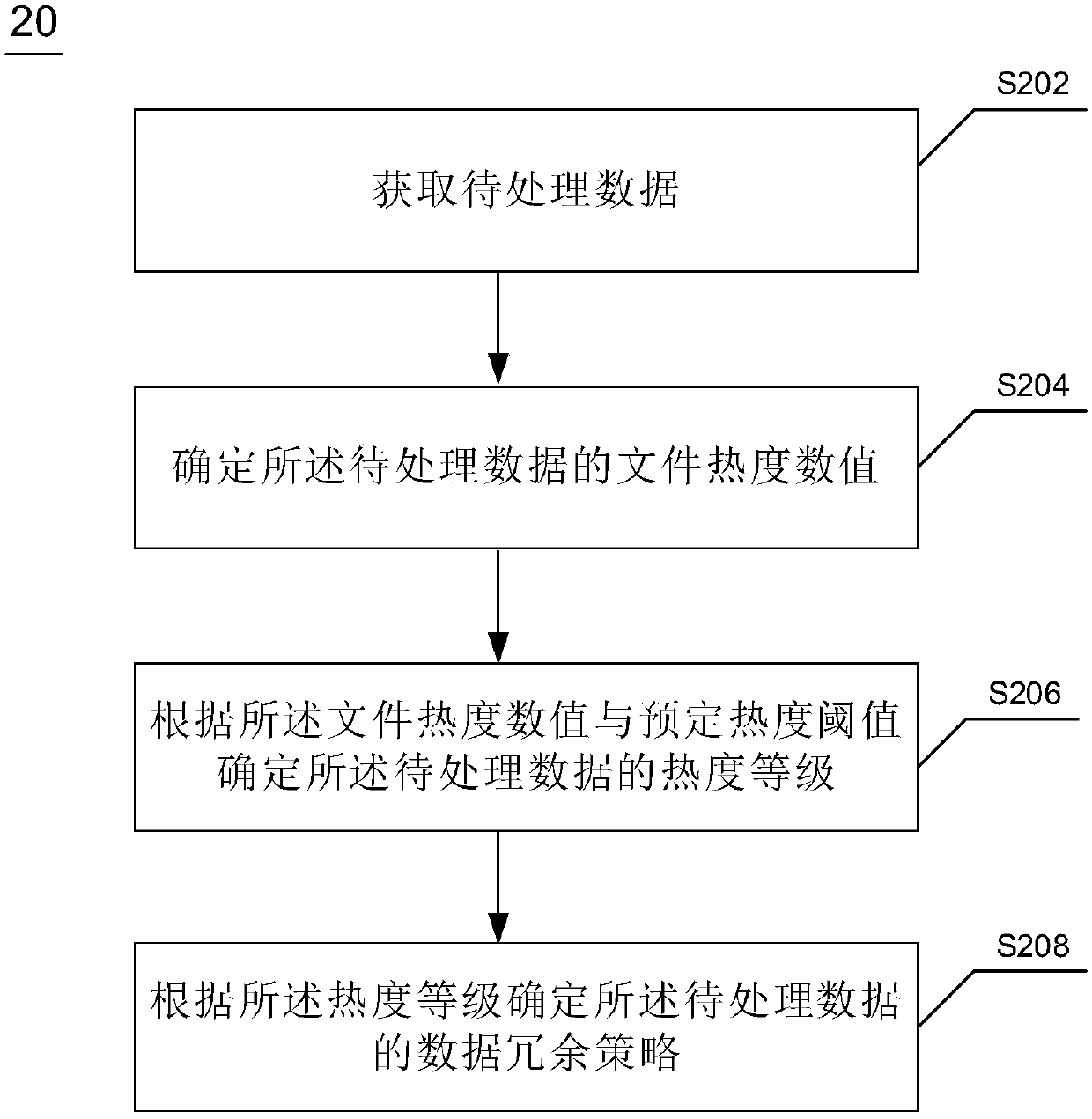

A method and apparatus for redundant storage of data

InactiveCN109522151ASolve wasted storage spaceSolve load imbalanceFinanceRedundant operation error correctionComputer data processingData processing

A method and apparatus for redundant storage of data is disclosed. The invention relates to the field of computer data processing. The method comprises the following steps: acquiring data to be processed; Determining a file heat value of the data to be processed; Determining a heat level of the data to be processed according to the file heat value and a predetermined heat threshold; And determining a data redundancy policy of the data to be processed according to the heat level. The method and device for data redundancy storage disclosed in the present application can solve the problem of nodeload imbalance caused by high visiting quantity, and can also solve the problem of wasting storage space caused by less visiting quantity data.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

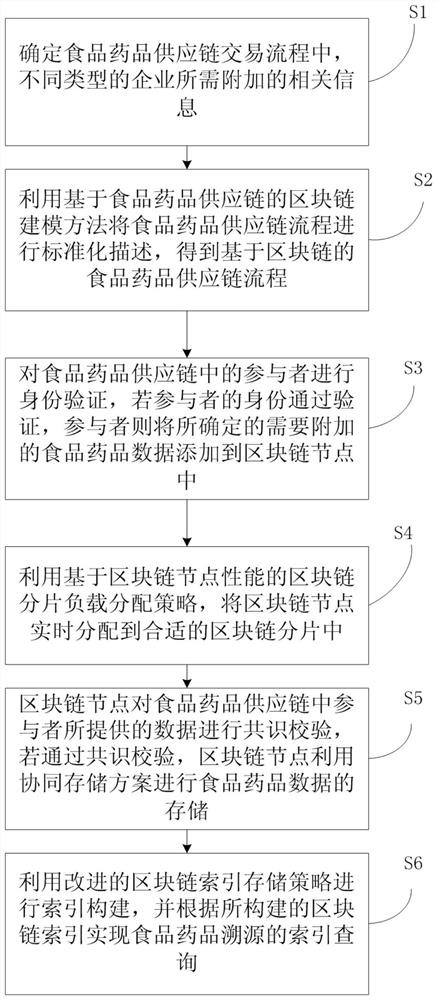

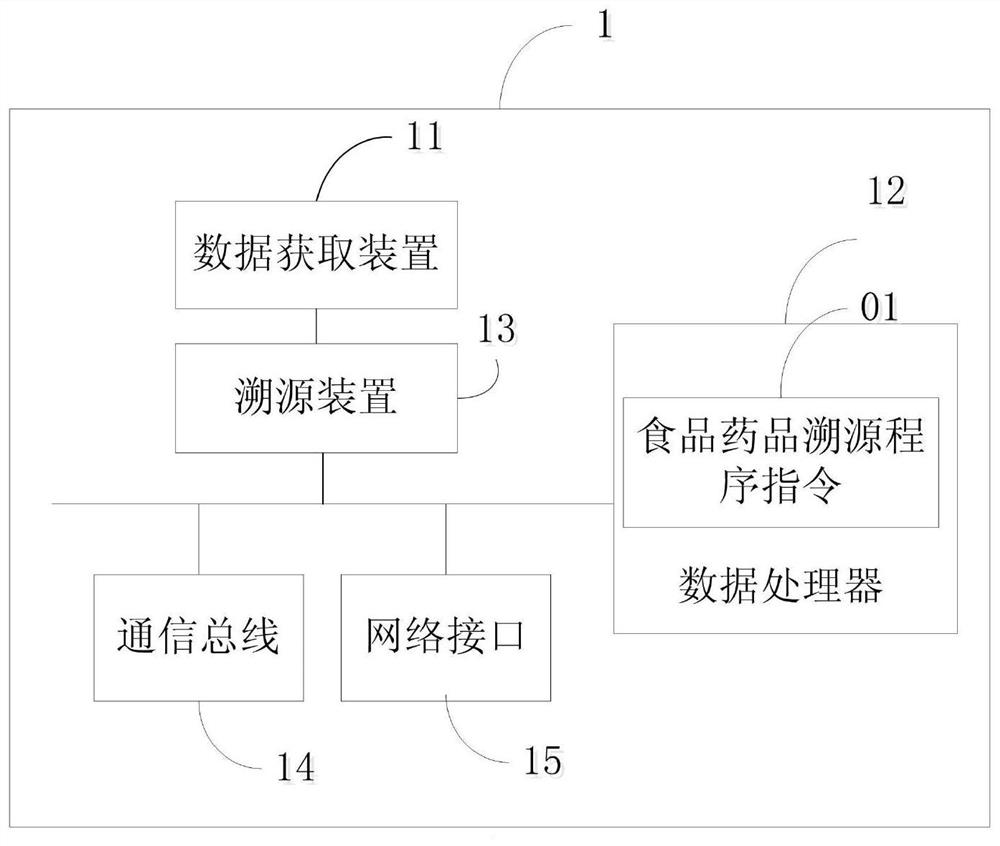

Food and drug tracing method and system based on blockchain

InactiveCN112488734AImprove accuracyGuarantee authenticityDigital data protectionCommerceDrugChemistry

The invention relates to the technical field of blockchains, and discloses a food and drug traceability method based on blockchain, and the method comprises the steps of determining related information required to be added to different types of enterprises in a food and drug supply chain transaction process; obtaining a food and drug supply chain process based on the blockchain by utilizing a blockchain modeling method based on the food and drug supply chain; performing identity verification on participants in the food and drug supply chain; utilizing a blockchain fragmentation load distribution strategy based on blockchain node performance; enabling the blockchain node to perform consensus verification on the data provided by the participants in the food and drug supply chain, and if thedata passes the consensus verification, enabling the blockchain node to store the food and drug data by using a collaborative storage scheme; performing index construction by utilizing an improved blockchain index storage strategy, and realizing index query of food and drug traceability according to the constructed blockchain index. The invention further provides a food and drug traceability system based on the blockchain. According to the invention, traceability of food and drugs is realized.

Owner:崔艳兰

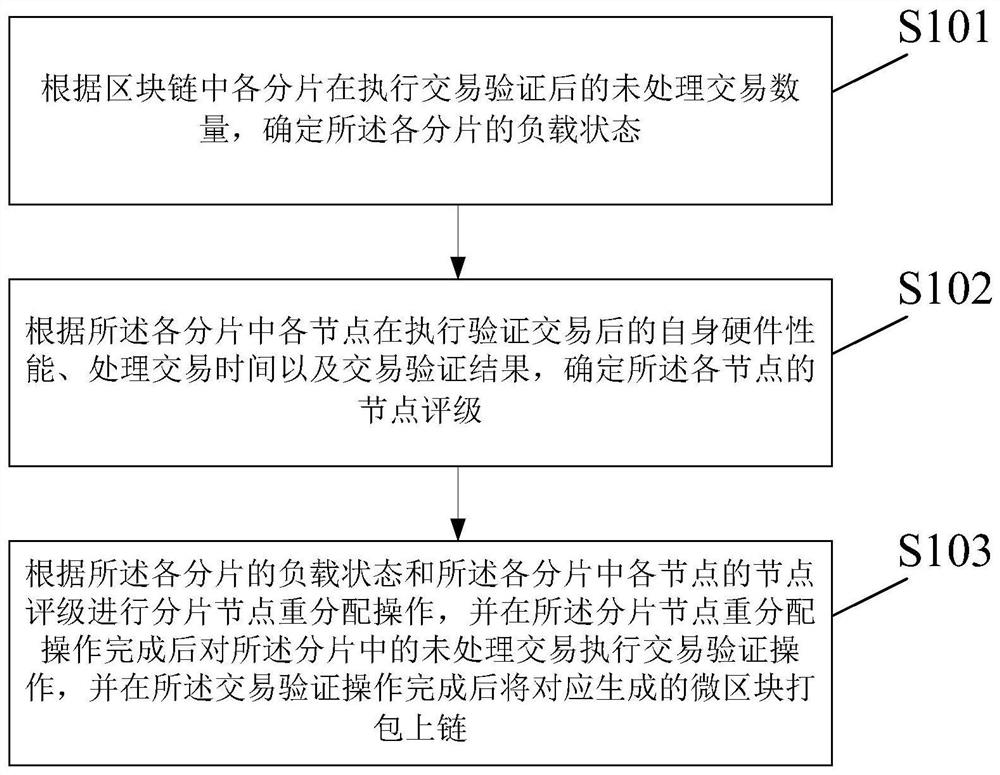

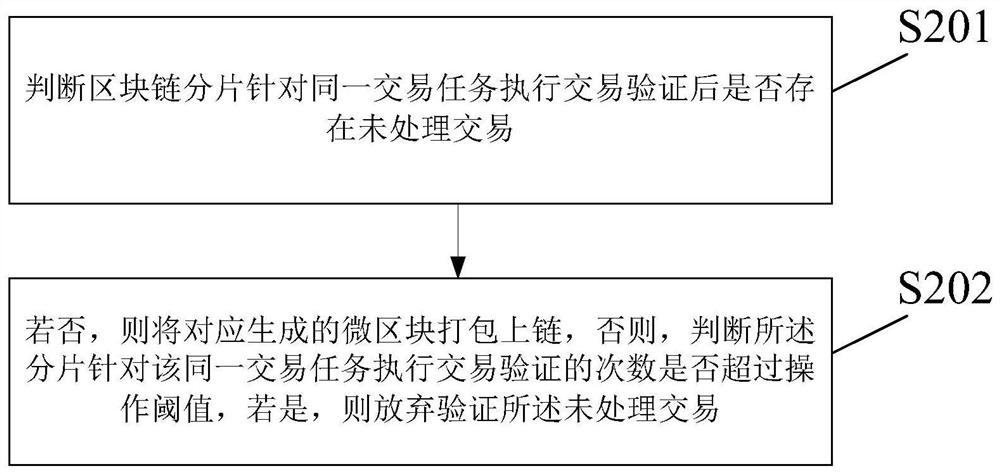

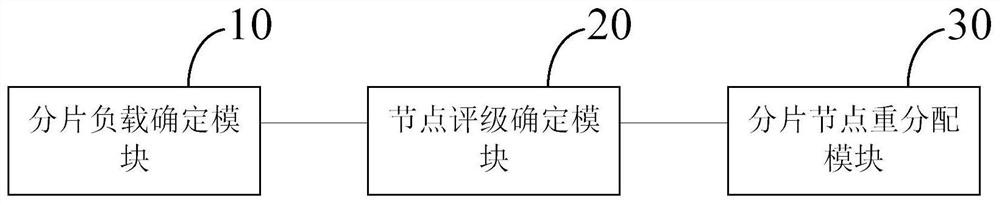

Block chain fragment load balancing method and device

PendingCN113157457AImprove performanceSolve load imbalanceResource allocationParallel computingFinancial transaction

Embodiments of the invention provide a block chain fragment load balancing method and device, belonging to the financial field. The method comprises the following steps: determining the load state of each fragment according to the number of unprocessed transactions of each fragment in a block chain after transaction verification is executed; determining the node rating of each node according to the own hardware performance, the transaction processing time and the transaction verification result of each node in each fragment after the verification transaction is executed; and performing fragment node redistribution operation according to the load state of each fragment and the node rating of each node in each fragment, executing transaction verification operation on unprocessed transactions in the fragment after the fragment node redistribution operation is completed, and after the transaction verification operation is completed, packaging and linking the correspondingly generated micro-blocks. According to the method, the load balance of each fragment of the block chain can be effectively improved, and block chain verification transaction efficiency is improved.

Owner:工银科技有限公司 +1

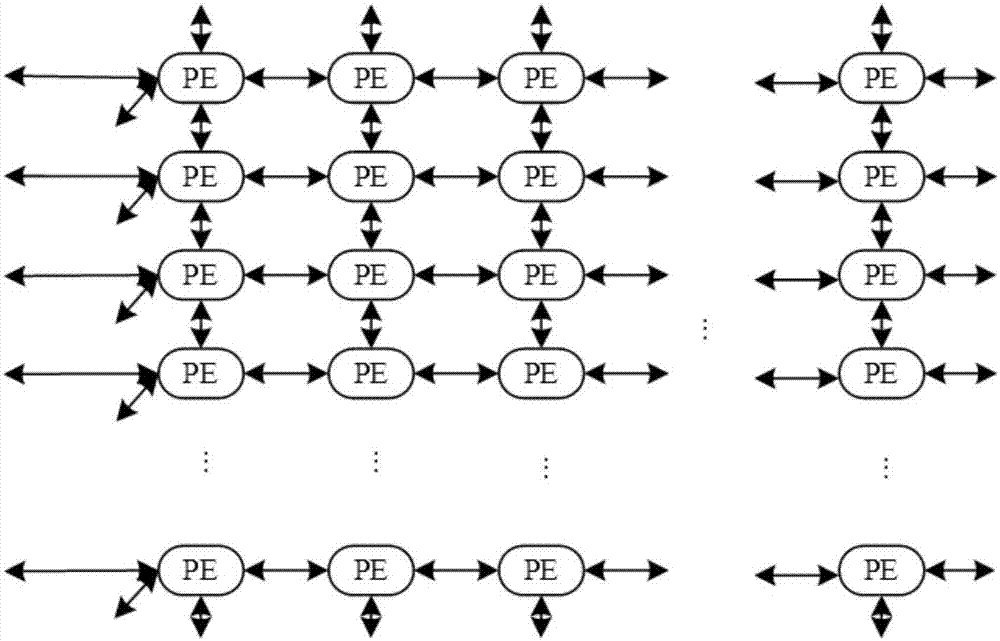

Instruction scheduling method and device based on data streams

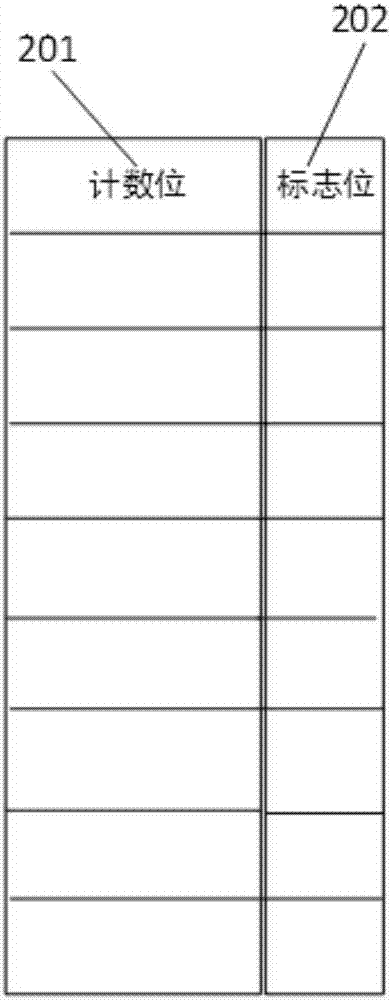

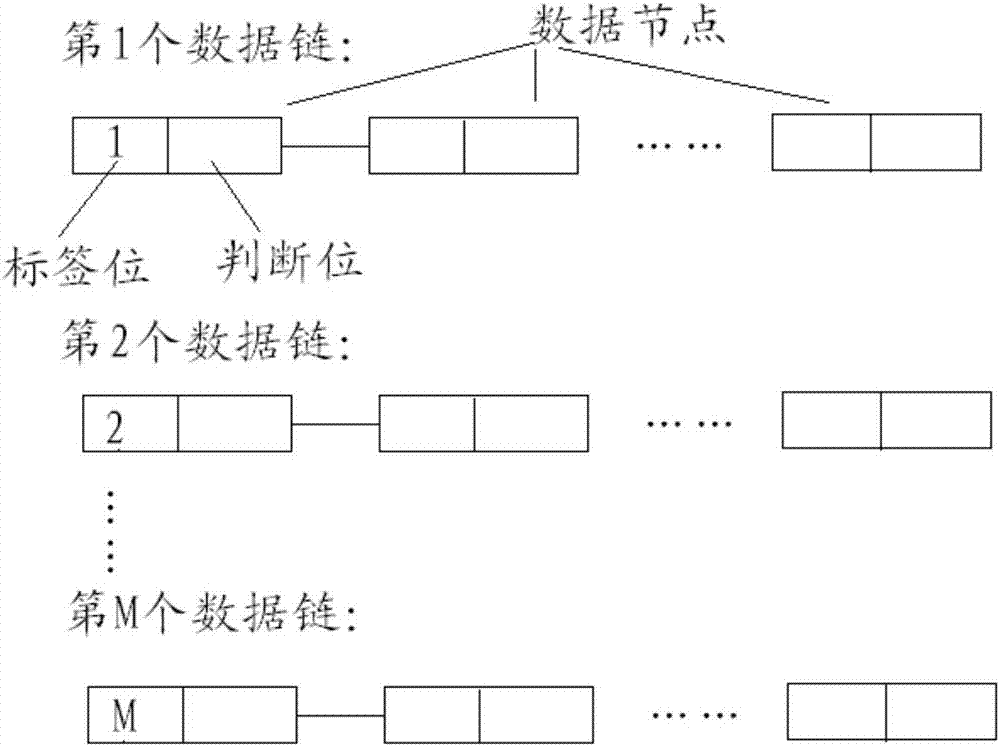

ActiveCN106909343ARealize dynamic regulationRealize distributionConcurrent instruction executionData streamScheduling instructions

The invention discloses an instruction scheduling method and device based on data streams. The device includes M instruction counting boards, M independent counting devices and a PE scheduling counting device, and a plurality of counting bits and a plurality of flag bits are arranged in each instruction counting board, wherein the counting bits and the flag bits are in one-to-one correspondence; each independent counting device is used for recording the total number of flag bits which are set as 1 in a current function unit, and according to the execution conditions of instructions in function units, the PE scheduling counting device schedules the instructions. According to the instruction scheduling method and device, structural features of the data streams are fully taken into account and used, under the principle of compatibility, by monitoring the execution conditions of various instructions in a processor in real time and scheduling the instructions according to the execution conditions of the various instructions, the load imbalance problem existing in an instruction distribution stage in current computers is solved, the dynamic regulation, control and distribution of the instructions are achieved, not only are the utilization rate of each function unit and the controllable distributivity of resources greatly improved, but also the energy consumption is greatly reduced, and the instruction scheduling method and device have very wide application prospects.

Owner:北京睿芯数据流科技有限公司

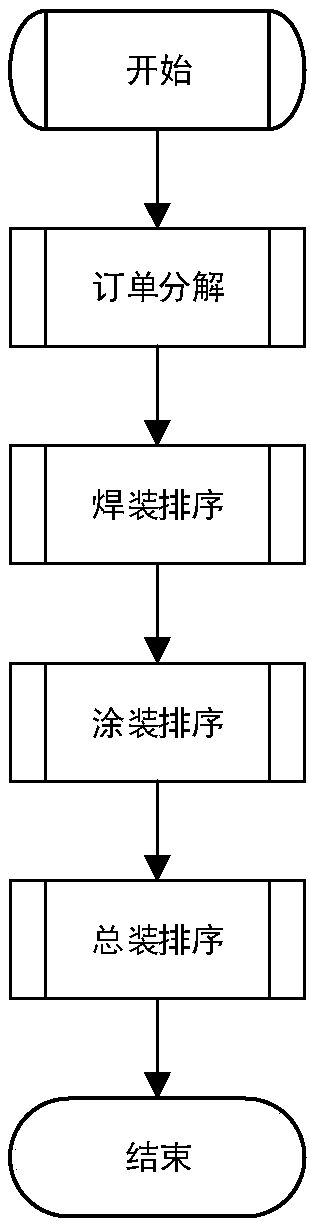

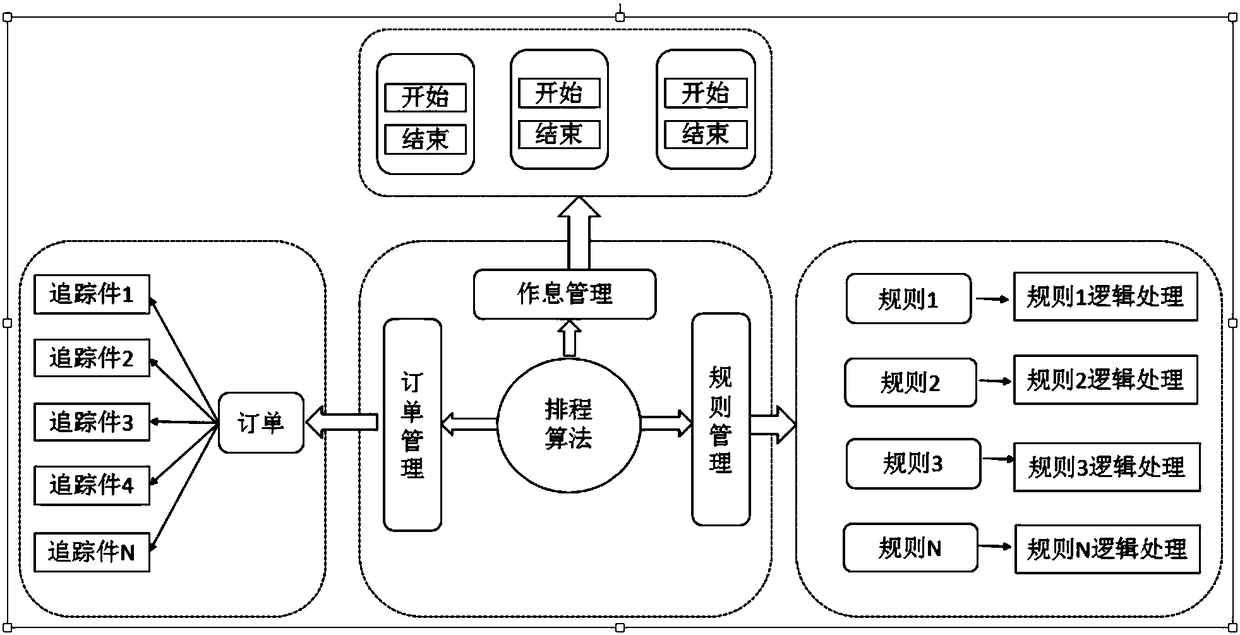

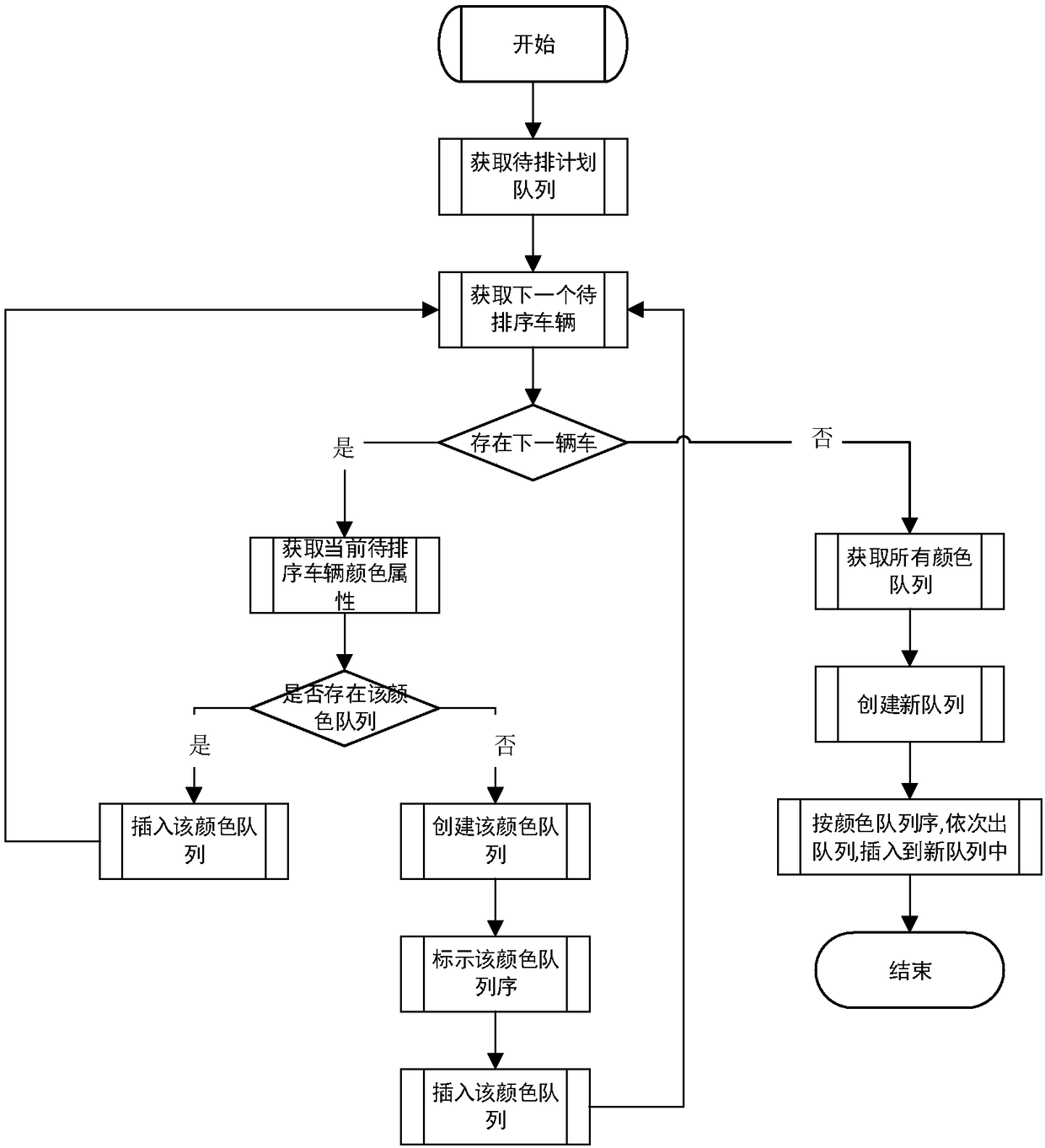

A production scheduling method with multi-rule constraints

ActiveCN109242229AFully consider production constraintsReduce replacement frequencyResourcesManufacturing computing systemsGeneral assemblyProduction planning

The invention relates to a production scheduling method with multi-rule constraints, in particular to a vehicle industry, multi-species collinear production, in order to ensure on-time delivery, reduce painting replacement frequency of a painting workshop, improve load balance of a general assembly workshop, and arrange orders to be scheduled. The steps are as follows: Step 1: Initializing; 2, decomposing the order to be scheduled; 3, generating a welding shop production plan based on constraint rule; 4, generating a painting workshop production plan according to that result of the step 3 andthe constraint rule of the painting workshop; 5, generating a general assembly workshop production plan according to the result of the step 4 and the constraint rule of the general assembly workshop.The invention can effectively solve the problems of frequent change of painting colors, unbalanced load in the general assembly workshop, reduced production capacity, high spraying cost and high production efficiency caused by non-sorting in the production of mixed lines in the whole automobile industry.

Owner:SHENYANG INST OF AUTOMATION - CHINESE ACAD OF SCI

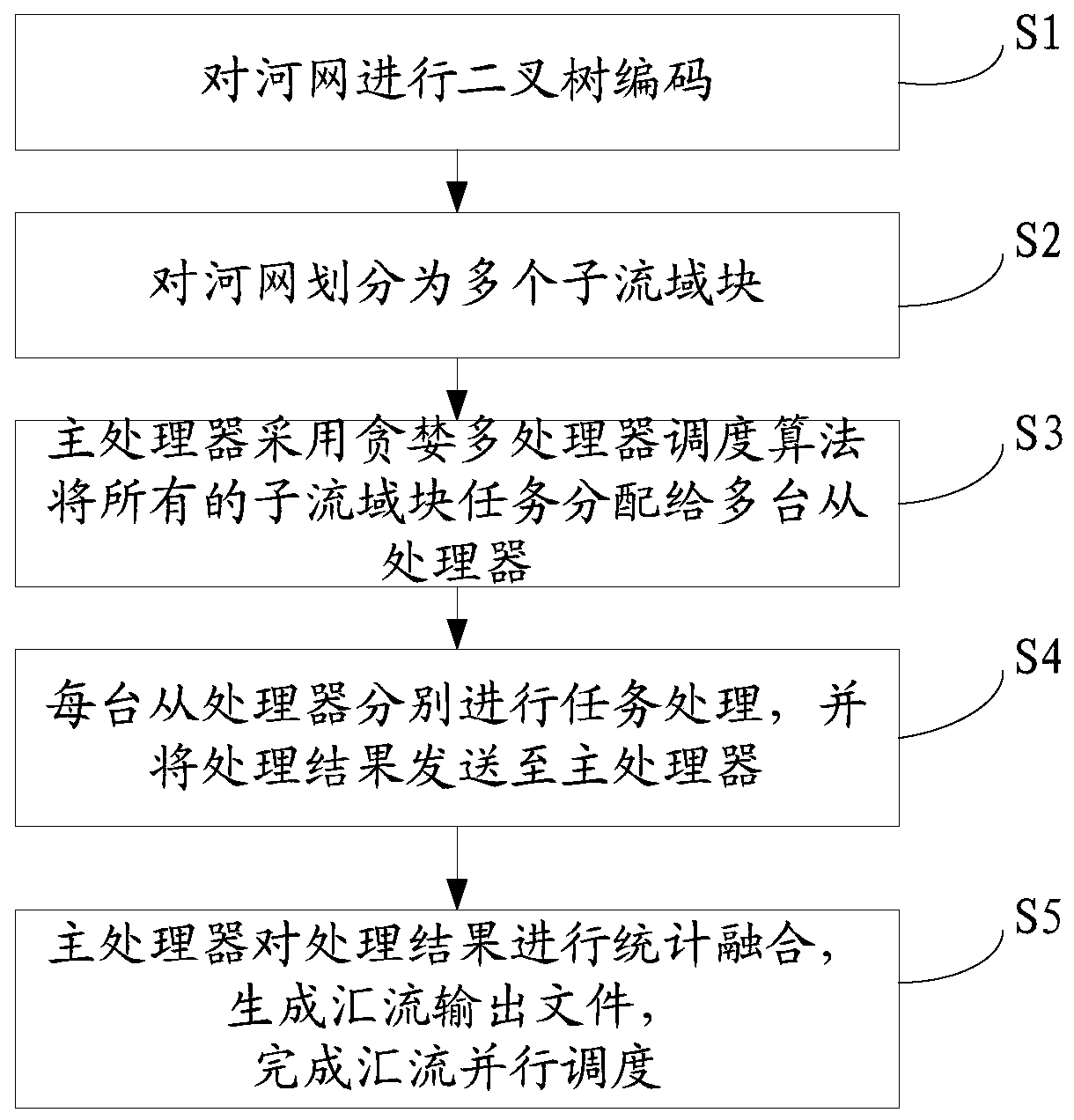

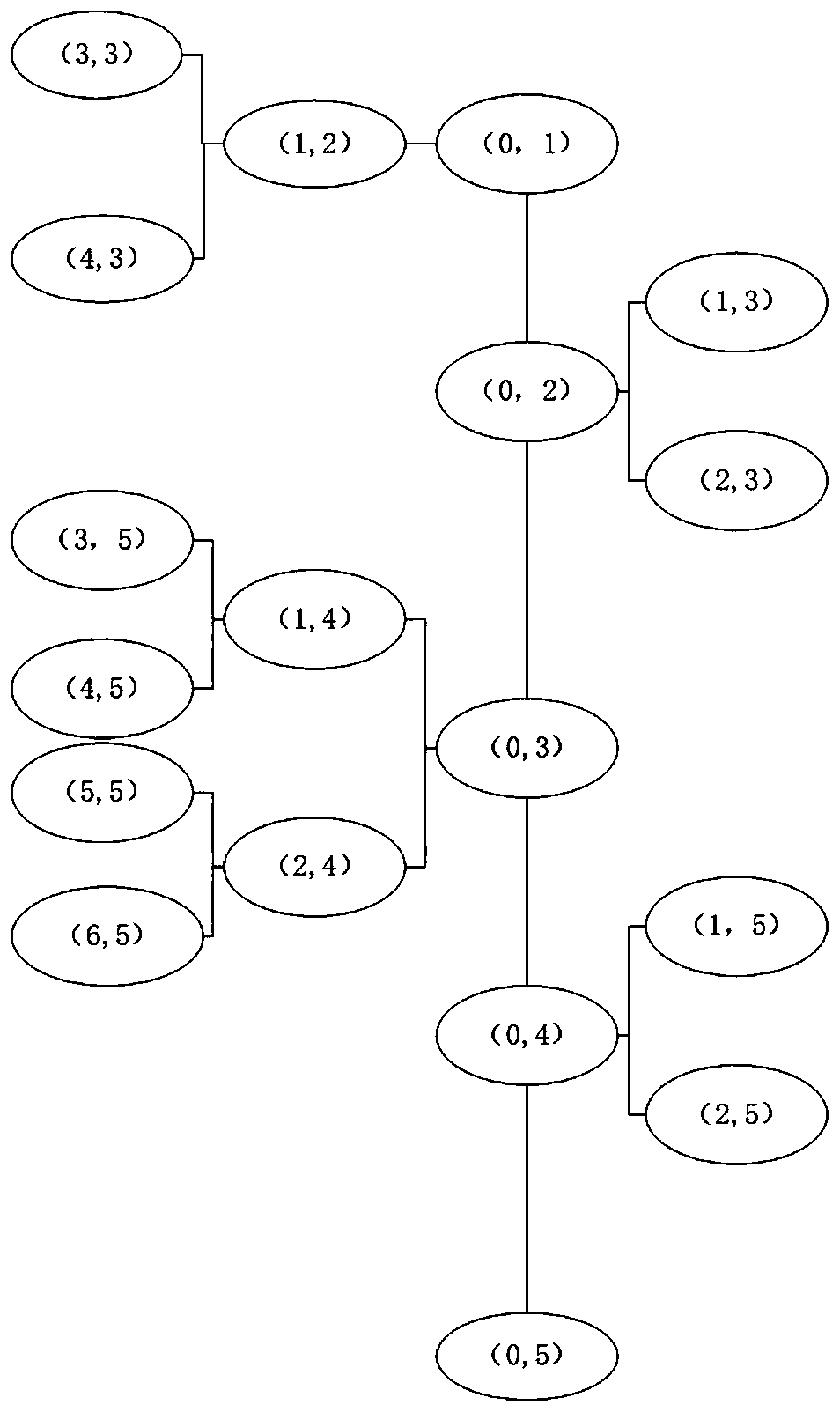

Confluence parallel scheduling method for distributed hydrological model

ActiveCN109753362AImprove computing efficiencySolve problems that cannot be parallelizedResource allocationSpecial data processing applicationsHydrological modellingProcessor scheduling

The invention discloses a confluence parallel scheduling method for a distributed hydrological model, and relates to the technical field of water resource scheduling. The method comprises the following steps: firstly, carrying out binary tree coding on a river network, dividing a plurality of sub-drainage-basins in the river network into a plurality of sub-drainage-basin blocks, distributing the plurality of sub-drainage-basin blocks into a plurality of slave processors by adopting a greedy multi-processor scheduling algorithm through a master processor, respectively processing the sub-drainage-basin blocks by the slave processors, and carrying out data fusion by the master processor. Therefore, the problem that the distributed hydrological model confluence module cannot perform parallel calculation due to complex dependency is effectively solved. The greedy multiprocessor scheduling algorithm is used for processing task scheduling of confluence sub-basin blocks, and the problem of load imbalance of multiprocessors during parallel computing is solved. By using the master processor and the slave processor to perform parallel transformation on the confluence module, the calculation efficiency of the confluence process of the distributed hydrological model is improved.

Owner:CHINA INST OF WATER RESOURCES & HYDROPOWER RES

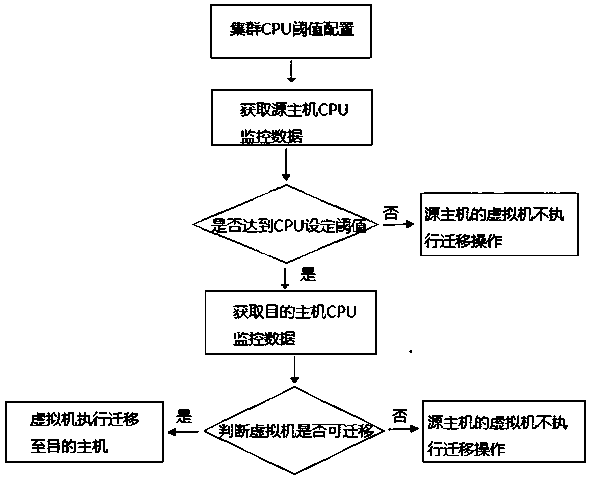

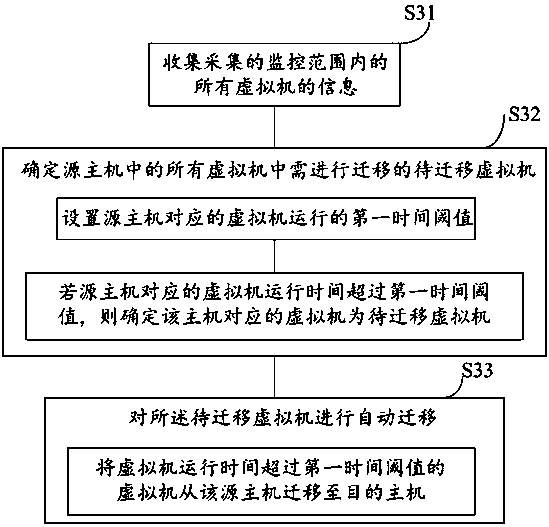

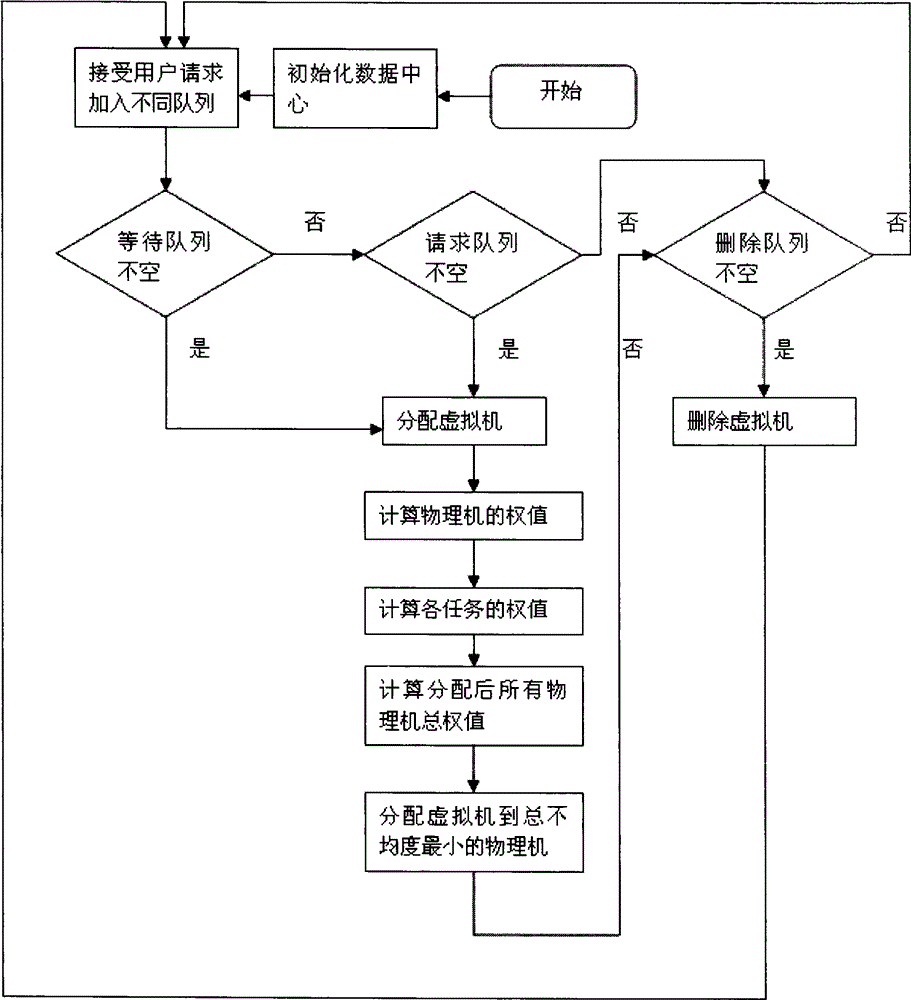

Load balance scheduling method for cloud computing virtual server system

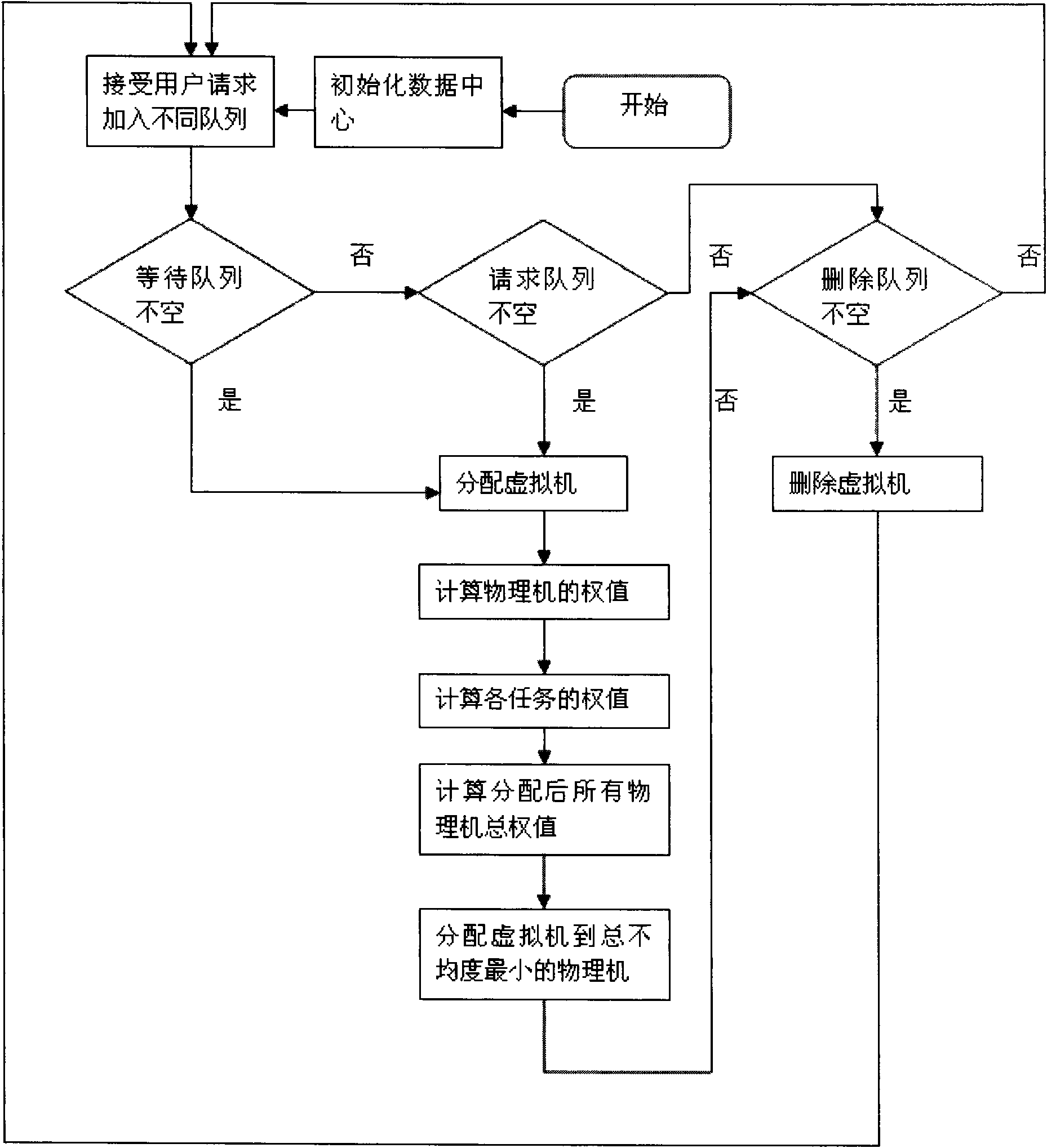

InactiveCN108874508ASolve load imbalanceImprove effective utilizationResource allocationSoftware simulation/interpretation/emulationDistributed computingVirtual servers

The invention provides a load balance scheduling method for cloud computing virtual server system, which comprises a cluster under a computing pool in the cloud computing virtual server system, in which running multiple hosts under the cluster, using shared storage by each host, and running multiple virtual machines by each virtual machine. The method is characterized by having following steps ofconfiguring a threshold for cluster host parameter sunder the computing pool in the cloud computing virtual server system; obtaining monitoring data of the host; determining whether the host is a source host that performs virtual machine migration with a size of a parameter value obtained by the monitoring data; obtaining monitoring data of other hosts; judging from the obtained monitoring data, determining a destination host whether the migration of the virtual machine can be accepted. According to the load balance scheduling method for the cloud computing virtual server system, the problem of unbalanced host load is solved.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

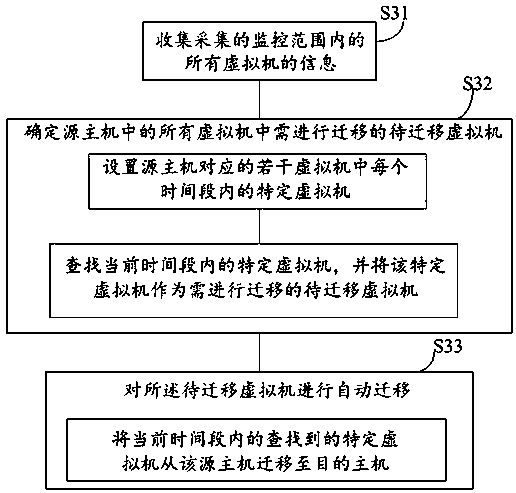

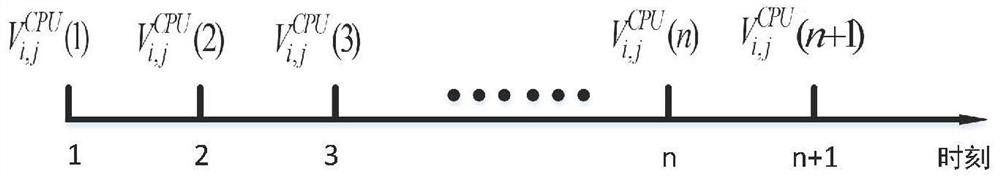

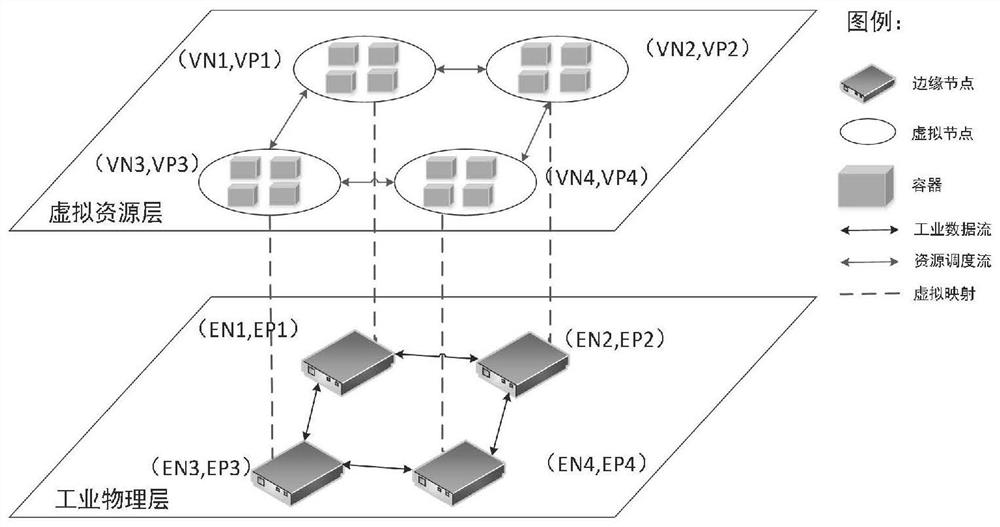

Computing resource management scheduling method for industrial edge nodes

PendingCN111813502ASolve load imbalanceRealize unified managementResource allocationSoftware simulation/interpretation/emulationResource schedulingInternet of Things

The invention relates to a computing resource management scheduling method for industrial edge nodes, and belongs to the field of Internet of Things. The method comprises the following steps: S1, constructing a computing resource virtualization architecture for industrial edge nodes; s2: scheduling computing resources. The invention provides an industrial edge node-oriented computing resource virtualization architecture, which comprises an industrial physical layer, a virtual resource layer and a control layer, and realizes unified management of computing resources. Meanwhile, a computing resource scheduling strategy is provided by predicting the computing resource use condition of the edge node at the future moment and combining the industrial computing priority and the overload conditionof the edge node, so that the problem of unbalanced load of the edge node in an industrial environment is solved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

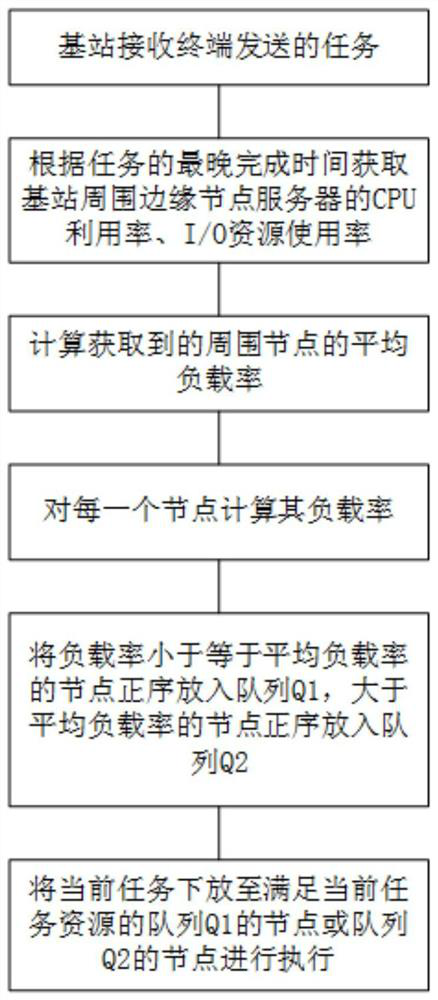

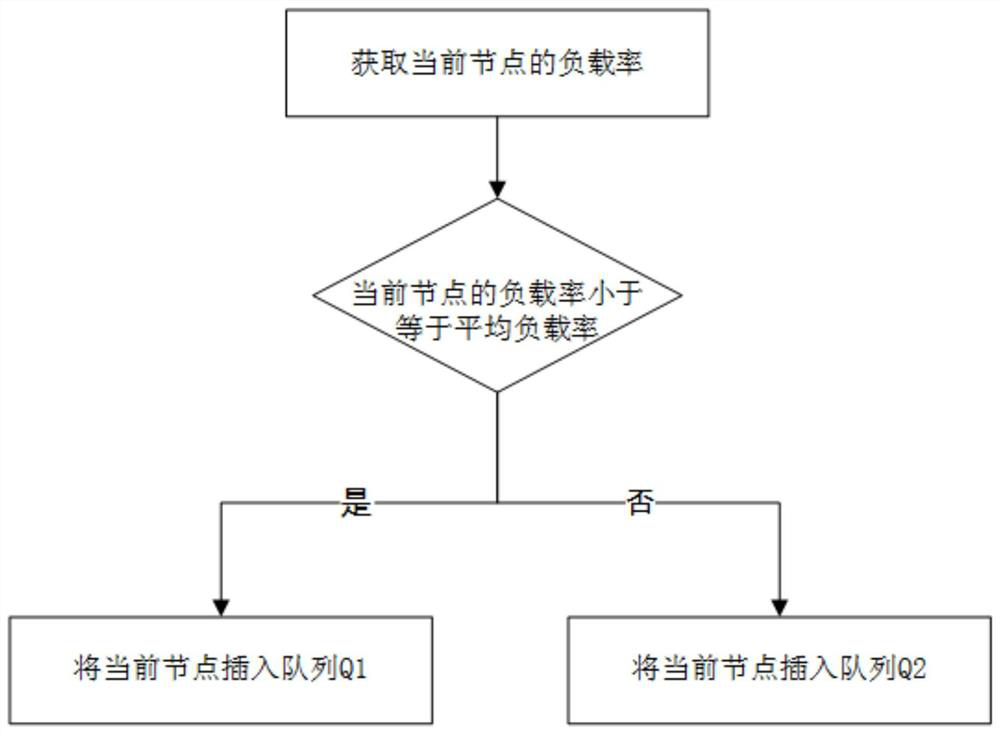

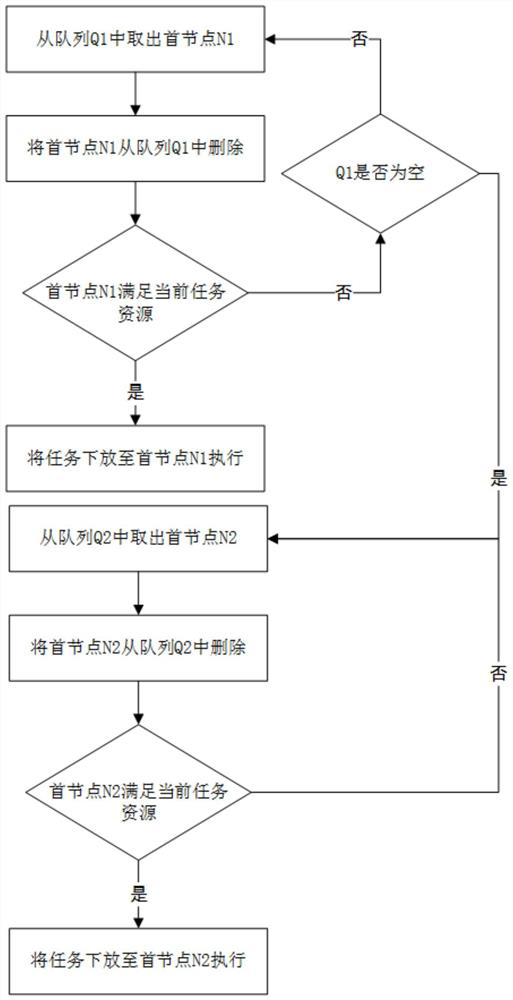

Node load balancing scheduling method for edge computing environment

InactiveCN112887345ASolve load imbalanceReduce the possibilityResource allocationTransmissionCompletion timeEdge computing

The invention discloses a node load balancing scheduling method in an edge computing environment. The method comprises the following steps: acquiring a CPU utilization rate and an I / O resource utilization rate of edge nodes around a base station according to the latest completion time of a task sent by a base station receiving terminal; calculating the average load rate of the obtained surrounding nodes, and calculating the load rate of each node; putting the nodes with the load rates smaller than or equal to the average load rate into the first queue in a positive sequence, putting the nodes with the load rates larger than the average load rate into the second queue in a positive sequence, and finally putting the current task into the nodes of the first queue or the nodes of the second queue meeting the current task resource to be executed. According to the method, the nodes are screened, so that the problem of load imbalance caused by only selecting the nearest node in a common method is avoided; when the nodes are released for the tasks, the adopted measurement index is the load rate instead of the load, so the tasks can be allocated to the nodes with fewer resources under the condition that the resource difference between the nodes is very large, and the load balancing is really achieved.

Owner:SHANGHAI JIAO TONG UNIV

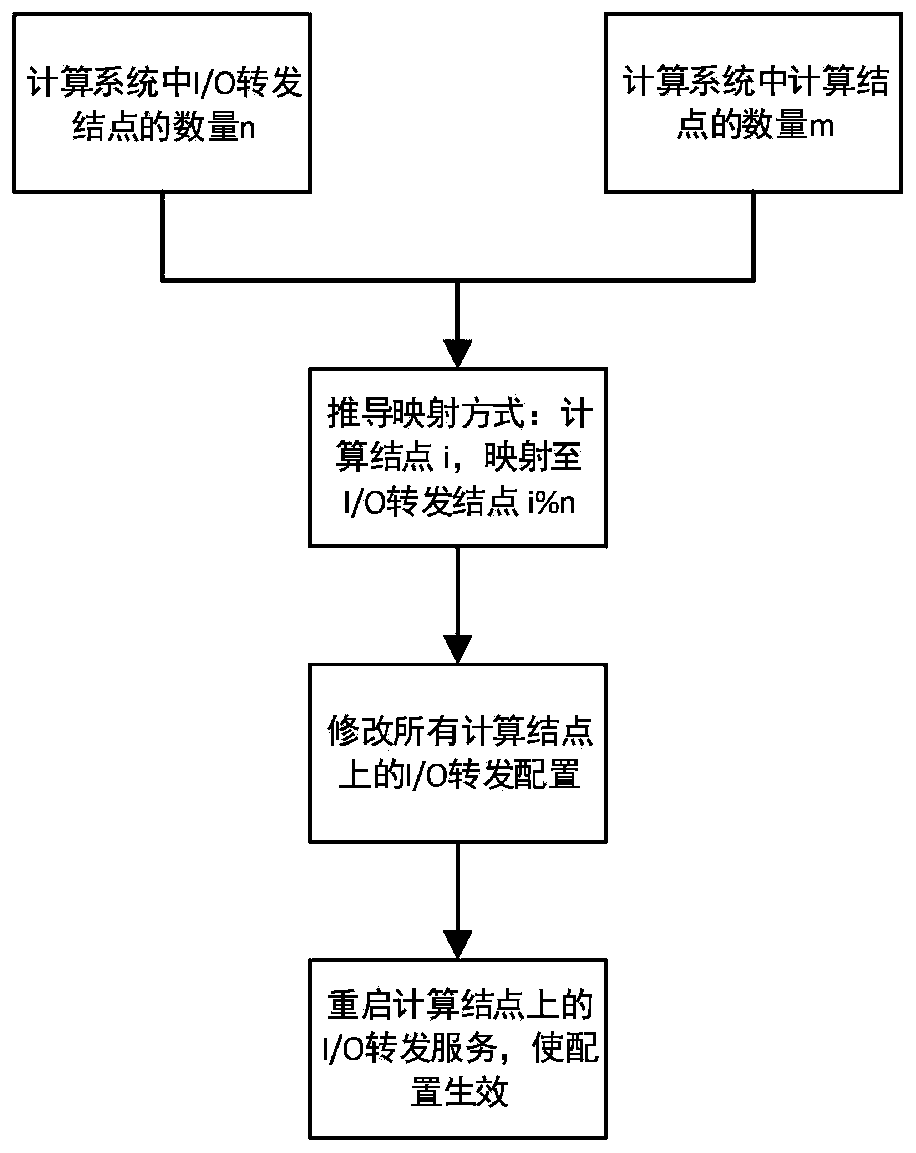

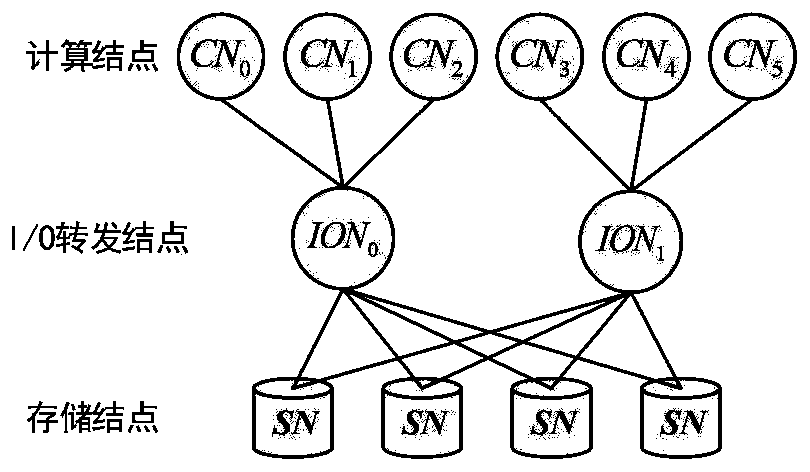

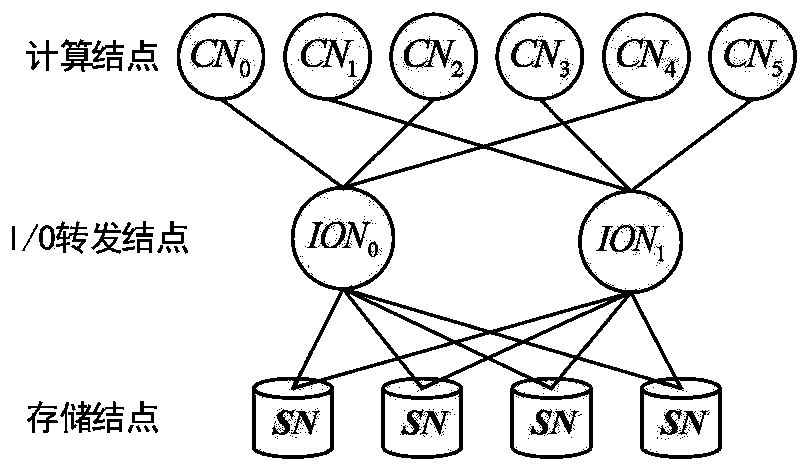

Super computer I/O forwarding node polling mapping method

ActiveCN111597038ASolve load imbalanceSimple methodResource allocationEnergy efficient computingSupercomputerComputer network

The invention discloses a super computer I / O forwarding node polling mapping method, which comprises the following steps of: calculating the number of I / O forwarding nodes in a super computer, and recording the number as n; numbering the n I / O forwarding nodes to be ION0, ION1,..., IONn-1 respectively; calculating the number of calculation nodes in the super computer, and recording the number as m, wherein the serial numbers of the calculation nodes are respectively CN0, CN1,..., CNm-1; mapping the calculation node CNi to an I / O forwarding node IONi% n by adopting a polling mapping calculationmethod; modifying I / O forwarding node configuration on each computing node, namely modifying the I / O forwarding node configuration from the traditional partition mapping method to IONi% n of a polling mapping method for the computing node CNi; processing all I / O requests sent from the computing node CNi by the I / O forwarding node IONi% n; after the I / O forwarding node configurations on all the computing nodes are modified, restarting the I / O forwarding services on the computing nodes, so that the configurations take effect, and the method solves the problem of load imbalance among the I / O forwarding nodes.

Owner:CALCULATION AERODYNAMICS INST CHINA AERODYNAMICS RES & DEV CENT

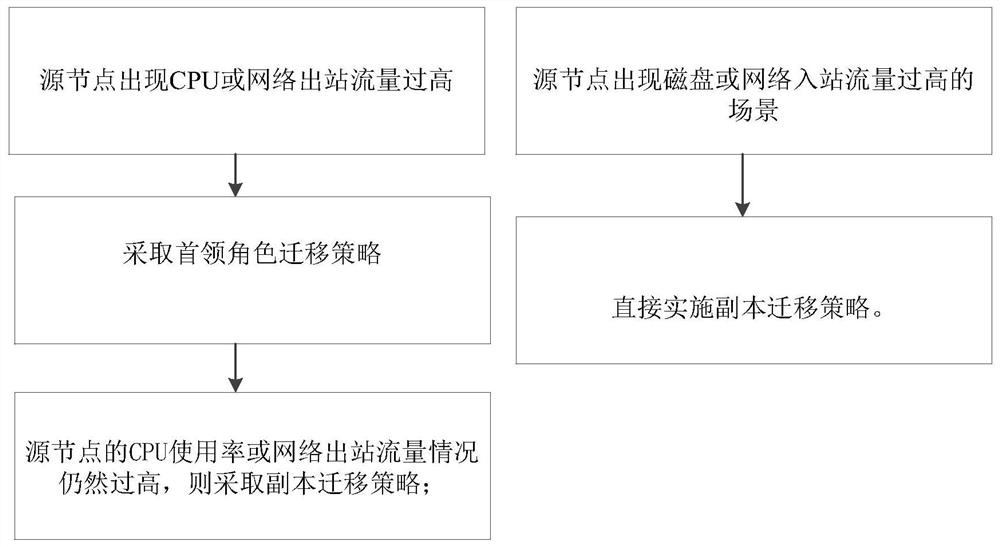

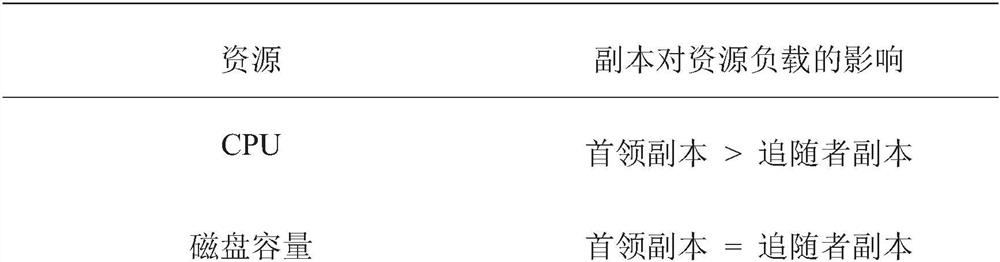

Load balancing method for distributed message system in cloud computing

PendingCN111767147ASolve load imbalanceLoad balancingResource allocationData switching networksParallel computingEngineering

The invention provides a load balancing method for a distributed message system. For functional roles of different copies under a distributed message system, two load migration strategies of leading role migration and copy migration are provided. For the two load migration strategies, a target copy and target node selection strategy basis method is designed in detail. While load migration is realized, copy consistency, partition availability and data reliability in a distributed environment are ensured, and resource utilization among users in a shared cluster does not influence each other.

Owner:BEIJING UNIV OF TECH

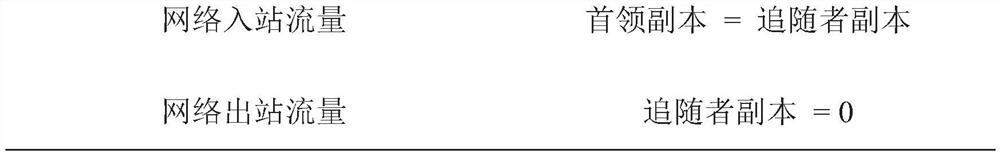

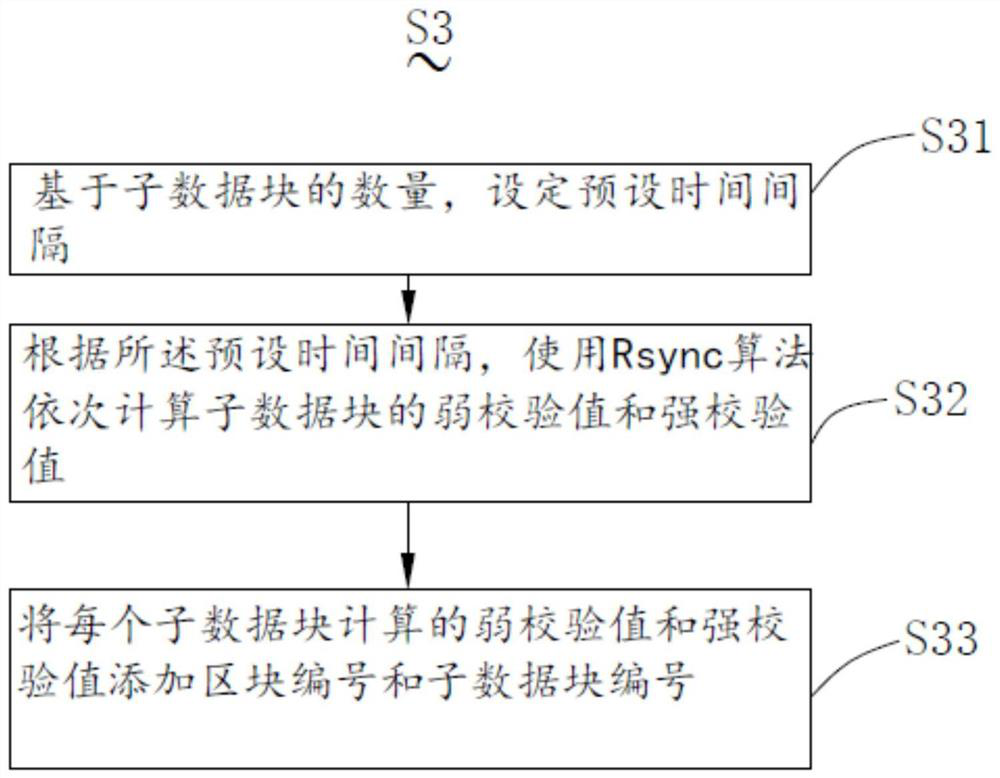

blockchain state data synchronization method and system based on Rsync

PendingCN113094437AImprove speed and efficiencyImprove performanceResource allocationInterprogram communicationBlockchainComputational resource

The invention discloses a blockchain state data synchronization method based on Rsync, and the method comprises the steps: packaging the state data of all block nodes in a blockchain into an object, storing the object in a message queue, and reading the data of each block node from the message queue one by one; based on the block data of each block node, uniformly dividing the block node into a plurality of sub-data blocks according to the size of the data volume, and numbering the plurality of sub-data blocks; based on an Rsync algorithm, calculating a strong verification value and a weak verification value of each sub-data block; inputting the sub-data blocks and the corresponding strong check values and weak check values to the Rsync client; based on a synchronous source file of the Rsync client, searching for a corresponding weak verification value and a strong verification value, and screening out sub-data blocks lacking the weak verification value and / or the strong verification value; on the basis of the synchronous source file, replacing the sub-data block without the verification value. The problem of unbalanced node load in the blockchain data synchronization process is solved, the data synchronization performance in the block network can be improved, and the required computing resources are less.

Owner:深圳前海移联科技有限公司

Method and device for realizing data center resource load balance in proportion to comprehensive allocation capability

ActiveCN102185779BTimely determination of load statusSolve load imbalanceData switching networksUser needsData center

The invention relates to a method and a device for realizing data center resource load balance. The method of the technical scheme comprises the following steps of: acquiring the current utilization rates of attributes of each physical machine in a scheduling domain, and determining the physical machine for a currently allocated task according to the principle of fair distribution in proportion to the allocation capability of a server, an actual allocated task weight value and an expected task weight value, wherein the attributes comprise a central processing unit (CPU) load, a memory load and a network load; determining a mean load value of the attributes of the scheduling domain according to the current utilization rates, and calculating a difference between the actual allocated task weight value and expected task weight value of the physical machine according to the mean load value and predicted load values of the attributes of the physical machine; and selecting the physical machine of which the difference between the actual allocated task weight value and the expected task weight value is the smallest for the currently allocated task. The device provided by the invention comprises a selection control module, a calculation processing module and an allocation execution module. By the technical scheme provided by the invention, the problem of physical server load unbalance caused by inconsistency between user need provisions and physical server provisions can be solved.

Owner:田文洪

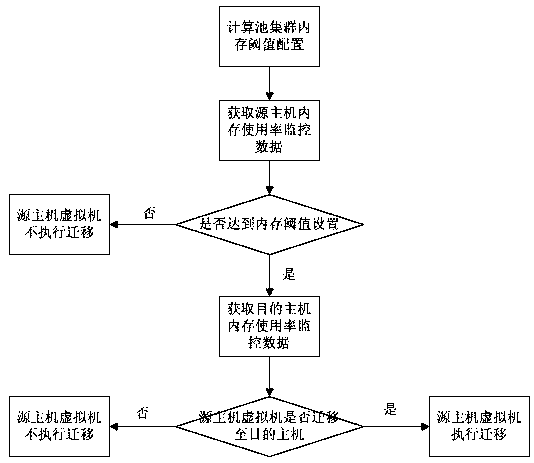

A load balancing scheduling method and system based on virtualized memory load monitoring

InactiveCN109189574AImprove effective utilizationImprove rational useResource allocationHost memoryMemory load

The invention discloses a load balancing dispatching method and system based on virtualized memory load monitoring. The method comprises the following steps: judging whether the memory utilization rate of a source host exceeds a preset memory threshold value; if so, judging whether the memory utilization rate of the destination host is lower than the preset memory threshold value; if the memory utilization rate of the destination host is below the preset memory threshold, migrating the virtual machine under the source host to the destination host. The invention fully utilizes the monitoring data of the host memory utilization rate to judge whether the virtual machine can be migrated and which destination host the virtual machine can be migrated to, thereby solving the problem of unbalancedload of the host memory utilization rate to improve the rational utilization of the host memory resources, thereby improving the overall effective utilization rate of the host memory.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

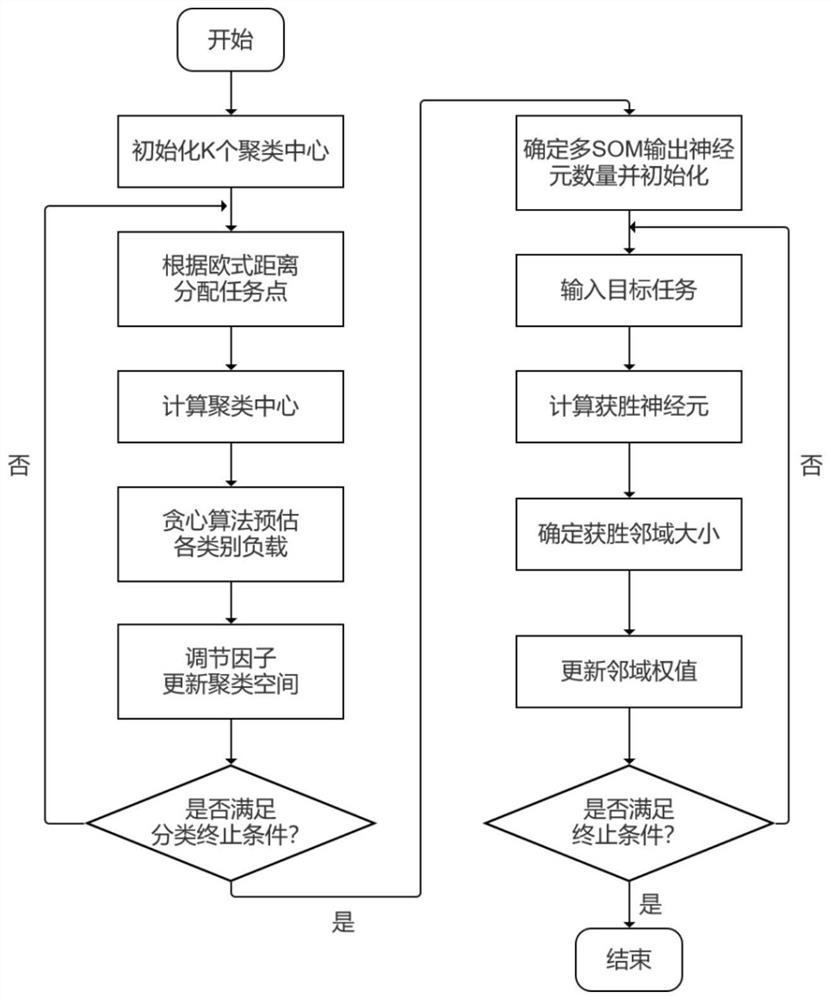

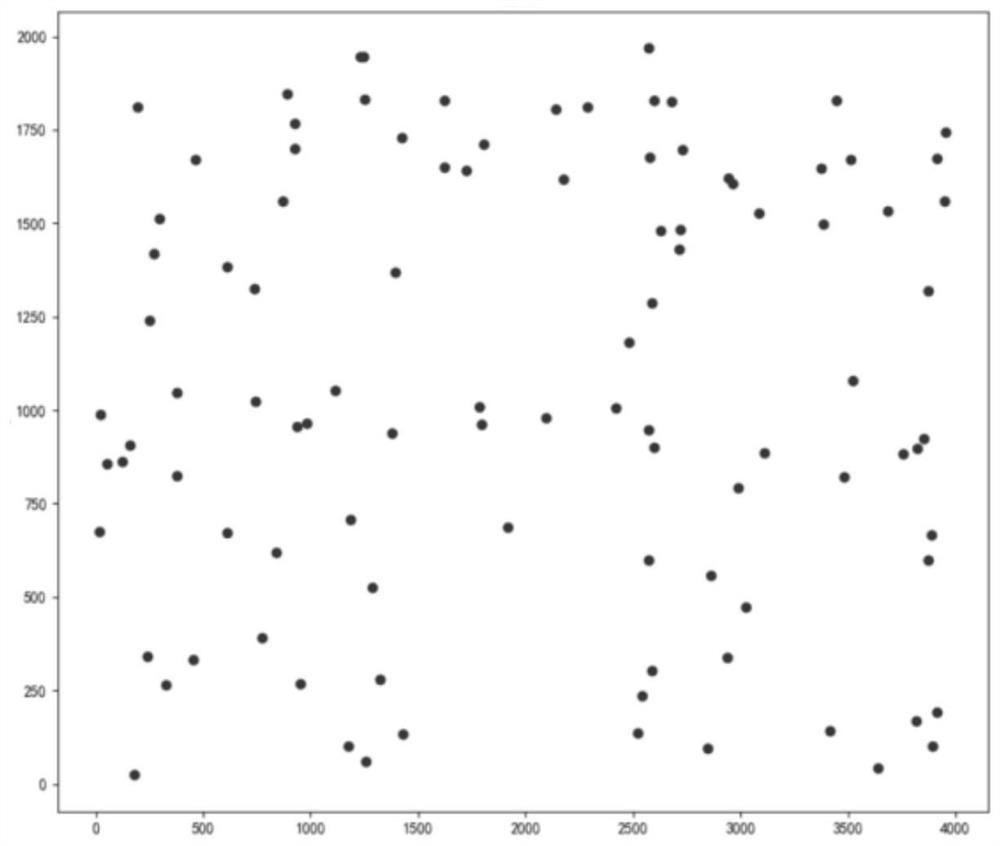

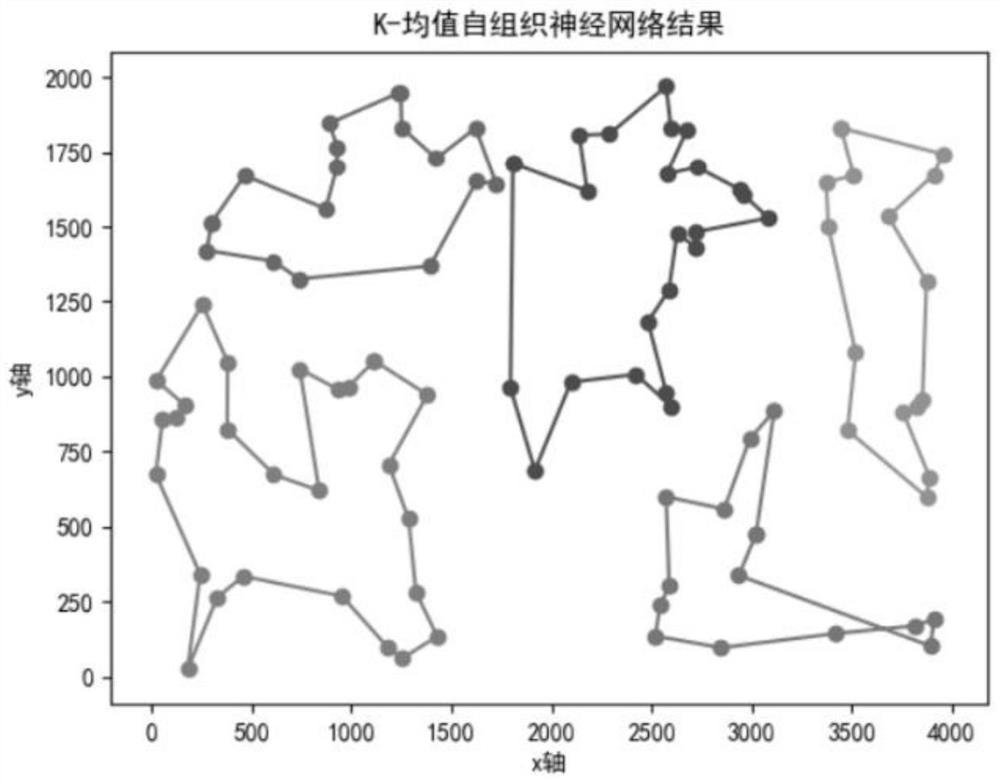

Greedy K-mean self-organizing neural network multi-robot path planning method

ActiveCN113281993ASolve load imbalanceImprove load imbalanceAdaptive controlRobotic systemsCluster algorithm

The invention relates to a greedy K-mean self-organizing neural network multi-robot path planning method, and belongs to the technical field of artificial intelligence and robot system control. According to the method, the task allocation problem and the path planning problem are considered together, a two-stage solving mode is adopted, robot task allocation is completed in the first stage, and path planning is conducted in the second stage according to the task allocation result in the first stage. In a K-mean iteration process, a greedy algorithm is used to estimate path cost required for task execution by each robot, the size of an adjustment factor is guided through the path cost, and a task allocation result in a clustering process is adjusted through the adjustment factor. The problem of unbalanced robot load of a K-means clustering algorithm and a self-organizing neural network algorithm is solved, a task execution path scheme of load balancing is efficiently planned for each robot, and the method has the advantages of being high in universality and robustness.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

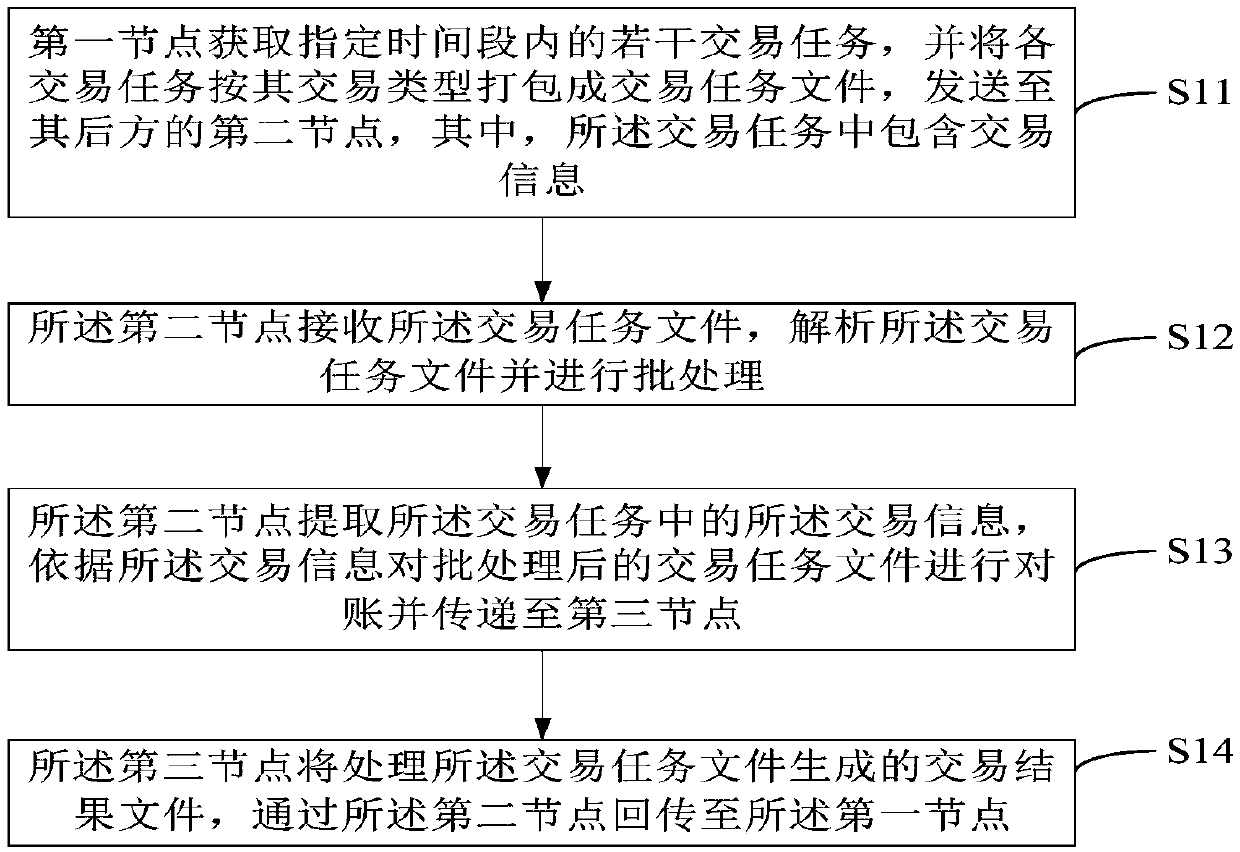

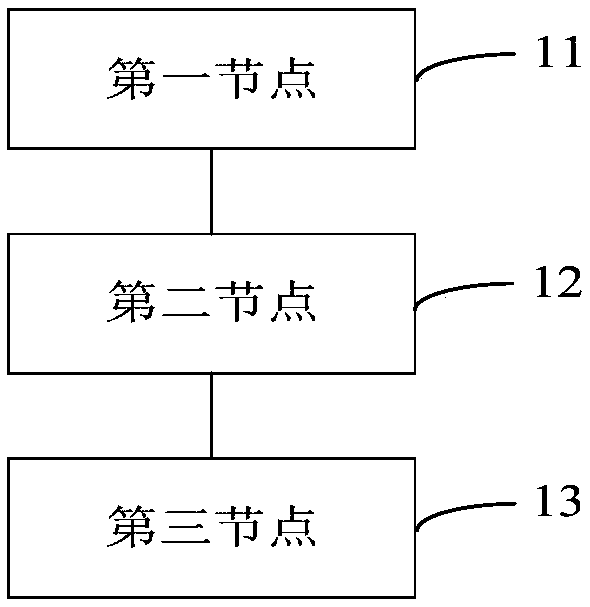

Batch transaction task processing method and system among multiple systems

PendingCN109583859ASolve the cumbersome problem of reconciliationSolve load imbalanceFinancePayment protocolsBatch processingResource utilization

The invention provides a batch transaction task processing method among multiple systems, which comprises the following steps that: a first node acquires a plurality of transaction tasks in a specified time period, packs each transaction task into a transaction task file according to the transaction type of the transaction task, and sends the transaction task file to a second node behind the transaction task file; The second node receives the transaction task file, analyzes the transaction task file and performs batch processing; The second node carries out account checking on the transactiontask files subjected to batch processing and transmits the transaction task files to the third node; And the third node transmits a transaction result file generated by processing the transaction taskfile back to the first node through the second node. According to the method, the resource utilization efficiency can be higher according to the proportion of idle resources to total resources, batchprocessing can not affect real-time transactions, it is guaranteed that the real-time transactions are efficiently completed, reconciliation is completed in the whole transaction completion process,and the problem that reconciliation is complex in the prior art is solved.

Owner:CHINA PING AN LIFE INSURANCE CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com