Patents

Literature

532 results about "Scheduling (computing)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, scheduling is the method by which work is assigned to resources that complete the work. The work may be virtual computation elements such as threads, processes or data flows, which are in turn scheduled onto hardware resources such as processors, network links or expansion cards.

Using execution statistics to select tasks for redundant assignment in a distributed computing platform

InactiveUS7093004B2Minimizes unnecessary network congestionImprove deploymentResource allocationMultiple digital computer combinationsOff the shelfSelf adaptive

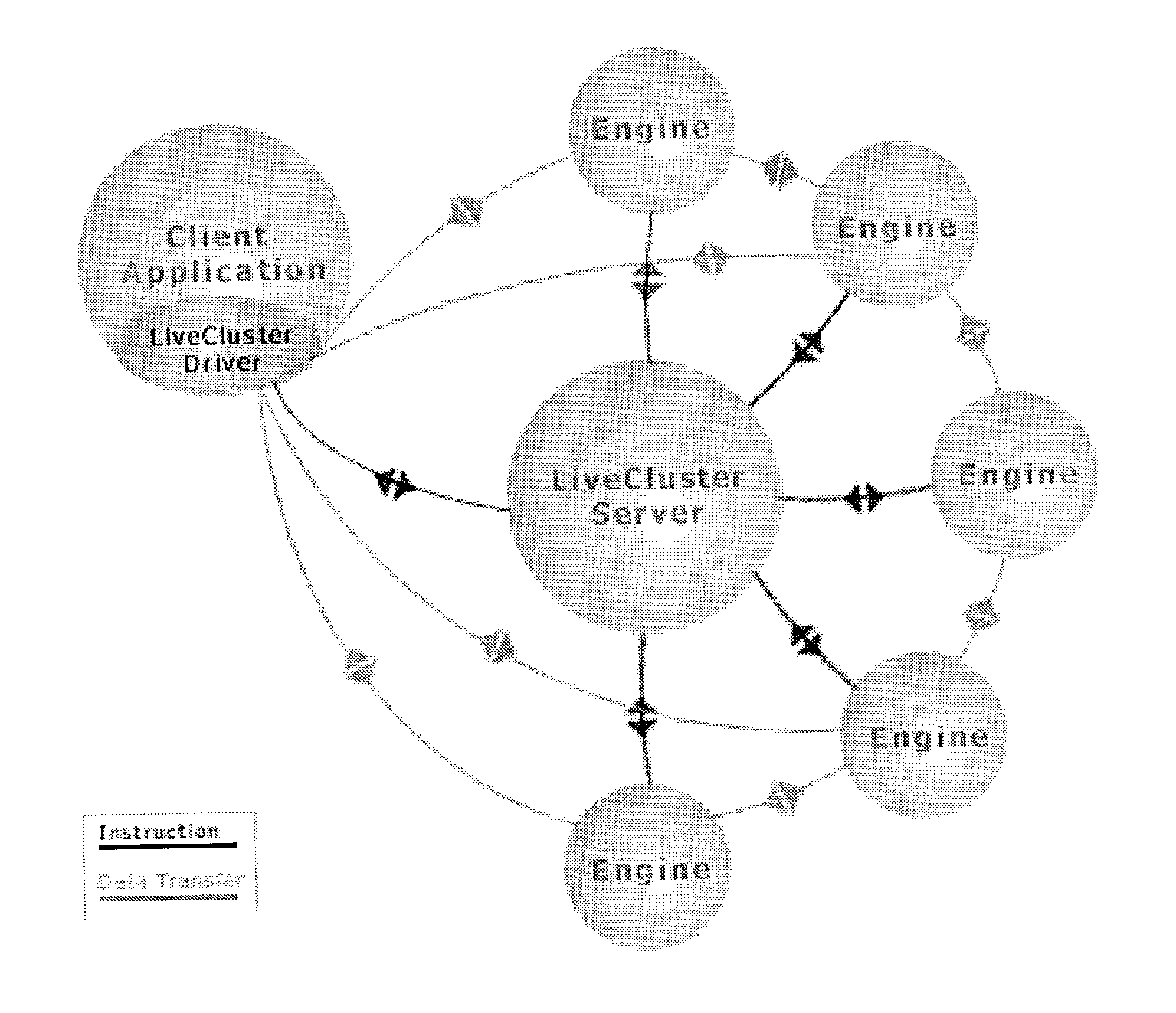

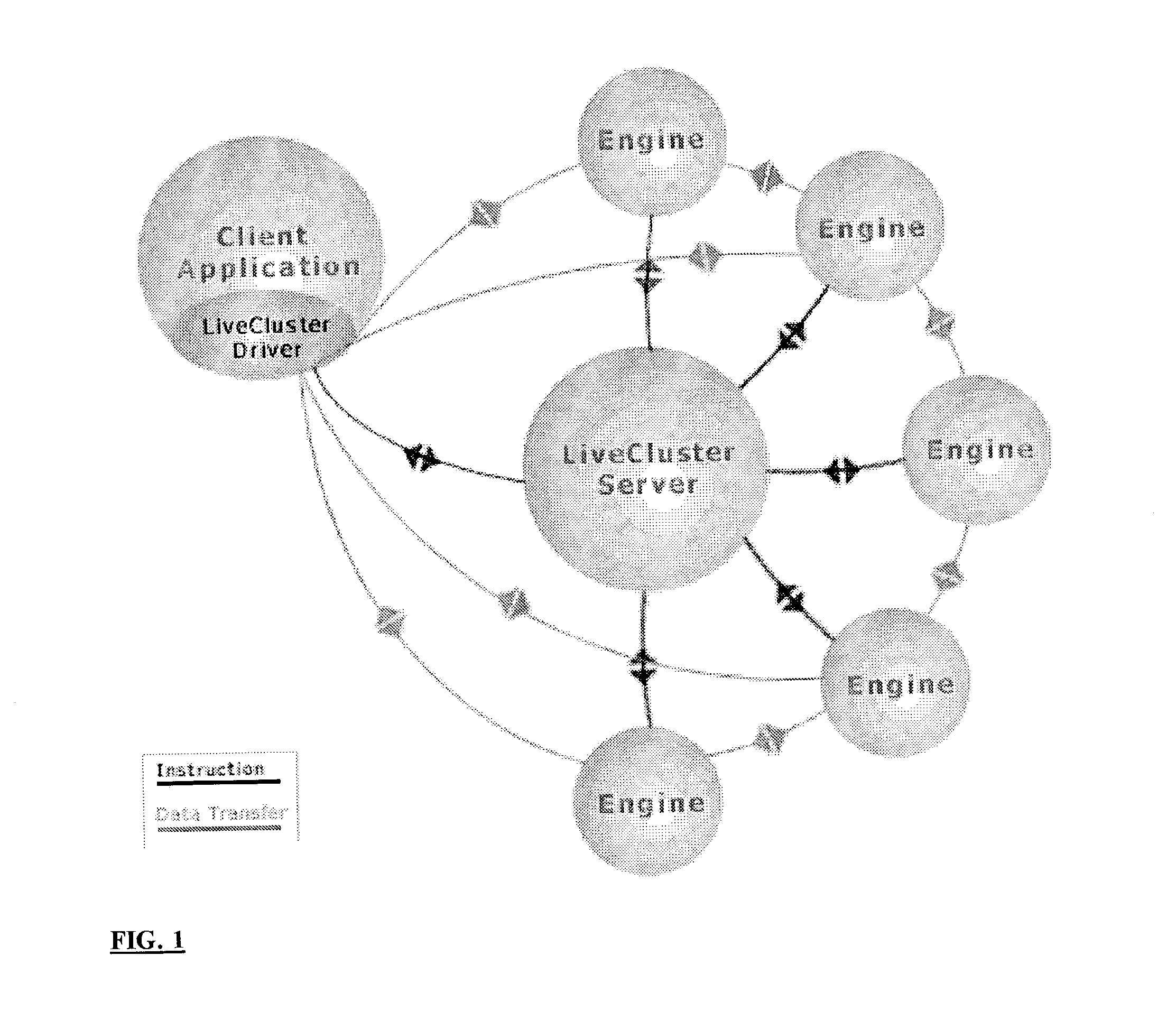

The invention provides an off-the-shelf product solution to target the specific needs of commercial users with naturally parallel applications. A top-level, public API provides a simple “compute server” or “task farm” model that dramatically accelerates integration and deployment. A number of described and claimed adaptive scheduling and caching techniques provide for efficient resource and / or network utilization of intermittently-available and interruptible computing resource in distributed computing systems.

Owner:DATASYNAPSE

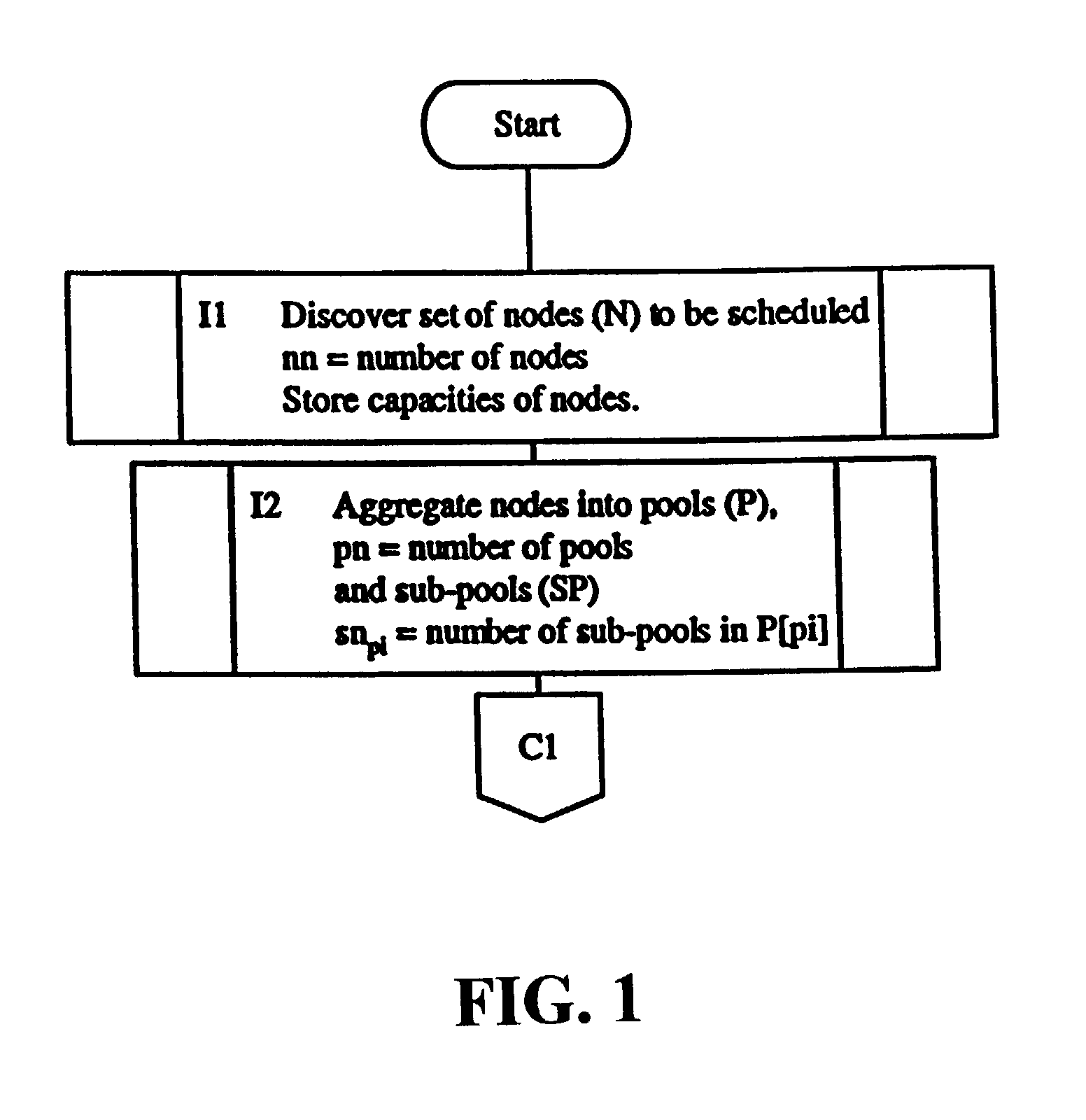

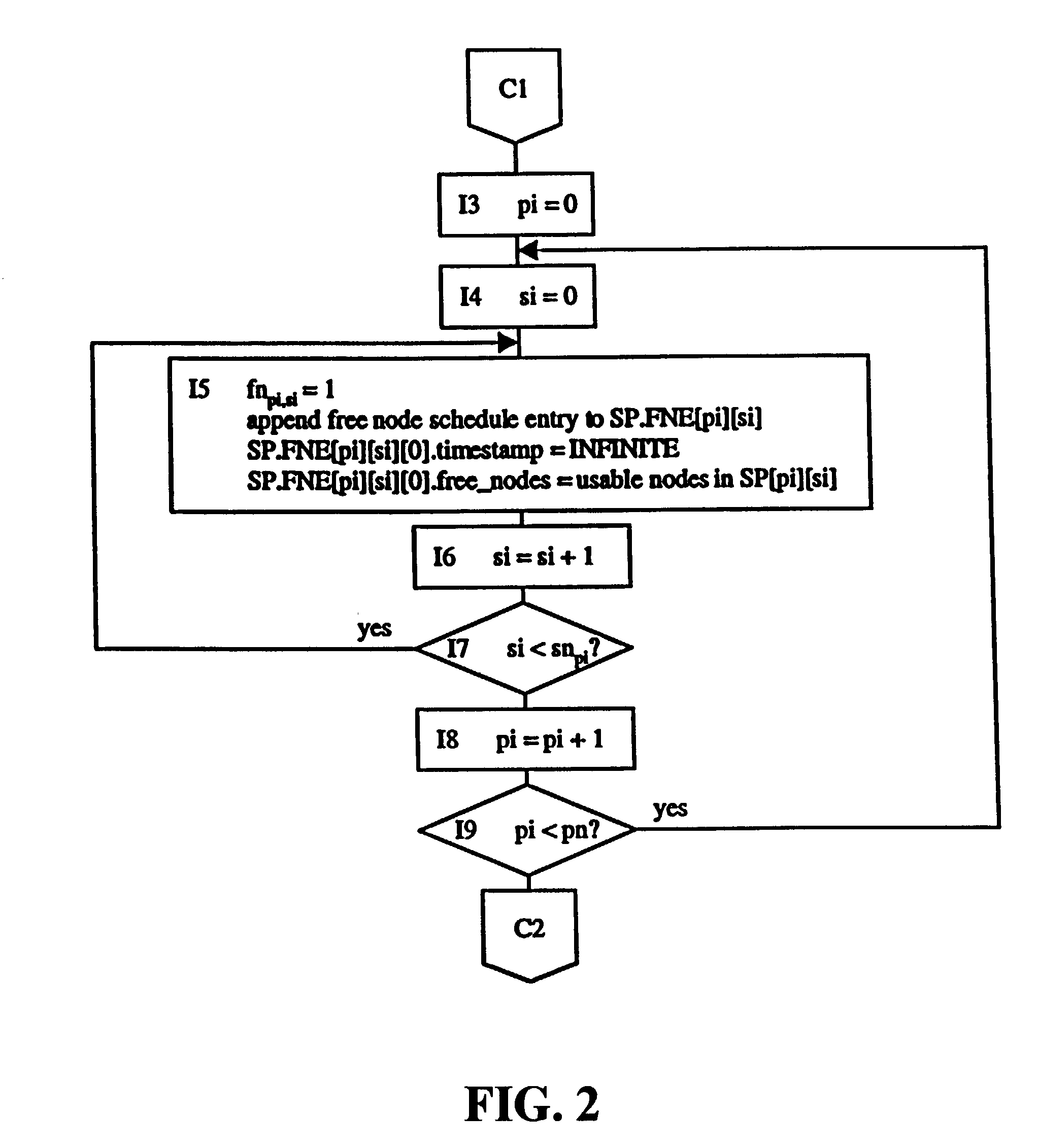

Dedicated heterogeneous node scheduling including backfill scheduling

InactiveUS7082606B2Improve throughputImprove utilizationResource allocationError detection/correctionStart timeParallel computing

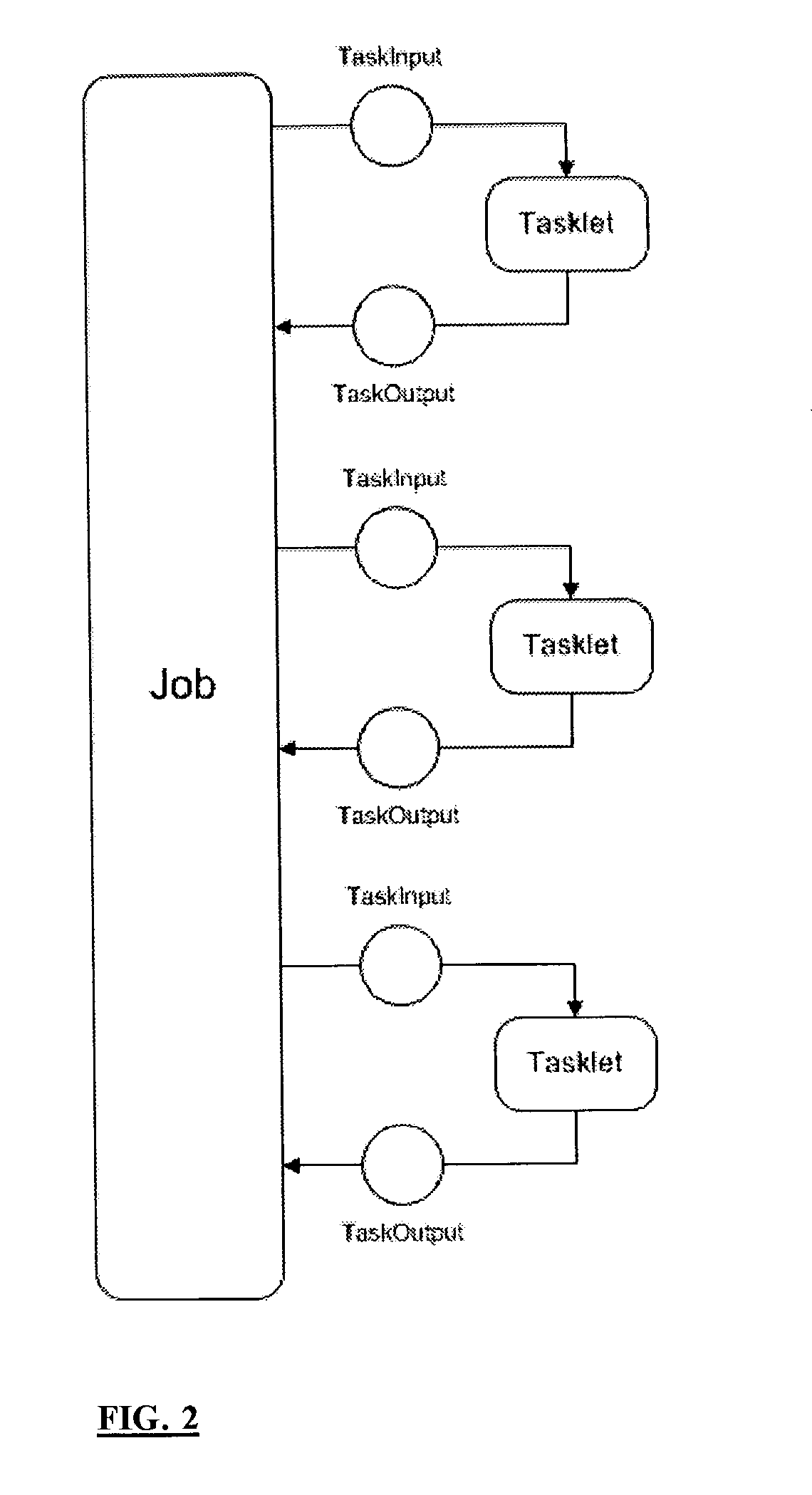

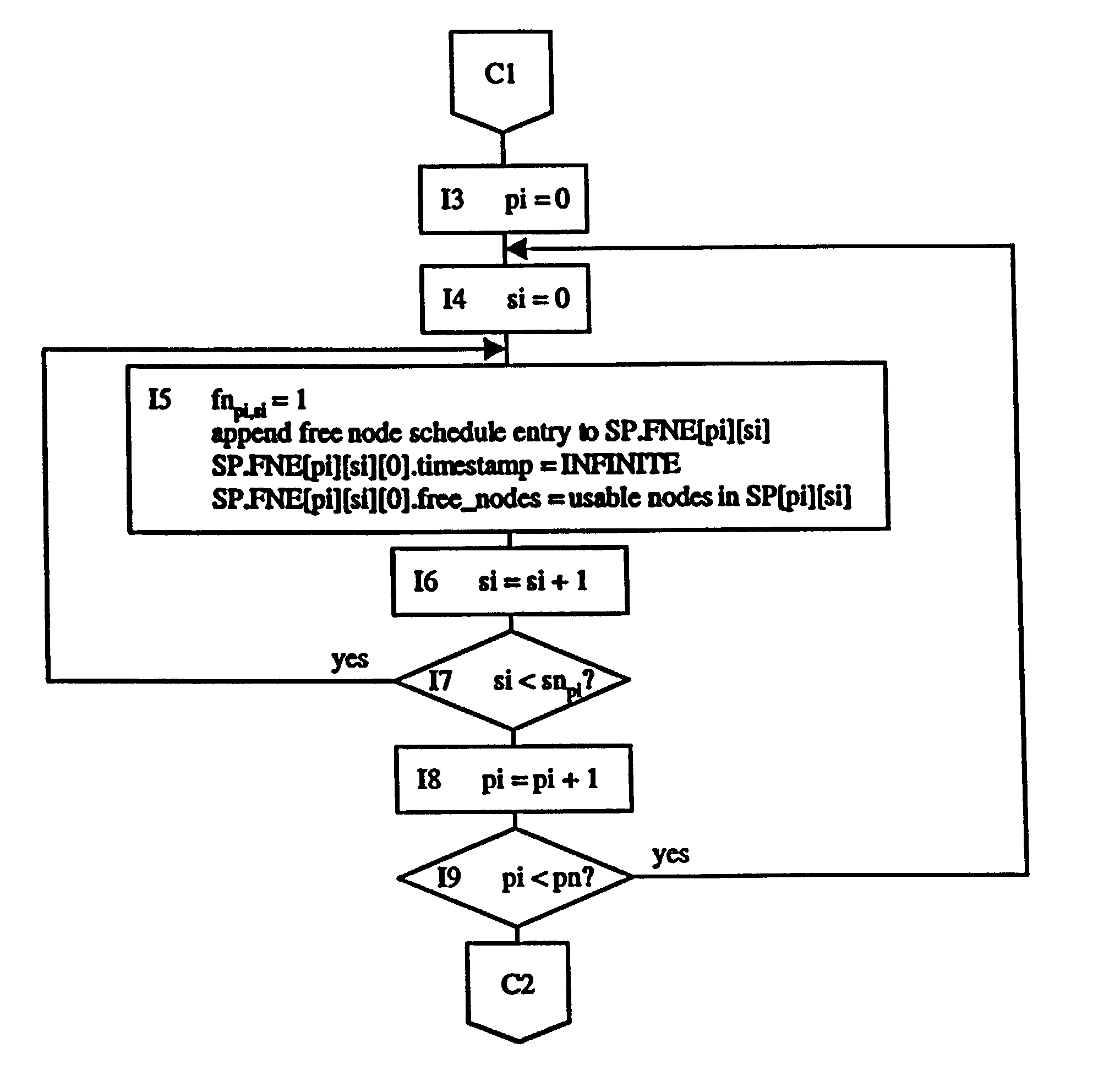

A method and system for job backfill scheduling dedicated heterogeneous nodes in a multi-node computing environment. Heterogeneous nodes are grouped into homogeneous node sub-pools. For each sub-pool, a free node schedule (FNS) is created so that the number of to chart the free nodes over time. For each prioritized job, using the FNS of sub-pools having nodes useable by a particular job, to determine the earliest time range (ETR) capable of running the job. Once determined for a particular job, scheduling the job to run in that ETR. If the ETR determined for a lower priority job (LPJ) has a start time earlier than a higher priority job (HPJ), then the LPJ is scheduled in that ETR if it would not disturb the anticipated start times of any HPJ previously scheduled for a future time. Thus, efficient utilization and throughput of such computing environments may be increased by utilizing resources otherwise remaining idle.

Owner:LAWRENCE LIVERMORE NAT SECURITY LLC

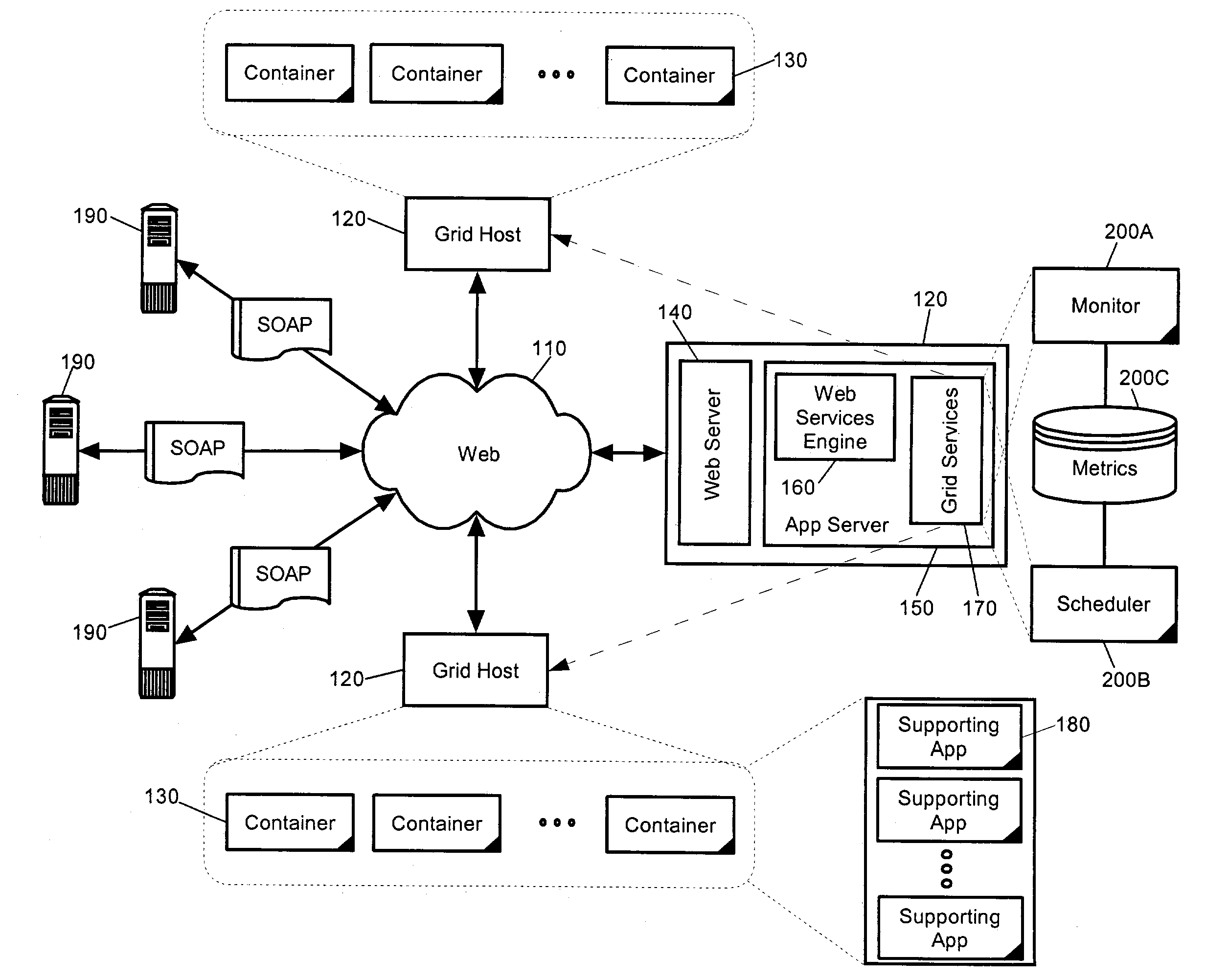

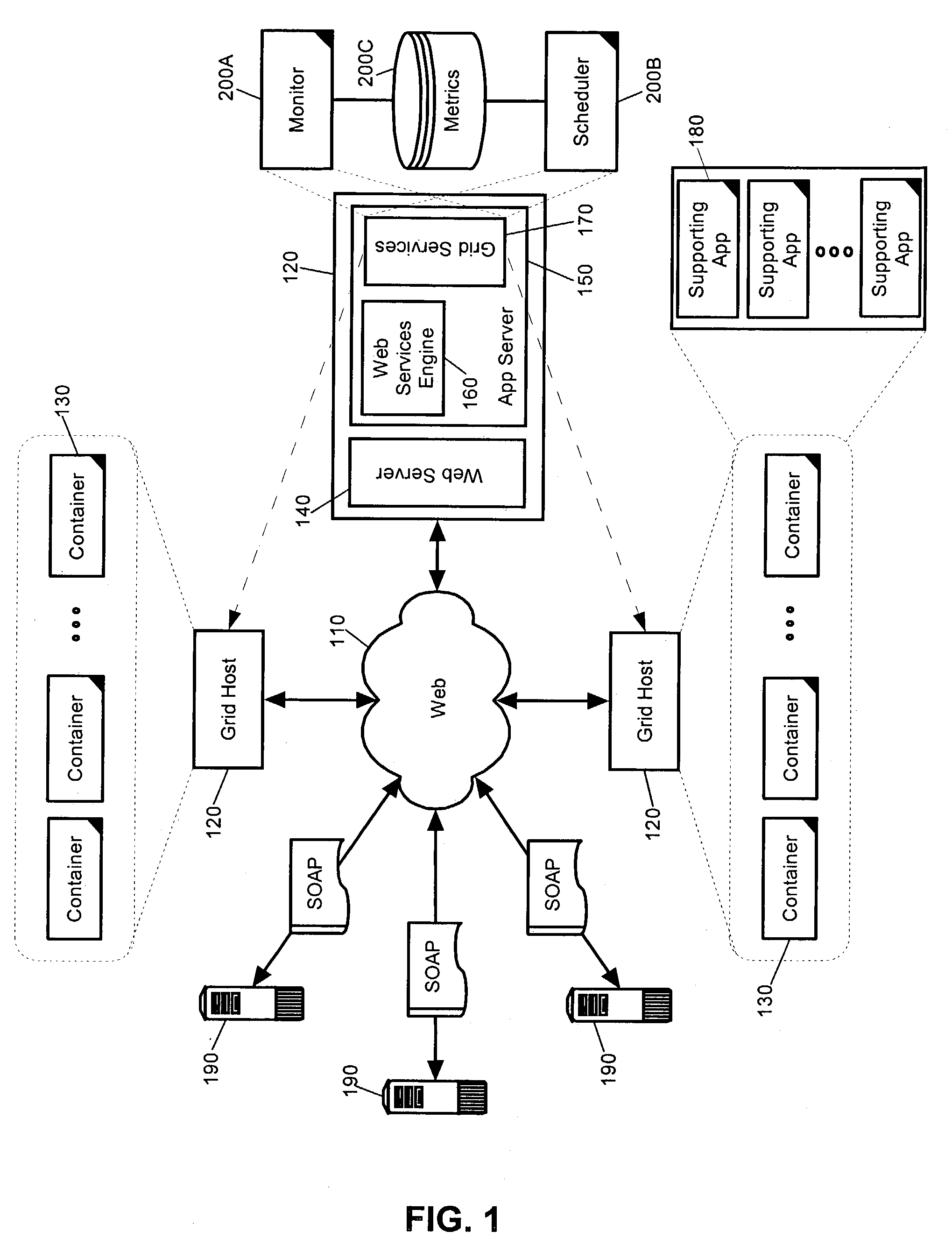

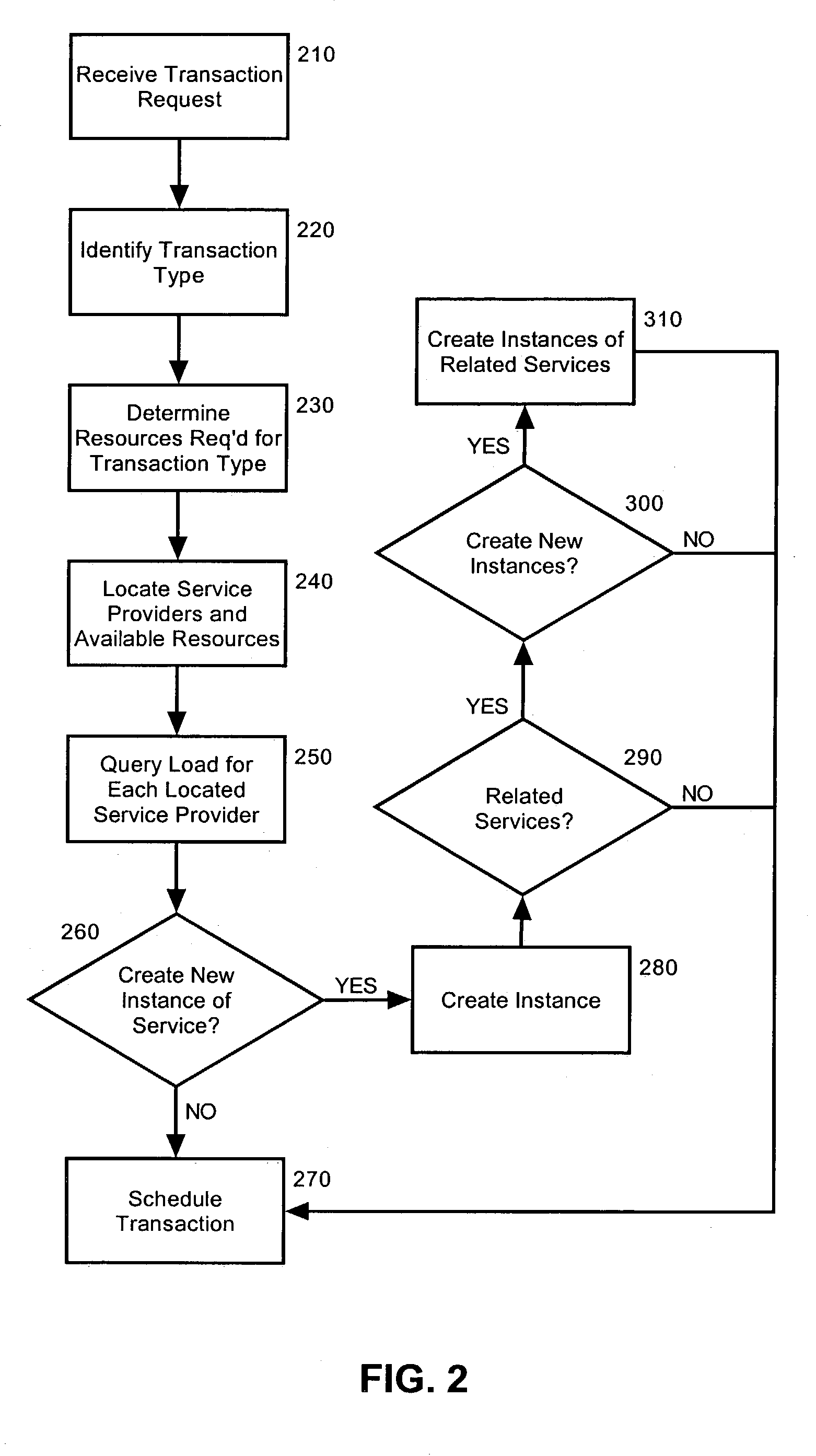

Grid service scheduling of related services using heuristics

ActiveUS7171470B2Increase capacityEasy loadingResource allocationMultiple digital computer combinationsEngineeringScheduling (computing)

A method and system for creating service instances in a computing grid. The method can include scheduling a service in the computing grid to process at least a portion of a requested transaction. At least one additional service related to the scheduled service can be identified, and a load condition can be assessed in the at least one additional service related to the scheduled service. A new instance of the at least one additional service can be created if the load condition exceeds a threshold load. In this way, an enhanced capacity for processing transactions can be established in the related services in advance of a predicted increase in load in the grid.

Owner:IBM CORP

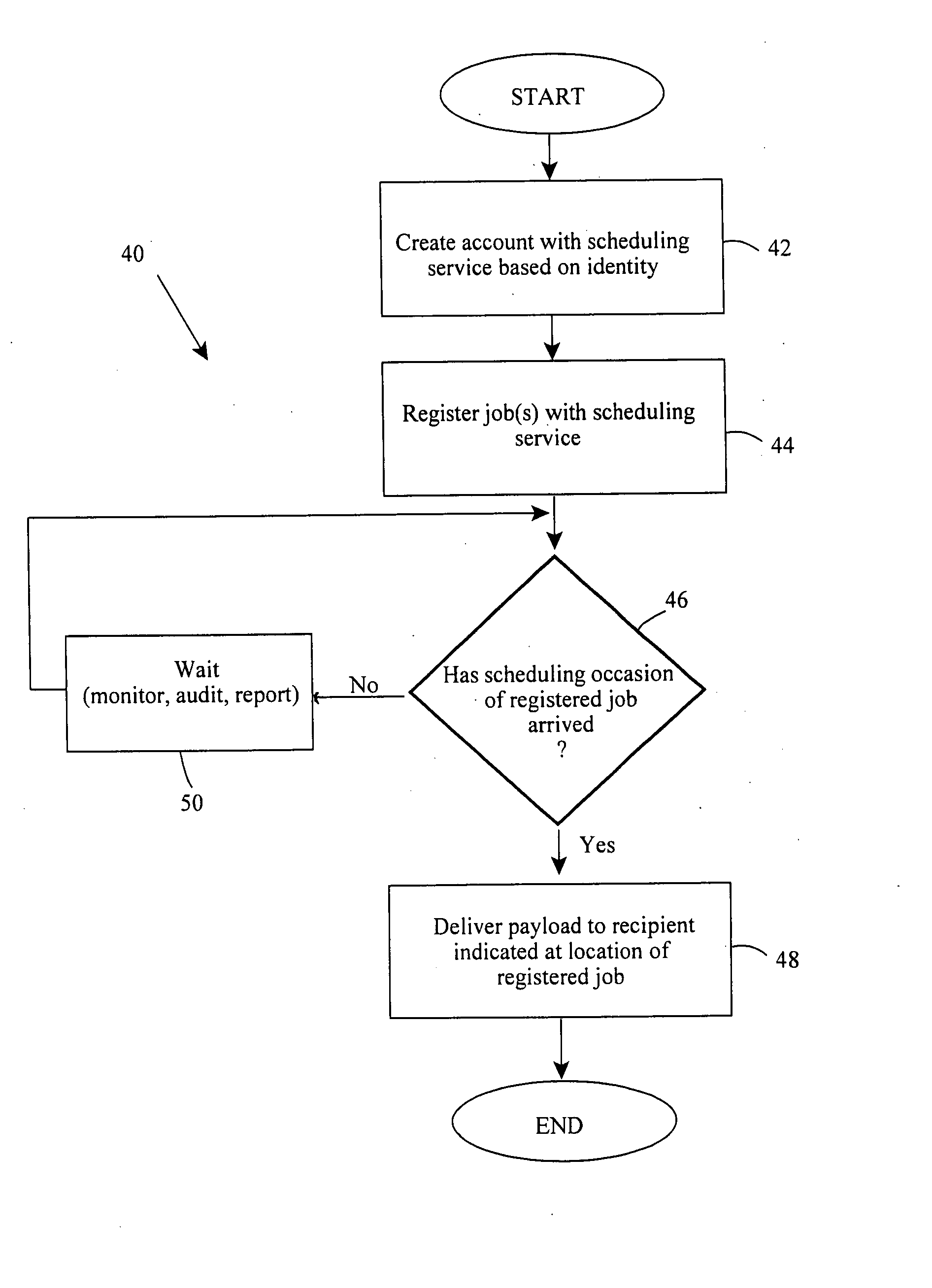

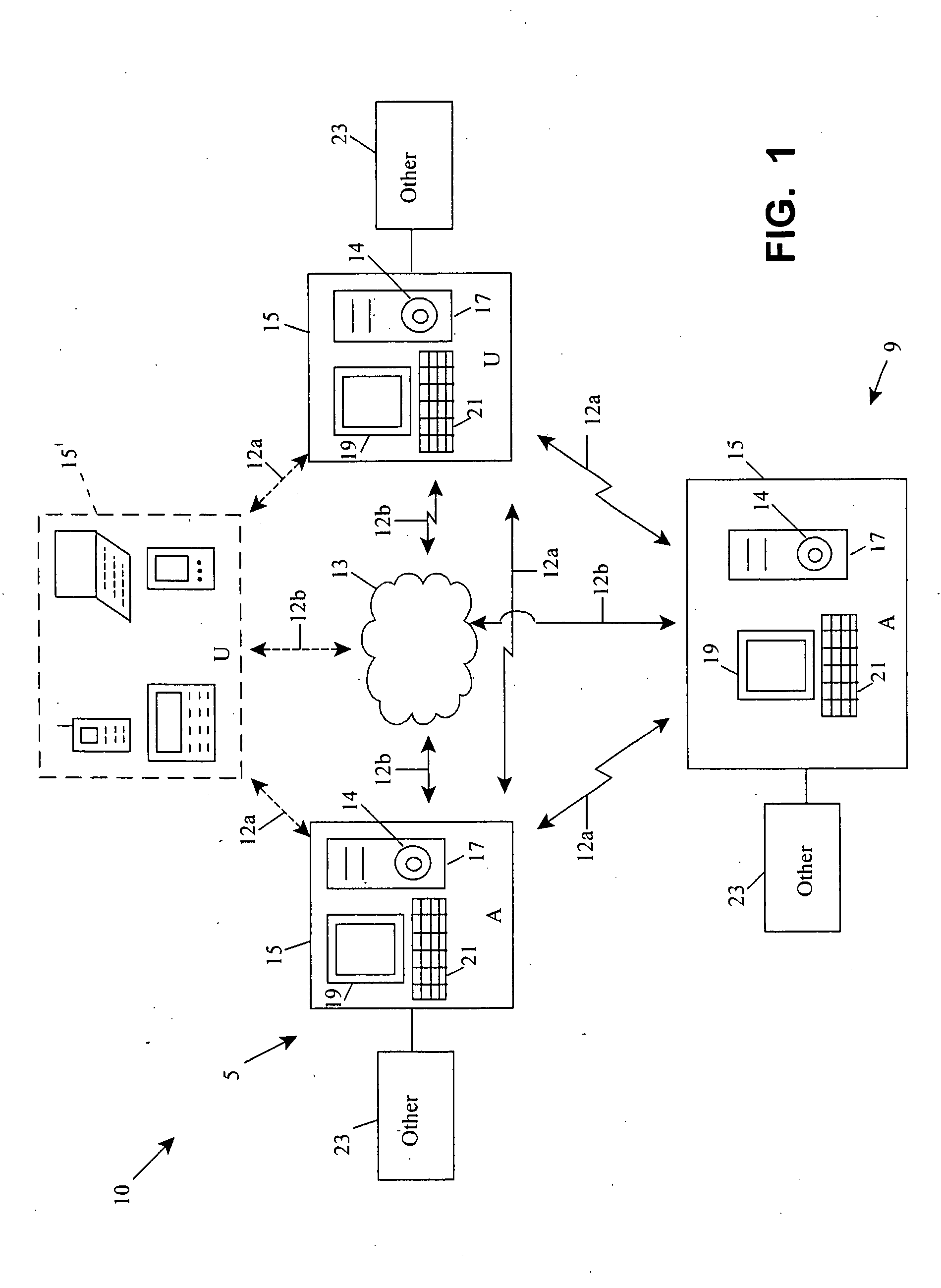

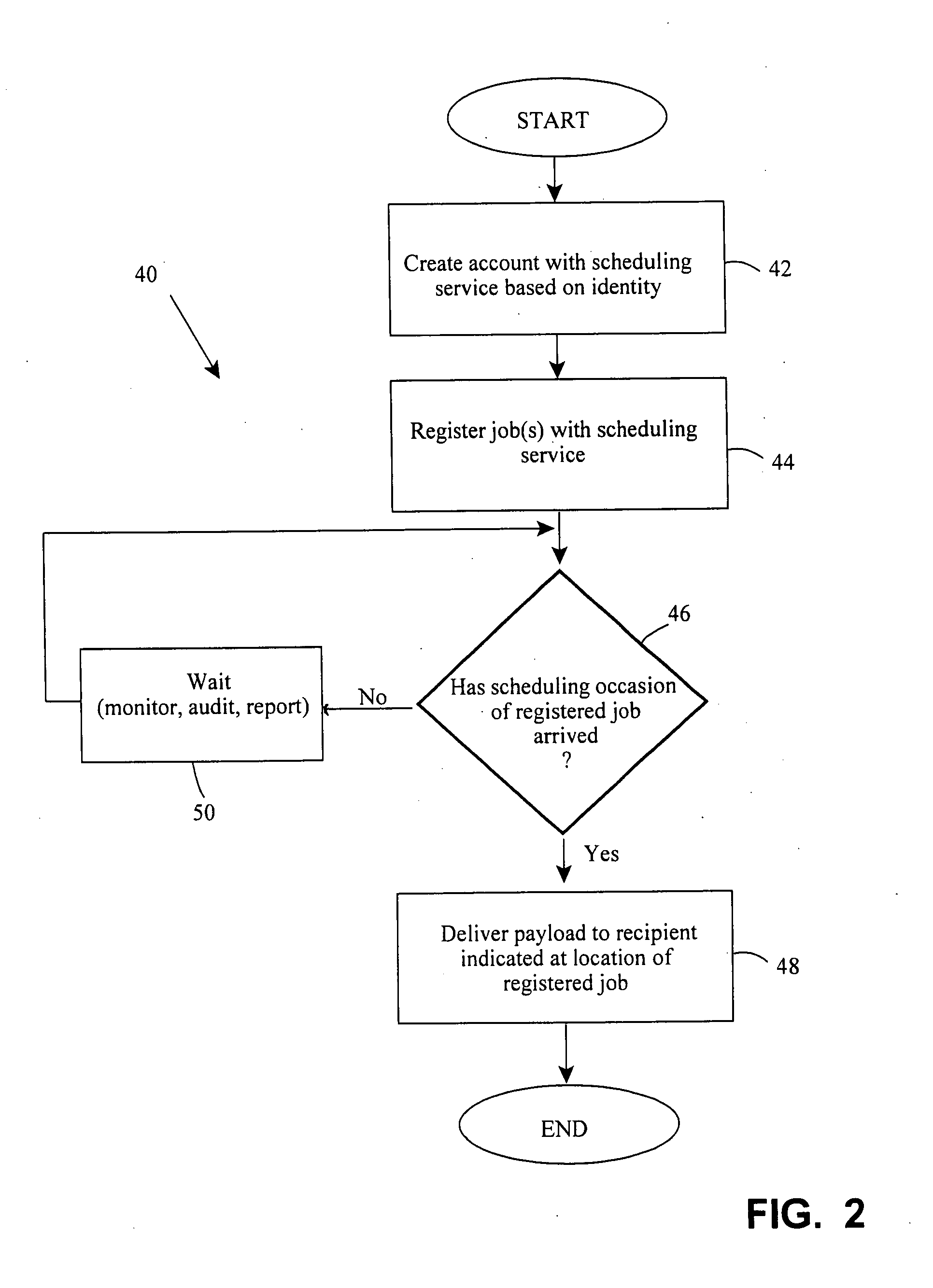

Identity-aware scheduler service

InactiveUS20080301685A1Low costImprove securityFinanceDigital data processing detailsThird partyClient-side

In a computing environment, clients and scheduling services are arranged to coordinate time-based services. Representatively, the client and scheduler engage in an http session whereby the client creates an account (if the first usage) indicating various identities and rights of the client for use with a scheduling job. Thereafter, one or more scheduling jobs are registered including an indication of what payloads are needed, where needed and when needed. Upon appropriate timing, the payloads are delivered to the proper locations, but the scheduling of events is no longer entwined with underlying applications in need of scheduled events. Monitoring of jobs is also possible as is establishment of appropriate communication channels between the parties. Noticing, encryption, and authentication are still other aspects as are launching third party services before payload delivery. Still other embodiments contemplate publishing an API or other particulars so the service can be used in mash-up applications.

Owner:APPLE INC

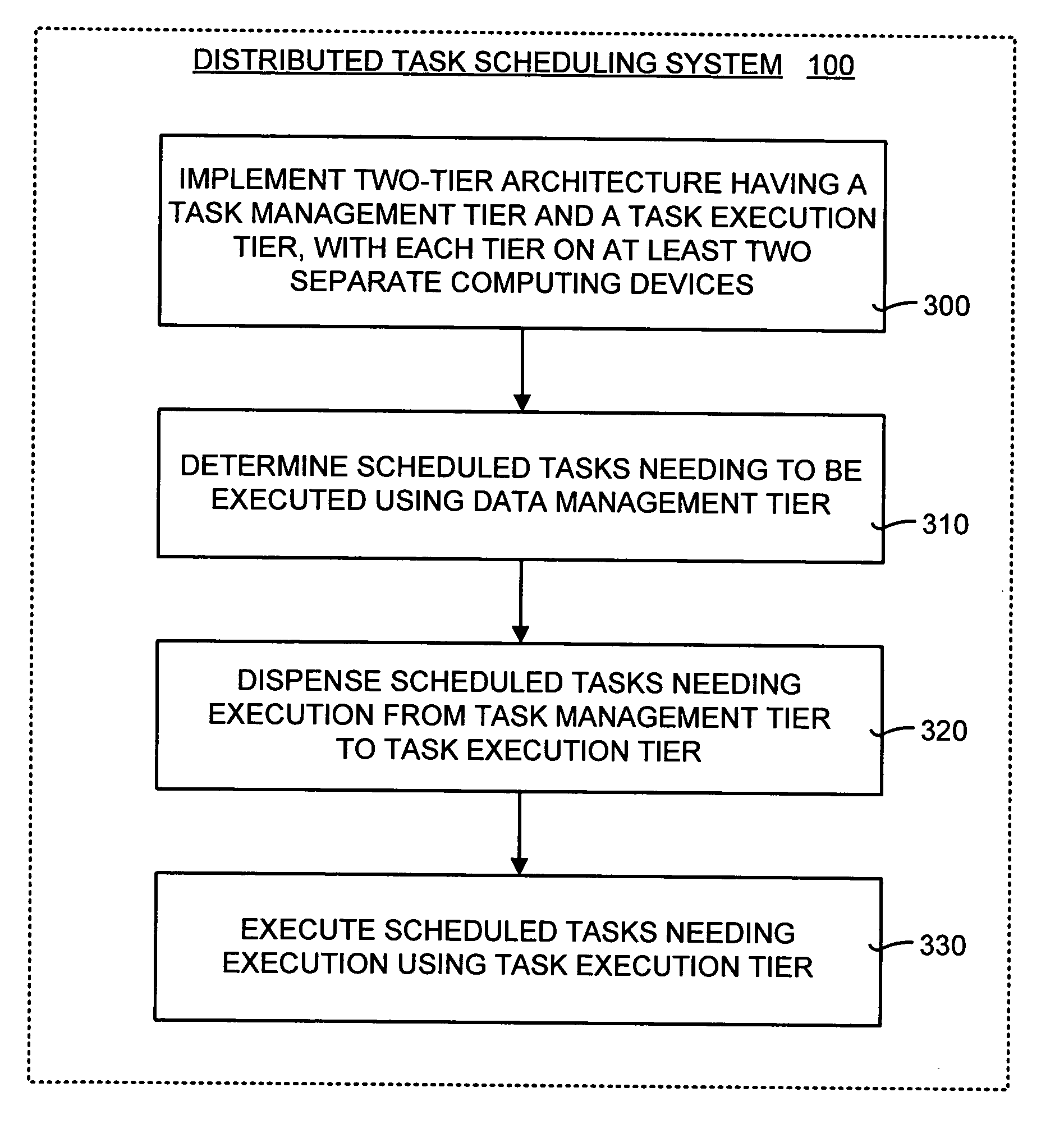

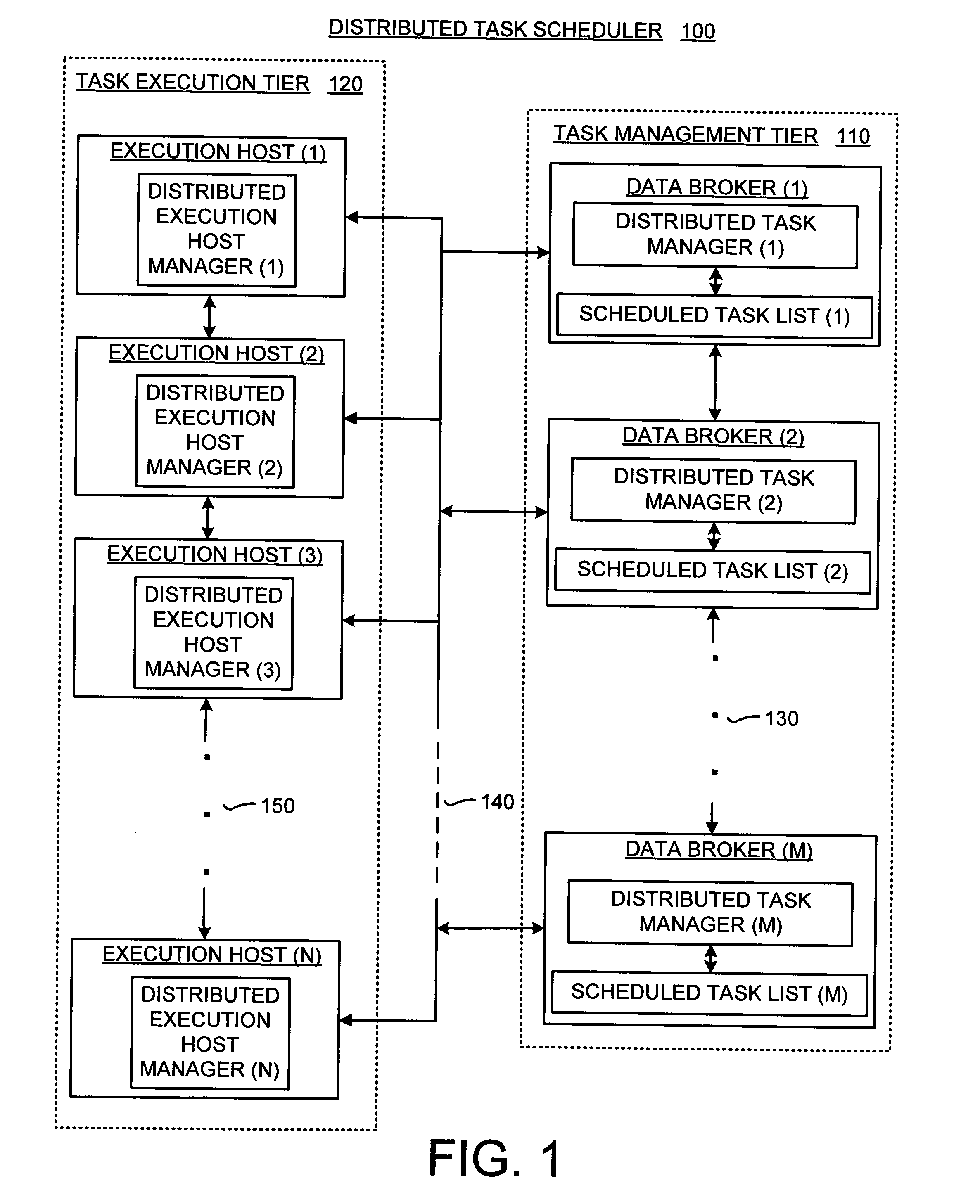

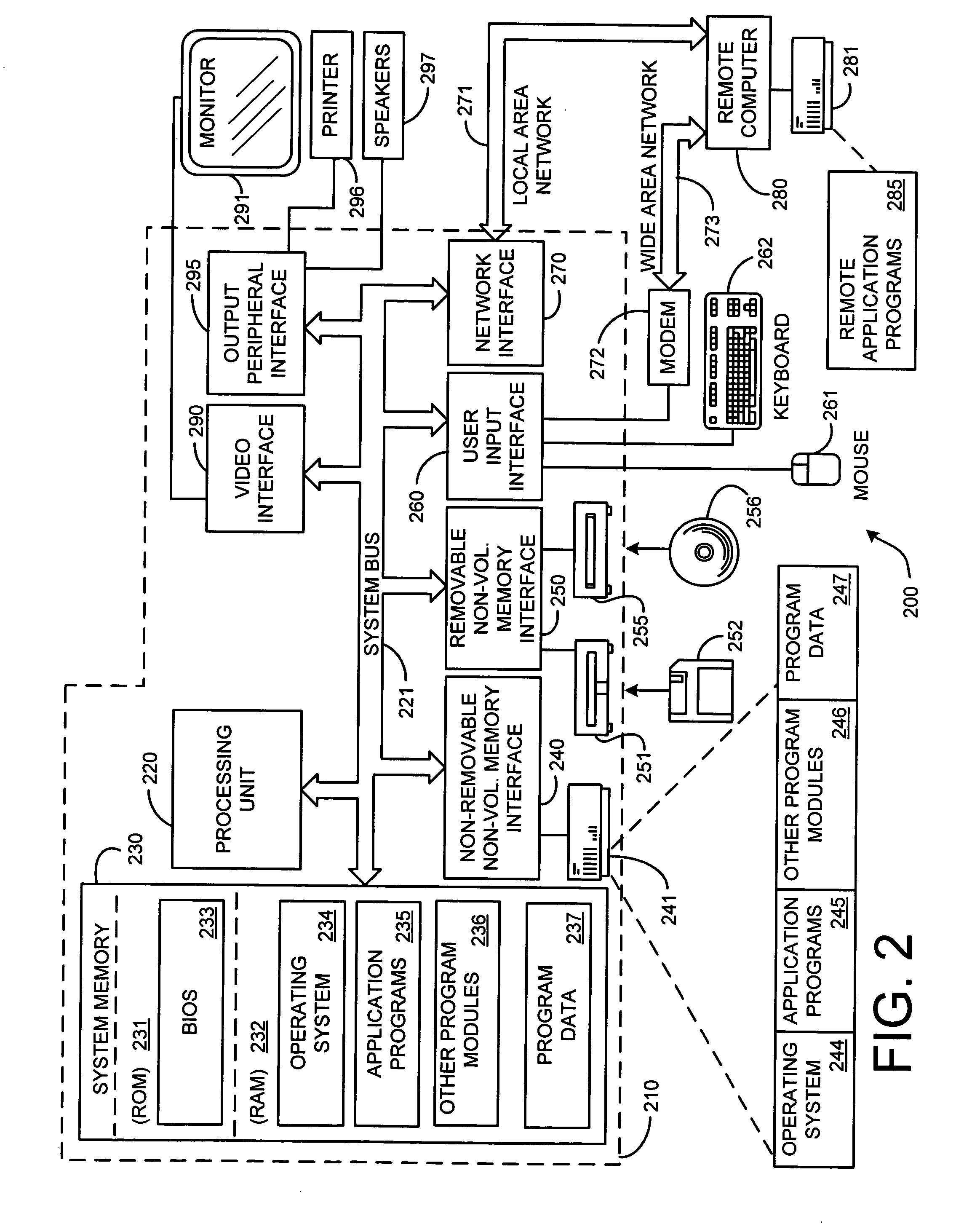

Distributed task scheduler for computing environments

InactiveUS20050268300A1Improve scalabilityResource allocationMemory systemsParallel computingEngineering

A distributed task scheduling method and system that separates and performs task management and task execution on separate computing devices and distributes task execution over multiple computing devices. The distributed task scheduler includes two-tier architecture having at least one execution host and at least one data broker. The execution hosts handle the tasks and the data broker manages the task schedule. The data broker determines any scheduled tasks that need to be executed. Once an available task is found, the data broker dispenses the scheduled task to an execution host. A timeout period is selected for each assigned task. If the assigned execution host does not report back to the data broker within the timeout period the completion of the assigned task, the data broker is free to assign the task to another execution host to ensure reliable execution of the task.

Owner:MICROSOFT TECH LICENSING LLC

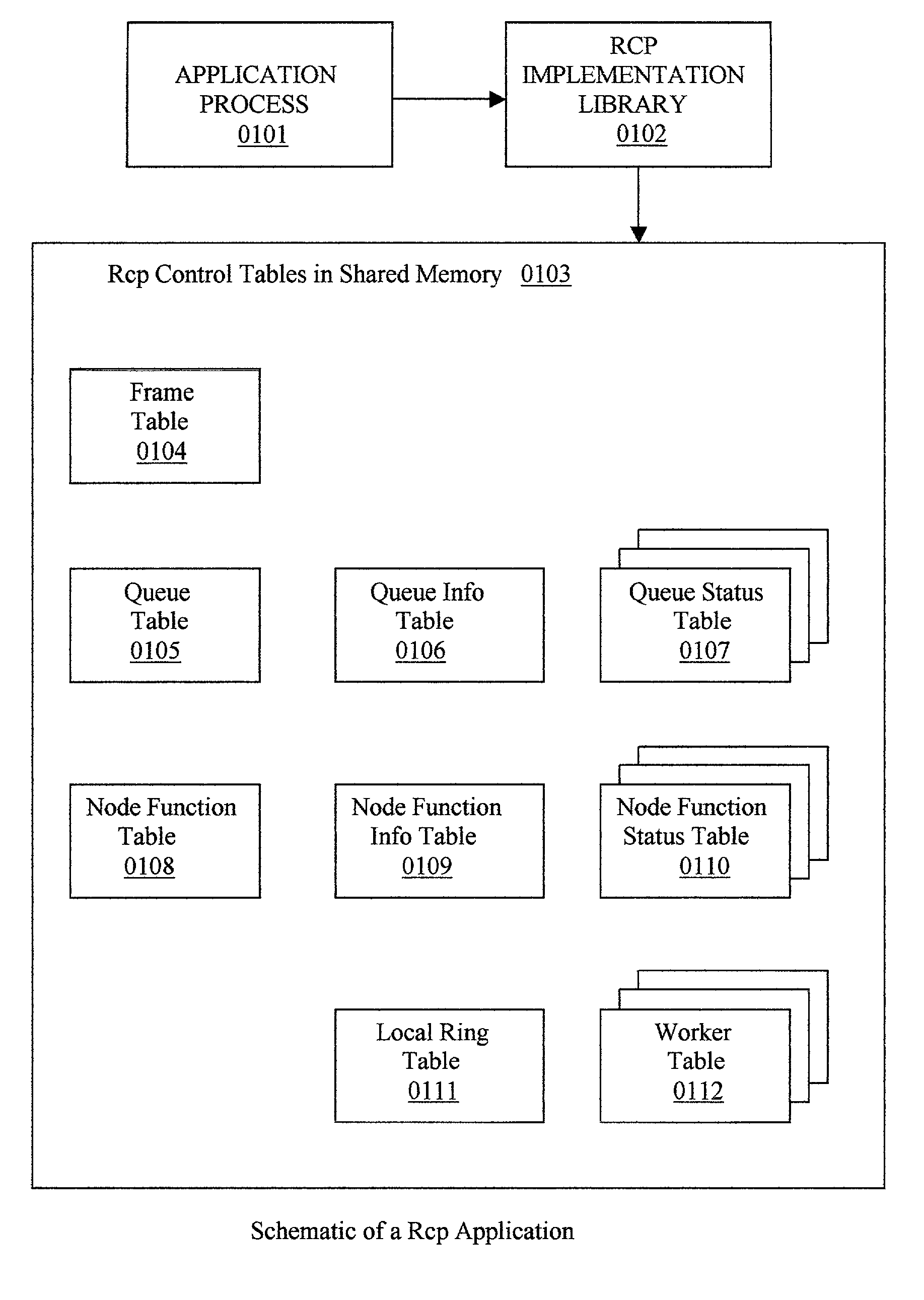

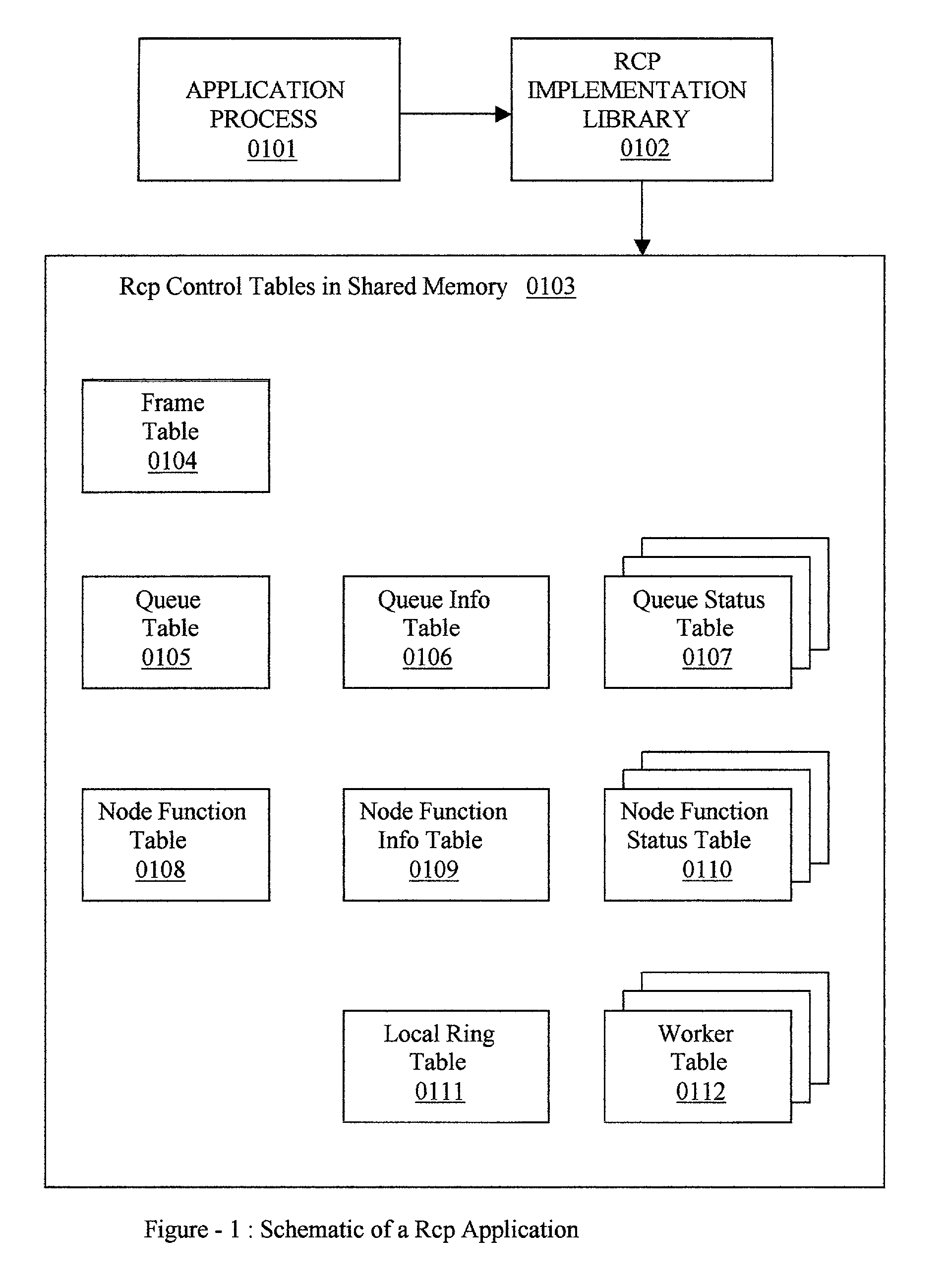

Parallel processing system design and architecture

InactiveUS20030188300A1Resource allocationSpecific program execution arrangementsVirtualizationComputer architecture

An architecture and design called Resource control programming (RCP), for automating the development of multithreaded applications for computing machines equipped with multiple symmetrical processors and shared memory. The Rcp runtime (0102) provides a special class of configurable software device called Rcp Gate (0600), for managing the inputs and outputs and user functions with a predefined signature called node functions (0500). Each Rcp gate manages one node function, and each node function can have one or more invocations. The inputs and outputs of the node functions are virtualized by means of virtual queues and the real queues are bound to the node function invocations, during execution. Each Rcp gate computes its efficiency during execution, which determines the efficiency at which the node function invocations are running. The Rcp Gate will schedule more node function invocations or throttle the scheduling of the node functions depending on the efficiency of the Rcp gate. Thus automatic load balancing of the node functions is provided without any prior knowledge of the load of the node functions and without computing the time taken by each of the node functions.

Owner:PATRUDU PILLA G

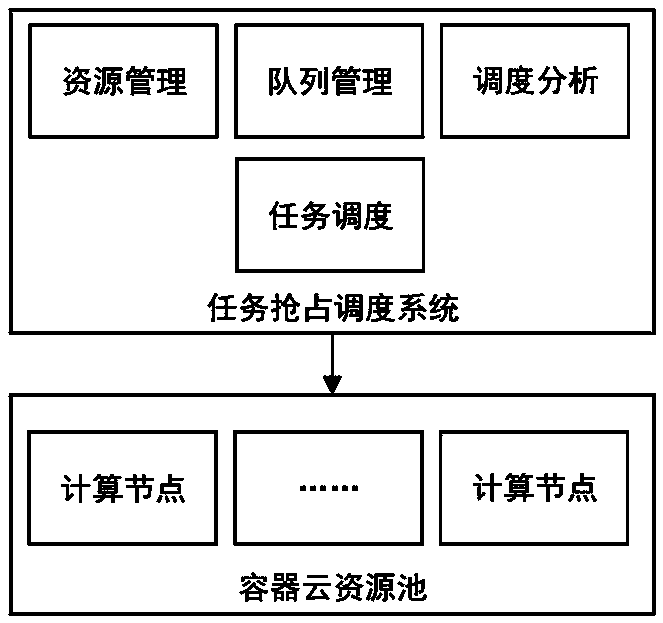

Management scheduling technology based on hyper-converged framework

PendingCN112000421AImprove virtualization capabilitiesImprove management abilityResource allocationSoftware simulation/interpretation/emulationResource poolSystem architecture design

The invention discloses a management scheduling technology based on a hyper-converged framework. The method comprises a hyper-converged system architecture design, resource integrated management basedon a hyper-converged architecture, unified computing virtualization oriented to a domestic heterogeneous platform, storage virtualization based on distributed storage, network virtualization based onsoftware definition and a container dynamic scheduling management technology oriented to a high-mobility environment. According to the management scheduling technology based on the hyper-converged framework provided by the invention, the virtualization capability and the management capability of the tactical cloud platform are improved; a key technical support is provided for constructing army maneuvering tactical cloud full-link ecology; an on-demand flexible virtualized computing storage resource pool is provided, heterogeneous fusion computing virtualization is achieved, meanwhile, a distributed storage technology is used for constructing a storage resource pool, a software definition technology is used for constructing a virtual network, a super-fusion resource pool is formed, localization data and network access of application services are achieved, the I / O bottleneck problem of a traditional virtualization deployment mode is solved, and the service response performance is improved.

Owner:BEIJING INST OF COMP TECH & APPL

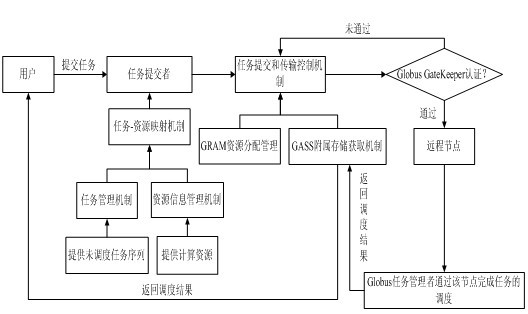

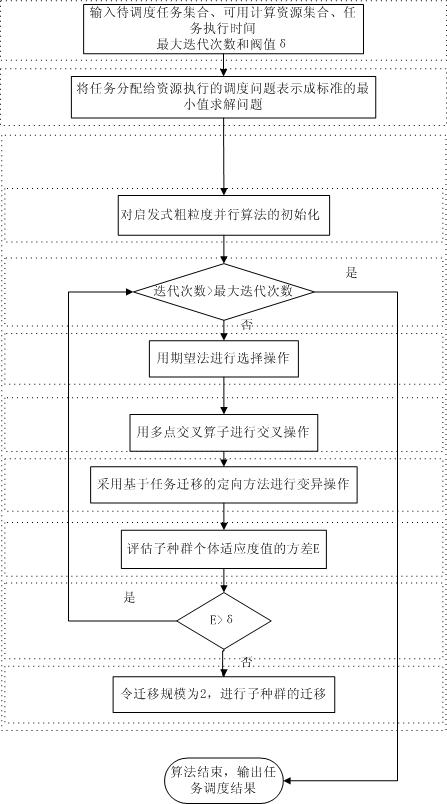

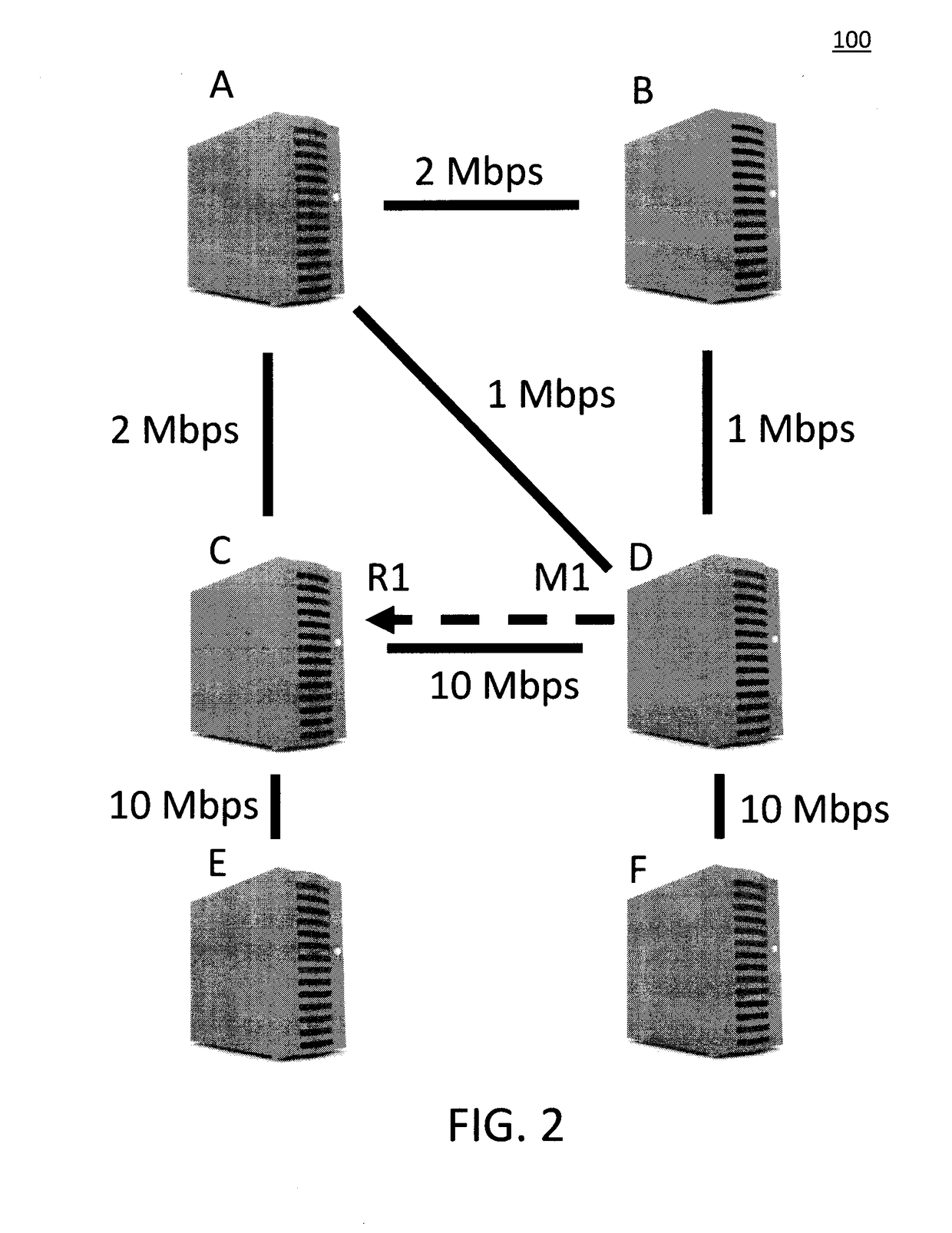

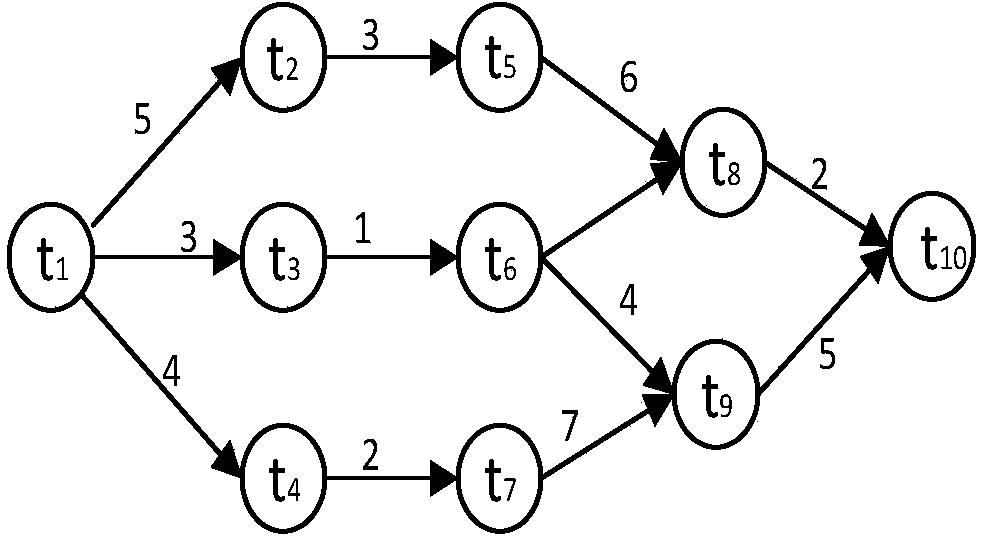

Heuristic type coarse grain parallel grid task scheduling method

The invention discloses a heuristic type coarse grain parallel grid task scheduling method which is characterized by comprising the following steps: firstly, a task submitter inputs a to-be-schemed task set, a usable computing resource set, an execution time set of tasks on the computing resource, a maximum number of iteration times, a threshold value delta and an entropy value epsilon; secondly, a task scheduler represents a scheduling problem of allocating resources to execute the tasks to be a standard minimum solving problem under task optimization and constraint conditions; thirdly, a grid task scheduling problem is solved by an iterative process of the heuristic type coarse grain parallel algorithm; and fourthly, after the algorithm is finished, the task scheduling result is output. In the way, the invention provides a new multipoint crossing method and the increasing property of a population optimal solution is maintained by an elitist strategy; during a variation stage, a directed variation method based on task immigration is adopted to prevent degeneration of the population; and the method is high in performance and better than the traditional random algorithm in computing power and convergence rate.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

Joint Network and Task Scheduling

InactiveUS20170286180A1Program initiation/switchingResource allocationResource poolDistributed computing

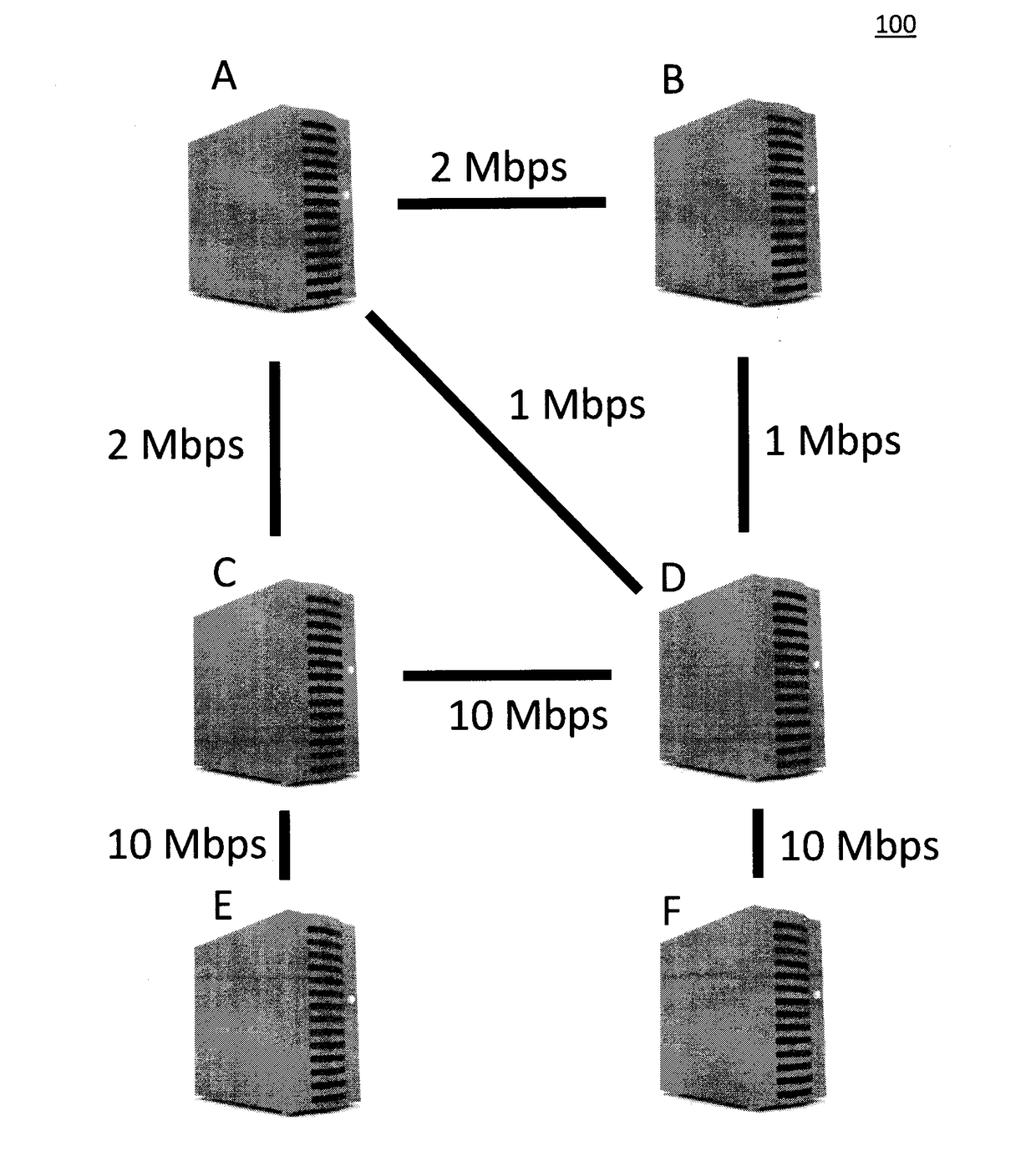

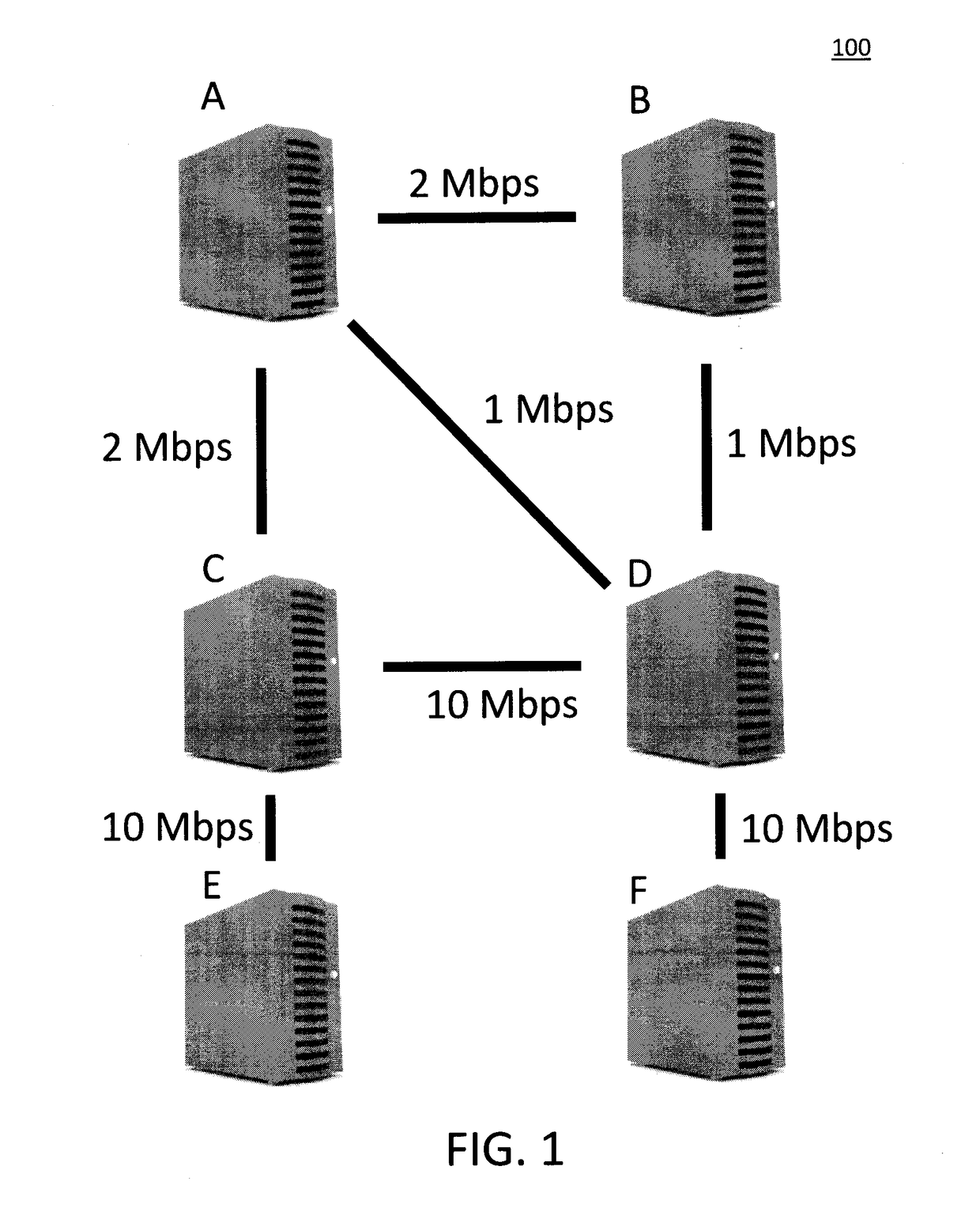

Techniques for network state-aware and network scheduling policy-aware task scheduling are provided. In one aspect, a method for scheduling tasks in a distributed computing network includes the steps of: collecting a pool of candidate resources in the distributed computing network for performing a given one of the tasks; predicting a performance of each of the candidate resources in performing the given task based on both i) a state and ii) a scheduling policy of the distributed computing network; and selecting a best candidate resource for the given task based on the performance. A system for scheduling tasks in a distributed computing network is also provided which includes a task scheduler; and a network scheduler, wherein the task scheduler is configured to schedule the tasks in the distributed computing network based on both i) the state and ii) the scheduling policy of the distributed computing network.

Owner:IBM CORP

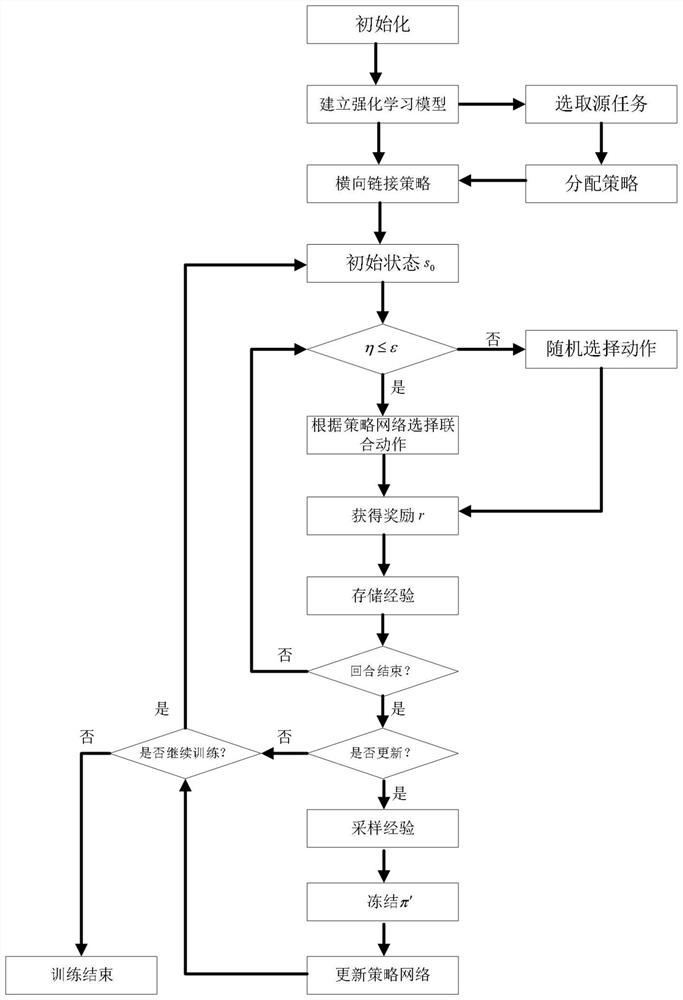

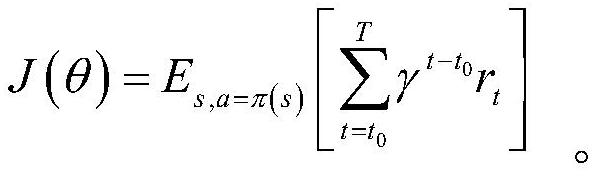

Mobile edge computing system task scheduling method based on migration and reinforcement learning

ActiveCN111858009AIncrease usageAvoid converging to a local optimumProgram initiation/switchingResource allocationEdge serverEdge computing

Aiming at the task scheduling problem of an edge computing server, the invention provides a mobile edge computing system task scheduling method based on migration and reinforcement learning. The method comprises the following steps: constructing an Actor-Critic network for each server to train a scheduling strategy of the server, wherein the Actor network determines the action according to the state of the Actor network, and the Critic network evaluates the quality of the action according to the actions and the states of all the servers. And all the servers share one Critic network. When a plurality of edge server scheduling strategies are trained by using multi-agent reinforcement learning, a strategy network with the same structure is constructed for the scheduling strategy of each server. These policy networks not only have the same network layer, but also have the same number of nodes in each layer. The strategies are trained by using a centralized training decentralized executionmechanism, so that the problem of dimensionality disasters caused by too many servers is avoided.

Owner:NORTHWESTERN POLYTECHNICAL UNIV +1

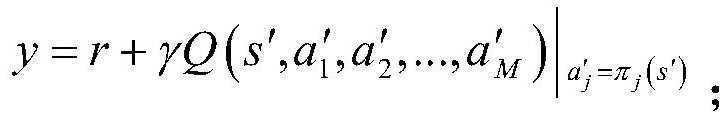

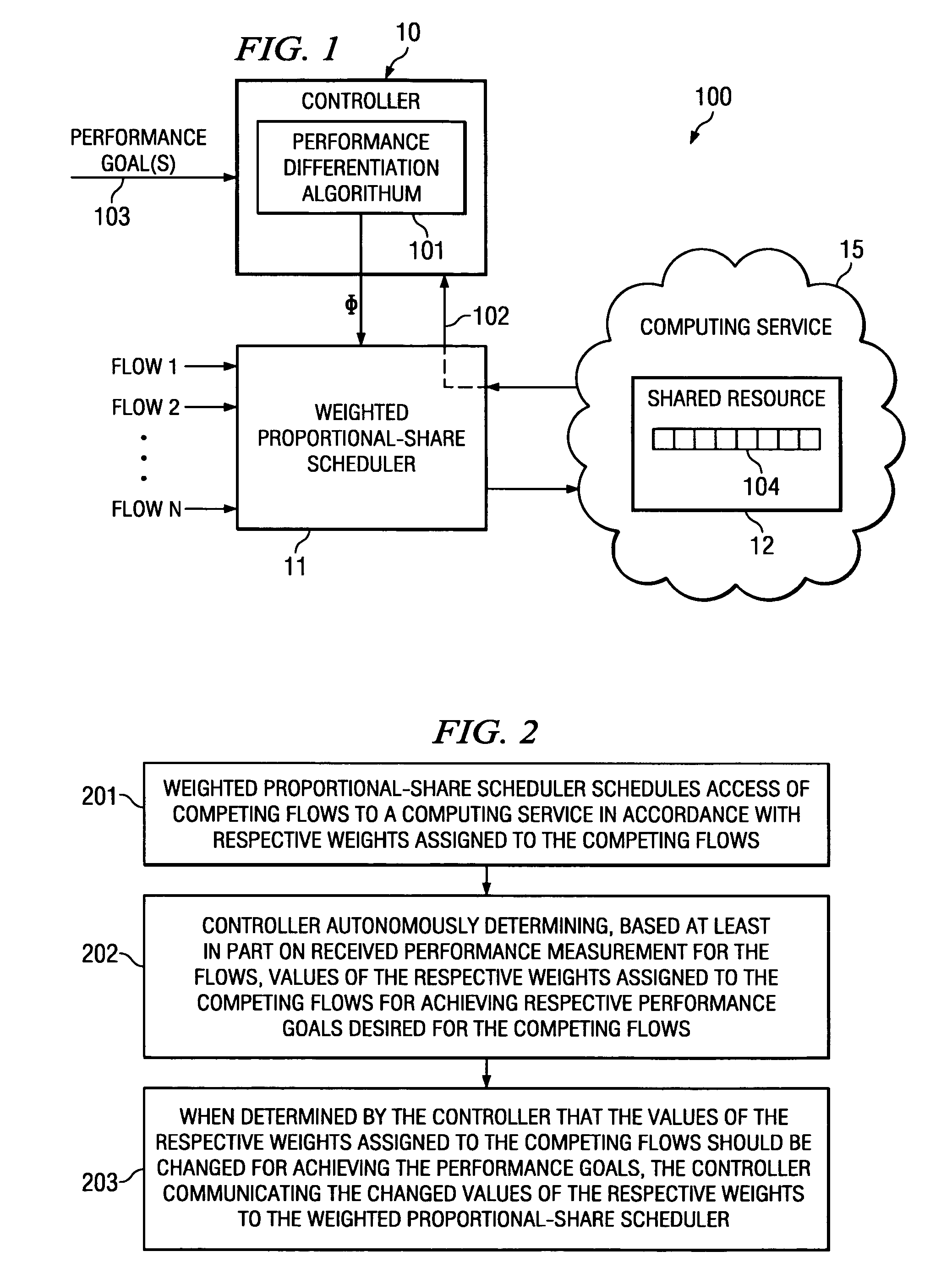

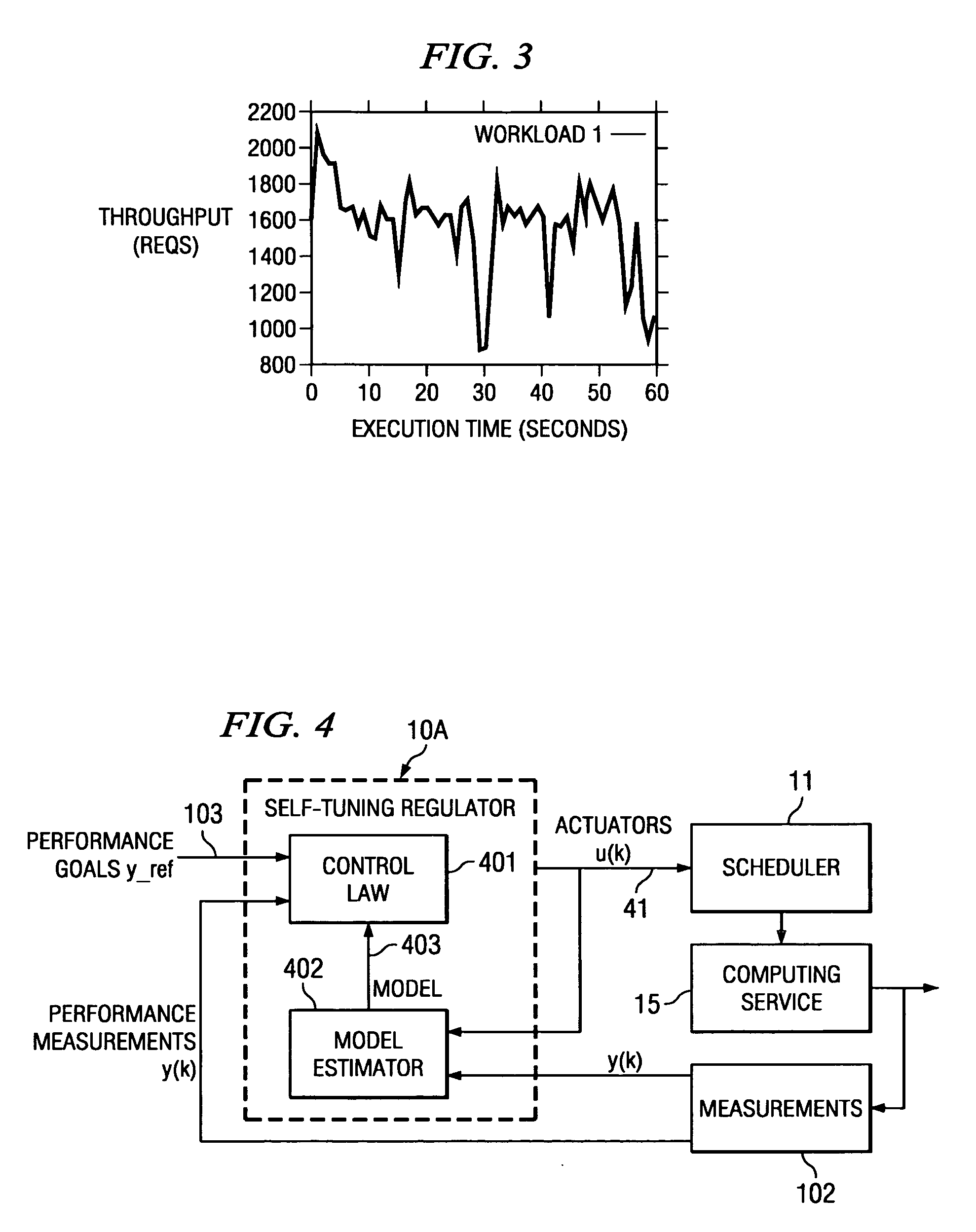

System and method for dynamically controlling weights assigned to consumers competing for a shared resource

InactiveUS20060294044A1Error preventionFrequency-division multiplex detailsProportional shareShared resource

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

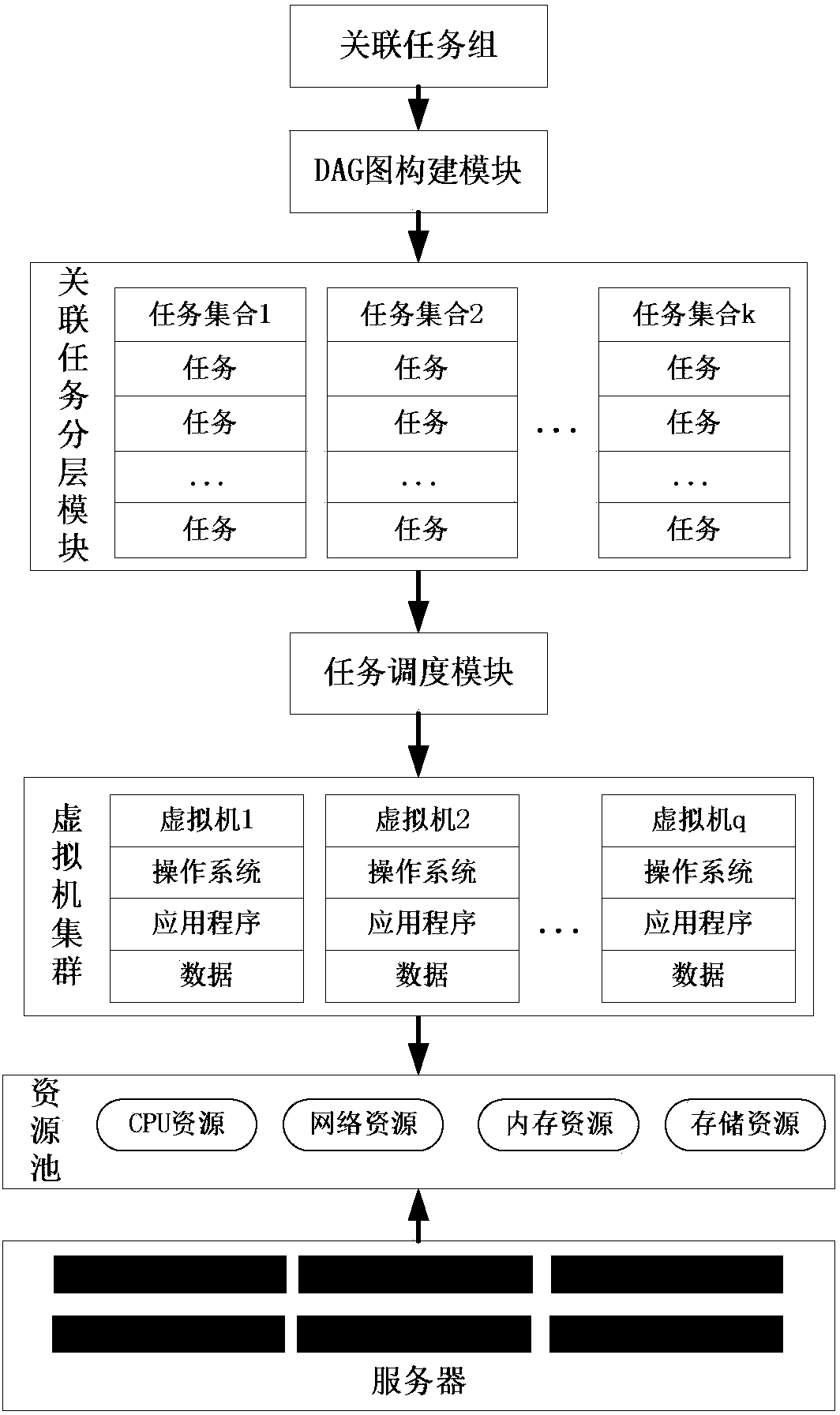

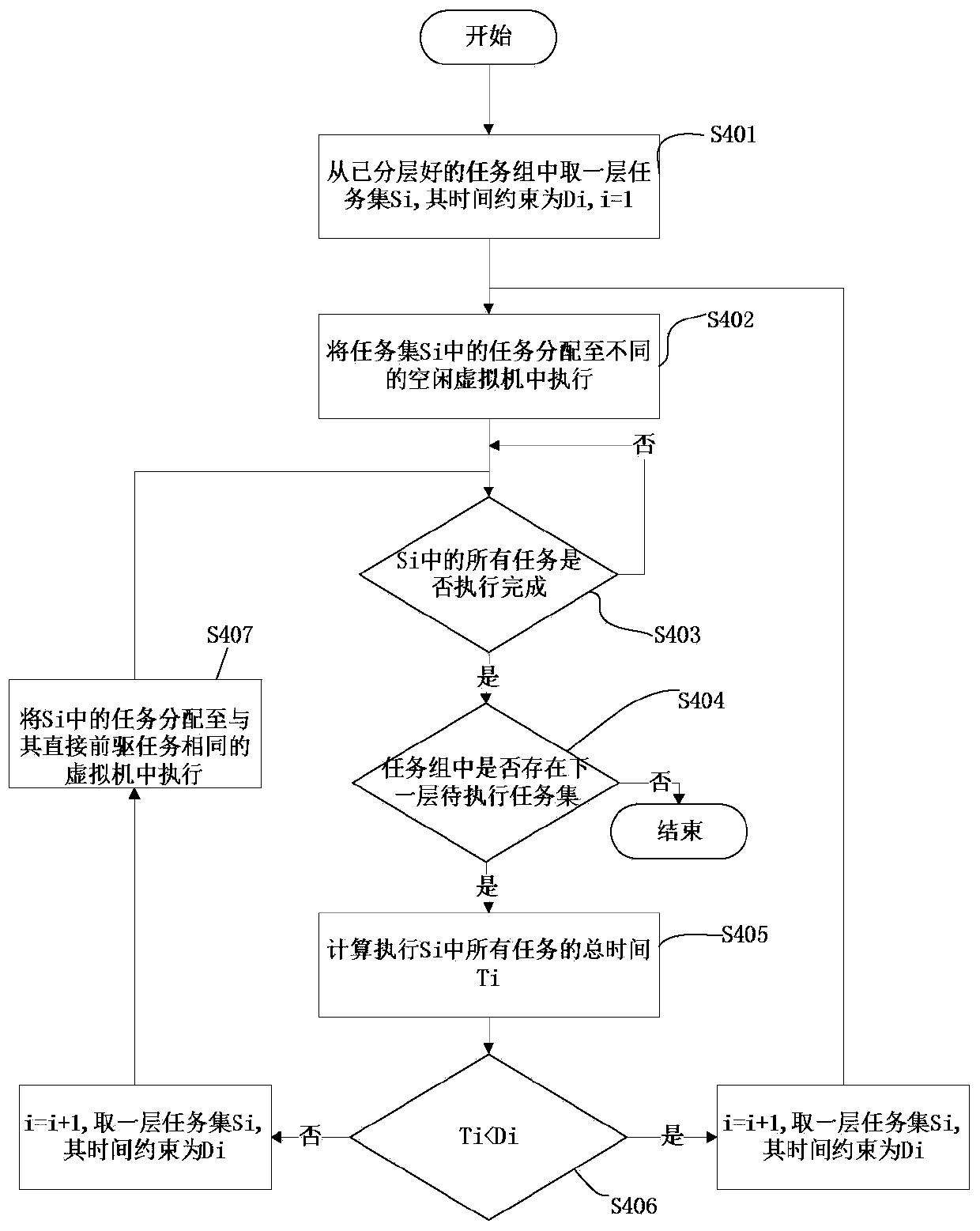

Cloud computing associated task scheduling method and device based on time constraint

ActiveCN104021040AFix execution delaysLower latencyProgram initiation/switchingSoftware simulation/interpretation/emulationCloud computingVirtual machine

The invention discloses a cloud computing associated task scheduling method and device based on time constraint. The method comprises the steps that firstly, the associated relation between tasks in an associated task set is expressed by building a DAG; the thought of layering the DAG is adopted to enable unassociated tasks in the same layer to be fallen into the same task set; hardware resources are virtualized, and a virtual machine cluster is built so as to provide task execution places; finally, the layering scheduling method based on the time constraint is adopted to schedule the tasks in the task set in each layer to the most appropriate virtual machine to be executed so as to ensure that the tasks are completed on schedule. According to the technical scheme, the problem that execution of the cloud computing associated tasks is delayed in the scheduling process in the prior art is effectively solved, the delay phenomena of the tasks can be reduced to the maximum, it is ensured that the tasks are completed within the time expected by a user, and meanwhile virtual machine resources are effectively utilized.

Owner:HOHAI UNIV

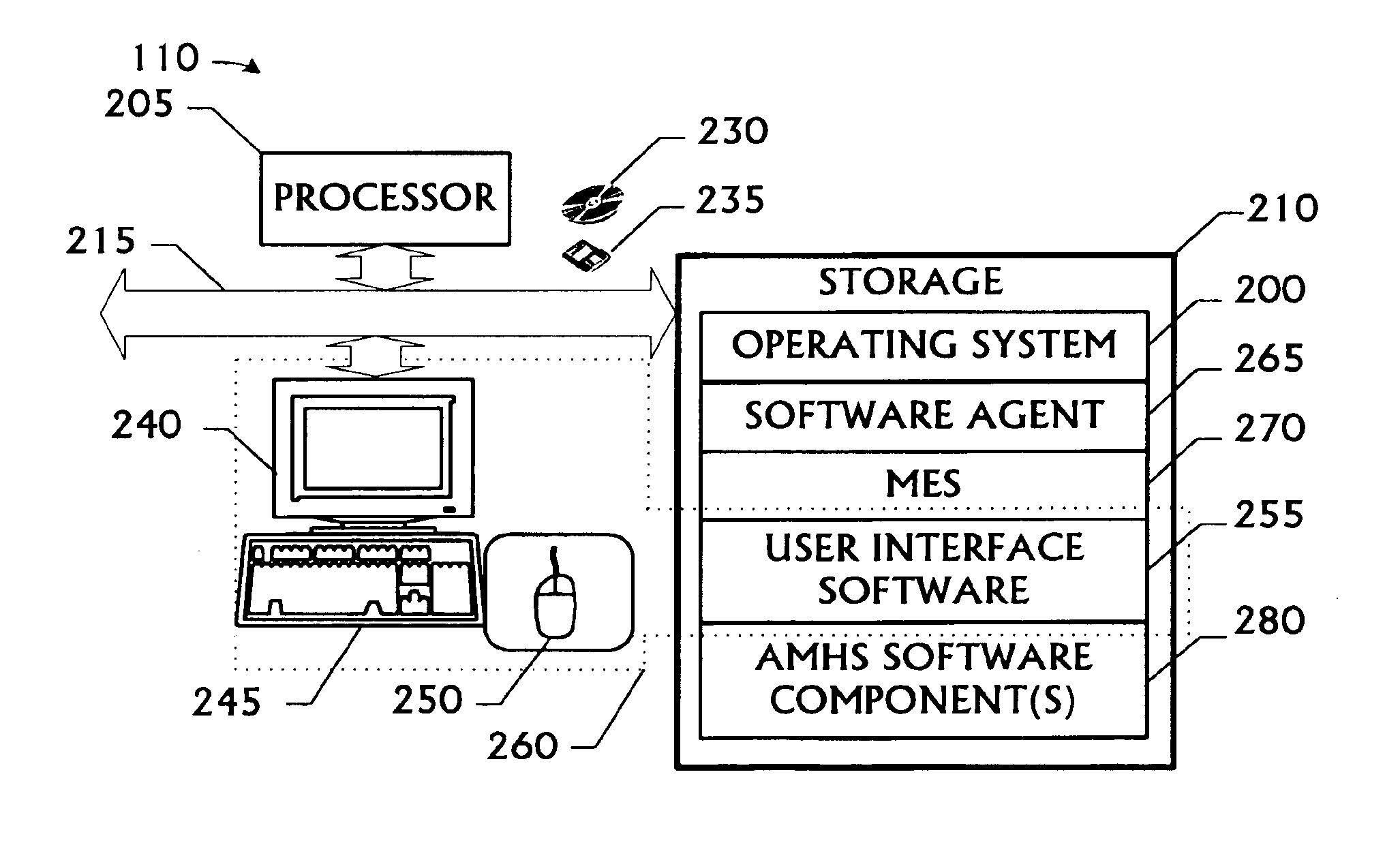

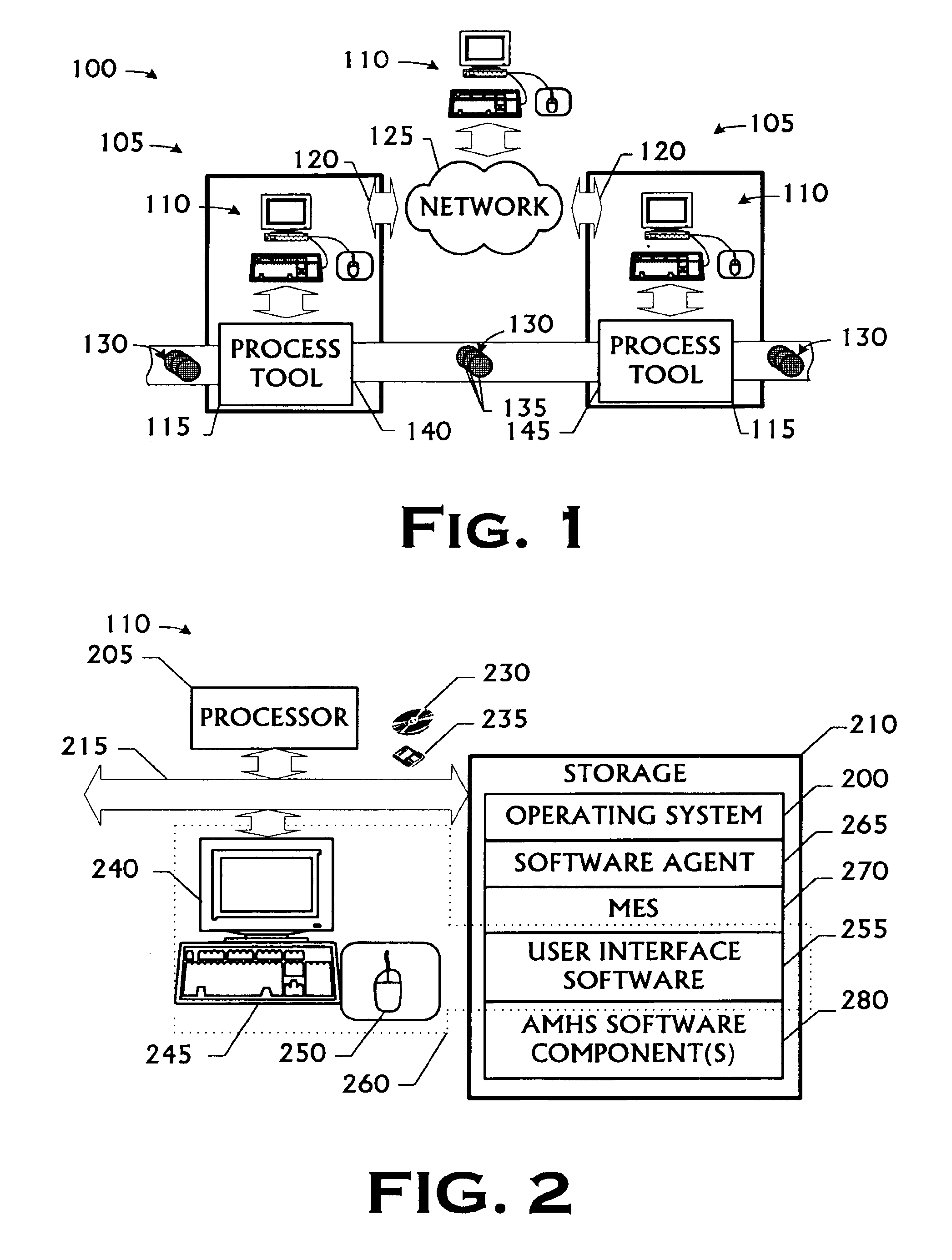

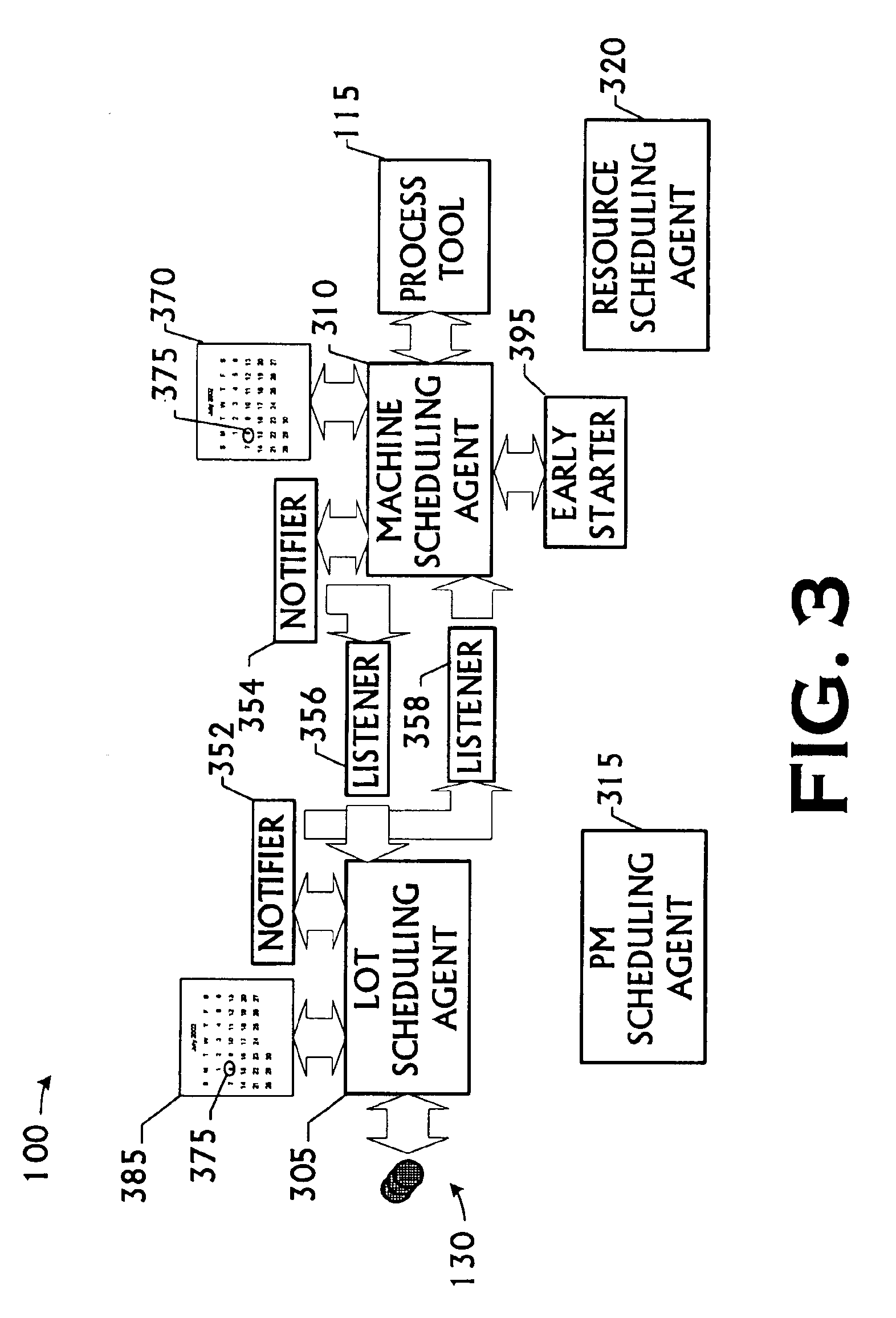

Agent reactive scheduling in an automated manufacturing environment

A method and apparatus for scheduling in an automated manufacturing environment, comprising are disclosed. The method includes detecting an occurrence of a predetermined event in a process flow; notifying a software scheduling agent of the occurrence; and reactively scheduling an action from the software scheduling agent responsive to the detection of the predetermined event. The apparatus is automated manufacturing environment including a process flow and a computing system. The computing system further includes a plurality of software scheduling agents residing thereon, the software scheduling agents being capable of reactively scheduling appointments for activities in the process flow responsive to a plurality of predetermined events.

Owner:OCEAN SEMICON LLC

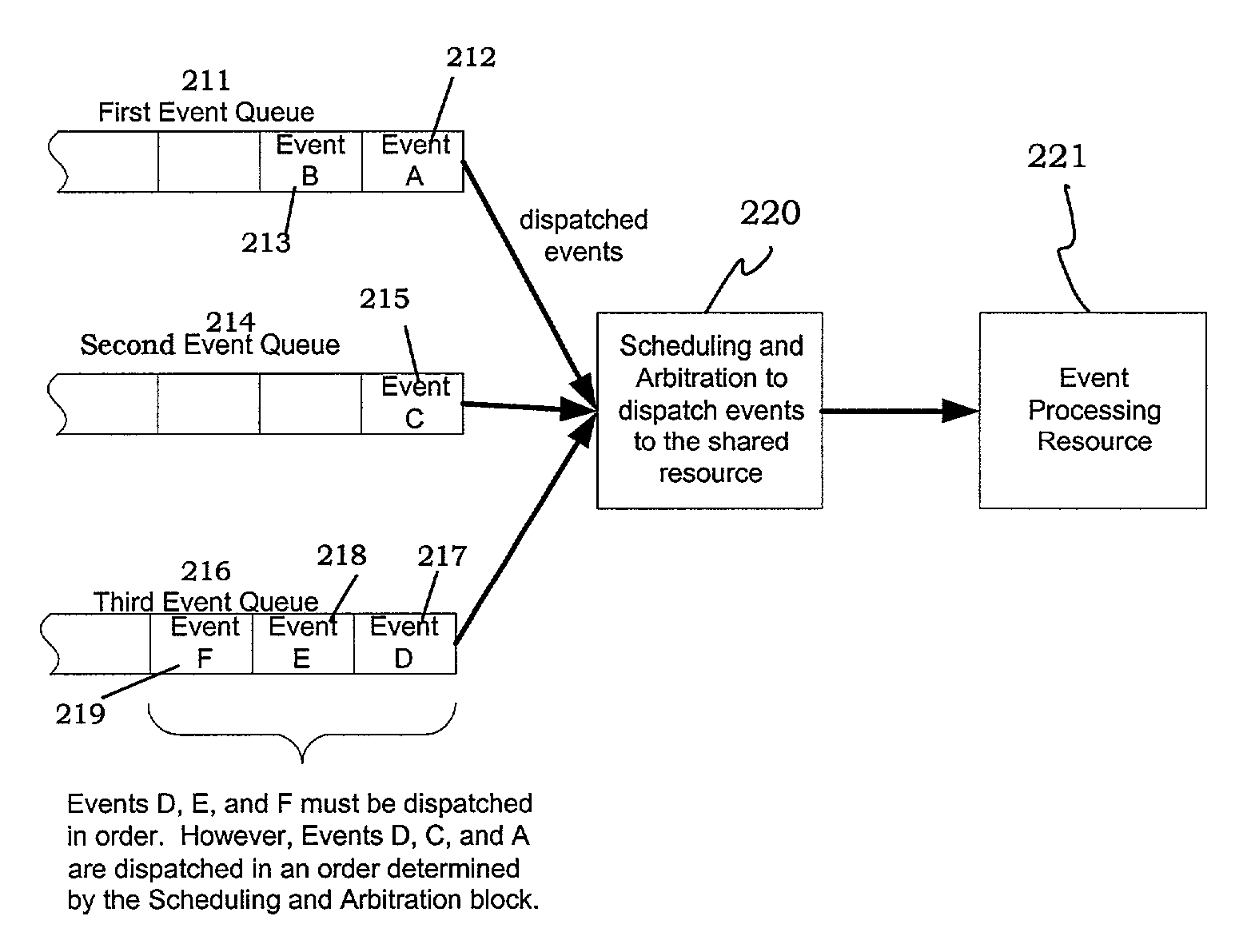

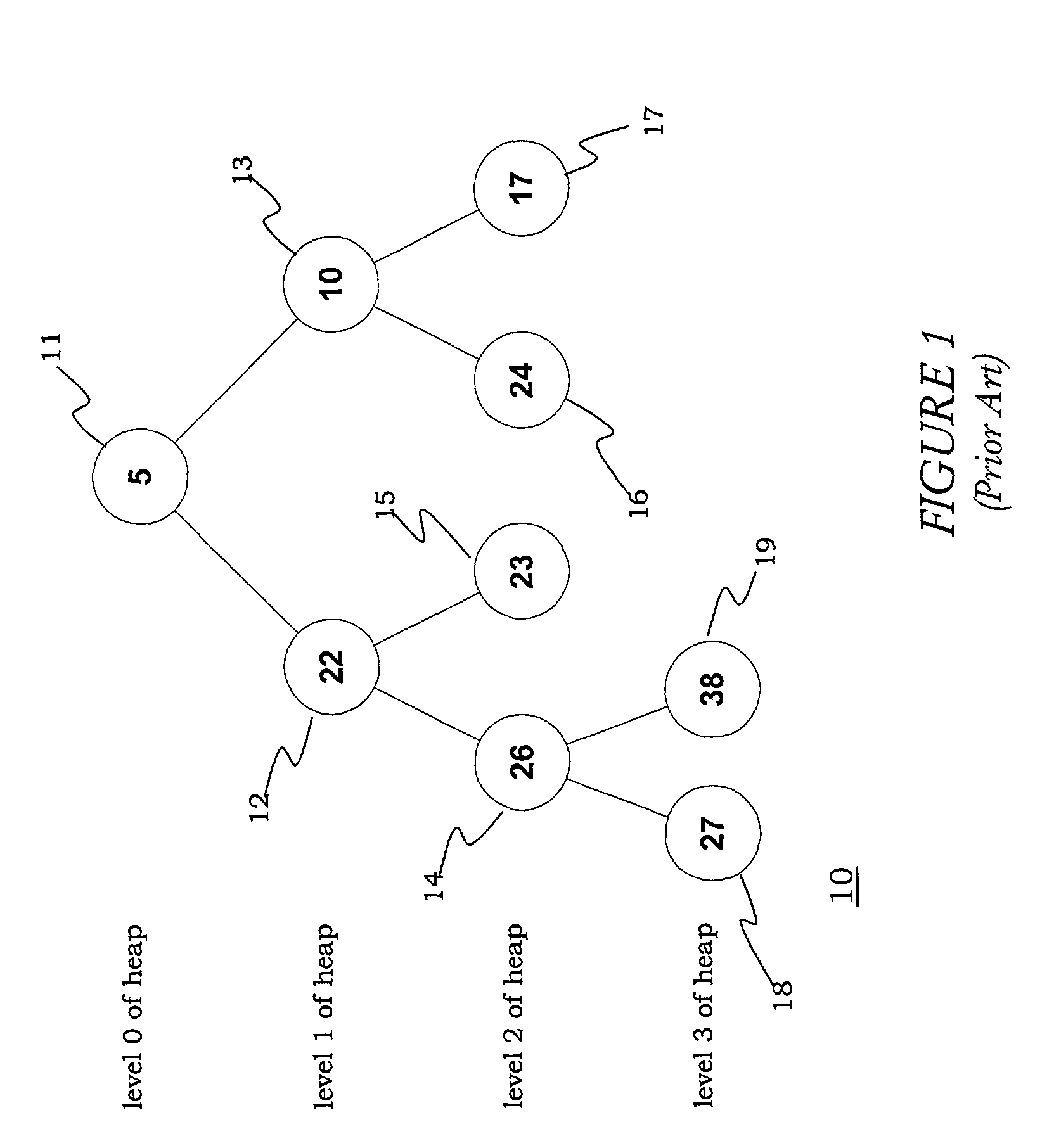

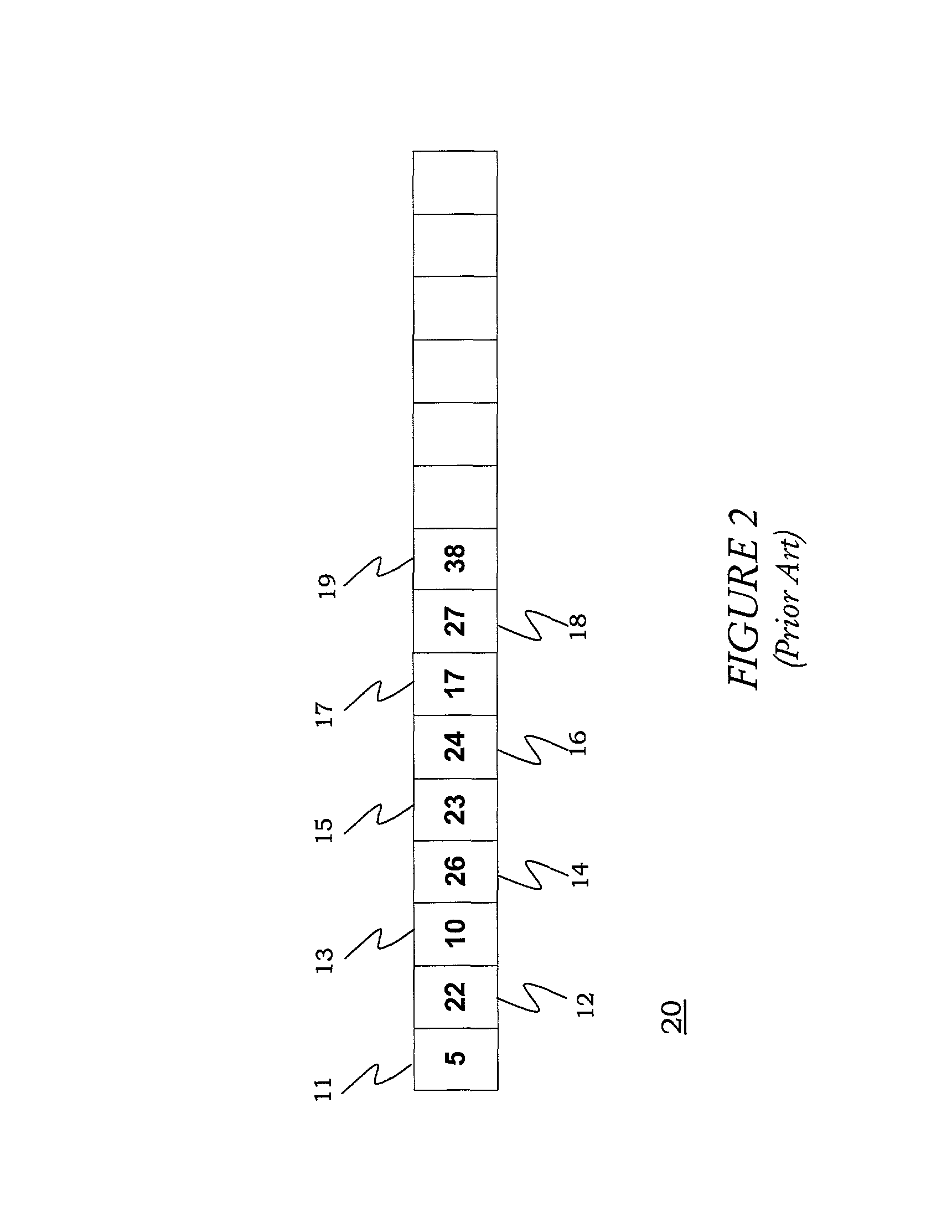

System and method for scheduling and arbitrating events in computing and networking

InactiveUS8032561B1Increase speedIncrease the number ofDigital data information retrievalData processing applicationsHardware implementationsData structure

A method for high-speed scheduling and arbitration of events for computing and networking is disclosed. The method includes the software and hardware implementation of a unique data structure, known as a pile, for scheduling and arbitration of events. According to the method, events are stored in loosely sorted order in piles, with the next event to be processed residing in the root node of the pile. The pipelining of the insertion and removal of events from the piles allows for simultaneous event removal and next event calculation. The method's inherent parallelisms thus allow for the automatic rescheduling of removed events for re-execution at a future time, also known as event swapping. The method executes in O(1) time.

Owner:ALTERA CORP

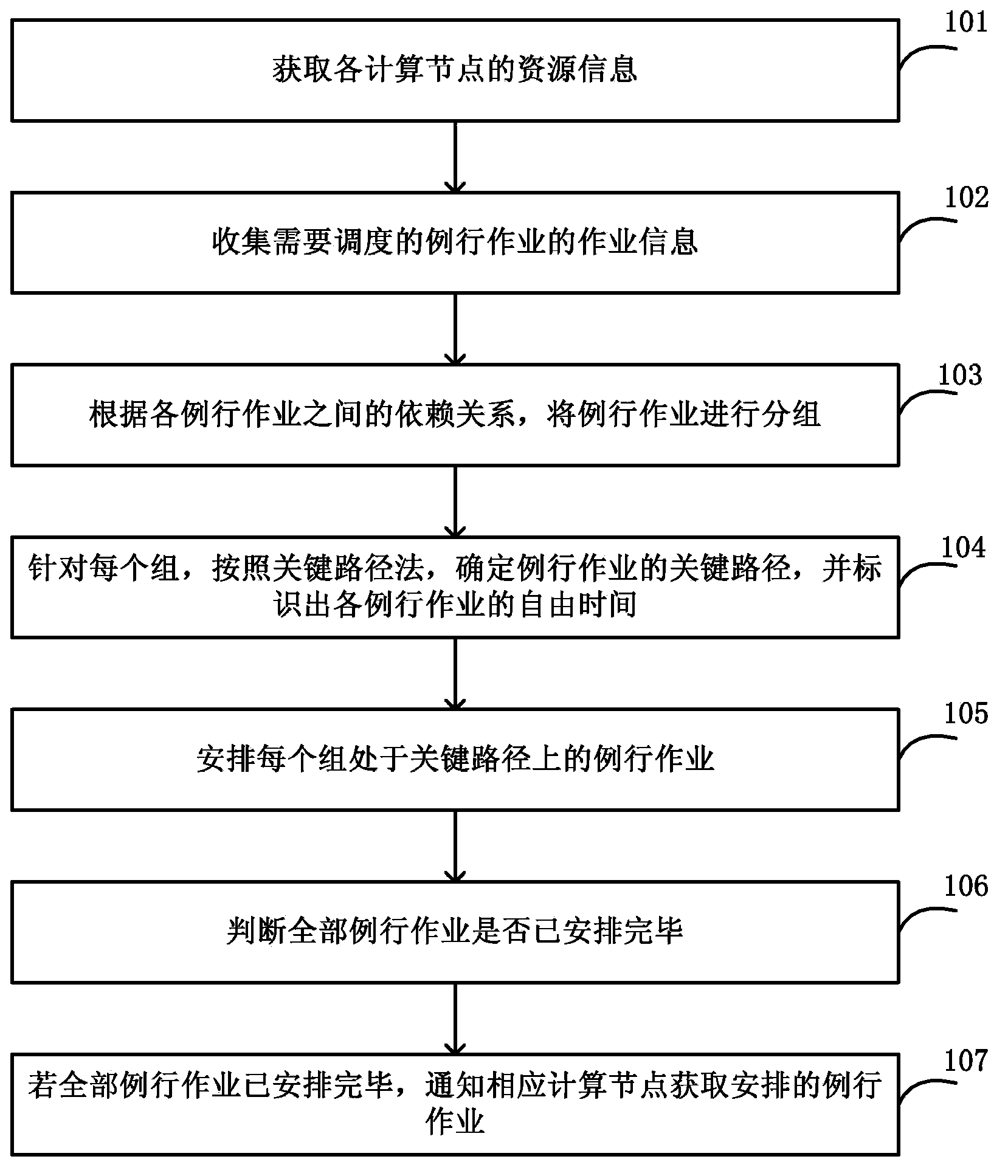

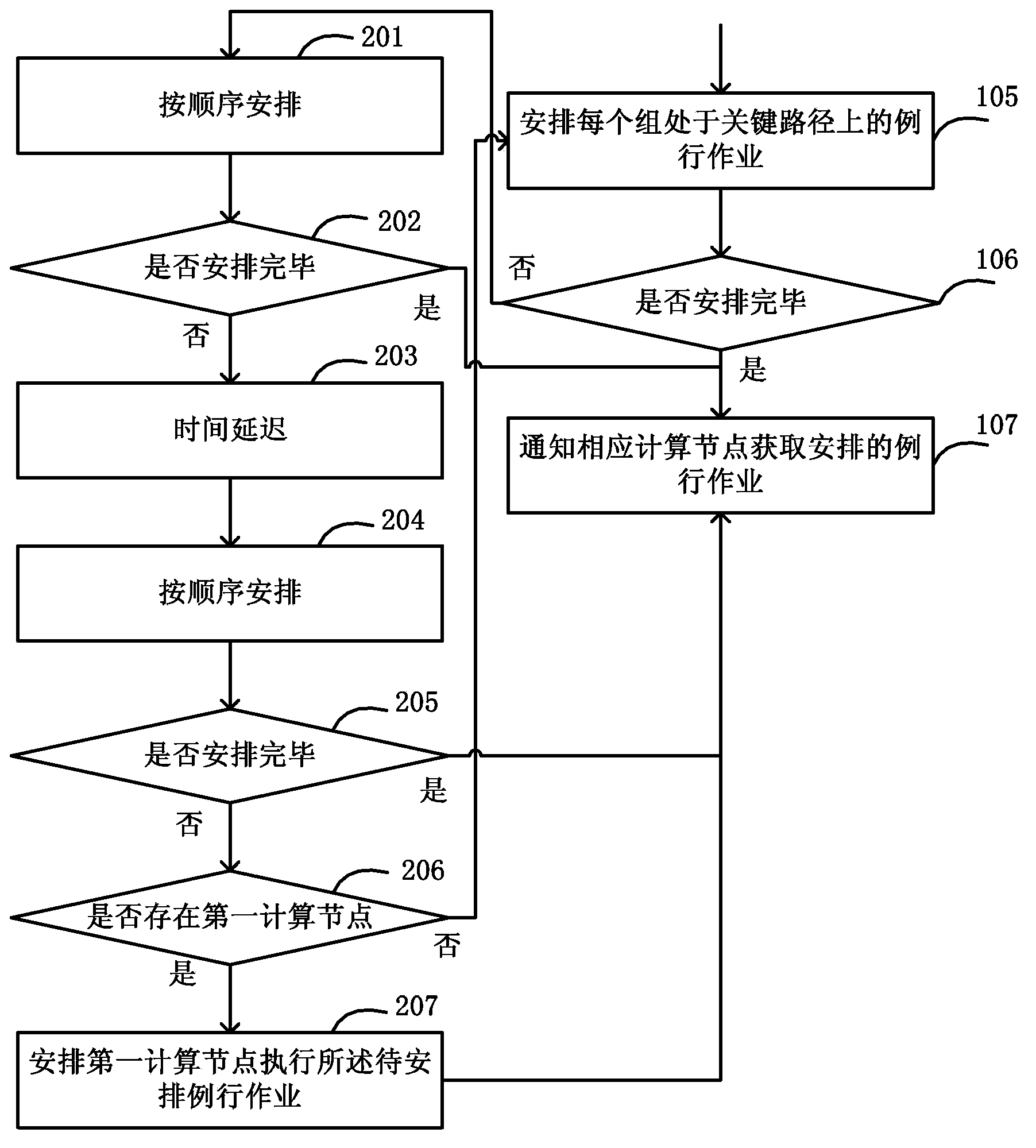

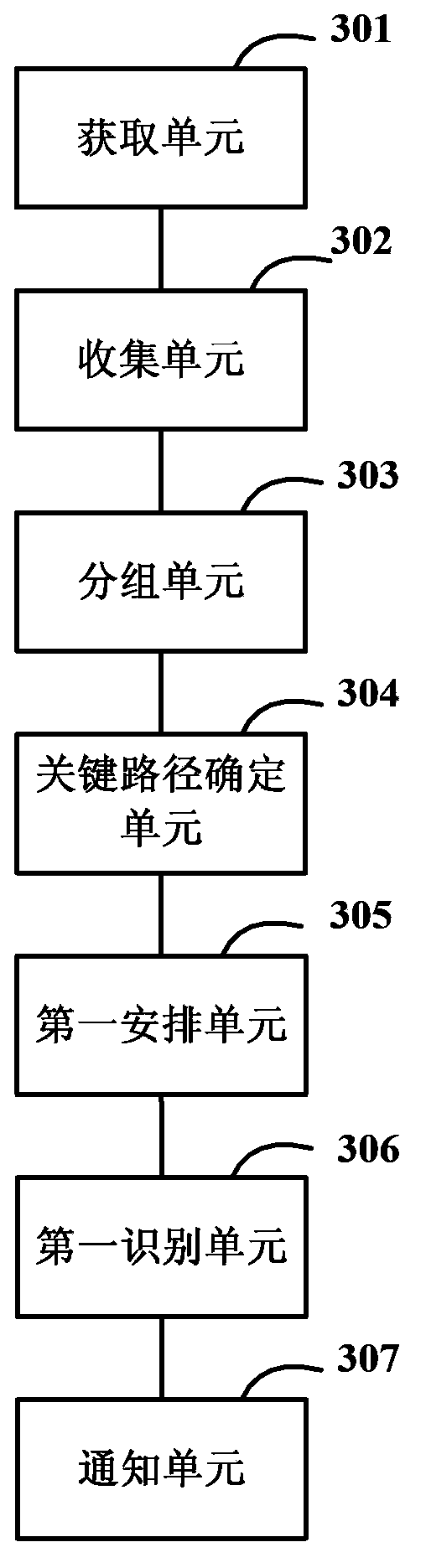

Method and system for scheduling routine work and scheduling nodes

ActiveCN103838621AChange resourceChange utilizationResource allocationResource informationCritical path method

The invention discloses a method and system for scheduling routine work and scheduling nodes. The method for scheduling the routine work comprises the steps of obtaining resource information of all computational nodes, collecting work information of the routine work required to be scheduled, dividing the routine work into groups according to the dependency relationship of the routine work, determining a key path of the routine work of each set according to a key path method, arranging each set to be in the routine work on the key path, judging whether all the routine work is arranged or not, if all the routine work is arranged, informing the corresponding computational nodes of obtaining the arranged routine work, and therefore enabling the corresponding computational nodes to conduct work processing according to the arranged routine work. Due to the fact that the routine work is arranged through the key path method, the processing blank of the routine work in a cloud computing environment is filled up, and the defects that in the past, due to manual arrangement, resources are busy and idle unevenly, and the utilization rate is low are overcome.

Owner:CHINA TELECOM CORP LTD

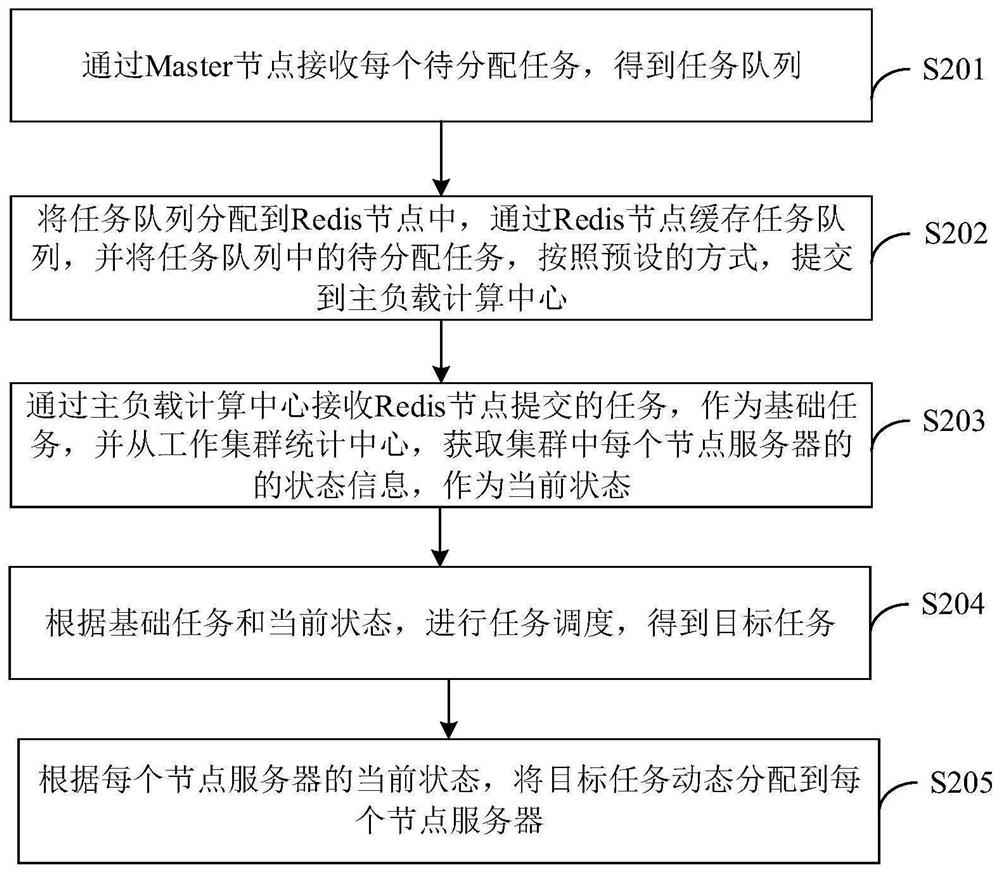

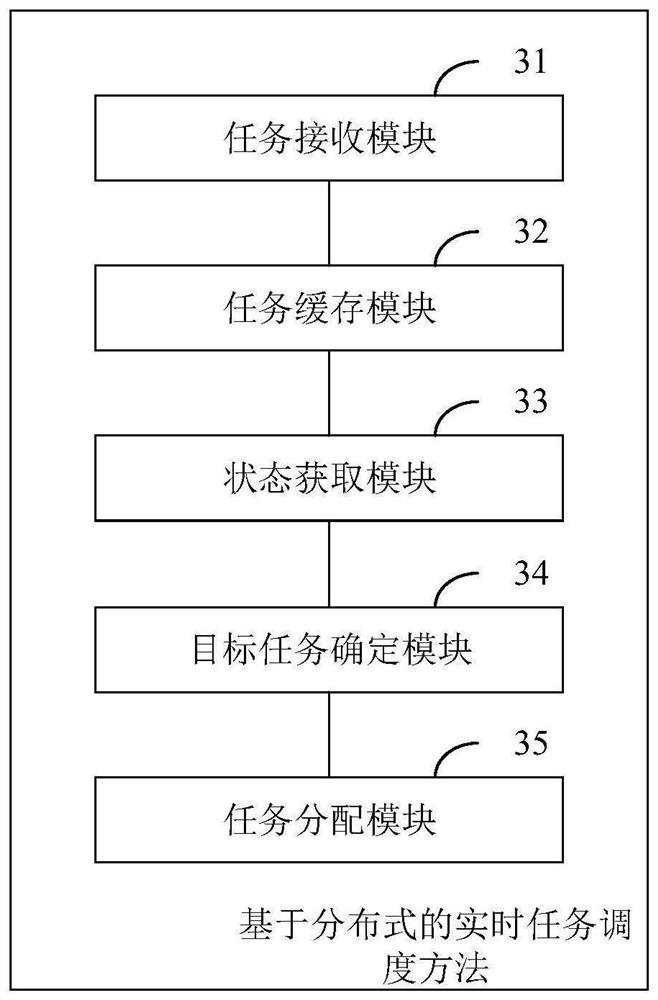

Distributed real-time task scheduling method and device, equipment and medium

PendingCN111813513AImprove availabilityImprove task allocation efficiencyProgram initiation/switchingResource allocationComputing centerEngineering

The invention discloses a distributed real-time task scheduling method and device, equipment and a storage medium. The distributed real-time task scheduling method comprises the following steps: receiving each task to be distributed through a Master node, and obtaining a task queue; distributing the task queue to a Redis node; caching a task queue through a Redis node; distributing the tasks to bedistributed in the task queue; according to a preset mode, submitting to a main load computing center; receiving a task submitted by the Redis node through a main load calculation center as a basic task; acquiring state information of each node server in the cluster from a work cluster statistics center as the current state; according to the basic task and the current state, performing task scheduling to obtain a target task; and dynamically distributing the target task to each node server according to the current state of each node server. The invention further relates to a blockchain technology, wherein the basic task, the current state and the target task can be stored in a blockchain node. The distributed real-time task scheduling method and device can improve the task scheduling efficiency.

Owner:CHINA PING AN LIFE INSURANCE CO LTD

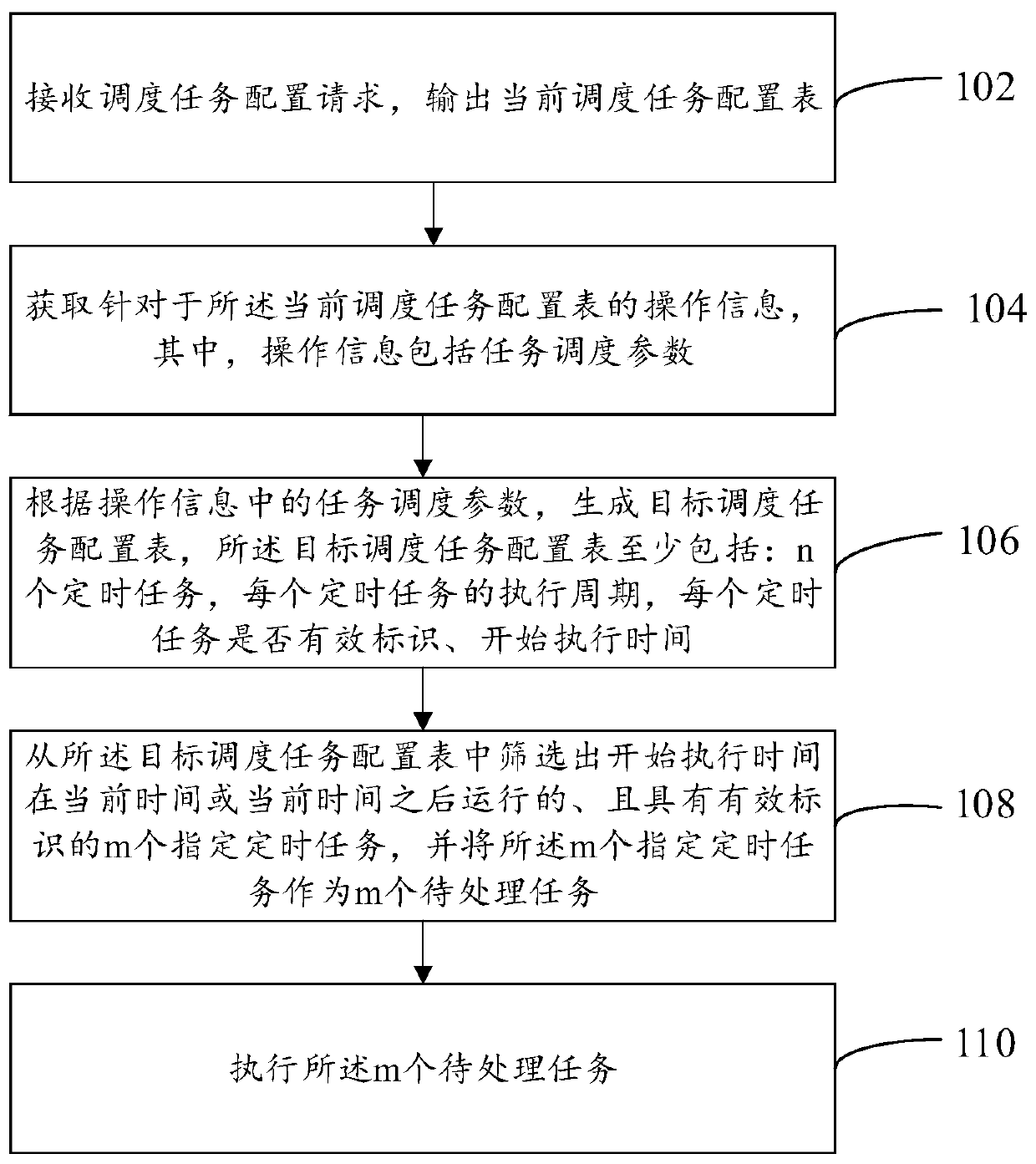

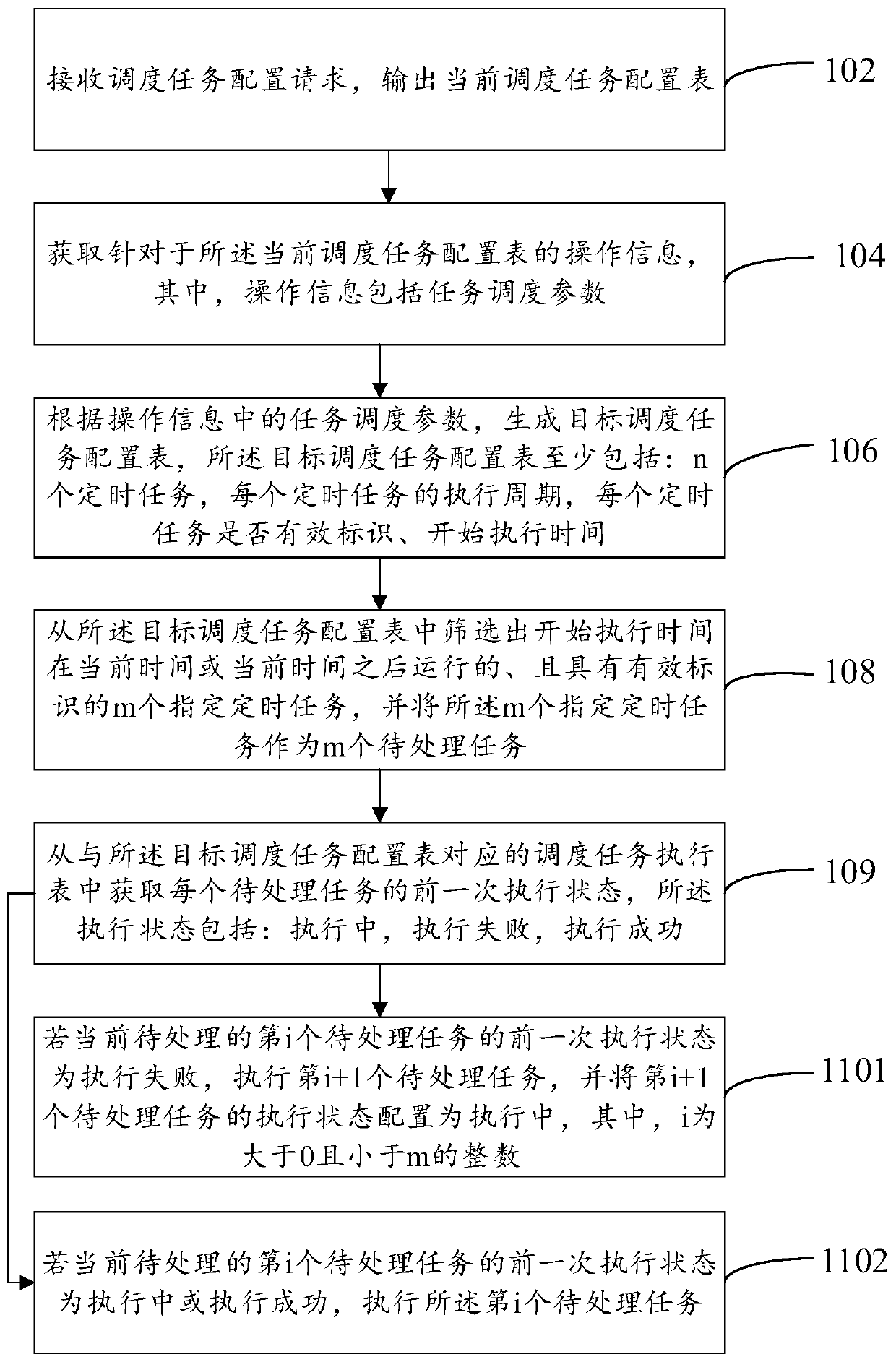

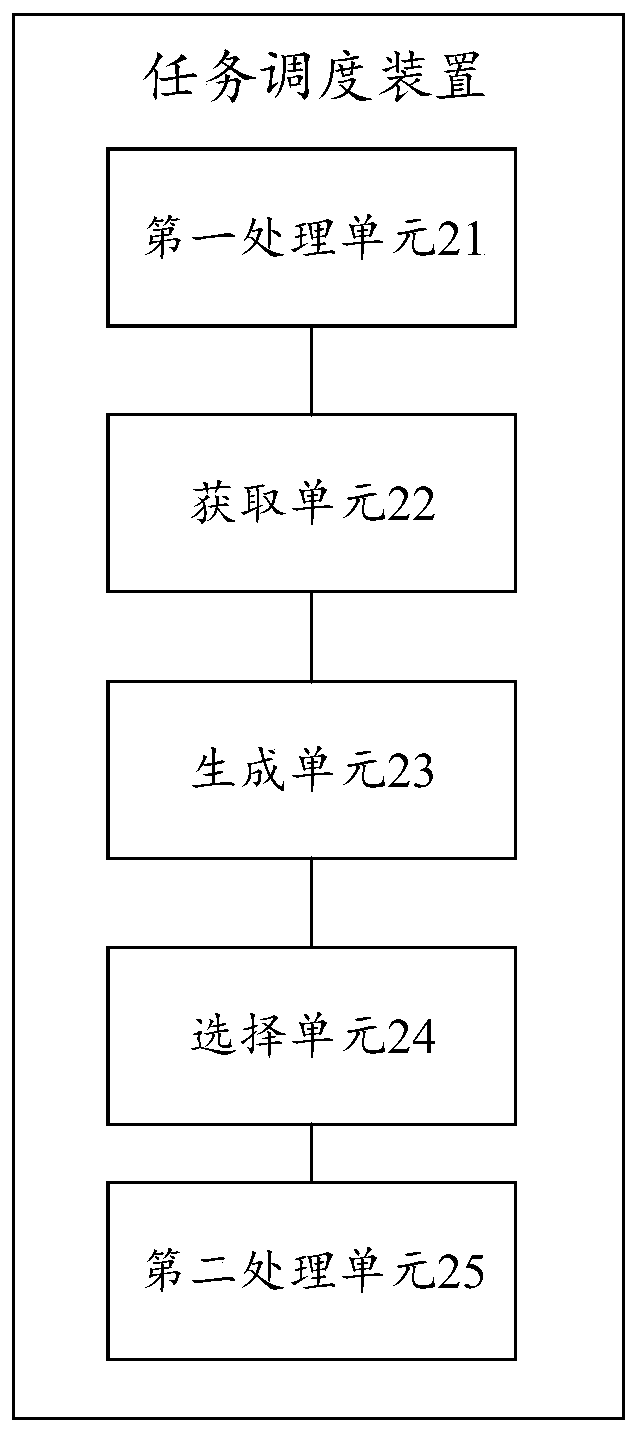

Task scheduling method and device and electronic terminal

PendingCN109901920AModification of scheduling requirementsProgram initiation/switchingStart timeComputer terminal

The embodiment of the invention provides a task scheduling method and device and an electronic terminal, and relates to the technical field of computing. The method comprises the steps: a scheduling task configuration request is received, and a current scheduling task configuration table is output; Then, operation information for the current scheduling task configuration table is obtained, and theoperation information comprises task scheduling parameters; According to task scheduling parameters in the operation information, a target scheduling task configuration table is generated, and the target scheduling task configuration table at least comprises n timing tasks, whether each timing task is effective or not and execution starting time; Therefore, m specified timing tasks with effectiveidentifiers are screened out from the target scheduling task configuration table, the execution starting time of the m specified timing tasks is at the current time or after the current time, and them specified timing tasks serve as m to-be-processed tasks; And then m to-be-processed tasks are executed. Therefore, according to the technical scheme provided by the embodiment of the invention, theswitch workload and problems in the development process can be reduced, and the requirements of flexibly increasing and modifying the quartz scheduling are met.

Owner:CHINA PING AN PROPERTY INSURANCE CO LTD

Multi-timing-sequence task scheduling method and system

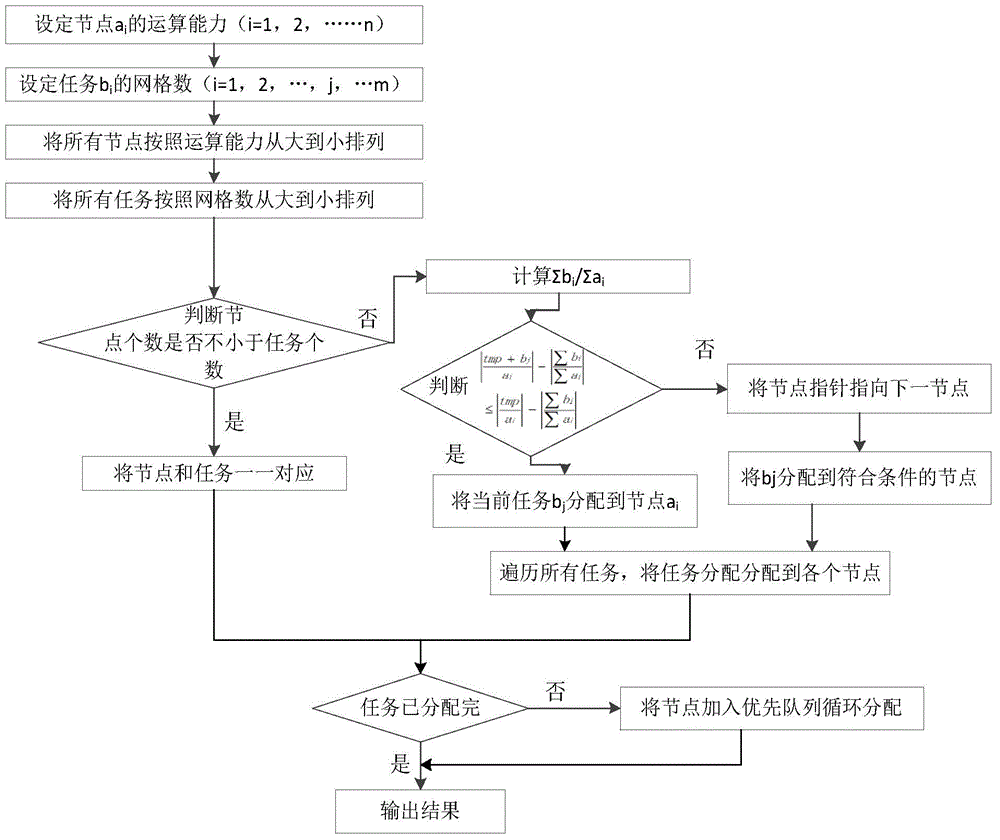

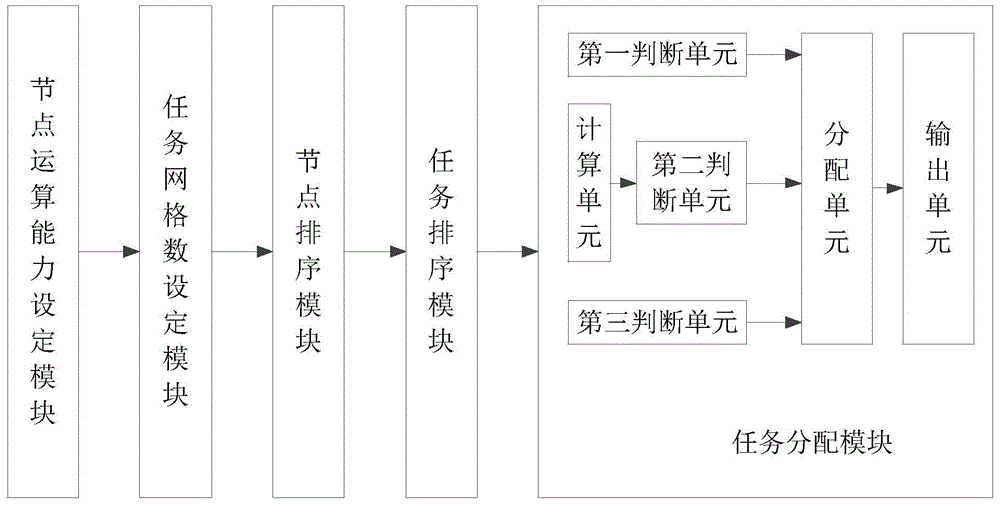

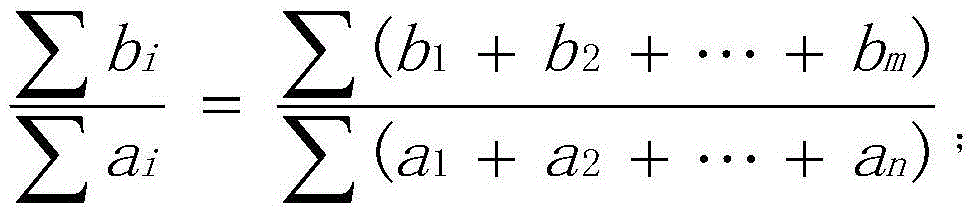

ActiveCN104793990AAvoid load imbalanceSolve low resource utilizationResource allocationGreedy algorithmOperational capabilities

The invention relates to a task scheduling method and system, in particular to a multi-timing-sequence task scheduling method and system. The method comprises the steps that node information is collected, and according to the node information, the operational capability of each node is set; task information is collected, and according to the task information, the grid number of each task is set; according to operational capability, all nodes are sorted; according to the grid number of each task, all tasks are sorted; and according to the load state of each node, a greedy algorithm is used, and according to the mode that nodes with high operational capability process tasks with the large grid numbers, the tasks are distributed to the nodes. Resource distribution on a current platform and the loading capacity and states of the nodes in the platform are considered, during task scheduling, tasks with high calculated quantity are distributed to node with high computing capacity, the problems of starvation states and the low system resource using rate can be effectively avoided, overall operational efficiency is improved, and operation time is optimized.

Owner:OCEAN UNIV OF CHINA

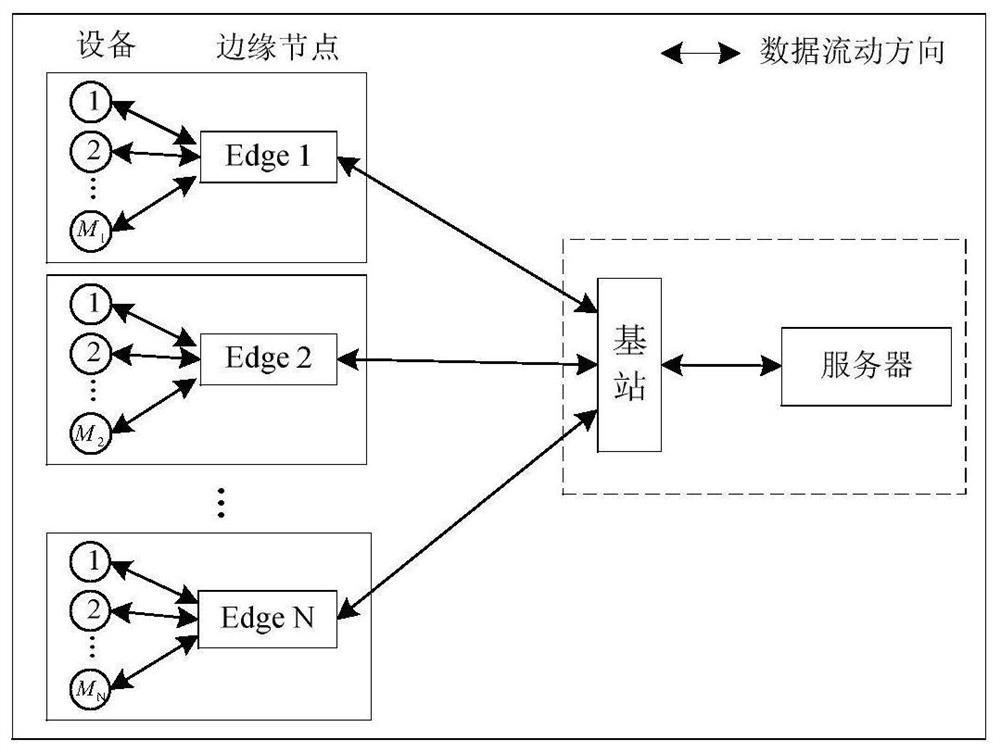

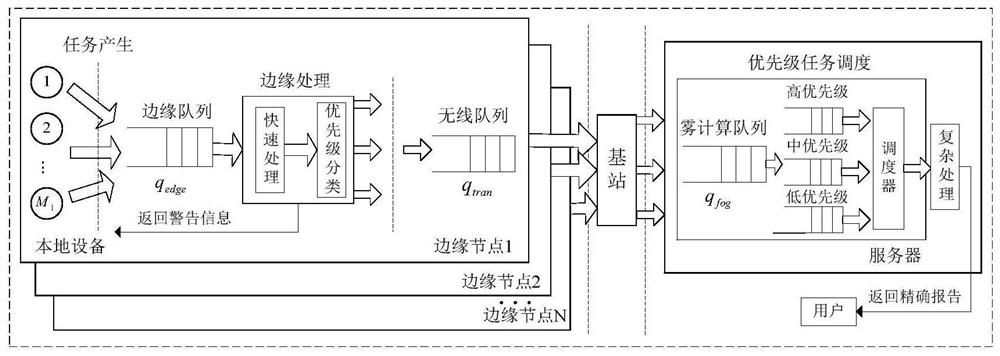

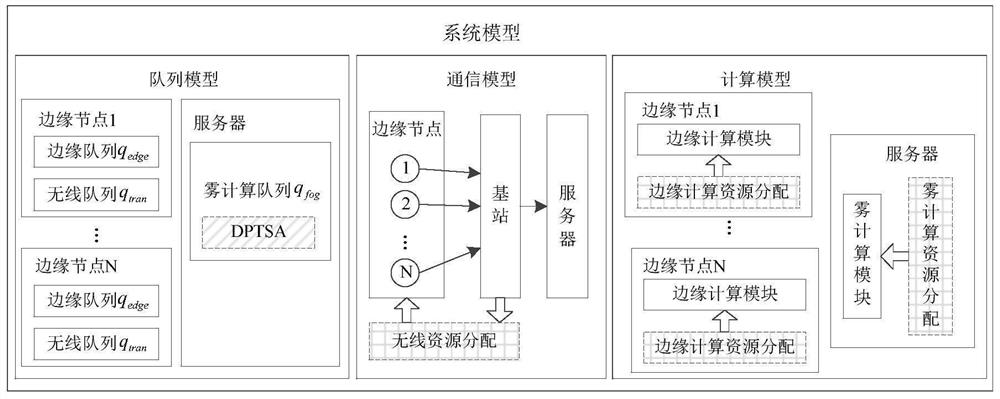

Hierarchical edge computing unloading method based on priority

ActiveCN111954236AReduce latencyImprove securityTransmissionWireless communicationUser deviceTime delays

The invention discloses a hierarchical edge computing unloading method based on priority. The method comprises a resource allocation and task scheduling strategy taking time delay minimization as an optimization target. On one hand, a layered edge computing framework is constructed for an emergency scene, edge nodes which are closer to user equipment and have fewer computing resources process simple tasks so as to quickly return warning information, and servers which are farther from the user equipment and have more computing resources process complex tasks so as to finally return accurate results. And on the other hand, a resource allocation and task scheduling optimization scheme based on the service emergency priority is provided for the problem that the time delay is increased due to limited resource competition, so that the total time delay of the system is minimized. A dynamic priority task scheduling algorithm (DPTSA) on a server is designed in order to ensure that the time delay of high priority tasks is still minimum under the condition that the tasks are very dense. Therefore, the user can obtain real-time response as soon as possible in case of emergency.

Owner:HOHAI UNIV

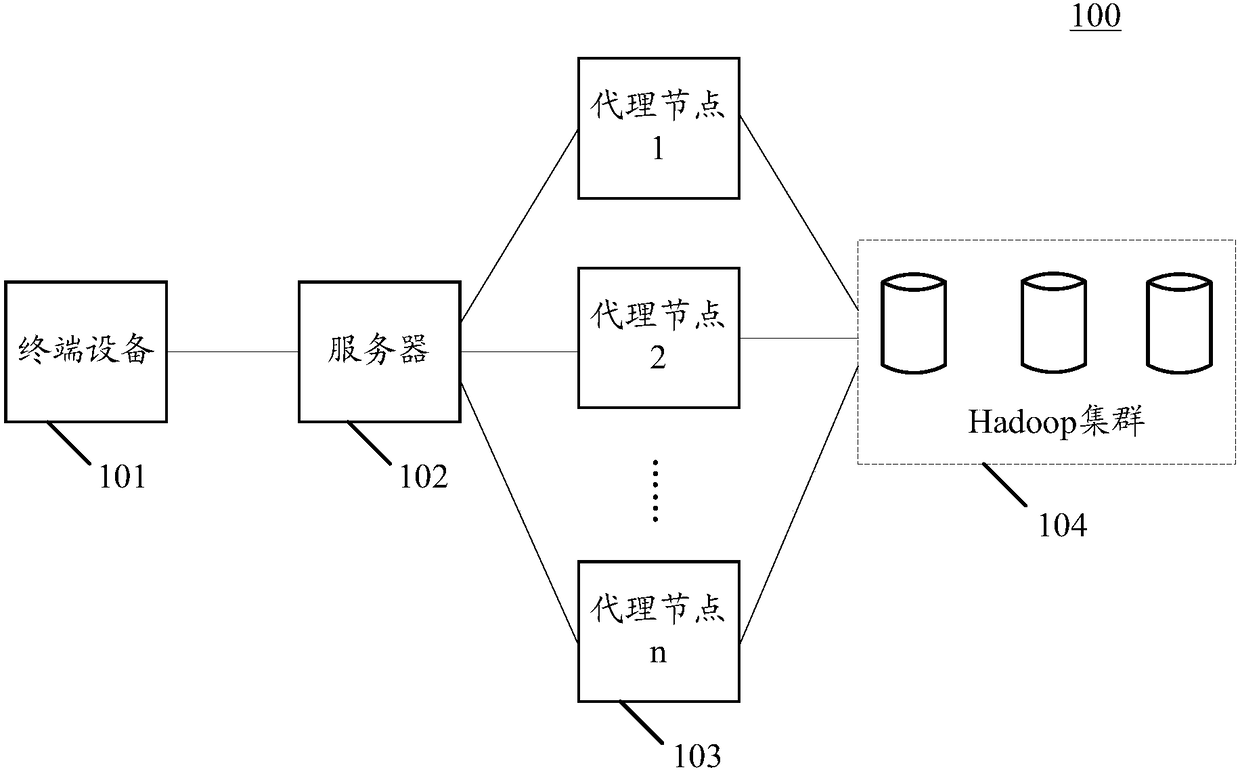

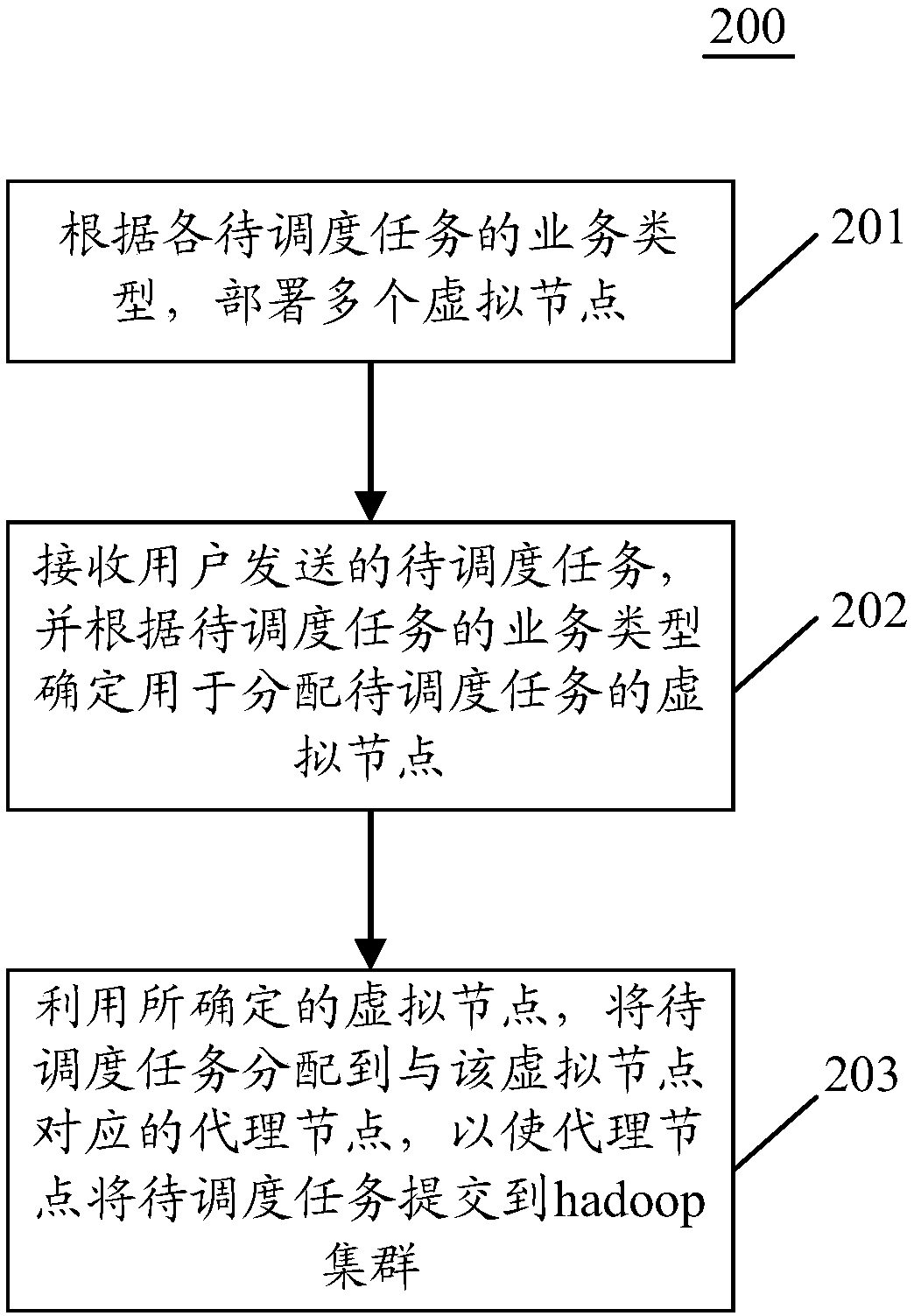

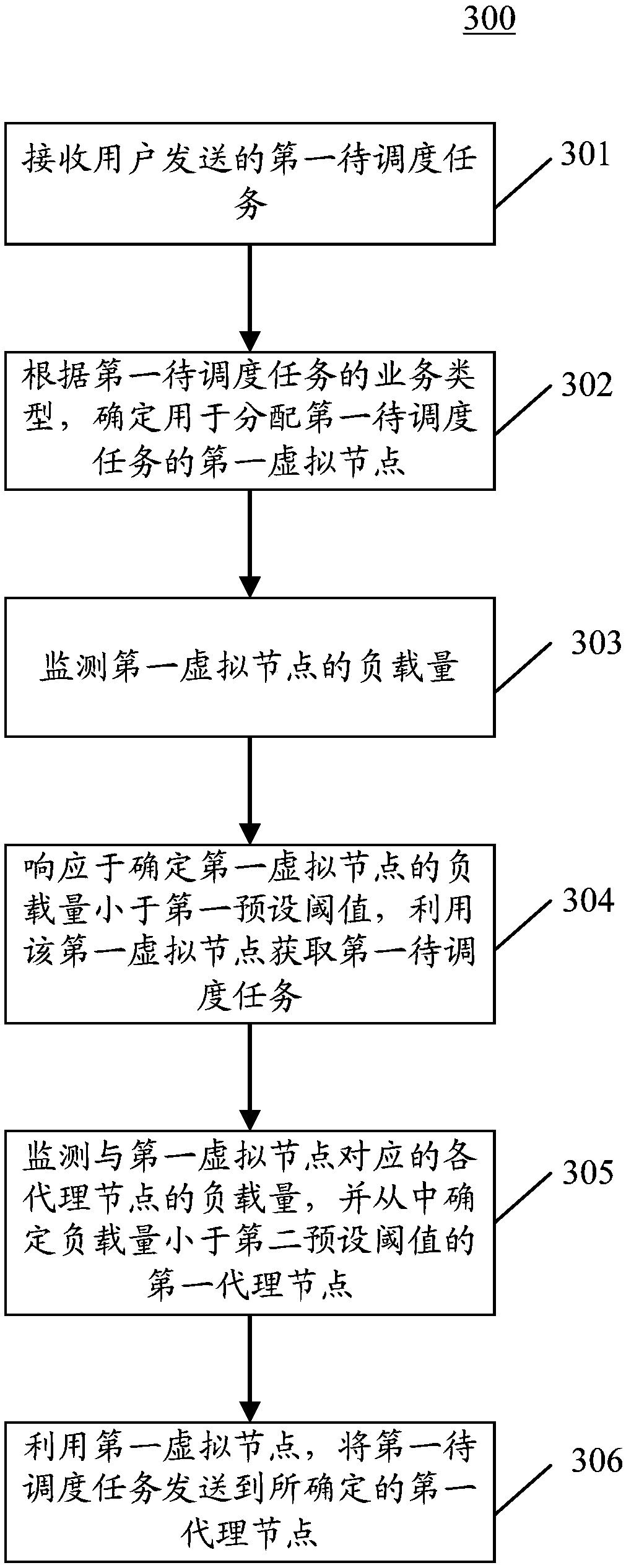

Task scheduling method and device based on hadoop cluster

ActiveCN109408205AAvoid resource grabbingBig amount of dataProgram initiation/switchingResource allocationDistributed computingConcurrent computation

An embodiment of the present application discloses a task scheduling method and device based on a hadoop cluster. A specific embodiment of the method includes: deploying a plurality of virtual nodes according to a service type of each task to be scheduled, wherein each service type corresponds to at least one virtual node; receiving a task to be scheduled sent by a user, and determining a virtualnode for allocating the task to be scheduled according to the service type of the task to be scheduled; using the determined virtual node, the task to be scheduled is assigned to a proxy node corresponding to the virtual node so that the proxy node submits the task to be scheduled to the hadoop cluster, wherein each virtual node corresponds to at least one proxy node. The embodiment determines a virtual node and a corresponding proxy node for submitting a task to be scheduled to a hadoop cluster according to the service type of the task to be scheduled, and can realize the parallel computing requirements of a large number of tasks to be scheduled of different service types.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

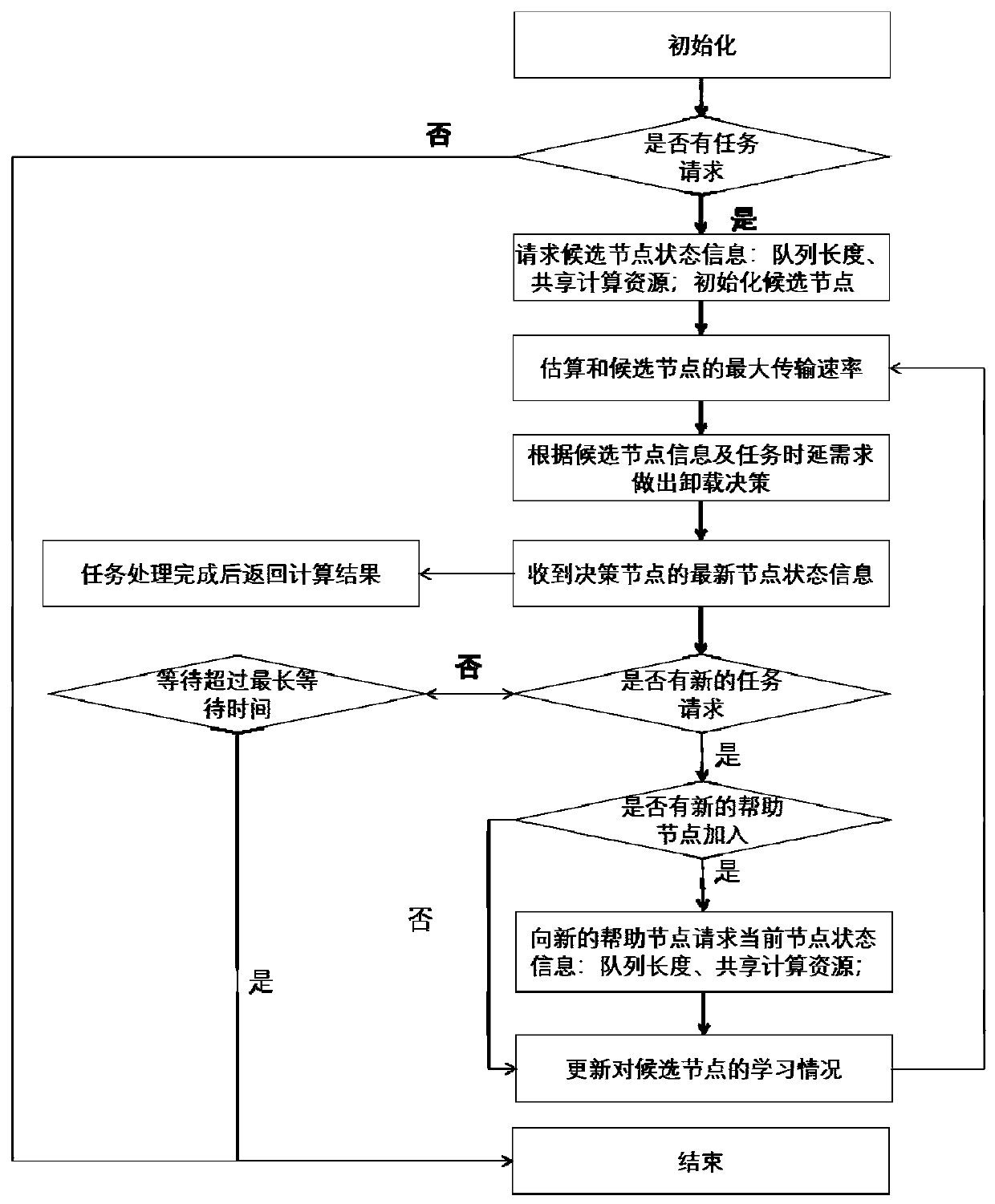

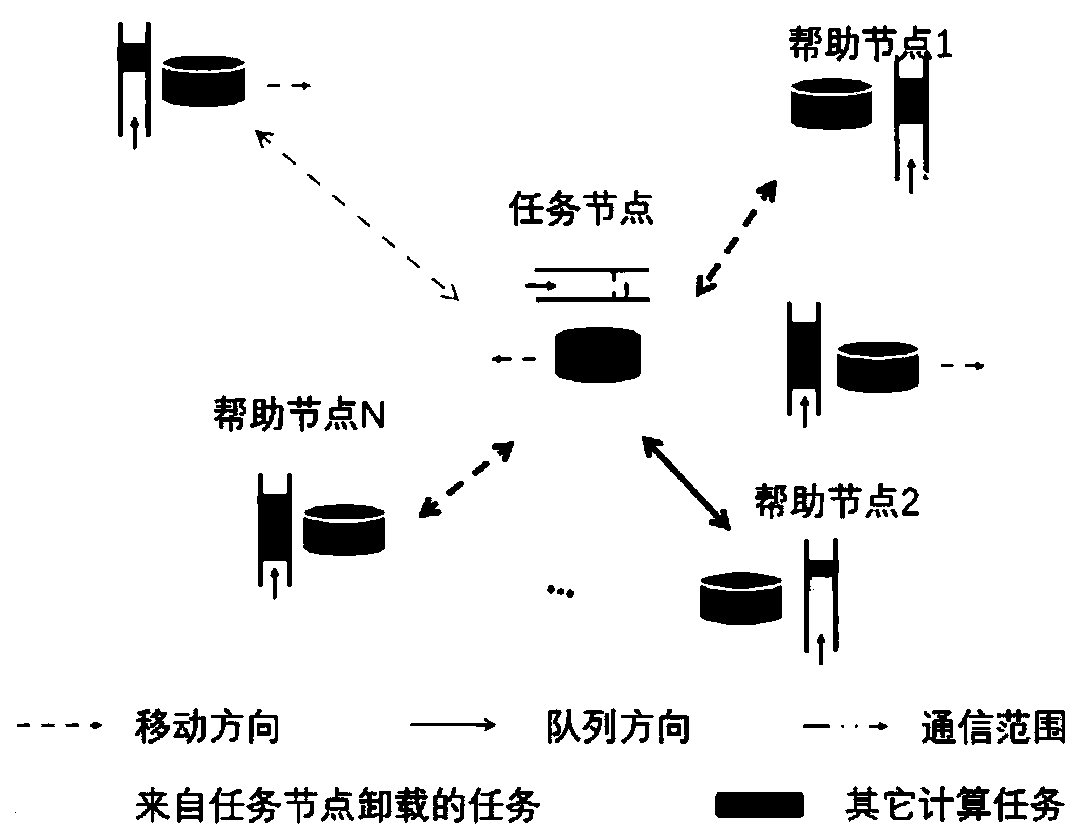

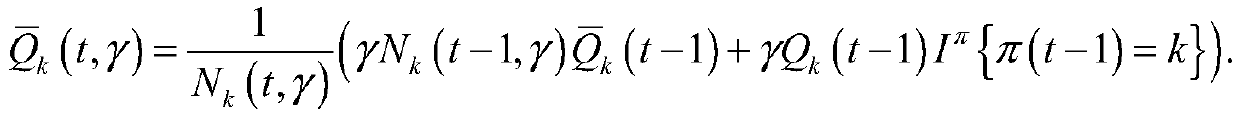

Low-delay task scheduling method for a dynamic fog computing network

The invention provides a low-delay task scheduling method for a dynamic fog computing network, which is characterized in that a help node in the network cannot broadcast own node state information inreal time, such as task queue information and sharable computing resource information, or the help node cannot respond to requests of the information in real time. And when a task unloading requirement exists each time, the task node needs to make an unloading decision in real time, and an unloading task is selected from the current candidate help nodes. Due to the fact that the task nodes have unknown states for the help nodes and the tasks have time delay requirements, the task nodes need to well unload experiences from previous tasks for learning, and judgment is provided for current decision making. The invention provides a one-to-many task unloading algorithm based on an online learning method for non-dynamic and dynamic changing fog computing or edge computing networks, and the method can greatly reduce the energy expenditure caused by information transmission in the network and prolong the service time of task nodes and help nodes.

Owner:SHANGHAI TECH UNIV

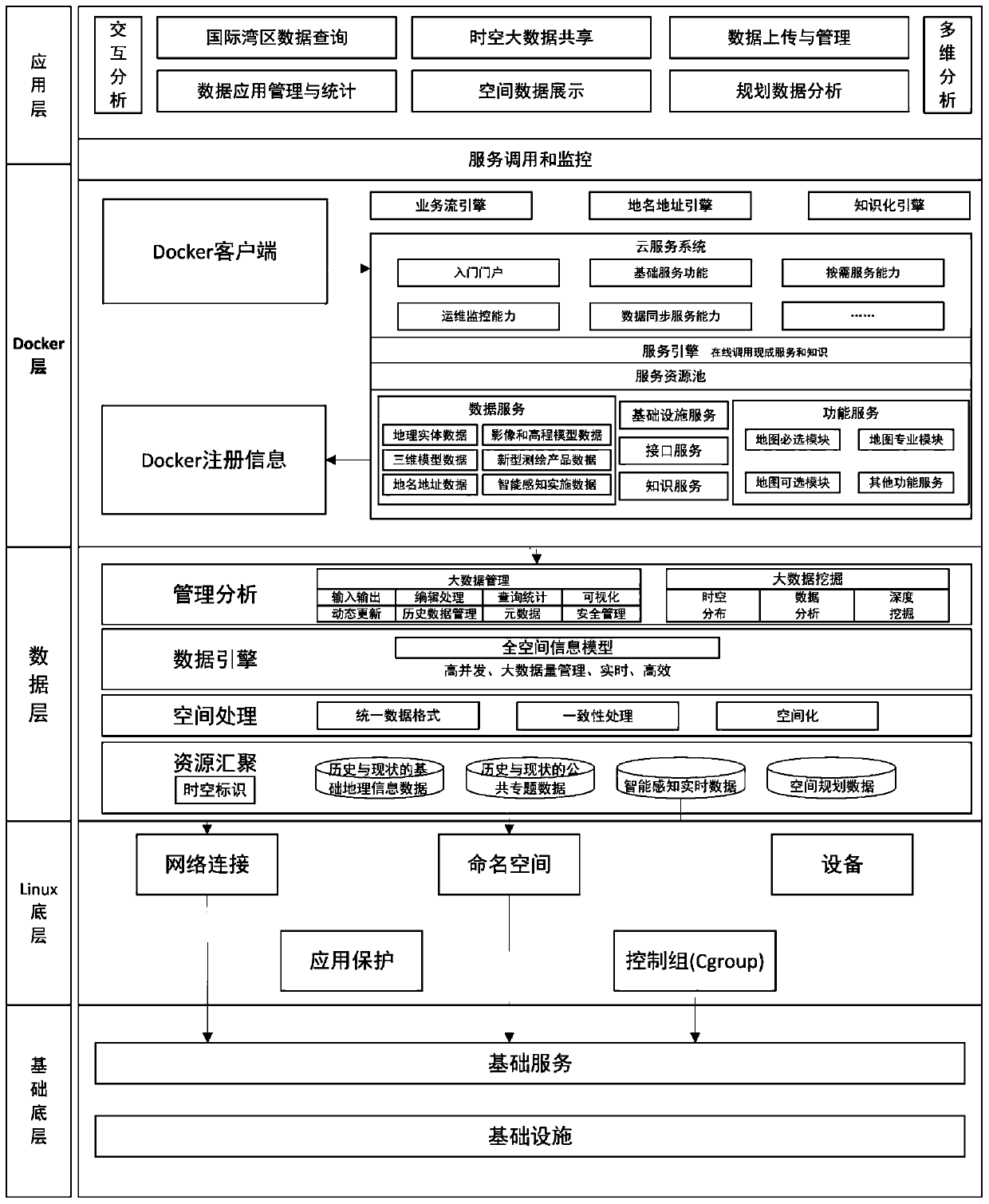

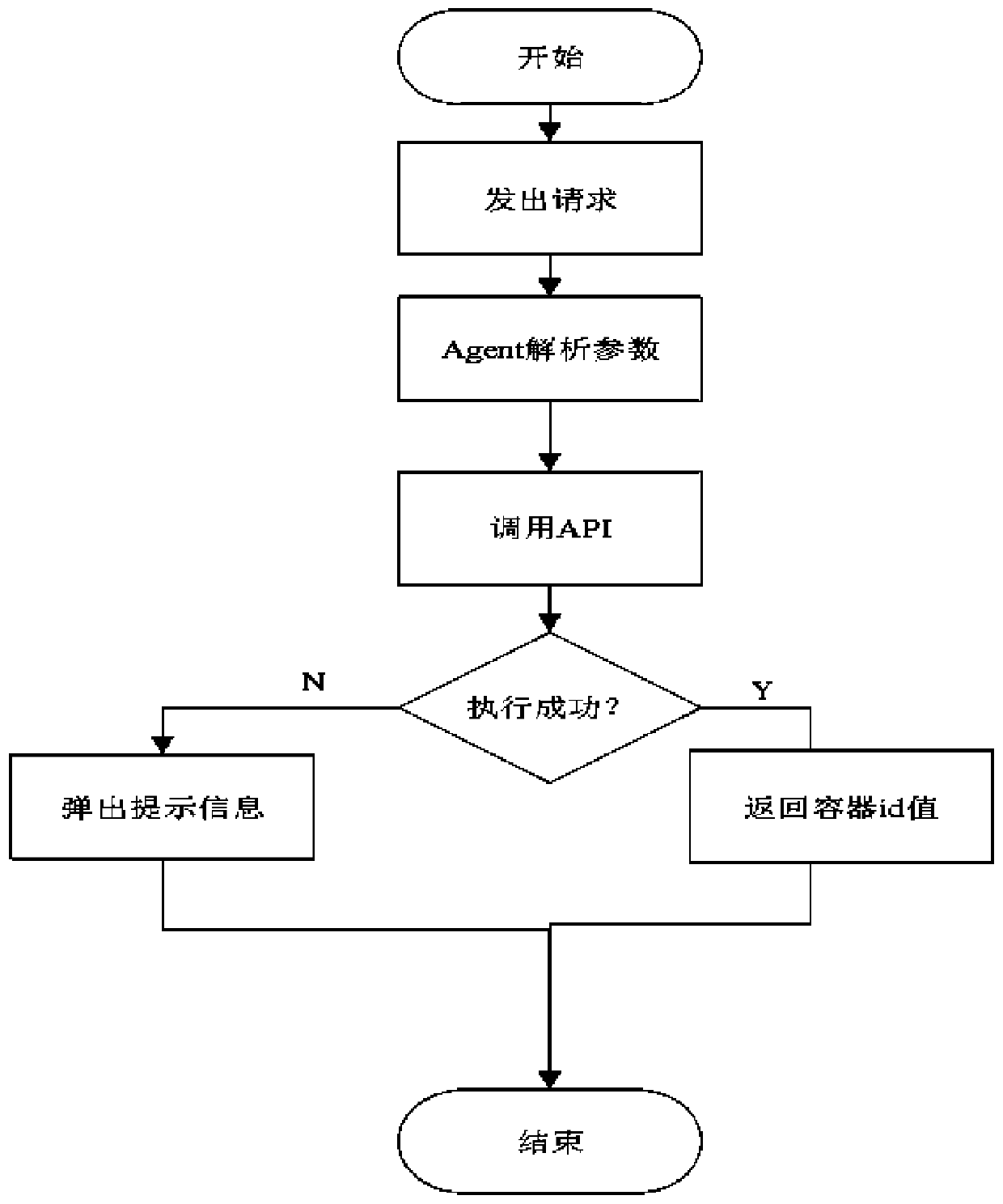

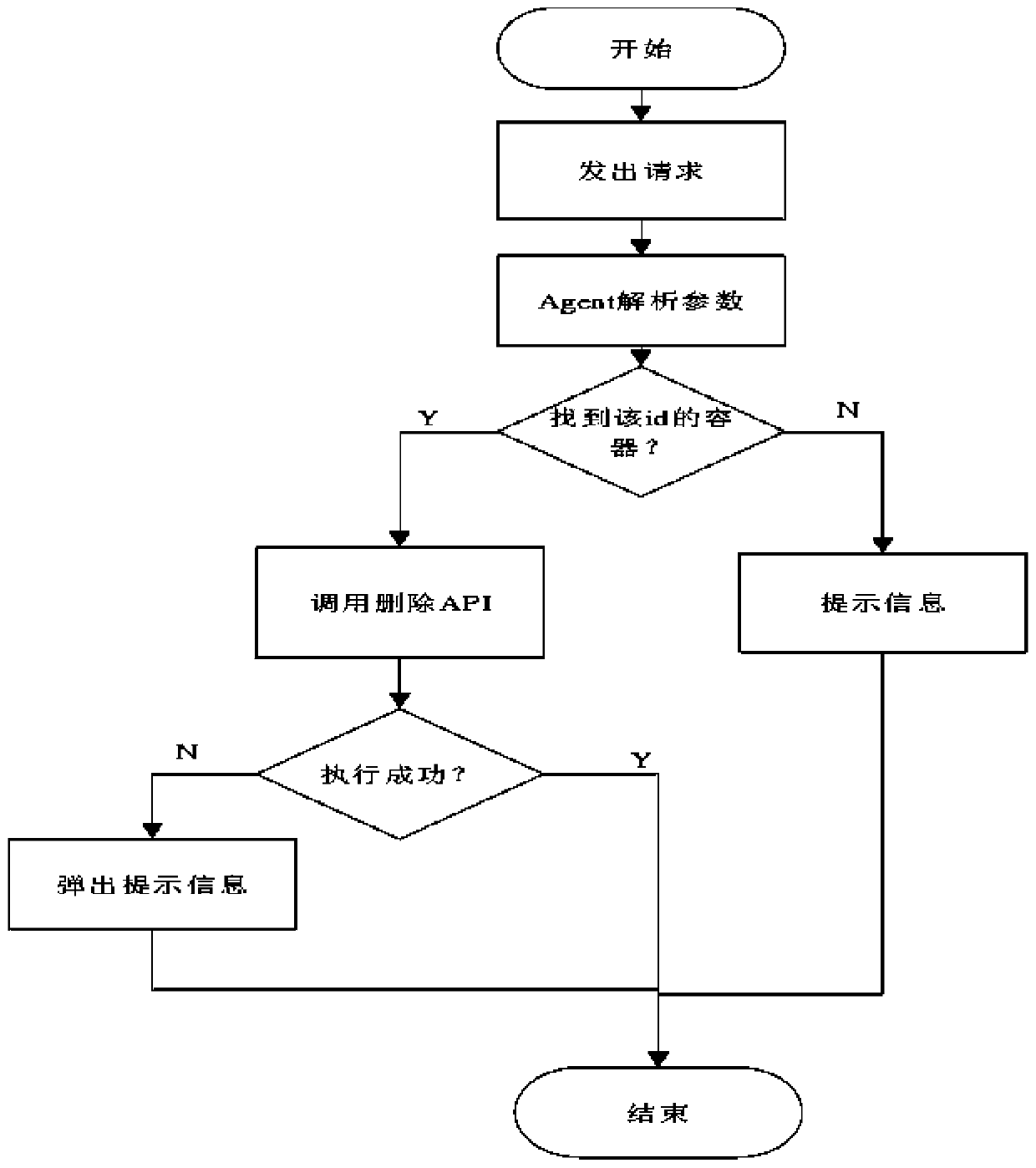

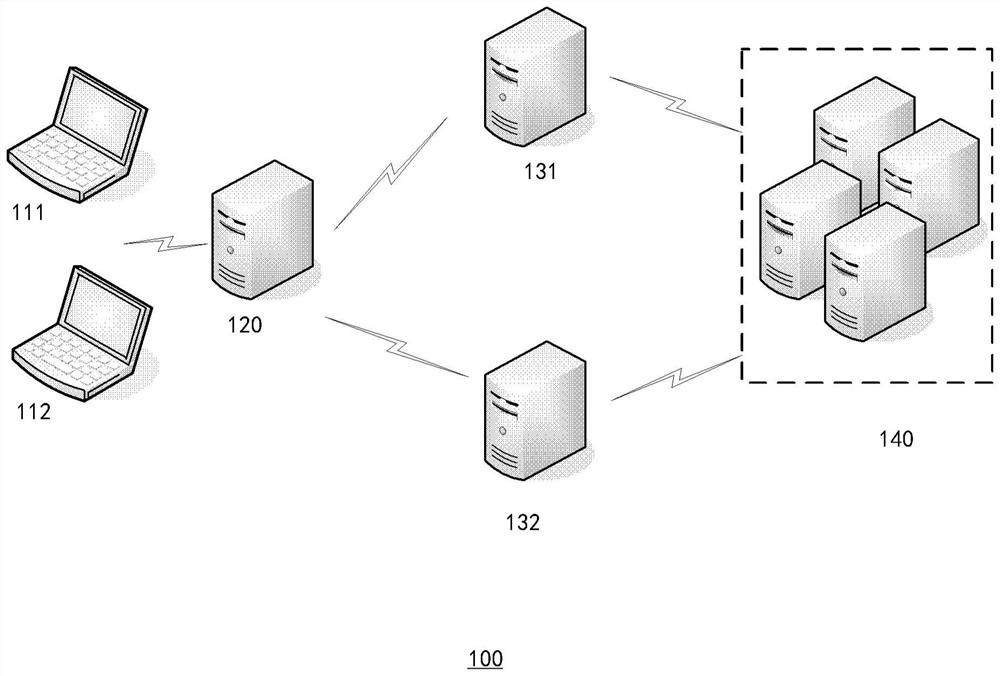

Intelligent planning space-time cloud GIS platform based on Docker container and micro-service architecture

ActiveCN110543537AAutomate deploymentRealize unified managementGeographical information databasesSpecial data processing applicationsNetwork connectionMirror image

Owner:GUANGDONG URBAN & RURAL PLANNING & DESIGN INST

Task scheduling method, device and system, electronic equipment and storage medium

PendingCN112486648ARealize unified managementDevelopment focusProgram initiation/switchingResource allocationParallel computingScheduling (computing)

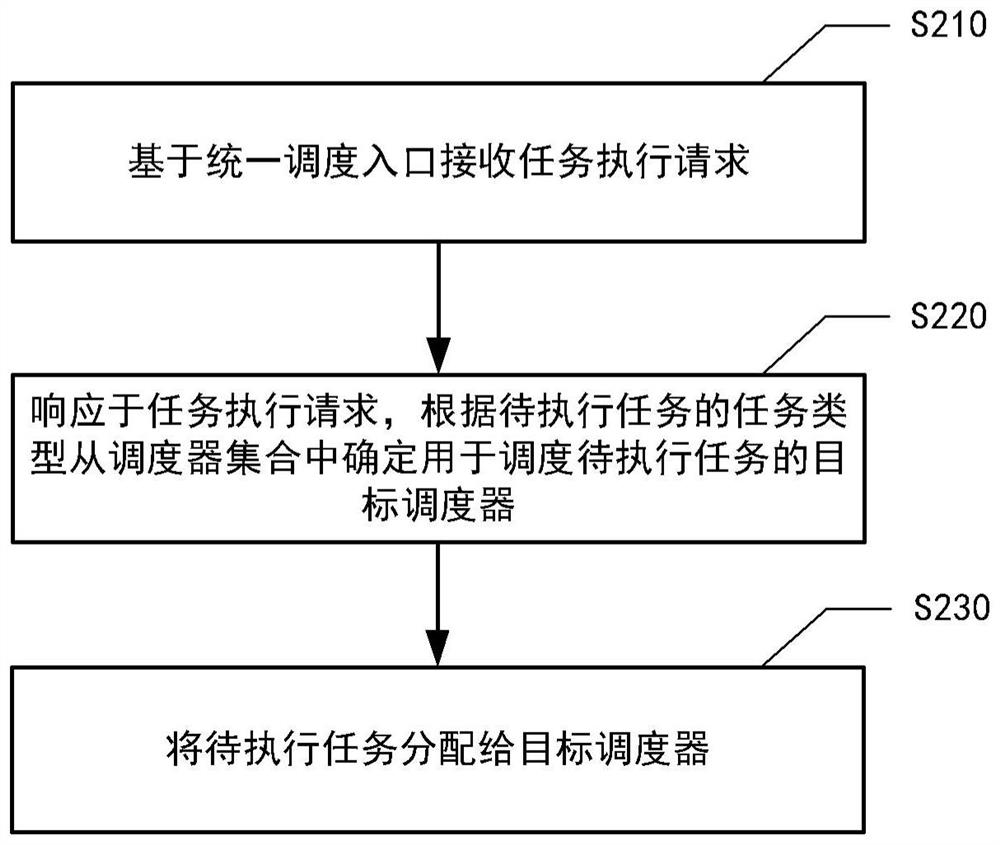

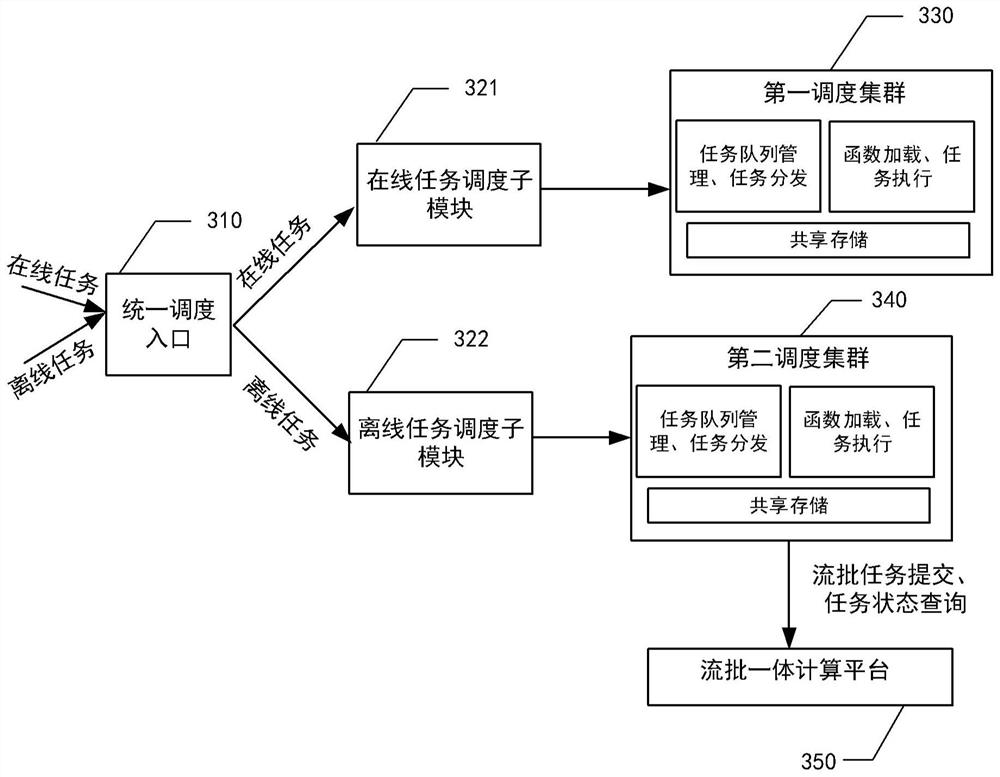

The invention discloses a task scheduling method, device and system, electronic equipment and a storage medium, relates to the technical field of computers, and can be used for cloud computing and thelike. According to the specific implementation scheme, the task scheduling method comprises the steps that a task execution request is received based on a unified scheduling entrance, and the task execution request carries the task type of a to-be-executed task; in response to the task execution request, determining a target scheduler for scheduling the to-be-executed task from a scheduler set according to the task type of the to-be-executed task, the target scheduler being used for allocating the to-be-executed task to an idle working node for execution, the scheduler set comprising a plurality of schedulers of different types; and allocating the to-be-executed task to the target scheduler.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

GPU resource using method and device and storage medium

ActiveCN110888743AProgram initiation/switchingResource allocationPerformance computingParallel computing

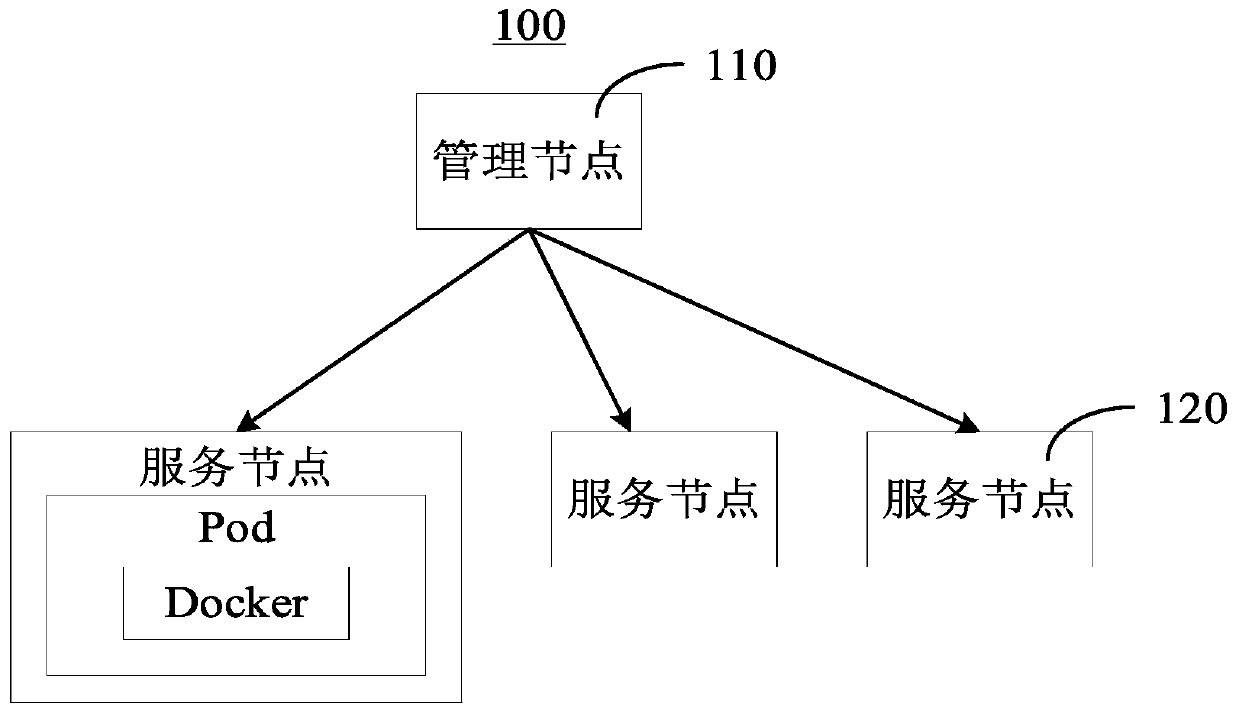

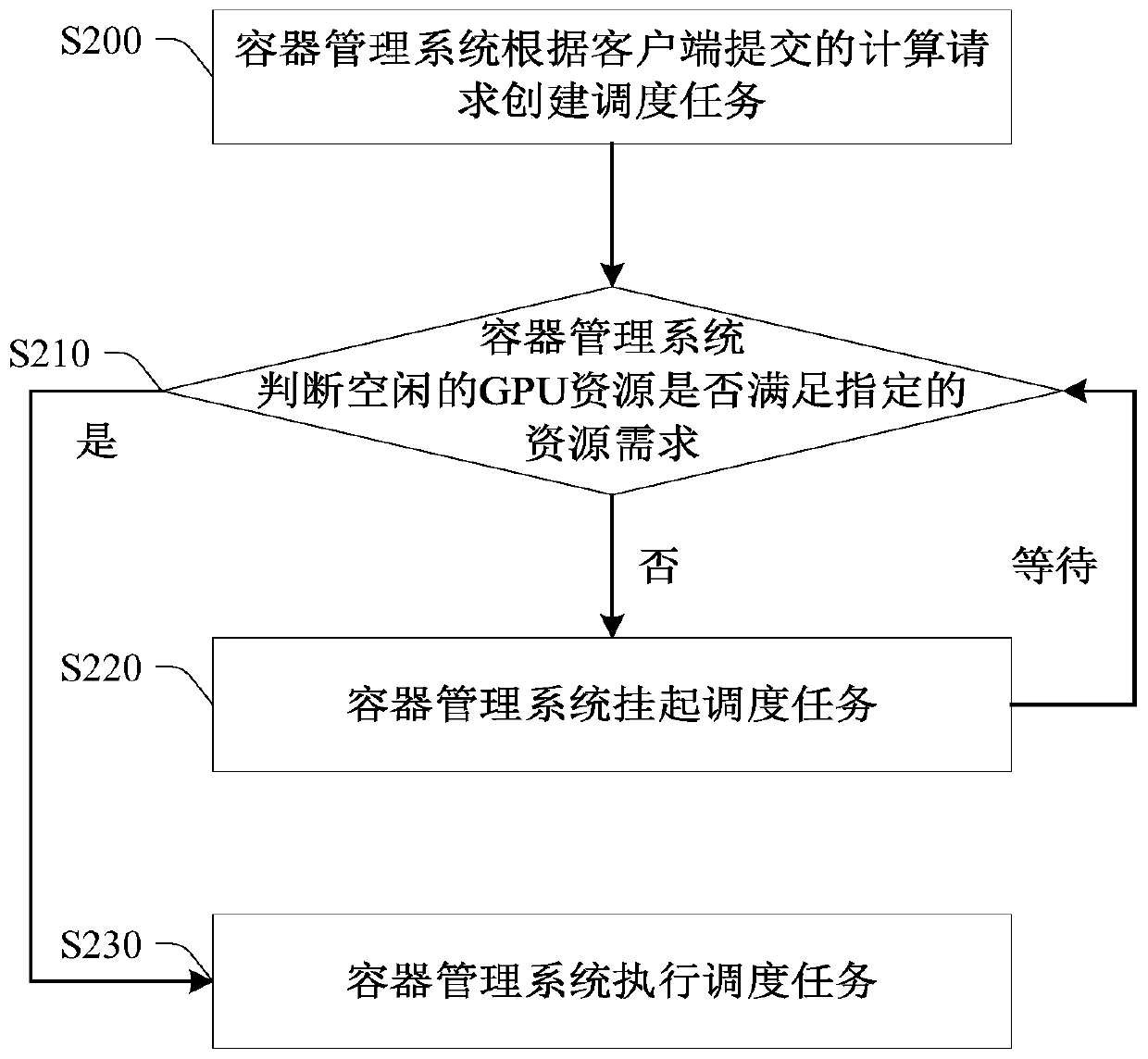

The invention relates to the technical field of high-performance computing, and provides a GPU resource using method and device and a storage medium. The GPU resource using method comprises the stepsthat a container management system creates a scheduling task according to a calculation request submitted by a client; the container management system judges whether the idle GPU resources in the cluster meet the resource requirements specified in the instruction for creating the GPU container or not; if the requirement is not met, the container management system suspends the scheduling task firstand then executes the scheduling task until the requirement is met; and when the scheduling task is suspended, the container management system does not create the GPU container. In this method, the container management system queues the scheduling tasks according to the use conditions of the GPU resources; therefore, for each computing task, the GPU resources can be used exclusively, the GPU resources used by the computing task are not shared with other computing tasks, and the execution progress and the computing result of the computing task can be carried out according to a plan and cannotbe affected by other computing tasks.

Owner:中科曙光国际信息产业有限公司

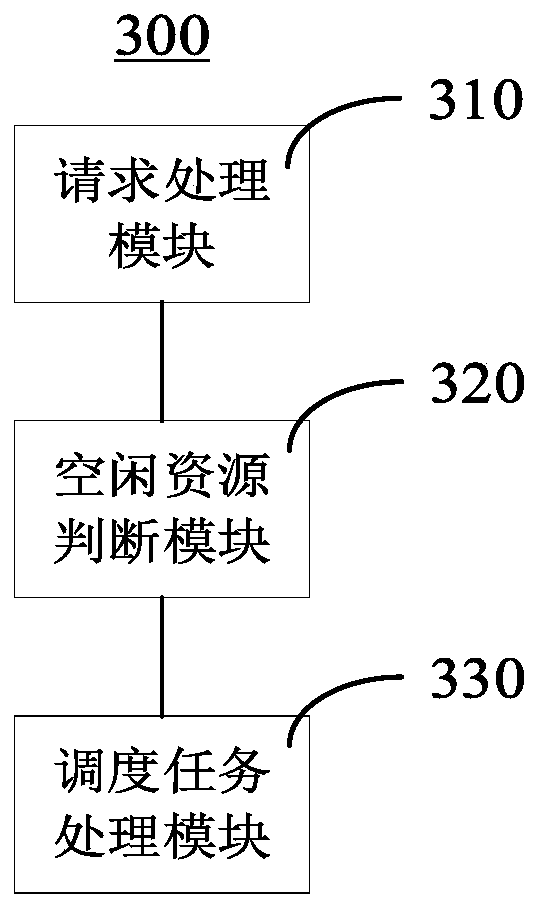

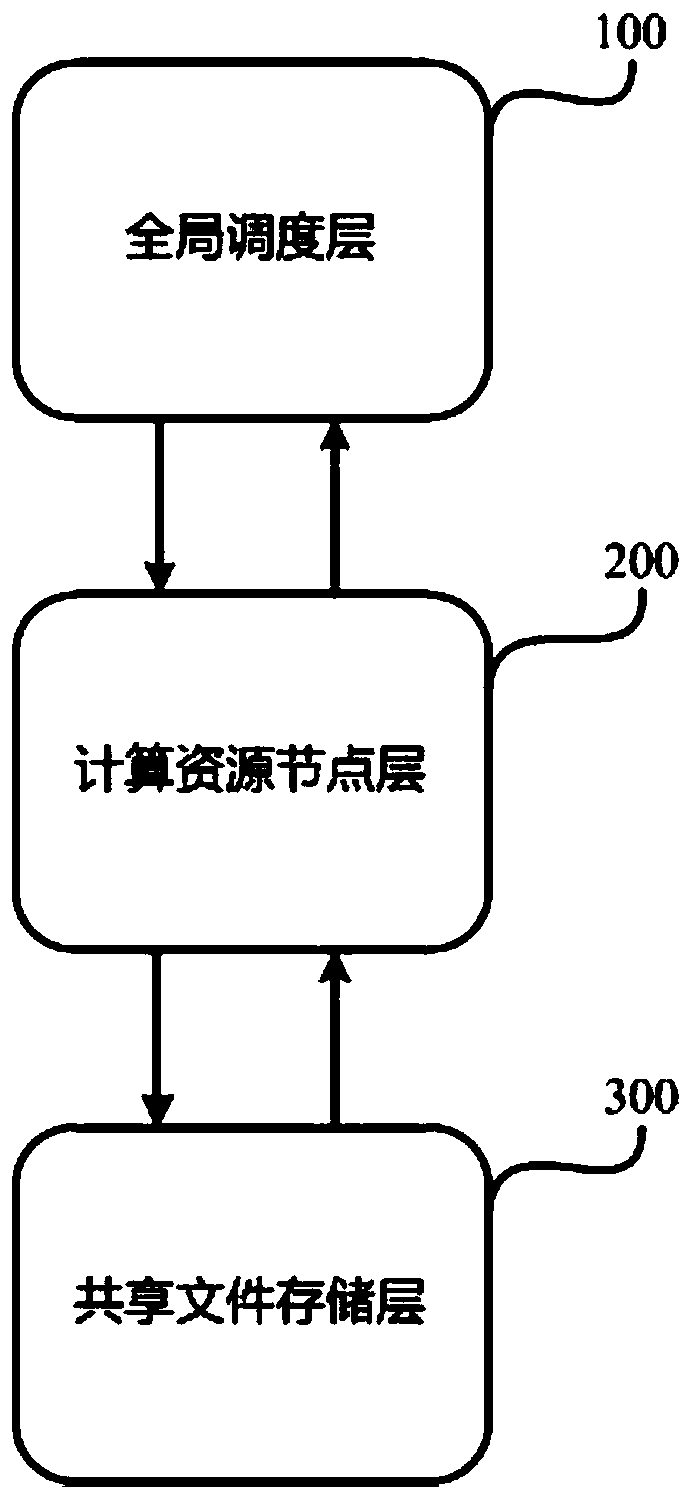

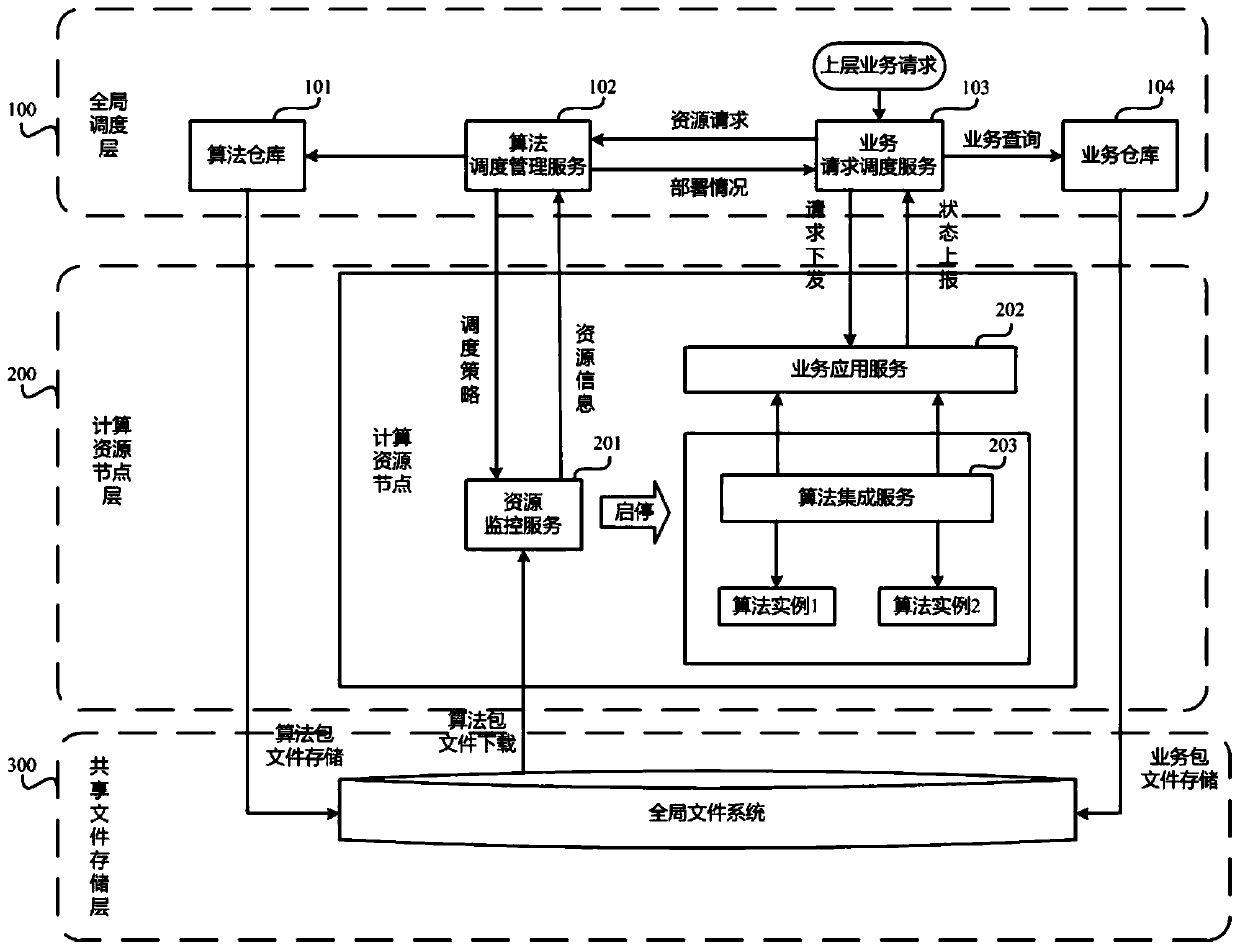

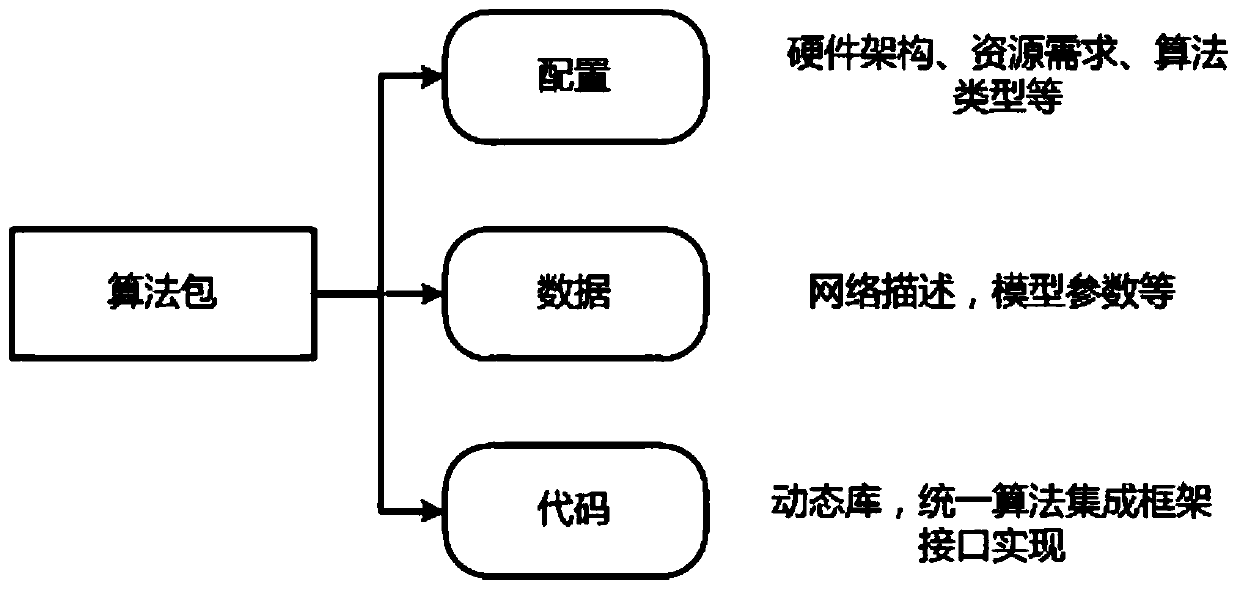

Management warehouse for scheduling intelligent analysis algorithm in complex scene, and scheduling method

PendingCN111126895AFlexible useRealize unified managementResource allocationLogisticsGlobal schedulingEngineering

The invention discloses a management warehouse for scheduling an intelligent analysis algorithm in a complex scene, and a scheduling method. The management warehouse comprises a global scheduling layer, a computing resource node layer and a shared file storage layer. The global scheduling layer receives the service request; wherein the service request comprises a to-be-executed service; determining a computing resource node for executing the service according to the service request; the service request is sent to the computing resource node layer; one or more intelligent analysis algorithms are called from the shared file storage layer to execute the to-be-executed service through the computing resource node executing the service in the computing resource node layer, so that the problem that unified management of computing resources cannot be achieved in the prior art is solved, multiple algorithms are fused, and the resource utilization rate is increased.

Owner:QINGDAO HISENSE TRANS TECH

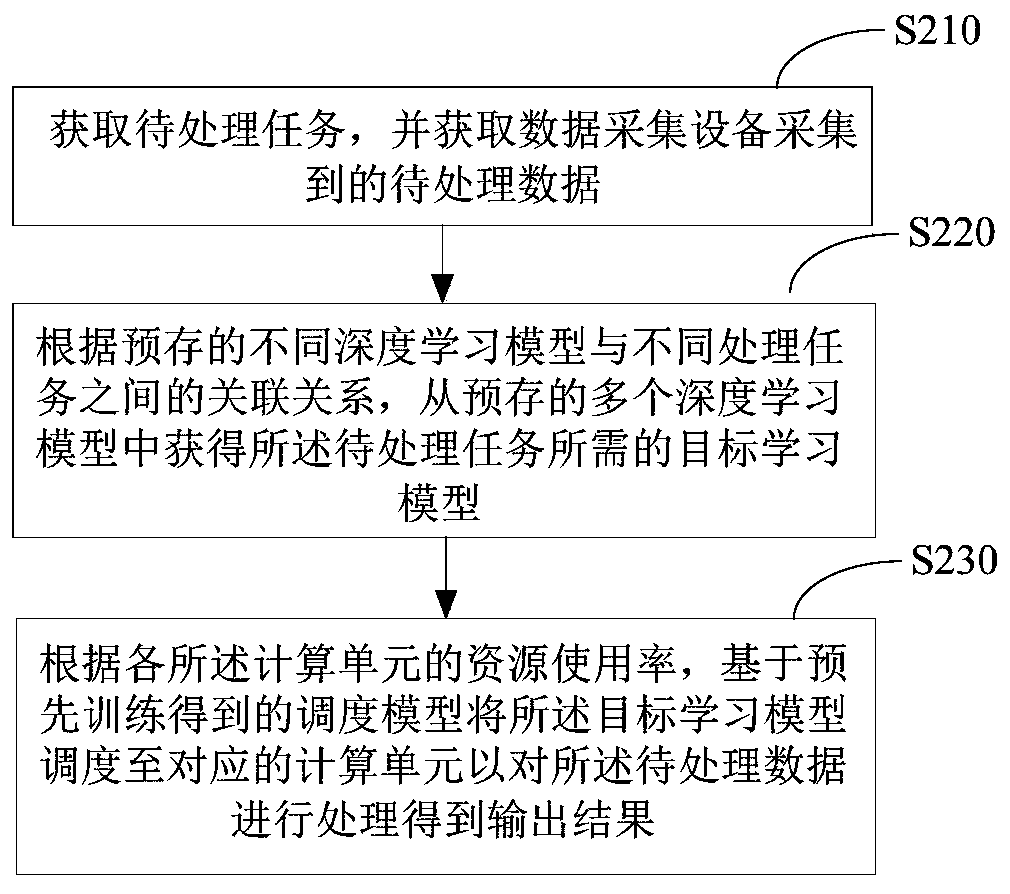

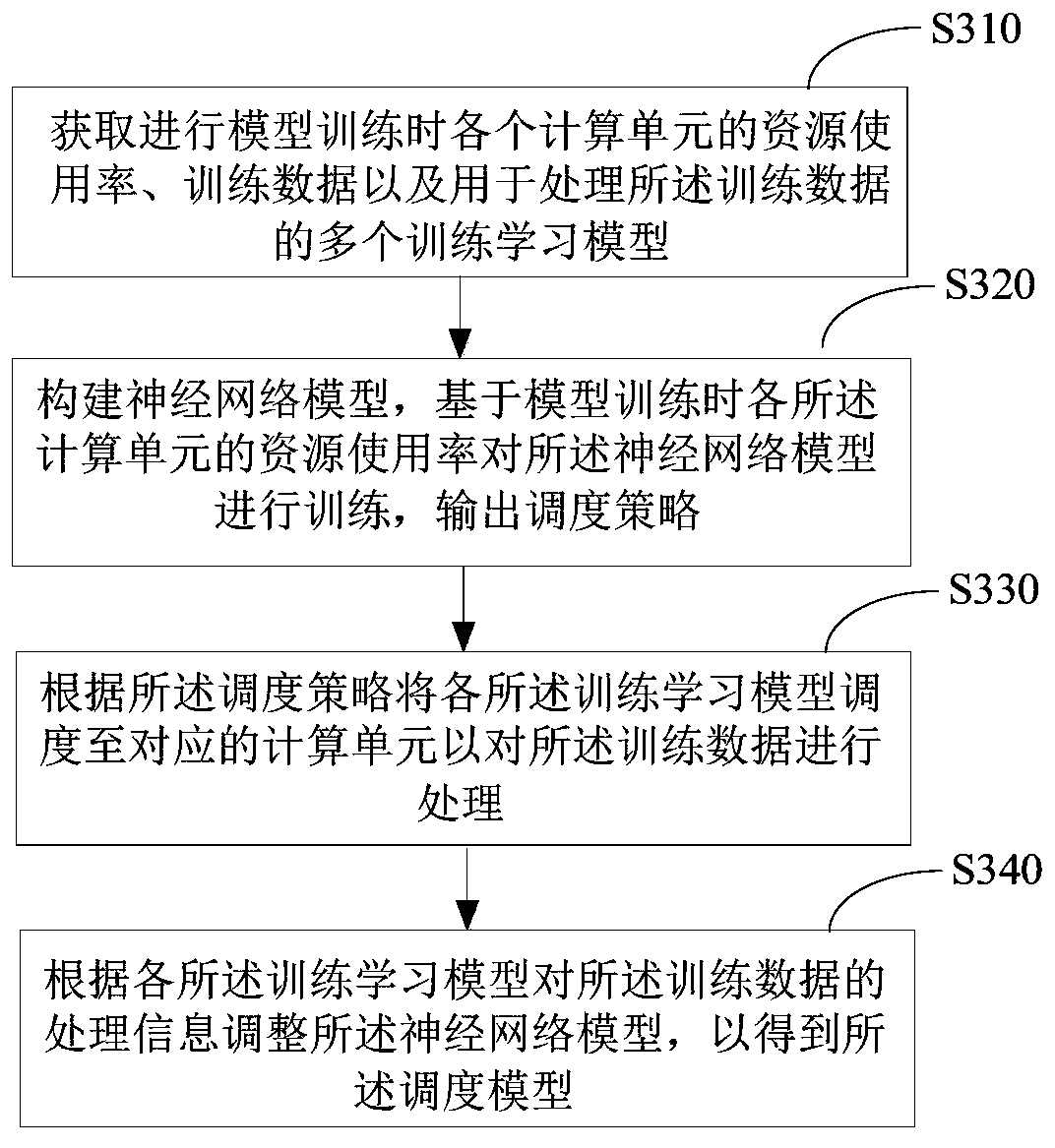

Scheduling method and device, electronic equipment and readable storage medium

ActiveCN110750342AOptimizing Scheduling StrategyImprove execution efficiencyProgram initiation/switchingNeural architecturesResource utilizationScheduling (computing)

The invention provides a scheduling method and device, electronic equipment and a readable storage medium, and the method comprises the steps: obtaining a to-be-processed task and to-be-processed data, and obtaining a target learning model needed by the to-be-processed task from a plurality of pre-stored deep learning models according to the correlation between different pre-stored deep learning models and different processing tasks; scheduling the target learning model to the corresponding computing unit based on a pre-trained scheduling model according to the resource utilization rate of each computing unit so as to process the to-be-processed data to obtain an output result. In this way, when the processing task is executed in the end-side equipment, the scheduling model is establishedin advance, and the optimized scheduling strategy is obtained based on the real-time resource utilization rate, so that the overall execution efficiency of the equipment is improved.

Owner:BEIJING DIDI INFINITY TECH & DEV

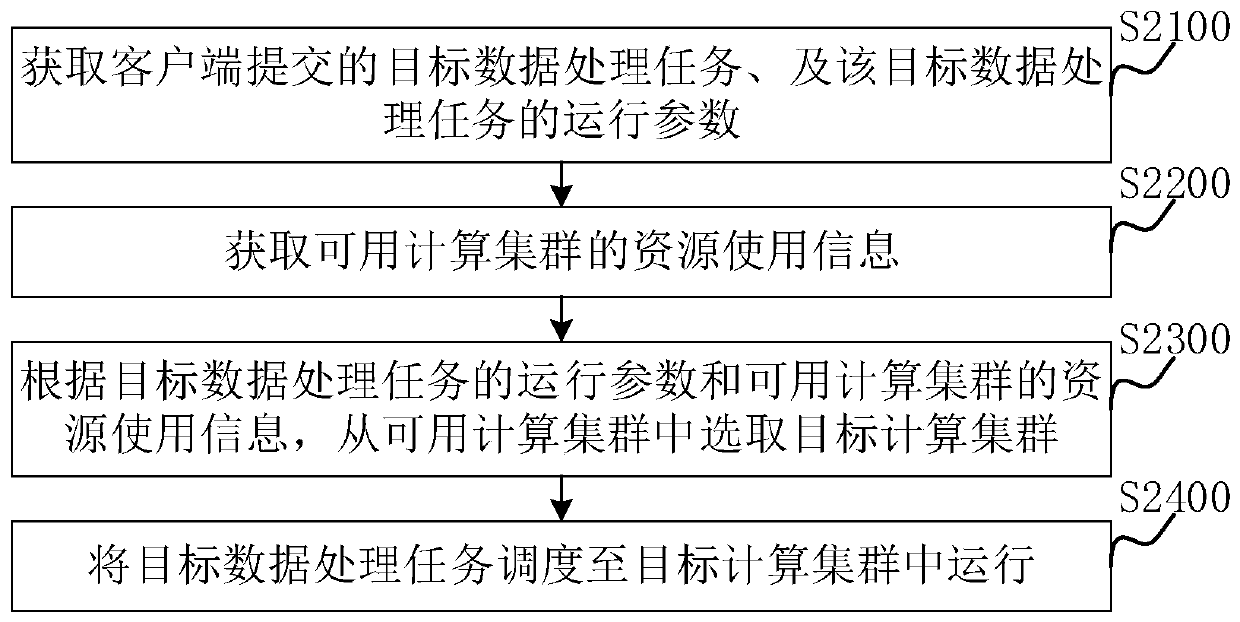

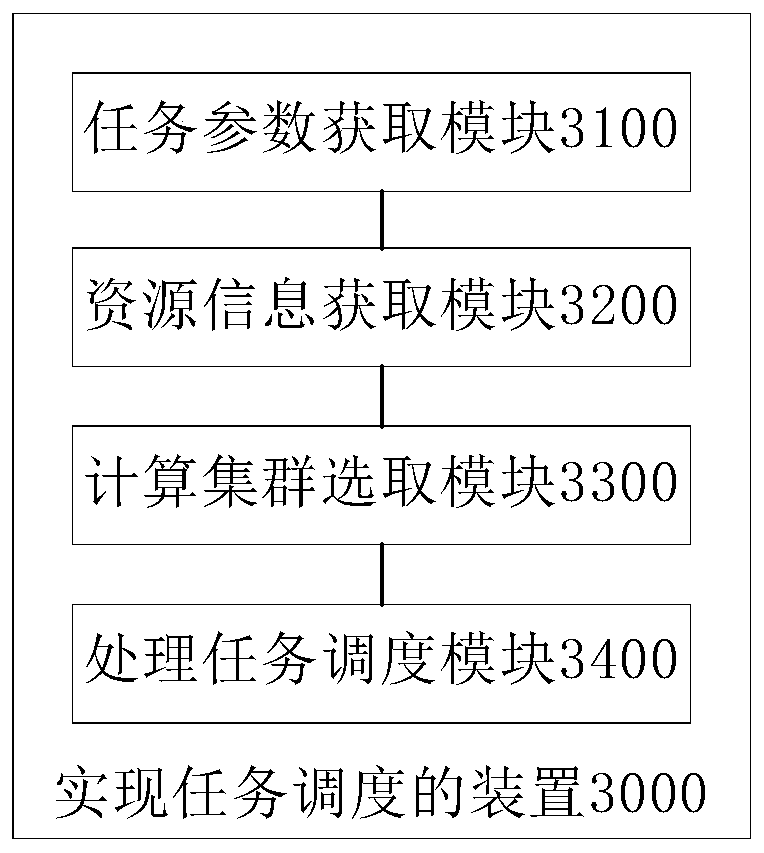

Method, device and system for realizing task scheduling

PendingCN111190718AImprove operational efficiencyImprove collation resource utilizationProgram initiation/switchingResource allocationScheduling (computing)Distributed computing

The invention provides a method, a device and a system for realizing task scheduling. The method comprises the following steps: acquiring a target data processing task submitted by a client and running parameters of the target data processing task; obtaining resource use information of the available computing cluster; selecting a target computing cluster from available computing clusters accordingto the running parameters of the target processing task and the resource use information of the available computing clusters; and scheduling the target data processing task to a target computing cluster for running.

Owner:THE FOURTH PARADIGM BEIJING TECH CO LTD

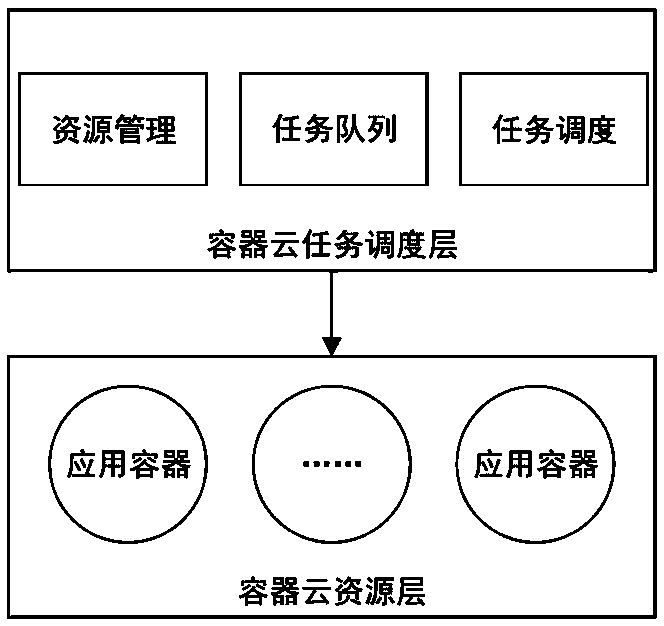

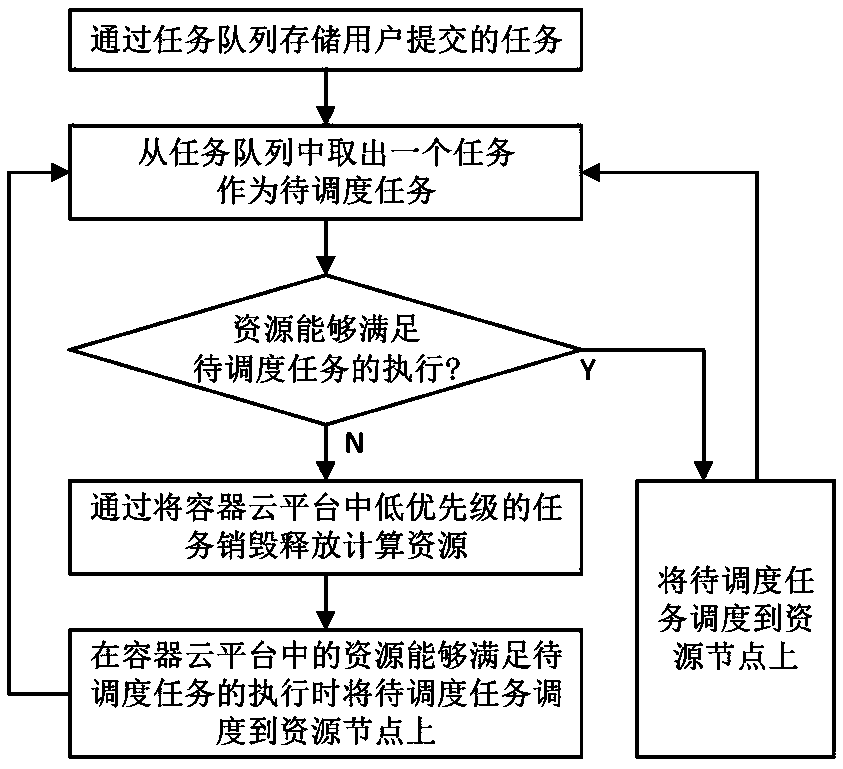

Container cloud-oriented task preemption scheduling method and system

ActiveCN111399989AReduce the number of tasksImprove task scheduling efficiencyProgram initiation/switchingResource allocationParallel computingEngineering

The invention discloses a container cloud-oriented task preemption scheduling method and system. The task preemption scheduling method comprises the steps: storing tasks submitted by a user through atask queue; taking out to-be-scheduled tasks from the task queue; judging whether the resources in the container cloud platform can meet the execution of the to-be-scheduled task or not; if the execution of the to-be-scheduled task can be satisfied, scheduling the to-be-scheduled task to the resource node, otherwise, destroying the low-priority task in the container cloud platform to release the computing resource, and scheduling the to-be-scheduled task to the resource node when the resource in the container cloud platform can satisfy the execution of the to-be-scheduled task. According to the task scheduling method and device, the tasks with low priorities can be destroyed and computing resources can be released in a resource full-load operation scene, so the tasks with high priorities are executed in time, meanwhile, due to smooth execution of the tasks, the number of the tasks waiting for being executed in the task queue is greatly reduced, and the task scheduling efficiency is effectively improved.

Owner:NAT UNIV OF DEFENSE TECH

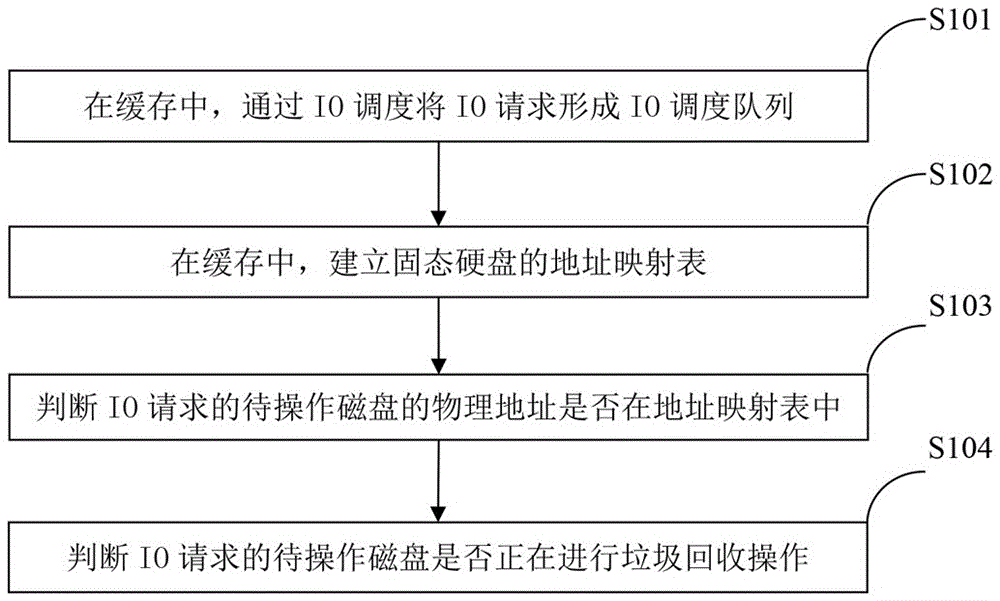

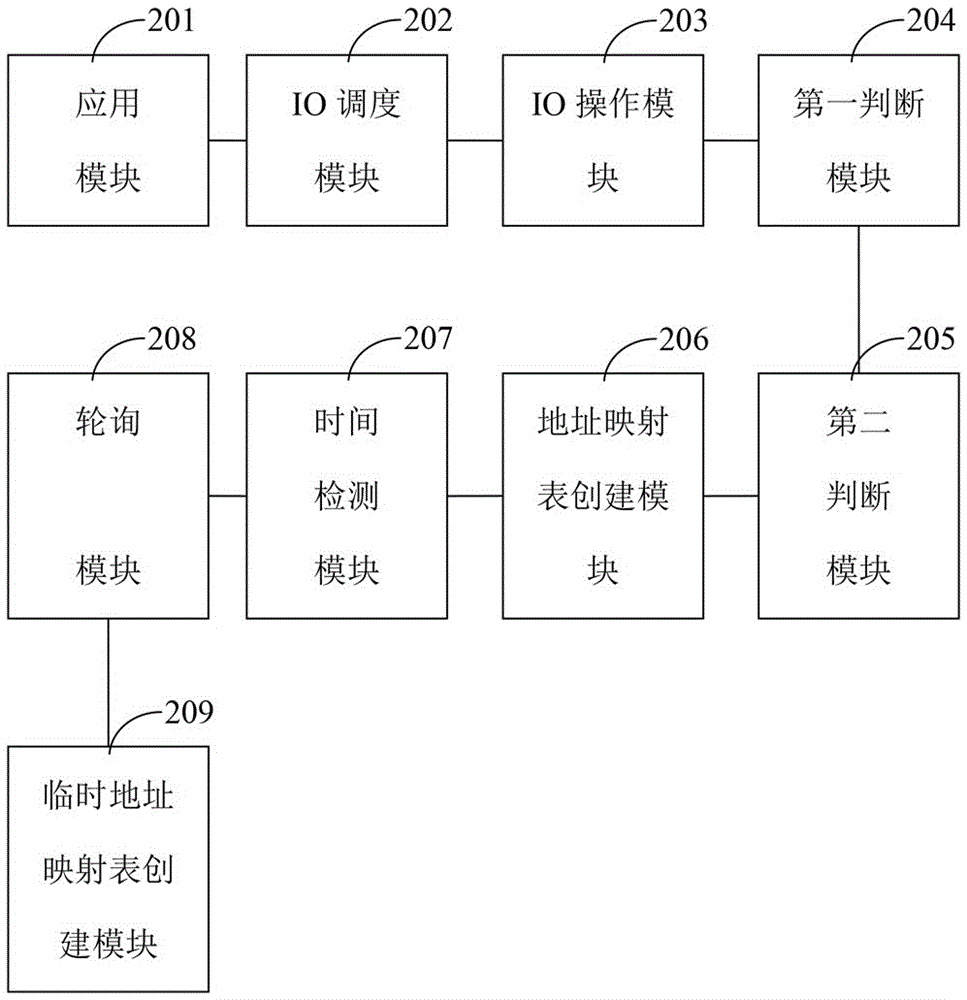

IO (input/output) scheduling method and IO scheduling device

ActiveCN106681660AImprove read and write speedExtended service lifeInput/output to record carriersMemory adressing/allocation/relocationScheduling (computing)Garbage collection

The invention relates to the technical field of computer storage computing, in particular to an IO (input / output) scheduling method and an IO scheduling device. The IO scheduling method carries out mapping by combining the characteristics of solid-state drive address mapping and the characteristics of junk recycling; the invention also provides the IO scheduling device, comprising an application module, an IO scheduling module and an IO operation module, wherein the IO operation module is used for judging whether an address mapping table of a solid-stage drive is present in cache or not and judging whether physical data blocks to be operated are under junk recycling operation or not, and if yes, IO operations are selected according to priority levels of IO scheduling queues and are issued to the solid-state drive for processing. By using the method and device, the problems are solved that writing a solid-state drive requires erasure prior to writing and the quantity of writing operations is limited; IO stacks are optimized in conjunction with the features of a solid-stage drive.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

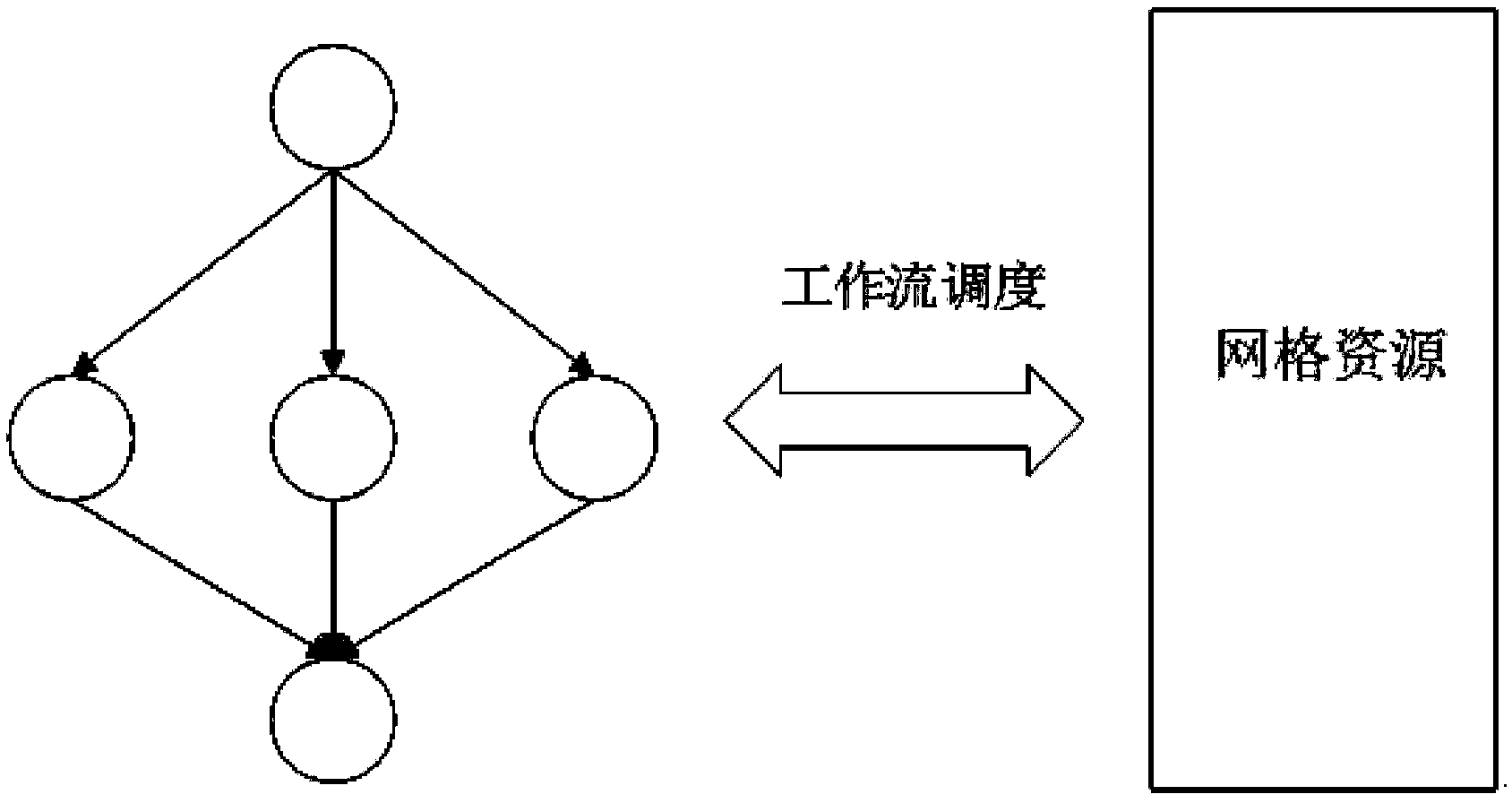

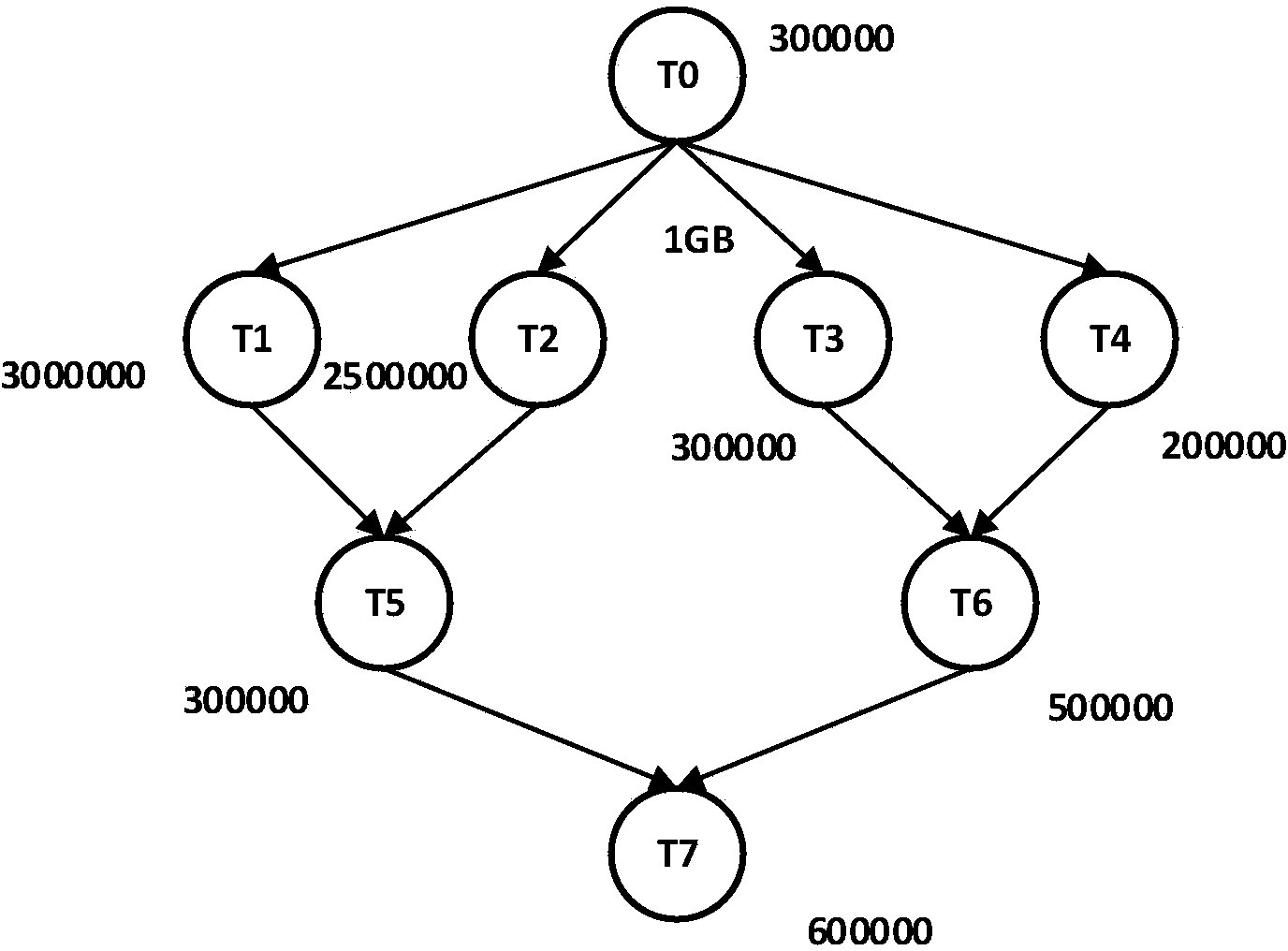

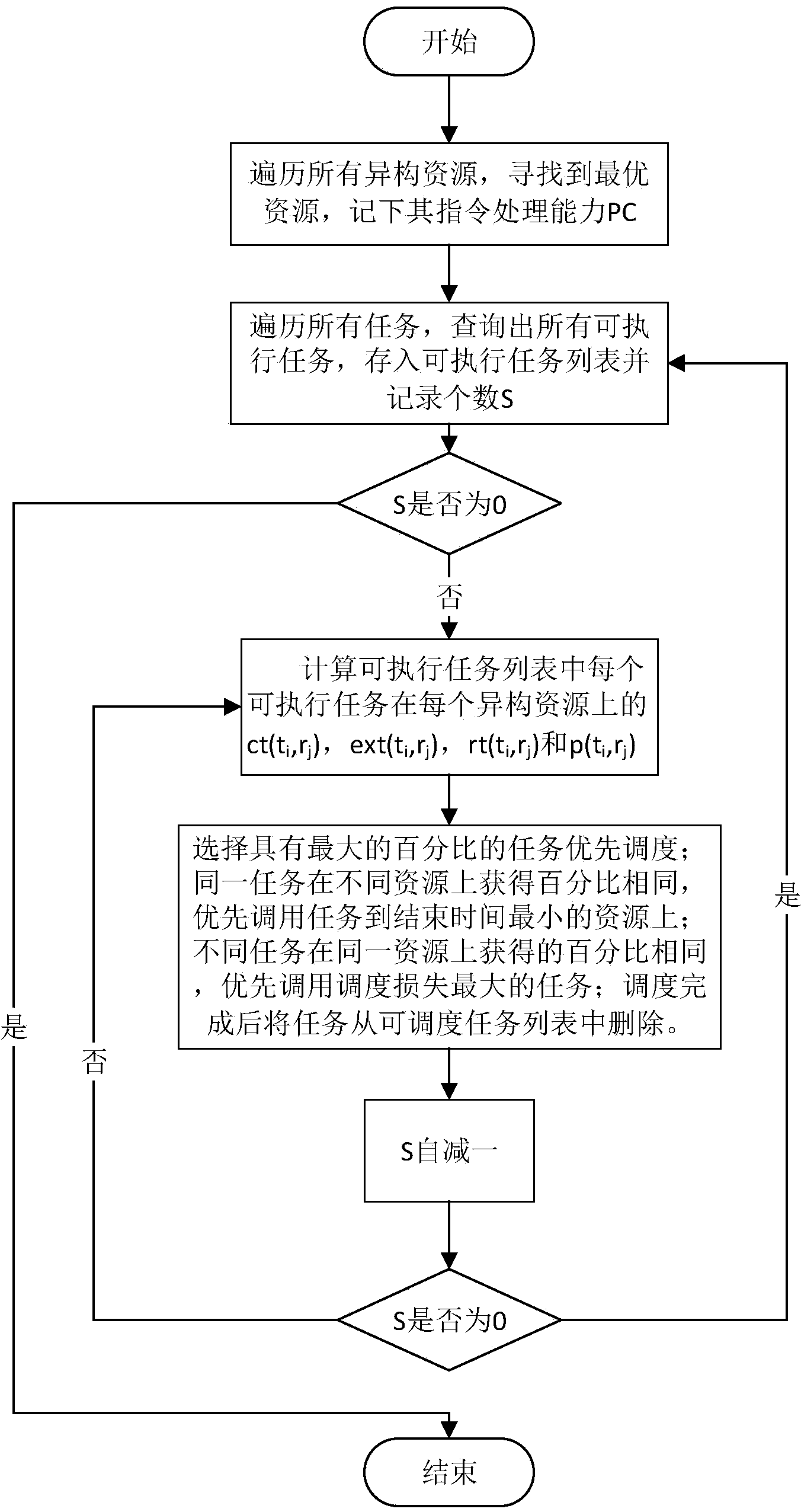

Scientific workflow scheduling method and device

ActiveCN104035819AReduced execution timeReduce usage timeProgram initiation/switchingResource allocationWorkflow schedulingSymmetric multiprocessor system

The invention discloses a scientific workflow scheduling method and device under a network environment. The method comprises the following steps that heterogeneous computing resources used as targets are inquired, and computing power PCj of each heterogeneous computing resource is recorded; all schedulable tasks in workflows used as targets are inquired; the task ratio p (ti, rj) of each task ti in all the schedulable tasks on the j heterogeneous computing resource rj is computed, and ct (ti, rj) = ext (ti, rj) + rt (ti, rj); in all the obtained task ratios, the largest task ratio p (tm, rn) is obtained, and the m task tm is correspondingly scheduled to the n heterogeneous computing resource rn to be executed. By means of the scientific workflow scheduling method and device, good resource load balance is achieved, the method and device can be adaptive to static and moving environments, scheduling time is short, total execution time is short, and combination property is excellent in the existing scheduling algorithms.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com