Patents

Literature

106 results about "Global scheduling" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

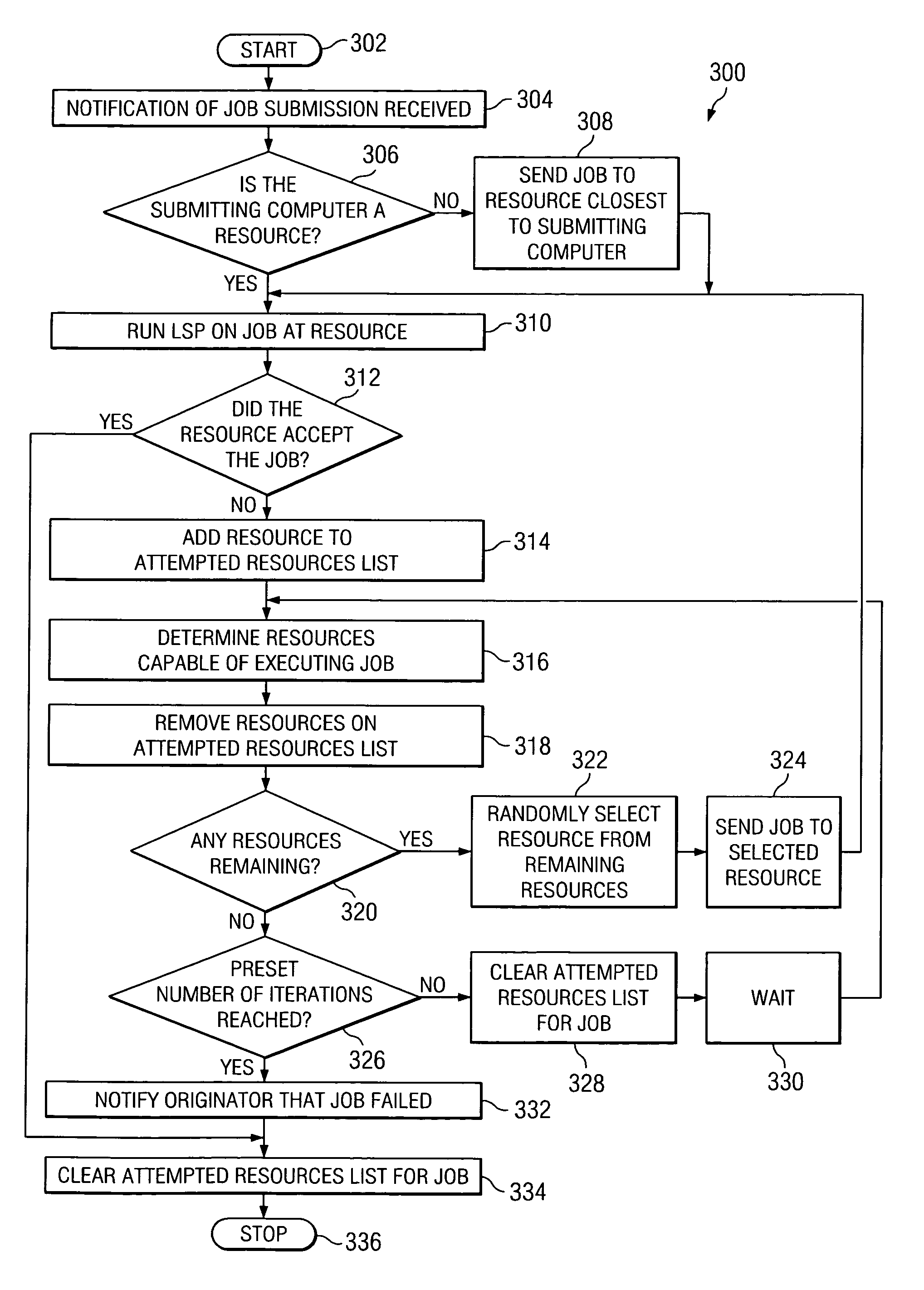

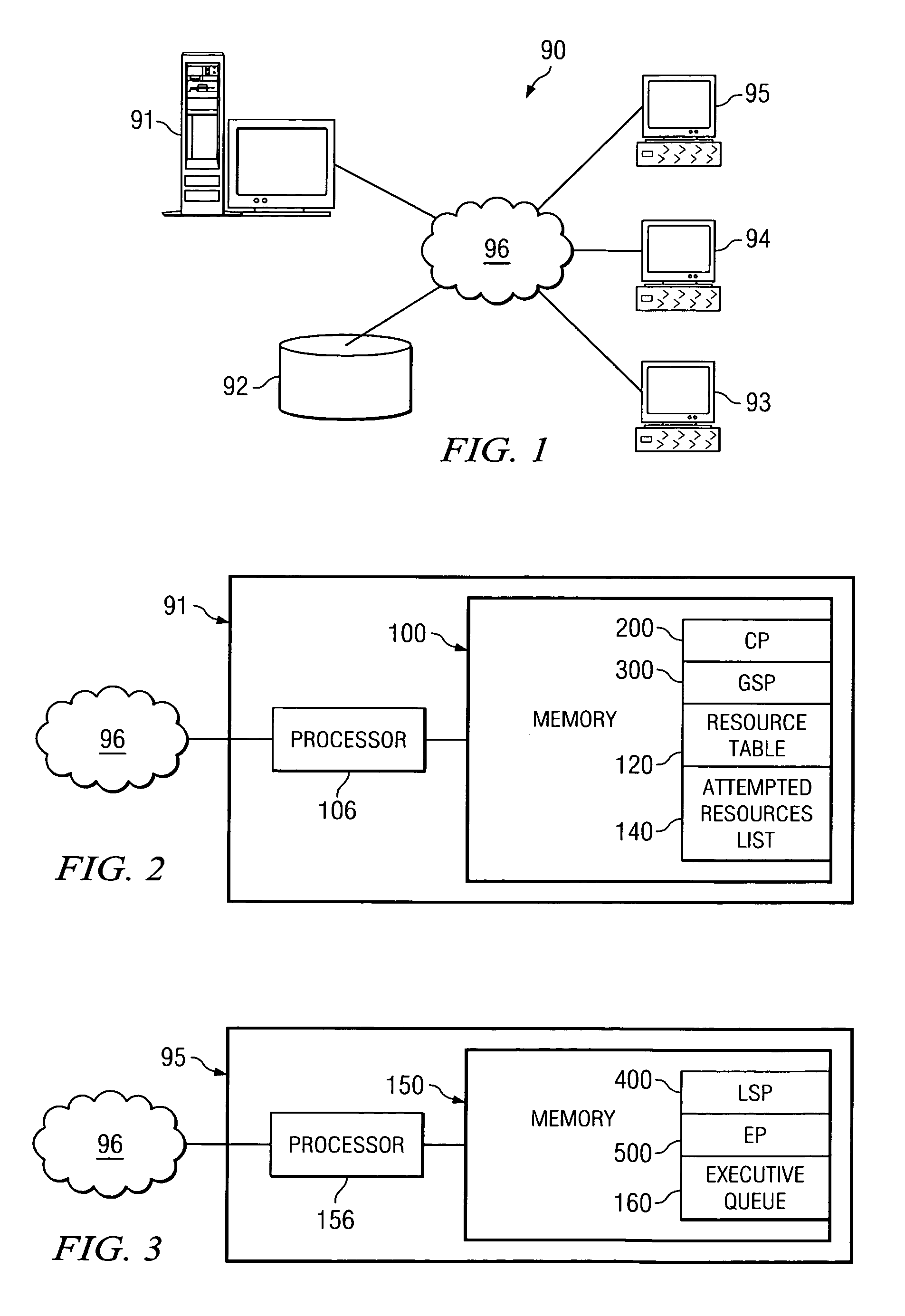

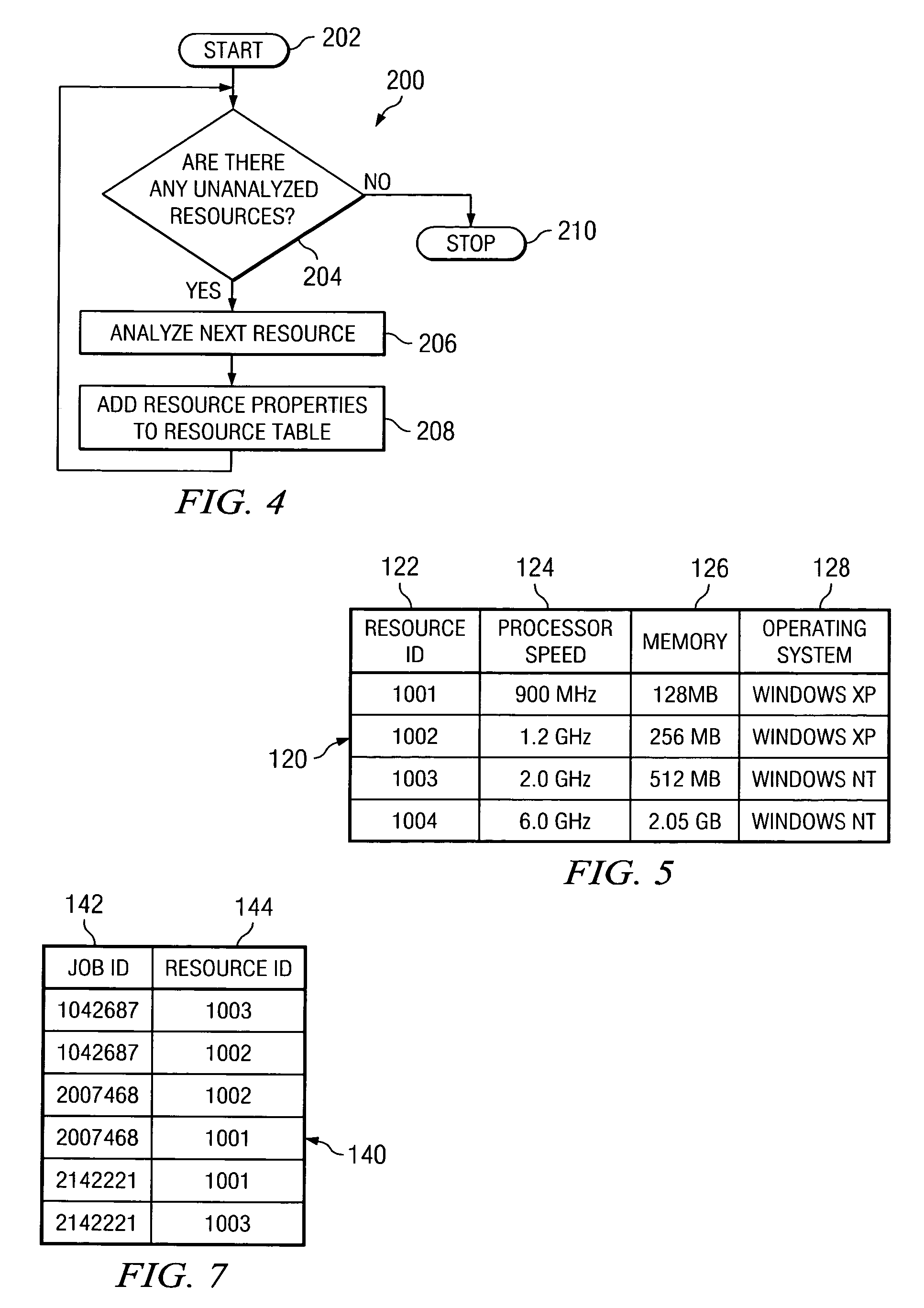

Grid non-deterministic job scheduling

InactiveUS20050262506A1Digital computer detailsMultiprogramming arrangementsGlobal schedulingComputer science

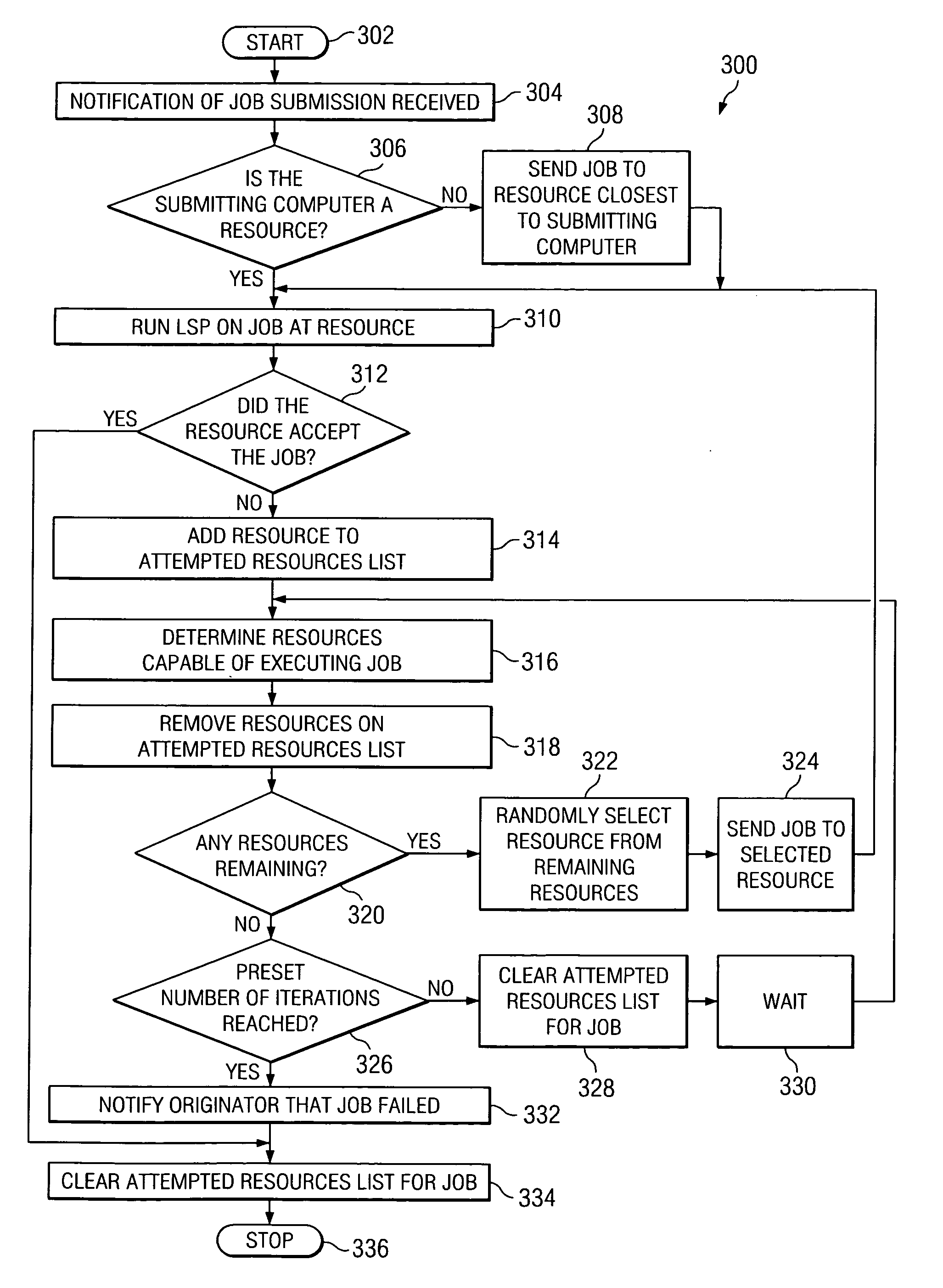

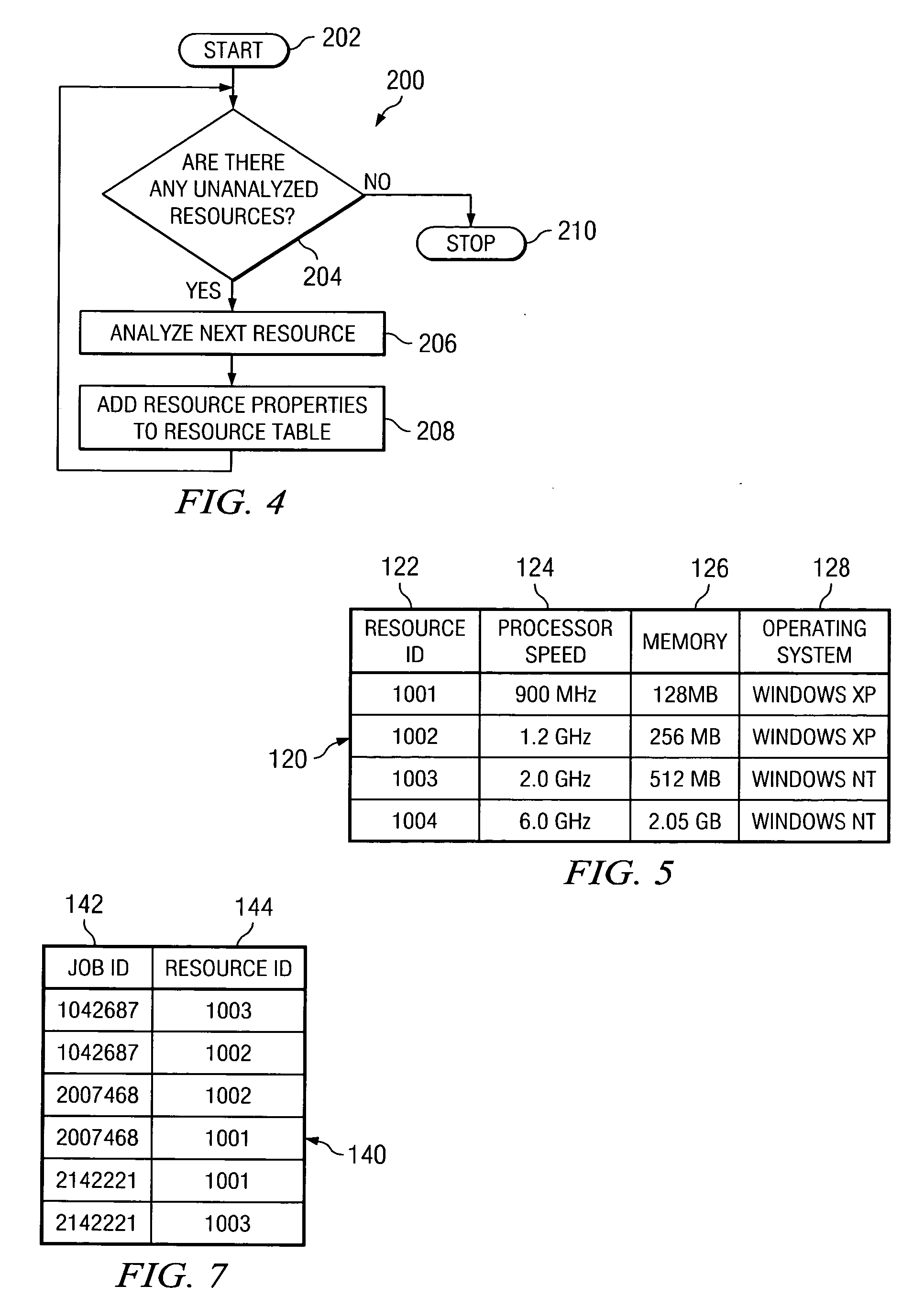

The present invention is method for scheduling jobs in a grid computing environment without having to monitor the state of the resource on the gird comprising a Global Scheduling Program (GSP) and a Local Scheduling Program (LSP). The GSP receives jobs submitted to the grid and distributes the job to the closest resource. The resource then runs the LSP to determine if the resource can execute the job under the conditions specified in the job. The LSP either rejects or accepts the job based on the current state of the resource properties and informs the GSP of the acceptance or rejection. If the job is rejected, the GSP randomly selects another resource to send the job to using a resource table. The resource table contains the state-independent properties of every resource on the grid.

Owner:IBM CORP

Method and Systems for Self-Service Programming of Content and Advertising in Digital Out-Of-Home Networks

InactiveUS20090049097A1Digital data processing detailsAnalogue secracy/subscription systemsContent distributionGlobal scheduling

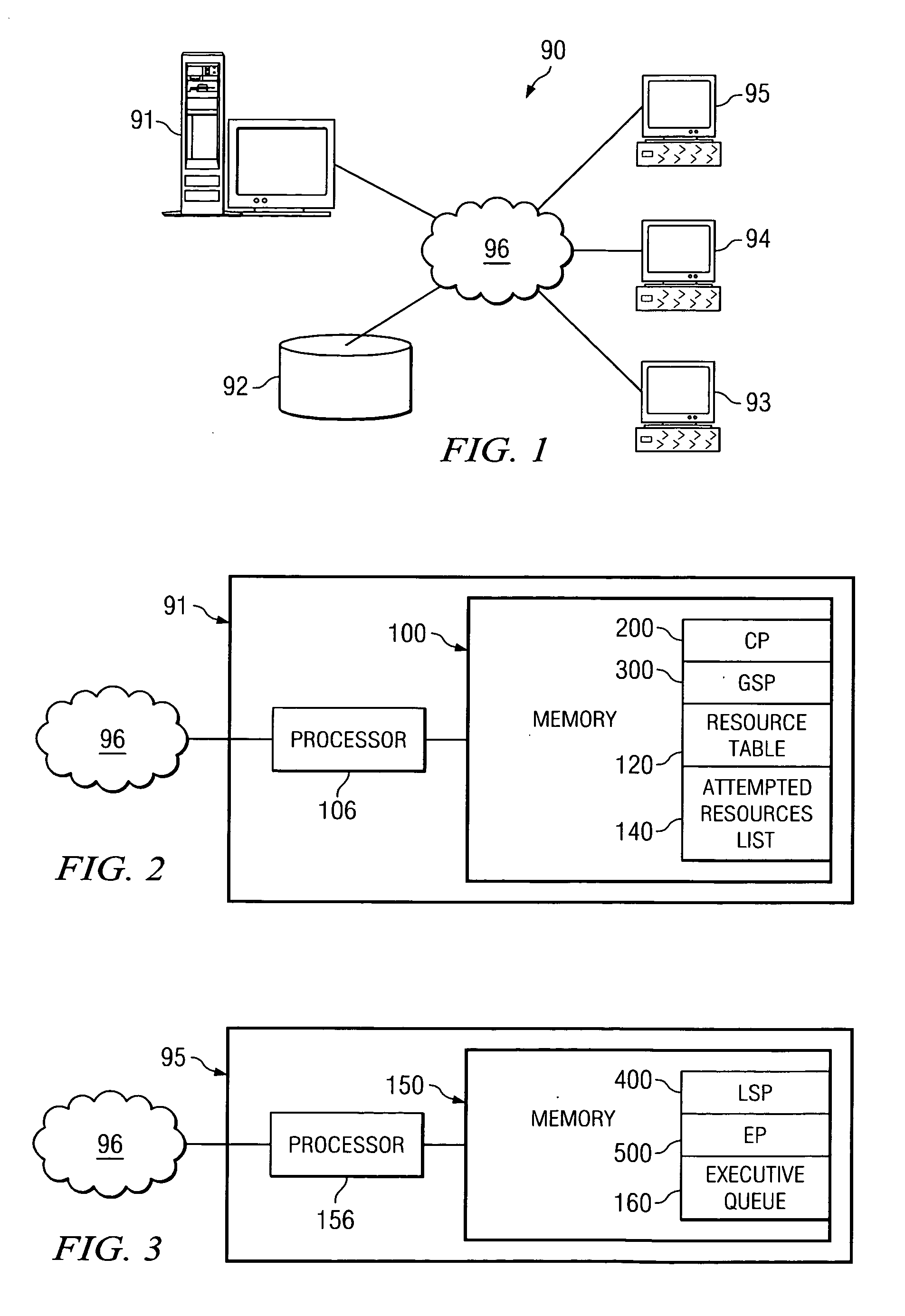

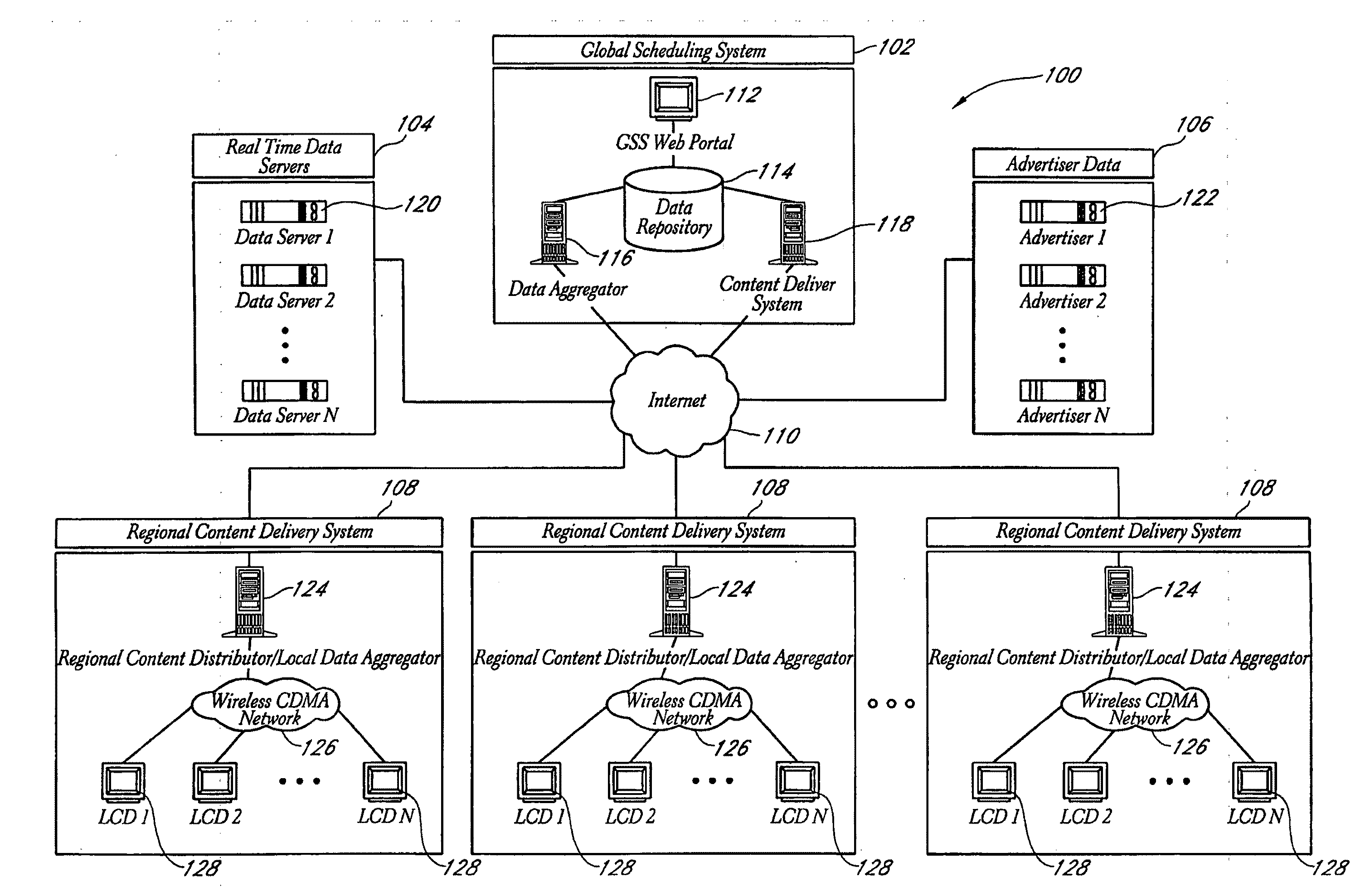

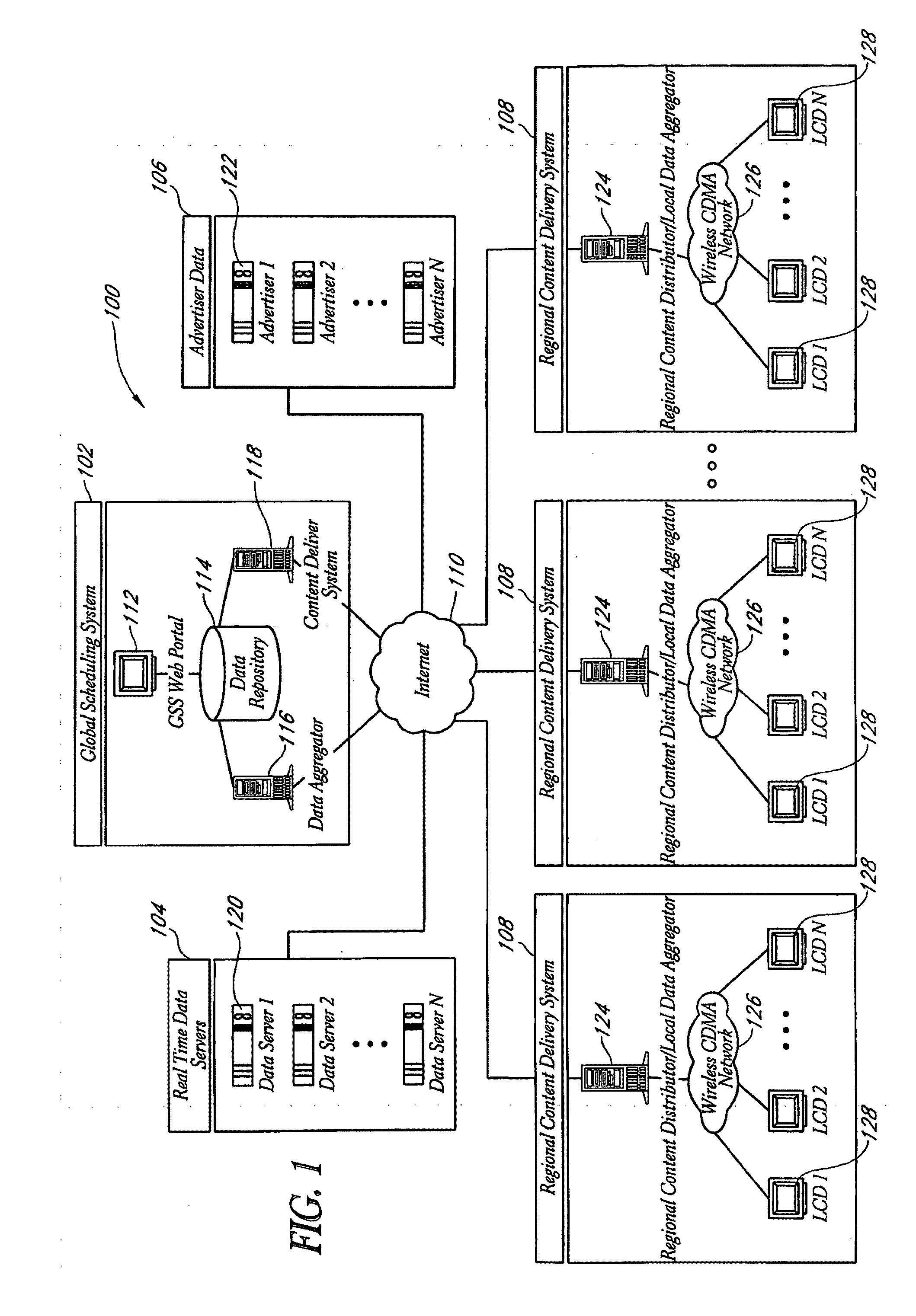

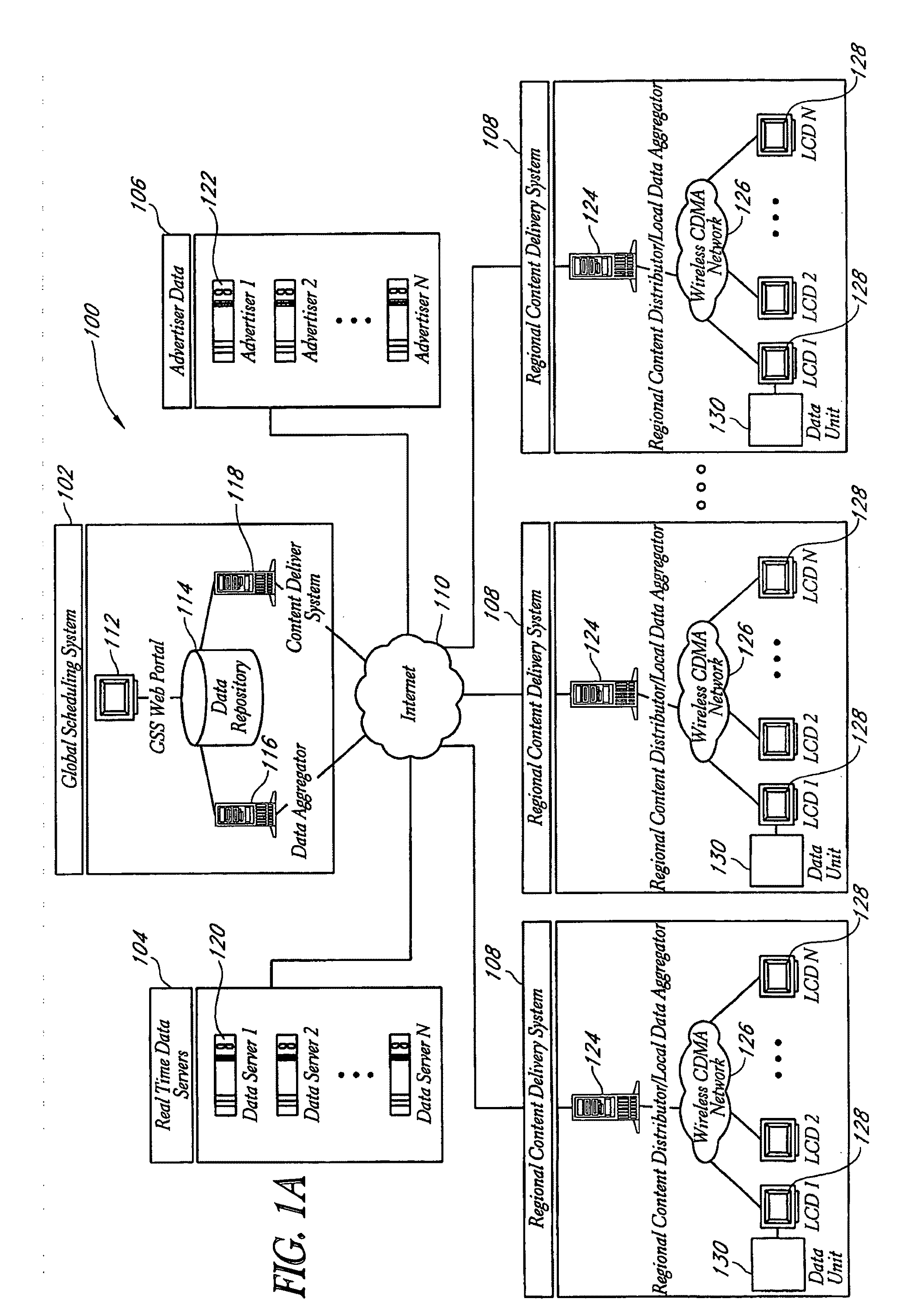

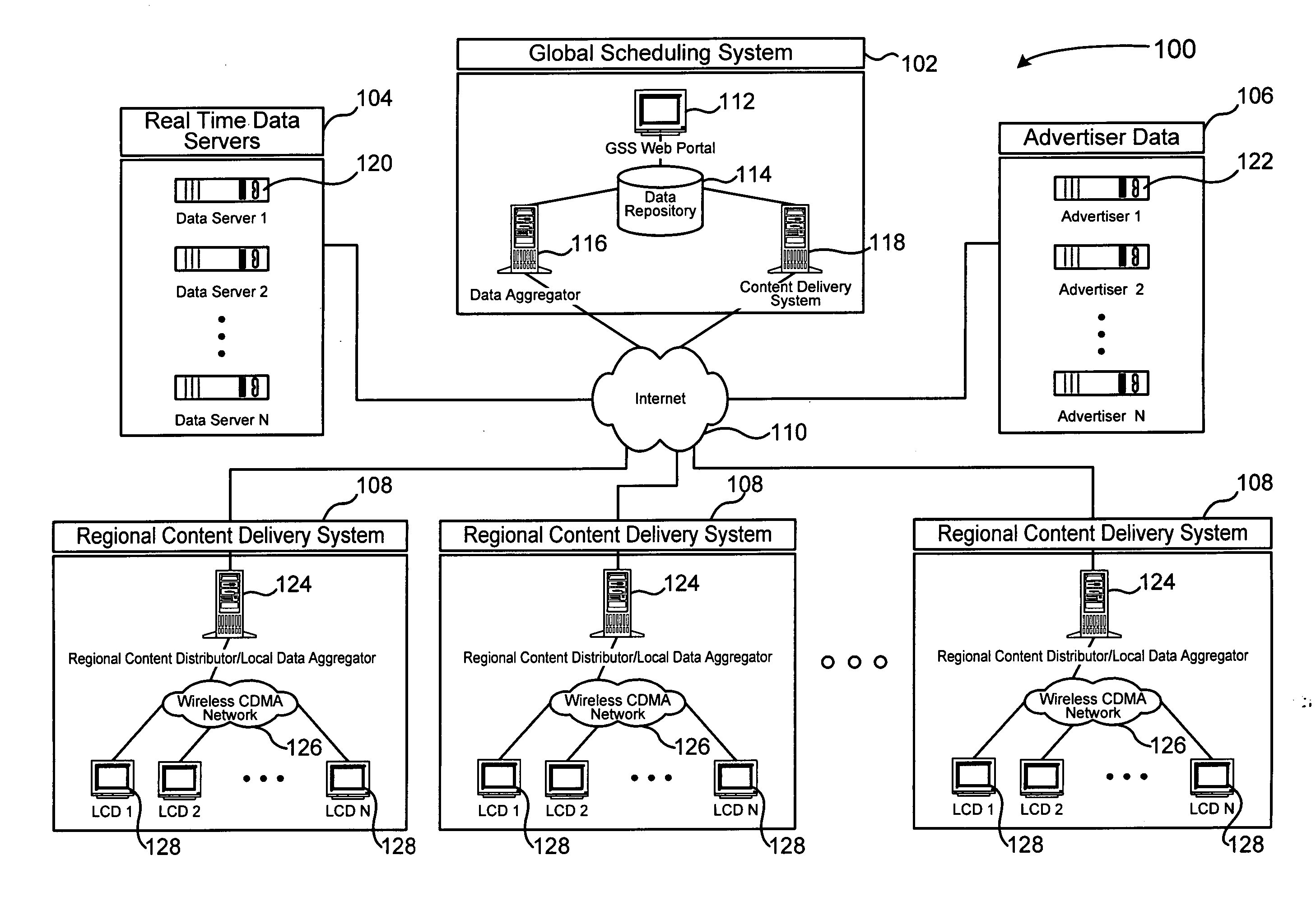

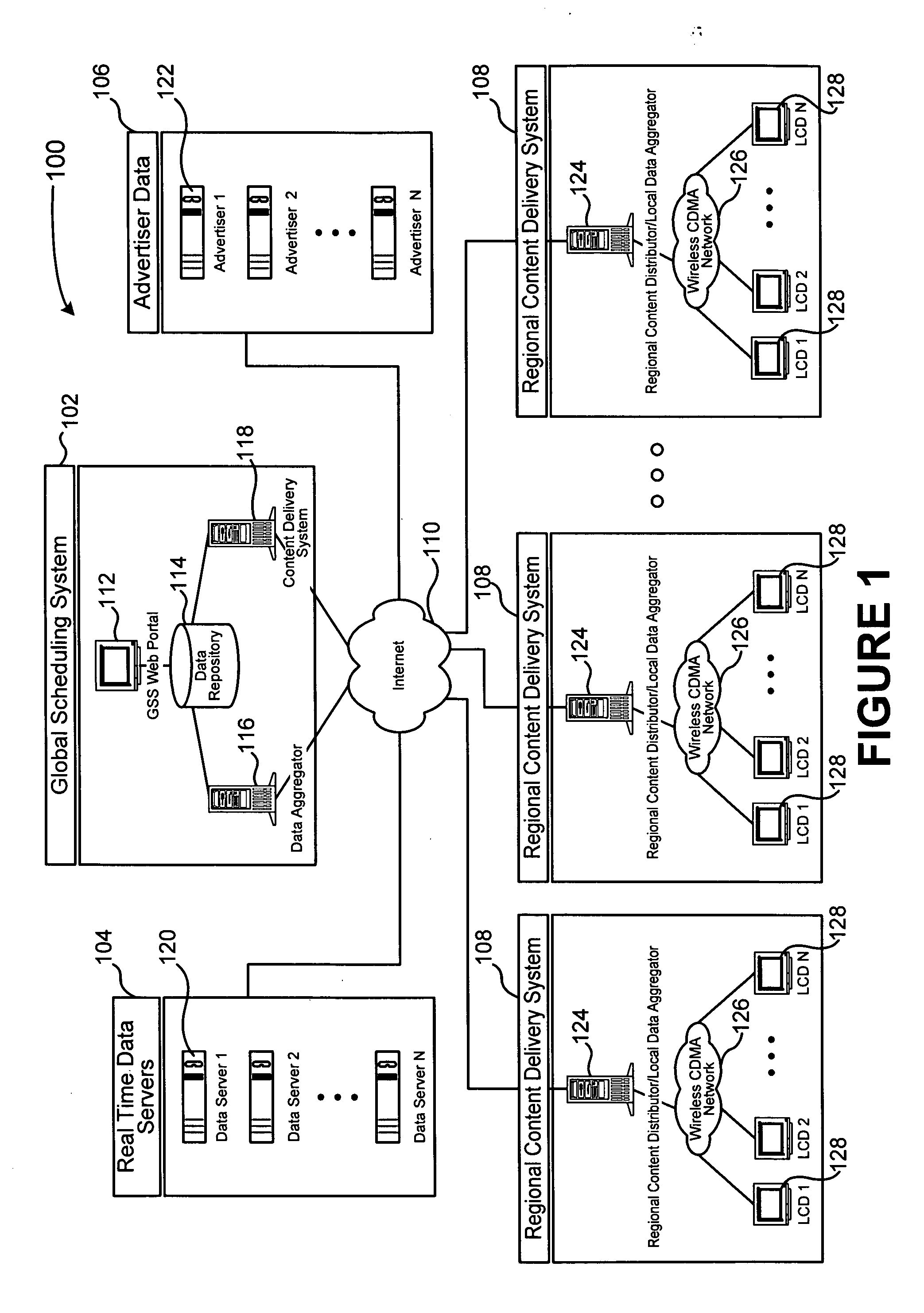

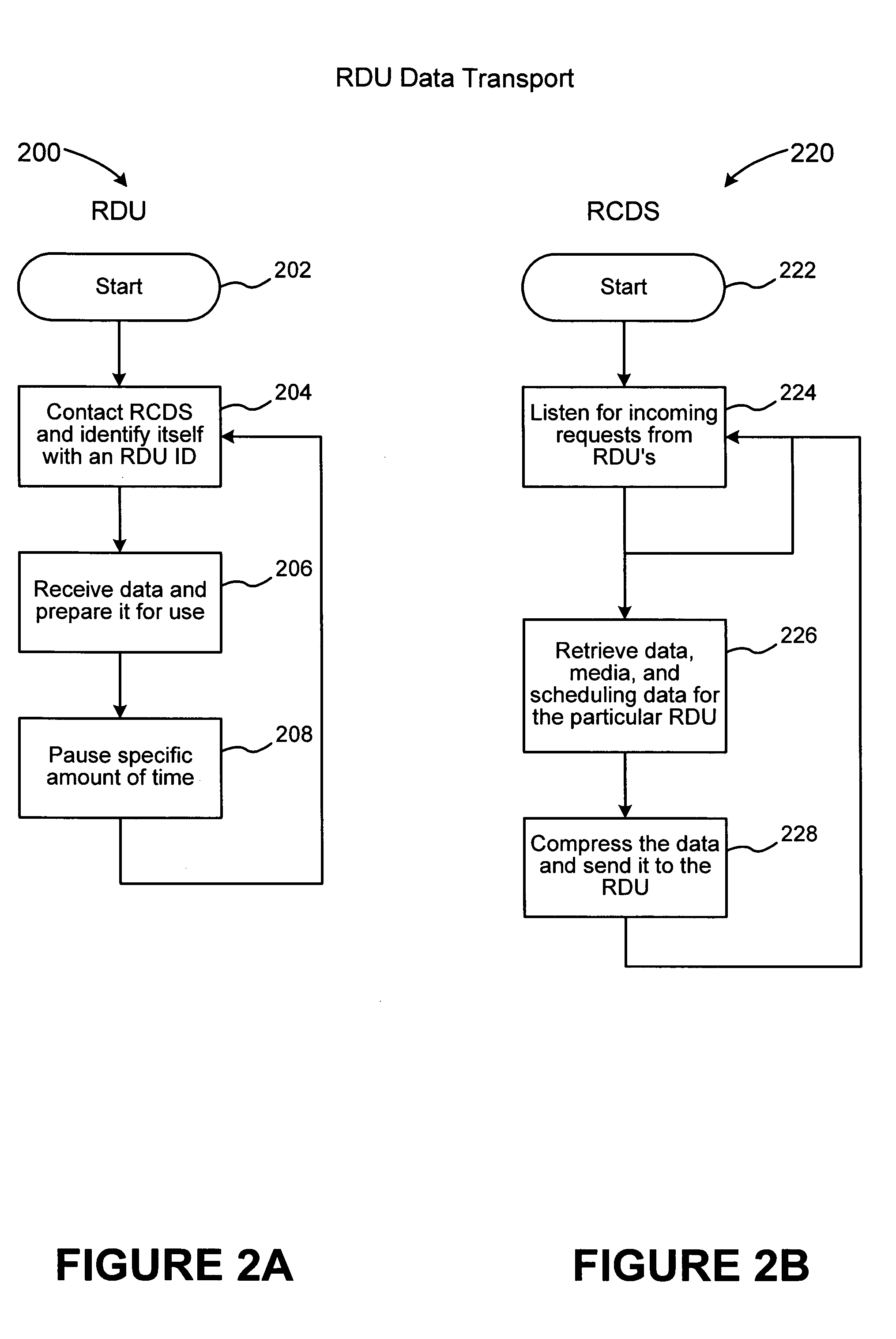

A media and content distribution system comprising a global scheduling system (GSS) configured to collect data and content, an Internet network, and one or more Regional Content Delivery Systems (RCDSs) is disclosed. The one or more RCDSs are configured to receive data and content and communicate with the GSS via the Internet network. The one or more RCDSs further comprise a regional content distributor / local data aggregator configured to receive data and content from the GSS, a cellular or wireless network, one or more remote display units (RDU) configured to display the data and content, and a data unit configured to acquire information related to activity at the one or more RDUs. The data unit is in communication with at least one of the one or more RDUs. The one or more RDUs are in communication with the regional content distributor / local data aggregator via the cellular or wireless network.

Owner:RIPPLE NETWORKS

Cloud storage system and method

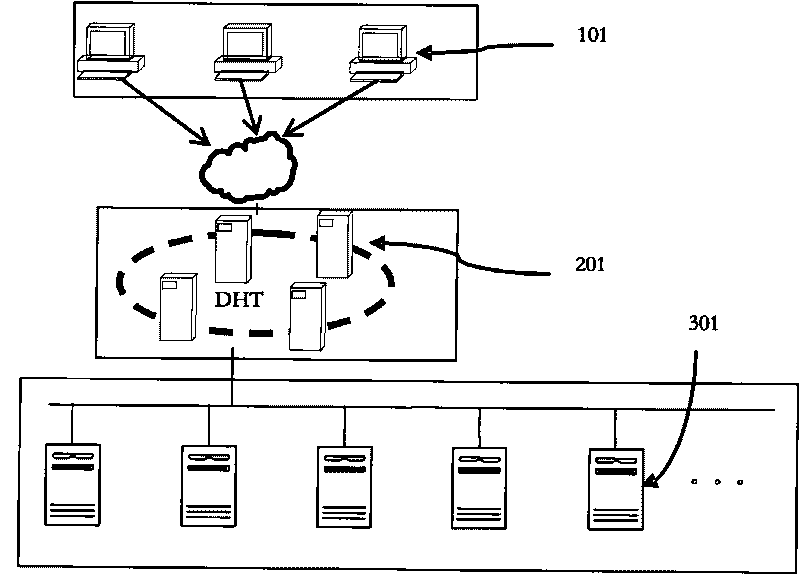

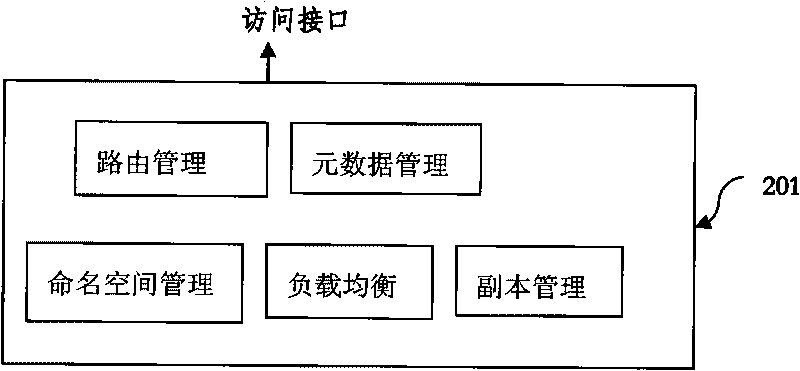

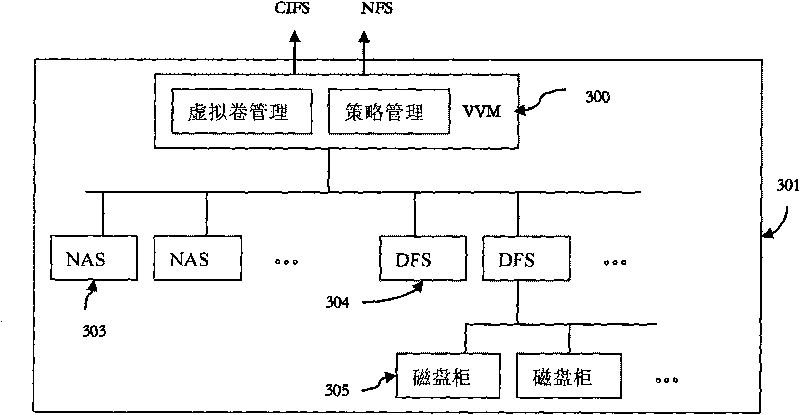

ActiveCN101753617AImprove scalabilityLow costInput/output to record carriersTransmissionGlobal schedulingCloud storage system

The invention discloses a cloud storage system and a method. The system comprises a global scheduling layer and a cloud storage layer, wherein the global scheduling layer is used for positioning the location of resources at the cloud storage layer according to a received access request and the resources of the access request; the global scheduling layer is composed of one or more than one server;and the cloud storage layer is composed of at least one cloud storage node. By using the global scheduling layer and the cloud storage layer, the cloud storage system can utilize the advantage of thetraditional storage architecture of the global scheduling layer and at the same time can also utilize the advantages of the cloud storage layer including strong extendibility and low cost.

Owner:ZTE CORP

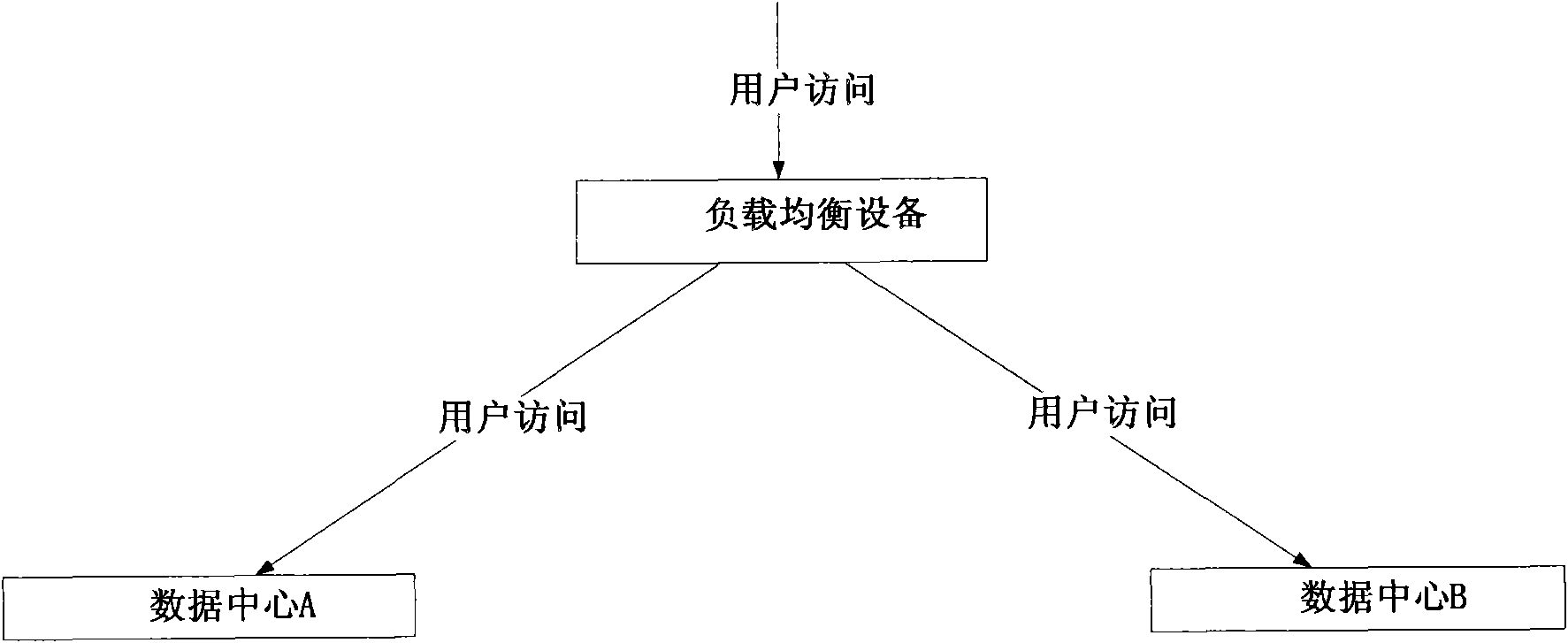

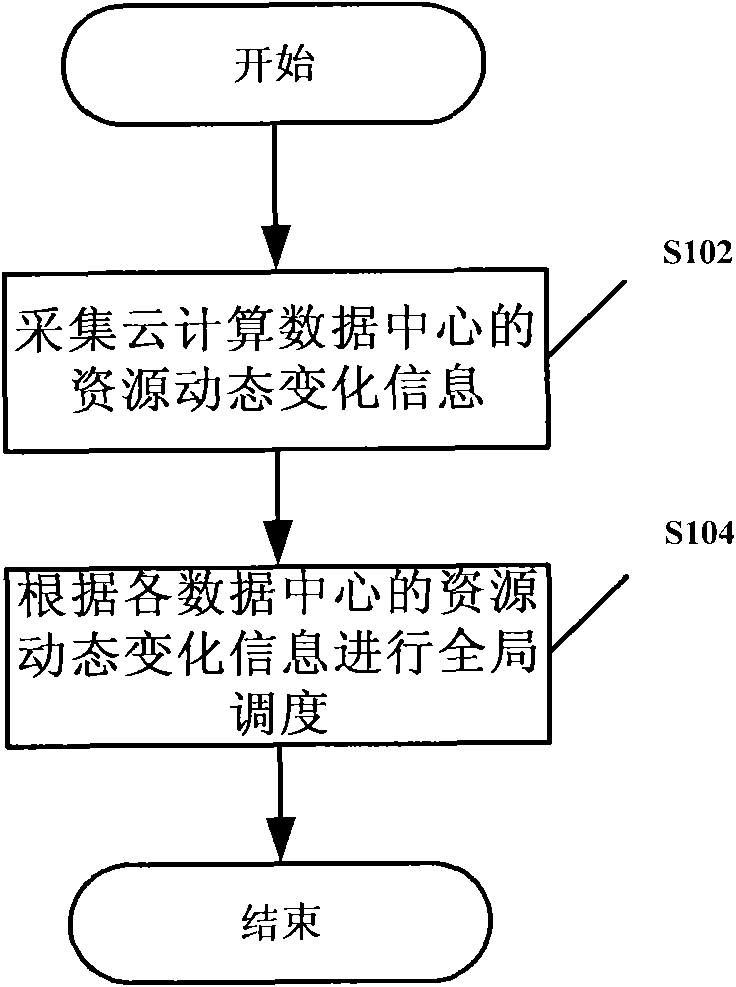

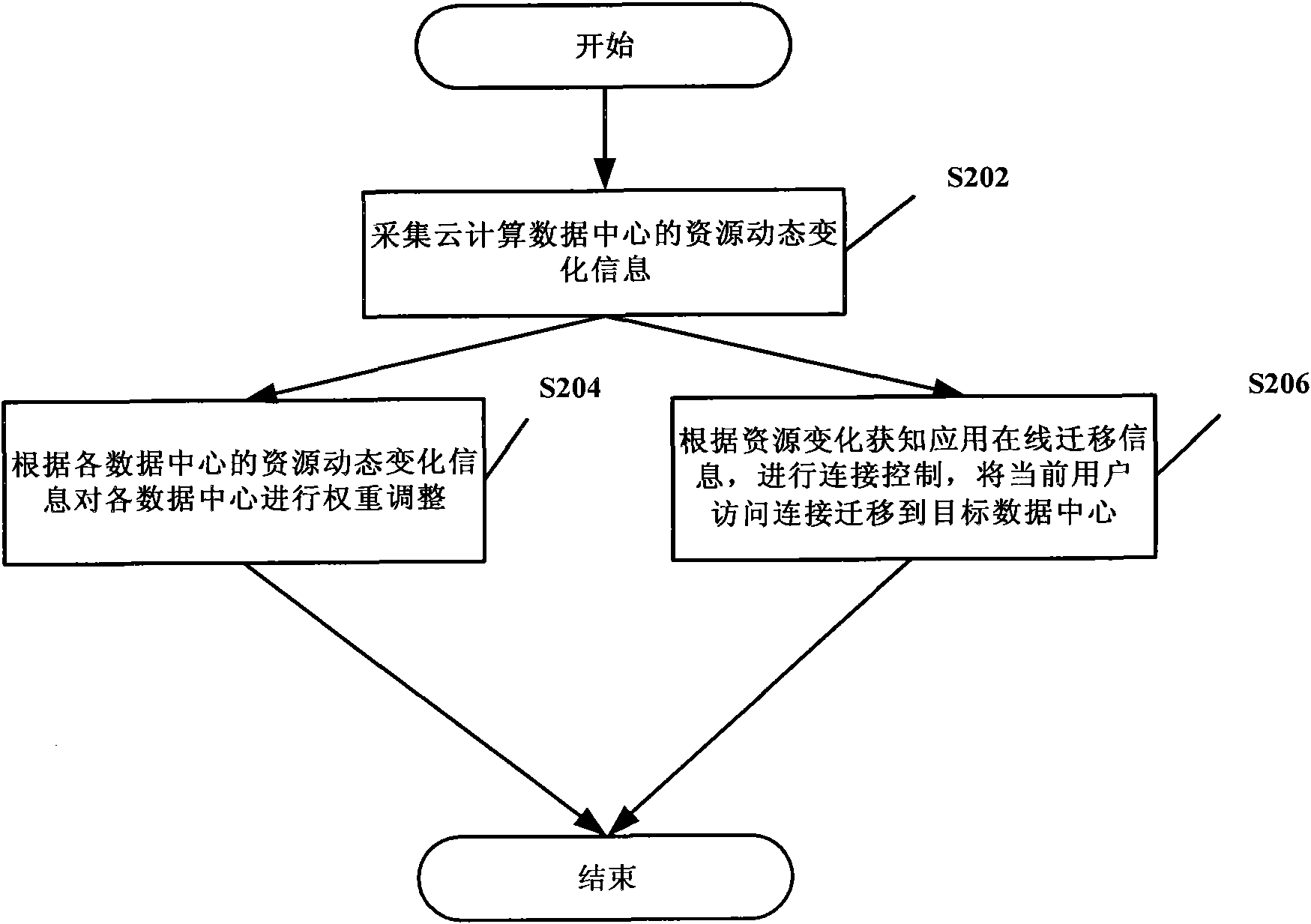

Dispatching method, unit and system based on cloud computing

ActiveCN102469023ASolve the problem of dynamic global load balancingImprove experienceData switching networksGlobal schedulingResource information

The invention discloses a dispatching method, unit and system based on cloud computing, thereinto, the method includes: collecting resource dynamic change information of a cloud data center; global scheduling in accordance with the resource dynamic change information each data center. The invention could achieve a resource dynamic change condition of an application system in different data center, which is able to ensure users' good experience when them visit the application system in network under a dynamic circumstance of the cloud computing, improving accessing quality of the network, solving a technical defect that in existing technology, data centers are all just passively accepting an access request, unable to directly understand specific resource information, and possibly to influence the quality of an application access.

Owner:CHINA MOBILE COMM GRP CO LTD

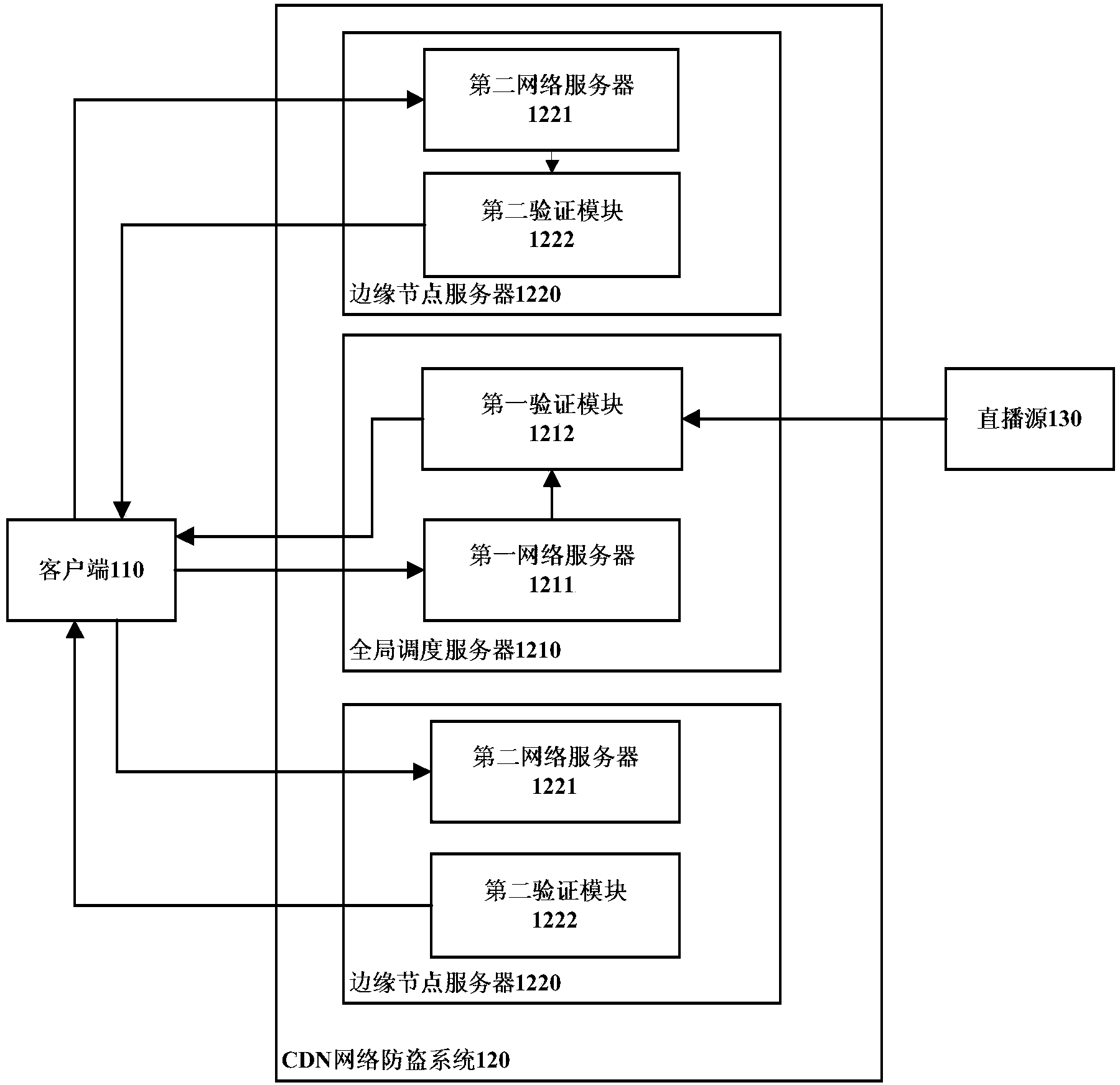

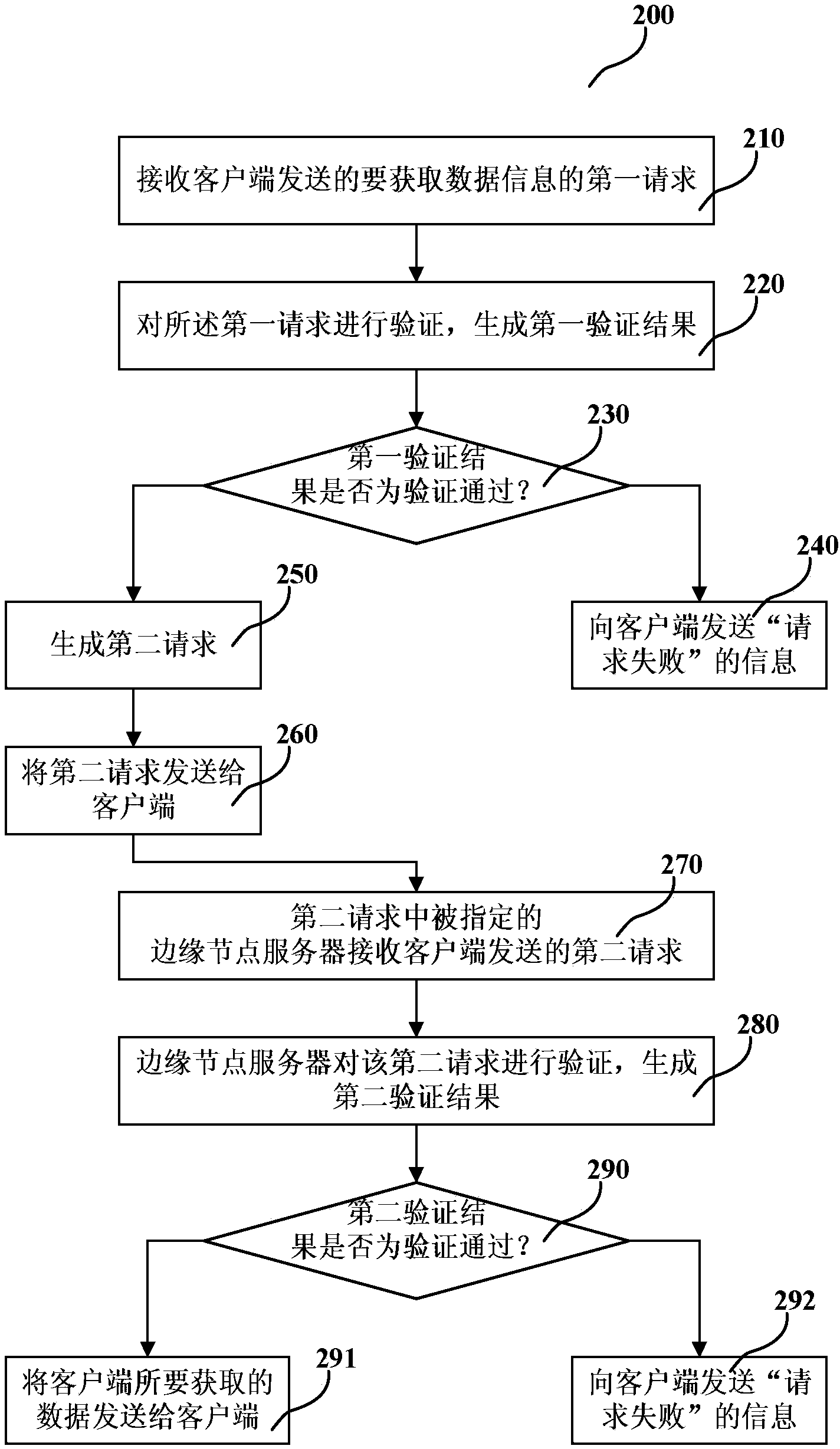

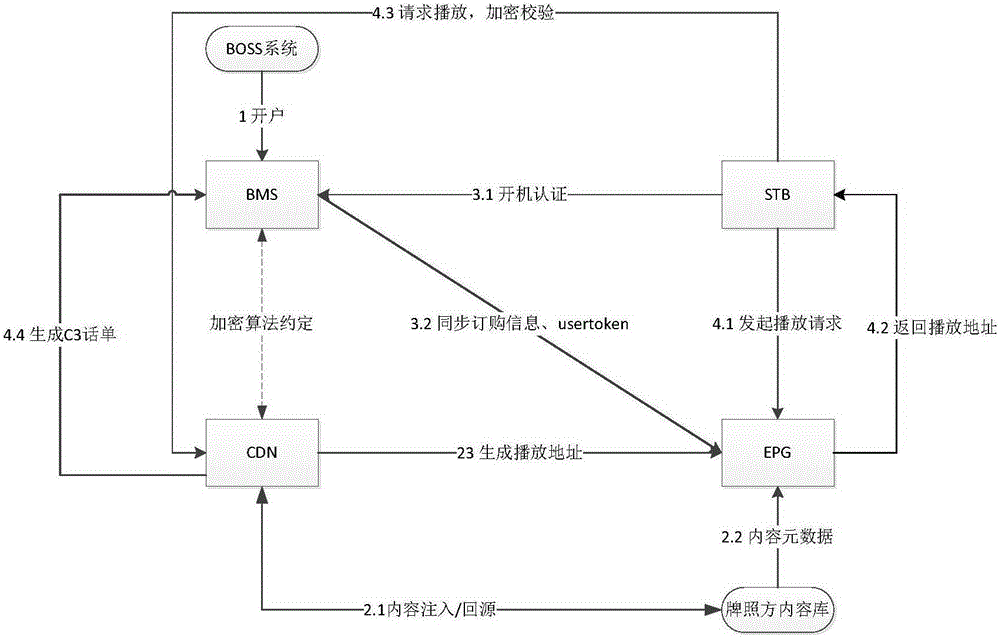

CDN (content distribution network) antitheft system and antitheft method

InactiveCN103986735ASolve the live stream anti-leech problemReduce implementation complexityUser identity/authority verificationContent distributionGlobal scheduling

The invention discloses a CDN (content distribution network) antitheft system which comprises a global scheduling server used for receiving a first request from a client, verifying the first request, generating a second request according to the request passing the verification and returning the second request to the client, and an edge node server used for receiving the second request from the client, verifying the second request and returning data to be acquired to the client if the second request passes the verification, otherwise, sending information of verification failure to the client. Furthermore, the invention also provides a CDN antitheft method. According to the CDN antitheft system and the CDN antitheft method, the effect of a live stream antitheft chain of frequently changed multi-client antitheft chain strategies is achieved, and the complexity and the maintenance cost of the system are reduced when multiple clients adopt different antitheft chain strategies and the antitheft chain strategies are often changed.

Owner:BEIJING SOOONER TECH DEV

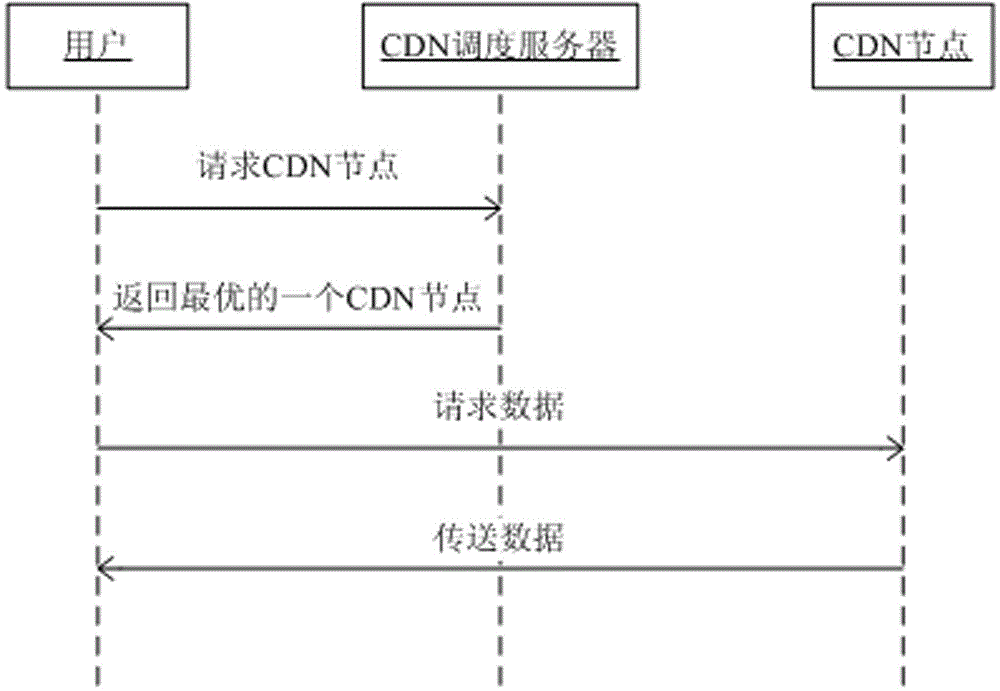

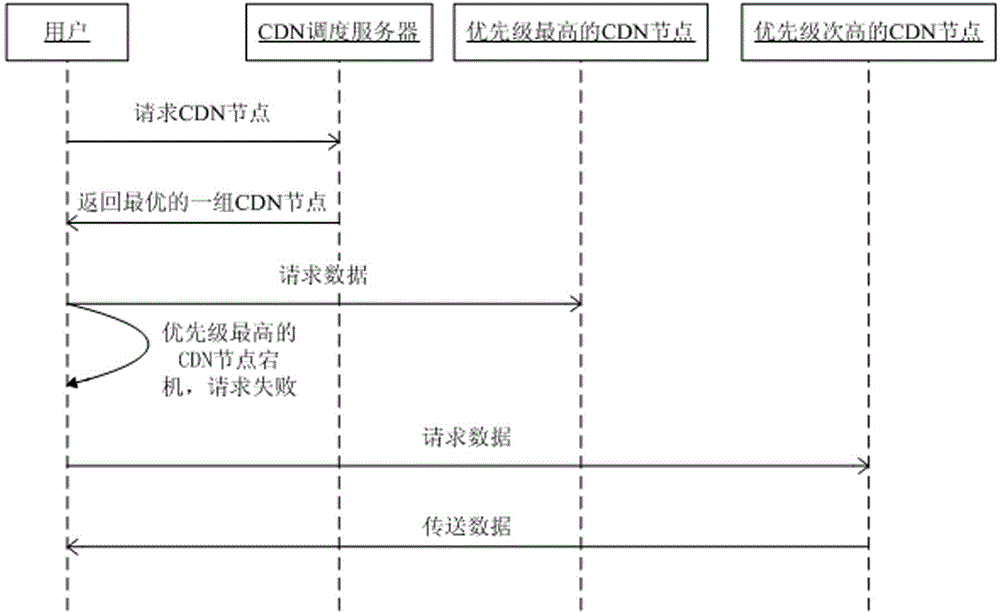

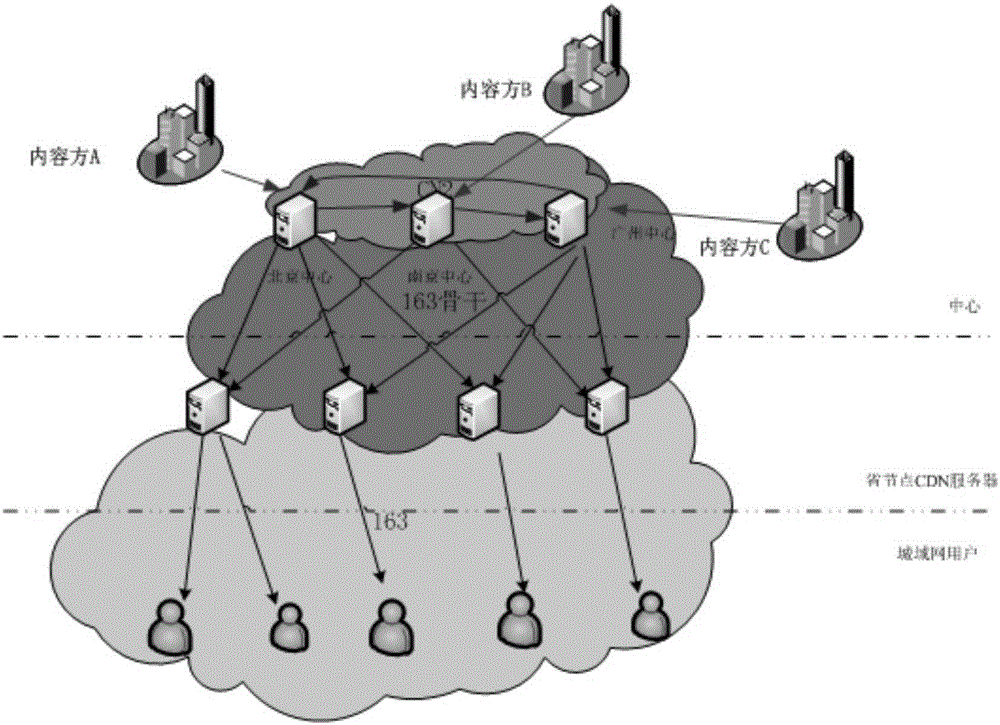

Scheduling method for responding multiple sources in content distribution network (CDN)

InactiveCN102868935AFix usability issuesImprove usabilitySelective content distributionFault toleranceContent distribution

The invention discloses a scheduling method for responding multiple sources in a content distribution network (CDN), aiming to solve the availability problem of the CDN system. The scheduling method comprises the steps of: when a user requests for global scheduling, returning a plurality of CDN node addresses for once request, and when the user is failed in access, retrying by using other backup address, that is to say, adding a failure retrying mechanism to an existing flow to solve the problem of the access failure to the CDN system and improve the availability of the system and the user experience. The method provided by the invention can be used to enhance the fault tolerance and the disaster tolerance of the CDN system to further realize the integral performance optimization of the system, so that the online video experience of a user can be promoted greatly, and all this is transparent to the user so that the user is free from carrying out any additional operation.

Owner:LETV CLOUD COMPUTING CO LTD

System and method for media content distribution

InactiveUS20060059511A1Television system detailsSpecific information broadcast systemsGlobal schedulingWeb technology

A media and content distribution system and method are provided, wherein specific media and content, including advertising material, real time traffic, news, sports, weather, and financial stock and / or other like information is delivered via cellular or other wireless network technology to locally distributed display panels, such as large plasma and LCD screens, positioned in high traffic or viewing areas. In addition, an exemplary media and content distribution system can include a highly complex scheduling and rotating capability that enables multiple media files to display at multiple locations based on each individual display requirement for advertising, training, entertainment, and other purposes. An exemplary media and content distribution system comprises a Global Scheduling System (GSS) and a Regional Content Delivery System (RCDS) configured to communicate through a communications network such as the Internet. Content and media can suitably be delivered from the Regional Content Delivery System to a Remote Display Unit (RDU) through cellular or other wireless network. The Remote Display Unit can suitably “poll” the Regional Content Delivery System to receive data and information, and then proceed to store the data and information locally to suitably reduce network utilization.

Owner:ACTIVEMAP

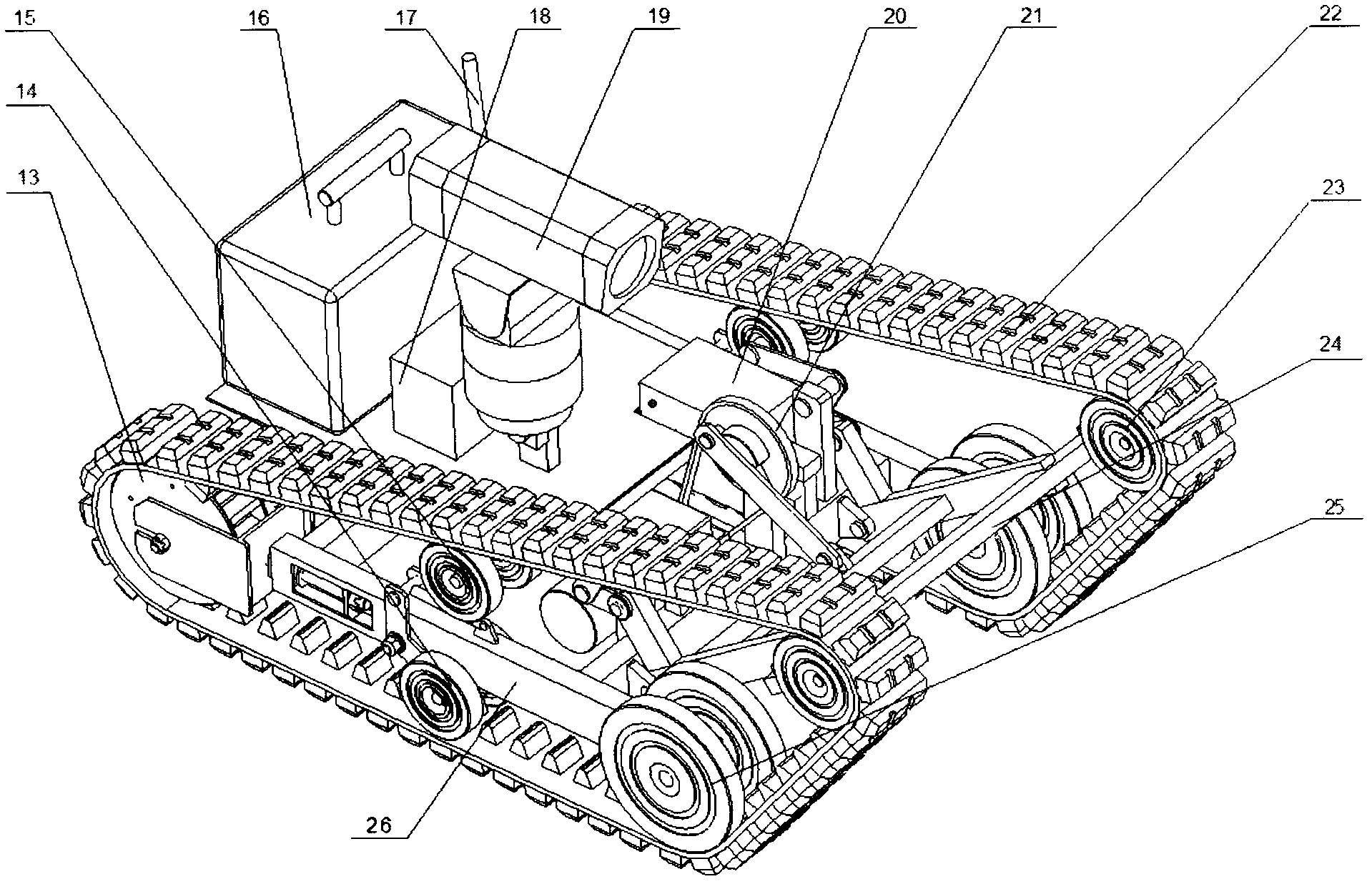

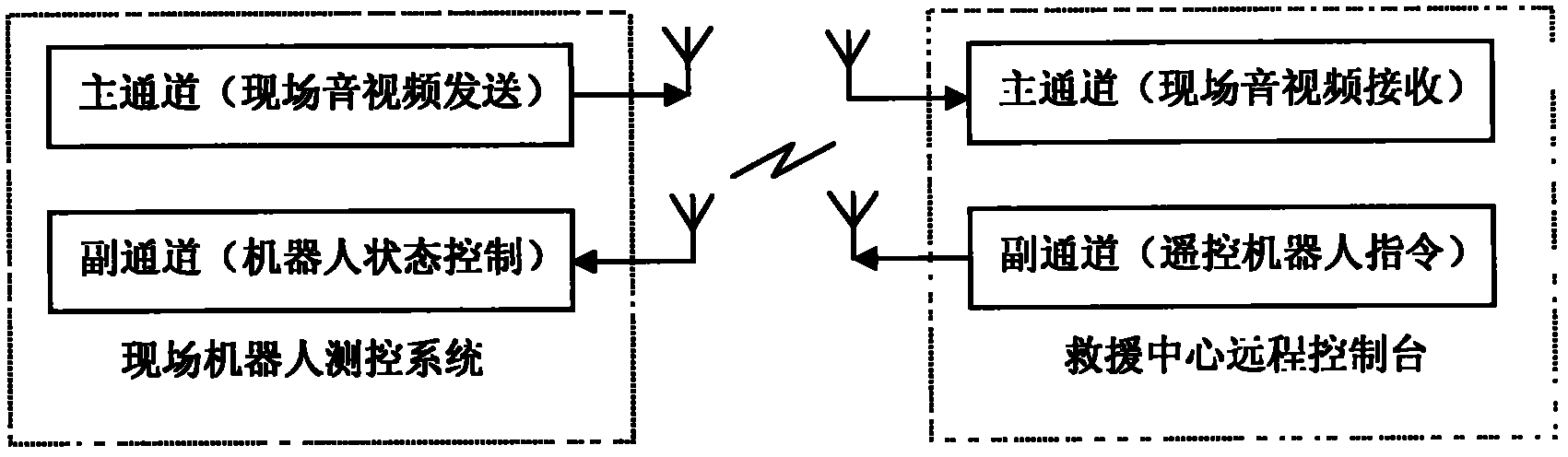

Information rapid access and emergency rescue airdrop robot system in disaster environment

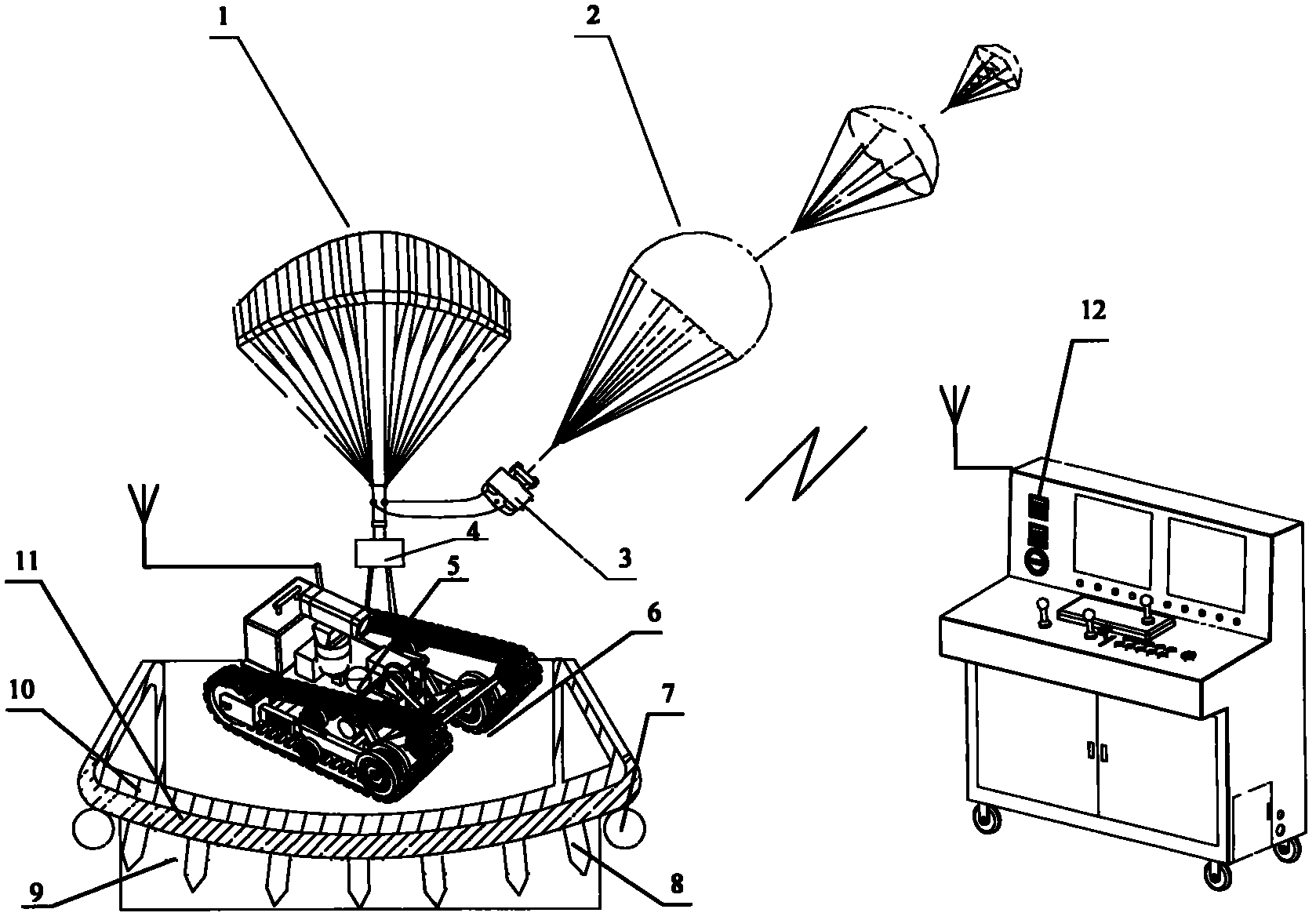

InactiveCN103029836ALow costImprove stabilityAircraft componentsMining devicesGlobal schedulingCommunications system

The invention relates to an information rapid access and emergency rescue airdrop robot system in a disaster environment. The system comprises V-shaped rail wheels, a parachuting system, an automatic tripping device, an airdrop robot body, an airdrop robot landing unlocking device, a buffer landing system, a remote wireless communication system and sensors. The V-shaped rail wheels are mounted on two sides of a landing chassis. The parachuting system and the buffer landing system are connected through the automatic tripping device. The airdrop robot body is fastened in the buffer landing system through the airdrop robot landing unlocking device. The remote wireless communication system comprises a wireless communication module arranged on the airdrop robot body, and a remote console arranged in a rescue command center. The information rapid access and emergency rescue airdrop robot system can be airdropped to a disaster scene immediately after a disaster happens, and achieves intelligent control and precise fixed point airdrop, safe landing of the airdrop robot and real-time multi-sensor detecting remote information interaction in the airdropping process, so the information rapid access and emergency rescue airdrop robot system has great significance in emergency rescue and global scheduling.

Owner:TIANJIN UNIV OF TECH & EDUCATION TEACHER DEV CENT OF CHINA VOCATIONAL TRAINING & GUIDANCE

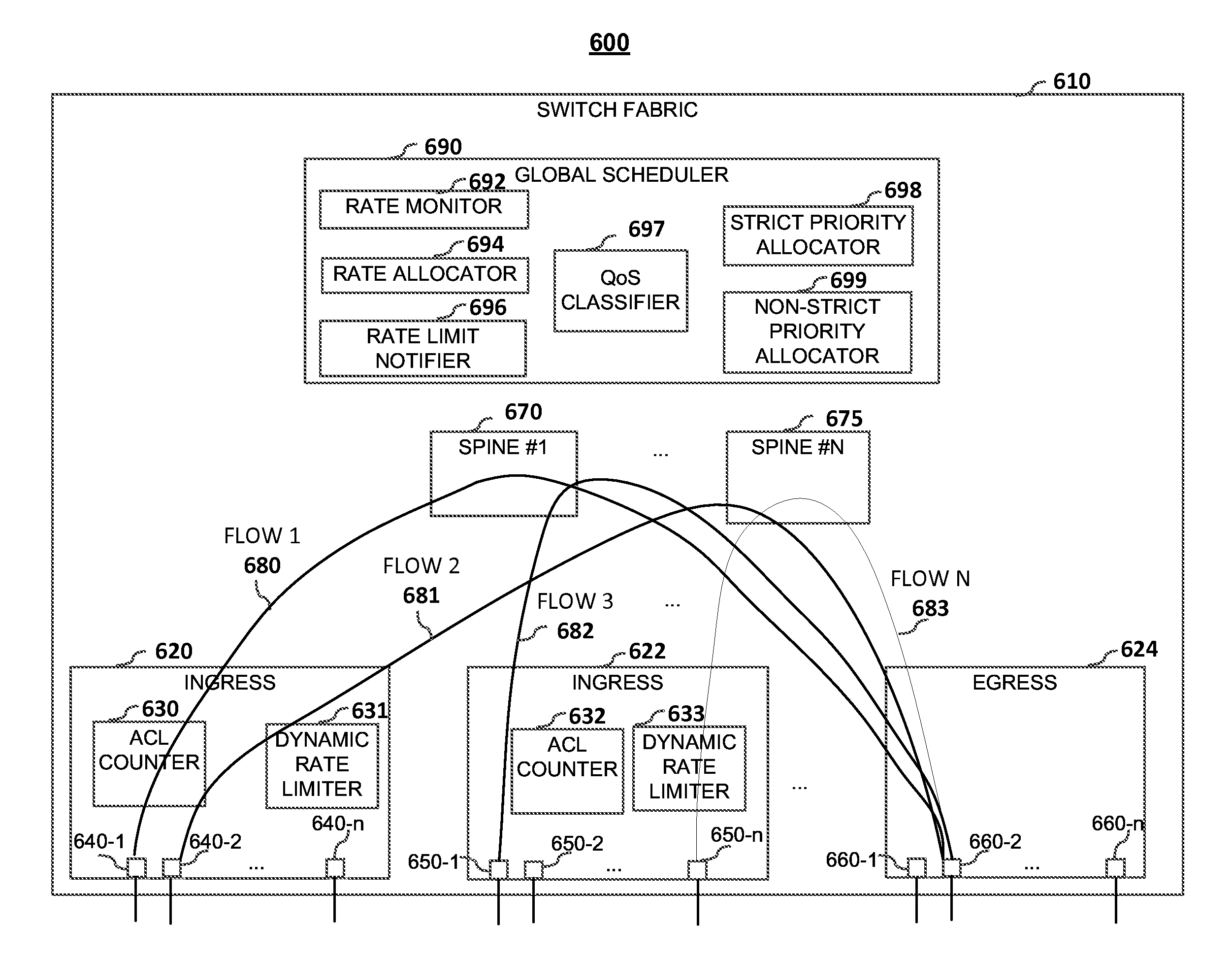

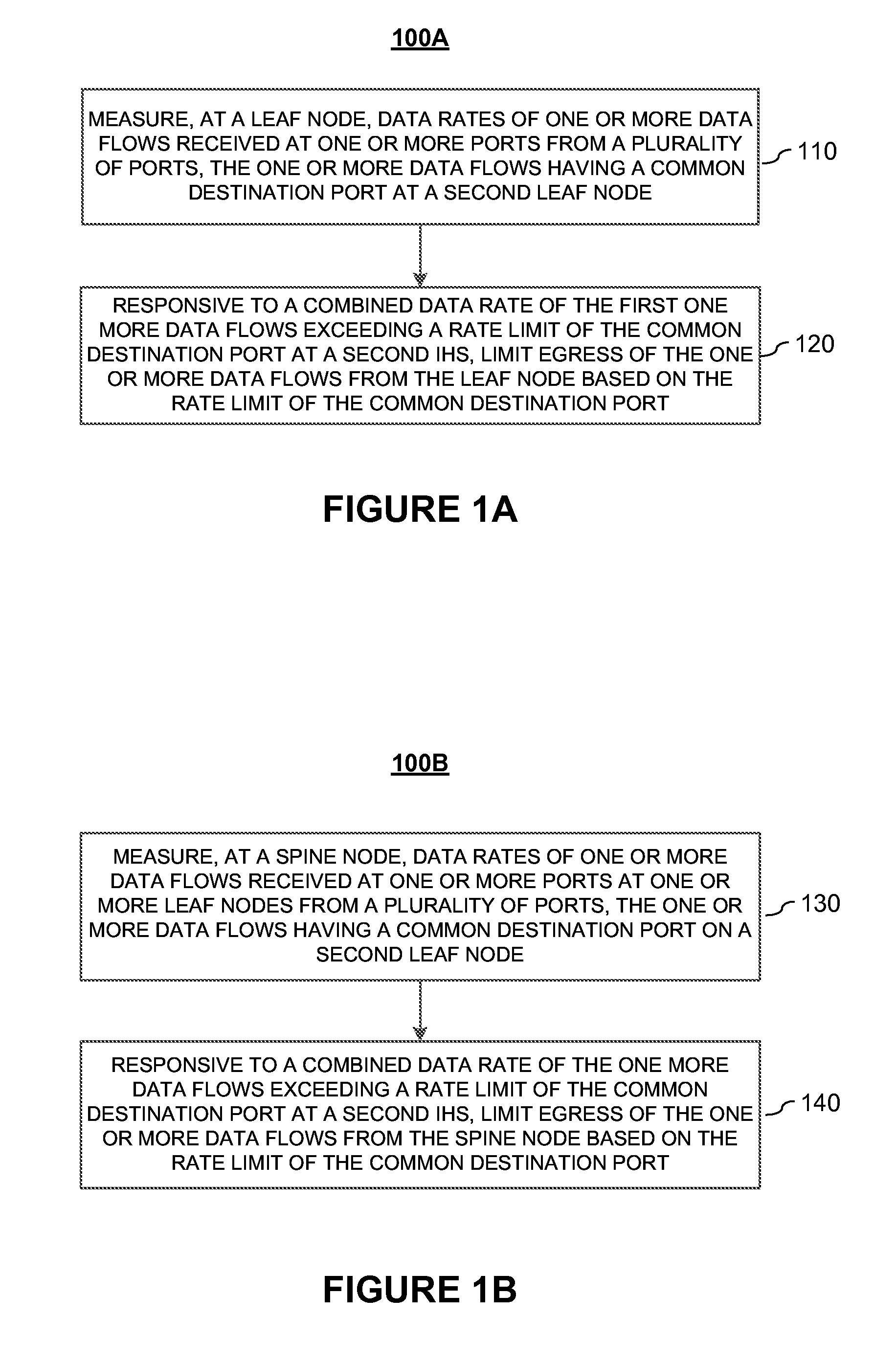

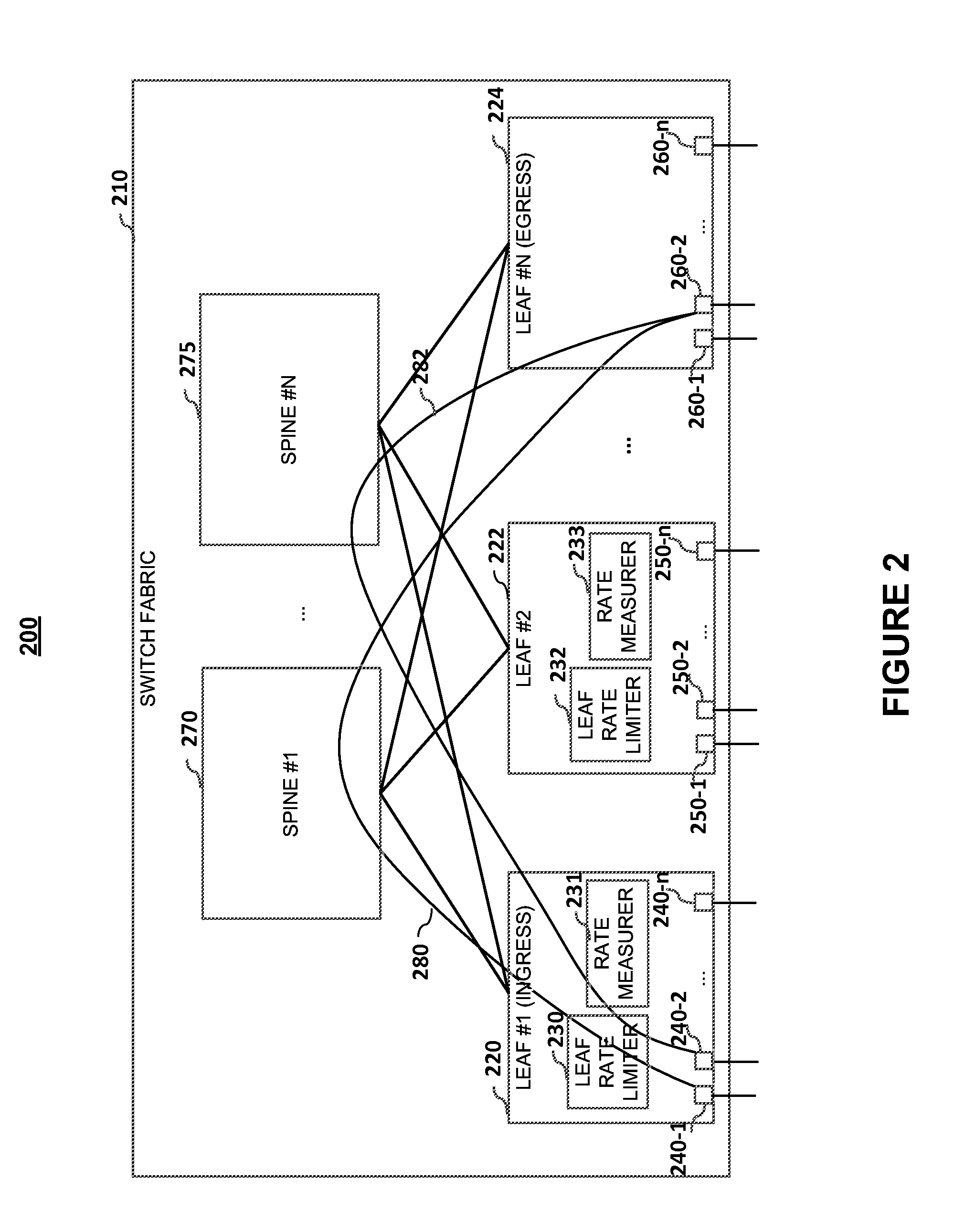

Reducing internal fabric congestion in leaf-spine switch fabric

Embodiments of the present invention provide methods and system to reduce needless data traffic in leaf-spine switch fabric. In embodiments, in a static solution, data rates of data flows having a common destination port may be measured and responsive to the data flows having a combined data rate that exceeding a rate limit of the common destination port, one or more of the data flows may be limited. Embodiments may also comprise a global scheduler to provide dynamic data rate controls of traffic flows from source ports to destination ports in which to reduce the handling of data traffic that would otherwise be discarded due to oversubscription.

Owner:DELL PROD LP

Grid non-deterministic job scheduling

InactiveUS7441241B2Multiprogramming arrangementsMultiple digital computer combinationsGlobal schedulingDistributed computing

Owner:INT BUSINESS MASCH CORP

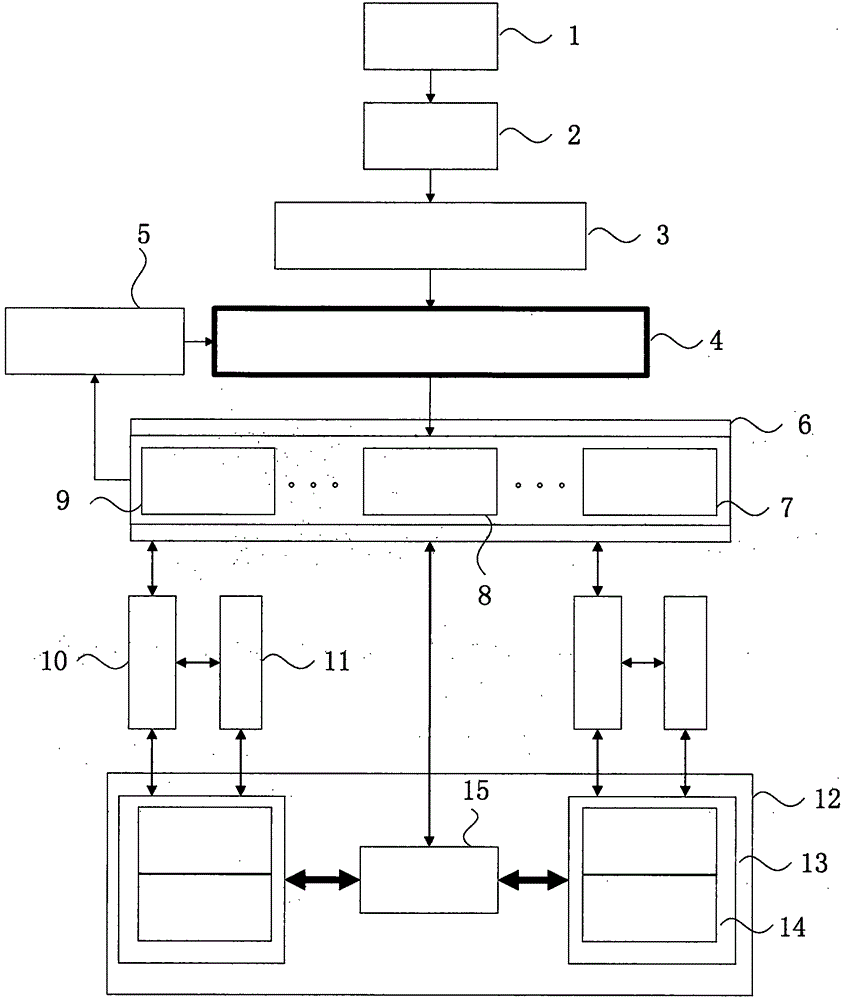

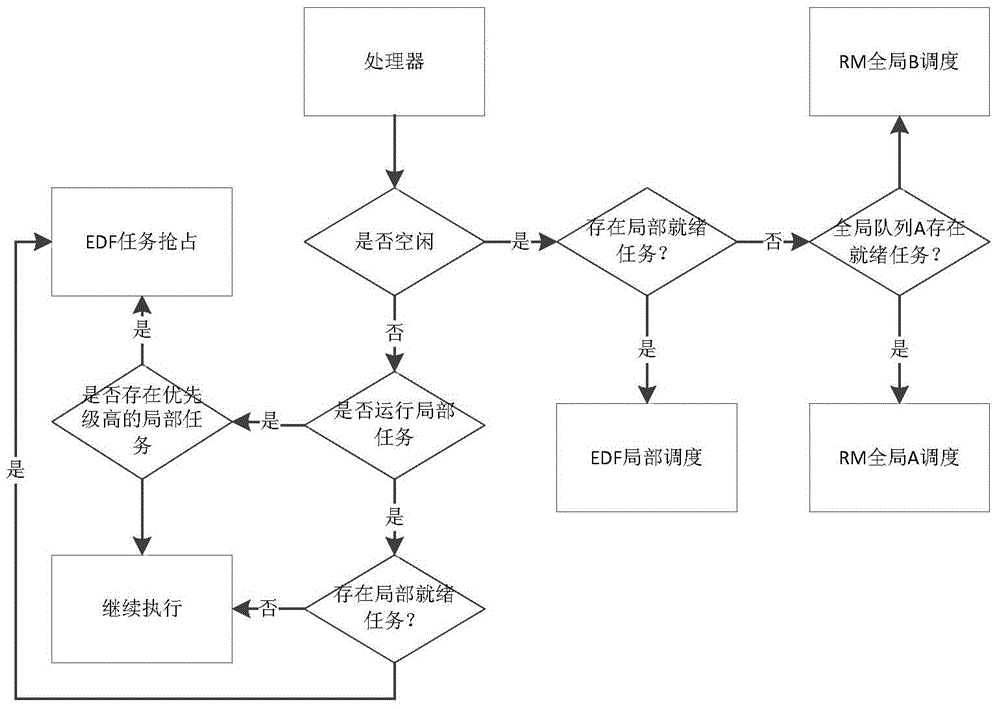

Big data hybrid scheduling model on private cloud condition

InactiveCN105893158AReduce migration costsFast executionResource allocationTransmissionNODALGlobal scheduling

The invention relates to the technical field of cloud computing and big data, in particular to a big data hybrid scheduling model on the private cloud condition. The big data hybrid scheduling model comprises an access control module, a task decomposition module, an overall situation scheduler module based on virtual resources, a resource virtualization mapping module, a virtual resource monitoring module, a physical resource monitoring module, and a local scheduler module based on physical resources. The big data hybrid scheduling model is a hybrid scheduler including physical resource scheduling, virtual resource scheduling, overall situation scheduling and local scheduling. User tasks are decomposed into a plurality of independent subtasks which are distributed on different clusters or computational nodes. Parallel and rapid execution of the tasks is ensured, network and data transfer costs are reduced, physical resource scheduling is utilized for further decomposing subtasks, cluster resources are utilized to be maximum extent, and the task execution time is further shortened.

Owner:BEIJING UNIV OF TECH

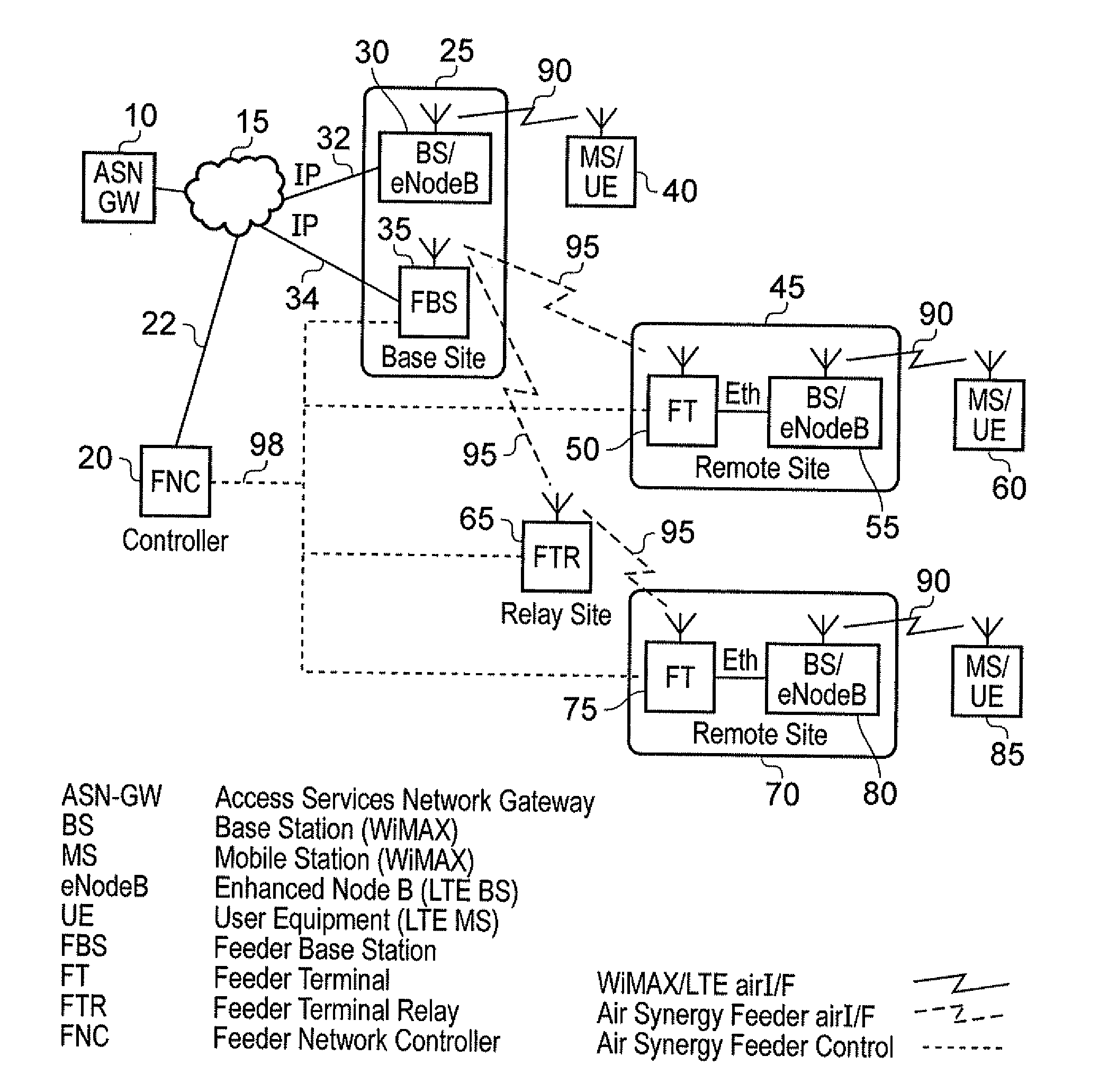

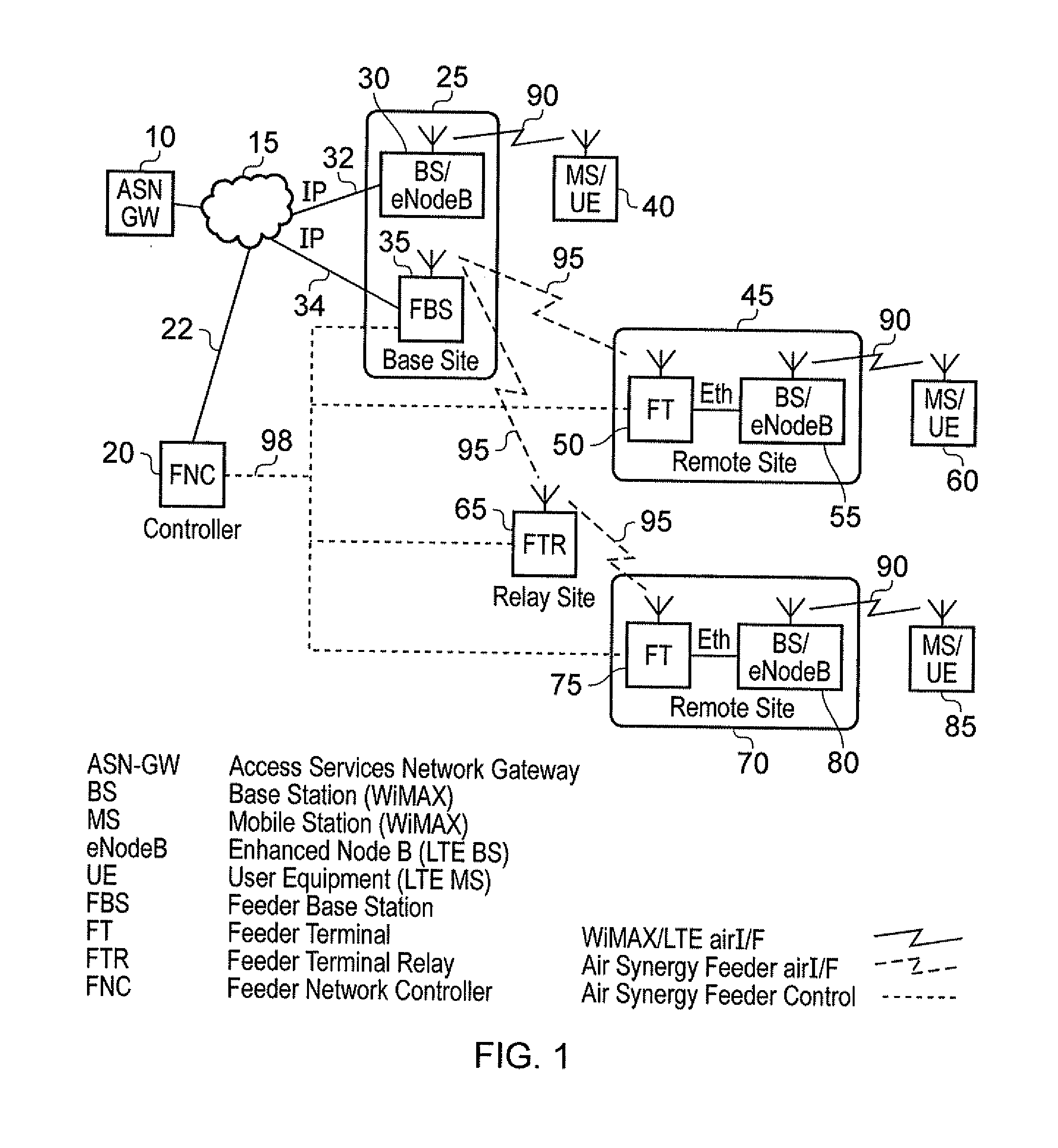

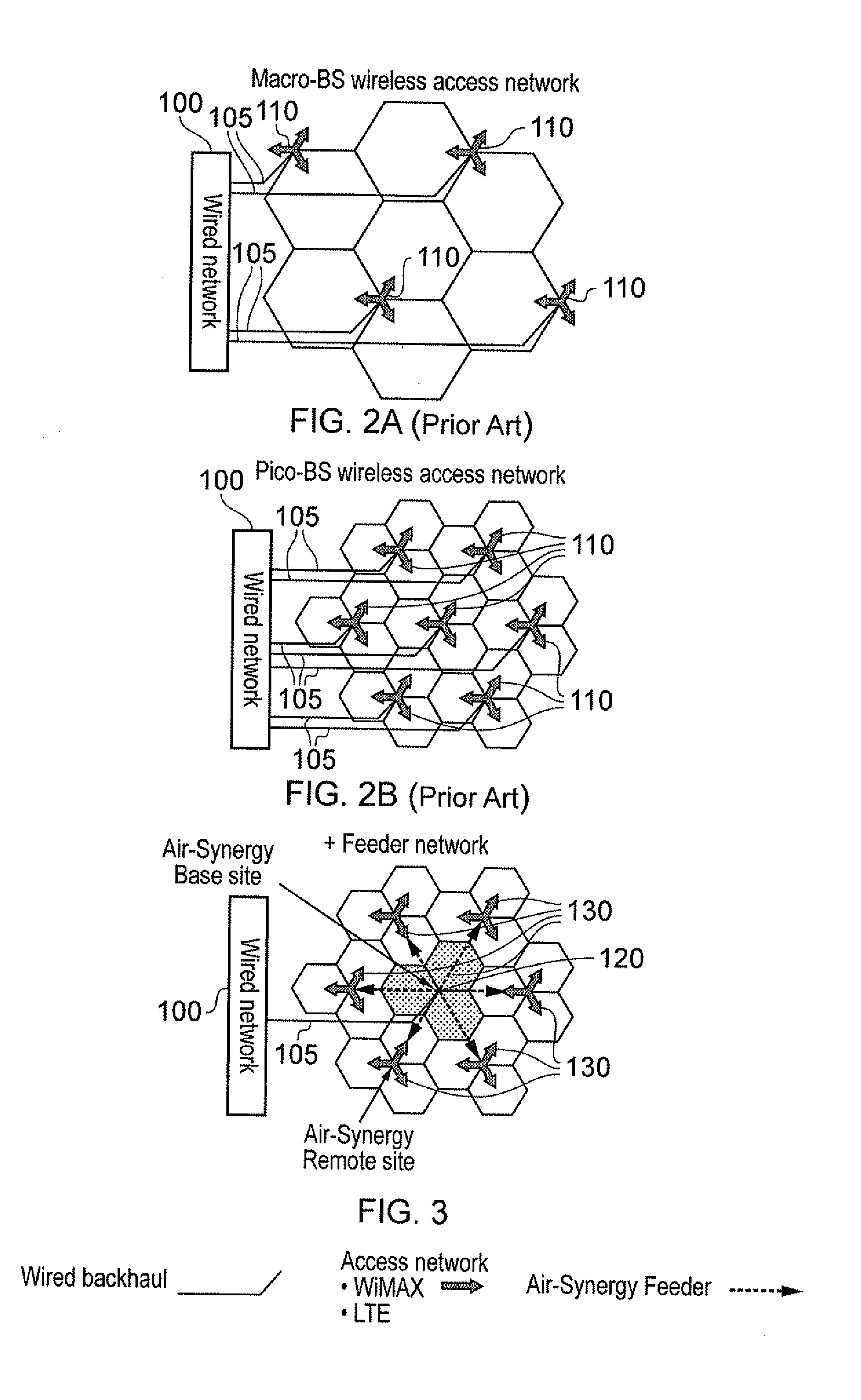

Apparatus and method for controlling a wireless feeder network

ActiveUS20120082044A1Improve spectral efficiencyUniform traffic loadingError preventionTransmission systemsAccess networkWireless mesh network

An apparatus and method are provided for controlling a wireless feeder network used to couple access base stations of an access network with a communications network. The wireless feeder network comprises a plurality of feeder base stations coupled to the communications network and a plurality of feeder terminals coupled to associated access base stations. Each feeder terminal has a feeder link with a feeder base station, and the feeder links are established over a wireless resource comprising a plurality of resource blocks. Sounding data obtained from the wireless feeder network is used to compute an initial global schedule to allocate to each feeder link at least one resource block, and the global schedule is distributed whereafter the wireless feeder network operates in accordance with the currently distributed global schedule to pass traffic between the communications network and the access base stations. Using traffic reports received during use, an evolutionary algorithm is applied to modify the global schedule, with the resultant updated global schedule then being distributed for use. This enables the allocation of resource blocks to individual feeder links to be varied over time taking account of traffic within the wireless feeder network, thereby improving spectral efficiency.

Owner:AIRSPAN IP HOLDCO LLC

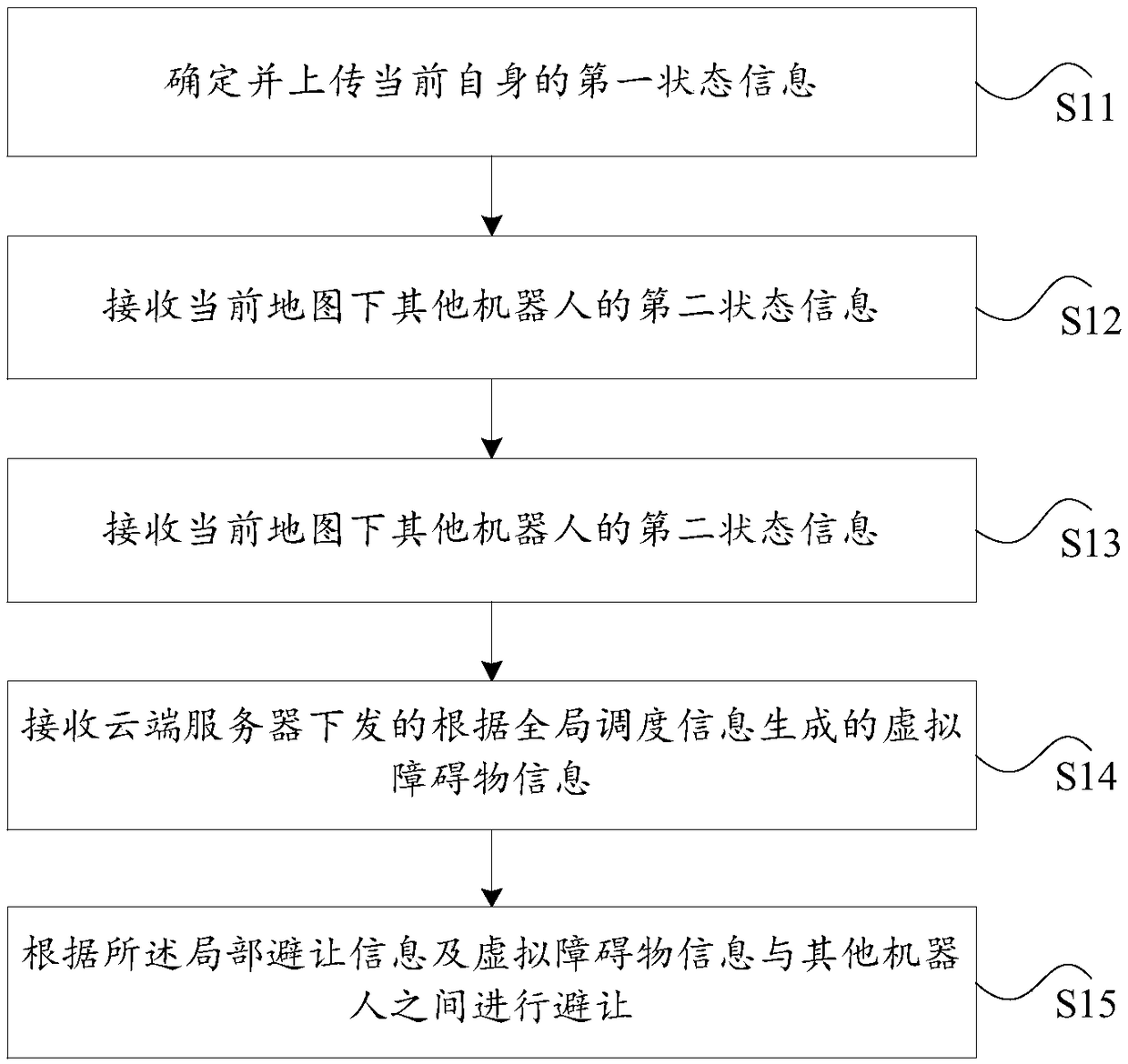

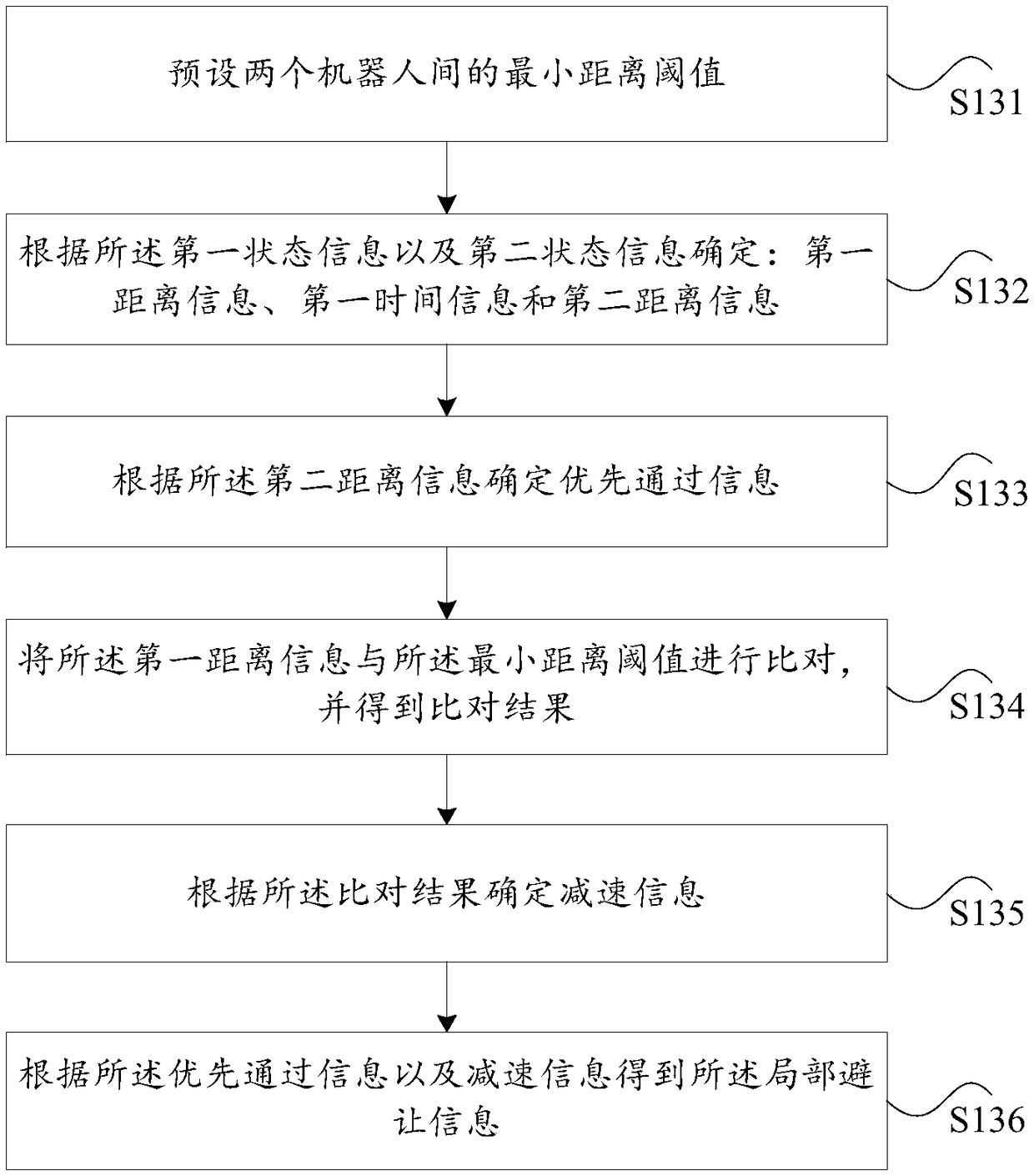

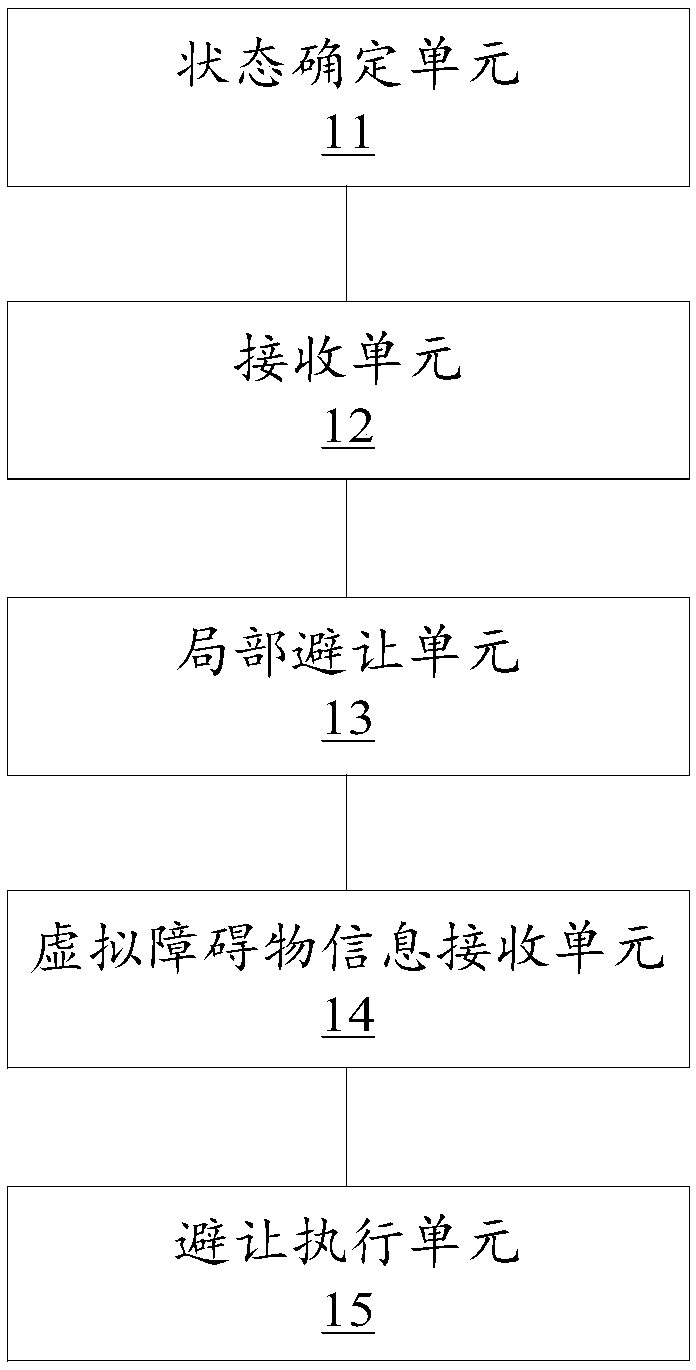

Scheduling methods, scheduling device and scheduling server used for multi-robot mutual avoidance

ActiveCN109213155AEnsure safetyGuaranteed traffic capacityPosition/course control in two dimensionsChannel state informationGlobal scheduling

The application discloses scheduling methods, a scheduling device and a scheduling server used for multi-robot mutual avoidance. The method includes: determining and uploading current first state information of a current one; receiving second state information of other robots in a current map; obtaining local avoidance information according to the first state information and the second state information; receiving virtual obstacle information which is issued by a cloud-side server and generated according to global scheduling information, wherein the global scheduling information is obtained bycarrying out processing by the cloud-side server according to the first state information and the second state information; and carrying out avoidance between the other robots according to the localavoidance information and the virtual obstacle information. A purpose of effectively unifying local avoidance and global scheduling strategies is achieved, and thus technical effects of better guaranteeing safety and traffic of running of the multiple robots in the same scene, and preventing problems of mutual collision among the multiple robots and the like are realized.

Owner:北京云迹科技股份有限公司

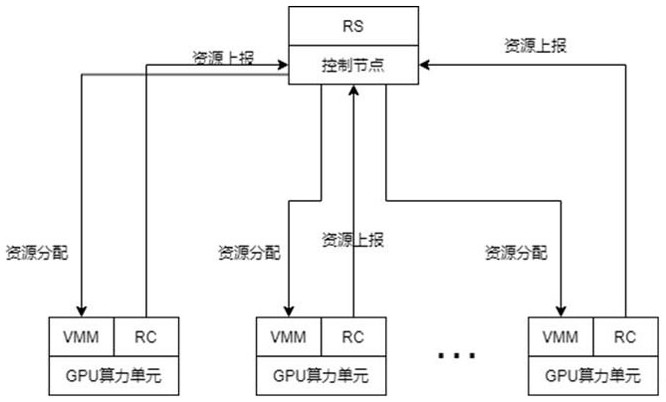

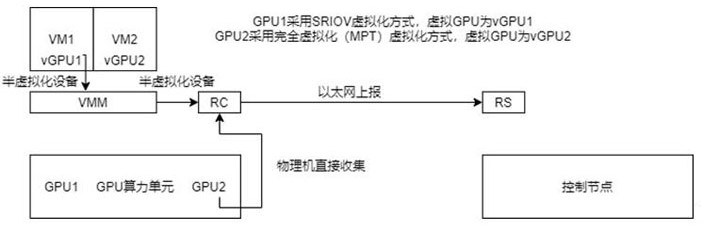

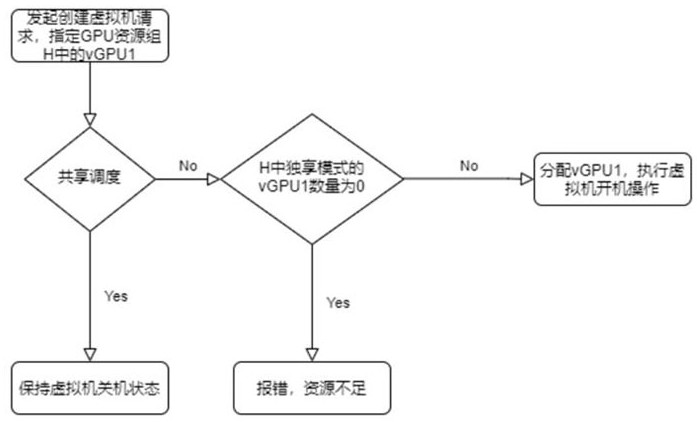

GPU resource pool scheduling system and method

ActiveCN112286645AImprove agilityIncrease elasticityResource allocationSoftware simulation/interpretation/emulationResource poolVirtualization

The invention discloses a GPU resource pool scheduling system, and the system comprises a GPU cloud computing power center and a GPU cloud control node; the GPU cloud computing power center comprisesa plurality of GPU computing power units, and each GPU computing power unit comprises a VMM and an RC, wherein the GPU cloud control node comprises an RS; the GPU computing power units are used for providing computing power of GPUs; each VMM is used for providing a control interface, receiving a resource scheduling instruction sent by the RS, creating a virtual machine according to the instruction, allocating vGPU resources to the virtual machine and starting the virtual machine; each RC is used for counting resource data of the GPU computing power unit and reporting the resource data to the RS; each RS is used for collecting resource data reported by each RC and sending a resource scheduling instruction to each VMM, and scheduling resources of the GPU computing power unit globally, including the steps of gathering GPU resources to form a plurality of groups of GPU hardware sets, and forming a GPU resource pool by the plurality of groups of GPU hardware sets. According to the system, unified resource pool scheduling management of various manufacturers, GPU models and GPU virtualization modes in a cloud computing platform is realized; the invention further discloses a GPU resource pool scheduling method.

Owner:WUHAN UNIV OF TECH +1

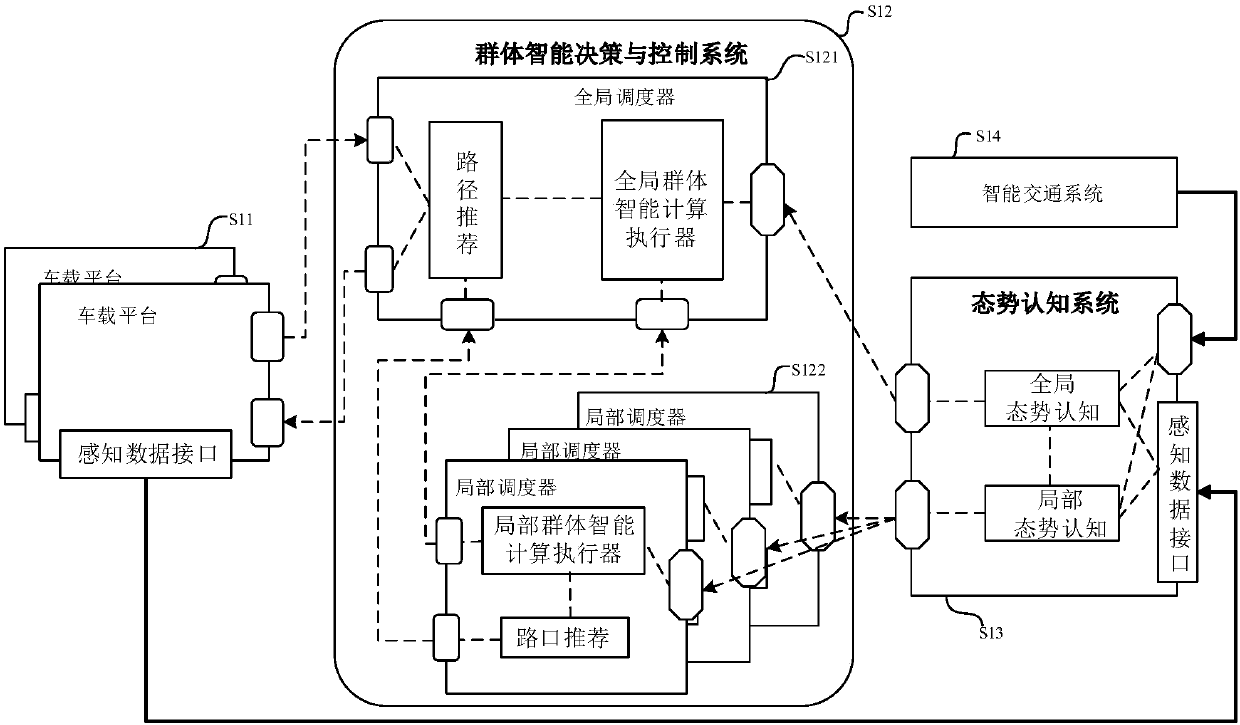

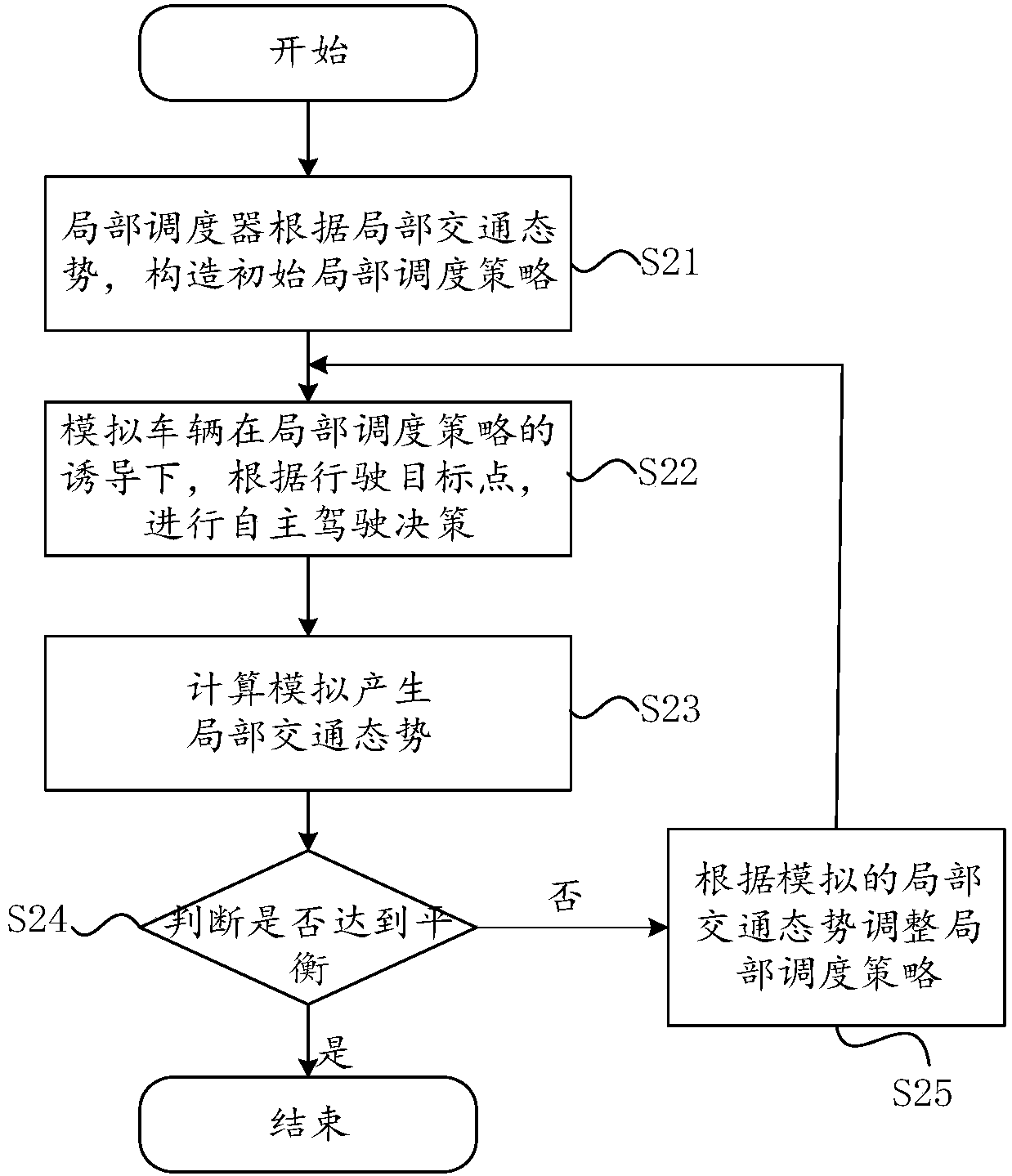

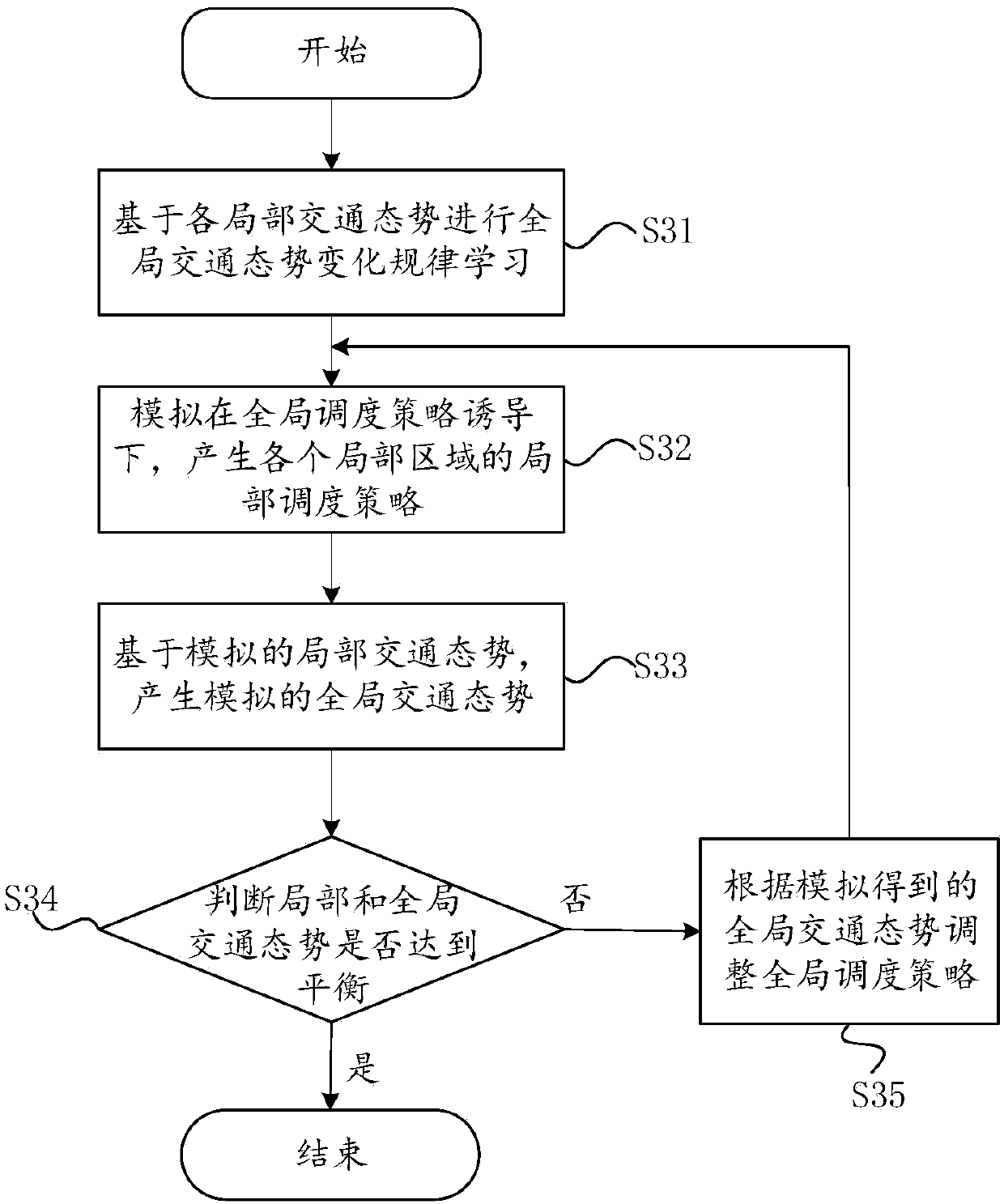

City traffic optimization service method and system based on swarm intelligence

The invention discloses a city traffic optimization service method and system based on swarm intelligence; the method comprises the following steps: simulating local traffic situations according to swarm intelligence, and selecting a local scheduling strategy allowing each vehicle driving time and the local traffic situations to reach balance according to the simulation result and a local trafficoptimization target; using swarm intelligence to simulate the global traffic situation, and selecting a global scheduling strategy allowing the local traffic situation and the global traffic situationto reach balance according to the simulation result and a global traffic optimization target; recommending optical routes for each participating vehicle according to the swarm intelligence calculation result, thus realizing city traffic optimization services; finally, using the method to realize the city traffic optimization service system based on swarm intelligence. The system comprises a vehicle mounted platform, a situation cognition system and a swarm intelligence decision and control system, and aims to improve the city traffic optimization service decision enforceability and validity.

Owner:BEIJING UNIV OF POSTS & TELECOMM

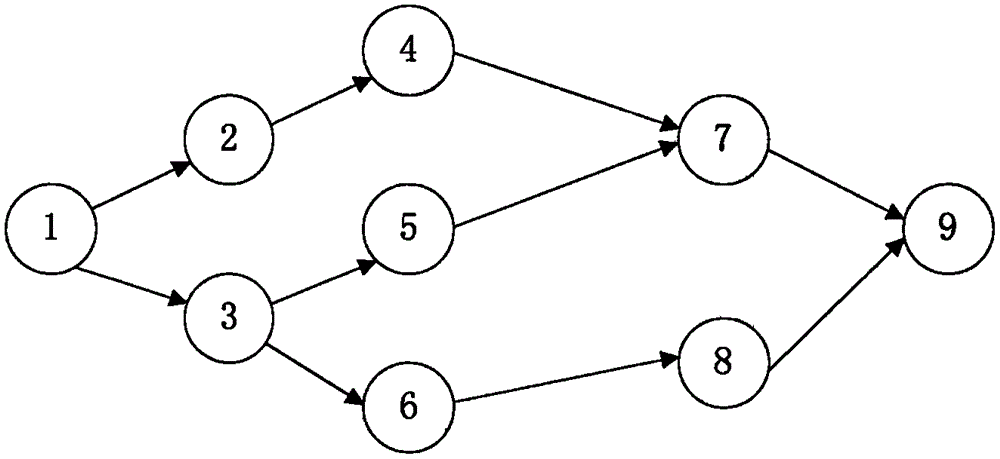

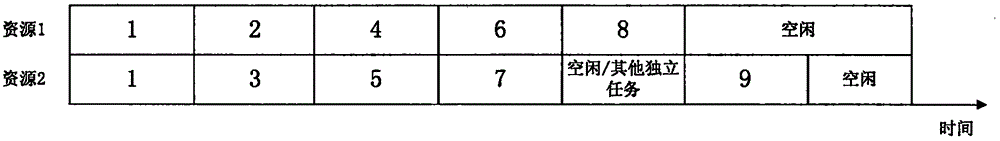

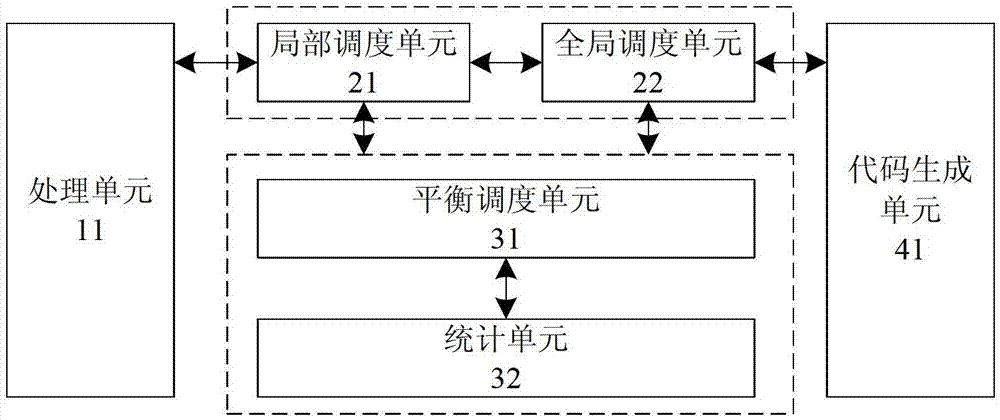

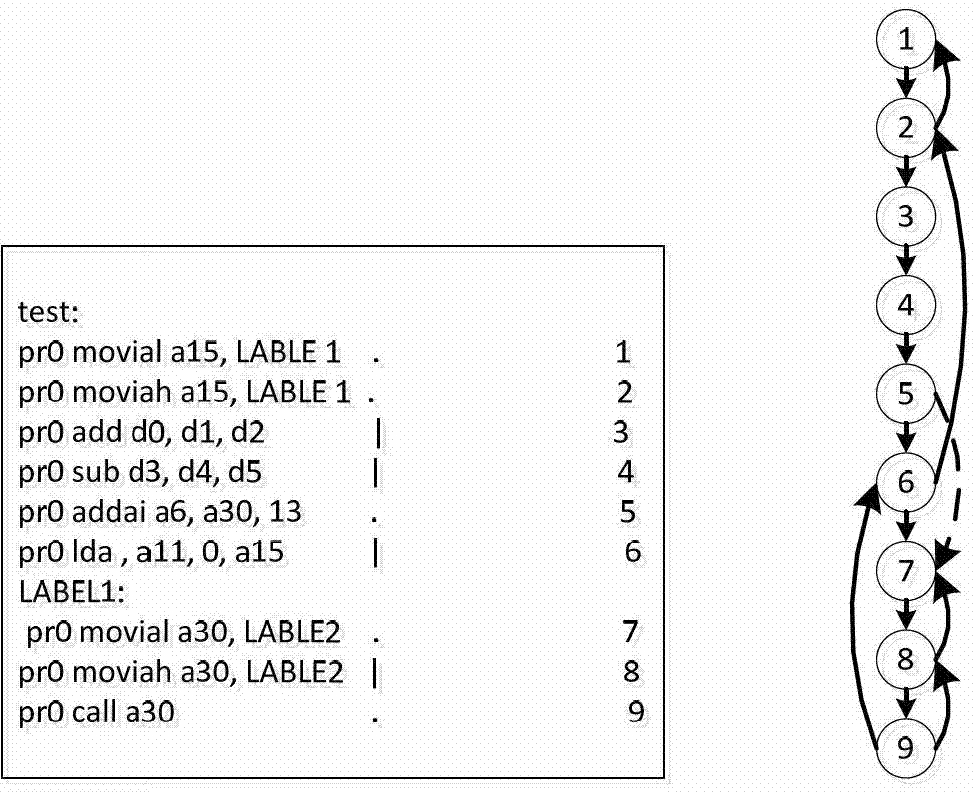

Method and system for scheduling delay slot in very-long instruction word structure

InactiveCN102880449AImprove execution efficiencyConcurrent instruction executionGlobal schedulingScheduling instructions

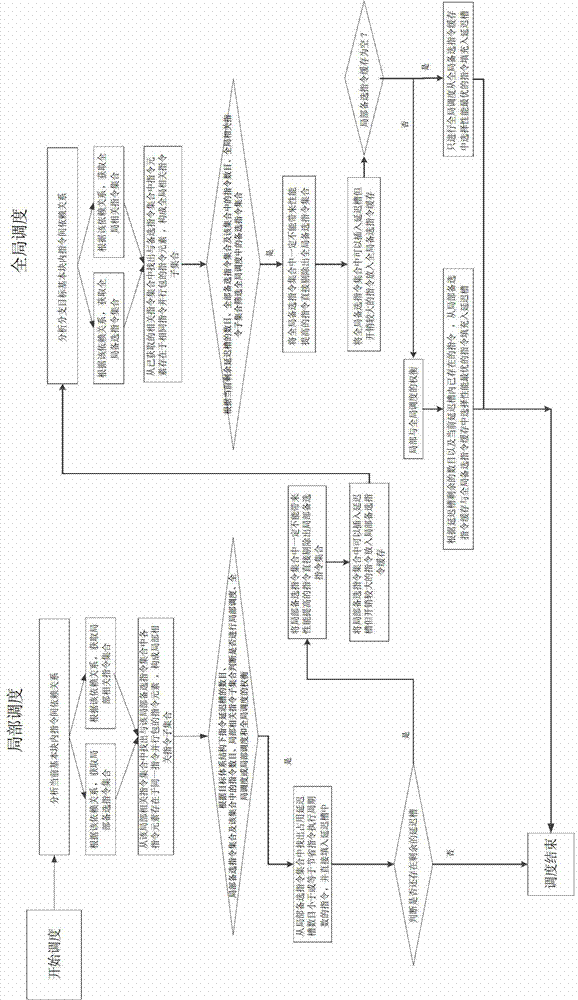

The invention discloses a method and a system for scheduling a delay slot in a very-long instruction word structure. The method comprises the steps of locally scheduling instructions in a current basic block; after the local scheduling is finished, judging whether a residual instruction delay slot exists, if not, ending the scheduling, otherwise, putting an instruction which can be filled into the instruction delay slot and is high in spending into a local standby instruction cache; globally scheduling instructions in a basic block of a branch target, selecting an instruction which can be filled into the instruction delay slot and placing the instruction in a global standby instruction cache; and selecting an instruction from the local standby instruction cache and / or the global standby instruction cache and filling the instruction into the residual instruction delay slot. The system comprises a local scheduling unit, a global scheduling unit and a balanced scheduling unit. According to the method and the system for scheduling the delay slot in the very-long instruction word structure disclosed by the invention, through balance between scheduling of the delay slot and program parallelism, as well as balance between local scheduling and global scheduling, high execution efficiency of programs can be implemented.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

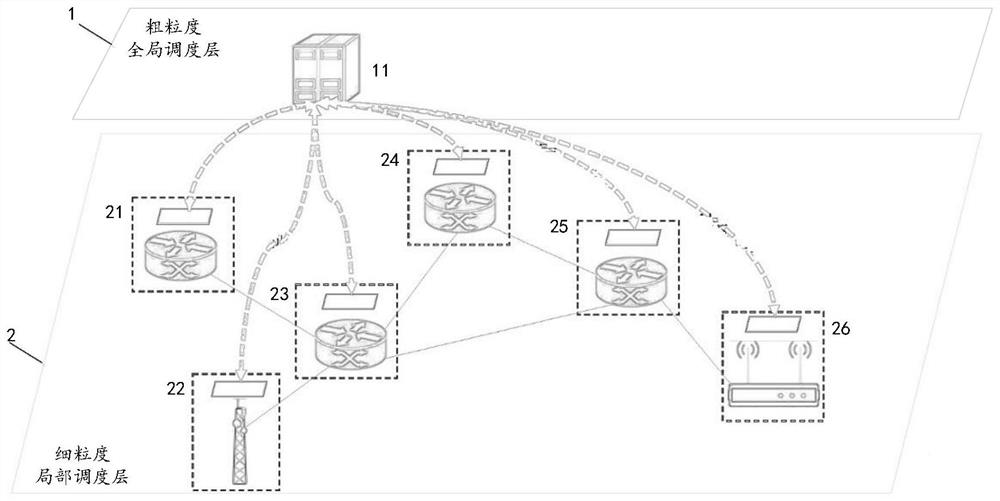

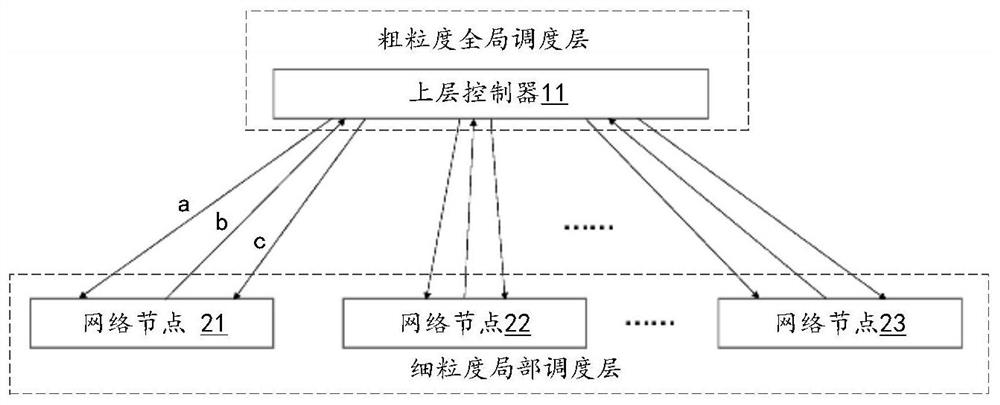

Hierarchical collaborative decision-making intra-network resource scheduling method, system, and storage medium

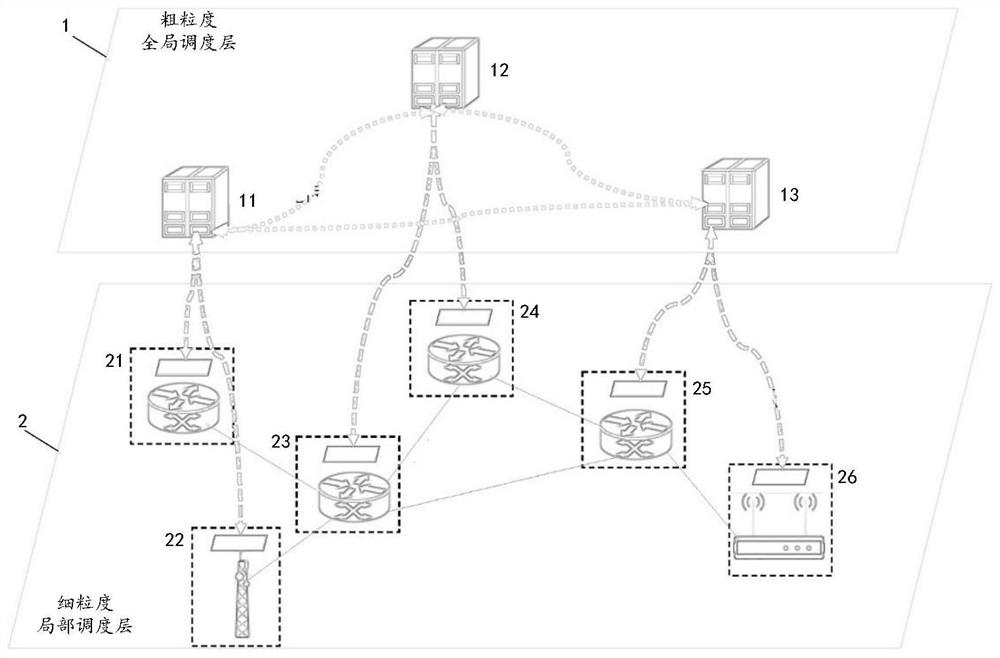

ActiveCN112346854AQuick dispatchFast scheduling decisionsResource allocationTransmissionGlobal schedulingEngineering

The invention discloses a hierarchical collaborative decision-making intra-network resource scheduling method, a system, and a storage medium. The intra-network resource scheduling method comprises the steps of obtaining a computing power demand interest packet requested by an upstream network node in a computing power network; judging whether the current network node meets the computing power demand of the computing power demand interest packet or not, if yes, providing computing power service for data in the computing power demand interest packet according to a deployed performance function,and if not, providing forwarding service for the computing power demand interest packet. According to the technical scheme, the scheduling decision mechanism of the fine-grained local scheduling layer is optimized by means of global information, so that the utilization rate of resources such as global computing power and storage of the computing power network is improved, and load balancing of resources in the network is realized; besides, through the mode of combining the coarse-grained global scheduling layer and the fine-grained local scheduling layer, the non-end-to-end hierarchical collaborative decision-making intra-network resource scheduling capability is provided, the realization of an efficient and balanced intra-network resource scheduling function from the technical level is facilitated, and the overall performance of the computing power network is also improved.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

Management warehouse for scheduling intelligent analysis algorithm in complex scene, and scheduling method

PendingCN111126895AFlexible useRealize unified managementResource allocationLogisticsGlobal schedulingEngineering

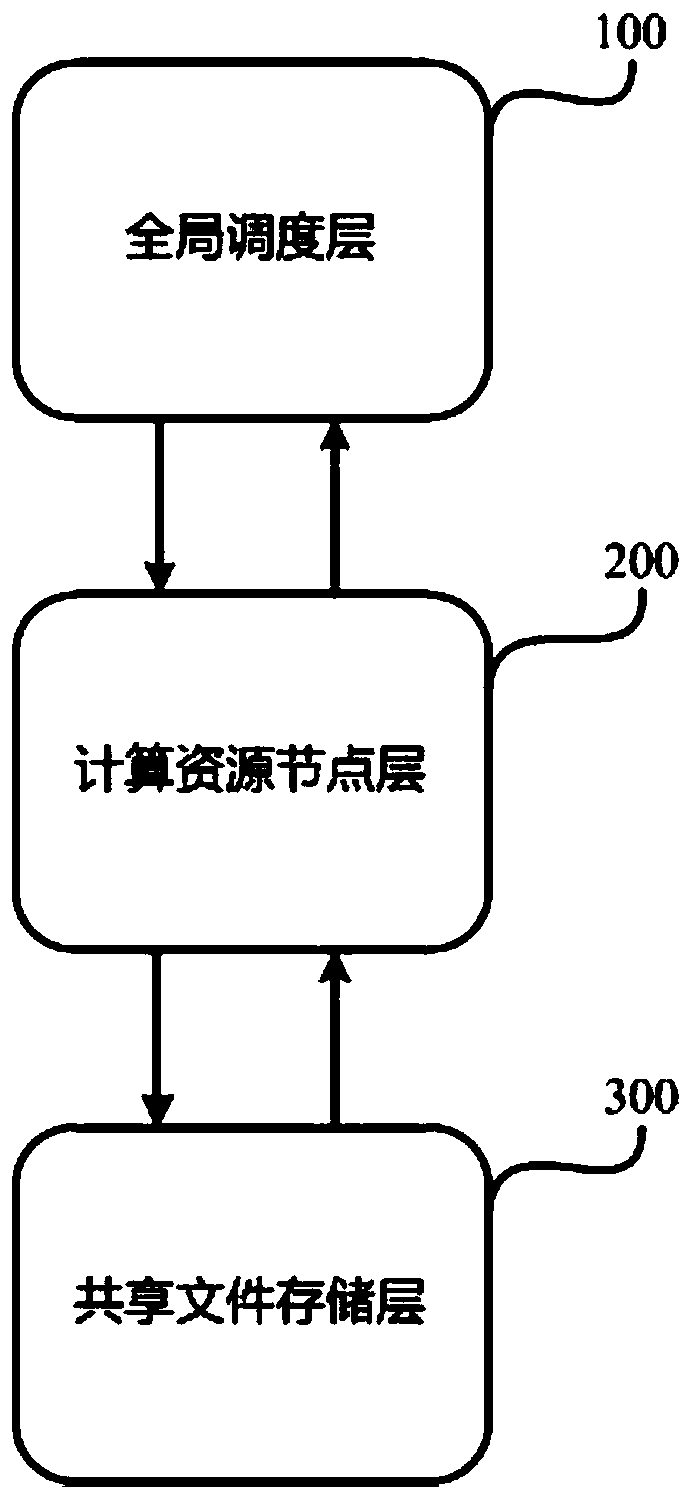

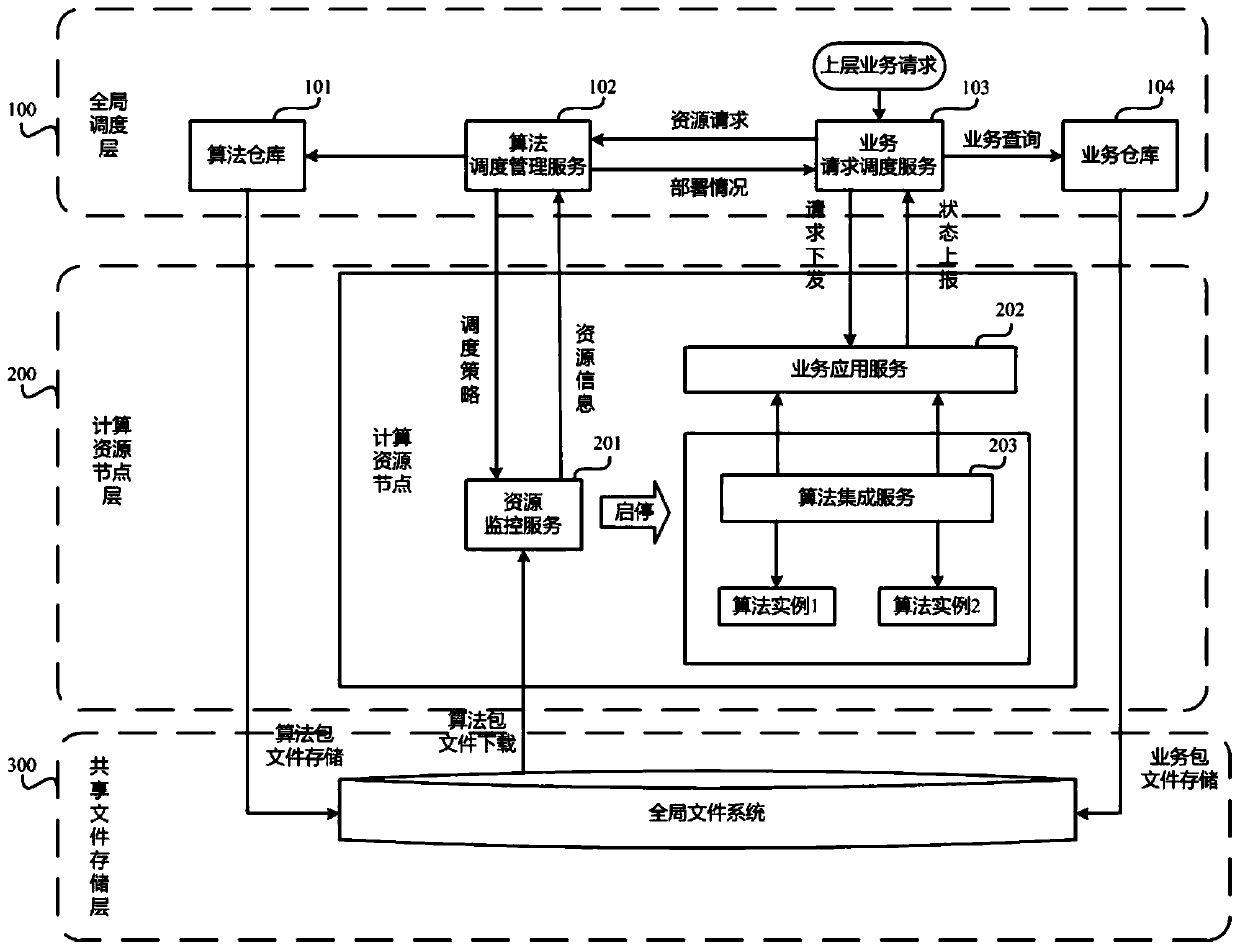

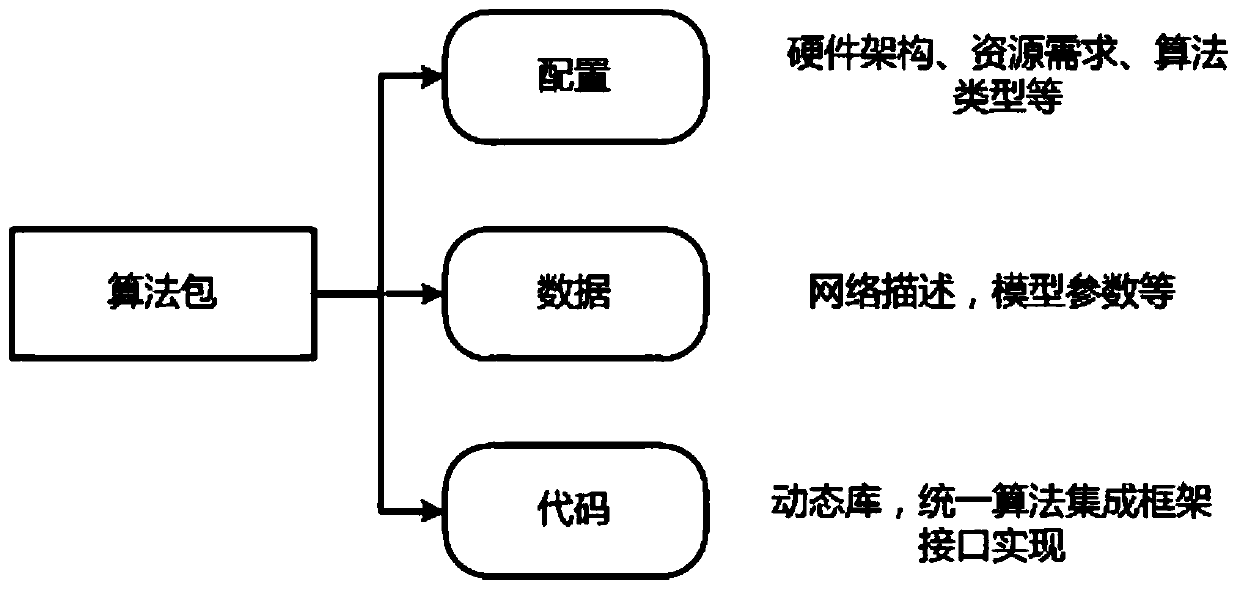

The invention discloses a management warehouse for scheduling an intelligent analysis algorithm in a complex scene, and a scheduling method. The management warehouse comprises a global scheduling layer, a computing resource node layer and a shared file storage layer. The global scheduling layer receives the service request; wherein the service request comprises a to-be-executed service; determining a computing resource node for executing the service according to the service request; the service request is sent to the computing resource node layer; one or more intelligent analysis algorithms are called from the shared file storage layer to execute the to-be-executed service through the computing resource node executing the service in the computing resource node layer, so that the problem that unified management of computing resources cannot be achieved in the prior art is solved, multiple algorithms are fused, and the resource utilization rate is increased.

Owner:QINGDAO HISENSE TRANS TECH

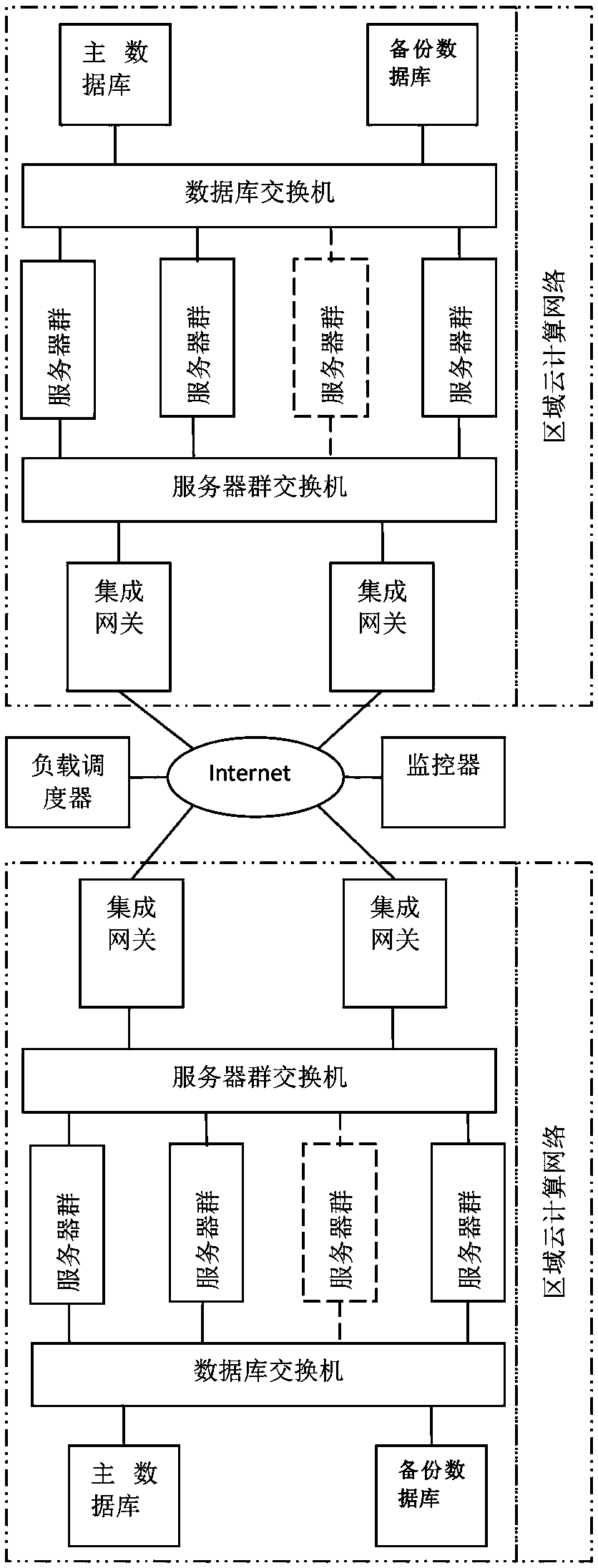

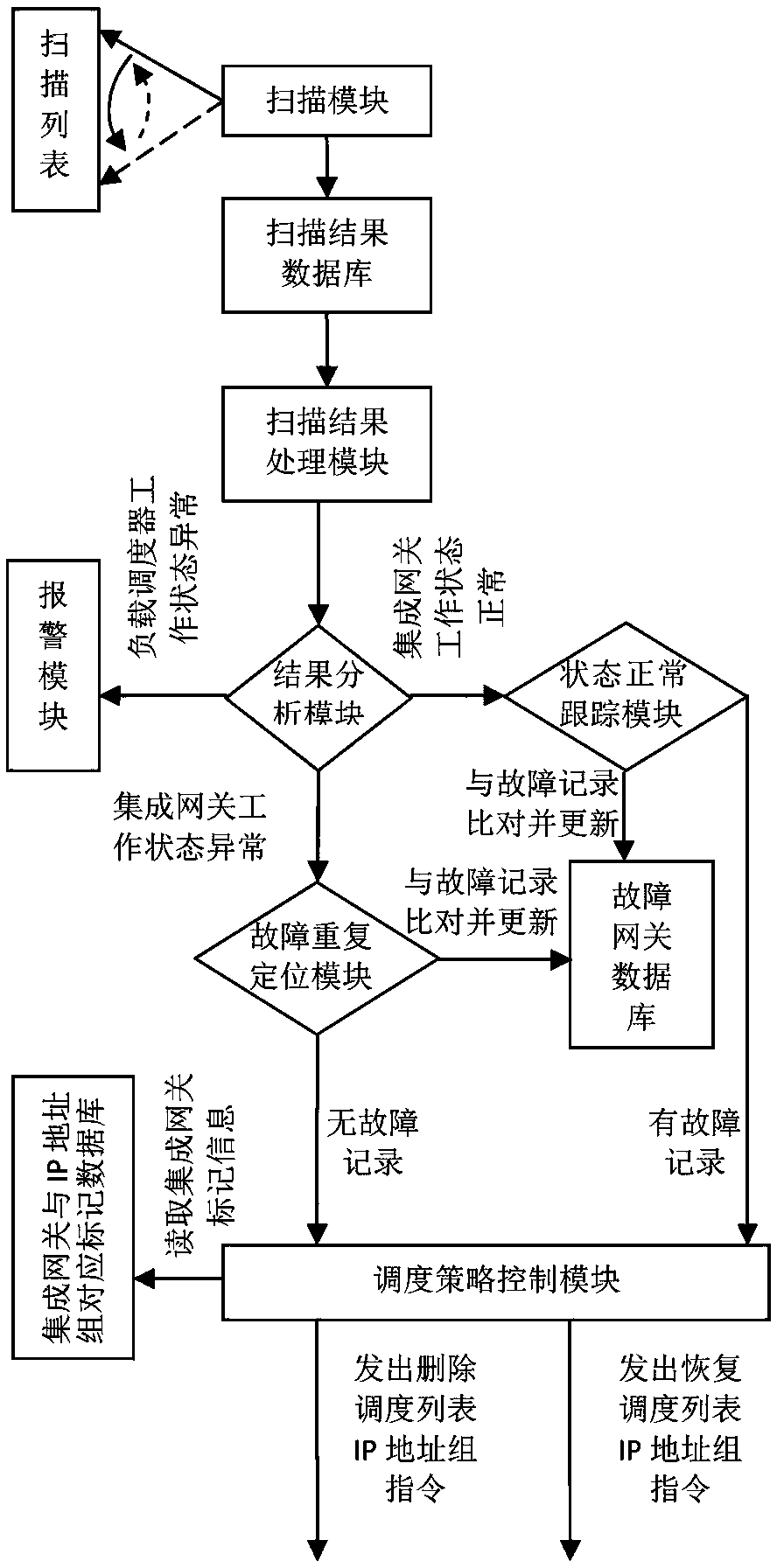

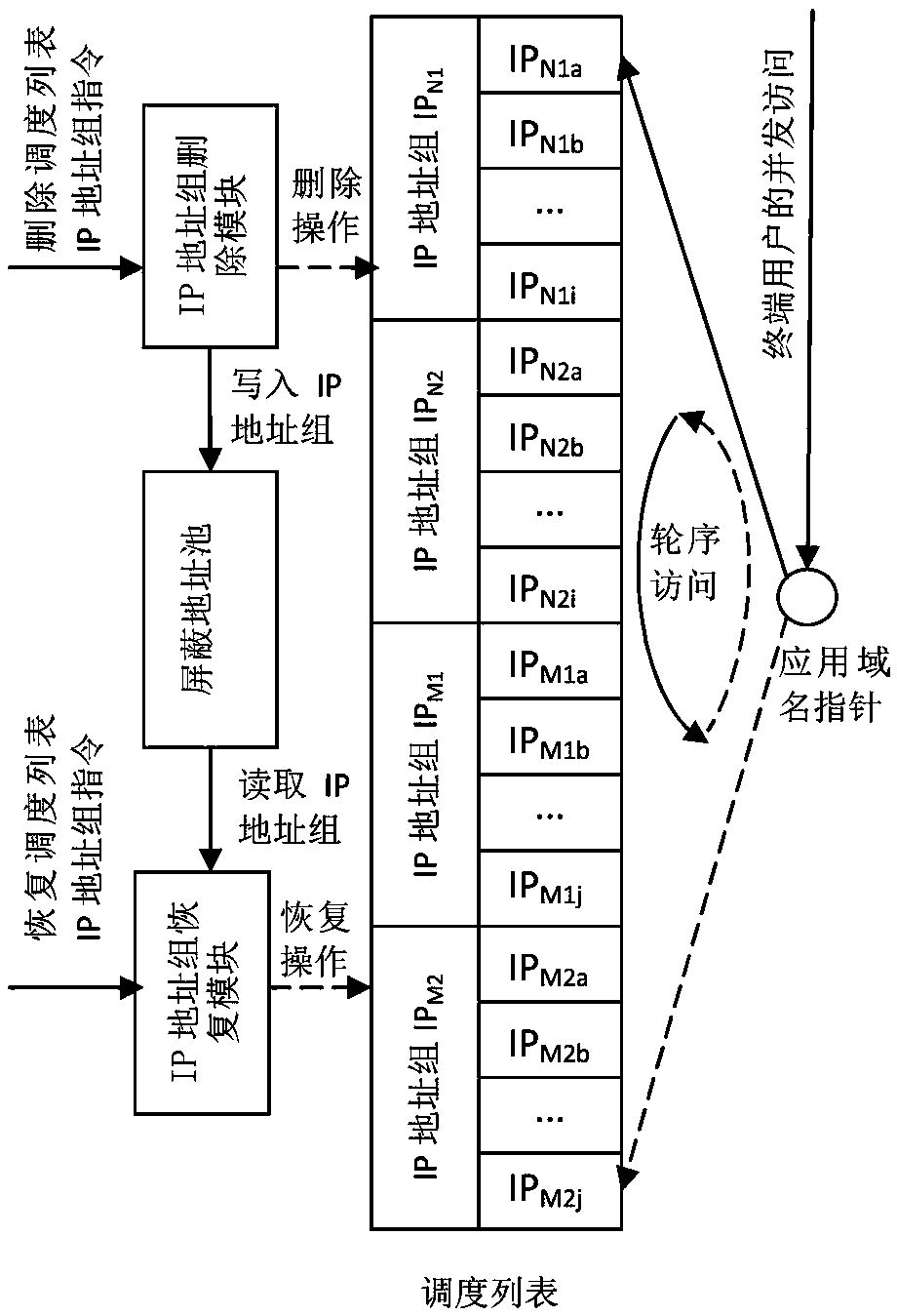

Intelligent disaster recovery configuration of cloud computing network

ActiveCN105376305AState of innovationInnovation control mechanismTransmissionData synchronizationGlobal scheduling

An intelligent disaster recovery configuration of the cloud computing network relates to Internet of Things system based on the multi-level disaster recovery. Four levels of configuration, including a load global scheduling layer, an area network access layer, a server farm layer and a database layer, and an area cloud computing network are included; the load global scheduling layer is connected with multiple area cloud computing networks, and the area cloud computing network comprises the area network access layer, the server farm layer and the database layer. Compared with the prior art, the intelligent disaster recovery configuration has the advantages that a multi-level disaster recovery mechanism is realized in the same area network, the local disaster recovery capability of the system is improved, and system disasters caused by local equipment faults are reduced; a global load regulator and an integrated gateway are monitored intelligently, and an automatic state monitoring and control mechanism for key devices of the cloud-computer network is provided creatively; and further long-distance network data synchronization method and realization scheme are provided, and the safety level of data is improved.

Owner:鞠洪尧

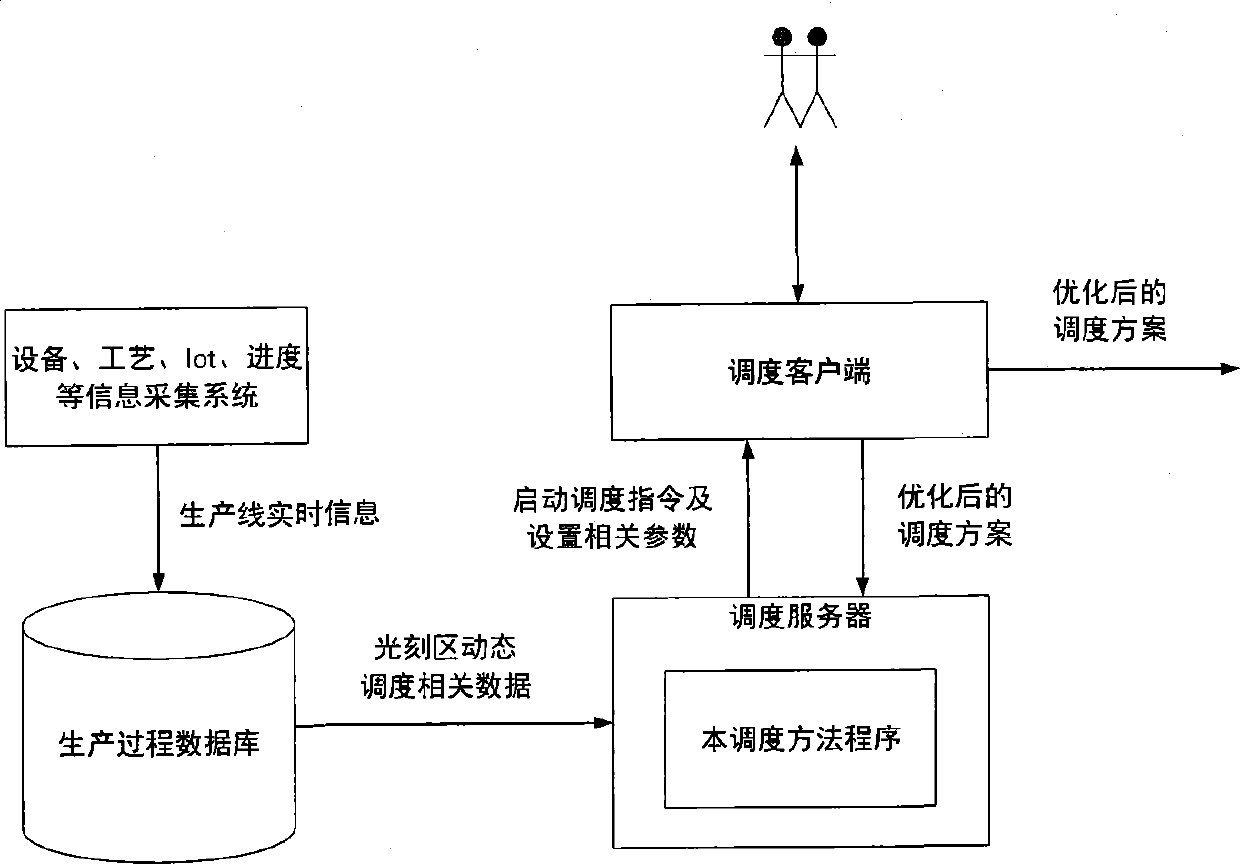

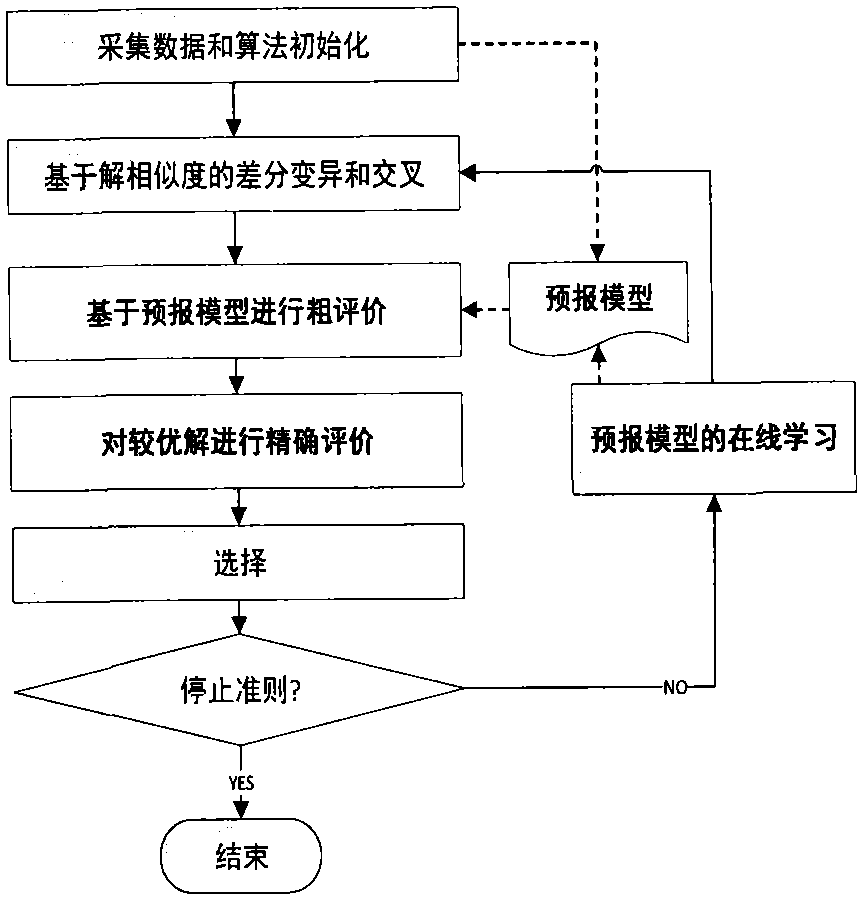

Photoetching procedure dynamic scheduling method based on index forecasting and solution similarity analysis

ActiveCN104536412ATotal factory controlProgramme total factory controlGlobal schedulingPerformance index

The invention discloses a photoetching procedure dynamic scheduling method based on index forecasting and solution similarity analysis and belongs to the fields of advanced manufacture, automation and information. The dynamic scheduling method aims at photoetching procedure dynamic scheduling on a semiconductor production line. The method includes the steps that a photoetching procedure dynamic scheduling problem is divided into an equipment selection scheduling sub-problem and a workpiece sequencing scheduling sub-problem, and a performance index forecasting model of the workpiece sequencing scheduling sub-problem is established on line; then, an original scheduling problem is solved by utilizing a differential evolution algorithm based on solution similarity analysis. In the differential evolution algorithm, the performance index forecasting model of the workpiece sequencing scheduling sub-problem is used for performing quick rough estimation on global scheduling performance of solutions of the equipment selection scheduling sub-problem. In the estimation process on the scheduling solutions, a mode of combining acute estimation and the rough estimation is adopted in the method to perform the performance estimation on solutions in the differential evolution algorithm; by using the dynamic scheduling method, the efficiency and the effect of photoetching procedure production and scheduling can be remarkably improved.

Owner:正大业恒生物科技(上海)有限公司

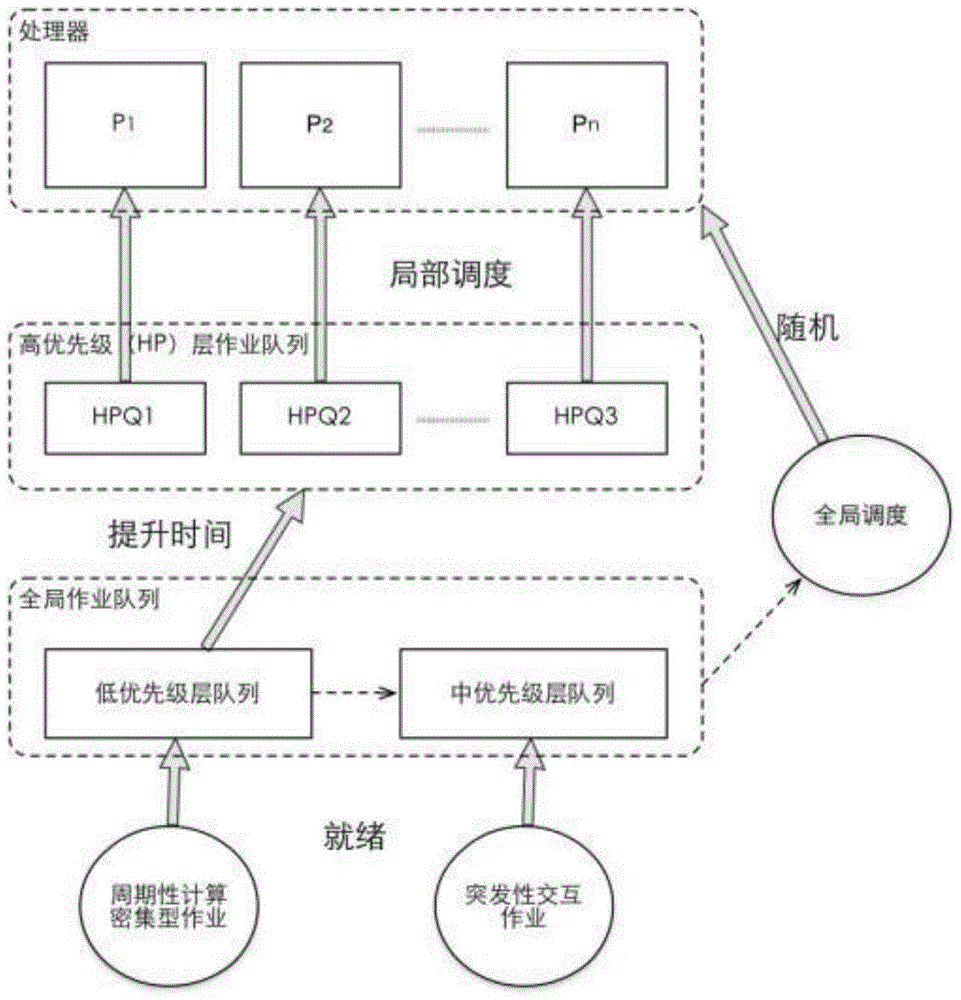

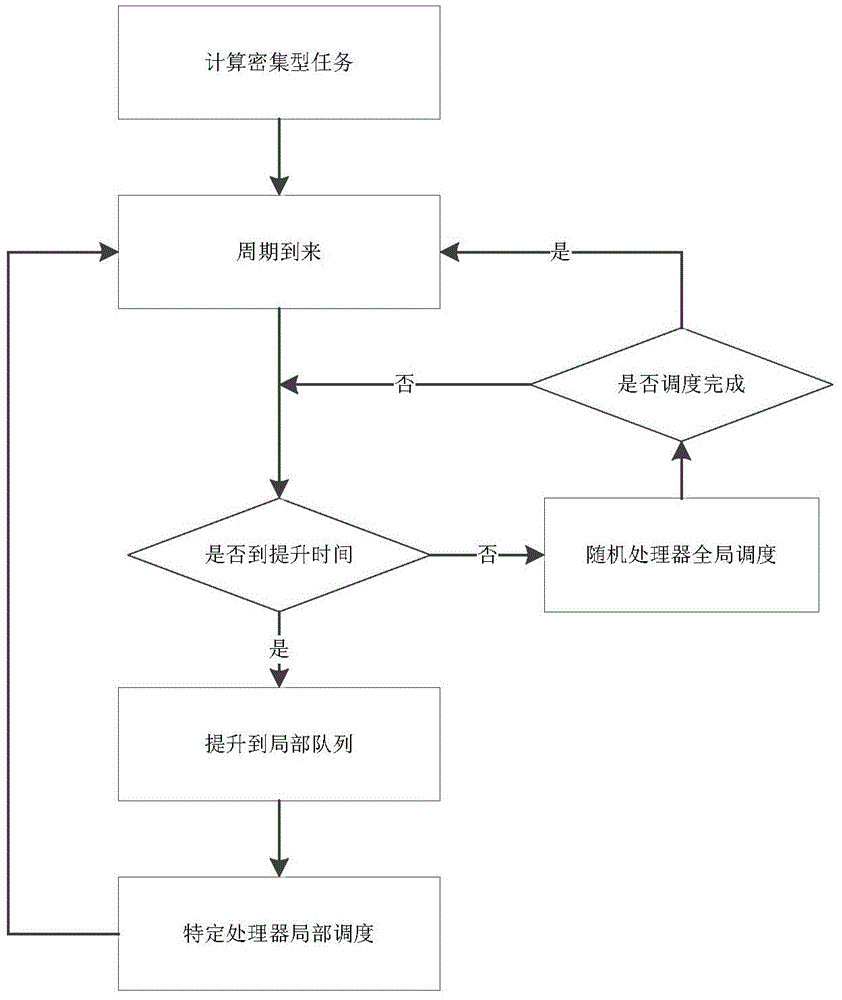

RTLinux (real-time Linux) based real-time scheduling method for analog simulation of controlled object model

InactiveCN103823706AFast response timeImprove real-time responseProgram initiation/switchingSoftware simulation/interpretation/emulationLoss rateGlobal scheduling

The invention discloses an RTLinux (real-time Linux) based real-time scheduling method for analog simulation of a controlled object model. Periodical computational intensive tasks are utilized to participate in global scheduling in a low-priority form, rather than directly preempting sudden interactive tasks, when a period arrives. Therefore, interactive response time is shortened, and task loss rate is decreased; after raising time, the computational intensive tasks enter a high-priority queue bound with specific processors to participate in local scheduling, missing pages of the processors due to switching of the tasks between different processors are decreased, and scheduling delay is reduced, so that real-time performance in response of the computational intensive tasks is effectively improved, the interactive tasks and the computational intensive tasks can be executed concurrently, and performance of multi-ore processors is fully utilized.

Owner:ZHEJIANG UNIV

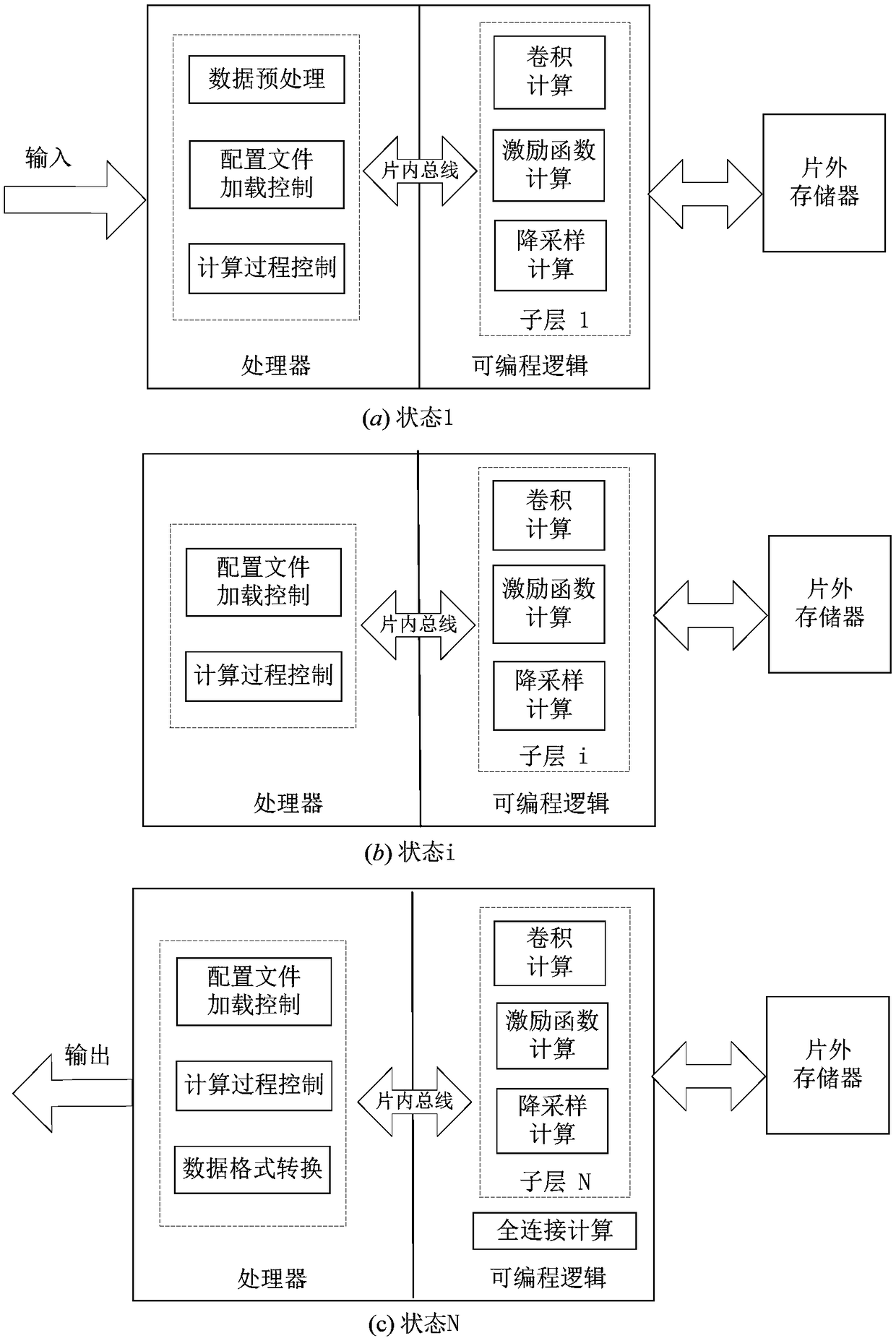

SoC chip-based deep neural network embedded realization method

InactiveCN108171321ATo make up for the shortcomings of weak scheduling abilityIncrease flexibilityNeural architecturesPhysical realisationGlobal schedulingProgrammable logic device

The invention belongs to the field of airborne intelligent computing, and provides an SoC chip-based deep neural network embedded realization method. The method is realized based on an SoC chip; a neural network main program is realized in a processor core and finishes a global scheduling task; a computing-intensive part is realized in programmable logic and finishes a parallel computing task; andthe two parts perform control instruction and state information exchange through an on-chip high-speed bus to realize cooperative work of a processor and the programmable logic. According to the method provided by the invention, the processor core in an SoC is used for executing an overall scheduling task, so that the dynamical reconfiguration characteristic of an FPGA is better utilized and thenetwork flexibility is improved.

Owner:XIAN AVIATION COMPUTING TECH RES INST OF AVIATION IND CORP OF CHINA

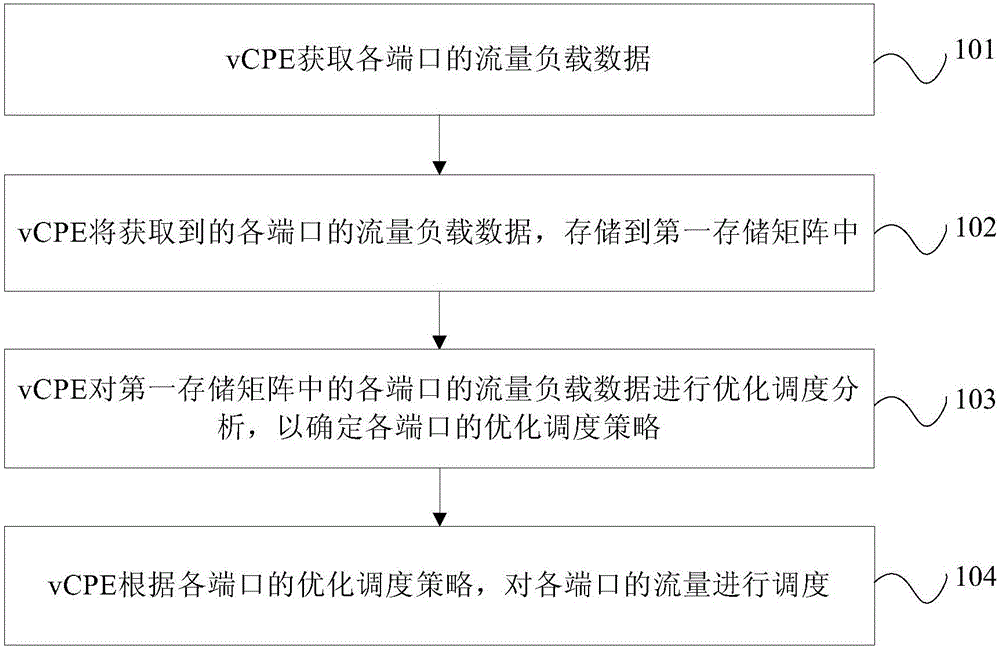

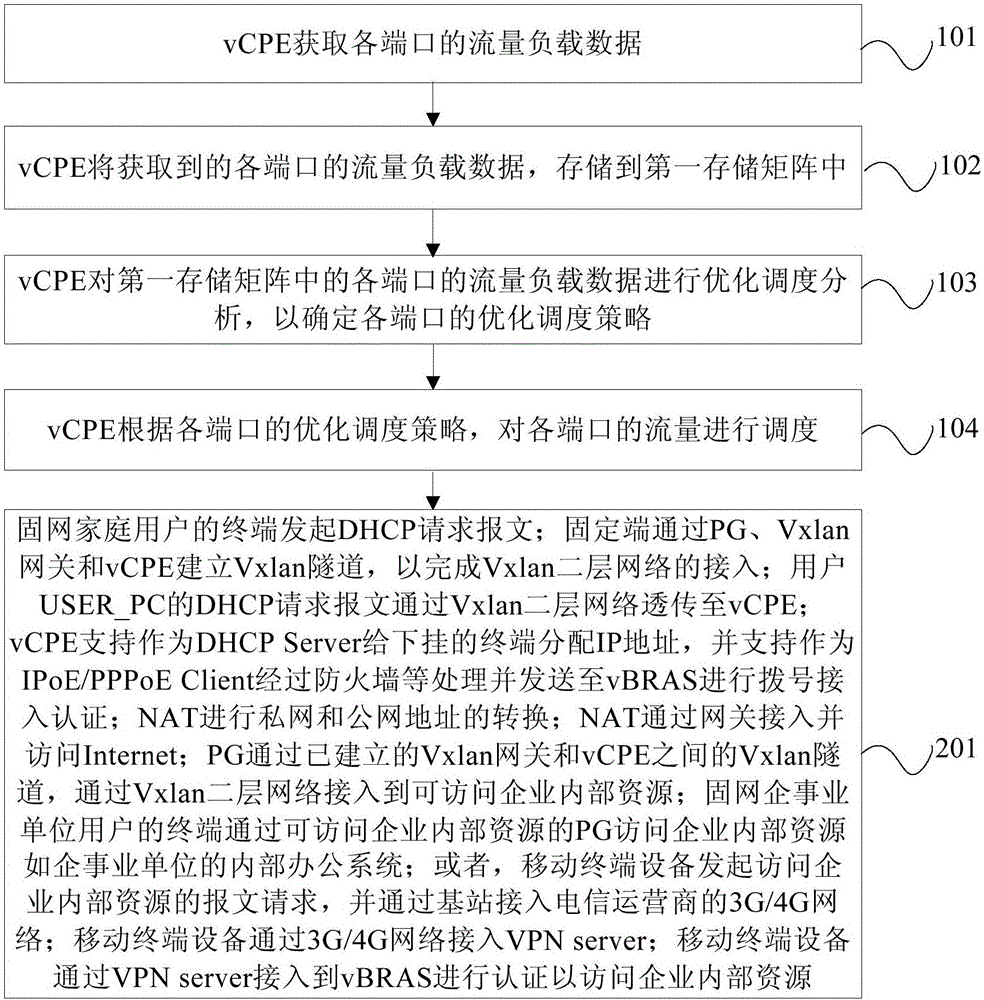

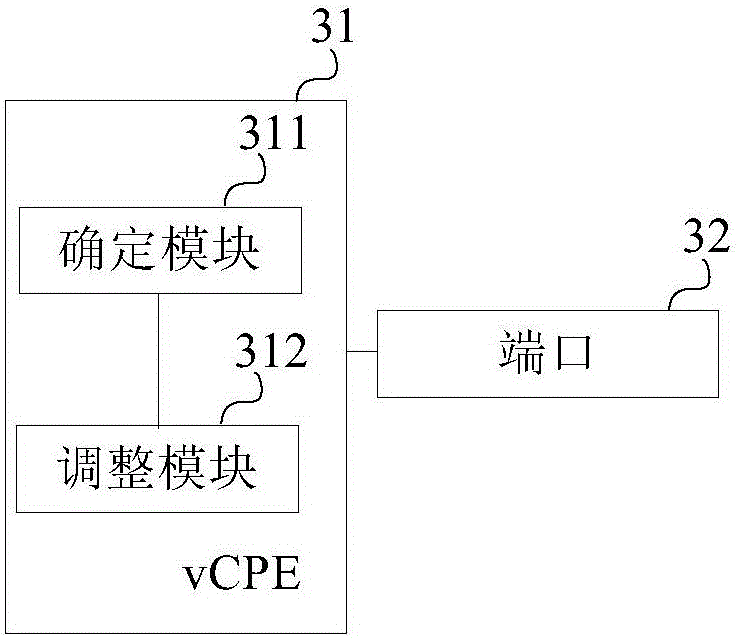

Method and system for traffic scheduling based on end office cloudization

ActiveCN106302221AImprove throughputReduce congestionData switching networksTraffic capacityGlobal scheduling

The invention provides a method and a system for traffic scheduling based on end office cloudization. The method comprises the following steps of enabling vCPE (virtual customer premise equipment) to obtain traffic loading data of each port; enabling the vCPE to store the obtained traffic loading data of each port into a first storage matrix; enabling the vCPE to optimize scheduling and analyze the traffic loading data of each port in the first storage matrix, so as to determine the scheduling optimizing strategy of each port; enabling the vCPE to schedule the traffic of each port according to the scheduling optimizing strategy of each port. The method has the advantage that a new adjusting strategy is obtained according to the real-time traffic state of each port, the unscheduled port is determined and adjusted, and the network traffic of each port, namely the node, is performed with global scheduling optimizing, so that the traffic resource of each port is optimum, the business response time is minimum, the throughput rate of network traffic is maximum, the network blockage degree is reduced, the utilization rate of network is improved, and the consumption is decreased.

Owner:CHINA UNITED NETWORK COMM GRP CO LTD

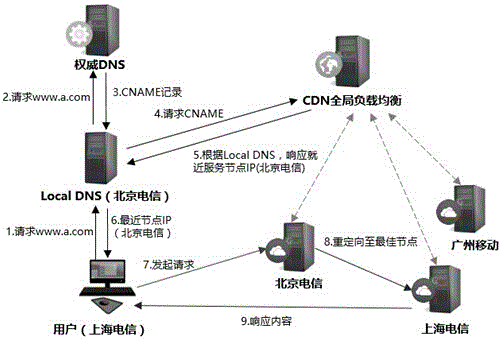

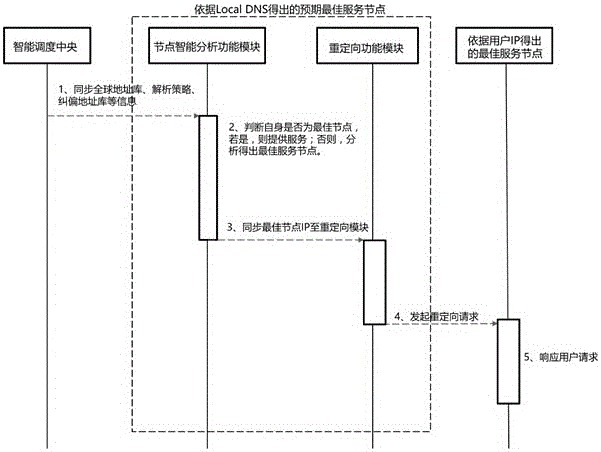

Redirection mechanism based intelligent CDN (content delivery network) scheduling method

The invention discloses a redirection mechanism based intelligent CDN (content delivery network) scheduling method and relates to the field of CDN. The redirection mechanism based method is characterized in that selection of the nearest service nodes of the CND is divided into two steps of 1), when a user initiates a user request, scheduling one expected nearest service node by global intelligence scheduling according to Local DNS (local domain name server) of the user; 2), the user initiating the user request to the expected nearest service node, and judging whether the node is the optimal nearest service node or not according to IP (internet protocol) of the user, if yes, directing providing service, and if not, redirecting the user request to the optimal nearest service node on the basis of the redirection mechanism. In the method, three functional modules, namely an intelligent scheduling center module, an intelligent node analyzing module and a node redirecting module are involved. The redirection mechanism based intelligent CDN scheduling method has the advantages that accuracy in CDN global scheduling can be effectively improved after implementation, and service quality of the user can be improved.

Owner:云之端网络(江苏)股份有限公司

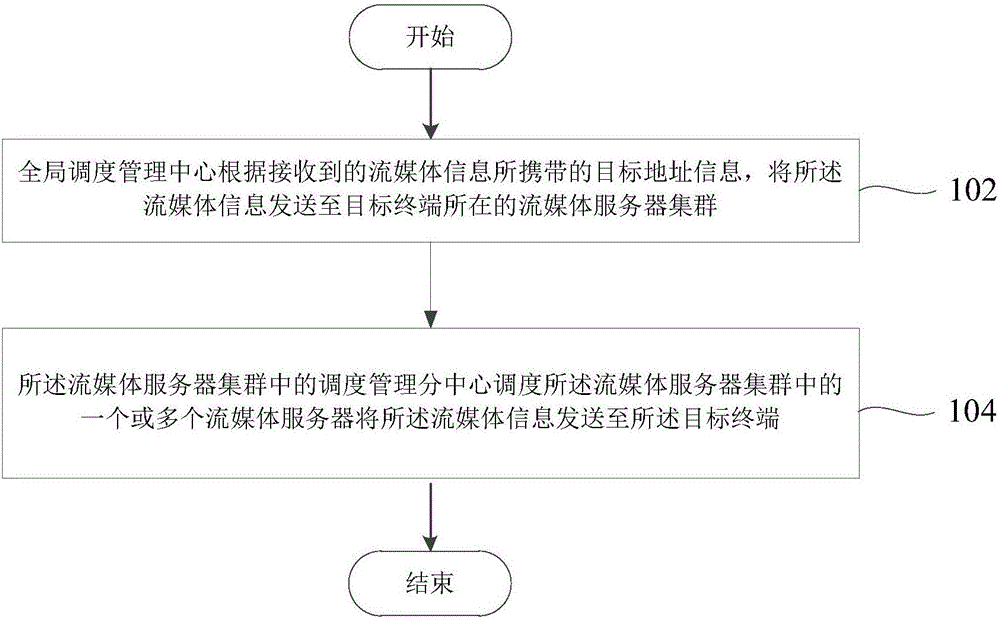

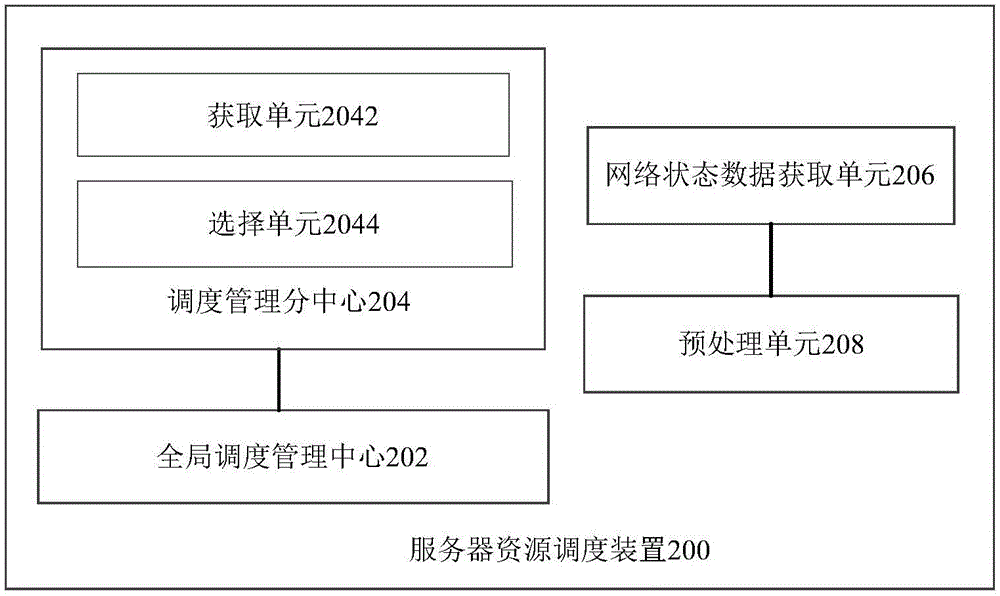

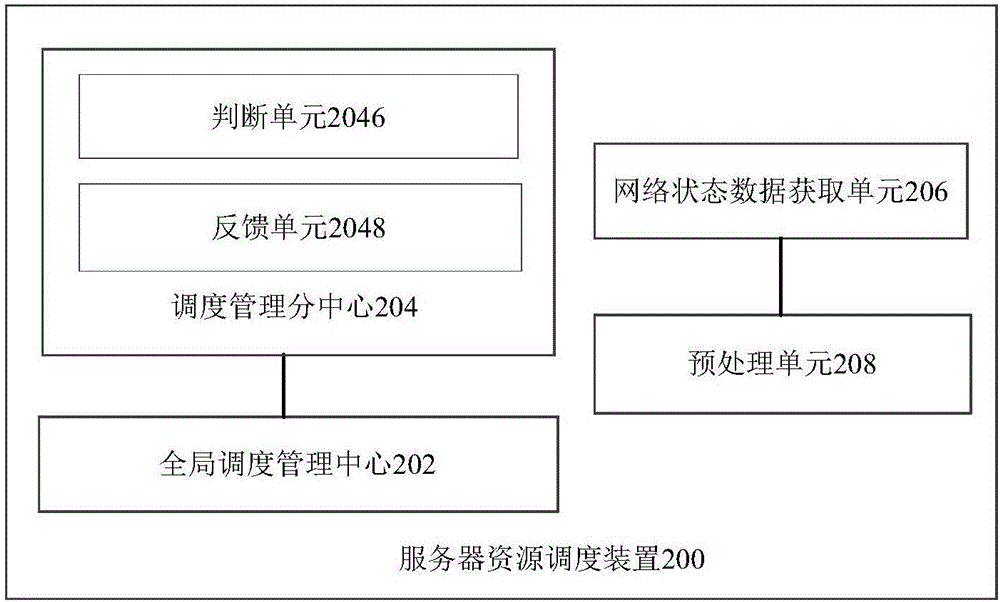

Server resource scheduling method and device

The invention provides a server resource scheduling method and device. The server resource scheduling method includes: a global scheduling management center sending streaming information to a streaming media server cluster of a terminal mobile according to received target address information carried by the streaming media information; a scheduling management sub-center in the steaming media server cluster scheduling one or multiple streaming media servers in the streaming media server cluster to send the streaming media information the target terminal. By the arrangement, global resource scheduling nodes are established, the entire streaming media server network is monitored in real time, server resources are scheduled reasonably through an intelligent scheduling method, and classroom interaction and live-broadcast are guaranteed.

Owner:SUZHOU CODYY NETWORK SCI & TECH

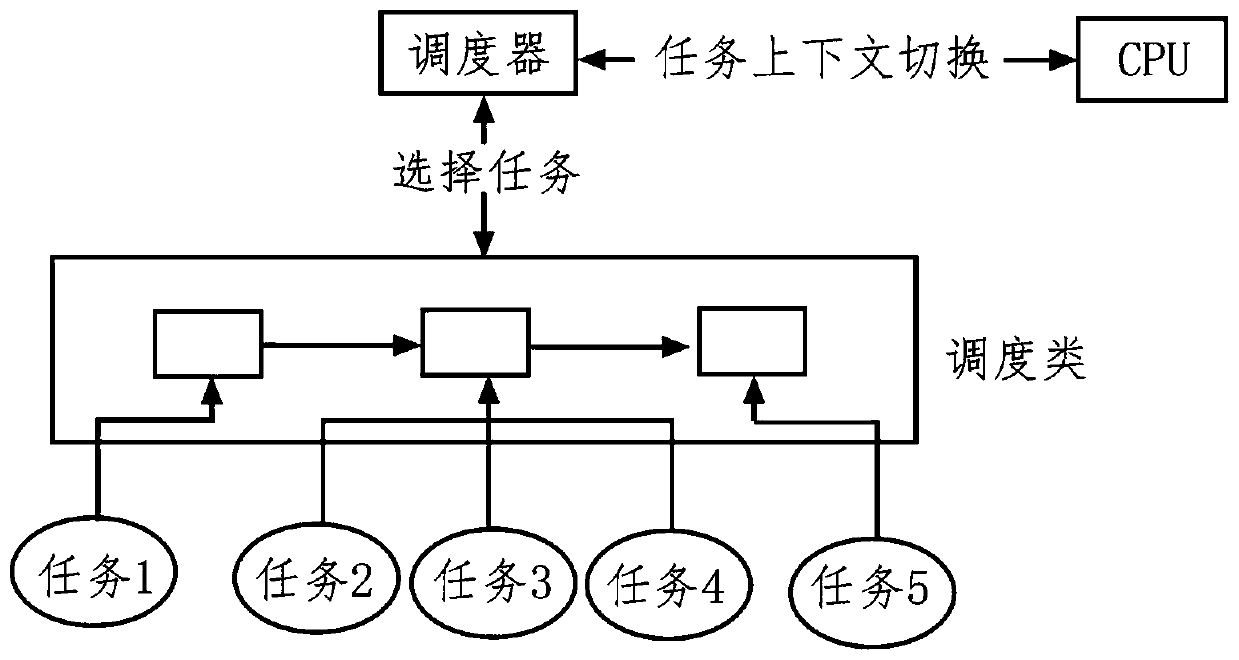

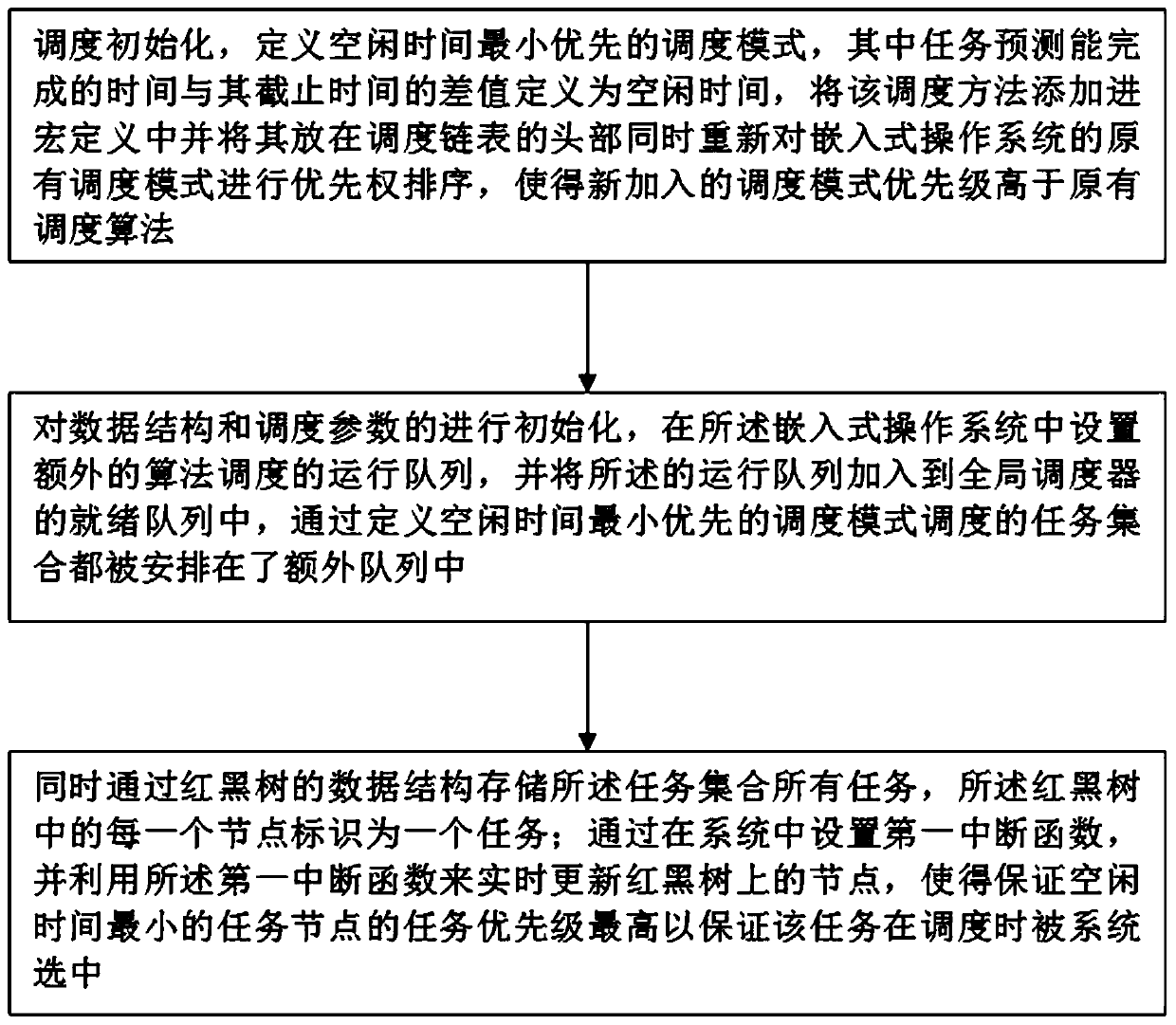

Task scheduling method and device for embedded operating system and storage medium

PendingCN110825506AImproved task distribution balanceSelf-aware affinityProgram initiation/switchingGlobal schedulingOperational system

The invention discloses a task scheduling method of an embedded operating system. The method comprises steps of carrying out scheduling initialization, defining a scheduling mode with minimum idle time priority; wherein the difference between the task prediction completion time and the deadline is defined as idle time; adding the scheduling method into macro definition and placing at the head of ascheduling linked list, and performing priority ranking on the original scheduling mode of the embedded operating system again, so that the priority of the newly added scheduling mode is higher thanthat of the original scheduling algorithm; initializing a data structure and scheduling parameters, setting an additional algorithm scheduling running queue in the embedded operating system, and adding the running queue into a ready queue of a global scheduler; wherein all the task sets scheduled by defining the scheduling mode with the minimum idle time priority are arranged in an additional queue; and storing all tasks of the task set through a data structure of a red-black tree.

Owner:湖南智领通信科技有限公司

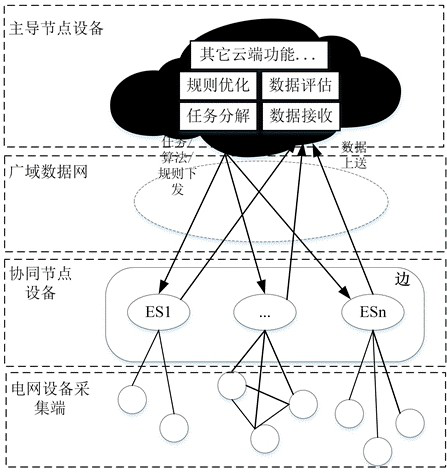

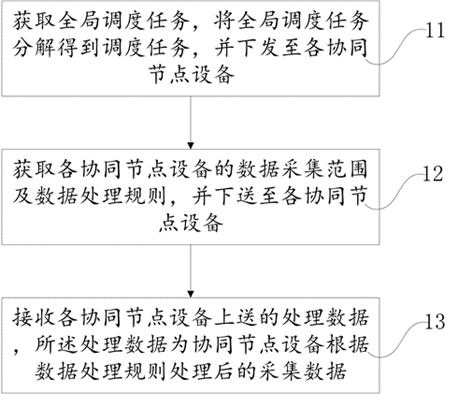

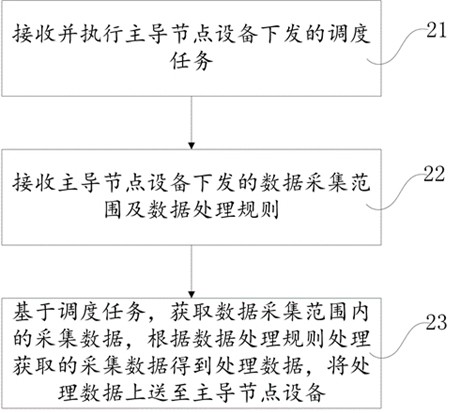

Regulation and control cloud data processing method, device and system

ActiveCN112104751AReduce transmissionCollaborative ComputingResourcesTransmissionGlobal schedulingData acquisition

The invention belongs to the field of data processing, and discloses a regulation and control cloud data processing method, device and system, and the method comprises the steps that leading node equipment obtains a global scheduling task, decomposes the global scheduling task to obtain a scheduling task, and transmits the scheduling task to cooperative node equipment; a data acquisition range anda data processing rule of each piece of cooperative node equipment are obtained, and the data acquisition range and the data processing rule are sent to each piece of cooperative node equipment; eachpiece of cooperative node equipment receives and executes the scheduling task issued by the leading node equipment; a data acquisition range and a data processing rule issued by the leading node equipment are received; acquired data in the data acquisition range is obtained based on the scheduling task, the acquired data is processed according to a data processing rule to obtain processed data, and the processed data is uploaded to the leading node equipment; and the leading node equipment receives the processing data sent by each piece of cooperative node equipment. The problem that the bandwidth pressure of a wide-area data network is large due to large computing and storage pressure of a cloud center and repeated uploading of data is solved, and the quality of regulation and control cloud data is improved.

Owner:CHINA ELECTRIC POWER RES INST

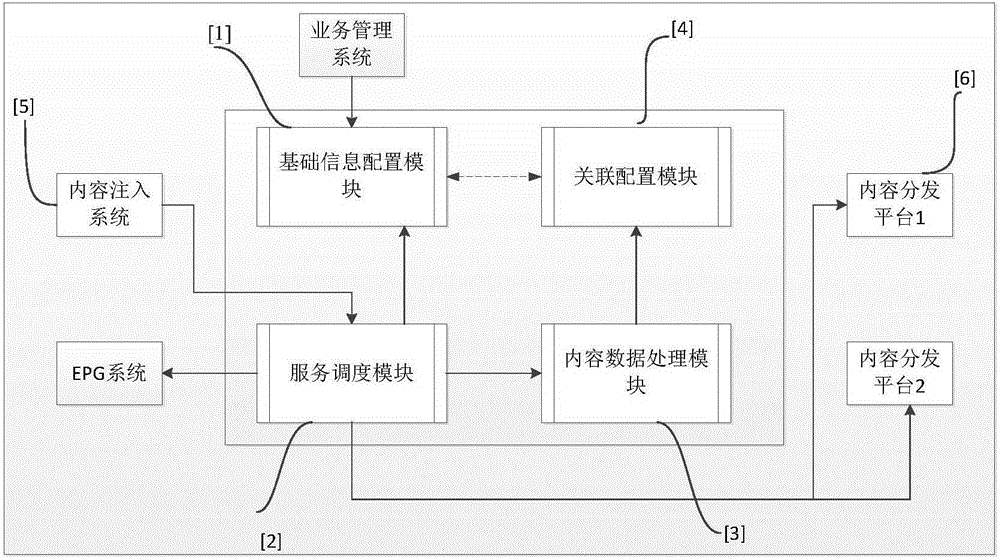

Video content distributed issuing method

ActiveCN105208414AMeet localized loading requirementsConvenient and fast docking methodSelective content distributionGlobal schedulingButt joint

The invention discloses a video content distributed issuing method, which comprises the steps of issued video-on-demand content injection, live broadcast playback on-line procedure and localization channel support. According to the invention, one-point access and global scheduling can be realized based on media asset issuing systems nationwide, service localization loading requirements are met at the same time, and a butt-joint method, which is unified in standard, convenient and efficient, is provided for each party of an industry chain; an original technical framework of an IPTV system is reserved, defects of decentralized information and poor flexibility among existing systems are changed through determination of interconnecting and interworking standard and processing procedures, and the processing efficiency and the service supporting capacity can be significantly improved; and a current situation of repeated investment caused by independent construction of each area of the IPTV system at present is changed, loose coupling among the systems can be realized based on cloud technologies, and the hardware cost of a server is greatly reduced.

Owner:天翼智慧家庭科技有限公司

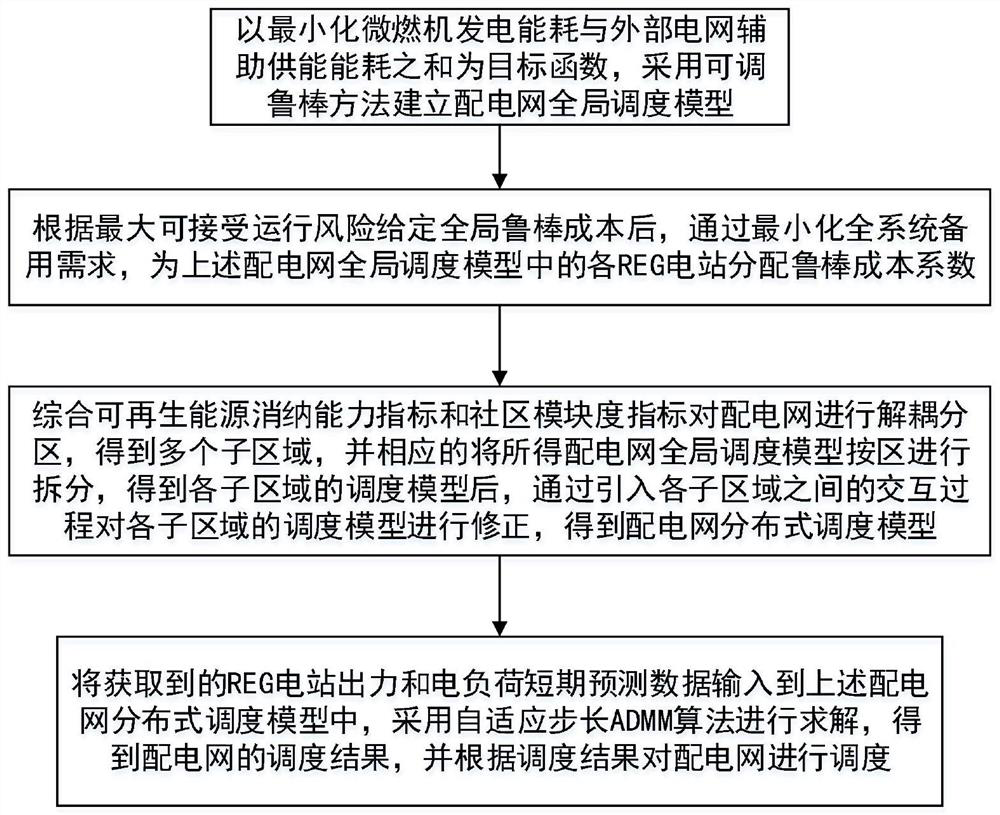

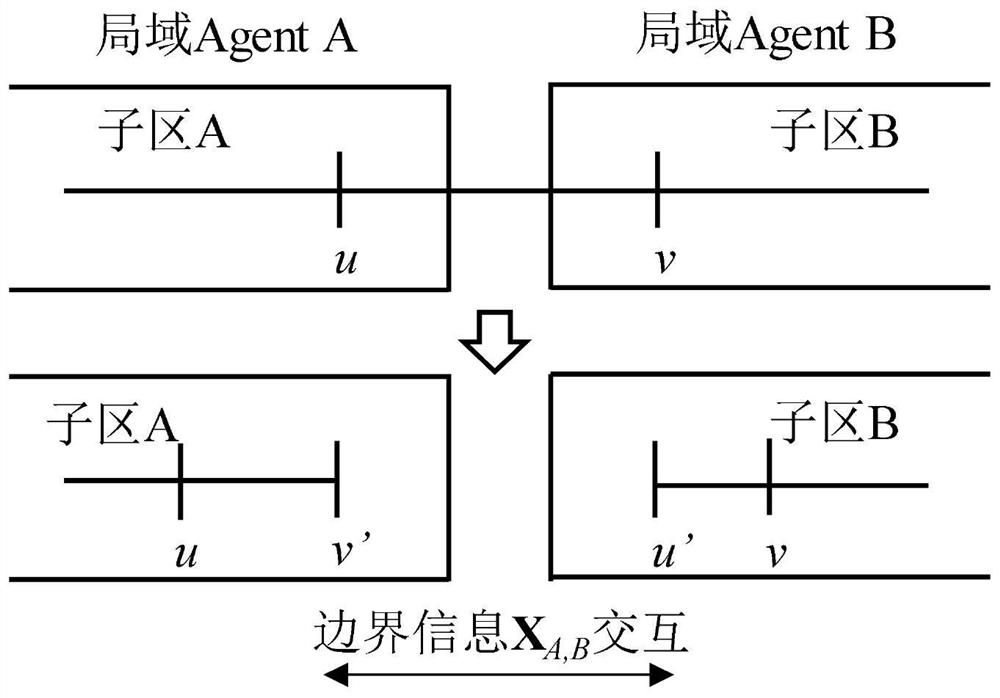

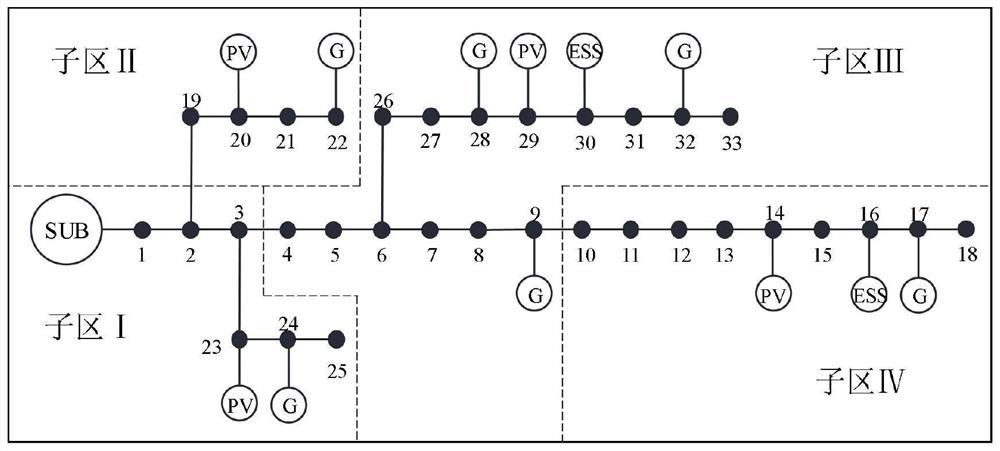

Power distribution network distributed scheduling method

PendingCN112183865ACut back-up burdenIncrease the adjustable rangeSingle network parallel feeding arrangementsForecastingGlobal schedulingEngineering

The invention discloses a power distribution network distributed scheduling method, which comprises the following steps of: firstly, establishing a power distribution network global scheduling model,giving global robust cost according to the maximum acceptable operation risk, and allocating robust cost coefficients to REG power stations in the power distribution network global scheduling model byminimizing the whole system standby requirement; then constructing a power distribution network distributed scheduling model through regional decomposition and model correction, finally, solving thepower distribution network distributed scheduling model through an adaptive step length ADMM algorithm, obtaining a scheduling result of the power distribution network, and scheduling the power distribution network. On the premise of ensuring the operation reliability of the system, the system energy consumption caused by the scheduling strategy is reduced, and the balance between the reliabilityand economy of the power distribution network can be realized. Meanwhile, when the power distribution network distributed scheduling model is constructed, the interaction process among the sub-regionsis considered, and the power distribution network distributed scheduling model is solved by adopting an adaptive step length ADMM algorithm, so that the solving efficiency of the system scheduling model is greatly improved.

Owner:HUAZHONG UNIV OF SCI & TECH +2

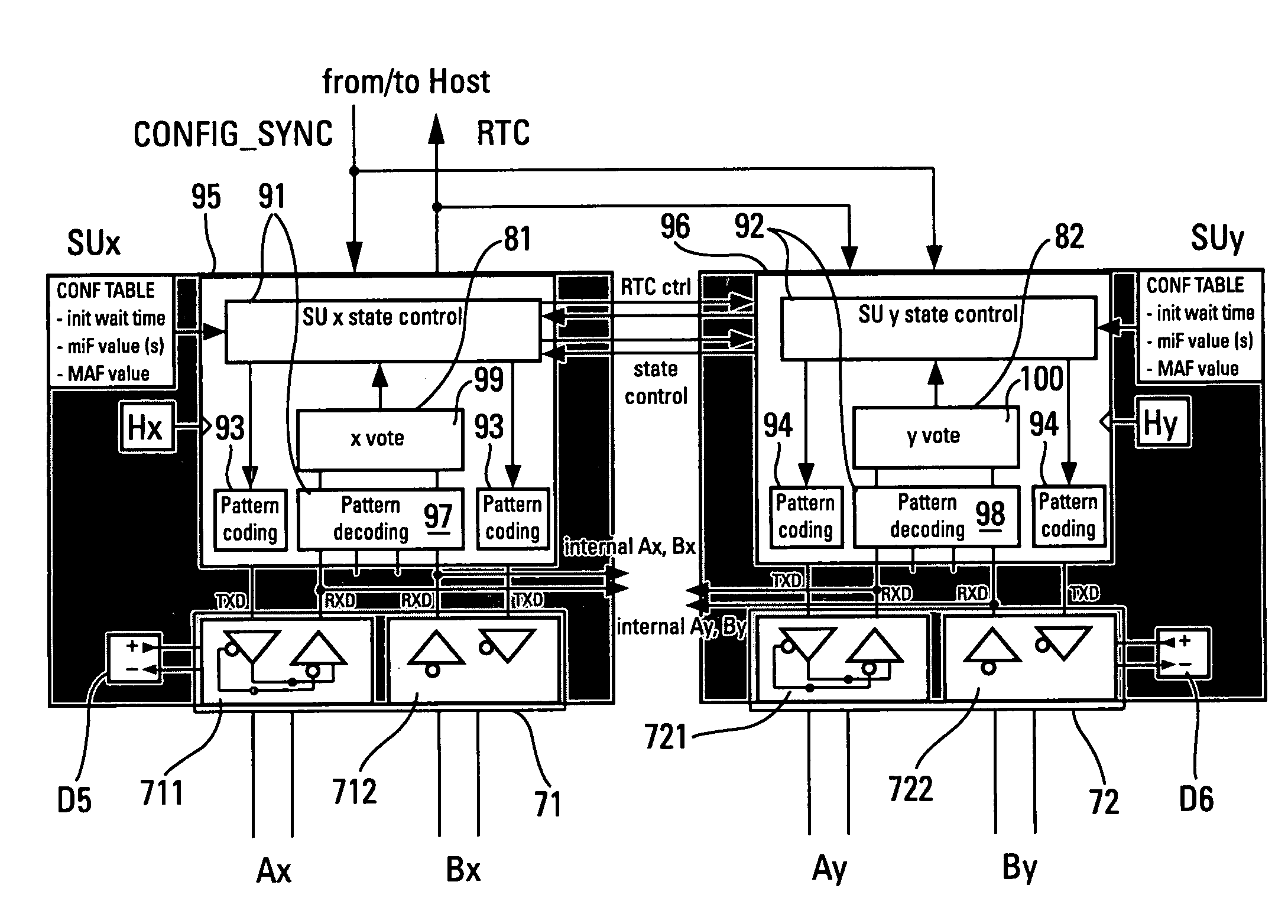

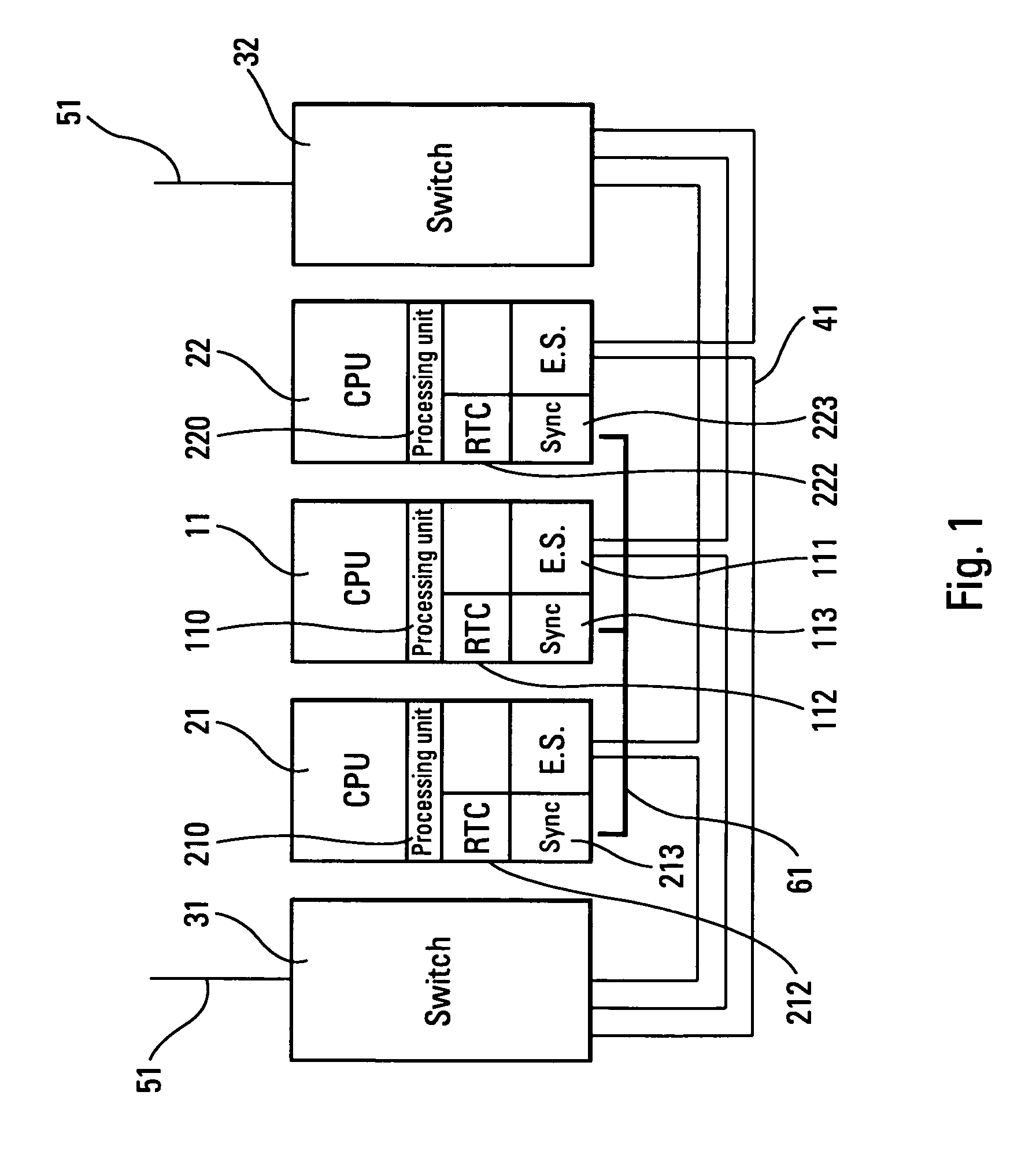

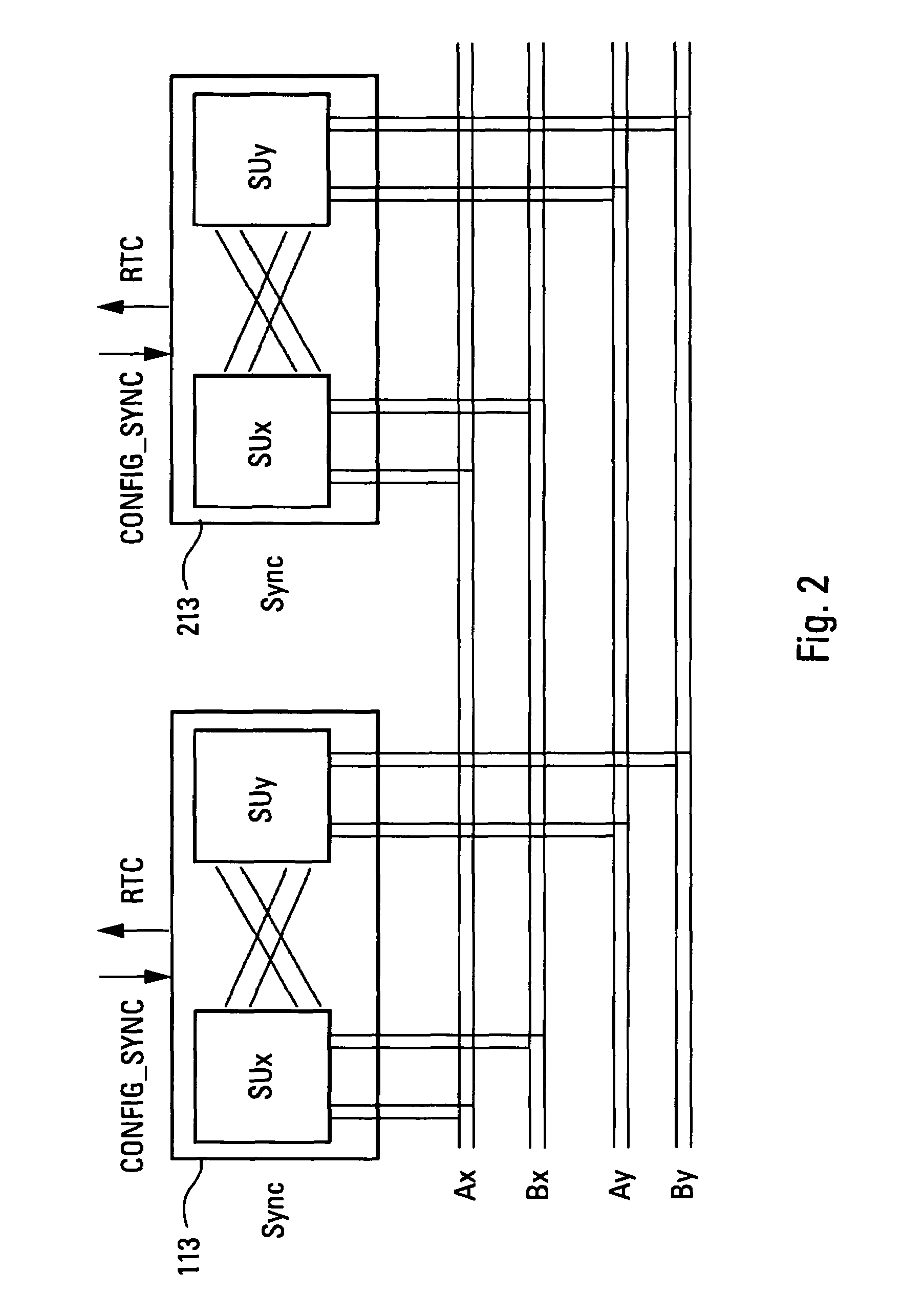

Fault-tolerant synchronisation device for a real-time computer network

InactiveUS7509513B2Convenient distanceLess accurateMultiple digital computer combinationsGenerating/distributing signalsReal-time clockGlobal scheduling

Fault-tolerant synchronization of real-time equipment connected to a computer network of several tens of meters with an option of including or not including such equipment in the synchronization device is disclosed. Global scheduling of the real-time computer platform in the form of minor and major cycles is provided in order to reduce latency during sensor acquisition. The associated calculation and preparation of output to the actuator is provided in an integrated modular avionic (IMA) architecture. To achieve the foregoing, a synchronization bus separate from the data transfer network and circuits interfacing with this specific bus for processing the local real-time clocks in each piece of equipment in a fault-tolerant, decentralized manner is provided.

Owner:THALES SA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com