Low-delay task scheduling method for a dynamic fog computing network

A task scheduling and fog computing technology, applied in electrical components, transmission systems, etc., can solve problems such as affecting the use time of equipment, and achieve the effect of extending the use time and reducing energy overhead.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The present invention will be further described below in conjunction with specific embodiments.

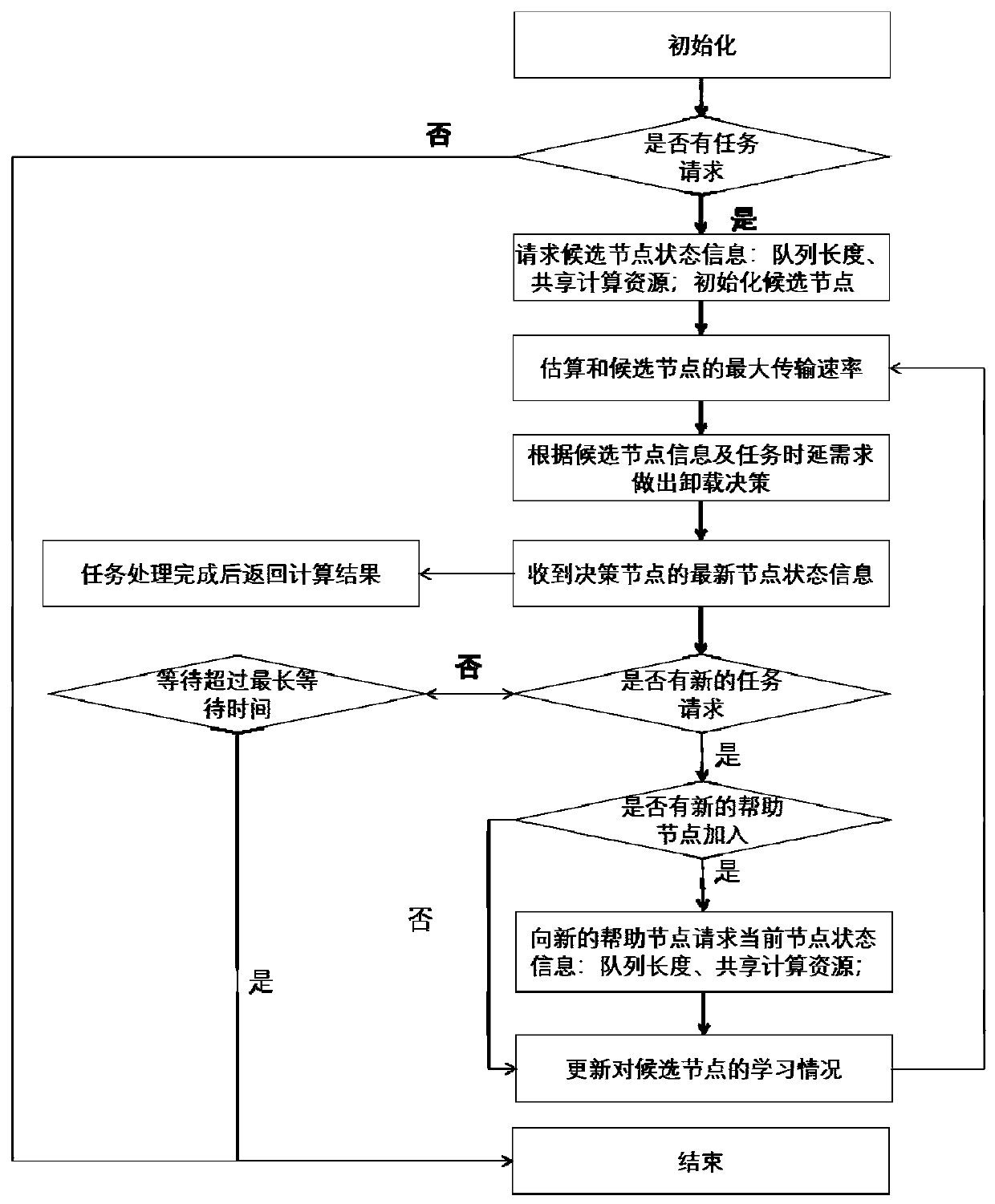

[0031] figure 1The flowchart of the low-latency task scheduling method oriented to dynamic fog computing networks provided in this embodiment, the “dynamic” oriented to dynamic fog computing networks includes the following three meanings: (1) The motion state of nodes in the network is variable ; (2) The size of the network is variable: nodes can freely enter and exit the network; (3) The computing resources provided by the helper nodes can be changed.

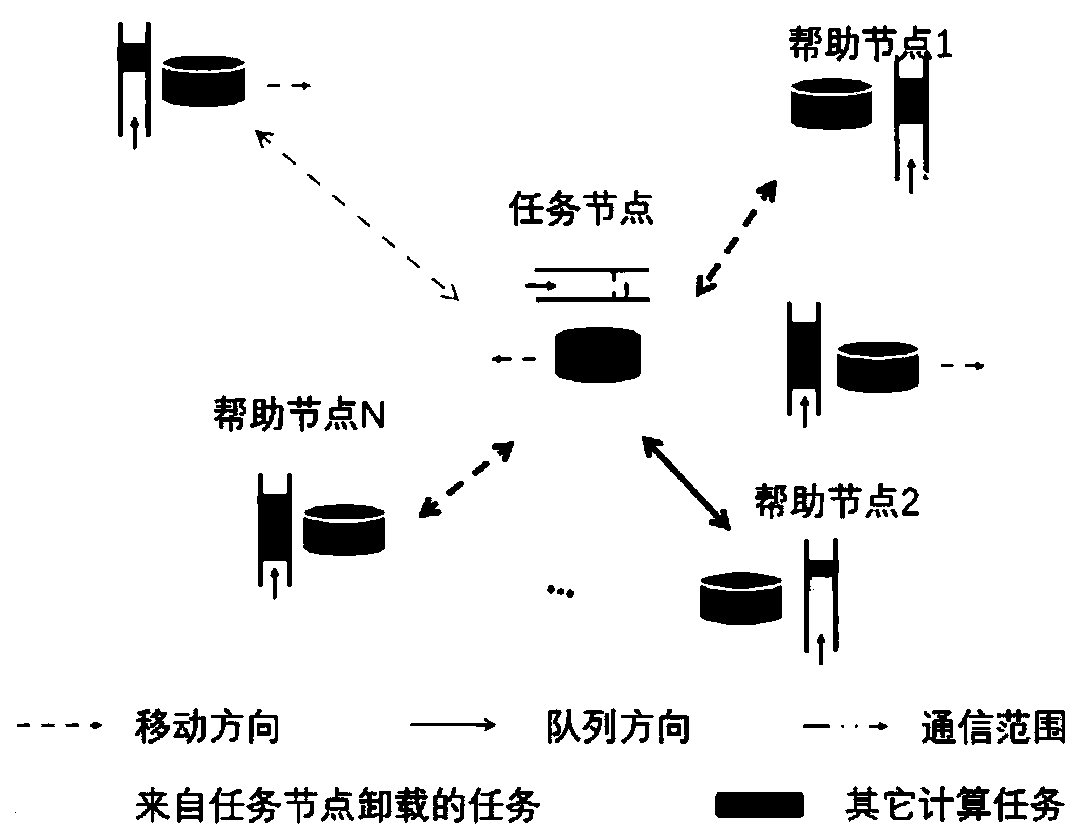

[0032] As an example, this embodiment provides a system block diagram such as figure 2 shown. Assume that at time t, within the communication range of the task node, there are N (N is a positive integer) candidate helper nodes. Since nodes are mobile, the nodes within the communication range of the task node will change, such as figure 2 , the upper left node may enter the communication range of the task node in the n...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com