Patents

Literature

544 results about "Symmetric multiprocessor system" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Heterogeneous computing refers to systems that use more than one kind of processor or cores. These systems gain performance or energy efficiency not just by adding the same type of processors, but by adding dissimilar coprocessors, usually incorporating specialized processing capabilities to handle particular tasks.

System and method for submitting and performing computational tasks in a distributed heterogeneous networked environment

ActiveUS20040098447A1Resource allocationMultiple digital computer combinationsSymmetric multiprocessor systemOperating environment

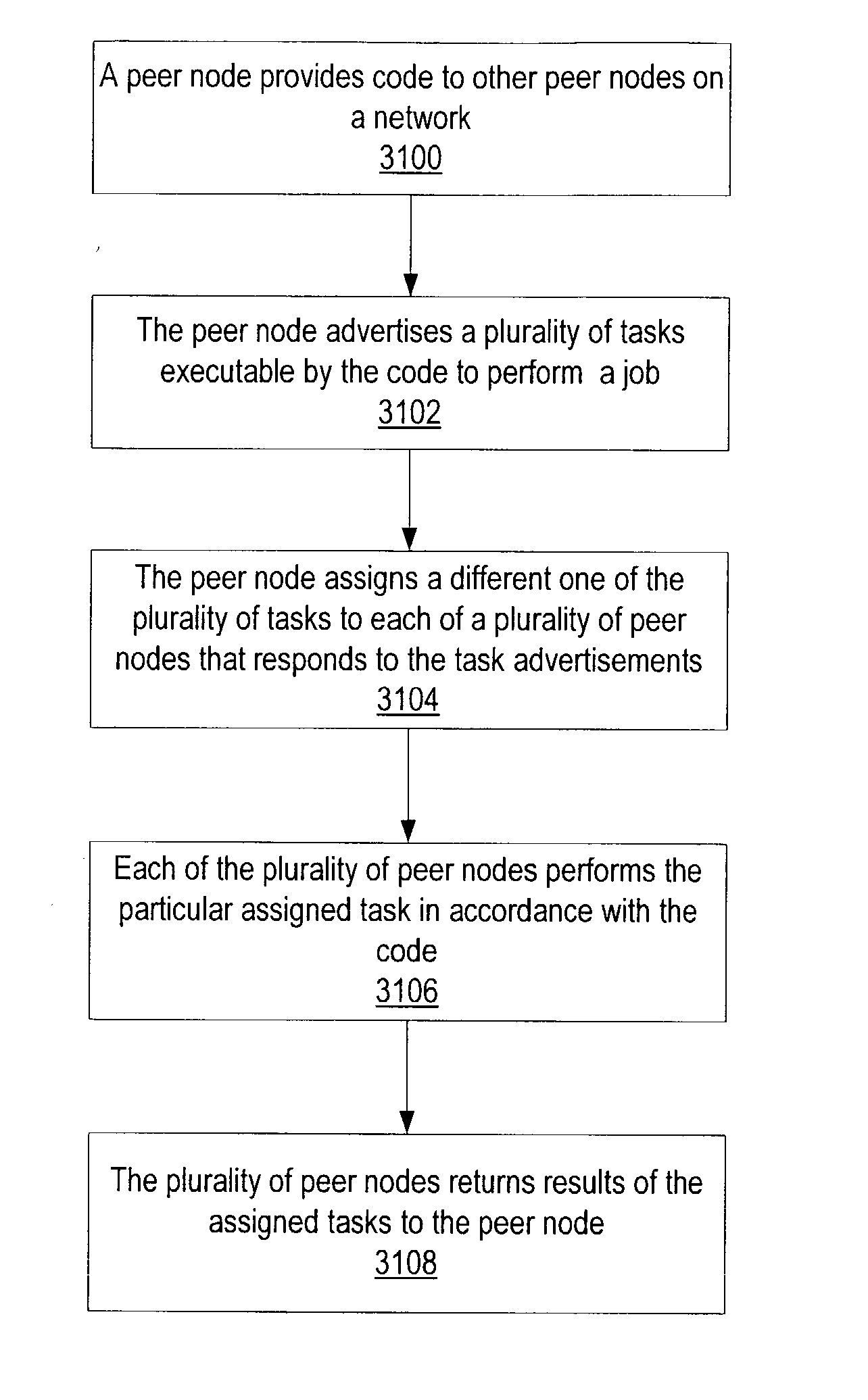

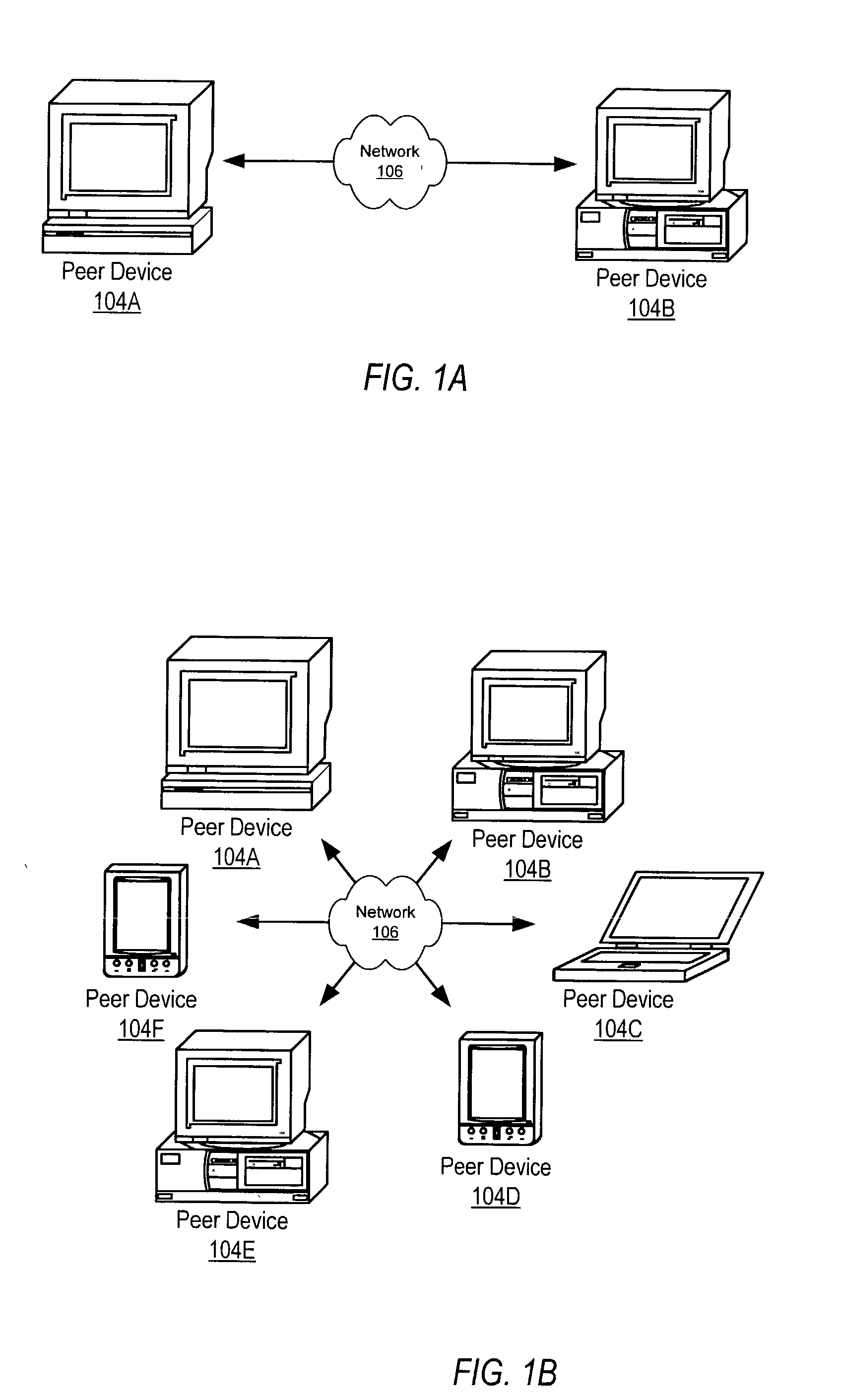

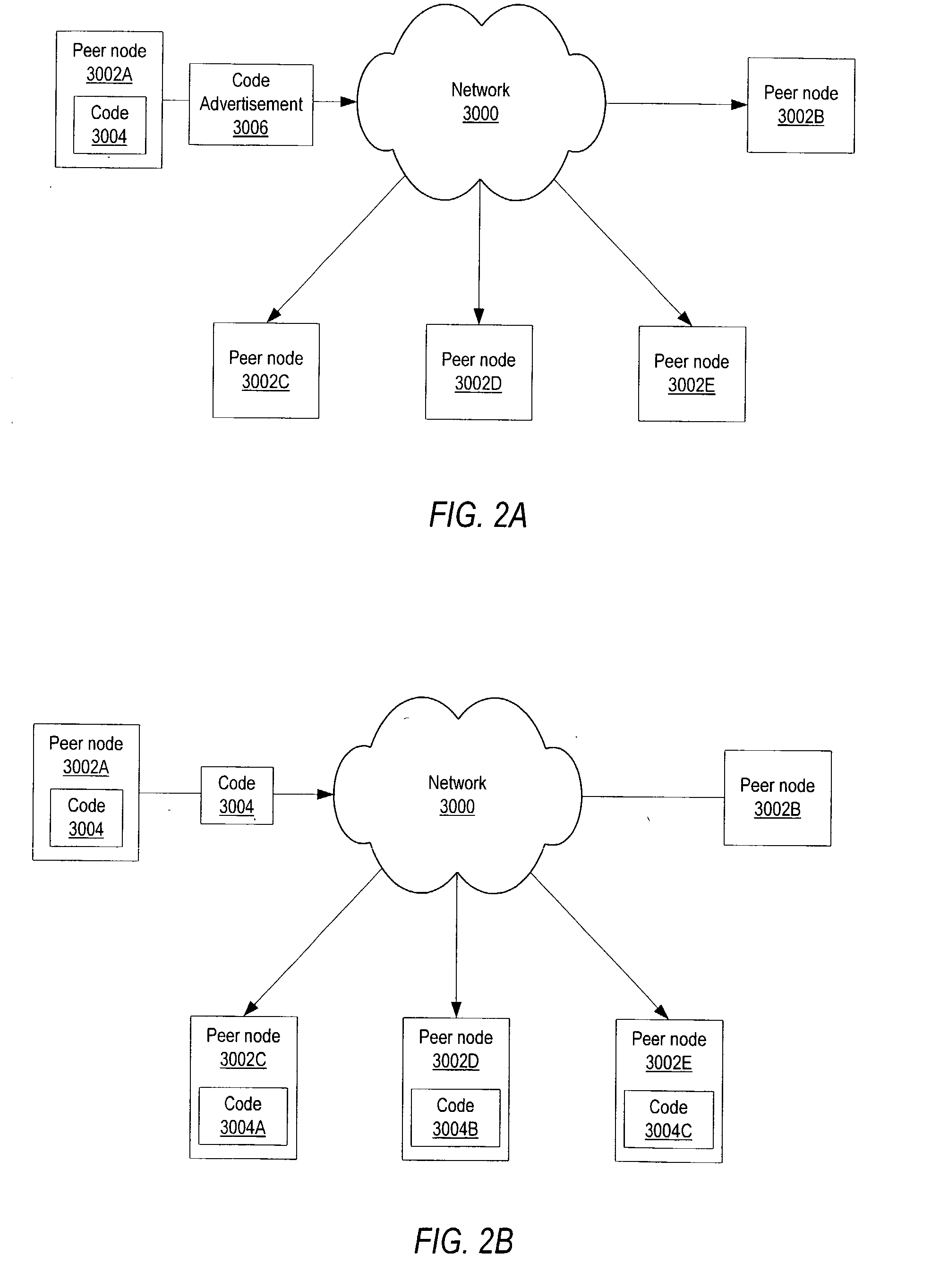

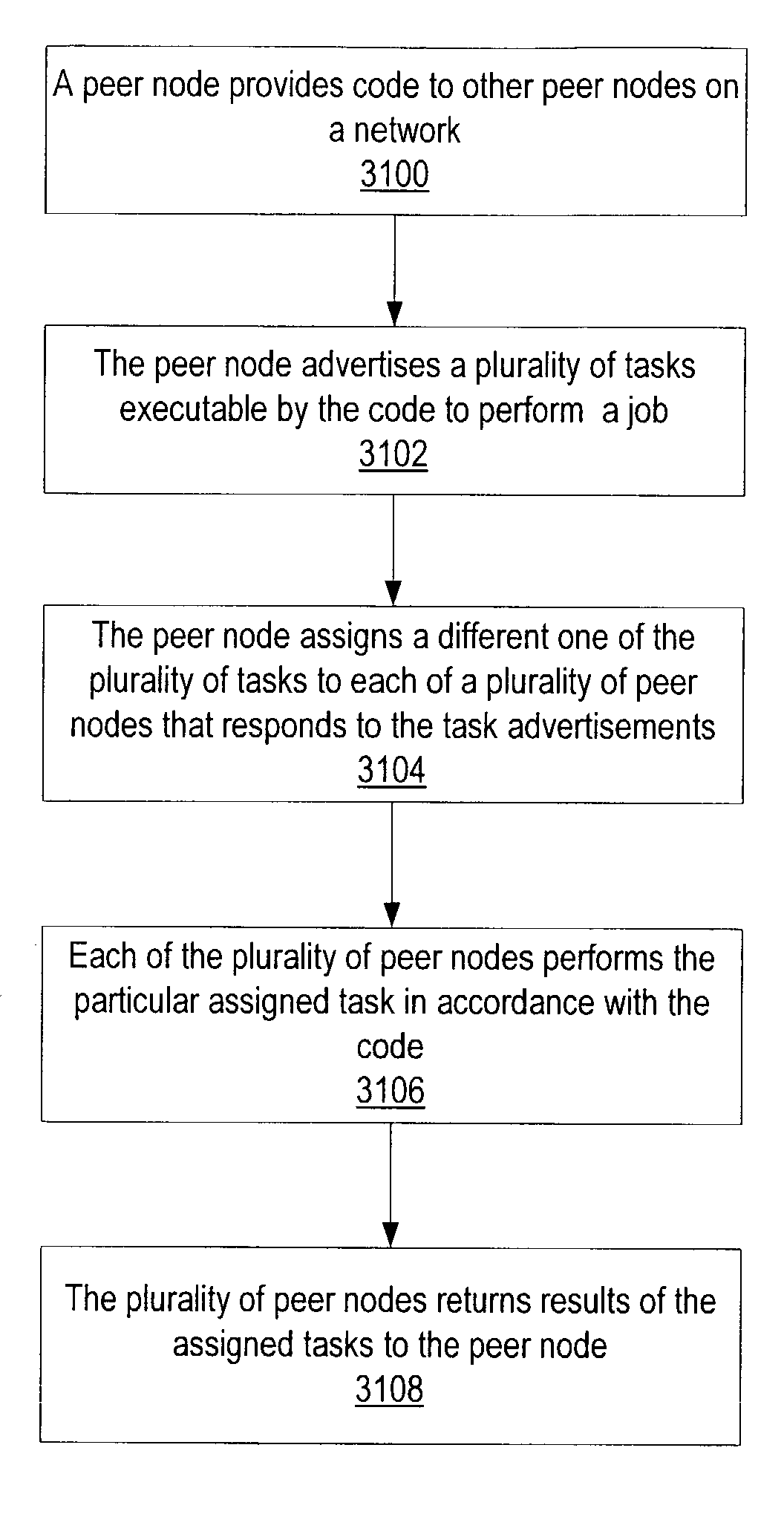

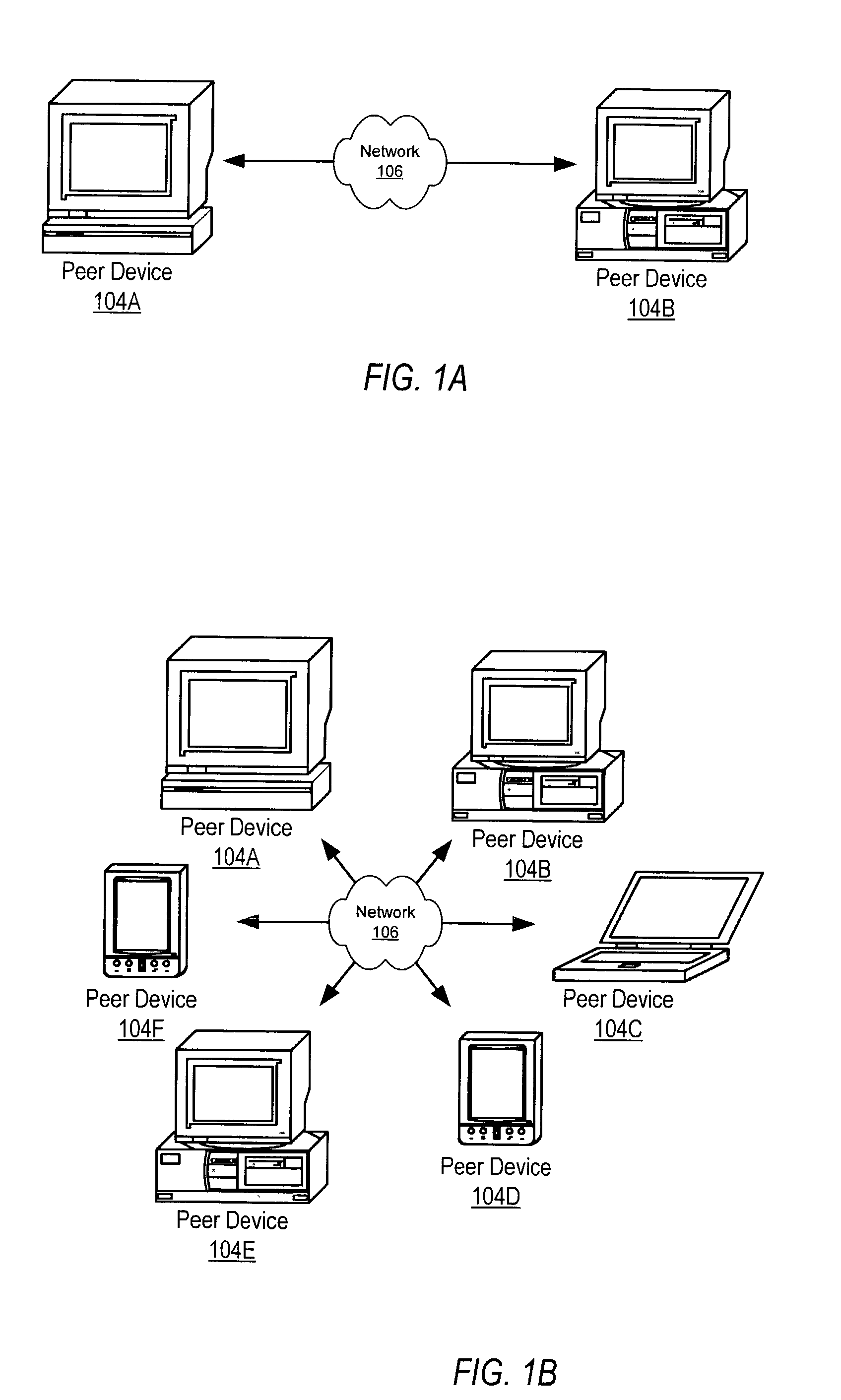

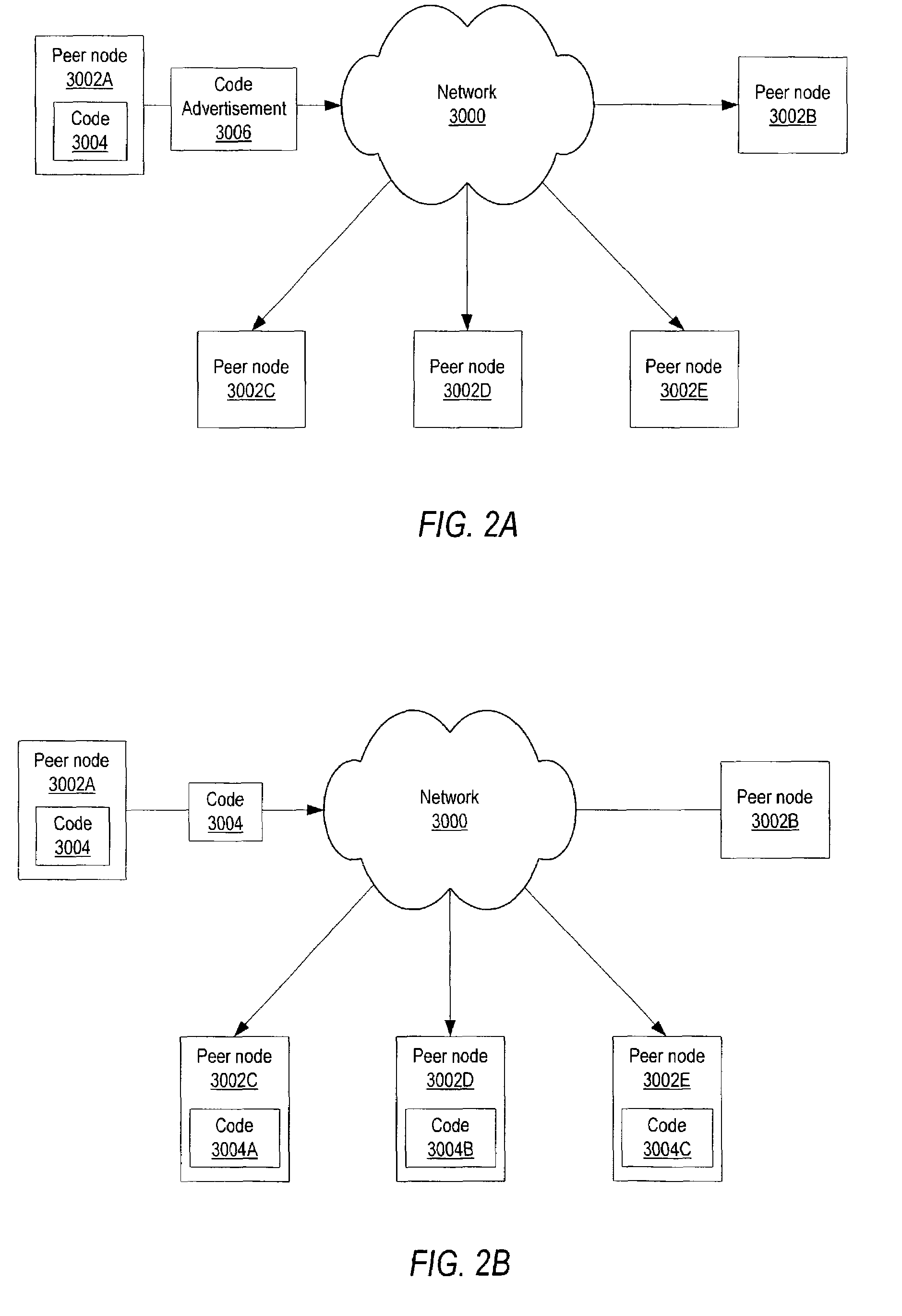

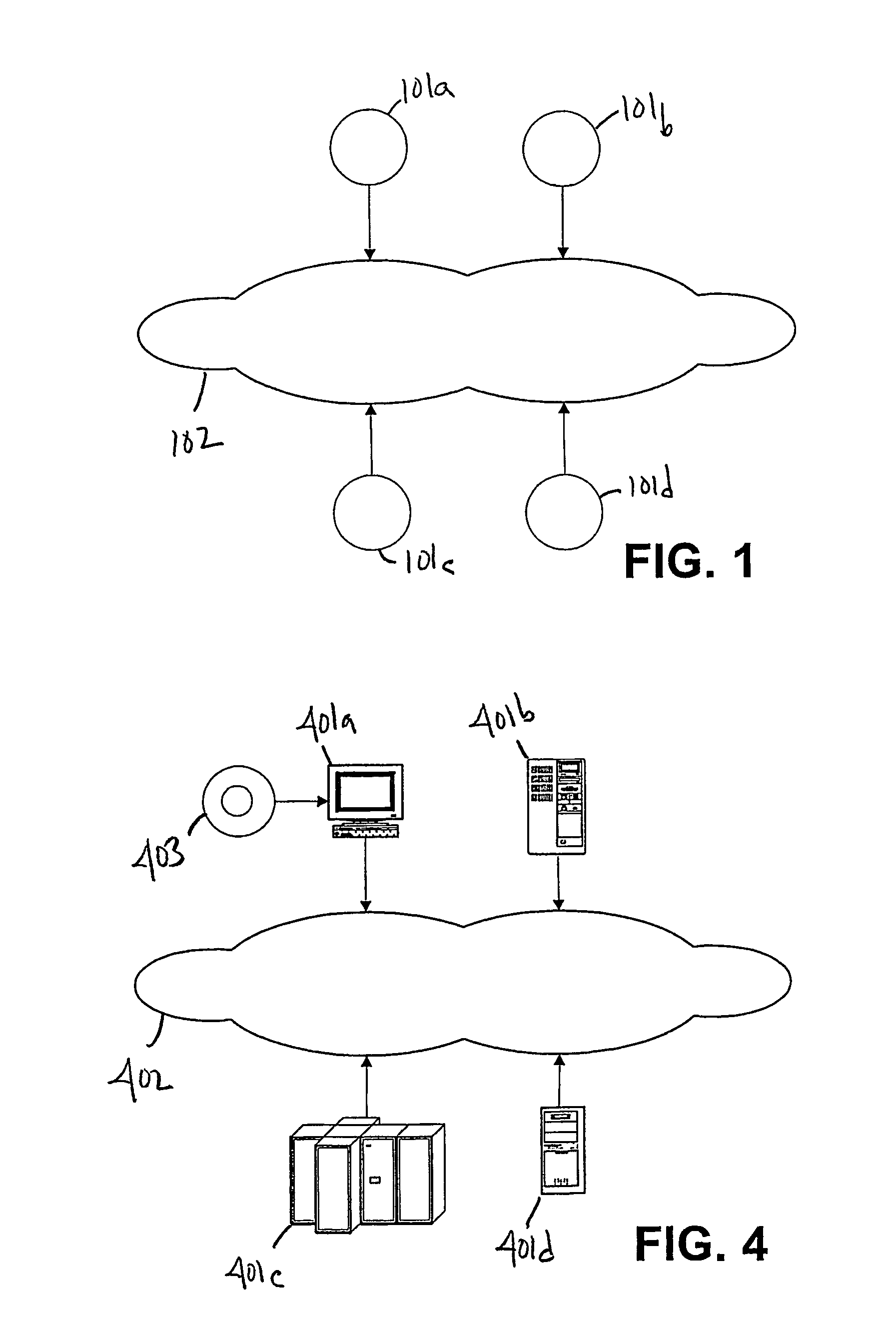

System and method for submitting and performing computational tasks in a distributed heterogeneous networked environment. Embodiments may allow tasks to be submitted and run in parallel on a network of heterogeneous computers implementing a variety of operating environments. In one embodiment, a user on an originating node may advertise code on the network. Peer nodes that respond to the advertisement may receive the code. A job to be executed by the code may be split into separate tasks to distributed to the peer nodes that received the code. These tasks may be advertised on the network. Tasks may be assigned to peer nodes that respond to the task advertisements. The peer nodes may then work on the assigned tasks. Once a peer node's work on a task is completed, the peer node may return the results of the task to the originating node.

Owner:ORACLE INT CORP

Cross-platform development for devices with heterogeneous capabilities

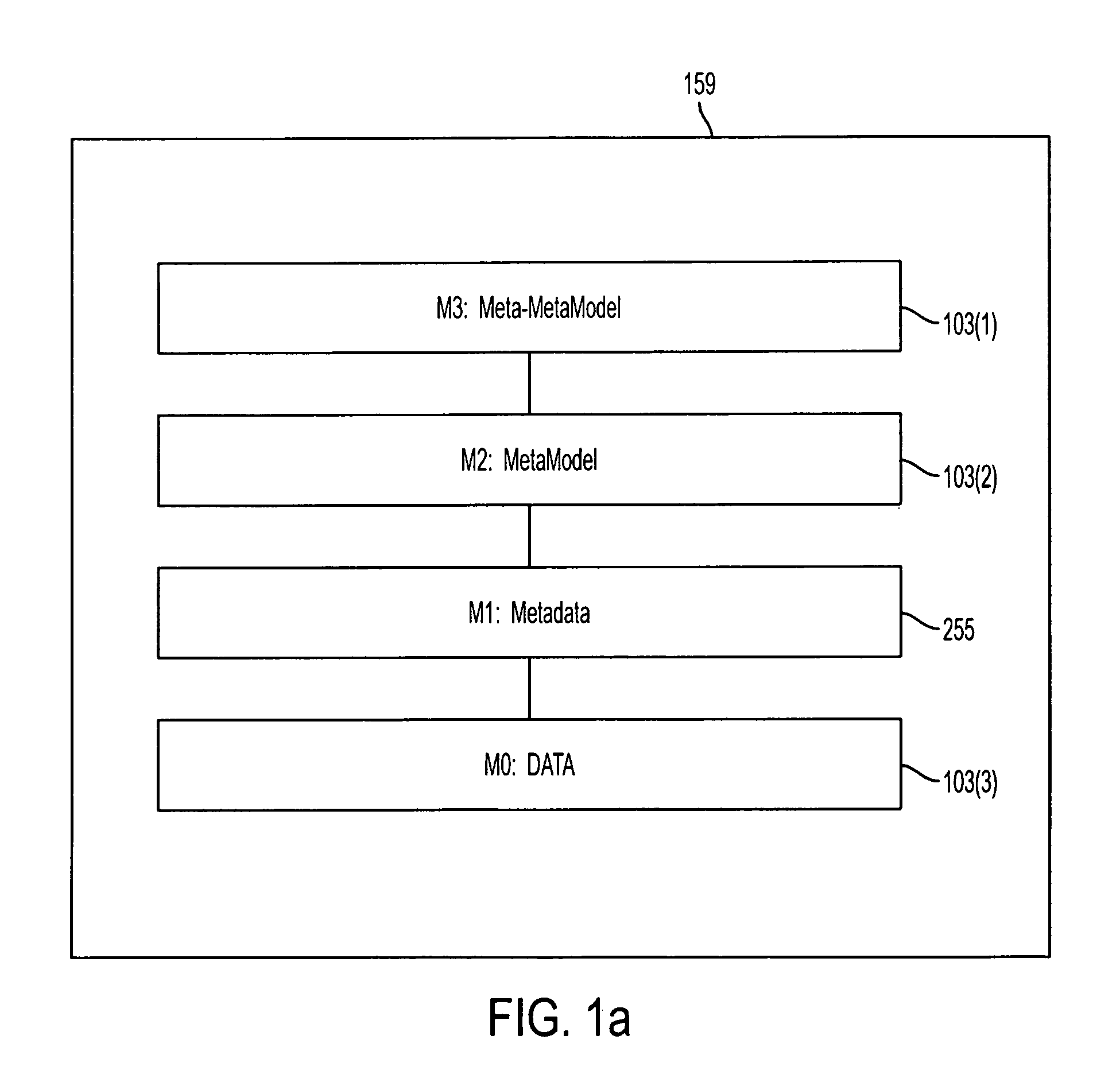

ActiveUS7240327B2Good serviceFunction increaseMultiple digital computer combinationsSpecific program execution arrangementsSoftware engineeringOutput device

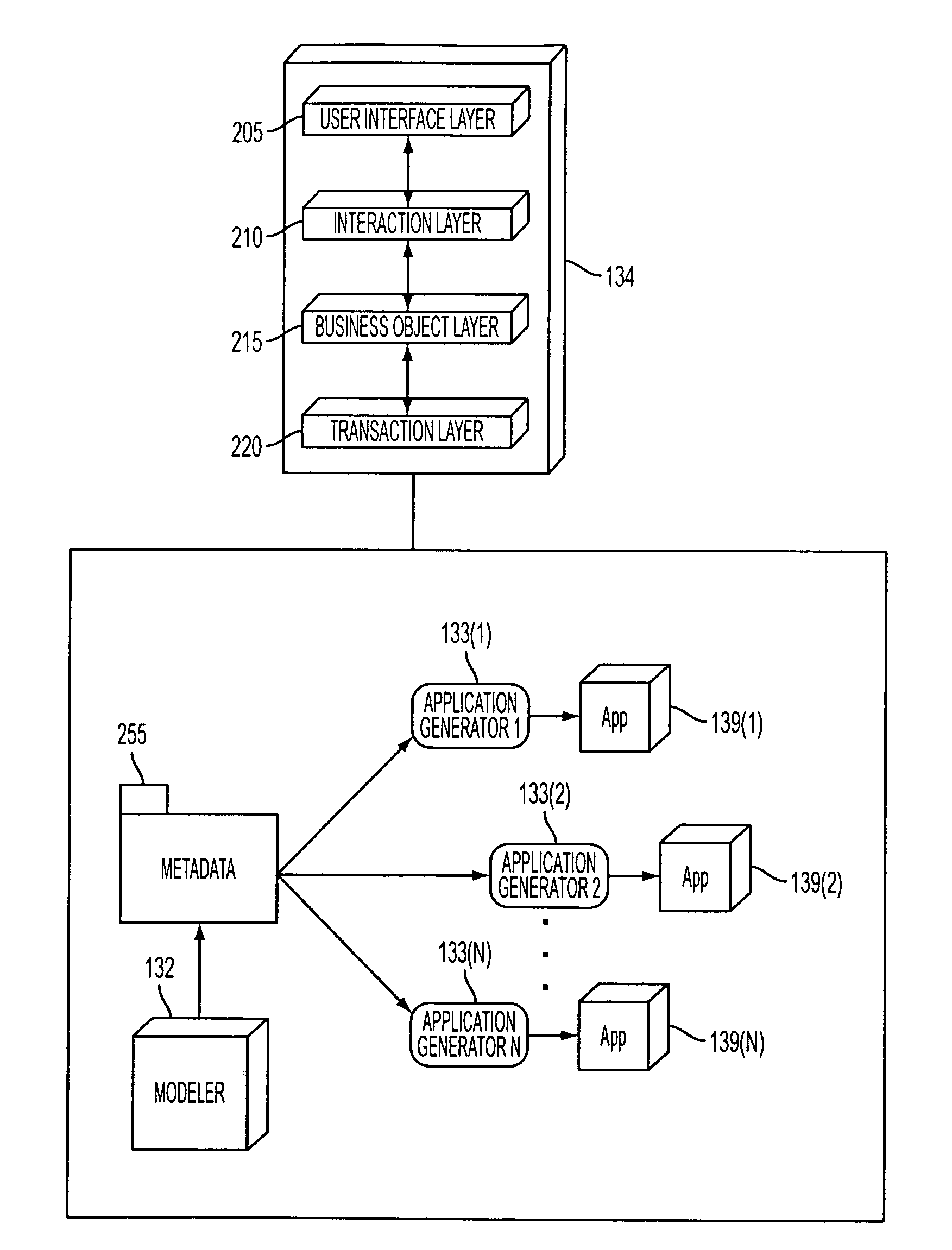

A system for generating software applications for a plurality of heterogeneous computing devices. Each computing device has different capabilities. The system outputs device-specific versions of a software application each tailored to the capabilities of the associated computing device in response to receiving device-independent modeling information characterizing the software application. The system includes a framework, a plurality of object types, a modeling tool, and a plurality of device-specific code generators. The framework defines common services on the computing devices. Each object type has a functional relationship to the common services provided by the framework. The modeling tool defines instances of the plurality of object types based on modeling information received as input, outputting a metadata structure describing the behavior and functionality of a software application. From the metadata, the code generators generate device-specific application code tailored to the capabilities of the associated devices.

Owner:SAP AG

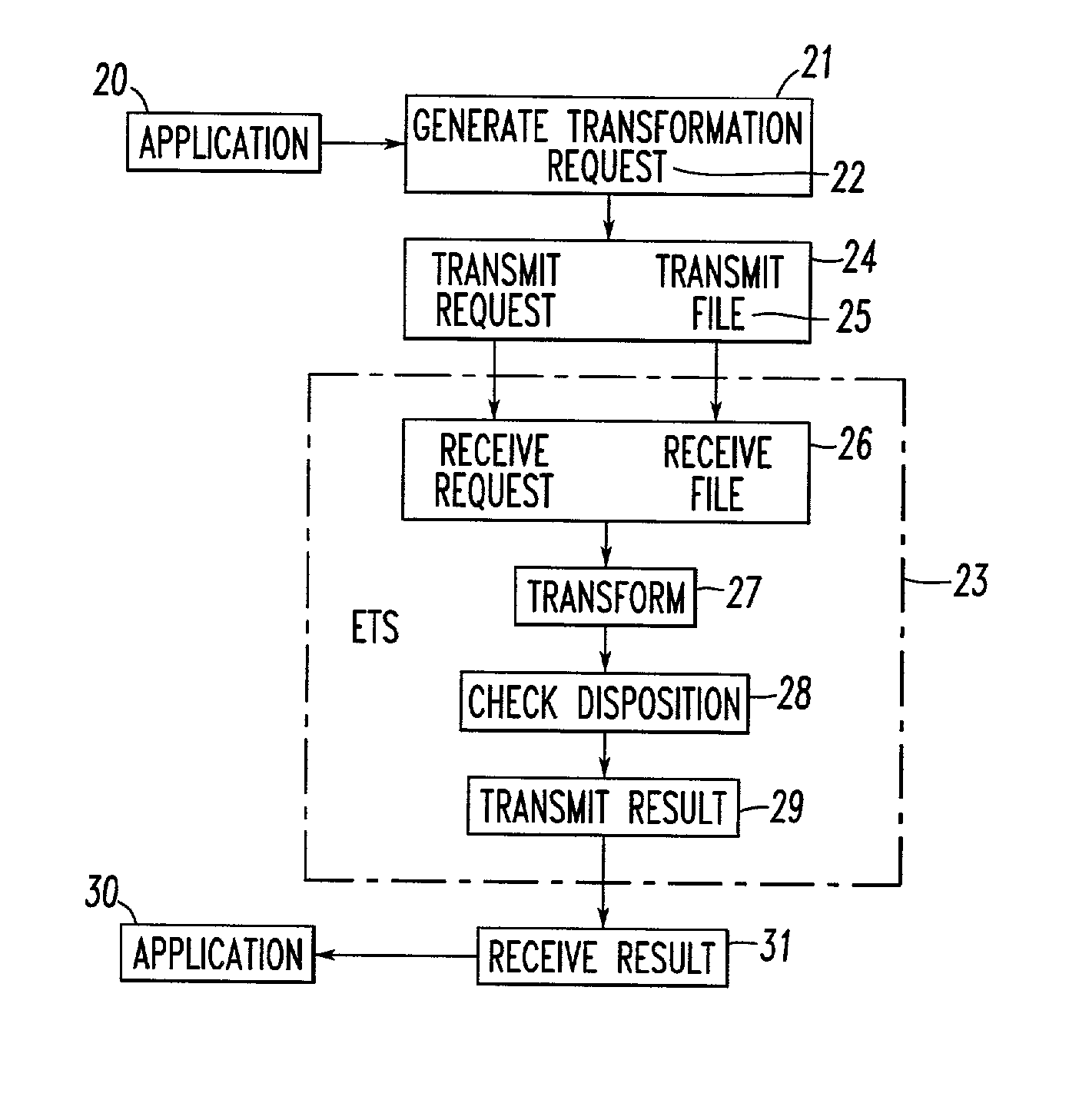

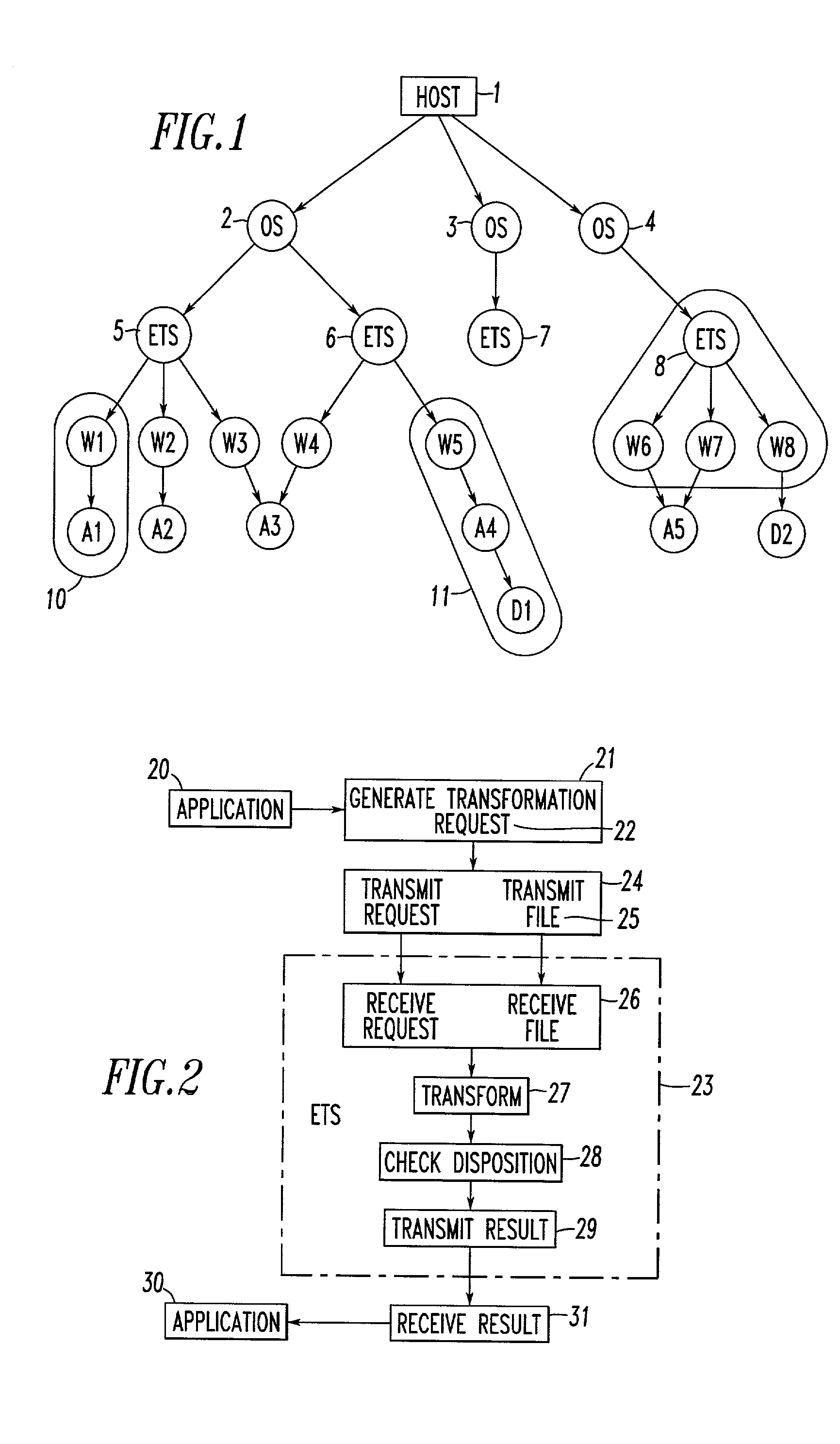

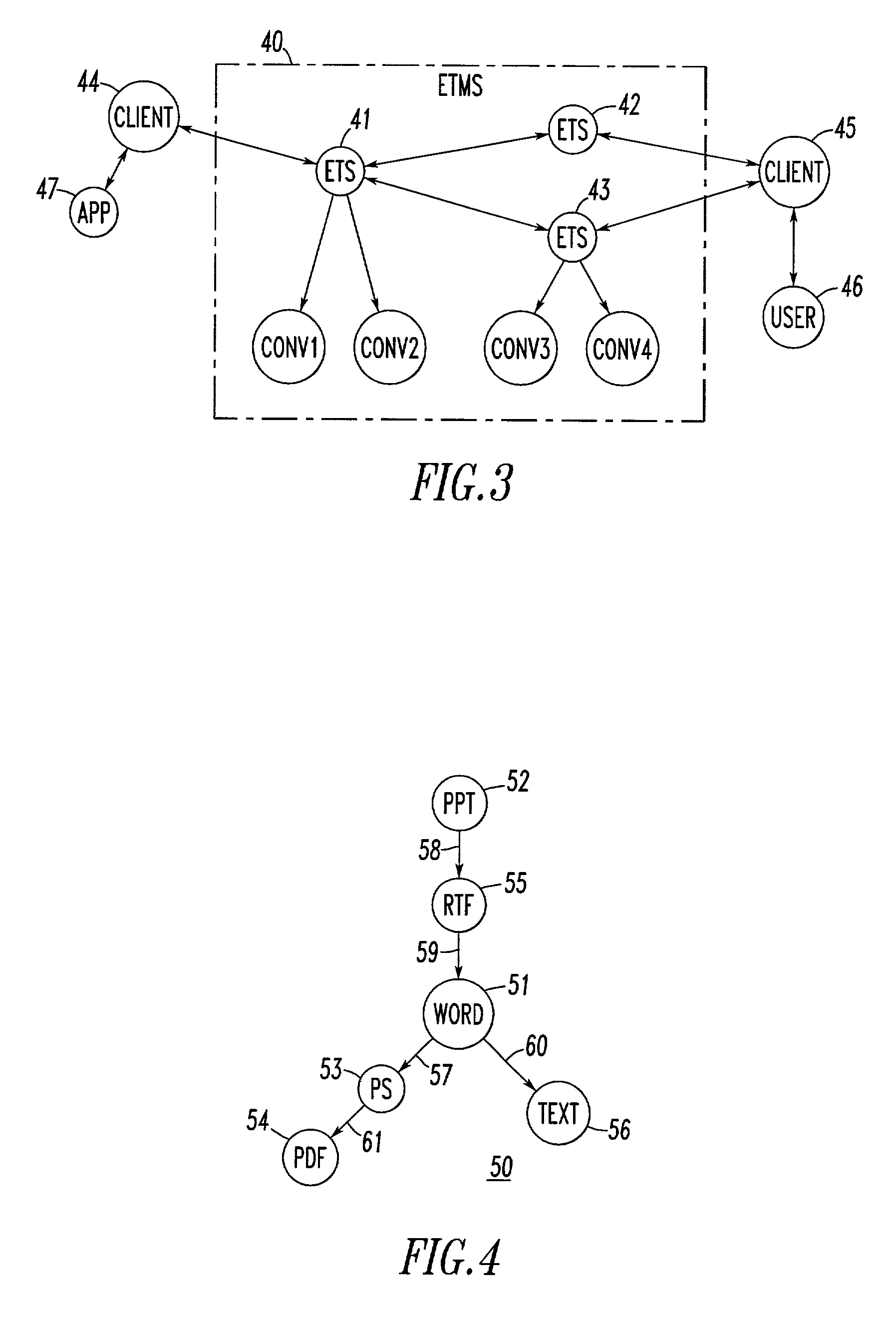

Method and system for data transformation in a heterogeneous computer system

InactiveUS20030041095A1Digital data information retrievalMultiple digital computer combinationsGraphicsClient-side

A data transformation system includes clients, which initiate requests for transformation of data between first and second data formats. The system also includes peer transformation servers having data converters and graphs of available transformations between input and output data formats of such servers. The graph includes unidirectional edges, which extend between corresponding pairs of the formats. The servers collectively include one or more converters for each of the edges. The servers receive the requests and select plural intermediate transformations from the first format to plural intermediate formats, and a final transformation from an intermediate format to the second format. Each of the intermediate and final transformations is associated with a corresponding one of the edges. The servers initiate the converters corresponding to the selected transformations, in order to obtain and dispose the data in the second format. A communication network provides communication among the client and servers.

Owner:CHAAVI

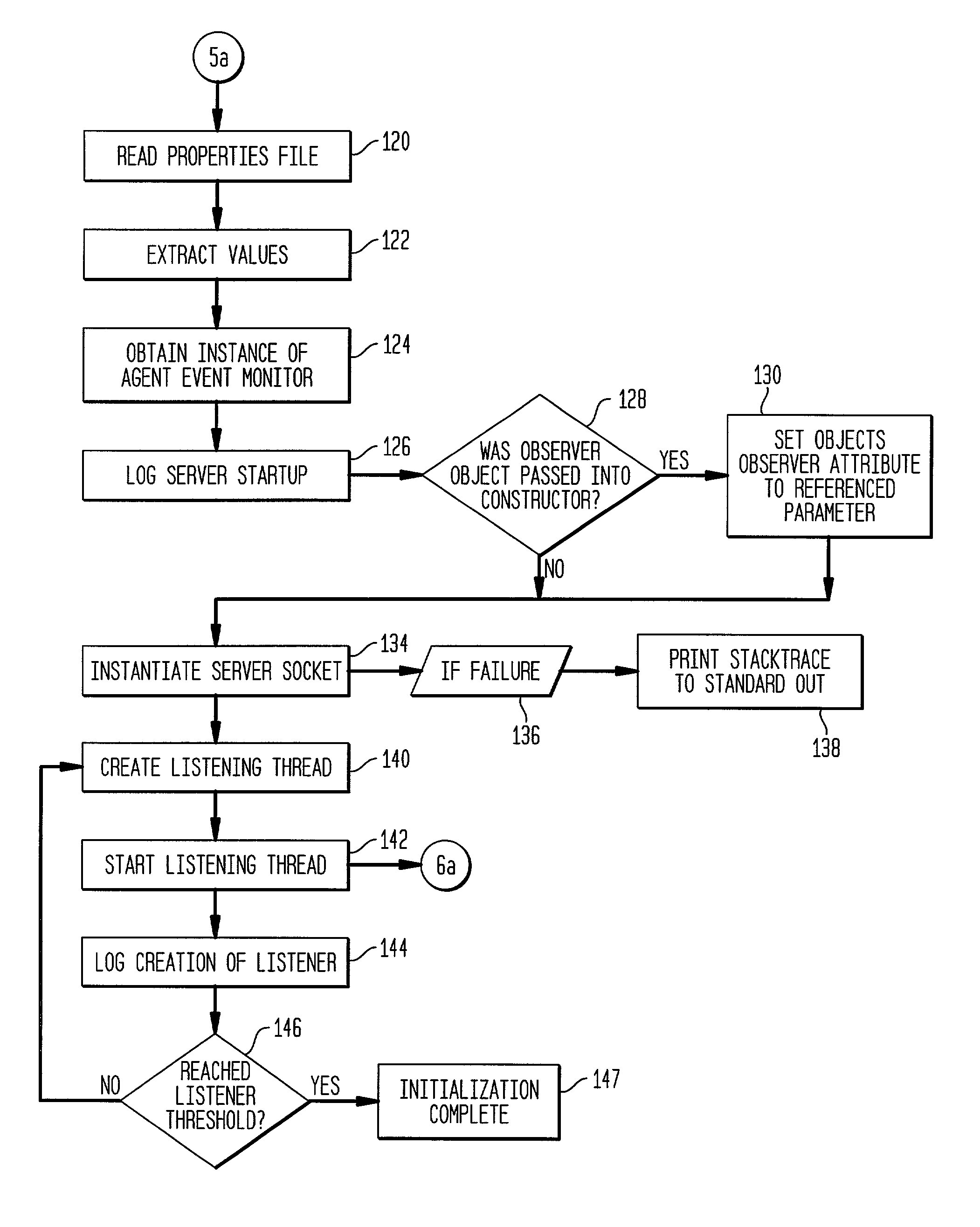

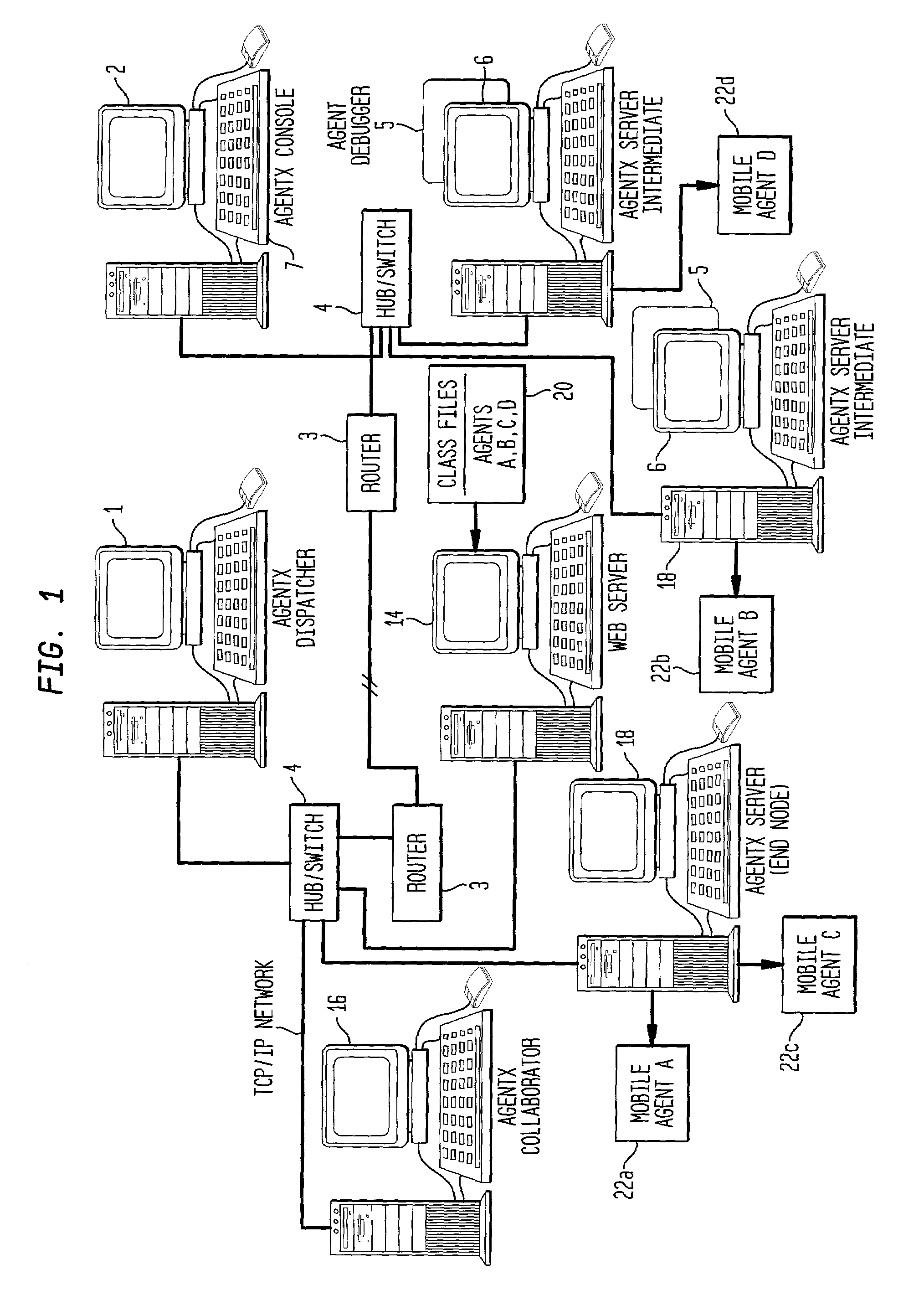

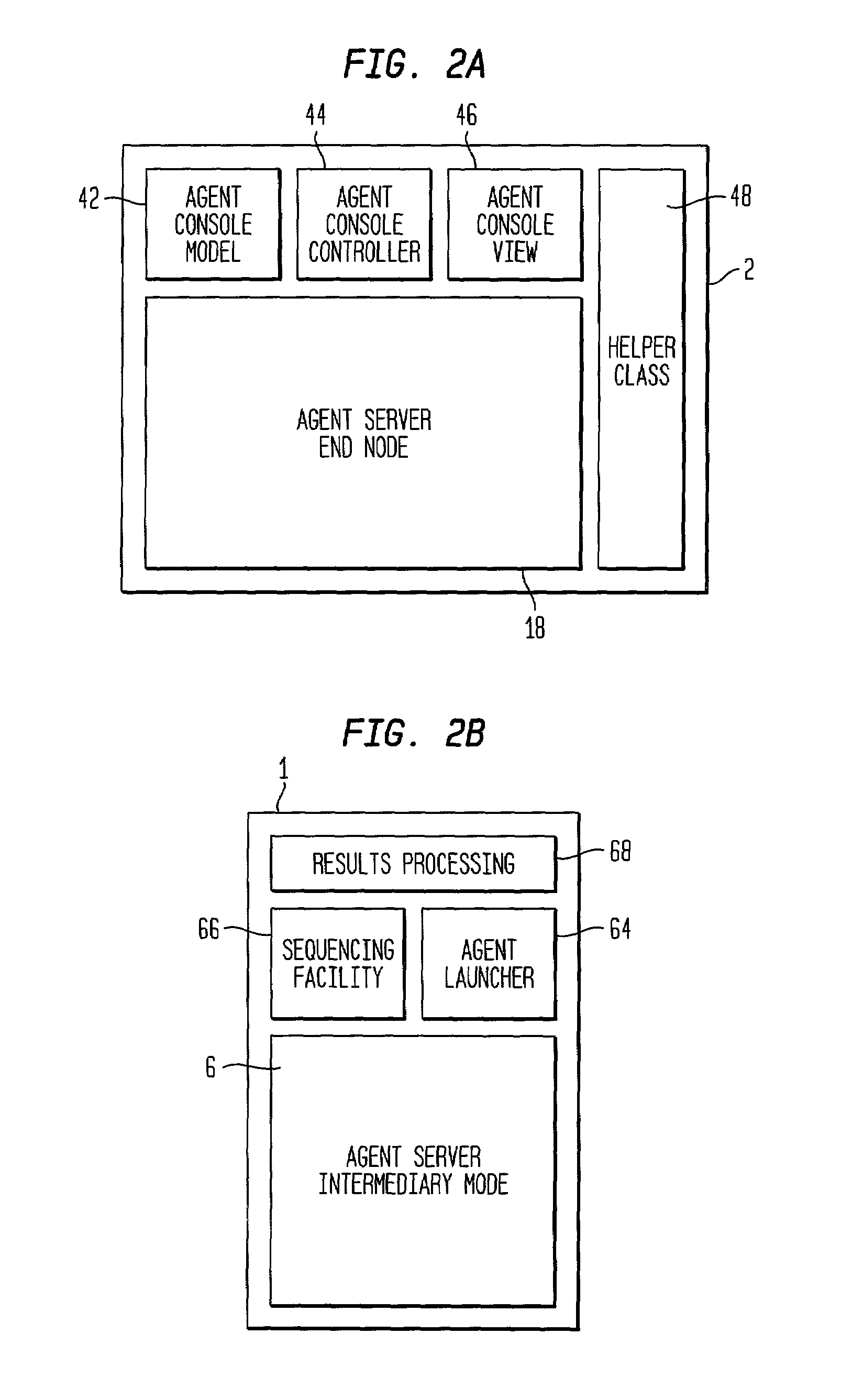

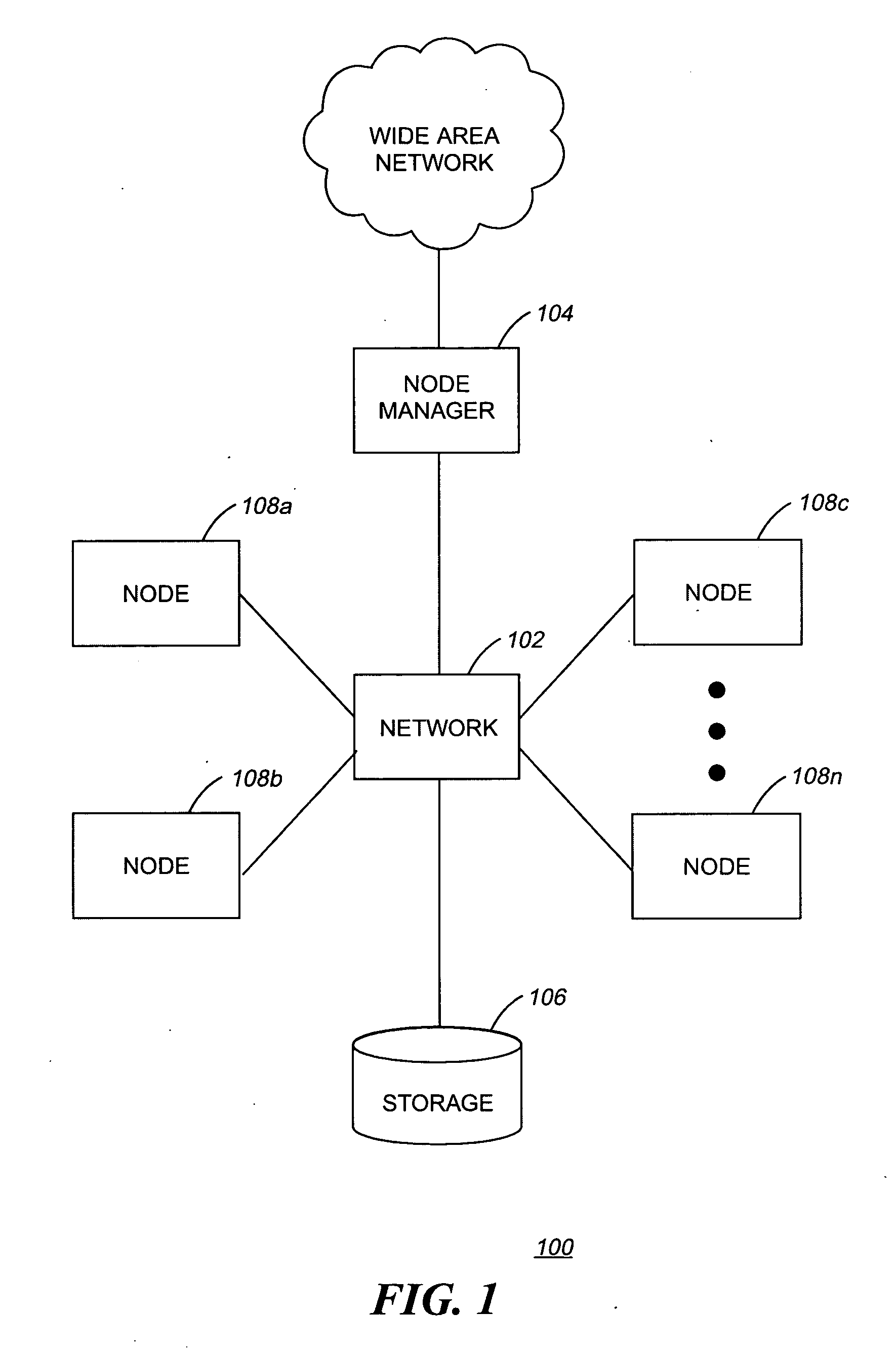

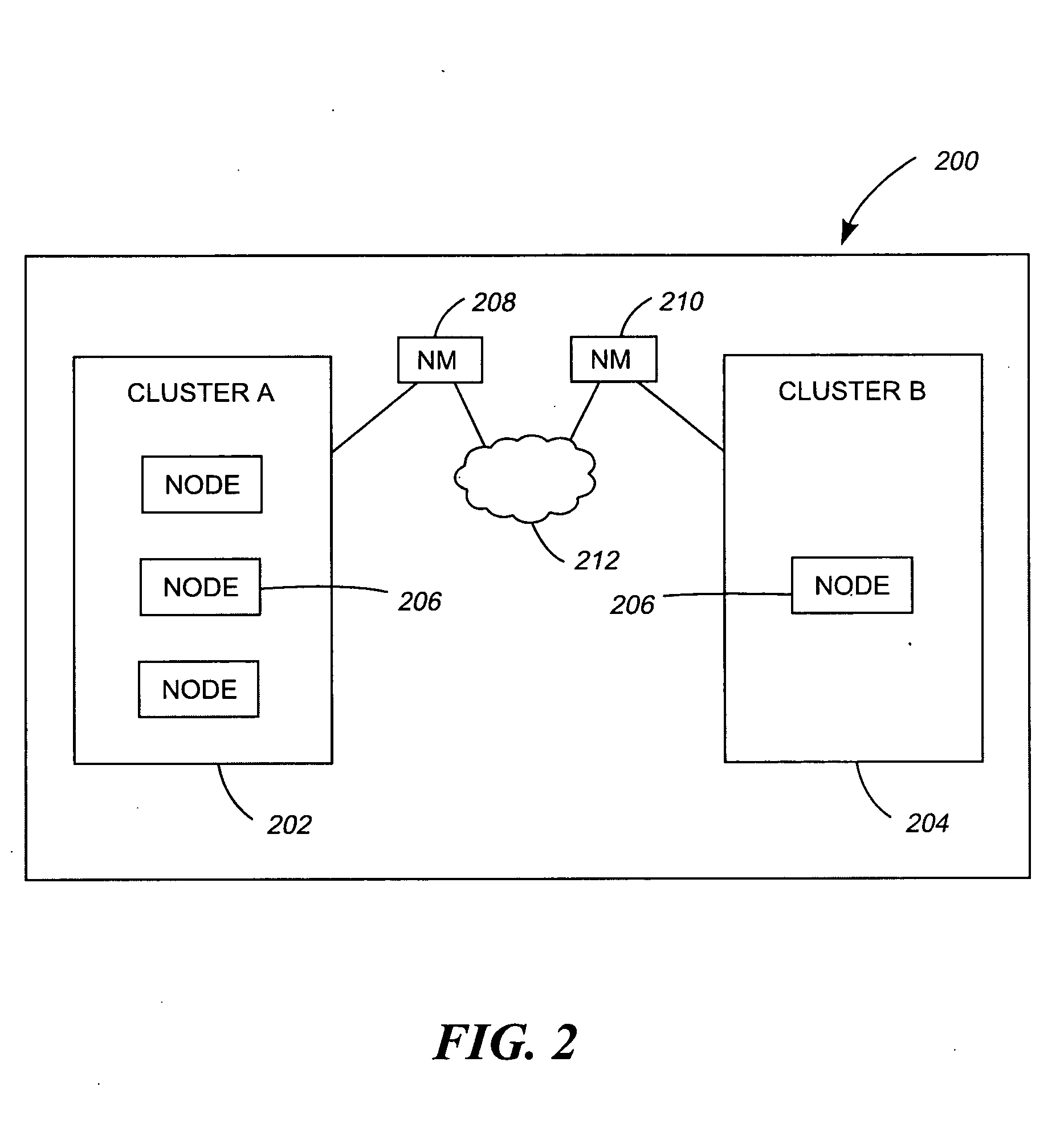

Method and apparatus for breaking down computing tasks across a network of heterogeneous computer for parallel execution by utilizing autonomous mobile agents

InactiveUS7082604B2Ease of parallel processingProgram initiation/switchingResource allocationApplication softwareLarge applications

A method and apparatus is provided for breaking down computing tasks within a larger application and distributing such tasks across a network of heterogeneous computers for simultaneous execution. The heterogeneous computers may be connected across a wide or local area network. The invention supports mobile agents that are self-migrating and can transport state information and stack trace information as they move from one host to another, continuing execution where the mobile agents may have left off. The invention includes a server component for providing an execution environment for the agents, in addition to sub-components which handle real-time collaboration between the mobile agents as well as facilities monitoring during execution. Additional components provide realistic thread migration for the mobile agents. Real-time stack trace information is stored as the computing tasks are executed, and if over-utilization of the computing host occurs, execution of the computing task can be halted and the computing task can be transferred to another computing hosts where execution can be seamlessly resumed using the stored, real-time state information and stack trace information.

Owner:MOBILE AGENT TECH

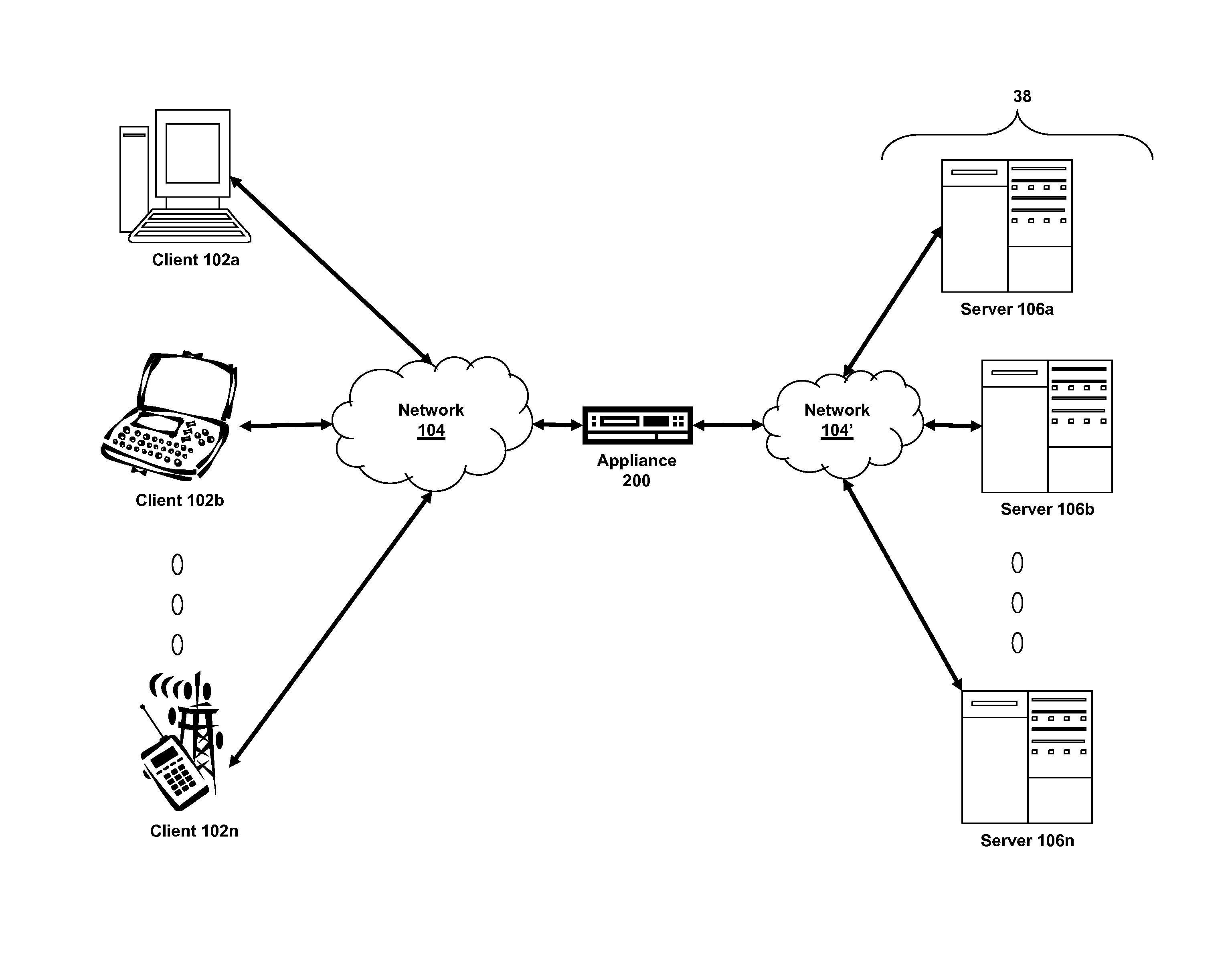

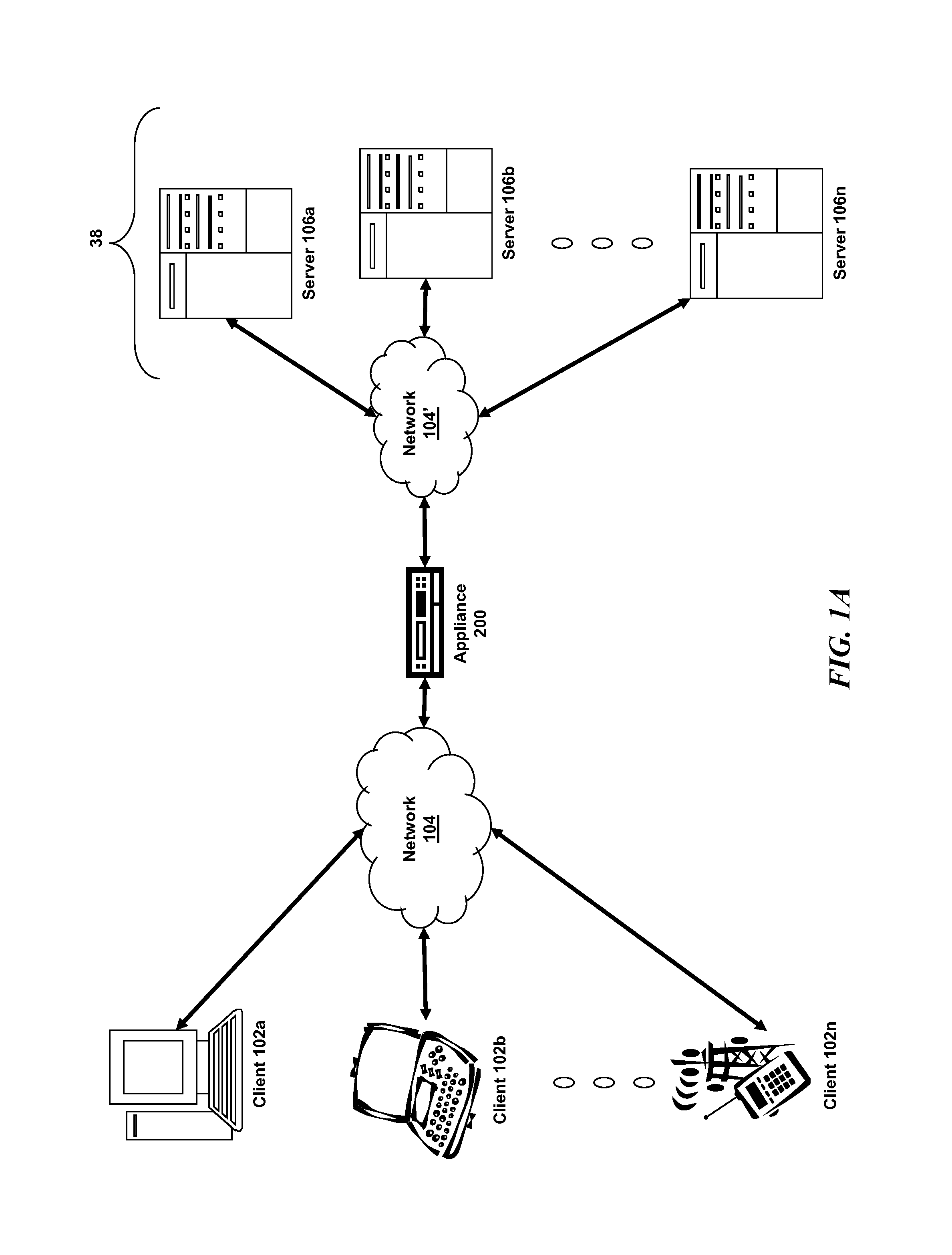

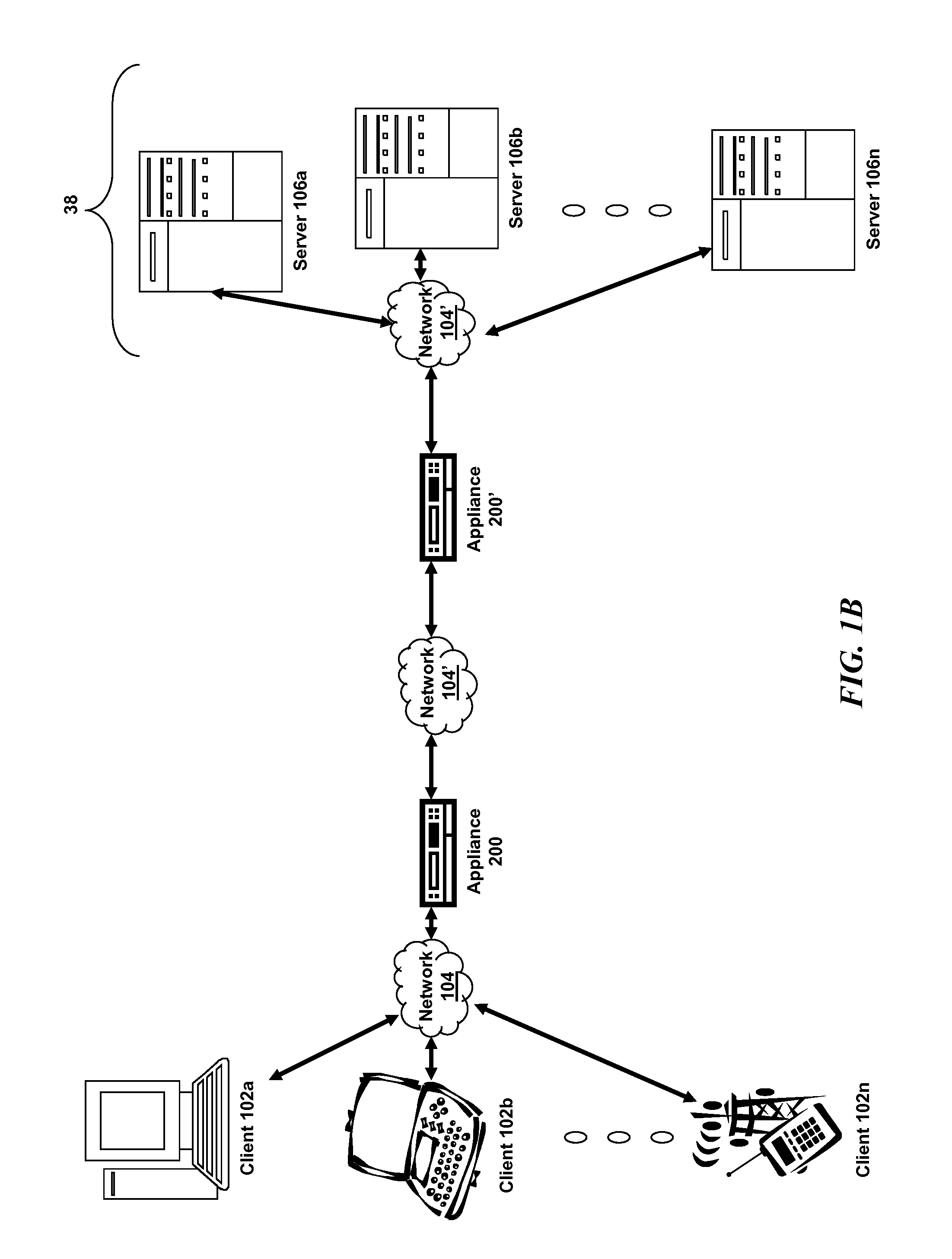

Systems and methods for cloud-based probing and diagnostics

ActiveUS20150195182A1Accelerated settlementDetecting faulty hardware by configuration testDetecting faulty hardware by remote testData centerVirtual device

Systems and methods of the present disclosure are directed to providing centralized diagnostic services to a plurality of heterogeneous computing environments deployed at different data centers on different networks. In some embodiments, a centralized diagnostic tool establishes a connection to a server of a data center that deploys a computing environment with components. The centralized diagnostic tool validates automatically a component of the computing environment based on a corresponding configuration file received from the server for the component. The centralized diagnostic tool establishes a virtual device simulating a client application executing on a client device. The client application can be configured to communicate with the component. The centralized diagnostic tool automatically initiates a request using a predetermined protocol flow, and the virtual device transmits the request to the component. The virtual device receives a response to the request indicative of a status of the computing environment.

Owner:CITRIX SYST INC

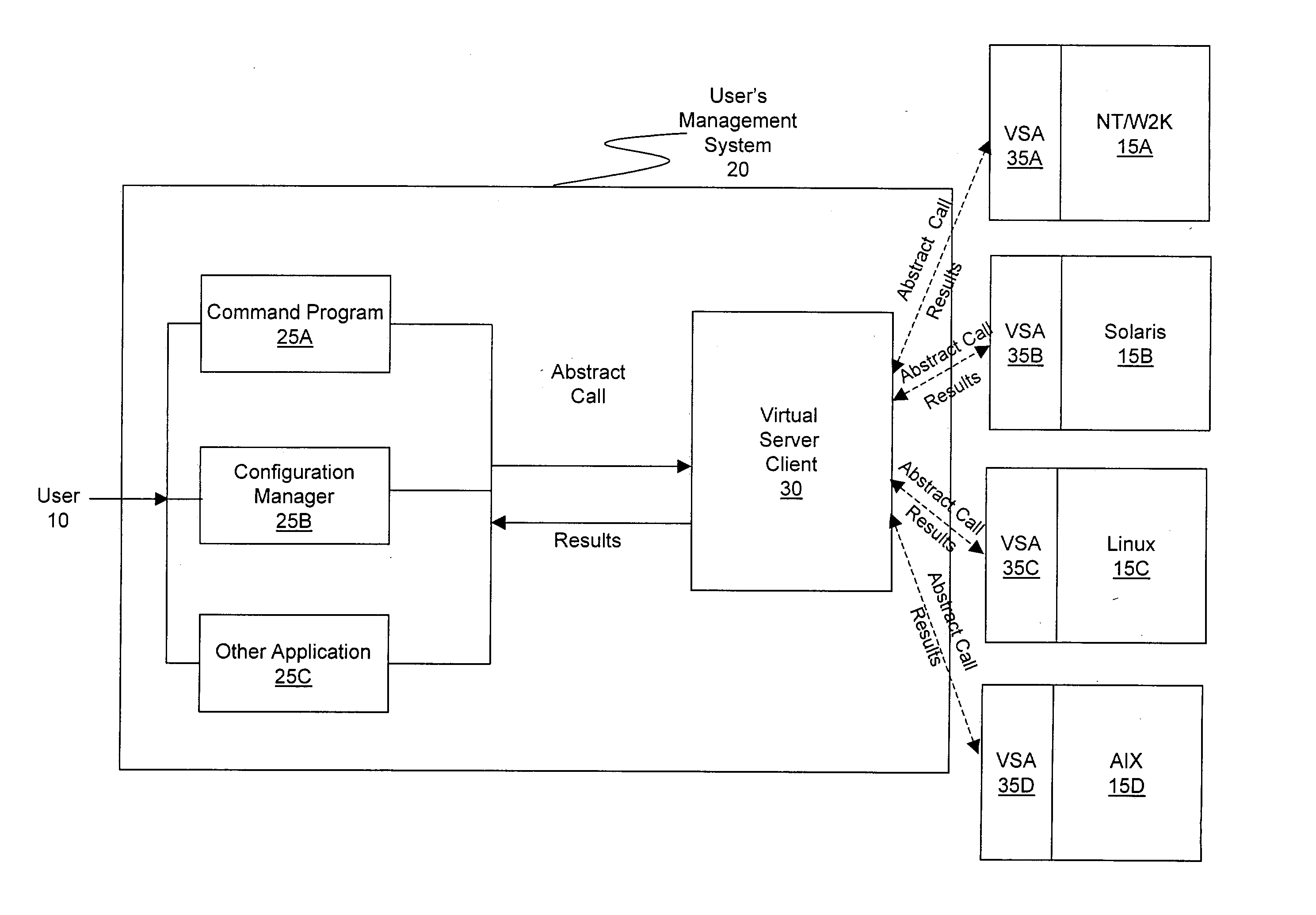

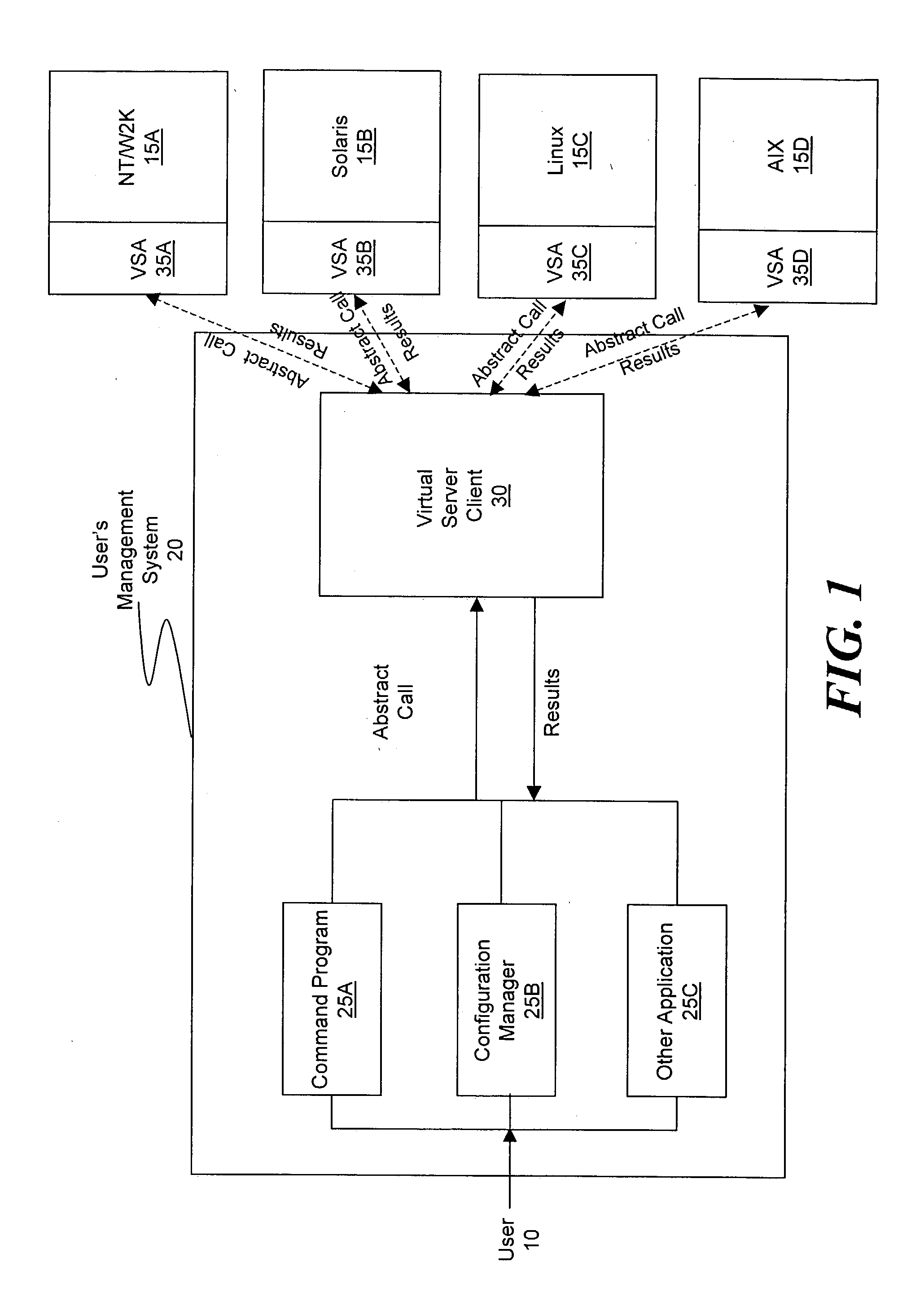

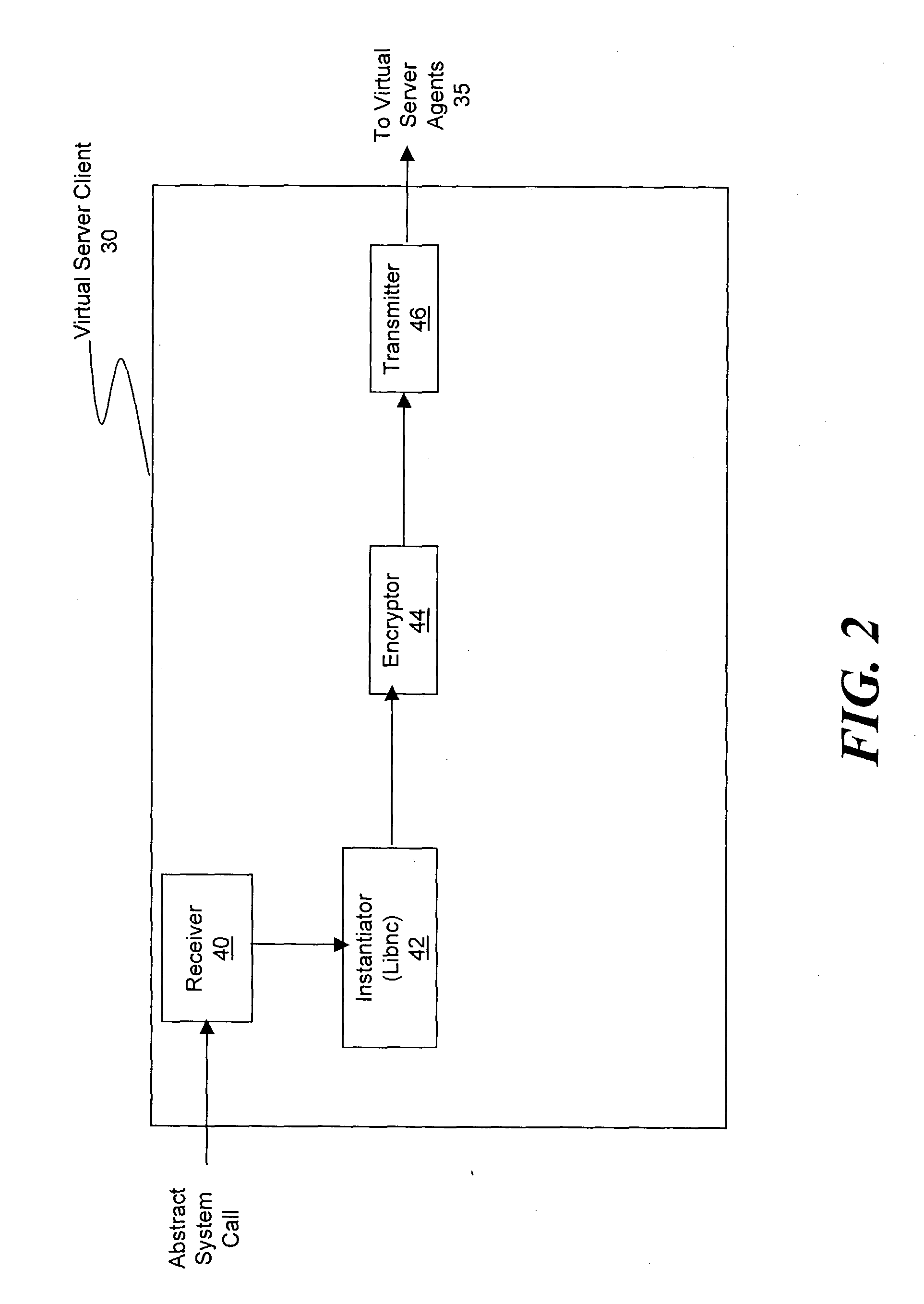

Method and system for simplifying distributed server management

ActiveUS20030233571A1Error detection/correctionInterprogram communicationOperational systemSystem call

A method and system for managing a large number of servers and their server components distributed throughout a heterogeneous computing environment is provided. In one embodiment, an authenticated user, such as a IT system administrator, can securely and simultaneously control and configure multiple servers, supporting different operating systems, through a "virtual server." A virtual server is an abstract model representing a collection of actual target servers. To represent multiple physical servers as one virtual server, abstract system calls that extend execution of operating-system-specific system calls to multiple servers, regardless of their supported operating systems, are used. A virtual server is implemented by a virtual server client and a collection of virtual server agents associated with a collection of actual servers.

Owner:BLADELOGIC

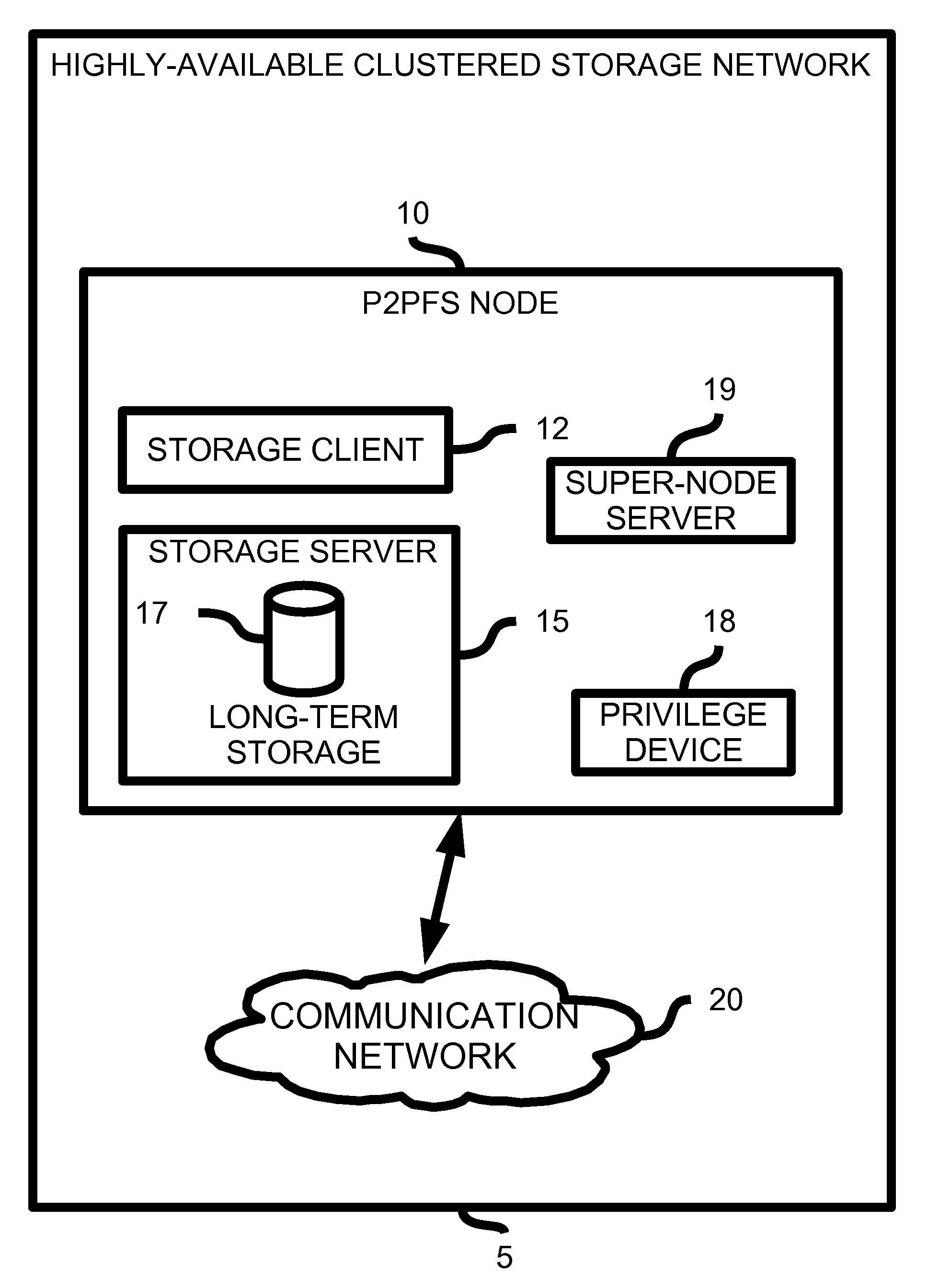

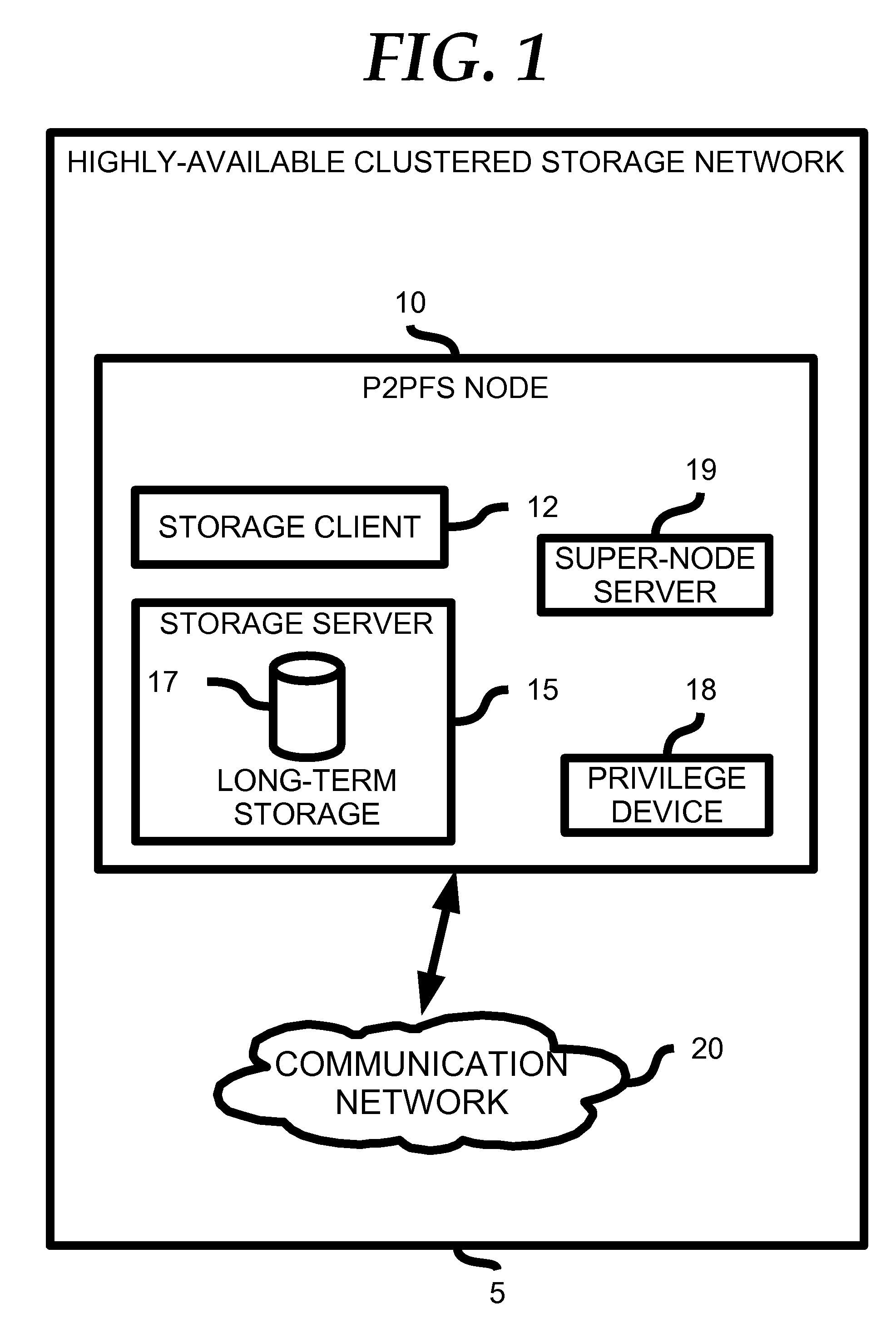

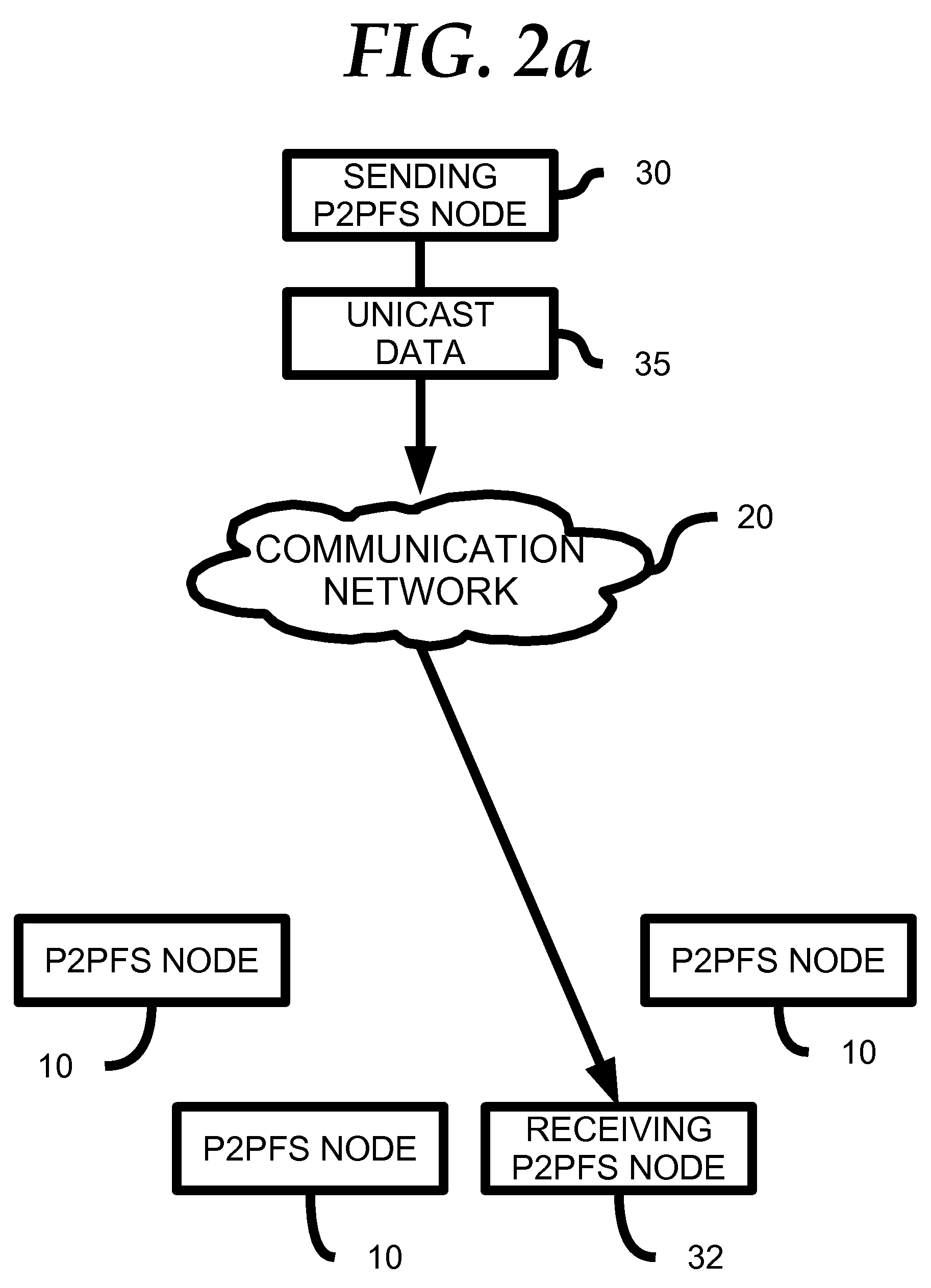

Highly Available Clustered Storage Network

InactiveUS20080077635A1Highly auto-configuringImprove scalabilityDigital data information retrievalSpecial data processing applicationsFault toleranceClustered file system

A computing method and system is presented that allows multiple heterogeneous computing systems containing file storage mechanisms to work together in a peer-to-peer fashion to provide a fault-tolerant decentralized highly available clustered file system. The file system can be used by multiple heterogeneous systems to store and retrieve files. The system automatically ensures fault tolerance by storing files in multiple locations and requires hardly any configuration for a computing device to join the clustered file system. Most importantly, there is no central authority regarding meta-data storage, ensuring no single point of failure.

Owner:DIGITAL BAZAR

System and method for submitting and performing computational tasks in a distributed heterogeneous networked environment

ActiveUS7395536B2Resource allocationMultiple digital computer combinationsSymmetric multiprocessor systemOperating environment

System and method for submitting and performing computational tasks in a distributed heterogeneous networked environment. Embodiments may allow tasks to be submitted and run in parallel on a network of heterogeneous computers implementing a variety of operating environments. In one embodiment, a user on an originating node may advertise code on the network. Peer nodes that respond to the advertisement may receive the code. A job to be executed by the code may be split into separate tasks to distributed to the peer nodes that received the code. These tasks may be advertised on the network. Tasks may be assigned to peer nodes that respond to the task advertisements. The peer nodes may then work on the assigned tasks. Once a peer node's work on a task is completed, the peer node may return the results of the task to the originating node.

Owner:ORACLE INT CORP

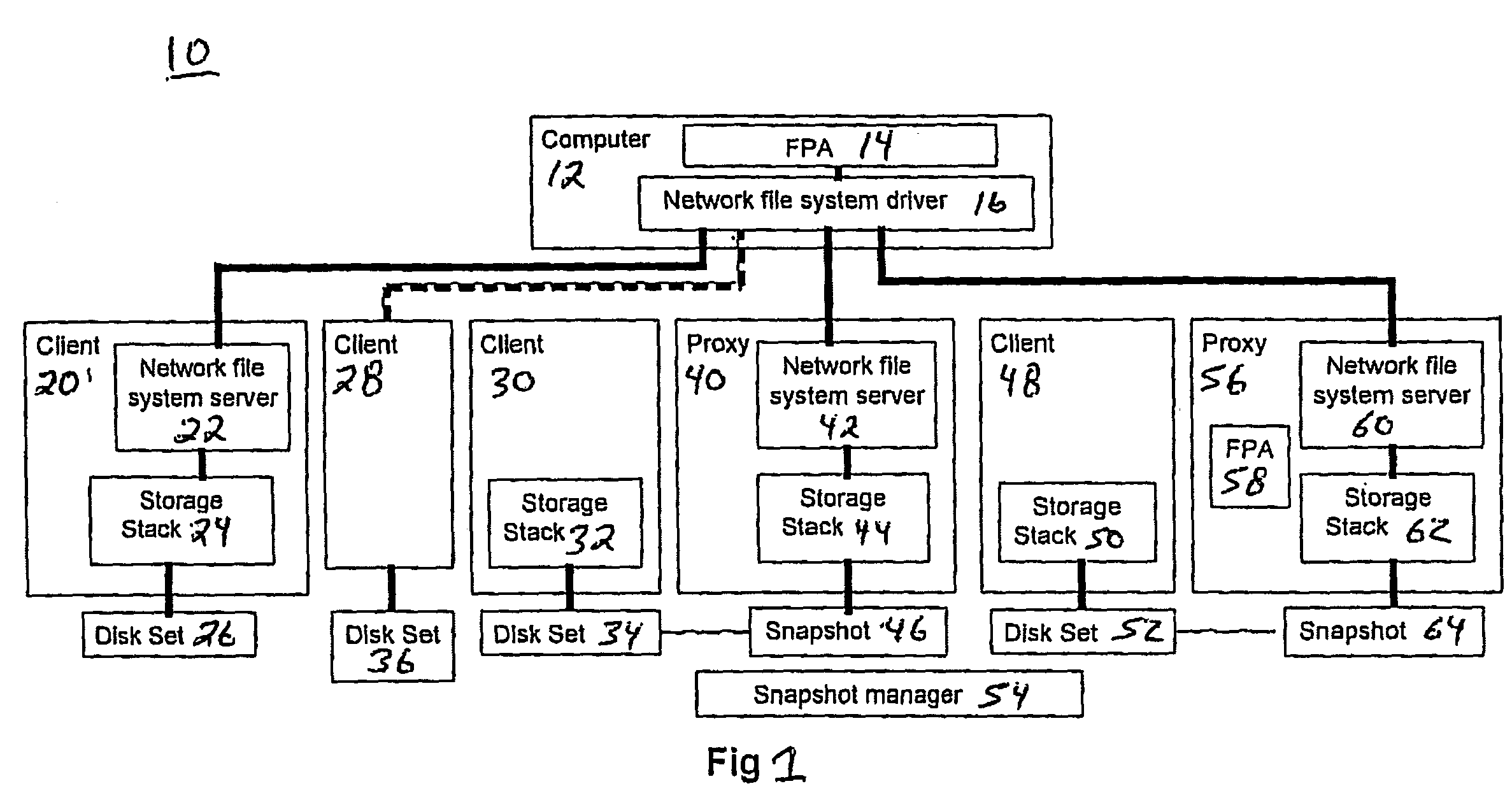

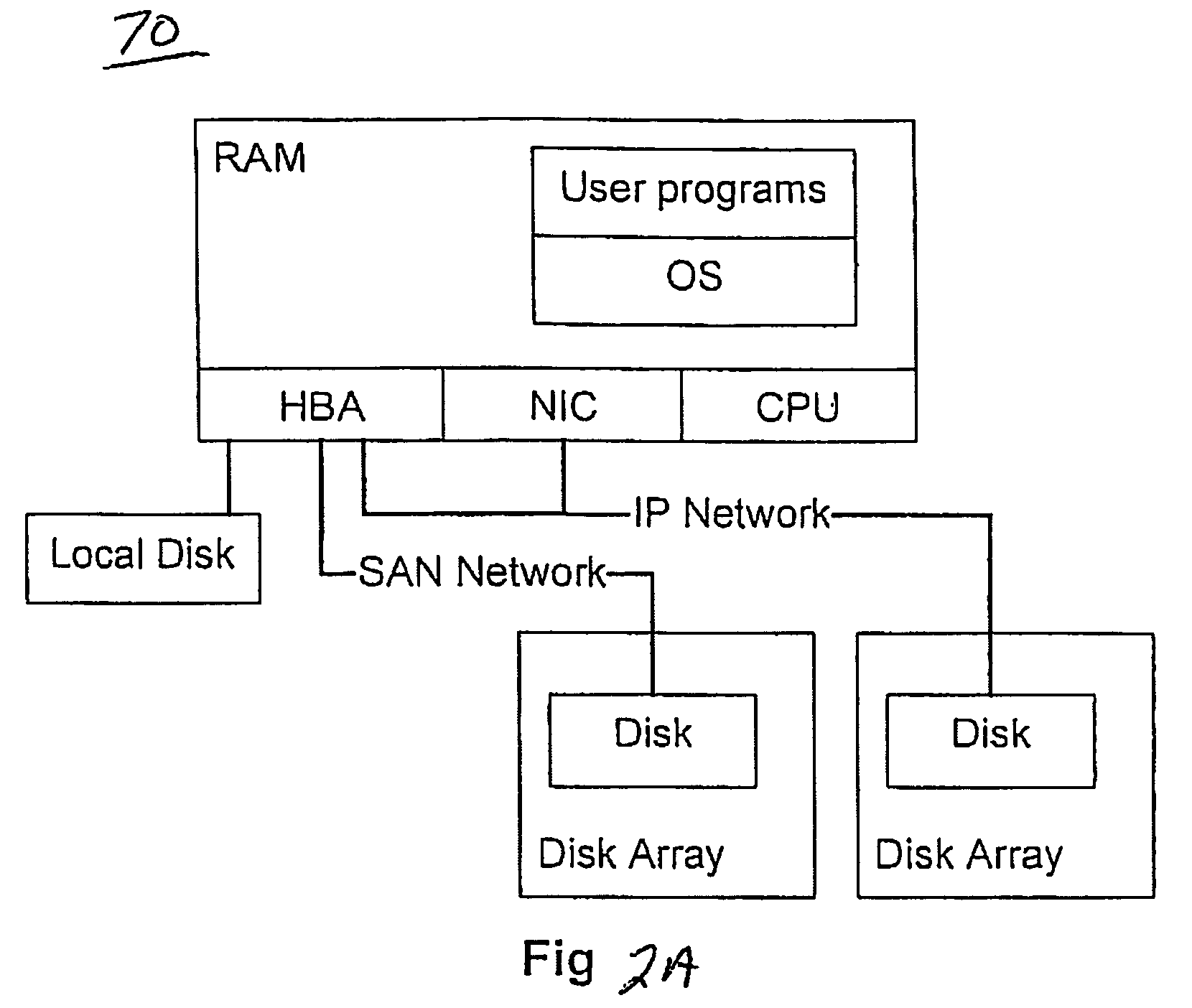

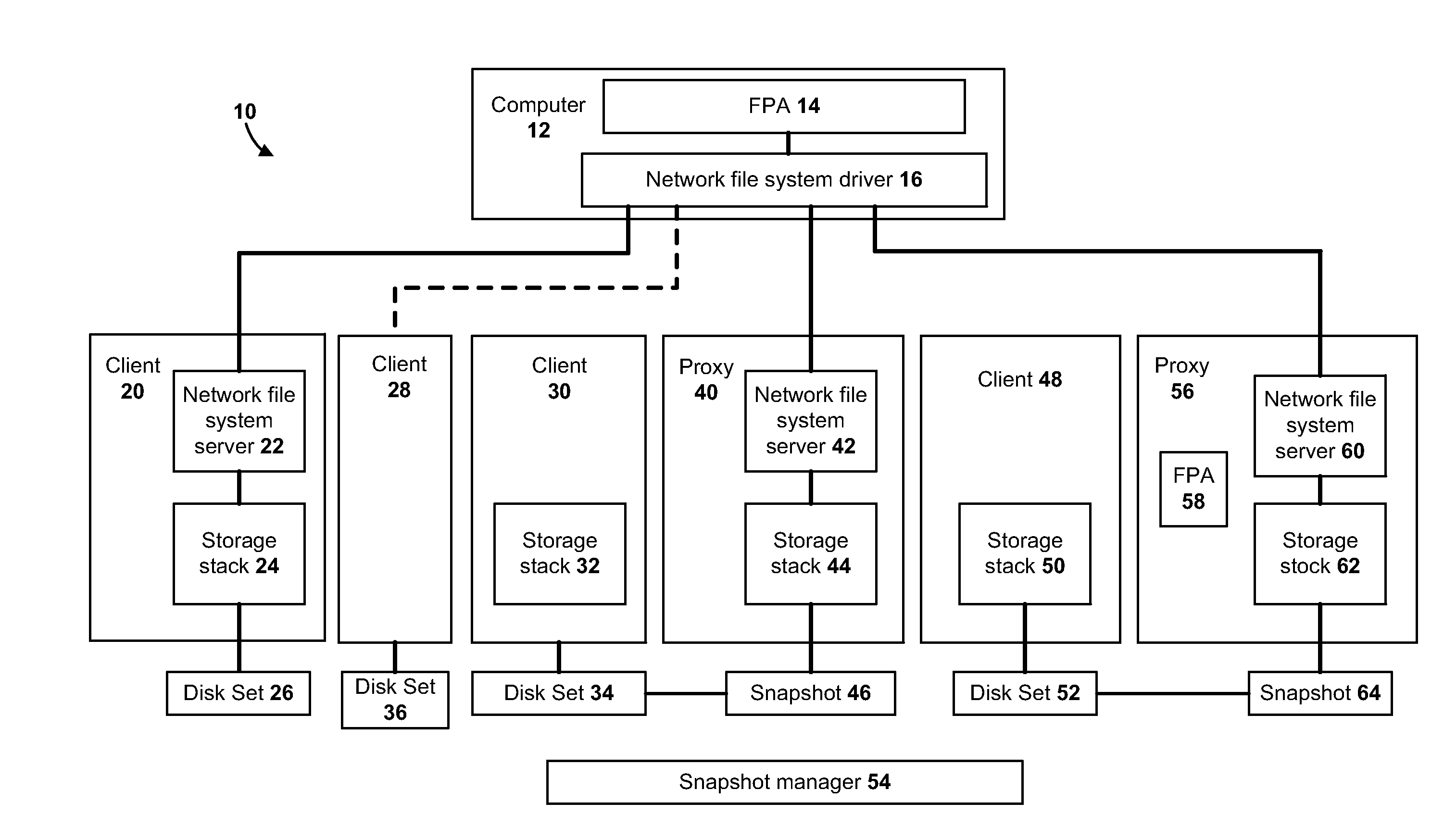

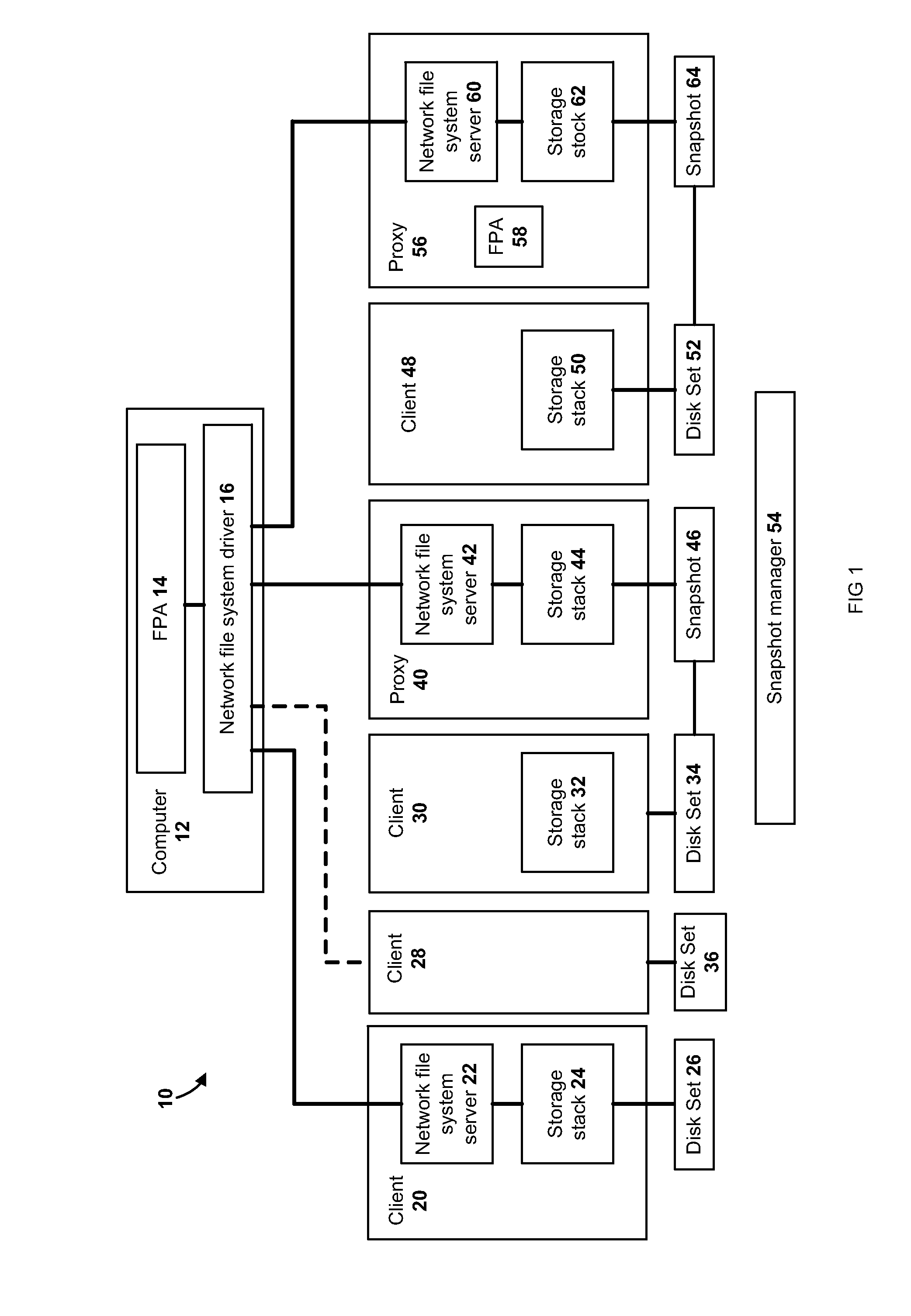

Method of universal file access for a heterogeneous computing environment

ActiveUS7774391B1Simple methodReduce the numberData processing applicationsFile access structuresOperational systemAuto-configuration

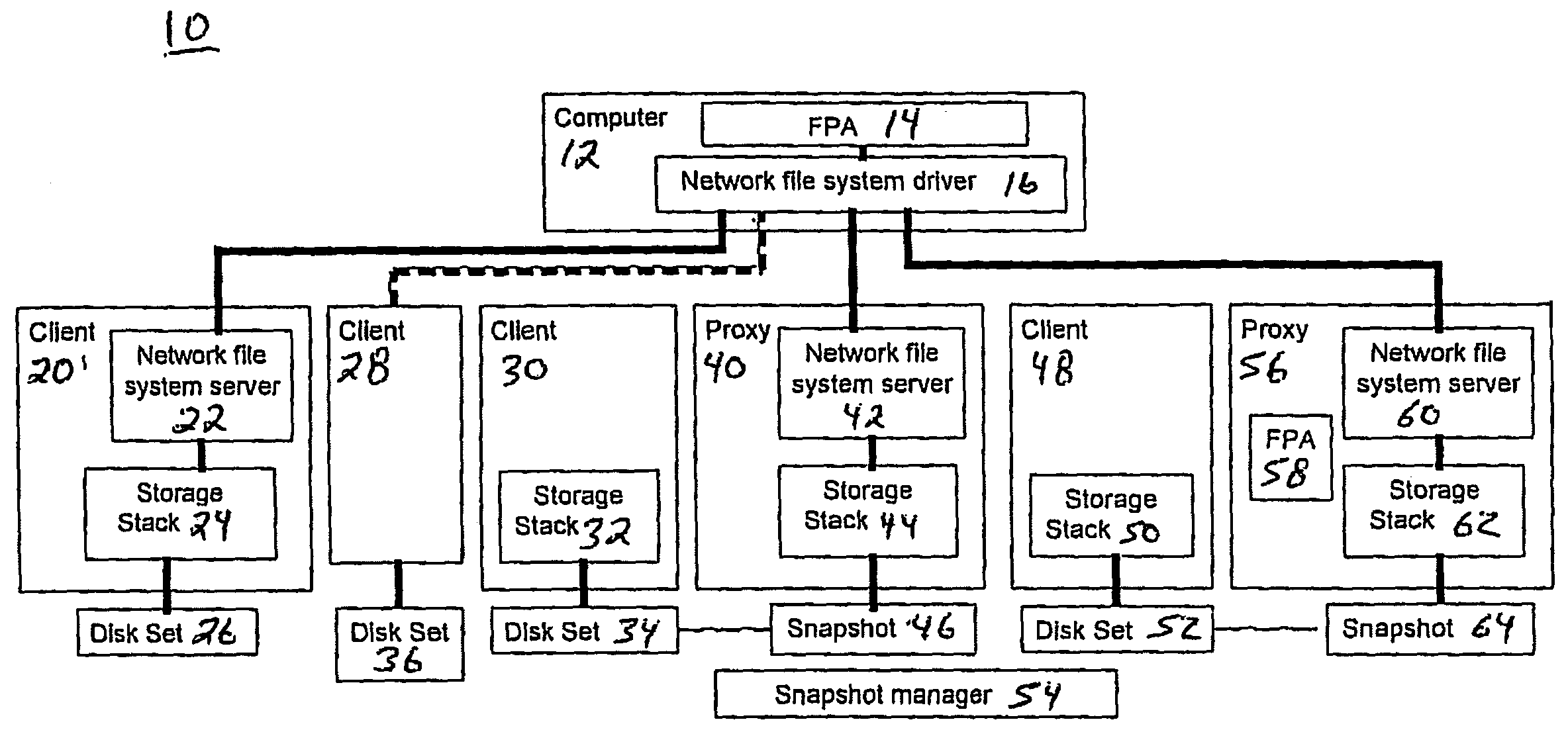

An architecture and system are described that provide a unified file access service within in a managed computing environment composed of diverse networks, computing devices, and storage devices. The service provides mechanisms for remotely accessing the file systems of any managed computer or disk snapshot, independently of the computer's current state (powered on, suspended or powered off), its location within the environment, its hardware type (virtual vs. physical), its operating system type, and its file system formats. The system isolates centralized FPAs from the details of clients, proxies and storage elements by providing a service that decomposes offloaded file system access into two steps. A FPA or a requester acting on behalf of the FPA first expresses the disk set or the computer containing the file systems it wishes to access, along with requirements and preferences about the access method. The service figures out an efficient data path satisfying the FPA's needs; and then automatically configures a set of storage and computing resources to provide the data path. The service then replies with information about the resources and instructions for using them. The FPA then accesses the requested file systems using the returned information.

Owner:VMWARE INC

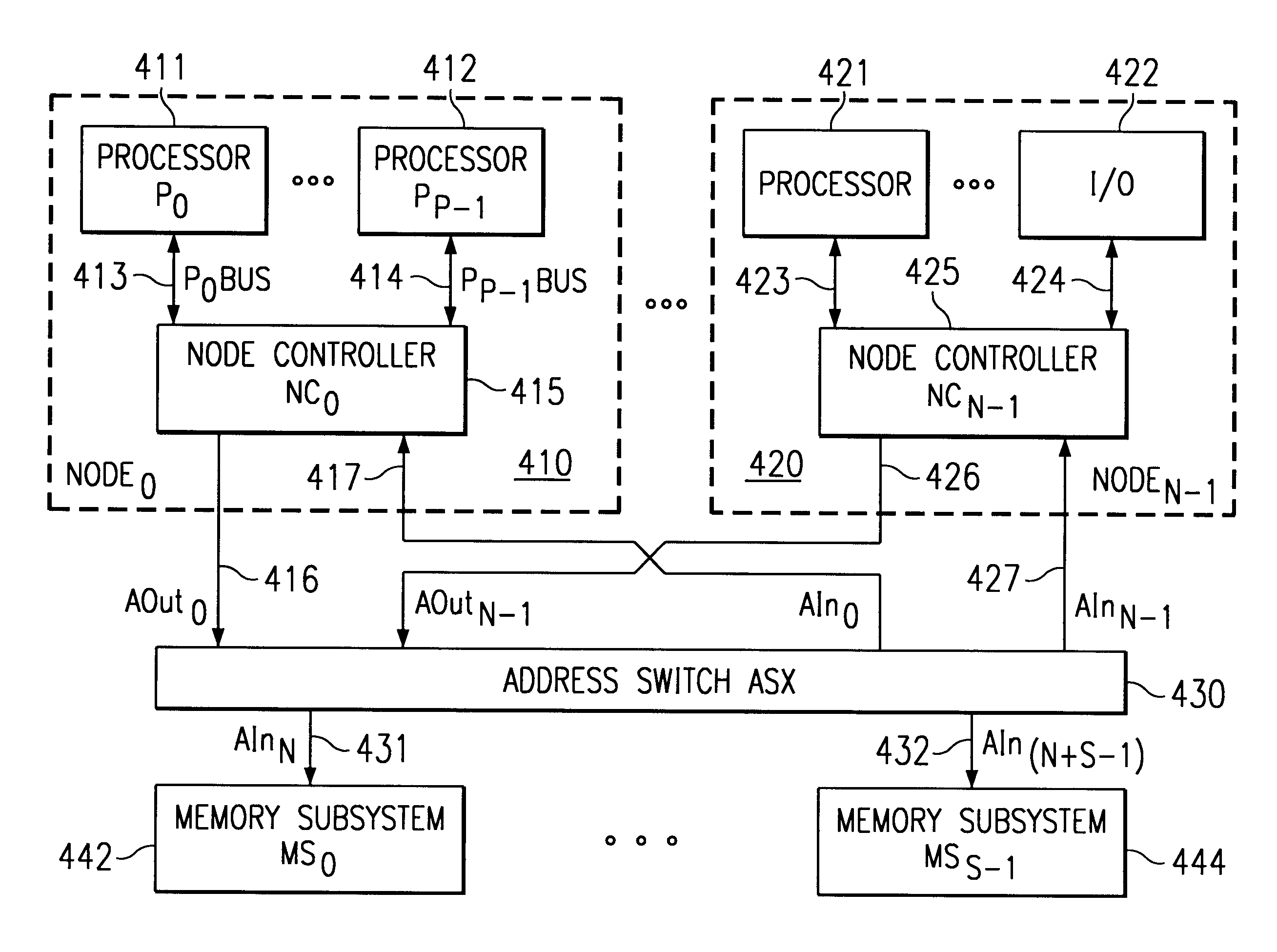

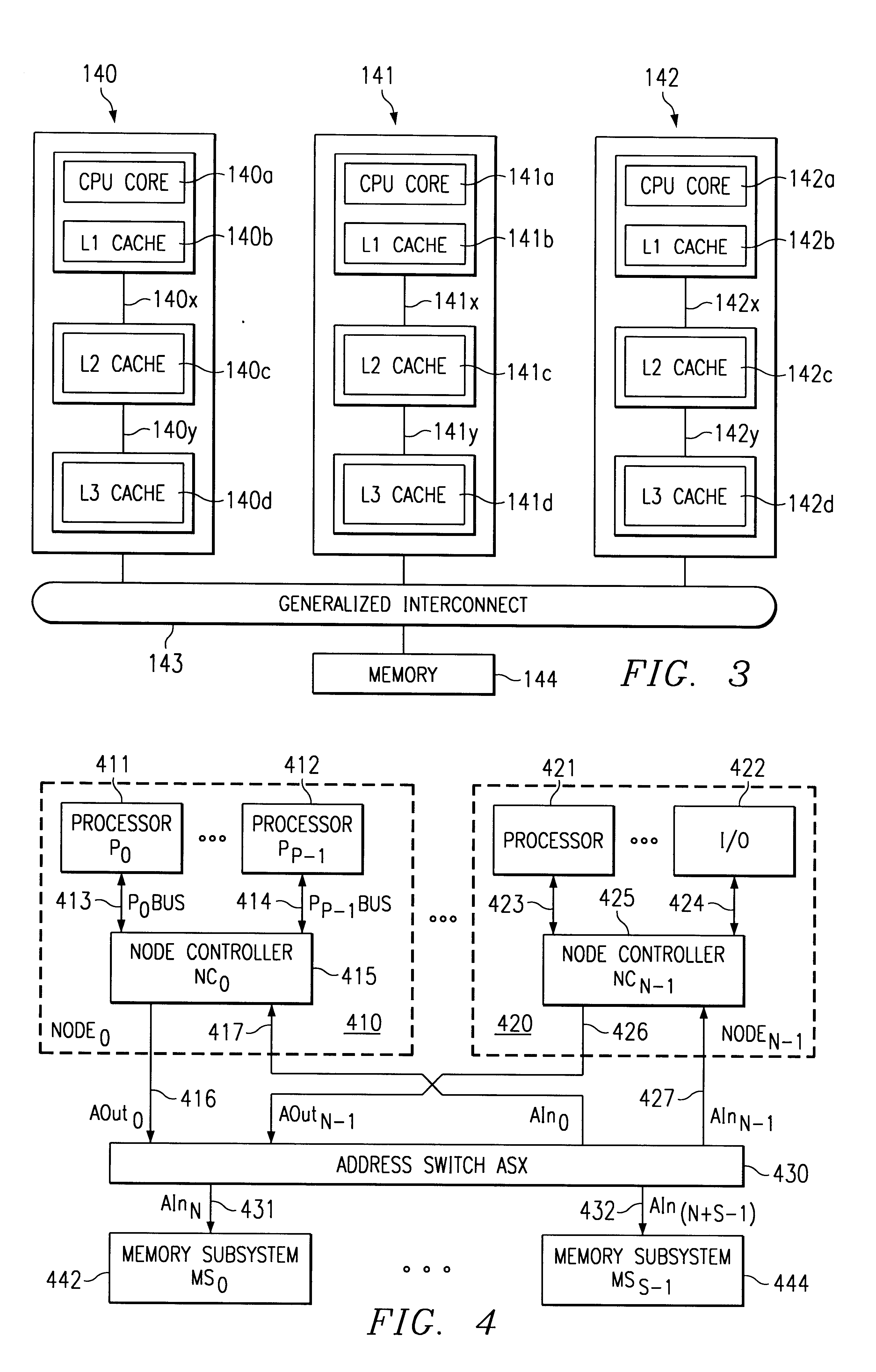

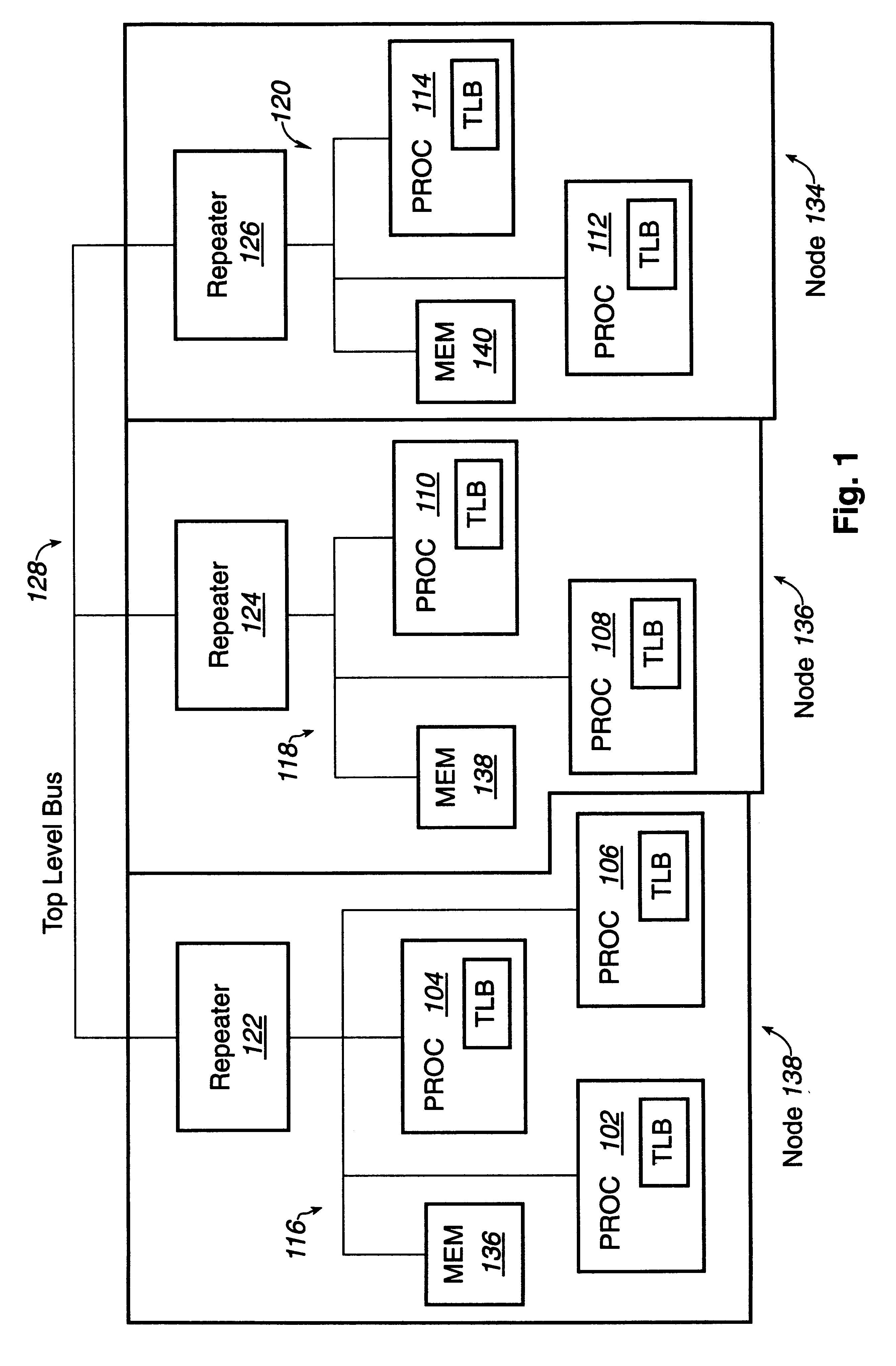

Method and apparatus to distribute interrupts to multiple interrupt handlers in a distributed symmetric multiprocessor system

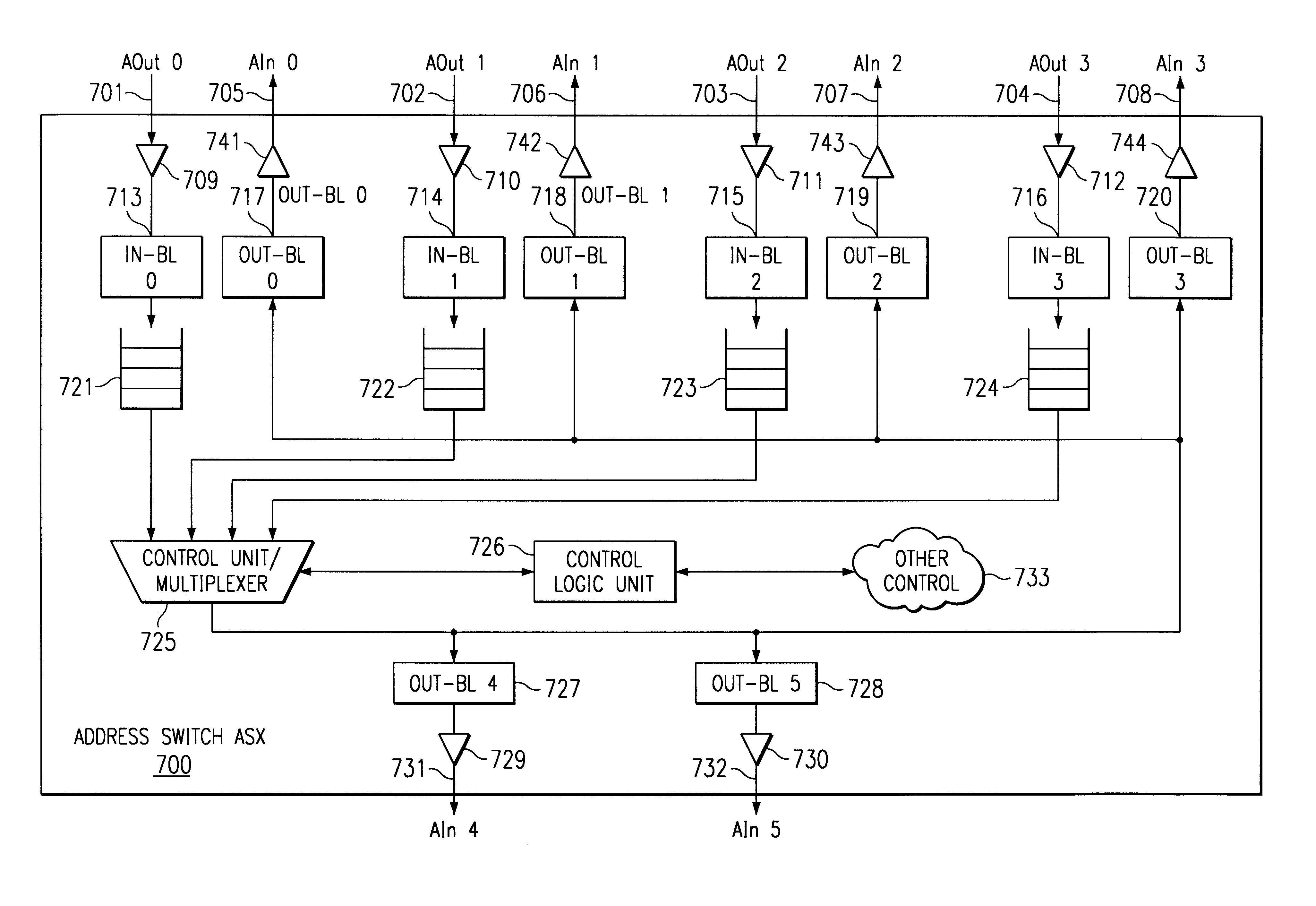

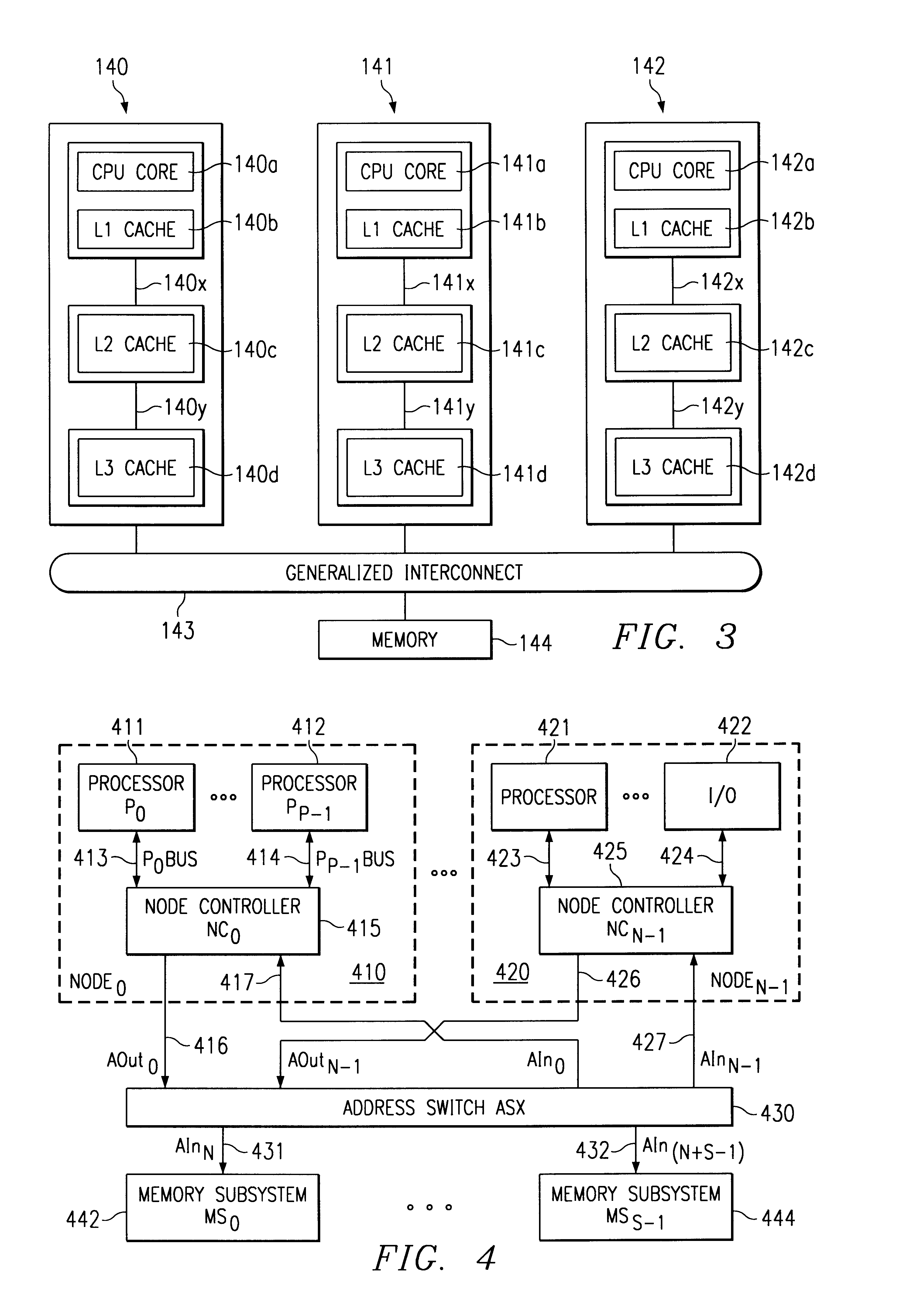

A distributed system structure for a large-way, symmetric multiprocessor system using a bus-based cache-coherence protocol is provided. The distributed system structure contains an address switch, multiple memory subsystems, and multiple master devices, either processors, I / O agents, or coherent memory adapters, organized into a set of nodes supported by a node controller. The node controller receives transactions from a master device, communicates with a master device as another master device or as a slave device, and queues transactions received from a master device. Since the achievement of coherency is distributed in time and space, the node controller helps to maintain cache coherency. The node controller also implements an interrupt arbitration scheme designed to choose among multiple eligible interrupt distribution units without using dedicated sideband signals on the bus.

Owner:GOOGLE LLC

Method of universal file access for a heterogeneous computing environment

ActiveUS20110047195A1Simplify the management processReduce the numberData processing applicationsDigital data processing detailsOperational systemAuto-configuration

An architecture and system are described that provide a unified file access service within in a managed computing environment composed of diverse networks, computing devices, and storage devices. The service provides mechanisms for remotely accessing the file systems of any managed computer or disk snapshot, independently of the computer's current state (powered on, suspended or powered off), its location within the environment, its hardware type (virtual vs. physical), its operating system type, and its file system formats. The system isolates centralized FPAs from the details of clients, proxies and storage elements by providing a service that decomposes offloaded file system access into two steps. A FPA or a requester acting on behalf of the FPA first expresses the disk set or the computer containing the file systems it wishes to access, along with requirements and preferences about the access method. The service figures out an efficient data path satisfying the FPA's needs, and then automatically configures a set of storage and computing resources to provide the data path. The service then replies with information about the resources and instructions for using them. The FPA then accesses the requested file systems using the returned information.

Owner:VMWARE INC

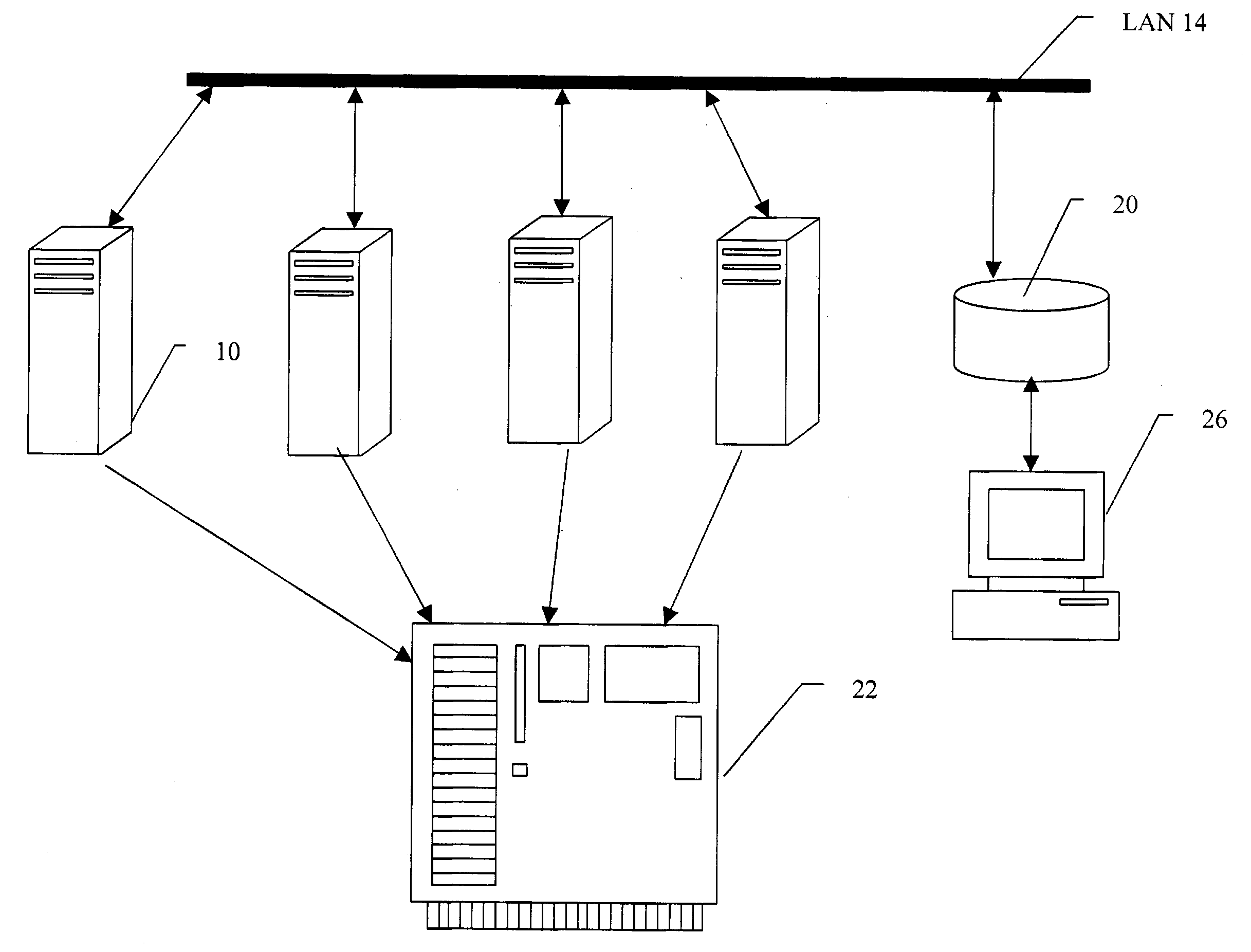

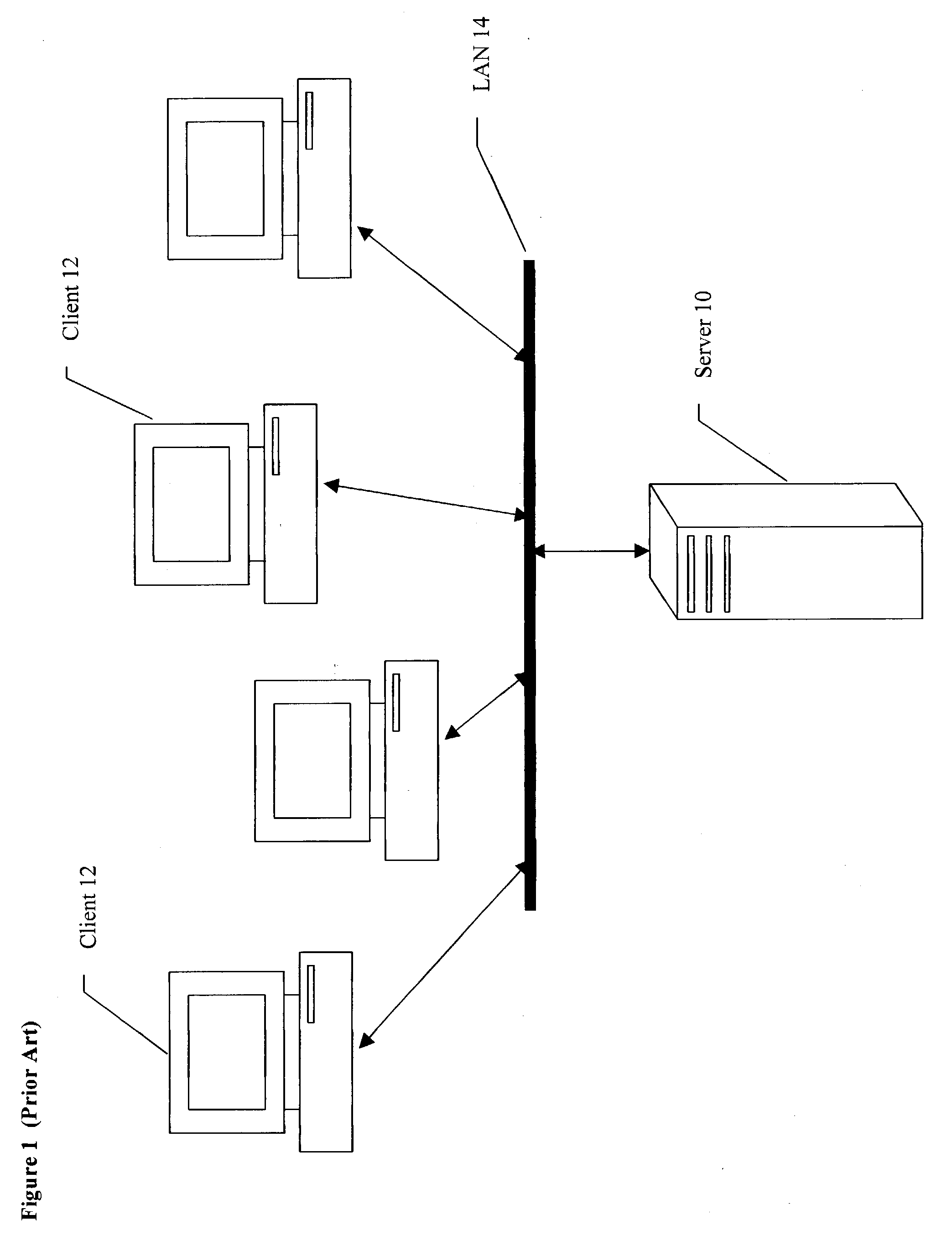

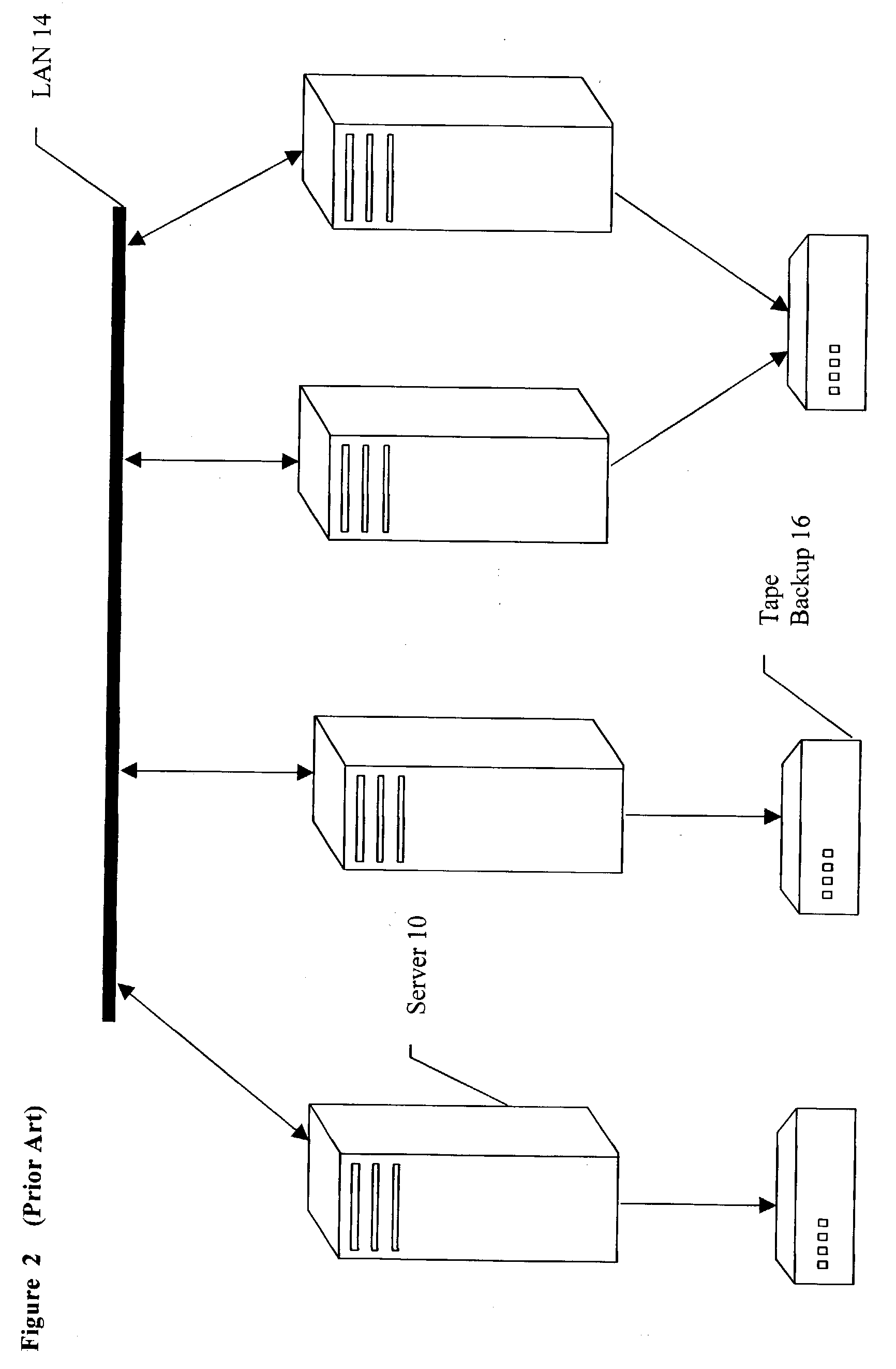

System and method of managing backup media in a computing environment

InactiveUS20050021524A1Digital data processing detailsRedundant operation error correctionOperational systemCentralized management

A storage media management system operating in a heterogeneous computing environment having different servers, different operating systems, and different backup applications. The management system extracts backup information from each backup application and consolidates the information in a tracking database. A centralized interface to the tracking database permits centralized management of backup storage media, such as movement, retention, vaulting, reporting, utilization, and duplication.

Owner:OLIVER JACK K

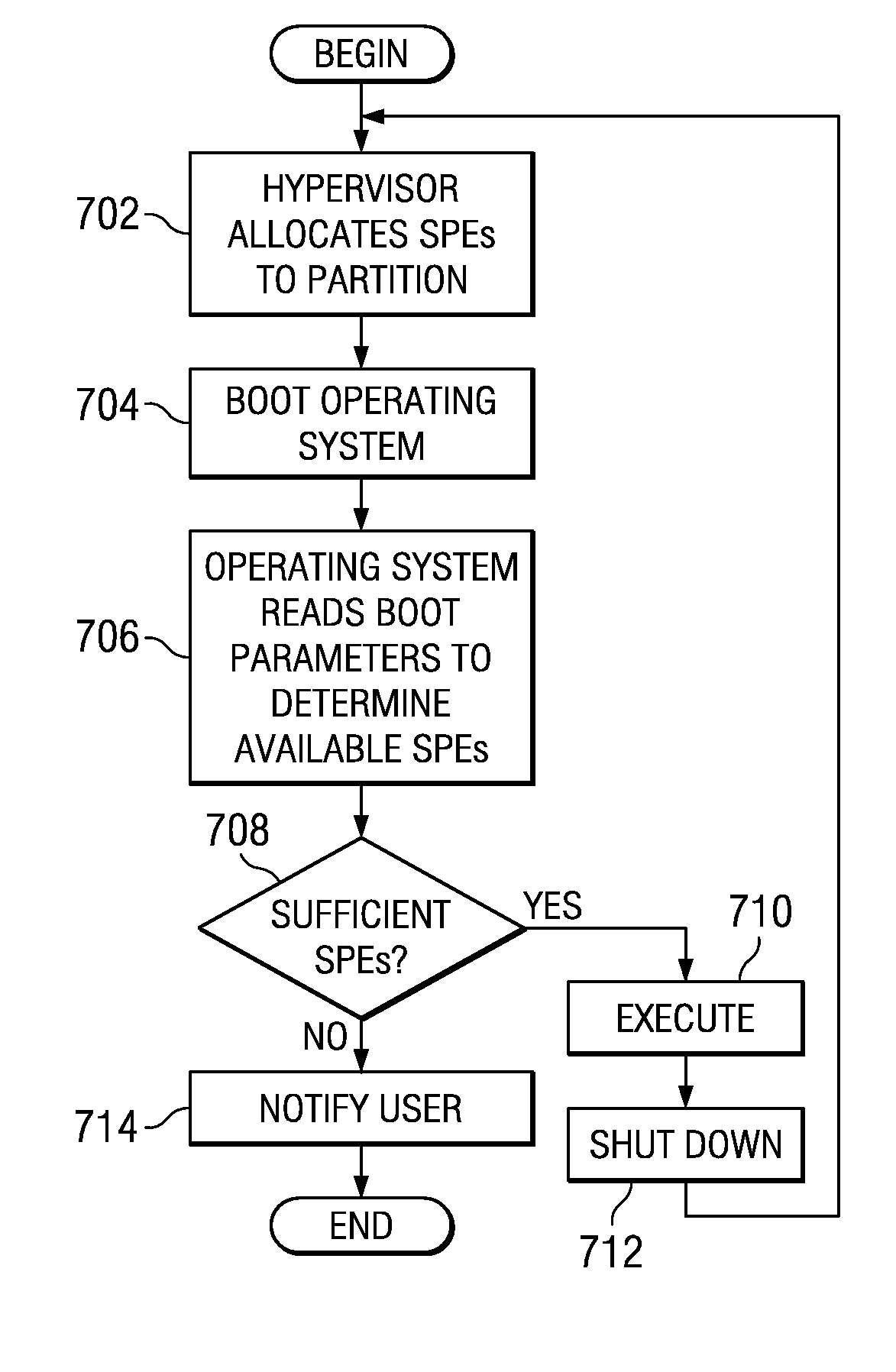

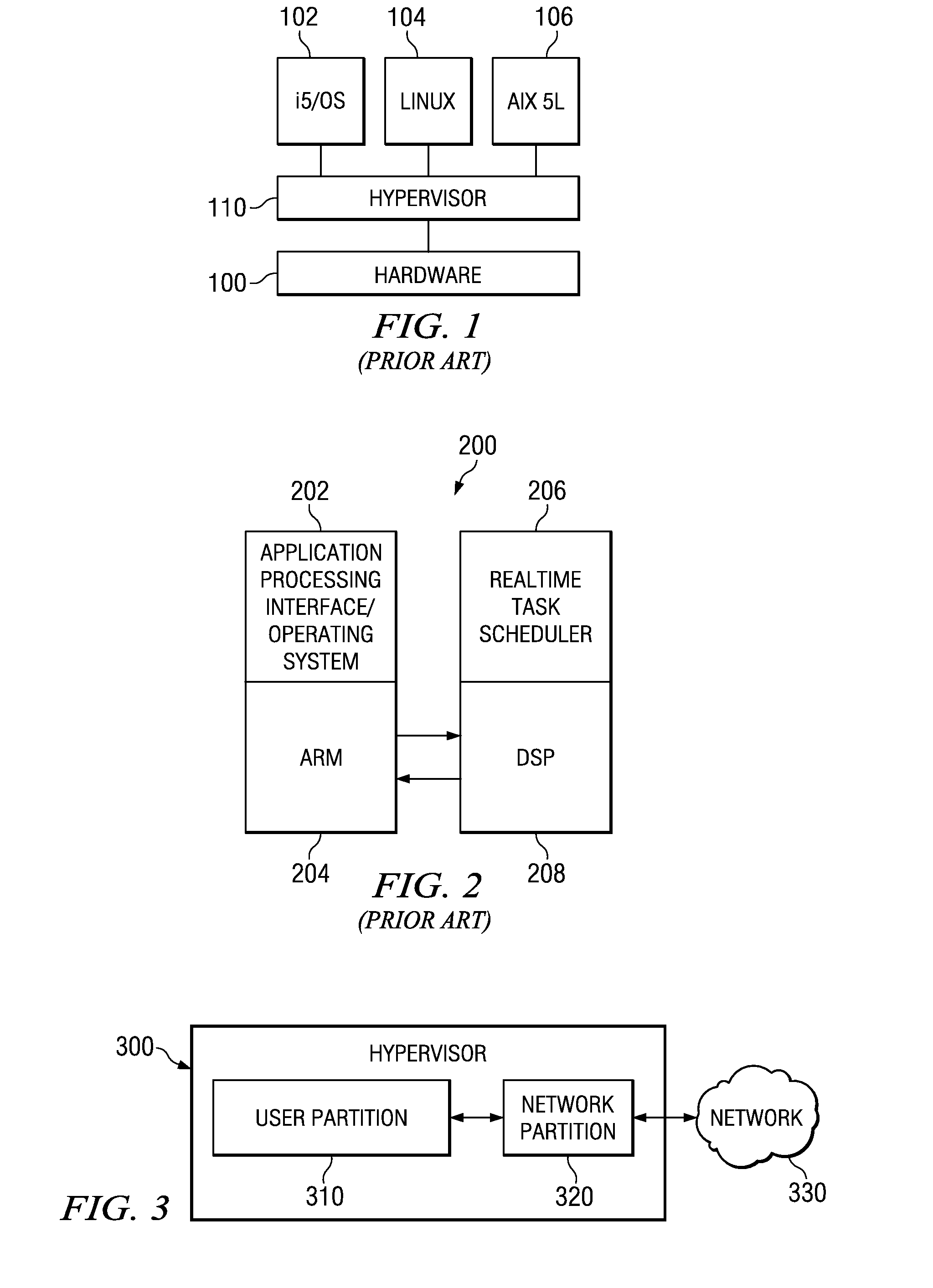

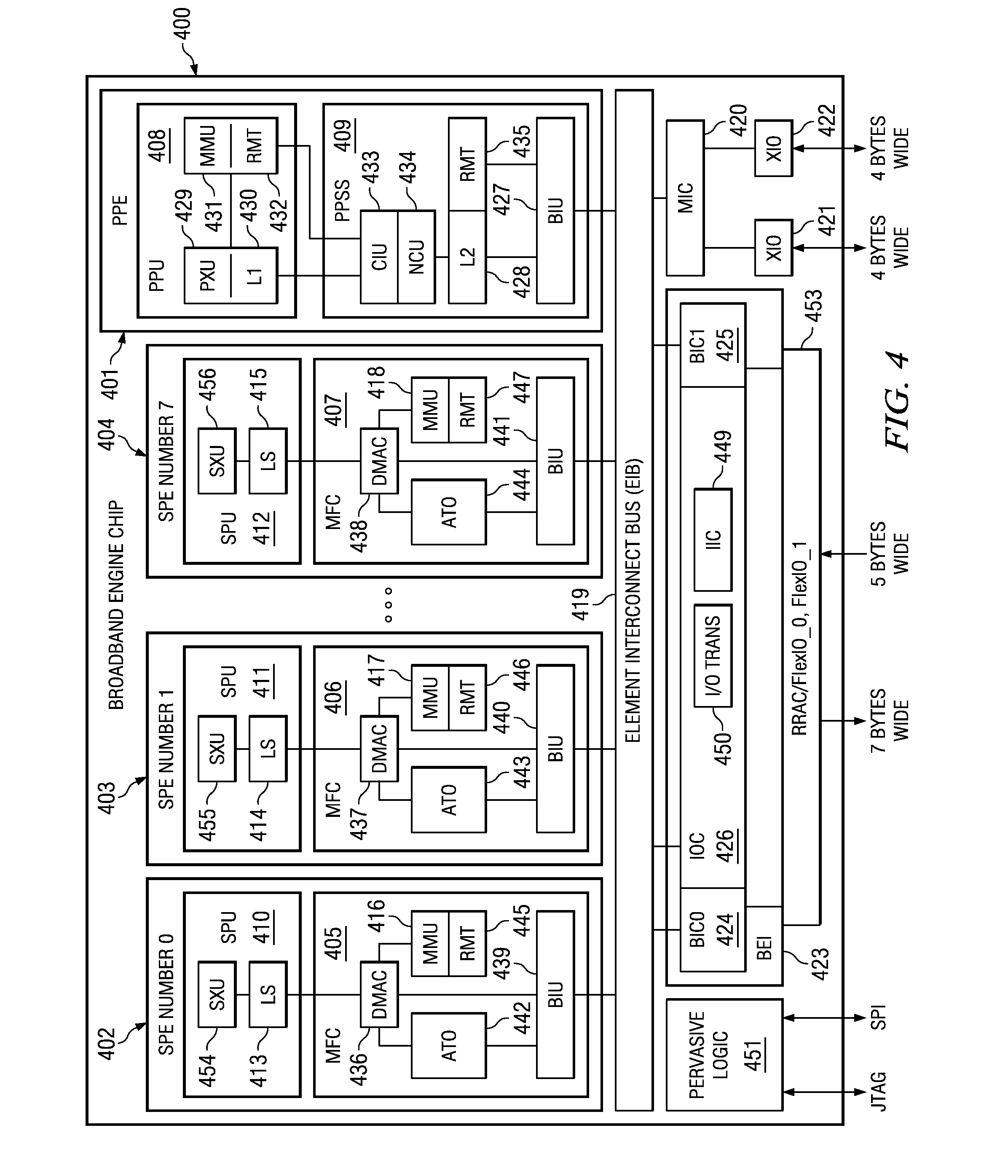

Logical partitioning and virtualization in a heterogeneous architecture

A method, apparatus, and computer usable program code for logical partitioning and virtualization in heterogeneous computer architecture. In one illustrative embodiment, a portion of a first set of processors of a first type is allocated to a partition in a heterogeneous logically partitioned system and a portion of a second set of processors of a second type is allocated to the partition.

Owner:IBM CORP

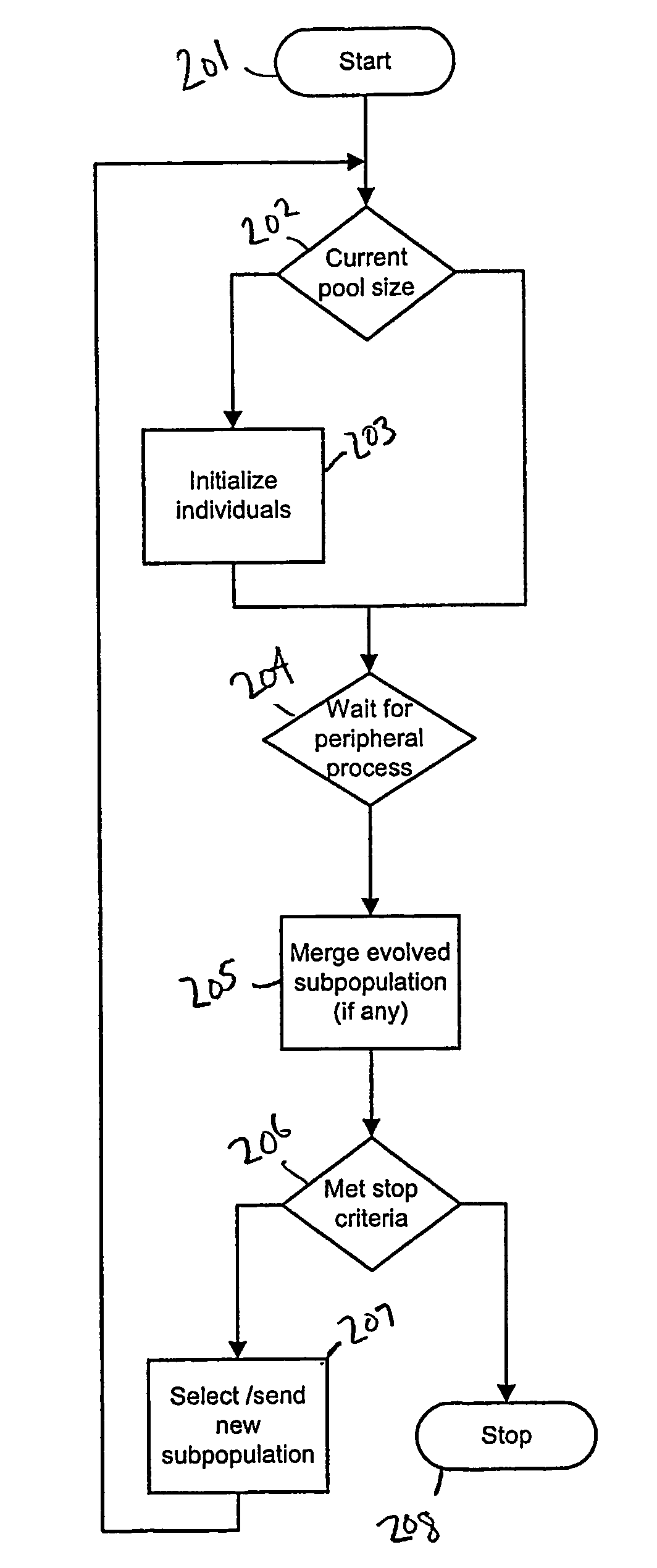

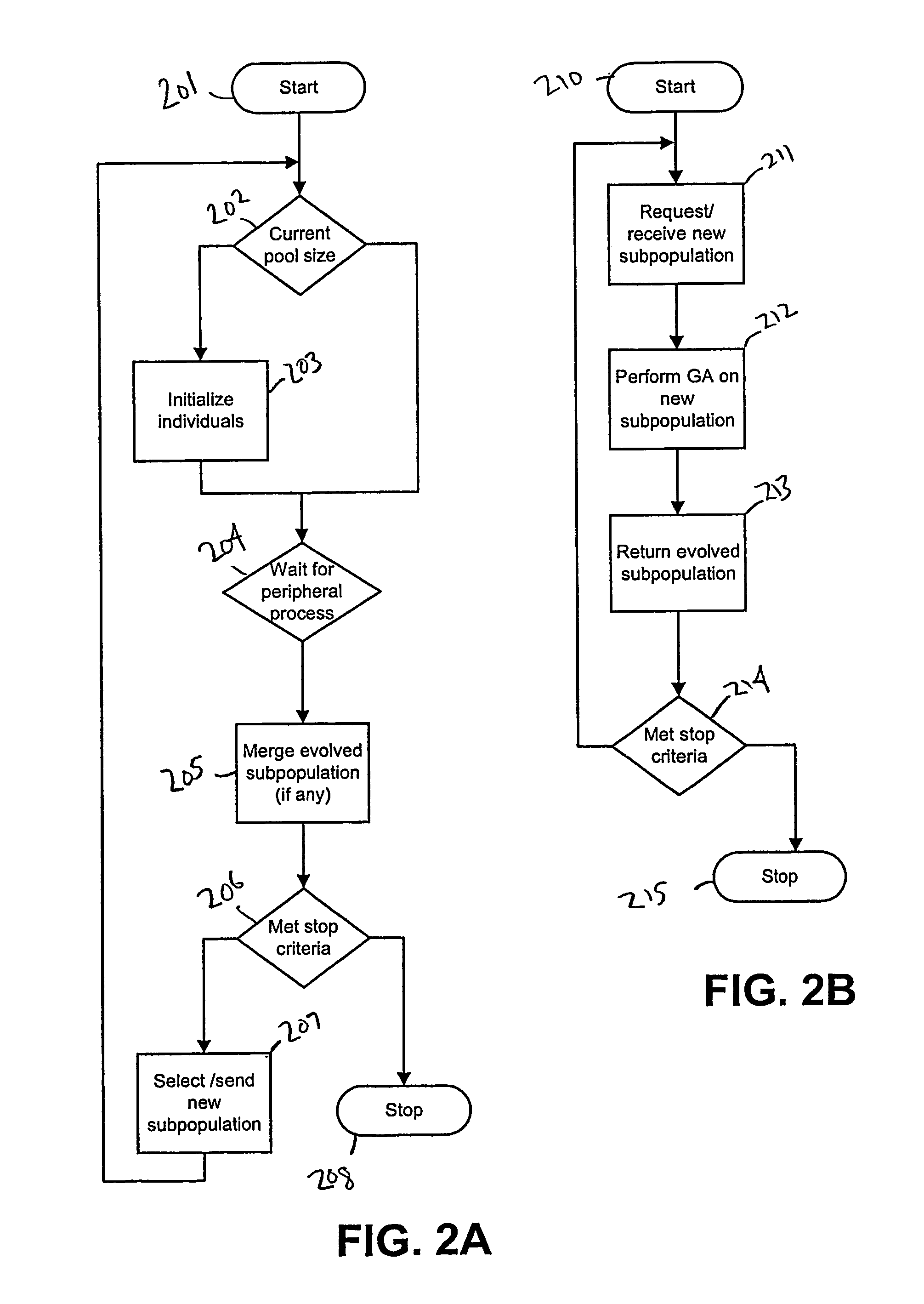

Method and system for implementing evolutionary algorithms

InactiveUS7444309B2Efficient and effectiveLimit competitionComputer controlSimulator controlComputer scienceSymmetric multiprocessor system

A method, computer program storage medium and system that implement evolutionary algorithms on heterogeneous computers; in which a central process resident in a central computer delegates subpopulations of individuals of similar fitness from a central pool to separate processes resident on peripheral computers where they evolve for a certain number of generations after which they return to the central pool before the delegation is repeated.

Owner:ICOSYST CORP

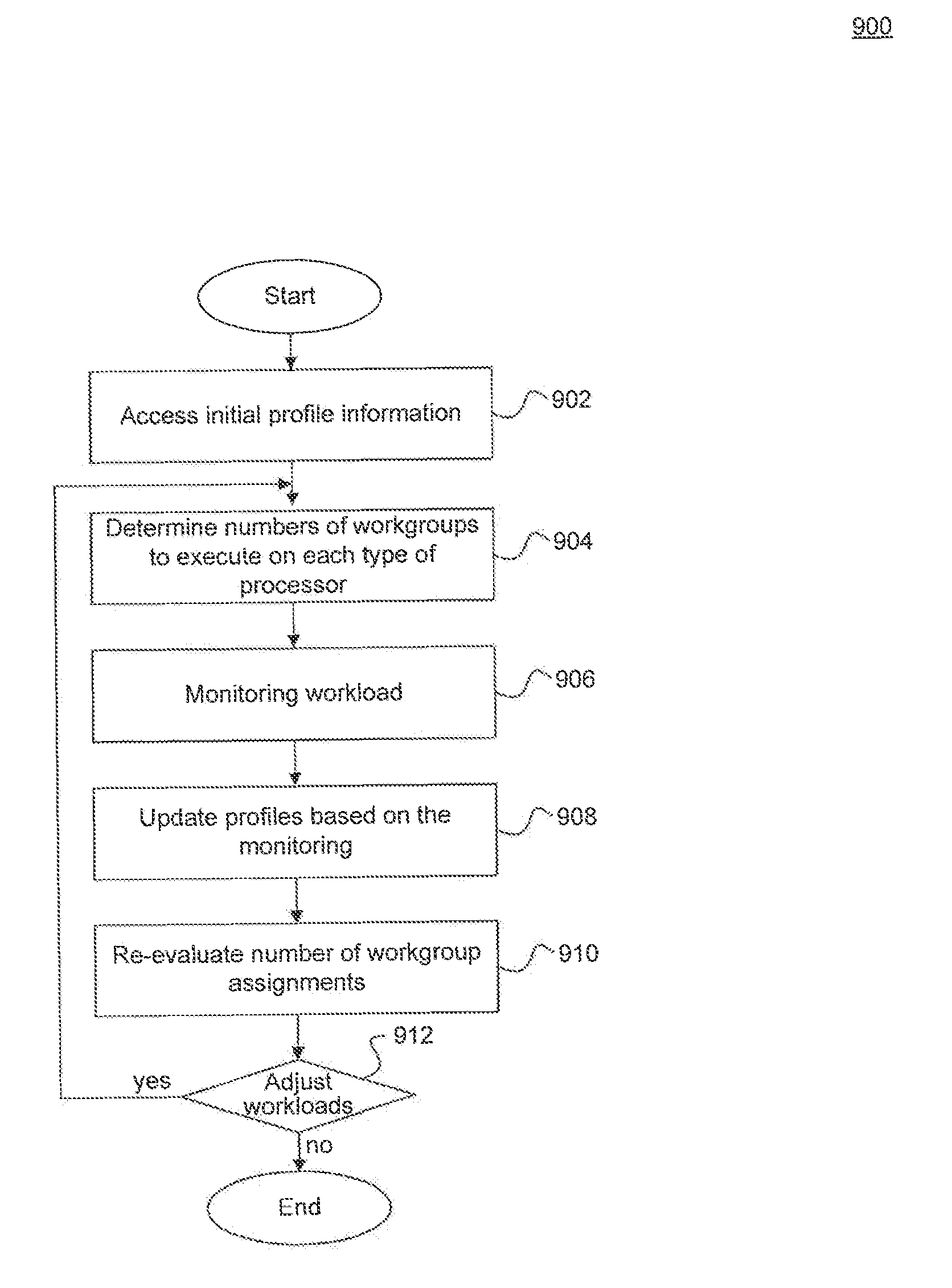

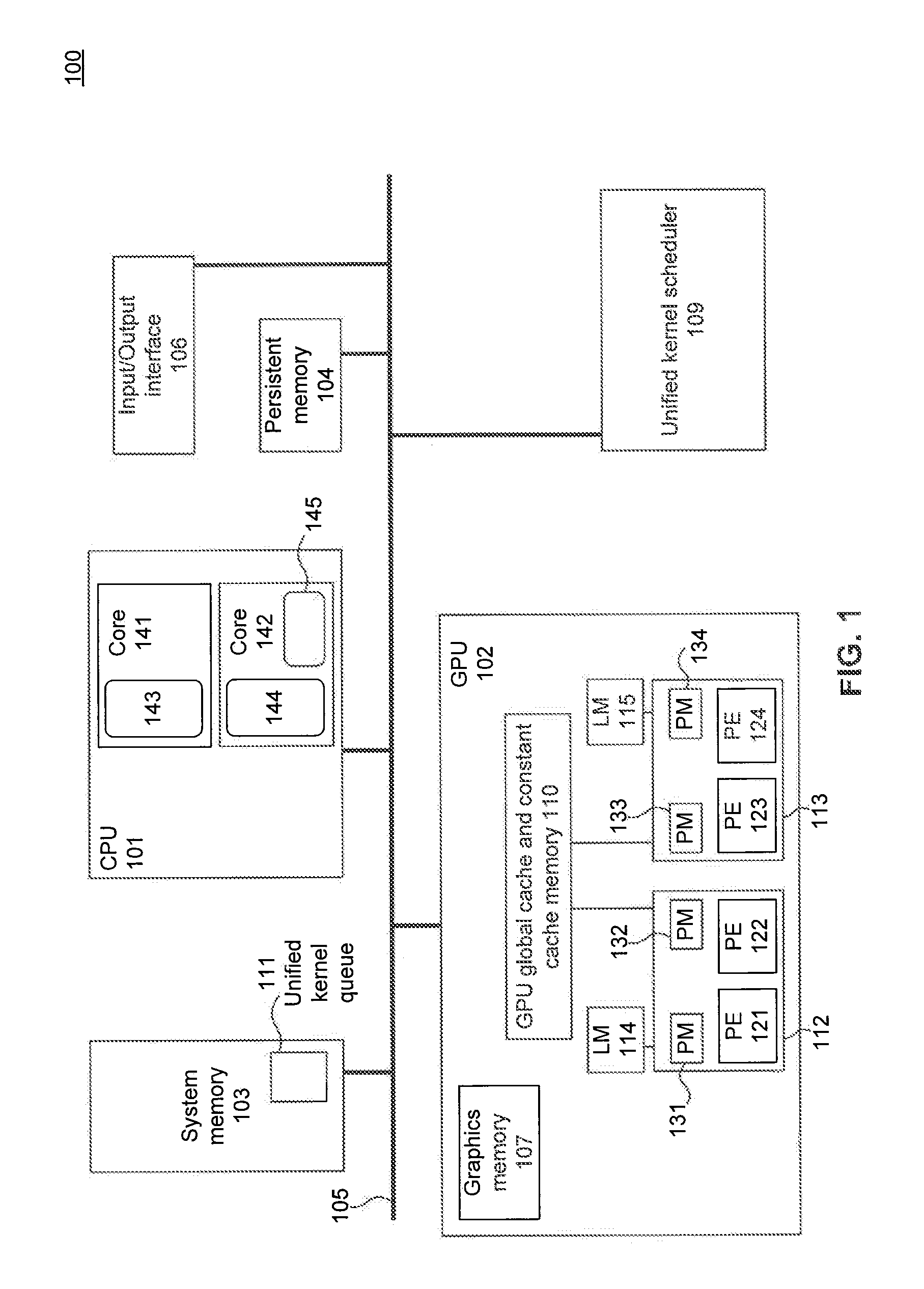

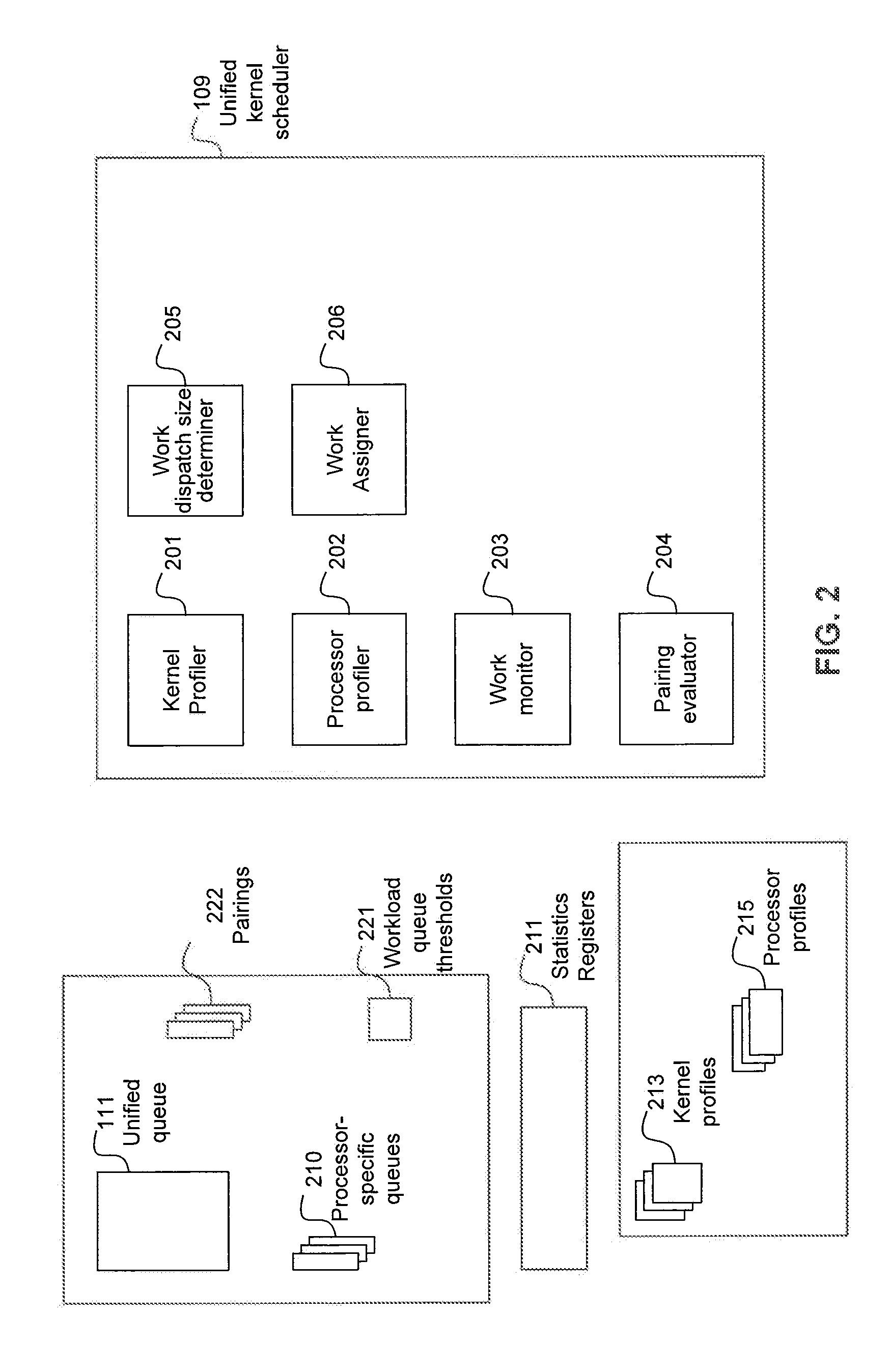

Scheduling compute kernel workgroups to heterogeneous processors based on historical processor execution times and utilizations

ActiveUS8707314B2Energy efficient ICTResource allocationSymmetric multiprocessor systemCompute kernel

A system and method embodiments for optimally allocating compute kernels to different types of processors, such as CPUs and GPUs, in a heterogeneous computer system are disclosed. These include comparing a kernel profile of a compute kernel to respective processor profiles of a plurality of processors in a heterogeneous computer system, selecting at least one processor from the plurality of processors based upon the comparing, and scheduling the compute kernel for execution in the selected at least one processor.

Owner:ADVANCED MICRO DEVICES INC

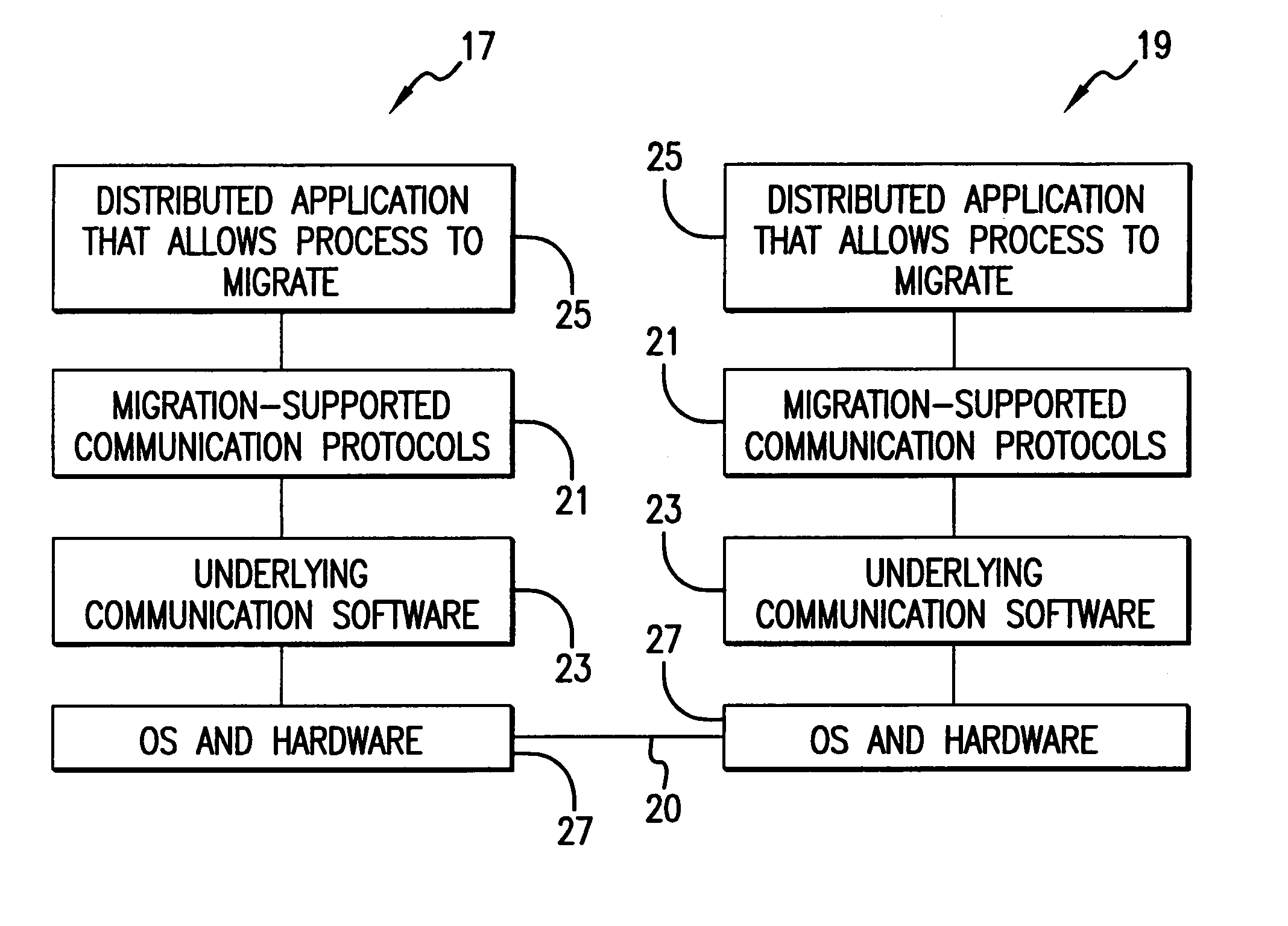

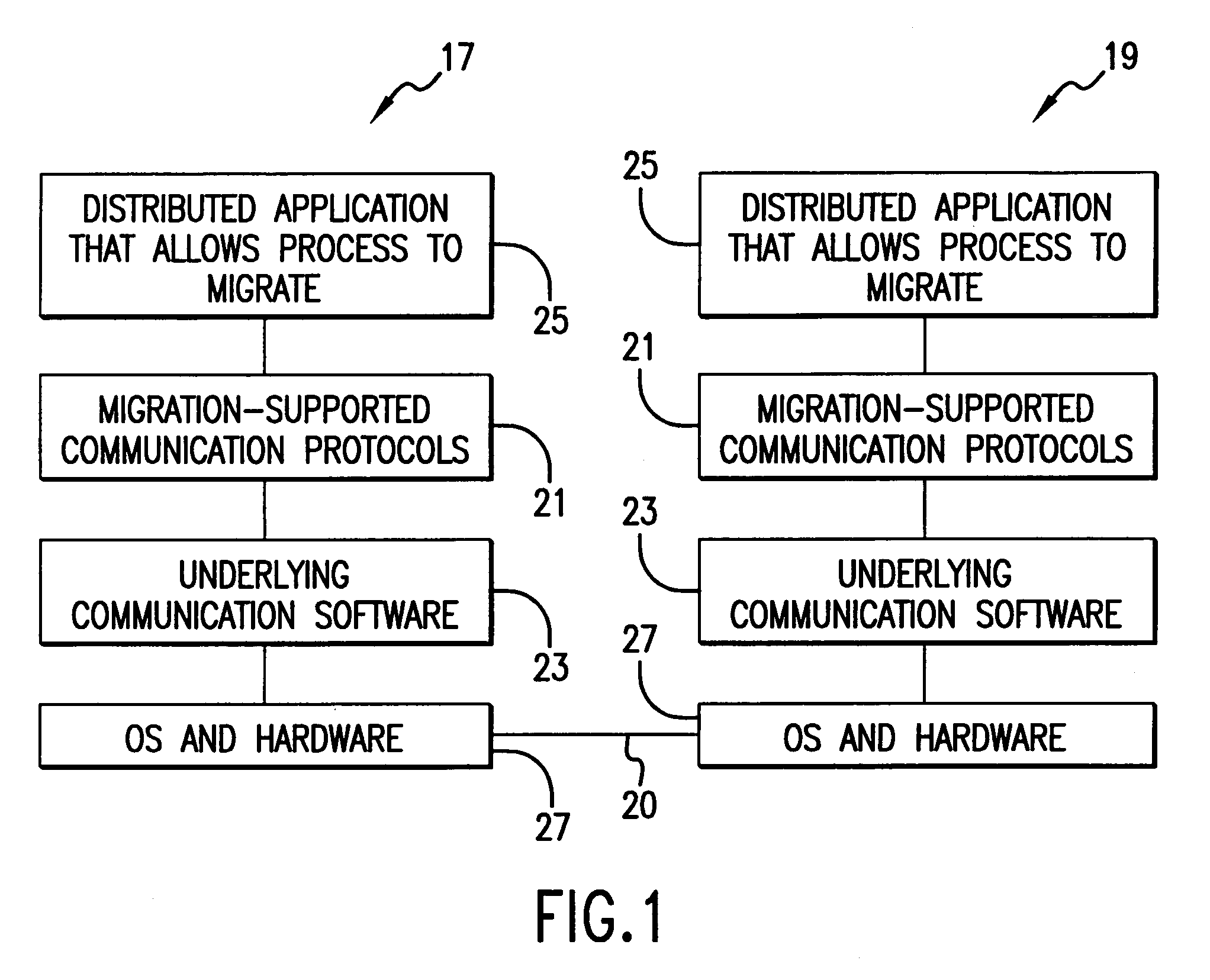

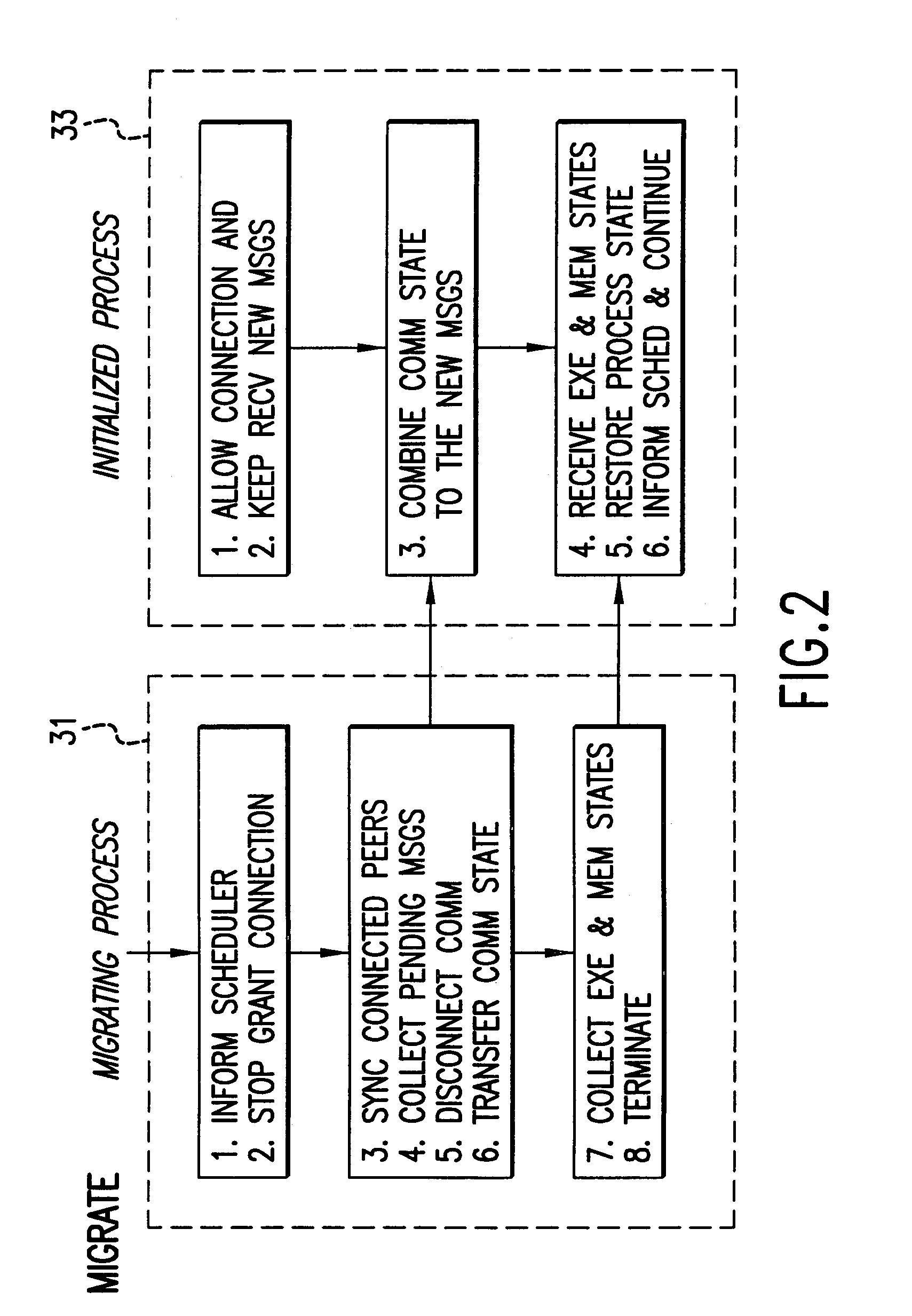

Communication and process migration protocols for distributed heterogeneous computing

ActiveUS7065549B2Effective supportSmall communication overheadMultiprogramming arrangementsMultiple digital computer combinationsTraffic capacityHigh effectiveness

Communication and Process Migration Protocols instituted in an independent layer of a virtual machine environment allow for heterogeneous or homogeneous process migration. The protocols manage message traffic for processes communicating in the virtual machine environment. The protocols manage message traffic for migrating processes so that no message traffic is lost during migration, and proper message order is maintained for the migrating process. In addition to correctness of migration operations, low overhead and high efficiency is achieved for supporting scalable, point-to-point communications.

Owner:ILLINOIS INSTITUTE OF TECHNOLOGY

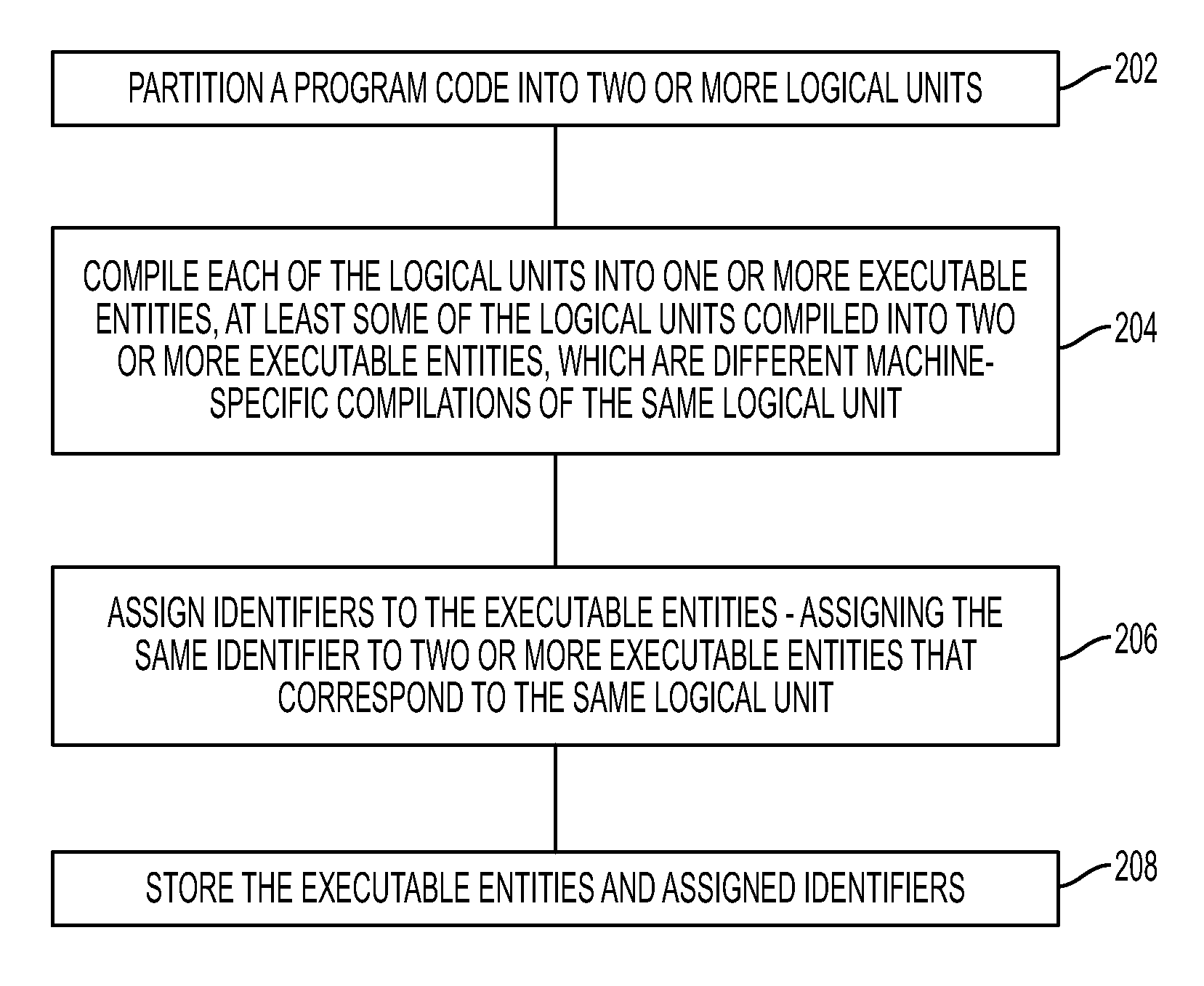

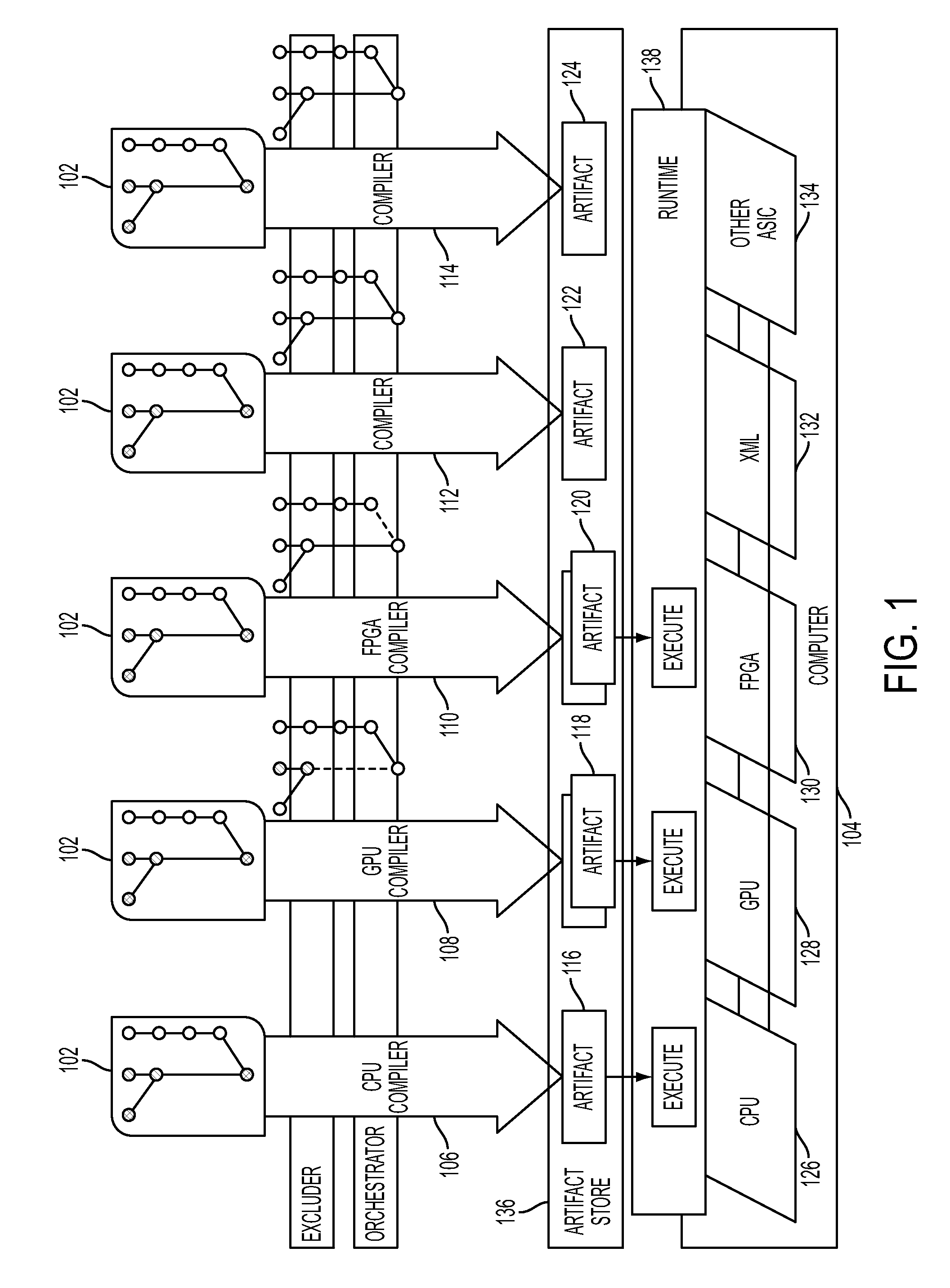

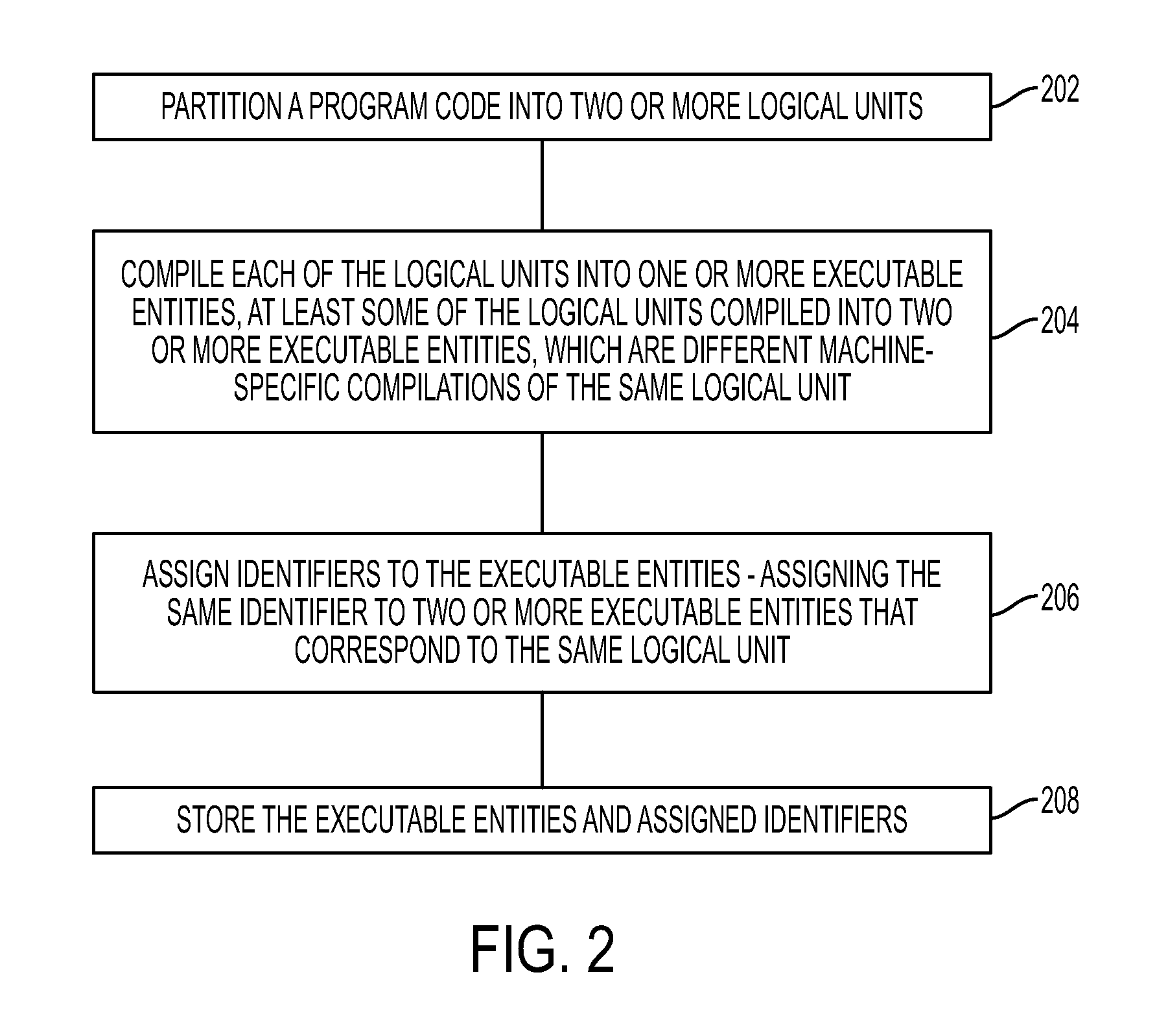

Technique for compiling and running high-level programs on heterogeneous computers

A technique for compiling and running high-level program on heterogeneous computers may include partitioning a program code into two or more logical units, and compiling each of the logical units into one or more executable entities. At least some of the logical units are compiled into two or more executable entities, the two or more executable entities being different compilations of the same logical unit. The two or more executable entities are compatible to run on respective two or more platforms that have different architecture.

Owner:IBM CORP

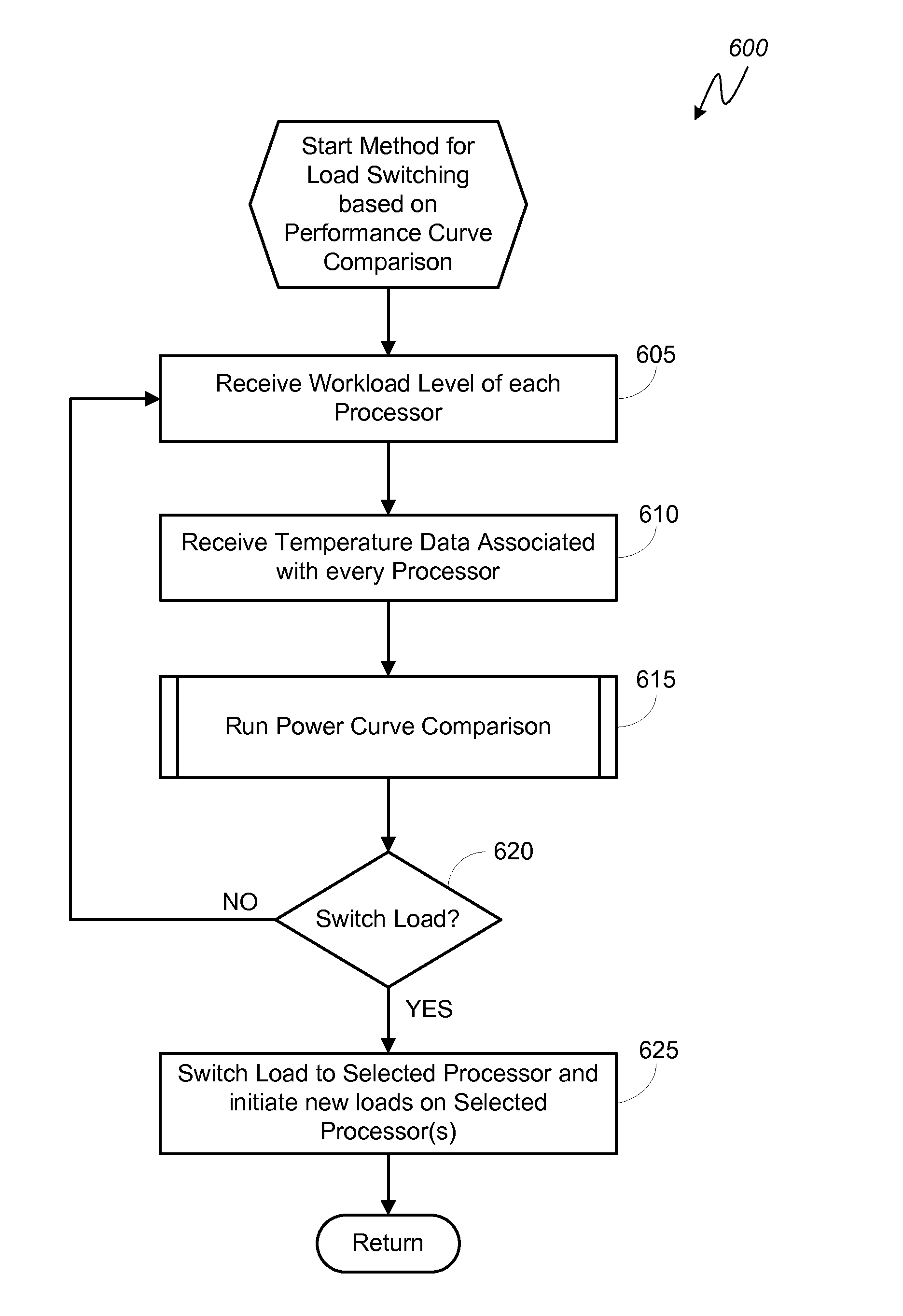

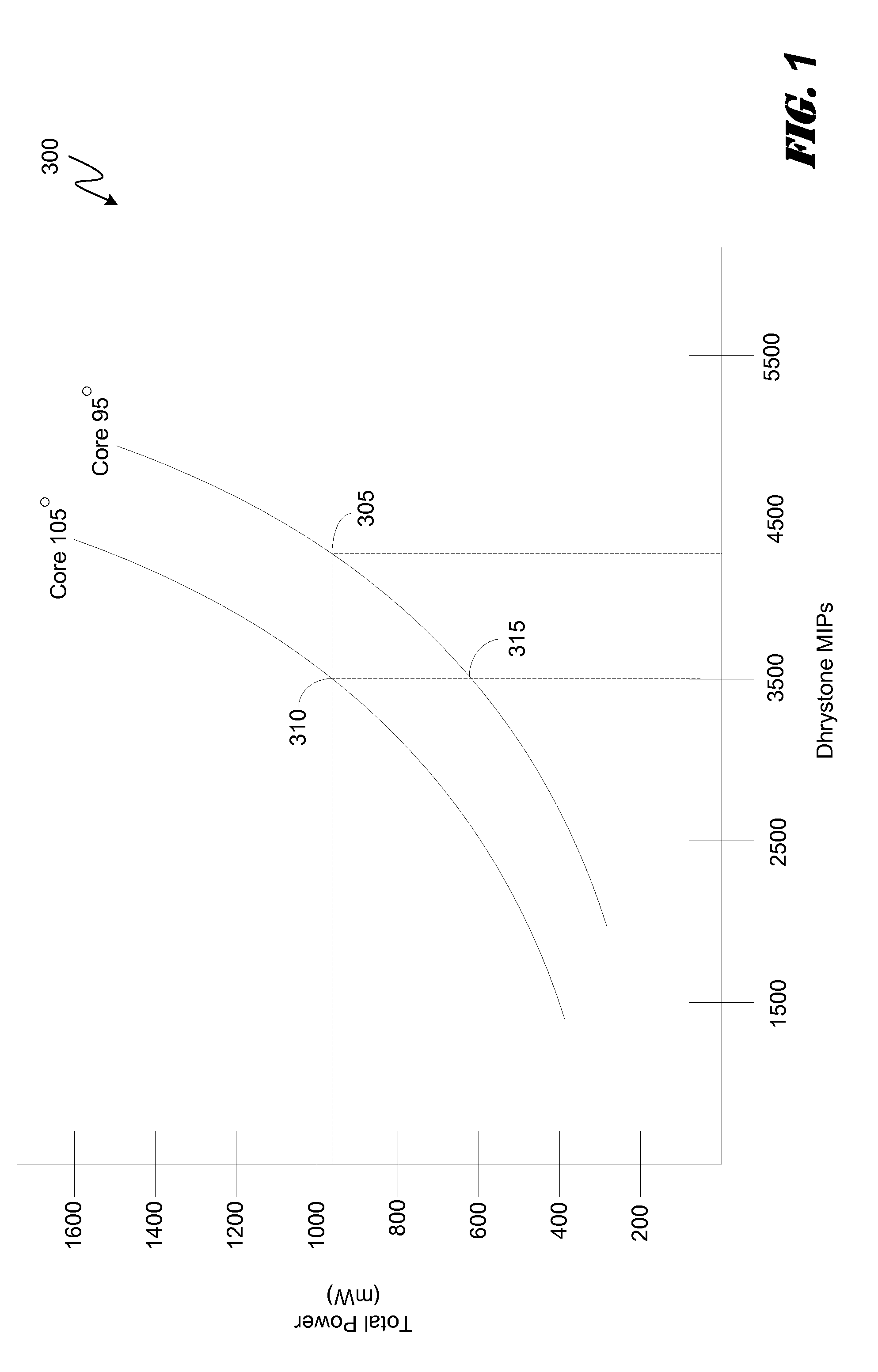

System and method for managing thermal energy generation in a heterogeneous multi-core processor

InactiveUS20130073875A1Efficient processingSaving energy thermal energy generationEnergy efficient ICTVolume/mass flow measurementThermal energyEngineering

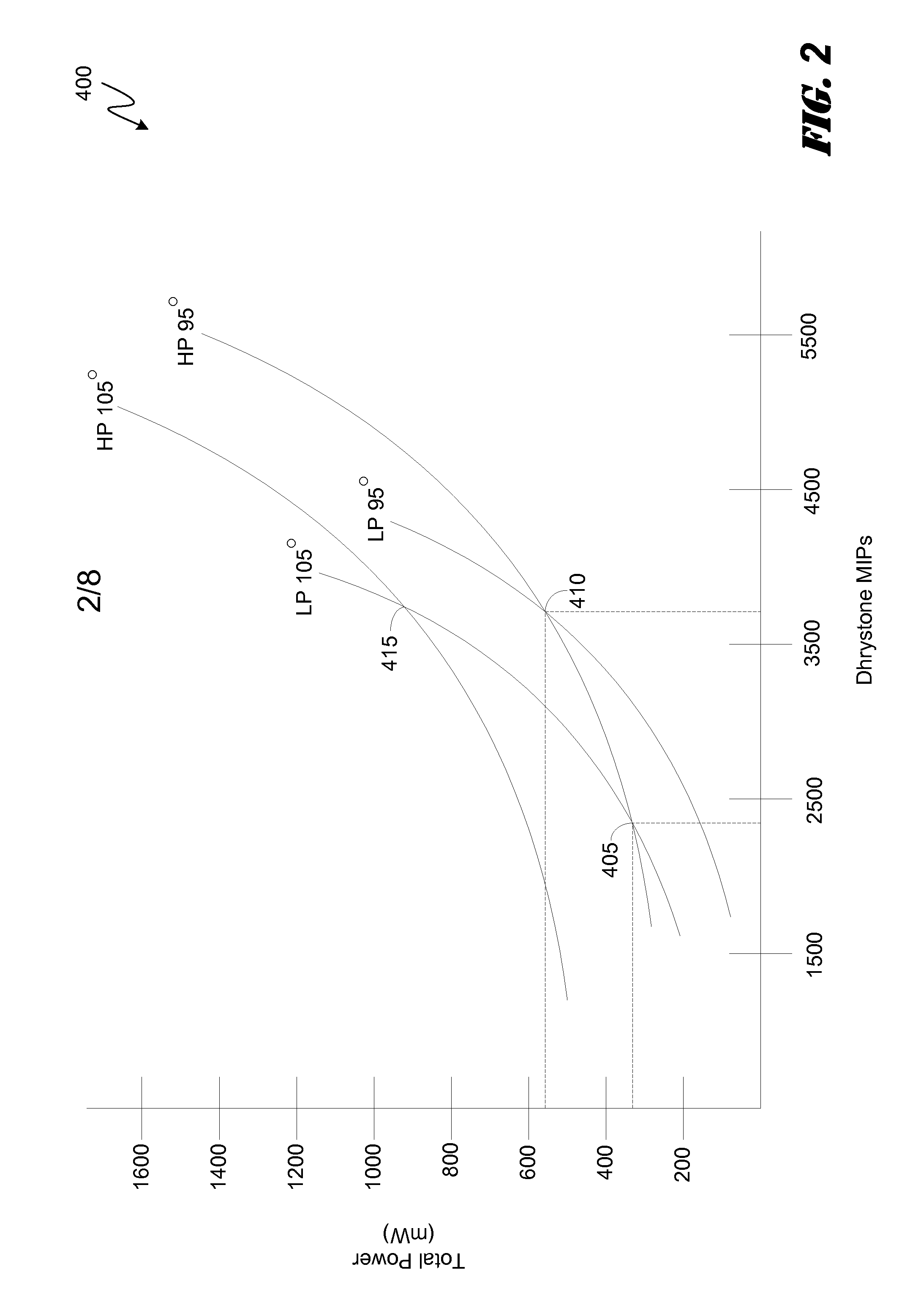

Various embodiments of methods and systems for controlling and / or managing thermal energy generation on a portable computing device that contains a heterogeneous multi-core processor are disclosed. Because individual cores in a heterogeneous processor may exhibit different processing efficiencies at a given temperature, thermal mitigation techniques that compare performance curves of the individual cores at their measured operating temperatures can be leveraged to manage thermal energy generation in the PCD by allocating and / or reallocating workloads among the individual cores based on the performance curve comparison.

Owner:QUALCOMM INC

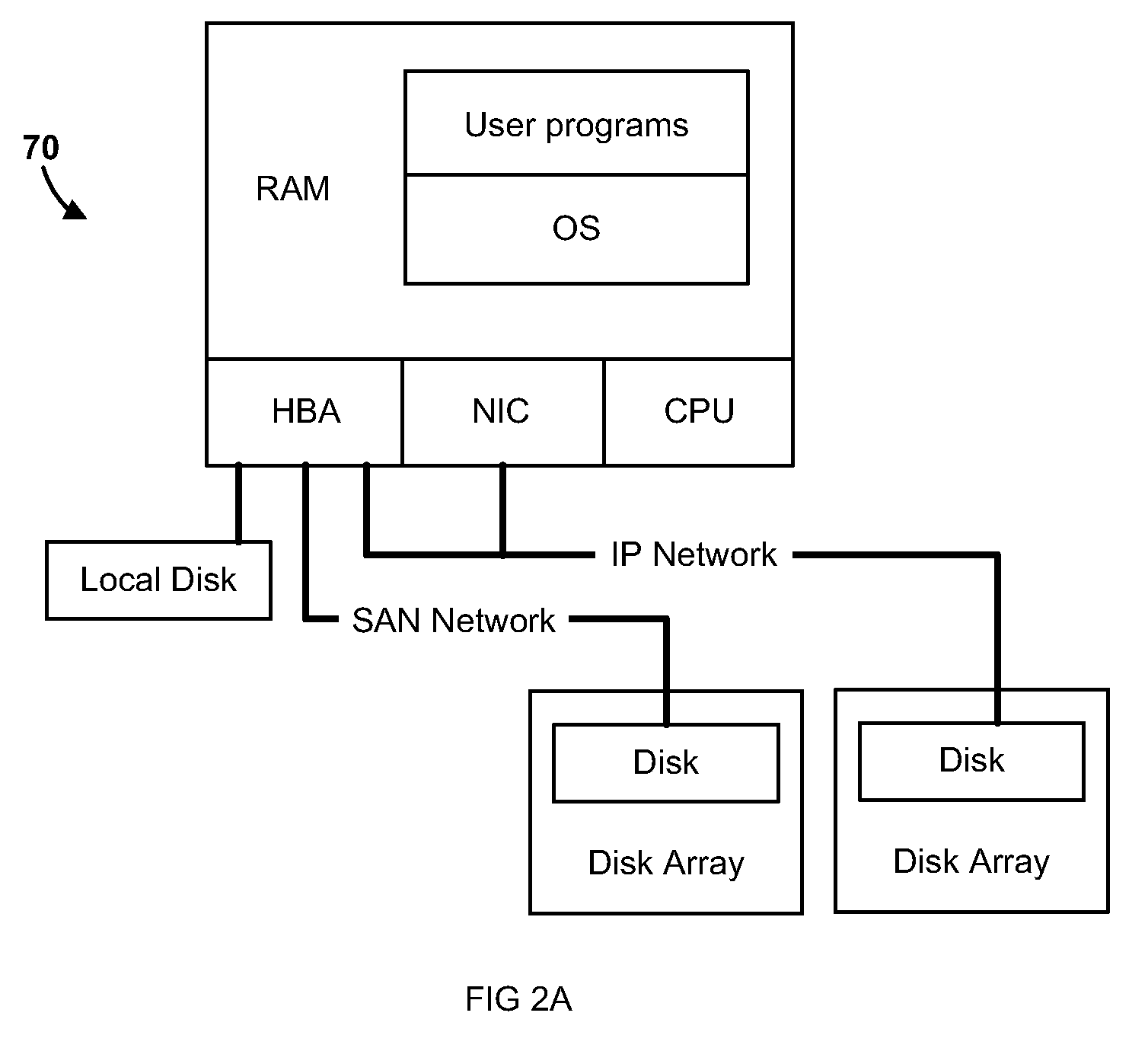

Method and apparatus for avoiding data bus grant starvation in a non-fair, prioritized arbiter for a split bus system with independent address and data bus grants

InactiveUS6535941B1Reduce delaysSpeeding up data bus grant processMemory systemsMulti processorAddress bus

A distributed system structure for a large-way, symmetric multiprocessor system using a bus-based cache-coherence protocol is provided. The distributed system structure contains an address switch, multiple memory subsystems, and multiple master devices, either processors, I / O agents, or coherent memory adapters, organized into a set of nodes supported by a node controller. The node controller receives transactions from a master device, communicates with a master device as another master device or as a slave device, and queues transactions received from a master device. Since the achievement of coherency is distributed in time and space, the node controller helps to maintain cache coherency. In order to reduce the delays in giving address bus grants, a bus arbiter for a bus connected to a processor and a particular port of the node controller parks the address bus towards the processor. A history of address bus grants is kept to determine whether any of the previous address bus grants could be used to satisfy an address bus request associated with a data bus request. If one of them qualifies, the data bus grant is given immediately, speeding up the data bus grant process by anywhere from one to many cycles depending on the requests for the address bus from the higher priority node controller.

Owner:IBM CORP

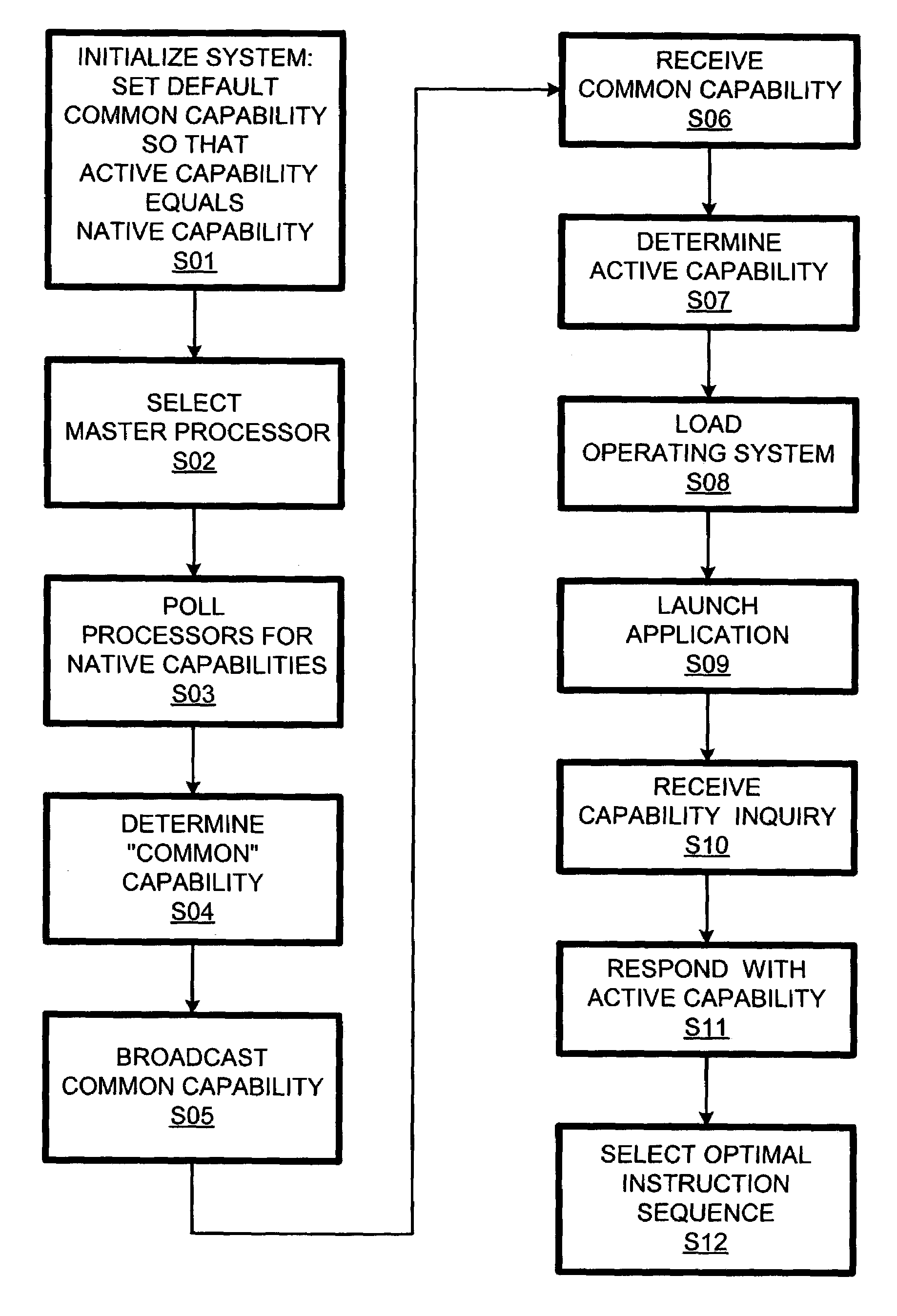

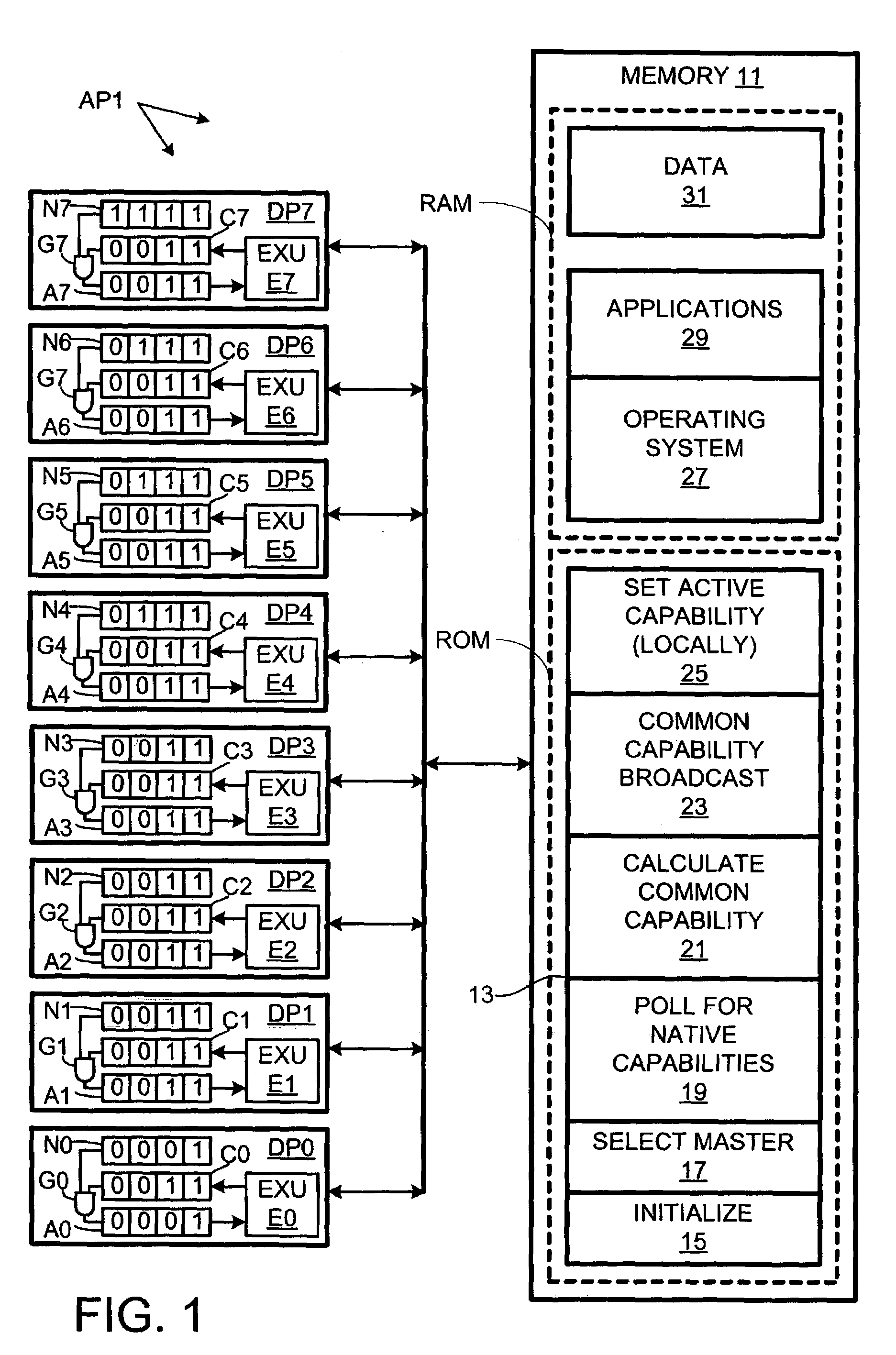

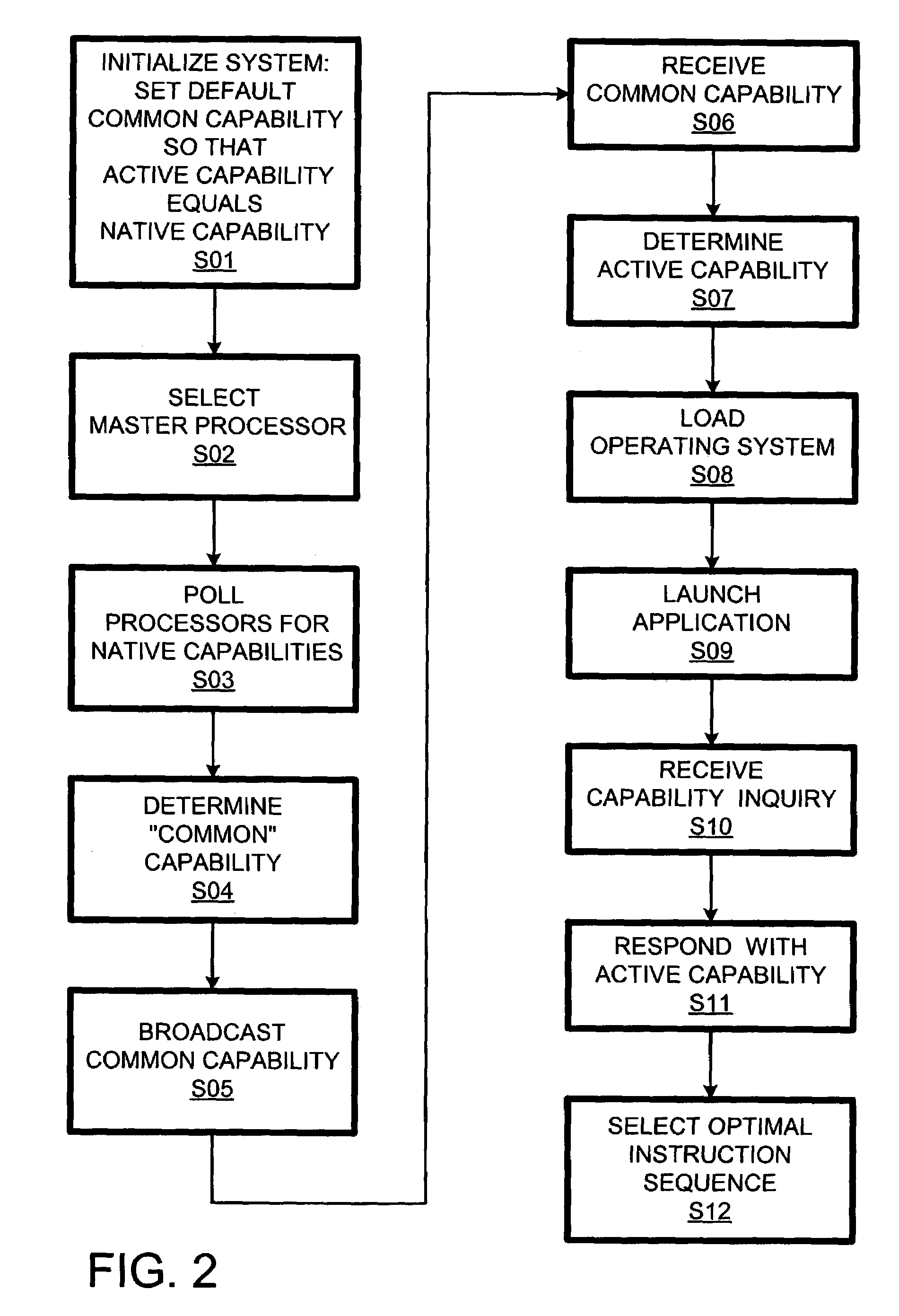

Instruction set reconciliation for heterogeneous symmetric-multiprocessor systems

ActiveUS7080242B2Improve performanceResource allocationDigital computer detailsMulti processorSymmetric multiprocessor system

In a symmetric multiprocessing system using processors (DP0–DP7) of different capabilities (instruction sets), a processor responds (S11) to a query regarding its capabilities (instruction set) with its “active” capability, which is the intersection of its native capability and a common capability across processors determined (S04) during a boot sequence (13). The querying application (29) can select (S12) a program variant optimized for the active capability of the selected processor. If the application is subsequently subjected to a blind transfer to another processor, it is more likely than it would otherwise be (if the processors responded with their native capabilities) that the previously selected program variant runs without encountering unimplemented instructions.

Owner:VALTRUS INNOVATIONS LTD

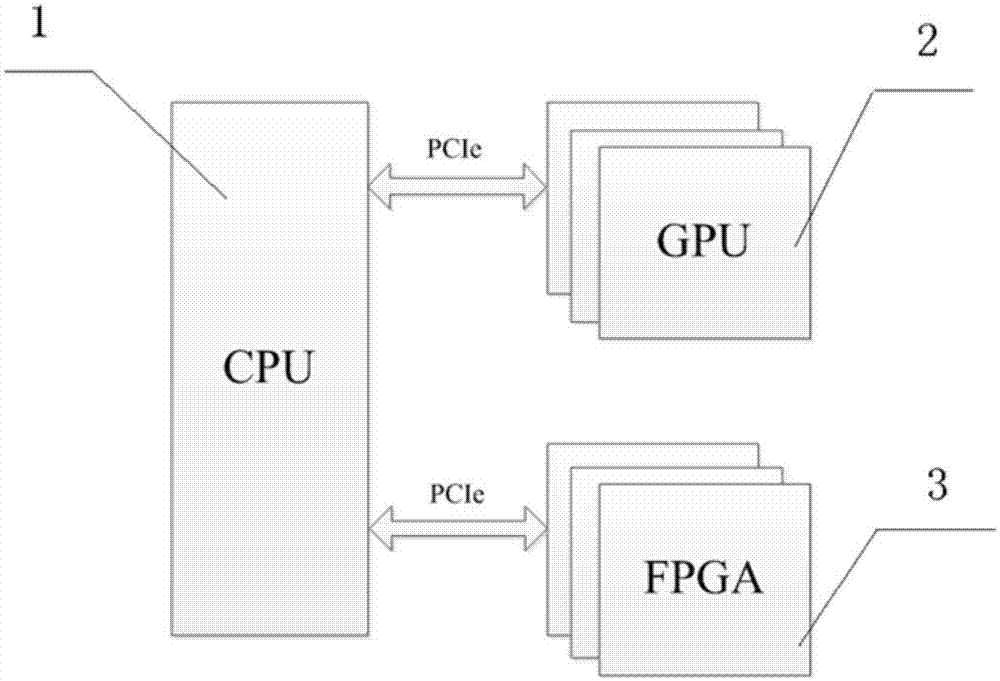

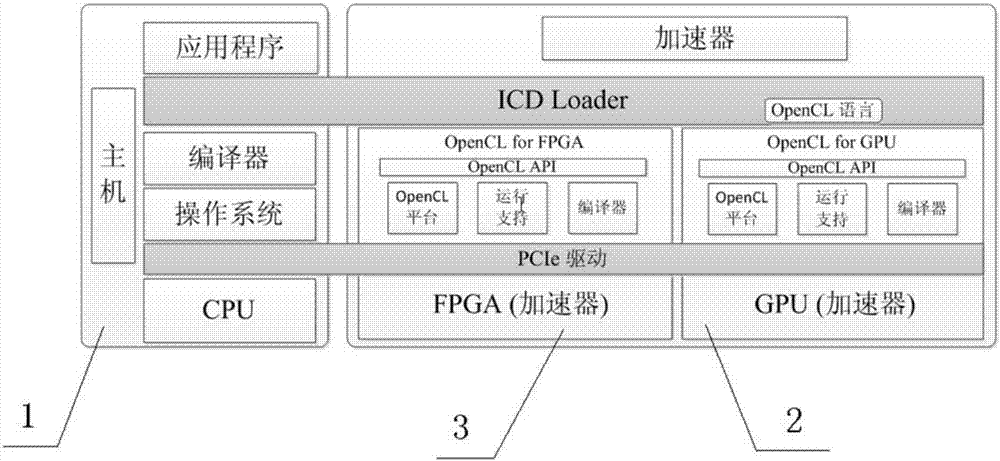

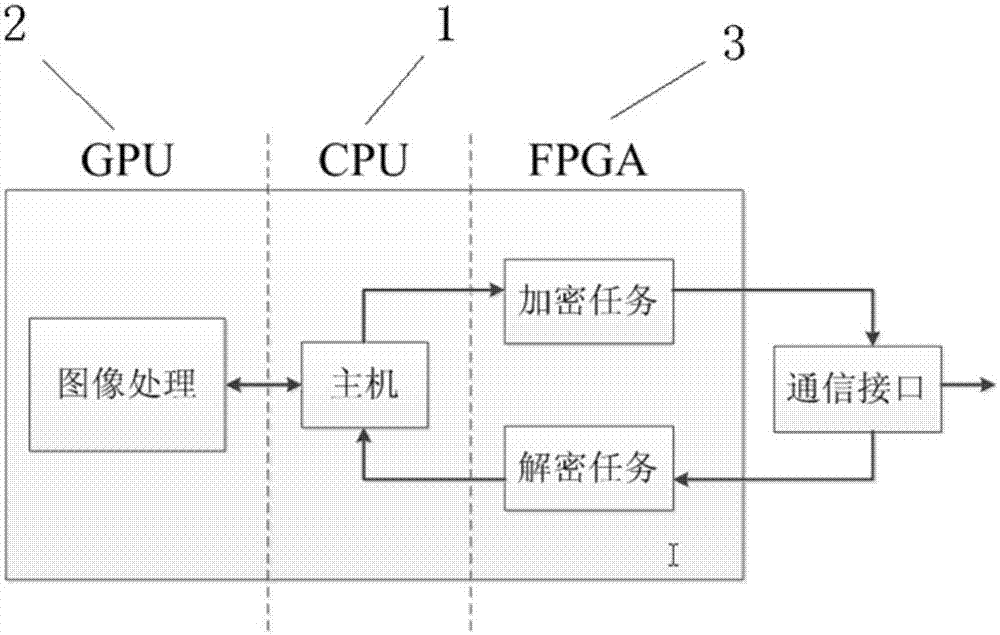

Heterogeneous computing system and method based on CPU+GPU+FPGA architecture

InactiveCN107273331AGive full play to the advantages of management and controlTake full advantage of parallel processingArchitecture with single central processing unitEnergy efficient computingFpga architectureResource management

The invention provides a heterogeneous computing system based on CPU+GPU+FPGA architecture. The system comprises a CPU host unit, one or more GPU heterogeneous acceleration units and one or more FPGA heterogeneous acceleration units. The CPU host unit is in communication connection with the GPU heterogeneous acceleration units and the FPGA heterogeneous acceleration units. The CPU host unit is used for managing resources and allocating processing tasks to the GPU heterogeneous acceleration units and / or the FPGA heterogeneous acceleration units. The GPU heterogeneous acceleration units are used for carrying out parallel processing on tasks from the CPU host unit. The FPGA heterogeneous acceleration units are used for carrying out serial or parallel processing on the tasks from the CPU host unit. According to the heterogeneous computing system provided by the invention, the control advantages of the CPU, the parallel processing advantages of the GPU, the performance and power consumption ratio and flexible configuration advantages of the FPGA can be exerted fully, the heterogeneous computing system can adapt to different application scenes and can satisfy different kinds of task demands. The invention also provides a heterogeneous computing method based on the CPU+GPU+FPGA architecture.

Owner:SHANDONG CHAOYUE DATA CONTROL ELECTRONICS CO LTD

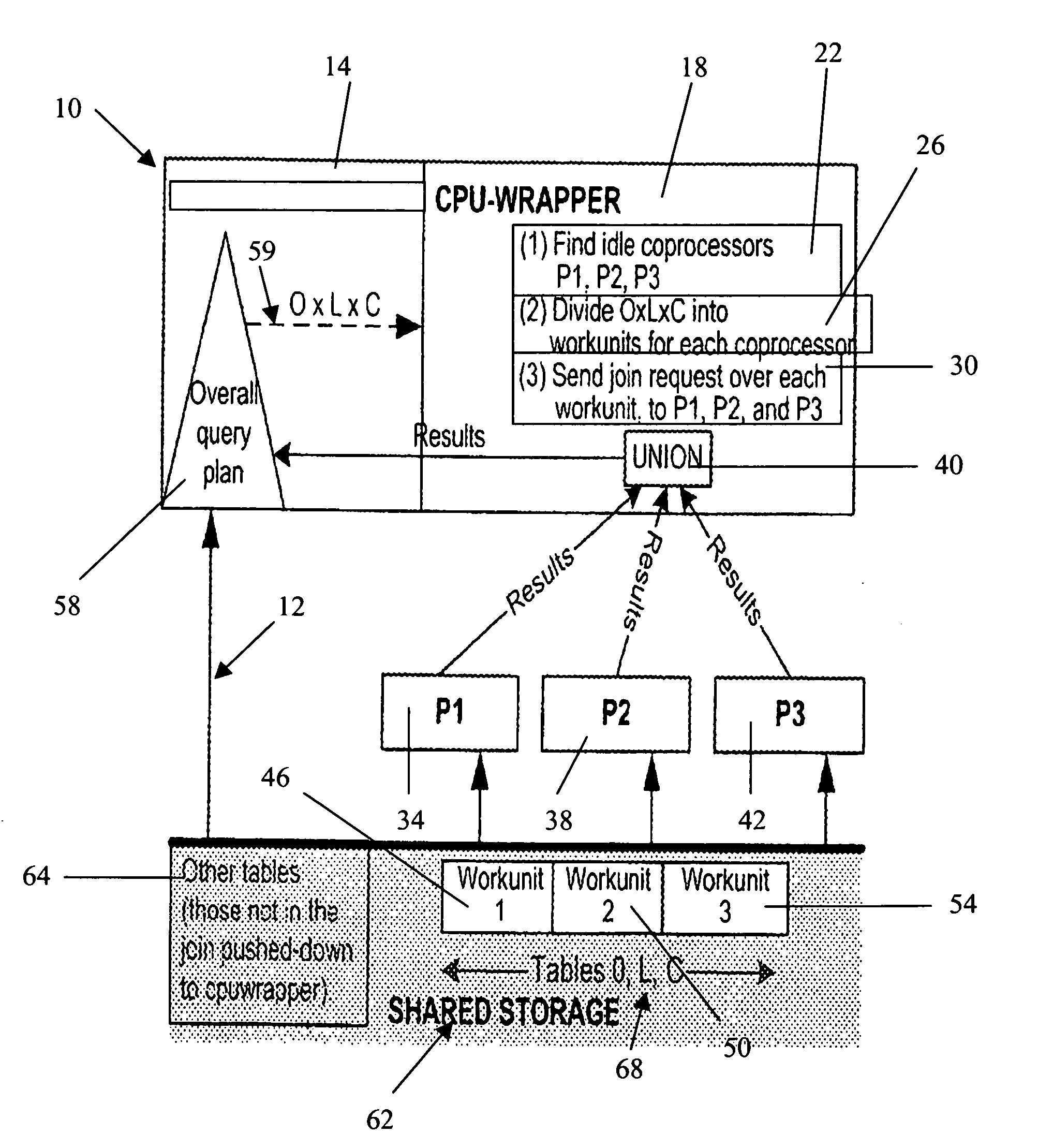

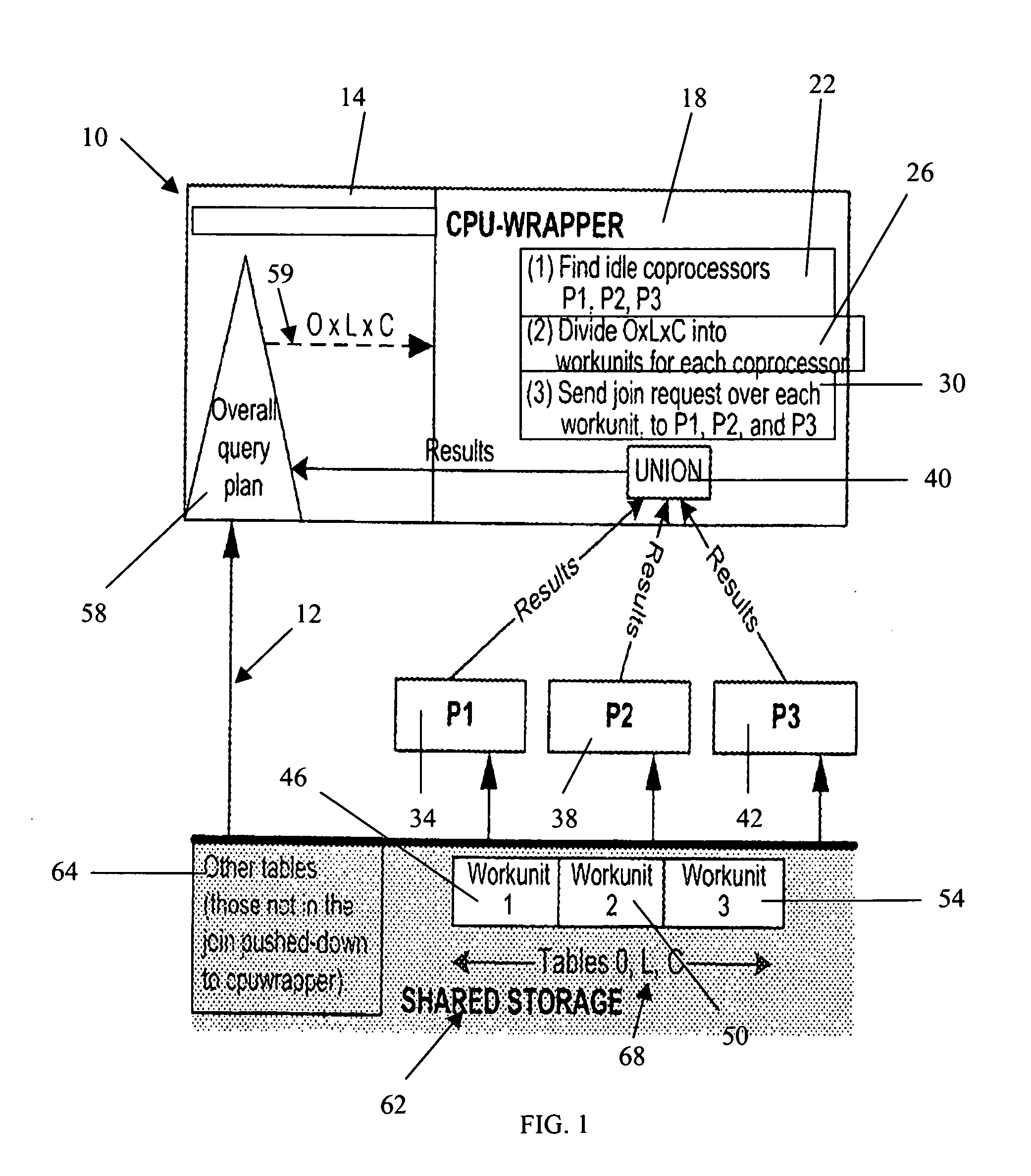

Method for parallel query processing with non-dedicated, heterogeneous computers that is resilient to load bursts and node failures

InactiveUS20080059489A1Increase speedDigital data information retrievalDigital data processing detailsParallel processingData store

A method is provided for query processing in a grid computing infrastructure. The method entails storing data in a data storage system accessible to a plurality of computing nodes. Computationally-expensive query operations are identified and query fragments are allocated to individual nodes according to computing capability. The query fragments are independently executed on individual nodes. The query fragment results are combined into a final query result.

Owner:IBM CORP

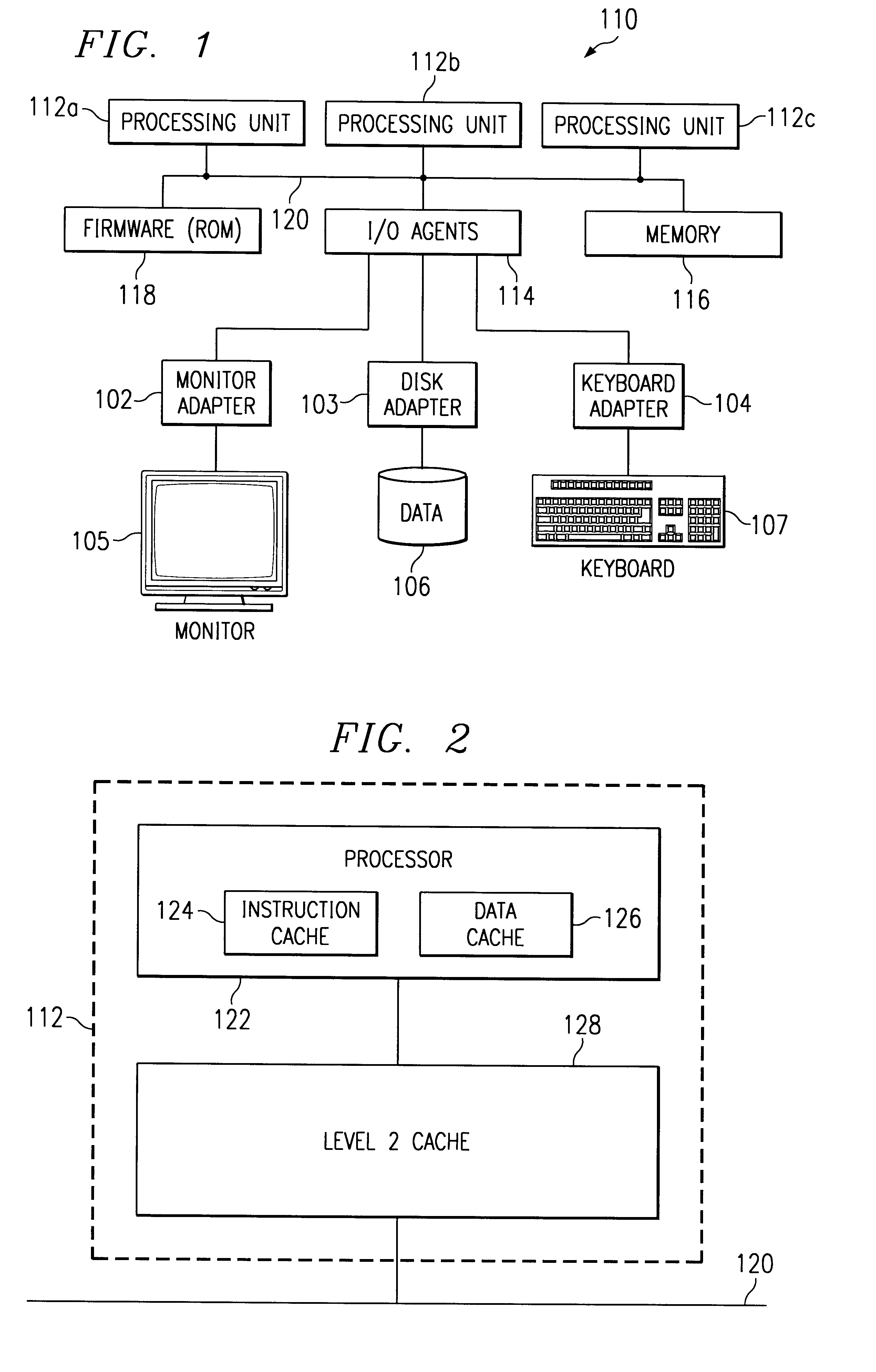

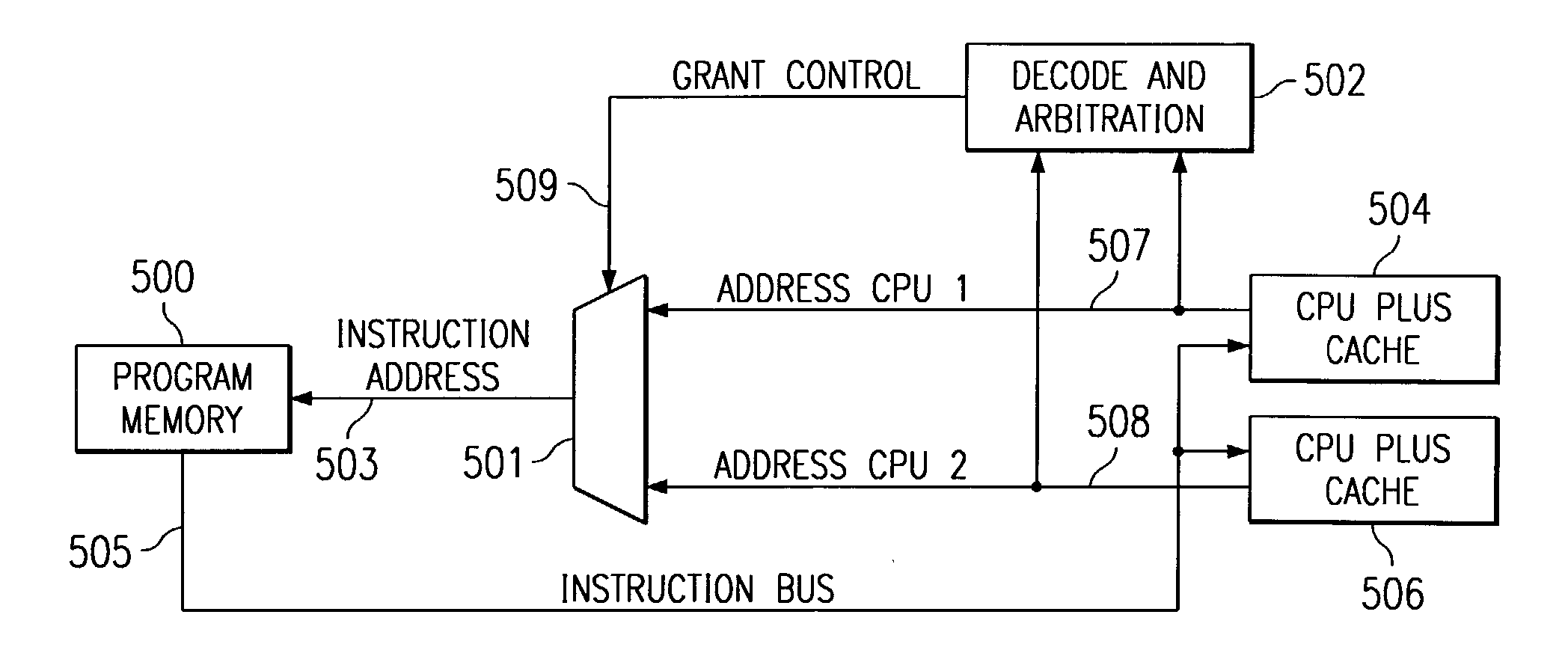

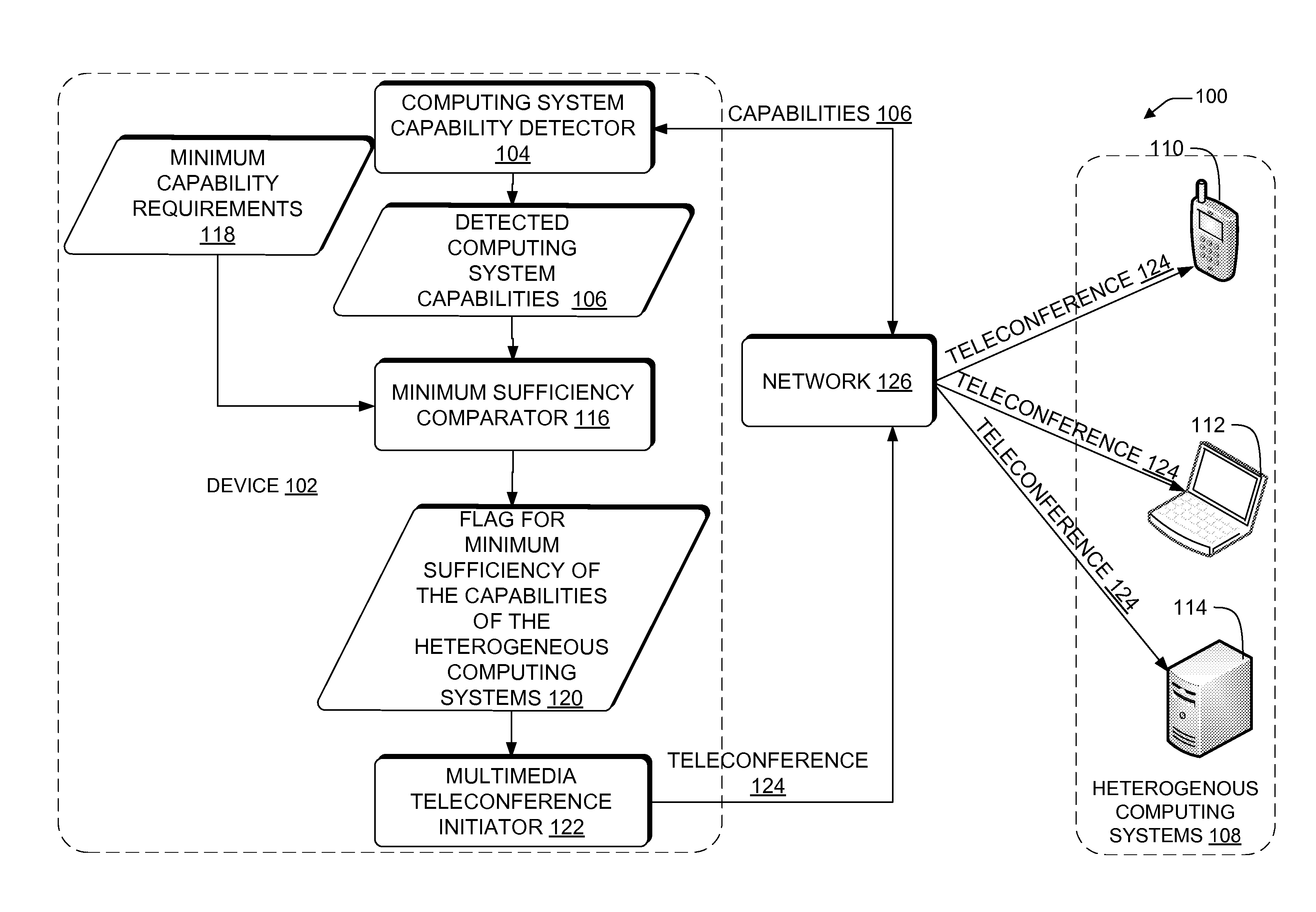

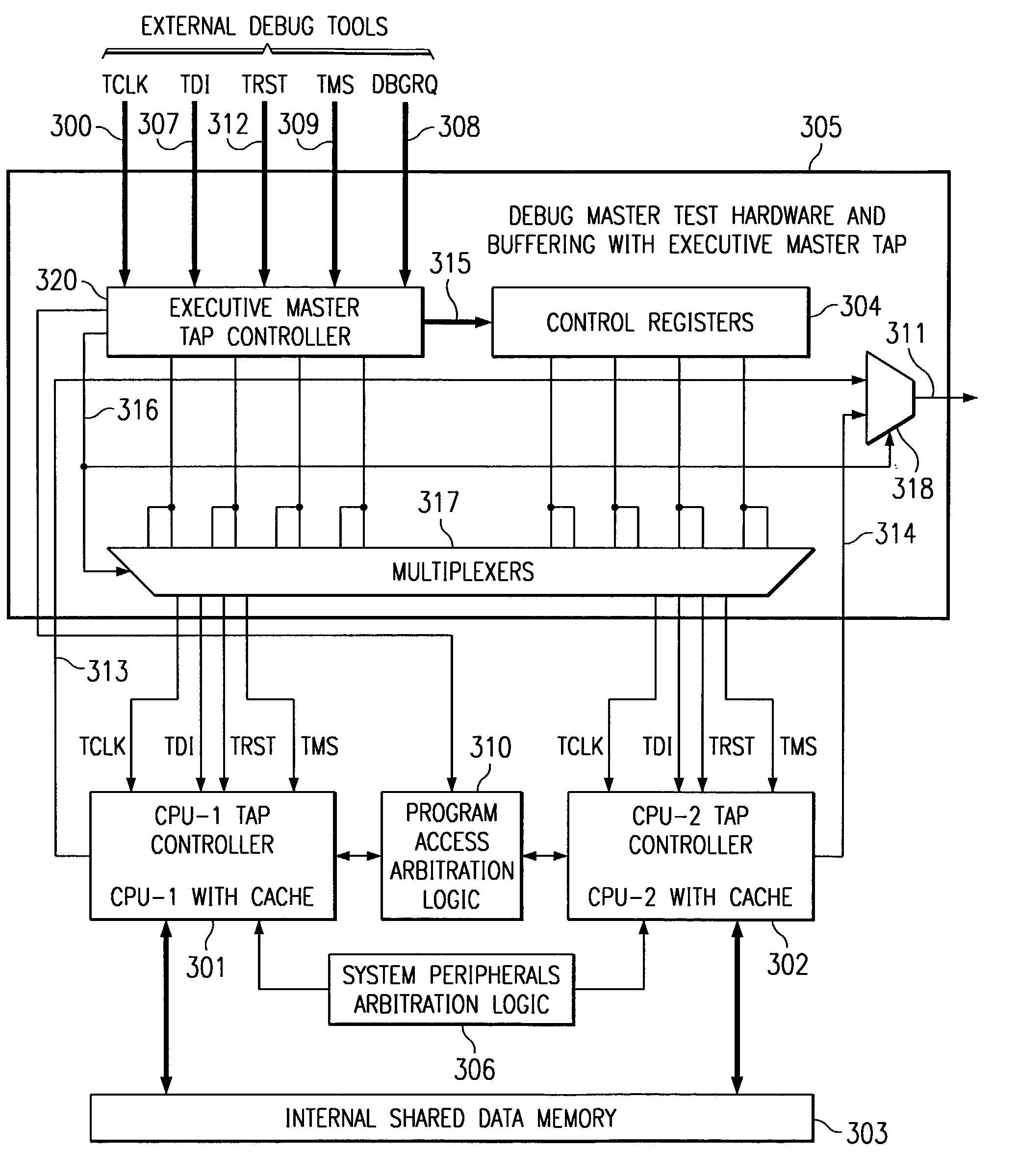

Embedded symmetric multiprocessor system with arbitration control of access to shared resources

InactiveUS7237071B2Low costImprove performanceEnergy efficient ICTMemory adressing/allocation/relocationMemory bankSingle chip

A single chip, embedded symmetric multiprocessor (ESMP) having parallel multiprocessing architecture composed of identical processors includes a single program memory. Program access arbitration logic supplies an instruction to a single requesting central processing unit at a time. Shared memory access arbitration logic can supply data from separate simultaneously accessible memory banks or arbitrate among central processing units for access. The system may simulate an atomic read / modify / write instruction by prohibiting access to the one address by another central processing unit for a predetermined number of memory cycles following a read access to one of a predetermined set of addresses in said shared memory.

Owner:TEXAS INSTR INC

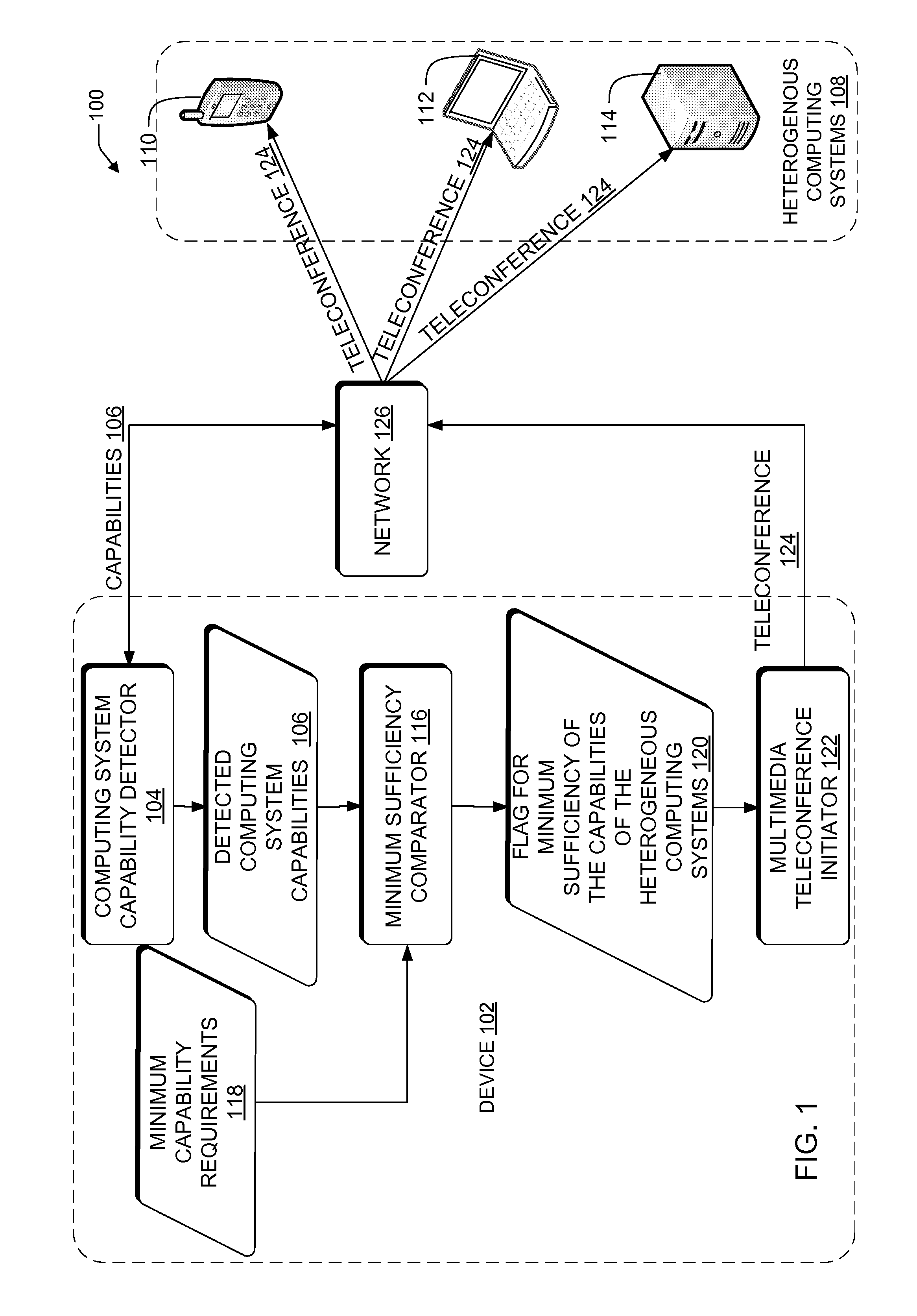

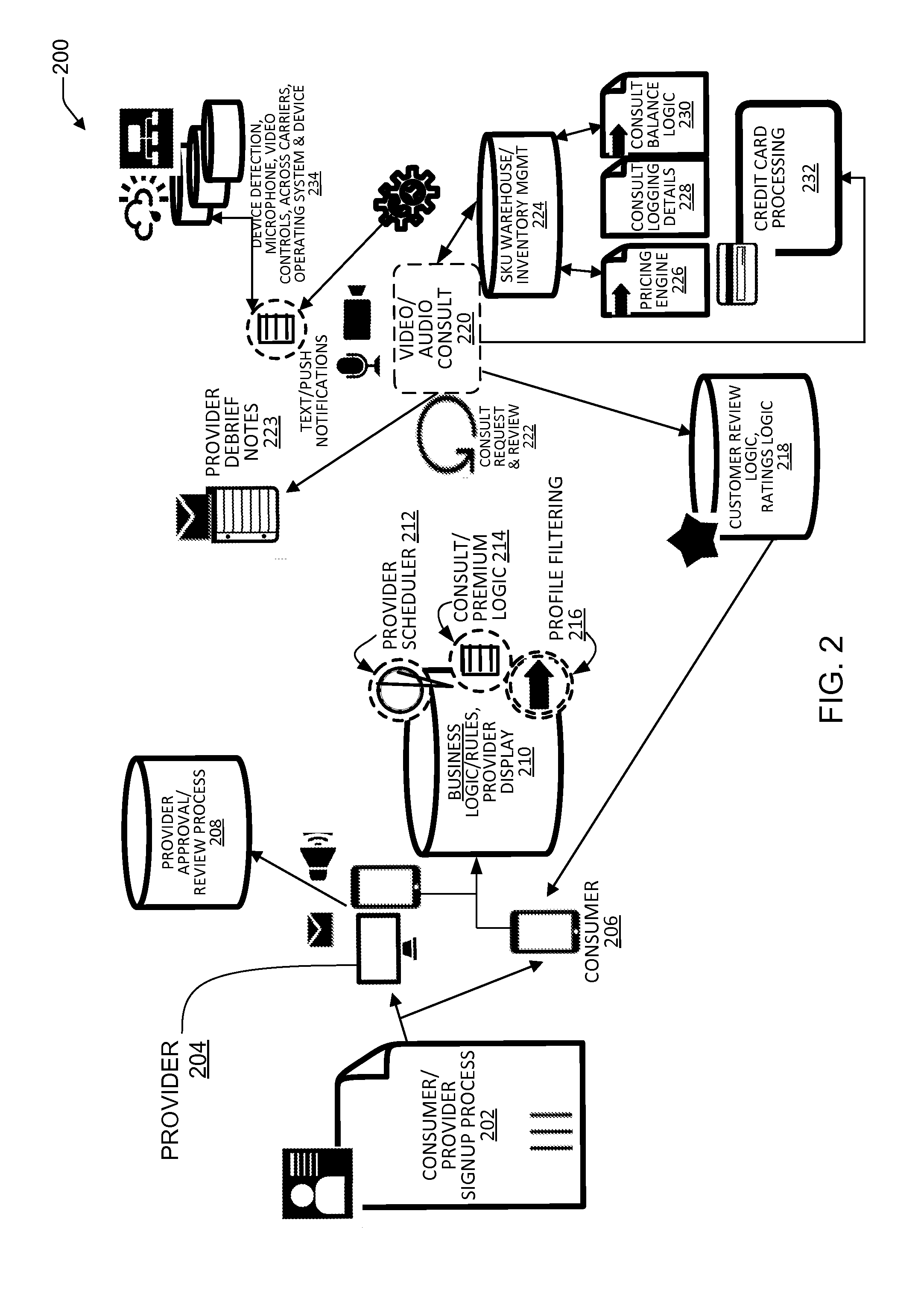

Multimedia teleconference streaming architecture between heterogeneous computer systems

In one implementation, an apparatus includes a detector of capabilities of each of heterogeneous computing systems, the capabilities including a model, existence of a microphone, video controls, specifications of a camera, a processor speed, a bus speed, an amount of cache memory, an amount of non-cache memory, a telecom carrier and an operating system, a determiner of the minimum sufficiency of the capabilities of the each of the heterogeneous computing systems; the determiner being operably coupled to the detector, the determiner being operable to set a state in a memory location indicating an affirmative or a negative state of the minimum sufficiency of the capabilities of the each of the heterogeneous computing systems, and an initiator of a multimedia teleconference between the heterogeneous computing systems, the initiator being operably coupled to the determiner, the initiator being operable to initiate the multimedia teleconference in reference to the memory location indicating an affirmative or a negative state of the minimum sufficiency of the capabilities of the each of the heterogeneous computing systems.

Owner:ONE TOUCH BRANDS

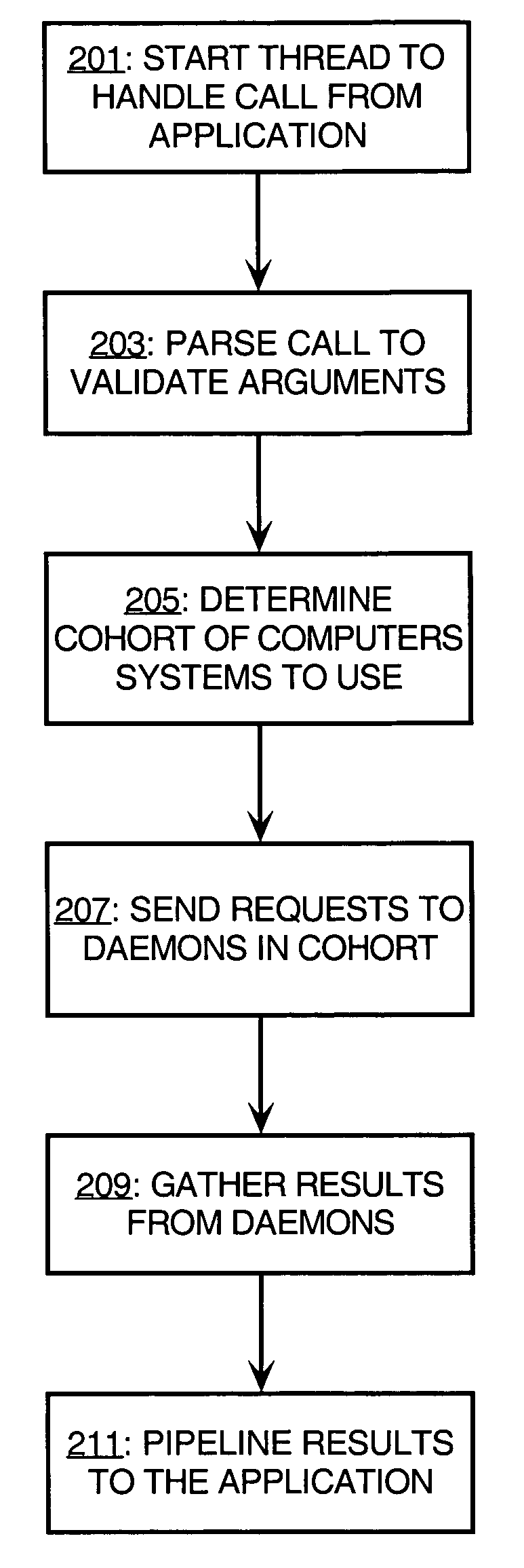

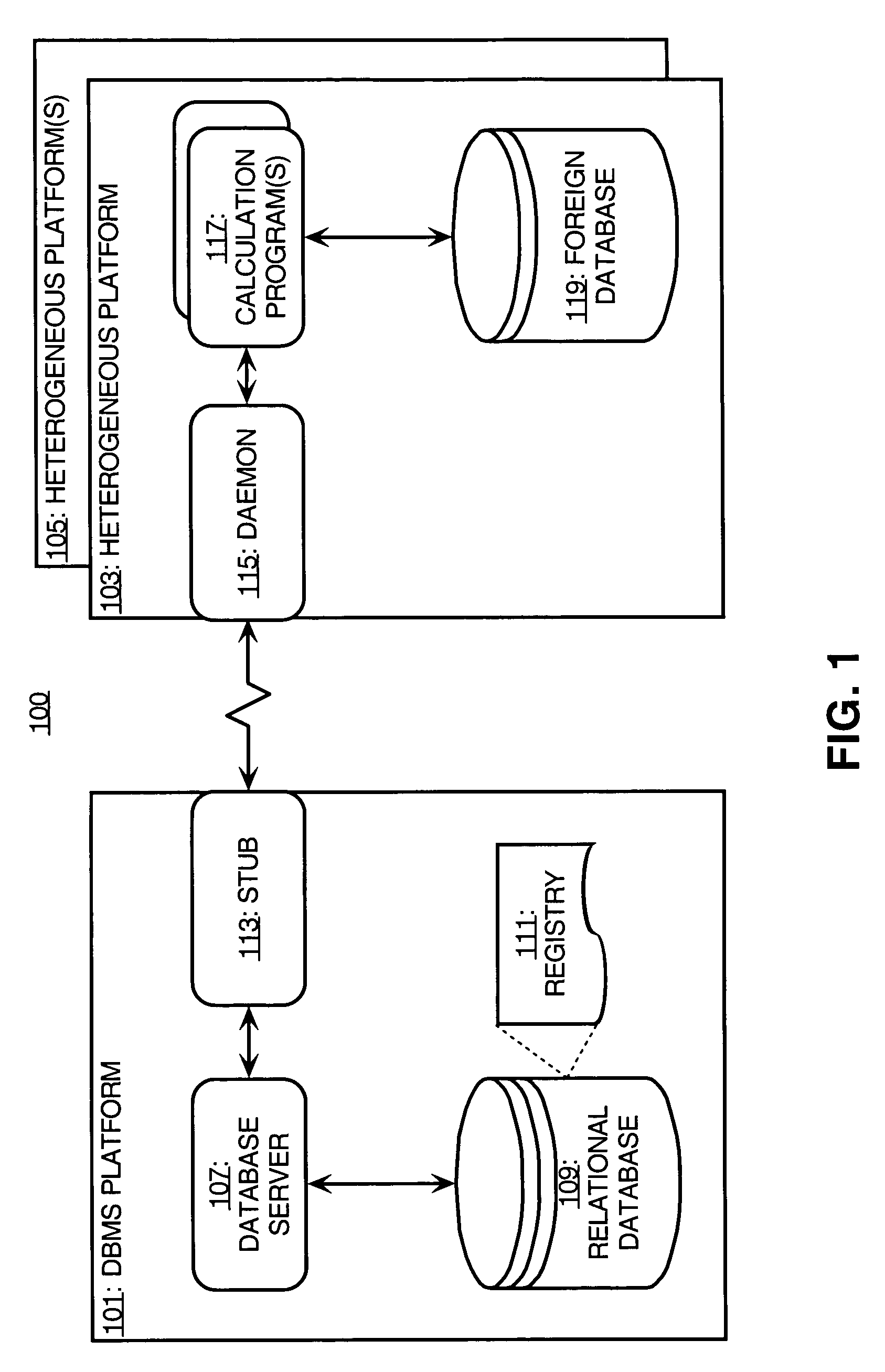

Complex computation across heterogenous computer systems

A programmatic interface to allow external functions to be registered and called in parallel from within a database management system is described for coordinating a computation at multiple nodes. In one embodiment, each node executes a process configured for starting a program to perform the computation in response to a command received from a database system. In response to receiving a query at the database system, multiple commands are transmitted to the processes for concurrently performing the computation at each said corresponding process. Results are received from each of the processes and execution of the statement is completed based on the results received.

Owner:ORACLE INT CORP

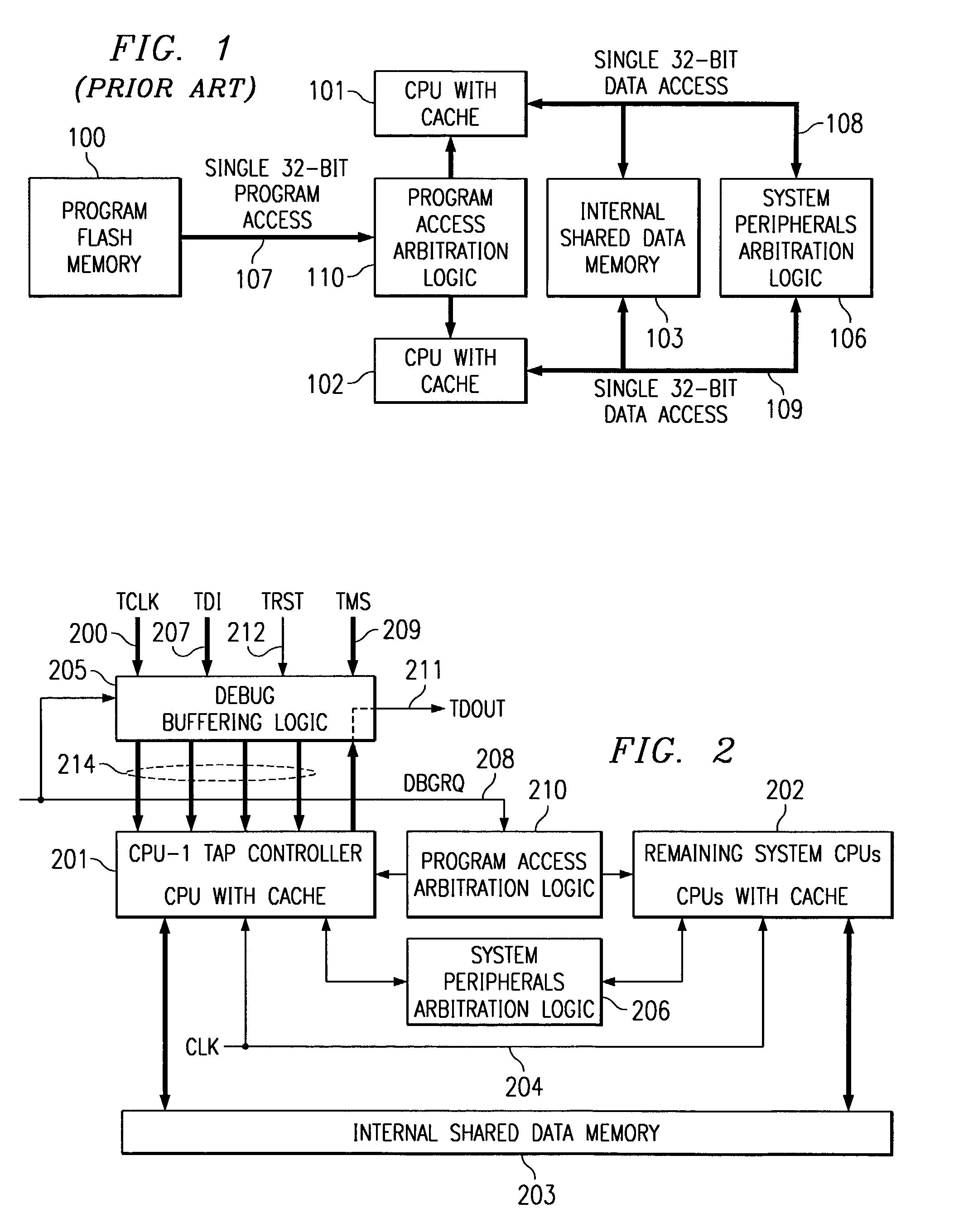

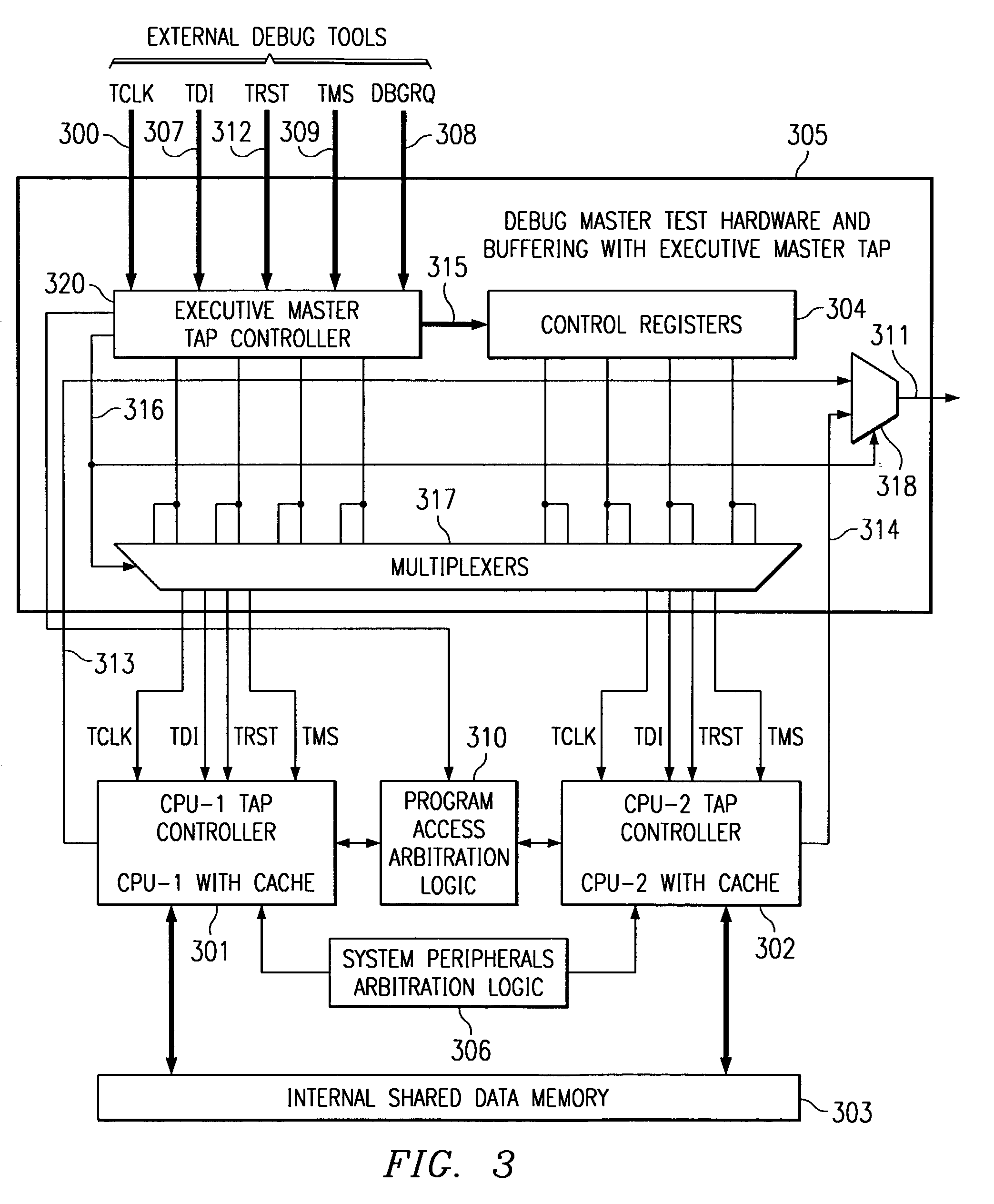

Embedded symmetric multiprocessor system debug

ActiveUS7010722B2Low advantageReduce development costsElectronic circuit testingFunctional testingMultiplexerNormal mode

A test signal multiplexer receives supplies external test signals to a selected debug master central processing unit in a symmetrical multiprocessor system and debug slave signals to debug slave central processing units. An executive master test access port controller responds to the external test signals and controls the test signal multiplexer. A control register loadable via the executive master test access port stores the debug slave signals. A test data output multiplexer connects the test data output line of the selected debug master central processor unit to an external test data output line. The external test signals includes a debug state signal supplied to each central processing unit. This selects either a normal mode or a debug mode at each central processor unit.

Owner:TEXAS INSTR INC

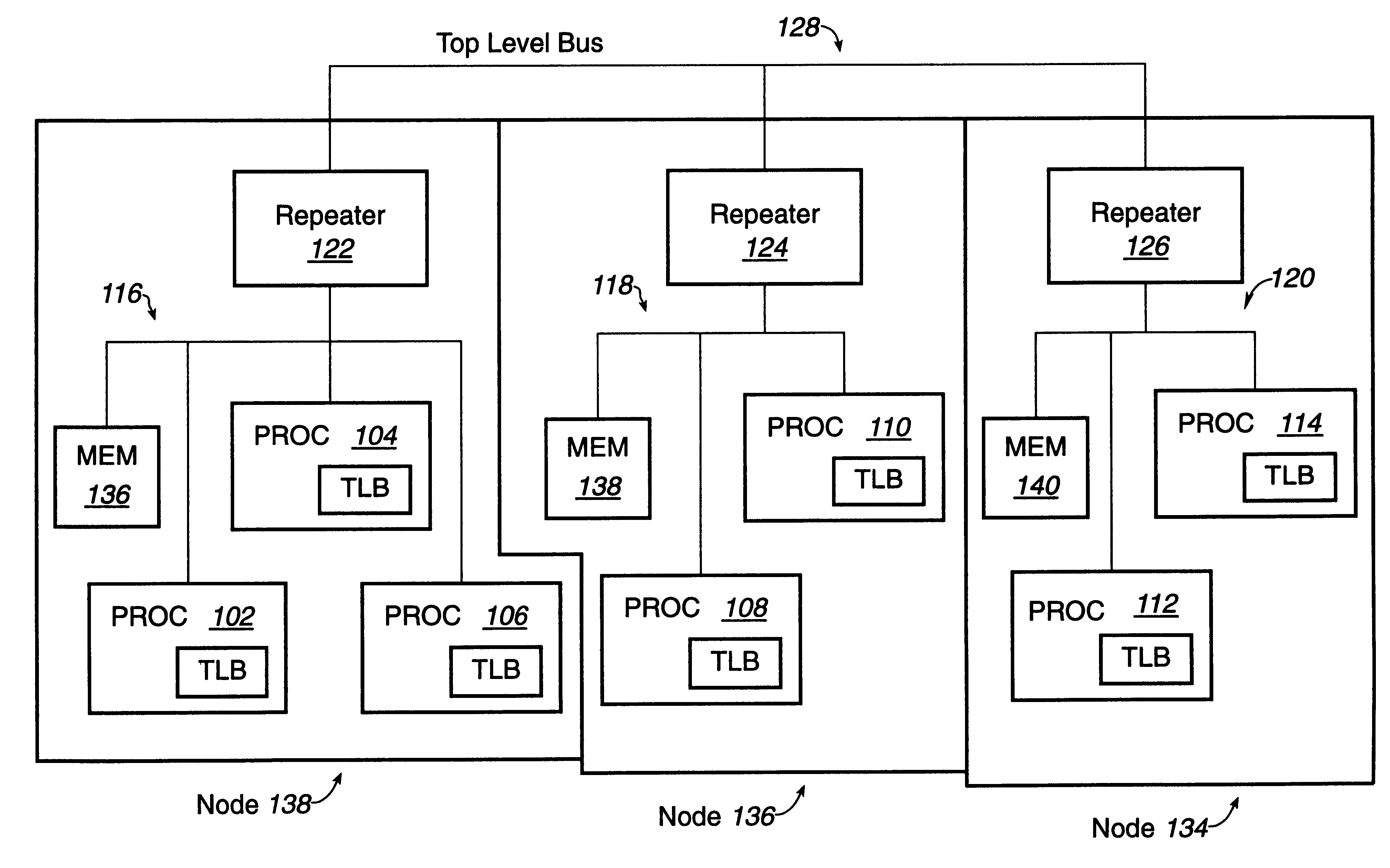

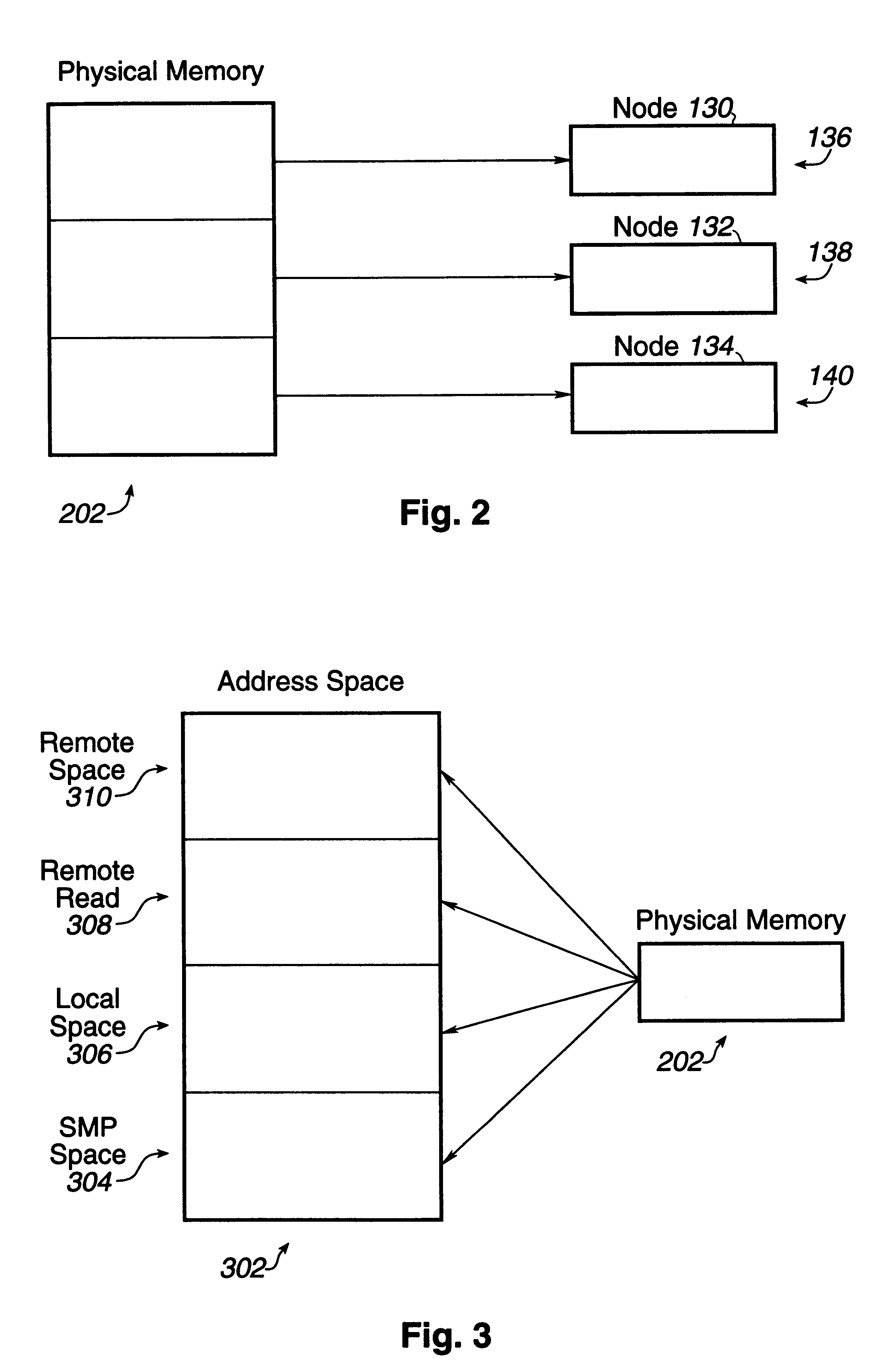

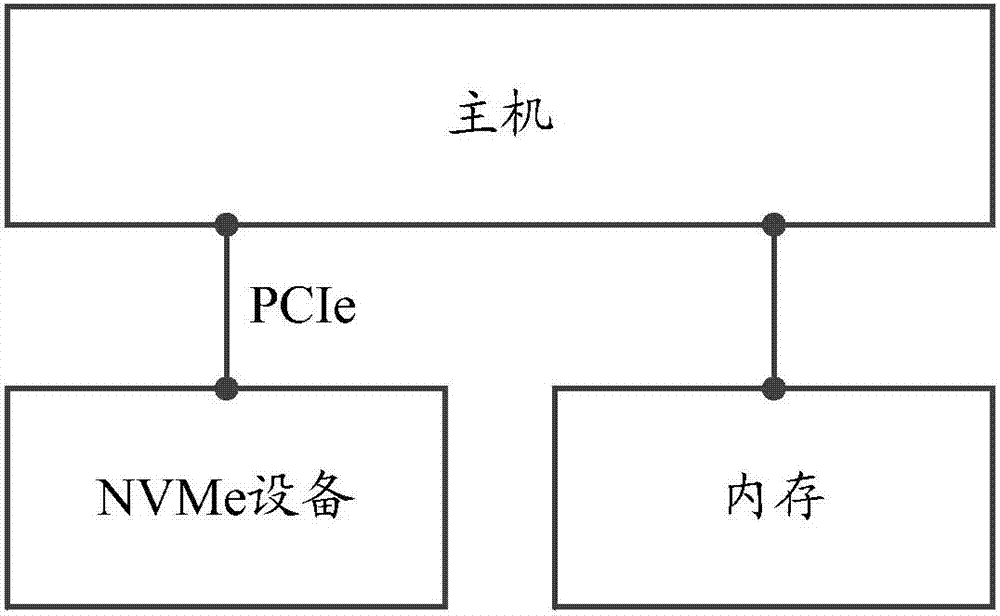

Shared memory system for symmetric multiprocessor systems

InactiveUS6226671B1Memory adressing/allocation/relocationMultiple digital computer combinationsSymmetric multiprocessor systemSymmetric multiprocessing

A shared memory system for symmetric multiprocessing systems including a plurality of physical memory locations in which the locations are either allocated to one node of a plurality of processing nodes, equally distributed among the processing nodes, or unequally distributed among the processing nodes. The memory locations are configured to be accessed by the plurality of processing nodes by mapping all memory locations into a plurality of address partitions within a hierarchy bus. The memory locations are addressed by a plurality of address aliases within the bus while the properties of the address partitions are employed to control transaction access generated in the processing nodes to memory locations allocated locally and globally within the processing nodes.

Owner:ORACLE INT CORP

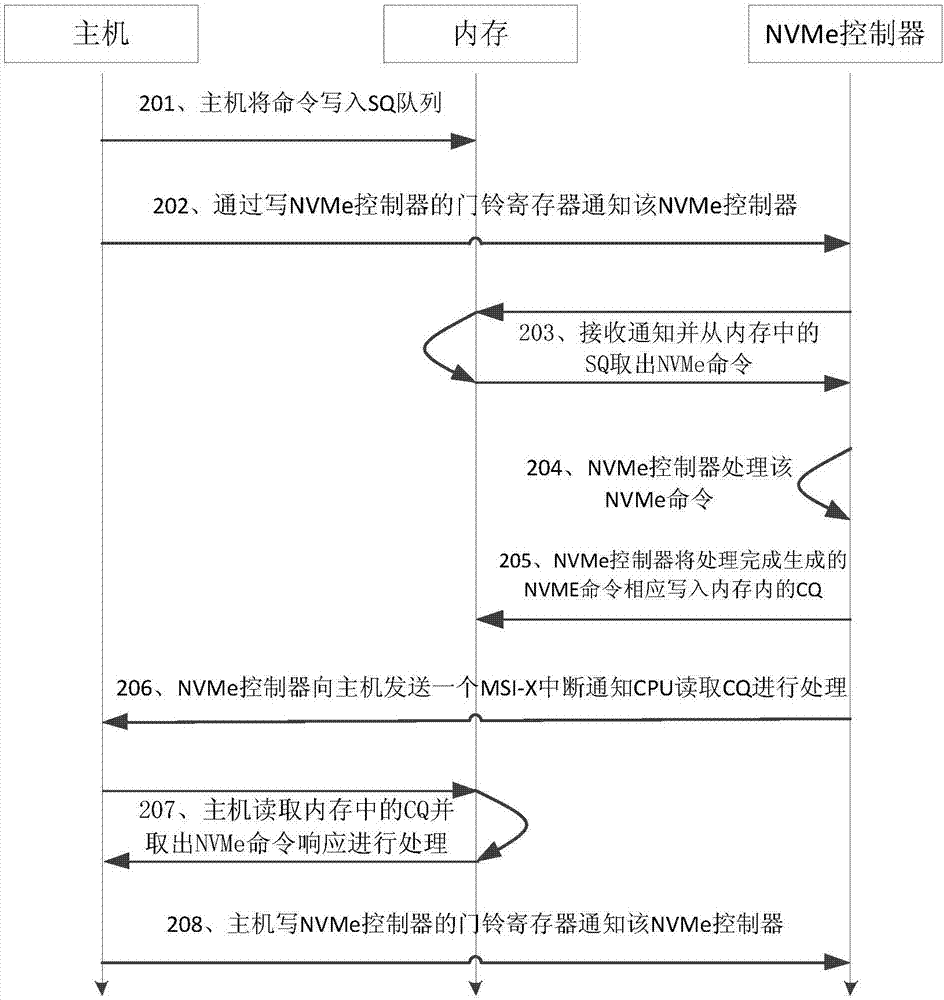

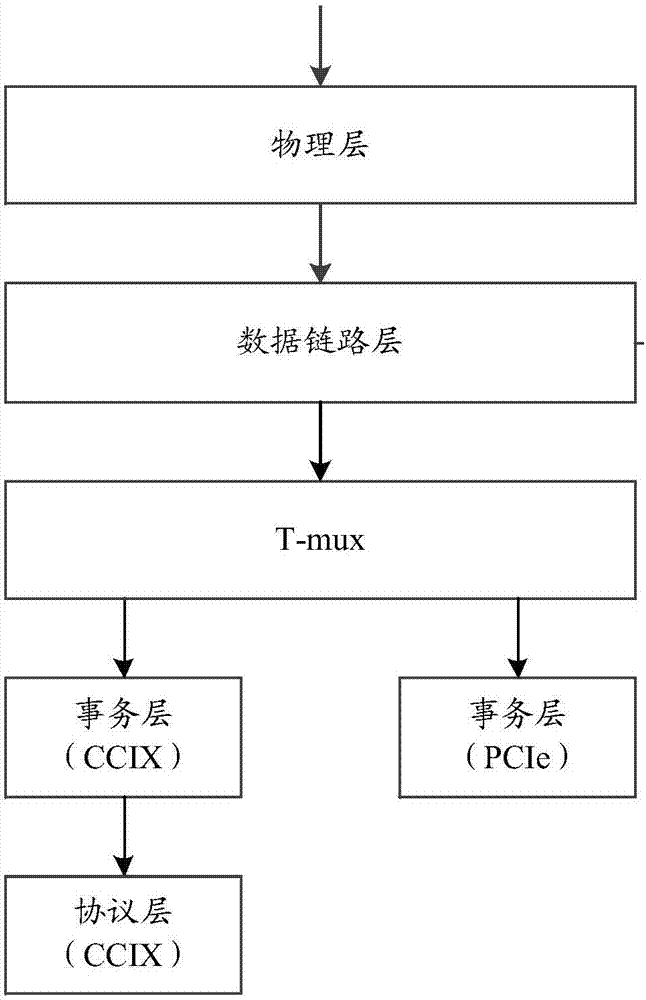

NVMe (Non-Volatile Memory Express) data read-write method and NVMe equipment

ActiveCN107992436AReduce consumptionImplement inputMemory architecture accessing/allocationMemory systemsTransceiverSolid-state drive

The invention relates to the technical field of storage, in particular to an NVMe (Non-Volatile Memory Express) data read-write method and NVMe equipment. The method comprises the following steps that: a transceiver receives an NVMe command which is written into an SQ (Submission Queue) by a host; when the SQ control module detects that the SQ changes in an SQ cache, the NVMe command in the SQ issent to an SSD (Solid State Drives) controller; the SSD controller executes the NVMe command and writes the generated NVMe command response into a CQ (Completion Queue) through a CQ control module; and the SSD controller notifies the host to read the CQ through trigger interruption to enable the host to process the NVMe command response in the CQ. Since the SQ and the CQ are both positioned in theNVMe equipment, the CPU (Central Processing Unit) can directly read the NVMe response command in the CQ or directly writes the NVMe command into the SQ so as to further lower the consumption of CPU resources. In addition, a way designed by the NVMe equipment can support CCIX (Cache Coherent Interconncet for Acceleration) on hardware, and therefore, the heterogeneous calculation of software memoryunification and an I / O operation can be realized.

Owner:HUAWEI TECH CO LTD

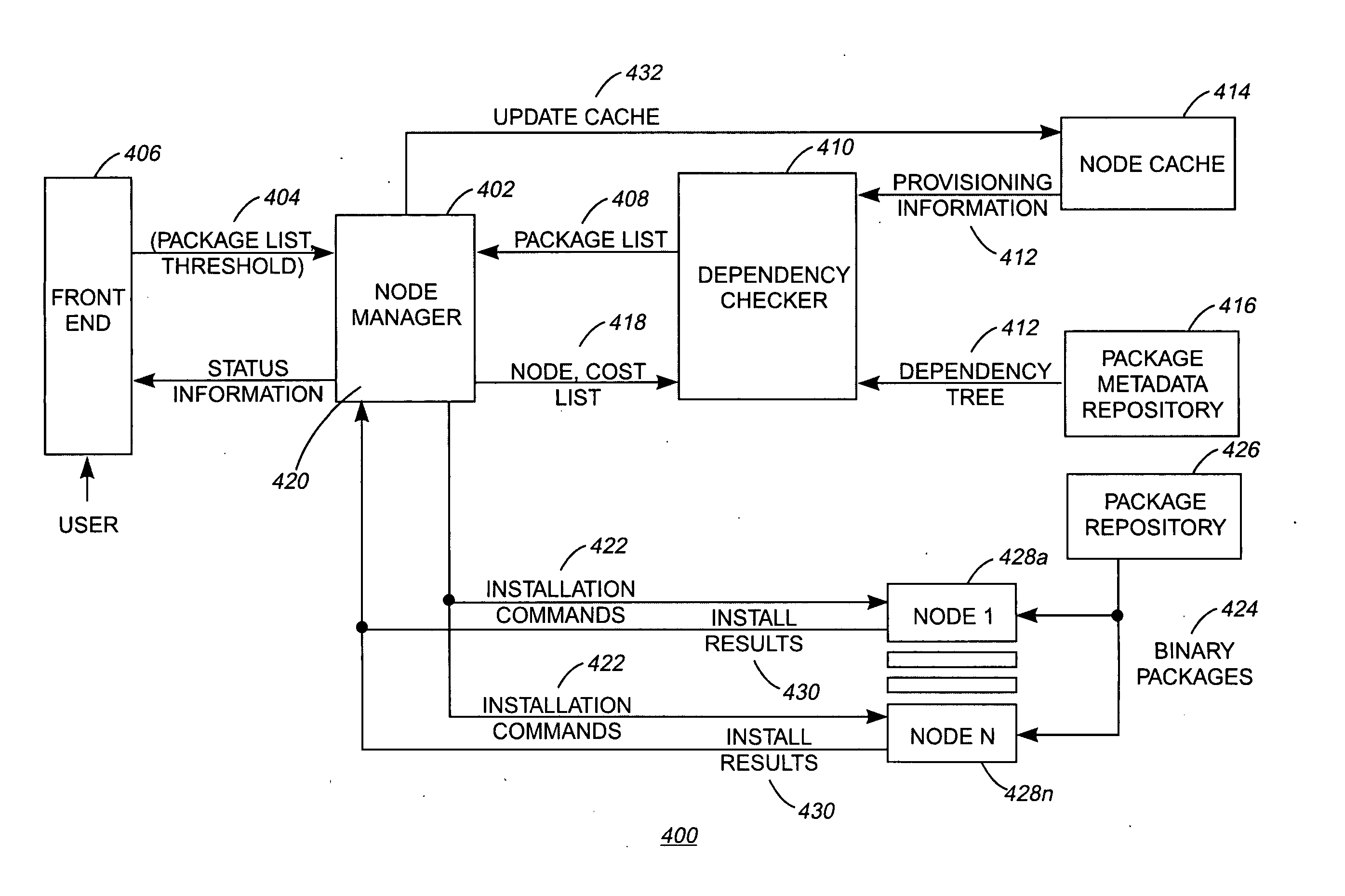

Method and apparatus for provisioning software on a network of computers

InactiveUS20070169113A1Program loading/initiatingTransmissionSymmetric multiprocessor systemSoftware

An apparatus and method for provisioning software on a network of heterogeneous computers in a network. The provisioner receives a list of packages and deployment scope of the packages, then checks each node for installed applications and records dependency and potential application conflicts. In addition, the provisioner measures a plurality of network and node metrics. Based on the dependency information, conflict information, and metrics, one or more nodes are selected and software is provisioned and / or removed in accordance with the dependency and conflict information.

Owner:IBM CORP

System and method for efficient data exchange in a multi-platform network of heterogeneous devices

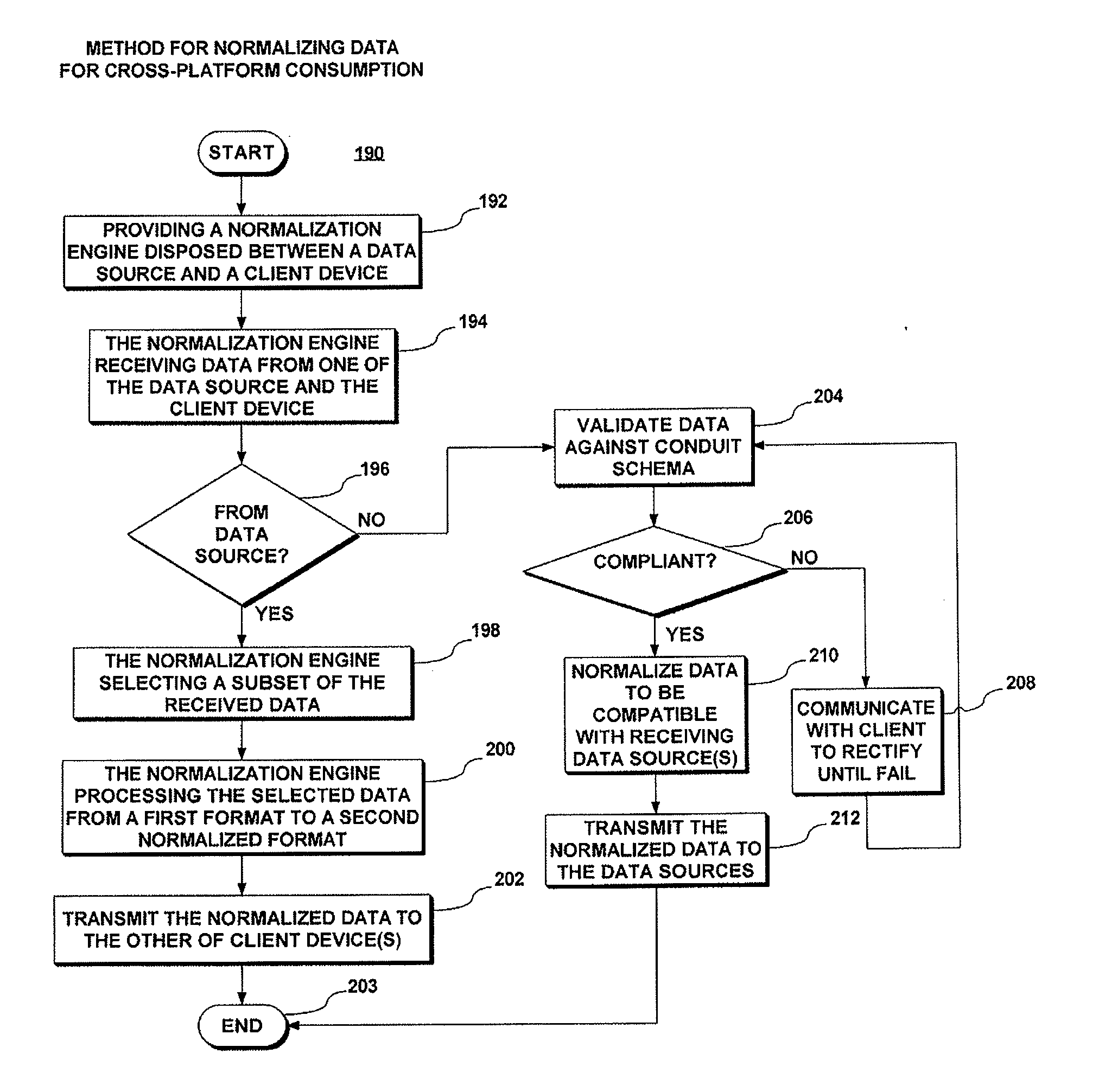

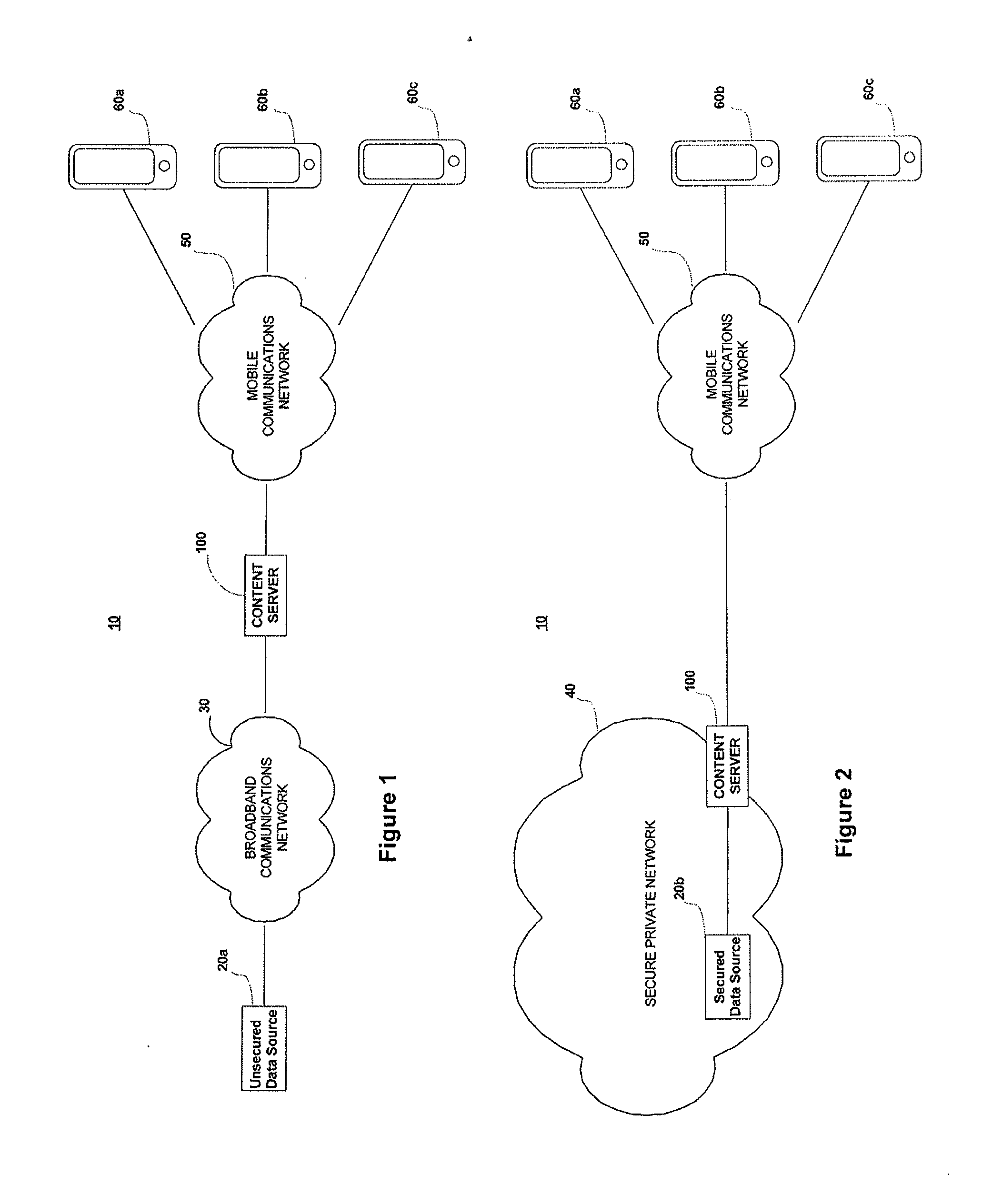

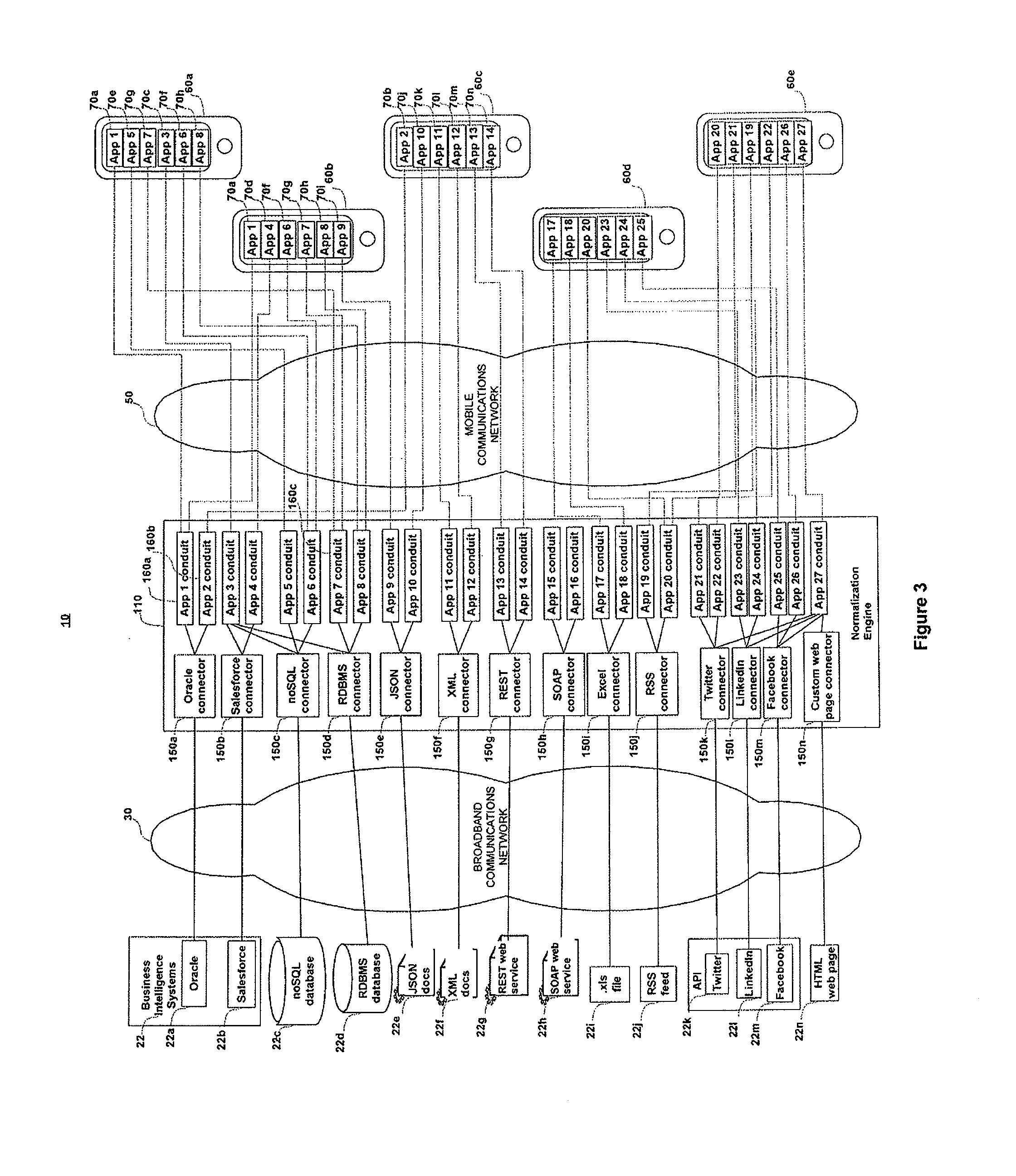

InactiveUS20120310899A1Facilitate efficient communicationEffective resourcesDigital data processing detailsSpecial data processing applicationsWeb serviceMulti platform

A normalization engine, system and method provide normalization of and access to data between heterogeneous data sources and heterogeneous computing devices. The engine includes connectors for heterogeneous data sources, and conduits for gathering a customized subset of data from the data sources, as required by a software application with which the conduit is compatible. Working together, the connector and conduit may gather large amounts of data from multiple data sources and prepare a subset of the data that includes only that data required by the application, which is particularly advantageous for mobile computing devices. Further, the conduit may process the subset data in various formats to provide normalized data in a single format, such as a JSON-formatted REST web service communication compatible with heterogeneous devices. As an intermediary, the normalization engine may further provide caching, authentication, discovery and targeted advertising to mobile computing and other computing devices.

Owner:TUNE

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com