Patents

Literature

105 results about "Compute kernel" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, a compute kernel is a routine compiled for high throughput accelerators (such as graphics processing units (GPUs), digital signal processors (DSPs) or field-programmable gate arrays (FPGAs)), separate from but used by a main program (typically running on a central processing unit). They are sometimes called compute shaders, sharing execution units with vertex shaders and pixel shaders on GPUs, but are not limited to execution on one class of device, or graphics APIs.

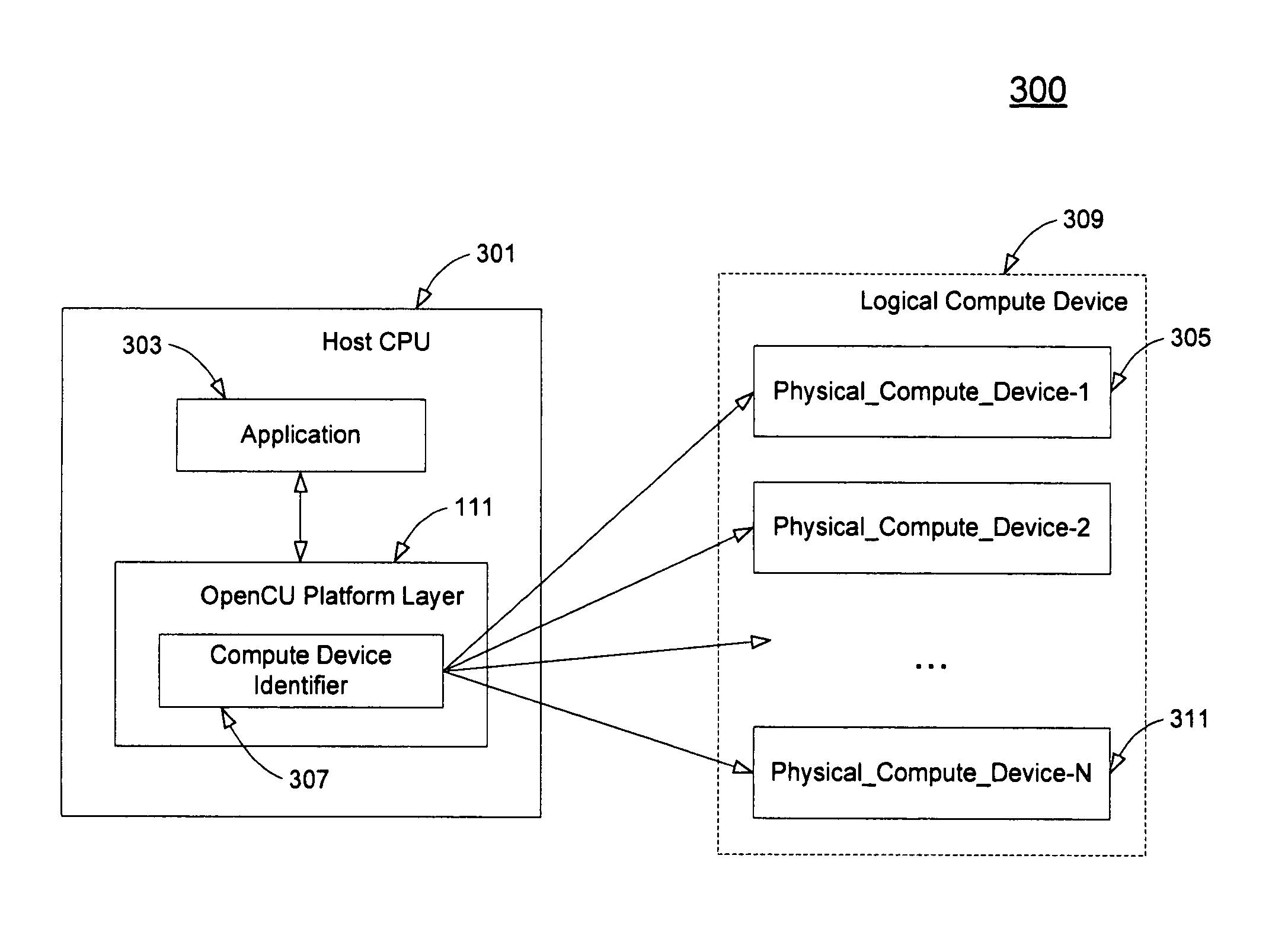

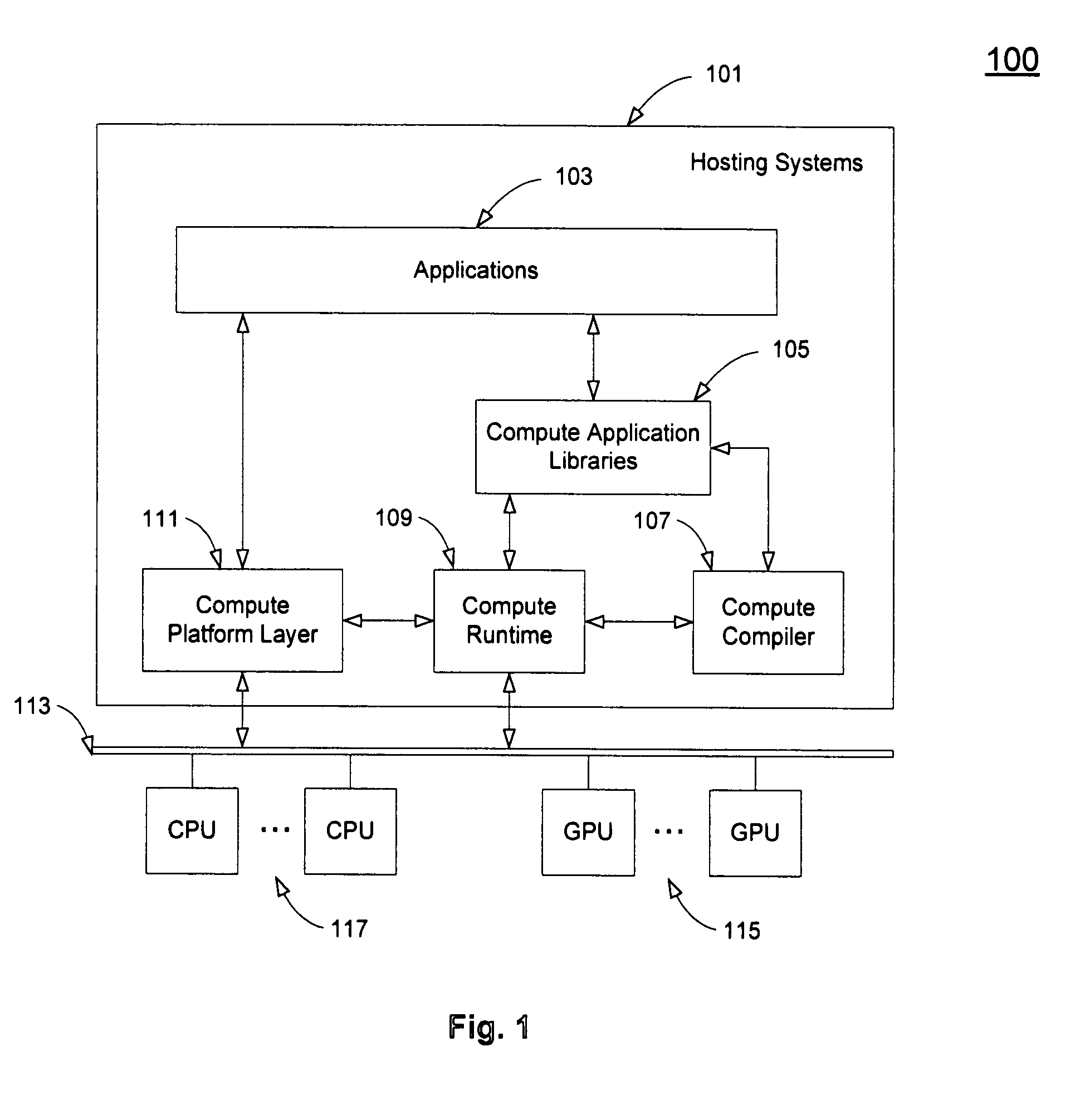

Systems and methods for dynamically choosing a processing element for a compute kernel

ActiveUS20070294512A1Error detection/correctionSoftware engineeringPerformance computingProcessing element

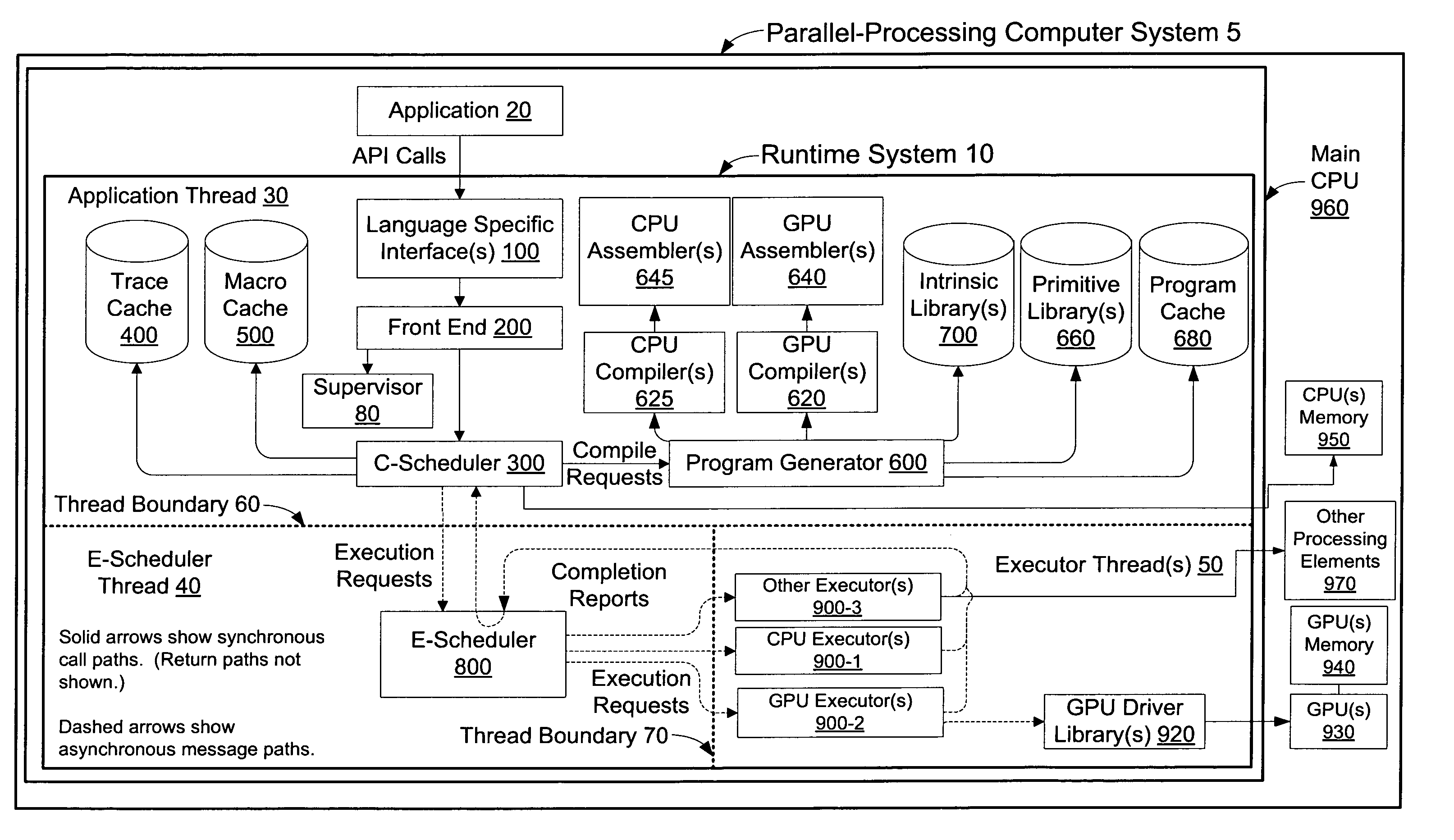

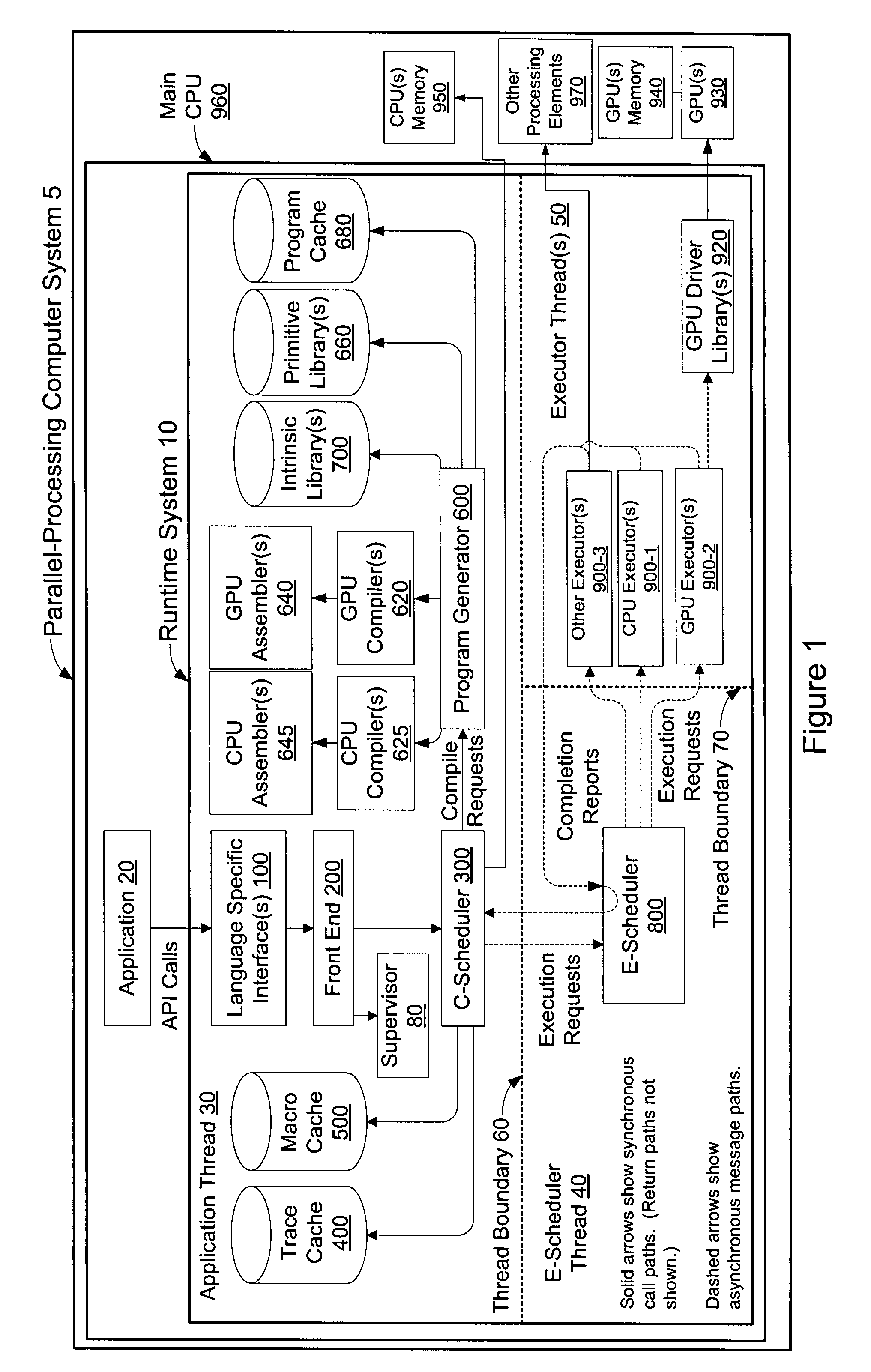

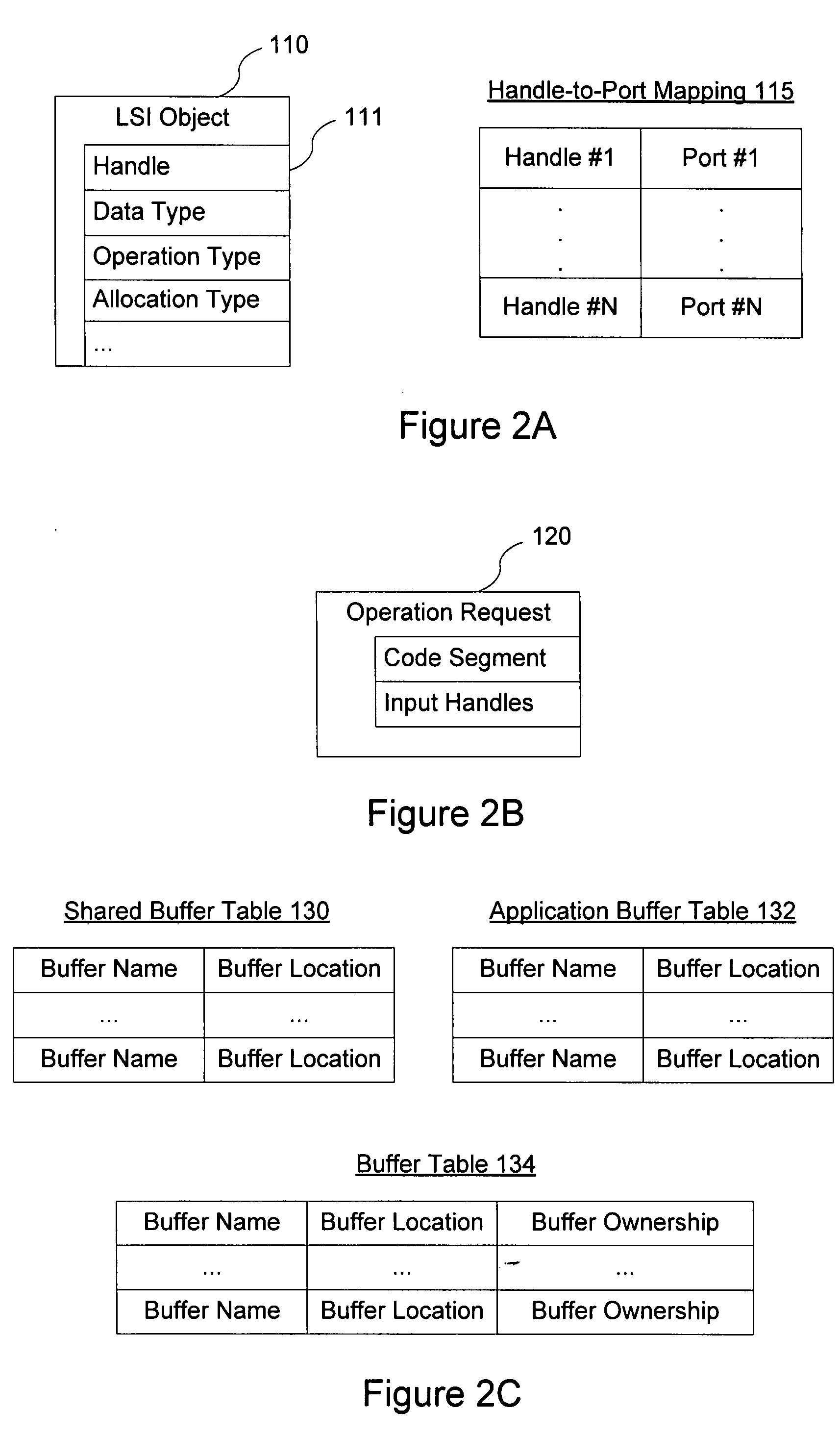

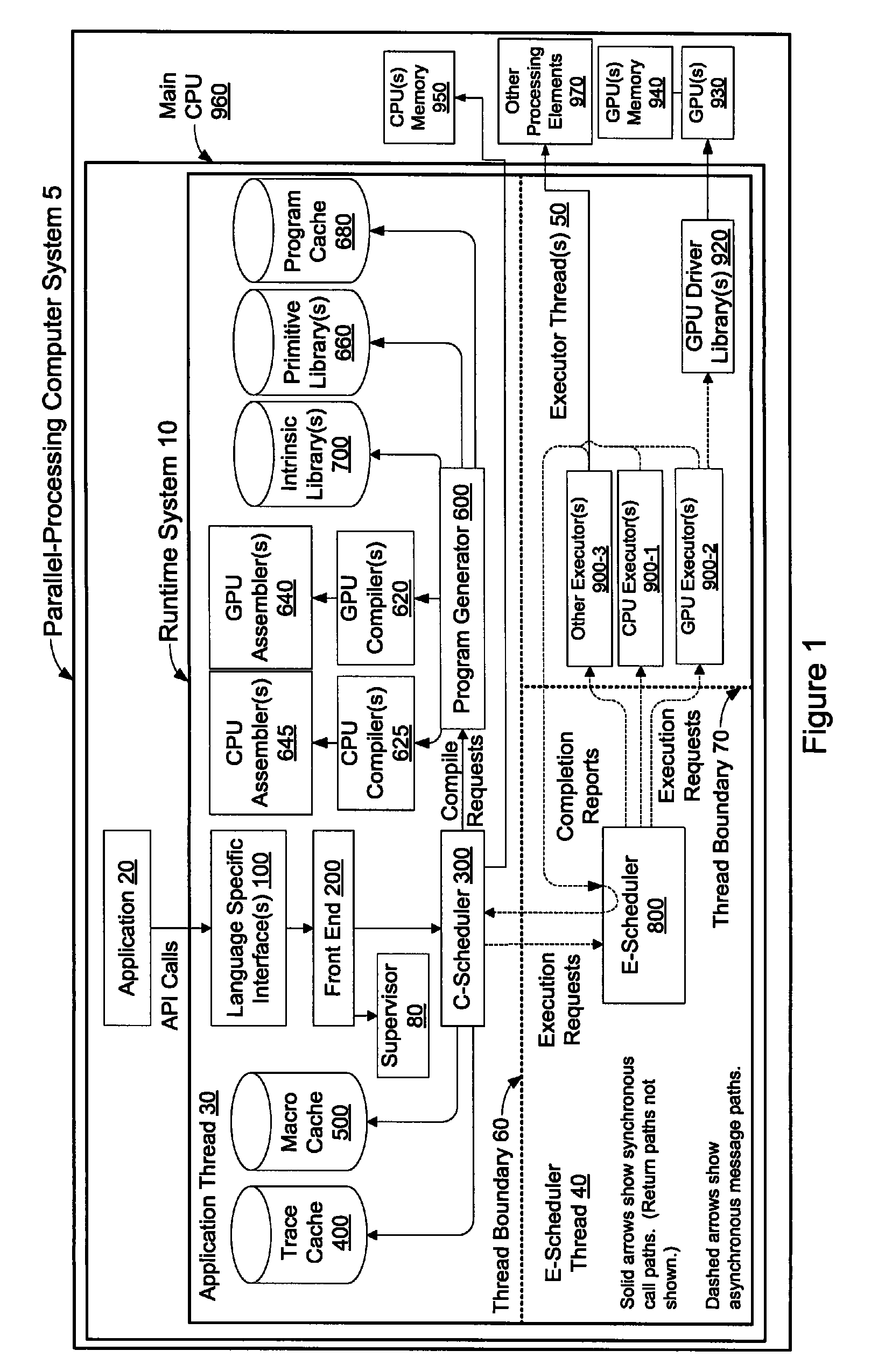

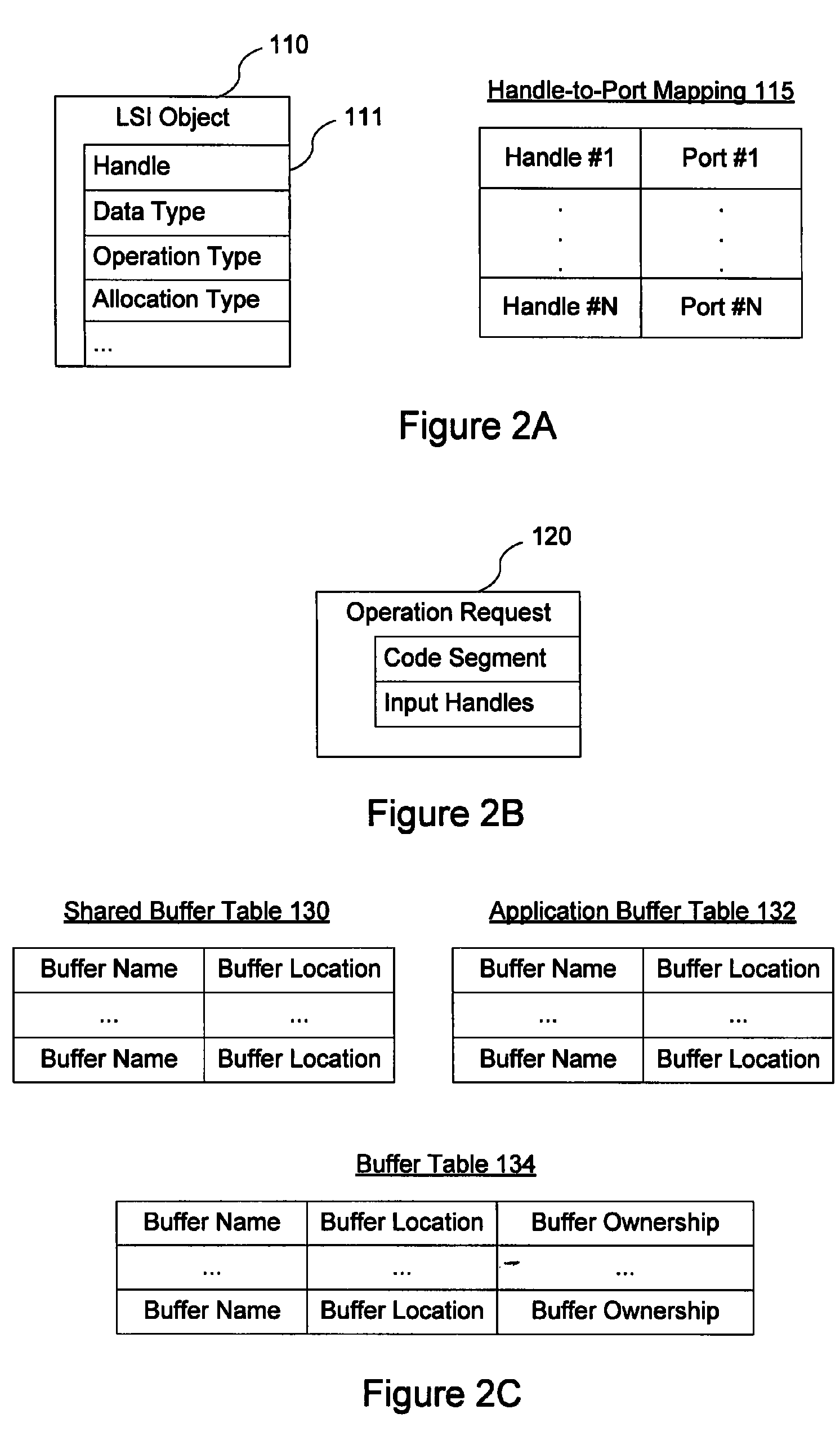

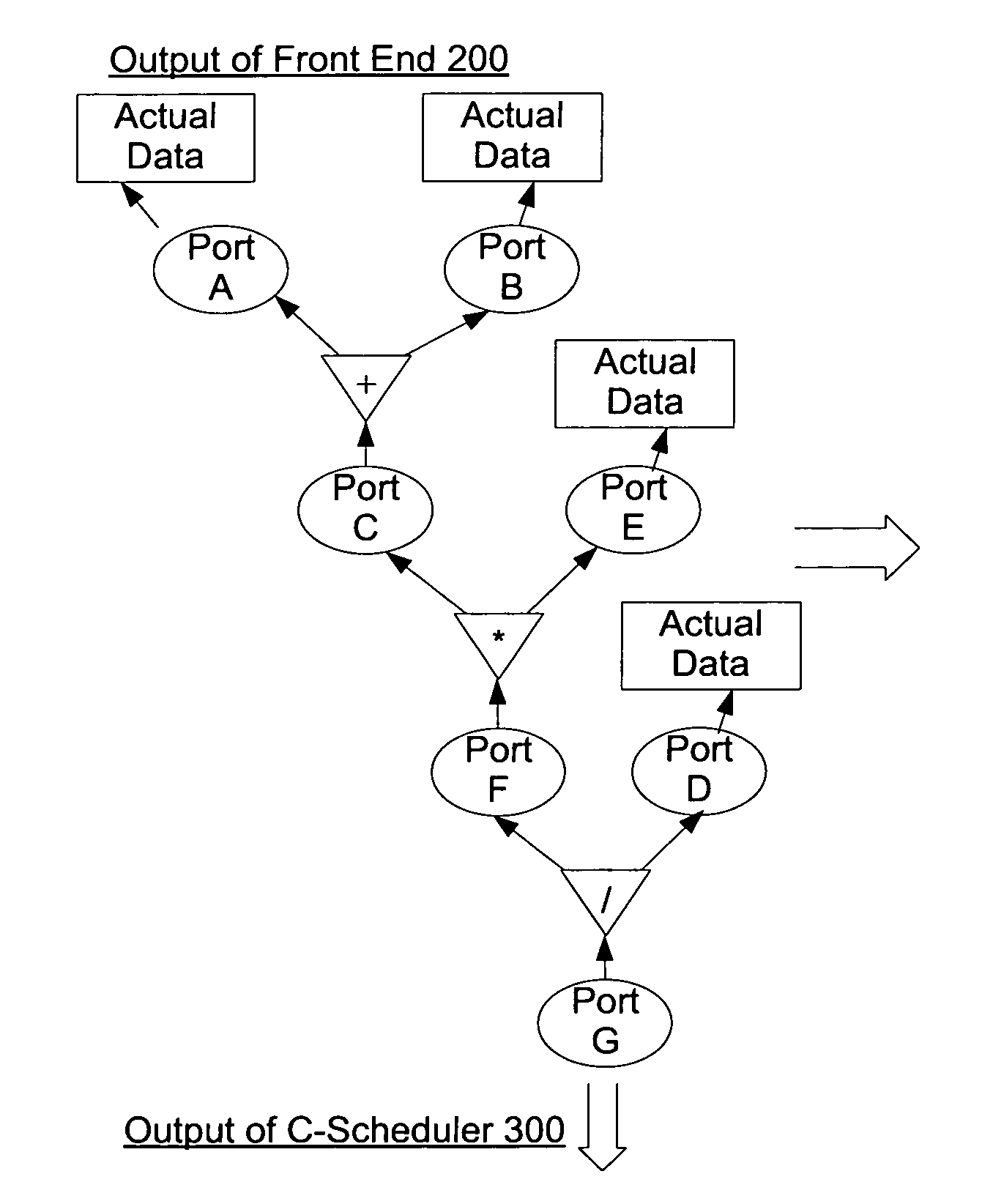

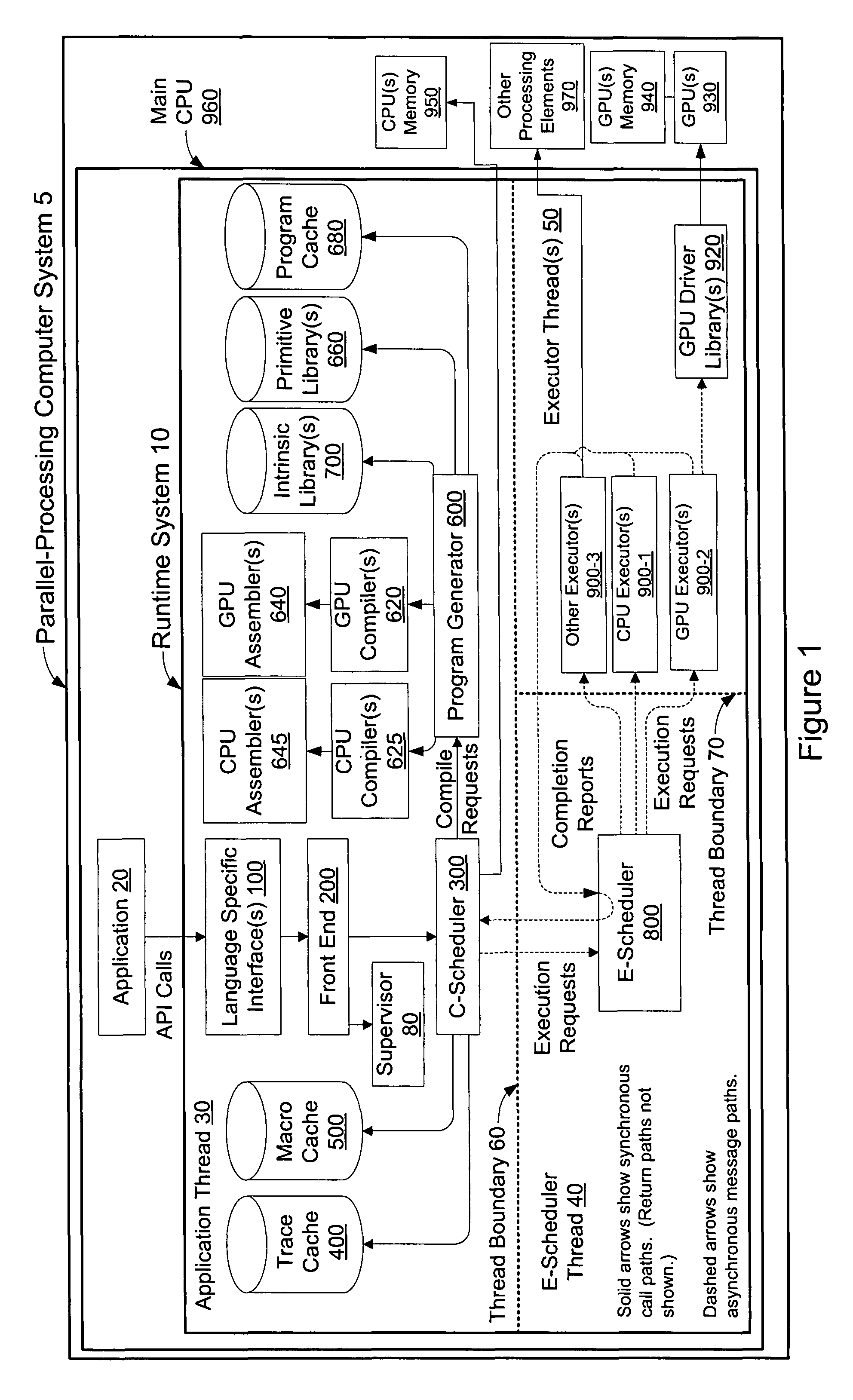

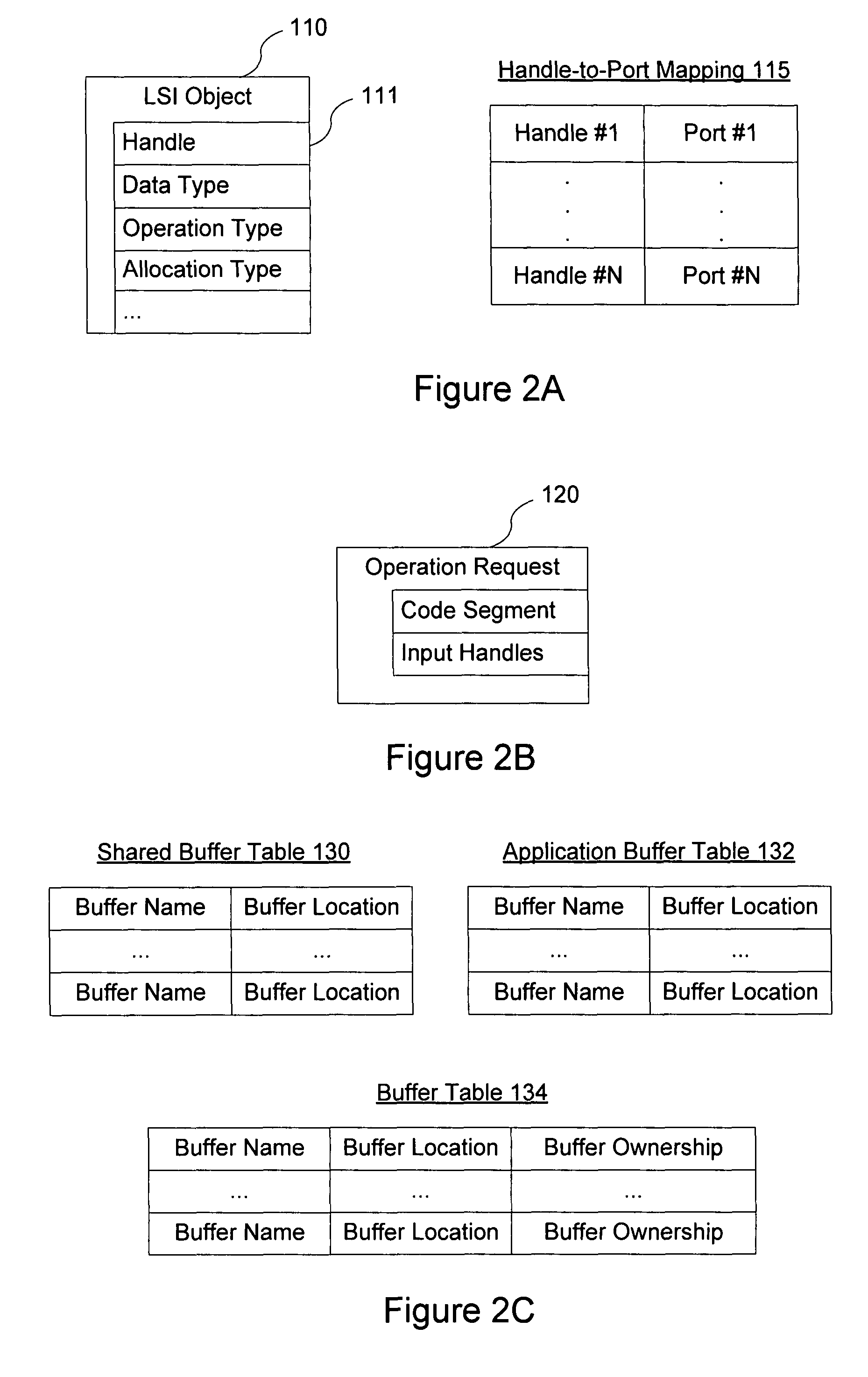

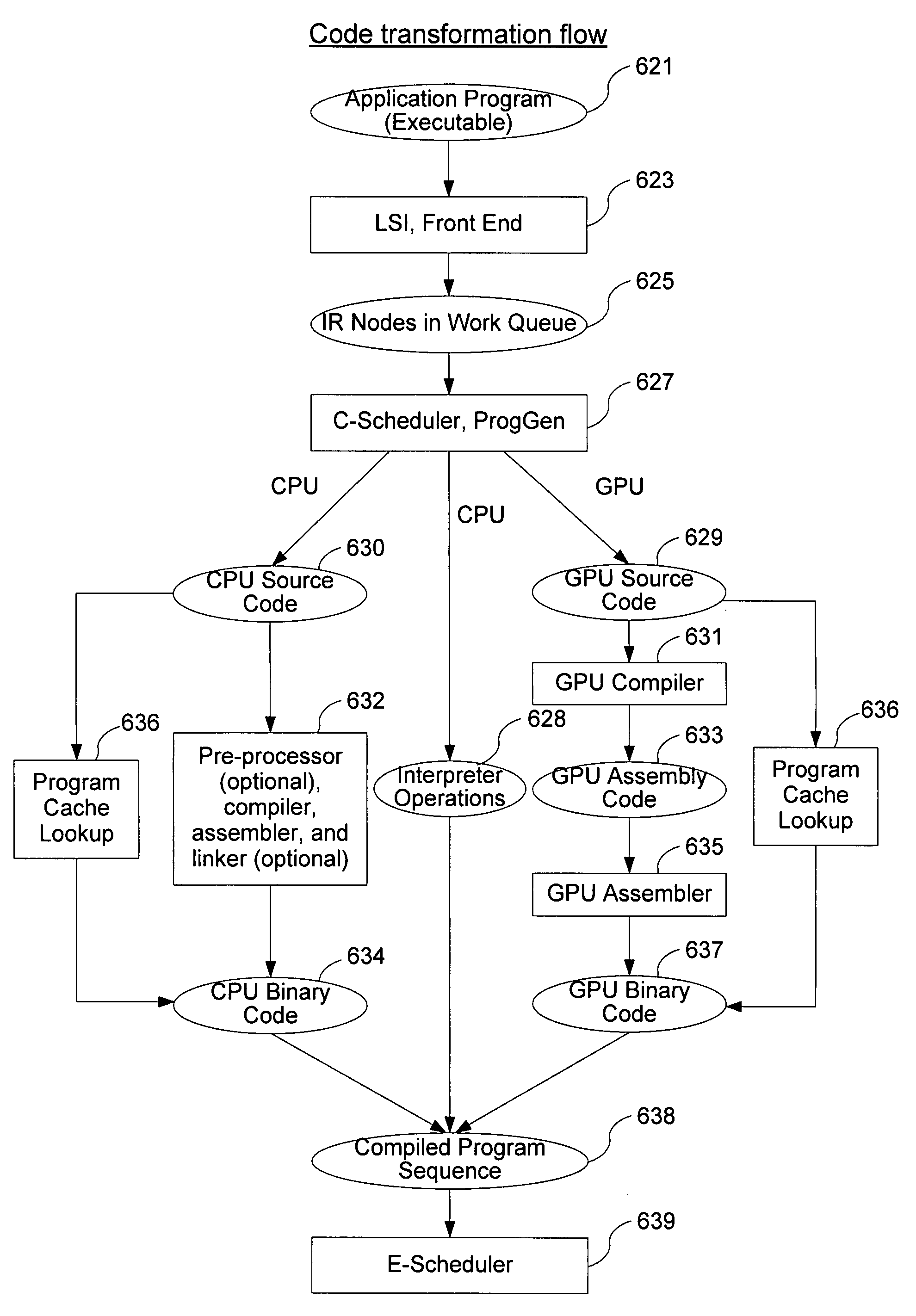

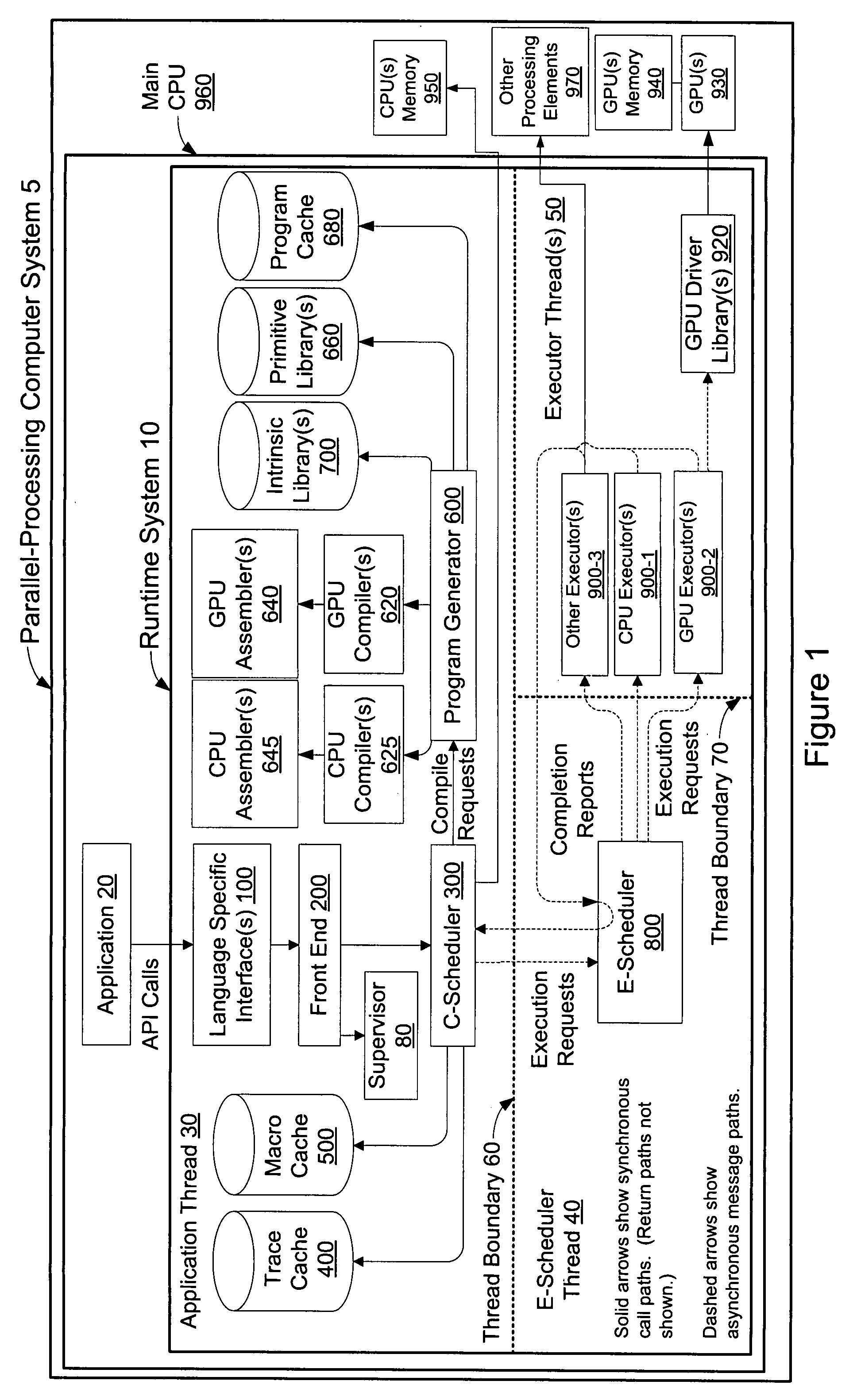

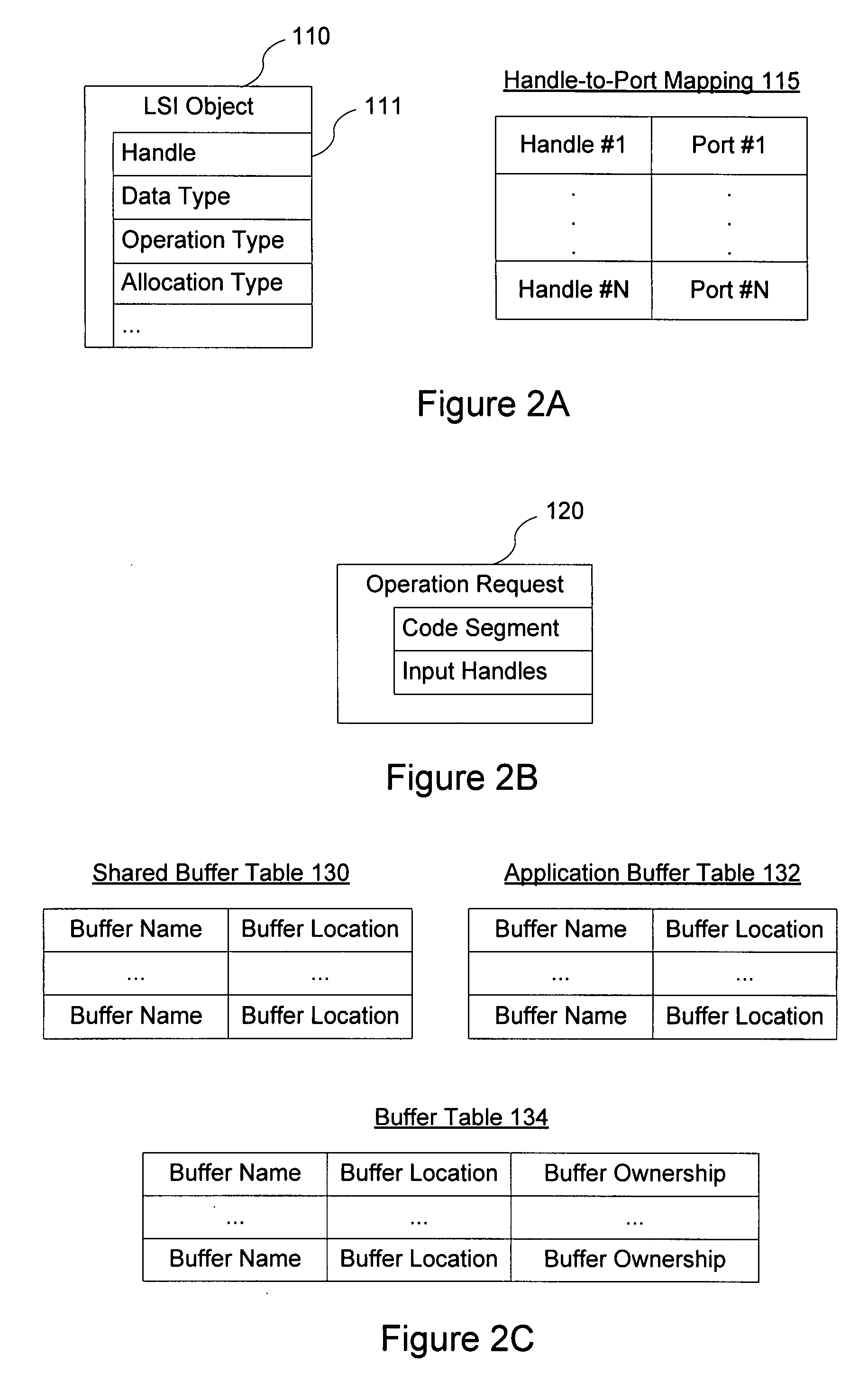

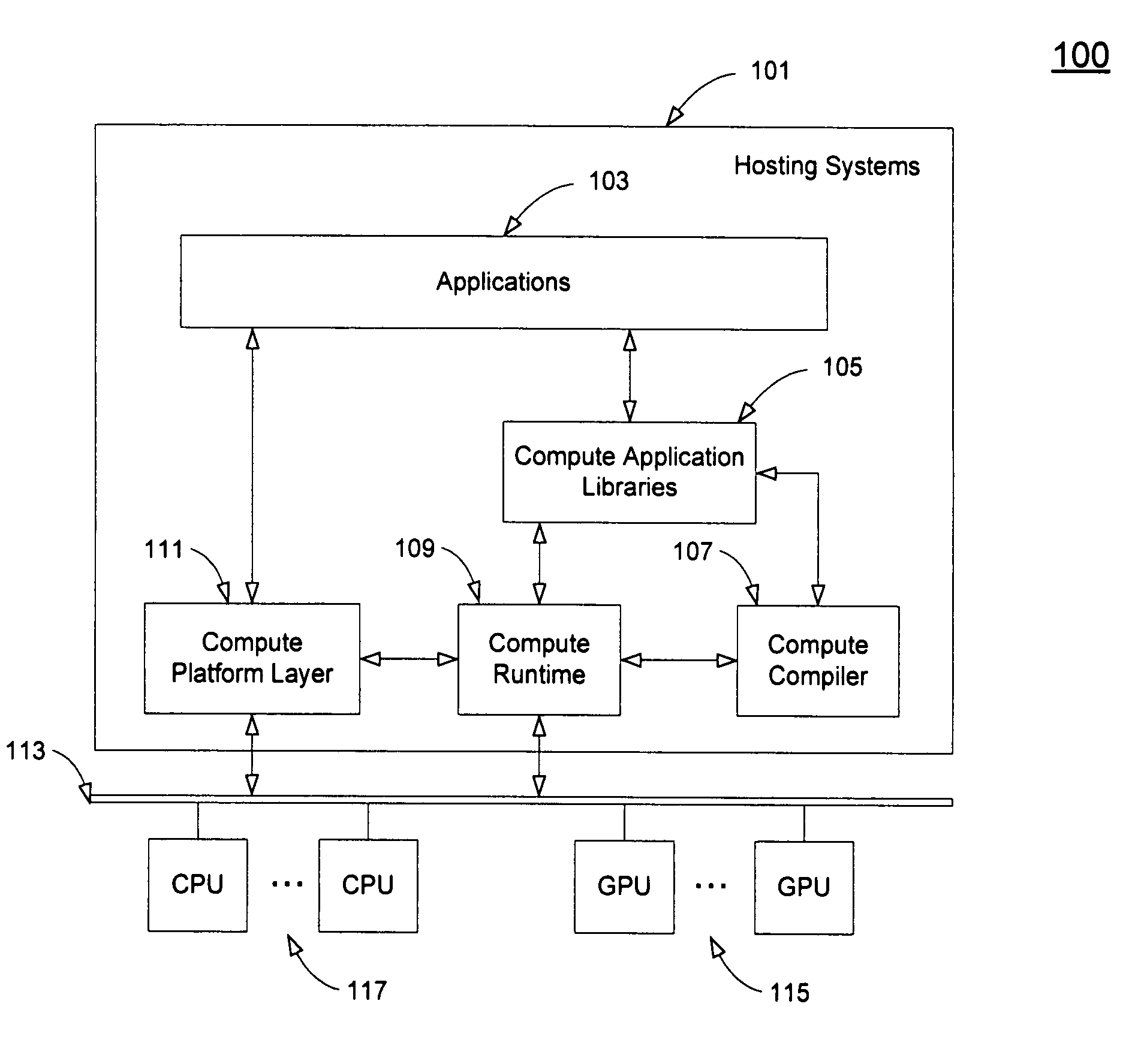

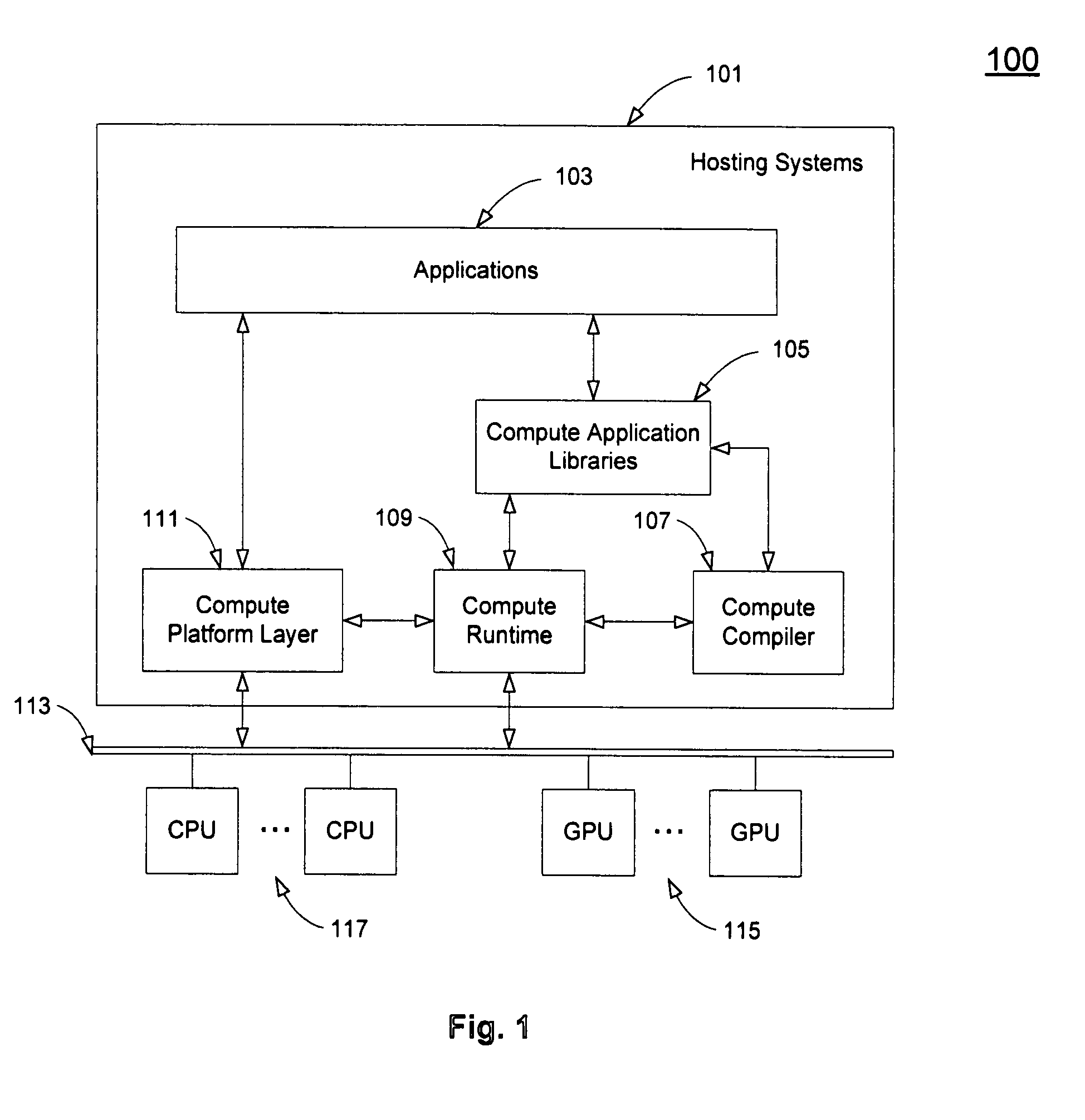

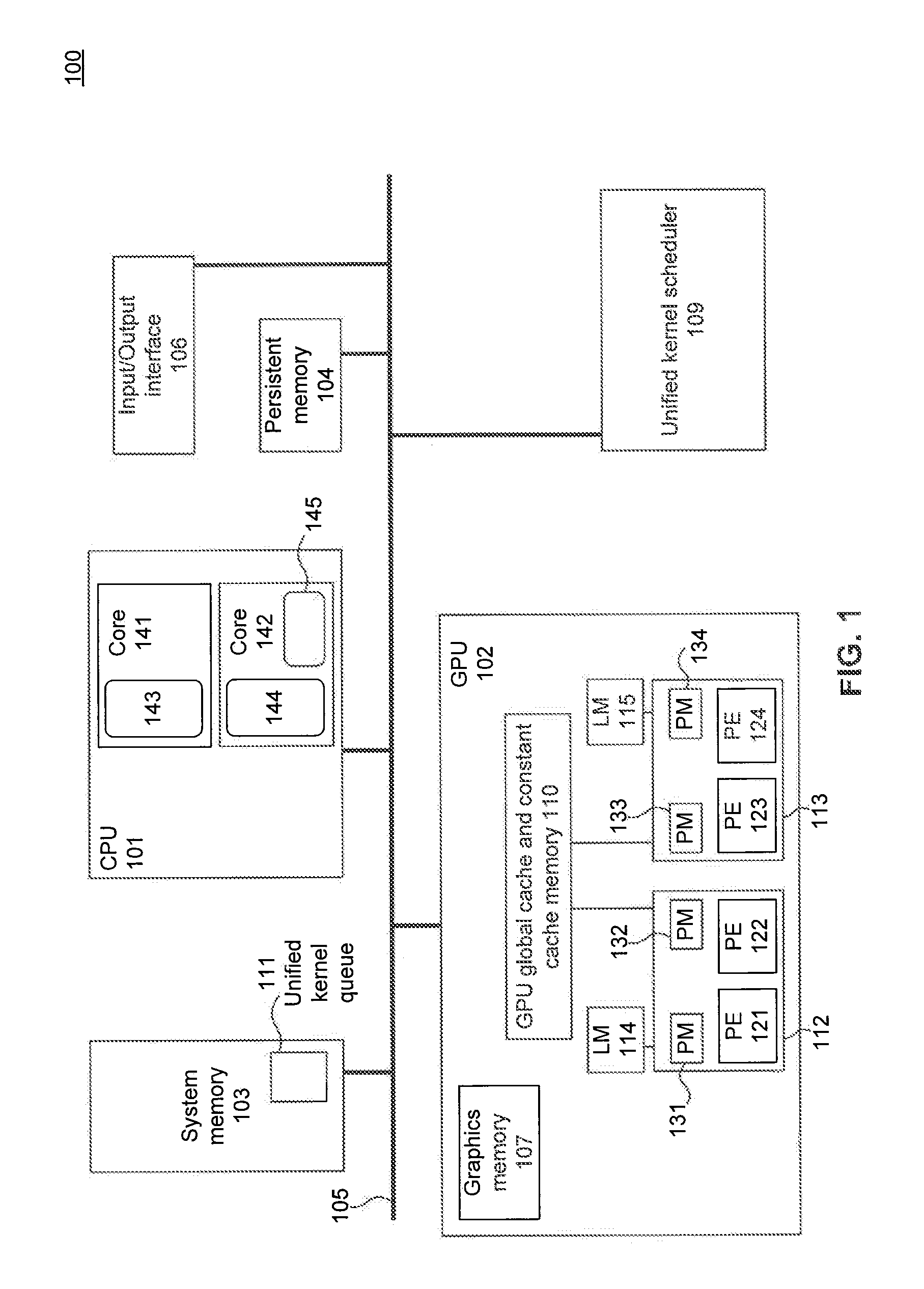

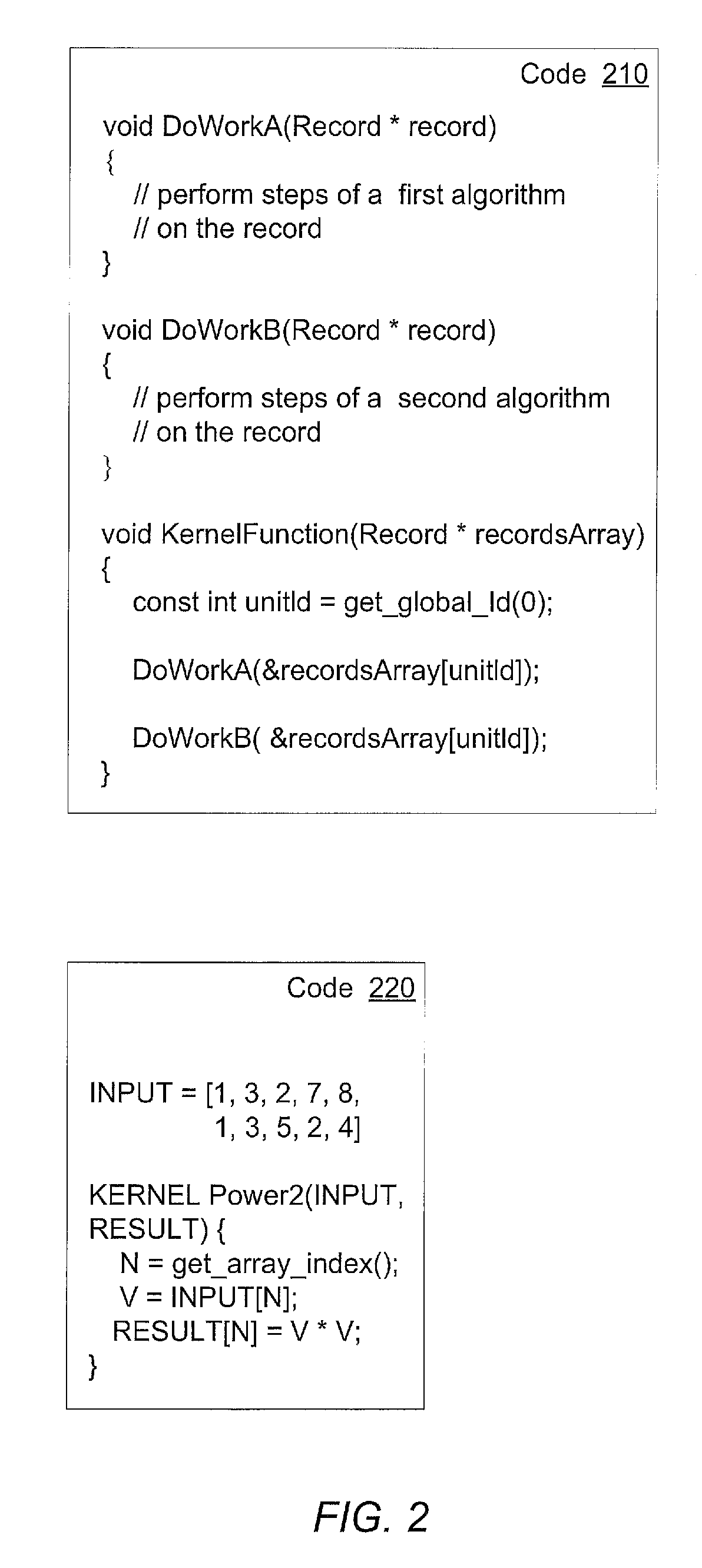

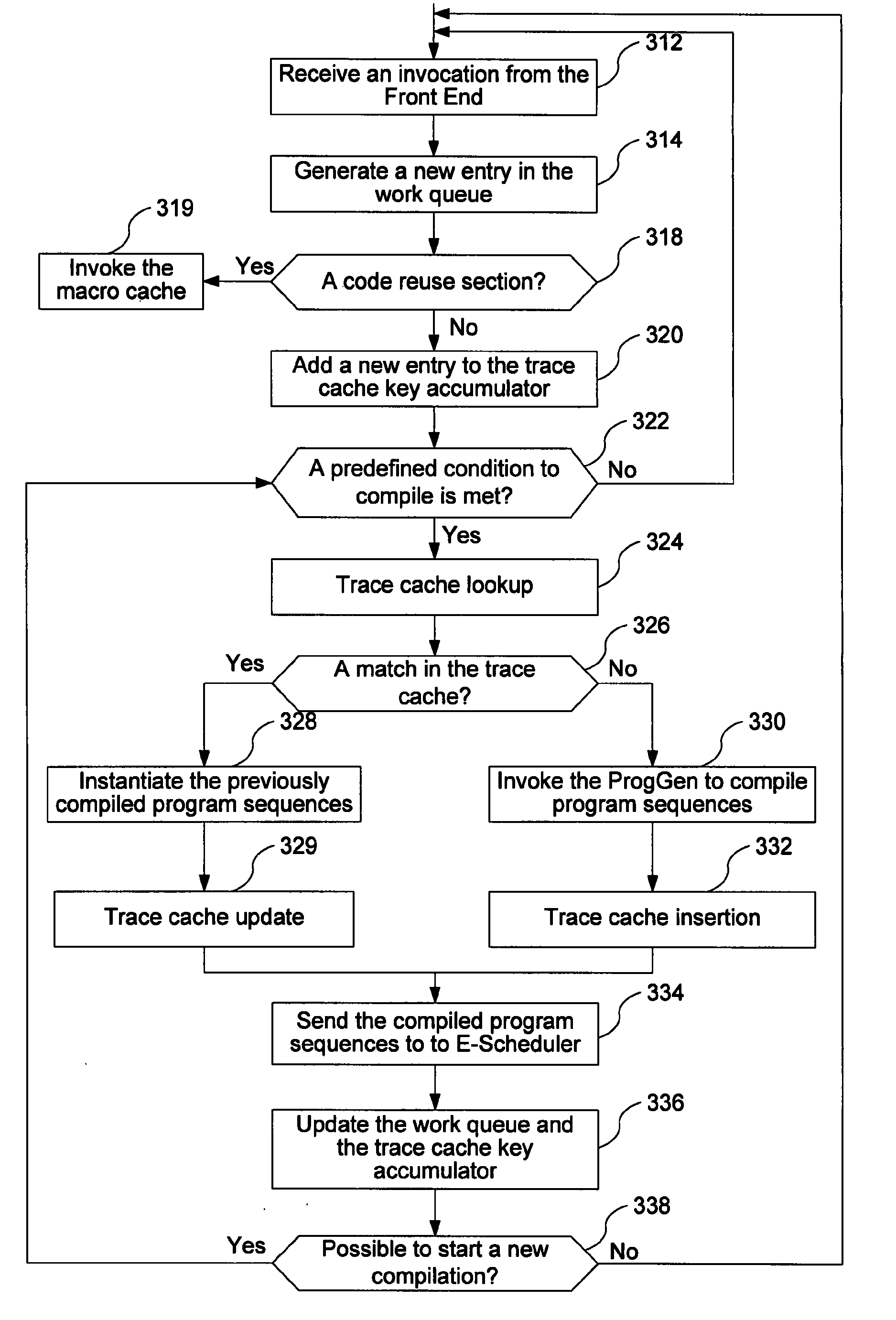

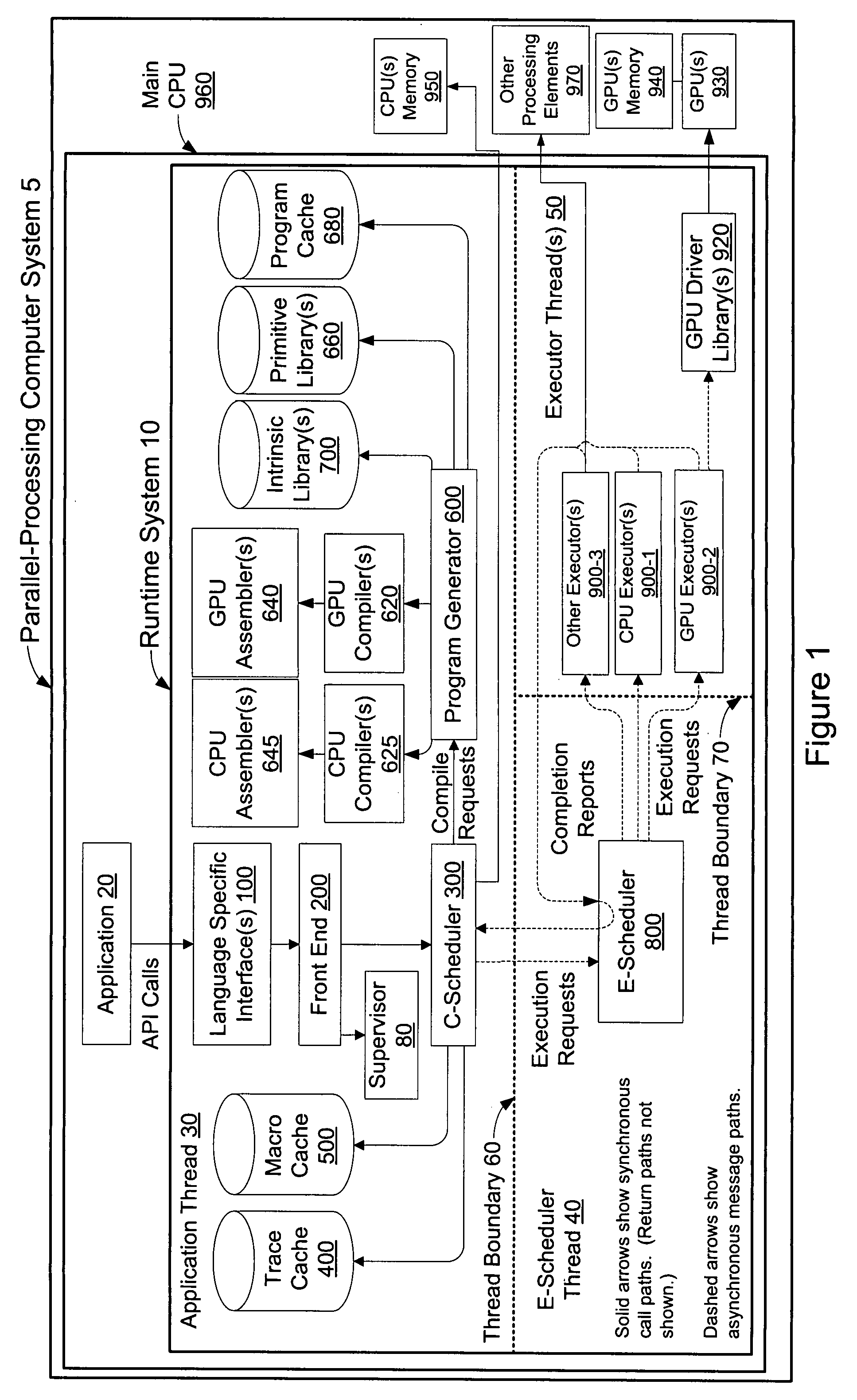

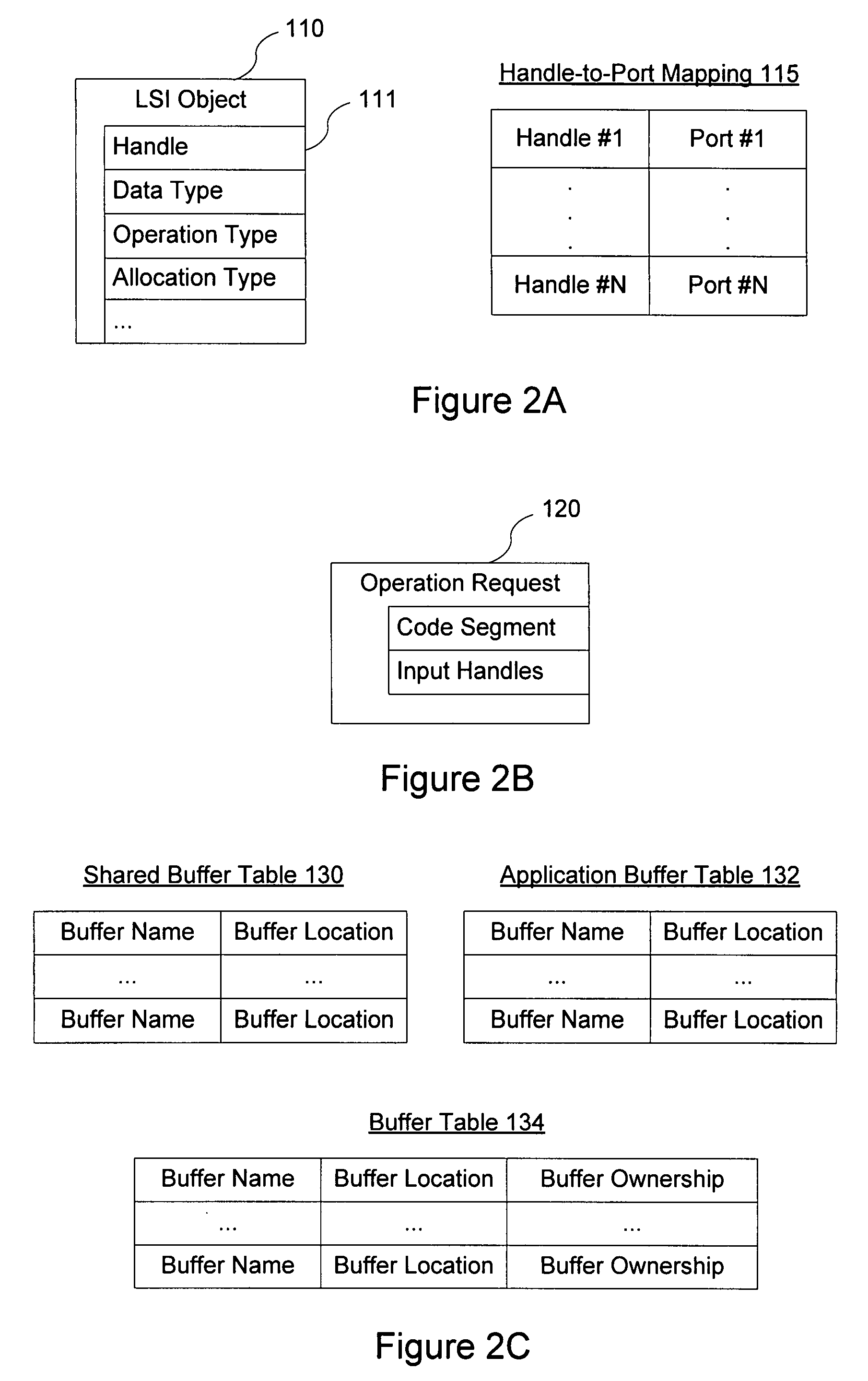

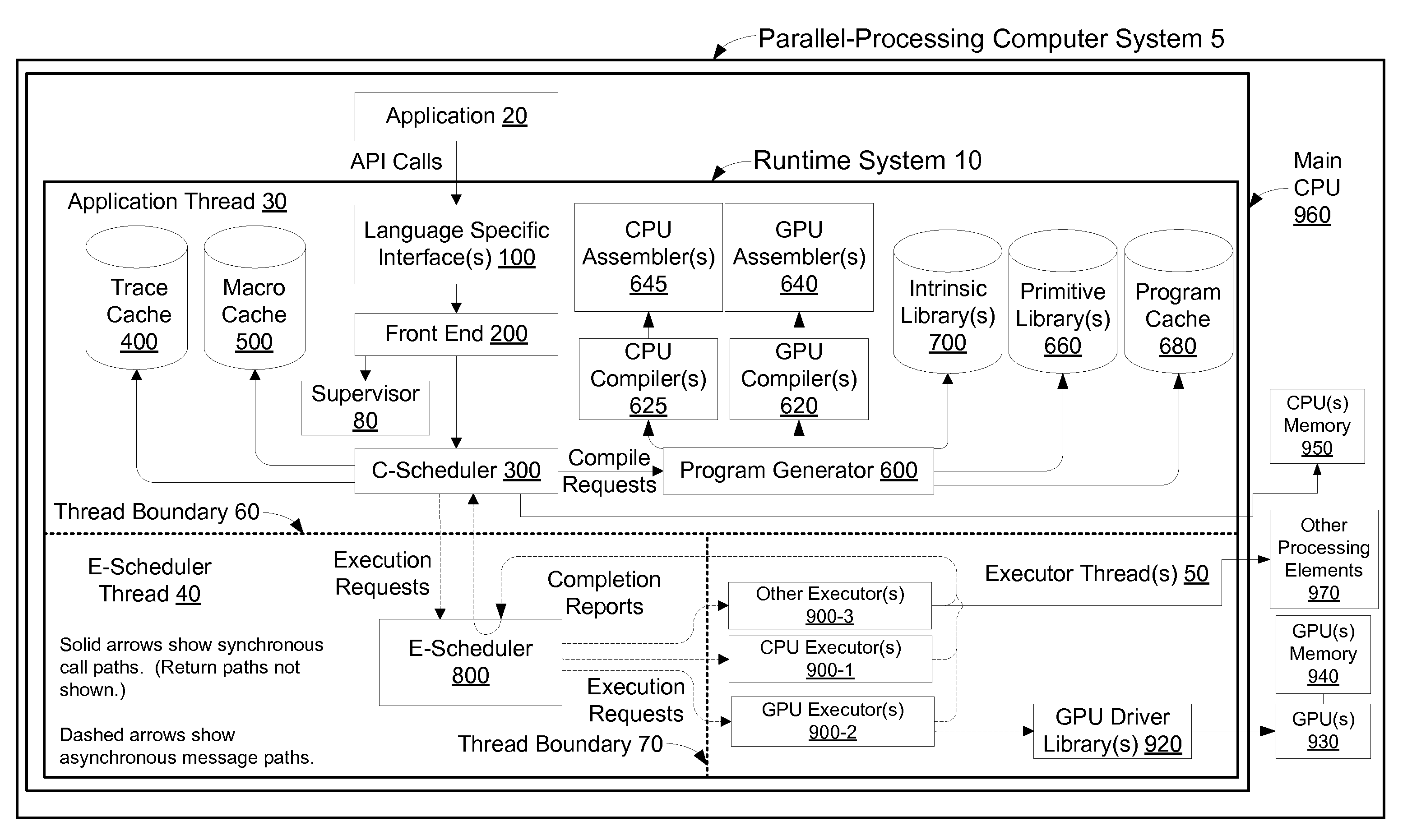

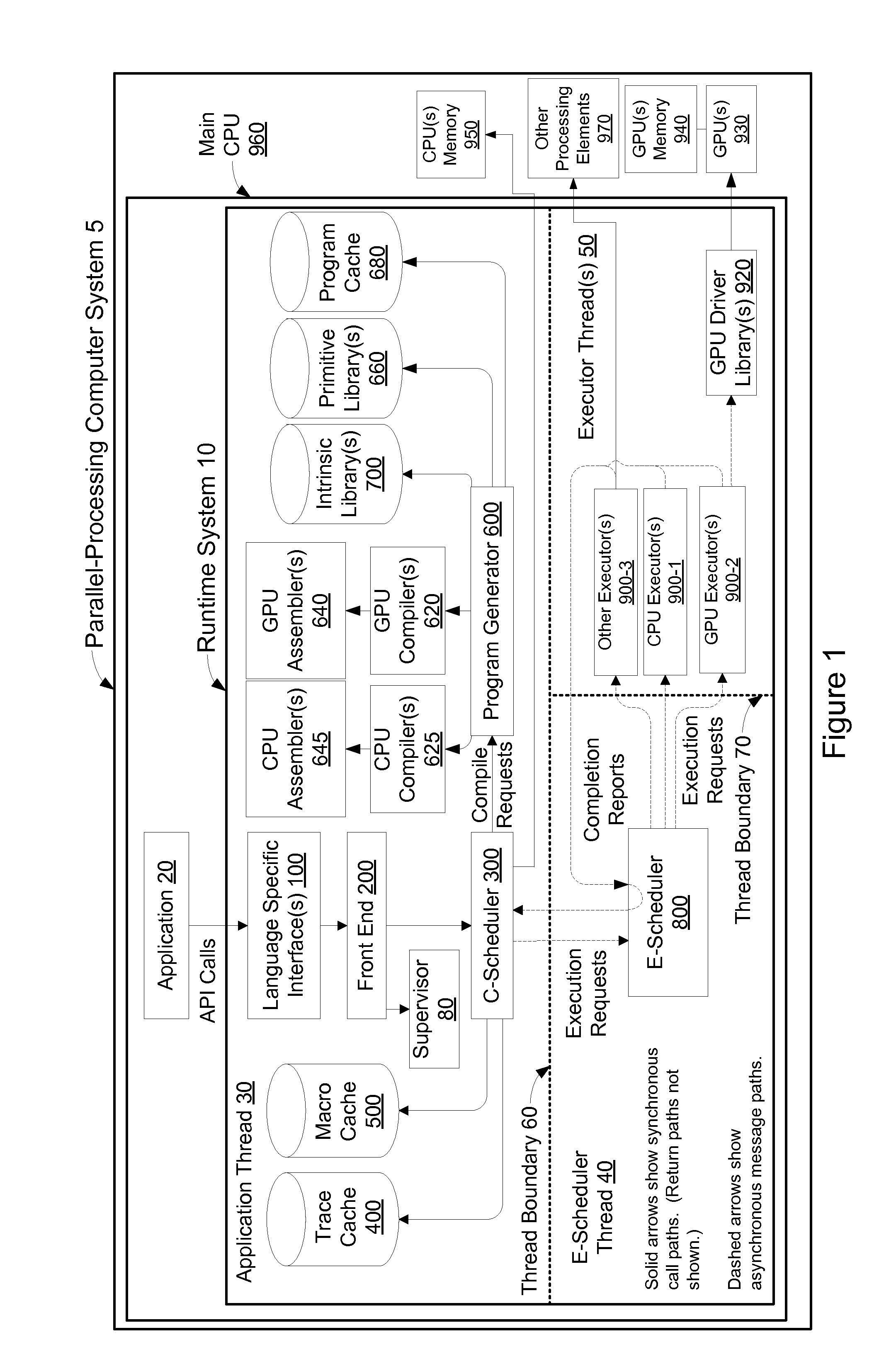

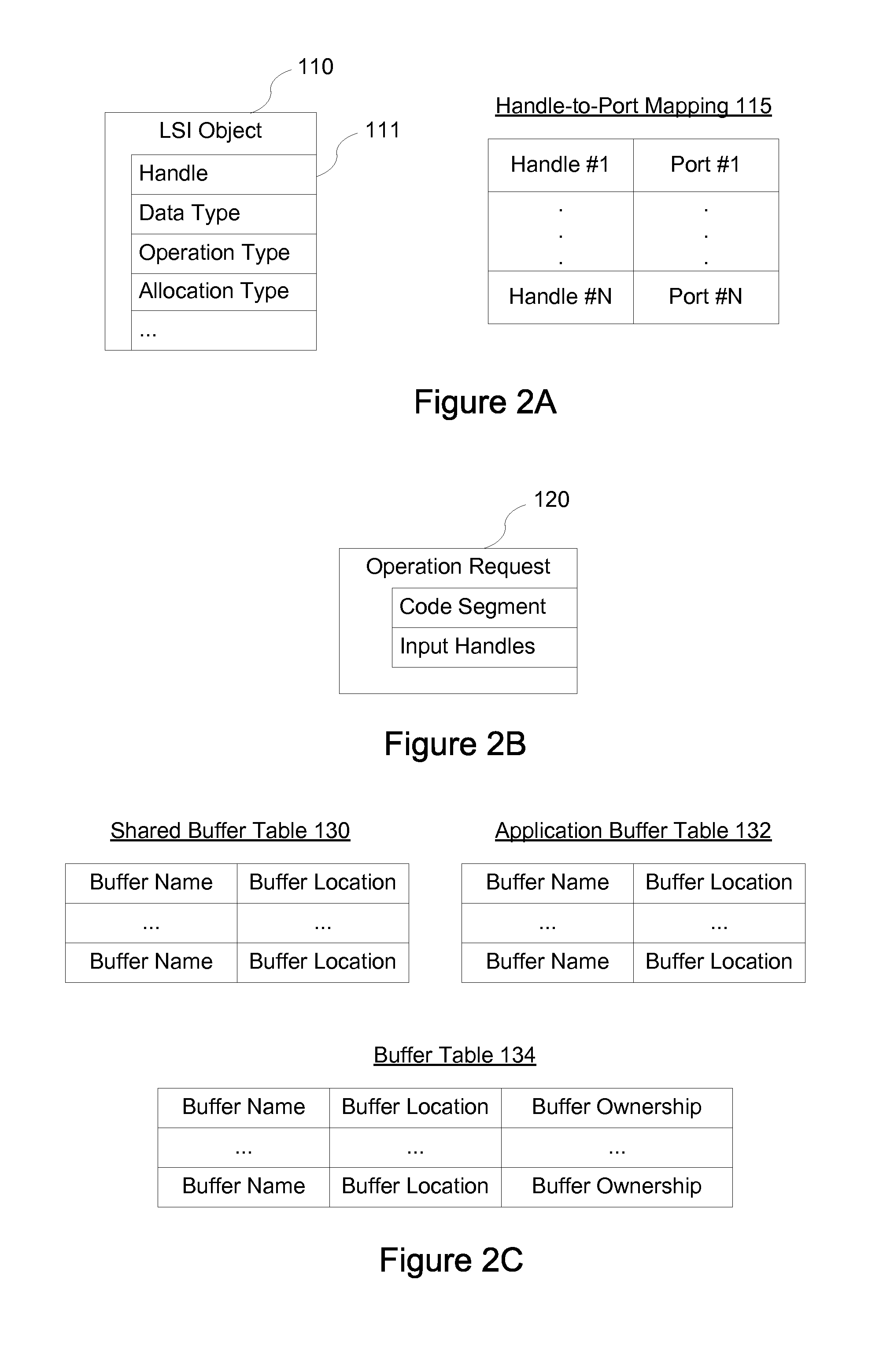

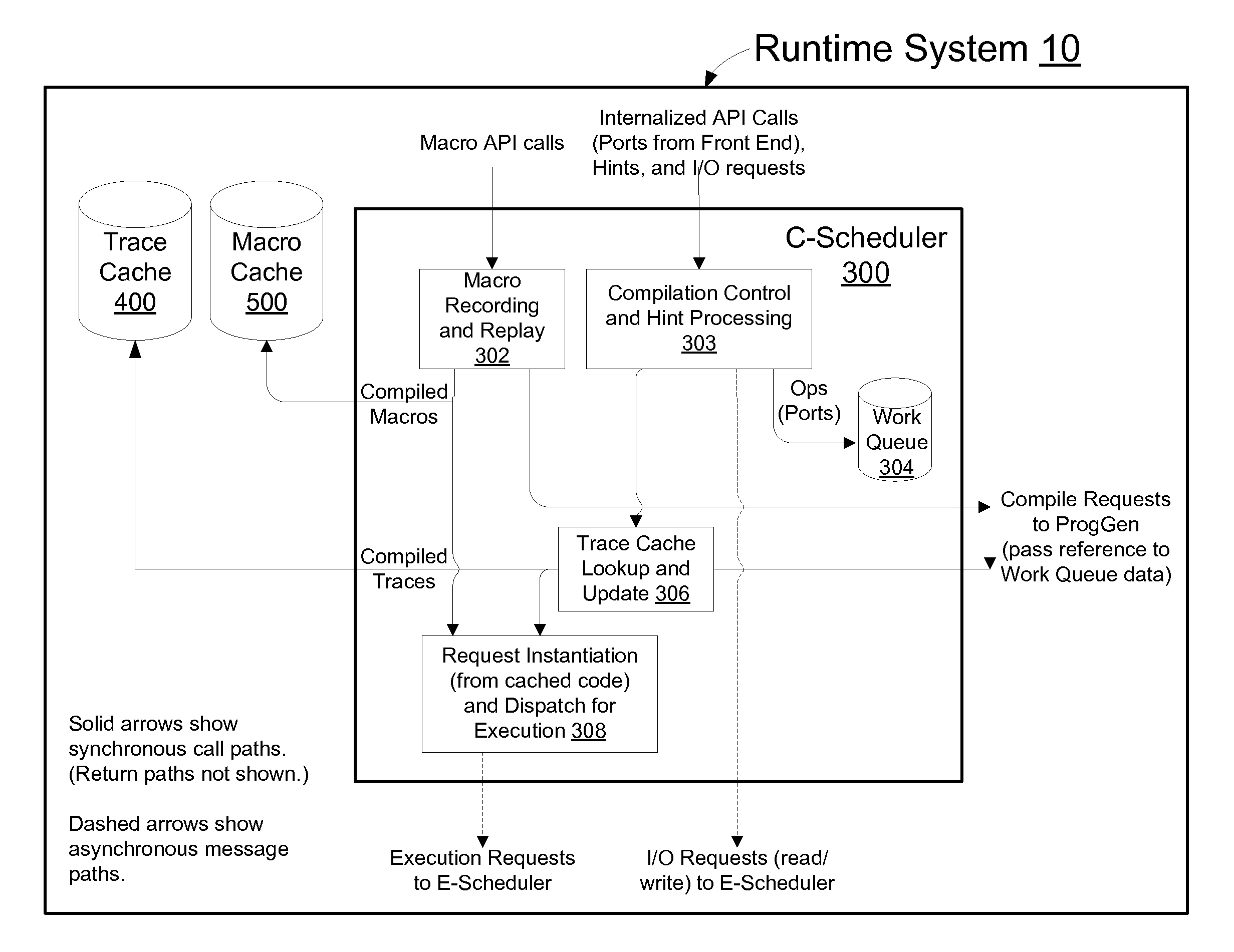

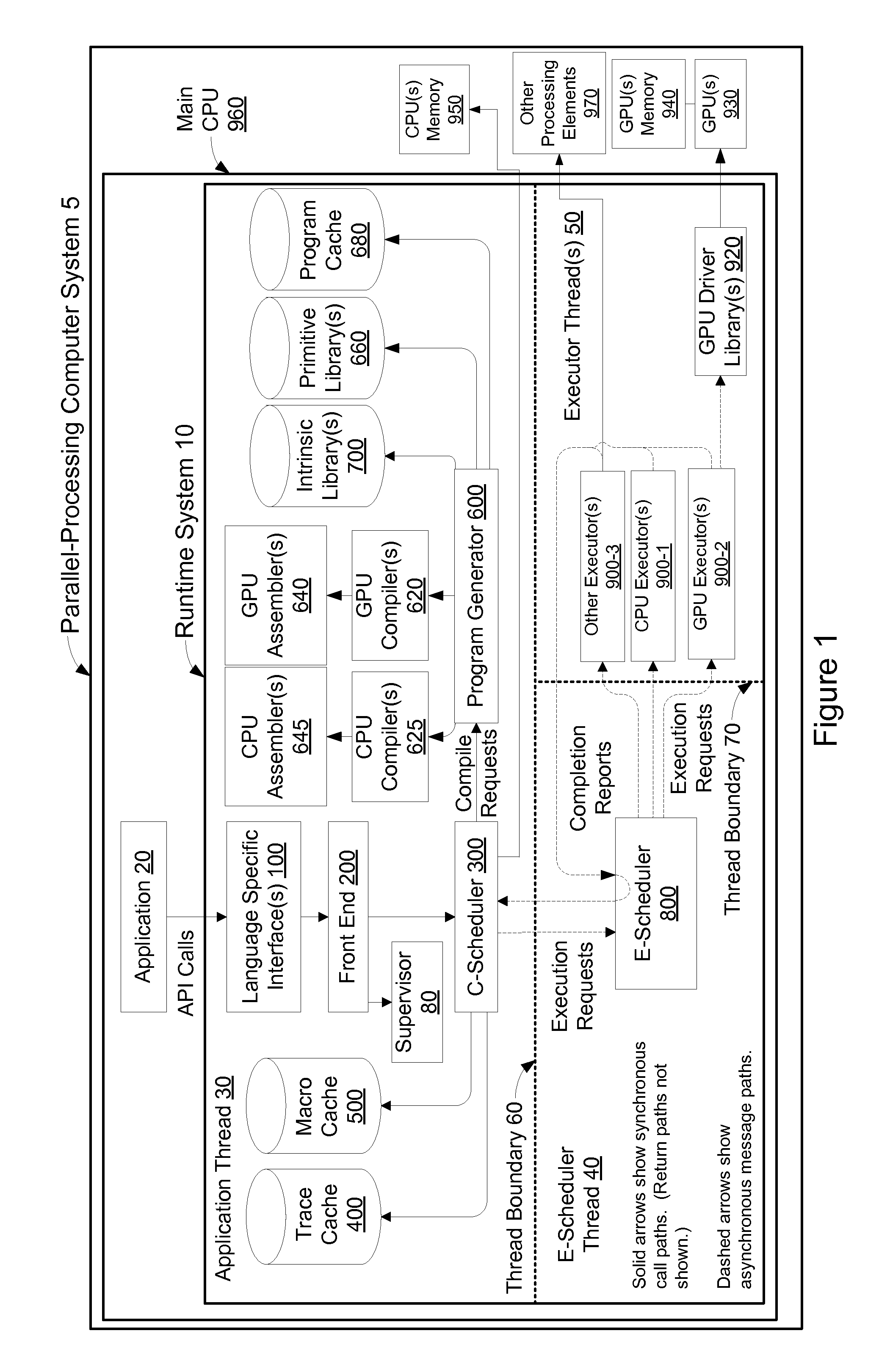

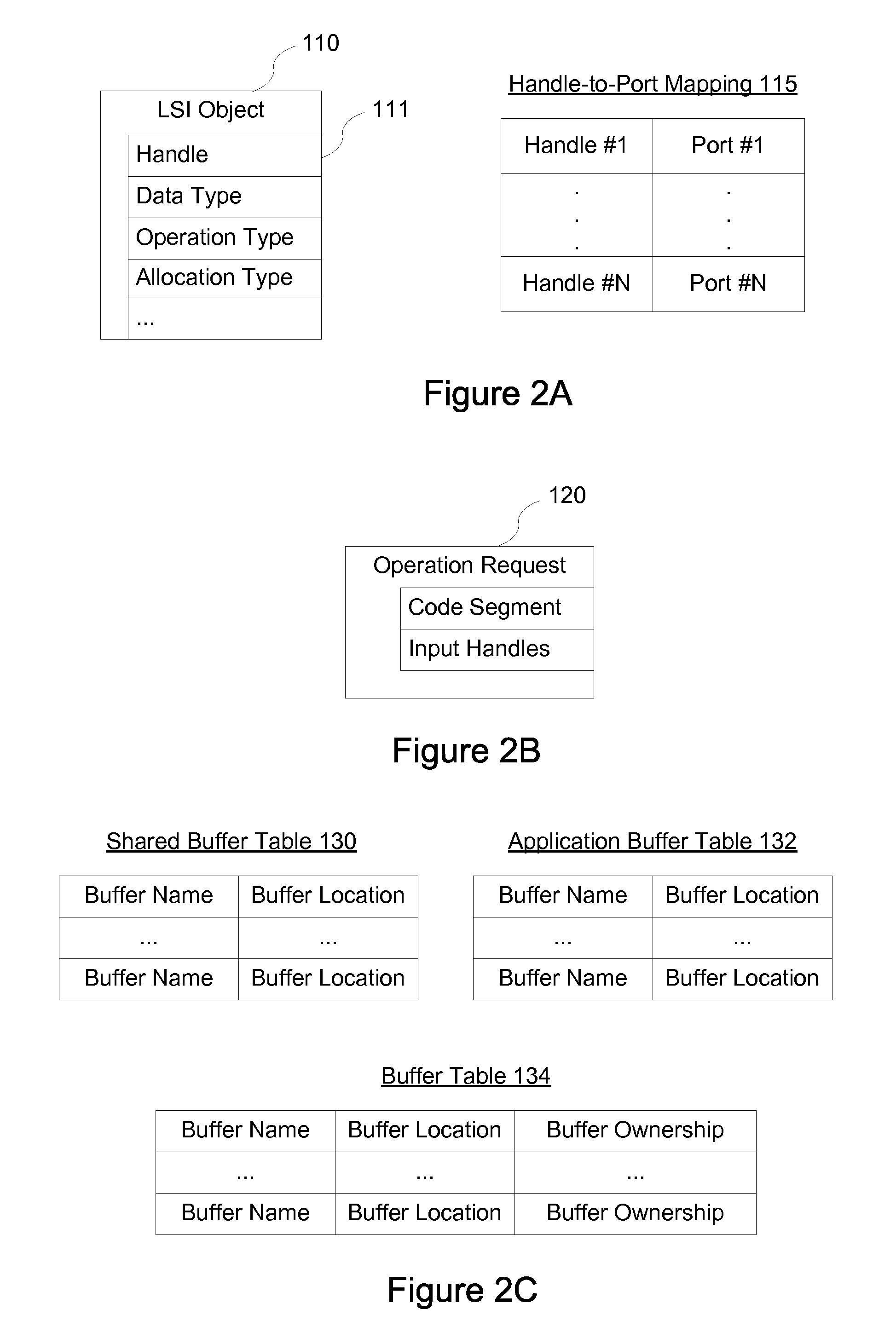

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

Systems and methods for dynamically choosing a processing element for a compute kernel

ActiveUS8108844B2Error detection/correctionSoftware engineeringPerformance computingProcessing element

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

Systems and methods for determining compute kernels for an application in a parallel-processing computer system

ActiveUS8136104B2Error detection/correctionMultiprogramming arrangementsPerformance computingParallel processing

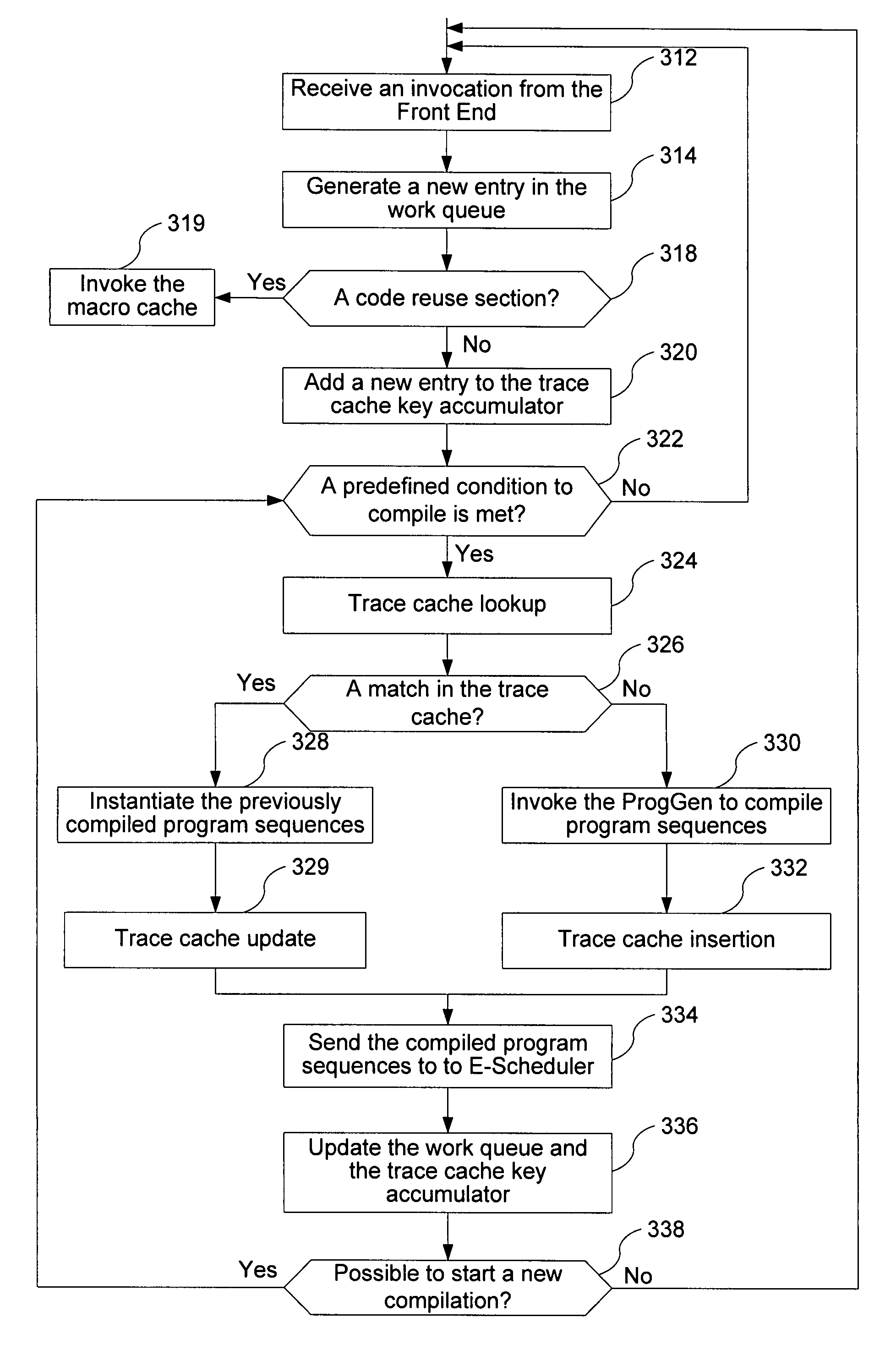

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

Systems and methods for caching compute kernels for an application running on a parallel-processing computer system

ActiveUS20070294682A1Multiprogramming arrangementsMemory systemsPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

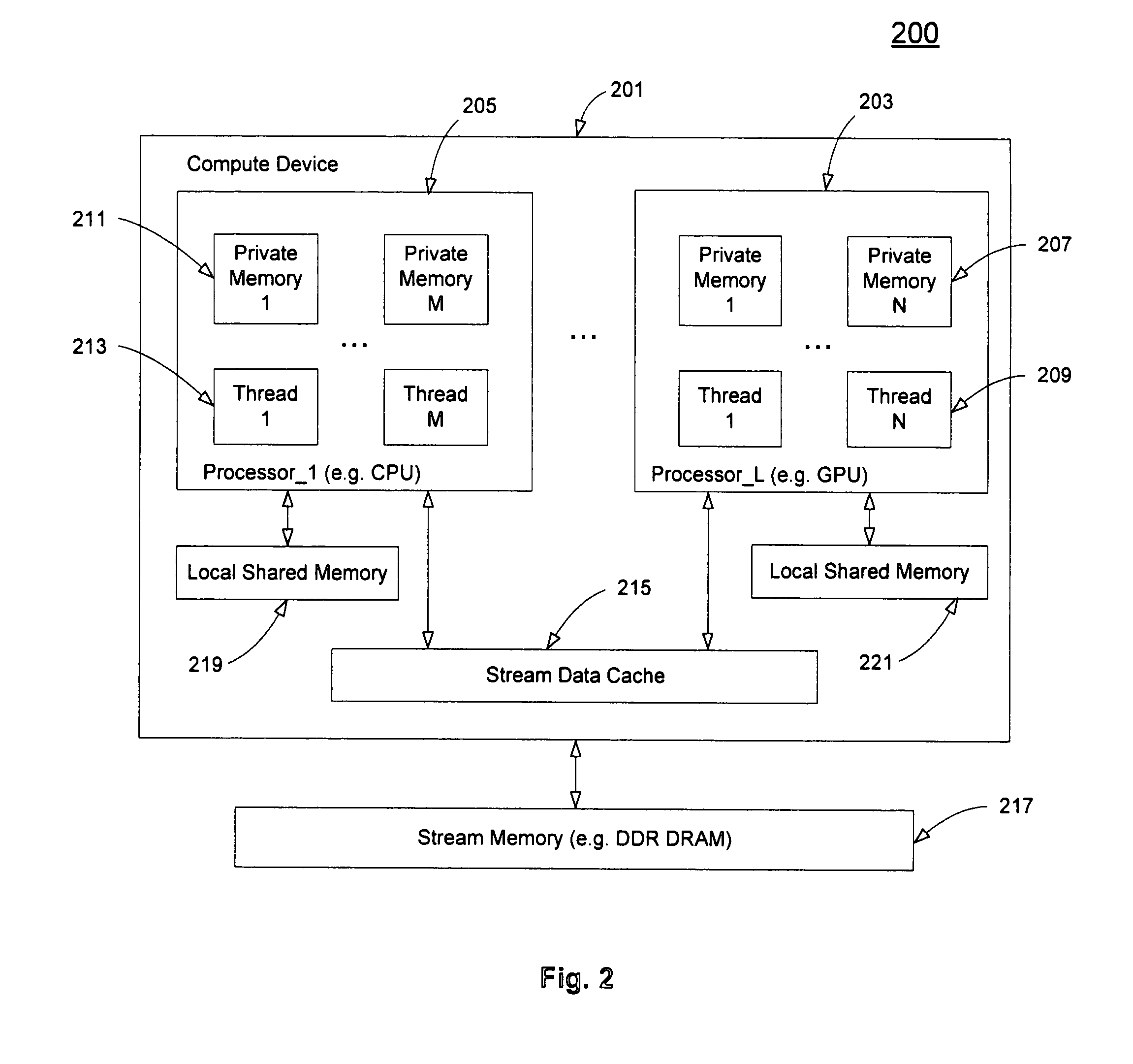

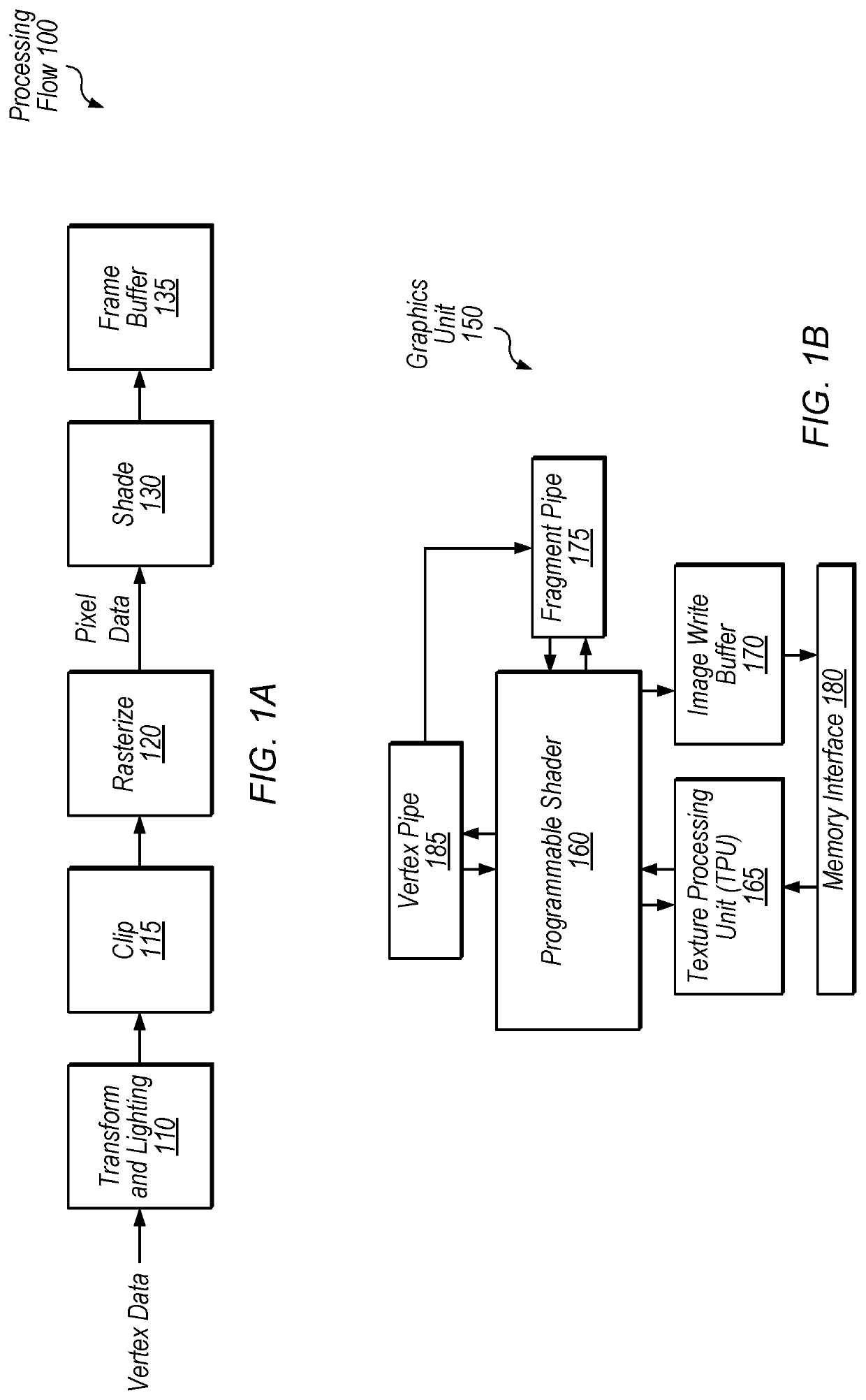

Application interface on multiple processors

ActiveUS20080276220A1Processor architectures/configurationSpecific program execution arrangementsGraphicsMulti processor

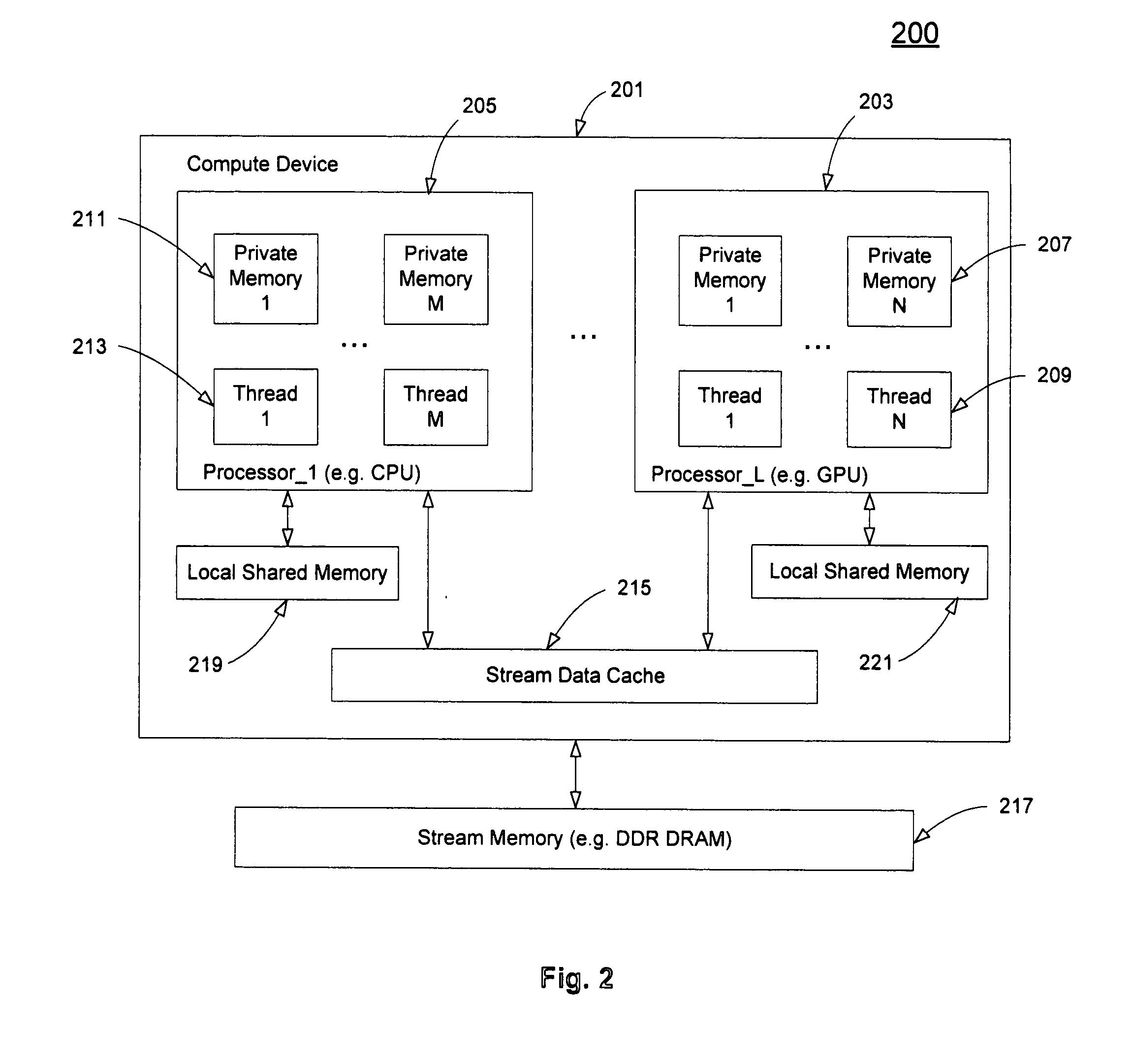

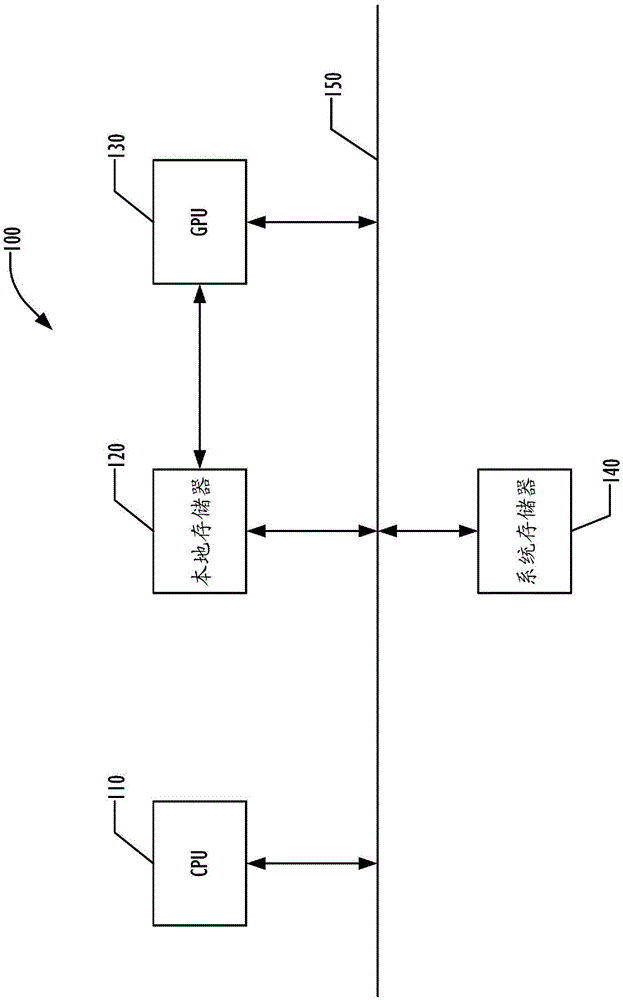

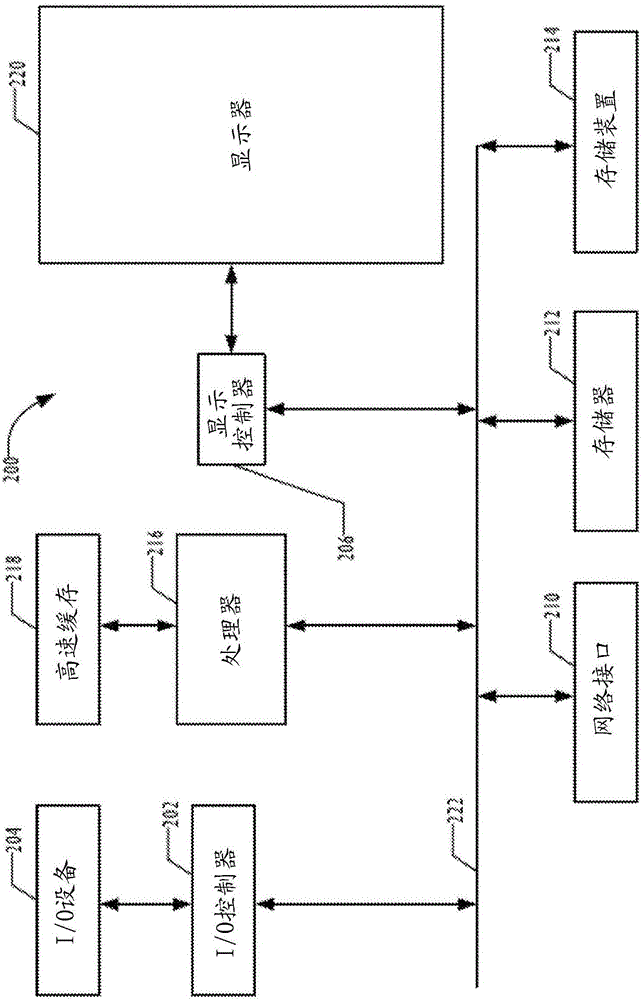

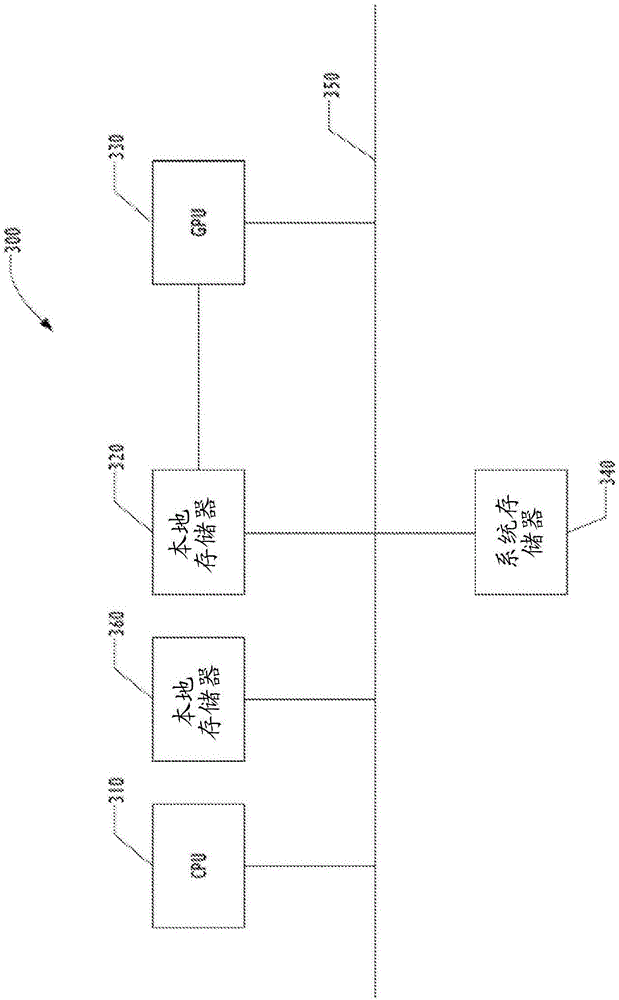

A method and an apparatus that execute a parallel computing program in a programming language for a parallel computing architecture are described. The parallel computing program is stored in memory in a system with parallel processors. The system includes a host processor, a graphics processing unit (GPU) coupled to the host processor and a memory coupled to at least one of the host processor and the GPU. The parallel computing program is stored in the memory to allocate threads between the host processor and the GPU. The programming language includes an API to allow an application to make calls using the API to allocate execution of the threads between the host processor and the GPU. The programming language includes host function data tokens for host functions performed in the host processor and kernel function data tokens for compute kernel functions performed in one or more compute processors, e.g. GPUs or CPUs, separate from the host processor. Standard data tokens in the programming language schedule a plurality of threads for execution on a plurality of processors, such as CPUs or GPUs in parallel. Extended data tokens in the programming language implement executables for the plurality of threads according to the schedules from the standard data tokens.

Owner:APPLE INC

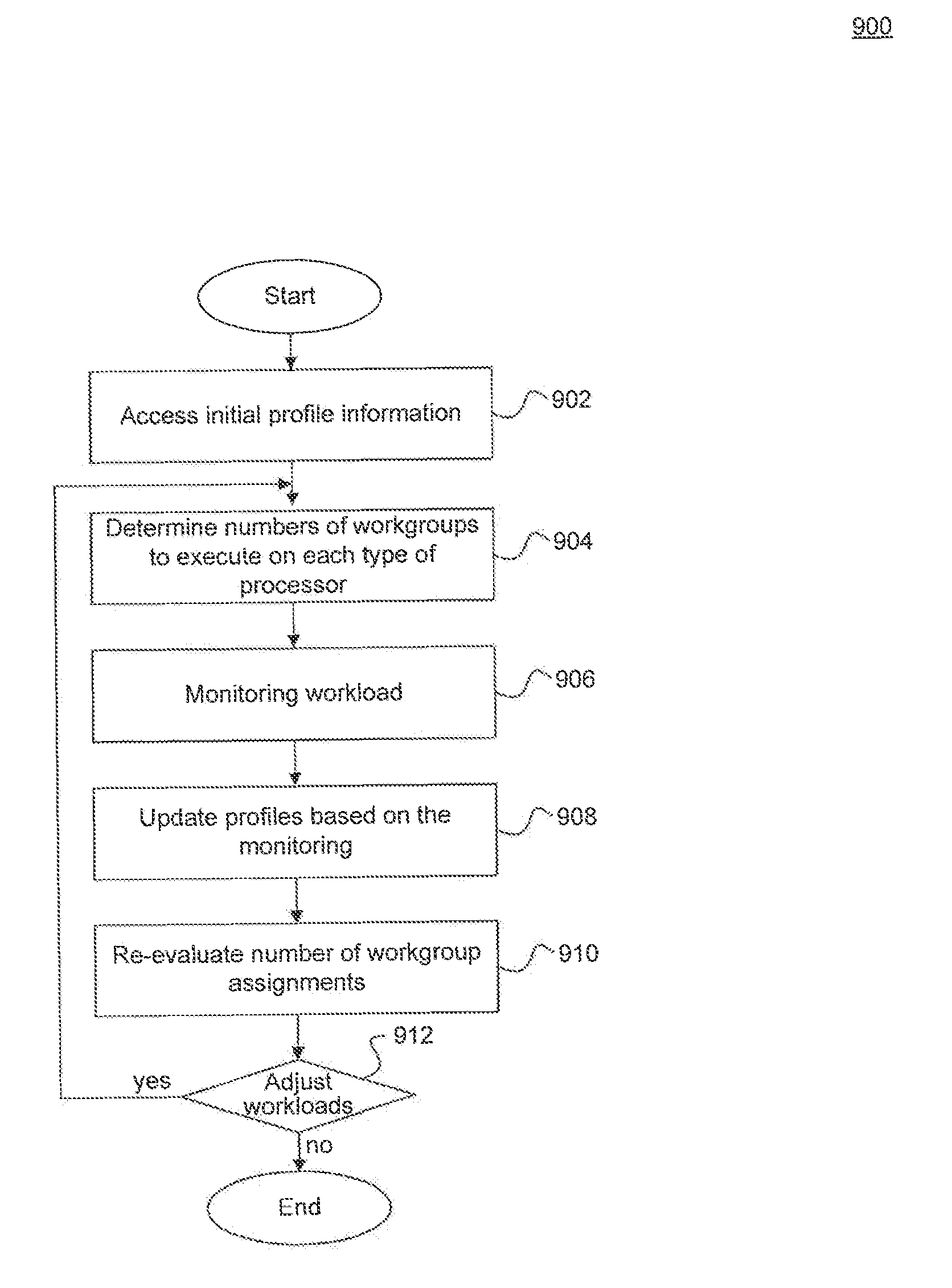

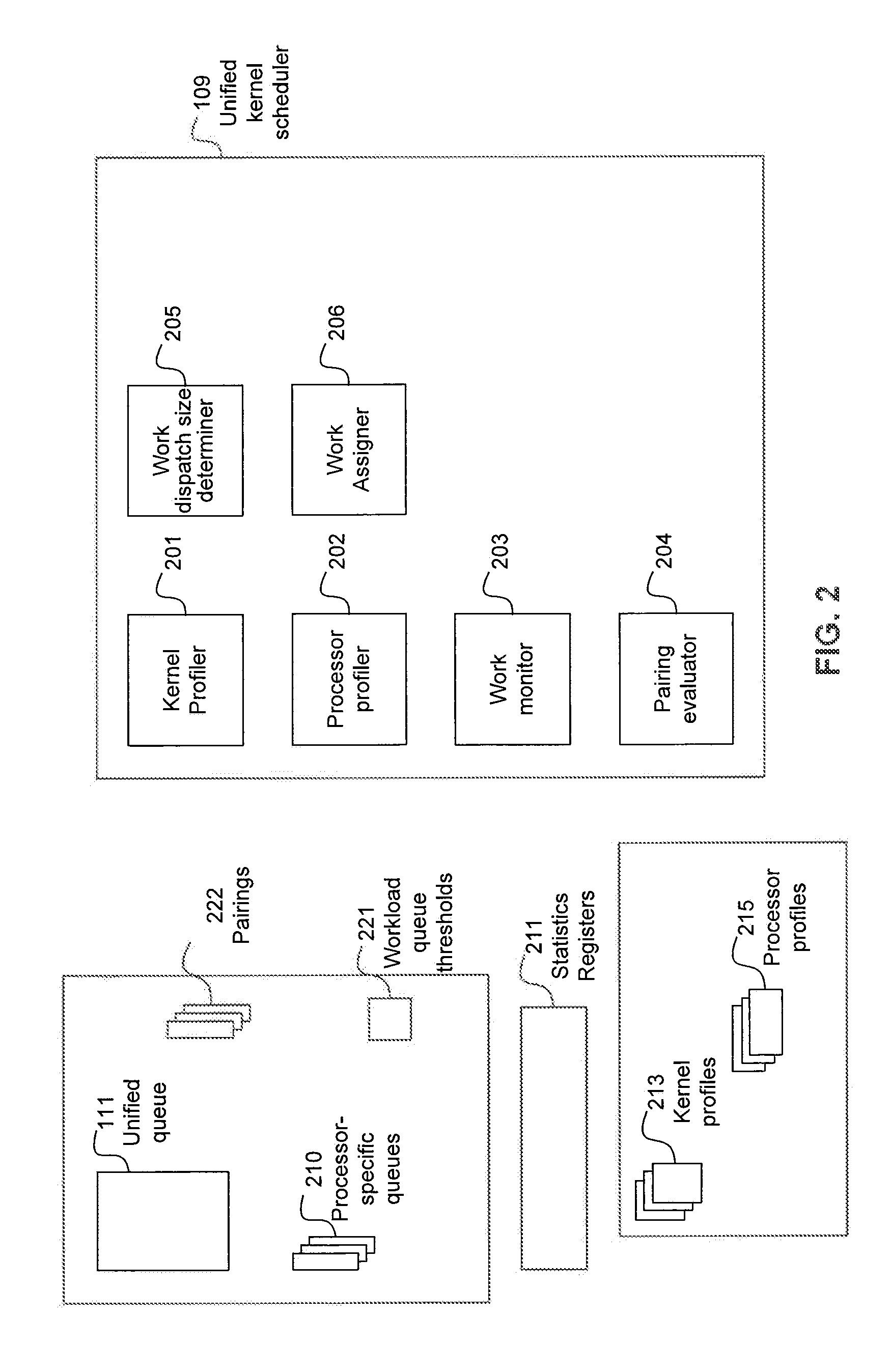

Scheduling compute kernel workgroups to heterogeneous processors based on historical processor execution times and utilizations

ActiveUS8707314B2Energy efficient ICTResource allocationSymmetric multiprocessor systemCompute kernel

A system and method embodiments for optimally allocating compute kernels to different types of processors, such as CPUs and GPUs, in a heterogeneous computer system are disclosed. These include comparing a kernel profile of a compute kernel to respective processor profiles of a plurality of processors in a heterogeneous computer system, selecting at least one processor from the plurality of processors based upon the comparing, and scheduling the compute kernel for execution in the selected at least one processor.

Owner:ADVANCED MICRO DEVICES INC

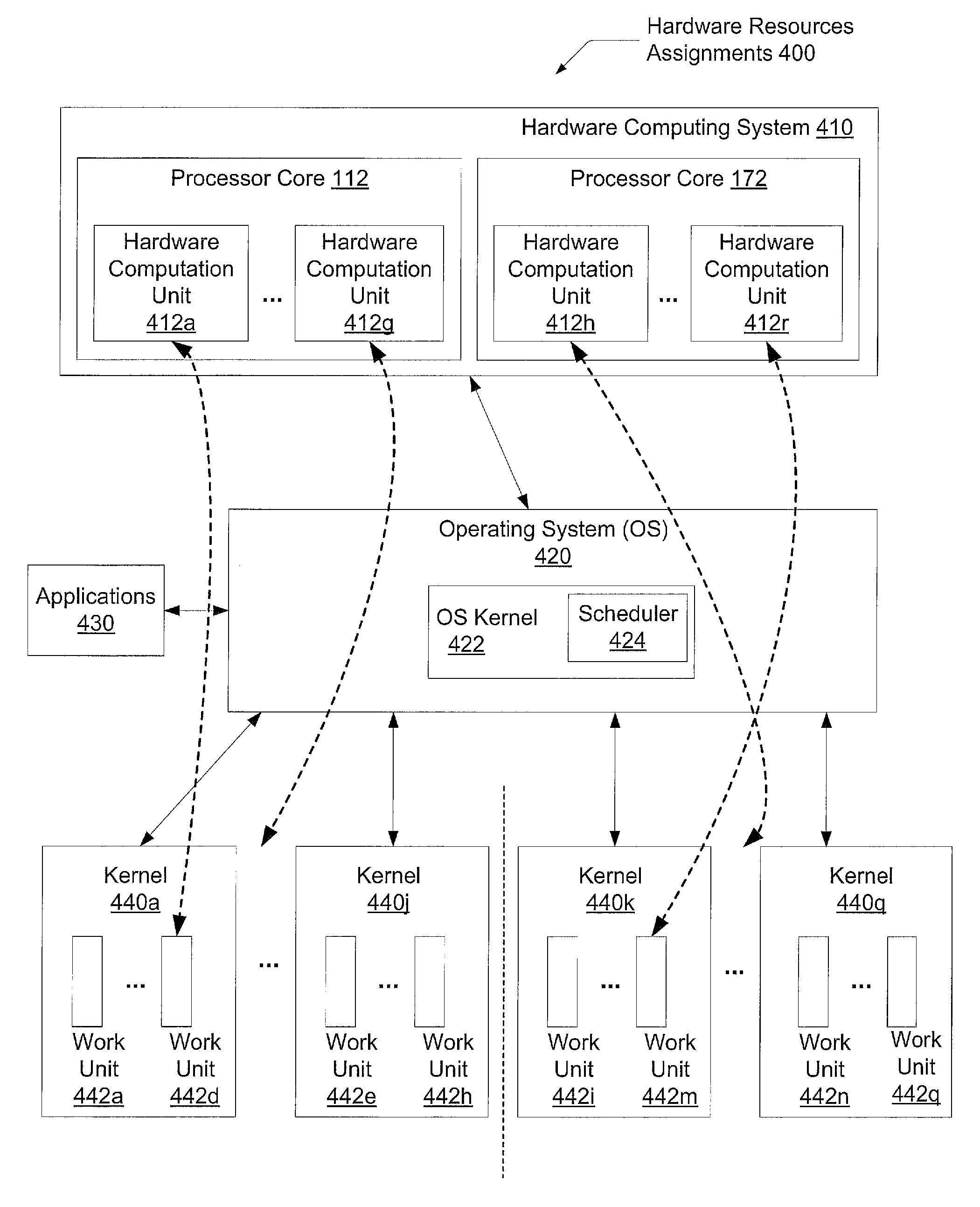

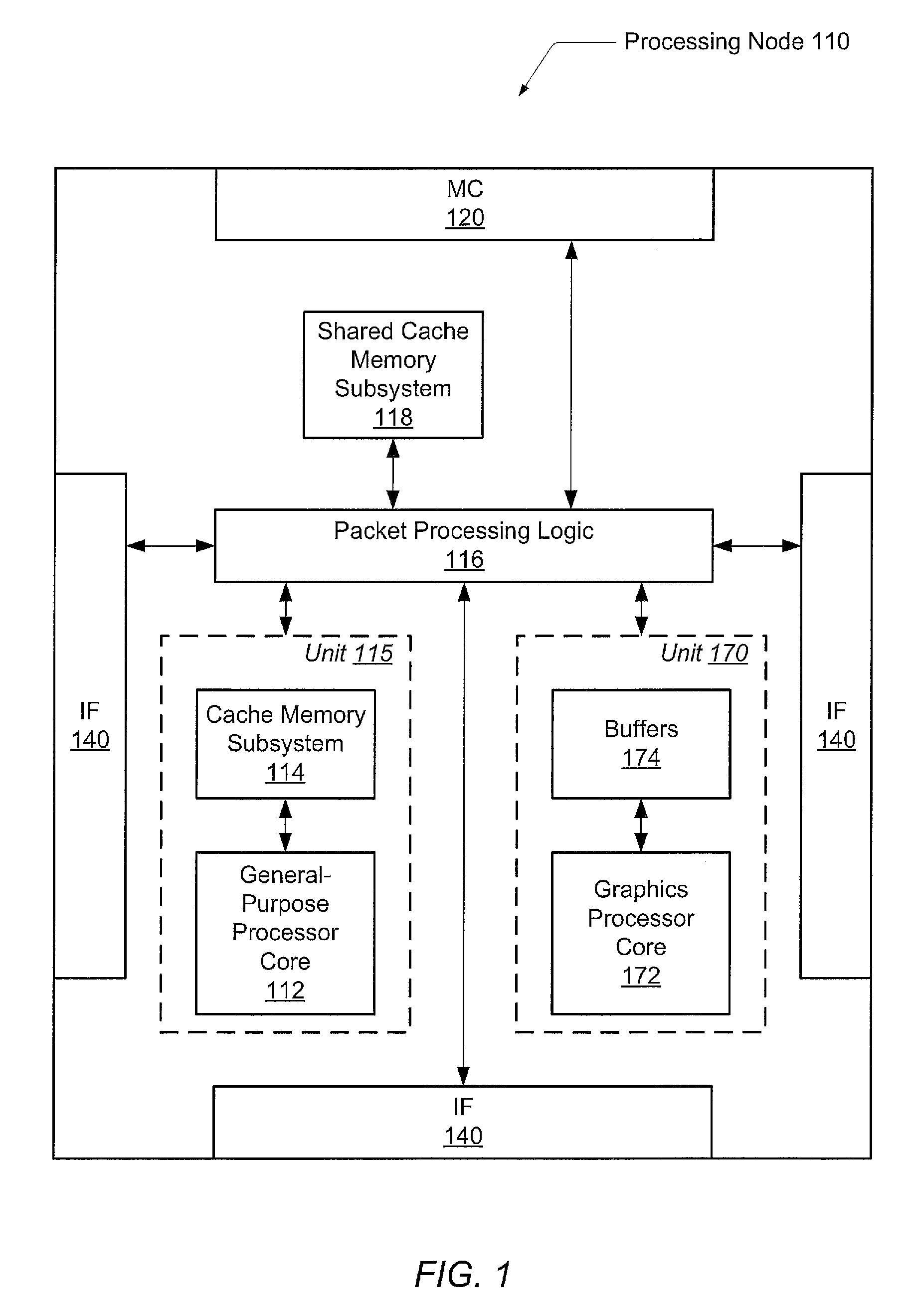

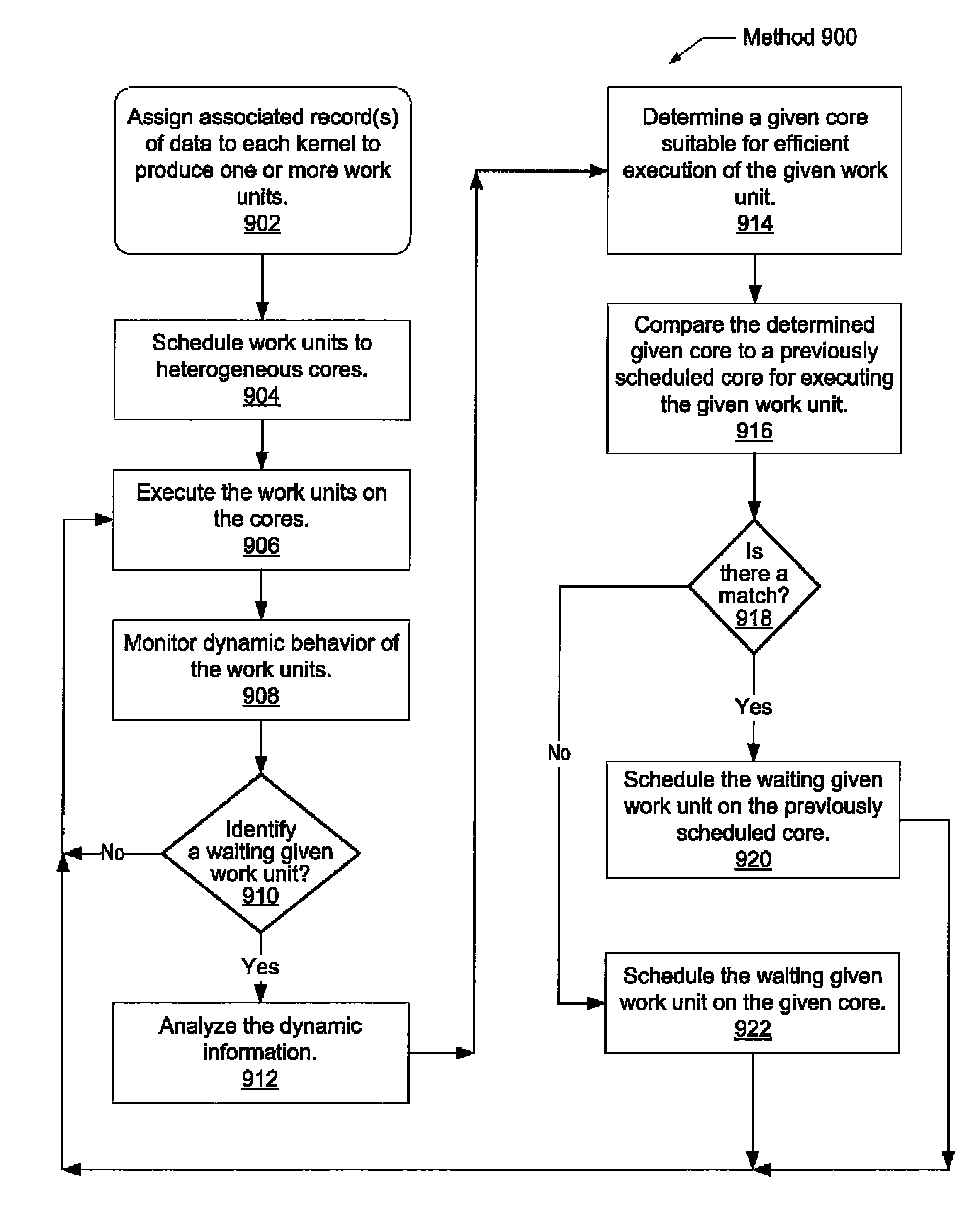

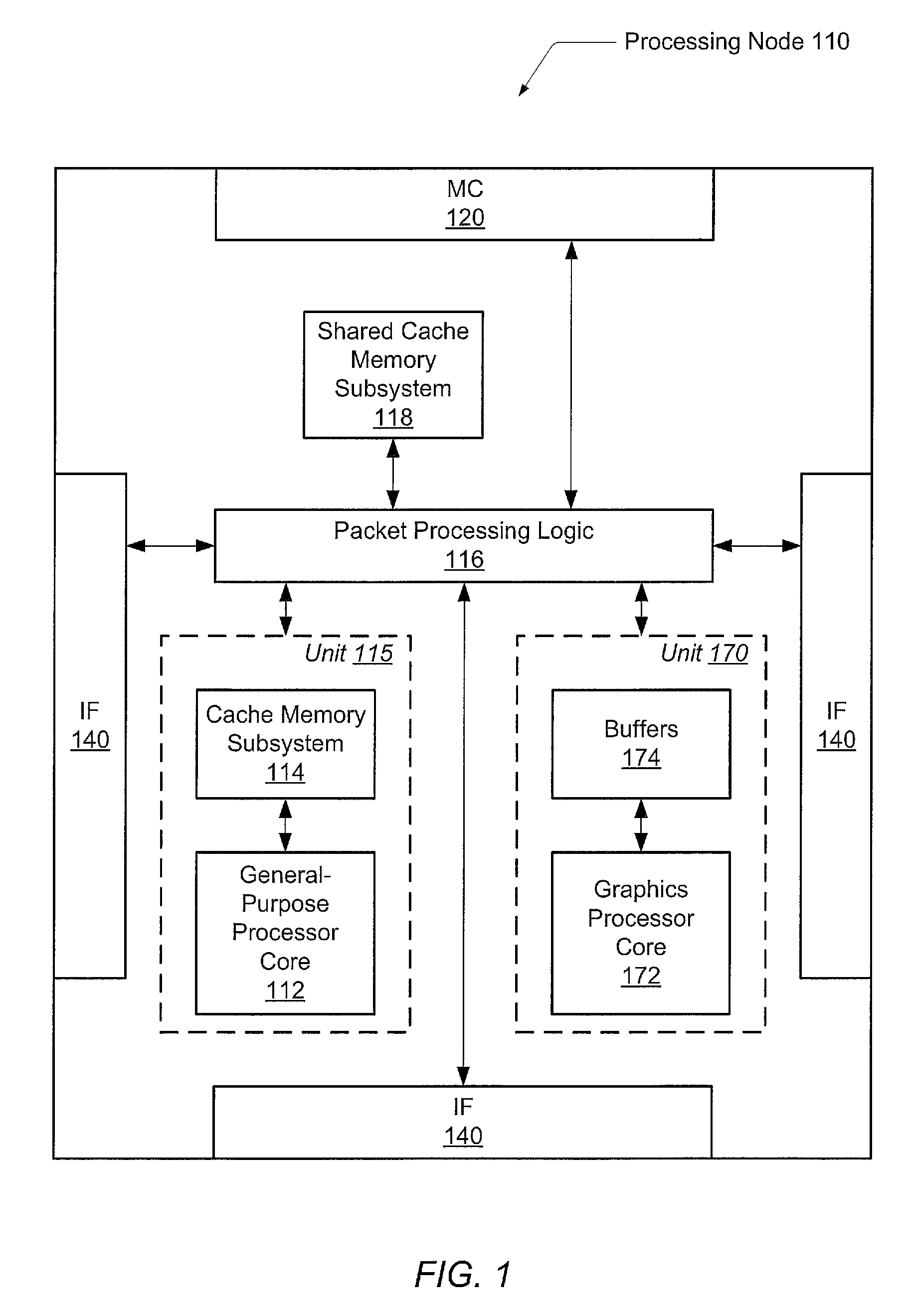

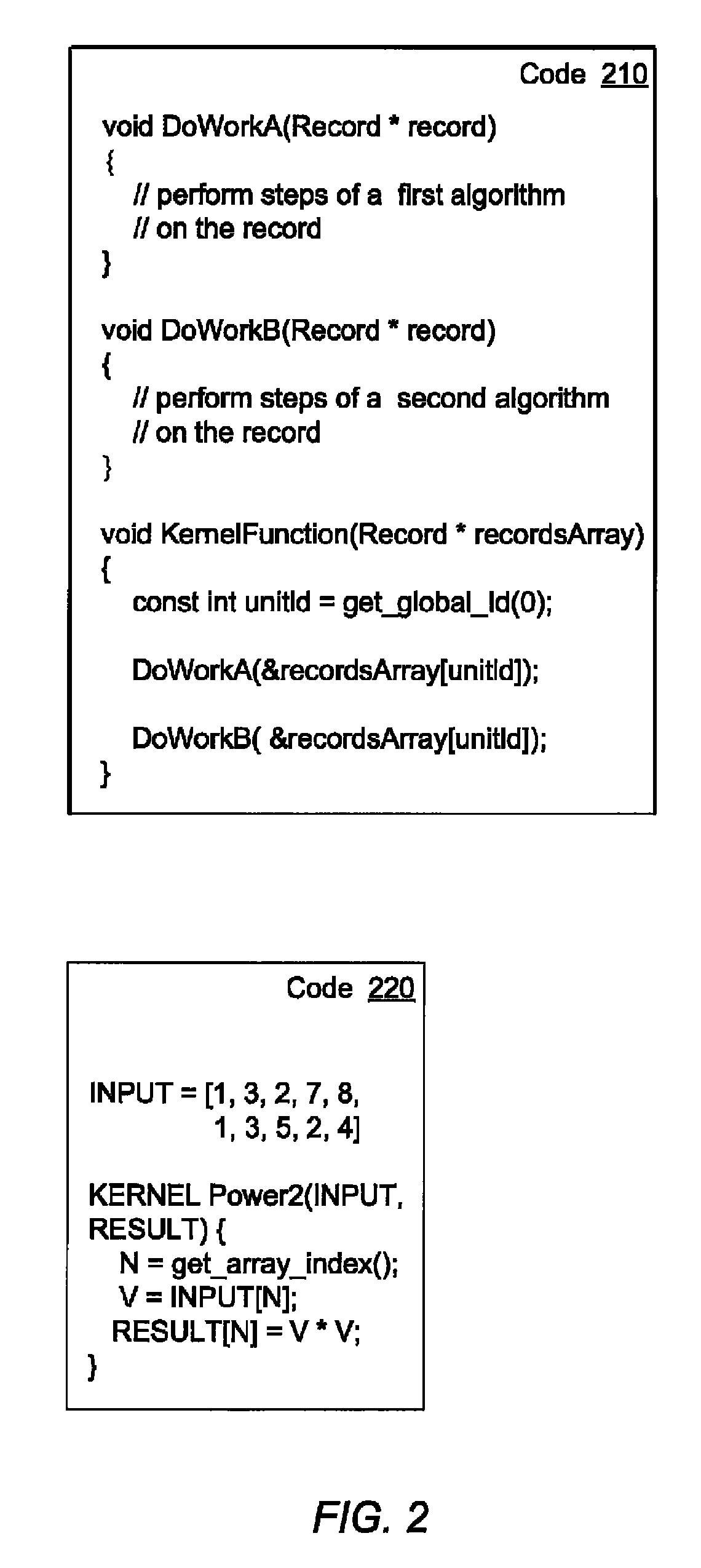

Automatic load balancing for heterogeneous cores

A system and method for efficient automatic scheduling of the execution of work units between multiple heterogeneous processor cores. A processing node includes a first processor core with a general-purpose micro-architecture and a second processor core with a single instruction multiple data micro-architecture. A computer program comprises one or more compute kernels, or function calls. A compiler computes pre-runtime information of the given function call. A runtime scheduler produces one or more work units by matching each of the one or more kernels with an associated record of data. The scheduler assigns work units either to the first or to the second processor core based at least in part on the computed pre-runtime information. In addition, the scheduler is able to change an original assignment for a waiting work unit based on dynamic runtime behavior of other work units corresponding to a same kernel as the waiting work unit.

Owner:ADVANCED MICRO DEVICES INC

Systems and methods for caching compute kernels for an application running on a parallel-processing computer system

ActiveUS8146066B2Multiprogramming arrangementsMemory systemsPerformance computingParallel processing

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

Automatic load balancing for heterogeneous cores

A system and method for efficient automatic scheduling of the execution of work units between multiple heterogeneous processor cores. A processing node includes a first processor core with a general-purpose micro-architecture and a second processor core with a single instruction multiple data micro-architecture. A computer program comprises one or more compute kernels, or function calls. A compiler computes pre-runtime information of the given function call. A runtime scheduler produces one or more work units by matching each of the one or more kernels with an associated record of data. The scheduler assigns work units either to the first or to the second processor core based at least in part on the computed pre-runtime information. In addition, the scheduler is able to change an original assignment for a waiting work unit based on dynamic runtime behavior of other work units corresponding to a same kernel as the waiting work unit.

Owner:ADVANCED MICRO DEVICES INC

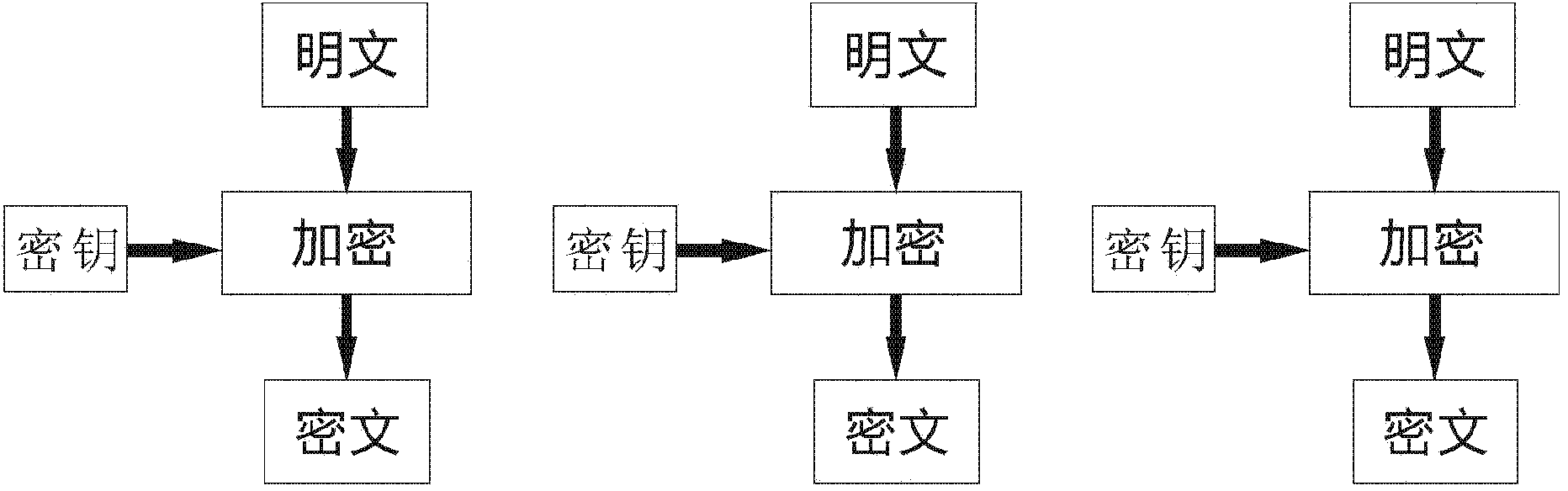

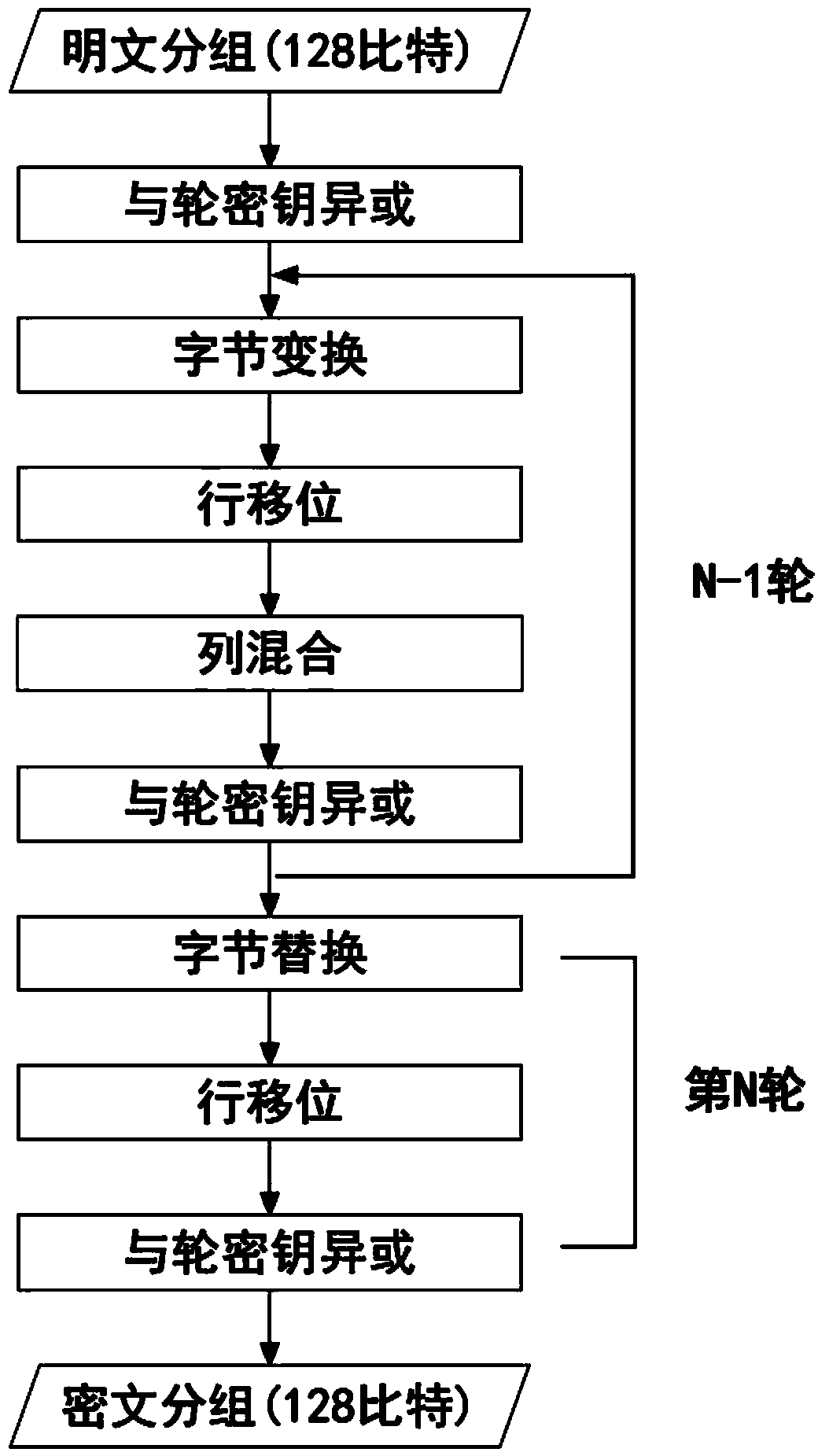

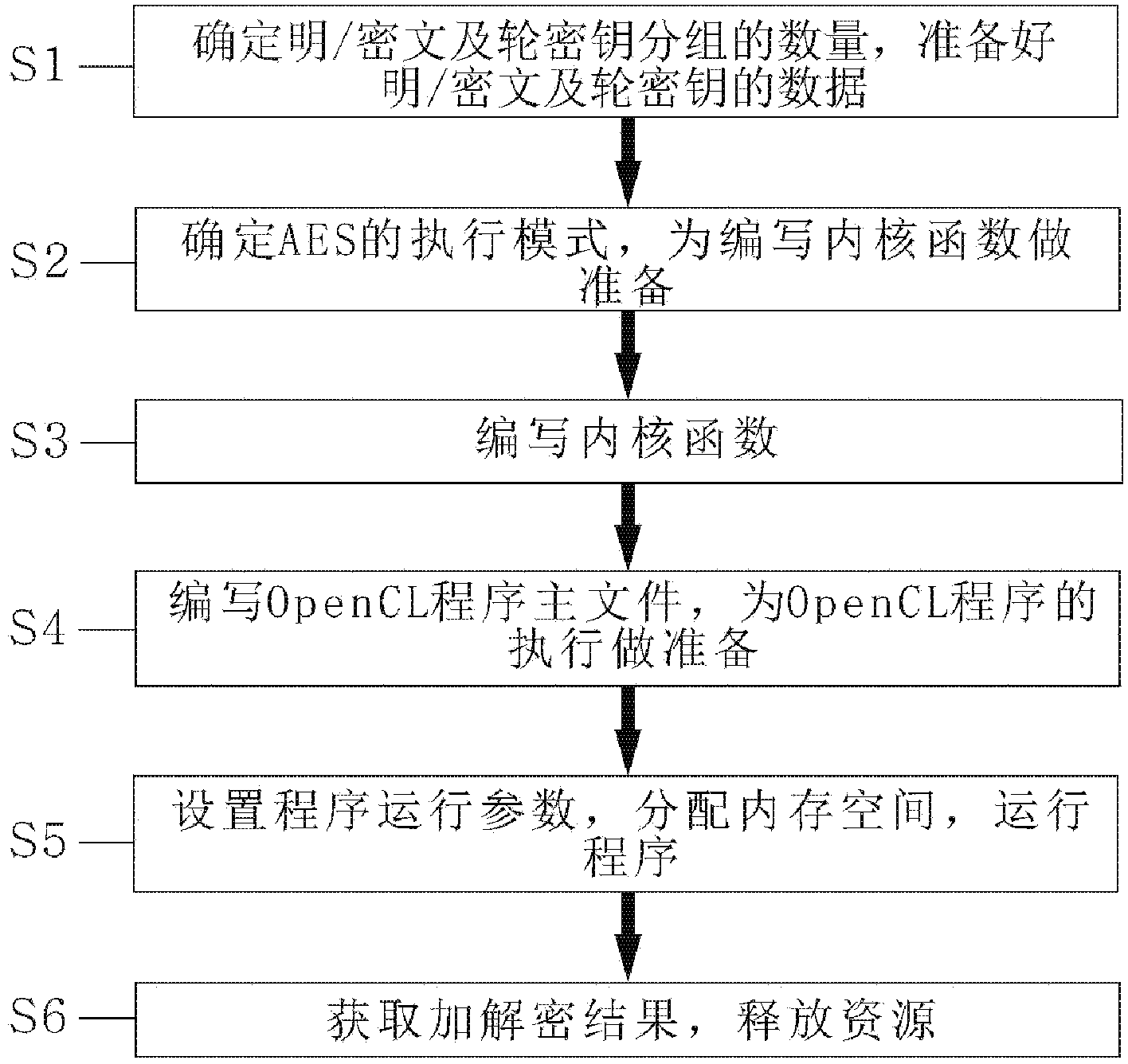

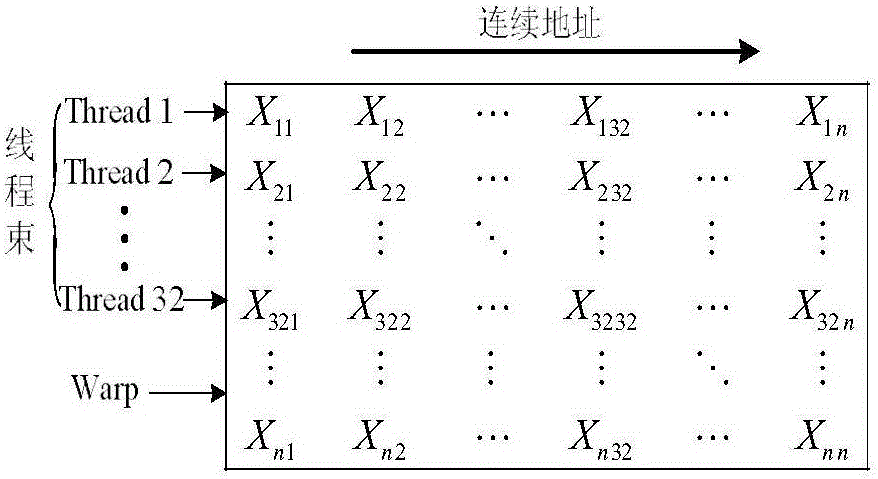

AES parallel implementation method based on OpenCL

ActiveCN103973431AHigh-speed programmingImprove matchEncryption apparatus with shift registers/memoriesConcurrent instruction executionPassword crackingComputer hardware

The invention discloses an AES parallel implementation method based on OpenCL. According to the scheme, when an AES performs parallel running on an AMD GPU based on OpenCL, optimum performance can be achieved. The method includes the following steps that first, the number of plaintext / ciphertext and the number of round key groups are determined, and plaintext / ciphertext and round key data are prepared; second, an executing mode of the AES is determined, and preparation is made for writing of a KiReadyThread; third, the KiReadyThread is written; fourth, a master OpenCL program file is written, and preparation is made for execution of an OpenCL program; fifth, program running parameters are set, memory space is allocated, and the program runs; sixth, the encryption and decryption result is acquired, and resources are released. According to the method, in parallel running process of the AES, data are reasonably allocated in a memory and parallel granularity is reasonably selected so that running performance can be improved, and the method can be used for fast encryption and decryption or password cracking machines.

Owner:SOUTH CHINA NORMAL UNIVERSITY

Application interface on multiple processors

ActiveUS8341611B2Processor architectures/configurationDetails involving image processing hardwareApplication softwareHuman language

Owner:APPLE INC

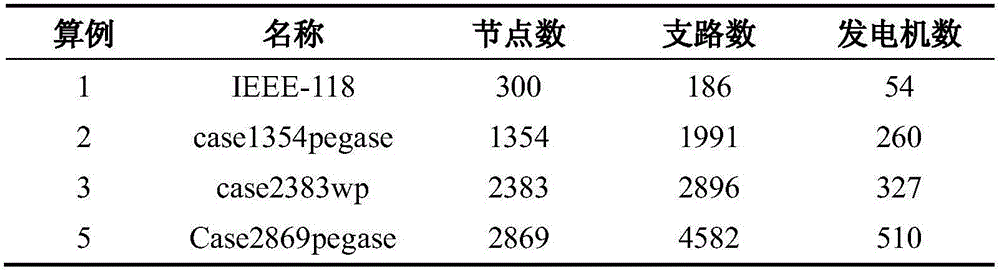

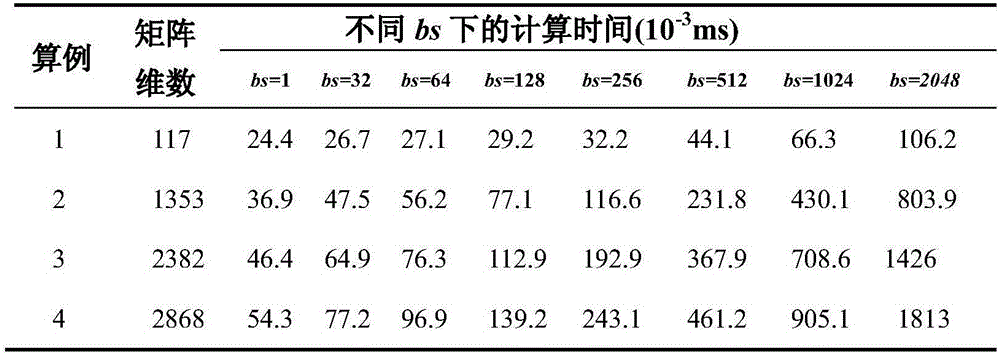

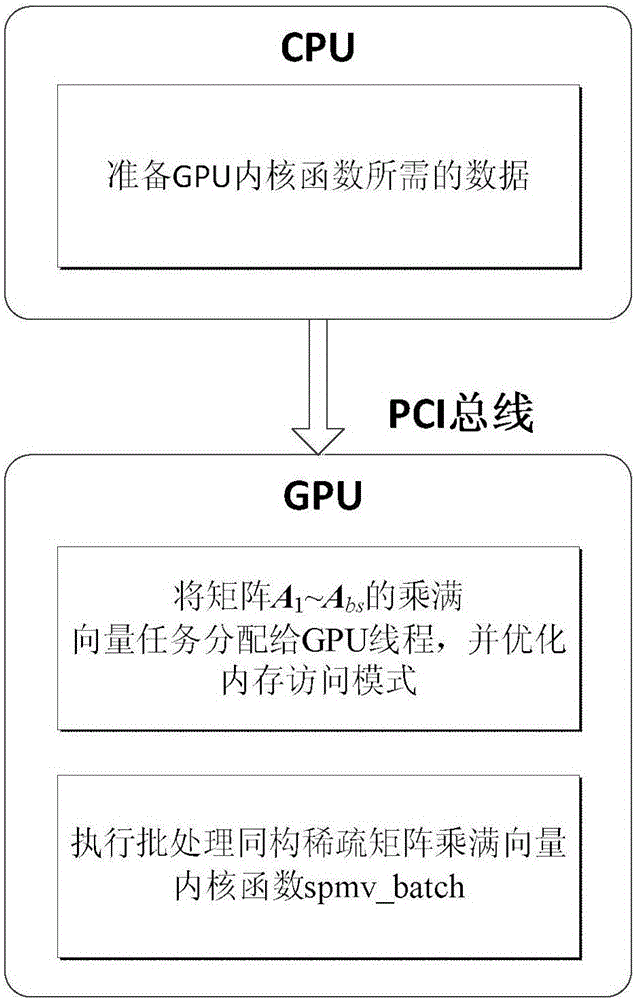

GPU accelerated method for performing batch processing of isomorphic sparse matrixes multiplied by full vectors

ActiveCN106407158AImprove parallelismImprove efficiencyProcessor architectures/configurationComplex mathematical operationsData transmissionSparse matrix

The invention discloses a GPU accelerated method for performing batch processing of isomorphic sparse matrixes multiplied by full vectors. The method comprises the following steps of: (1), storing all matrixes A<1>-A<bs> in a row compression storage format in a CPU; (2), transmitting data required by a GPU kernel function to a GPU by the CPU; (3), distributing full vector multiplication tasks of the matrixes A<1>-A<bs> to GPU threads, and optimizing a memory access mode; and (4), executing batch processing of the isomorphic sparse matrixes multiplied by a full vector kernel function spmv_batch in the GPU, and calling the kernel function to perform batch processing of parallel computing of the isomorphic sparse matrixes multiplied by the full vectors. In the method disclosed by the invention, the CPU is responsible for controlling the whole process of a program and preparing data; the GPU is responsible for computing intensive vector multiplication; the algorithm parallelism and the access efficiency are increased by utilization of a batching processing mode; and thus, the computing time of batch sparse matrixes multiplied by full vectors is greatly reduced.

Owner:SOUTHEAST UNIV

Method and system for rescaling image files

ActiveCN104952037AImage compression is fastReduce power consumptionTelevision system detailsRecording carrier detailsComputer hardwareImage compression

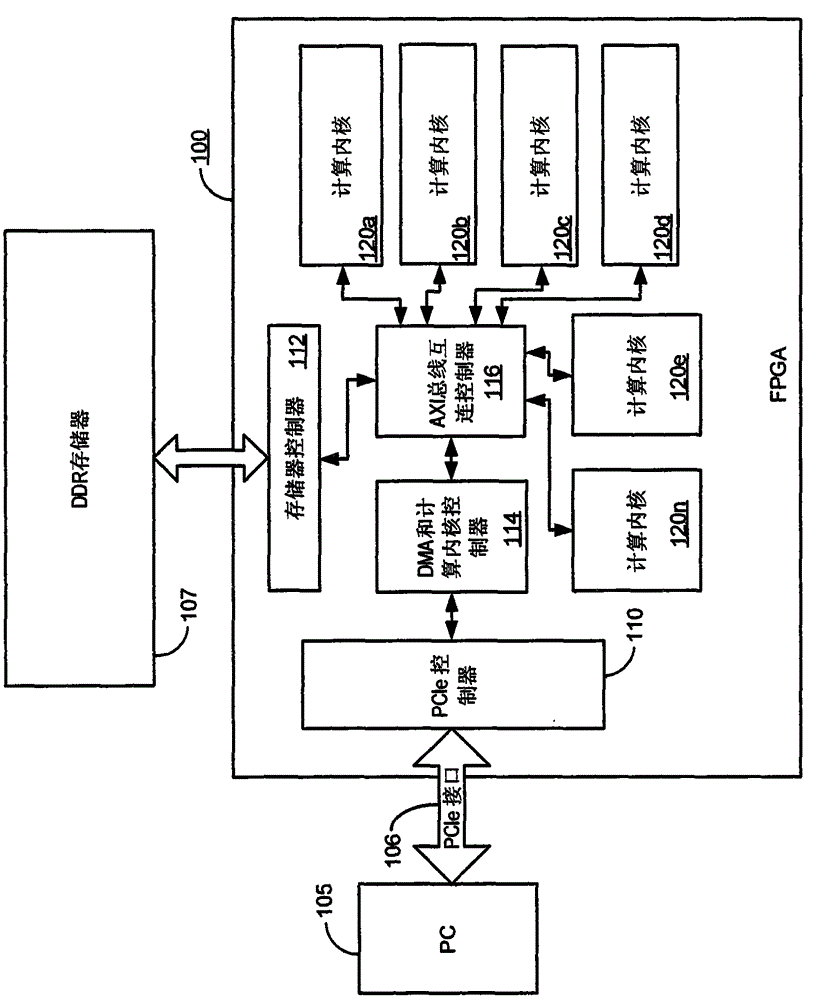

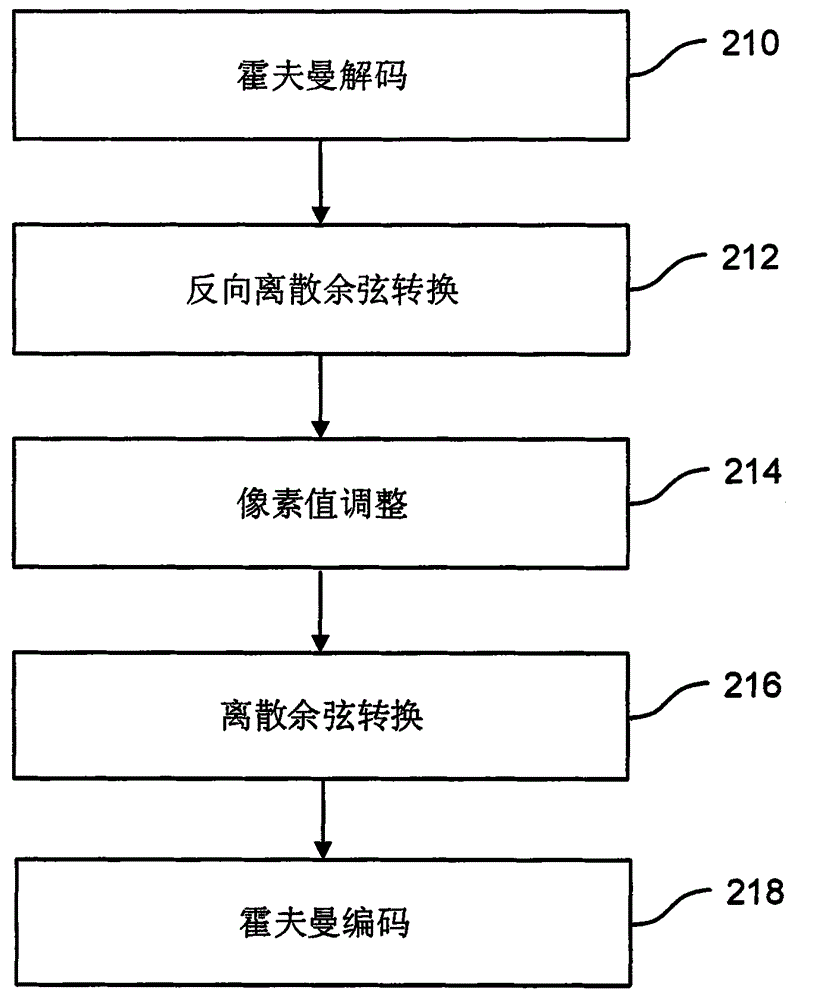

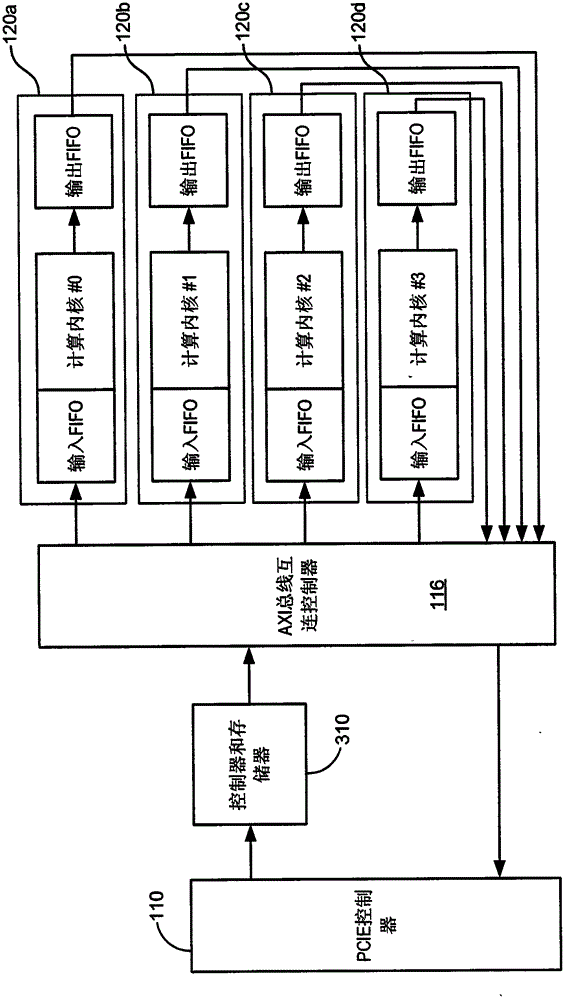

A system for resizing image files includes an FPGA including an interface controller operable to receive a plurality of image files through an interface, a computing kernel controller, and a memory controller. The FPGA also includes an interconnect coupled to the computing kernel controller and the memory controller and a plurality of computing kernels coupled to the interconnect. The system also includes a memory coupled to the FPGA.

Owner:CTACCEL LIMITED

Systems and methods for determining compute kernels for an application in a parallel-processing computer system

ActiveUS20120144162A1Error detection/correctionDigital computer detailsPerformance computingParallel processing

Owner:GOOGLE LLC

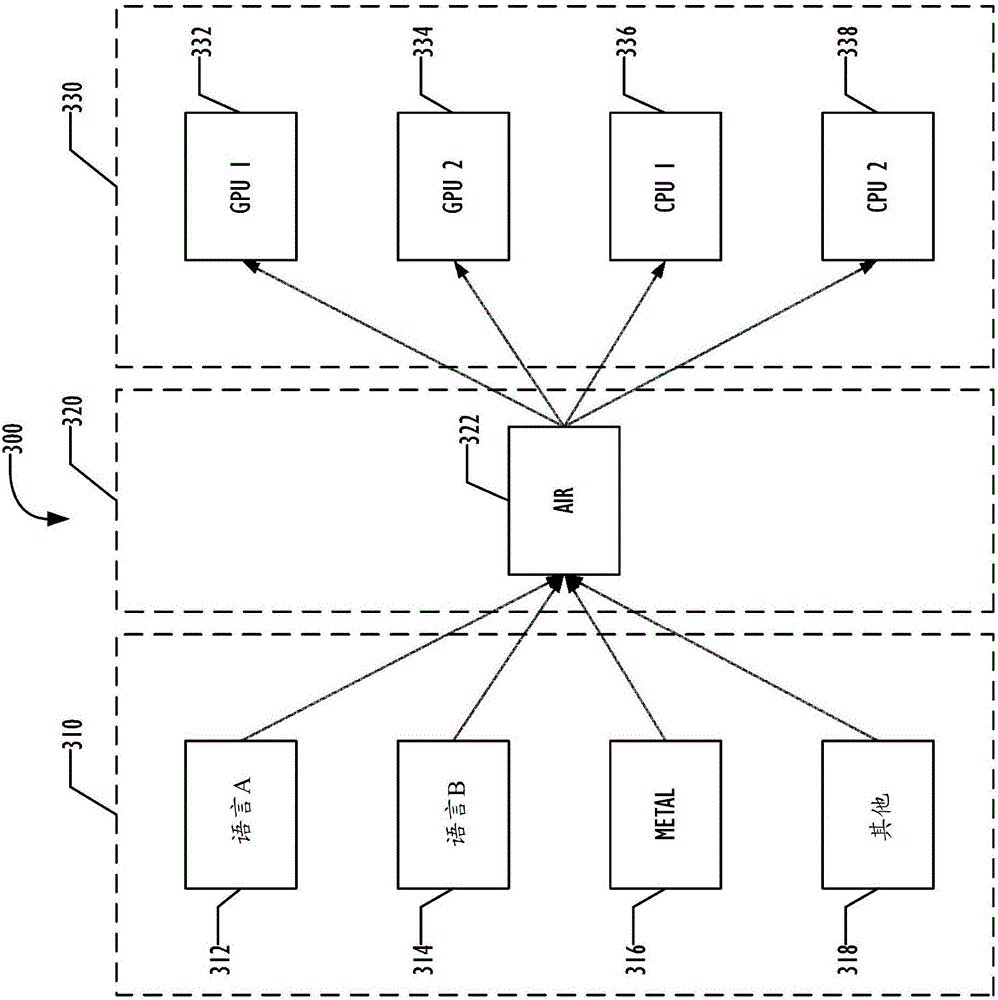

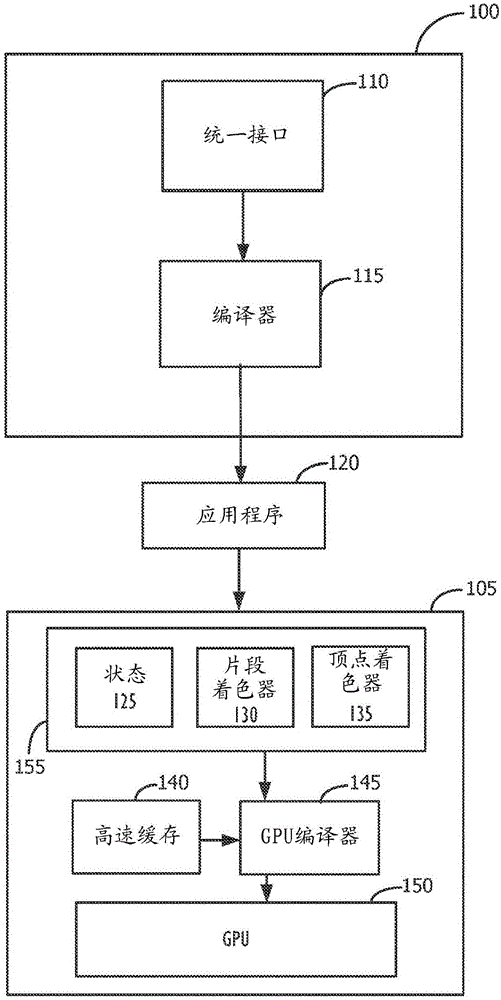

Unified intermediate representation

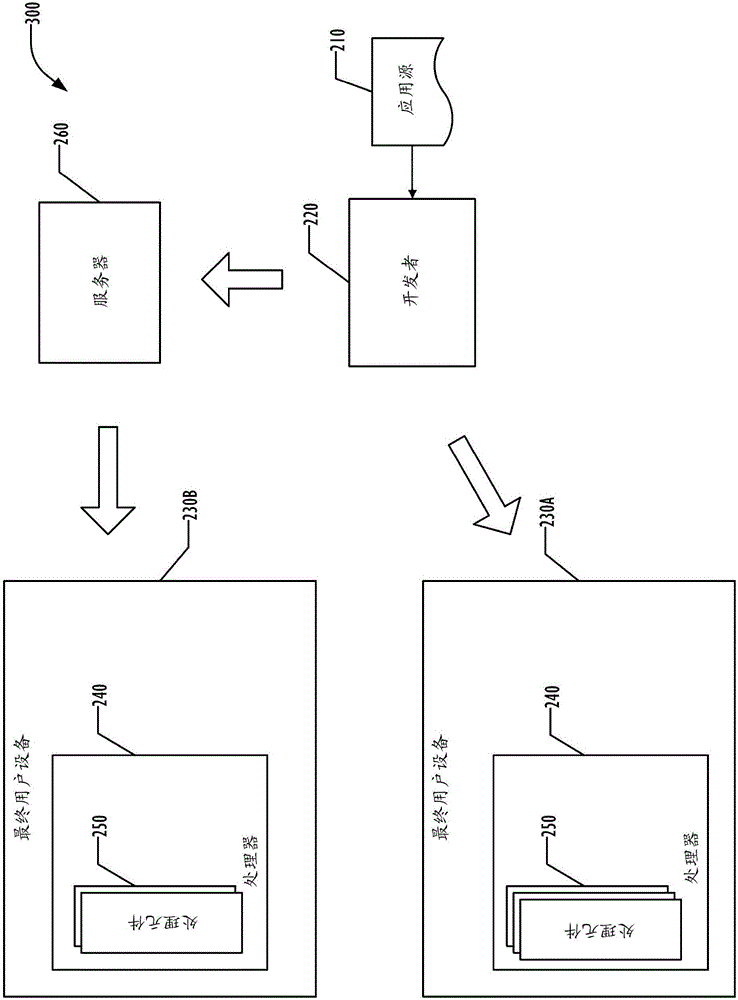

A system decouples the source code language from the eventual execution environment by compiling the source code language into a unified intermediate representation that conforms to a language model allowing both parallel graphical operations and parallel general-purpose computational operations. The intermediate representation may then be distributed to end-user computers, where an embedded compiler can compile the intermediate representation into an executable binary targeted for the CPUs and GPUs available in that end-user device. The intermediate representation is sufficient to define both graphics and non-graphics compute kernels and shaders. At install-time or later, the intermediate representation file may be compiled for the specific target hardware of the given end-user computing system. The CPU or other host device in the given computing system may compile the intermediate representation file to generate an instruction set architecture binary for the hardware target, such as a GPU, within the system.

Owner:APPLE INC

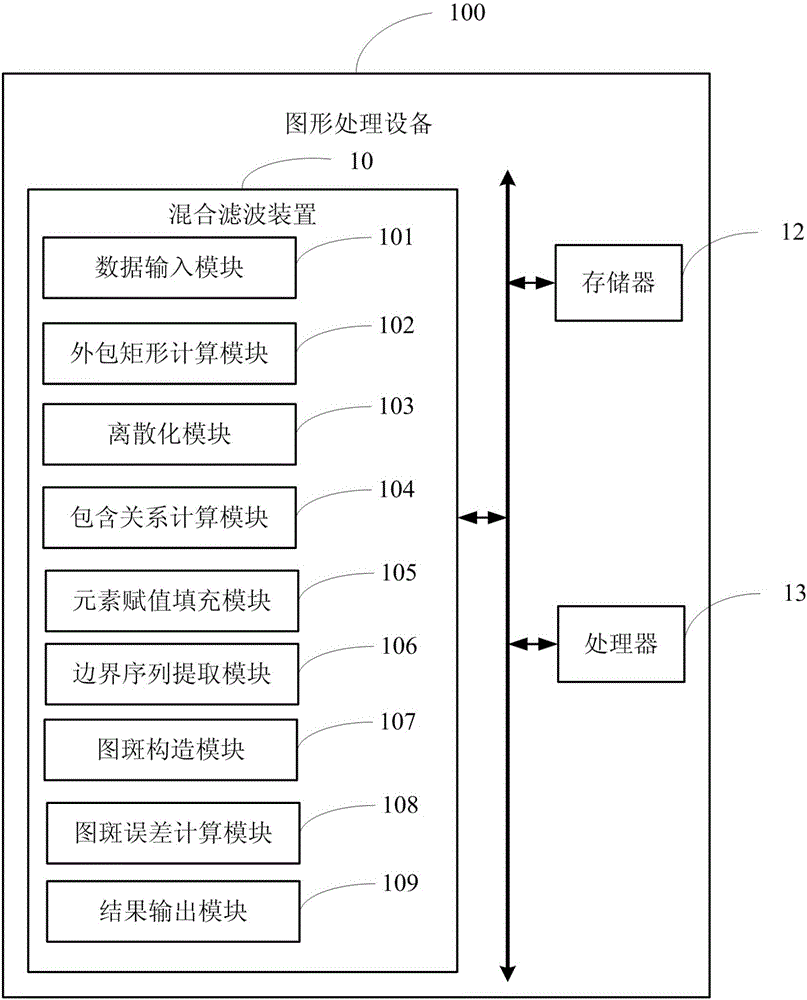

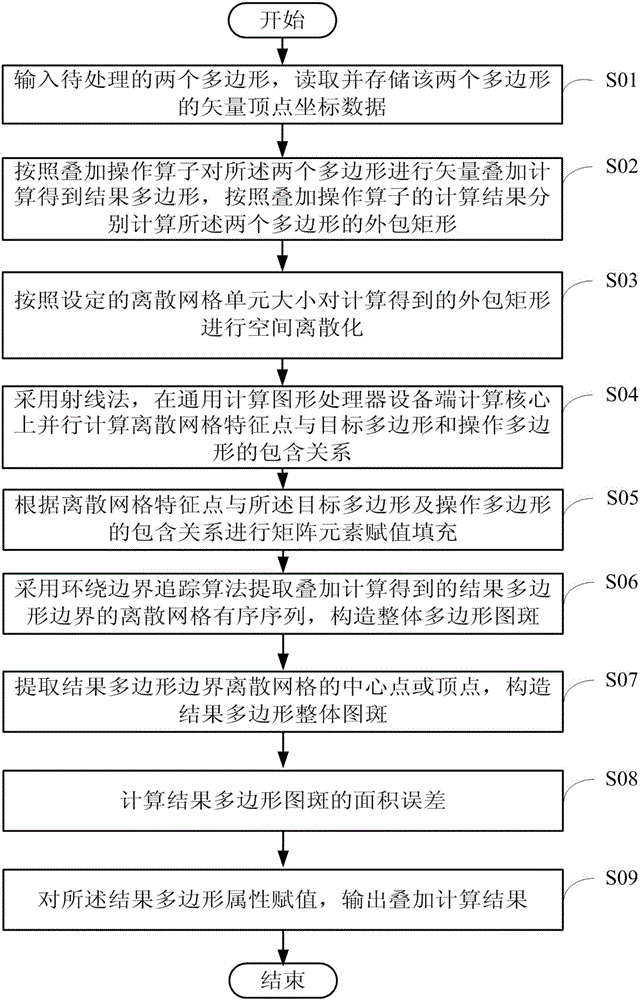

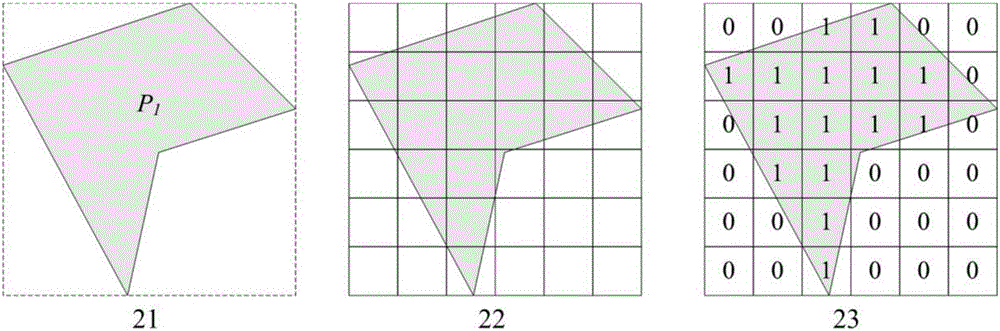

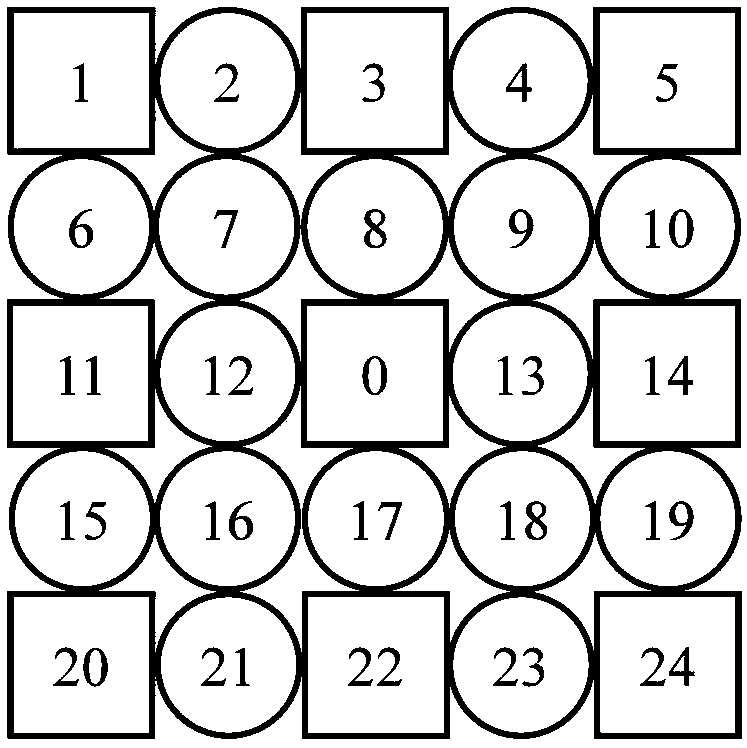

Graph processing method and device based on rasterized superposition analysis

InactiveCN105956994AIncrease profitLow coupling propertiesImage analysisProcessor architectures/configurationGraphicsInclusion relation

The embodiment of the invention provides a graph processing method and a device based on rasterized superposition analysis in order to solve a problem in the prior art that the computation efficiency rapidly decreases along with increase in the number of polygon vertices. The graph processing method comprises the steps of computing bounding rectangles of superposition computation results of two vector polygons participating in superposition computation, carrying out spatial discretization on the bounding rectangles according to the set discrete grid cell size, parallelly computing an inclusion relation between discrete grid feather points and the two polygons on a computing kernel of a general purpose computation graph processor equipment terminal by adopting a ray method, carrying out matrix element assignment filling according to the inclusion relation between the discrete grid feature points and the two polygons, extracting a discrete grid ordered sequence of result polygon boundaries by adopting a surround boundary tracing algorithm, extracting a center point or a vertex of a discrete grid of the result polygon boundaries so as to construct a result polygon overall pattern spot, computing an area error of the result polygon pattern spot, and carrying out attribute assignment on the result polygon so as to output a superposition computation result.

Owner:SHANDONG UNIV OF TECH

System and method for unified application programming interface and model

ActiveCN106462393AProgram synchronisationInstruction analysisApplication programming interfaceParallel computing

Systems, computer readable media, and methods for a unified programming interface and language are disclosed. In one embodiment, the unified programming interface and language assists program developers write multi-threaded programs that can perform both graphics and data-parallel compute processing on GPUs. The same GPU programming language model can be used to describe both graphics shaders and compute kernels, and the same data structures and resources may be used for both graphics and compute operations. Developers can use multithreading efficiently to create and submit command buffers in parallel.

Owner:APPLE INC

Systems and methods for generating reference results using parallel-processing computer system

ActiveUS20130061230A1Multiprogramming arrangementsMemory systemsTheoretical computer scienceParallel processing

Owner:GOOGLE LLC

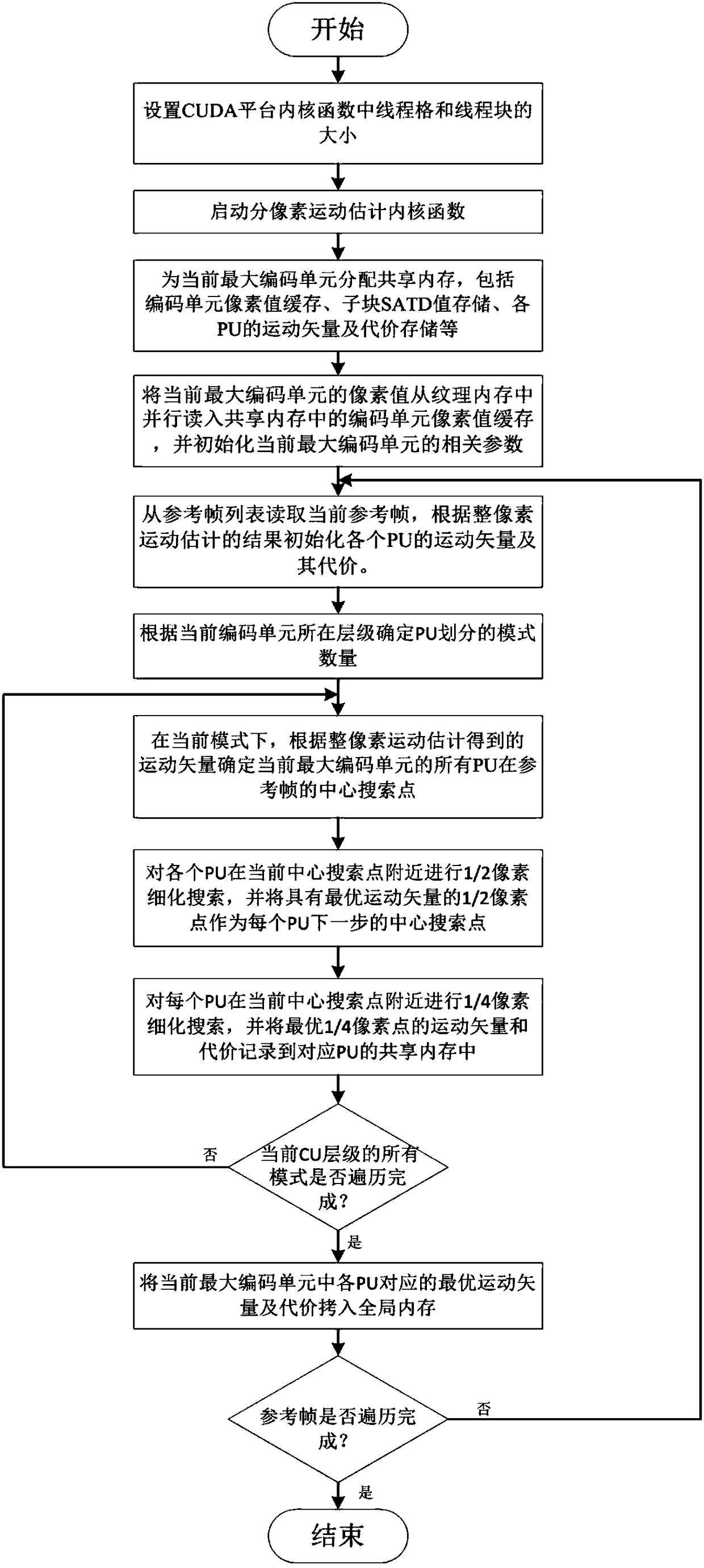

Parallel implementation method of sub-pixel motion estimation

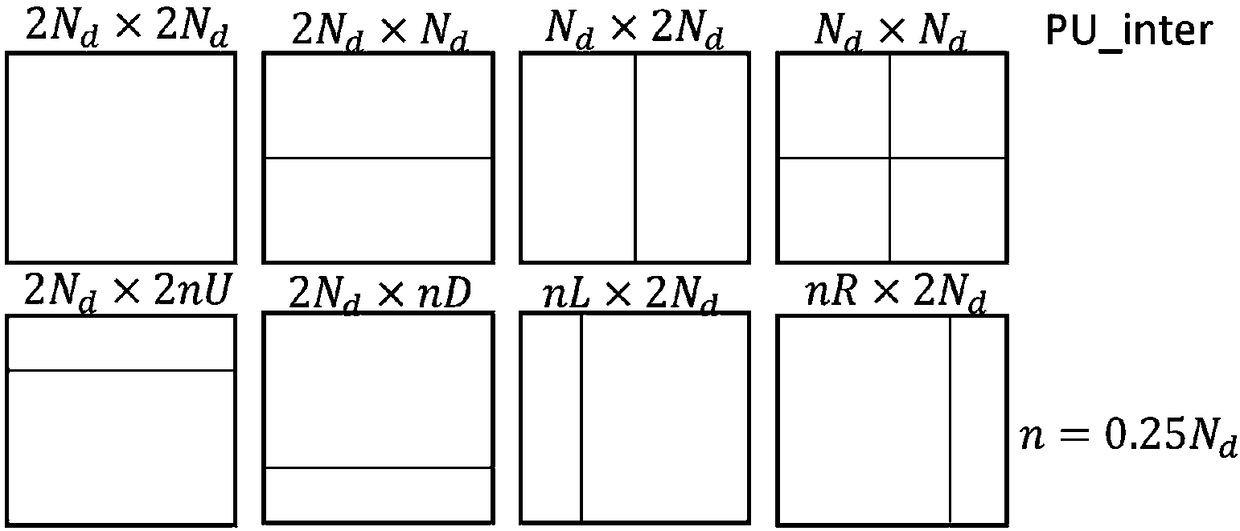

InactiveCN108259912AReduce computational complexityReduce search timeDigital video signal modificationMotion vectorMotion estimation

A parallel implementation method of sub-pixel motion estimation comprises the steps of: setting the size of a thread grid and a thread block in the kernel function of a CUDA platform; starting the sub-pixel motion estimation kernel function of the CUDA platform; allocating a shared memory for the current maximum coding unit on a device terminal; initializing a motion vector and a motion vector cost of each PU; determining the number of modes divided by the PU; determining, according to the search result of the integer pixel motion estimation, a center search point of all PUs of the current maximum coding unit at a reference frame; performing a 1 / 2 pixel refinement search for each PU near the current center search point; performing a 1 / 4 pixel refinement search for each PU near the currentcenter search point, and recording the search result to the motion vector storage of the PU and the motion vector cost storage of the PU in the shared memory; determining whether all the modes are traversed; and copying the motion vector of each PU and the motion vector cost of each PU in the current maximum coding unit into a global memory. The invention is capable of effectively accelerating theinter-frame sub-pixel motion estimation.

Owner:TIANJIN UNIV

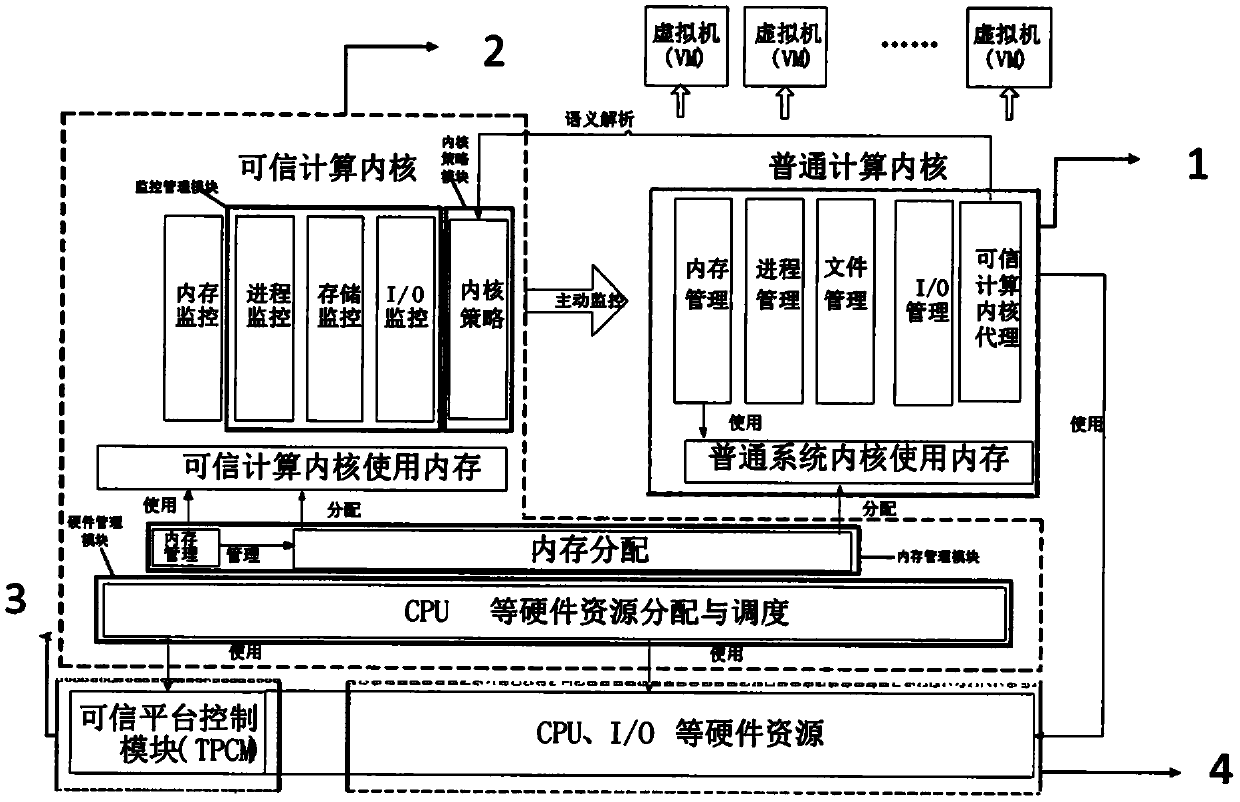

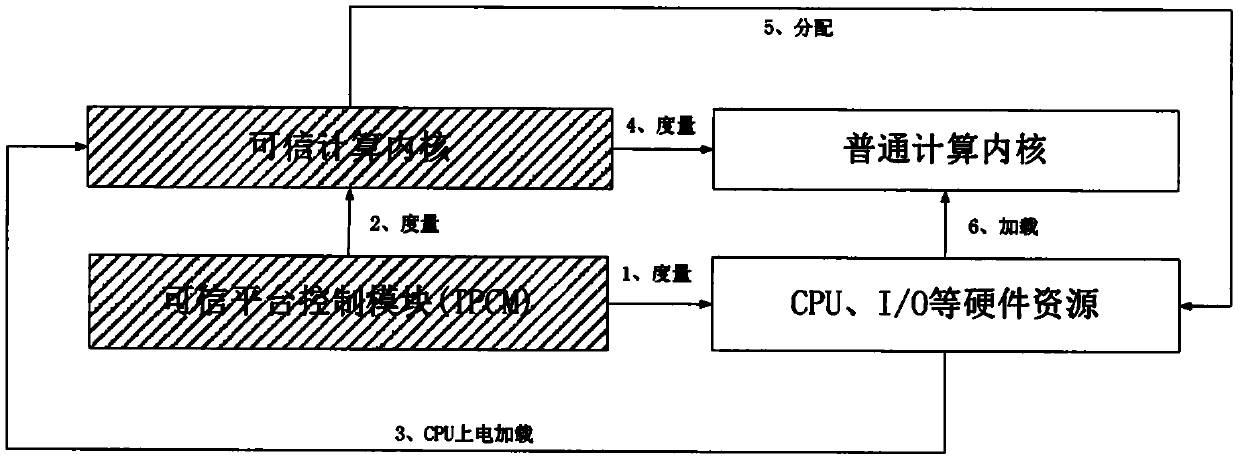

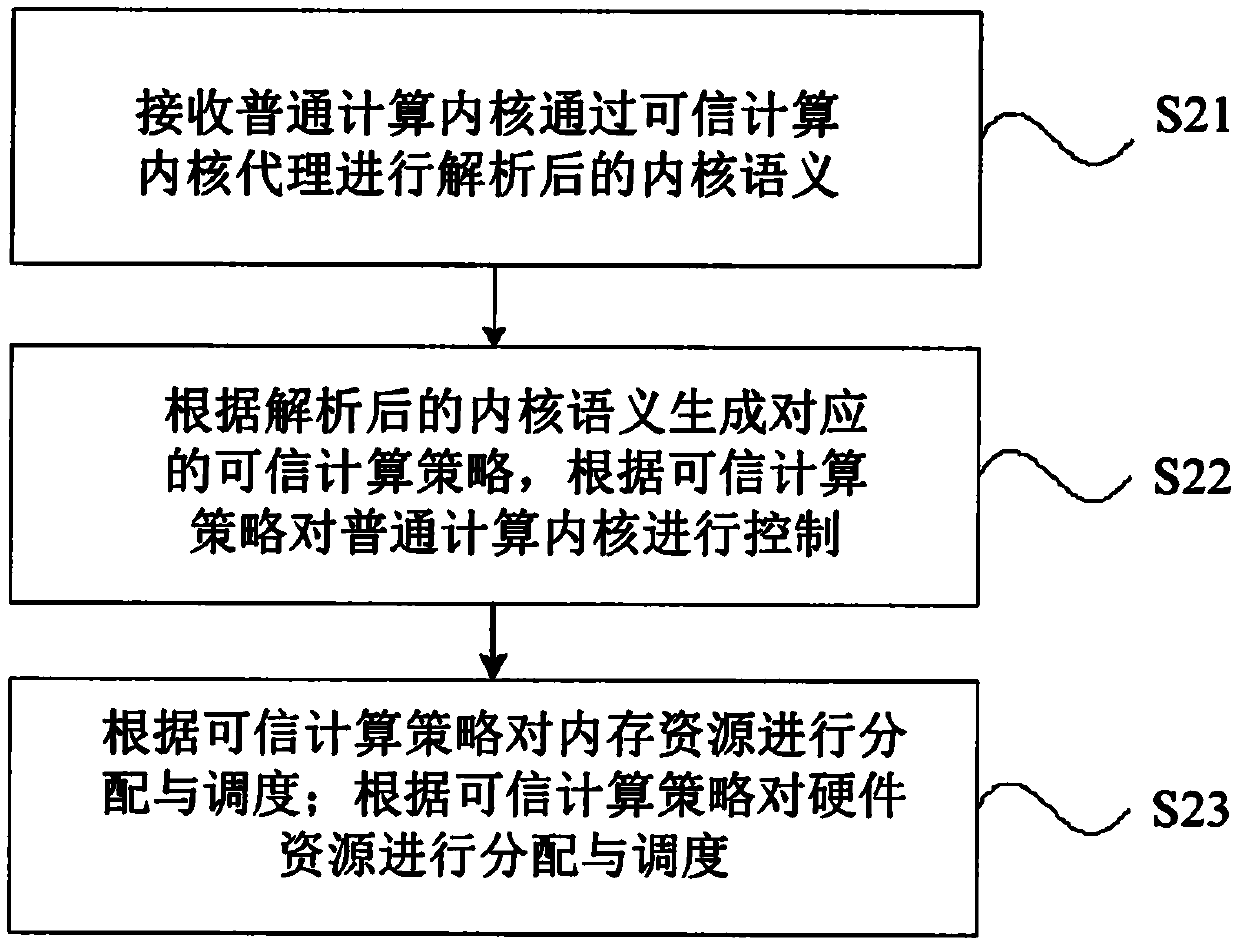

Dual-architecture trusted operating system and method

ActiveCN110175457AEnsure safetyGuaranteed safe operationResource allocationPlatform integrity maintainanceOperational systemStructure of Management Information

The invention discloses a dual-architecture trusted operating system and method, and the system comprises a common computing kernel, a trusted computing kernel, a monitoring management module and a hardware mangament module as well as a memory mangament module. The common computing kernel is configured with a trusted computing kernel agent for obtaining a common computing kernel state request forprocessing, and carrying out semantic analysis on kernel semantics through a trusted computing kernel agent, and sending the kernel semantics to the trusted computing kernel. The trusted computing kernel comprises a kernel strategy module used for generating a trusted computing strategy and analyzing the semantics of the common computing kernel. The monitoring management module is used for monitoring, measuring and controlling the state of the common computing kernel. The hardware management module is used for distributing and scheduling hardware resources according to a trusted computing strategy. The memory management module is used for allocating and scheduling the memory resources according to the trusted computing strategy. System operation can be actively protected on the basis of not interfering system services, and the trusted computing kernel is compatible with various computing architectures and is more suitable for being applied to terminals with diversified service softwareand hardware environments or high security levels.

Owner:GLOBAL ENERGY INTERCONNECTION RES INST CO LTD +2

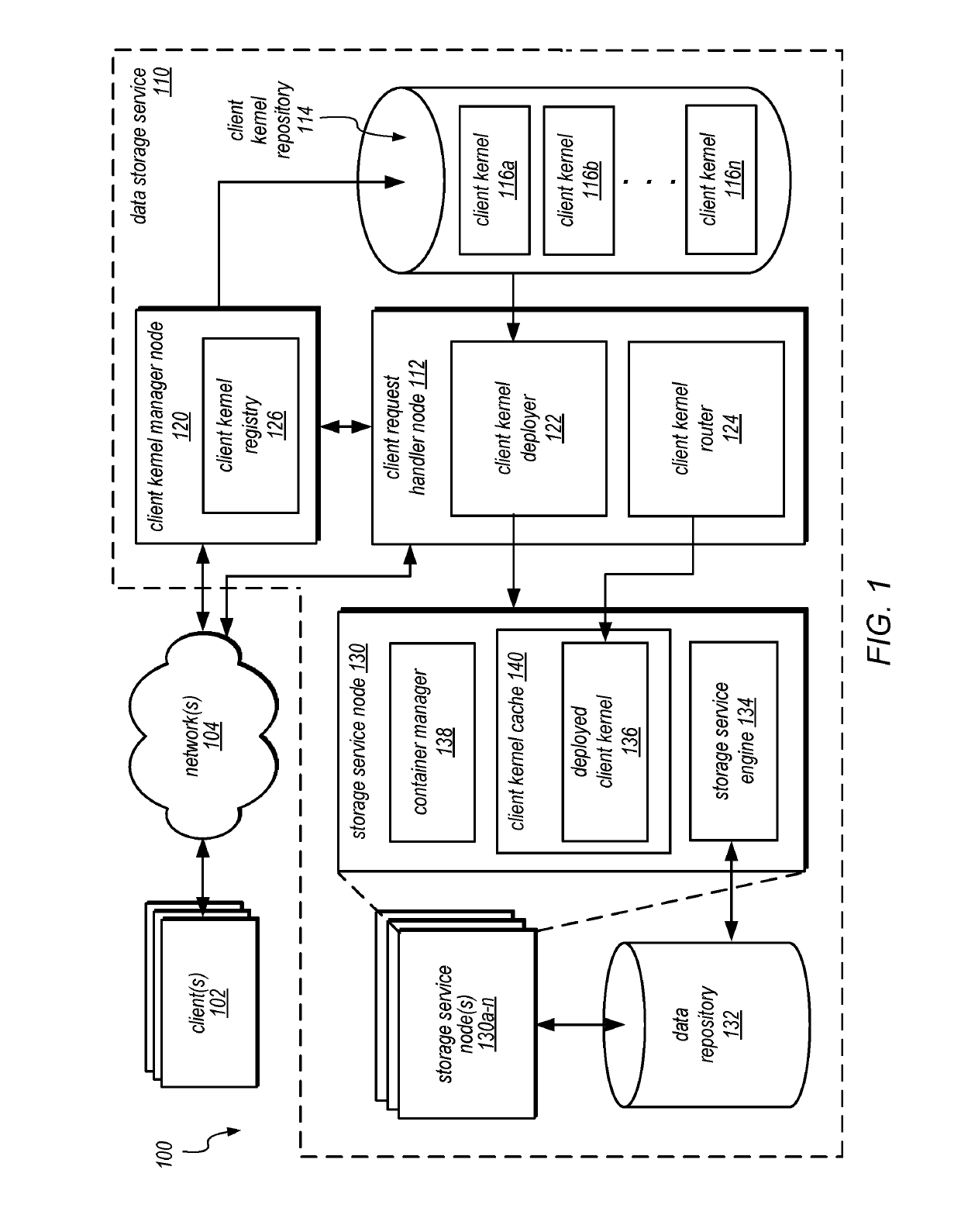

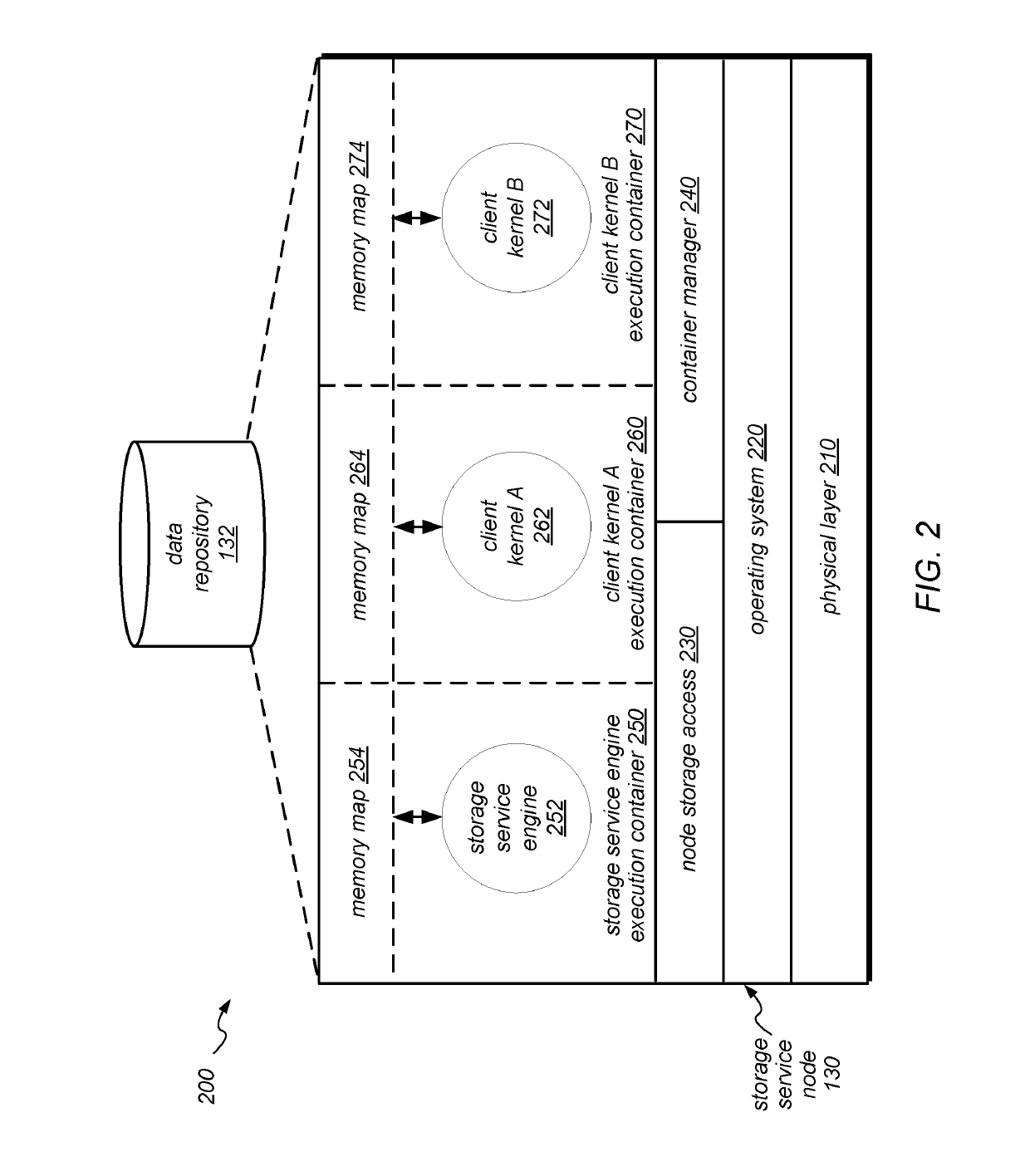

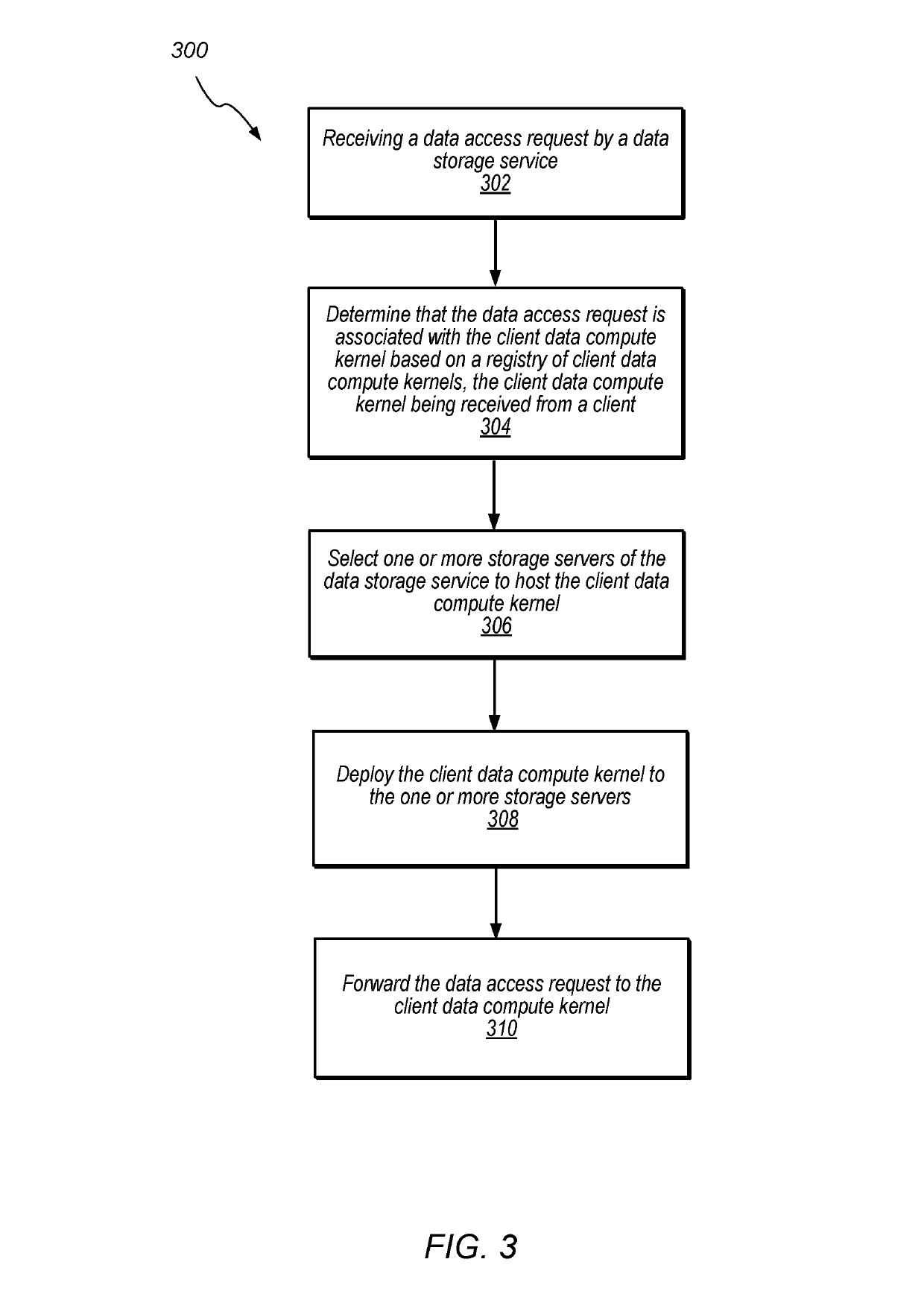

Deployment of client data compute kernels in cloud

A data storage service includes a client data compute kernel manager that receives and registers a client data compute kernel in a client kernel repository. The client data compute kernel may be a custom data compute kernel that is received from a client. The data storage service includes a client request handler that receives data access requests to a client data store. The client request handler may determine that a data access request is associated with the client data compute kernel. The client request handler may then deploy the client data compute kernel to one or more selected storage servers, and then forward the data access request to the client data compute kernel. A storage server may execute a storage service engine of the data storage service in one execution container on the storage server and the client data compute kernel on a second execution container on the storage server.

Owner:AMAZON TECH INC

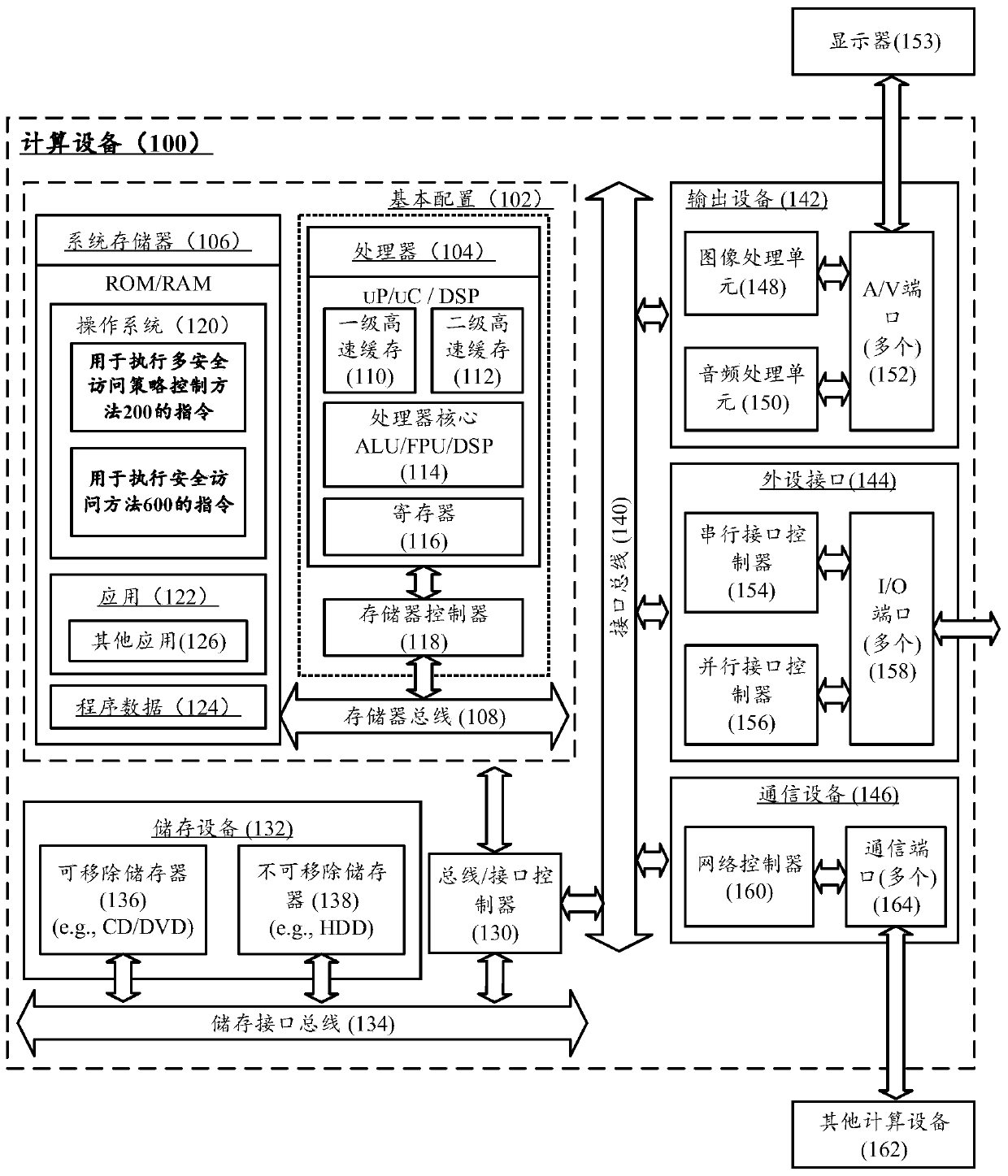

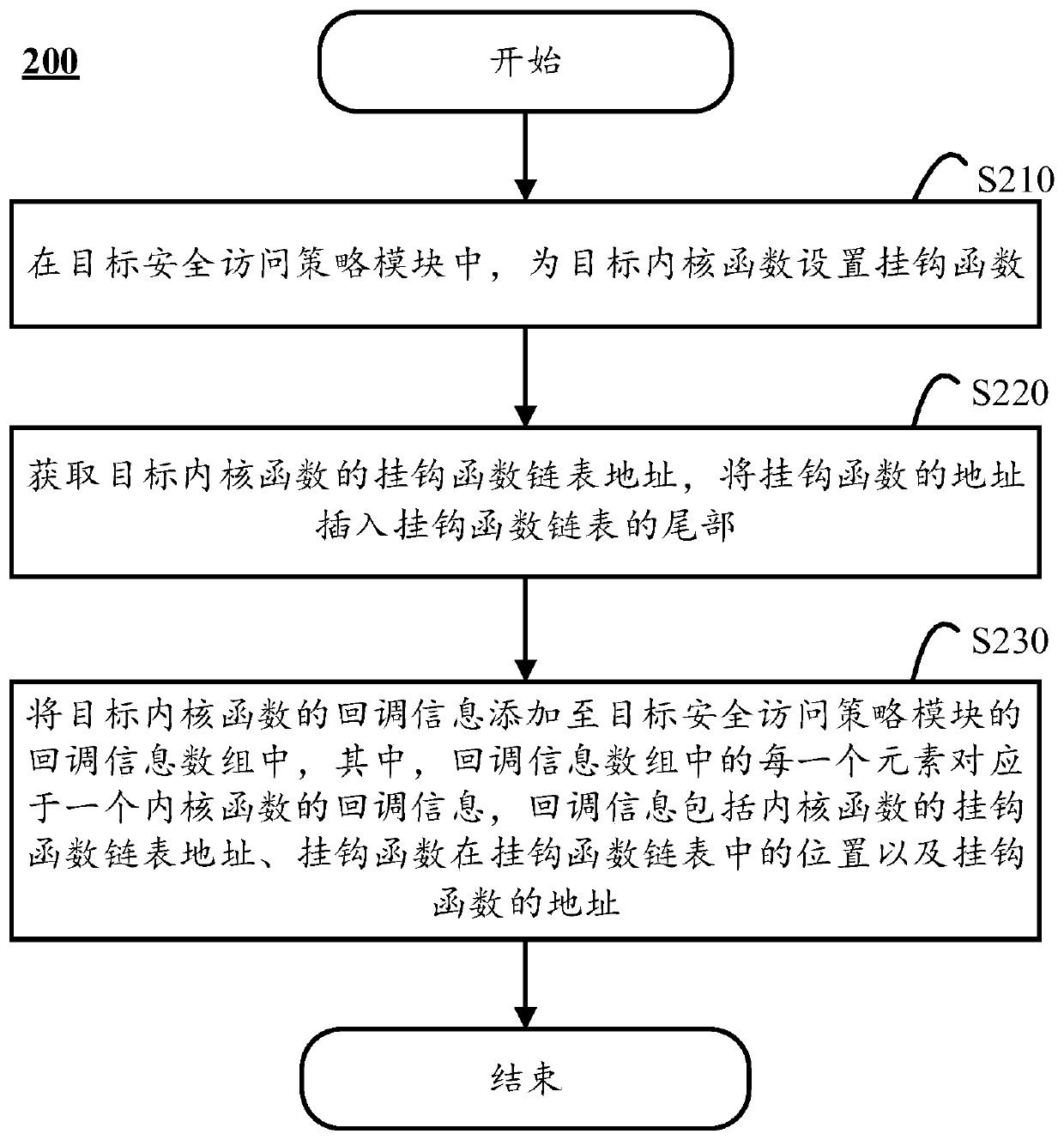

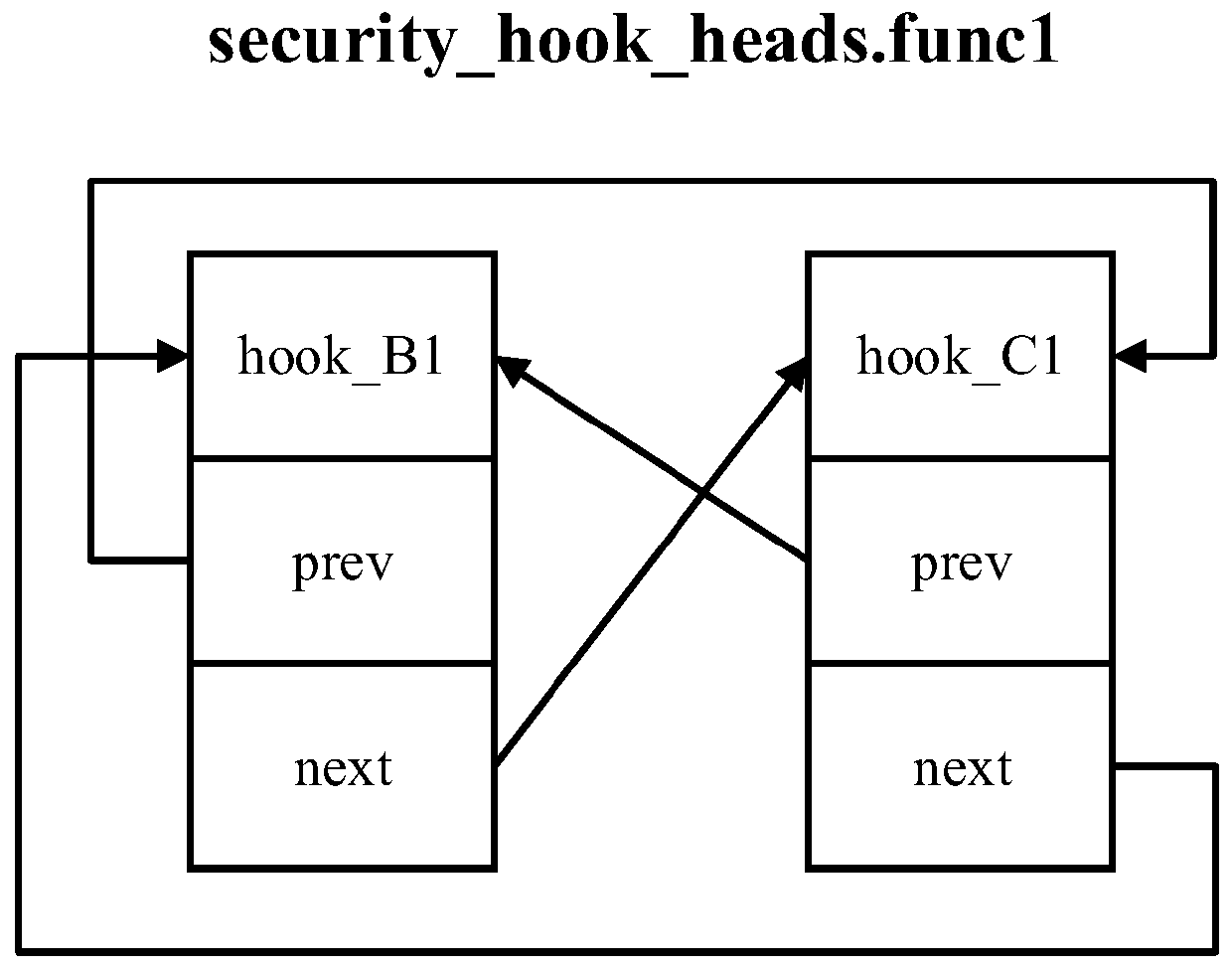

Multi-security access strategy control method and computing equipment

The invention discloses a multi-security access strategy control method, which is executed in computing equipment and comprises the following steps of: setting a hook function for a target kernel function in a target security access strategy module; acquiring the hook function linked list address of the target kernel function, and inserting the address of the hook function into the tail part of the hook function linked list; and adding the callback information of the target kernel function to the callback information array of the target security access strategy module, wherein each element inthe callback information array corresponds to the callback information of one kernel function, and the callback information comprises the hook function linked list address of the kernel function, theposition of the hook function in the hook function linked list and the address of the hook function. The invention also discloses a corresponding security access method and computing equipment.

Owner:北京统信软件技术有限公司

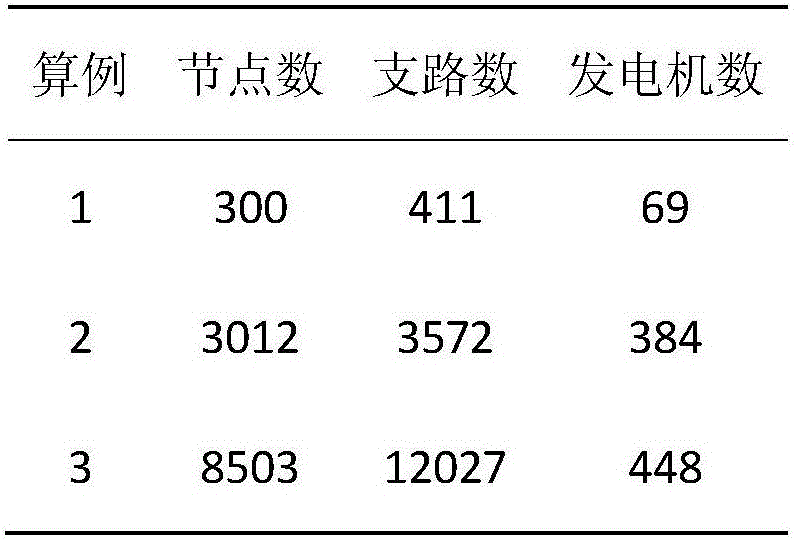

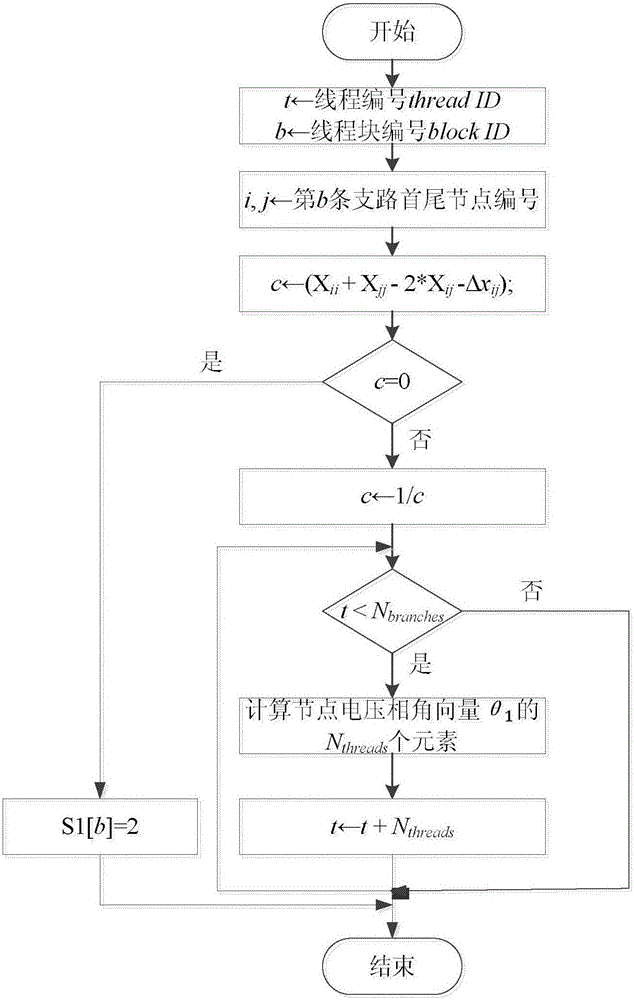

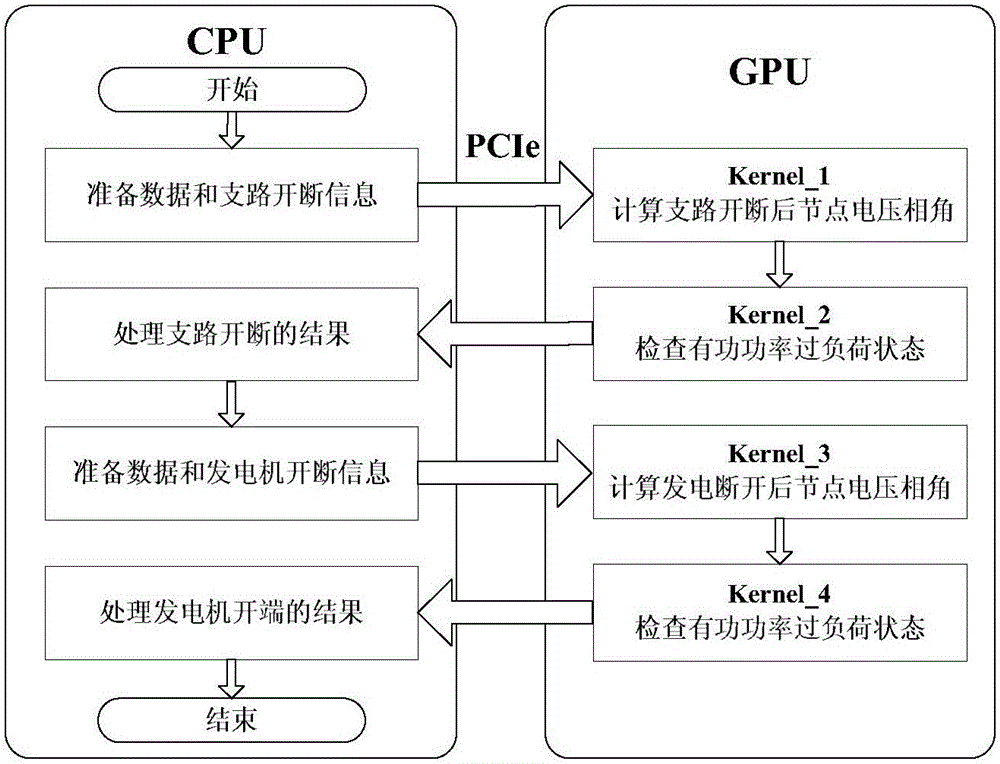

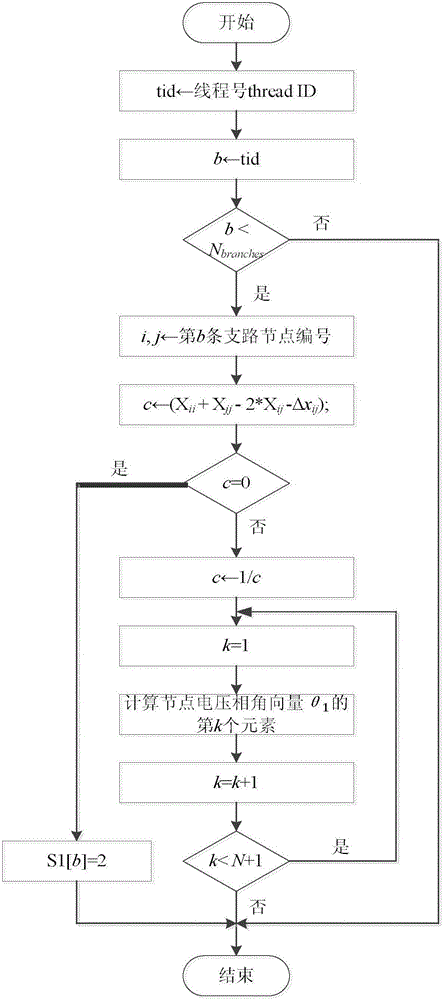

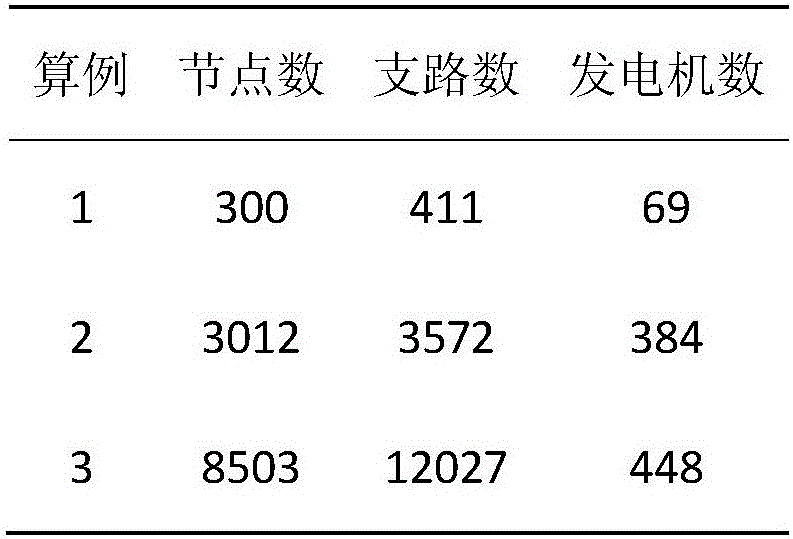

Direct current fault screening method designed in combination with GPU hardware and software architecture characteristics

ActiveCN106021943ATime-consuming to solveExpansion quantityInformaticsSpecial data processing applicationsSoftware architectureScreening method

The invention discloses a direct current fault screening method designed in combination with GPU hardware and software architecture characteristics. The method includes the steps that optimal design is conducted on task assignment of kernel functions of a GPU according to GPU hardware and software parameters; one cut-off is calculated with a thread block; a CPU reads power network data and sends the required data to the GPU; two CUDA currents are adopted, and asynchronous execution of branch cut-off fault screening and electric generator cut-off fault screening is achieved while the GPU executes the kernel functions; according to the first CUDA current, the first kernel function and the second kernel function in the GPU screen a branch cut-off fault set S1 and send the fault set S1 back to the CPU; according to the second CUDA current, the third kernel function and the fourth kernel function in the GPU screen an electric generator cut-off fault set S2 and send the fault set S2 back to the CPU. One thread block is utilized to calculate one cut-off, the total number of used threads is increased, the calculation amount of a single thread is reduced, and hardware resources and calculation capacity of the GPU are fully utilized.

Owner:SOUTHEAST UNIV

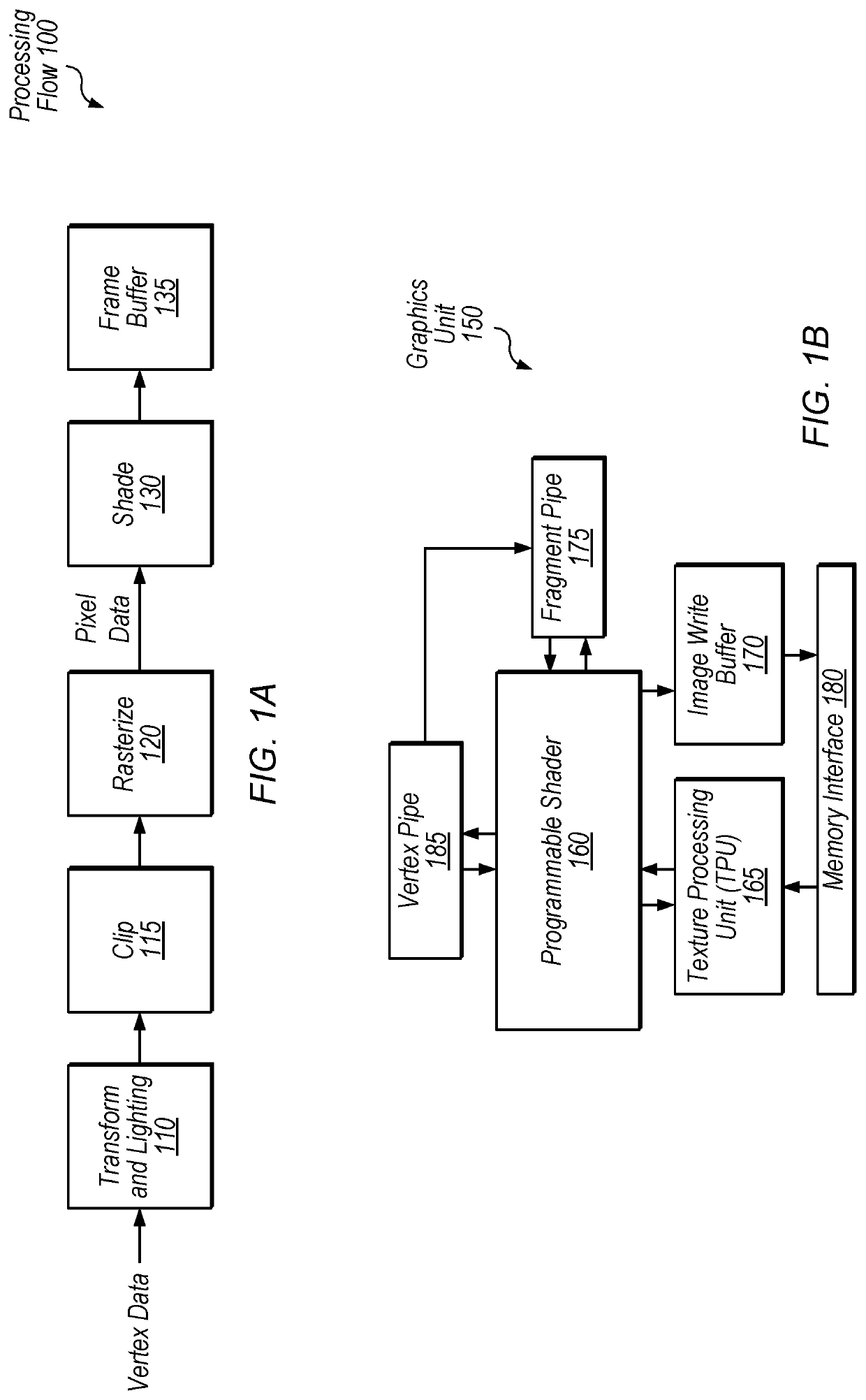

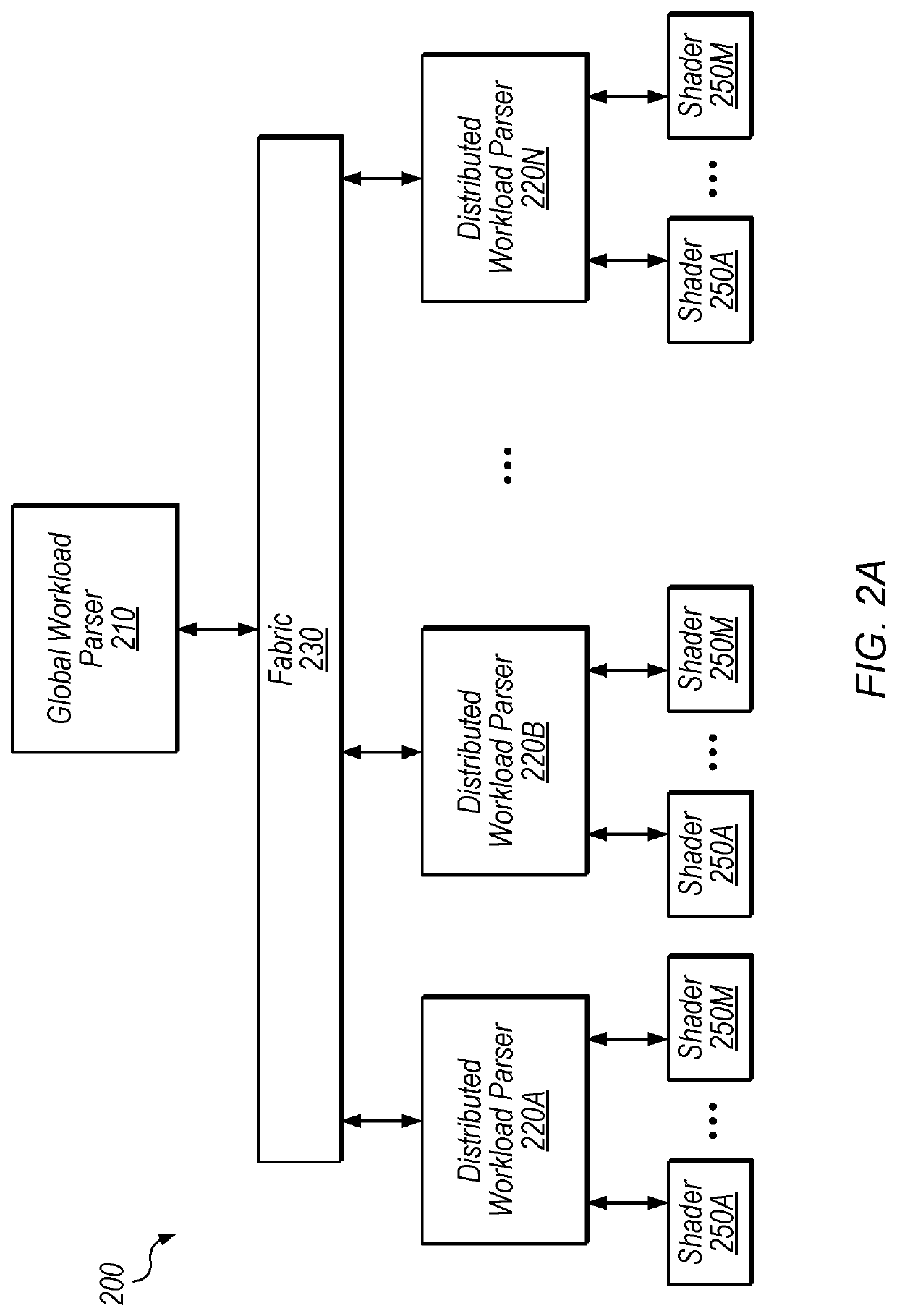

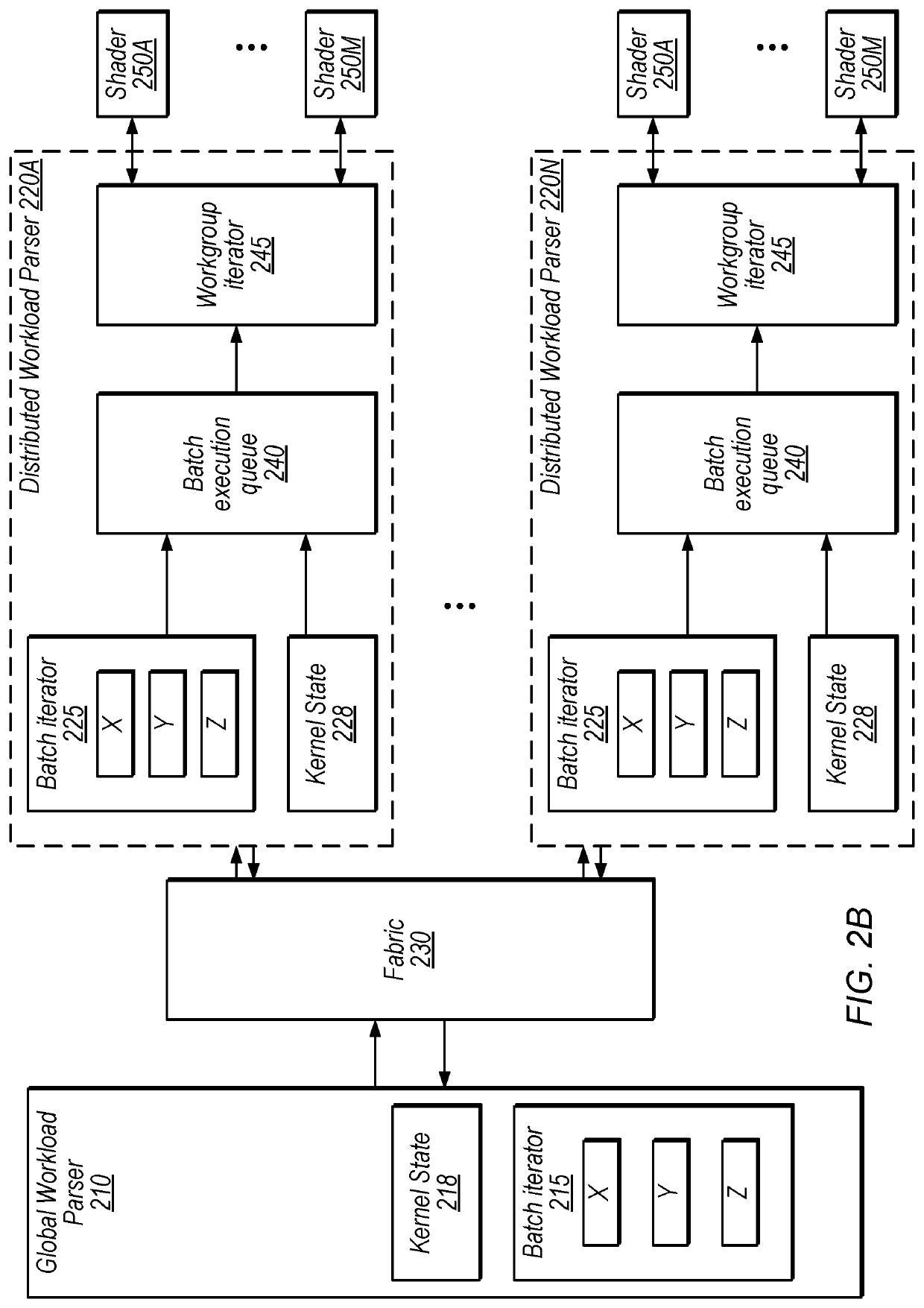

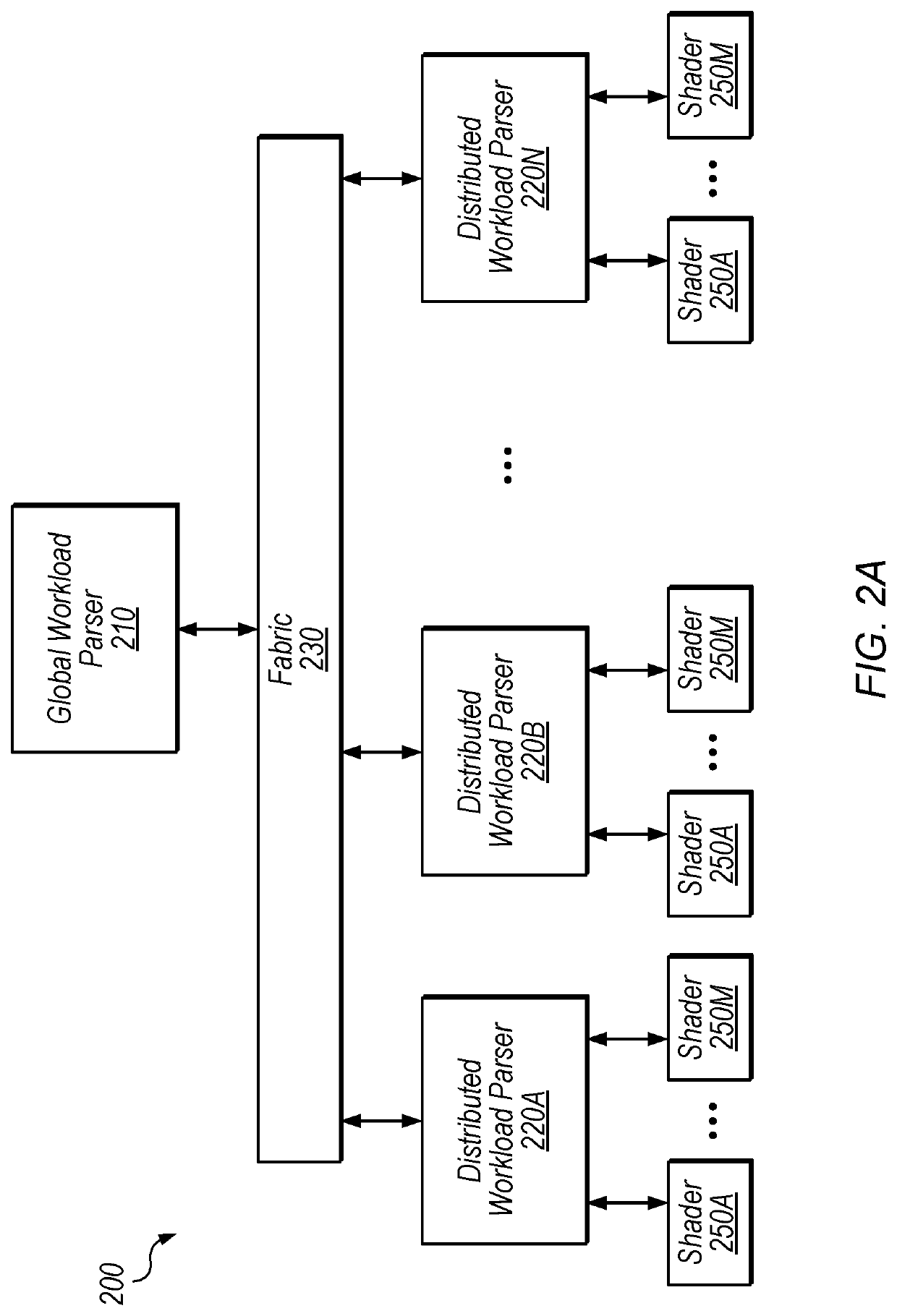

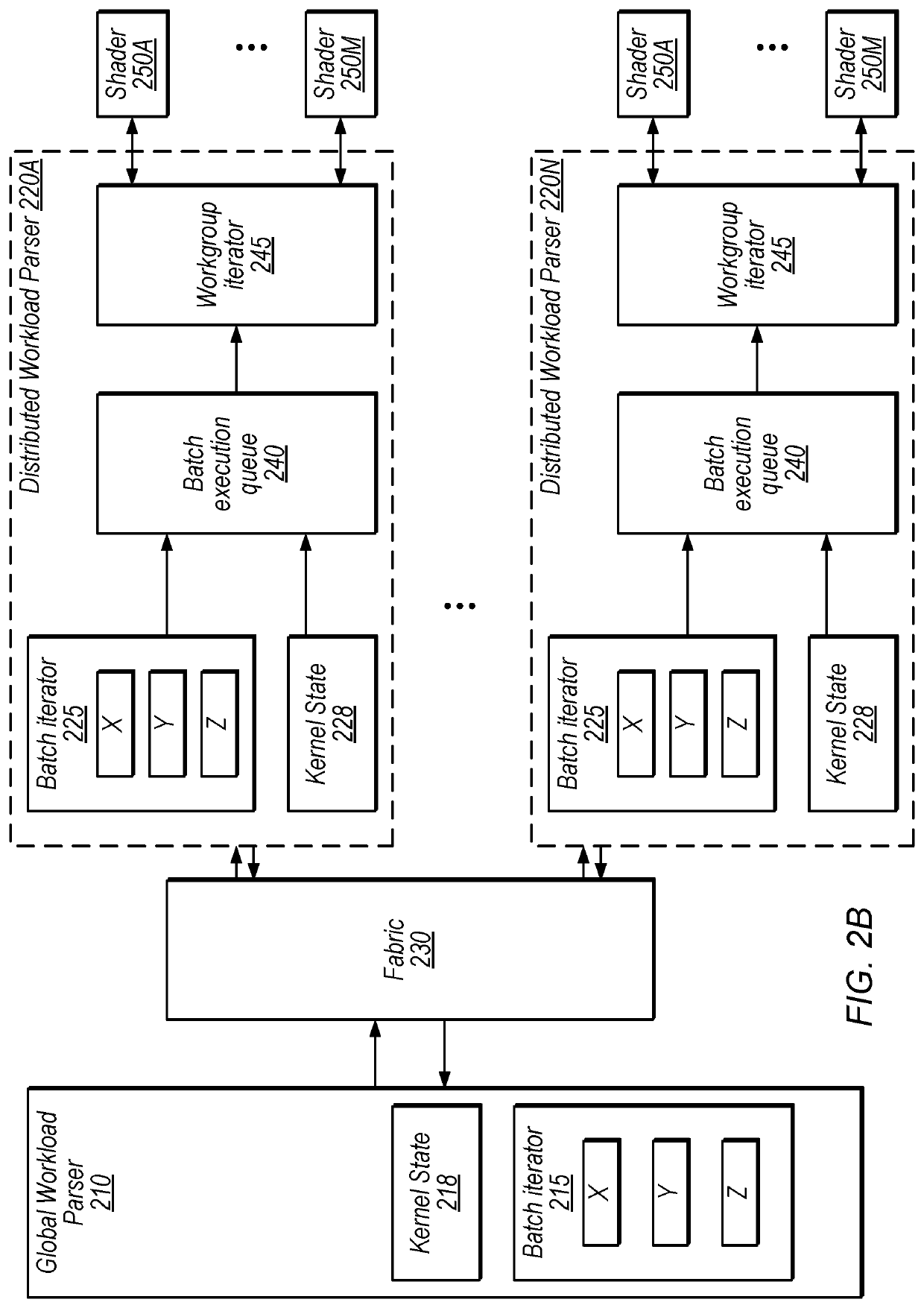

Distributed Compute Work Parser Circuitry using Communications Fabric

ActiveUS20200098160A1Resource allocationDetails involving image processing hardwareBatch processingParallel computing

Techniques are disclosed relating to distributing work from compute kernels using a distributed hierarchical parser architecture. In some embodiments, an apparatus includes a plurality of shader units configured to perform operations for compute workgroups included in compute kernels processed by the apparatus, a plurality of distributed workload parser circuits, and a communications fabric connected to the plurality of distributed workload parser circuits and a master workload parser circuit. In some embodiments, the master workload parser circuit is configured to iteratively determine a next position in multiple dimensions for a next batch of workgroups from the kernel and send batch information to the distributed workload parser circuits via the communications fabric to assign the batch of workgroups. In some embodiments, the distributed parsers maintain coordinate information for the kernel and update the coordinate information in response to the batch information, even when the distributed parsers are not assigned to execute the batch.

Owner:APPLE INC

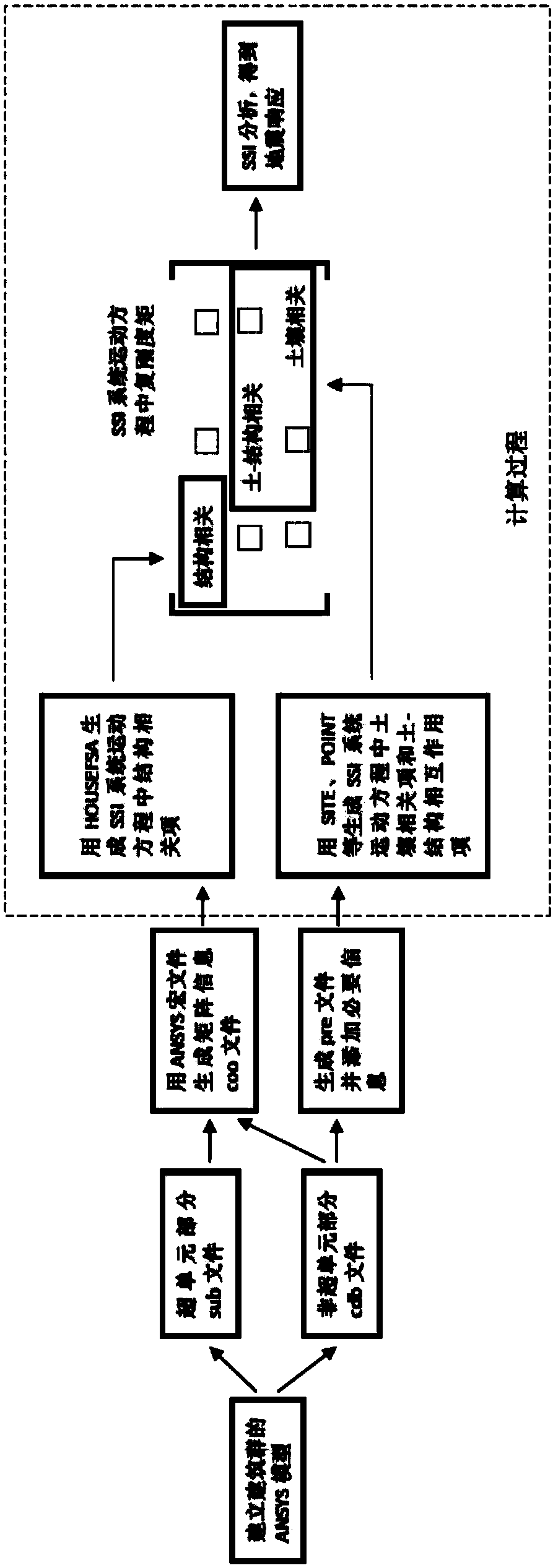

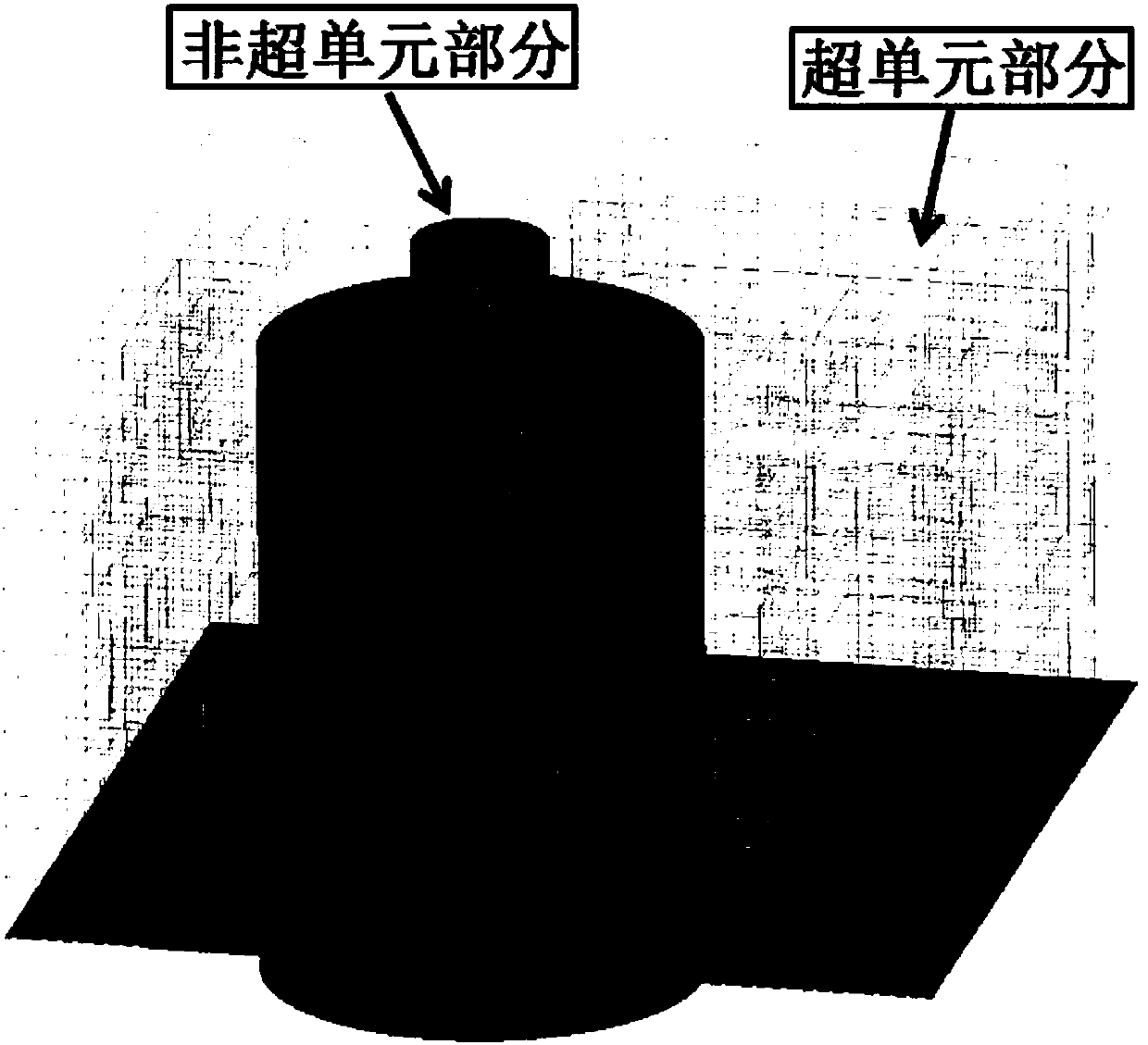

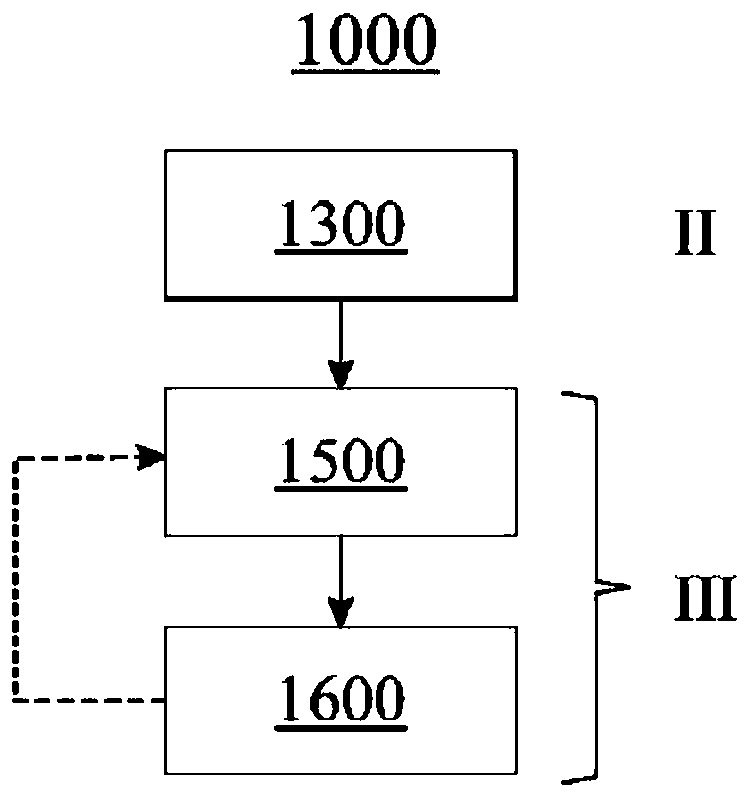

Floor response spectrum simplified calculation method considering SSSI effect

ActiveCN108038262AConsider realConsider efficientGeometric CADDesign optimisation/simulationSuper elementBuilding design

The invention belongs to the technical field of nuclear building design, and relates to a floor response spectrum simplified calculation method considering an SSSI effect. The simplified calculation method is combined with two kinds of finite element programs of the general finite element program and the SSI analysis program; on one hand, part of the structure units in a building group are assembled into a unit by means of super-elements in the general finite element program; on the other hand, by means of format conversion of a dynamic characteristic matrix, the SSI analysis program can directly read unit matrix information of the general finite element program, and floor response spectrum simplified calculation considering the SSI effect is conducted by means of a computing kernel of theSSI analysis program. According to the floor response spectrum simplified calculation method considering the SSSI effect, the SSSI effect can be considered in a real, efficient and rapid mode, calculation results conform to reality on the basis of ensuring consistency and accuracy of a model, and the computing cost is low.

Owner:CHINA NUCLEAR POWER ENG CO LTD

Computer-implemented method, computer-readable medium and heterogeneous computing system

A computer-implemented method includes initializing a first processing unit (71) of a heterogeneous computing system with a first compute kernel (140-144) and a second processing unit (72) of the heterogeneous computing system with a second compute kernel (150-154). Both the first compute kernel (140-144) and the second compute kernel (150- 54) are configured to perform a numerical operation derived from a program segment (220) configured to receive a first data structure (A) storing multiple elements of a common data type. The program segment (220) includes a function meta information including data related to a size of an output of the numerical operation, a structure of the output, and / or an effort for generating the output. The function meta information and a data meta information of aruntime instance (A1) of the first data structure (A) are used to determine first expected costs of executing the first kernel (140-144) on the first processing unit (71) to perform the numerical operation with the runtime instance (A1) and to determine second expected costs of executing the second kernel (150-154) on the second processing unit (72) to perform the numerical operation with the runtime instance (A1). The data meta information includes at least one of a runtime size information of the runtime instance (A1), a runtime location information of the runtime instance (A1), a runtime synchronization information of the runtime instance (A1) and a runtime type information of the runtime instance (A1). The method further includes one of executing the first compute kernel (140-144) onthe first processing unit (71) to perform the numerical operation on the runtime instance (A1) if the first expected costs are lower than or equal to the second expected costs, and executing the second compute kernel (150-154) on the second processing unit (72) to perform the numerical operation on the runtime instance (A1) if the first expected costs are higher than the second expected costs.

Owner:ILNUMERICS GMBH

Method and device for detecting errors of kernel extension module on basis of access rule control

InactiveCN103049381AImprove securityReduce workloadSoftware testing/debuggingComputer hardwareModula

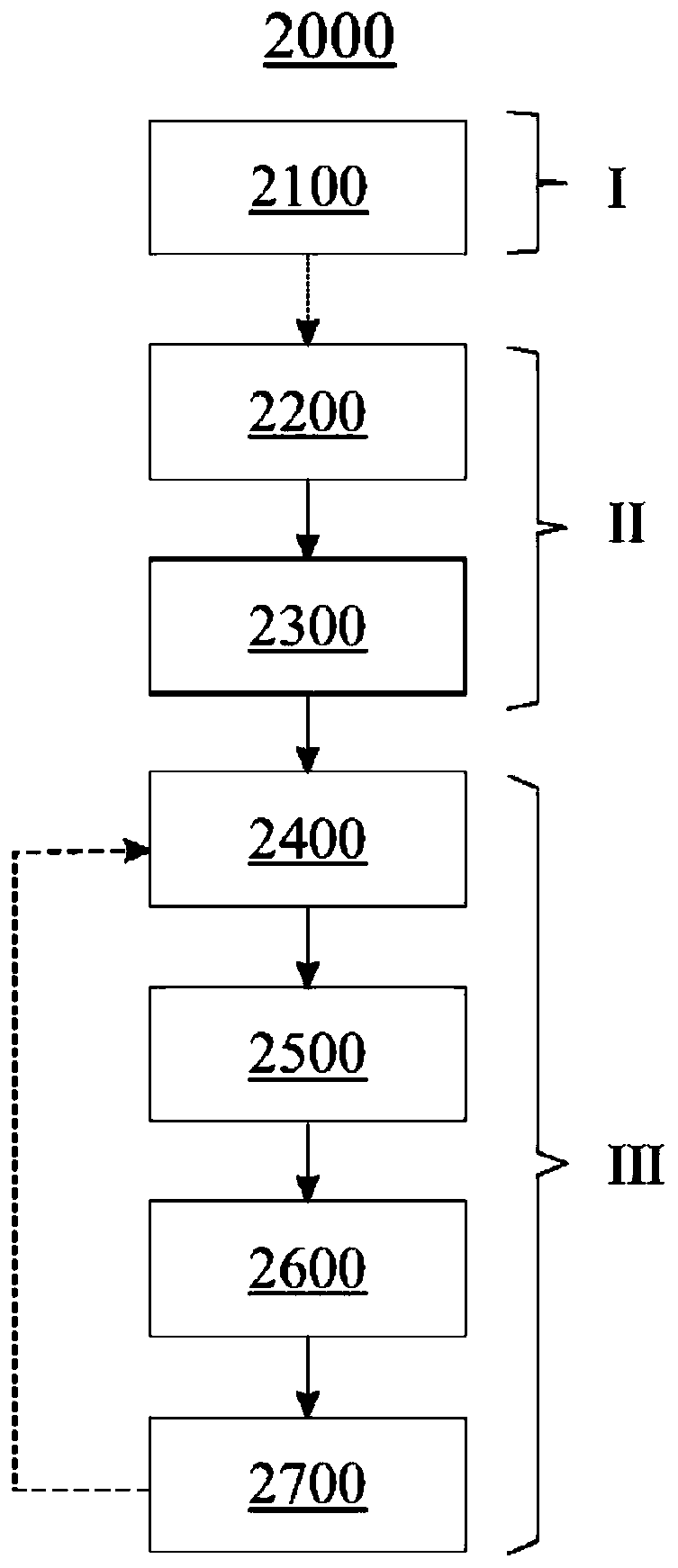

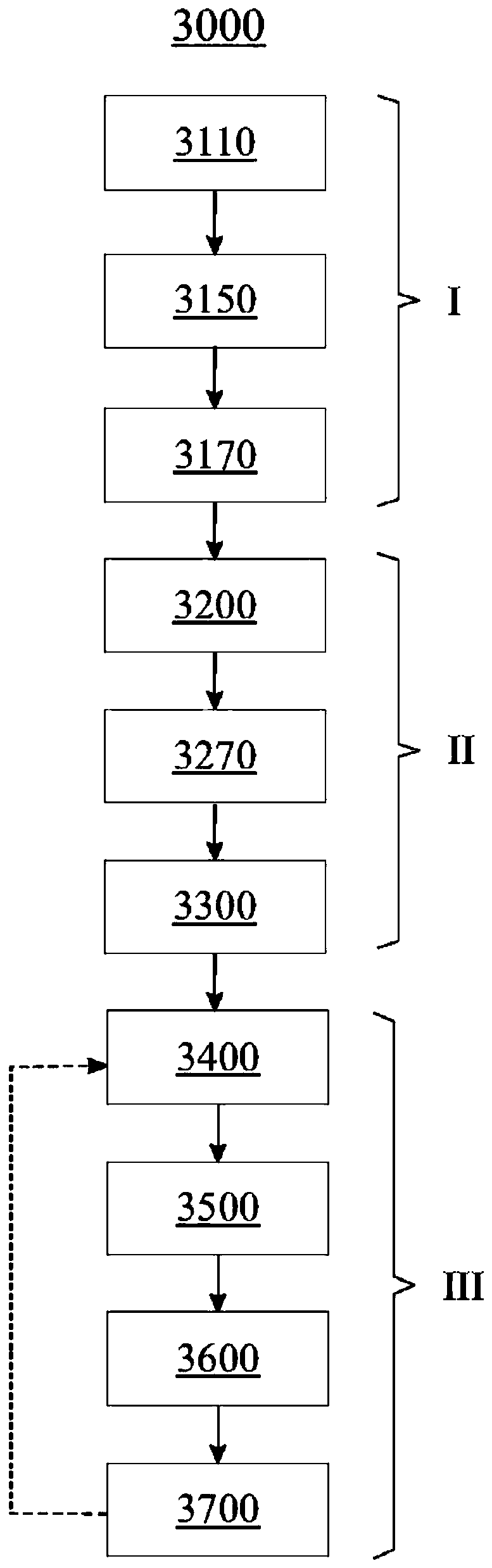

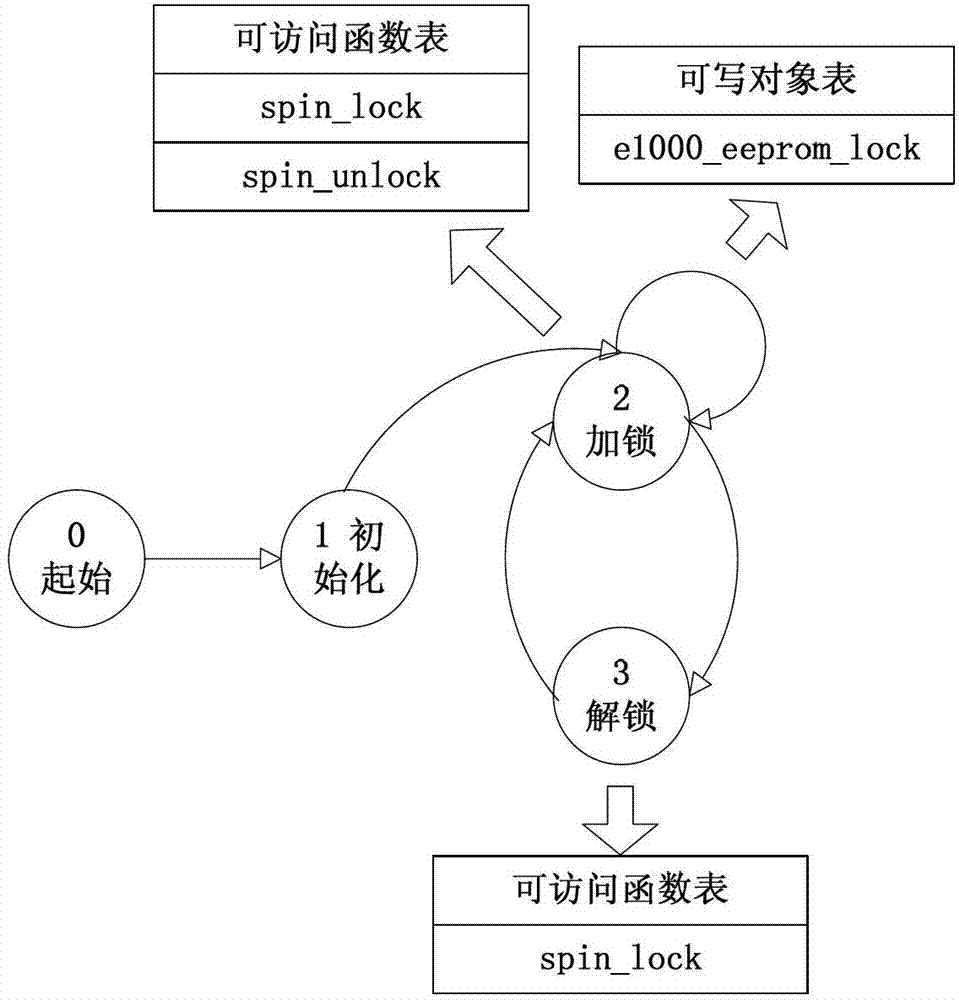

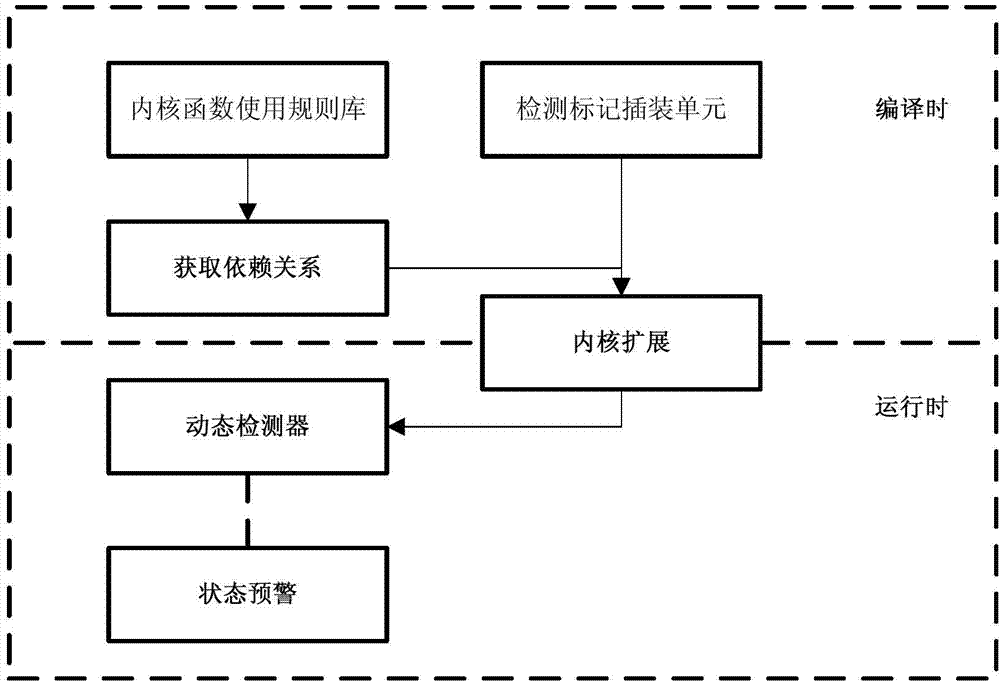

The invention relates to the technical field of computer security protection, in particular to a method and a device for detecting errors of a kernel extension module on the basis of access rule control. The method for detecting the errors includes steps of S1, setting use rules of a kernel function for the kernel extension module; S2, analyzing the dependency among the use rules and the kernel extension module and creating a state transition diaphragm according to the dependency; S3, adding instrumentation marks in the kernel extension module according to transition conditions in the state transition diaphragm; and S4, triggering detection executed according to the use rules when the kernel extension module is operated to the instrumentation marks. The method and the device have the advantages that whether an operating procedure of the kernel extension module has insecurity factors or not can be accurately detected in real time, the security of a kernel of an operating system can be improved, unnecessary loss is reduced, and workload of programmers is reduced.

Owner:TSINGHUA UNIV

Distributed compute work parser circuitry using communications fabric

ActiveUS10593094B1Resource allocationDetails involving image processing hardwareBatch processingParallel computing

Techniques are disclosed relating to distributing work from compute kernels using a distributed hierarchical parser architecture. In some embodiments, an apparatus includes a plurality of shader units configured to perform operations for compute workgroups included in compute kernels processed by the apparatus, a plurality of distributed workload parser circuits, and a communications fabric connected to the plurality of distributed workload parser circuits and a master workload parser circuit. In some embodiments, the master workload parser circuit is configured to iteratively determine a next position in multiple dimensions for a next batch of workgroups from the kernel and send batch information to the distributed workload parser circuits via the communications fabric to assign the batch of workgroups. In some embodiments, the distributed parsers maintain coordinate information for the kernel and update the coordinate information in response to the batch information, even when the distributed parsers are not assigned to execute the batch.

Owner:APPLE INC

Direct current fault screening method based on GPU acceleration

ActiveCN105955712AImprove computing efficiencyReduce the number of transfersData processing applicationsProgram initiation/switchingElectric power systemScreening method

The invention discloses a direct current fault screening method based on GPU (Graphics Processing Unit) acceleration. The method comprises the following steps that a direct current power flow algorithm is optimized; a CPU (Central Processing Unit) reads electric network data, and calculates a node reactance matrix X0; a node voltage phase angle Theta<0> of a ground state electric network is calculated; electric network basic data is transmitted to the GPU; a GPU kernel function 1 calculates a node voltage phase angle Theta<1> when branch circuits are in an open circuit state; a GPU kernel function 2 calculates the active power of each branch circuit when the branch circuits are in an open circuit state; a branch circuit open circuit fault set S1 is screened out and is transmitted back to the CPU; a GPU kernel function 3 calculates a node voltage phase angle Theta<2> when a power generator is in an open circuit state; a GPU kernel function 4 calculates the active power of each branch circuit when the power generator is in the open circuit state; and a power generator fault set S2 is screened out and is transmitted back into the CPU. The direct current fault screening method based on GPU accelerationprovided by the invention has the advantages that the calculation efficiency is improved; and the problem of great time consumption of fault direct current screening in the static safety analysis of an electric power system is solved.

Owner:SOUTHEAST UNIV

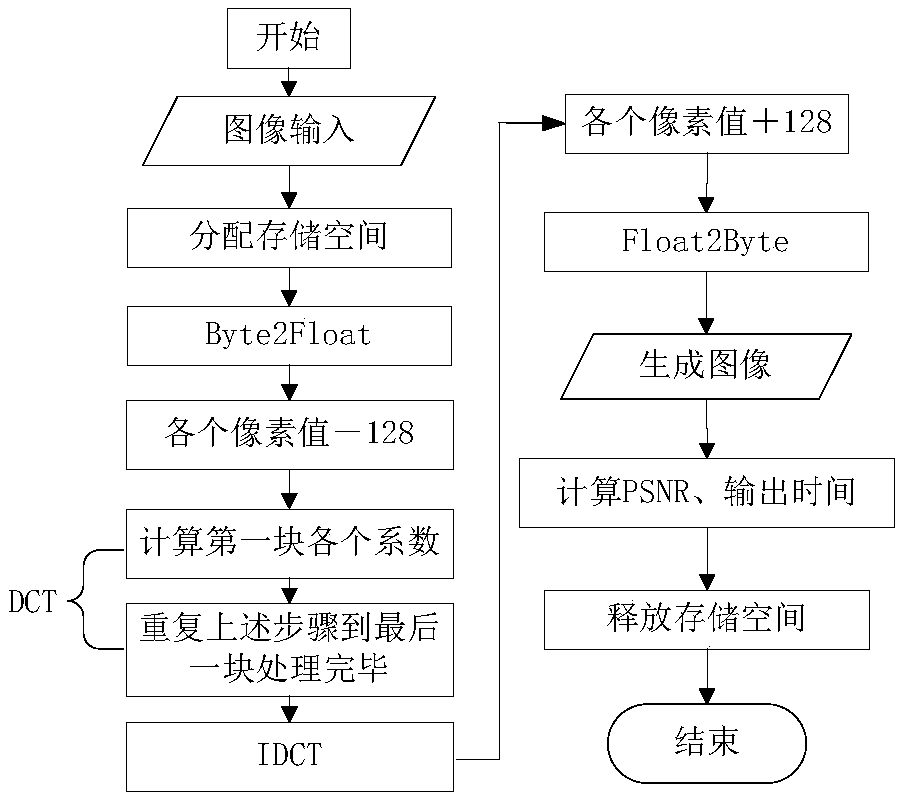

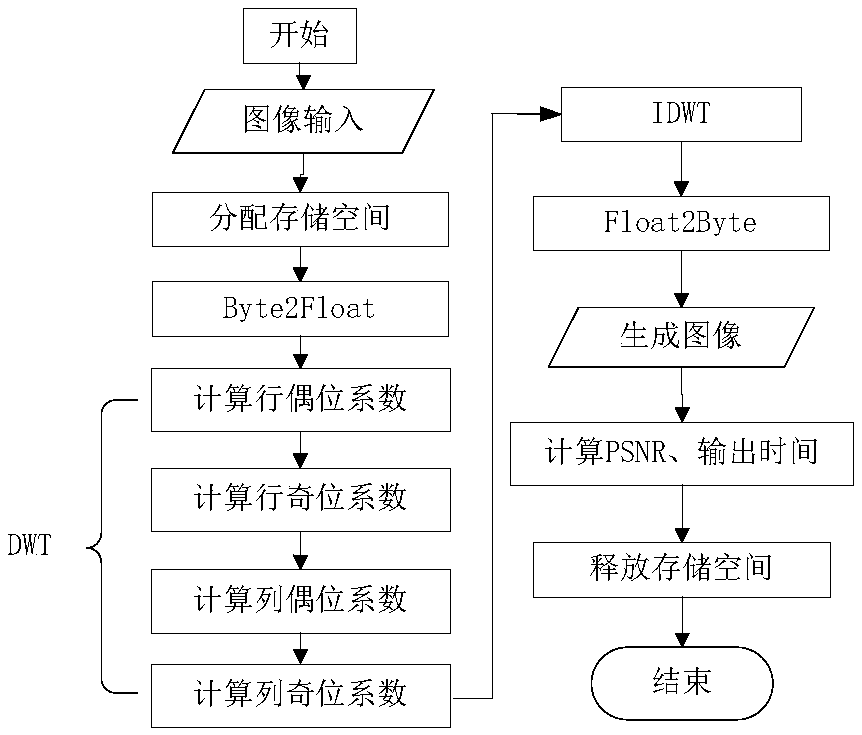

Accelerated implementation method of DCT algorithm and DWT algorithm based on CUDA architecture for image compression

ActiveCN109451322AParallel Execution High SpeedConvenient researchDigital video signal modificationOperational systemImaging processing

The invention provides an accelerated implementation method of a DCT algorithm and a DWT algorithm based on a CUDA architecture for image compression, and belongs to the field of image compression. The existing image processing means has a problem of low compression rate. The accelerated implementation method of the DCT algorithm and the DWT algorithm based on the CUDA architecture for image compression comprises the following steps: analyzing the software system and a hardware system of s CUDA platform, and building the CUDA platform based on VS2010 under an Windows operating system; respectively mapping the DCT algorithm and the DWT algorithm into kernel functions of a two-layer CUDA execution model to obtain an improved DCT algorithm and an improved DWT algorithm, and respectively running the improved DCT algorithm and the improved DWT algorithm on a GPU end; and running the improved DCT algorithm on the CUDA platform. The accelerated implementation method provided by the inventionis applicable to the implementation of the DCT algorithm and the DWT algorithm on the CUDA platform. A compression ratio several times greater than that of the CPU can be obtained in a parallel execution operation process, so that the compression rate of compressing digital images can be effectively improved.

Owner:BEIJING INST OF AEROSPACE CONTROL DEVICES +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com