Patents

Literature

568 results about "Parallel running" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Parallel running is a strategy for system implementation where a new system slowly assumes the roles of the older system while both systems operate simultaneously. This conversion takes place as the technology of the old system is outdated so a new system is needed to be installed to replace the old one. After a period of time, when the system is proved to be working correctly, the old system will be removed completely and users will depend solely on the new system. The phrase parallel running can refer to the process of changing a fragment of business information technology operation to a new system or to the technique applied by the human resources departments in which the existing staff stay on board during the transition to a new staff.

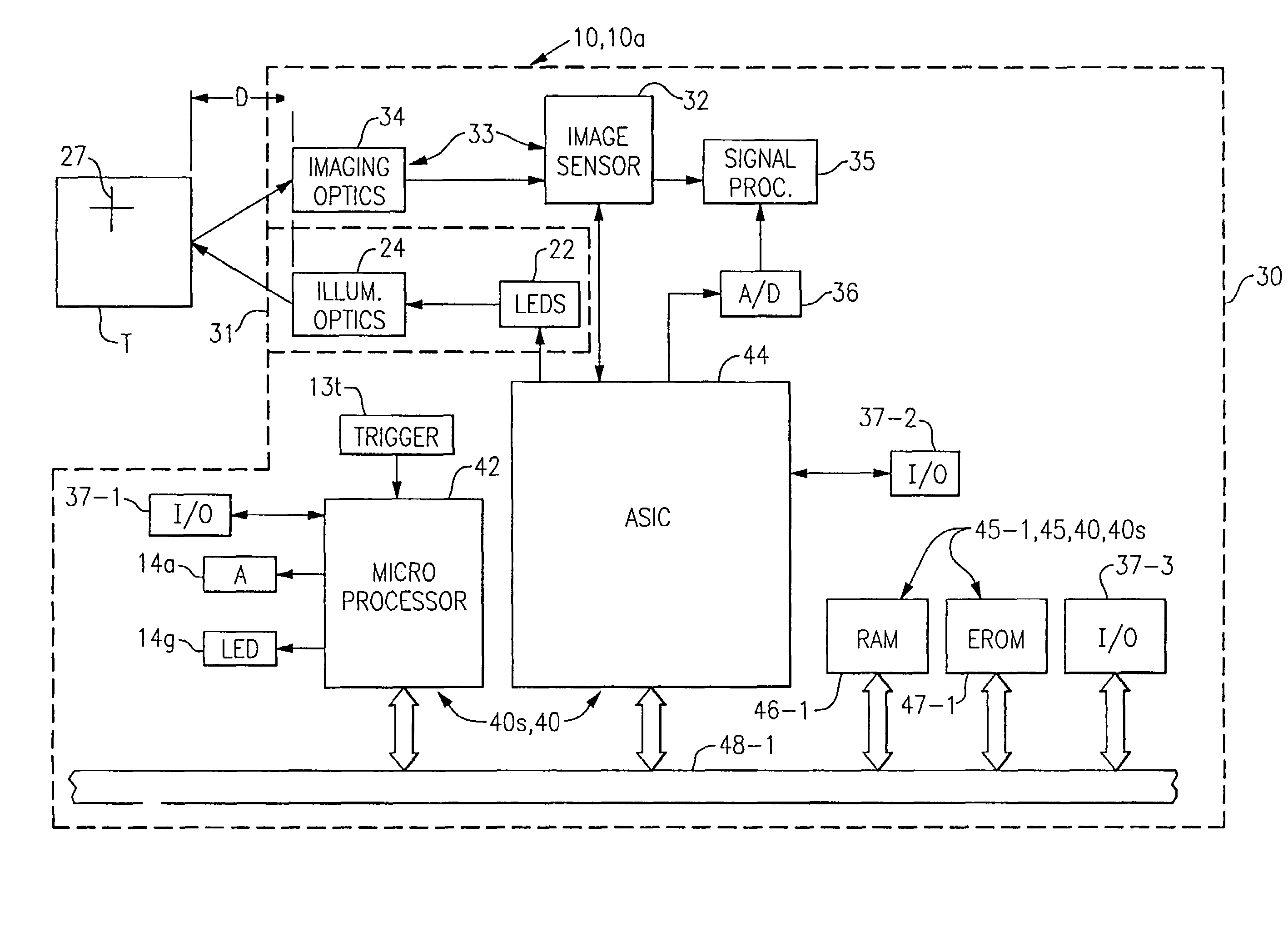

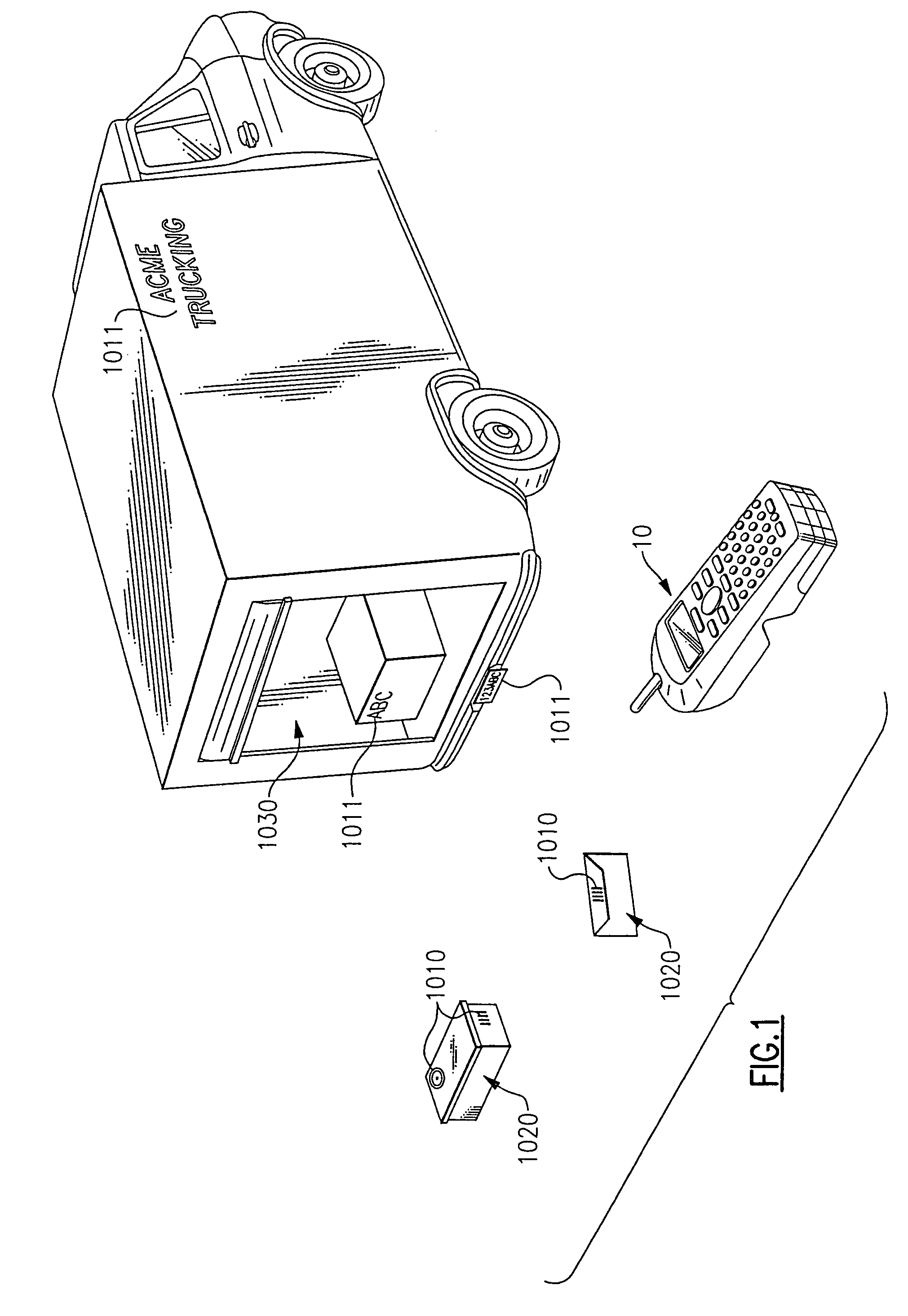

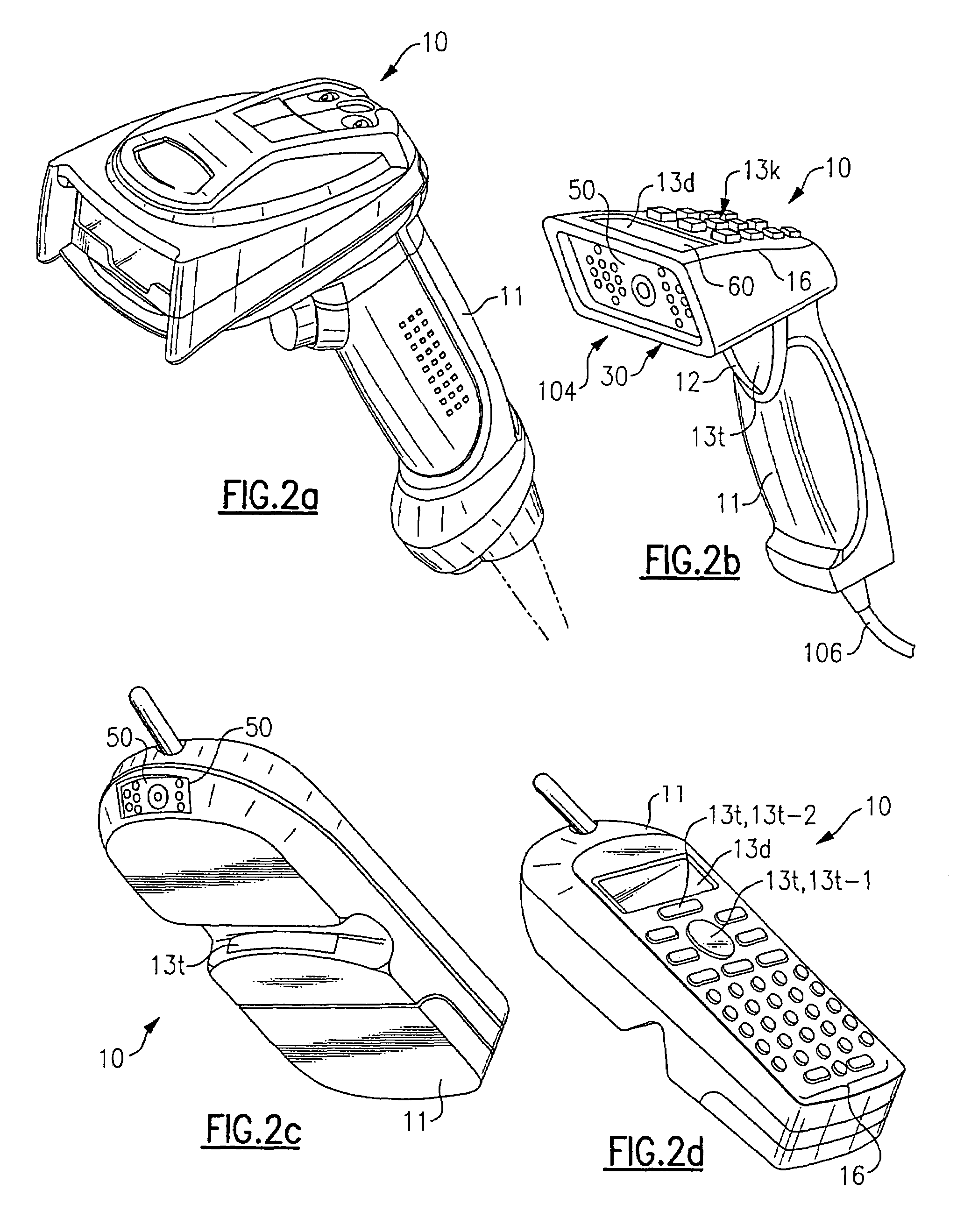

Adaptive optical image reader

InactiveUS7331523B2Character and pattern recognitionExposure controlDigital converterComputer science

Owner:HAND HELD PRODS

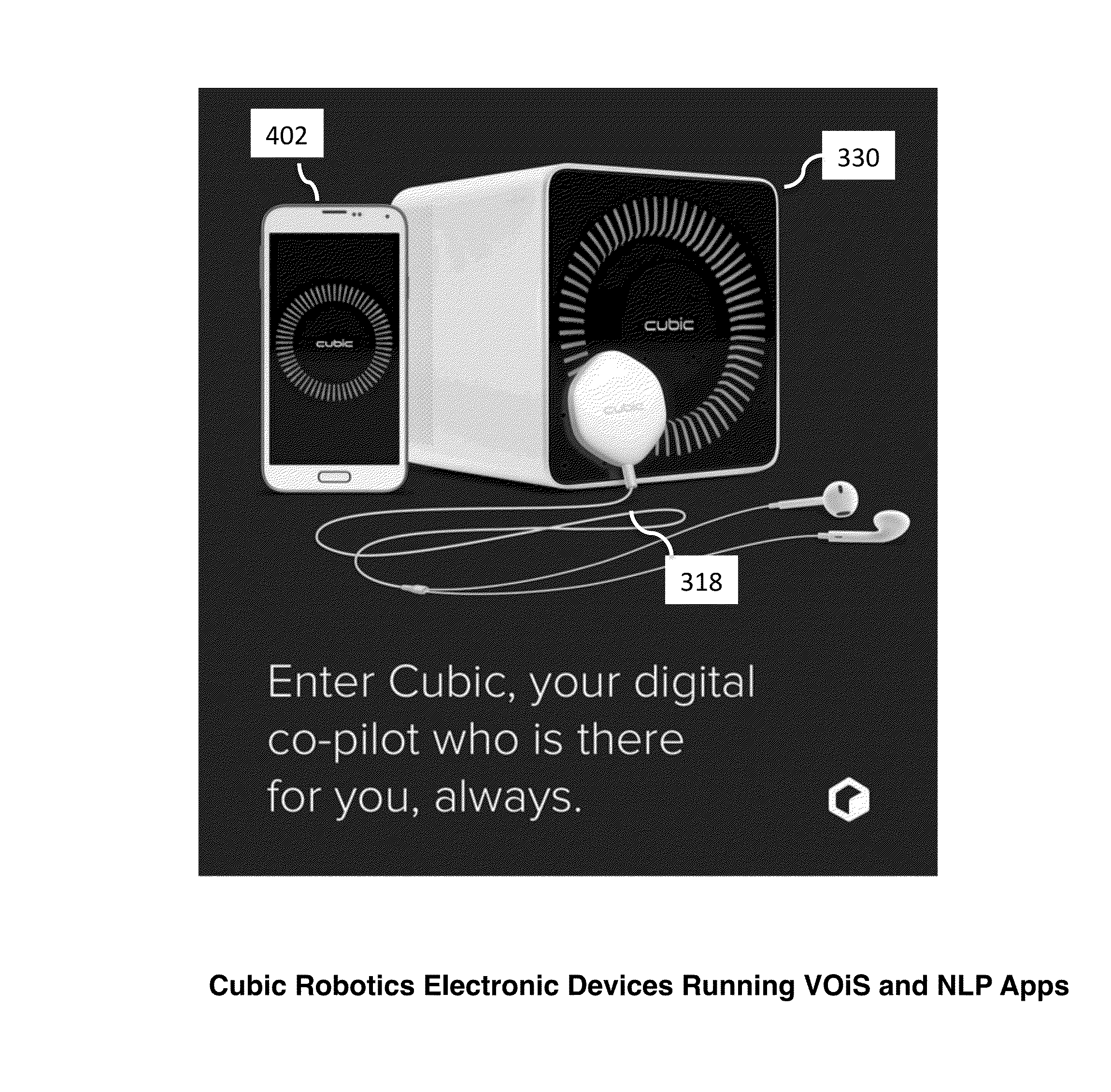

Voice driven operating system for interfacing with electronic devices: system, method, and architecture

InactiveUS20150279366A1Keep users engaged in conversationNatural language data processingProgramming languages/paradigmsOperational systemSoftware architecture

A system comprising an electronic device, a means for the electronic device to receive input text, a means to generate a response wherein the means to generate the response is a software architecture organized in the form of a stack of functional elements. These functional elements comprise an operating system kernel whose blocks and elements are dedicated to natural language processing, a dedicated programming language specifically for developing programs to run on the operating system, and one or more natural language processing applications developed employing the dedicated programming language, wherein the one or more natural language processing applications may run in parallel. Moreover, one or more of these natural language processing applications employ an emotional overlay.

Owner:CUBIC ROBOTICS

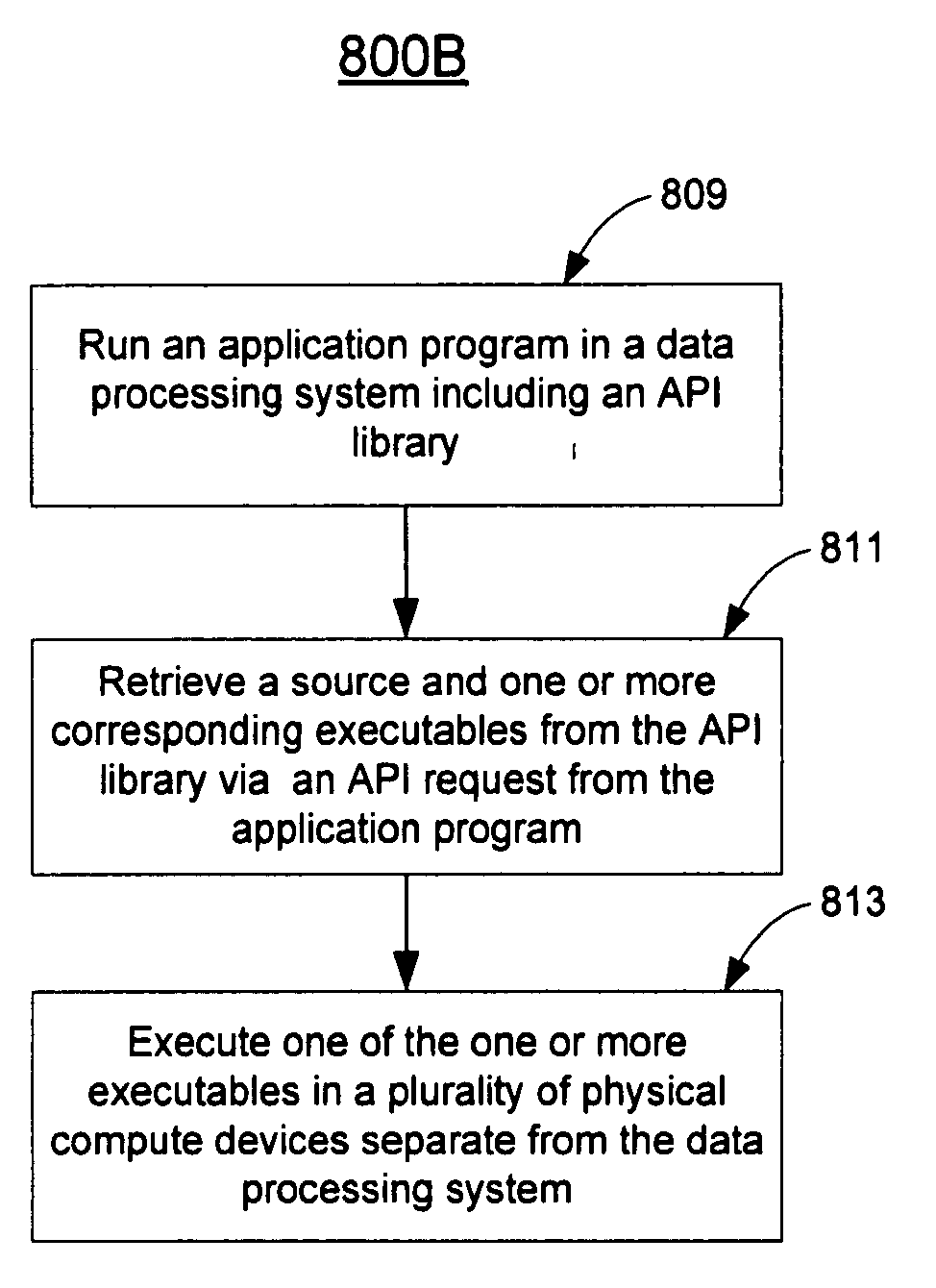

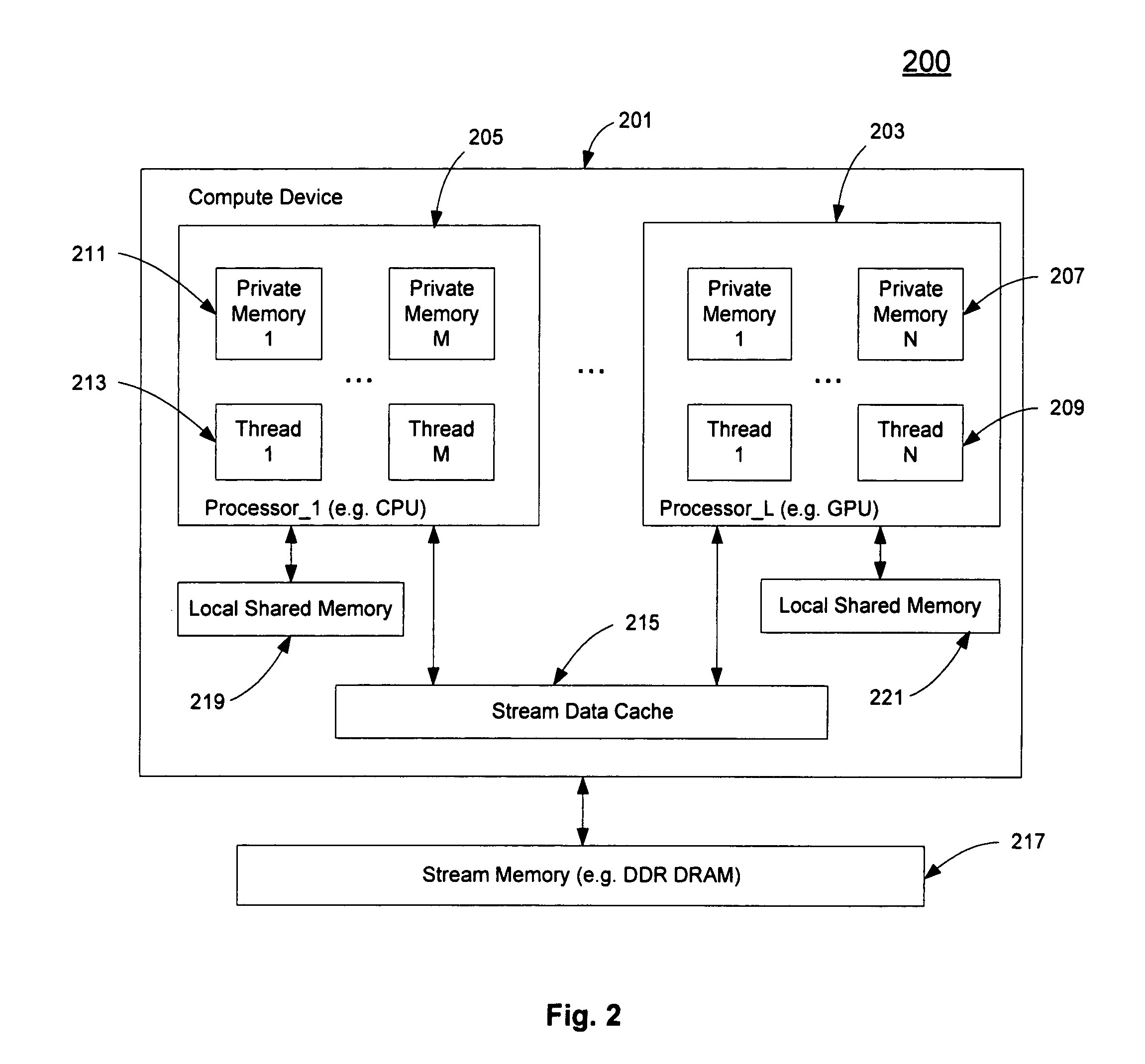

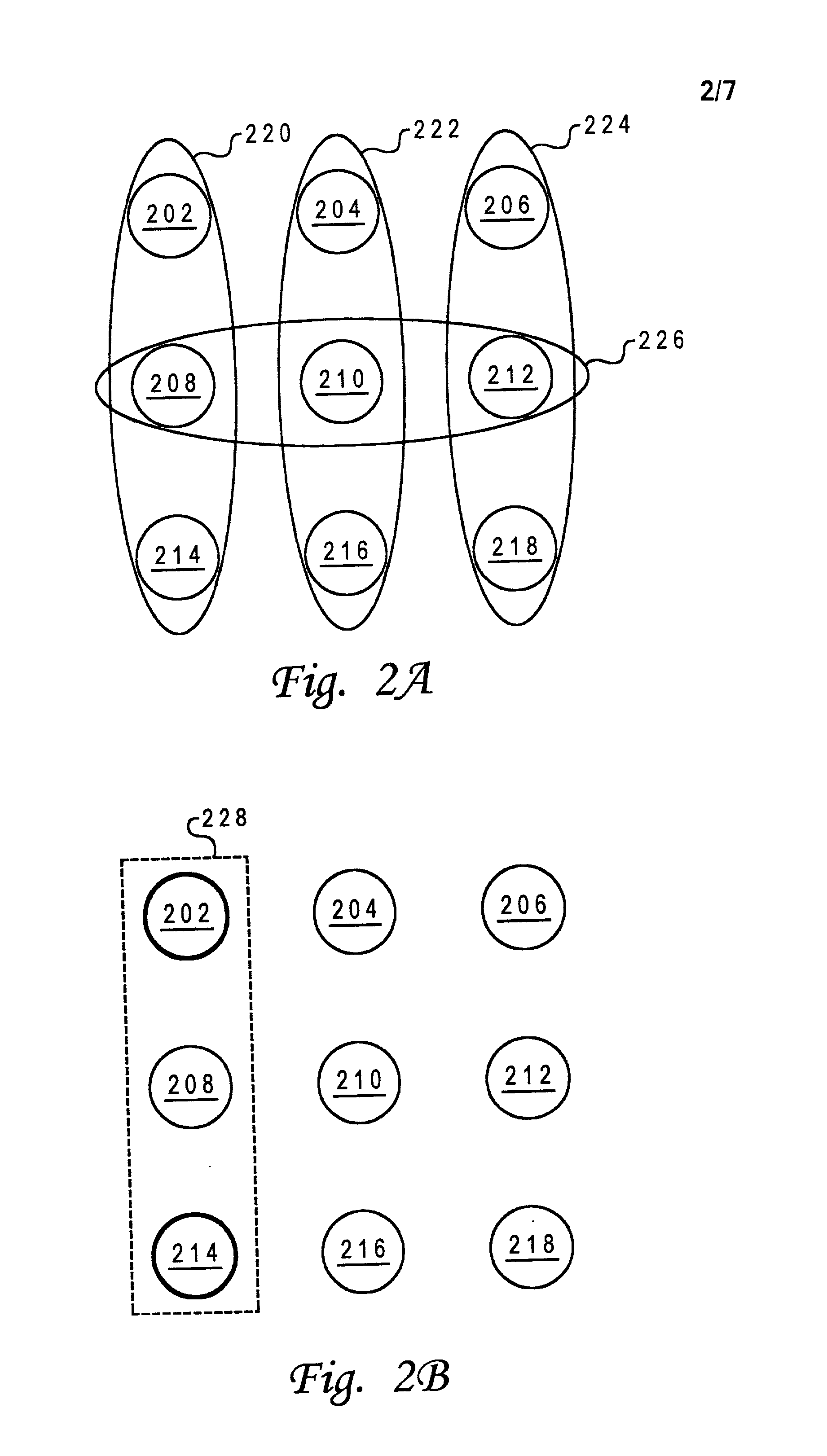

Parallel runtime execution on multiple processors

ActiveUS20080276262A1Multiprogramming arrangementsProgram loading/initiatingGraphicsParallel computing

A method and an apparatus that schedule a plurality of executables in a schedule queue for execution in one or more physical compute devices such as CPUs or GPUs concurrently are described. One or more executables are compiled online from a source having an existing executable for a type of physical compute devices different from the one or more physical compute devices. Dependency relations among elements corresponding to scheduled executables are determined to select an executable to be executed by a plurality of threads concurrently in more than one of the physical compute devices. A thread initialized for executing an executable in a GPU of the physical compute devices are initialized for execution in another CPU of the physical compute devices if the GPU is busy with graphics processing threads. Sources and existing executables for an API function are stored in an API library to execute a plurality of executables in a plurality of physical compute devices, including the existing executables and online compiled executables from the sources.

Owner:APPLE INC

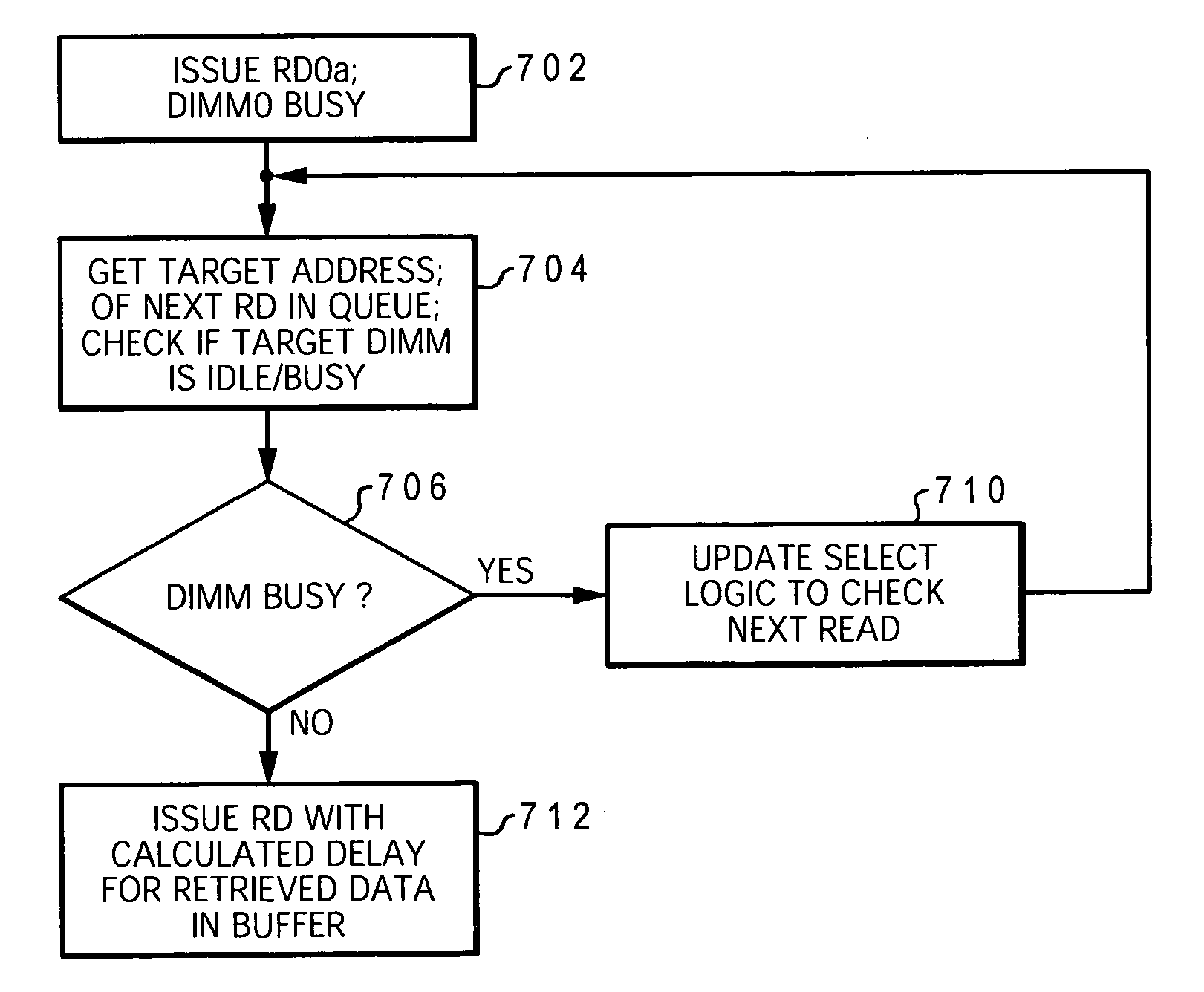

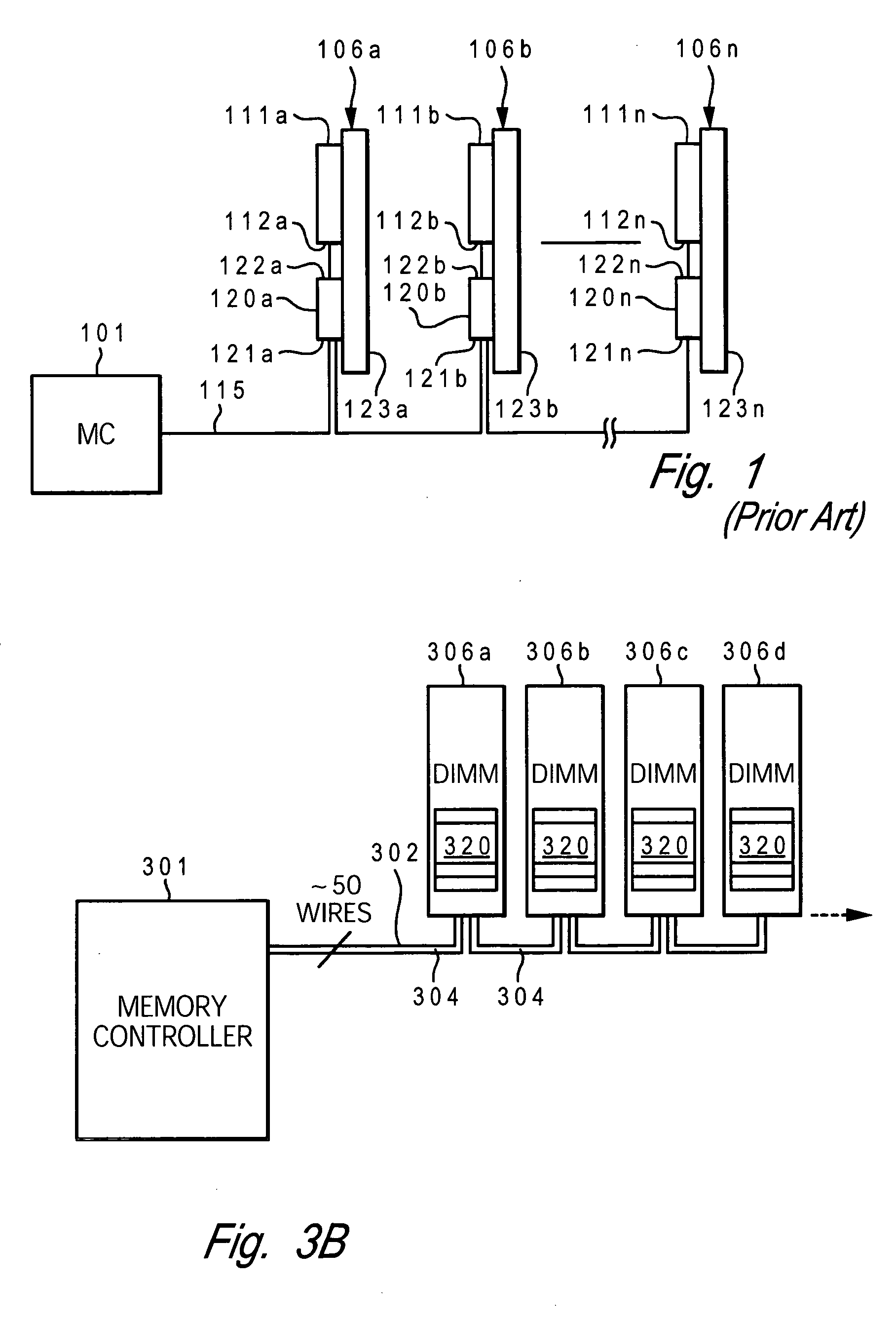

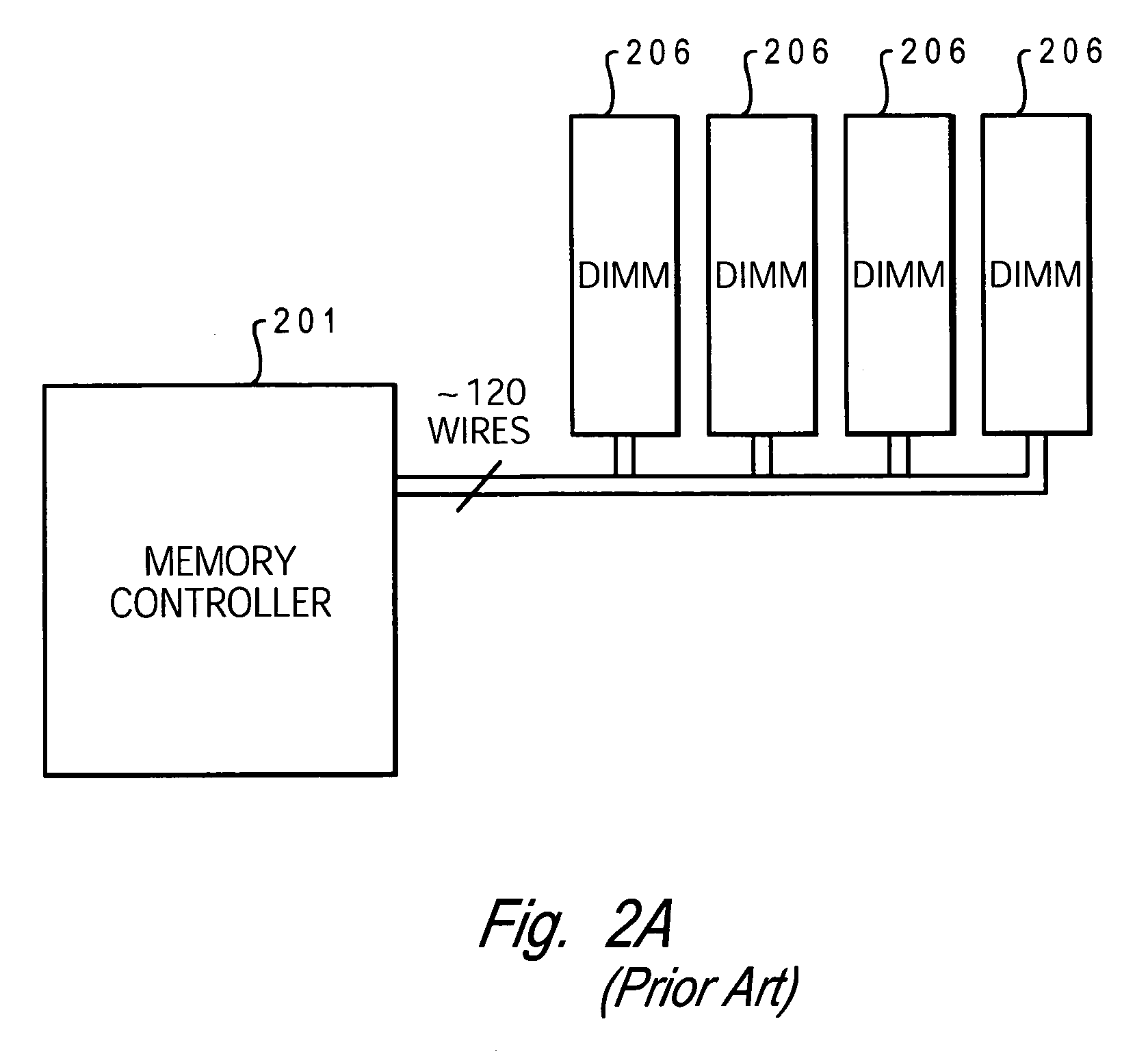

Streaming reads for early processing in a cascaded memory subsystem with buffered memory devices

InactiveUS20060179262A1Complete efficientlySignificant utilityMemory systemsControl storeTerm memory

A memory subsystem completes multiple read operations in parallel, utilizing the functionality of buffered memory modules in a daisy chain topology. A variable read latency is provided with each read command to enable memory modules to run independently in the memory subsystem. Busy periods of the memory device architecture are hidden by allowing data buses on multiple memory modules attached to the same data channel to run in parallel rather than in series and by issuing reads earlier than required to enable the memory devices to return from a busy state earlier. During scheduling of reads, the earliest received read whose target memory module is not busy is immediately issued at a next command cycle. The memory controller provides a delay parameter with each issued read. The number of cycles of delay is calculated to allow maximum utilization of the memory modules' data bus bandwidth without causing collisions on the memory channel.

Owner:IBM CORP

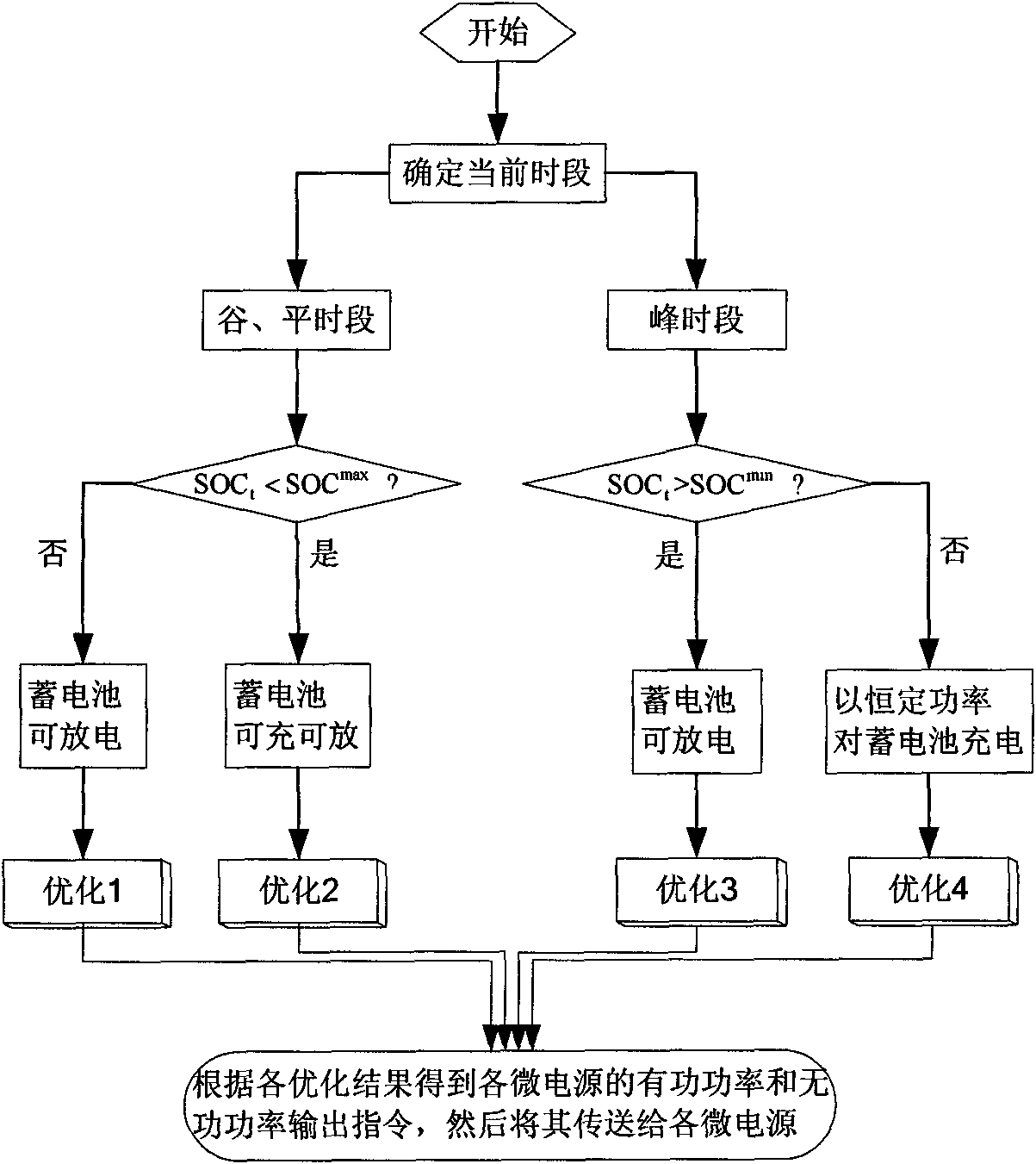

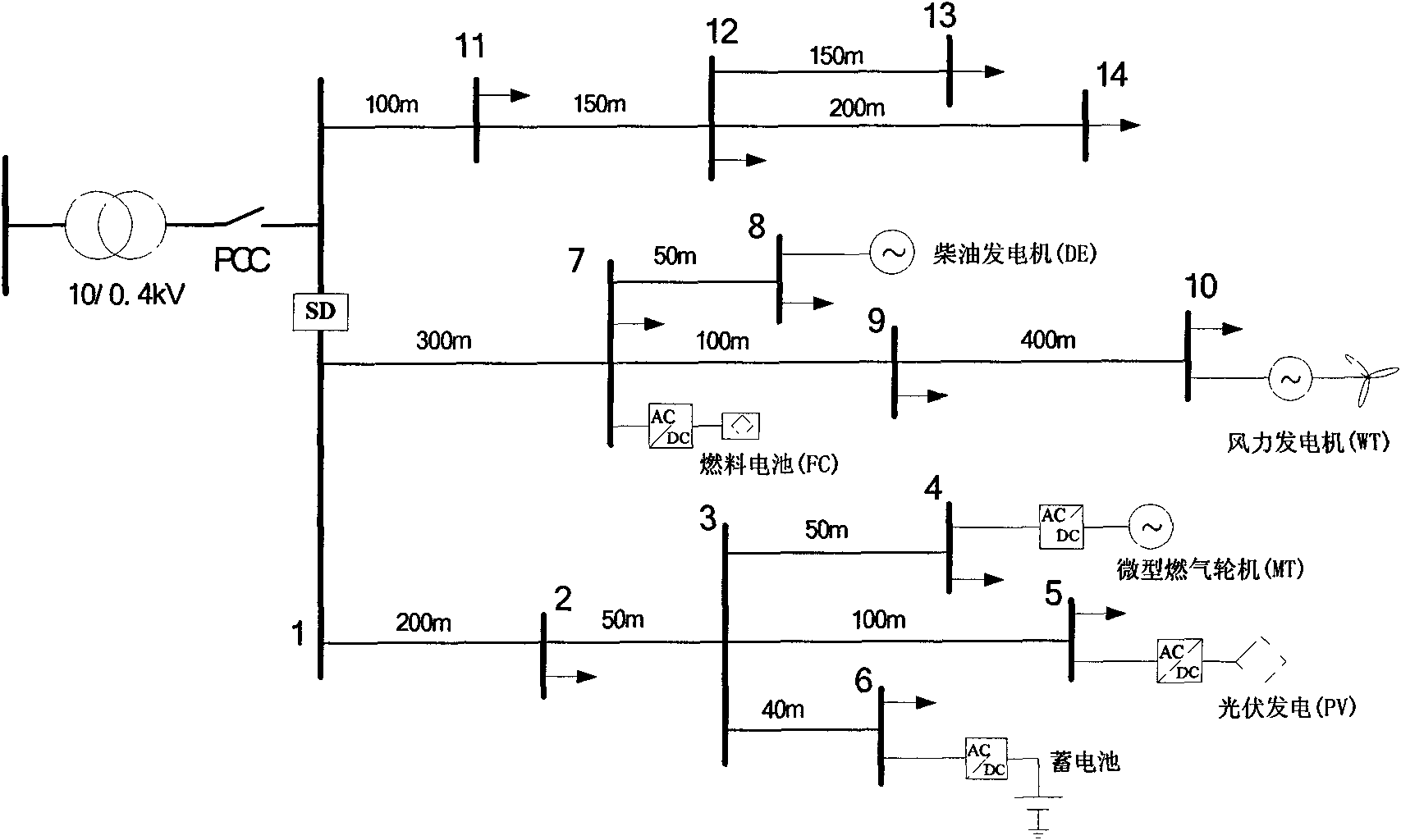

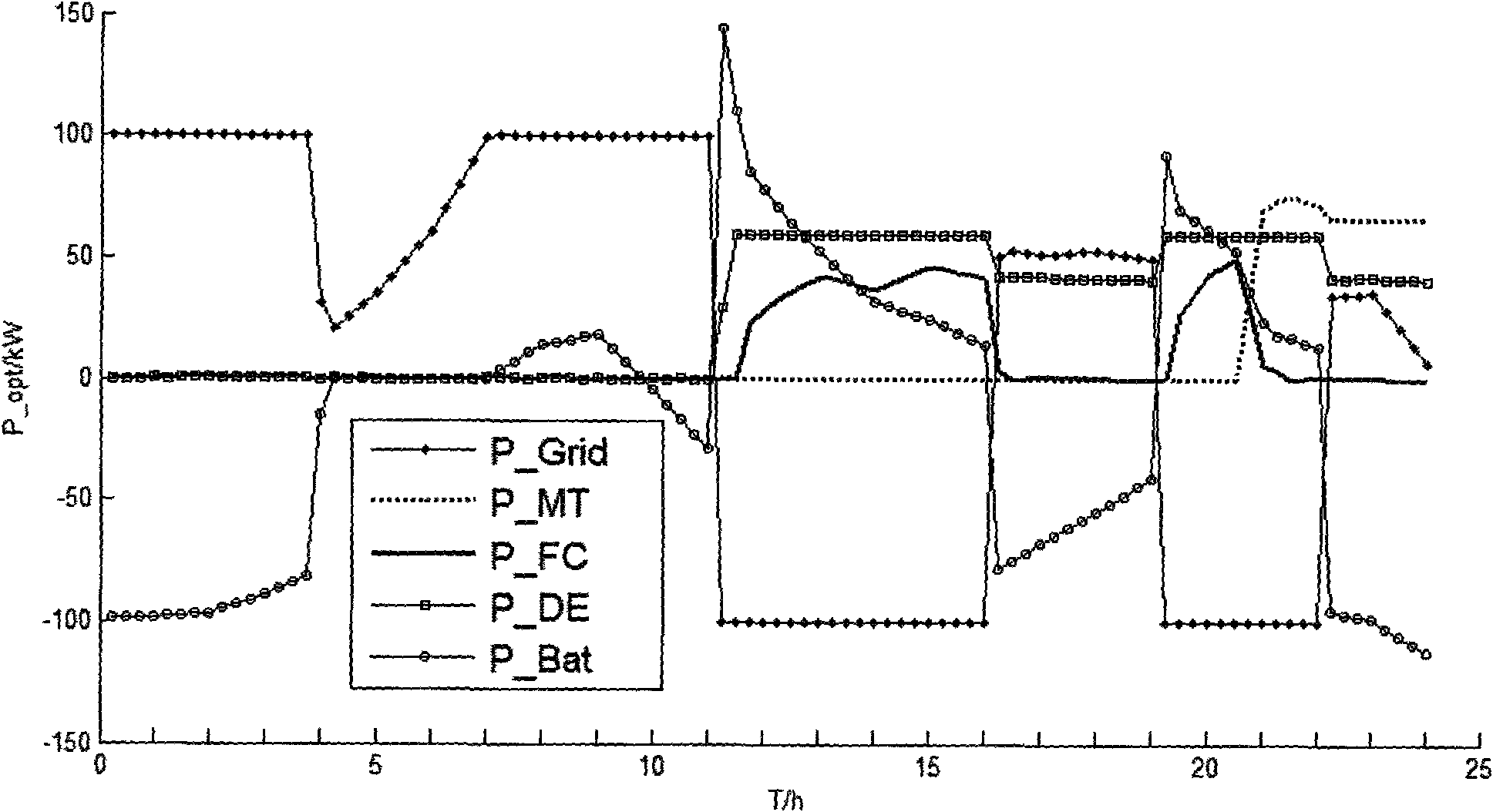

Microgrid real-time energy optimizing and scheduling method in parallel running mode

ActiveCN102104251AImprove operational efficiencyContribute to "shaving peaks and filling valleys"Energy industryAc network load balancingMicrogridScheduling instructions

The invention discloses a microgrid real-time energy optimizing and scheduling method in a parallel running mode. The method comprises the following steps of: firstly, dividing 24 hours in a day into a peak period of time, a general period of time and a trough period of time according to the load condition of a large power grid; and then, monitoring the working state of an accumulator in a microgrid in real time in the real-time process of the microgrid and adopting different energy optimizing strategies according to different periods of time and different working states of the accumulator soas to confirm the active power output of various schedulable micro power supplies, the charging and discharging power of the accumulator and scheduling instructions of the active power interactive with the power grid and the passive power of a passive adjustable power supply in the microgrid. The microgrid suitable for the invention comprises a reproducible energy generator, the schedulable micropower supplies and the accumulator. The invention not only can improve the economy and the reliability of the microgrid, but also can help the great power grid to cut peaks and fill troughs and is favorable to prolonging the service life of the accumulator.

Owner:ZHEJIANG UNIV

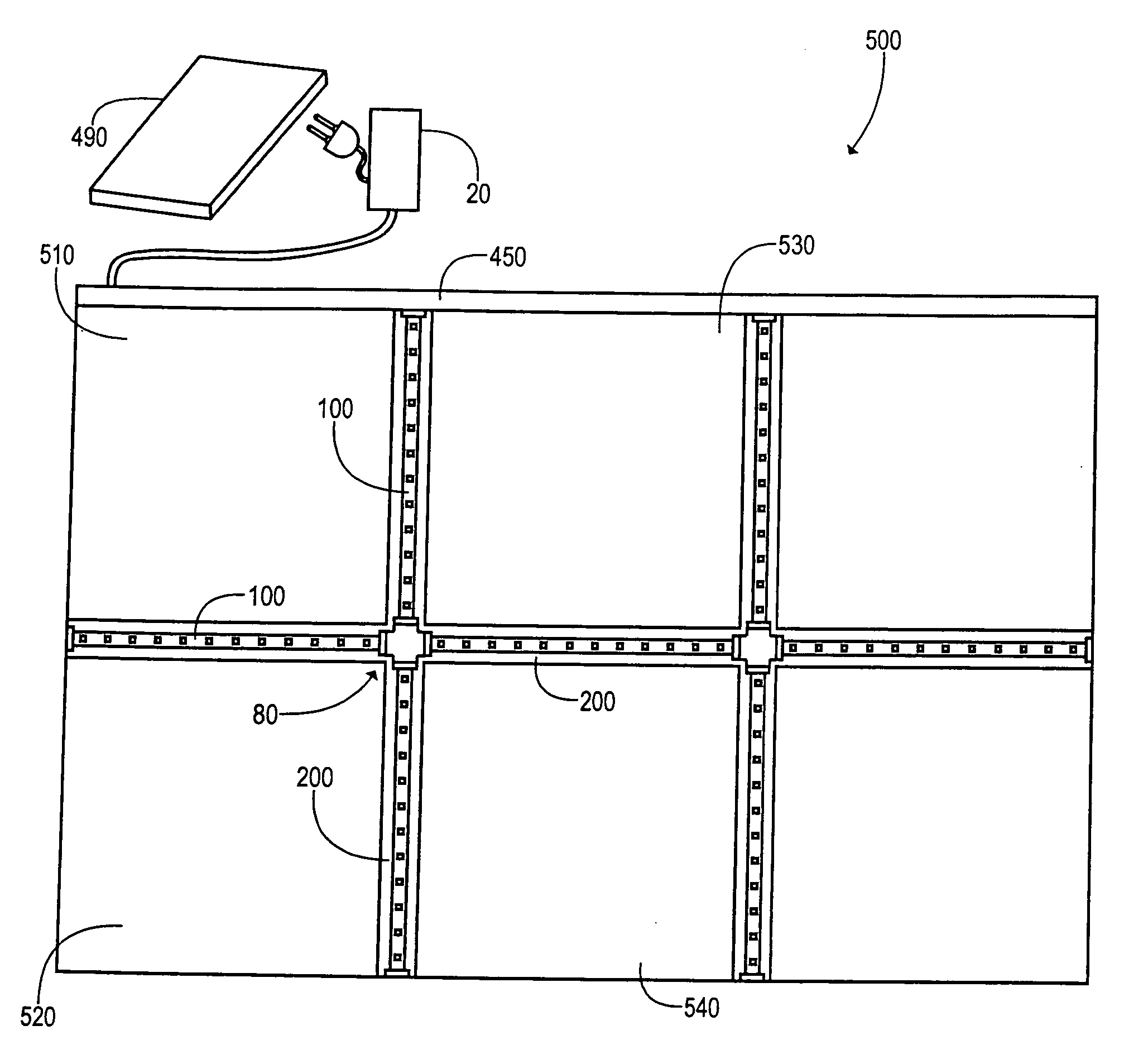

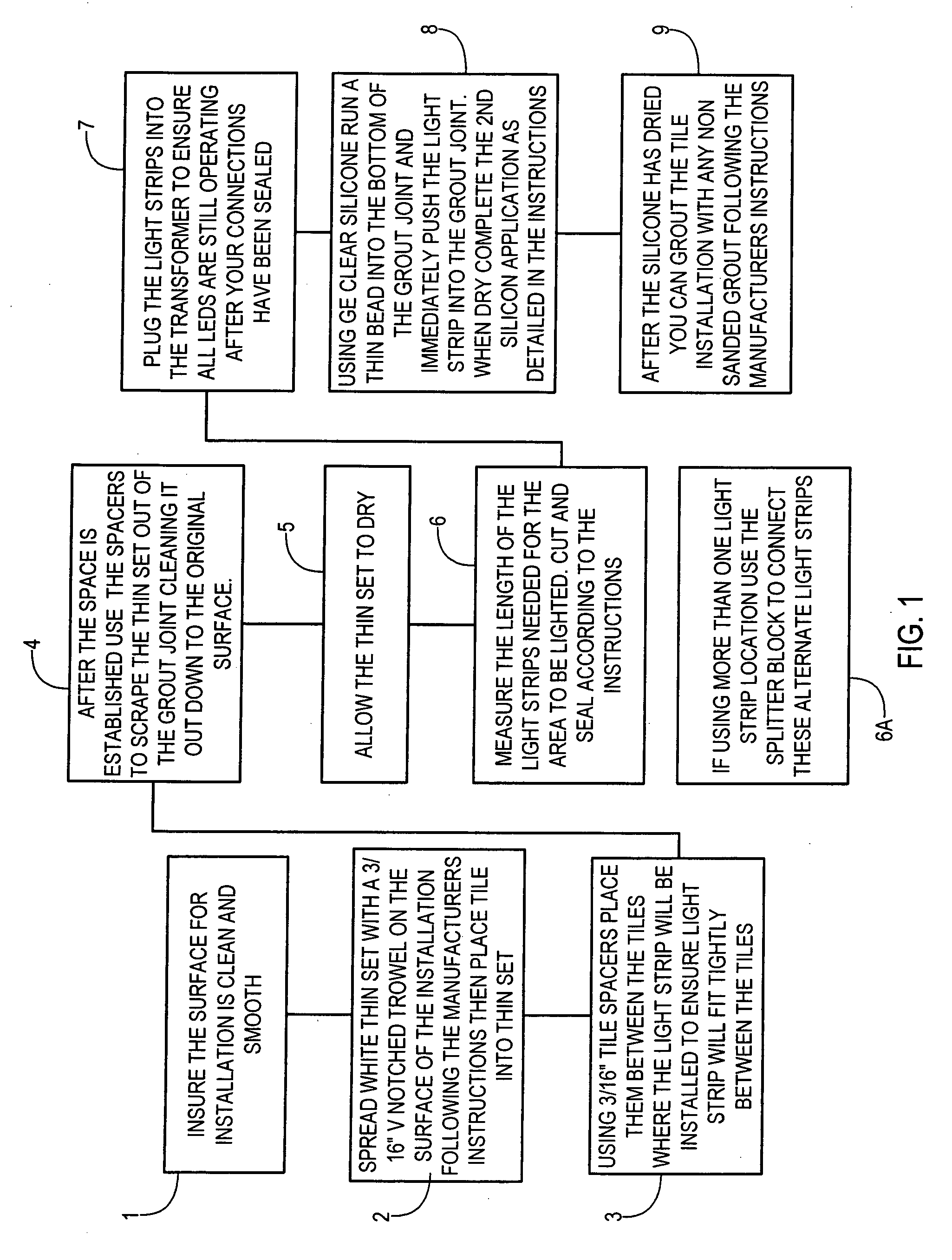

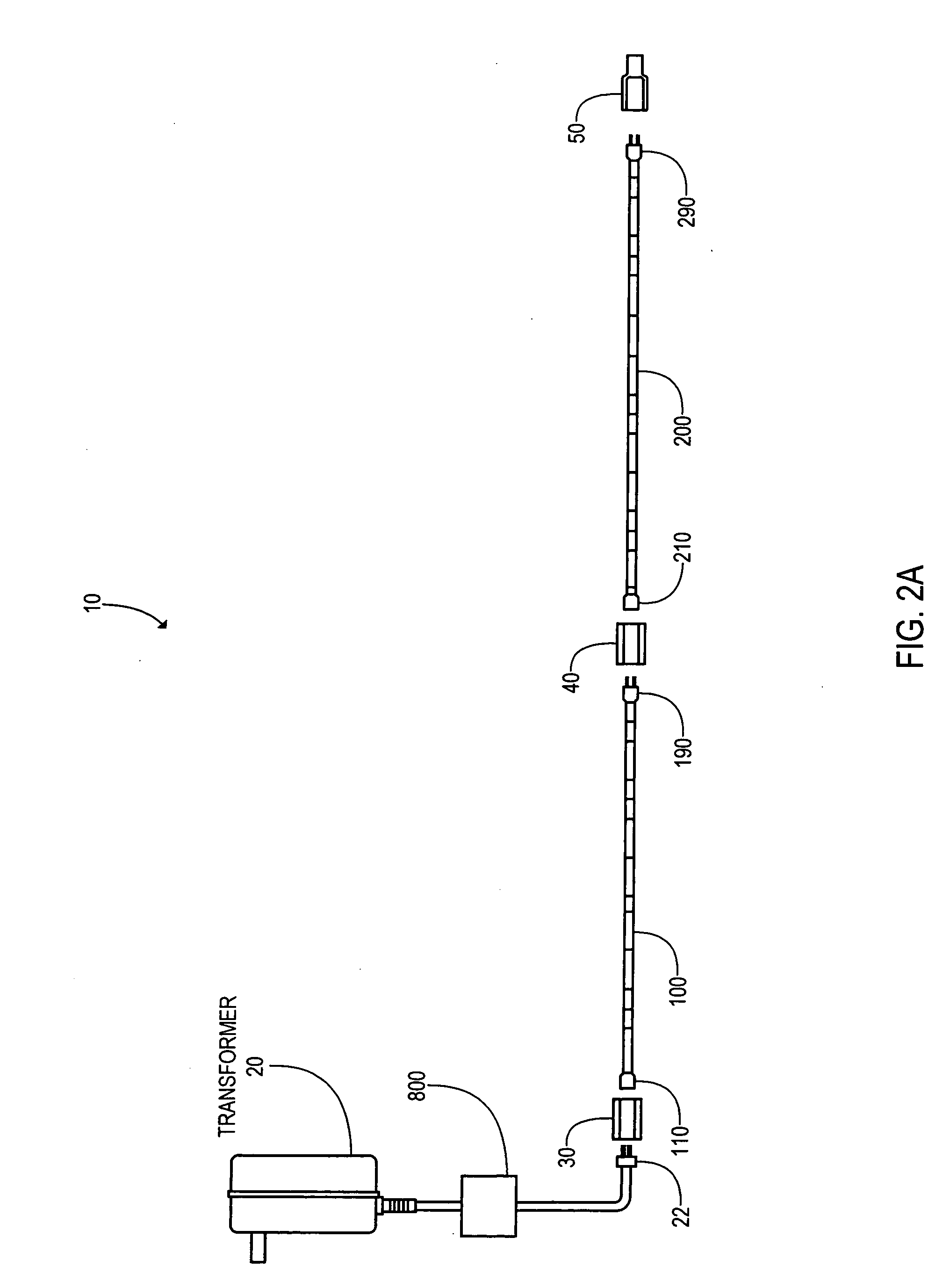

LED lighting for glass tiles

InactiveUS20090147504A1Easy to removeEasy to replaceCovering/liningsPoint-like light sourceBrickSurface layer

Devices, apparatus, systems, and methods of installing LED (light emitting diodes) for glass tiles and glass blocks. The LEDs can be housed in flexible strips having flexible bendable transparent housing sleeves with ends that can interconnect by male and female ends to one another with various types of interconnectors. Each separate sleeve can house up to 33 LEDs in a transparent plastic sleeve. A transparent connector sleeve can be slid over the interconnected ends and heat shrunk in place. The glass tiles can be laid out to uniform joints spacings between the glass tiles of approximately 3 / 16 of an inch. The LED strips can be placed on a surface layer of transparent grout that has been laid in the joint spacing, followed by a top layer of transparent grout. The transparent grout can be removable grout and include clear Silicon. The LED strips can have peel and stick back layers with adhesive backing that allows mounting to the lower surface. The LED strips can be placed with glass tiles, and other types of tiles such as but not limited to ceramic tiles, stone tiles and the like, as well as with glass blocks. Splitter(s) can be used to run parallel runs of LED strips at different spaced apart locations.

Owner:NEW HORIZON DESIGNS

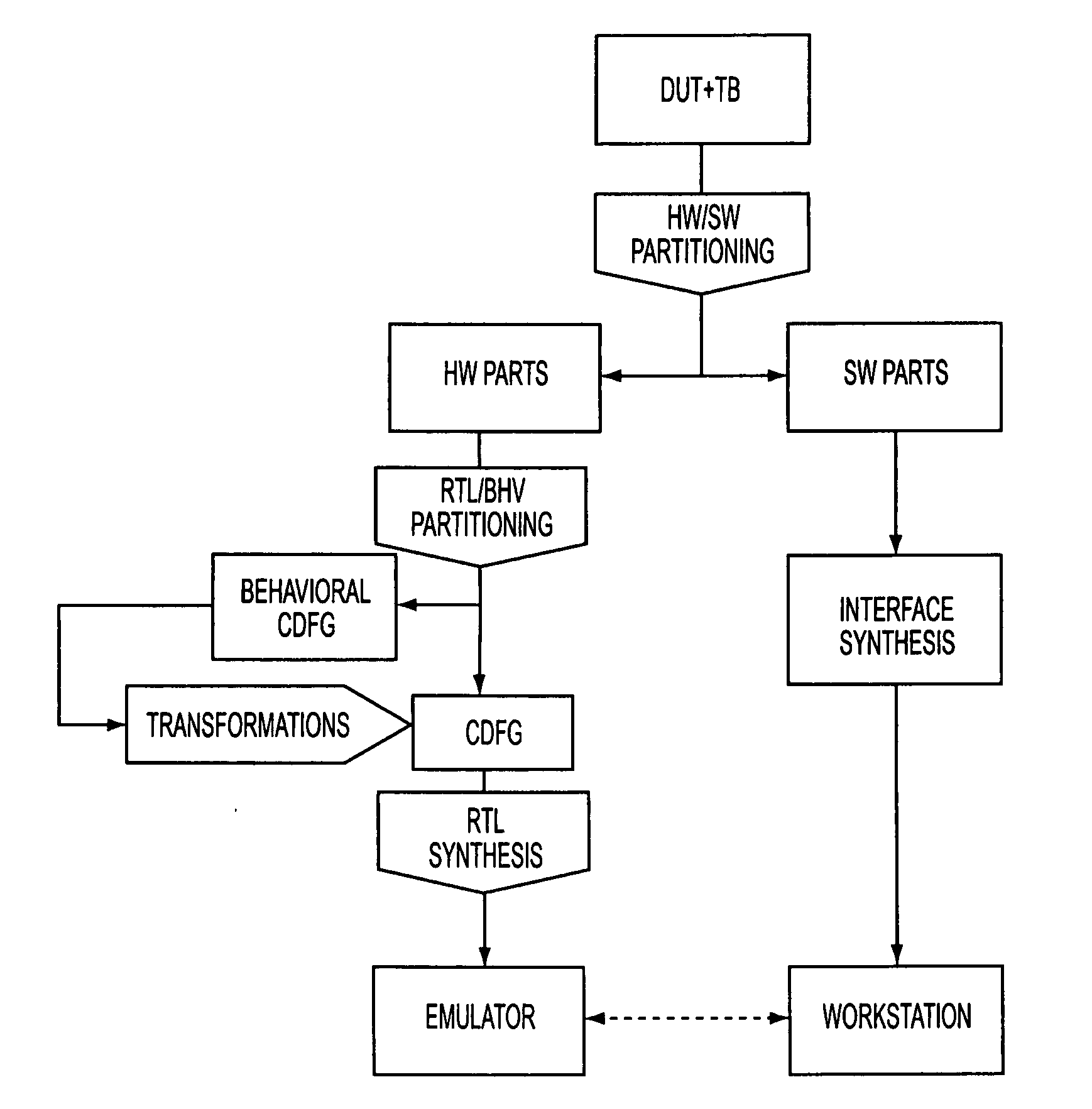

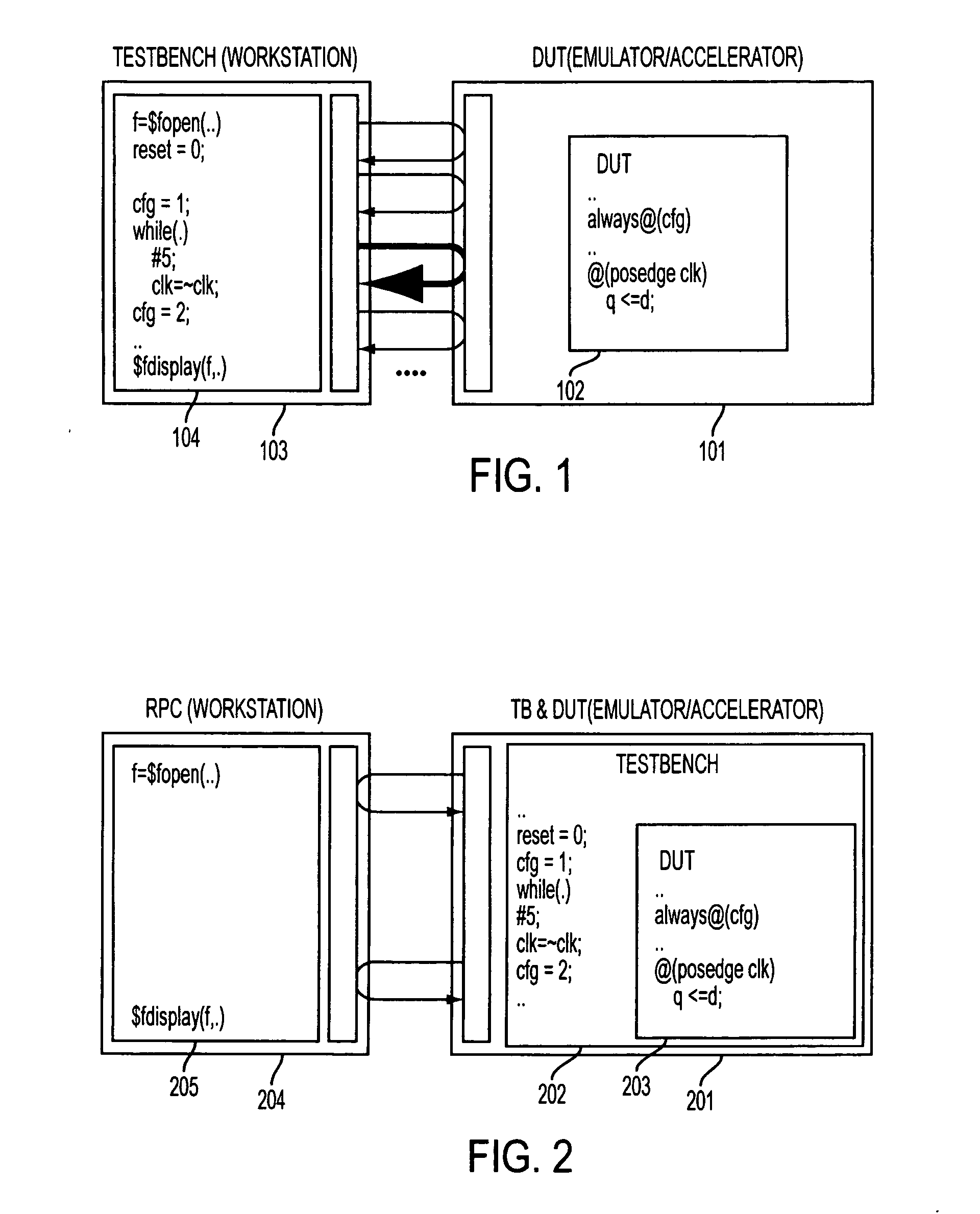

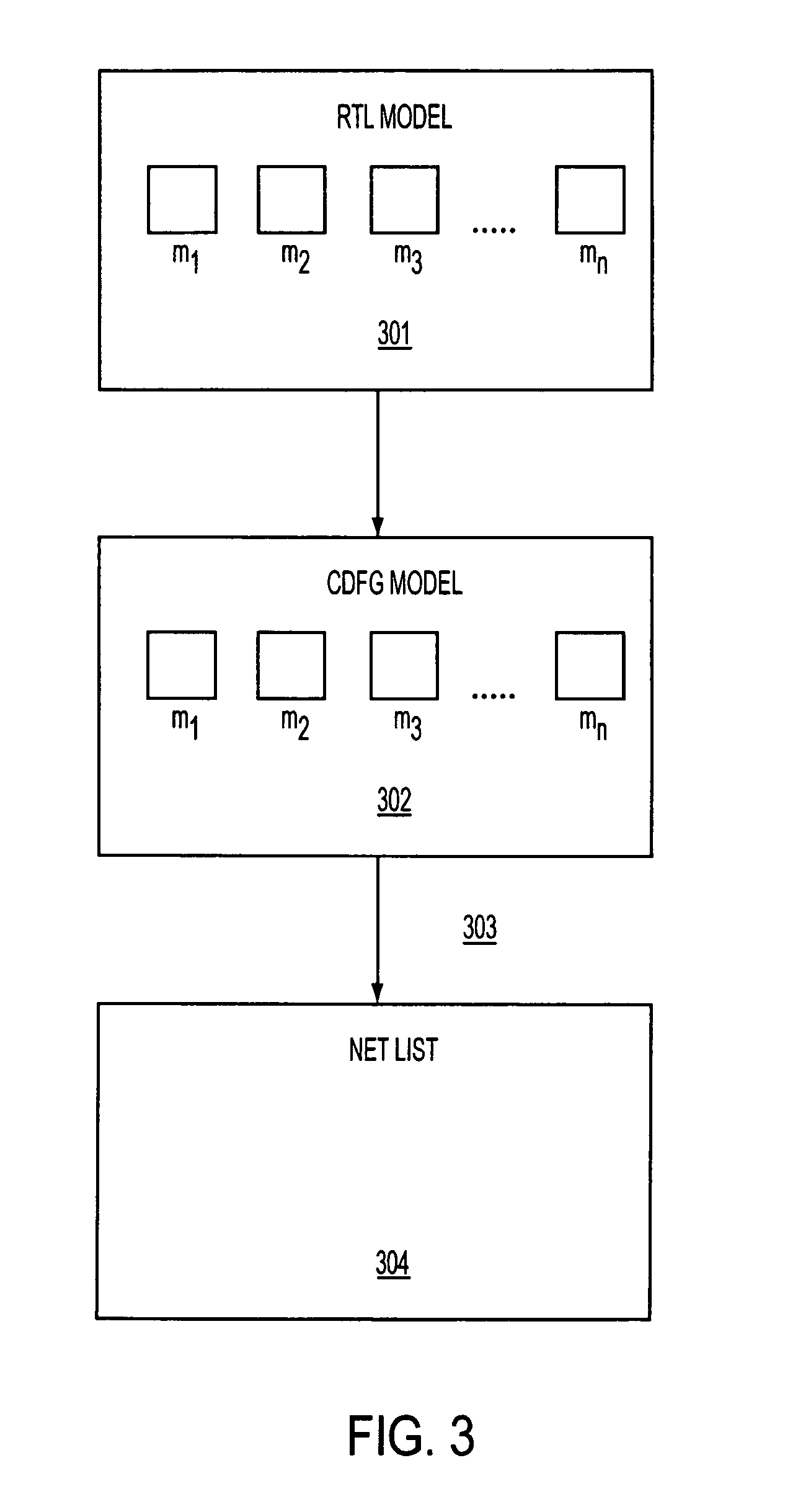

Compilation of remote procedure calls between a timed HDL model on a reconfigurable hardware platform and an untimed model on a sequential computing platform

ActiveUS20050198606A1Guaranteed repeatabilityEasy to useElectrical testingSoftware simulation/interpretation/emulationTelecommunications linkProcedure calls

A system is described for managing interaction between an untimed HAL portion and a timed HDL portion of the testbench, wherein the timed portion is embodied on an emulator and the un-timed portion executes on a workstation. Repeatability of verification results may be achieved even though the HAL portion and the HDL portion run in parallel with each other. A communication interface is also described for synchronizing and passing data between multiple HDL threads on the emulator domain and simultaneously-running multiple HAL threads on the workstation domain. In addition, a remote procedural-call-based communication link, transparent to the user, is generated between the workstation and the emulator. A technique provides for repeatability for blocking and non-blocking procedure calls. FSMs and synchronization logic are automatically inferred to implement remote procedural calls. A subset of behavioral language is identified that combines the power of conventional modeling paradigms with RTL performance.

Owner:SIEMENS PROD LIFECYCLE MANAGEMENT SOFTWARE INC

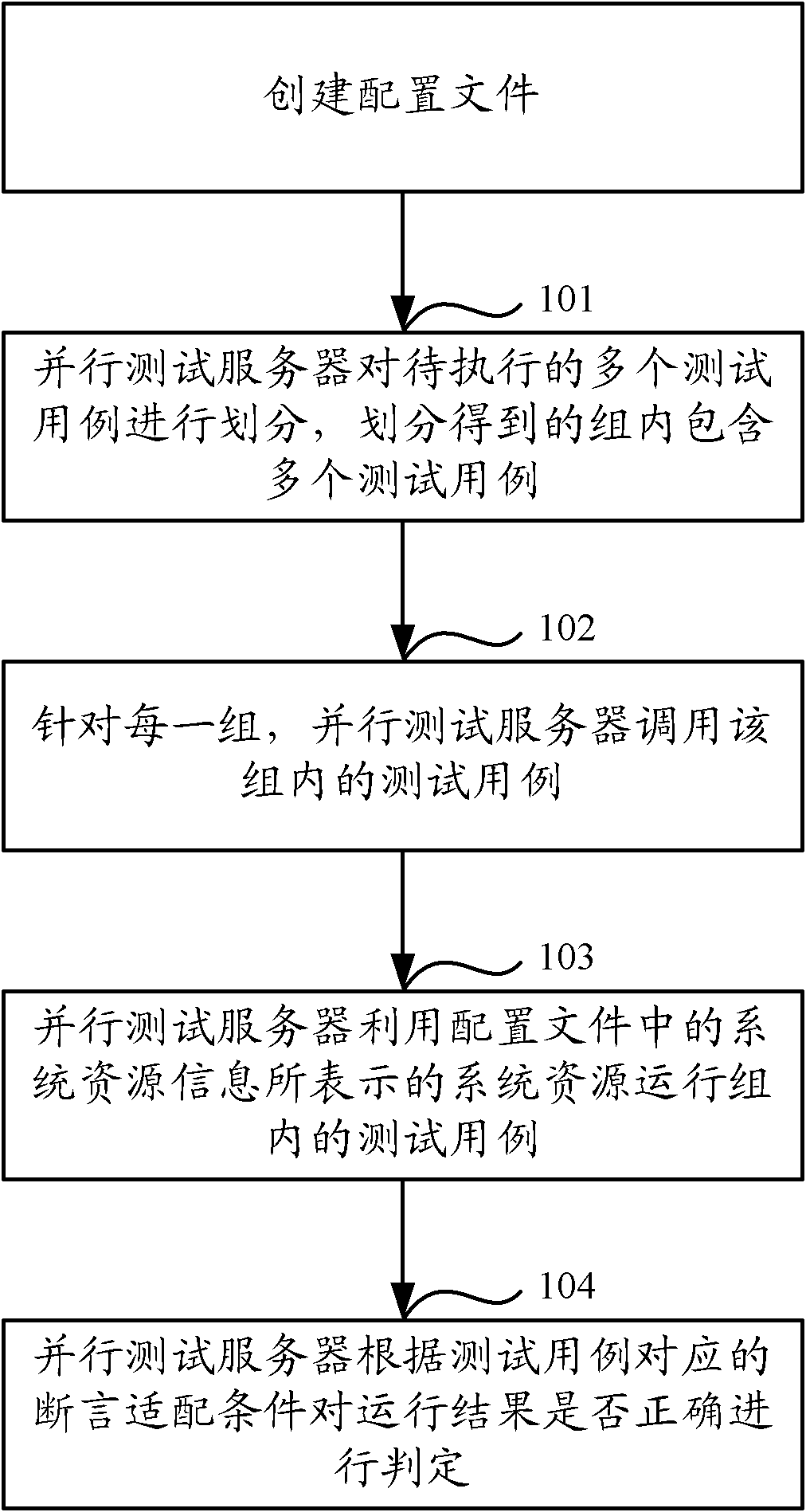

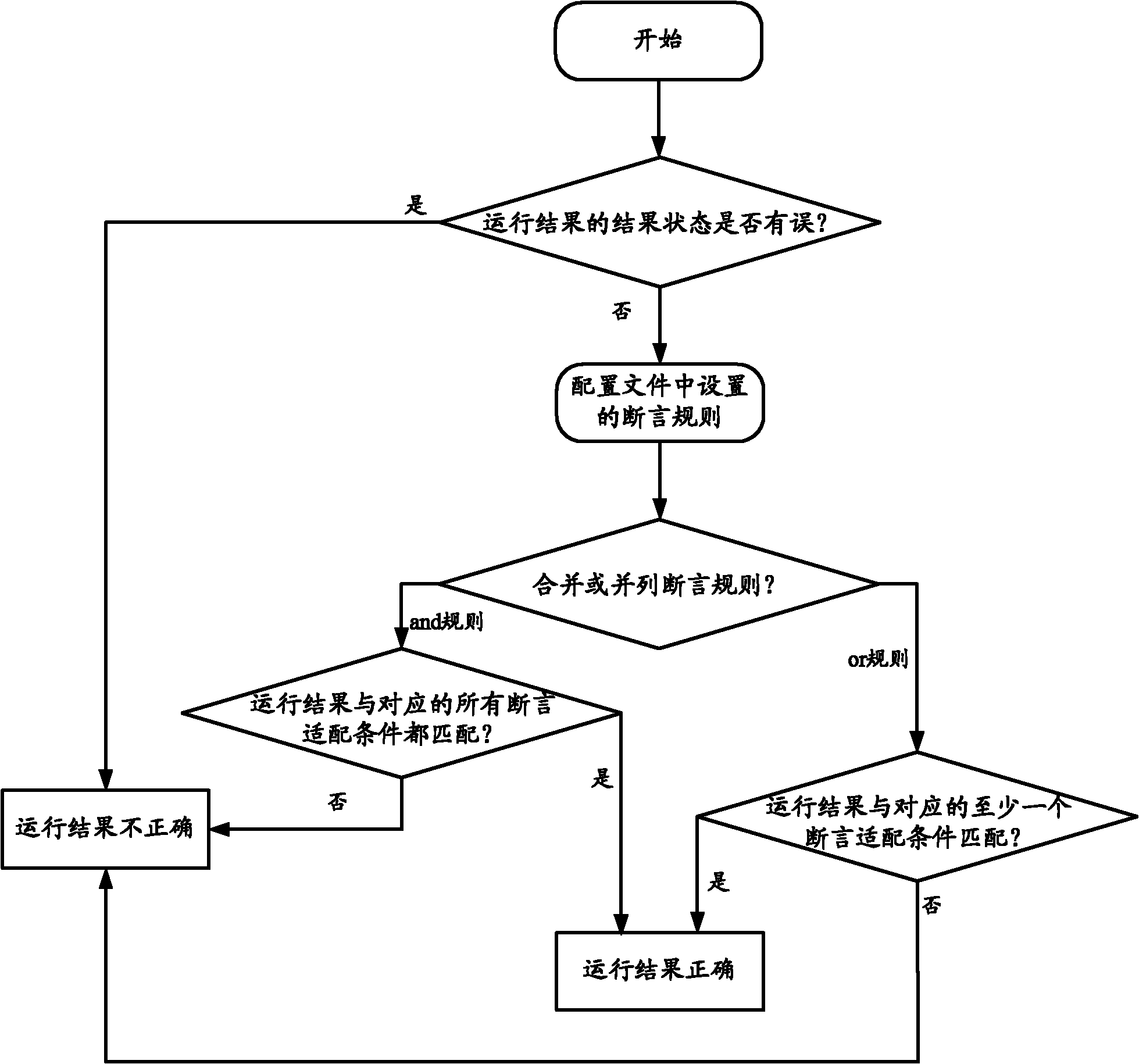

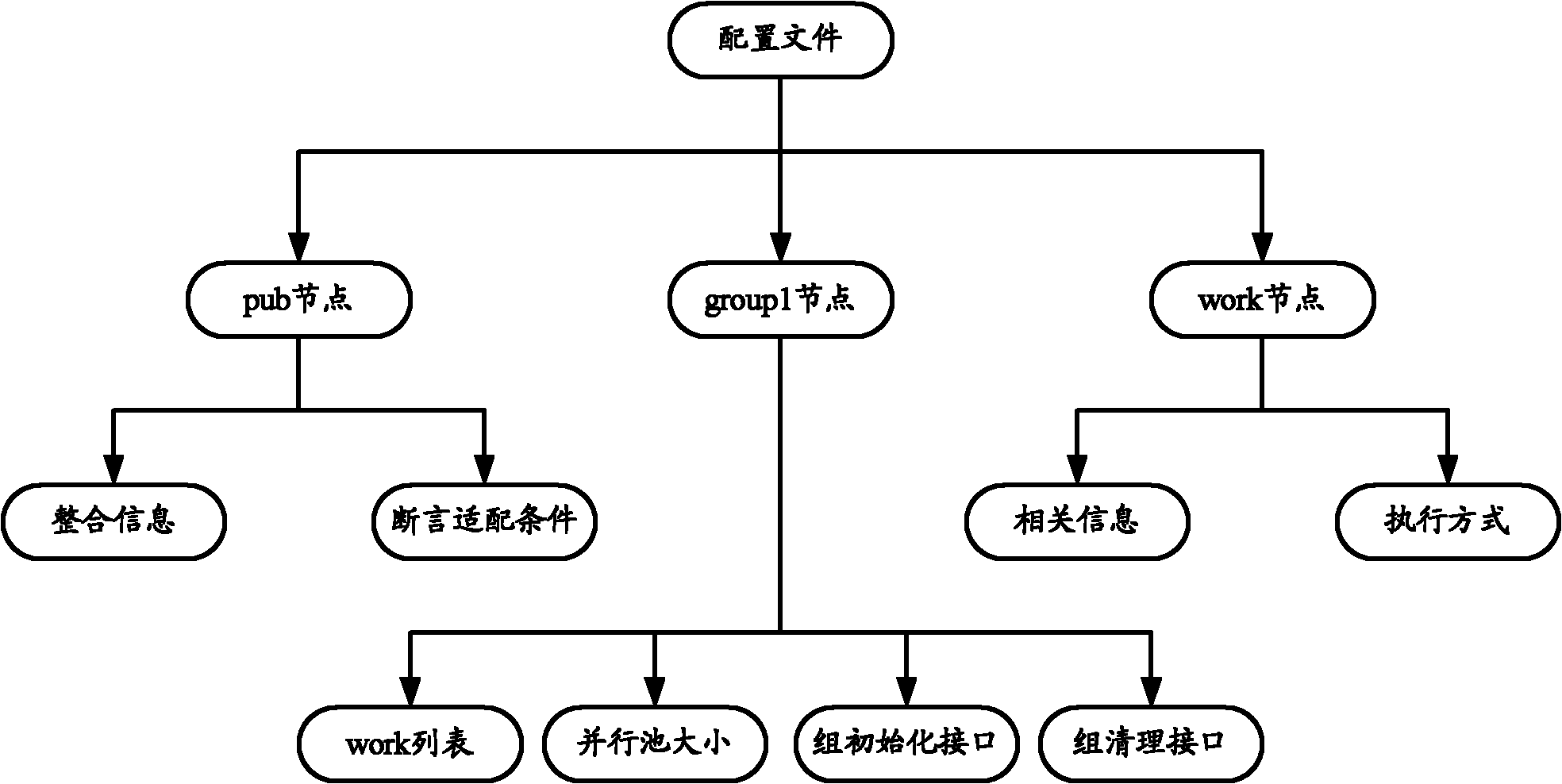

Parallel testing method and parallel testing server

The invention discloses a parallel testing method and a parallel testing server. The parallel testing method mainly includes the steps: designating test cases to be executed by means of file configuration; dividing the test cases to be executed; respectively using system resources in each configuration group obtained by means of division; and parallelly operating a plurality of test cases in the group. Compared with the prior art, the parallel testing method and the parallel testing server have the advantages that corresponding test systems do not need to be respectively written for different test cases parallelly operated every time, only one configuration file needs to be established and used by all the test cases, a test script does not need to be respectively written for each test case, and parallel testing efficiency can be effectively improved.

Owner:ALIBABA GRP HLDG LTD

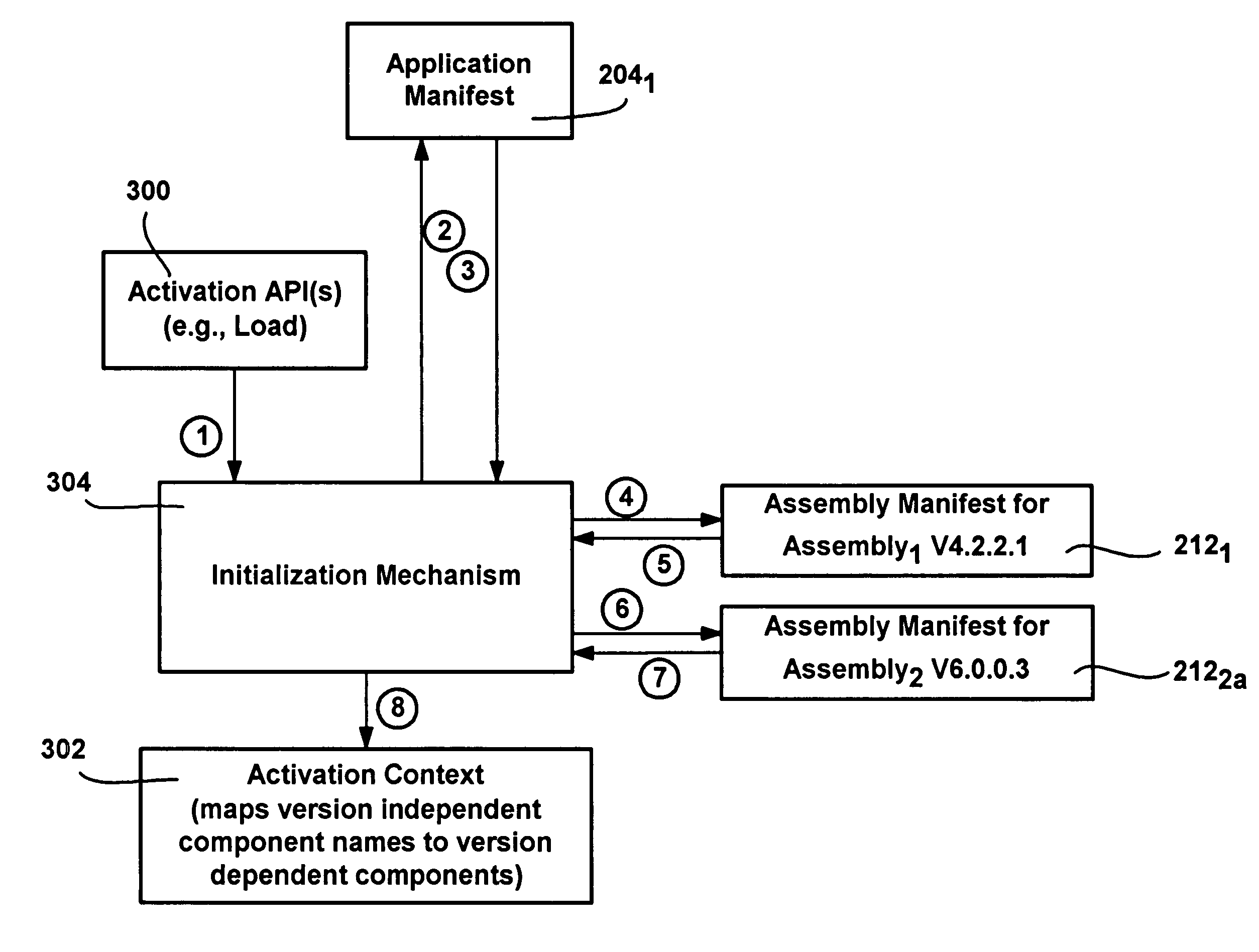

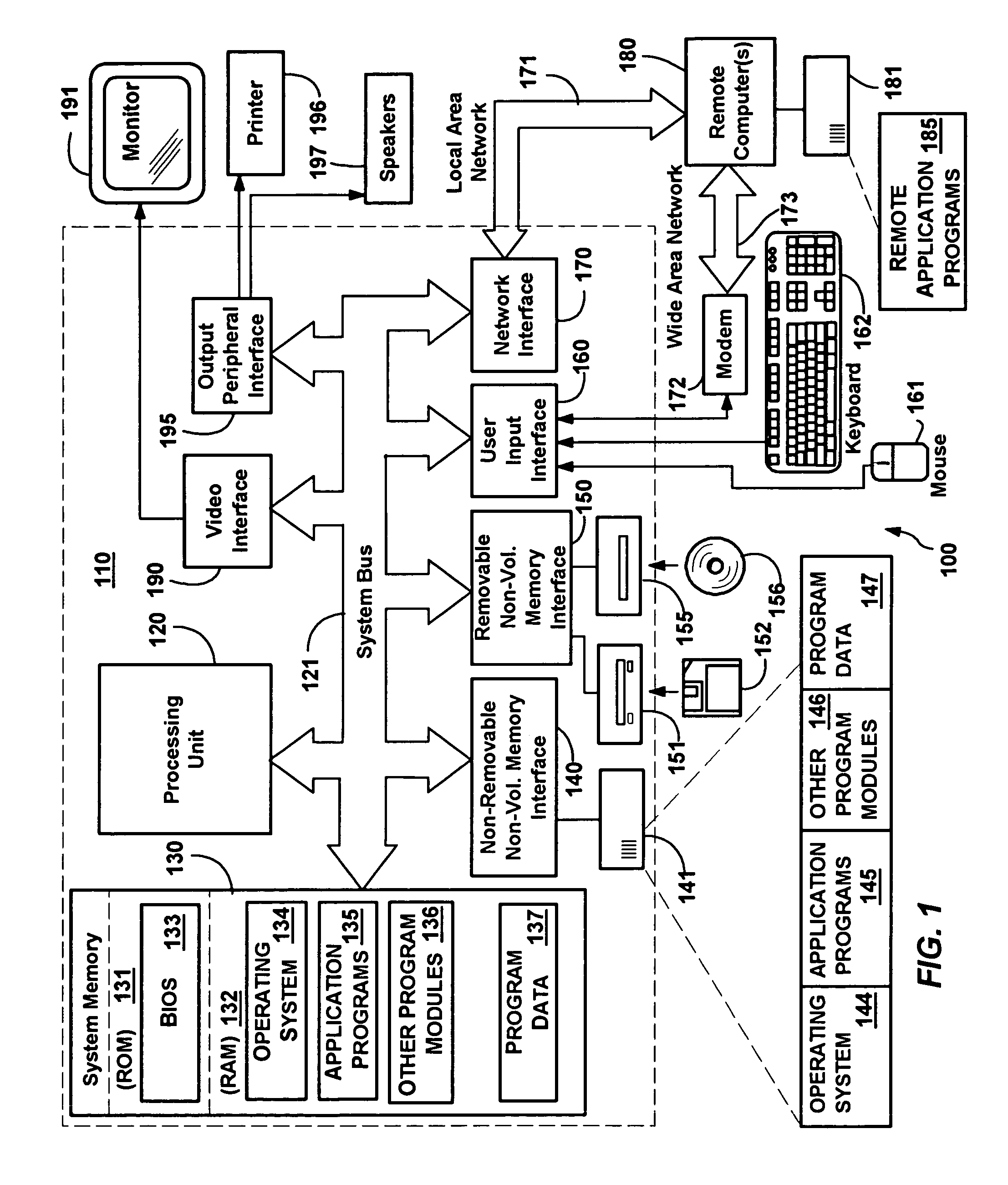

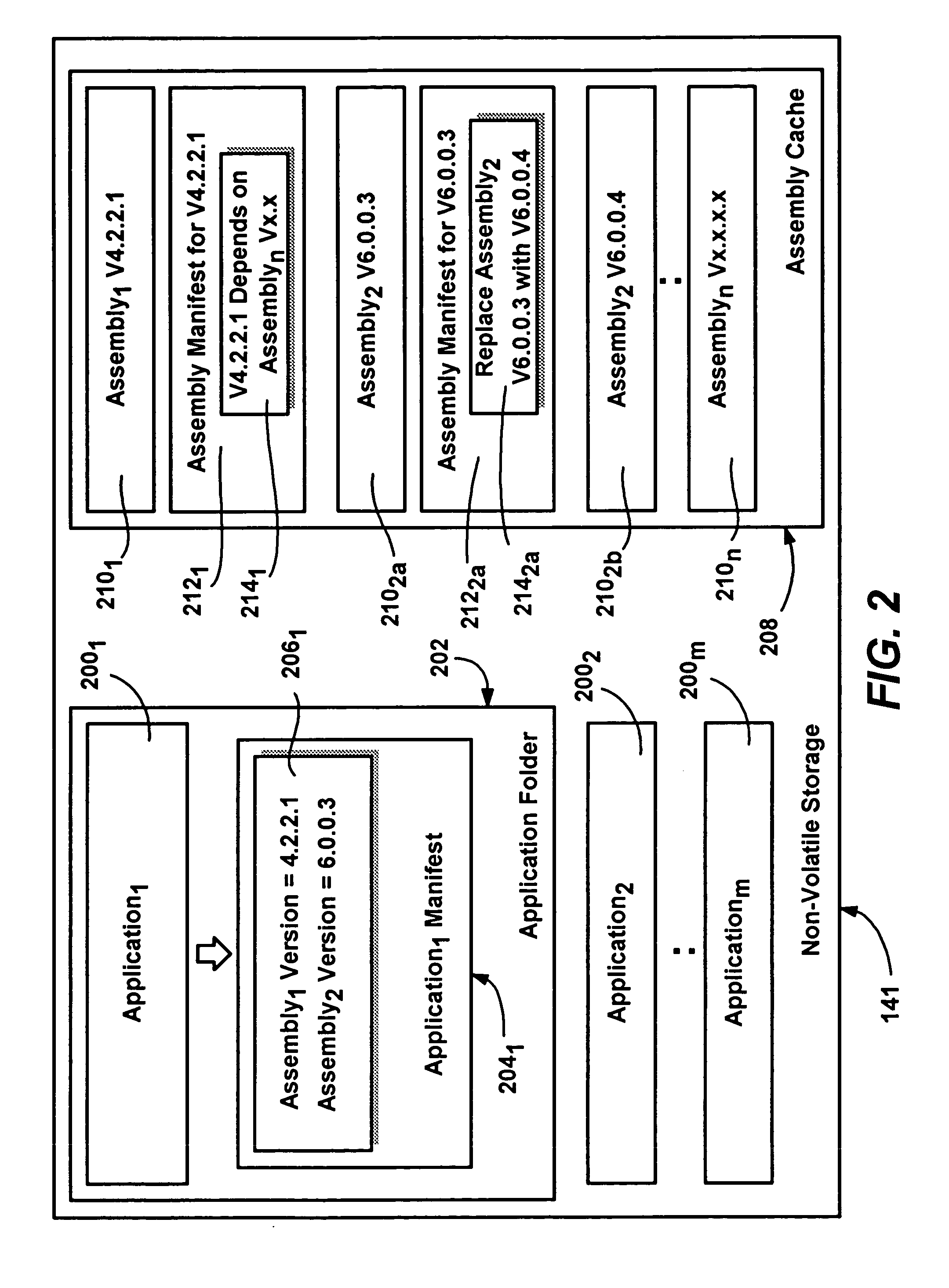

Isolating assembly versions for binding to application programs

InactiveUS7287259B2Version controlSpecific program execution arrangementsParallel computingBiological activation

An infrastructure that allows applications to run with specified versions of dependent assemblies, wherein each assembly may exist and run side-by-side on the system with other versions of the same assembly being used by other applications. An application provides a manifest to specify any desired assembly versions on which it is dependent. Similarly, each assembly may have an assembly manifest that specifies the versions of assemblies on which it is dependent. During an initialization phase, an activation context is created for the application, based on the manifests, to map version independent names to a particular assembly version maintained on the system. While the application is in a running phase, for any globally named object that the application wants created, the activation context is accessed to locate the application's or assembly's manifest-specified version. The manifests and activation context constructed therefrom thus isolate an application from assembly version changes.

Owner:MICROSOFT TECH LICENSING LLC

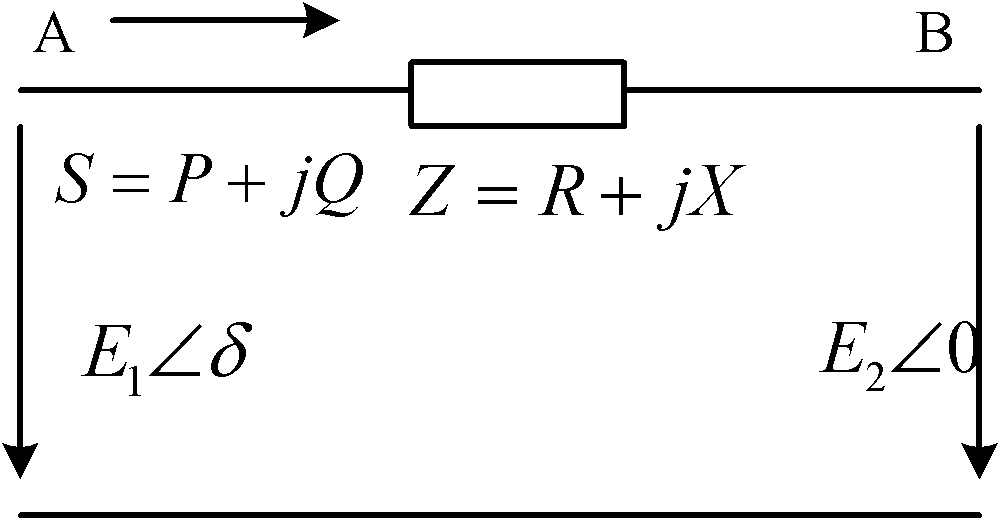

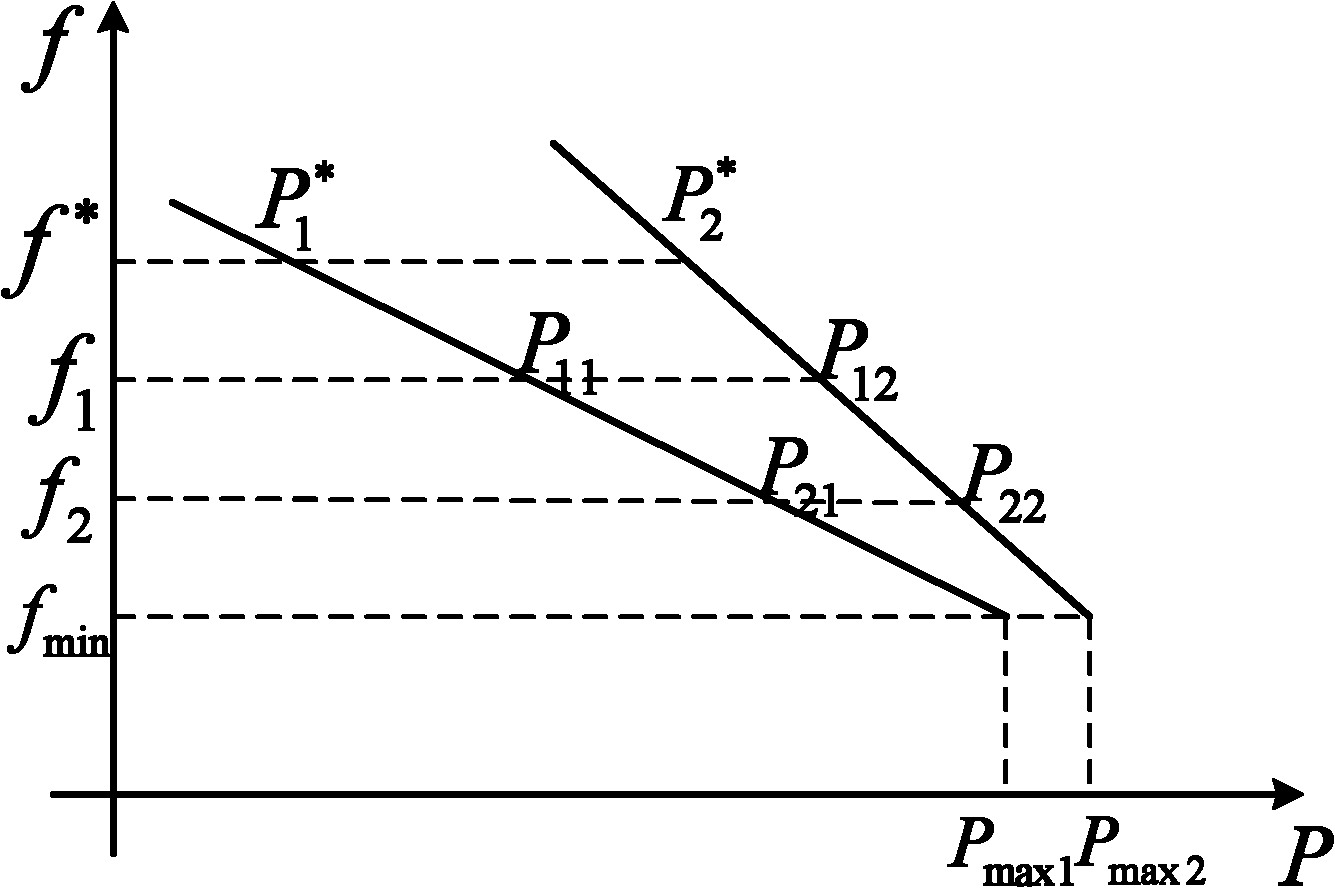

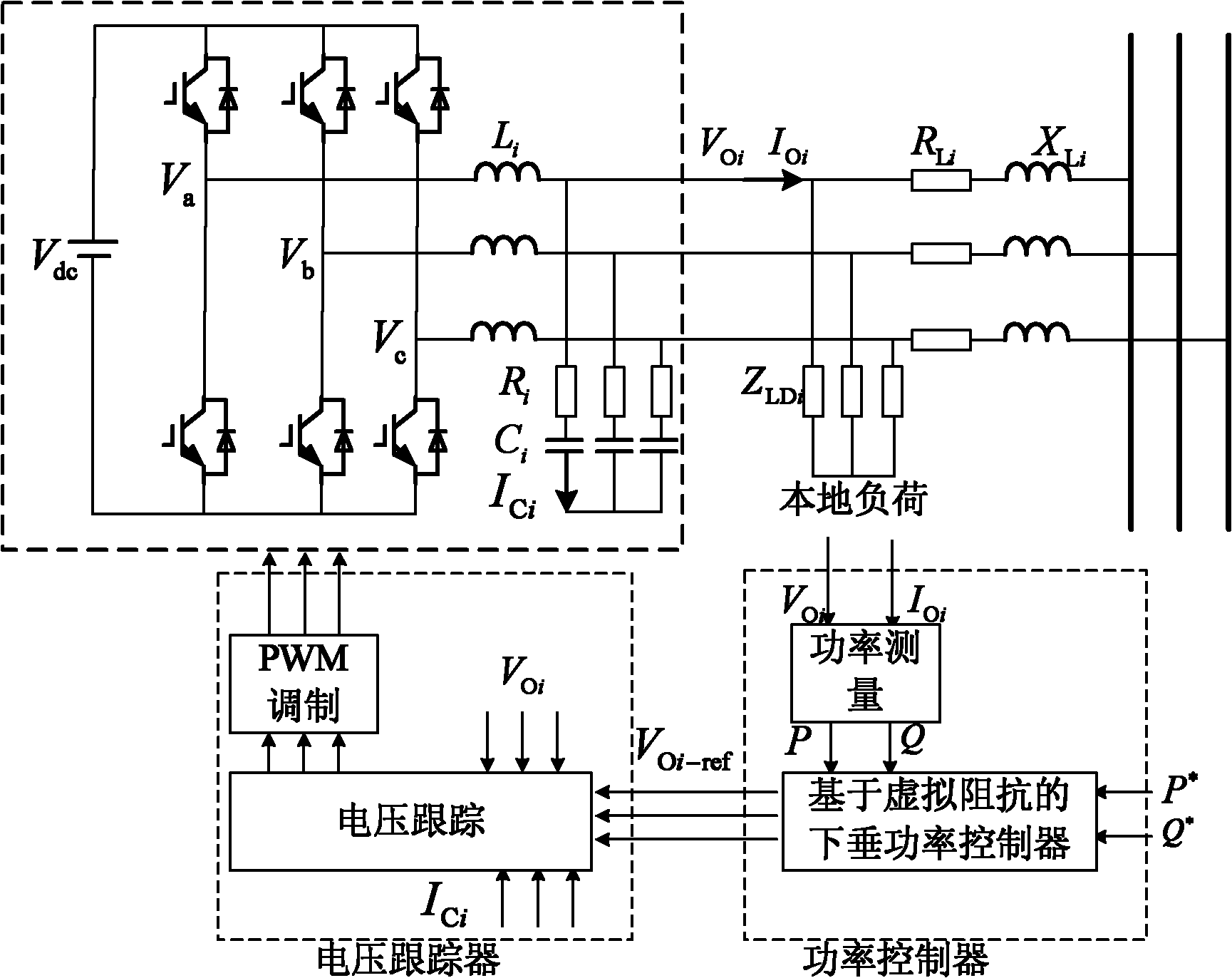

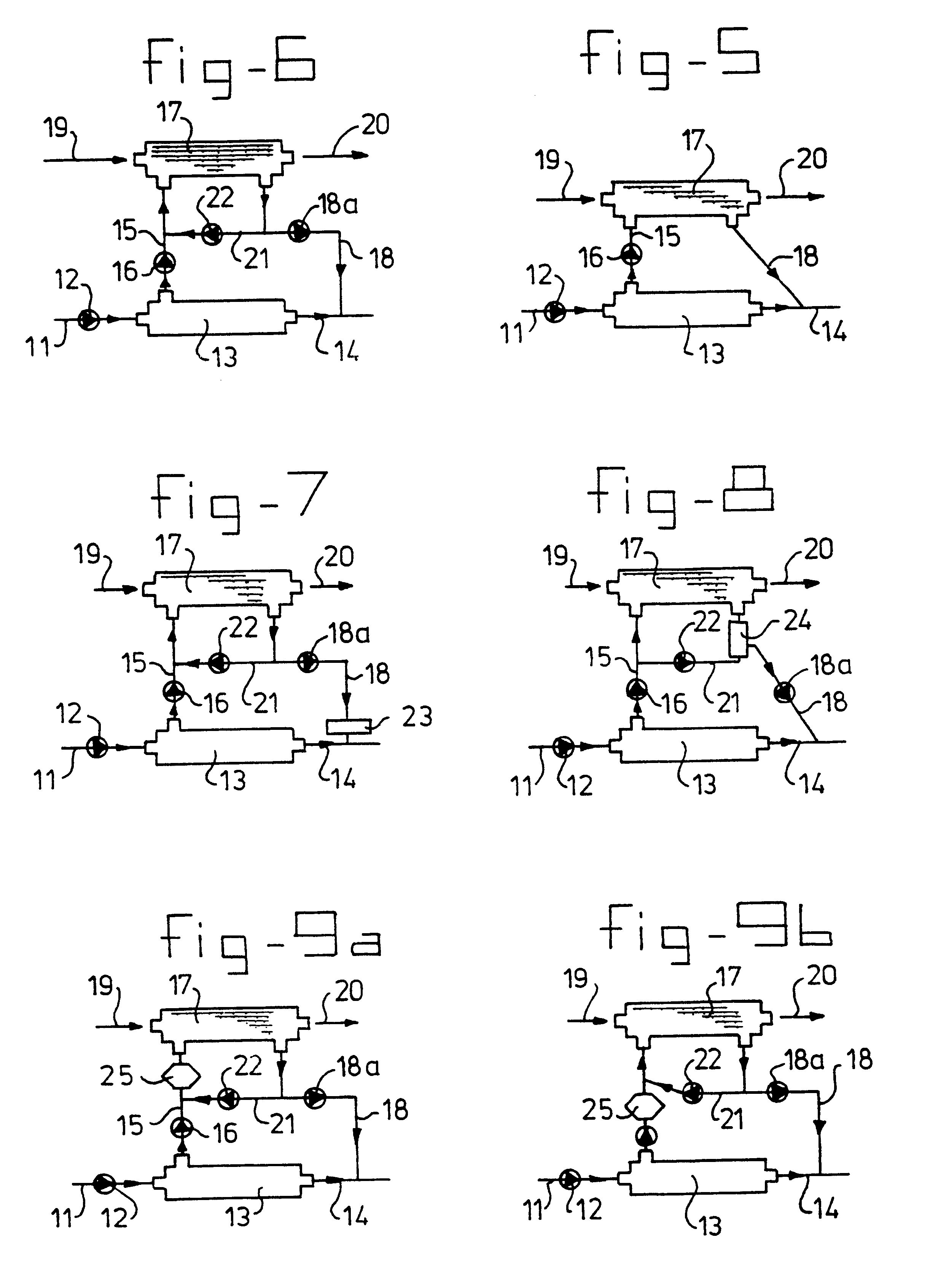

Virtual-impedance-based inverter parallel running method

ActiveCN102157956AIncrease investmentEasy to useFlexible AC transmissionSingle network parallel feeding arrangementsPower inverterVoltage amplitude

The invention provides a virtual-impedance-based inverter parallel running method, which is characterized by comprising the following steps of: for each inverter, introducing a virtual generator which is connected to a point with the inverter by virtual impedance; performing droop control on the virtual generators, regulating the frequency and voltage amplitude of each virtual generator by utilizing the active power and reactive power of the corresponding inverters respectively, and further calculating voltage directive values of the virtual generators; and based on the voltage directive values, further calculating output voltage directive values of the inverters, and controlling the inverters to output voltages to track the directive values, thereby realizing control over the voltages of the virtual generators and finally realizing the decoupling regulation of the active power and the reactive power. In the method, a control policy for the wireless parallel running of the inverters is realized by utilizing the virtual impedance; and compared with the conventional control methods, the invention is not required to remarkably increase hardware investment, and betters the using effects of droop characteristics to make applicable the droop characteristics to resistive environments.

Owner:STATE GRID ELECTRIC POWER RES INST

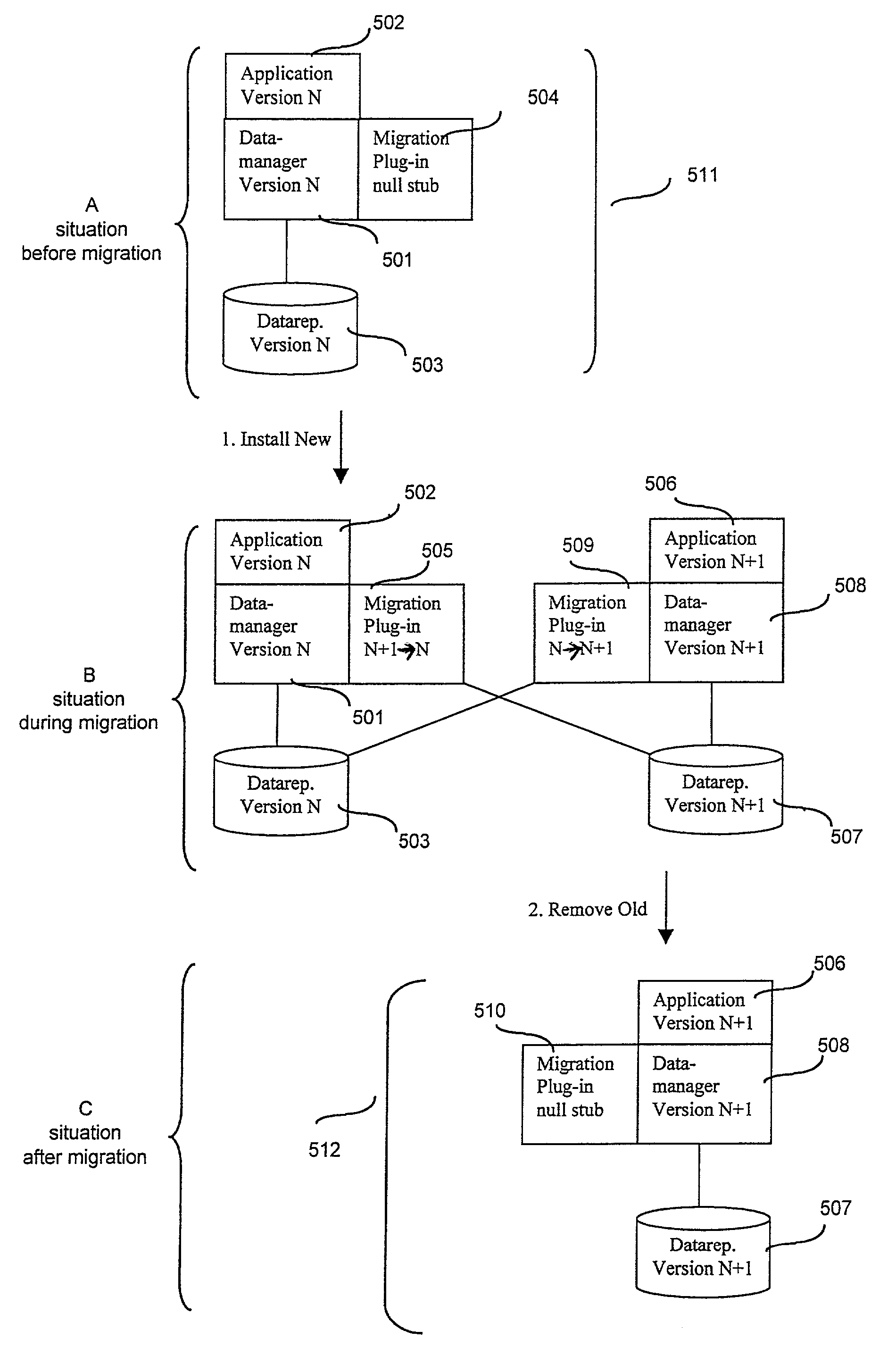

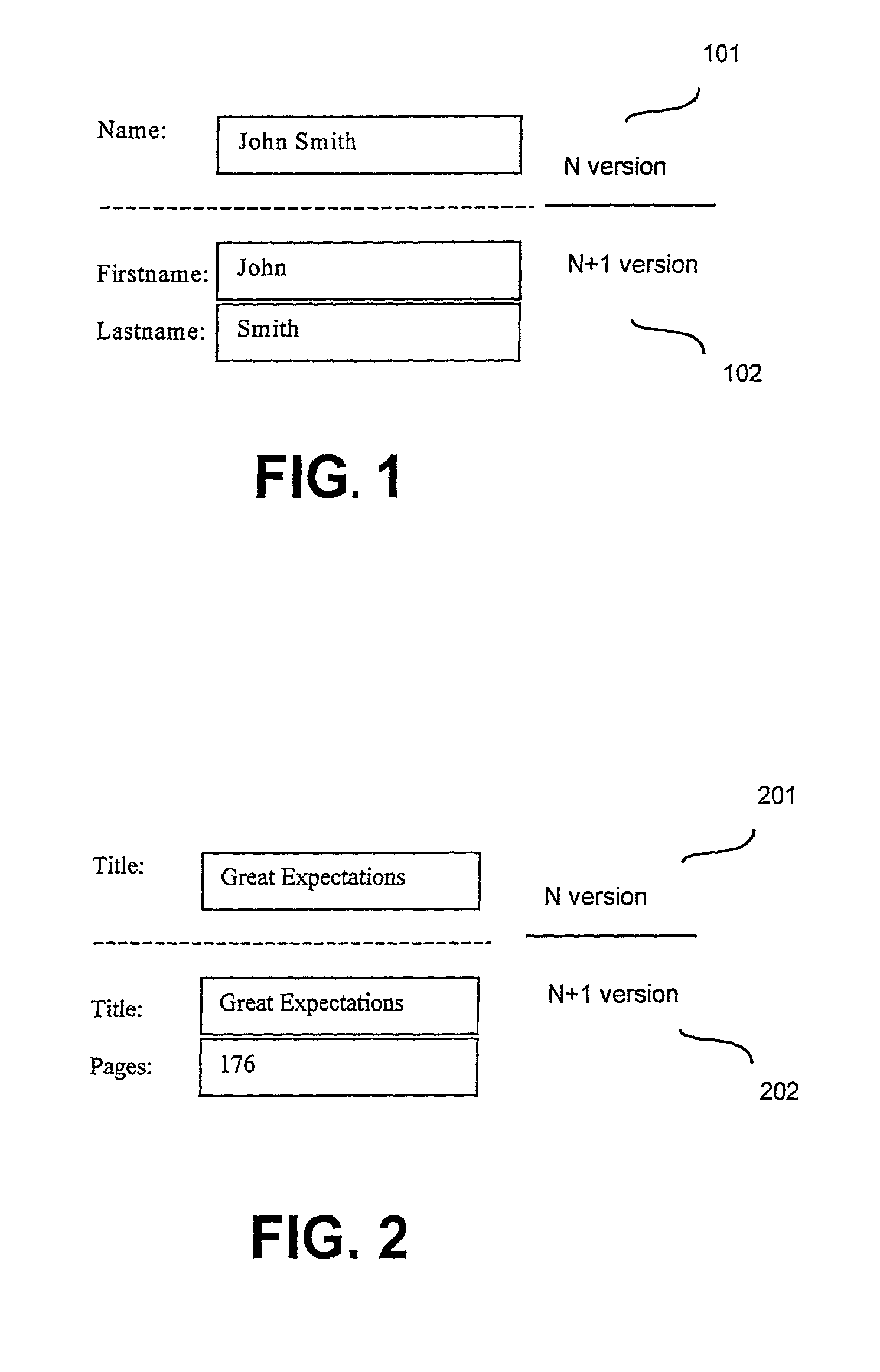

Method, system and computer program for executing hot migrate operation using migration plug-ins

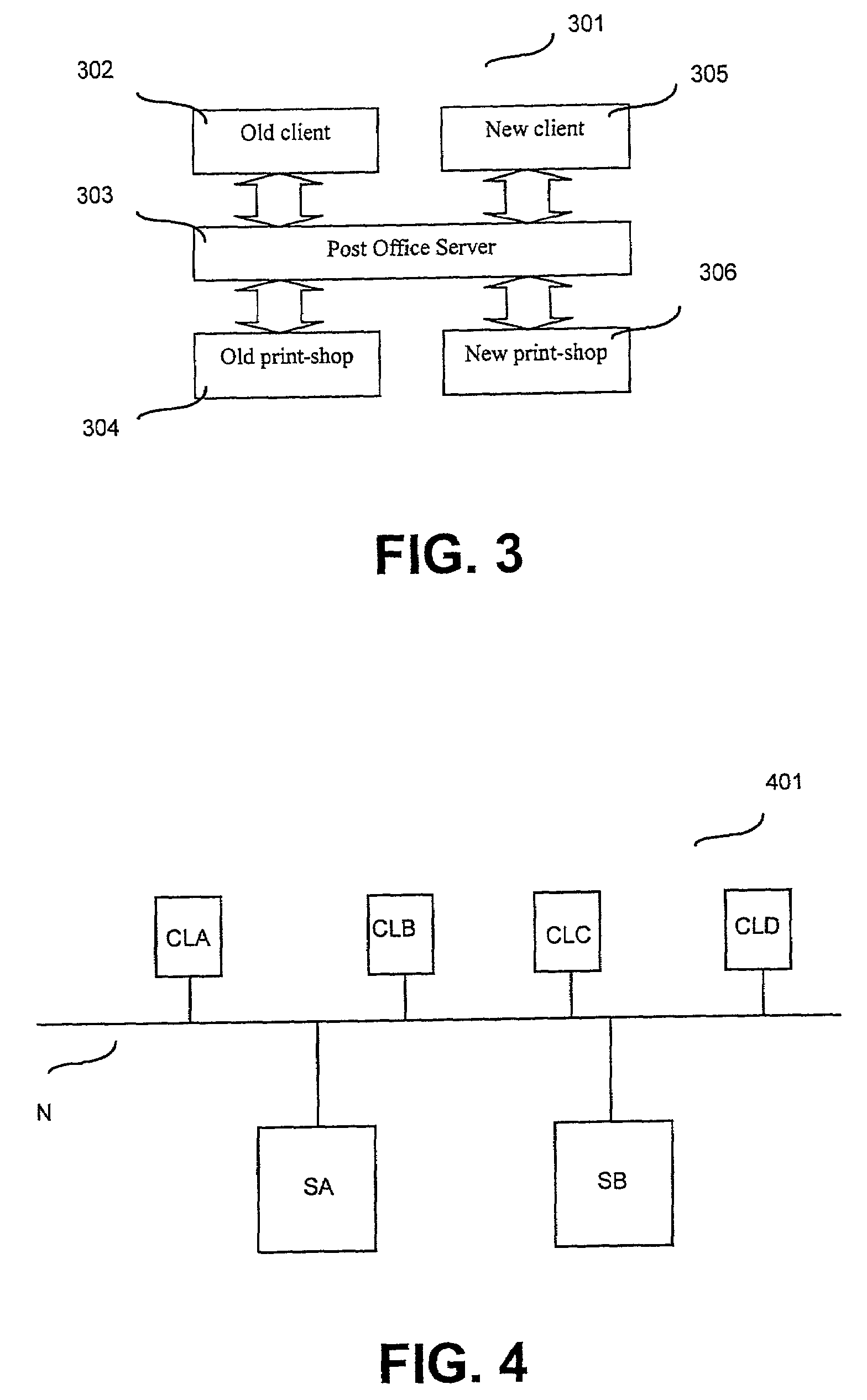

A method, system and computer program for executing a hot migration operation are provided. A hot migration operation is executed from a first version of a service using a first data model, to a second version of the service using a second data model that is modified with respect to said first data model. A service comprises a client application, a data manager and a data repository. The migrate operation is effected on a server facility that accommodates multiple processes to be running in parallel. Second version client applications, a second version data manager operating according to the second data model, and a second version data repository arranged according to the second data model and cooperating with the second version data manager are installed. The first and the second version data managers are provided each with a migration plug-in. The method uses an incremental roll-over process, wherein, in successive steps, data is converted from the first version data repository to the second version data repository by the migration plug-in until all data are converted and thereafter, any old version of the service is removed.

Owner:OCE TECH

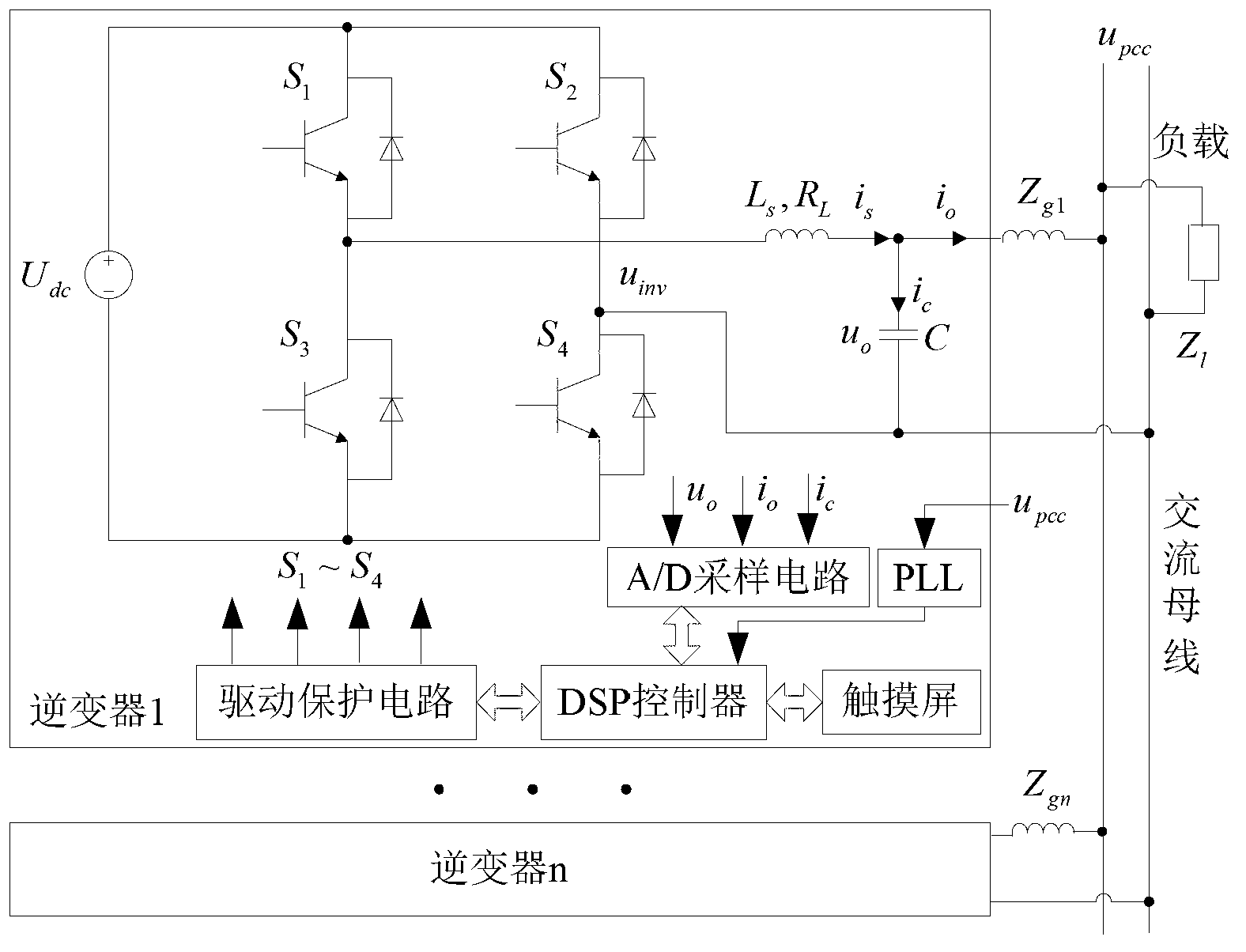

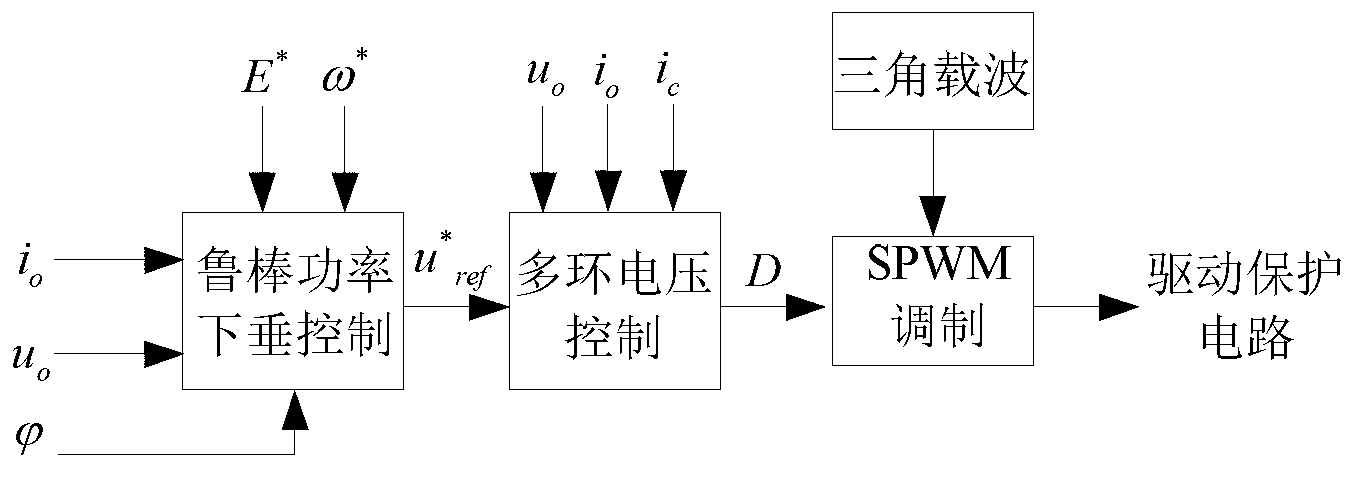

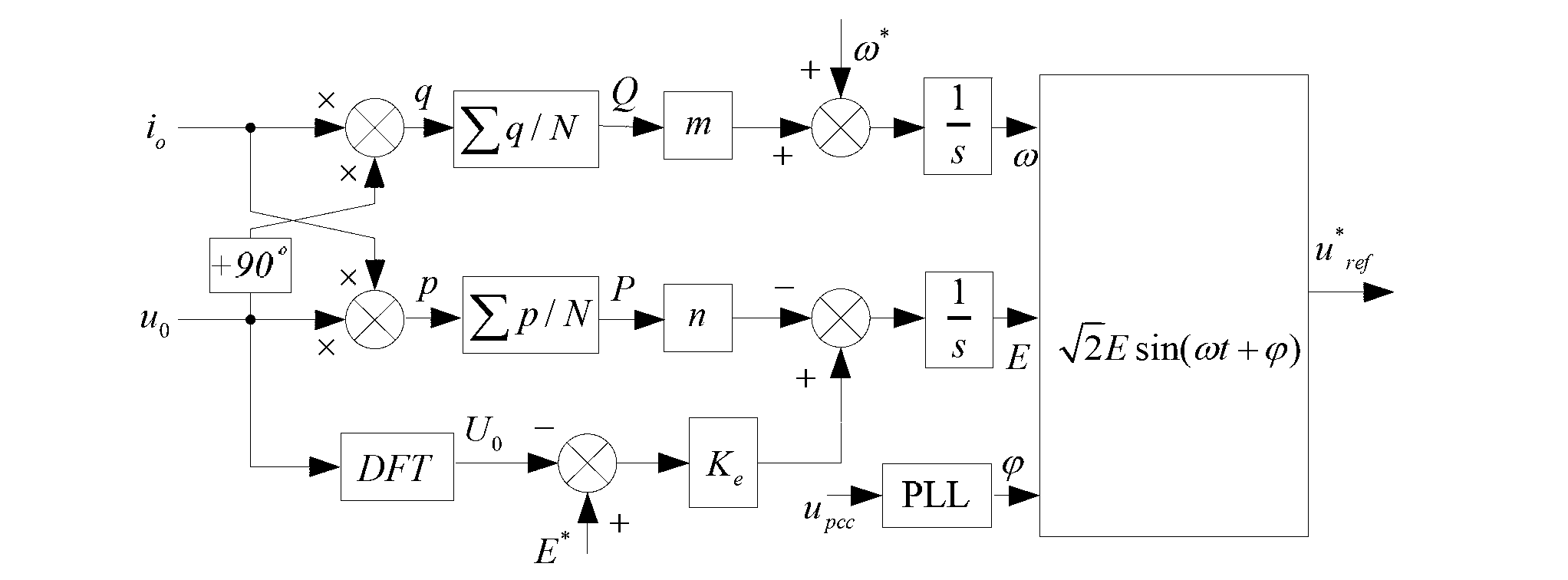

Micro-grid multi-inverter parallel voltage control method for droop control of robust power

ActiveCN102842921AIncrease the output resistanceSmall output resistanceSingle network parallel feeding arrangementsLow voltageVoltage reference

The invention discloses a micro-grid multi-inverter parallel voltage control method for droop control of robust power. The method comprises the following steps of: specific to each inverter in a micro-grid, computing and synthesizing an inverter output reference voltage by adopting a robust power droop controller; and introducing virtual complex impedance containing a resistance component and an inductive impedance component, and keeping inverter output impedance in a pure resistance state under a power frequency condition by adopting a multi-loop voltage control method based on virtual impedance and quasi-resonance PR (Proportional-Resonant) control, thereby realizing micro-grid multi-inverter parallel running and power equation, wherein the robustness of a micro-grid parallel system on numeric value computing errors, parameter drift, noise interference and the like is enhanced. Due to the adoption of the method, the defects of larger loop current of a parallel system, non-uniform power distribution and the like caused by the inductivity of the impedance output by inverters in the conventional droop method are overcome; and the method is suitable for multi-grid parallel uniform current control in a low-voltage micro-grid.

Owner:HUNAN UNIV

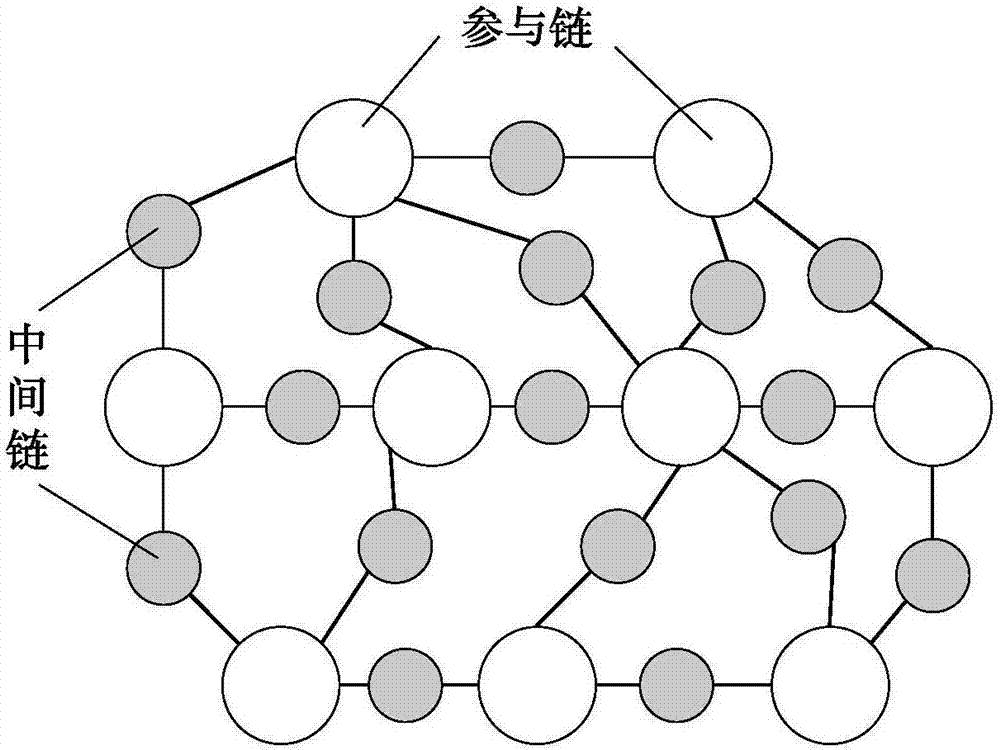

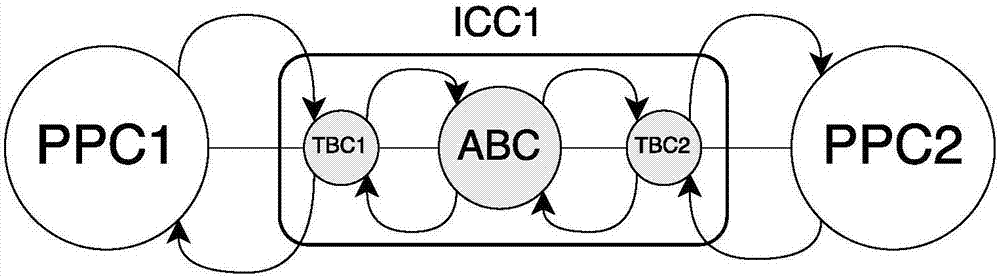

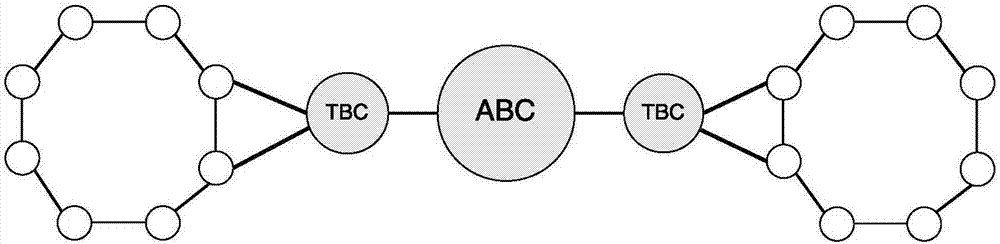

Double chain-type cross chain trading Internet of blockchains model core algorithm

InactiveCN107248076AJoin easyEasy to leaveFinanceCryptography processingFault toleranceNetwork architecture

The invention discloses a double chain-type cross chain trading Internet of blockchains model (golden monkey model) core algorithm. Different from the traditional centralized architecture, the model has a fully-distributed and multi-chain network architecture. Due to the fully-distributed architecture, easier expansion, easier extension and easier fault tolerance are realized than the existing architecture. Each chain maintains the self consistency, the consistency between chains does not need to be managed by a central organization but is maintained by a fully-distributed mechanism. Limitations from the previous centralized Internet of blockchains (chain network, in short) are broken, all chains can operate in parallel on the model, and the trading efficiency and the network operation speed are improved. The model has a new financial market architecture, extensibility is realized, a financial unit can randomly and easily enter or leave the network, and the large-scale network and a high transaction volume are supported. The model comprises participant blockchains and inter-chain blockchains, the participant blockchains or the inter-chain blockchains can be one or one group of financial institutions, the inter-chain blockchain has a double-chain structure, a trading record and a balance account are separated, and cross chain trading is realized. A core algorithm for trading between chains, particularly, a protocol algorithm of enabling the inter-chain blockchains to assist two or more than two participant blockchains for mutual trading is provided, no centralized mechanism is needed, and the consistency of the whole network is maintained.

Owner:ZEU CRYPTO NETWORKS INC

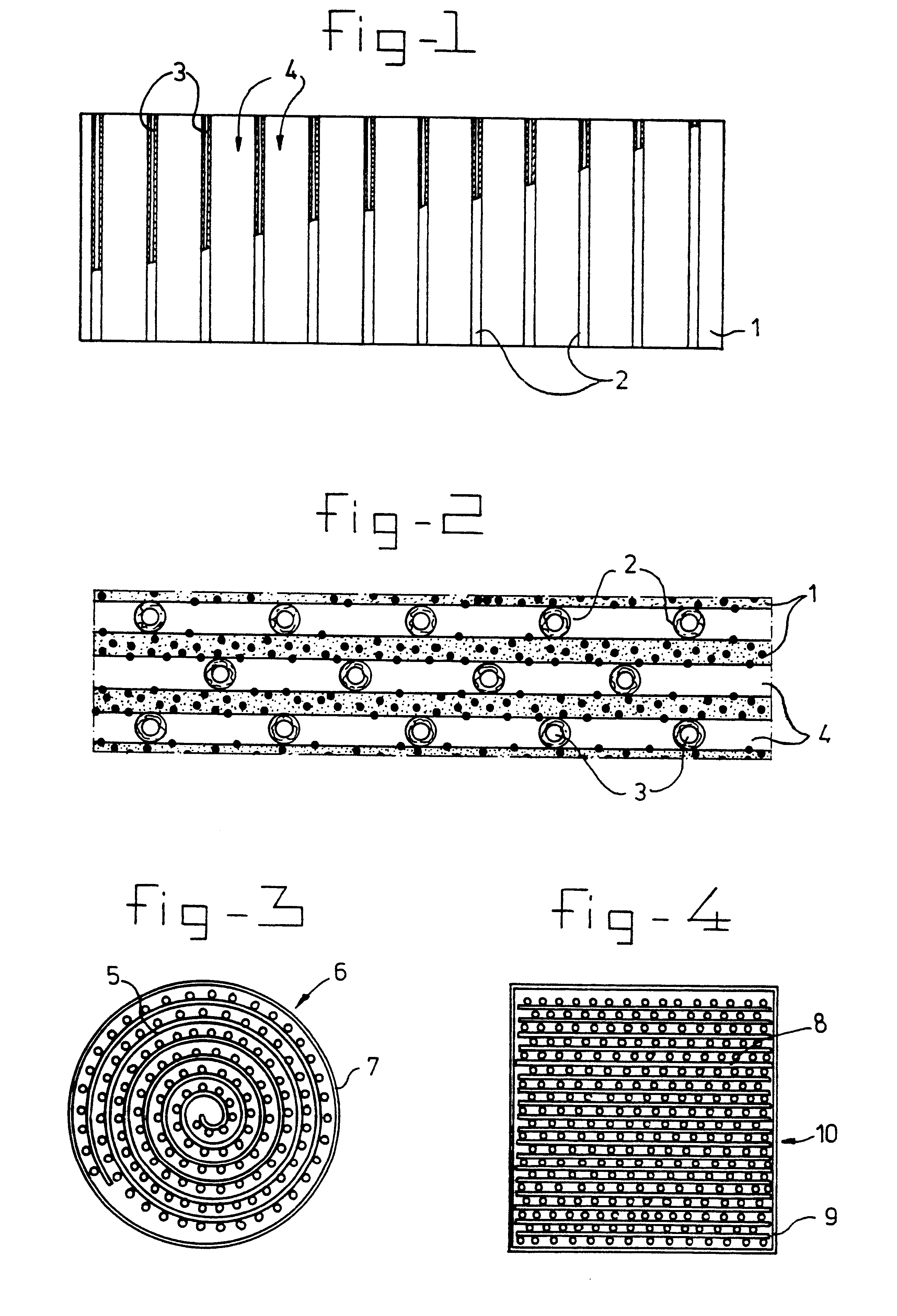

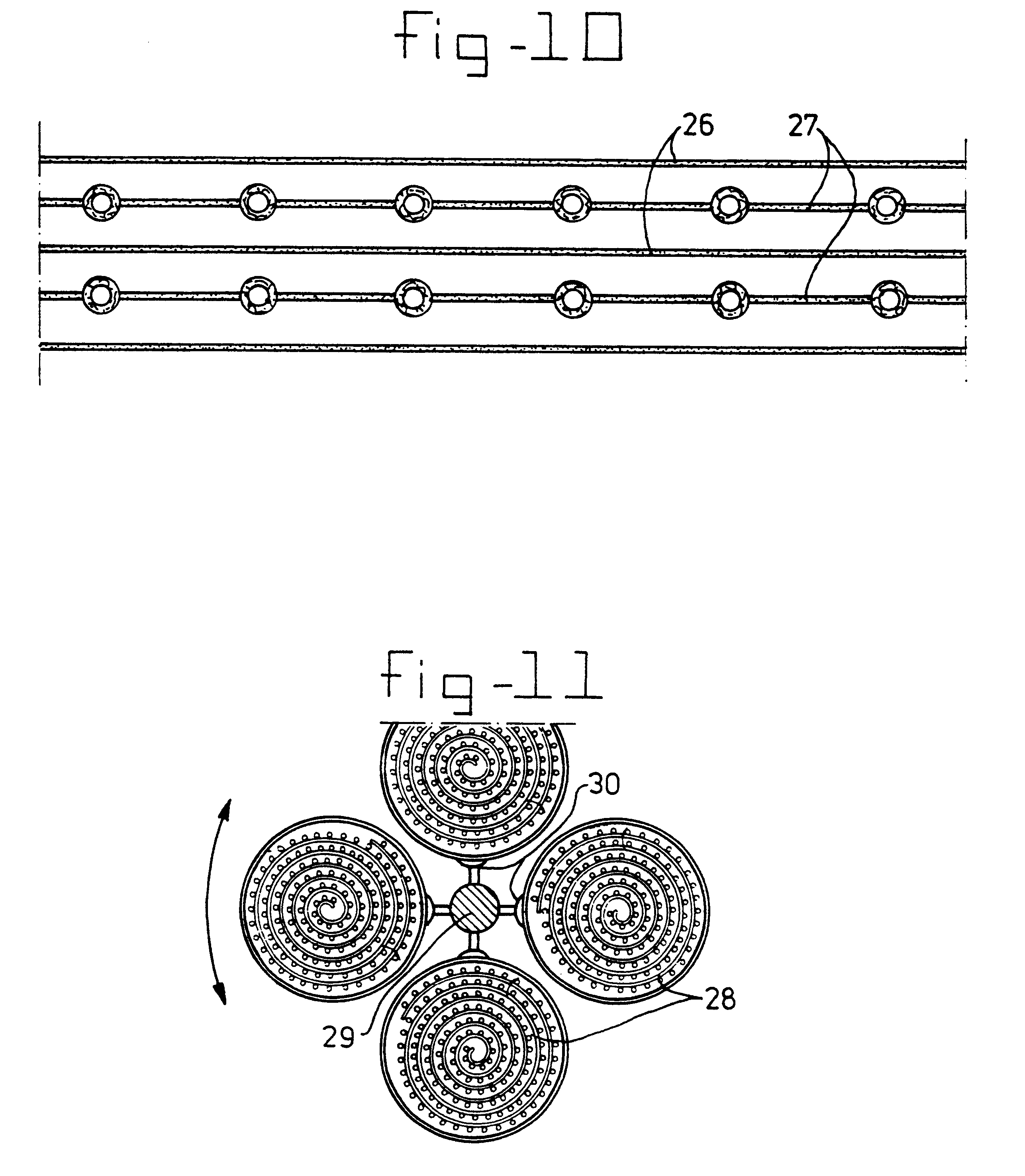

Bio-artificial organ containing a matrix having hollow fibers for supplying gaseous oxygen

InactiveUS6372495B1Practical to useBioreactor/fermenter combinationsBiological substance pretreatmentsHollow fibreFiber

A bio-artificial organ system is provided comprising a wall surrounding a space which has a solid support for cell cultivation. The space includes a there dimensional matrix in the form of a highly porous sheet or mat and including a physiologically acceptable network of fibers or an open-pore foam structure; hydrophobic hollow fibers permeable to gaseous oxygen or gaseous carbon dioxide evenly distributed through the three dimensional matrix material and arranged in parallel running from one end of the matrix material to the other end of the matrix material. Cells obtained from organs are present in the extra fiber space for culture wherein the cells are provided with sufficient oxygenation and are maintained as small aggregates with three dimensional attachment.

Owner:ACADEMISCH ZIEKENHUIS BIJ DE UNIV VAN AMSTERDAM ACADEMISCH MEDISCH CENT +1

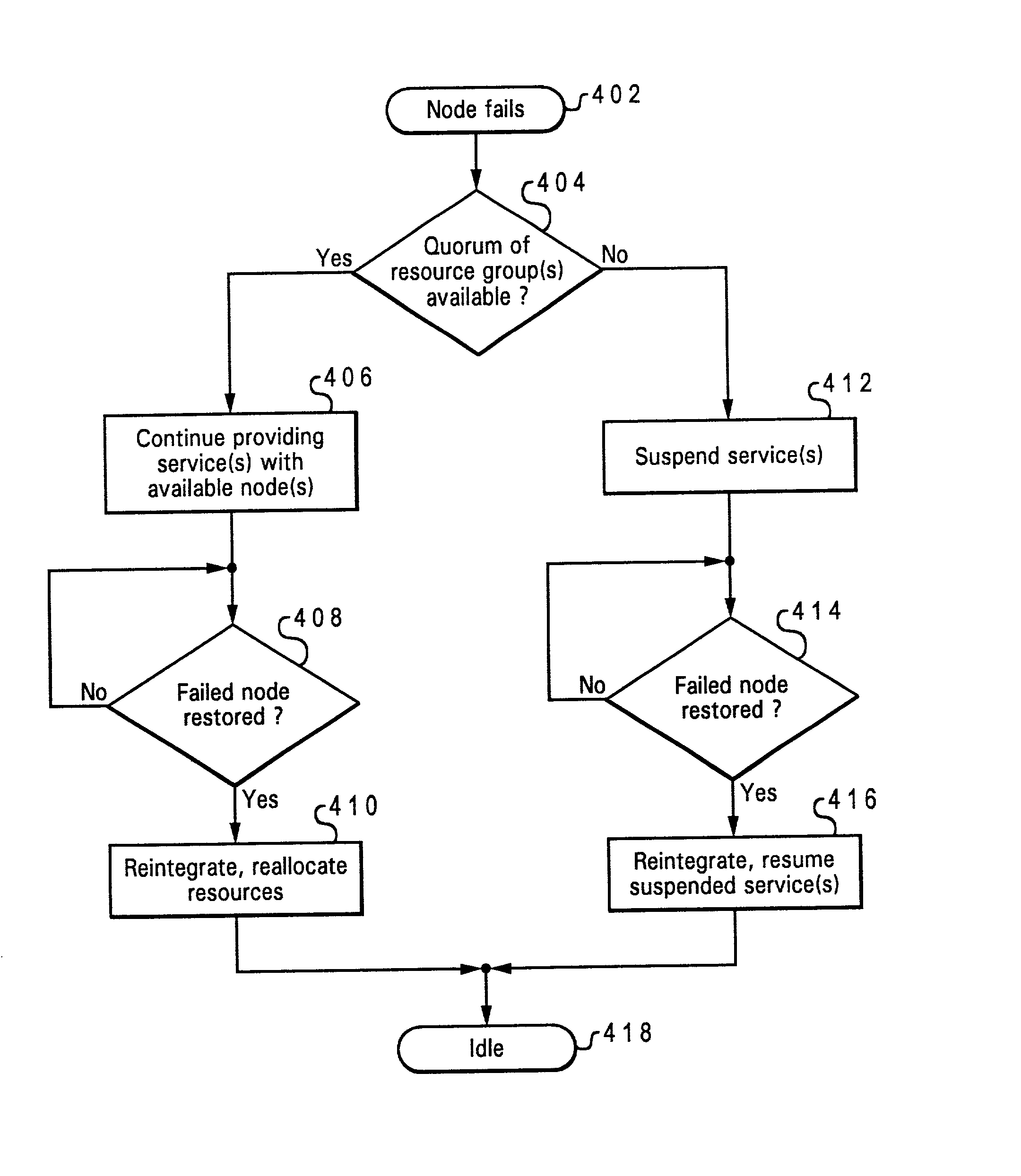

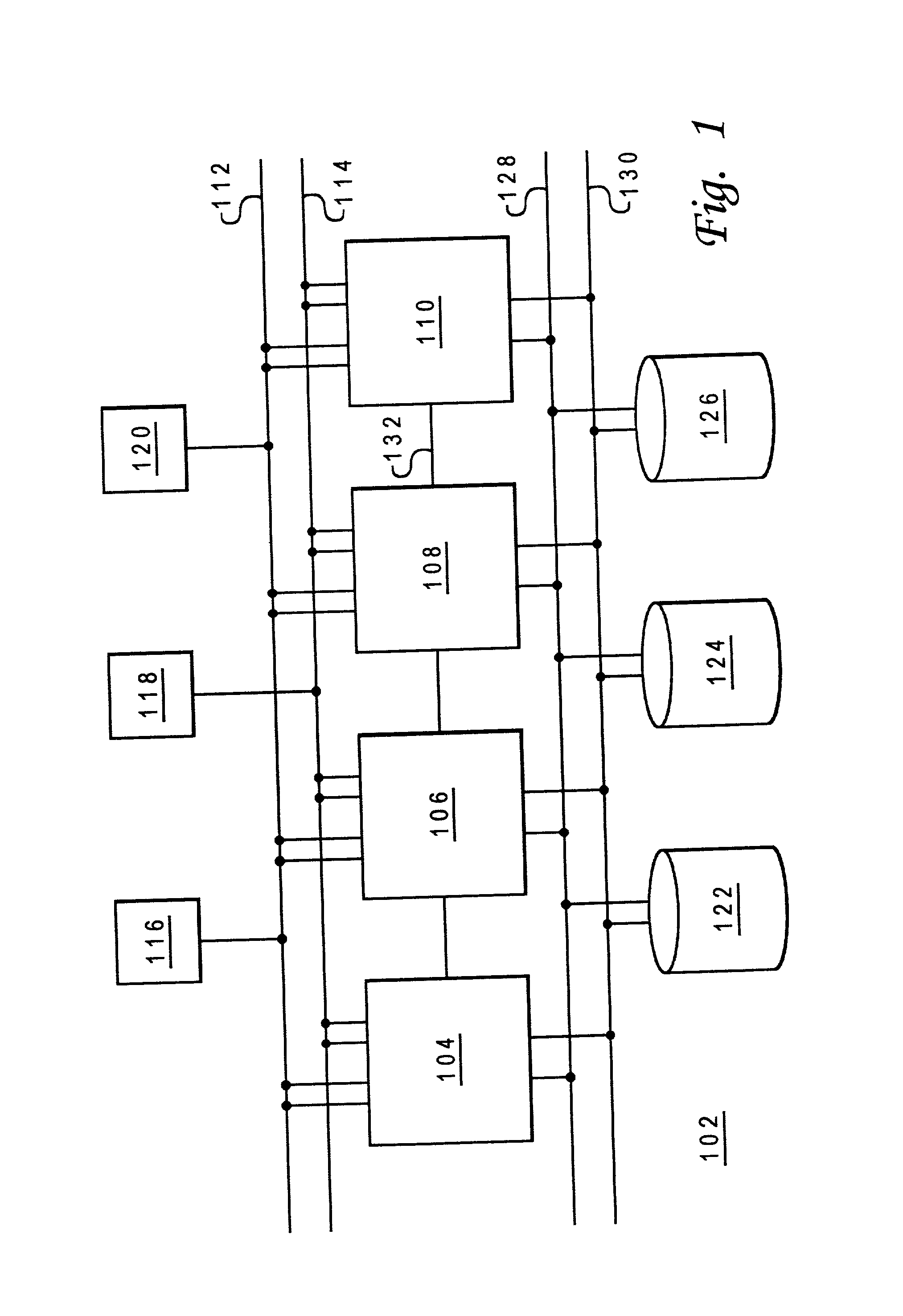

Highly scalable and highly available cluster system management scheme

InactiveUS20020091814A1Low costImprove scalabilityError detection/correctionMultiple digital computer combinationsData processing systemCluster systems

A cluster system is treated as a set of resource groups, each resource group including an highly available application and the resources upon which it depends. A resource group may have between 2 and M data processing systems, where M is small relative to the cluster size N of the total cluster. Configuration and status information for the resource group is fully replicated only on those data processing systems which are members of the resource group. A configuration object / database record for the resource group has an associated owner list identifying the data processing systems which are members of the resource group and which may therefore manage the application. A data processing system may belong to more than one resource group, however, and configuration and status information for the data processing system is replicated to each data processing system which could be affected by failure of the subject data processing system-that is, any data processing system which belongs to at least one resource group also containing the subject data processing system. The partial replication scheme of the present invention allows resource groups to run in parallel, reduces the cost of data replication and access, is highly scalable and applicable to very large clusters, and provides better performance after a catastrophe such as a network partition.

Owner:BLUECAT NETWORKS USA

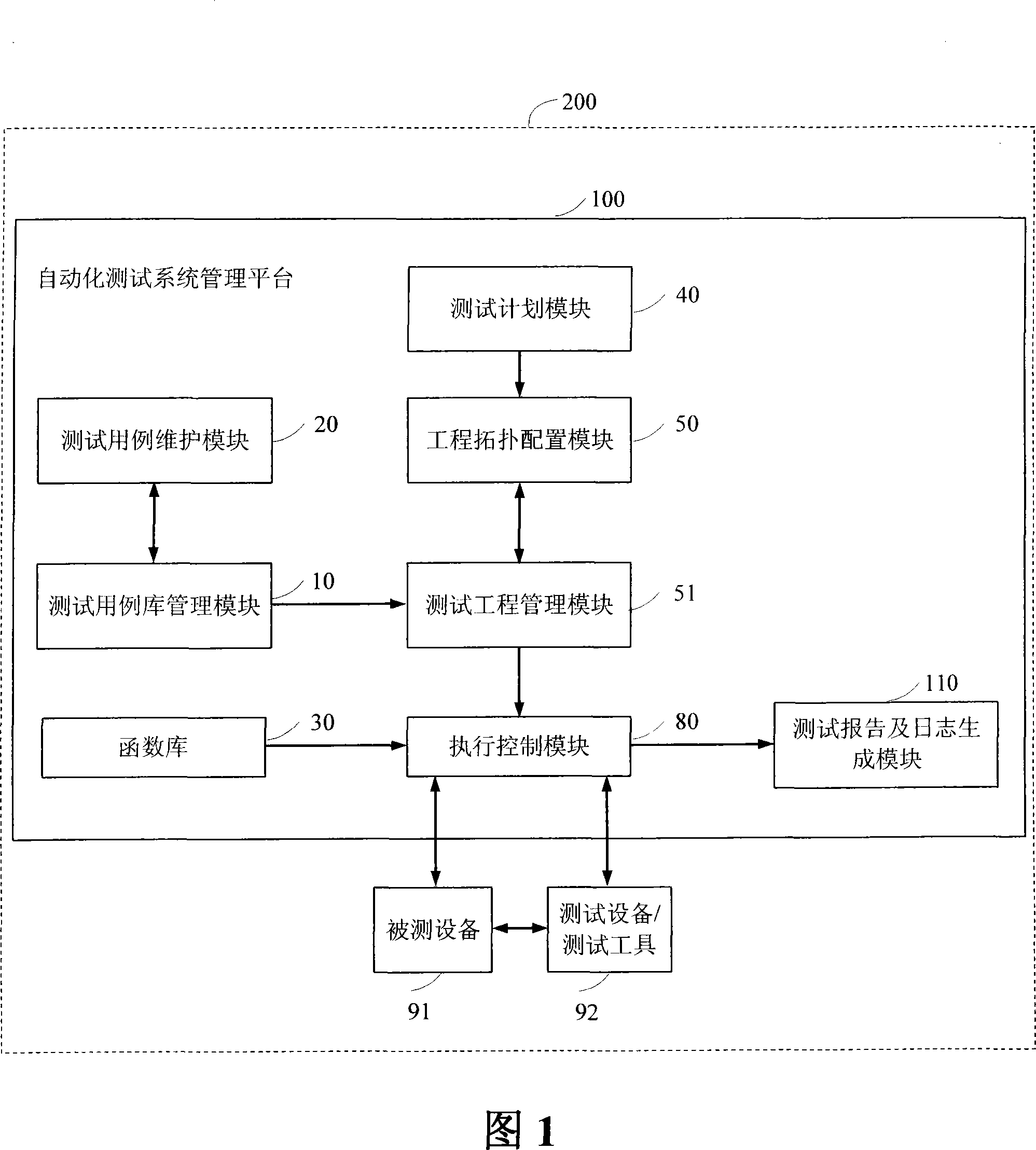

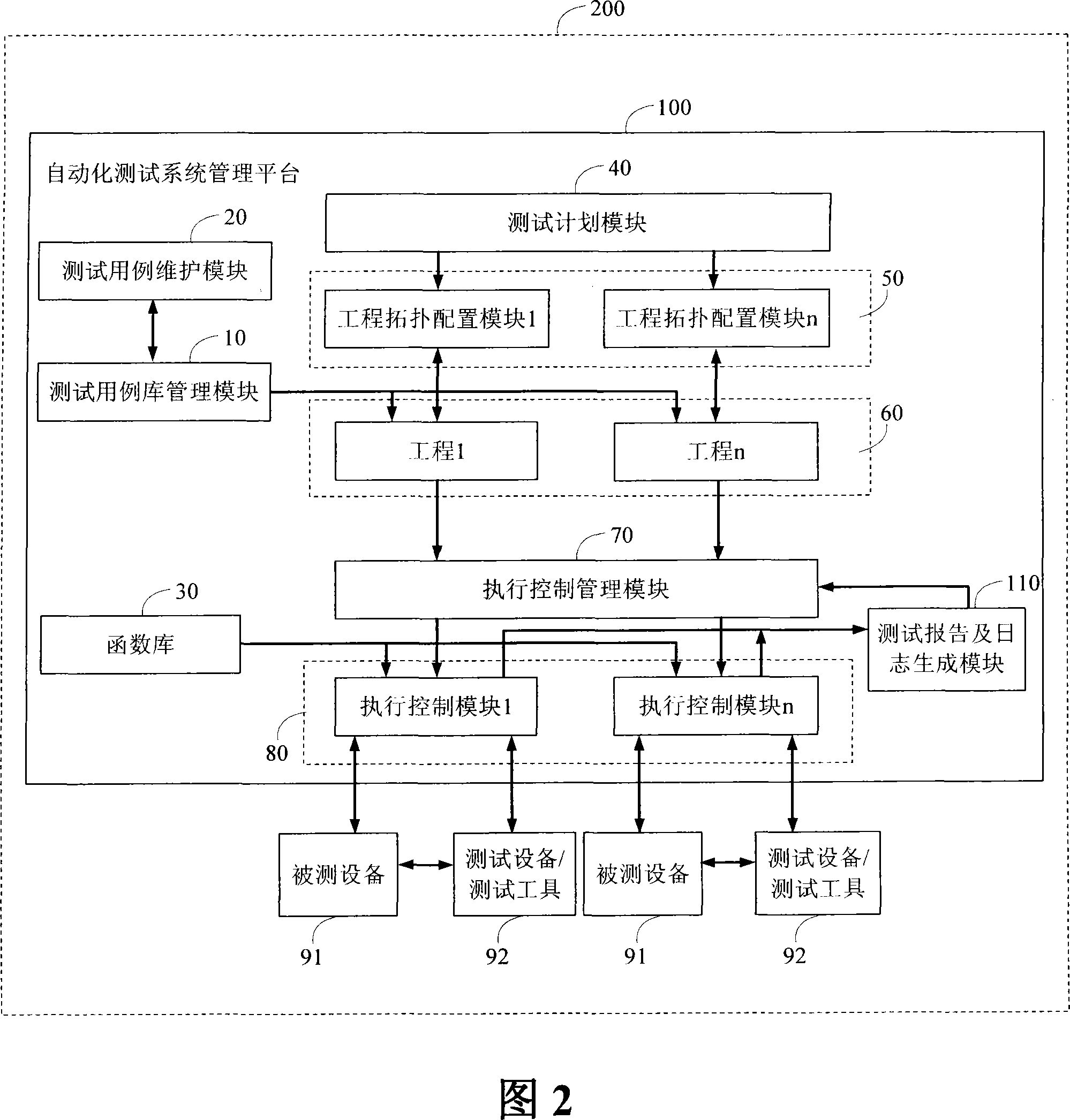

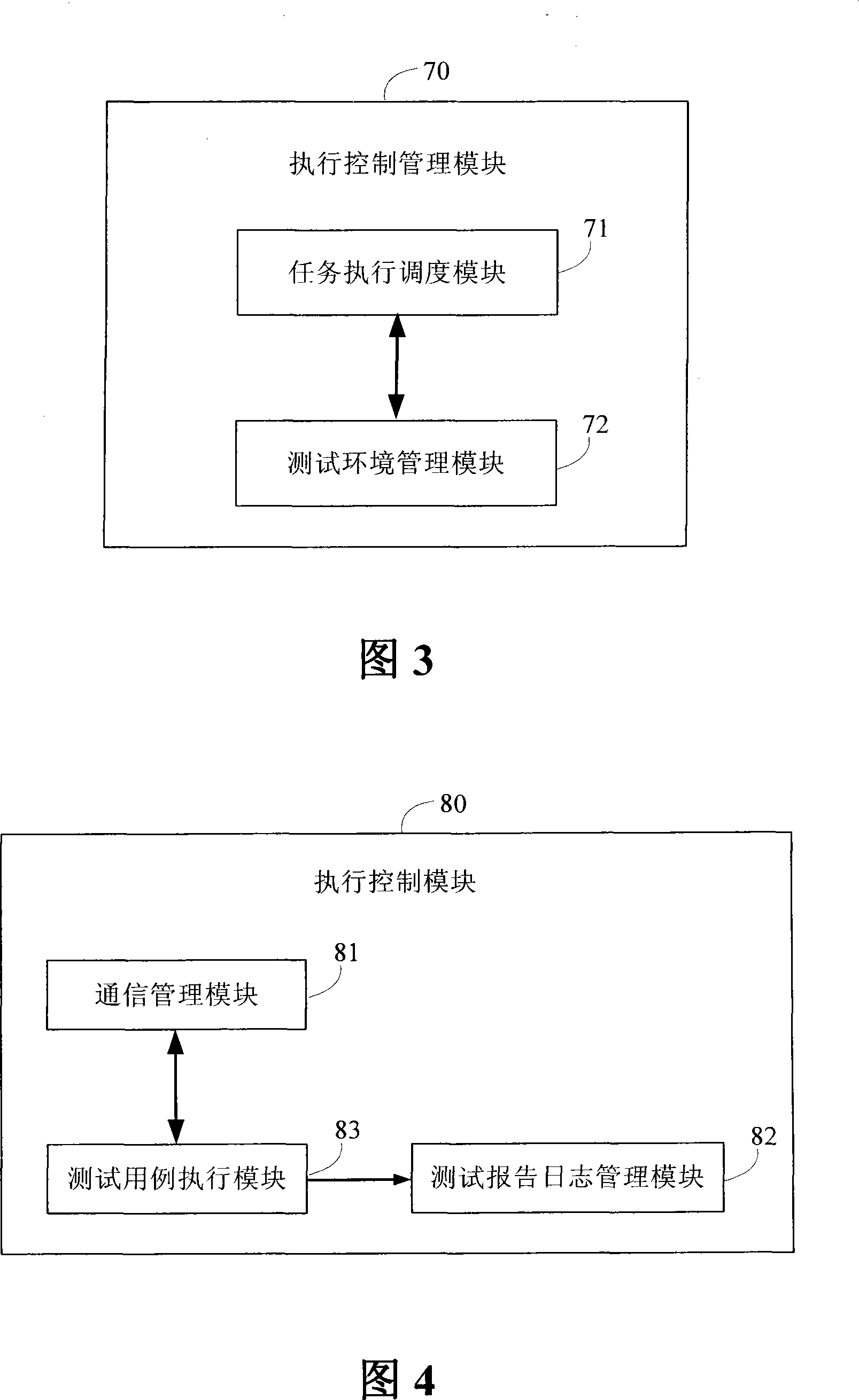

System for parallel executing automatization test based on priority level scheduling and method thereof

InactiveCN101227350ATake advantage ofImprove test efficiencyData switching networksResource utilizationExecution control

The invention discloses a parallel execution automation test system and a method which are based on priority scheduling, the system comprises a test plan module which is used to manage and draw up testing contents, an engineering topology configuring module which is used to topologically allocate test environment and manage the essential information of test environment, a test report and journal generating module for generating test journal and / or test report and a execution control module which is responsible for executing a plurality of test environment, and the invention also comprises an execution control management module, a connection execution control module, an engineering topology configuring module, a test report and journal generating module, a testing use case group with higher priority level which controls the execution control module to preferentially operate in test environment according to testing use case group, when the priority level is same, the relative testing use case group is operated in parallel. The invention greatly improves testing efficiency, which improves recourse utilization ratio, timely avoids ineffective test, and improves availability of test.

Owner:ZTE CORP

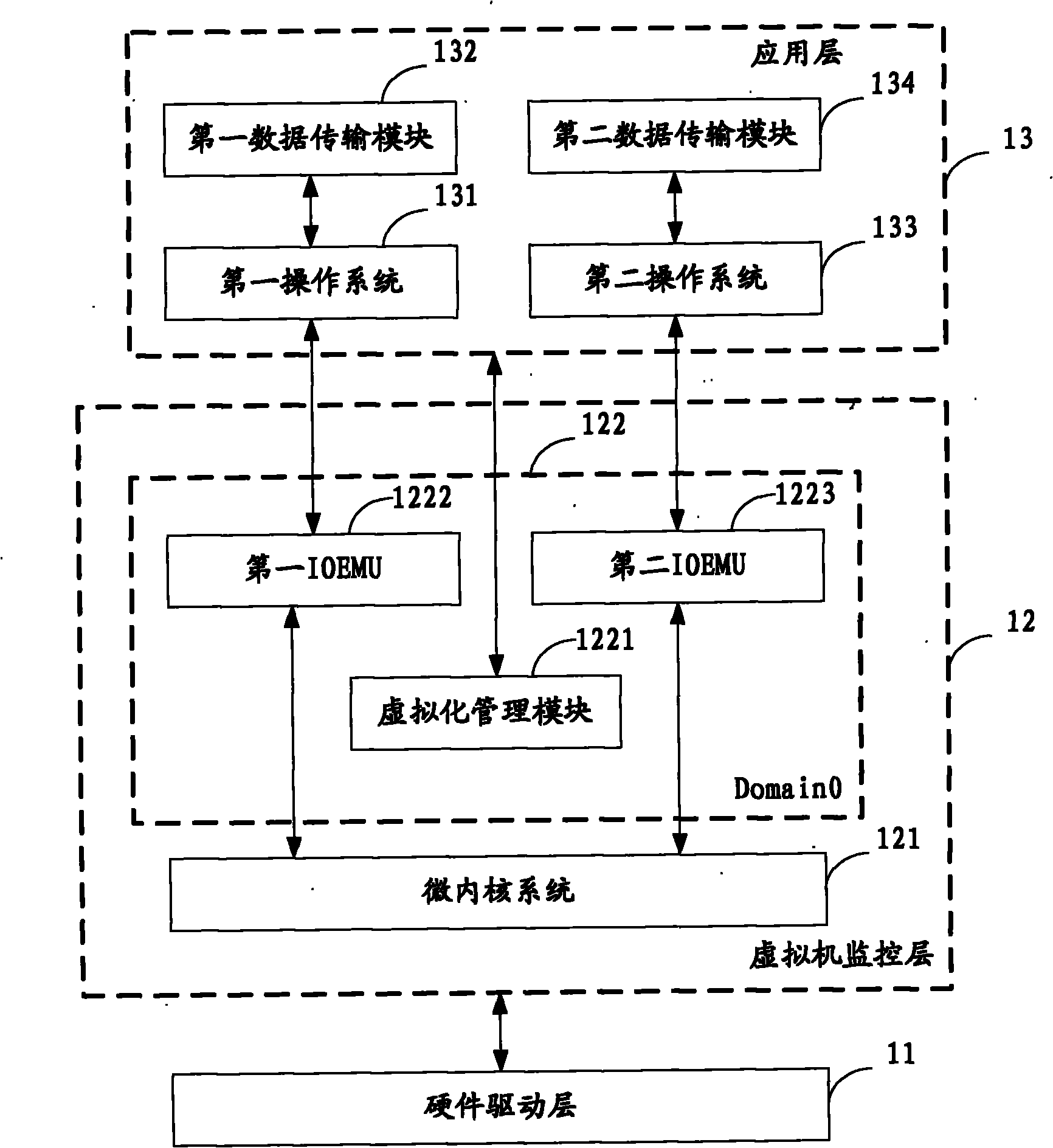

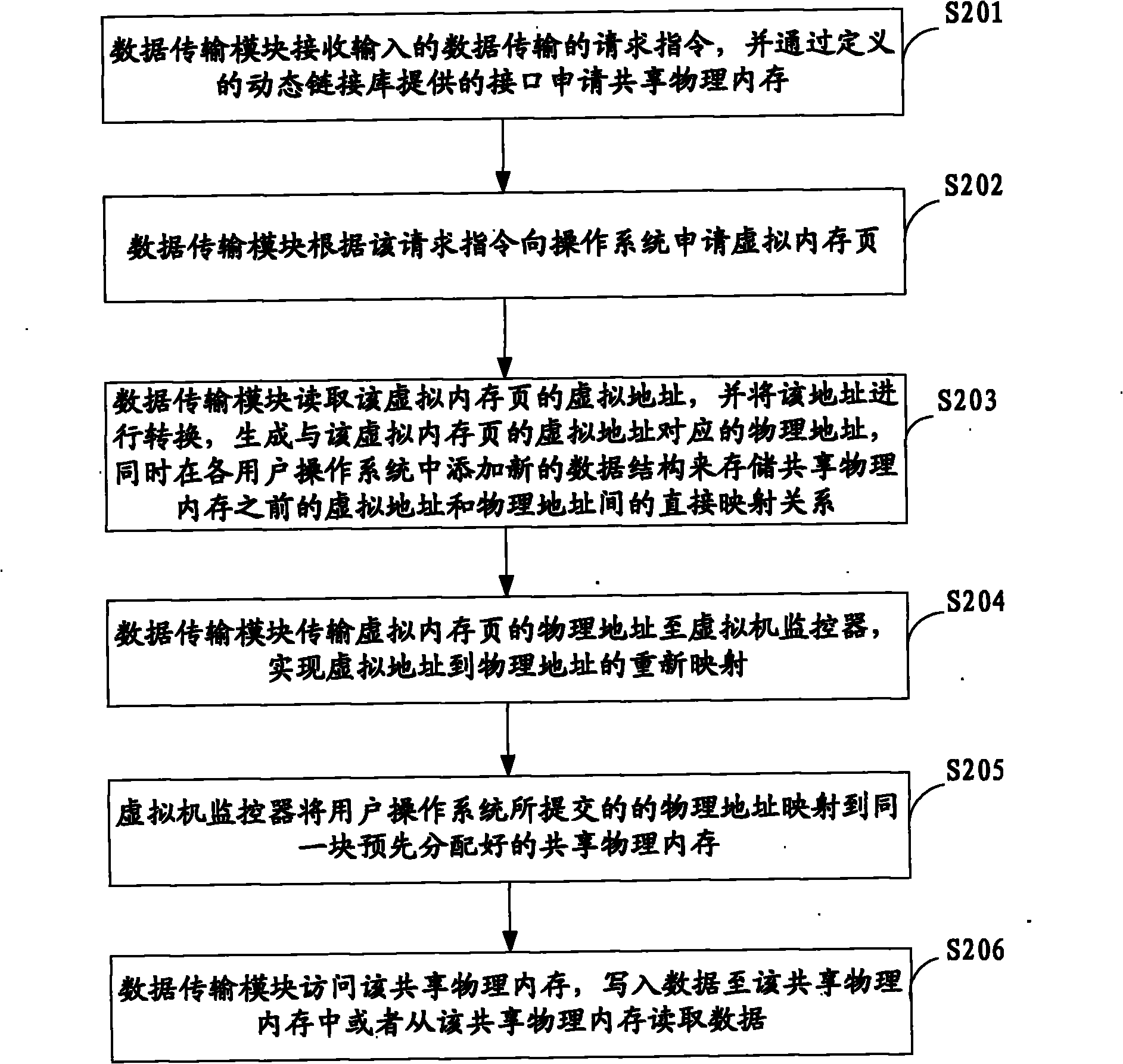

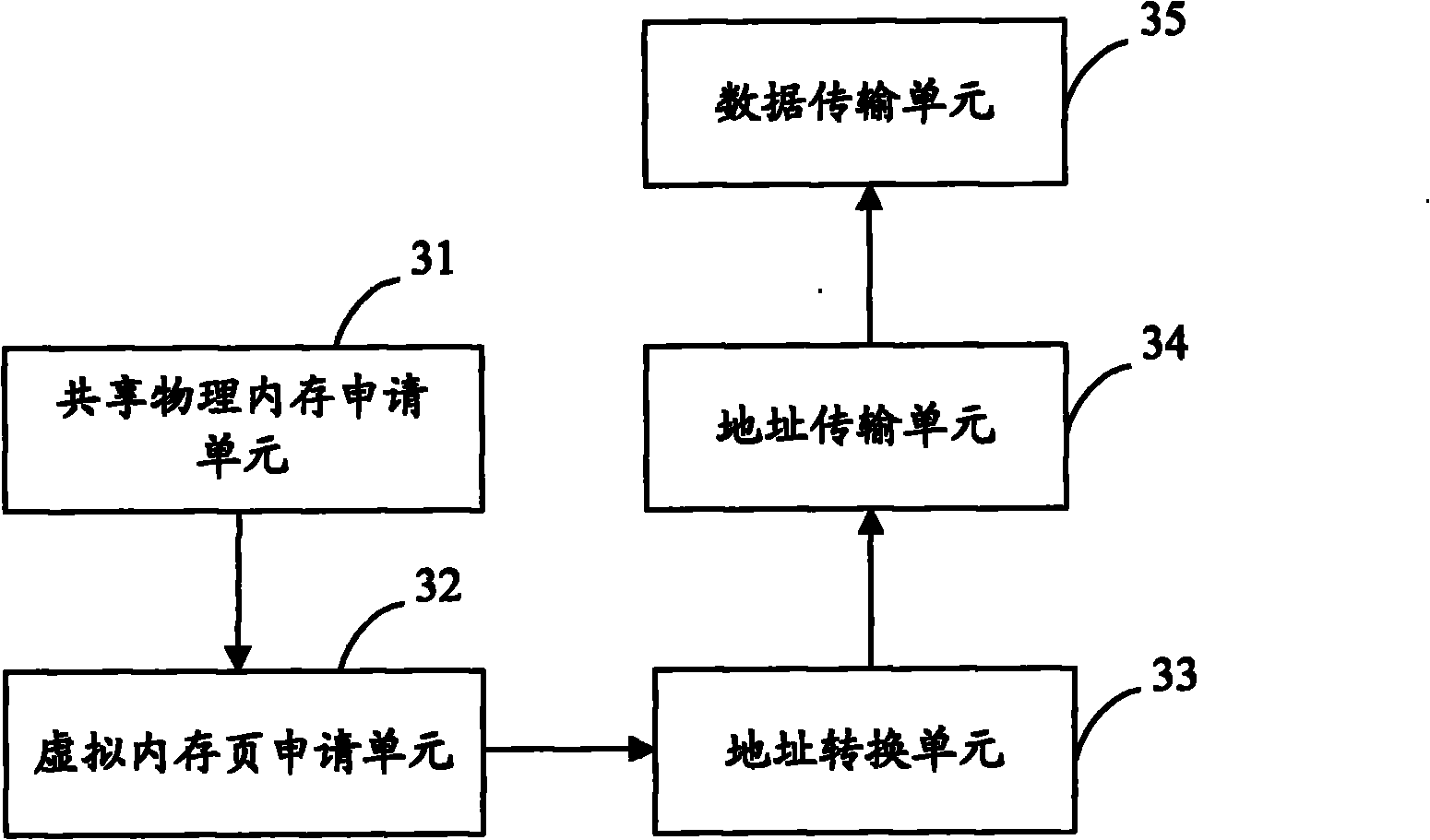

Method and system for safely transmitting data among parallel-running multiple user operating systems

ActiveCN102110196AGuaranteed isolation effectEasy to operateMemory adressing/allocation/relocationDigital data protectionThird partyVirtual memory

The invention is suitable for the technical field of computers, providing a method and system for safely transmitting data among parallel-running multiple user operating systems. The method comprises the following steps: a data transmission module applies for a shared physical memory; the data transmission module applies for a virtual memory page from the operating system; the data transmission module converts the virtual address of the virtual memory page to generate a physical address corresponding to the virtual address, and stores the direct mapping relationship between the virtual address and the physical address in front of the shared physical memory; the data transmission module transmits the physical address of the virtual memory page to a virtual machine monitor to achieve the purpose of mapping the virtual address to the physical address again; the virtual machine monitor maps the physical addresses submitted by the user operating systems to the same shared physical memory; and the data transmission module writes data into the shared physical memory or reads data from the shared physical memory. By utilizing the method and the system, data can be exchanged without a third party tool, and the operation is safe and convenient.

Owner:CHINA GREATWALL TECH GRP CO LTD

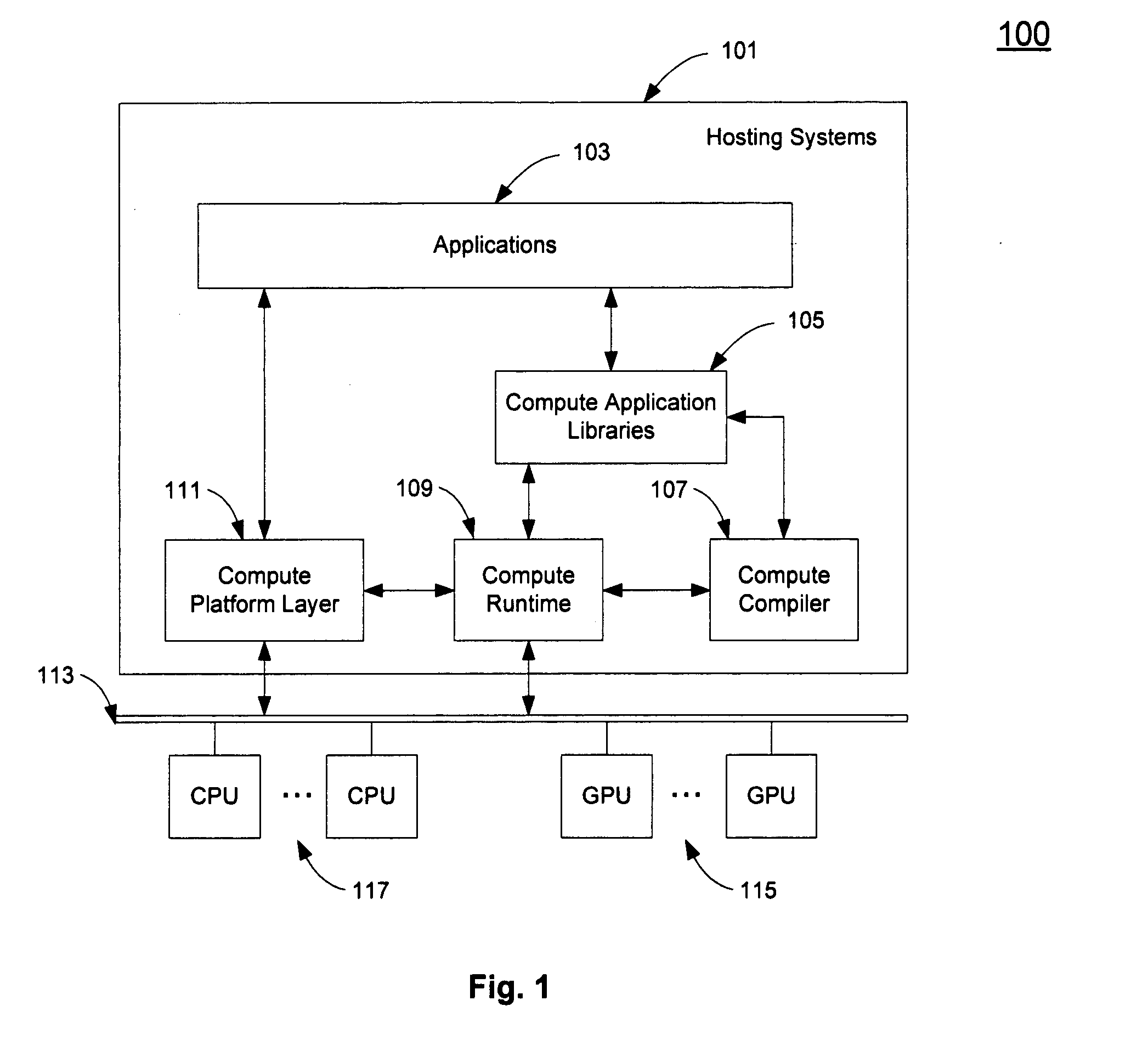

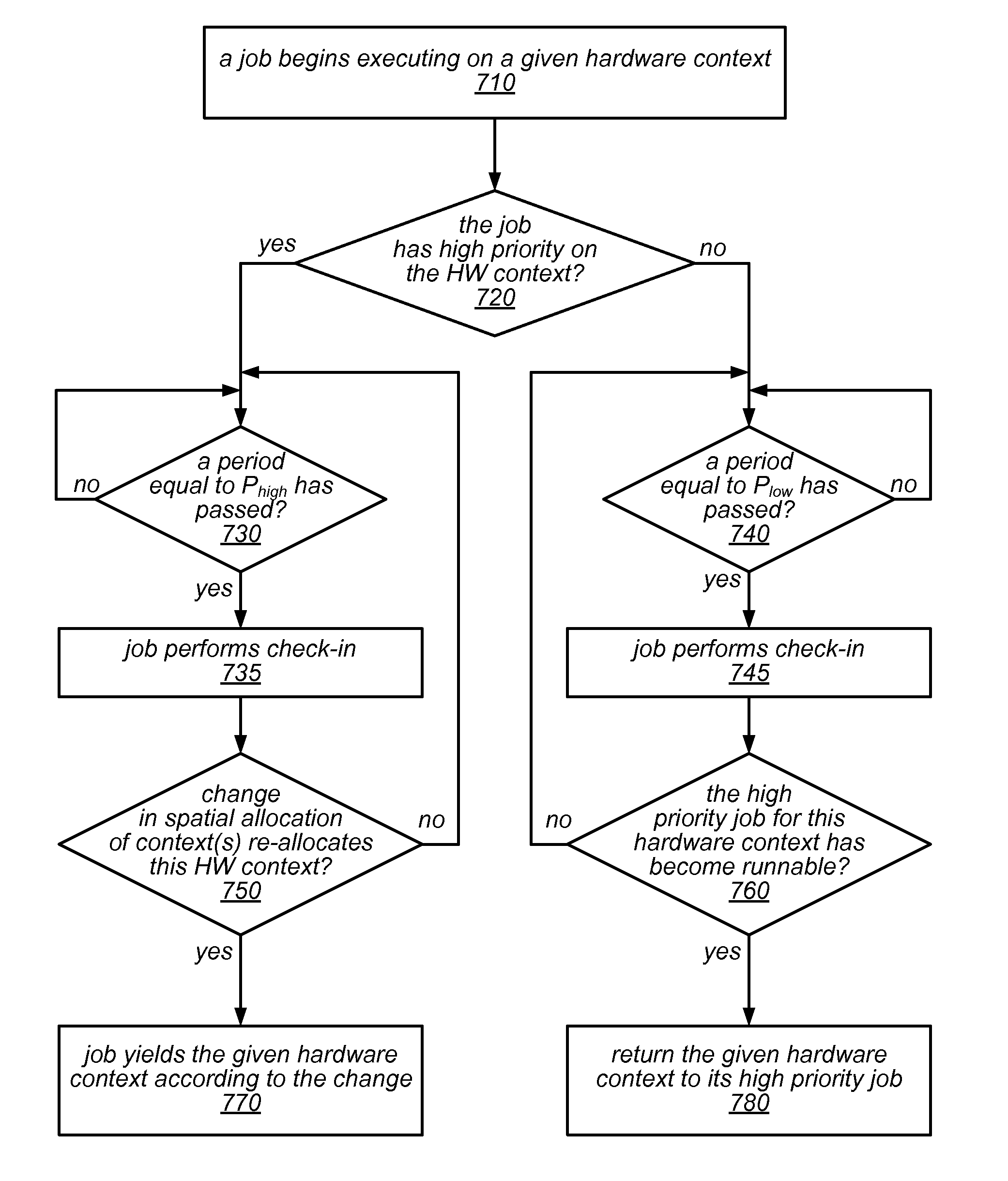

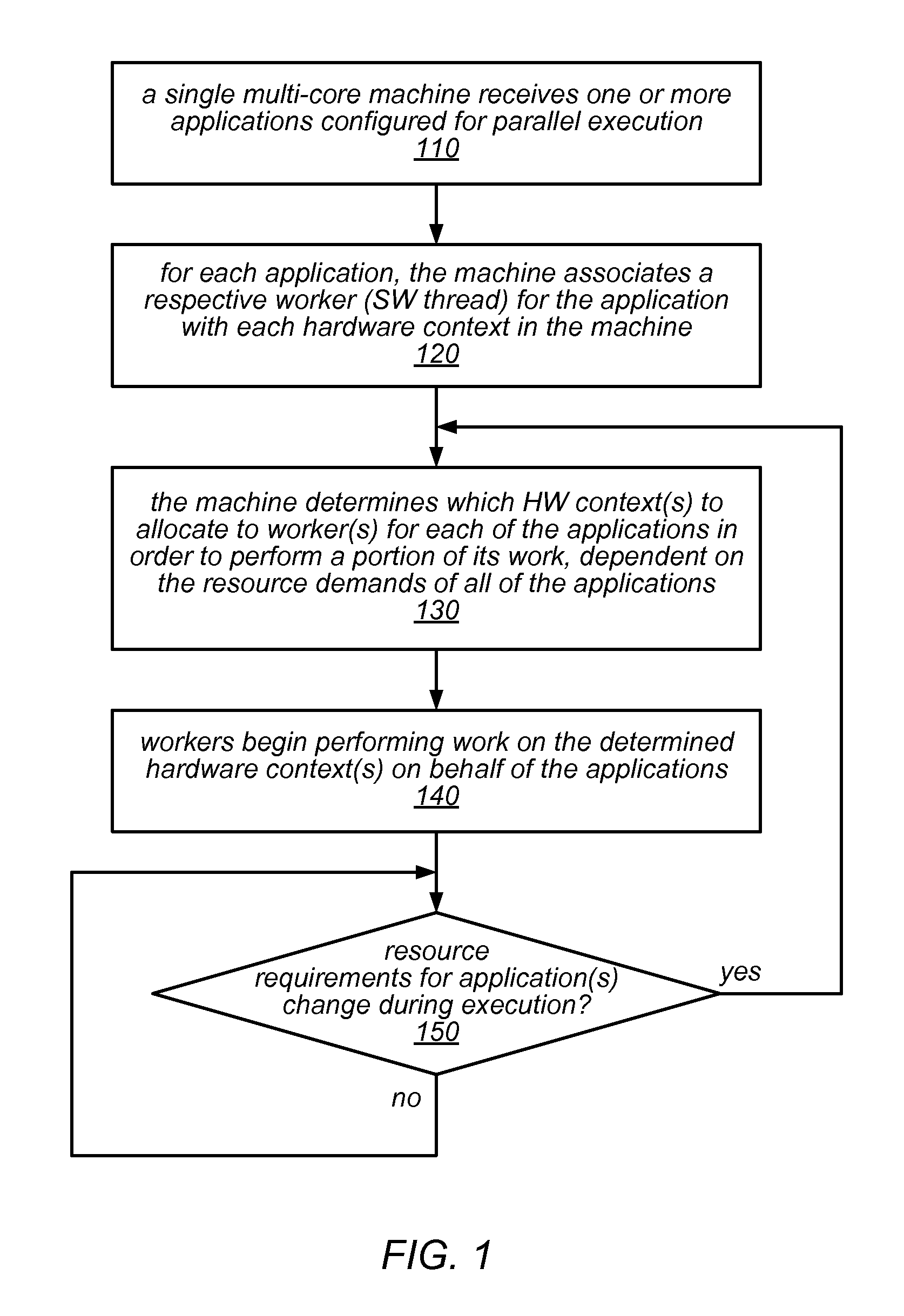

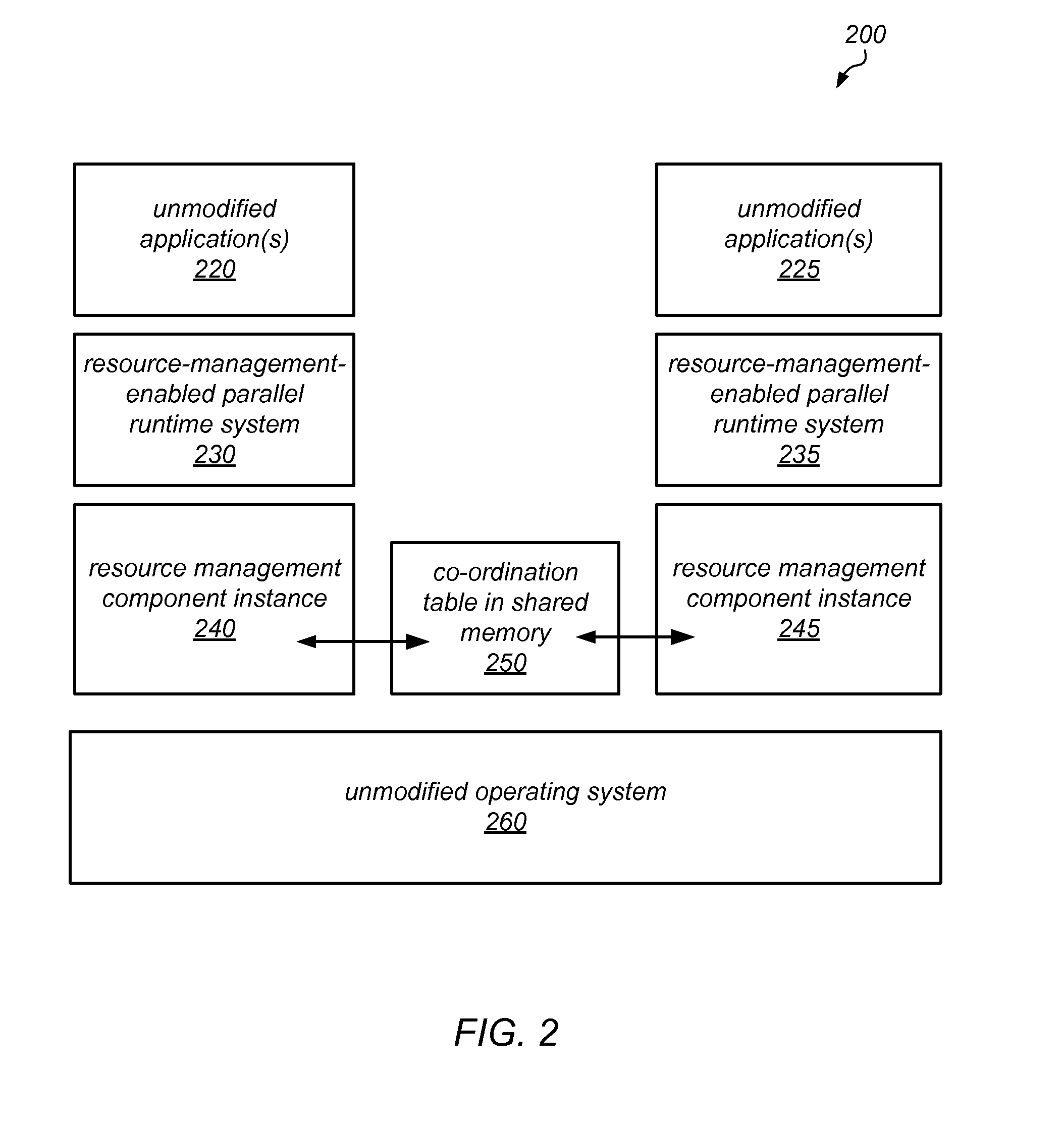

Dynamic Co-Scheduling of Hardware Contexts for Parallel Runtime Systems on Shared Machines

ActiveUS20150339158A1Reduce load imbalanceEasy to useProgram initiation/switchingResource allocationOperational systemResource management

Multi-core computers may implement a resource management layer between the operating system and resource-management-enabled parallel runtime systems. The resource management components and runtime systems may collectively implement dynamic co-scheduling of hardware contexts when executing multiple parallel applications, using a spatial scheduling policy that grants high priority to one application per hardware context and a temporal scheduling policy for re-allocating unused hardware contexts. The runtime systems may receive resources on a varying number of hardware contexts as demands of the applications change over time, and the resource management components may co-ordinate to leave one runnable software thread for each hardware context. Periodic check-in operations may be used to determine (at times convenient to the applications) when hardware contexts should be re-allocated. Over-subscription of worker threads may reduce load imbalances between applications. A co-ordination table may store per-hardware-context information about resource demands and allocations.

Owner:ORACLE INT CORP

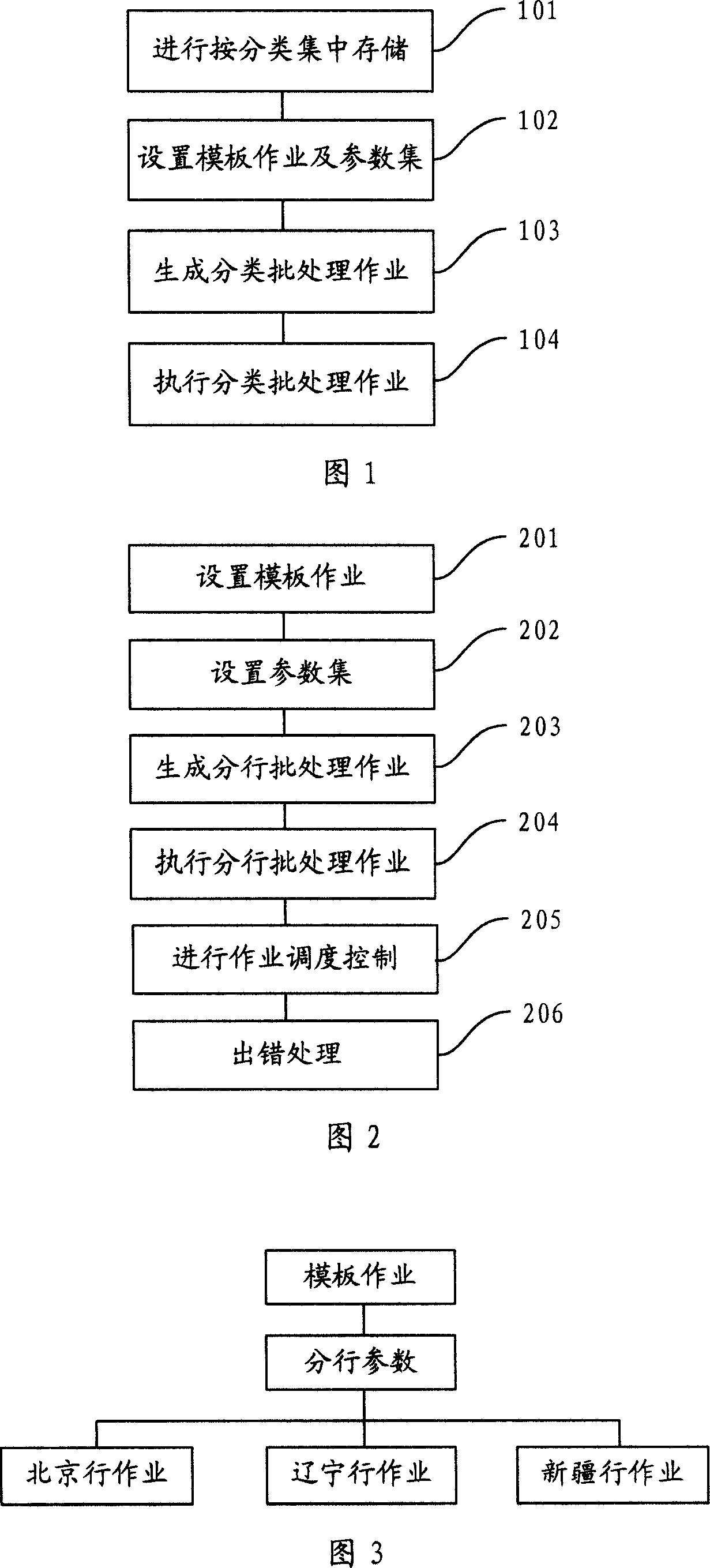

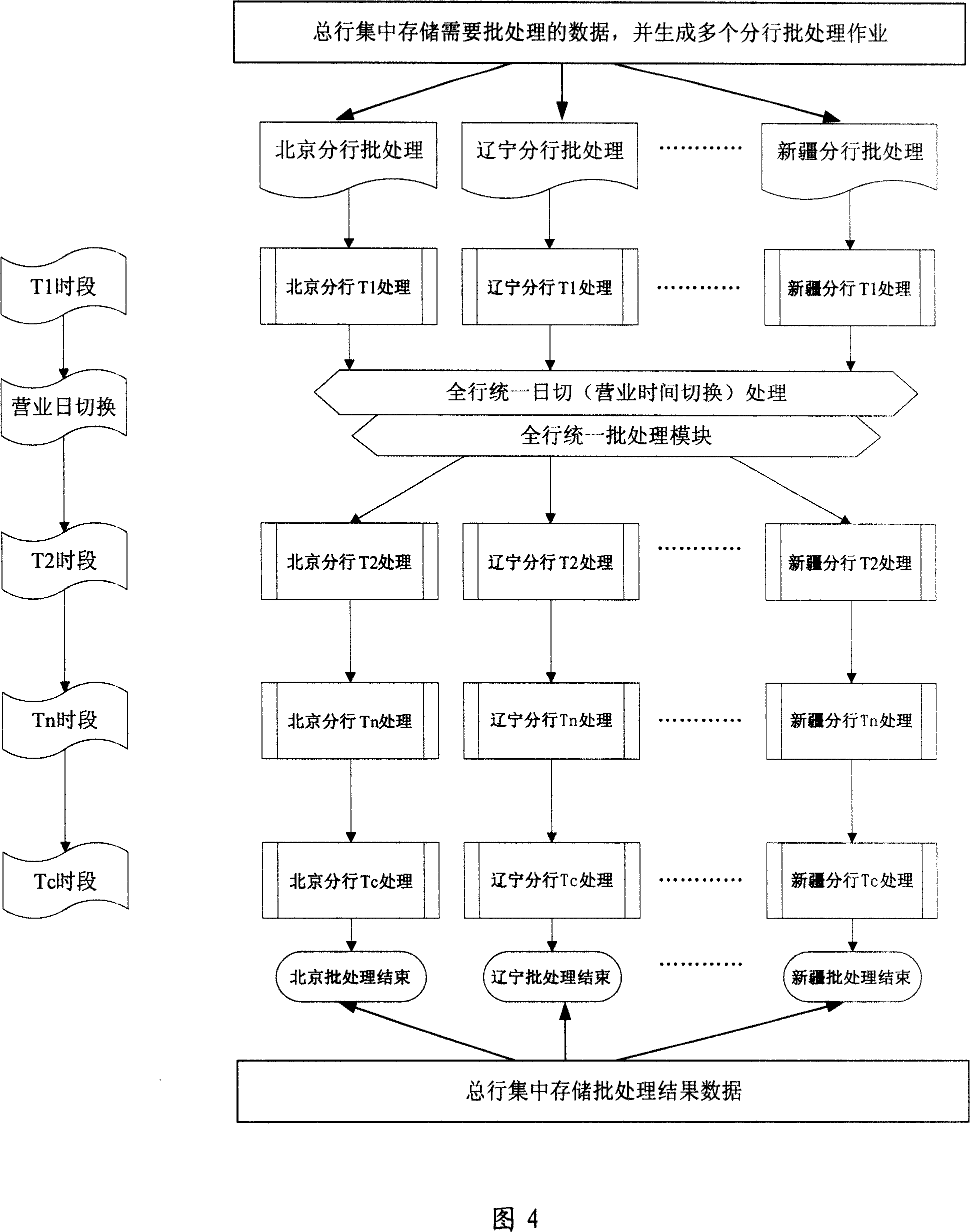

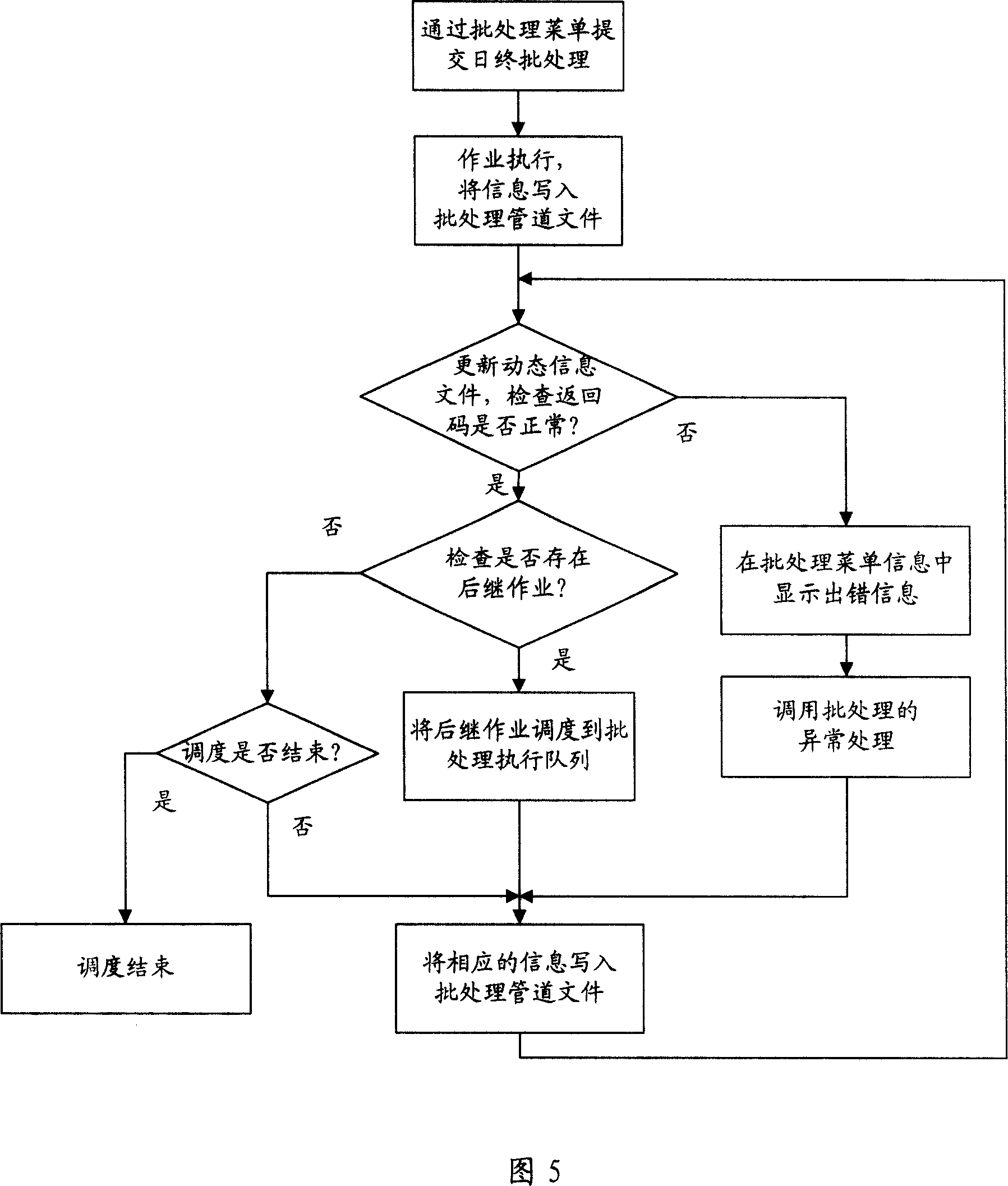

Method and device for categorical data batch processing

InactiveCN101017546AImprove processing efficiencyGood processing effectivenessFinanceMultiprogramming arrangementsBatch processingData mining

This invention discloses one method and device to sort data batch process, which comprises the following steps: integrating and storing disperse stored data; setting mode operation and parameters set to store different sorts of data process parameters; the said mode board is to generate different sort data batch process; according to the mode operation and the said parameters generation different sort of data batch; executing the said operations. This invention legally uses systematic materials to quicken process operation speed.

Owner:CHINA CONSTRUCTION BANK

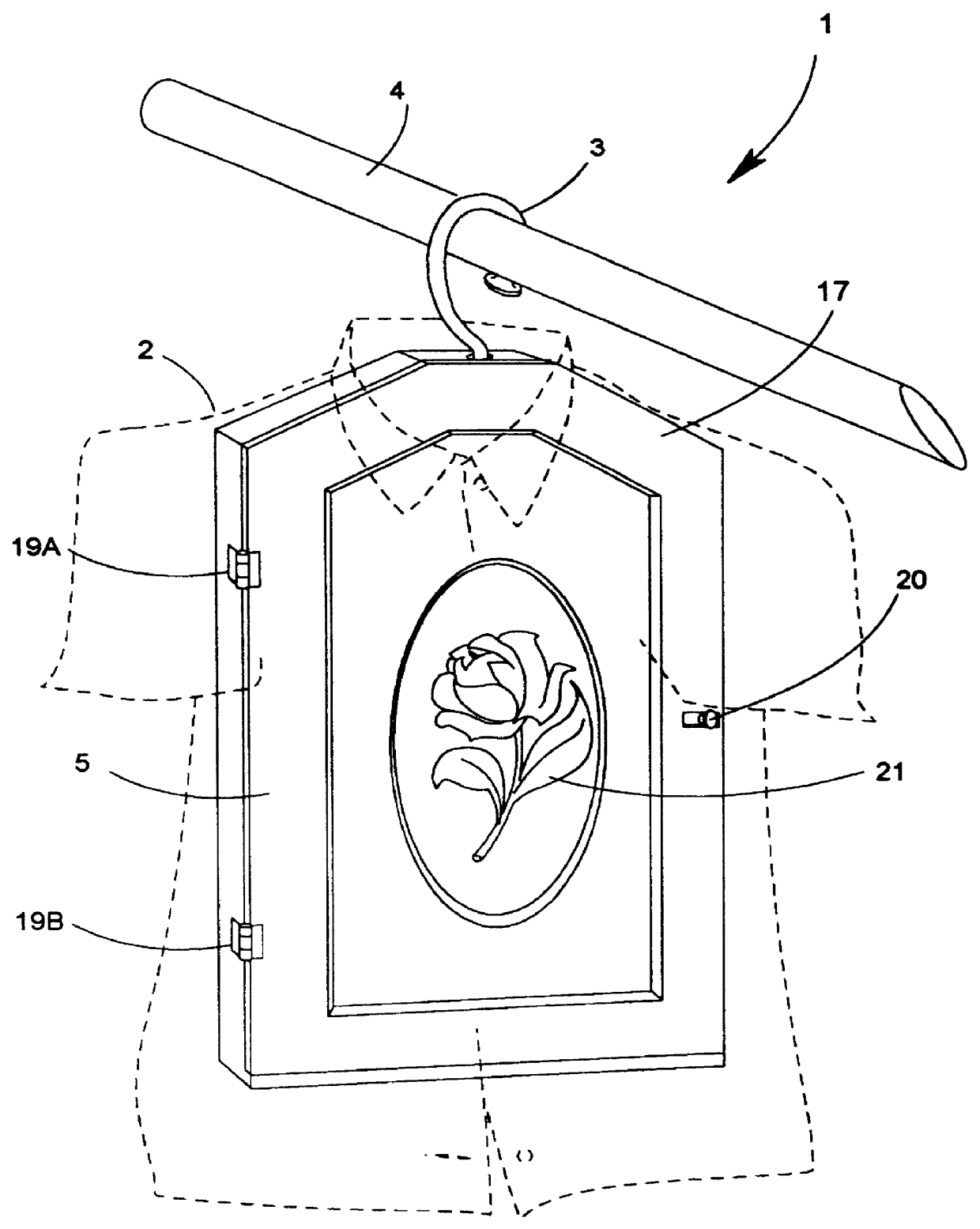

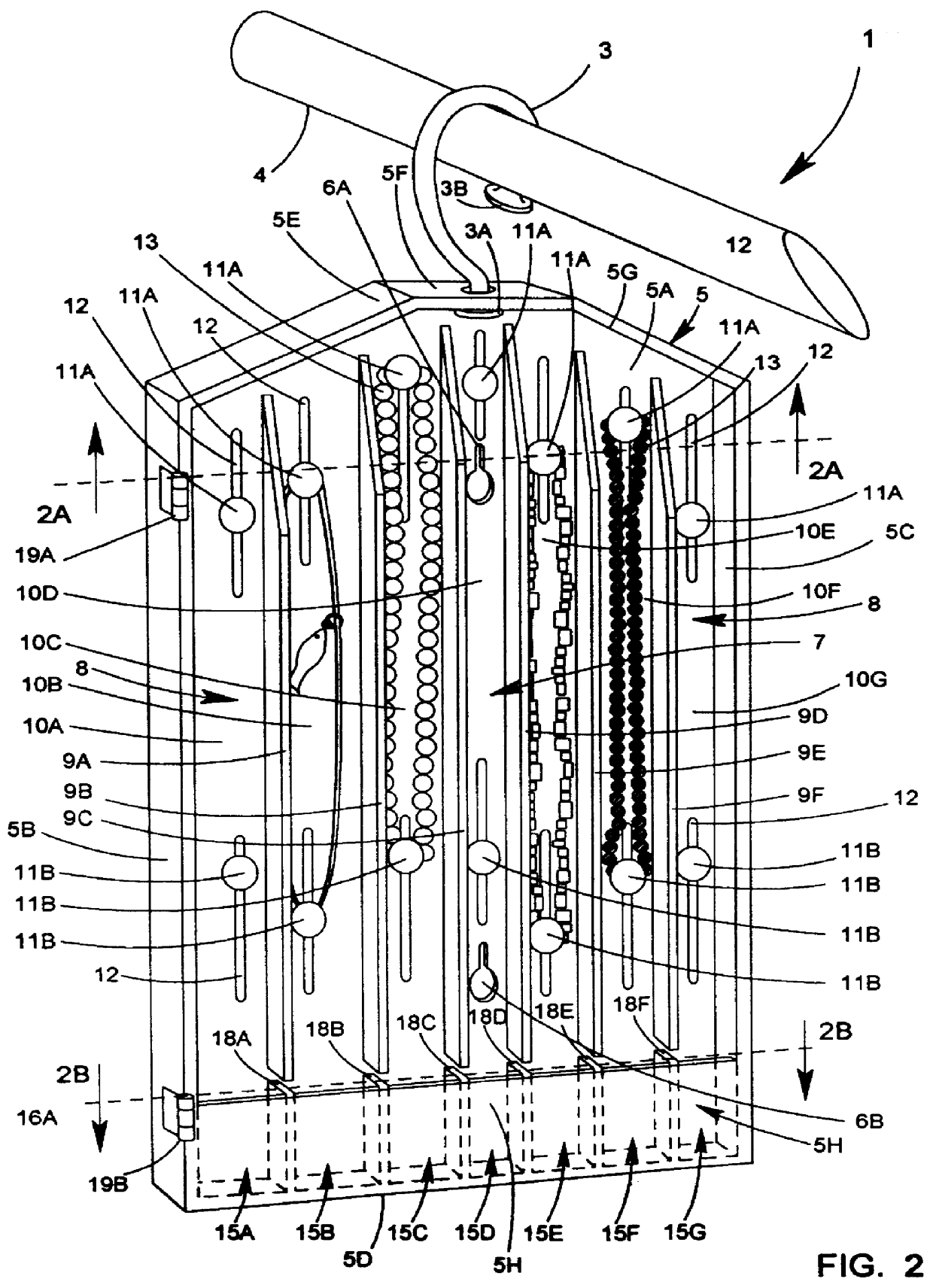

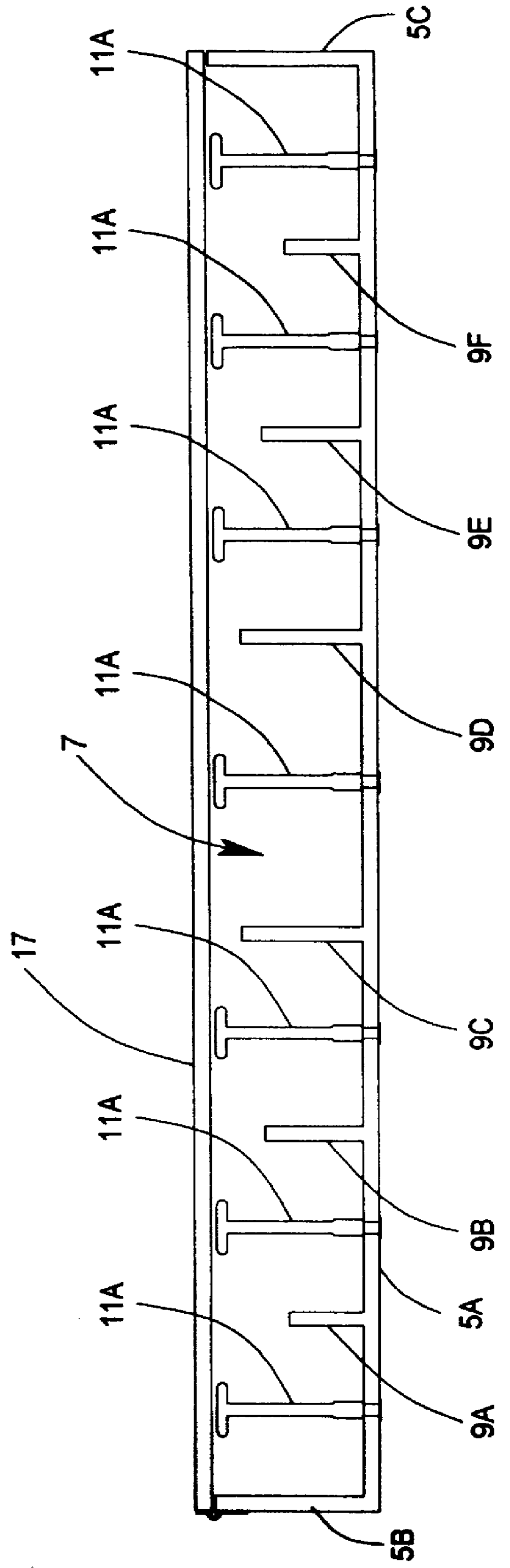

Garment-concealable jewelry case having parallel-running compartments and integrated jewelry trays for storing and organizing jewelry

A garment-concealable jewelry case having a front opening with a front cover panel portion that can be either moved or configured to reveal a plurality of parallel-running isolated storage compartments each having an interior storage space which is accessible through a front opening revealed when the front cover panel is removed or reconfigured. Through the front opening of each storage compartment, one or more necklaces, pendants, bracelets or other strands of jewelry can be securely hung on a pair of jewelry support posts adapted for spatial separation on the back wall portion of the storage compartment in order to accommodate the length of jewelry strands being supported. The bottom portion of each parallel-running isolated compartment has a stationary front panel portion which, cooperating with the other wall portions of the storage compartment, provides a five sided stationary storage tray accessible through the opening of the respective storage compartment and within which articles of jewelry such as rings, watches, earrings and / or tie tacks can be placed for organization and storage. The front cover panel has a tray cover panel integrated therewith, which closes off each jewelry storage compartment when the front cover panel is positioned over the access opening formed in the case housing. When the front cover panel is closed, the jewelry support posts contact the rear surface of the front cover panel to prevent supported articles of jewelry from falling off and tossing about within the storage compartment during usage, including travel.

Owner:GEMINI MARKETING

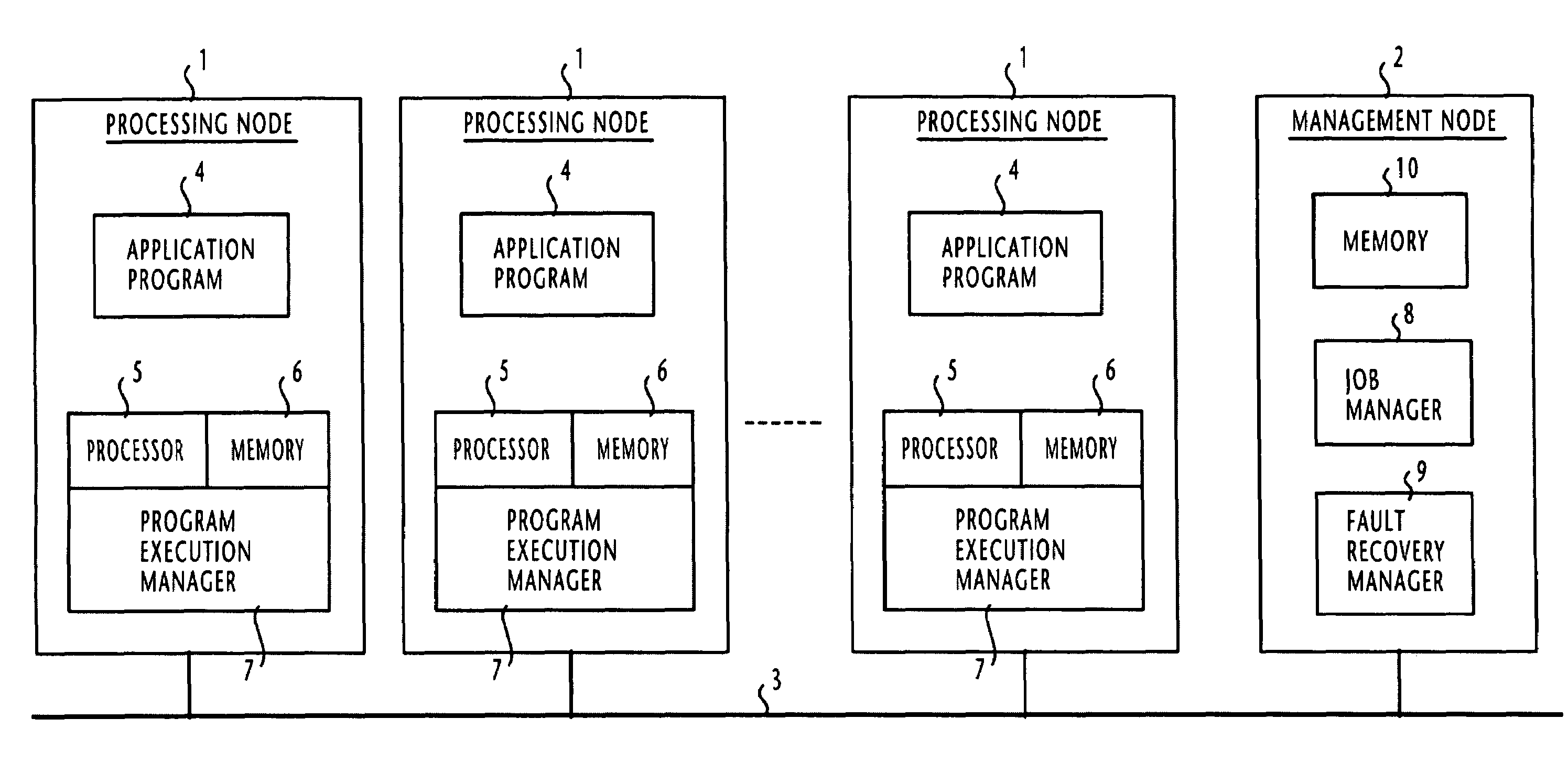

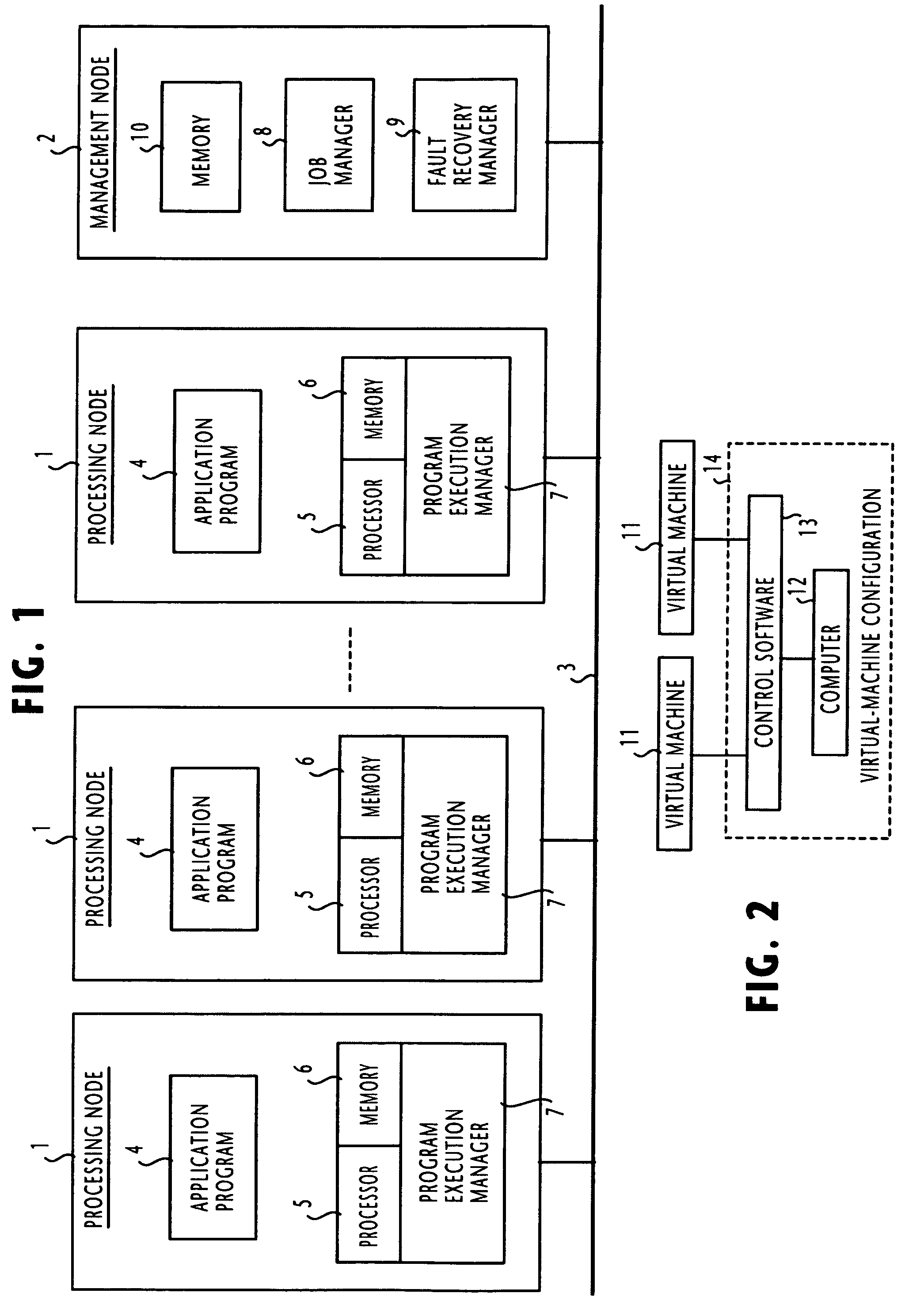

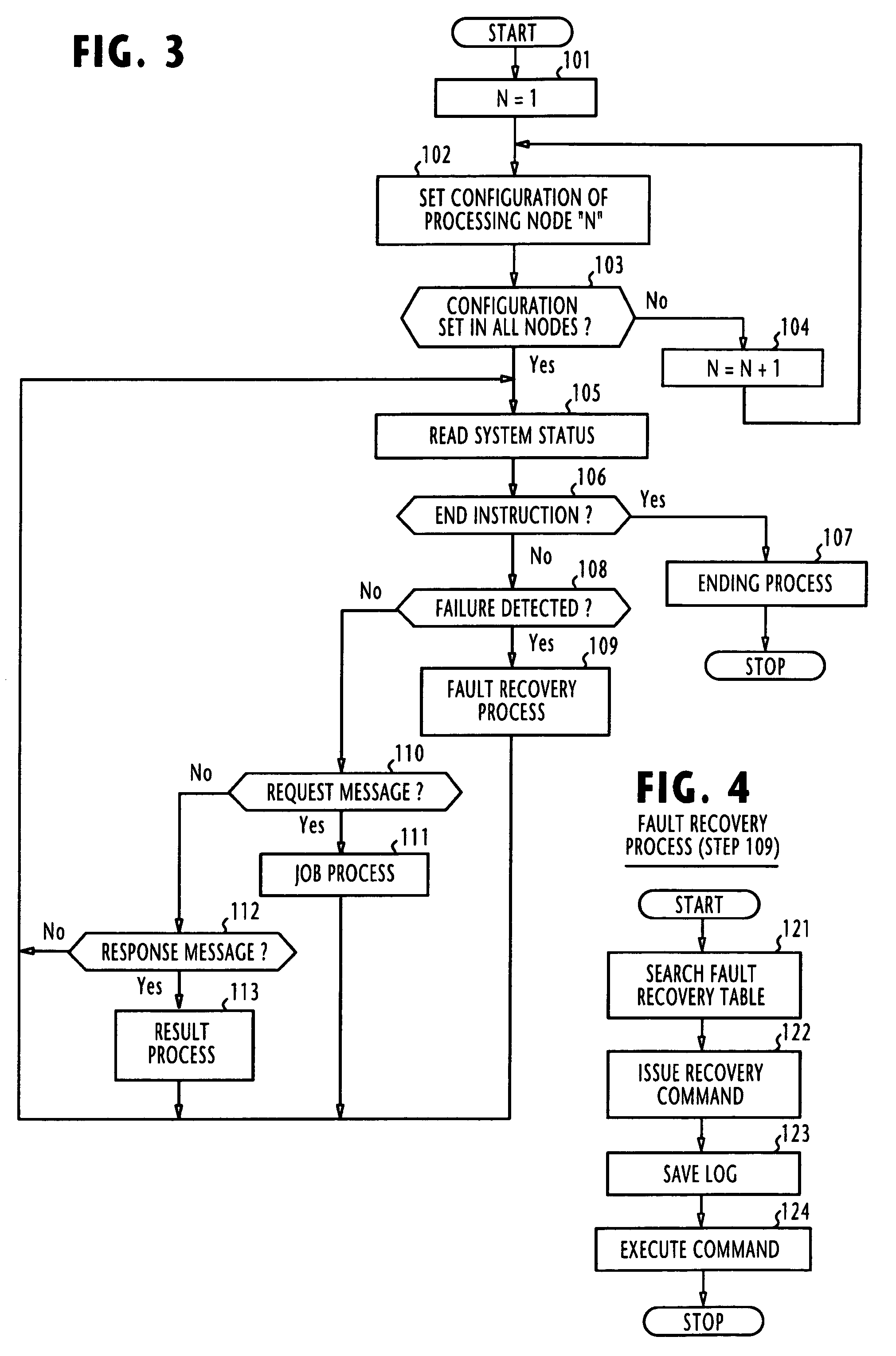

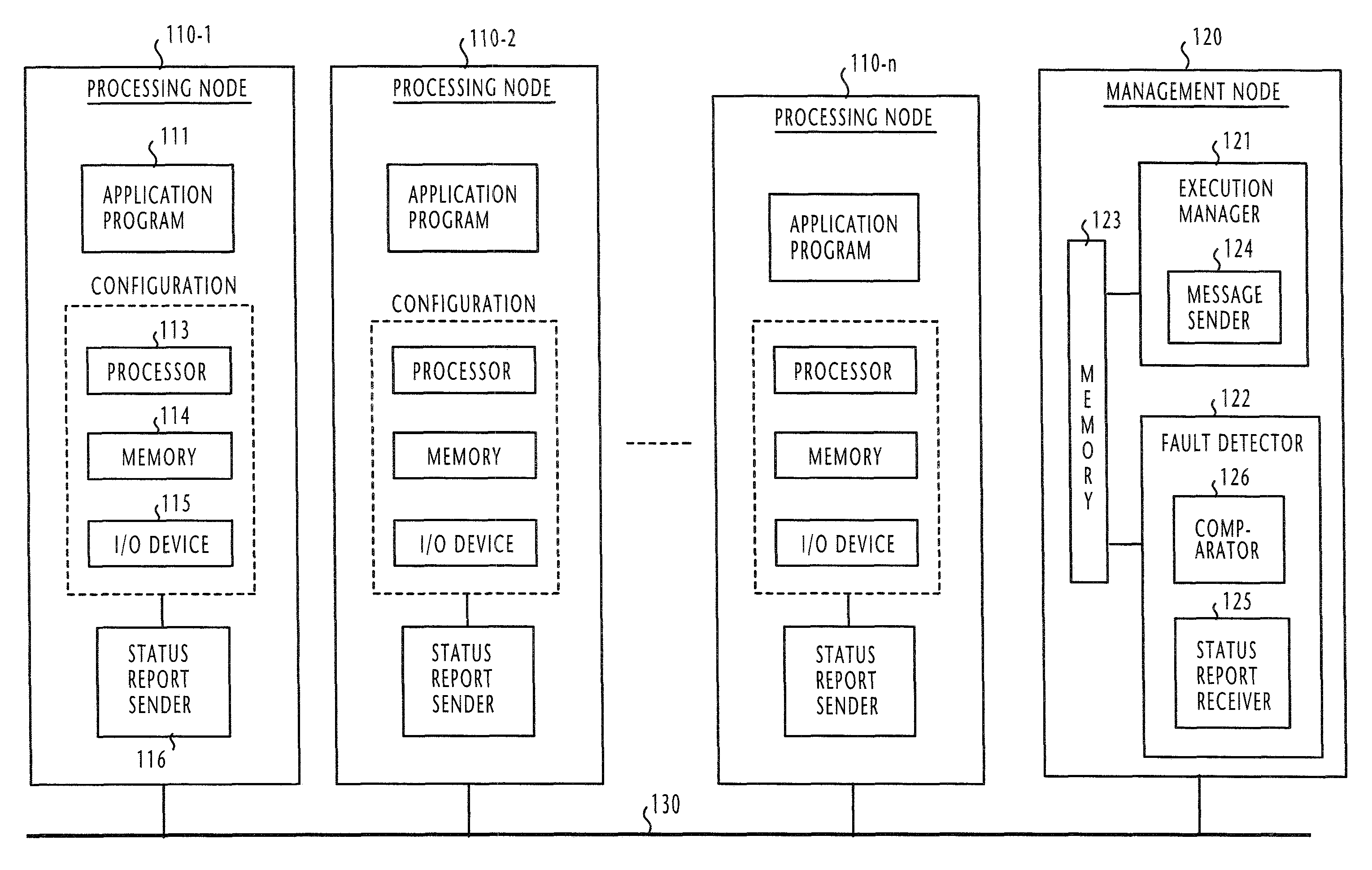

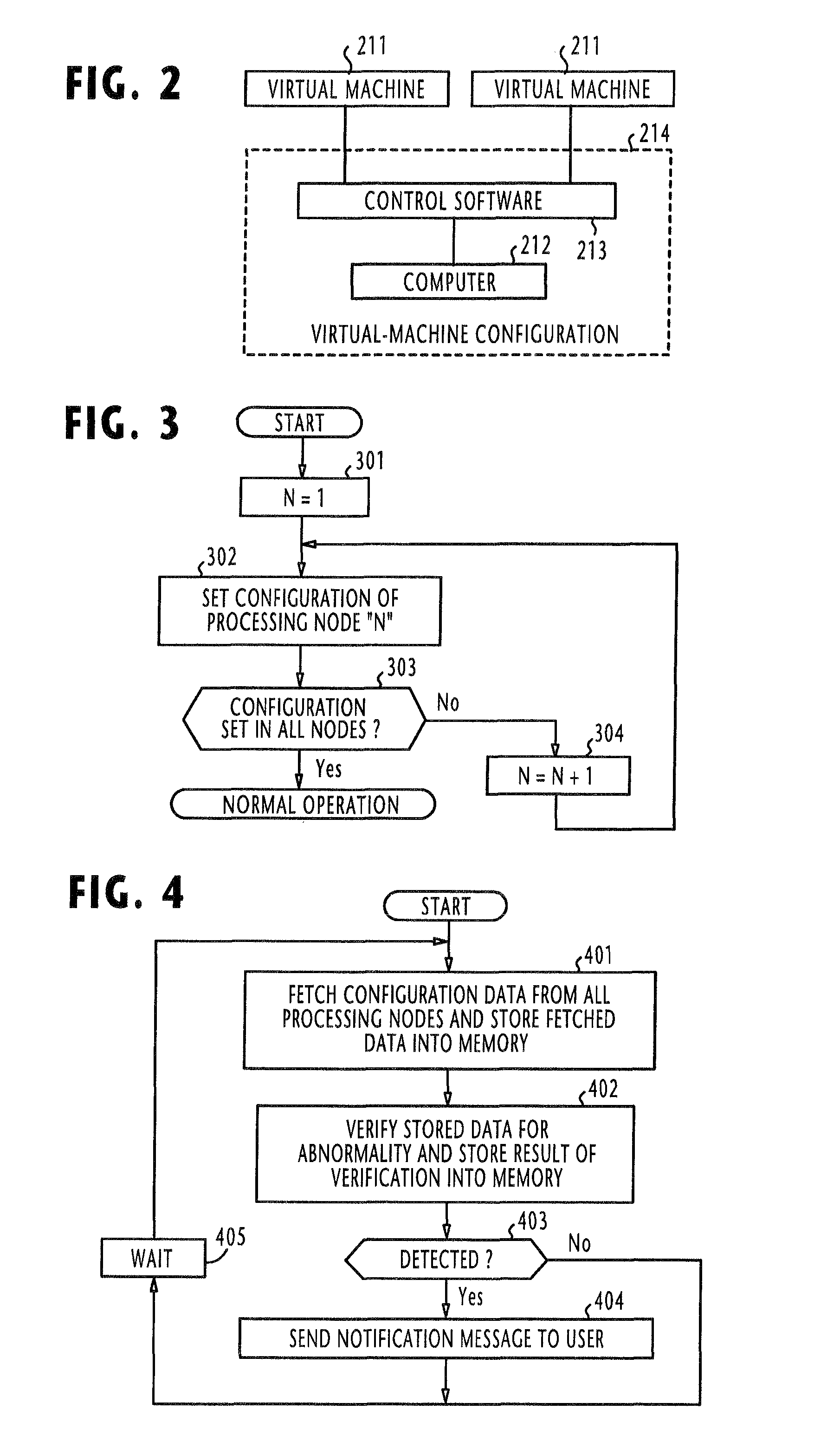

Fault tolerant multi-node computing system for parallel-running a program under different environments

InactiveUS7237140B2High software fault toleranceAvoid it happening againProgram initiation/switchingDigital computer detailsParallel computingApplication procedure

In a fault tolerant computing system, a number of processing nodes are connected via a communication channel to a management node. The management node has the function of setting the processing nodes in uniquely different configurations and operating the processing nodes in parallel to execute common application programs in parallel, so that the application programs are run under diversified environments. The management node has the function of respectively distributing request messages to the processing nodes. The configurations of the processing nodes are sufficiently different from each other to produce a difference in processing result between response messages when a failure occurs in one of the processing nodes. Alternatively, the management node has the function of selecting, from many processing nodes, those processing nodes which are respectively set in uniquely different configurations and operating the selected processing nodes in parallel to execute the common application programs.

Owner:NEC CORP

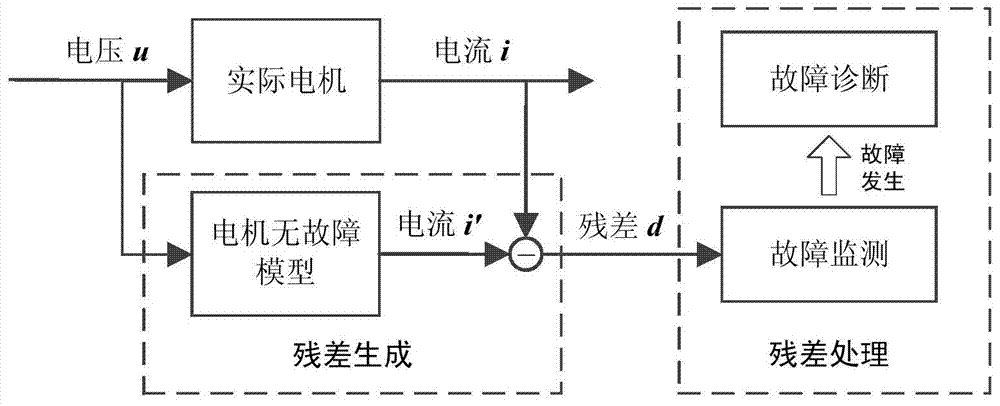

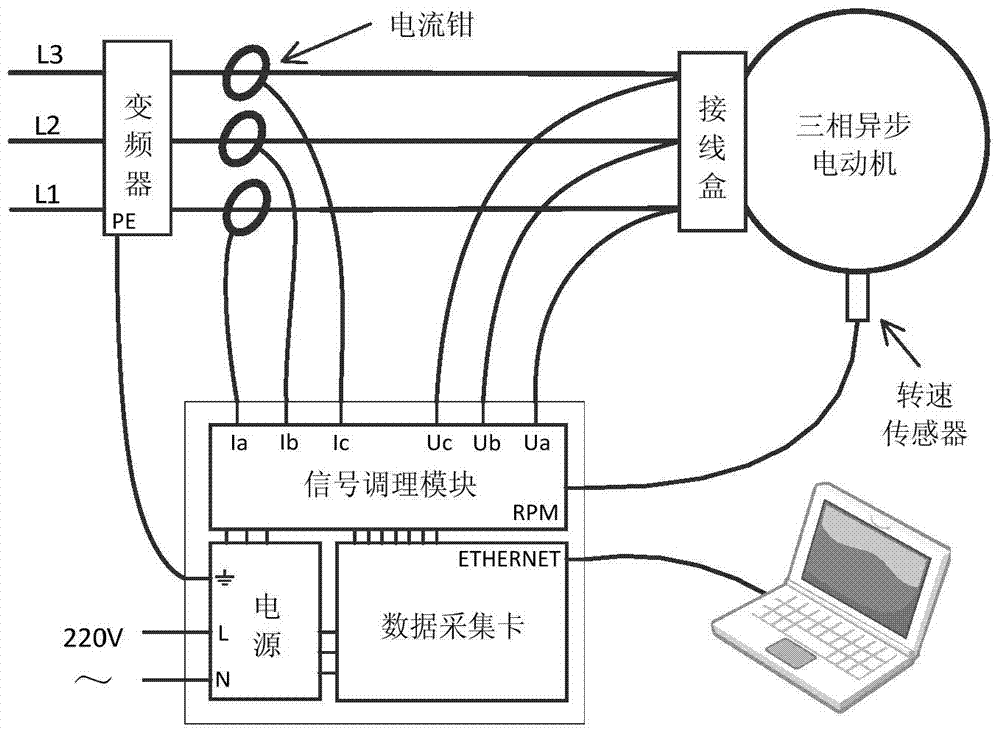

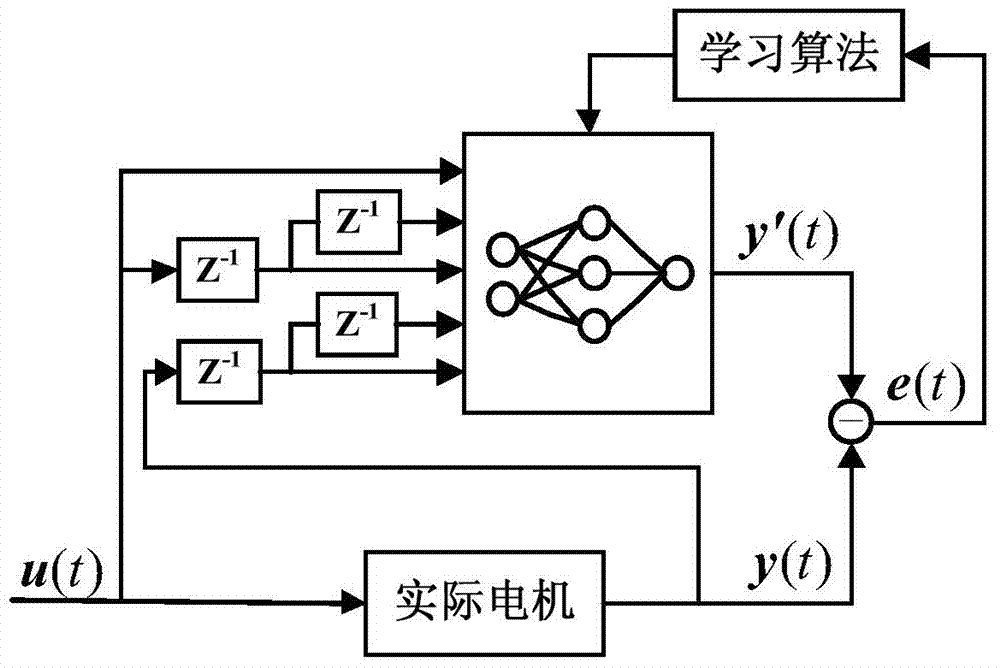

Asynchronous motor fault monitoring and diagnosing method based on model

ActiveCN103698699AHigh sensitivityImprove reliabilityBiological neural network modelsDynamo-electric machine testingFrequency spectrumMathematical model

The invention discloses an asynchronous motor fault monitoring and diagnosing method based on a model. The method comprises the following steps: firstly, acquiring a three-phase input voltage signal and a three-phase output current signal of an asynchronous motor which can be normally operated, establishing a mathematical model to serve as a fault-free model; carrying out parallel running on the fault-free model under driving of a same input voltage u to obtain a residual signal d; then carrying out time domain analysis on the residual signal d, determining a threshold value eta of the residual signal of the asynchronous motor according to the 3 sigma principle, and judging whether a fault occurs or not by monitoring whether a residual effective value dRMS exceeds the threshold value eta or not when the asynchronous motor is stably operated; carrying out frequency domain analysis on the residual signal d again, and determining the fault type according to a fault feature frequency component fF appeared in a residual spectrum. The monitoring and diagnosing method disclosed by the invention can effectively weaken adverse effects on motor fault monitoring and diagnosis by an input voltage and improve the signal-to-noise ratio of a fault signal, thereby improving the sensitivity of the motor fault monitoring and the reliability of the fault diagnosis.

Owner:XI AN JIAOTONG UNIV

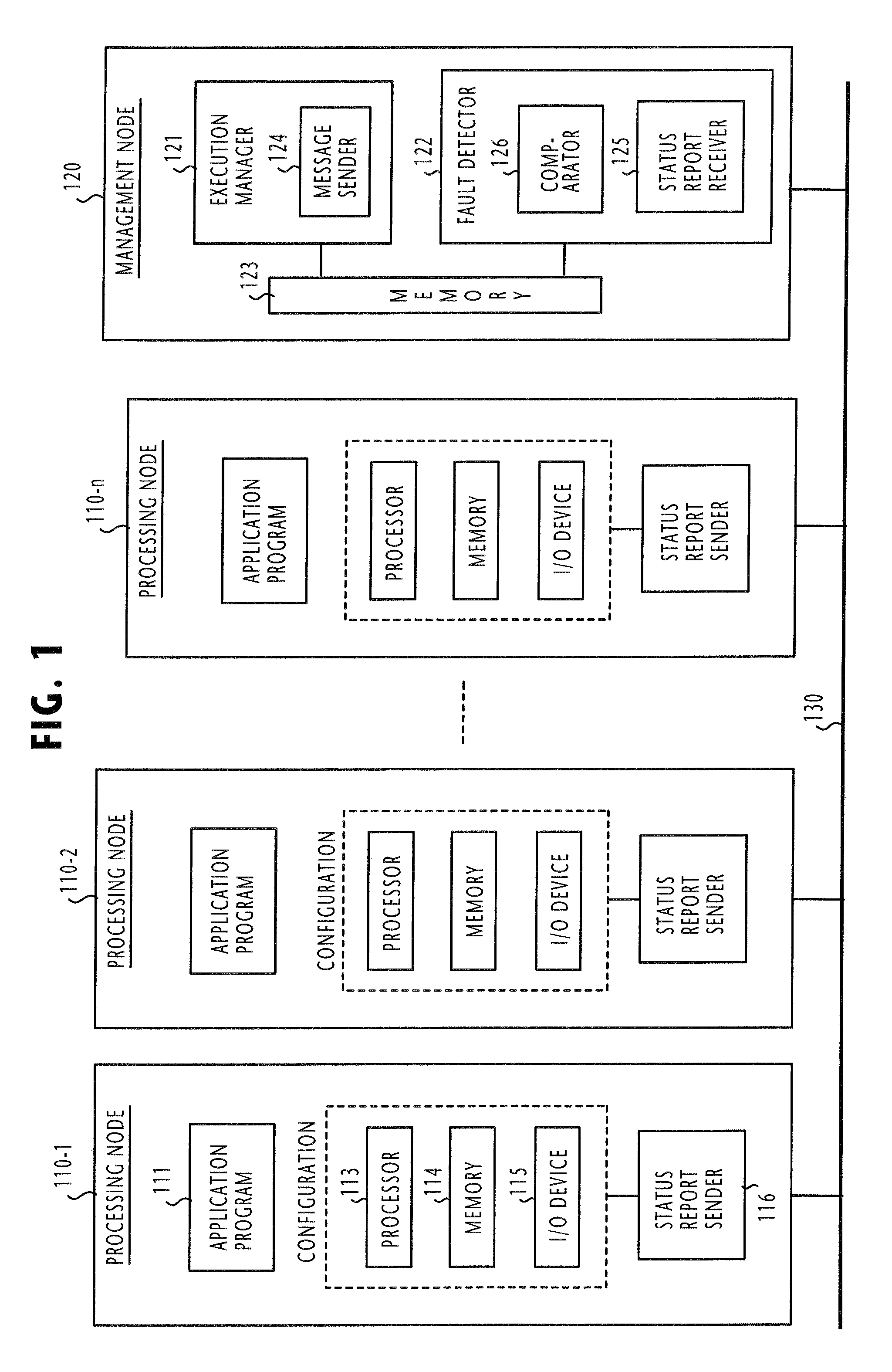

Fault tolerant multi-node computing system using periodically fetched configuration status data to detect an abnormal node

InactiveUS7870439B2Less-costly to developLess costly to develop application programsDigital computer detailsEmergency protective arrangements for automatic disconnectionSoftware faultCommunications media

A fault tolerant computing system comprises a plurality of processing nodes interconnected by a communication medium for parallel-running identical application programs. A fault detector is connected to the processing nodes via the communication medium for periodically collecting configuration status data from the processing nodes and mutually verifying the collected configuration status data for detecting an abnormal node. In one preferred embodiment of this invention, the system operates in a version diversity mode in which the processing nodes are configured in a substantially equal configuration and the application programs are identical programs of uniquely different software versions. In a second preferred embodiment, the system operates in a configuration diversity mode in which the processing nodes are respectively configured in uniquely different configurations. The configurations of the processing nodes are sufficiently different from each other that a software fault is not simultaneously activated by the processing nodes.

Owner:NEC CORP

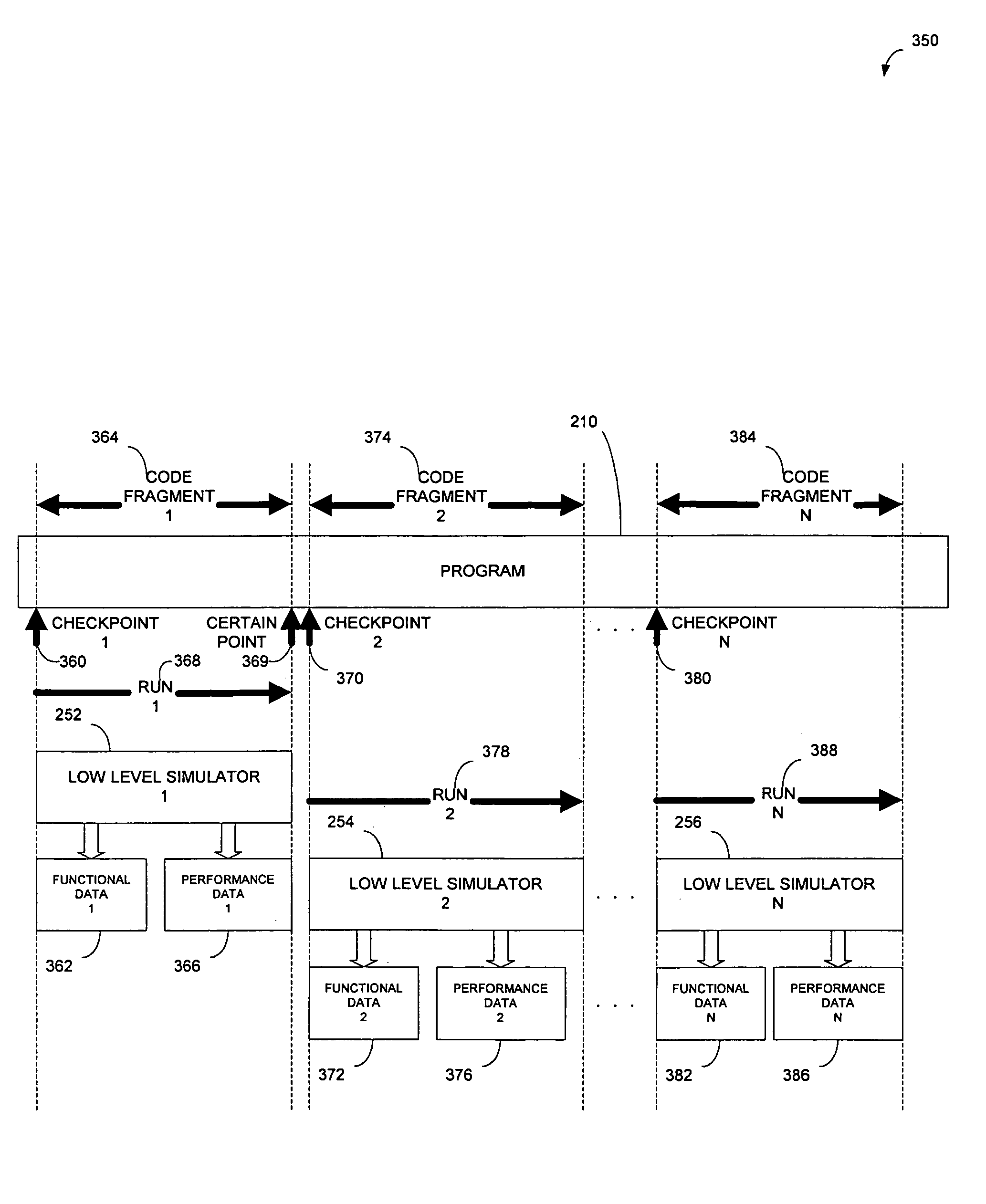

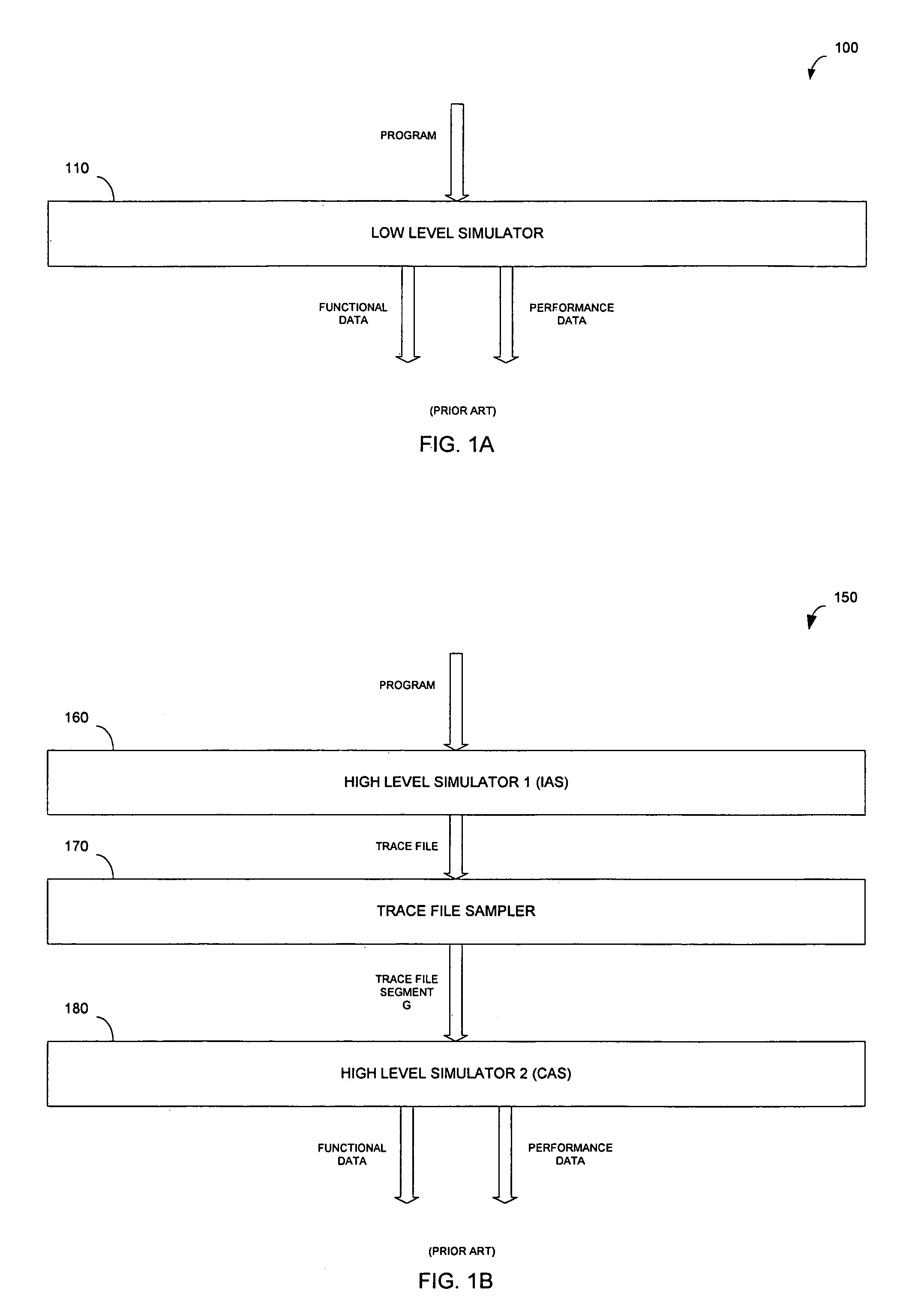

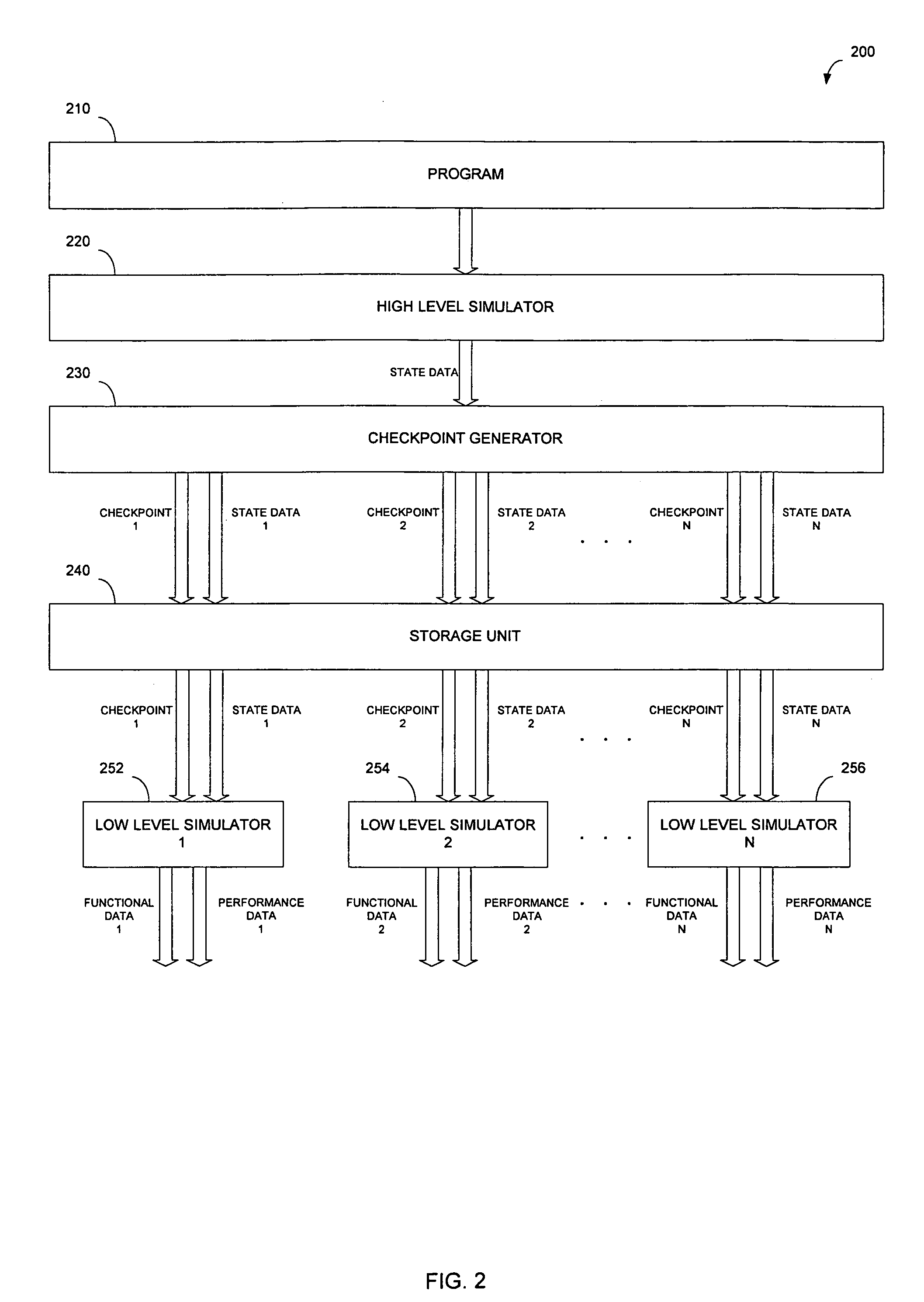

System and method for validating processor performance and functionality

InactiveUS6983234B1Analogue computers for electric apparatusCAD circuit designEmbedded systemParallel running

A method and system for accurately validating performance and functionality of a processor in a timely manner is provided. First, a program is executed on a high level simulator of the processor. Next, a plurality of checkpoints are established. Then, state data at each of the checkpoints is saved. Finally, the program is run on a plurality of low level simulators of the processor in parallel, where each of the low level simulators is started at a corresponding checkpoint with corresponding state data associated with the corresponding checkpoint.

Owner:ORACLE INT CORP

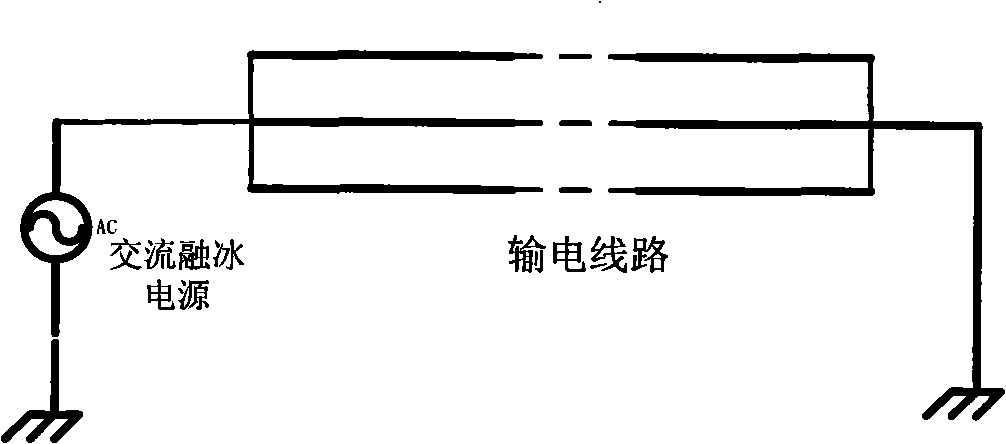

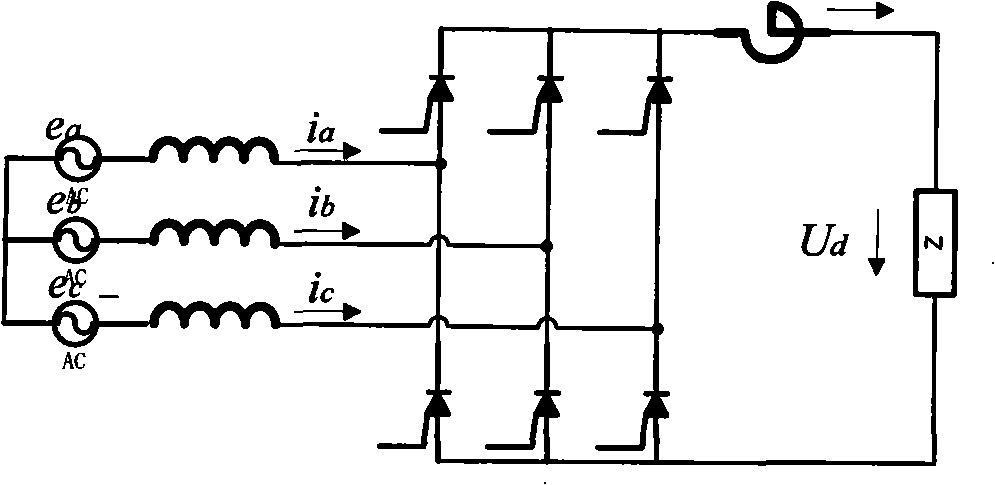

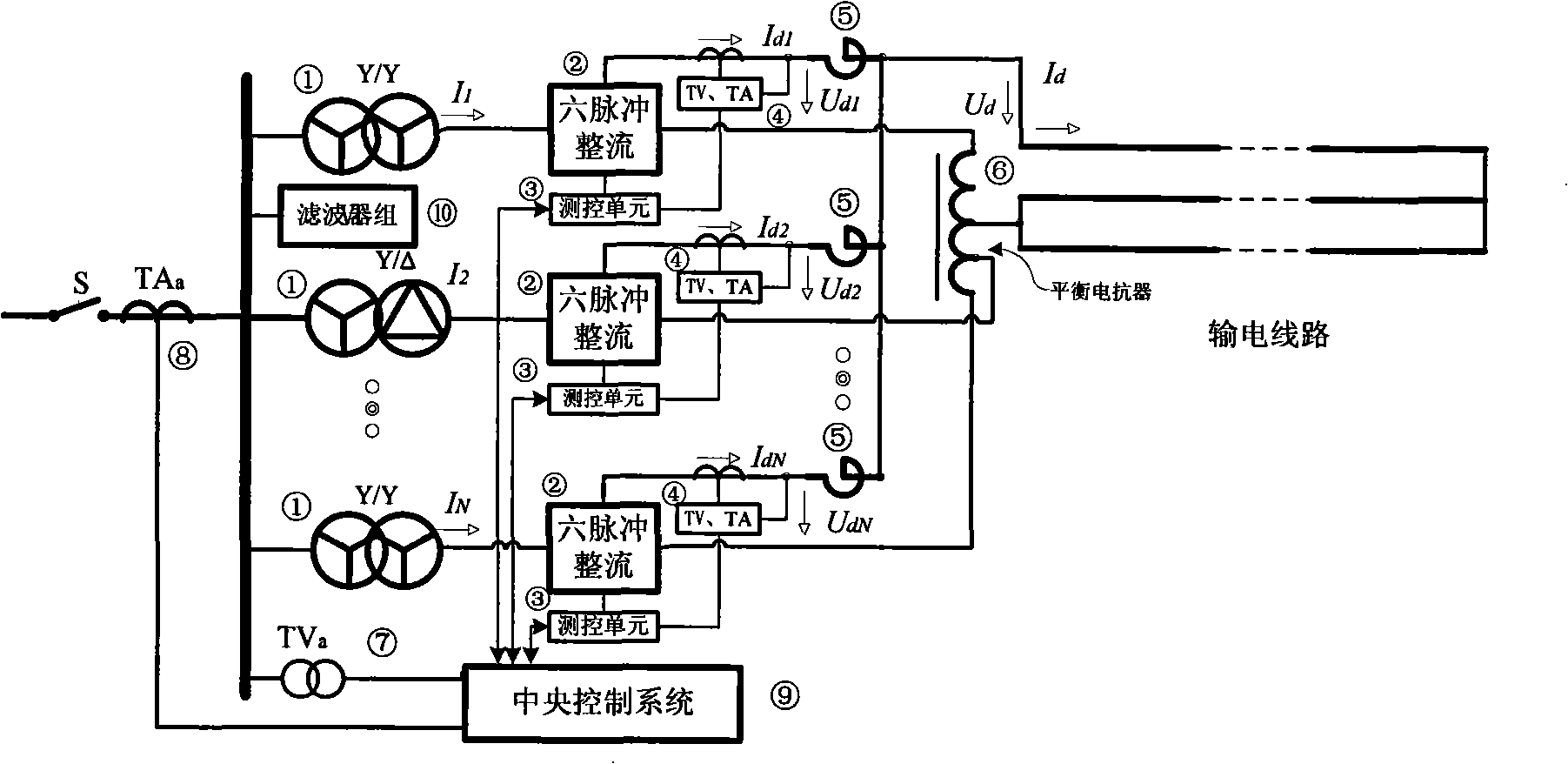

High-capacity direct current de-icing device

InactiveCN101316033AMeet the needs of melting iceMeet ice melting needsOverhead installationAc-dc conversion without reversalEngineeringDc voltage

The invention provides a large-capacity DC thawing apparatus, comprising a rectifier transformer, six-pulse rectifier, measurement and control units, a DC voltage and current transformer TV and TA, a DC smoothing reactor, a balancing reactor, an AC voltage transformer TVa, an AC current transformer TAa and a central control system; the large-capacity DC thawing apparatus has N six-pulse rectifiers with identical parameters; N measurement and control units respectively provide triggering pulses for each six-pulse rectifier; the input terminal of the DC thawing apparatus is provided with the AC voltage transformer TVa and AC current transformer TAa and the output terminal thereof is connected with the central control system; the output anodes of the N six-pulse rectifiers are directly connected in parallel by the smoothing reactor and connected with one end of the transmission line to be thawed and the output cathode thereof is connected with the other end of the transmission line to be thawed in parallel by N tap balance reactors. By adopting the parallel running technique of the rectifier, the problems of the large-capacity DC thawing apparatus such as large current, high voltage and large capacity are solved.

Owner:STATE GRID ELECTRIC POWER RES INST

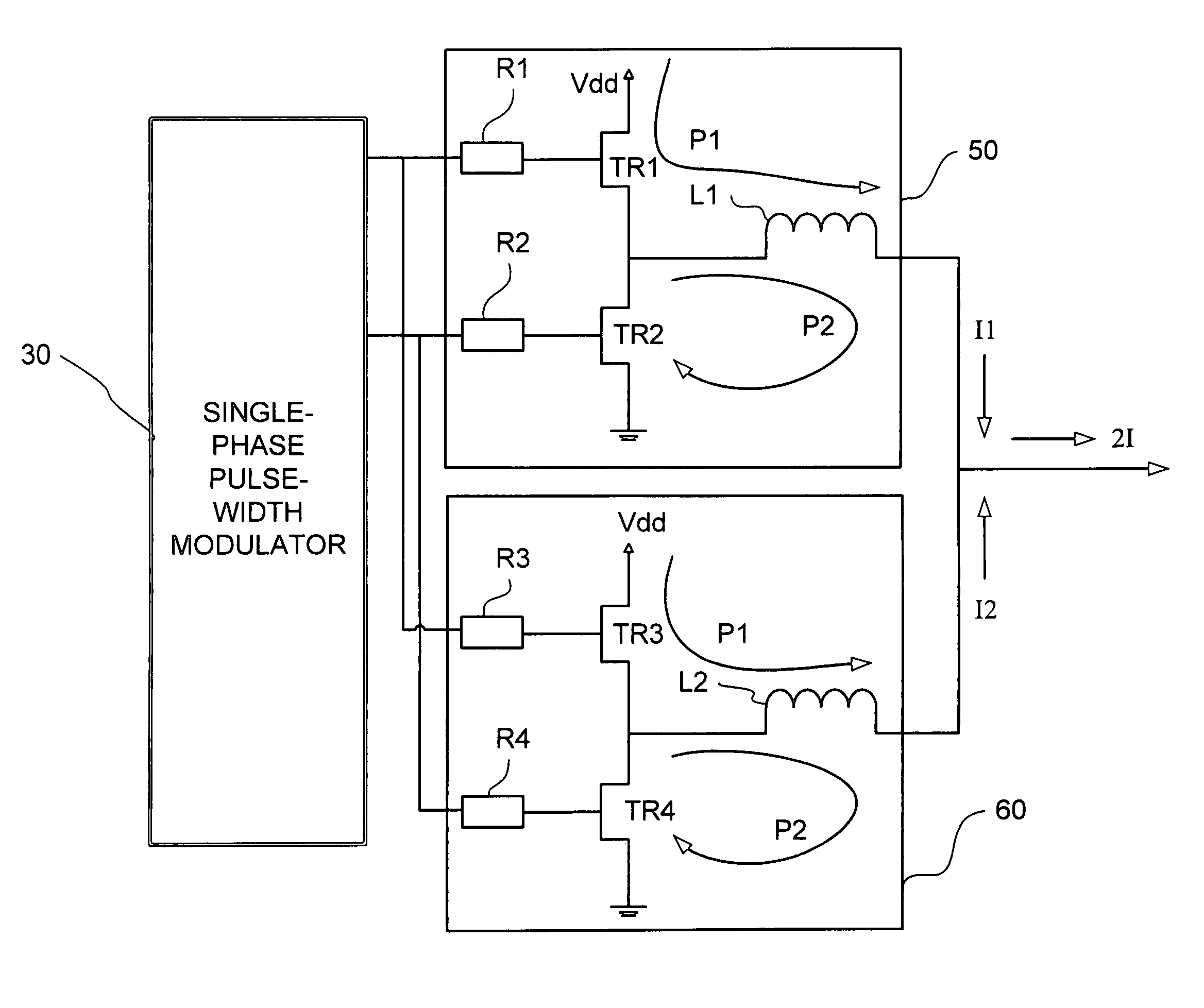

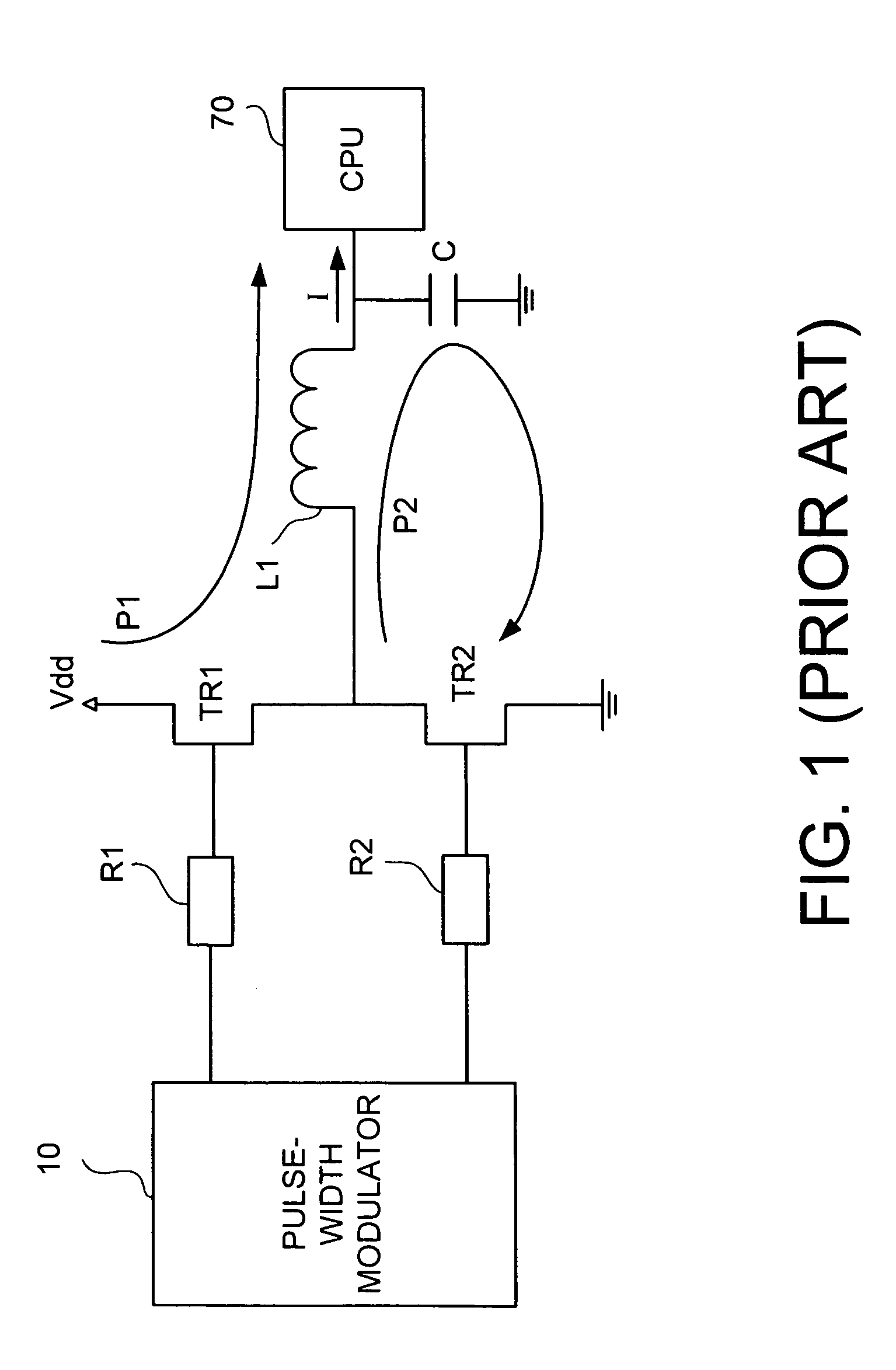

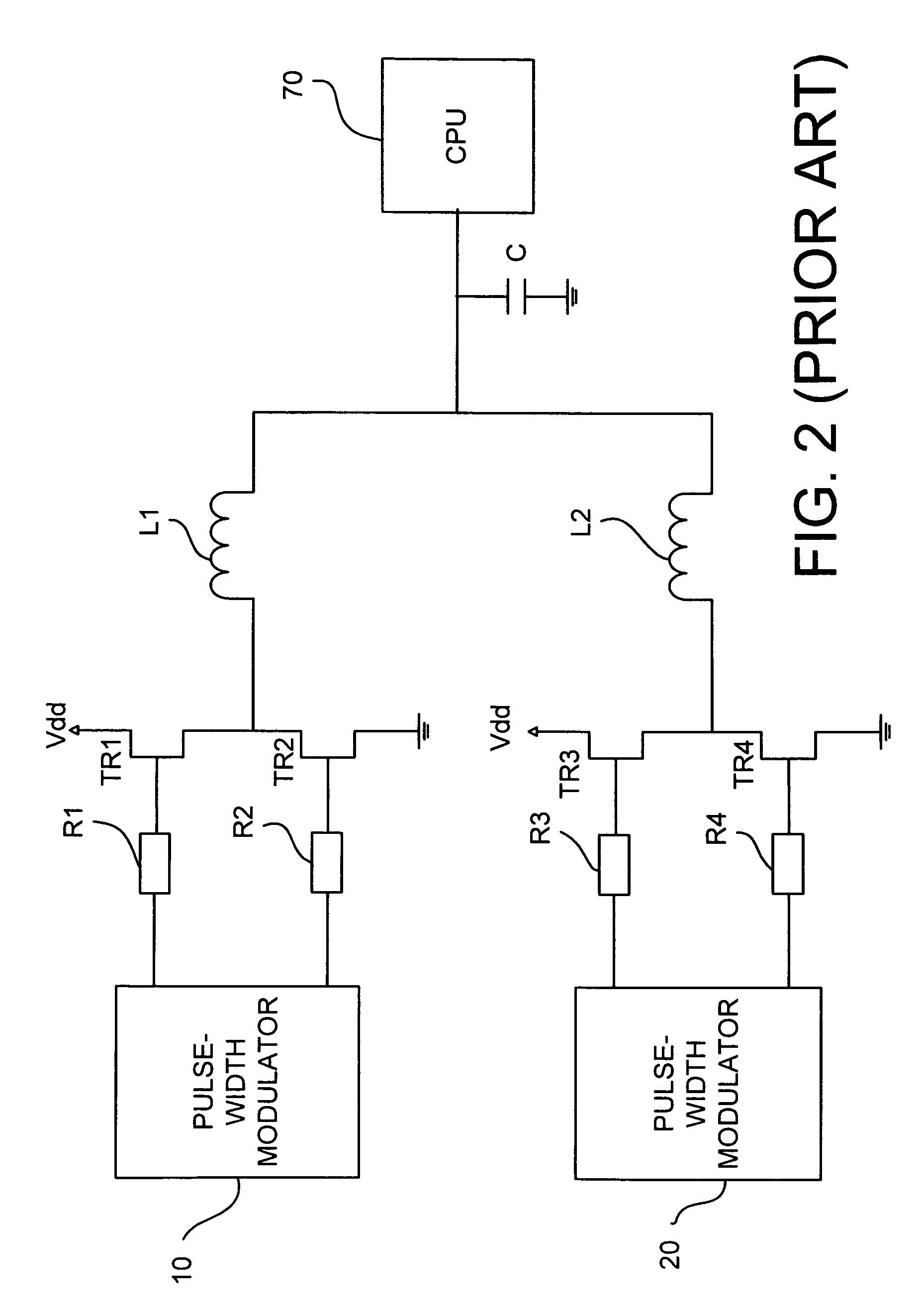

Synchronized parallel running power converter

In prior arts, additional pulse-width modulators and more costs are needed for increasing the current output. The invention provides a synchronized parallel running power converter. The power converter includes multiple power converters controlled by single-phase or double-phase pulse-width modulators. Each power converter includes a first pulse input port, a second pulse input port and a current output port. Each first pulse input ports are coupled, and each second pulse input ports are coupled also, so that each power converter is controlled by the same pulse signal and provide a same output current to be added as several times of current output.

Owner:MICRO-STAR INTERNATIONAL

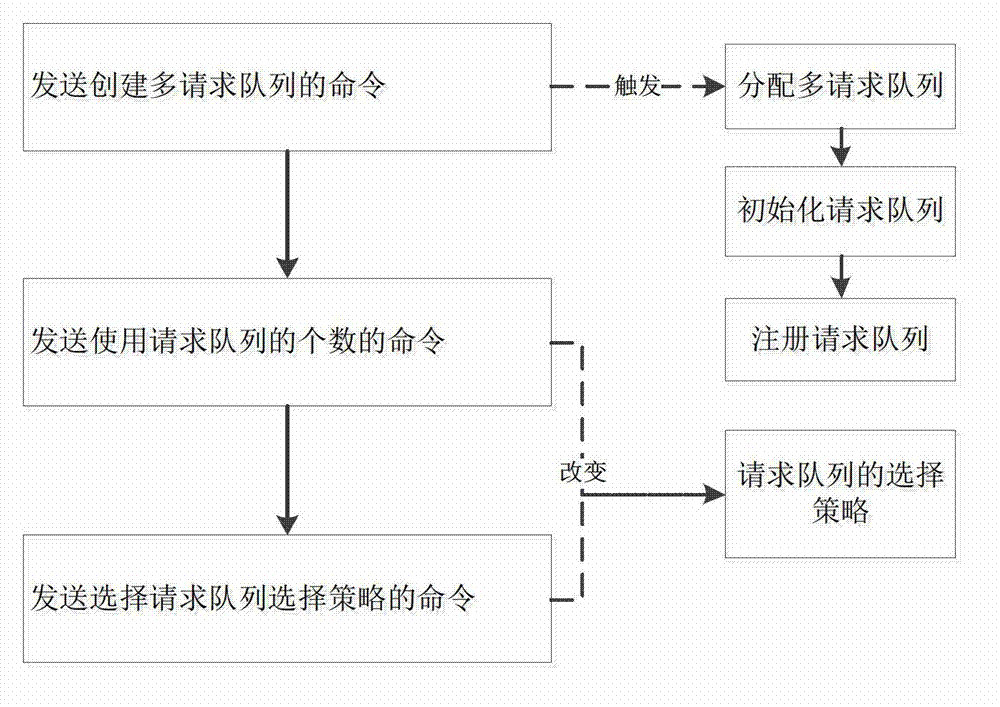

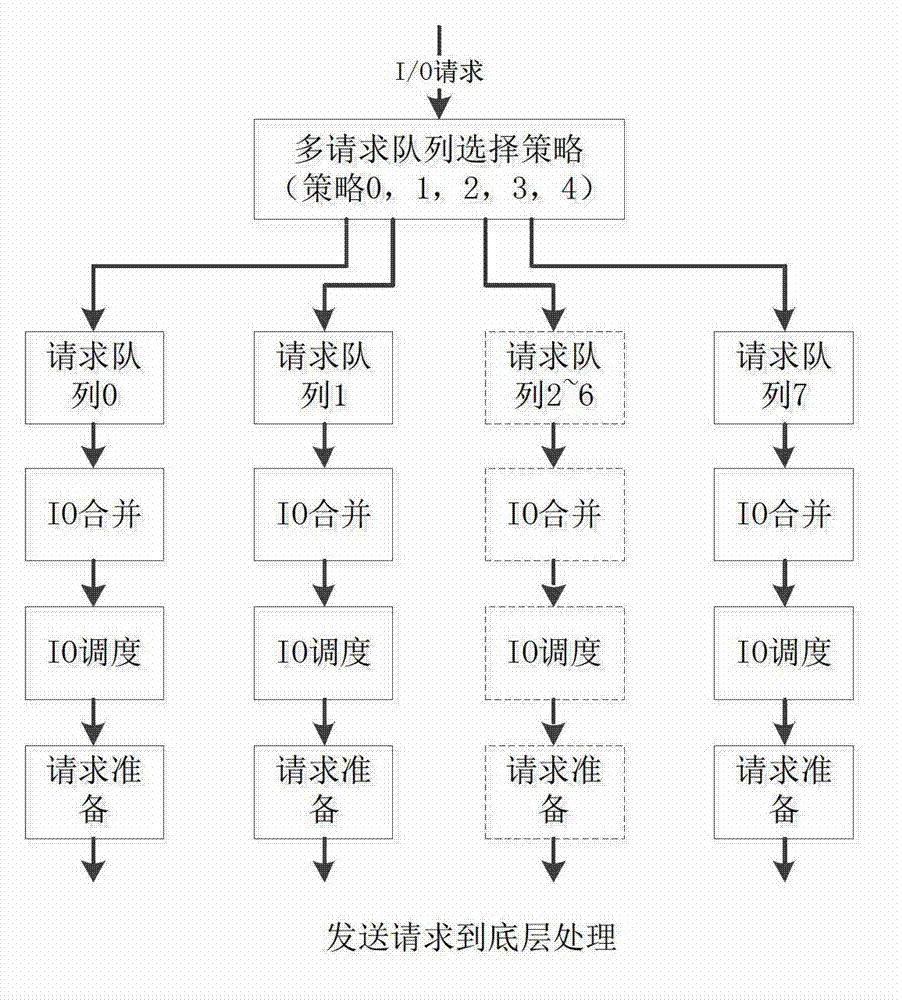

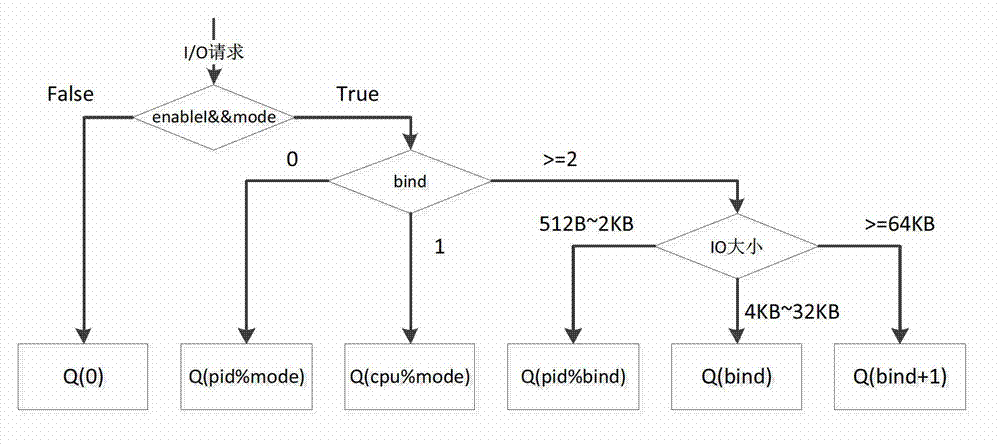

Method for prompting IO (input/output) parallelism and reducing small IO delay by utilizing multiple request queues

ActiveCN102831014AImprove processing efficiencyImprove throughputResource allocationParallel processingDistributed computing

The invention discloses a method for prompting IO (input / output) parallelism and reducing small IO delay by utilizing multiple request queues. The method comprises steps: establishing multiple request queues and ensuring that the IO requests to select corresponding request queues to process by utilizing the selection strategy, so as to realize the parallel running of the IO requests, wherein the selection strategy comprises: binding each process with one request queue so as to evenly allocate the IO requests on a plurality of processes on the plurality of request queues for processing, and binding each CPU (Central Processing Unit) so as to evenly allocate the IO requests on a plurality of CPUs on the plurality of request queues for processing. The invention further discloses application of the method in an FC or FCoE storage system. A great amount of IO requests are allocated in the plurality of request queues according to the strategy, thus realizing the parallel processing of the IO requests, prompting the processing efficiency of the IO requests, achieving the effect of the IO throughput rate, and prompting the real-time IO processing efficiency and reducing the processing delay of the small IO requests by allocating more queues for the small IO requests.

Owner:HUAZHONG UNIV OF SCI & TECH

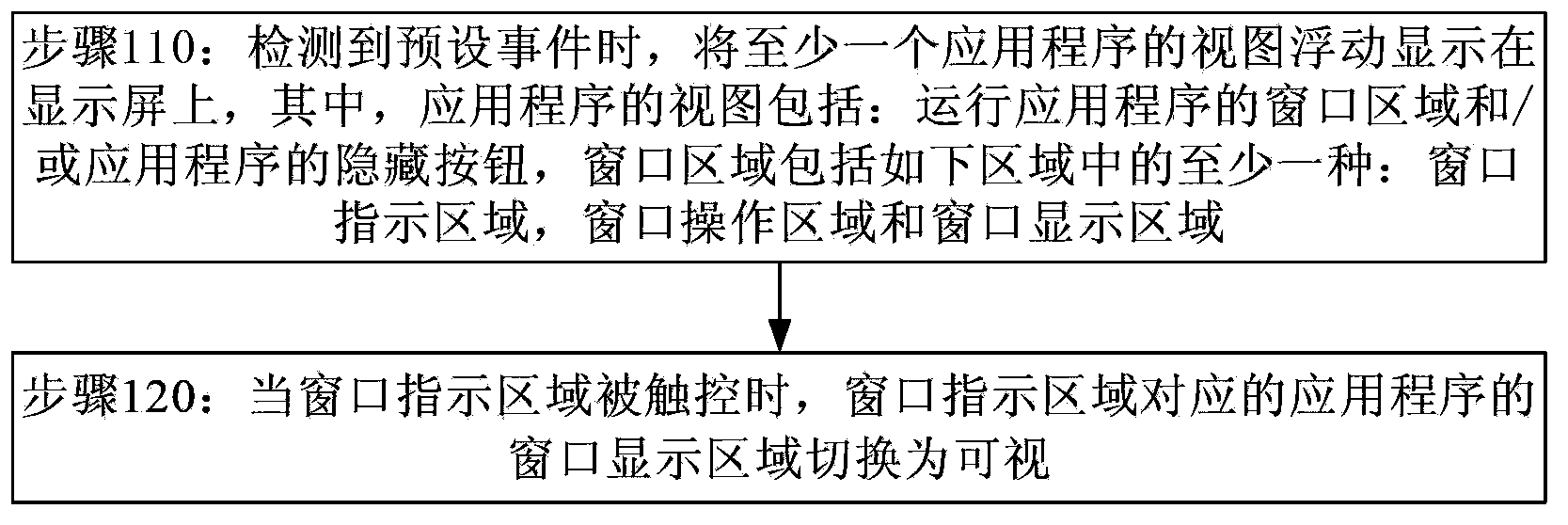

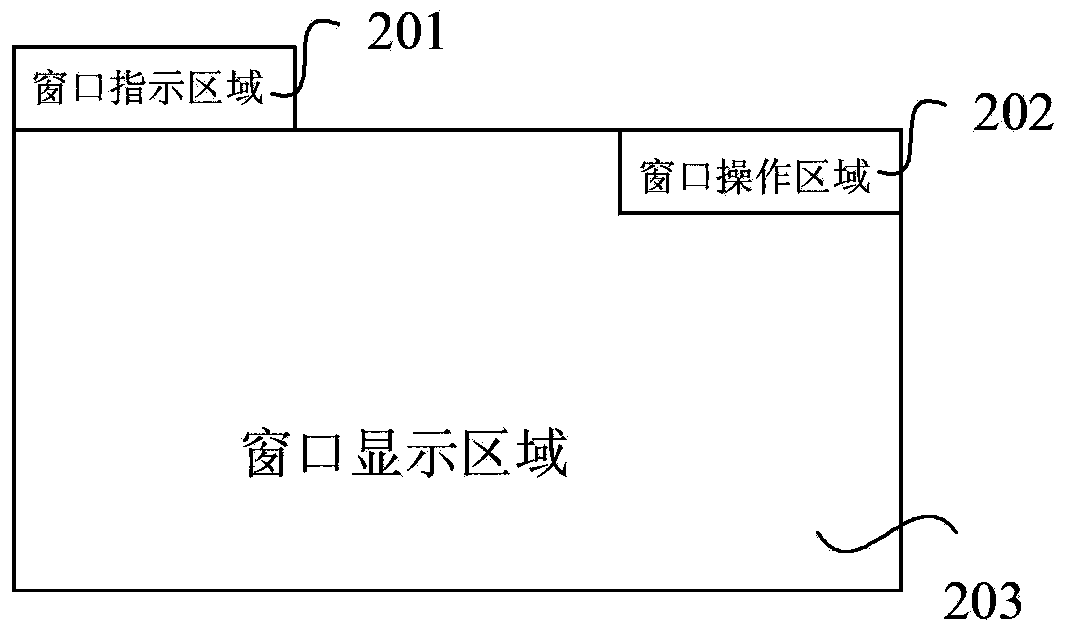

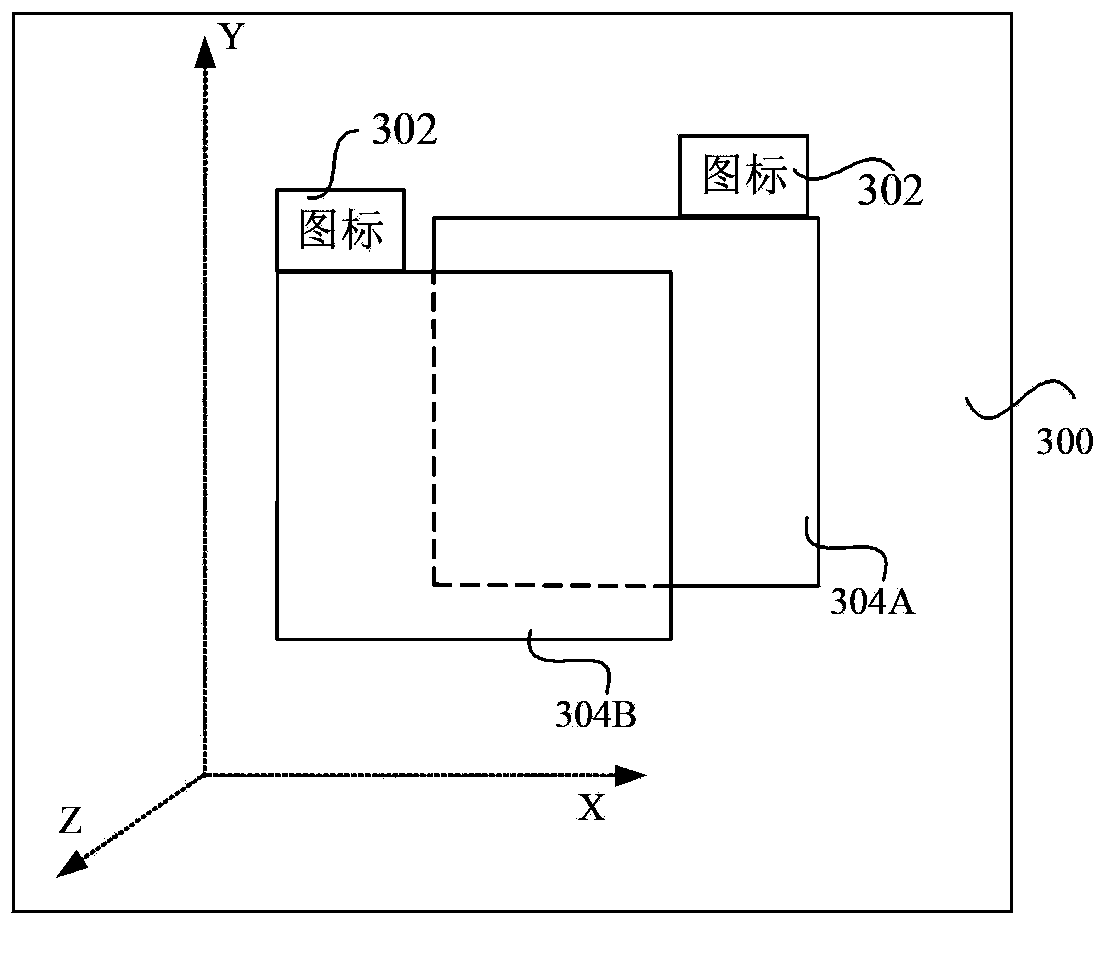

Application program view management method and device by mobile terminal

InactiveCN104298417AAvoid complex operationsAvoid time consumingInput/output processes for data processingApplication softwareComputer engineering

The invention provides an application program view management method by a mobile terminal. The method includes the steps that when a preset event is detected, views of at least one application program are displayed on a display screen in a floating mode, wherein the view of each application program comprises a window region for operating the application program and / or a hidden button of the application program, and the window region comprises at least one of a window indication region, a window operation region and a window display region; when the window indication region is controlled in a touch mode, the window display region of the application program corresponding to the window indication region is switched to be visual. By means of the technical scheme, a user can select any window in the display screen and conduct information interaction with the application program corresponding to the window, user using is facilitated, the user does not need to interrupt the application program operated in the current window, instead application programs are operated in parallel and direct switching is achieved, so that the problems that the operation process of switching the operated program is complex and too much time is consumed are solved, the operated program in the mobile terminal is easy, convenient and fast to switch, and efficiency in switching the operated program is improved.

Owner:BEIJING SAMSUNG TELECOM R&D CENT +1

Concurrent Hardware Selftest for Central Storage

InactiveUS20070283104A1Improve availabilityImprove performance reliabilityError detection/correctionStatic storageParallel computingApplication software

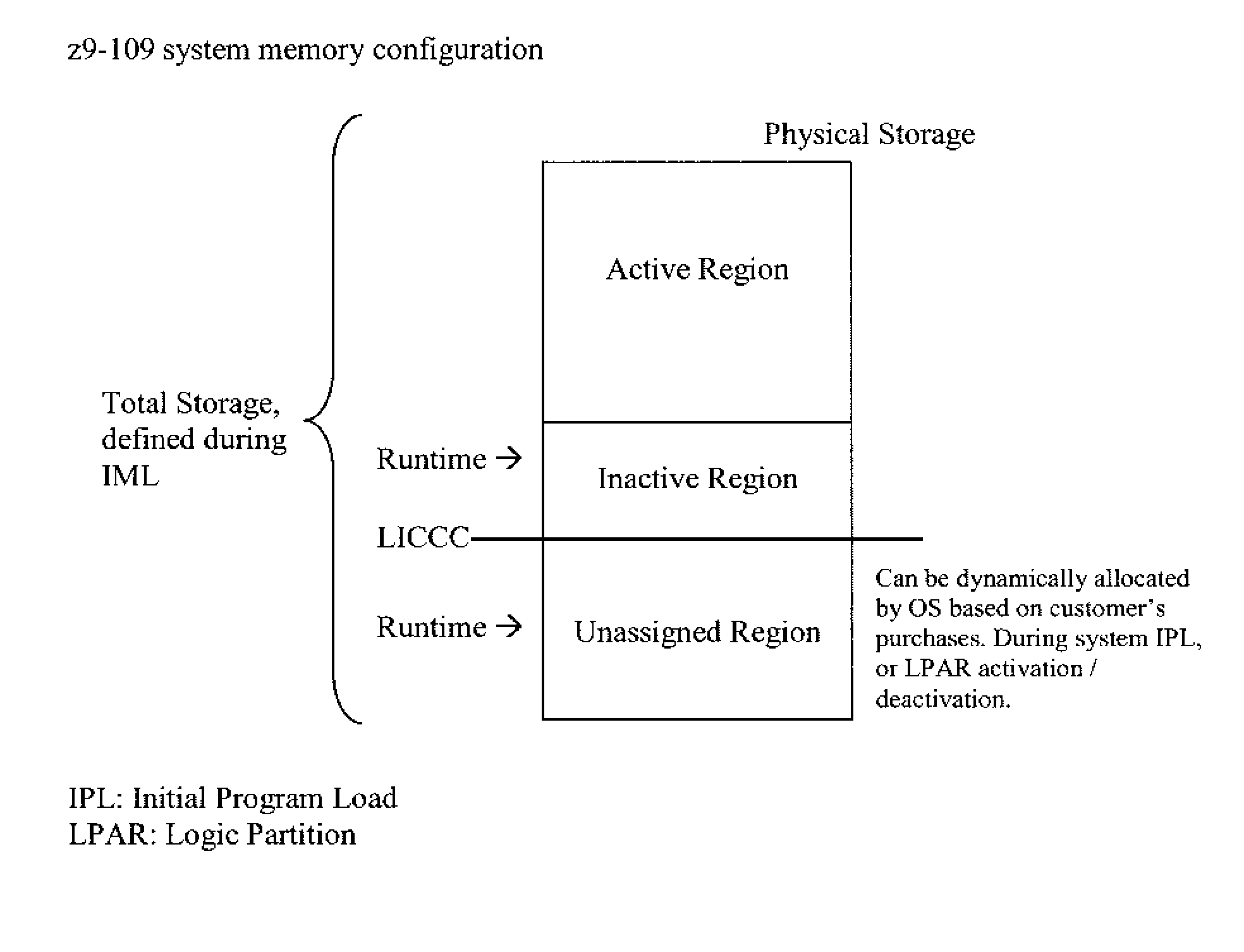

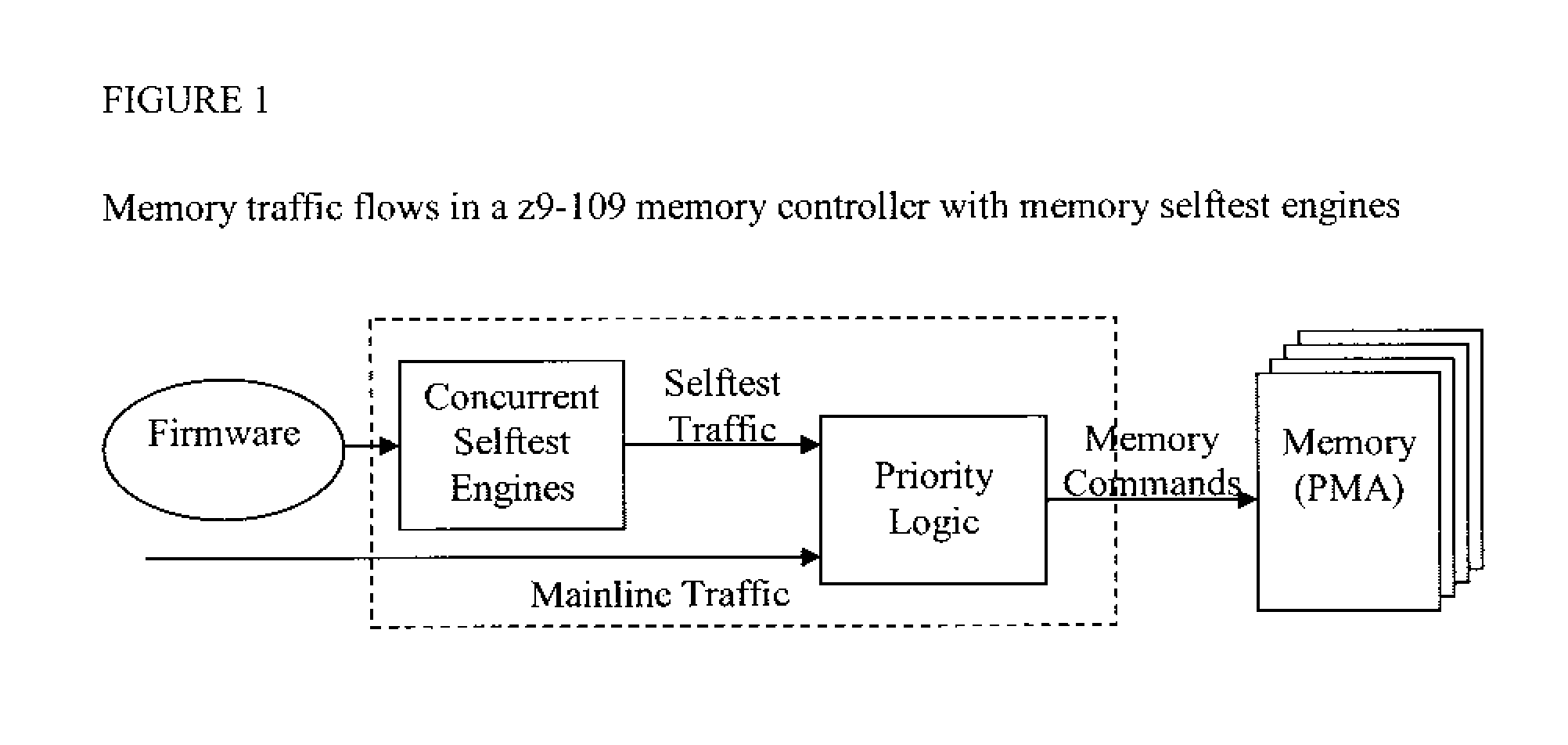

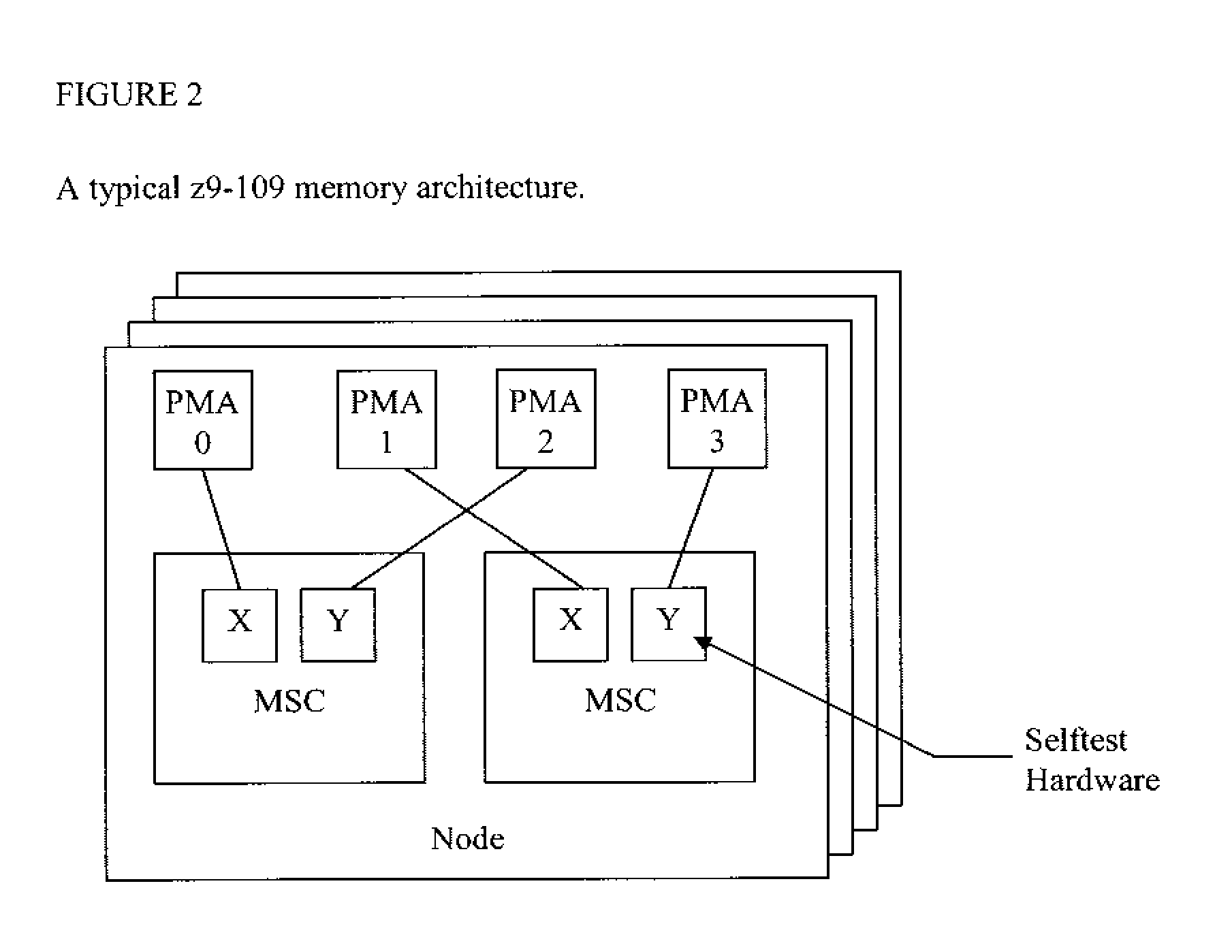

Disclosed are a concurrent selftest engine and its applications to verify, initialize and scramble the system memory concurrently along with mainline operations. In prior art, memory reconfiguration and initialization can only be done by firmware with a full system shutdown and reboot. The disclosed hardware, working along with firmware, allows us to do comprehensive memory test operations on the extended customer memory area while the customer mainline memory accesses arc running in parallel. The hardware consists of concurrent selftest engines and priority logic. Great flexibility is achieved by the new design because customer-usable memory area can be dynamically allocated, verified and initialized. The system performance is improved by the fact that the selftest is hardware-driven whereas in prior art, the firmware drove the selftest. More comprehensive test patterns can be used to improve system memory RAS as well.

Owner:IBM CORP

Method and system for performing SW upgrade in a real-time system

ActiveUS7089550B2Reduce complexitySoftware engineeringProgram loading/initiatingSoftware engineeringTransaction data

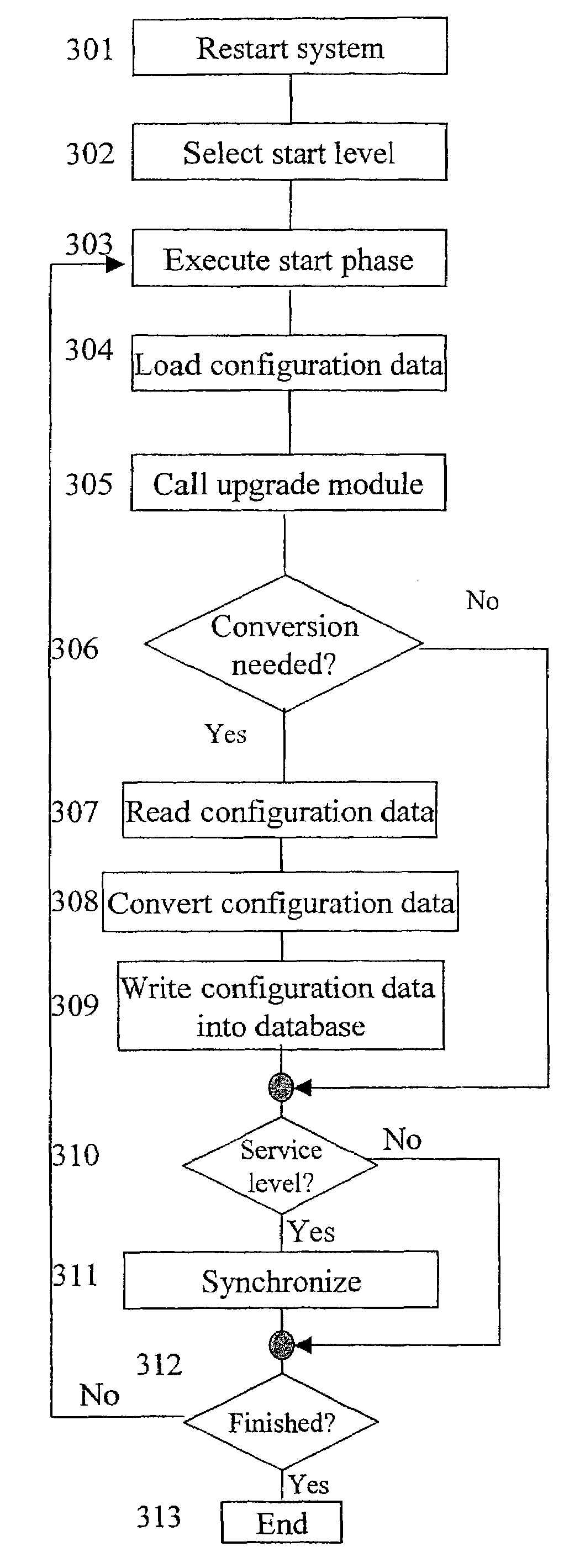

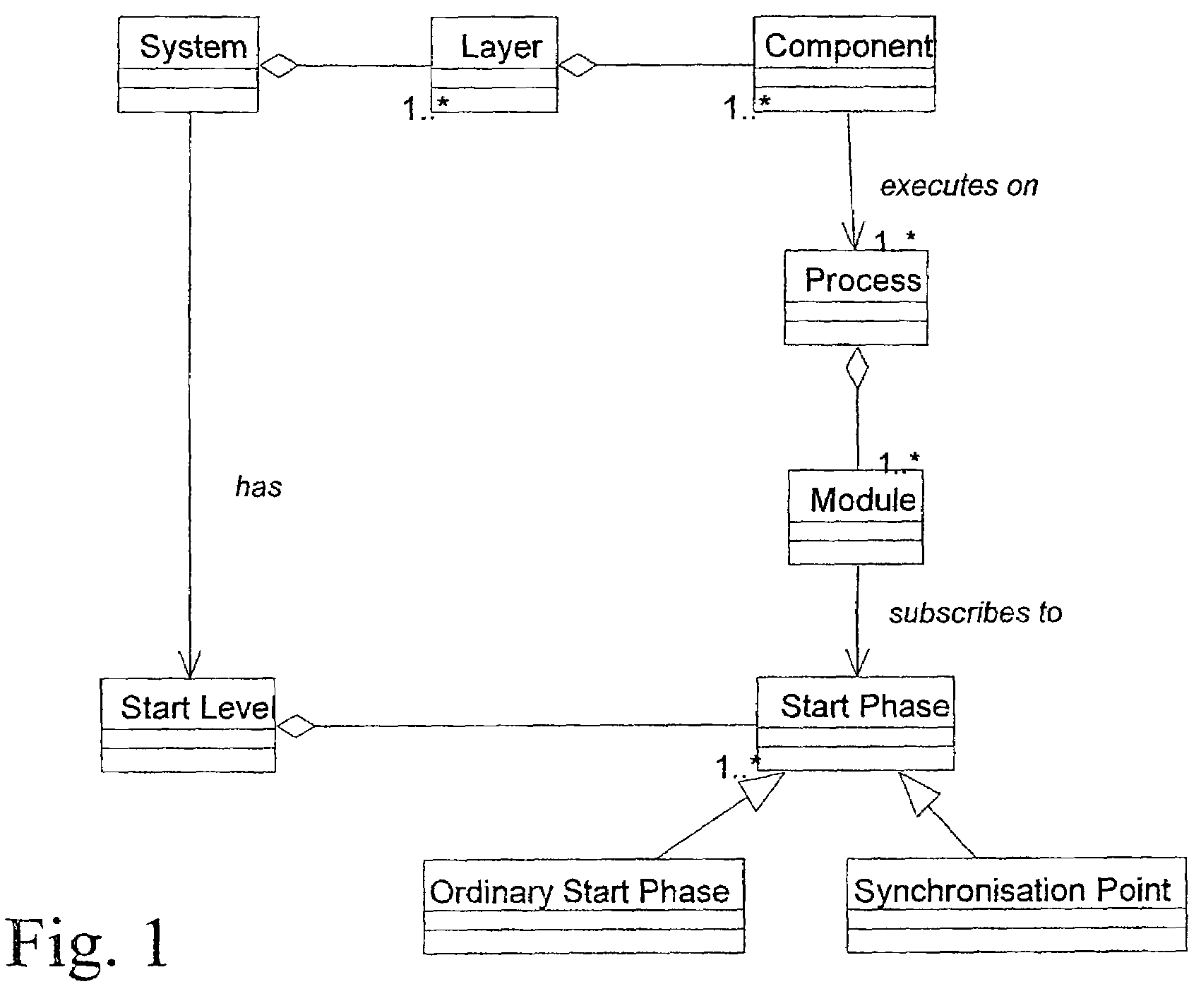

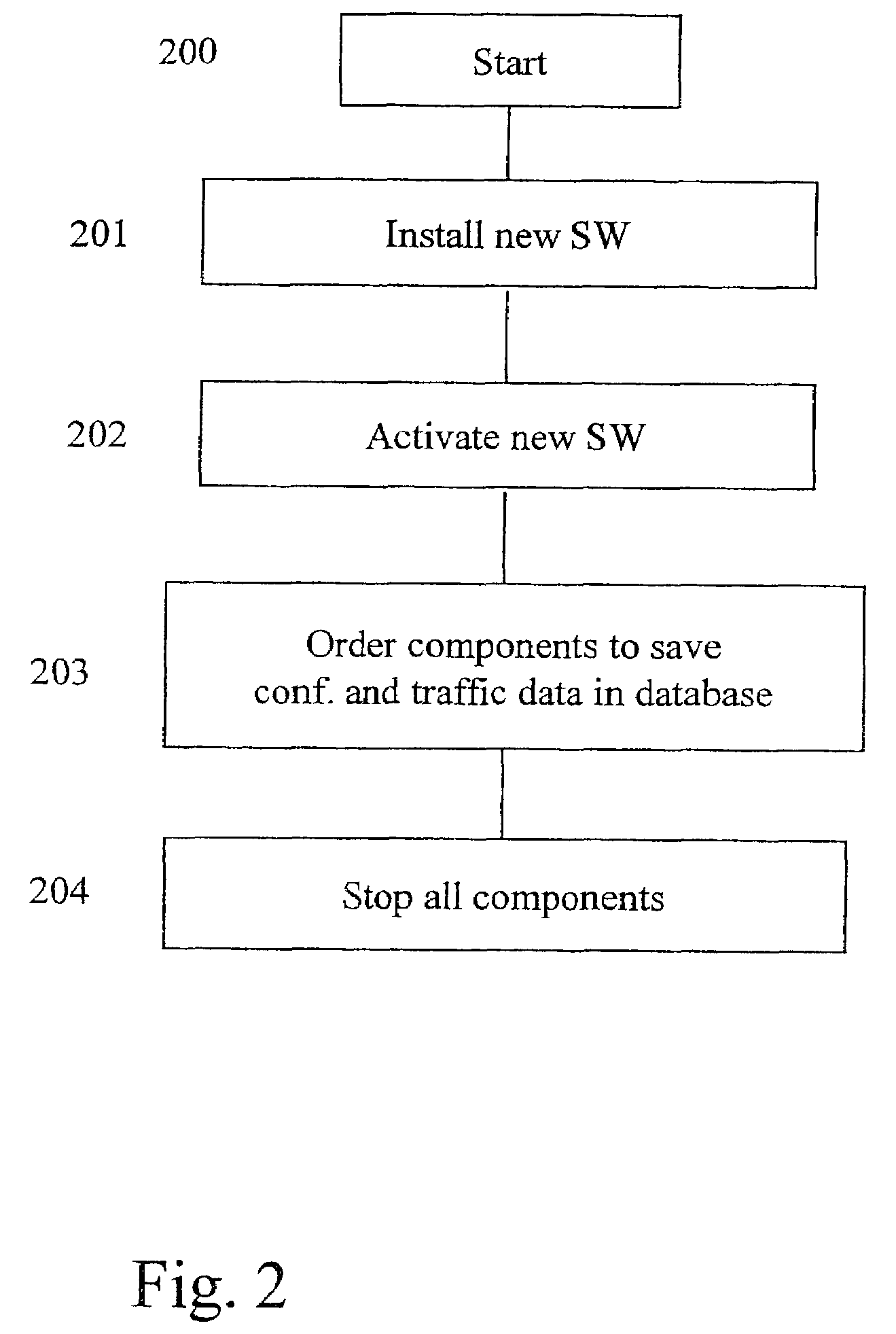

A new method is disclosed, for upgrading the software in a real-time distributed software system comprising several processes running in parallell. The method involves a preparatory procedure in which the new software is installed. Then, the software is activated, the components ordered to save configuration data and transaction data in a database, whereupon the components are stopped. When the preparatory procedure is completed, a restart procedure is invoked. This involves selecting start level (full upgrade involving all components, or a partial upgrade concerning just one or a few components). The modules concerned are started from the new software version. On each start level a number of start phases are defined. These are sequential steps performed during the restart operation. Each module subscribes to a number of these phases. Some of the phases are defined as synchronisation points, here called service levels, which all modules have to arrive at before the process is allowed to commence. During this process, each module is given the responsibility of converting any configuration data or transaction data belonging to it, if needed. The conversion is done by reading the old data from the database mentioned above and converting said data to the format required by the new software.

Owner:TELEFON AB LM ERICSSON (PUBL)

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com