Method for prompting IO (input/output) parallelism and reducing small IO delay by utilizing multiple request queues

A request queue and multi-request technology, which is applied to multi-programming devices, resource allocation, etc., can solve problems such as reduced efficiency, inability to make full use of multi-core systems, and affect the parallelism of IO processing, so as to reduce delay, improve processing efficiency, and improve The effect of IO throughput

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments. The following examples are illustrative only, and are not intended to limit the present invention.

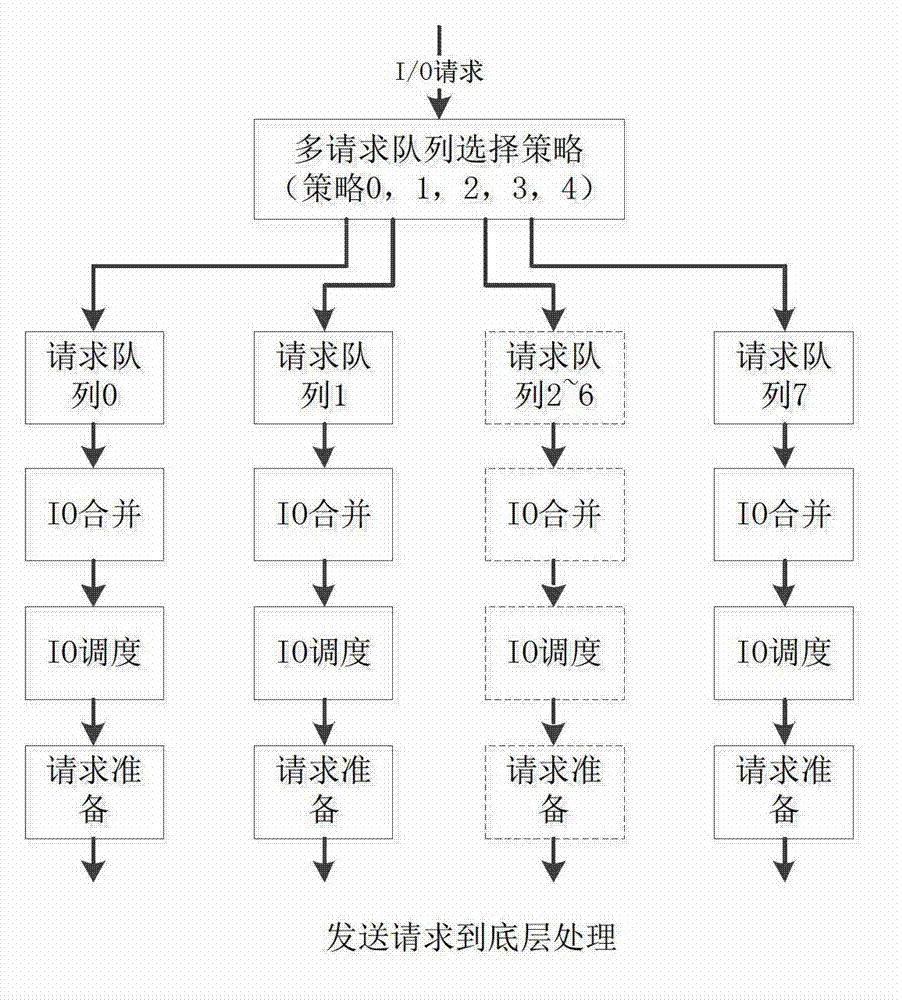

[0031] The present invention aims at improving the IO parallel processing capability and ensuring the real-time requirements of small IOs by implementing multi-request queues and multi-request queue selection strategies in a multi-core environment.

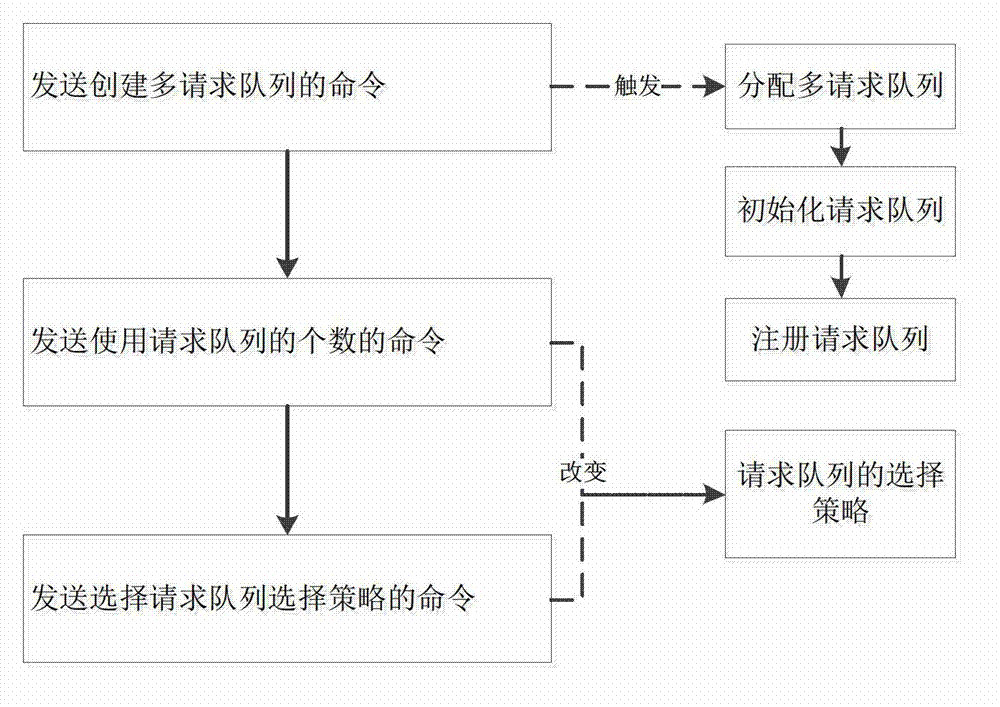

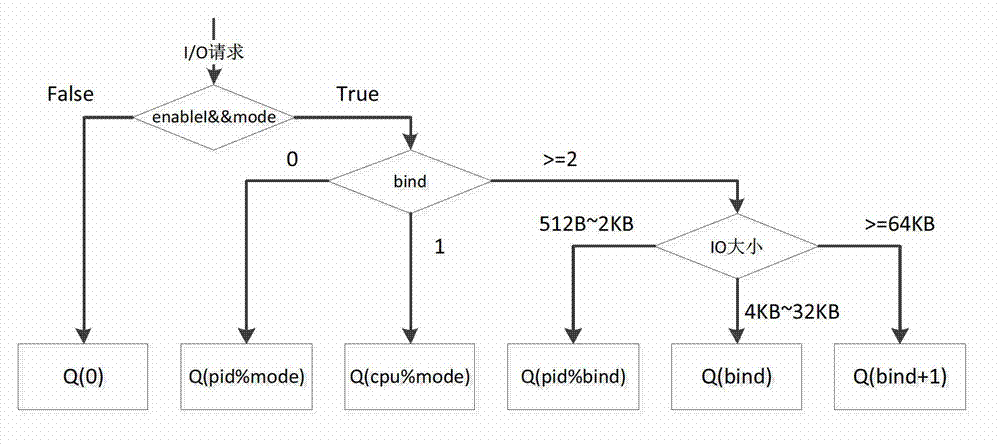

[0032] The present invention first needs to establish a multi-request queue. Implement multi-request queues, create a unified interface for block devices, and create enable, mode, and bind interfaces for block devices. enable is the interface for enabling and disabling the multi-request queue, mode is the interface for using the number of request queues, and bind is the interface for policy selection. You can modify the operation mode of the multi-request queue through these three interface...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com