Patents

Literature

38 results about "Network scheduler" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A network scheduler, also called packet scheduler, queueing discipline, qdisc or queueing algorithm, is an arbiter on a node in packet switching communication network. It manages the sequence of network packets in the transmit and receive queues of the network interface controller. There are several network schedulers available for the different operating systems, that implement many of the existing network scheduling algorithms.

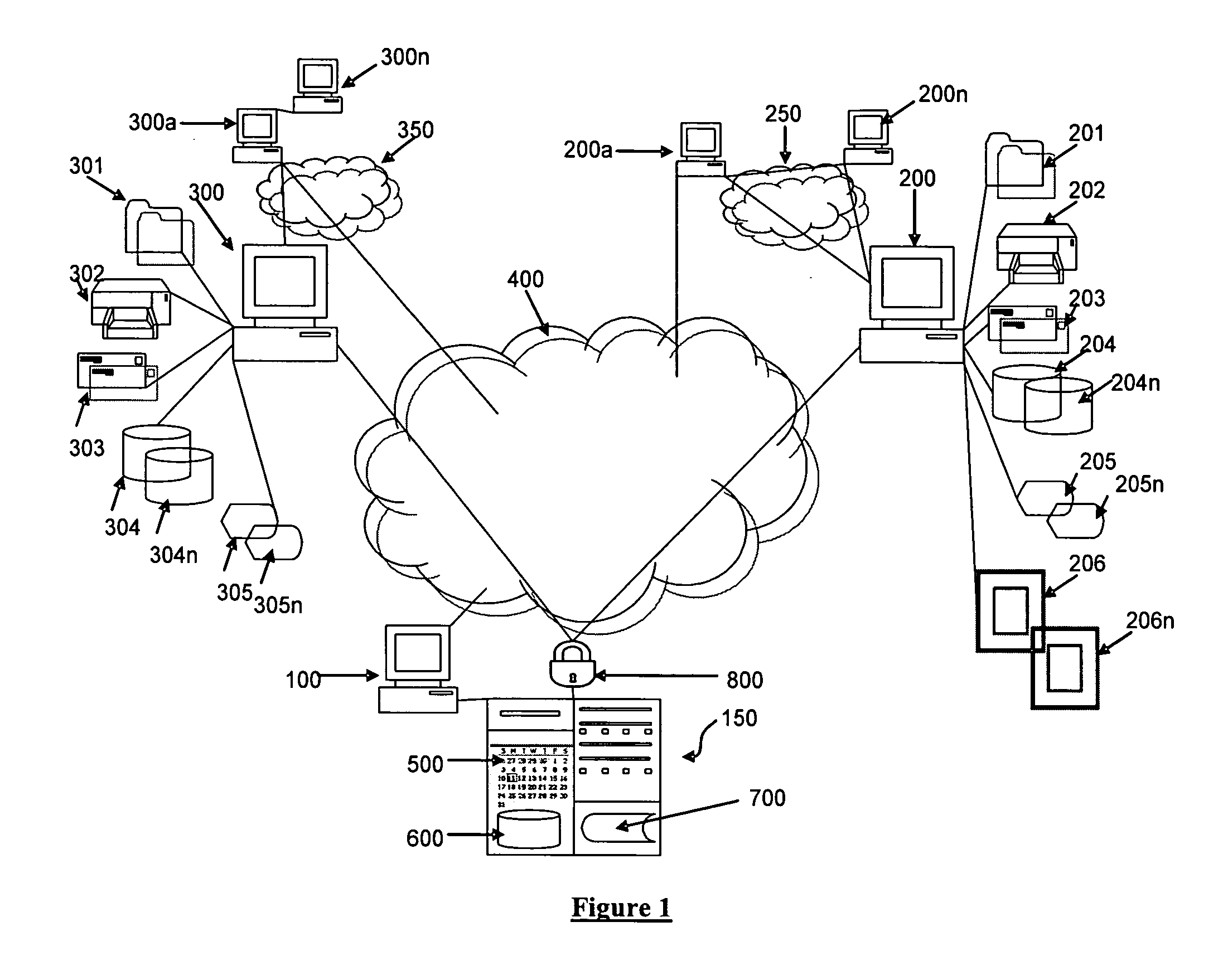

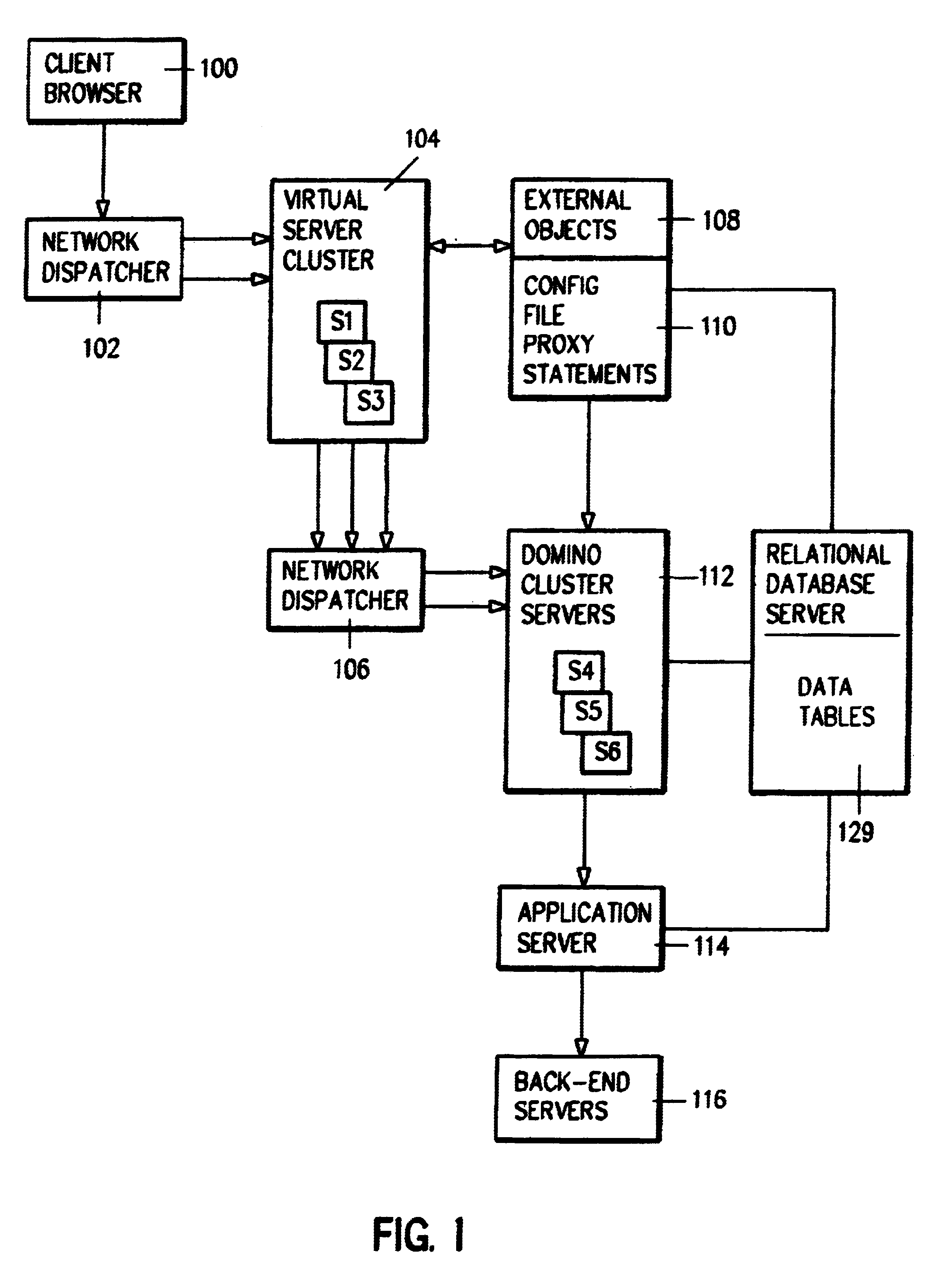

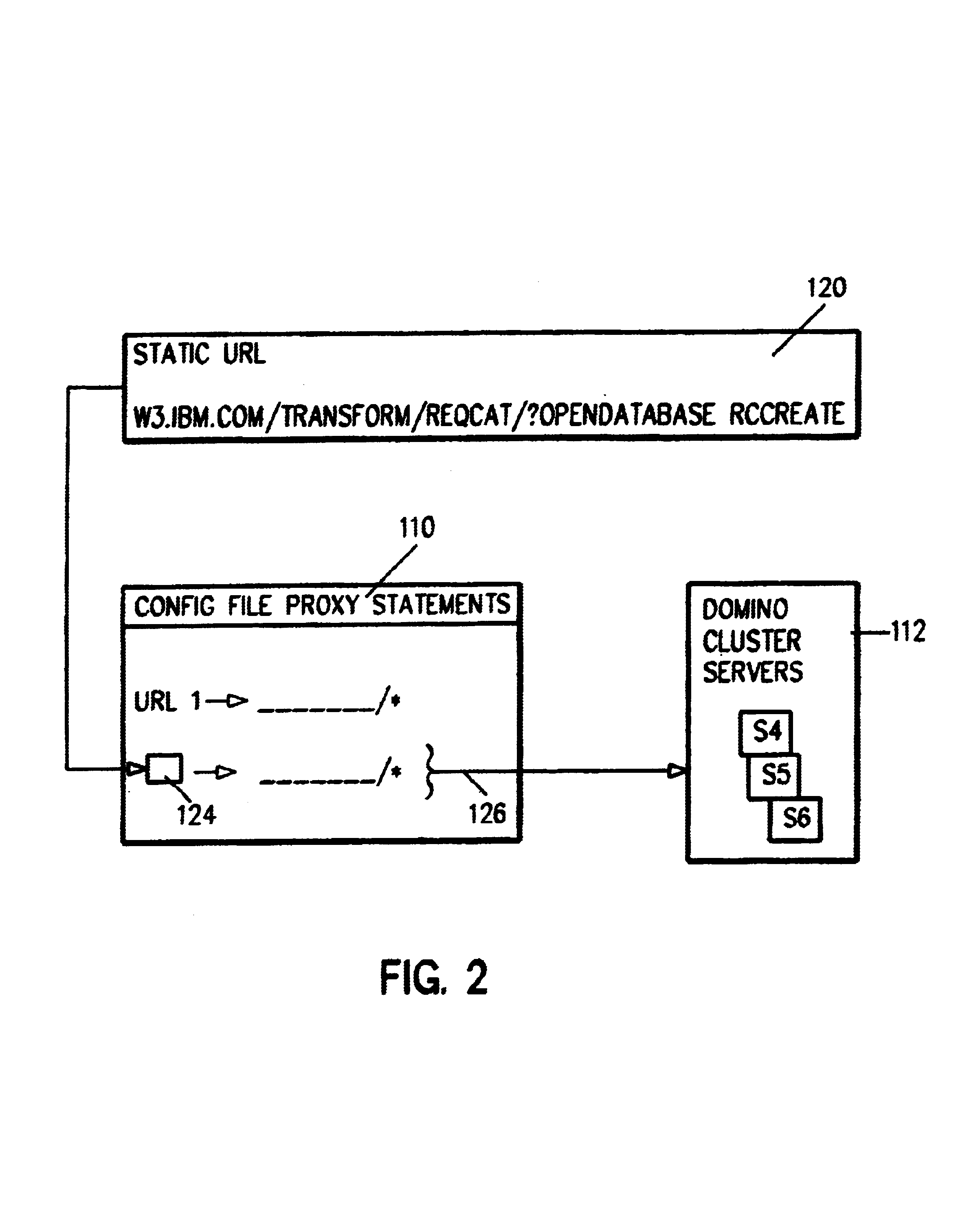

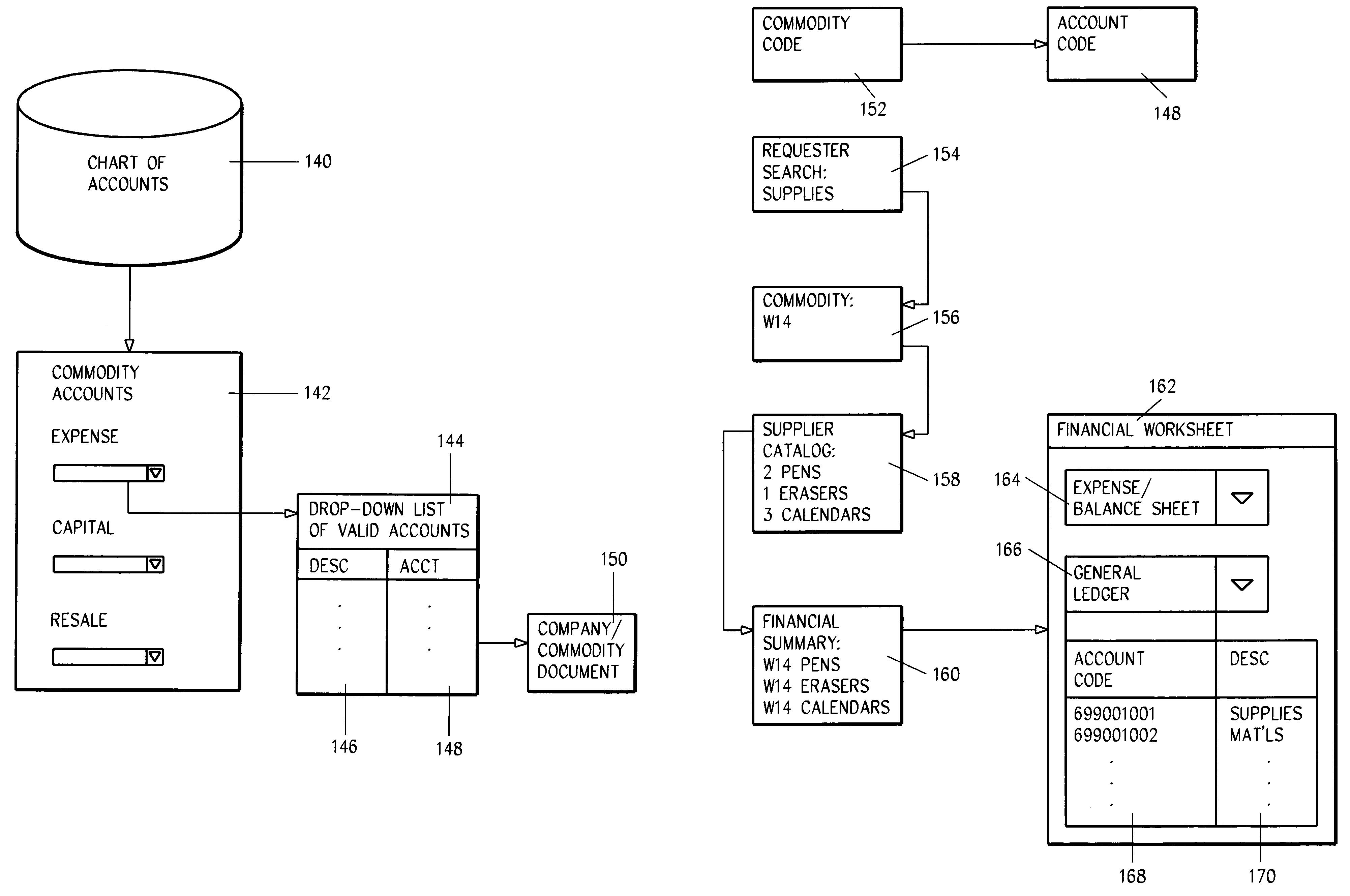

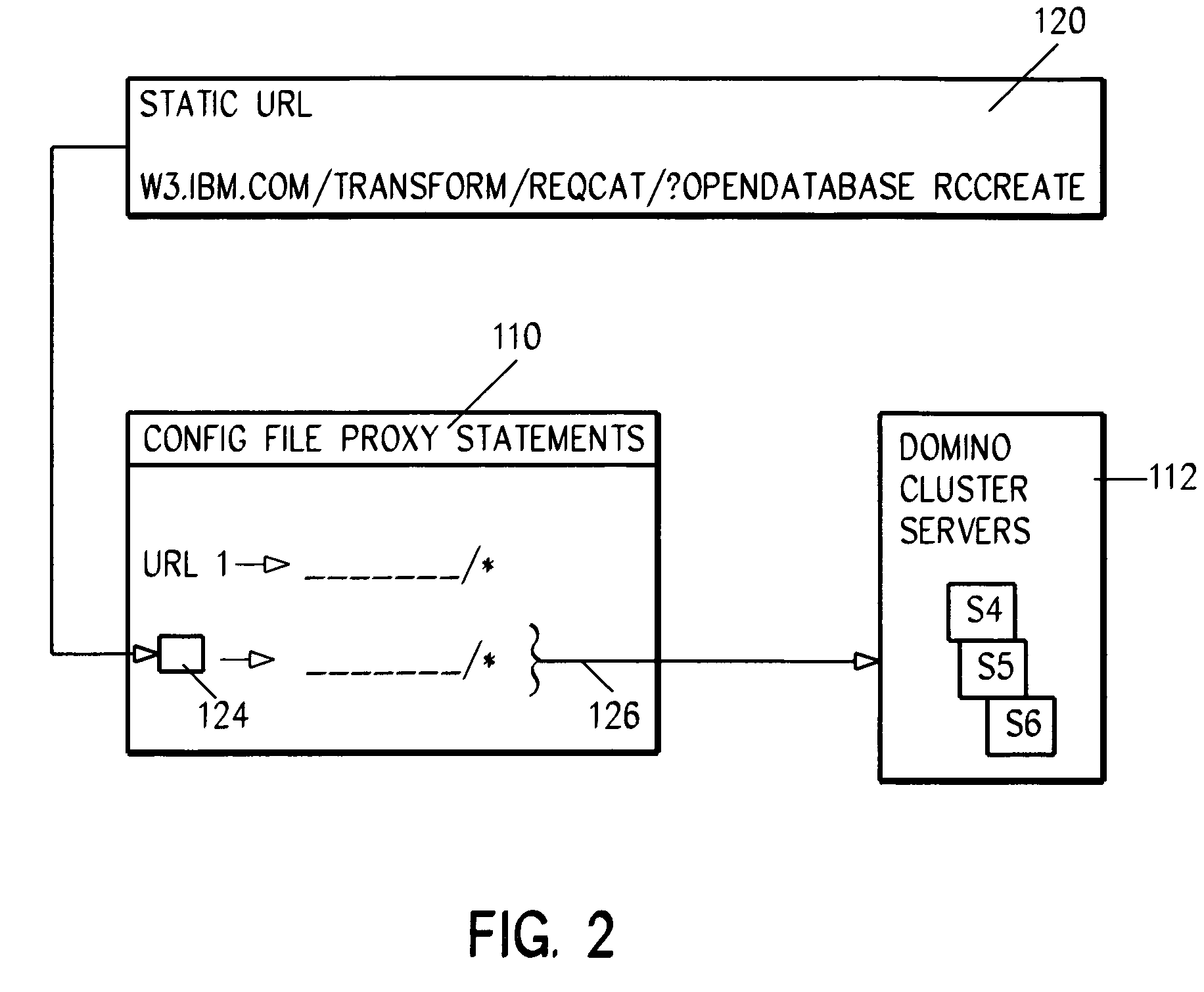

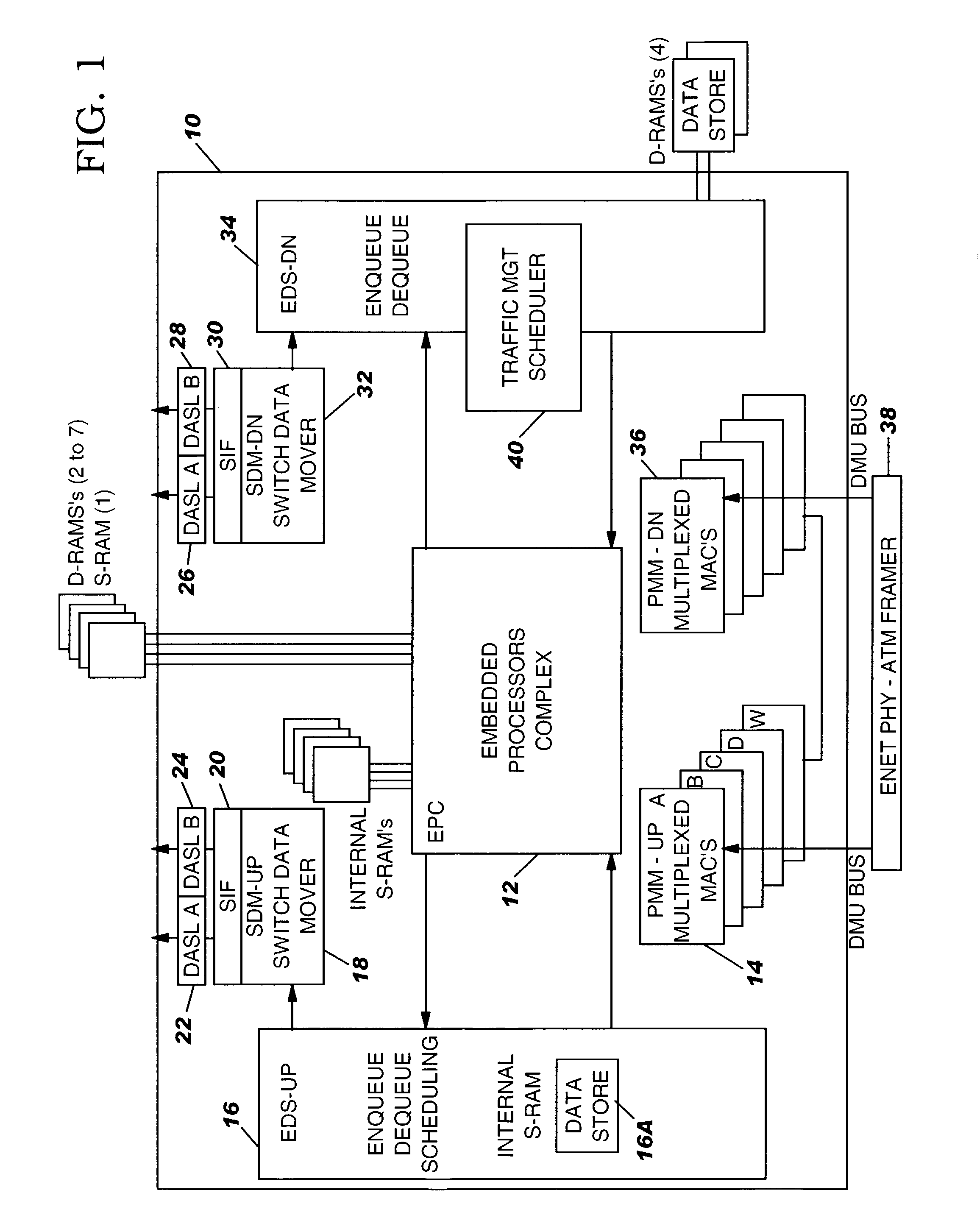

System and method for populating HTML forms using relational database agents

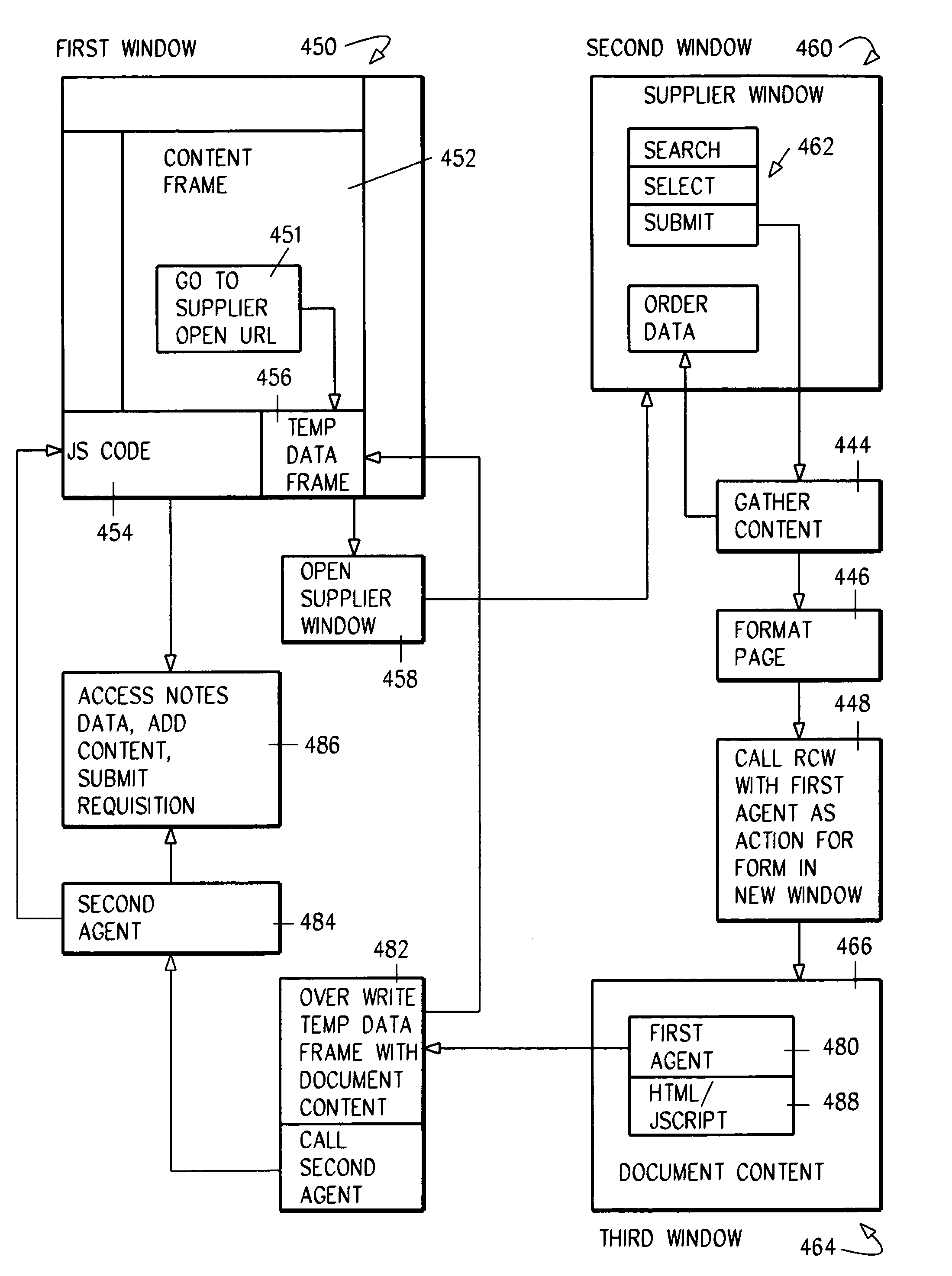

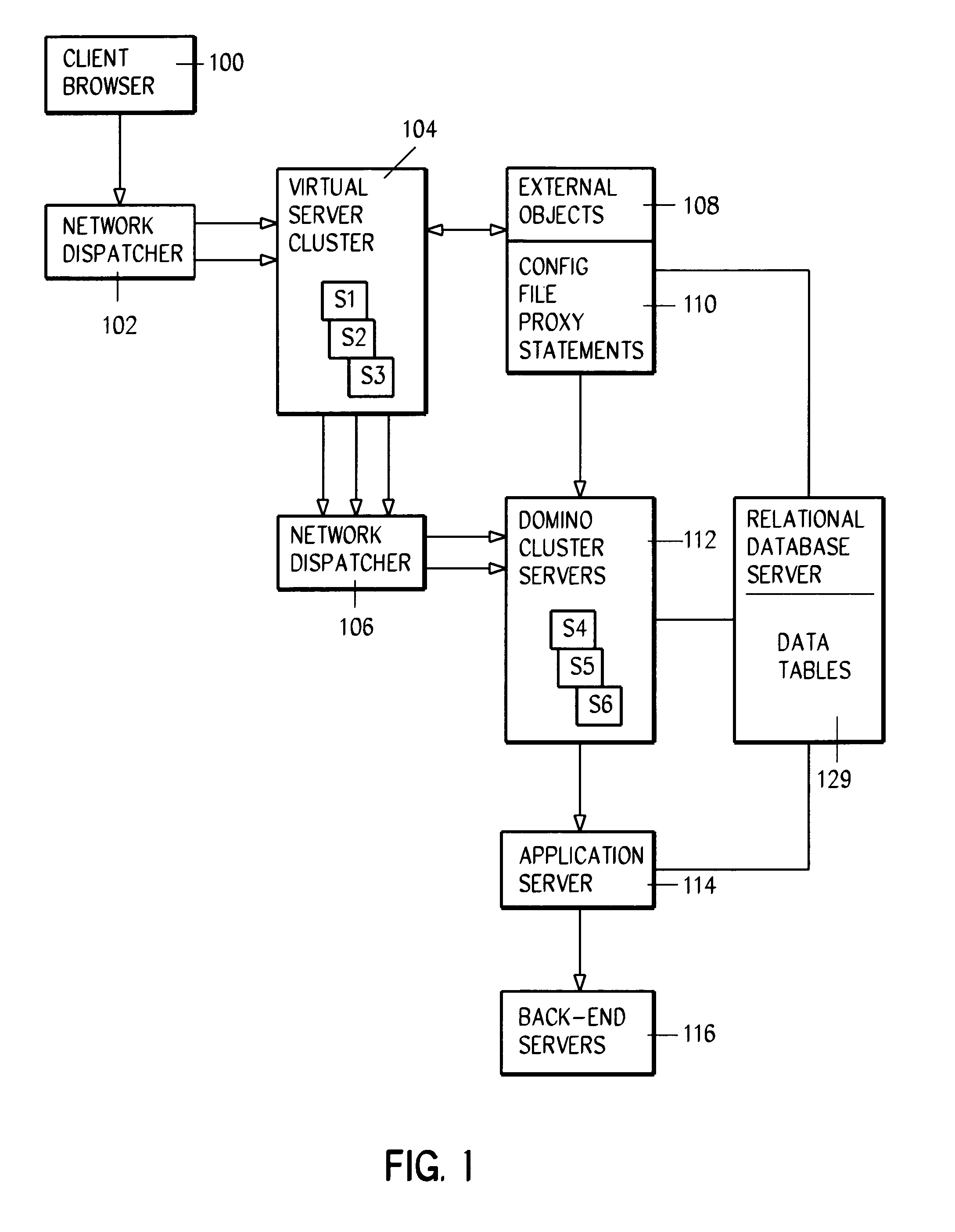

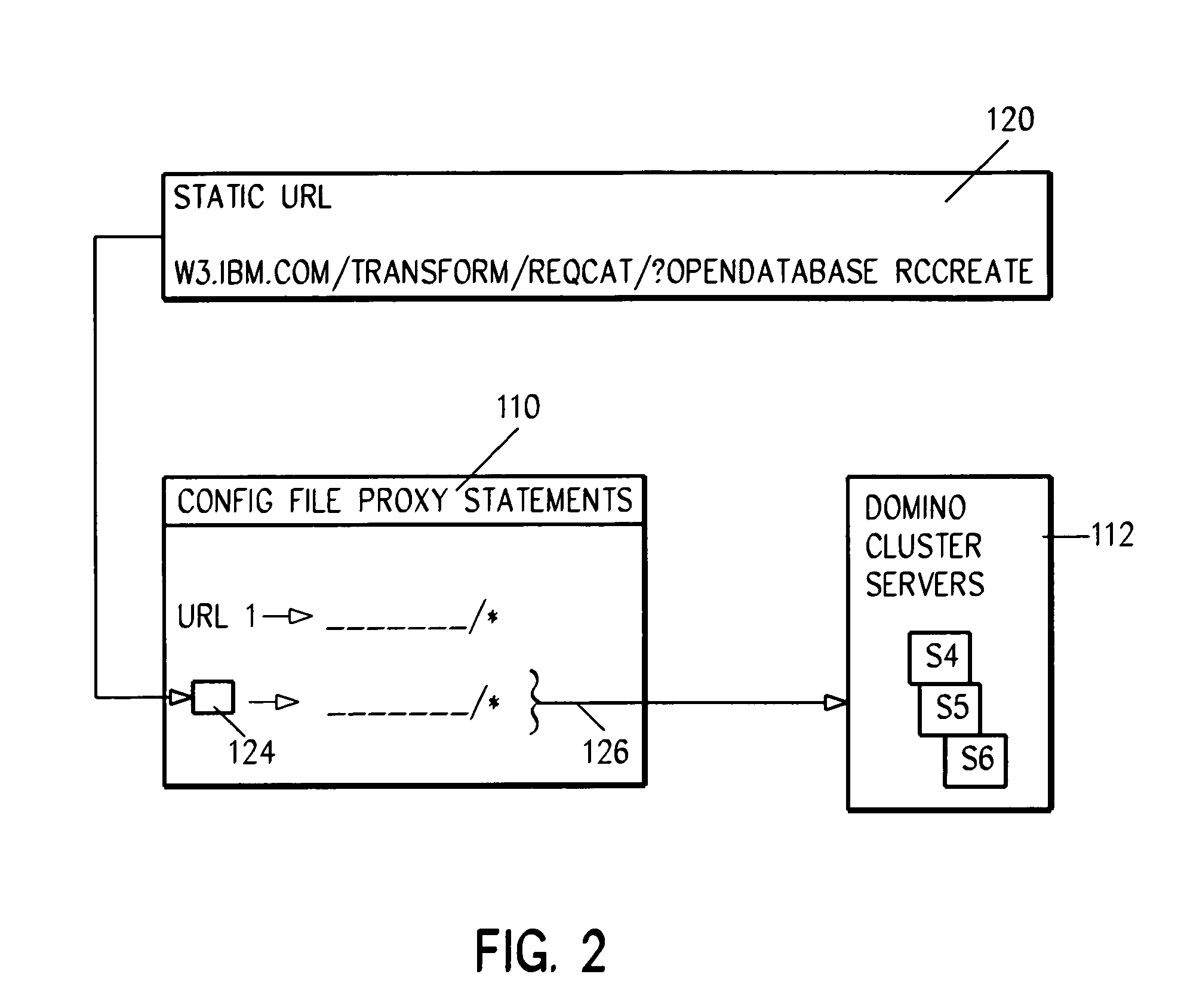

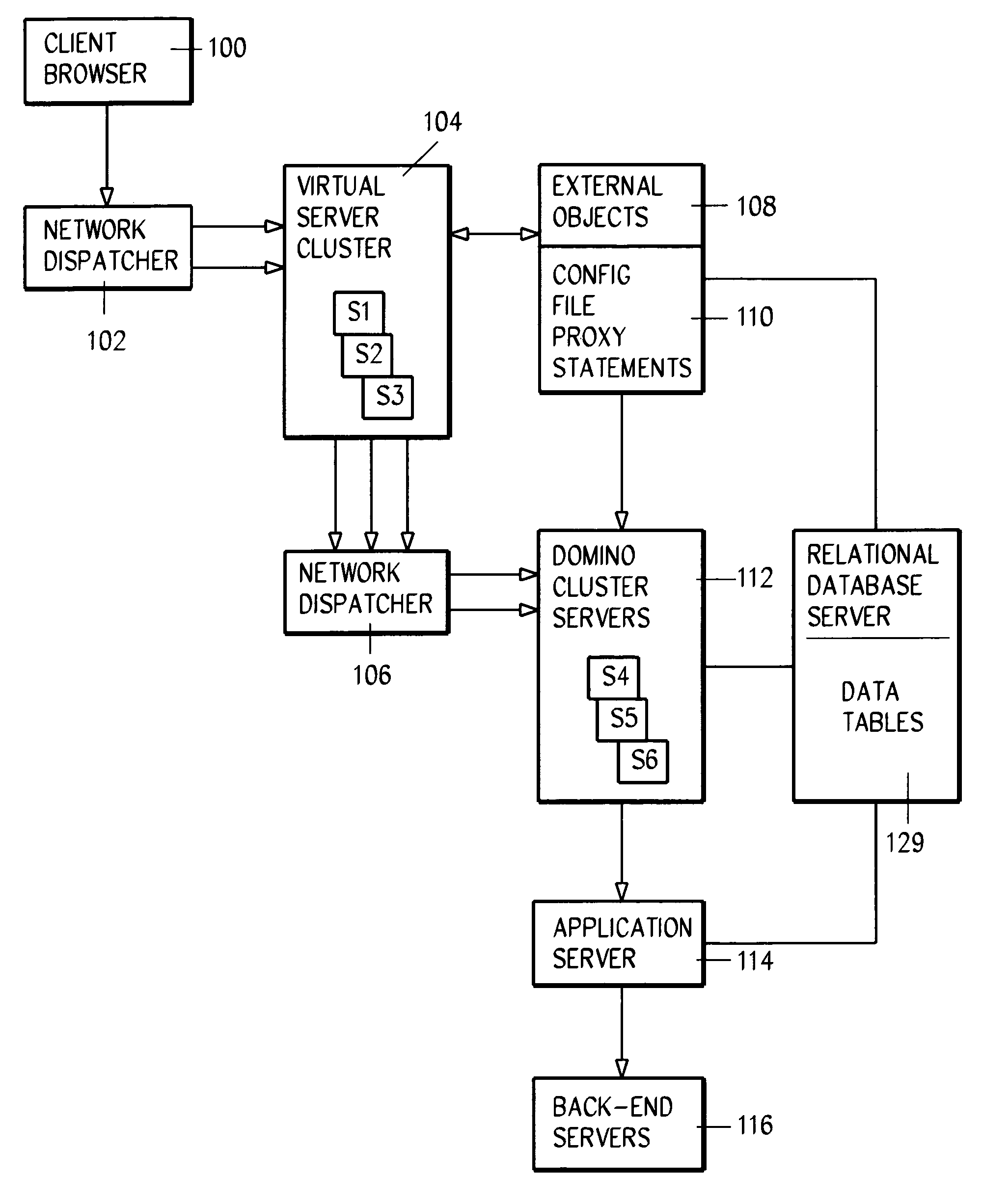

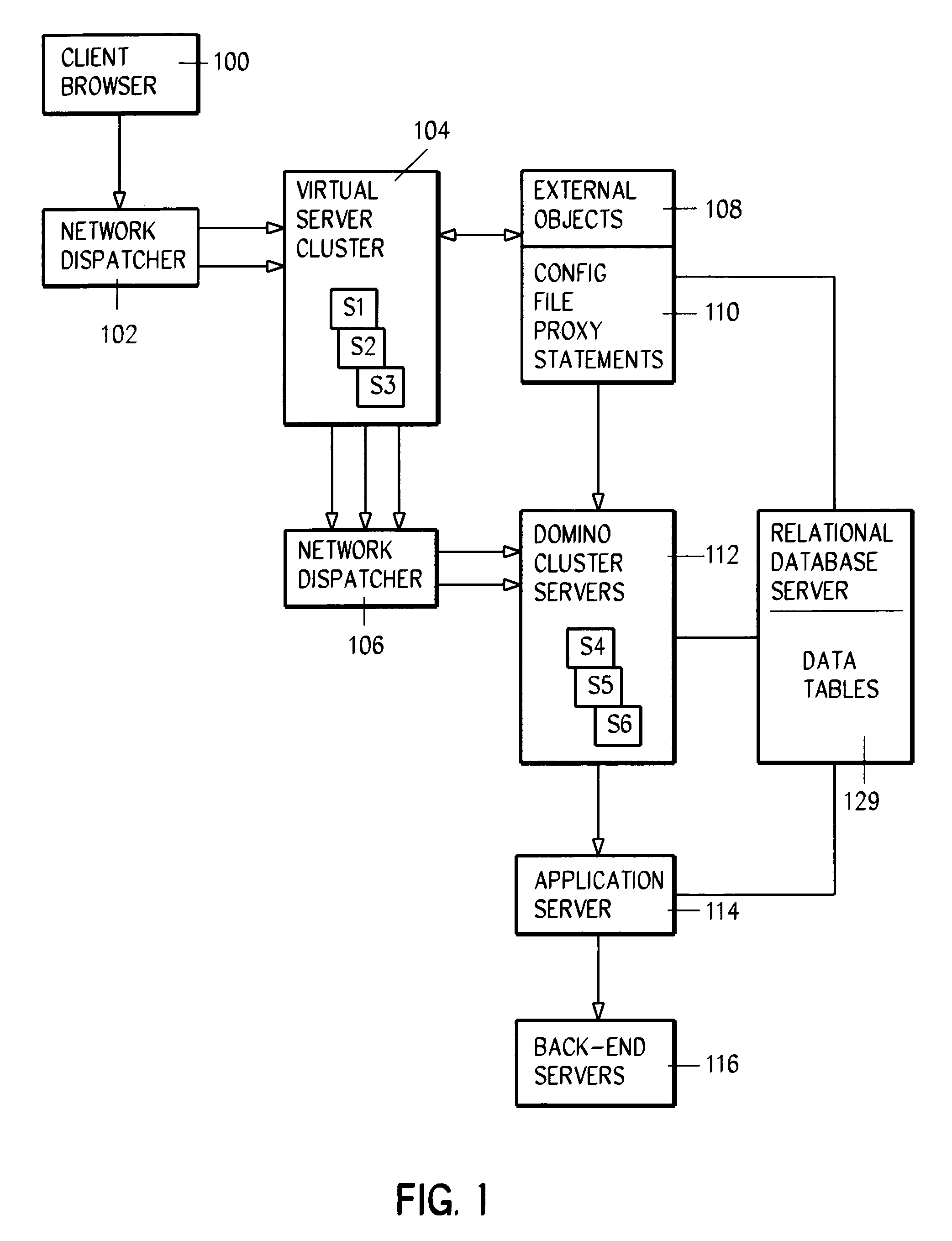

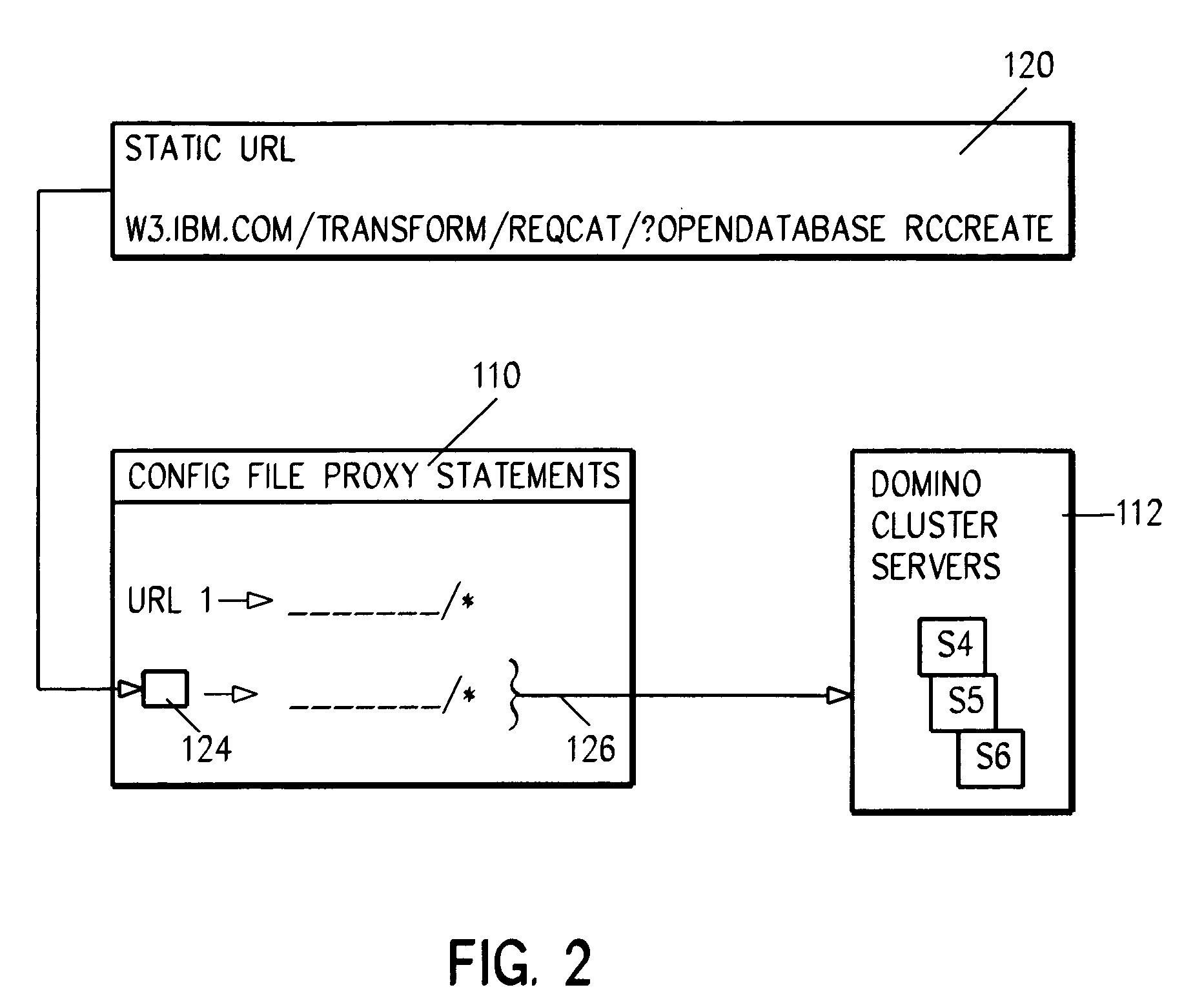

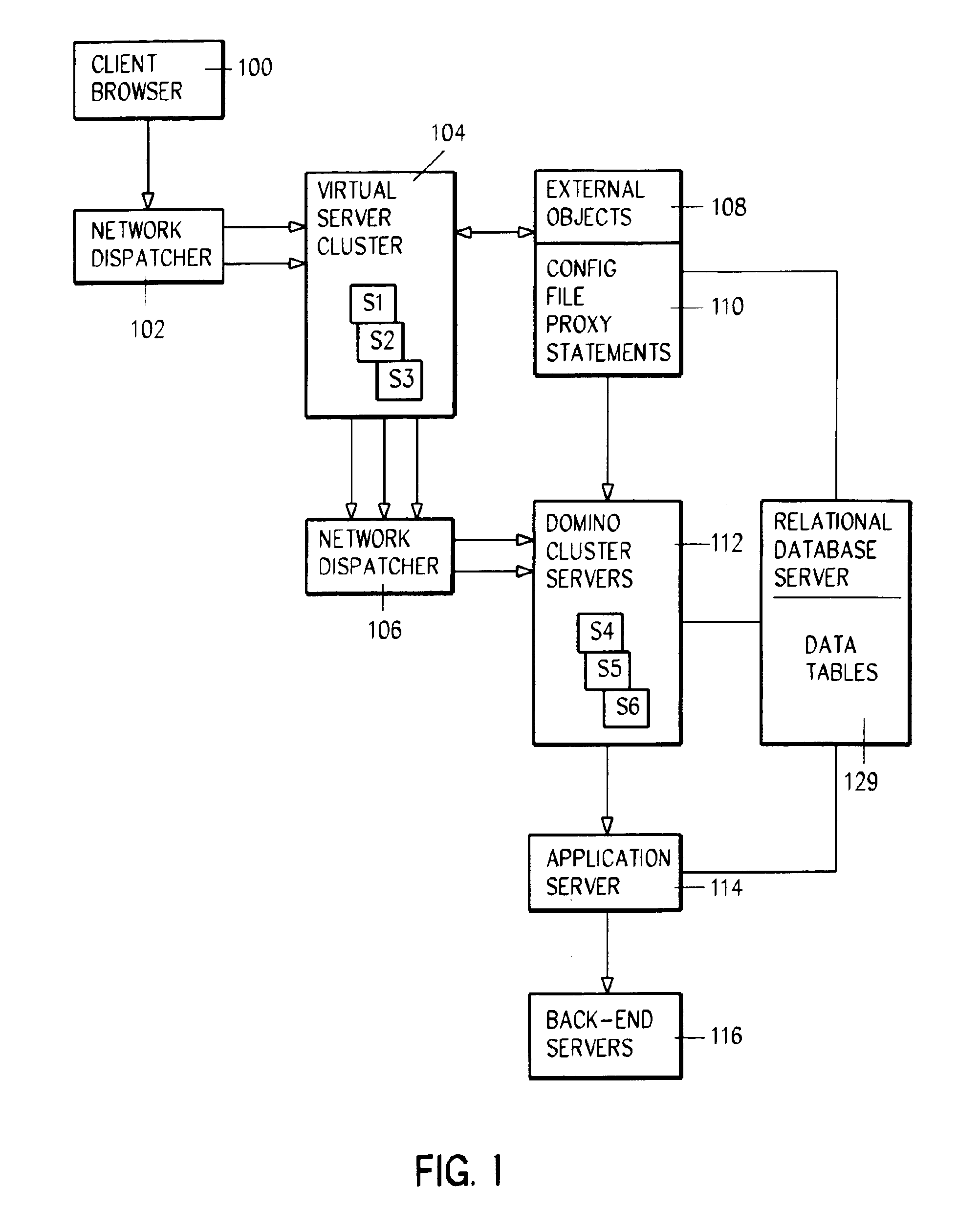

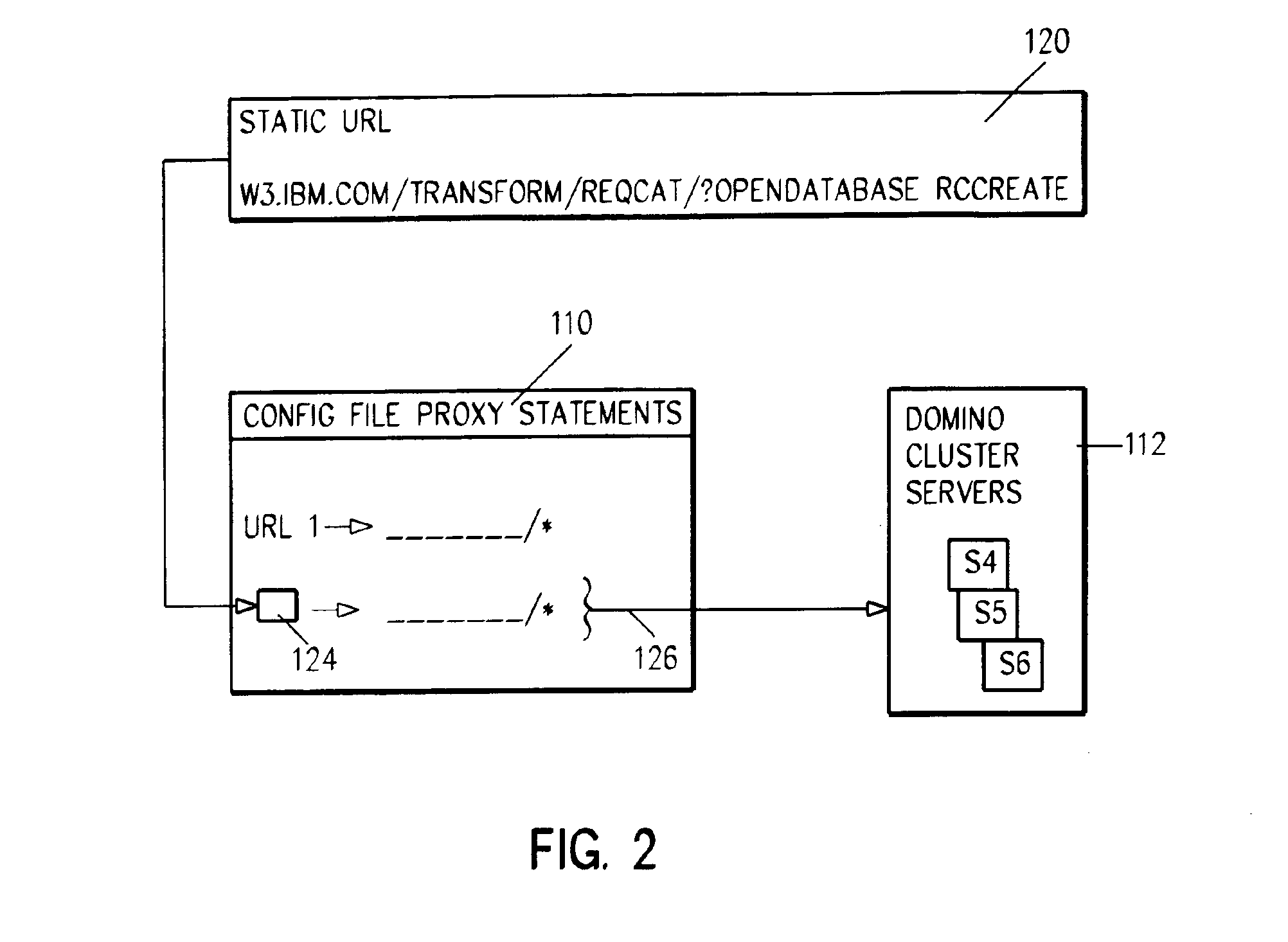

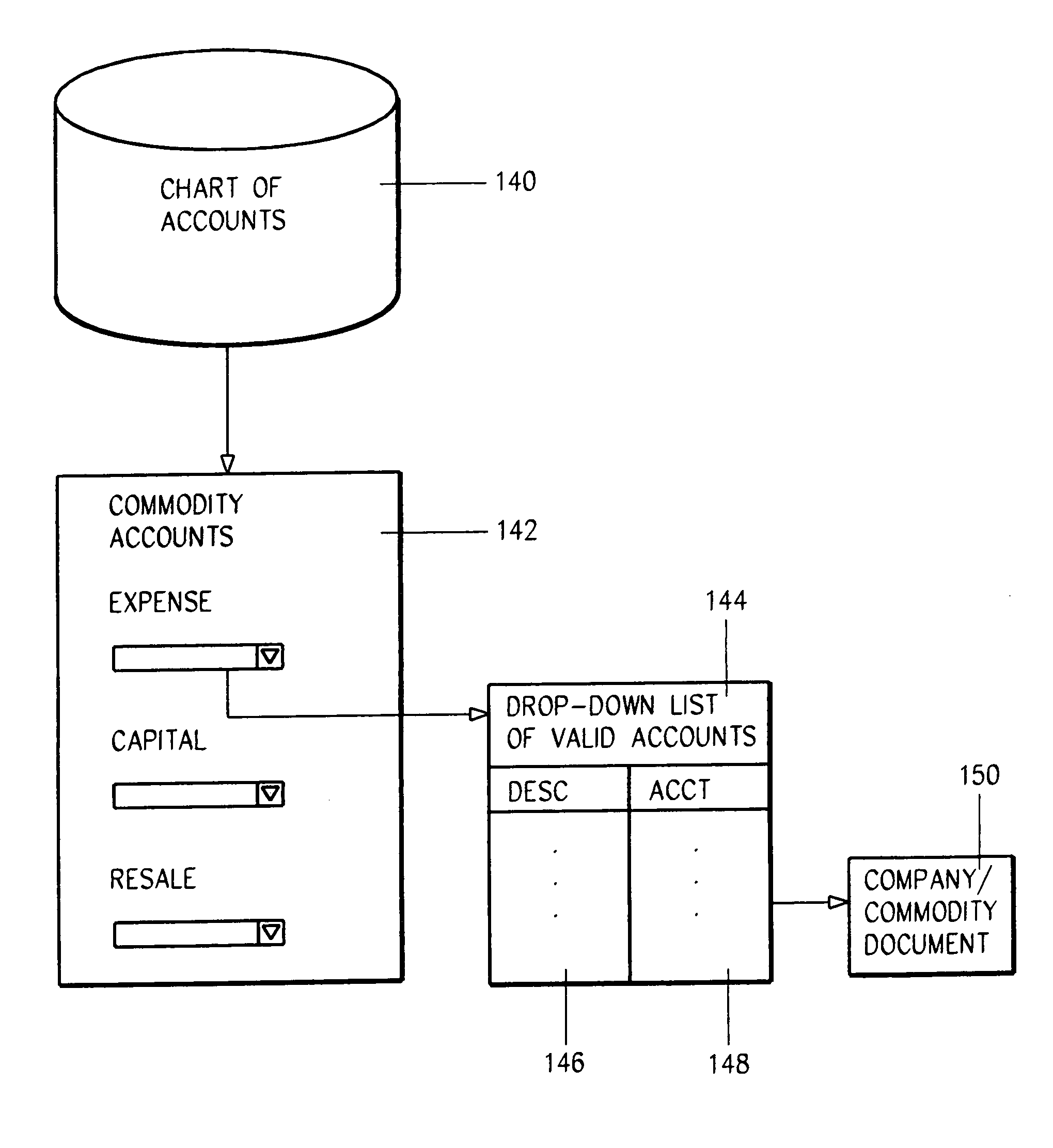

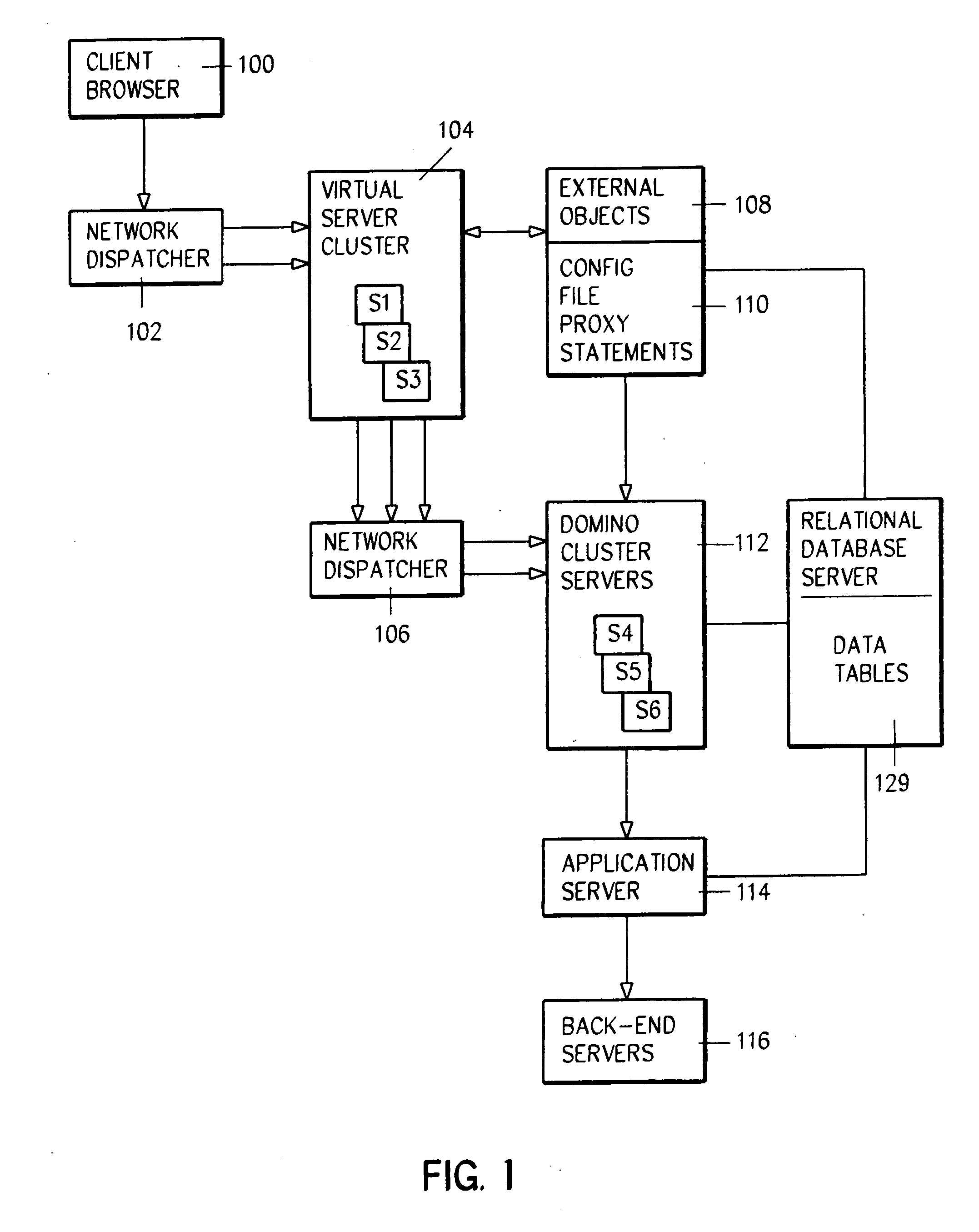

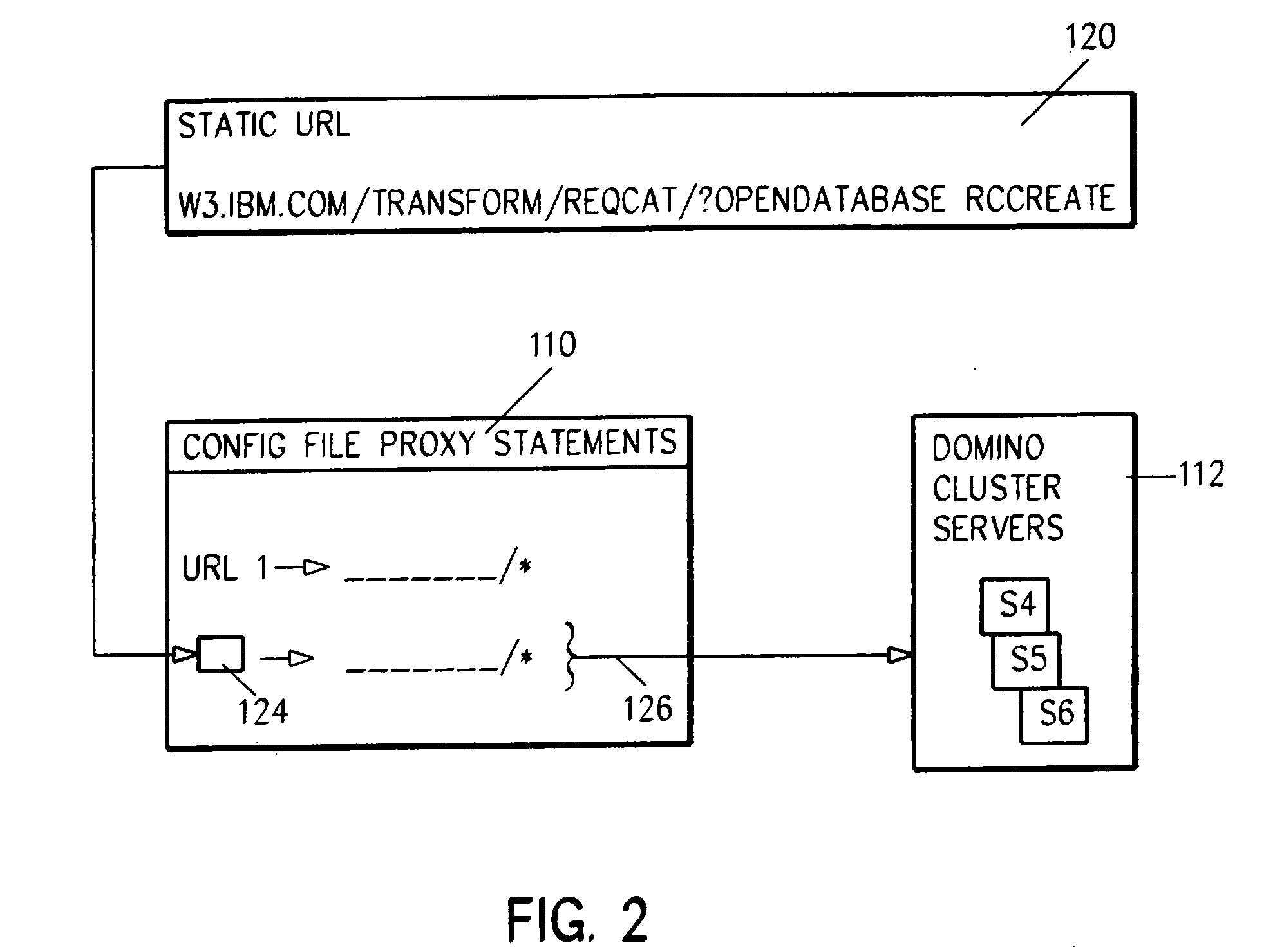

A hybird Notes / DB2 environment provides a requisition catalog on the Web. Client browsers are connected to a GWA infrastructure including a first network dispatcher and a virtual cluster of Domino.Go servers. The network dispatcher sprays out browser requests among configured .nsf servers in virtual server cluster. Communications from this virtual server cluster are, in turn, dispatched by a second network dispatcher servers in a Domino cluster. External objects, primarily for a GUI, are served in a .dfs and include graphic files, Java files, HTML images and net.data macros. The catalog is built from supplier provided flat files. A front end is provided for business logic and validation, as also is a relation database backend. HTML forms are populated using relational database agents. A role table is used for controlling access both to Notes code and DB2 data. Large amounts of data is quickly transferred using an intermediate agent and window.

Owner:KYNDRYL INC

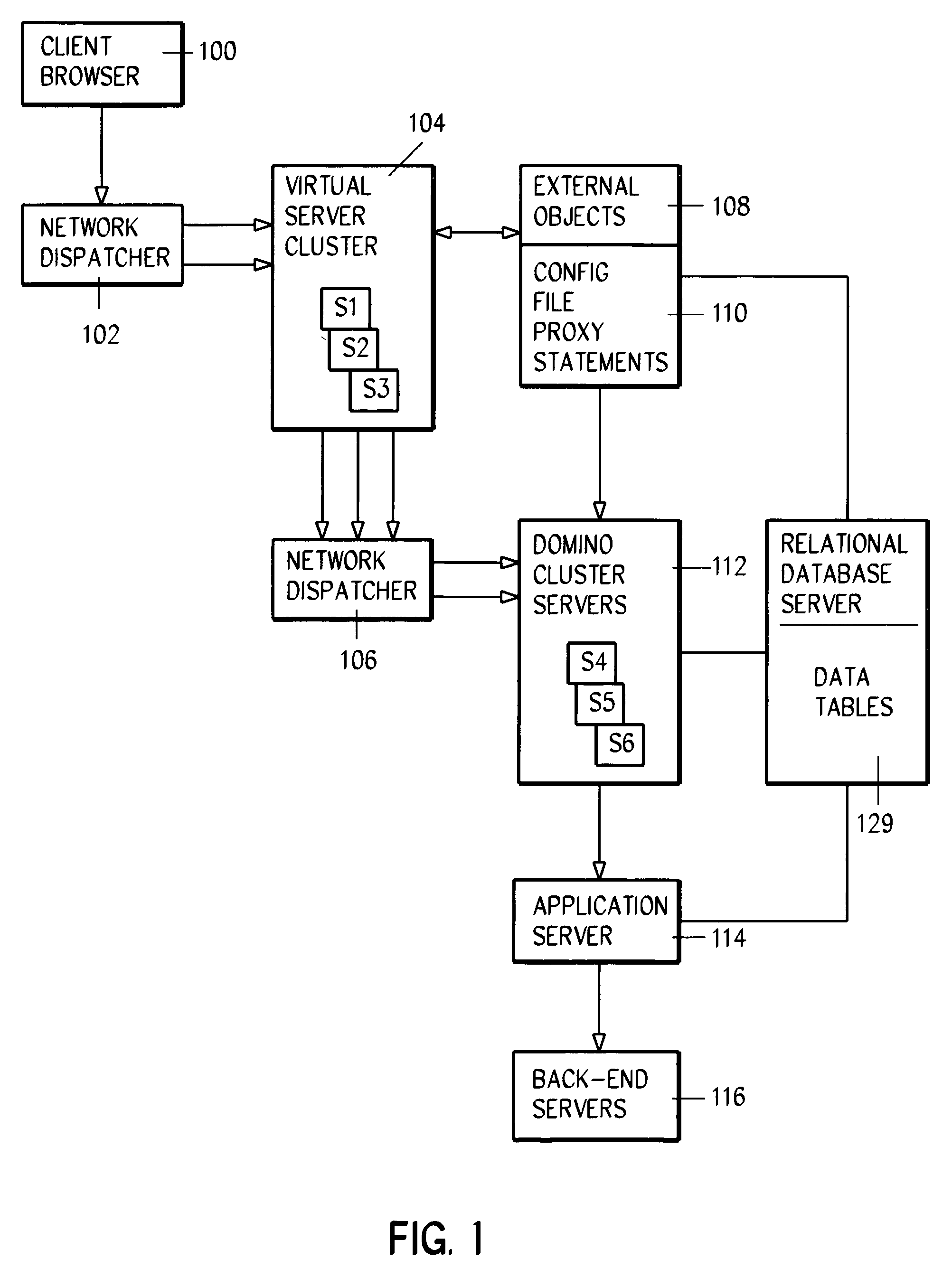

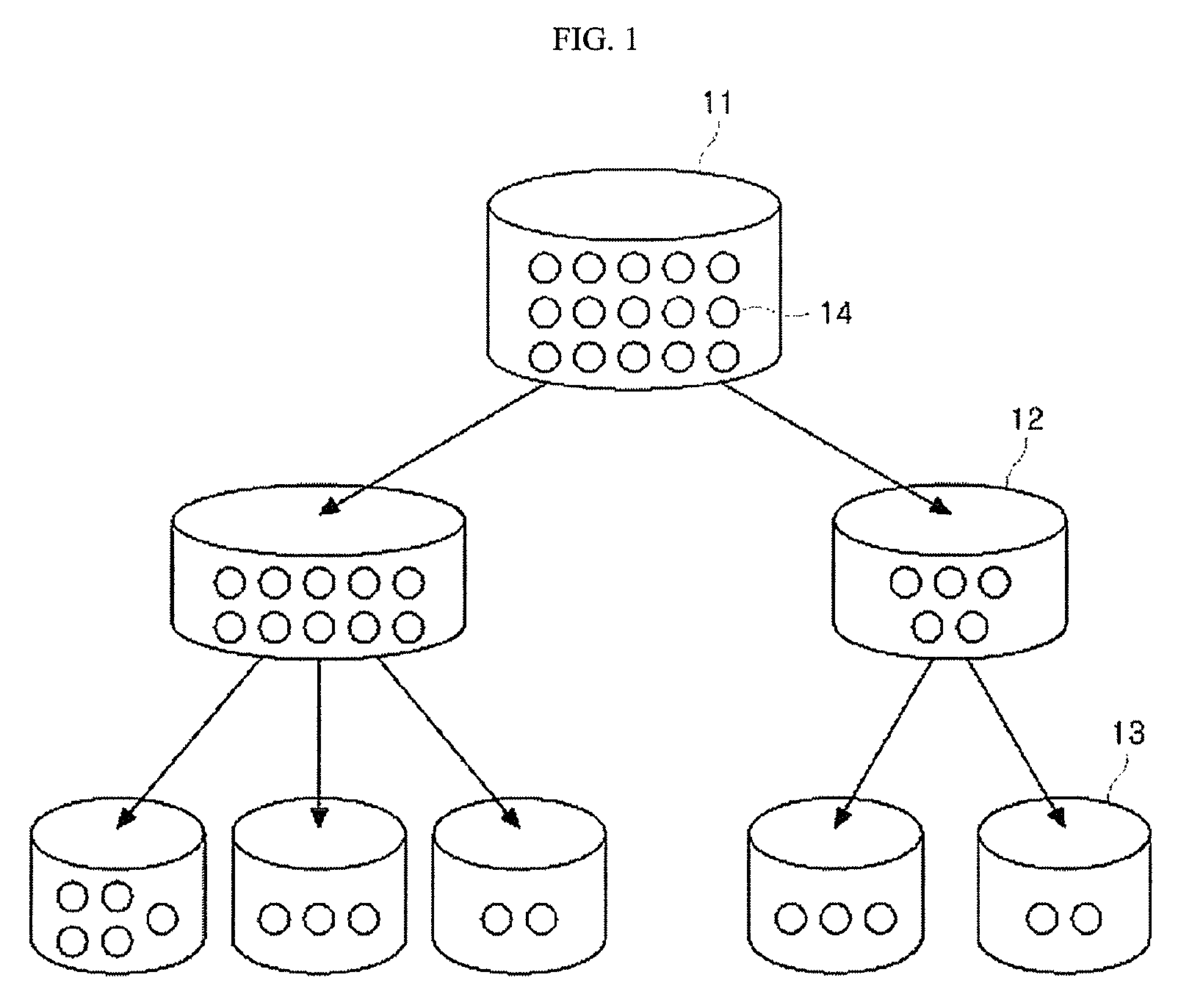

System and method for clustering servers for performance and load balancing

InactiveUS6965938B1Choose accuratelyMultiple digital computer combinationsTransmissionGraphicsRelational database

A hybrid Notes / DB2 environment provides a requisition catalog on the Web. Client browsers are connected to a GWA infrastructure including a first network dispatcher and a virtual cluster of Domino.Go servers. The network dispatcher sprays out browser requests among configured .nsf servers in virtual server cluster. Communications from this virtual server cluster are, in turn, dispatched by a second network dispatcher servers in a Domino cluster. External objects, primarily for a GUI, are served in a .dfs and include graphic files, Java files, HTML images and net.data macros. The catalog is built from supplier provided flat files. A front end is provided for business logic and validation, as also is a relation database backend. HTML forms are populated using relational database agents. A role table is used for controlling access both to Notes code and DB2 data. Large amounts of data are quickly transferred using an intermediate agent and window.

Owner:KYNDRYL INC +1

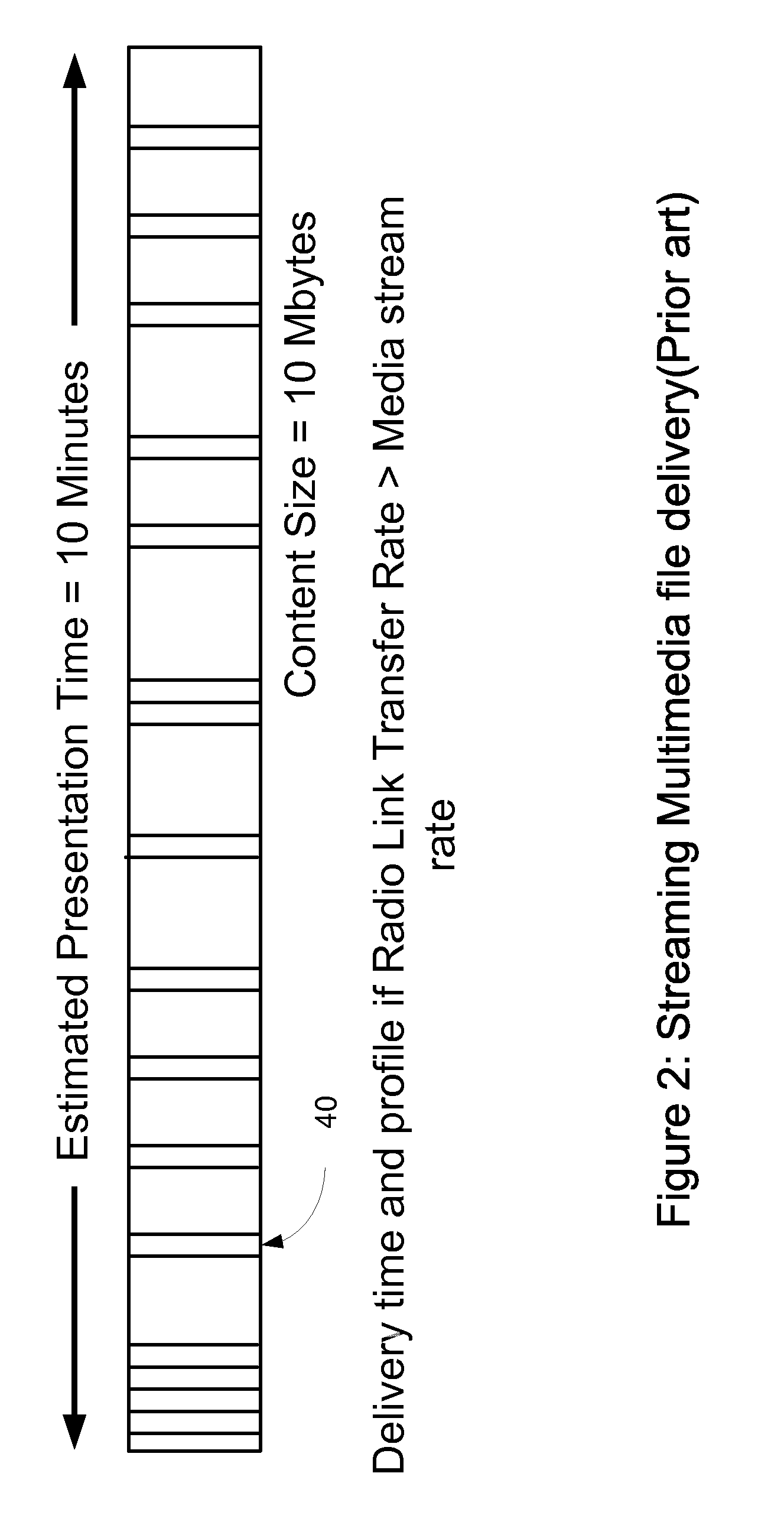

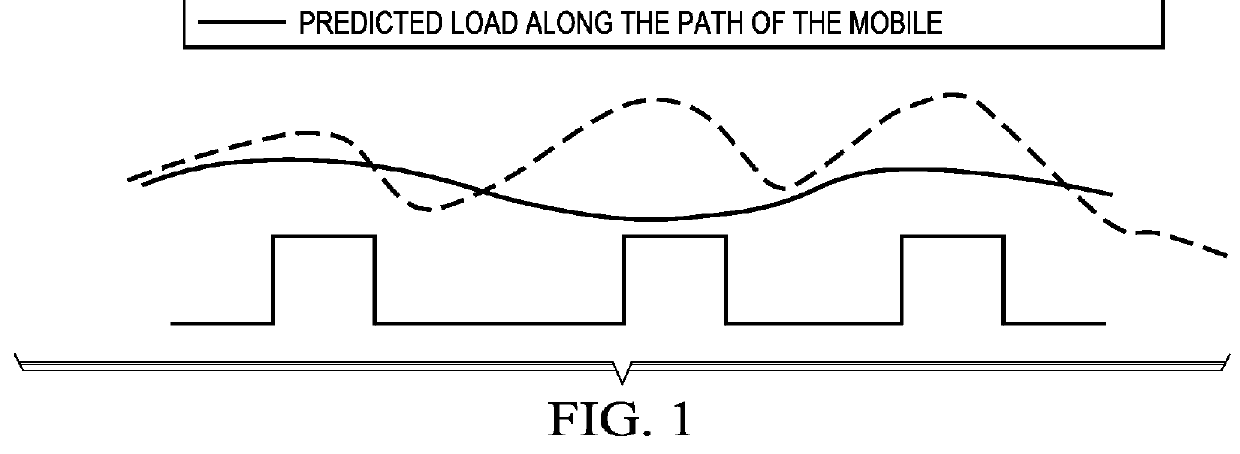

Application, Usage & Radio Link Aware Transport Network Scheduler

ActiveUS20100195602A1Reduce wasteFacilitates optimal sharingNetwork traffic/resource managementWireless commuication servicesTransit networkThird generation

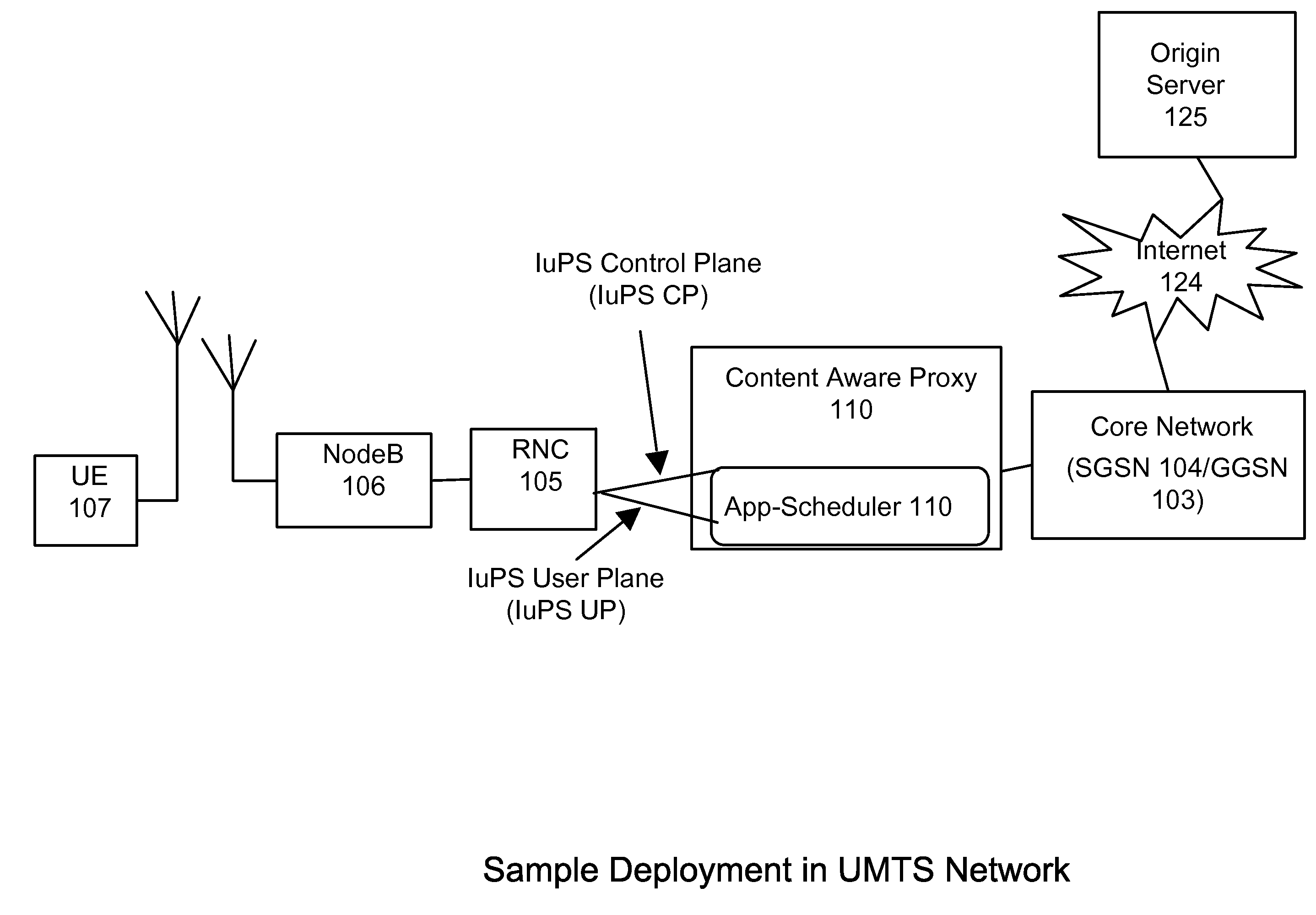

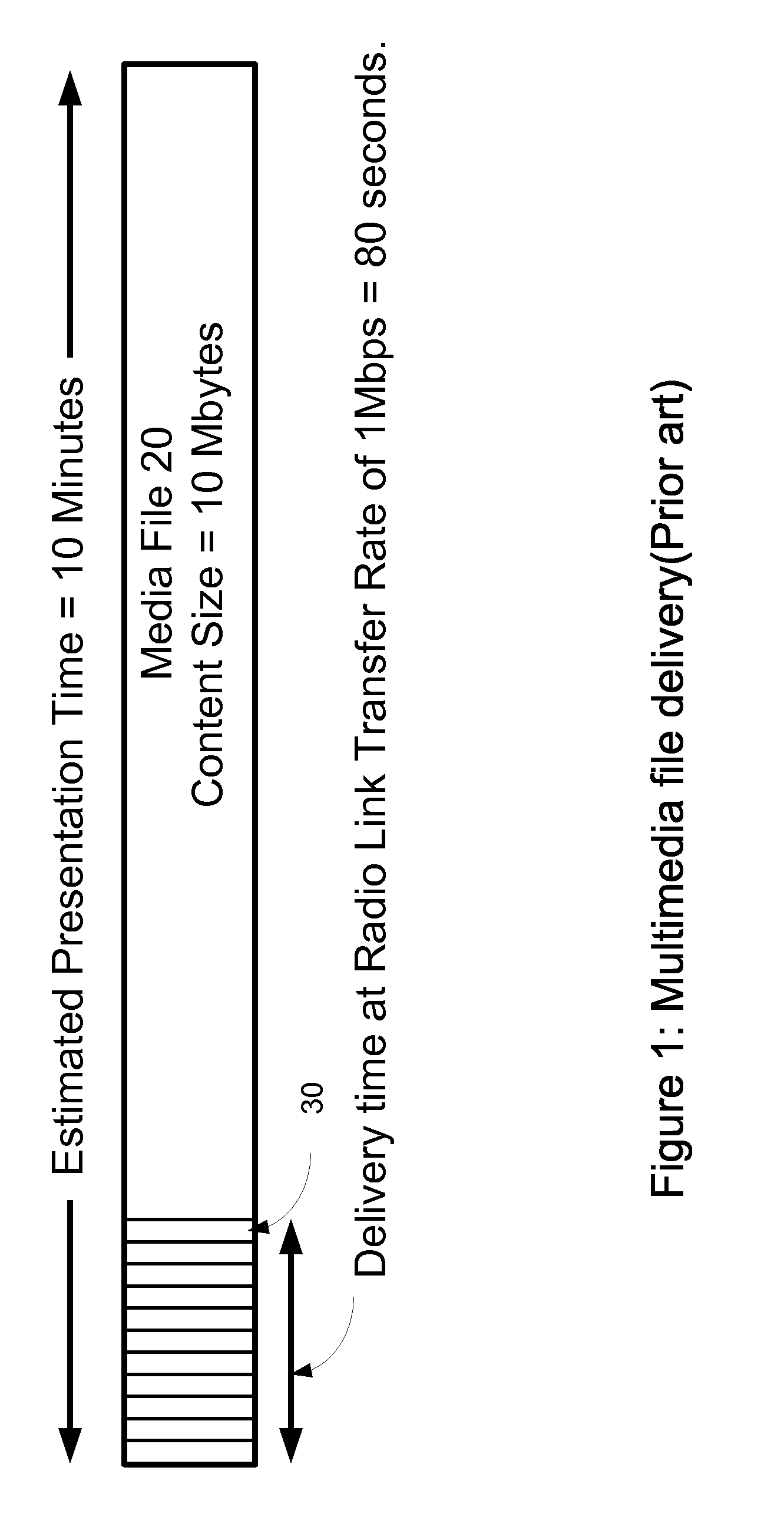

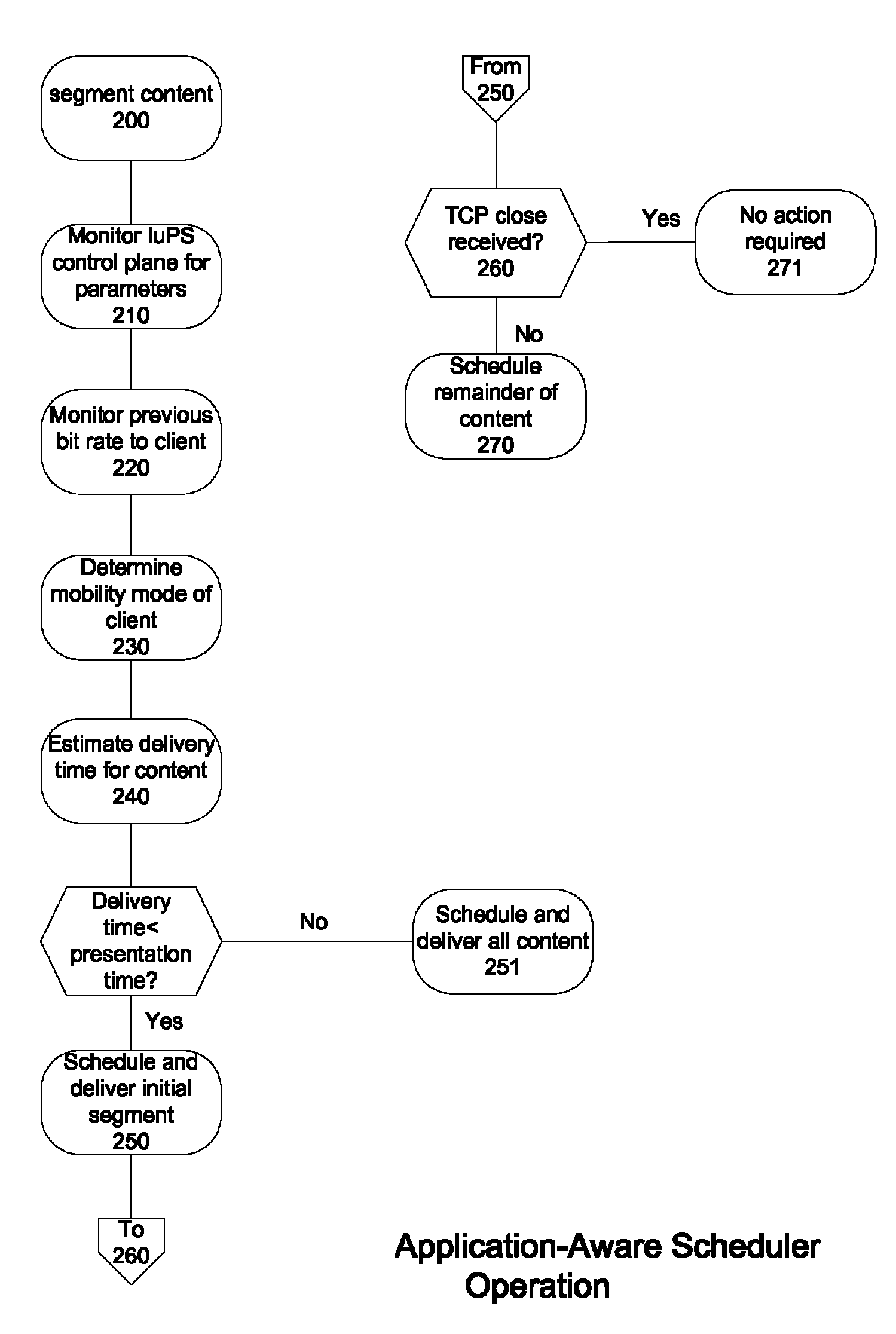

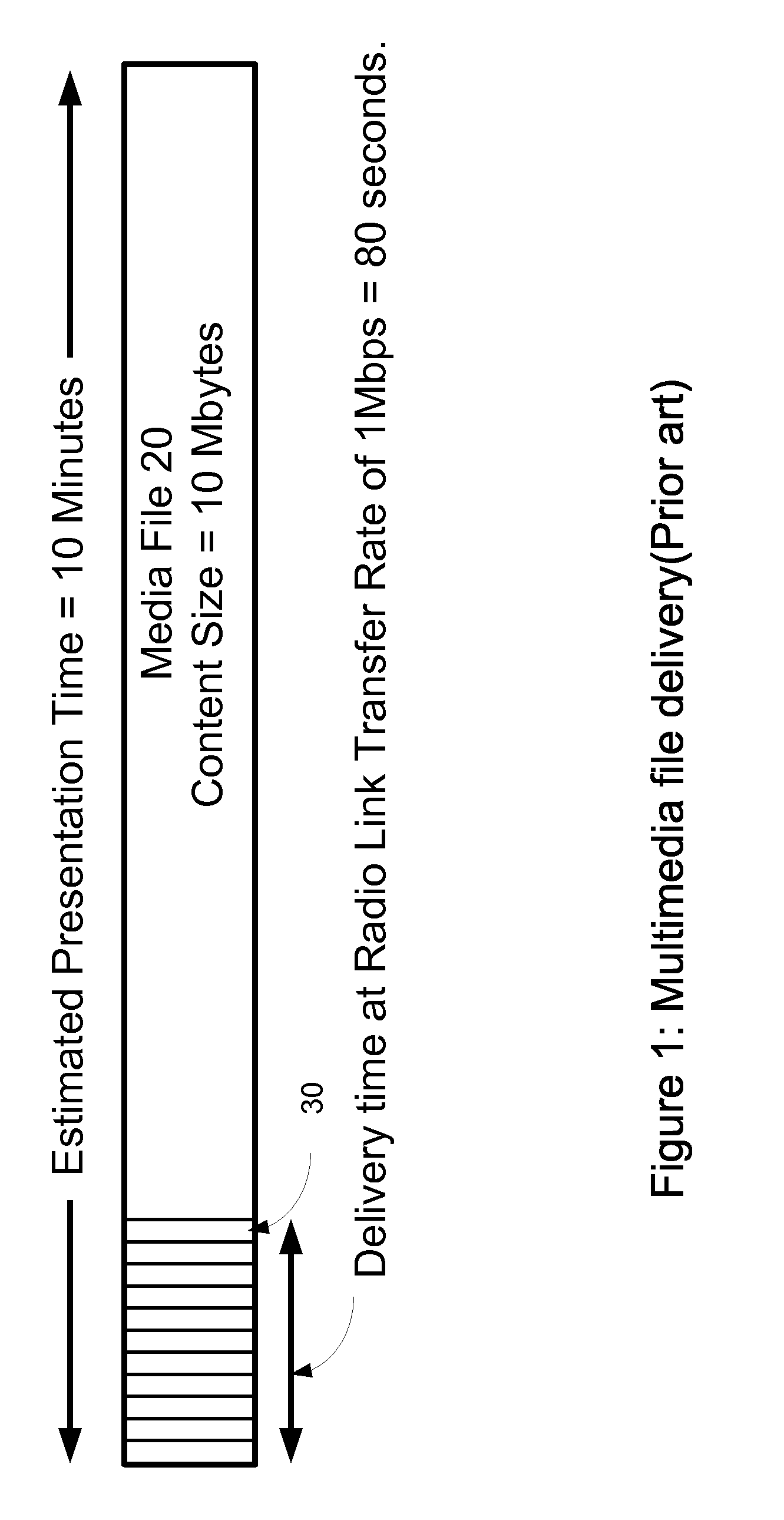

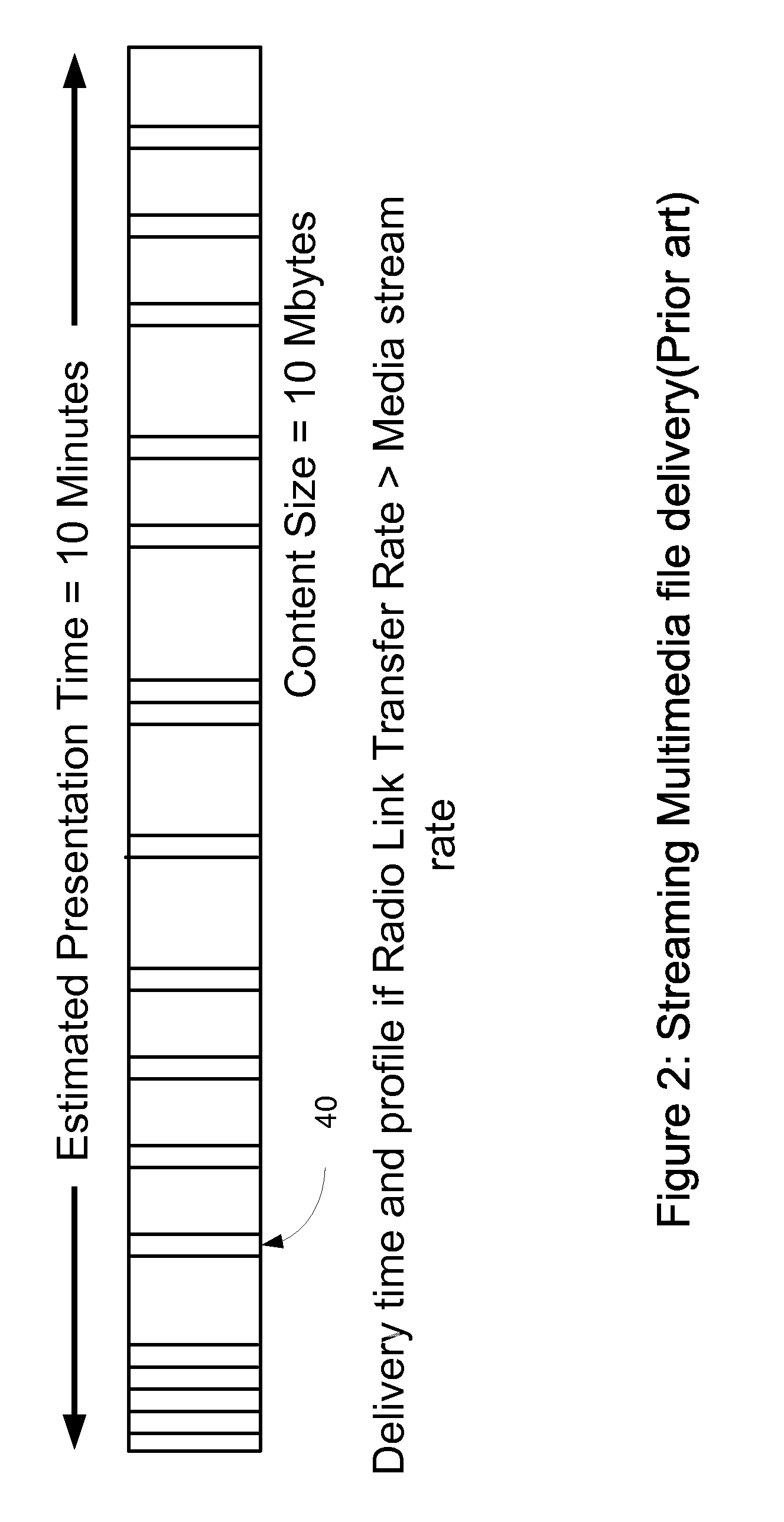

A packet scheduling method and apparatus with the knowledge of application behavior, anticipated usage / behavior based on the type of content, and underlying transport conditions during the time of delivery, is disclosed. This type of scheduling is applicable to a content server or a transit network device in wireless (e.g., 3G, WIMAX, LTE, WIFI) or wire-line networks. Methods for identifying or estimating rendering times of multi-media objects, segmenting a large media content, and automatically pausing or delaying delivery are disclosed. The scheduling reduces transit network bandwidth wastage, and facilitates optimal sharing of network resources such as in a wireless network.

Owner:RIBBON COMM SECURITIES CORP

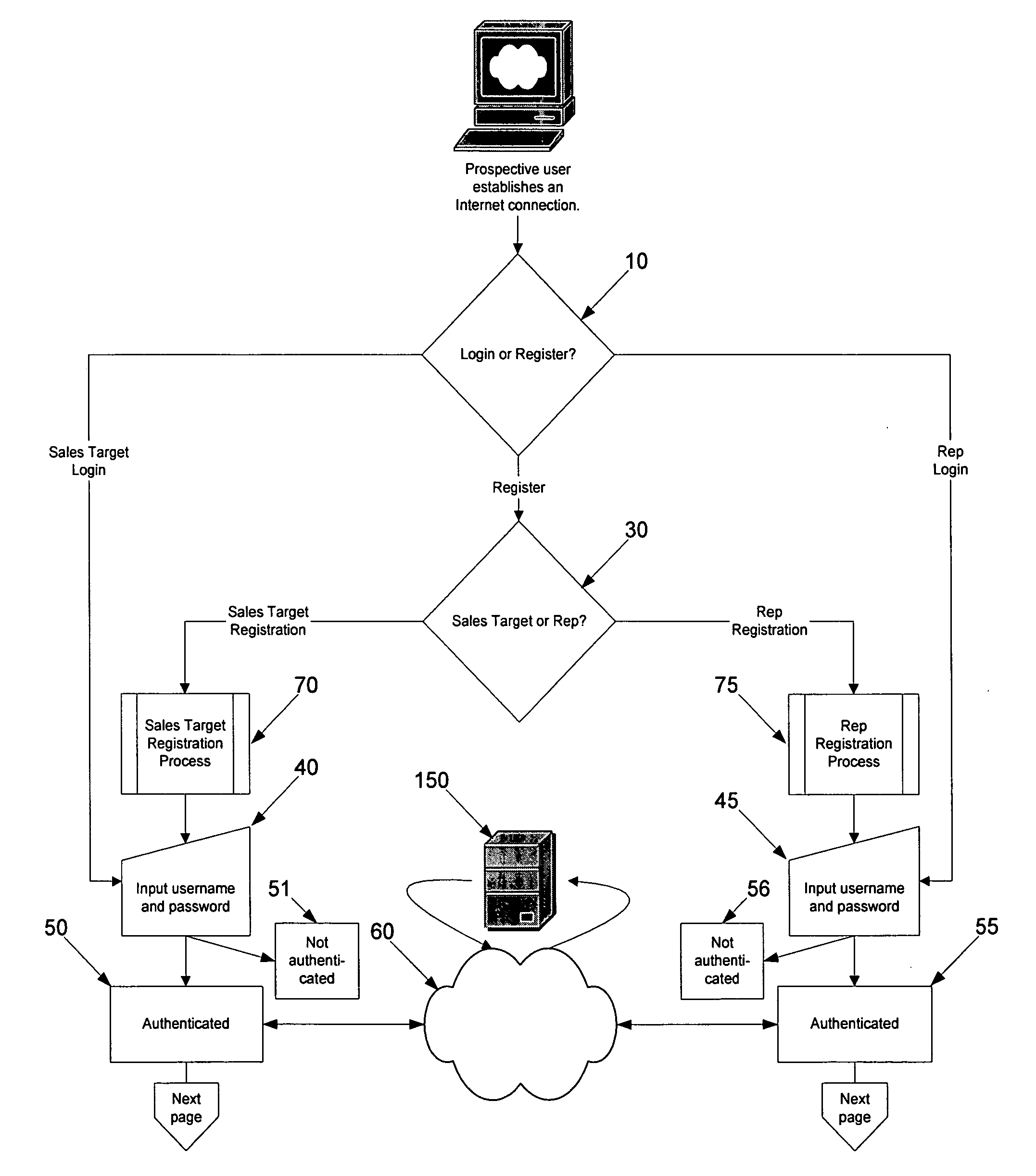

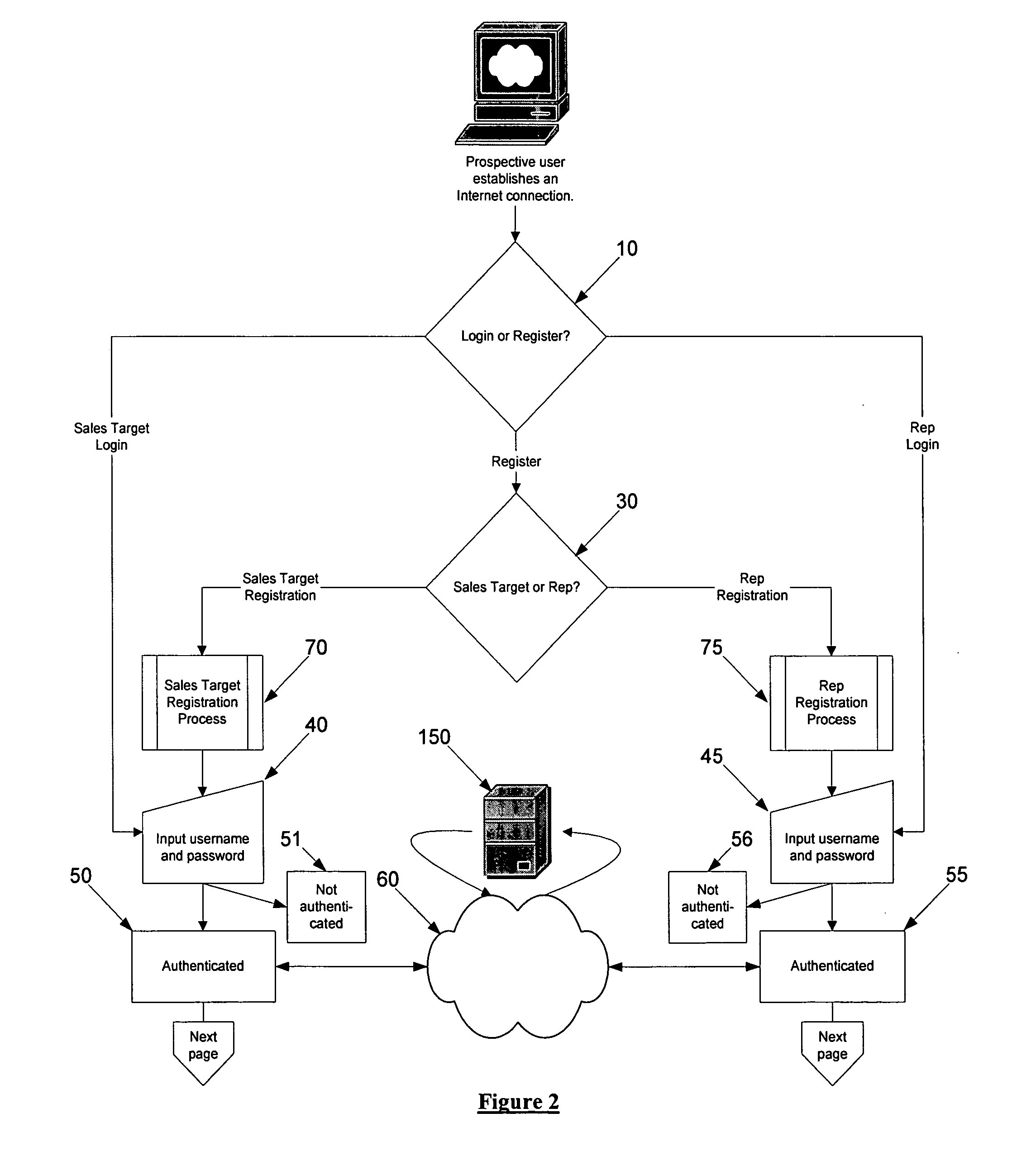

Network scheduler with linked information

InactiveUS20060116918A1Present inventionFlexible designMultiprogramming arrangementsOffice automationComputer scienceDistributed computing

A system and method of scheduling events offered and or created by one or more sales target having a network association with one or more rep and linking information to the event.

Owner:FLORA JOEL L +2

System and method for data transfer with respect to external applications

A hybird Notes / DB2 environment provides a requisition catalog on the Web. Client browsers are connected to a GWA infrastructure including a first network dispatcher and a virtual cluster of Domino.Go servers. The network dispatcher sprays out browser requests among configured .nsf servers in virtual server cluster. Communications from this virtual server cluster are, in turn, dispatched by a second network dispatcher servers in a Domino cluster. External objects, primarily for a GUI, are served in a .dfs and include graphic files, Java files, HTML images and net.data macros. The catalog is built from supplier provided flat files. A front end is provided for business logic and validation, as also is a relation database backend. HTML forms are populated using relational database agents. A role table is used for controlling access both to Notes code and DB2 data. Large amounts of data is quickly transferred using an intermediate agent and window.

Owner:KYNDRYL INC

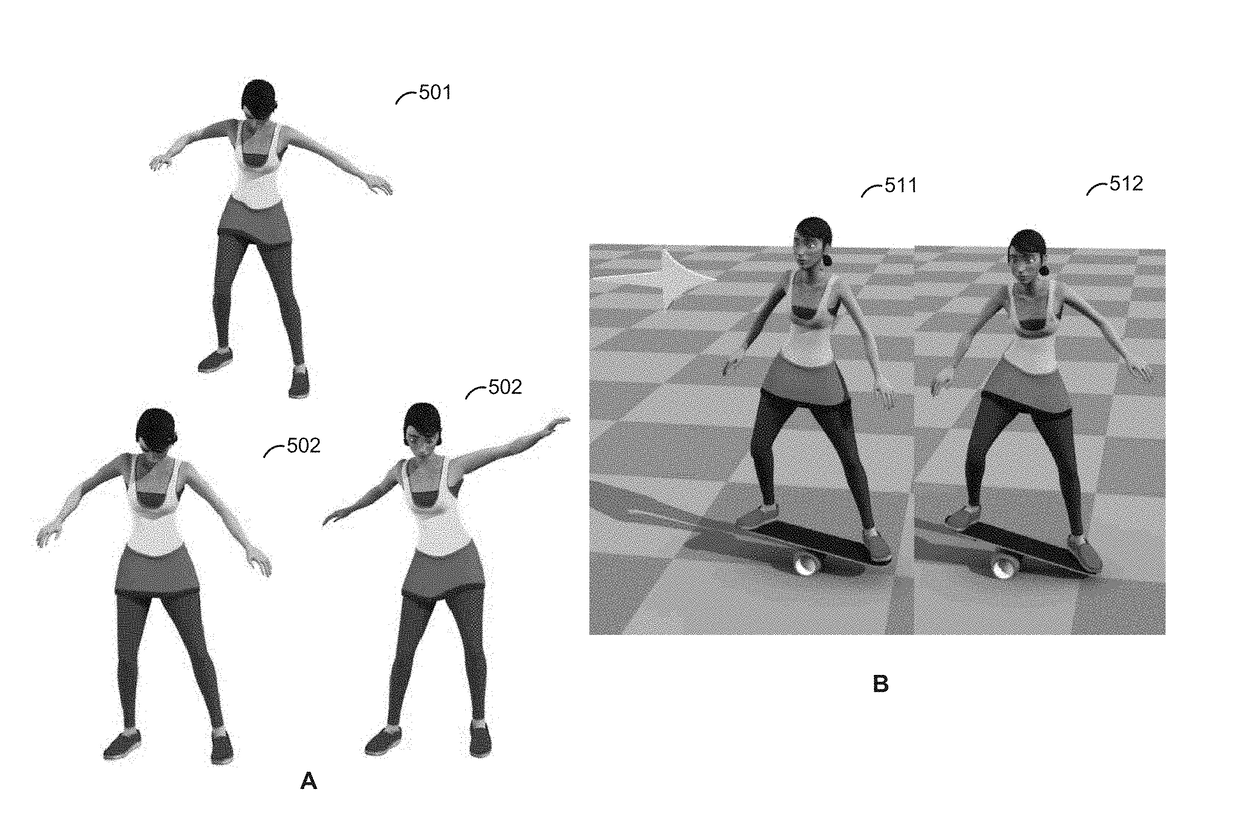

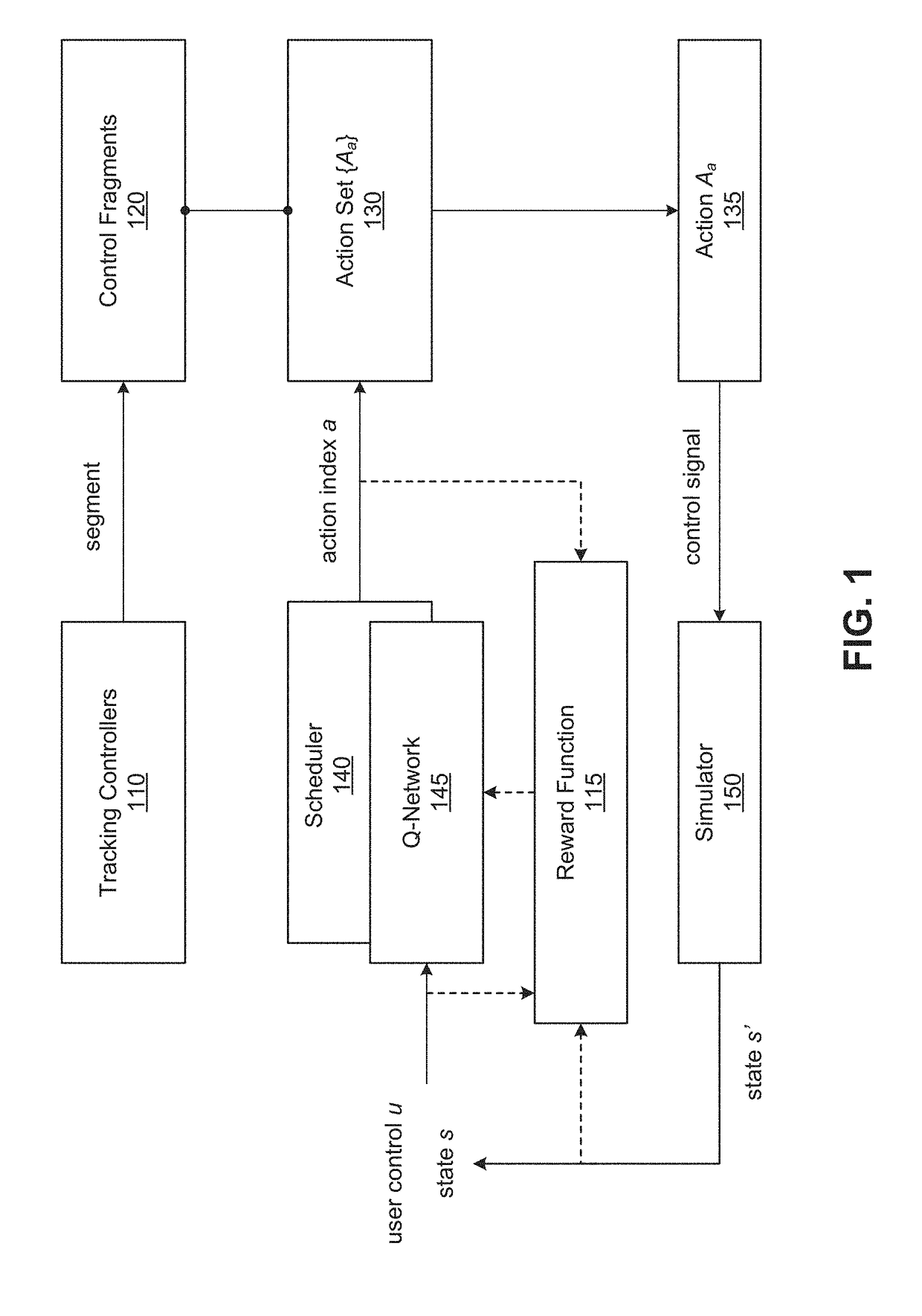

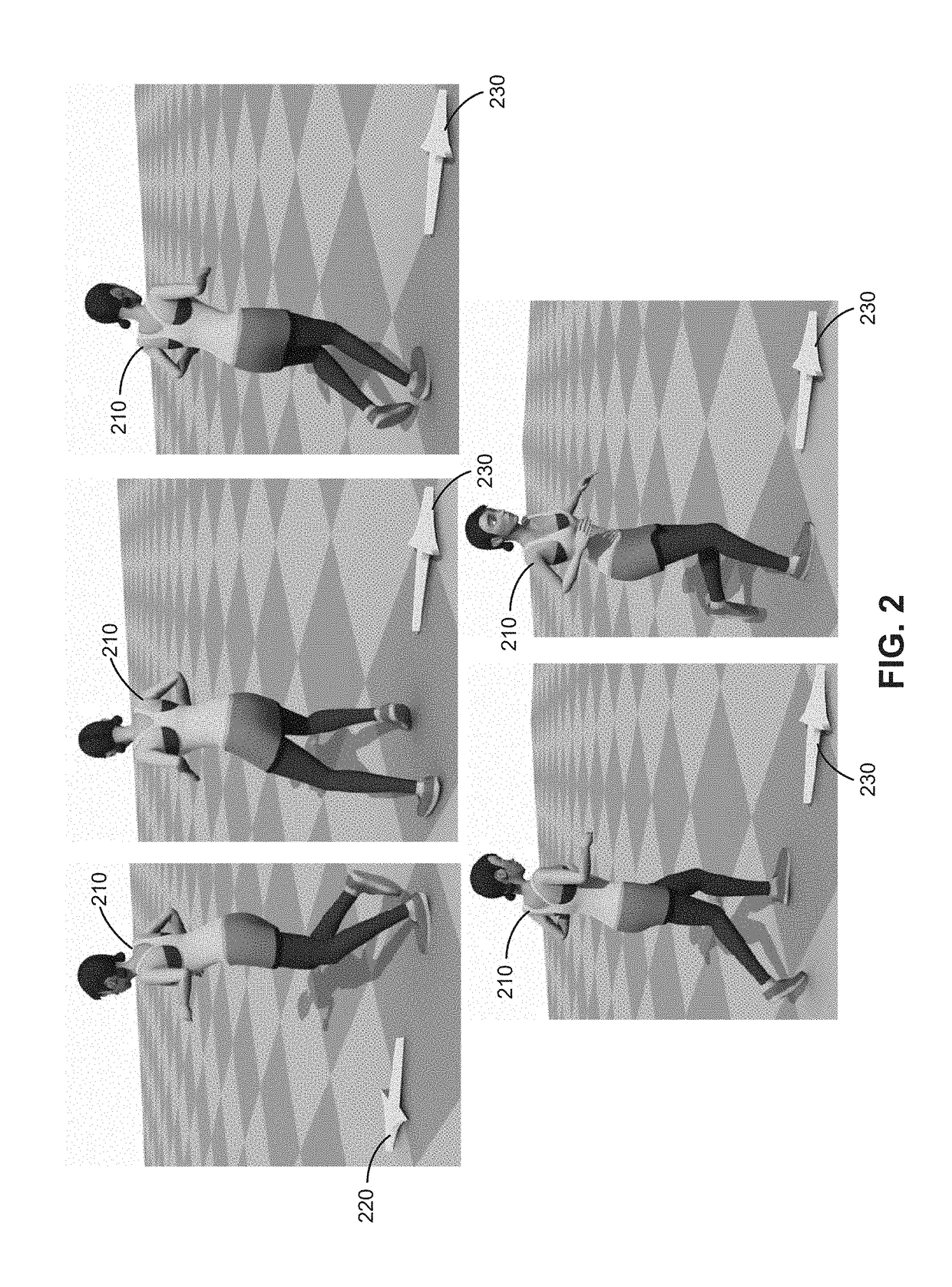

Learning to schedule control fragments for physics-based character simulation and robots using deep q-learning

The disclosure provides an approach for learning to schedule control fragments for physics-based virtual character simulations and physical robot control. Given precomputed tracking controllers, a simulation application segments the controllers into control fragments and learns a scheduler that selects control fragments at runtime to accomplish a task. In one embodiment, each scheduler may be modeled with a Q-network that maps a high-level representation of the state of the simulation to a control fragment for execution. In such a case, the deep Q-learning algorithm applied to learn the Q-network schedulers may be adapted to use a reward function that prefers the original controller sequence and an exploration strategy that gives more chance to in-sequence control fragments than to out-of-sequence control fragments. Such a modified Q-learning algorithm learns schedulers that are capable of following the original controller sequence most of the time while selecting out-of-sequence control fragments when necessary.

Owner:DISNEY ENTERPRISES INC

System and method for front end business logic and validation

A hybird Notes / DB2 environment provides a requisition catalog on the Web. Client browsers are connected to a GWA infrastructure including a first network dispatcher and a virtual cluster of Domino.Go servers. The network dispatcher sprays out browser requests among configured .nsf servers in virtual server cluster. Communications from this virtual server cluster are, in turn, dispatched by a second network dispatcher servers in a Domino cluster. External objects, primarily for a GUI, are served in a .dfs and include graphic files, Java files, HTML images and net.data macros. The catalog is built from supplier provided flat files. A front end is provided for business logic and validation, as also is a relation database backend. HTML forms are populated using relational database agents. A role table is used for controlling access both to Notes code and DB2 data. Large amounts of data is quickly transferred using an intermediate agent and window.

Owner:PAYPAL INC

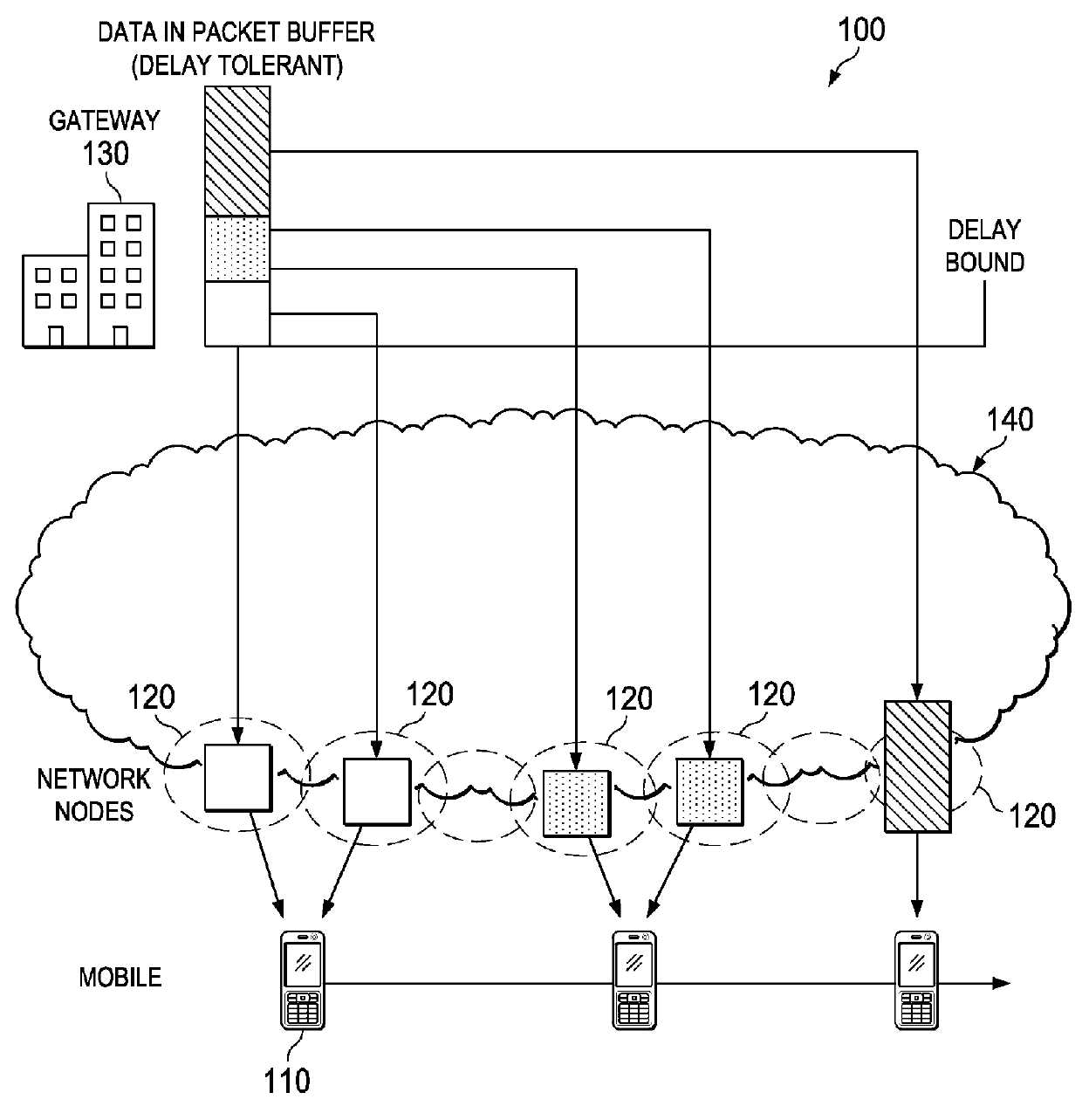

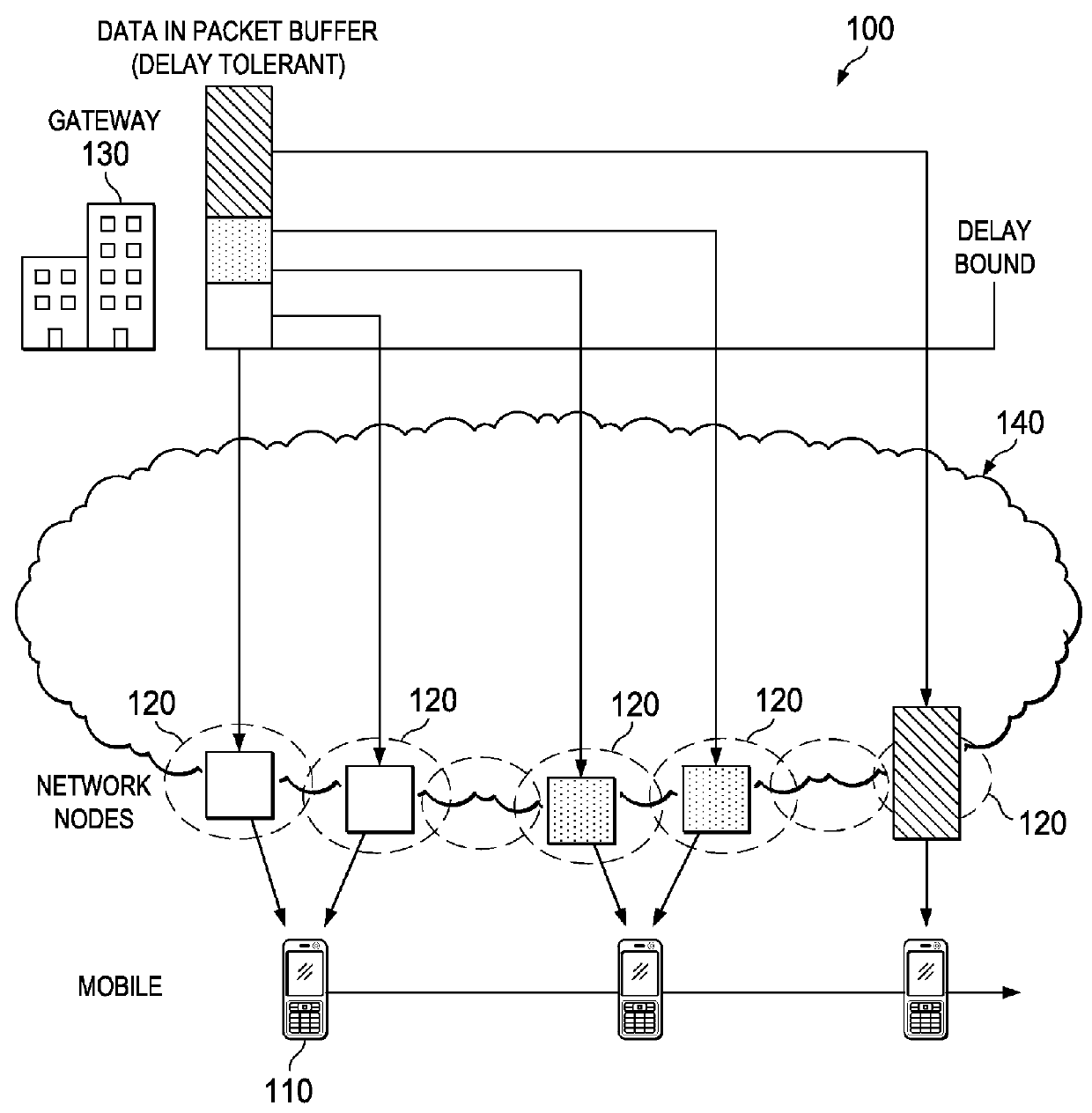

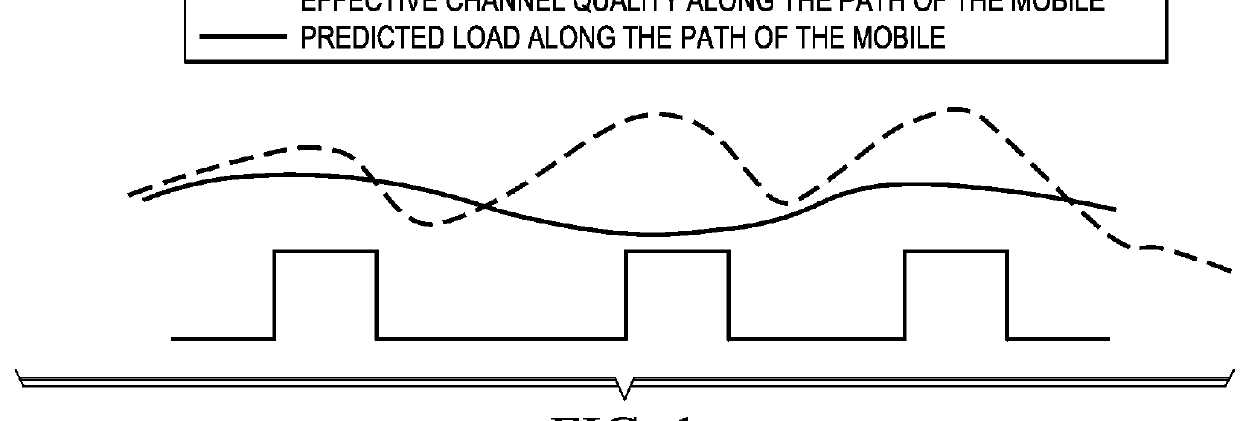

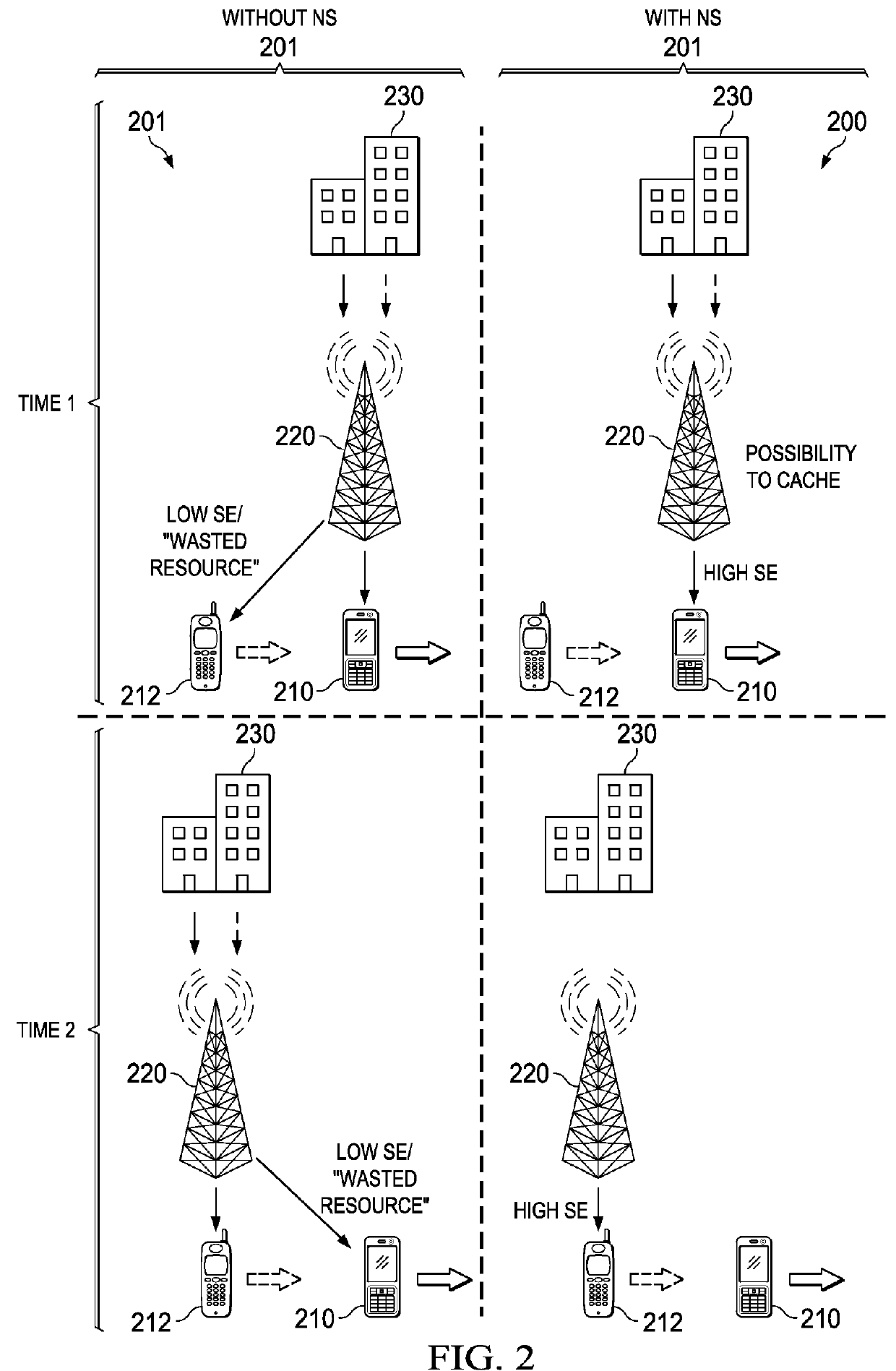

System and Method for a Location Prediction-Based Network Scheduler

Embodiments are provided for traffic scheduling based on user equipment (UE) in wireless networks. A location prediction-based network scheduler (NS) interfaces with a traffic engineering (TE) function to enable location-prediction-based routing for UE traffic. The NS obtains location prediction information for a UE for a next time window comprising a plurality of next time slots, and obtains available network resource prediction for the next time slots. The NS then determines, for each of the next time slots, a weight value as a priority parameter for forwarding data to the UE, in accordance with the location prediction information and the available network resource prediction. The result for the first time slot is then forwarded from the NS to the TE function, which optimizes, for the first time slot, the weight value with a route and data for forwarding the data to the UE.

Owner:HUAWEI TECH CO LTD

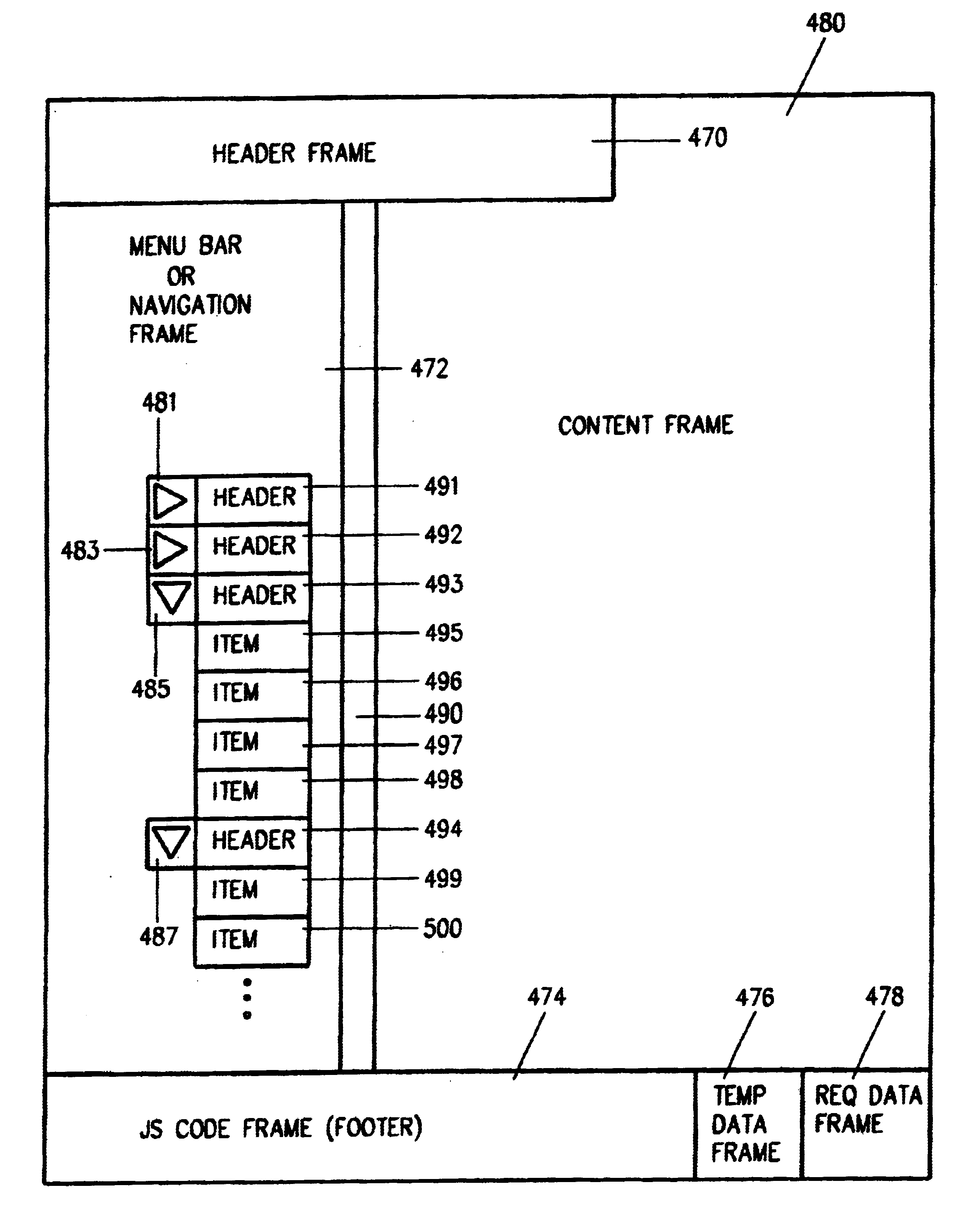

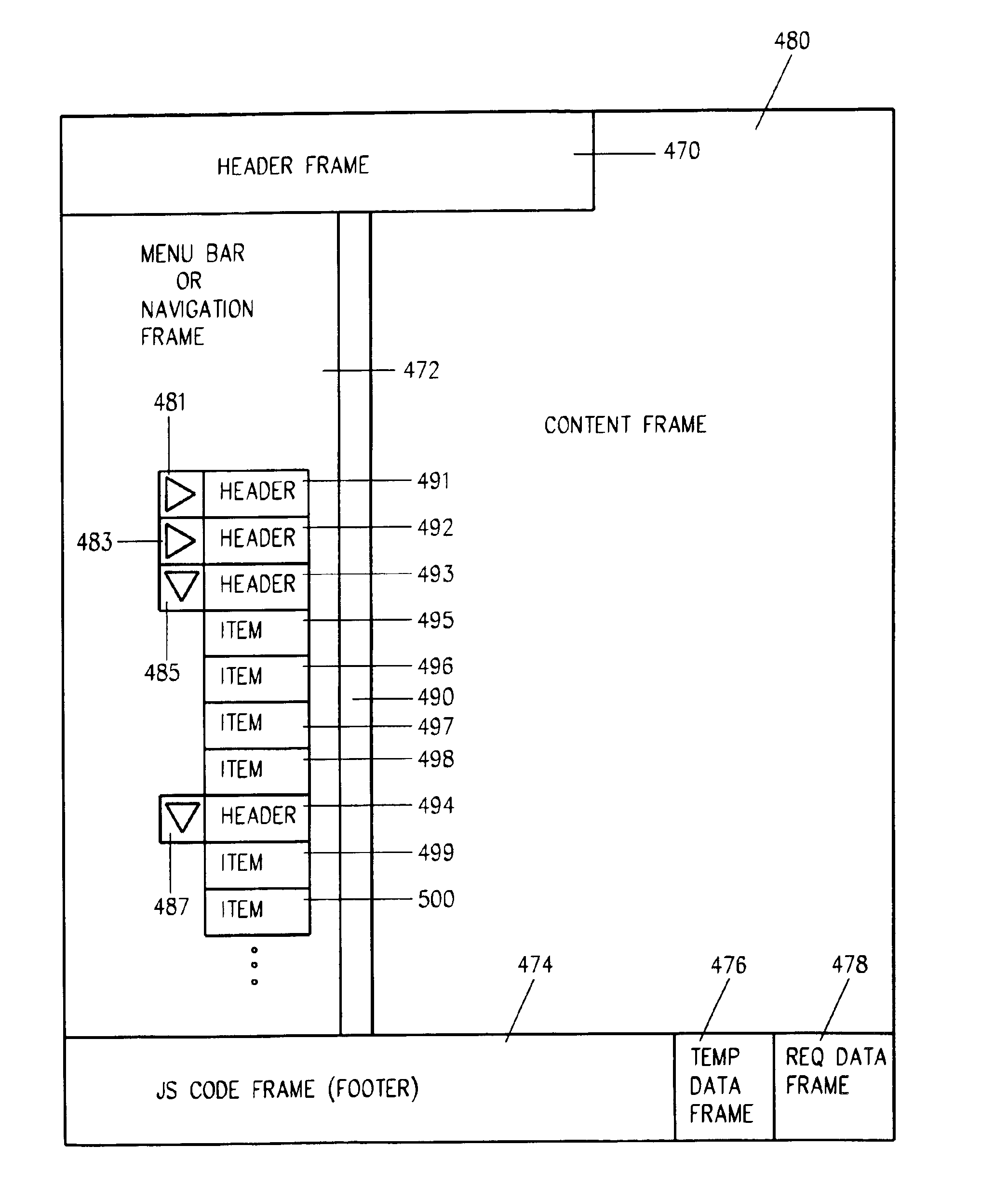

System and method for providing an application navigator client menu side bar

InactiveUS6886134B1Choose accuratelyCathode-ray tube indicatorsWebsite content managementGraphicsRelational database

A hybird Notes / DB2 environment provides a requisition catalog on the Web. Client browsers are connected to a GWA infrastructure including a first network dispatcher and a virtual cluster of Domino.Go servers. The network dispatcher sprays out browser requests among configured .nsf servers in virtual server cluster. Communications from this virtual server cluster are, in turn, dispatched by a second network dispatcher in a Domino cluster. External objects, primarily for a GUI, are served in a .dfs and include graphic files, Java files, HTML images and net.data macros. The catalog is built from supplier provided flat files. A front end is provided for business logic and validation, as also is a relation database backend. HTML forms are populated using relational database agents. A role table is used for controlling access both to Notes code and DB2 data. Large amounts of data are quickly transferred using an intermediate agent and window.

Owner:IBM CORP

System and method for front end business logic and validation

A hybird Notes / DB2 environment provides a requisition catalog on the Web. Client browsers are connected to a GWA infrastructure including a first network dispatcher and a virtual cluster of Domino.Go servers. The network dispatcher sprays out browser requests among configured .nsf servers in virtual server cluster. Communications from this virtual server cluster are, in turn, dispatched by a second network dispatcher servers in a Domino cluster. External objects, primarily for a GUI, are served in a .dfs and include graphic files, Java files, HTML images and net.data macros. The catalog is built from supplier provided flat files. A front end is provided for business logic and validation, as also is a relation database backend. HTML forms are populated using relational database agents. A role table is used for controlling access both to Notes code and DB2 data. Large amounts of data is quickly transferred using an intermediate agent and window.

Owner:PAYPAL INC

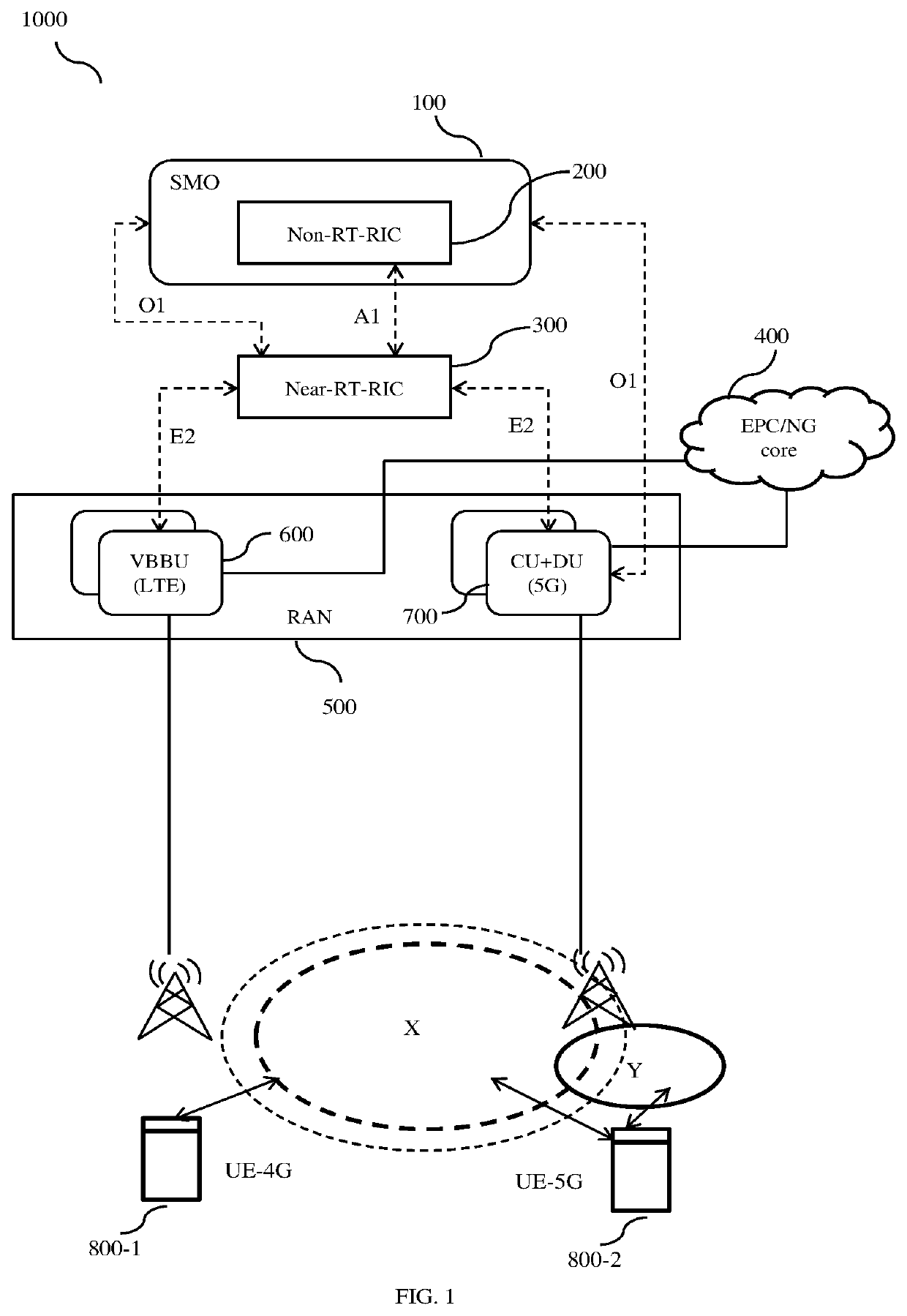

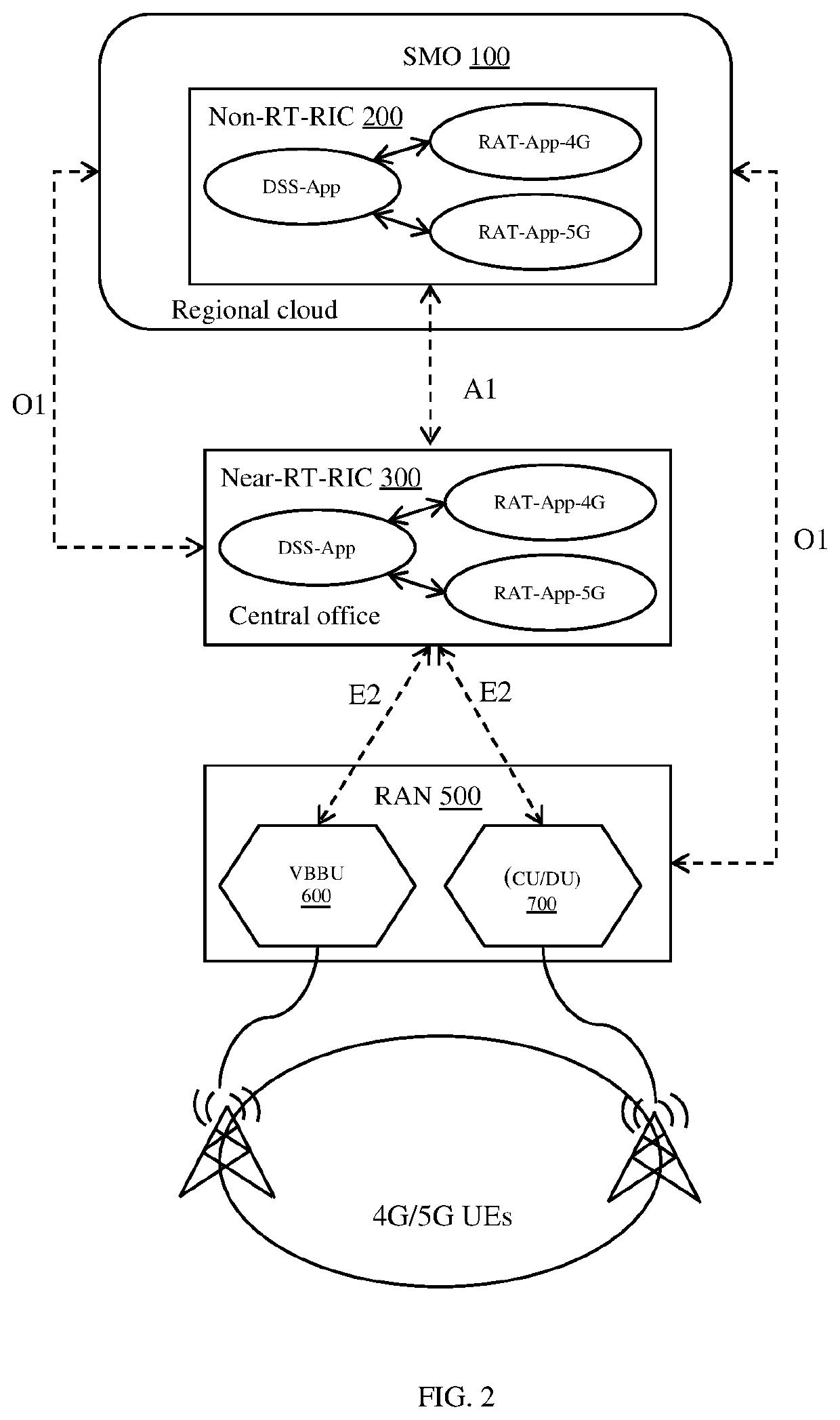

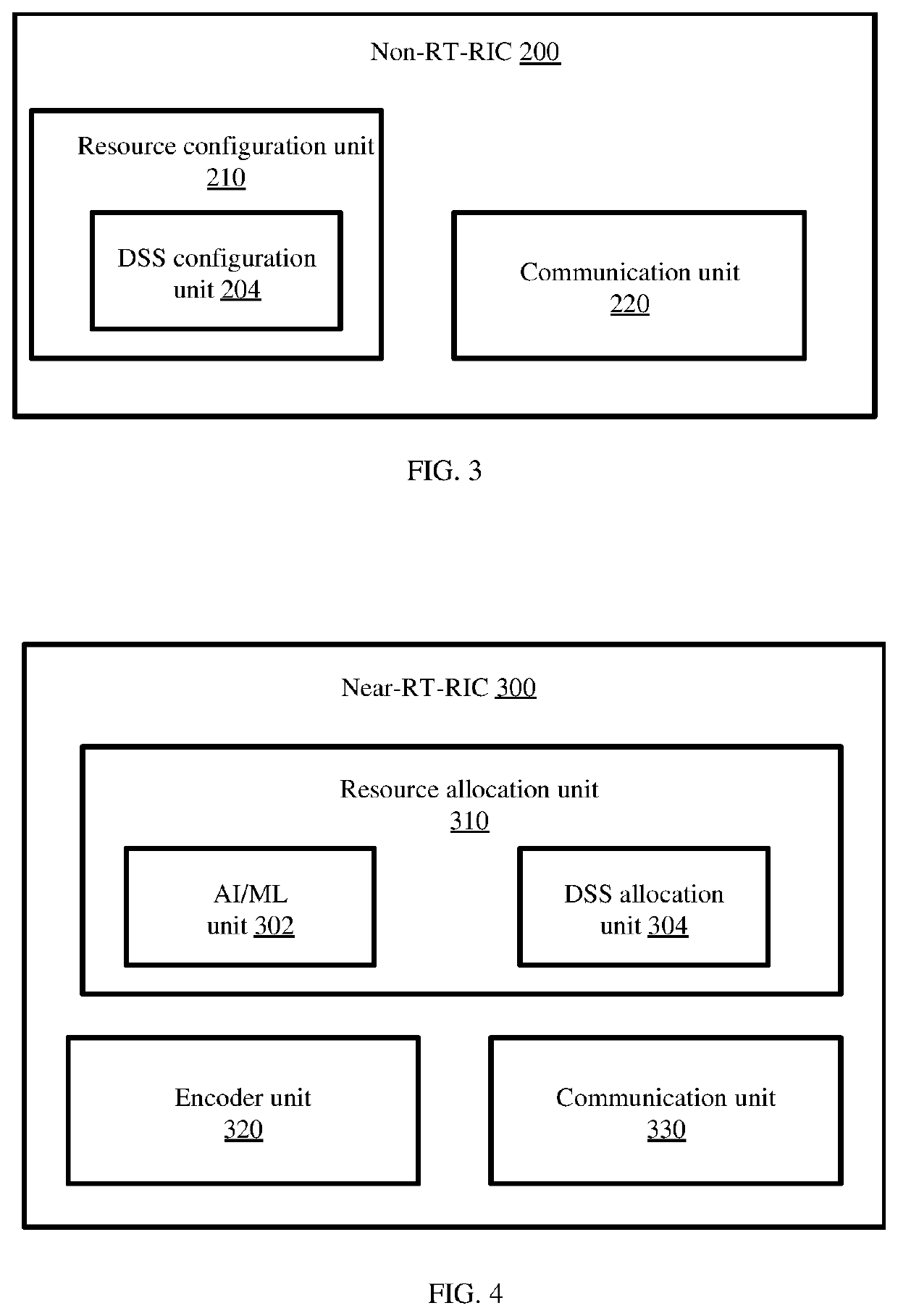

Method and apparatus for dynamically allocating radio resources in a wireless communication system

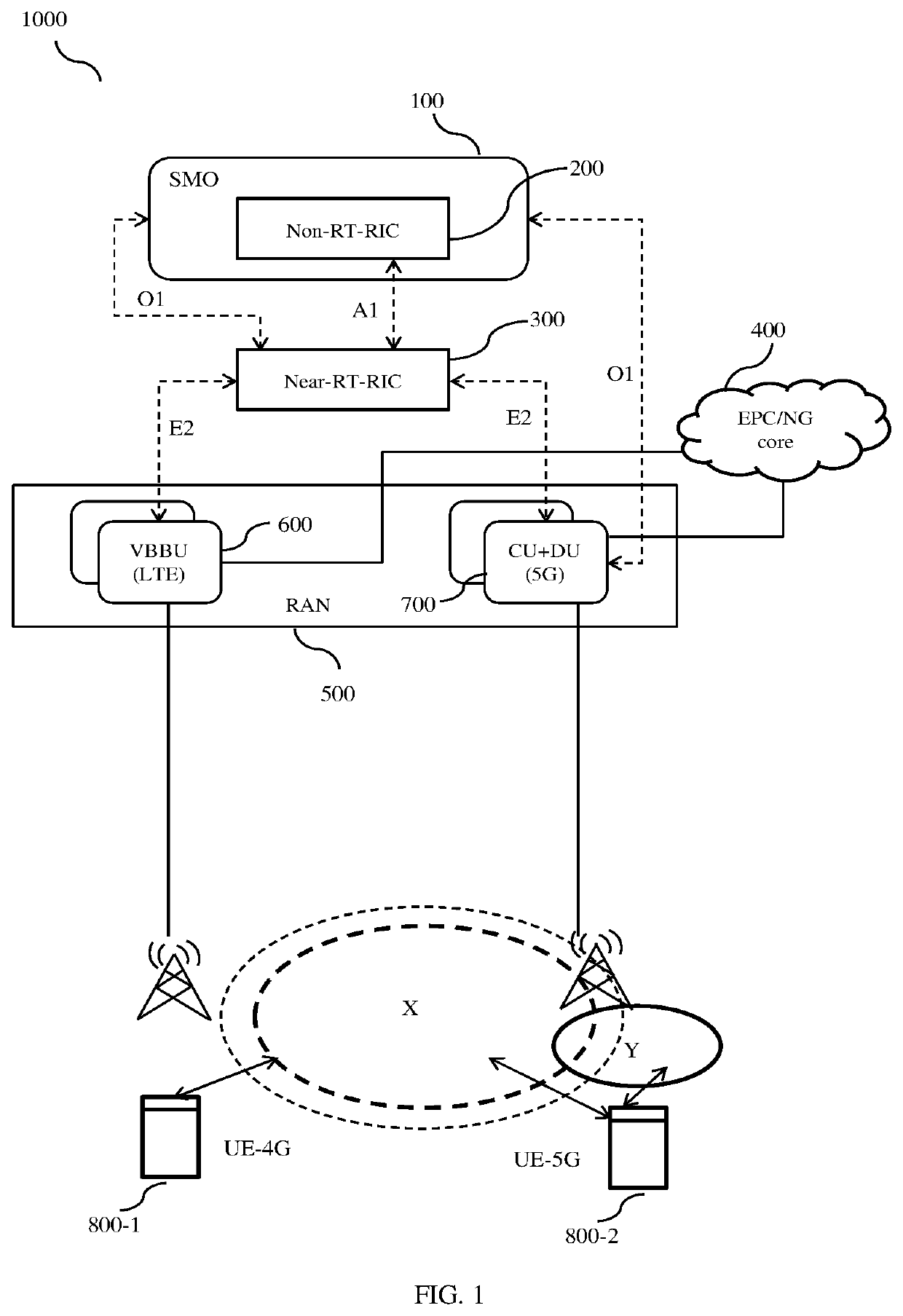

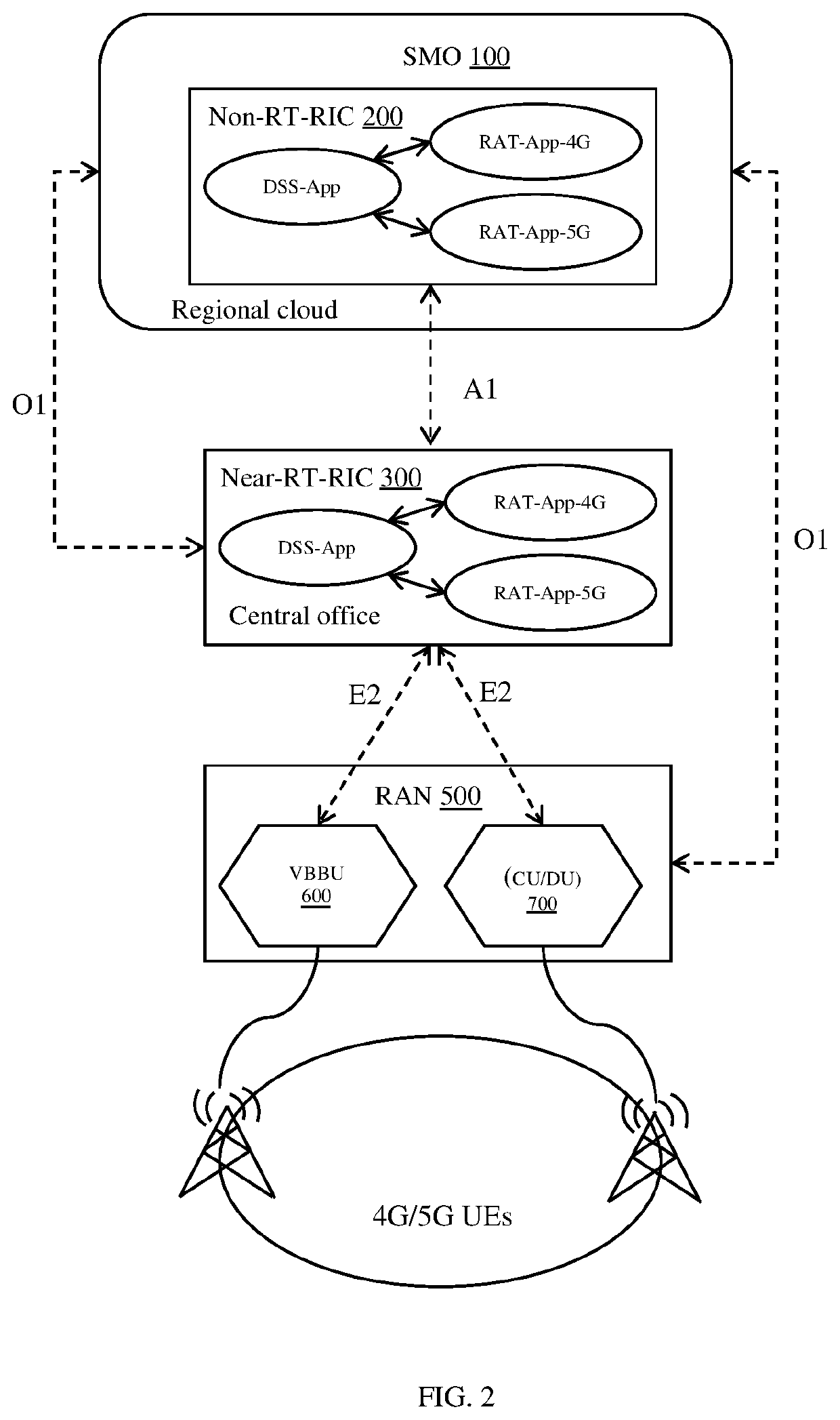

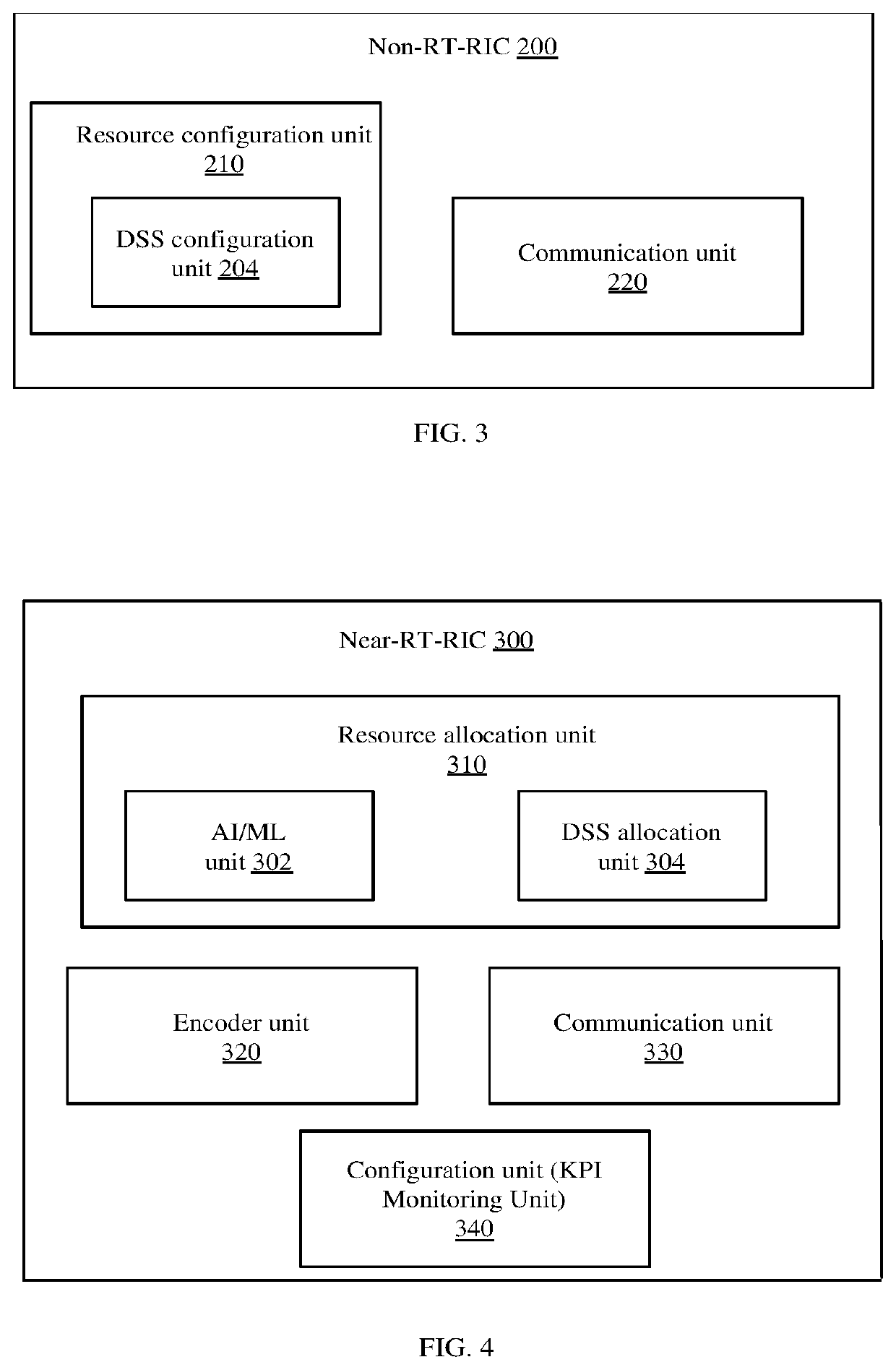

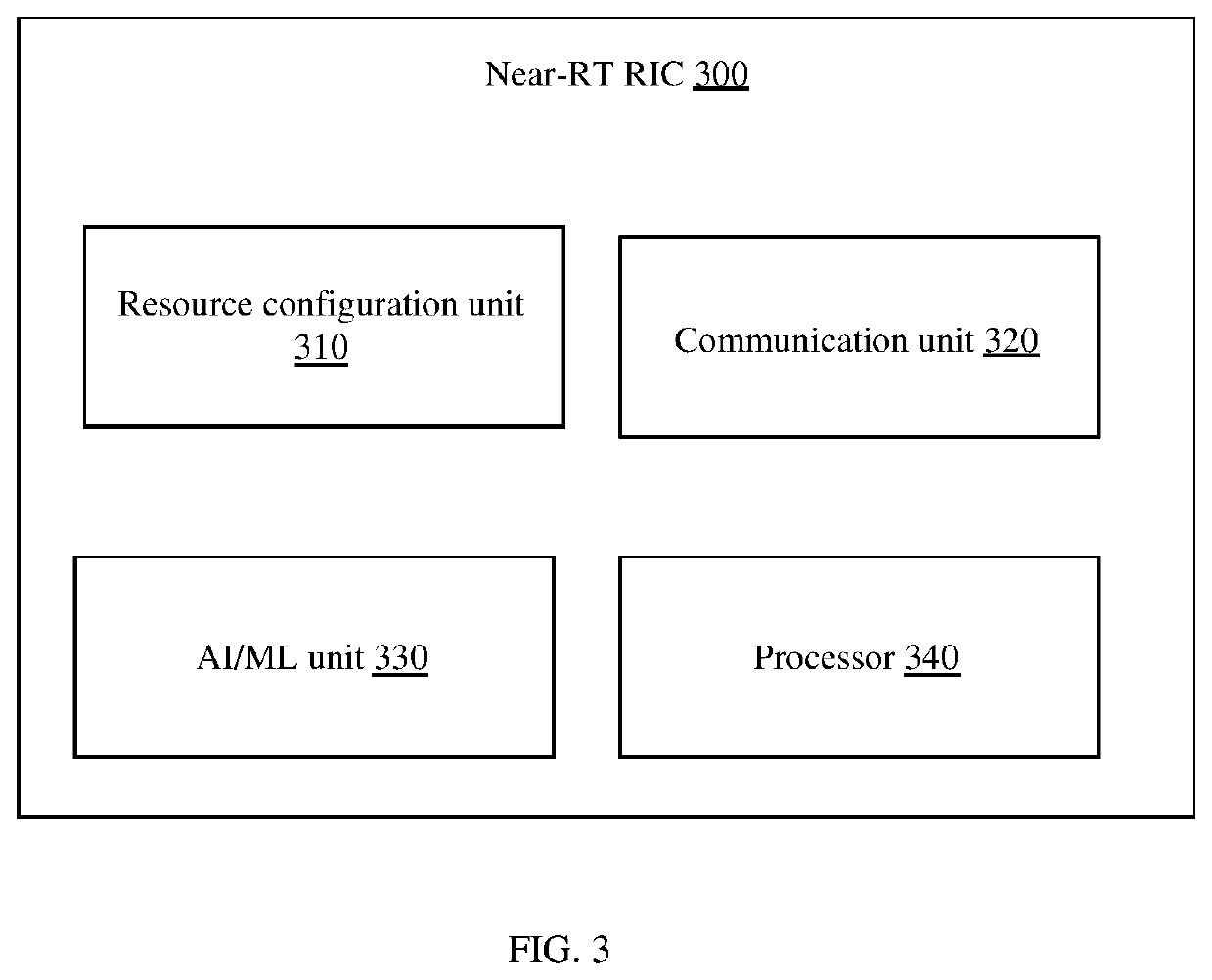

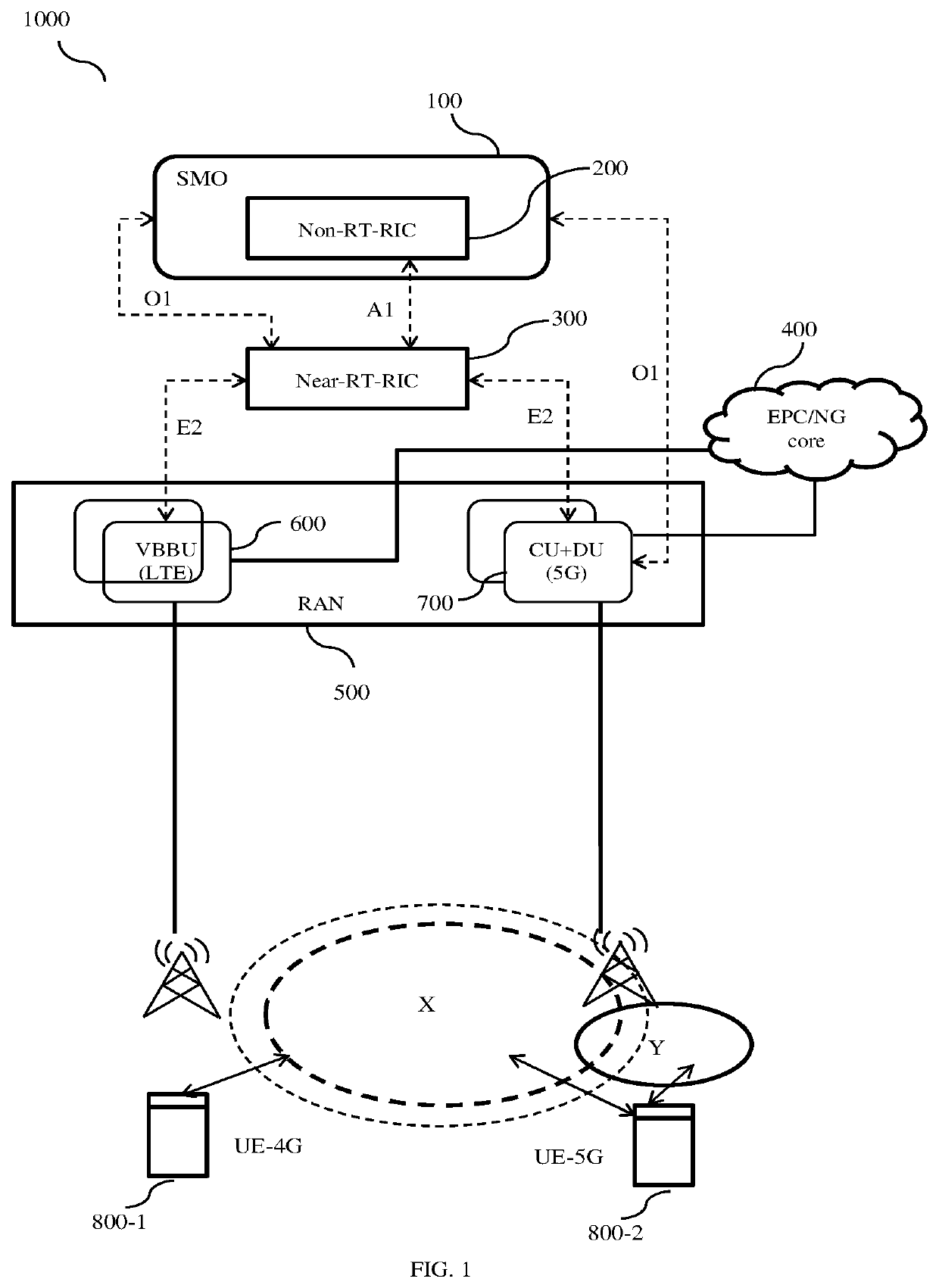

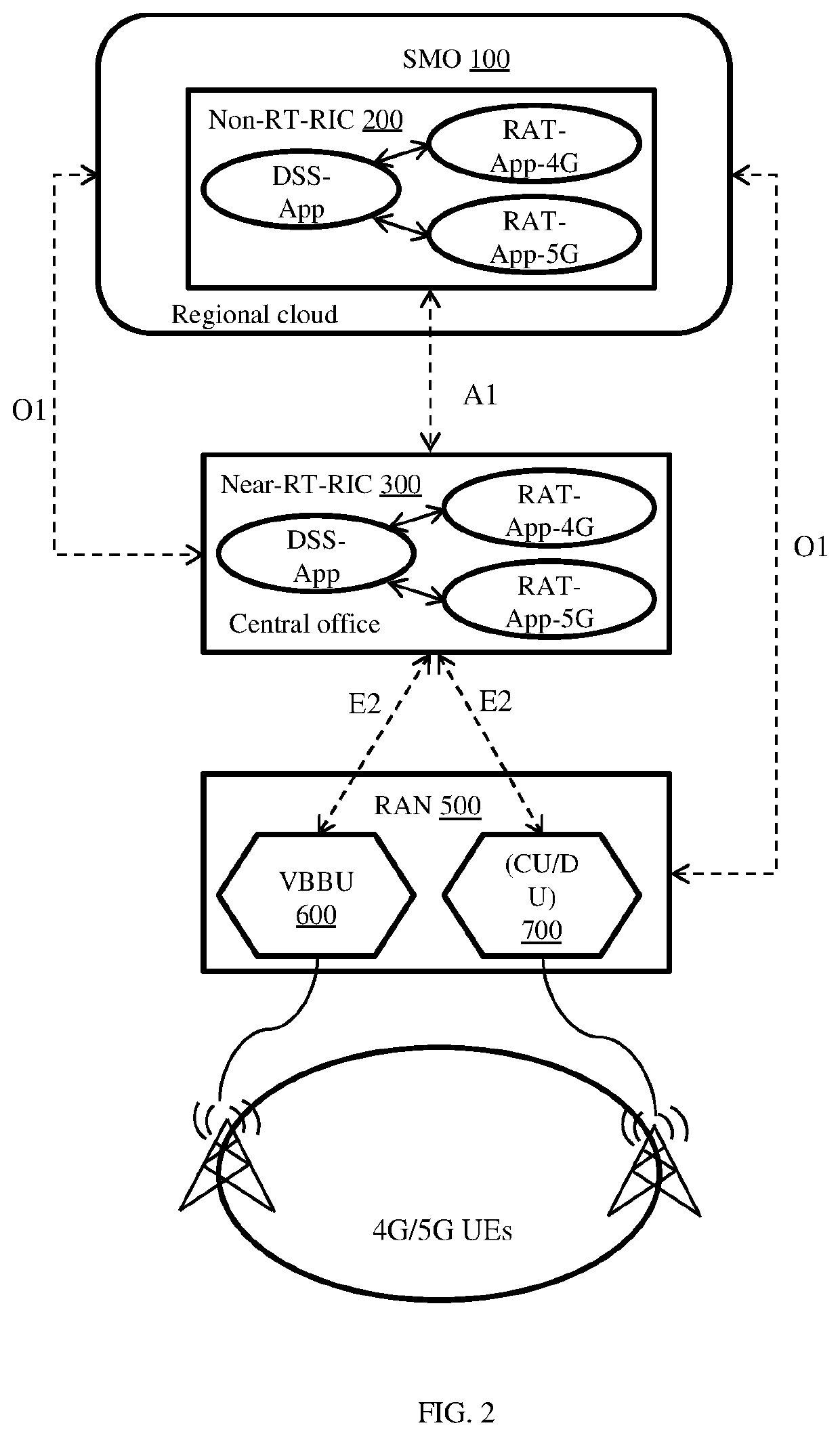

InactiveUS20210235277A1Improved centralized resource allocation-based spectrum sharingNetwork planningCommunications systemResource assignment

A method and apparatus for providing dynamic allocation of radio resources in a wireless communication system is disclosed. The method includes receiving, by a first controller from a second controller, a dynamic spectrums sharing (DSS) policy configuration message, wherein the DSS policy configuration message comprising a resource allocation proportion between a type one network scheduler and a type two network scheduler. The method further includes receiving, by the first controller from the type one network scheduler and the type two network scheduler, a plurality of report messages and a plurality of key performance indicators (KPIs). The method further includes assigning, by the first controller to the type one network scheduler and the type two network scheduler, a plurality of physical resource blocks (PRBs) based on the DSS policy configuration message, the plurality of report messages and the plurality of KPIs.

Owner:STERLITE TECHNOLOGIES

Application, usage and radio link aware transport network scheduler

ActiveUS8717890B2Reduce wasteFacilitates optimal sharingError preventionFrequency-division multiplex detailsTransit networkThird generation

A packet scheduling method and apparatus with the knowledge of application behavior, anticipated usage / behavior based on the type of content, and underlying transport conditions during the time of delivery, is disclosed. This type of scheduling is applicable to a content server or a transit network device in wireless (e.g., 3G, WIMAX, LTE, WIFI) or wire-line networks. Methods for identifying or estimating rendering times of multi-media objects, segmenting a large media content, and automatically pausing or delaying delivery are disclosed. The scheduling reduces transit network bandwidth wastage, and facilitates optimal sharing of network resources such as in a wireless network.

Owner:RIBBON COMM SECURITIES CORP

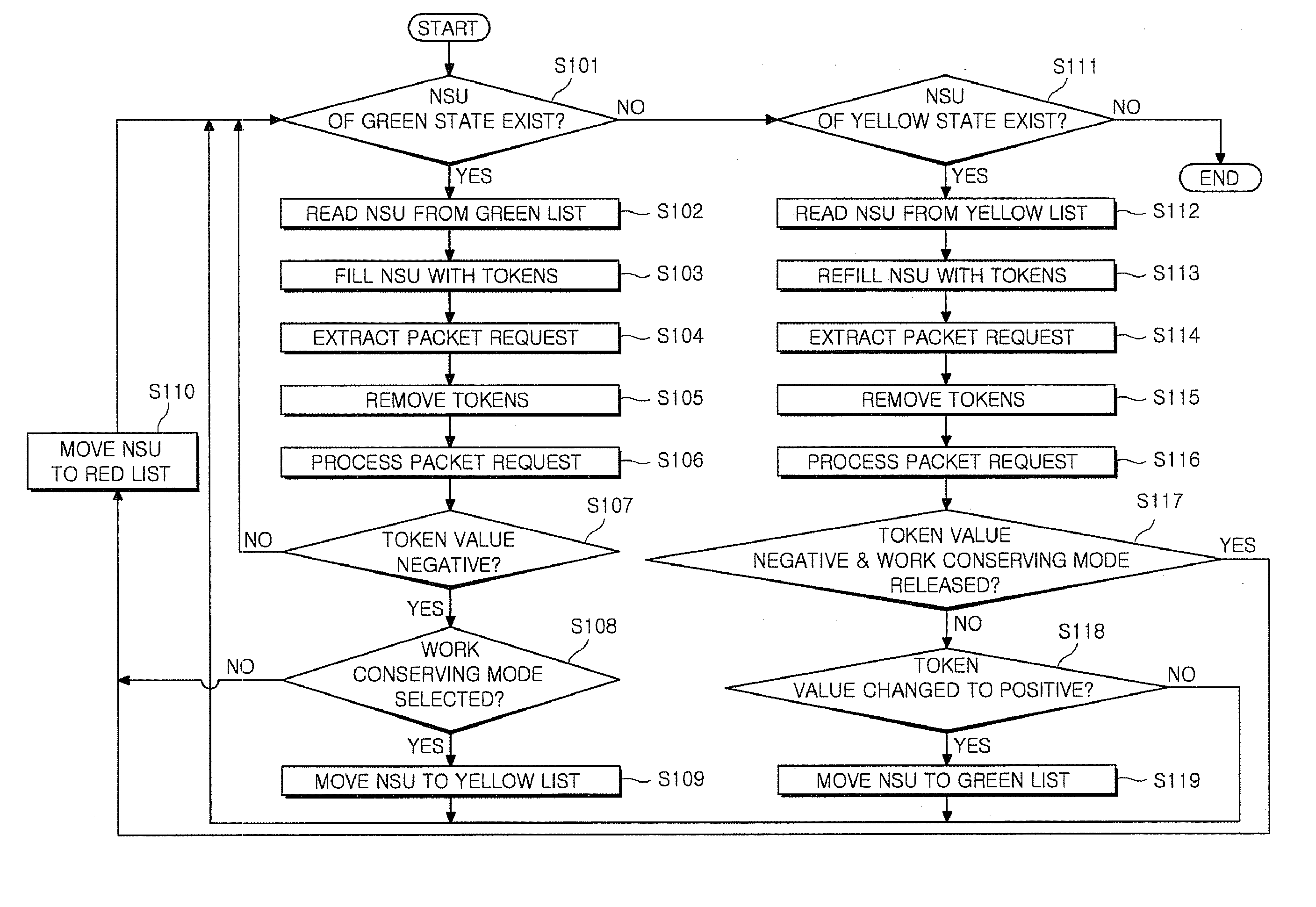

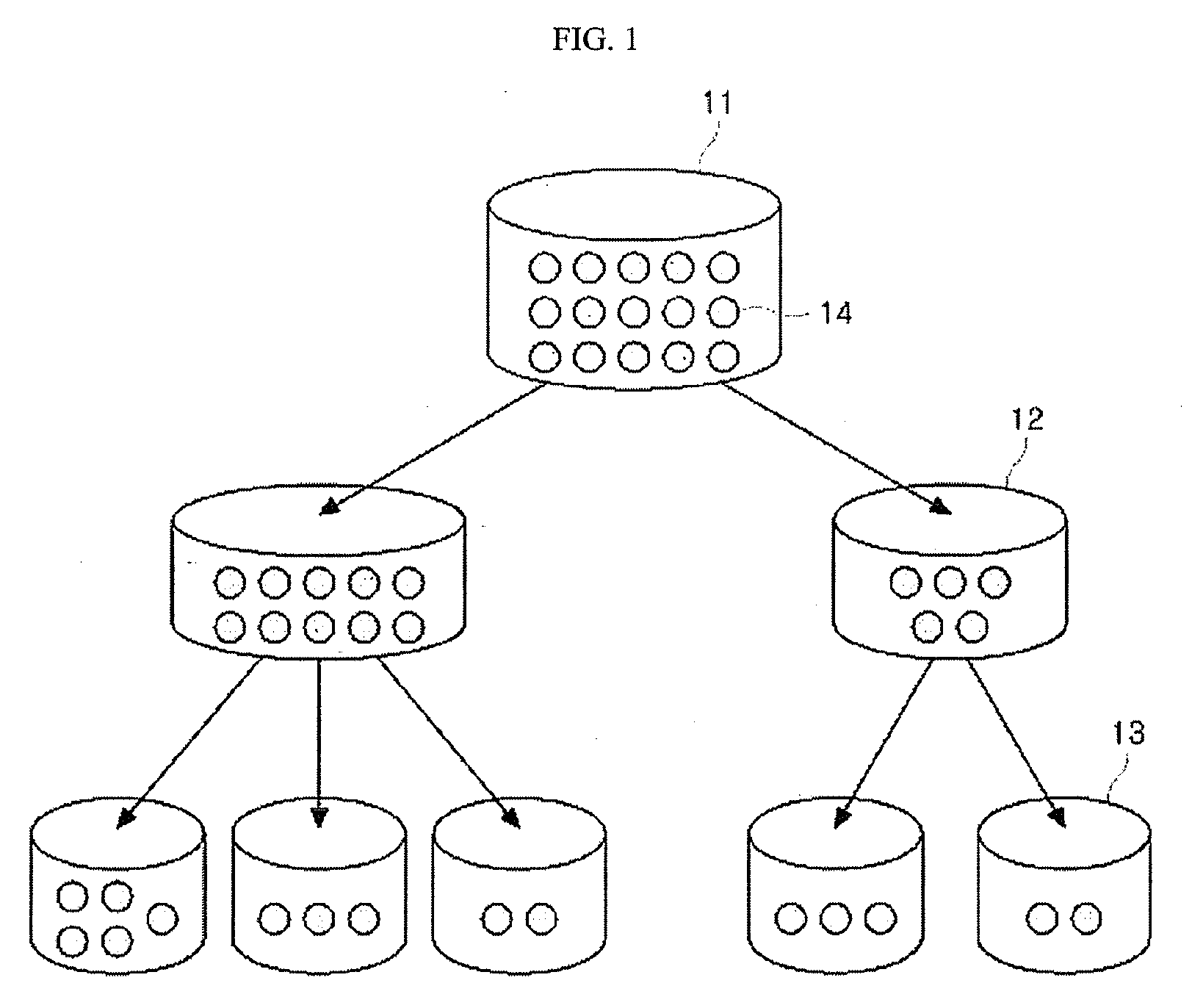

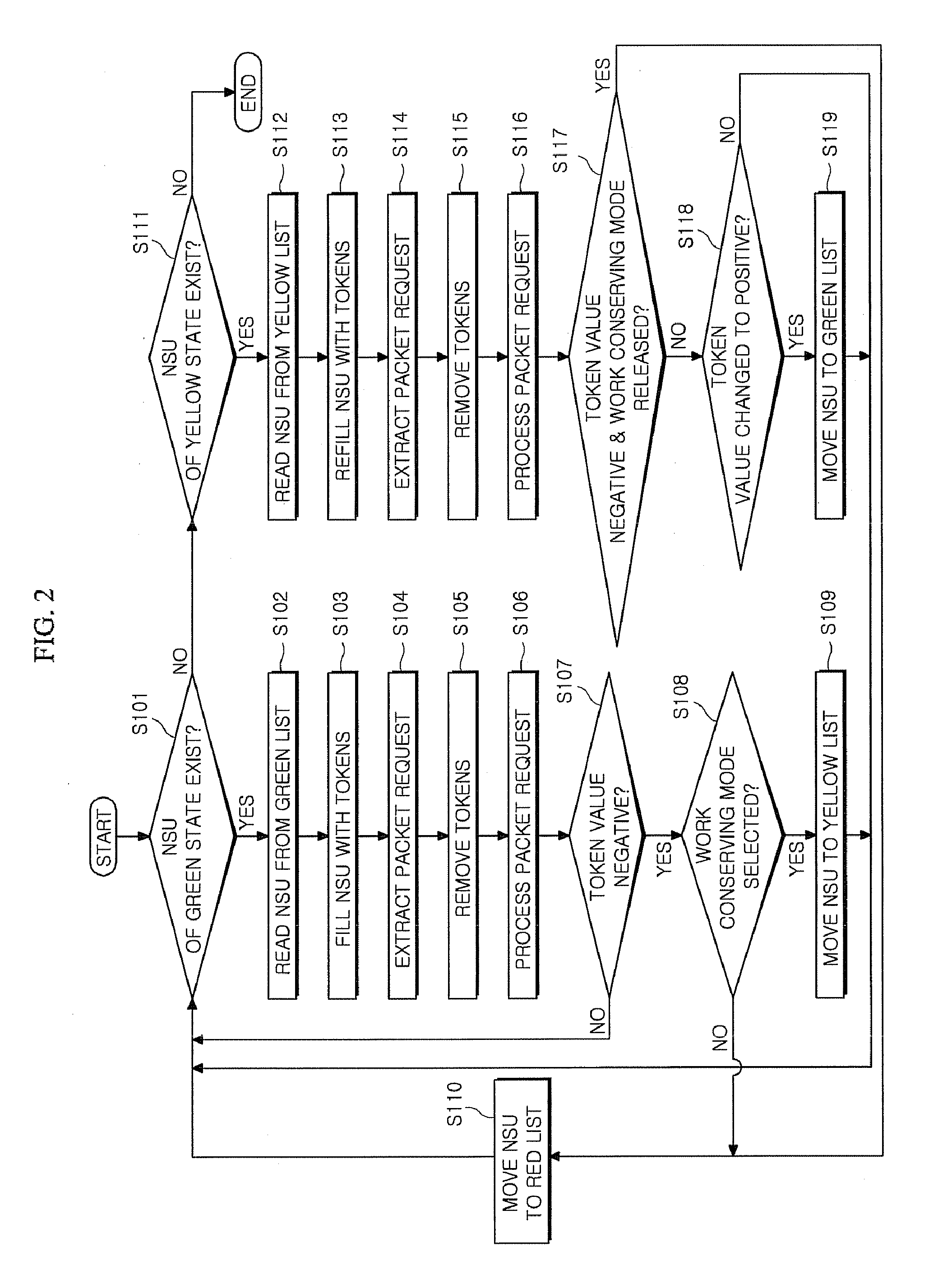

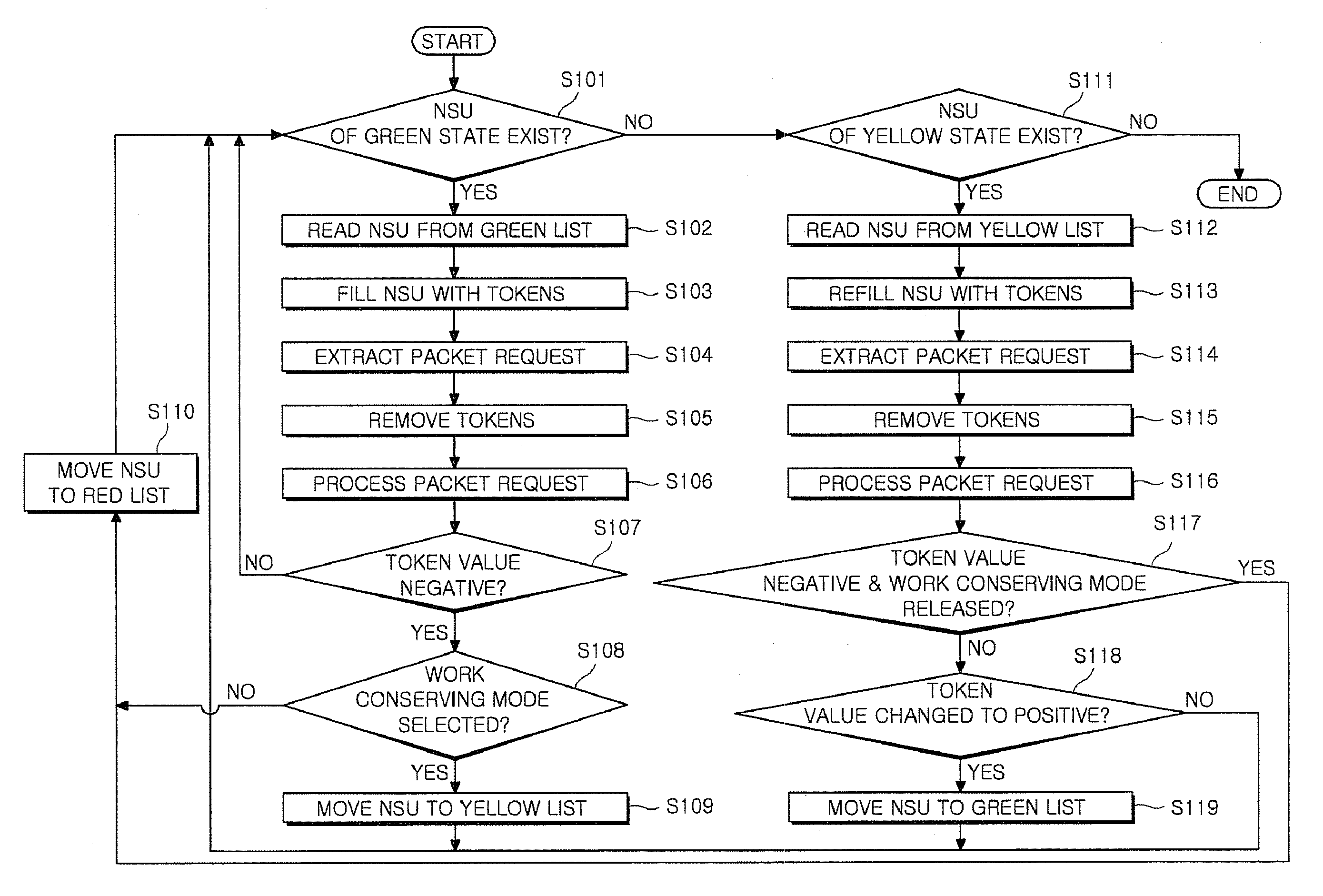

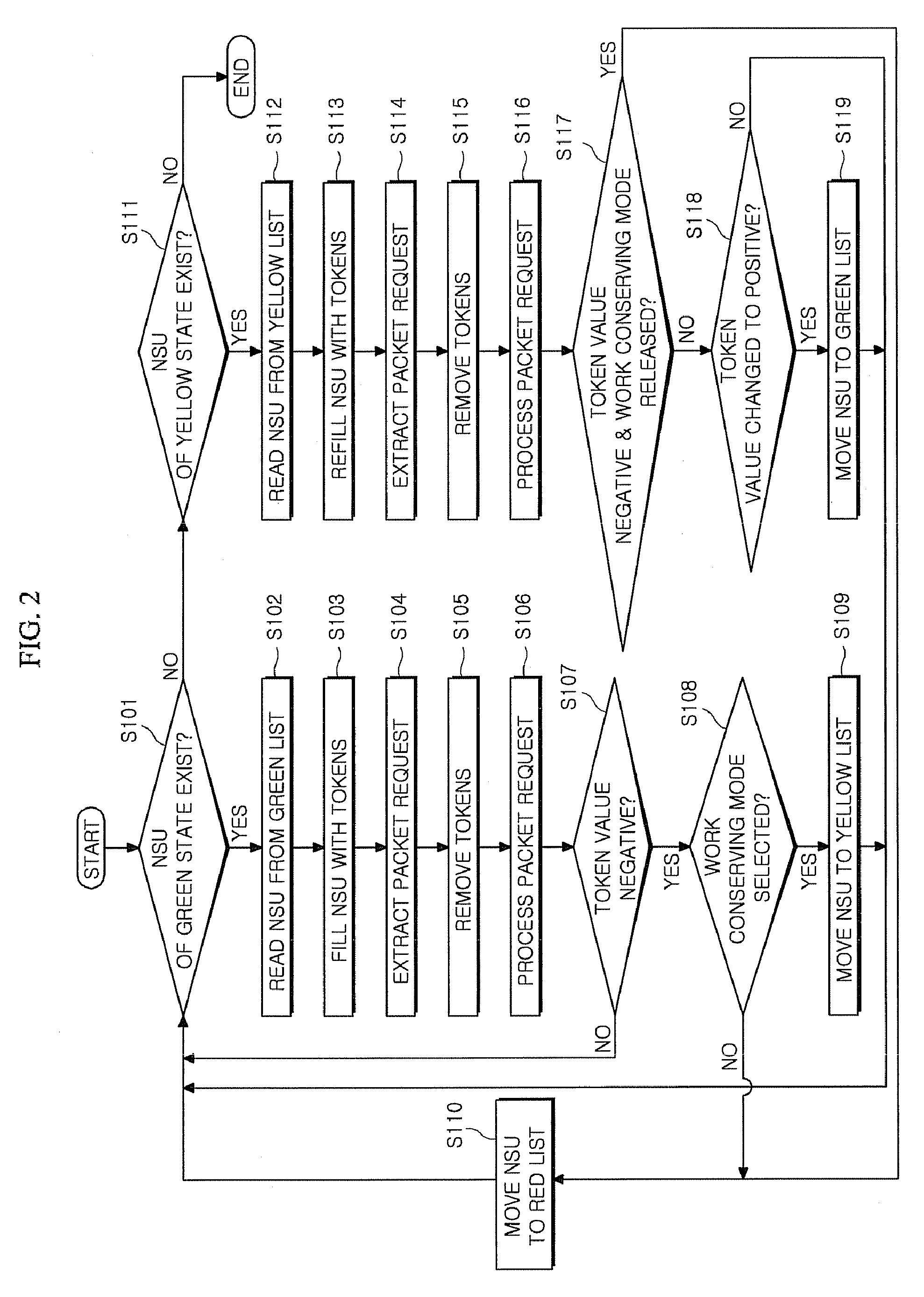

Network scheduler for selectively supporting work conserving mode and network scheduling method thereof

InactiveUS20090154351A1Effective bandwidthImprove efficiencyError preventionFrequency-division multiplex detailsDistributed computingGreen state

Provided are a network scheduler and a network scheduling method capable of effectively managing network bandwidths by selectively supporting a work conserving mode to network entities by using an improved token bucket scheme. The network scheduler selectively supports a work conserving mode to network scheduling units (NSUs) serving as network entities by using a token bucket scheme, such that the network scheduler ensures an allocated network bandwidth or enables the NSUs to use a remaining bandwidth. The network scheduler manages the NSUs by classifying the NSUs into a green state, a red state, a yellow state, and a black state according to a token value, a selection / non-selection of the work conserving mode, and an existence / non-existence of the packet request to be processed.

Owner:ELECTRONICS & TELECOMM RES INST

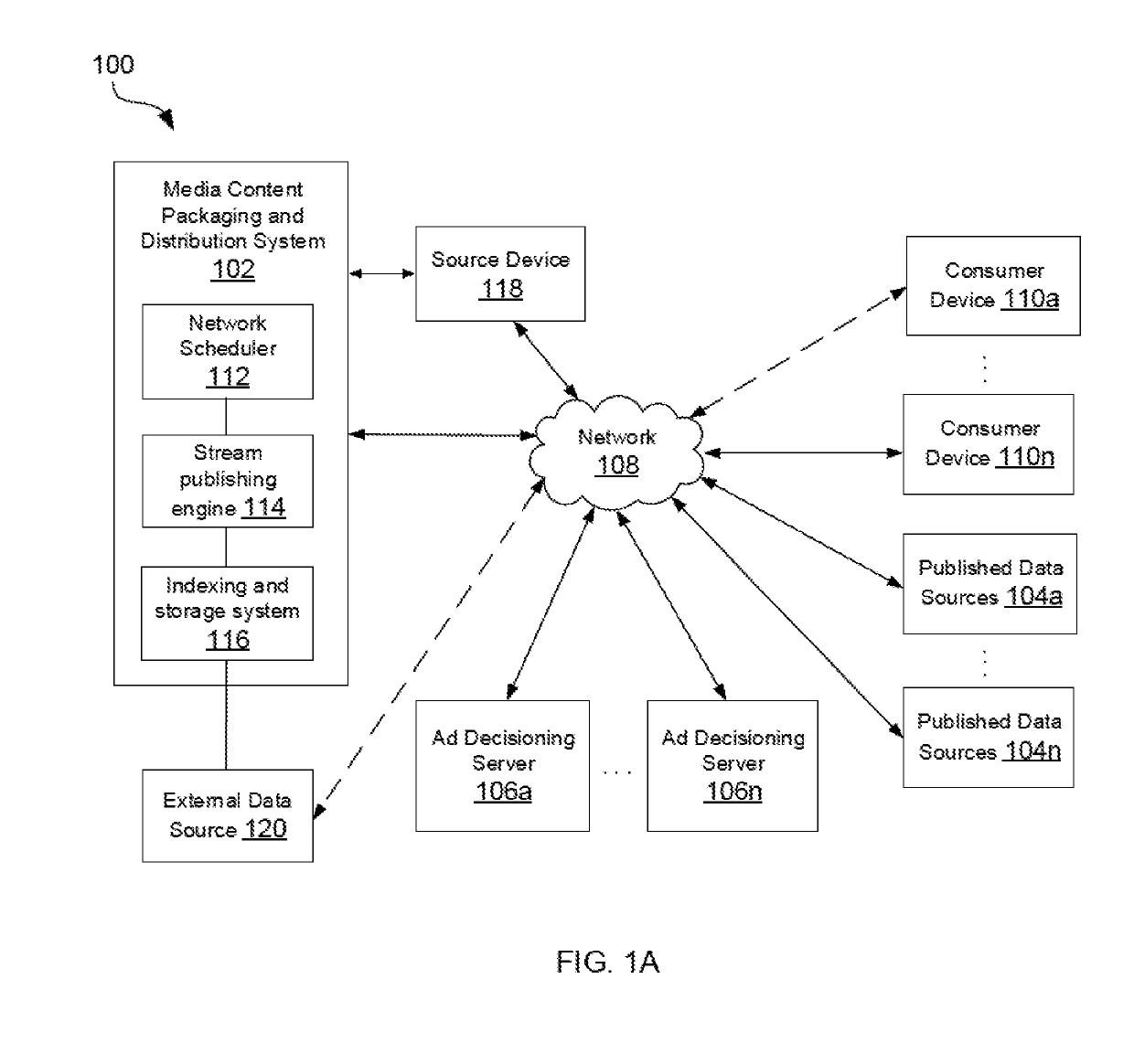

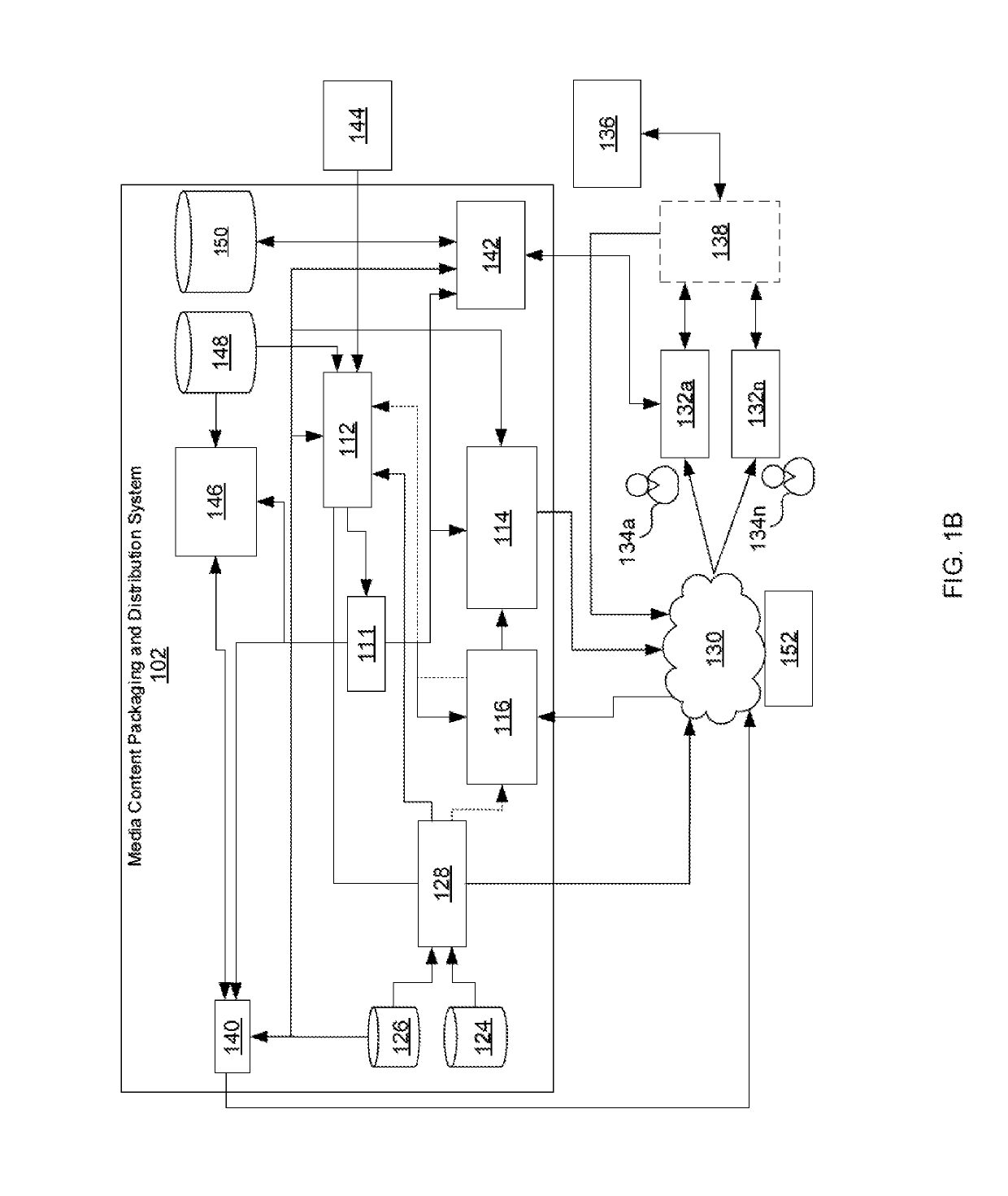

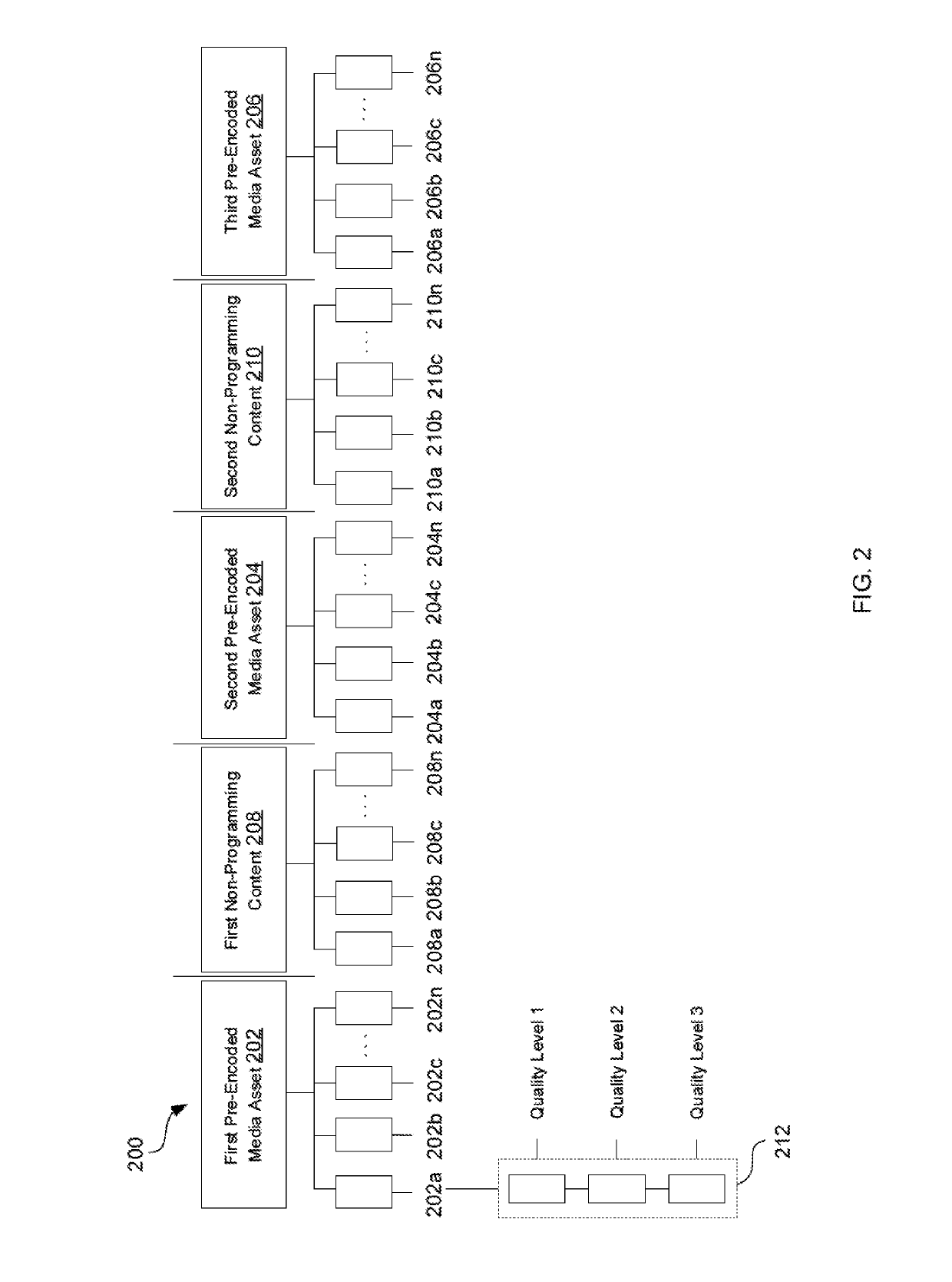

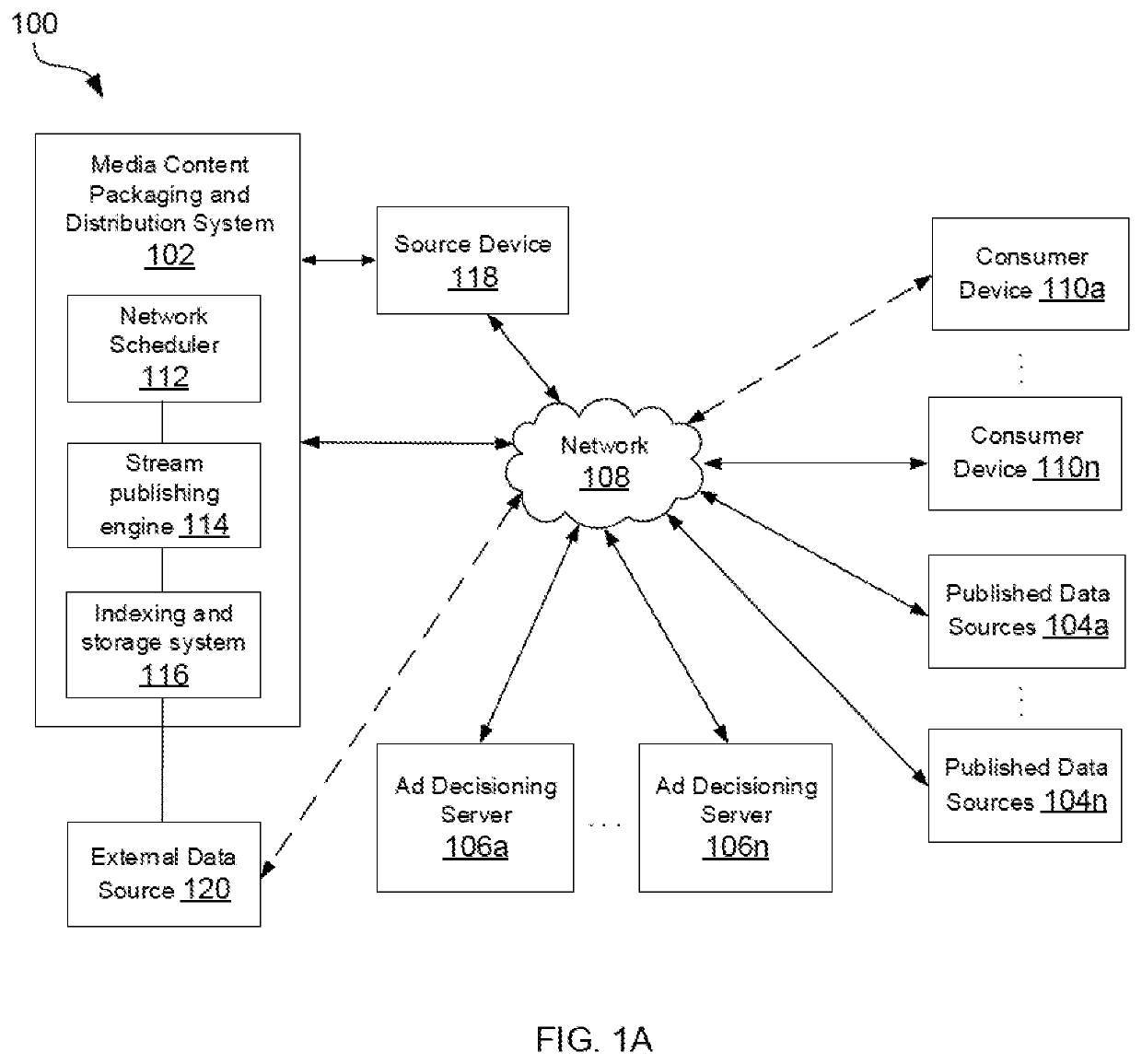

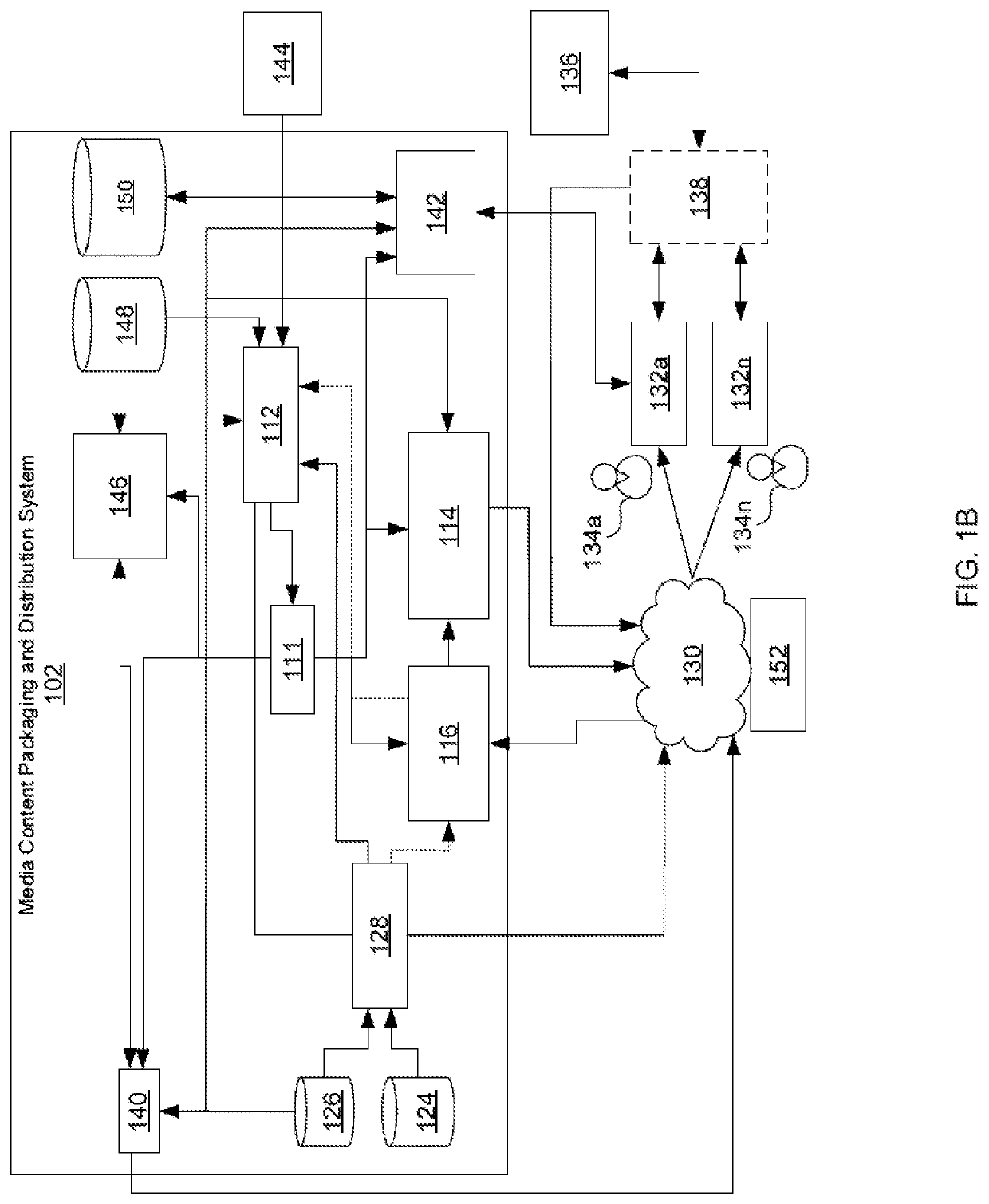

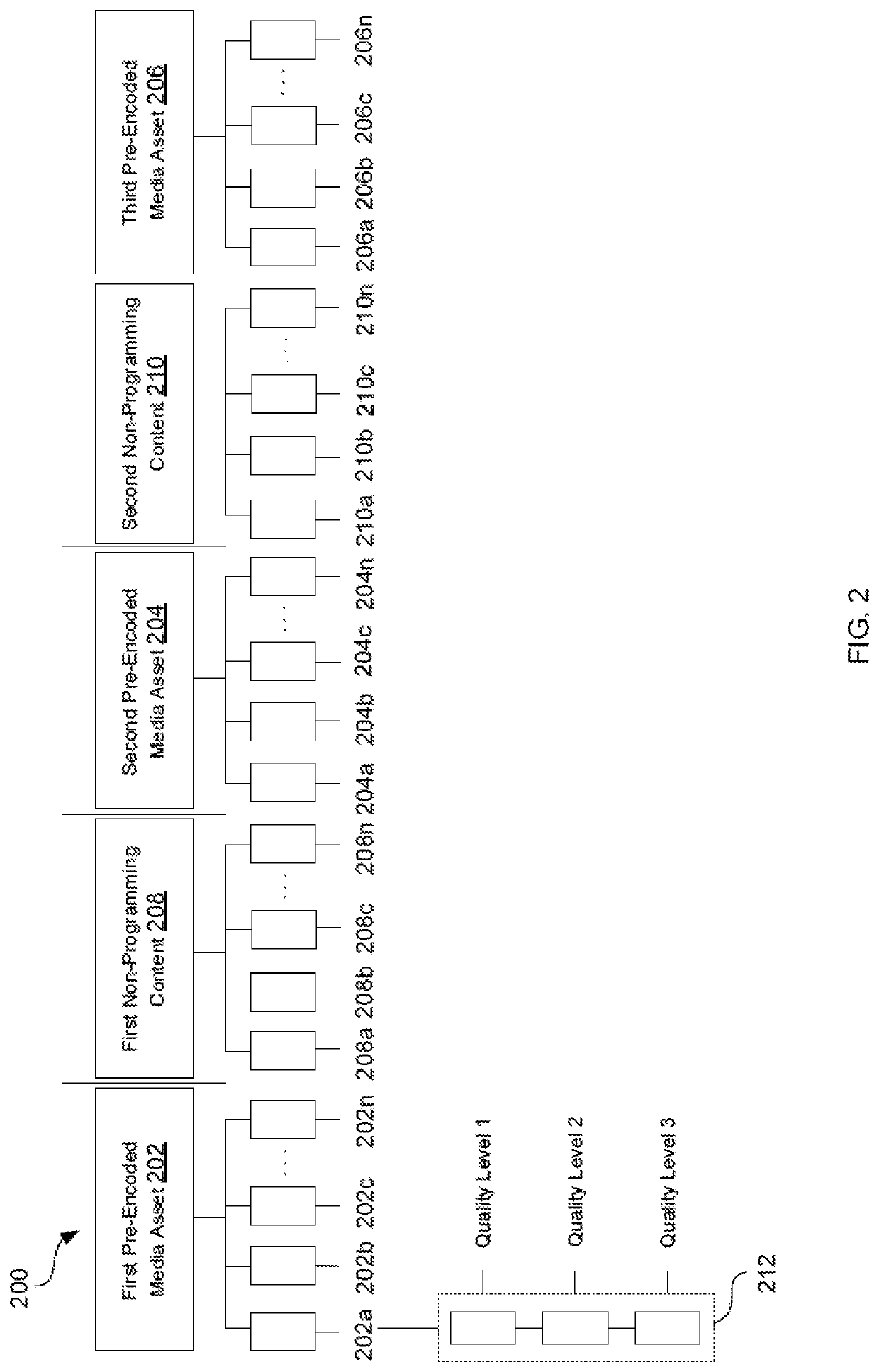

Publishing a disparate live media output stream using pre-encoded media assets

A media content packaging and distribution system that generates a plurality of disparate live media output streams to be viewed on a plurality of consumer devices, receives a programming schedule for a channel from a network scheduler. The programming schedule corresponds to at least a first manifest associated with a first pre-encoded media asset. Information related to a plurality of first media segments and one or more events from the first pre-encoded media asset indicated in the first manifest is inserted into a live output stream manifest at defined intervals. A disparate live media output stream, to be viewed by a consumer device for a channel via a media player, is generated based on the insertion of the information related to the plurality of first media segments from the first pre-encoded media asset indicated in the first manifest into the live output stream manifest.

Owner:TURNER BROADCASTING SYST INC

Method and apparatus for orthogonal resource allocation in a wireless communication system

InactiveUS20210235323A1Network traffic/resource managementInterprogram communicationCommunications systemResource assignment

A method and an apparatus for providing dynamic orthogonal assignment of radio resources in a wireless communication system is disclosed. The method includes receiving a dynamic spectrum sharing (DSS) policy configuration message from a second controller and receiving a physical resource block (PRB) assignment bitmap proposal and a protected bitmap indication data from a type one network scheduler and a type two network scheduler. The method includes computing an available bandwidth based on the PRB assignment bitmap proposal and the protected bitmap indication data and further computing a bandwidth allocation for the type one network scheduler and the type two network scheduler based on the computed available bandwidth and the DSS policy configuration message from the type one network scheduler and the type two network scheduler. Lastly, the method includes allocating the computed bandwidth to the type one network scheduler and the type two network scheduler.

Owner:STERLITE TECHNOLOGIES

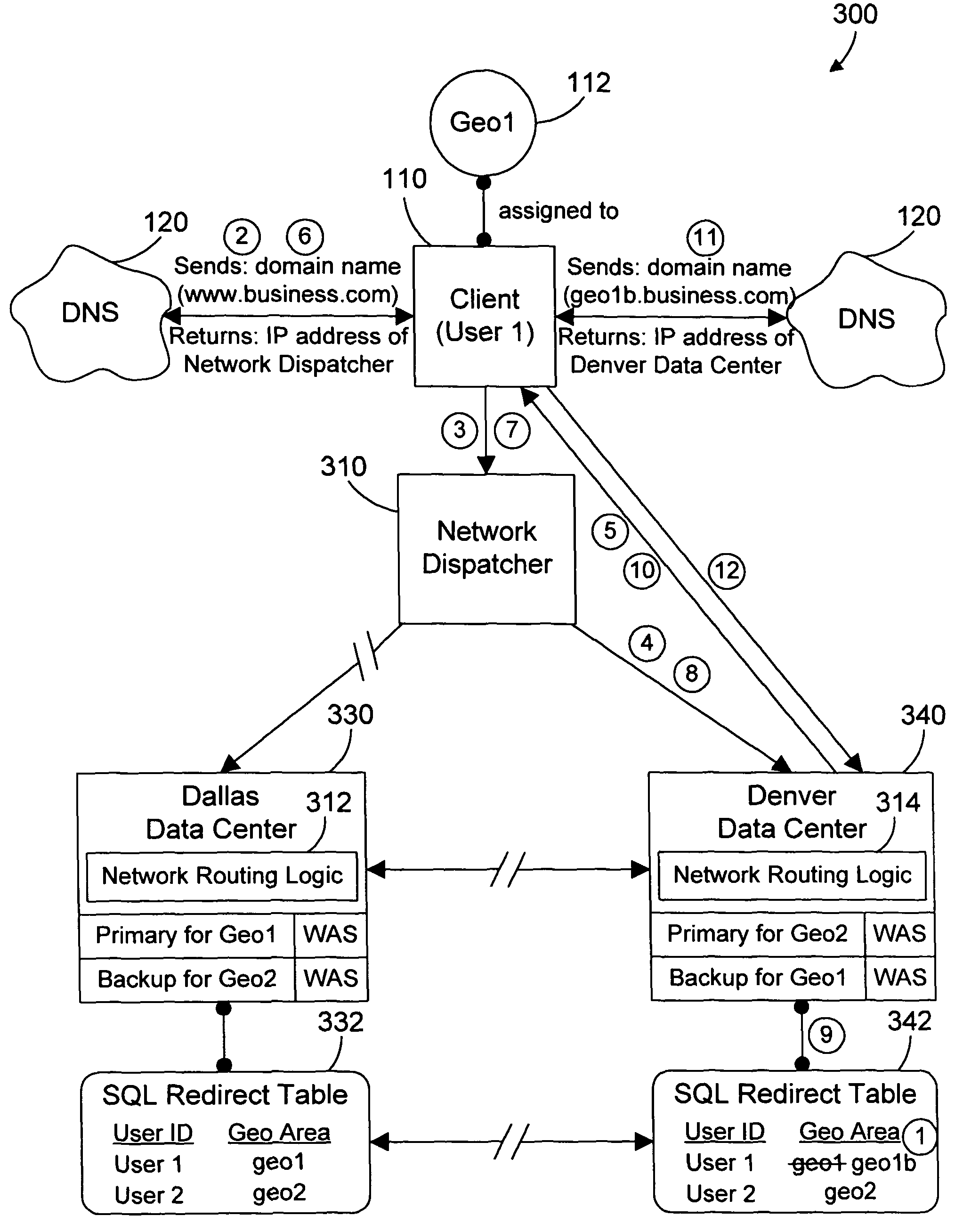

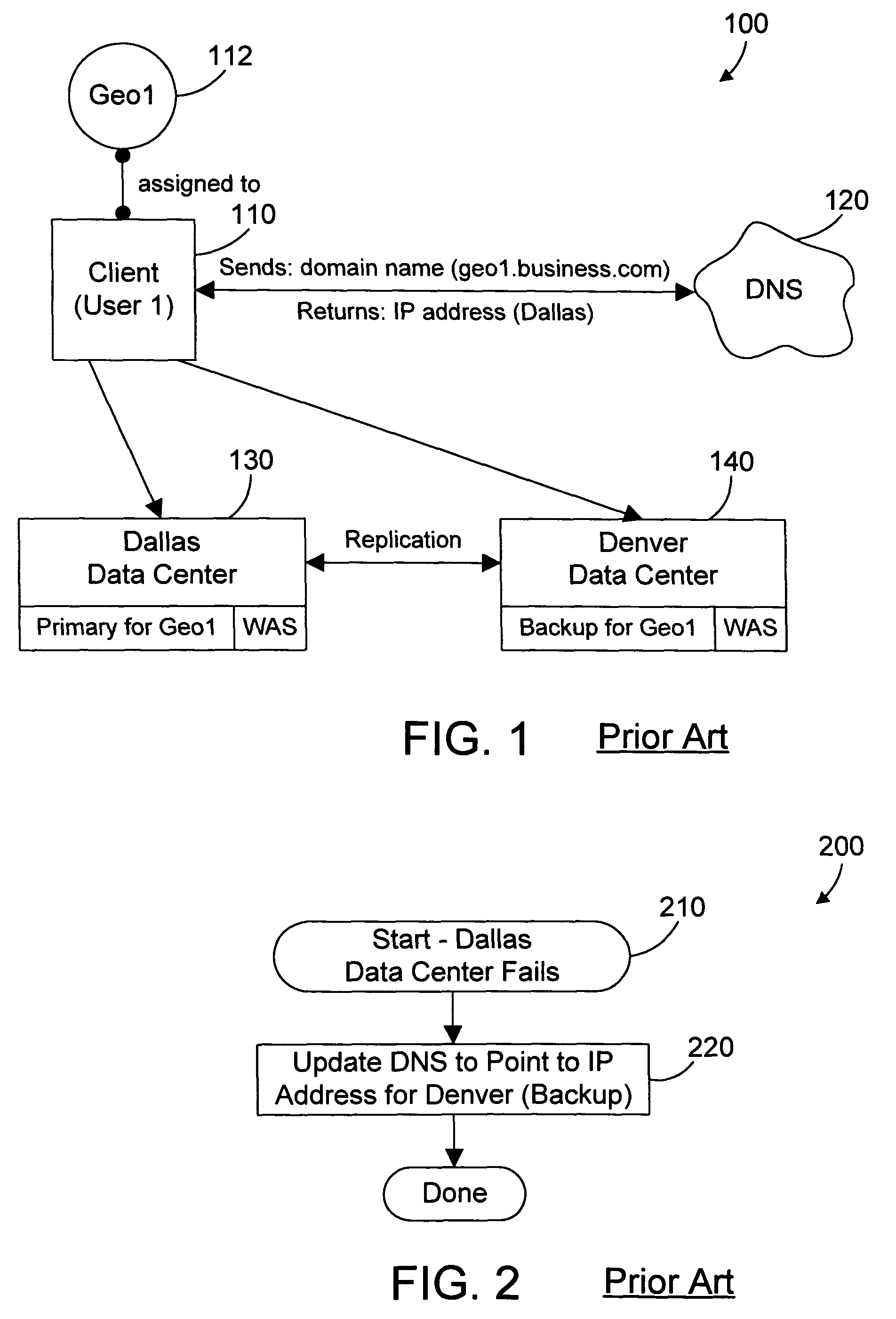

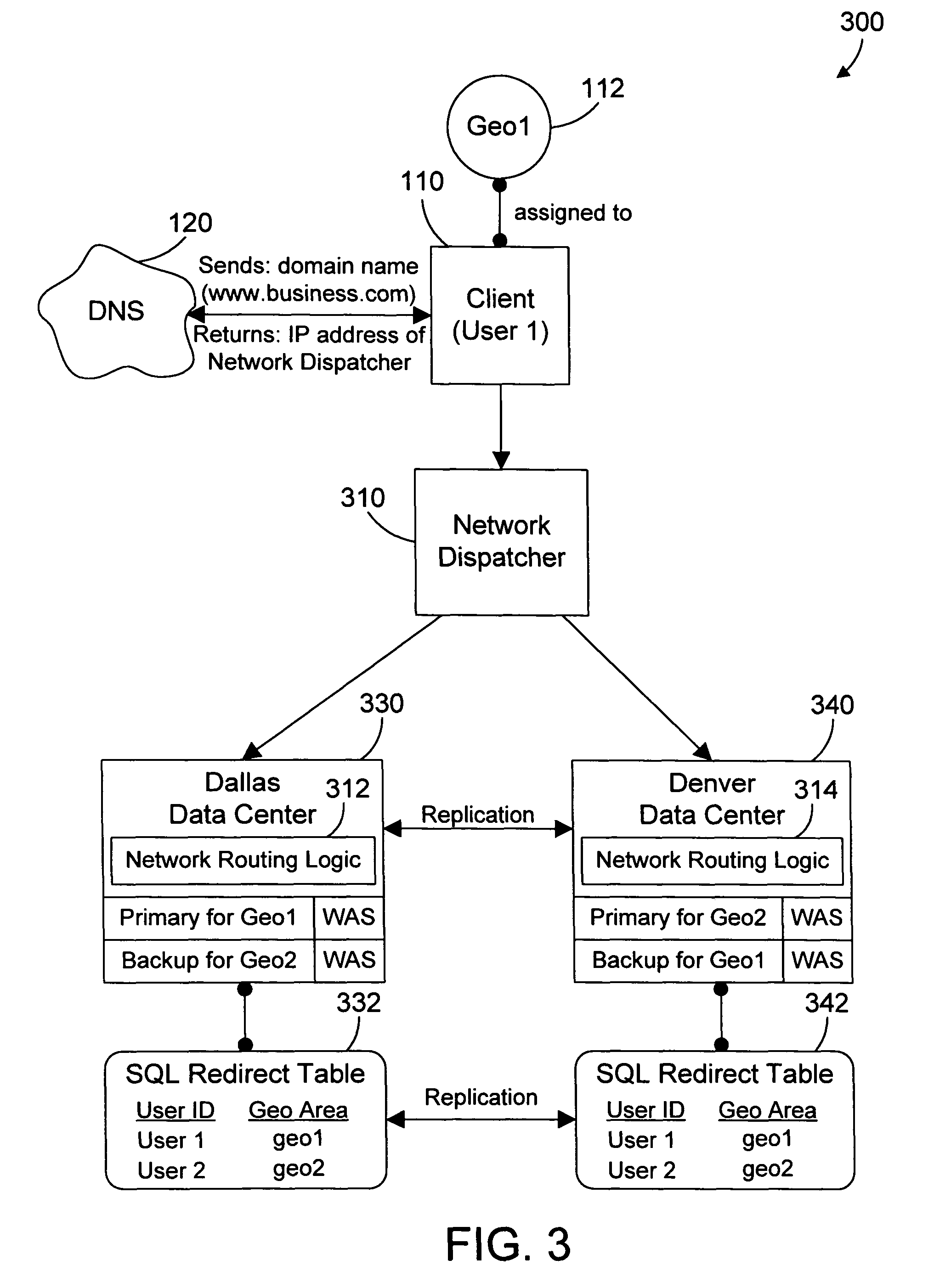

Near real-time data center switching for client requests

A networked computer system provides a way to quickly switch to a backup data center when a primary data center fails. Each data center includes a redirect table that specifies a geographical area corresponding to each user. The redirect table is replicated on one or more data centers so that each data center has the same information. When a data center fails, the redirect table in one of the non-failed data centers is updated to specify a new data center for each client that used the failed data center as its primary data center. A network dispatcher recognizes that the failed data center is unavailable, and routes a request to the backup data center. Network routing logic then issues a redirection command that causes all subsequent requests from that client to be redirected directly to the backup data center.

Owner:TWITTER INC

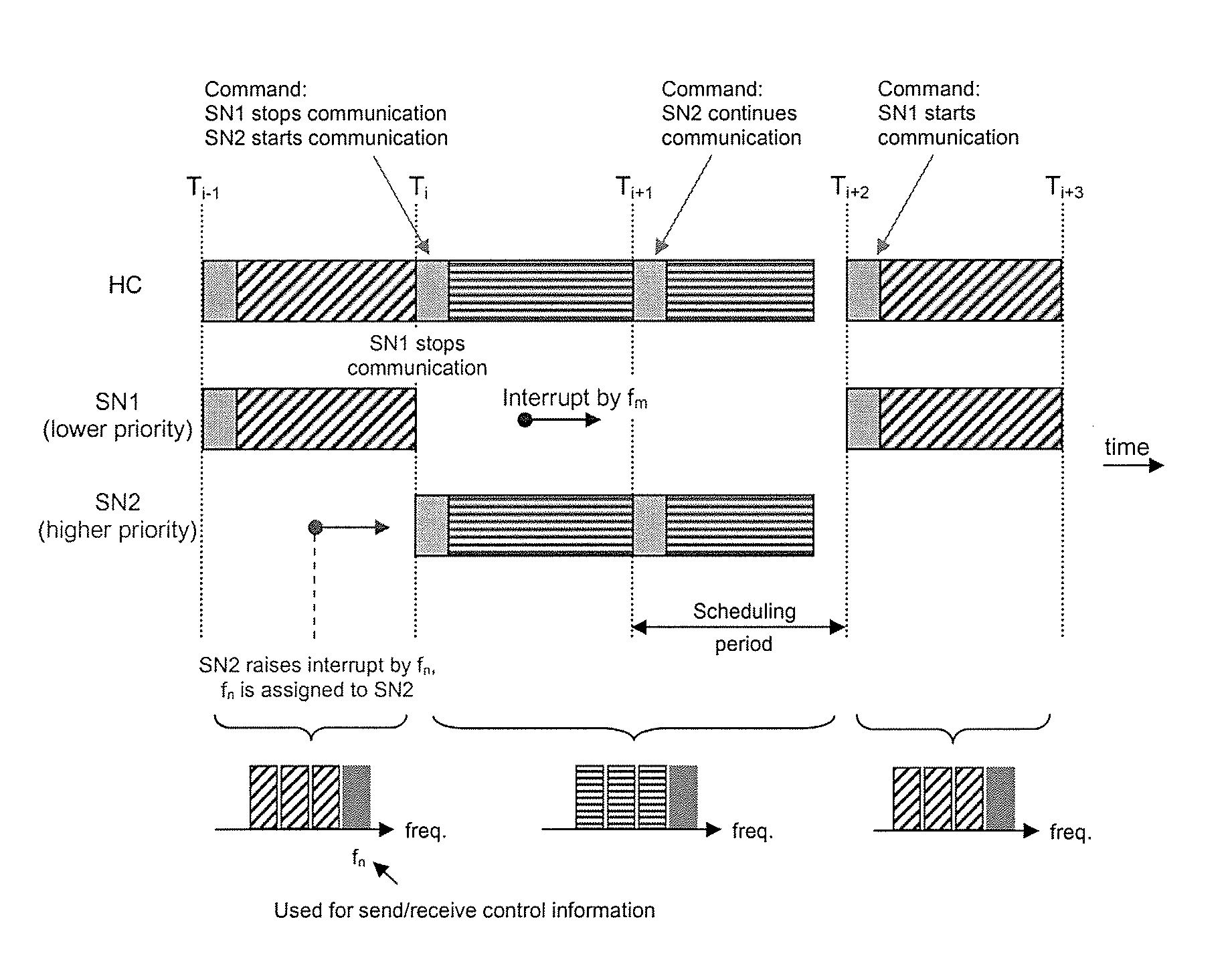

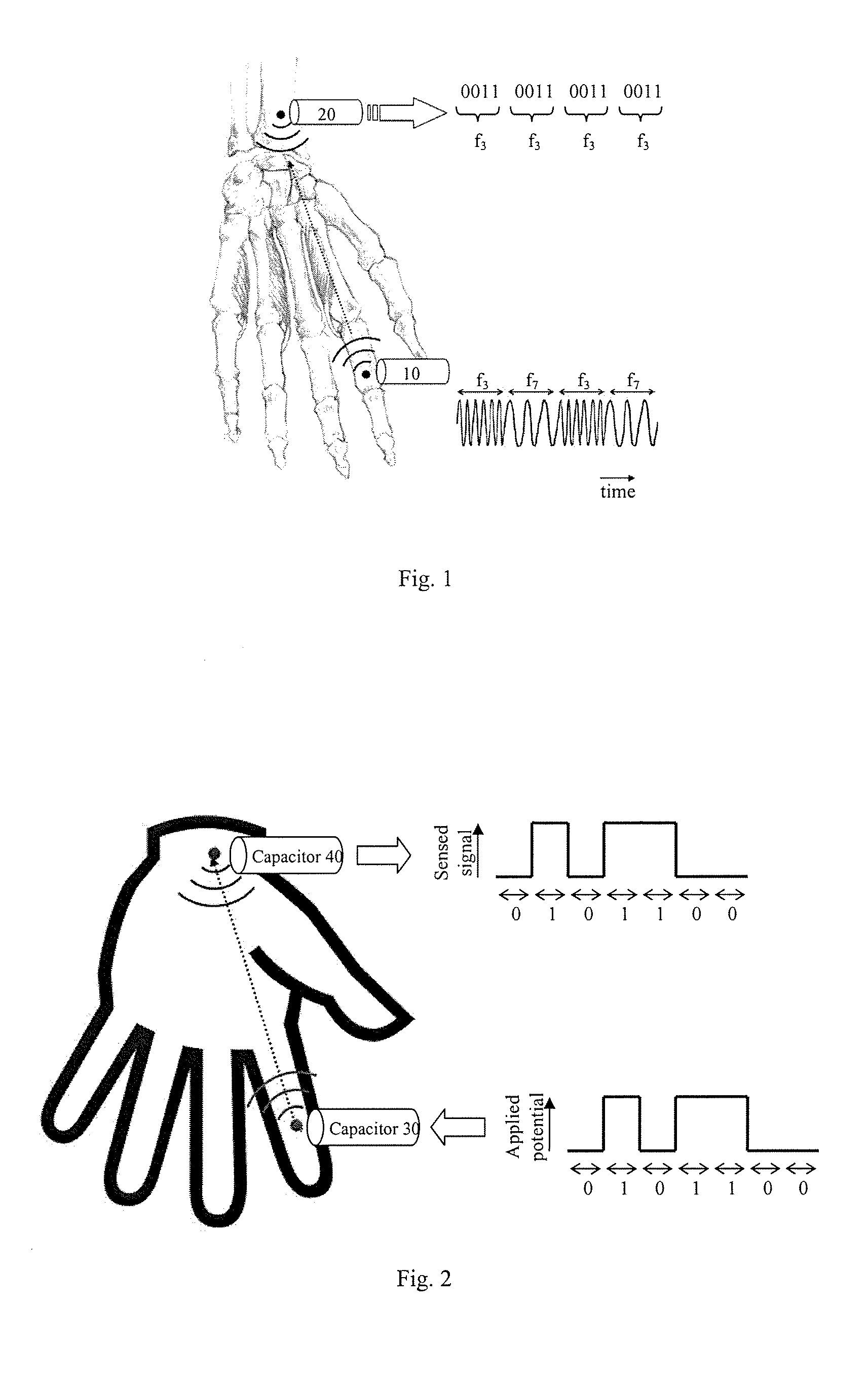

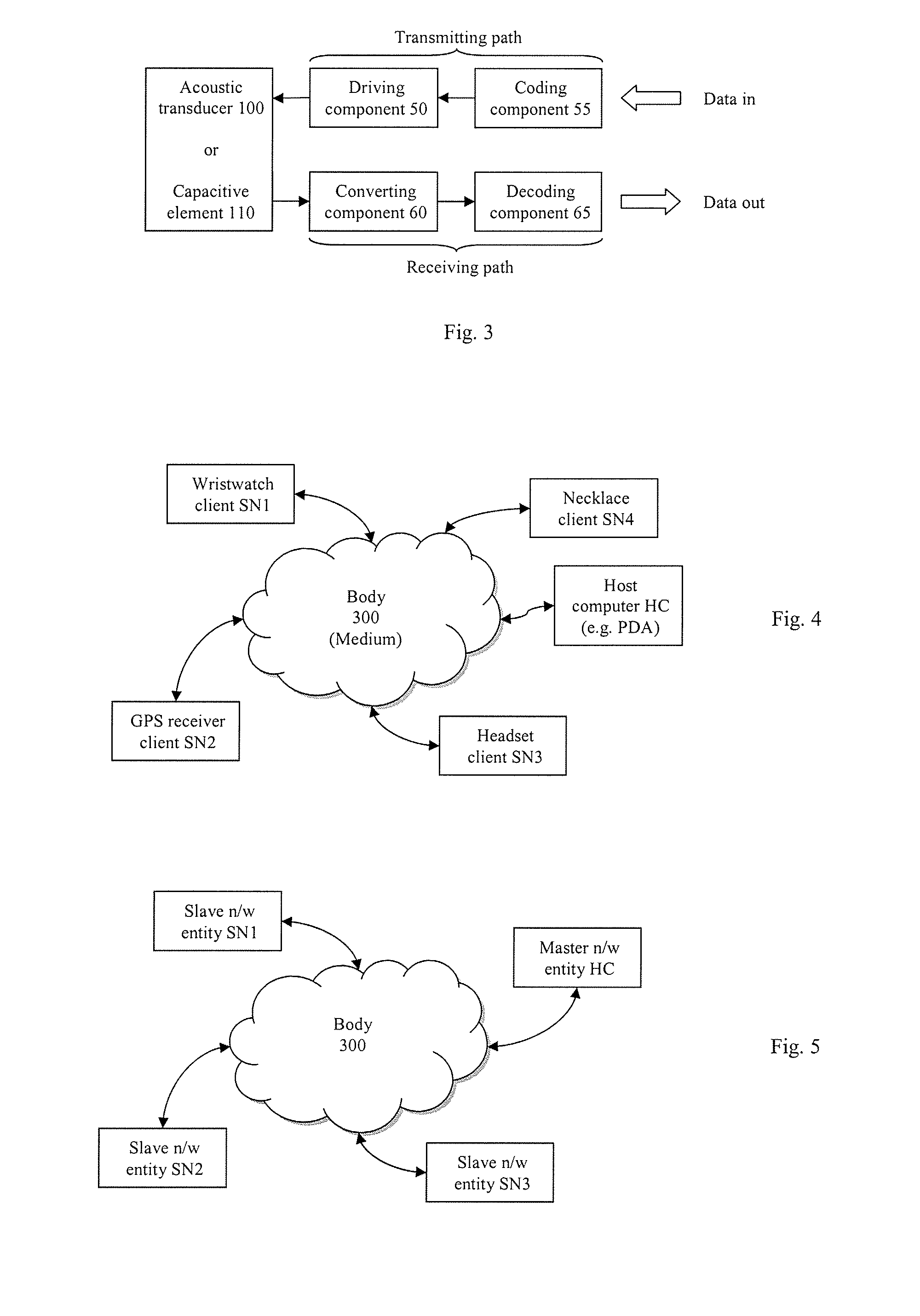

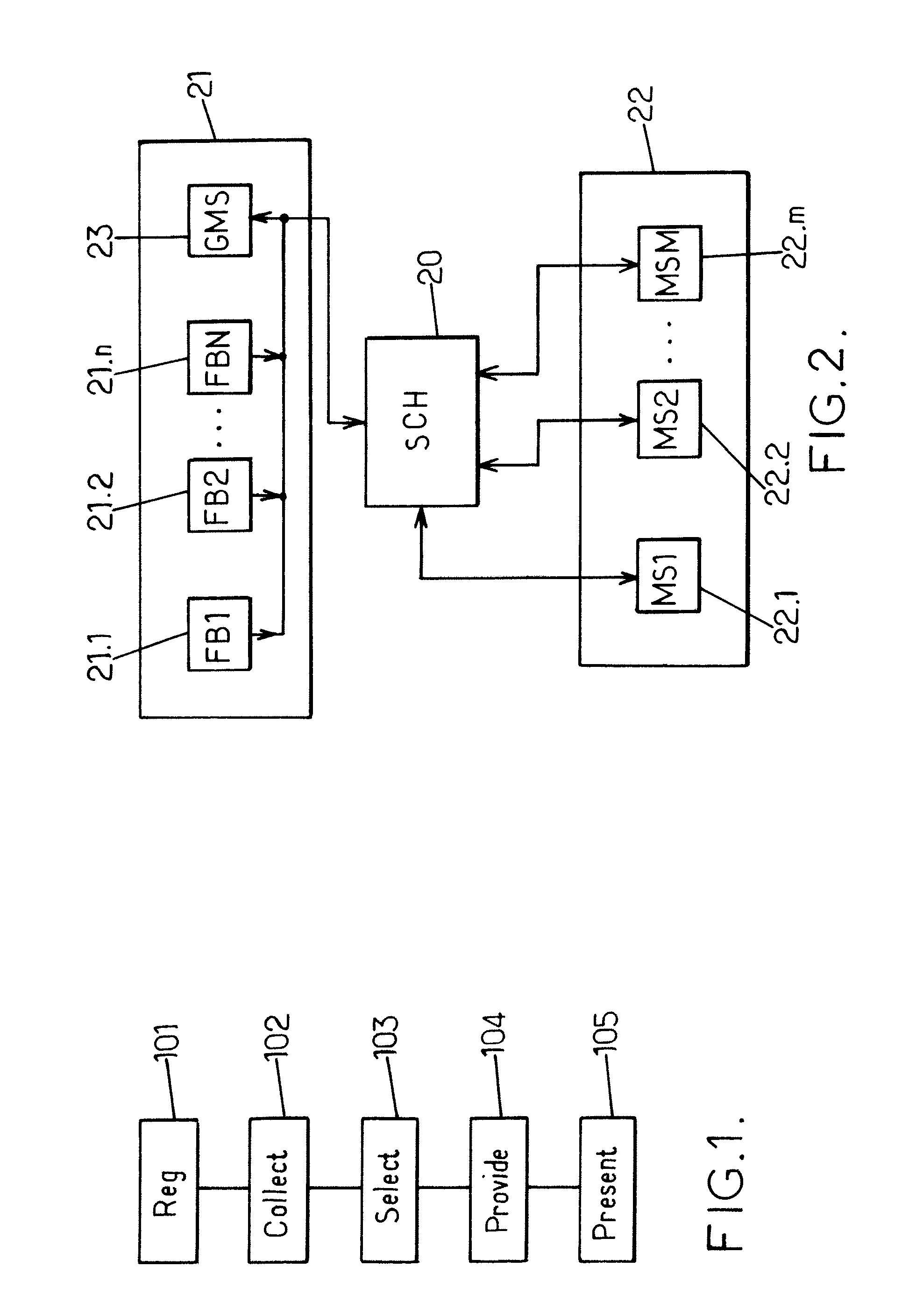

Intra-body communication network scheduler and method of operation thereof

InactiveUS20080298389A1Time-division multiplexData switching networksDistributed computingNetwork scheduler

An intra-body communication network scheduler and method of operation thereof are provided. At a scheduler, network entities of an intra-body communication network are registered, which have raised interrupts to obtain the right to communicate data over the intra-body communication network. The network entities are queued at the scheduler in accordance with priorities of the network entities. The scheduler allocates the right to communicate data over the intra-body communication network during a next scheduling period to the next network entity in the queue.

Owner:NOKIA CORP

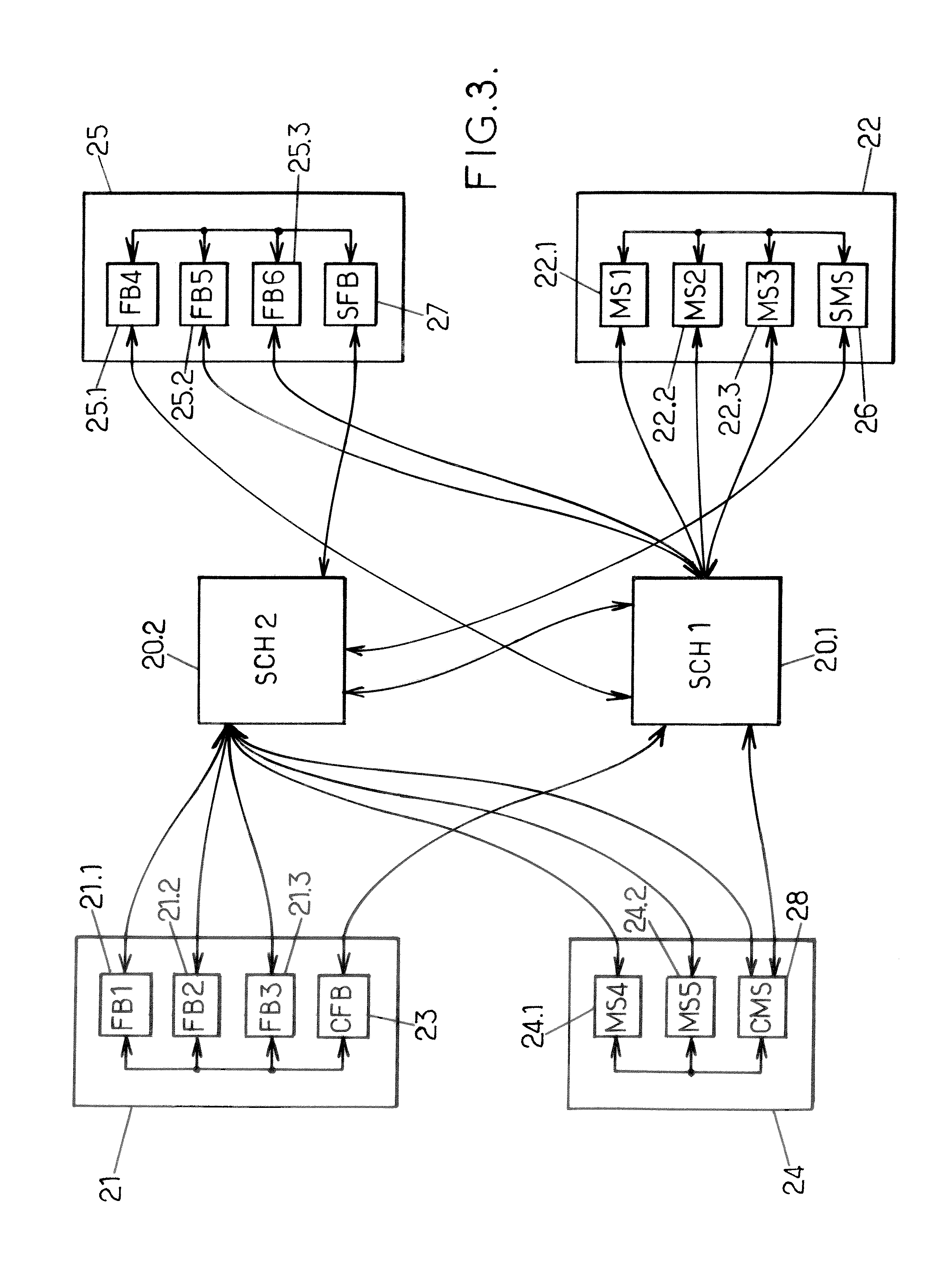

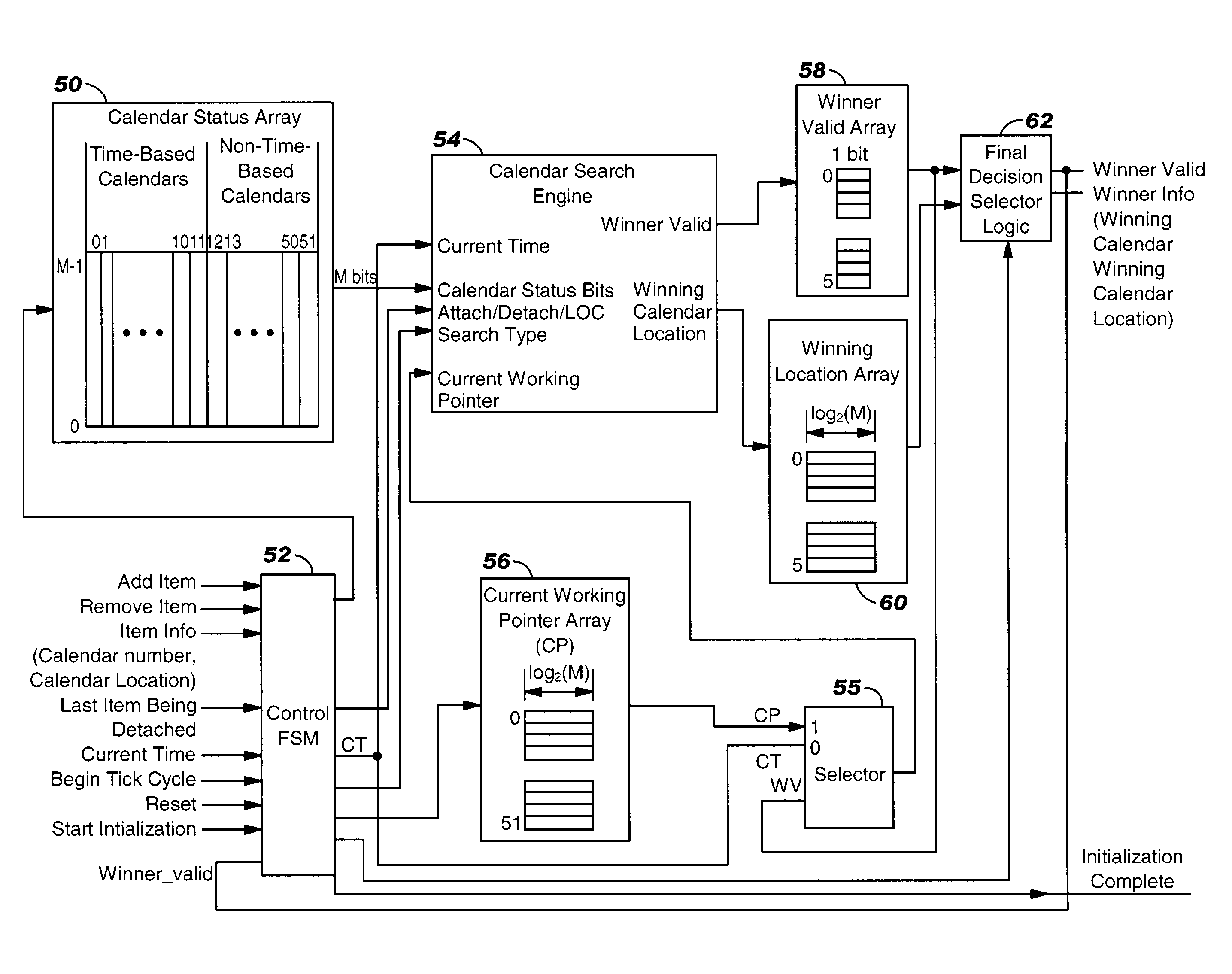

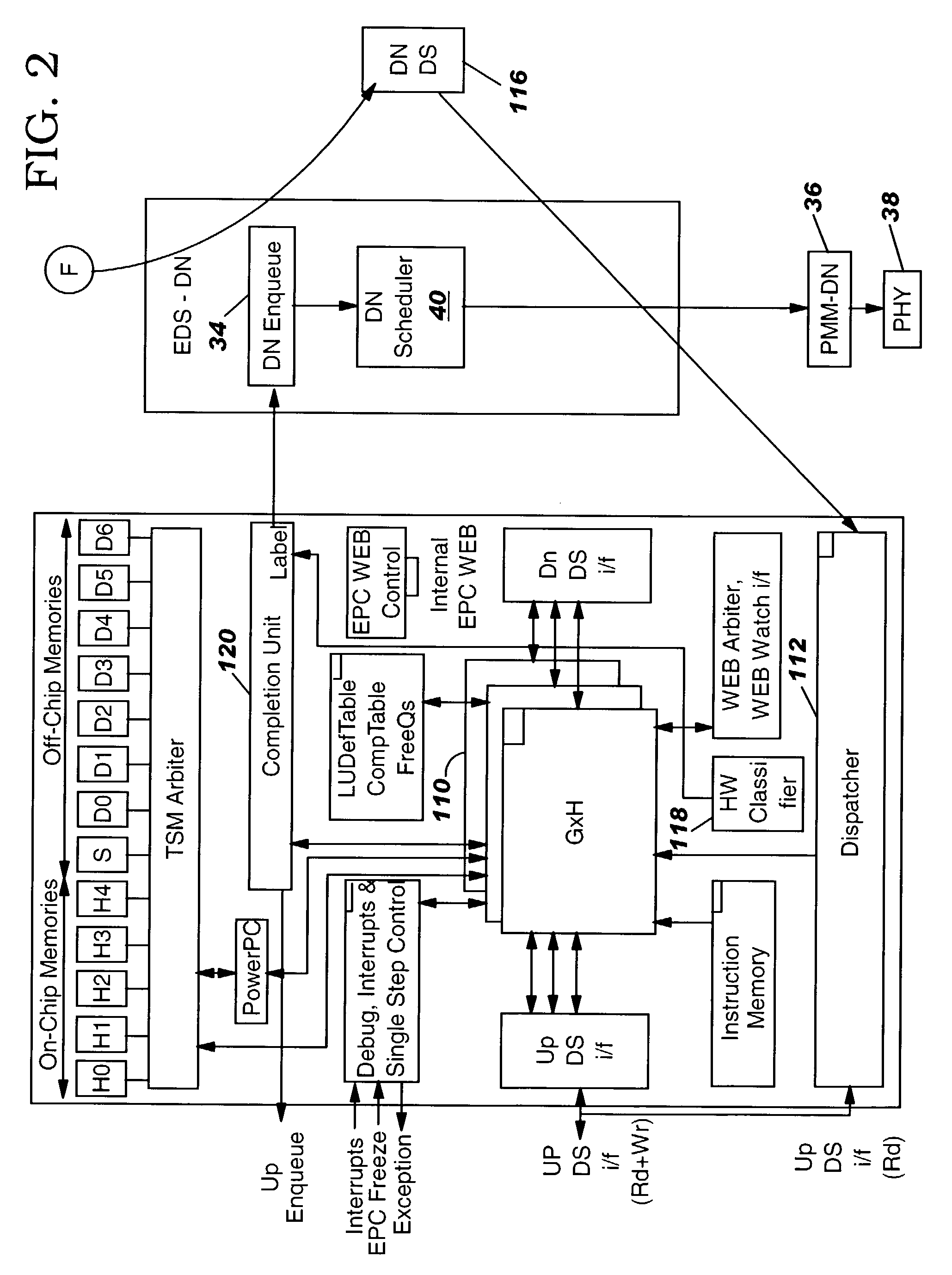

Apparatus and method to coordinate calendar searches in a network scheduler given limited resources

InactiveUS20050008021A1Minimal/negligible impactSaving in chip surface areaTime-division multiplexData switching by path configurationLimited resourcesFinite-state machine

A system that indicates which frame should next be removed by a scheduler from flow queues within a network device, such as a router, network processor, and like devices is disclosed. The system includes a search engine that searches a set of calendars under the control of a Finite State Machine (FSM), a current pointer, and input signals from an array and a clock line providing current time. Also included is a decision block that determines which of the searches are critical and which, during peak calendar search periods, can be postponed with minimal impact to the system. The postponed searches are then conducted at a time when there is available calendar search capacity.

Owner:IBM CORP

Method and apparatus for distribution and synchronization of radio resource assignments in a wireless communication system

InactiveUS20210234648A1Efficiently providedSynchronisation arrangementCriteria allocationCommunications systemResource assignment

A method and an apparatus for providing dynamic synchronization of radio resources in a radio access network (RAN) in a wireless communication system. The RAN has a type one network scheduler and a type two network scheduler. The method includes receiving a first physical resource block (PRB) assignment configuration from the type one network scheduler and the type two network scheduler. The first physical resource block (PRB) assignment configuration is received by a first controller. Further, the method includes determining a second PRB assignment configuration using the first PRB assignment configuration, wherein the second PRB assignment configuration is determined by the first controller. Furthermore, the method includes allocating the second PRB assignment configuration at the synchronization time (s) to the type one network scheduler and the type two network scheduler by the first controller.

Owner:STERLITE TECHNOLOGIES

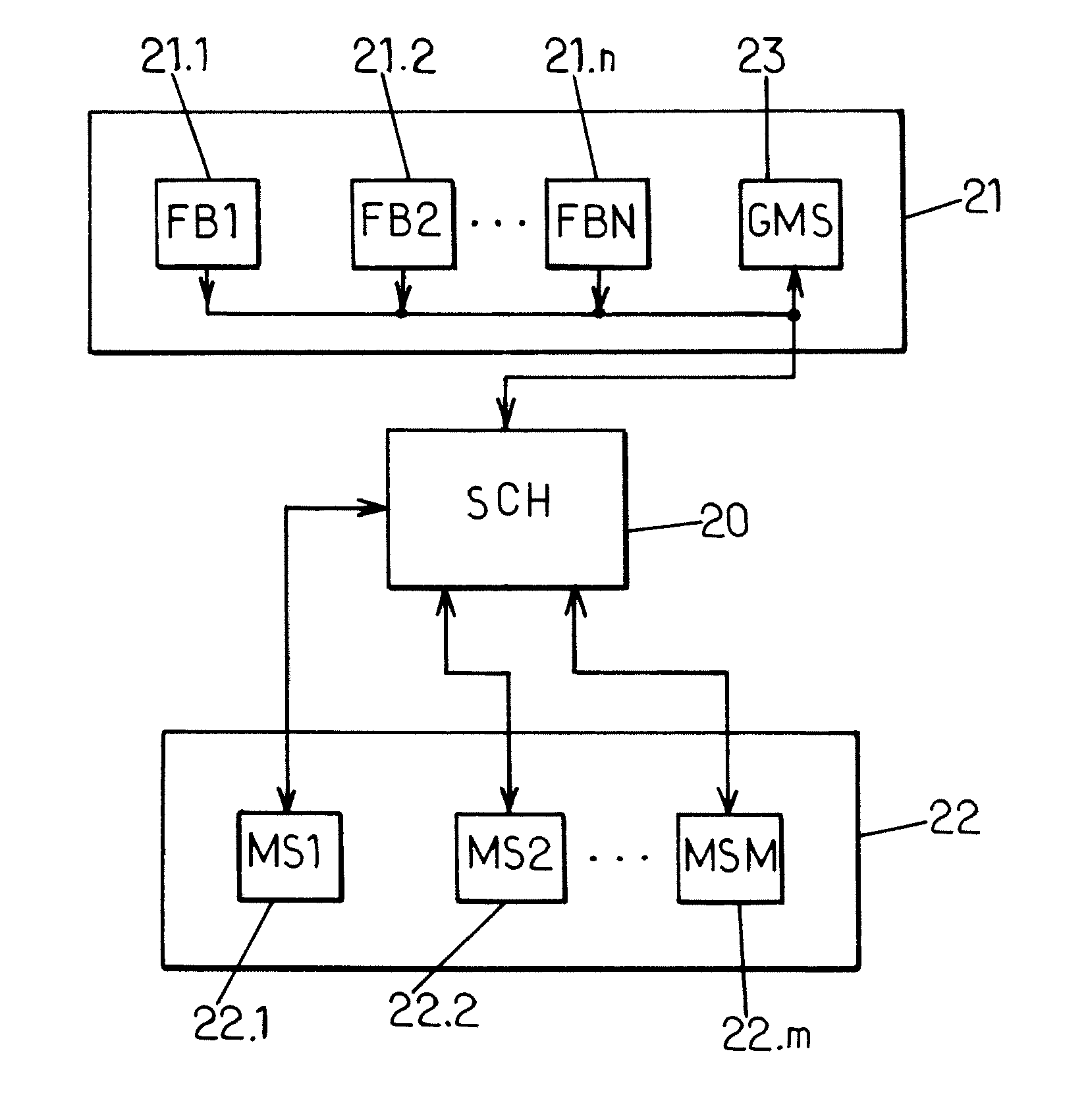

Social network enabler

ActiveUS20120259931A1Cost-effectiveGuaranteed connectivityMultiple digital computer combinationsData switching networksSocial webNetwork scheduler

A method and apparatus are provided for connecting a first group of users from at least one first social network with users from at least one second social network. The first group of users is registered on the second social network as a generic first user. The method is carried out by a network scheduler and includes collecting a first message from at least one second user from the second social network intended to the generic first user, selecting a user from the first group of users based on a predefined criterion, and providing the first message to the selected user and presenting the second user as the sender of the first message.

Owner:FRANCE TELECOM SA

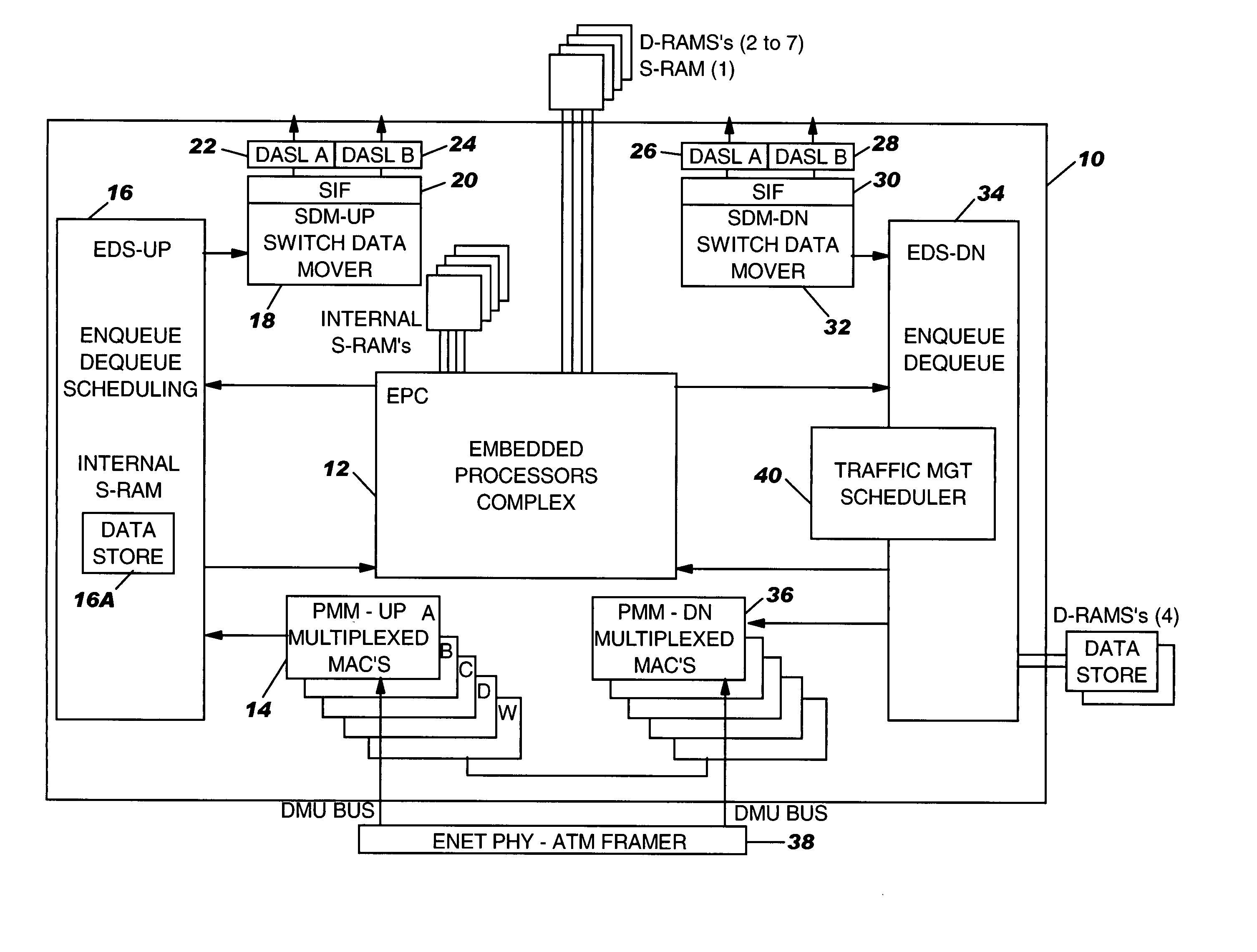

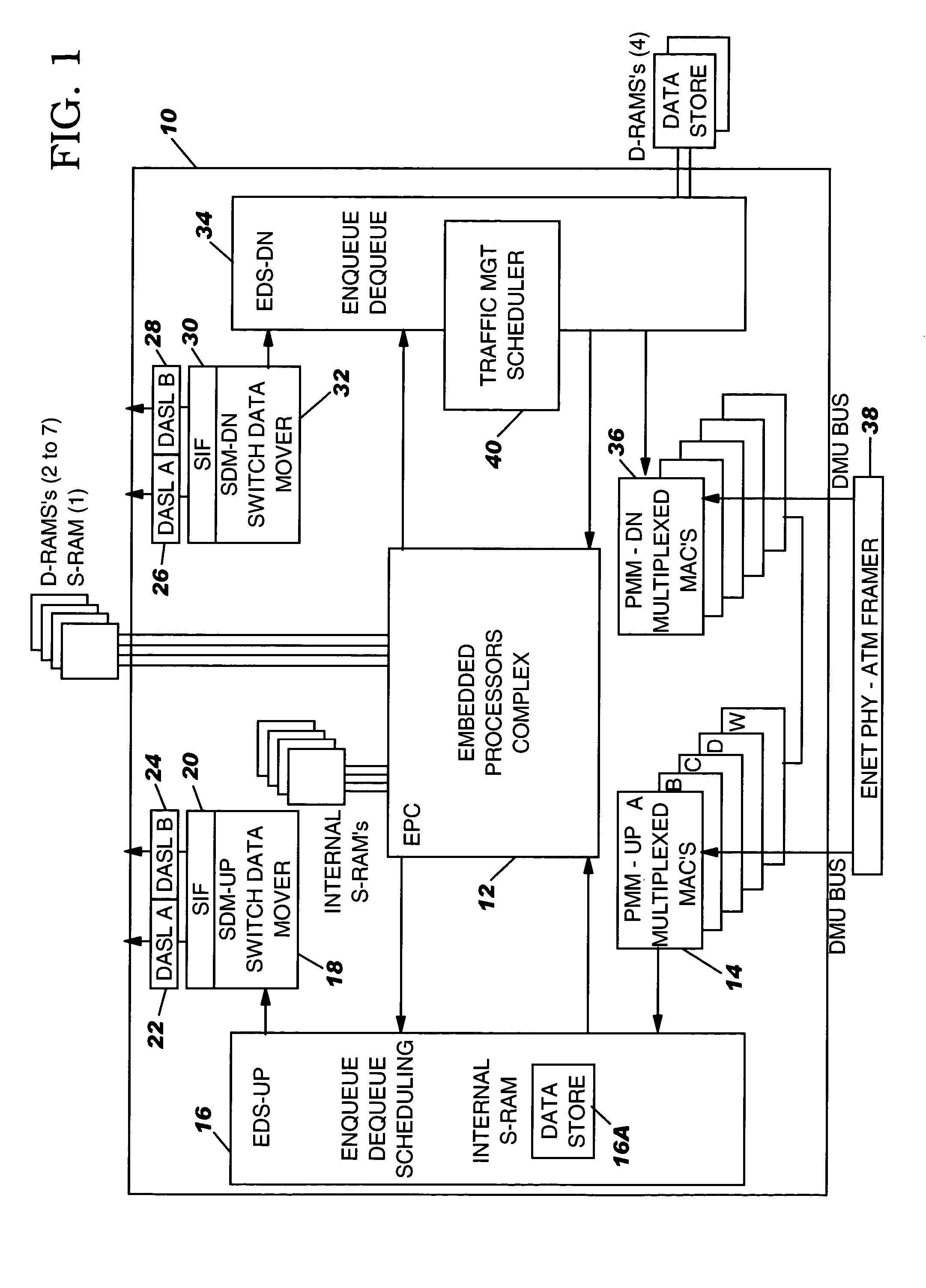

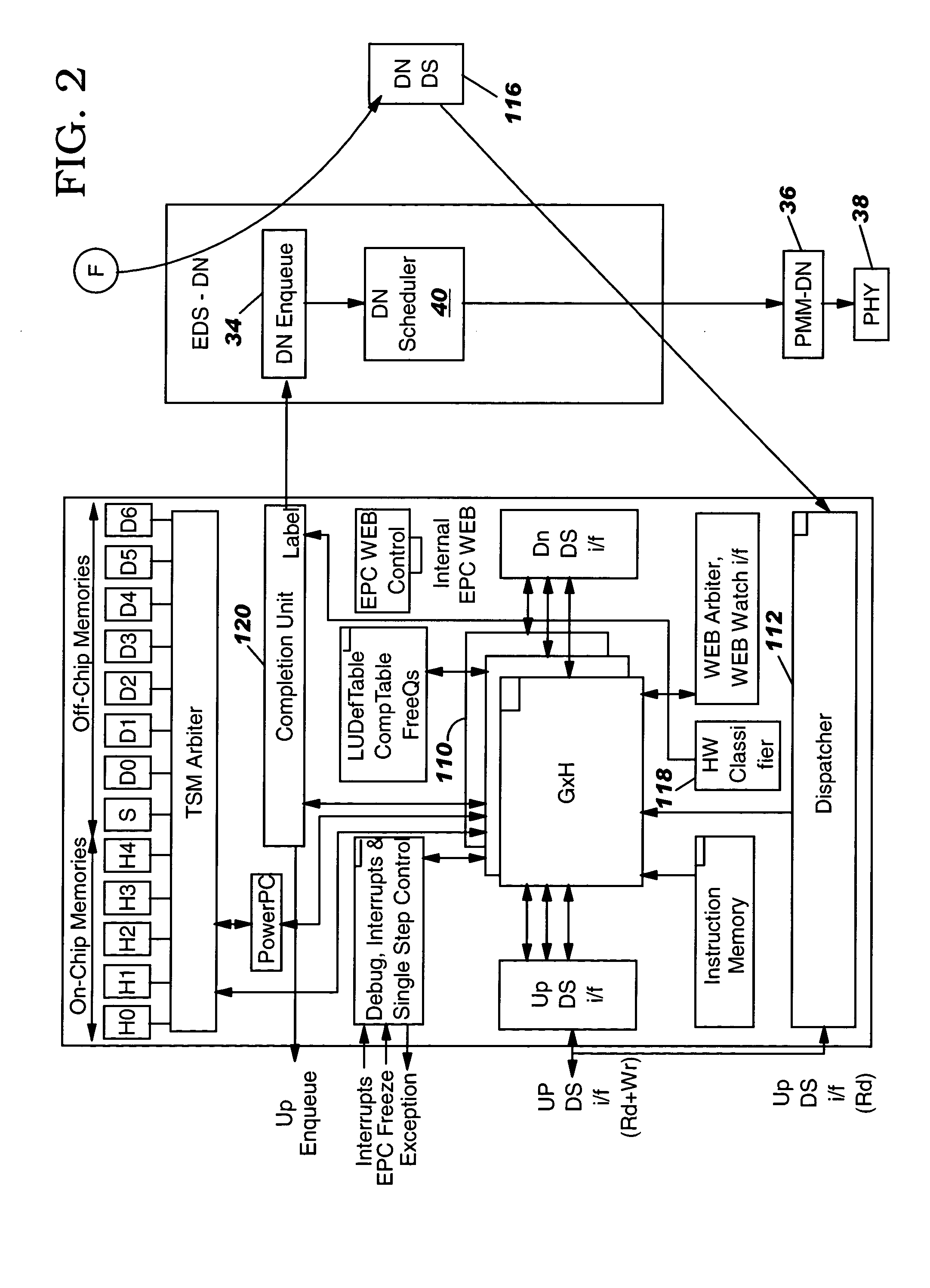

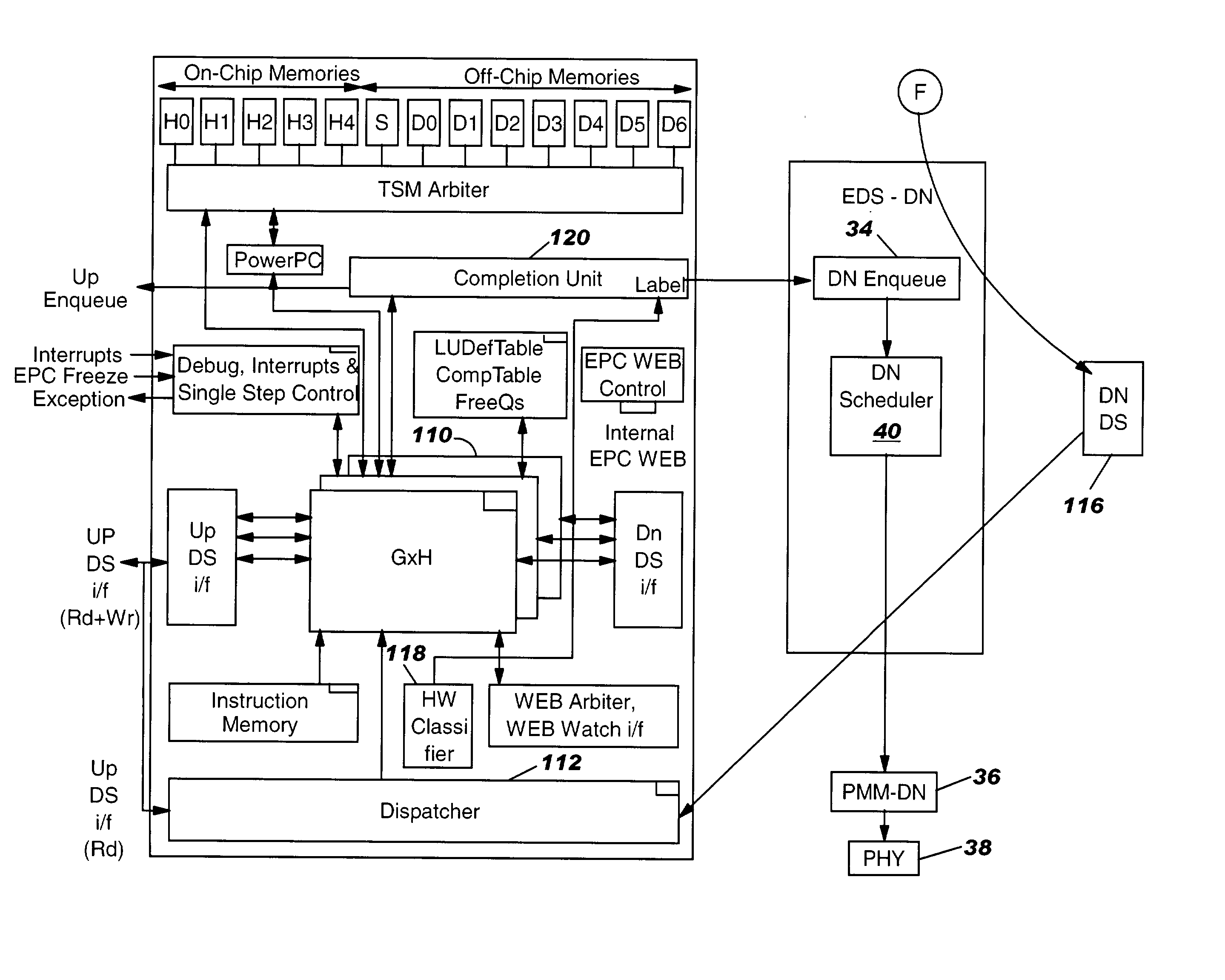

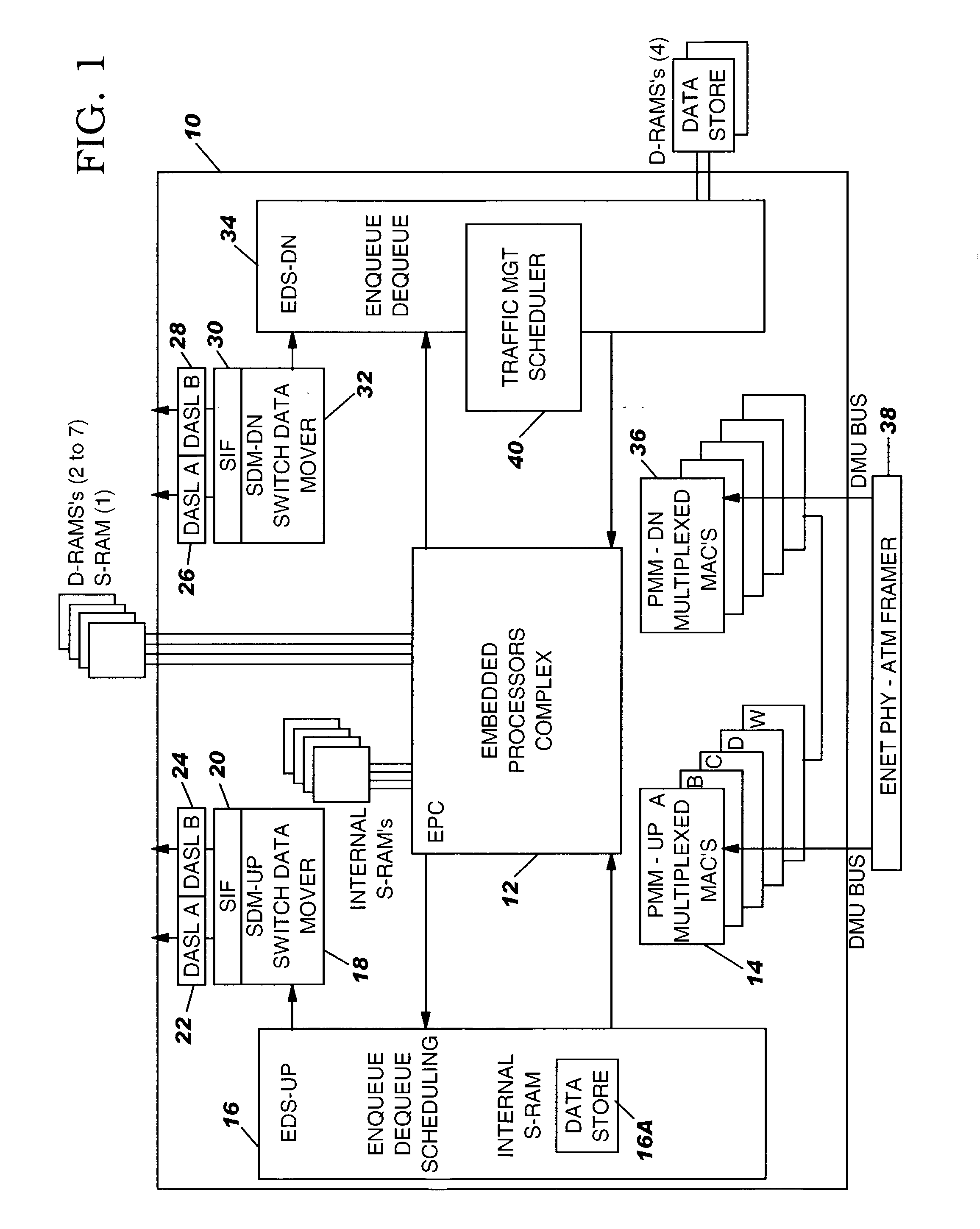

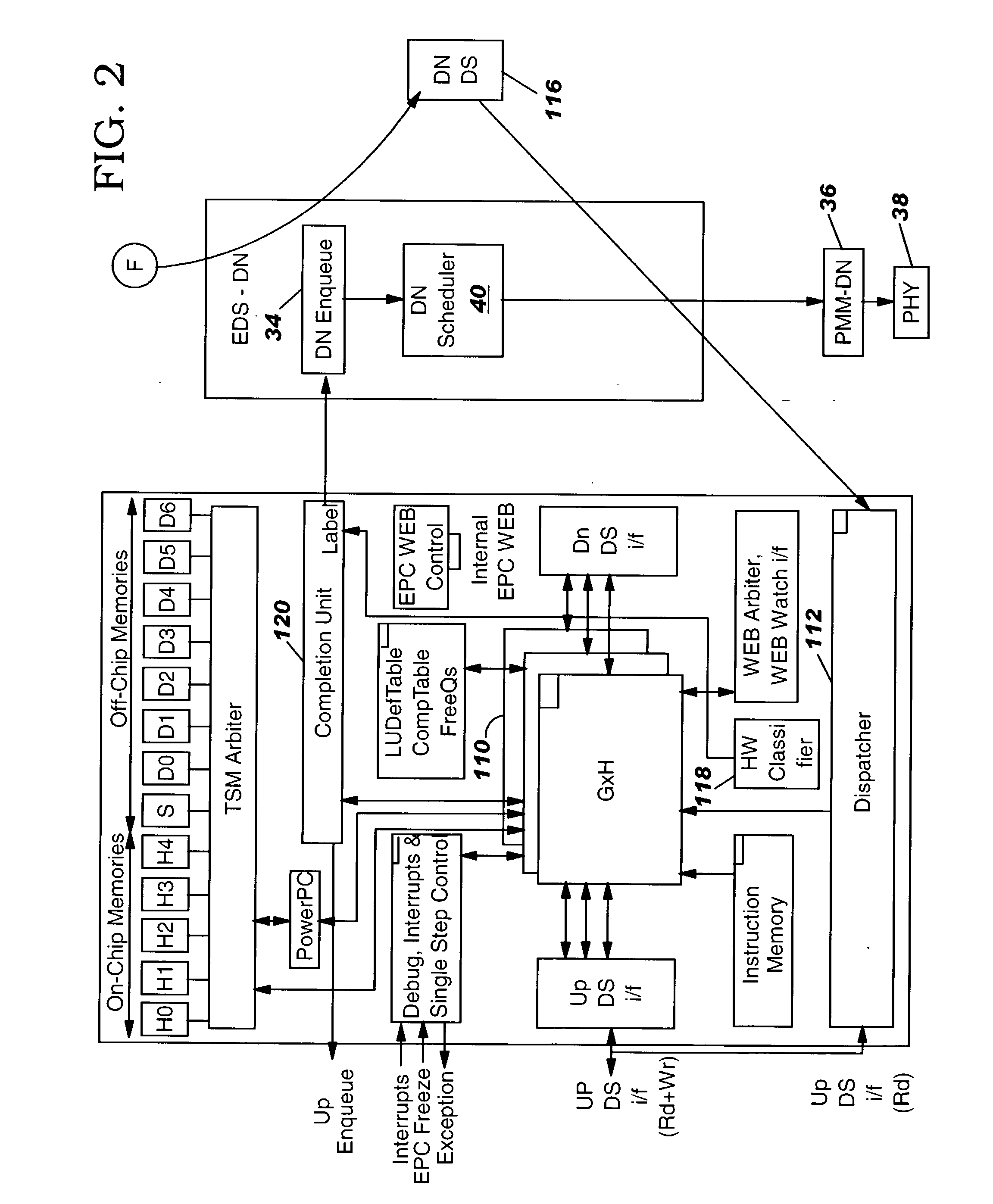

Apparatus and method to coordinate calendar searches in a network scheduler

InactiveUS20030058868A1Data switching by path configurationOffice automationArray data structureFinite-state machine

A system that indicates which frame should next be removed by a scheduler from flow queues within a network device, such as a router, network processor, and like devices is disclosed. The system includes a search engine that searches a set of calendars under the control of a Finite State Machine (FSM), a current pointer and input signals from array and a clock line providing current time. The results of the search are loaded into a Winner Valid array and a Winner Location array. A final decision logic circuit parses information in the Winner Valid array and Winner Location array to generate a final Winner Valid Signal, the identity of the winning calendar and the winning location. Winning is used to define the status of the calendar in the calendar status array that is selected as a result of a search process being executed on a plurality of calendars in the calendar status array.

Owner:IBM CORP

System and method for a location prediction-based network scheduler

ActiveUS9380487B2Easy to useNetwork traffic/resource managementDigital computer detailsTraffic capacityComputer science

Embodiments are provided for traffic scheduling based on user equipment (UE) in wireless networks. A location prediction-based network scheduler (NS) interfaces with a traffic engineering (TE) function to enable location-prediction-based routing for UE traffic. The NS obtains location prediction information for a UE for a next time window comprising a plurality of next time slots, and obtains available network resource prediction for the next time slots. The NS then determines, for each of the next time slots, a weight value as a priority parameter for forwarding data to the UE, in accordance with the location prediction information and the available network resource prediction. The result for the first time slot is then forwarded from the NS to the TE function, which optimizes, for the first time slot, the weight value with a route and data for forwarding the data to the UE.

Owner:HUAWEI TECH CO LTD

Network scheduler for selectively supporting work conserving mode and network scheduling method thereof

InactiveUS7961620B2Effective bandwidthImprove efficiencyError preventionFrequency-division multiplex detailsDistributed computingNetwork scheduler

Provided are a network scheduler and a network scheduling method capable of effectively managing network bandwidths by selectively supporting a work conserving mode to network entities by using an improved token bucket scheme. The network scheduler selectively supports a work conserving mode to network scheduling units (NSUs) serving as network entities by using a token bucket scheme, such that the network scheduler ensures an allocated network bandwidth or enables the NSUs to use a remaining bandwidth. The network scheduler manages the NSUs by classifying the NSUs into a green state, a red state, a yellow state, and a black state according to a token value, a selection / non-selection of the work conserving mode, and an existence / non-existence of the packet request to be processed.

Owner:ELECTRONICS & TELECOMM RES INST

Apparatus and method to coordinate calendar searches in a network scheduler

InactiveUS7283530B2Easy to adaptData switching by path configurationOffice automationArray data structureFinite-state machine

A system that indicates which frame should next be removed by a scheduler from flow queues within a network device, such as a router, network processor, and like devices is disclosed. The system includes a search engine that searches a set of calendars under the control of a Finite State Machine (FSM), a current pointer and input signals from array and a clock line providing current time. The results of the search are loaded into a Winner Valid array and a Winner Location array. A final decision logic circuit parses information in the Winner Valid array and Winner Location array to generate a final Winner Valid Signal, the identity of the winning calendar and the winning location. Winning is used to define the status of the calendar in the calendar status array that is selected as a result of a search process being executed on a plurality of calendars in the calendar status array.

Owner:INT BUSINESS MASCH CORP

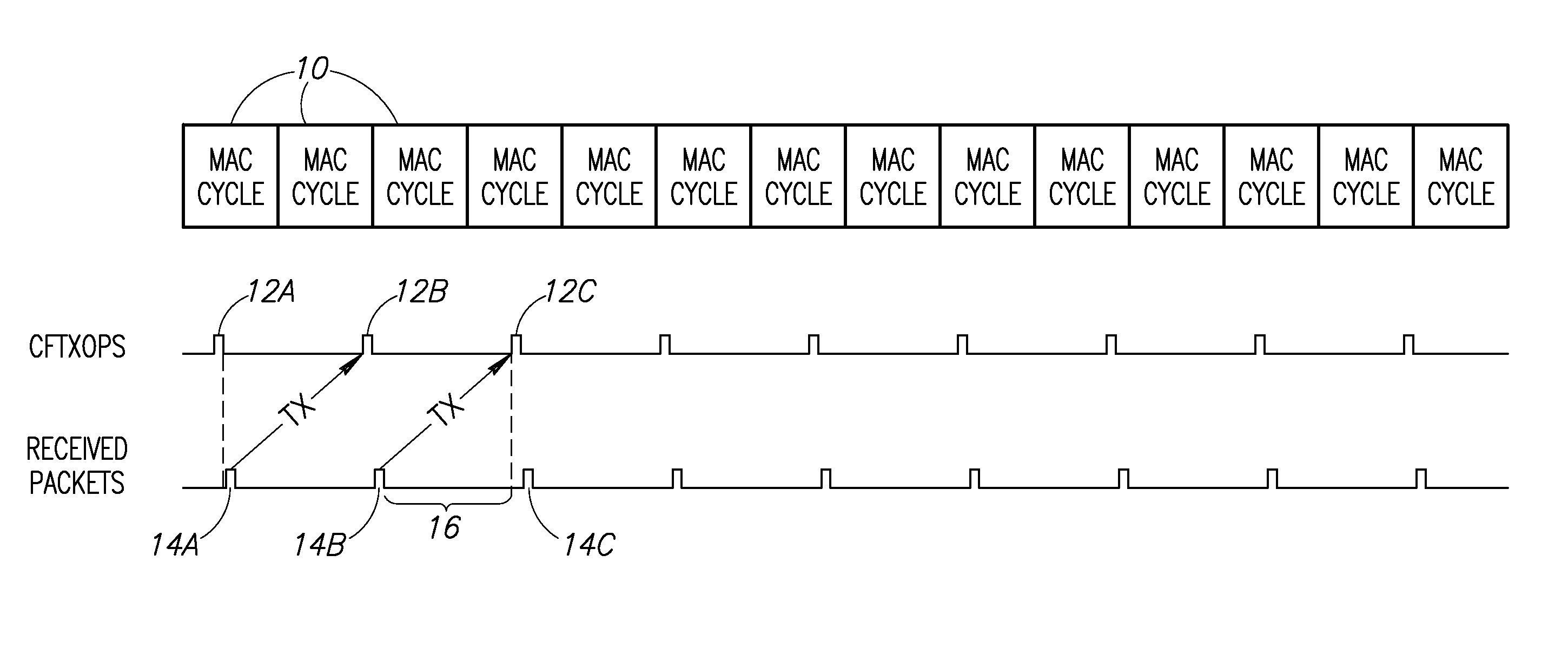

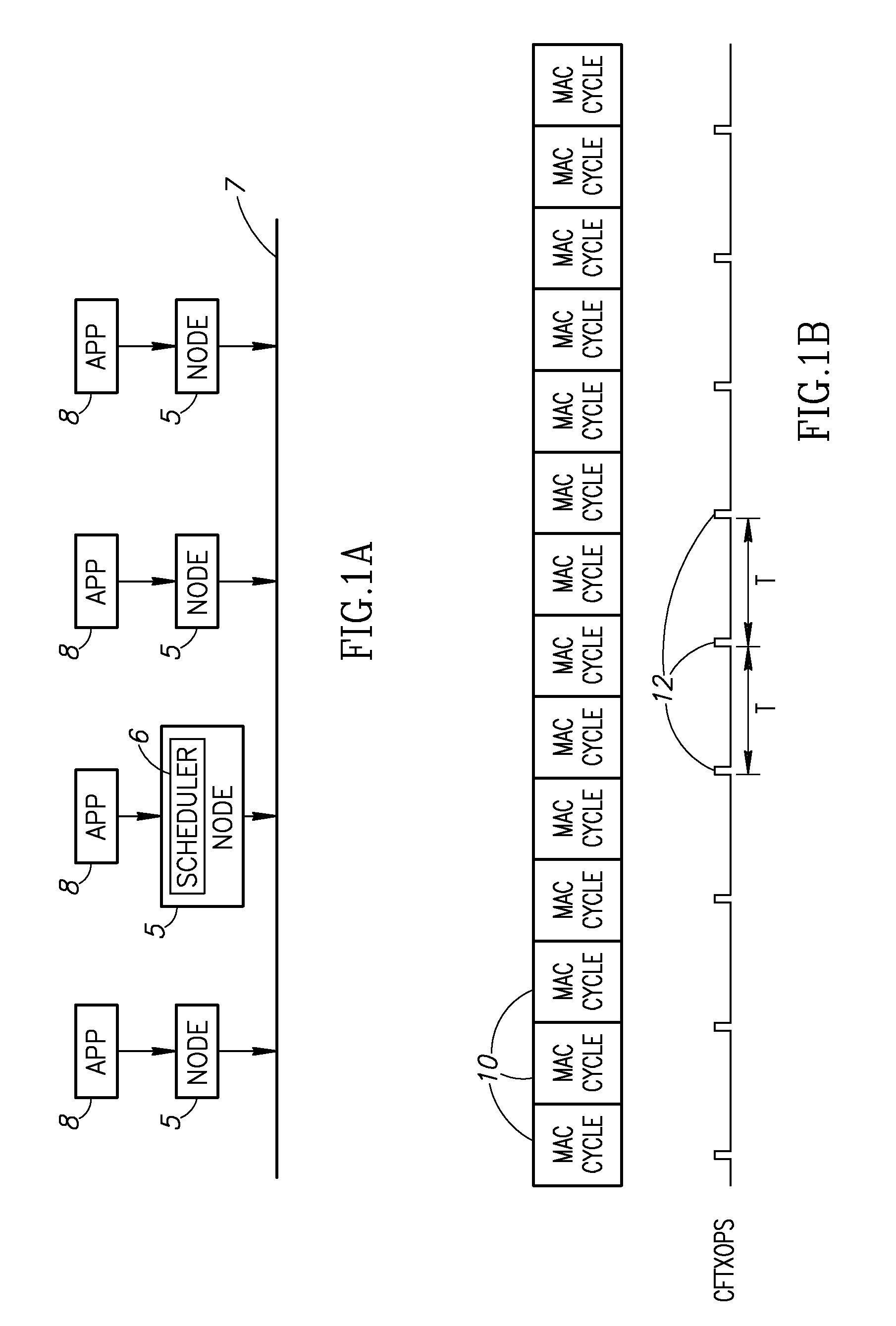

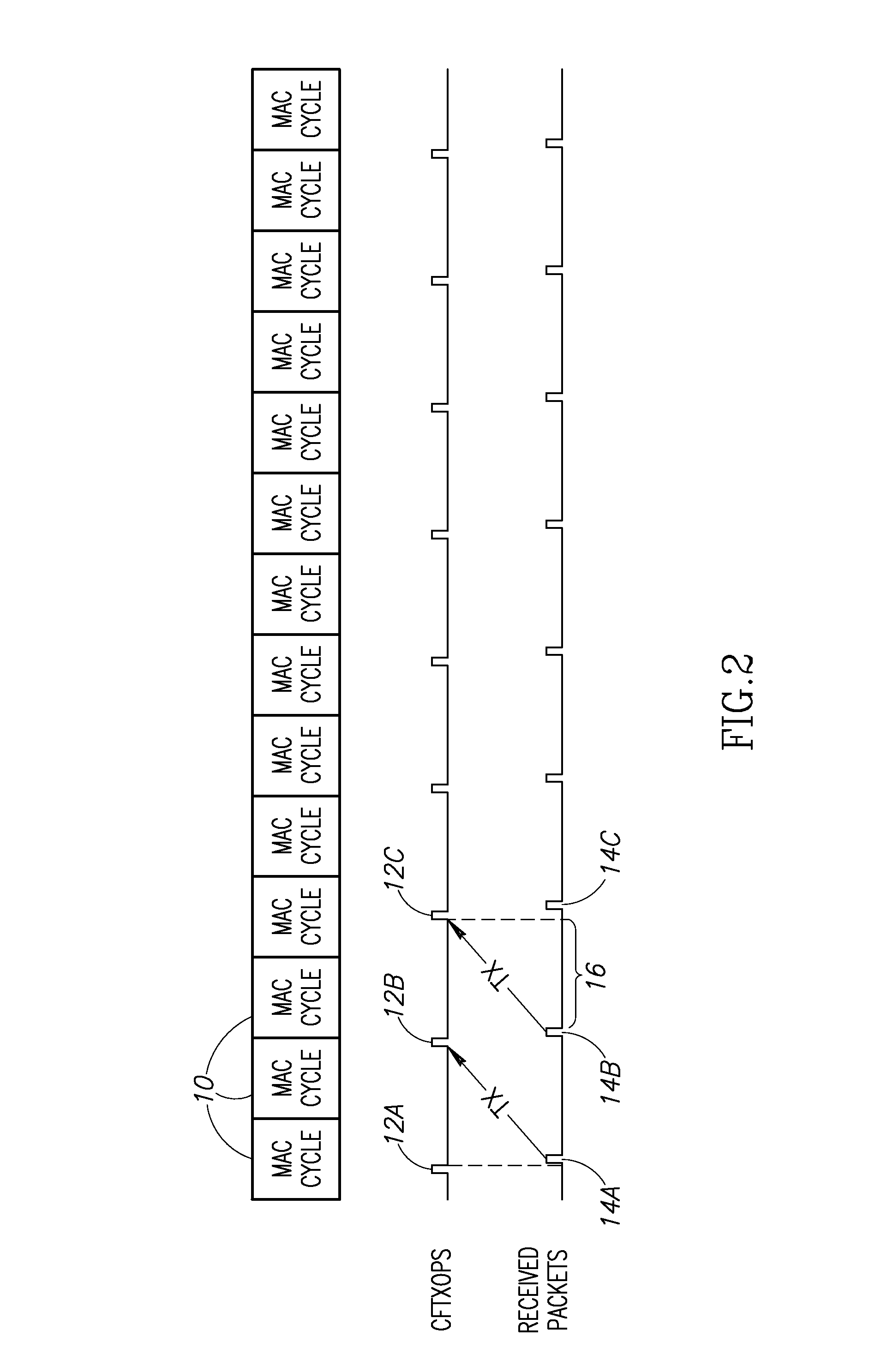

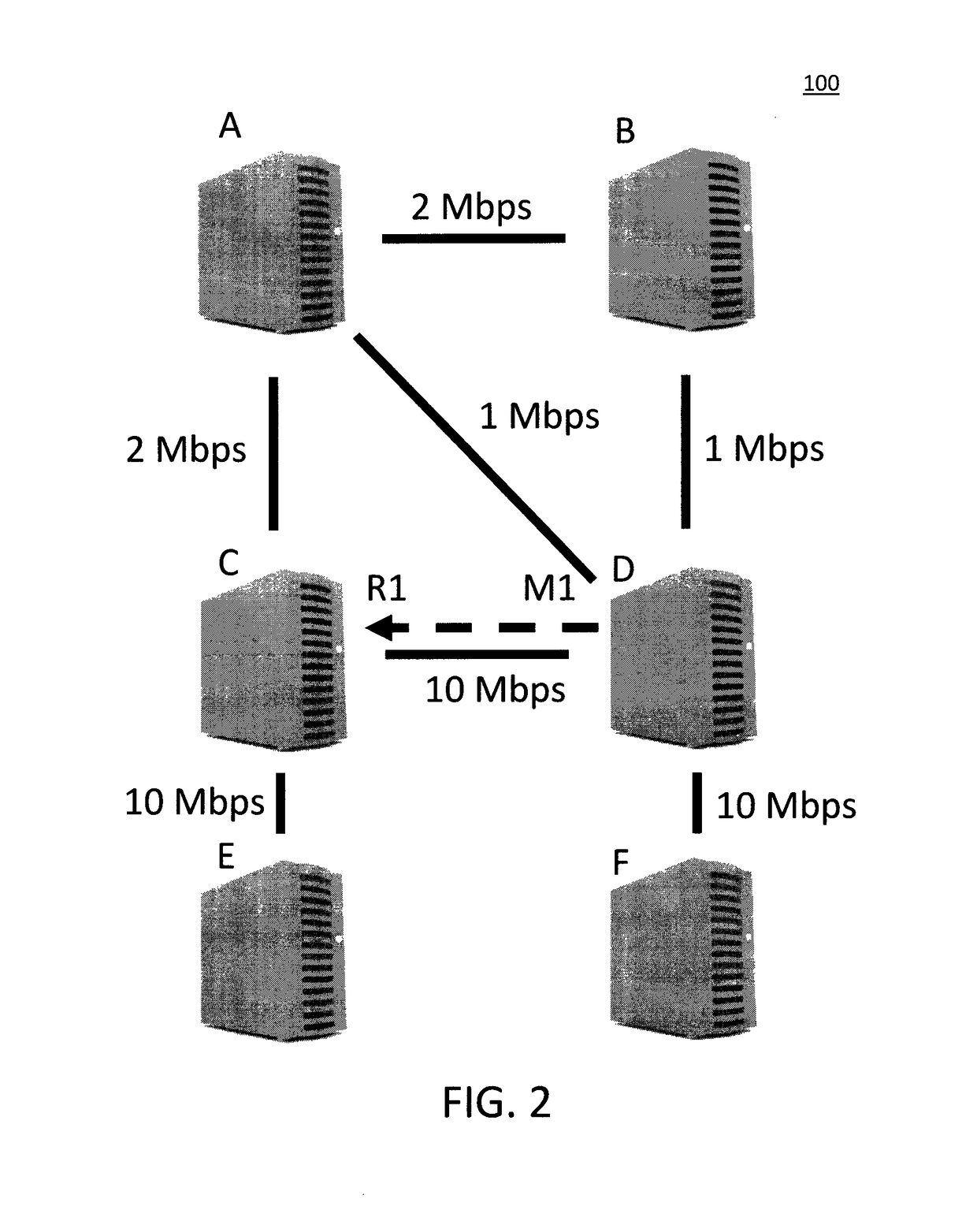

Allocation adjustment in network domains

ActiveUS9094232B2Delay minimizationTime-division multiplexNetwork connectionsService flowMedia access

Owner:SIGMA DESIGNS ISRAEL S D I

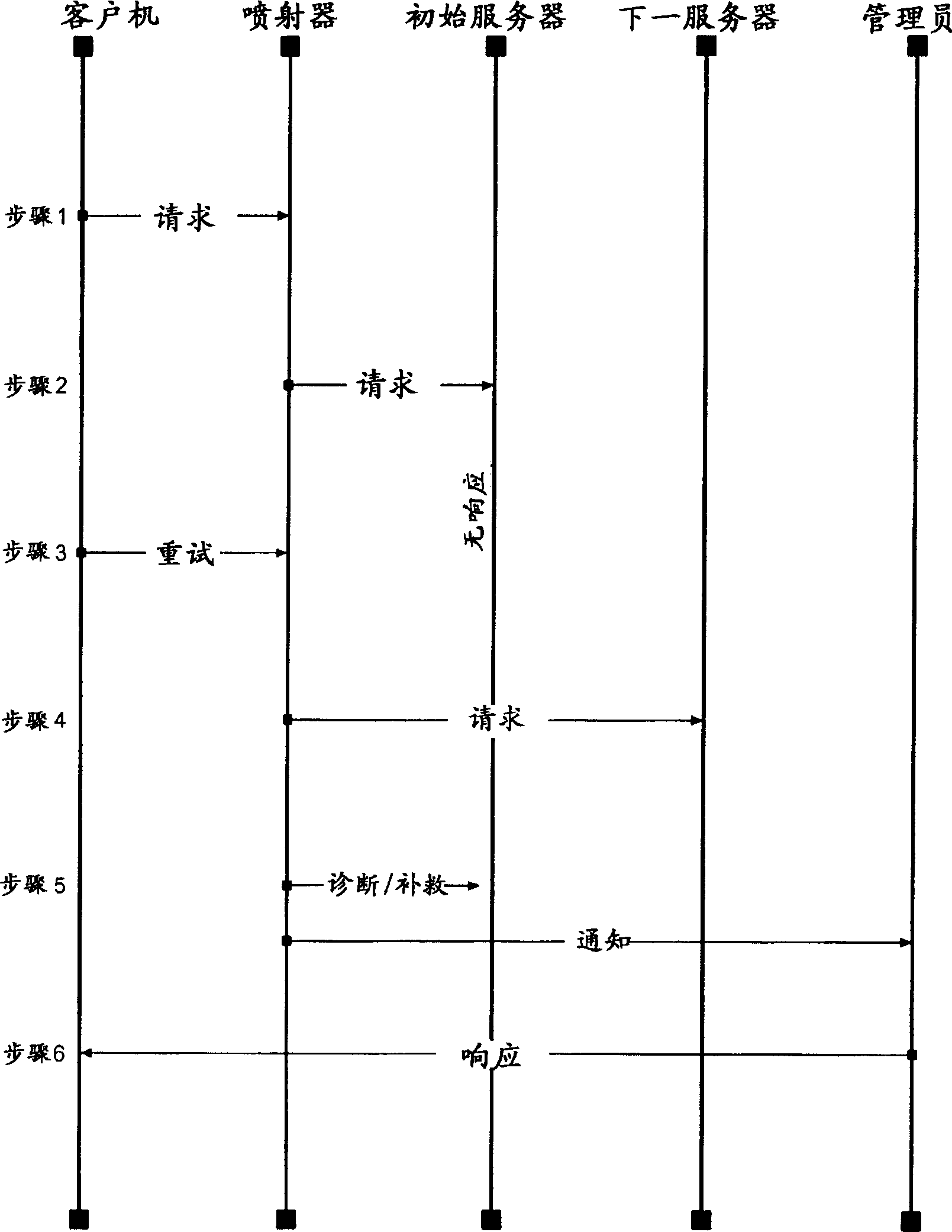

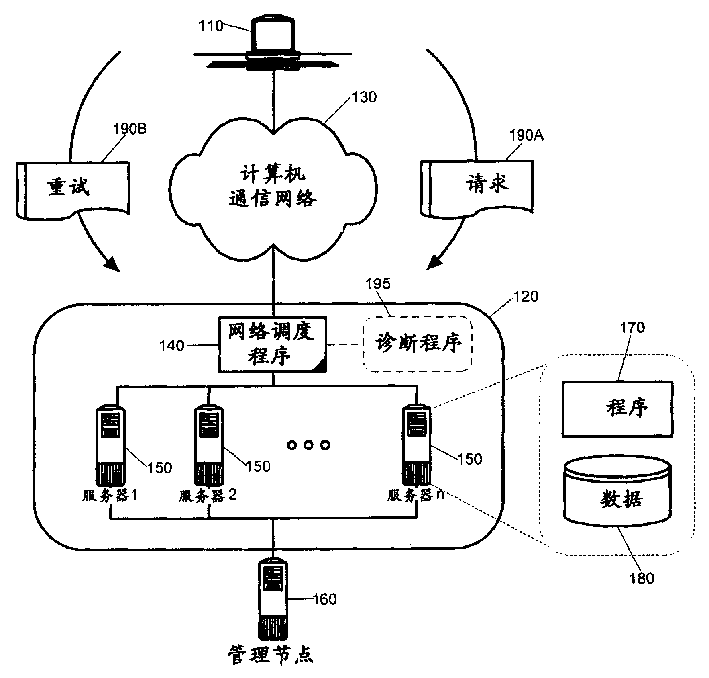

Self repairnig method in automatic server field and server field

InactiveCN1492348AMultiple digital computer combinationsNon-redundant fault processingSelf-healingLeast recently frequently used

Owner:INT BUSINESS MASCH CORP

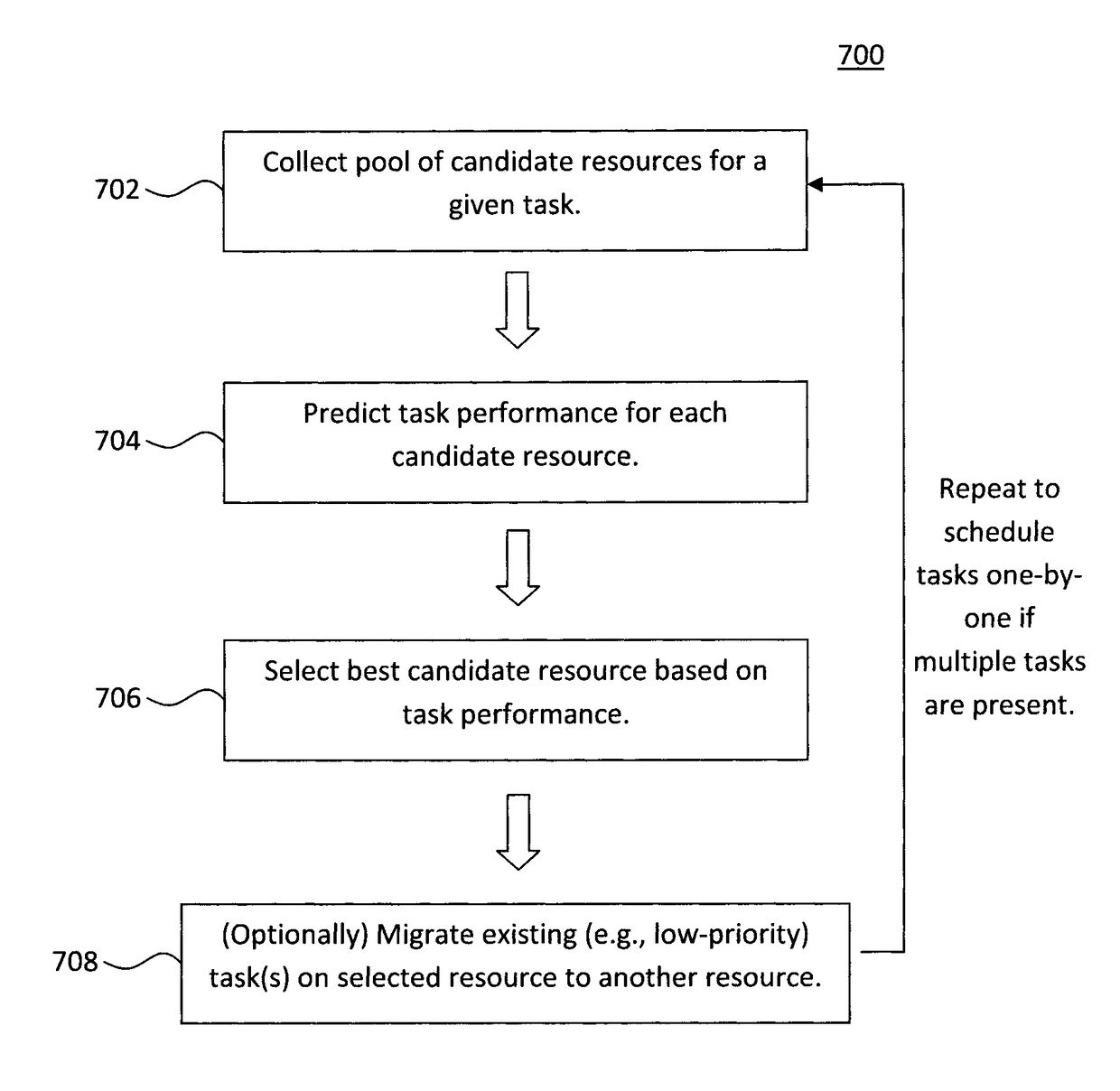

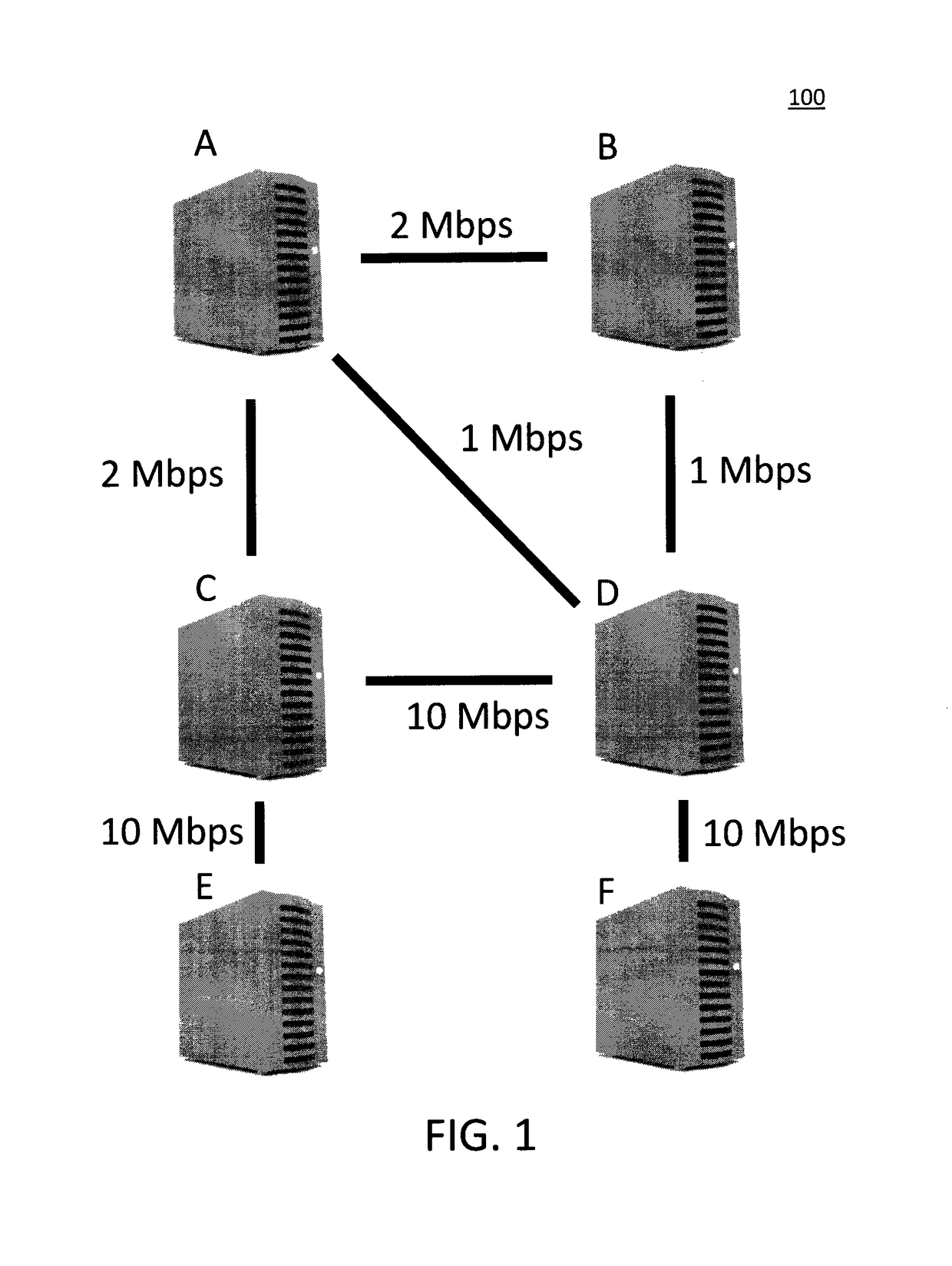

Joint network and task scheduling

Owner:INT BUSINESS MASCH CORP

Publishing a Disparate Live Media Output Stream using Pre-Encoded Media Assets

A media content packaging and distribution system that generates a plurality of disparate live media output streams to be viewed on a plurality of consumer devices, receives a programming schedule for a channel from a network scheduler. The programming schedule corresponds to a first manifest associated with a first pre-encoded media asset. Information related to a plurality of media segments from the first pre-encoded media asset indicated in the first manifest is inserted into a live output stream manifest. Transition occurs between first media segments of the plurality of media segments from a first data source to second media segments of the plurality of media segments from a second data source, different from the first data source, where the transition is based on additional information. Based on the transition, a disparate live media output stream is generated for a channel viewable on a consumer device via a media player

Owner:TURNER BROADCASTING SYST INC

Publishing a disparate live media output stream using pre-encoded media assets

Owner:TURNER BROADCASTING SYST INC

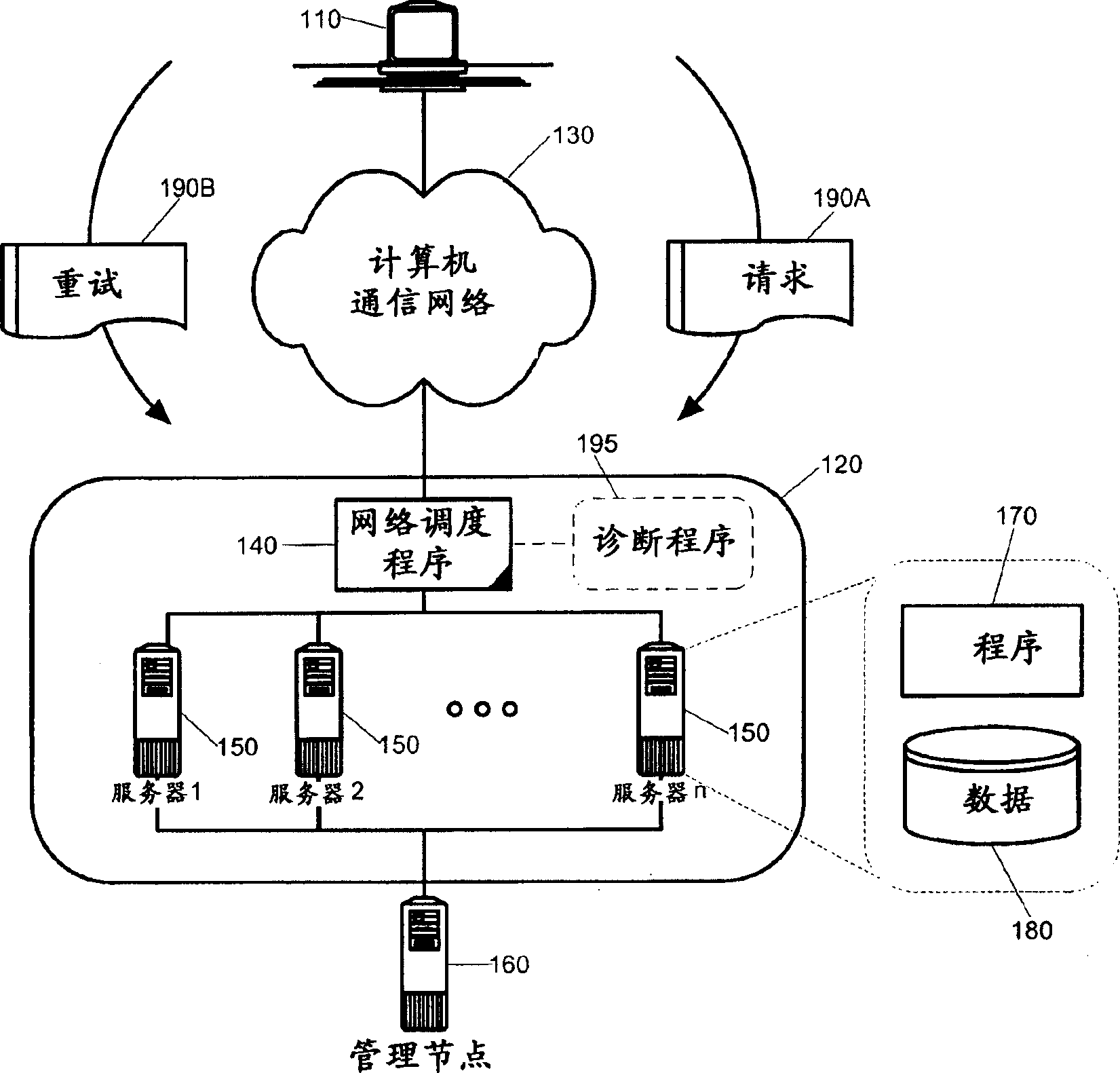

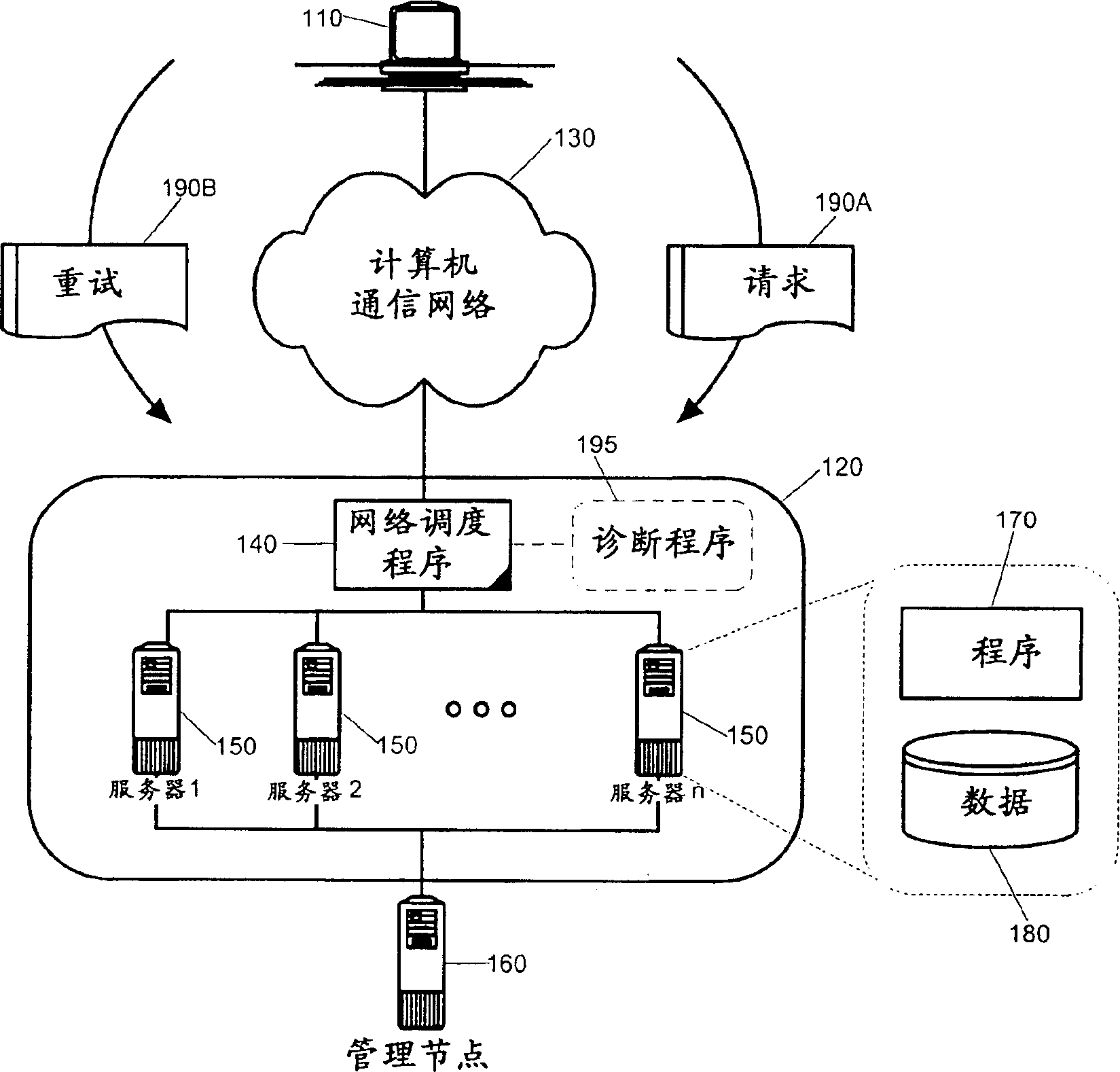

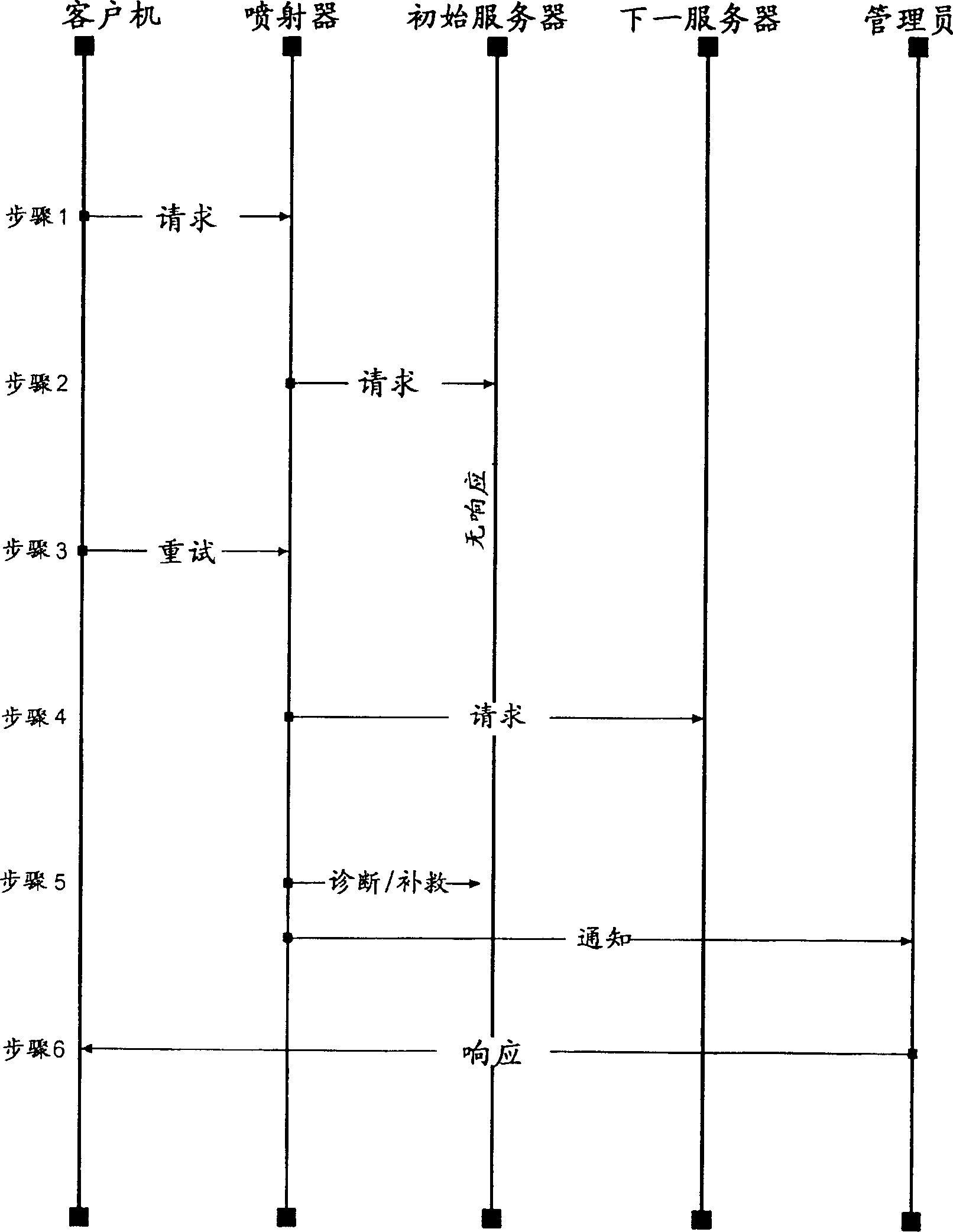

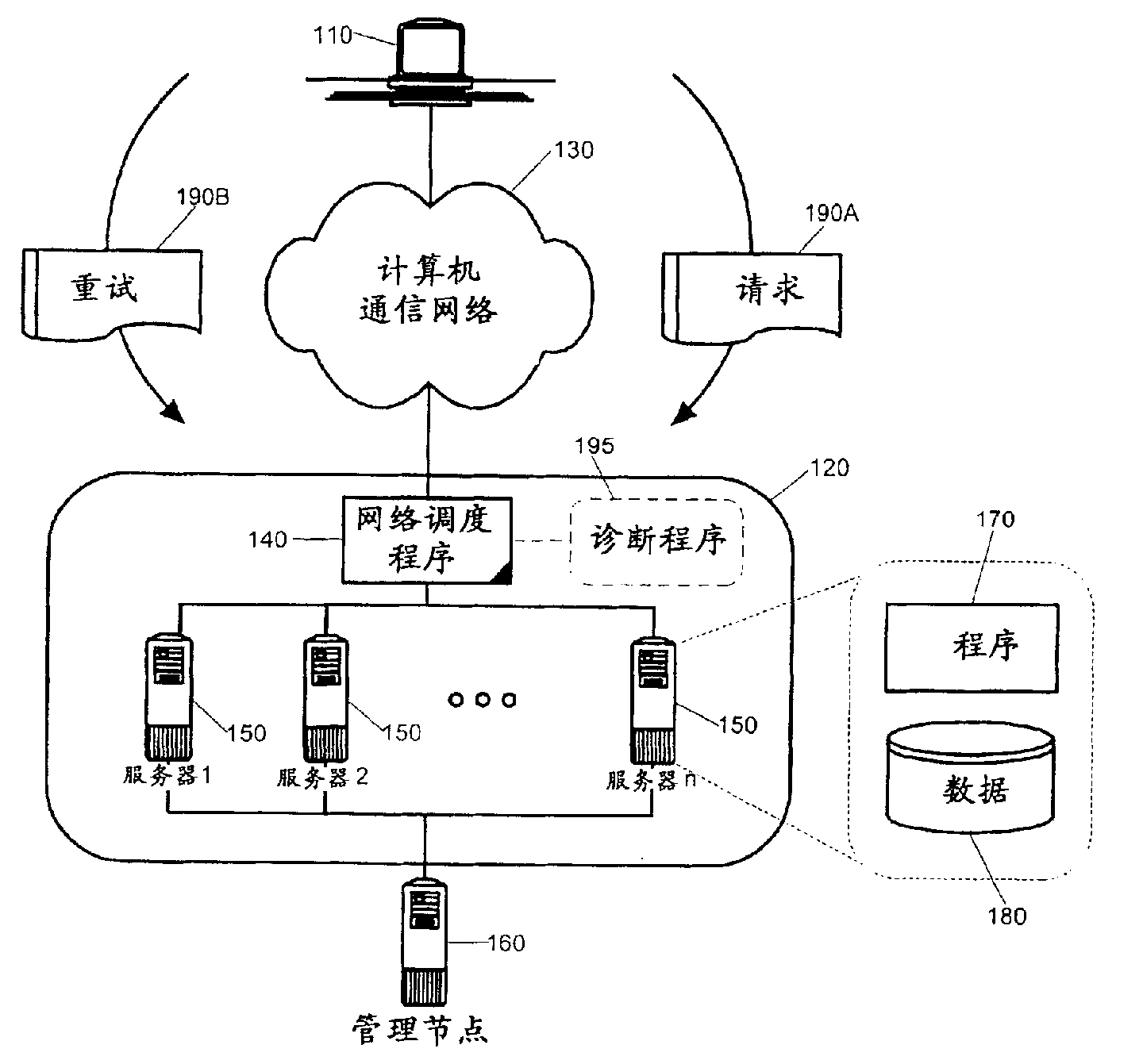

Self repairing method in automatic server field and server field

InactiveCN1248132CMultiple digital computer combinationsNon-redundant fault processingSprayerLeast recently frequently used

A method, system and apparatus for server failure diagnosis and self-healing in a server farm. An autonomic server farm which has been configured in accordance with the inventive arrangements can include a multiplicity of servers enabled to respond to requests received from clients which are external to the server farm. A resource director such as an IP sprayer or a network dispatcher can be configured to route requests to selected ones of the servers, in accordance with resource allocation algorithms such as random, round-robin and least recently used. Significantly, unlike conventional server farms whose management of failure diagnosis and self-healing relies exclusively upon the capabilities of the resource director, in the present invention, client-assisted failure detection logic can be coupled to the resource director so as to provide client-assisted management of failure diagnosis and self-healing.

Owner:INT BUSINESS MASCH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com