Scheduling method and device, electronic equipment and readable storage medium

A scheduling method and technology of electronic equipment, applied in the direction of multi-channel program device, neural learning method, program control design, etc., can solve the problems that data privacy cannot be guaranteed and model service quality has a great impact

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

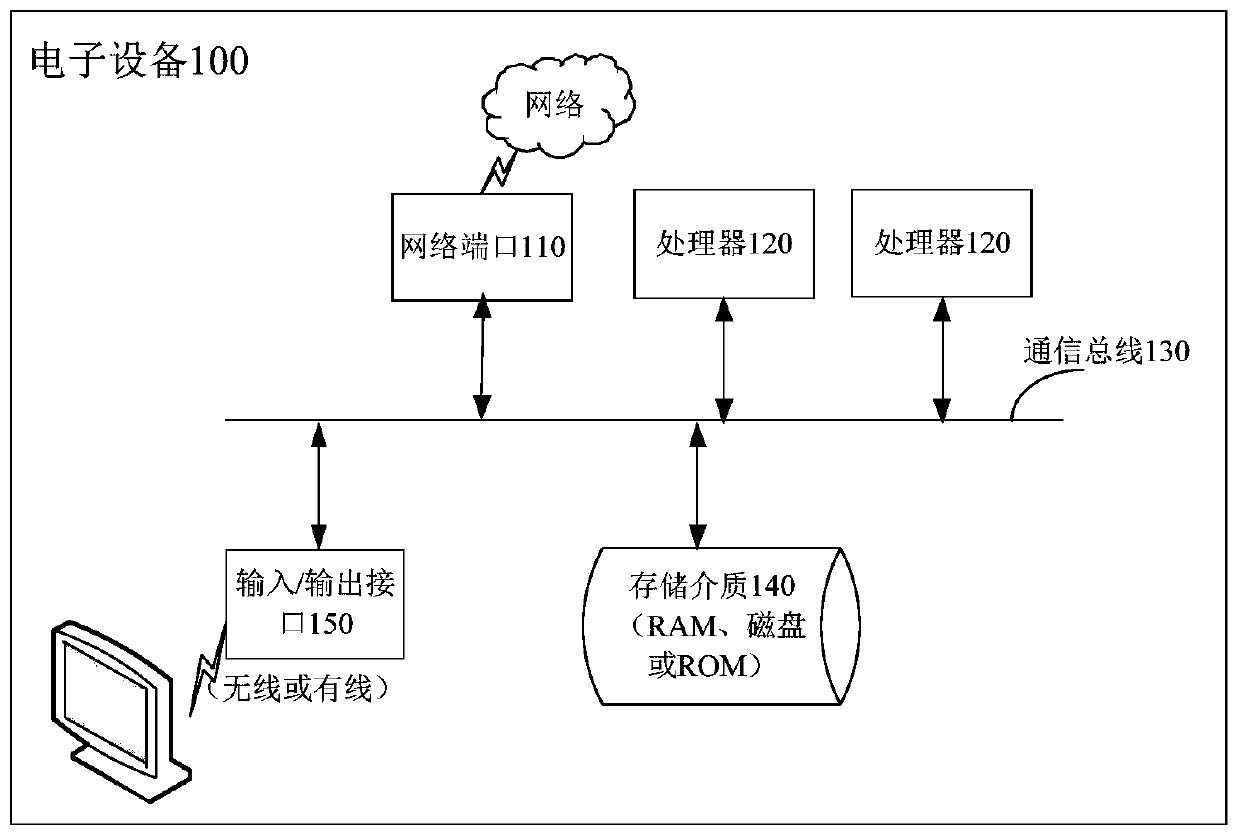

[0093] figure 1 It is a schematic diagram of exemplary hardware and software components of an electronic device 100 provided in an alternative embodiment of the present application, wherein the electronic device 100 may be a general-purpose computer or a special-purpose computer, or may be a mobile terminal. Although only one electronic device 100 is shown in the present application, for the sake of convenience, the functions described in the present application may also be implemented in a distributed manner on multiple similar platforms to execute tasks to be processed.

[0094]For example, the electronic device 100 may include a network port 110 connected to a network, one or more processors 120 for executing program instructions, a communication bus 130, and various forms of storage media 140, such as RAM, disk, or ROM, or any combination thereof. Exemplarily, the computer platform may also include program instructions stored in ROM, RAM, or other types of non-transitory ...

no. 2 example

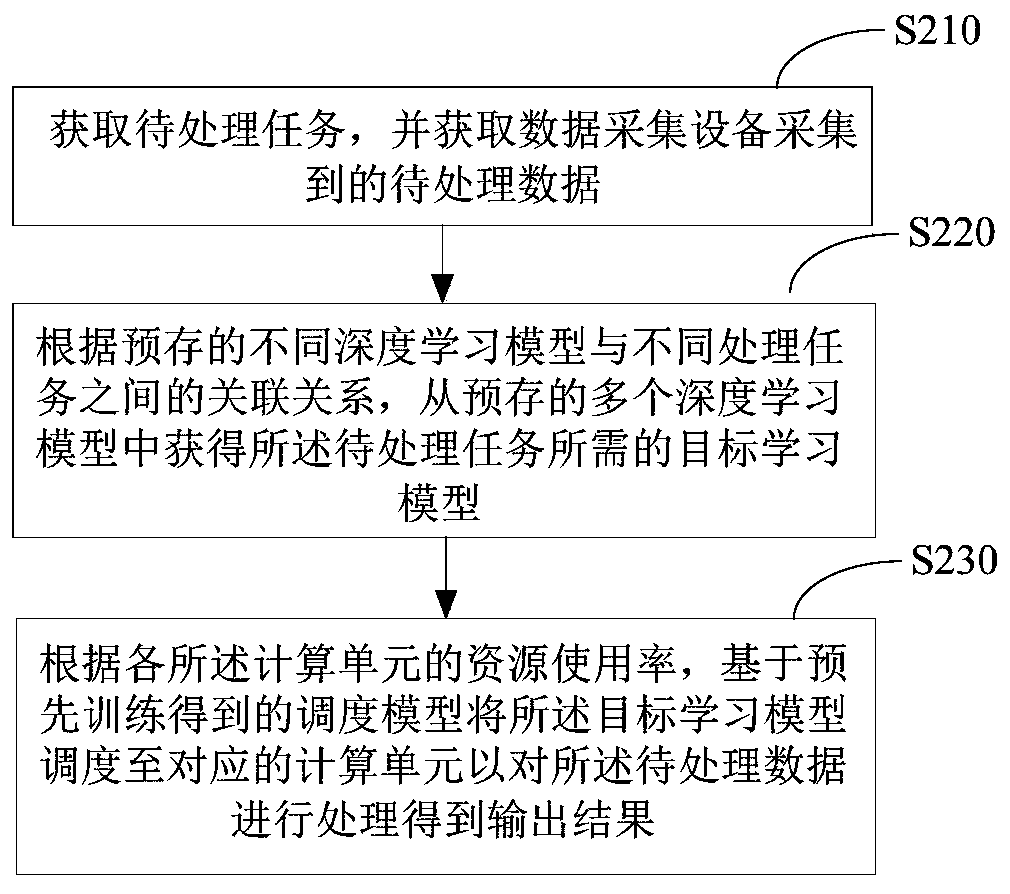

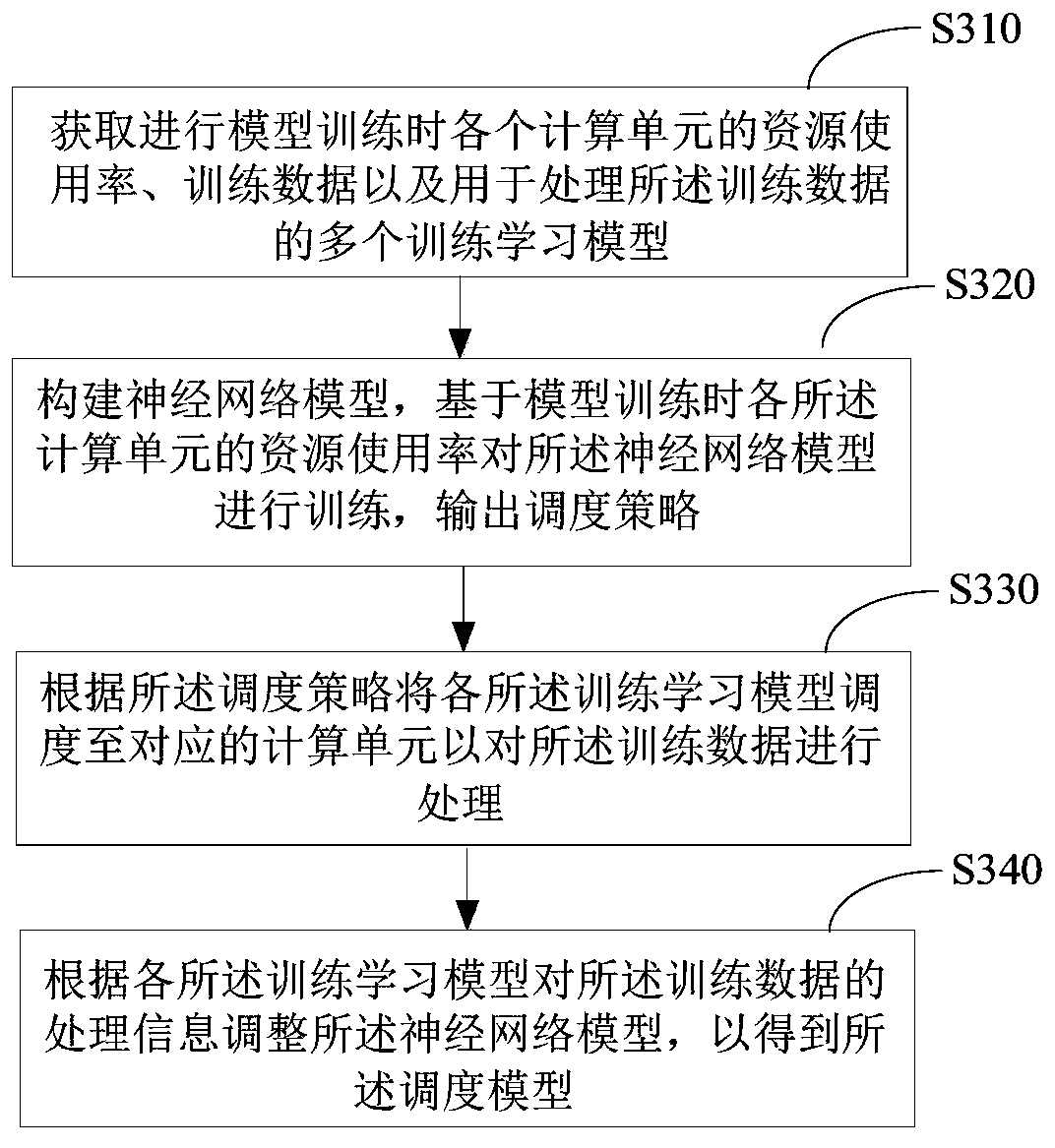

[0100] figure 2 A schematic flowchart of a scheduling method according to some embodiments of the present application is shown, and the scheduling method provided in the present application can be applied to the above-mentioned electronic device 100 . It should be understood that in other embodiments, the order of some steps in the scheduling method described in this embodiment may be exchanged according to actual needs, or some steps may be omitted or deleted. The detailed steps of the scheduling method are introduced as follows.

[0101] Step S210, obtaining tasks to be processed, and obtaining data to be processed collected by the data collection device.

[0102] Step S220, according to the association between different pre-stored deep learning models and different processing tasks, obtain the target learning model required by the task to be processed from a plurality of pre-stored deep learning models.

[0103] Step S230 , according to the resource utilization rate of e...

no. 3 example

[0142] Figure 8 A functional module block diagram of the scheduling device 800 in some embodiments of the present application is shown, and the functions implemented by the scheduling device 800 correspond to the steps performed by the above method. The device can be understood as the above-mentioned electronic device 100, or the processor 120 of the electronic device 100, and can also be understood as a component that realizes the functions of the present application under the control of the electronic device 100 independent of the above-mentioned electronic device 100 or the processor 120, such as Figure 8 As shown, the scheduling apparatus 800 may include an acquisition module 810 , a target learning model acquisition module 820 and a scheduling module 830 .

[0143] The obtaining module 810 is configured to obtain tasks to be processed and obtain data to be processed collected by the data collection device. It can be understood that the acquiring module 810 can be used ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com