Patents

Literature

177 results about "Memory load" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Load memory is non-volatile storage for the user program, data and configuration. When a project is downloaded to the CPU, it is first stored in the Load memory area. This area is located either in a memory card (if present) or in the CPU.

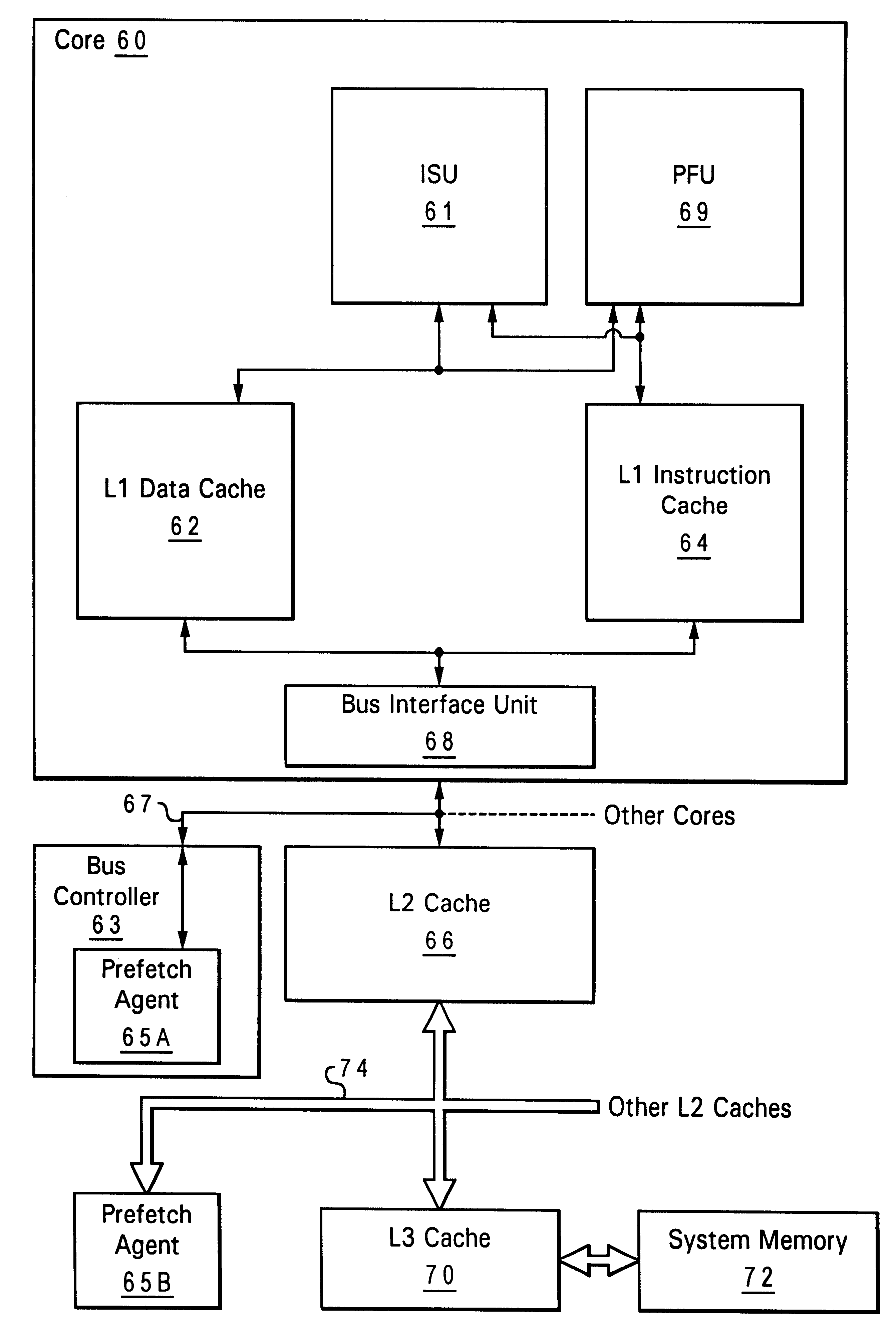

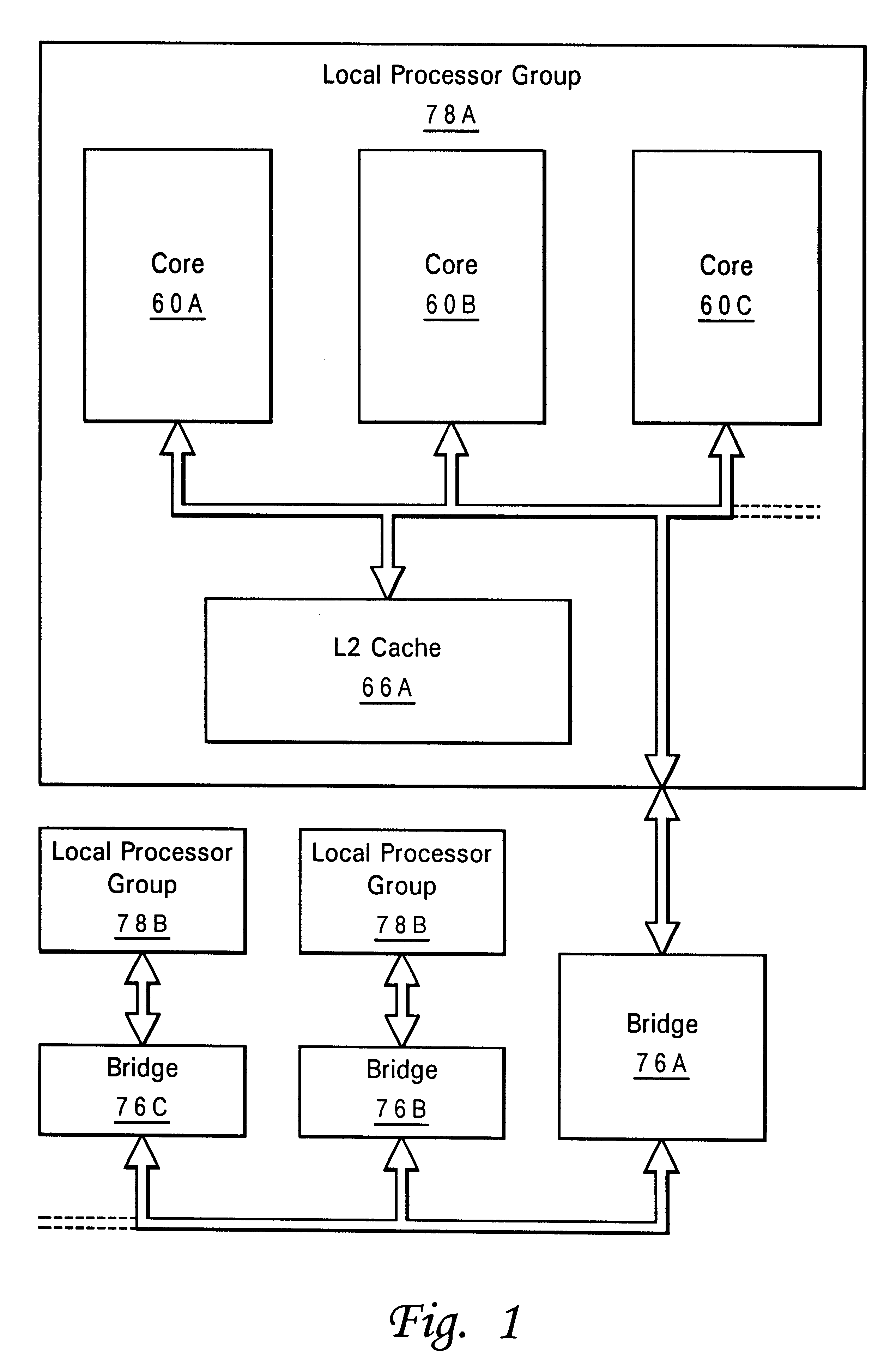

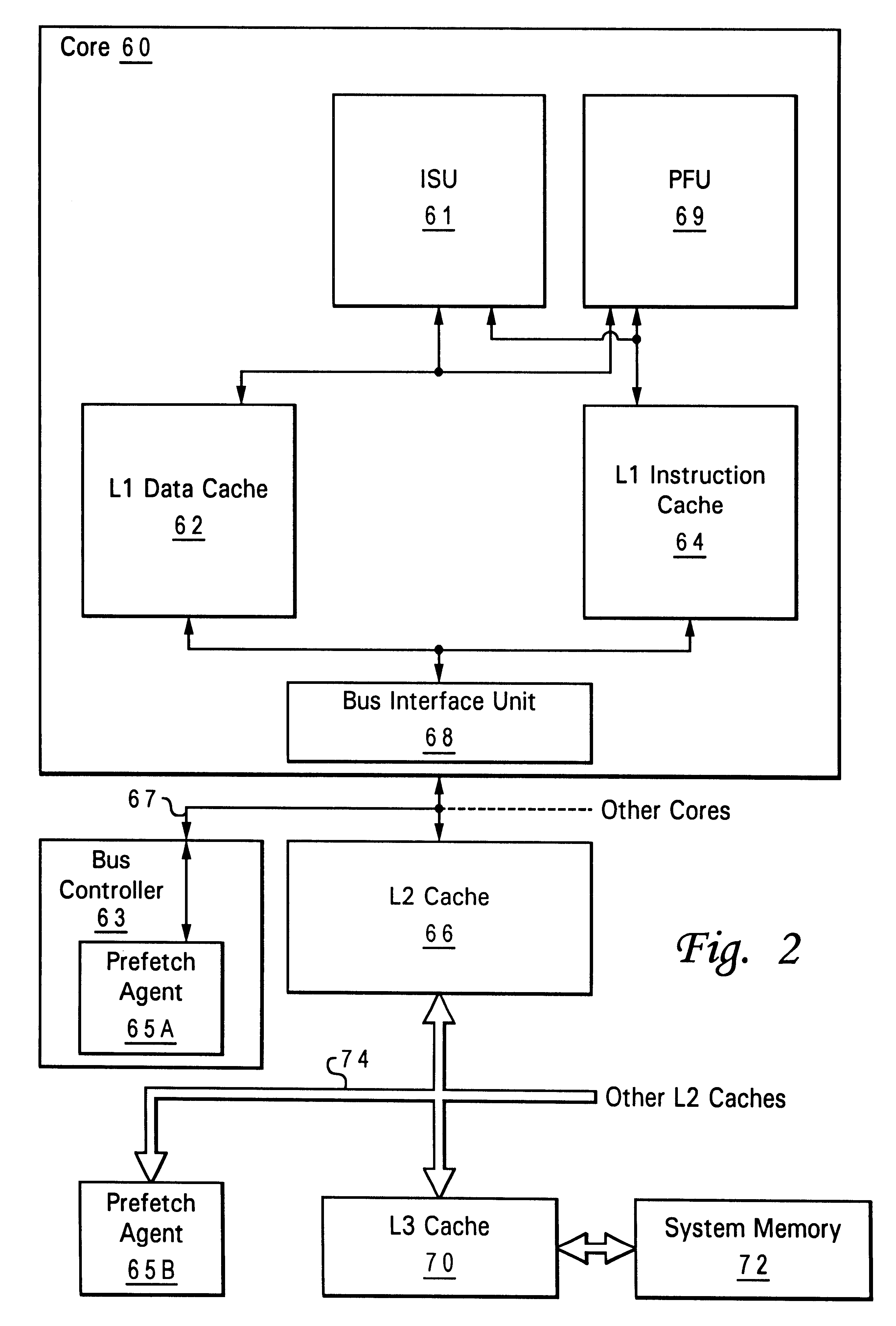

Programmable agent and method for managing prefetch queues

InactiveUS6470427B1Efficient loadingMemory architecture accessing/allocationMemory adressing/allocation/relocationComputerized systemProcedural approach

A programmable agent and method for managing prefetch queues provide dynamically configurable handling of priorities in a prefetching subsystem for providing look-ahead memory loads in a computer system. When it's queues are at capacity an agent handling prefetches from memory either ignores new requests, forces the new requests to retry or cancels a pending request in order to perform the new request. The behavior can be adjusted under program control by programming a register, or the control may be coupled to a load pattern analyzer. In addition, the behavior with respect to new requests can be set to different types depending on a phase of a pending request.

Owner:LINKEDIN

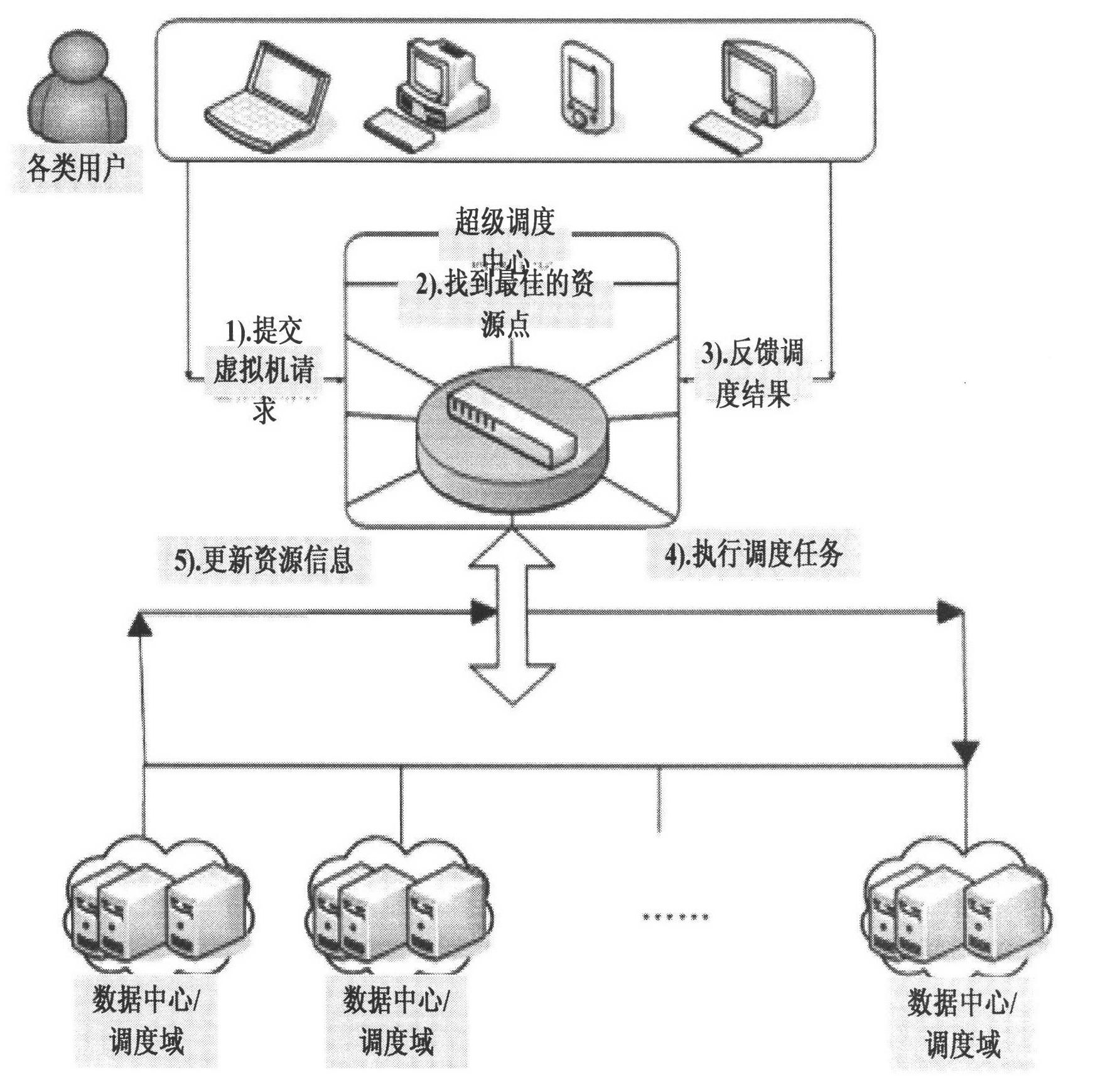

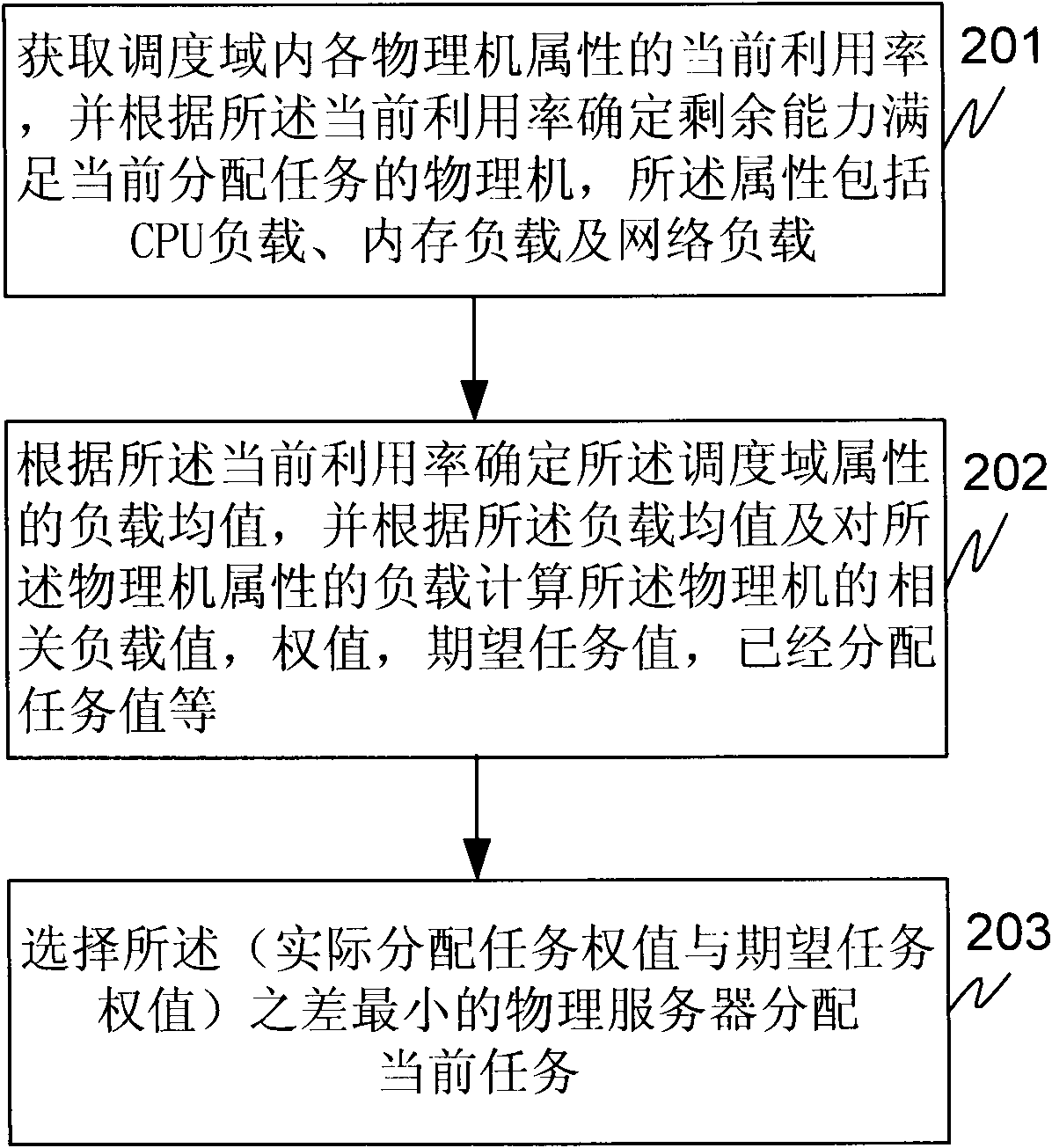

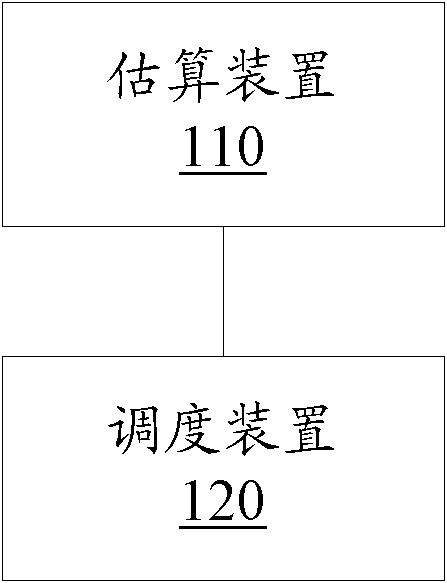

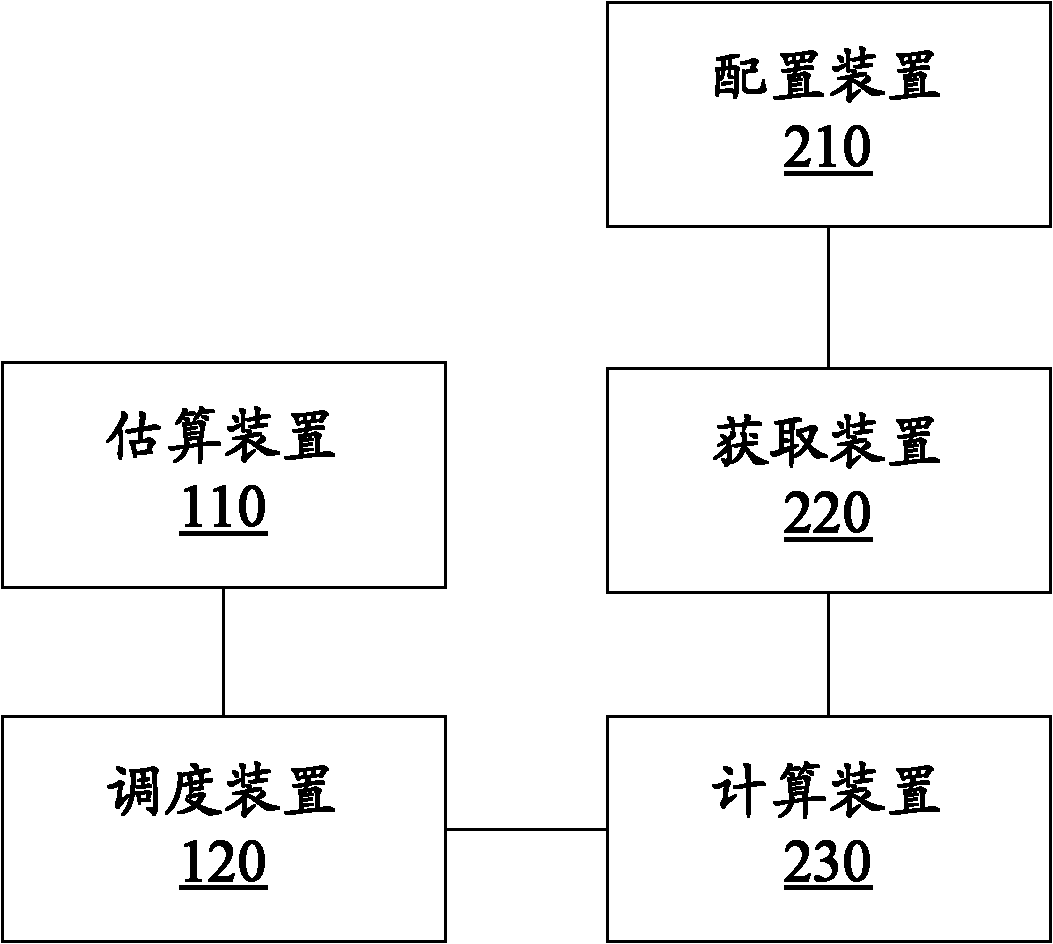

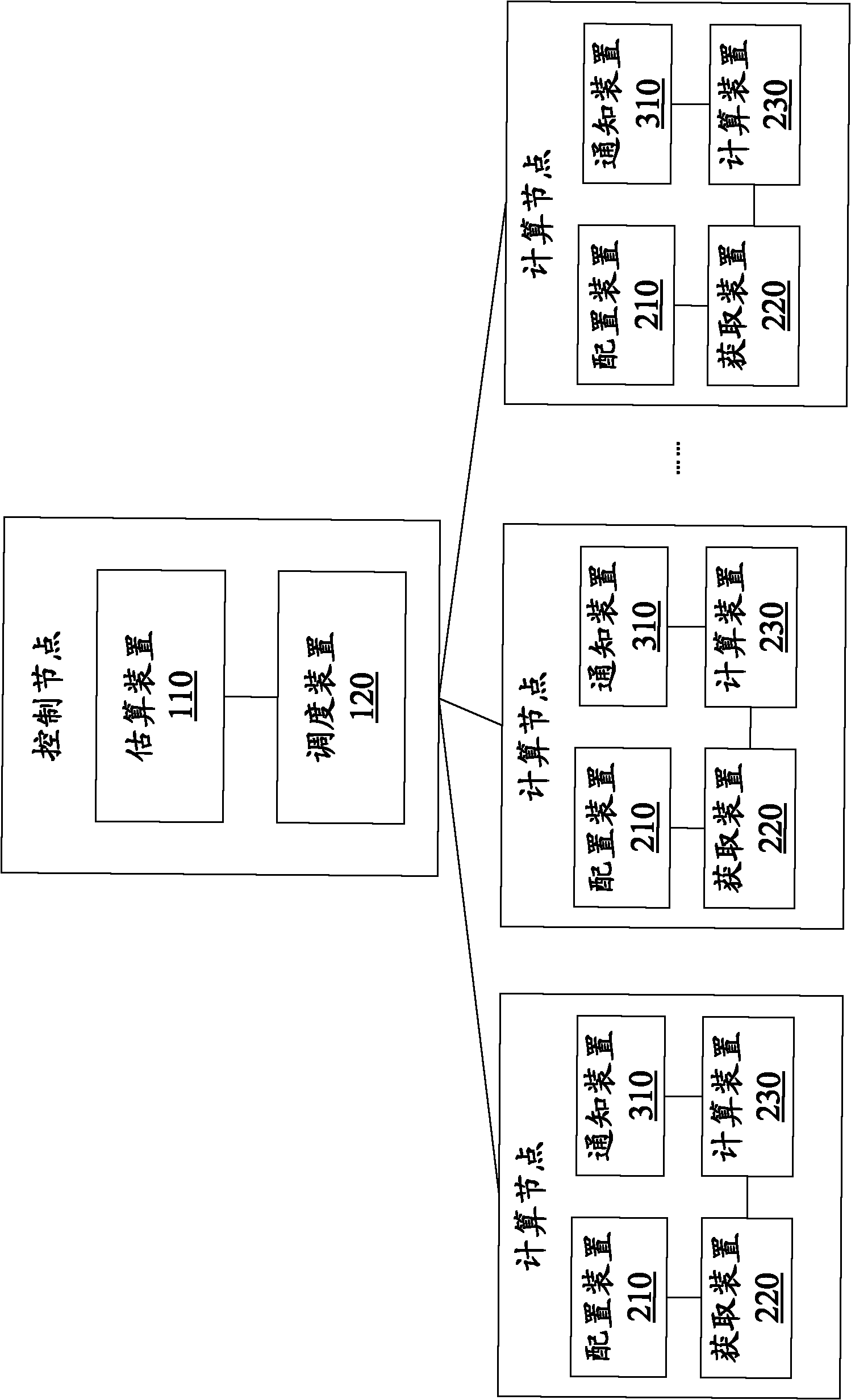

Method and device for realizing data center resource load balance in proportion to comprehensive allocation capability

ActiveCN102185779ATimely determination of load statusSolve load imbalanceData switching networksUser needsData center

The invention relates to a method and a device for realizing data center resource load balance. The method of the technical scheme comprises the following steps of: acquiring the current utilization rates of attributes of each physical machine in a scheduling domain, and determining the physical machine for a currently allocated task according to the principle of fair distribution in proportion to the allocation capability of a server, an actual allocated task weight value and an expected task weight value, wherein the attributes comprise a central processing unit (CPU) load, a memory load and a network load; determining a mean load value of the attributes of the scheduling domain according to the current utilization rates, and calculating a difference between the actual allocated task weight value and expected task weight value of the physical machine according to the mean load value and predicted load values of the attributes of the physical machine; and selecting the physical machine of which the difference between the actual allocated task weight value and the expected task weight value is the smallest for the currently allocated task. The device provided by the invention comprises a selection control module, a calculation processing module and an allocation execution module. By the technical scheme provided by the invention, the problem of physical server load unbalance caused by inconsistency between user need provisions and physical server provisions can be solved.

Owner:田文洪

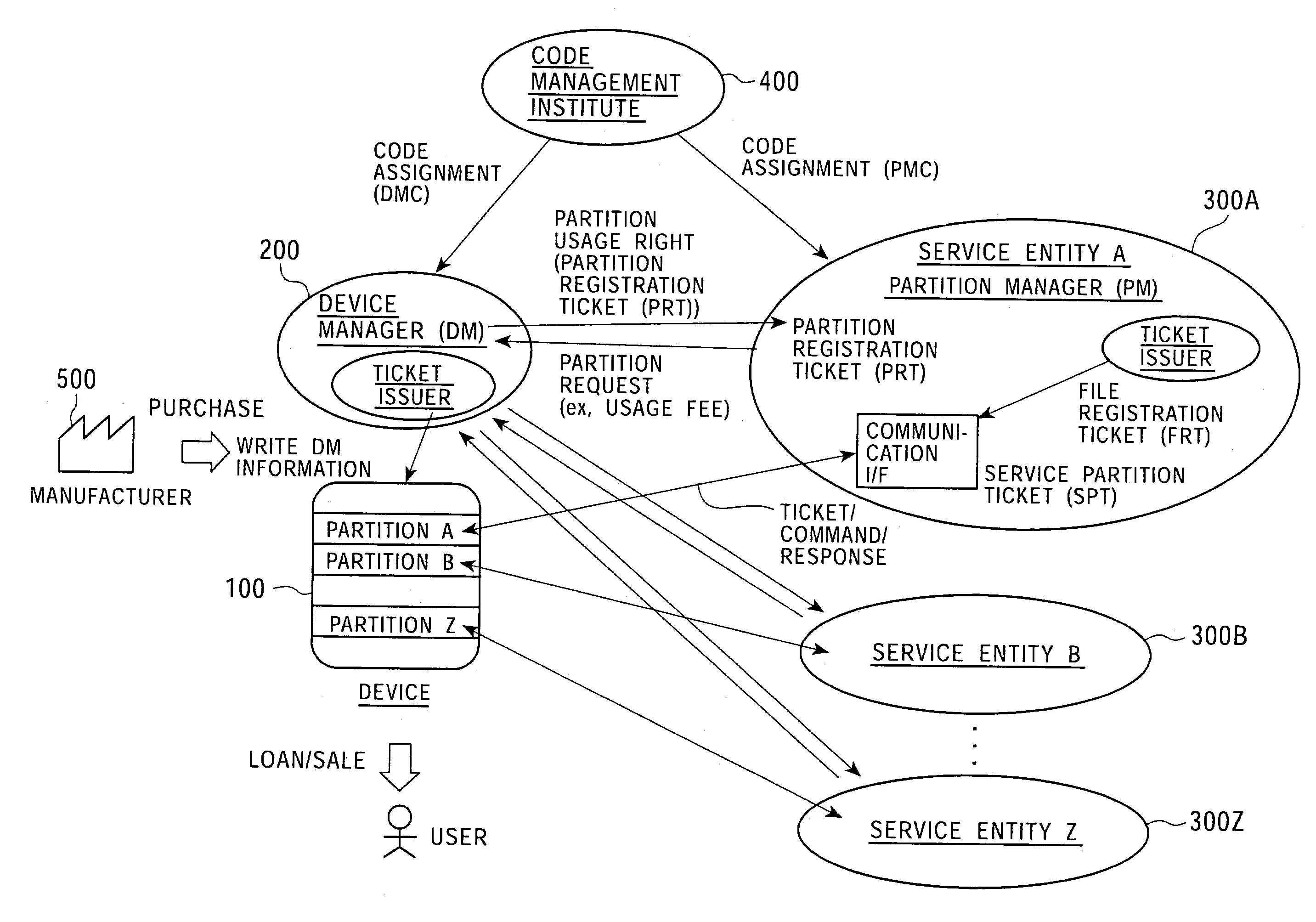

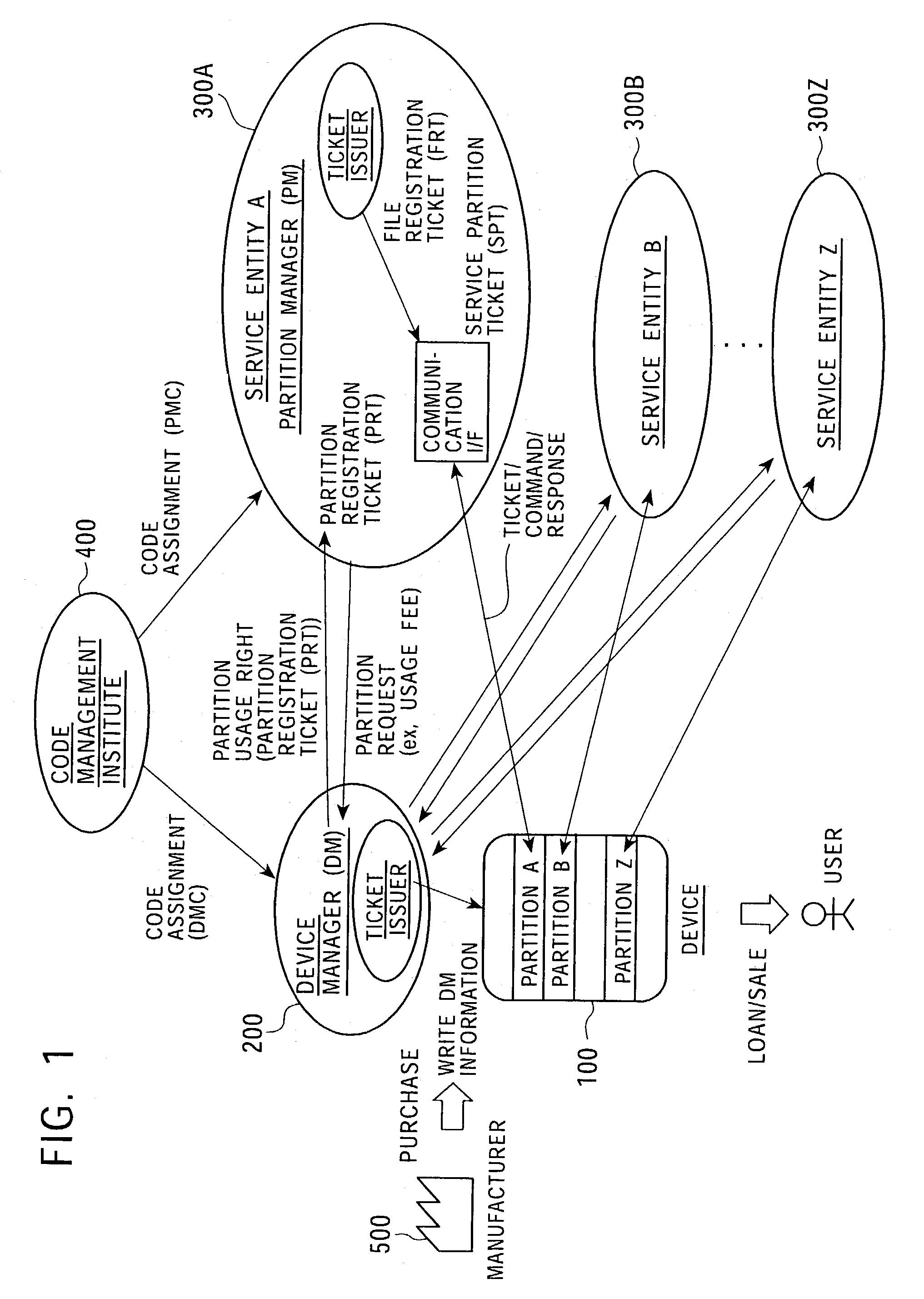

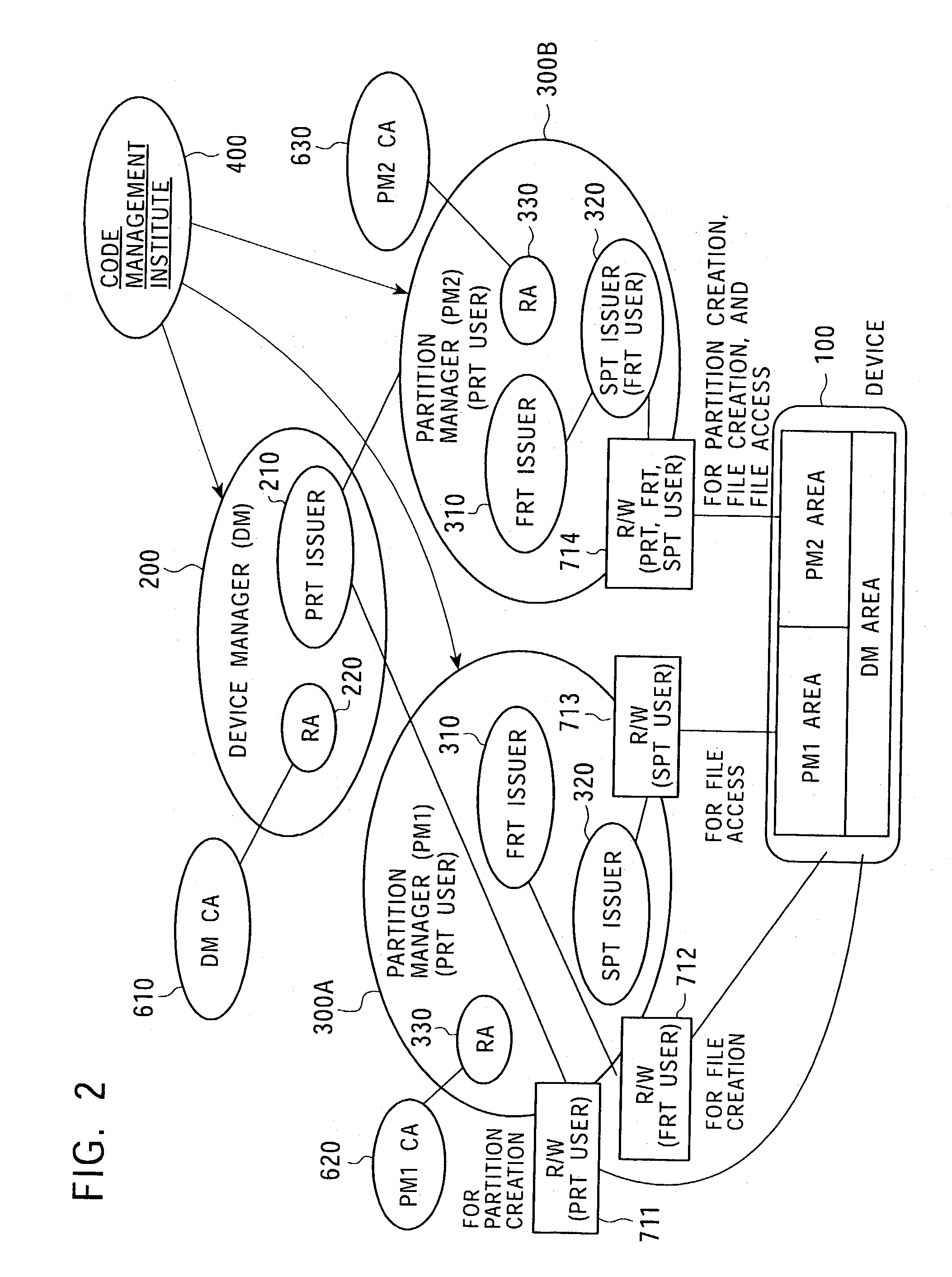

Memory access control system and management method using access control ticket

InactiveUS7225341B2Implement configurationUser identity/authority verificationUnauthorized memory use protectionComputer hardwareAccess control matrix

To provide a memory access control system in which partitions, which are divided memory areas generated in a device, can be independently managed. In response to access to the divided memory areas, which are a plurality of partitions, various types of access control tickets are issued under the management of each device or partition manager, and processing based on rules indicated in each ticket is performed in a memory-loaded device. A memory has a partition, which serves as a memory area managed by the partition manager, and a device manager management area managed by the device manager. Accordingly, partition authentication and device authentication can be executed according to either a public-key designation method or a common-key designation method.

Owner:SONY CORP

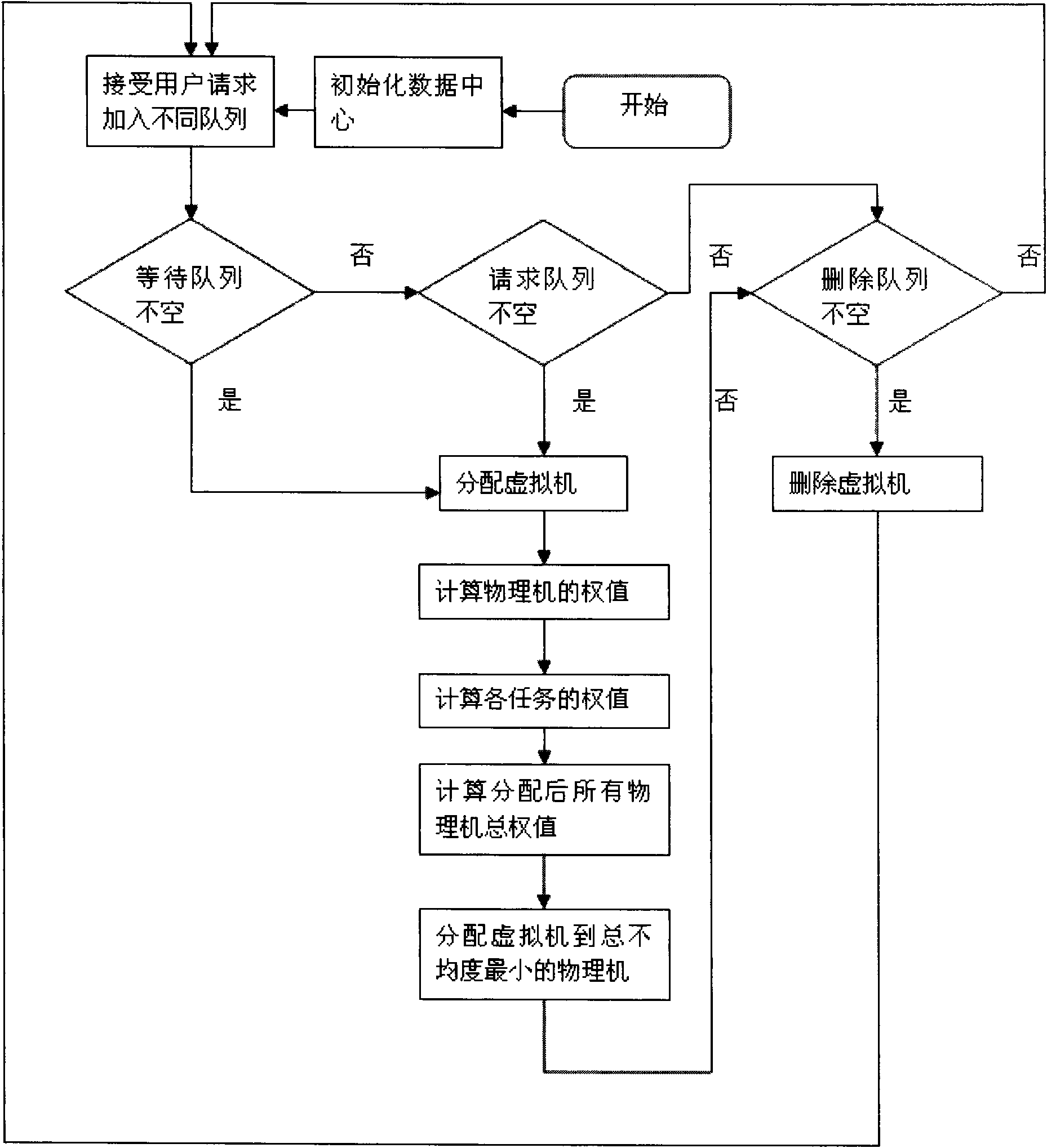

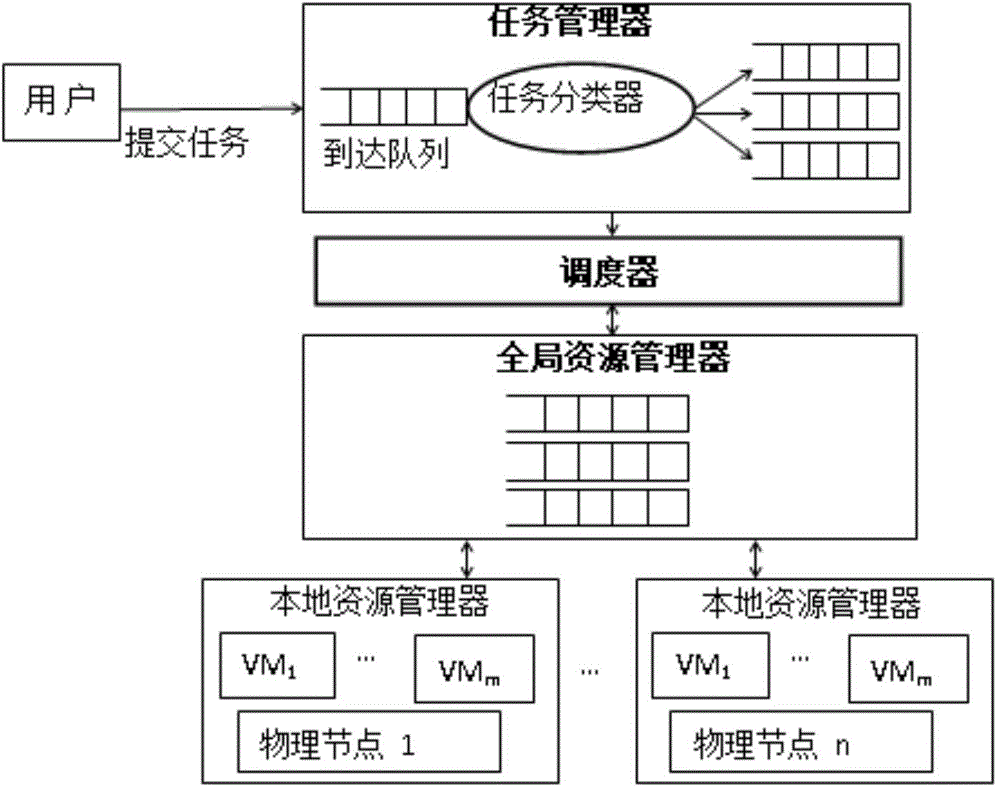

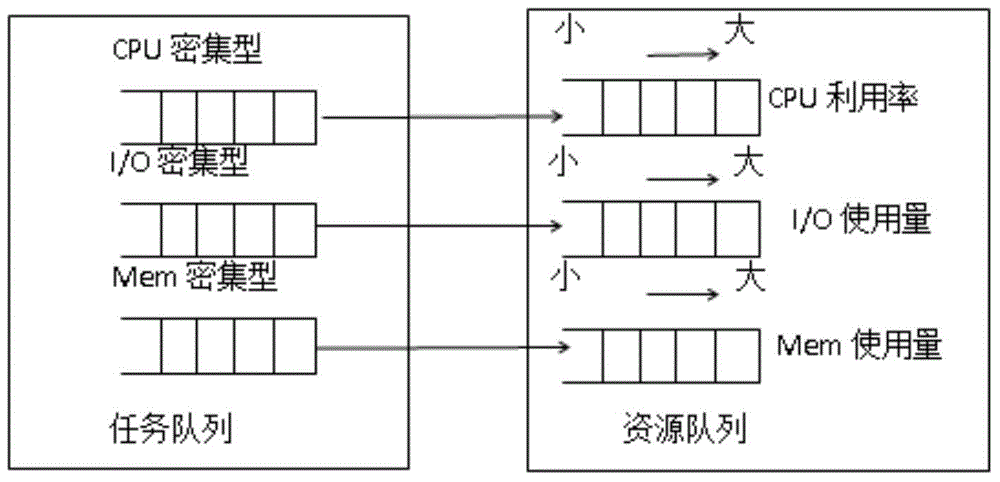

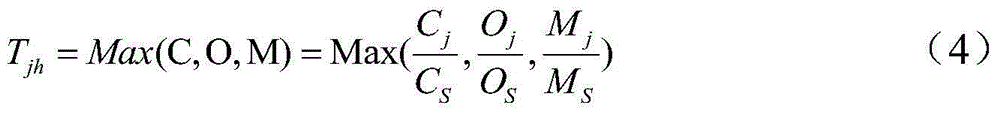

Multi-queue peak-alternation scheduling model and multi-queue peak-alteration scheduling method based on task classification in cloud computing

ActiveCN104657221ASimple methodNo differentiation involvedResource allocationResource utilizationResource management

The invention discloses a multi-queue peak-alternation scheduling model and a multi-queue peak-alteration scheduling method based on task classification in cloud computing. The multi-queue peak-alternation scheduling model is characterized by comprising a task manager, a local resource manager, a global resource manager and a scheduler. The multi-queue peak-alternation scheduling method comprises the following steps: firstly, according to demand conditions of a task to resources, dividing tasks into a CPU (central processing unit) intensive type, an I / O (input / output) intensive type and a memory intensive type; sequencing the resources according to the CPU, the I / O and the memory load condition, and staggering a resource using peak during task scheduling; scheduling a certain parameter intensive type mask to a resource with relatively light index load, scheduling the CPU intensive type mask to the resource with relatively low CPU utilization rate. According to the multi-queue peak-alternation scheduling model and the multi-queue peak-alternation scheduling method disclosed by the invention, load balancing can be effectively realized, scheduling efficiency is improved and the resource utilization rate is increased.

Owner:GUANGDONG UNIV OF PETROCHEMICAL TECH

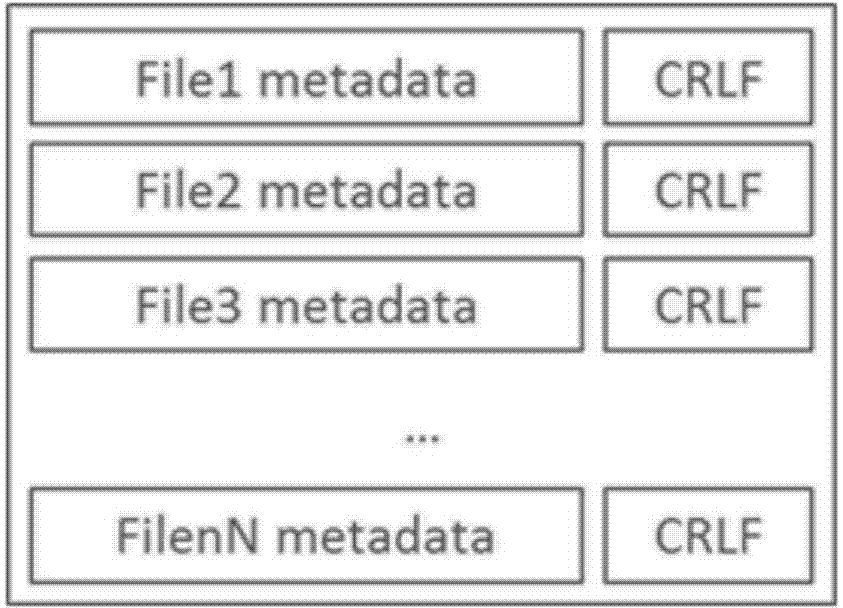

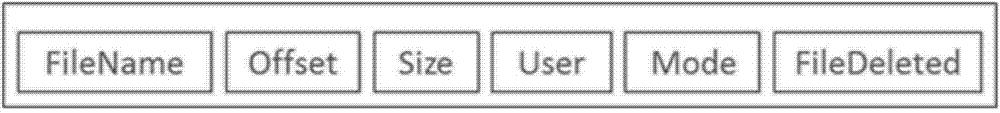

Method for storing and processing small log type files in Hadoop distributed file system

InactiveCN104731921ASolve inefficiencyEasy accessSpecial data processing applicationsDistributed File SystemFile system

The invention relates to the field of an HDFS of a computer, and discloses a method for storing and processing small log type files in a Hadoop distributed file system (HDFS). According to the method, files are combined in a nearby mode according to physical locations, and a Copy-On-Write mechanism is used for optimizing read-write of the small files; specifically, the small log type files are combined in a nearby mode according to a physical path, a client side reads and writes the combined files from a NameNode and Metadata information of indexes of the combined files when reading and writing the small log type files, and then all the small log type file data are read and written from the combined files according to the indexes of the combined files. According to the new processing method of the small log type files, the memory load of the metadata of the small files are transmitted to the client side from the NameNode, and the problem that when the HDFS processes a large number of small files, efficiency is low is effectively solved. The client side caches the metadata of the small files, so that the speed of access to the small files is improved, and a user does not need to send a metadata request to the NameNode when sequentially accessing small files which are adjacent in physical location.

Owner:JIANGSU R & D CENTER FOR INTERNET OF THINGS +2

Method and system for task scheduling

ActiveCN102111337ASolve the problem of effective utilization at the same timeEfficient use ofData switching networksLower limitLimit value

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

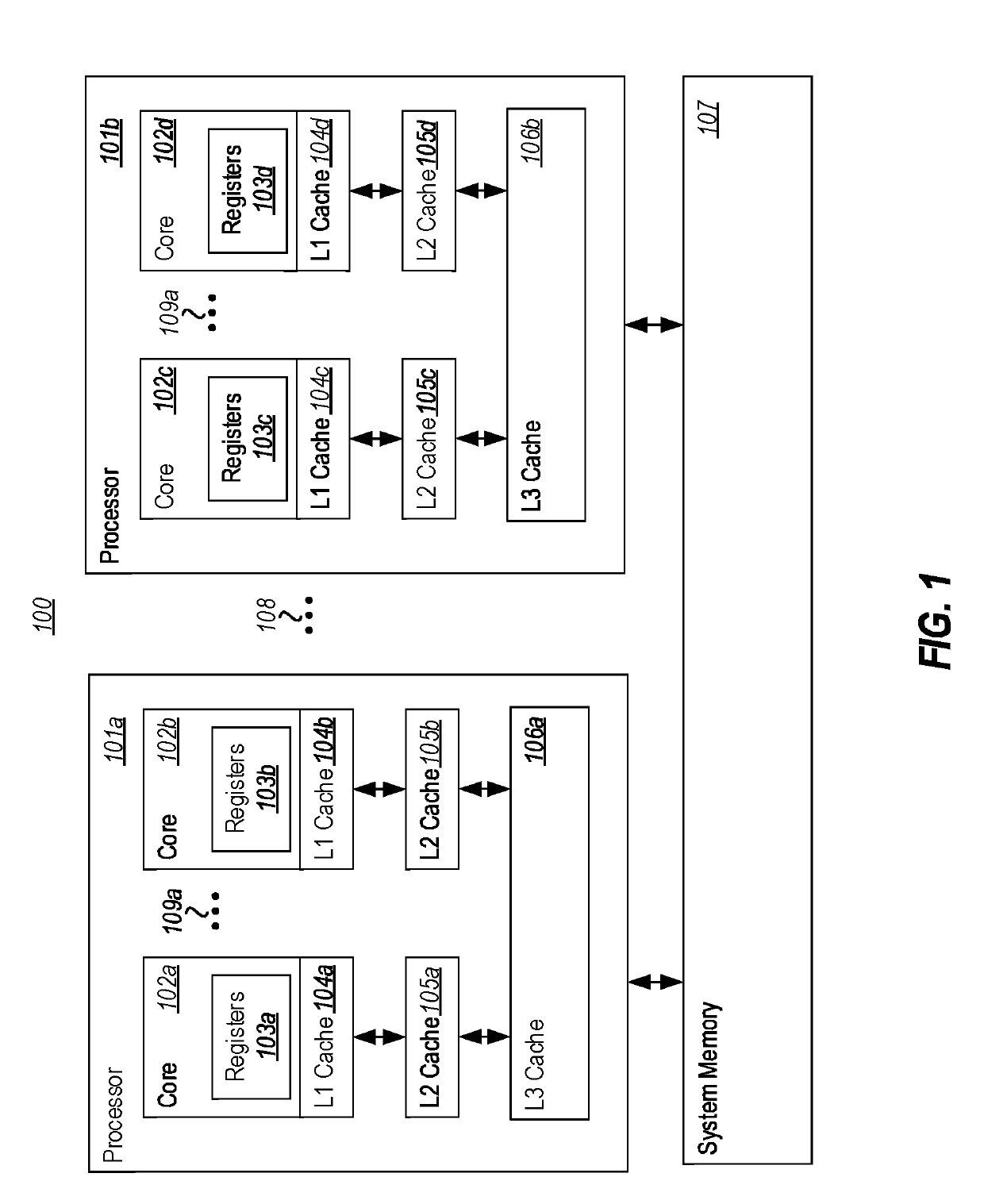

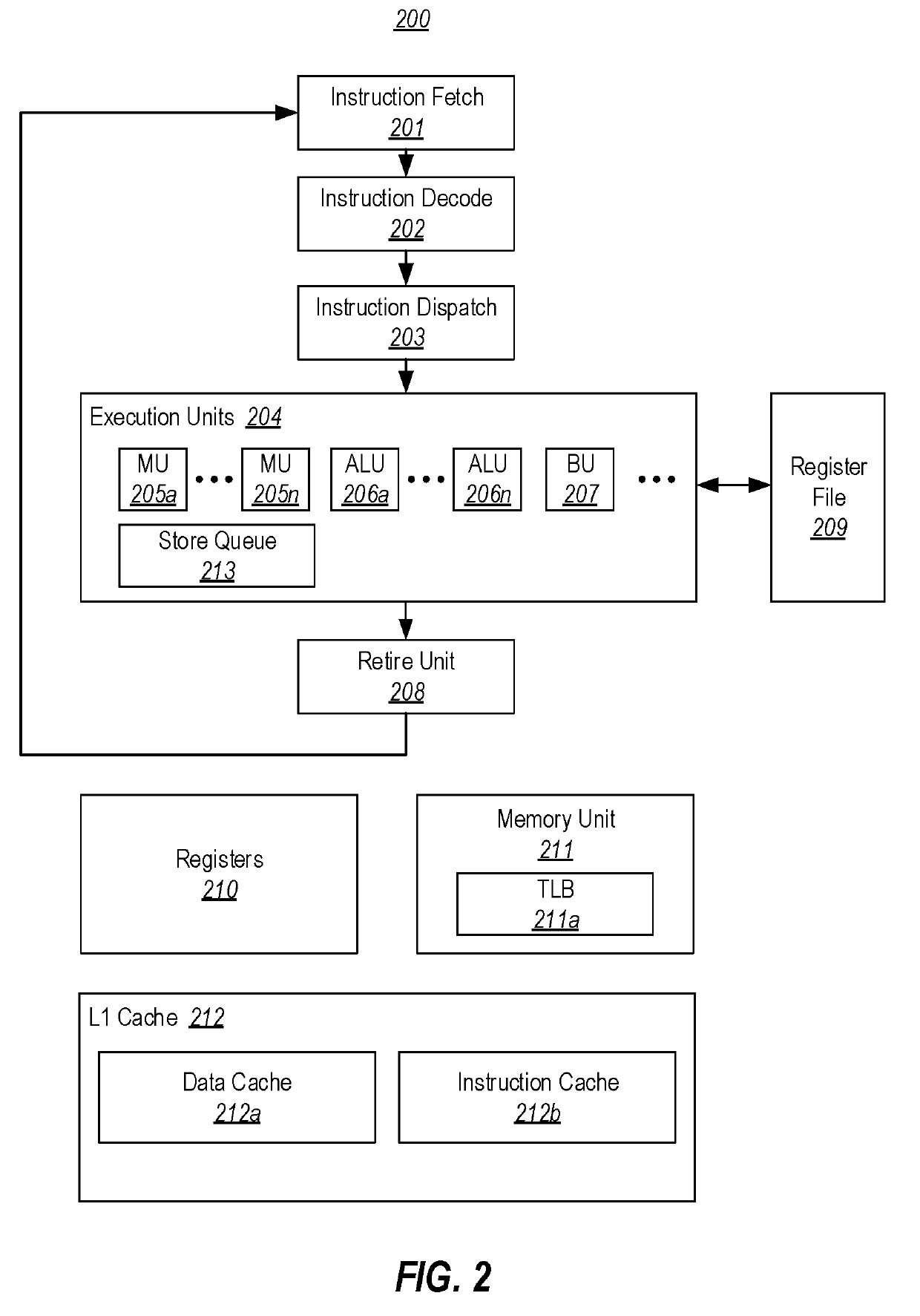

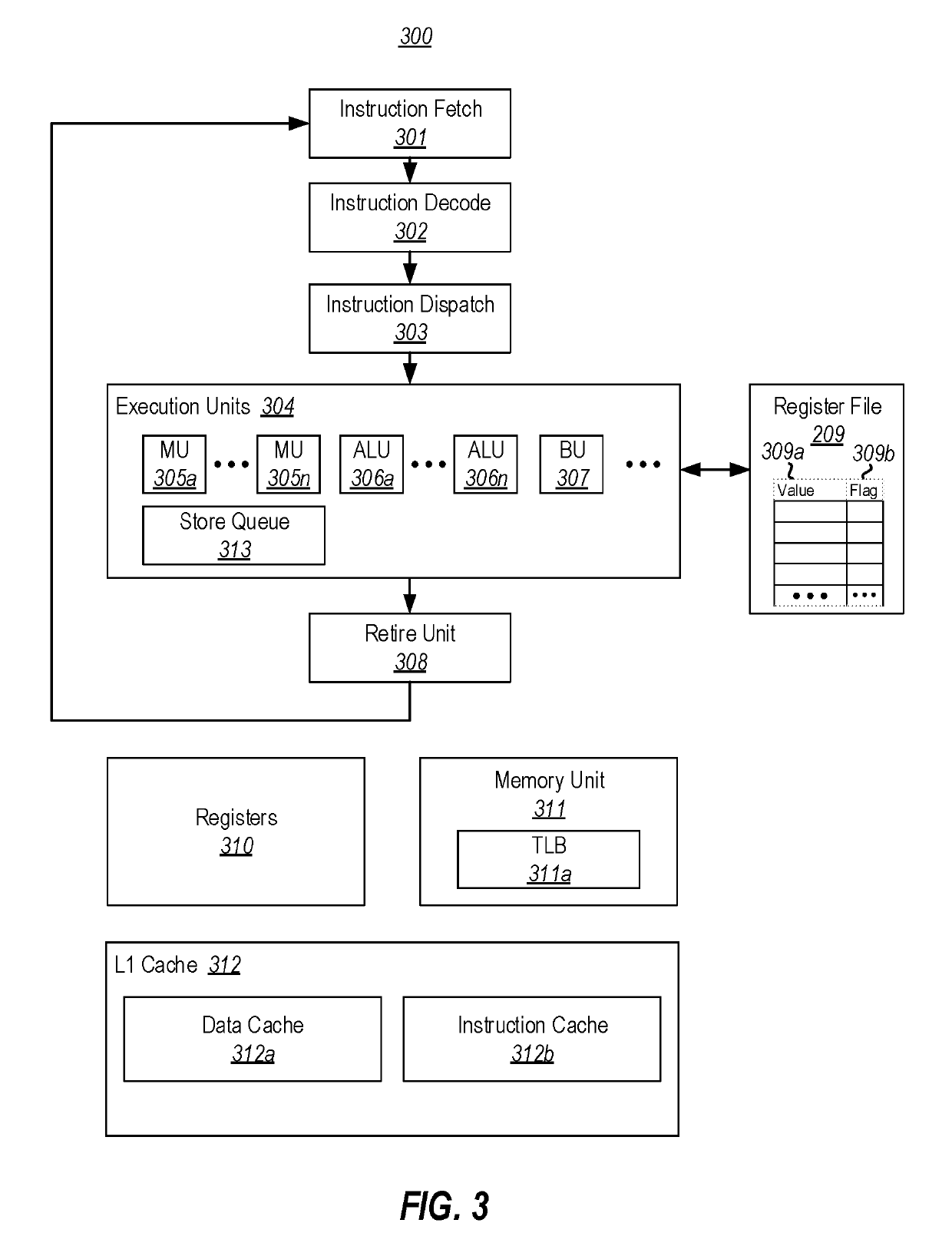

Speculative side-channel attack mitigations

ActiveUS20190114422A1Memory architecture accessing/allocationRegister arrangementsSpeculative executionTerm memory

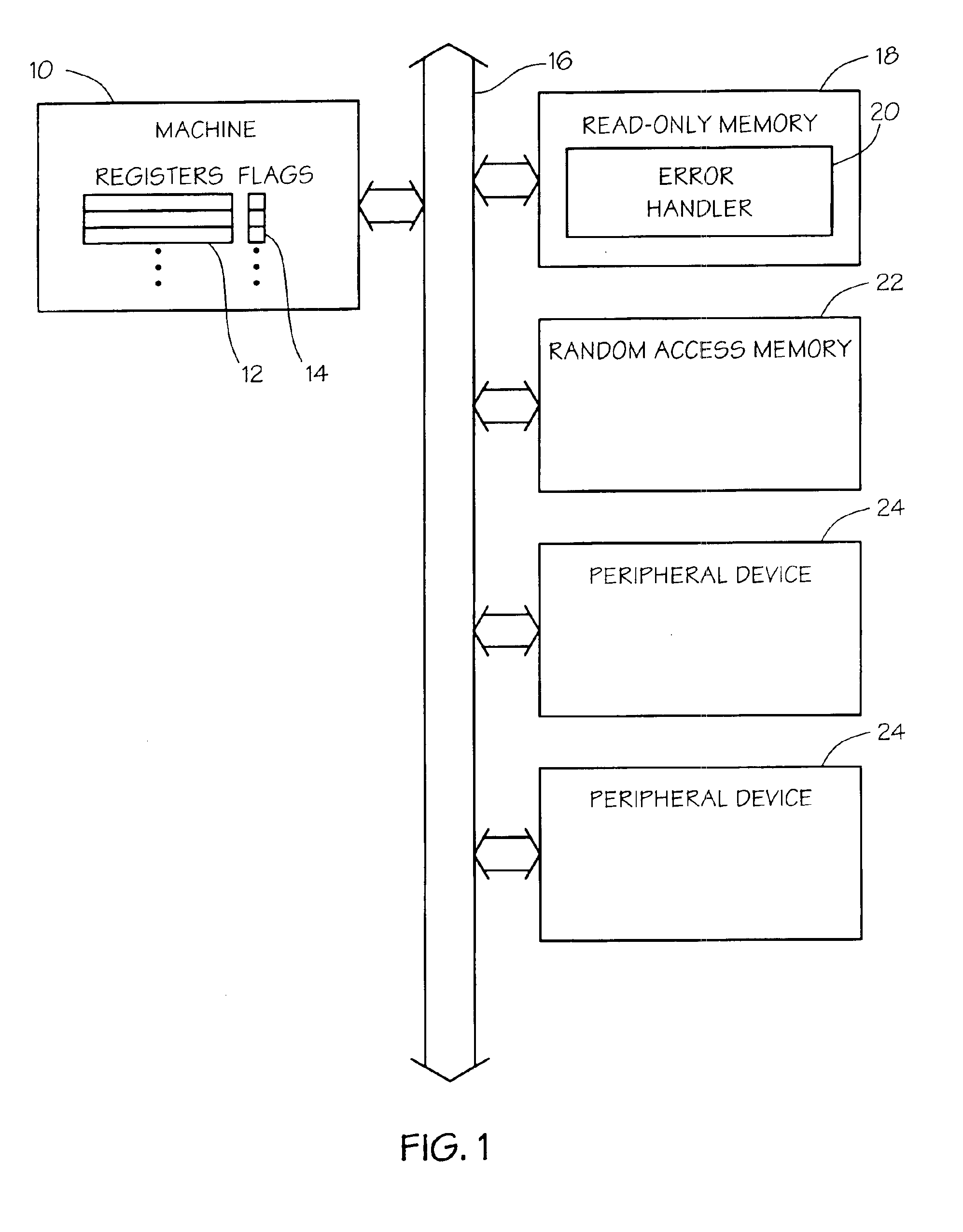

Preventing the observation of the side effects of mispredicted speculative execution flows using restricted speculation. In an embodiment a microprocessor comprises a register file including a plurality of entries, each entry comprising a value and a flag. The microprocessor (i) sets the flag corresponding to any entry whose value results from a memory load operation that has not yet been retired or cancelled, or results from a calculation that was derived from a register file entry whose corresponding flag was set, and (ii) clears the flag corresponding to any entry when the operation that generated the entry's value is retired. The microprocessor also comprises a memory unit that is configured to hold any memory load operation that uses an address whose value is calculated based on a register file entry whose flag is set, unless all previous instructions have been retired or cancelled.

Owner:MICROSOFT TECH LICENSING LLC

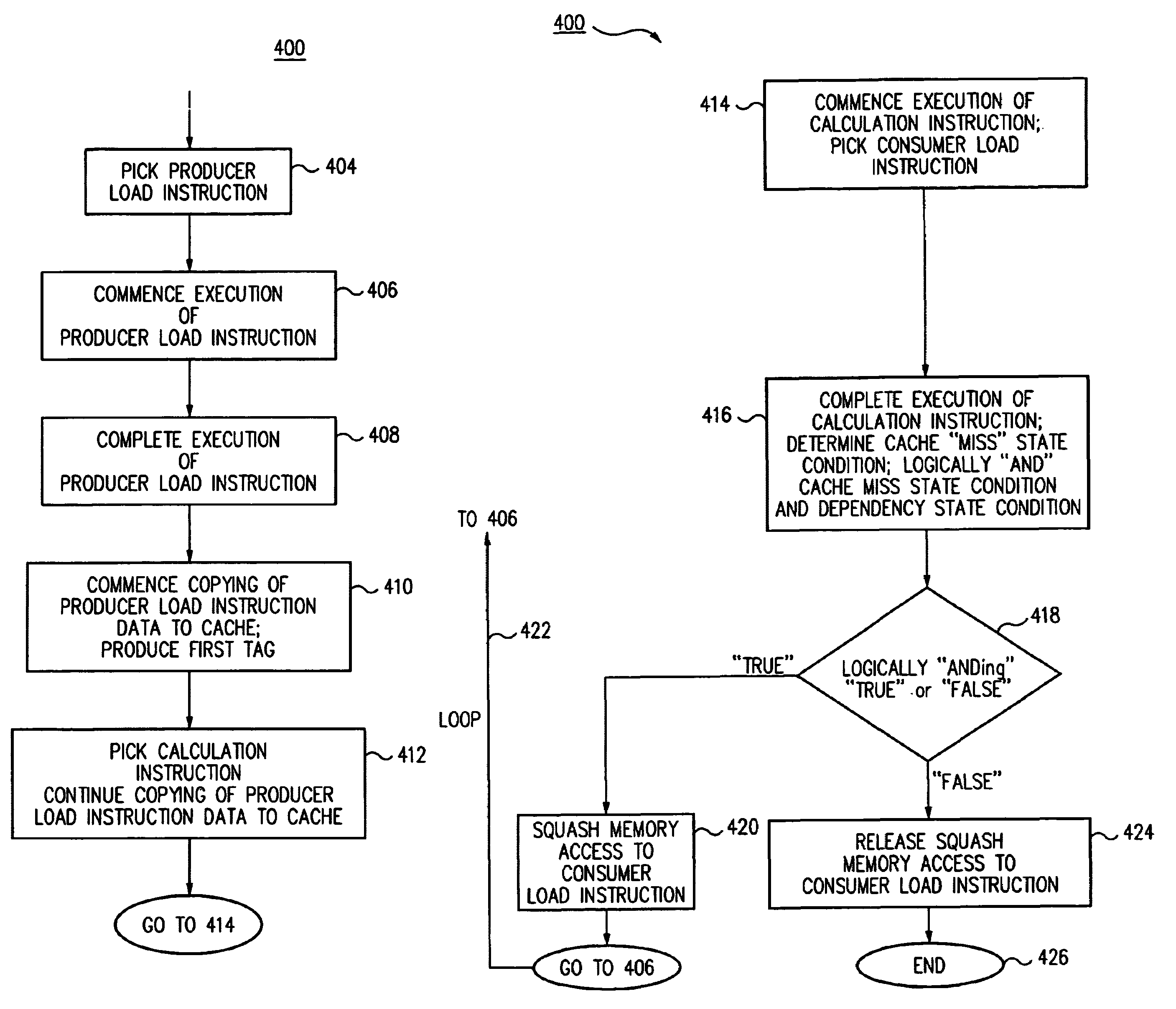

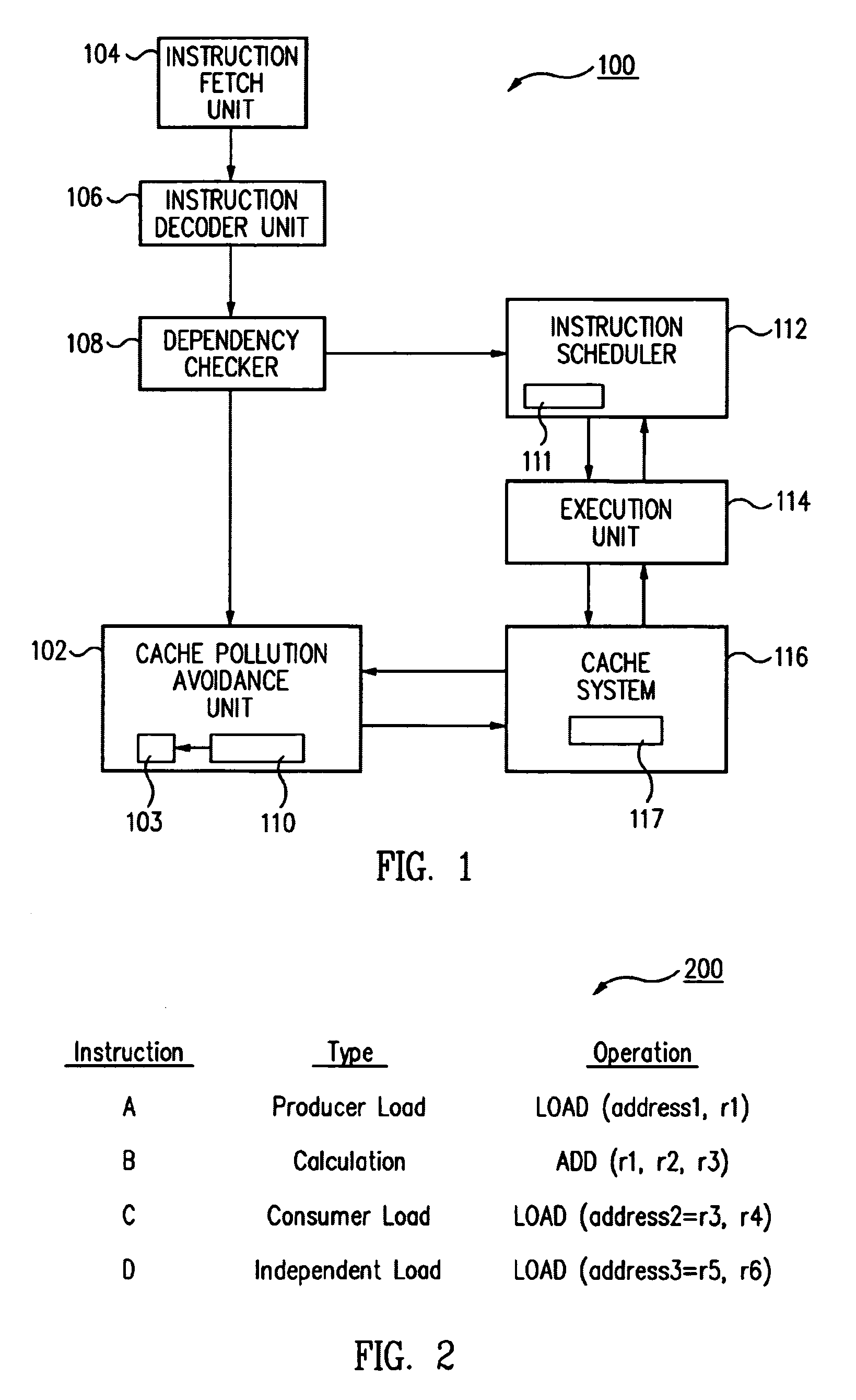

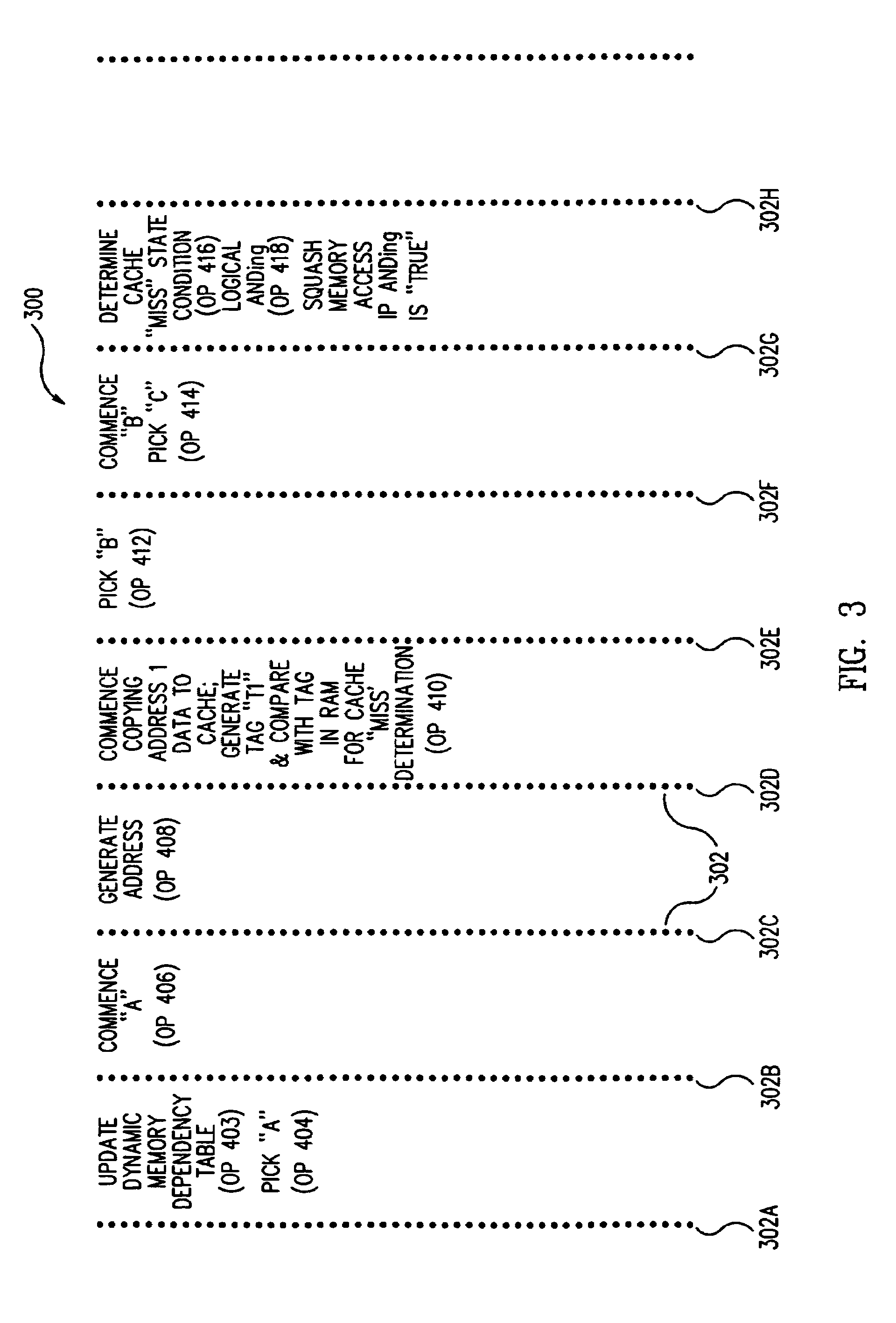

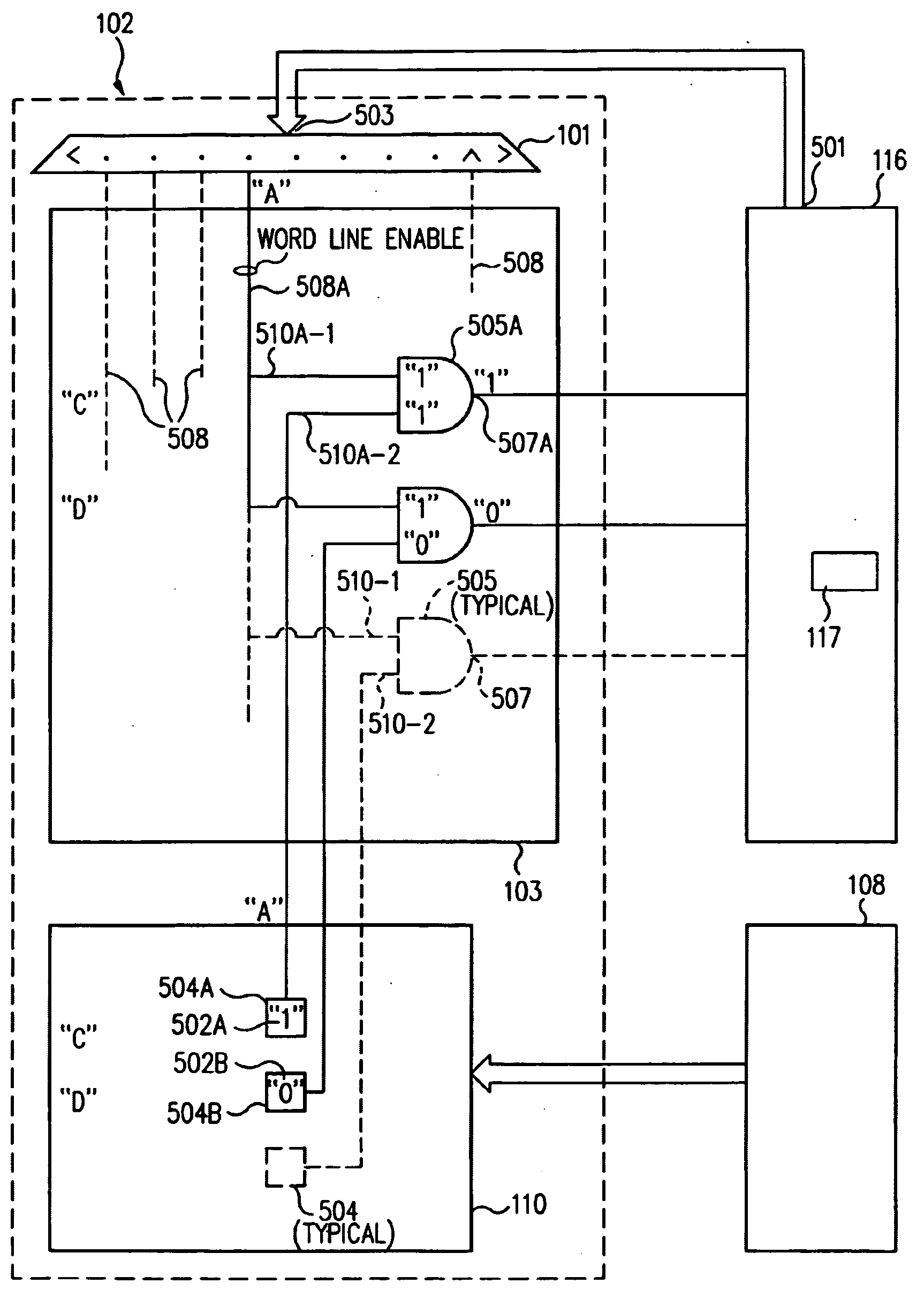

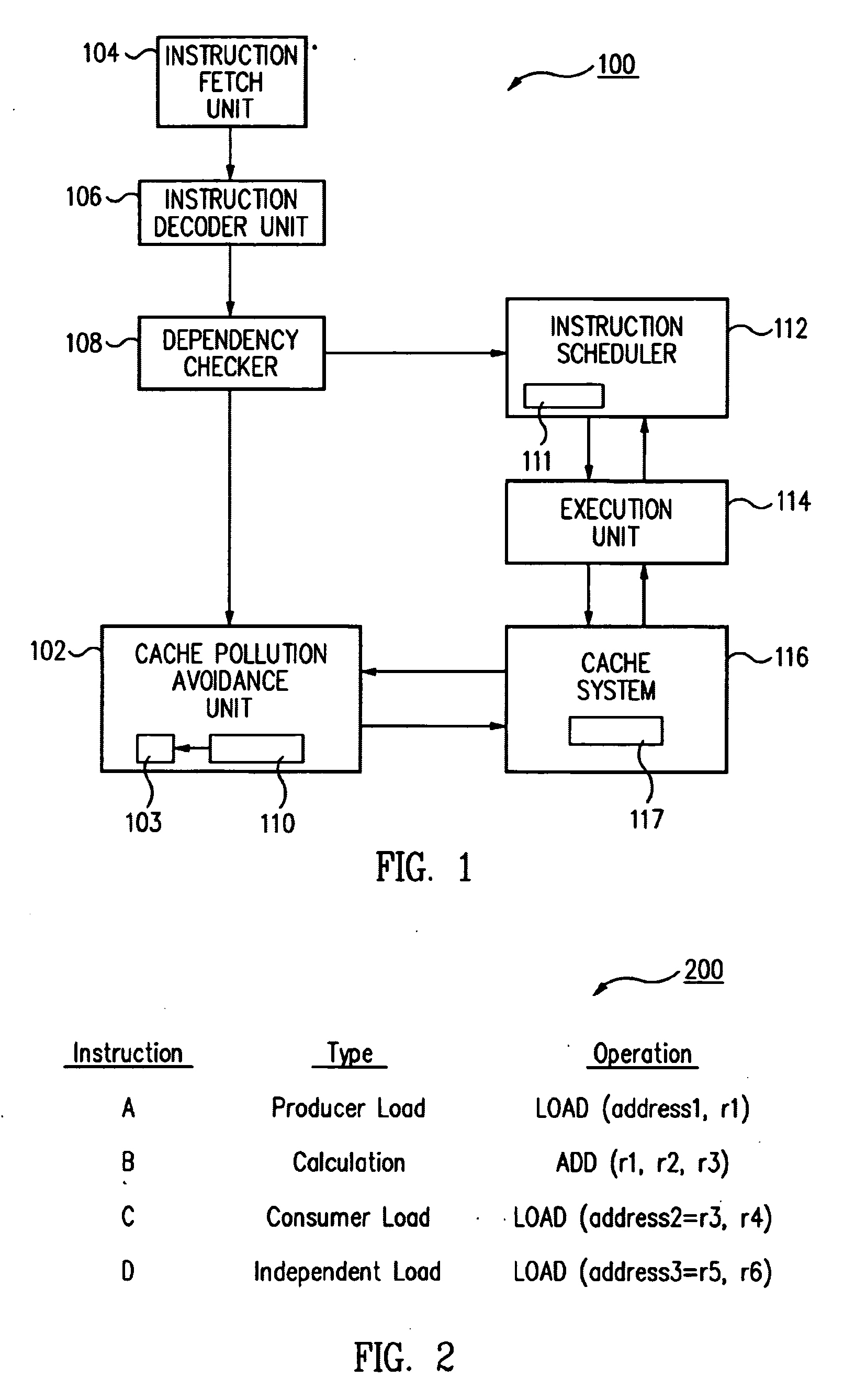

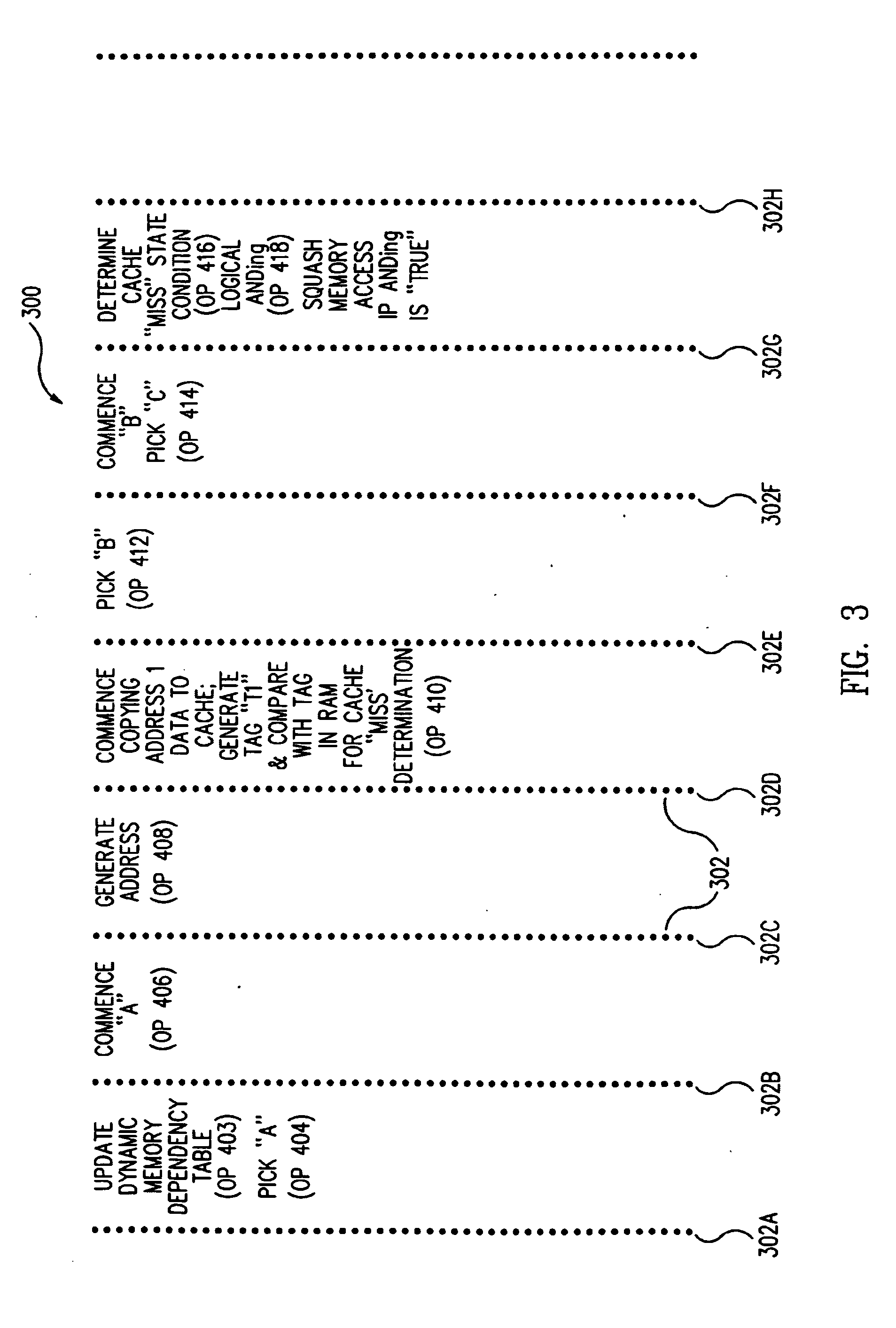

Method and apparatus for avoiding cache pollution due to speculative memory load operations in a microprocessor

ActiveUS7010648B2Eliminate pollutionGeneral purpose stored program computerConcurrent instruction executionLoad instructionOperand

A cache pollution avoidance unit includes a dynamic memory dependency table for storing a dependency state condition between a first load instruction and a sequentially later second load instruction, which may depend on the completion of execution of the first load instruction for operand data. The cache pollution avoidance unit logically ANDs the dependency state condition stored in the dynamic memory dependency table with a cache memory “miss” state condition returned by the cache pollution avoidance unit for operand data produced by the first load instruction and required by the second load instruction. If the logical ANDing is true, memory access to the second load instruction is squashed and the execution of the second load instruction is re-scheduled.

Owner:ORACLE INT CORP

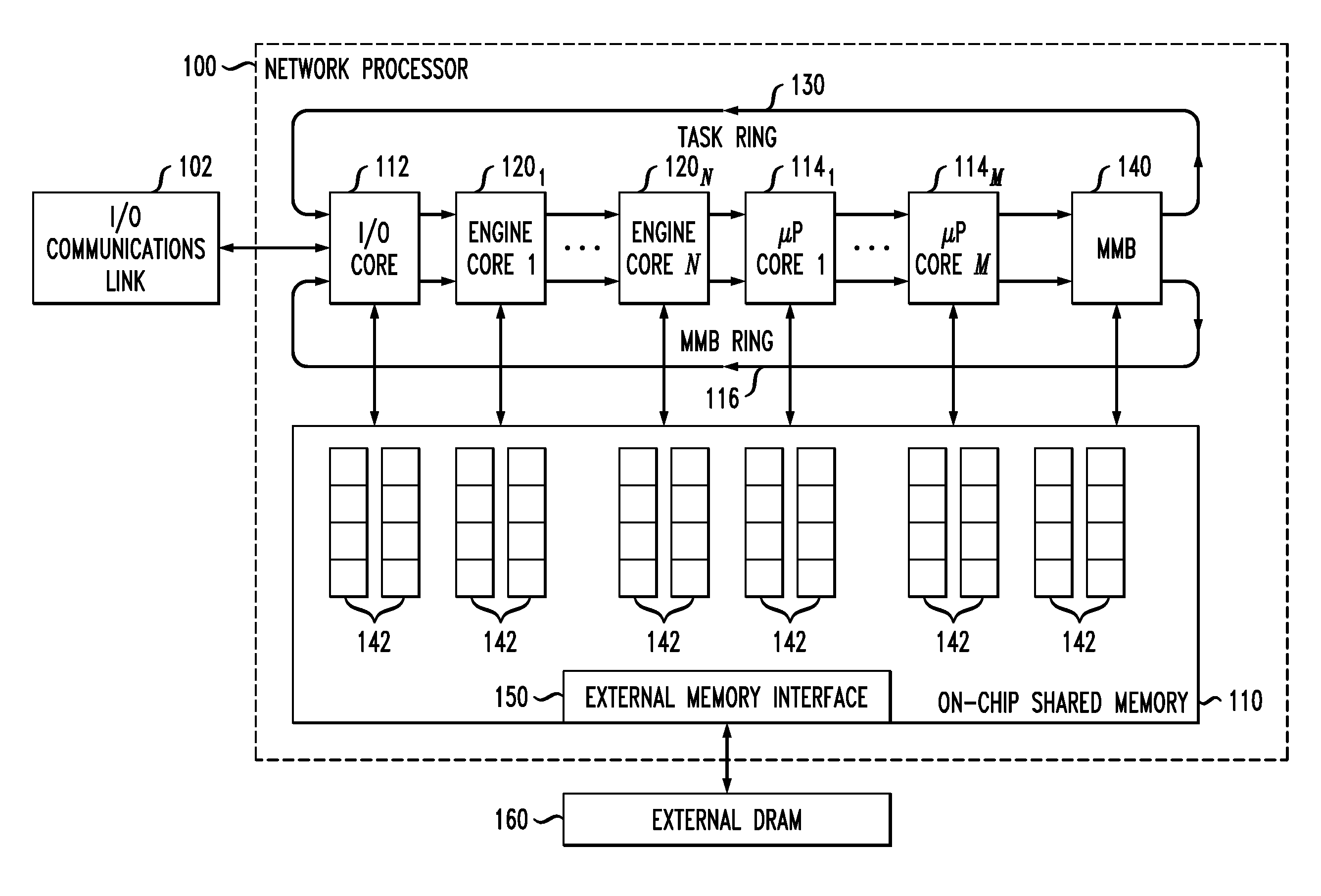

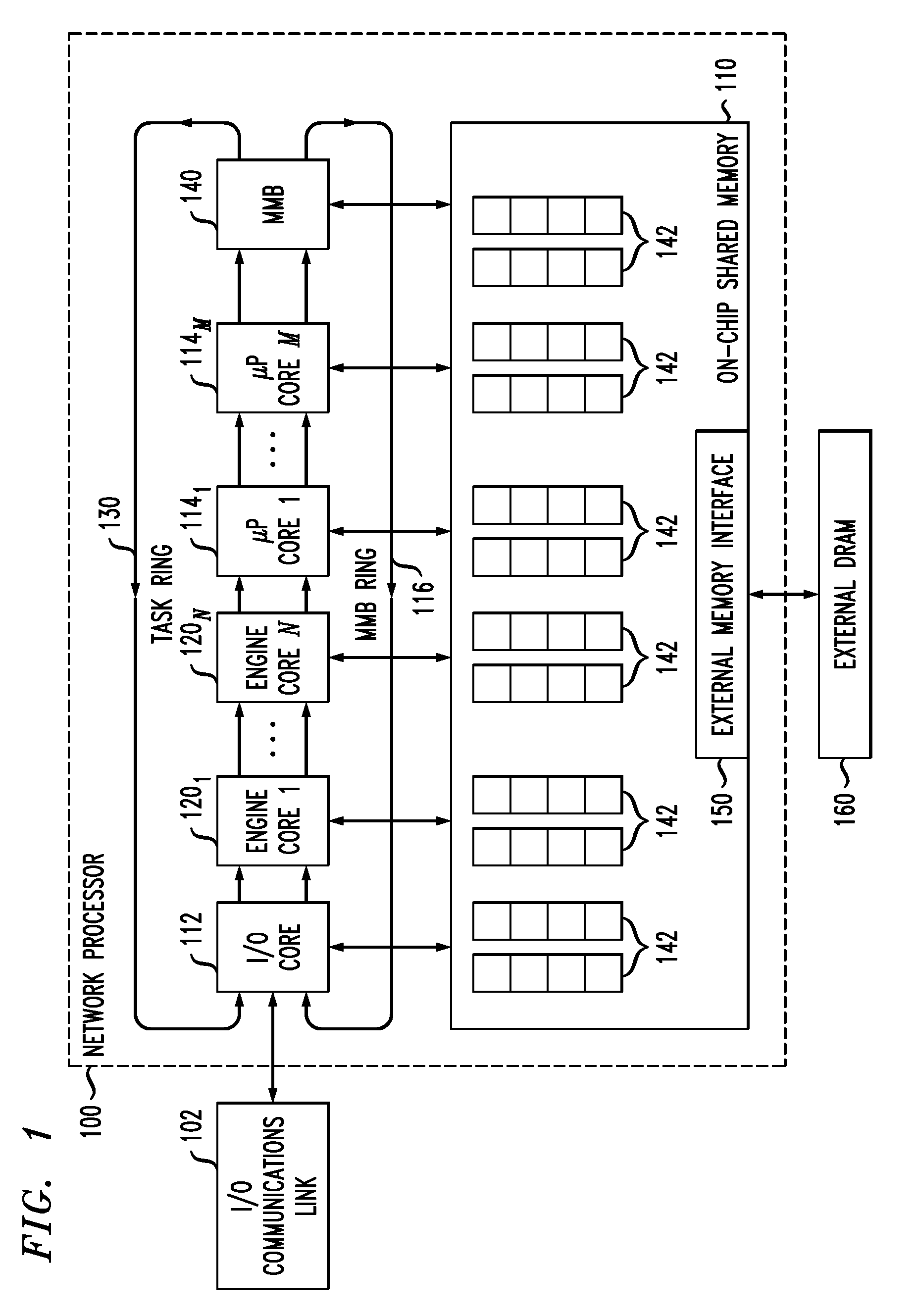

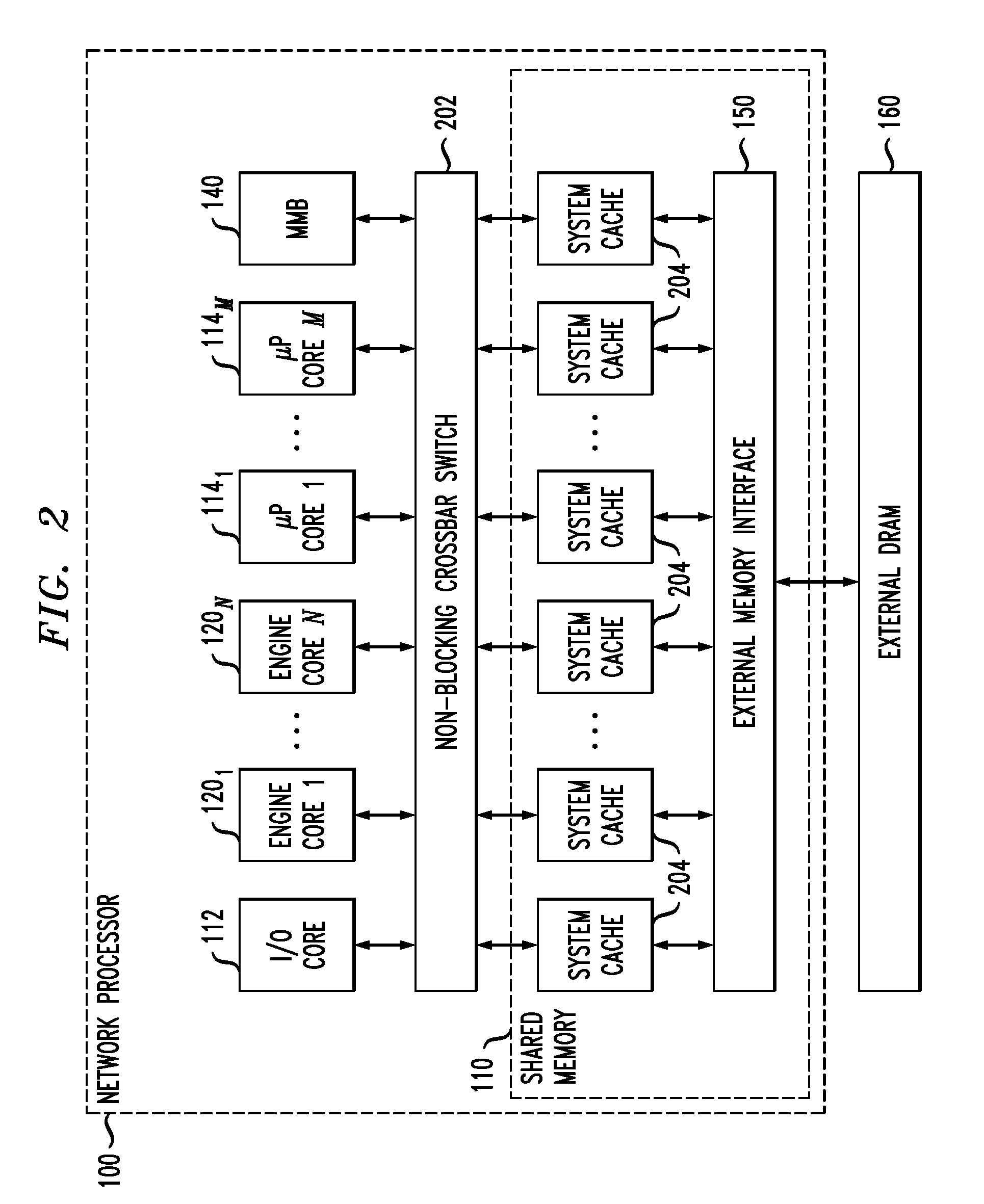

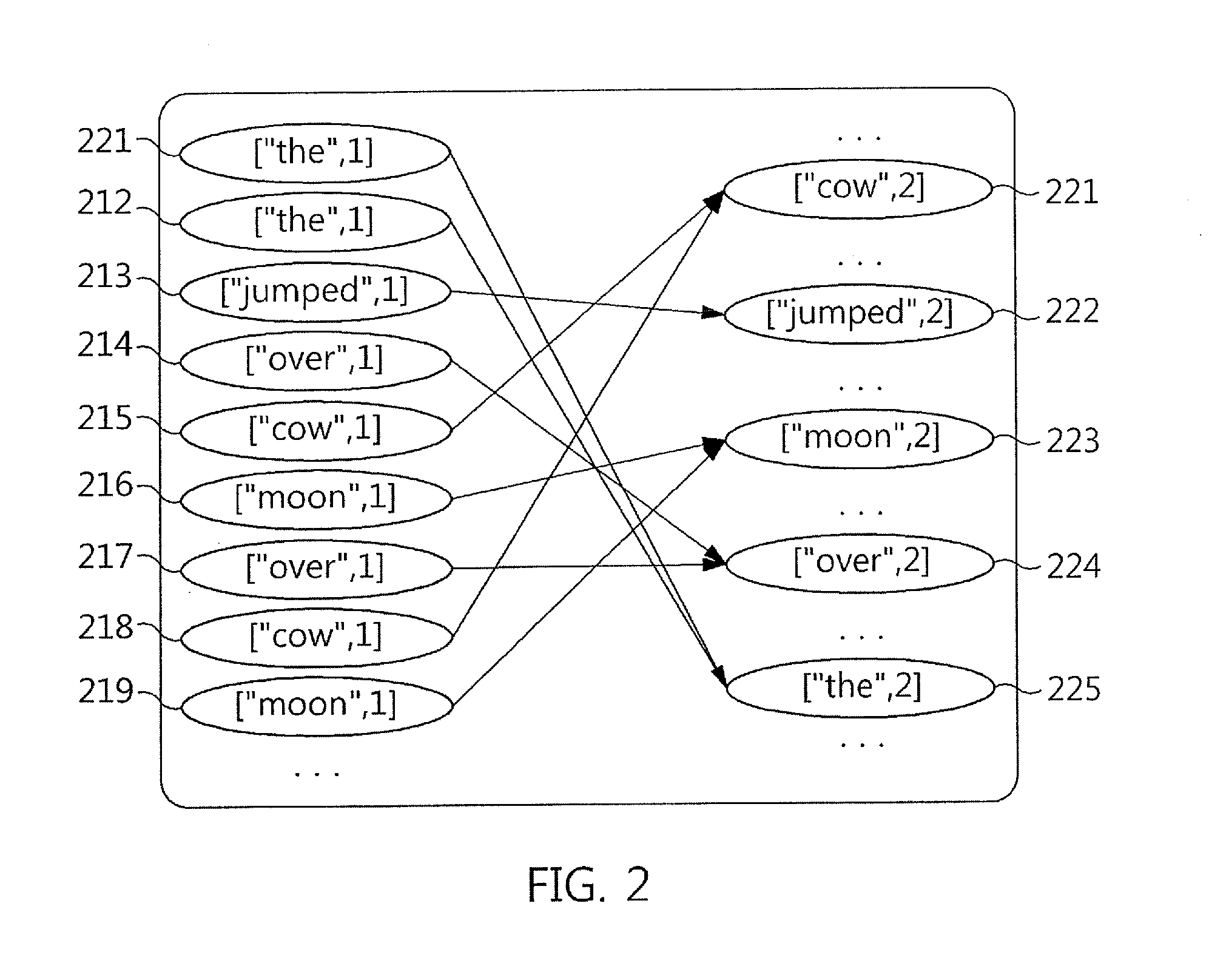

Network communications processor architecture with memory load balancing

InactiveUS8255644B2Memory adressing/allocation/relocationDigital computer detailsHash tableMemory load

Described embodiments provide a memory system including a plurality of addressable memory arrays. Data in the arrays is accessed by receiving a logical address of data in the addressable memory array and computing a hash value based on at least a part of the logical address. One of the addressable memory arrays is selected based on the hash value. Data in the selected addressable memory array is accessed using a physical address based on at least part of the logical address not used to compute the hash value. The hash value is generated by a hash function to provide essentially random selection of each of the addressable memory arrays.

Owner:INTEL CORP

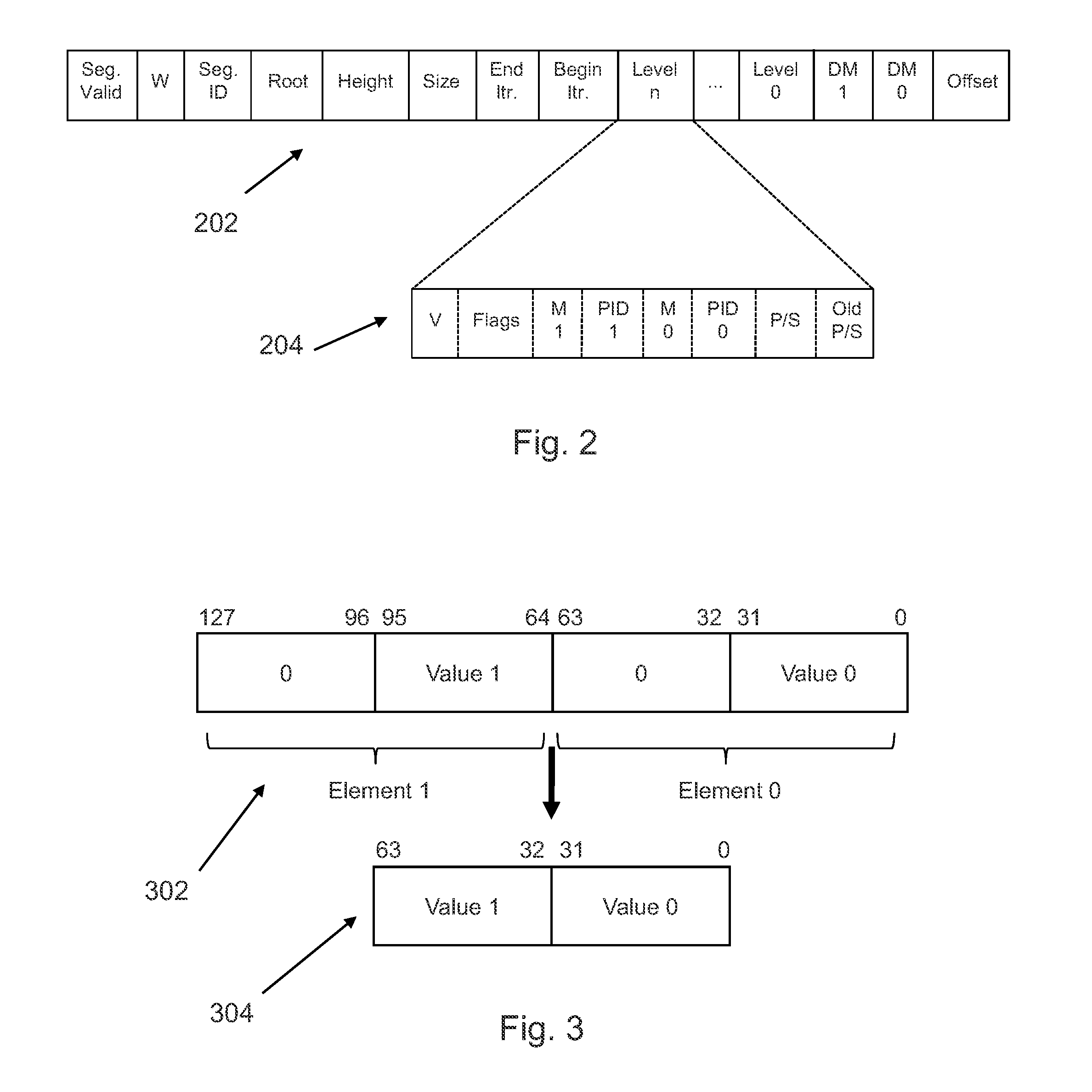

Iterator register for structured memory

ActiveUS20110010347A1Memory architecture accessing/allocationDigital data processing detailsTheoretical computer scienceIterator

Loading data from a computer memory system is disclosed. A memory system is provided, wherein some or all data stored in the memory system is organized as one or more pointer-linked data structures. One or more iterator registers are provided. A first pointer chain is loaded, having two or more pointers leading to a first element of a selected pointer-linked data structure to a selected iterator register. A second pointer chain is loaded, having two or more pointers leading to a second element of the selected pointer-linked data structure to the selected iterator register. The loading of the second pointer chain reuses portions of the first pointer chain that are common with the second pointer chain.Modifying data stored in a computer memory system is disclosed. A memory system is provided. One or more iterator registers are provided, wherein the iterator registers each include two or more pointer fields for storing two or more pointers that form a pointer chain leading to a data element. A local state associated with a selected iterator register is generated by performing one or more register operations relating to the selected iterator register and involving pointers in the pointer fields of the selected iterator register. A pointer-linked data structure is updated in the memory system according to the local state.

Owner:INTEL CORP

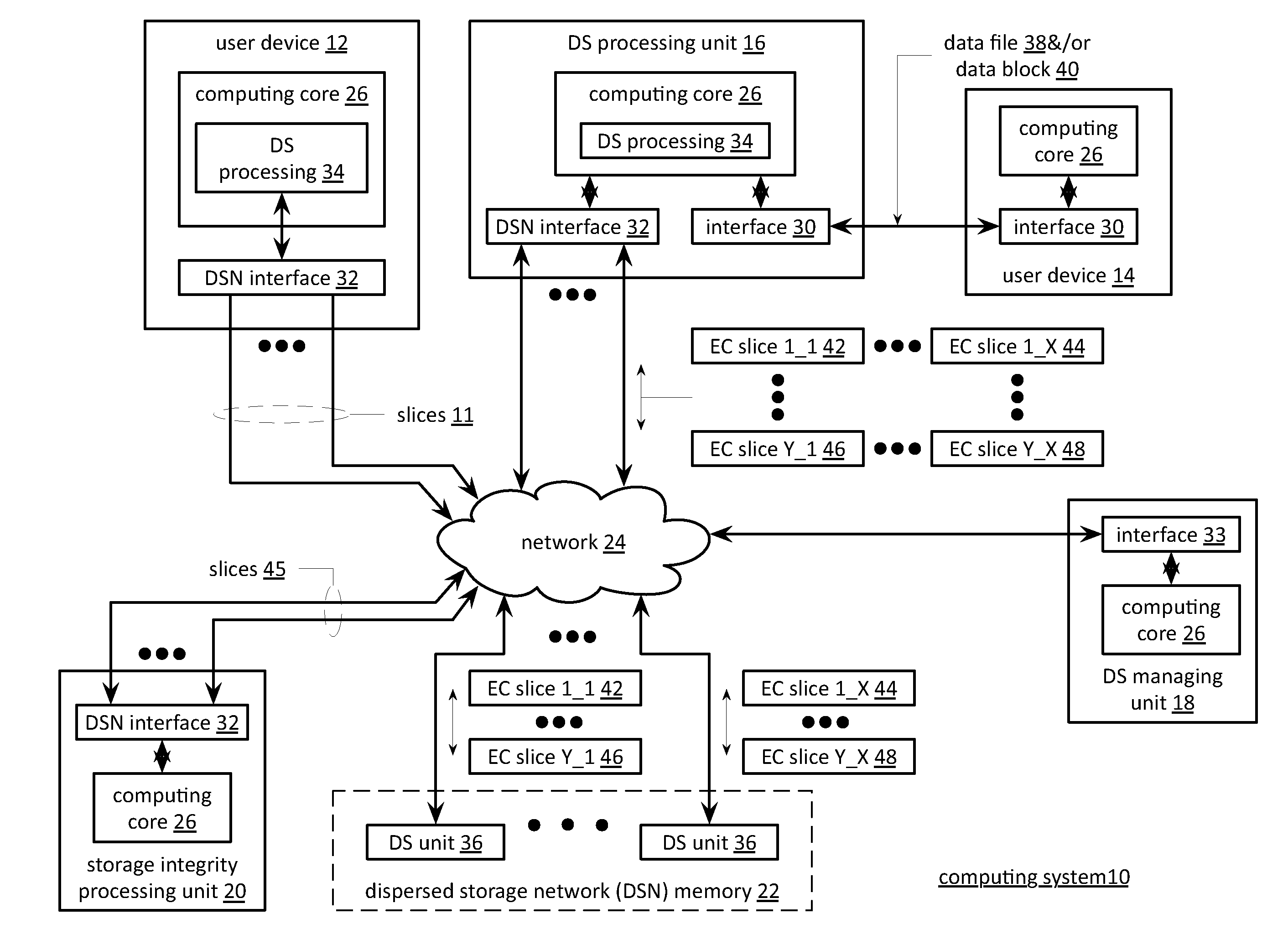

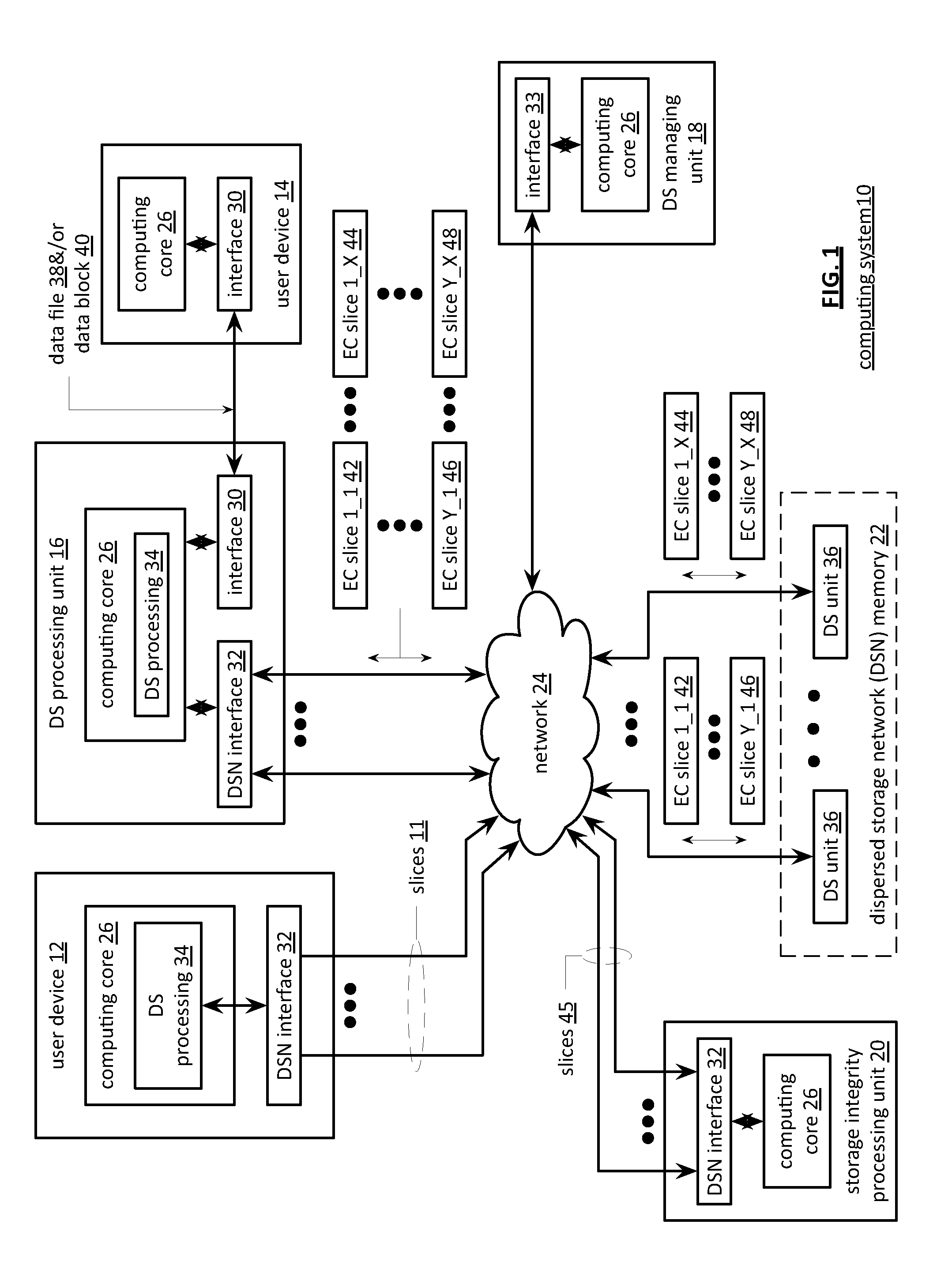

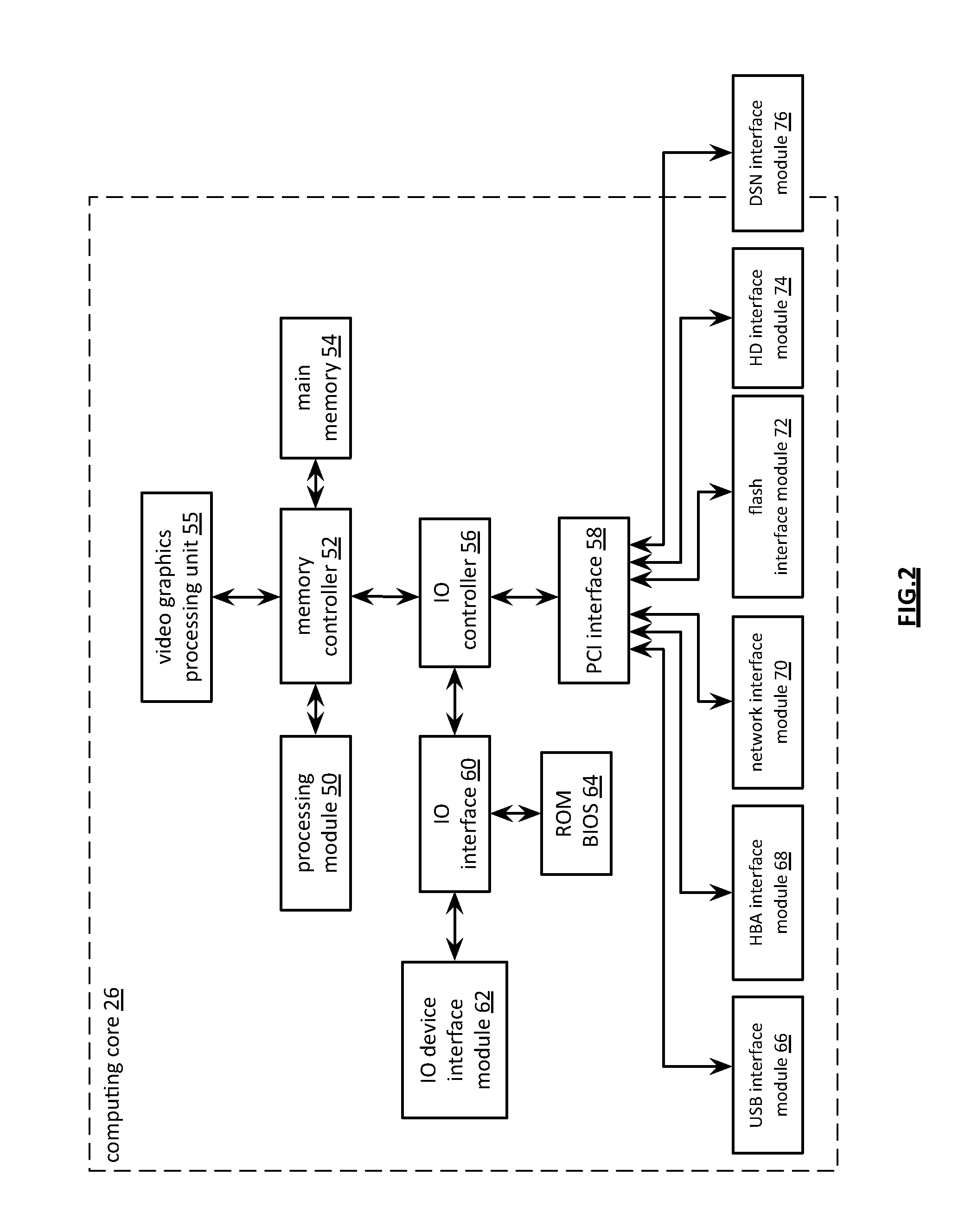

Memory utilization balancing in a dispersed storage network

InactiveUS20110289366A1Multiple keys/algorithms usageRedundant data error correctionMemory loadDispersed storage

A method begins by a processing module identifying a memory loading mismatch between a first memory device and a second memory device of a dispersed storage unit, wherein the first memory device is assigned a first range of slice names and the second memory device is assigned a second range of slice names. The method continues with the processing module determining an estimated impact to reduce the memory loading mismatch and when the estimated impact compares favorably to an impact threshold, modifying the first and second ranges of slices names to produce a first modified range of slice names for the first memory device and a second modified range of slice names for the second memory device based on the memory loading mismatch and transferring one or more encoded data slices between the first and second memory devices in accordance with the first and second modified ranges of slice names.

Owner:PURE STORAGE

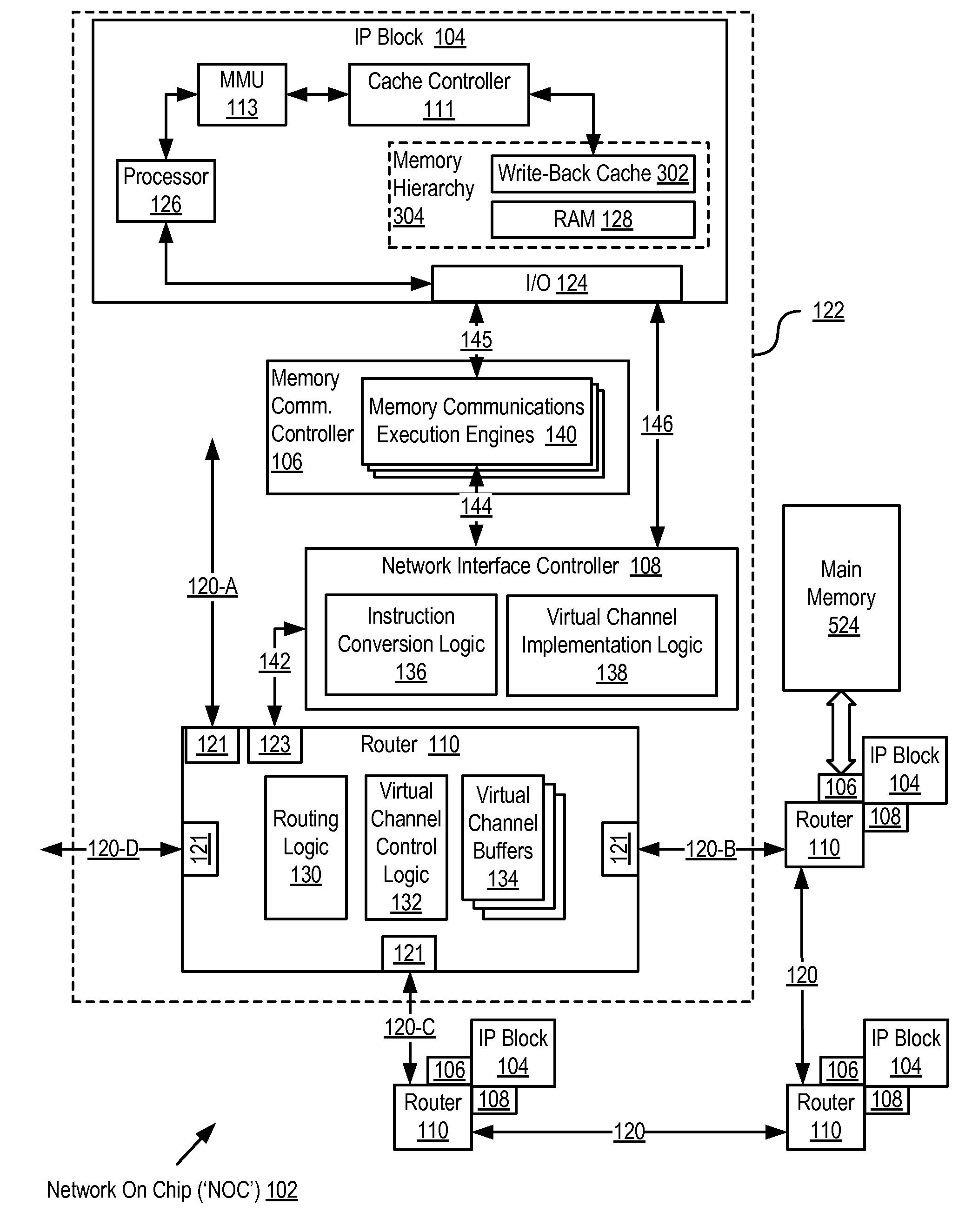

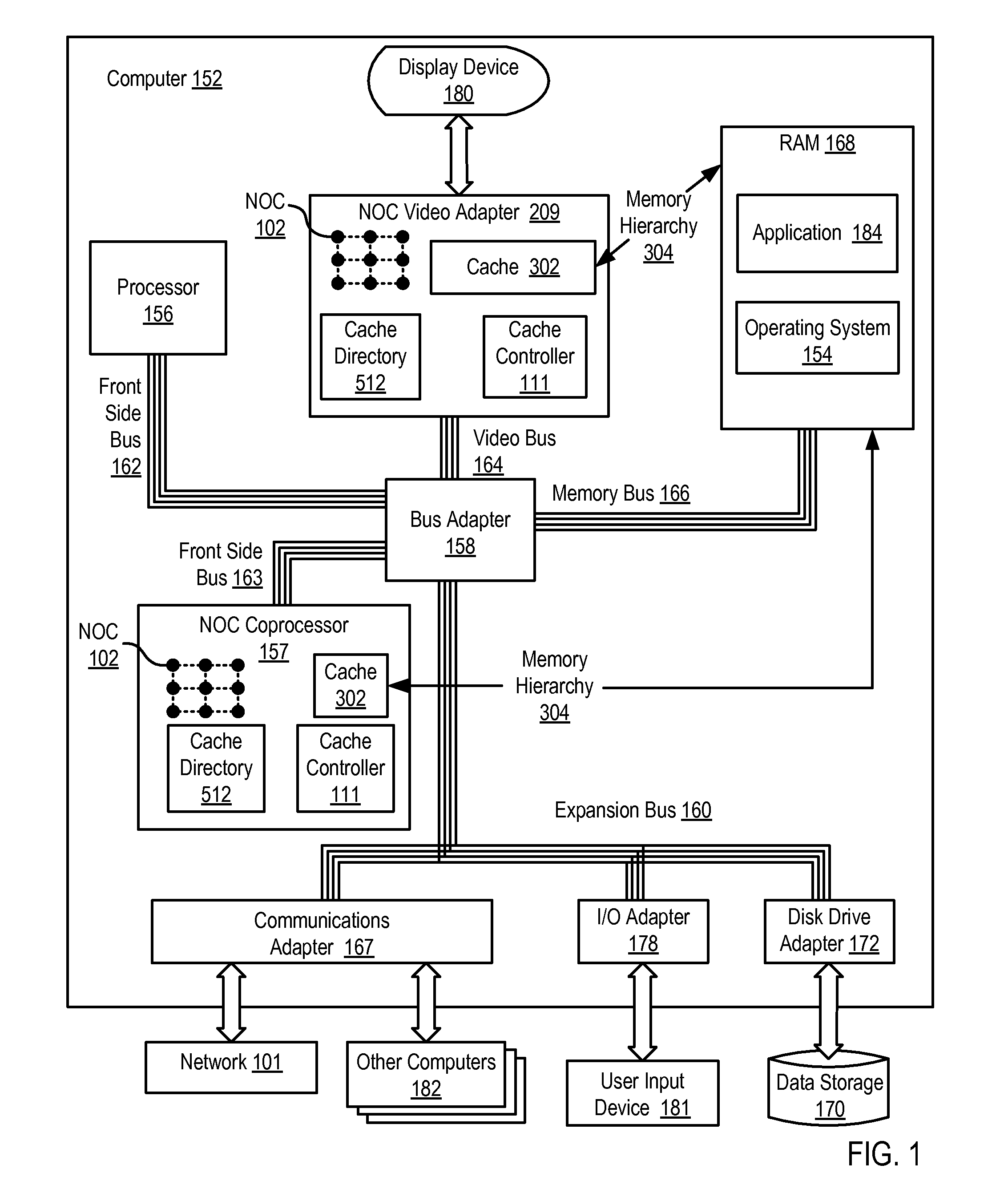

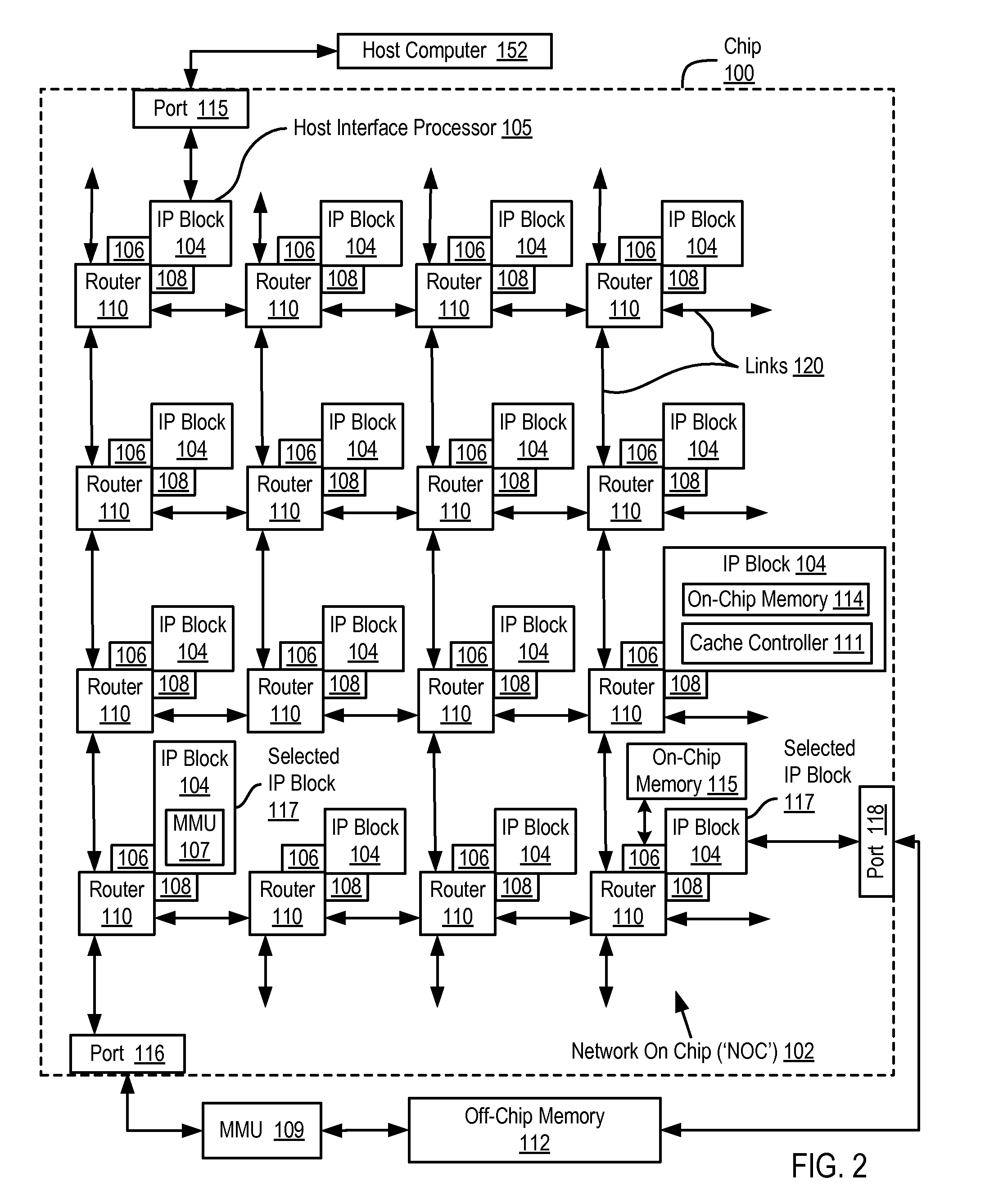

Administering Non-Cacheable Memory Load Instructions

InactiveUS20090287885A1Memory adressing/allocation/relocationDigital computer detailsMemory addressLoad instruction

Administering non-cacheable memory load instructions in a computing environment where cacheable data is produced and consumed in a coherent manner without harming performance of a producer, the environment including a hierarchy of computer memory that includes one or more caches backed by main memory, the caches controlled by a cache controller, at least one of the caches configured as a write-back cache. Embodiments of the present invention include receiving, by the cache controller, a non-cacheable memory load instruction for data stored at a memory address, the data treated by the producer as cacheable; determining by the cache controller from a cache directory whether the data is cached; if the data is cached, returning the data in the memory address from the write-back cache without affecting the write-back cache's state; and if the data is not cached, returning the data from main memory without affecting the write-back cache's state.

Owner:IBM CORP

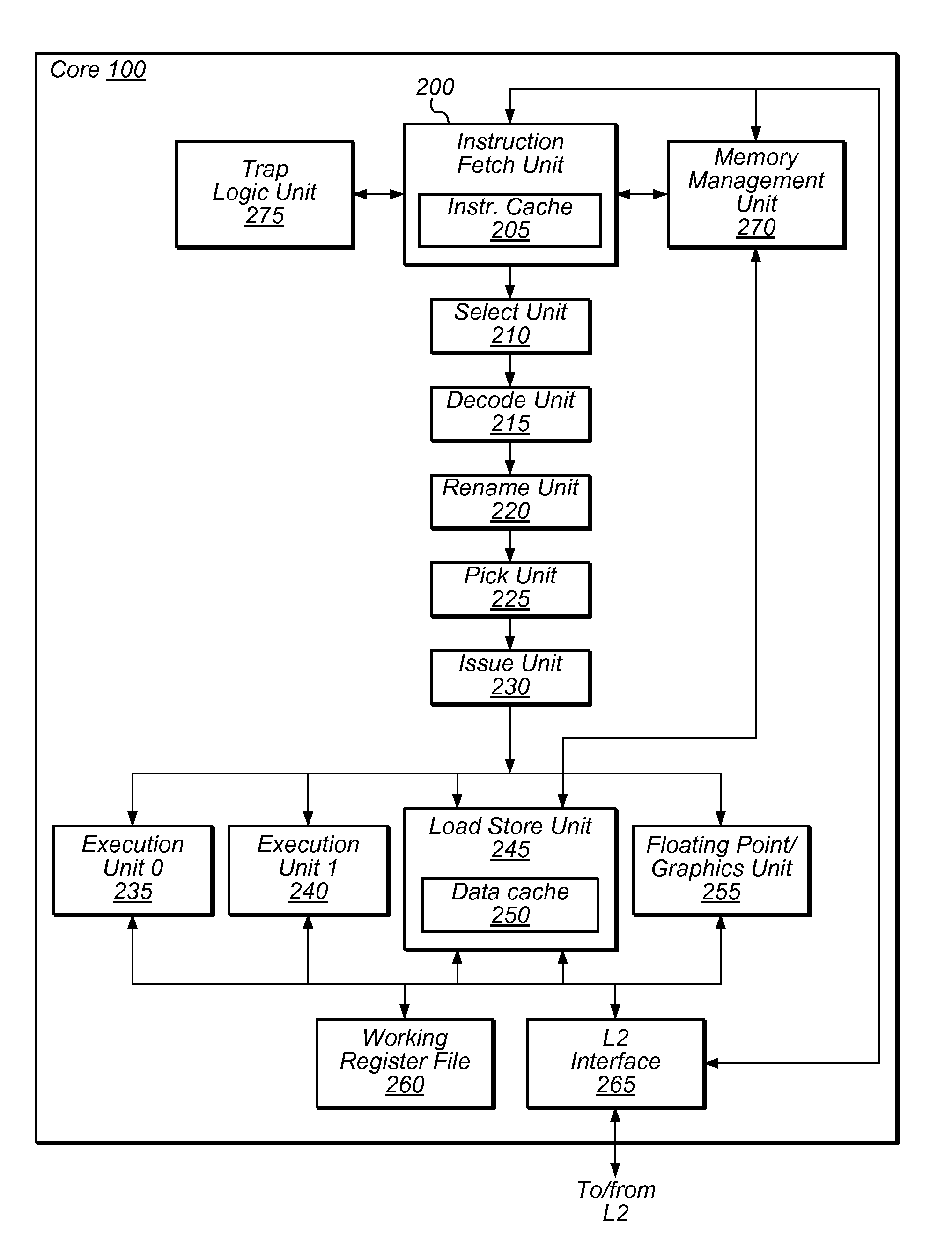

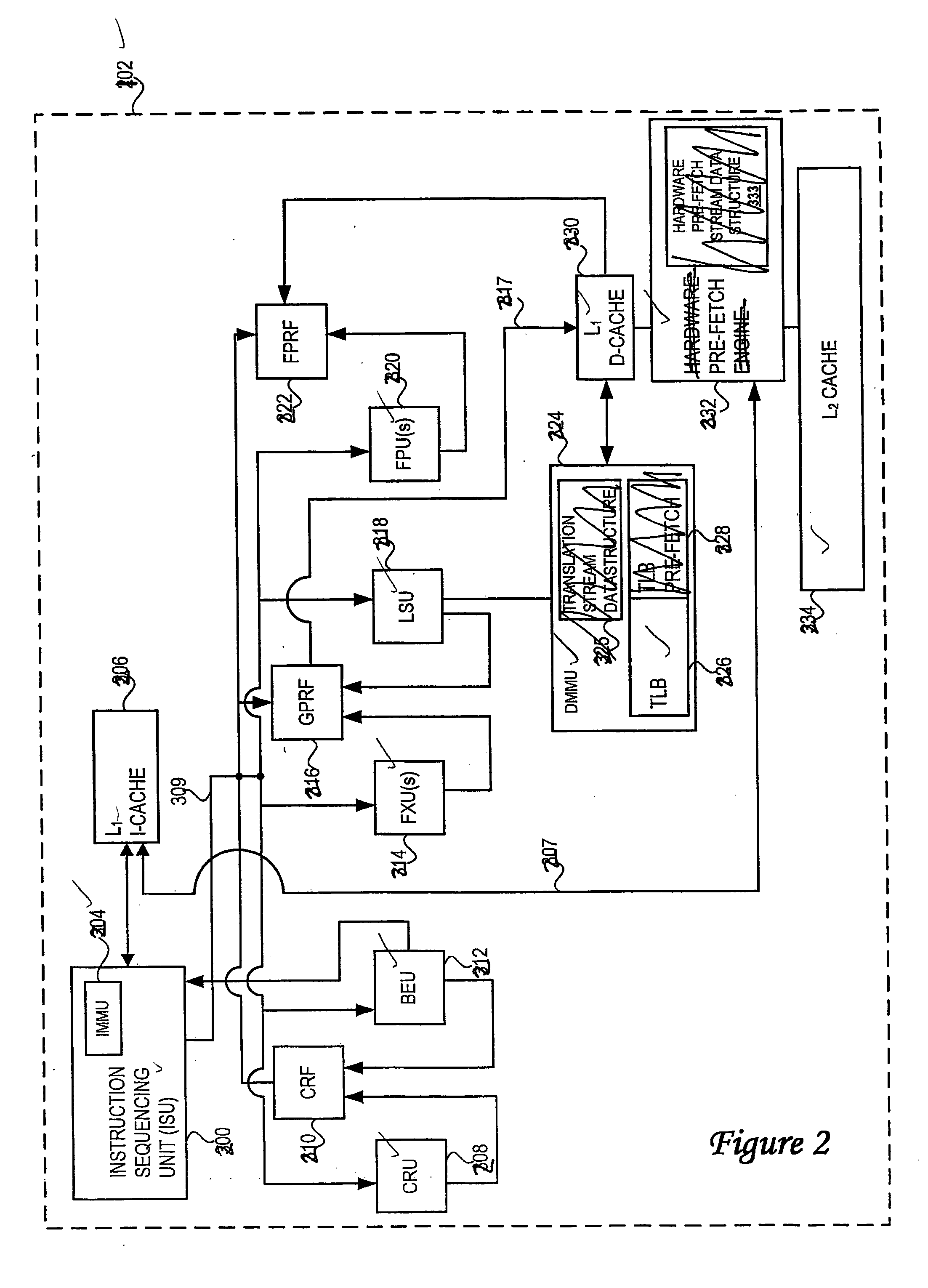

Space-efficient mechanism to support additional scouting in a processor using checkpoints

ActiveUS20110167243A1Lower program execution timesEasy to operateRegister arrangementsMemory adressing/allocation/relocationProcessor registerMemory load

Techniques and structures are disclosed for a processor supporting checkpointing to operate effectively in scouting mode while a maximum number of supported checkpoints are active. Operation in scouting mode may include using bypass logic and a set of register storage locations to store and / or forward in-flight instruction results that were calculated during scouting mode. These forwarded results may be used during scouting mode to calculate memory load addresses for yet other in-flight instructions, and the processor may accordingly cause data to be prefetched from these calculated memory load addresses. The set of register storage locations may comprise a working register file or an active portion of a multiported register file.

Owner:ORACLE INT CORP

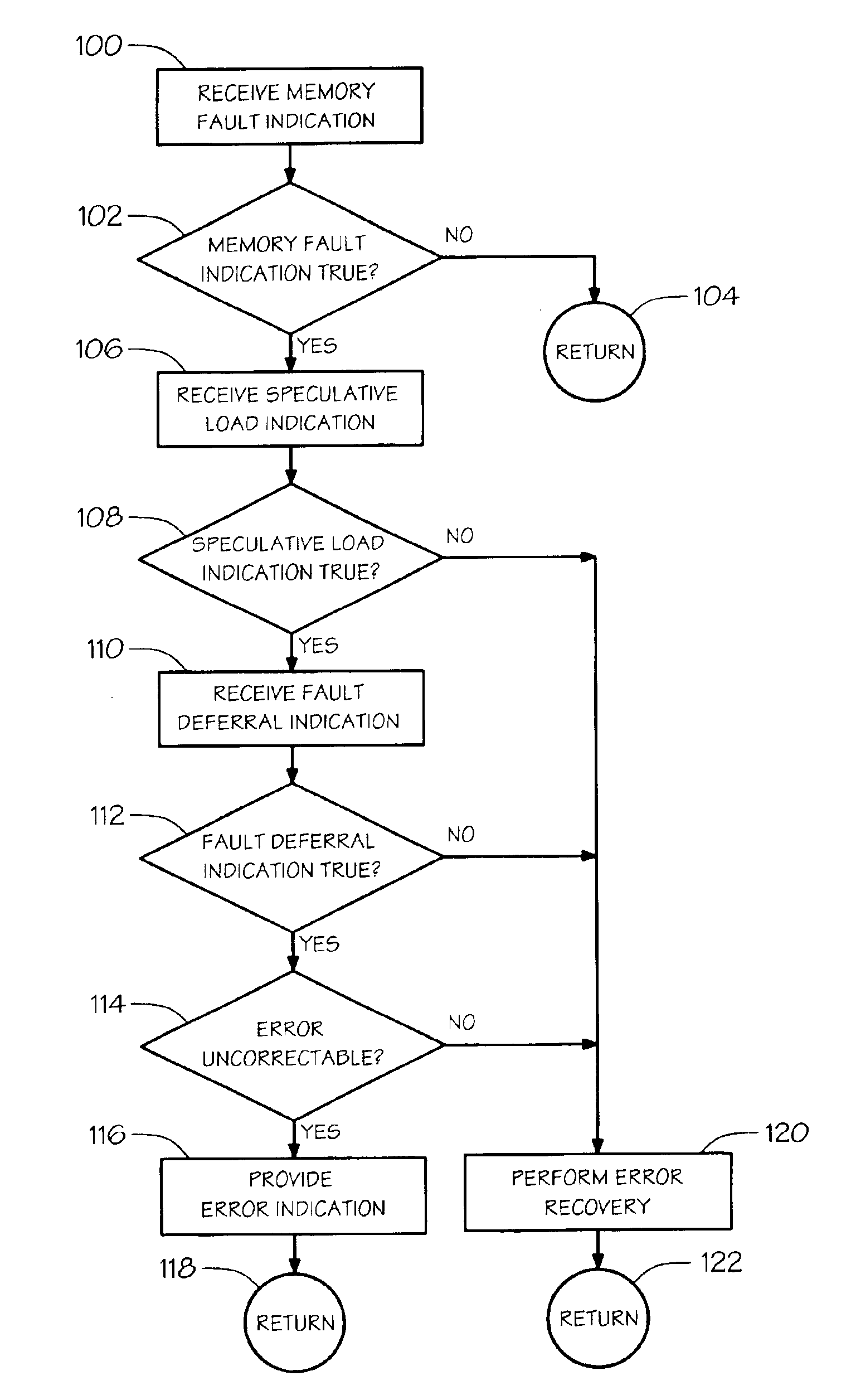

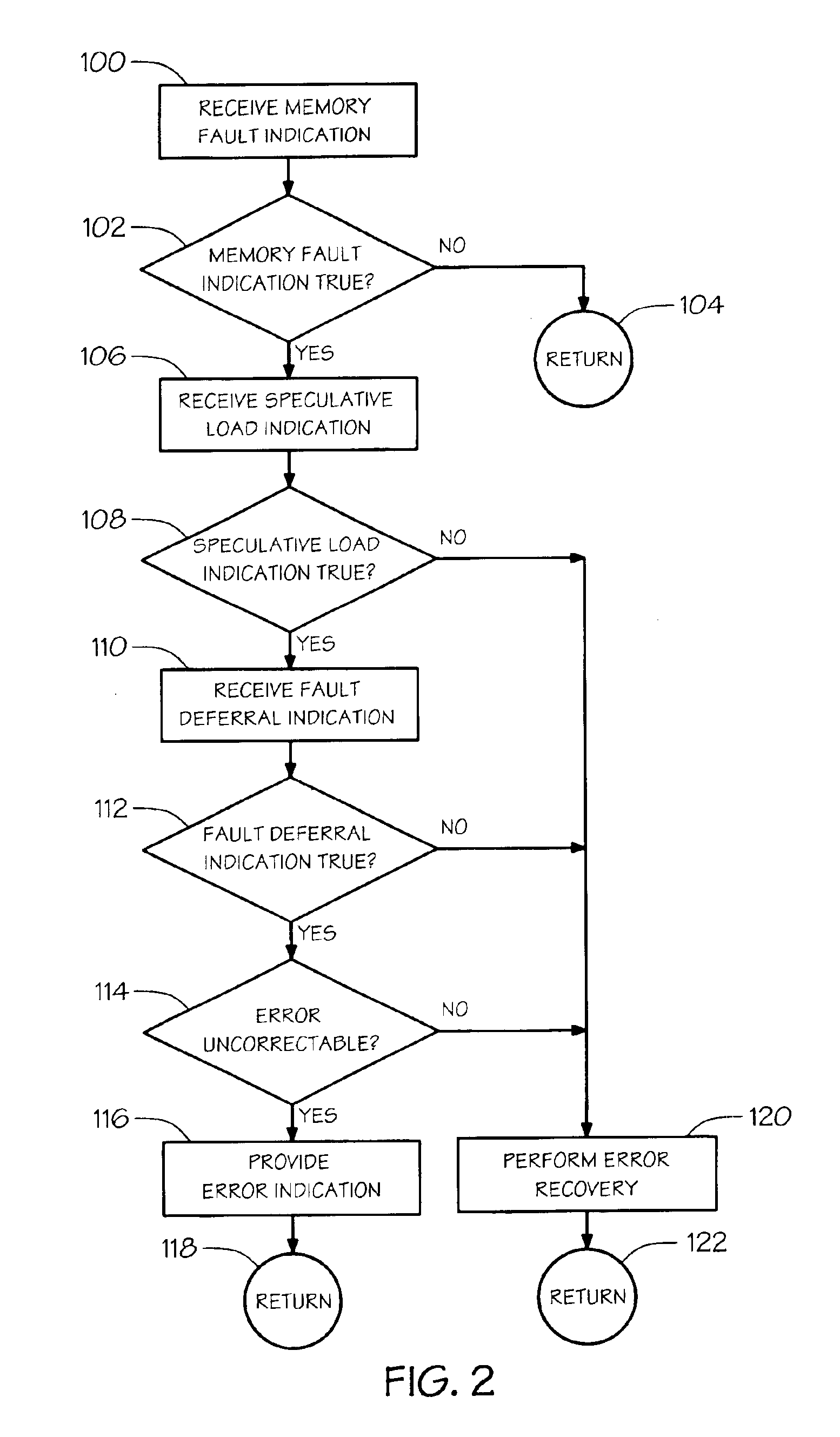

Error recovery for speculative memory accesses

A method of handling memory errors. A memory fault indication is received that is true if an error in the memory is detected while executing a memory load request to retrieve a value from the memory. A speculative load indication is received that is true if the memory load request was issued speculatively. If the memory fault indication is true and the speculative load indication is true, then an error indication that the returned value is invalid is provided, otherwise, error recovery is performed.

Owner:INTEL CORP

Method and apparatus for avoiding cache pollution due to speculative memory load operations in a microprocessor

ActiveUS20050055533A1Eliminate cache pollutionEliminate pollutionGeneral purpose stored program computerConcurrent instruction executionLoad instructionOperand

A cache pollution avoidance unit includes a dynamic memory dependency table for storing a dependency state condition between a first load instruction and a sequentially later second load instruction, which may depend on the completion of execution of the first load instruction for operand data. The cache pollution avoidance unit logically ANDs the dependency state condition stored in the dynamic memory dependency table with a cache memory “miss” state condition returned by the cache pollution avoidance unit for operand data produced by the first load instruction and required by the second load instruction. If the logical ANDing is true, memory access to the second load instruction is squashed and the execution of the second load instruction is re-scheduled.

Owner:ORACLE INT CORP

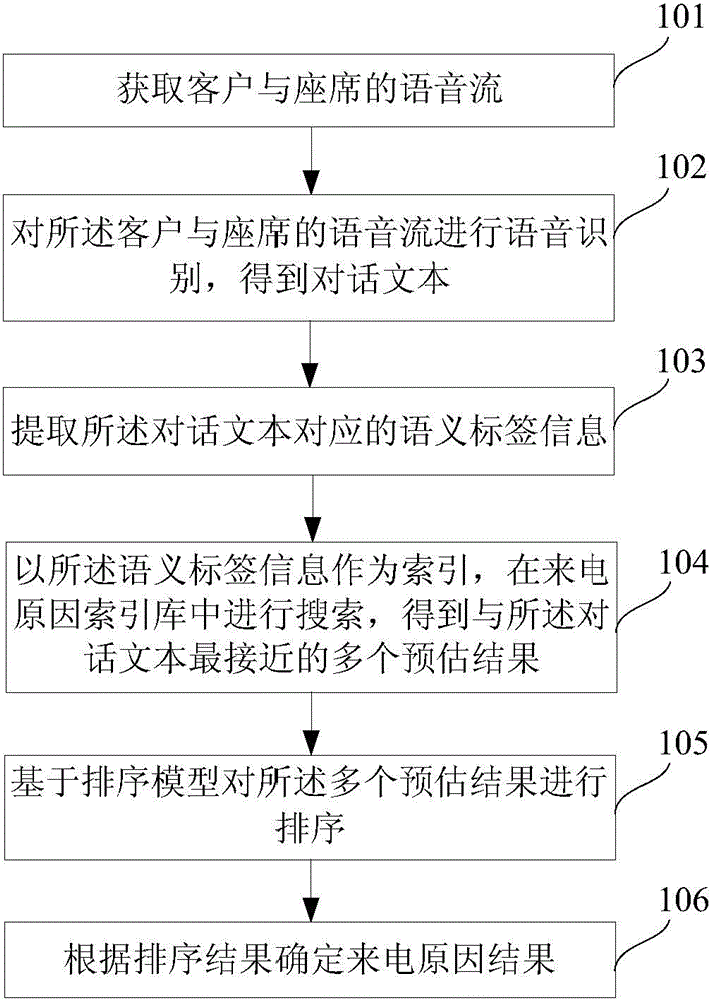

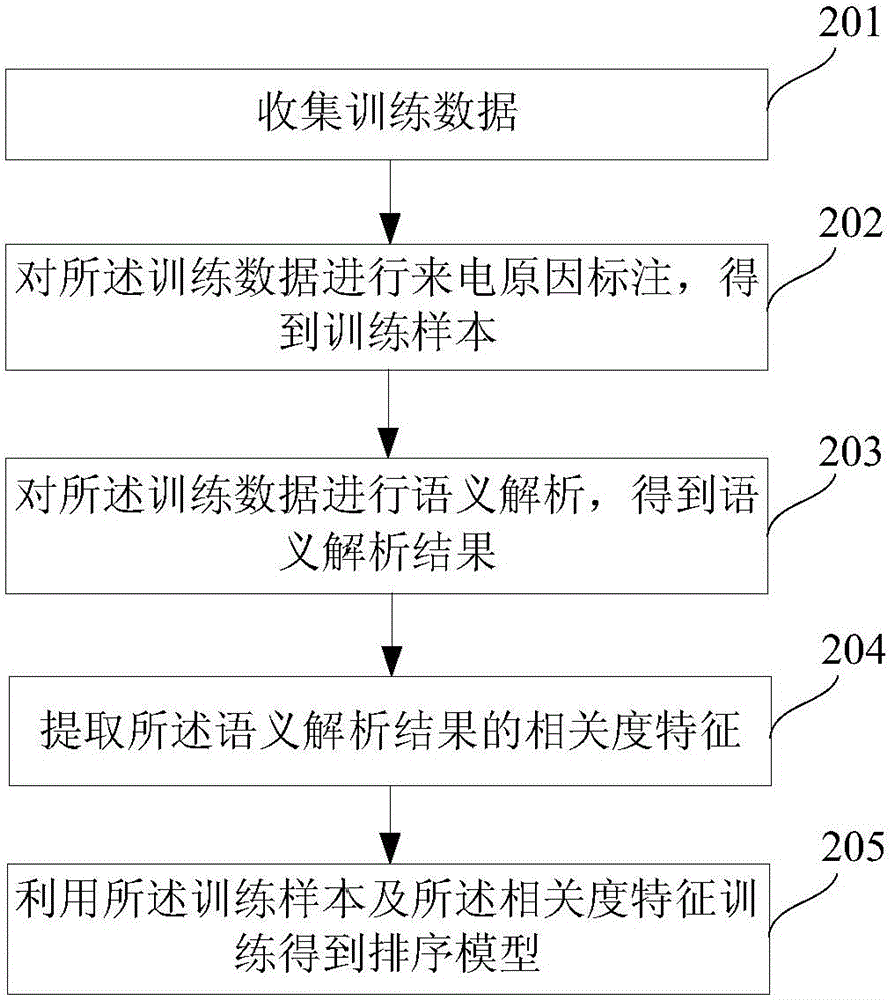

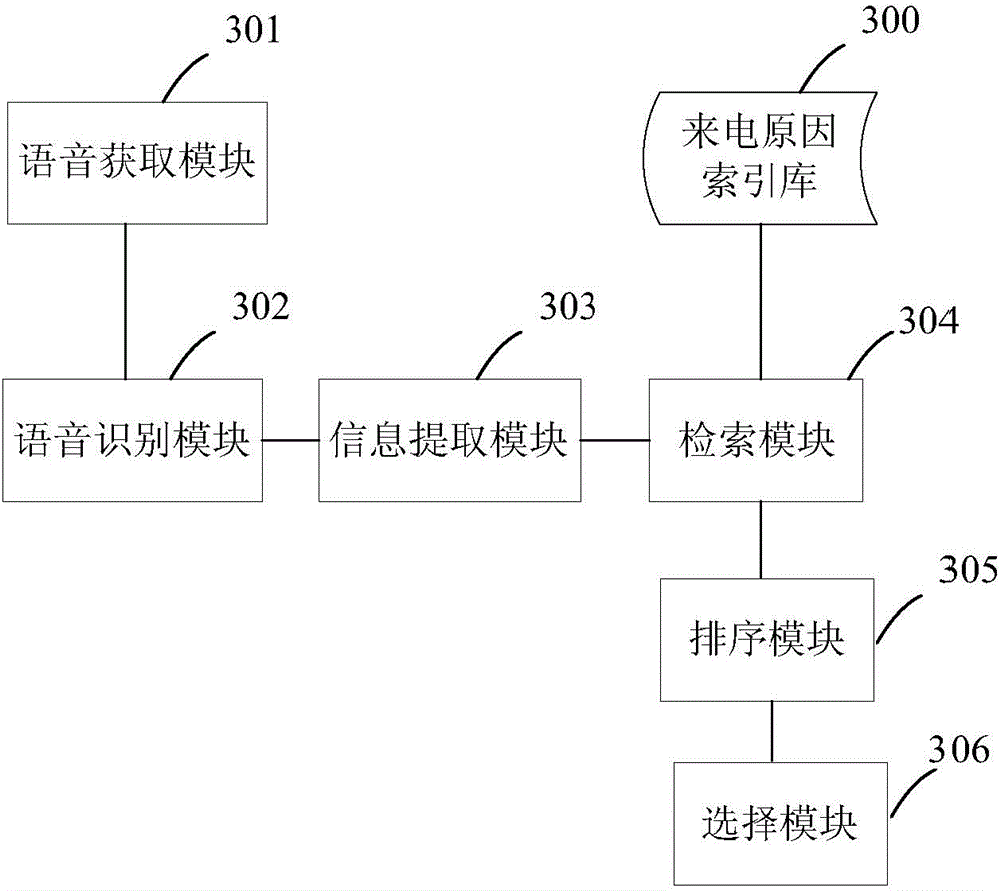

Method and device for achieving automatic classification of calling reasons

ActiveCN104462600AAvoid wrong choiceAvoider missedSemantic analysisSpeech recognitionSpeech identificationData mining

The invention discloses a method and device for achieving automatic classification of calling reasons. The method comprises the steps of obtaining speech flow of clients and a telephone operator, carrying out speech recognition on the speech flow of the clients and the telephone operator to obtain conversation texts, extracting corresponding semantic tag information of the conversation texts, carrying out search in a calling reason index database with the semantic tag information as index to obtain multiple estimated results most proximate to the conversation texts, ranking the estimated results on the basis of a classification model, and confirming calling reason results according to the ranking result. By the adoption of the method and device, memory load and retrieval load of the telephone operator can be relieved, and the accuracy and comprehensiveness of recording of the calling reasons can be improved.

Owner:IFLYTEK CO LTD

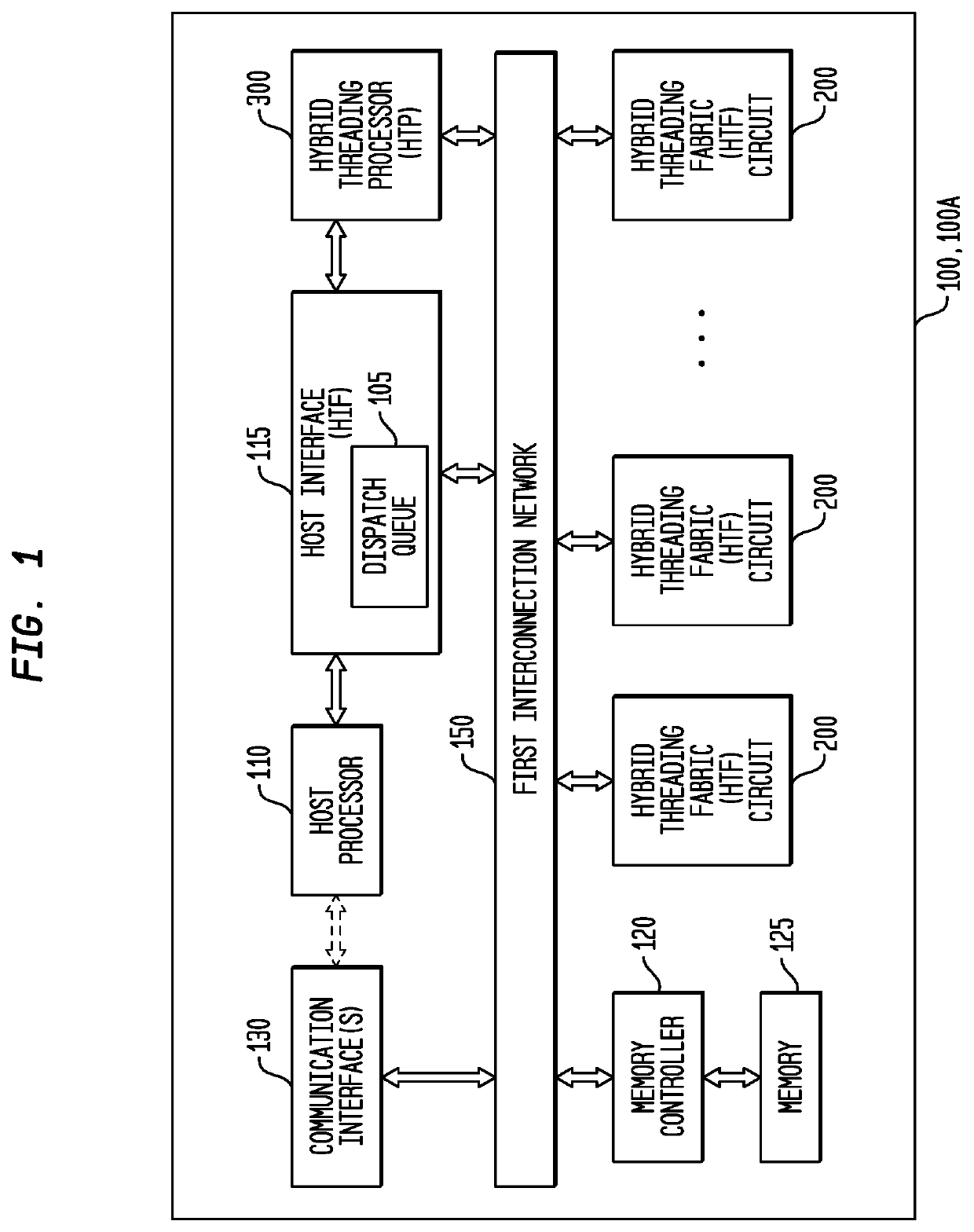

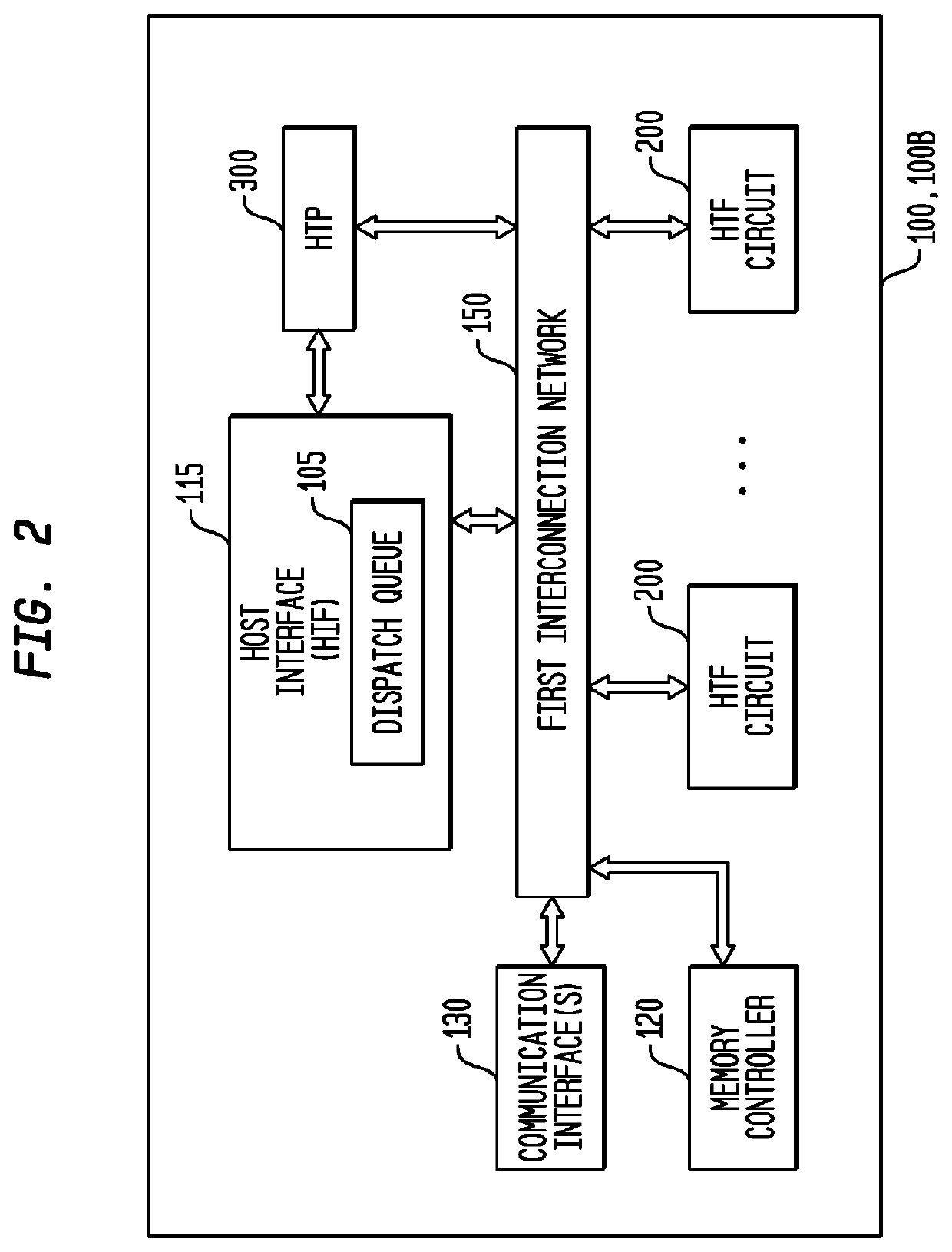

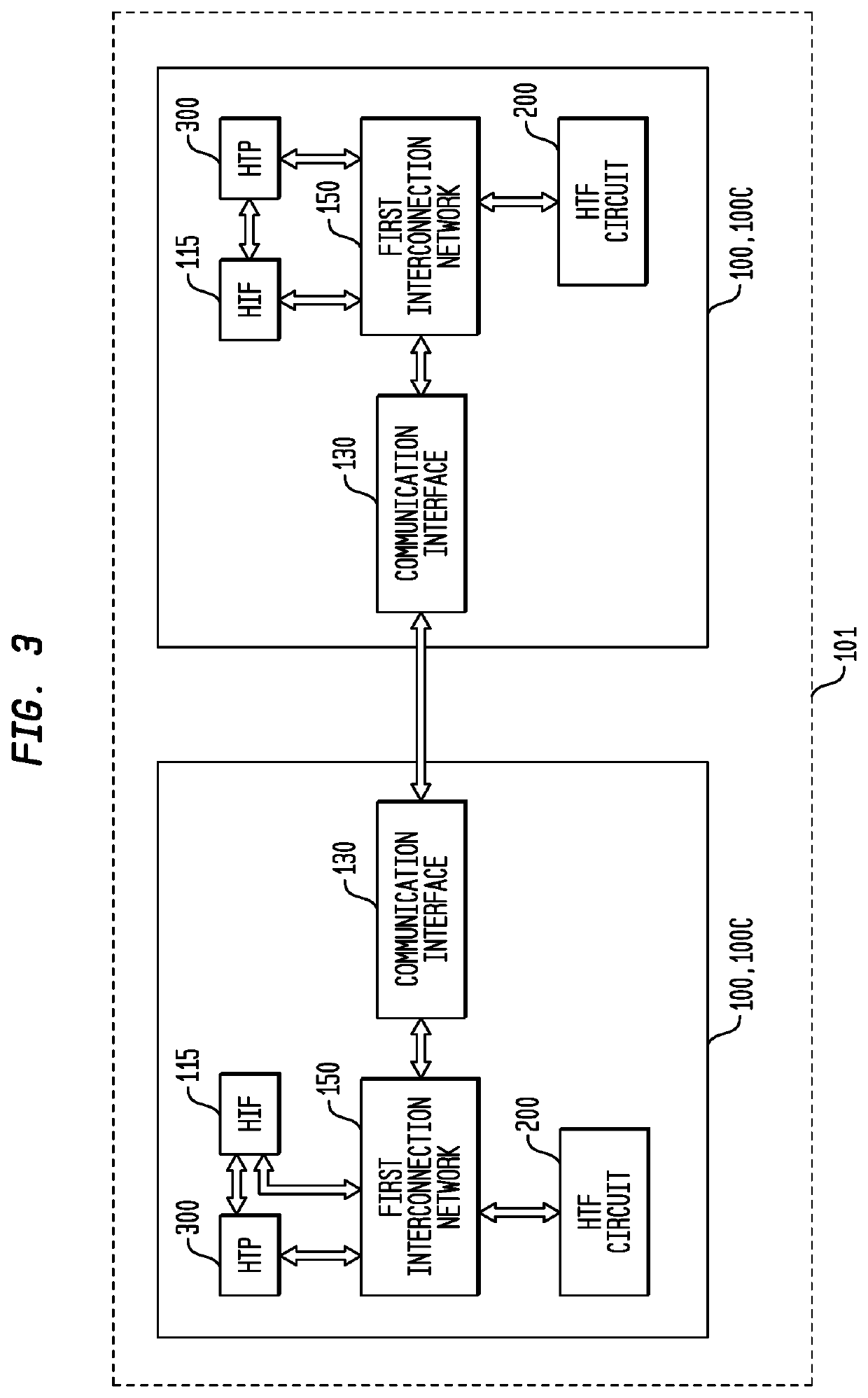

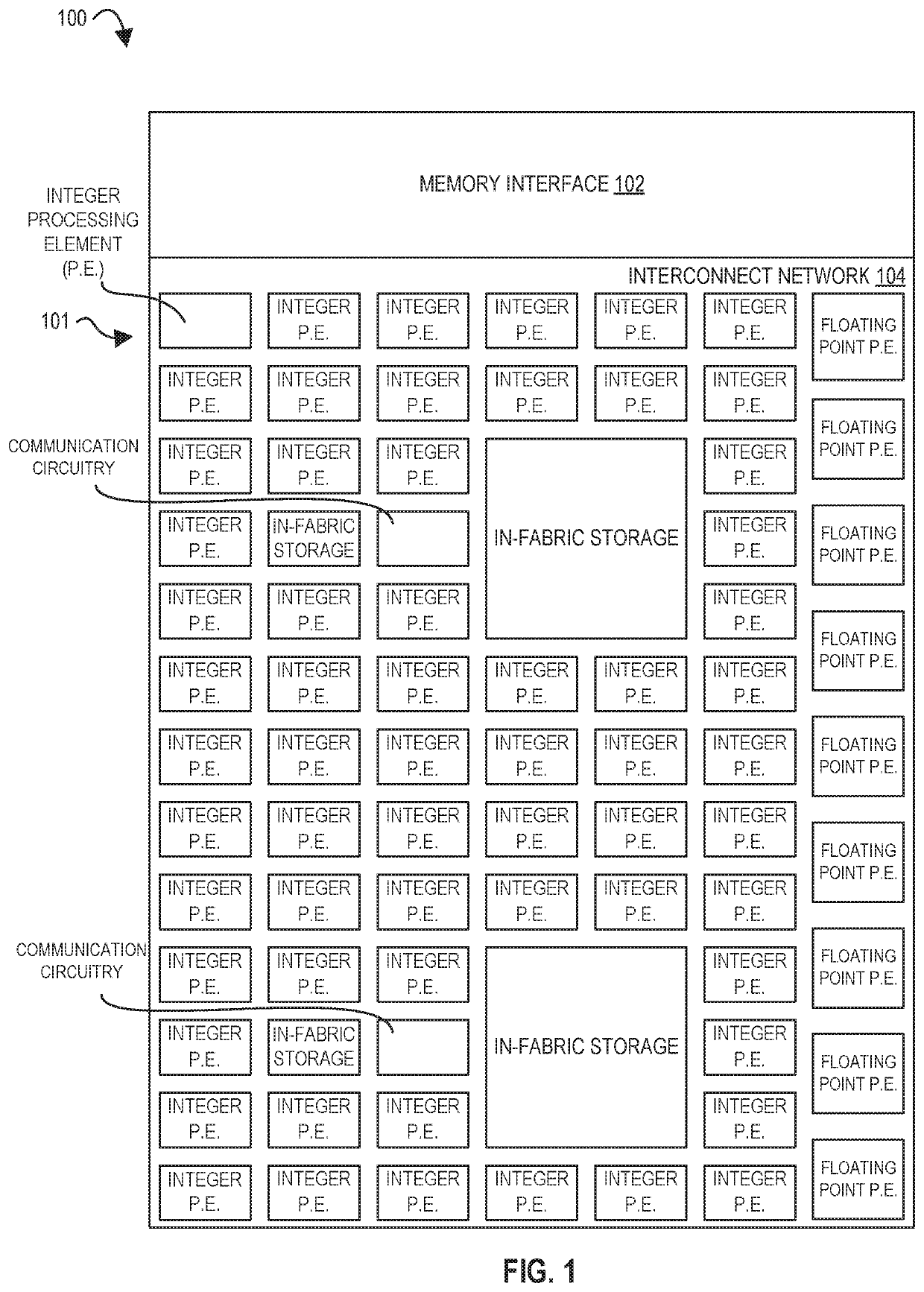

Memory Interface for a Multi-Threaded, Self-Scheduling Reconfigurable Computing Fabric

PendingUS20210064435A1Fast executionResource allocationInterprogram communicationData packMemory interface

Representative apparatus, method, and system embodiments are disclosed for configurable computing. A representative memory interface circuit comprises: a plurality of registers storing a plurality of tables, a state machine circuit, and a plurality of queues. The plurality of tables include a memory request table, a memory request identifier table, a memory response table, a memory data message table, and a memory response buffer. The state machine circuit is adapted to receive a load request, and in response, to obtain a first memory request identifier from the load request, to store the first memory request identifier in the memory request identifier table, to generate one or more memory load request data packets having the memory request identifier for transmission to the memory circuit, and to store load request information in the memory request table. The plurality of queues store one or more data packets for transmission.

Owner:MICRON TECH INC

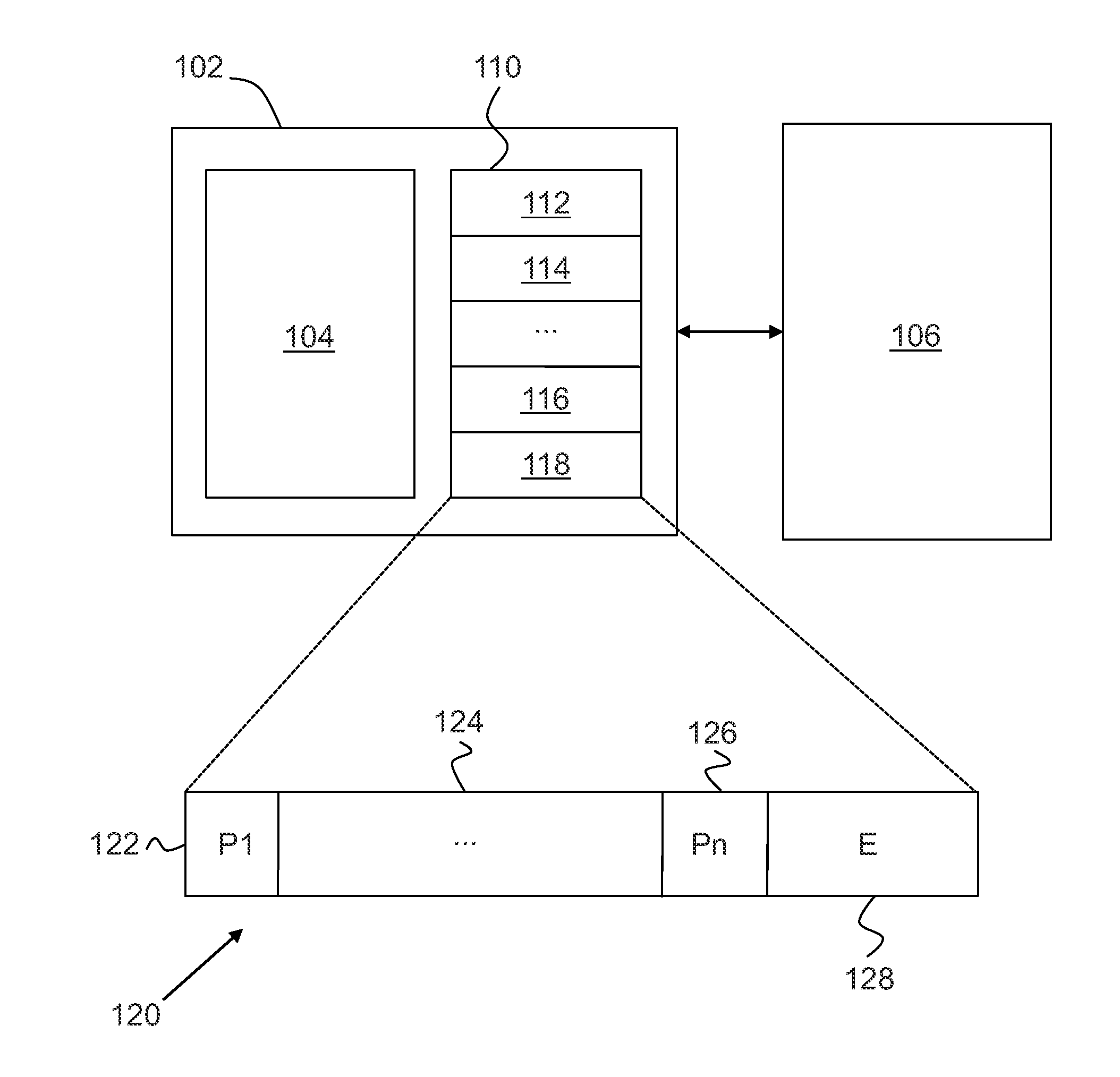

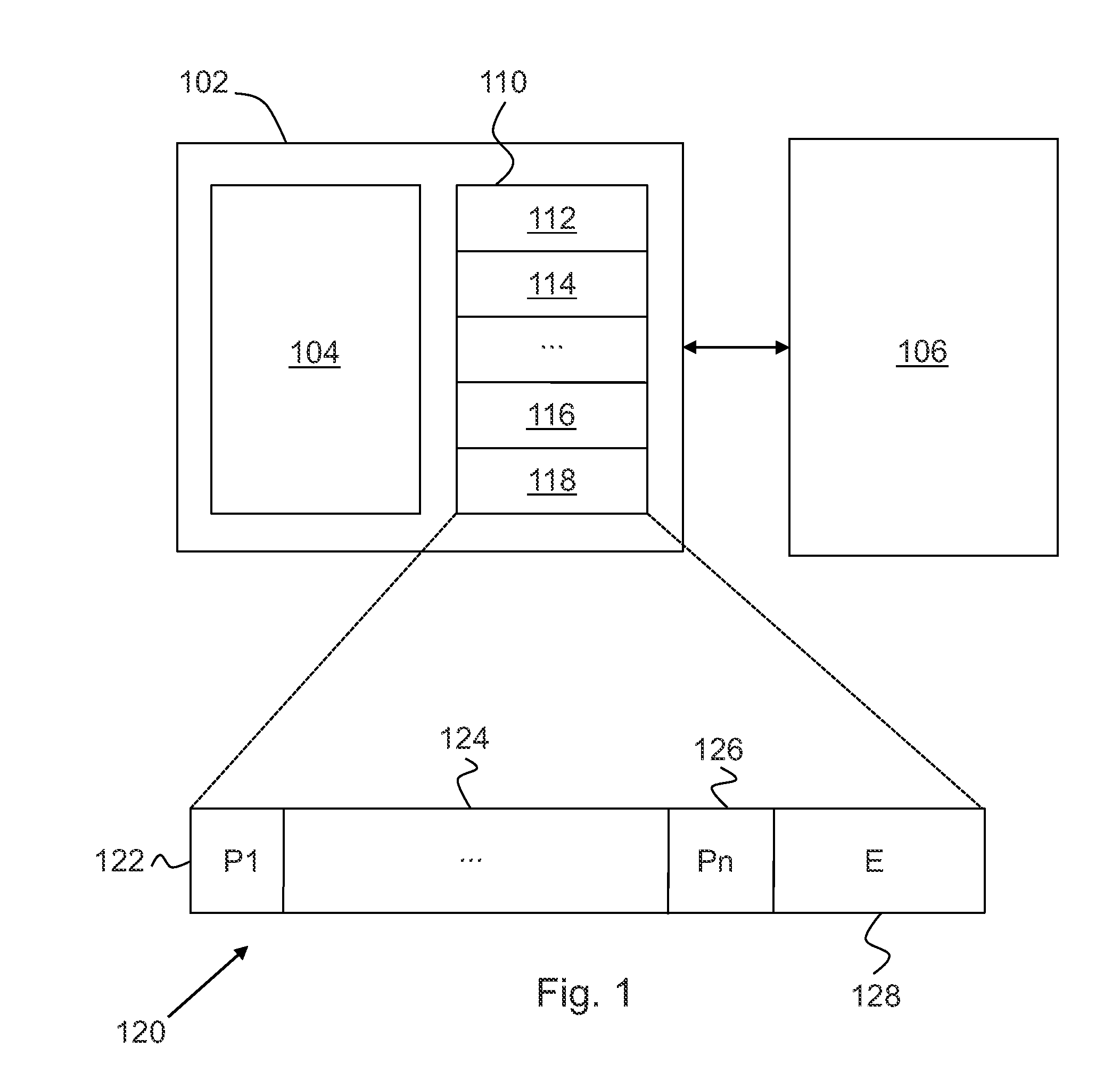

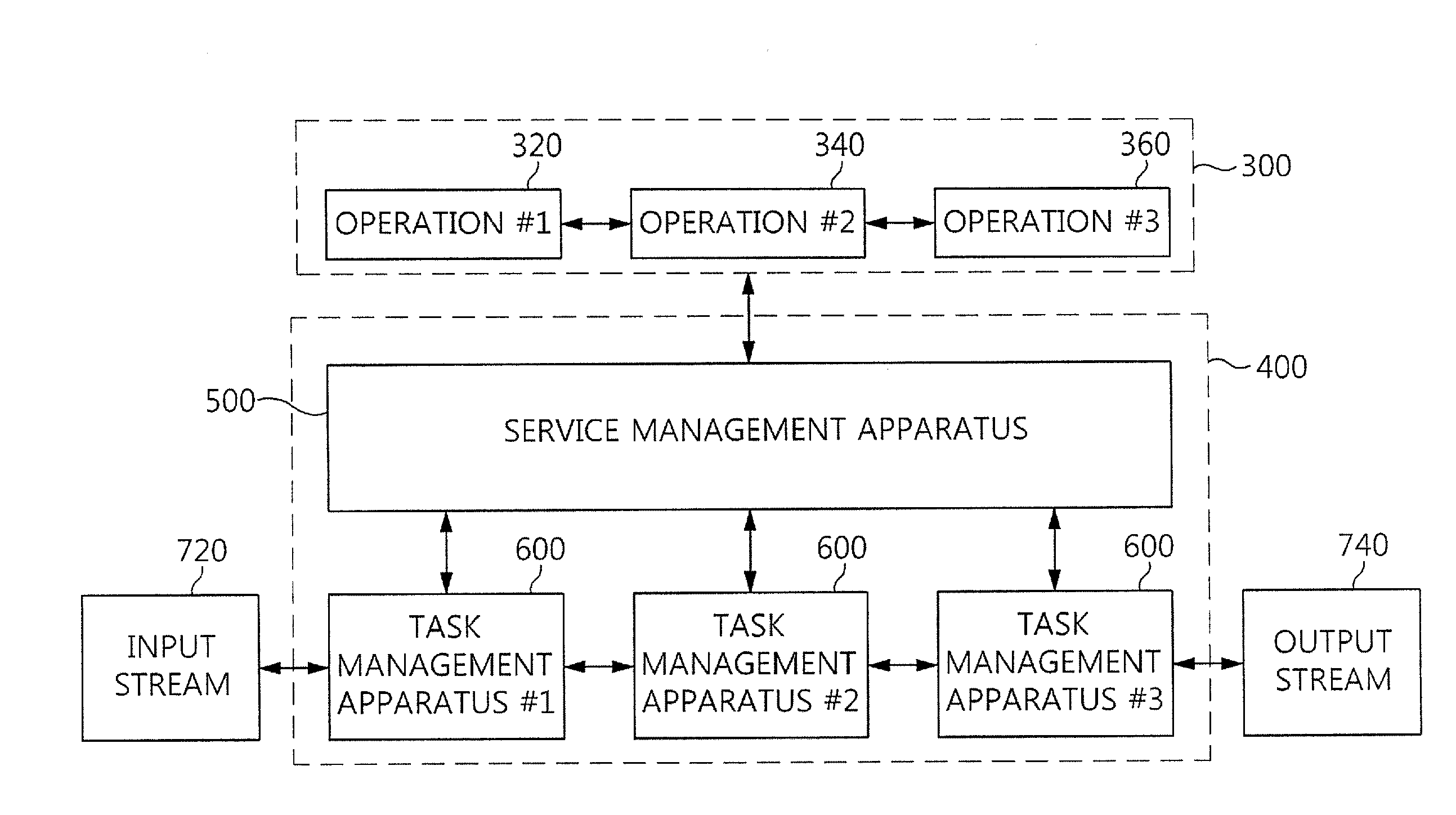

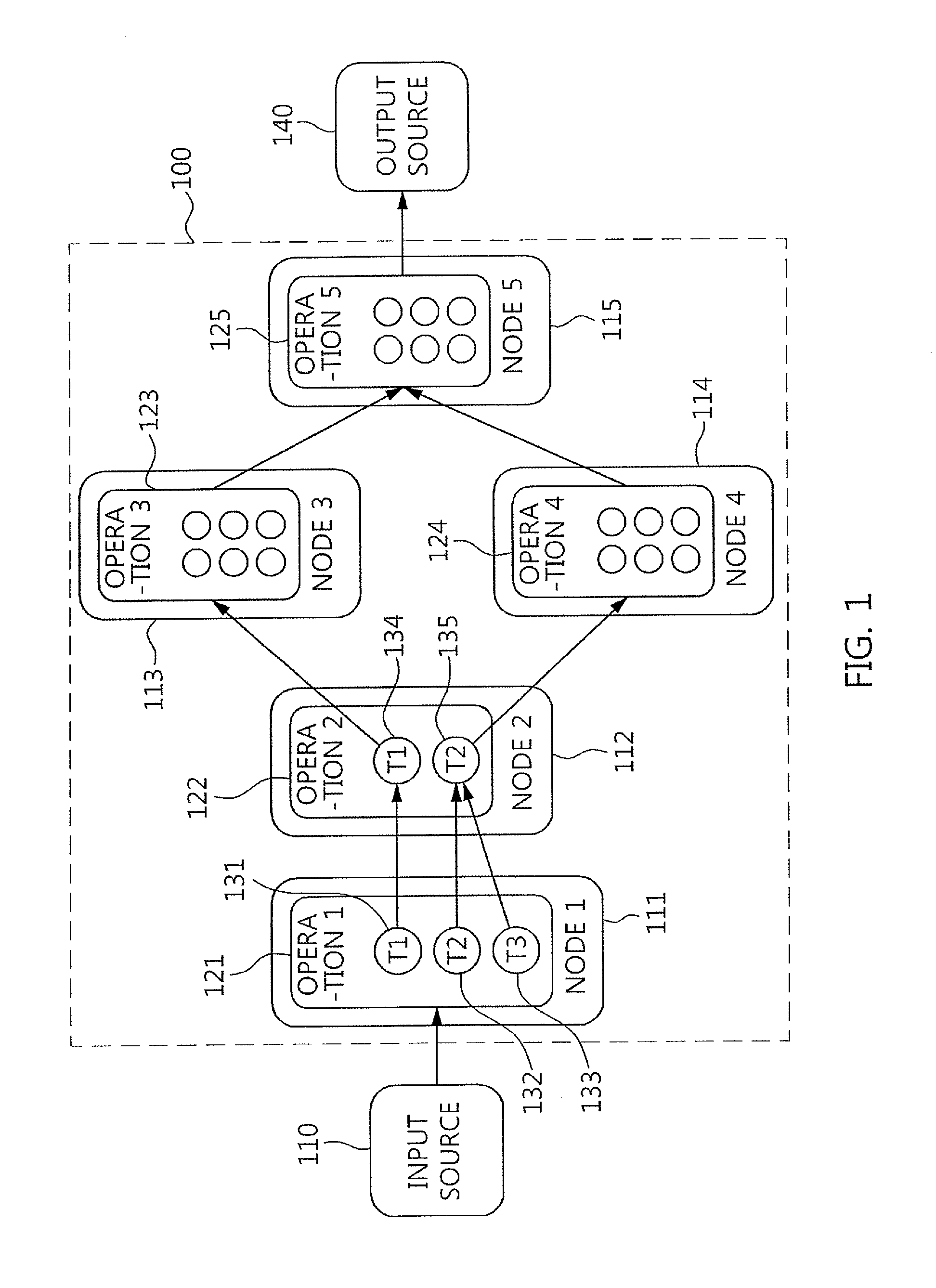

Apparatus and method for managing stream processing tasks

InactiveUS20140351820A1Convenient and accurateData augmentationResource allocationMemory systemsManagement unitExecution unit

An apparatus and method for managing stream processing tasks are disclosed. The apparatus includes a task management unit and a task execution unit. The task management unit controls and manages the execution of assigned tasks. The task execution unit executes the tasks in response to a request from the task management unit, collects a memory load state and task execution frequency characteristics based on the execution of the tasks, detects low-frequency tasks based on the execution frequency characteristics if it is determined that a shortage of memory has occurred based on the memory load state, assigns rearrangement priorities to the low-frequency tasks, and rearranges the tasks based on the assigned rearrangement priorities.

Owner:ELECTRONICS & TELECOMM RES INST

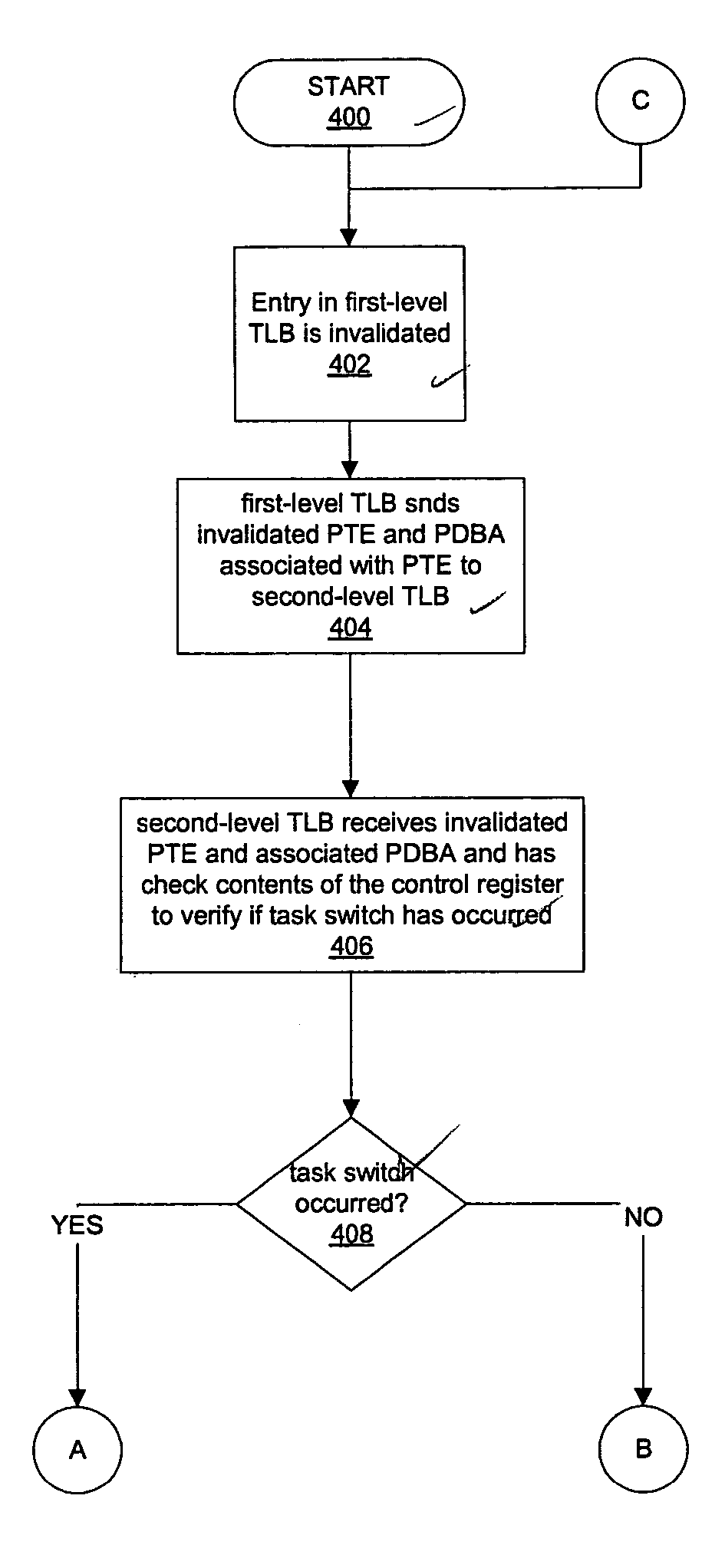

System and method of improving task switching and page translation performance utilizing a multilevel translation lookaside buffer

ActiveUS20060230252A1Easy to switchMemory architecture accessing/allocationMultiprogramming arrangementsData processing systemData treatment

A system and method of improved task switching in a data processing system. First, a first-level cache memory casts out an invalidated page table entry and an associated first page directory base address to a second-level cache memory. Then, the second-level cache memory determines if a task switch has occurred. If a task switch has not occurred, first-level cache memory sends the invalidated page table entry to a current running task directory. If a task switch has occurred, first-level cache memory loads from the second-level cache directory a collection of page table entries related to a new task to enable improved task switching without requiring access to a page table stored in main memory to retrieve the collection of page table entries.

Owner:META PLATFORMS INC

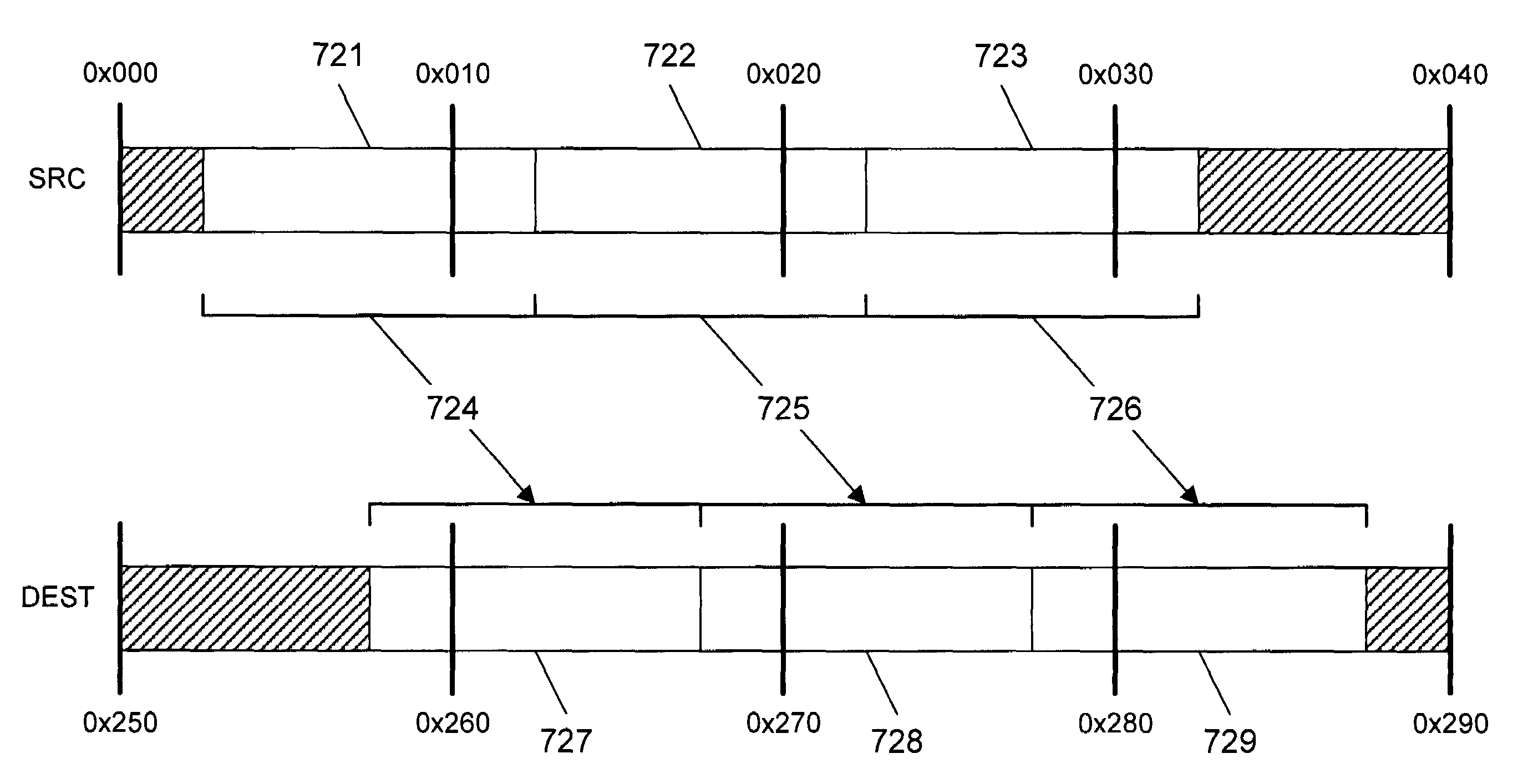

Superior misaligned memory load and copy using merge hardware

InactiveUS7340495B2Register arrangementsDigital data processing detailsMemory addressParallel computing

Method, apparatus, and program means for performing misaligned memory load and copy using aligned memory operations together with a SIMD merge instruction. The method of one embodiment comprises determining whether a memory operation involves a misaligned memory address. The memory operation is performed with aligned memory accesses if the memory operation is determined as not involving a misaligned memory address. The memory operation is performed with an algorithm including a merge operation and aligned memory accesses if the memory operation is determined as involving a misaligned memory address.

Owner:INTEL CORP

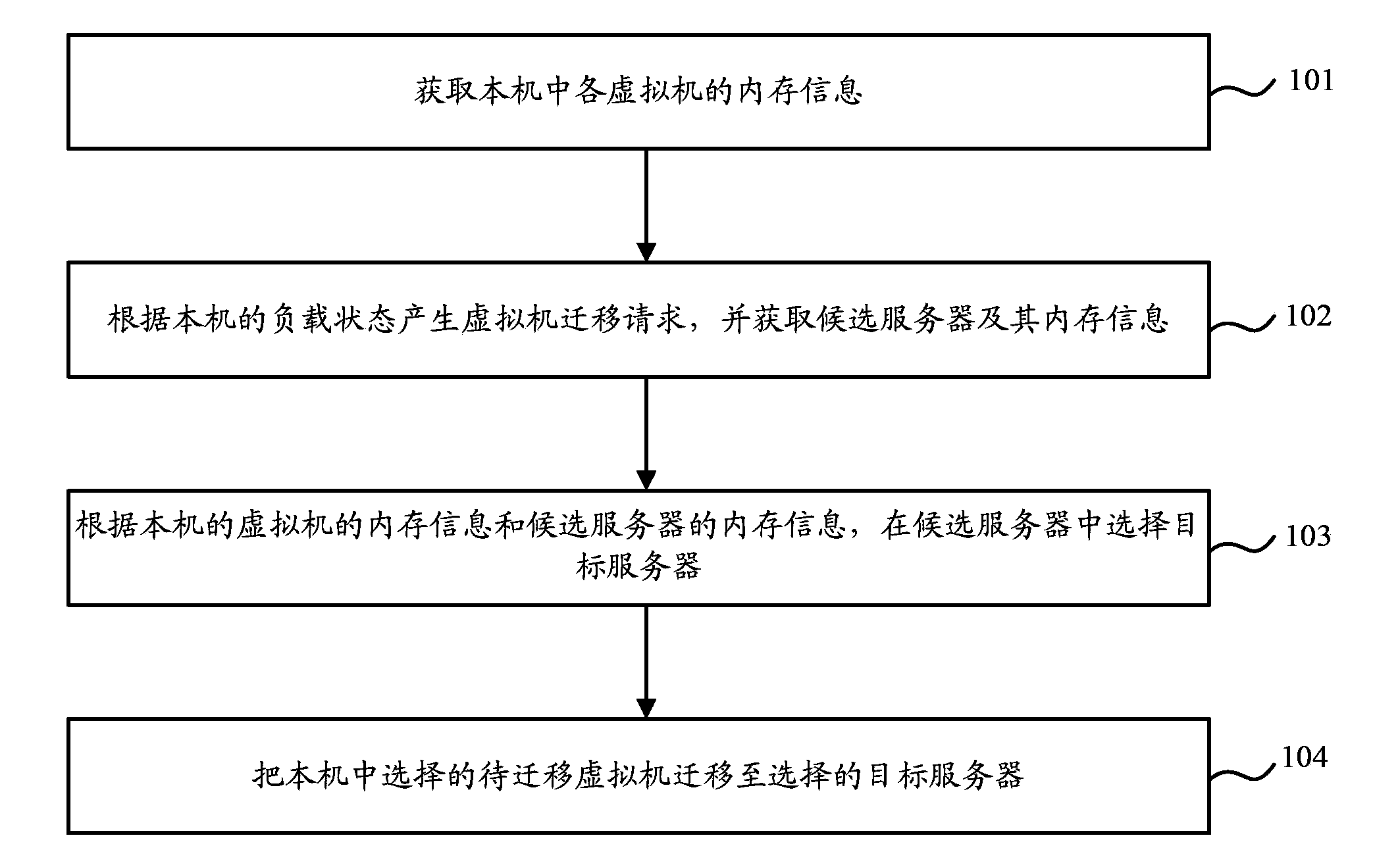

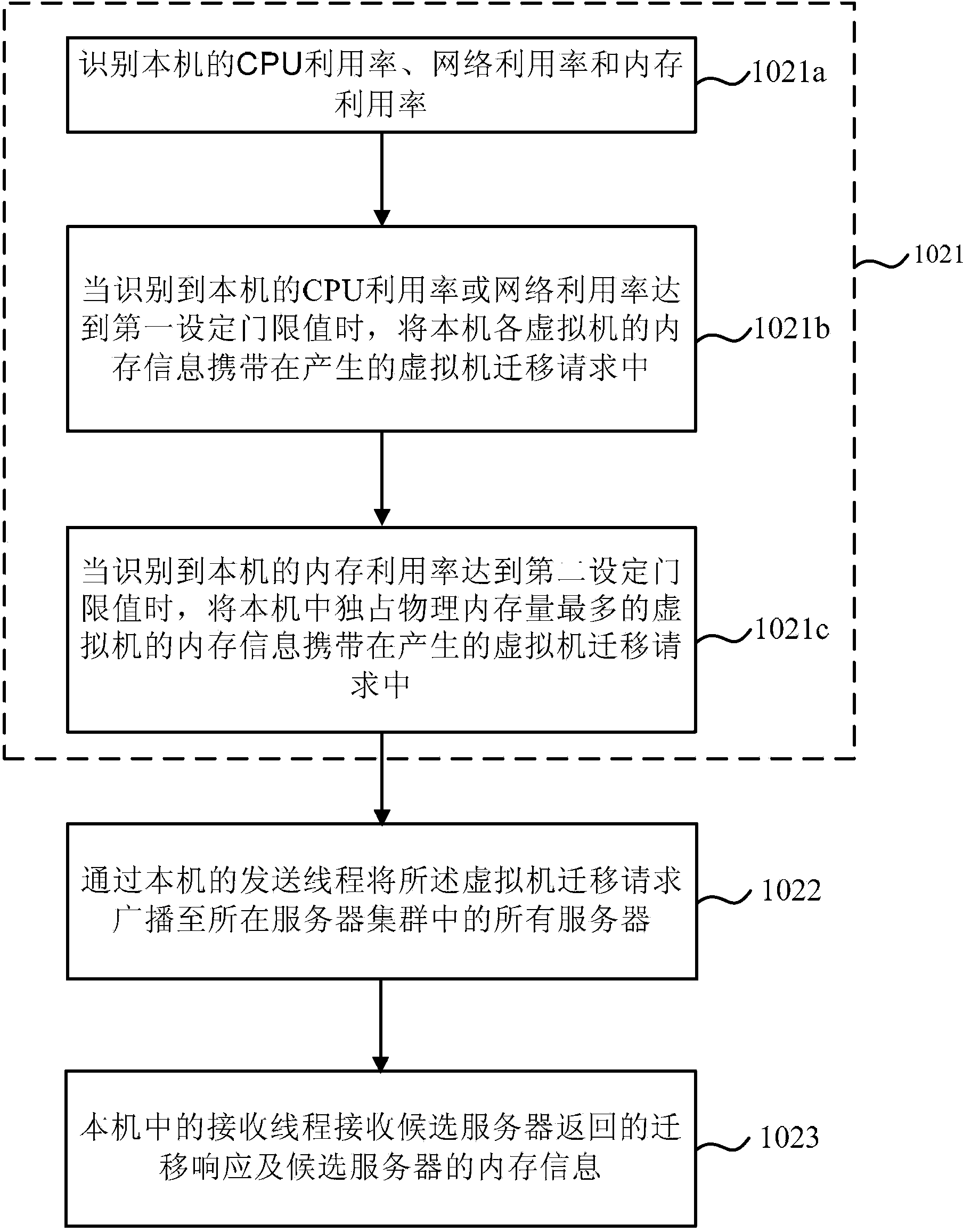

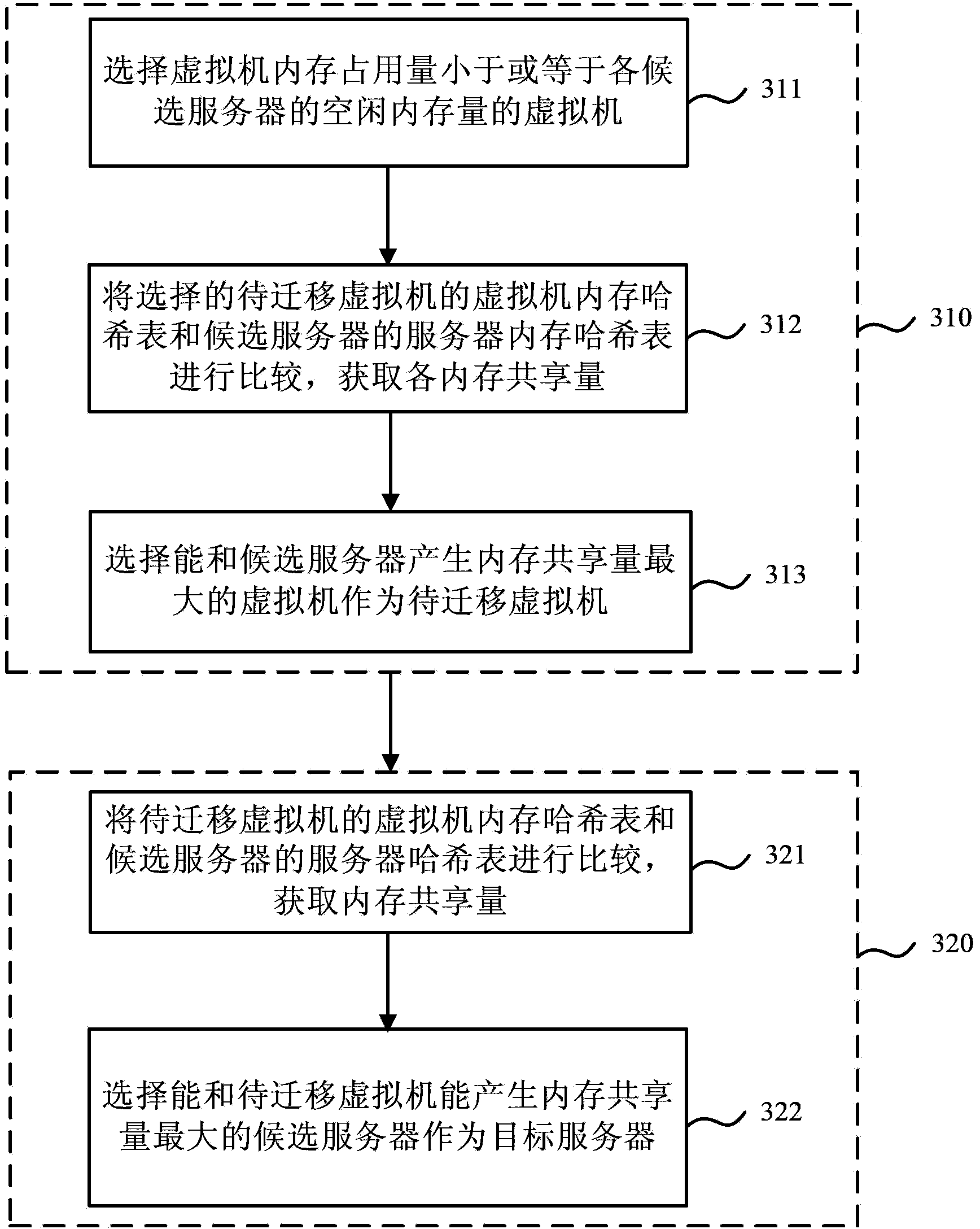

Virtual machine migration method and device

InactiveCN103888501ASolve the problem of not being able to get the expected free memoryProgram initiation/switchingTransmissionHigh memoryMemory load

The embodiment of the invention provides a virtual machine migration method and device. The method comprises the steps that the memory information of each virtual machine in the machine is acquired; when the load of the machine is too heavy, a virtual machine migration request is generated, and candidate servers and the memory information thereof are acquired; the memory information of each virtual machine and the memory information of the candidate servers are compared, so that the virtual machine needing migration in the machine is determined and a target server is selected from the candidate servers; and the virtual machine needing migration in the machine is migrated to the selected target server. According to the embodiment of the invention, a system has a high memory utilization rate after virtual machine migration; the sharp increasing of a physical memory requirement is avoided; and when the memory load of a server is too heavy, the server can acquire desired idle memory after virtual machine migration.

Owner:HUAWEI TECH CO LTD +1

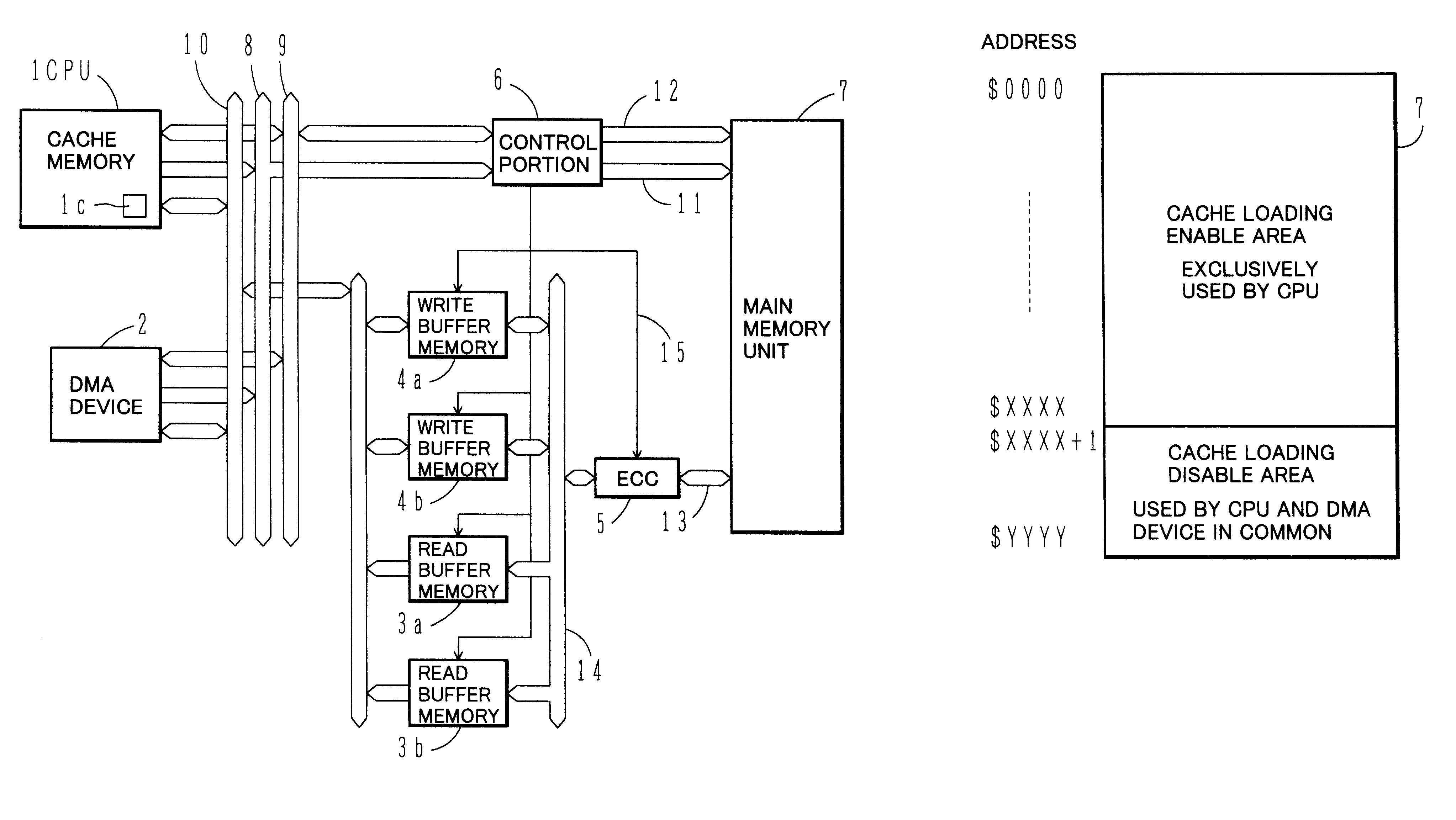

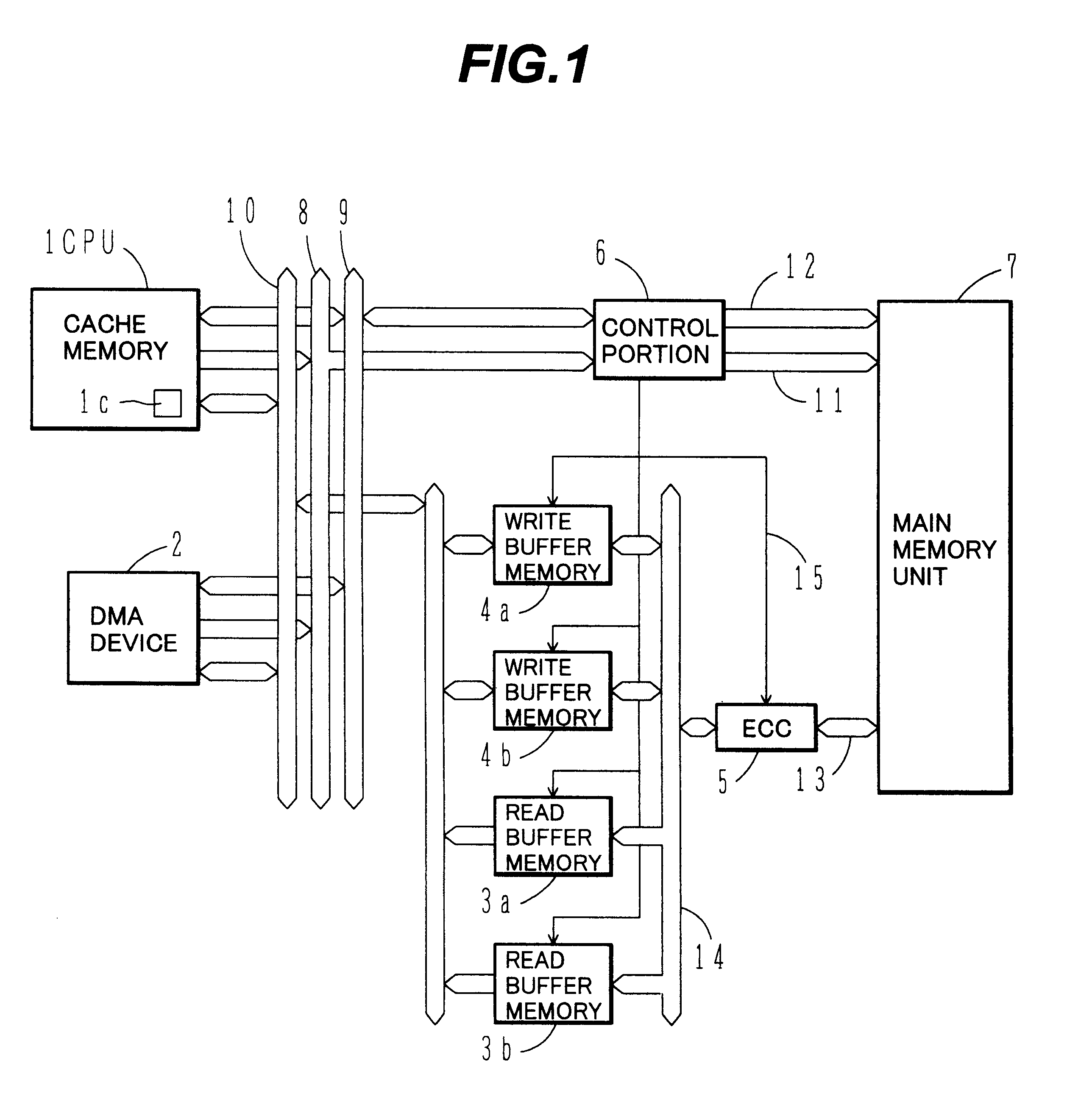

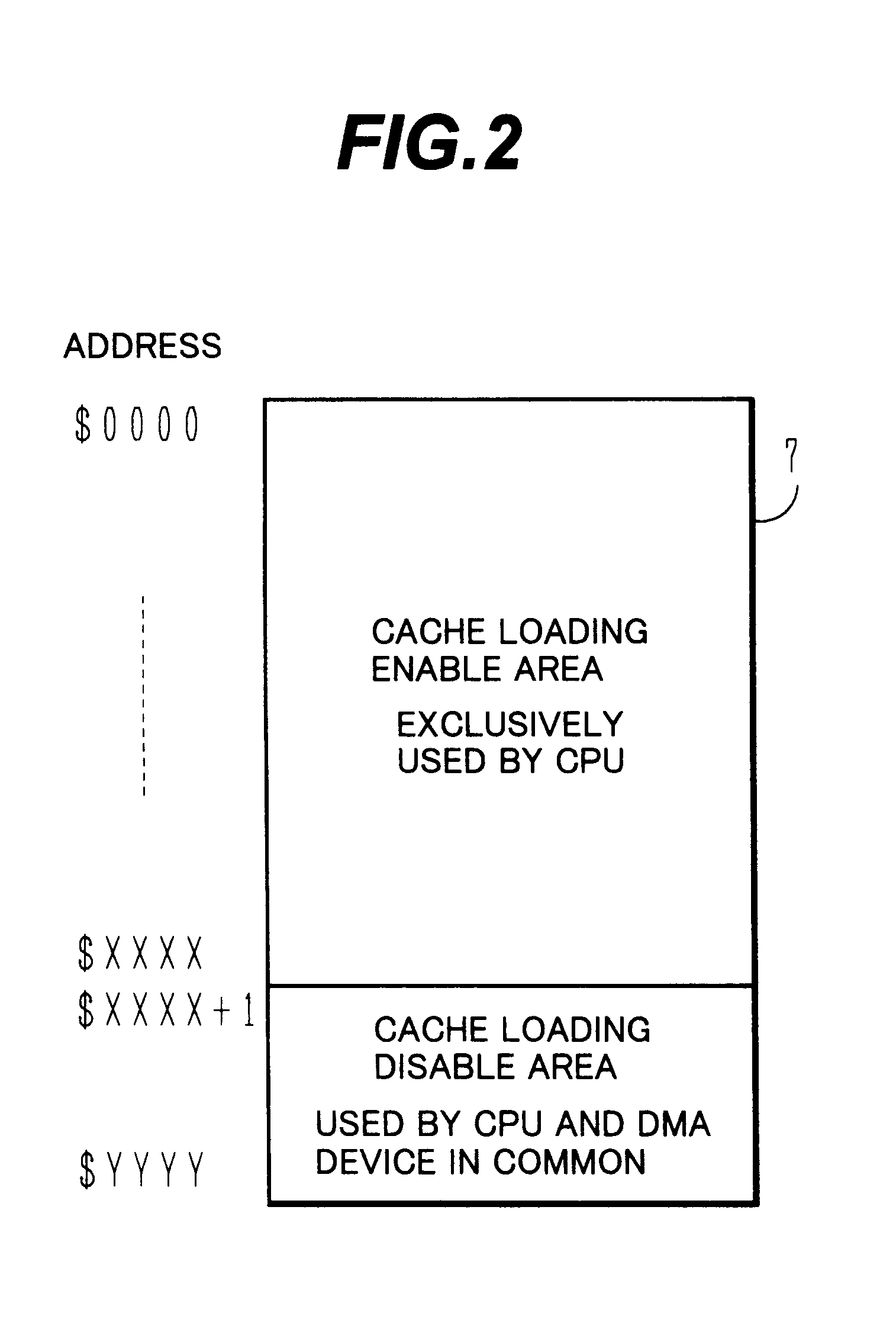

Main memory control apparatus for use in a memory having non-cacheable address space allocated to DMA accesses

InactiveUS6205517B1Maintain consistencyIncrease speedMemory loss protectionMemory adressing/allocation/relocationWrite bufferData error

A CPU and a DMA device employ in common an address area from which data cannot be loaded into a cache memory. A read buffer memory and a write buffer memory loading therein data read from and written into that address area are provided. In read access from the CPU or DMA device to the common address area, if data to be accessed exists in any buffer memory, the data in the buffer memory is transferred to the CPU or DMA device. If data to be accessed does not exist in any buffer memory, data at addresses different from the read access address from the CPU or DMA device only in lower bits of fixed length is read from the main memory unit and then loaded into the buffer memory after performing data error checking and correcting of the read data.

Owner:HITACHI LTD

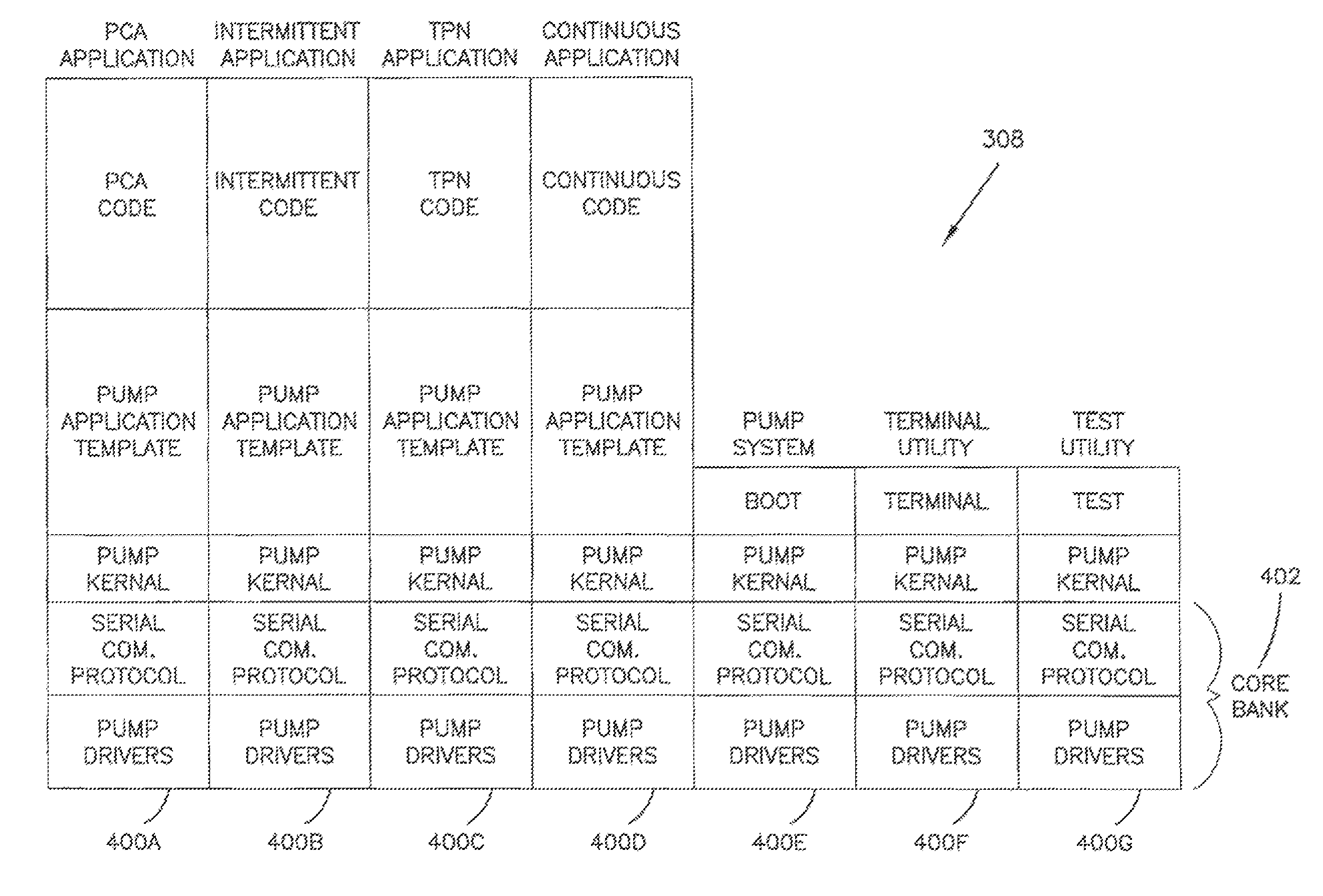

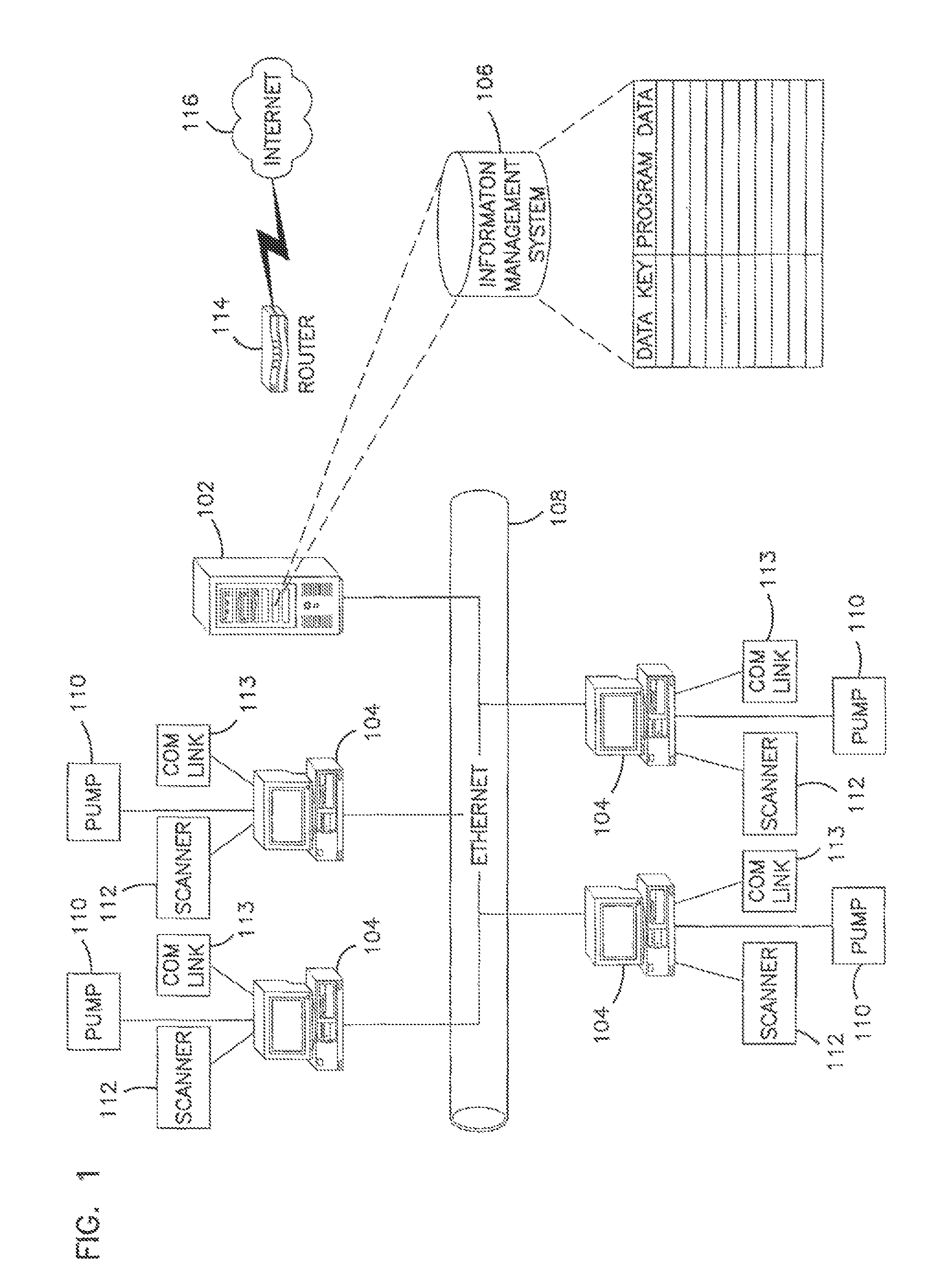

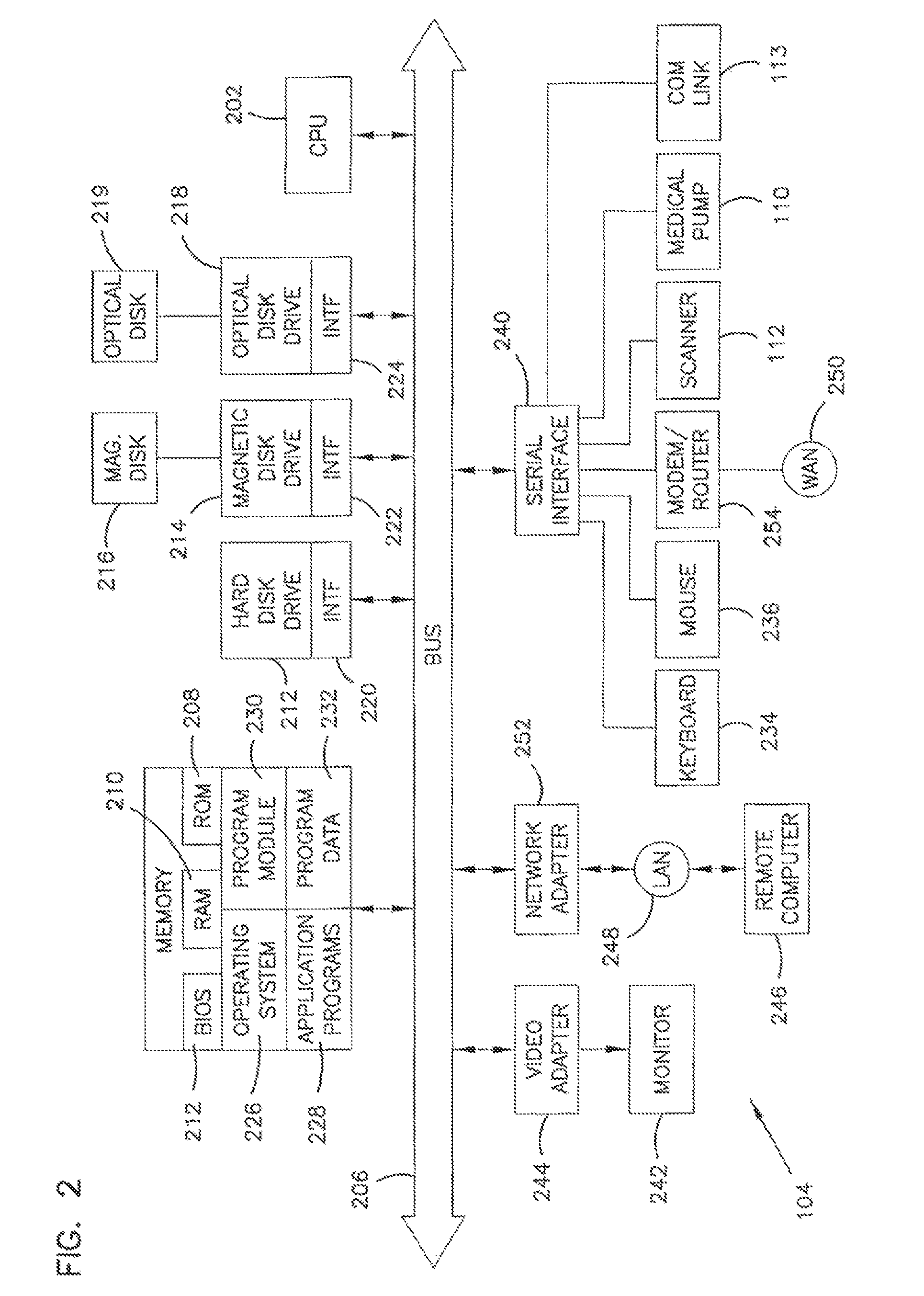

Processing program data for medical pumps

An apparatus for maintaining a library of program data for medical pumps, the apparatus comprising: memory loaded with a database, the database including a plurality of program data records and a plurality of data key records, each program data record containing a set of program data items, at least some of the program data items included in the database for controlling operation of a medical pump, each data key record containing a data key and each data key identifying one of the data program records; a database management system programmed to link a data key to a set of program data; and a scanner in data communication with the database management system, the database management system being further programmed to receive a code scanned by the scanner, save the code in a data key record, and link the code to a set of program data, the code being a data key.

Owner:SMITHS MEDICAL ASD INC

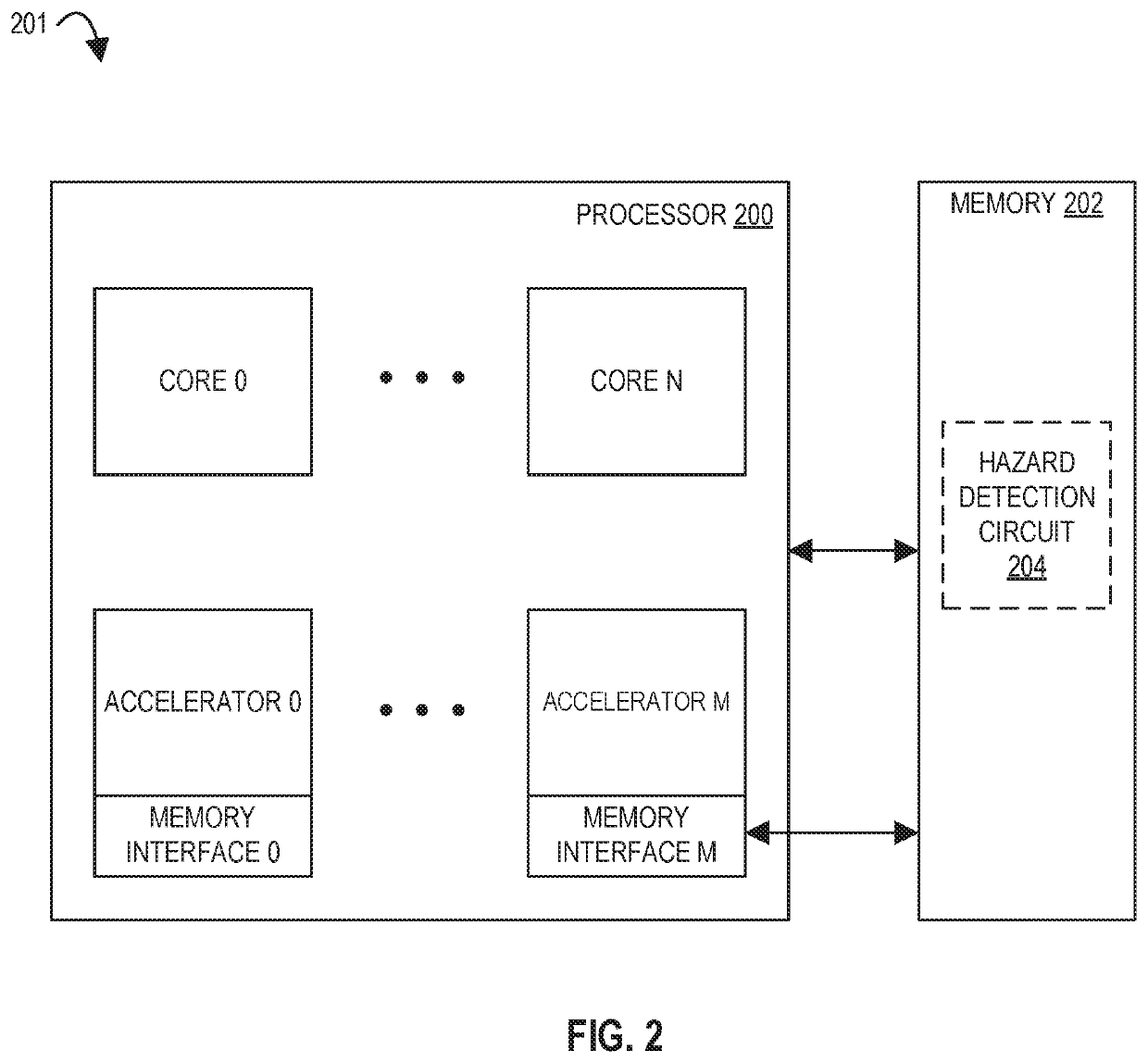

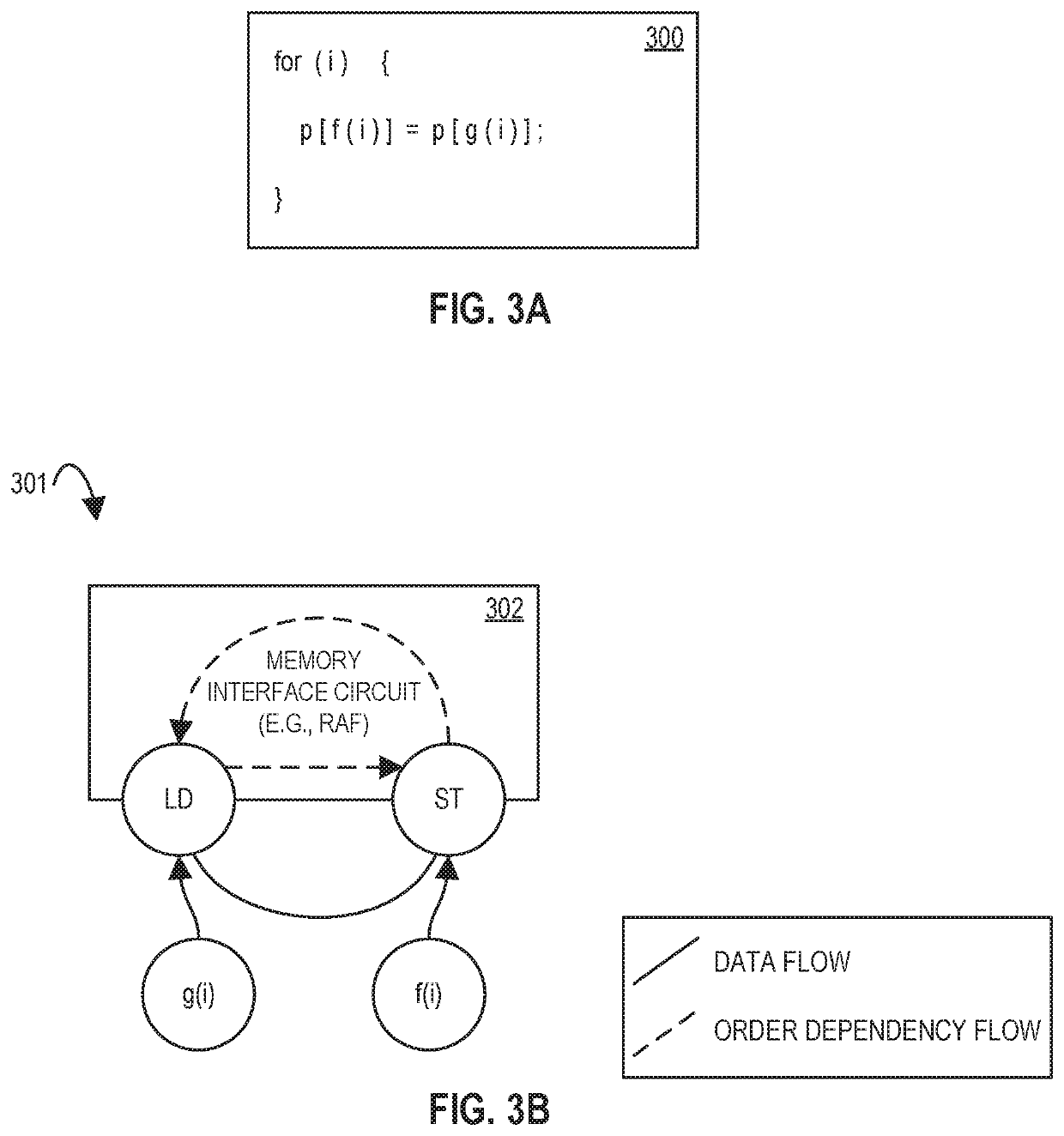

Memory circuits and methods for distributed memory hazard detection and error recovery

ActiveUS10515049B1Memory architecture accessing/allocationRead-only memoriesRecovery methodDistributed memory

Methods and apparatuses relating to distributed memory hazard detection and error recovery are described. In one embodiment, a memory circuit includes a memory interface circuit to service memory requests from a spatial array of processing elements for data stored in a plurality of cache banks; and a hazard detection circuit in each of the plurality of cache banks, wherein a first hazard detection circuit for a speculative memory load request from the memory interface circuit, that is marked with a potential dynamic data dependency, to an address within a first cache bank of the first hazard detection circuit, is to mark the address for tracking of other memory requests to the address, store data from the address in speculative completion storage, and send the data from the speculative completion storage to the spatial array of processing elements when a memory dependency token is received for the speculative memory load request.

Owner:TAHOE RES LTD

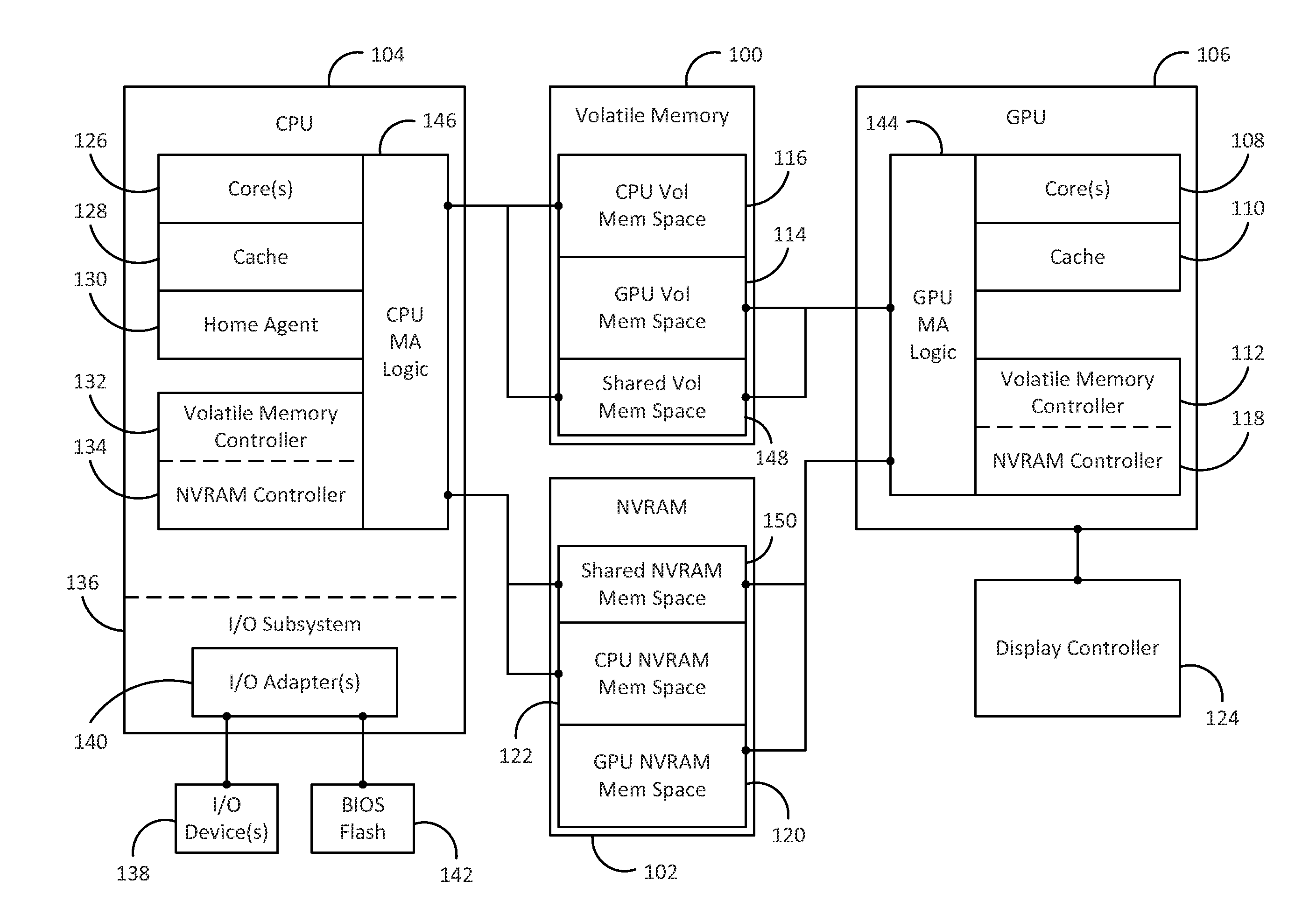

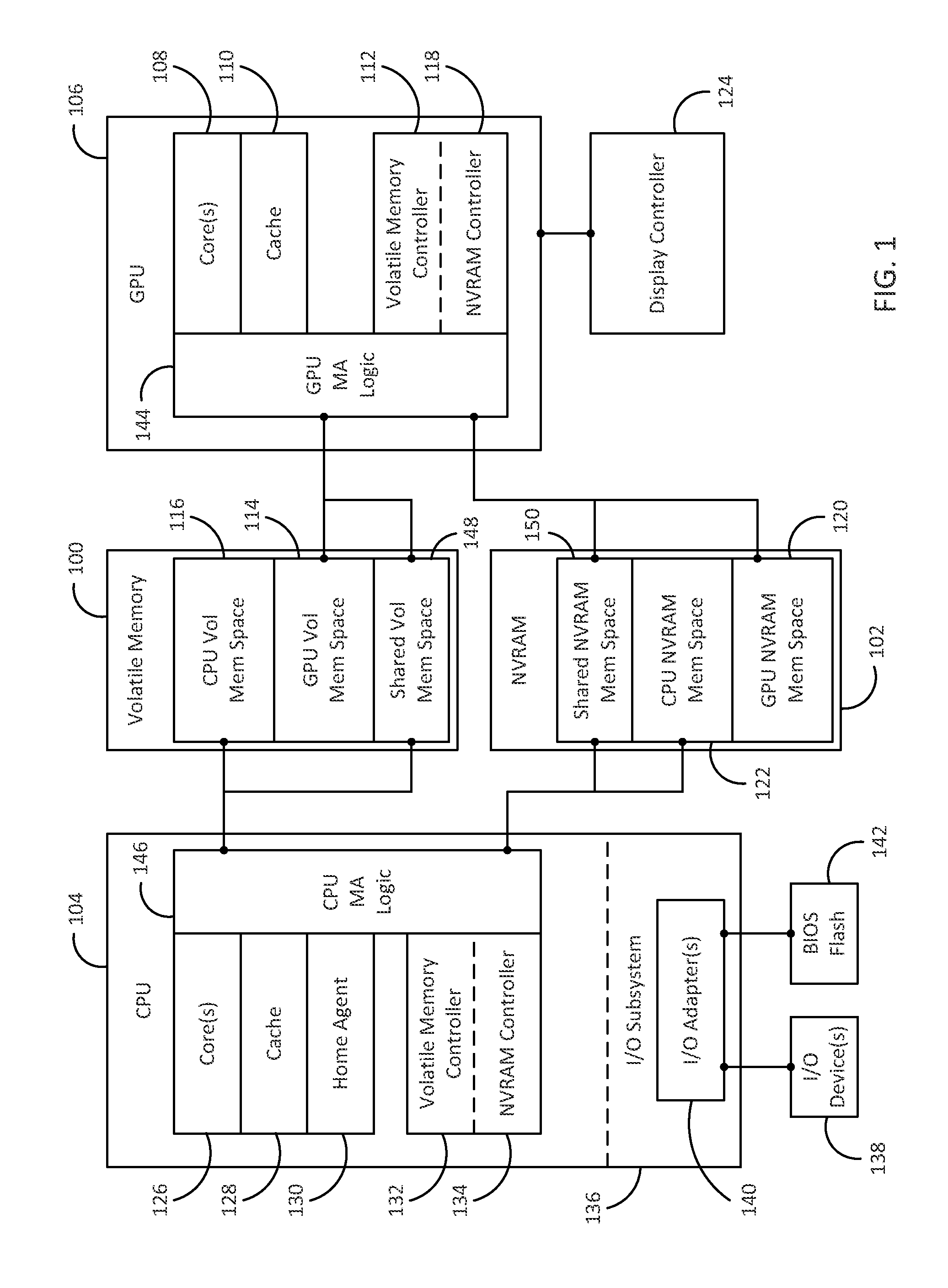

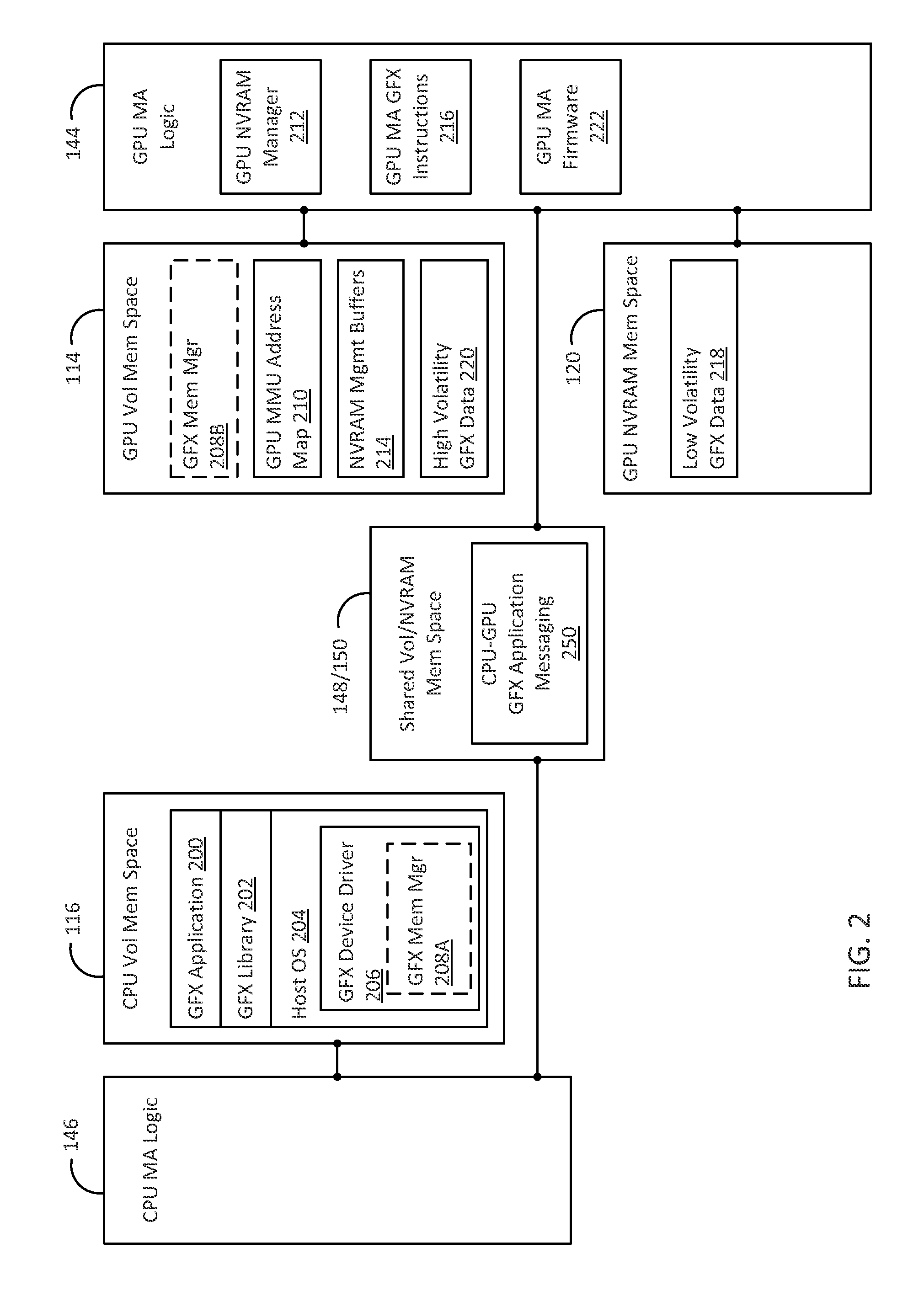

A method and device to augment volatile memory in a graphics subsystem with non-volatile memory

Methods and devices to augment volatile memory in a graphics subsystem with certain types of non-volatile memory are described. In one embodiment, includes storing one or more static or near-static graphics resources in a non-volatile random access memory (NVRAM). The NVRAM is directly accessible by a graphics processor using at least memory store and load commands. The method also includes a graphics processor executing a graphics application. The graphics processor sends a request using a memory load command for an address corresponding to at least one static or near-static graphics resources stored in the NVRAM. The method also includes directly loading the requested graphics resource from the NVRAM into a cache for the graphics processor in response to the memory load command.

Owner:TAHOE RES LTD

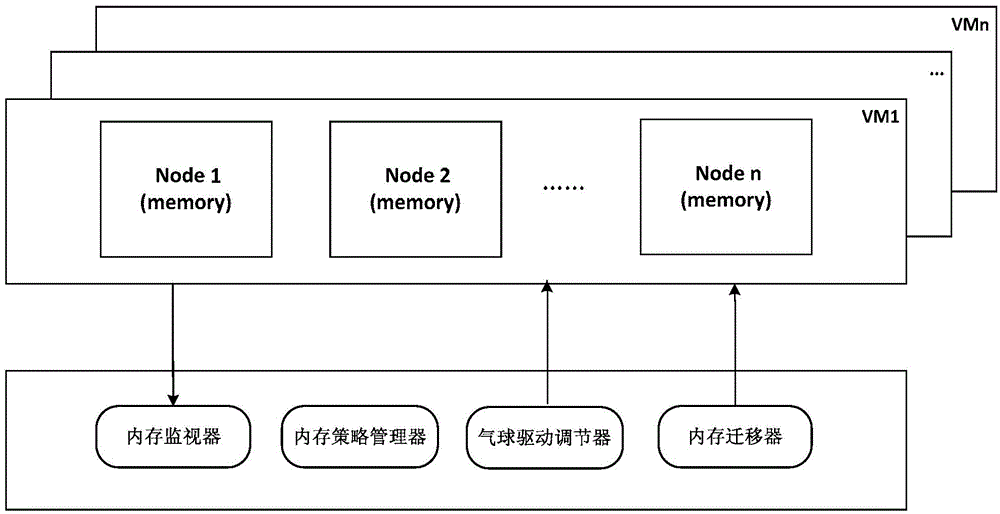

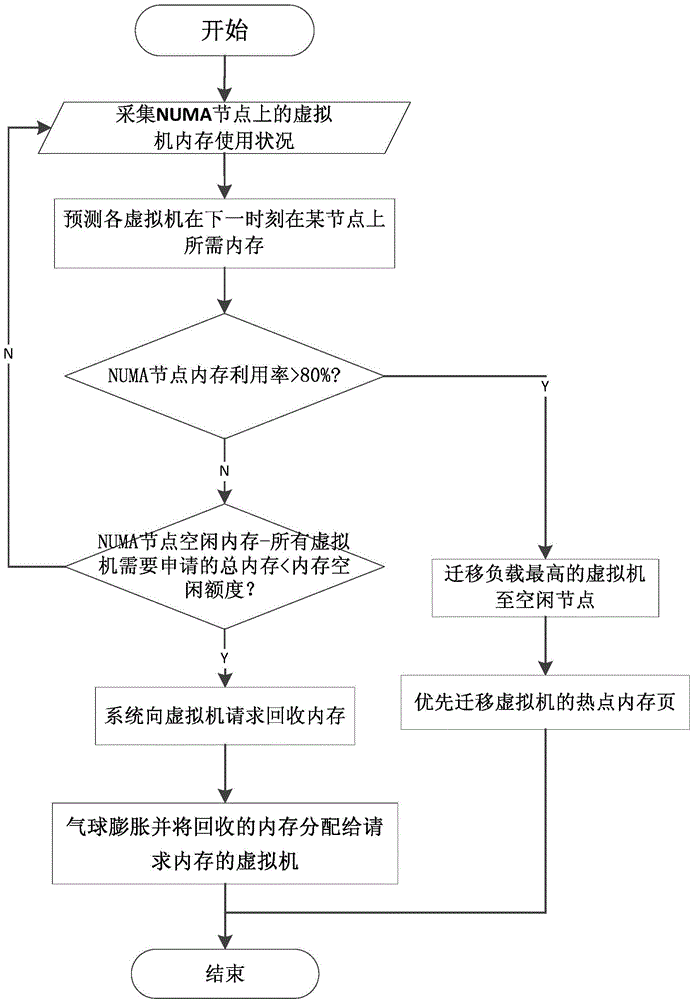

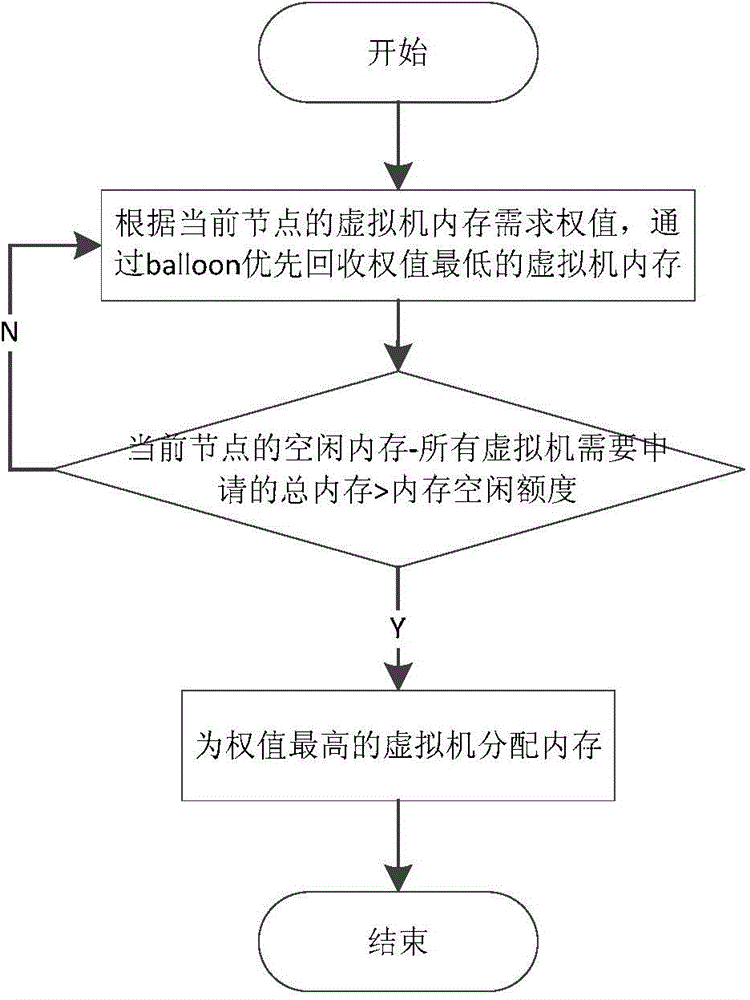

Virtualization-based method and device for adjusting QoS (quality of service) of node memory of NUMA (non uniform memory access architecture)

The invention discloses a virtualization-based method and device for adjusting QoS (quality of service) of node memory of NUMA (non uniform memory access architecture). The method includes: by means of acquiring an occupancy state of memory resources, predicting a memory required by every virtual machine in the future according to a certain rule so as to obtain the memory required for the next moment, and determining whether to adjust balance of memory load or not to guarantee the QoS of the memory; in the condition of insufficient memory resource, starting a memory balance adjusting operation, sensing the NUMA node according to a memory quotient proportion of every virtual machine so as to decide from which virtual machines the memory is reclaimed and to which virtual machines the memory is allocated, computing the sizes of reclaimable memory and the allocatable memories, and sending a given optimal memory value of an operation system of every client down to an actual adjusting part. The technical problems that during operation of the virtual machine, the virtual machine cannot sense a memory usage state of the node where the virtual machine currently locates and QoS of the memory cannot be adjusted from an angle of the system and the like are solved.

Owner:ZHEJIANG UNIV

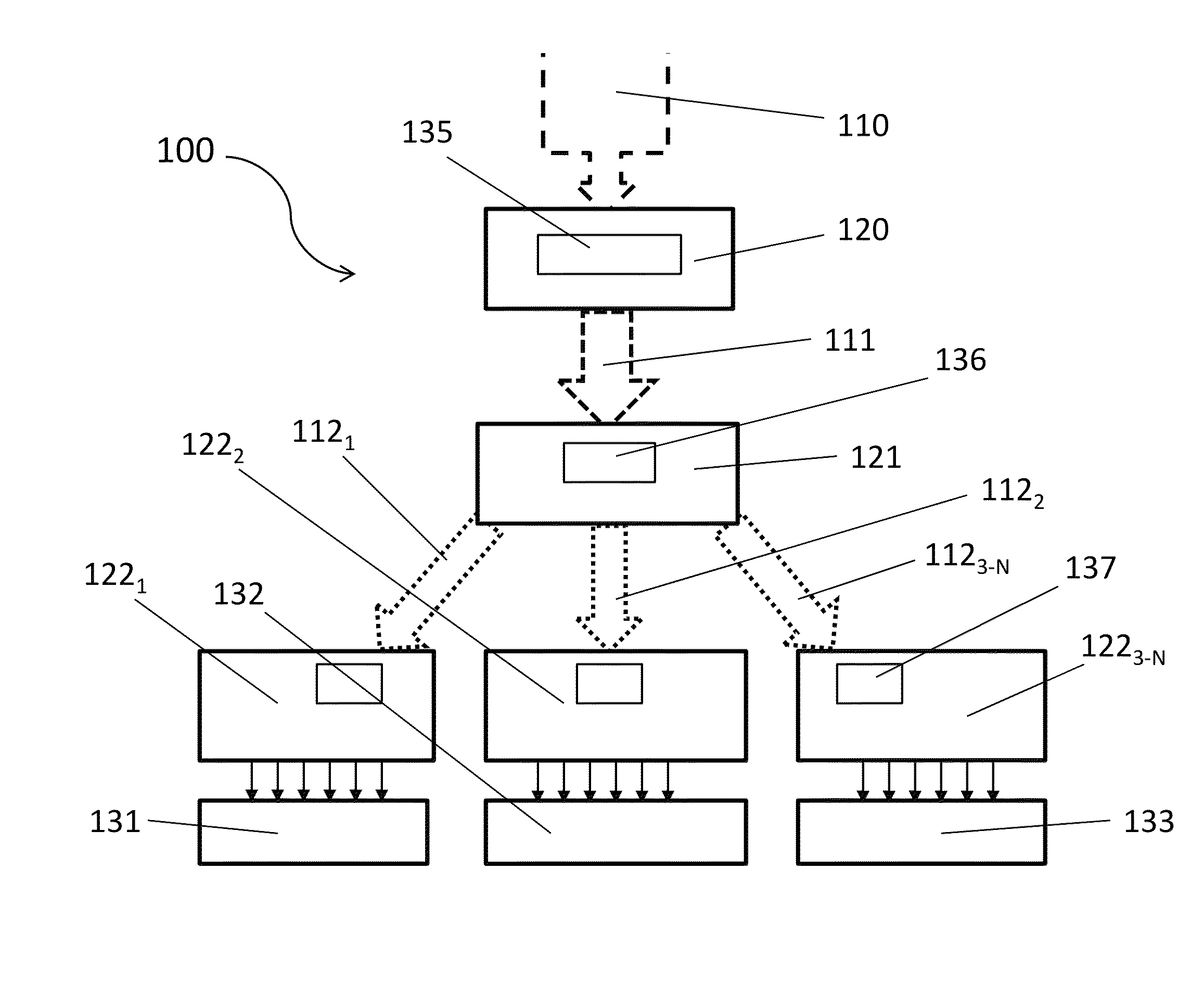

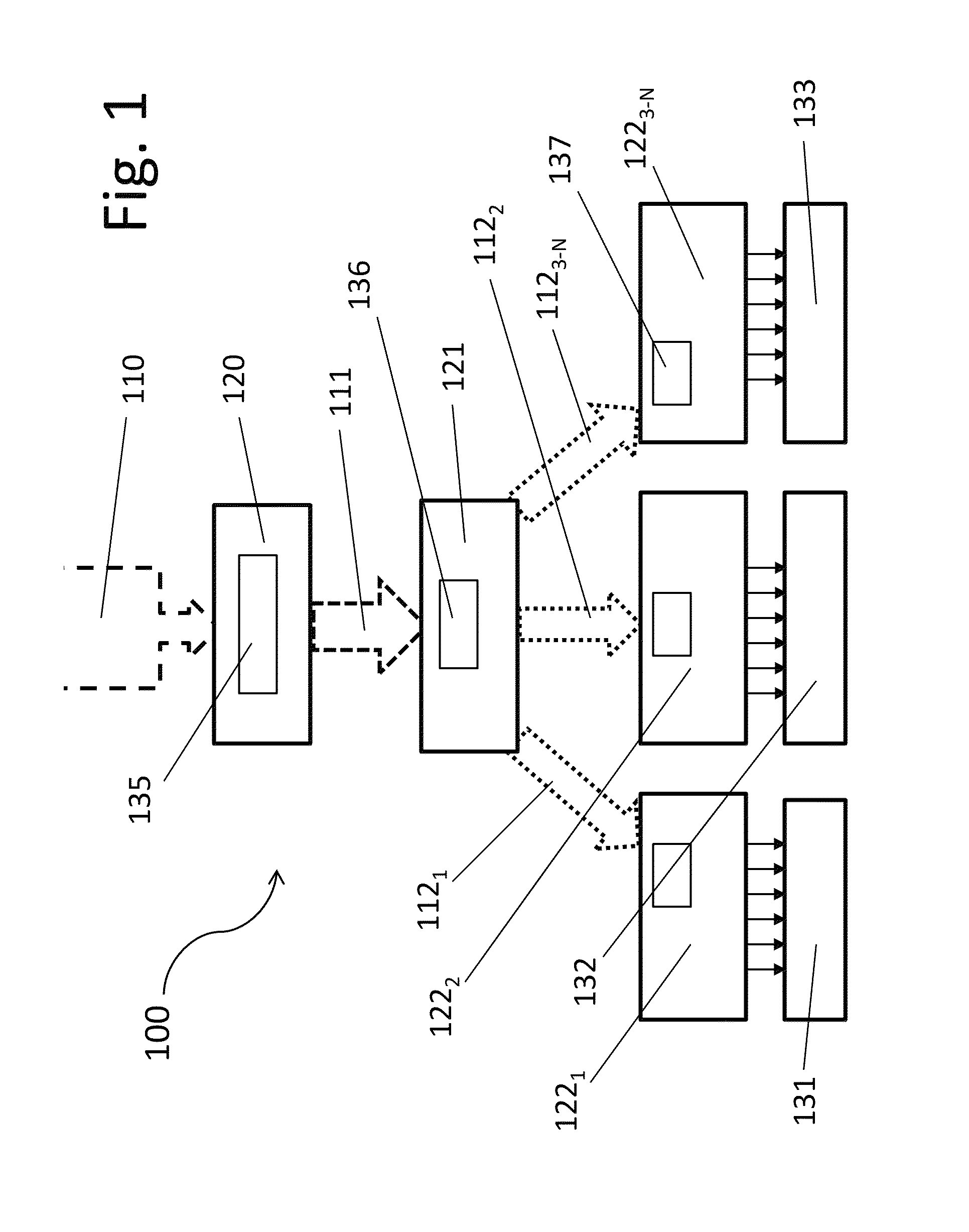

Resilient device authentication system with metadata binding

ActiveUS20160170907A1Outweigh burdenOutweigh upfront costMemory architecture accessing/allocationUser identity/authority verificationPhysical unclonable functionAuthentication system

A resilient device authentication system for use with one or more managed devices each including a physical unclonable function (PUF), comprises: one or more verification authorities (VA) each including a processor and a memory loaded with a complete verification set (CVS) that includes hardware part-specific data associated with the managed devices' PUFs and metadata, the processor configured to create a limited verification set (LVS) through one-way algorithmic transformation of hardware part-specific data together with metadata from the loaded CVS so as to create a LVS representing both metadata and hardware part-specific data adequate to redundantly verify all of the hardware parts associated with the LVS; and one or more provisioning entities (PE) each connectable to a VA and including a processor and a memory loaded with a LVS, and configured to select a subset of the LVS so as to create an application limited verification set (ALVS). The system may also comprise one or more device management systems each connectable to a PE and to managed devices and including a memory configured to store an ALVS. The VA may also be configured to create a replacement LVS.

Owner:ANALOG DEVICES INC

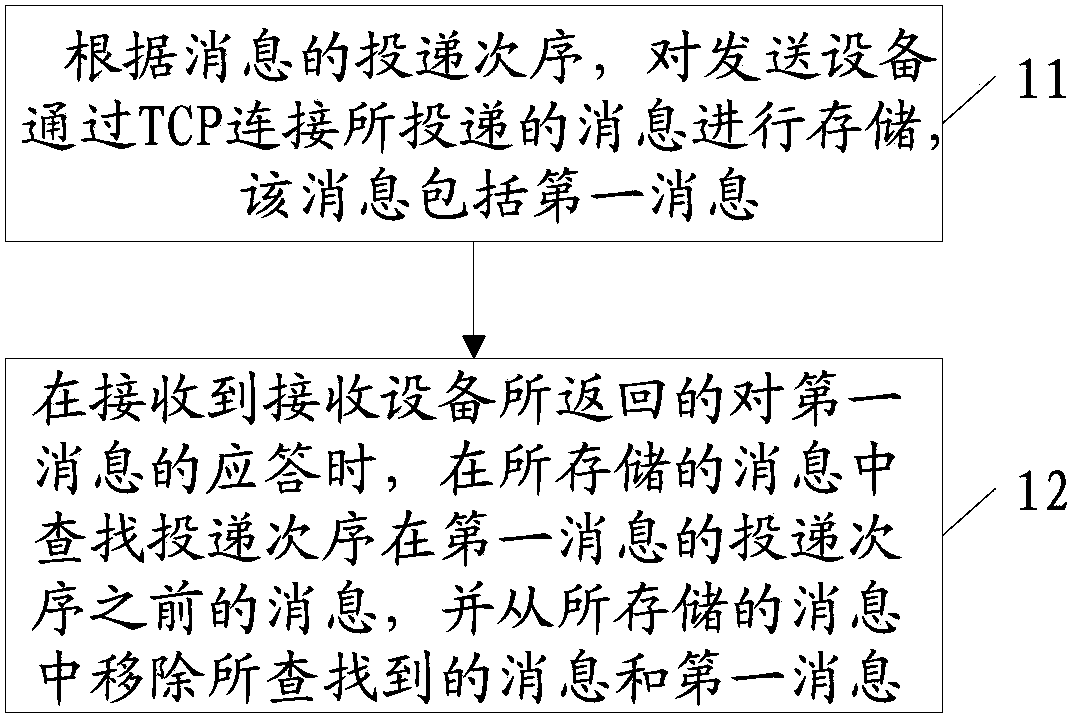

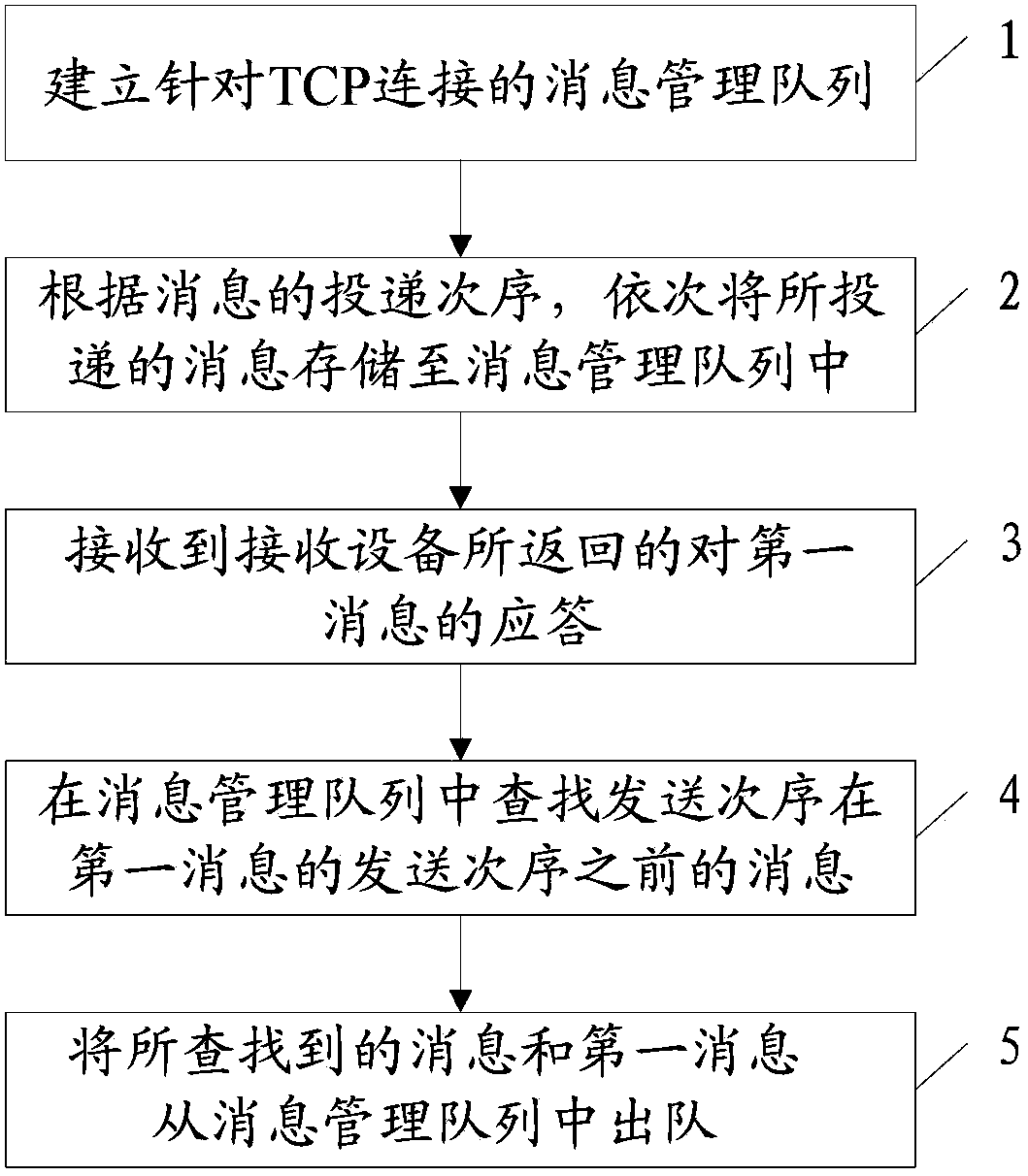

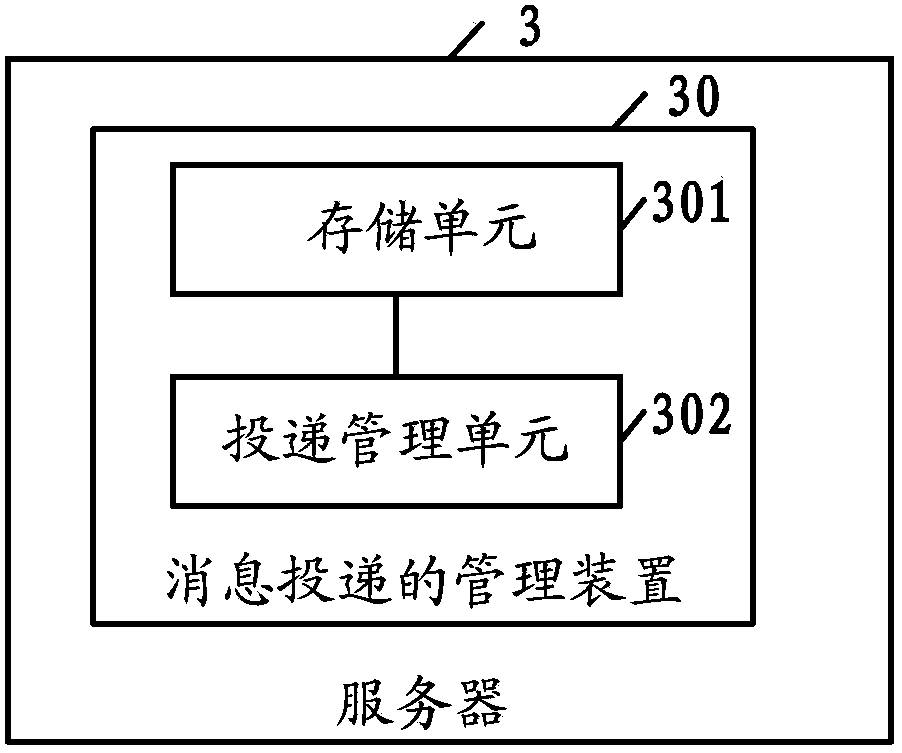

Management method for message delivery, server and system thereof

ActiveCN103532822AQuality improvementReduce memory pressureData switching networksMessage deliveryComputer science

The present invention discloses a management method for message delivery, a server and a system thereof. The management method has the functions of: reducing memory load of a server, accurately determining whether the message is successively transmitted, and improving message quality. The management method for message delivery according to one embodiment of the invention comprises the following steps: according to delivery order of the messages, storing the messages which are delivered by delivery equipment through a TCP connection, wherein the messages comprise first message; and when the response which is returned from receiving equipment for the first message is received, searching the message with delivery order which is before the delivery order of the first message from the stored messages, confirming success delivery of the searched message and the first message, and removing the searched message and the first message from the stored message.

Owner:ULTRAPOWER SOFTWARE

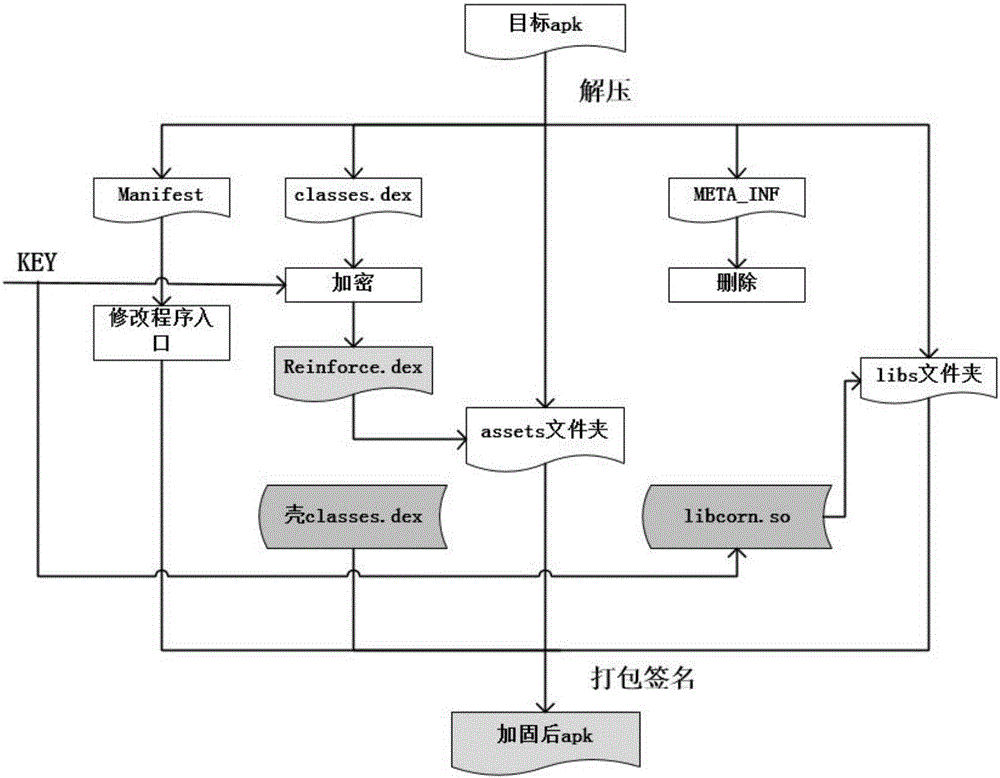

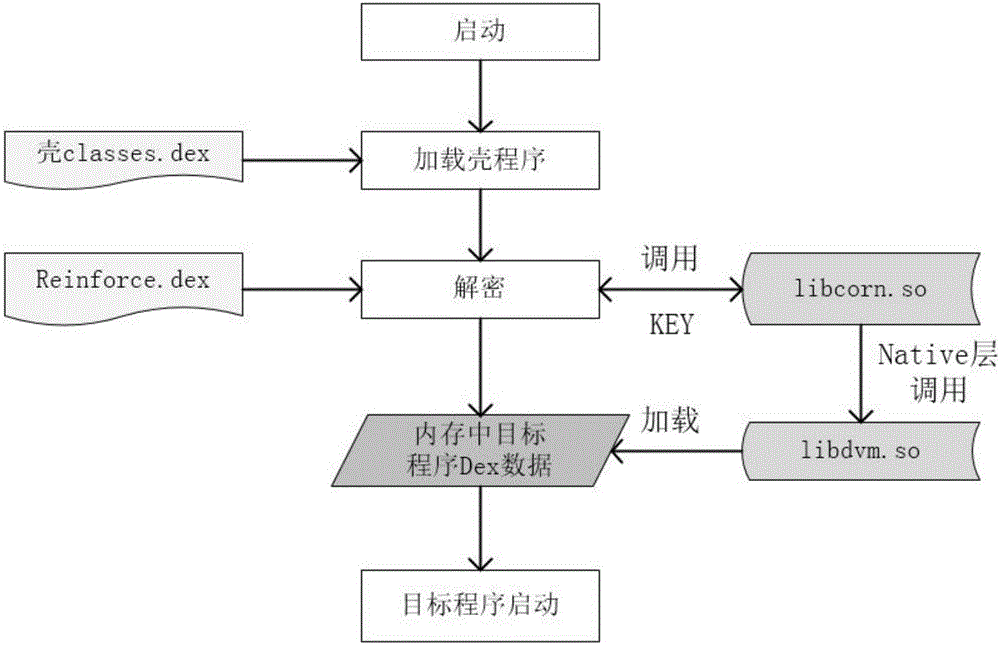

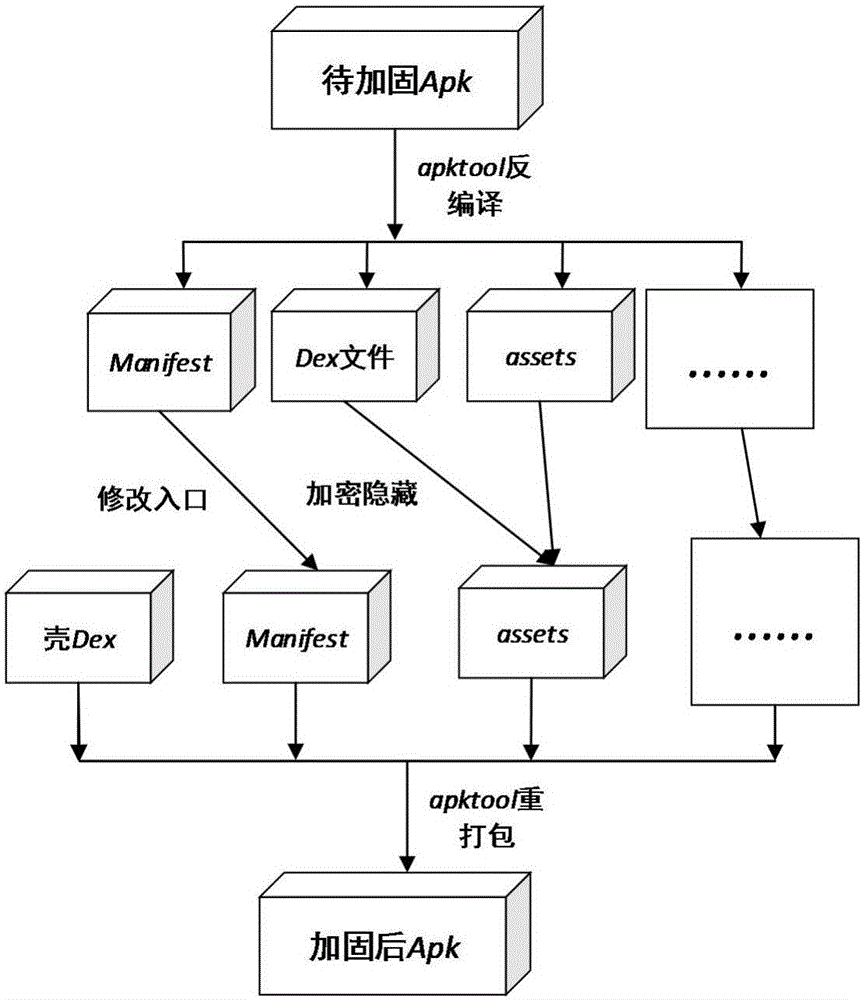

Android application software reinforcement protection method based on DexClassloader

InactiveCN106650330AGuaranteed confidentialityAvoid robberyDigital data protectionProgram/content distribution protectionPlaintextConfidentiality

The invention aims to provide a method for realizing Dex memory loading on the basis of a DexClassLoader. An apk (AndroidPackage)) reinforcement operation is carried out on a PC (Personal Computer); a reinforced application is installed, shelled, loaded and started on an Android terminal; and a reinforced dex structure is completely different from the structure of a traditional dex file and can not be successfully decompiled into a source file with readability. A reinforcement scheme successfully protects the confidentiality of key data so as to perform an anti-decompilation effect, a ZjDroil shelling tool is used for carrying out a shelling operation on the reinforced apk, and the source files and the dex fragments of any source apk can not be obtained. A memory loading process provided by the invention performs a good effect on preventing Java layer Hook, and meanwhile, a phenomenon that a dex plaintext appears on a disk and is robbed by an attacker is avoided. Compared with a traditional disk loading way, the method disclosed by the invention is characterized in that safety is greatly improved.

Owner:清创网御(合肥)科技有限公司

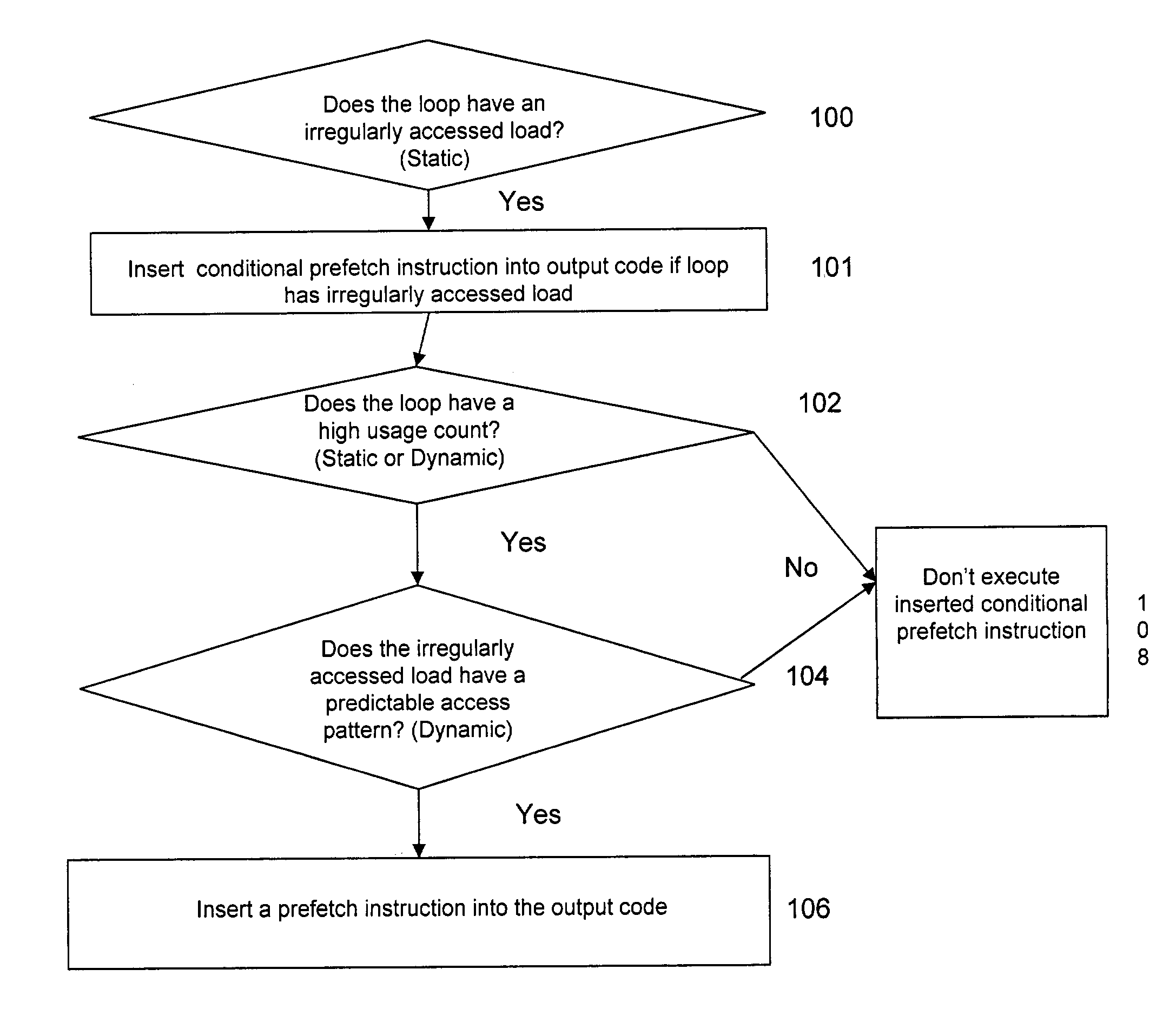

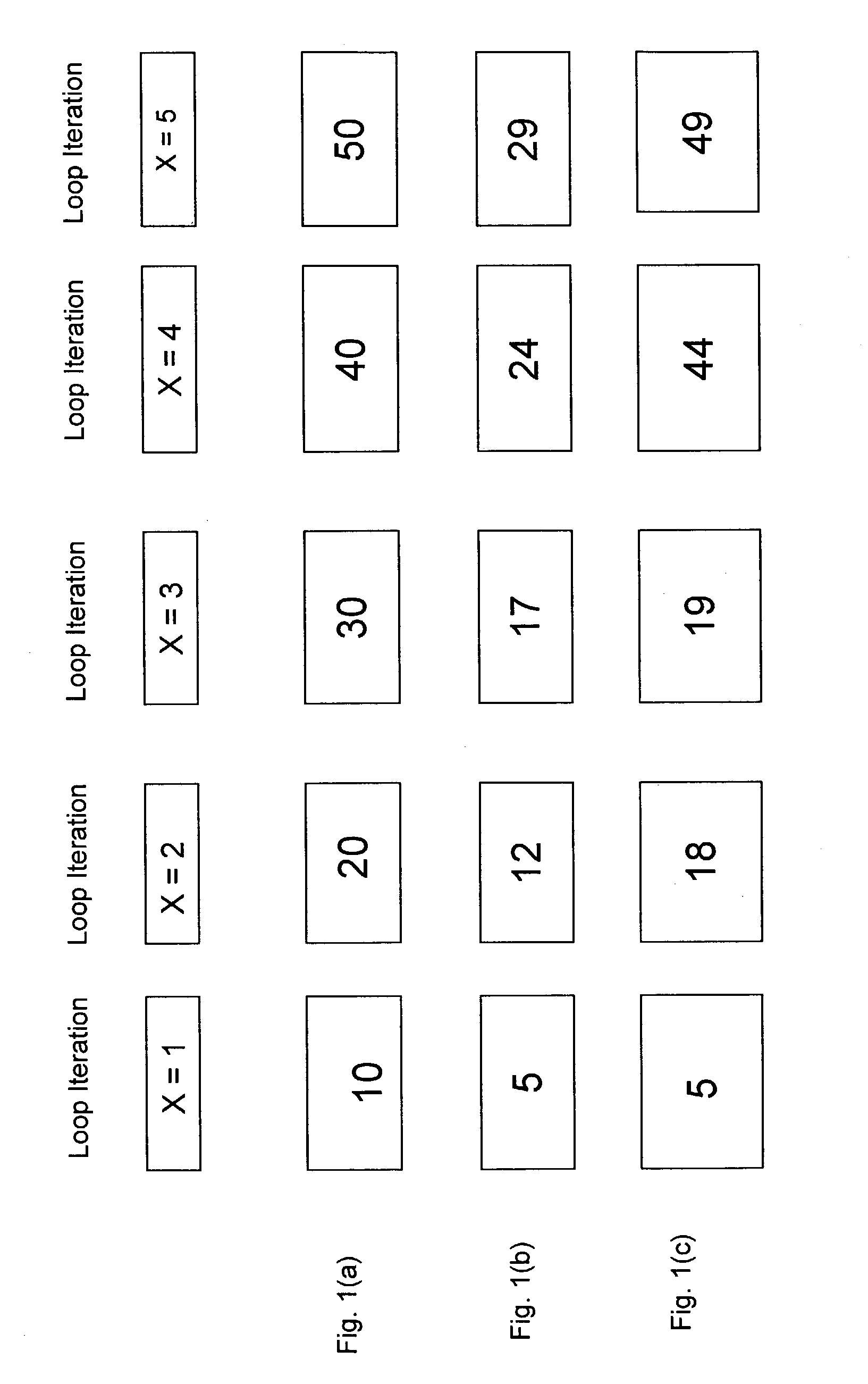

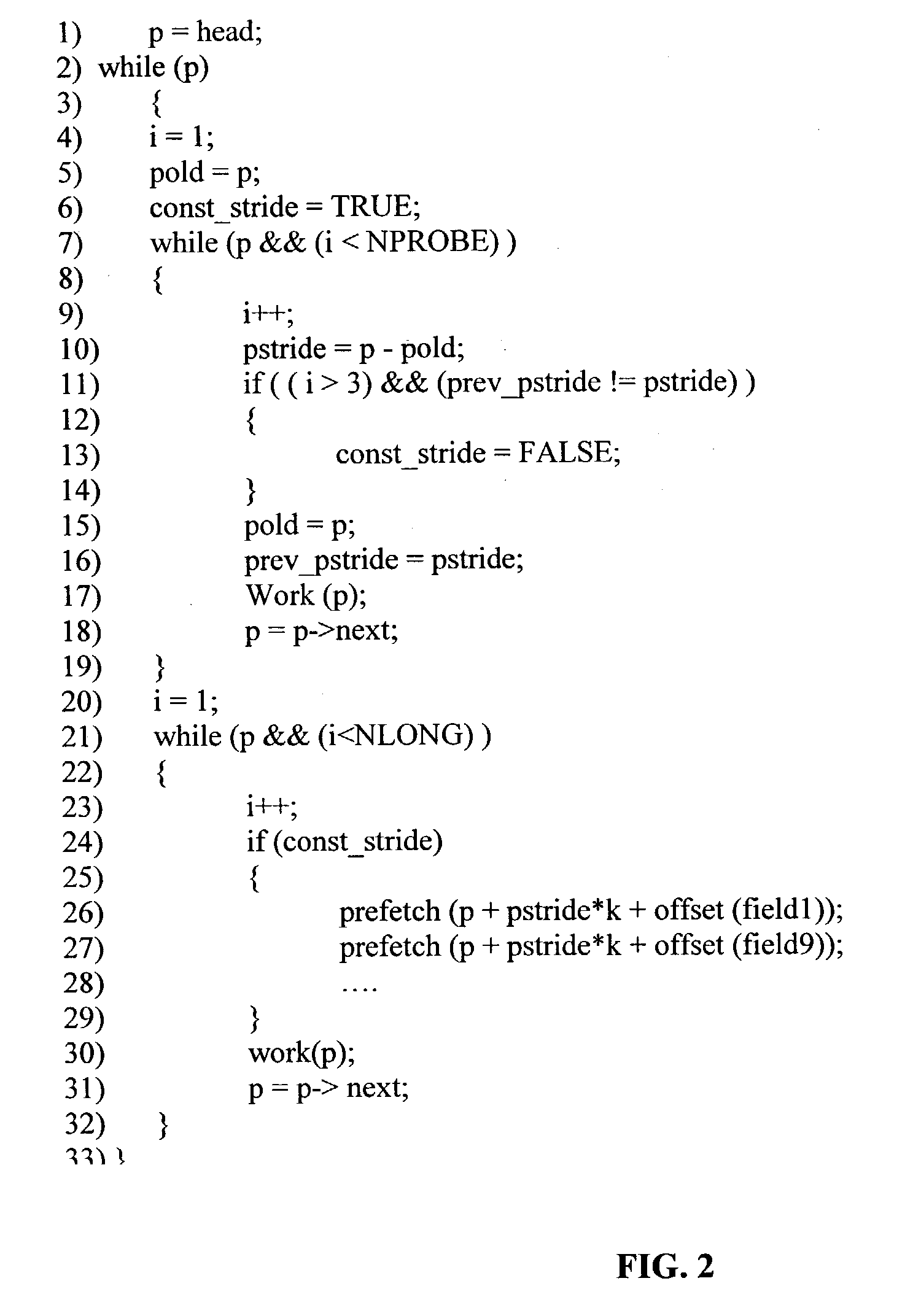

Adaptive prefetch for irregular access patterns

InactiveUS7155575B2Software engineeringMemory adressing/allocation/relocationParallel computingSelf adaptive

A computer program product determines whether a loop has a high usage count. If the computer program product determines the loop has a high usage count, the computer program product determines whether the loop has an irregularly accessed load. If the loop has an irregularly accessed load, the computer program product inserts pattern recognition code to calculate whether successive iterations of the irregular memory load in the loop have a predictable access pattern. The computer program product implants conditional adaptive prefetch code including a prefetch instruction into the output code.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com