Patents

Literature

150 results about "Non-uniform memory access" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

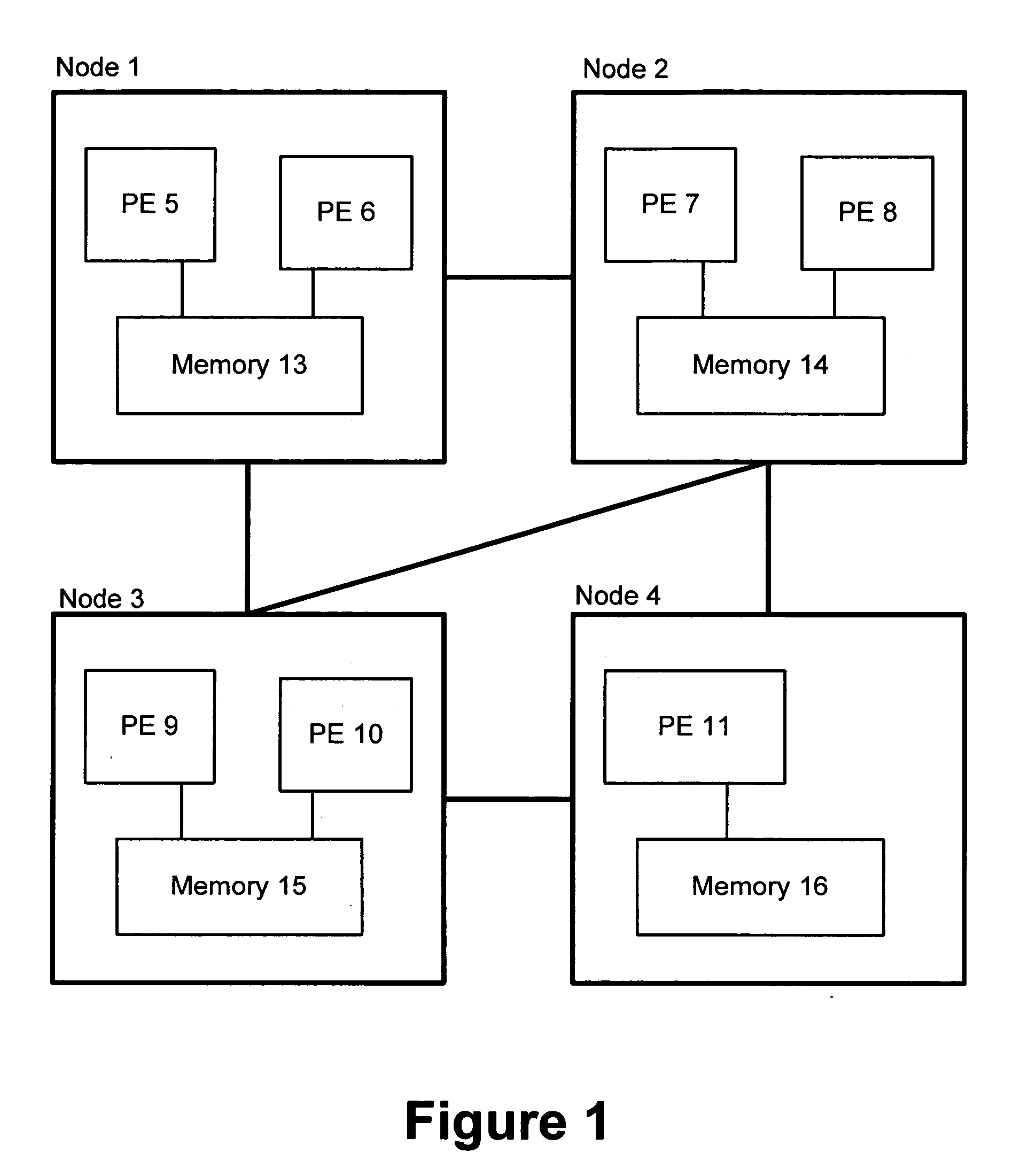

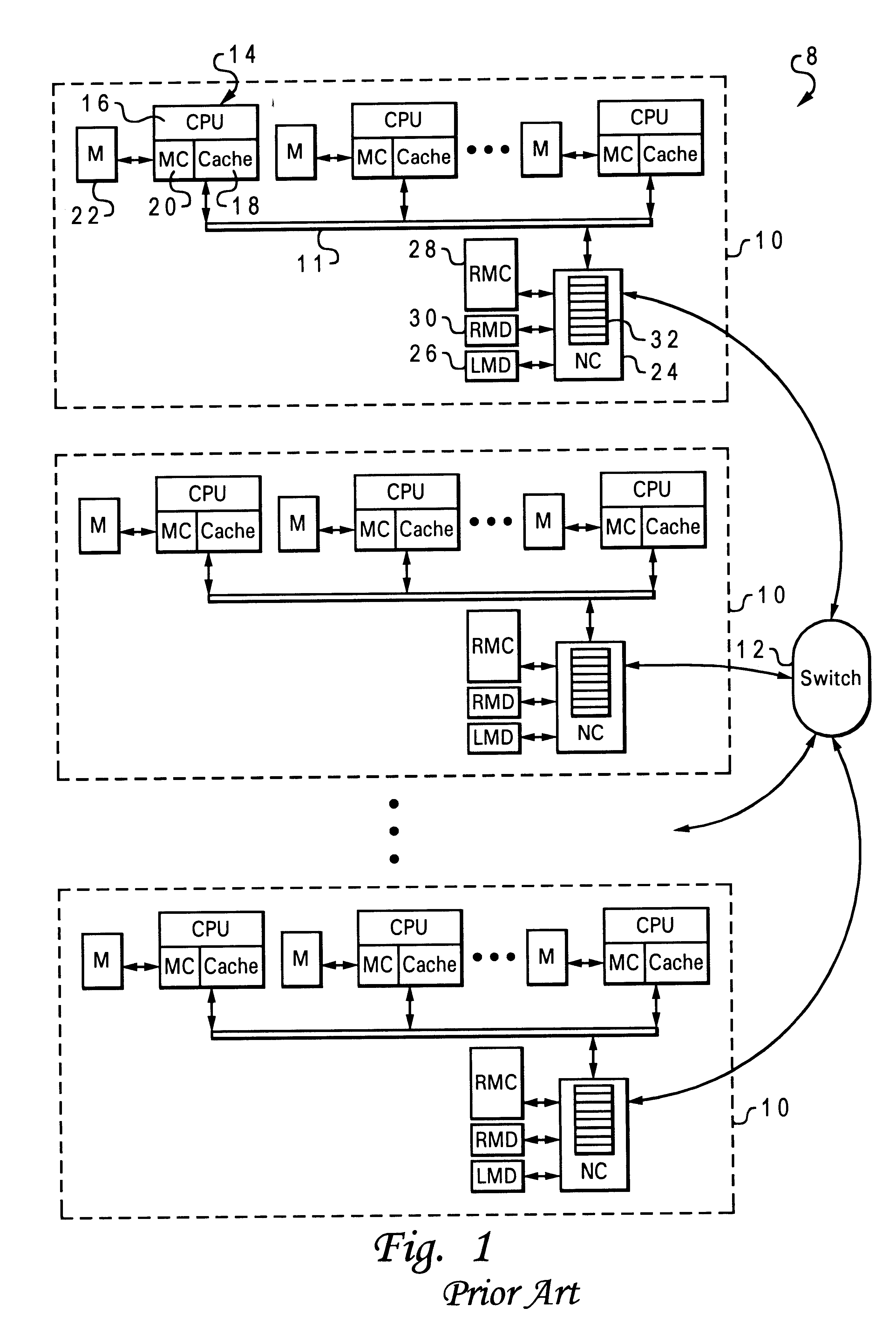

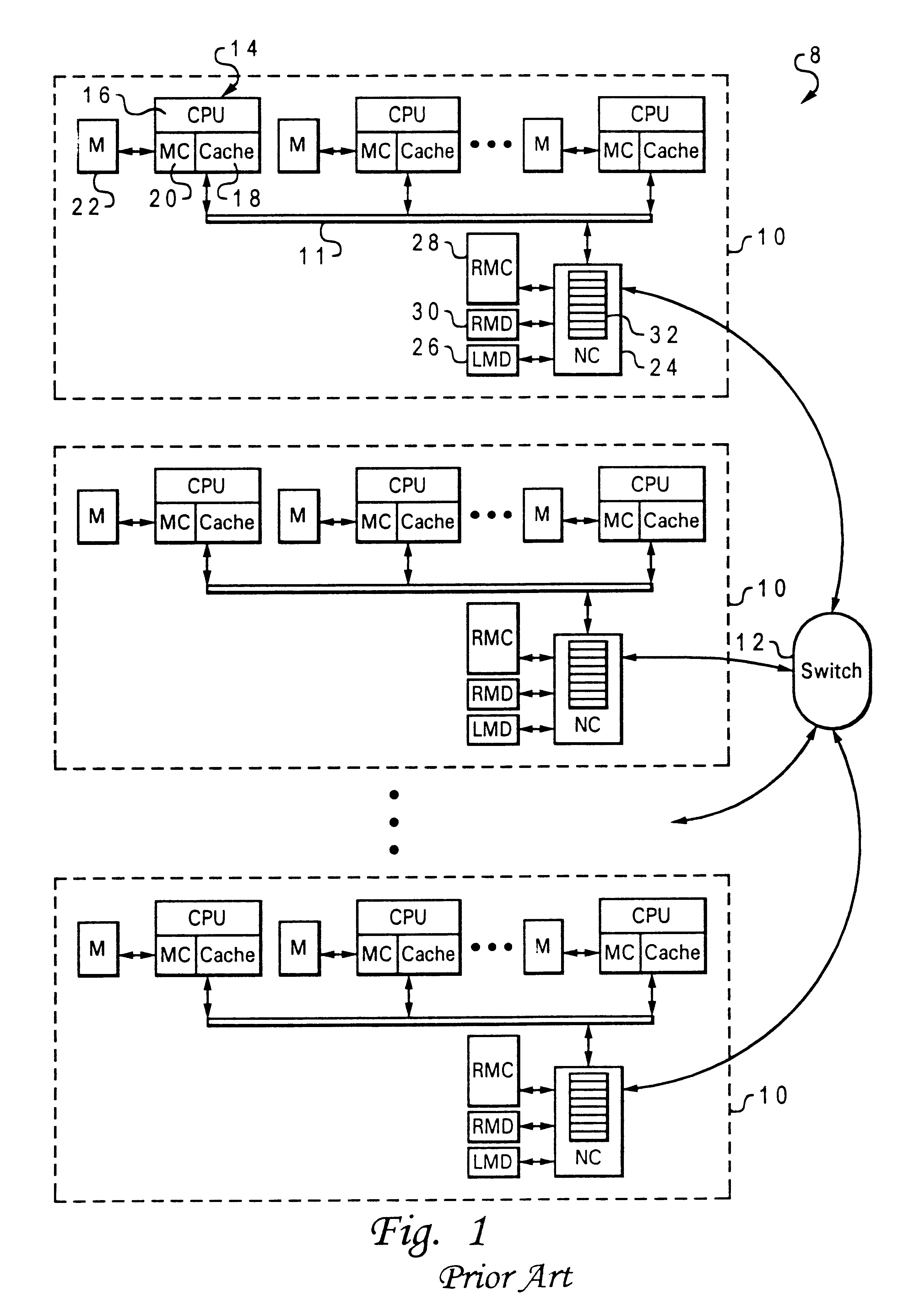

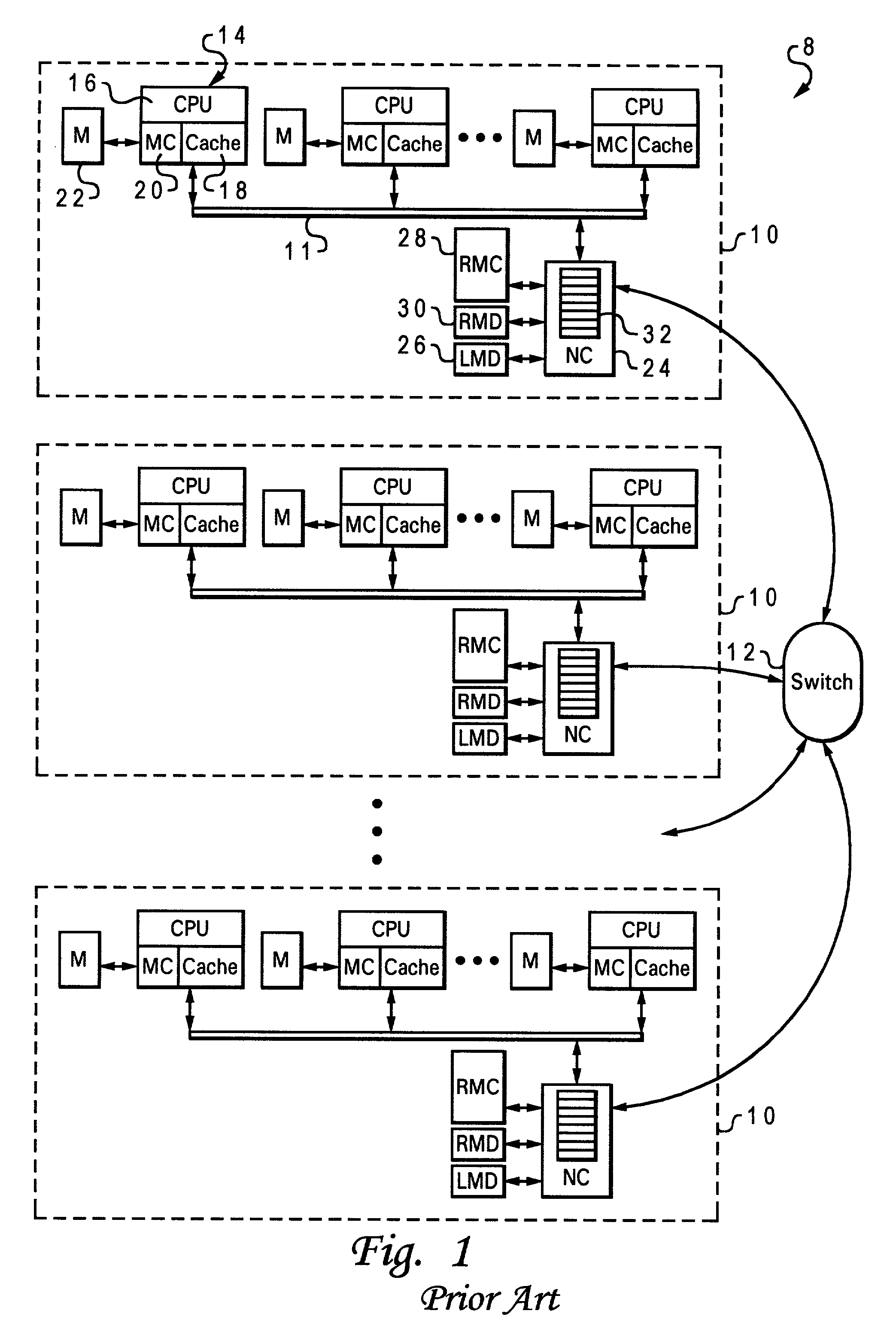

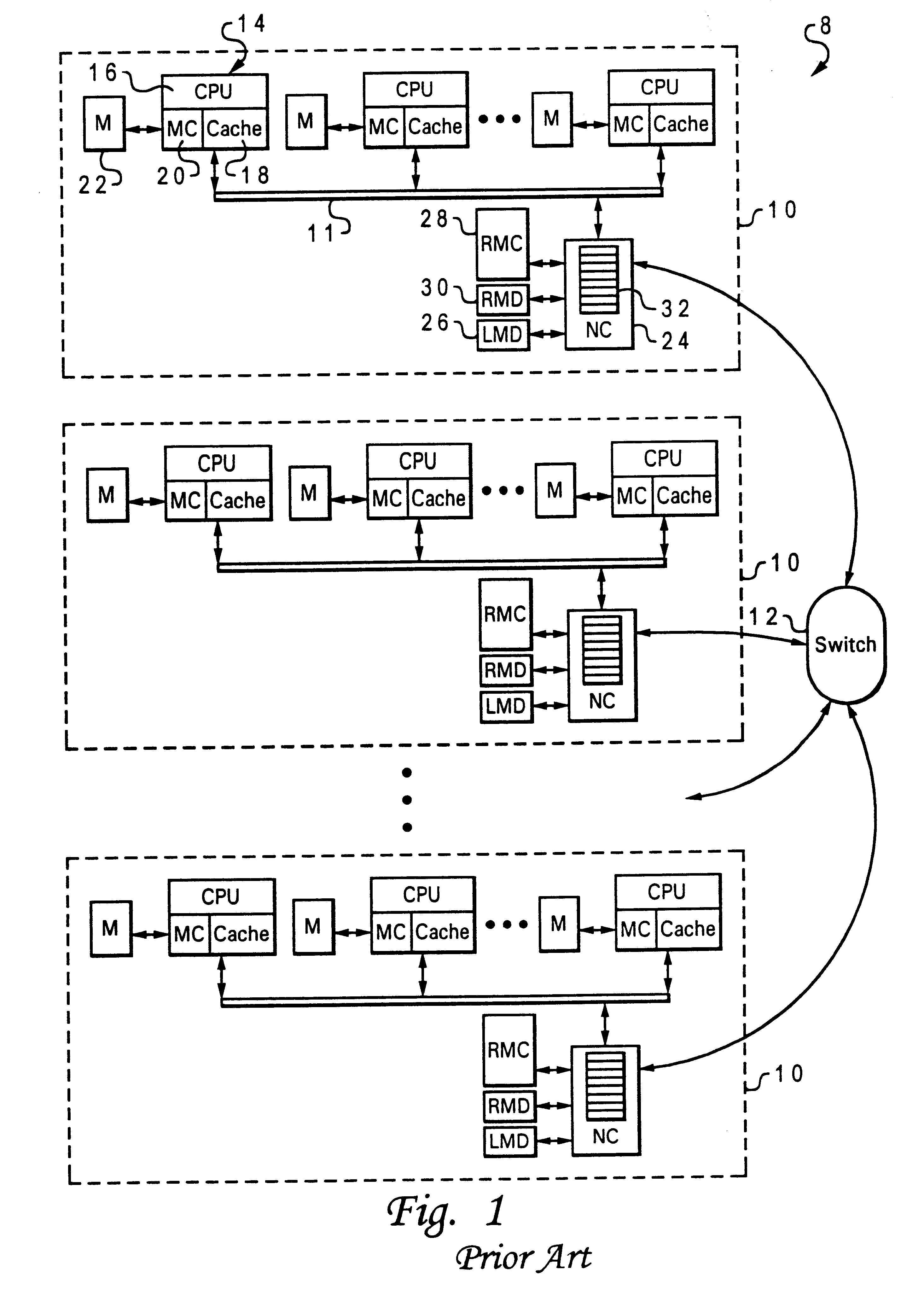

Non-uniform memory access (NUMA) is a computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). The benefits of NUMA are limited to particular workloads, notably on servers where the data is often associated strongly with certain tasks or users.

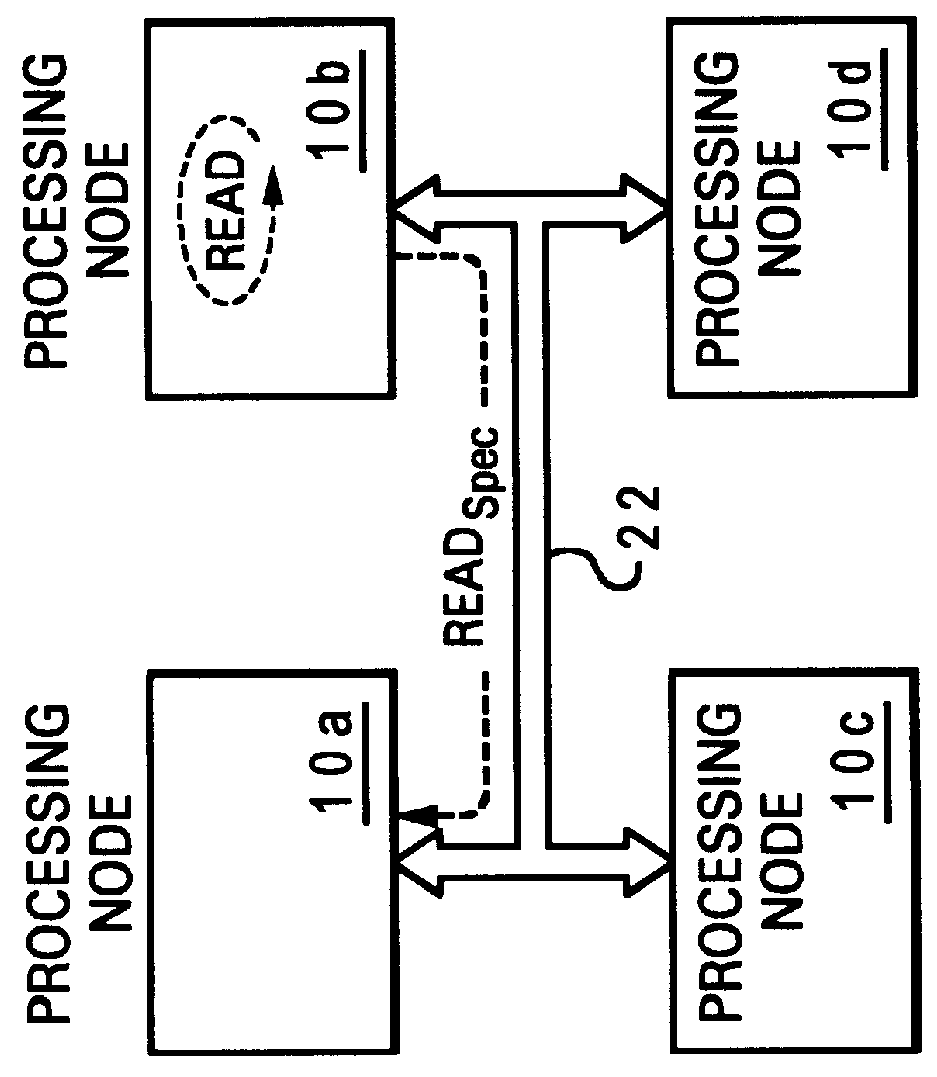

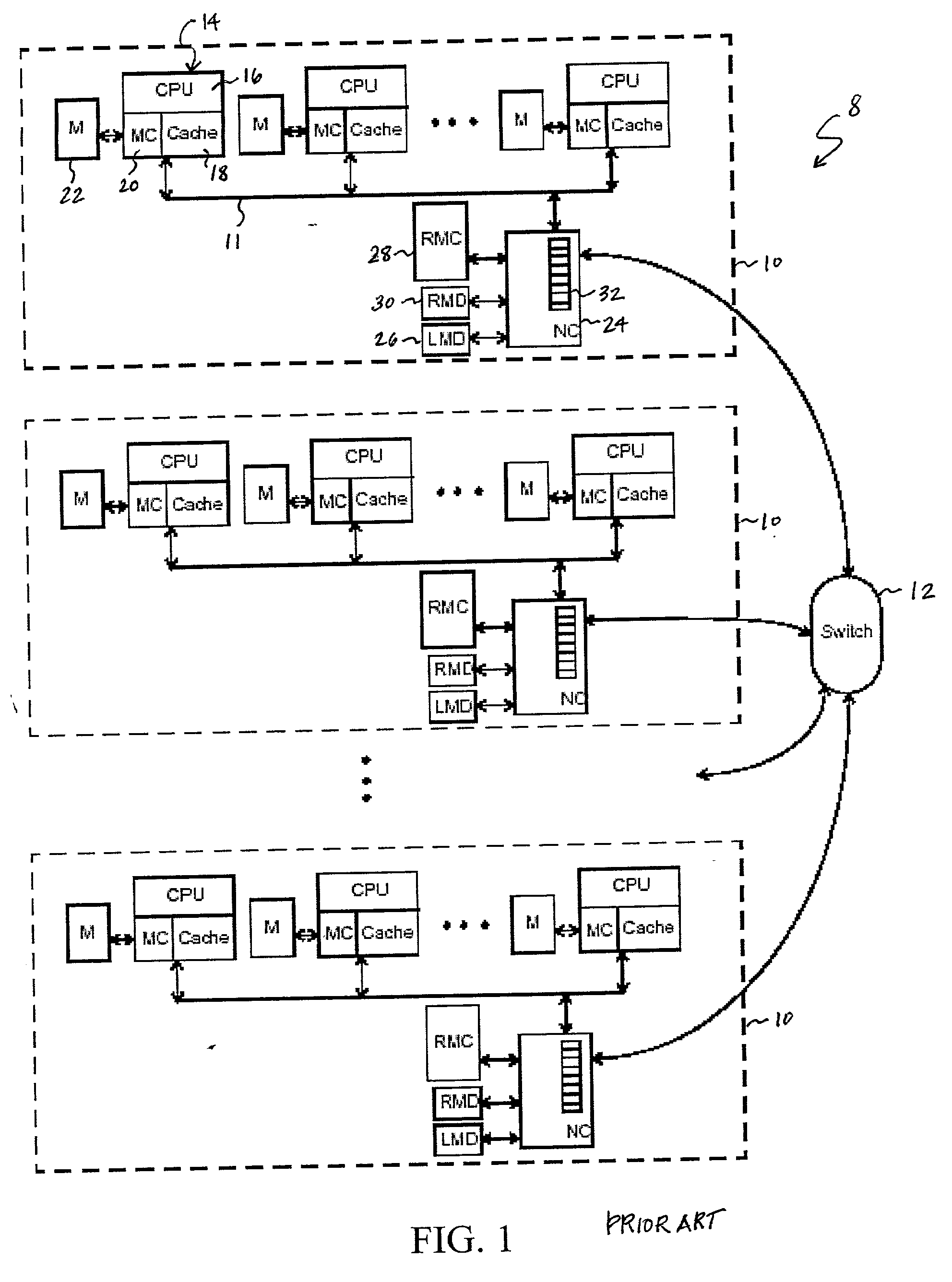

Non-uniform memory access (NUMA) data processing system that speculatively forwards a read request to a remote processing node

InactiveUS6338122B1Memory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemImage resolution

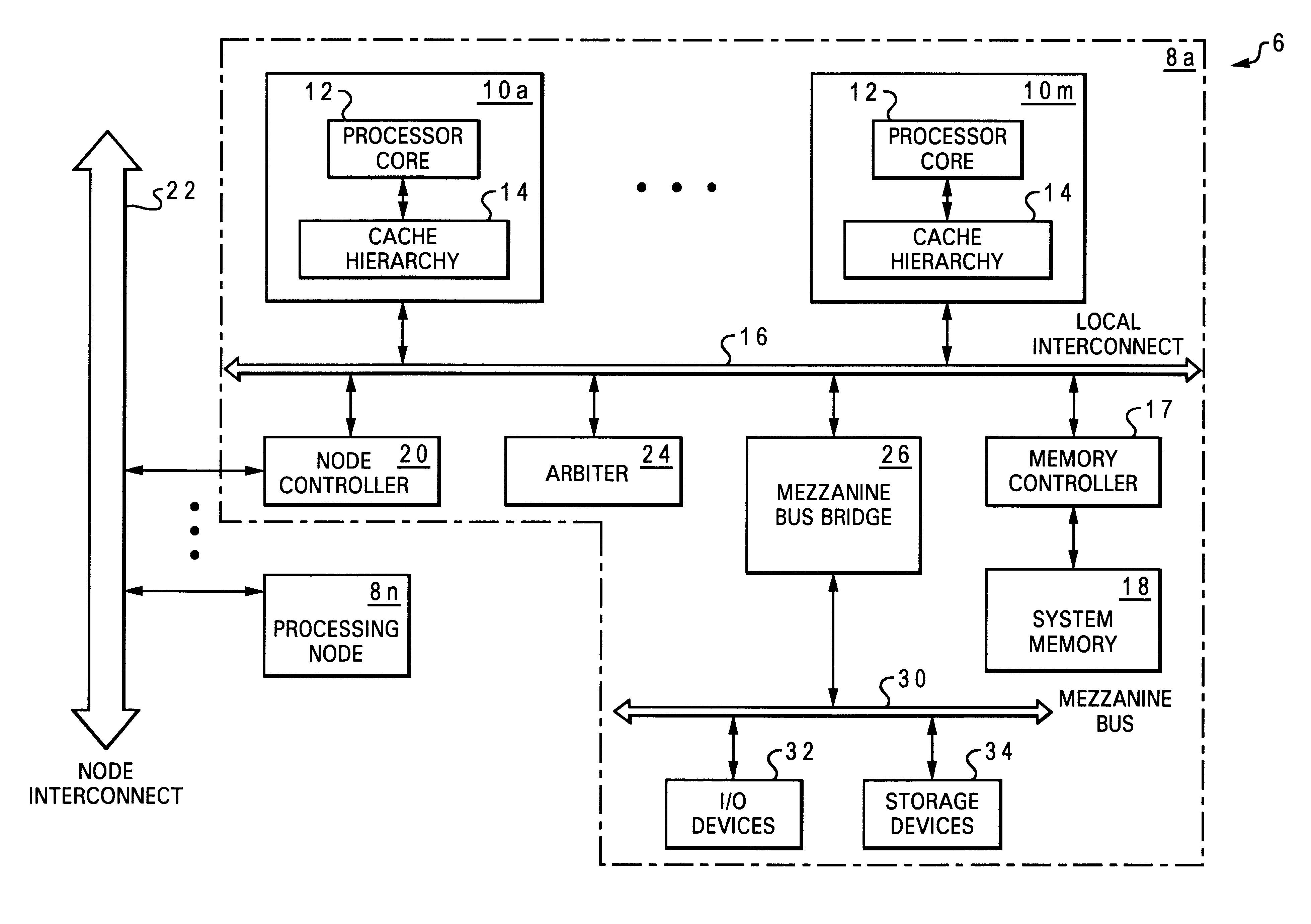

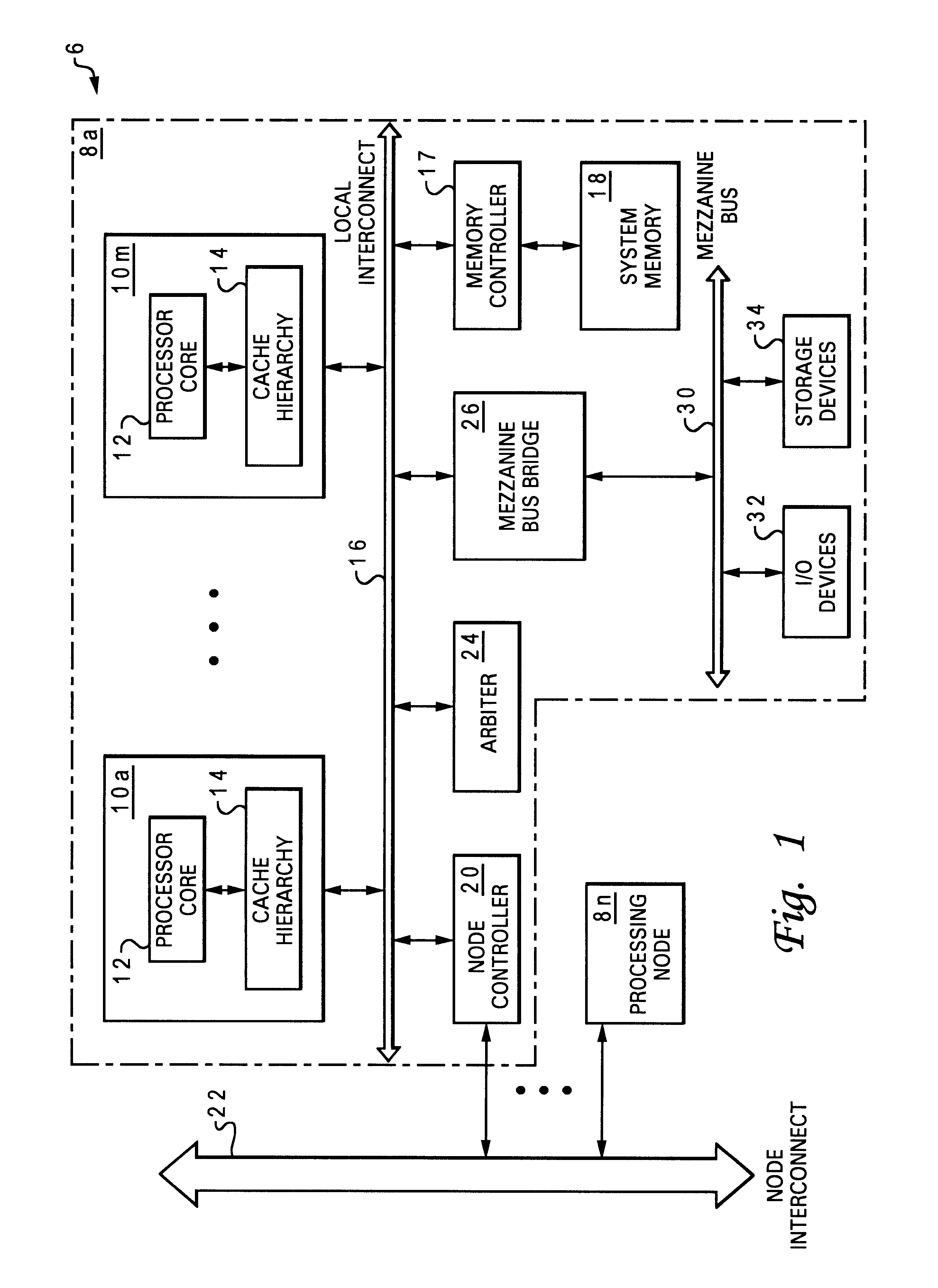

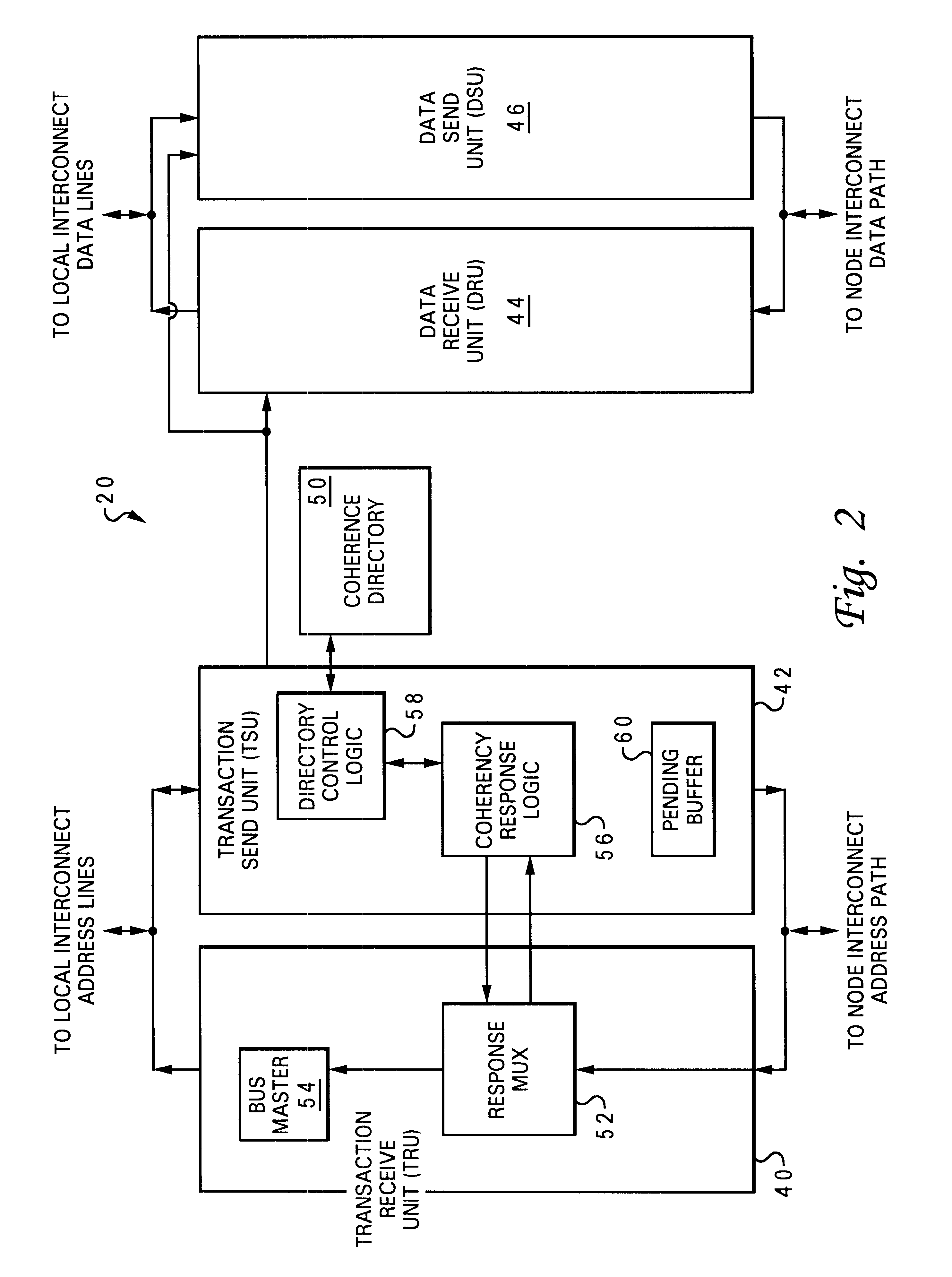

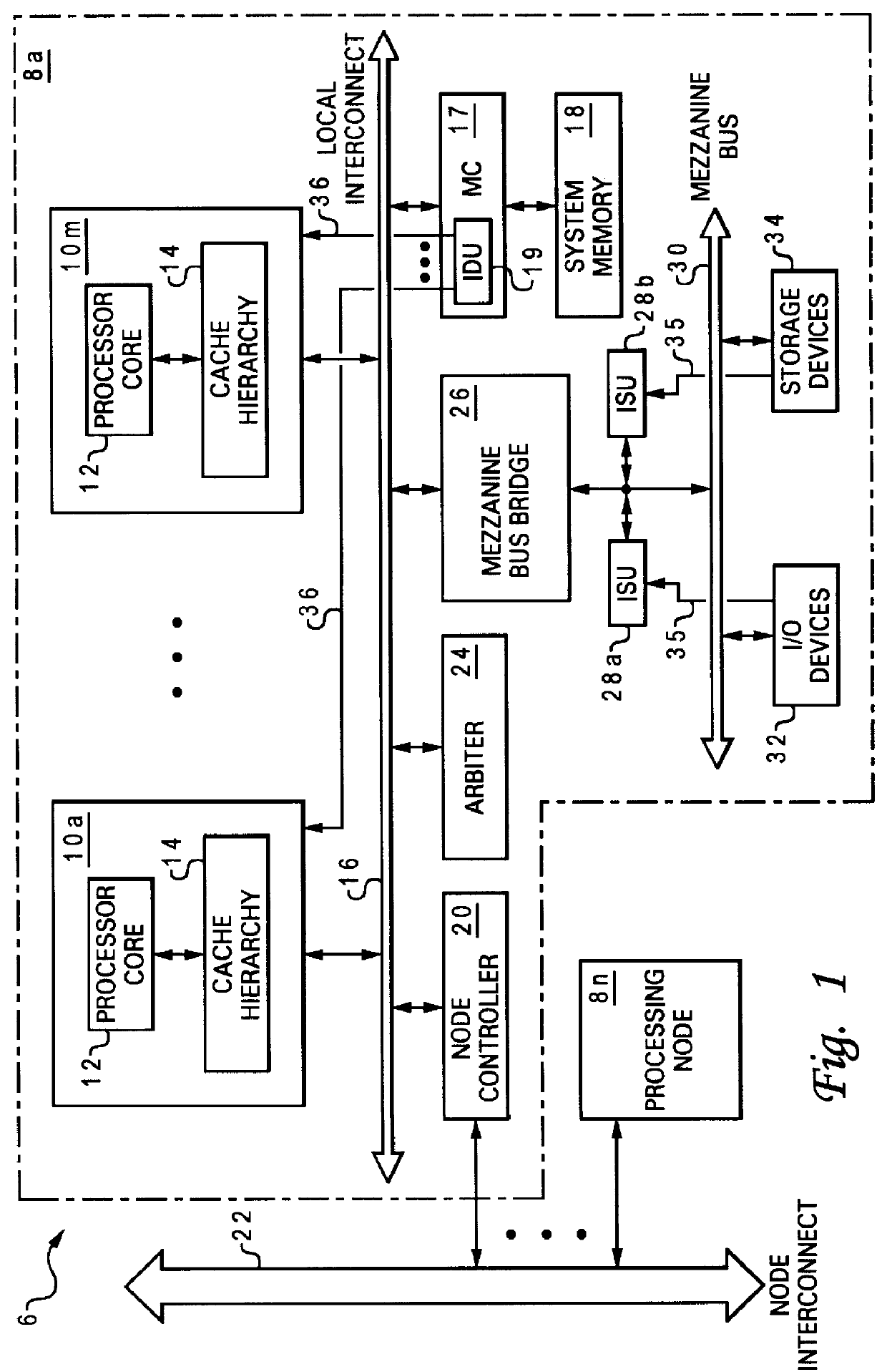

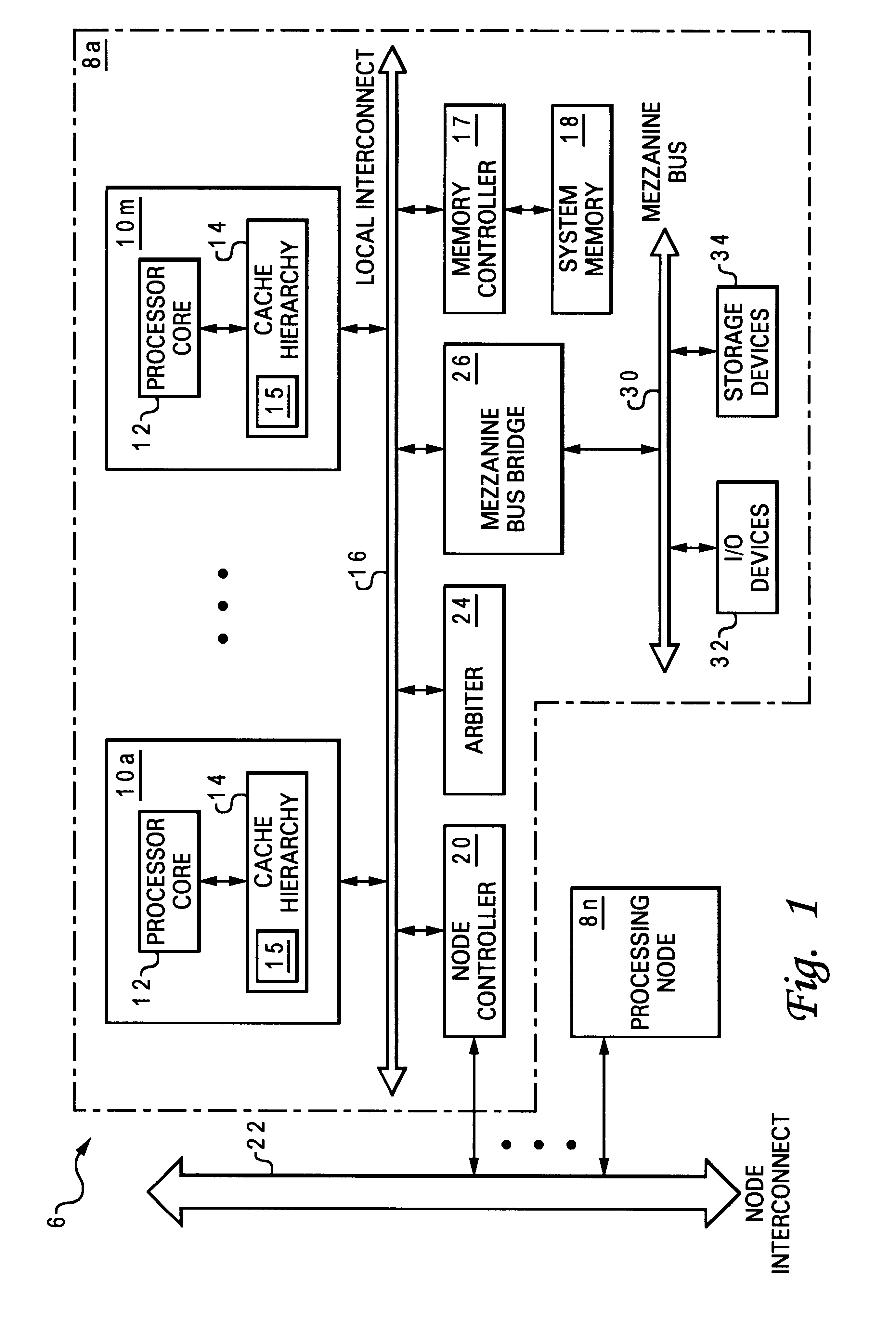

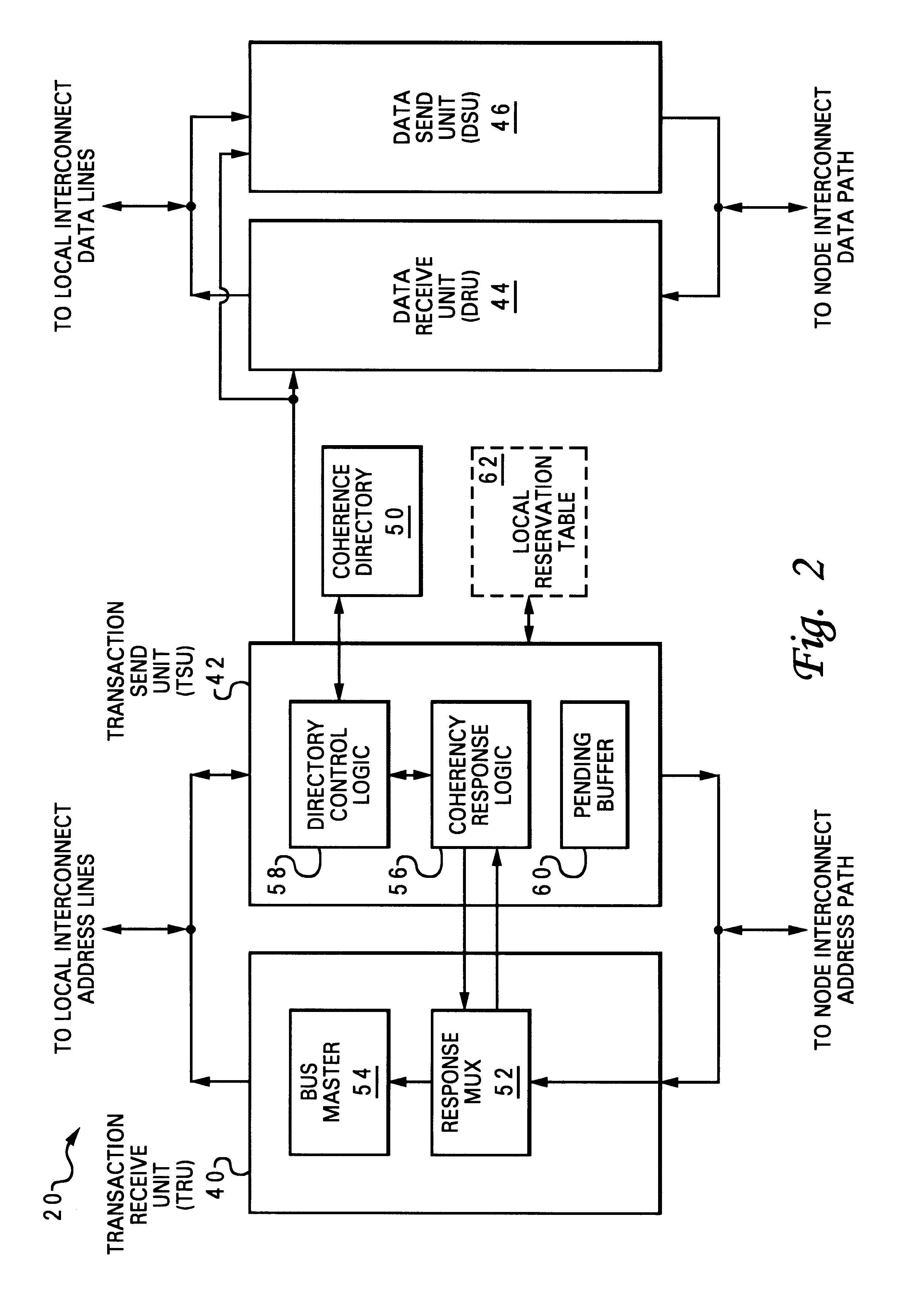

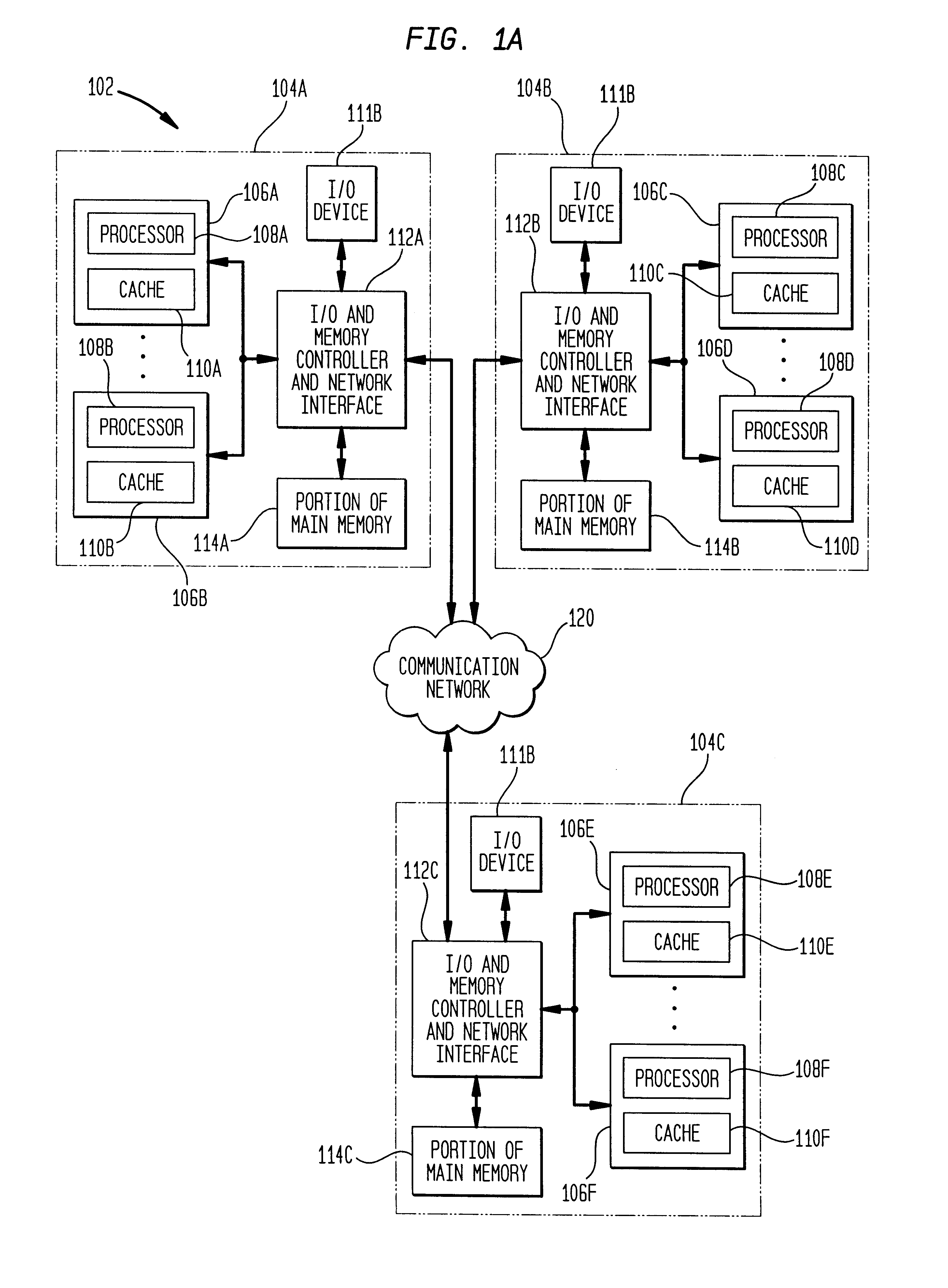

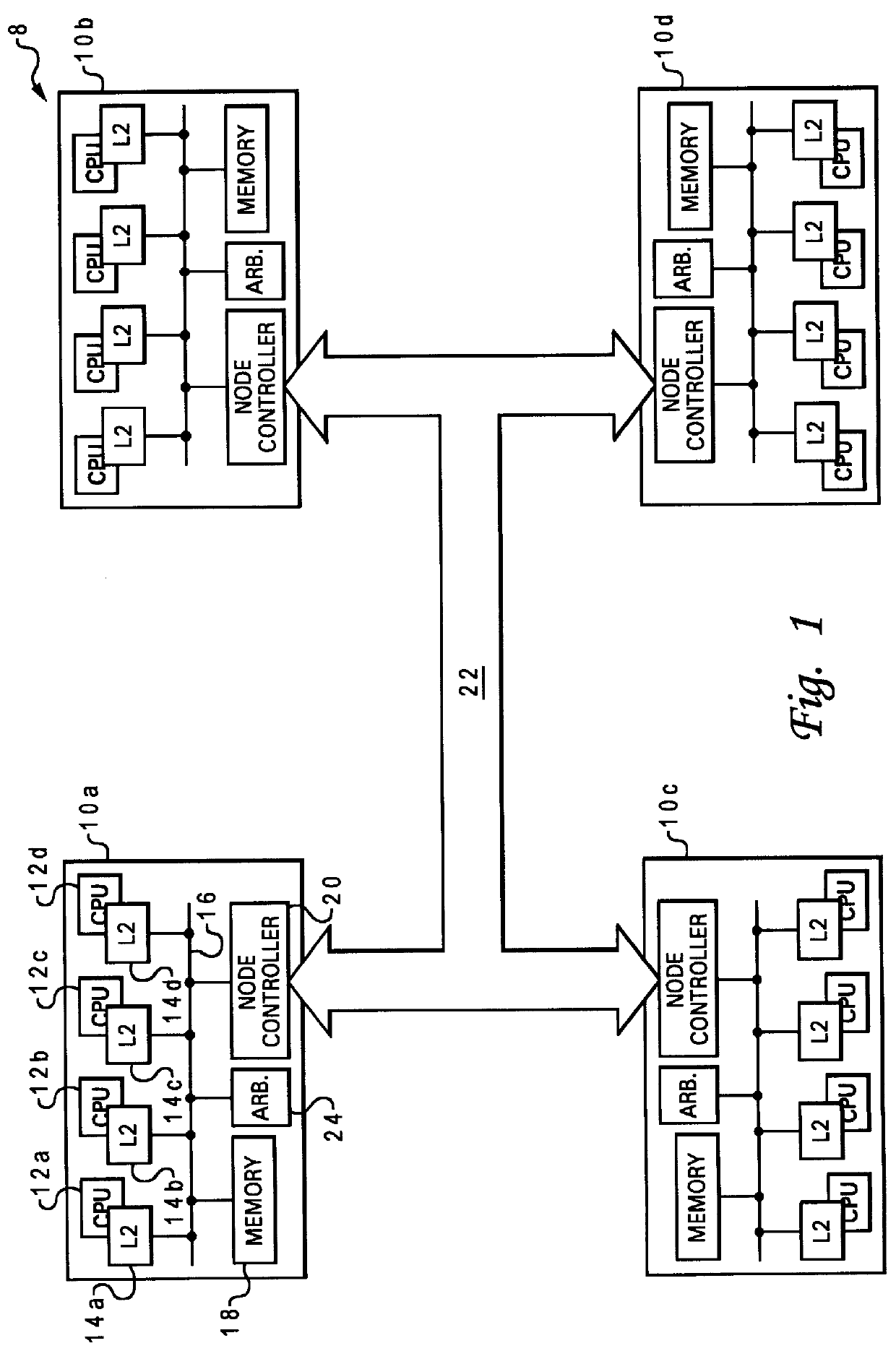

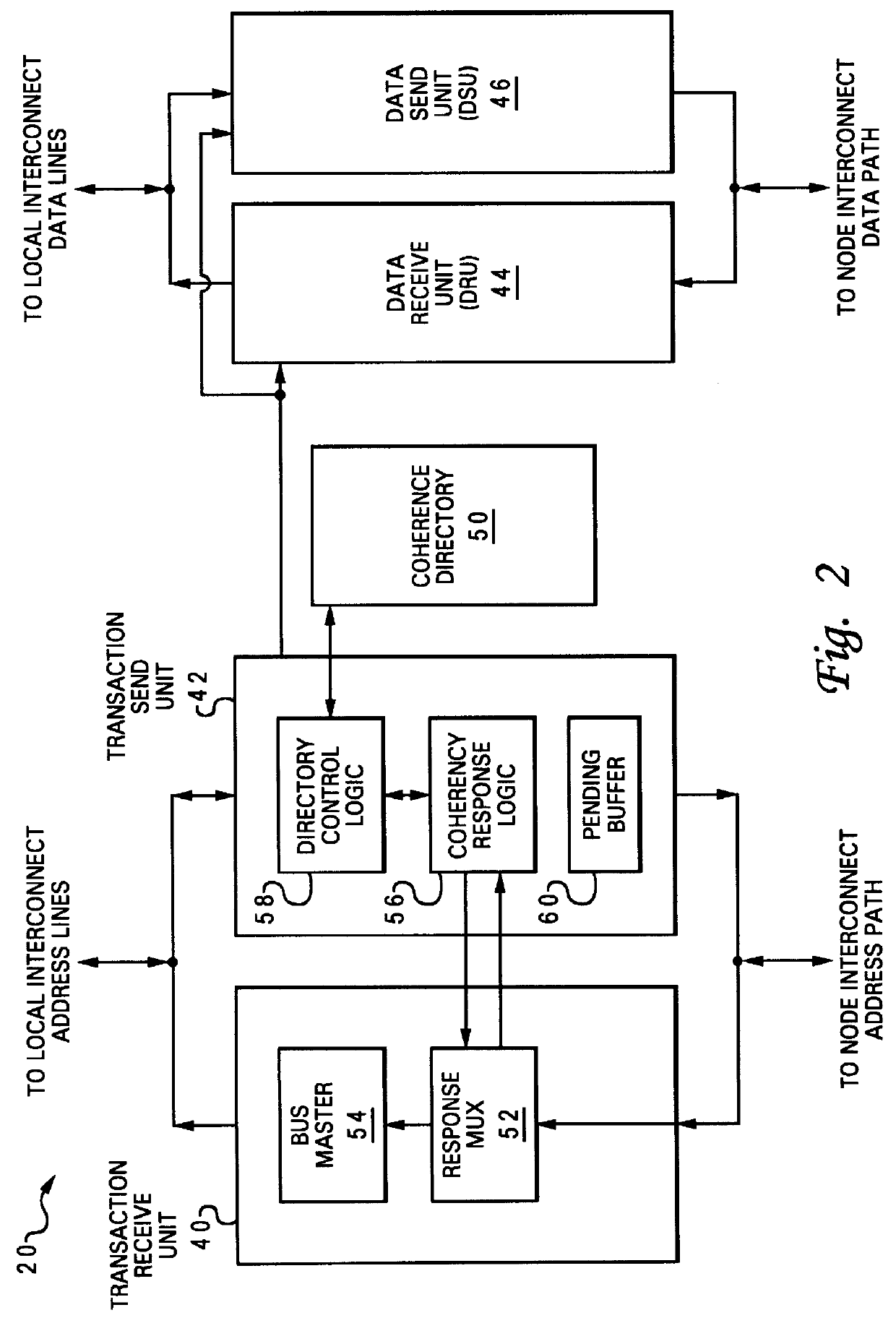

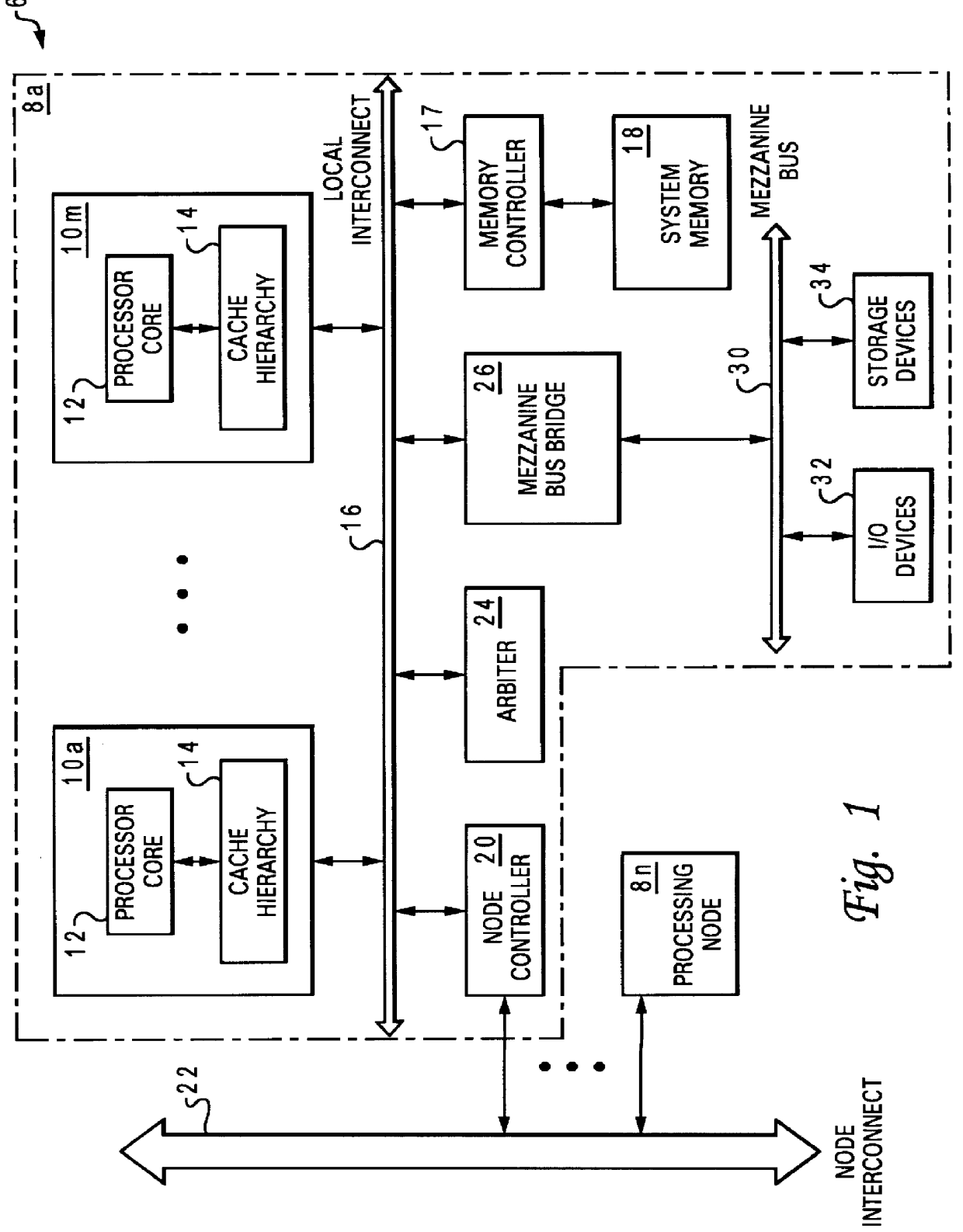

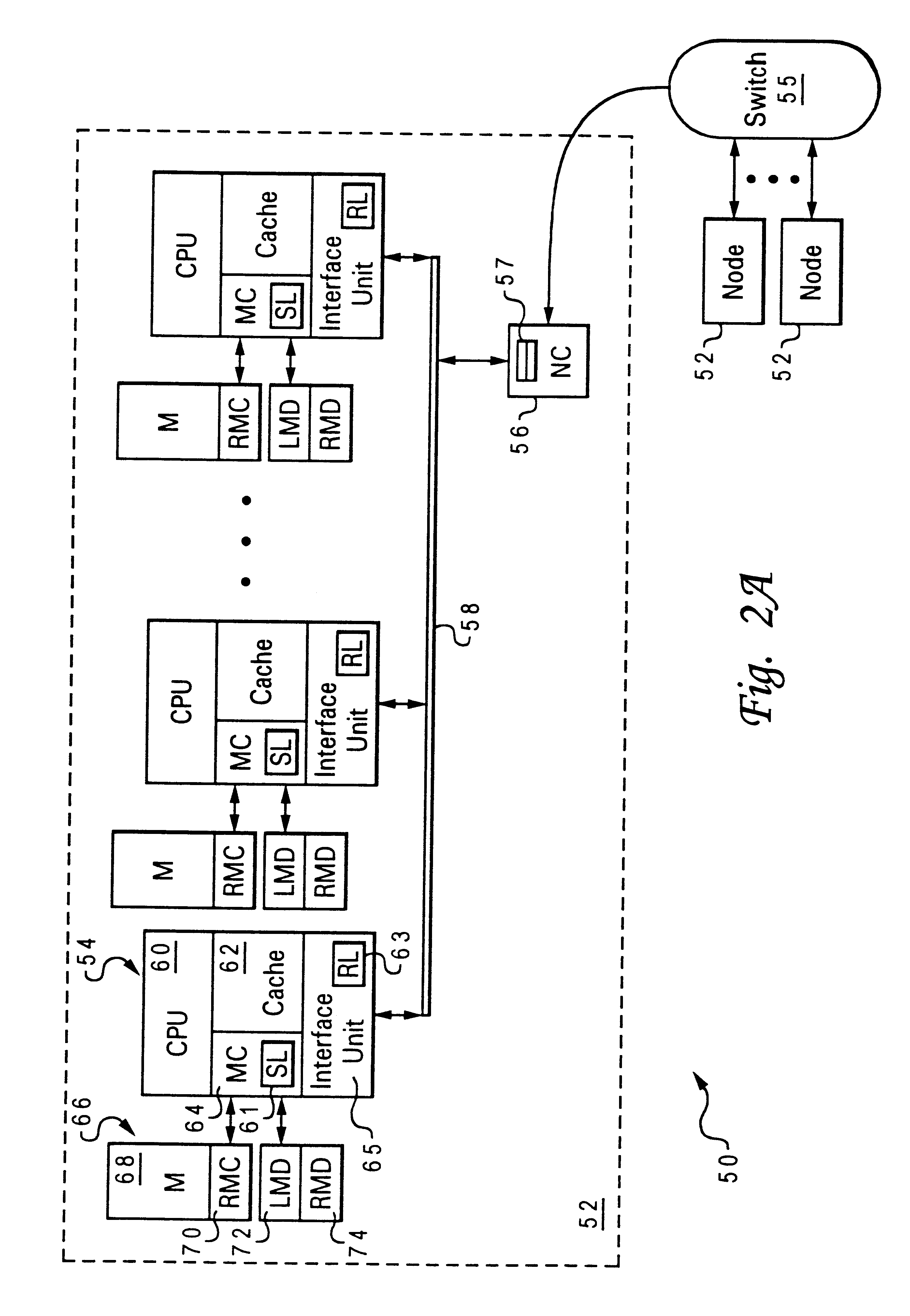

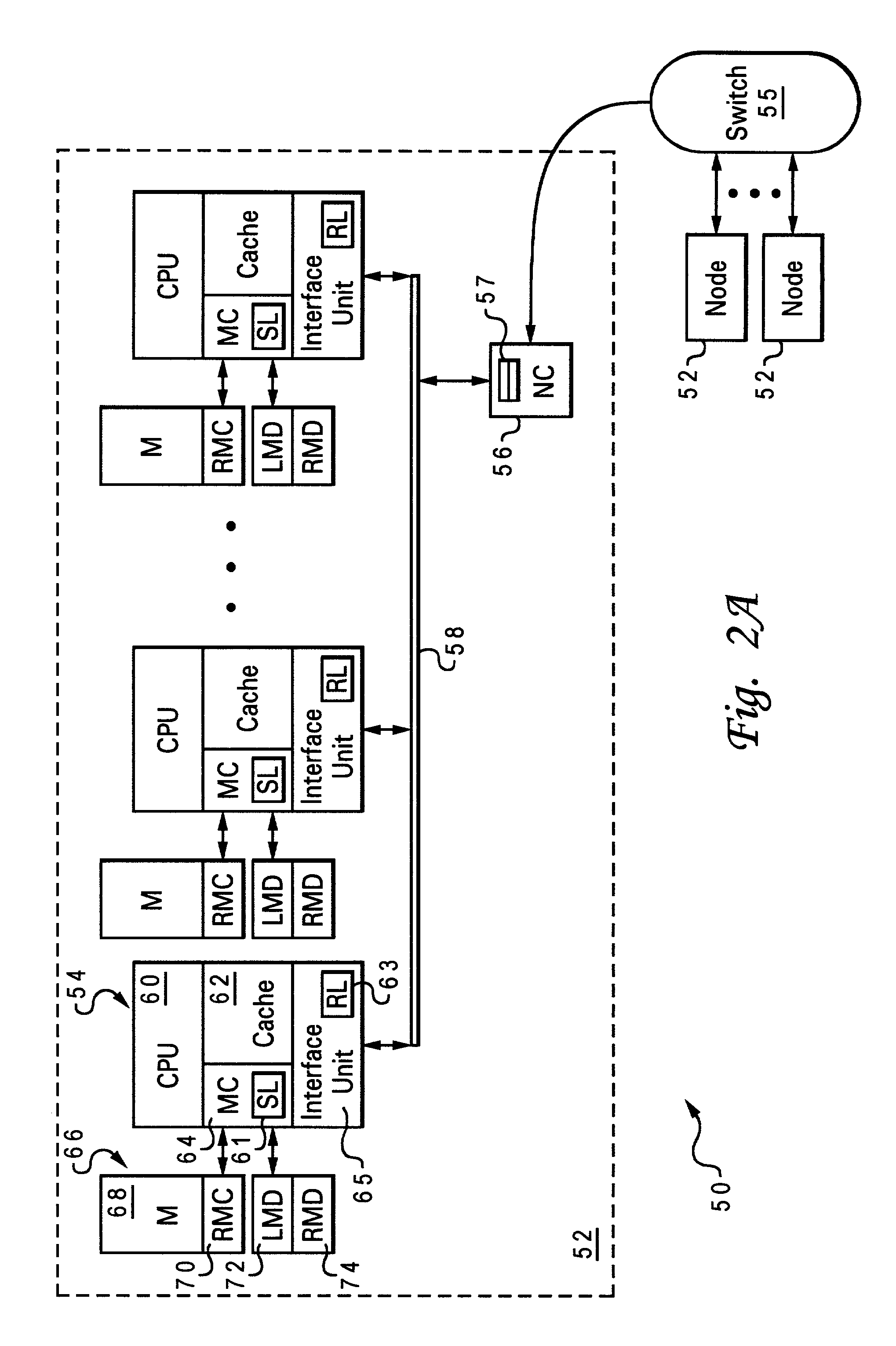

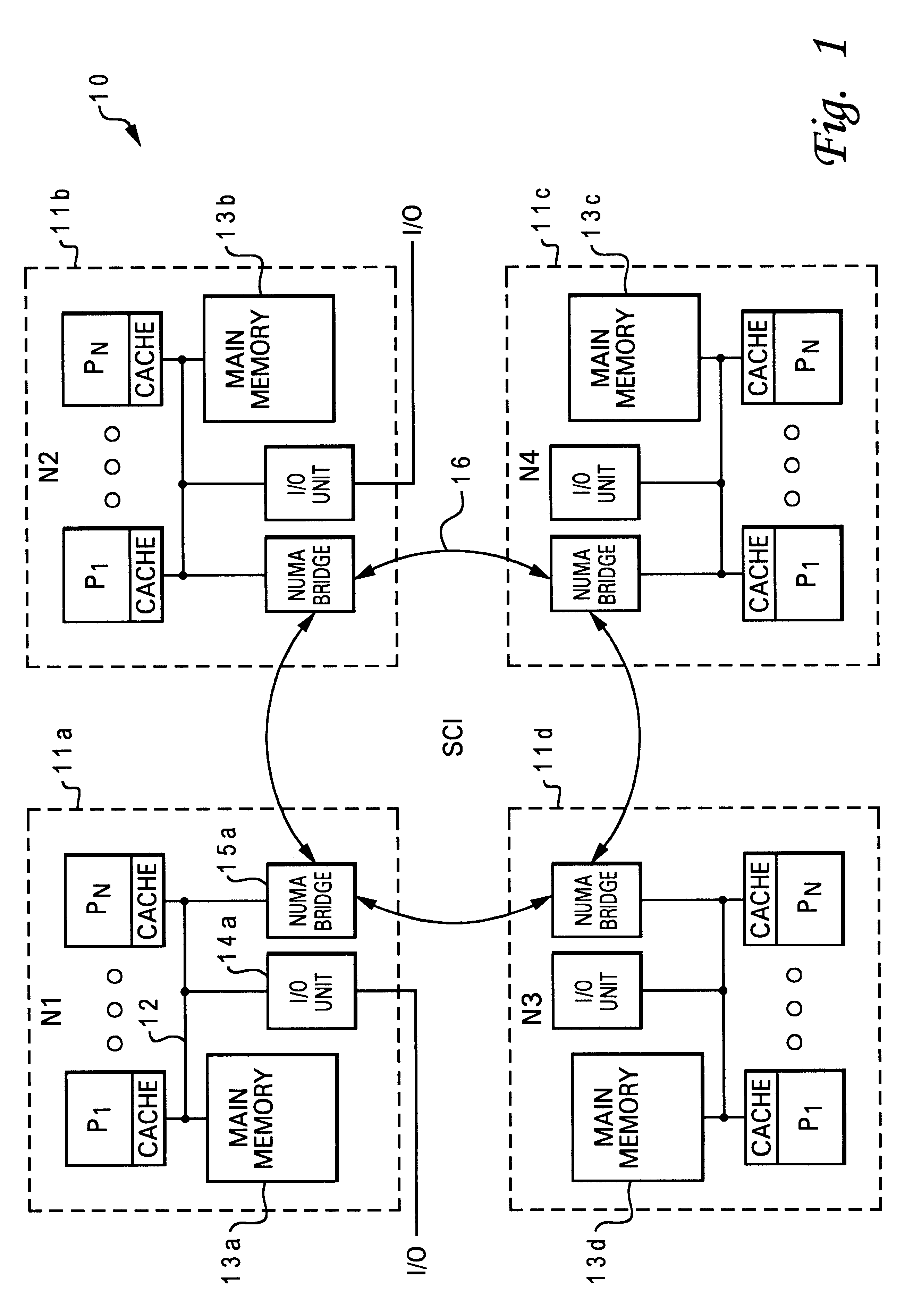

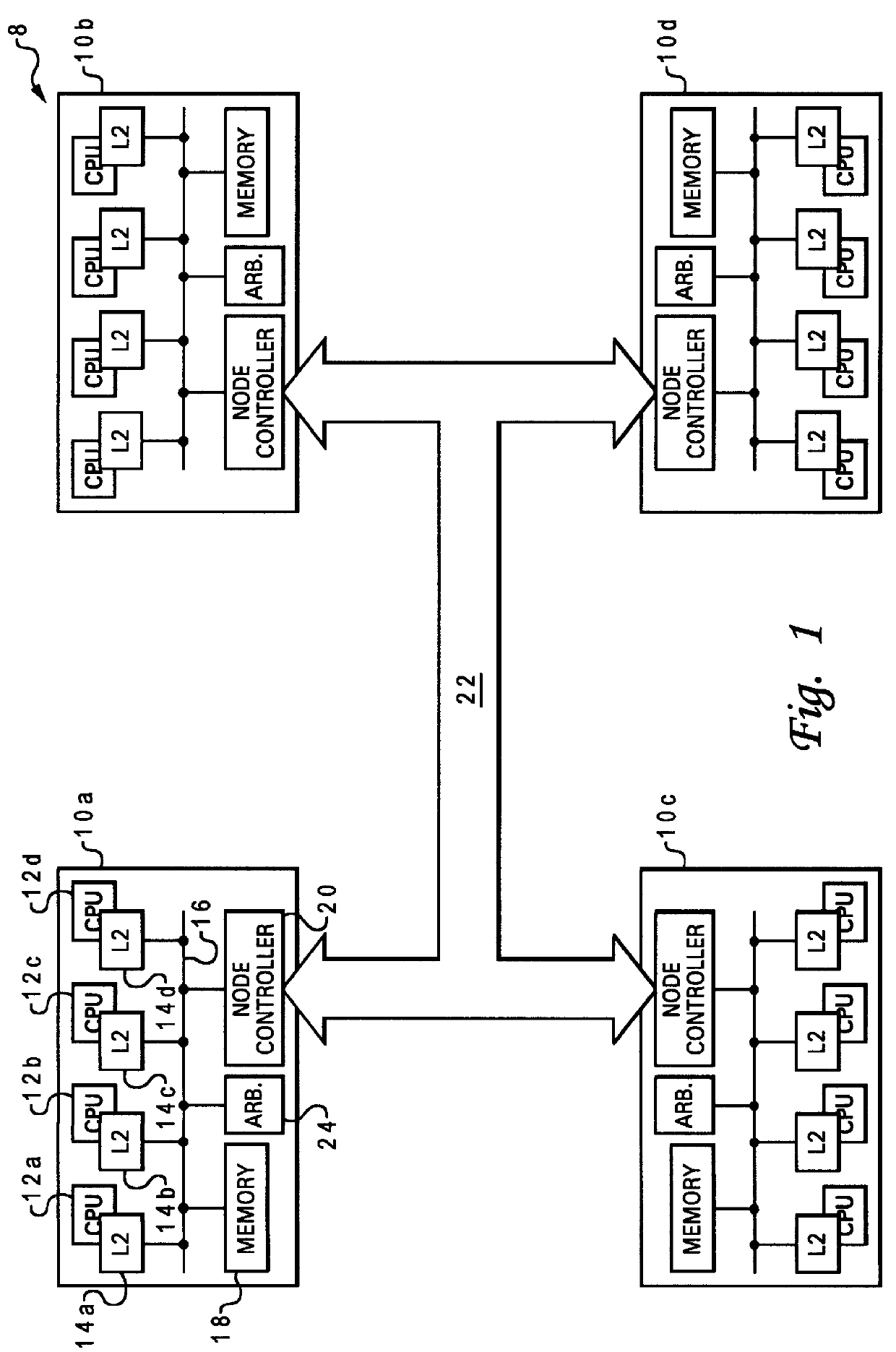

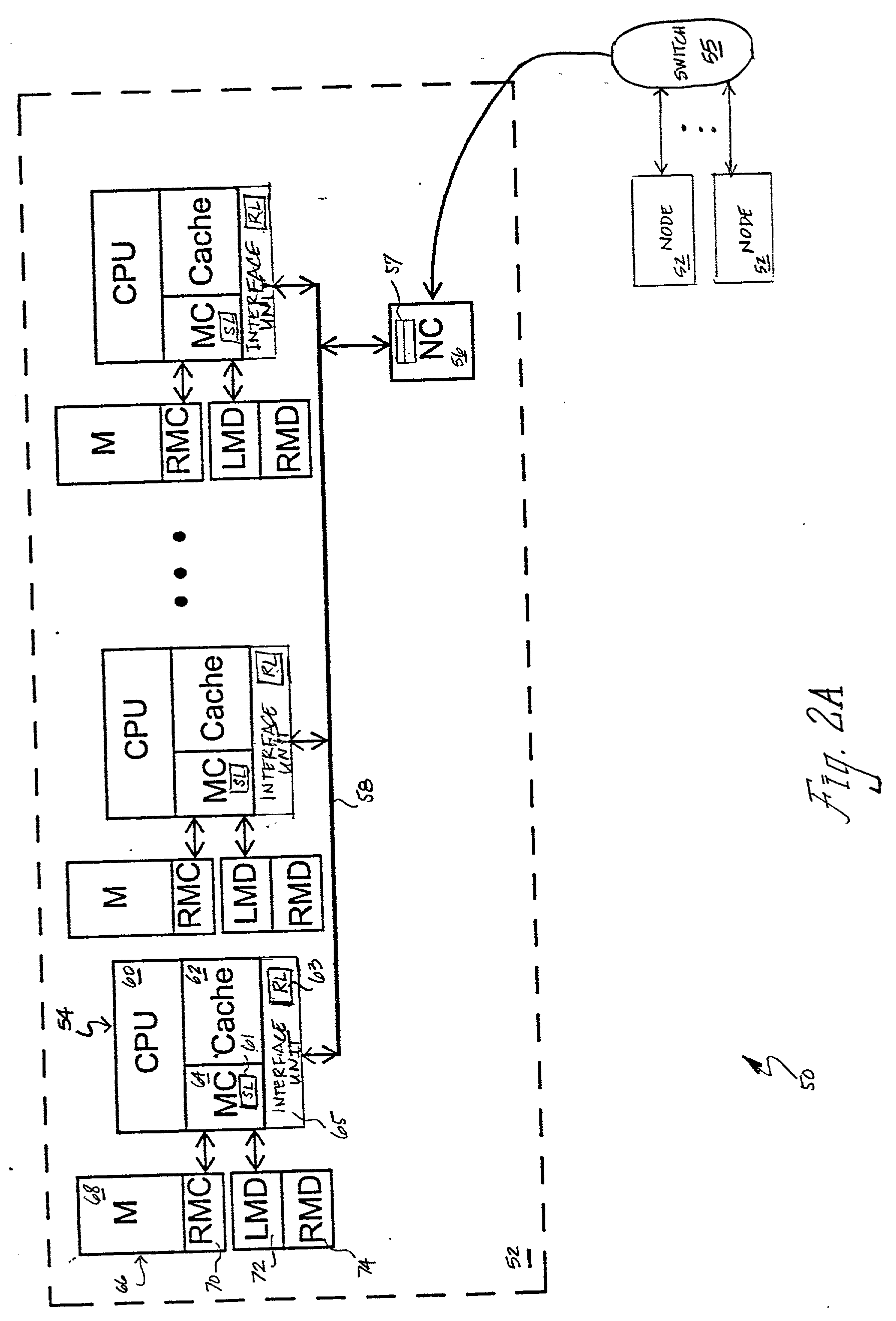

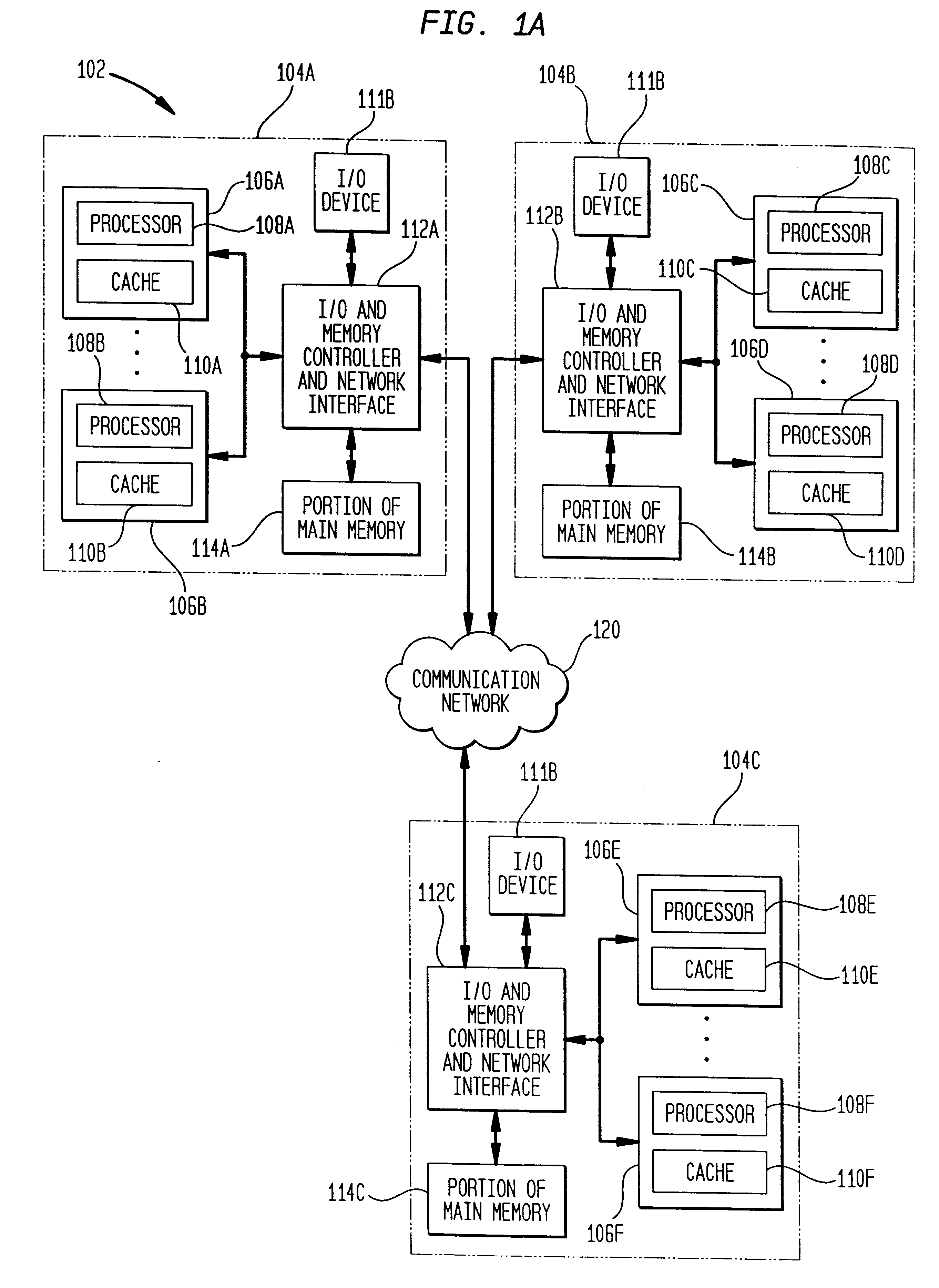

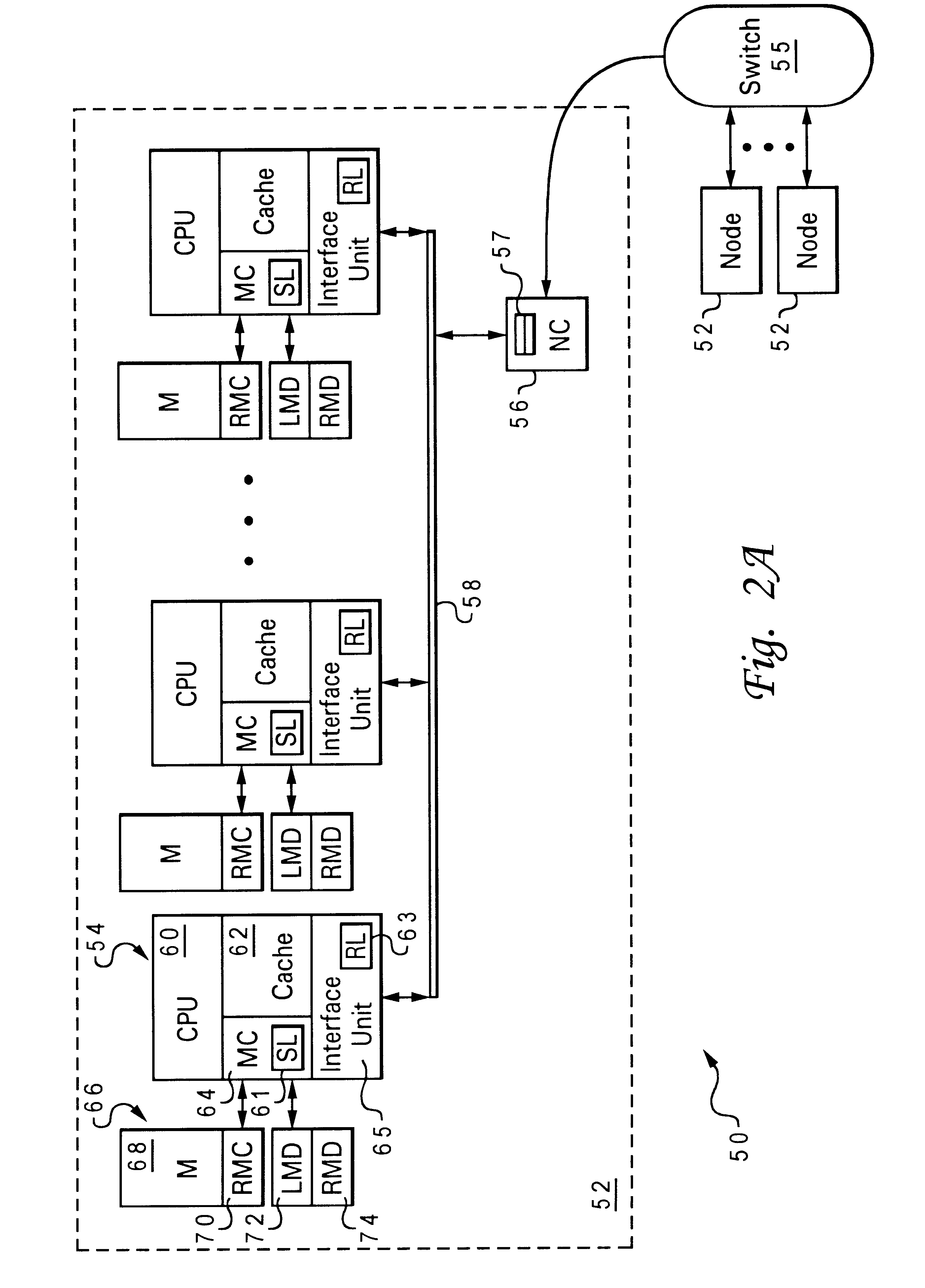

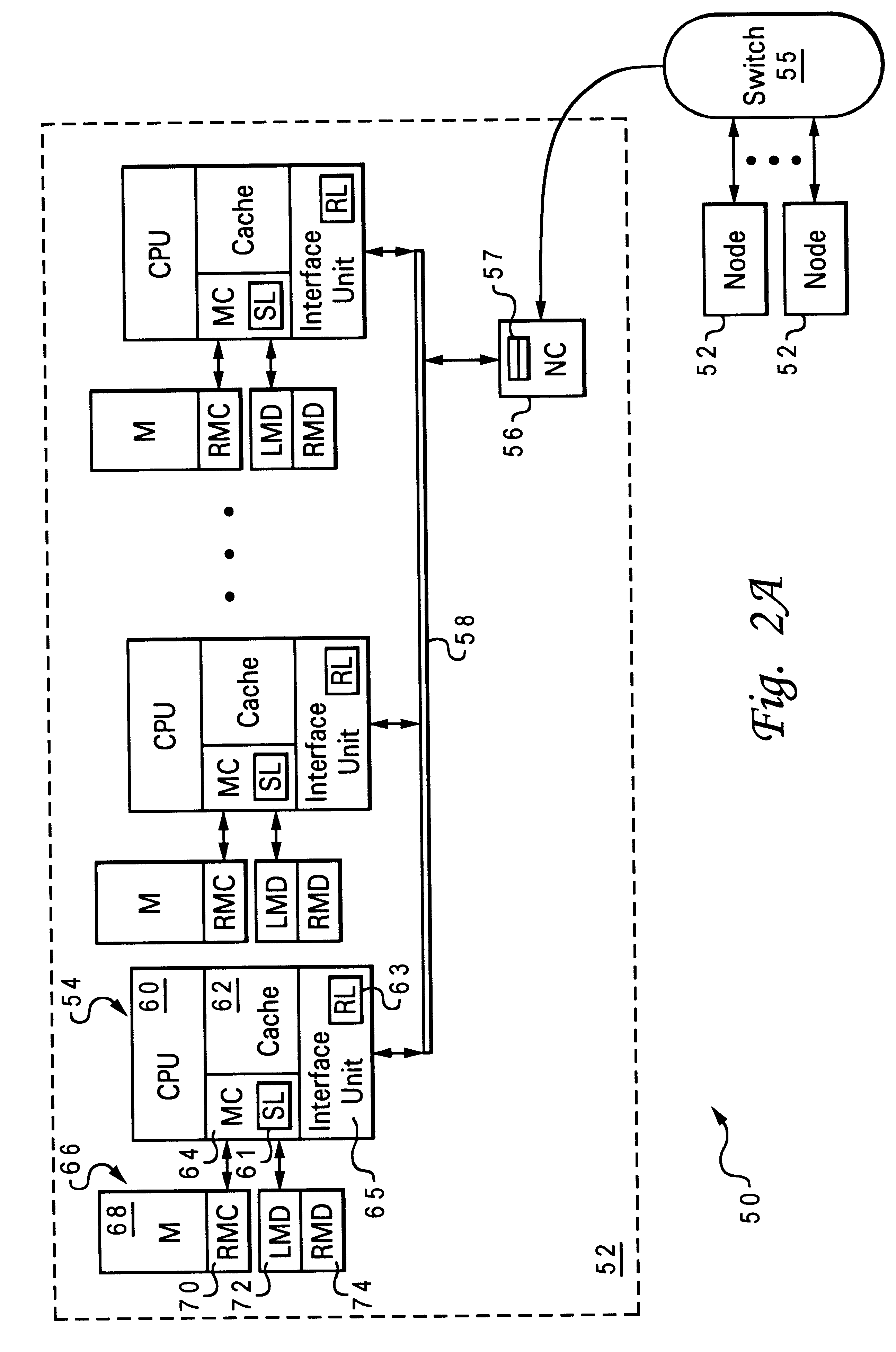

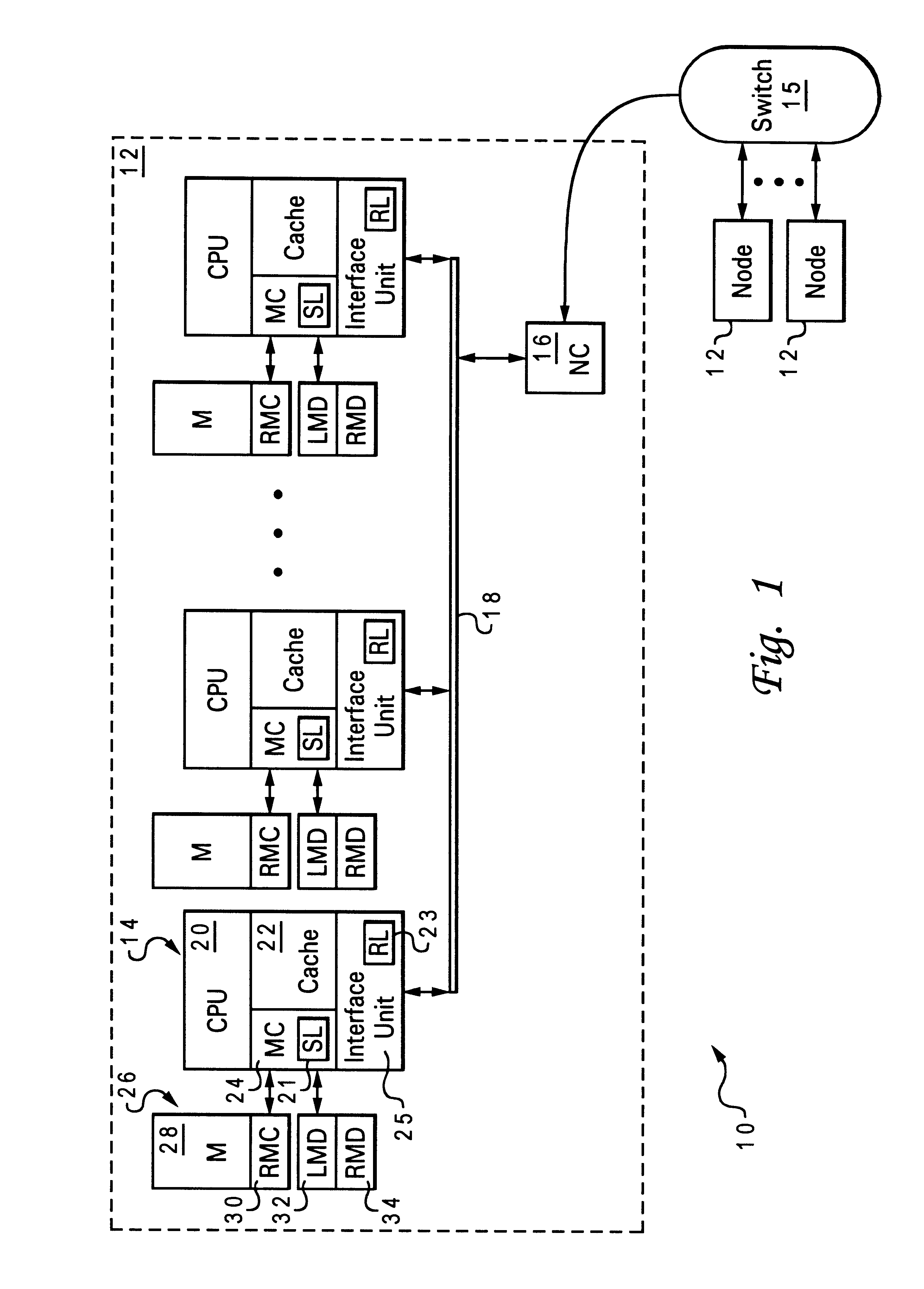

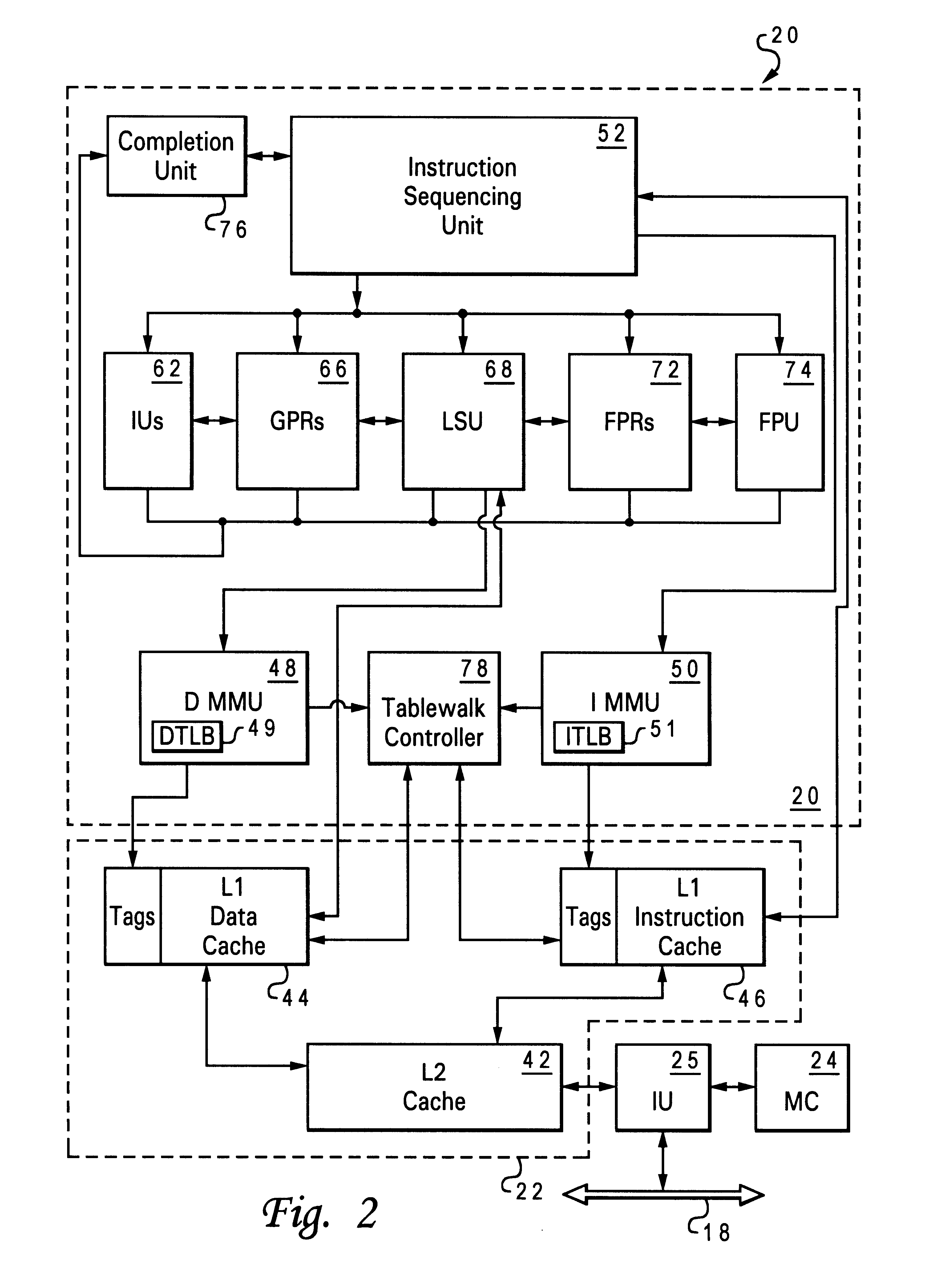

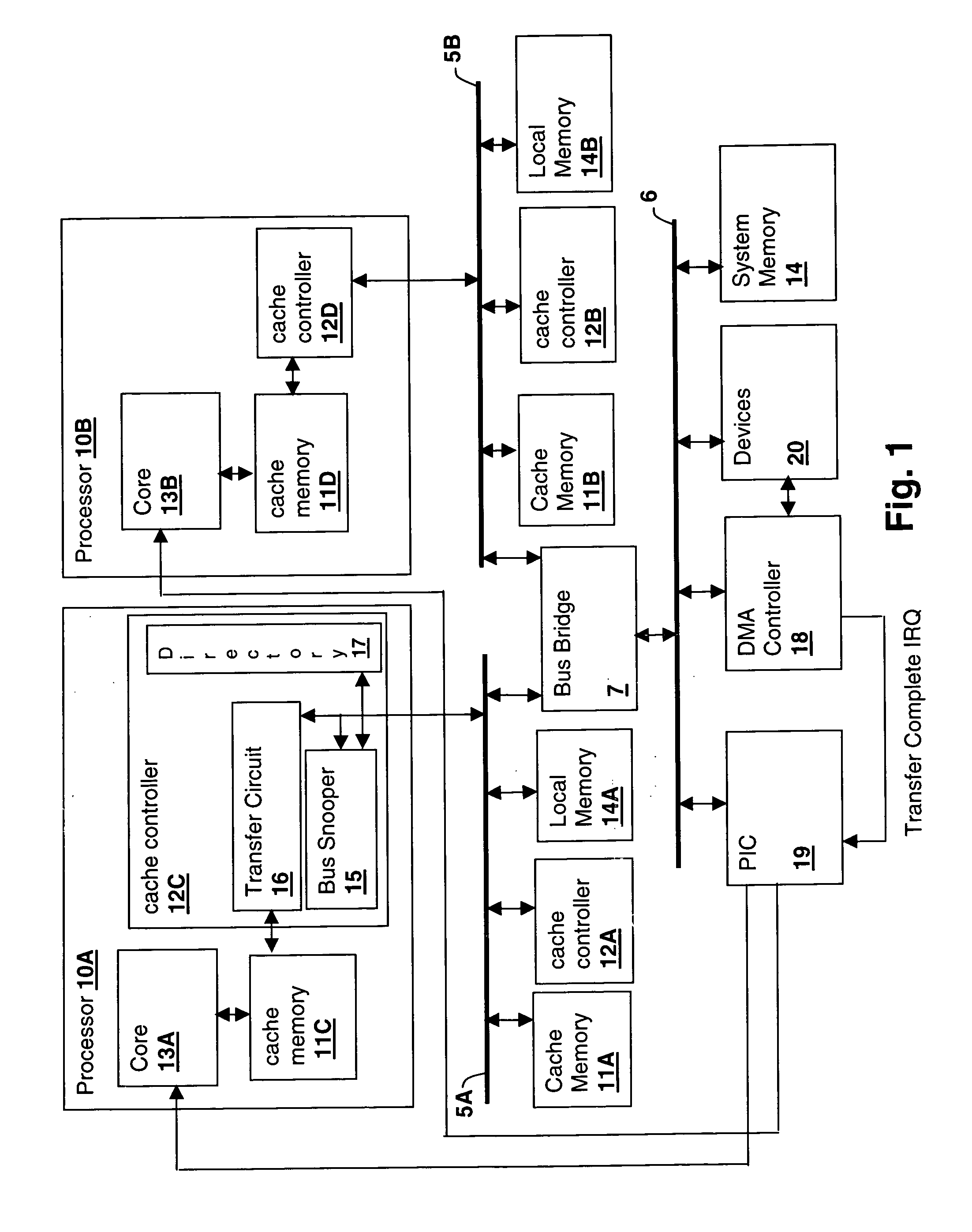

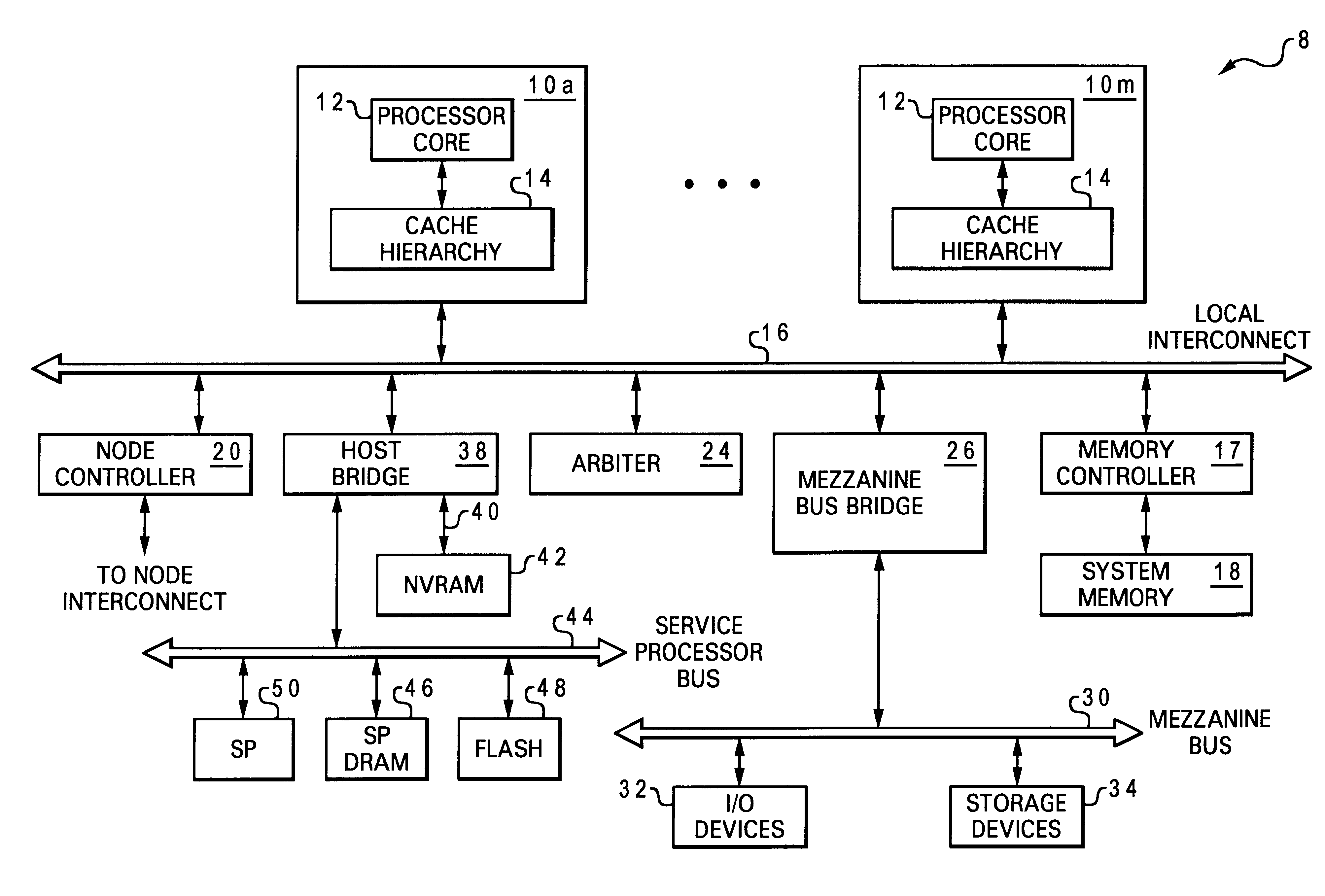

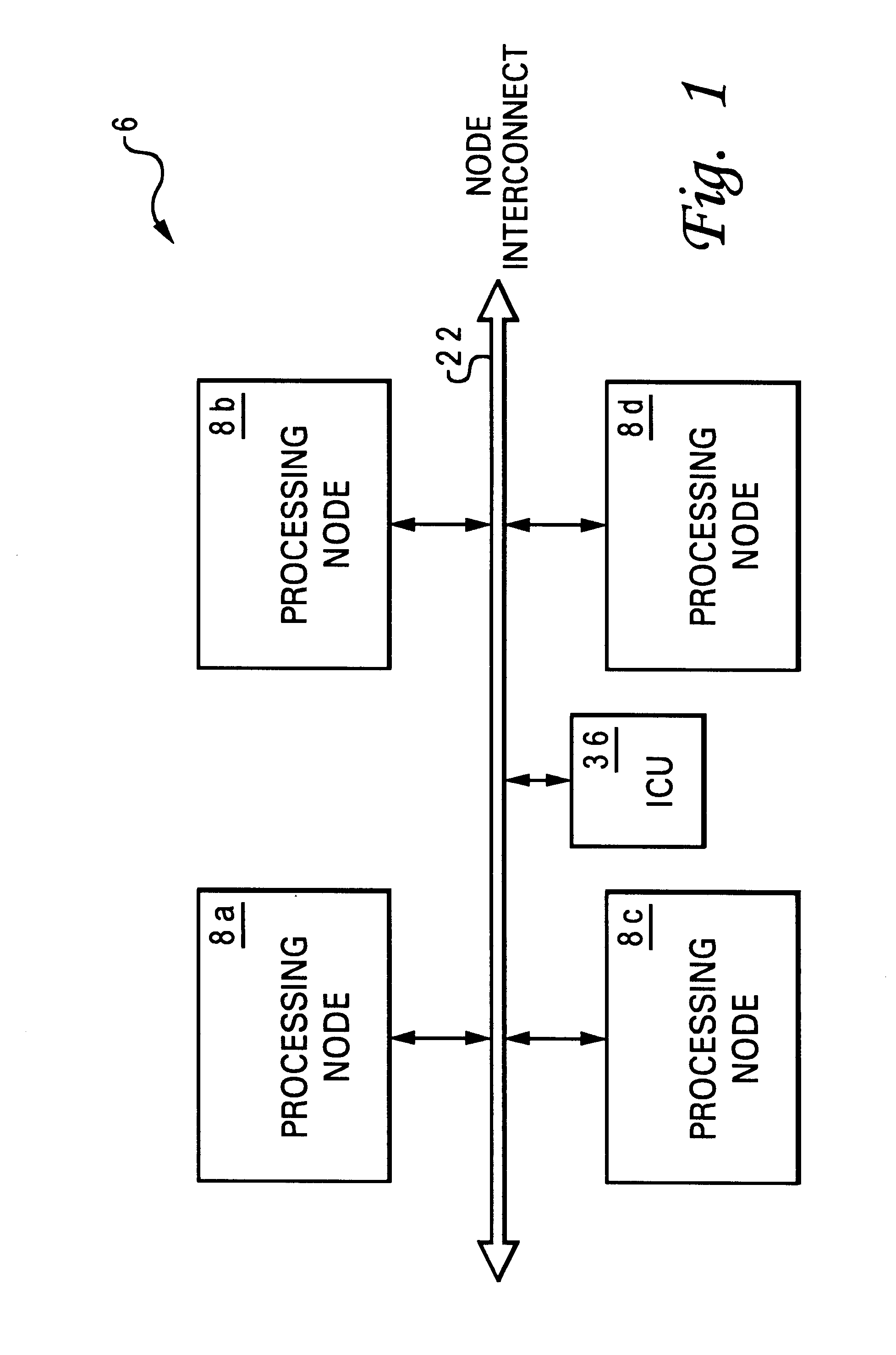

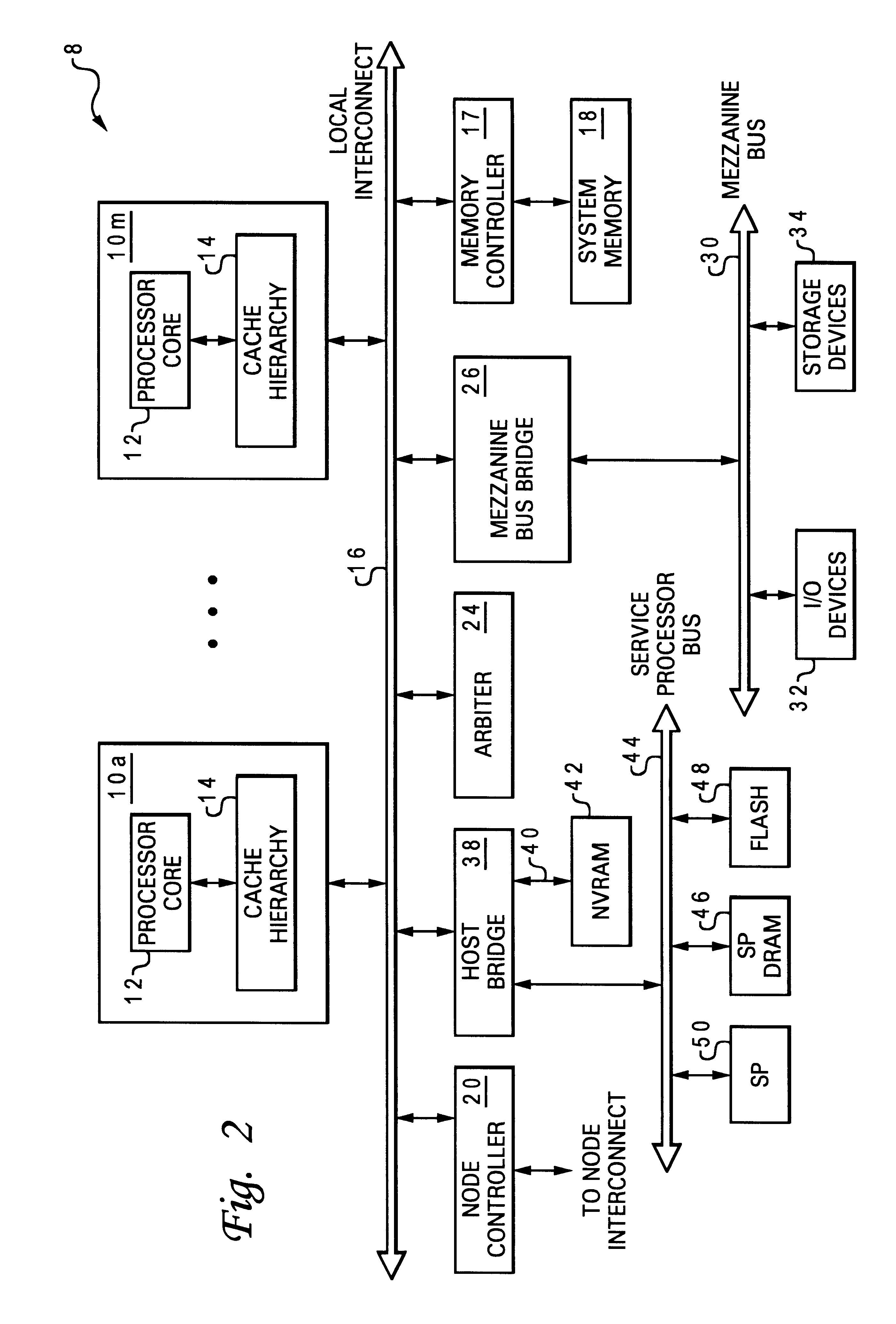

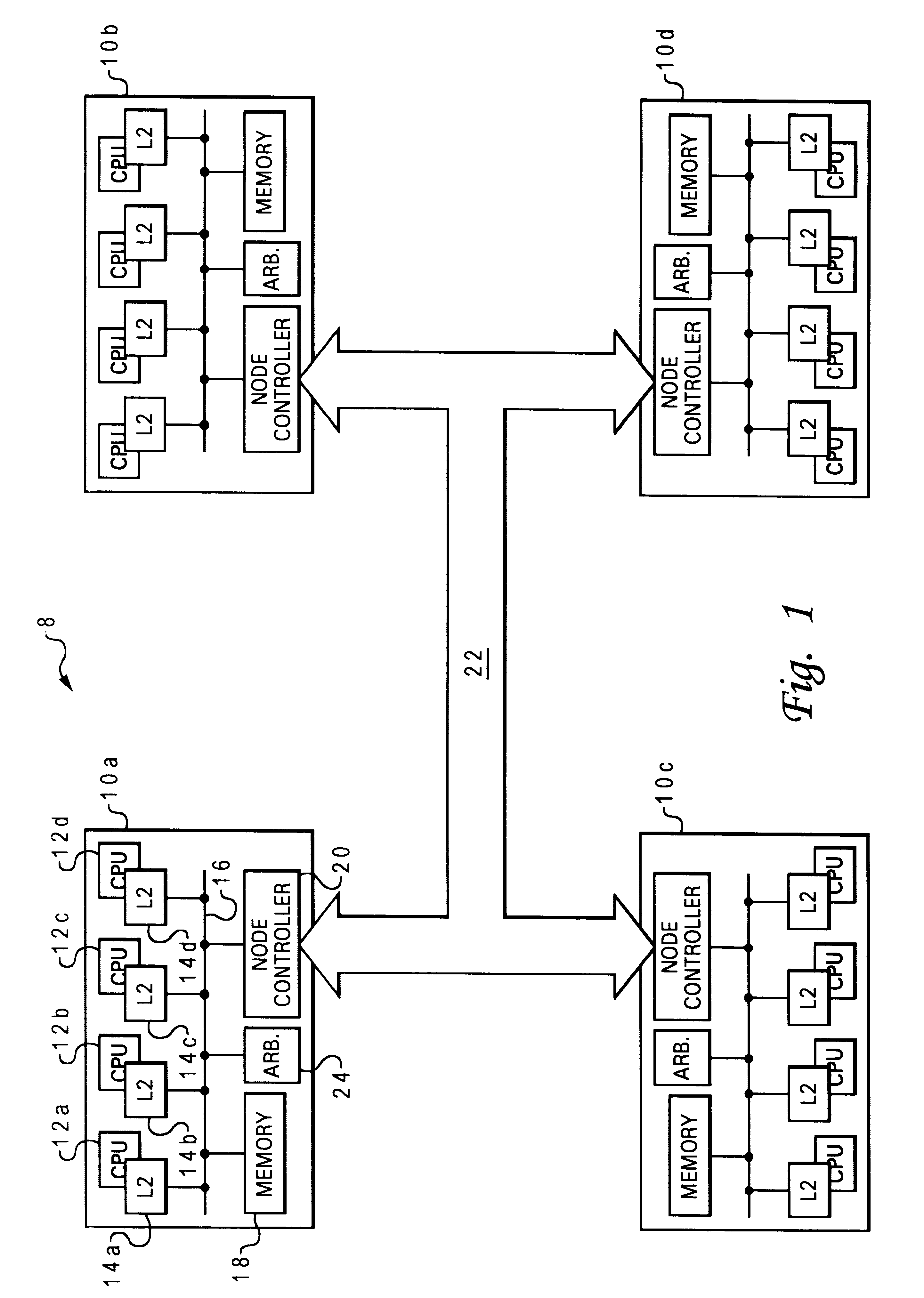

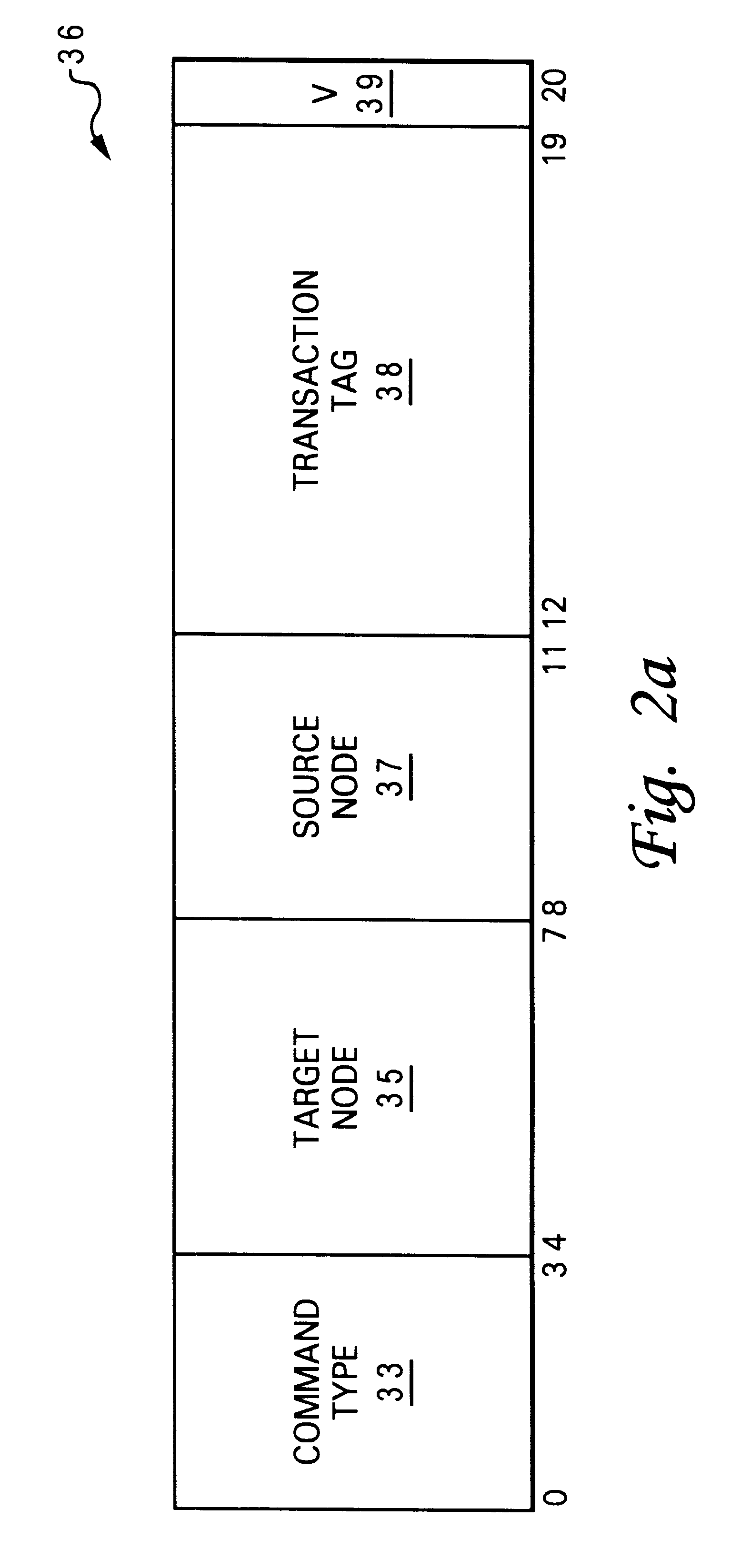

A non-uniform memory access (NUMA) computer system includes at least a local processing node and a remote processing node that are each coupled to a node interconnect. The local processing node includes a local interconnect, a processor and a system memory coupled to the local interconnect, and a node controller interposed between the local interconnect and the node interconnect. In response to receipt of a read request from the local interconnect, the node controller speculatively transmits the read request to the remote processing node via the node interconnect. Thereafter, in response to receipt of a response to the read request from the remote processing node, the node controller handles the response in accordance with a resolution of the read request at the local processing node. For example, in one processing scenario, data contained in the response received from the remote processing node is discarded by the node controller if the read request received a Modified Intervention coherency response at the local processing node.

Owner:IBM CORP

Interrupt architecture for a non-uniform memory access (NUMA) data processing system

InactiveUS6148361ASmall sizePromote disseminationProgram initiation/switchingGeneral purpose stored program computerExtensibilityData processing system

A non-uniform memory access (NUMA) computer system includes at least two nodes coupled by a node interconnect, where at least one of the nodes includes a processor for servicing interrupts. The nodes are partitioned into external interrupt domains so that an external interrupt is always presented to a processor within the external interrupt domain in which the interrupt occurs. Although each external interrupt domain typically includes only a single node, interrupt channeling or interrupt funneling may be implemented to route external interrupts across node boundaries for presentation to a processor. Once presented to a processor, interrupt handling software may then execute on any processor to service the external interrupt. Servicing external interrupts is expedited by reducing the size of the interrupt handler polling chain as compared to prior art methods. In addition to external interrupts, the interrupt architecture of the present invention supports inter-processor interrupts (IPIs) by which any processor may interrupt itself or one or more other processors in the NUMA computer system. IPIs are triggered by writing to memory mapped registers in global system memory, which facilitates the transmission of IPIs across node boundaries and permits multicast IPIs to be triggered simply by transmitting one write transaction to each node containing a processor to be interrupted. The interrupt hardware within each node is also distributed for scalability, with the hardware components communicating via interrupt transactions conveyed across shared communication paths.

Owner:IBM CORP

Reservation management in a non-uniform memory access (NUMA) data processing system

InactiveUS6275907B1Memory adressing/allocation/relocationProgram controlData processing systemCache hierarchy

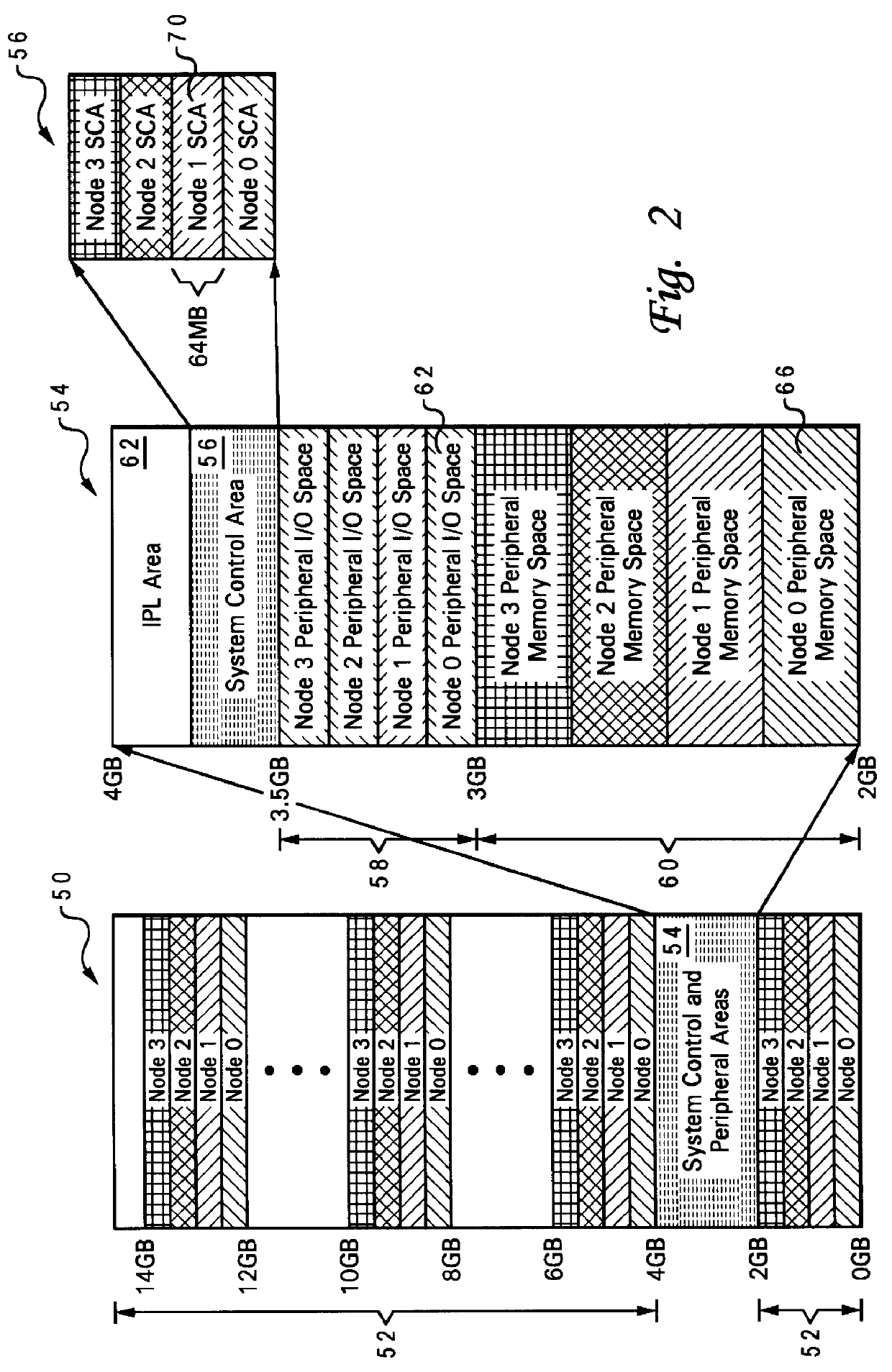

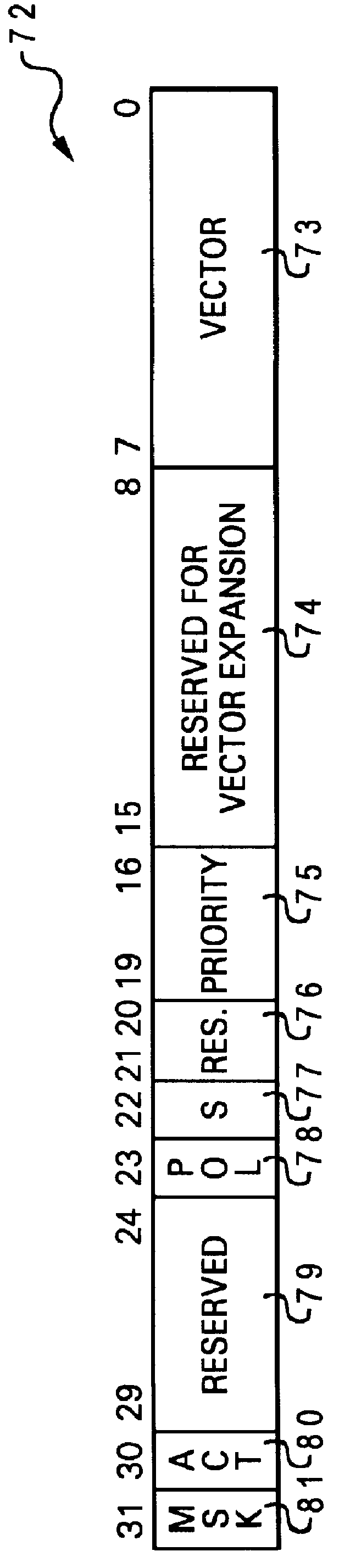

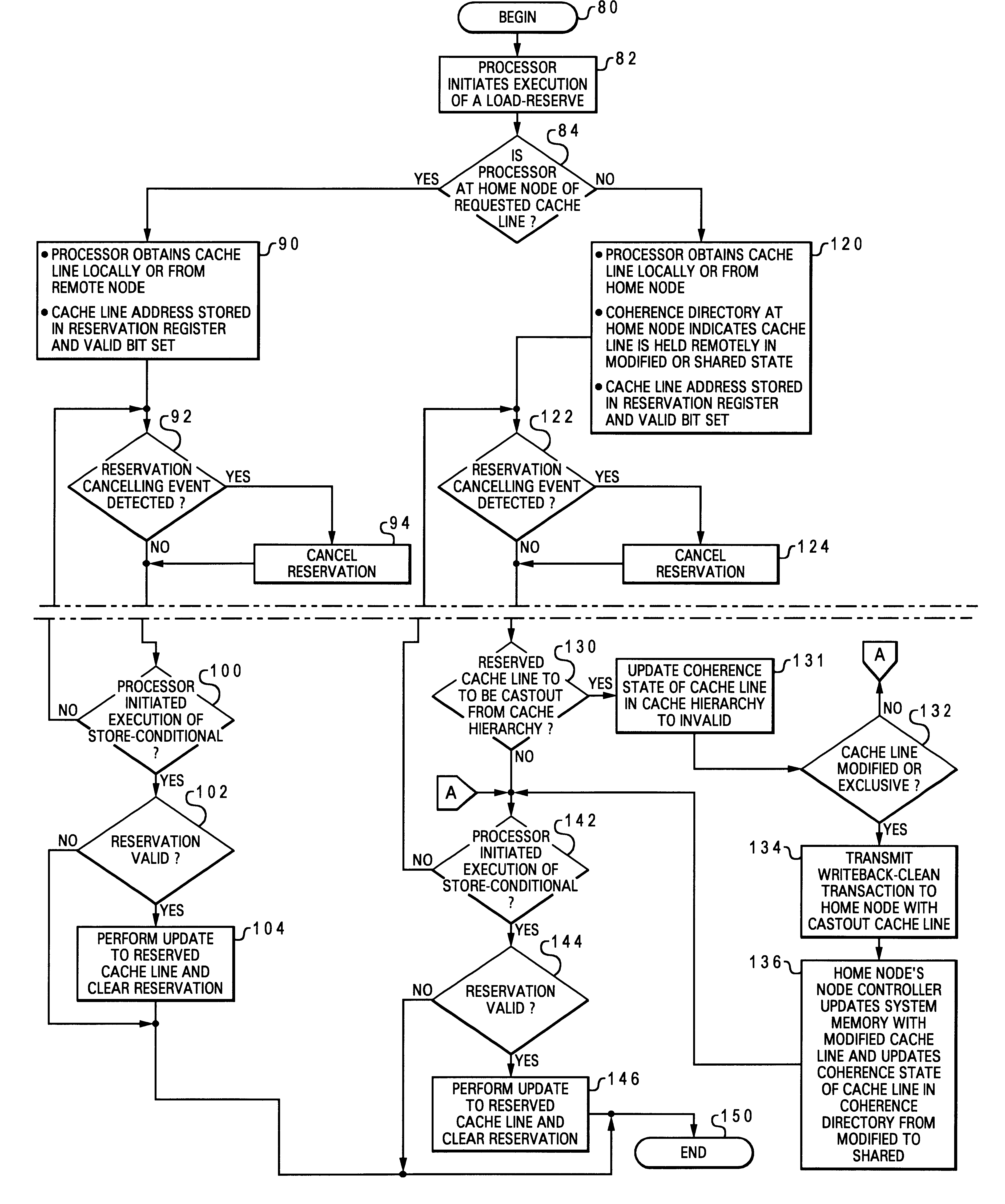

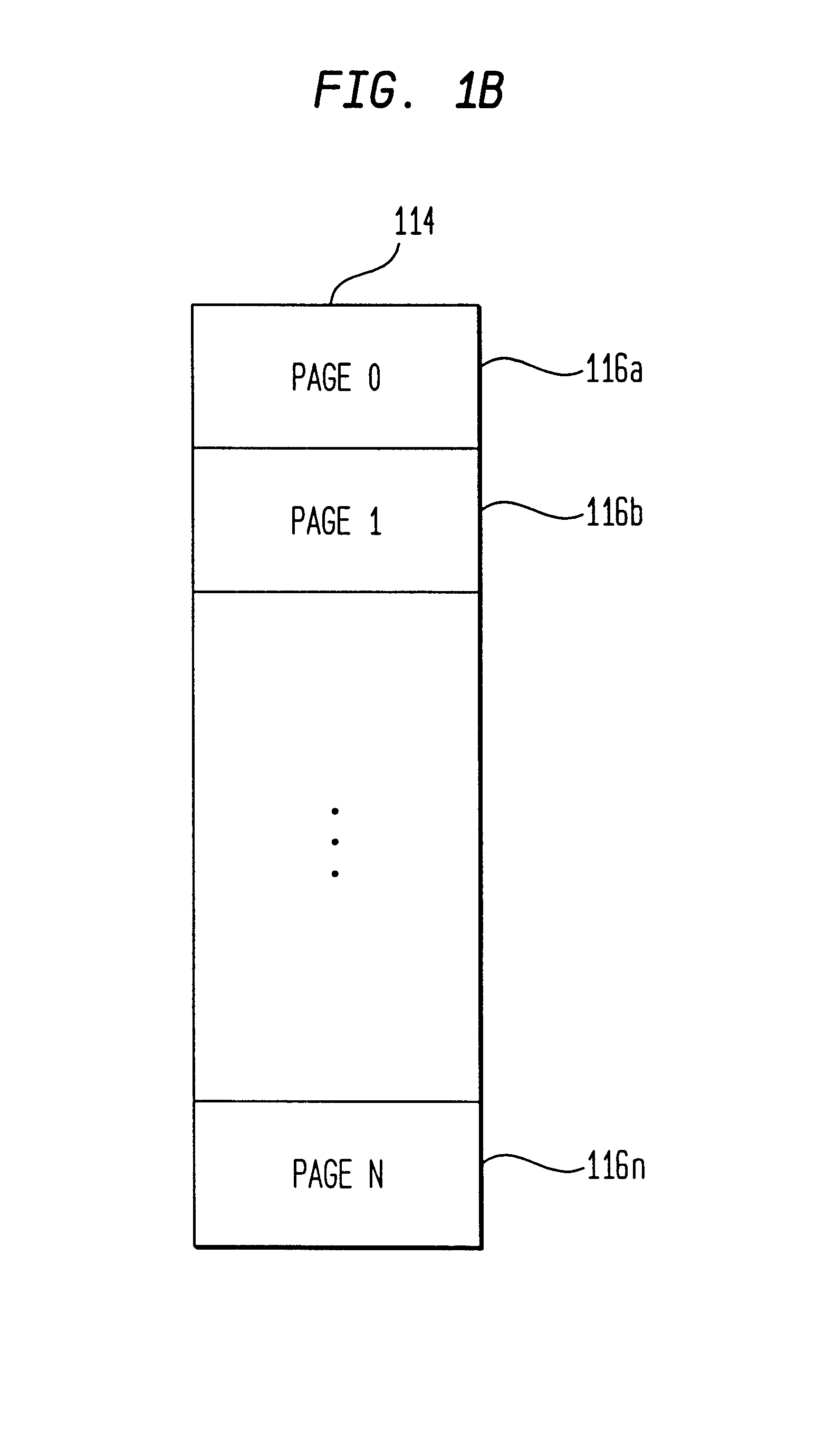

A non-uniform memory access (NUMA) computer system includes a plurality of processing nodes coupled to a node interconnect. The plurality of processing nodes include at least a remote processing node, which contains a processor having an associated cache hierarchy, and a home processing node. The home processing node includes a shared system memory containing a plurality of memory granules and a coherence directory that indicates possible coherence states of copies of memory granules among the plurality of memory granules that are stored within at least one processing node other than the home processing node. If the processor within the remote processing node has a reservation for a memory granule among the plurality of memory granules that is not resident within the associated cache hierarchy, the coherence directory associates the memory granule with a coherence state indicating that the reserved memory granule may possibly be held non-exclusively at the remote processing node. In this manner, the coherence mechanism can be utilized to manage processor reservations even in cases in which a reserving processor's cache hierarchy does not hold a copy of the reserved memory granule.

Owner:IBM CORP

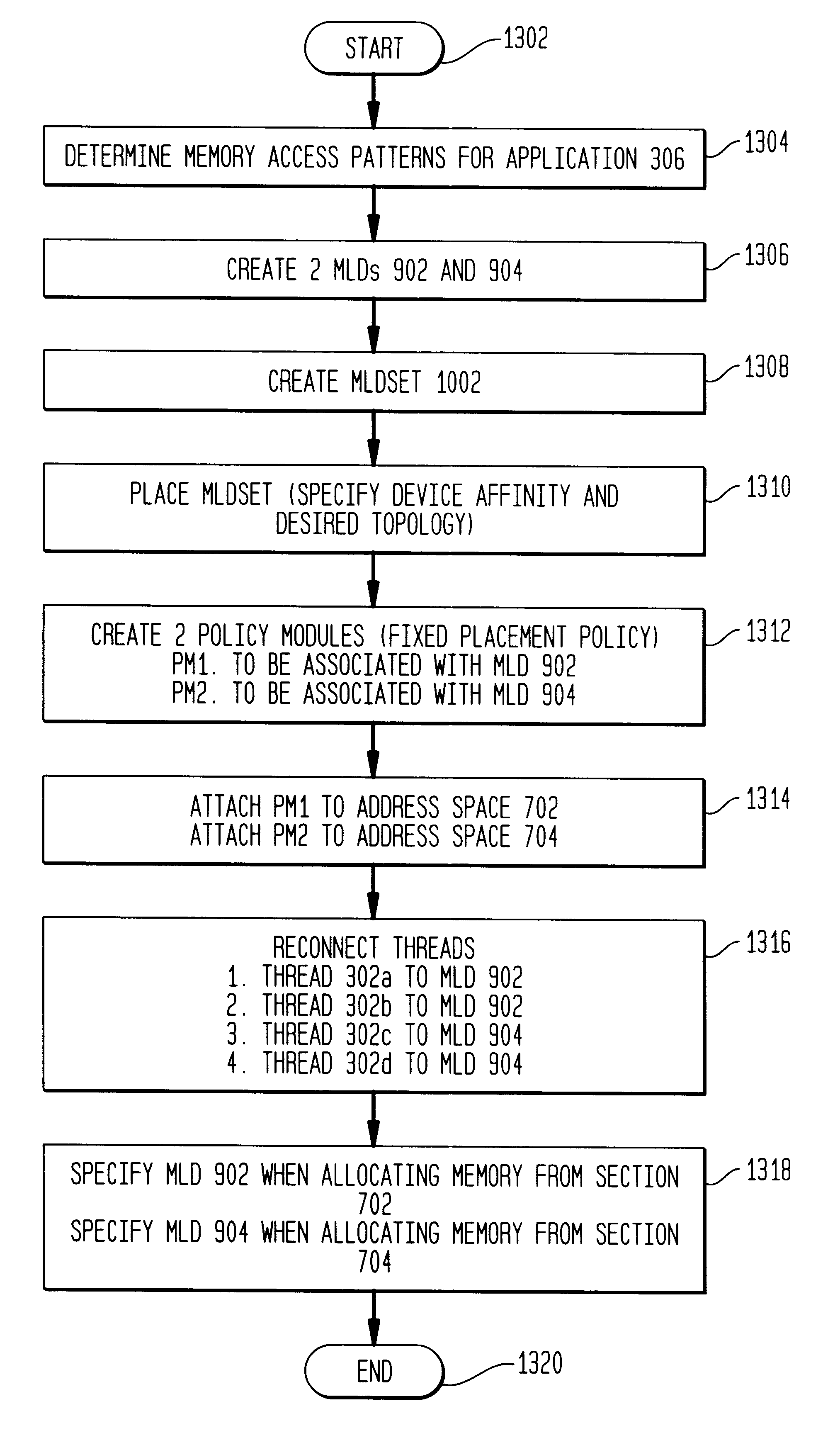

Method, system and computer program product for managing memory in a non-uniform memory access system

InactiveUS6289424B1Easy to carryMemory architecture accessing/allocationResource allocationControl systemComputerized system

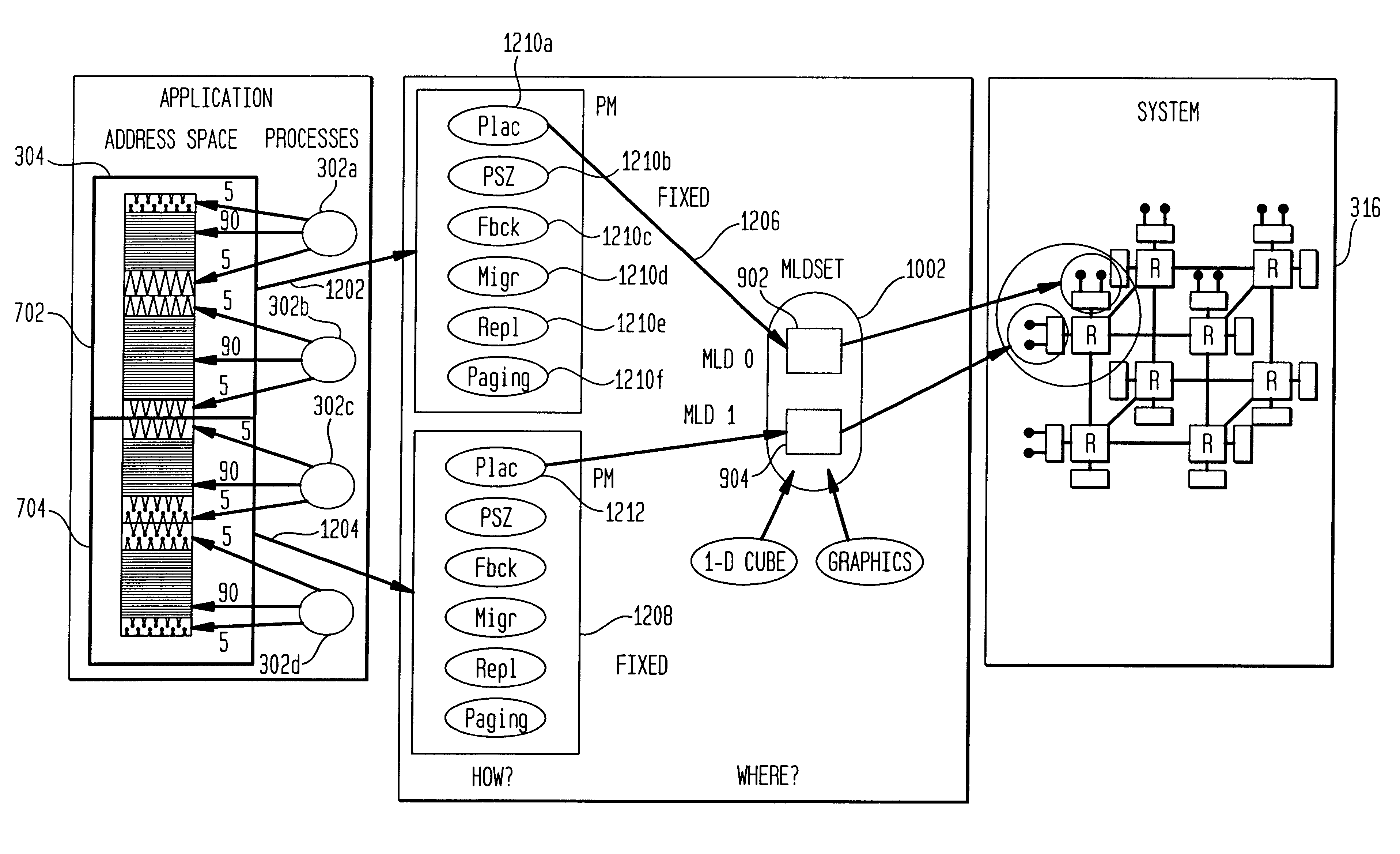

A memory management and control system that is selectable at the application level by an application programmer is provided. The memory management and control system is based on the use of policy modules. Policy modules are used to specify and control different aspects of memory operations in NUMA computer systems, including how memory is managed for processes running in NUMA computer systems. Preferably, each policy module comprises a plurality of methods that are used to control a variety of memory operations. Such memory operations typically include initial memory placement, memory page size, a migration policy, a replication policy and a paging policy. One method typically contained in policy modules is an initial placement policy. Placement policies may be based on two abstractions of physical memory nodes. These two abstractions are referred to herein as "Memory Locality Domains" (MLDs) and "Memory Locality Domain Sets" (MLDSETs). By specifying MLDs and MLDSETs, rather than physical memory nodes, application programs can be executed on different computer systems regardless of the particular node configuration and physical node topology employed by the system. Further, such application programs can be run on different machines without the need for code modification and / or re-compiling.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

Decentralized global coherency management in a multi-node computer system

InactiveUS20030009637A1Memory adressing/allocation/relocationDigital computer detailsComputerized systemConsistency management

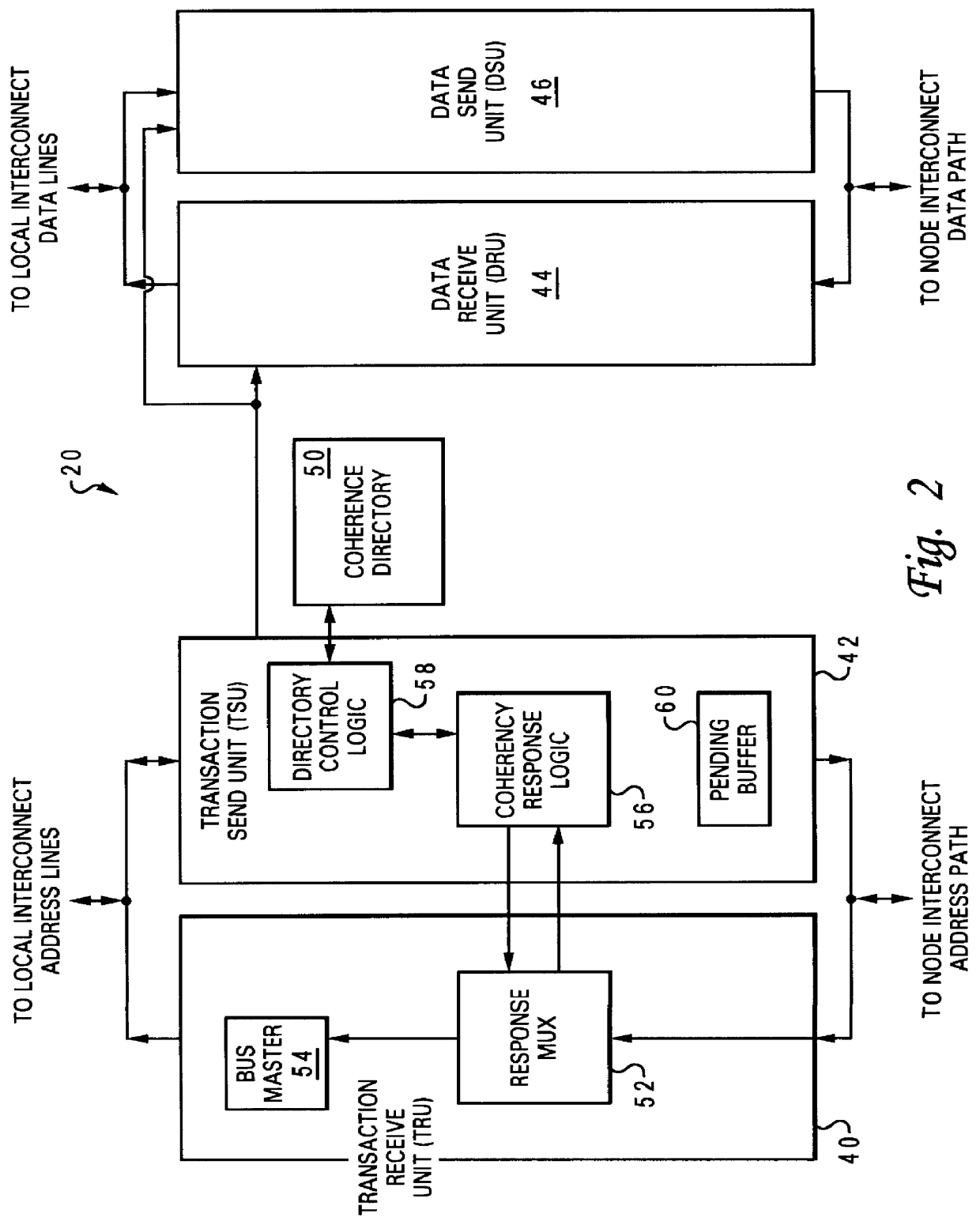

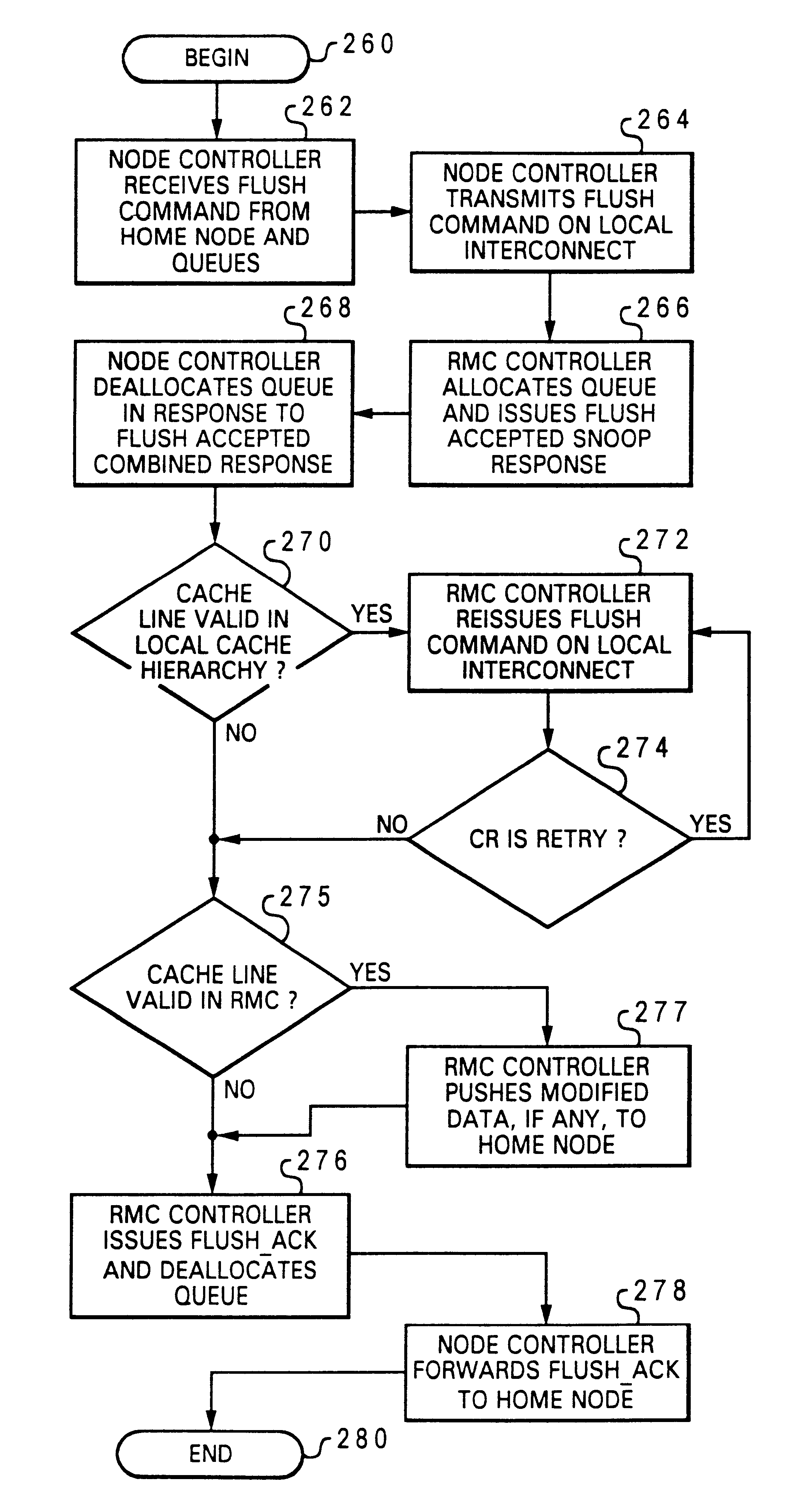

A non-uniform memory access (NUMA) computer system includes a first node and a second node coupled by a node interconnect. The second node includes a local interconnect, a node controller coupled between the local interconnect and the node interconnect, and a controller coupled to the local interconnect. In response to snooping an operation from the first node issued on the local interconnect by the node controller, the controller signals acceptance of responsibility for coherency management activities related to the operation in the second node, performs coherency management activities in the second node required by the operation, and thereafter provides notification of performance of the coherency management activities. To promote efficient utilization of queues within the node controller, the node controller preferably allocates a queue to the operation in response to receipt of the operation from the node interconnect and then deallocates the queue in response to transferring responsibility for coherency management activities to the controller.

Owner:IBM CORP

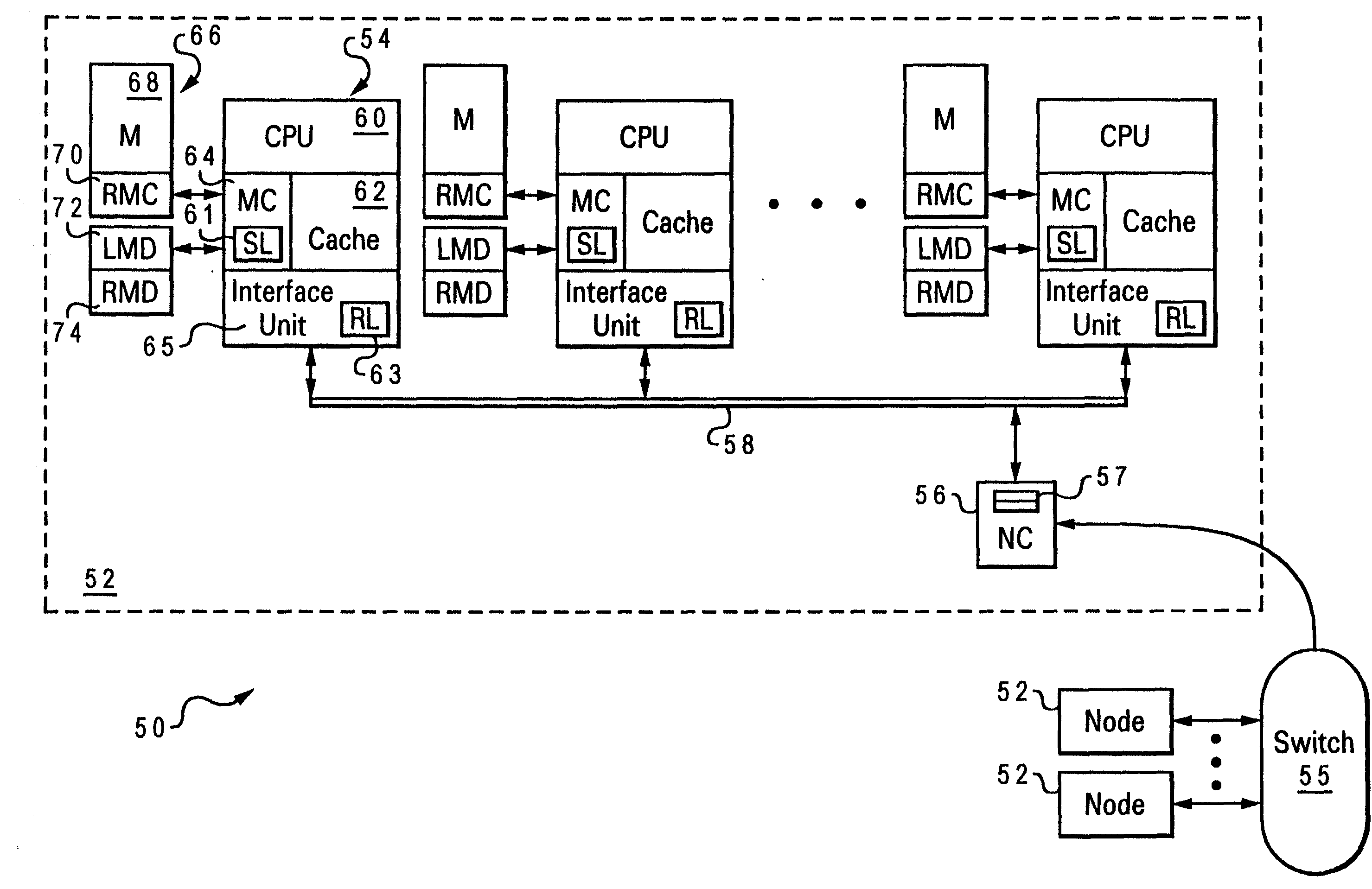

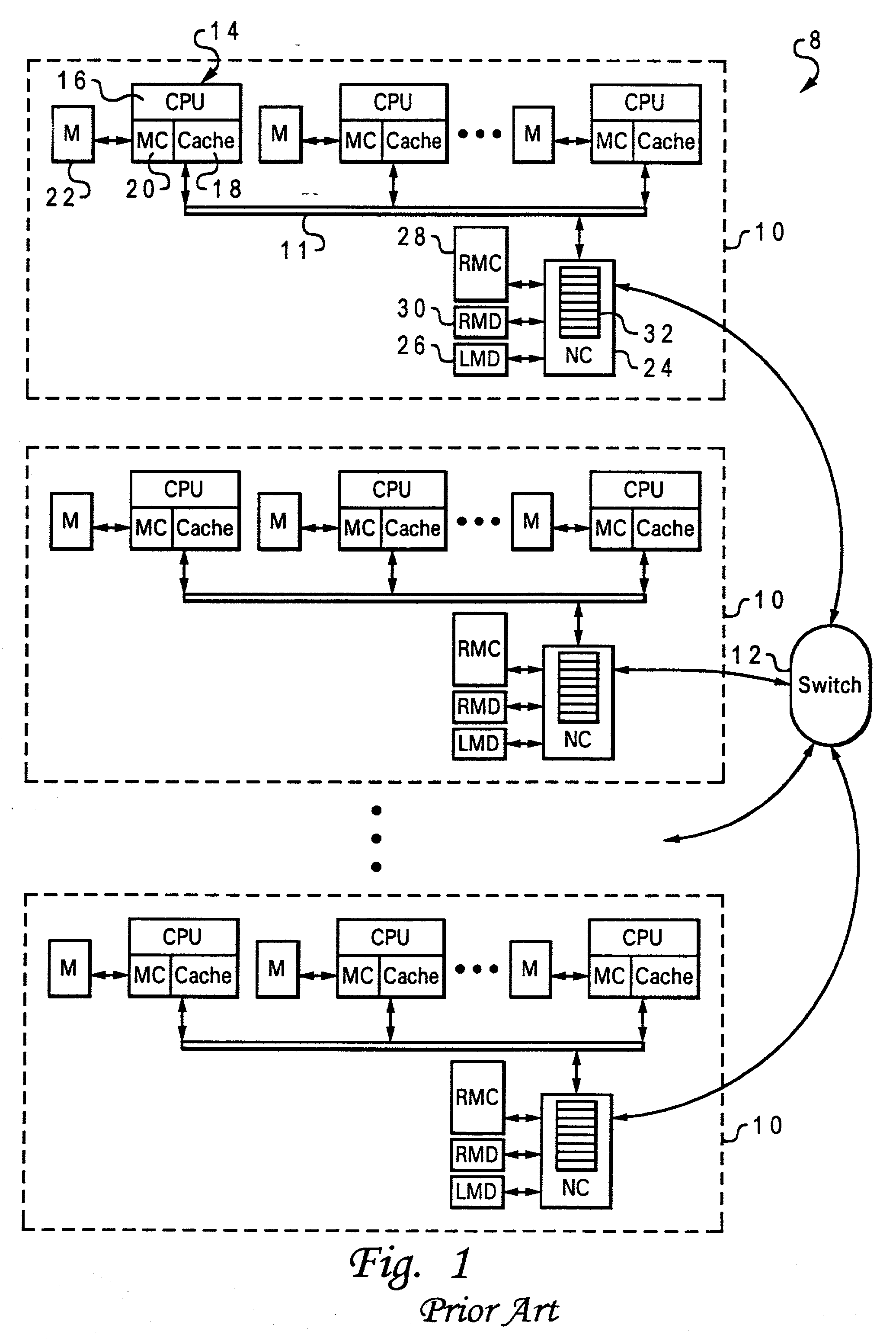

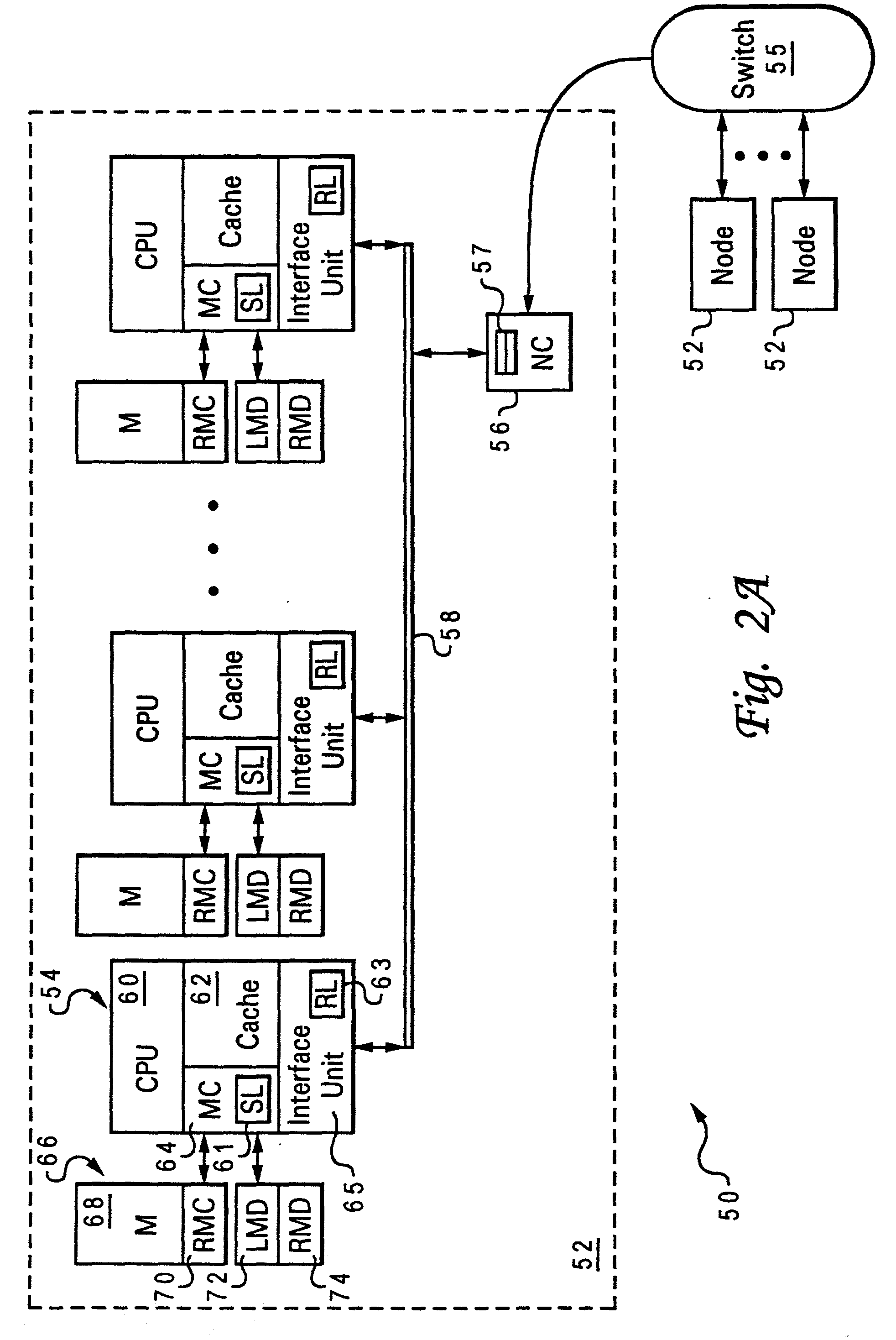

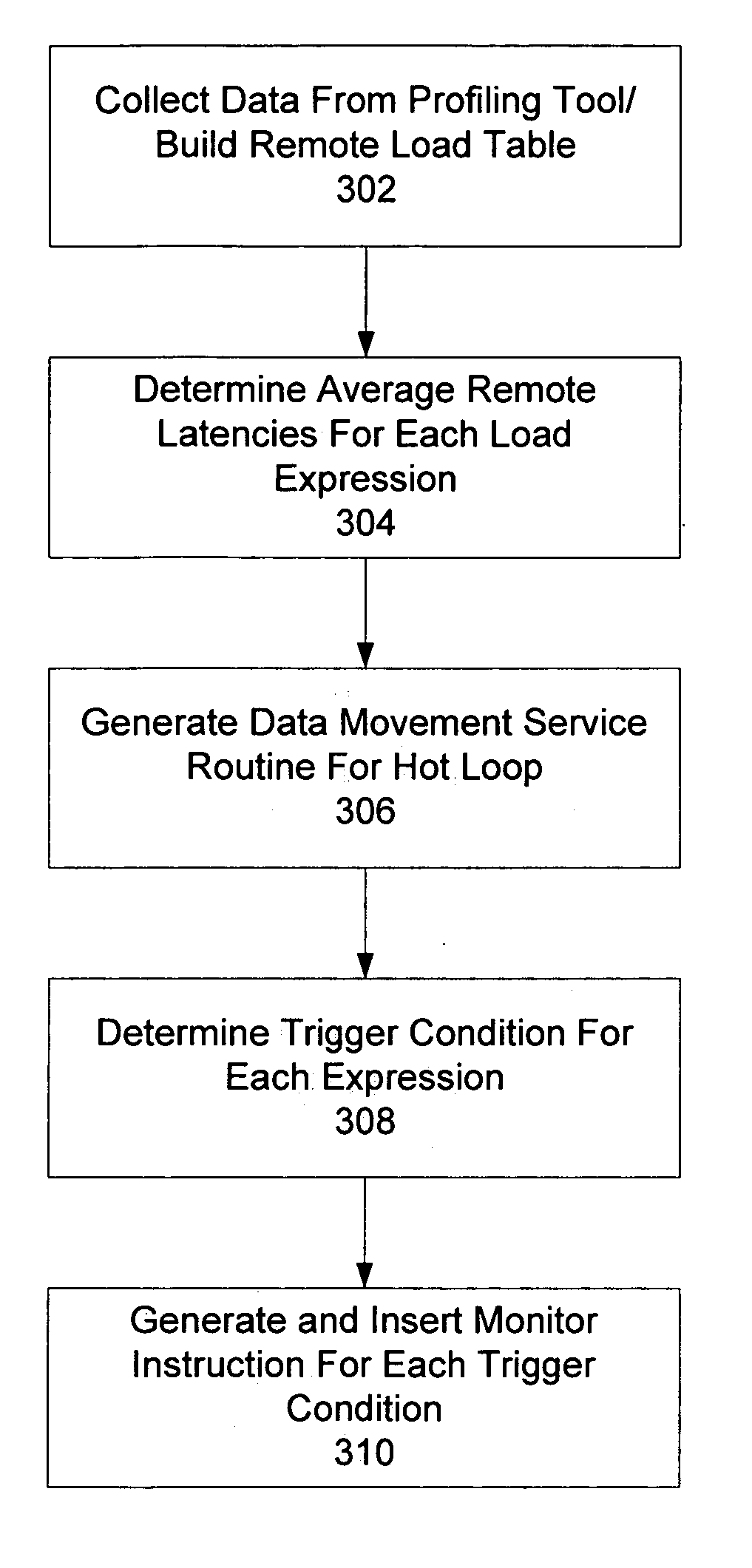

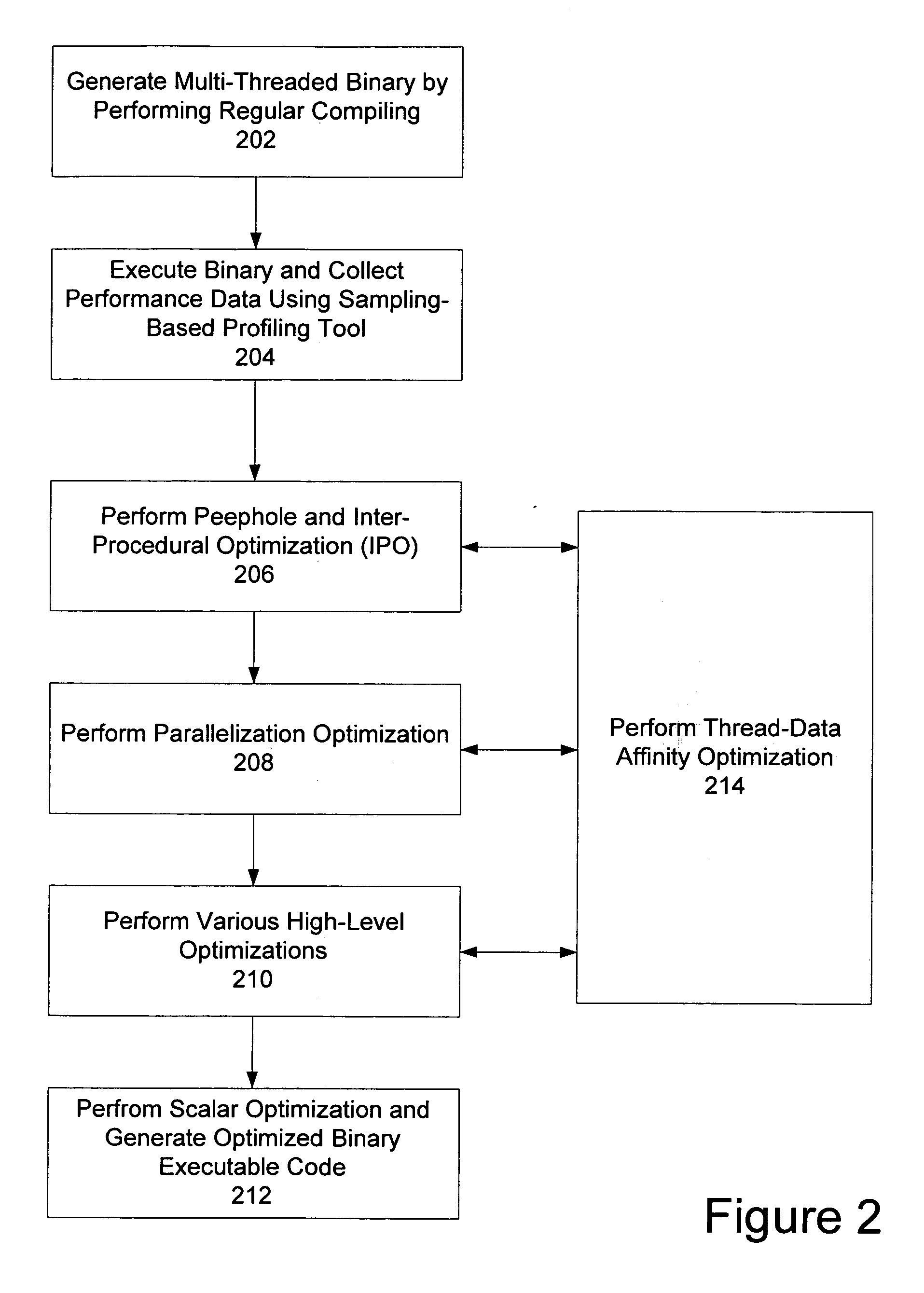

Thread-data affinity optimization using compiler

InactiveUS20070079298A1Compact integrationSpecific program execution arrangementsMemory systemsAnalysis dataParallel computing

Thread-data affinity optimization can be performed by a compiler during the compiling of a computer program to be executed on a cache coherent non-uniform memory access (cc-NUMA) platform. In one embodiment, the present invention includes receiving a program to be compiled. The received program is then compiled in a first pass and executed. During execution, the compiler collects profiling data using a profiling tool. Then, in a second pass, the compiler performs thread-data affinity optimization on the program using the collected profiling data.

Owner:INTEL CORP

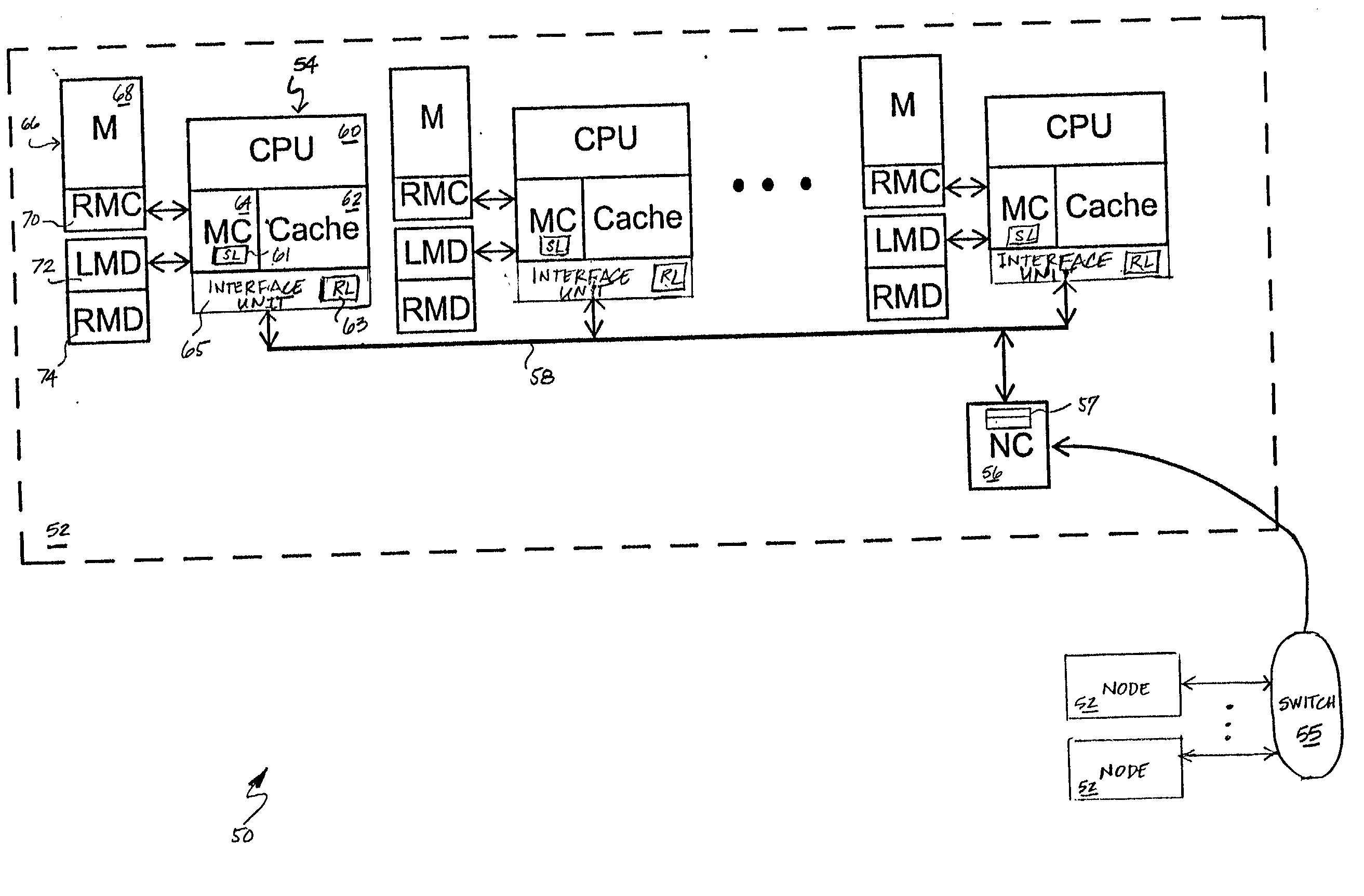

Non-uniform memory access (NUMA) data processing system that speculatively issues requests on a node interconnect

InactiveUS6067603AMemory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemComputerized system

A computer system includes a node interconnect to which at least a first processing node and a second processing node are coupled. The first and the second processing nodes each include a local interconnect, a processor coupled to the local interconnect, a system memory coupled to the local interconnect, and a node controller interposed between the local interconnect and the node interconnect. In order to reduce communication latency, the node controller of the first processing node speculatively transmits request transactions received from the local interconnect of the first processing node to the second processing node via the node interconnect, where each such request transaction specifies an associated datum. The node controller of the second processing node handles each speculatively transmitted request transaction received in response to a directory state of its associated datum.

Owner:IBM CORP

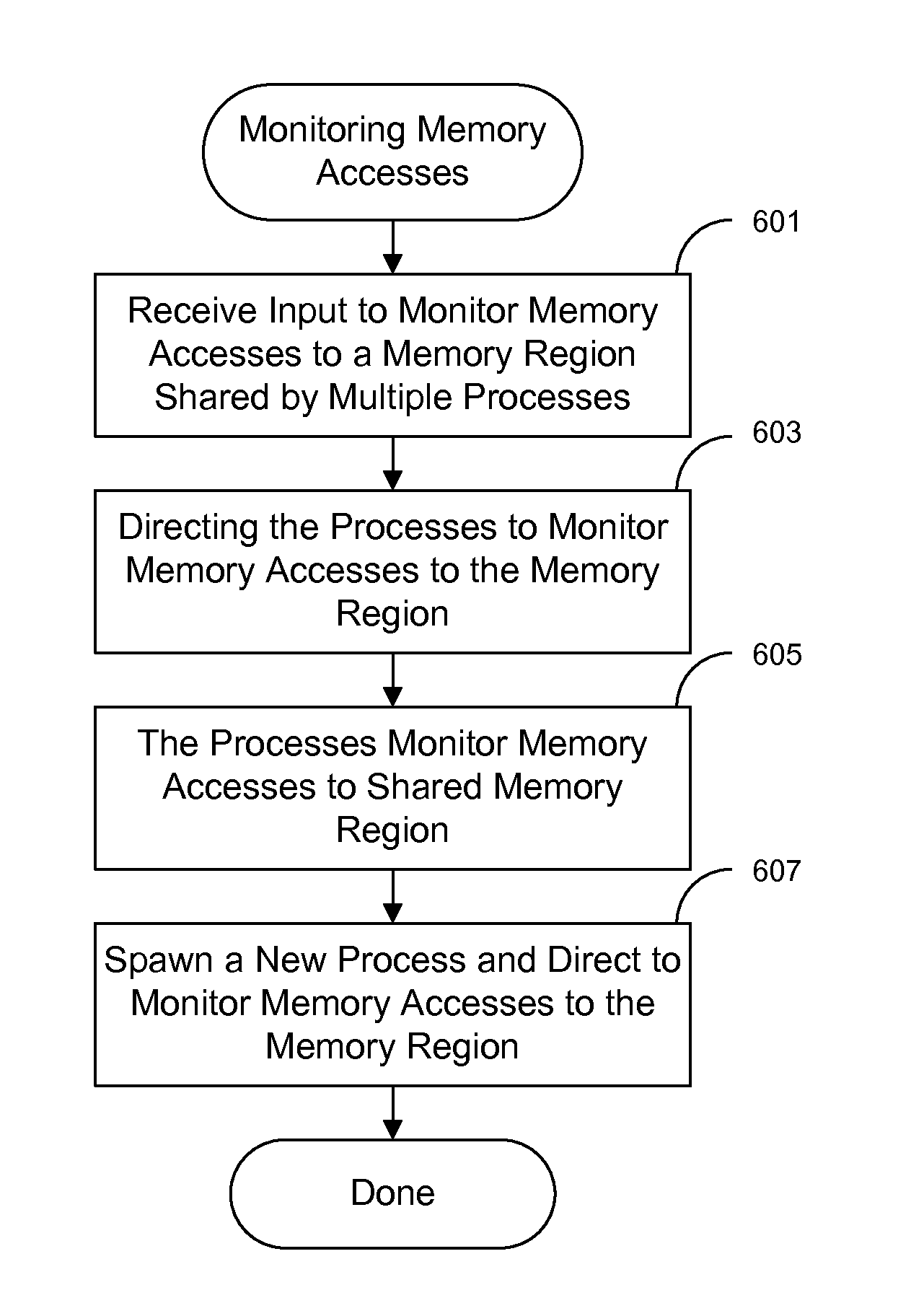

Monitoring memory accesses for computer programs

ActiveUS7669189B1Digital data information retrievalSoftware testing/debuggingParallel computingComputer program

Techniques for monitoring memory accesses for computer programs are provided. A user can instruct a computer program to have one of more of its processes monitor memory accesses to a memory region. As memory accesses to the memory region occur, a log can be created that includes information concerning the memory accesses. The log can be analyzed in order to debug memory access bugs. Additionally, new processes can be spawned that monitor memory accesses in a way that is similar to existing processes.

Owner:ORACLE INT CORP

Non-uniform memory access (NUMA) data processing system with multiple caches concurrently holding data in a recent state from which data can be sourced by shared intervention

InactiveUS6108764AProgram synchronisationMemory adressing/allocation/relocationData processing systemCache hierarchy

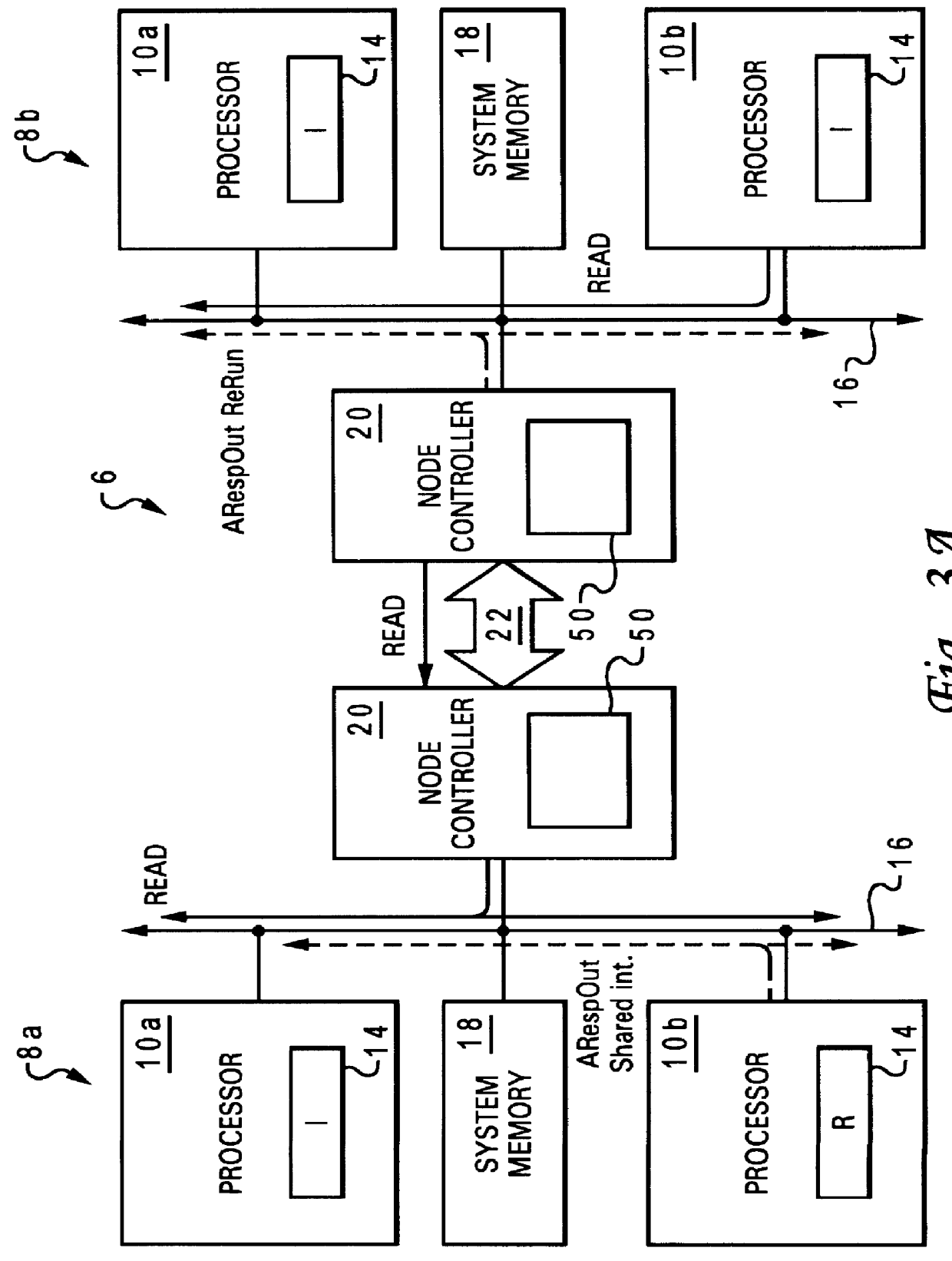

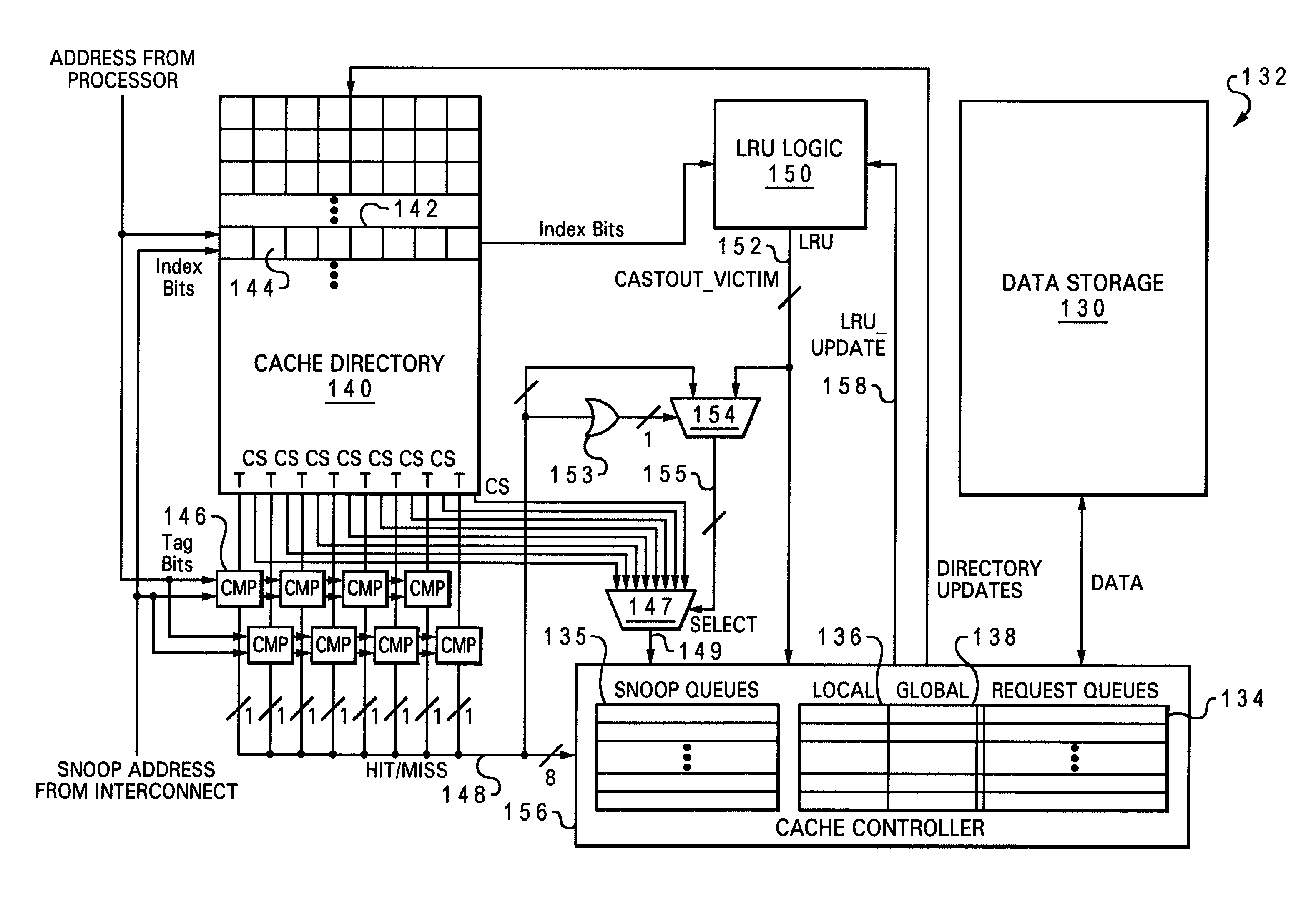

A non-uniform memory access (NUMA) computer system includes first and second processing nodes that are coupled together. The first processing node includes a system memory and first and second processors that each have a respective associated cache hierarchy. The second processing node includes at least a third processor and a system memory. If the cache hierarchy of the first processor holds an unmodified copy of a cache line and receives a request for the cache line from the third processor, the cache hierarchy of the first processor sources the requested cache line to the third processor and retains a copy of the cache line in a Recent coherency state from which the cache hierarchy of the first processor can source the cache line in response to subsequent requests.

Owner:IBM CORP

Two-stage request protocol for accessing remote memory data in a NUMA data processing system

InactiveUS6615322B2Memory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemComputerized system

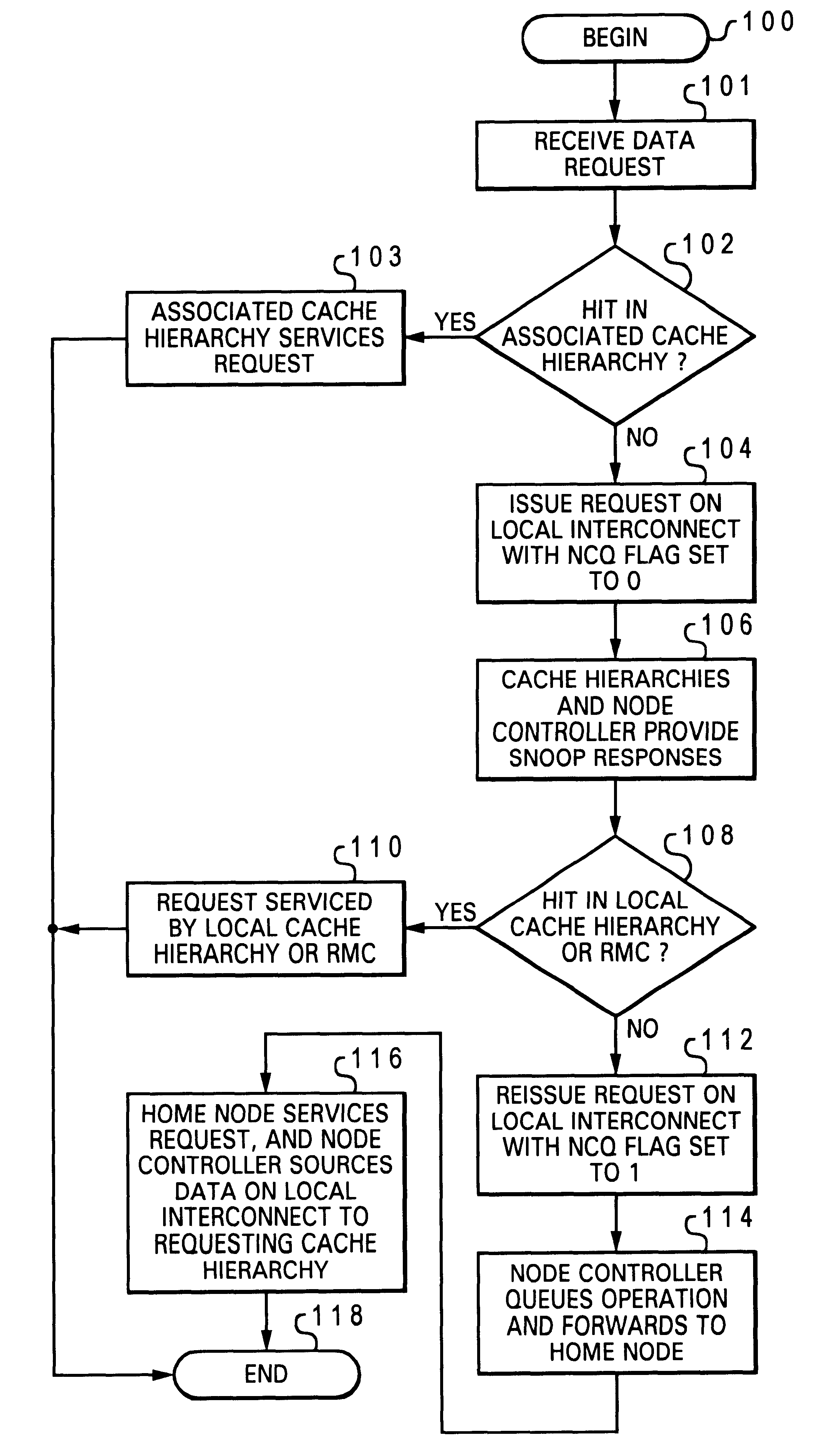

A non-uniform memory access (NUMA) computer system includes a remote node coupled by a node interconnect to a home node having a home system memory. The remote node includes a local interconnect, a processing unit and at least one cache coupled to the local interconnect, and a node controller coupled between the local interconnect and the node interconnect. The processing unit first issues, on the local interconnect, a read-type request targeting data resident in the home system memory with a flag in the read-type request set to a first state to indicate only local servicing of the read-type request. In response to inability to service the read-type request locally in the remote node, the processing unit reissues the read-type request with the flag set to a second state to instruct the node controller to transmit the read-type request to the home node. The node controller, which includes a plurality of queues, preferably does not queue the read-type request until receipt of the reissued read-type request with the flag set to the second state.

Owner:INT BUSINESS MASCH CORP

Decentralized global coherency management in a multi-node computer system

InactiveUS6754782B2Memory adressing/allocation/relocationDigital computer detailsComputerized systemConsistency management

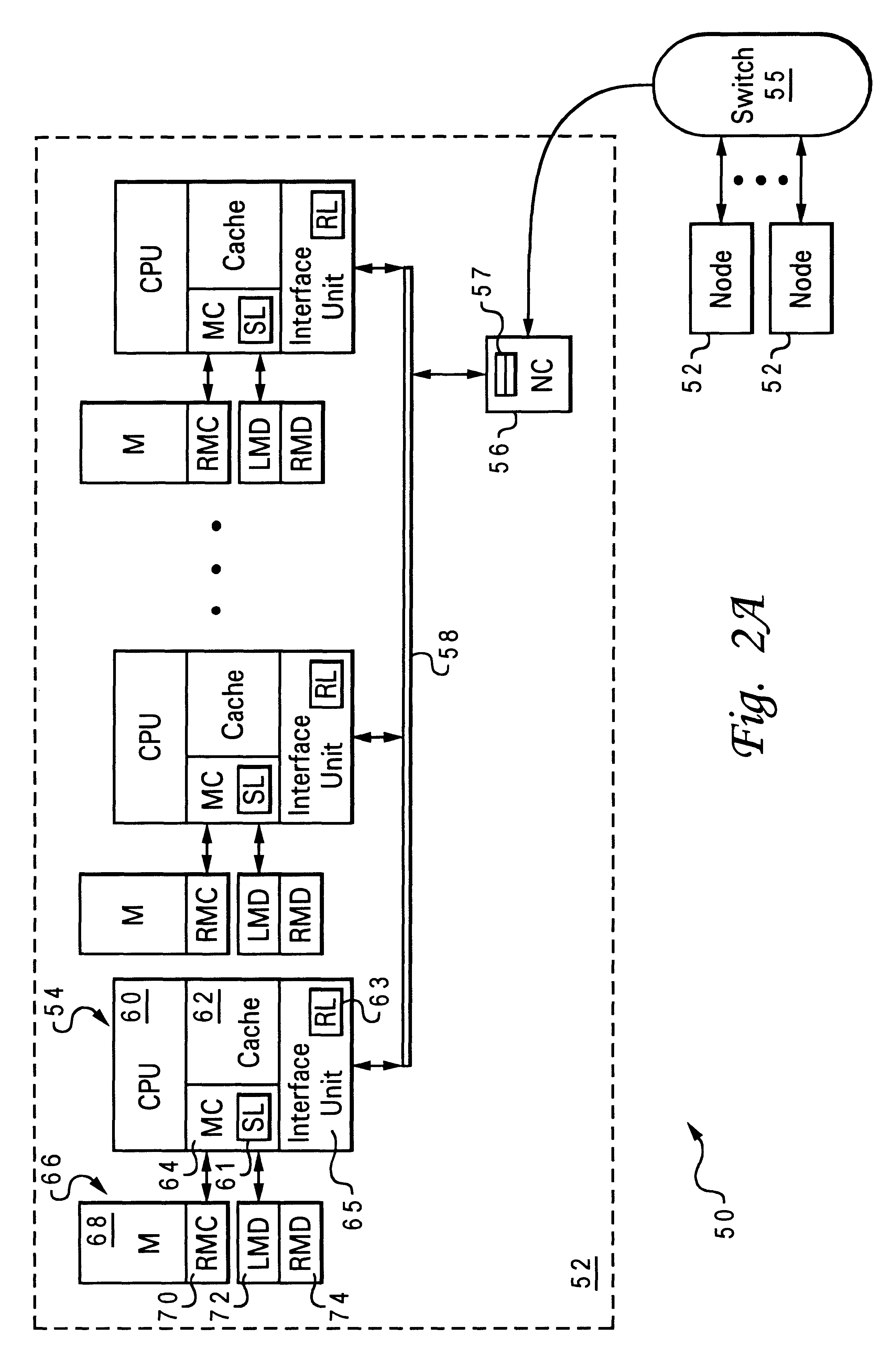

A non-uniform memory access (NUMA) computer system includes a first node and a second node coupled by a node interconnect. The second node includes a local interconnect, a node controller coupled between the local interconnect and the node interconnect, and a controller coupled to the local interconnect. In response to snooping an operation from the first node issued on the local interconnect by the node controller, the controller signals acceptance of responsibility for coherency management activities related to the operation in the second node, performs coherency management activities in the second node required by the operation, and thereafter provides notification of performance of the coherency management activities. To promote efficient utilization of queues within the node controller, the node controller preferably allocates a queue to the operation in response to receipt of the operation from the node interconnect and then deallocates the queue in response to transferring responsibility for coherency management activities to the controller.

Owner:INT BUSINESS MASCH CORP

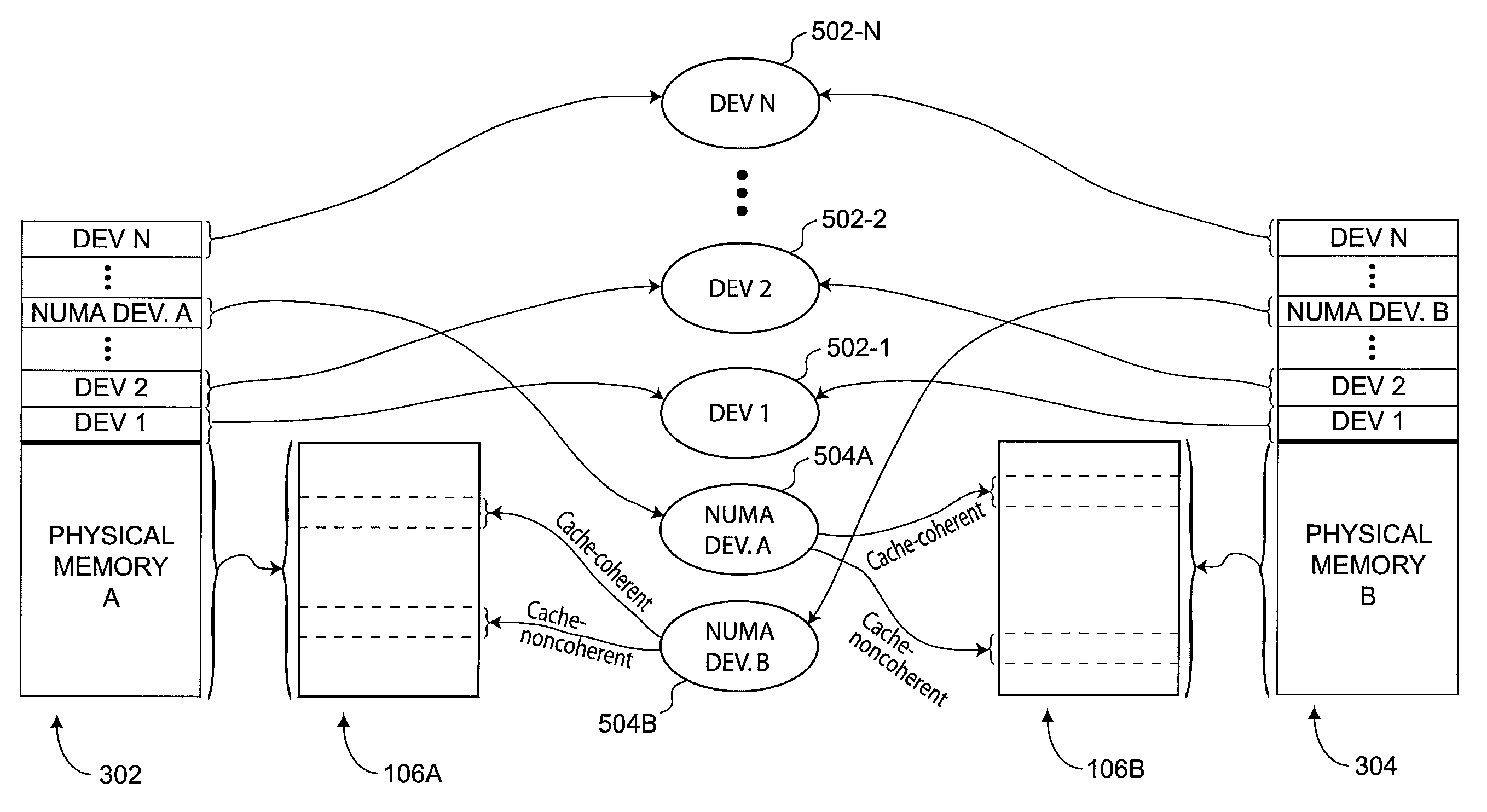

Non-uniform memory access (NUMA) data processing system having remote memory cache incorporated within system memory

A non-uniform memory access (NUMA) computer system and associated method of operation are disclosed. The NUMA computer system includes at least a remote node and a home node coupled to an interconnect. The remote node contains at least one processing unit coupled to a remote system memory, and the home node contains at least a home system memory. To reduce access latency for data from other nodes, a portion of the remote system memory is allocated as a remote memory cache containing data corresponding to data resident in the home system memory. In one embodiment, access bandwidth to the remote memory cache is increased by distributing the remote memory cache across multiple system memories in the remote node.

Owner:IBM CORP

Method and system for supporting software partitions and dynamic reconfiguration within a non-uniform memory access system

InactiveUS6334177B1Memory architecture accessing/allocationResource allocationParallel computingComputerized system

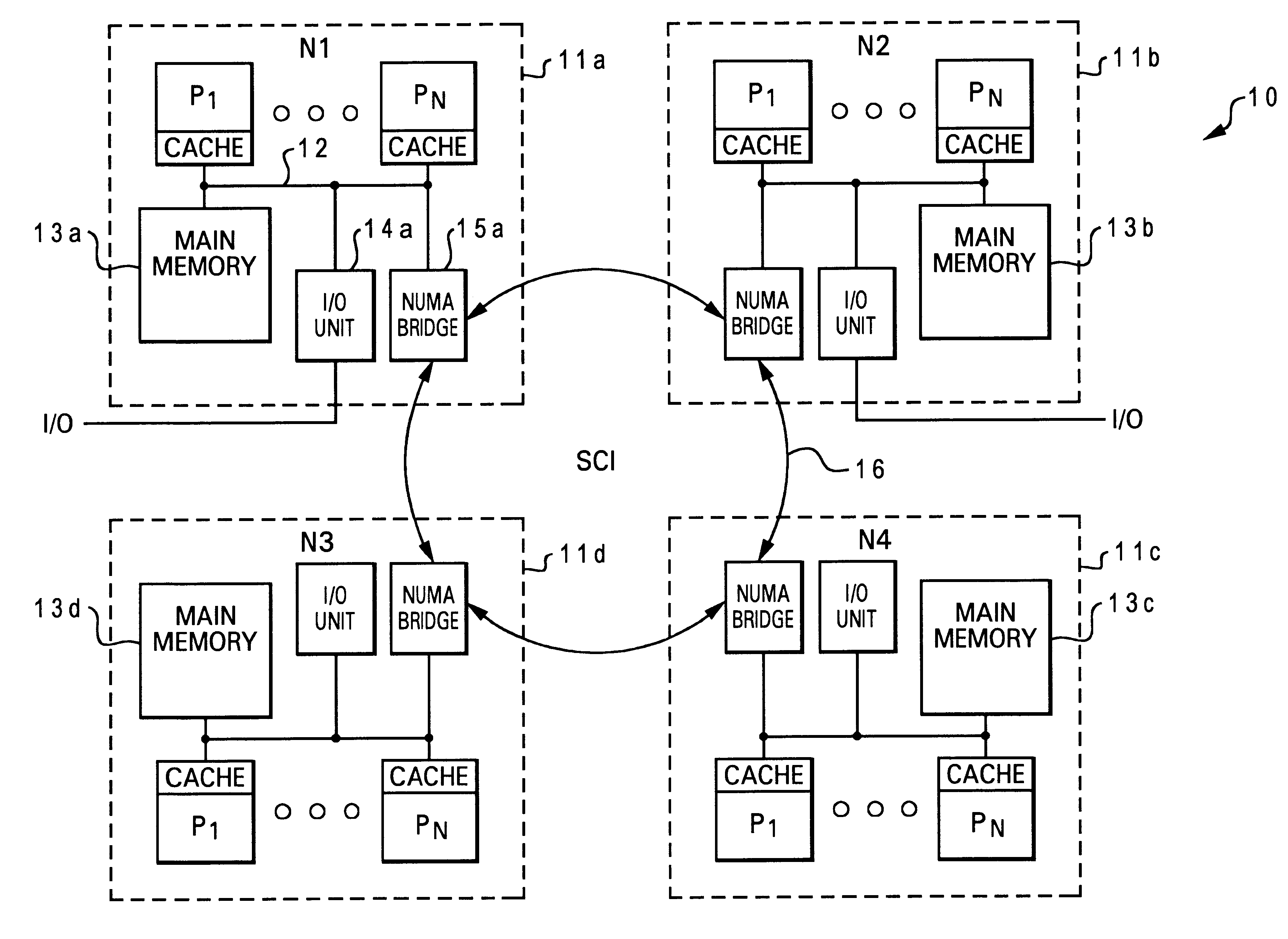

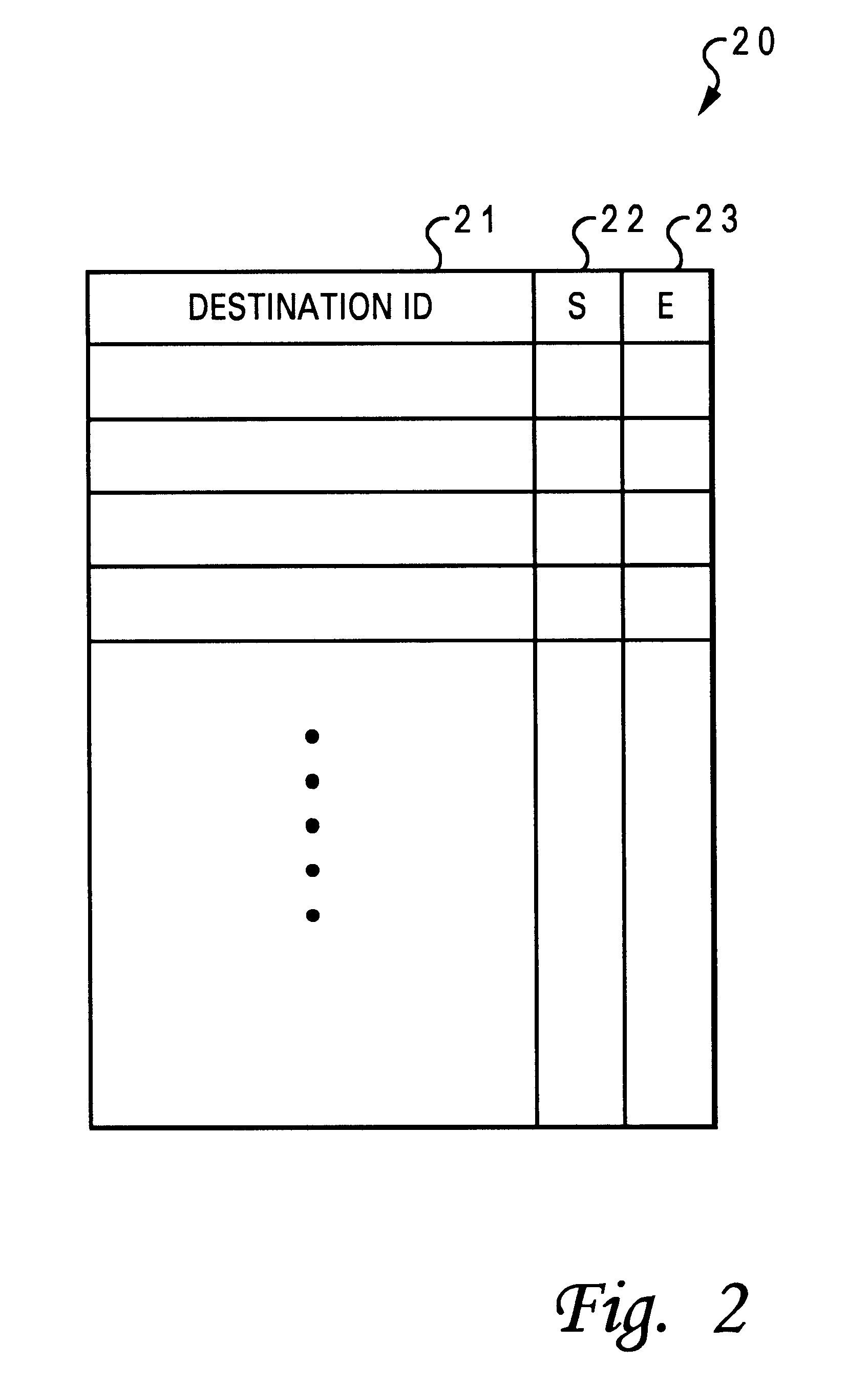

A method for supporting software partition and dynamic reconfiguration within a non-uniform memory access (NUMA) computer system is disclosed. A NUMA computer system includes multiple nodes coupled to an interconnect. Each of the nodes includes a NUMA bridge, a local system memory, and at least one processor having at least a local cache memory. Multiple groups of software partitions are formed within the NUMA computer system, and each of the software partitions is formed by a subset of the nodes. A destination map table is provided in a NUMA bridge of each of the nodes for keeping track of the nodes within a software partition. A command is forwarded to only the nodes within a software partition.

Owner:IBM CORP

Non-uniform memory access (NUMA) data processing system that speculatively issues requests on a node interconnect

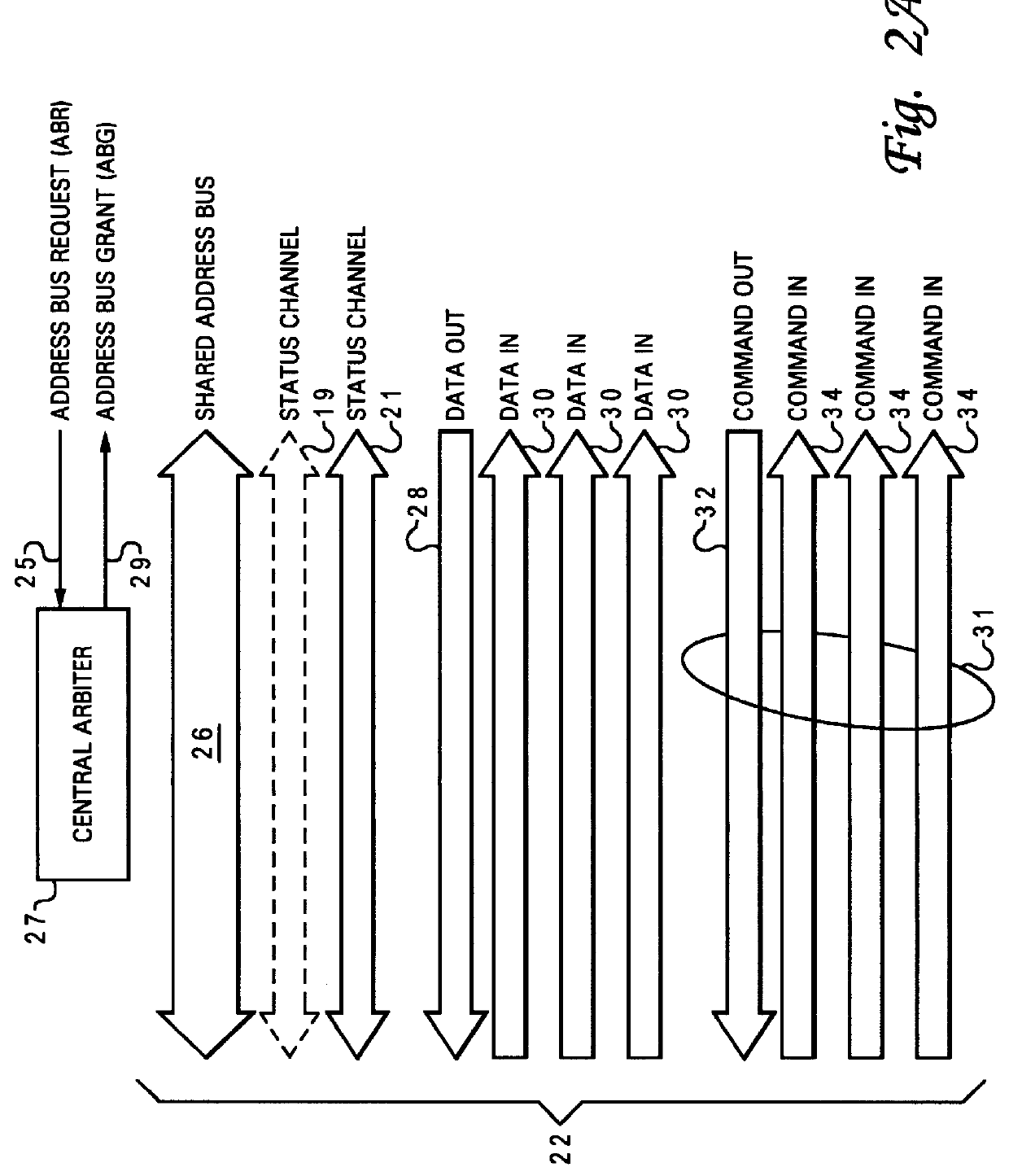

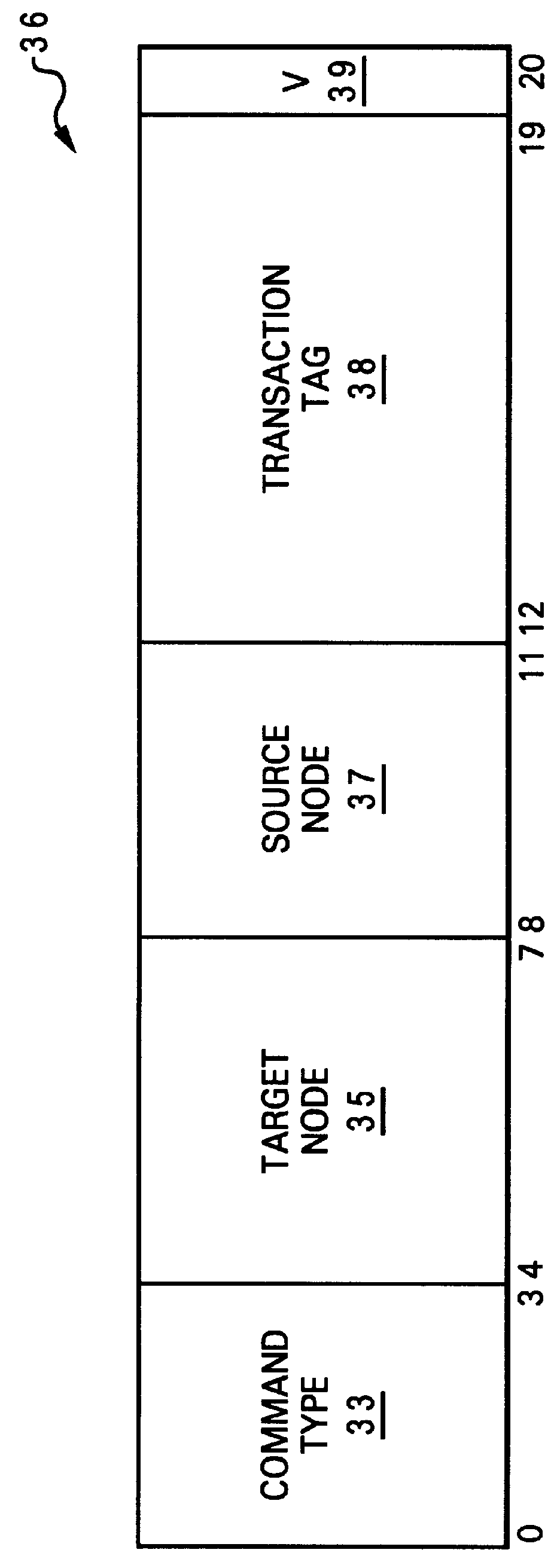

InactiveUS6081874AMemory adressing/allocation/relocationDigital computer detailsData processing systemParallel computing

A non-uniform memory access (NUMA) data processing system includes a node interconnect to which at least a first processing node and a second processing node are coupled. The first and the second processing nodes each include a local interconnect, a processor coupled to the local interconnect, a system memory coupled to the local interconnect, and a node controller interposed between the local interconnect and the node interconnect. In order to reduce communication latency, the node controller of the first processing node speculatively transmits request transactions received from the local interconnect of the first processing node to the second processing node via the node interconnect. In one embodiment, the node controller of the first processing node subsequently transmits a status signal to the node controller of the second processing node in order to indicate how the request transaction should be processed at the second processing node.

Owner:IBM CORP

Dynamic history based mechanism for the granting of exclusive data ownership in a non-uniform memory access (numa) computer system

InactiveUS20030009641A1Program synchronisationMemory adressing/allocation/relocationExclusive orData access

A non-uniform memory access (NUMA) computer system includes at least one remote node and a home node coupled by a node interconnect. The home node contains a home system memory and a memory controller. In response to receipt of a data request from a remote node, the memory controller determines whether to grant exclusive or non-exclusive ownership of requested data specified in the data request by reference to history information indicative of prior data accesses originating in the remote node. The memory controller then transmits the requested data and an indication of exclusive or non-exclusive ownership to the remote node.

Owner:IBM CORP

Supporting a weak ordering memory model for a virtual physical address space that spans multiple nodes

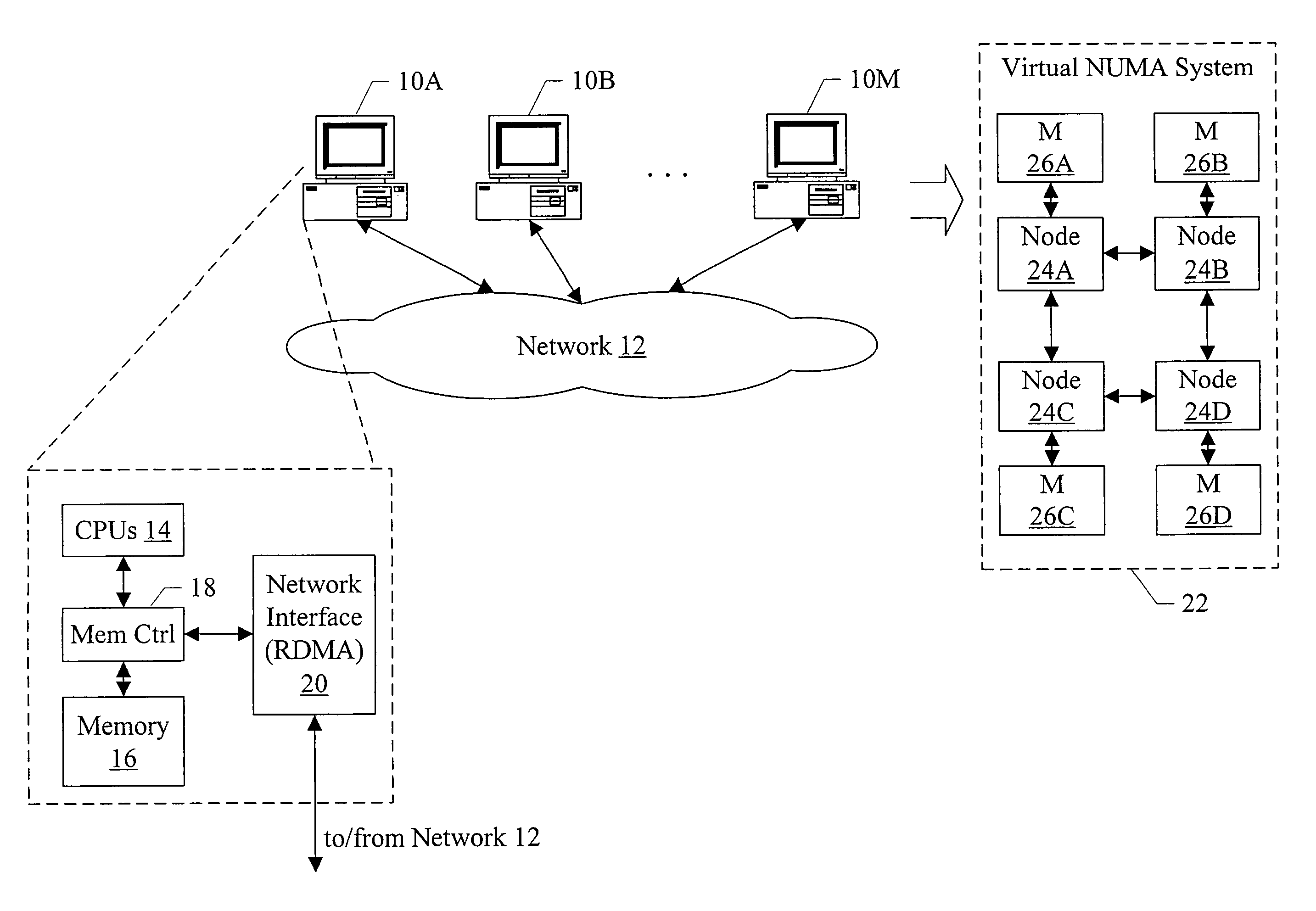

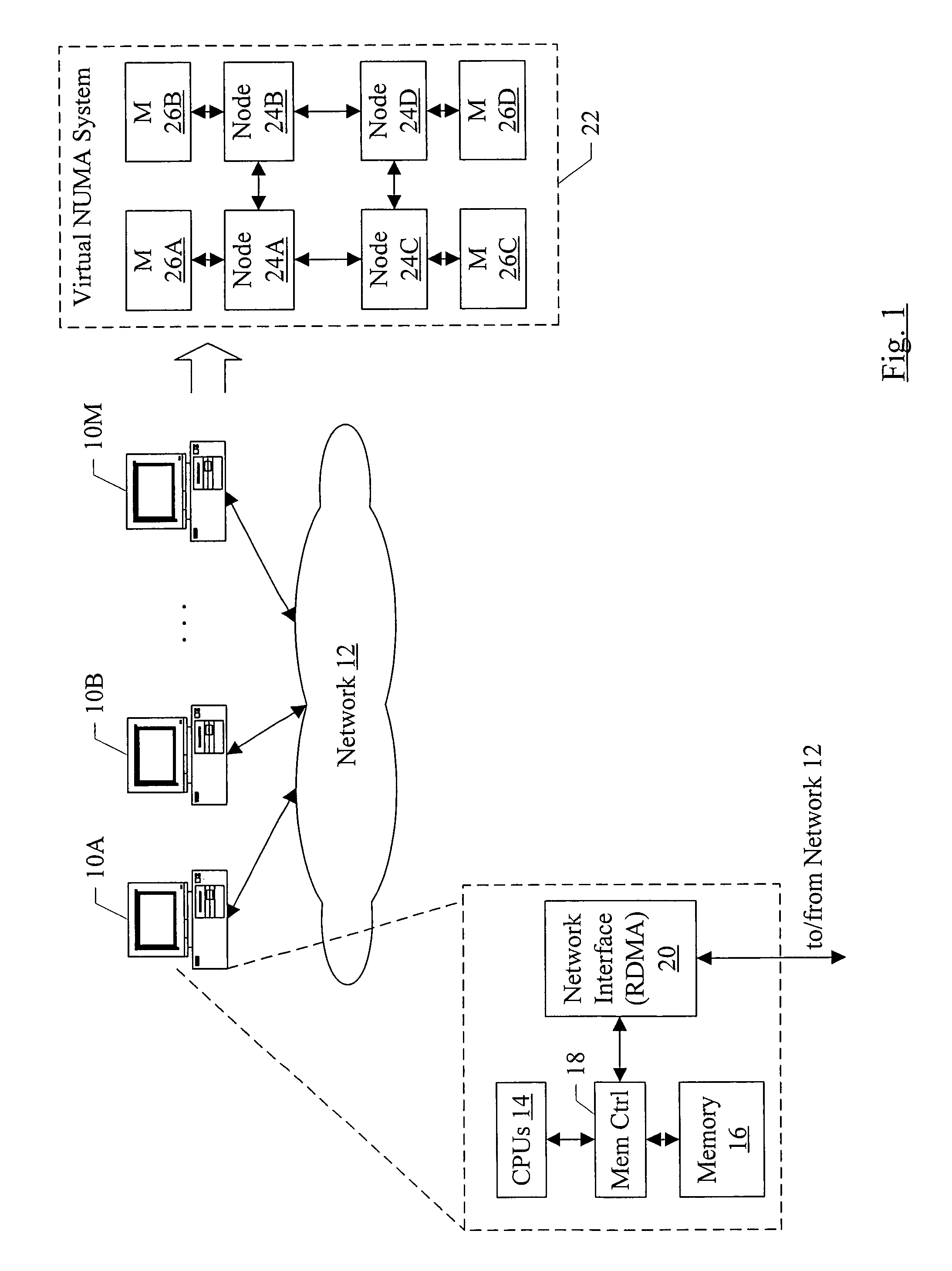

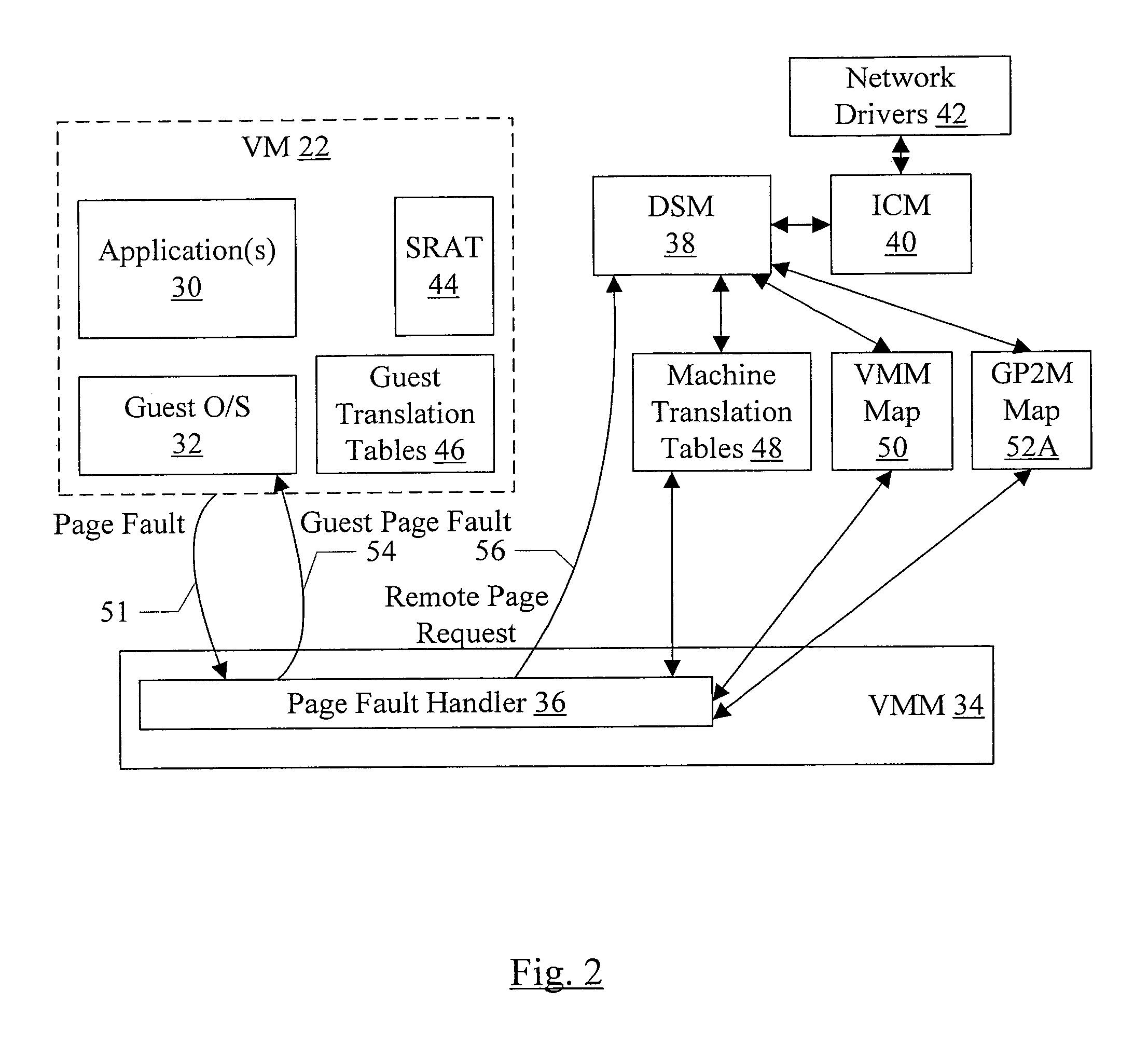

InactiveUS7702743B1Memory architecture accessing/allocationDigital computer detailsComputerized systemEthernet

In one embodiment, a virtual NUMA system may be formed from multiple computer systems coupled to a network such as InfiniBand, Ethernet, etc. Each computer includes one or more software modules which present the resources of the computers as a virtual NUMA machine. The virtual machine is a non-uniform memory access (NUMA) machine comprising a plurality of nodes, each node having memory that is part of a distributed shared memory. Additionally, the virtual machine is coherent with a weakly ordered memory model. When executed in a current owner node of a first block in response to an ownership transfer request from a requesting node of the plurality of nodes for the first block, the software modules perform a synchronization operation if the first block has been modified in the current owner node.

Owner:SYMANTEC OPERATING CORP

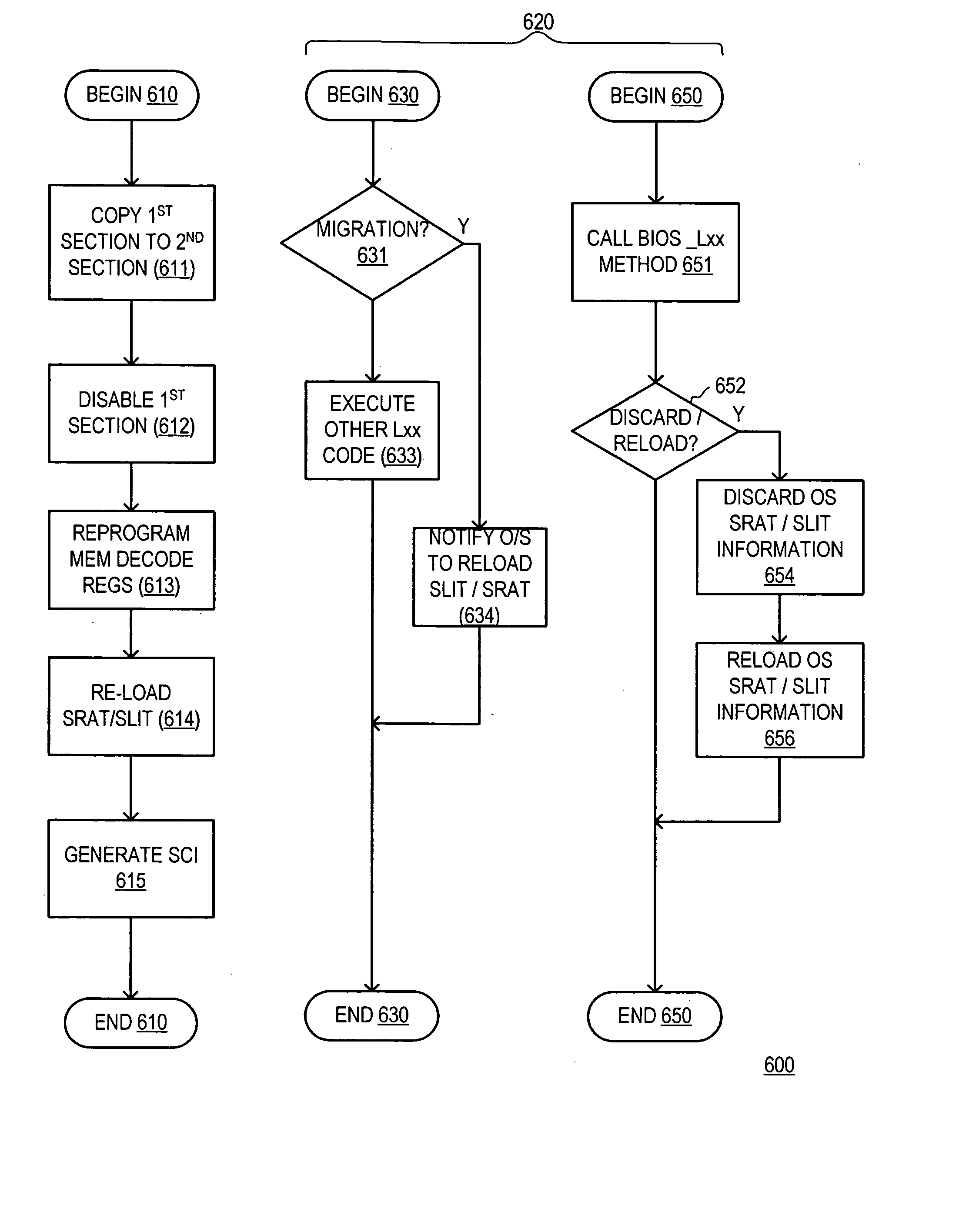

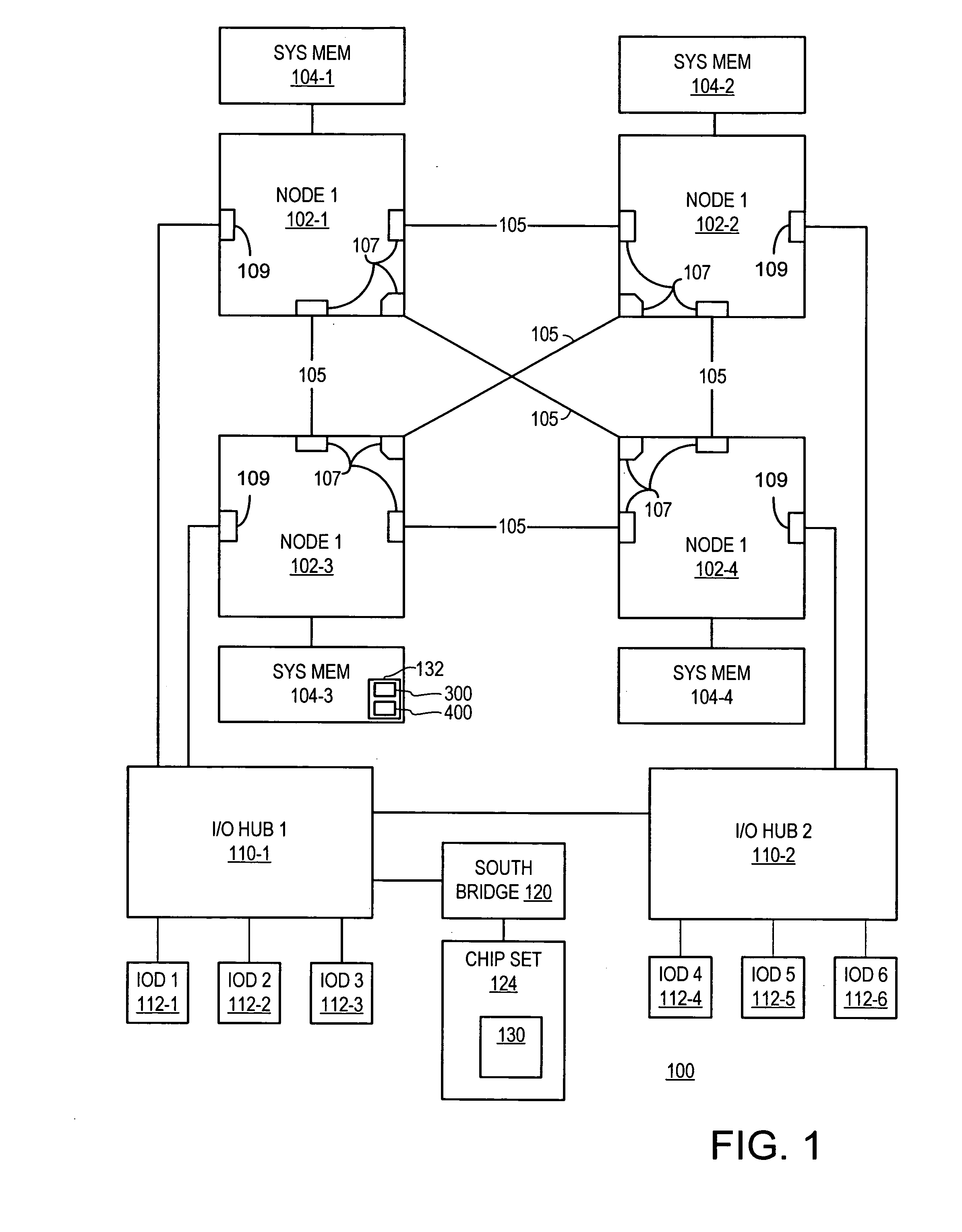

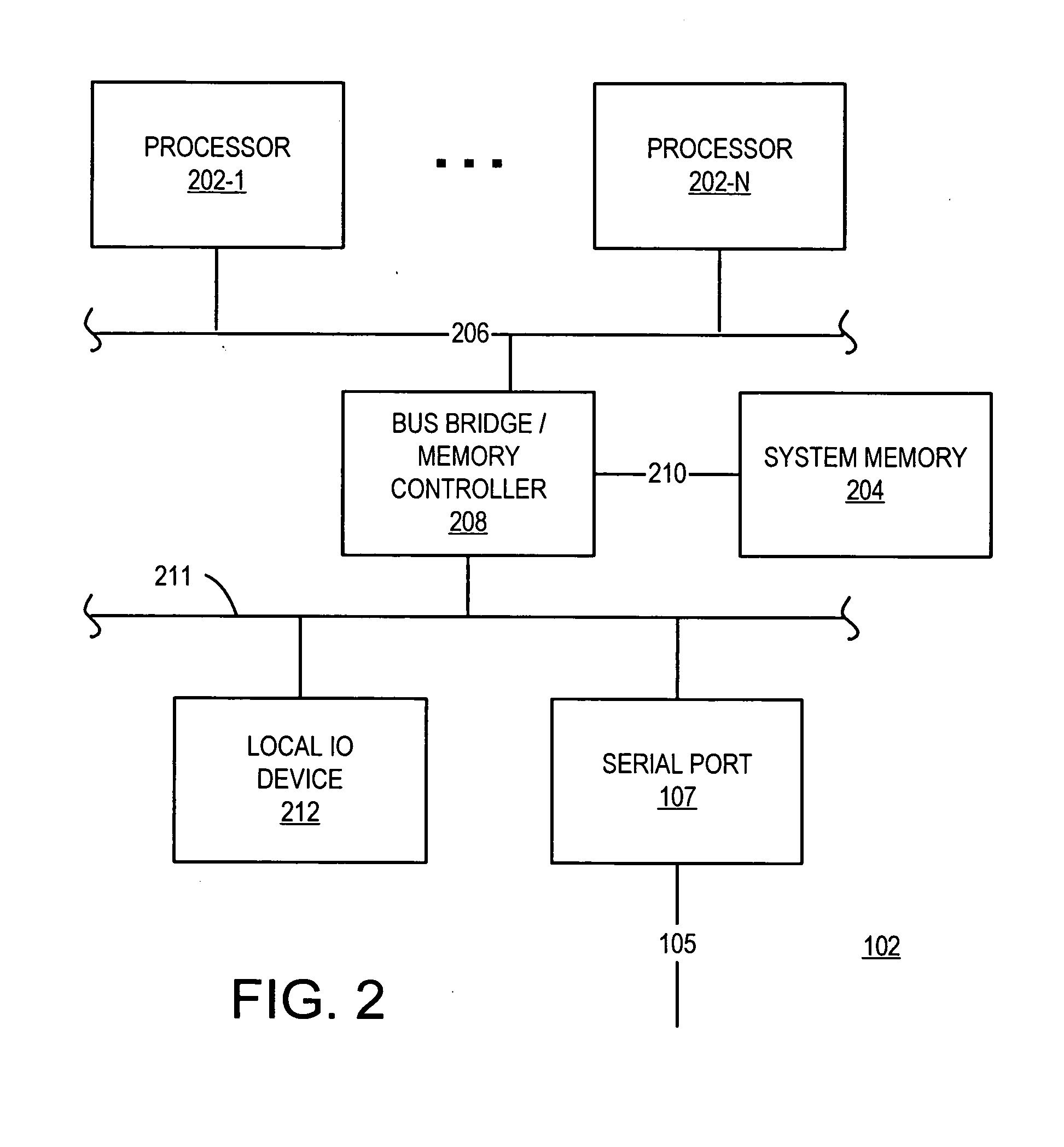

Modifying node descriptors to reflect memory migration in an information handling system with non-uniform memory access

An information handling system includes a first node and a second node. Each node includes a processor and a local system memory. An interconnect between the first node and the second node enables a processor on the first node to access system memory on the second node. The system includes affinity information that is indicative of a proximity relationship between portions of system memory and the system nodes. A BIOS module migrates a block from one node to another, reloads BIOS-visible affinity tables, and reprograms memory address decoders before calling an operating system affinity module. The affinity module modifies the operating system visible affinity information. The operating system then has accurate affinity information with which to allocate processing threads so that a thread is allocated to a node where memory accesses issued by thread are local accesses.

Owner:DELL PROD LP

Method, system and computer program product for managing memory in a non-uniform memory access system

InactiveUS6336177B1Easy to carryMemory architecture accessing/allocationResource allocationControl systemComputerized system

A memory management and control system that is selectable at the application level by an application programmer is provided. The memory management and control system is based on the use of policy modules. Policy modules are used to specify and control different aspects of memory operations in NUMA computer systems, including how memory is managed for processes running in NUMA computer systems. Preferably, each policy module comprises a plurality of methods that are used to control a variety of memory operations. Such memory operations typically include initial memory placement, memory page size, a migration policy, a replication policy and a paging policy. One method typically contained in policy modules is an initial placement policy. Placement policies may be based on two abstractions of physical memory nodes. These two abstractions are referred to herein as "Memory Locality Domains" (MLDS) and "Memory Locality Domain Sets" (MLDSETs). By specifying MLDs and MLDSETS, rather than physical memory nodes, application programs can be executed on different computer systems regardless of the particular node configuration and physical node topology employed by the system. Further, such application programs can be run on different machines without the need for code modification and / or re-compiling.

Owner:MORGAN STANLEY +1

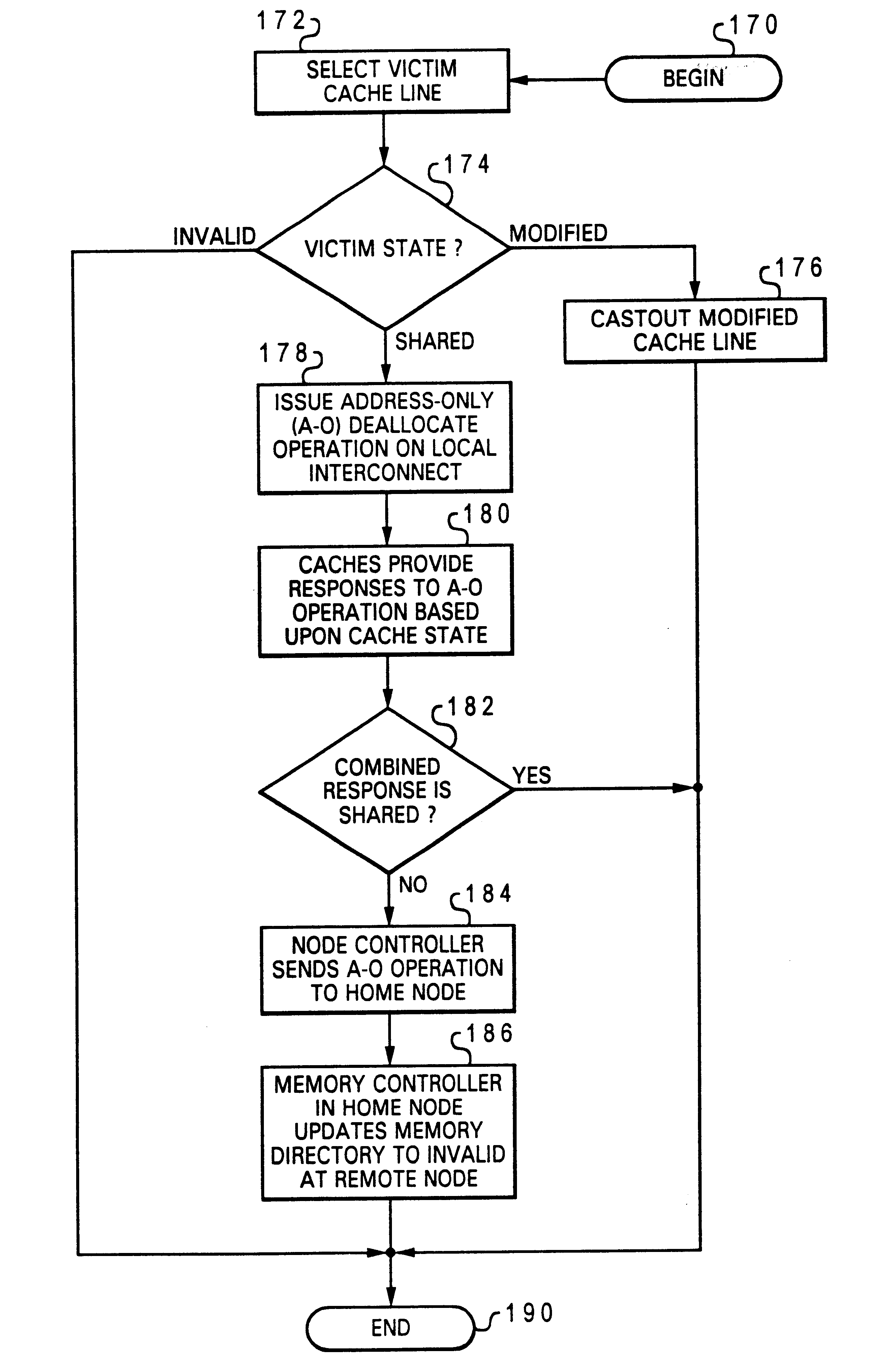

Non-uniform memory access (NUMA) data processing system that provides precise notification of remote deallocation of modified data

Owner:INT BUSINESS MASCH CORP

Non-uniform memory access (NUMA) data processing system that provides notification of remote deallocation of shared data

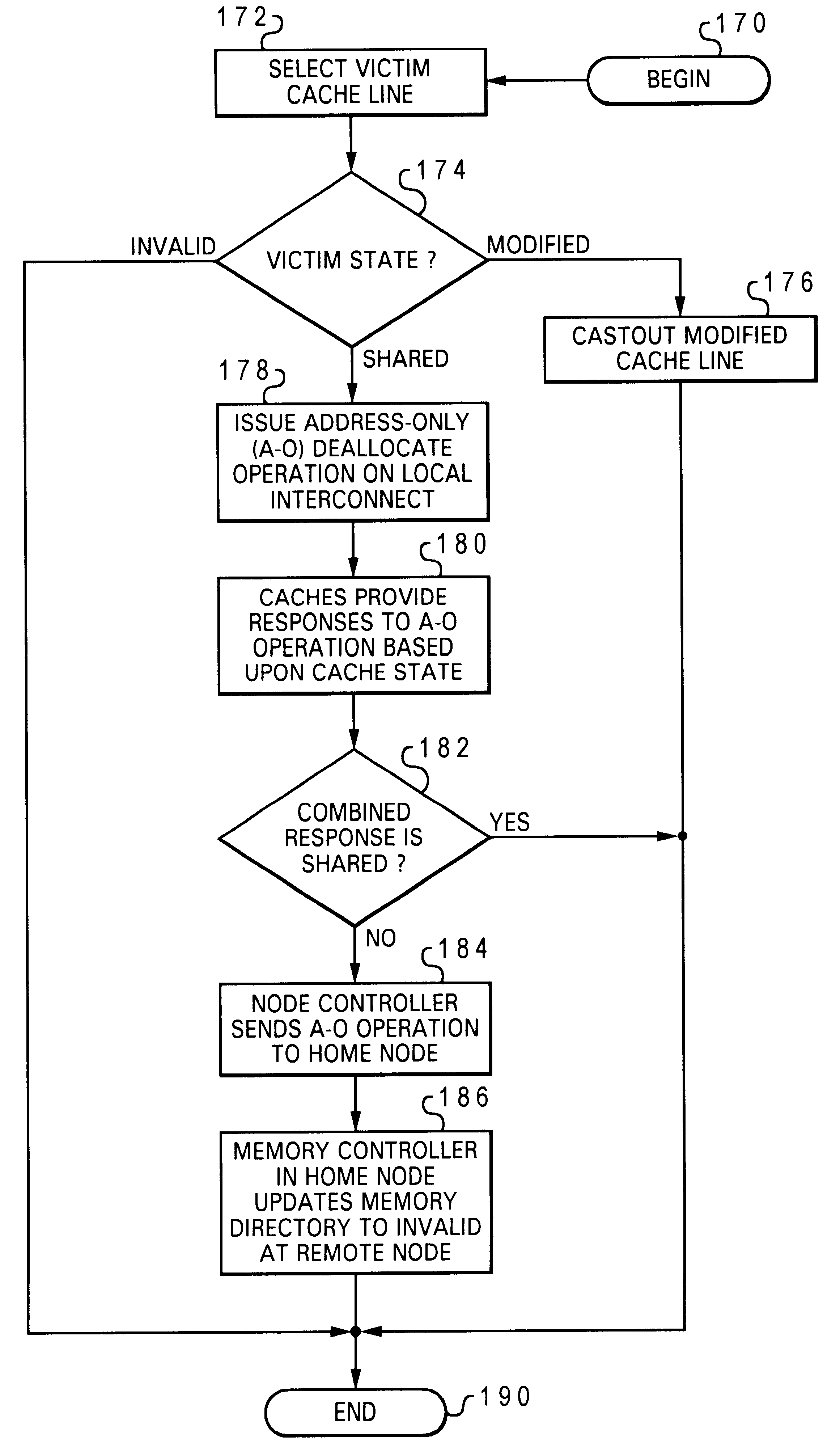

InactiveUS6633959B2Memory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemComputerized system

A non-uniform memory access (NUMA) computer system includes a node interconnect to which a remote node and a home node are coupled. The home node contains a home system memory, and the remote node includes at least one processing unit and a cache. In response to the cache deallocating an unmodified cache line that corresponds to data resident in the home system memory, a cache controller of the cache issues a deallocate operation on a local interconnect of the remote node. In one embodiment, the deallocate operation is further transmitted to the home node via the node interconnect only in response to an indication, such as a combined response, that no other cache in the remote node caches the cache line. In response to receipt of the deallocate operation, a memory controller in the home node updates a local memory directory associated with the home system memory to indicate that the remote node does not hold a copy of the cache line.< / PTEXT>

Owner:IBM CORP

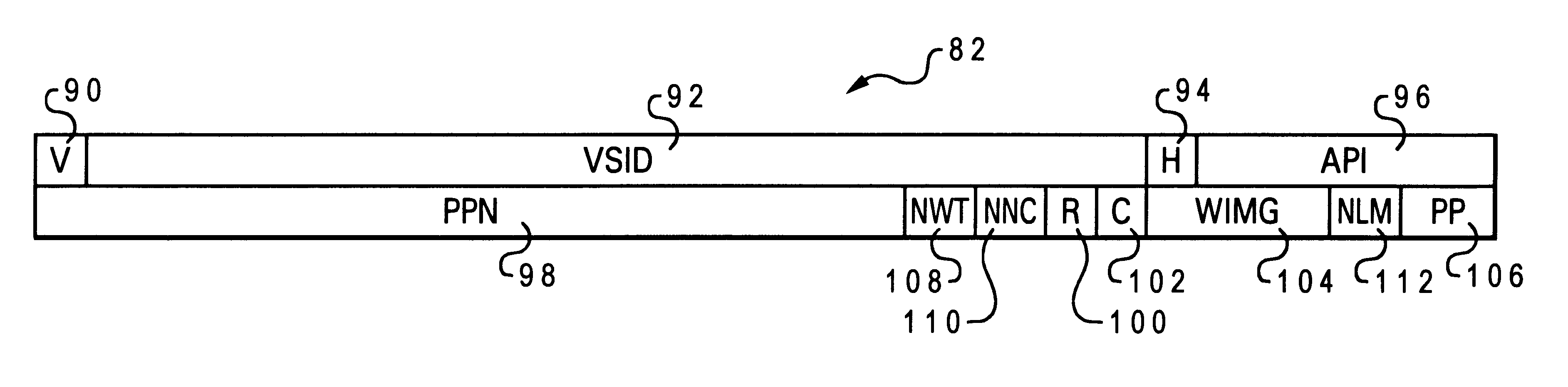

Non-uniform memory access (NUMA) data processing system having a page table including node-specific data storage and coherency control

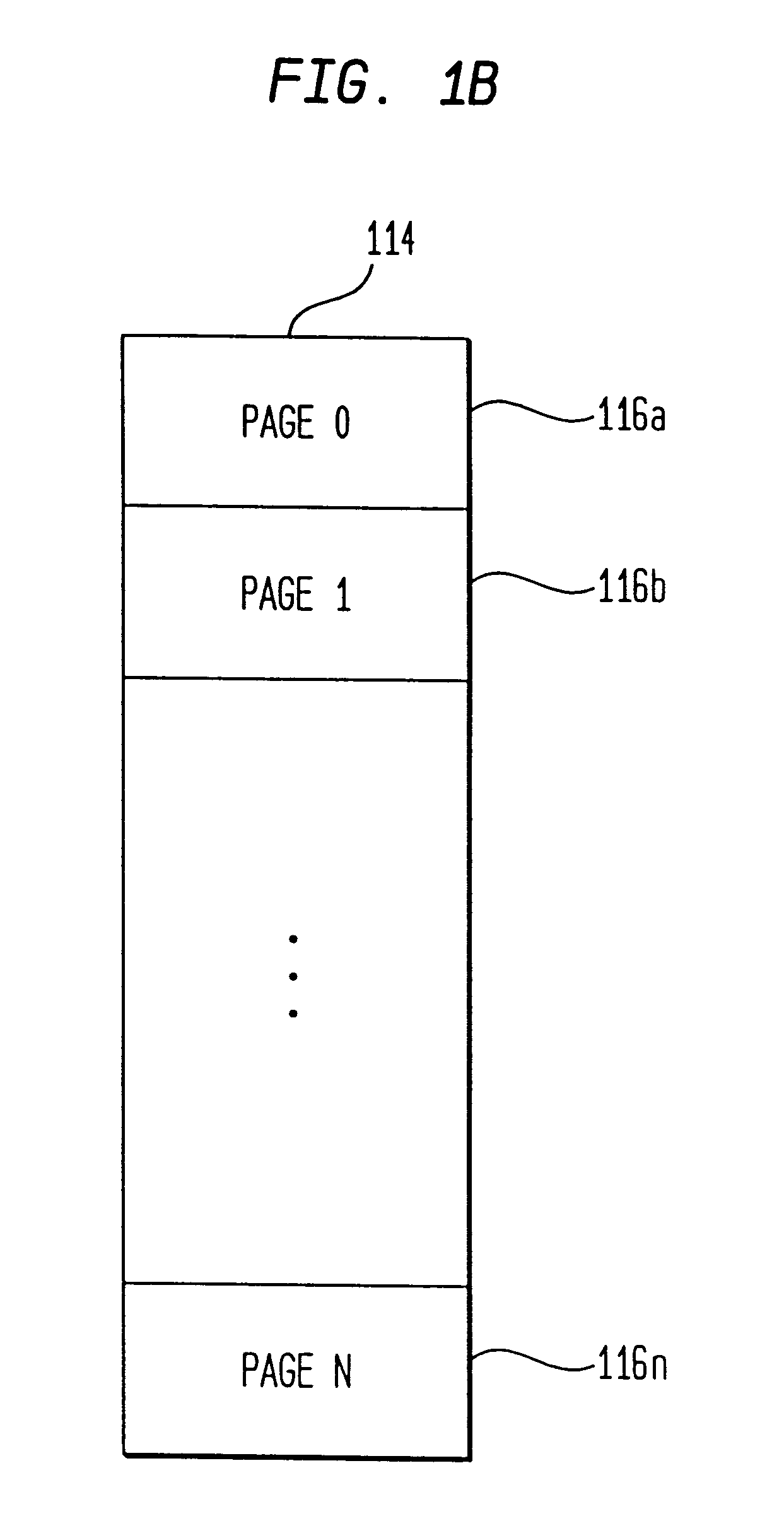

InactiveUS6658538B2Memory adressing/allocation/relocationMultiple digital computer combinationsData processing systemPhysical address

A non-uniform memory access (NUMA) data processing system includes a plurality of nodes coupled to a node interconnect. The plurality of nodes contain a plurality of processing units and at least one system memory having a table (e.g., a page table) resident therein. The table includes at least one entry for translating a group of non-physical addresses to physical addresses that individually specifies control information pertaining to the group of non-physical addresses for each of the plurality of nodes. The control information may include one or more data storage control fields, which may include a plurality of write through indicators that are each associated with a respective one of the plurality of nodes. When a write through indicator is set, processing units in the associated node write modified data back to system memory in a home node rather than caching the data. The control information may further include a data storage control field comprising a plurality of non-cacheable indicators that are each associated with a respective one of the plurality of nodes. When a non-cacheable indicator is set, processing units in the associated node are instructed to not cache data associated with non-physical addresses within the group translated by reference to the table entry. The control information may also include coherency control information that individually indicates for each node whether or not inter-node coherency for data associated with the table entry will be maintained with software support.

Owner:GOOGLE LLC

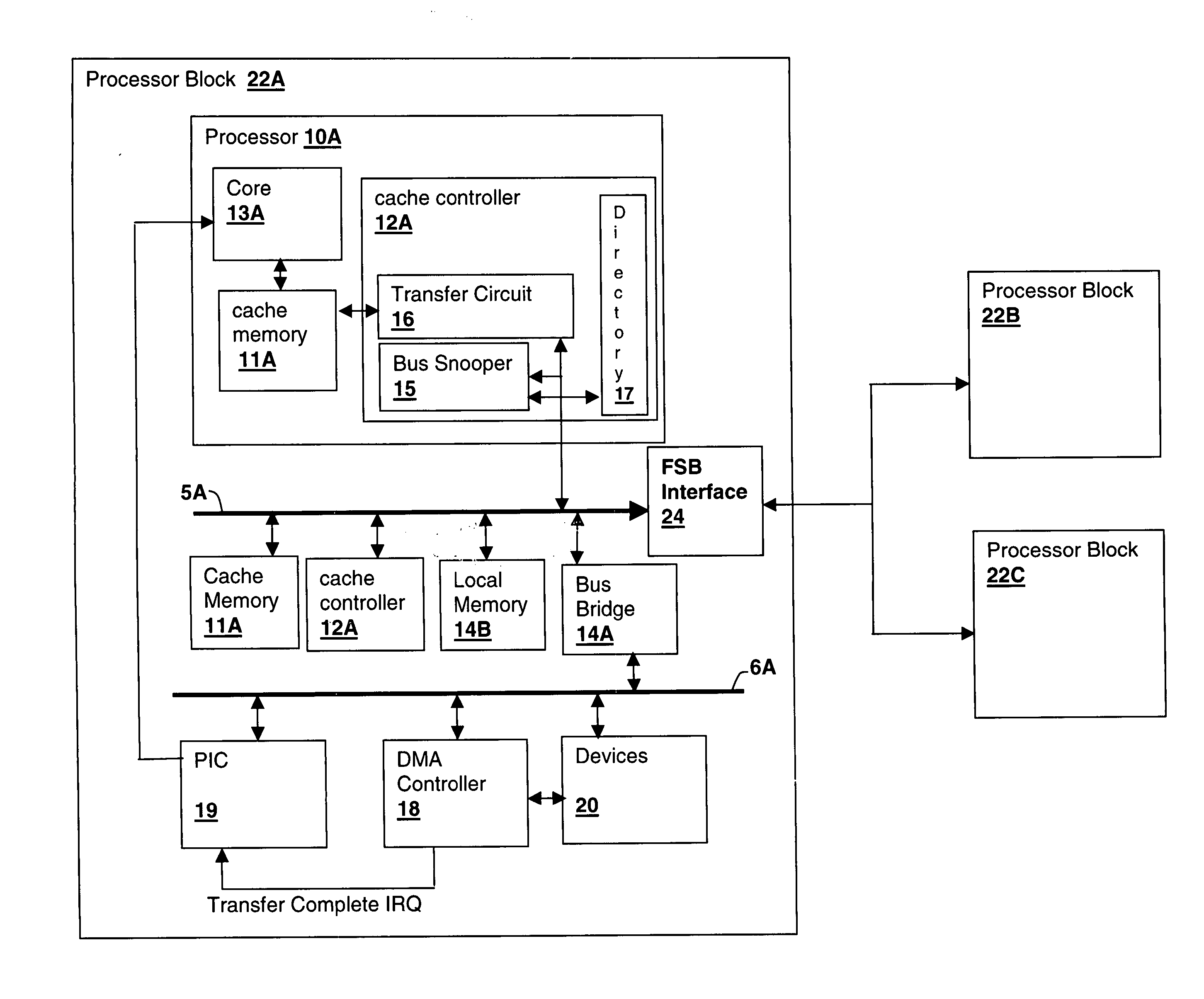

Method and system for managing cache injection in a multiprocessor system

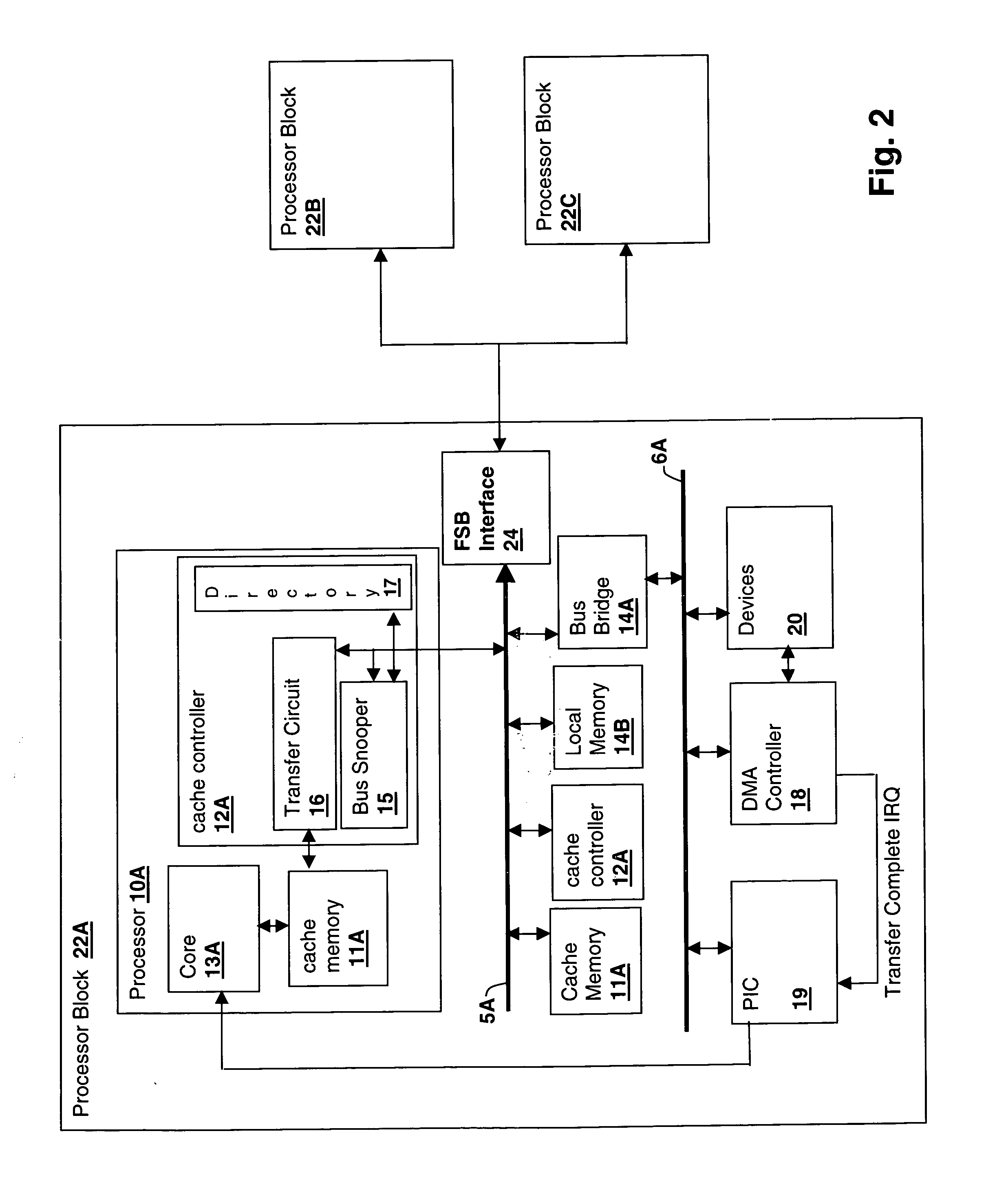

A method and apparatus for managing cache injection in a multiprocessor system reduces processing time associated with direct memory access transfers in a symmetrical multiprocessor (SMP) or a non-uniform memory access (NUMA) multiprocessor environment. The method and apparatus either detect the target processor for DMA completion or direct processing of DMA completion to a particular processor, thereby enabling cache injection to a cache that is coupled with processor that executes the DMA completion routine processing the data injected into the cache. The target processor may be identified by determining the processor handling the interrupt that occurs on completion of the DMA transfer. Alternatively or in conjunction with target processor identification, an interrupt handler may queue a deferred procedure call to the target processor to process the transferred data. In NUMA multiprocessor systems, the completing processor / target memory is chosen for accessibility of the target memory to the processor and associated cache.

Owner:IBM CORP

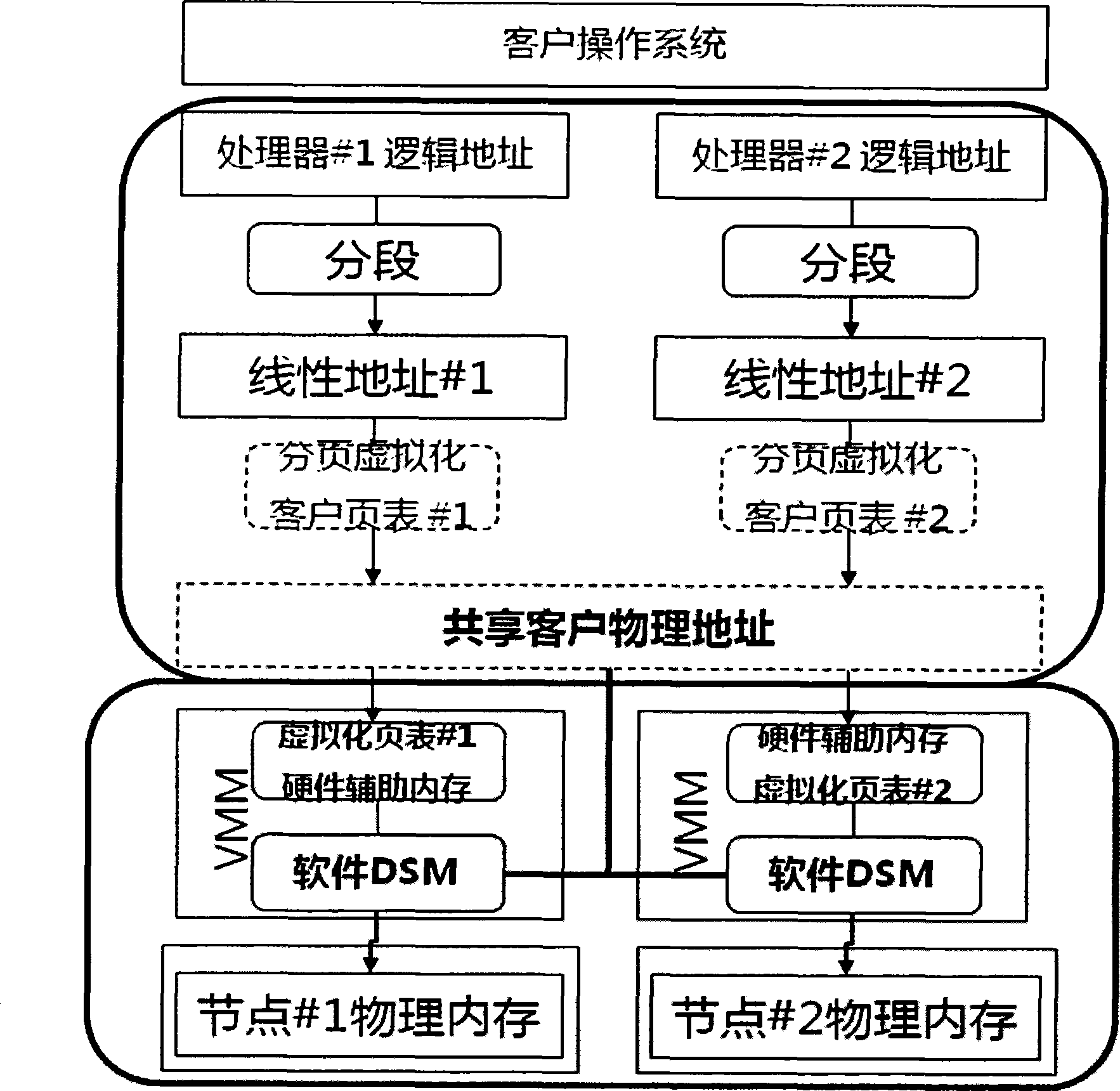

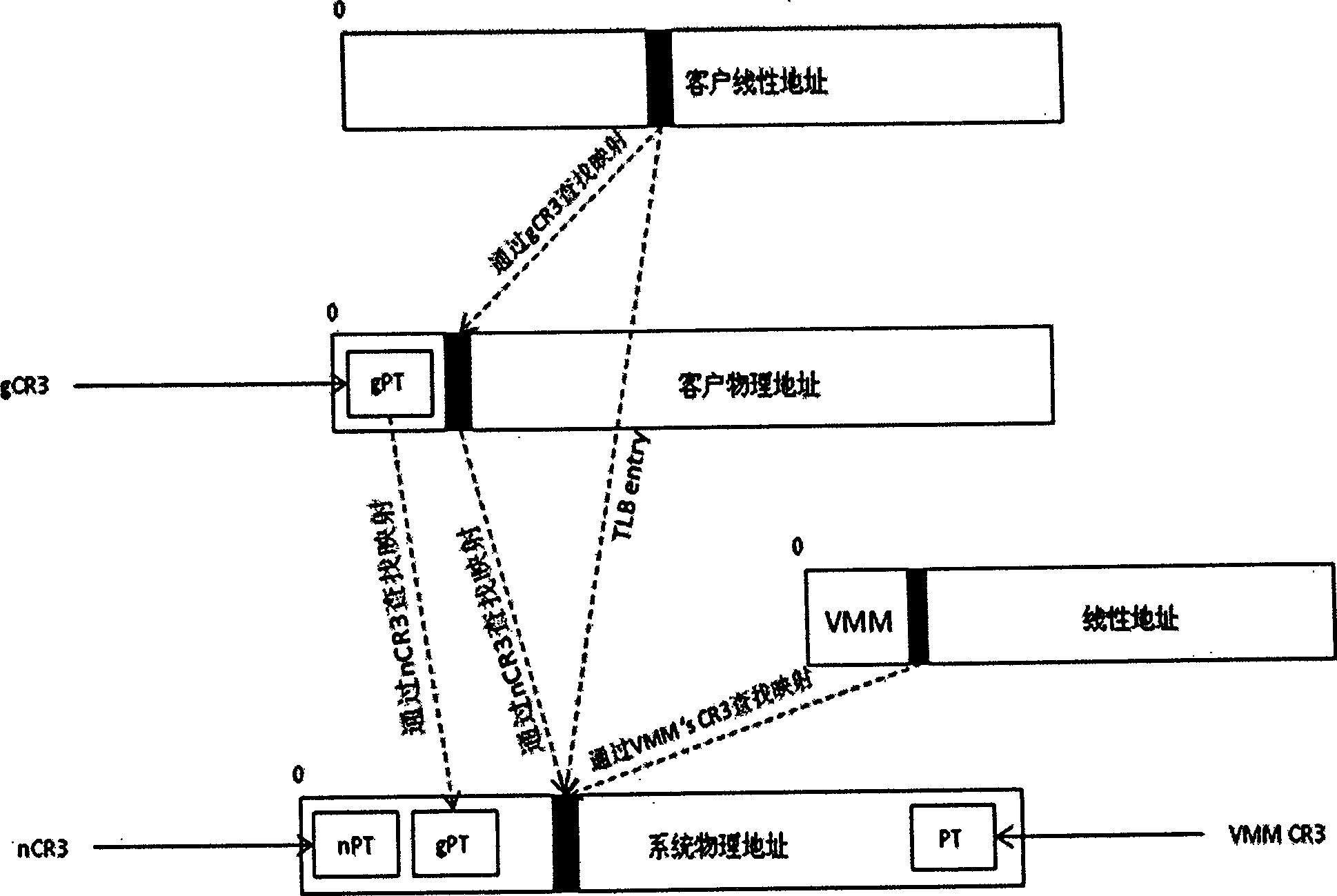

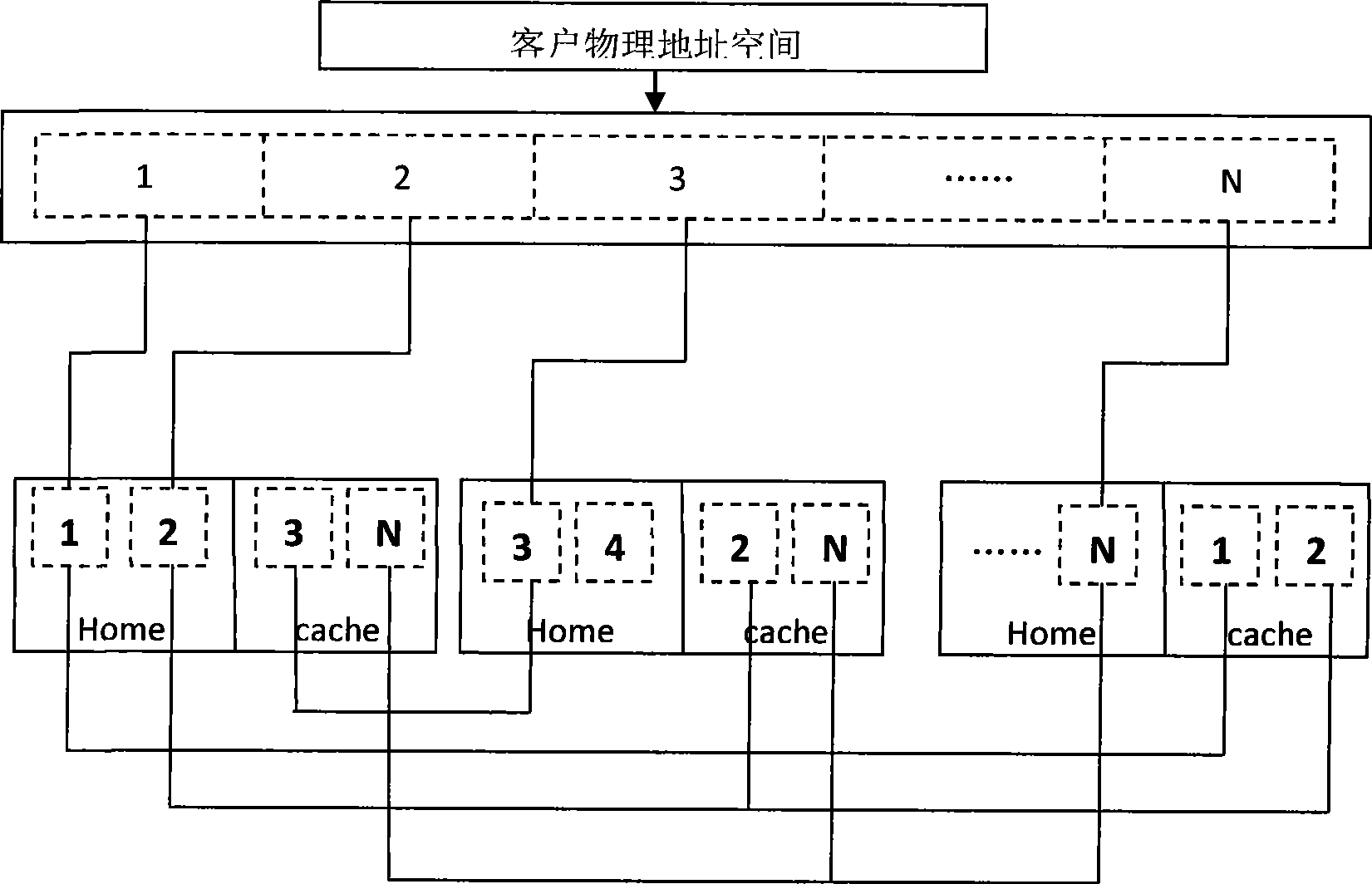

NUMA structure implementing method based on distributed internal memory virtualization

InactiveCN101477496AGuaranteed correctnessImprove manageabilityMemory adressing/allocation/relocationUnauthorized memory use protectionInternal memoryVirtualization

The invention discloses a realization method for NUMA (Non-Uniform Memory Access) structure based on distributed hardware-assisted memory virtualization. The method comprises the following four steps: step one, preparation stage; step two, normal work stage; step three, NUMA processing local request stage; and step four, NUMA processing remote request stage. The invention adopts the latest hardware-assisted memory virtualization technology and the distributed shared storage algorithm, provides a NUMA-structure shared single physical address space, and realizes the transparent and unified management to the multi-host memory resource by a guest operation system, so as to reduce the complexity of the application programming and increase the usability of system resource. Furthermore, the invention has the advantages of favorable use and development prospects.

Owner:HUAWEI TECH CO LTD

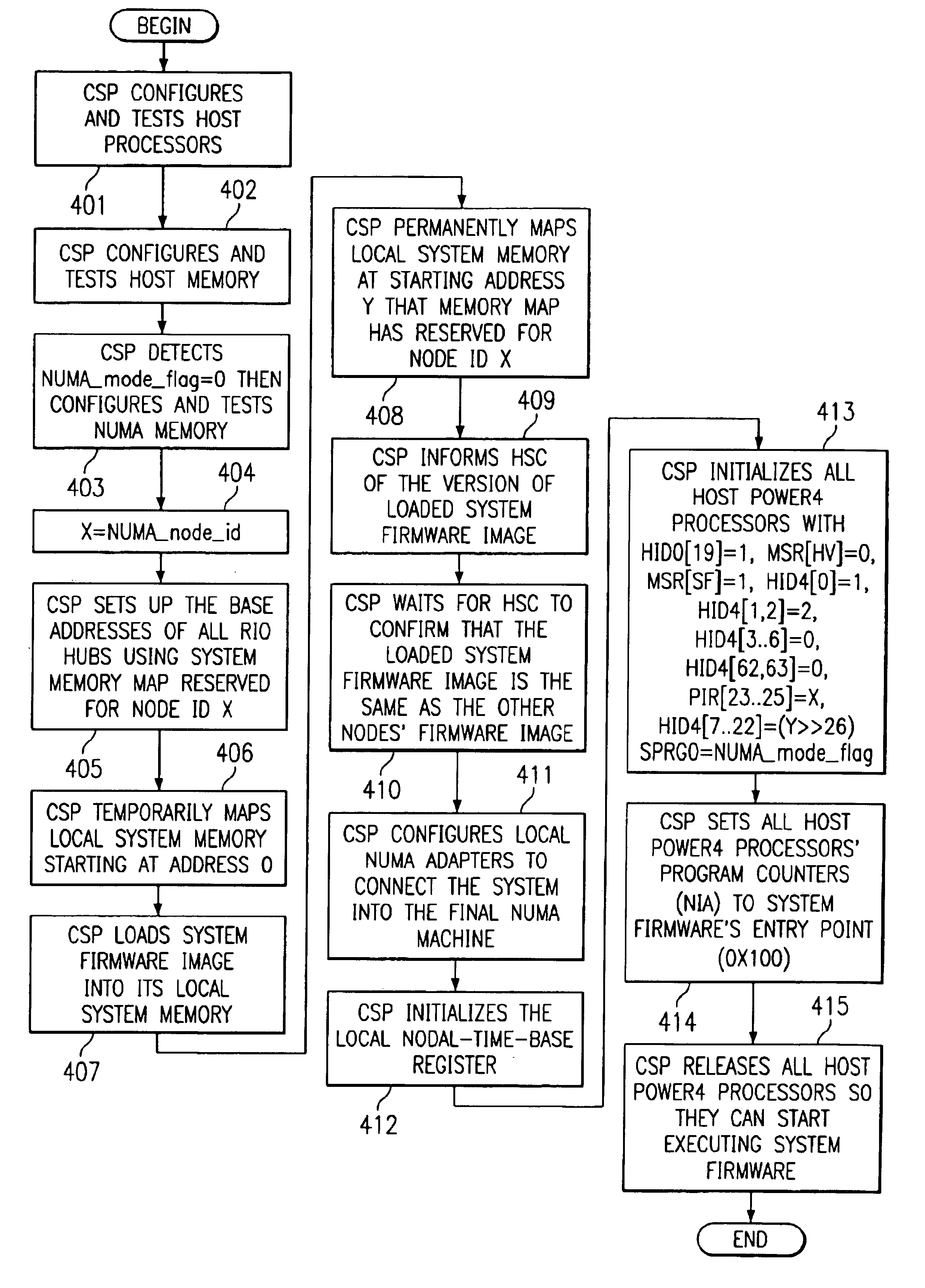

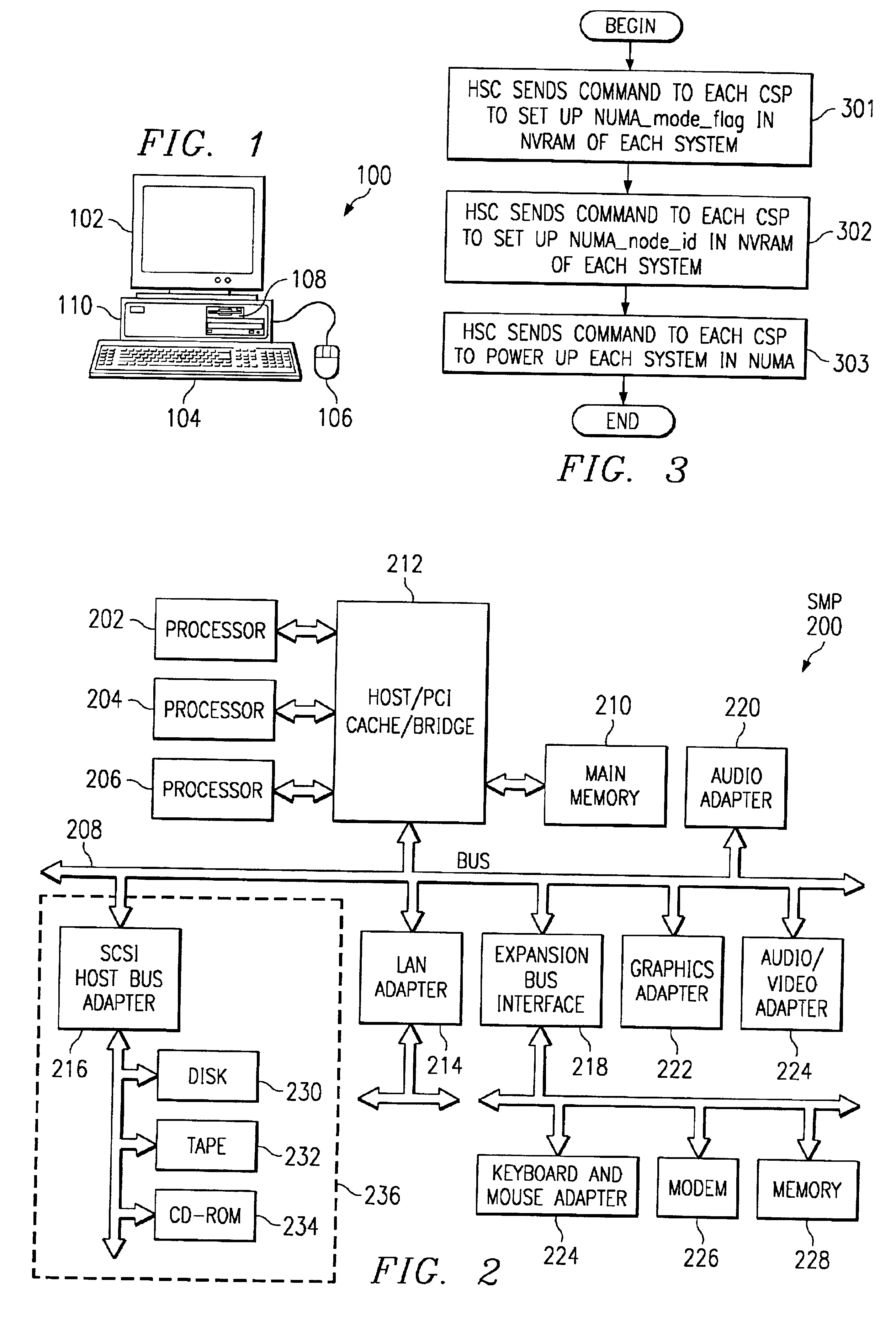

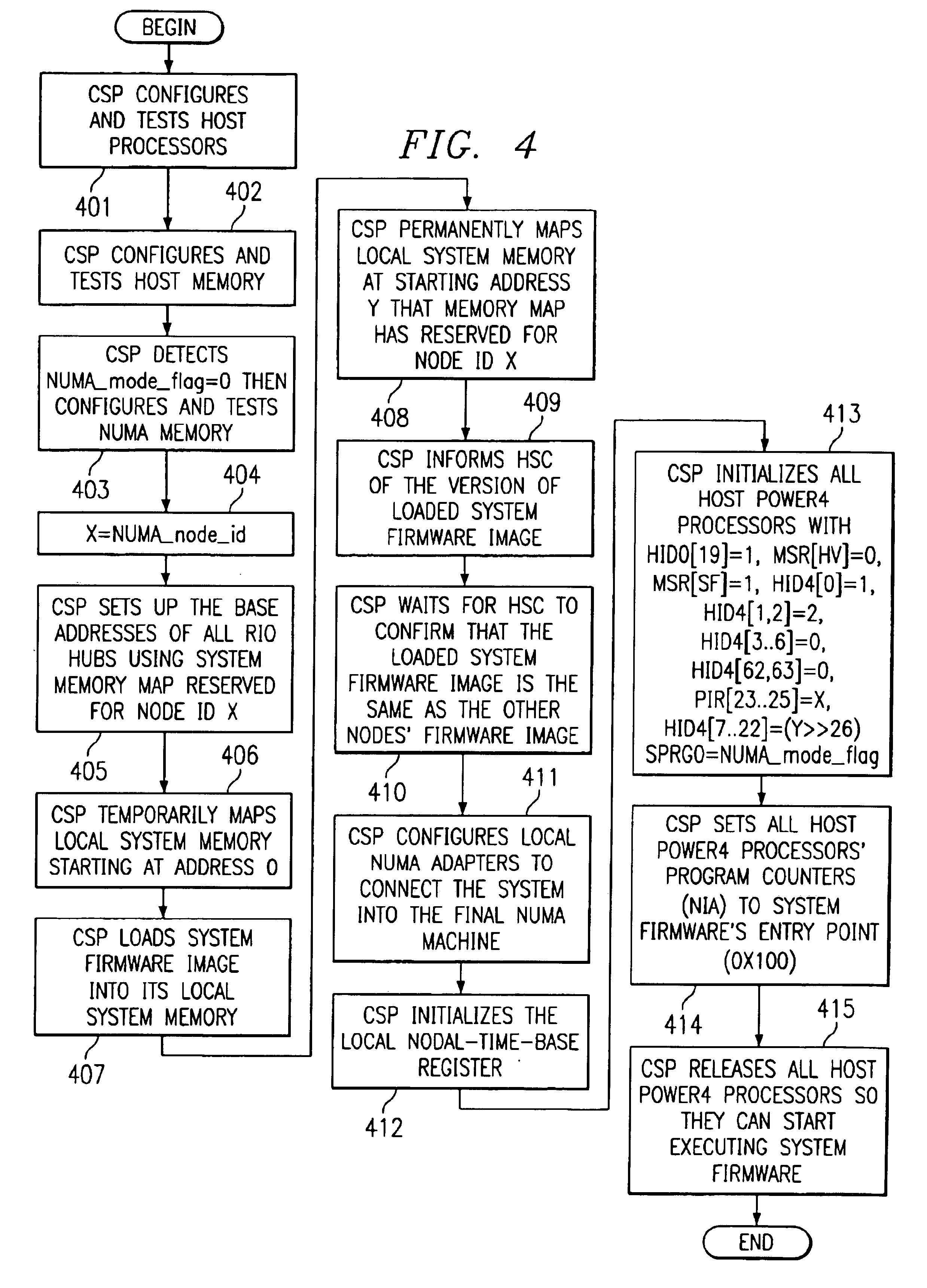

Method and apparatus to concurrently boot multiple processors in a non-uniform-memory-access machine

A method, apparatus and program for booting a non-uniform-memory-access (NUMA) machine are provided. The invention comprises configuring a plurality of standalone, symmetrical multiprocessing (SMP) systems to operate within a NUMA system. A master processor is selected within each SMP; the other processors in the SMP are designated as NUMA slave processors. A NUMA master processor is then chosen from the SMP master processors; the other SMP master processors are designated as NUMA slave processors. A unique NUMA ID is assigned to each SMP that will be part of the NUMA system. The SMPs are then booted in NUMA mode in one-pass with memory coherency established right at the beginning of the execution of the system firmware.

Owner:IBM CORP

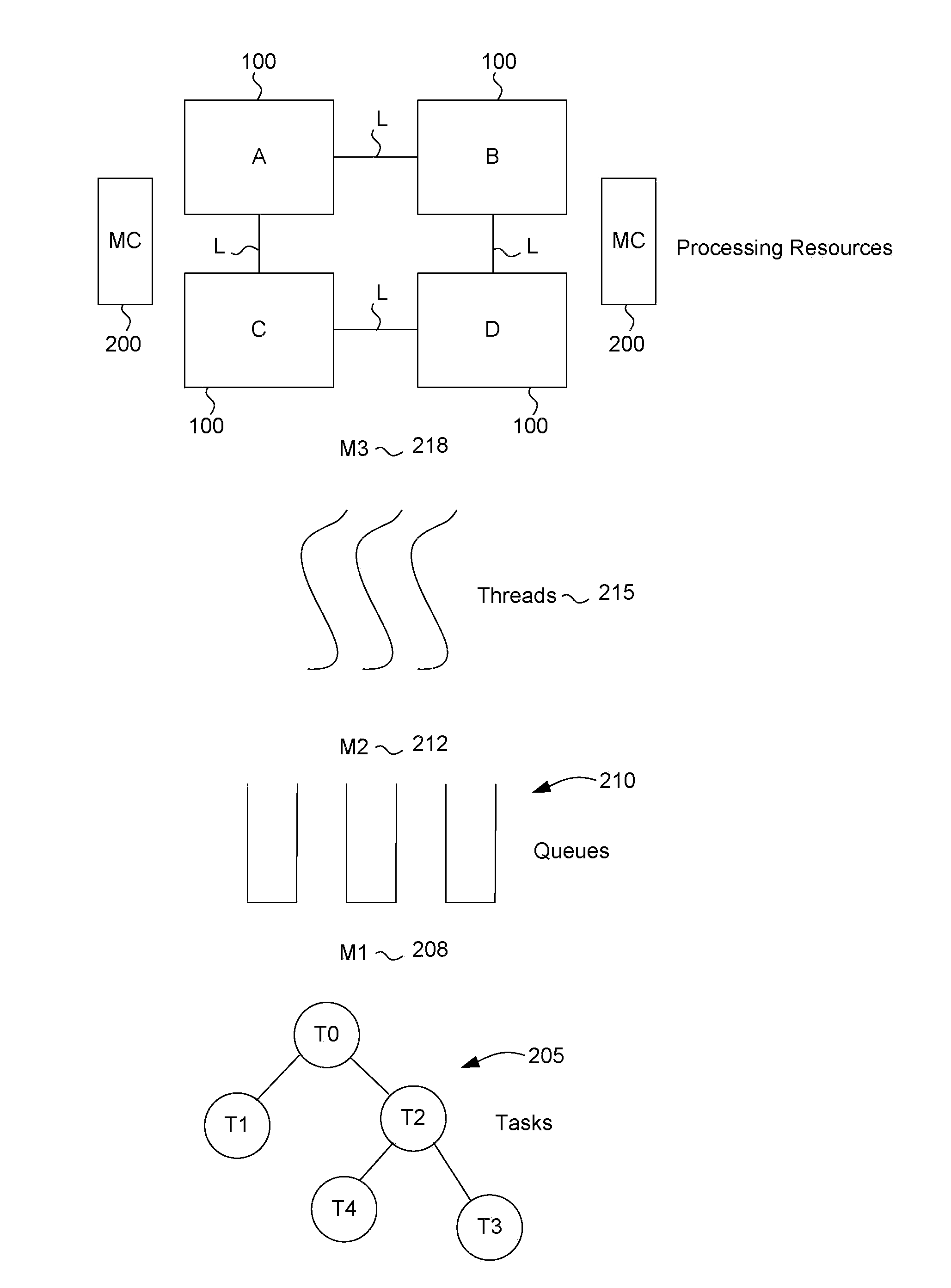

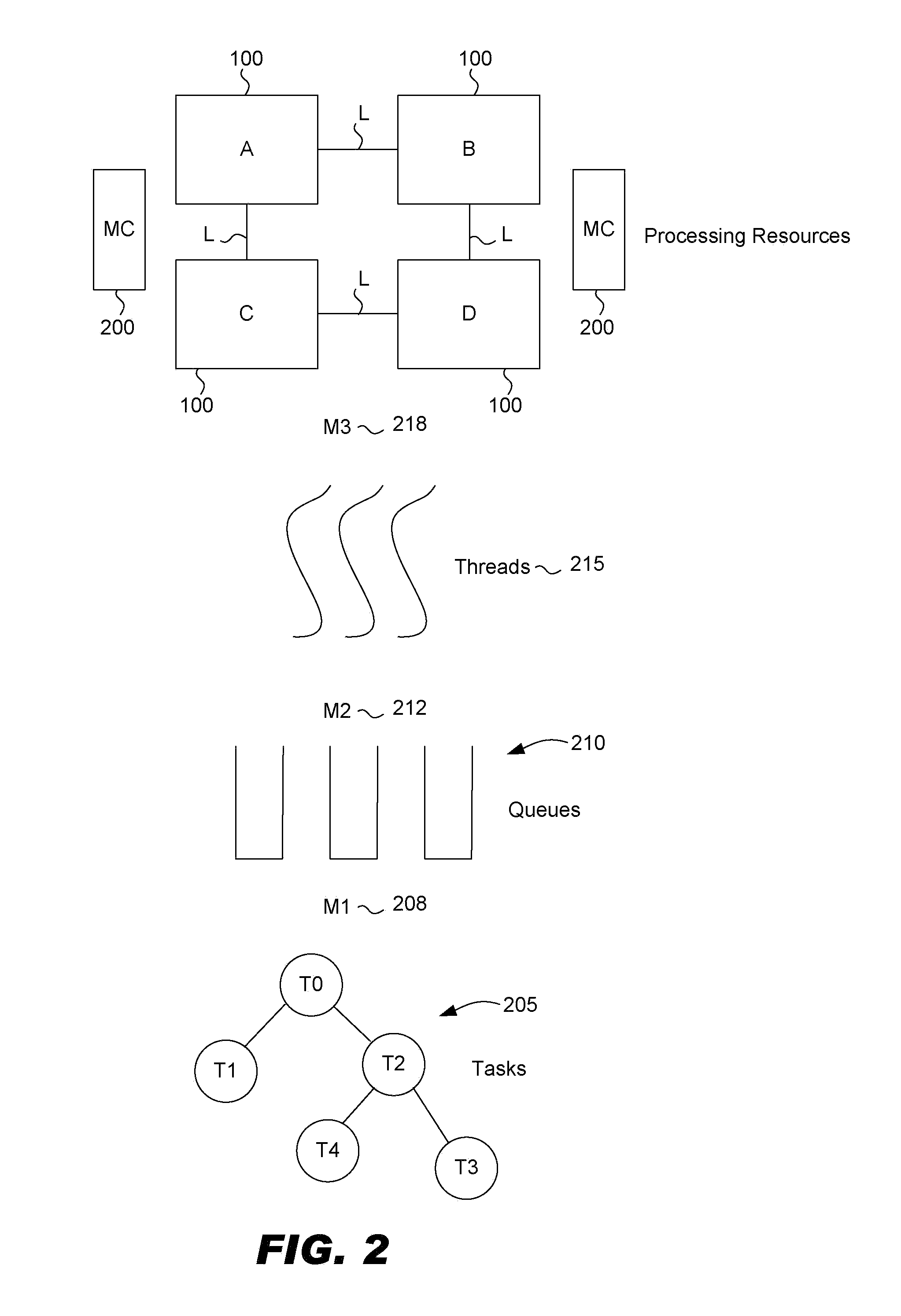

Numa aware system task management

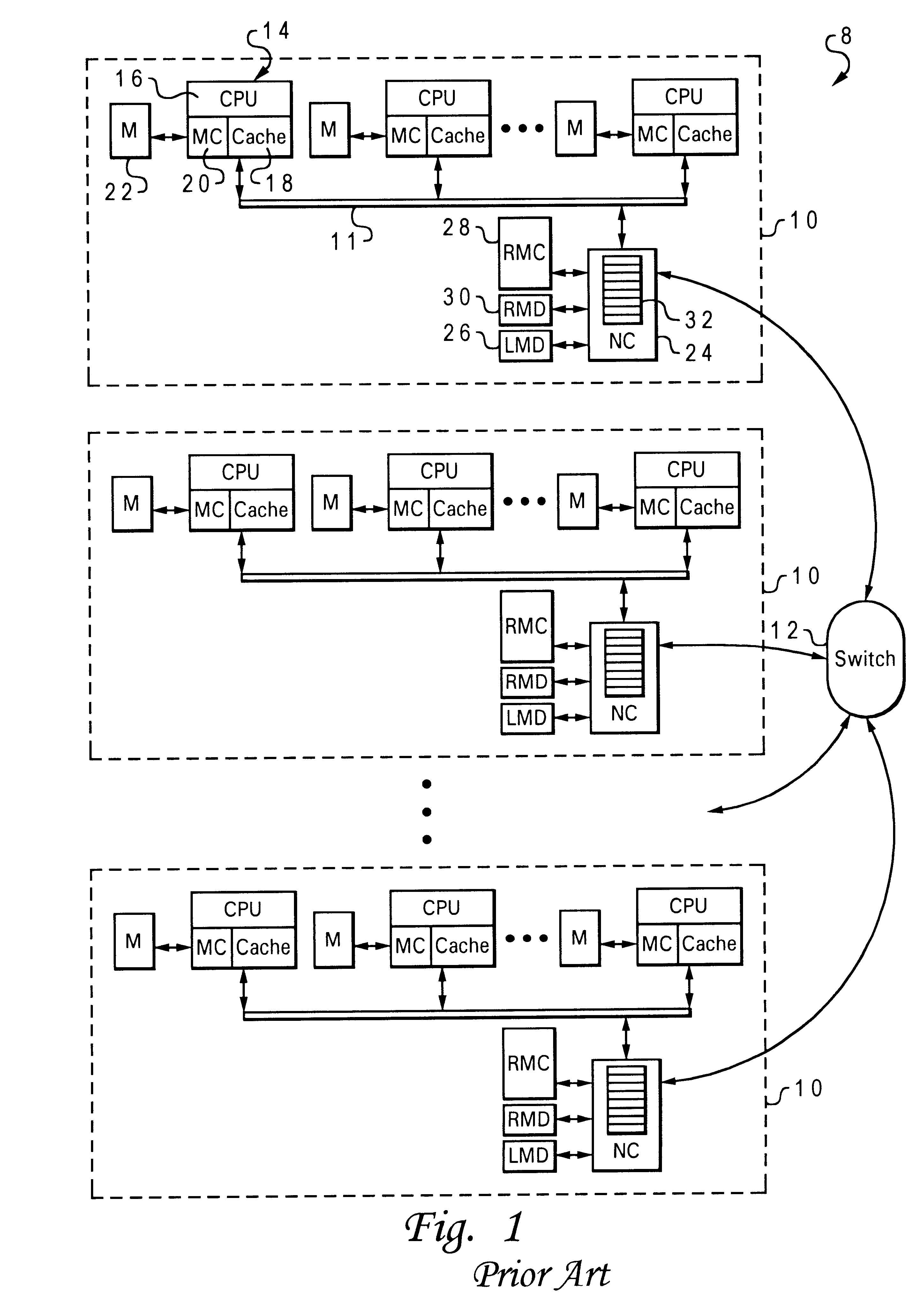

ActiveUS20120102500A1Reduces memory access costMinimal costResource allocationMemory systemsMemory controllerMajorization minimization

Task management in a Non-Uniform Memory Access (NUMA) architecture having multiple processor cores is aware of the NUMA topology in task management. As a result memory access penalties are reduced. Each processor is assigned to a zone allocated to a memory controller. The zone assignment is based on a cost function. In a default mode a thread of execution attempts to perform work in a queue of the same zone as the processor to minimize memory access penalties. Additional work stealing rules may be invoked if there is no work for a thread to perform from its default zone queue.

Owner:SAMSUNG ELECTRONICS CO LTD

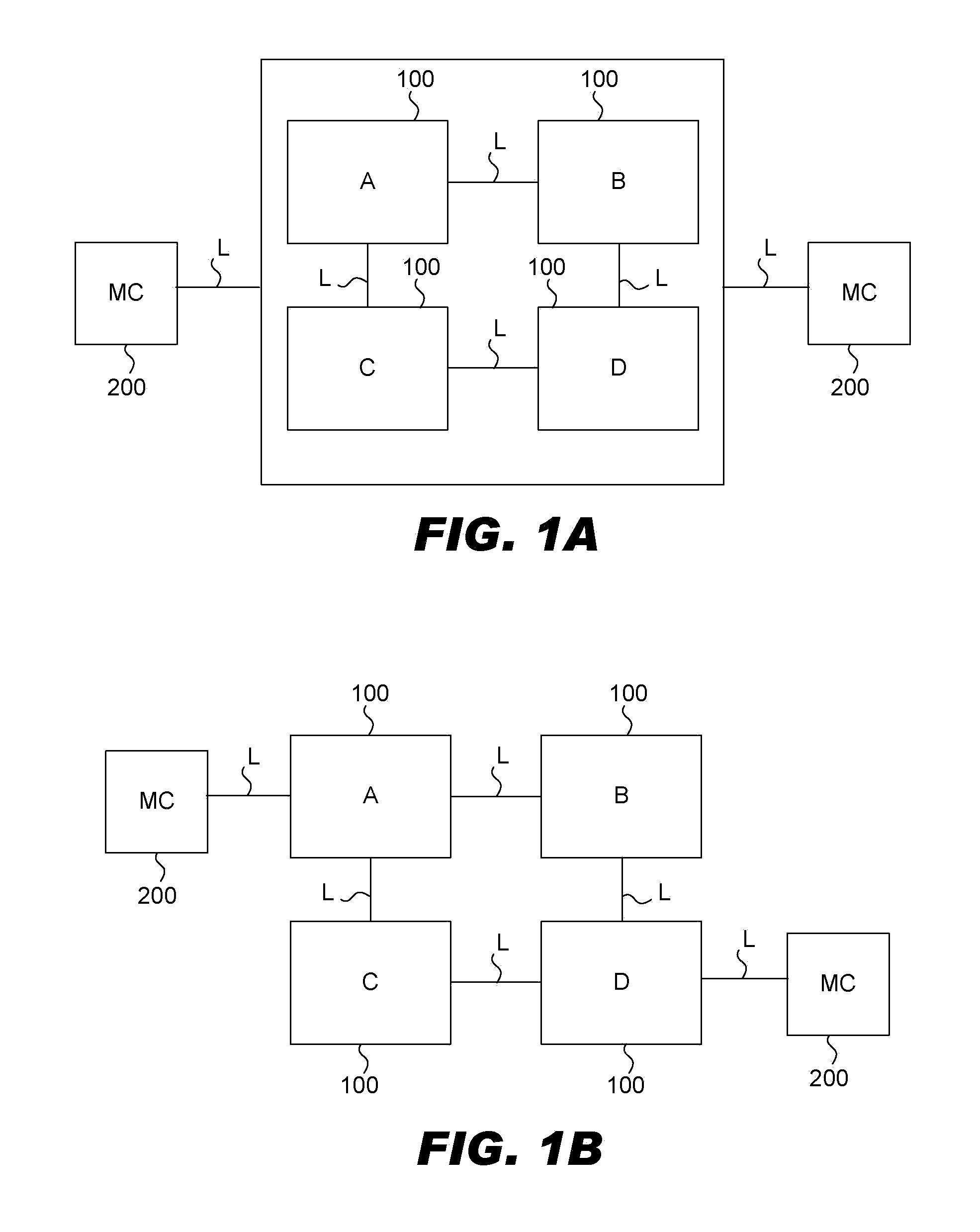

Interconnected processing nodes configurable as at least one non-uniform memory access (NUMA) data processing system

InactiveUS6421775B1General purpose stored program computerMultiprogramming arrangementsData processing systemParallel computing

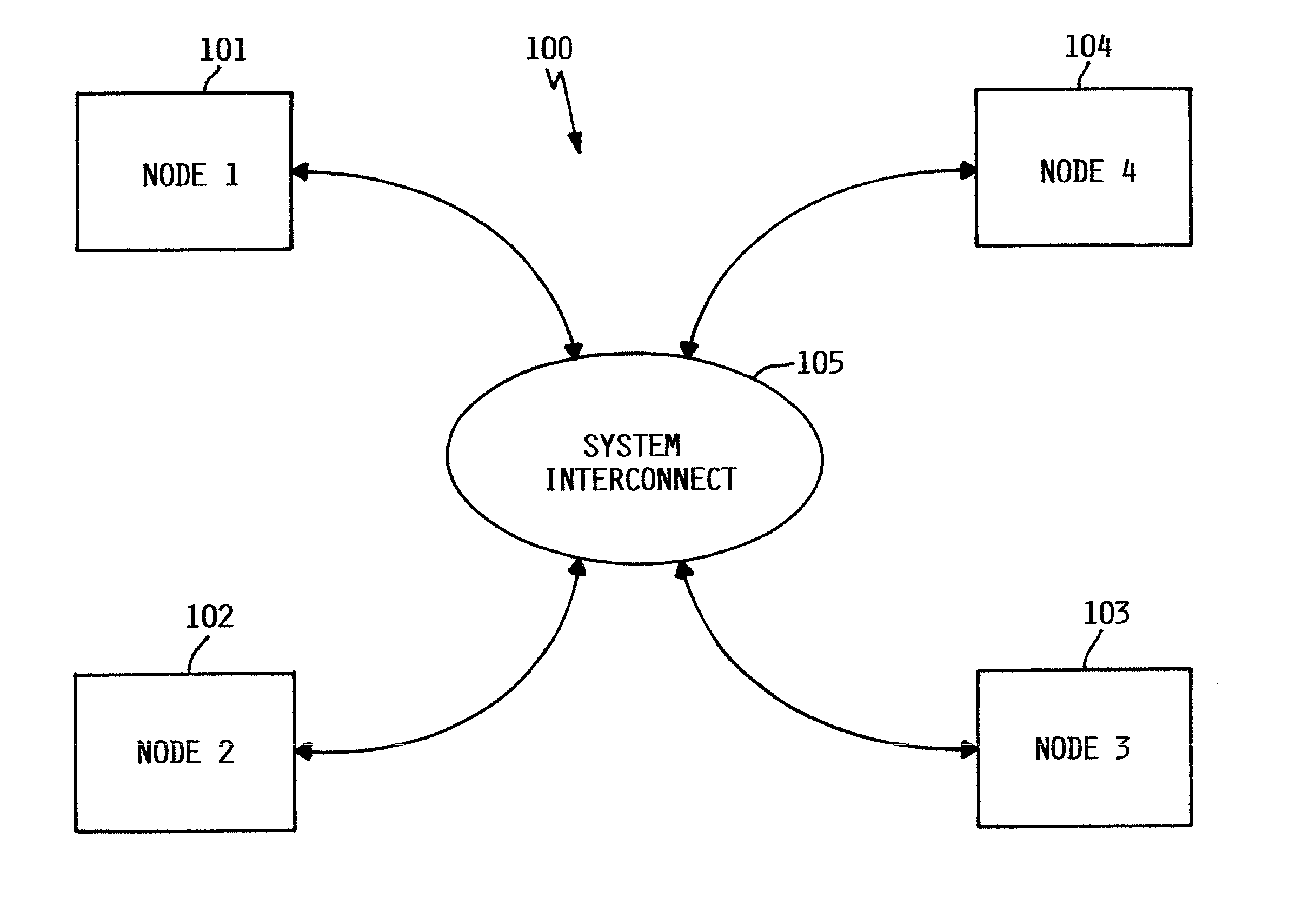

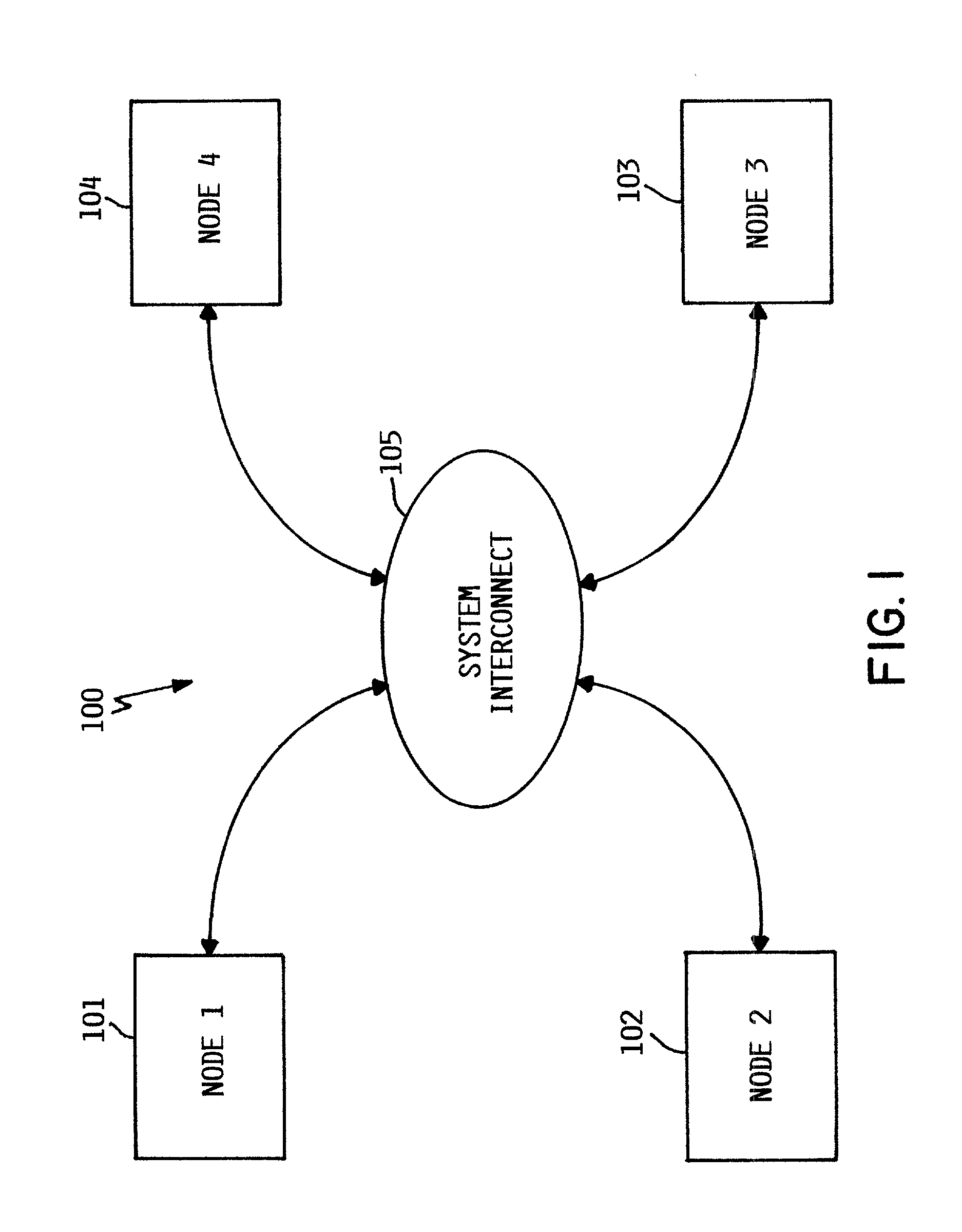

A data processing system includes a plurality of processing nodes that each contain at least one processor and data storage. The plurality of processing nodes are coupled together by a system interconnect. The data processing system further includes a configuration utility residing in data storage within at least one of the plurality of processing nodes. The configuration utility selectively configures the plurality of processing nodes into either a single non-uniform memory access (NUMA) system or into multiple independent data processing systems through communication via the system interconnect.

Owner:LINKEDIN

Method and apparatus for dispatching tasks in a non-uniform memory access (NUMA) computer system

InactiveUS7159216B2Avoid starvationShort timeProgram initiation/switchingMemory adressing/allocation/relocationDistributed memoryAccess time

A dispatcher for a non-uniform memory access computer system dispatches threads from a common ready queue not associated with any CPU, but favors the dispatching of a thread to a CPU having a shorter memory access time. Preferably, the system comprises multiple discrete nodes, each having a local memory and one or more CPUs. System main memory is a distributed memory comprising the union of the local memories. A respective preferred CPU and preferred node may be associated with each thread. When a CPU becomes available, the dispatcher gives at least some relative priority to a thread having a preferred CPU in the same node as the available CPU over a thread having a preferred CPU in a different node. This preference is relative, and does not prevent the dispatch from overriding the preference to avoid starvation or other problems.

Owner:DAEDALUS BLUE LLC

Optimized memory allocator for a multiprocessor computer system

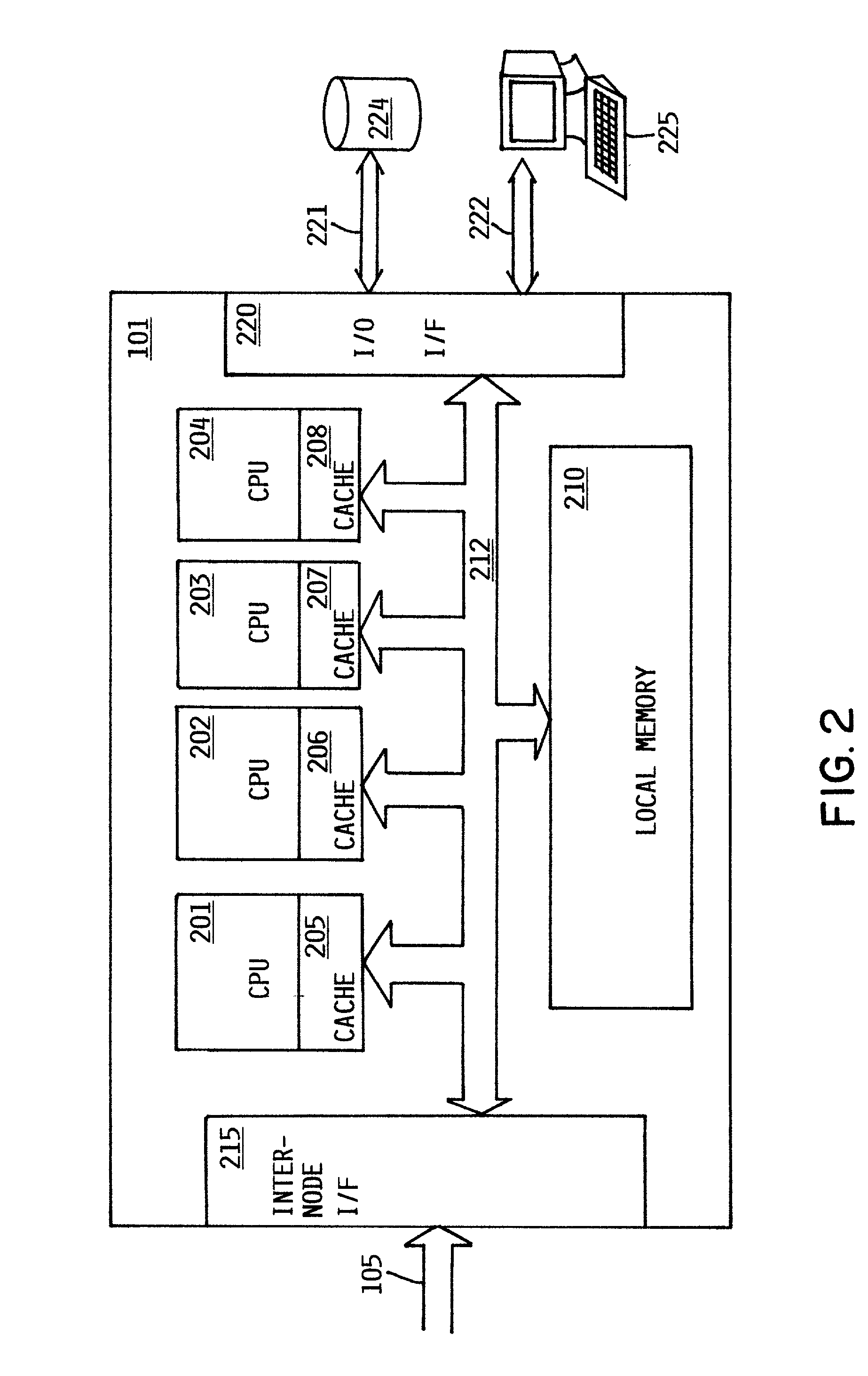

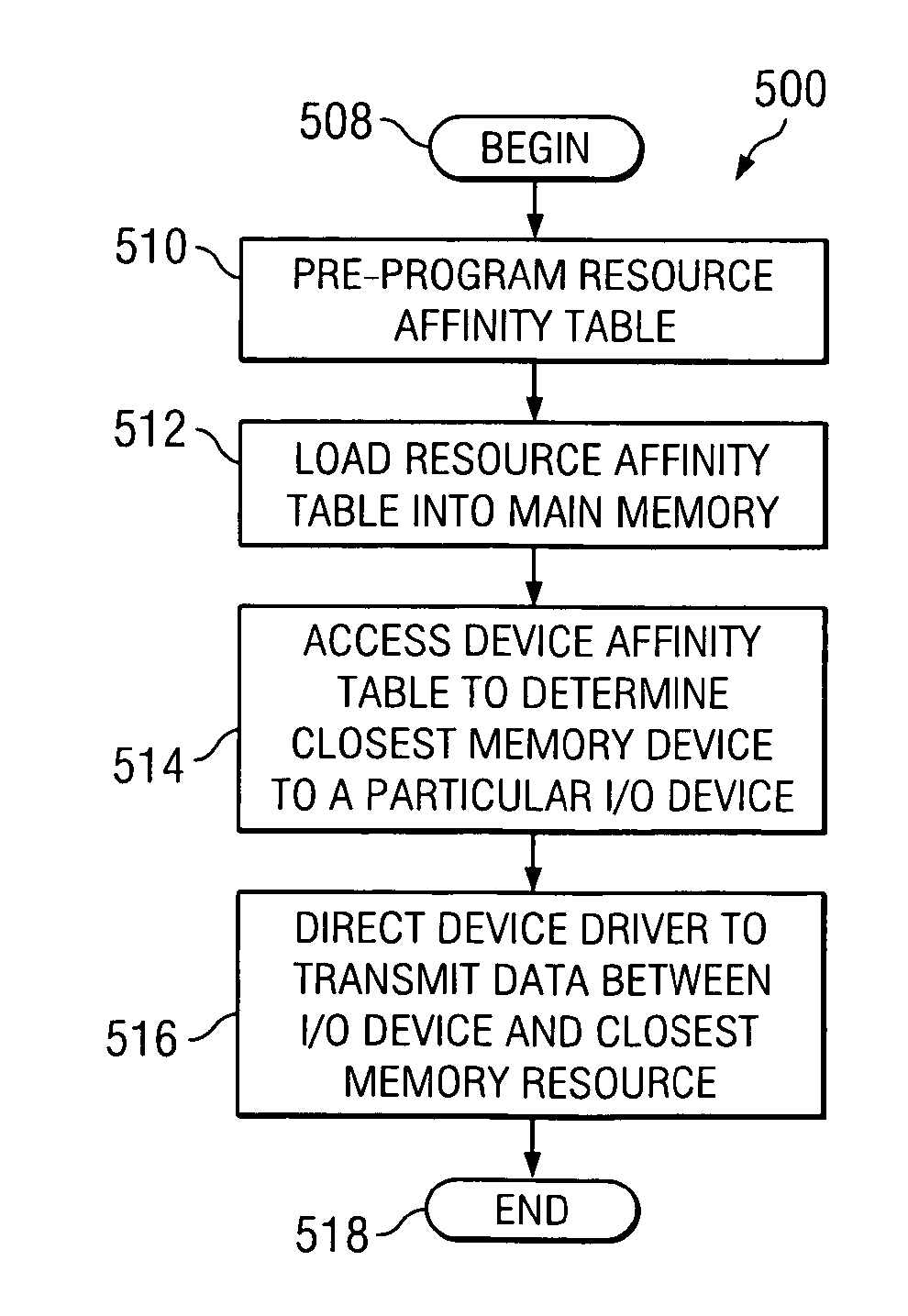

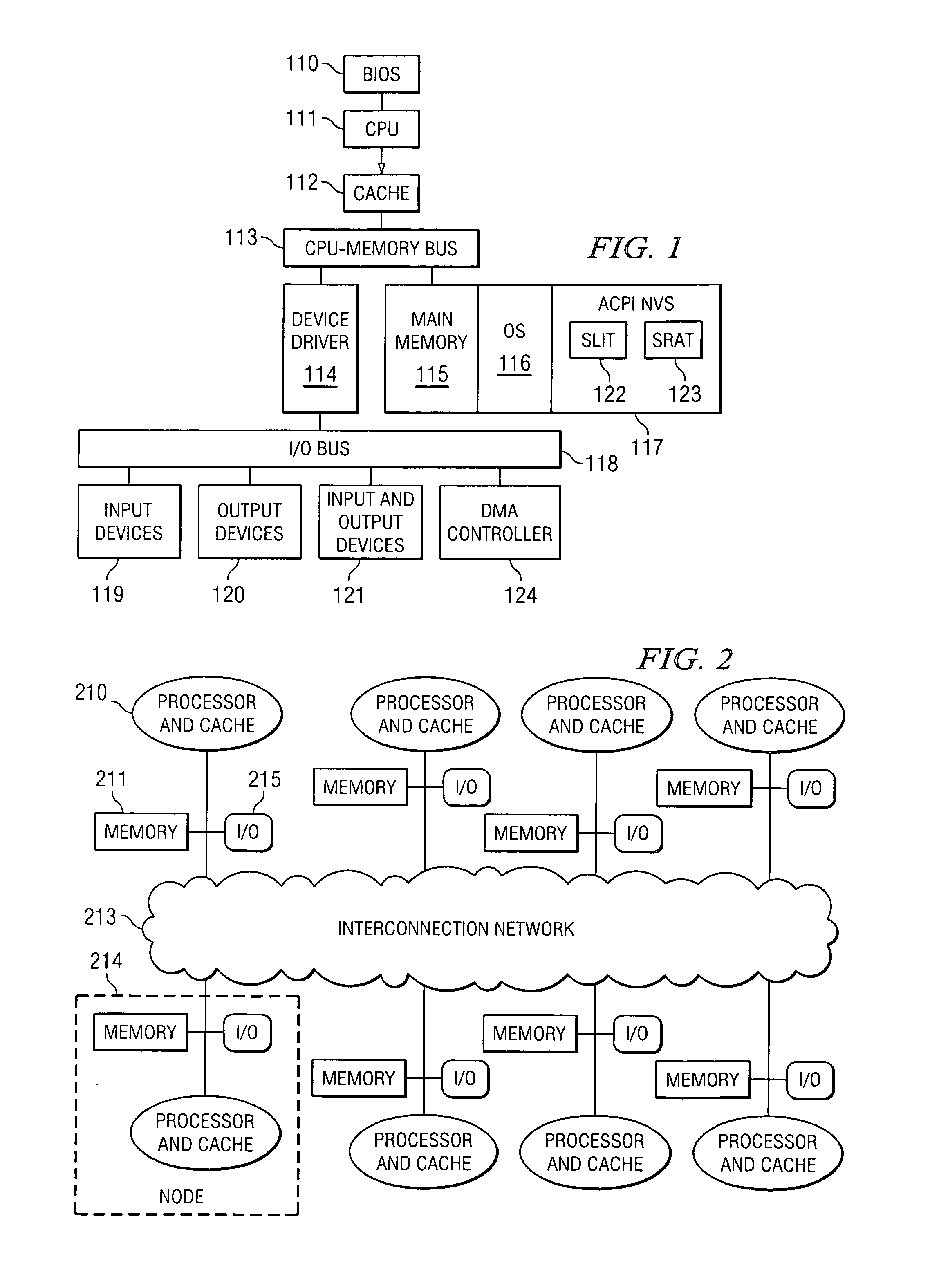

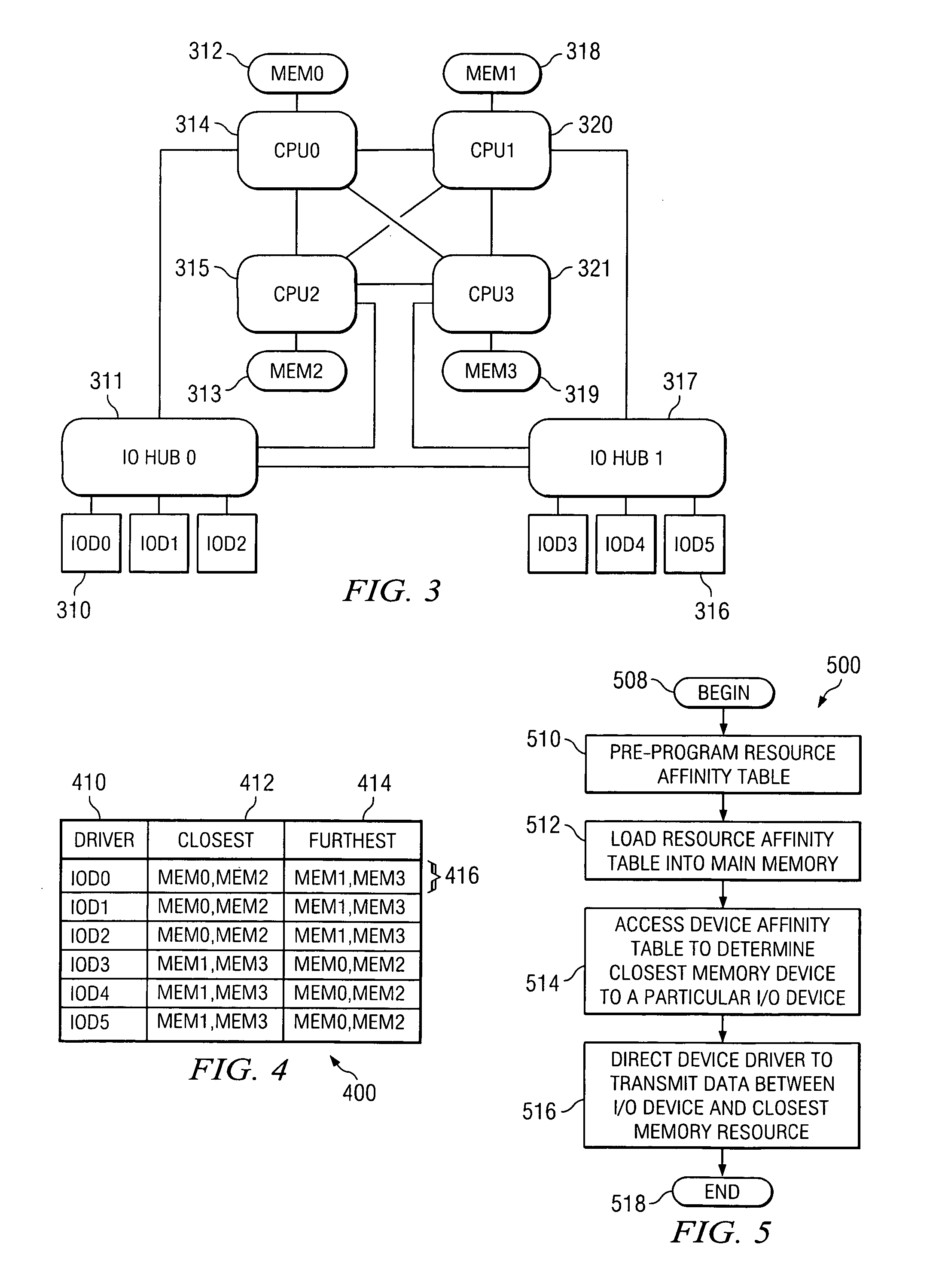

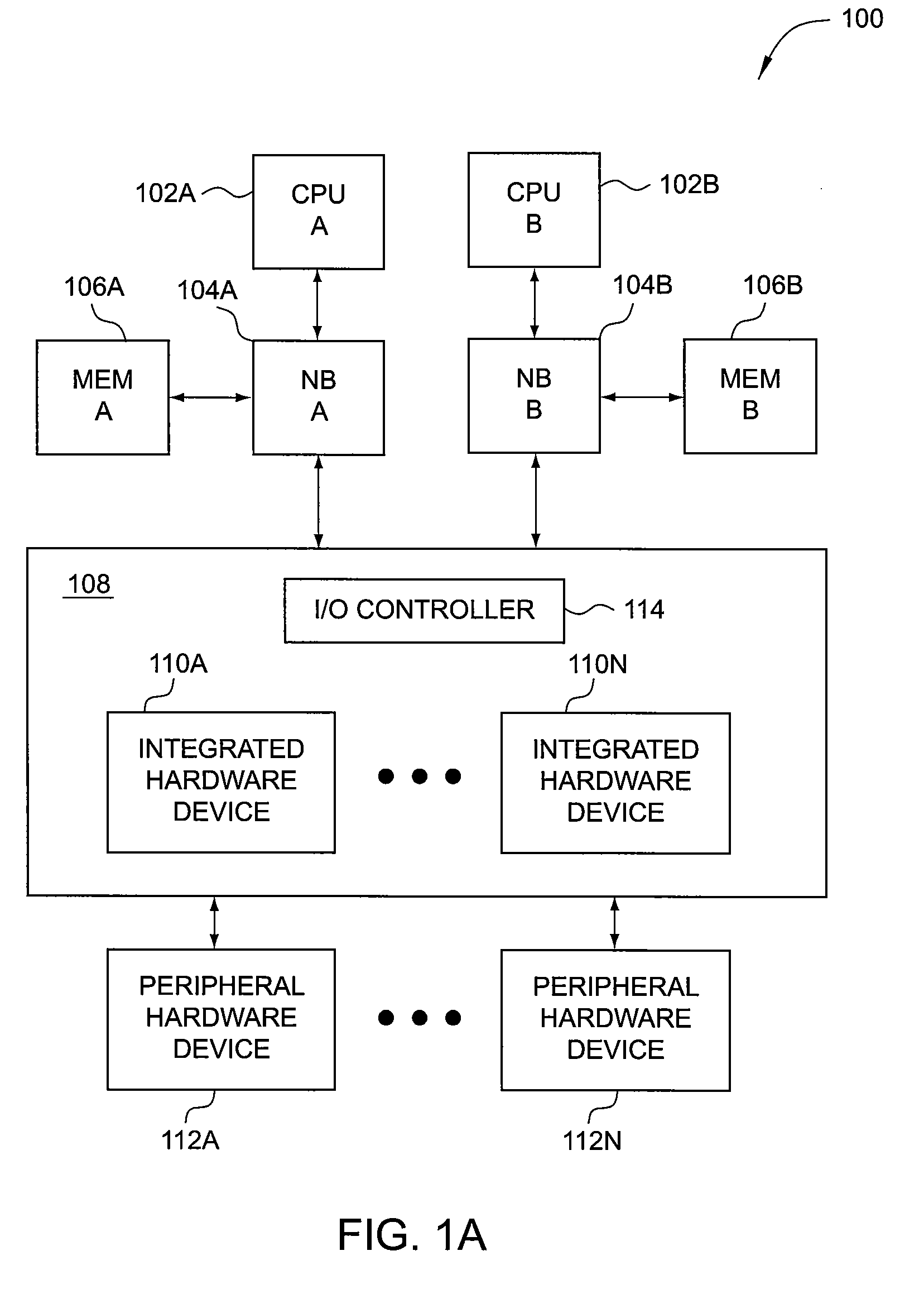

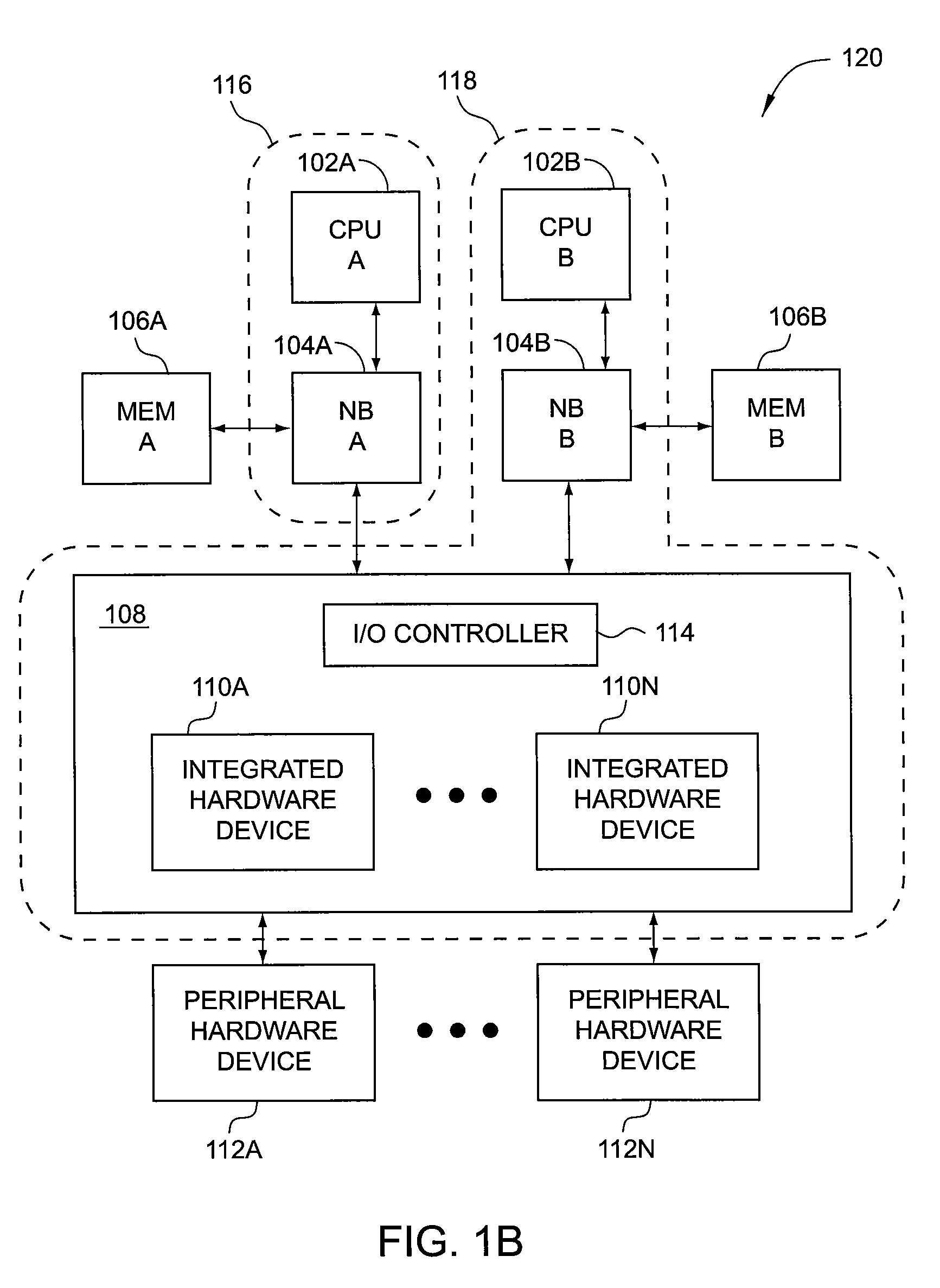

ActiveUS20070233967A1Maximize total data transmittedImproving I/O performance measureMemory architecture accessing/allocationMemory systemsAccess timeMulti processor

The present disclosure describes systems and methods for allocating memory in a multiprocessor computer system such as a non-uniform memory access (NUMA) machine having distribute shared memory. The systems and methods include allocating memory to input-output devices (I / O devices) based at least in part on which memory resource is physically closest to a particular I / O device. Through these systems and methods memory is allocated more efficiently in a NUMA machine. For example, allocating memory to an I / O device that i80s on the same node as a memory resource, reduces memory access time thereby maximizing data transmission. The present disclosure further describes a system and method for improving performance in a multiprocessor computer system by utilizing a pre-programmed device affinity table. The system and method includes listing the memory resources physically closest to each I / O device and accessing the device table to determine the closest memory resource to a particular I / O device. The system and method further includes directing a device driver to transmit data between the I / O device and the closest memory resource.

Owner:DELL PROD LP

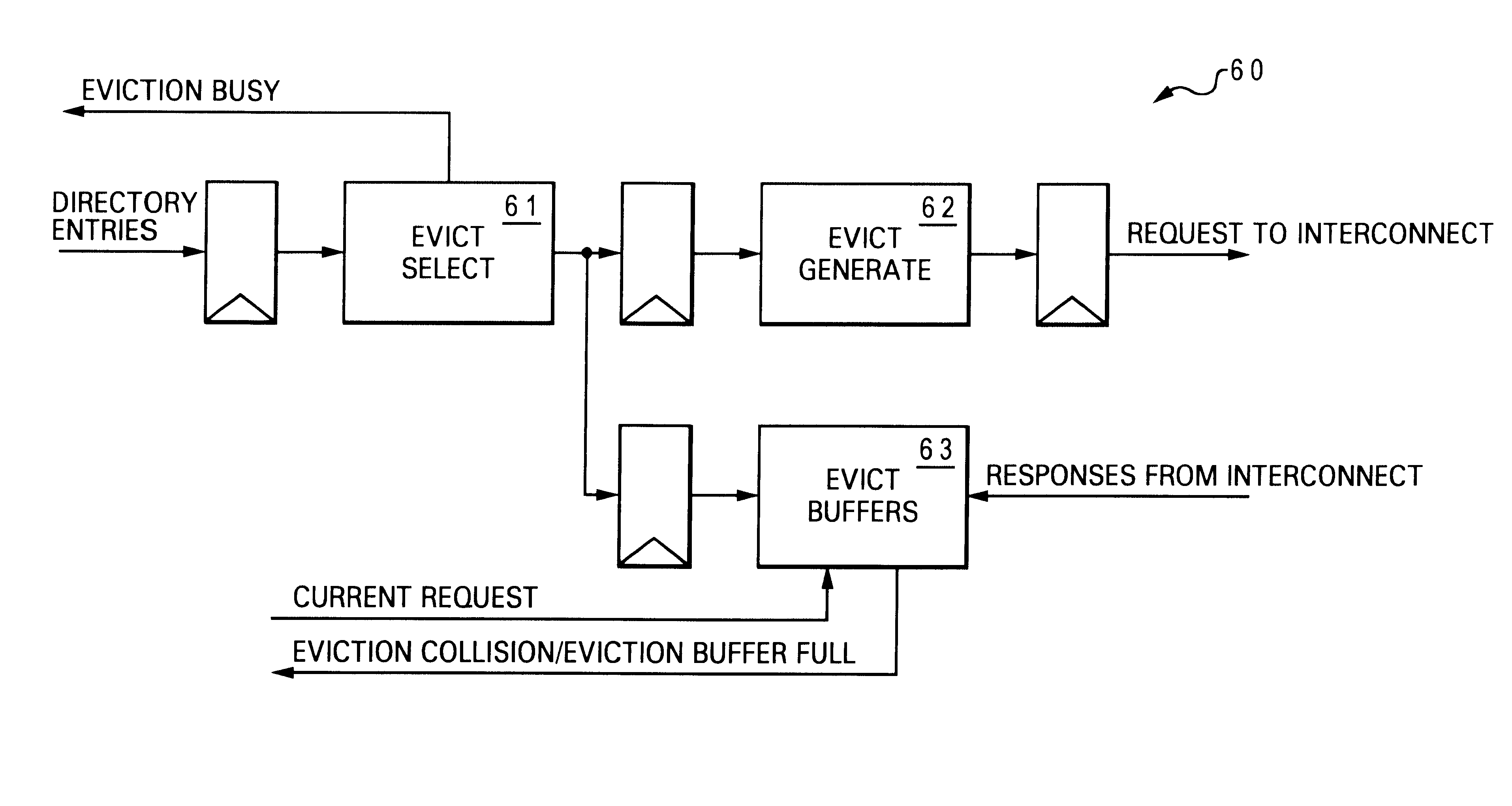

Method and system for providing an eviction protocol within a non-uniform memory access system

InactiveUS6266743B1Program synchronisationMemory adressing/allocation/relocationComputerized systemComputer science

A method and system for providing an eviction protocol within a non-uniform memory access (NUMA) computer system are disclosed. A NUMA computer system includes at least two nodes coupled to an interconnect. Each of the two nodes includes a local system memory. In response to a request for evicting an entry from a sparse directory, an non-intervention writeback request is sent to a node having the modified cache line when the entry is associated with a modified cache line. After the data from the modified cache line has been written back to a local system memory of the node, the entry can then be evicted from the sparse directory. If the entry is associated with a shared line, an invalidation request is sent to all nodes that the directory entry indicates may hold a copy of the line. Once all invalidations have been acknowledged, the entry can be evicted from the sparse directory.

Owner:IBM CORP

Chipset Support For Non-Uniform Memory Access Among Heterogeneous Processing Units

ActiveUS20100146222A1Memory adressing/allocation/relocationMicro-instruction address formationChipsetTranslation table

A method for providing a first processor access to a memory associated with a second processor. The method includes receiving a first address map from the first processor that includes an MMIO aperture for a NUMA device, receiving a second address map from a second processor that includes MMIO apertures for hardware devices that the second processor is configured to access, and generating a global address map by combining the first and second address maps. The method further includes receiving an access request transmitted from the first processor to the NUMA device, generating a memory access request based on the first access request and a translation table that maps a first address associated with the first access request into a second address associated with the memory associated with the second processor, and routing the memory access request to the memory based on the global address map.

Owner:NVIDIA CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com