Patents

Literature

416 results about "Cpu load" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

CPU load average is a metric used to determine CPU usage in Linux. CPU load is a measure of the number of processes that are running as well processes that are waiting for CPU access. Tracking CPU load averages over a period of time can help system administrators diagnose the cause of server slowdowns.

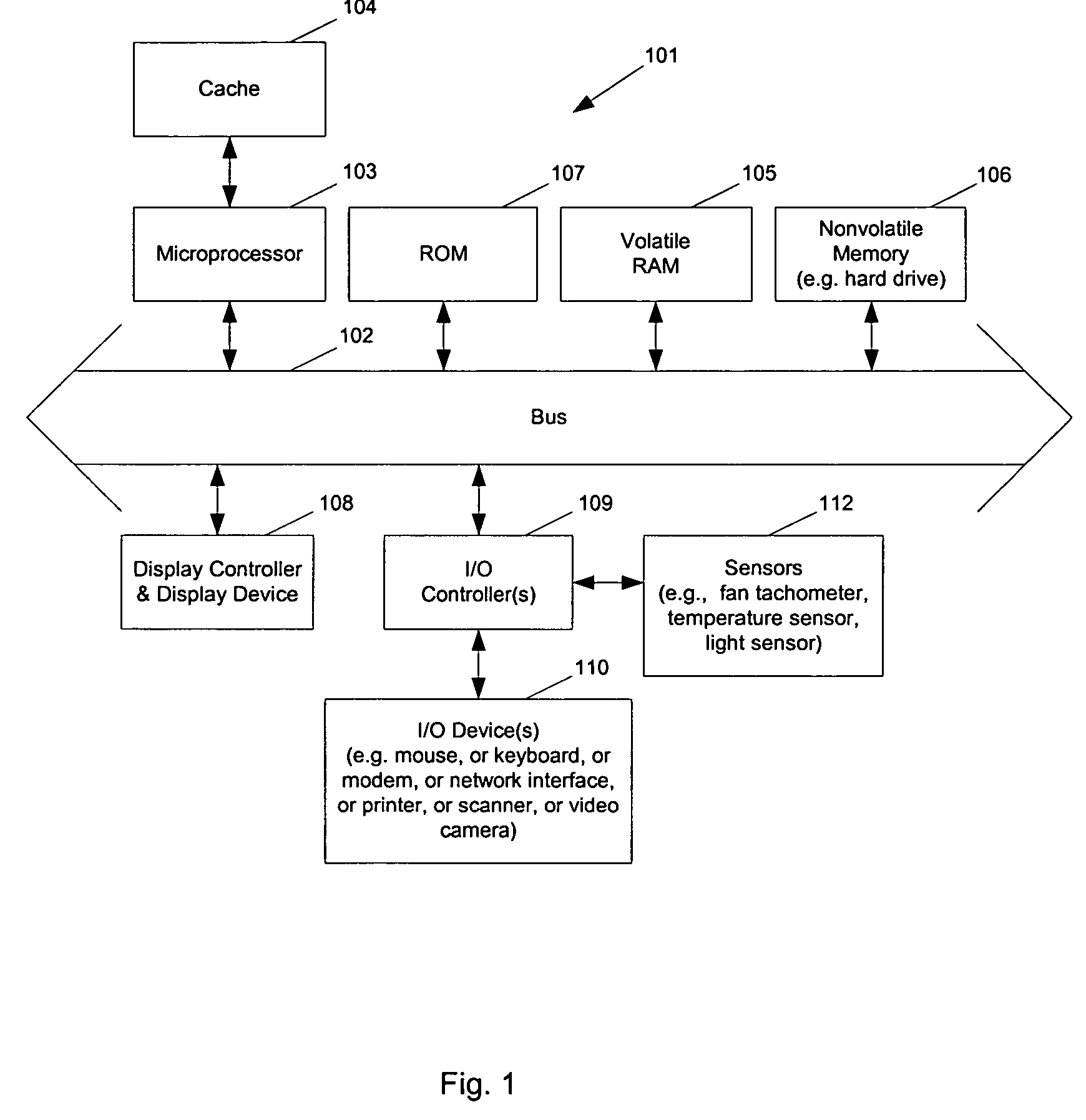

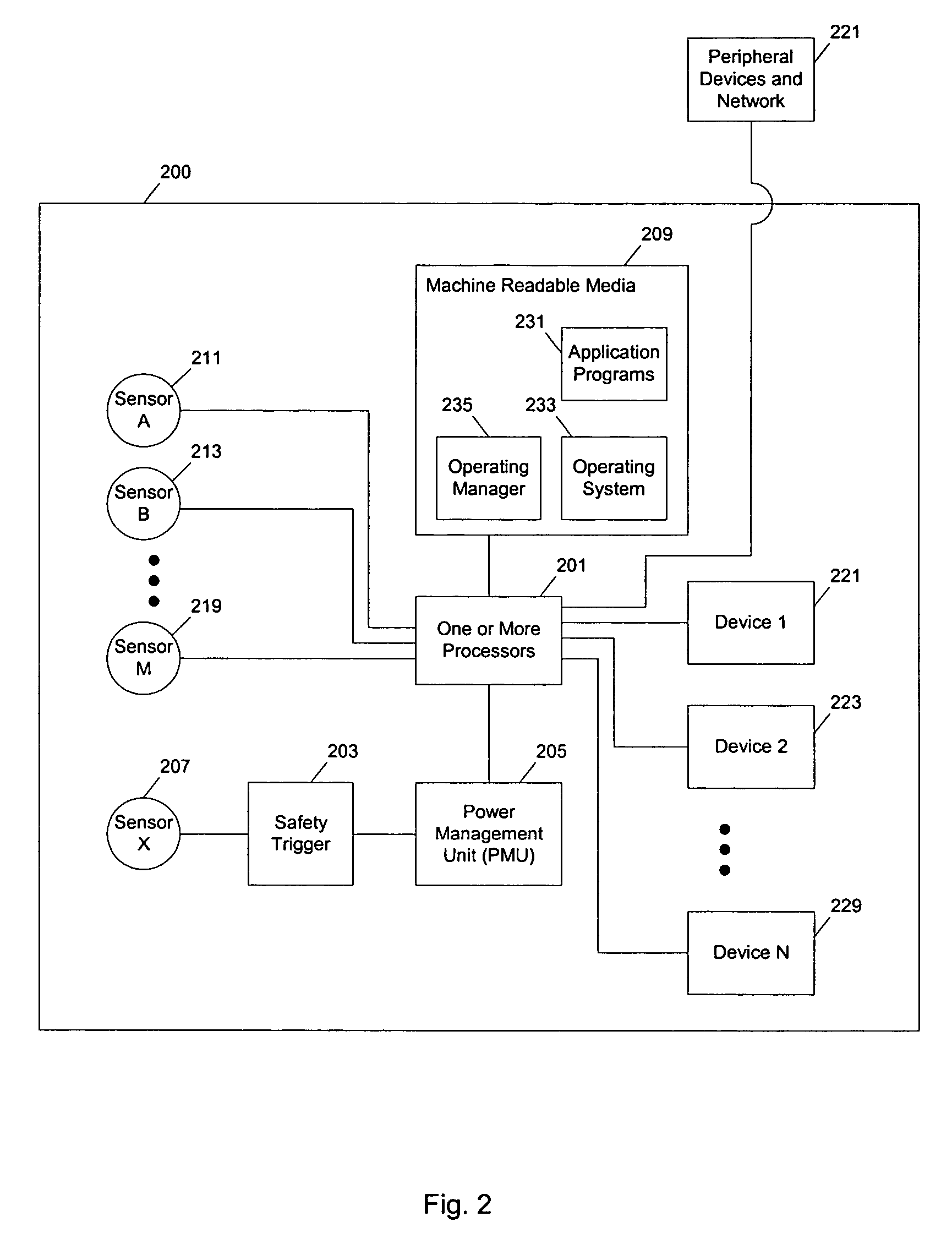

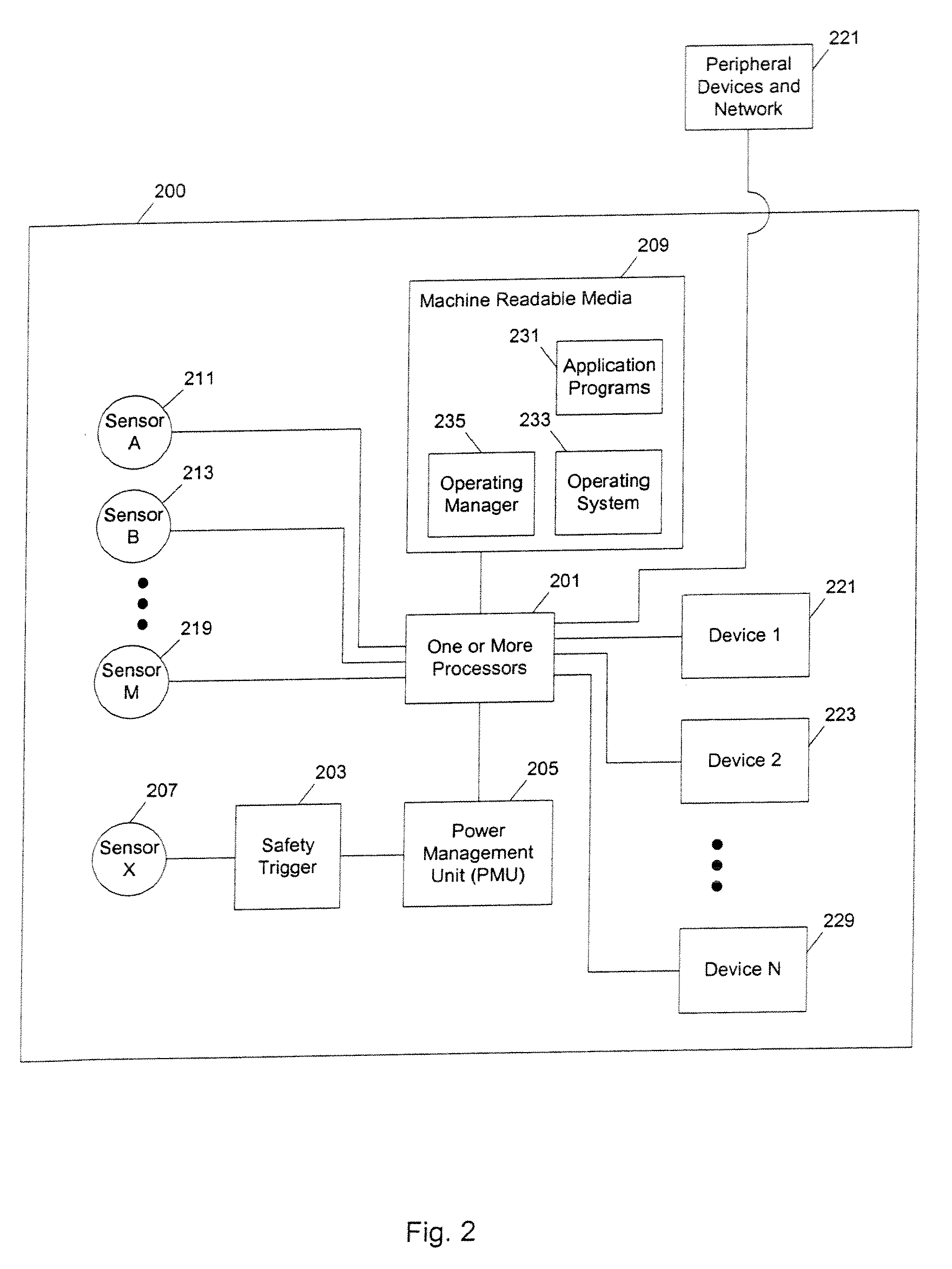

Methods and apparatuses for operating a data processing system

ActiveUS20050049729A1Balance performanceShorten speedMultiplex communicationVolume/mass flow measurementHeat sensitiveThermistor

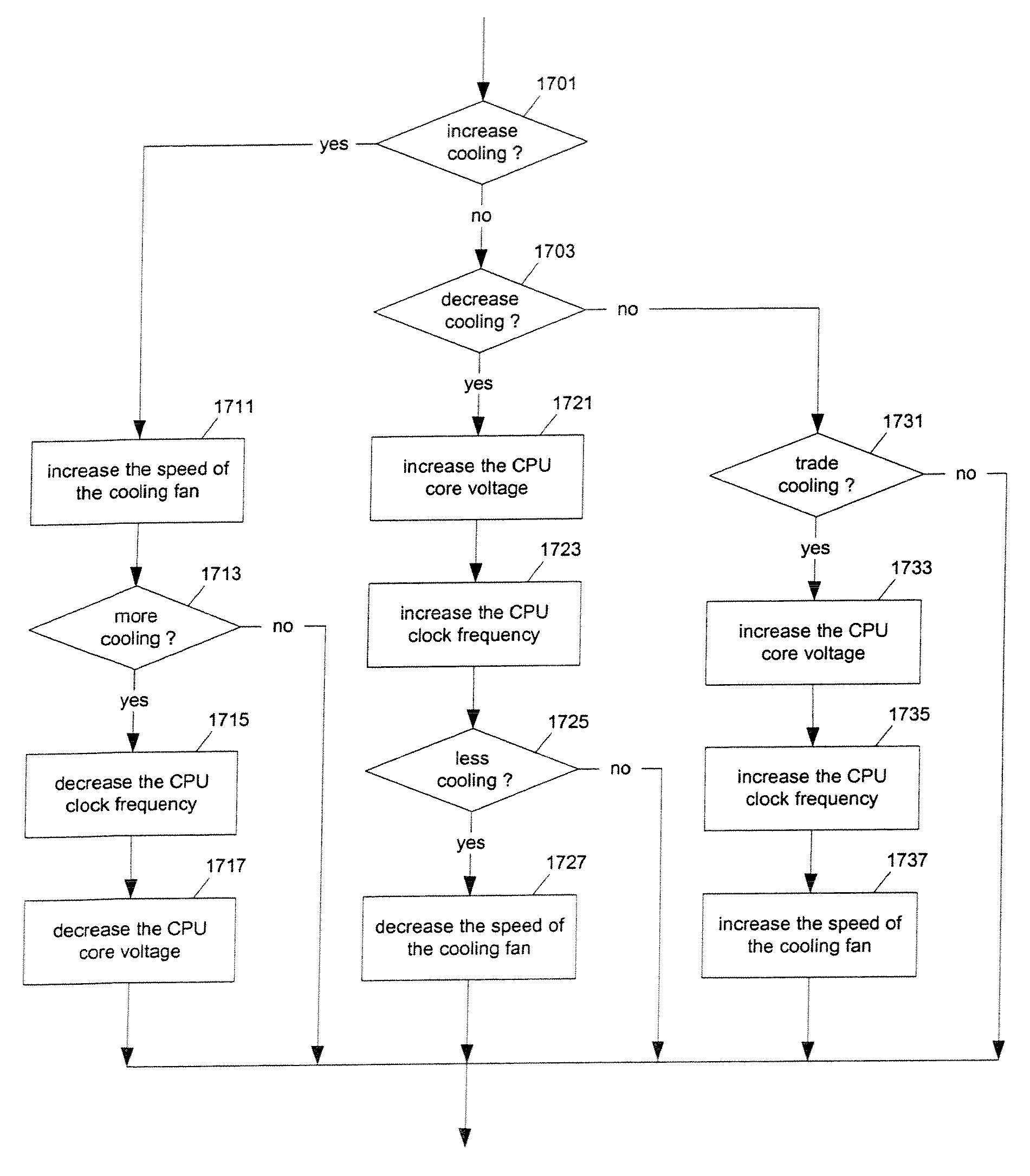

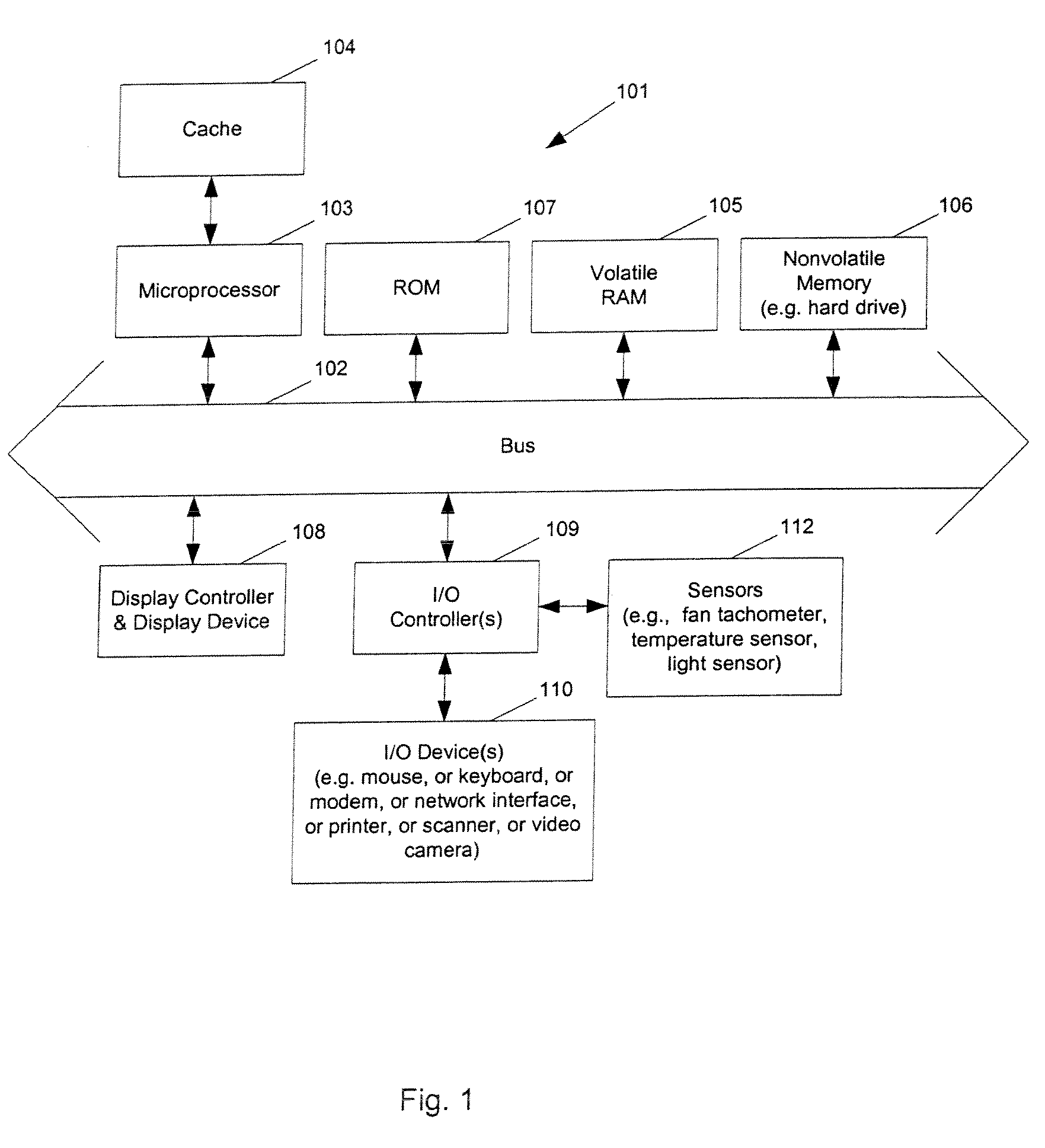

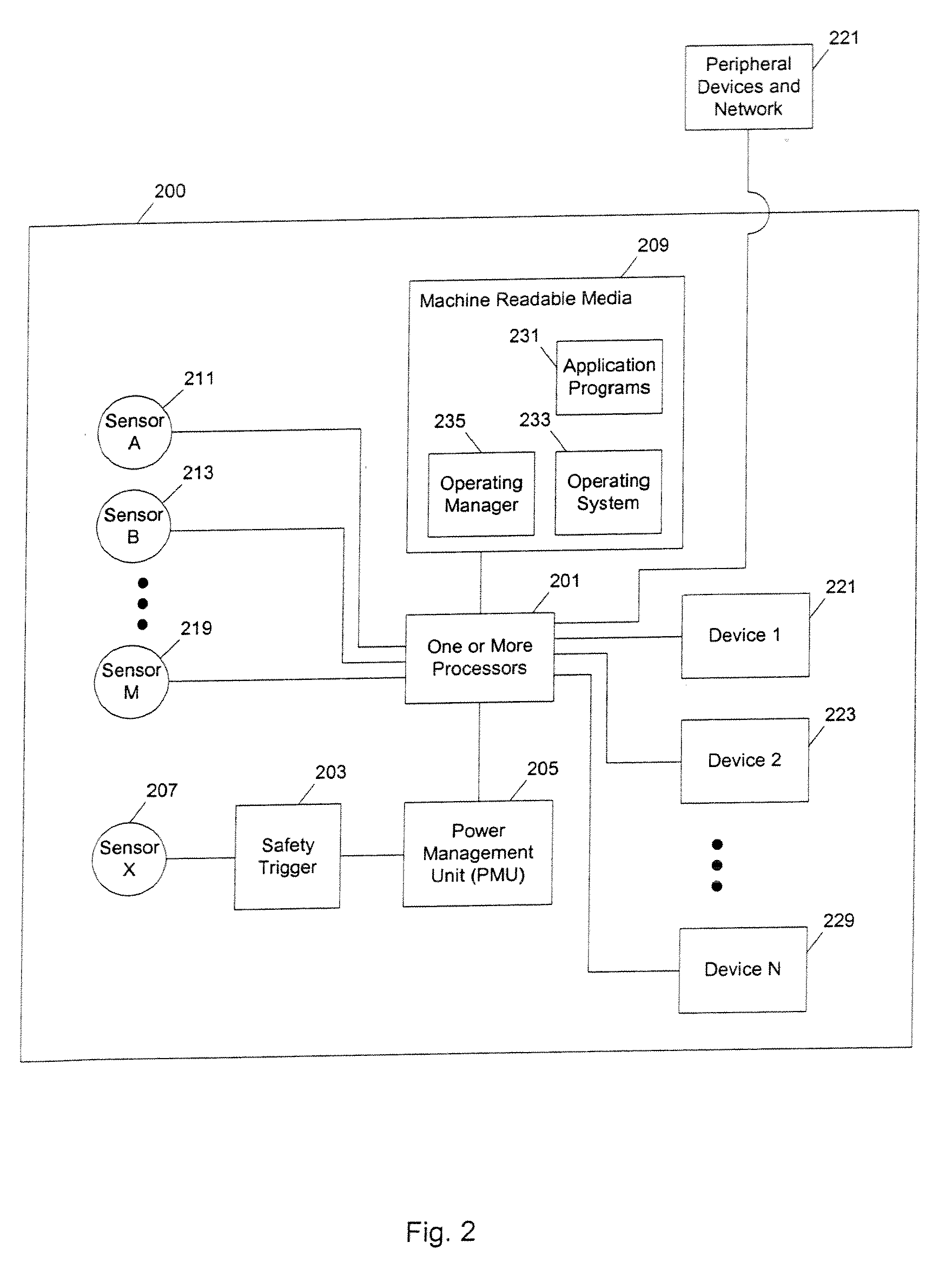

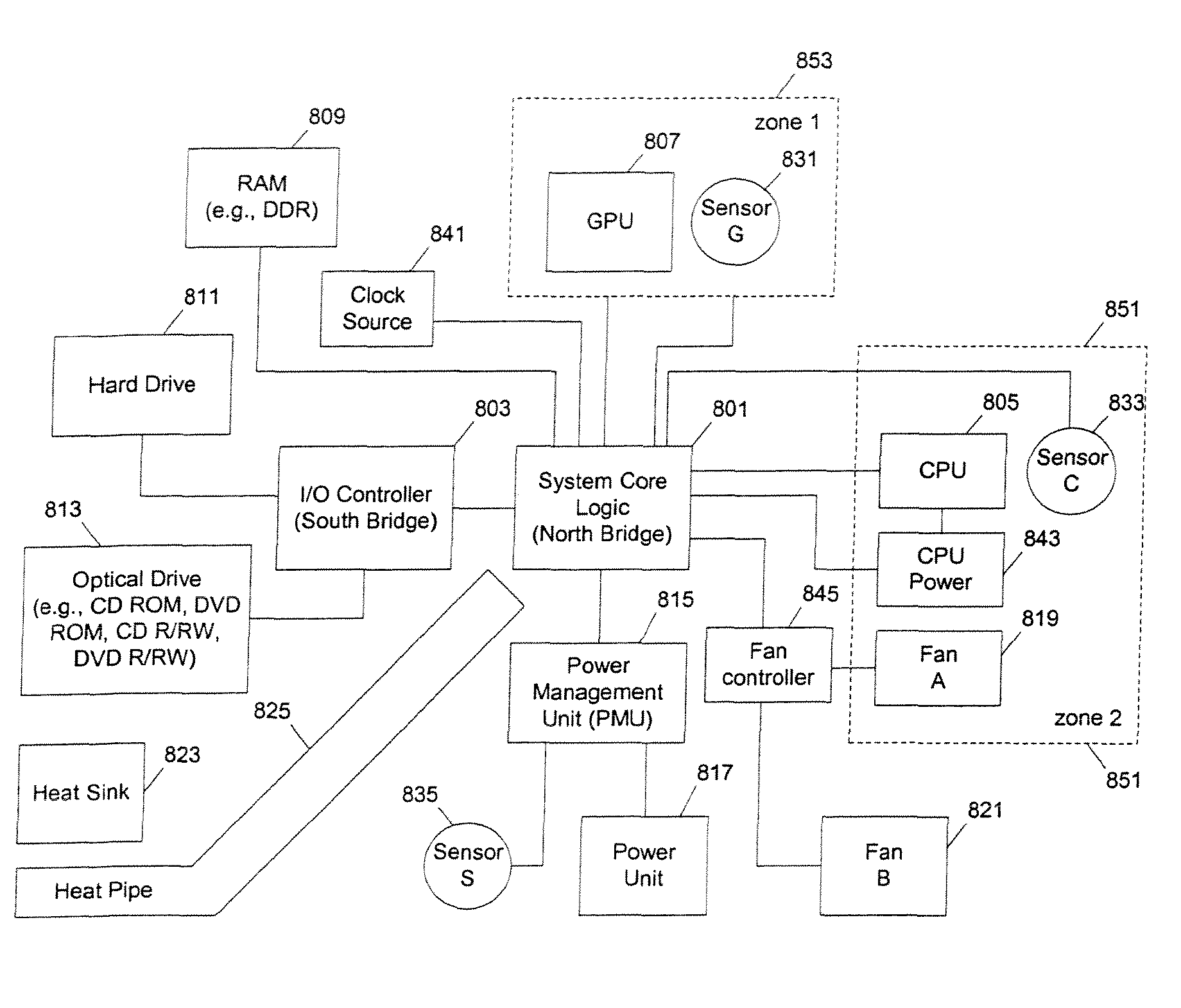

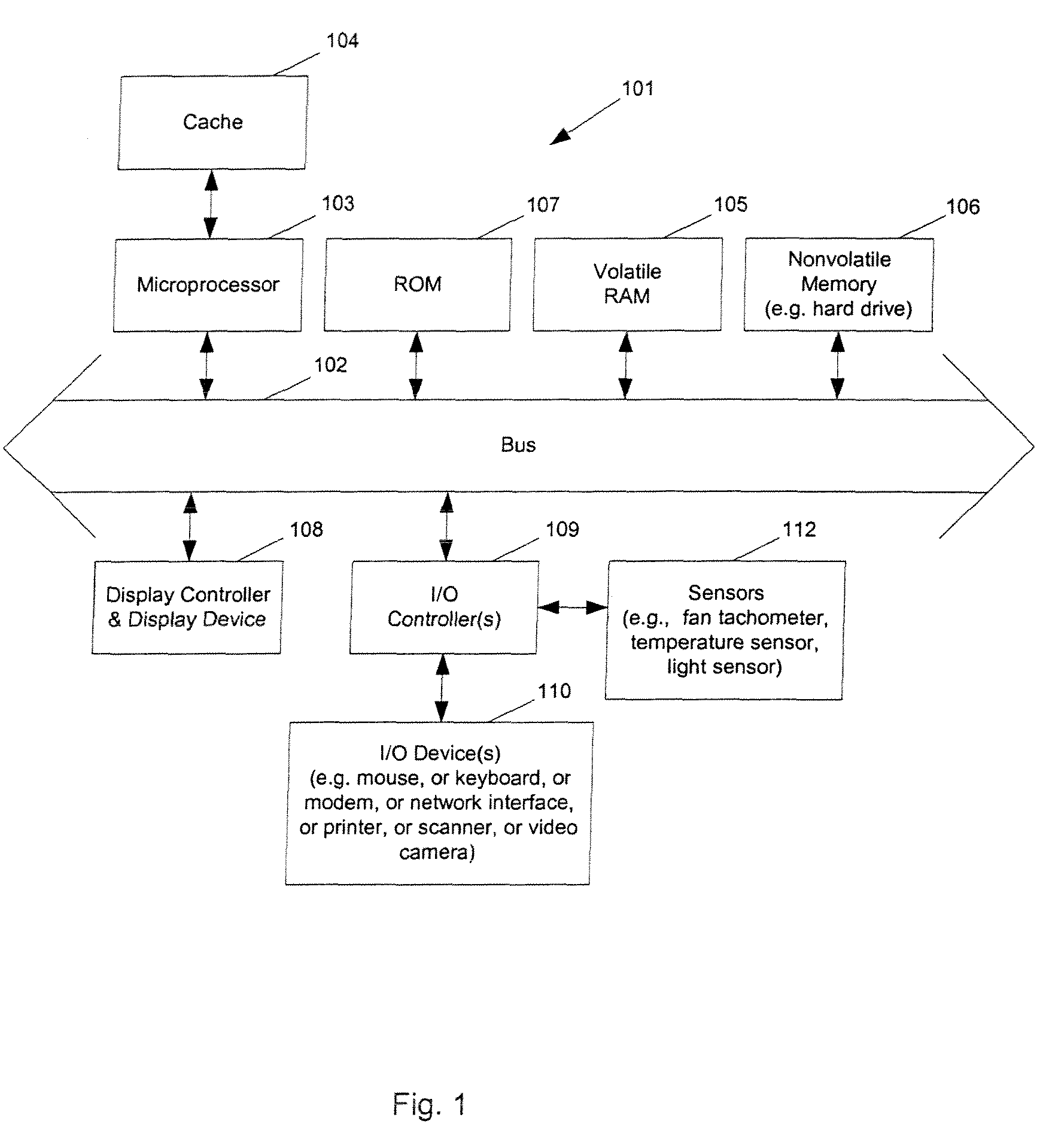

Methods and apparatuses to manage working states of a data processing system. At least one embodiment of the present invention includes a data processing system with one or more sensors (e.g., physical sensors such as tachometer and thermistors, and logical sensors such as CPU load) for fine grain control of one or more components (e.g., processor, fan, hard drive, optical drive) of the system for working conditions that balance various goals (e.g., user preferences, performance, power consumption, thermal constraints, acoustic noise). In one example, the clock frequency and core voltage for a processor are actively managed to balance performance and power consumption (heat generation) without a significant latency. In one example, the speed of a cooling fan is actively managed to balance cooling effort and noise (and / or power consumption).

Owner:APPLE INC

Methods and apparatuses for controlling the temperature of a data processing system

ActiveUS7451332B2Low heat generationImprove performanceEnergy efficient ICTVolume/mass flow measurementHard disc driveHeat sensitive

Methods and apparatuses to manage working states of a data processing system. At least one embodiment of the present invention includes a data processing system with one or more sensors (e.g., physical sensors such as tachometer and thermistors, and logical sensors such as CPU load) for fine grain control of one or more components (e.g., processor, fan, hard drive, optical drive) of the system for working conditions that balance various goals (e.g., user preferences, performance, power consumption, thermal constraints, acoustic noise). In one example, the clock frequency and core voltage for a processor are actively managed to balance performance and power consumption (heat generation) without a significant latency. In one example, the speed of a cooling fan is actively managed to balance cooling effort and noise (and / or power consumption).

Owner:APPLE INC

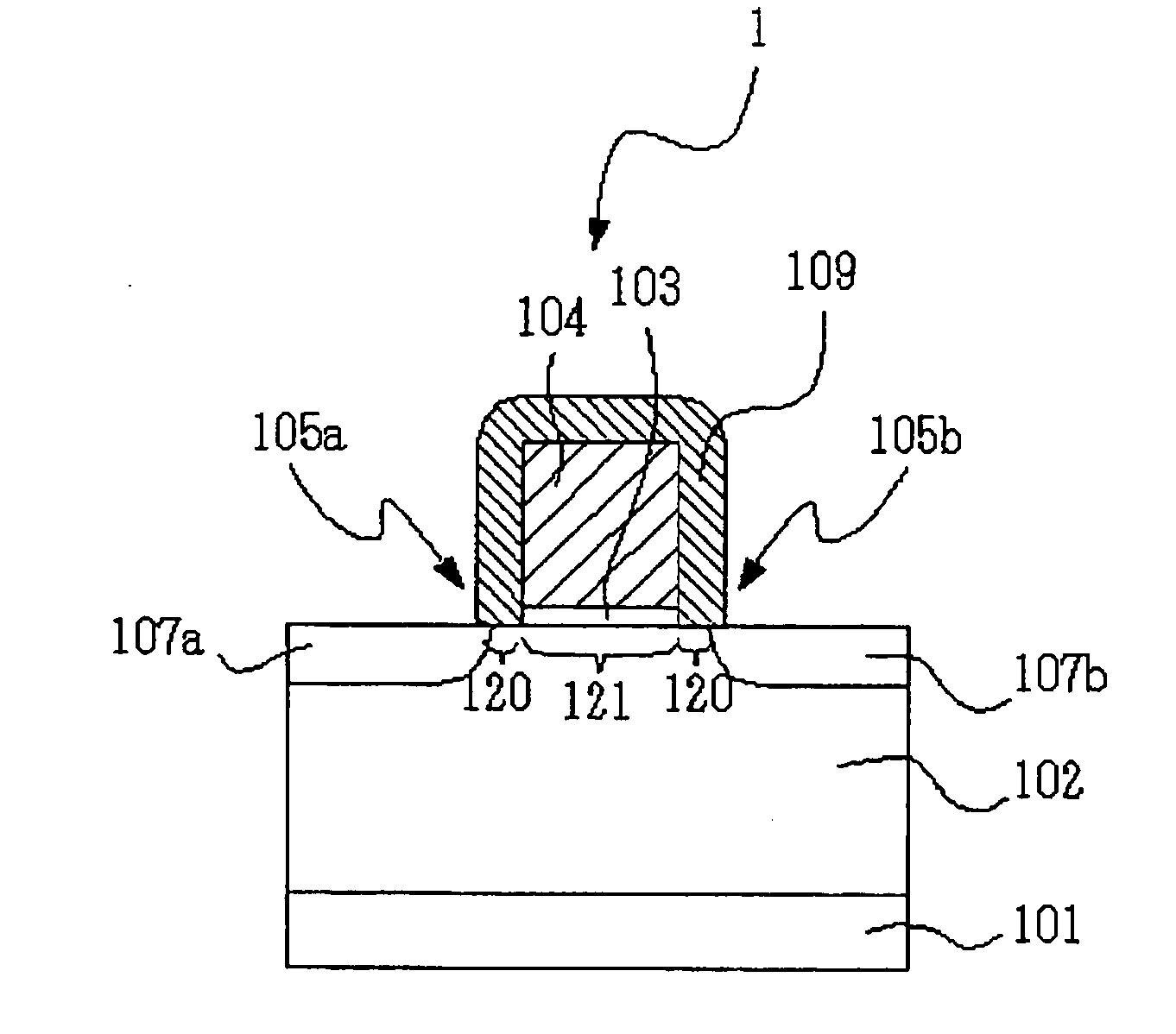

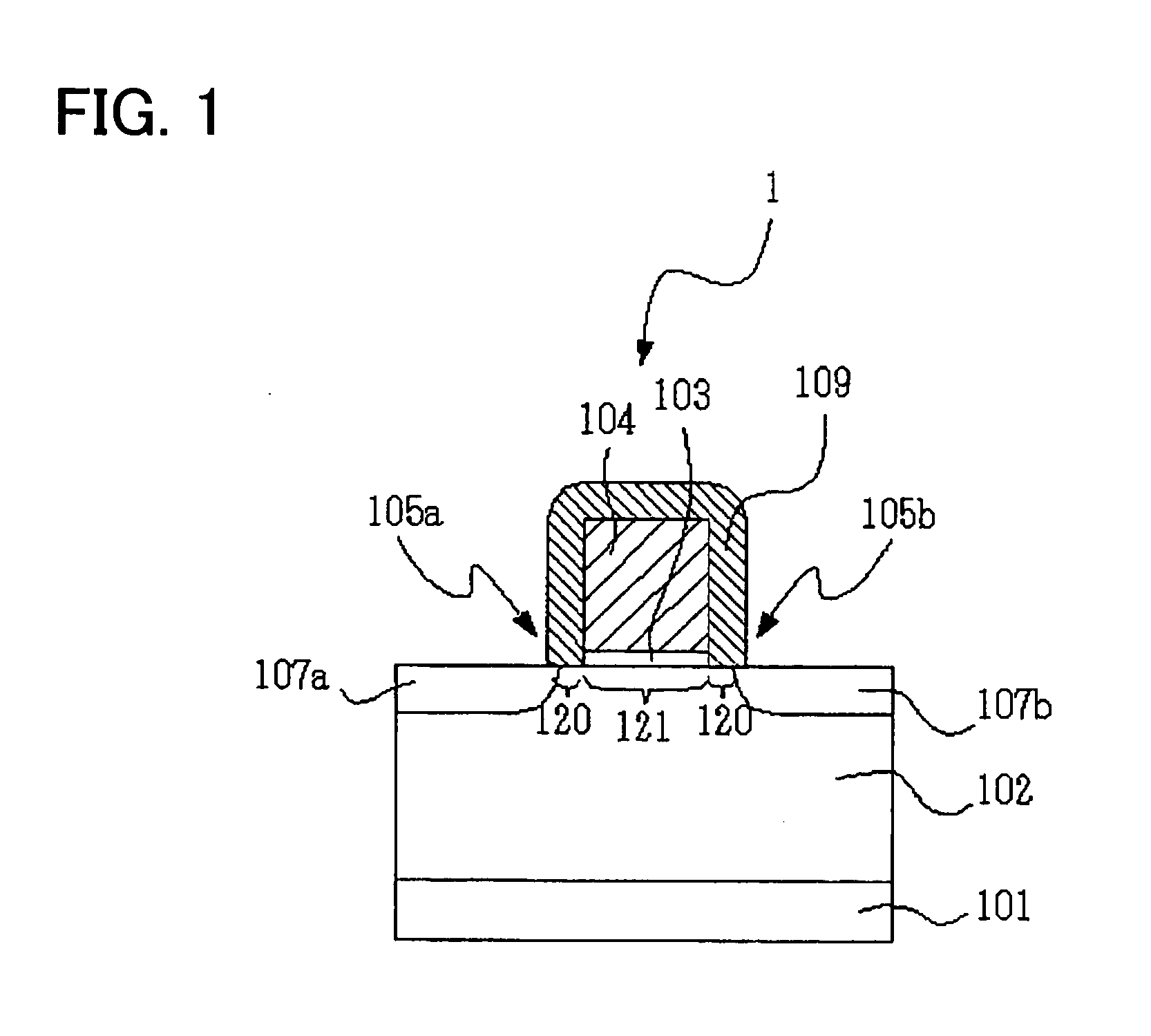

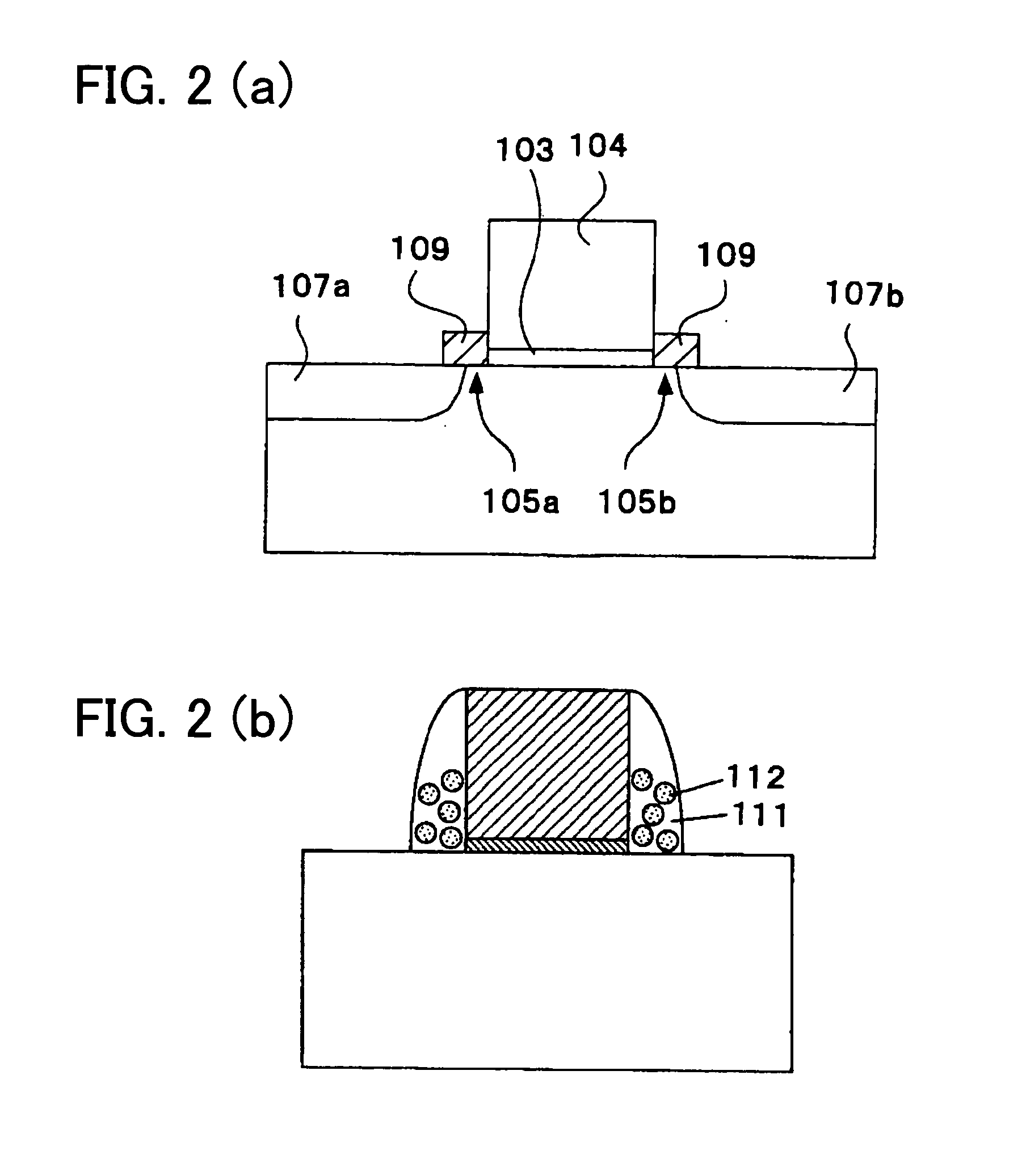

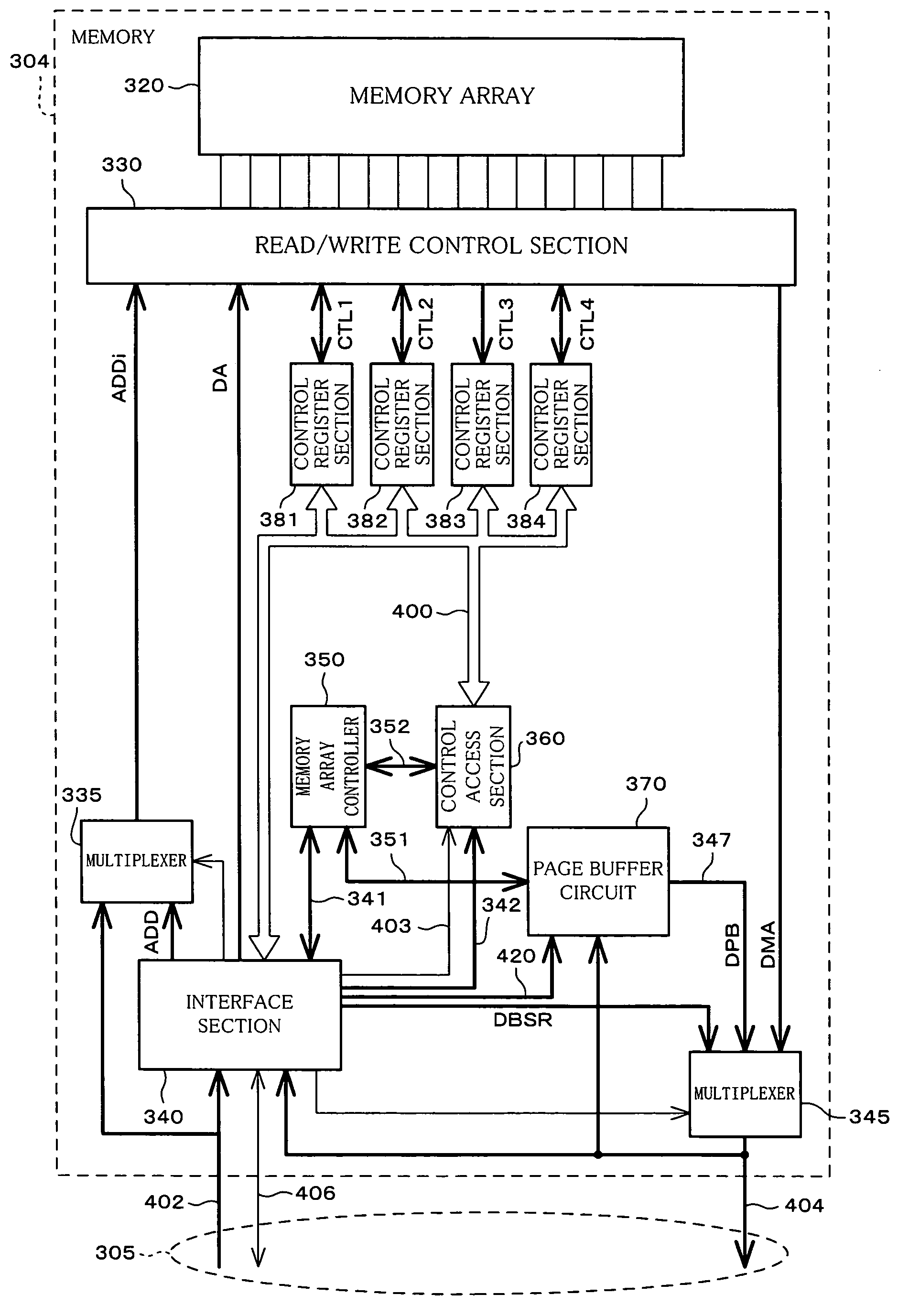

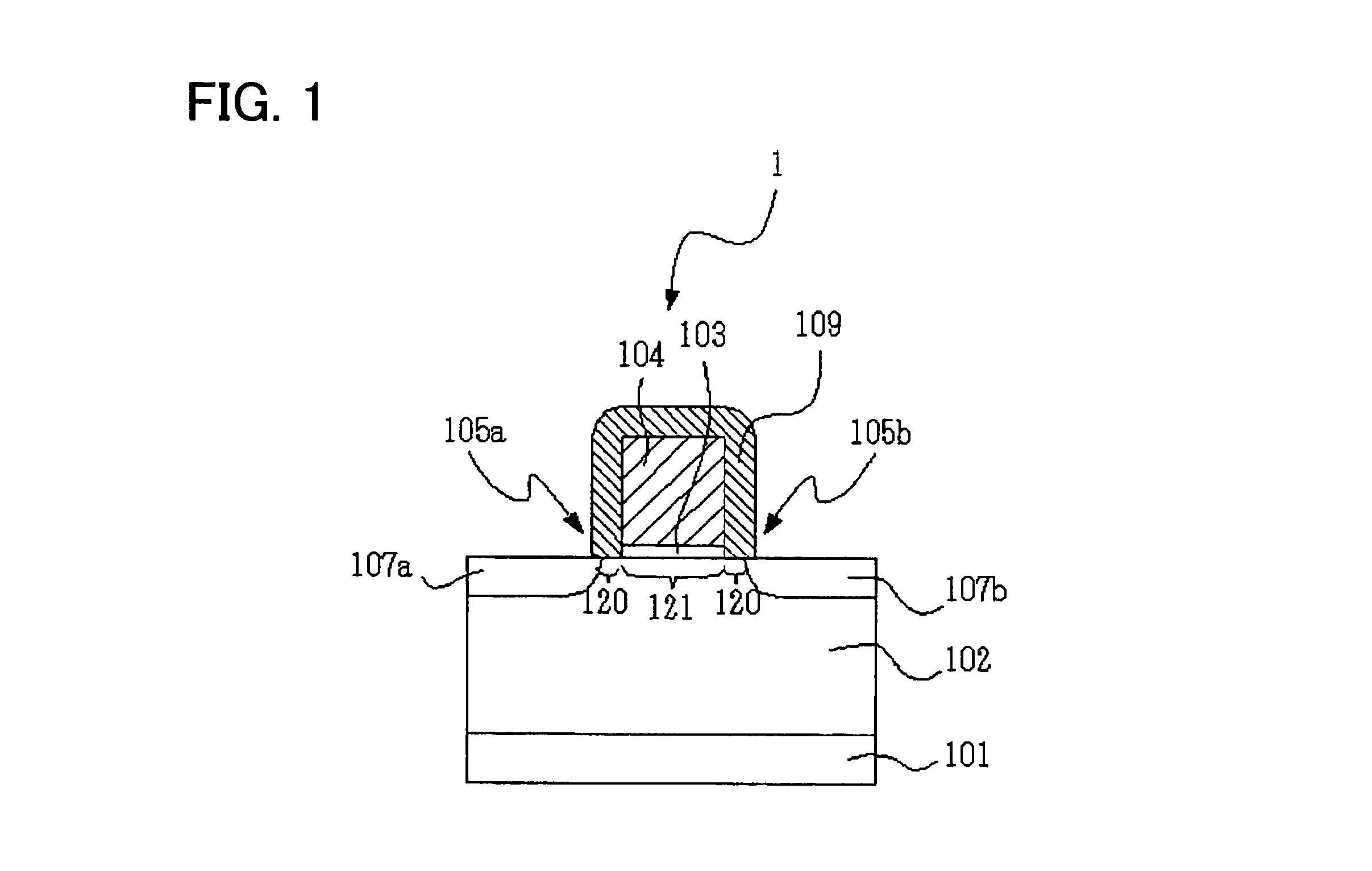

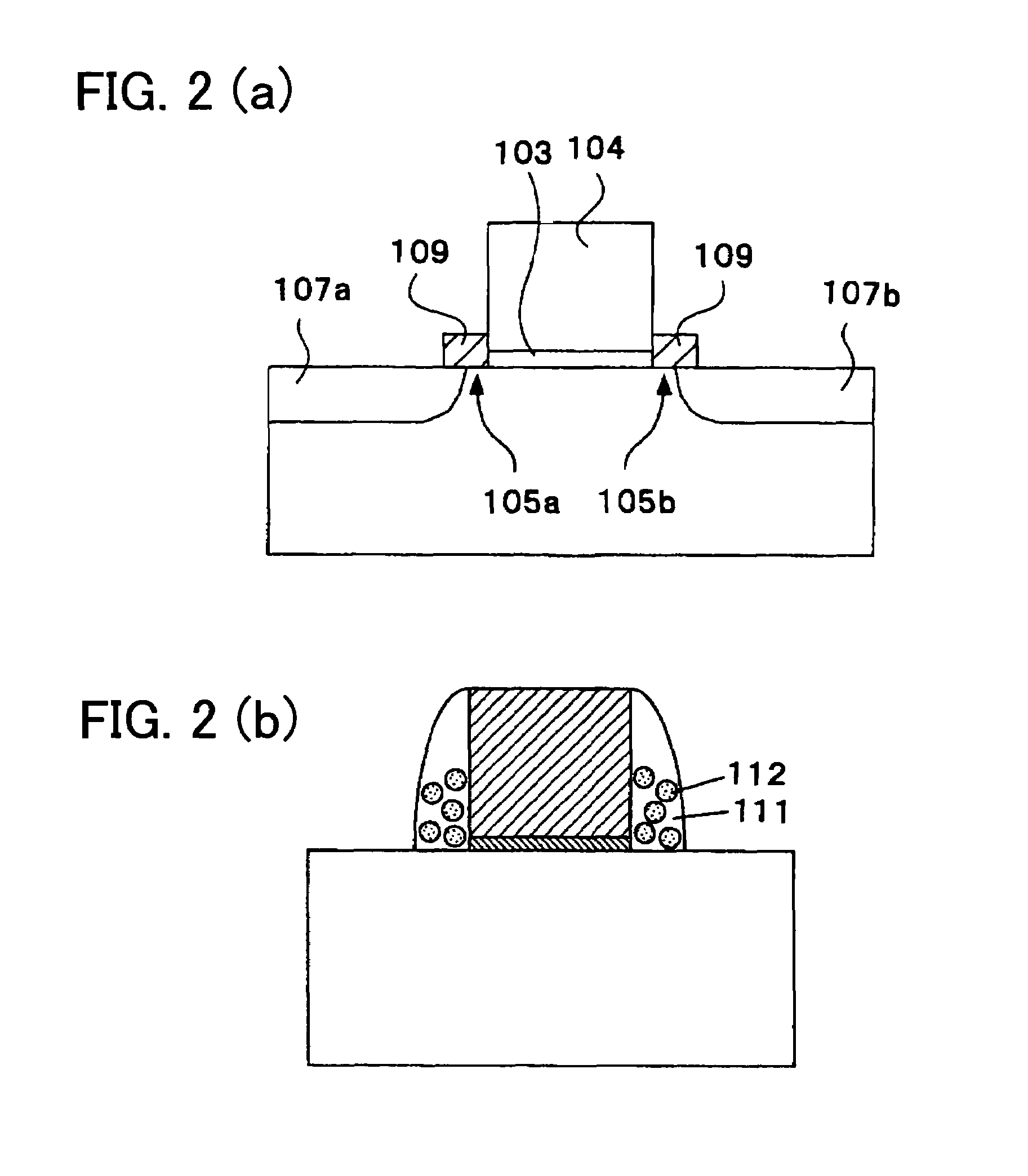

Writing control method and writing control system of semiconductor storage device, and portable electronic apparatus

InactiveUS20050002263A1Easily-realized finerSpeedup of writingTransistorSolid-state devicesSemiconductor storage devicesEngineering

A writing control system providing high-speed writing to a nonvolatile semiconductor storage device, includes (a) a plurality of memory elements each having: a gate electrode provided on a semiconductor layer with an intervening gate insulating film; a channel region provided beneath the gate electrode; a diffusion region provided on both sides of the channel region, having an opposite polarity to the channel region; and a memory functioning member, provided on both sides of the gate electrode, having a function of holding electric charges, (b) a memory array including a page buffer circuit, and (c) CPU controlling writing to the memory array. The CPU loads a first plane of the page buffer circuit with a first byte of data and writes with the first byte of data stored in the first plane. Further, the CPU writes a second byte of data into the second plane and writes the second byte of data having been stored in the second plane while writing the first byte of data having been stored in the first plane into the memory array.

Owner:SHARP KK

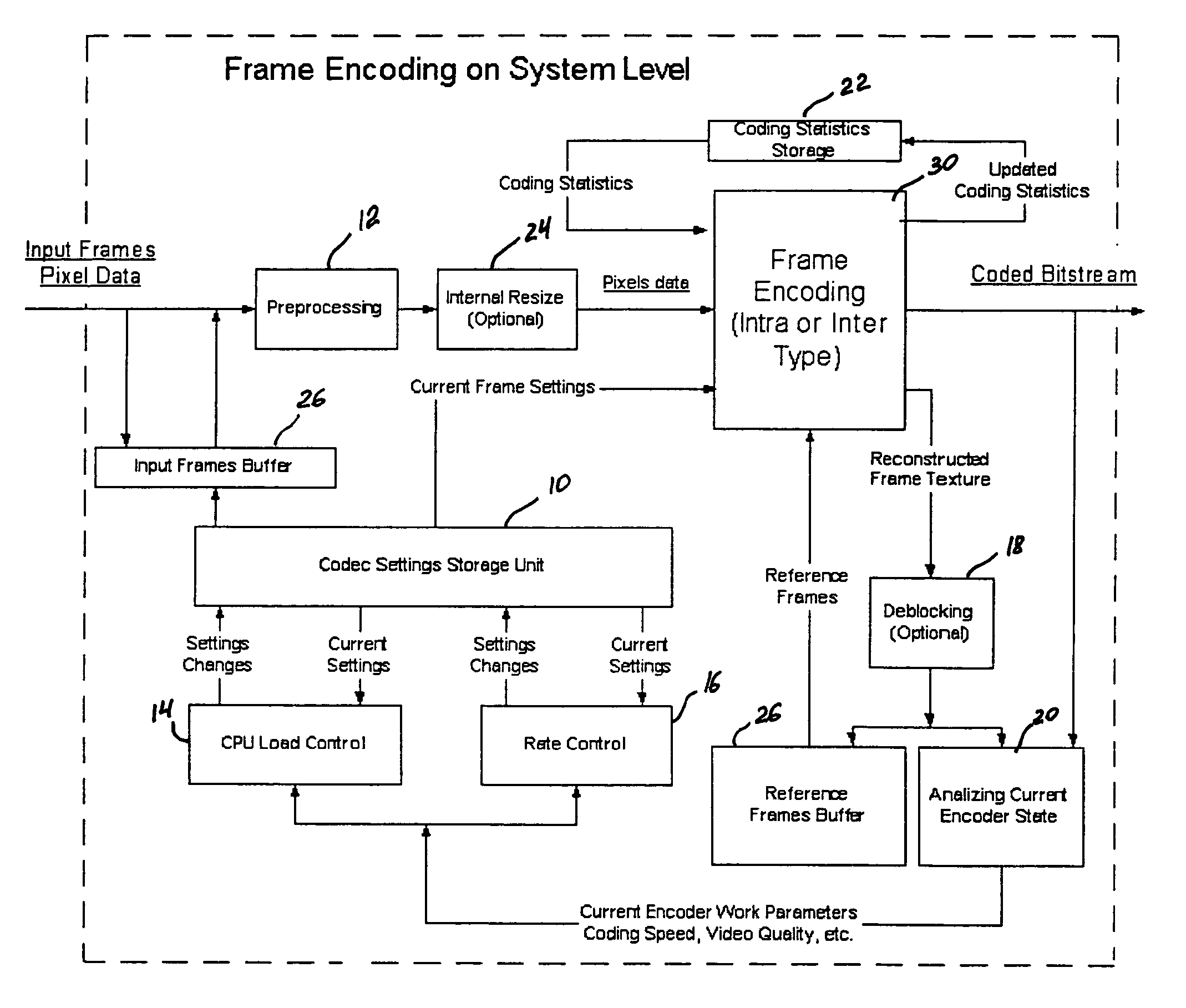

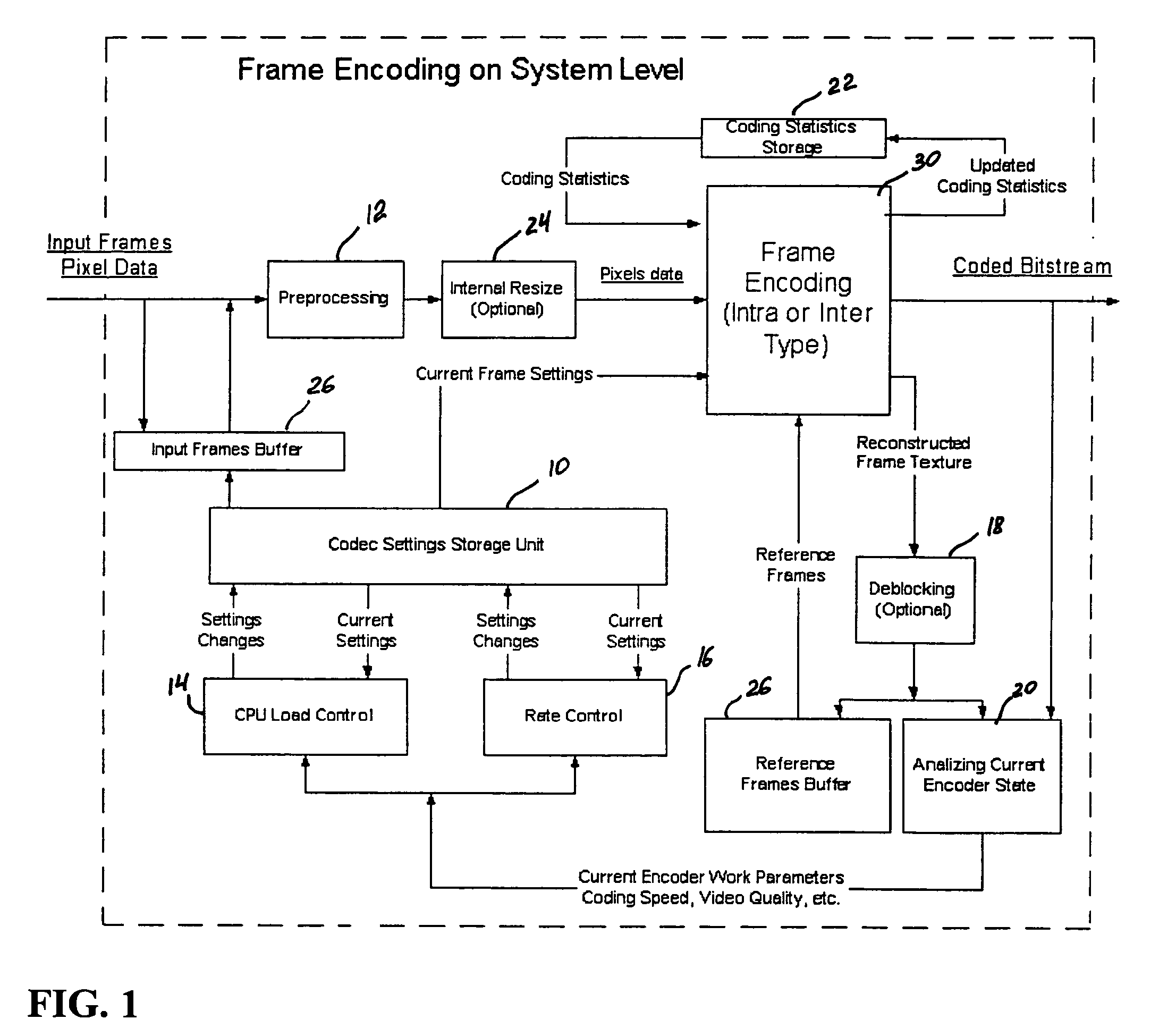

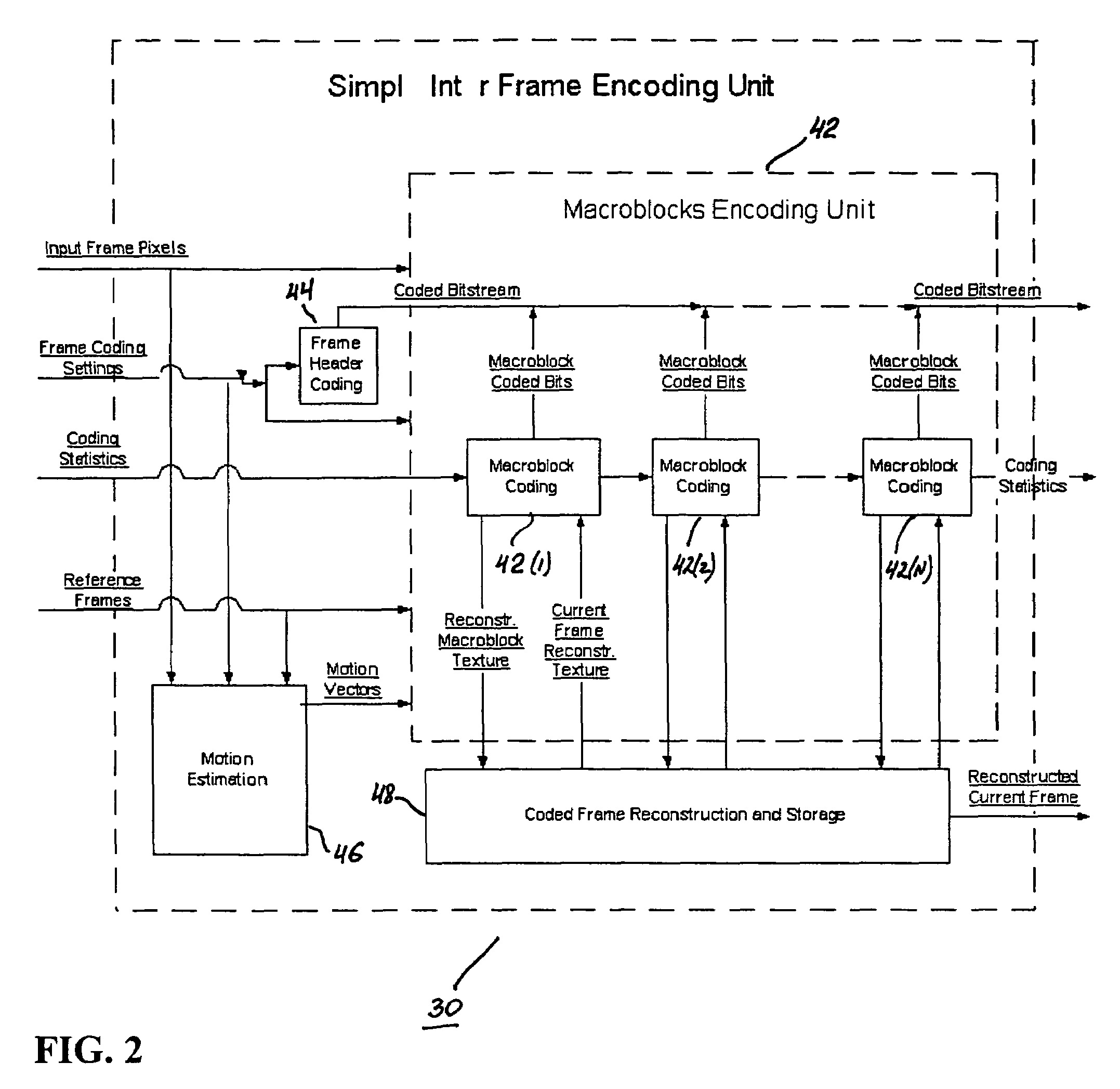

Real-time video coding/decoding

Owner:BEAMR IMAGING LTD

Writing control method and writing control system of semiconductor storage device, and portable electronic apparatus

InactiveUS7050337B2The implementation process is simpleSpeedup of writingTransistorSolid-state devicesSemiconductor storage devicesHemt circuits

A writing control system providing high-speed writing to a nonvolatile semiconductor storage device, includes (a) a plurality of memory elements each having: a gate electrode provided on a semiconductor layer with an intervening gate insulating film; a channel region provided beneath the gate electrode; a diffusion region provided on both sides of the channel region, having an opposite polarity to the channel region; and a memory functioning member, provided on both sides of the gate electrode, having a function of holding electric charges, (b) a memory array including a page buffer circuit, and (c) CPU controlling writing to the memory array. The CPU loads a first plane of the page buffer circuit with a first byte of data and writes with the first byte of data stored in the first plane. Further, the CPU writes a second byte of data into the second plane and writes the second byte of data having been stored in the second plane while writing the first byte of data having been stored in the first plane into the memory array.

Owner:SHARP KK

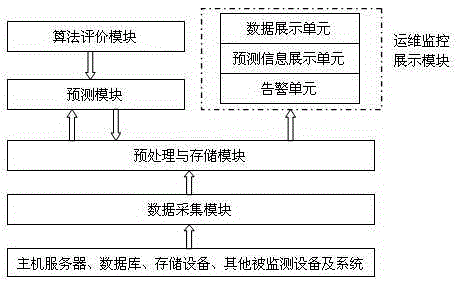

Operation and maintenance automation system and method

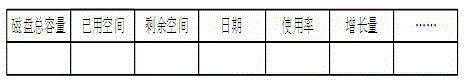

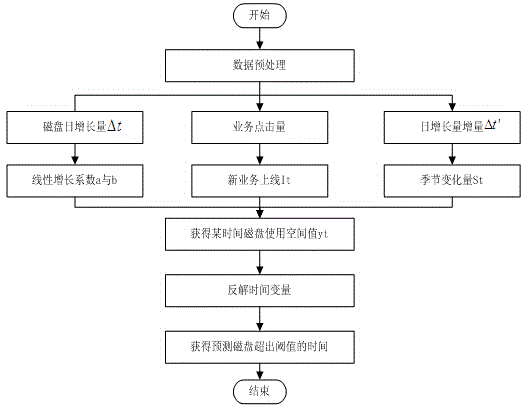

ActiveCN105323111AEasy to viewImprove the difficulty of operation and maintenanceData switching networksPrediction algorithmsData acquisition

The invention discloses an operation and maintenance automation system and method. The system comprises a data acquisition module, a pre-processing and storing module, a prediction module, an algorithm evaluation module and an operation and maintenance monitoring management module, wherein the data acquisition module is used for acquiring key performance indexes and running states of monitored units in an operation and maintenance system through a network management protocol or a log file; the pre-processing and storing module is used for performing pre-processing work and sorted storing on data acquired by the data acquisition module; the prediction module is used for performing predictions, including a CPU (Central Processing Unit) load prediction and a disk load prediction according to the data processed by the pre-processing and storing module; the algorithm evaluation module is used for establishing an evaluation criterion of a prediction algorithm and the prediction module, comparing an actual value with a predicted value of the prediction algorithm, and establishing a self-learning process; and the operation and maintenance monitoring management module is used for interacting with operation and maintenance management personnel. A load prediction mechanism and an algorithm prediction model are established in order to finish predictions specific to resource use situations of CPUs, memories, disks and the like. Alarm information is analyzed by further referring to a load prediction result in order to give a relevant auxiliary decision. Resource expansion and fault handling are realized in a way of using scripts, an API (Application Programming Interface) interface and the like.

Owner:NANJING NARI GROUP CORP

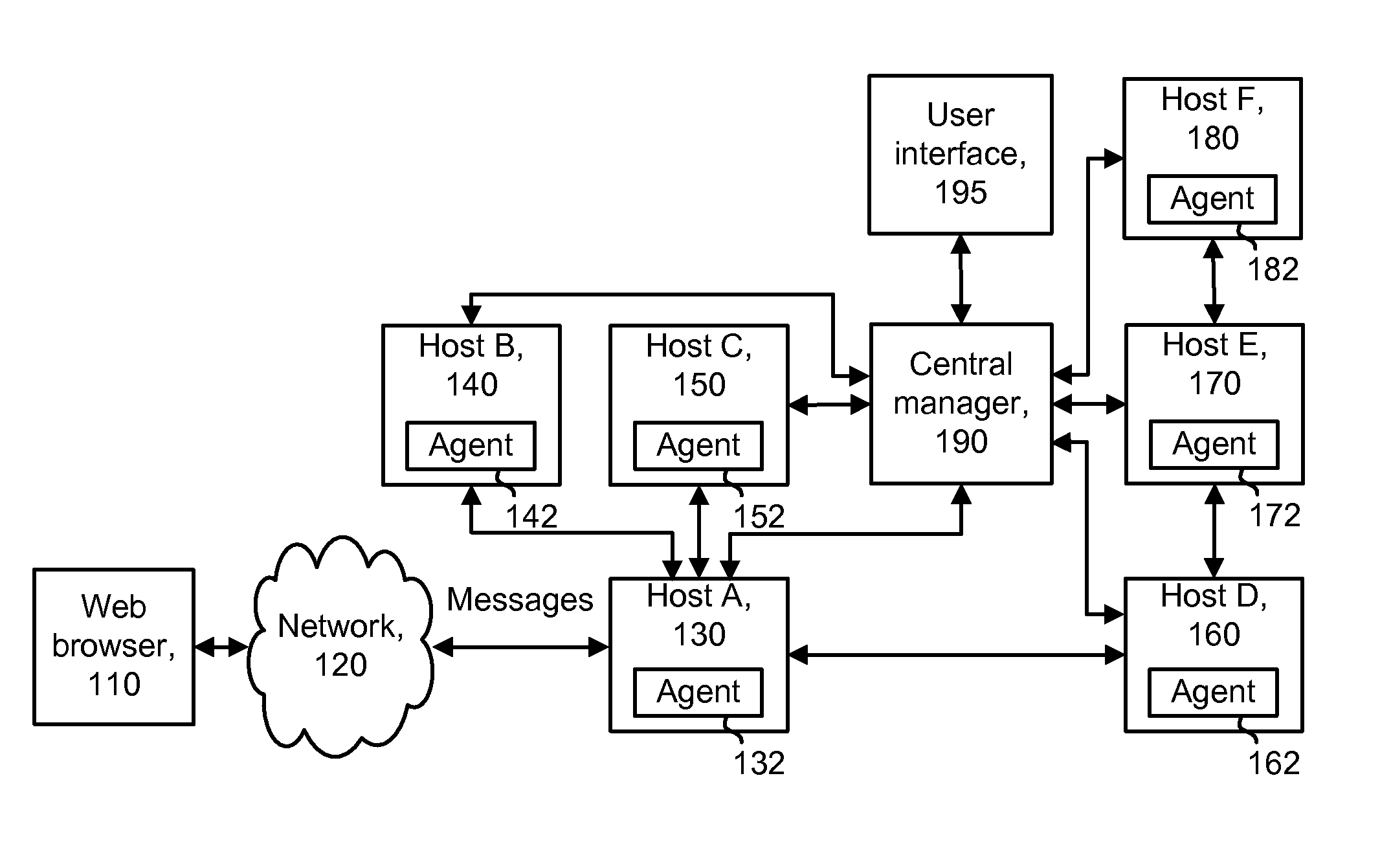

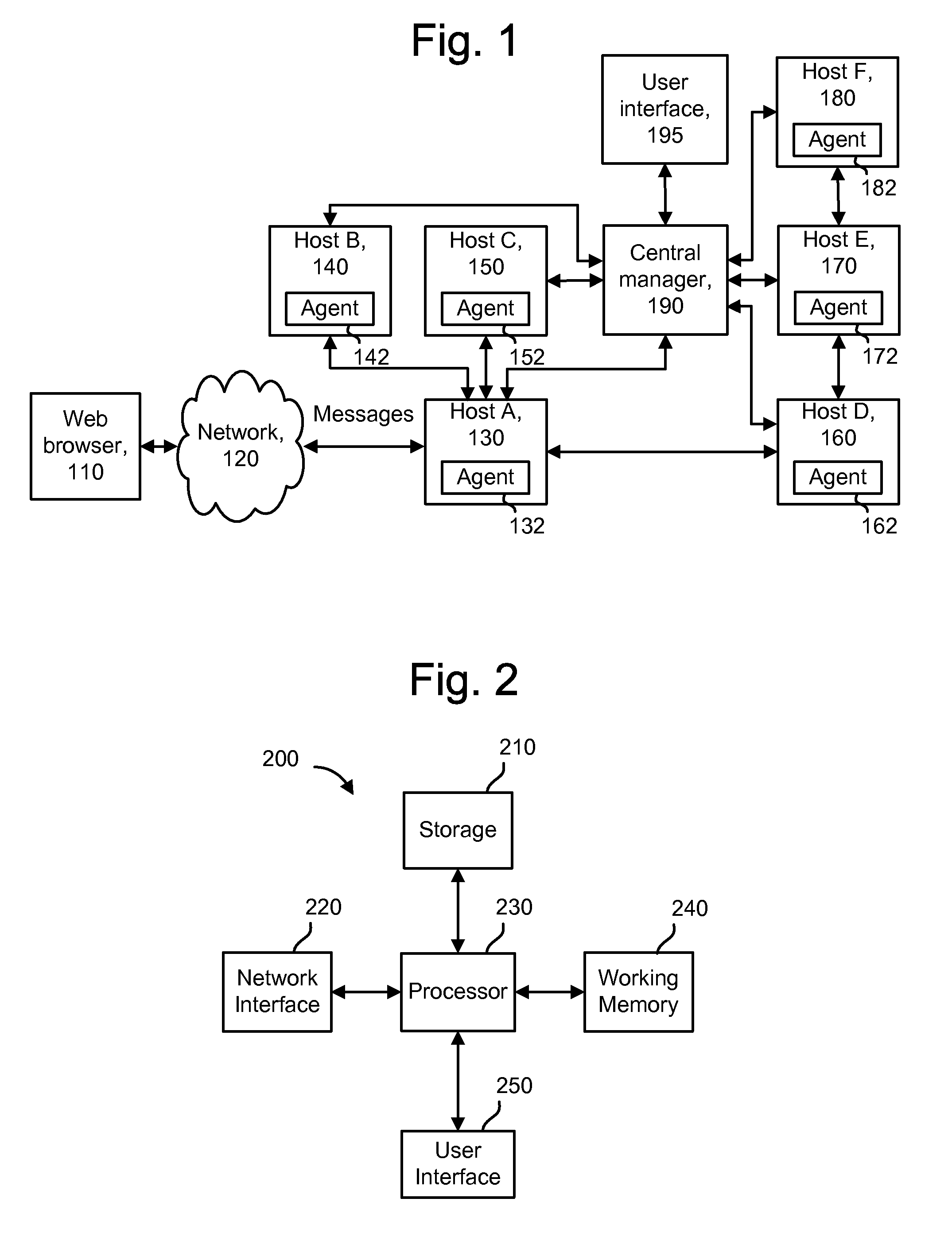

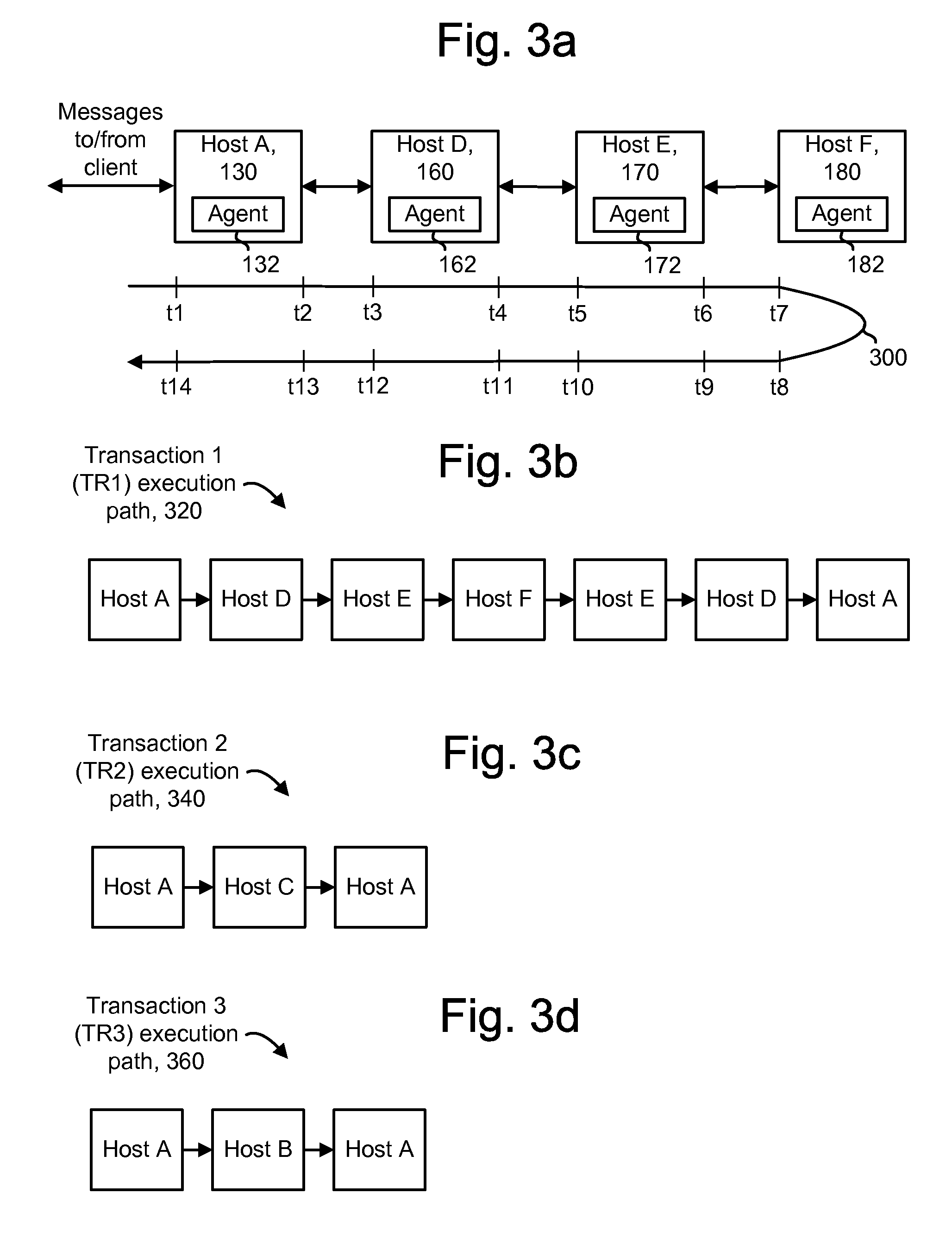

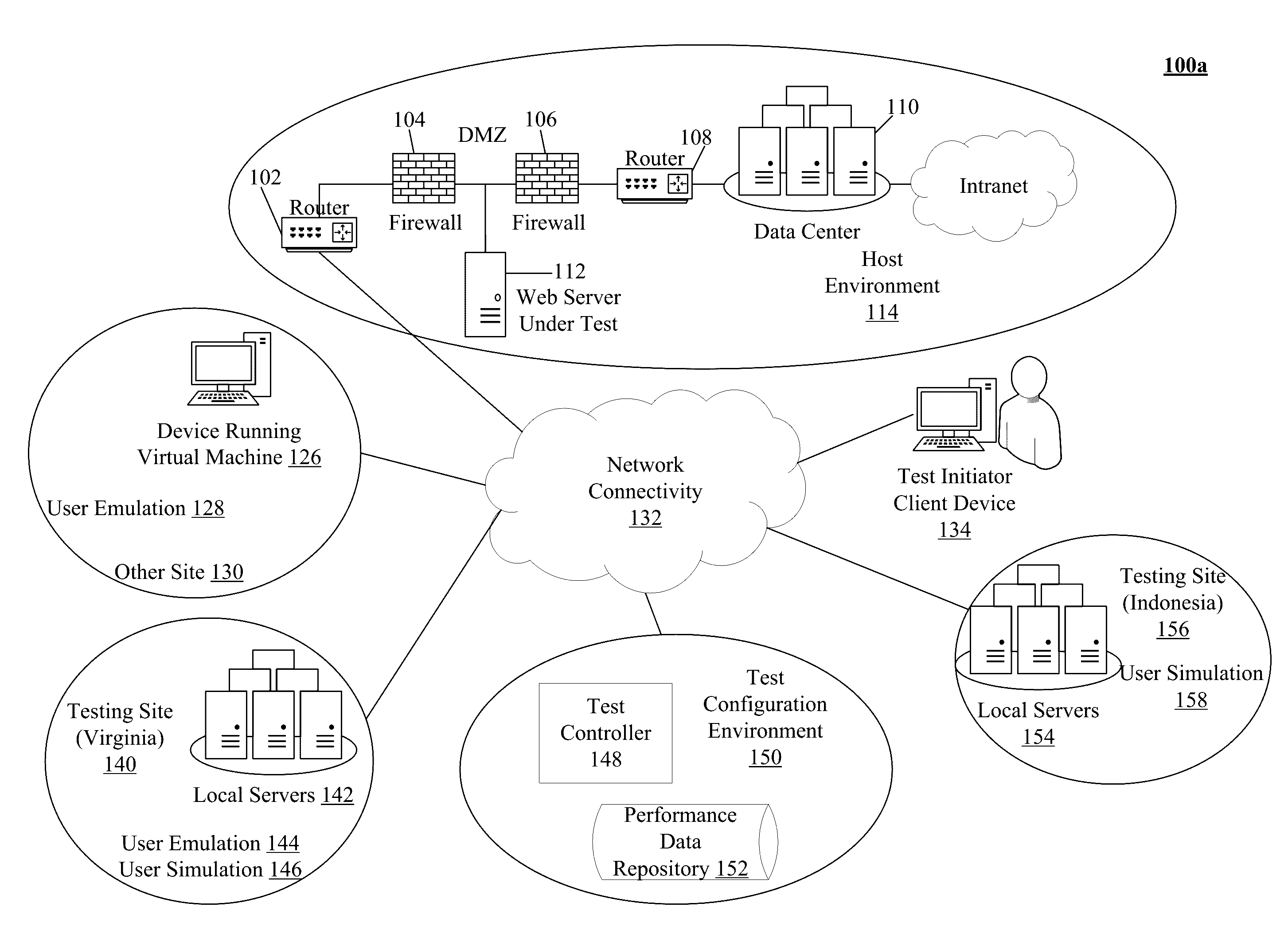

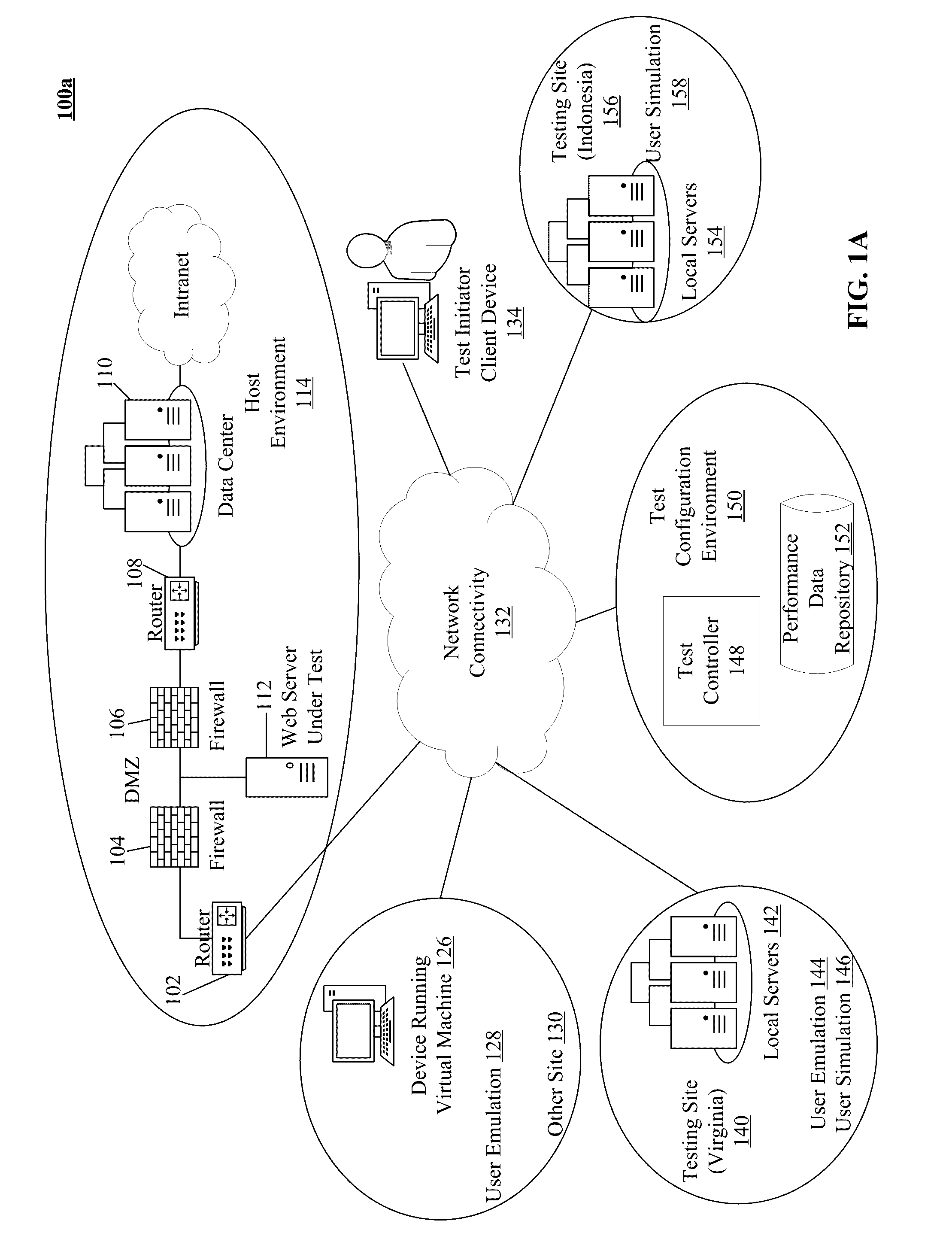

Automatic root cause analysis of performance problems using auto-baselining on aggregated performance metrics

InactiveUS20080235365A1Multiple digital computer combinationsTransmissionDrill downAnomalous behavior

Anomalous behavior in a distributed system is automatically detected. Metrics are gathered for transactions, subsystems and / or components of the subsystems. The metrics can identify response times, error counts and / or CPU loads, for instance. Baseline metrics and associated deviation ranges are automatically determined and can be periodically updated. Metrics from specific transactions are compared to the baseline metrics to determine if an anomaly has occurred. A drill down approach can be used so that metrics for a subsystem are not examined unless the metrics for an associated transaction indicate an anomaly. Further, metrics for a component, application which includes one or more components, or process which includes one or more applications, are not examined unless the metrics for an associated subsystem indicate an anomaly. Multiple subsystems can report the metrics to a central manager, which can correlate the metrics to transactions using transaction identifiers or other transaction context data.

Owner:CA TECH INC

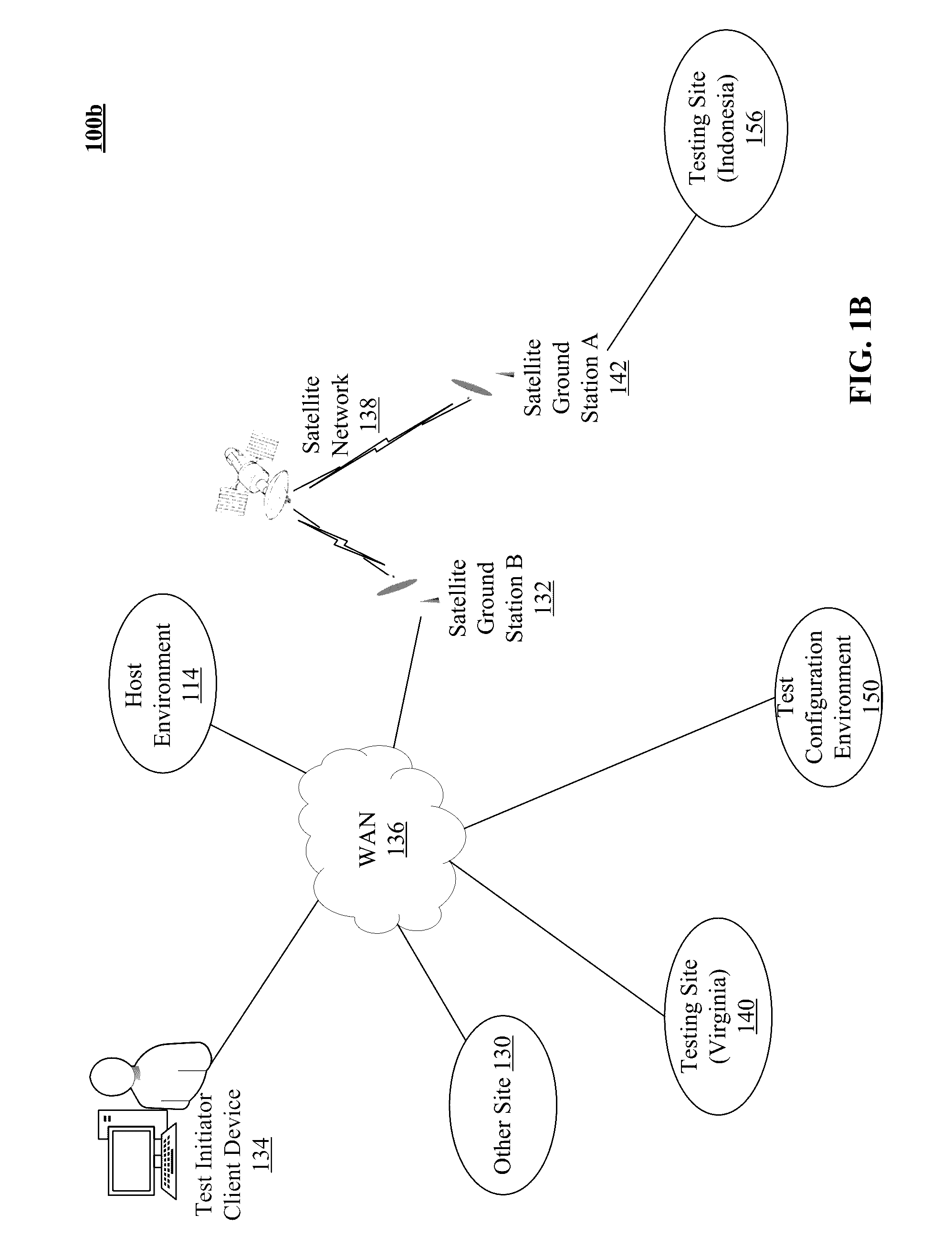

Method to configure monitoring thresholds using output of load or resource loadings

The technology disclosed enables the automatic definition of monitoring alerts for a web page across a plurality of variables such as server response time, server CPU load, network bandwidth utilization, response time from a measured client, network latency, server memory utilization, and the number of simultaneous sessions, amongst others. This is accomplished through the combination of load or resource loading and performance snapshots, where performance correlations allow for the alignment of operating variables. Performance data such as response time for the objects retrieved, number of hits per second, number of timeouts per sec, and errors per second can be recorded and reported. This allows for the automated ranking of tens of thousands of web pages, with an analysis of the web page assets that affect performance, and the automatic alignment of performance alerts by resource participation.

Owner:SPIRENT COMM

Methods and apparatuses for operating a data processing system

ActiveUS20100117579A1Balance performanceShorten speedDigital data processing detailsTemperatue controlHard disc driveThermistor

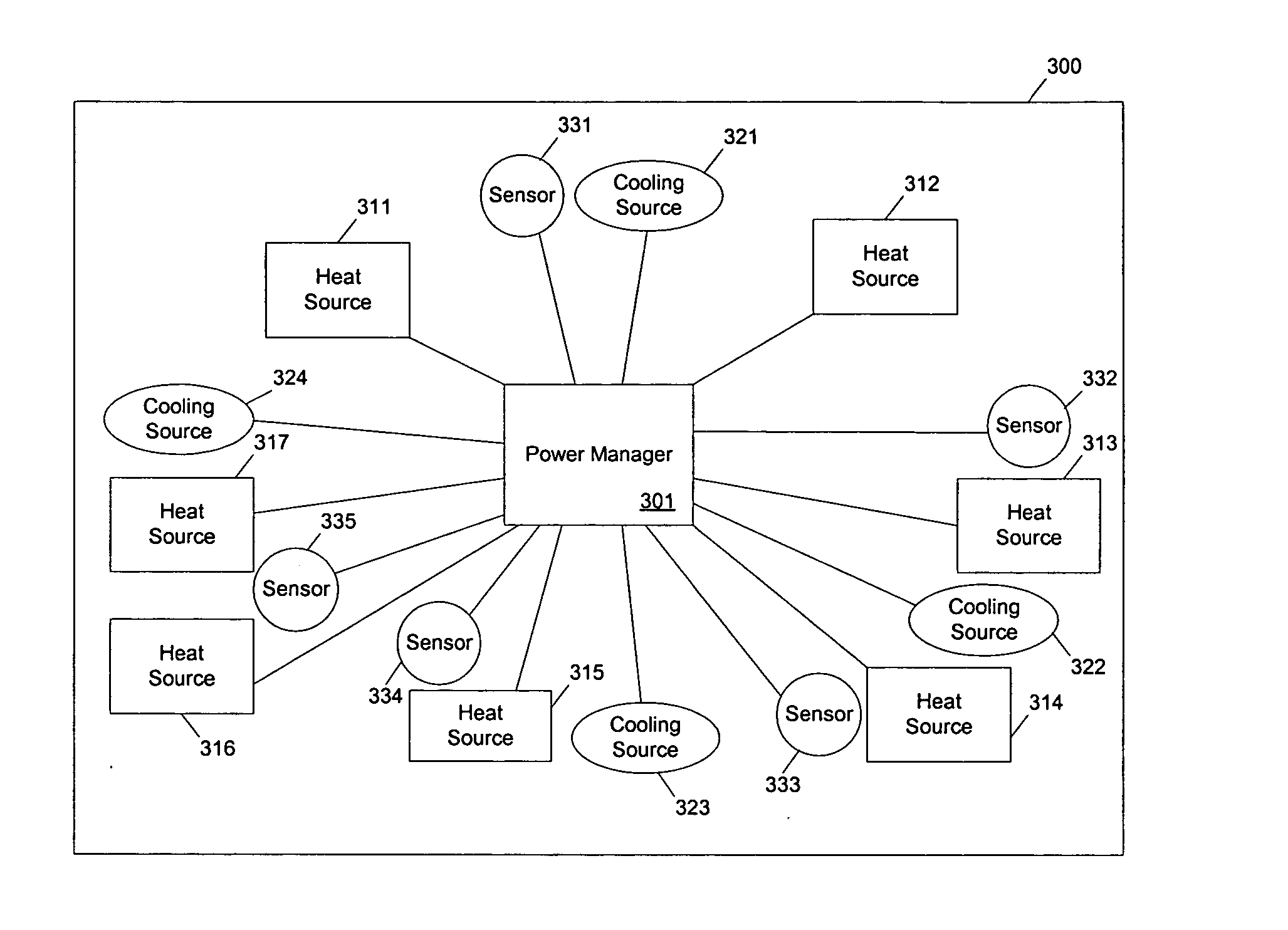

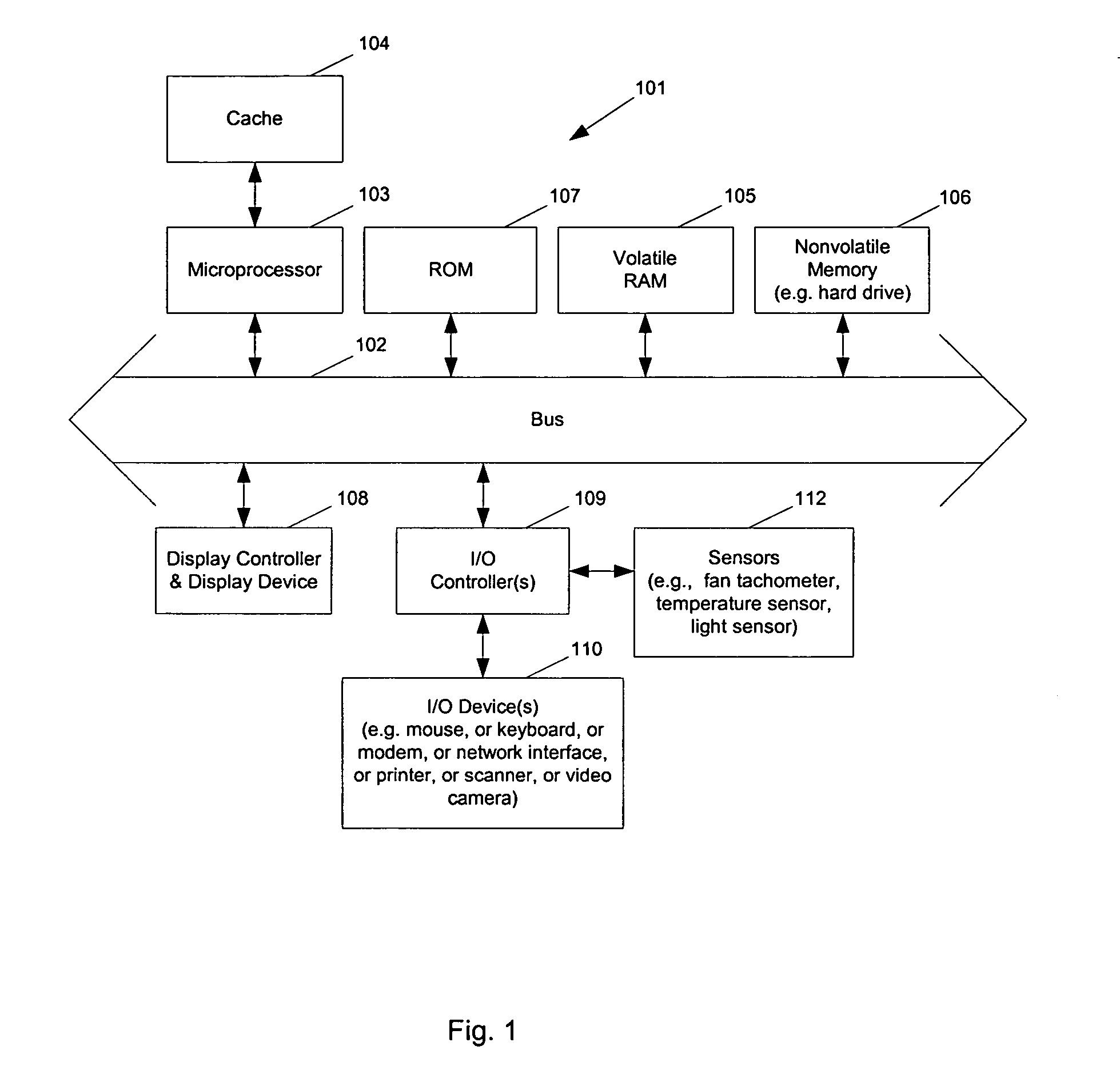

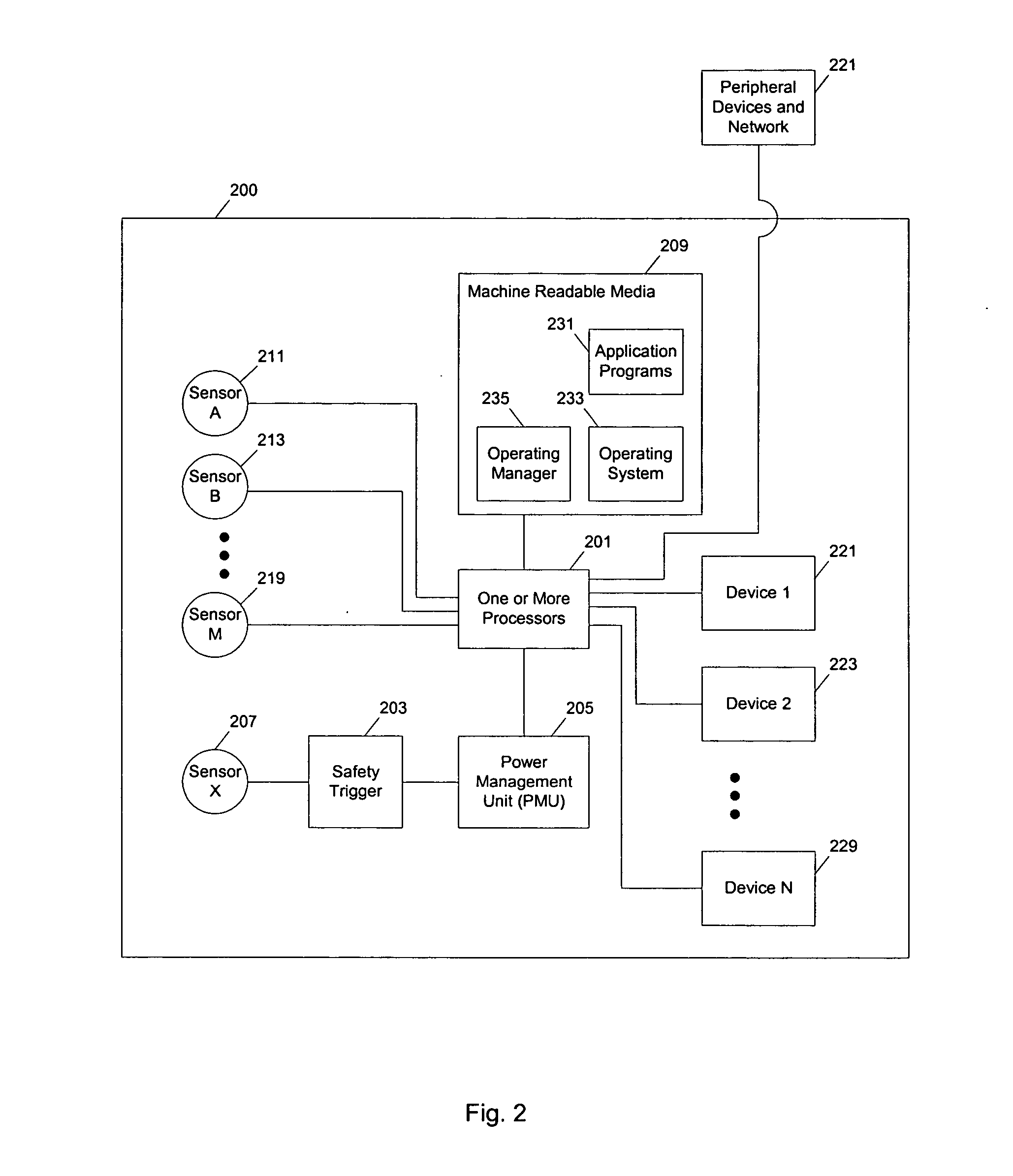

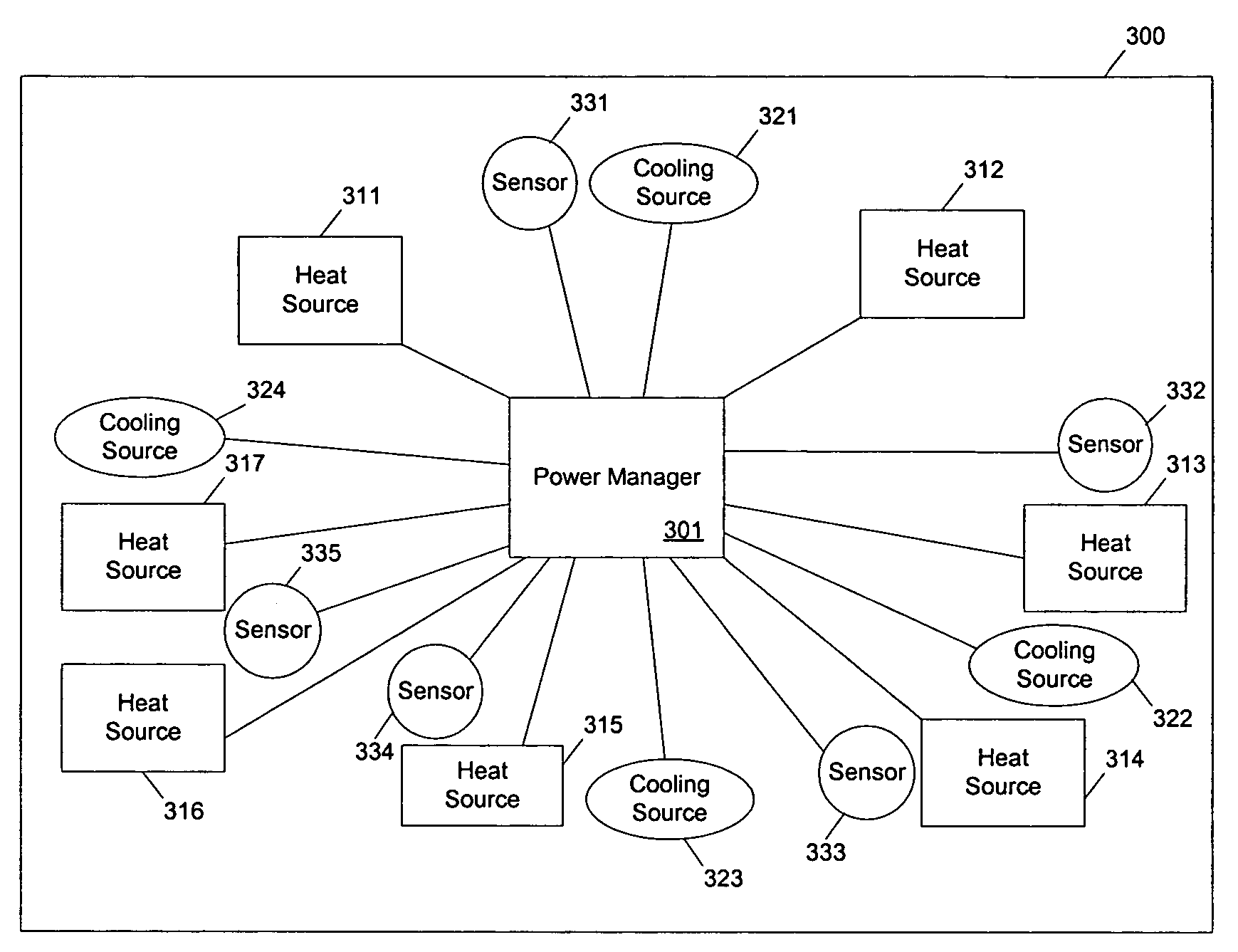

Methods and apparatuses to manage working states of a data processing system. At least one embodiment of the present invention includes a data processing system with one or more sensors (e.g., physical sensors such as tachometer and thermistors, and logical sensors such as CPU load) for fine grain control of one or more components (e.g., processor, fan, hard drive, optical drive) of the system for working conditions that balance various goals (e.g., user preferences, performance, power consumption, thermal constraints, acoustic noise). In one example, the clock frequency and core voltage for a processor are actively managed to balance performance and power consumption (heat generation) without a significant latency. In one example, the speed of a cooling fan is actively managed to balance cooling effort and noise (and / or power consumption).

Owner:APPLE INC

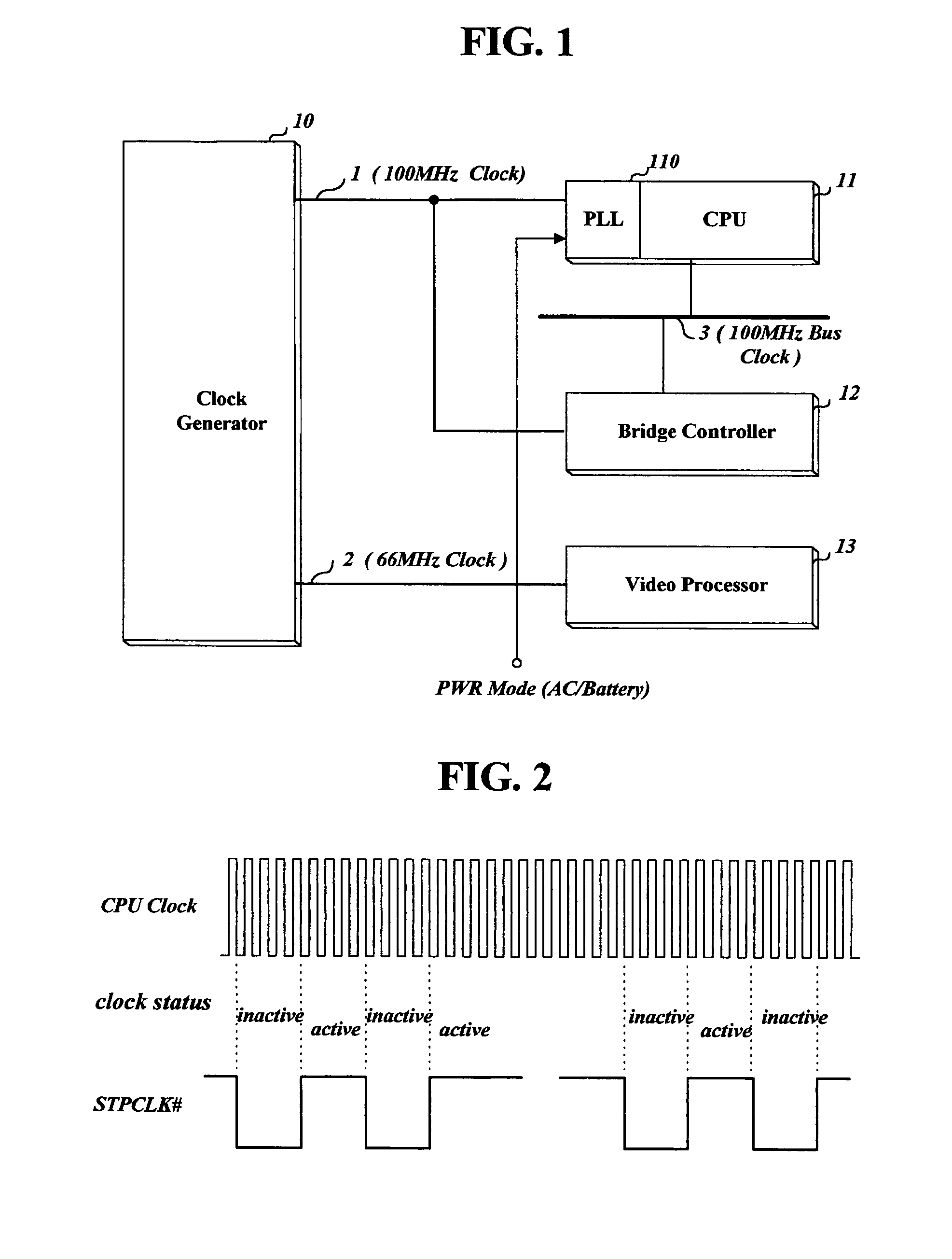

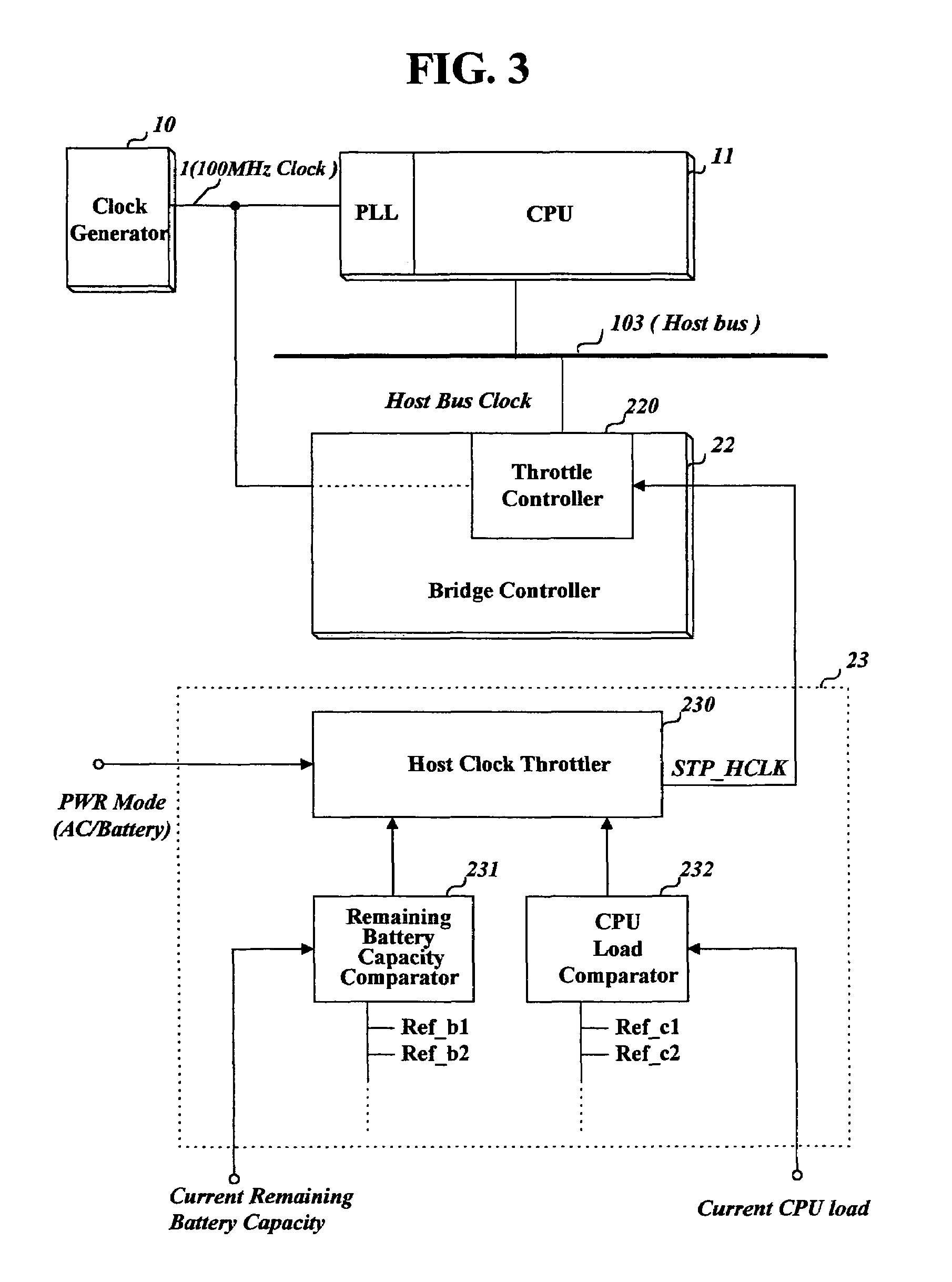

Bus clock controlling apparatus and method

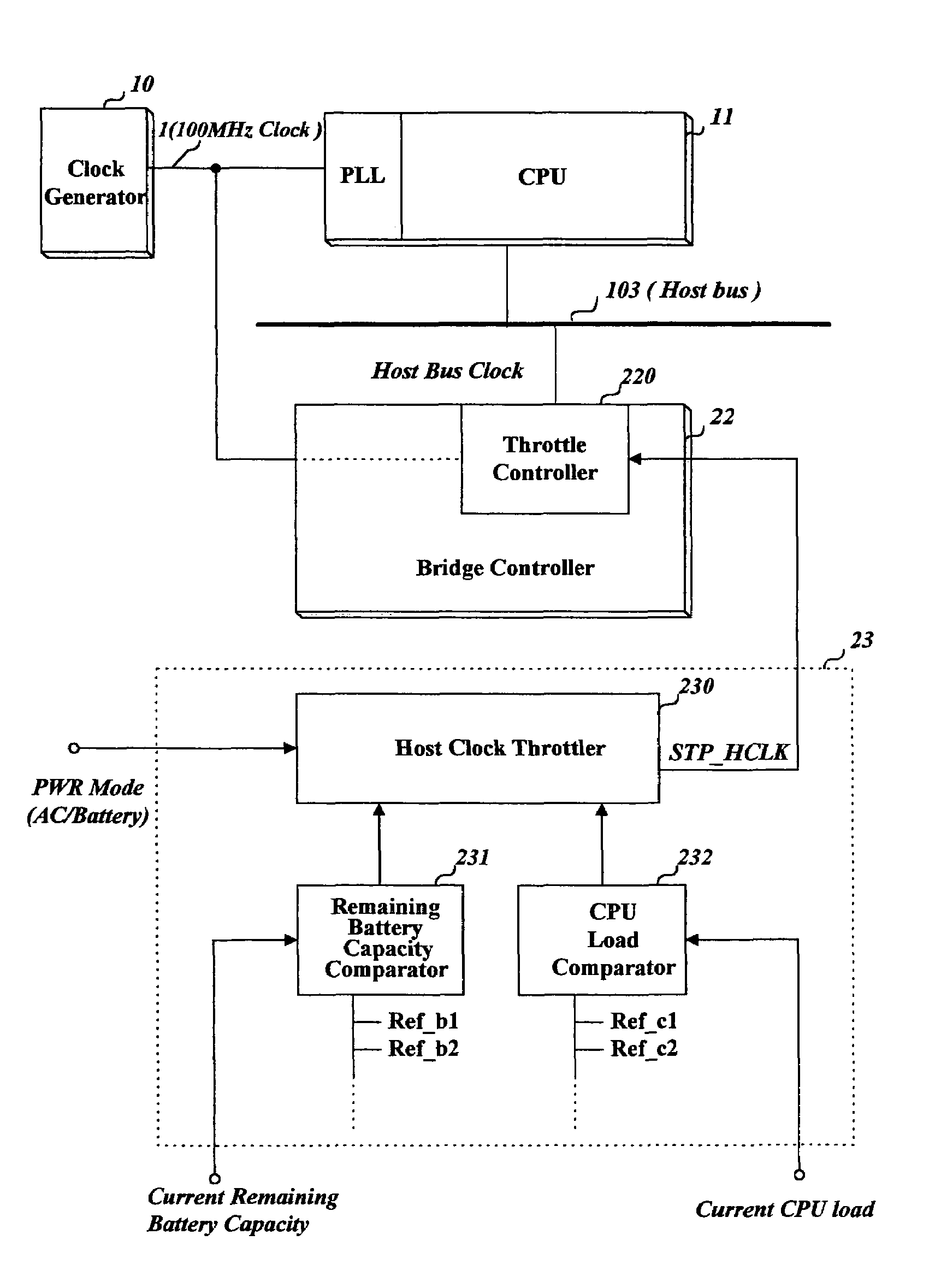

InactiveUS7069463B2Reduce power consumptionEnergy efficient ICTError detection/correctionCurrent loadData exchange

The present invention relates to an apparatus and method for throttling a clock of a bus used for data exchange between devices in a computer such as a portable computer or notebook. Methods according to the invention can set a throttle rate of a clock to a predetermined initial value, detect a current remaining battery capacity or a current load to the CPU, and adjust the set throttle rate to a prescribed or calculated value according to the detected remaining battery capacity or the CPU load. Thus, power consumption is reduced, and, in the case of a battery-powered computer, battery life and operating time are extended.

Owner:LG ELECTRONICS INC

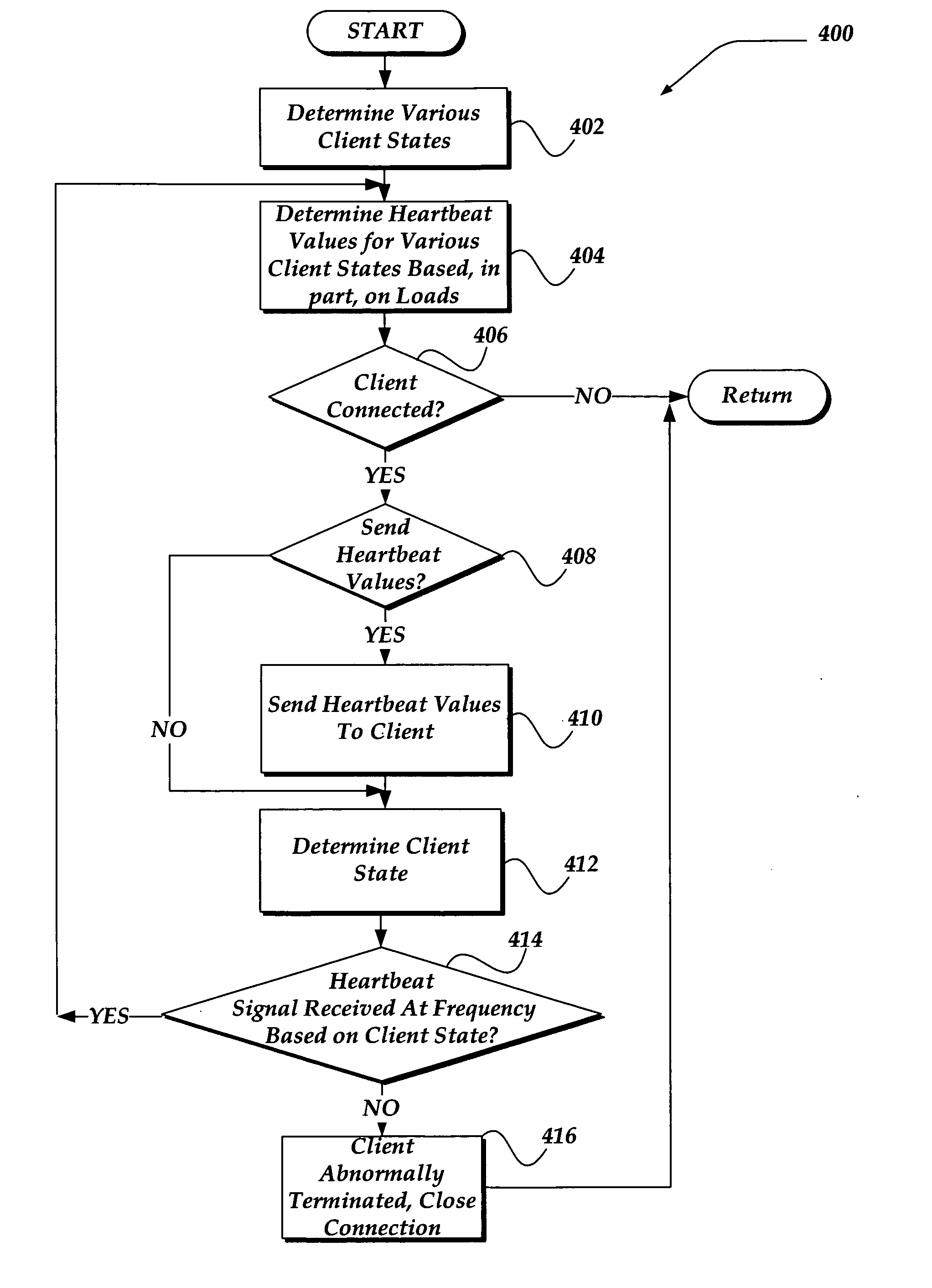

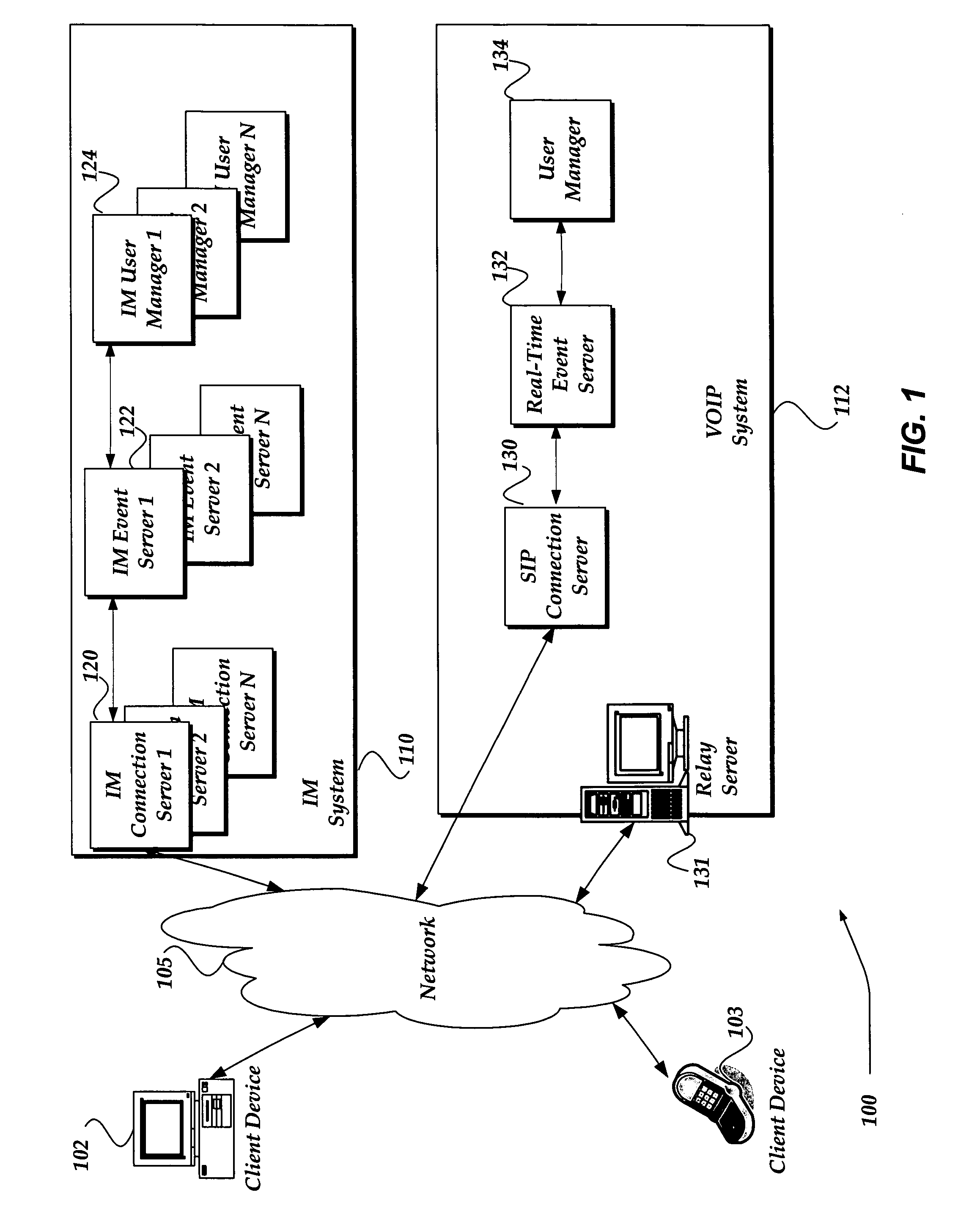

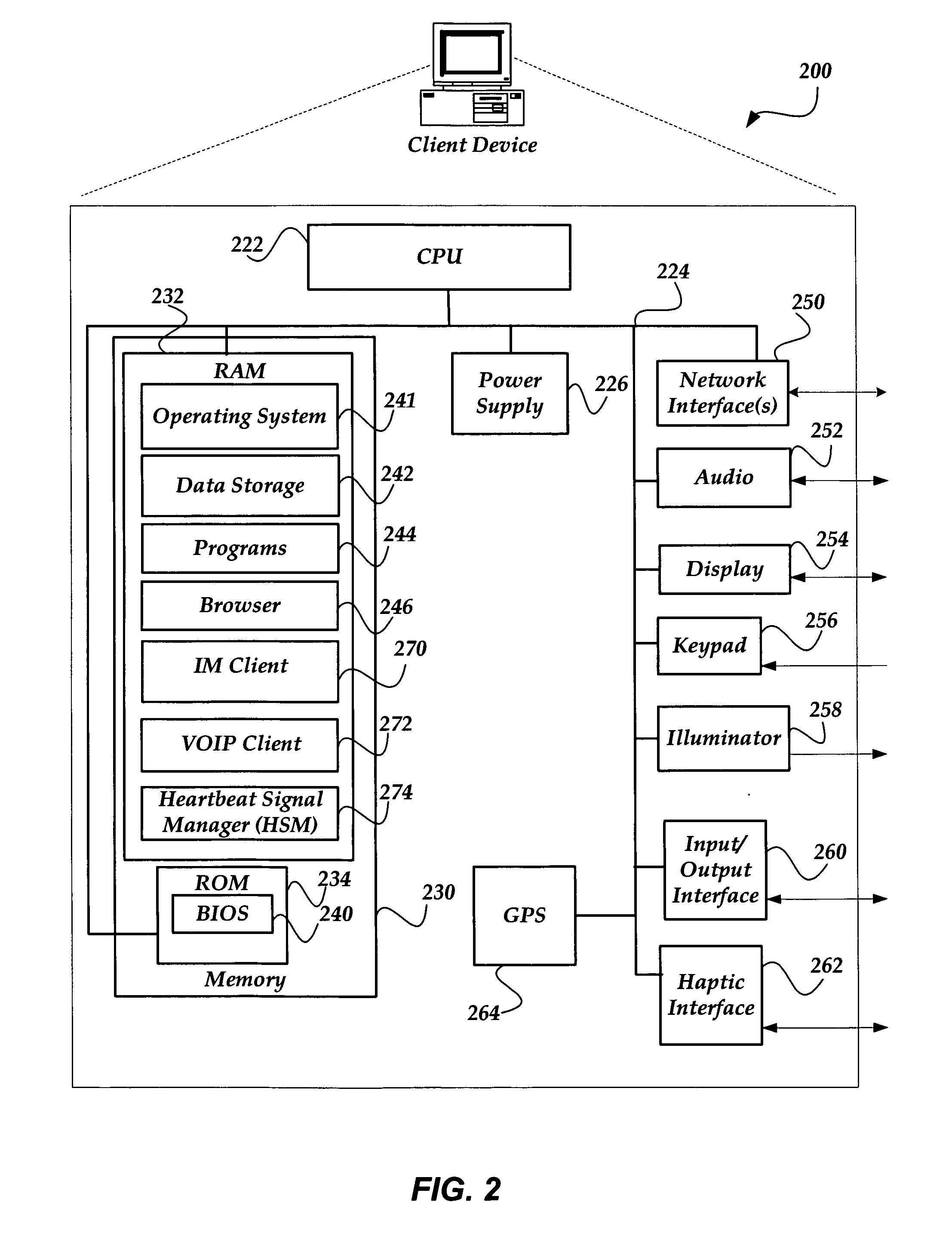

Efficiently detecting abnormal client termination

The invention is directed to managing an abnormal client termination of a network connection with a server. When a client establishes a connection with the server, the server provides various heartbeat values to the client. The server may also send heartbeat values at various other times based on a variety of factors. Heartbeat values may be based on a network load, a CPU load, or the like, as well as different states of the client. For example, one heartbeat value may be used when the client is in an idle state. Other heartbeat values may be used when the client is engaged in a VOIP session, in a videoconferencing session, a streaming video session, or the like. When the client changes state, a different heartbeat value may be used to automatically modify a frequency for sending the heartbeat signal to the server.

Owner:OATH INC

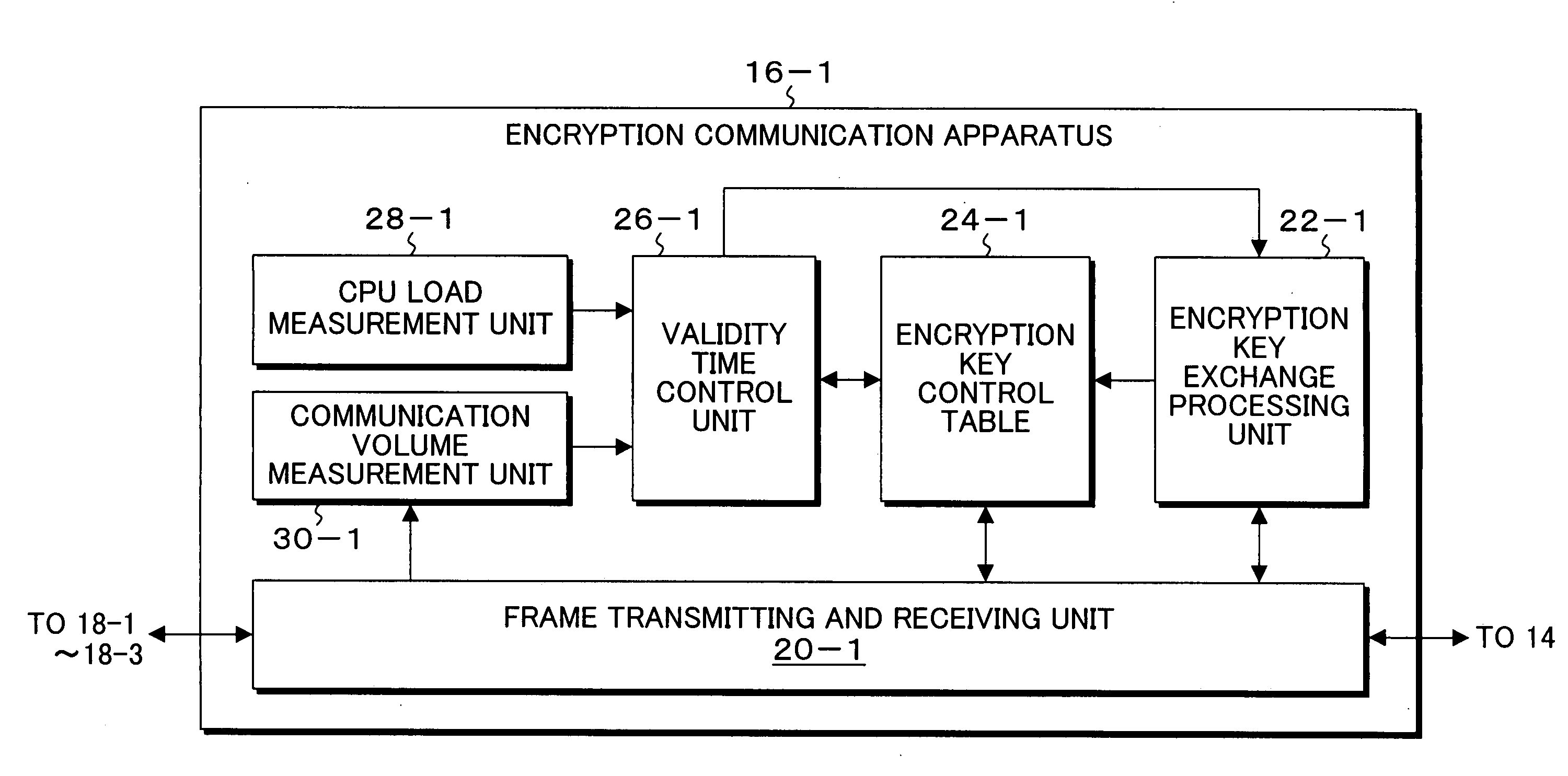

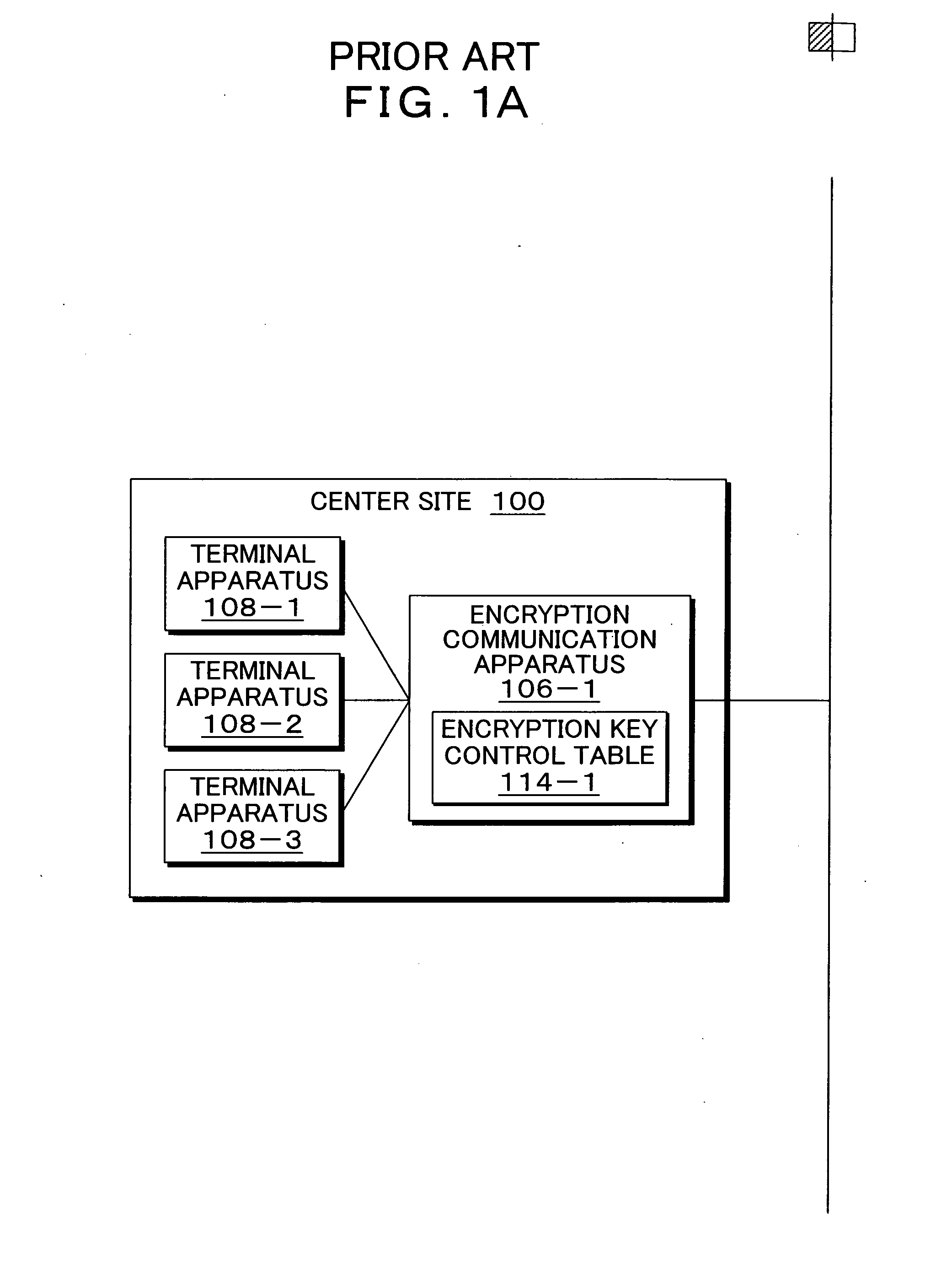

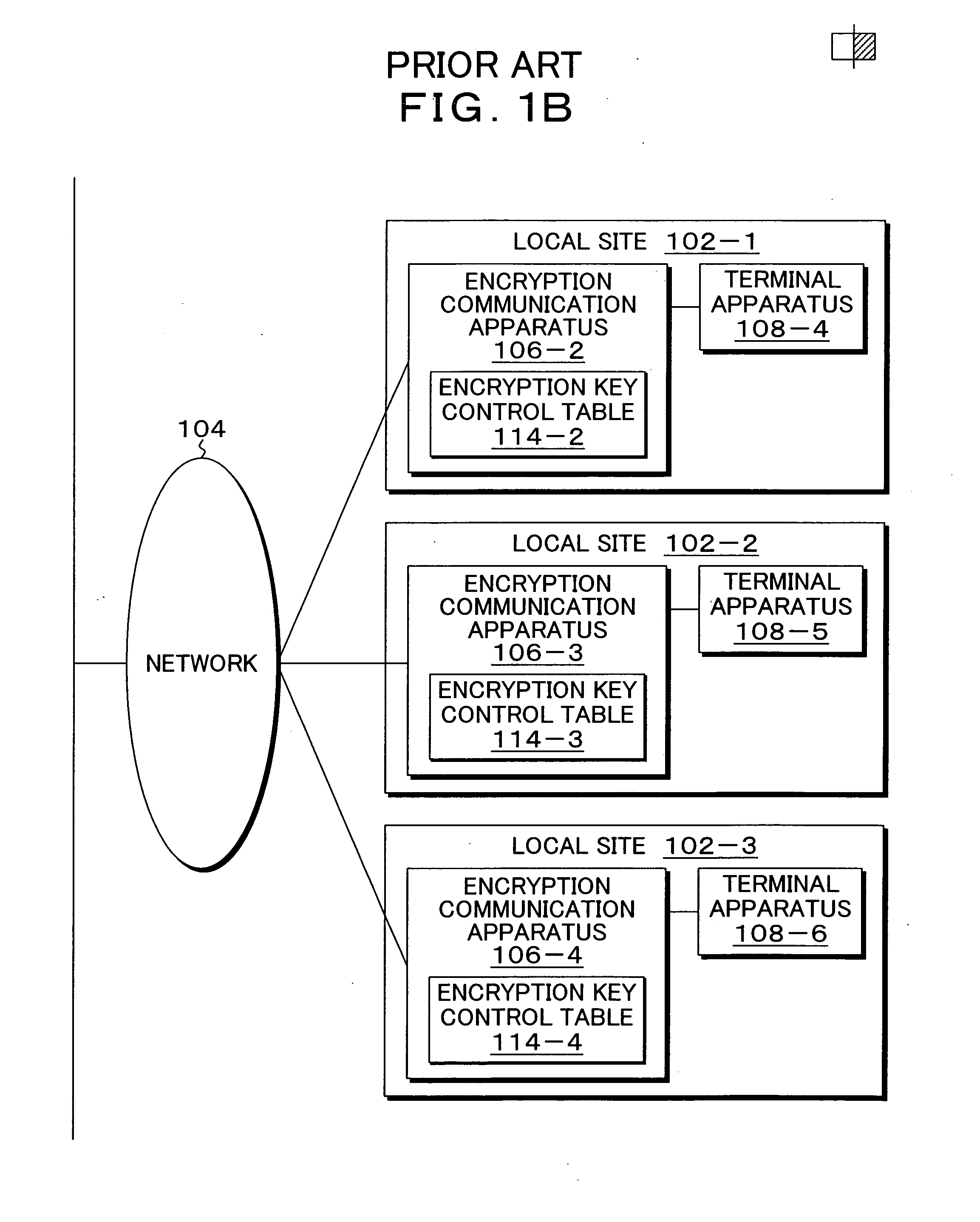

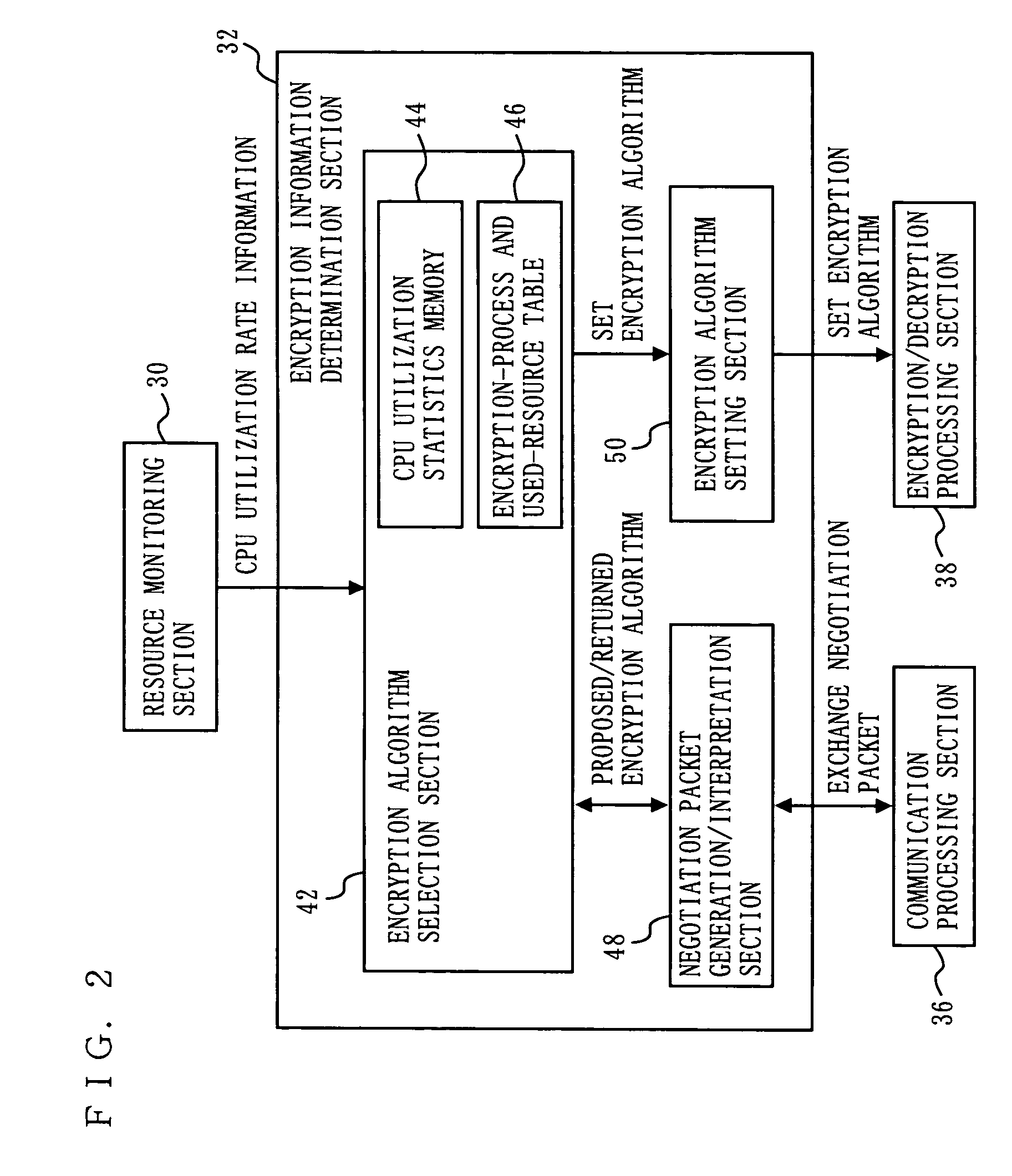

Encryption communication system, apparatus, method, and program

InactiveUS20080098226A1Avoid it happening againUser identity/authority verificationComputer hardwareCommunications system

A plurality of encryption communication apparatuses to which terminal apparatuses are connected are connected via a network, data received from the terminal apparatus which is a transmission source is encrypted by the encryption communication apparatus and transmitted to the other encryption communication apparatus, and data received from the other encryption communication apparatus is decrypted and transmitted to the terminal apparatus which is a transmission destination. Upon initiation of first communication with the other encryption communication apparatuses, the encryption communication apparatus generates and exchange encryption keys according to an encryption key exchange protocol, records them in the encryption key control table and, and sets validity time so as to control that. The encryption key is subjected to encryption key update when validity time is close; however, even during validity time period, when the state that CPU load is low is determined, the encryption key of the encryption communication apparatus which is a counterpart having a small communication volume is searched, and the encryption key is updated.

Owner:FUJITSU LTD

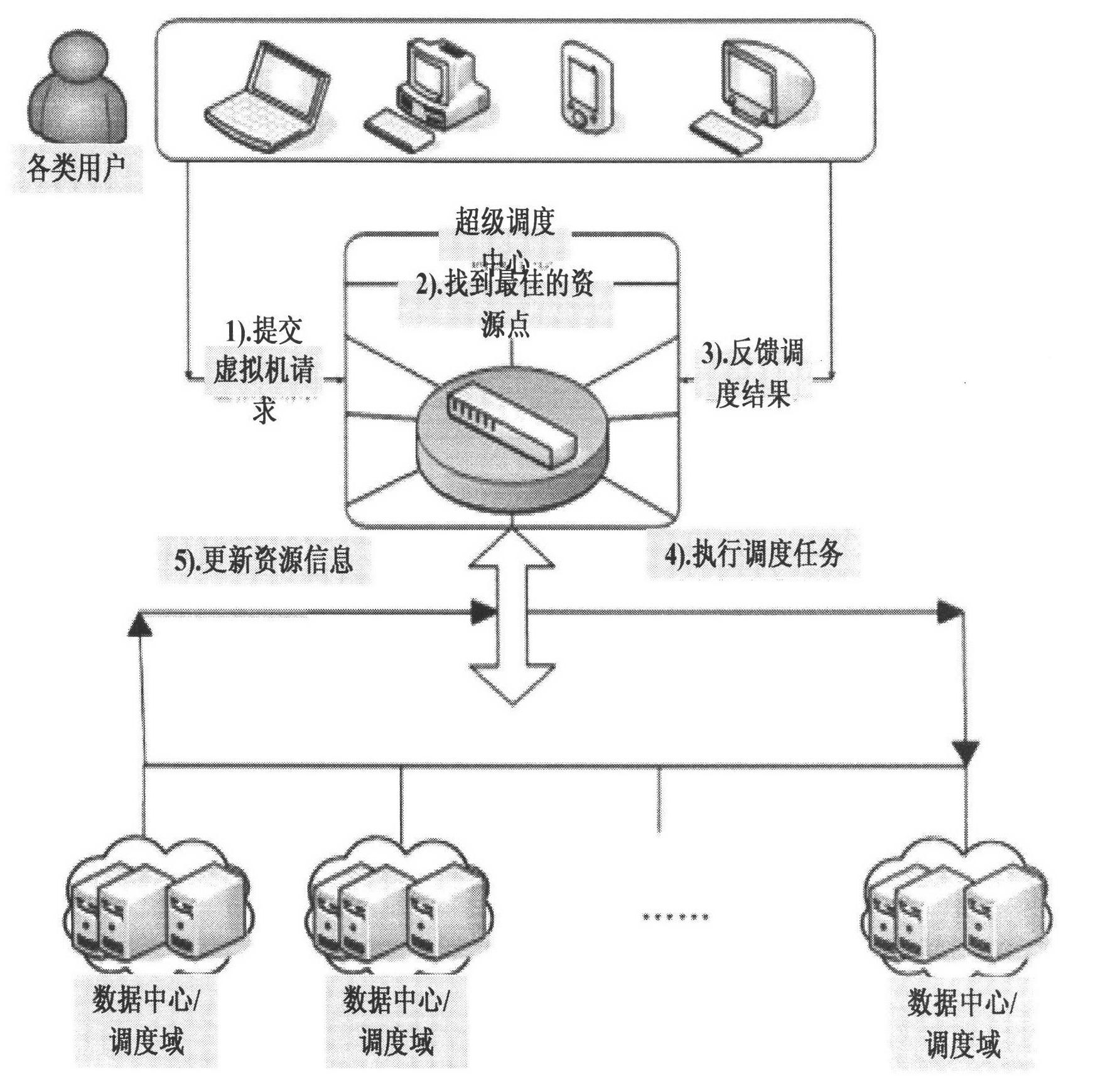

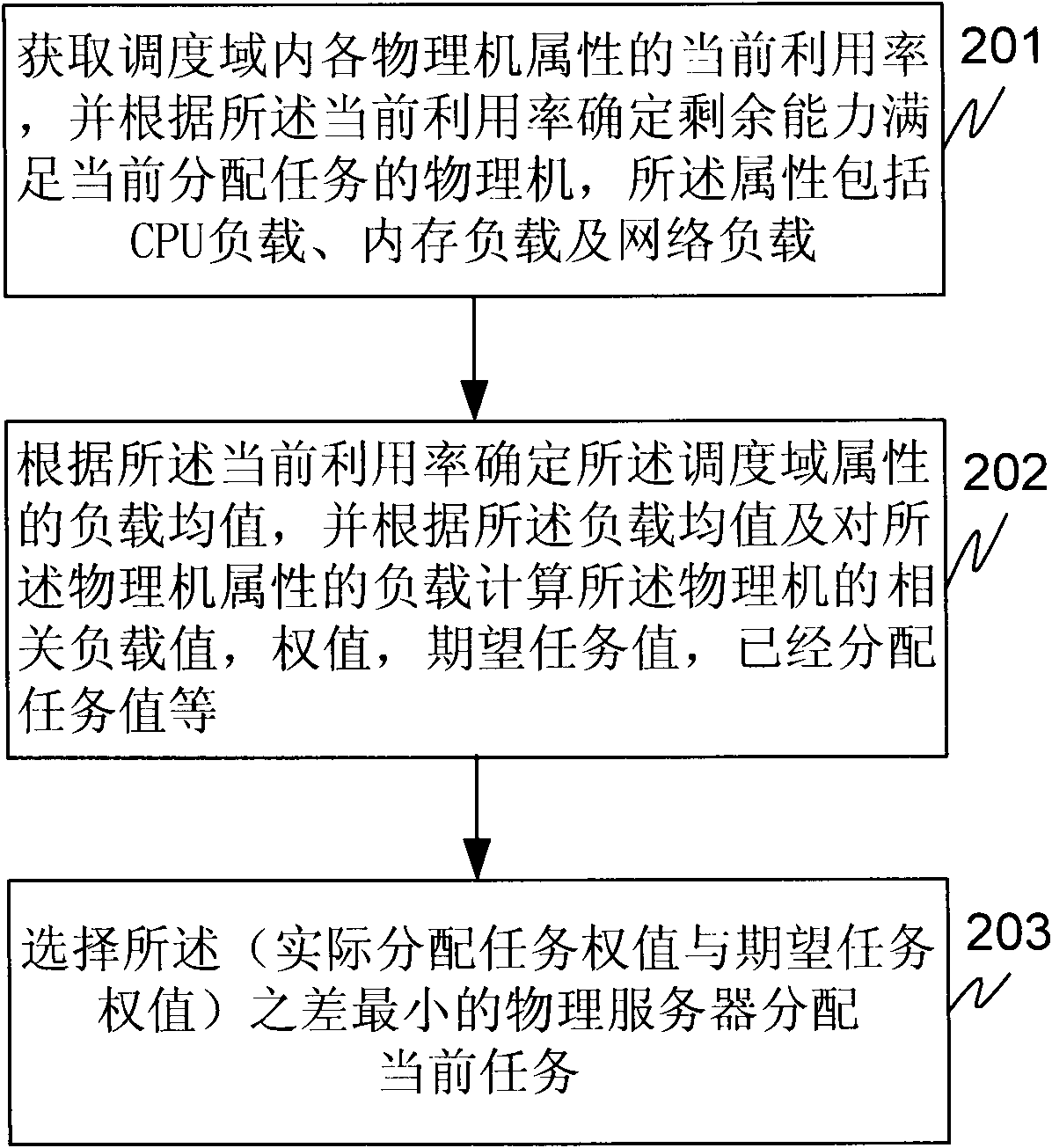

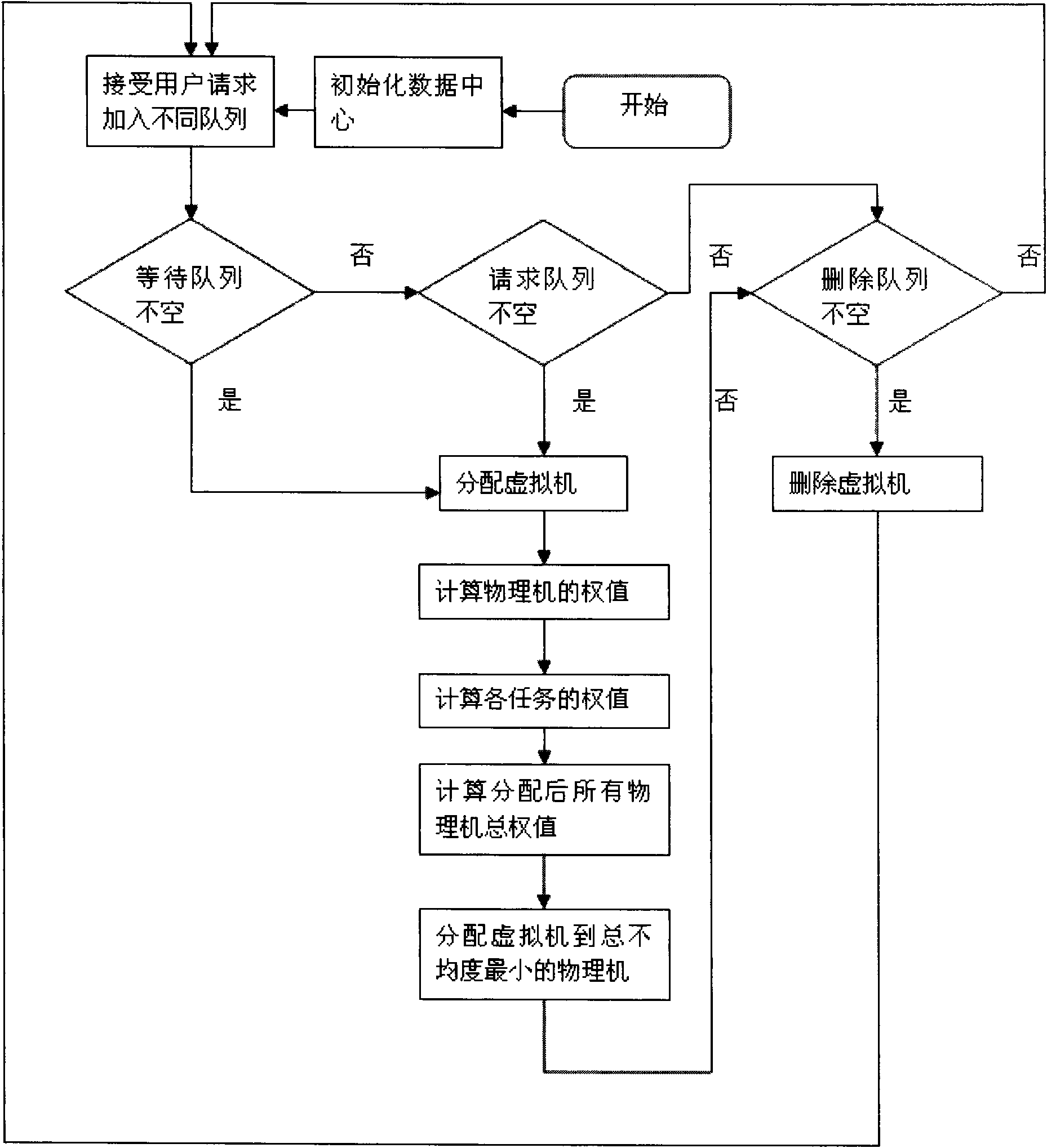

Method and device for realizing data center resource load balance in proportion to comprehensive allocation capability

ActiveCN102185779ATimely determination of load statusSolve load imbalanceData switching networksUser needsData center

The invention relates to a method and a device for realizing data center resource load balance. The method of the technical scheme comprises the following steps of: acquiring the current utilization rates of attributes of each physical machine in a scheduling domain, and determining the physical machine for a currently allocated task according to the principle of fair distribution in proportion to the allocation capability of a server, an actual allocated task weight value and an expected task weight value, wherein the attributes comprise a central processing unit (CPU) load, a memory load and a network load; determining a mean load value of the attributes of the scheduling domain according to the current utilization rates, and calculating a difference between the actual allocated task weight value and expected task weight value of the physical machine according to the mean load value and predicted load values of the attributes of the physical machine; and selecting the physical machine of which the difference between the actual allocated task weight value and the expected task weight value is the smallest for the currently allocated task. The device provided by the invention comprises a selection control module, a calculation processing module and an allocation execution module. By the technical scheme provided by the invention, the problem of physical server load unbalance caused by inconsistency between user need provisions and physical server provisions can be solved.

Owner:田文洪

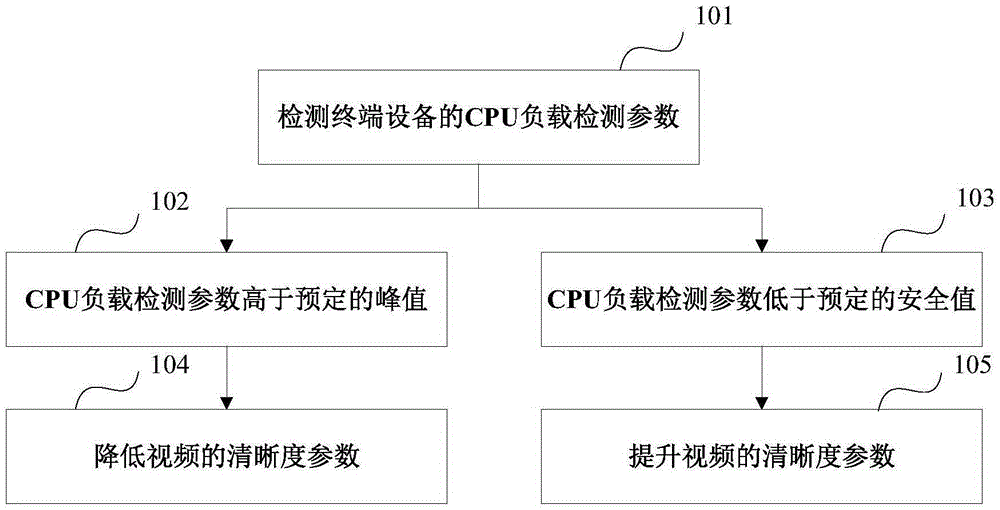

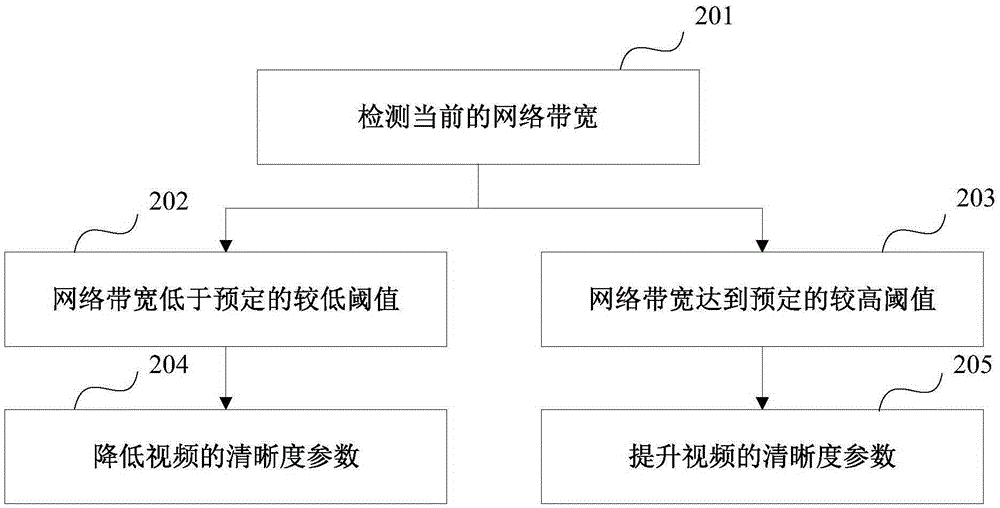

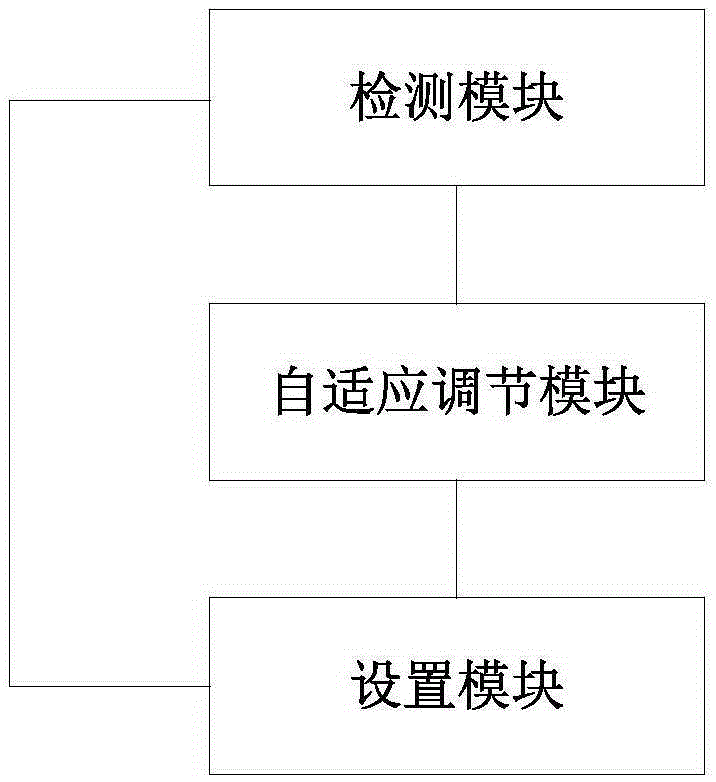

Adaptive video definition adjusting method, apparatus and terminal

InactiveCN105657321AGuaranteed uptimeImprove fluencySupplementary picture signal insertionTwo-way working systemsImage resolutionTerminal equipment

The invention provides an adaptive video definition adjusting method, apparatus and terminal. The adaptive video definition adjusting method provided by the invention comprises the following steps: detecting a current CPU load detection parameter of a terminal device participating in a video call in a video call process; judging whether the current CPU load detection parameter reaches a preset peak value, and if judging that the current CPU load detection parameter reaches the preset peak value, reducing the definition parameter of the video according to a predetermined adjustment strategy, wherein the definition parameter of the video comprises resolution, a code rate and / or a frame rate of the video. According to the adaptive video definition adjusting method provided by the invention, the definition parameter of the video is dynamically and adaptively adjusted via CPU load detection and network bandwidth detection and the like, so as to guarantee the normal operation of the video call and guarantee better smoothness as well.

Owner:LE SHI ZHI ZIN ELECTRONIC TECHNOLOGY (TIANJIN) LTD

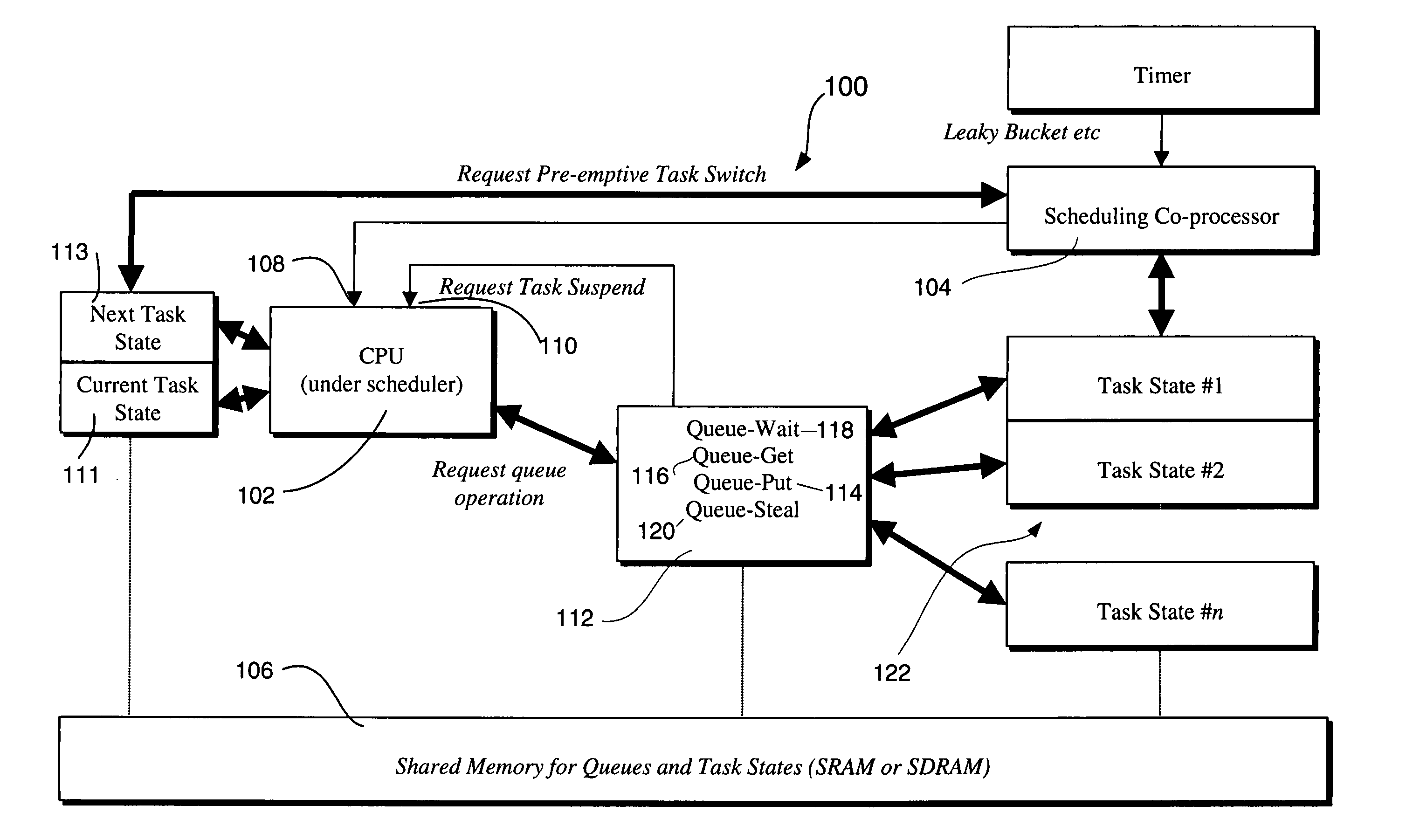

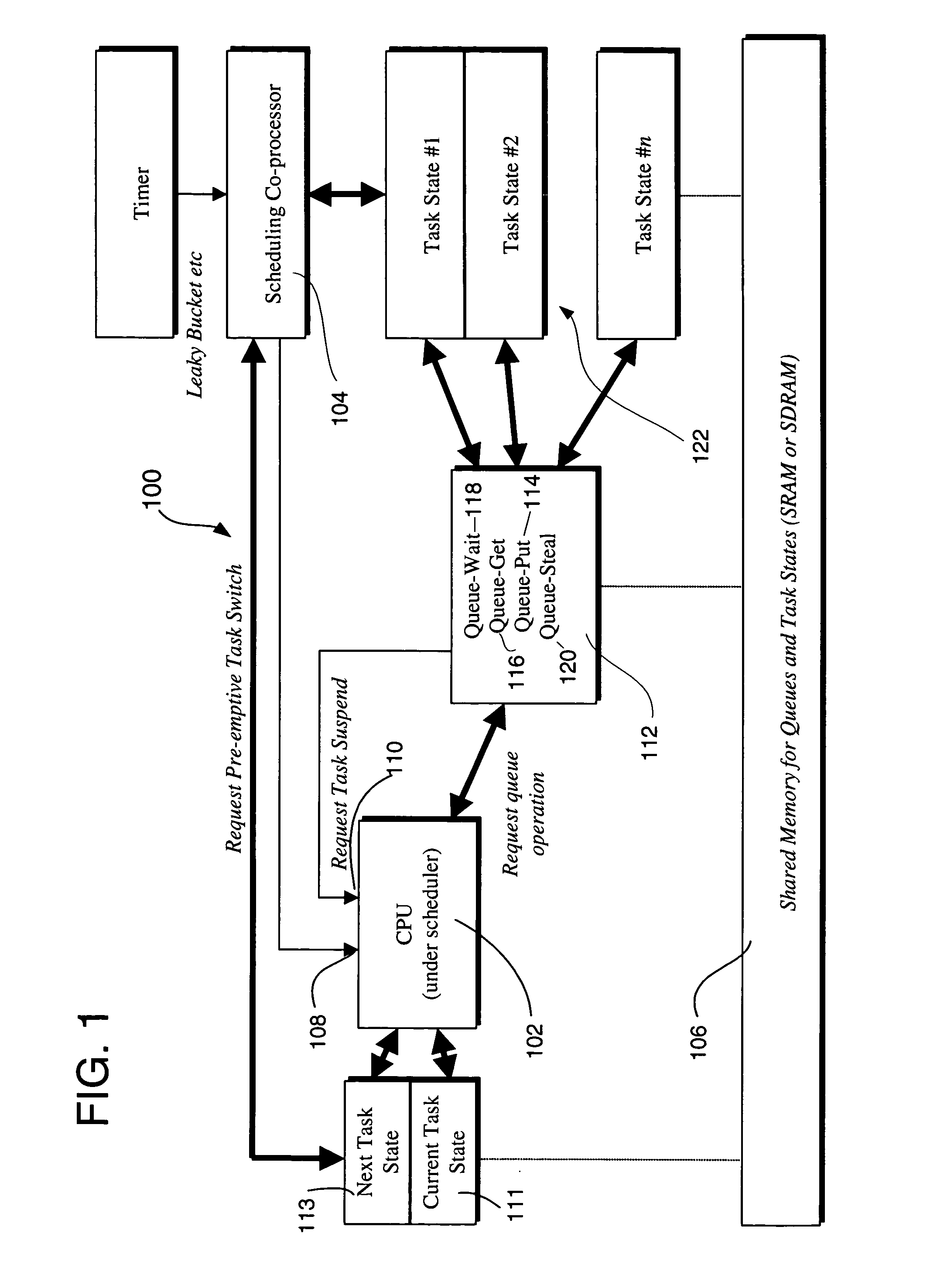

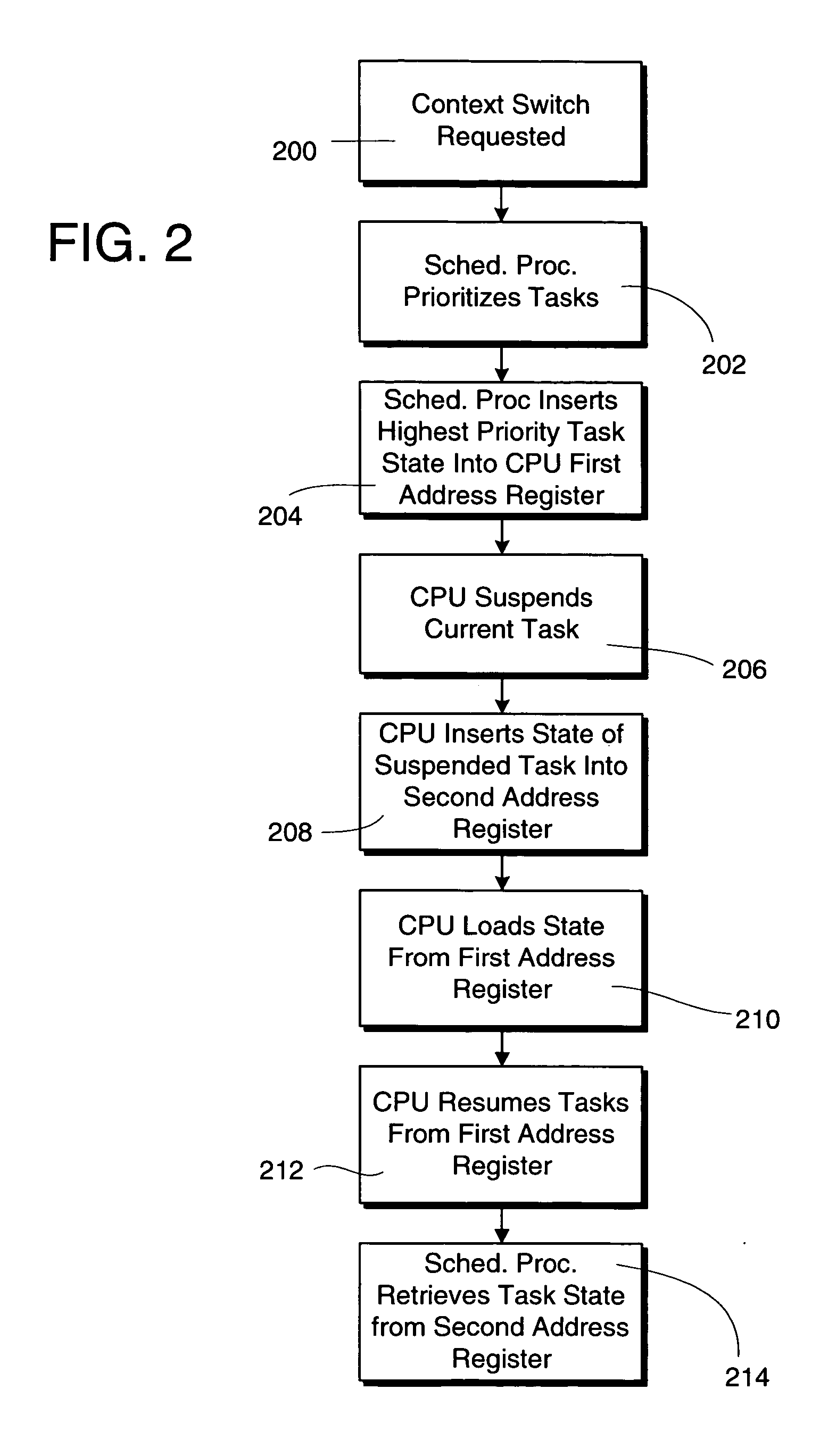

System and method for providing hardware-assisted task scheduling

A method, system and computer-readable medium for scheduling tasks, wherein a task switch request is initially received. A scheduling processor prioritizes the available tasks and inserts a highest priority task state into a first address register associated with a CPU. Next, the CPU suspends operation of the currently executing task and inserts a state of the suspended task into a second address register associated with the CPU. The CPU loads the task state from the first address register associated with the CPU and resumes the loaded task loaded. The scheduling processor then retrieves the task state from the second address register by the scheduling processor and schedules the retrieved task for subsequent execution.

Owner:MOORE MARK JUSTIN

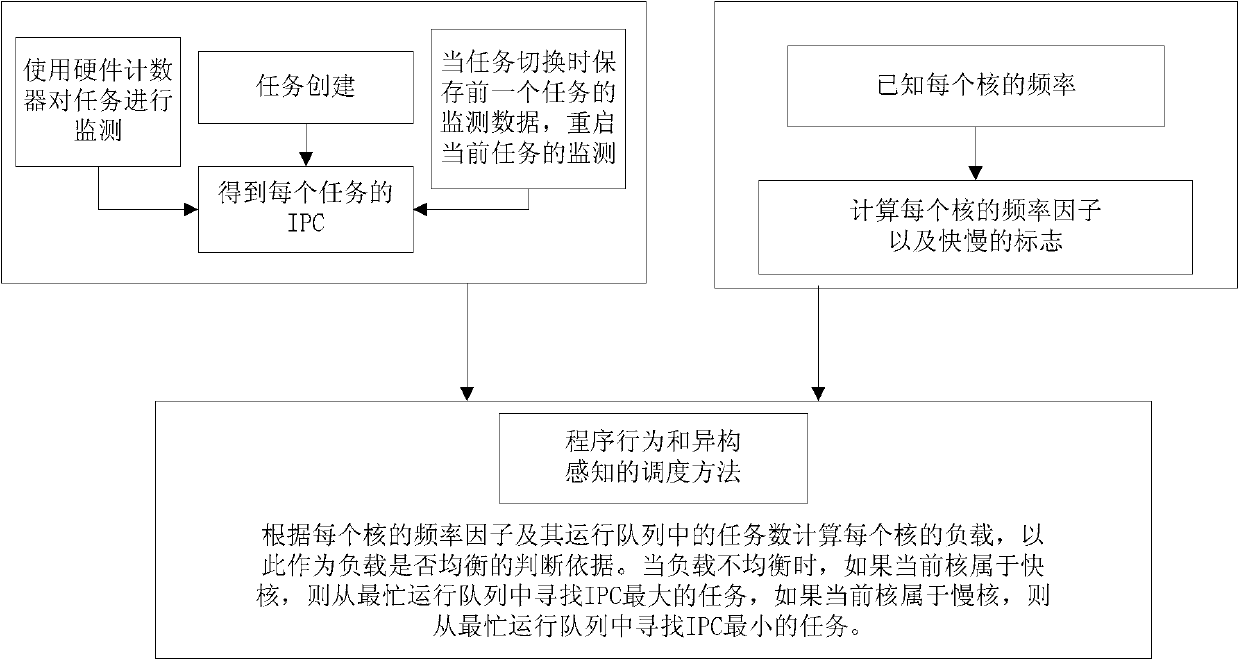

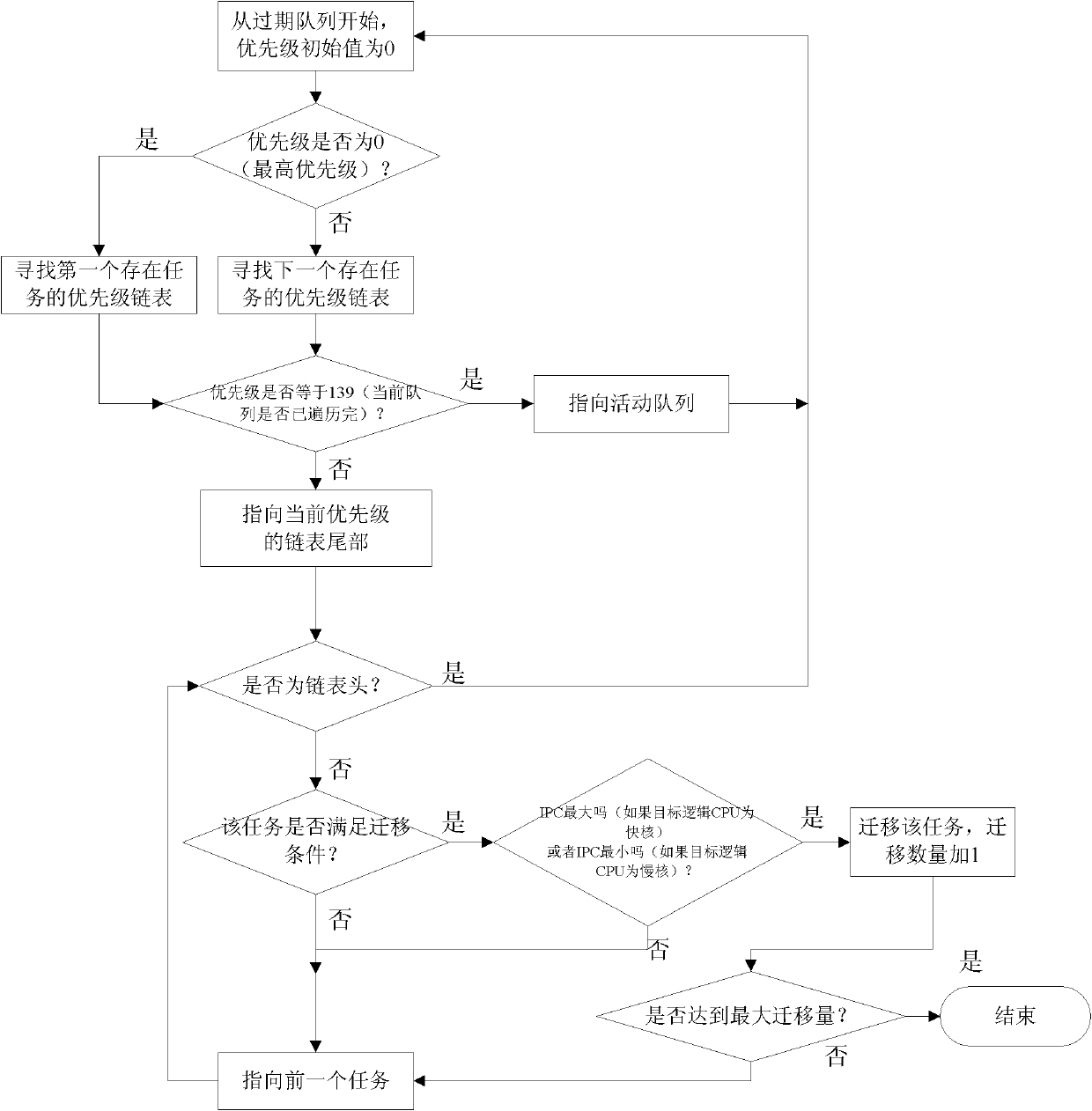

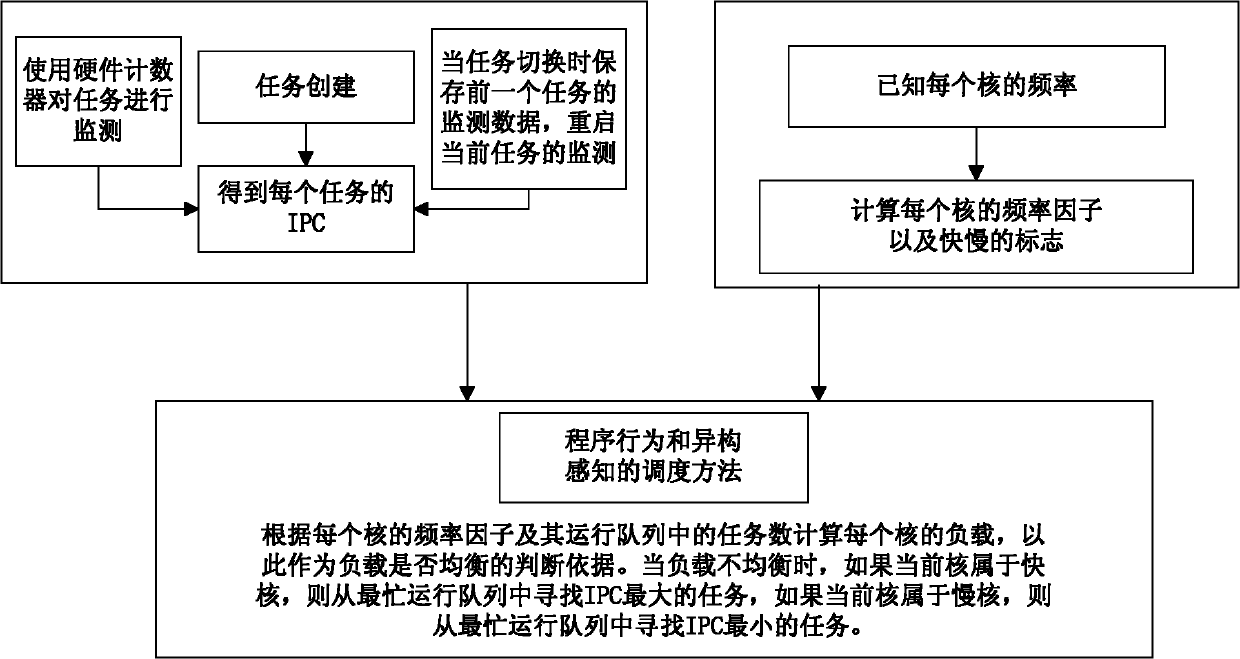

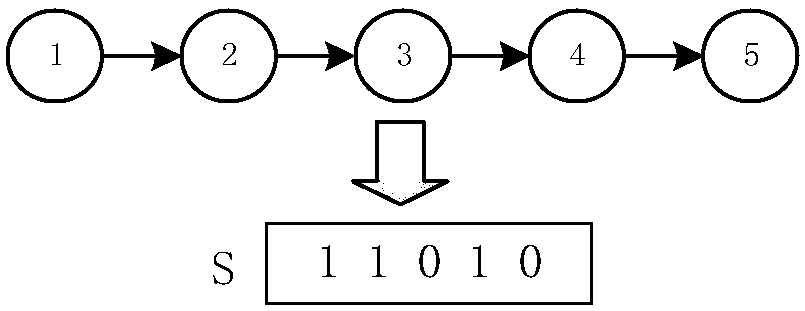

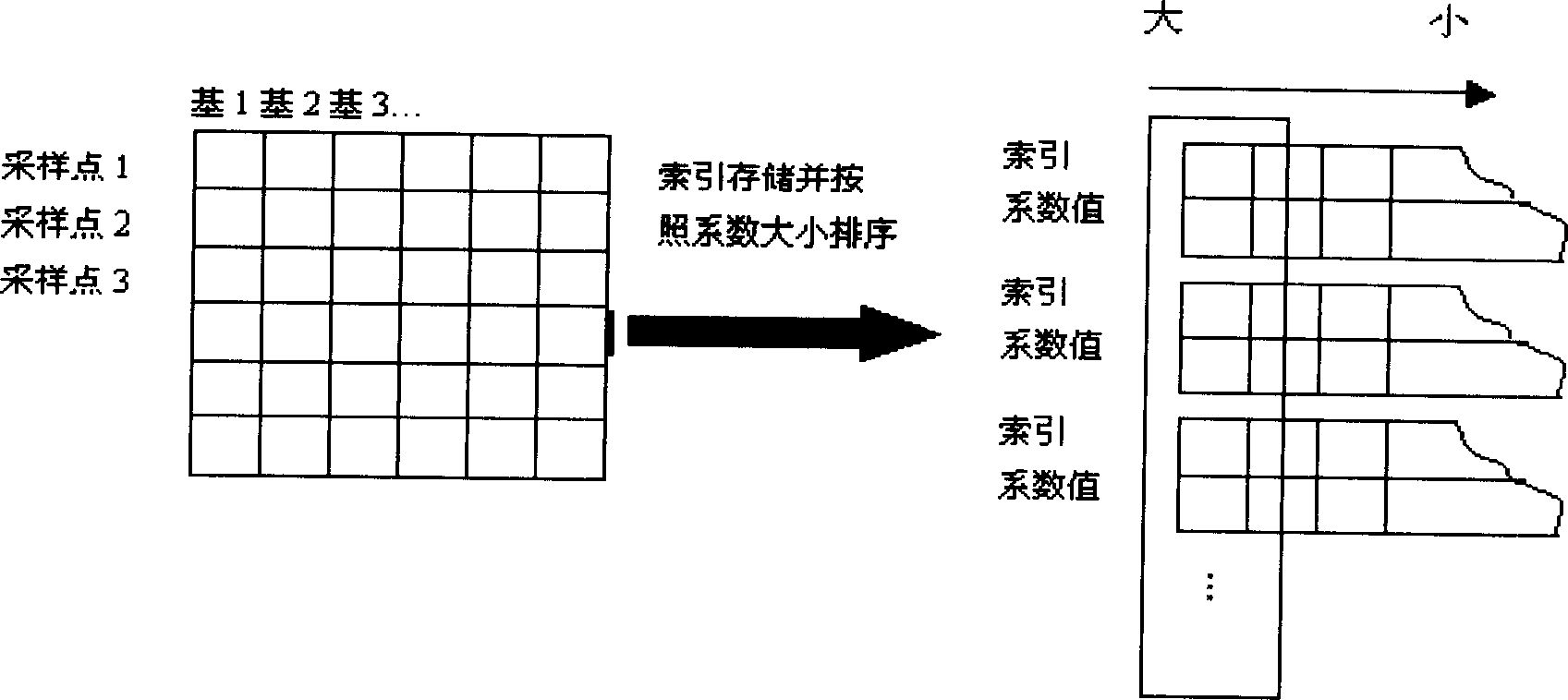

Load balancing method based on program behaviour online analysis under heterogeneous multi-core environment

InactiveCN102184125AHigh frequencyBear moreResource allocationMultiple digital computer combinationsDomain modelOperational system

The invention discloses a load balancing method based on program behaviour online analysis under a heterogeneous multi-core environment, which comprises the steps of: being compatible with a heterogeneous multi-core environment of the traditional scheduling domain model, dynamically monitoring task characteristics, calculating a logic CPU load, and balancing loads of program behaviour perception. The load balancing method is completely compatible with the traditional operating system scheduling strategy, is simple and efficient, and is suitable for popularization; and an algorithm is tested on an actual software and hardware platform.

Owner:CAPITAL NORMAL UNIVERSITY

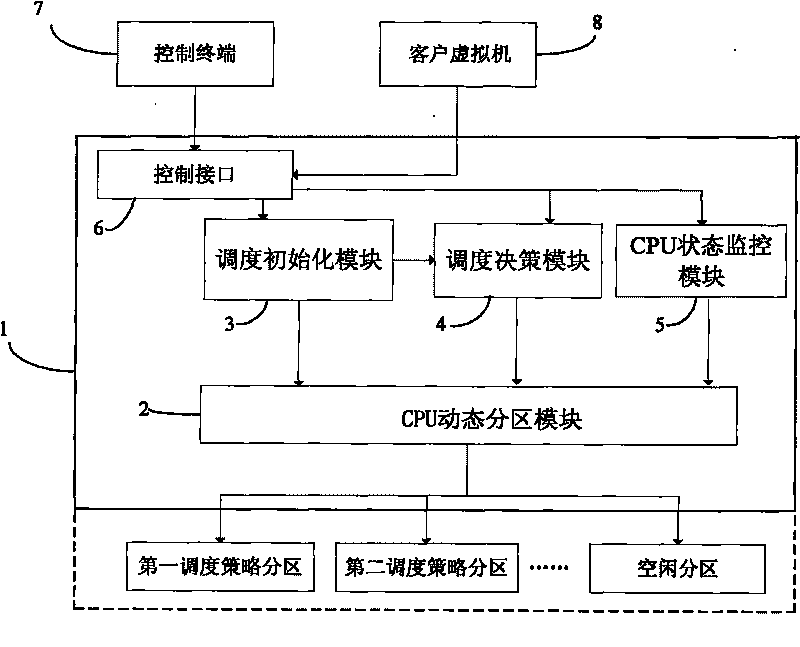

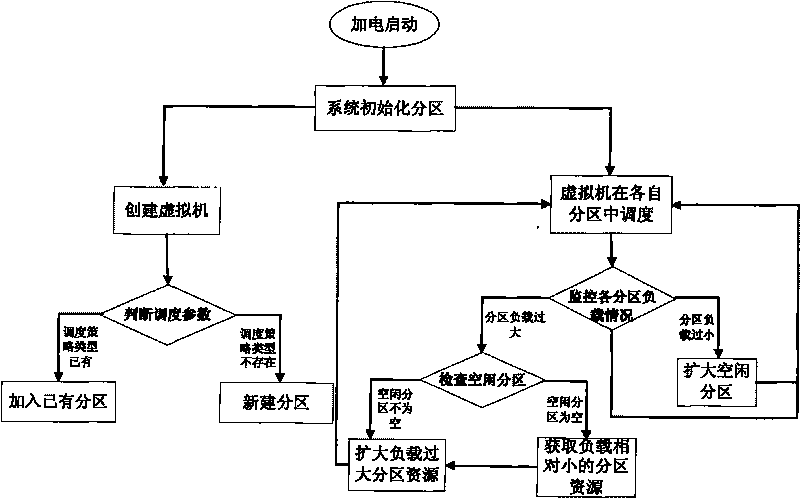

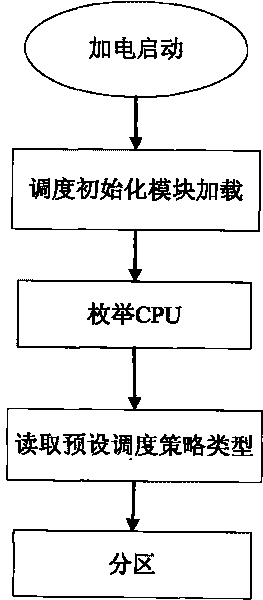

Dispatching method of virtual machine under multi-core environment

InactiveCN101706743AImprove scheduling efficiencyTake advantage ofResource allocationSoftware simulation/interpretation/emulationComputer architectureCpu load

The invention provides a dispatching method of a virtual machine under a multi-core environment. The dispatching method divides zones for CUP resources according to dispatching strategy types when a system is started, monitors the CPU loading condition of all divided zones in real time when the system operates, and dynamically adjusts the size of the CPU resources in the divided zones. The dispatching method carries out dispatching in the same divided zone by using the same dispatching strategy, thus improving dispatching efficiency, achieving the purpose of resource loading balance by dynamically adjusting the size of the CPU resources of the divided zone, realizing the full utilization of resources and reducing waste of resources.

Owner:BEIHANG UNIV

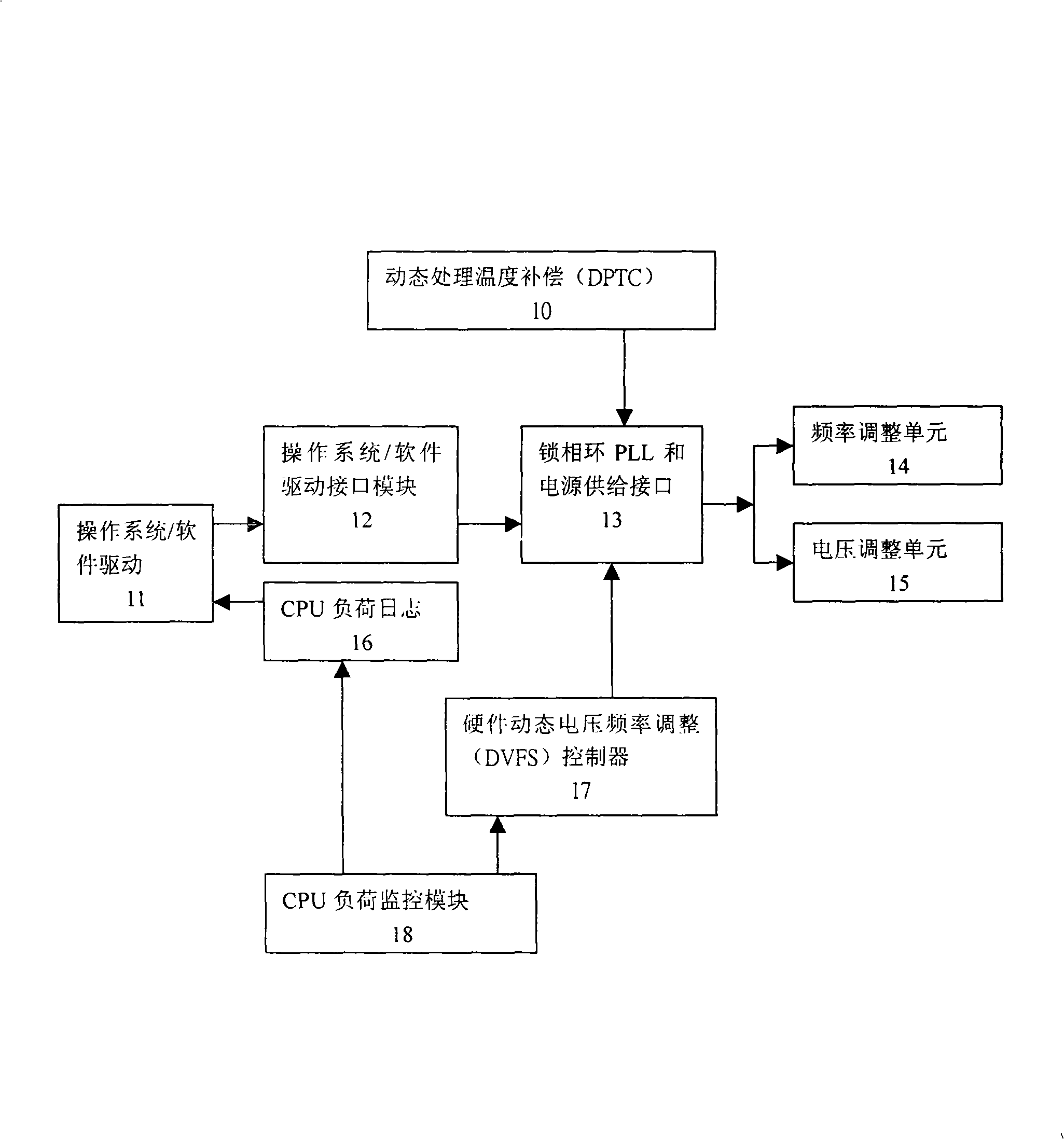

Method for regulating dynamic voltage frequency in power supply management technique

InactiveCN101281415AImprove the shortcomings of short use time, system stability, and poor reliabilityExtended use timeVolume/mass flow measurementPower supply for data processingOperational systemTime of use

The invention relates to a dynamic voltage frequency adjustment method in the power management technology, which comprises steps: collecting different hardware signals; writing a hardware dynamic voltage frequency adjustment controller and a corresponding register of a CPU loading log; reading CPU loading log information via an operating system / software drive, and inputting the information into an interface module of the operating system / software drive after analyzing; and reading dynamic processing temperature compensation, the hardware dynamic voltage frequency adjustment controller, the information processed by the interface module of the operating system / software drive; outputting to a corresponding frequency adjustment and voltage adjustment unit via related algorithm analysis, so as to complete adjusting system real time dynamic voltage frequency. The invention adjusts working frequency according to system performance demands, and correspondingly reduces system working voltage and reduces dynamic power dissipation. The invention greatly improves shortcomings of short service life of the battery, bad system stability and reliability brought in by the traditional simple power management technology. The invention can use various embedded system devices such as the portable multimedia recording and playing device, and smart phone.

Owner:上海摩飞电子科技有限公司

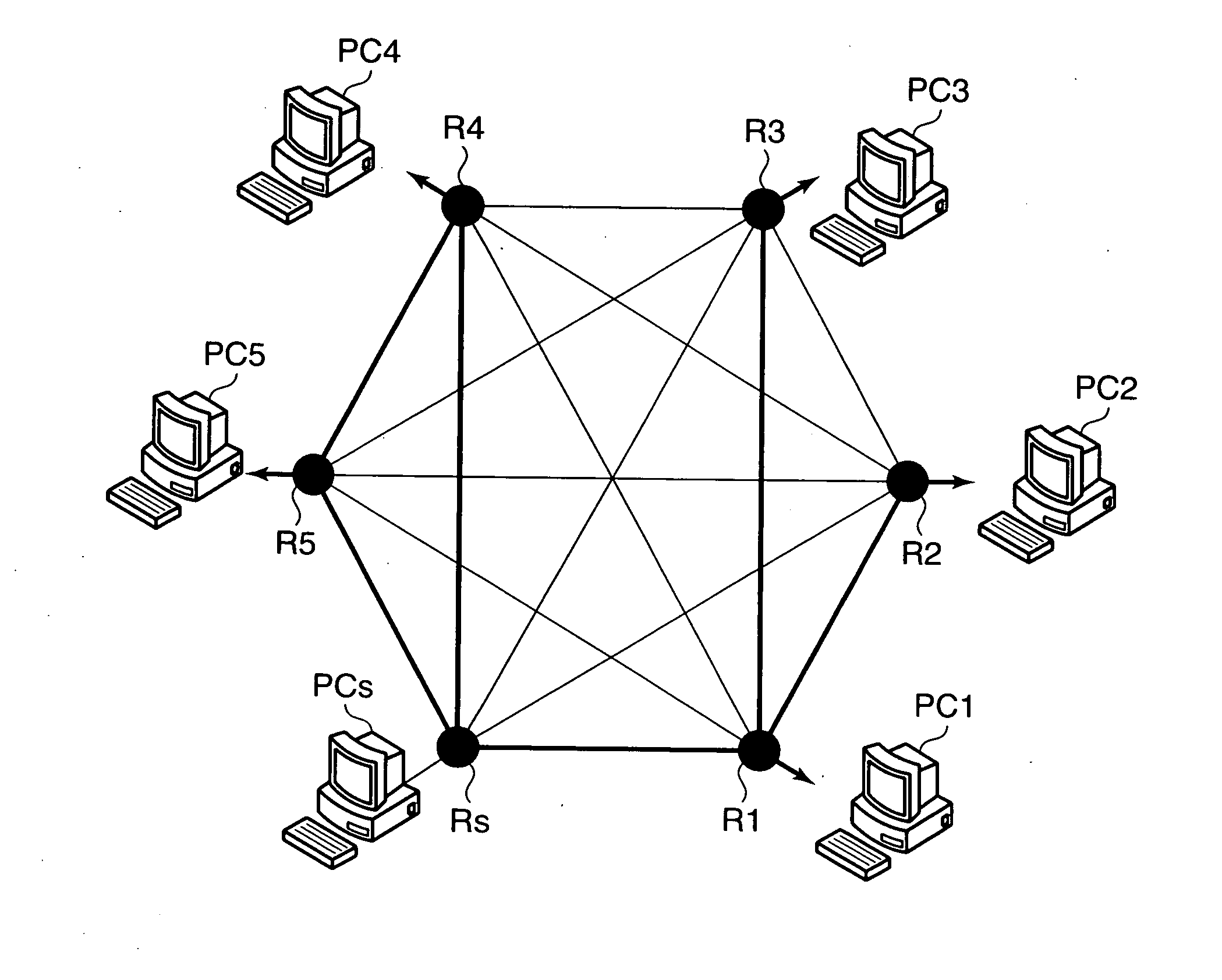

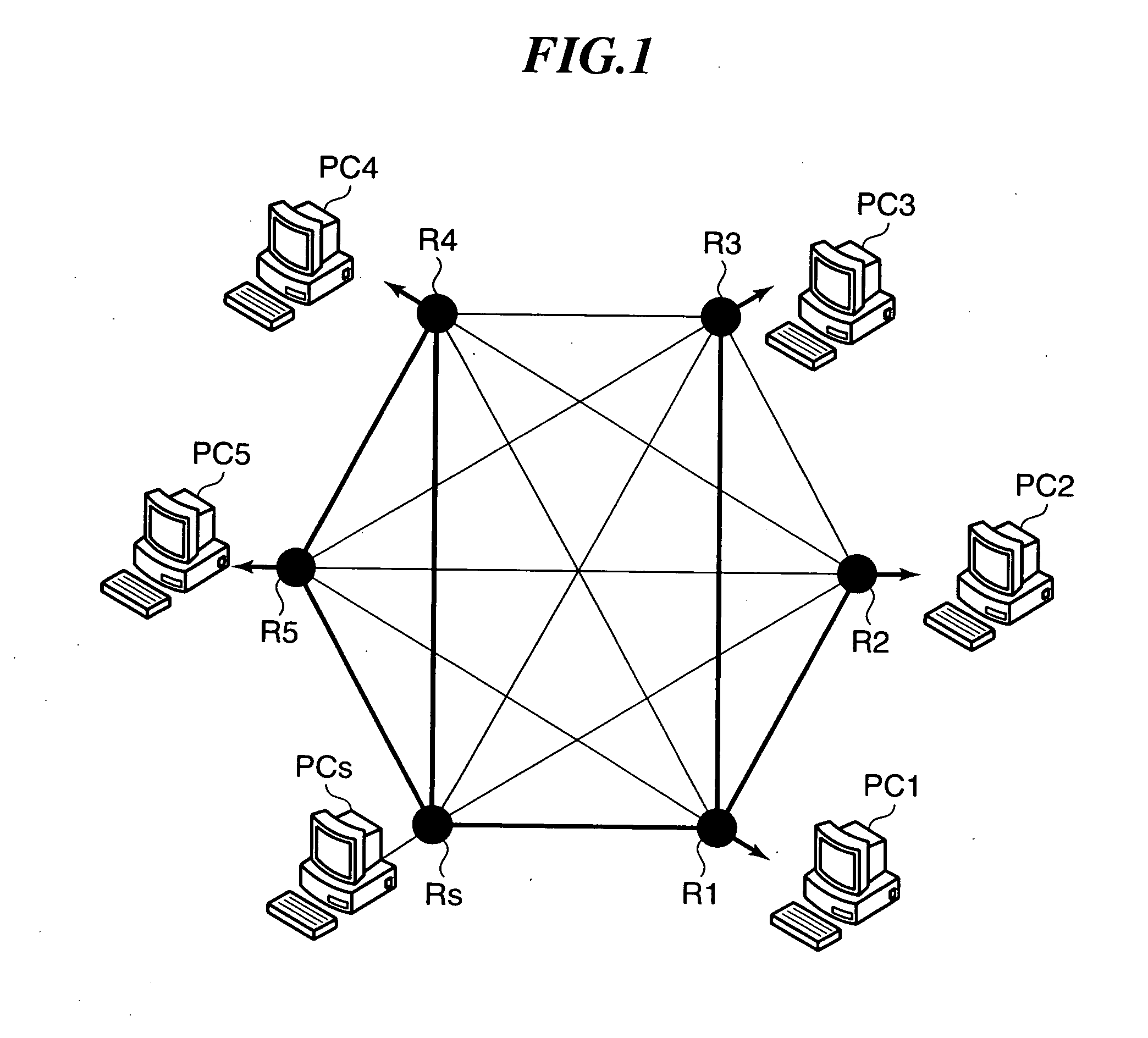

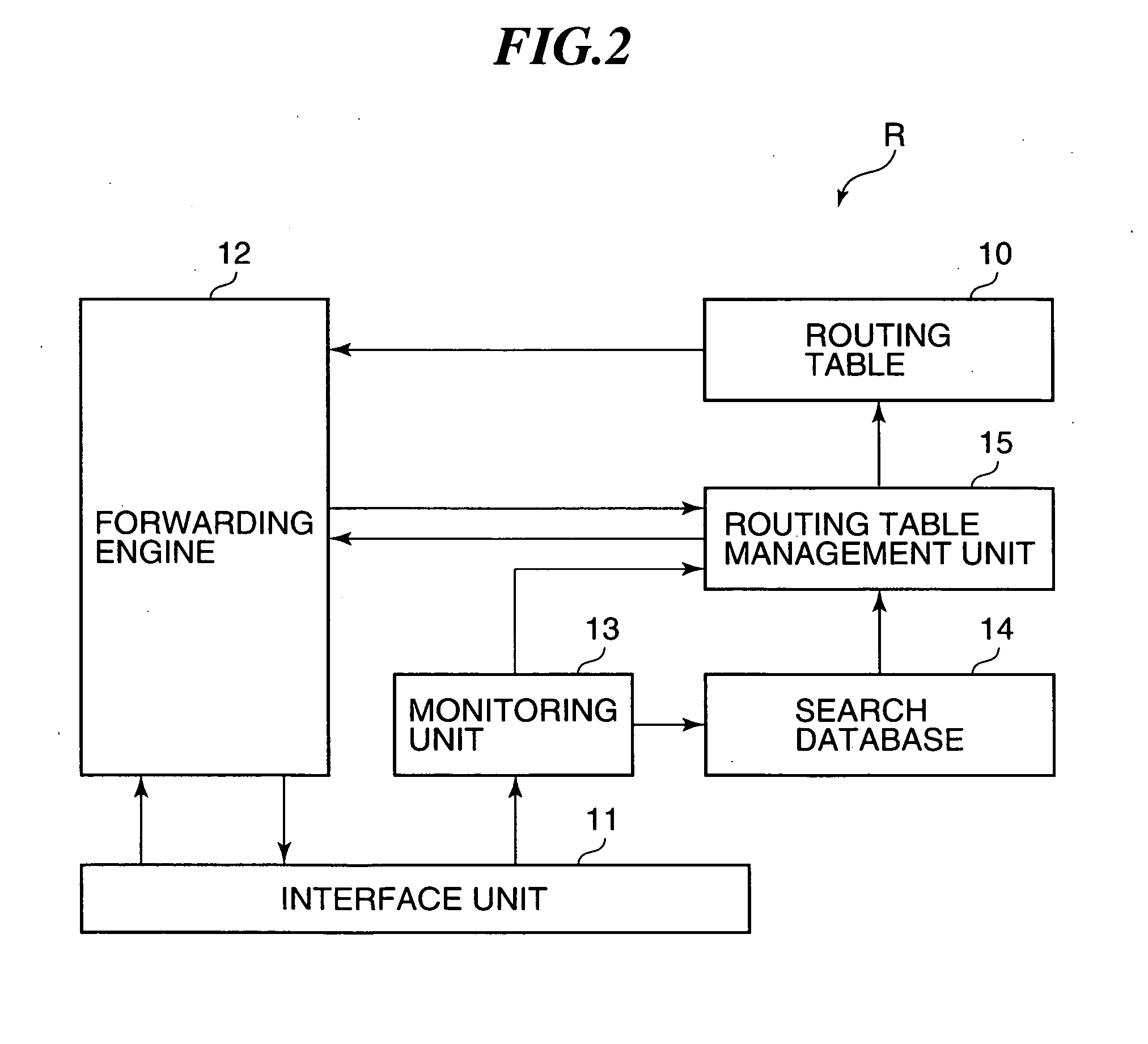

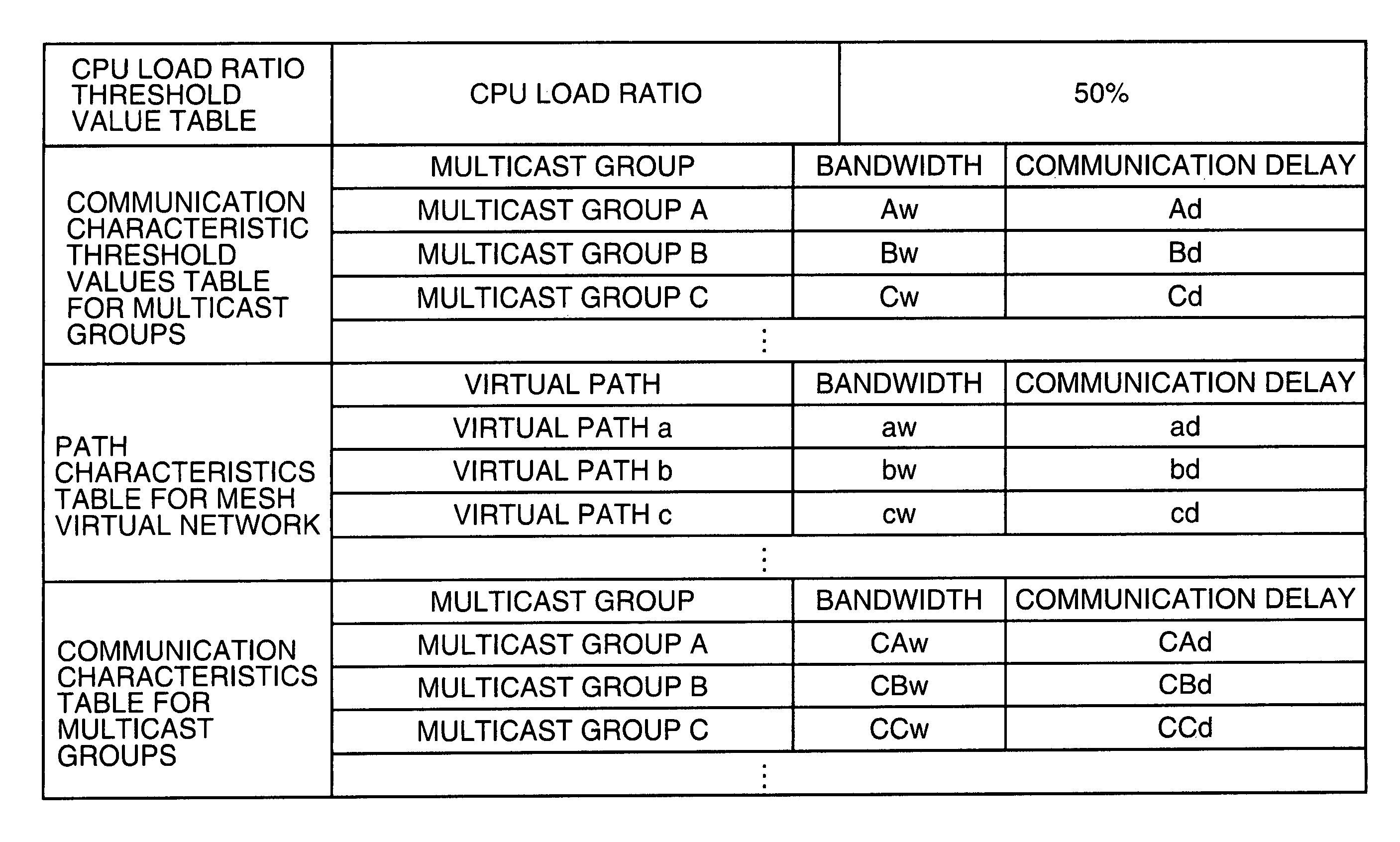

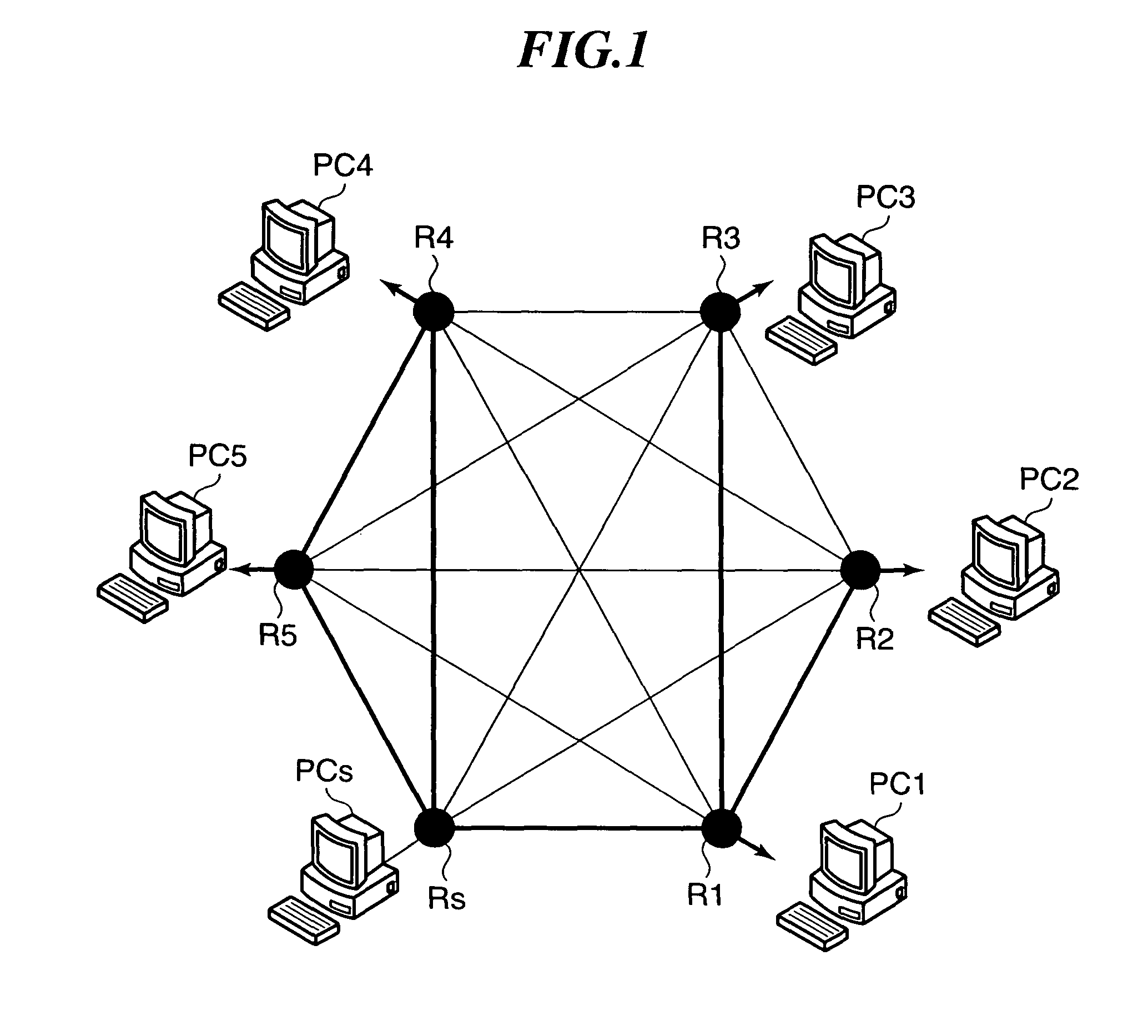

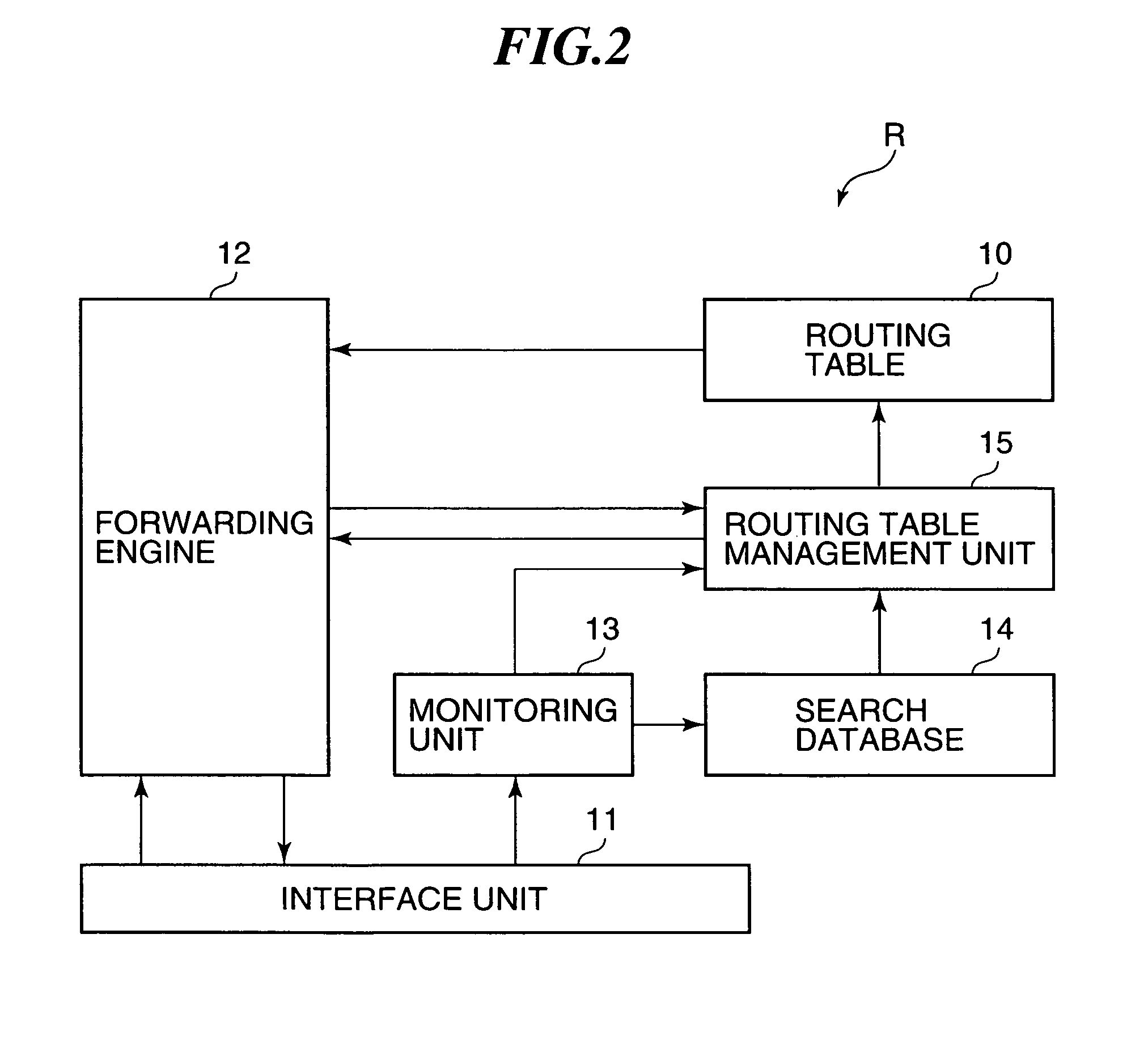

Multicast distribution system and multicast distribution method

ActiveUS20110044336A1Reduce bandwidth loadEnsure scalabilityData switching by path configurationWireless communicationRouting tableDistribution method

Provided is a multicast distribution system which can perform a multicast distribution by selecting a communication path satisfying a communication feature required by a multicast group. A distribution source router (Rs) performs a multicast distribution to routers (R1 to R5) constituting a multicast group based on an application via a mesh virtual network connected to a plurality of routers. When the CPU load ratio and the multicast group communication feature exceed threshold values, the distribution source router updates the number of branches and the depth of the multicast tree which distributes a packet from the router and starts is reconfiguration of the multicast tree. When reconfiguring the multicast tree, the distribution source router references a path feature of the mesh virtual network, selects a communication path having a sufficient path feature with a higher priority, and generates a routing table based on the multicast tree.

Owner:YAMAHA CORP

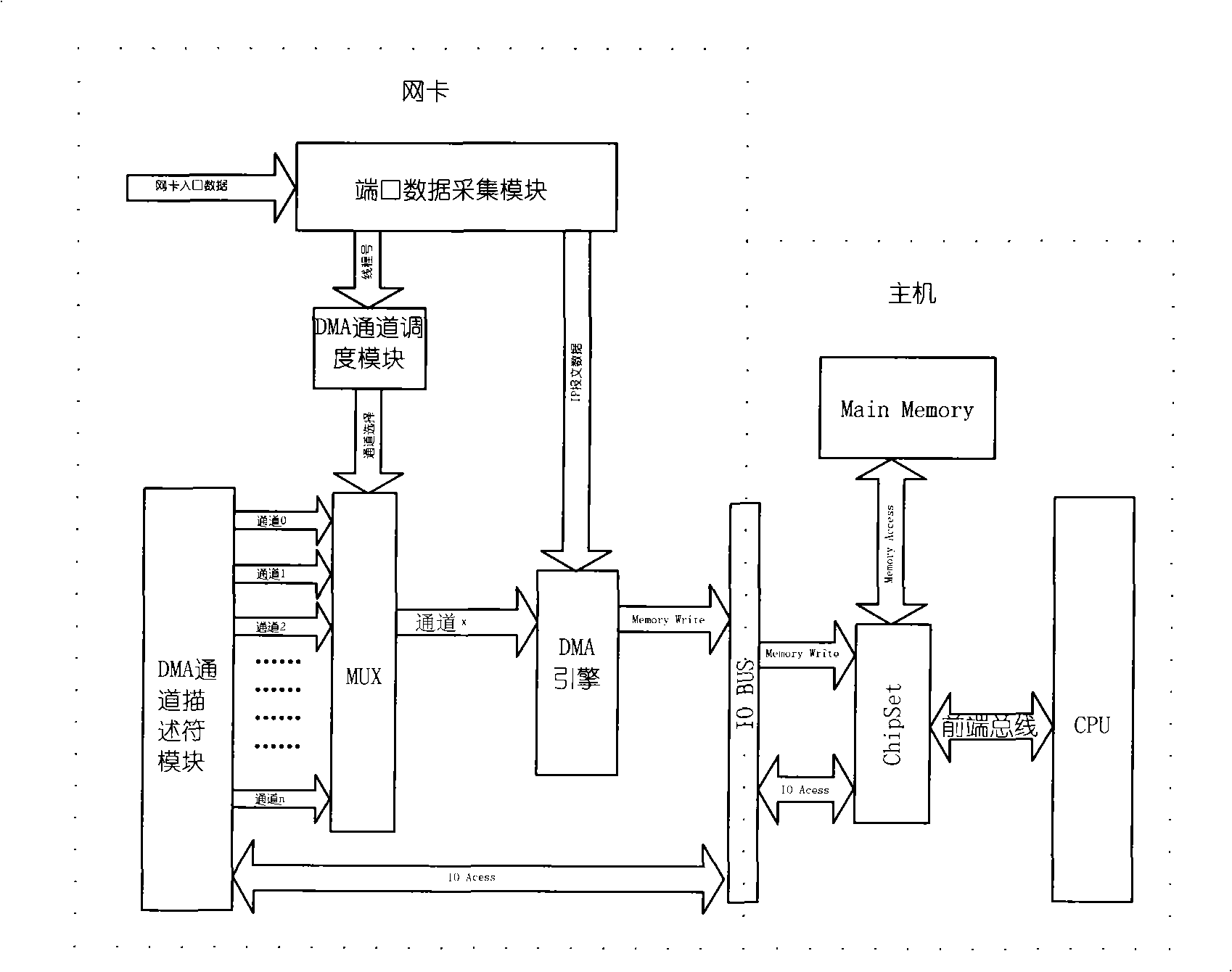

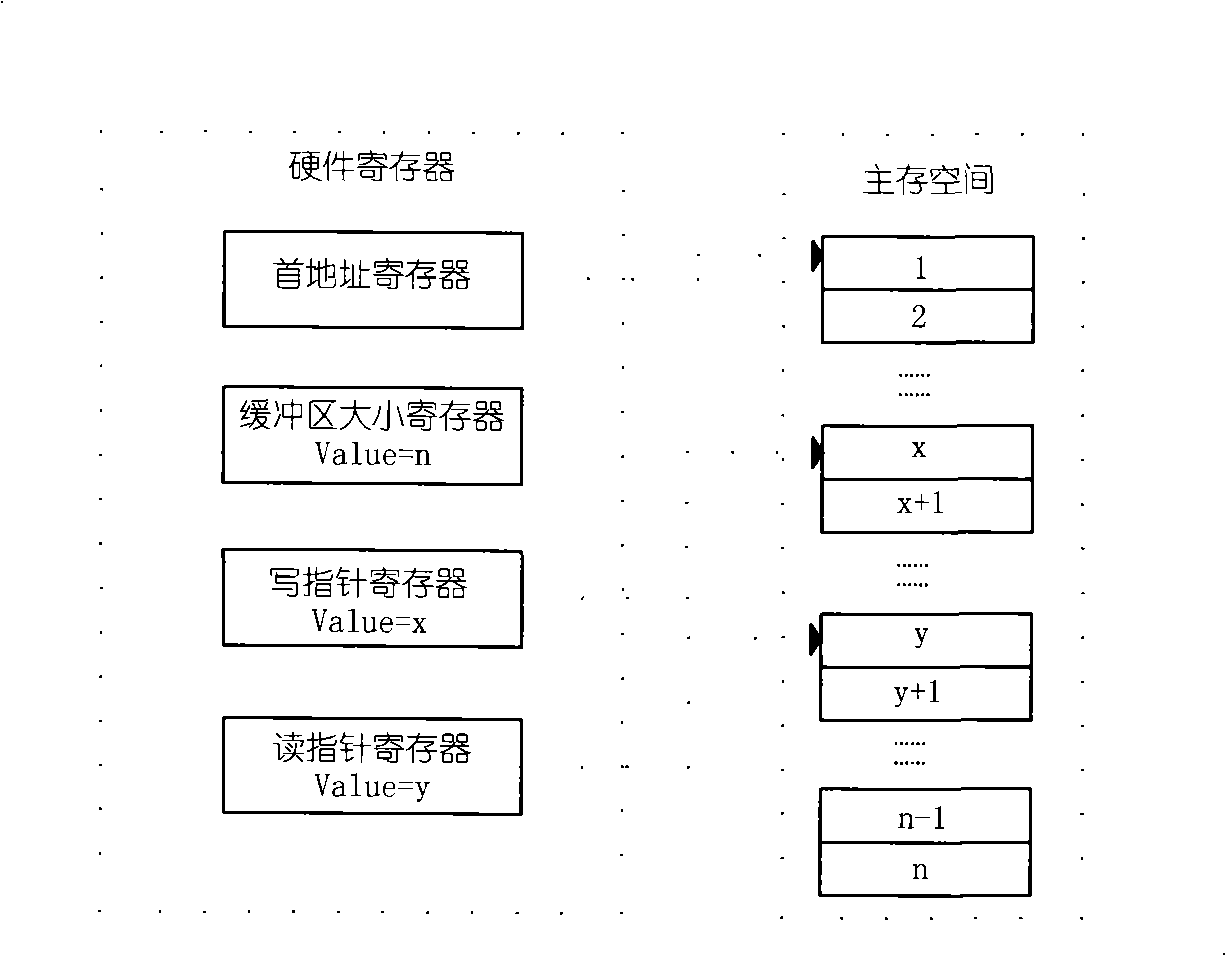

Hardware shunt method of IP report

ActiveCN101540727AImprove performanceAvoid congestionResource allocationData switching networksHardware threadChannel scheduling

The invention relates to a hardware shunt method of an IP report. In the technical scheme of the invention, a received IP report is shunted by a piece of network card hardware; the source address and the target address of an IP report title are extracted when the IP report is received by a network card; Hash algorithm is then employed to calculate tread to which the IP report belongs; and a DMA channel scheduling module starts up a DMA engine according to tread number to transmit the report to a main storage buffer area to which the tread belongs; in order to support hardware shunt strategy of the IP report, an upper-layer software ensures each tread to have a special main storage buffer area; the network card starts up the threads of a plurality of IP packages to correspond to the treads of host processing IP packages one to one; the treads of the host processing IP packages directly acquires data from a memory buffer area for processing; therefore, the transmission of intermediate data does not need a CPU, thereby reducing the CPU load. The hardware tread number supported by the method is as high as 1024 and even as higher as 4096 or 8192. The best configuration is that each CPU is corresponding to one tread, so that each tread operates independently without mutual interference; moreover, the best performance is achieved due to the least system resource sharing.

Owner:WUXI CITY CLOUD COMPUTING CENT

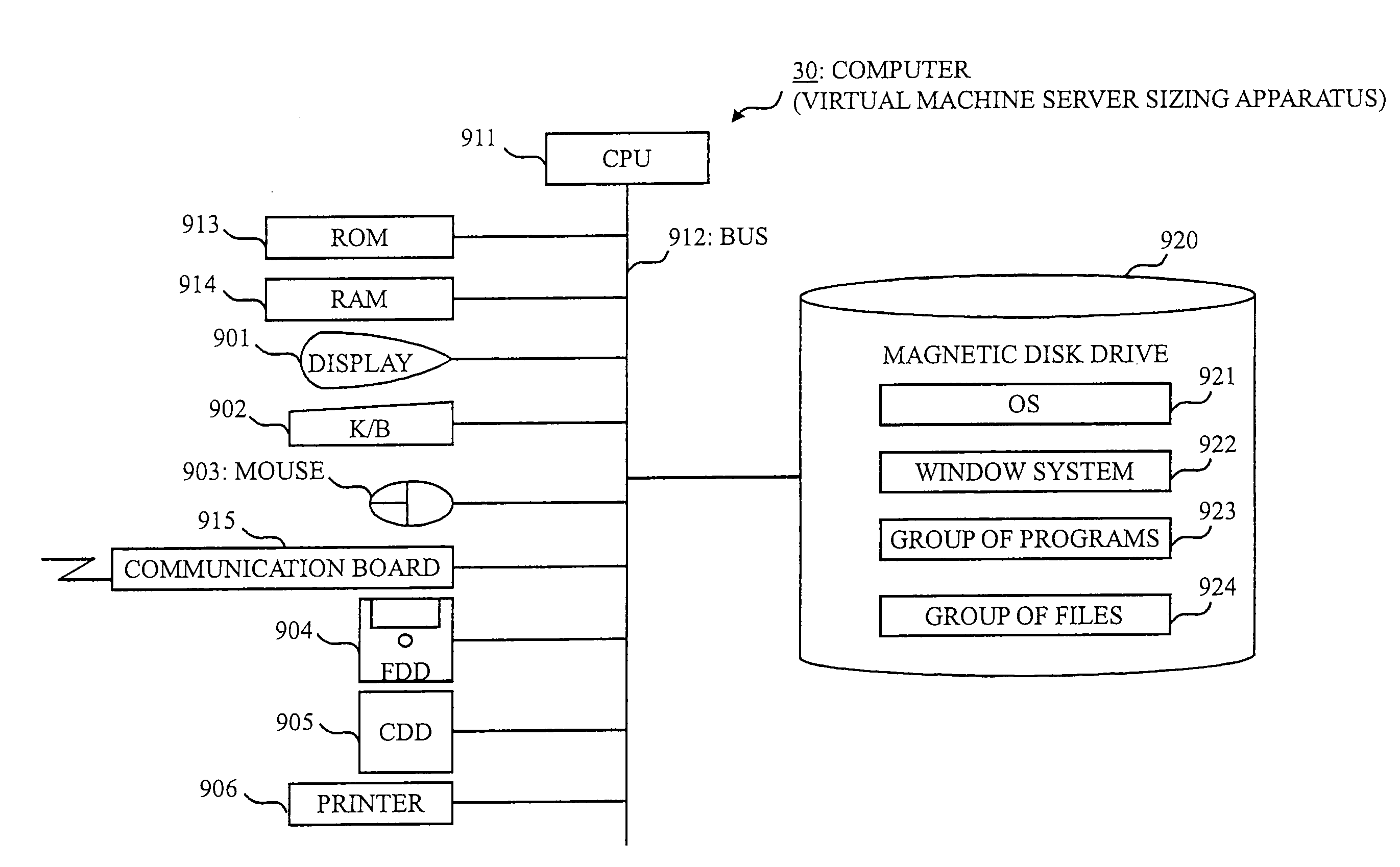

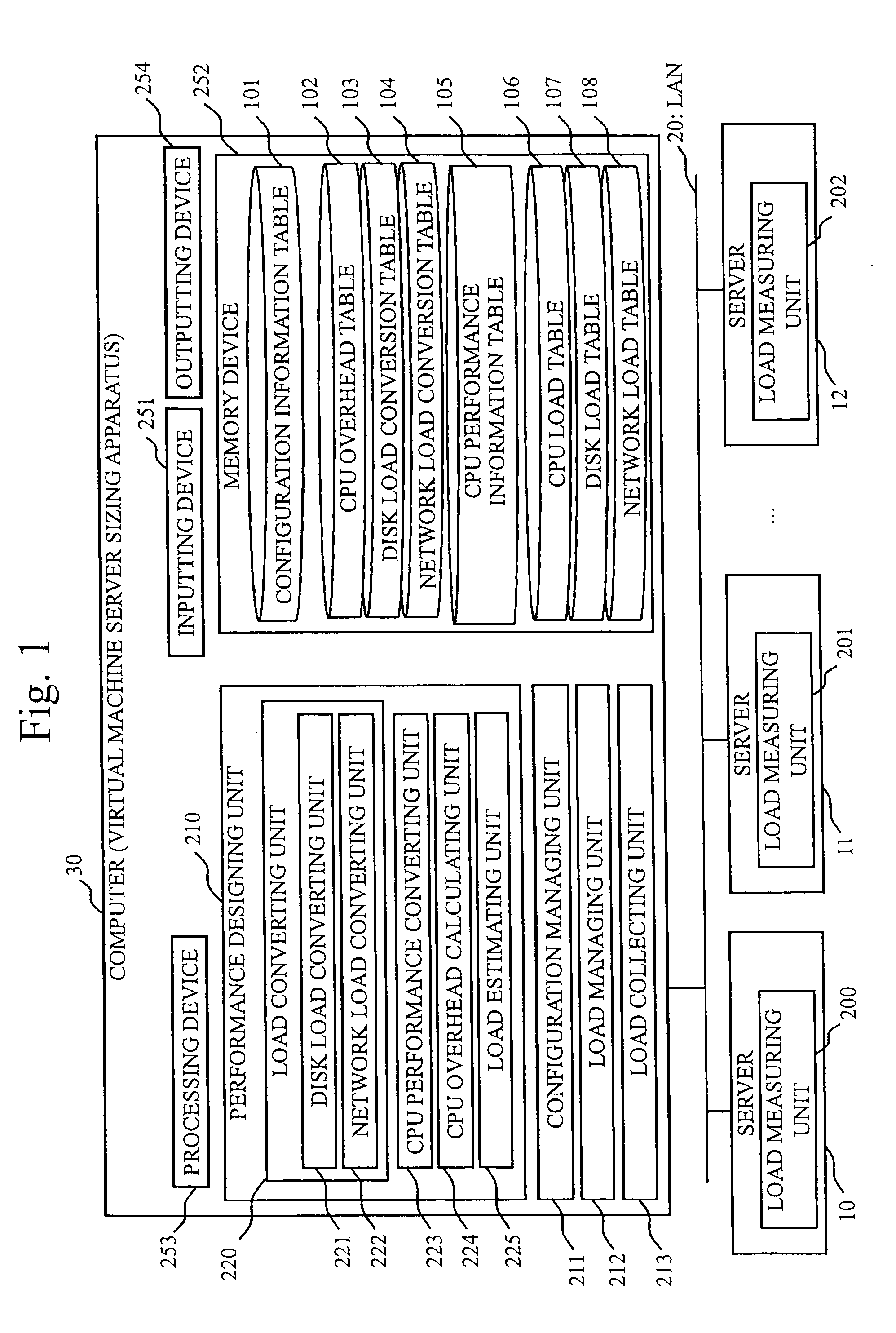

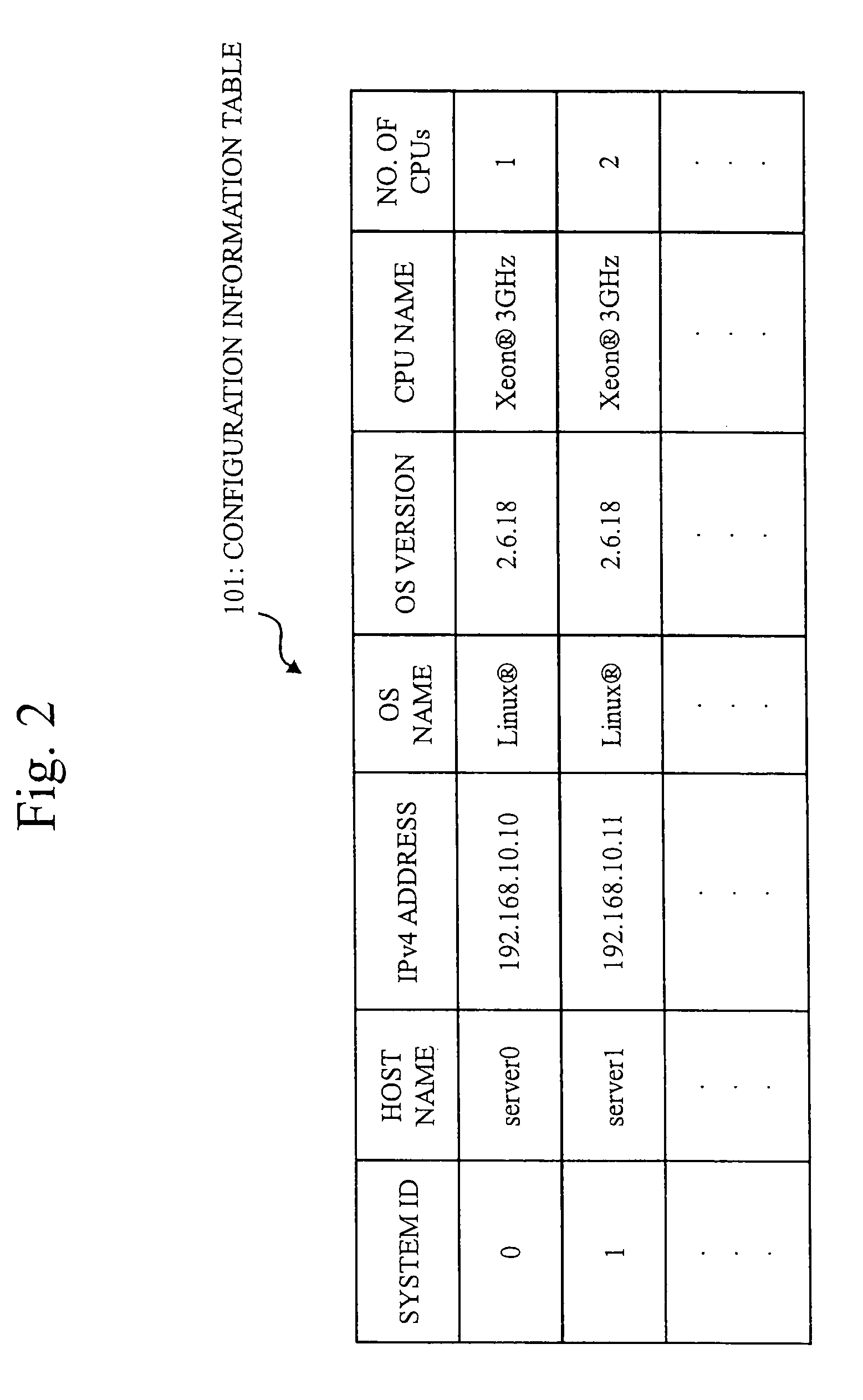

Virtual machine server sizing apparatus, virtual machine server sizing method, and virtual machine server sizing program

InactiveUS20090133018A1Improve estimation accuracyImprove accuracyError detection/correctionSoftware simulation/interpretation/emulationVirtualizationCpu load

It is an object to improve accuracy of estimation of CPU load by calculating the CPU load necessary for performing I / O emulation under the virtualized environment based on disk load and / or network load. In case of estimating CPU load of a server X which operates servers 10 to 12 as virtual servers, a CPU performance converting unit 223 obtains measured values of CPU load of the servers 10 to 12. A load converting unit 220 obtains an estimated value of the CPU load of the server X caused by I / Os of disks and / or network from disk load and / or network load of the servers 10 to 12. A CPU overhead calculating unit 224 obtains a coefficient showing CPU overhead caused by virtualization. A load estimating unit 225 estimates the CPU load of the server X using the above measured value, the above estimated value, and the above coefficient.

Owner:MITSUBISHI ELECTRIC CORP

Multicast distribution system and method for distributing data on a mesh virtual network

ActiveUS8089905B2Ensure scalabilityReduce bandwidth loadData switching by path configurationMultiple digital computer combinationsRouting tableDistribution system

Provided is a multicast distribution system which can perform a multicast distribution by selecting a communication path satisfying a communication feature required by a multicast group. A distribution source router (Rs) performs a multicast distribution to routers (R1 to R5) constituting a multicast group based on an application via a mesh virtual network connected to a plurality of routers. When the CPU load ratio and the multicast group communication feature exceed threshold values, the distribution source router updates the number of branches and the depth of the multicast tree which distributes a packet from the router and starts reconfiguration of the multicast tree. When reconfiguring the multicast tree, the distribution source router references a path feature of the mesh virtual network, selects a communication path having a sufficient path feature with a higher priority, and generates a routing table based on the multicast tree.

Owner:YAMAHA CORP

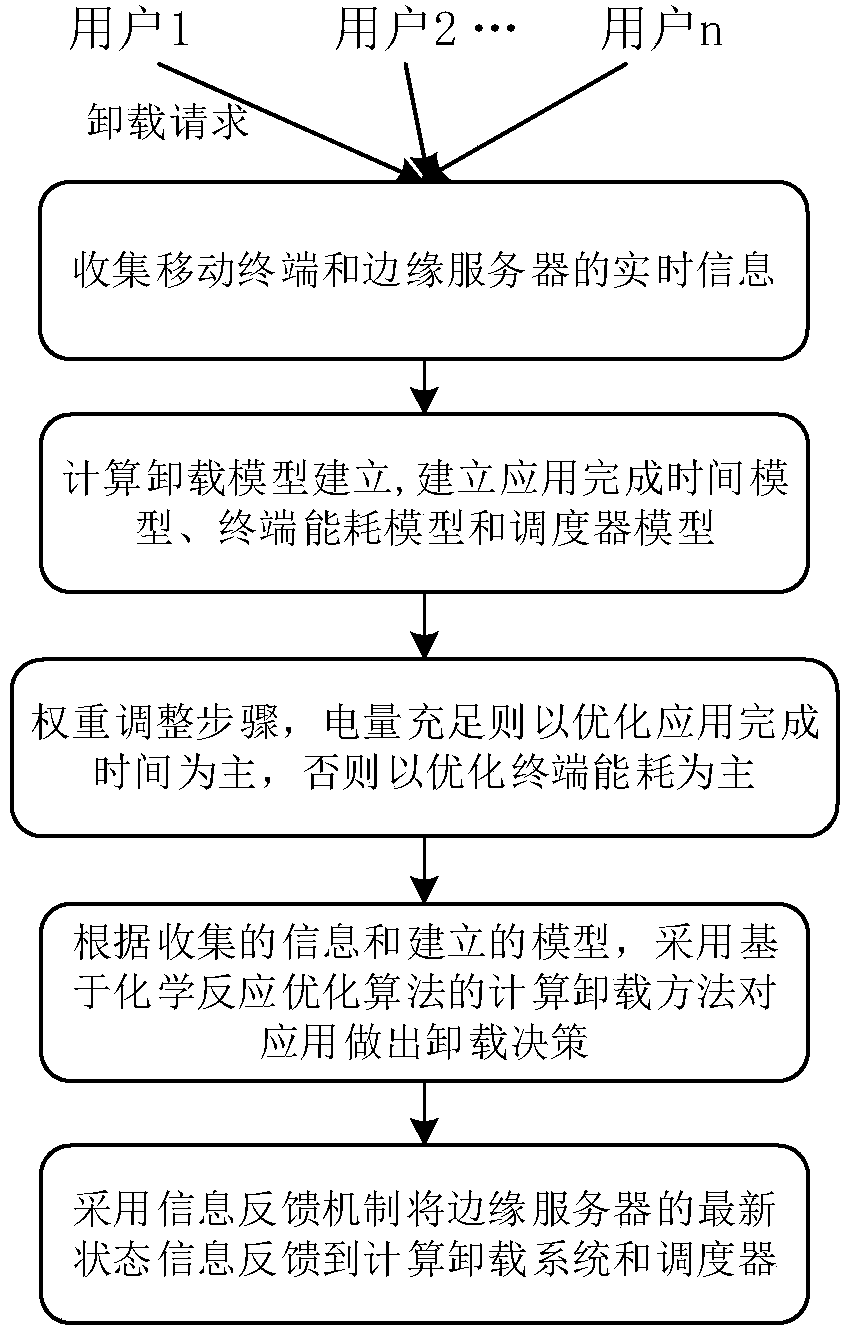

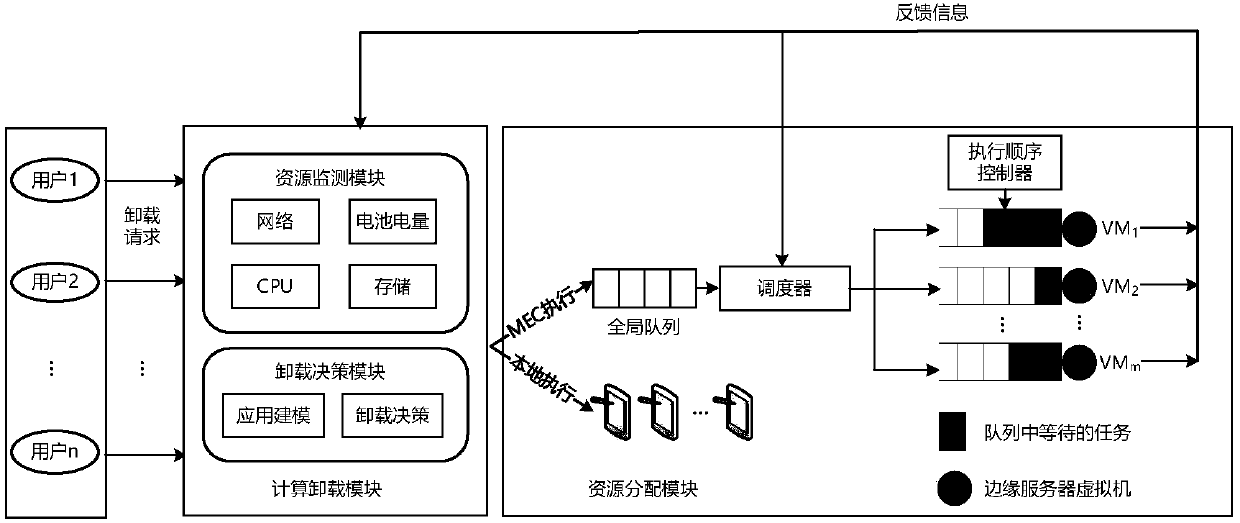

Multi-user computation unloading method and device based on chemical reaction optimization algorithm

ActiveCN107911478AReduce consumptionShorten completion timeResource allocationTransmissionTime informationCompletion time

The invention discloses a multi-user computation unloading method and device based on a chemical reaction optimization algorithm. A computation unloading device collects real-time information of a mobile terminal and an edge server, and the real-time information comprises the CPU load, the current electric quantity, the network bandwidth and like information of the terminal and the state information of a virtual machine of the edge server, the completion time of an application and the energy consumption occupied weight of the terminal are adjusted so as to establish an application completion time model, a terminal energy consumption model and a scheduler module; according to the collected real-time information and the established model, a selection decision is worked out by adopting the multi-user computation unloading method based on the chemical reaction optimization algorithm to obtain a quasi-optimal result of an application program, thereby improving the application performance and reducing the terminal energy consumption.

Owner:WUHAN UNIV OF TECH

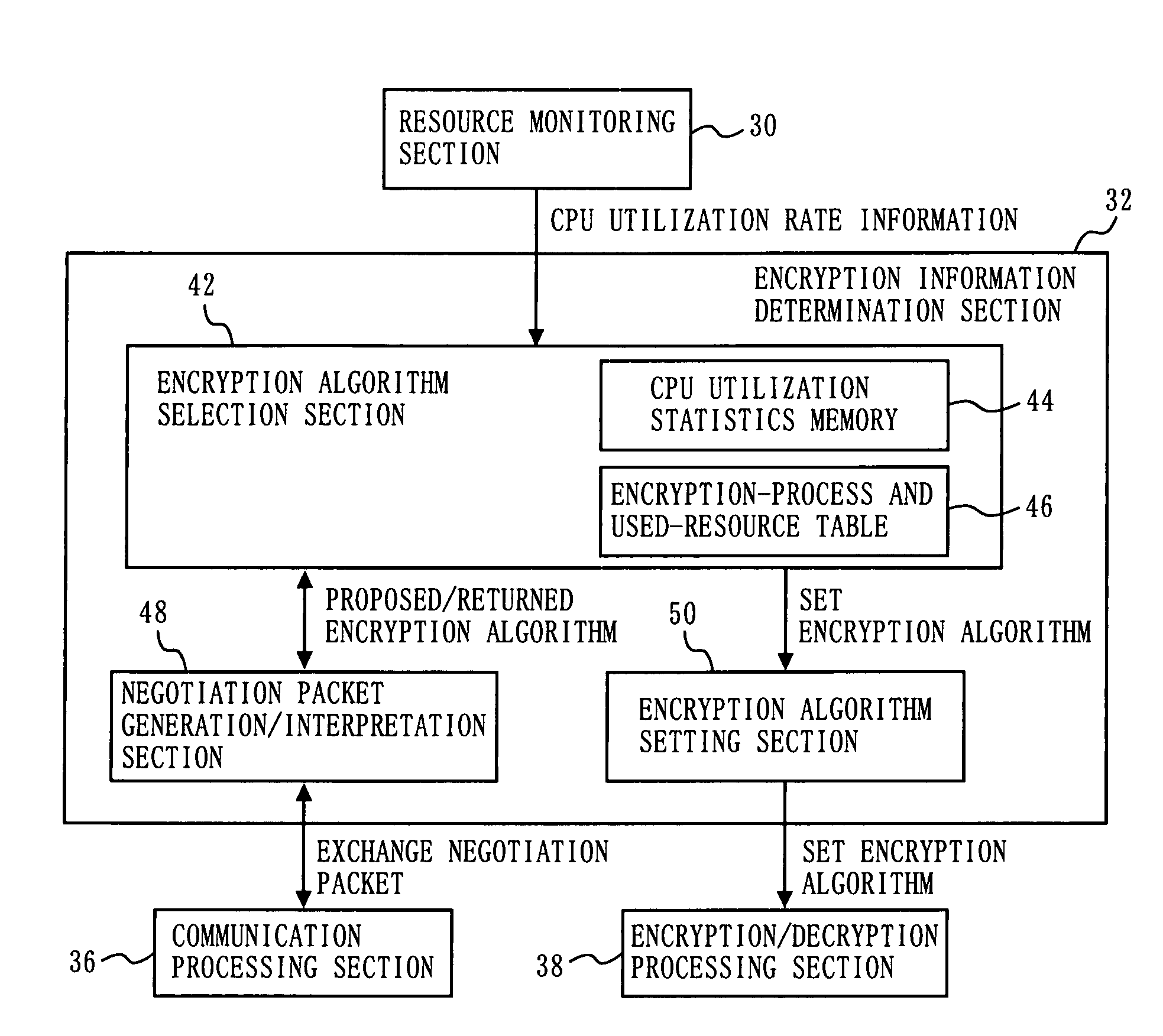

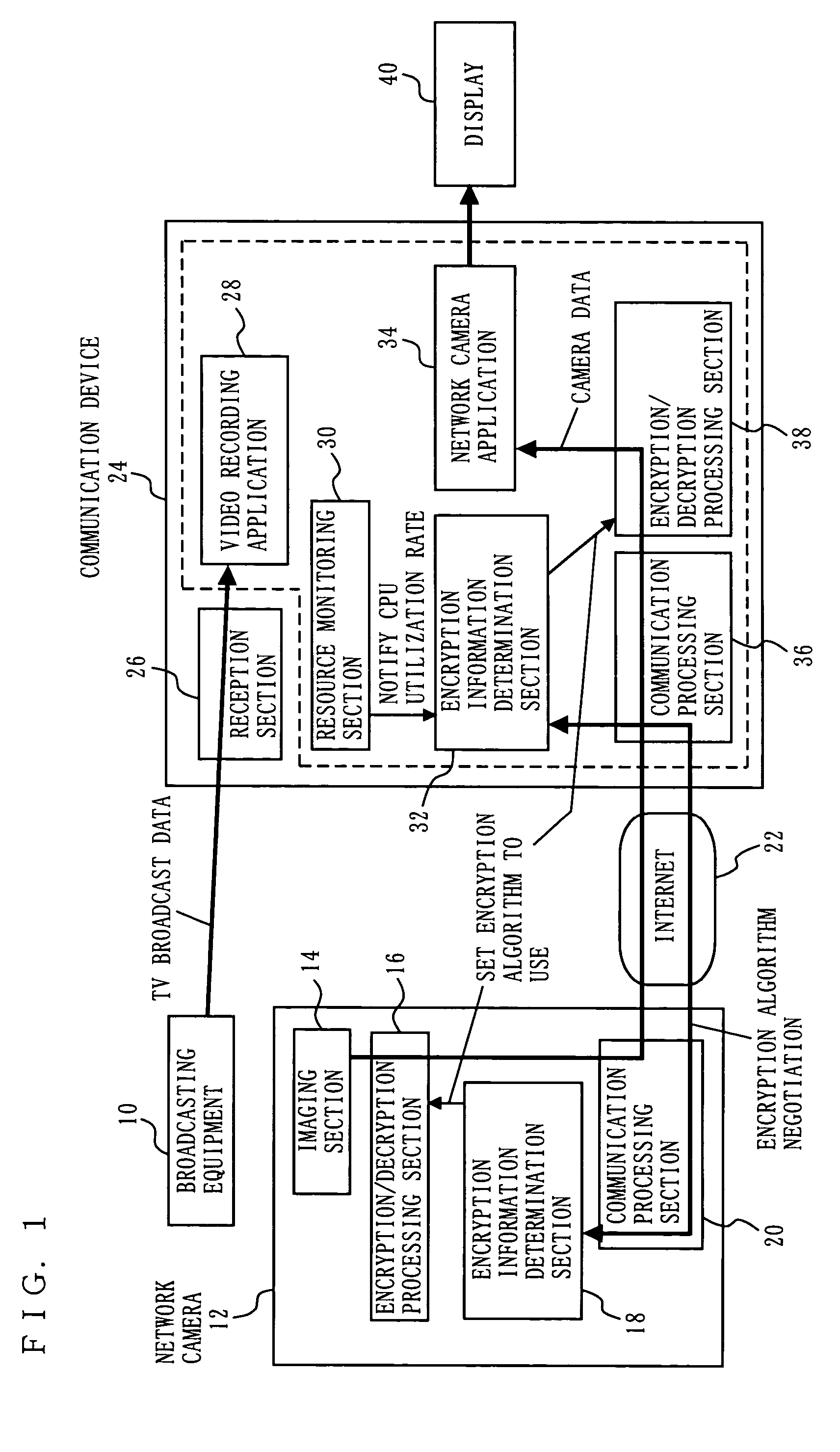

Communication device, communication system, and algorithm selection method

InactiveUS7313234B2Reduce loadImprove confidentialityKey distribution for secure communicationSecret communicationCommunications systemLow load

An encryption information determination section in a communication device negotiates an encryption algorithm to be used for encrypted communications with an encryption information determination section in a network camera, which is a communication counterpart. At this time, the encryption algorithm to be selected is varied depending on the CPU load of the communication device. That is, if the CPU utilization rate is high, a low-load encryption algorithm is selected, and if the CPU utilization rate is low, a high-load encryption algorithm is selected. A encryption / decryption processing section performs code processing using the encryption algorithm selected by the encryption information determination section.

Owner:PANASONIC CORP

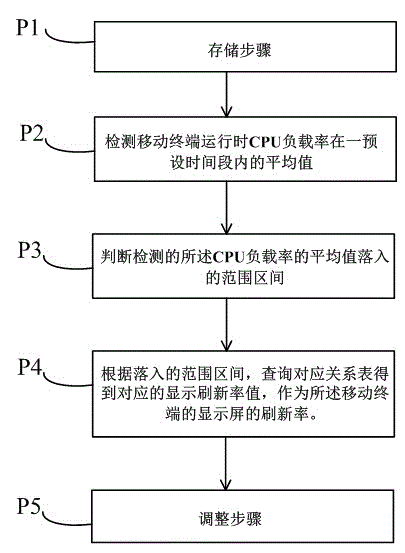

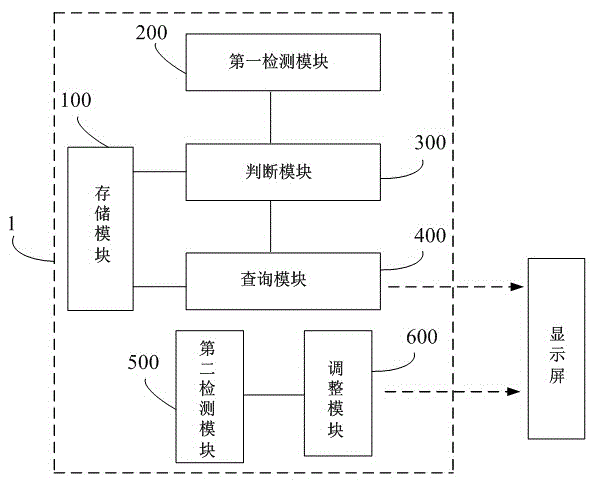

Control method and control device of display refresh rate of mobile terminal

ActiveCN103151019AWill not affect the user experienceImprove experienceCathode-ray tube indicatorsSubstation equipmentTime rangeComputer terminal

The invention discloses a control method and a control device of display refresh rate of a mobile terminal. The control method comprises the following steps: the first step is storing n range terminals defined in a load rate range between 0 and 100% of a central processing unit (CPU) of the mobile terminal, n display refresh rate values, and a correspondence table between the n range terminals and the n display refresh rate values, the second steps is detecting an average value of the load rate of the CPU within a preset time range when the mobile terminal runs, the third step is judging a range terminal to which the average value, detected in the second step, of the load rate of the CPU belongs, and the fourth step is obtaining a corresponding display refresh rate according to the range terminal to which the average value belongs in the third step and by inquiring the correspondence table in the first step, and using the corresponding display refresh rate as the refresh rate of a display of the mobile terminal. According to the control method and the control device of the display refresh rate of the mobile terminal, the display refresh rate of the display is adjusted according to the dynamic of the load rate of the CPU when the mobile terminal runs, using experience of a user is not affected and energy consumption of the system is reduced at the same time.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

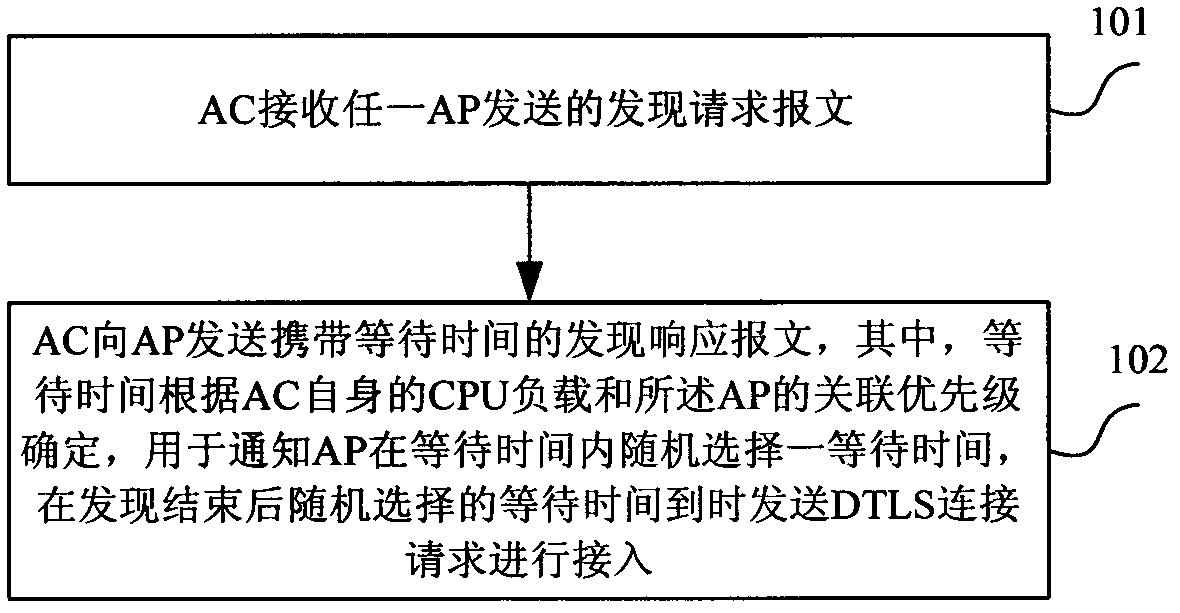

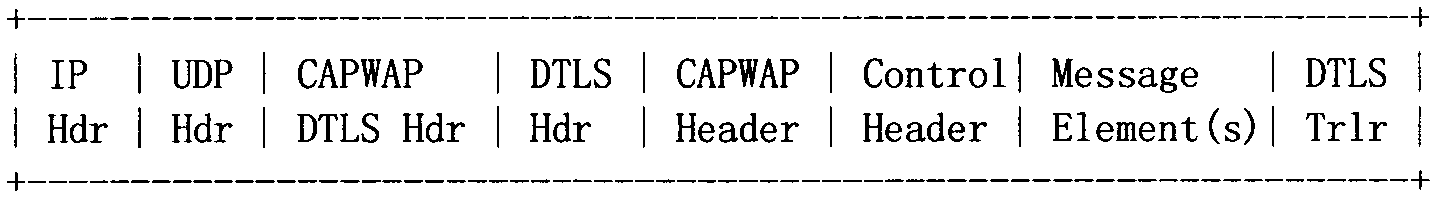

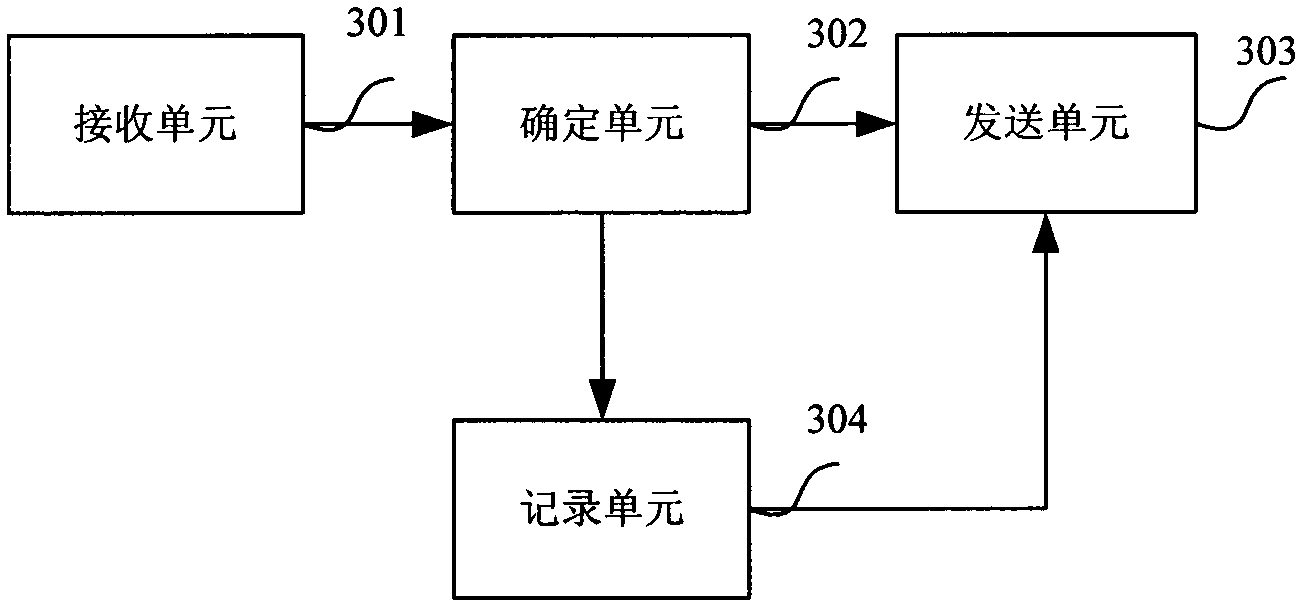

Method for accessing access pint (AP) into access controller (AC) in local area network, AC and AP

ActiveCN102316549AEasy maintenanceAvoid associationAssess restrictionNetwork topologiesCpu loadComputer science

The invention discloses a method for accessing an access pint (AP) into an access controller (AC) in a local area network, which comprises the following steps that: the AC receives discovery request messages sent by any one AP and sends discovery response messages carrying the waiting time to the AP, wherein the waiting time is determined according to the self central processing unit (CPU) load of the AC and the association priority of the AP and is used for notifying the AP to randomly select a waiting time in the waiting time, and datagram transport layer security (DTLS) connection requests are sent when the randomly selected waiting time is reached after the discovery completion for carrying out the access. Based on the same invention idea, the invention also provides the AC and the AP. The problem of simulation correlation of the AC by a large number of APs can be avoided, and convenience is brought for the network maintenance.

Owner:NEW H3C TECH CO LTD

Methods and apparatuses for operating a data processing system

ActiveUS8237386B2Low heat generationImprove performanceTemperatue controlDigital data processing detailsHard disc driveThermistor

Methods and apparatuses to manage working states of a data processing system. At least one embodiment of the present invention includes a data processing system with one or more sensors (e.g., physical sensors such as tachometer and thermistors, and logical sensors such as CPU load) for fine grain control of one or more components (e.g., processor, fan, hard drive, optical drive) of the system for working conditions that balance various goals (e.g., user preferences, performance, power consumption, thermal constraints, acoustic noise). In one example, the clock frequency and core voltage for a processor are actively managed to balance performance and power consumption (heat generation) without a significant latency. In one example, the speed of a cooling fan is actively managed to balance cooling effort and noise (and / or power consumption).

Owner:APPLE INC

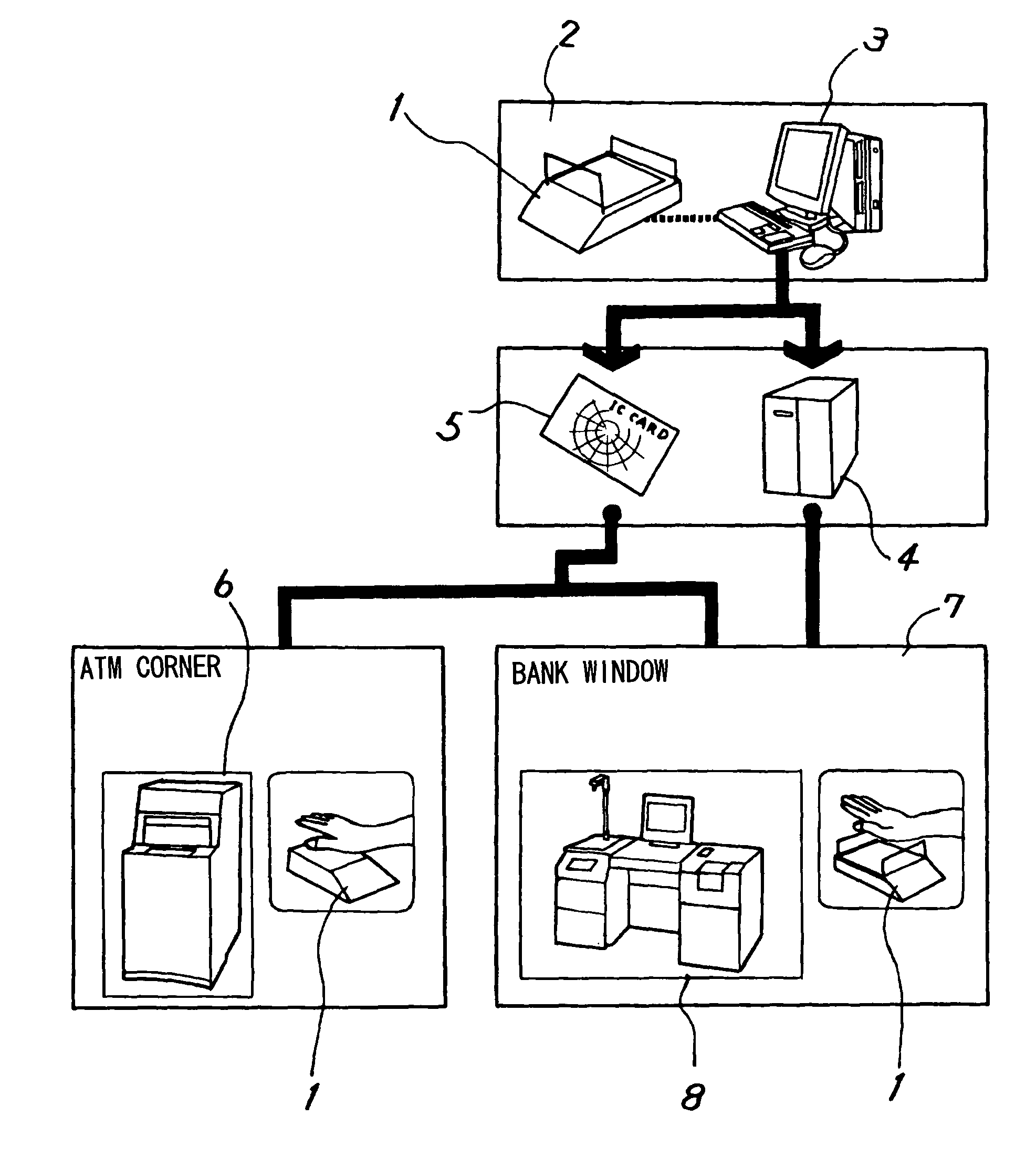

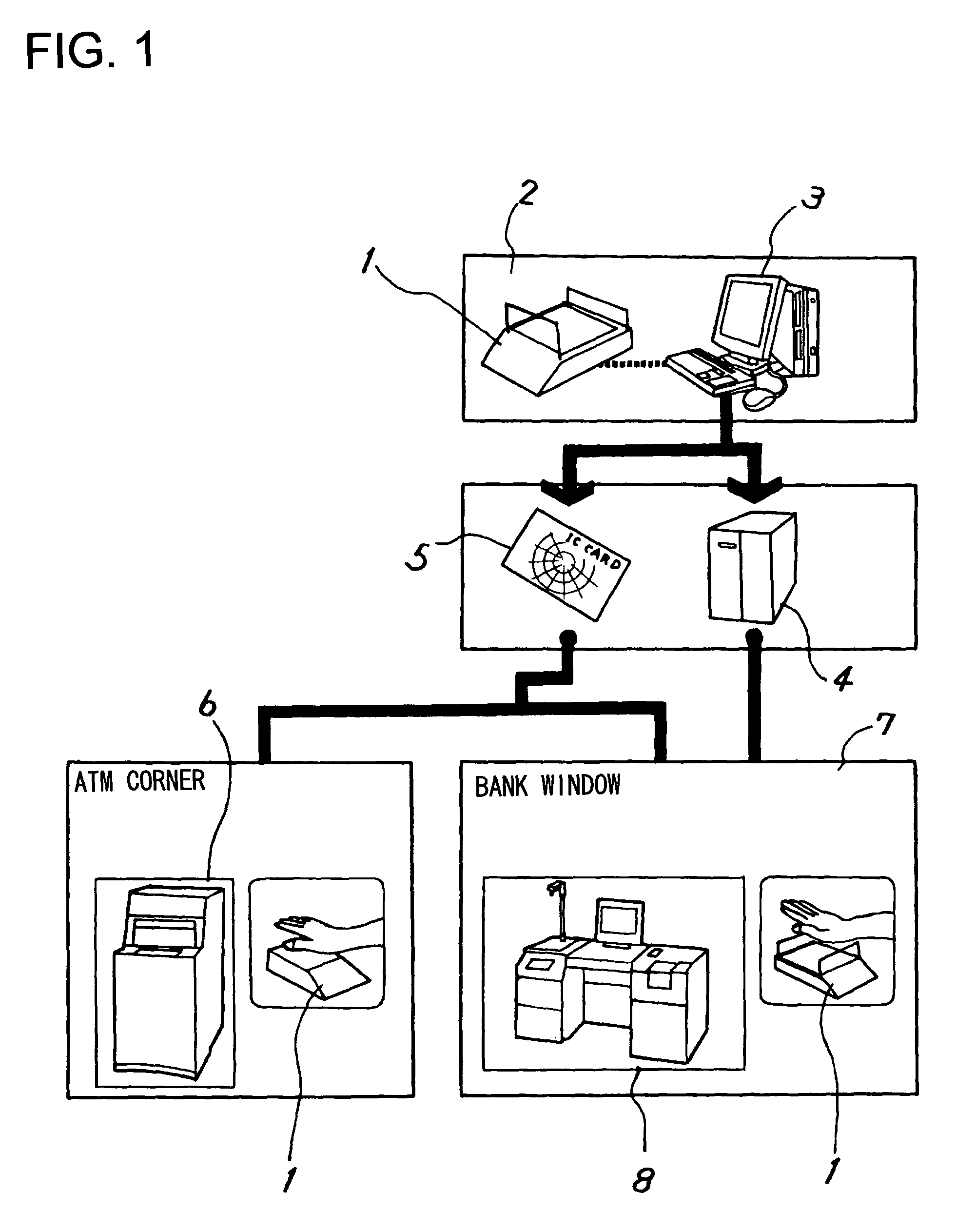

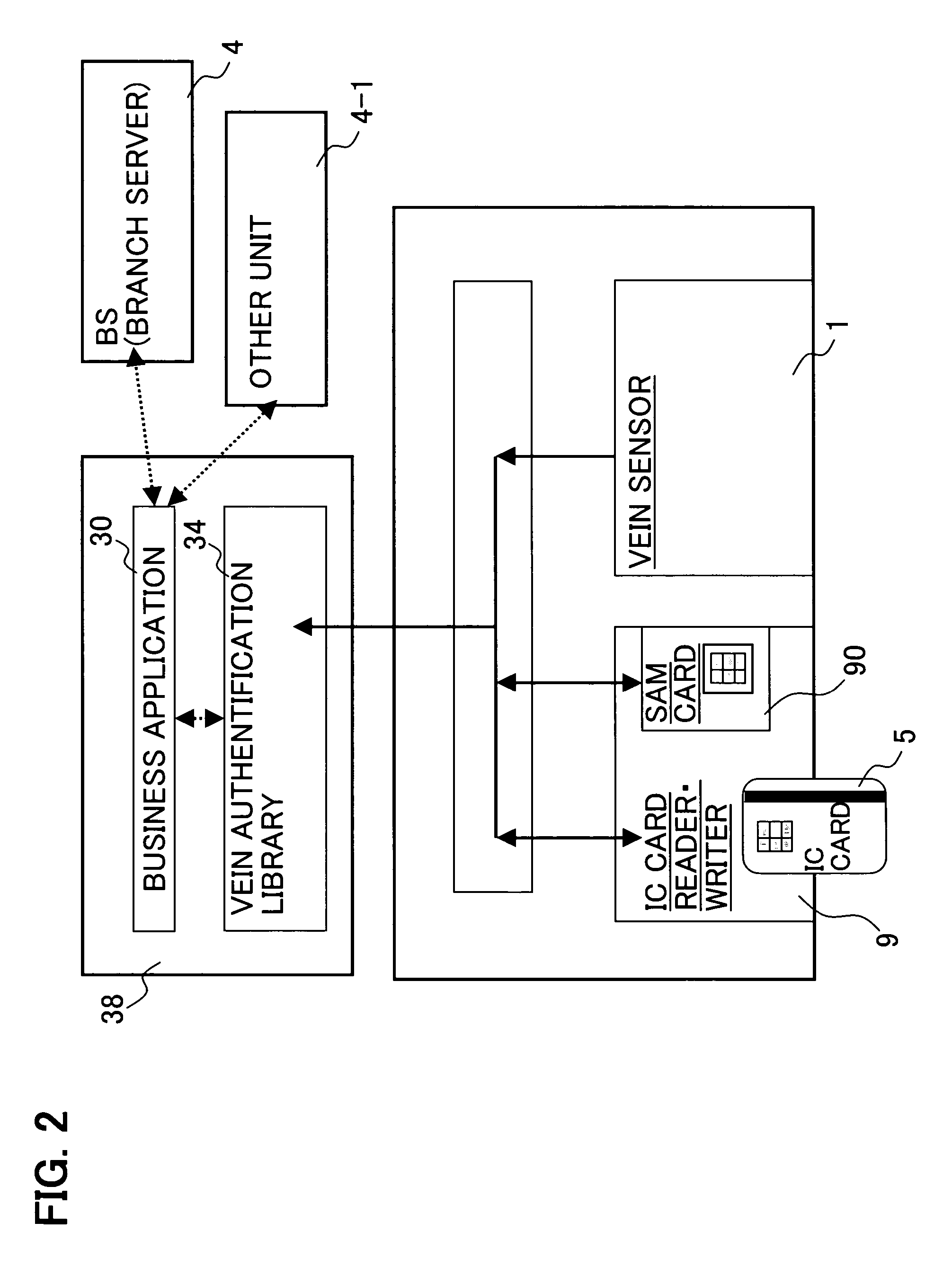

Biometrics authentication method and biometrics authentication device

ActiveUS7725733B2Reduce loadImprove securityAcutation objectsCarpet cleanersComputer hardwareBiometric data

Owner:FUJITSU LTD +1

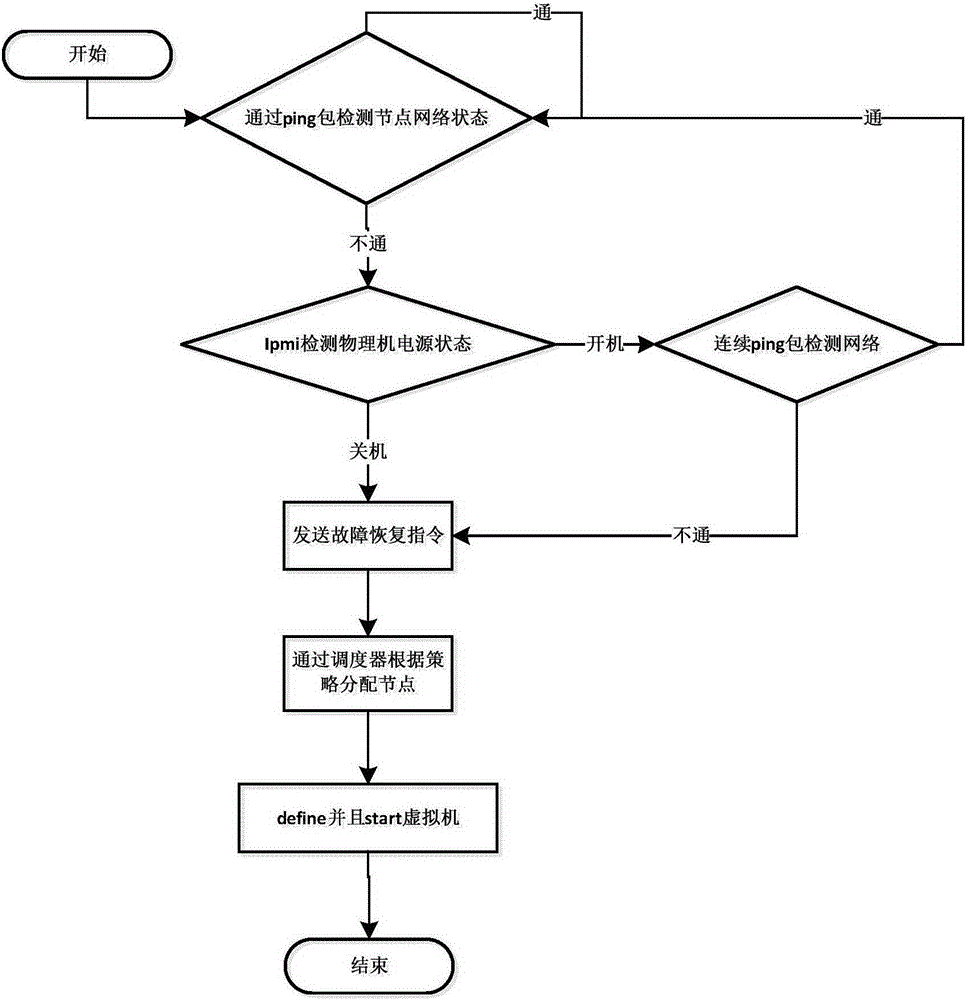

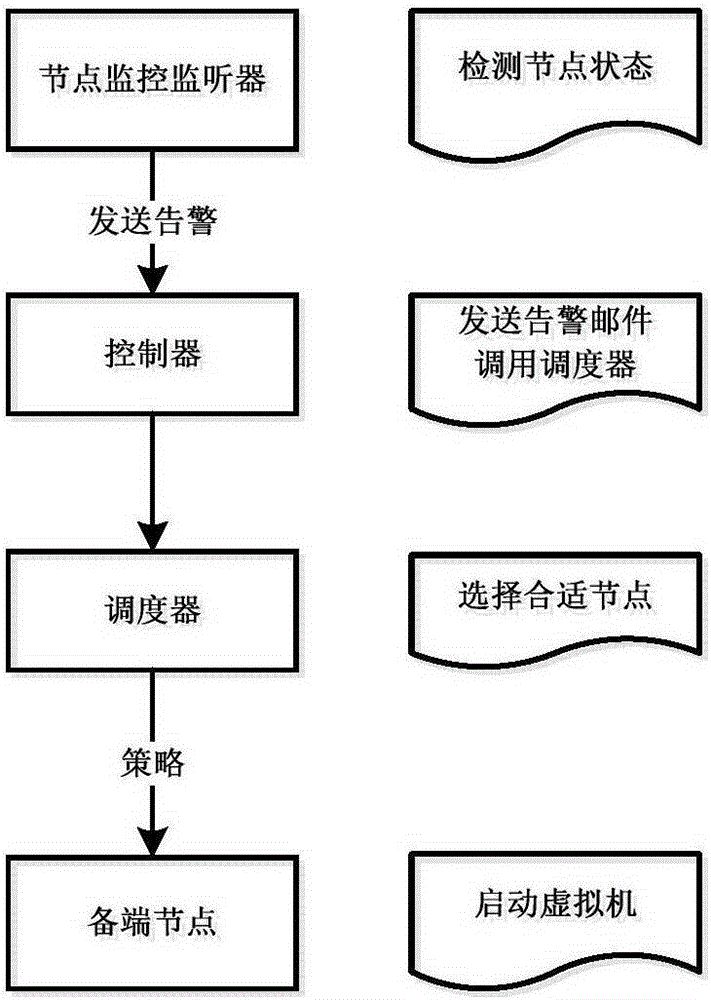

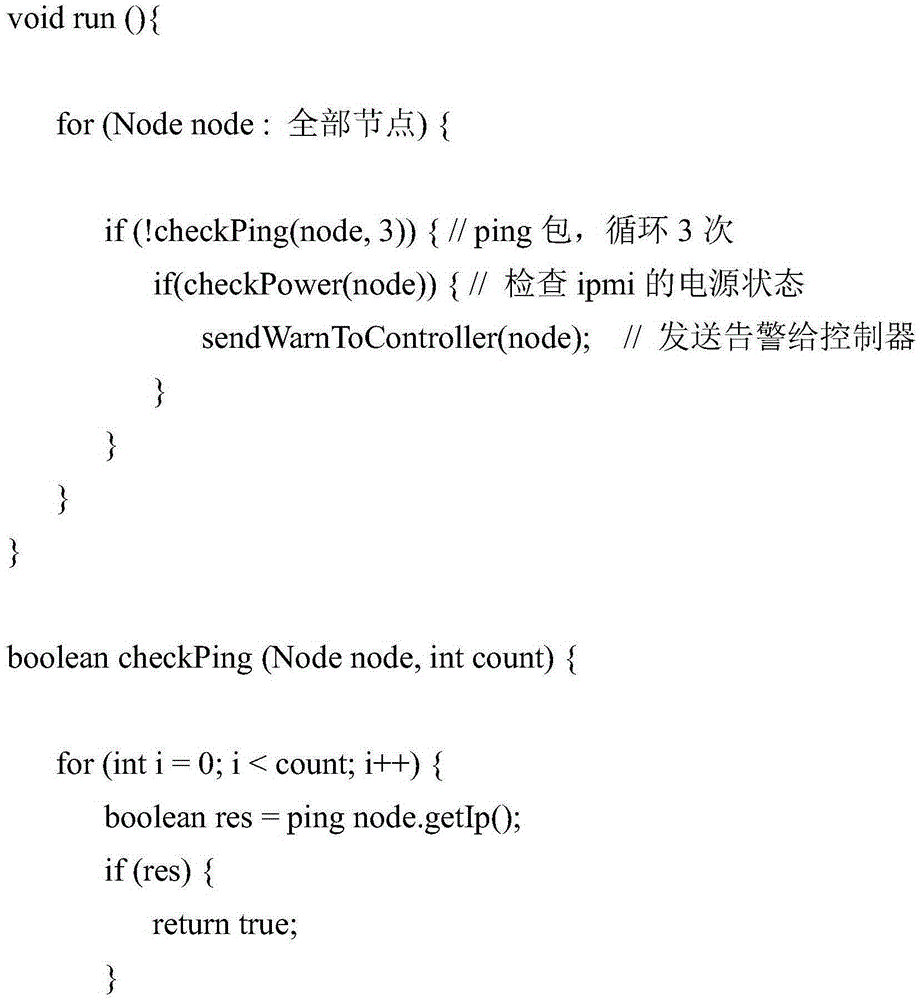

Virtual machine failure detection and recovery method

InactiveCN105335214APrevent wrong migrationGuaranteed uptimeFault responseData switching networksRecovery methodDowntime

The invention relates to the fields of cloud computing and network technique and in particular relates to a virtual machine failure detection and recovery method. The method comprises the following steps: 1, deploying an NFS network file system environment, establishing a mount point on an NFS server, putting a mirror image on the mount point of the NFS server, mounting shared storage on all the nodes, and establishing a virtual machine for the mirror image; 2, starting a detection node timer, detecting whether the network and the power supply are positioned in a normal state, otherwise transmitting a failure recovery instruction to a scheduler; 3, selecting available nodes by virtue of the scheduler according to the preset cpu load strategy and other scheduling rules; 4, regenerating a virtual machine which is the same as the original machine according to a configuration file generated in the process of establishing the virtual machine; and 5, starting the established virtual machine. According to the method disclosed by the invention, the problem that the virtual machine cannot be continuously used after physical node downtime is solved, and the method can be used for virtual machine failure detection and recovery.

Owner:G CLOUD TECH

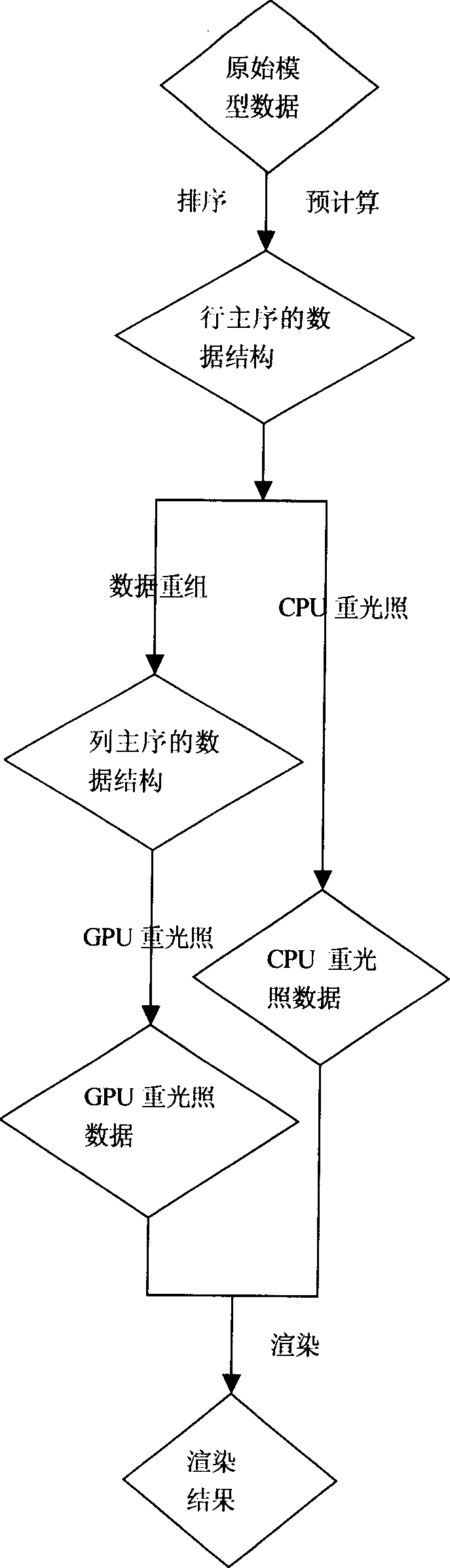

Method for precalculating radiancy transfer full-frequency shadow based on GPU

InactiveCN1889128AReduce usageRendering speed maintained3D-image renderingIlluminanceTransfer matrix

A method of transferring entire frequency shadow owing to the pre-computed radiancey of GPU, (1) making use of the circumstance illuminance image to pursues the illumination to circumstance , getting the radiation delivery function B = TL, matrix T is radiation delivery matrix, L is an illuminant matrix; (2) pre-computing the radiation delivery matrix T; (3)getting sparse radiation delivery matrix when the pre-computed radiation delivery matrix T compressed in quantization owe to small echo alternation; (4) rearranging the sparse radiation delivery matrix in (3) to put the important matrix in the front part; (5) doing small echo alternation rapidly for L, getting sparse illuminant matrix which has been quantization compressed; (6) carrying out rapidly sparse matrix multiplication on T and L in GPU to accomplish the re illumination exaggeration. The invention make use of the data structure and algorithm according to the ability of GPU that computed in parallel, it can reach fairly good balance between CPU loads and GPU loads, exaggerating speed and exaggerating quality . It can reduce the use of memory and keep the quality of exaggerating at the same time, and the exaggerating speed has increased in wide-range. It has reached the purpose of exaggerating entire frequency shadow in real time.

Owner:BEIHANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com