Patents

Literature

406 results about "Parallel process" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Parallel process is a phenomenon noted between therapist and supervisor, whereby the therapist recreates, or parallels, the client's problems by way of relating to the supervisor. The client's transference and the therapist's countertransference thus re-appear in the mirror of the therapist/supervisor relationship.

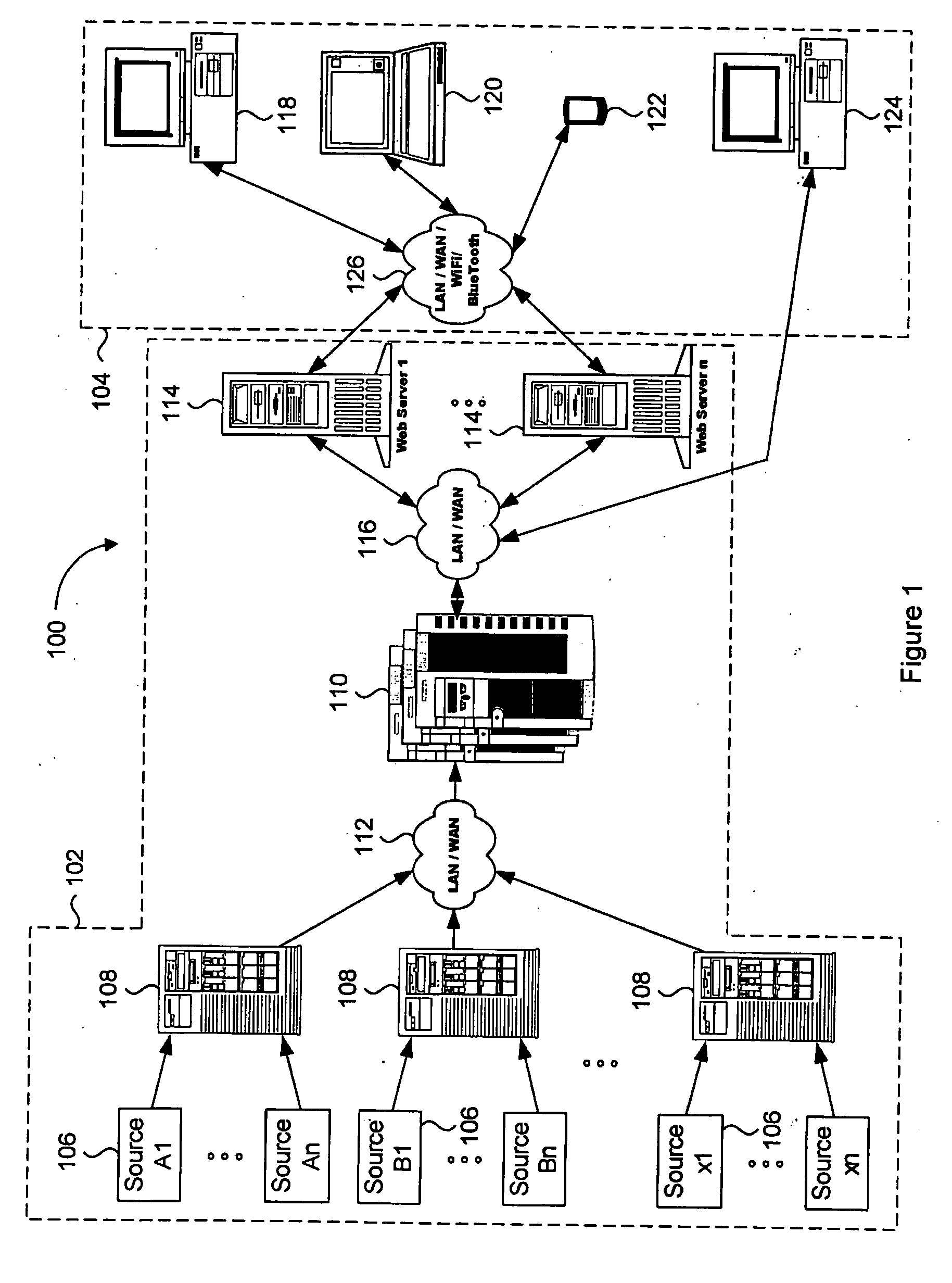

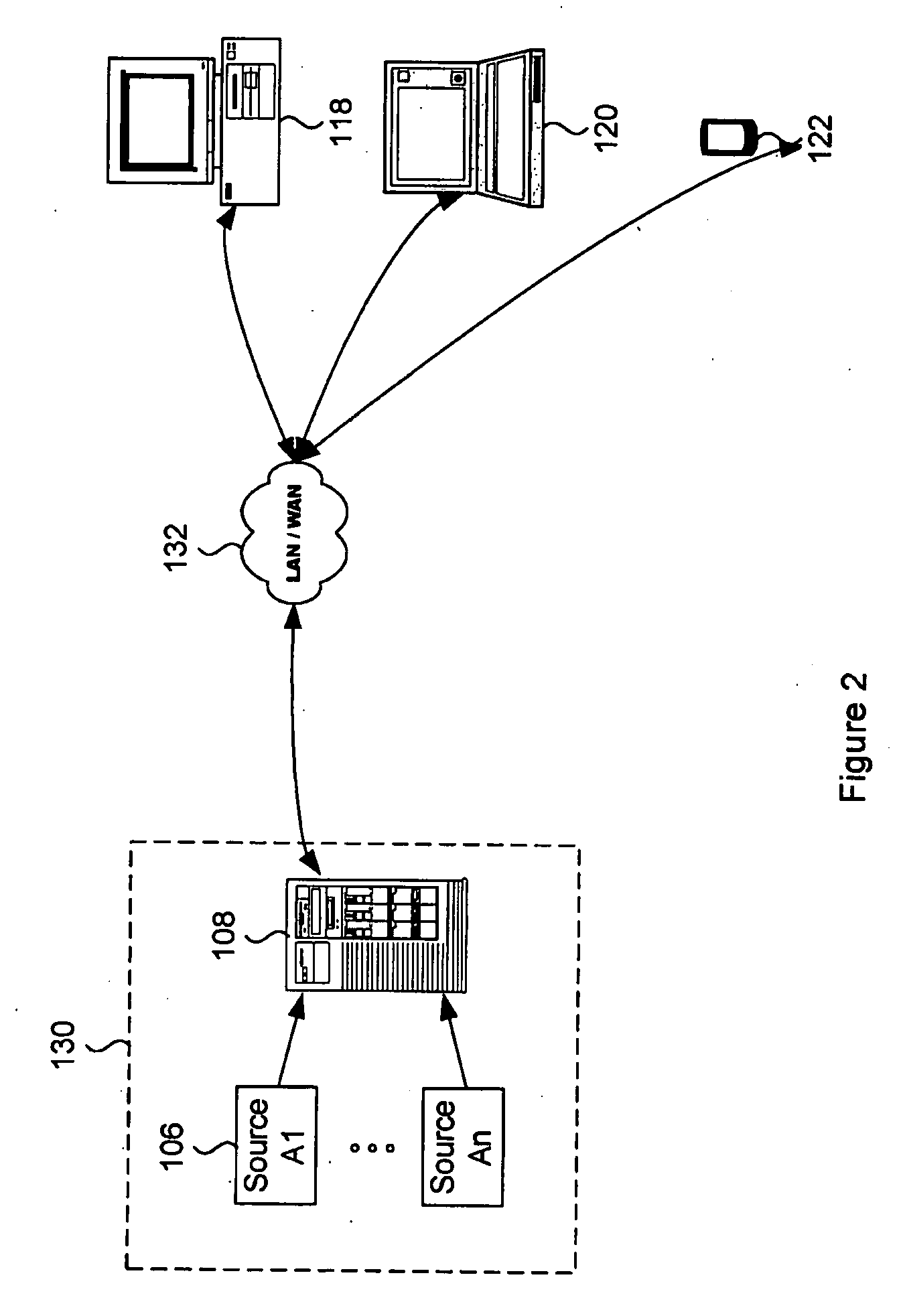

System and method for real-time media searching and alerting

InactiveUS20080072256A1Television system detailsMetadata video data retrievalClosed captioningRadio broadcasting

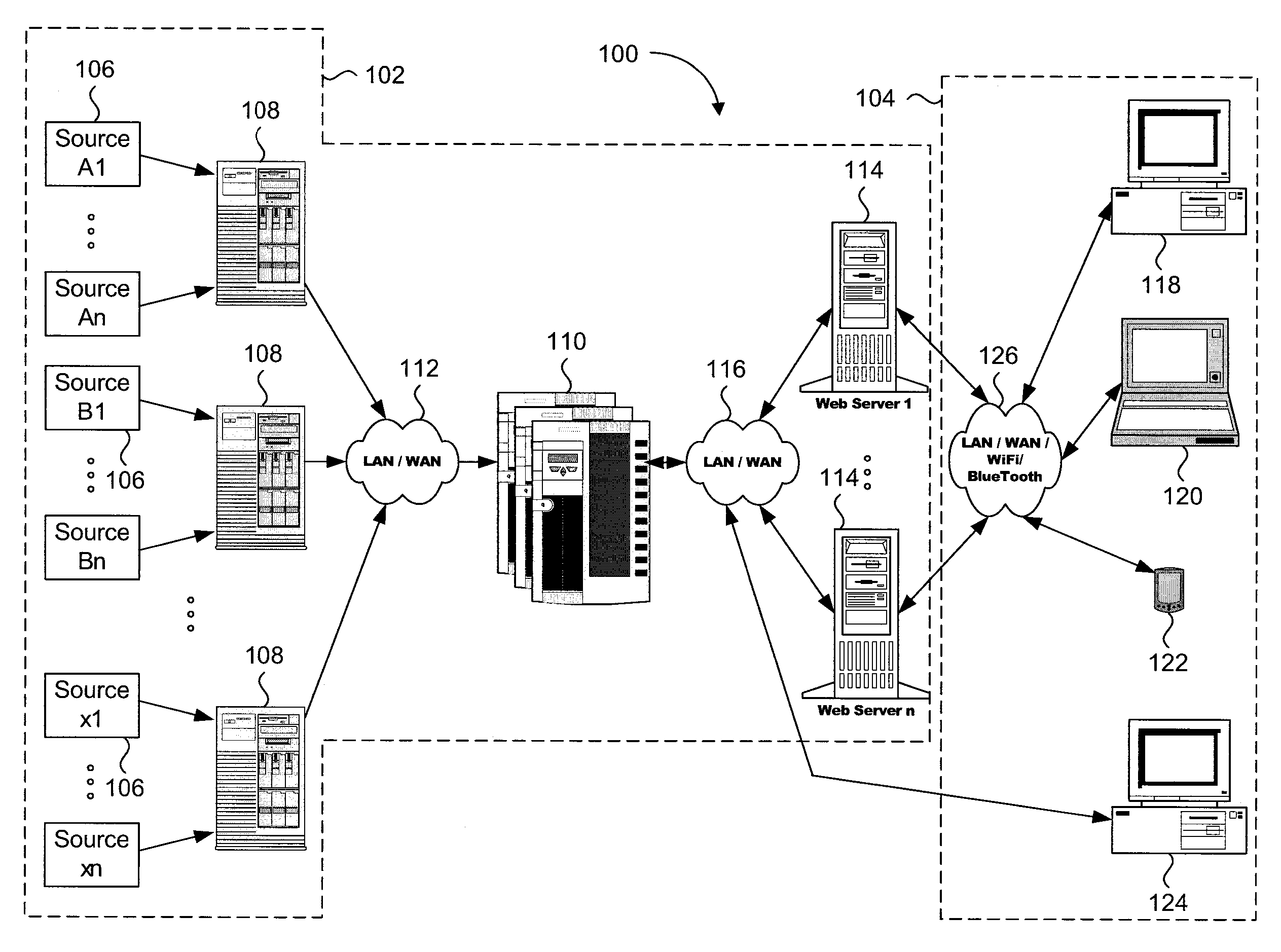

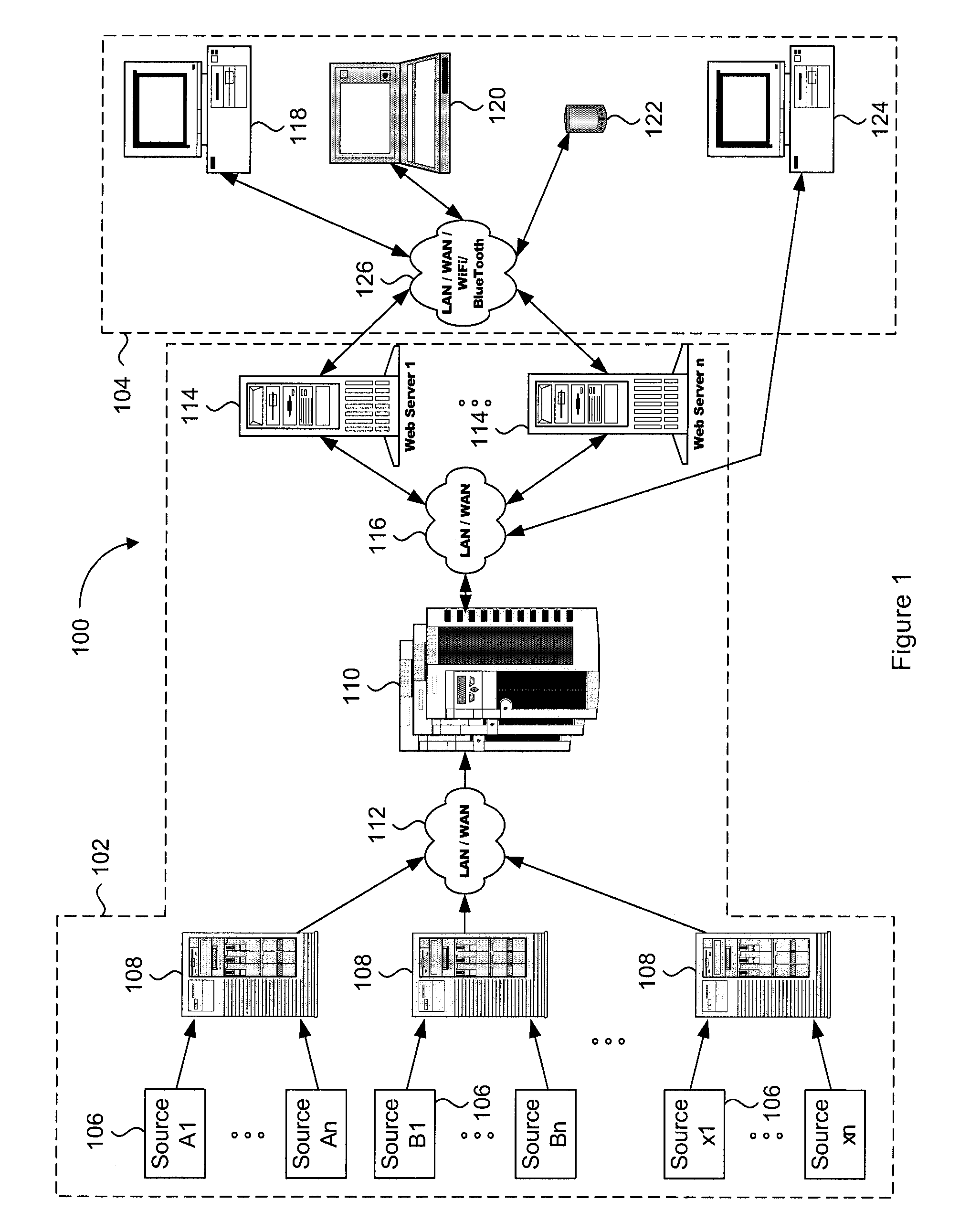

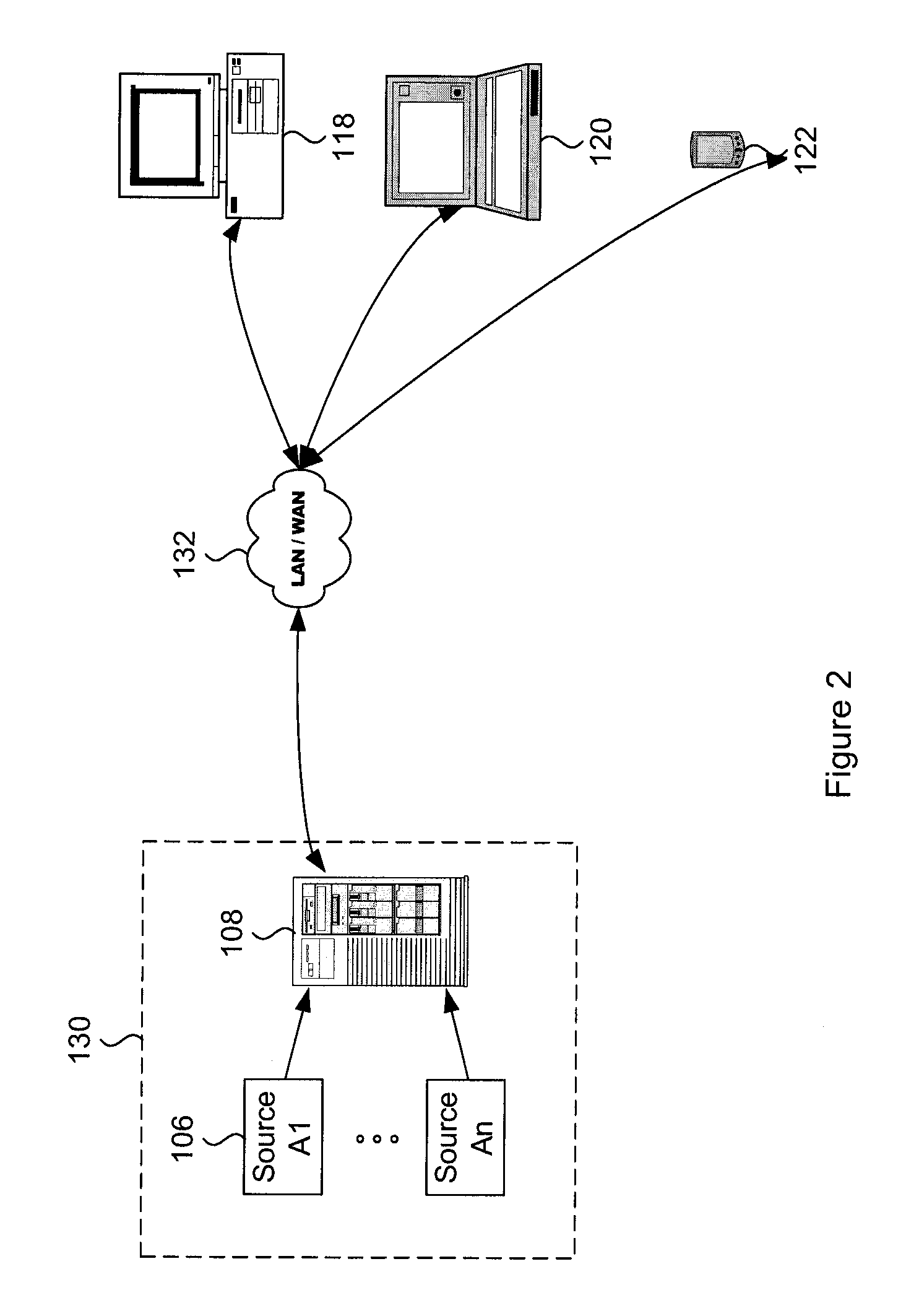

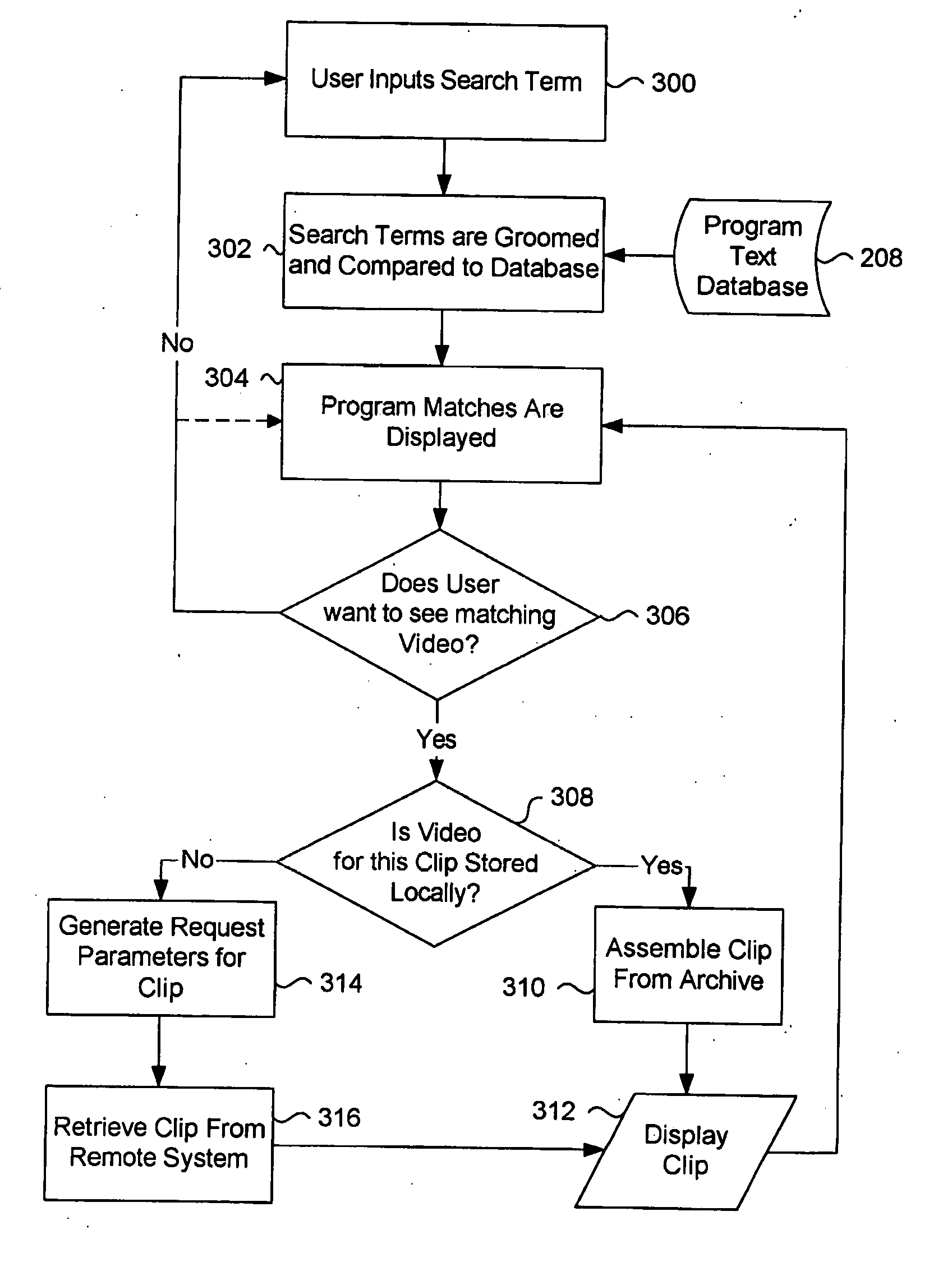

A method and system for continually storing and cataloguing streams of broadcast content, allowing real-time searching and real-time results display of all catalogued video. A bank of video recording devices store and index all video content on any number of broadcast sources. This video is stored along with the associated program information such as program name, description, airdate and channel. A parallel process obtains the text of the program, either from the closed captioning data stream, or by using a speech-to-text system. Once the text is decoded, stored, and indexed, users can then perform searches against the text, and view matching video immediately along with its associated text and broadcast information. Users can retrieve program information by other methods, such as by airdate, originating station, program name and program description. An alerting mechanism scans all content in real-time and can be configured to notify users by various means upon the occurrence of a specified search criteria in the video stream. The system is preferably designed to be used on publicly available broadcast video content, but can also be used to catalog private video, such as conference speeches or audio-only content such as radio broadcasts.

Owner:DNA 13

System and method for real-time media searching and alerting

InactiveUS20050198006A1Television system detailsMetadata video data retrievalRadio broadcastingClosed captioning

A method and system for continually storing and cataloguing streams of broadcast content, allowing real-time searching and real-time results display of all catalogued video. A bank of video recording devices store and index all video content on any number of broadcast sources. This video is stored along with the associated program information such as program name, description, airdate and channel. A parallel process obtains the text of the program, either from the closed captioning data stream, or by using a speech-to-text system. Once the text is decoded, stored, and indexed, users can then perform searches against the text, and view matching video immediately along with its associated text and broadcast information. Users can retrieve program information by other methods, such as by airdate, originating station, program name and program description. An alerting mechanism scans all content in real-time and can be configured to notify users by various means upon the occurrence of a specified search criteria in the video stream. The system is preferably designed to be used on publicly available broadcast video content, but can also be used to catalog private video, such as conference speeches or audio-only content such as radio broadcasts.

Owner:DNA13

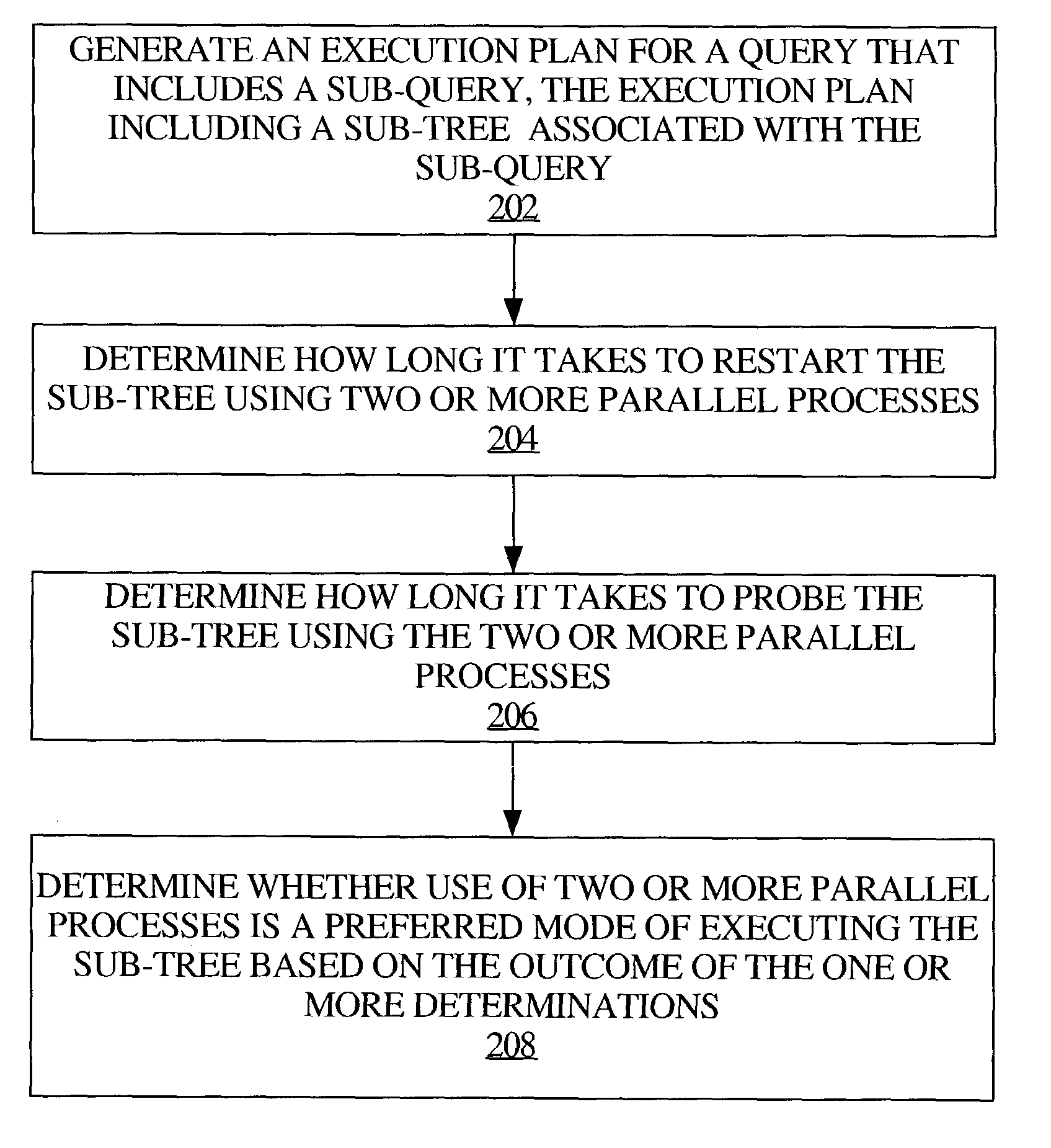

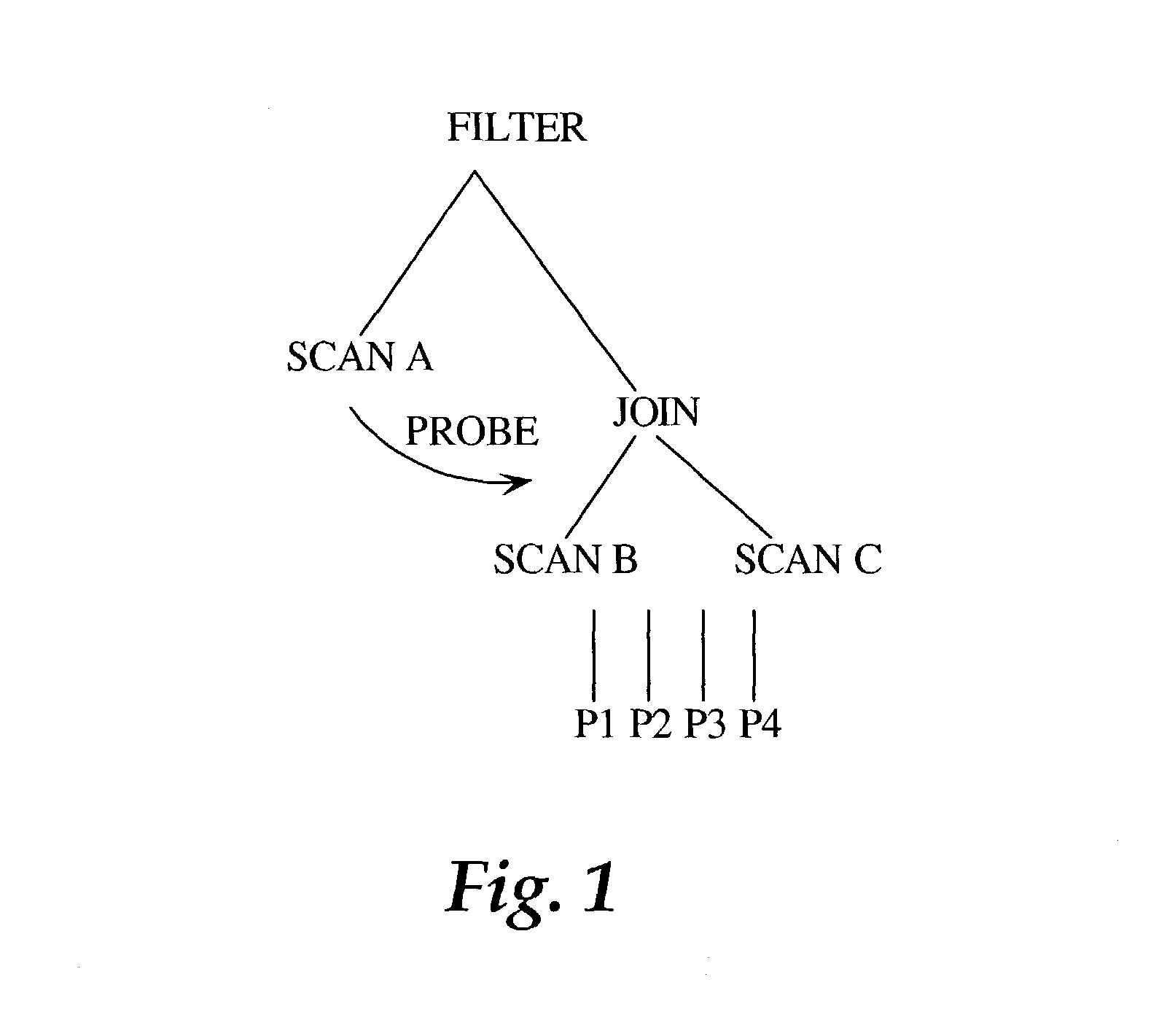

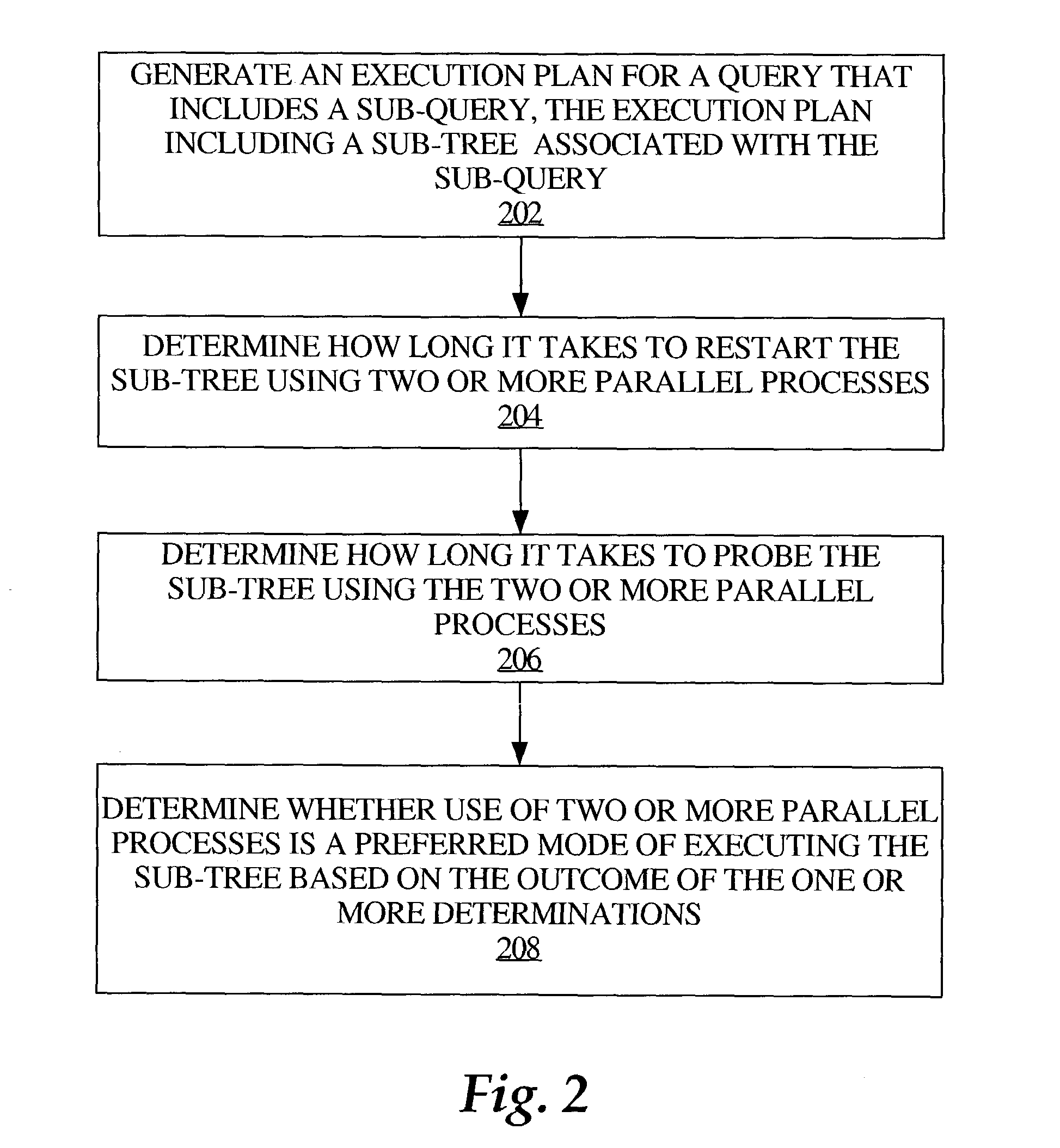

Dynamic optimization for processing a restartable sub-tree of a query execution plan

ActiveUS7051034B1Digital data information retrievalData processing applicationsExecution planDynamical optimization

Execution of a restartable sub-tree of a query execution plan comprises determining whether use of parallel processes is a preferred or optimal mode of executing the sub-tree. The determination is based, at least in part, on how long it takes to restart the sub-tree using two or more parallel processes and / or how long it takes to probe the sub-tree, i.e., to fetch a row that meets one or more conditions or correlations associated with the sub-query, using the two or more parallel processes. Thus, a dynamic computational cost-based operation is described, which determines at query runtime whether to execute the restartable sub-tree using a single server process or multiple parallel server processes.

Owner:ORACLE INT CORP

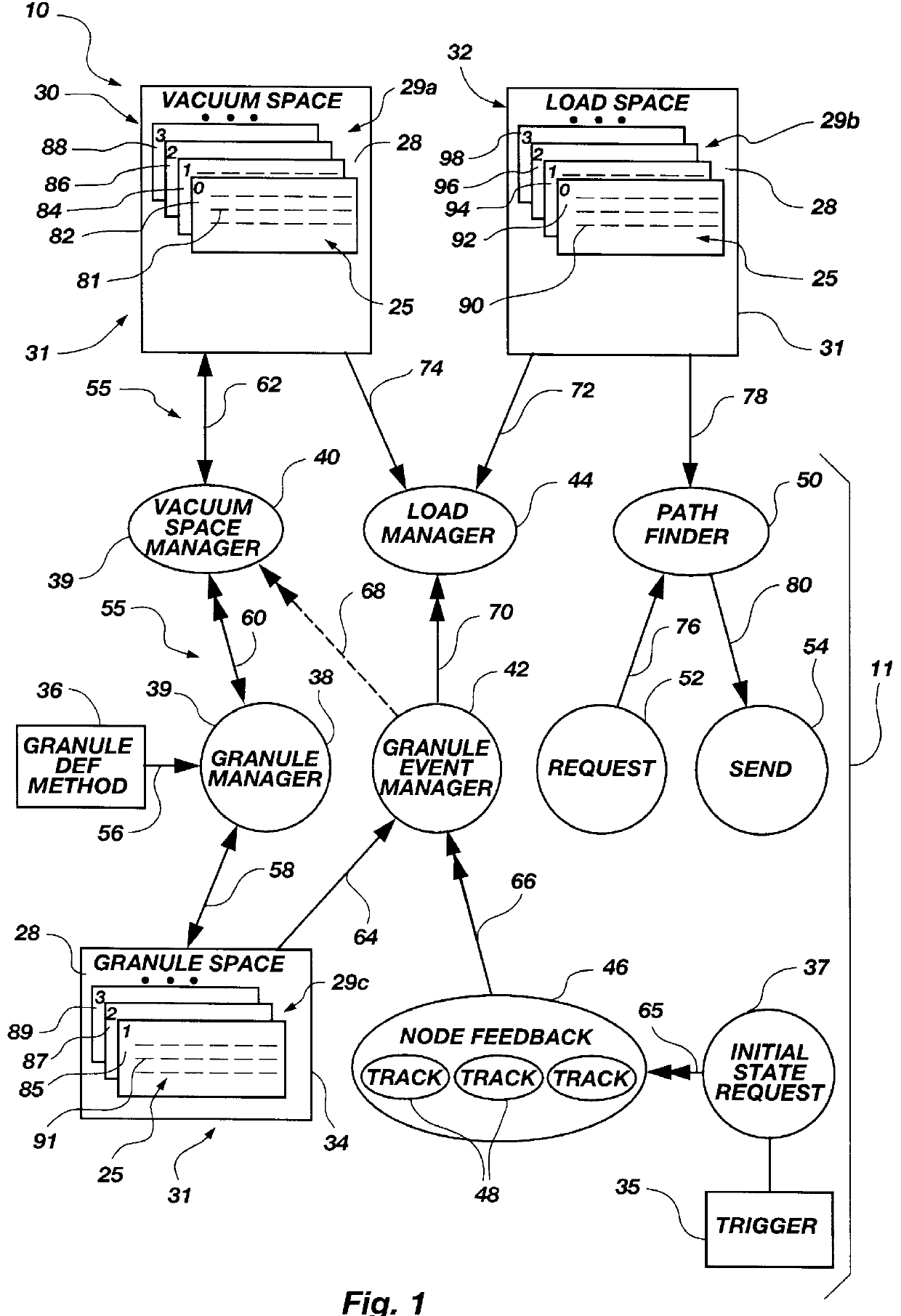

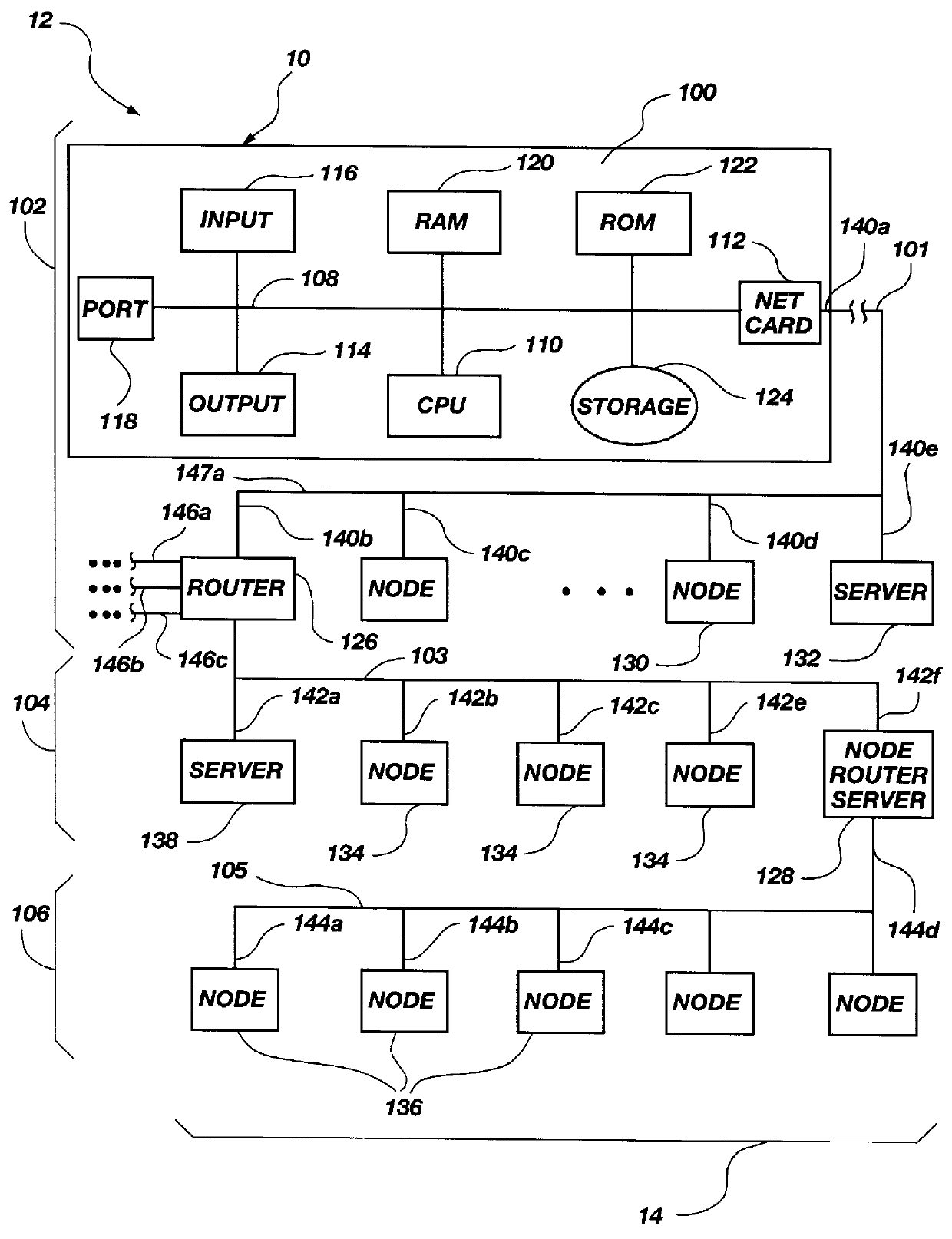

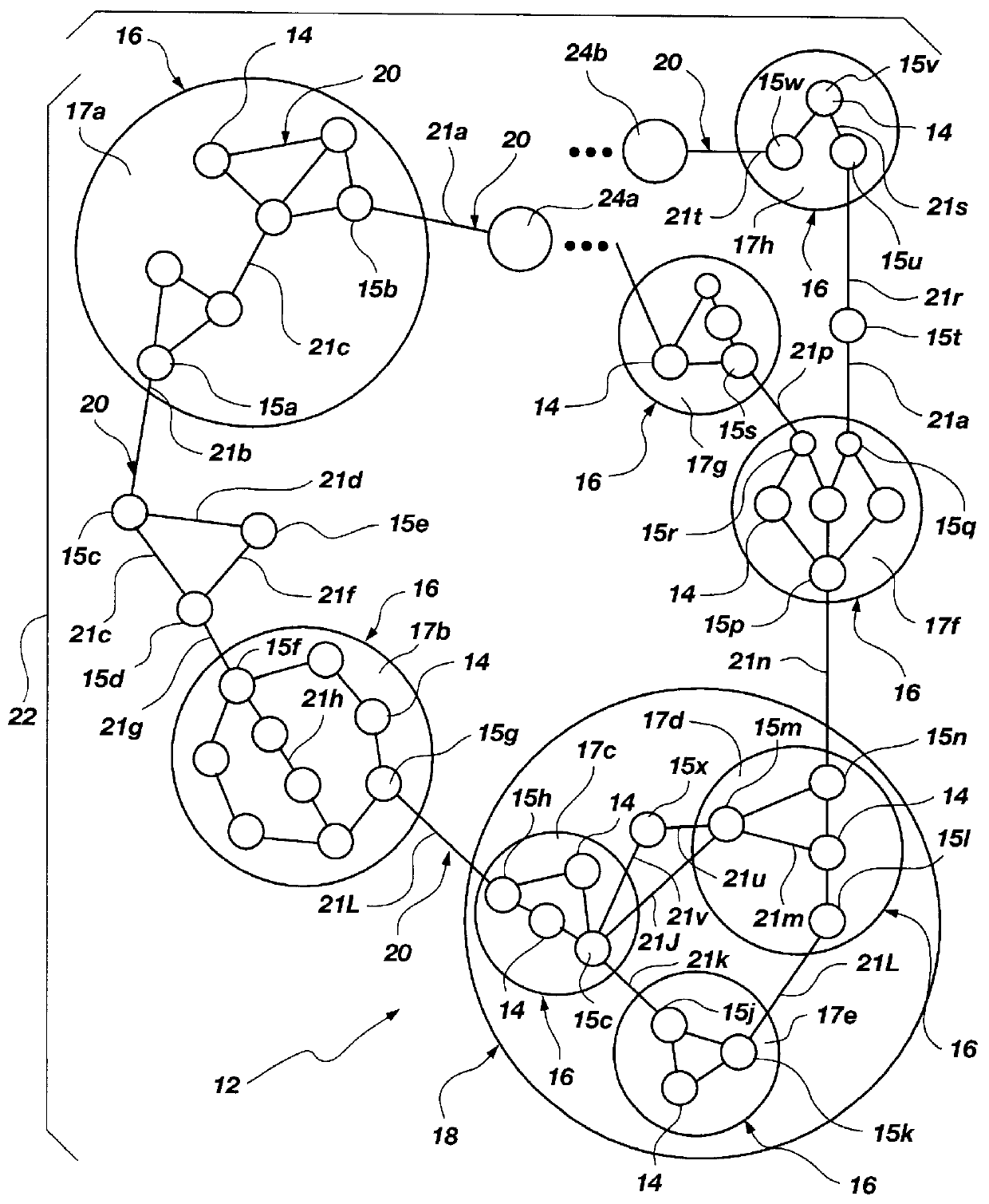

Extrinsically influenced near-optimal path apparatus and method

InactiveUS6067572ARapidly and automatically determineRapidly and automatically designateError preventionFrequency-division multiplex detailsWavefrontOperational system

A method and apparatus for dynamically providing a path through a network of nodes or granules may use a limited, advanced look at potential steps along a plurality of available paths. Given an initial position, at an initial node or granule within a network, and some destination node or granule in the network, all nodes or granules may be represented in a connected graph. An apparatus and method may evaluate current potential paths, or edges between nodes still considered to lie in potential paths, according to some cost or distance function associated therewith. In evaluating potential paths or edges, the apparatus and method may consider extrinsic data which influences the cost or distance function for a path or edge. Each next edge may lie ahead across the advancing "partial" wavefront, toward a new candidate node being considered for the path. With each advancement of the wavefront, one or more potential paths, previously considered, may be dropped from consideration. Thus, a "partial" wavefront, limited in size (number of nodes and connecting edges) continues to evaluate some number of the best paths "so far." The method deletes worst paths, backs out of cul-de-sacs, and penalizes turning around. The method and apparatus may be implemented to manage a computer network, a computer internetwork, parallel processors, parallel processes in a multi-processing operating system, a smart scissor for a drawing application, and other systems of nodes.

Owner:ORACLE INT CORP

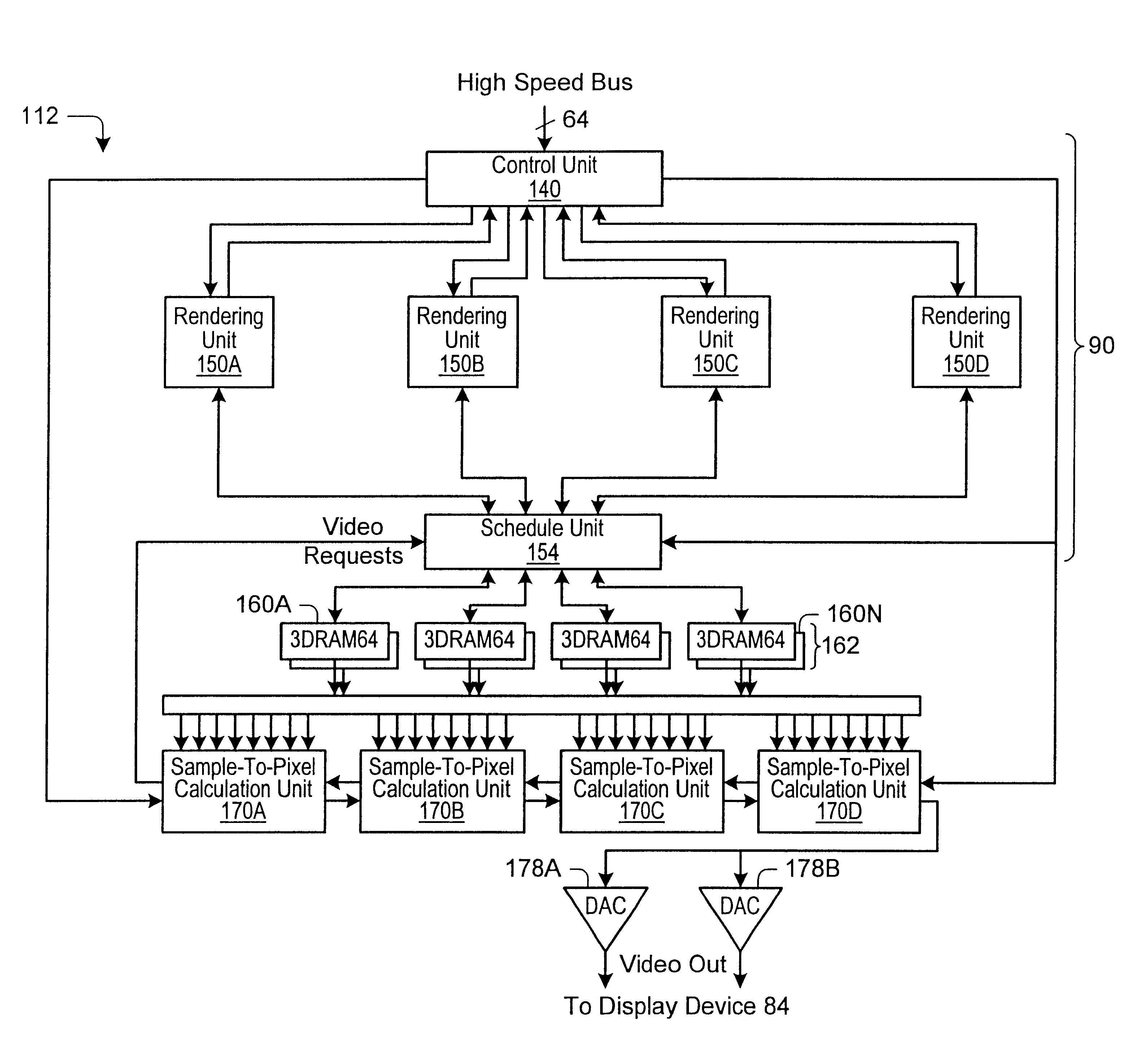

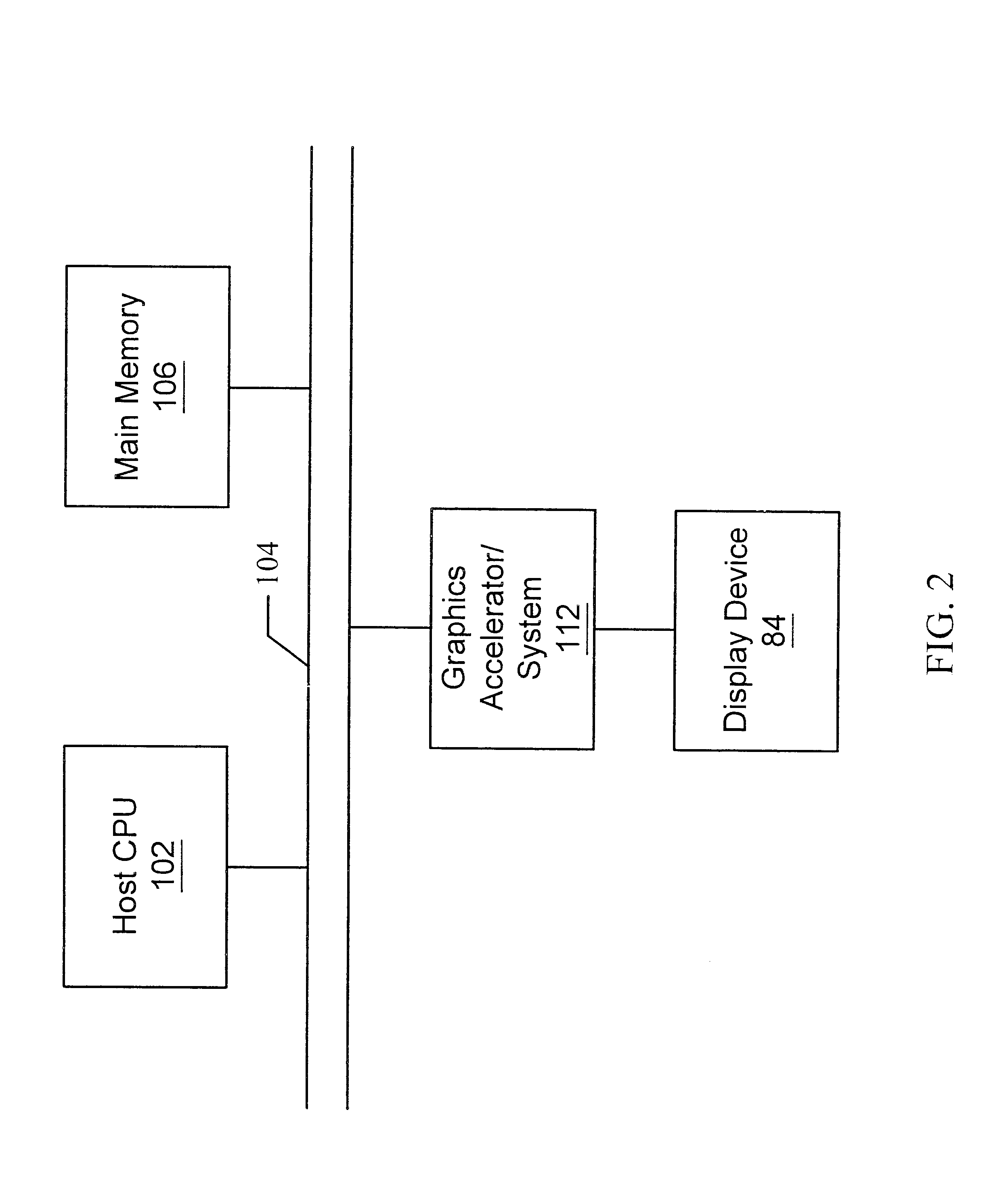

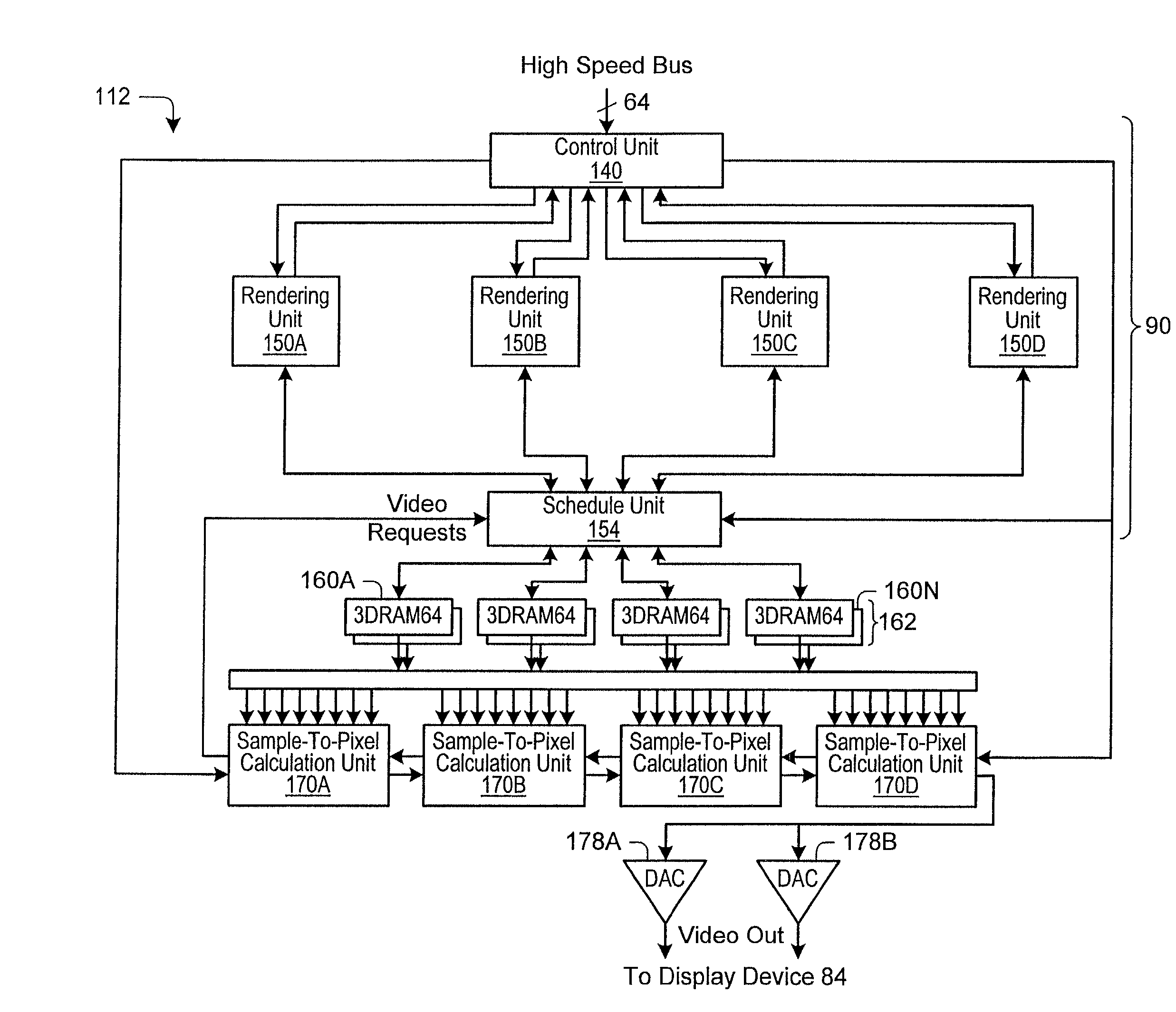

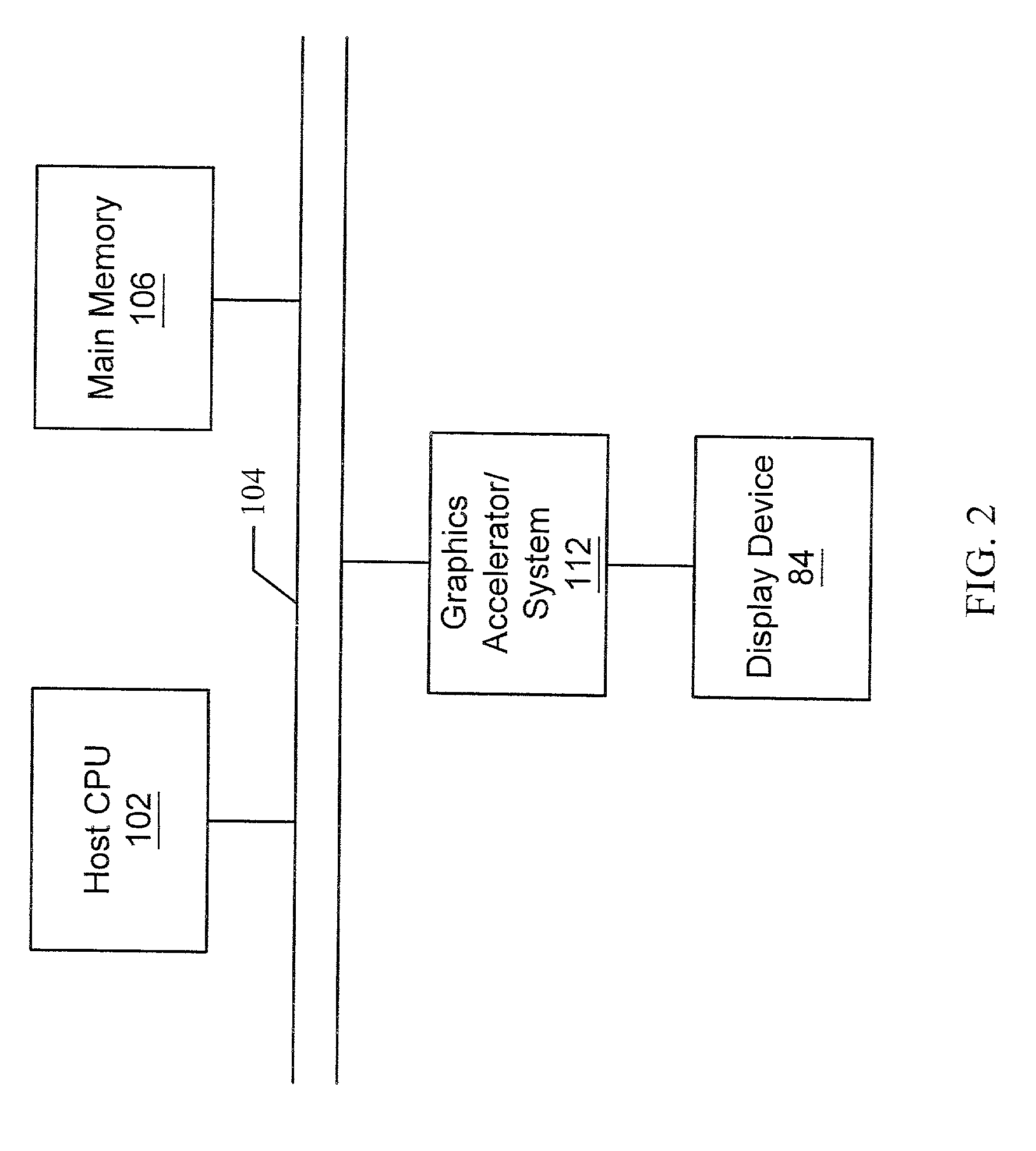

Graphics system configured to parallel-process graphics data using multiple pipelines

InactiveUS6801202B2Program initiation/switchingMultiple digital computer combinationsComputational scienceGraphics

Owner:ORACLE INT CORP

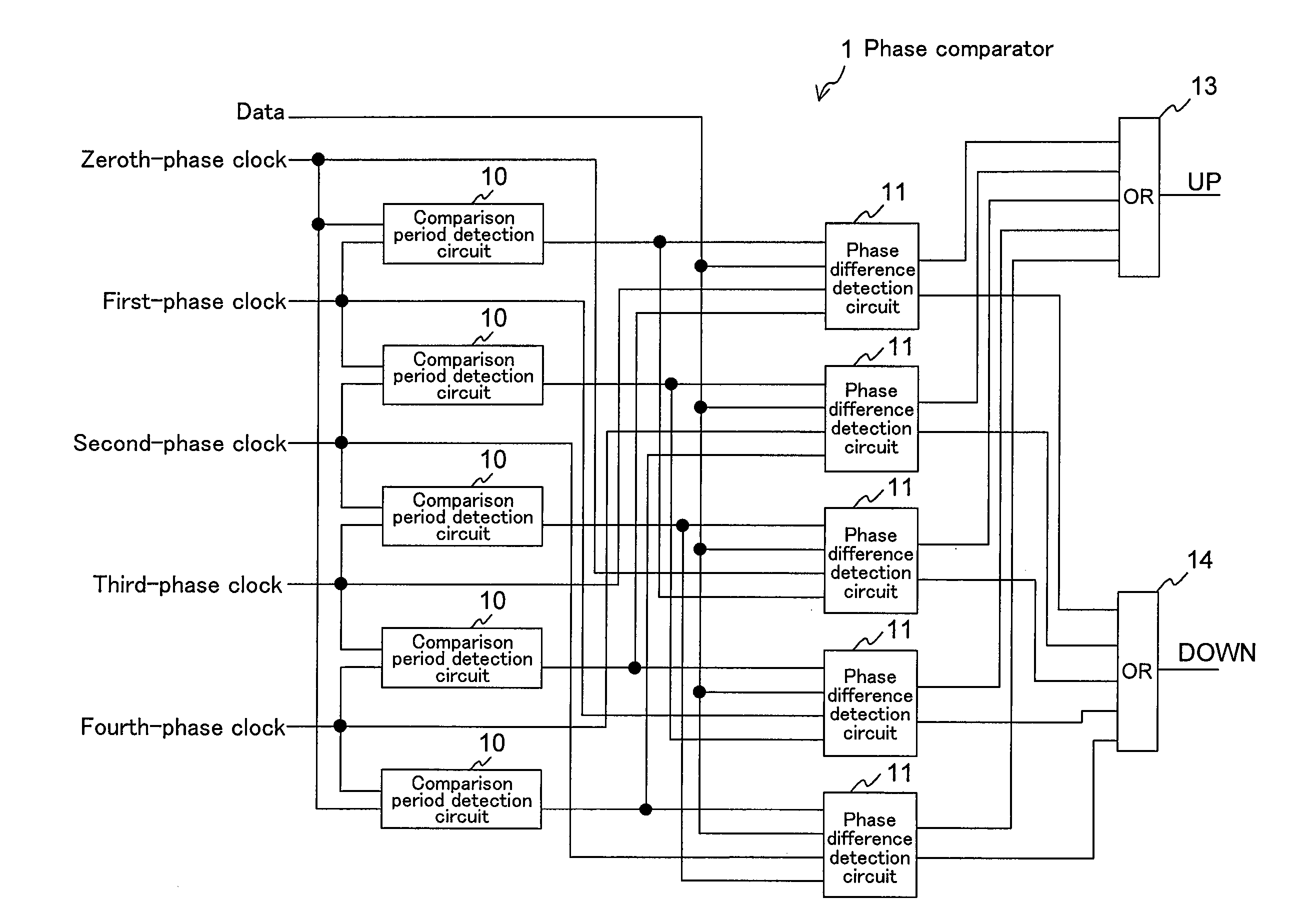

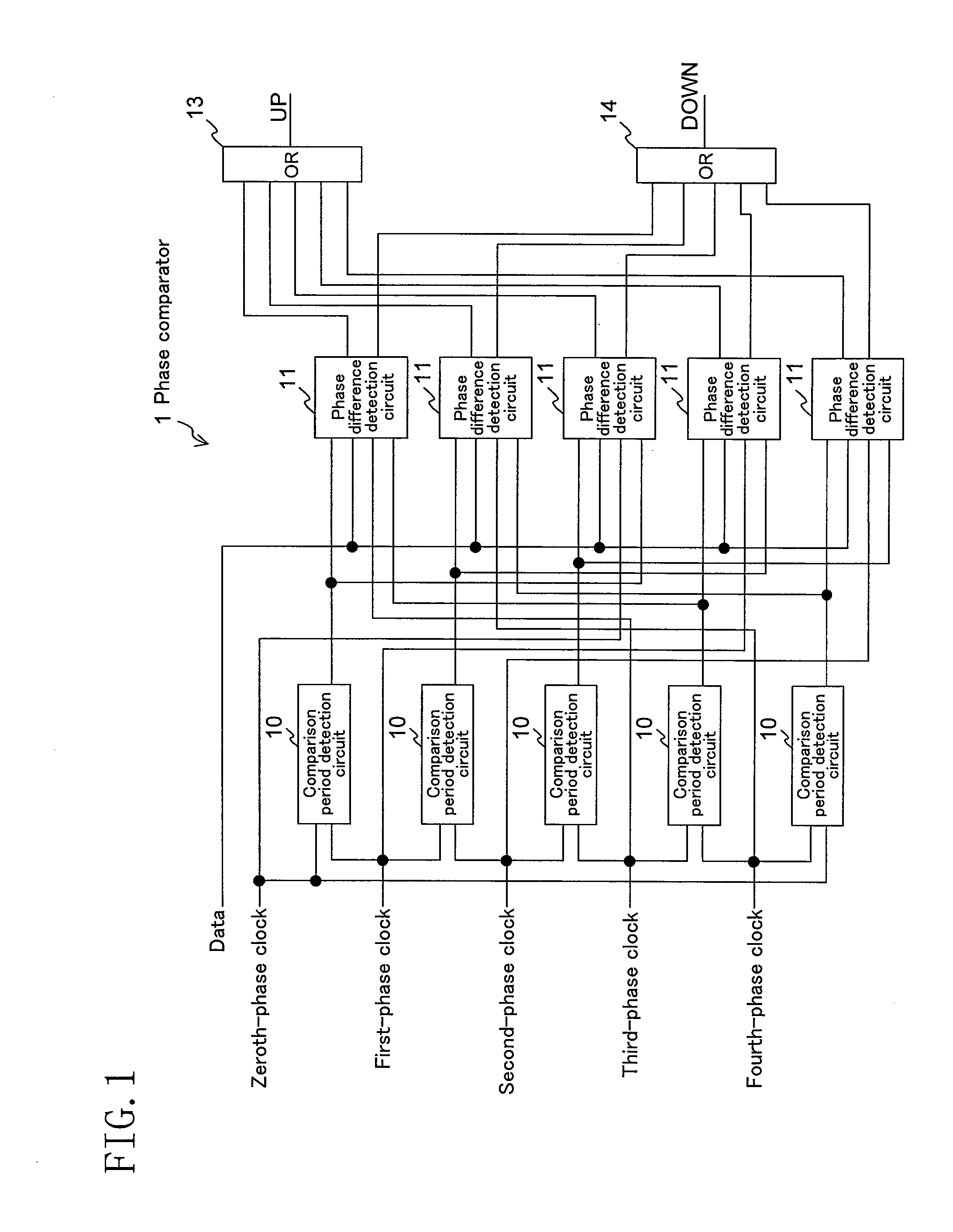

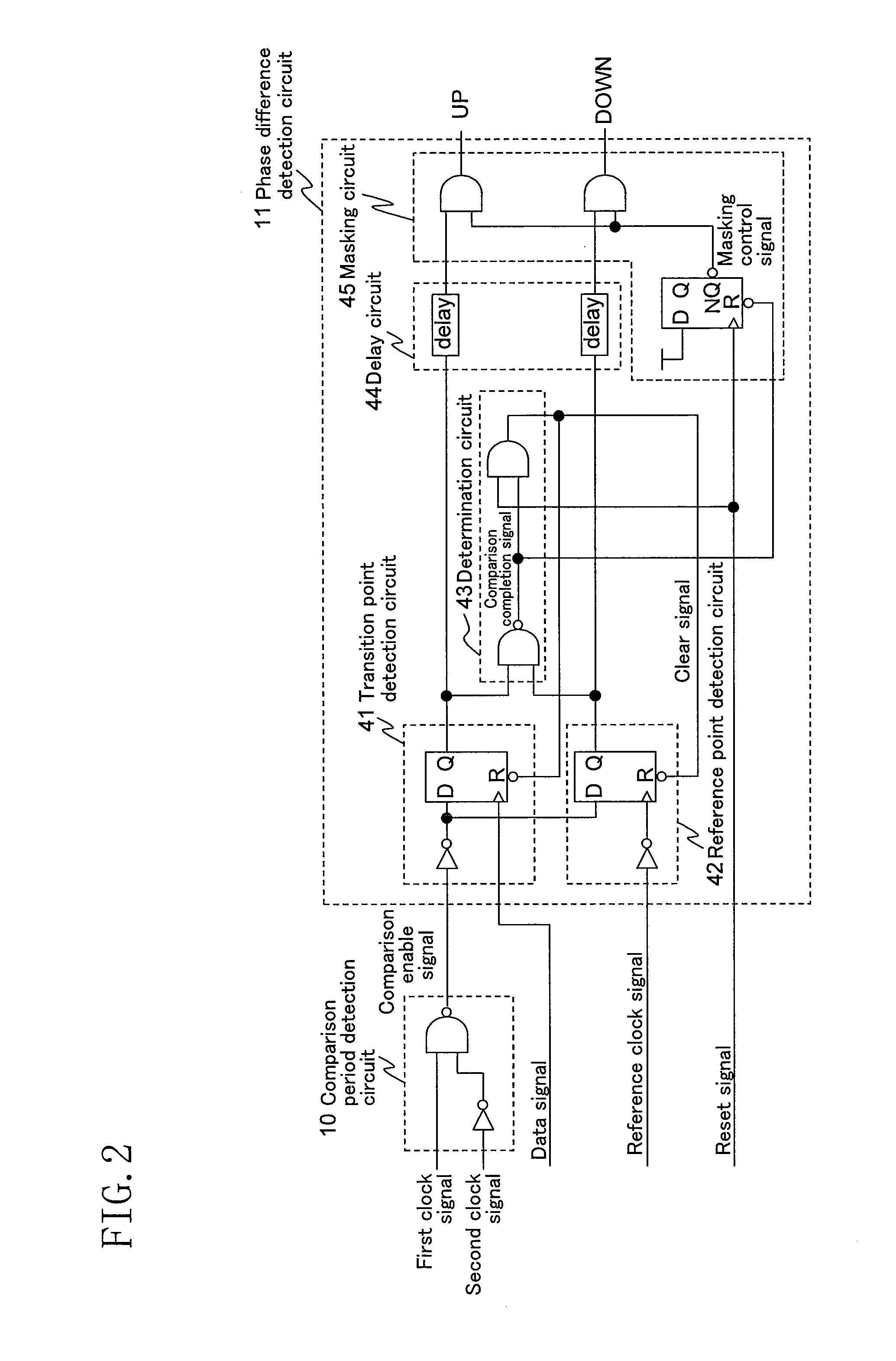

Phase comparator and regulation circuit

ActiveUS20090262876A1Output be stopStable phase comparisonPulse automatic controlAngle demodulation by phase difference detectionParallel processingComputer science

A phase comparison process in a timing recovery process for high-speed data communication defines a data window and compares the phase of a clock in the window with the phase of an edge of data so as to realize a parallel process, wherein the phase comparison and the process of determining whether a data edge lies within the window are performed in parallel to each other, and the phase comparison result is output only if the data edge lies within the window. With this configuration, it is possible to perform an accurate phase comparison process with no errors without requiring high-precision delay circuits.

Owner:SOCIONEXT INC

Graphics system configured to parallel-process graphics data using multiple pipelines

InactiveUS20020085007A1Program initiation/switchingMultiple digital computer combinationsGraphicsComputational science

A method and computer graphics system capable of implementing multiple pipelines for the parallel processing of graphics data. For certain data, a requirement may exist that the data be processed in order. The graphics system may use a set of tokens to reliably switch between ordered and unordered data modes. Furthermore, the graphics system may be capable of super-sampling and performing real-time convolution. In one embodiment, the computer graphics system may comprise a graphics processor, a sample buffer, and a sample-to-pixel calculation unit. The graphics processor may be configured to receive graphics data and to generate a plurality of samples for each of a plurality of frames. The sample buffer, which is coupled to the graphics processor, may be configured to store the samples. The sample-to-pixel calculation unit is programmable to generate a plurality of output pixels by filtering the rendered samples using a filter.

Owner:ORACLE INT CORP

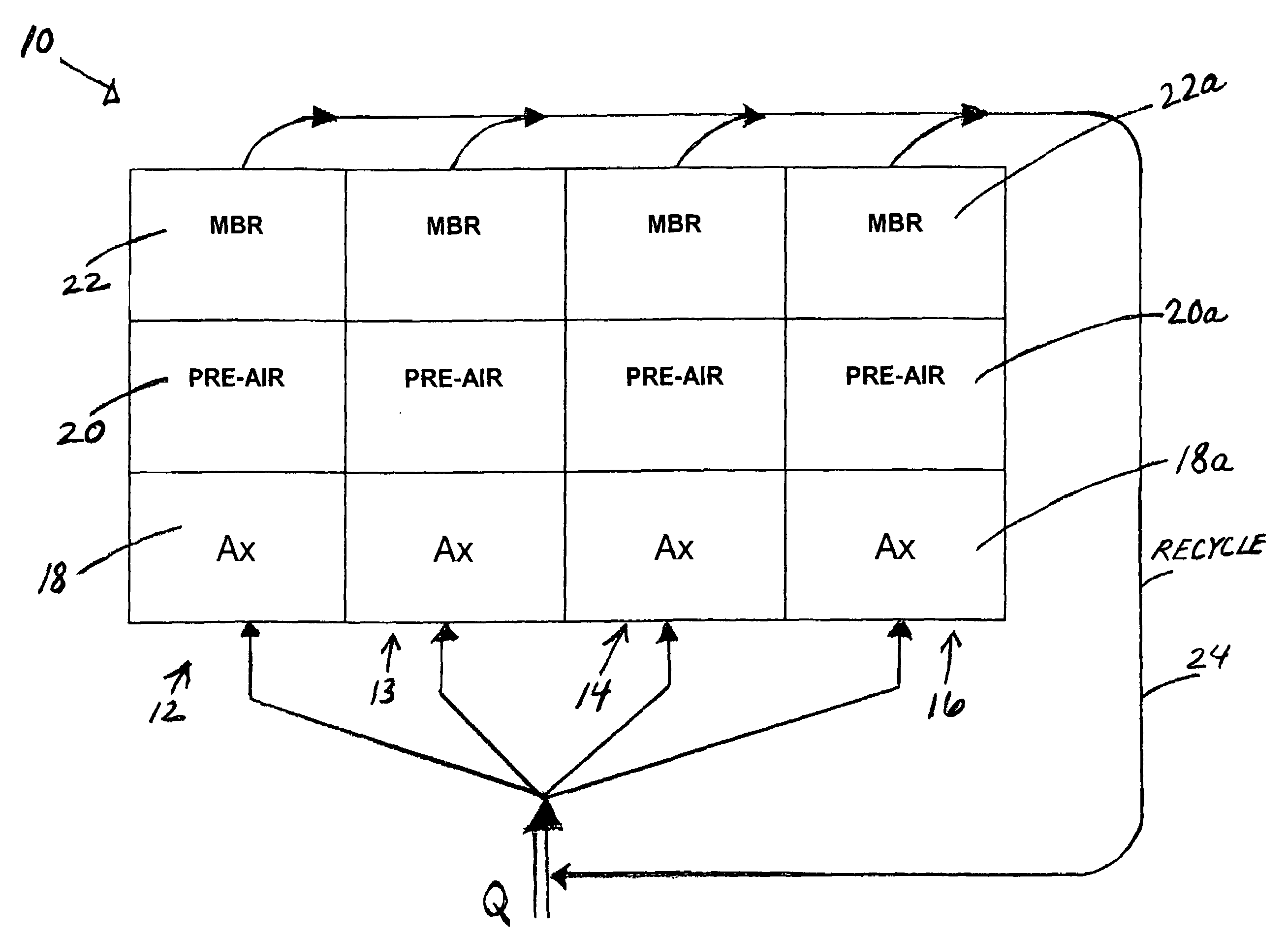

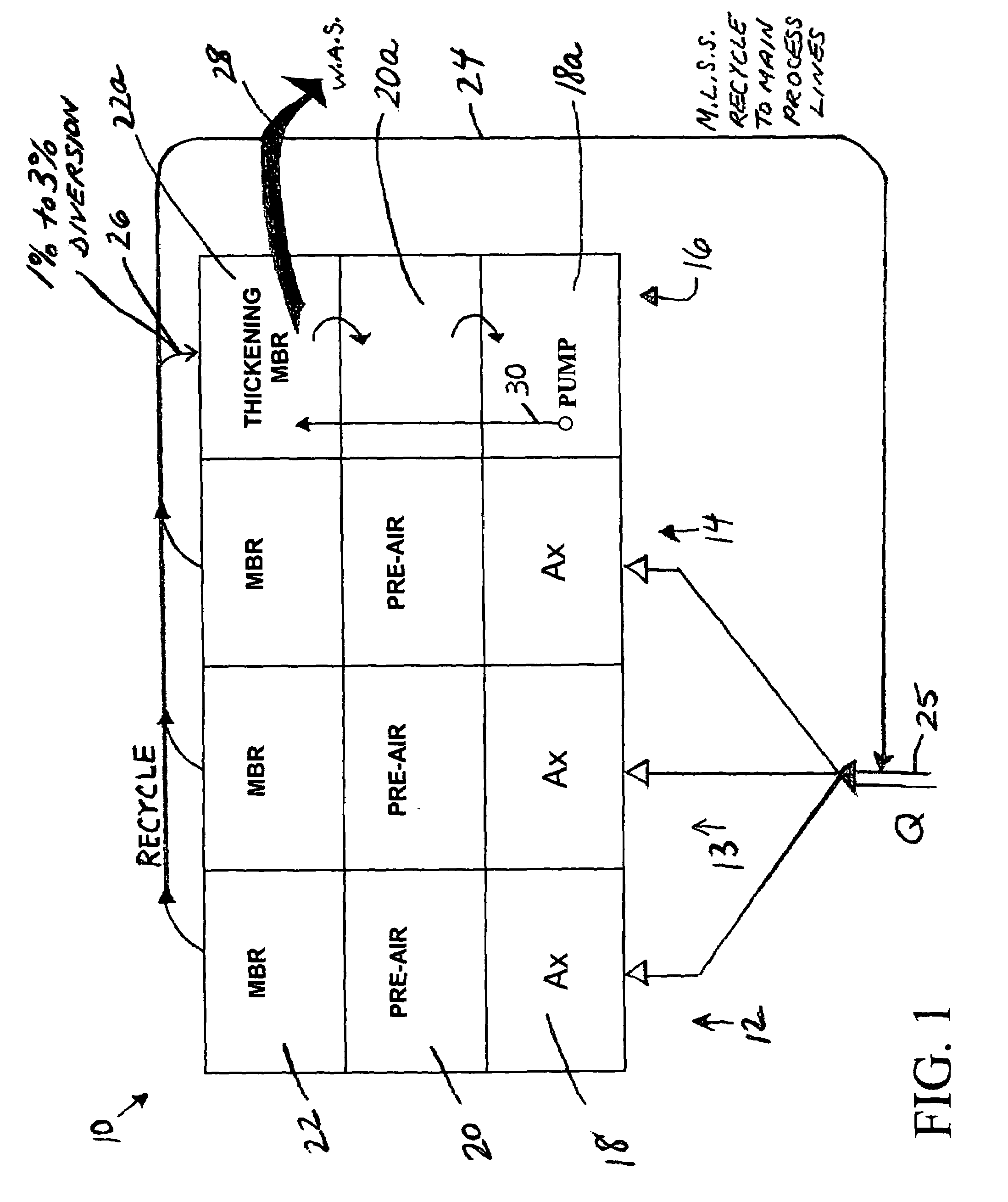

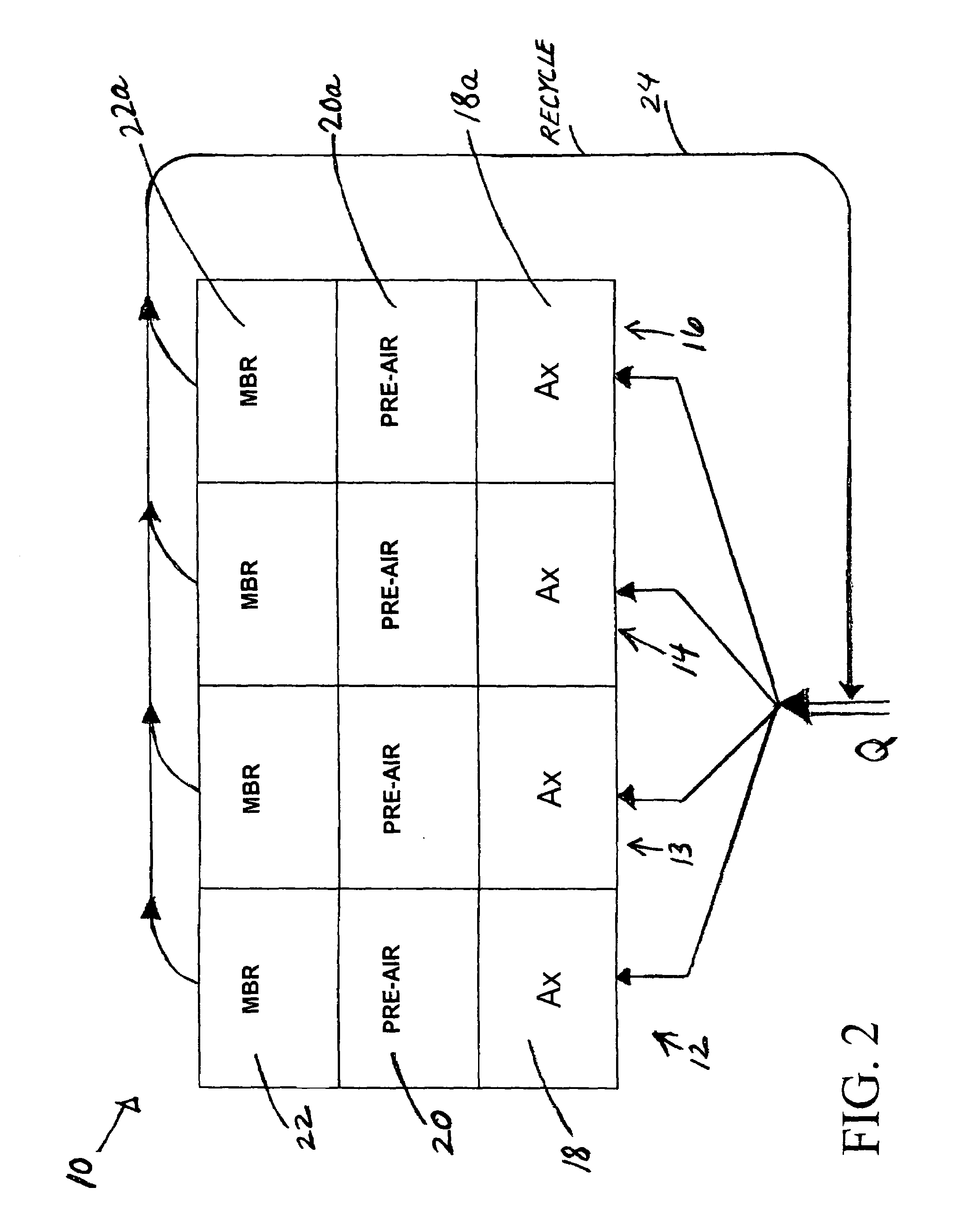

Wastewater treatment system with membrane separators and provision for storm flow conditions

ActiveUS7147777B1Increase biomassTreatment using aerobic processesTreatment with aerobic and anaerobic processesStreamflowTreatment system

In a wastewater treatment system and process utilizing membrane bioreactors (MBRs), multiple, parallel series of tanks or stages each include, an MBR stage. Under conditions of normal flow volume into the system, influent passes through several parallel series of stages or process lines, which might be, for example, an anoxic stage, an aeration stage and an MBR stage. From the MBR stages a portion of M.L.S.S. is cycled through one or more thickening MBRs of similar process lines, for further thickening and further processing and digesting of the sludge, while a majority portion of the M.L.S.S. is recycled back into the main process lines. During peak flow conditions, such as storm conditions in a combined storm water / wastewater system, all of the series of stages with their thickening MBRs are operated in parallel to accept the peak flow, which is more than twice normal flow. M.L.S.S. is recycled from all MBR stages to the upstream end of each of all the parallel process lines, mixing with influent wastewater, and the last one or several process lines no longer act to digest the sludge. Another advantage is that with the thickened sludge in the last process line of basins, which ordinally act to digest the sludge, there is always sufficient biomass in the system to handle peak flow, the biomass being available if needed for a sudden heavy flow or an event that might bring a toxic condition into the main basins.

Owner:OVIVO INC

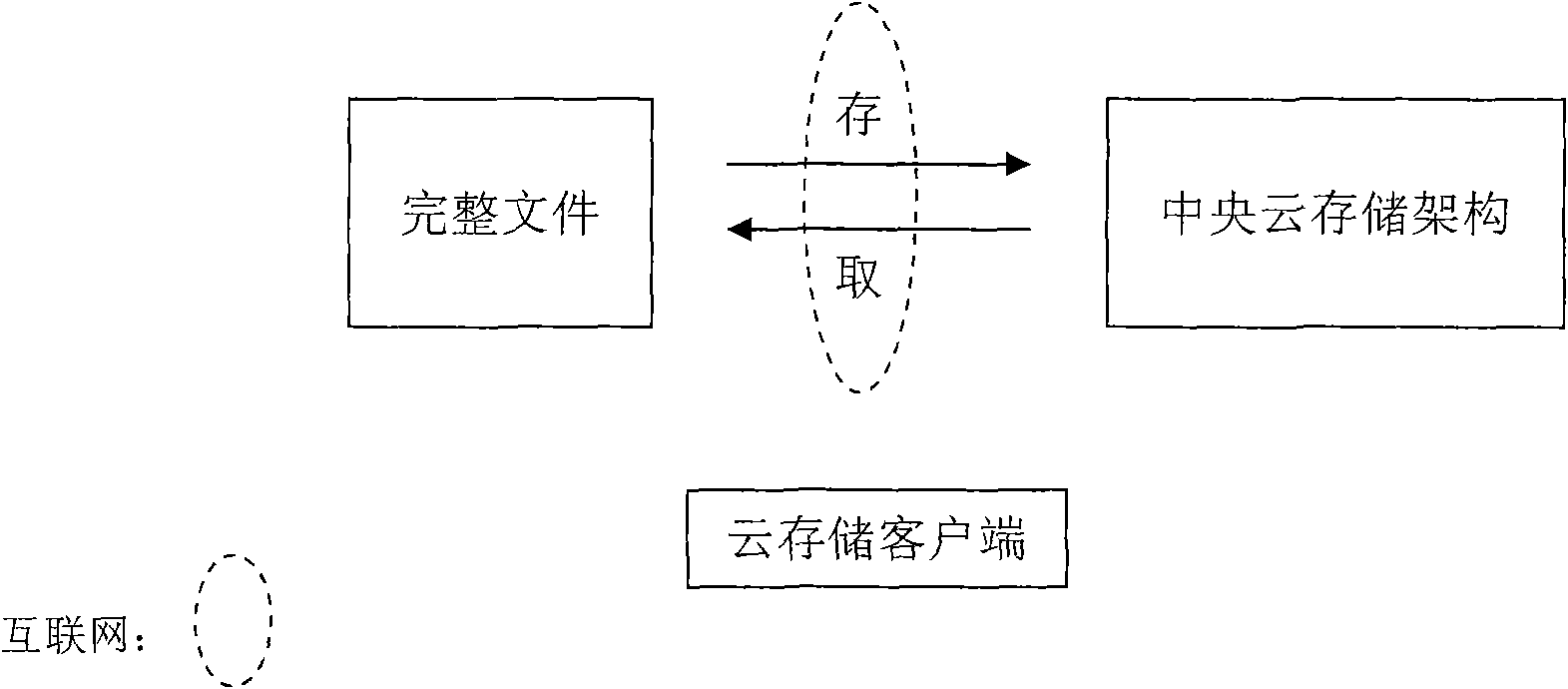

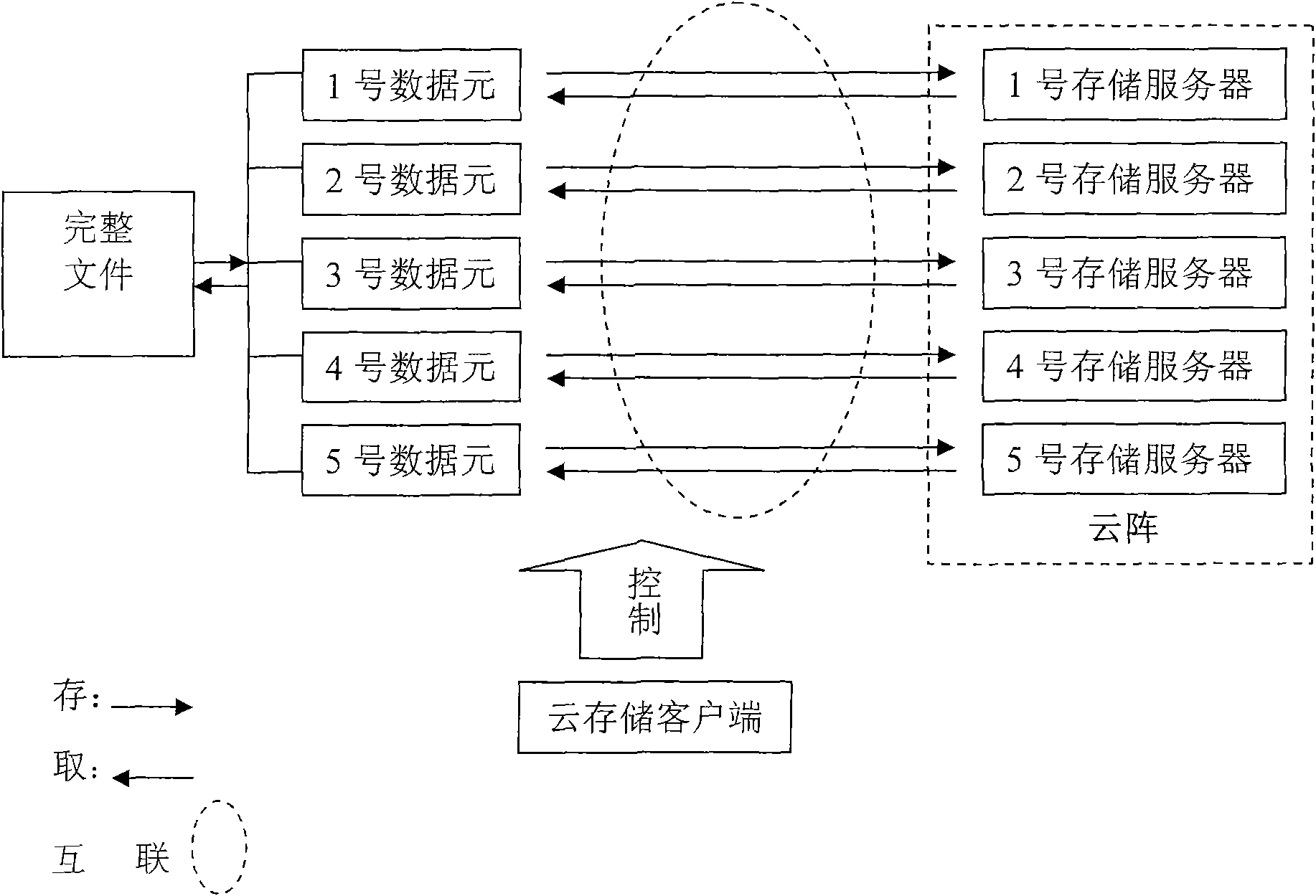

Method for structuring parallel system for cloud storage

The invention is mainly characterized in that: (1) a novel technical proposal for decentralizing network central storage is established, namely before being stored, a master file is broken into a plurality of sub-files known as data elements, as the smallest units for storage, by a program; (2) a prior network central storage method is replaced by cloud array storage technology, wherein a cloud array consists of a plurality of servers set by special serial numbers; an access relation of the data elements corresponding to the servers is established according to the serial numbers; and an original file is broken into the data elements respectively stored in the cloud arrays; (3) the storage of the data elements adopts a multi-process parallel mode, namely one process executes the storage of one data element, and N processes execute the storage of N data elements; and due to the parallel processes, the storage speed can be improved; (4) the data elements are encrypted and compressed, and an original password is not allowed to save; and (5) an operating system of the servers is Unix. The method for structuring a parallel system for the cloud storage can effectively solve the problem of the security of cloud storage data, and has important value for cloud storage structuring and application development.

Owner:何吴迪

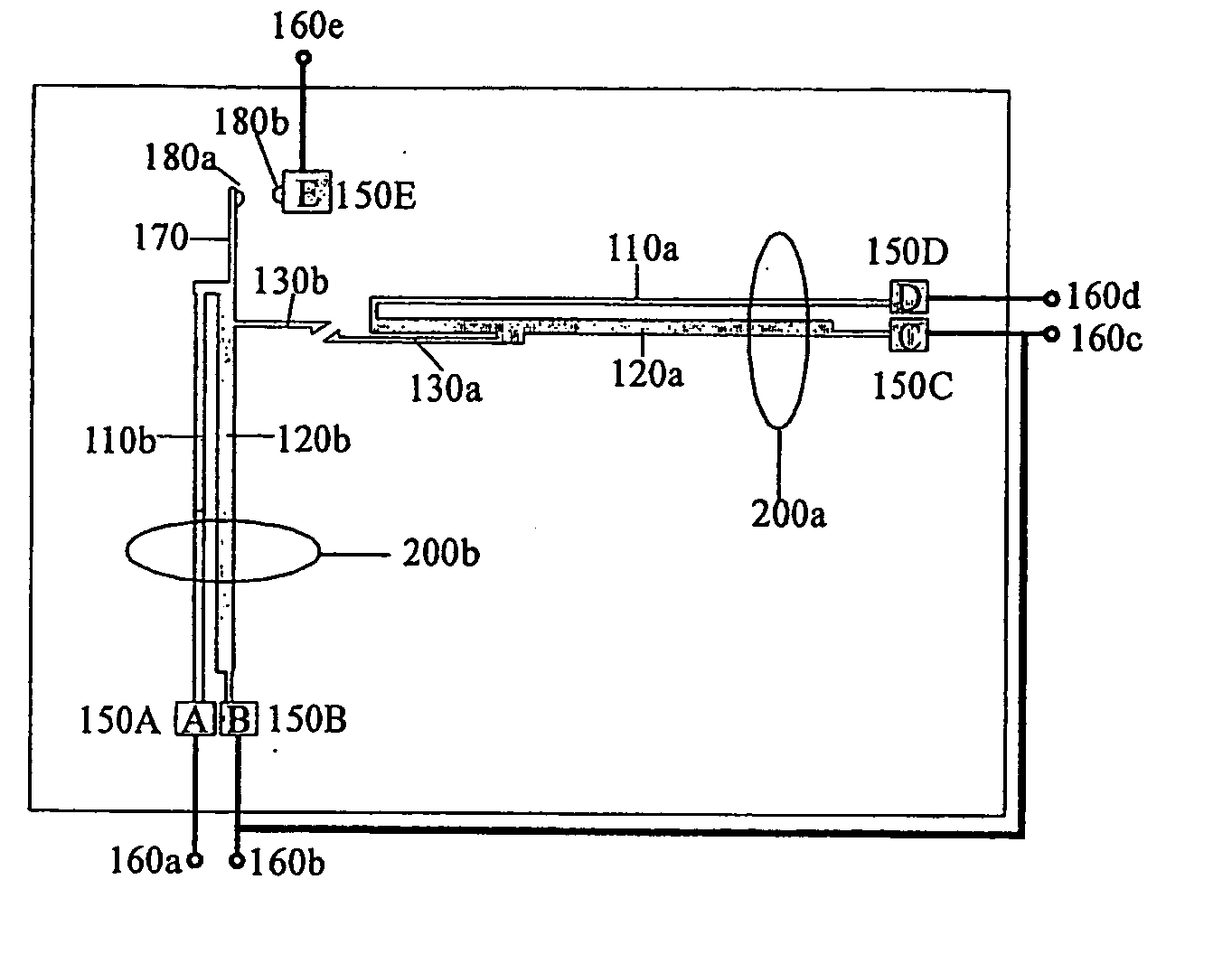

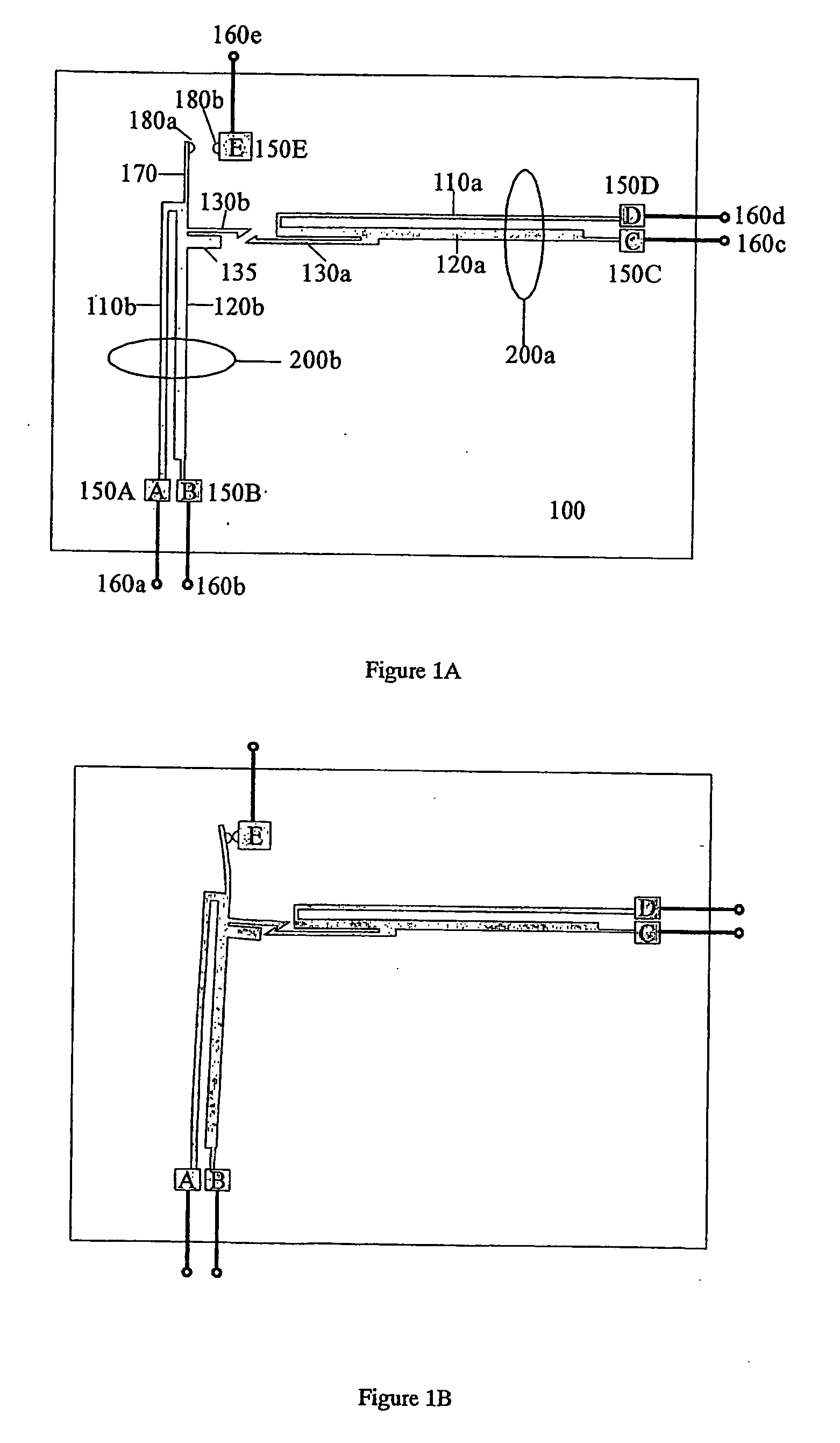

Microengineered self-releasing switch

A MEMS (microelectromechanical system) electrical switch device is provided for circuit protection applications. The device includes a mechanical latching mechanism by which the switch is held in the closed position, and a mechanism by which this latch is released when the load current passing through the device reaches or exceeds some desired magnitude. In addition, a mechanism is provided by which the switch may be reset to its closed position by applying an electrical control voltage to certain terminals of the device. A number of these devices, or arrays of these devices, can be fabricated by parallel processes on a single substrate, and photolithography can be employed to define the mechanical structures described above. Other embodiments include additional electrical isolation of the resetting mechanism, enhancement of the separation distance of the contact points of the switch in the open position, and prevention of arcing at the latch mechanism. A method of fabricating the device is provided. A method of using the aforementioned device is also provided.

Owner:YEATMAN ERIC

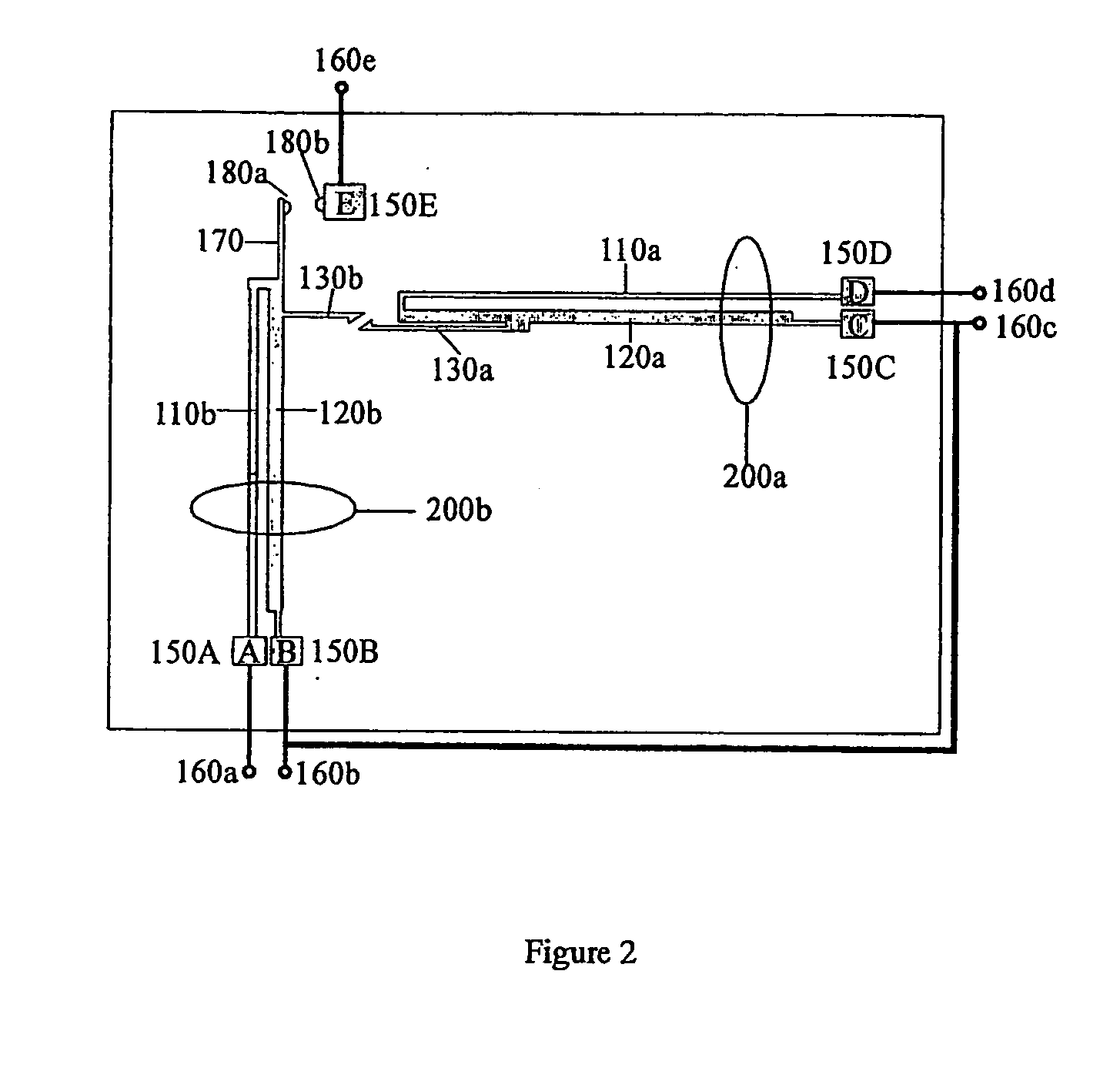

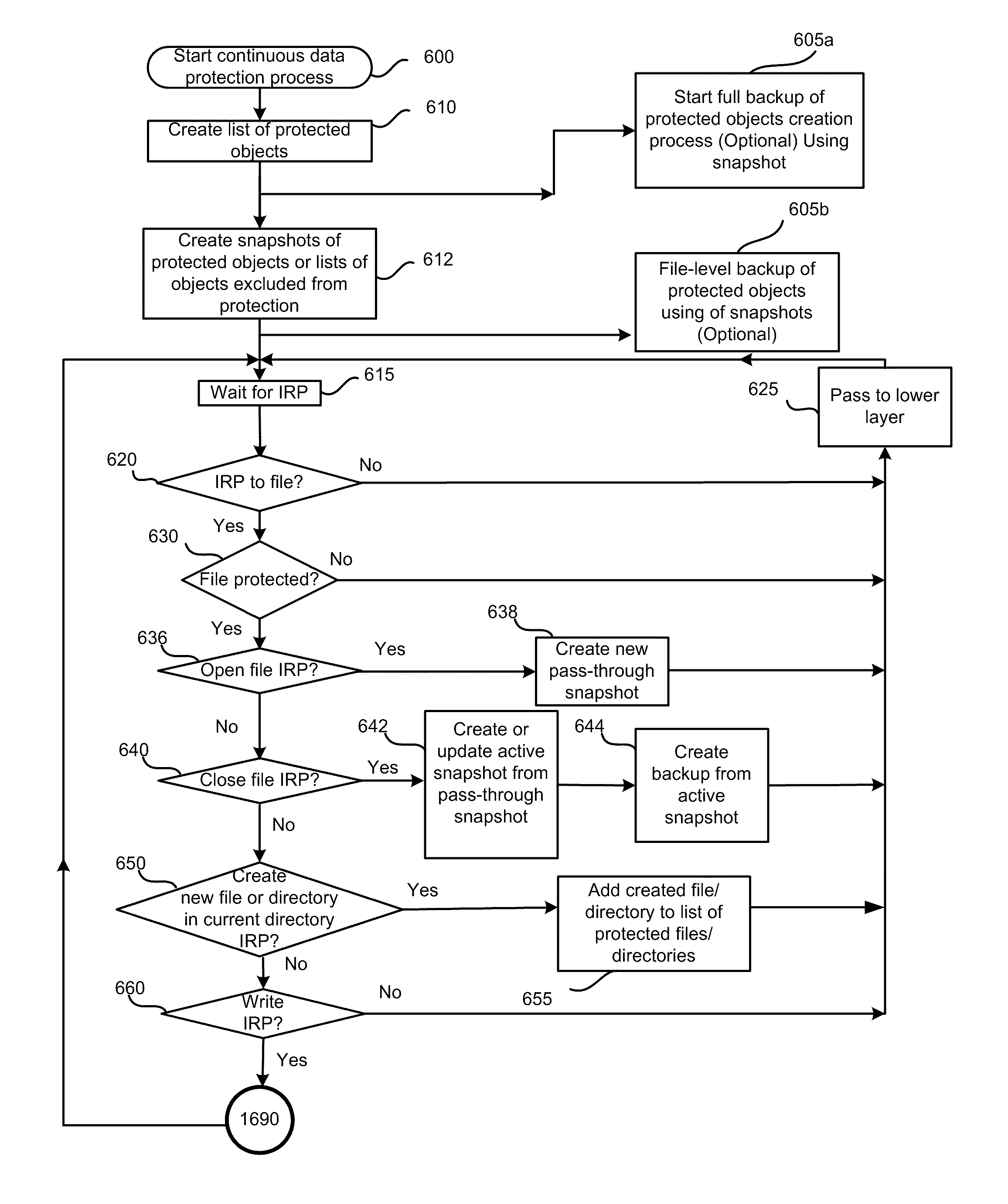

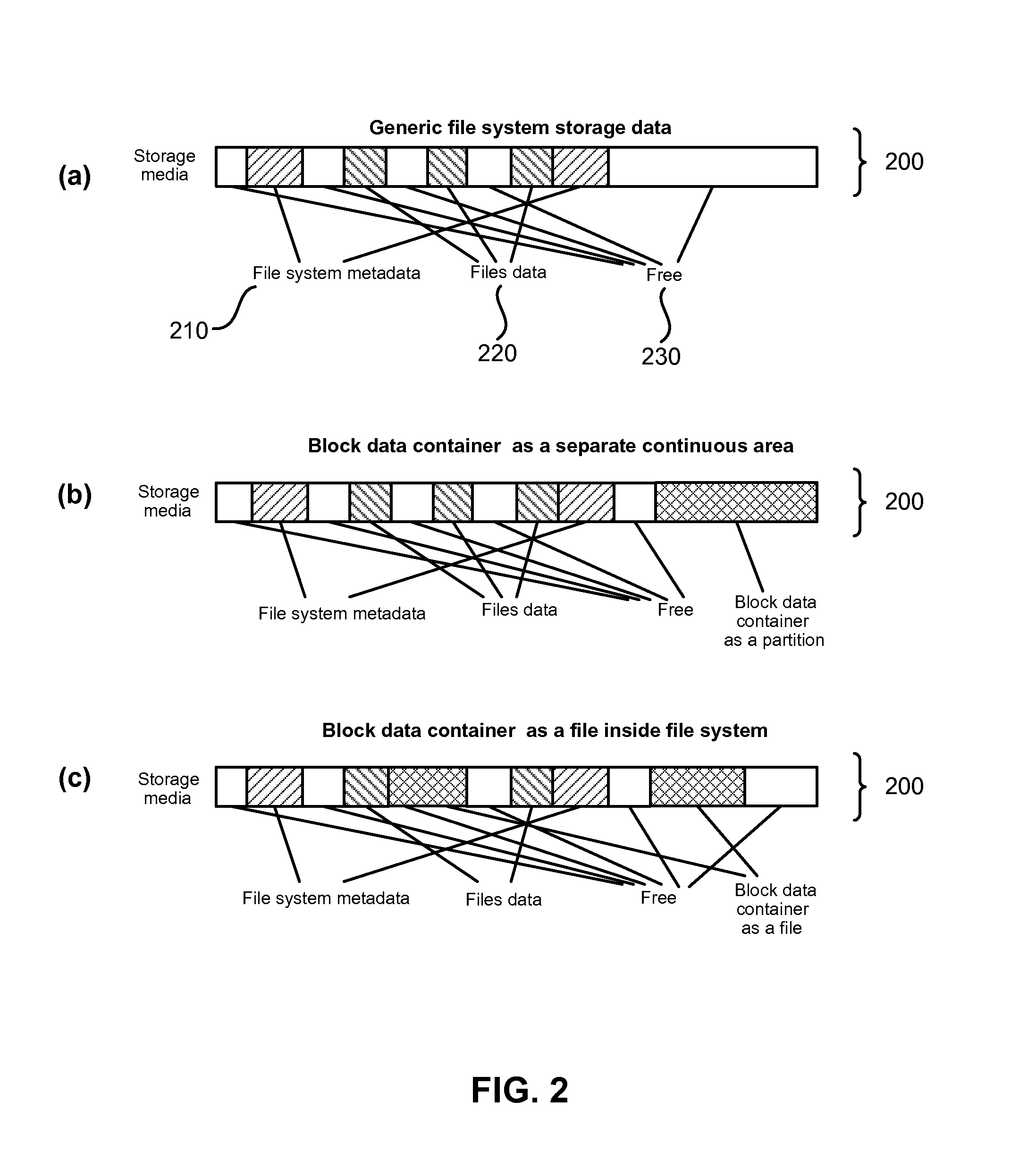

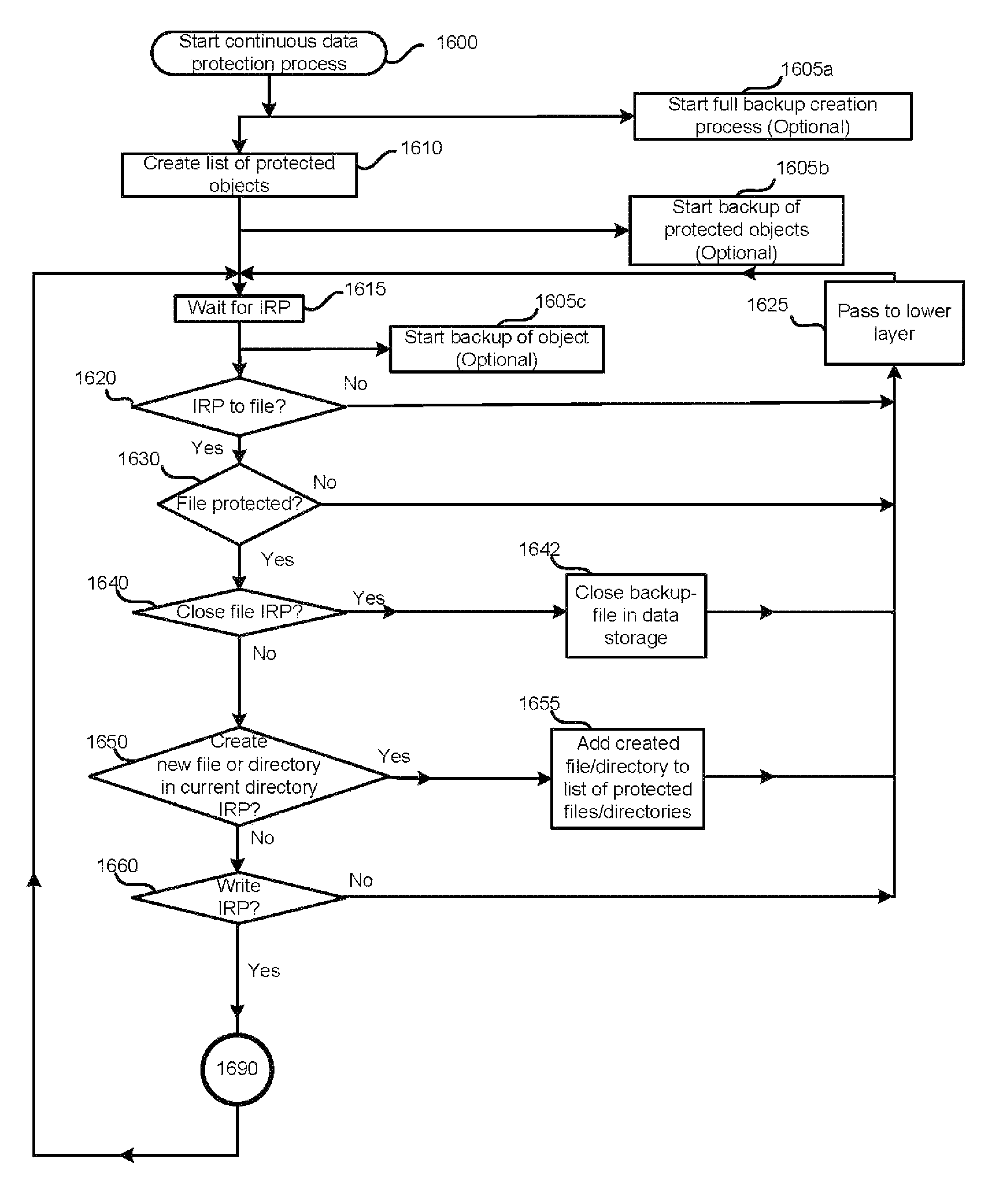

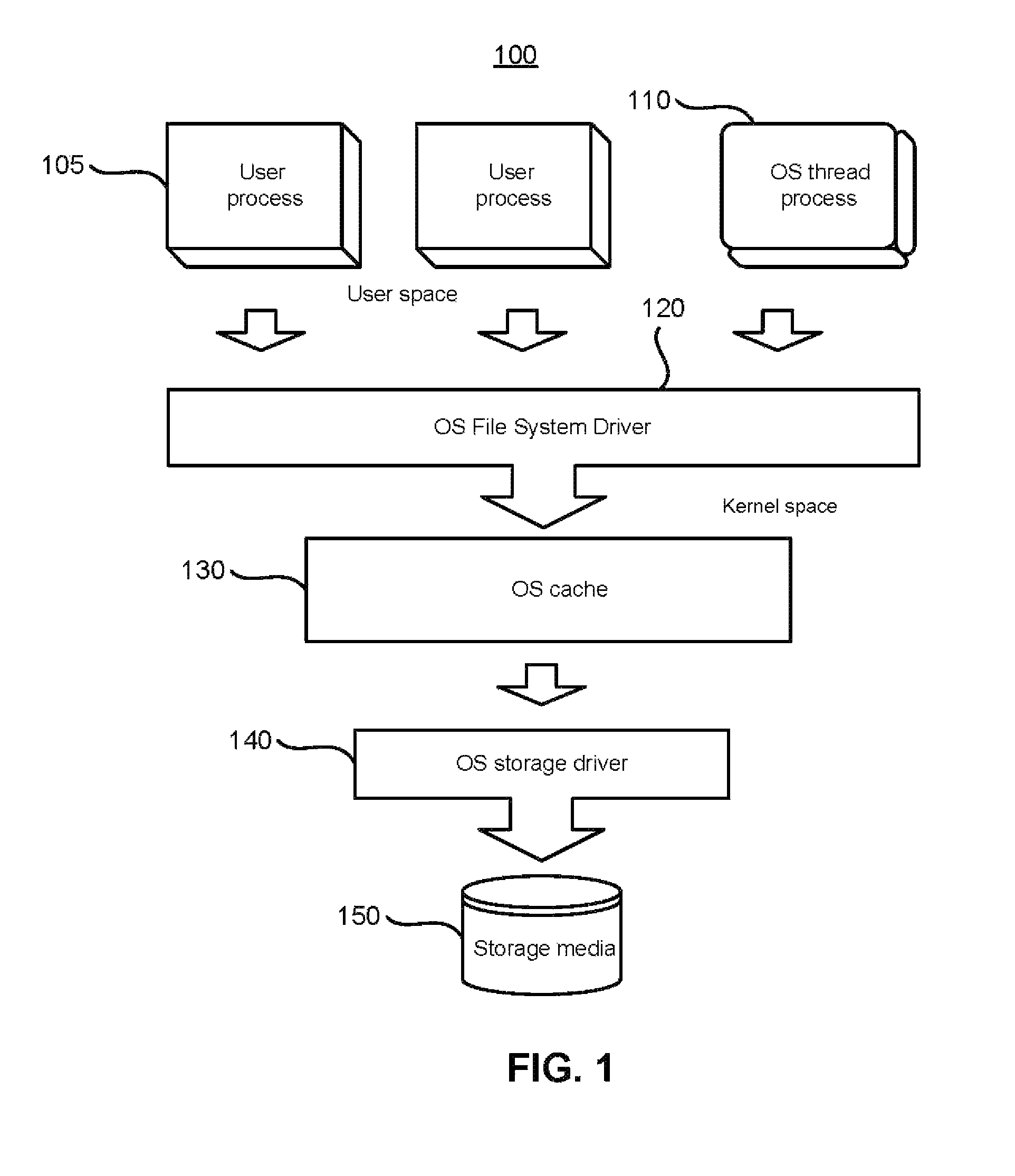

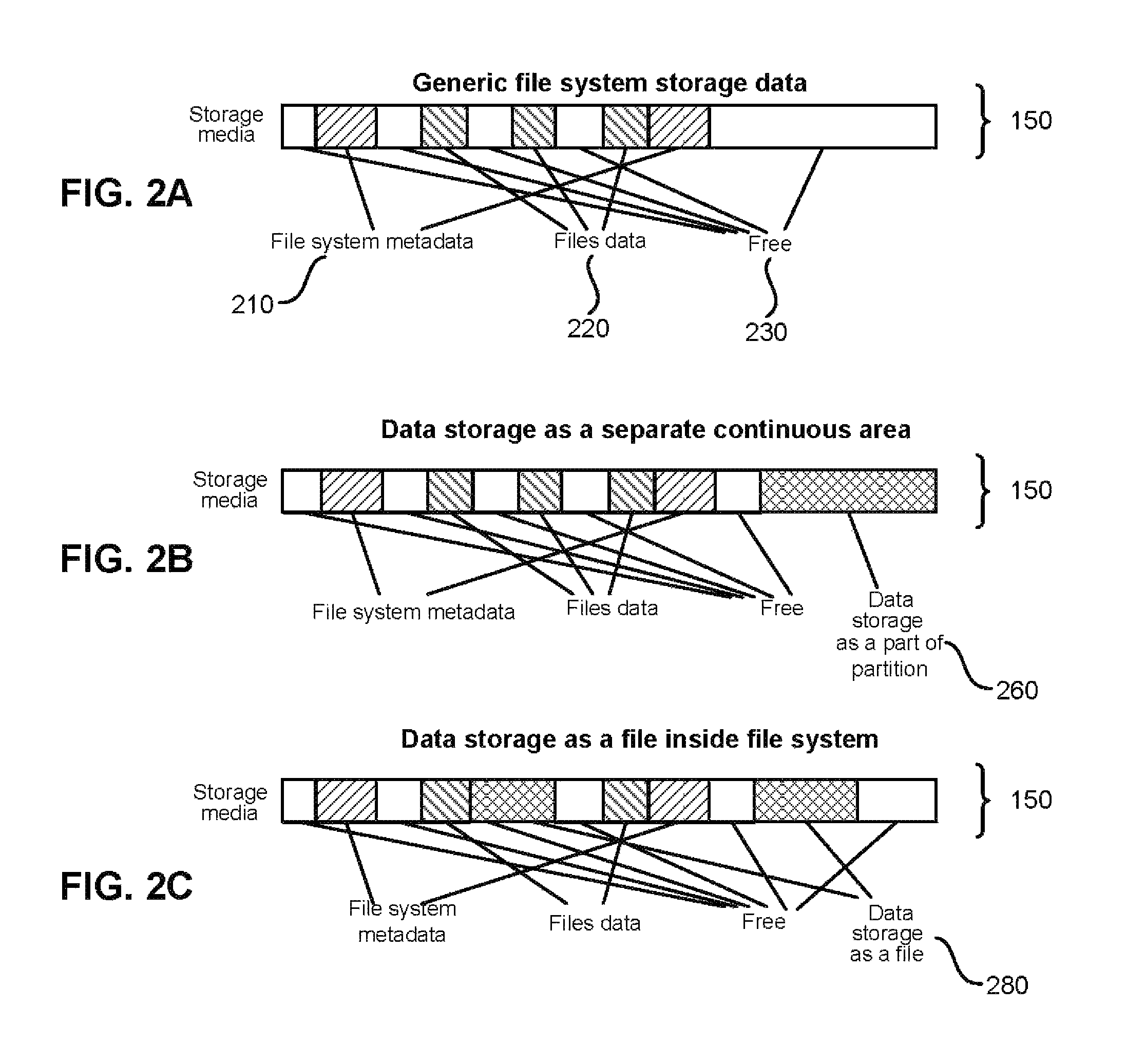

File-level continuous data protection with access to previous versions

ActiveUS8005797B1View effectivelyOvercome disadvantagesDigital data processing detailsError detection/correctionFile systemData memory

A system for continuous data protection includes a storage device and a backup storage device. The continuous data protection procedure is performed as two parallel processes: creating an initial backup by copying a data as a file / directory from the storage device into the backup storage device, and copying the data to be written to the data storage as a part of a file / directory into the incremental backup. When a write command is directed to a file system driver, it is intercepted and redirected to the backup storage, and the data to be written in accordance with the write request, is written to the incremental backup on the backup storage. If the write command is also directed to a data (a file / directory) that has been identified for backup, but has not yet been backed up, the identified data (a file / directory) is copied from the storage device to the intermediate storage device. Then, the write command is executed on the identified file / directory on the storage device and the file / directory is copied from the intermediate storage device.

Owner:MIDCAP FINANCIAL TRUST

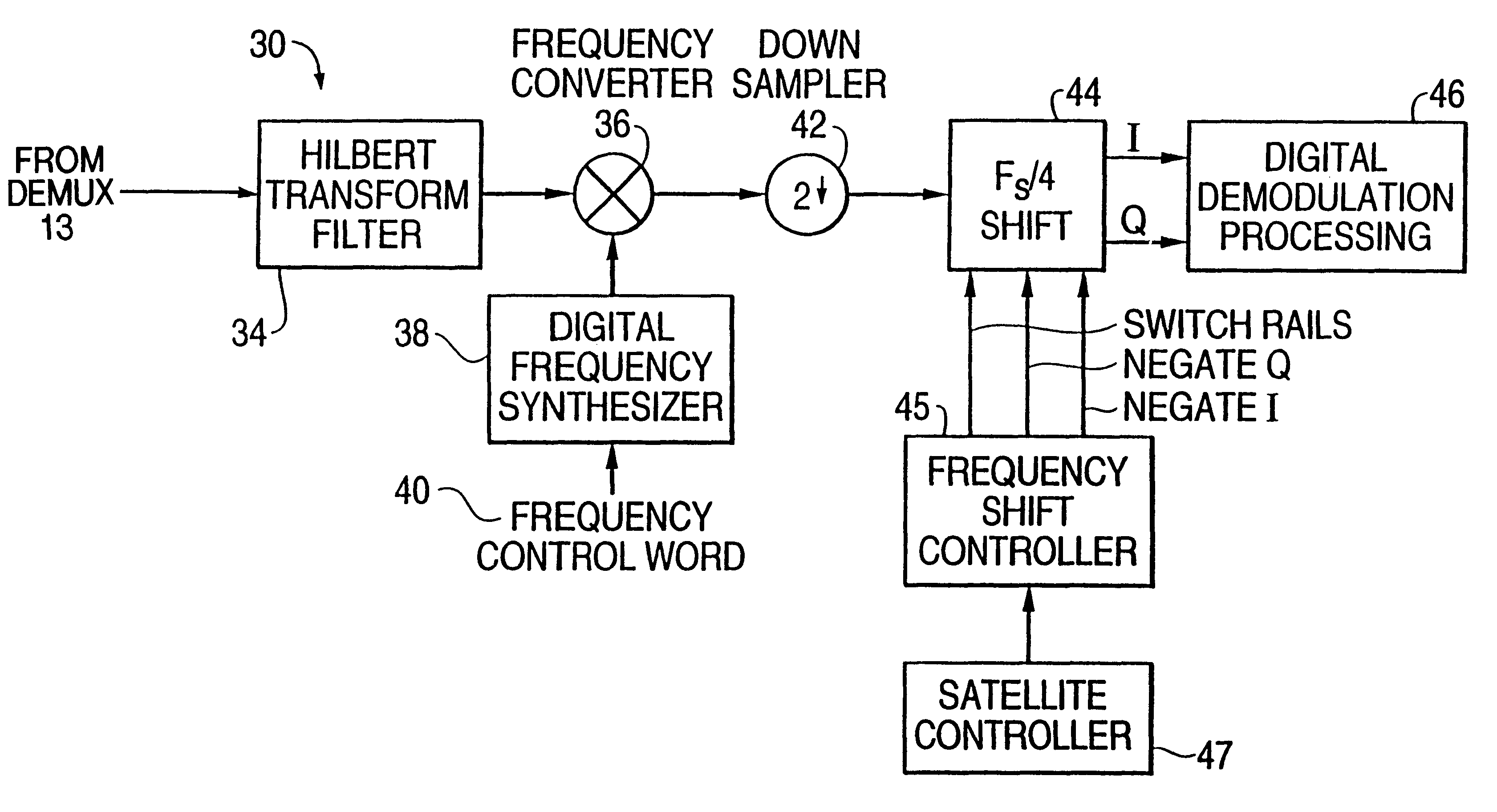

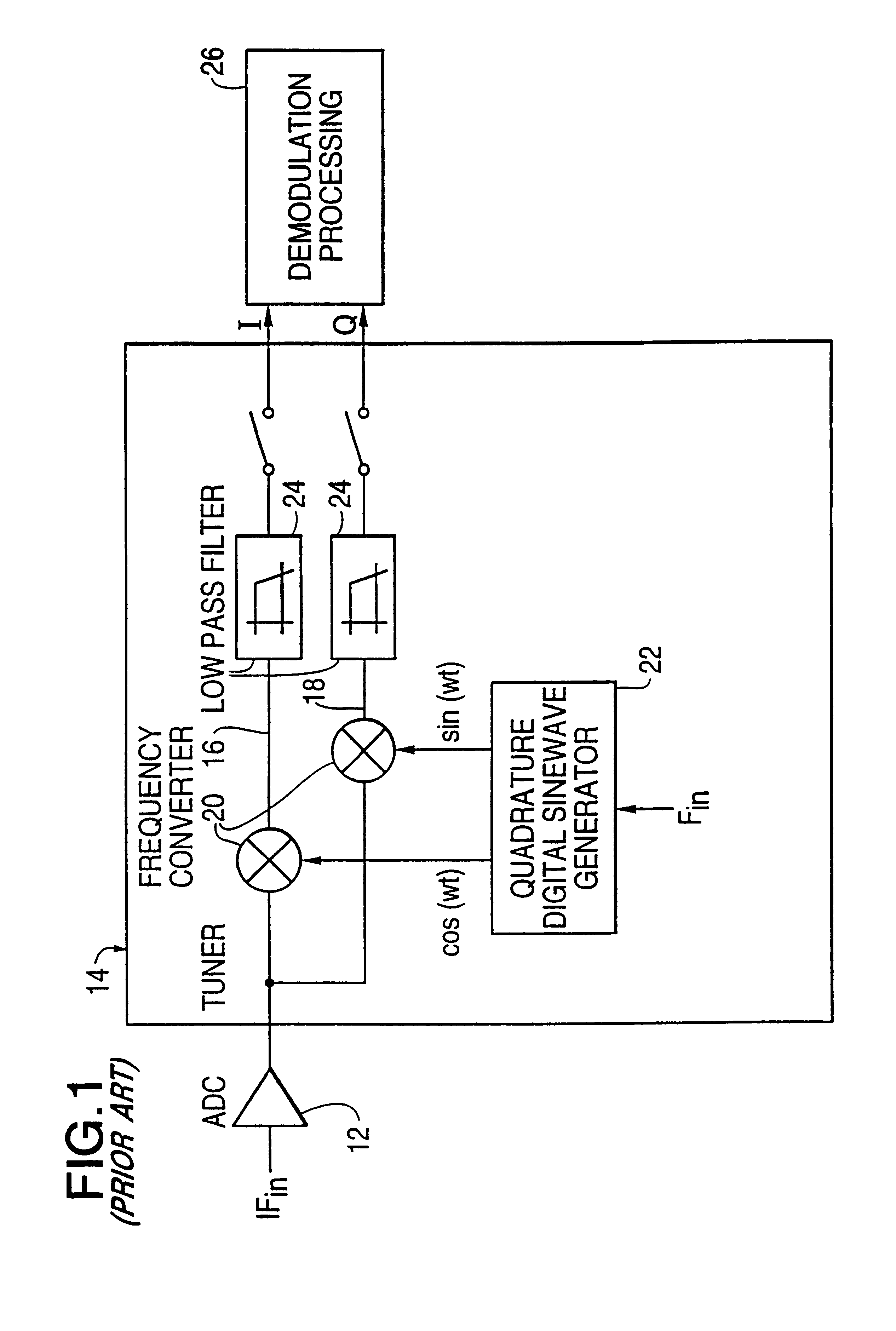

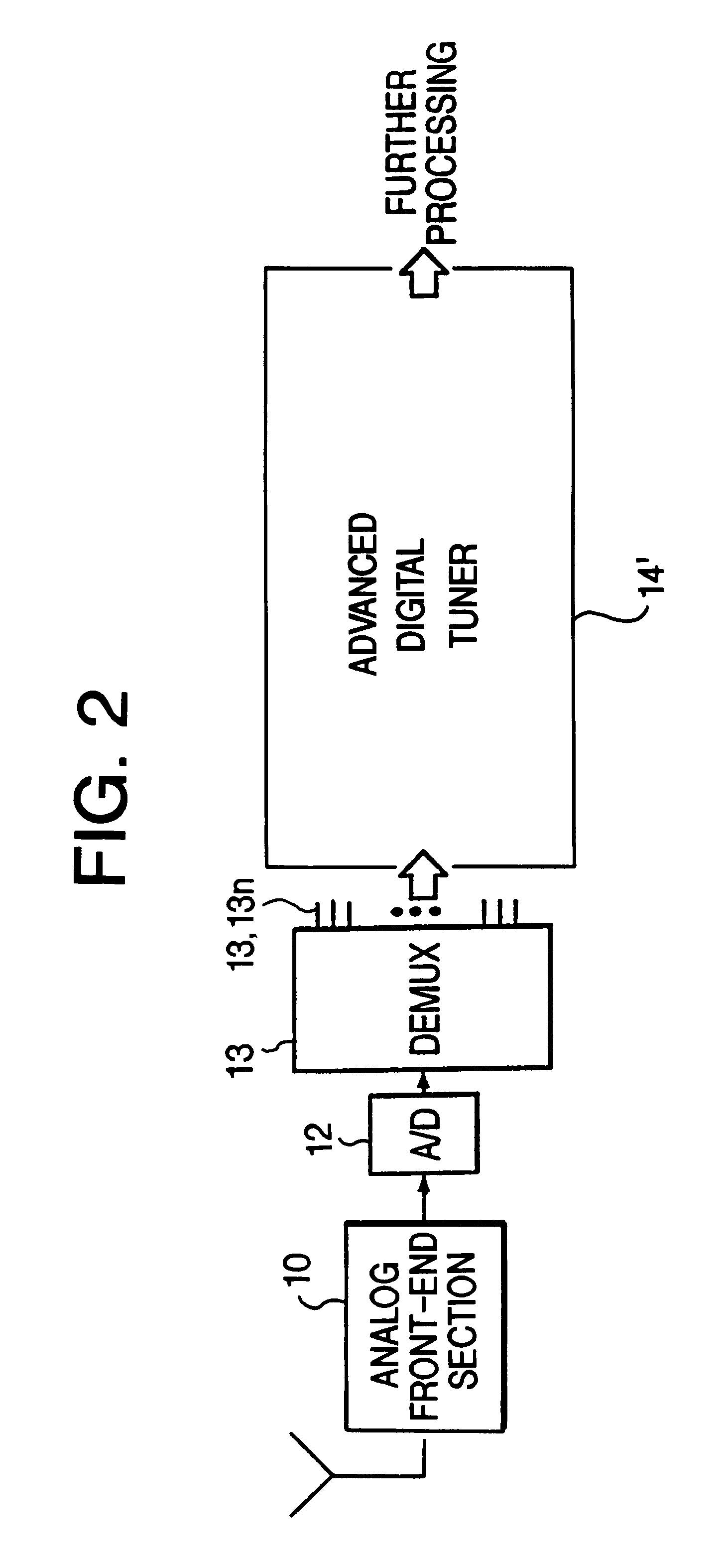

Wideband parallel processing digital tuner

InactiveUS6263195B1High speed data rateAvoid disadvantagesPolarisation/directional diversityPhase-modulated carrier systemsDigital dataFrequency spectrum

A wideband digital tuner (14') has an analog front-end section (10), a high speed analog-to-digital converter (12), demultiplexer (13) and a plurality of filters (341-342) arranged in a parallel input architecture to process wideband digital data received at extremely high sampling rates, such as at 2 Gsps (giga-samples per second). The tuner greatly attenuates an undesired spectral half of the wide bandwidth digital spectrum of the incoming digital signal using a complex band-pass filter, such as a Hilbert Transform filter. The tuner places the remaining half of the wide bandwidth digital spectrum of the incoming digital signal at complex baseband and down samples by 2. The architecture of the tuner can be partitioned into two separate halves which are hardware copies of each other.

Owner:NORTHROP GRUMMAN SYST CORP

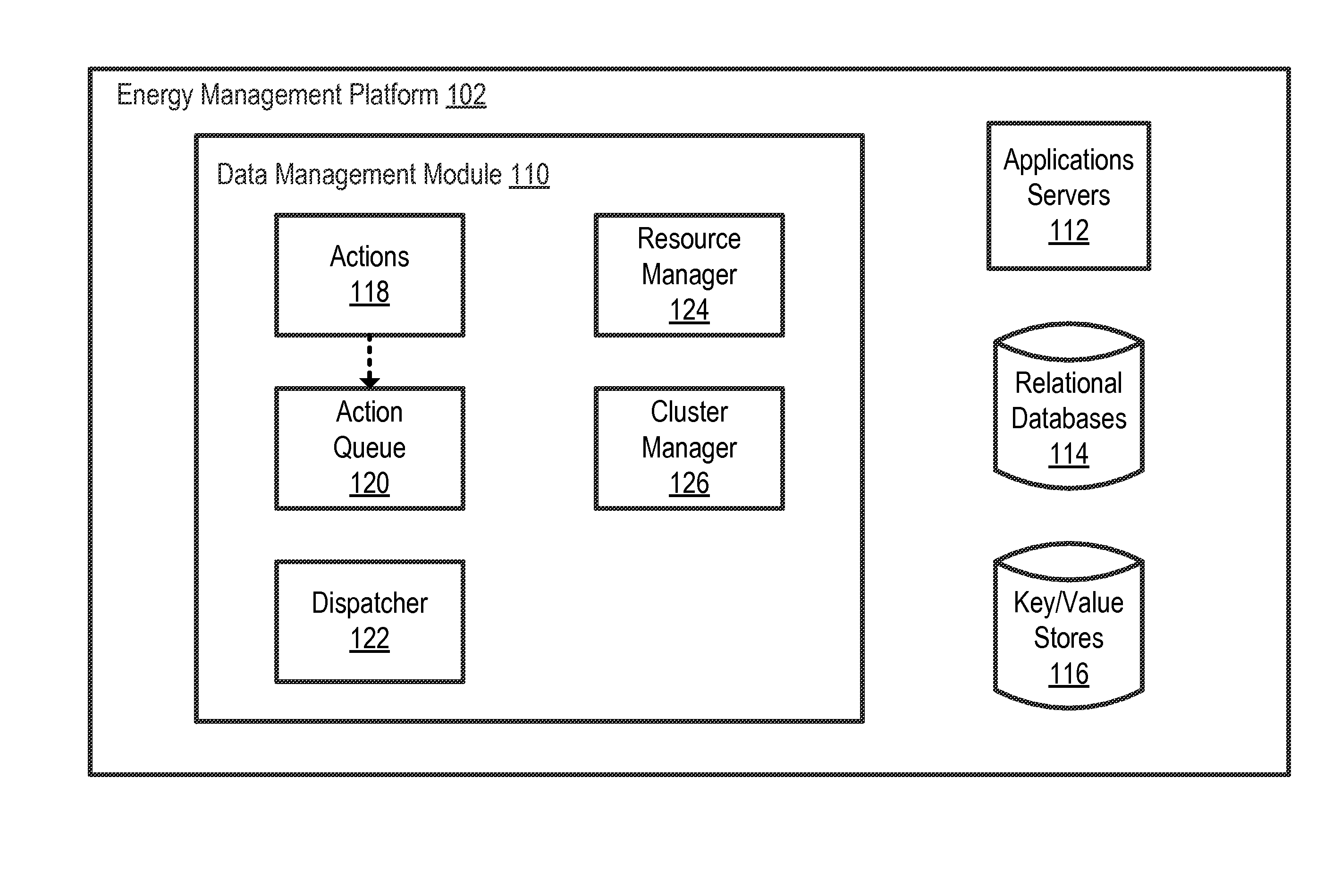

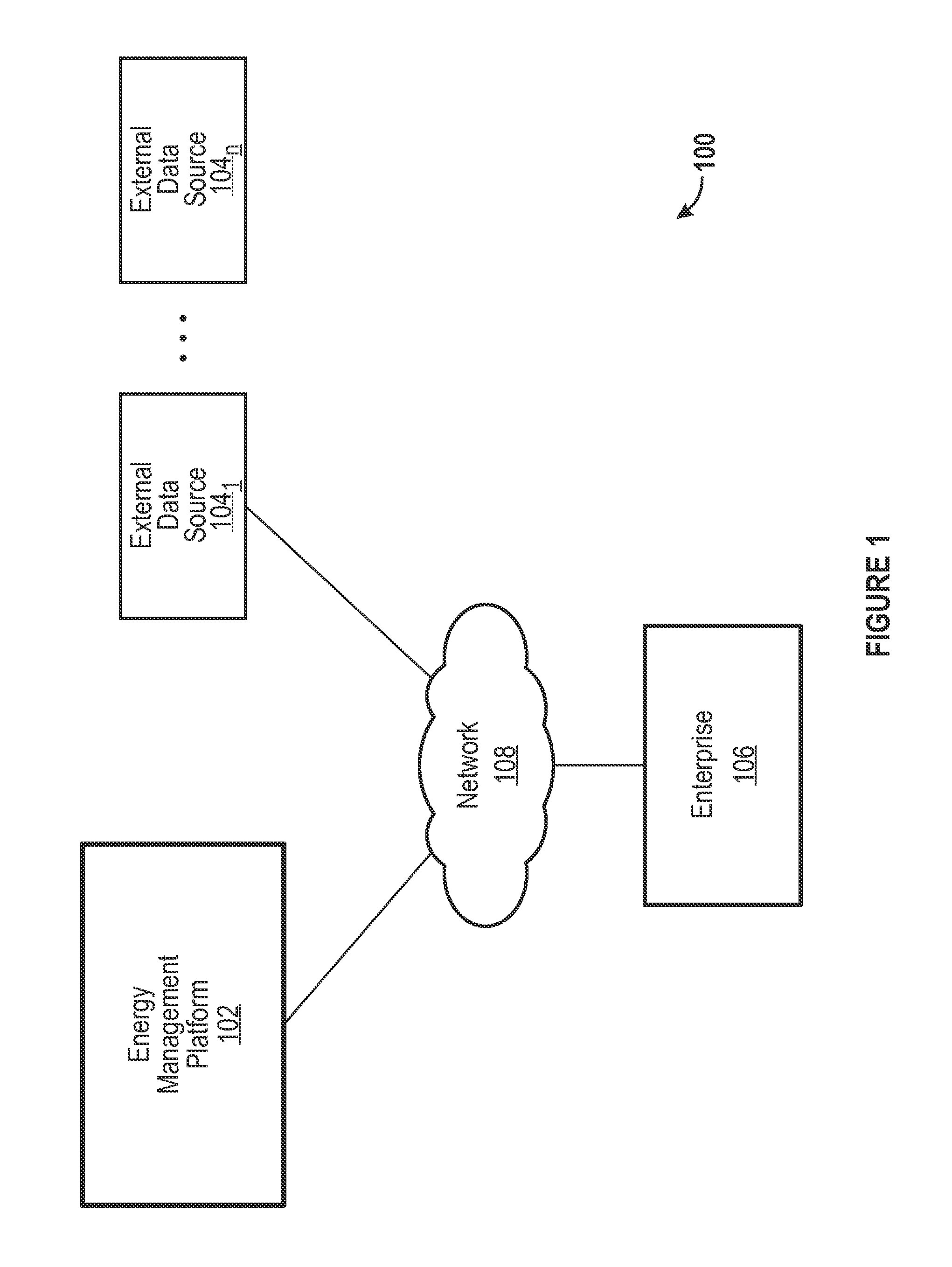

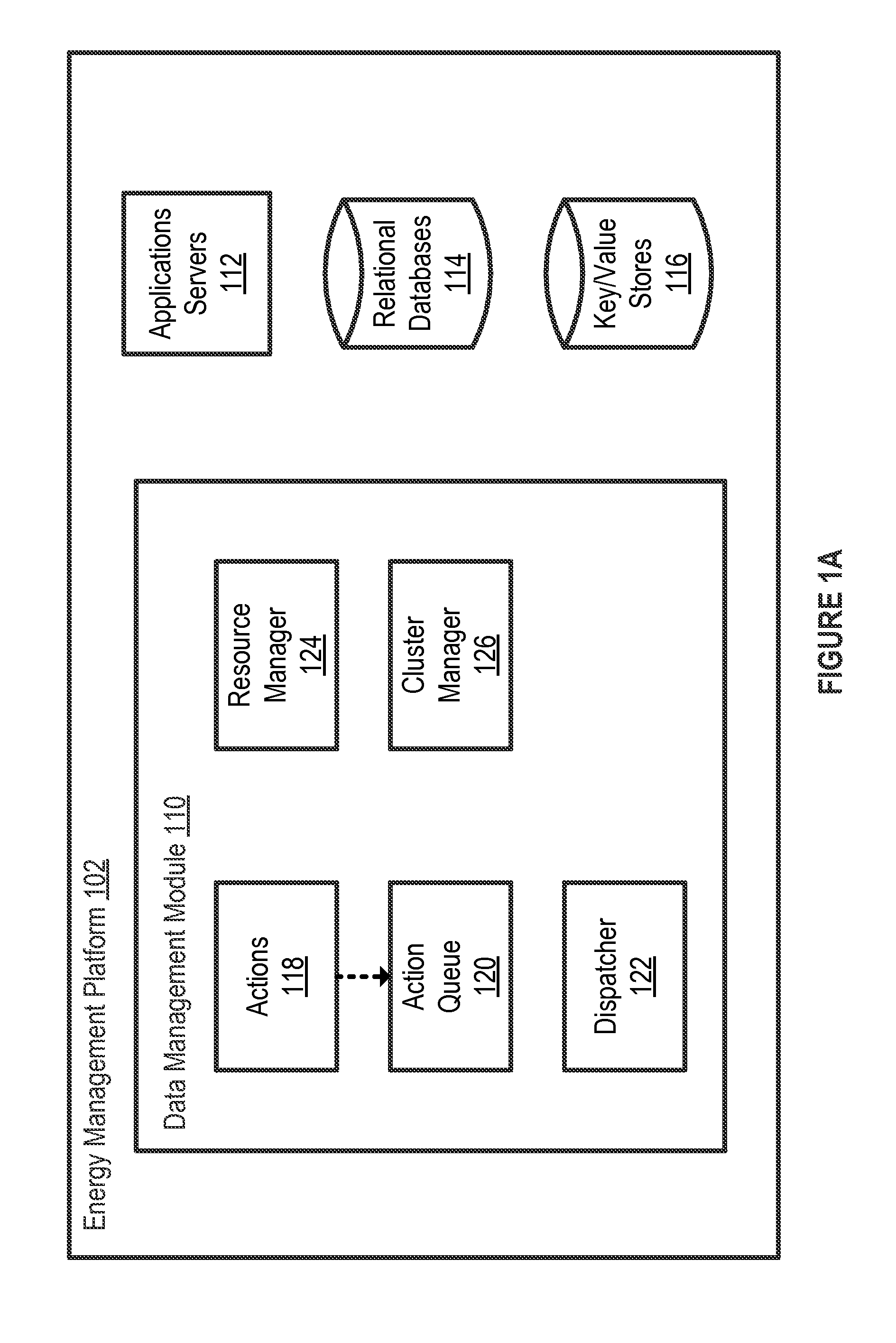

Systems and methods for processing data relating to energy usage

InactiveUS20150120224A1Increasing computing demandElectric devicesRelational databasesThird partyComputerized system

Processing of data relating to energy usage. First data relating to energy usage is loaded for analysis by an energy management platform. Second data relating to energy usage is stream processed by the energy management platform. Third data relating to energy usage is batch parallel processed by the energy management platform. Additional computing resources, owned by a third party separate from an entity that owns the computer system that supports the energy management platform, are provisioned based on increasing computing demand. Existing computing resources owned by the third party are released based on decreasing computing demand.

Owner:C3 AI INC

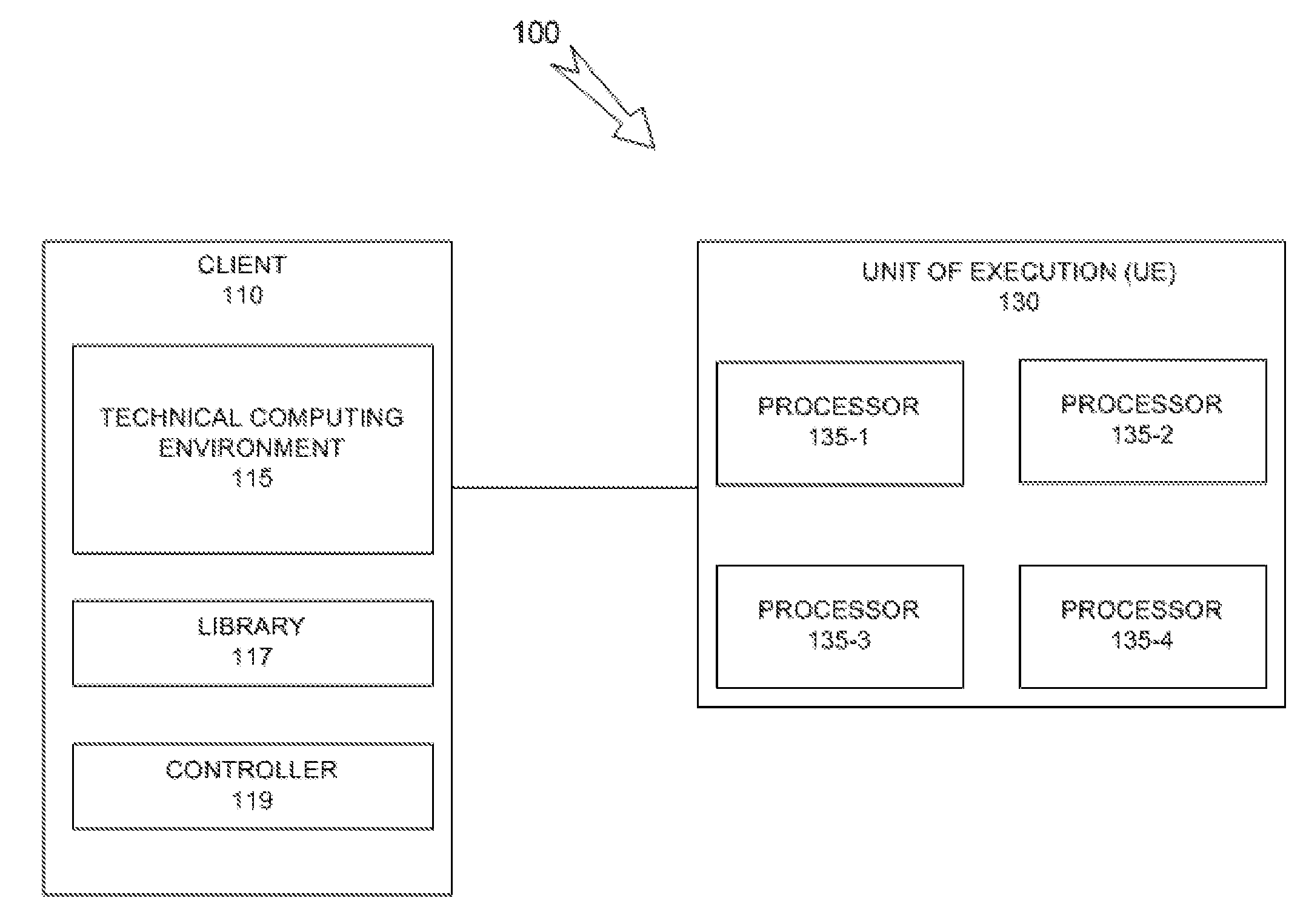

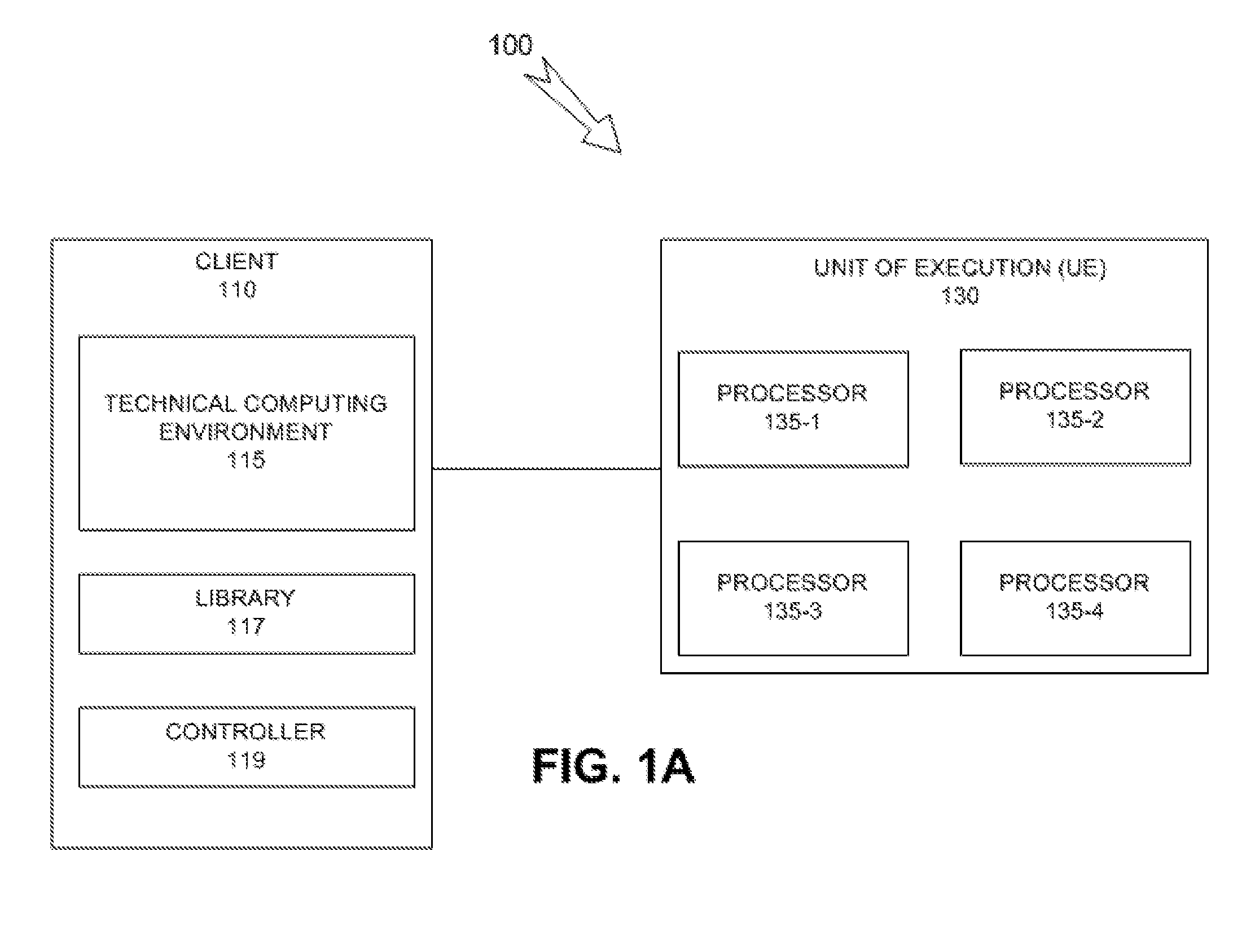

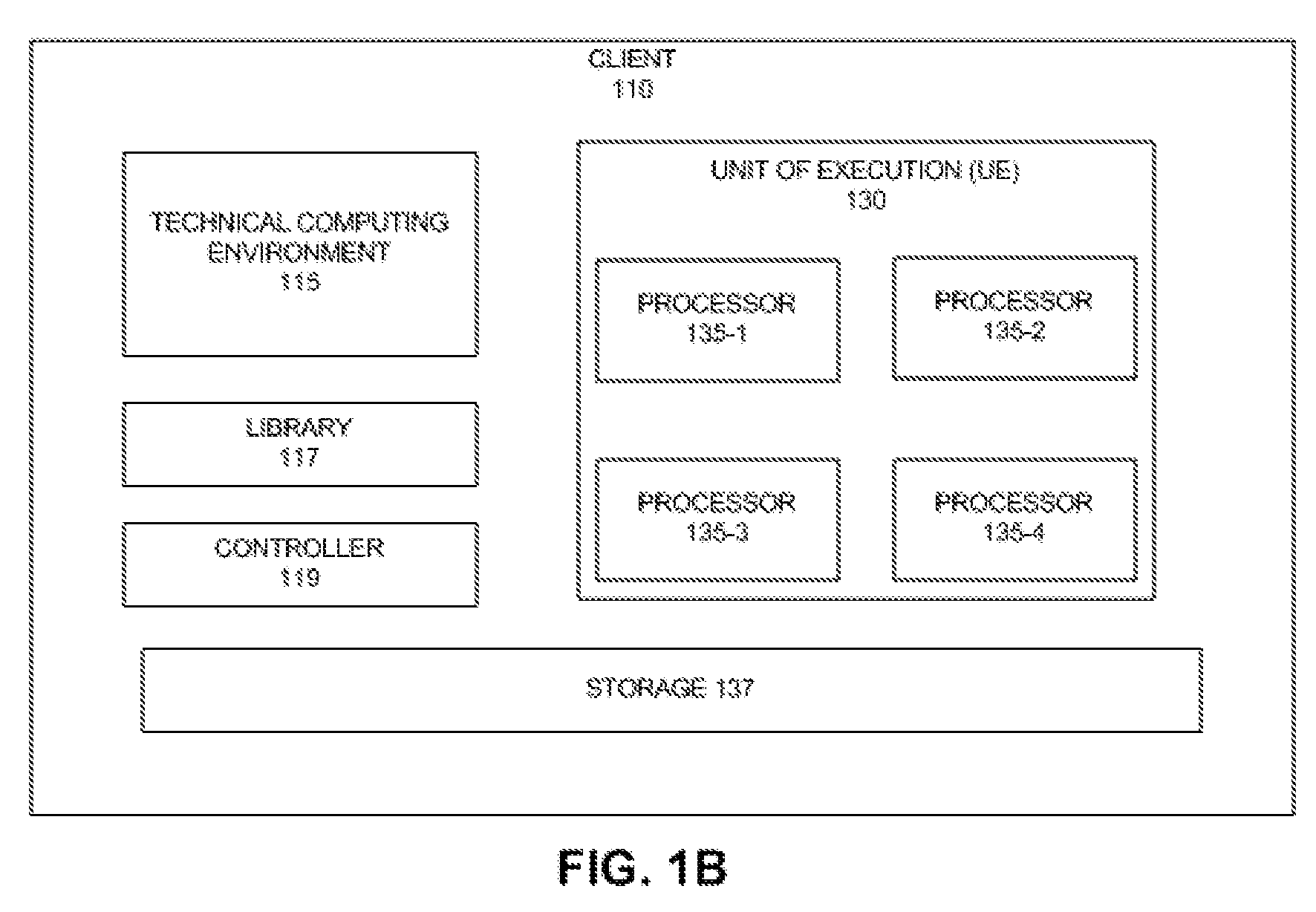

Bi-directional communication in a parallel processing environment

A system receives an instruction from a technical computing environment, and commences parallel processing on behalf of the technical computing environment based on the received instruction. The system also sends a query, related to the parallel processing, to the technical computing environment, receives an answer associated with the query from the technical computing environment, and generates a result based on the parallel processing. The system further sends the result to the technical computing environment, where the result is used by the technical computing environment to perform an operation.

Owner:THE MATHWORKS INC

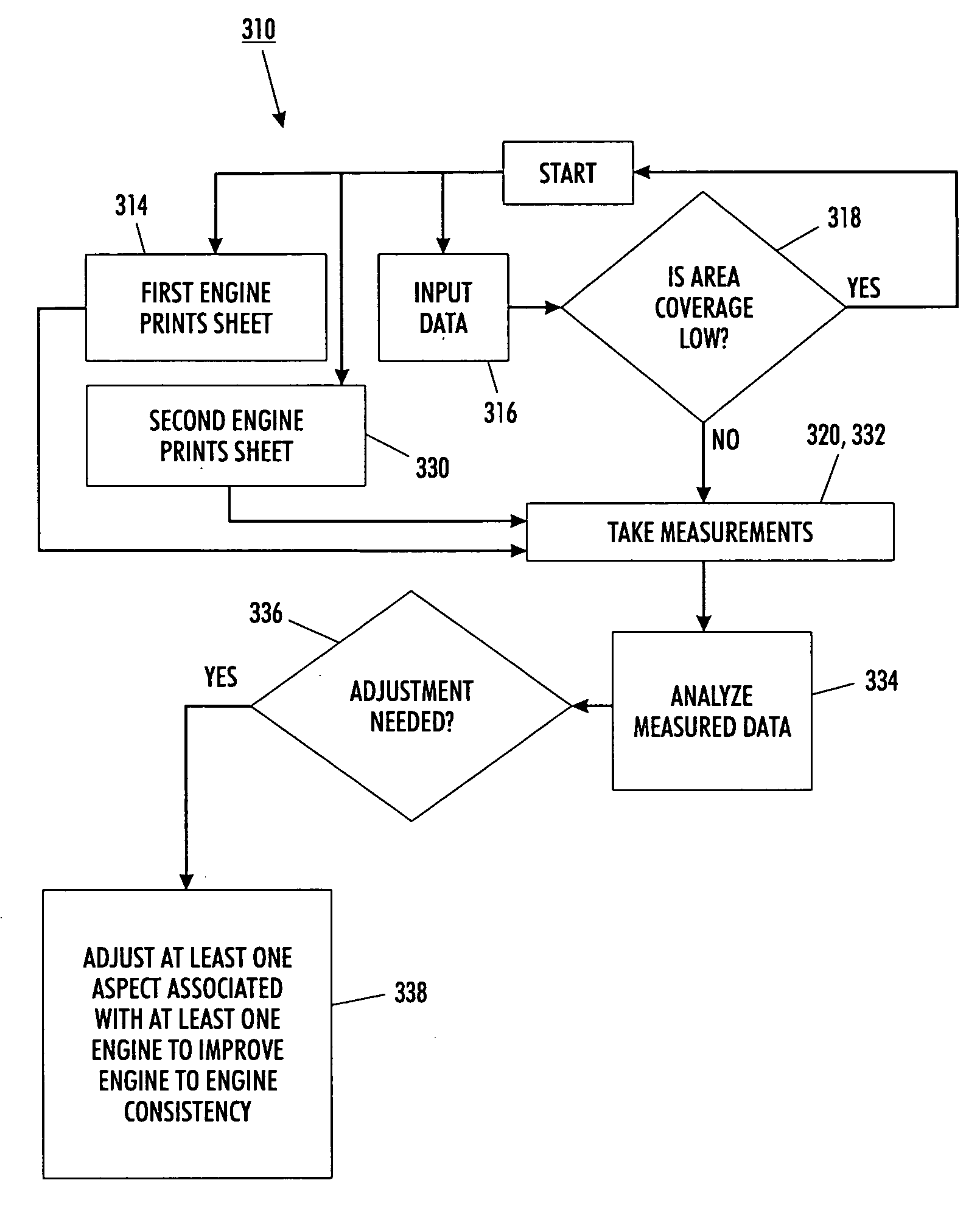

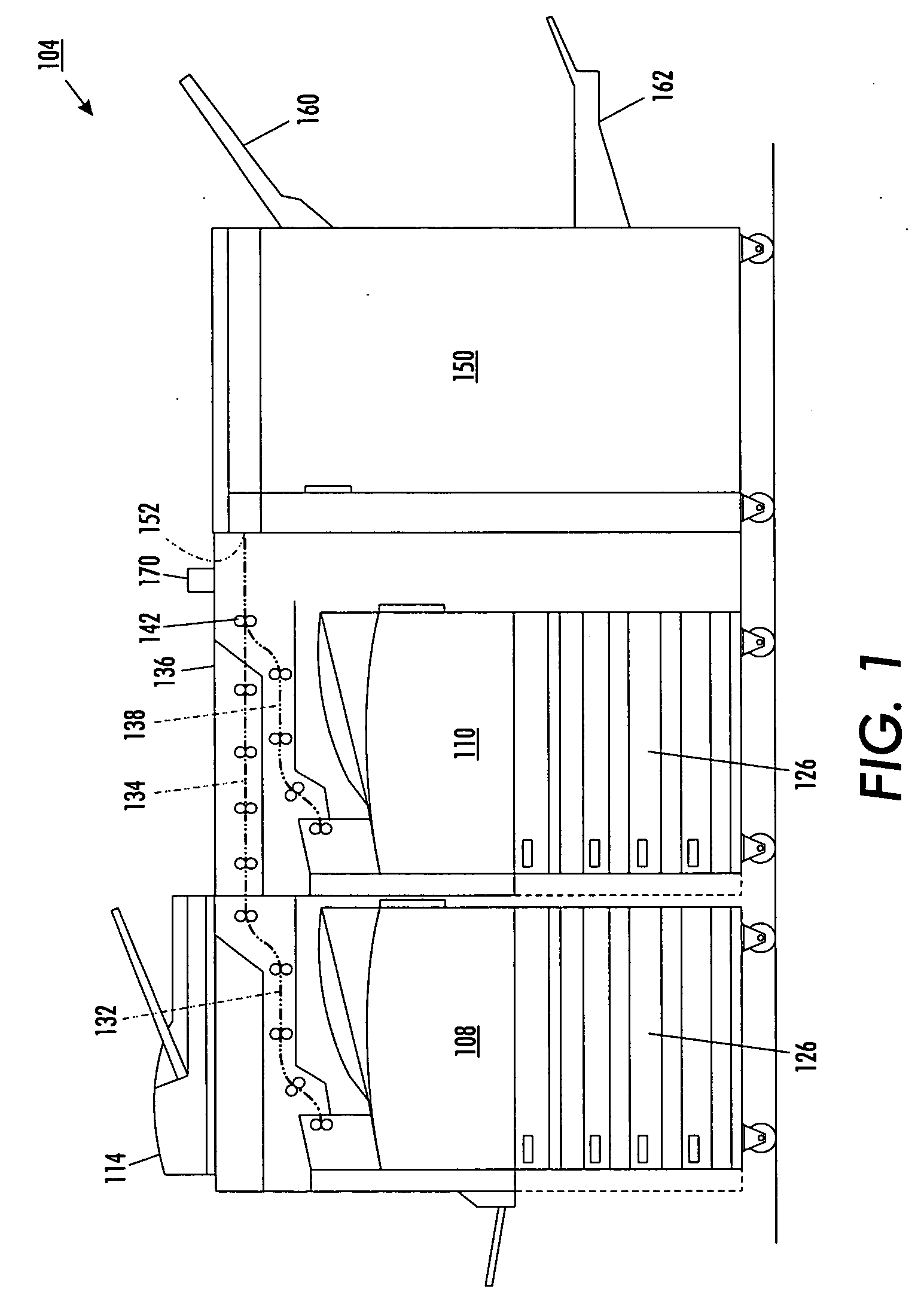

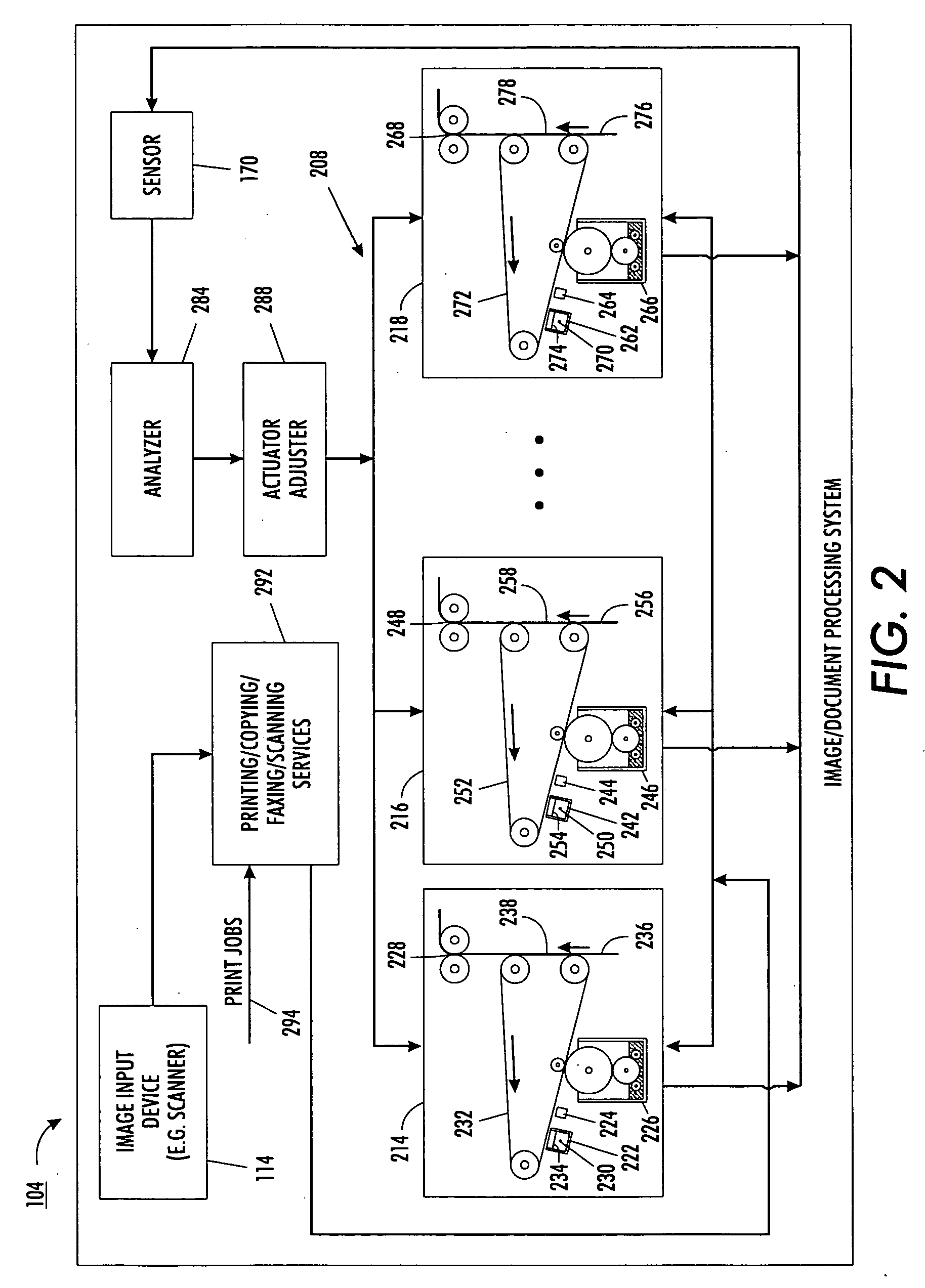

Image quality control method and apparatus for multiple marking engine systems

A full width array CCD sensor is incorporated in the media path to monitor fused pages by calculating area coverage from multiple engines in a Tightly Integrated Parallel Process (TIPP) architecture. With knowledge of the area coverage differences between print engines for a given pixel count to the ROS, a relative density difference of each engine is determined. Based on the determined relative density difference, an adjustment is calculated and applied to the engine with the largest error to match the area coverage(s) of the other engine(s).

Owner:XEROX CORP

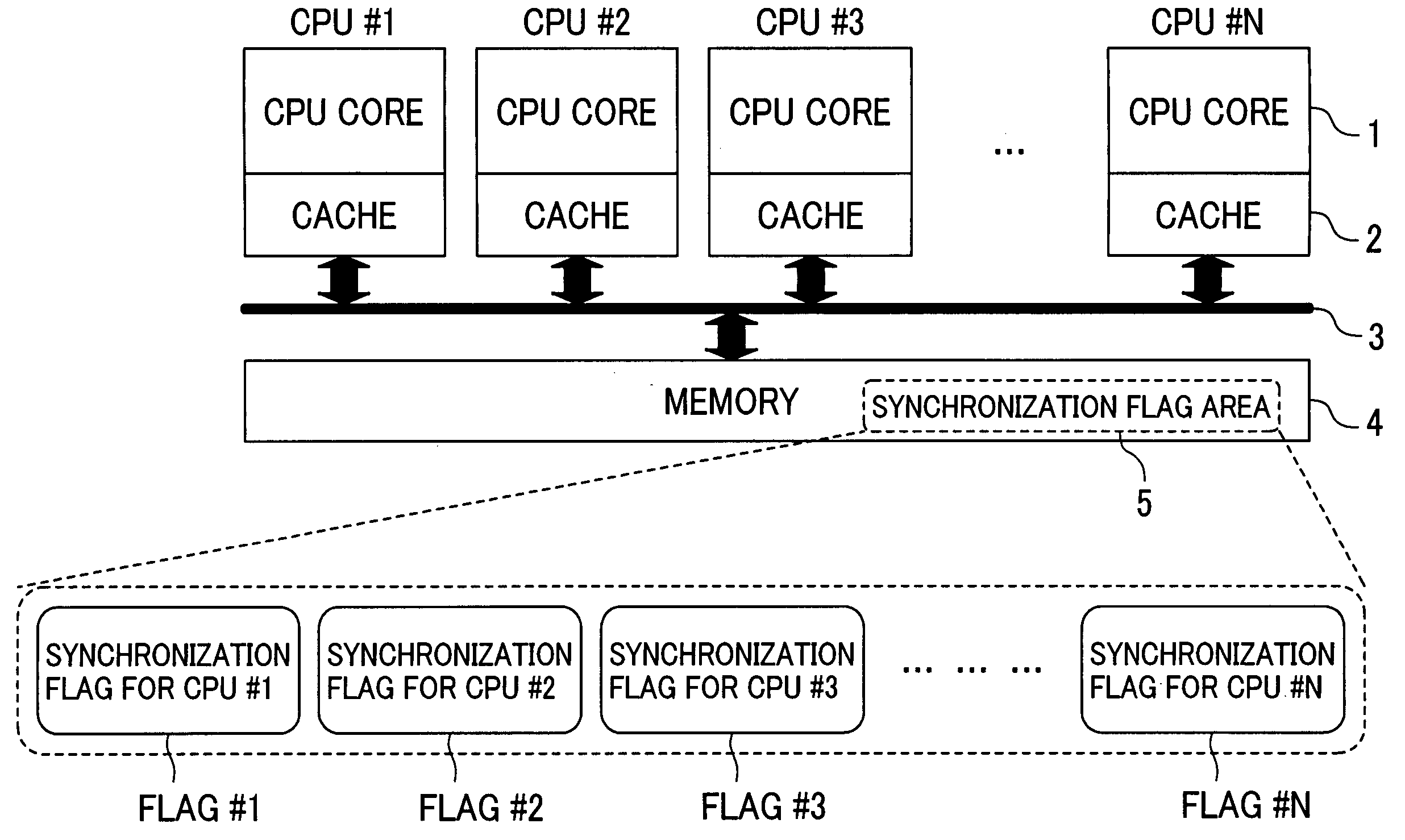

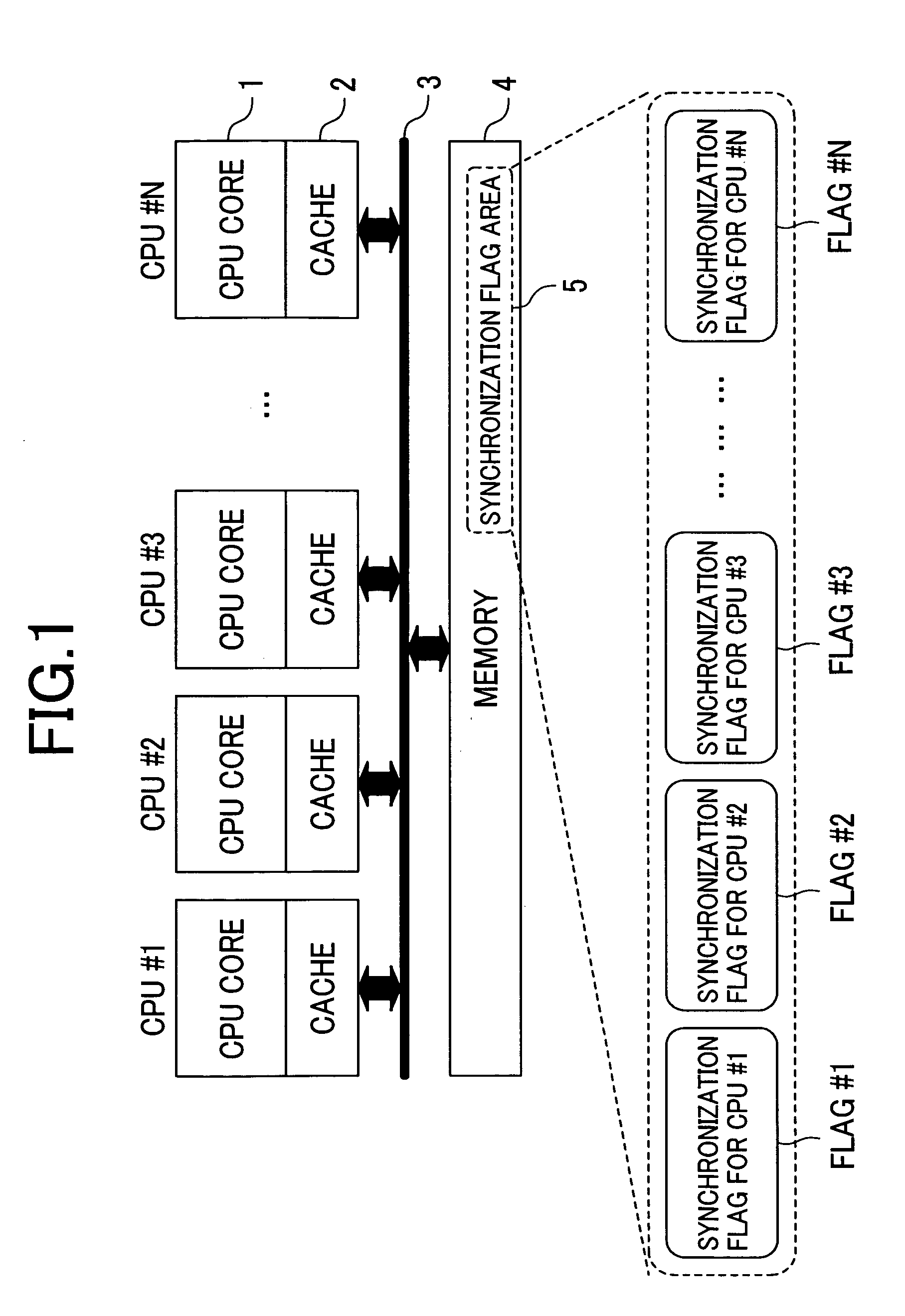

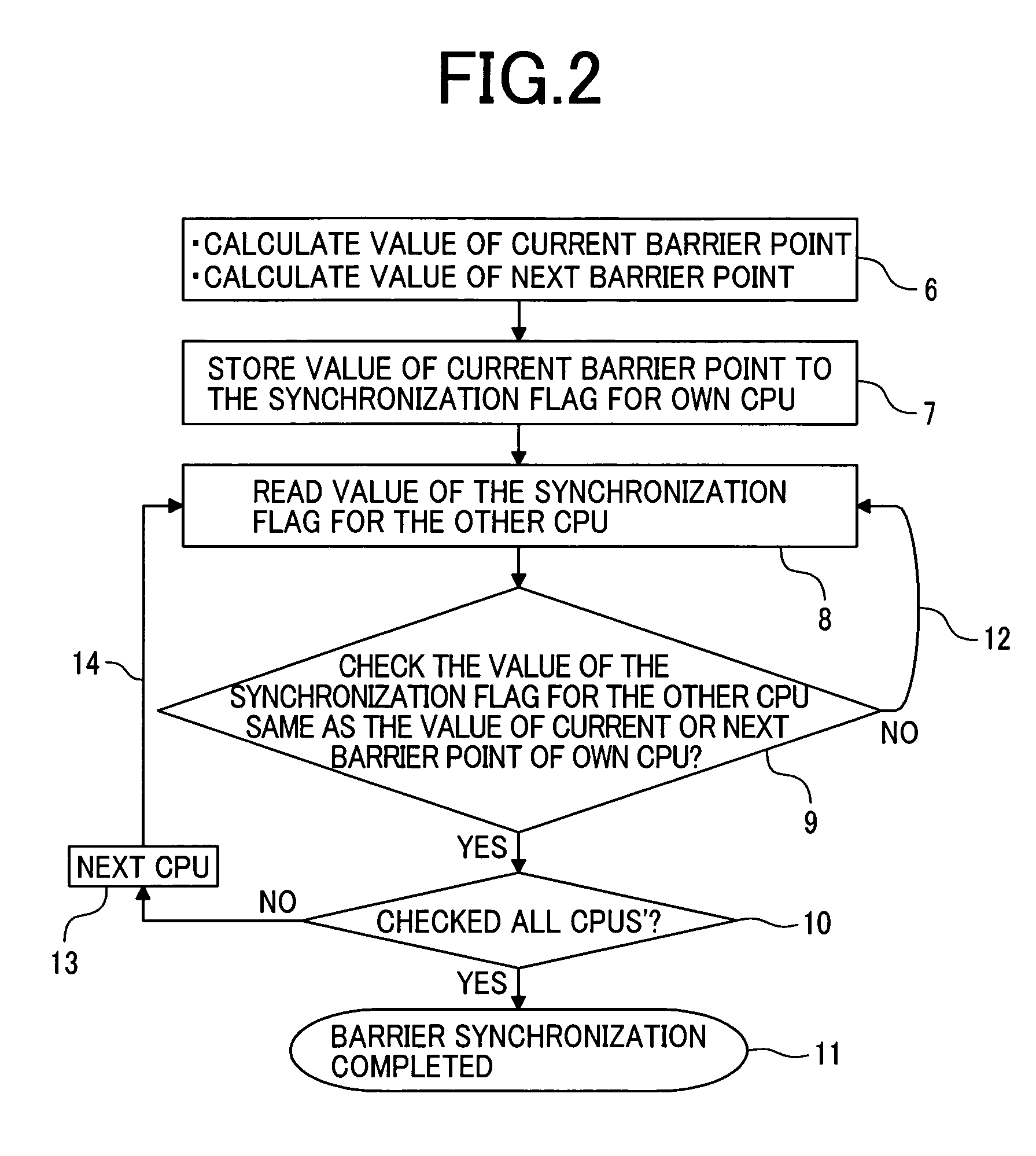

Method for synchronizing processors in a multiprocessor system

InactiveUS20050050374A1Improve parallel processing capabilitiesProgram synchronisationMemory adressing/allocation/relocationMulti processorSoftware update

The high-speed barrier synchronization is completed among multiprocessors by saving overhead for parallel process without addition of a particular hardware mechanism. That is, the barrier synchronization process is performed by allocating the synchronization flag area, on the shared memory, indicating the synchronization point where the execution of each processor for completing the barrier synchronization is completed, updating the synchronization flag area with the software in accordance with the executing condition, and comparing, with each processor, the synchronization flag area of the other processors which takes part in the barrier synchronization.

Owner:HITACHI LTD

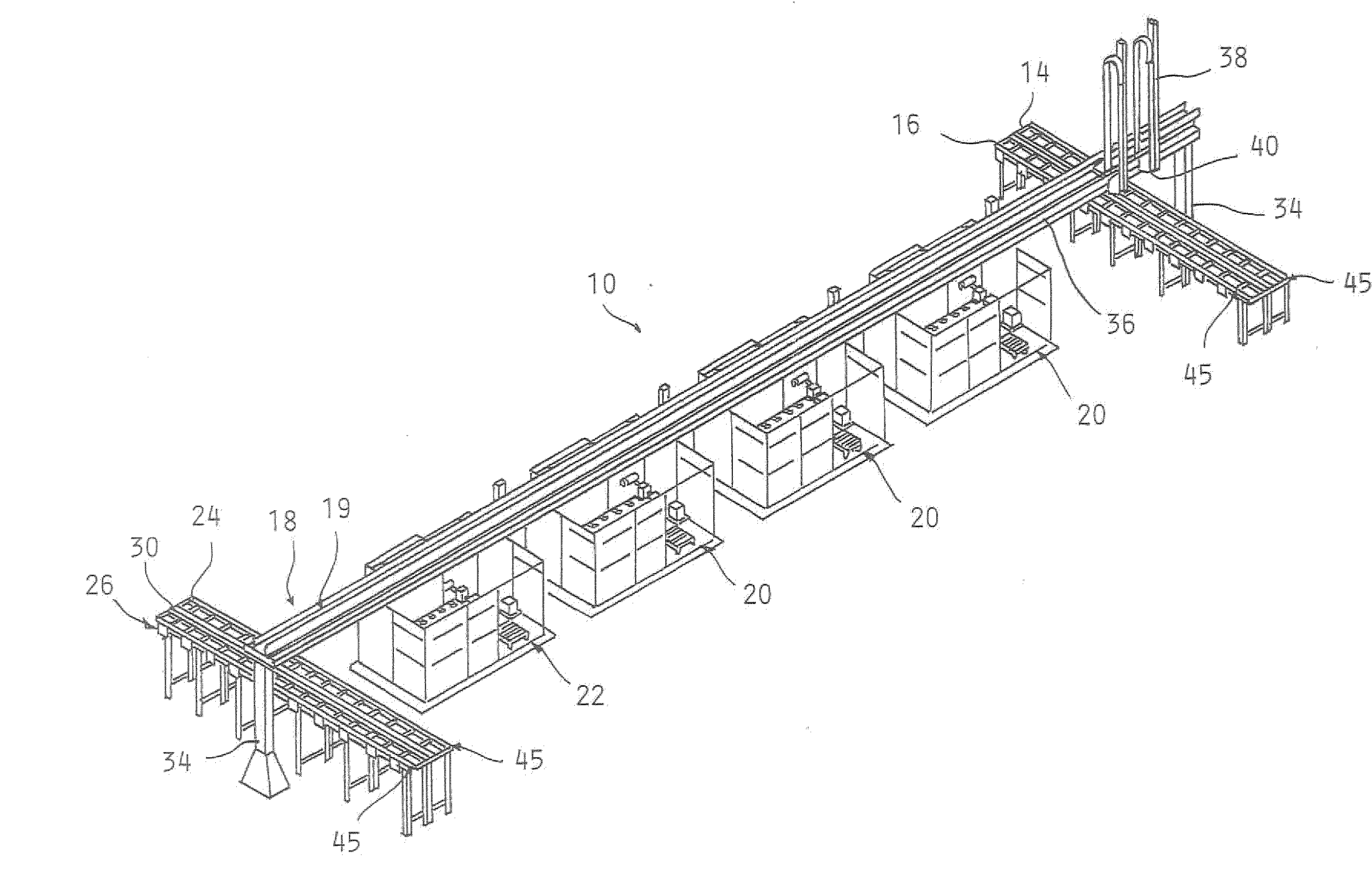

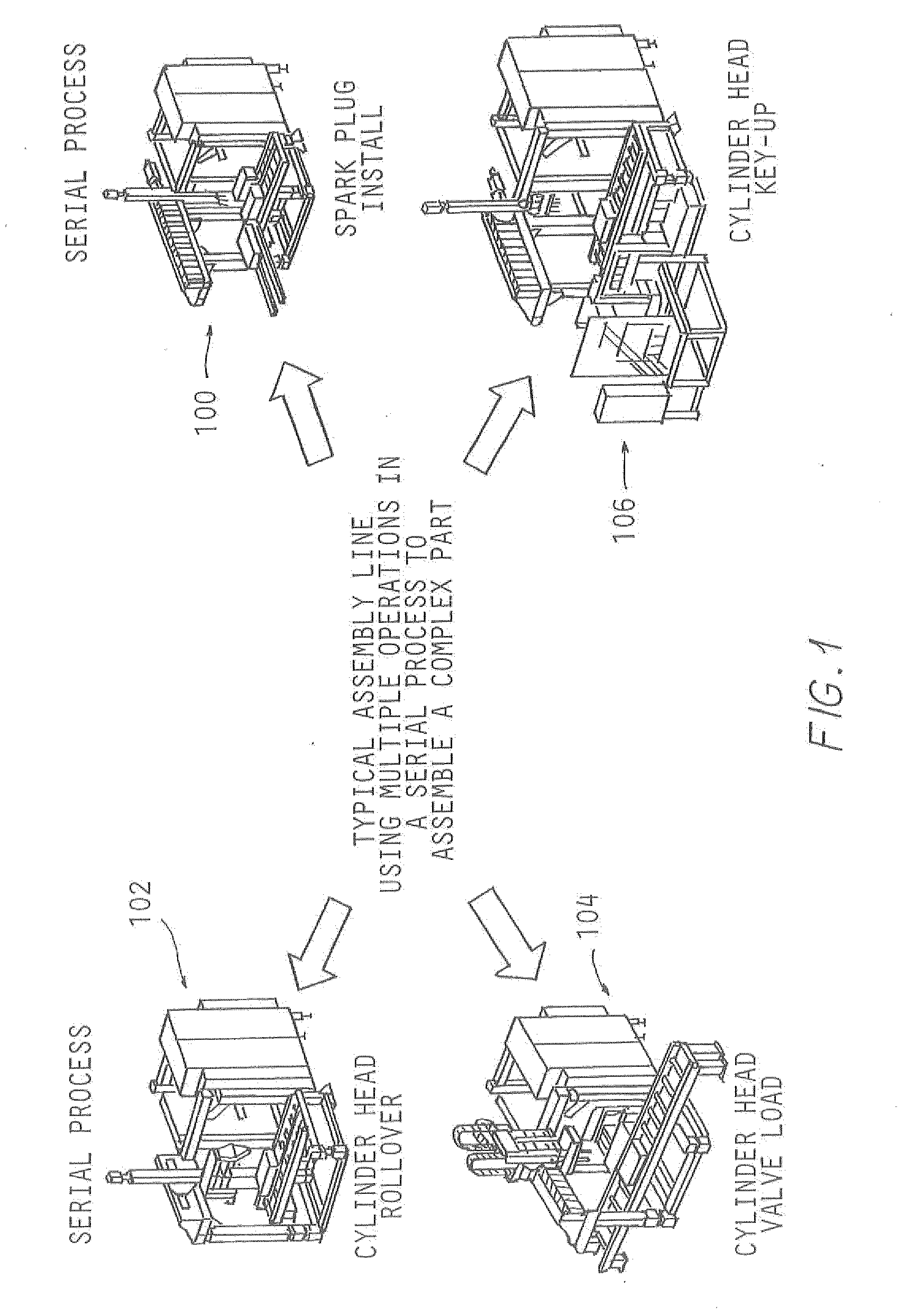

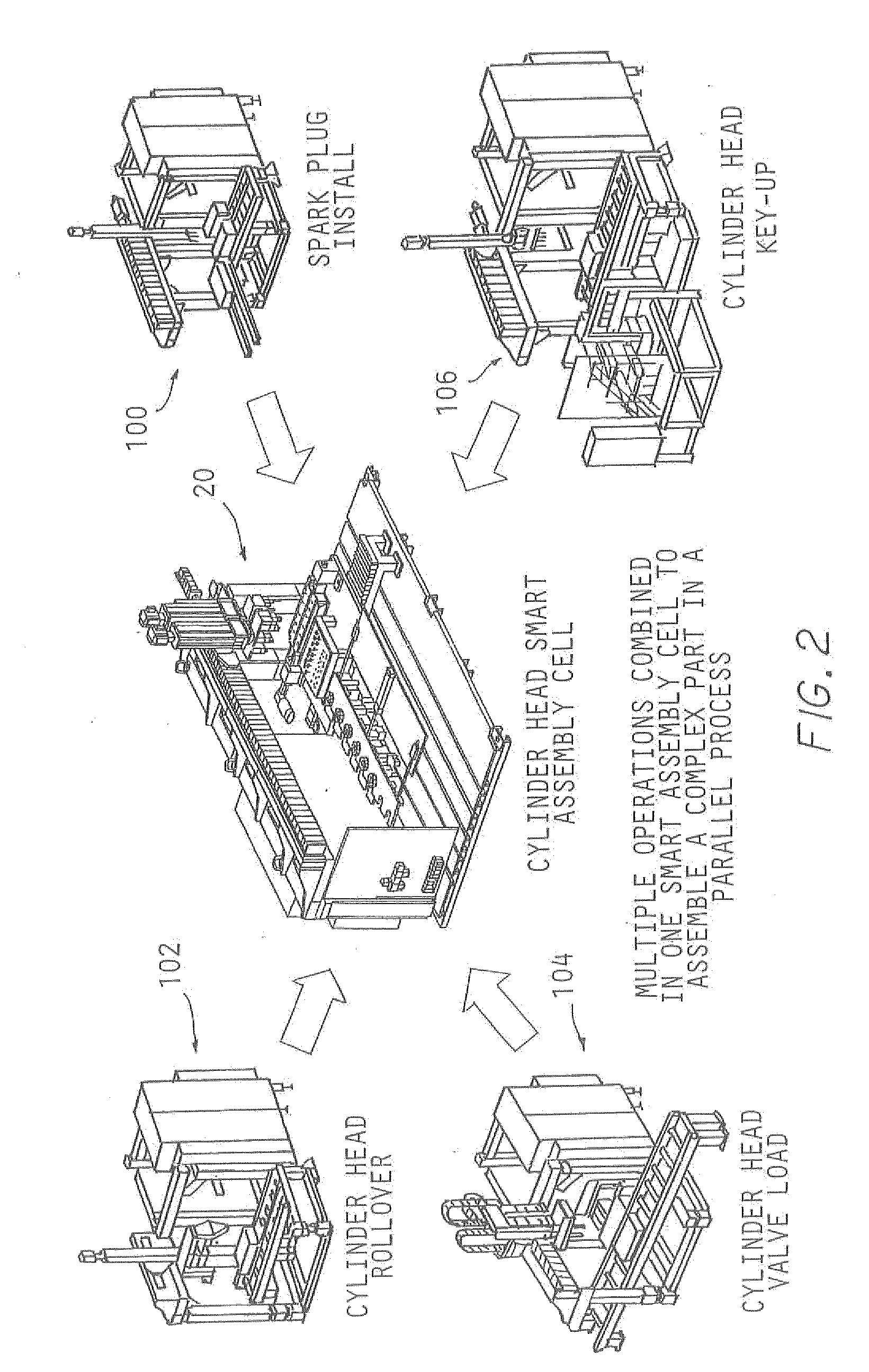

Method and apparatus forassembling a complex product ina parrallel process system

A method and apparatus for assembling a complex product in a parallel process system wherein a collection of components are provided for assembling the complex product. The present invention involves transferring the collection of the components to one of a plurality of similar computerized assembly cells through the use of a transport system. The collection of components is automatically assembled into the complex product through the use of the computerized assembly cells. The complex product is then transferred from one of the assembly cells to a computerized test cell, where the complex product is tested to ensure for the proper dimensioning and functioning of the complex product. The complex product is then transferred from the test cell via the transport system to either a part reject area or conveyor, if the complex product is defective, or to an automatic dunnage load or part return system, if the complex product is not defective.

Owner:COMAU LLC

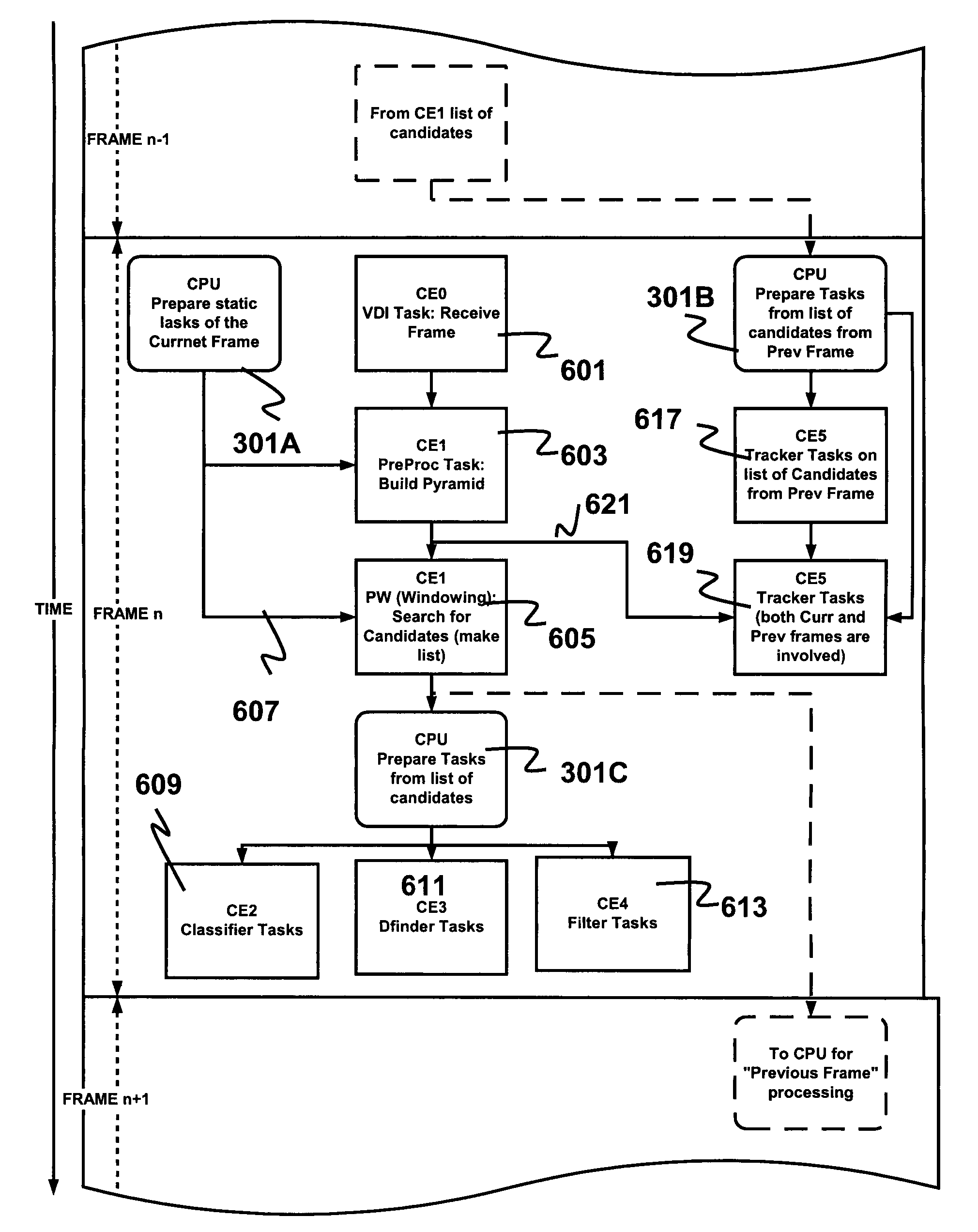

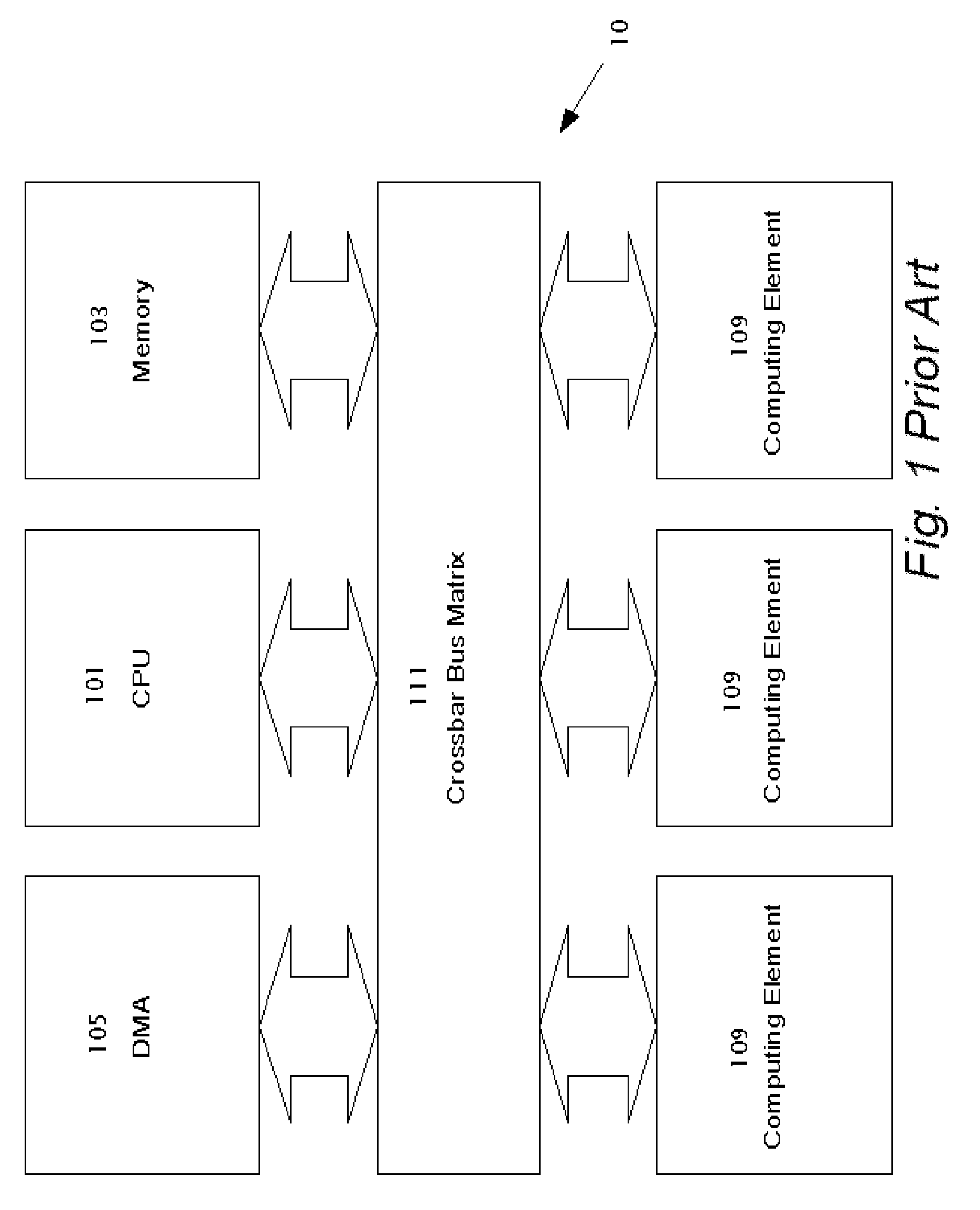

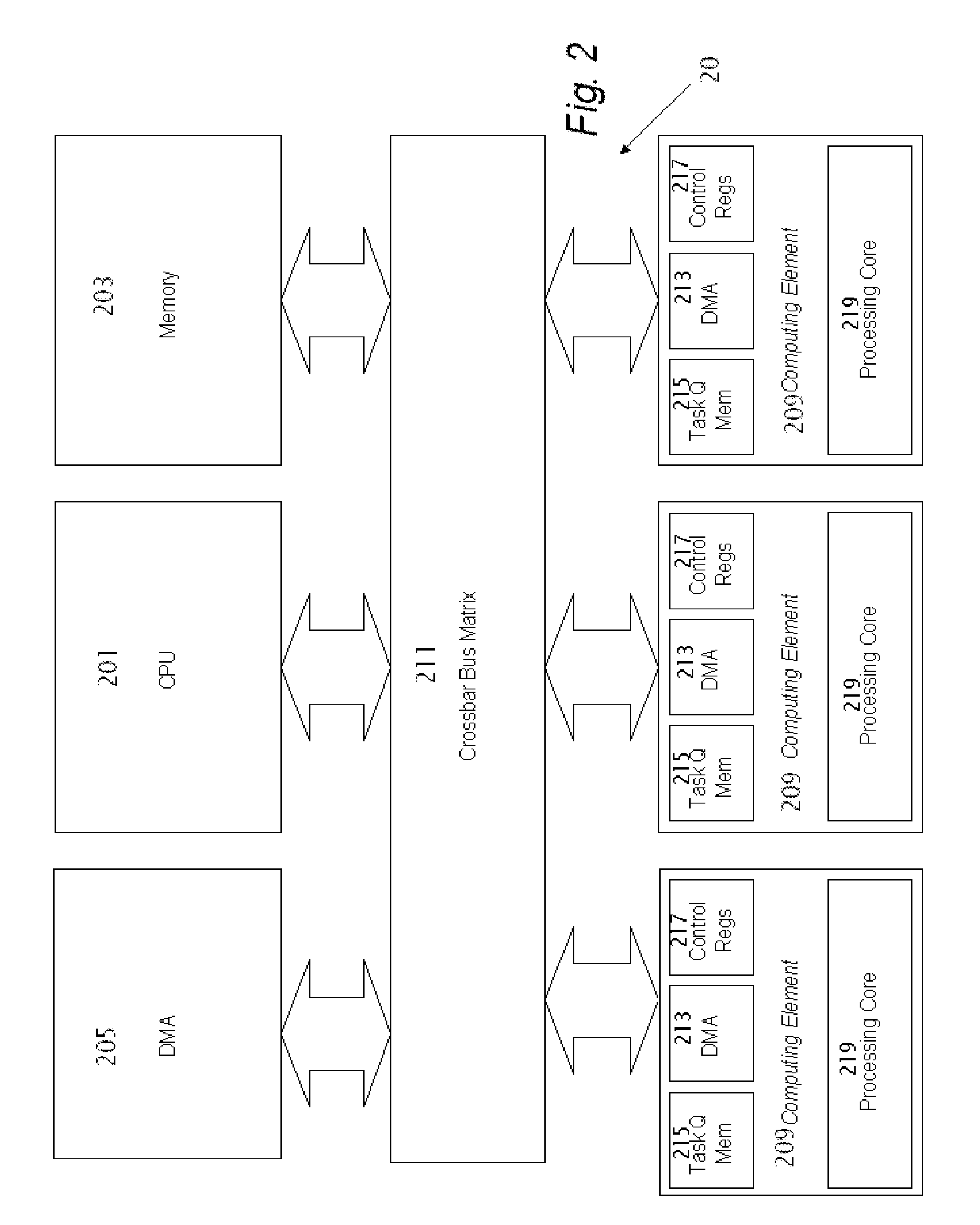

Scheduling of Multiple Tasks in a System Including Multiple Computing Elements

InactiveUS20090300629A1Insufficient capacityMultiprogramming arrangementsSpecific program execution arrangementsDirect memory accessControl system

A method for controlling parallel process flow in a system including a central processing unit (CPU) attached to and accessing system memory, and multiple computing elements. The computing elements (CEs) each include a computational core, local memory and a local direct memory access (DMA) unit. The CPU stores in the system memory multiple task queues in a one-to-one correspondence with the computing elements. Each task queue, which includes multiple task descriptors, specifies a sequence of tasks for execution by the corresponding computing element. Upon programming the computing element with task queue information of the task queue, the task descriptors of the task queue in system memory are accessed. The task descriptors of the task queue are stored in the local memory of the computing element. The accessing and the storing of the data by the CEs is performed using the local DMA unit. When the tasks of the task queue are executed by the computing element, the execution is typically performed in parallel by at least two of the computing elements. The CPU is interrupted respectively by the computing elements only upon their fully executing the tasks of their respective task queues.

Owner:MOBILEYE TECH

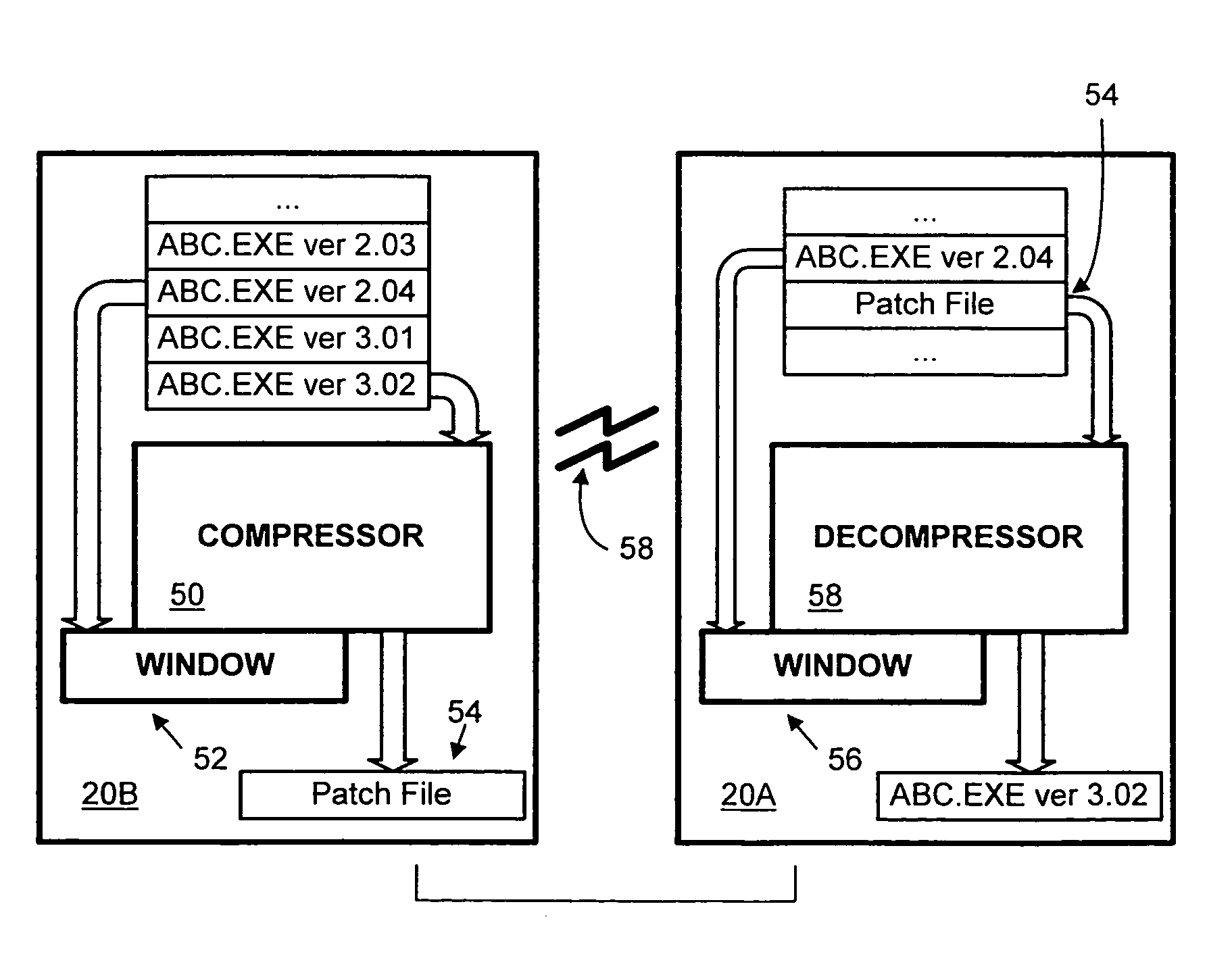

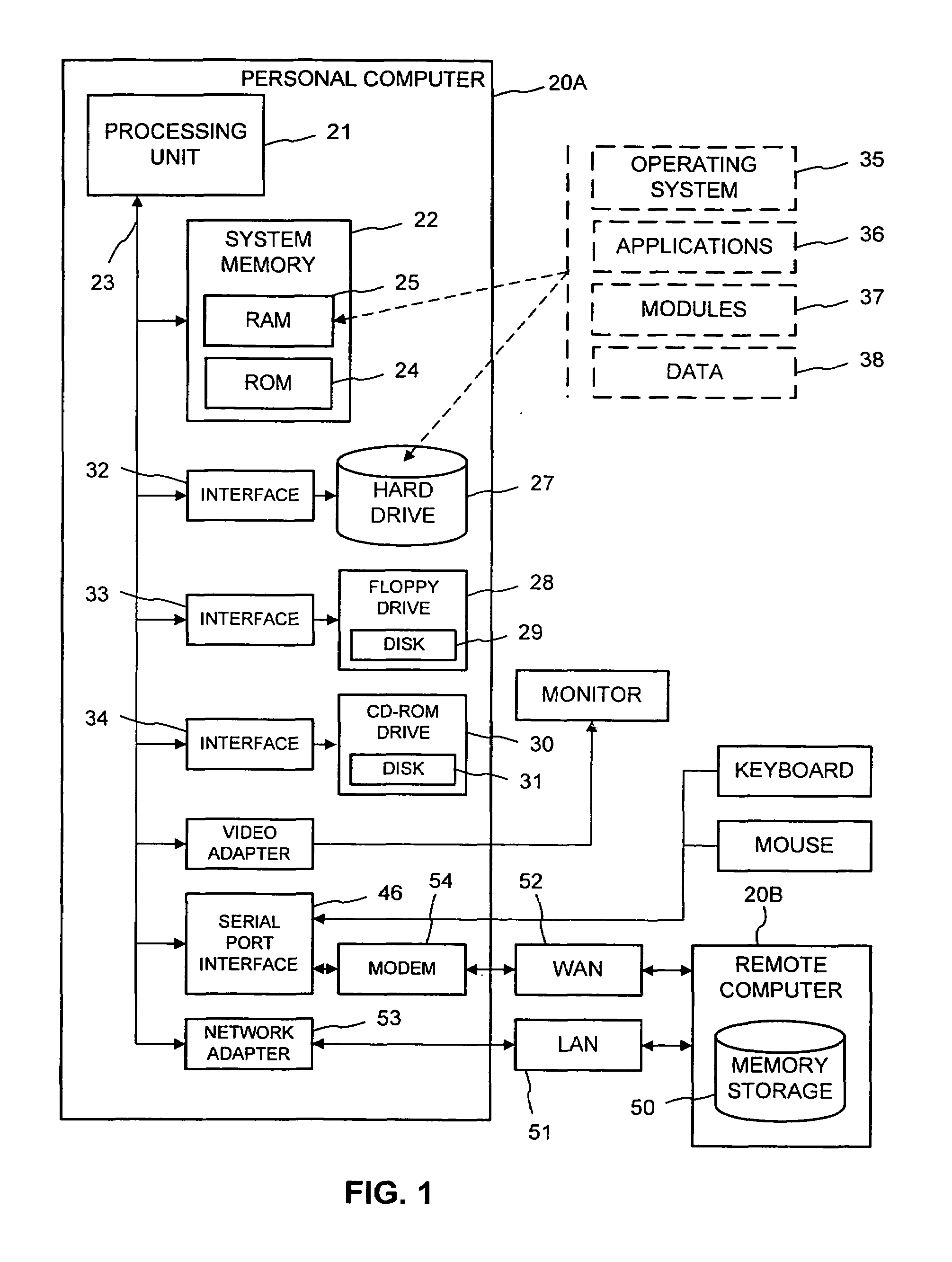

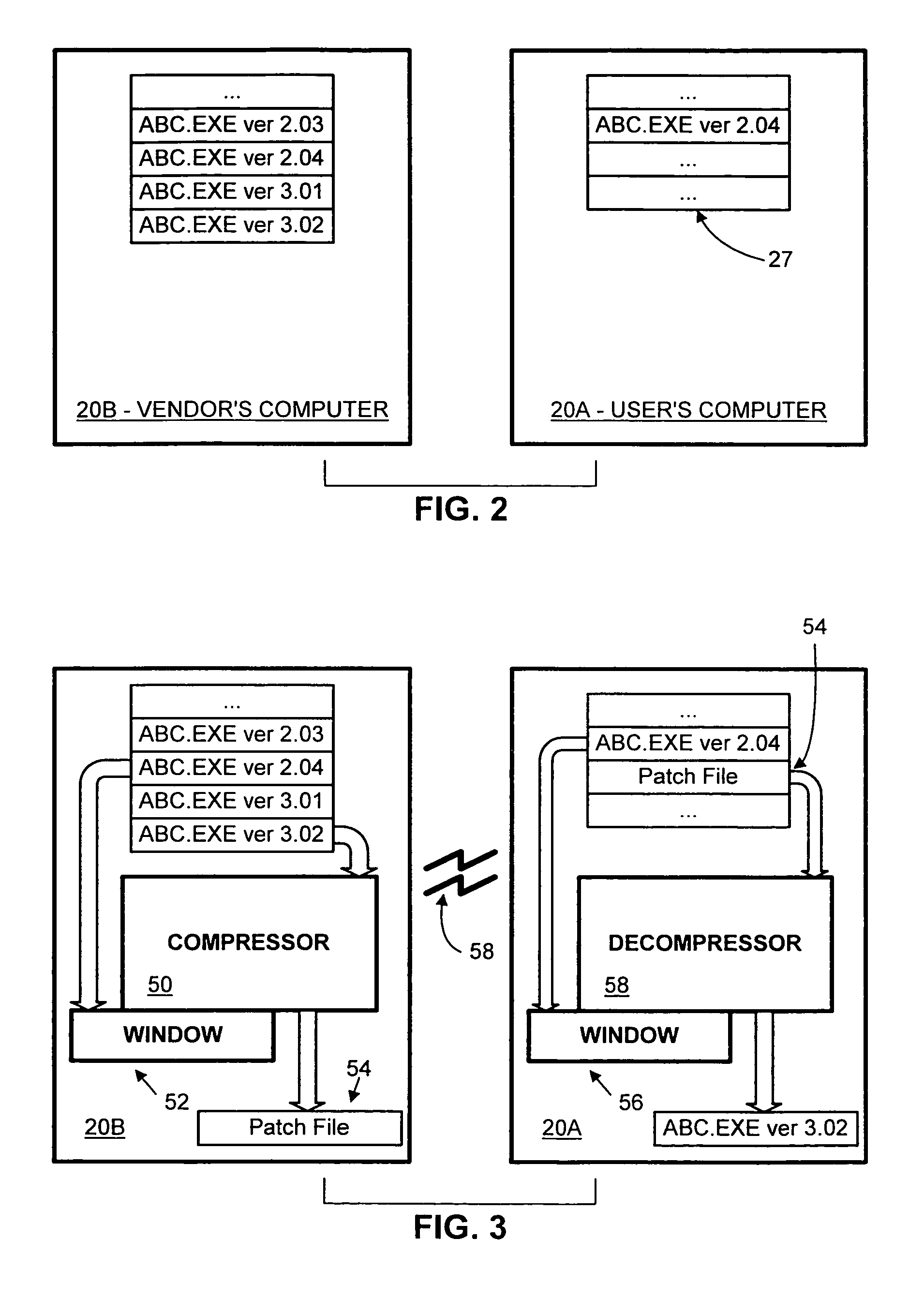

Method and system for updating software with smaller patch files

InactiveUS6938109B1More outputSmall sizeData processing applicationsProgram control using stored programsReduced sizeParallel processing

Rather than comparing an old file with a new file to generate a set of patching instructions, and then compressing the patching instructions to generate a compact patch file for transmission to a user, a patch file is generated in a single operation. A compressor is pre-initialized in accordance with the old version of the file (e.g. in an LZ77 compressor, the history window is pre-loaded with the file). The pre-initialized compressor then compresses the new file, producing a patch file from which the new file can be generated. At the user's computer, a parallel process is performed, with the user's copy of the old file being used to pre-initialize a decompressor to which the patch file is then input. The output of the decompressor is the new file. The patch files generated and used in these processes are of significantly reduced size when compared to the prior art. Variations between copies of the old file as installed on different computers are also addressed, so that a single patch file can be applied irrespective of such variations. By so doing, the need for a multi-version patch file to handle such installation differences is eliminated, further reducing the size of the patch file when compared with prior art techniques. Such variations are addressed by “normalizing” the old file prior to application of the patch file. A temporary copy of the old file is typically made, and locations within the file at which the data may be unpredictable due to idiosyncrasies of the file's installation are changed to known or predictable values.

Owner:MICROSOFT TECH LICENSING LLC

Machine, computer program product and method to carry out parallel reservoir simulation

Owner:SAUDI ARABIAN OIL CO

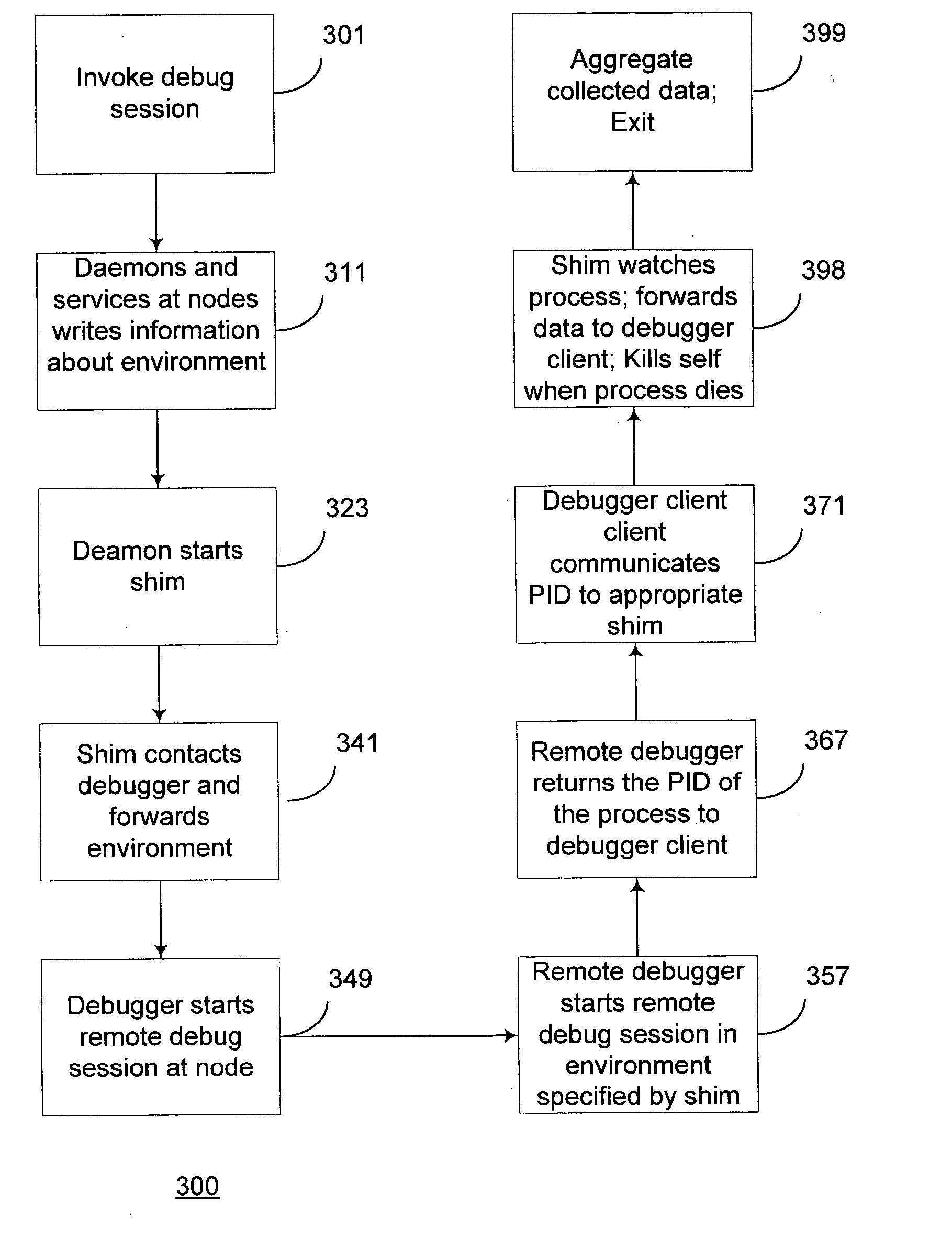

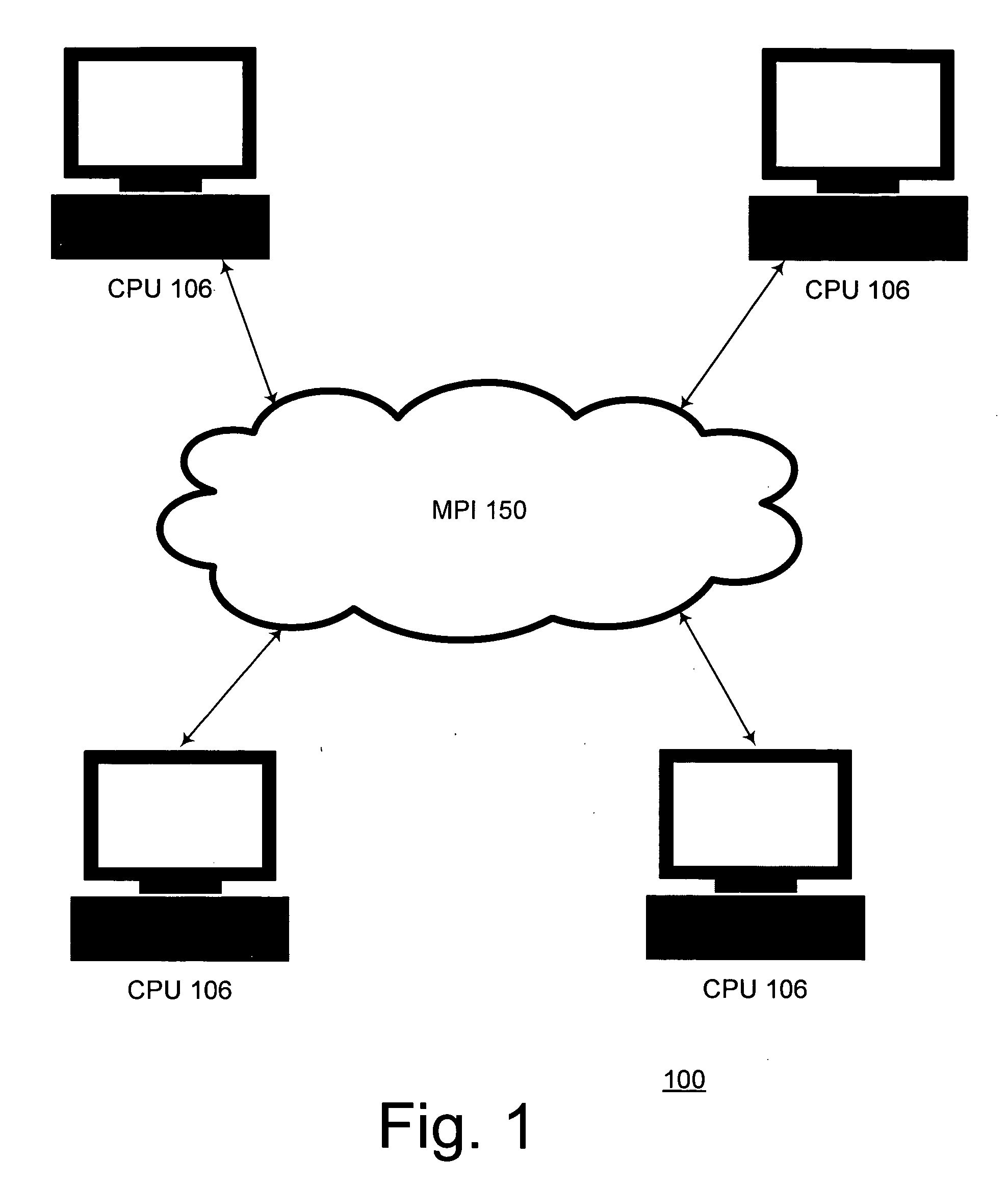

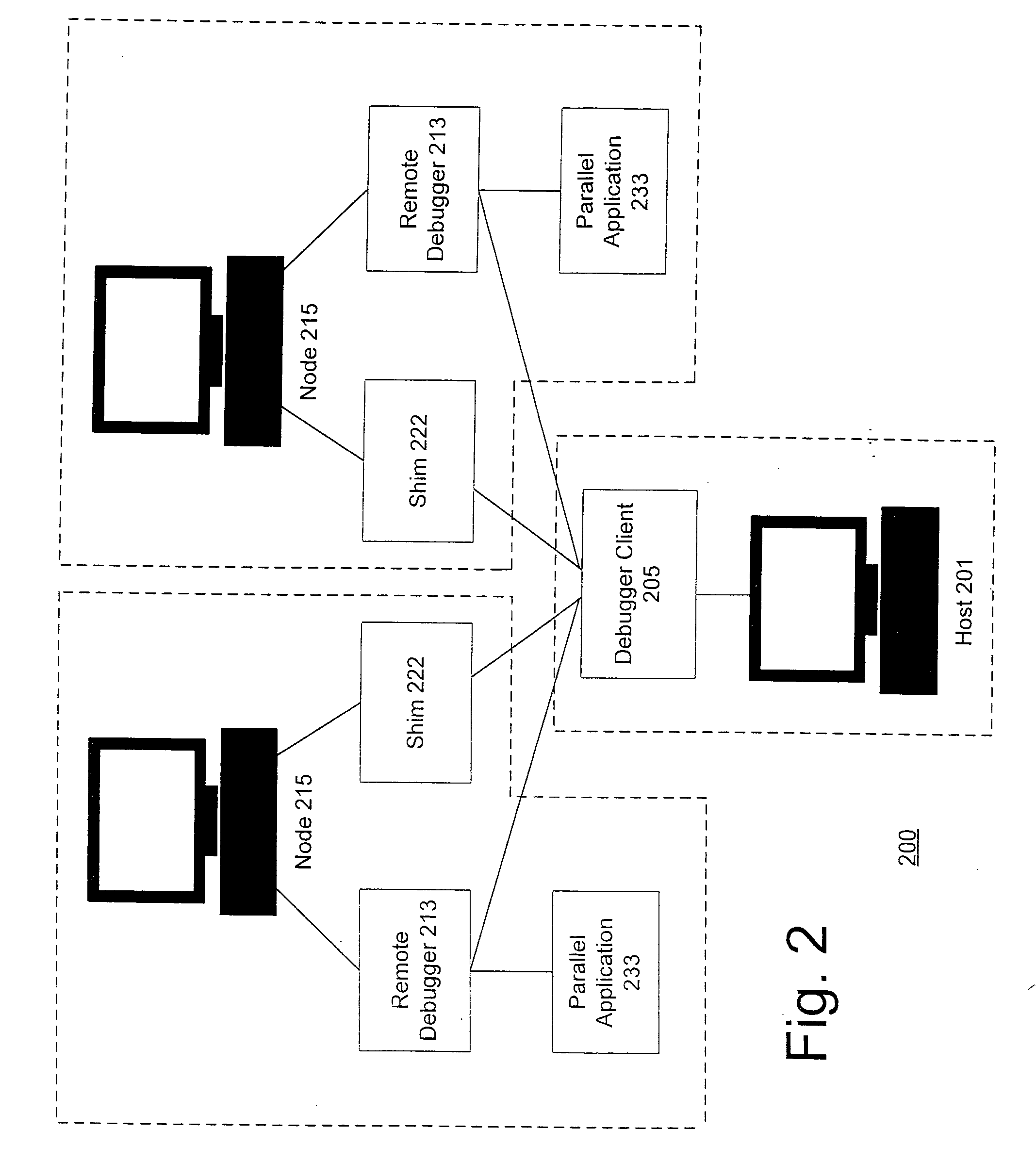

Parallel debugger

ActiveUS20060048098A1Error detection/correctionSpecific program execution arrangementsParallel computingDebugger

A debugger attaches to a parallel process that is executing simultaneously at various nodes of a computing cluster. Using a shim, executing at each node, to monitor each of the processes, the parallel process is debugged such that neither the process or the particular message passing system implemented on the cluster, needs to know of the existence or details regarding the debugger.

Owner:MICROSOFT TECH LICENSING LLC

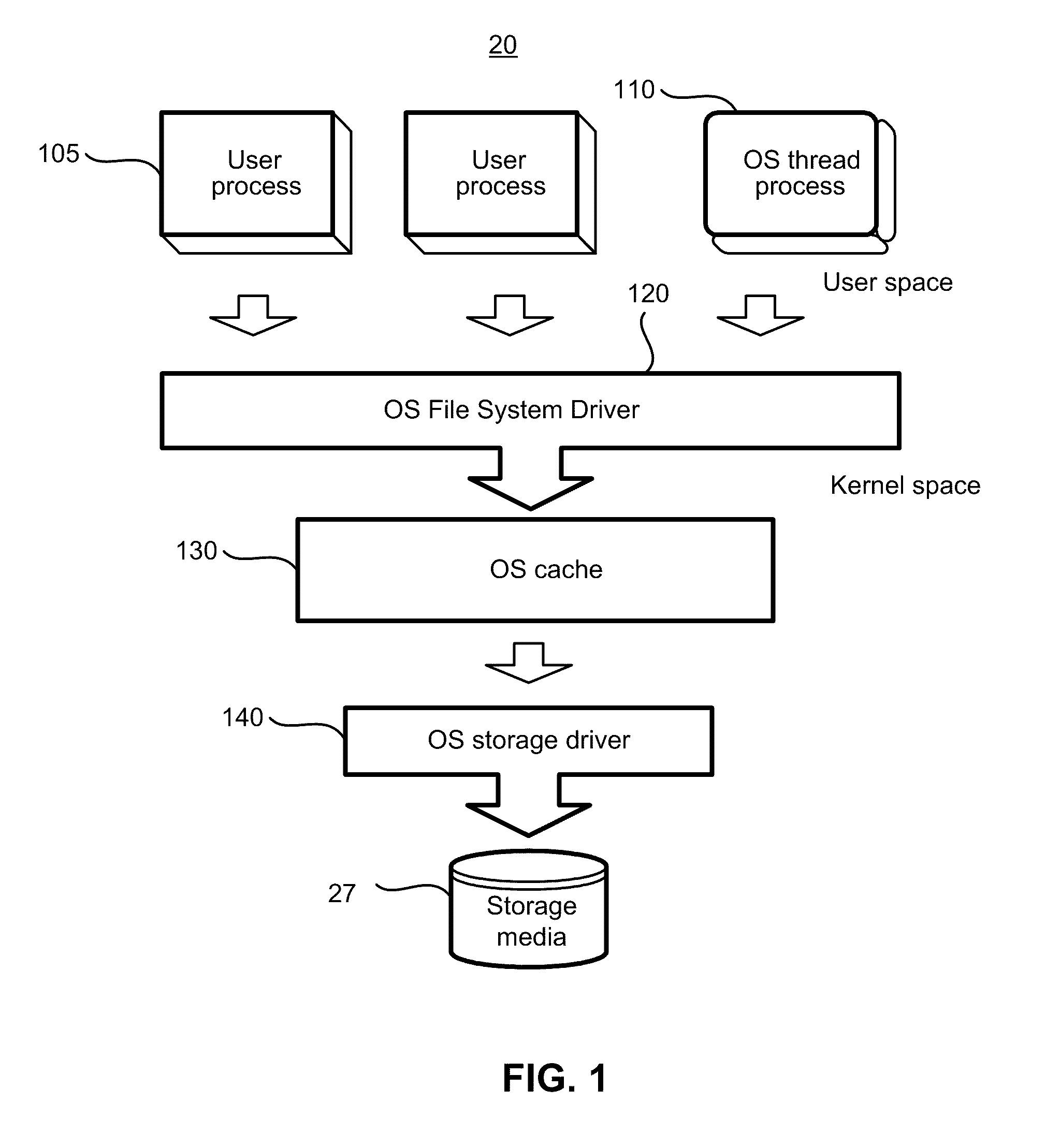

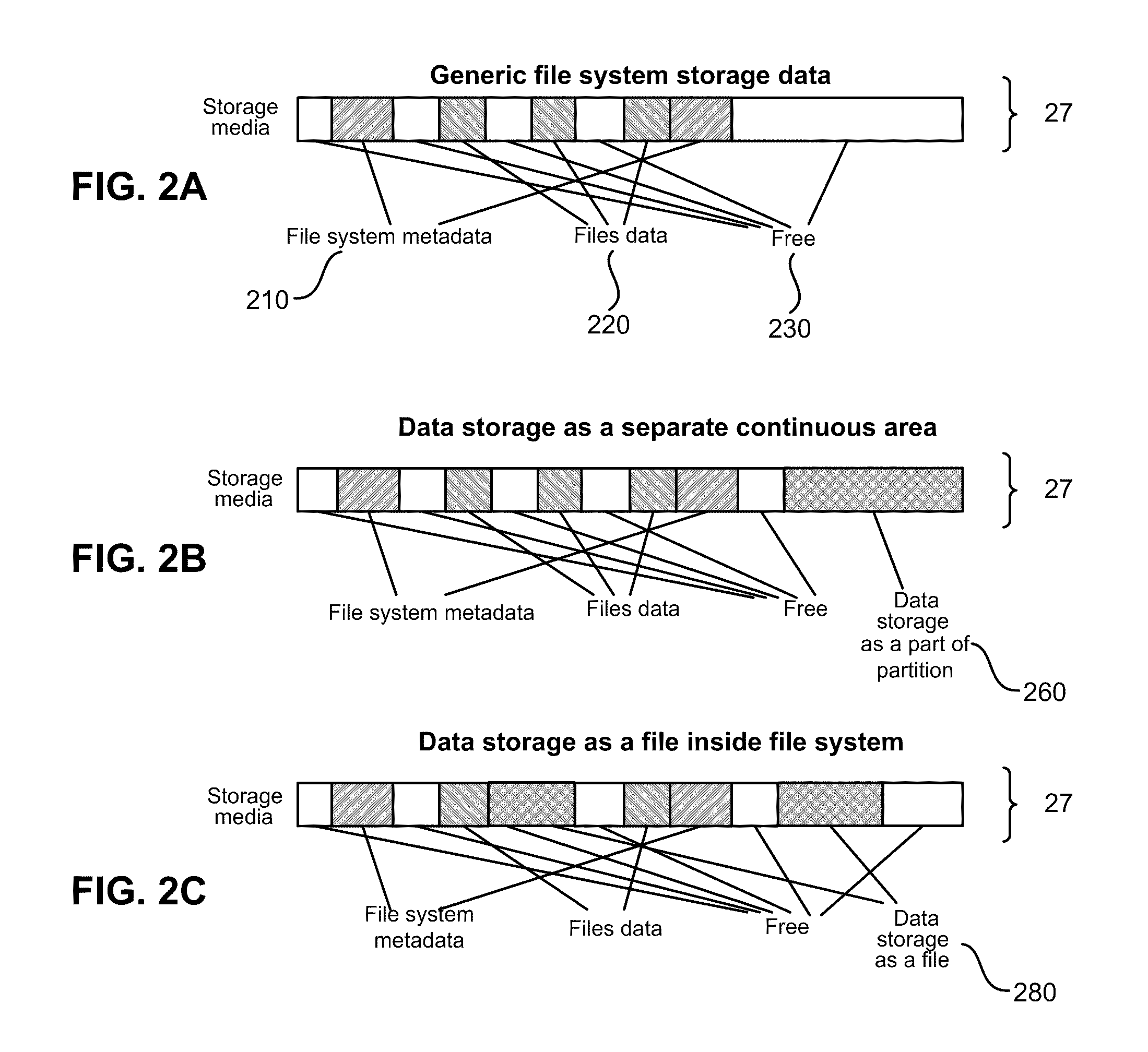

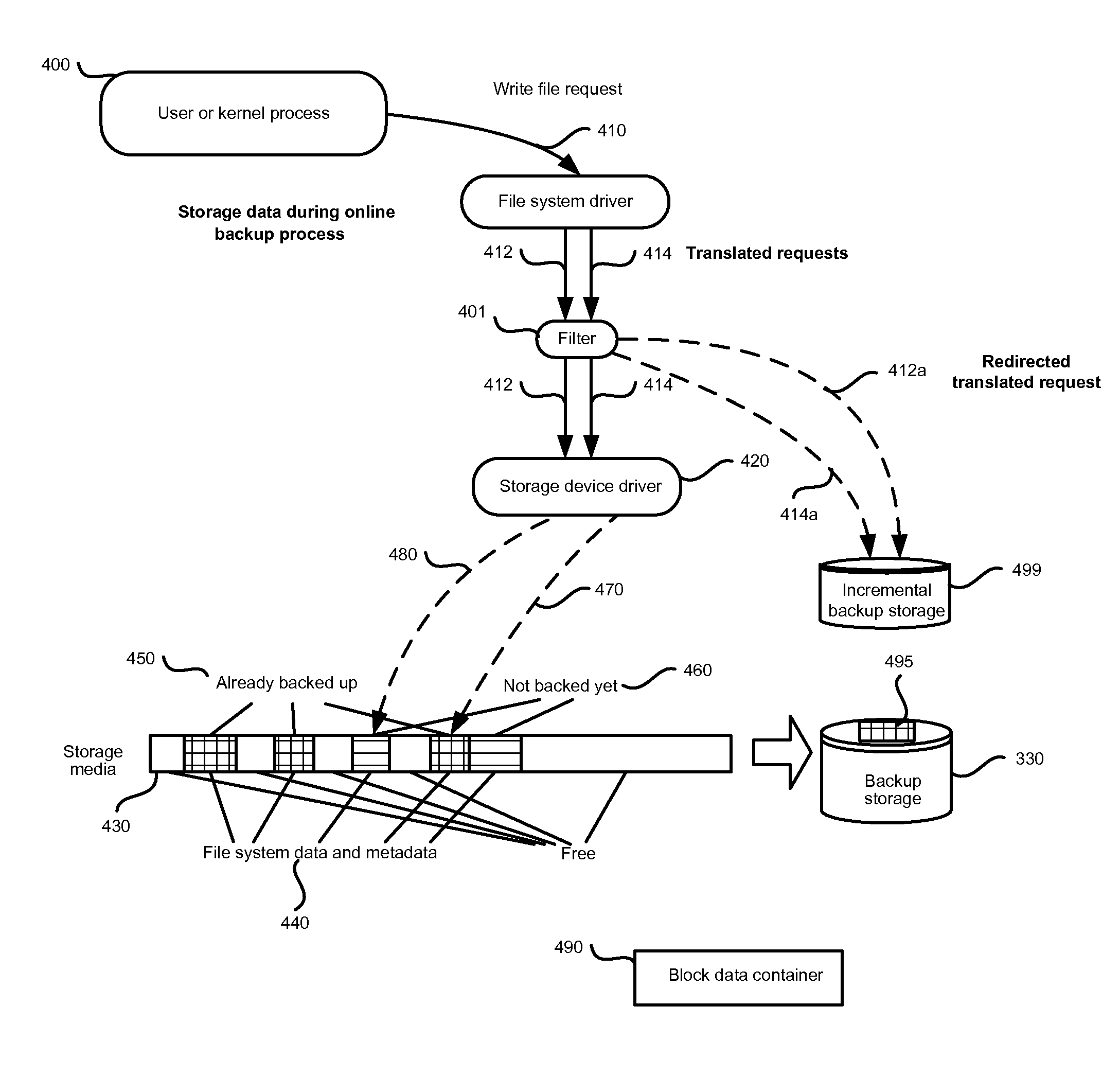

Method and system for continuous data protection

ActiveUS8051044B1Digital data processing detailsError detection/correctionData capacityIncremental backup

Continuous data protection is performed as two parallel processes: copying a data block from the storage device into the backup storage device (creating initial backup) and copying the data block to be written to the data storage into the incremental backup. When a write command is directed to a data storage block, it's intercepted and redirected to the backup storage, and data, which is to be written in accord to the write request, is written to the incremental backup on the backup storage. If write command is also directed to a data storage block identified for backup that has not yet been backed up, the identified data storage block is copied from the storage device to the intermediate storage device, the write command is executed on the identified data storage block from the storage device, and the data storage block is copied from the intermediate storage device to the backup storage device. In case of an error accessing a block on the storage device, the block is marked as invalid. The system suspends a write command to the storage device during the initial data backup process if the intermediate storage device has reached a selected data capacity; and copies a selected amount of data from the intermediate storage device to the backup storage device.

Owner:MIDCAP FINANCIAL TRUST

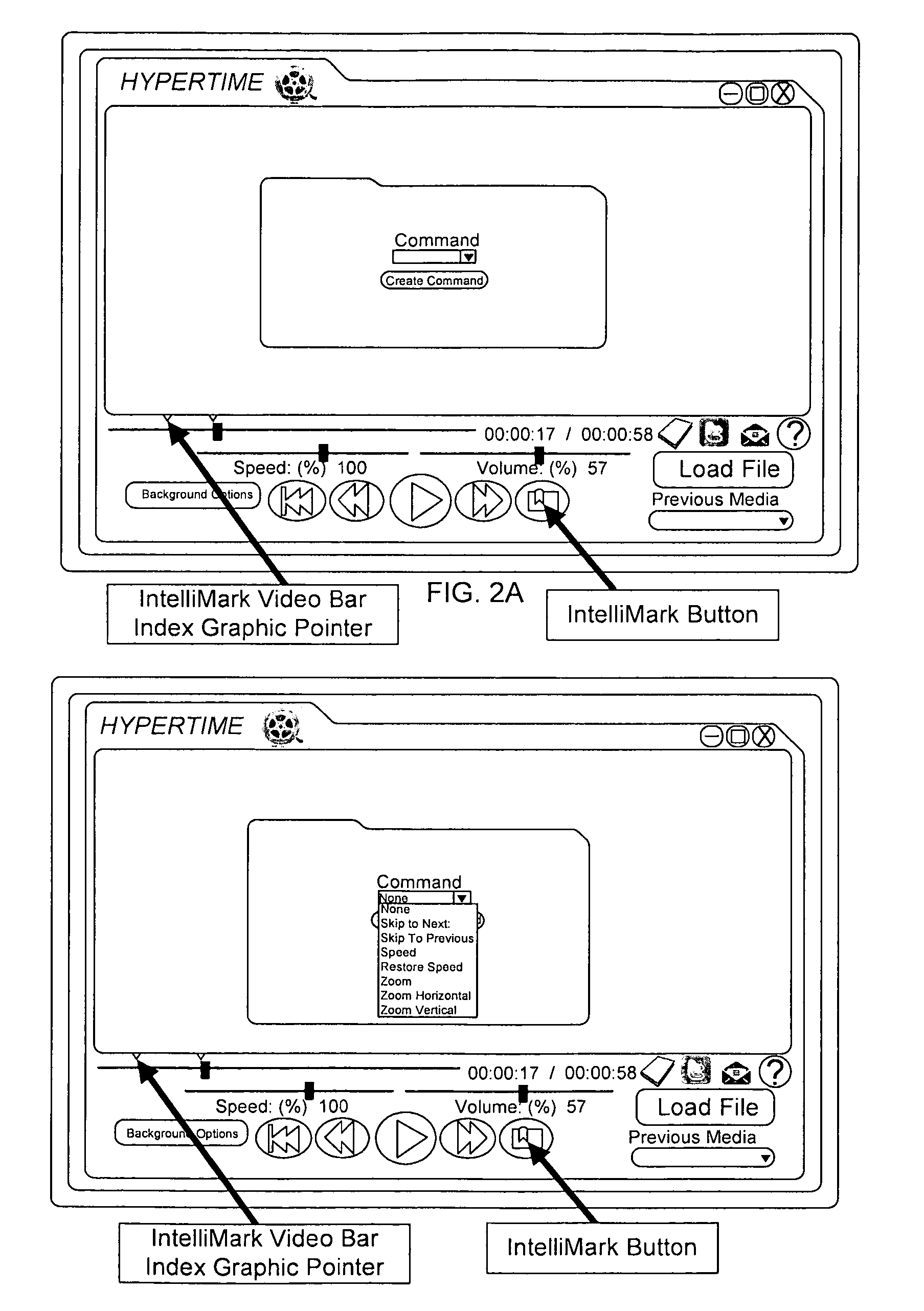

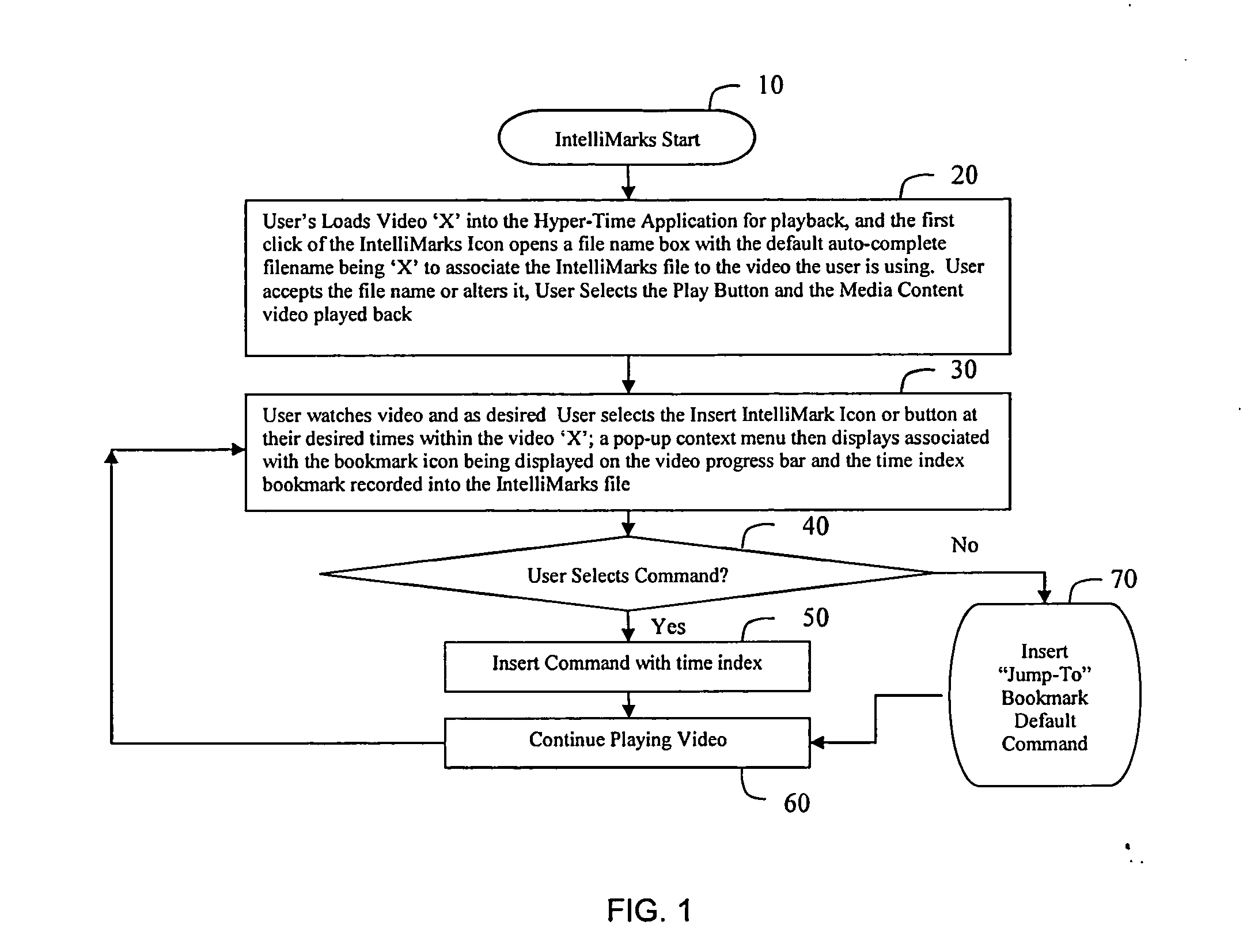

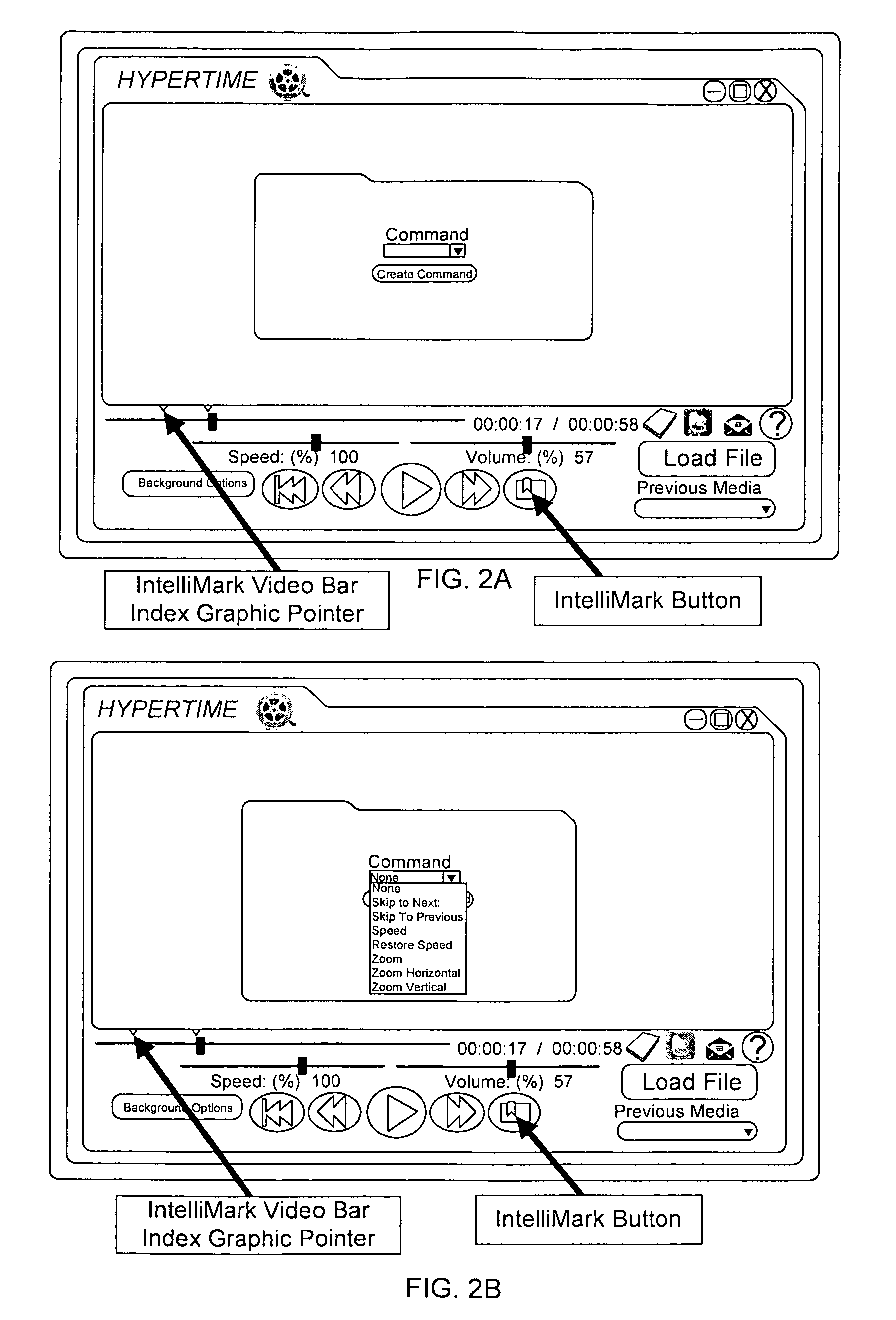

Intellimarks universal parallel processes and devices for user controlled presentation customizations of content playback intervals, skips, sequencing, loops, rates, zooms, warpings, distortions, and synchronized fusions

ActiveUS20140186010A1Easy to implementEnhancement to conventional telepresenceElectronic editing digitised analogue information signalsColor television signals processingParallel processingRunning time

Improved automated methods of dynamically customizing displayed presentation of media content playback and / or live streams with the users seeking to add, remove, change, and / or fuse displayed information. Specifically, a user or users are able to add, remove, or move IntelliMark (Intelligent Bookmarks) that are separate parallel temporal bookmarks with associate dynamic run-time display manipulations within a separate file(s) that are NOT part of the media content nor live stream, and are stored within media players, enabling customized viewings without violating copyrights or terms of use for the underlying unaltered, un-copied original media content.In addition to an individual user customizing his own viewing experience, by sharing his IntelliMarks file(s) with others that have access to the same media content, these others can experience the customized playback as constructed by other users. Further, they can evolve their own interpretations and share back to the original user and / or others.

Owner:VR REHAB INC +2

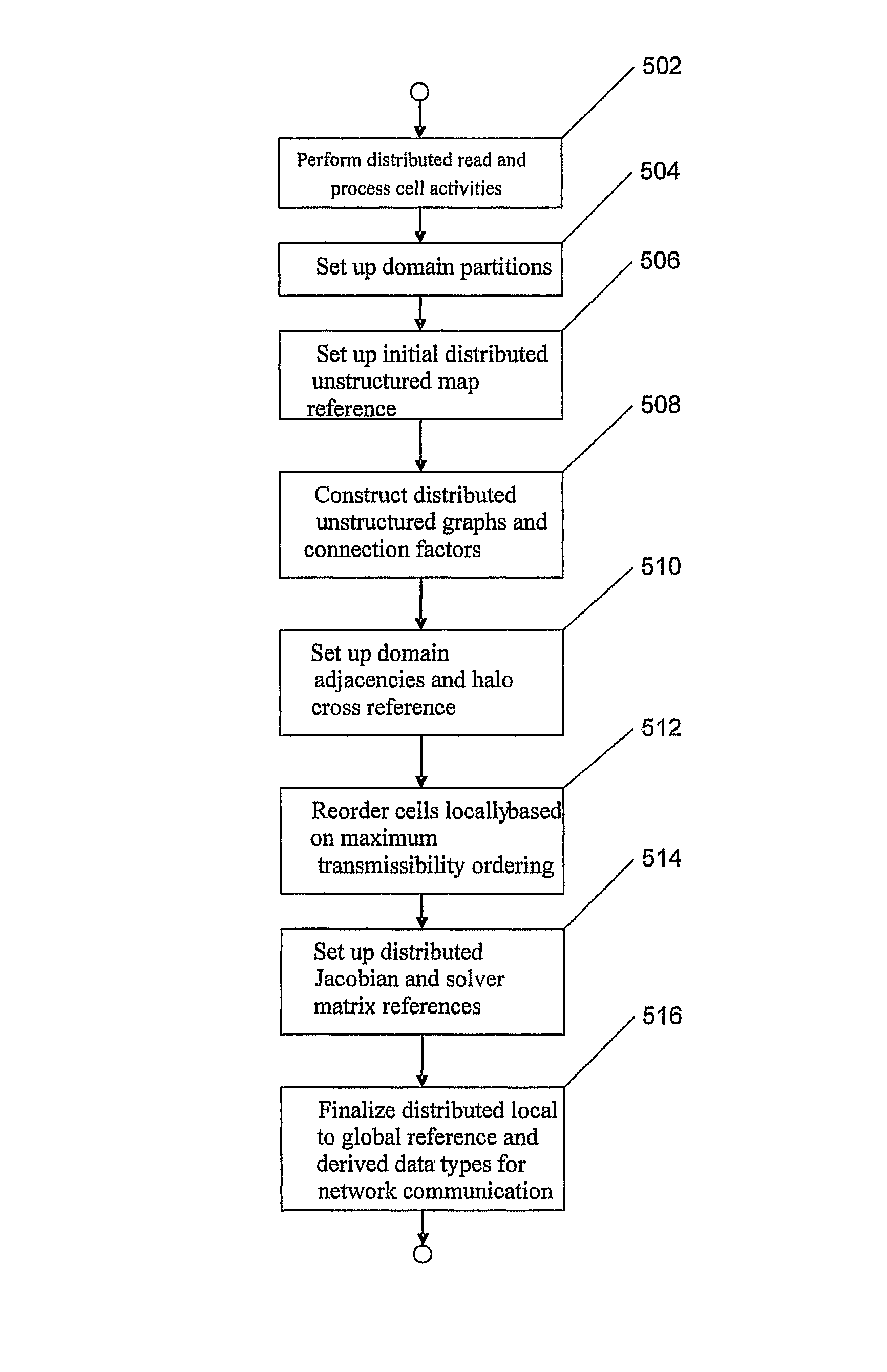

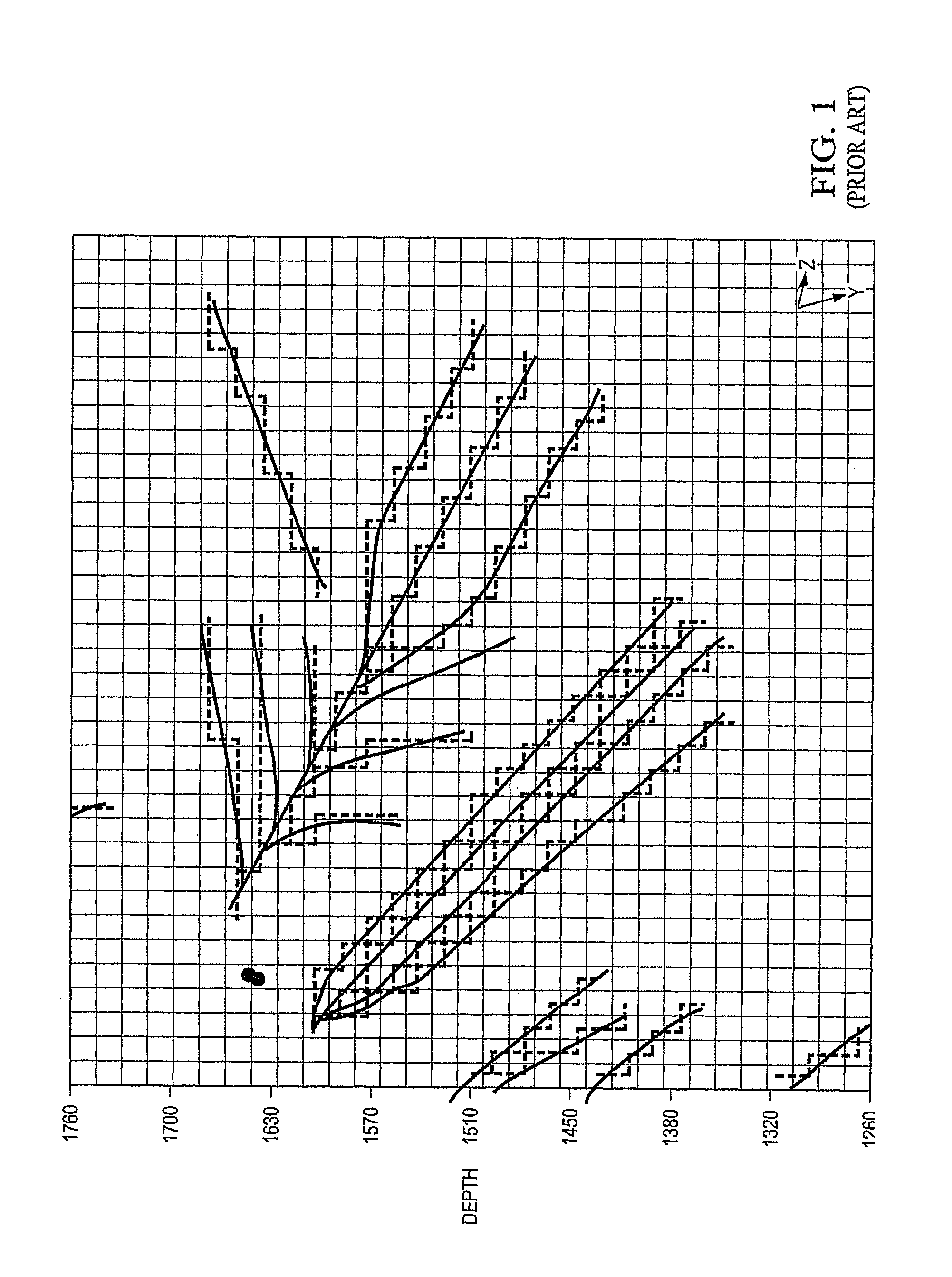

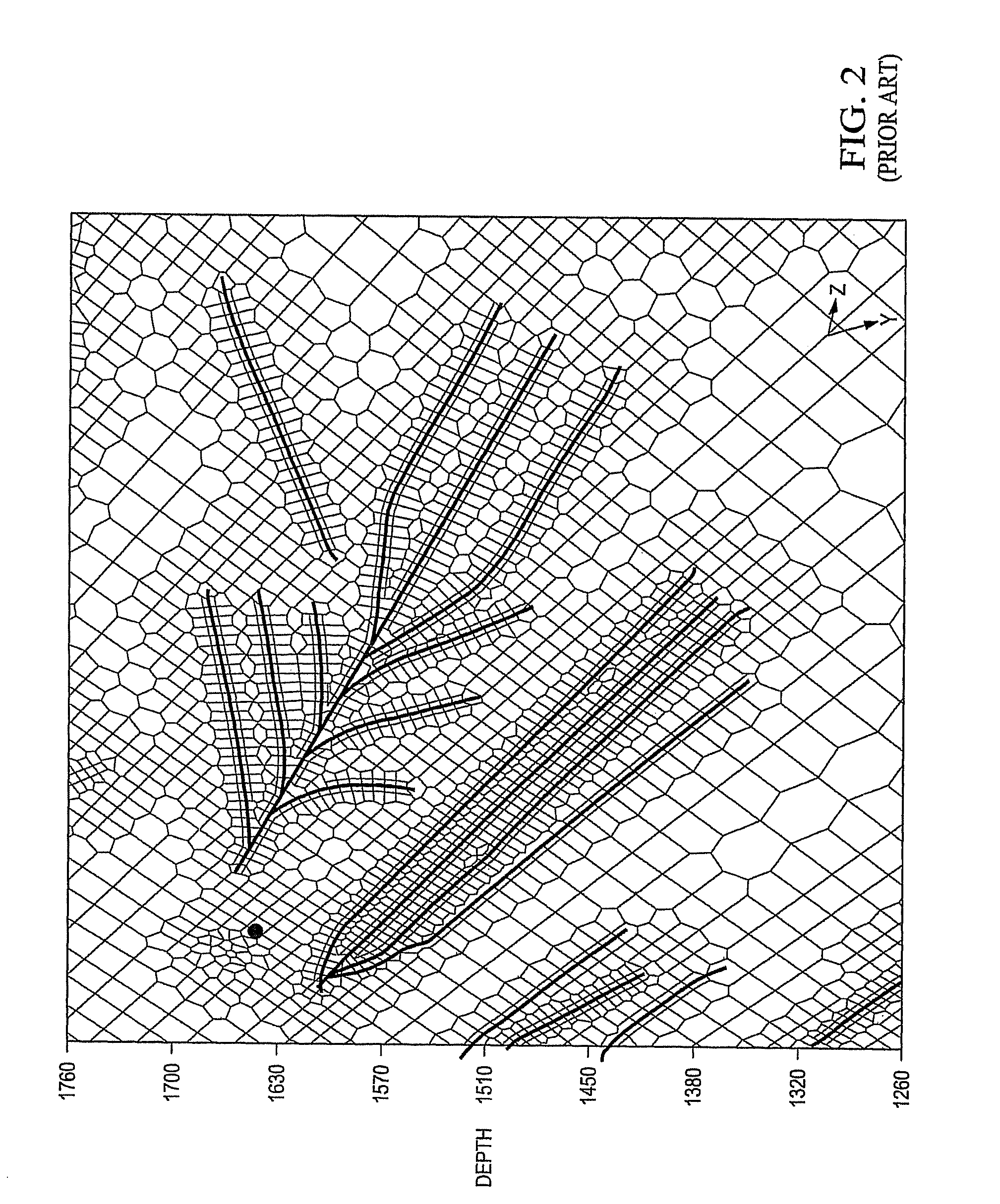

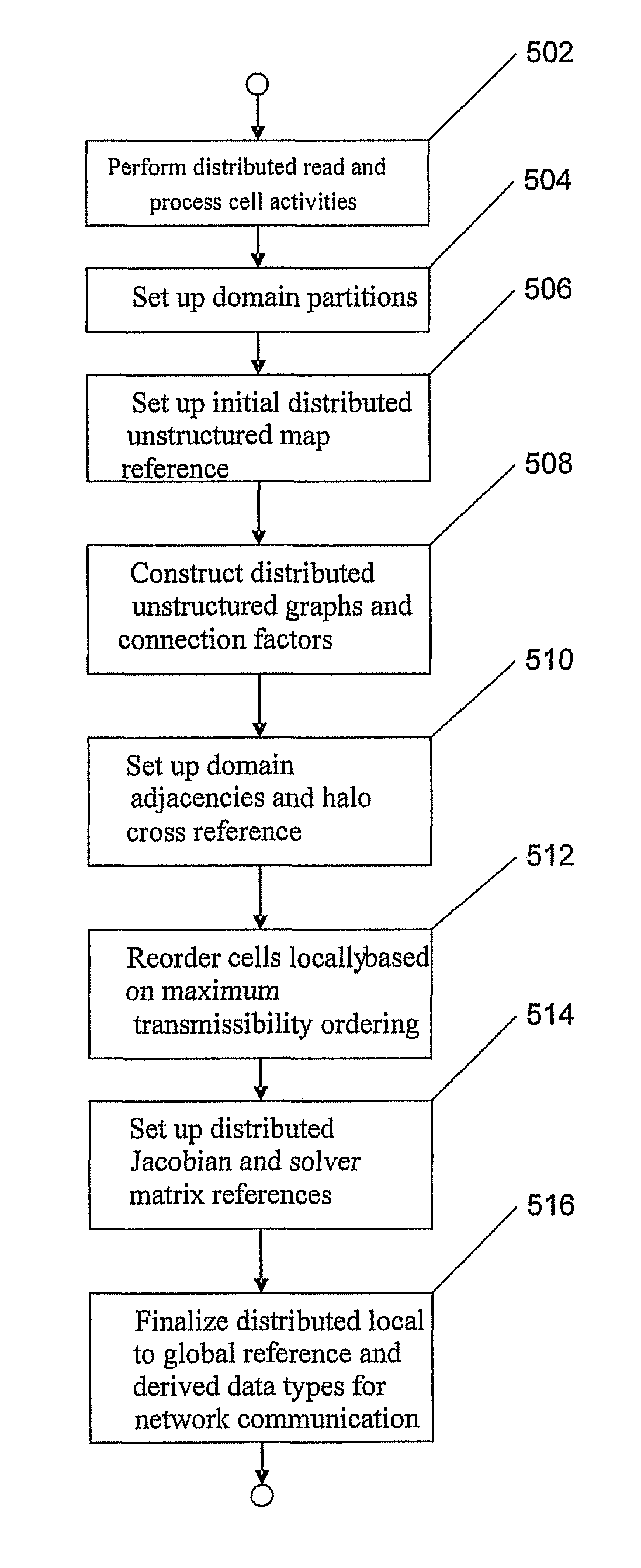

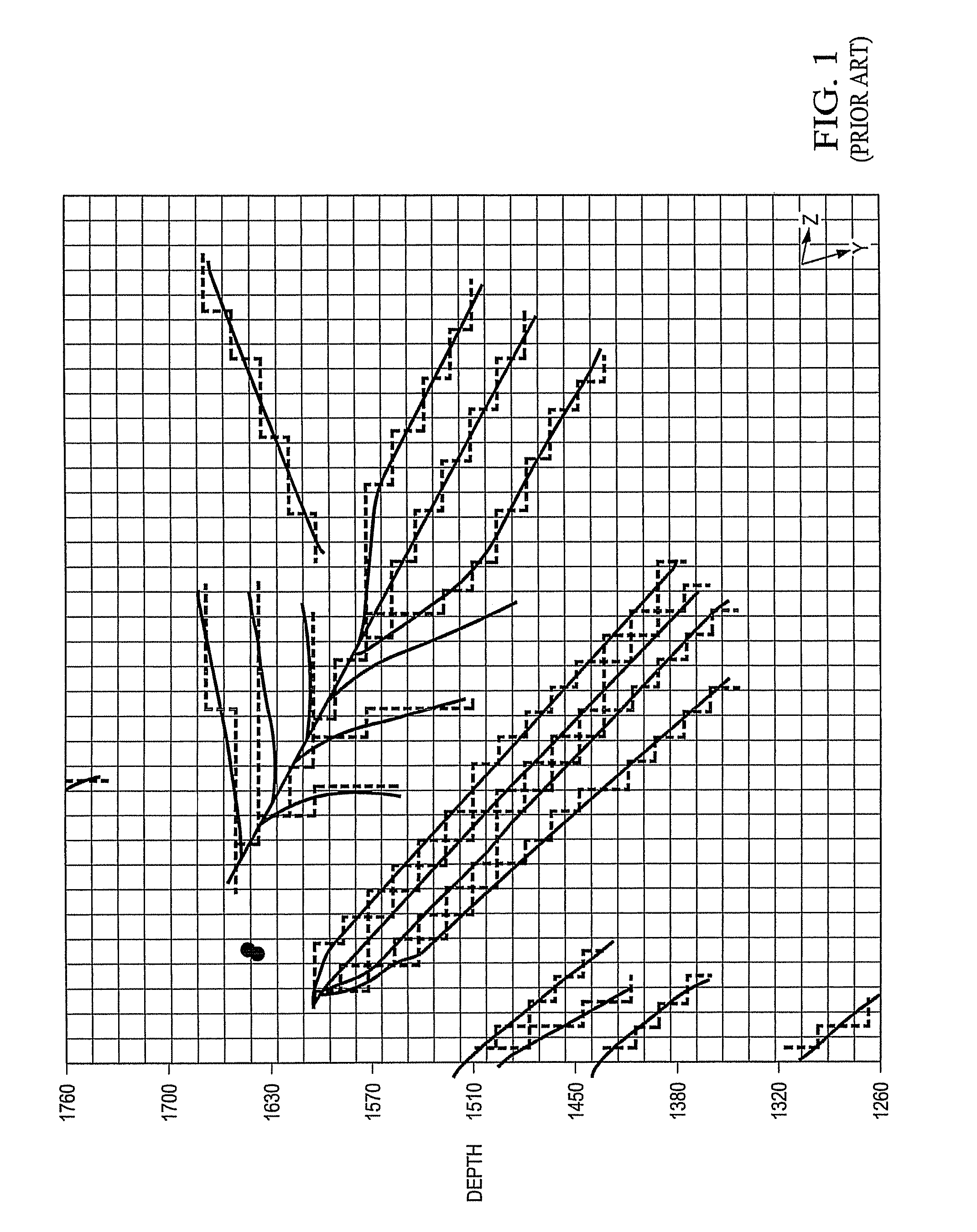

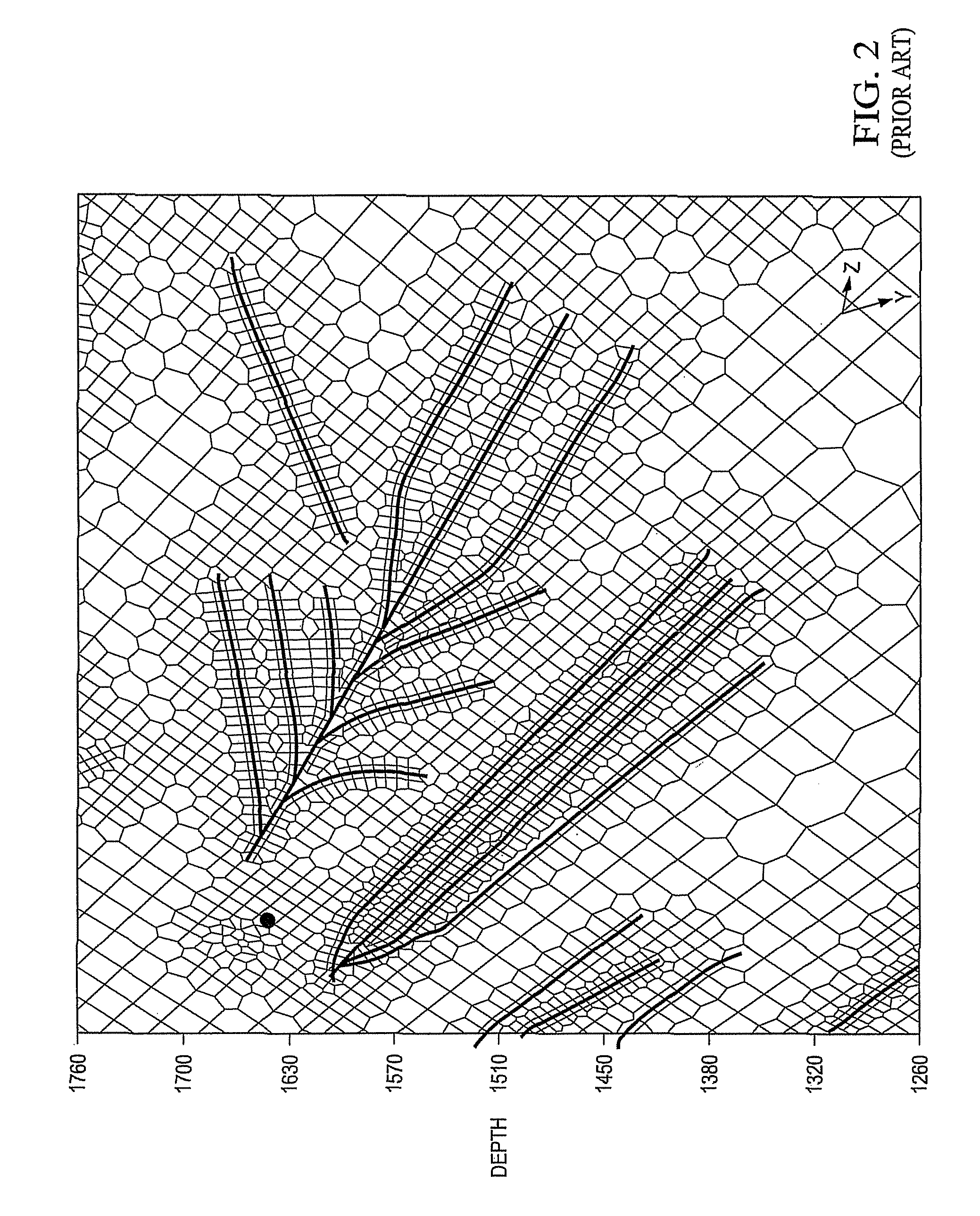

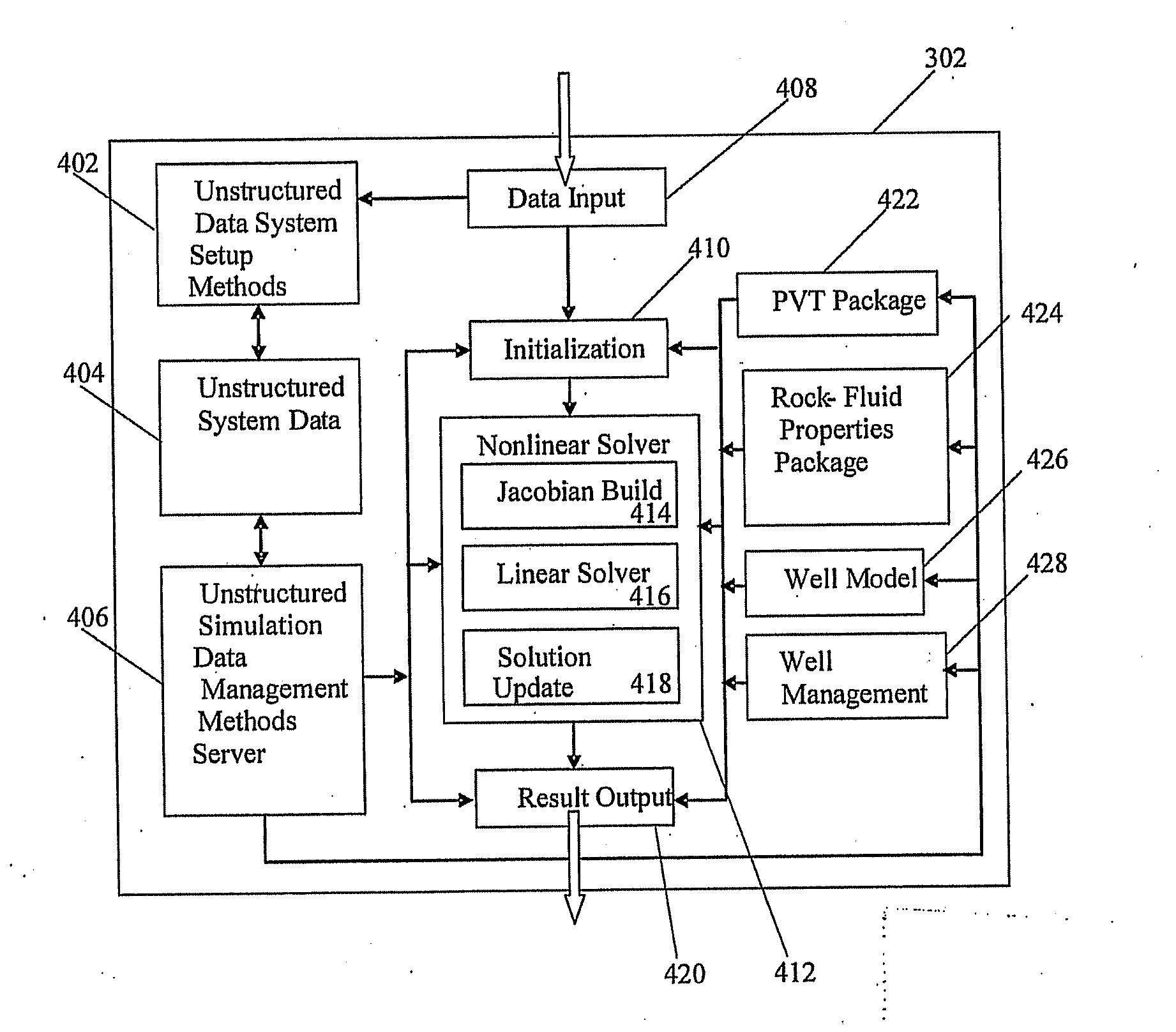

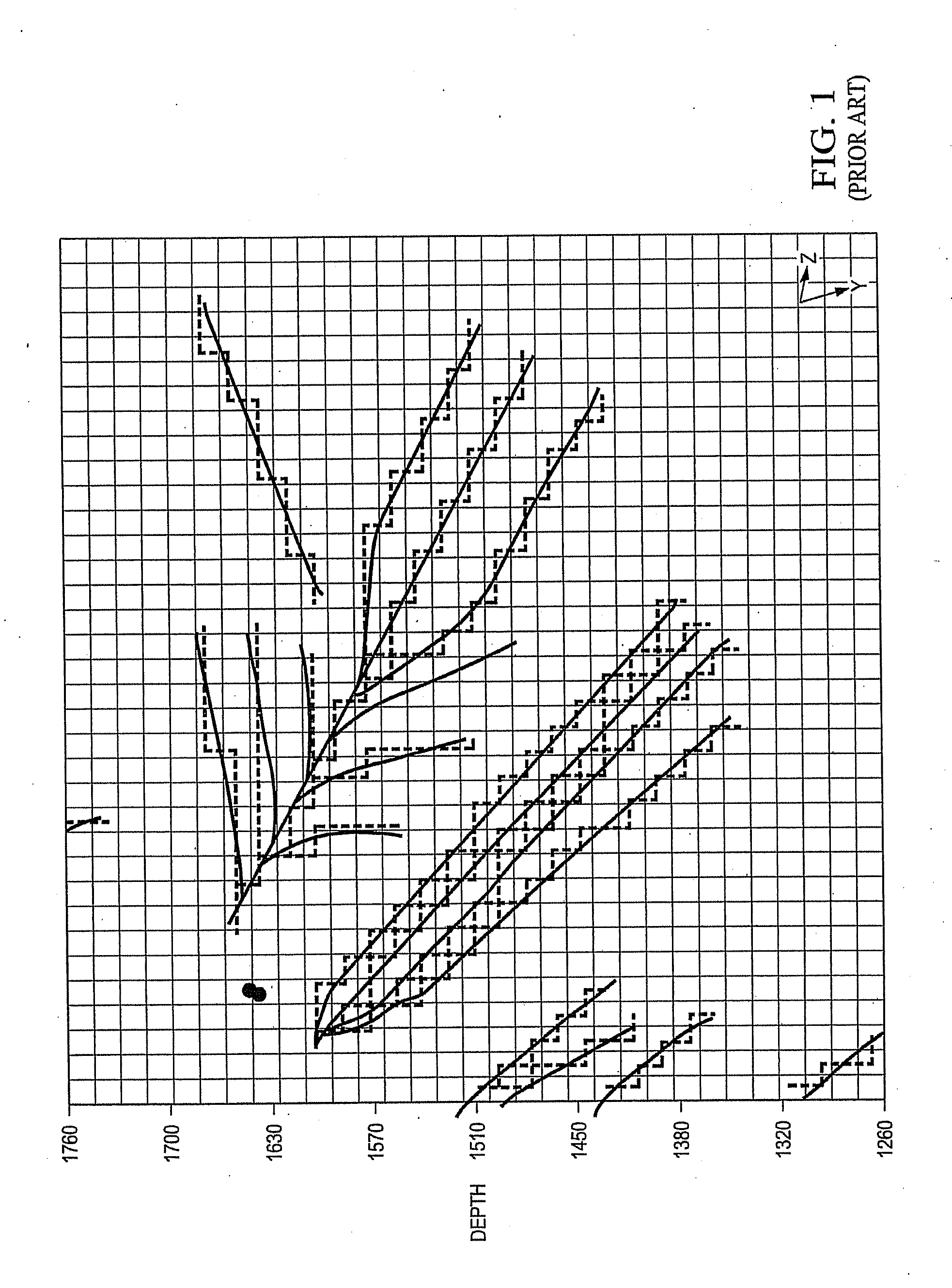

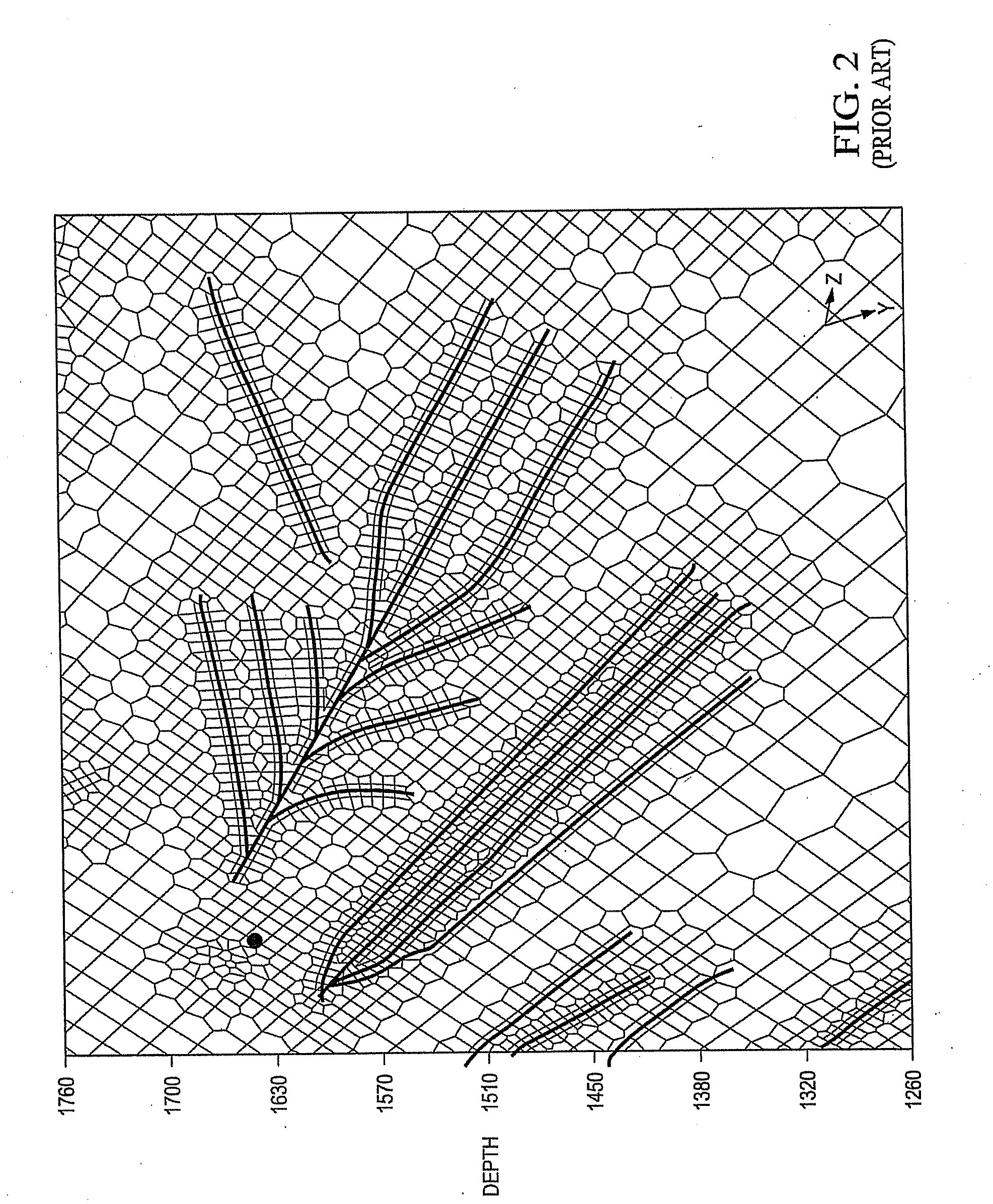

Machine, computer program product and method to generate unstructured grids and carry out parallel reservoir simulation

ActiveUS8386227B2Design optimisation/simulationSpecial data processing applicationsCell indexParallel processing

A machine, computer program product, and method to enable scalable parallel reservoir simulations for a variety of simulation model sizes are described herein. Some embodiments of the disclosed invention include a machine, methods, and implemented software for performing parallel processing of a grid defining a reservoir or oil / gas field using a plurality of sub-domains for the reservoir simulation, a parallel process of re-ordering a local cell index for each of the plurality of cells using characteristics of the cell and location within the at least one sub-domain and a parallel process of simulating at least one production characteristic of the reservoir.

Owner:SAUDI ARABIAN OIL CO

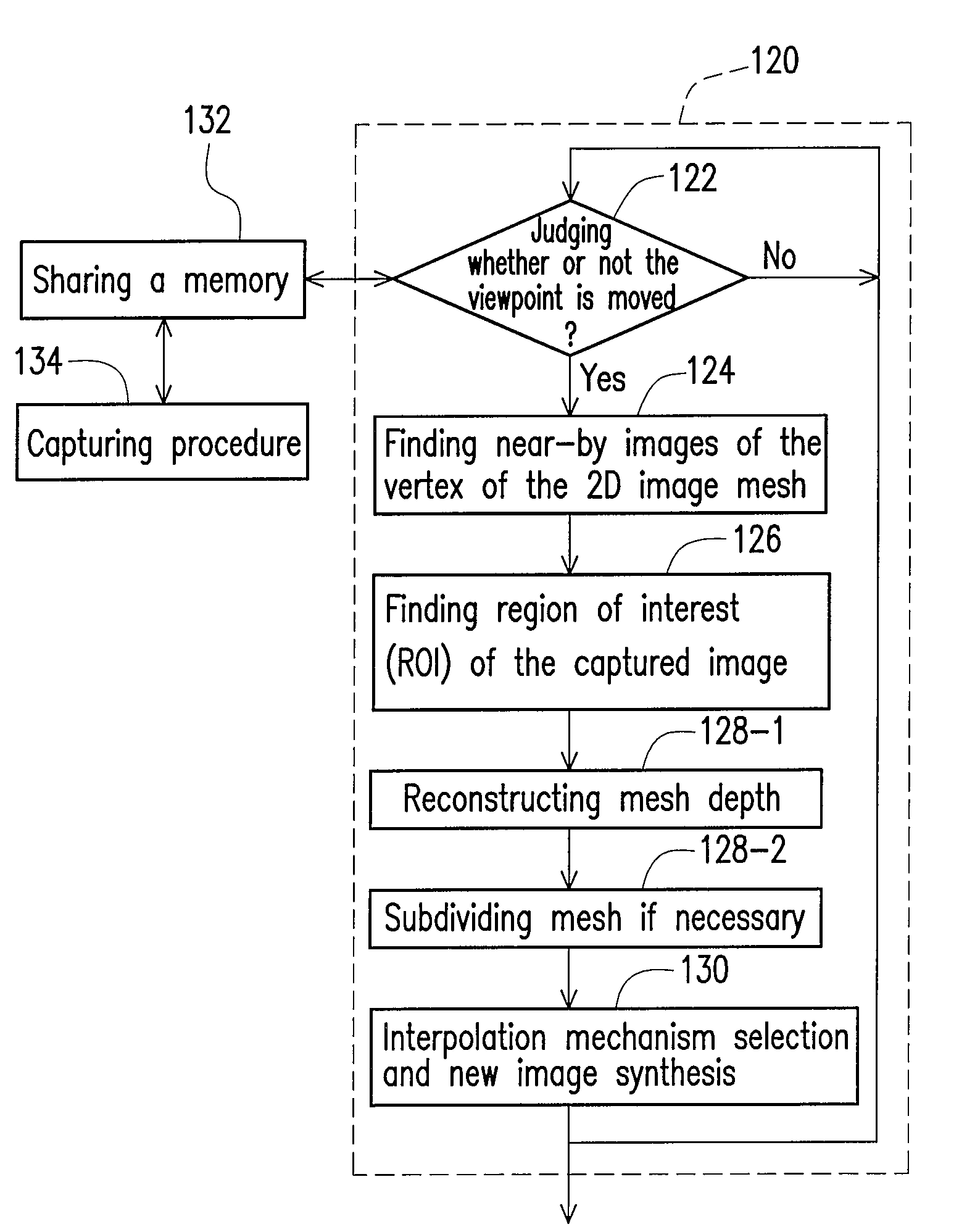

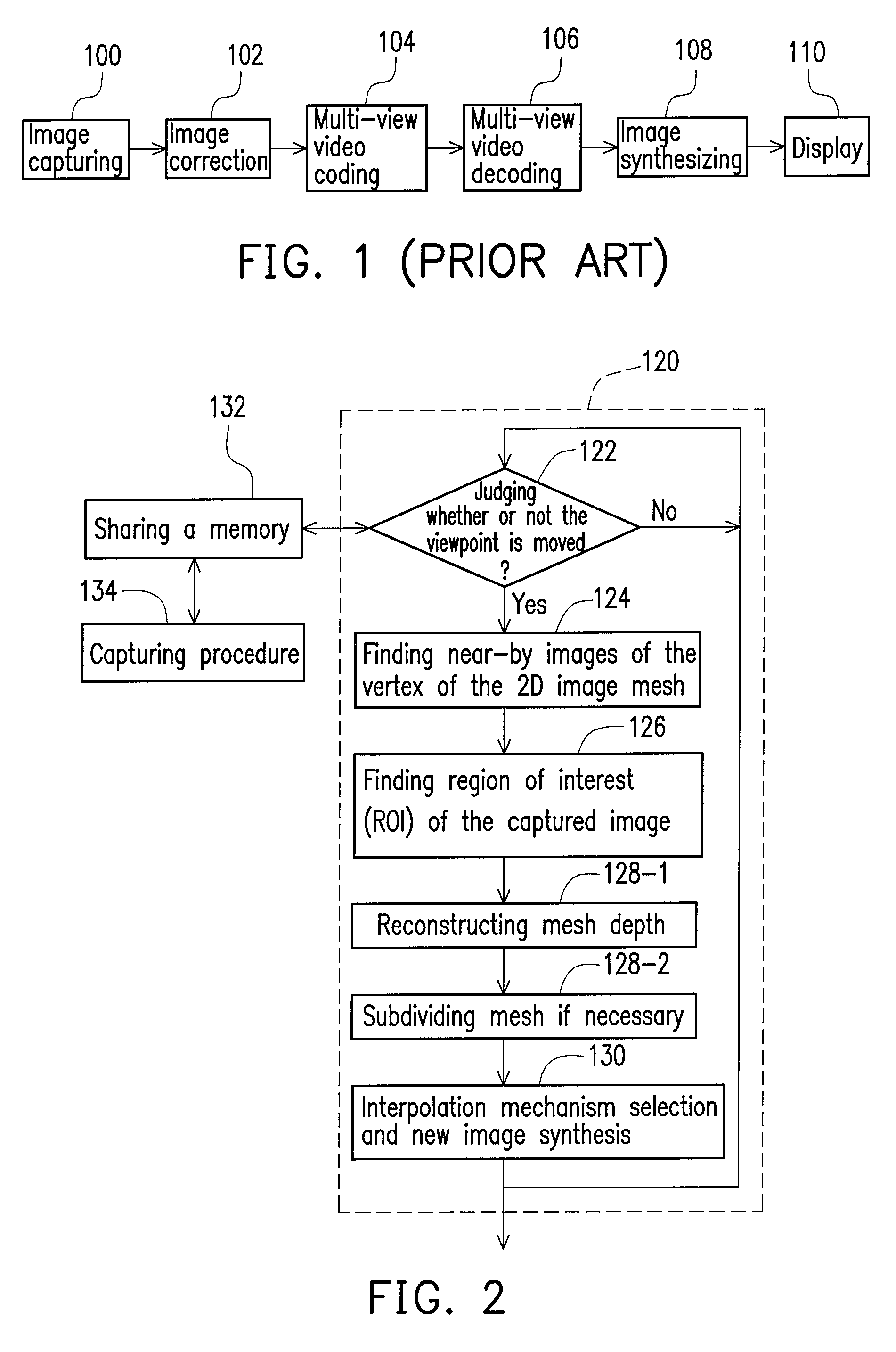

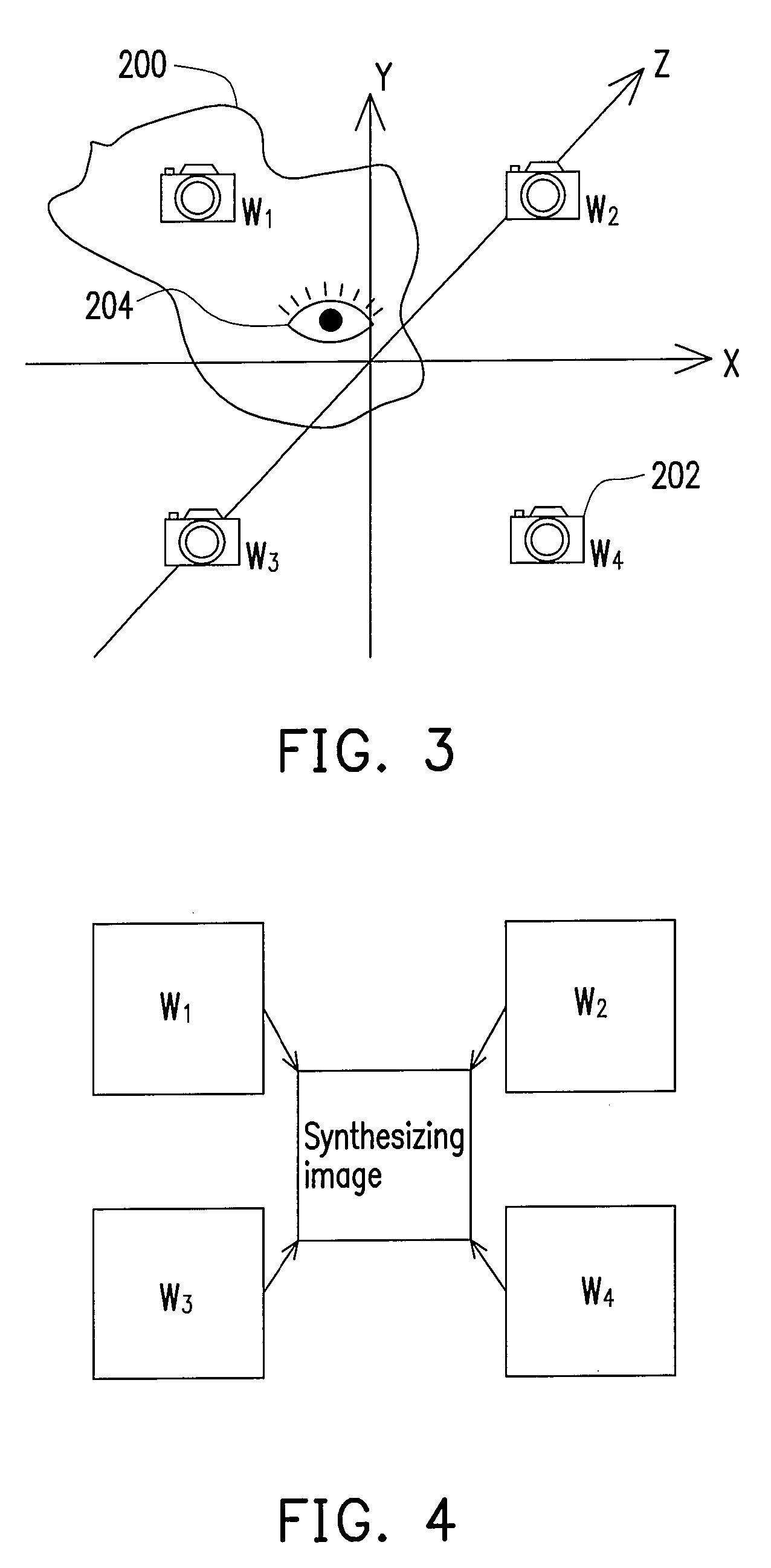

Parallel processing method for synthesizing an image with multi-view images

InactiveUS20090207179A1Good effectImprove visual effectsElectric digital data processingArchitecture with multiple processing unitsViewpointsComputer graphics (images)

A parallel processing method for synthesizing multi-view images is provided, which may parallel process at least a potion of the following steps. First, multiple reference images are input, wherein each reference image is correspondingly taken from a reference viewing angle. Next, an intended synthesized image corresponding to a viewpoint and an intended viewing angle is determined. Next, the intended synthesized image is divided to obtain multiple meshes and multiple vertices of the meshes, wherein the vertices are divided into several vertex groups, and each vertex and the viewpoint form a view direction. Next, the view direction is referenced to find several near-by images from the reference images for synthesizing an image of a novel viewing angle. After the foregoing actions are totally or partially processed according to the parallel processing mechanism, separate results are combined for use in a next processing stage.

Owner:IND TECH RES INST

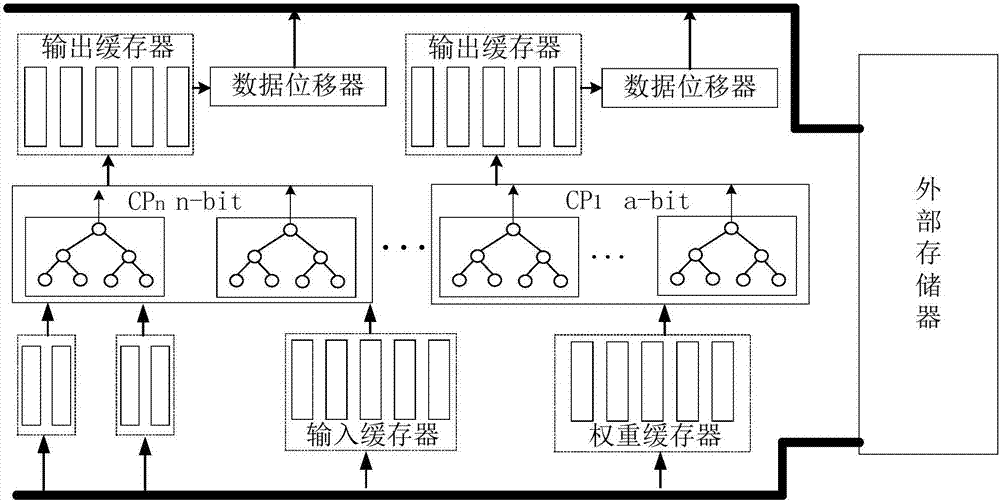

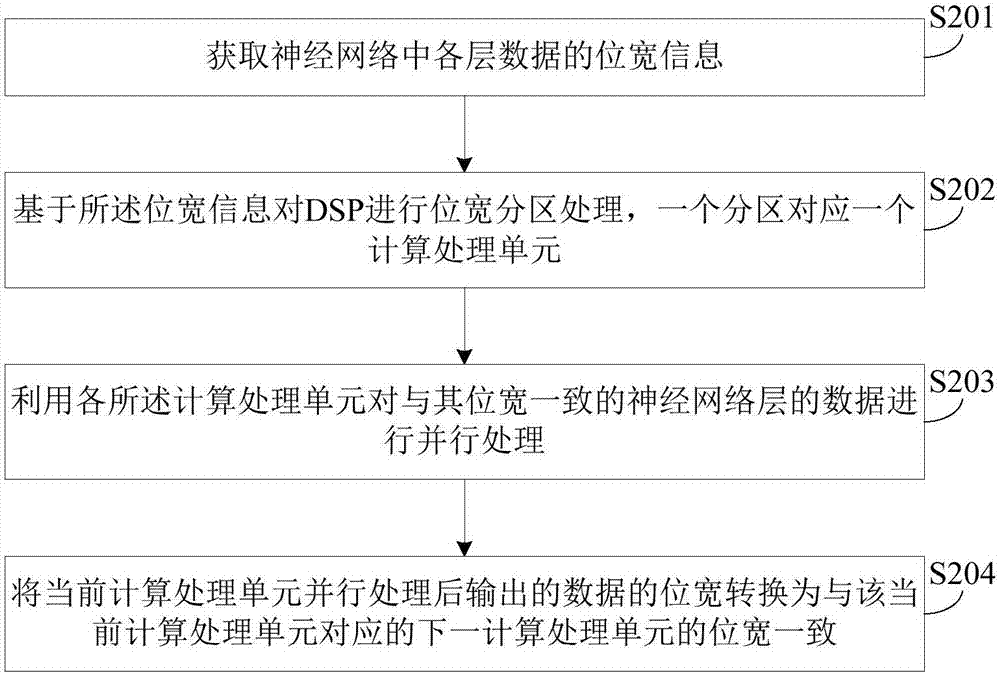

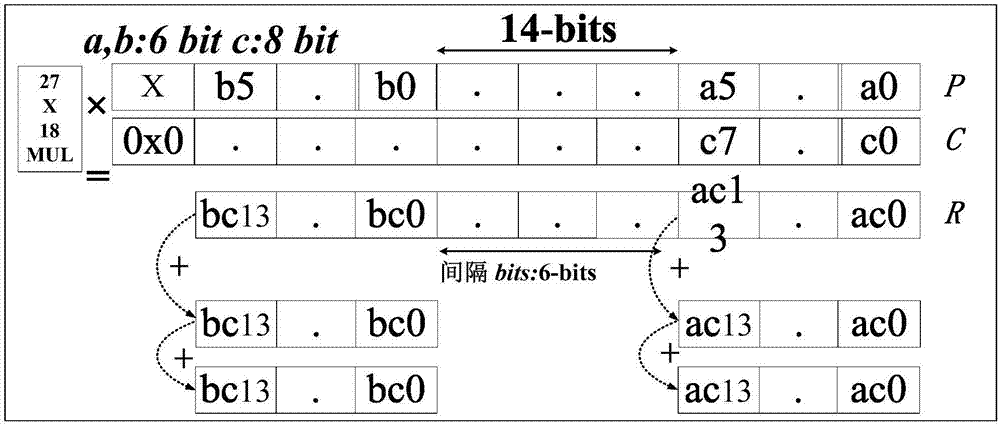

Neural network accelerator for bit width partitioning and implementation method of neural network accelerator

ActiveCN107451659AIncrease profitImprove resource usage efficiencyPhysical realisationExternal storageNerve network

The present invention provides a neural network accelerator for bit width partitioning and an implementation method of the neural network accelerator. The neural network accelerator includes a plurality of computing and processing units with different bit widths, input buffers, weight buffers, output buffers, data shifters and an off-chip memory; each of the computing and processing units obtains data from the corresponding input buffering area and weight buffer, and performs parallel processing on data of a neural network layer having a bit width consistent with the bit width of the corresponding computing and processing unit; the data shifters are used for converting the bit width of data outputted by the current computing and processing unit into a bit width consistent with the bit width of a next computing and processing unit corresponding to the current computing and processing unit; and the off-chip memory is used for storing data which have not been processed and have been processed by the computing and processing units. With the neural network accelerator for bit width partitioning and the implementation method of the neural network accelerator of the invention adopted, multiply-accumulate operation can be performed on a plurality of short-bit width data, so that the utilization rate of a DSP can be increased; and the computing and processing units (CP) with different bit widths are adopted to perform parallel computation of each layer of a neural network, and therefore, the computing throughput of the accelerator can be improved.

Owner:TSINGHUA UNIV

Machine, computer program product and method to carry out parallel reservoir simulation

A machine, computer program product, and method to enable scalable parallel reservoir simulations for a variety of simulation model sizes are described herein. Some embodiments of the disclosed invention include a machine, methods, and implemented software for performing parallel processing of a grid defining a reservoir or oil / gas field using a plurality of sub-domains for the reservoir simulation, a parallel process of re-ordering a local cell index for each of the plurality of cells using characteristics of the cell and location within the at least one sub-domain and a parallel process of simulating at least one production characteristic of the reservoir.

Owner:SAUDI ARABIAN OIL CO

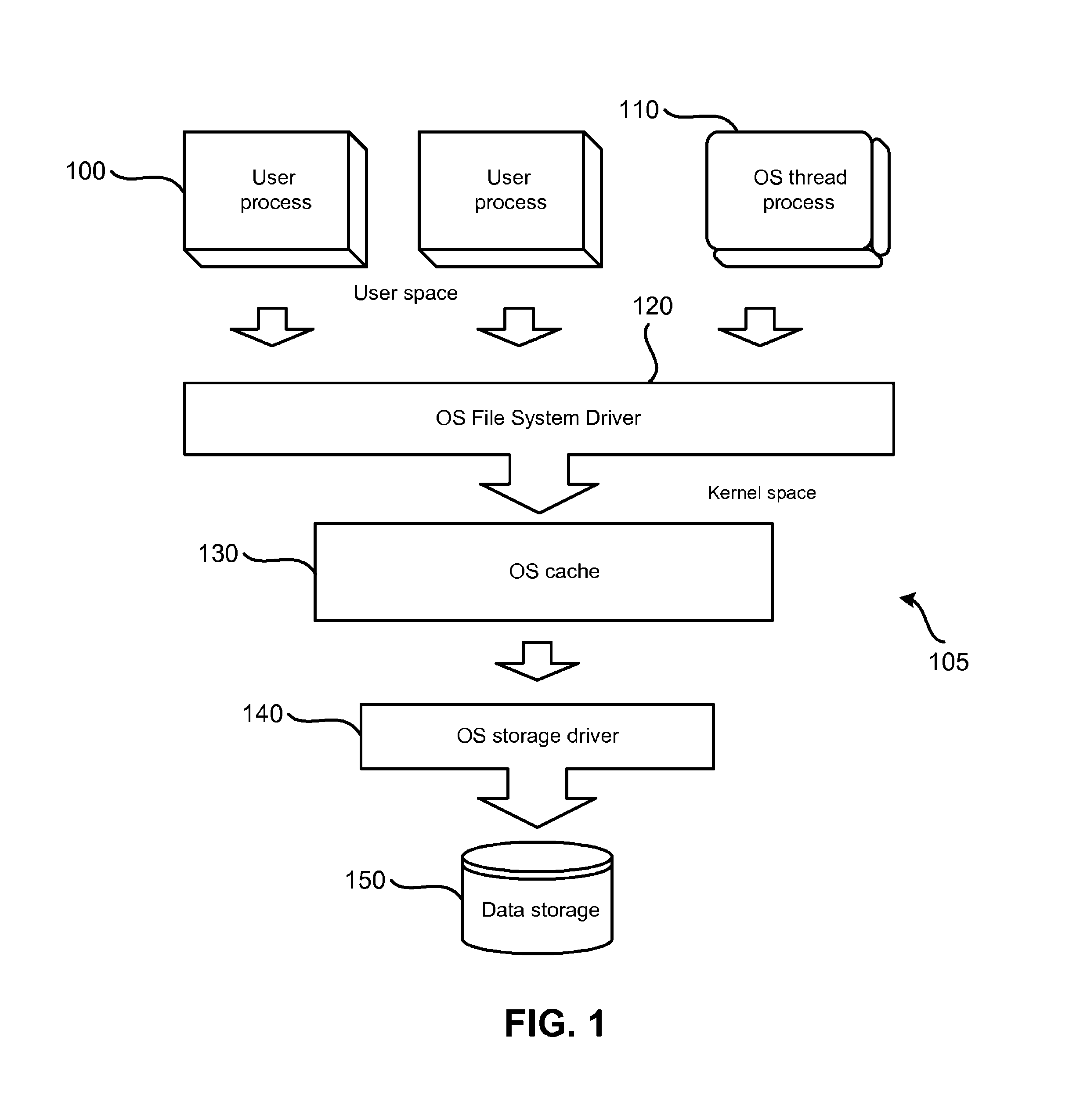

Method and system for file-level continuous data protection

ActiveUS8296264B1Digital data information retrievalDigital data processing detailsFile systemData memory

Continuous data protection is performed as two parallel processes: creating an initial backup by copying a data as a file / directory from the storage device into the backup storage, and copying the data to be written to the data storage as a part of a file / directory into the incremental backup. Alternatively, it can be performed as one process: copying the data to be written to the data storage as a part of a file / directory on the storage. A write command to a file system driver is intercepted and redirected to the backup, and the data is written to the incremental backup. If the write command is also directed to a not yet backed up data (a file / directory), the identified data is copied from the storage device to intermediate storage. The write command is executed on the file / directory from the storage device, and the file / directory is copied from the intermediate storage.

Owner:MIDCAP FINANCIAL TRUST

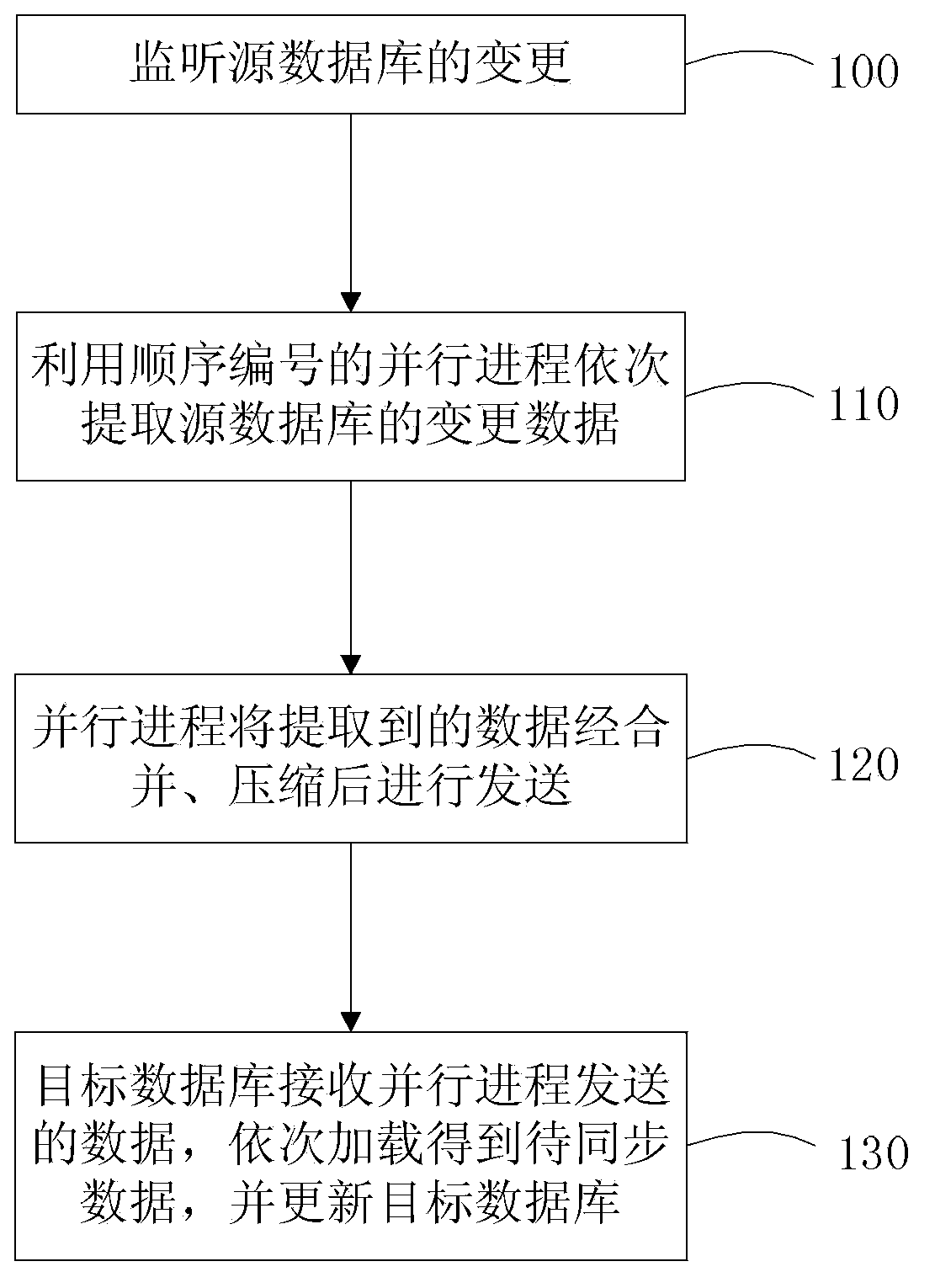

Cross-room database synchronization method and system

InactiveCN103778136AAvoid accumulationData transfer minimizationRedundant operation error correctionSpecial data processing applicationsData synchronizationParallel processing

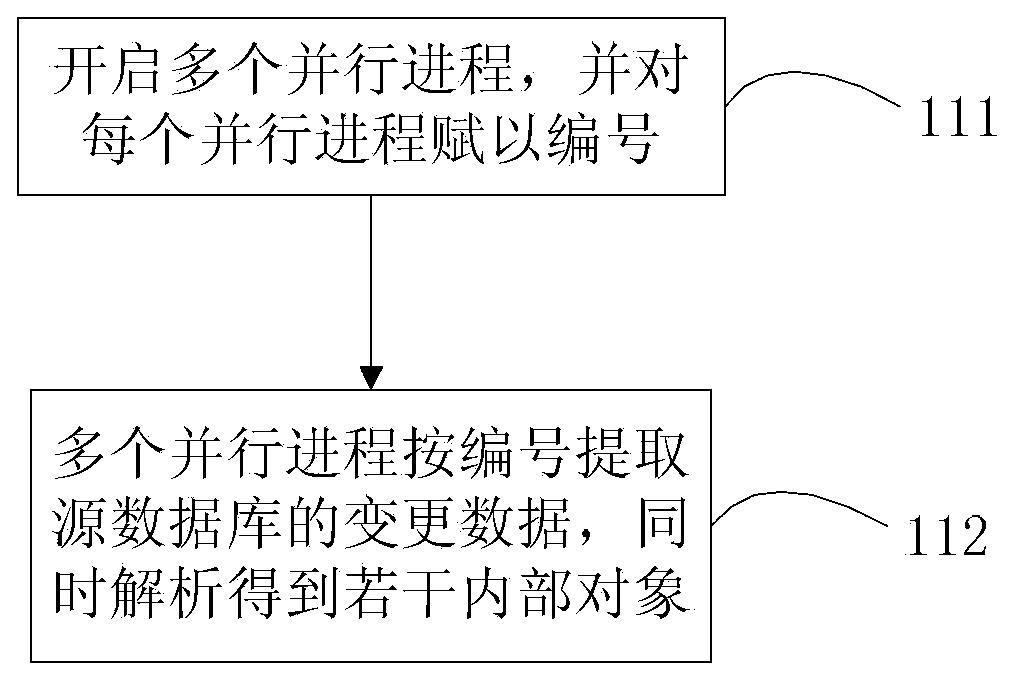

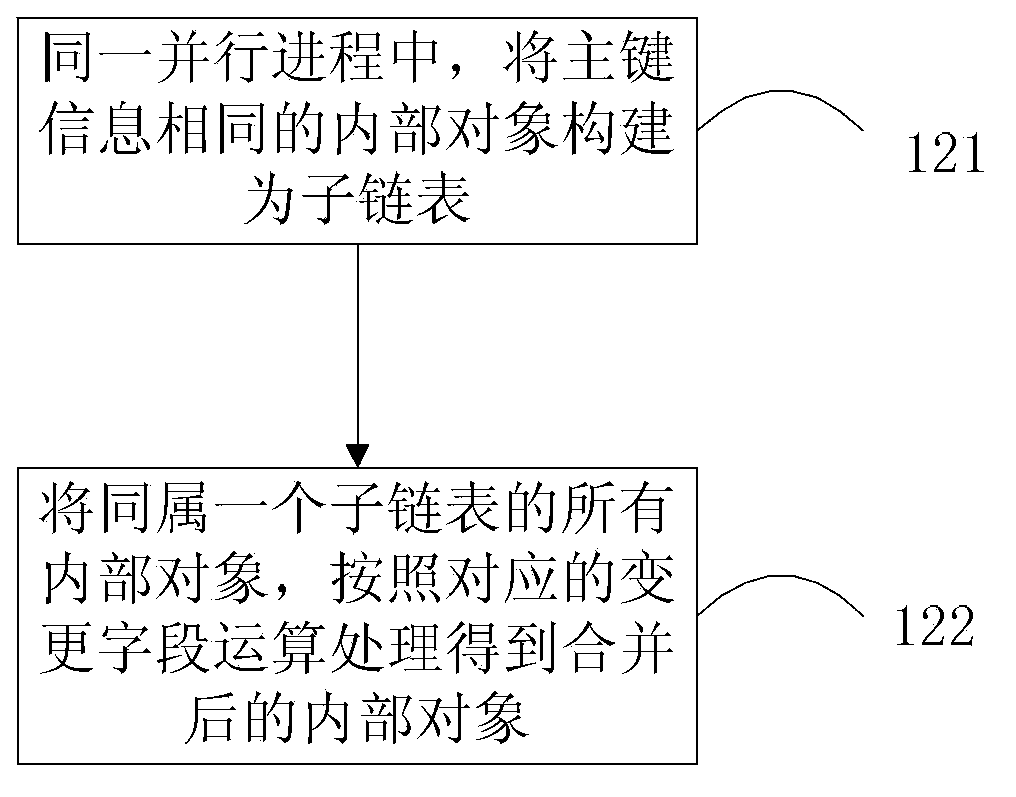

The invention discloses a cross-room database synchronization method which is used for performing cross-room data synchronization on a target database from a source database. The cross-room database synchronization method comprises step 100, monitoring changes of the source database at a source database terminal; step 110, extracting change data of the source database in turn through a plurality of parallel processes which are serially numbered; step 120, sending the extracted data through the parallel processes after combination and compression; step 130, receiving the data sent from the parallel processes at a target database terminal, loading according to the order of the parallel processes to obtain data to be synchronized and updating the target database according to the data to be synchronized. According to the cross-room database synchronization method and device, the thinking of multi-threaded parallel processing is adopted, the characteristics of SQL (Structured Query Language) semantic execution are adopted, and accordingly the problem of the synchronization delay of a cross-room and cross-regional network can be alleviated through the complete transmission scheme such as data combination, compression and parallel processing.

Owner:ALIBABA GRP HLDG LTD

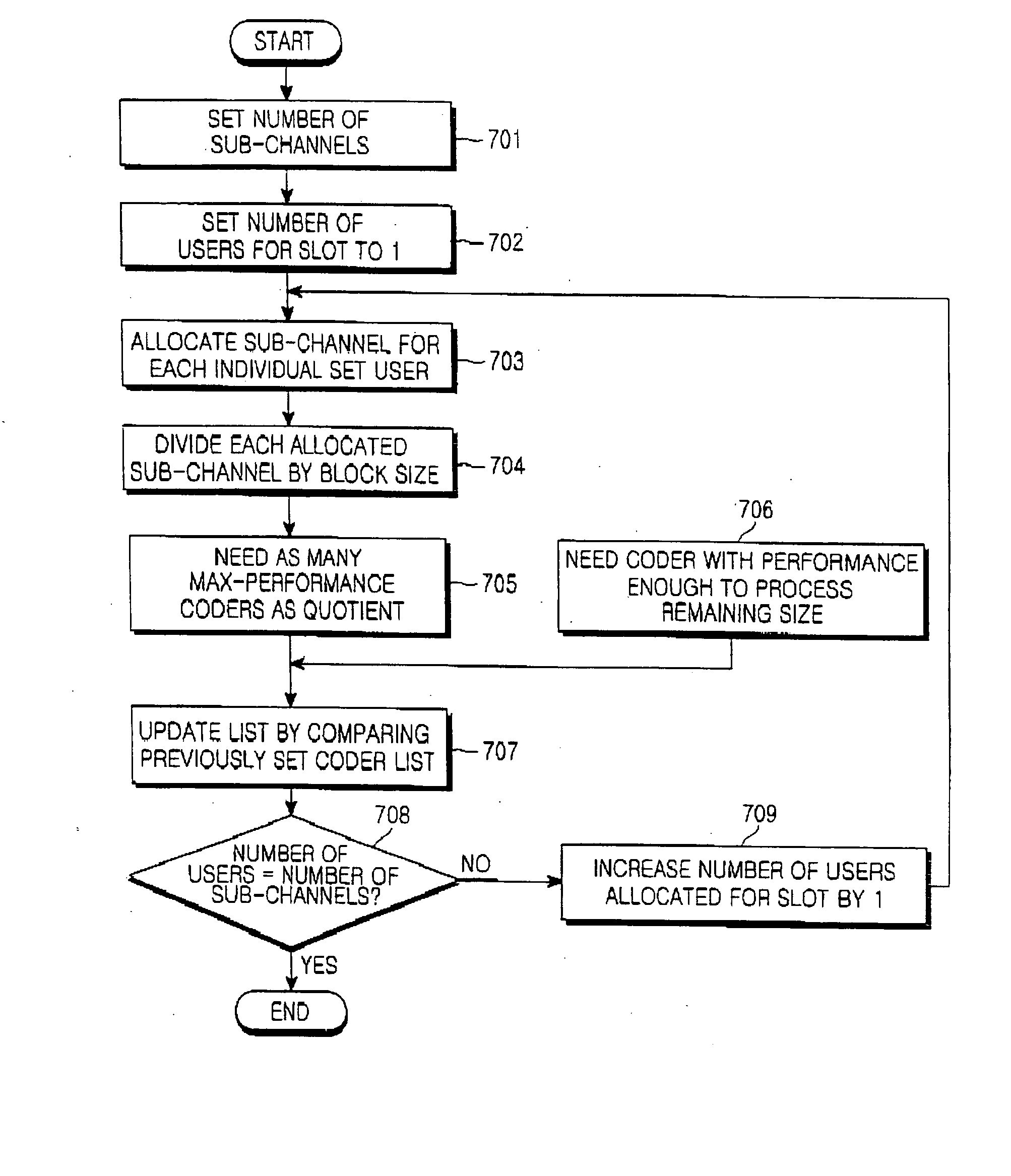

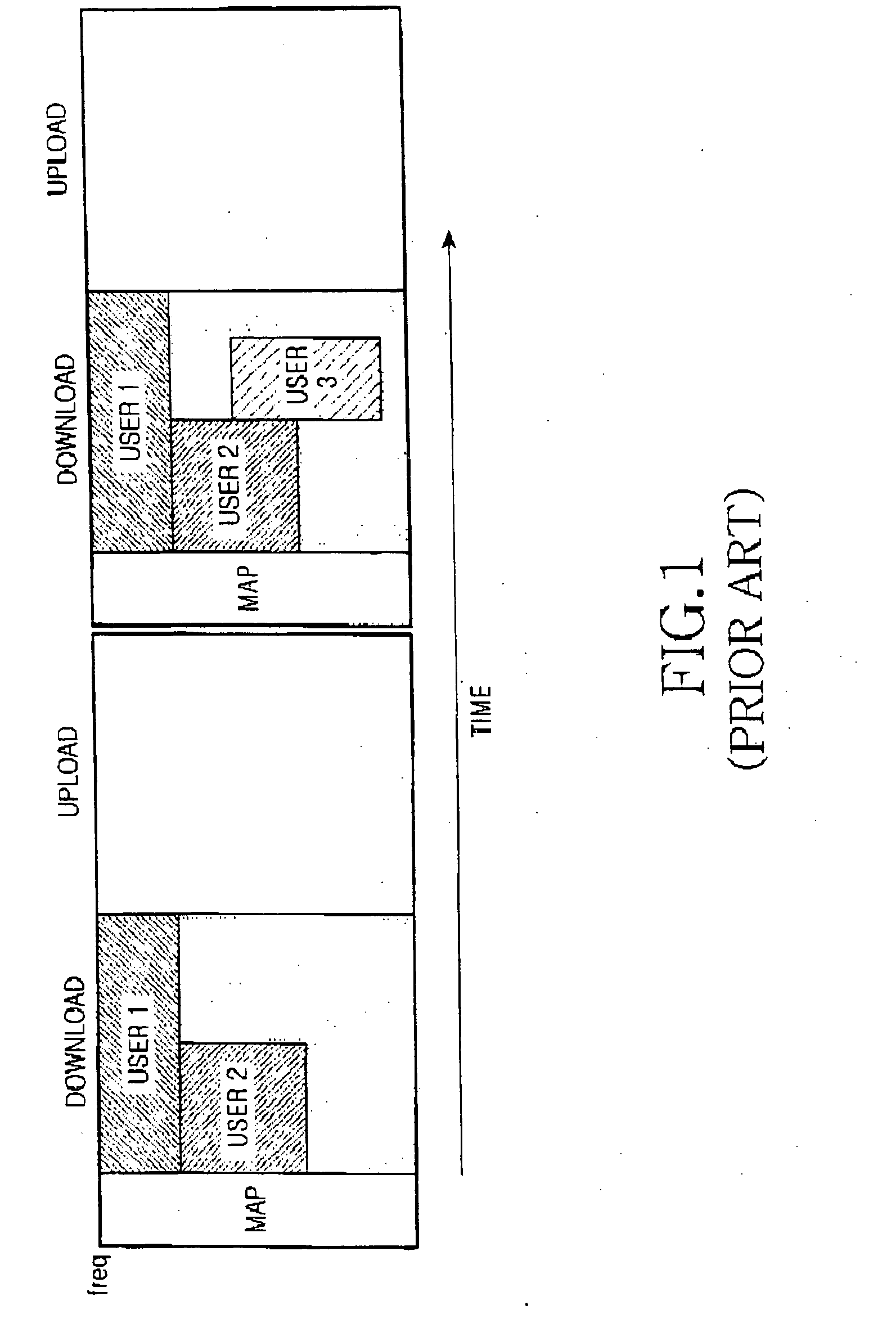

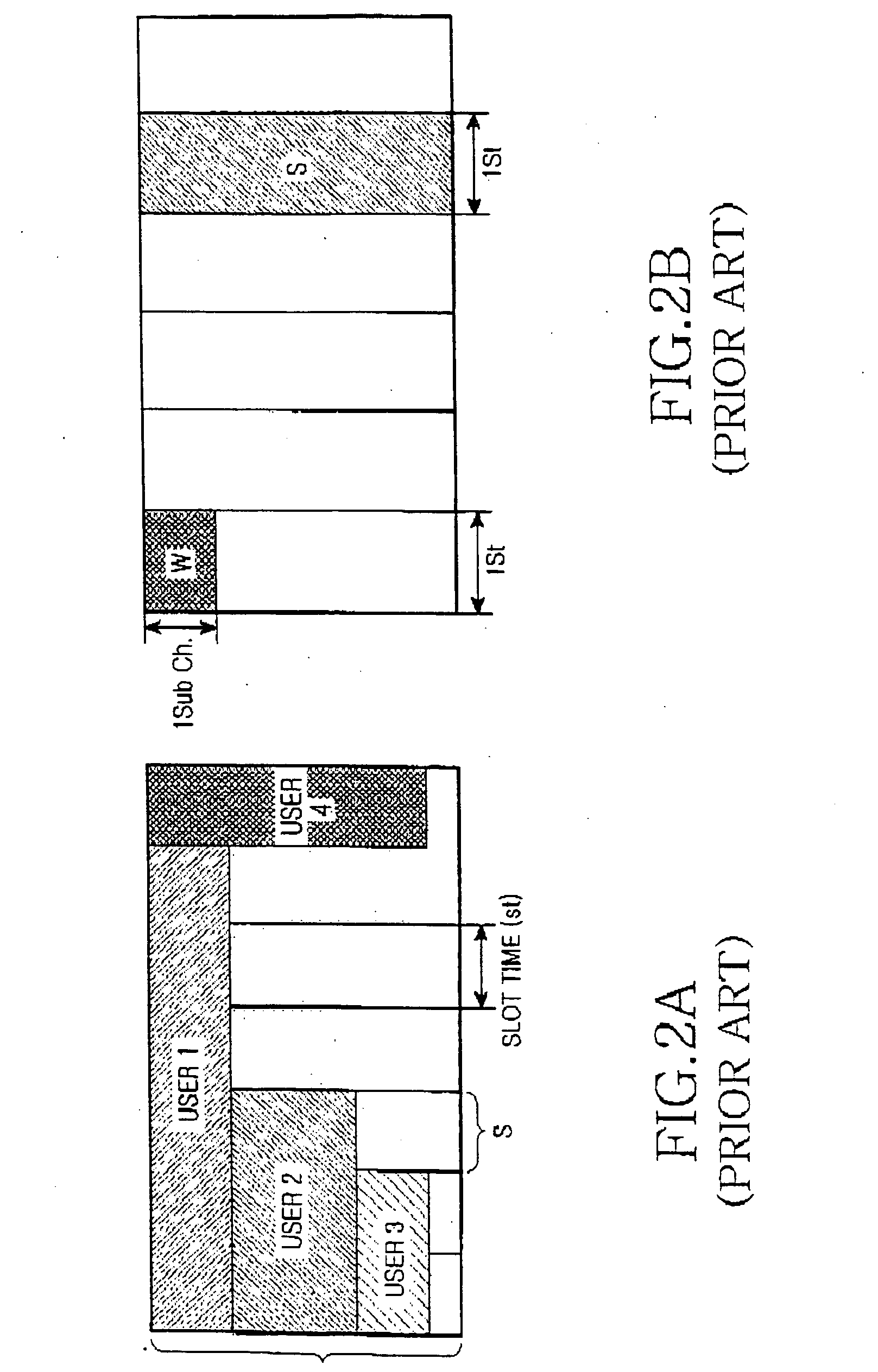

Coding/decoding apparatus for orthogonal frequency division multiple access communication system and method for designing the same

InactiveUS20070016413A1Maximizing numberReduce the numberError preventionTransmission path divisionCommunications systemEncoder decoder

Provided is a coding / decoding apparatus for an orthogonal frequency division multiple access (OFDMA) communication system that takes multiple users into consideration and uses block coding / decoding scheme. In the apparatus, a mapper determines paths through which information of users will be provided, according to states of sub-channels allocated to the users. A coding / decoding unit parallel-processes the information of the users through the paths selectively connected by the mapper. The coding / decoding unit includes coders / decoders having performance lower than the maximum processing performance determined taking into account a size of a processing block and the number of sub-channels.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com