Patents

Literature

269 results about "Closed captioning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

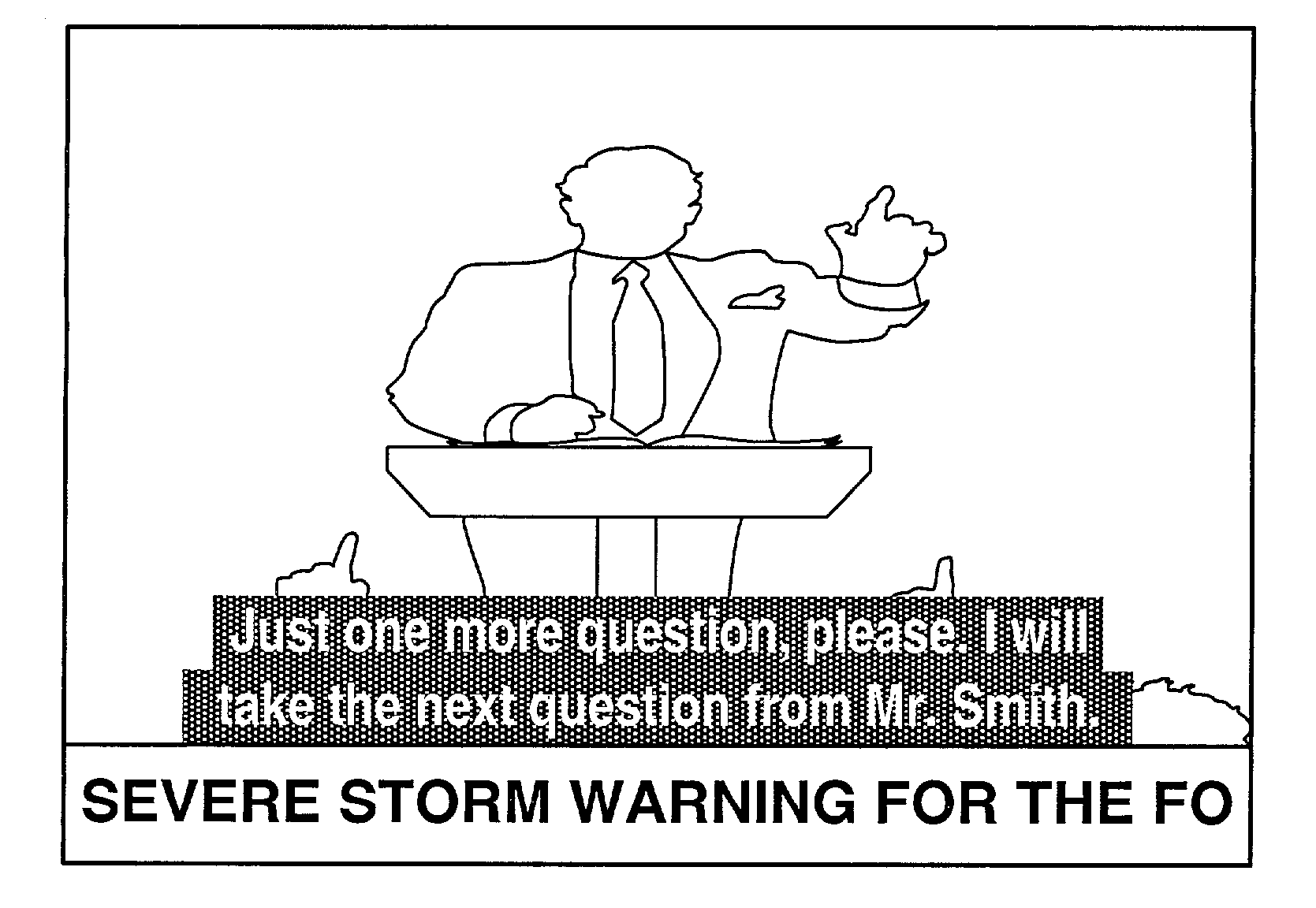

Closed captioning (CC) and subtitling are both processes of displaying text on a television, video screen, or other visual display to provide additional or interpretive information. Both are typically used as a transcription of the audio portion of a program as it occurs (either verbatim or in edited form), sometimes including descriptions of non-speech elements. Other uses have been to provide a textual alternative language translation of a presentation's primary audio language that is usually burned-in (or "open") to the video and unselectable. HTML5 defines subtitles as a "transcription or translation of the dialogue ... when sound is available but not understood" by the viewer (for example, dialogue in a foreign language) and captions as a "transcription or translation of the dialogue, sound effects, relevant musical cues, and other relevant audio information ... when sound is unavailable or not clearly audible" (for example, when audio is muted or the viewer is deaf or hard of hearing).

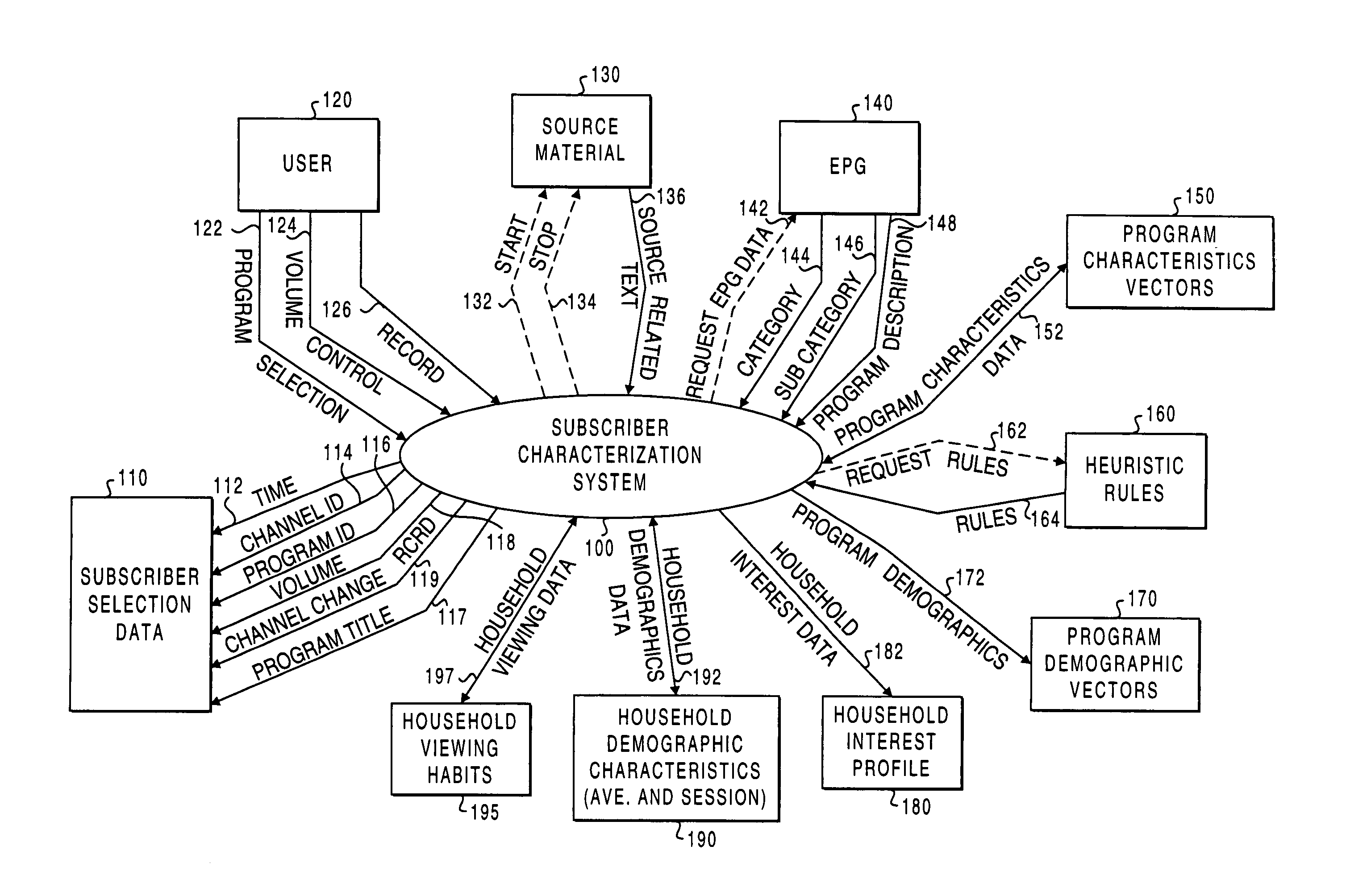

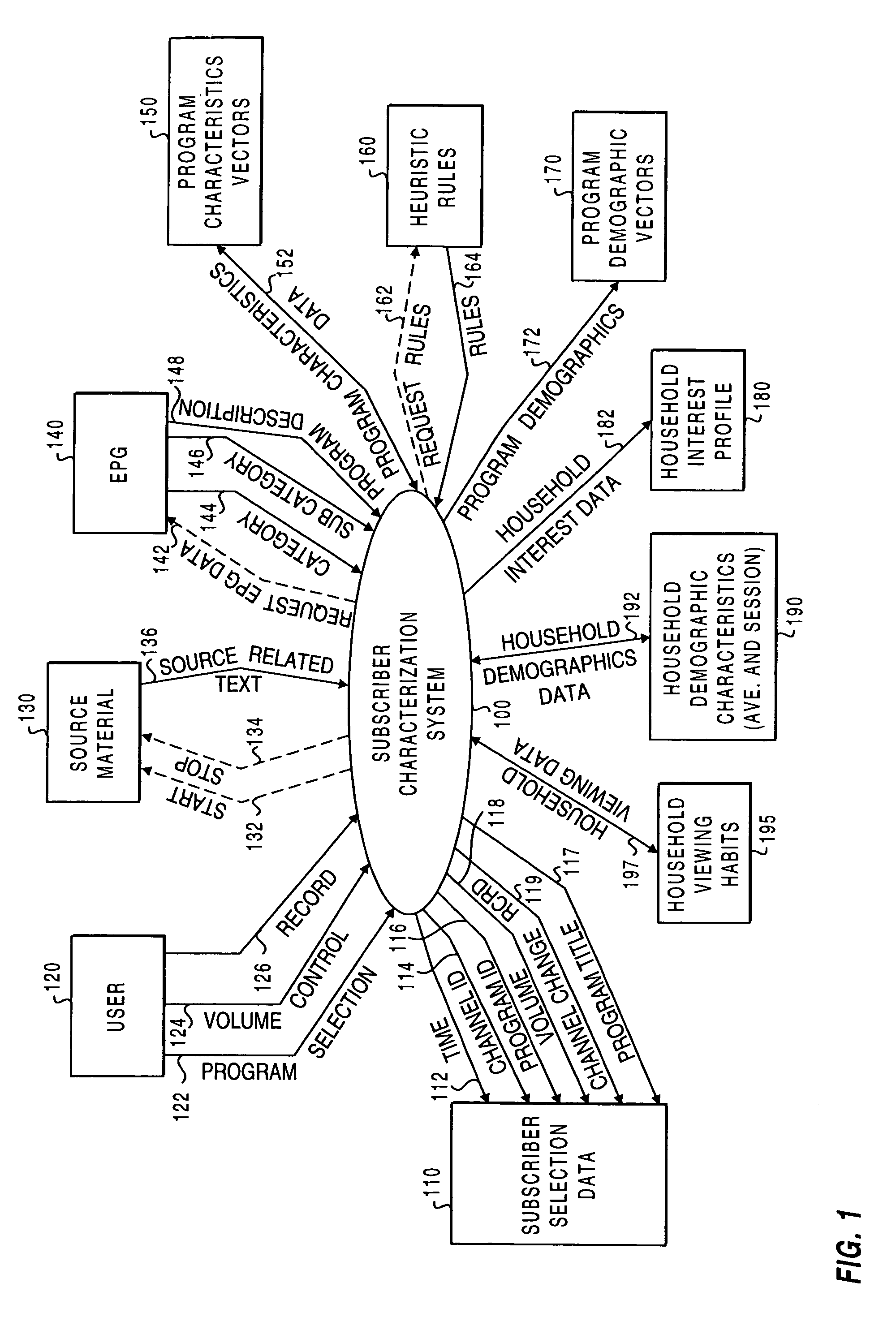

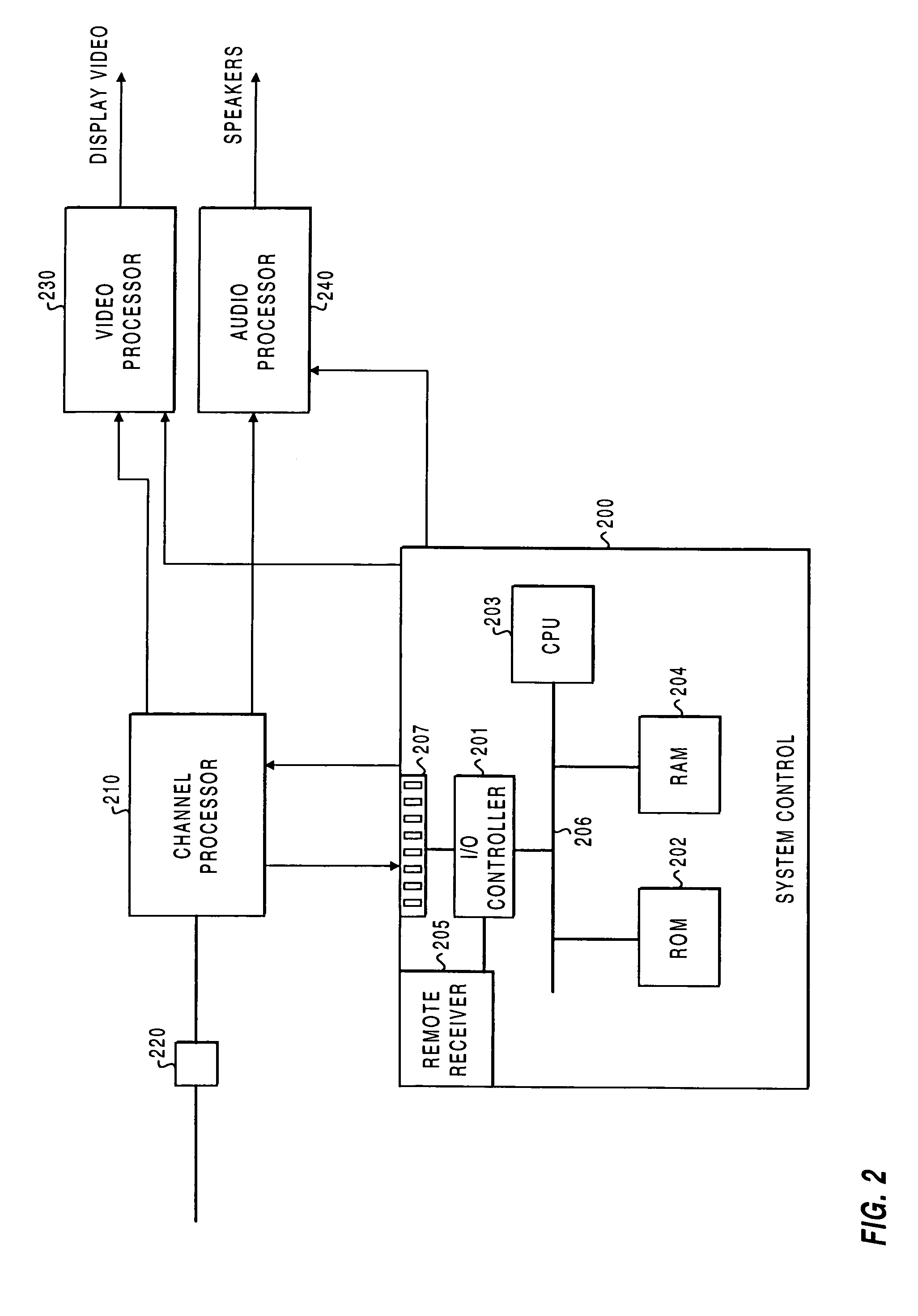

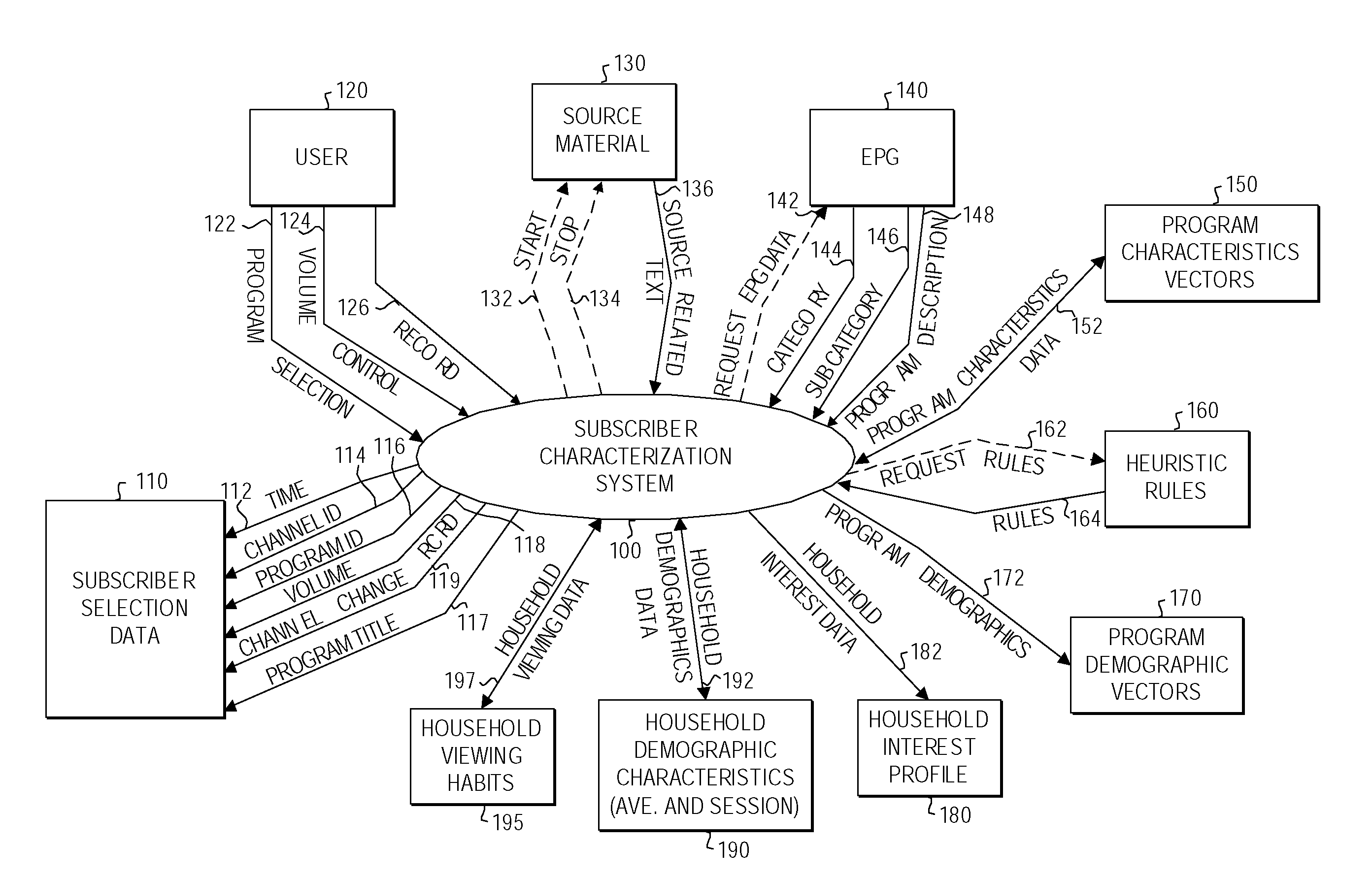

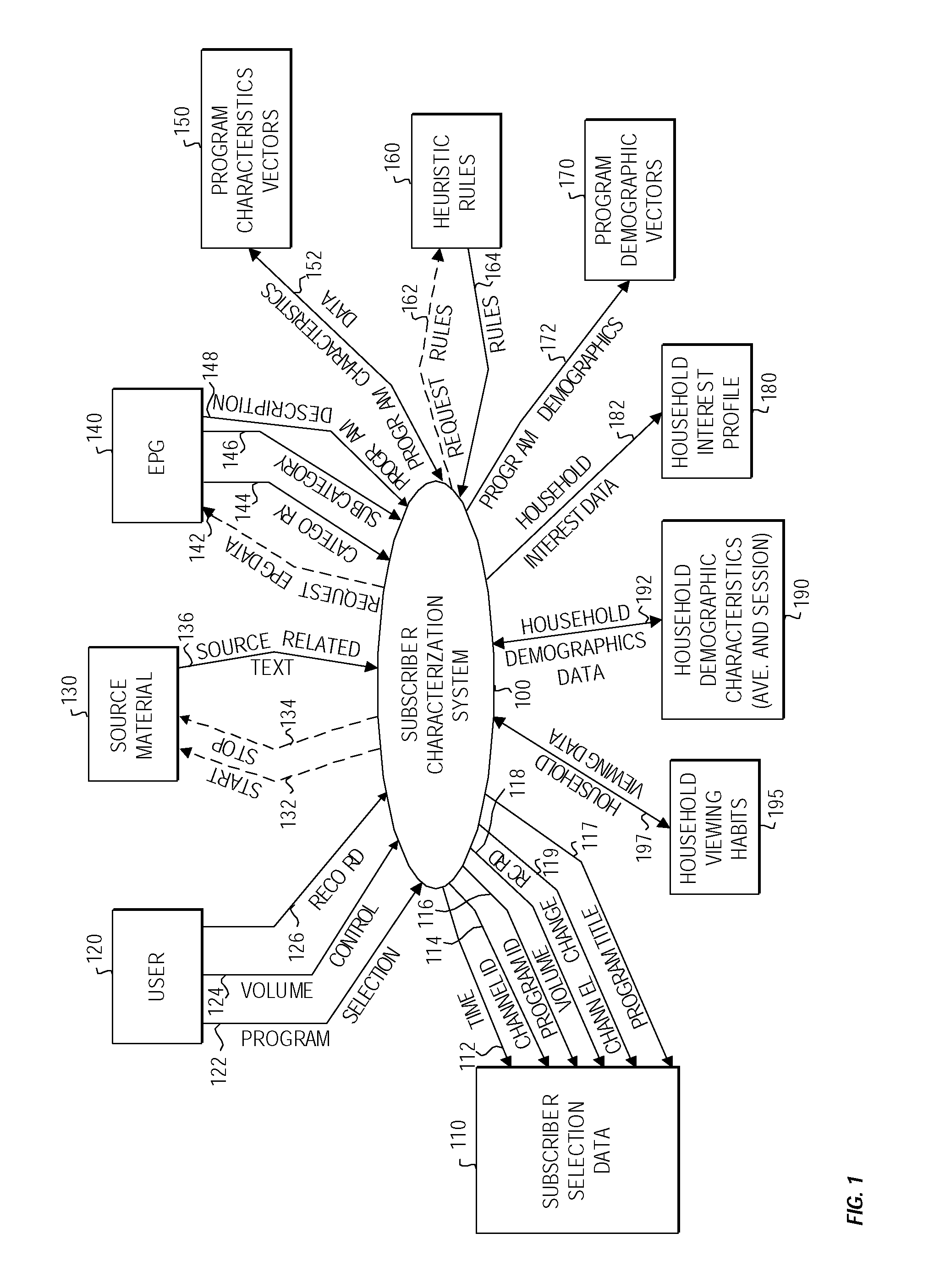

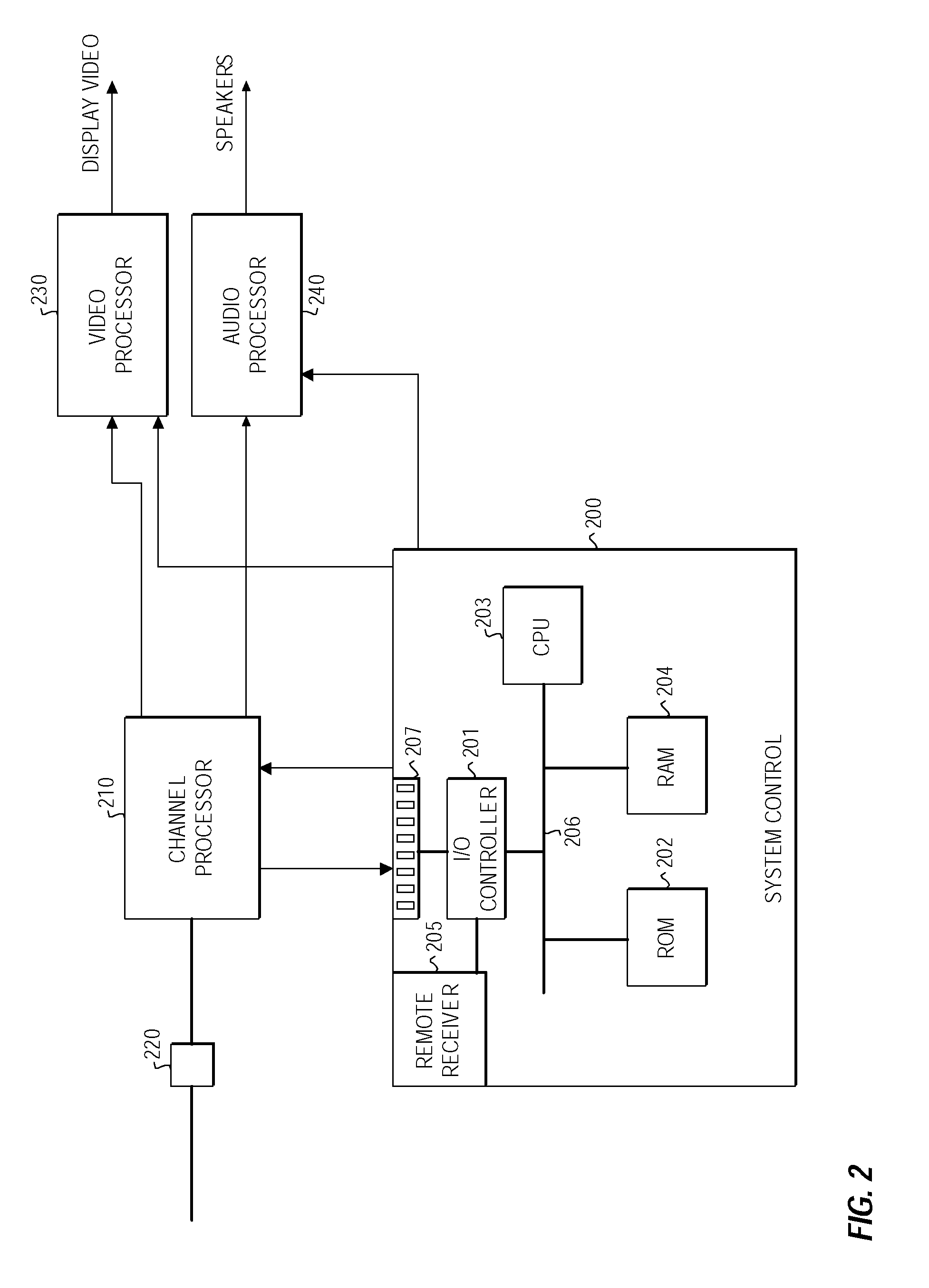

Subscriber characterization system

InactiveUS7150030B1Television system detailsSpecific information broadcast systemsProgramming languageFeature vector

A subscriber characterization system is presented in which the subscriber's selections are monitored, including monitoring of the time duration programming is watched, the volume at which the programming is listened to, and any available information regarding the type of programming, including category and sub-category of the programming. The characterization system can extract textual information related to the programming from closed captioning data, electronic program guides, or other text sources associated with the programming. The extracted information is used to form program characteristics vectors. The programming characteristics vectors can be used in combination with the subscriber selection data to form a subscriber profile. Heuristic rules indicating the relationships between programming choices and demographics can be applied to generate additional probabilistic information regarding demographics and programming and product interests.

Owner:PRIME RES ALLIANCE E LLC

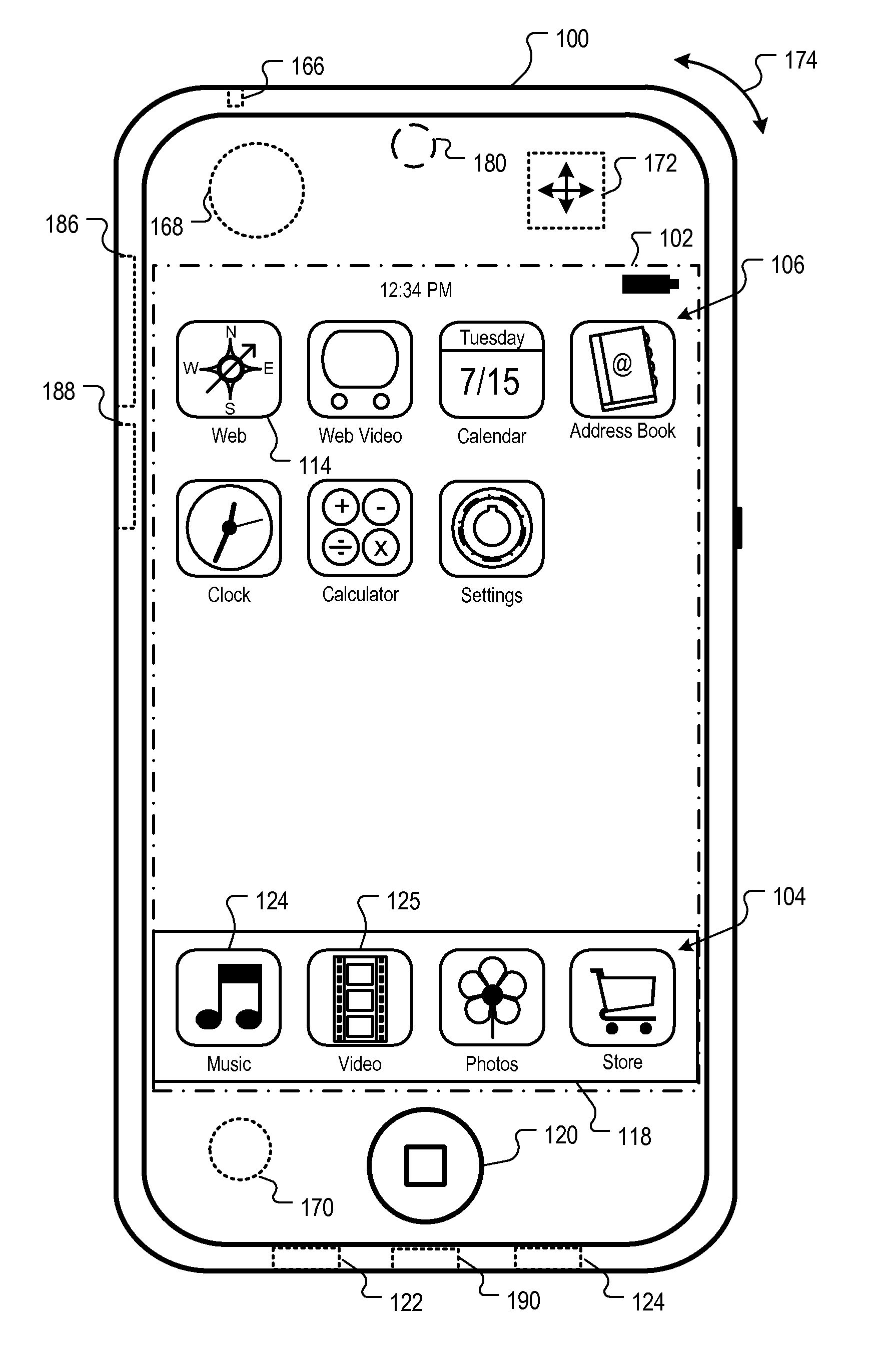

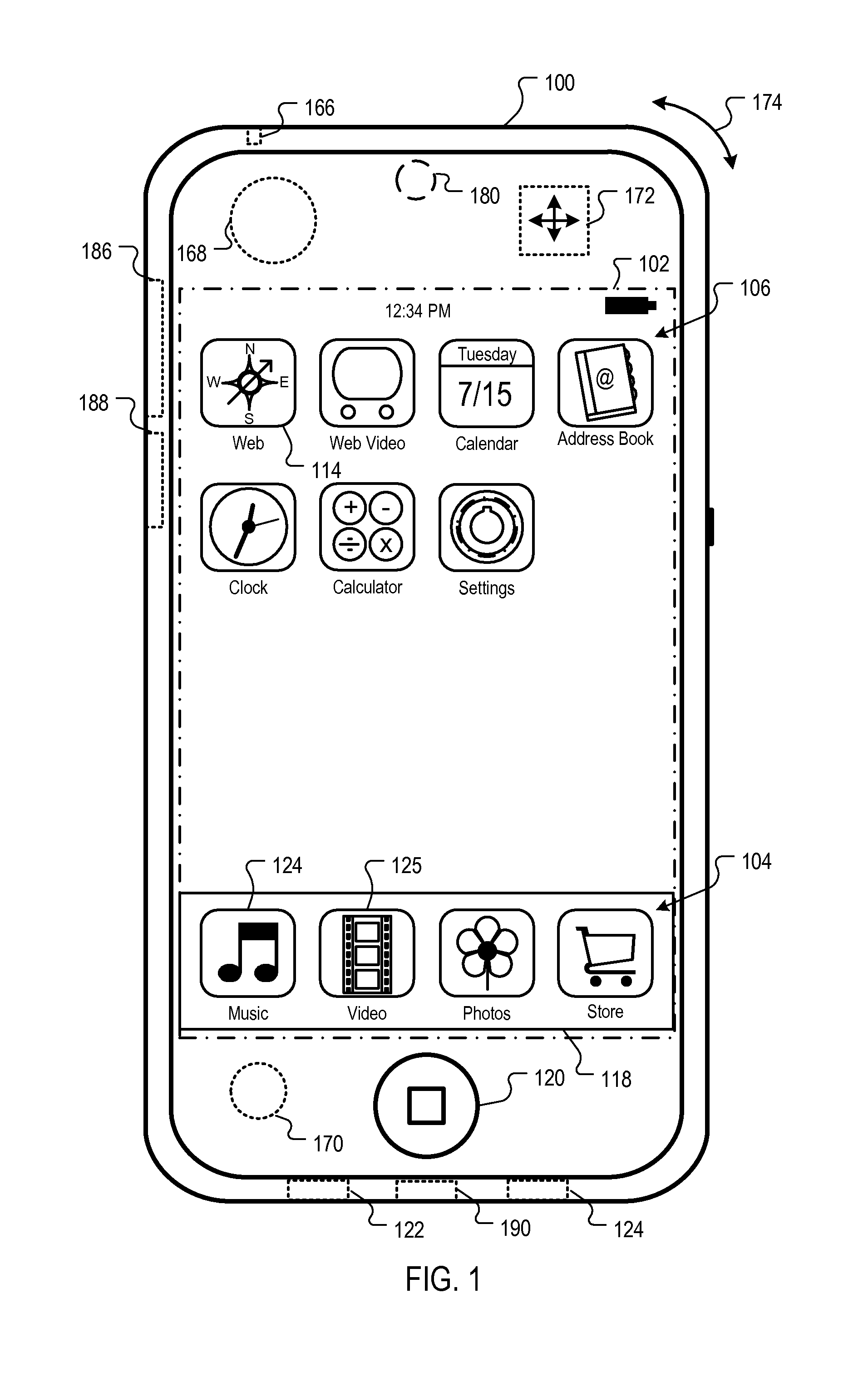

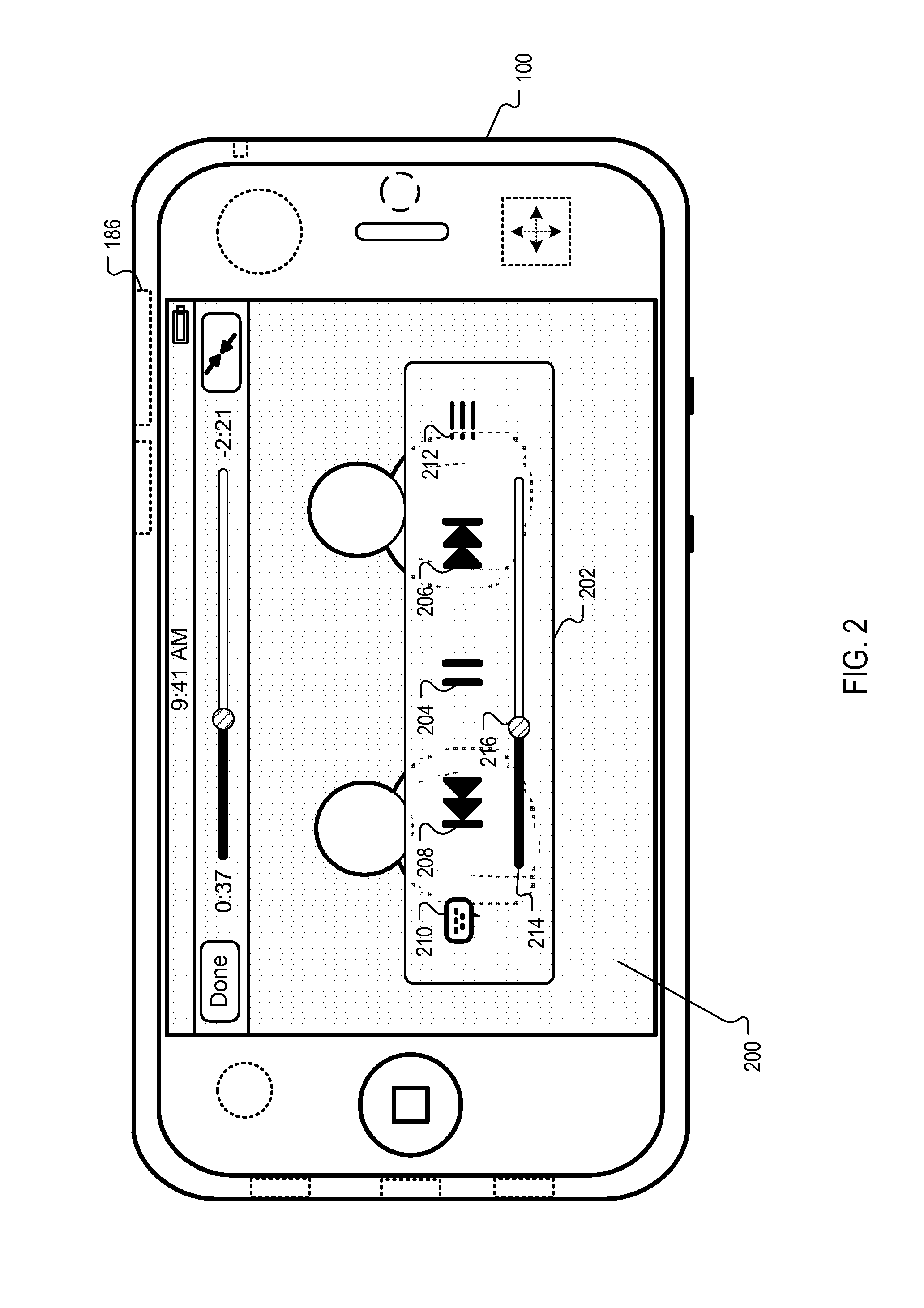

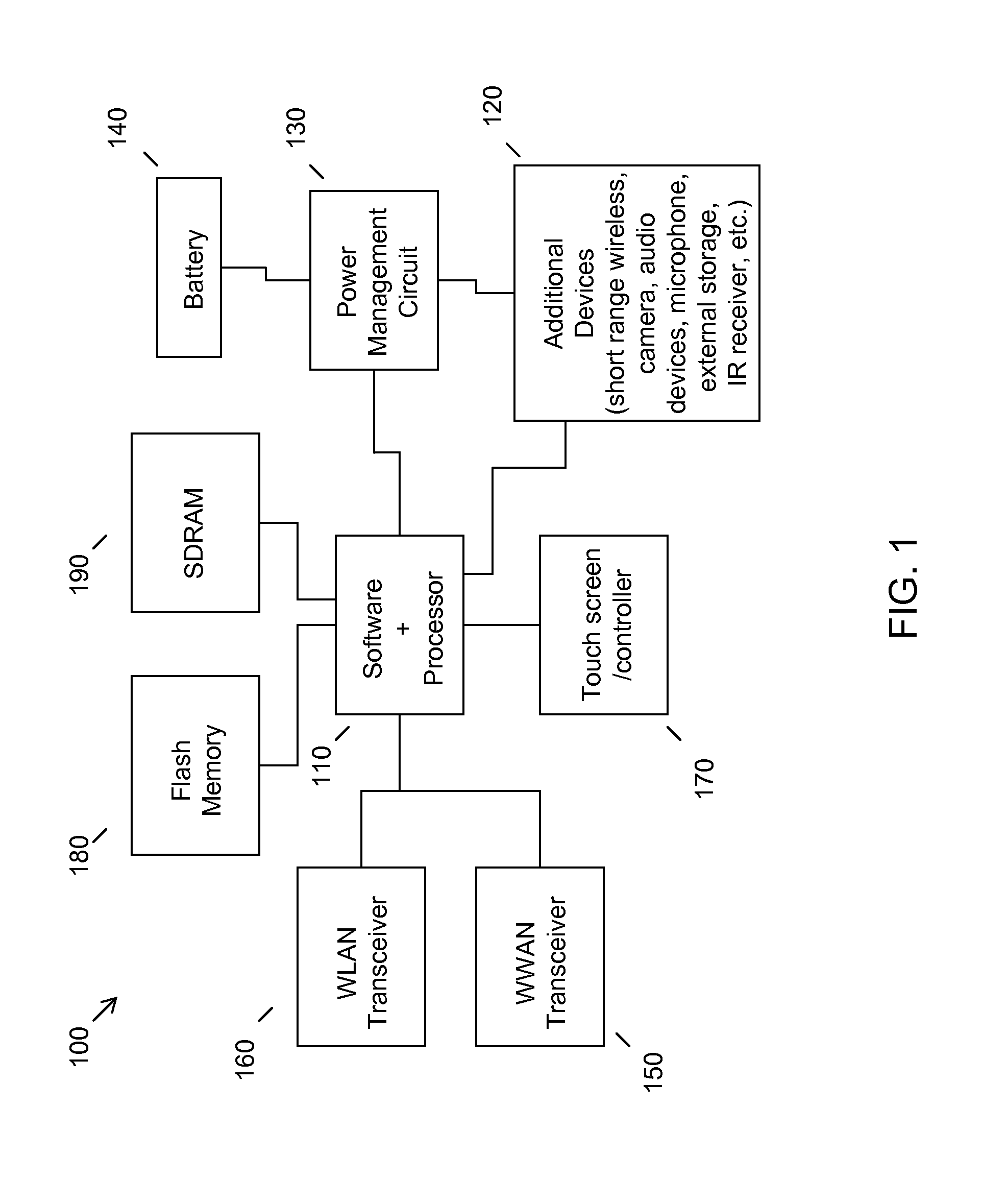

Ambient noise based augmentation of media playback

ActiveUS8977974B2Television system detailsBatteries circuit arrangementsEnvironmental noiseClosed captioning

Ambient noise sampled by a mobile device from a local environment is used to automatically trigger actions associated with content currently playing on the mobile device. In some implementations, subtitles or closed captions associated with the currently playing content are automatically invoked and displayed on a user interface based on a level of ambient noise. In some implementations, audio associated with the currently playing content is adjusted or muted. Actions can be automatically triggered based on a comparison of the sampled ambient noise, or an audio fingerprint of the sampled ambient noise, with reference data, such as a reference volume level or a reference audio fingerprint. In some implementations, a reference volume level can be learned on the mobile device based on ambient noise samples.

Owner:APPLE INC

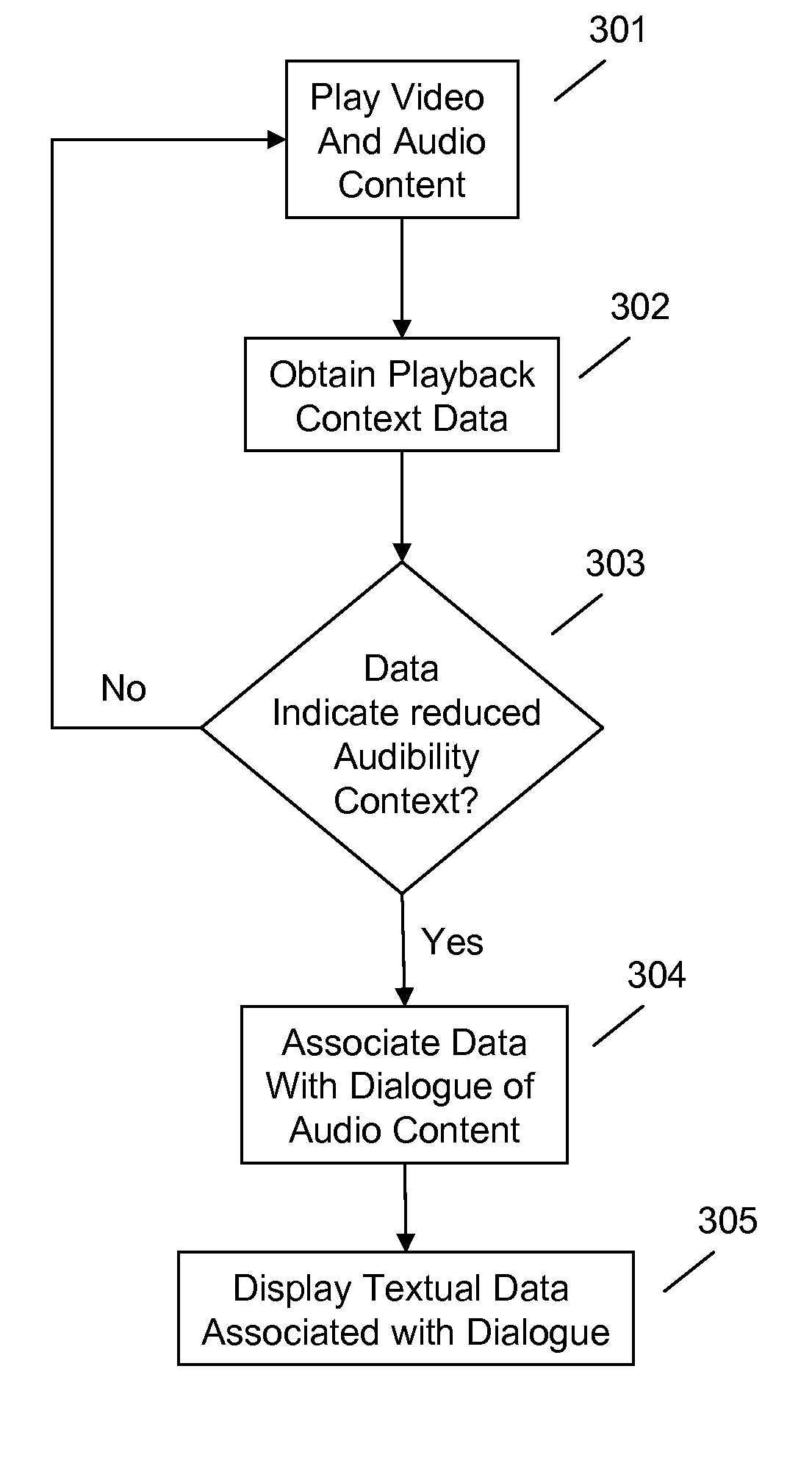

Intelligent closed captioning

ActiveUS20160014476A1Reduced audibility contextElectrical cable transmission adaptationSelective content distributionClosed captioningDisplay device

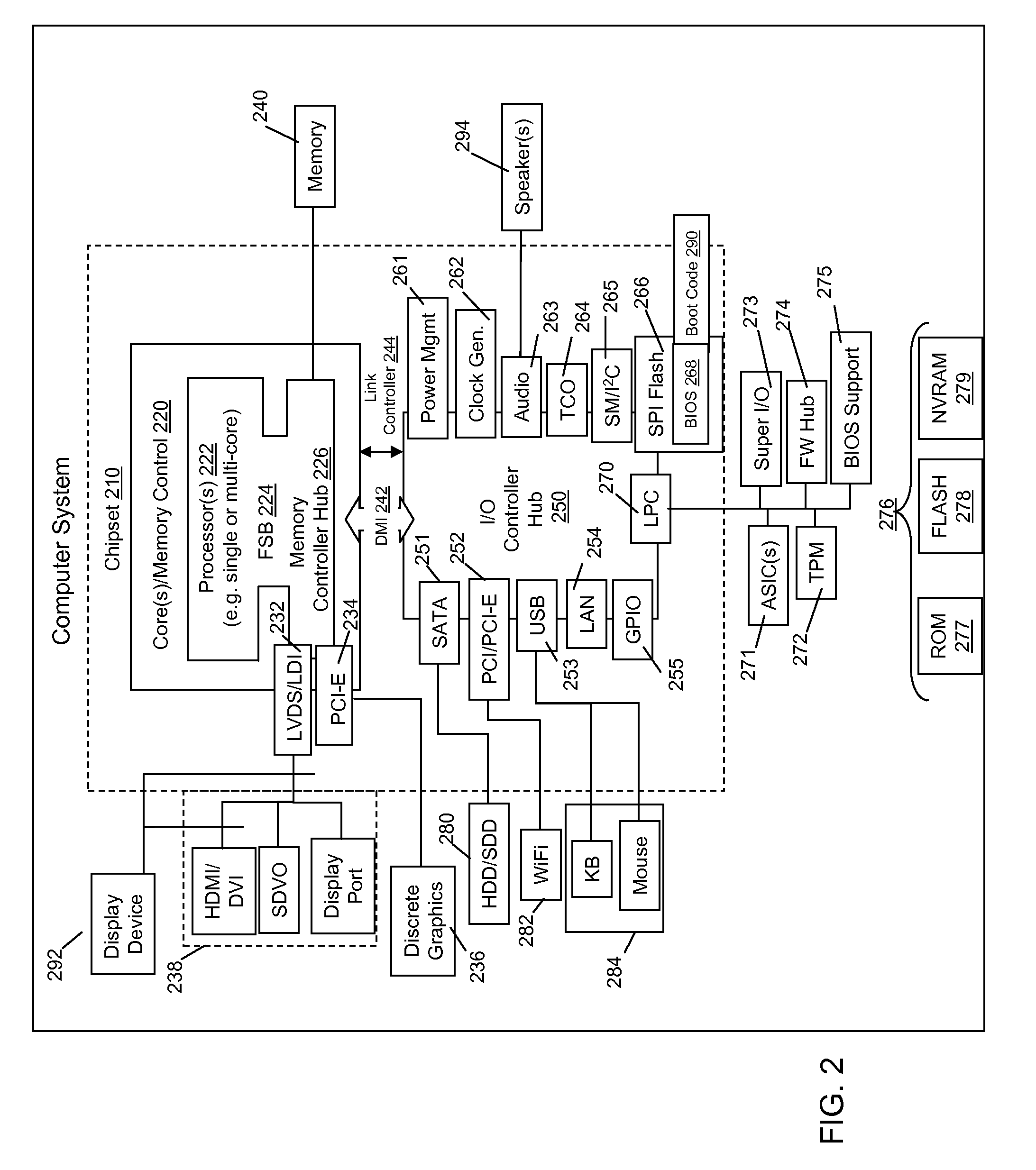

An aspect provides a method, including: playing, on a display device, video content; providing, using at least one speaker, audio content associated with the video content; obtaining, from an external source, data relating to playback context; determining, using a processor, that the data relating to playback context is associated with a reduced audibility context; and providing, on the display device, textual data associated with dialogue of the video content. Other aspects are described and claimed.

Owner:LENOVO (SINGAPORE) PTE LTD

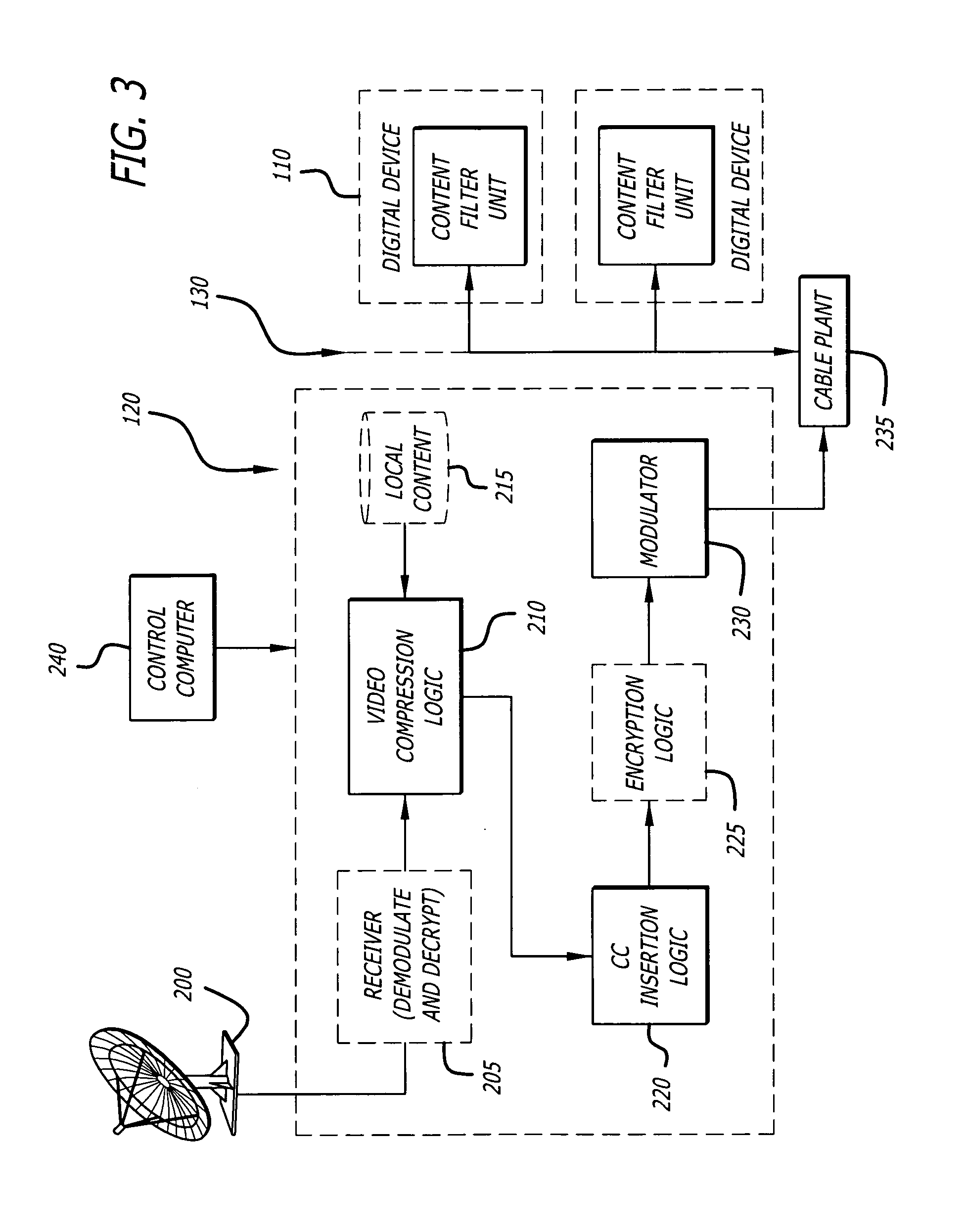

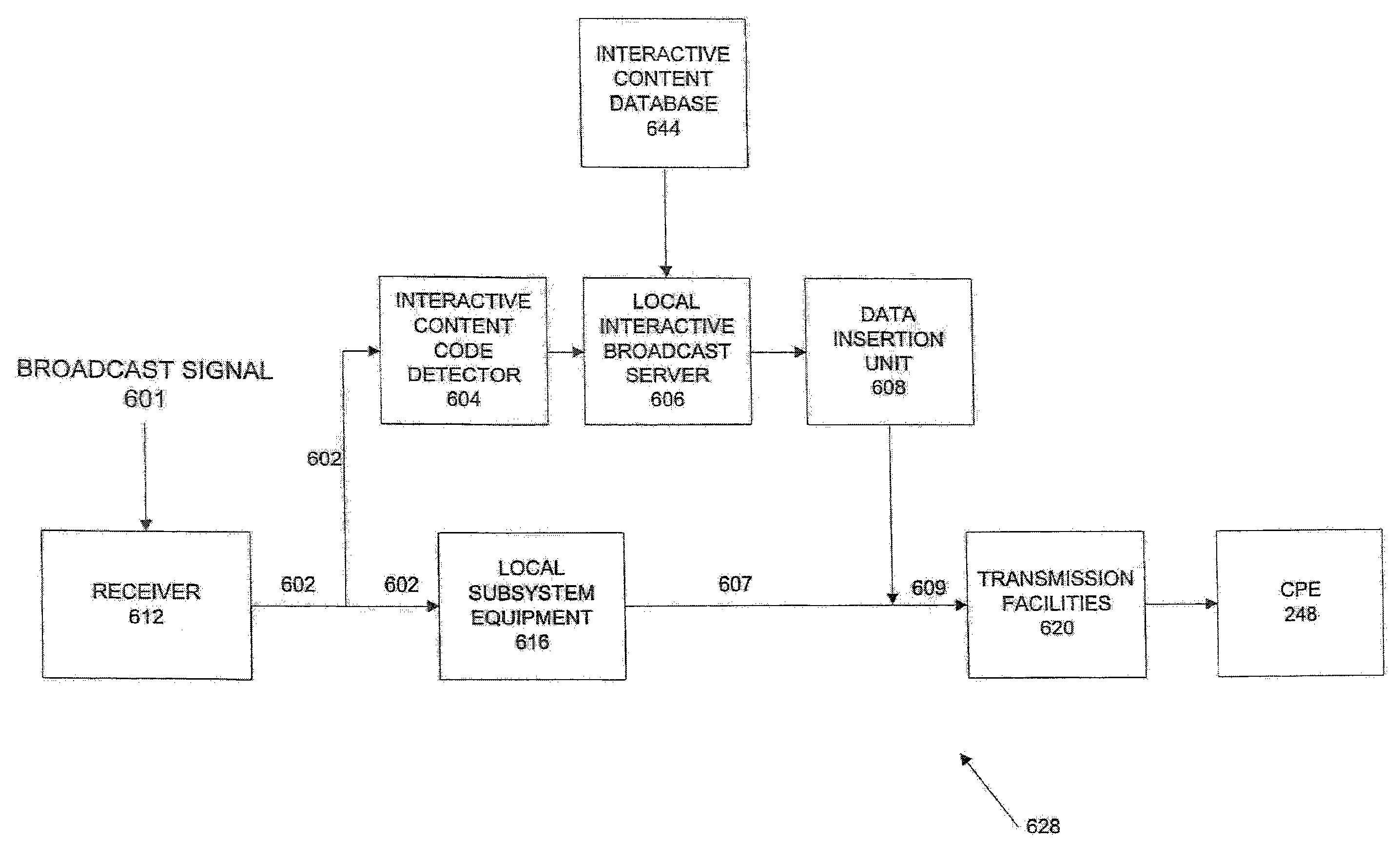

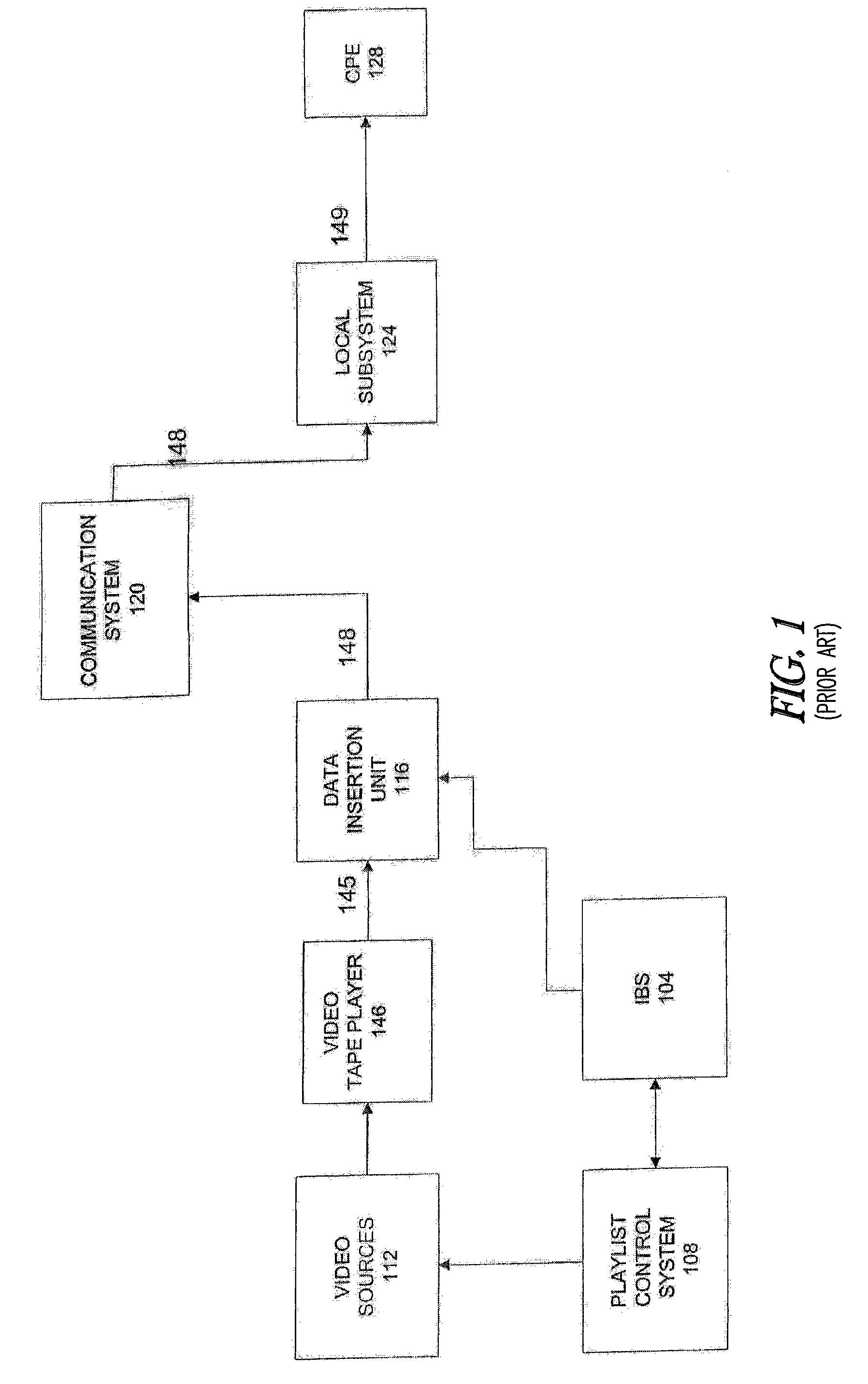

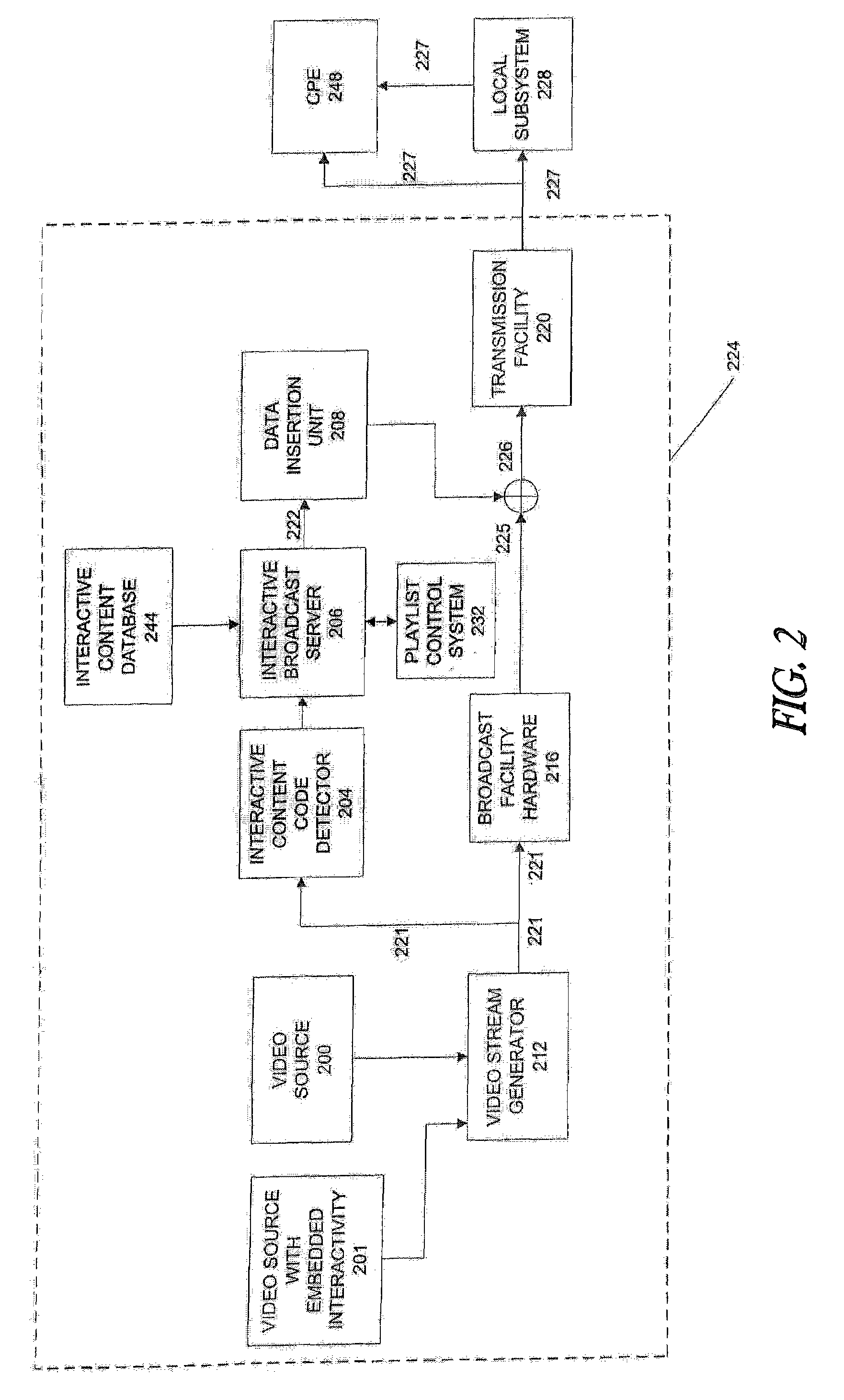

Interactive content delivery methods and apparatus

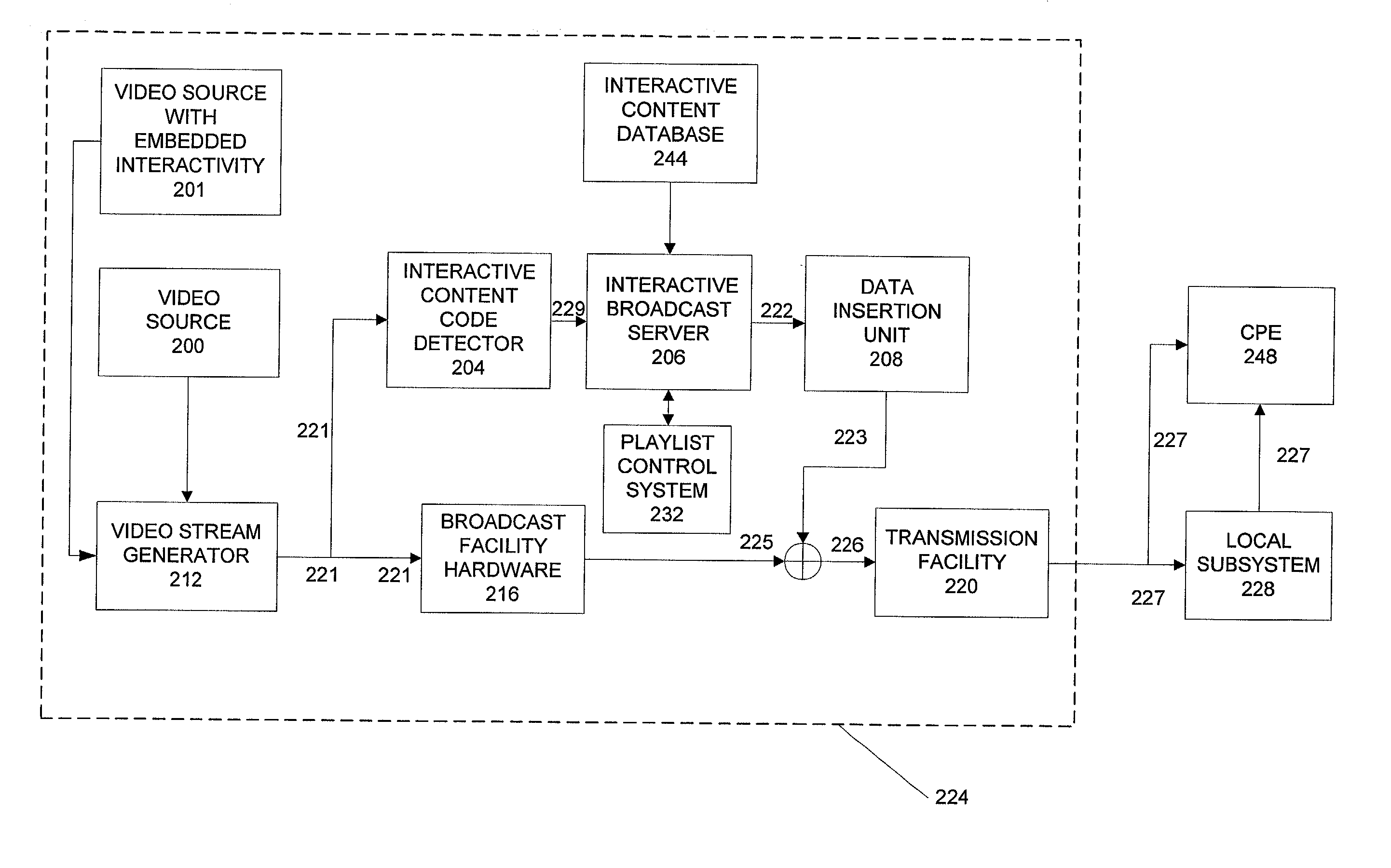

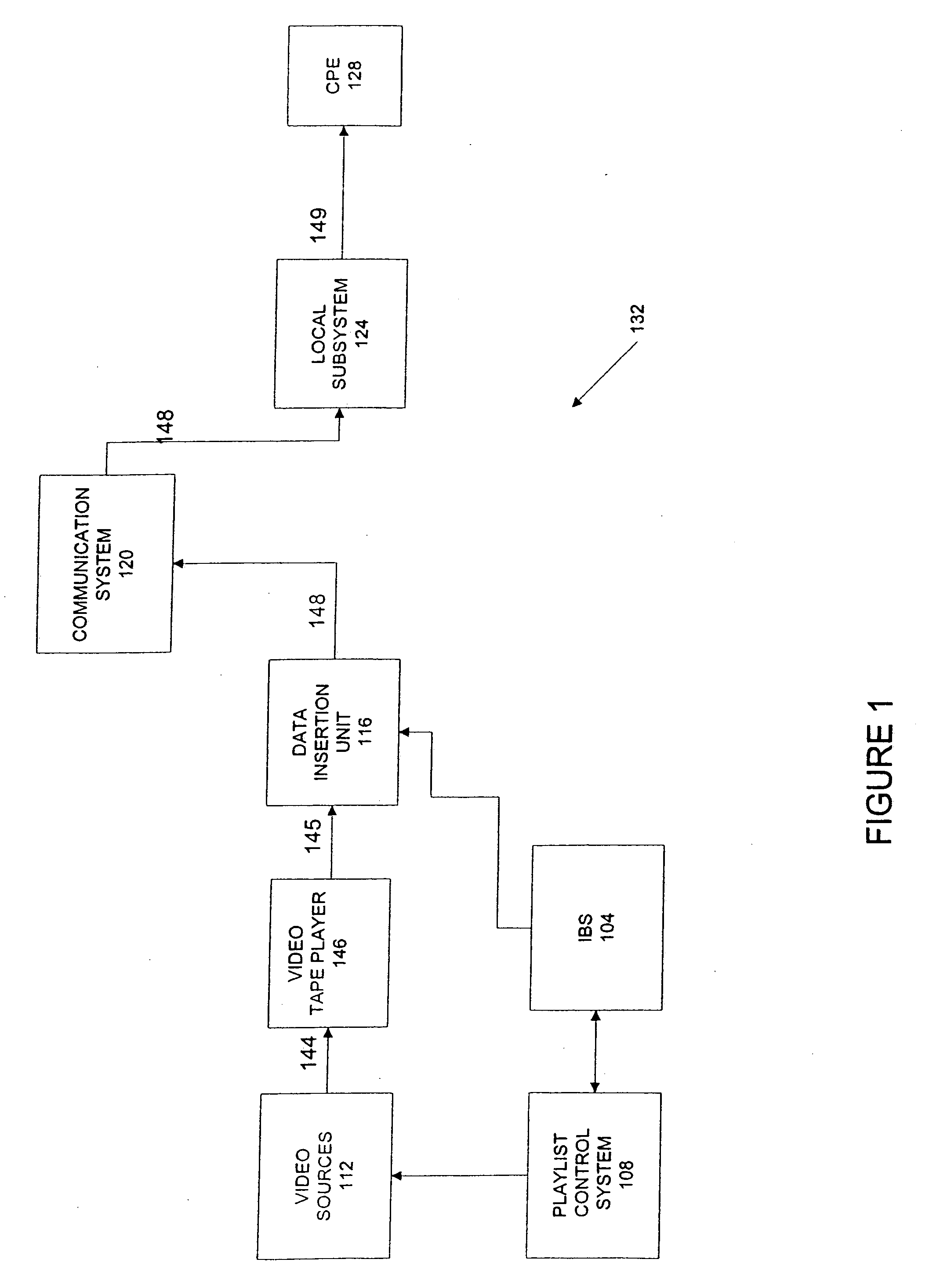

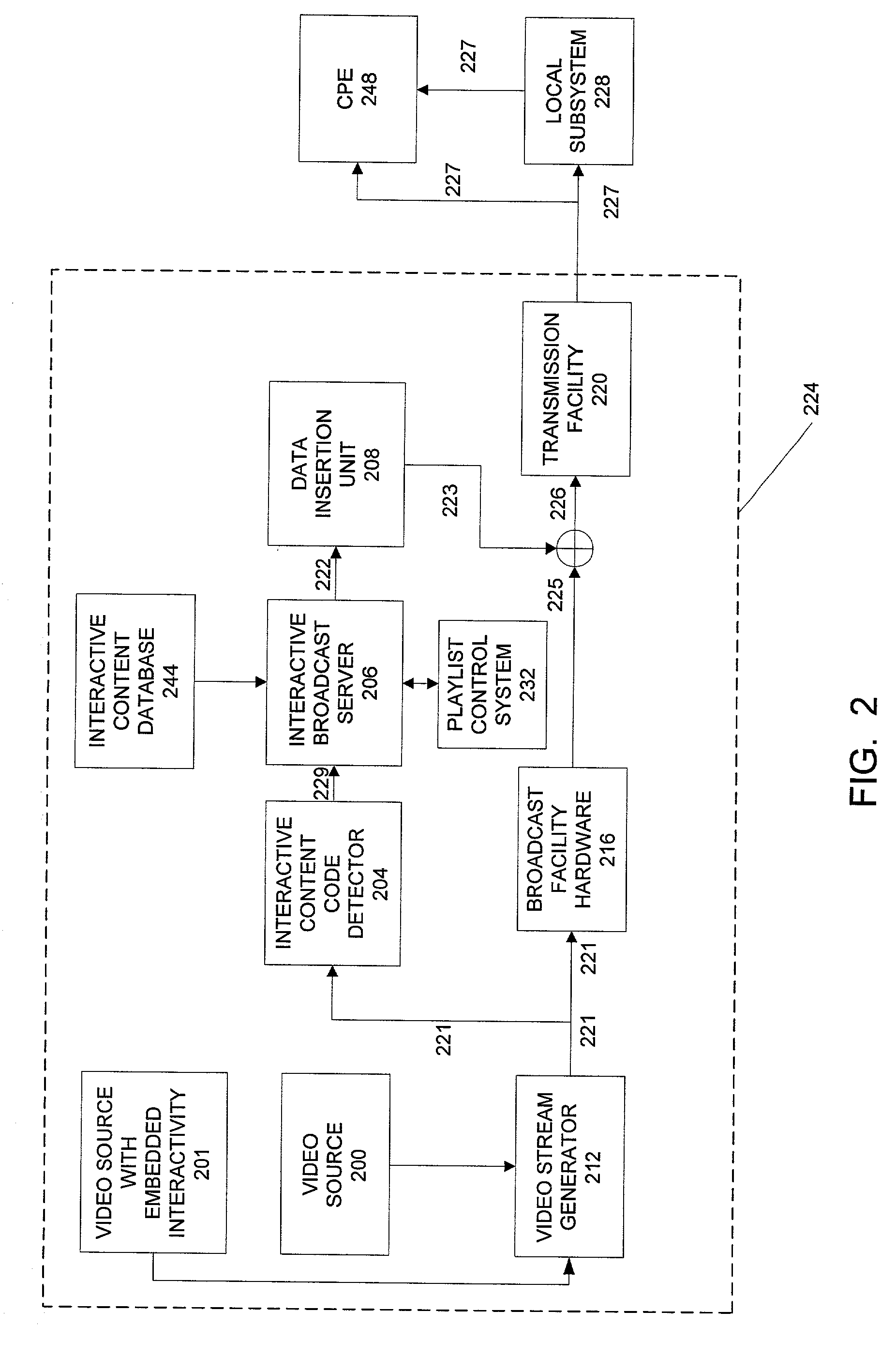

InactiveUS20070130581A1Preserve interactivityBroadcast with distributionAnalogue secracy/subscription systemsClosed captioningInteractive content

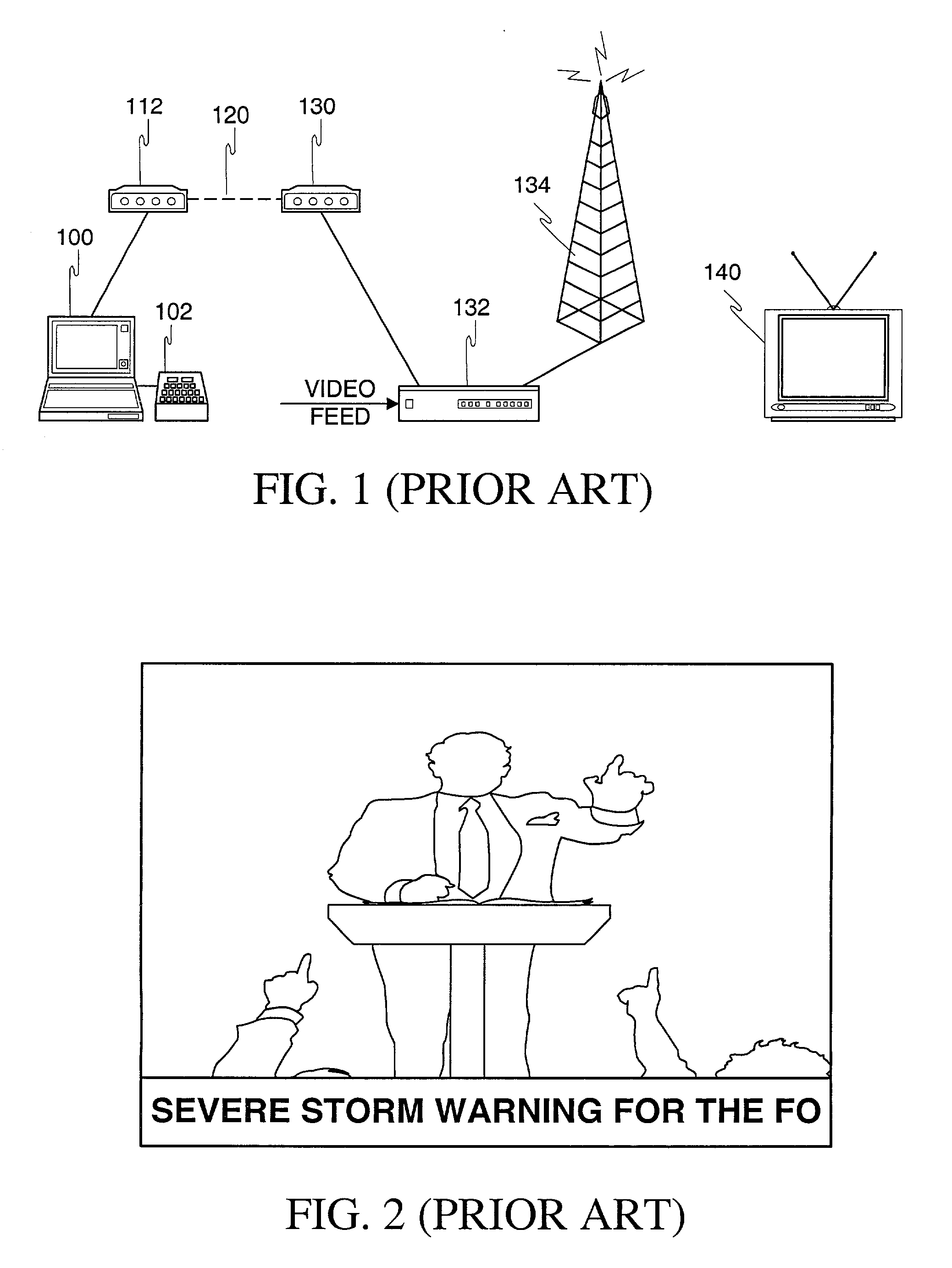

Interactive content preservation and customization technology is placed at the broadcast facility to ensure reliable transmission of the interactive content to a local subsystem. An interactive content code detector detects interactive content codes in the video stream at the broadcast facility. The interactive content code detector is placed in the transmission path before the video stream is transmitted to broadcast facility hardware that may strip out, destroy, corrupt or otherwise modify the interactive content and interactive content codes. Once an interactive content code detector detects a code, an interactive broadcast server determines what action to take, and instructs an data insertion unit accordingly. The interactive content codes or interactive content may be placed in a portion of the video that is guaranteed by the broadcast facility to be transmitted, for example, the closed caption region of the vertical blanking interval.

Owner:OPEN TV INC

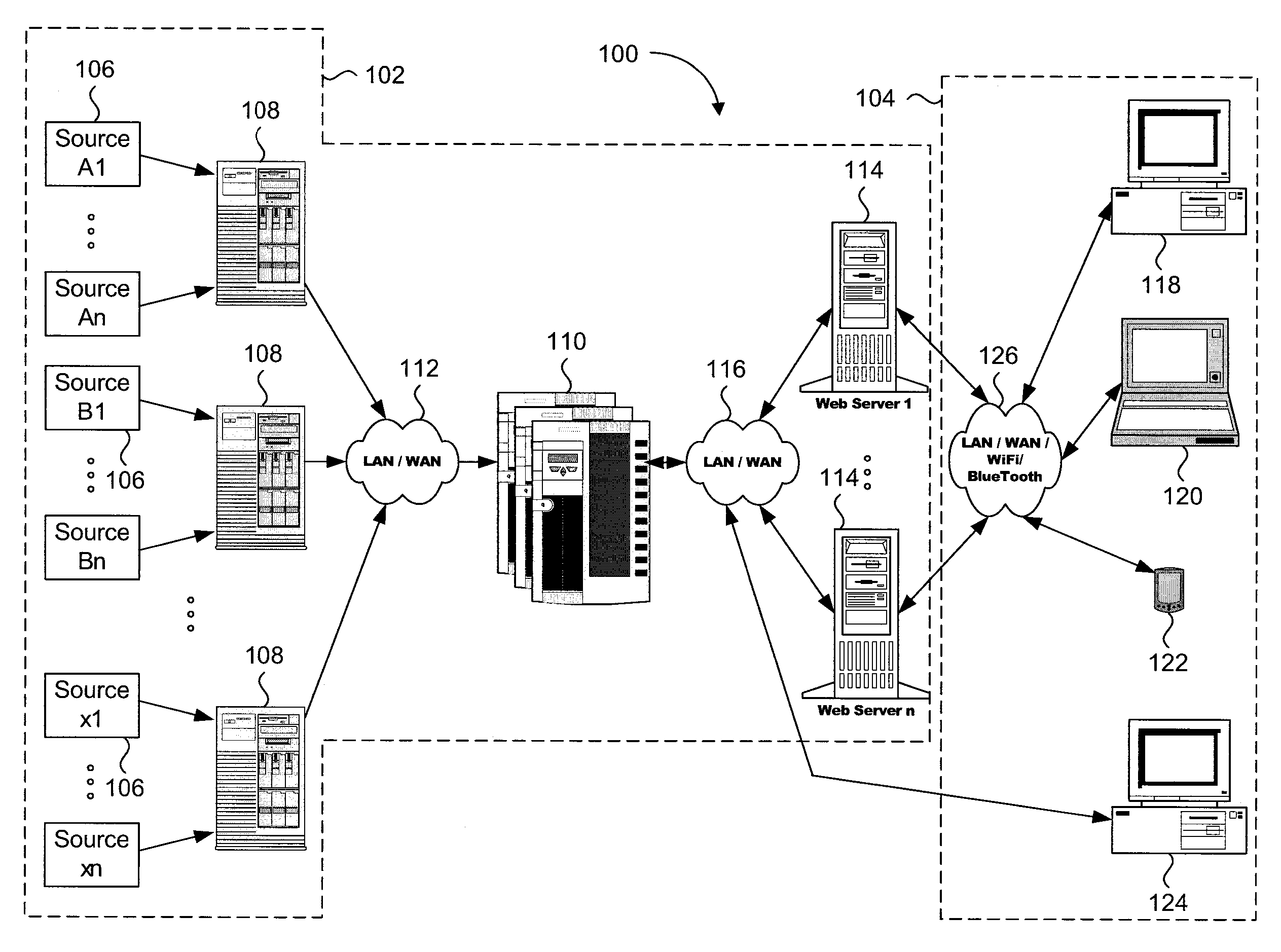

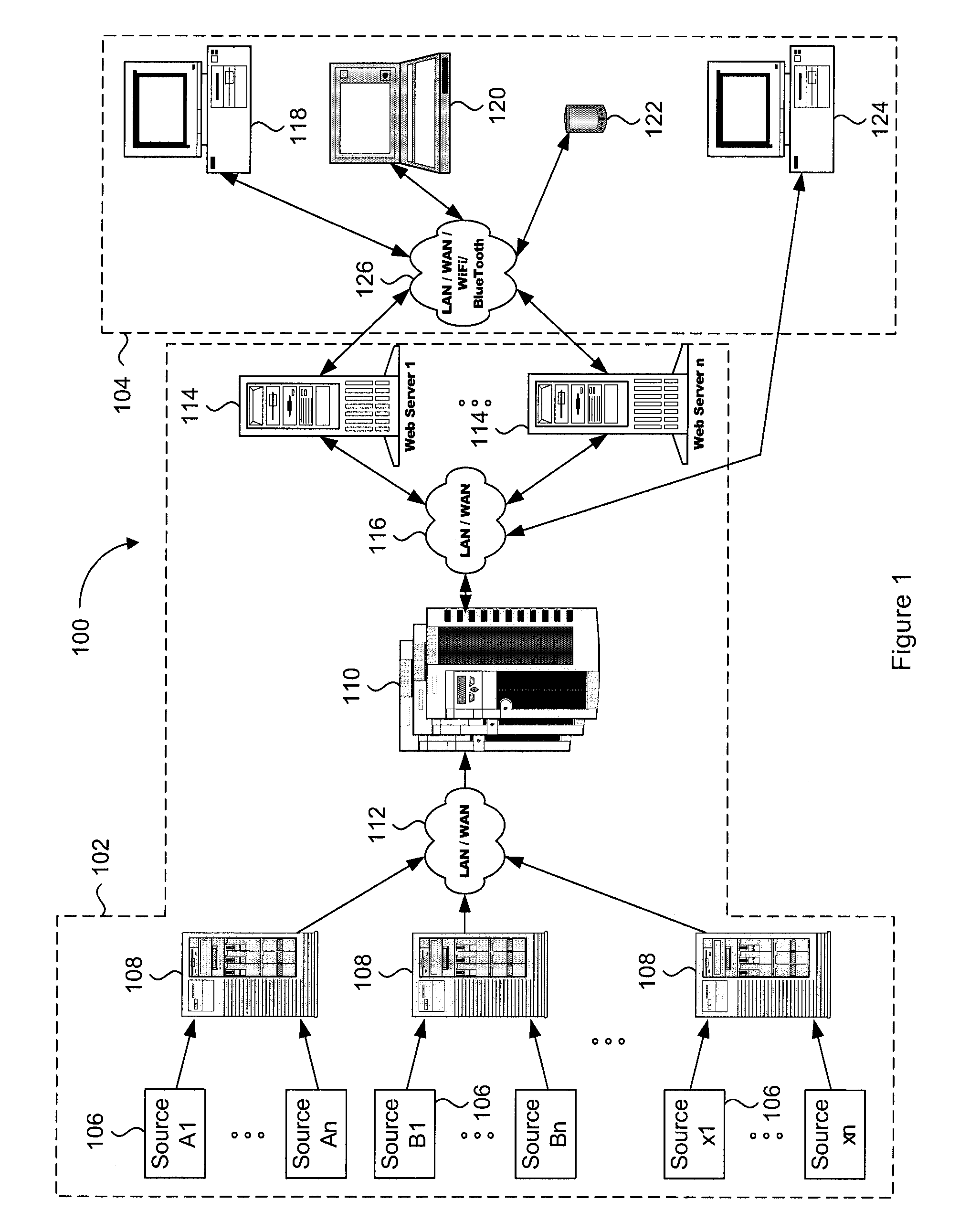

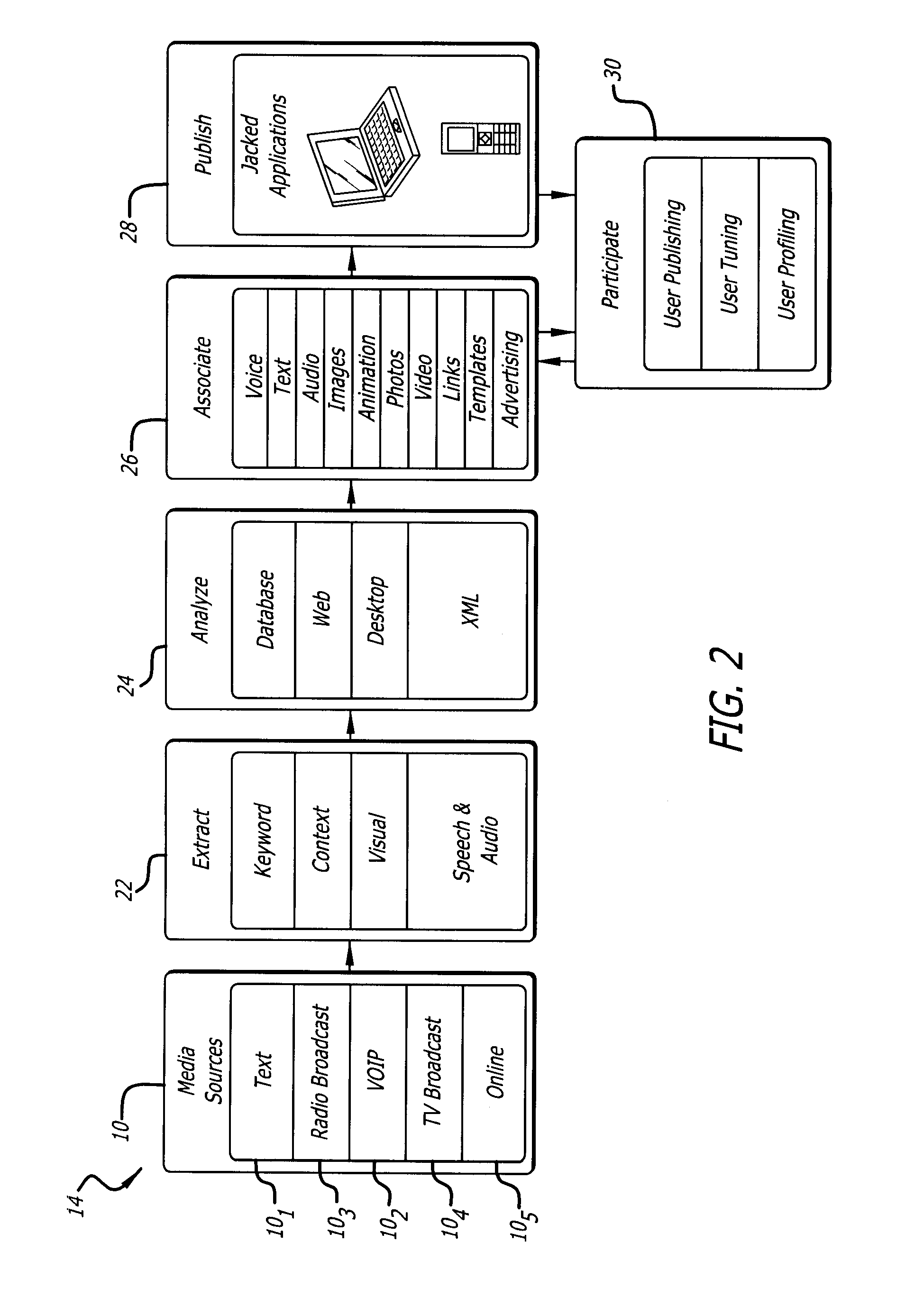

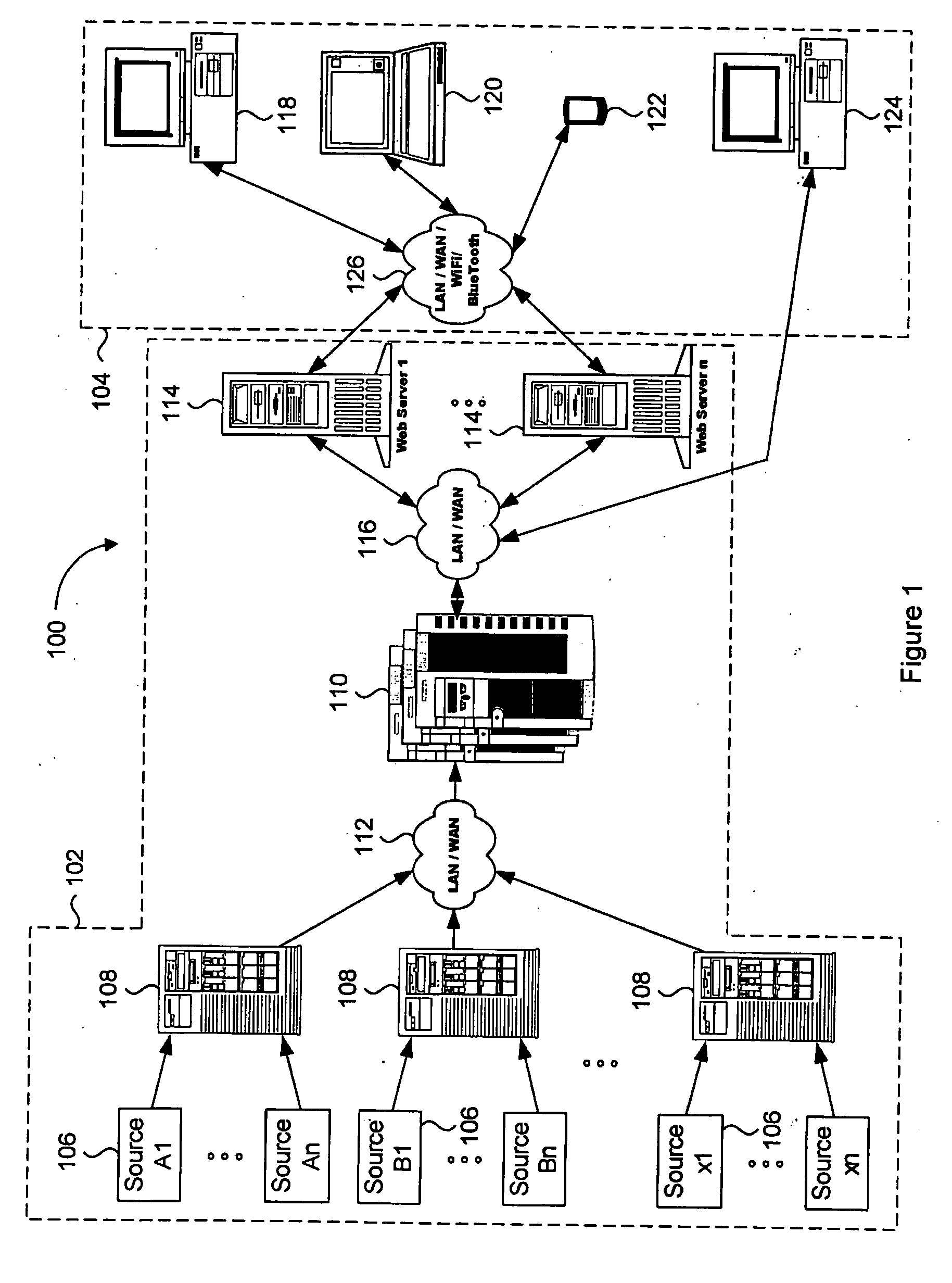

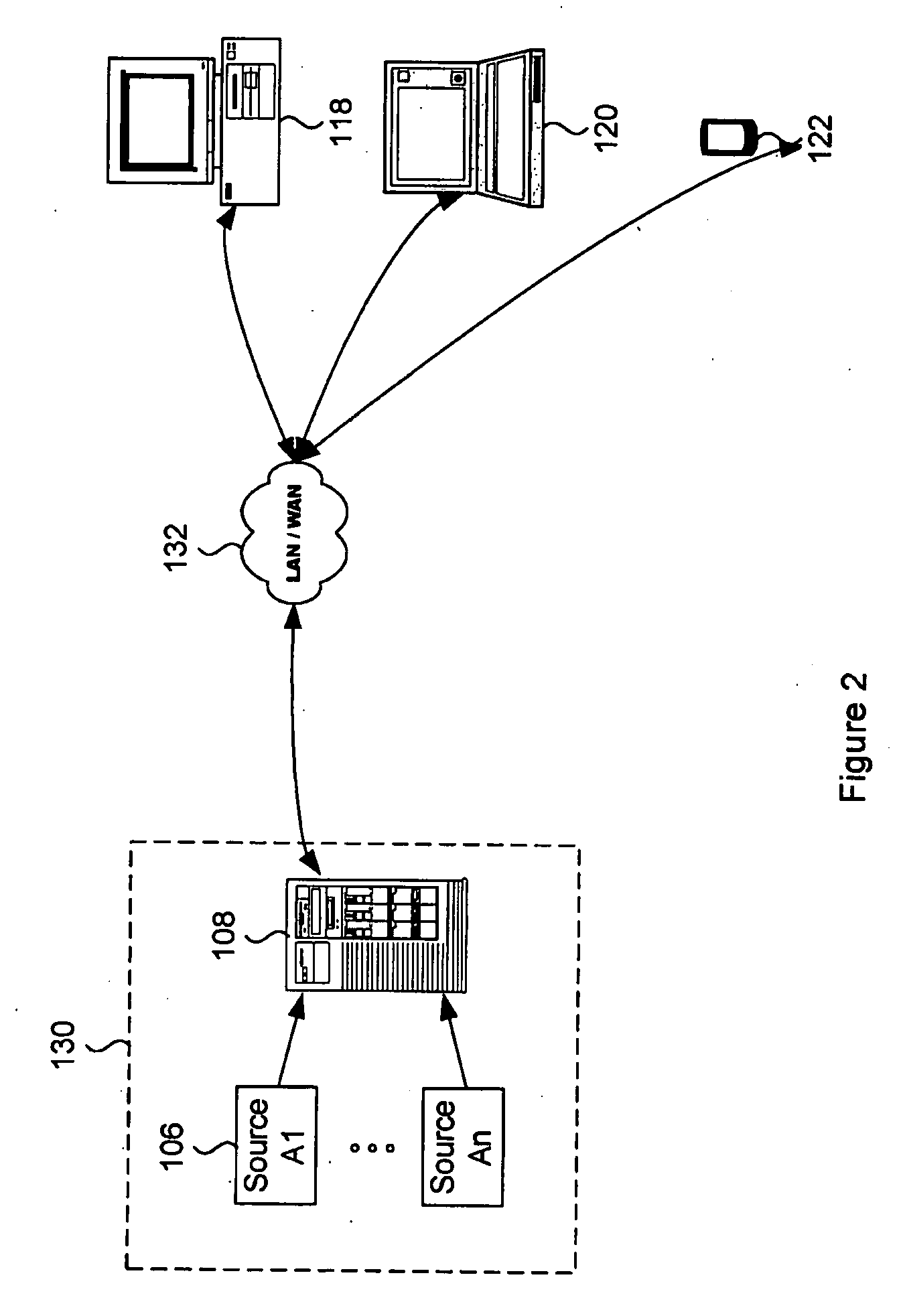

System and method for real-time media searching and alerting

InactiveUS20080072256A1Television system detailsMetadata video data retrievalClosed captioningRadio broadcasting

A method and system for continually storing and cataloguing streams of broadcast content, allowing real-time searching and real-time results display of all catalogued video. A bank of video recording devices store and index all video content on any number of broadcast sources. This video is stored along with the associated program information such as program name, description, airdate and channel. A parallel process obtains the text of the program, either from the closed captioning data stream, or by using a speech-to-text system. Once the text is decoded, stored, and indexed, users can then perform searches against the text, and view matching video immediately along with its associated text and broadcast information. Users can retrieve program information by other methods, such as by airdate, originating station, program name and program description. An alerting mechanism scans all content in real-time and can be configured to notify users by various means upon the occurrence of a specified search criteria in the video stream. The system is preferably designed to be used on publicly available broadcast video content, but can also be used to catalog private video, such as conference speeches or audio-only content such as radio broadcasts.

Owner:DNA 13

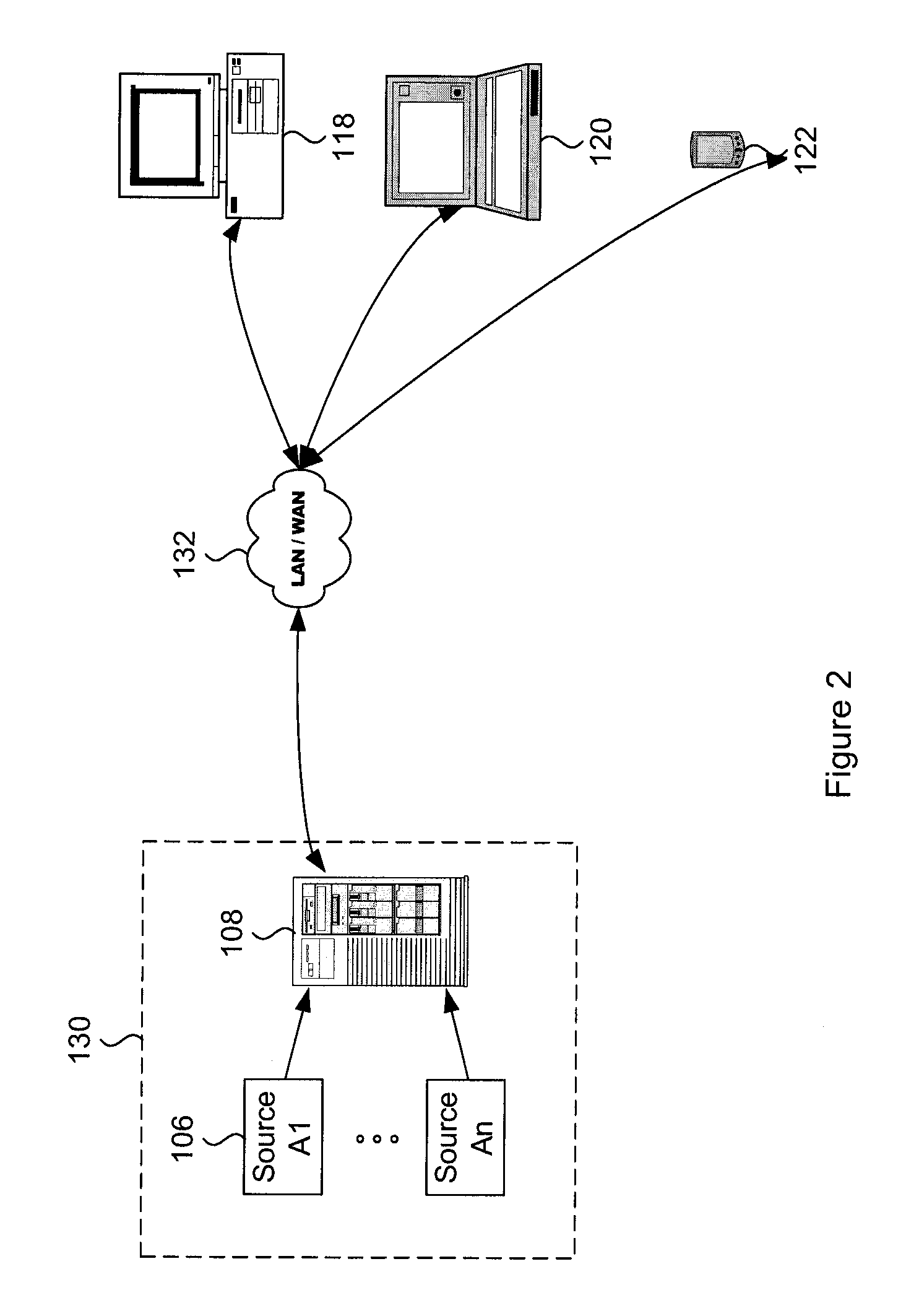

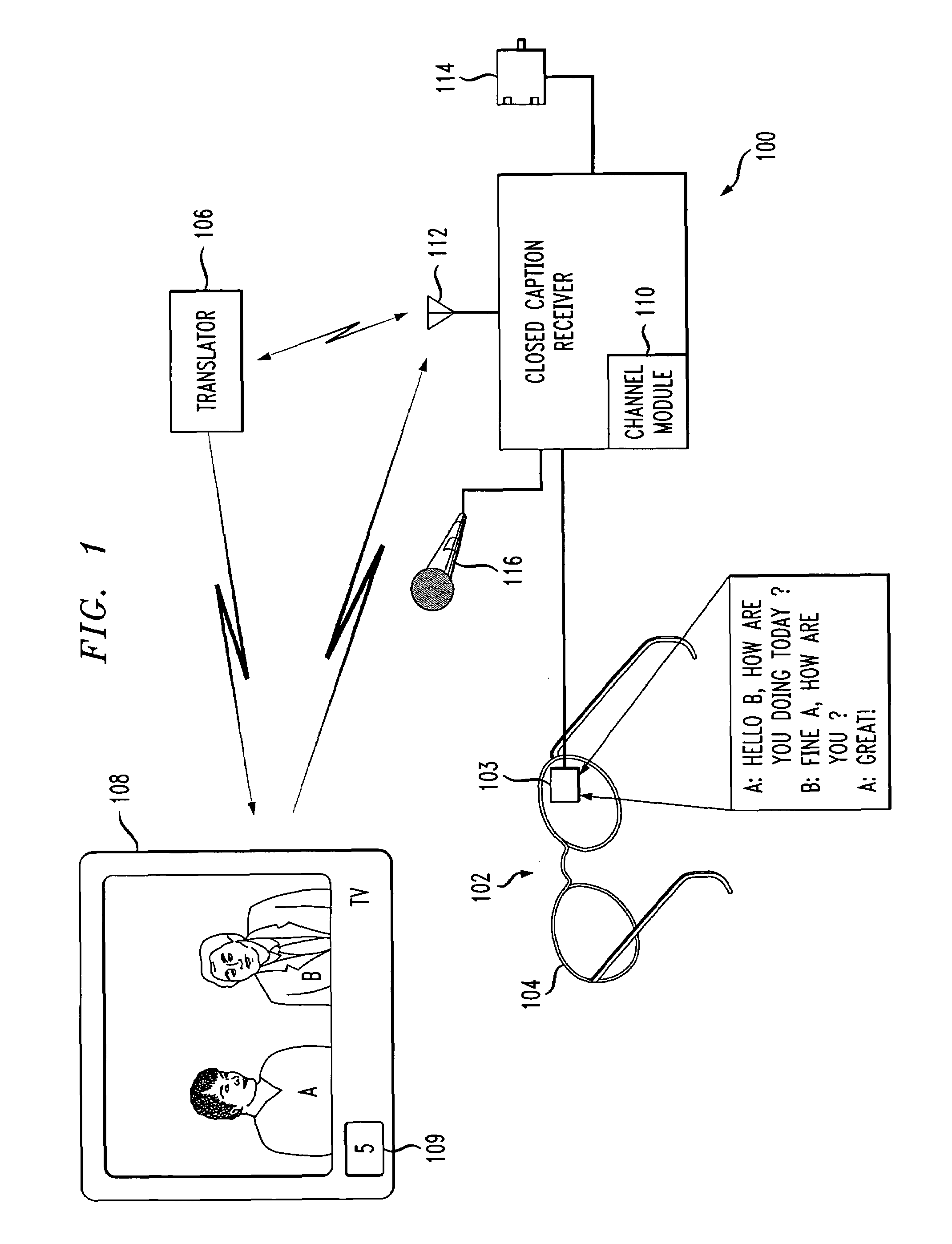

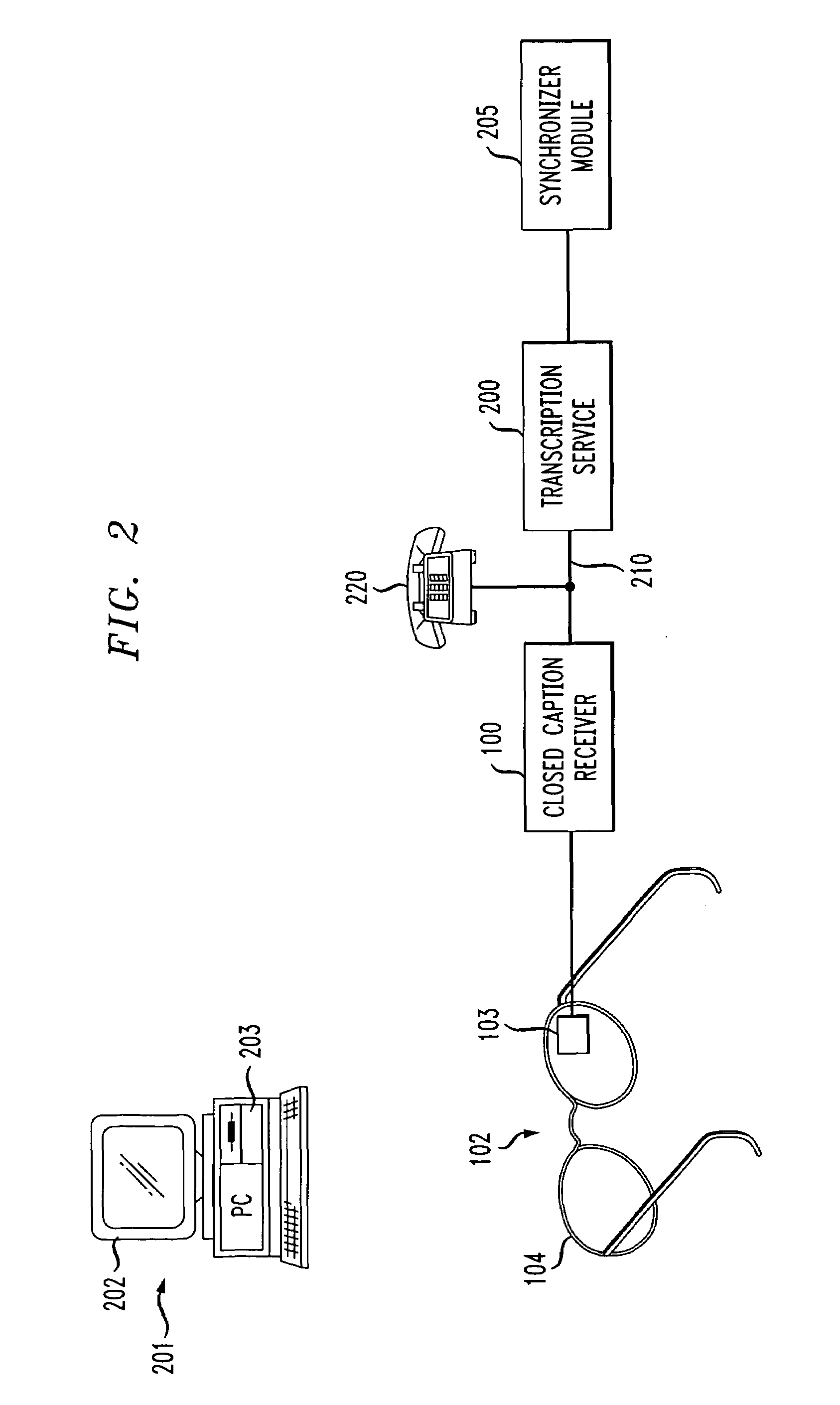

Universal closed caption portable receiver

ActiveUS7221405B2Reading comfortTelevision system detailsPicture reproducers using cathode ray tubesClosed captioningComputer monitor

Methods and apparatus for portable and universal receipt of closed captioning services by a user are provided in accordance with the invention. The invention permits a user to receive closed captioning services wherever he or she may be viewing video content with an audio component in accordance with a video / audio content display system (e.g., television set, computer monitor, movie theater), regardless of whether the content display system provides closed captioning capabilities. Also, the invention permits a user to receive closed captioning services independent of the video / audio content display system that they are using to view the video content. In one illustrative aspect of the present invention, a portable and universal closed caption receiving device (closed caption receiver) is provided for: (i) receiving a signal, which includes closed captions, from a closed caption provider while the user watches a program on a video / audio content display system; (ii) extracting the closed captions; and (iii) providing the closed captions to a head mounted display for presentation to the user so that the user may view the program and, at the same time, view the closed captions in synchronization with the video content of the program.

Owner:RAKUTEN GRP INC

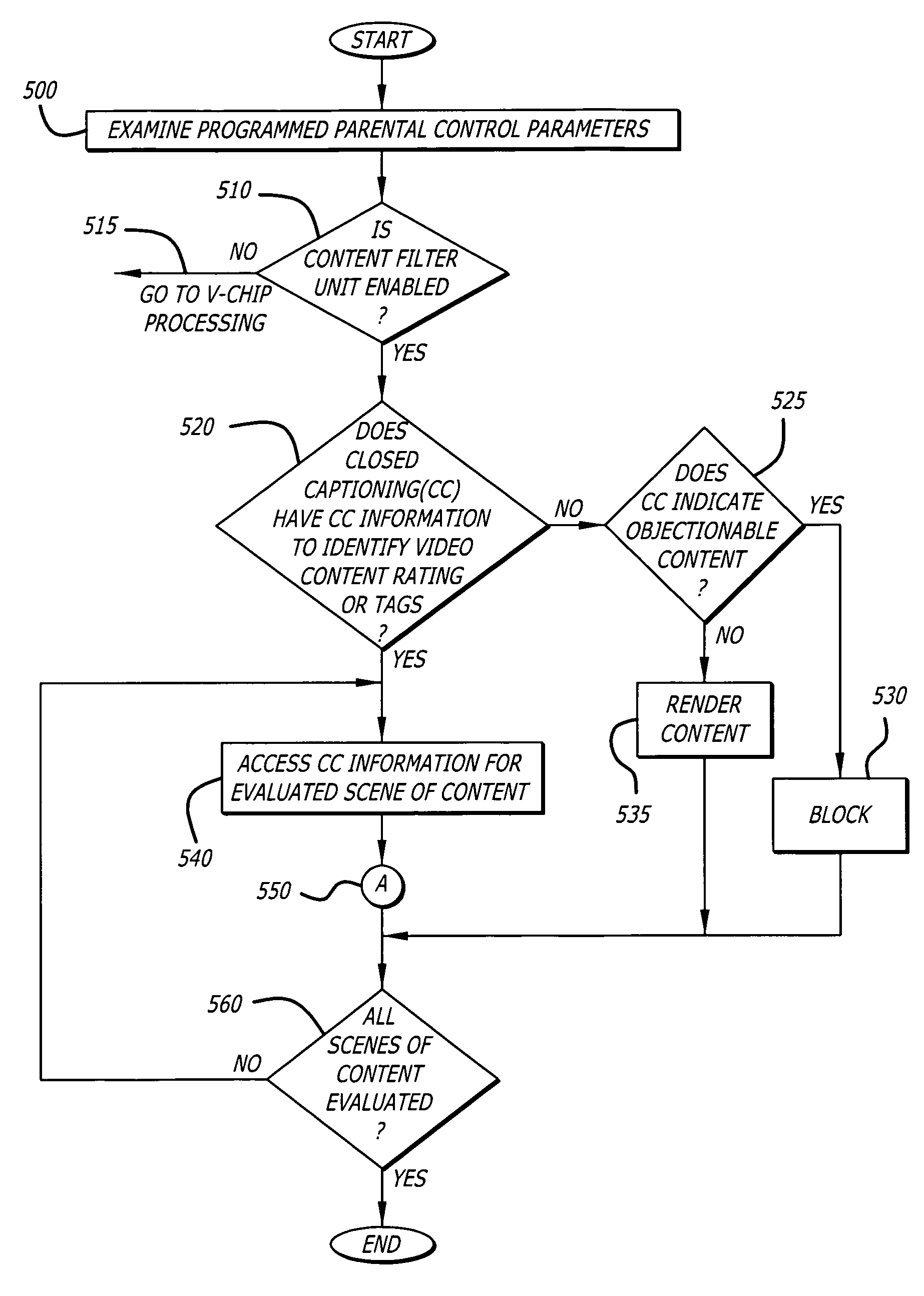

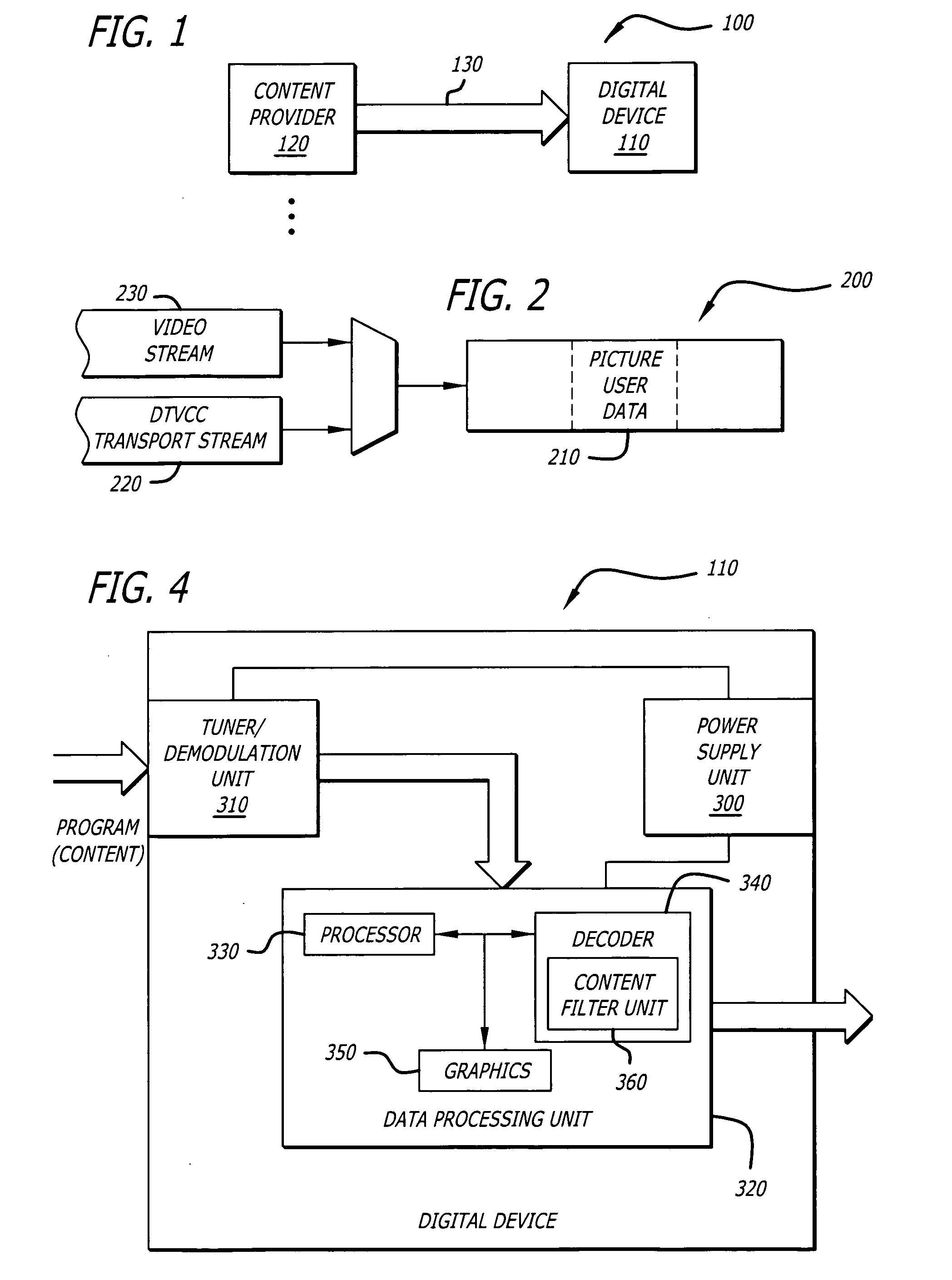

Parental control of displayed content using closed captioning

ActiveUS20070204288A1Television system detailsPicture reproducers using cathode ray tubesClosed captioningComputer graphics (images)

According to one embodiment, a method for blocking scenes with objectionable content comprises receiving incoming content, namely a scene of a program. Thereafter, using closed captioning information, a determination is made if the scene of the program includes objectionable content, and if so, blocking the scene from being displayed.

Owner:SONY CORP +1

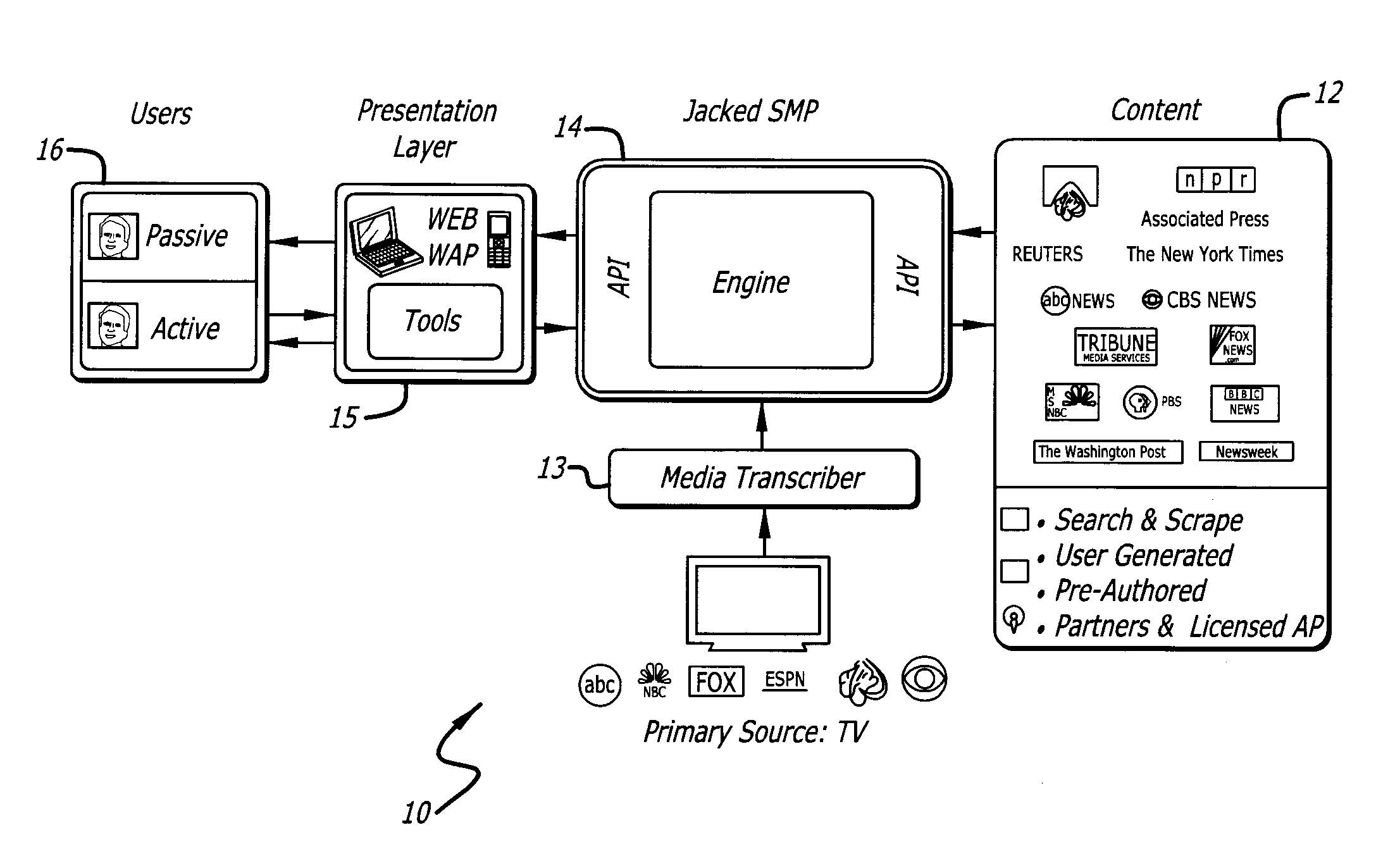

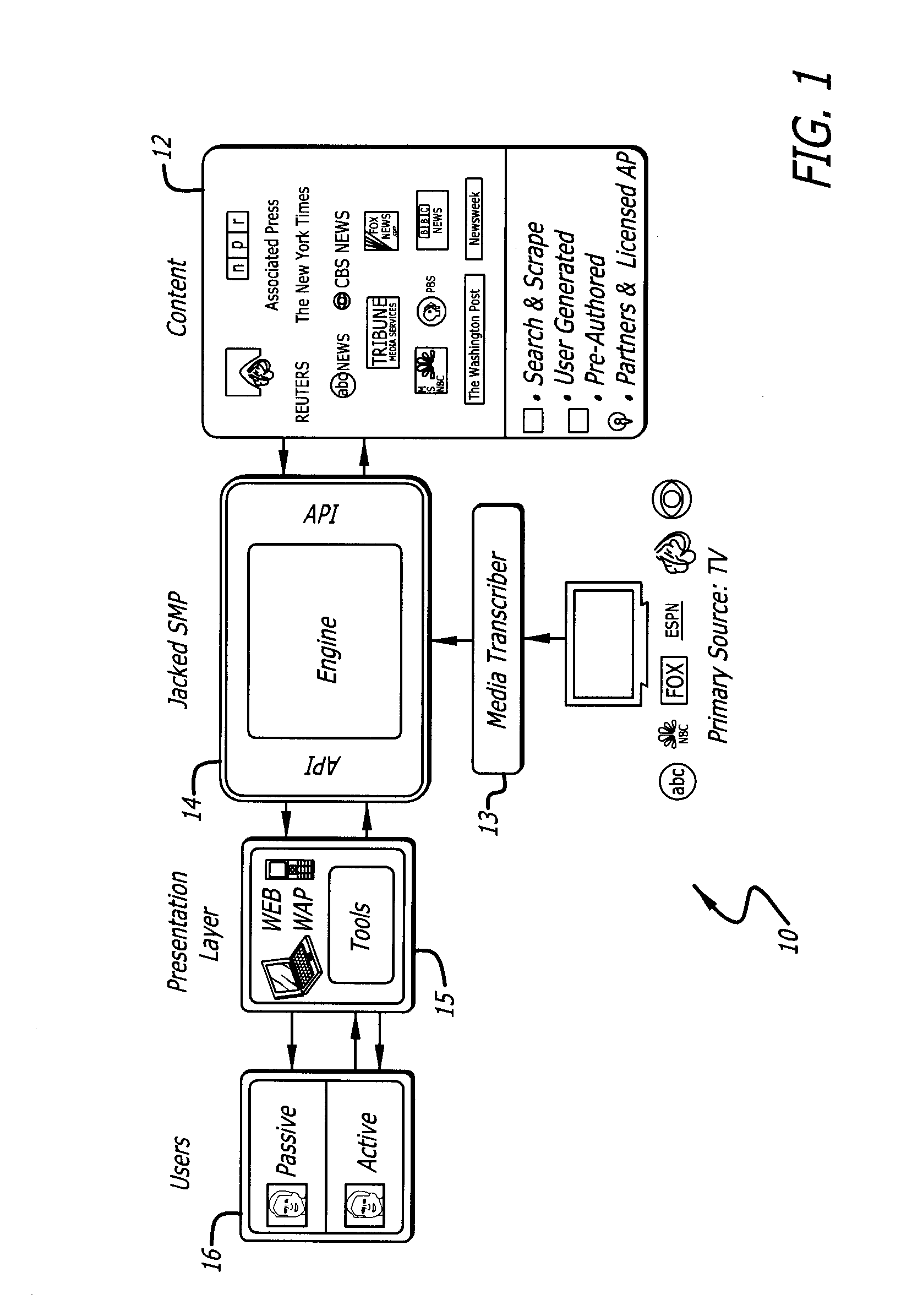

System for providing secondary content based on primary broadcast

InactiveUS20080082922A1Enhance and optimize experienceTelevision systemsSelective content distributionClosed captioningBroadcasting

The system provides a computer based presentation synchronized to a broadcast and not merely to an event. The system includes a customizable interface that uses a broadcast and a plurality of secondary sources to present data and information to a user to enhance and optimize a broadcast experience. The system defines templates that represent a customizable content interface for a user. In one embodiment, the templates comprise triggers, sources, widgets, and filters. In one embodiment the system receives the closed captioning feed (cc feed) of a broadcast and mines the text of the cc feed to identify keywords and triggers that will cause the retrieval, generation, and / or display of content related to the keywords and triggers. The system can also use speech recognition to supplement, or to replace, the cc feed and identify key words and triggers used to initiate content.

Owner:ROUNDBOX

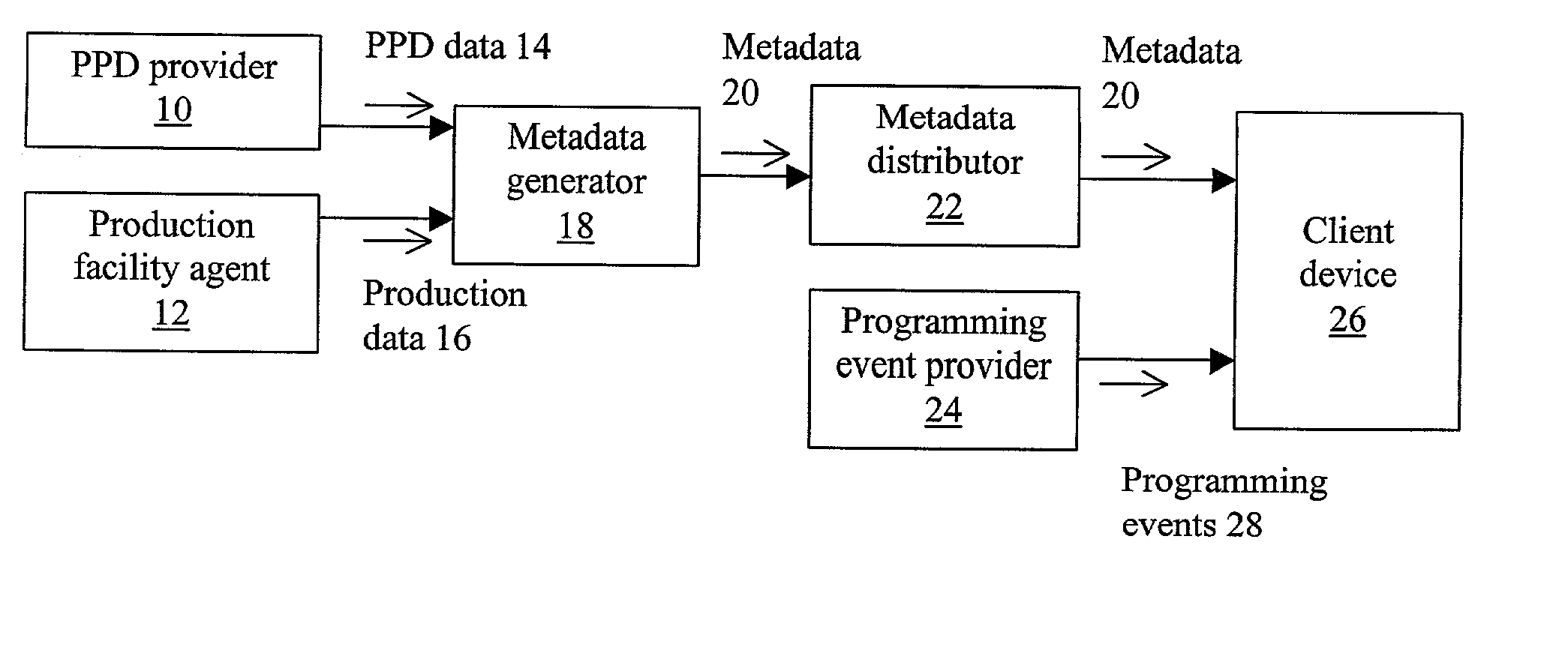

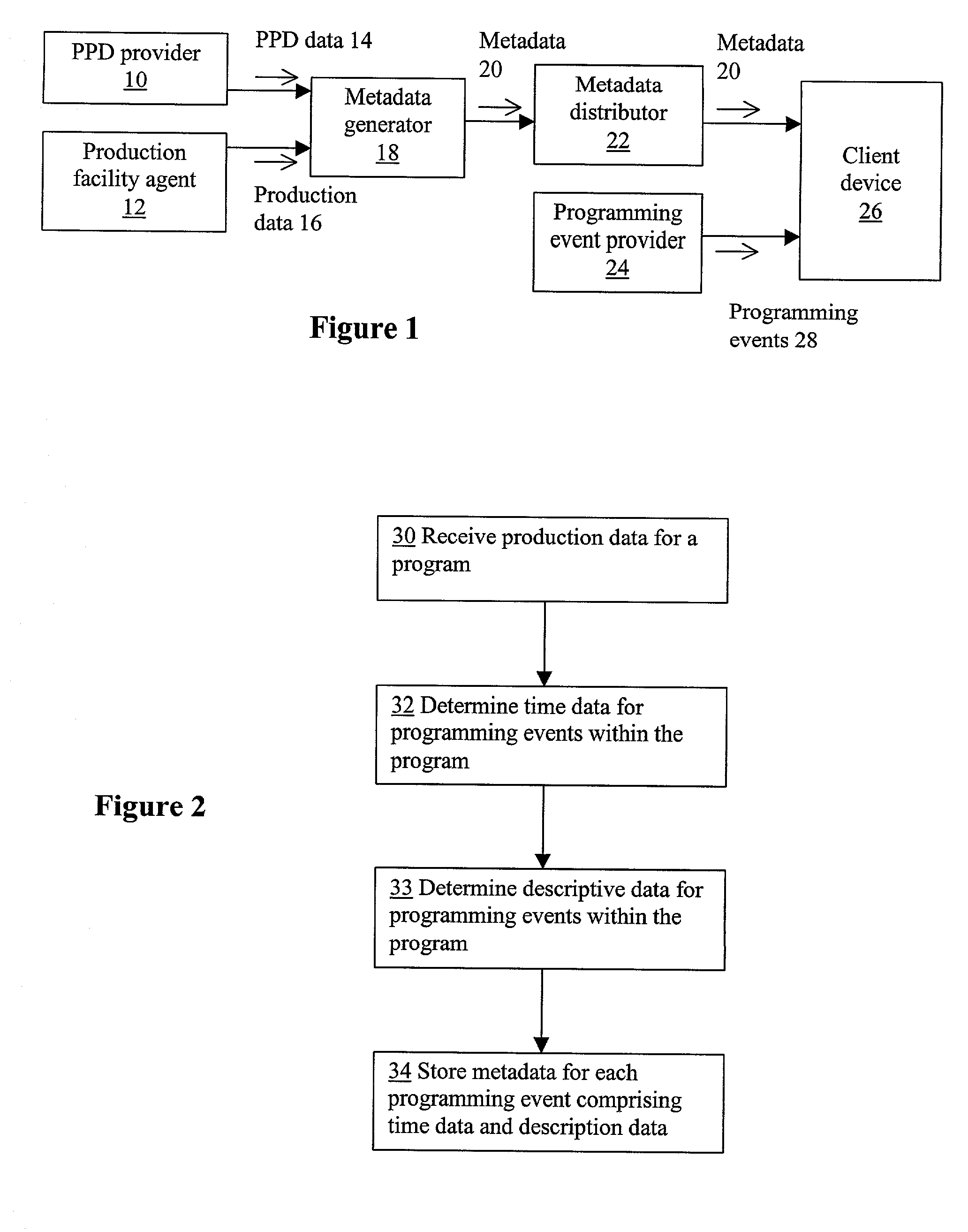

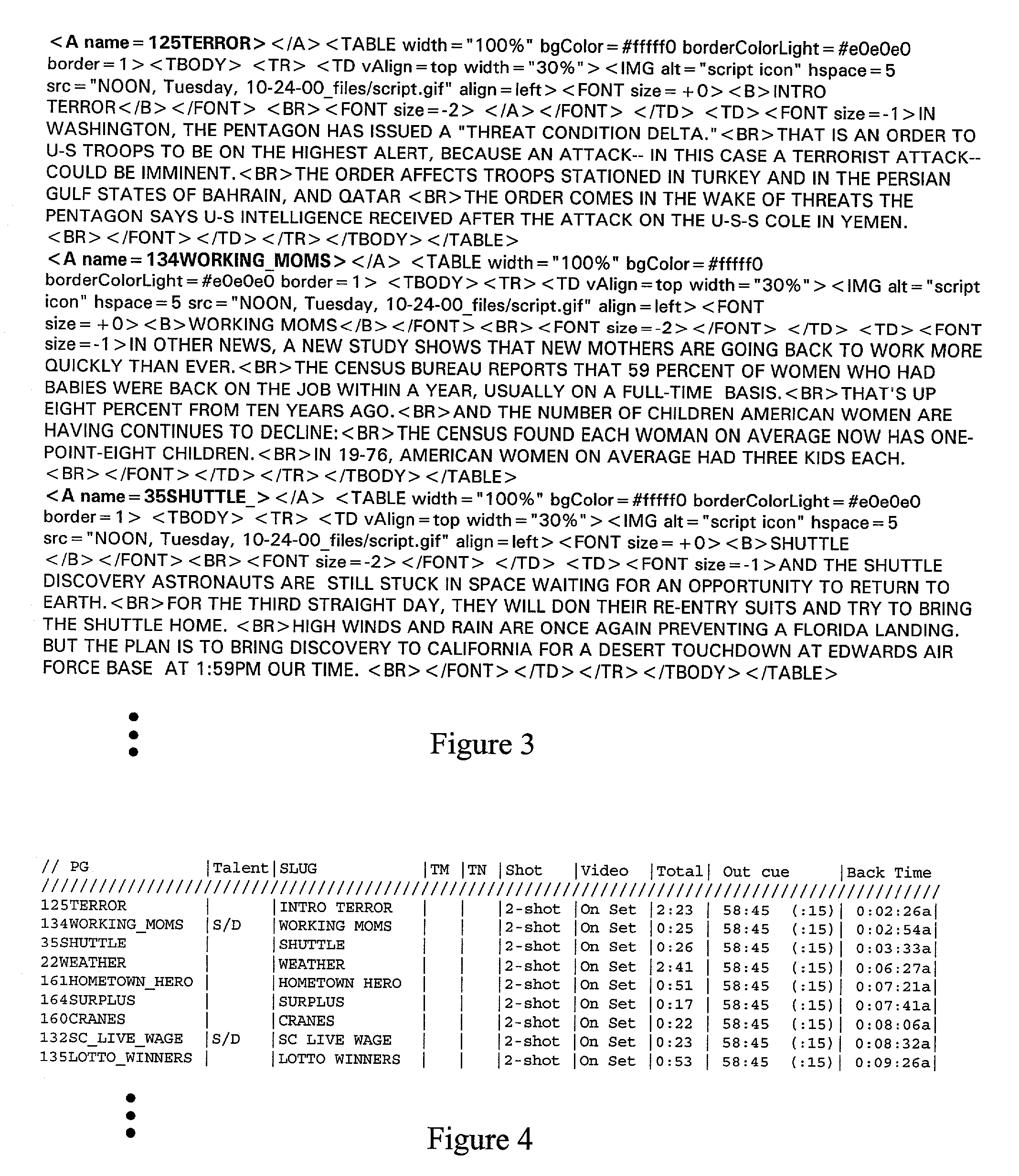

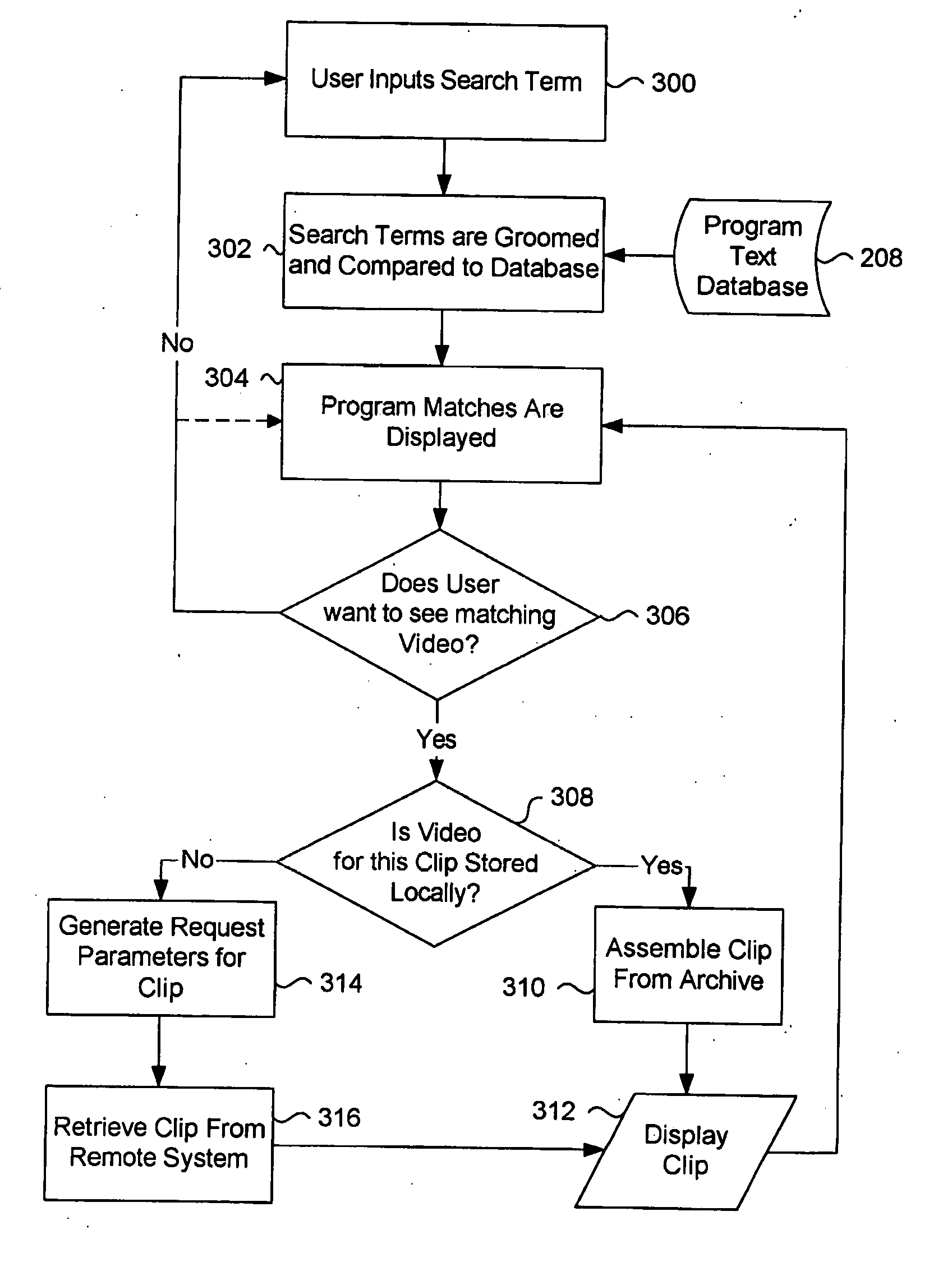

System and method for providing timing data for programming events

InactiveUS20020088009A1Television system detailsPicture reproducers using cathode ray tubesProgramming languageClosed captioning

A video production facility system may produce closed caption data that includes text data and timing data. The system may receive script data for a video program. The system may then determine identifiers for individual programming events that make up the program. The system may then produce closed caption data for the program. The closed caption data may include text data corresponding to the script data, and timing data provided at locations that correspond to the beginning of each of the programming events. The timing data may comprise an identifier of the corresponding programming event. Further timing data may be provided that marks the ends of programming events, or that marks individual segments within a programming event. Related embodiments may pertain to systems that implement such processing. The closed caption data may be synchronized to a video signal based on the display of corresponding text by a teleprompter system that is used in the production of the video program. Timing data may alternatively be provided within the video signal itself.

Owner:MEEVEE

System and method for real-time media searching and alerting

InactiveUS20050198006A1Television system detailsMetadata video data retrievalRadio broadcastingClosed captioning

A method and system for continually storing and cataloguing streams of broadcast content, allowing real-time searching and real-time results display of all catalogued video. A bank of video recording devices store and index all video content on any number of broadcast sources. This video is stored along with the associated program information such as program name, description, airdate and channel. A parallel process obtains the text of the program, either from the closed captioning data stream, or by using a speech-to-text system. Once the text is decoded, stored, and indexed, users can then perform searches against the text, and view matching video immediately along with its associated text and broadcast information. Users can retrieve program information by other methods, such as by airdate, originating station, program name and program description. An alerting mechanism scans all content in real-time and can be configured to notify users by various means upon the occurrence of a specified search criteria in the video stream. The system is preferably designed to be used on publicly available broadcast video content, but can also be used to catalog private video, such as conference speeches or audio-only content such as radio broadcasts.

Owner:DNA13

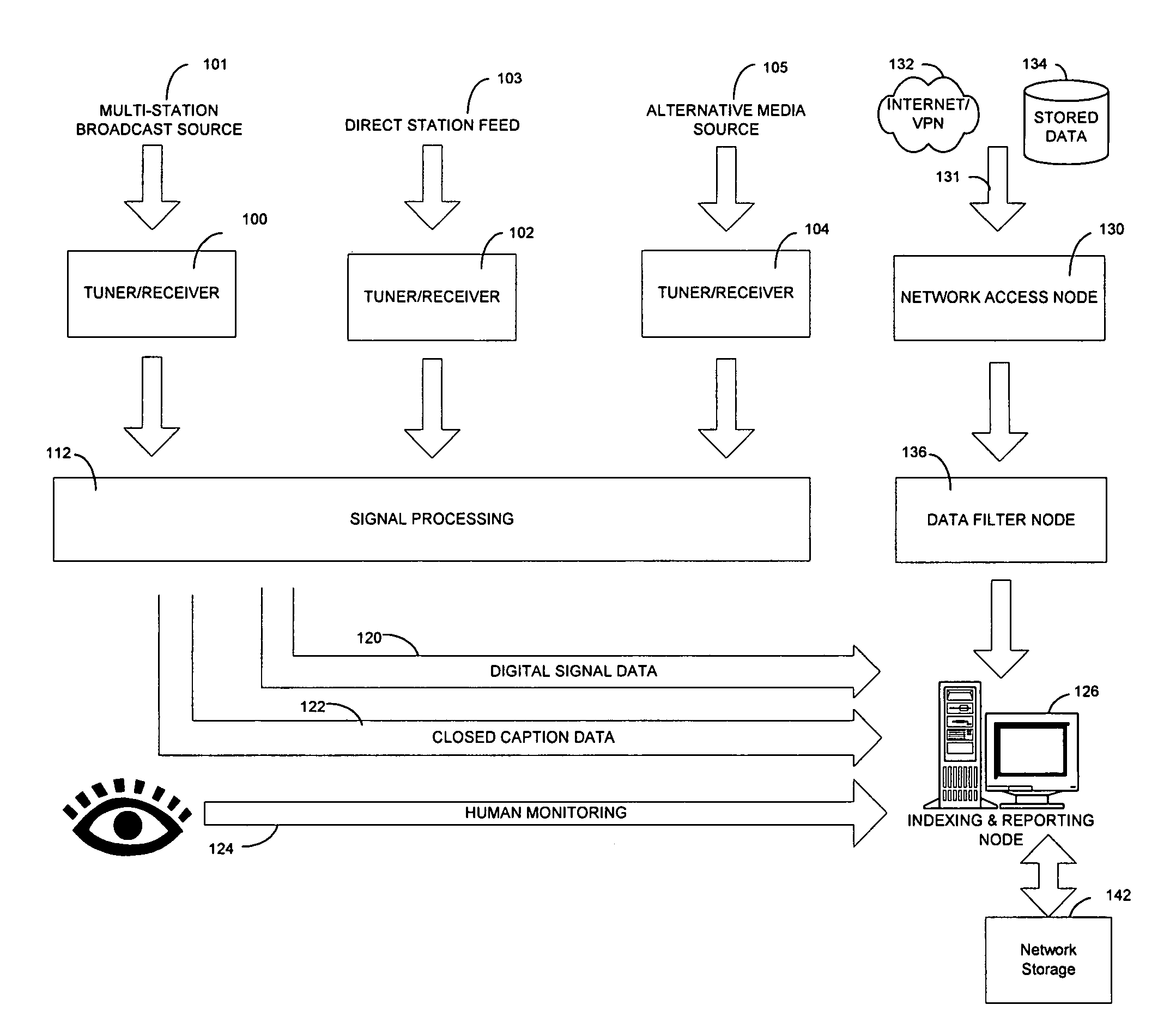

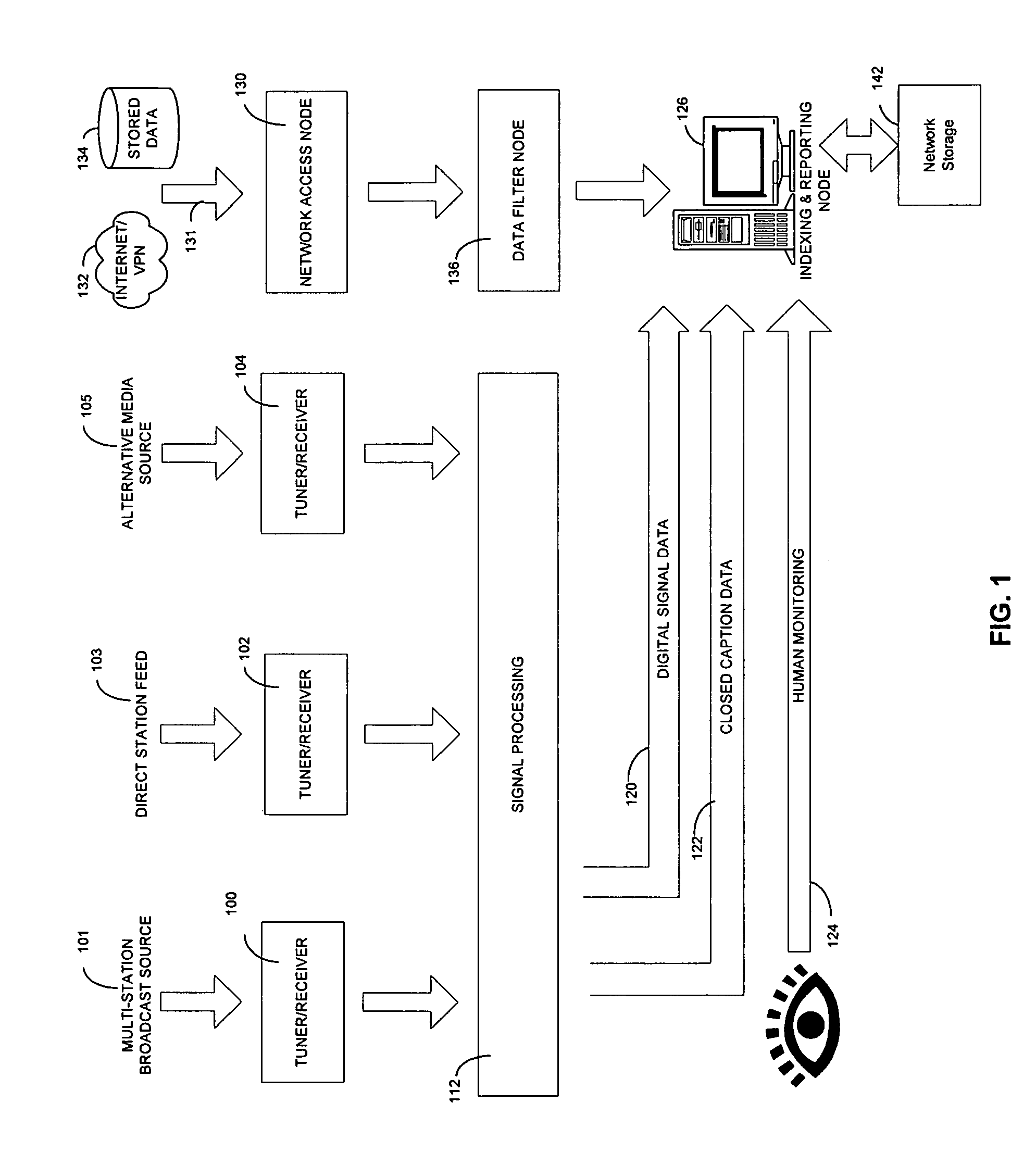

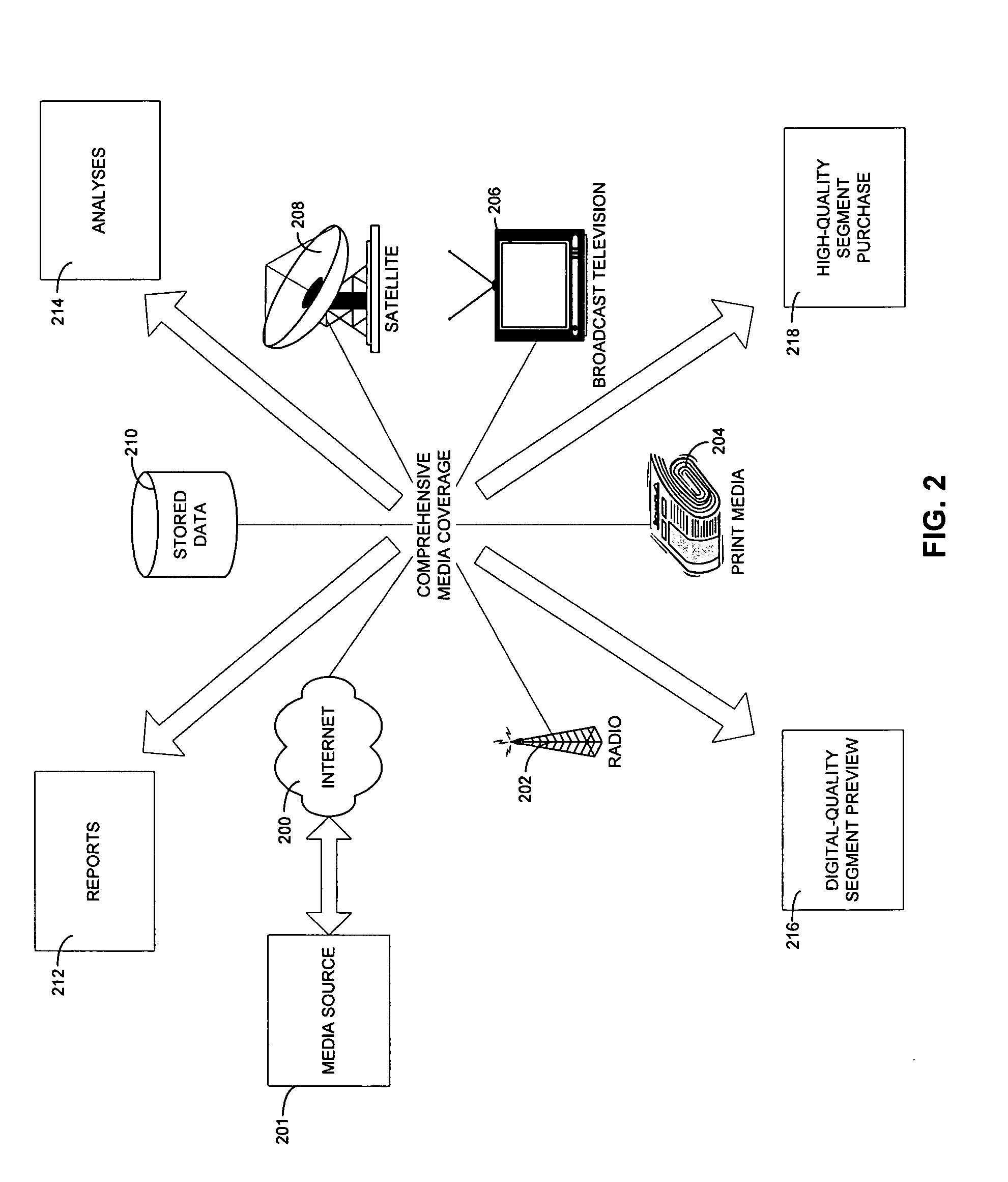

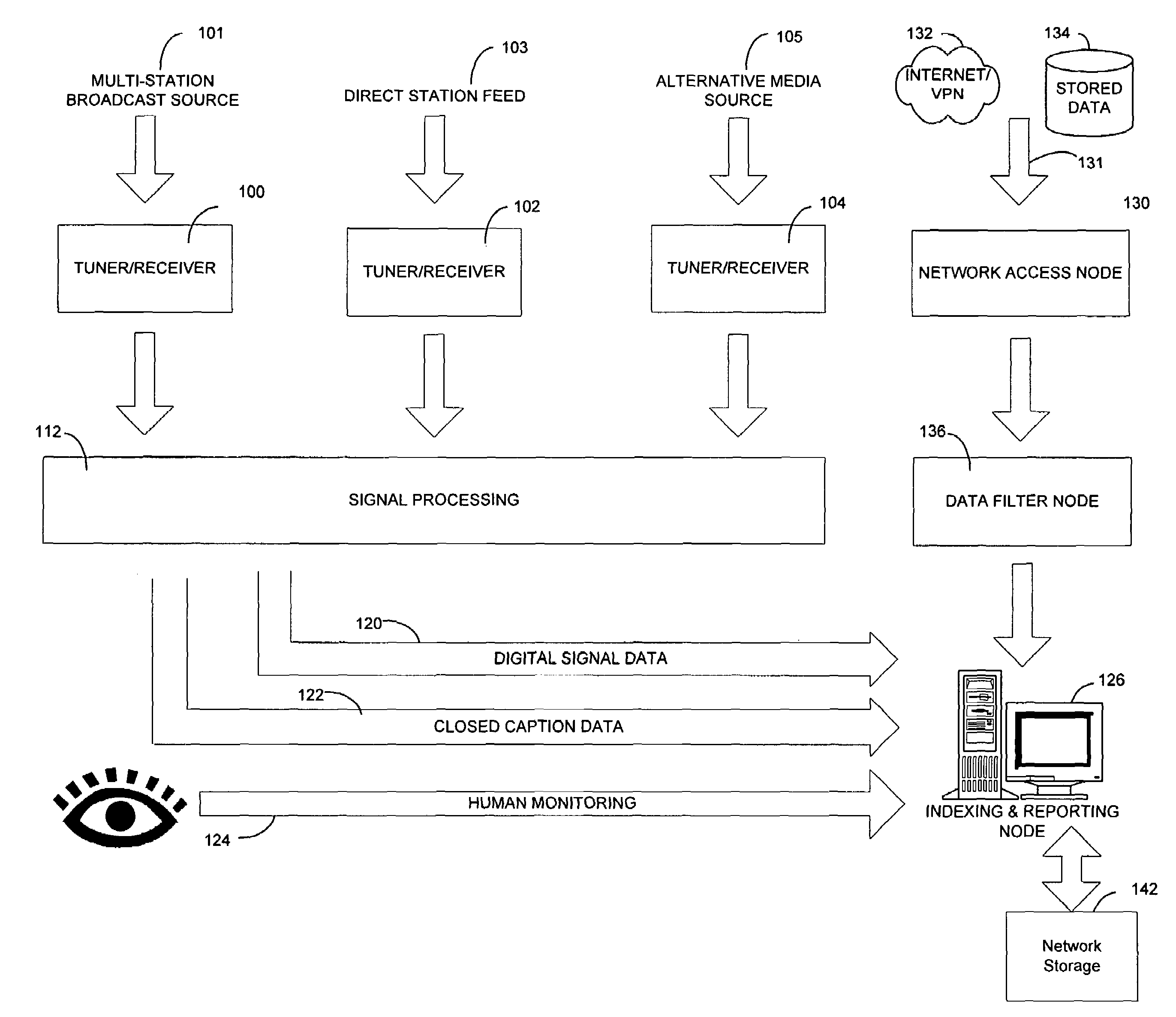

Method for integrated media preview, analysis, purchase, and display

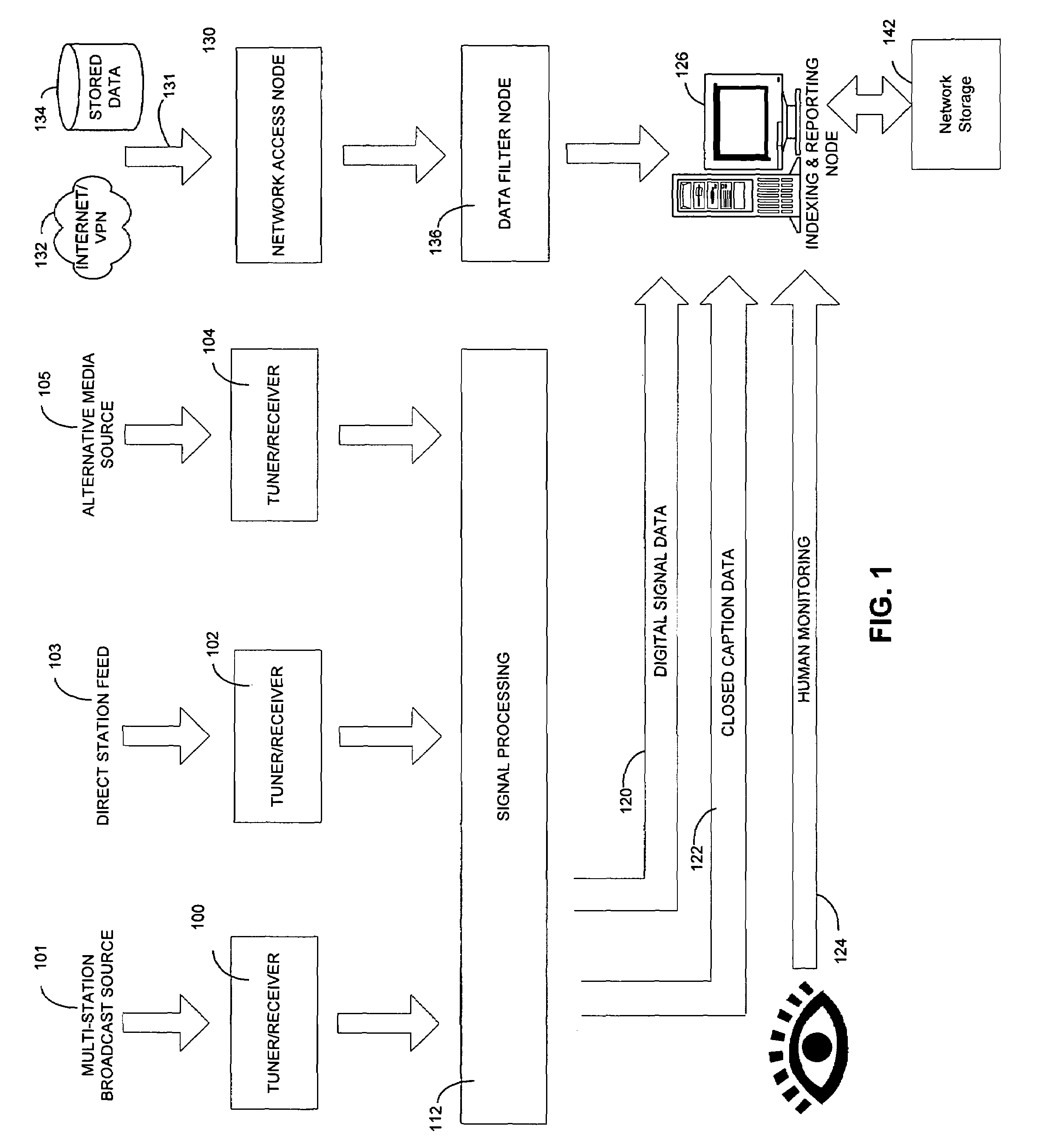

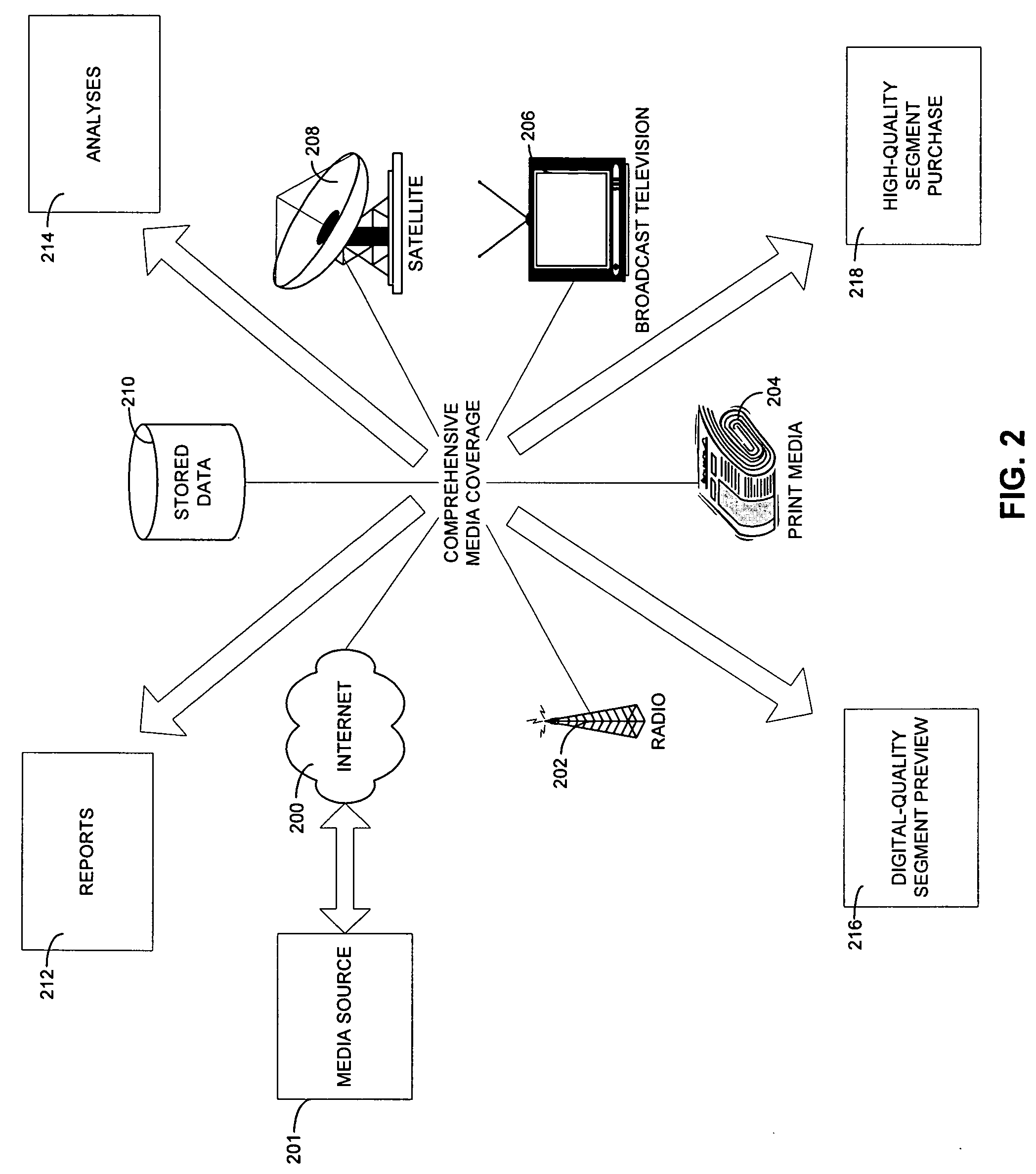

InactiveUS20070203945A1Efficient and effectiveMarketingSpecial data processing applicationsClosed captioningHuman monitoring

Systems and methods for integrated media monitoring are disclosed. The present invention enables users to analyze how a product or service is being advertised or otherwise conveyed to the general public. Via strategically placed servers, the present invention captures multiple types and sources of media for storage and analysis. Analysis includes both closed captioning analysis and human monitoring. Media search parameters are received over a network and a near real-time hit list of occurrences of the parameters are produced and presented to a requesting user. Options for previewing and purchasing matching media segments are presented, along with corresponding reports and coverage analyses. Reports indicate the effectiveness of advertising, the tonality of editorials, and other information useful to a user looking to understand how a product or service is being conveyed to the public via the media.

Owner:VMS MONITORING SERVICES OF AMERICA INC

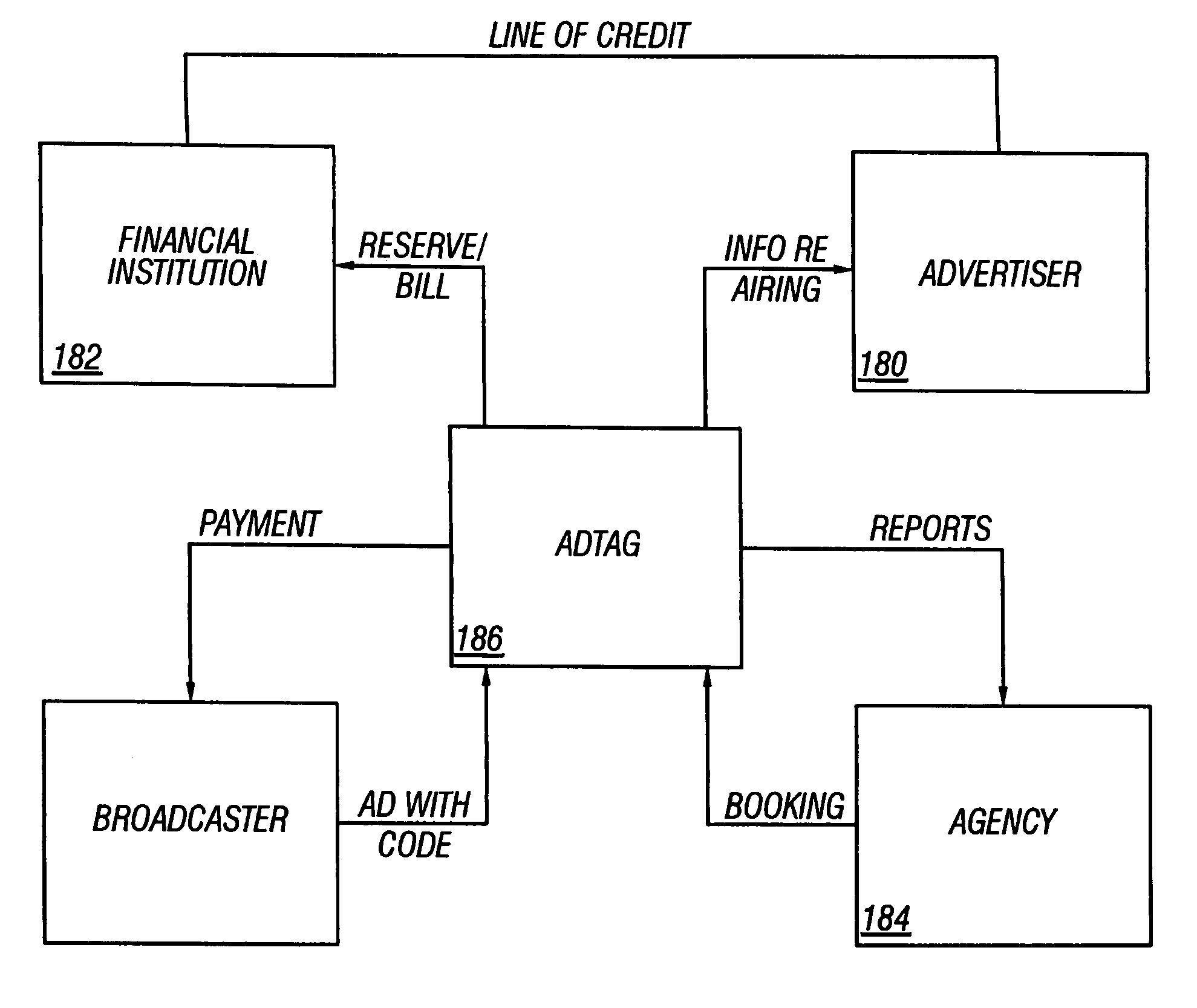

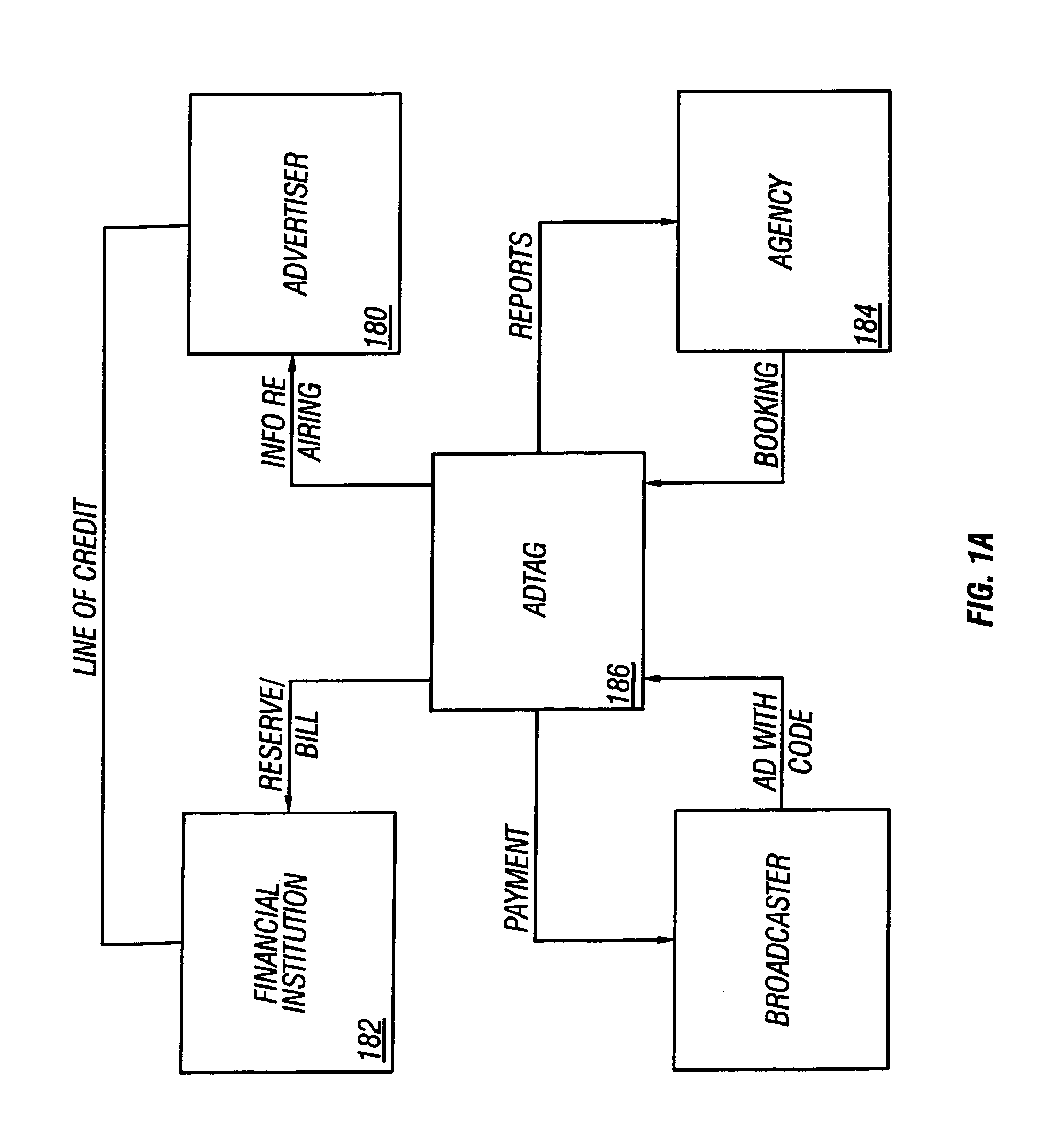

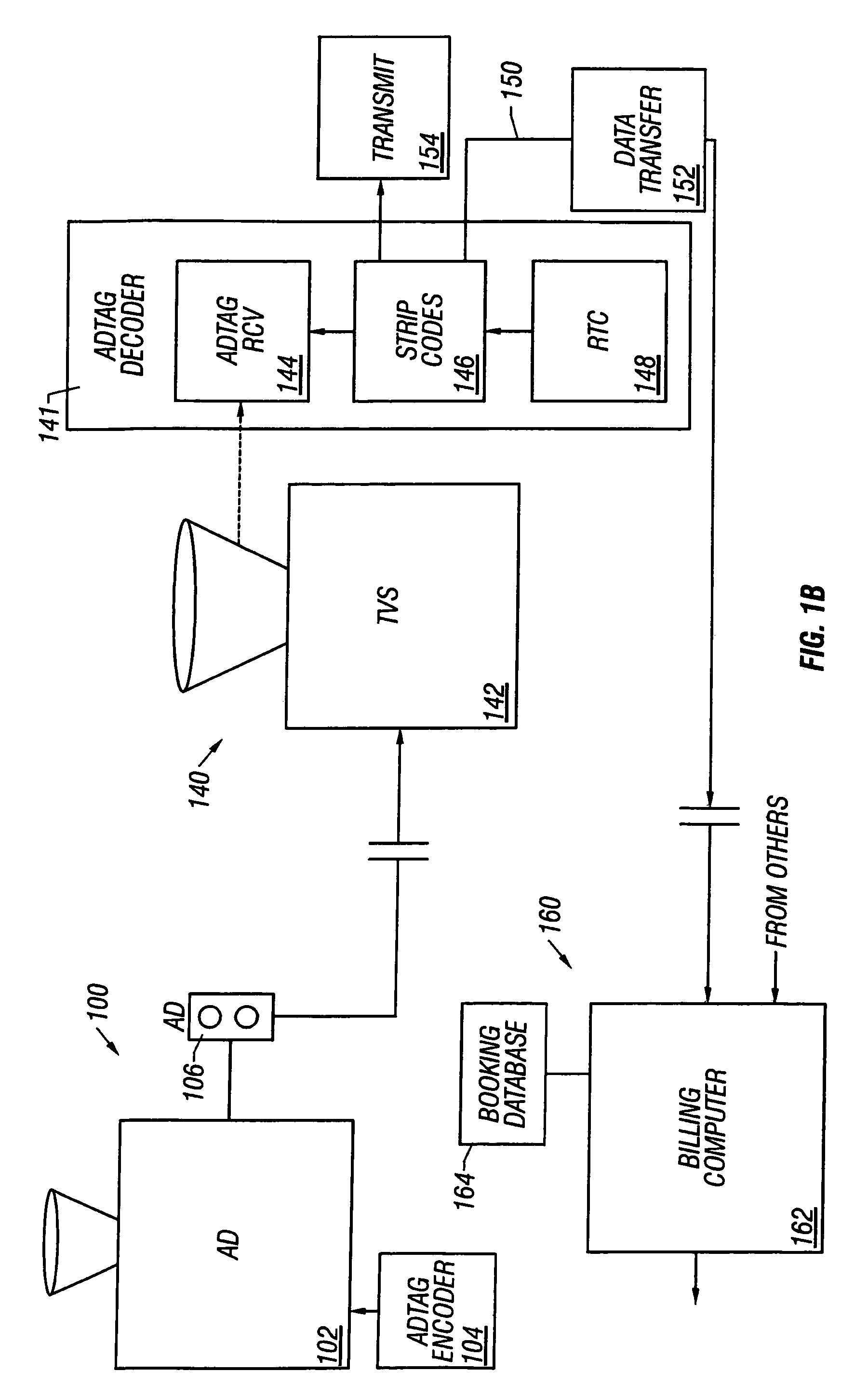

Television advertising automated billing system

InactiveUS7039930B1Avoid possibilityAccurate accountingAdvertisementsPicture reproducers using cathode ray tubesPaymentClosed captioning

Advertising is marked with a code in a way which makes it difficult to fool the system. The advertising is marked with a code at the time the advertising is produced. Then, when the advertising is broadcast, the code on the advertising is analyzed. Different security measures can be used, including producing the code in the closed captioning so that many different people can see the code, or comparing codes in one part of the signal with a code in another part of the signal. Measures are taken to prevent the code from being used to detect commercials. According to another part of this system, a paradigm for a clearinghouse is disclosed in which the user signs up with the clearinghouse, obtains a line of credit, and the advertiser, the agency, and the ad producer also subscribe to the service. When the ad is actually aired, the payment can be automatically transferred.

Owner:CALIFORNIA INST OF TECH

Advertisement monitoring system

InactiveUS7690013B1Broadcast information monitoringTwo-way working systemsClosed captioningMonitoring system

An advertising monitoring system is presented in which subscriber selections including channel changes are monitored, and in which information regarding an advertisement is extracted from text related to the advertisement. The text related to the advertisement is in the form of closed caption text, data transmitted with the advertisement, or other associated text. A record of the effectiveness of the advertisement is created in which measurements of the percentage of the advertisement which was viewed are stored. Such records allow a manufacturer or advertiser to determine if their advertisement is being watched by subscribers. The system can be realized in a client-sever mode in which subscriber selection requests are transmitted to a server for fulfillment, in which case the advertisement monitoring takes place at the server side.

Owner:PRIME RES ALLIANCE E LLC

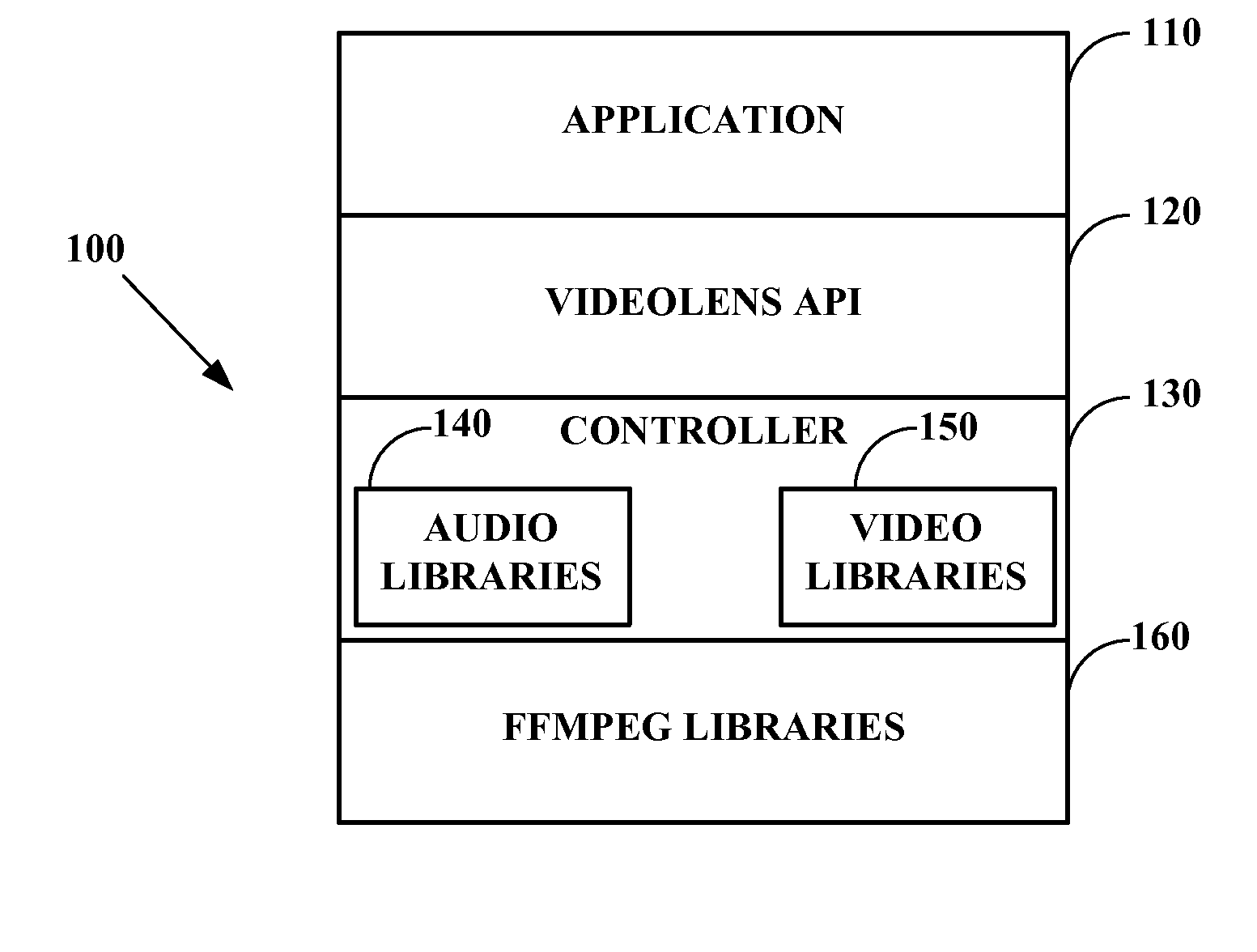

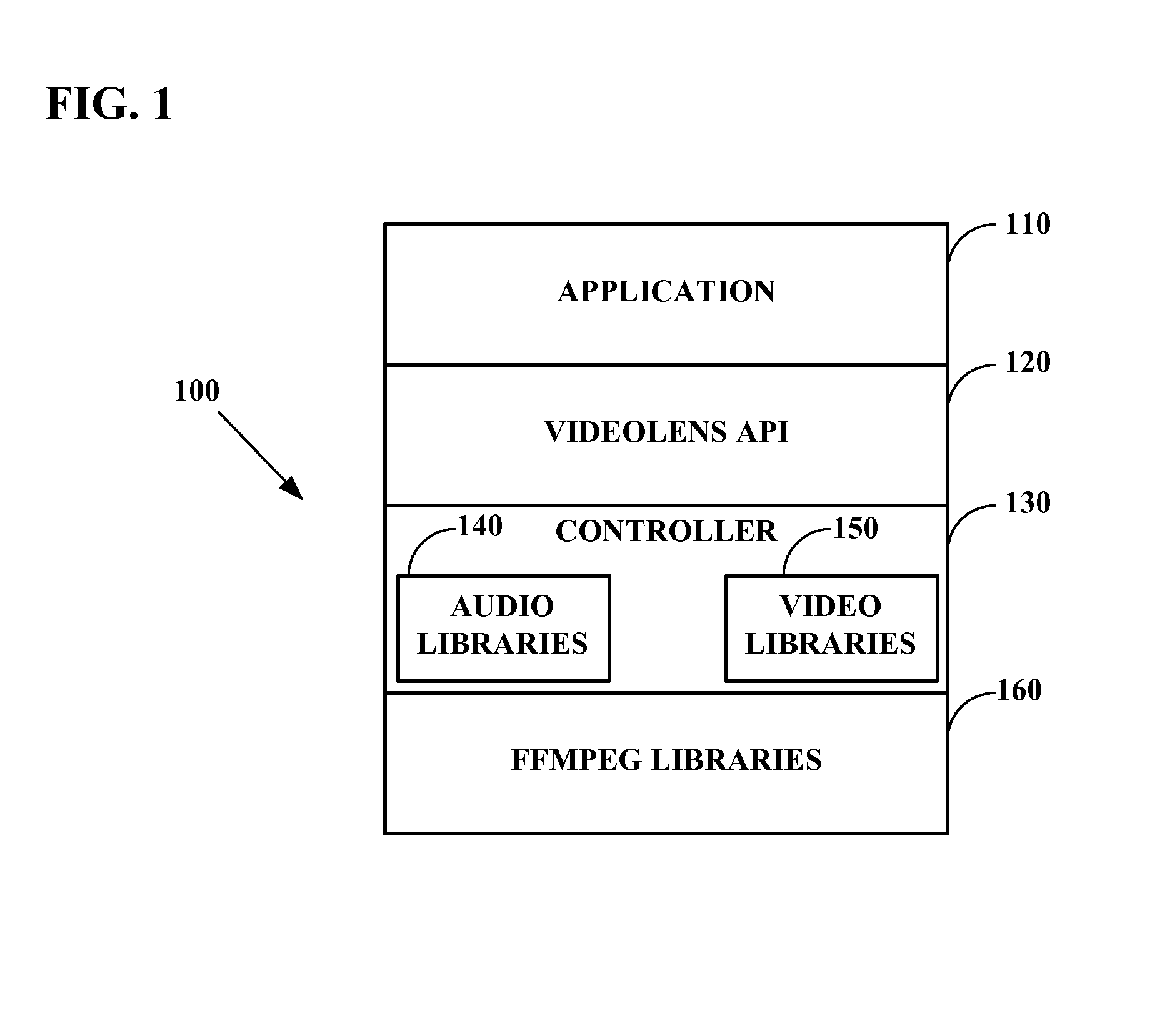

Extended videolens media engine for audio recognition

InactiveUS20130006625A1Improve recognition accuracySpeech recognitionSelective content distributionClosed captioningNetwork media

A system, method, and computer program product for automatically analyzing multimedia data audio content are disclosed. Embodiments receive multimedia data, detect portions having specified audio features, and output a corresponding subset of the multimedia data and generated metadata. Audio content features including voices, non-voice sounds, and closed captioning, from downloaded or streaming movies or video clips are identified as a human probably would do, but in essentially real time. Particular speakers and the most meaningful content sounds and words and corresponding time-stamps are recognized via database comparison, and may be presented in order of match probability. Embodiments responsively pre-fetch related data, recognize locations, and provide related advertisements. The content features may be also sent to search engines so that further related content may be identified. User feedback and verification may improve the embodiments over time.

Owner:SONY CORP

Interactive content delivery methods and apparatus

InactiveUS7631338B2Reliable transmissionBroadcast with distributionAnalogue secracy/subscription systemsClosed captioningInteractive content

Interactive content preservation and customization technology is placed at the broadcast facility to ensure reliable transmission of the interactive content to a local subsystem. An interactive content code detector detects interactive content codes in the video stream at the broadcast facility. The interactive content code detector is placed in the transmission path before the video stream is transmitted to broadcast facility hardware that may strip out, destroy, corrupt or otherwise modify the interactive content and interactive content codes. Once an interactive content code detector detects a code, an interactive broadcast server determines what action to take, and instructs an data insertion unit accordingly. The interactive content codes or interactive content may be placed in a portion of the video that is guaranteed by the broadcast facility to be transmitted, for example, the closed caption region of the vertical blanking interval.

Owner:OPEN TV INC

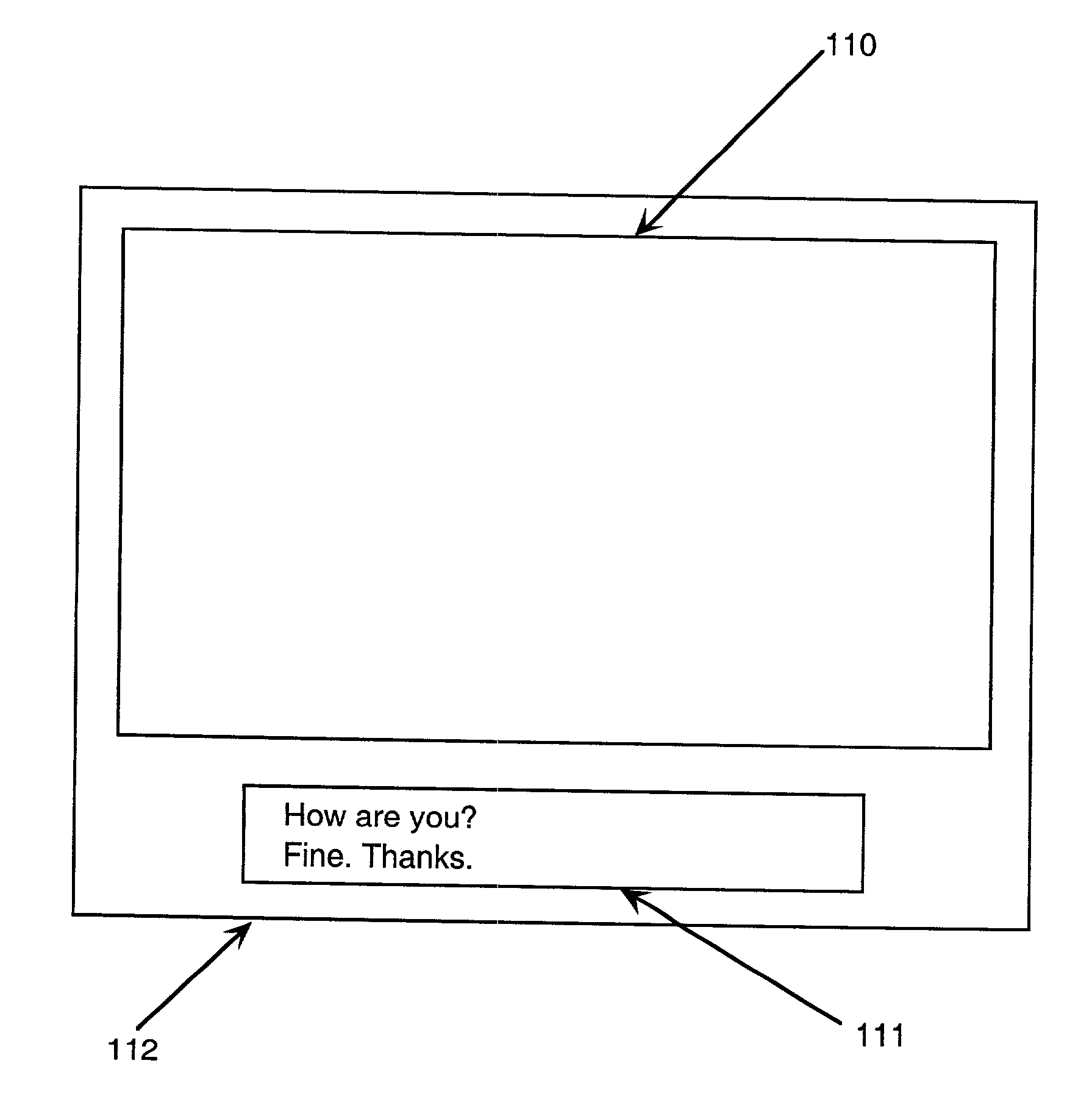

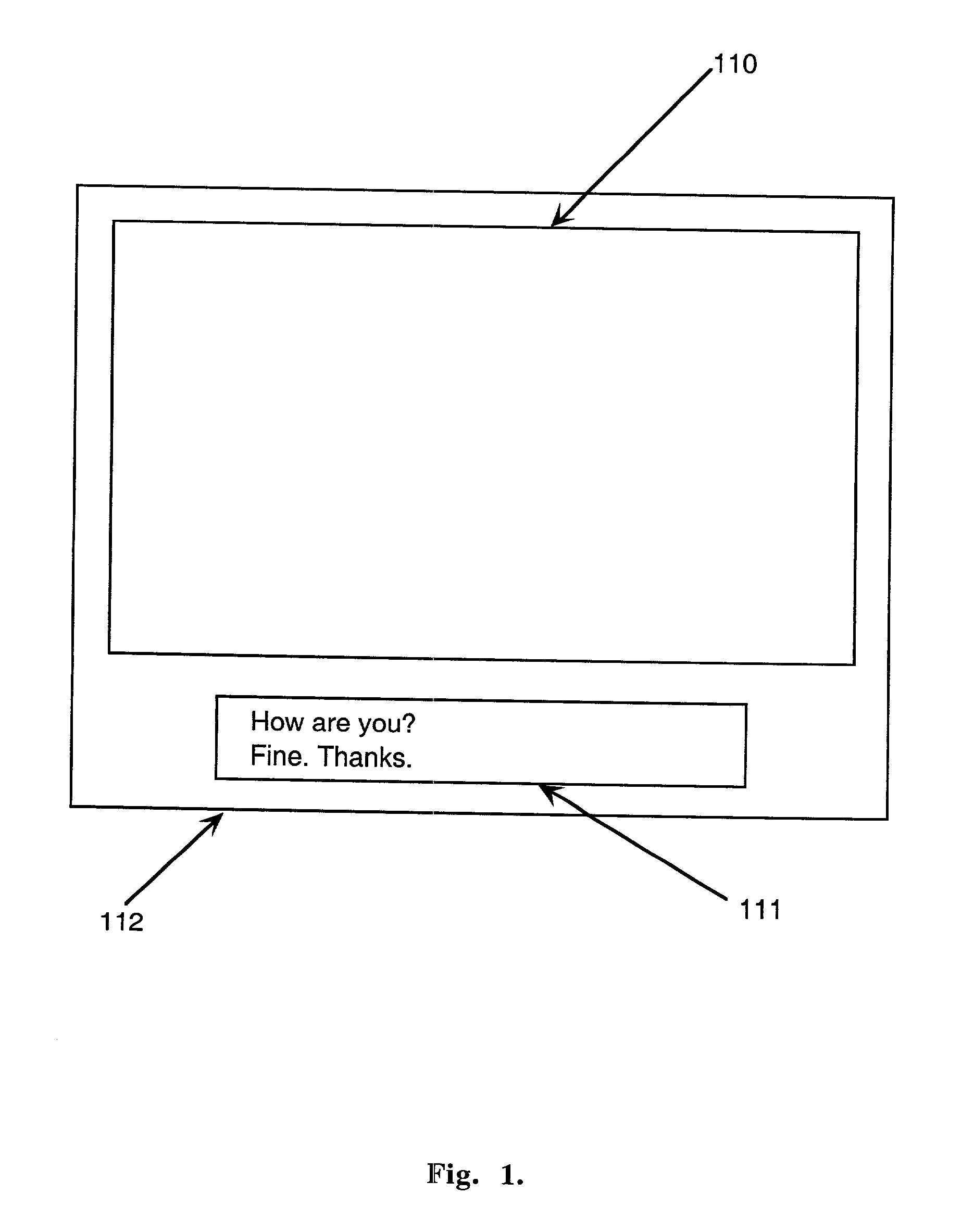

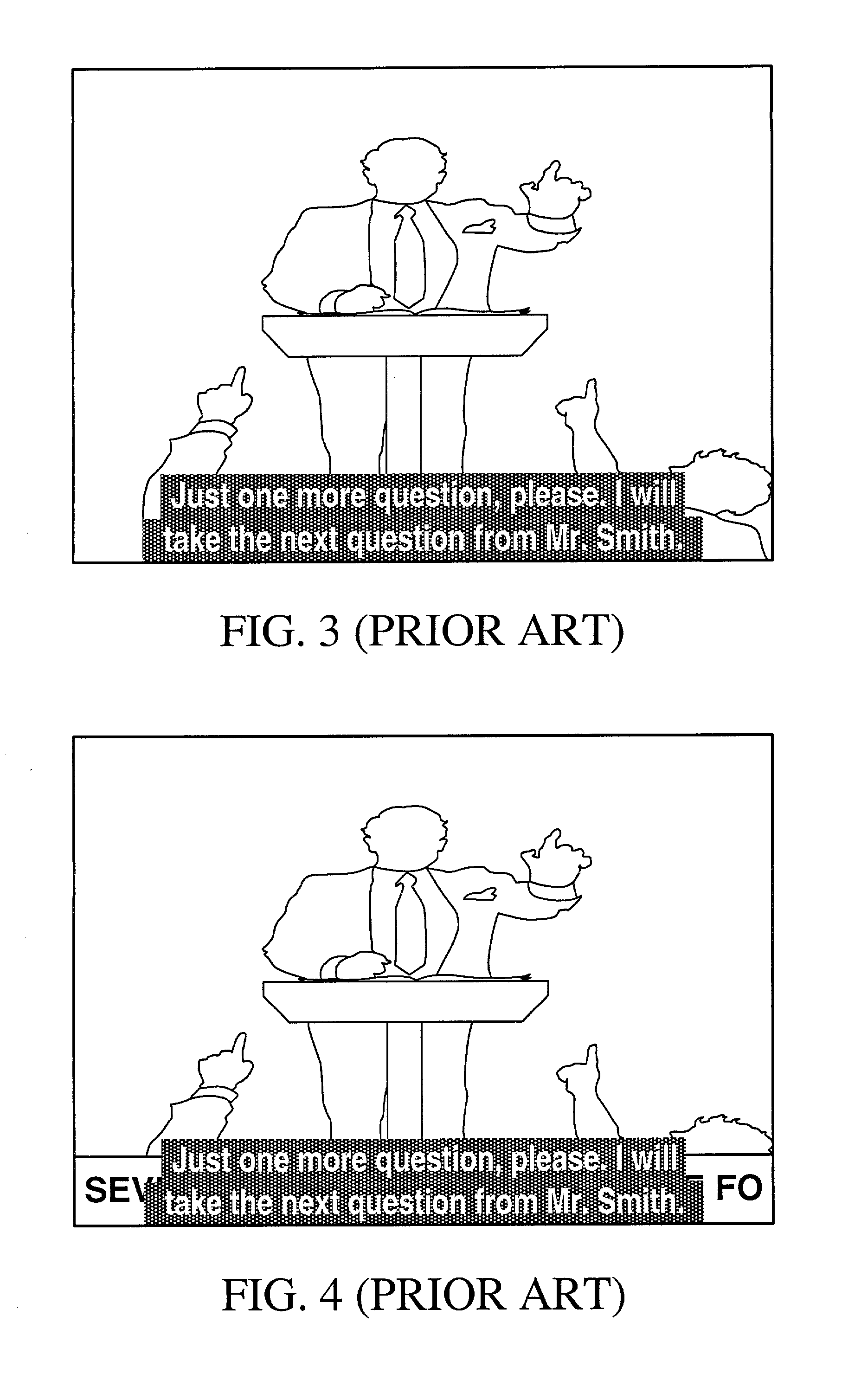

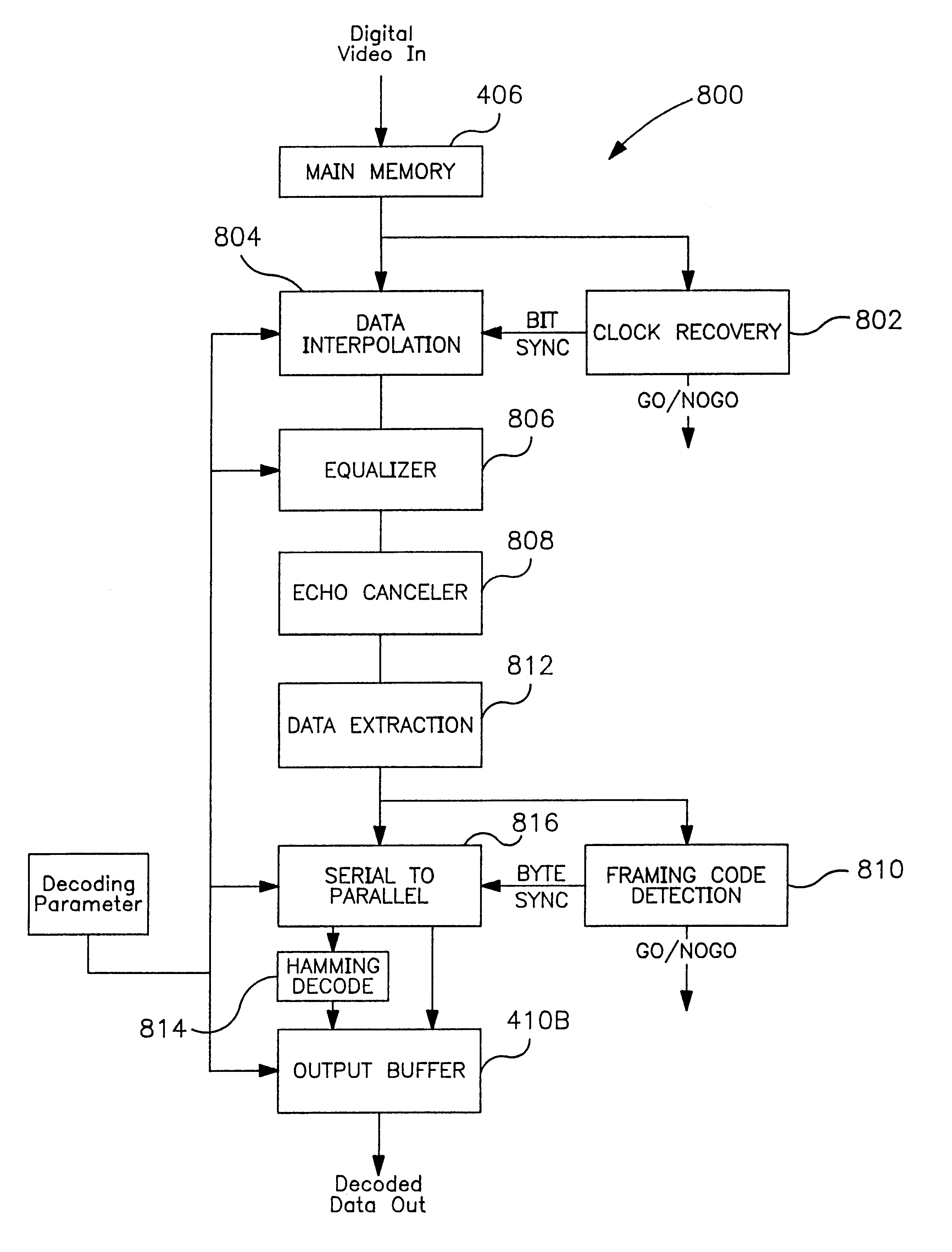

Video display apparatus with separate display means for textual information

InactiveUS20030128296A1Effective displayTelevision system detailsColor signal processing circuitsClosed captioningTelevision receivers

A video display apparatus with separate display means is provided so that the textual information can be displayed without preventing viewers from watching the full picture. The separate display means can be provided for a television receiver so that textual information, which includes closed caption text and subtitles, can be displayed on the separate display means without occupying the picture area. The separate display means can be provided for a screen in a movie theater so that subtitles can be displayed on the separate display means when a foreign movie is played. The separate display unit can be used to display other textual information, including a channel number, a name of the broadcasting station, the title of the current program and the remaining time of the current program. Furthermore, the separate display unit can display information on a local weather and a local time.

Owner:IND ACADEMIC CORP FOUND YONSEI UNIV

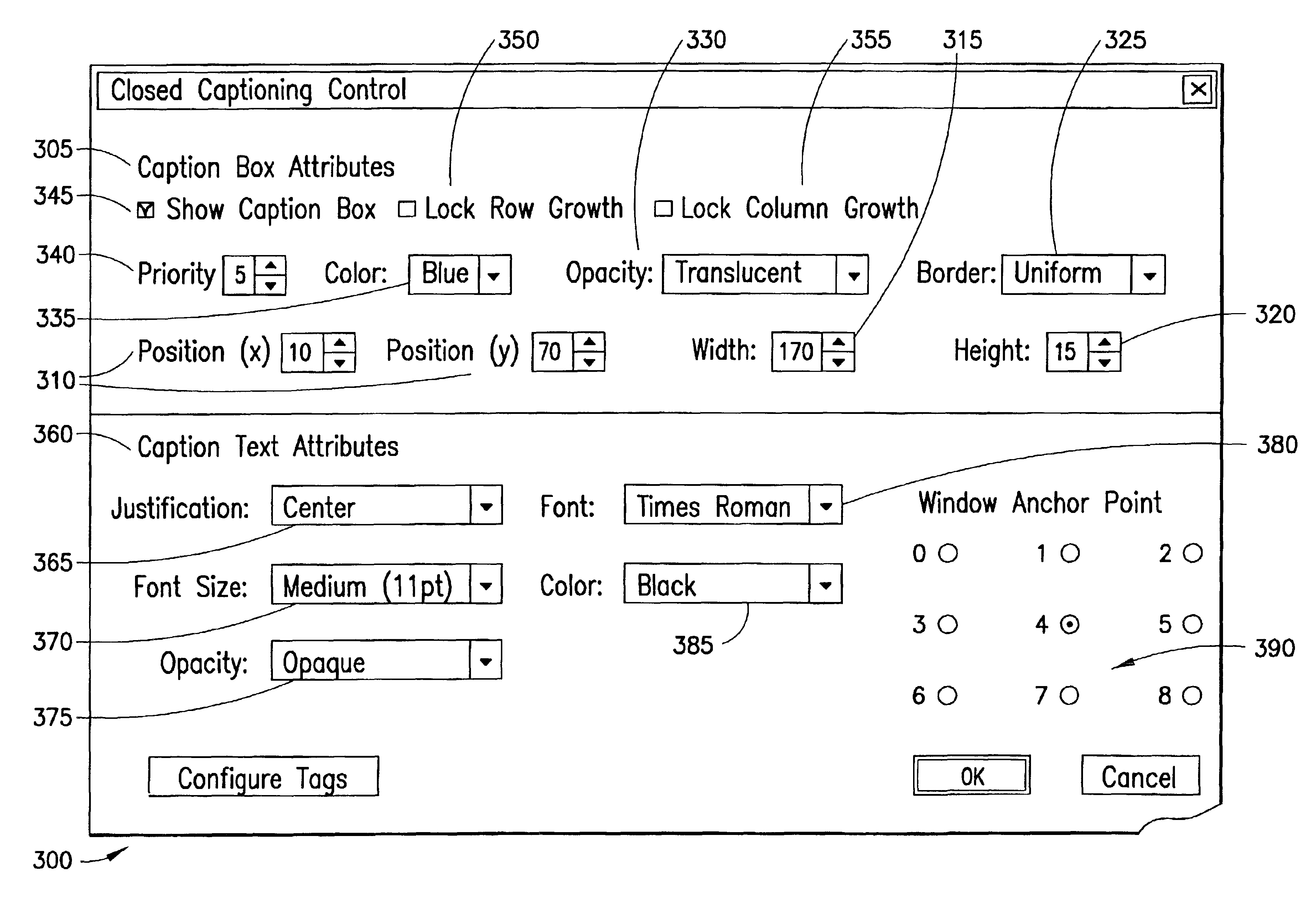

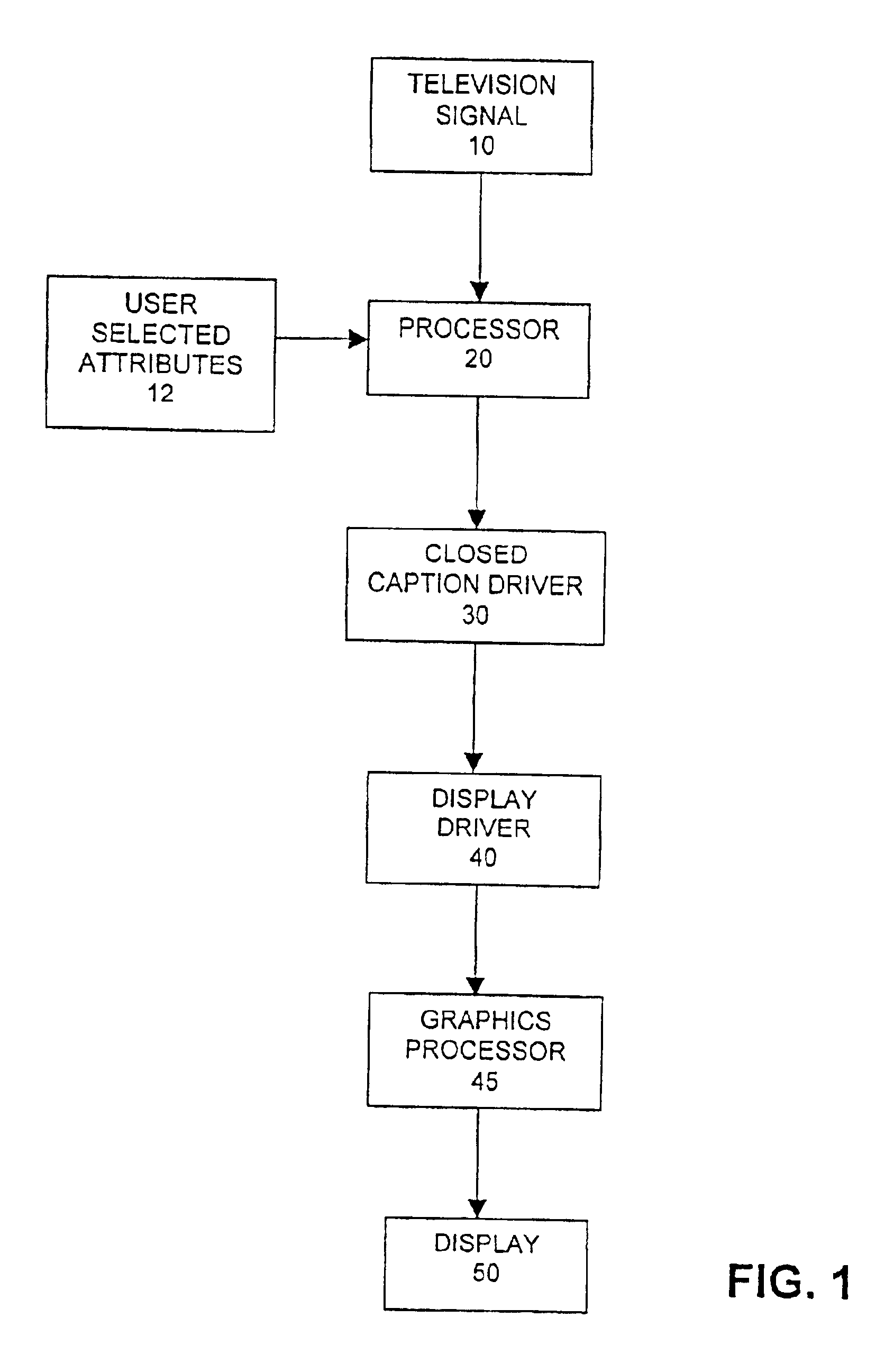

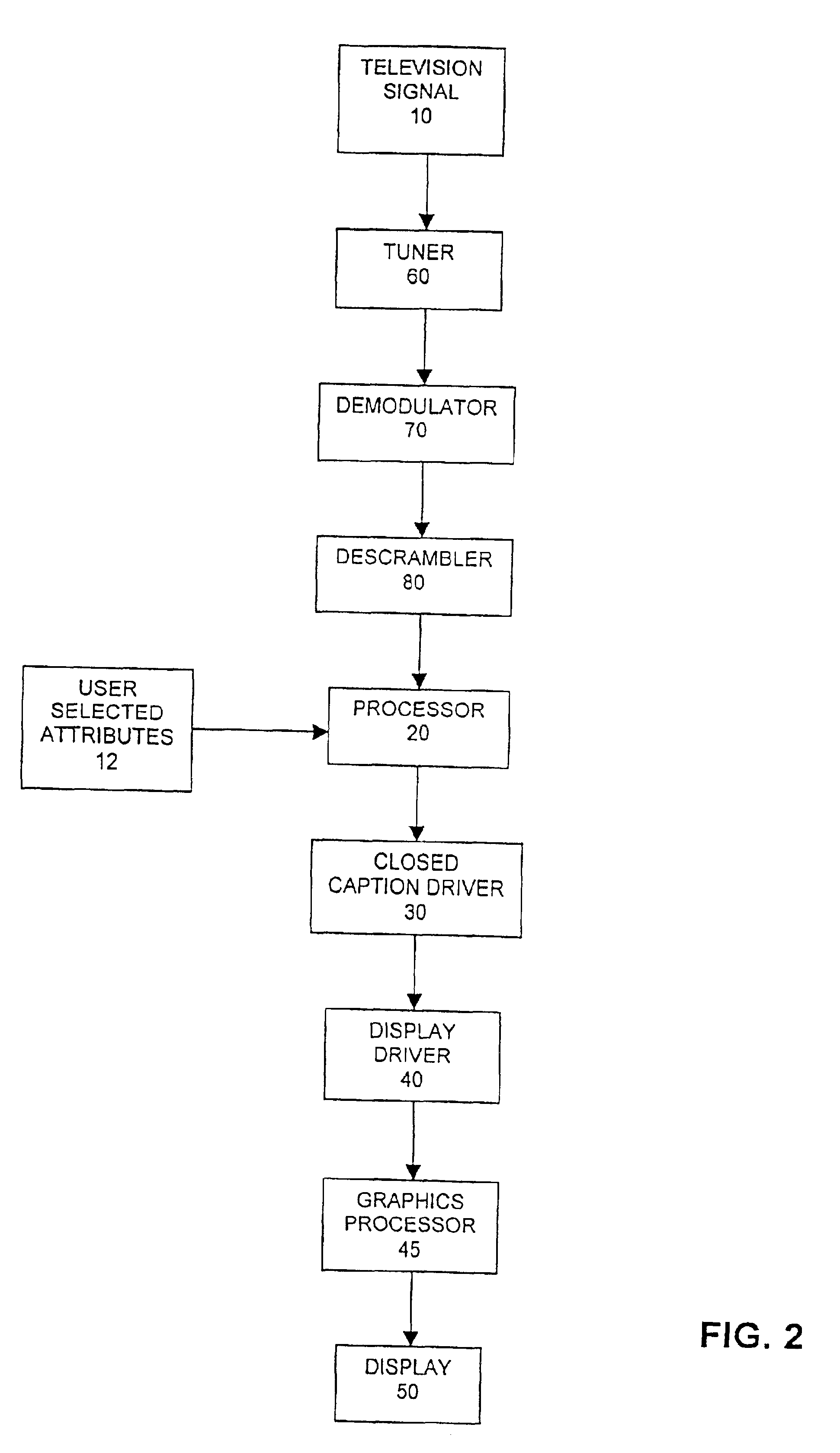

Methods and apparatus for the provision of user selected advanced close captions

InactiveUS7050109B2Television system detailsPicture reproducers using cathode ray tubesGraphicsClosed captioning

User customizable advanced closed caption capbilities are provided using existing closed caption information contained in a television signal. The invention allows the user to override the closed caption presentation format as selected by the originator (e.g., programmer or broadcaster), in order to select alternate presentation attributes based on the user's preference. Closed caption information is extracted (e.g., by a closed caption processor from a television signal, which television signal also contains corresponding audiovisual programming. The processor determines whether one or more user selected attributes have been set. At least one user selected attribute is applied to at least a portion of the closed caption information via a closed caption driver. The closed caption information is displayed via a display driver and graphics processor on a display device in accordance with the user selected attributes via a graphical overlay on top of the audiovisual programming.

Owner:VIZIO INC

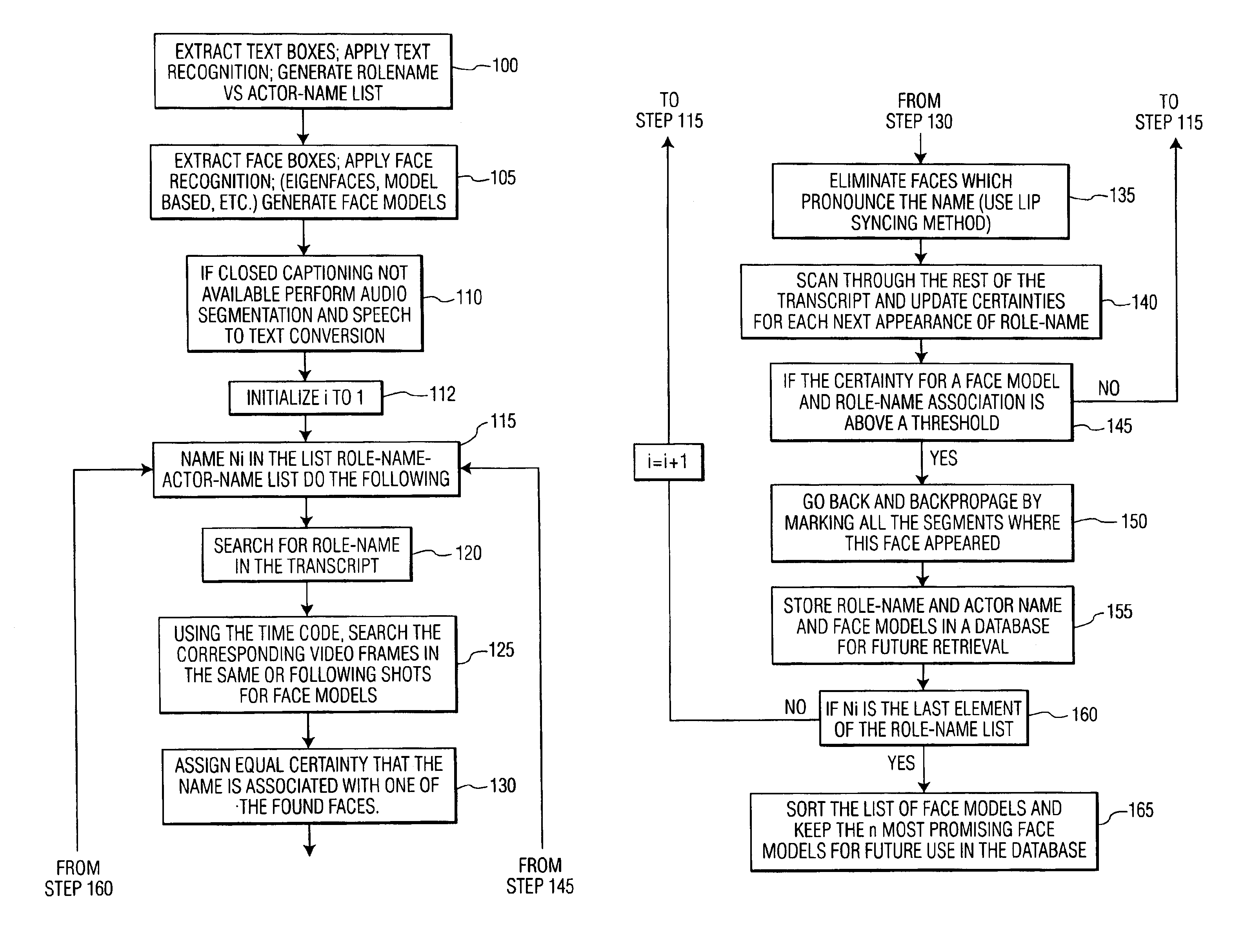

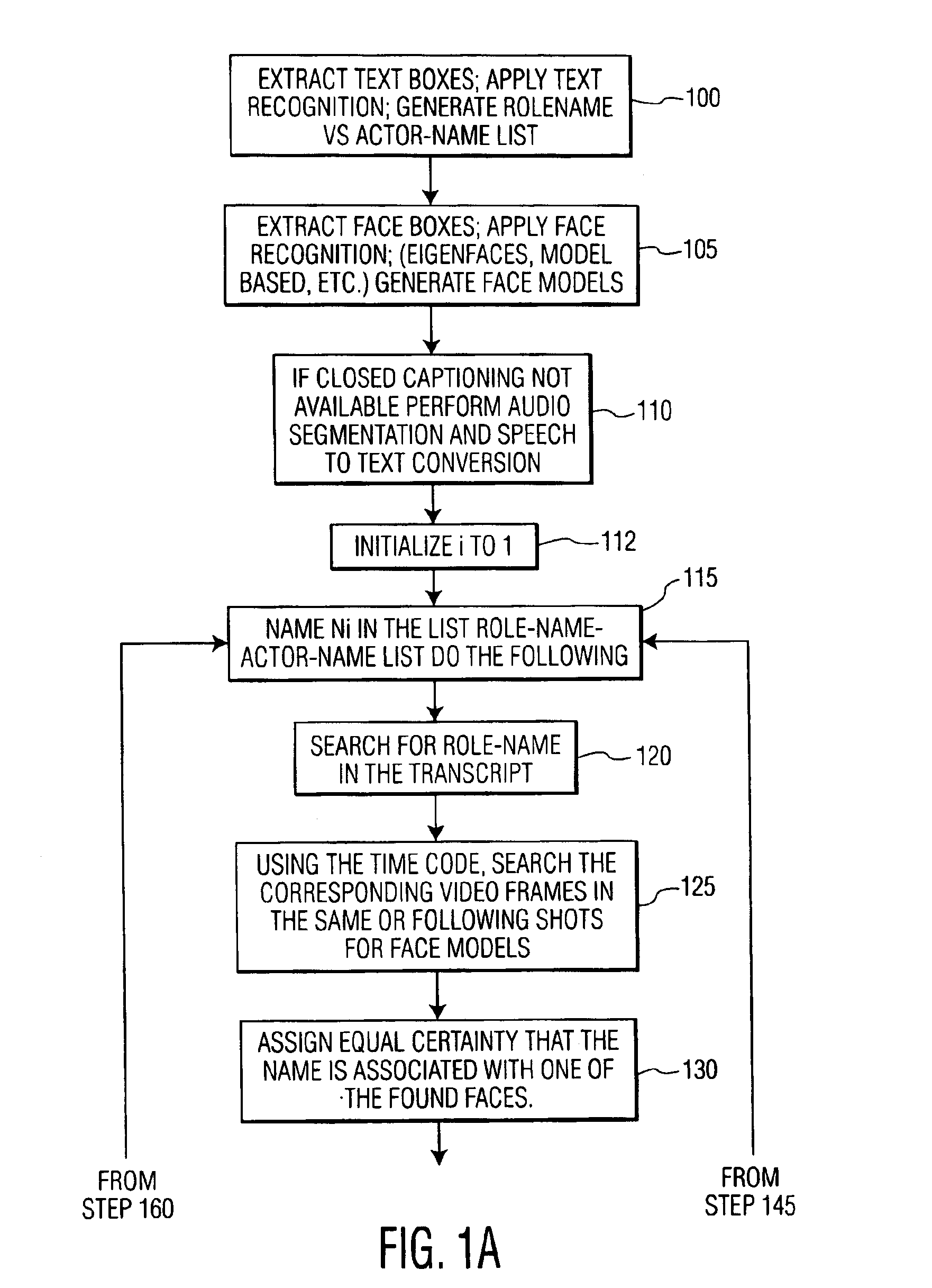

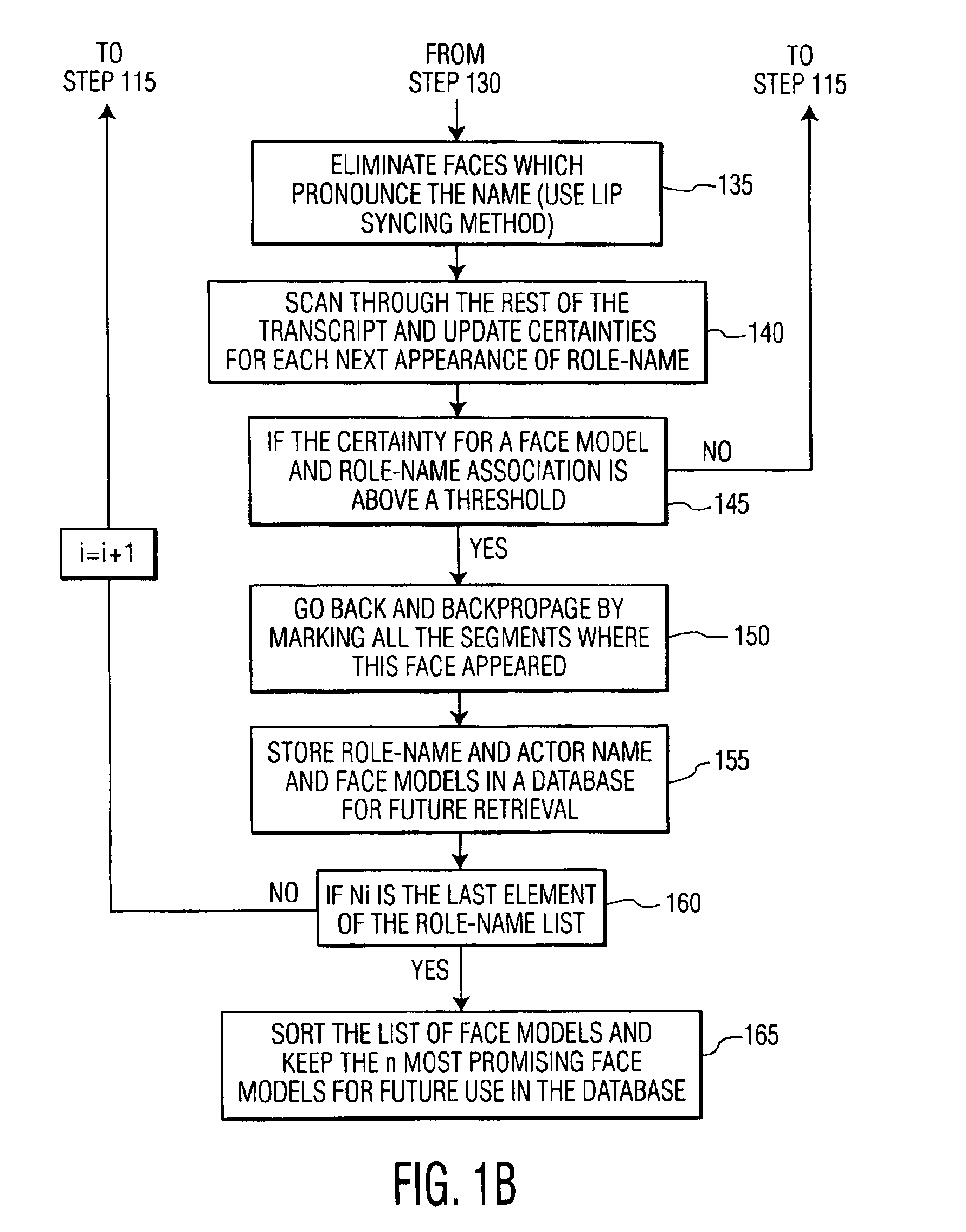

Method and system for name-face/voice-role association

InactiveUS6925197B2Digital data information retrievalData processing applicationsText recognitionVideo sequence

A method for providing name-face / voice-role association includes determining whether a closed captioned text accompanies a video sequence, providing one of text recognition and speech to text conversion to the video sequence to generate a role-name versus actor-name list from the video sequence, extracting face boxes from the video sequence and generating face models, searching a predetermined portion of text for an entry on the role-name versus actor-name list, searching video frames for face models / voice models that correspond to the text searched by using a time code so that the video frames correspond to portions of the text where role-names are detected, assigning an equal level of certainty for each of the face models found, using lip reading to eliminate face models found that pronounce a role-name corresponding to said entry on the role-name versus actor-name list, scanning a remaining portion of text provided and updating a level of certainty for said each of the face models previously found. Once a particular face model / voice model and role-name association has reached a threshold the role-name, actor name, and particular face model / voice model is stored in a database and can be displayed by a user when the threshold for the particular face model has been reached. Thus the user can query information by entry of role-name, actor name, face model, or even words spoken by the role-name as a basis for the association. A system provides hardware and software to perform these functions.

Owner:UNILOC 2017 LLC

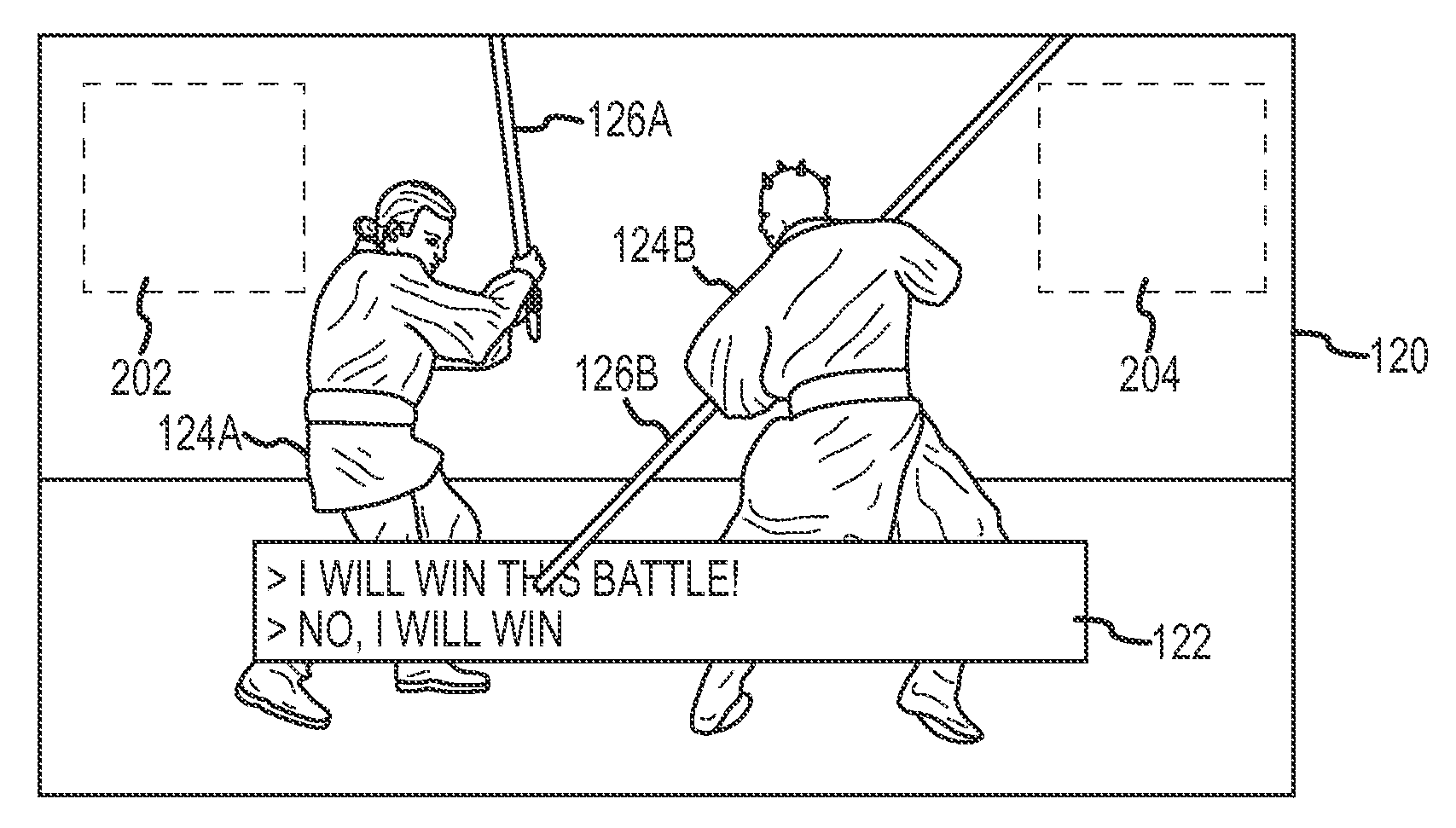

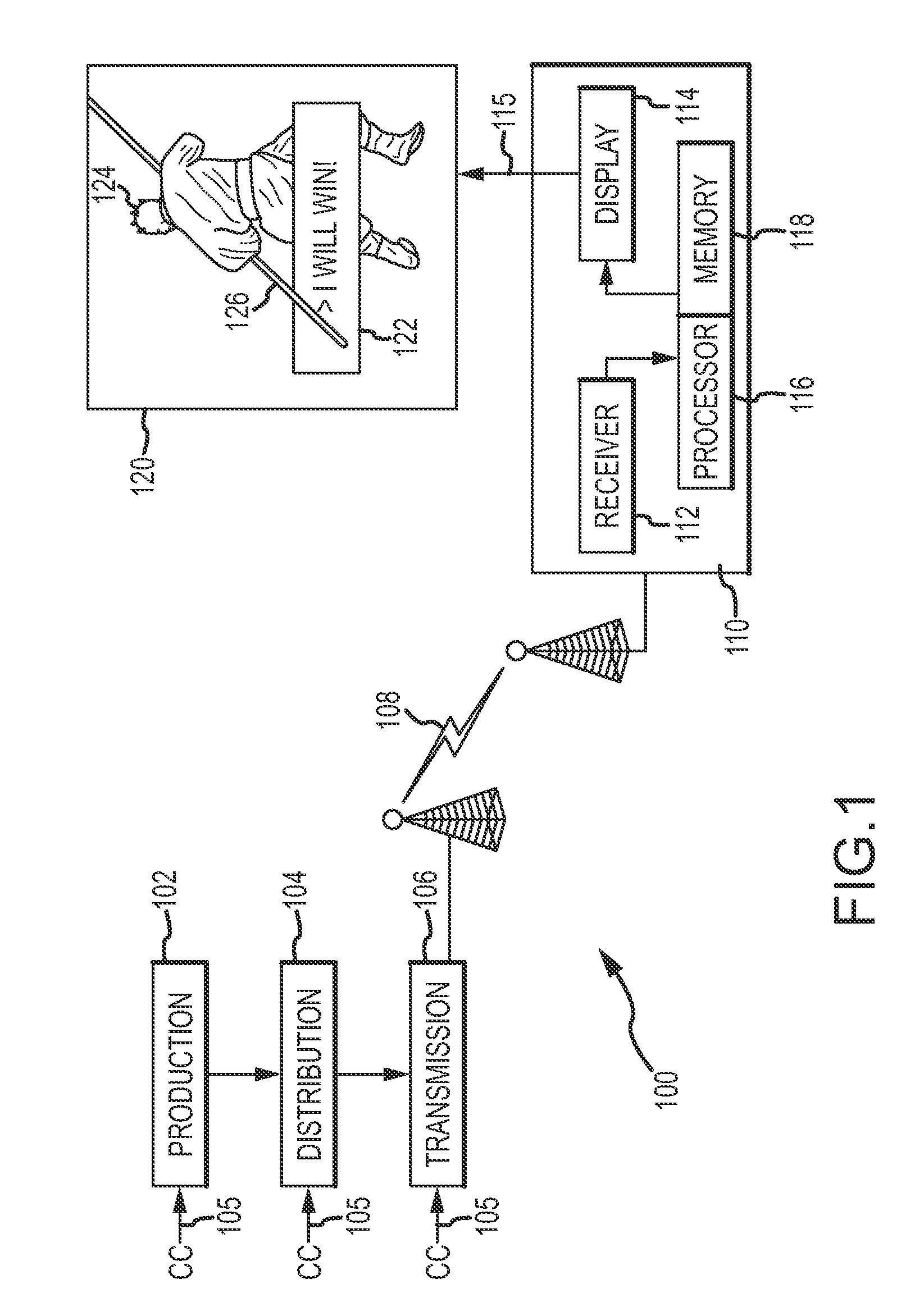

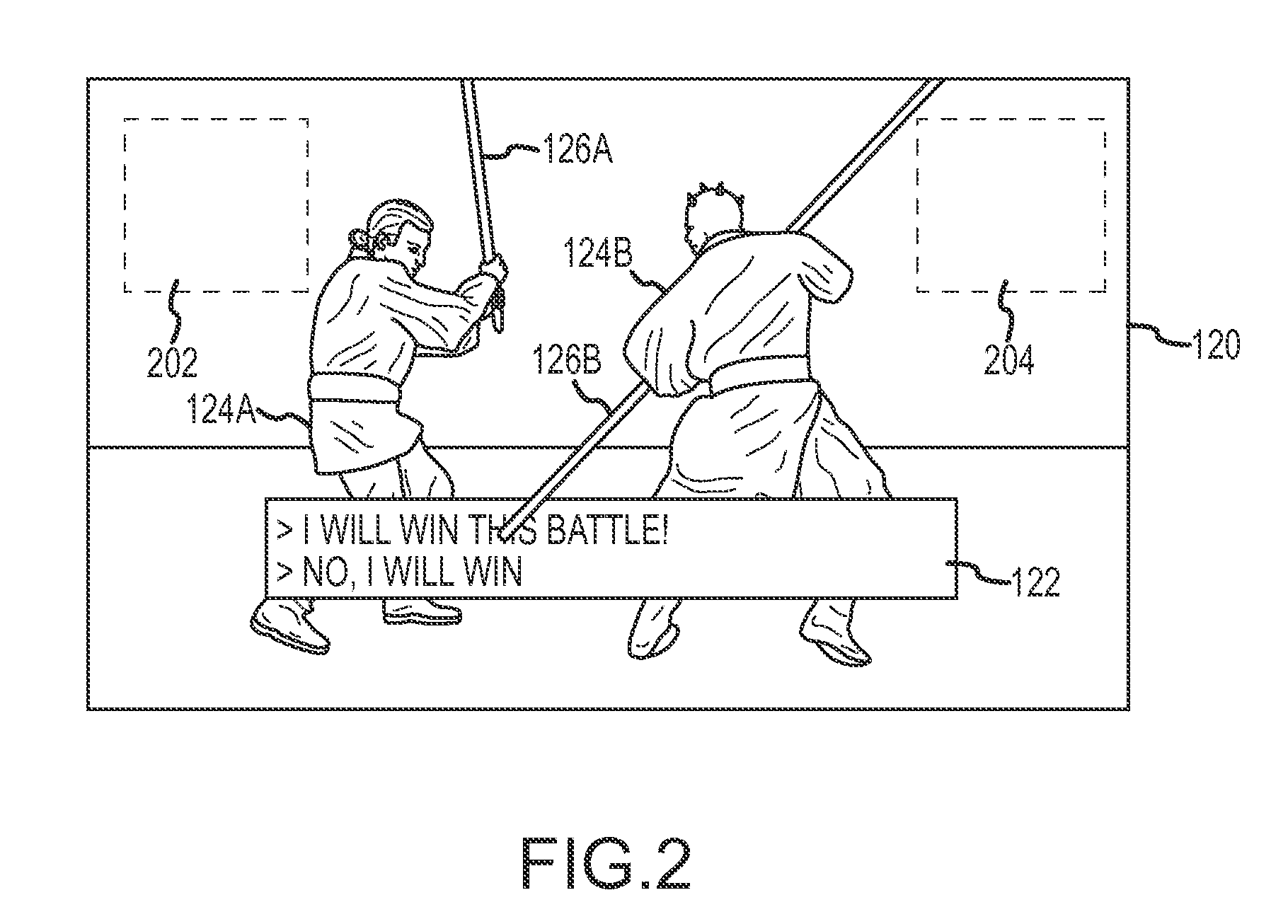

Systems and methods for providing closed captioning in three-dimensional imagery

ActiveUS20100188572A1High level of configurabilityImprove the level ofTelevision system detailsTelevision system scanning detailsClosed captioningComputer graphics (images)

Systems and methods are presented for processing three-dimensional (3D or 3-D) or pseudo-3D programming. The programming includes closed caption (CC) information that includes caption data and a location identifier that specifies a location for the caption data within the 3D programming. The programming information is processed to render the caption data at the specified location and to present the programming on the display. By encoding location identification information into the three-dimensional programming, a high level of configurability can be provided and the 3D experience can be preserved while captions are displayed.

Owner:DISH TECH L L C

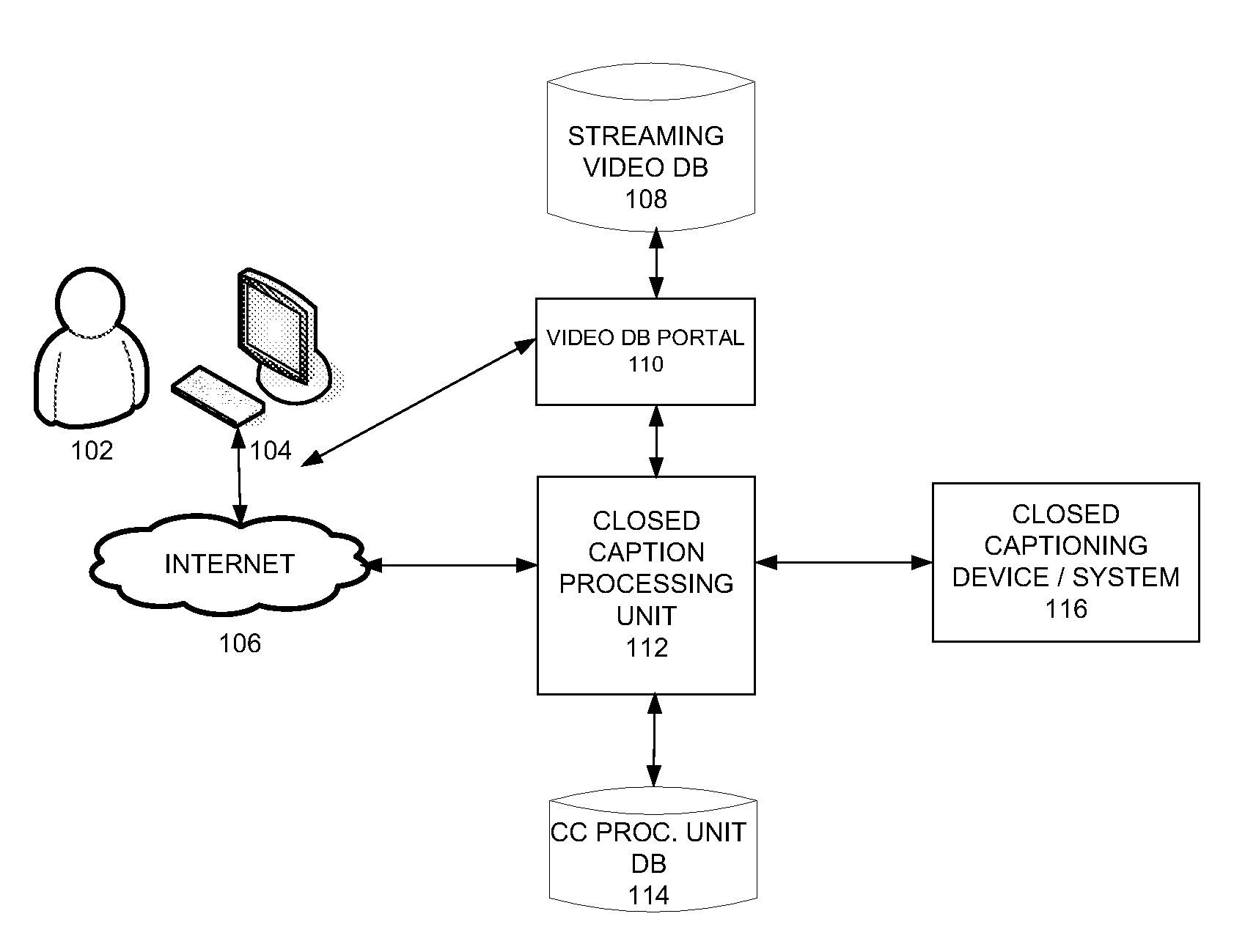

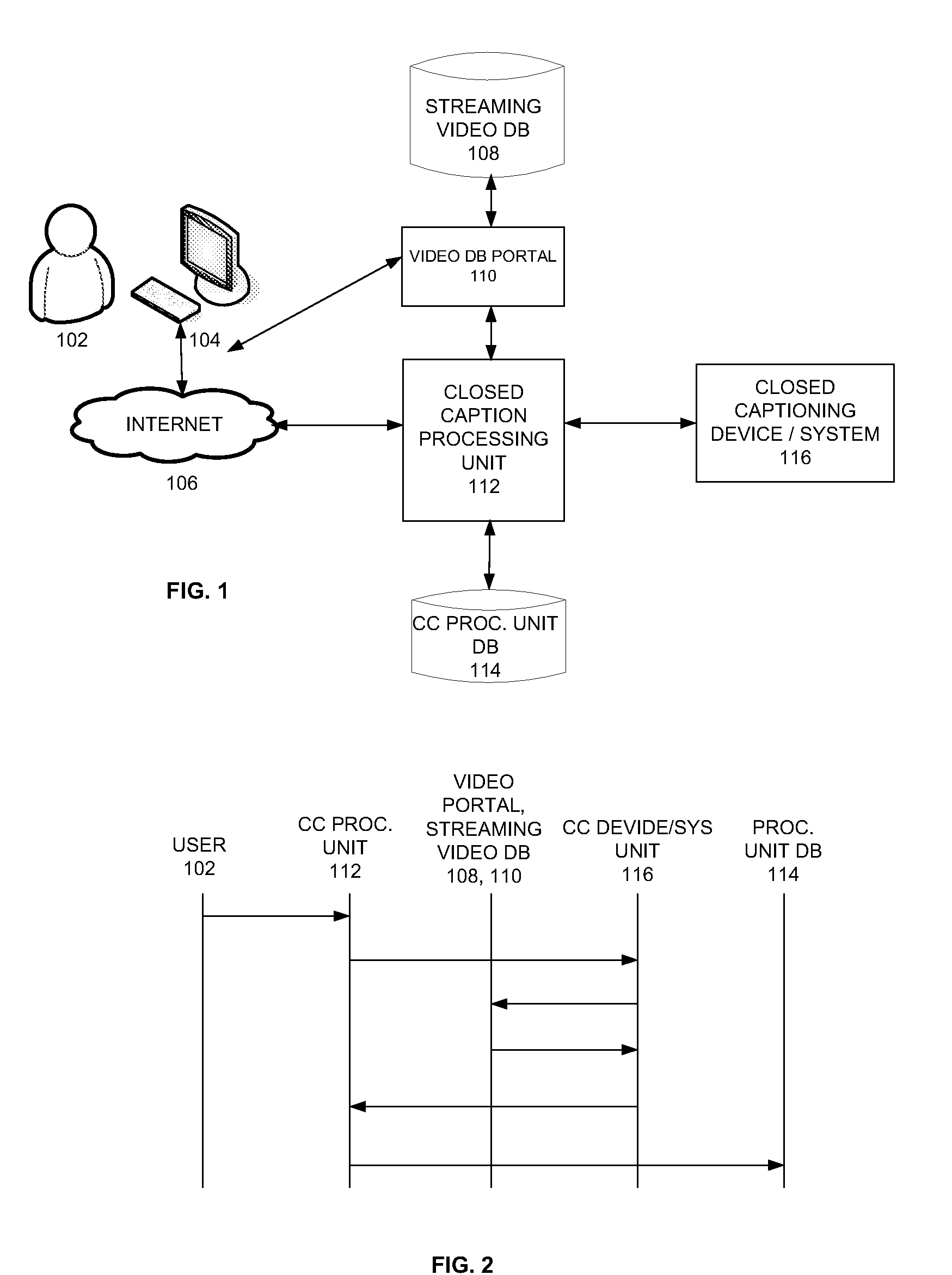

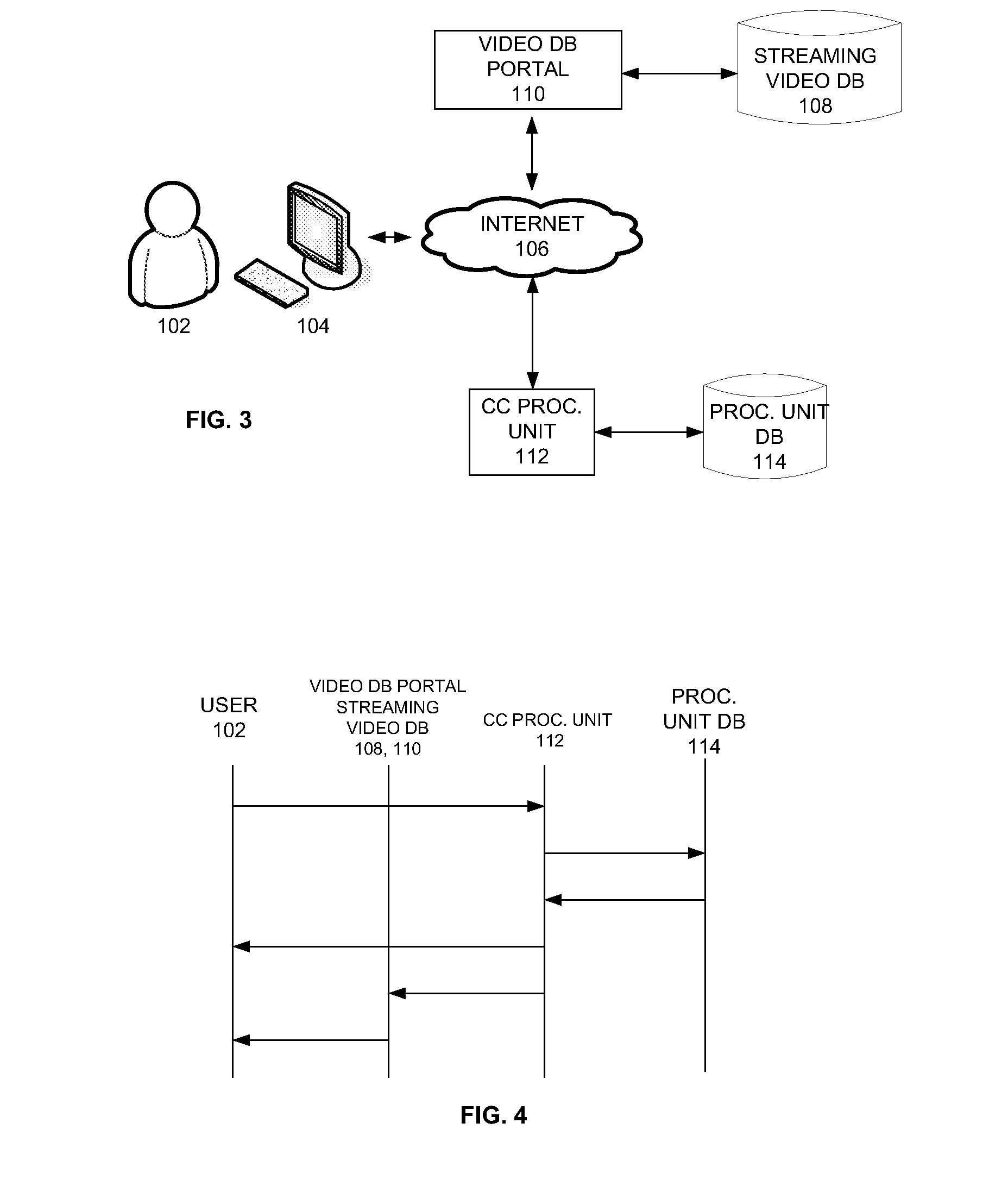

Text data for streaming video

InactiveUS20080284910A1Load largeTelevision system detailsPicture reproducers using cathode ray tubesClosed captioningText string

In a system and method providing a video with closed captioning, a processor may: provide a first website user interface adapted for receiving a user request for generation of closed captioning, the request referencing a multimedia file provided by a second website; responsive to the request: transcribe audio associated with the video into a series of closed captioning text strings arranged in a text file; for each of the text strings, store in the text file respective data associating the text string with a respective portion of the video; and store, for retrieval in response to a subsequent request made to the first website, the text file and a pointer associated with the text file and referencing the text file with the video; and / or providing the text file to an advertisement engine for obtaining an advertisement based on the text file and that is to be displayed with the video.

Owner:ERSKINE JOHN +2

Closed Captioned Telephone and Computer System

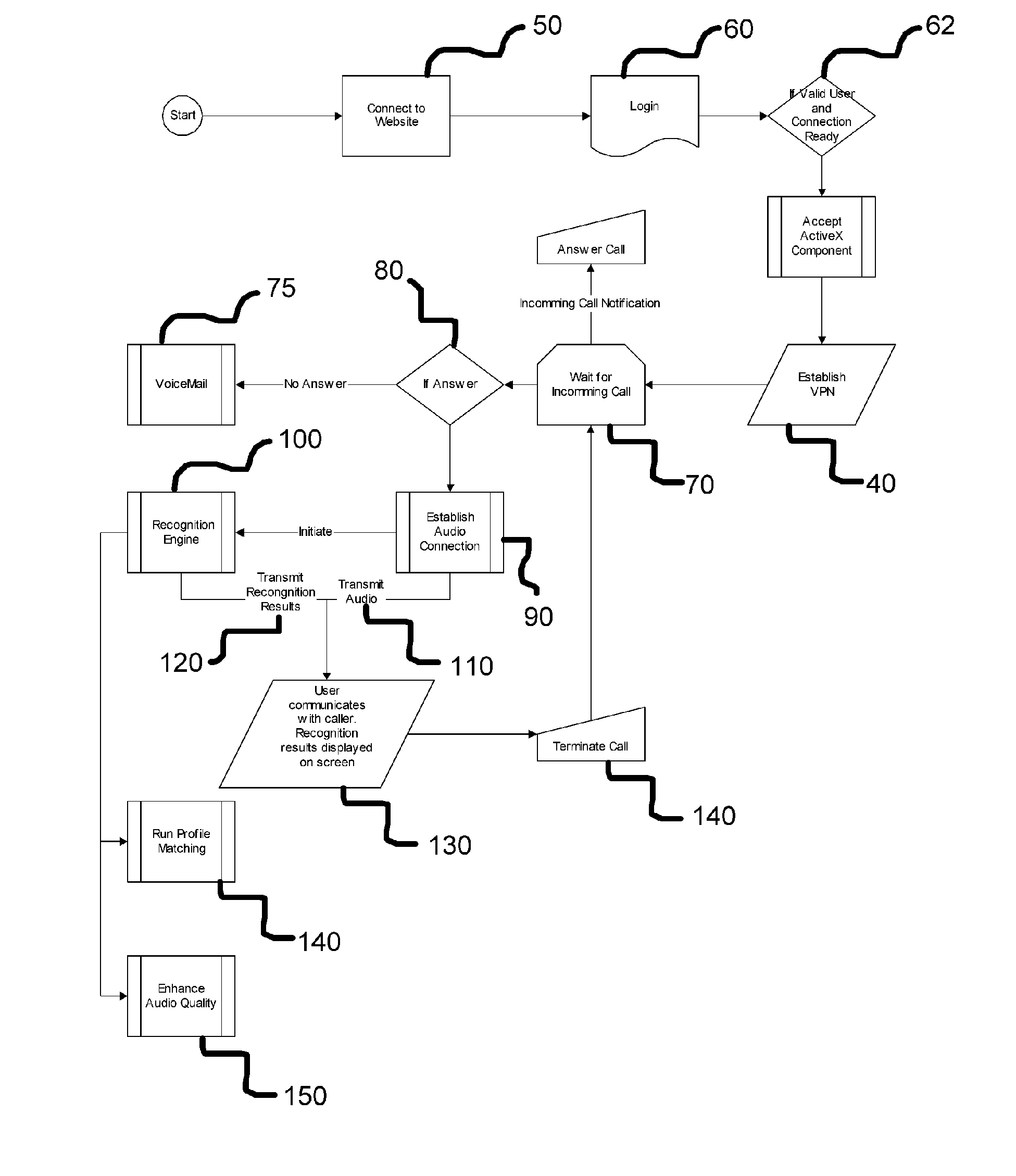

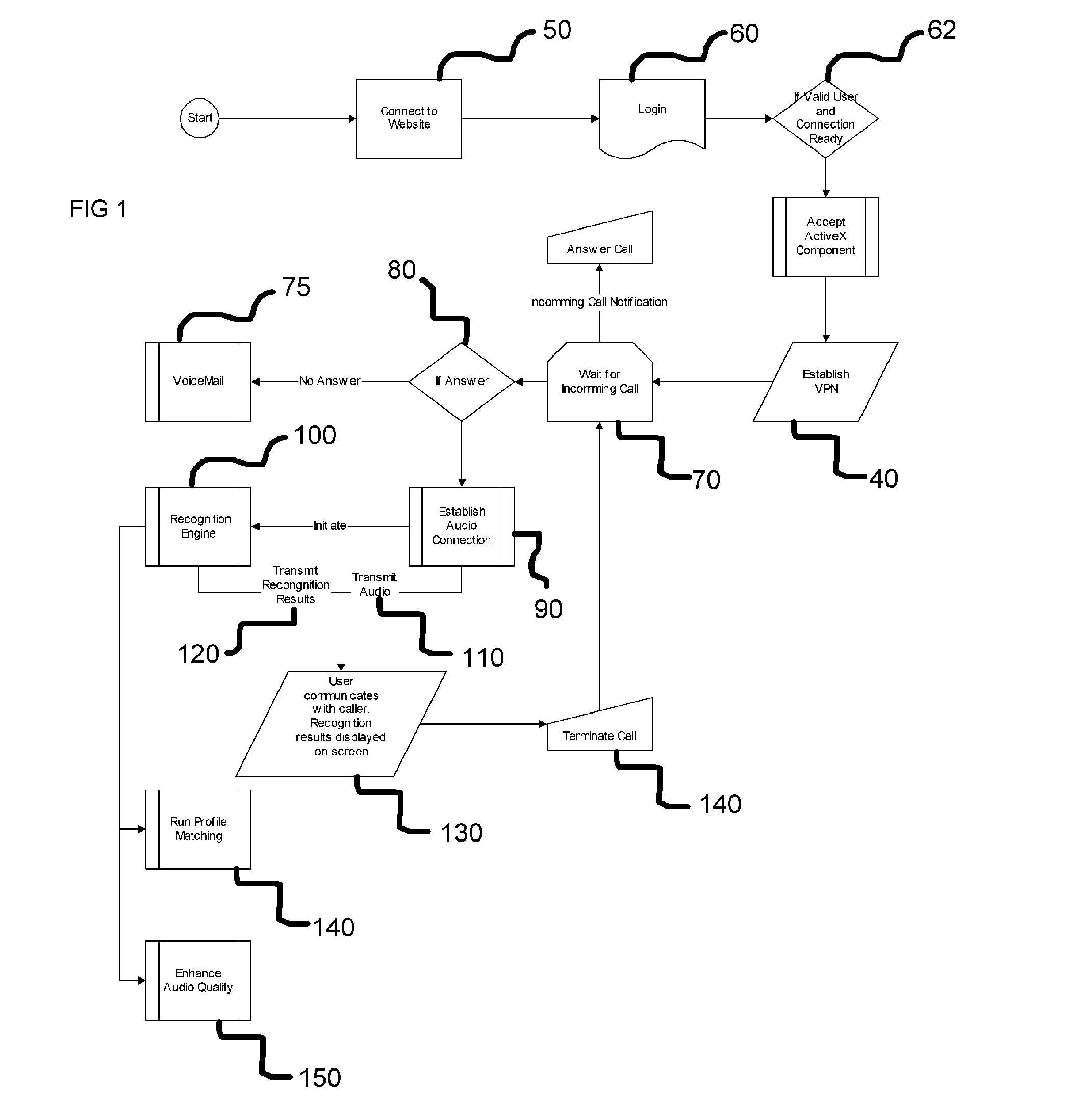

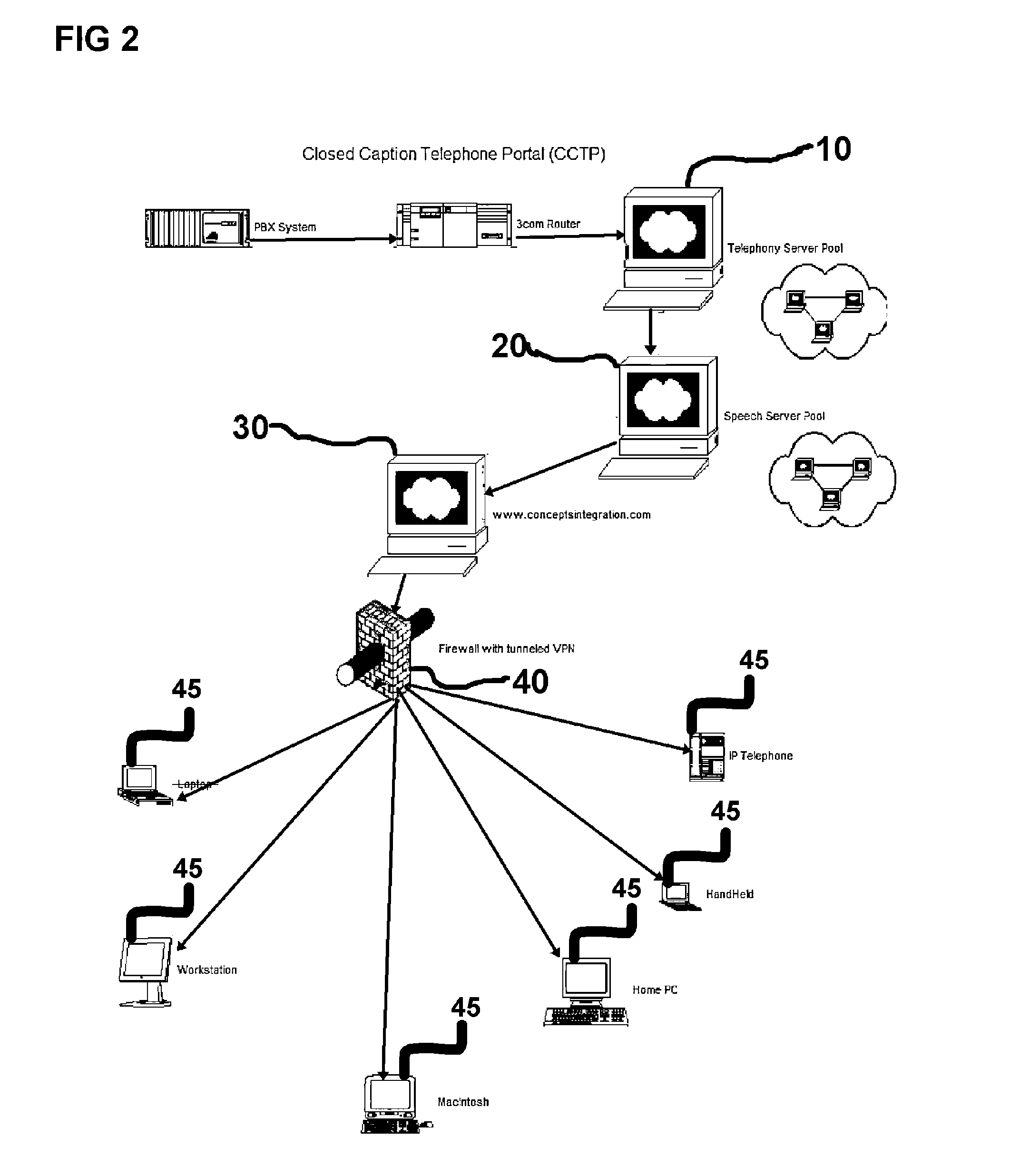

InactiveUS20050226398A1Increase currentImprove performanceTelephonic communicationWide area networksClosed captioningPrivate network

A Closed Caption Telephony Portal (CCTP) computer system that provides real-time online telephony services that include utilizing speech recognition technology to extend telephone communication through closed captioning services to all incoming and outgoing phone calls. Phone calls are call forwarded to the CCTP system using services provided by a telephone carrier. The CCTP system is completely transportable and can be utilized on any computer system, Internet connection, and standard Internet Browser. Employing an HTML / Java based desktop interface, the CCTP system enables users to make and receive telephone calls, receive closed captioning of conversations, provide voice dialing and voice driven telephone functionality. Additional features allow call hold, call waiting, caller id, and conference calling. To use the CCTP system a user logs in with his or her username and password and this process will immediately set up a Virtual Private Network (VPN) between the client computer and the server.

Owner:BOJEUN MARK C

Method and apparatus for control of closed captioning

InactiveUS20030169366A1Television system detailsPicture reproducers using cathode ray tubesClosed captioningControl data

A system for performing closed captioning enables a caption prepared remotely by a captioner to be repositioned by someone other than the captioner, such as by a program originator. This capability is particularly useful when, for example, the program originator wishes to include a banner in a video but also wishes to avoid having a closed caption interfere with the banner. In one illustrative system, the program originator is a broadcast station that includes a conventional encoder and a broadcast station computer. In one arrangement, control data generated at the station computer is incorporated into the caption data by the station computer. In another arrangement, the control data is sent from the station computer to the captioner computer, which incorporates the control data into the caption data.

Owner:VITAC CORP

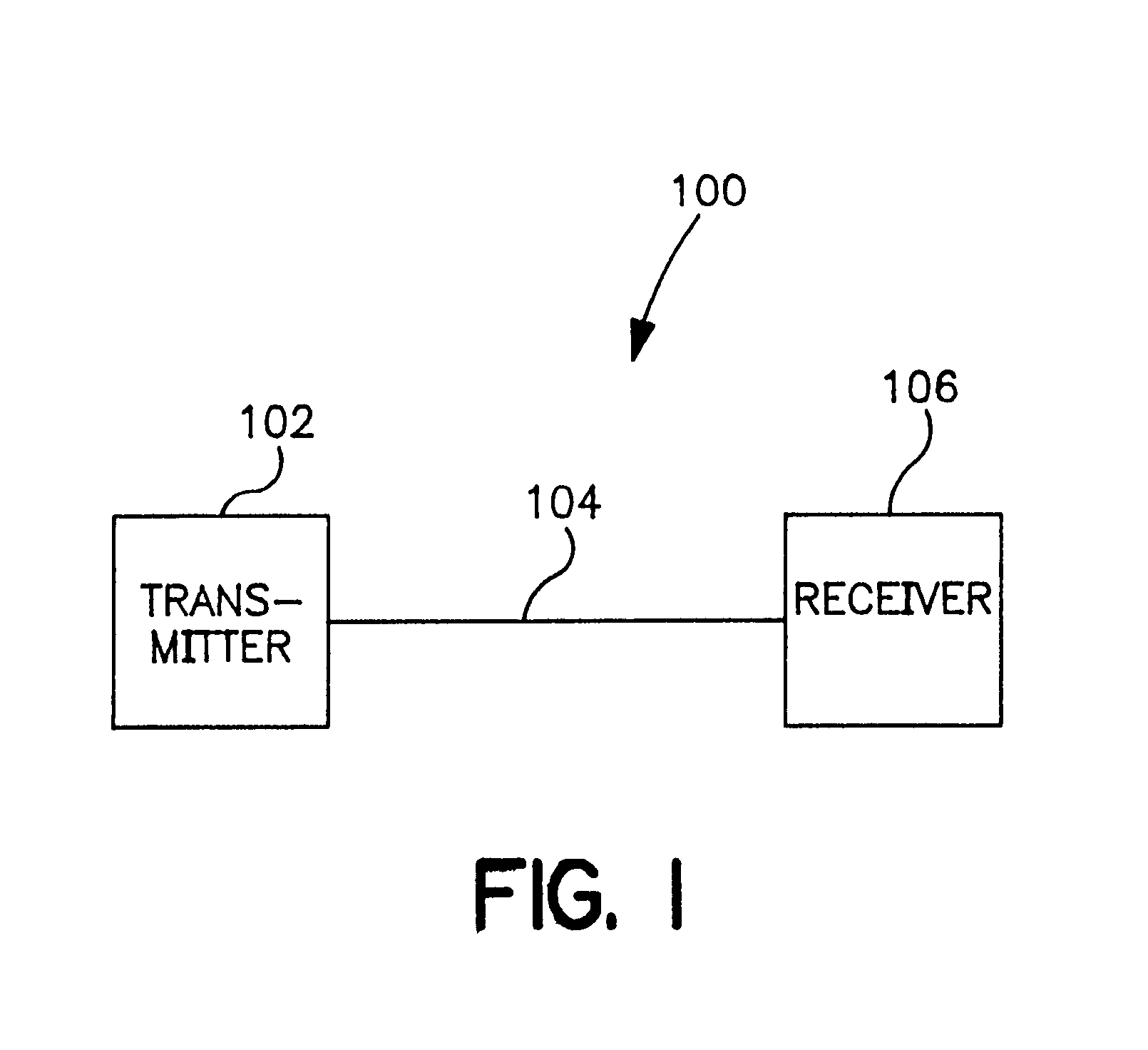

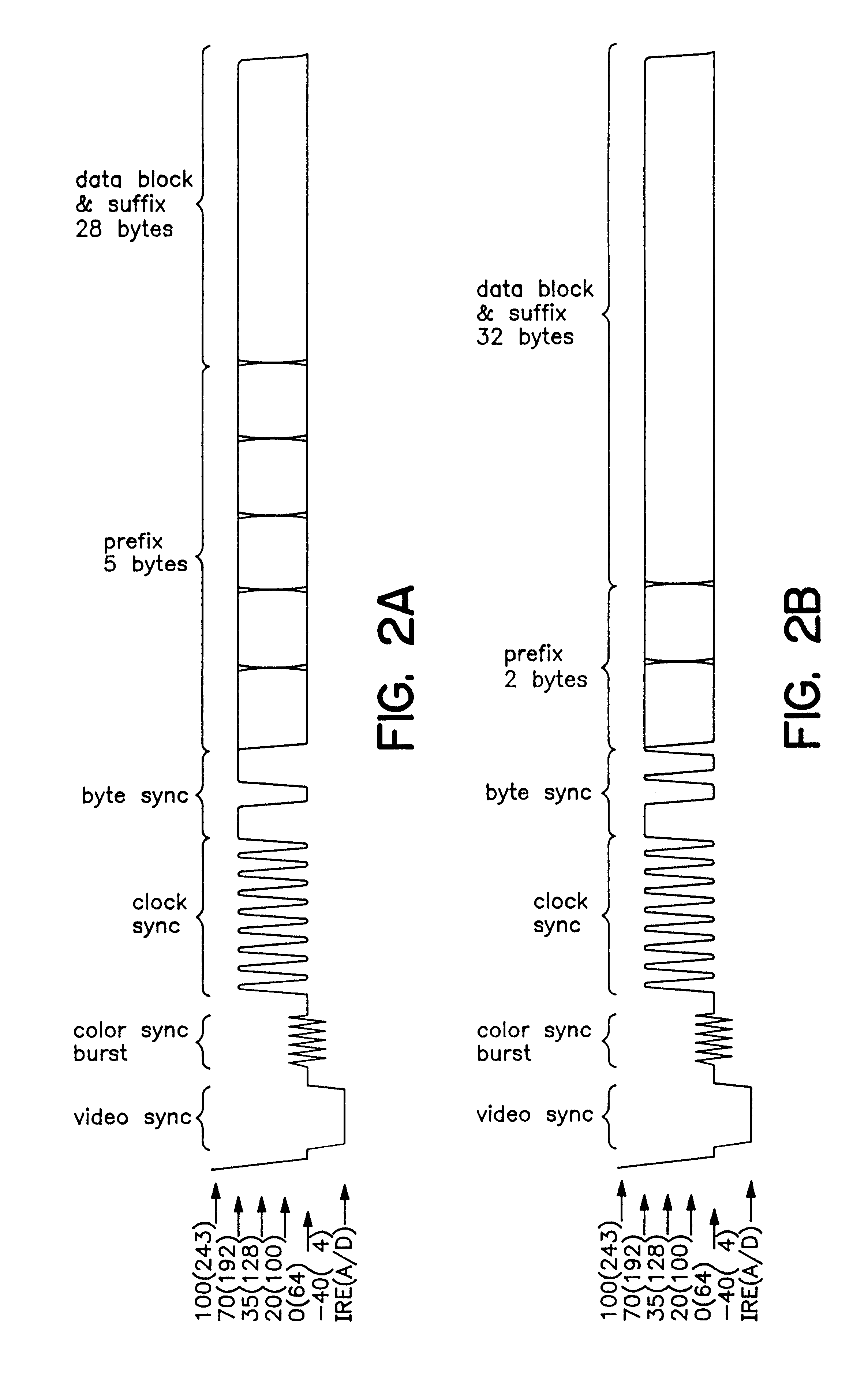

Method and system for decoding data in a signal

InactiveUS6239843B1Television system detailsPicture reproducers using cathode ray tubesHamming codeClosed captioning

A decoding system comprises a software-oriented implementation of the decoding process for extracting binary teletext and closed caption data from a signal. The decoder system according to one embodiment includes a processing unit which implements both a closed caption module and a teletext module. The decoder system calls the closed caption module to detect a clock synchronization signal associated with closed caption data. Upon finding the clock synchronization signal, the closed caption module synchronizes to the signal, identifies a framing code, and extracts the closed caption data. Similarly, the decoder system may call the teletext module for the teletext lines, which also synchronizes using a teletext clock synchronization signal. When the clock synchronization signal is detected, the teletext module interpolates the incoming data to reconstruct the data signal. The data signal is then processed to normalize the amplitude and reduce the "ghost" effects of signal echoes prior to the slicing of the data signal. The data is also suitably subjected to Hamming code error detection to ensure the accuracy of the data.

Owner:WAVO CORP

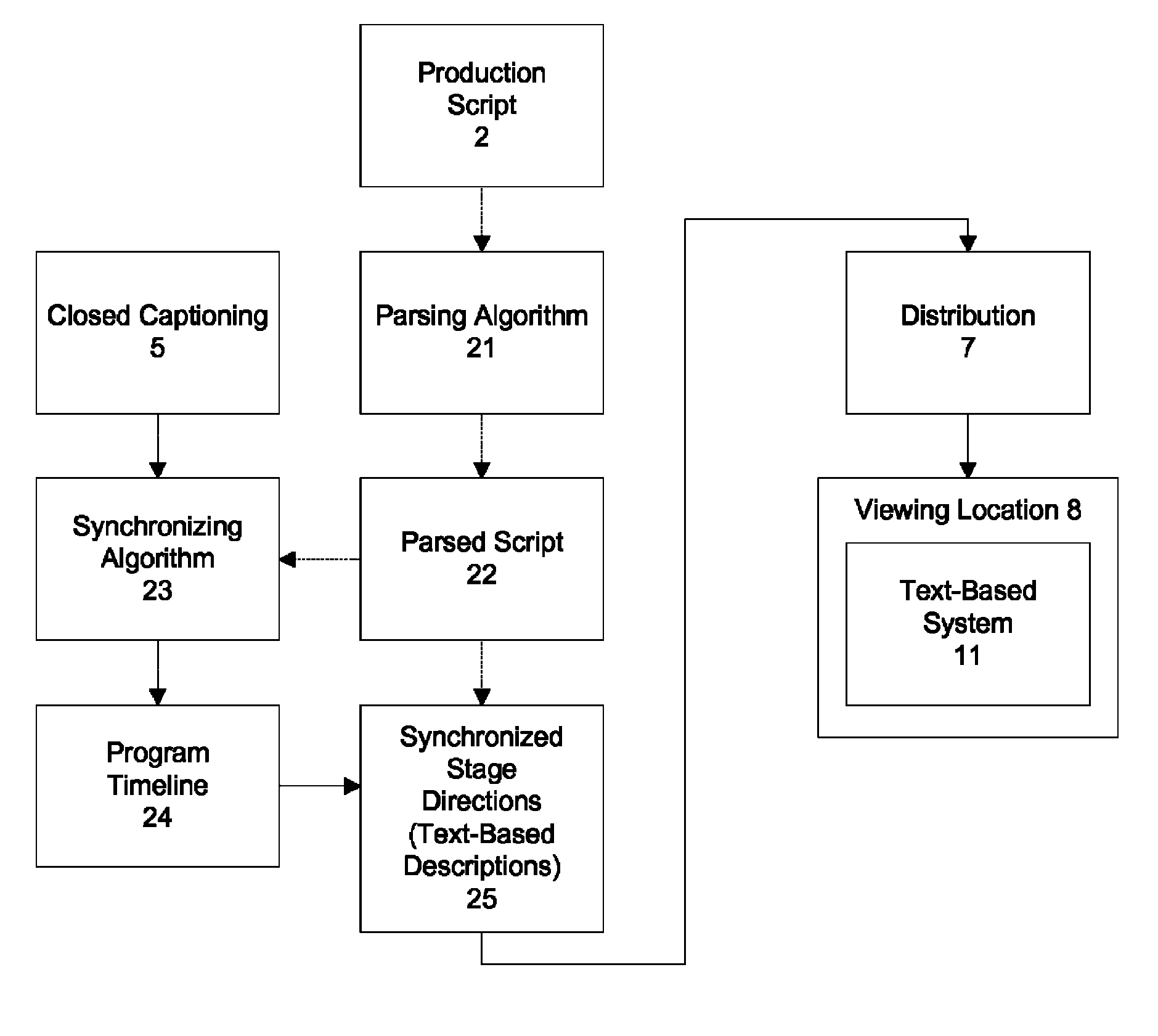

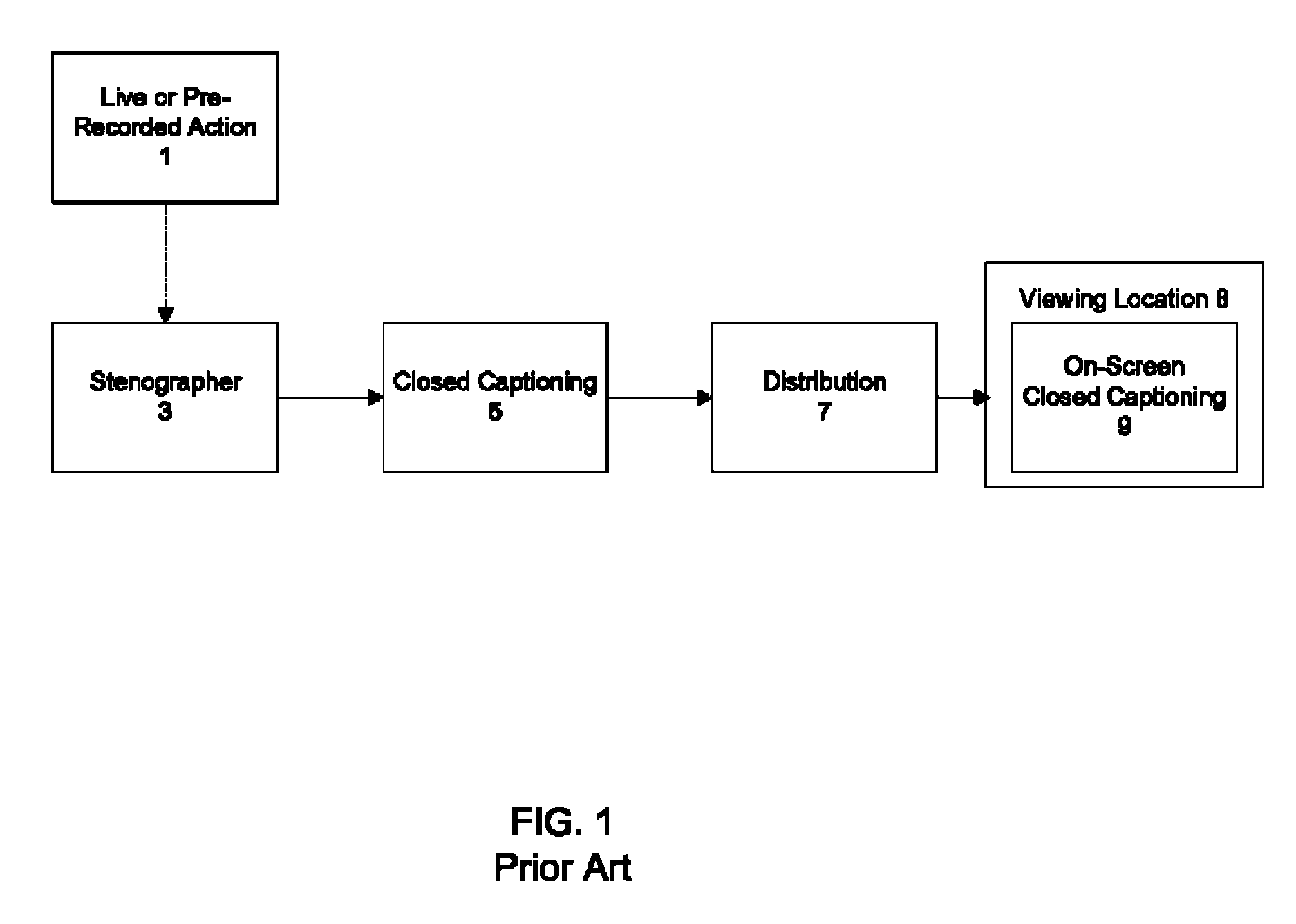

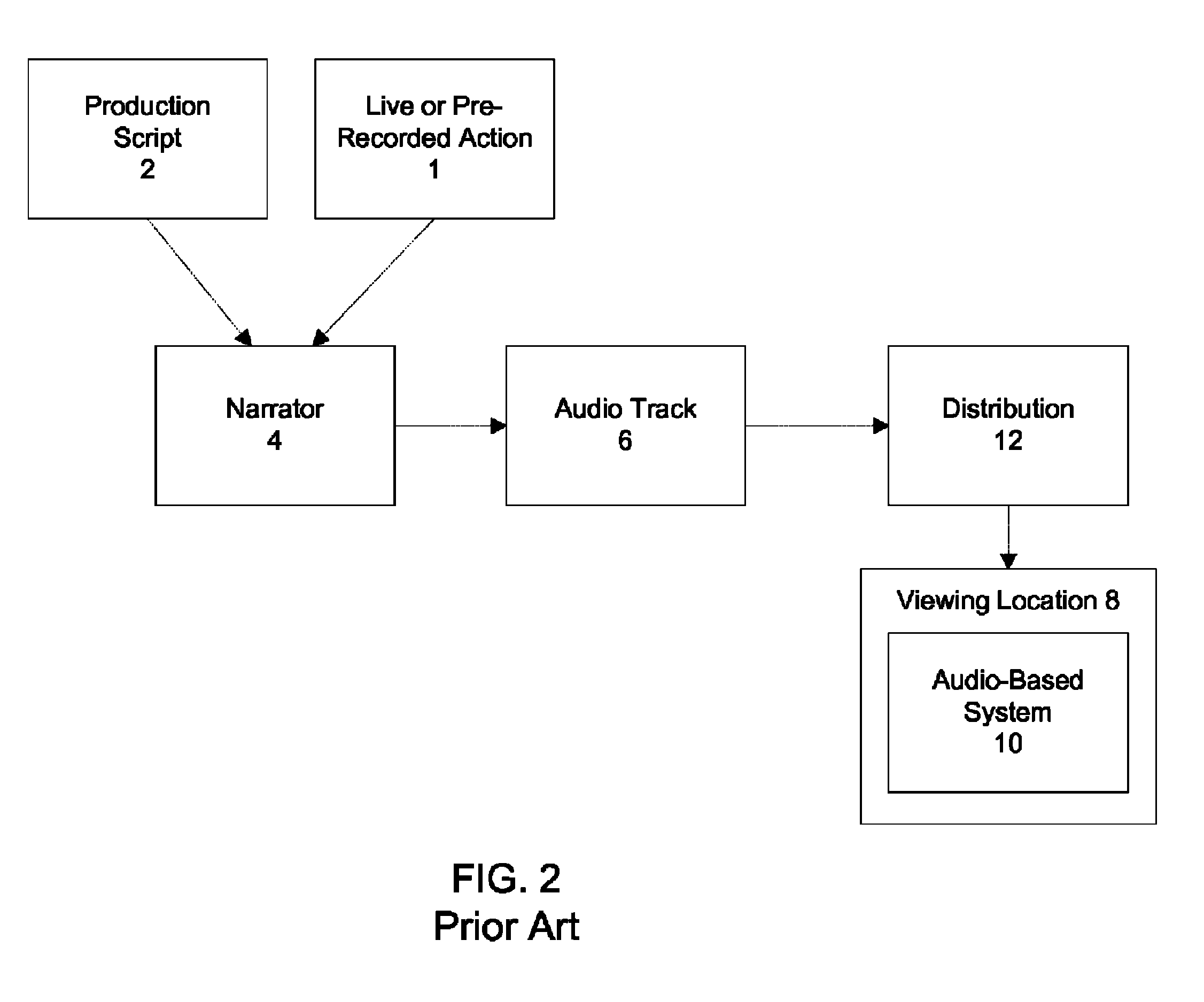

Method and process for text-based assistive program descriptions for television

ActiveUS8497939B2Less-time-consuming and expensiveMaintain normalTelevision system detailsRecording carrier detailsClosed captioningVisually impaired

In a system and method of producing time-synchronous textual descriptions of stage directions in live or pre-recorded productions to provide assistive program descriptions to visually-impaired consumers, a processor may parse stage directions from a production script and synchronize the parsed stage directions with closed captioning streams. The method may include viewing a live or pre-recorded production, creating textual descriptions of the stage directions using a stenography system, and outputting the textual descriptions to a separate output stream than that of dialogue descriptions. In addition, the method may include creating audio descriptions of the stage directions, and converting the audio descriptions to textual descriptions of the stage directions using a voice-recognition system. Further, the method may include distributing the synchronized stage directions, and receiving and decoding the synchronized stage directions using a text-based system.

Owner:HOME BOX OFFICE INC

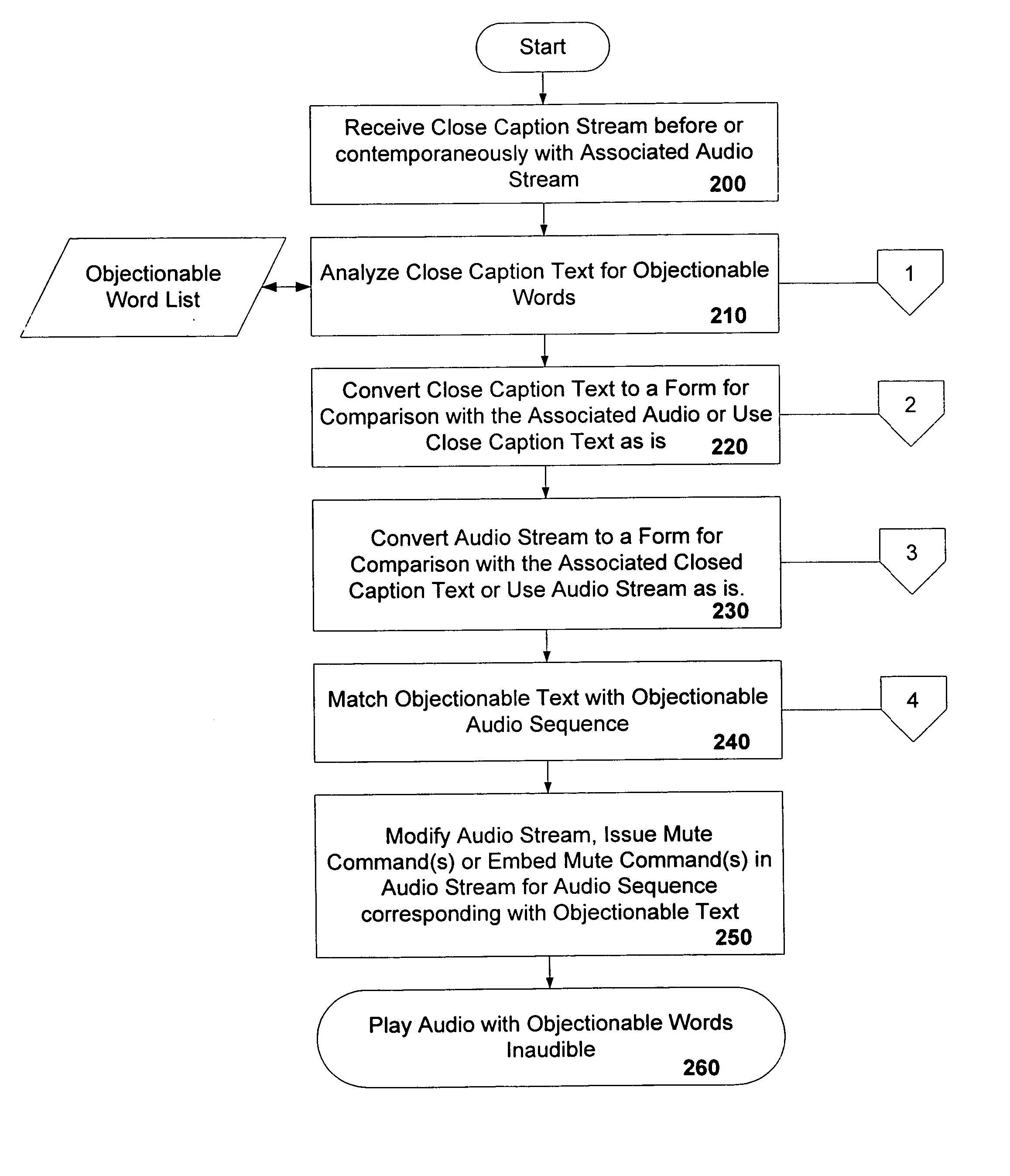

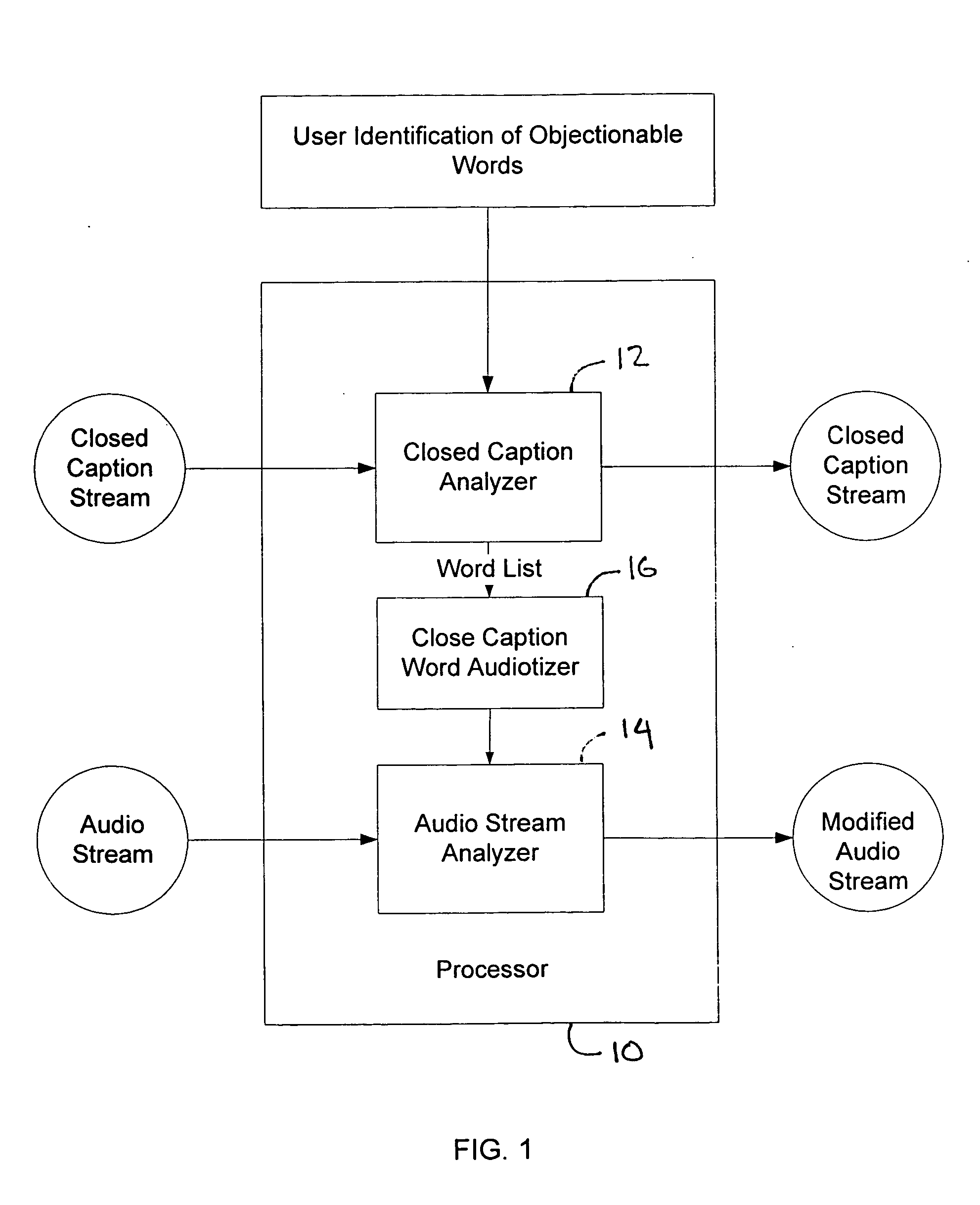

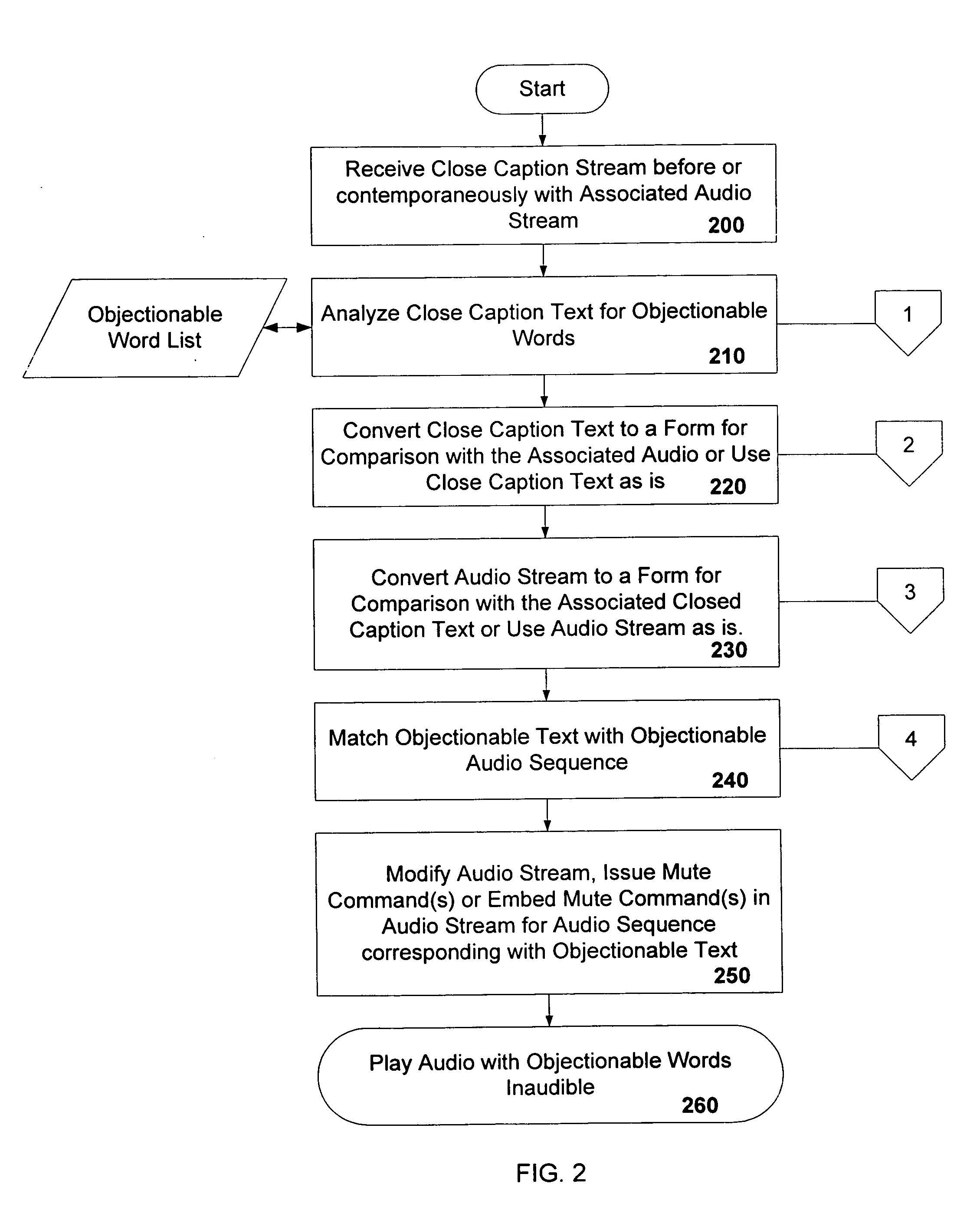

Method and apparatus for controlling play of an audio signal

InactiveUS20050086705A1Television system detailsPicture reproducers using cathode ray tubesClosed captioningAudio frequency

Apparatus and methods conforming to the present invention comprise a method of controlling playback of an audio signal through analysis of a corresponding close caption signal in conjunction with analysis of the corresponding audio signal. Objection text or other specified text in the close caption signal is identified through comparison with user identified objectionable text. Upon identification of the objectionable text, the audio signal is analyzed to identify the audio portion corresponding to the objectionable text. Upon identification of the audio portion, the audio signal may be controlled to mute the audible objectionable text.

Owner:CLEARPLAY INC

Method for integrated media monitoring, purchase, and display

InactiveUS20070204285A1Digital data information retrievalAnalogue secracy/subscription systemsClosed captioningMultiple forms

A method for integrated media monitoring is disclosed, wherein multiple forms of media are monitored and searched according to user defined criteria. The method may be used by a business to understand how a product or service is being received by the general public. Monitoring includes analysis of closed captioning data and human monitoring so as to provide a business with a full understanding of advertising and editorial effectiveness. A user provides media search parameters via a network, and a near real-time hit list is produced and presented to the requesting user. Options for previewing and purchasing matching media segments are presented, along with corresponding reports and coverage analyses. Previewing can occur via a streamed video format, whereas purchasing allows for high quality video download. Reports include information about how the product was conveyed, audience watching, and value to the business. Reports can be created by the system or by the user, formatted for presentation, purchased, and downloaded.

Owner:VMS MONITORING SERVICES OF AMERICA INC

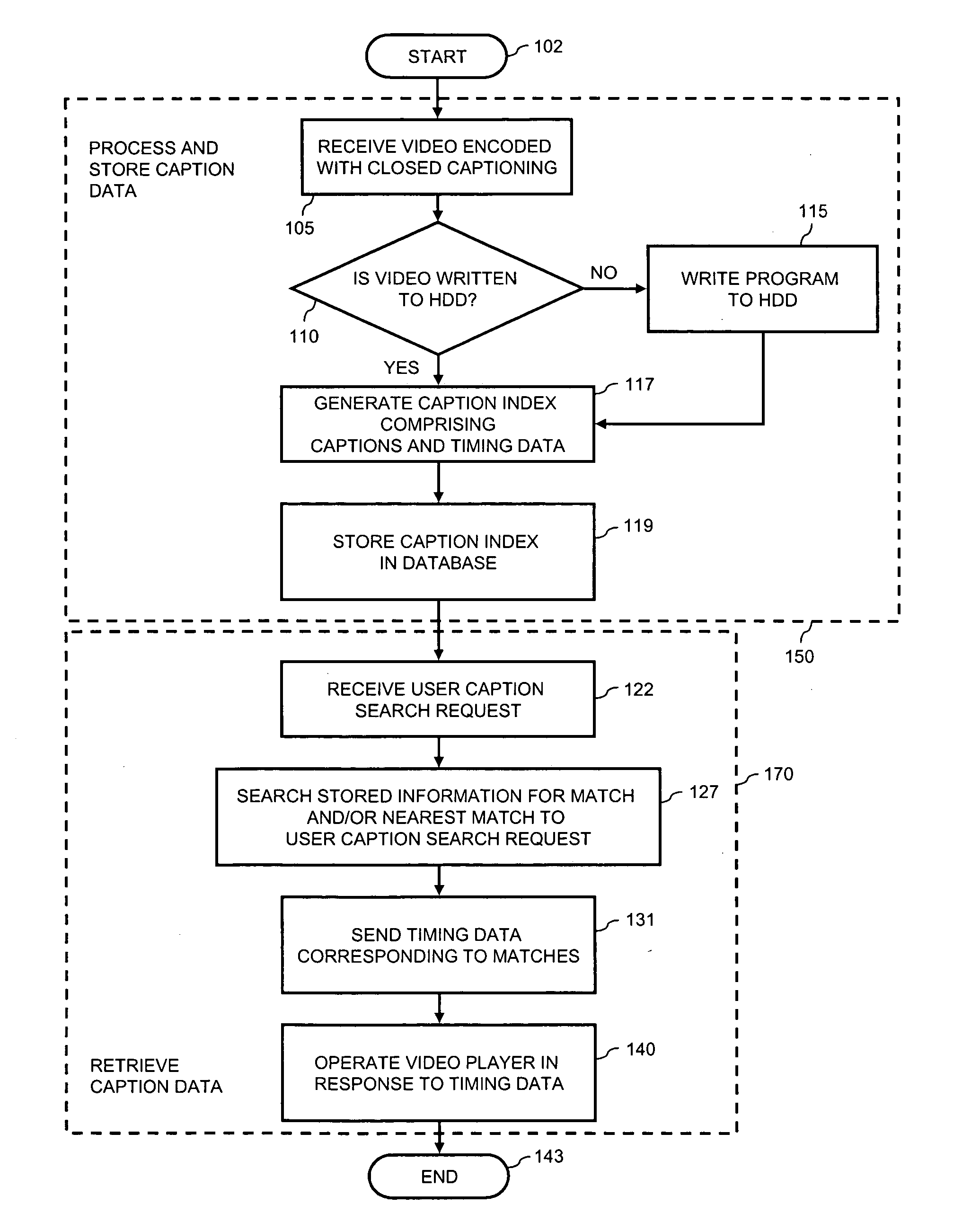

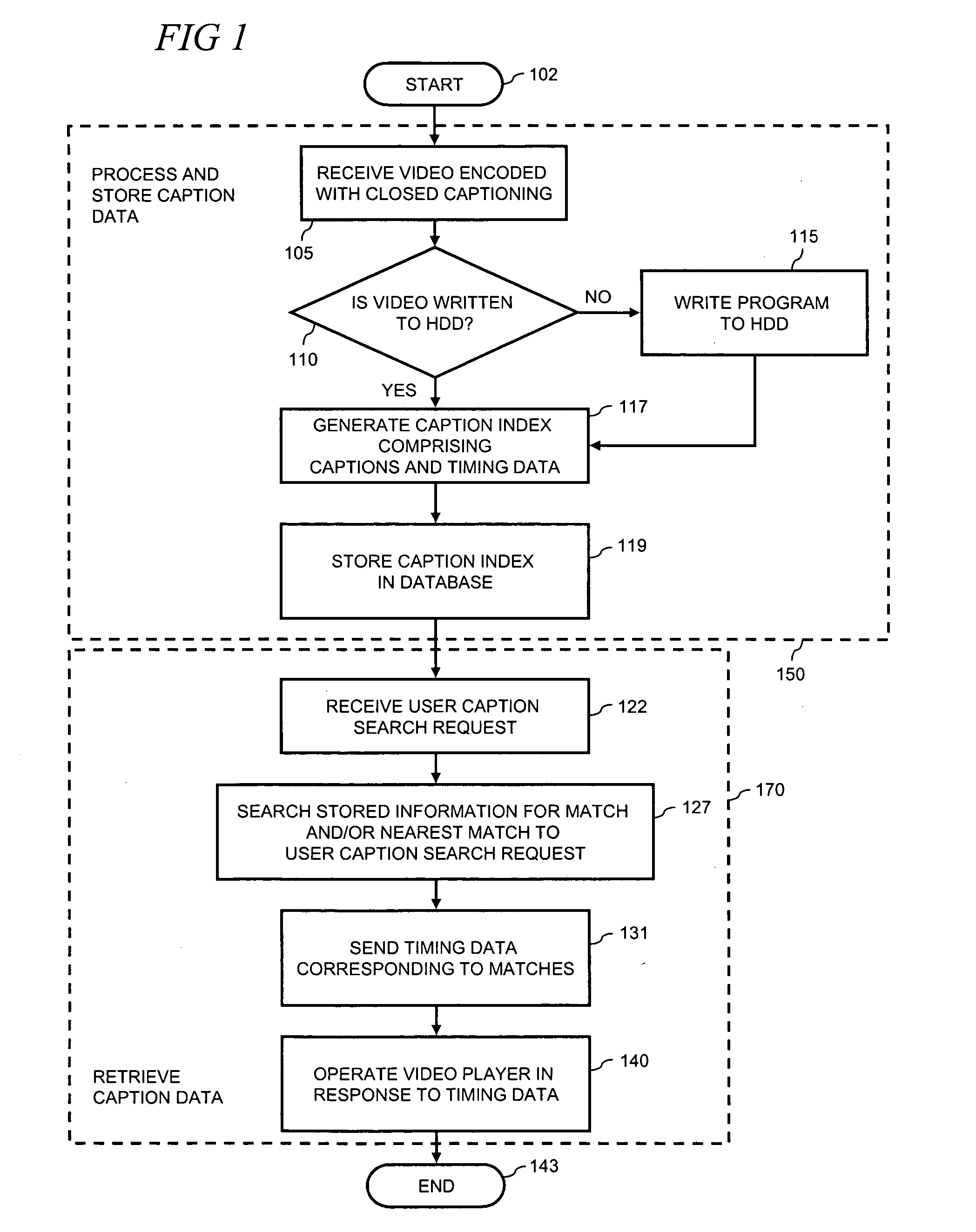

Navigating recorded video using closed captioning

InactiveUS20070154171A1Television system detailsColor television signals processingClosed captioningFrame based

Video navigation is provided where a video stream encoded with captioning is received. A user-searchable captioning index comprising the captioning and synchronization data indicative of synchronization between the video stream and the captioning is generated. In illustrative examples, the synchronization is time-based, video-frame-based, or marker-based.

Owner:GENERAL INSTR CORP

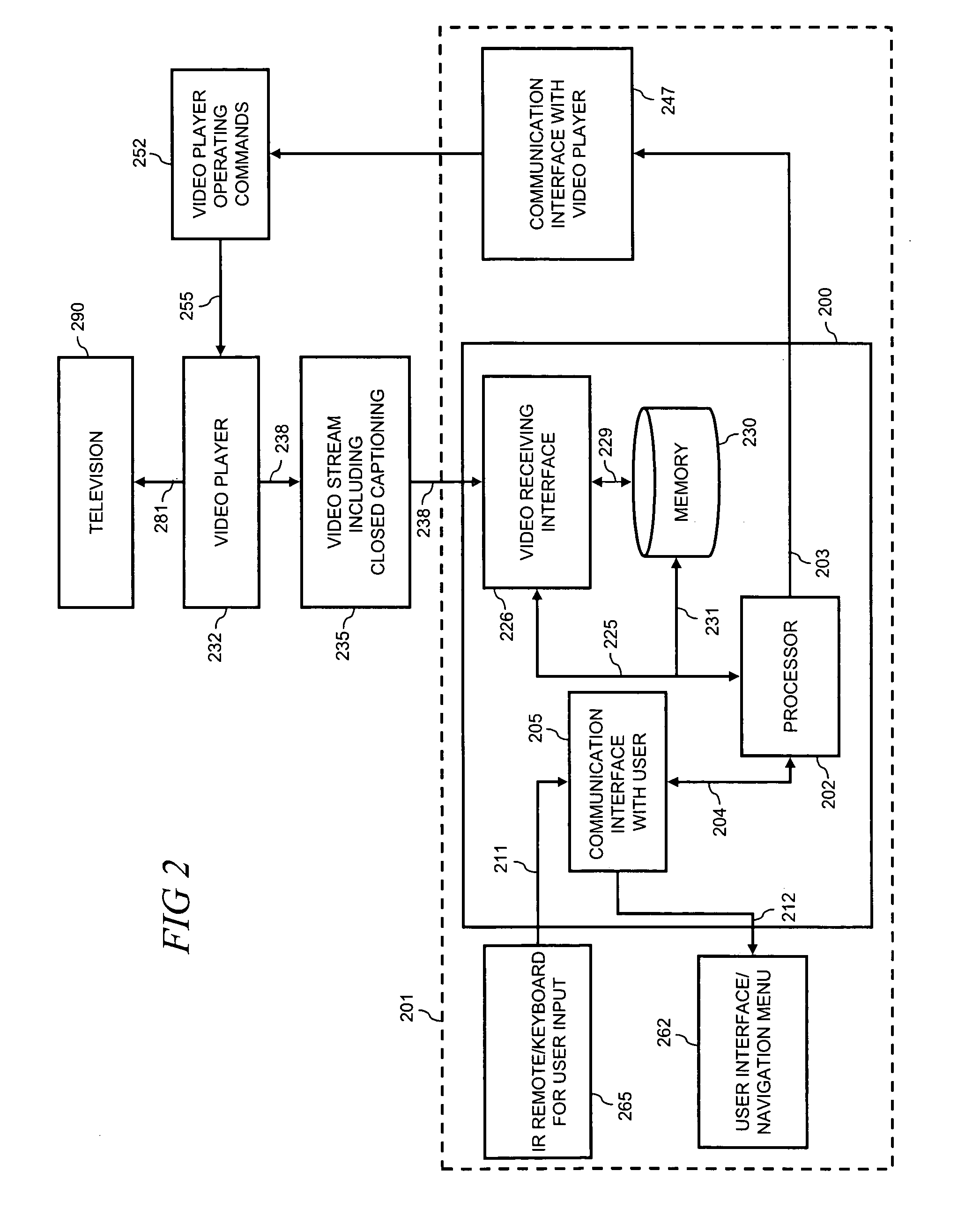

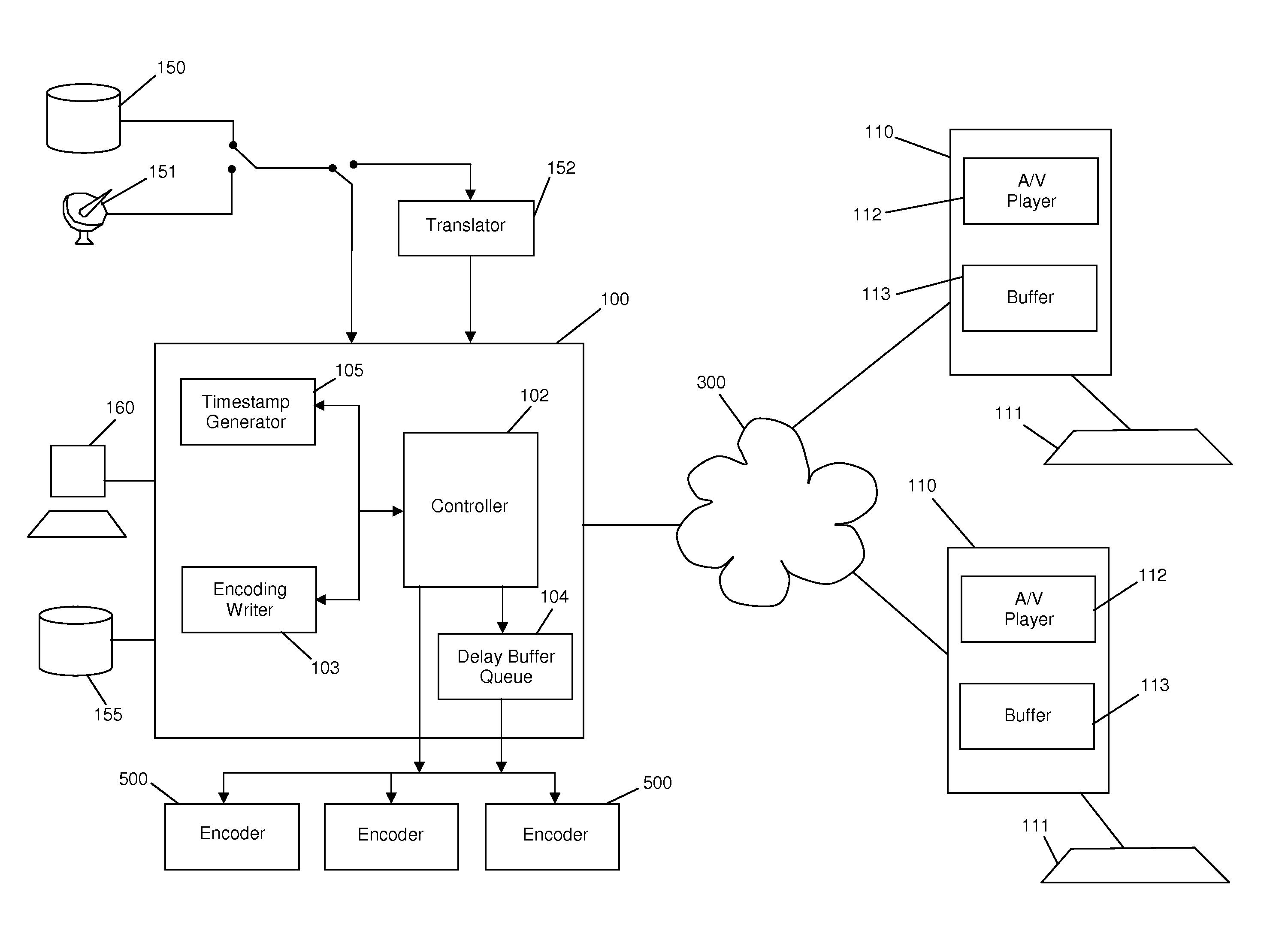

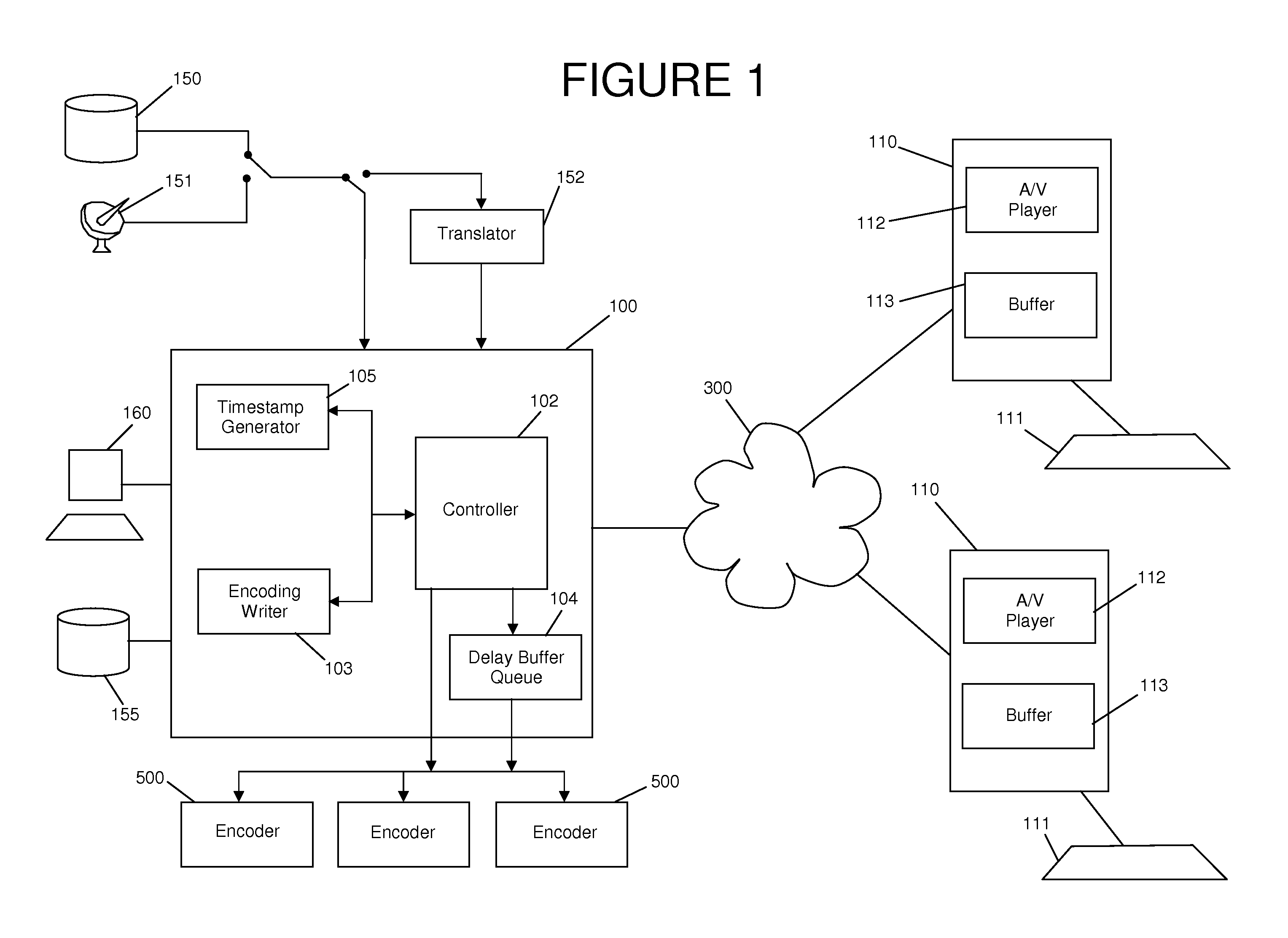

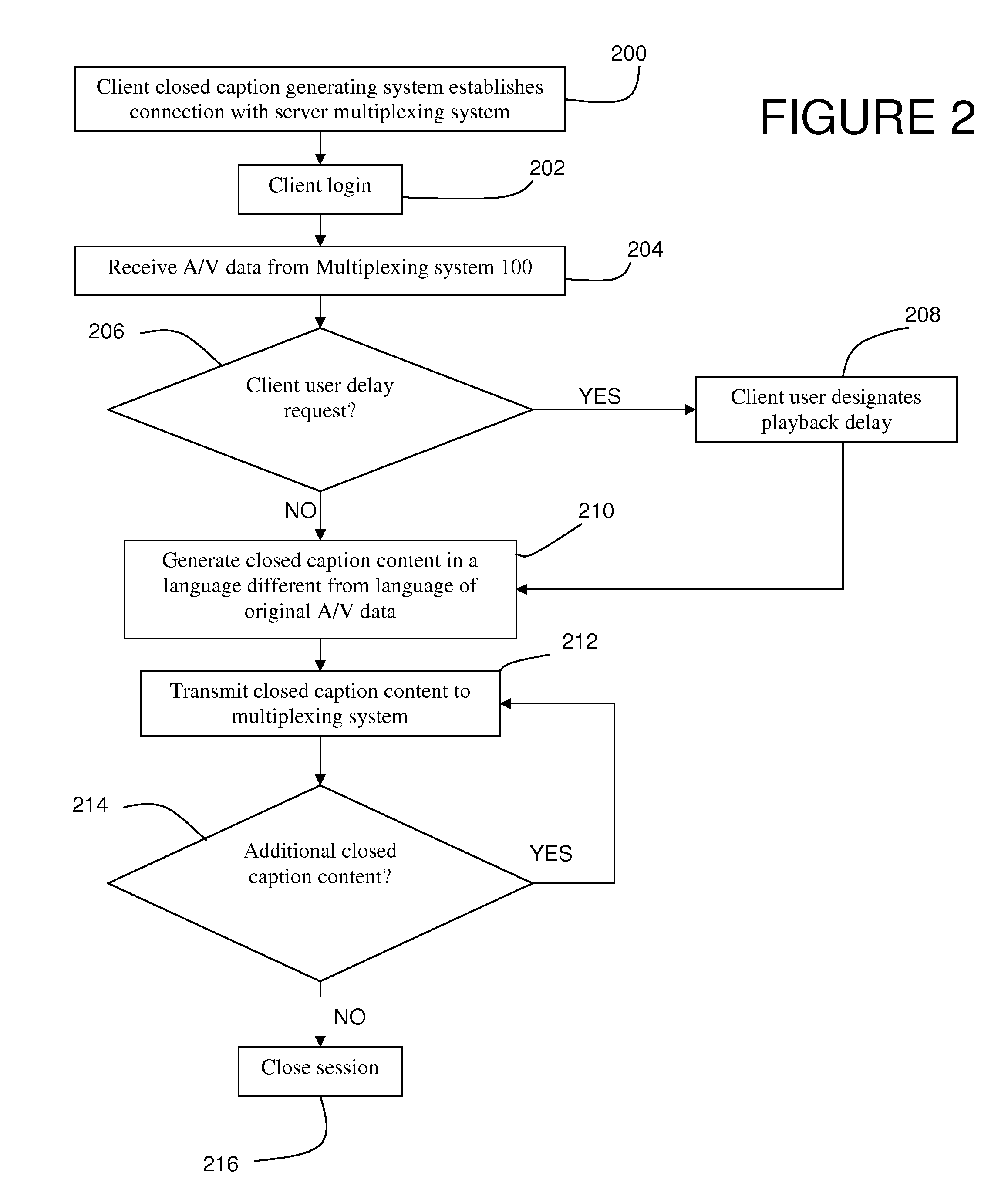

Multi-lingual transmission and delay of closed caption content through a delivery system

InactiveUS20100194979A1Natural language translationTelevision system detailsMultiplexingClosed captioning

Disclosed is a system and method of blending multiple closed caption language streams into a single output for transmission using closed caption encoding devices. Closed caption signals are created by remote closed caption generating systems that are connected to an input device, such as a stenography keyboard or voice recognition system. The closed caption signals are generated at multiple remote closed caption generating systems and in different languages, and are then independently transmitted to a multiplexing system where they are properly identified and blended into a single output data stream. The single output data stream is then delivered to a closed caption encoding device via a connection such as an Ethernet or serial connection.

Owner:XORBIT

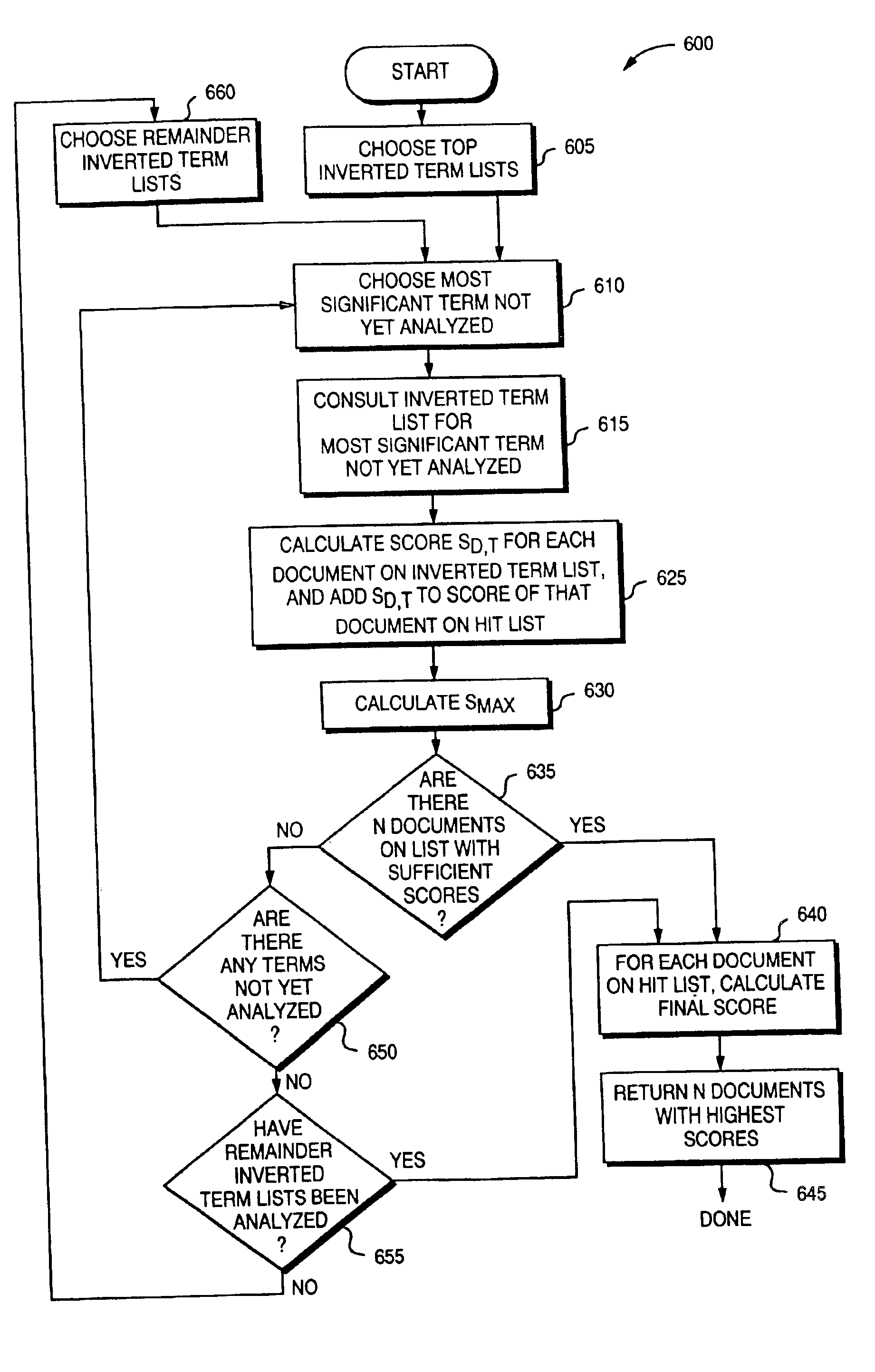

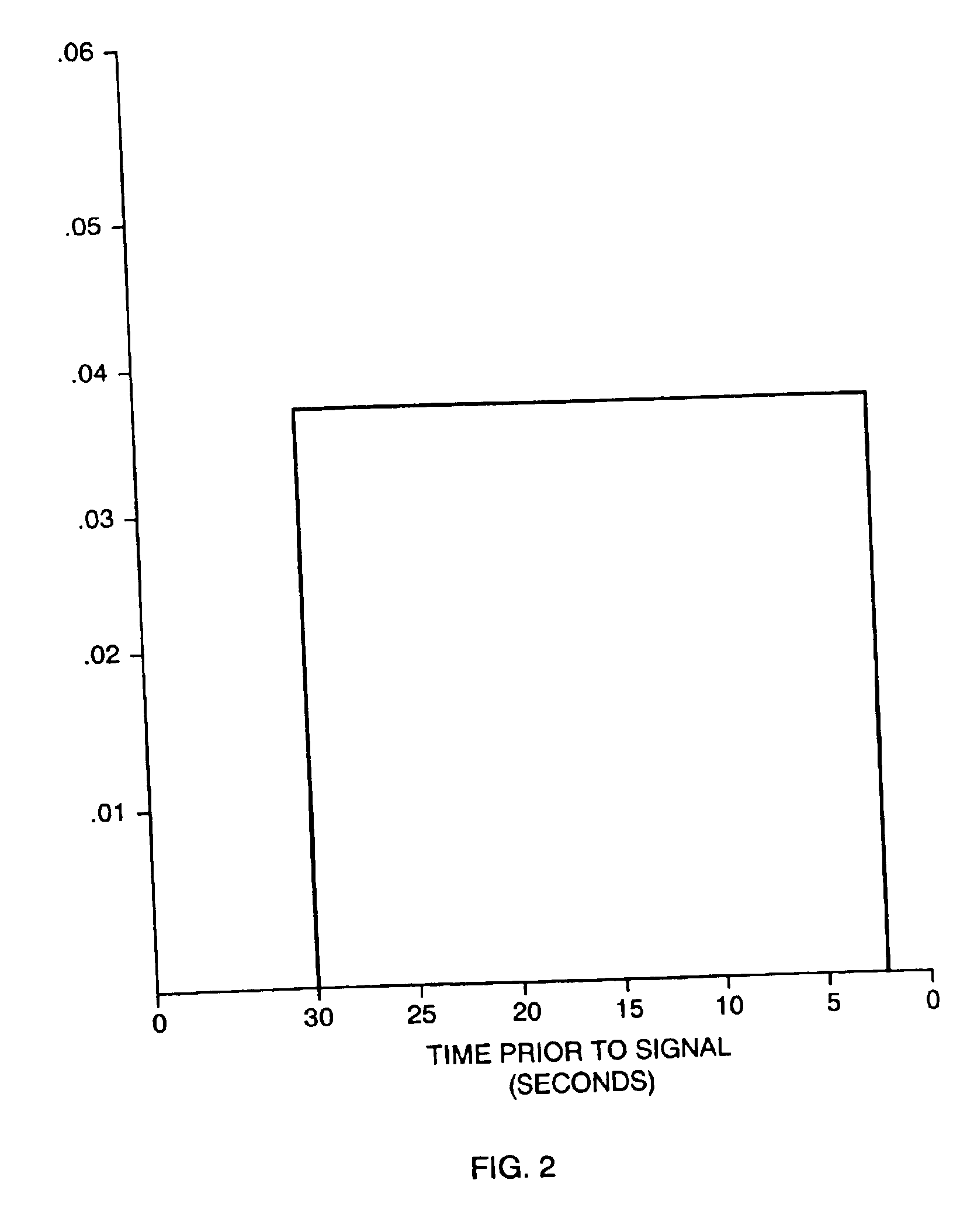

Hyper video: information retrieval using realtime buffers

InactiveUS6965890B1Solve insufficient capacityData processing applicationsMultimedia data retrievalClosed captioningDocument preparation

Disclosed is a method and device for selecting documents, such as Web pages or sites, for presentation to a user, in response to a user expression of interest, during the course of presentation to the user of a document, such as a video or audio selection, whose content varies with time. The method takes advantage of information retrieval techniques to select documents related to the portion of the temporal document in which the user has expressed interest. The method generates the search query to use to select documents by reference to text associated with the portion of the temporal document in which the user has expressed interest, as by using the closed caption test associated with the video, or by using speech recognition techniques. The method further uses a weighting function to weigh the terms used in the search query, depending on their temporal relationship to the user expression of interest. The method uses a buffer to save necessary information about the temporal occurrence of terms in the temporal document.

Owner:LEVEL 3 COMM LLC

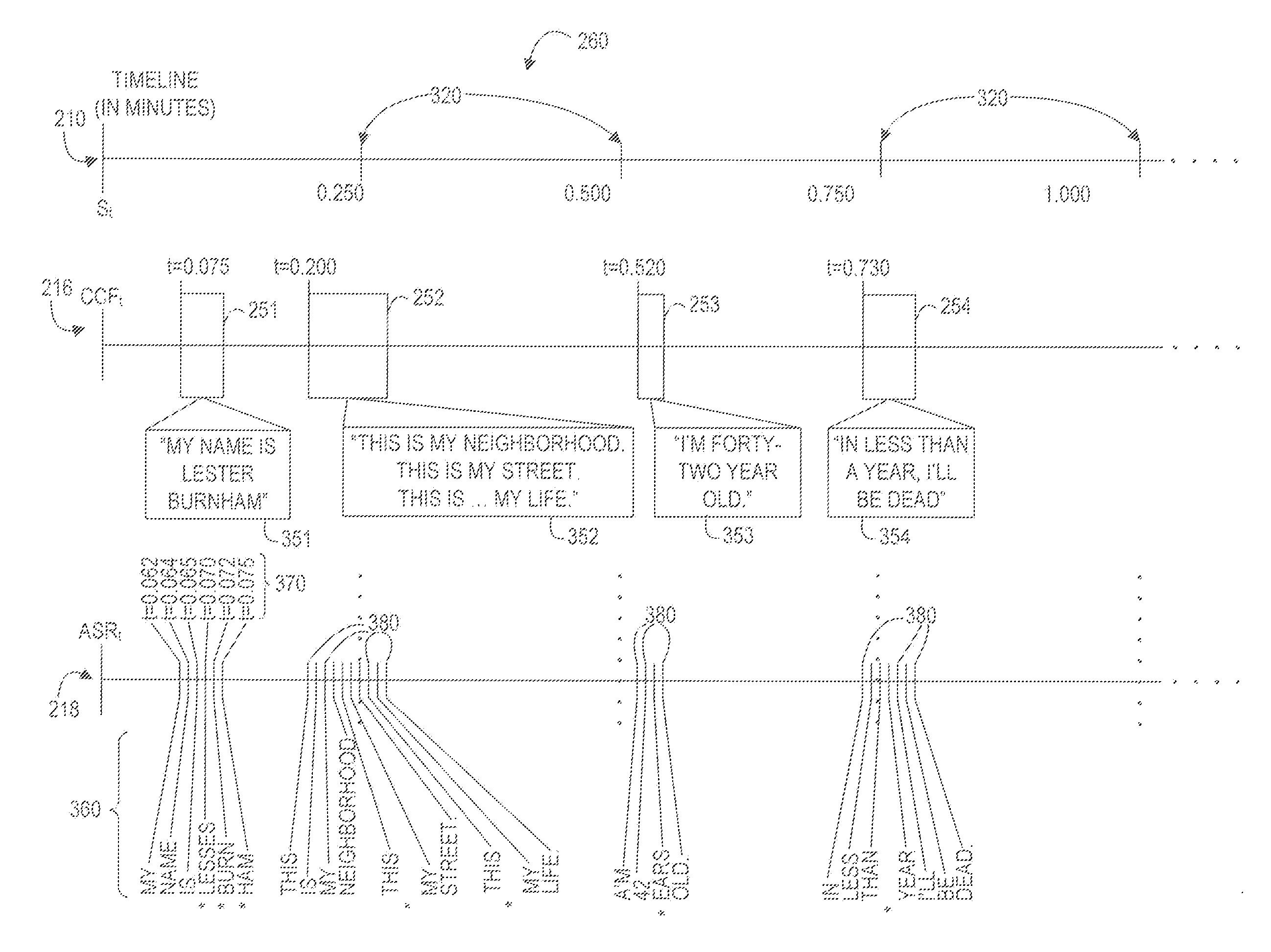

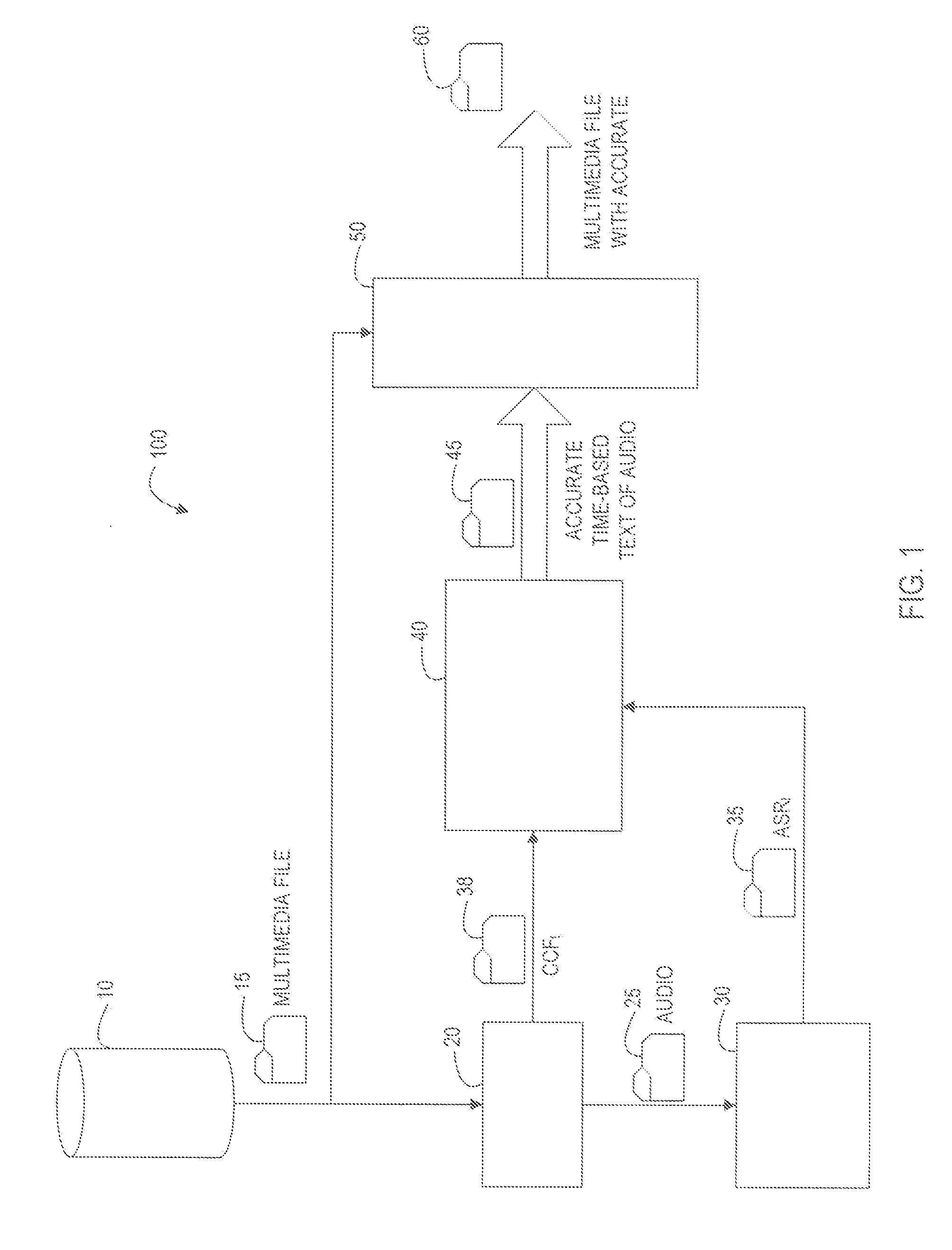

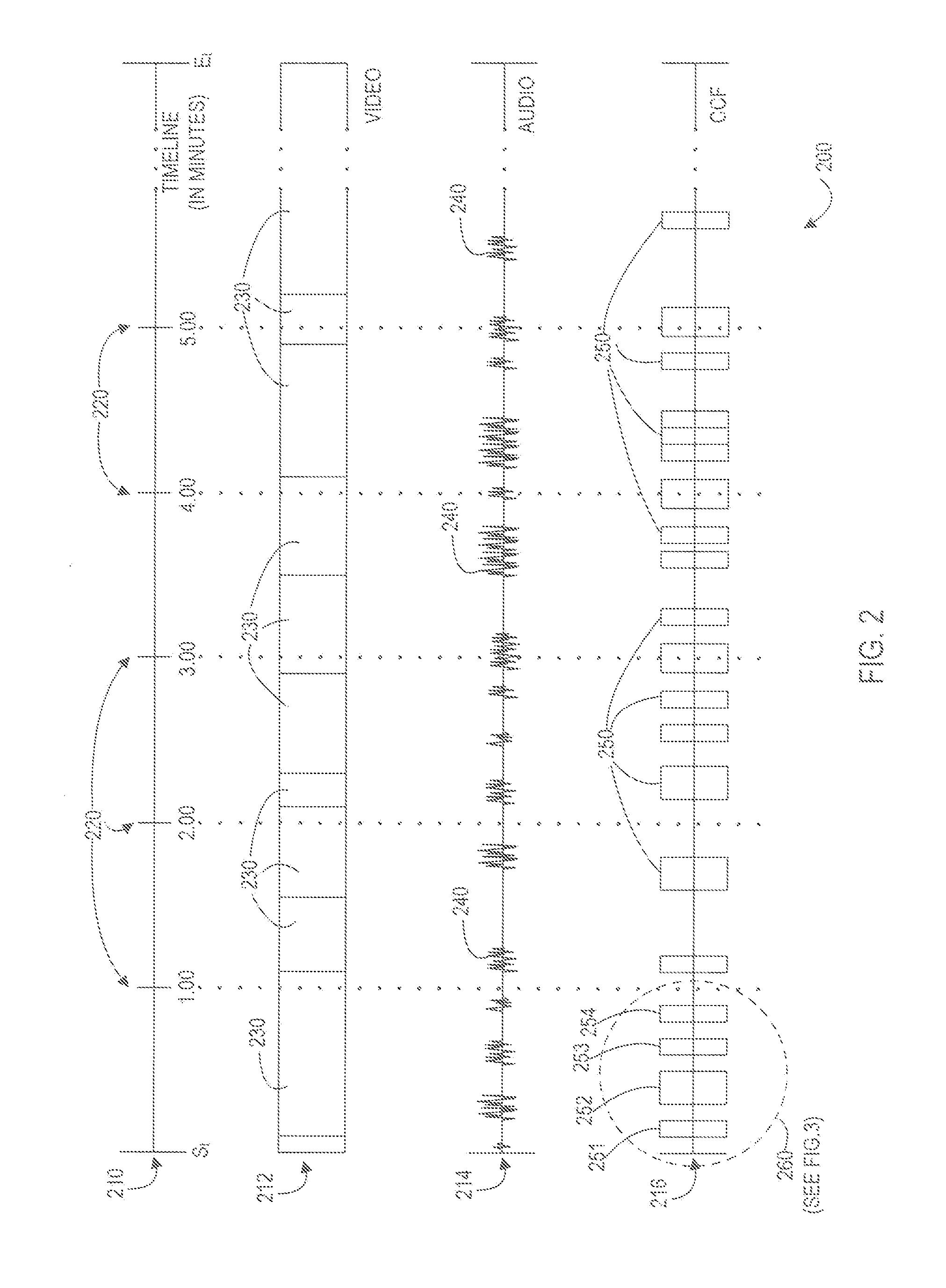

Timeline Alignment for Closed-Caption Text Using Speech Recognition Transcripts

ActiveUS20110134321A1Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningClosed captioningSpeech sound

Method, systems, and computer program products for synchronizing text with audio in a multimedia file, wherein the multimedia file is defined by a timeline having a start point and end point and respective points in time therebetween, wherein an N-gram analysis is used to compare each word of a closed-captioned text associated with the multimedia file with words generated by an automated speech recognition (ASR) analysis of the audio of the multimedia file to create an accurate, time-based metadata file in which each closed-captioned word is associated with a respective point on the timeline corresponding to the same point in time on the timeline in which the word is actually spoken in the audio and occurs within the video.

Owner:ROVI PROD CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com