Patents

Literature

1533results about "Architecture with multiple processing units" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

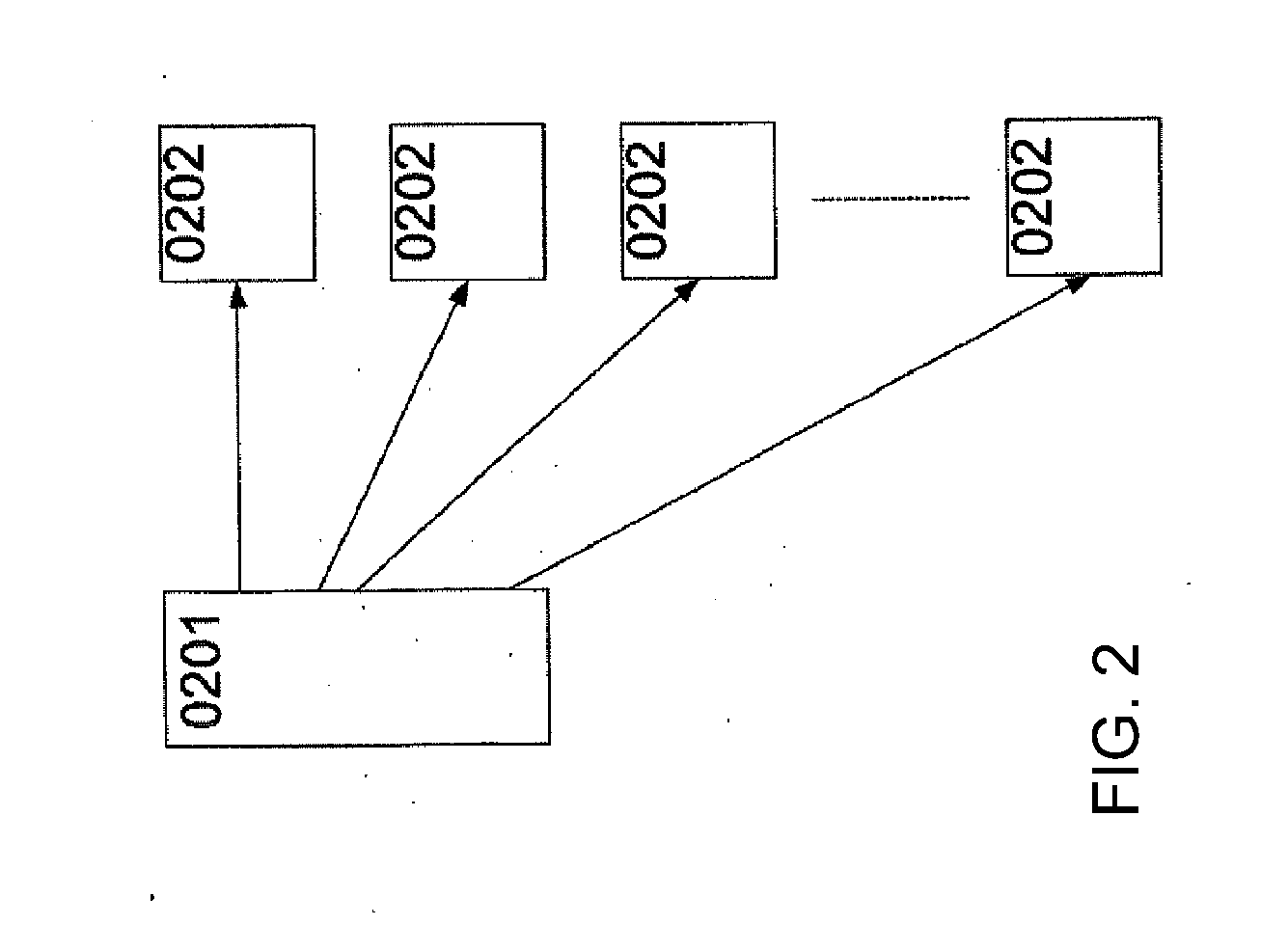

Distributed sensing techniques for mobile devices

ActiveUS20050093868A1Input/output for user-computer interactionServices signallingHuman–computer interactionMobile device

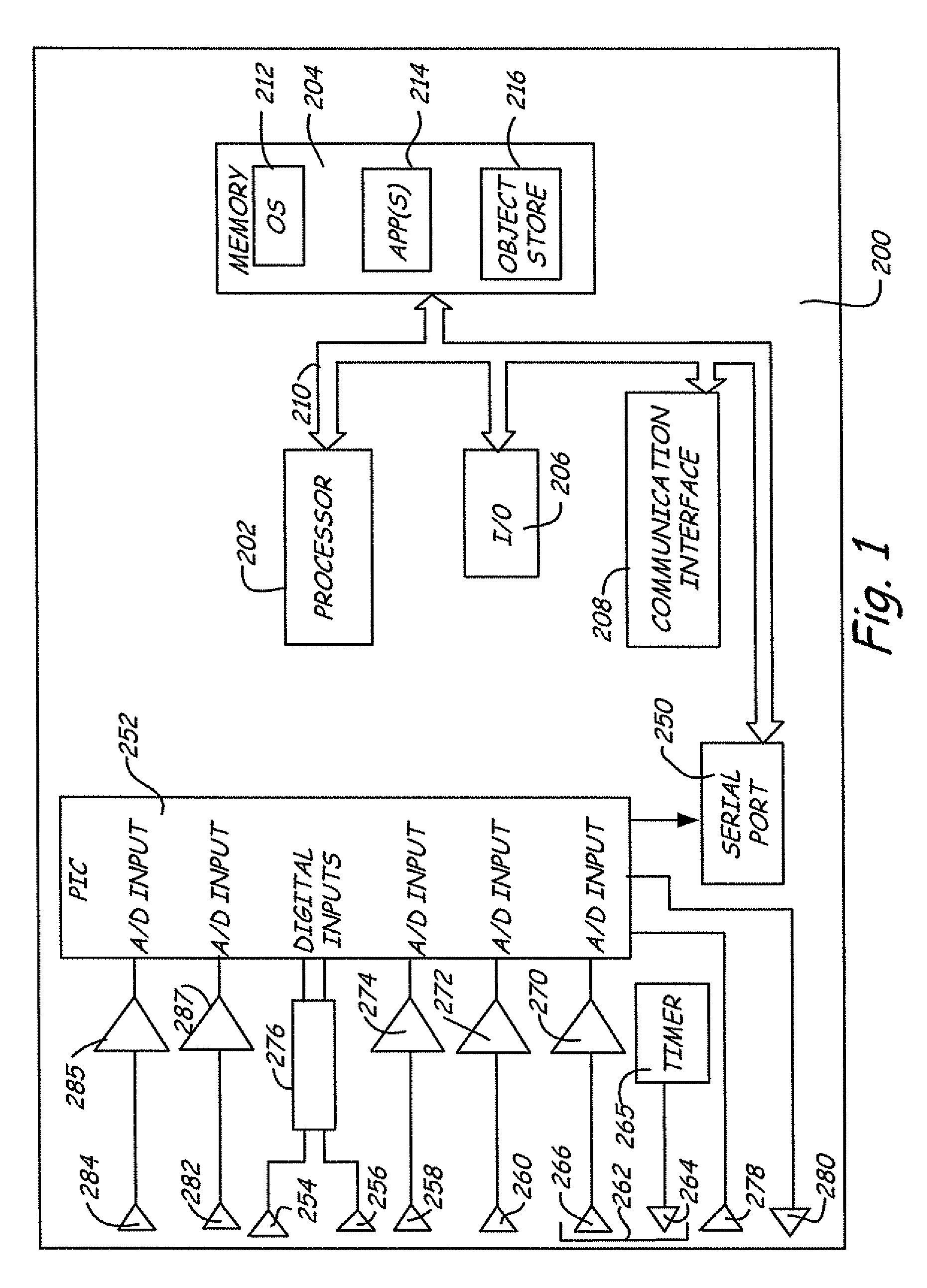

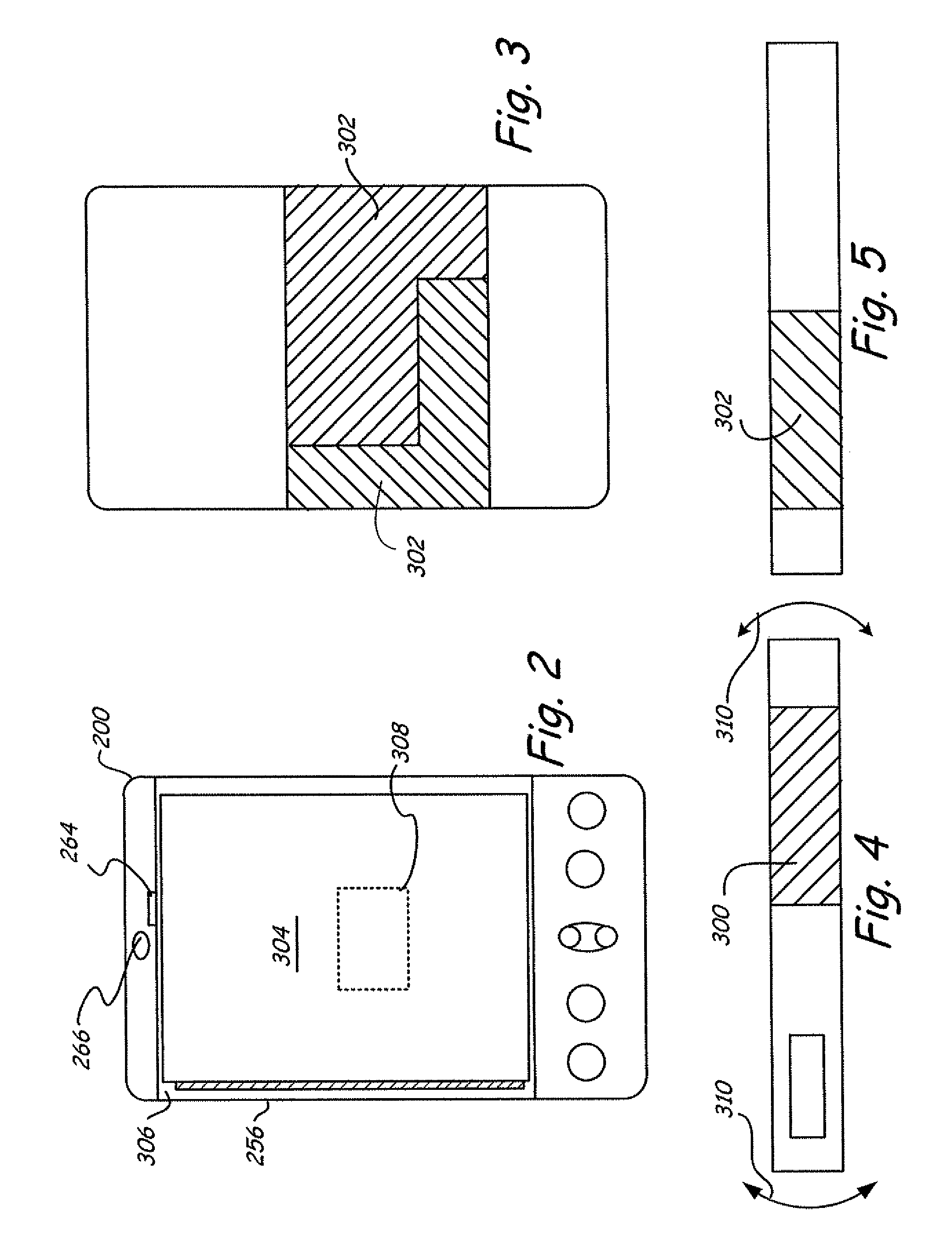

Methods and apparatus of the invention allow the coordination of resources of mobile computing devices to jointly execute tasks. In the method, a first gesture input is received at a first mobile computing device. A second gesture input is received at a second mobile computing device. In response, a determination is made as to whether the first and second gesture inputs form one of a plurality of different synchronous gesture types. If it is determined that the first and second gesture inputs form the one of the plurality of different synchronous gesture types, then resources of the first and second mobile computing devices are combined to jointly execute a particular task associated with the one of the plurality of different synchronous gesture types.

Owner:MICROSOFT TECH LICENSING LLC

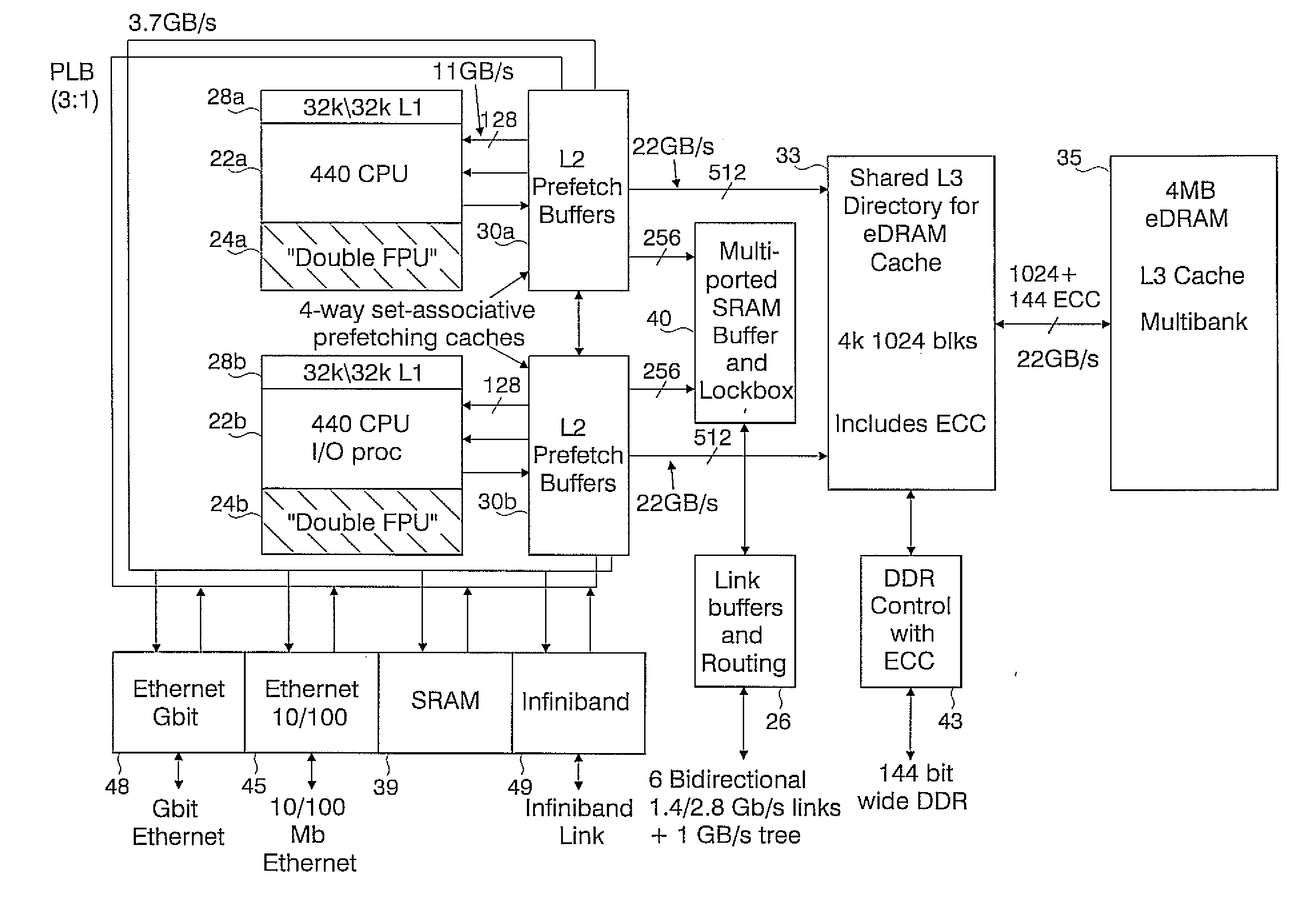

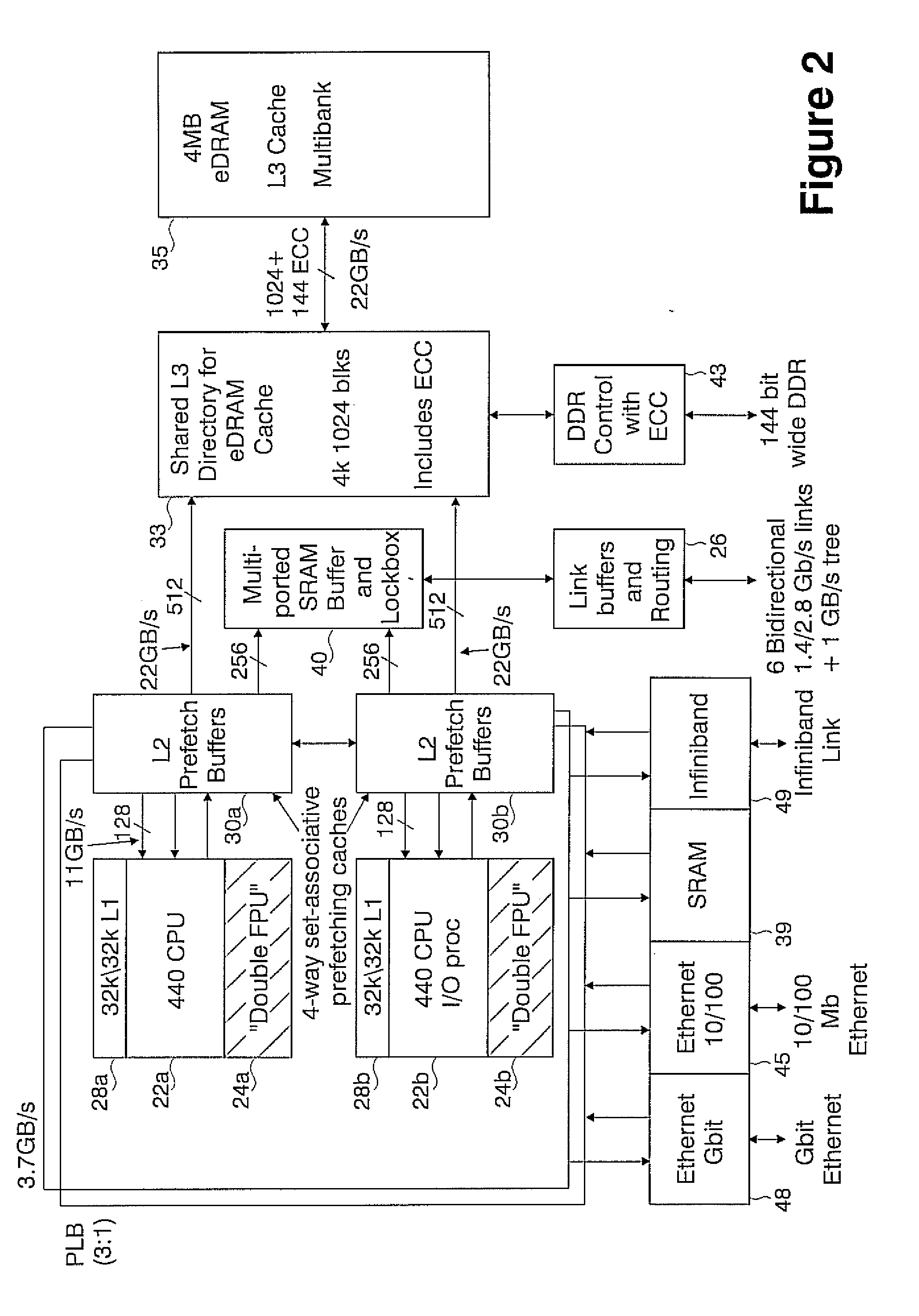

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

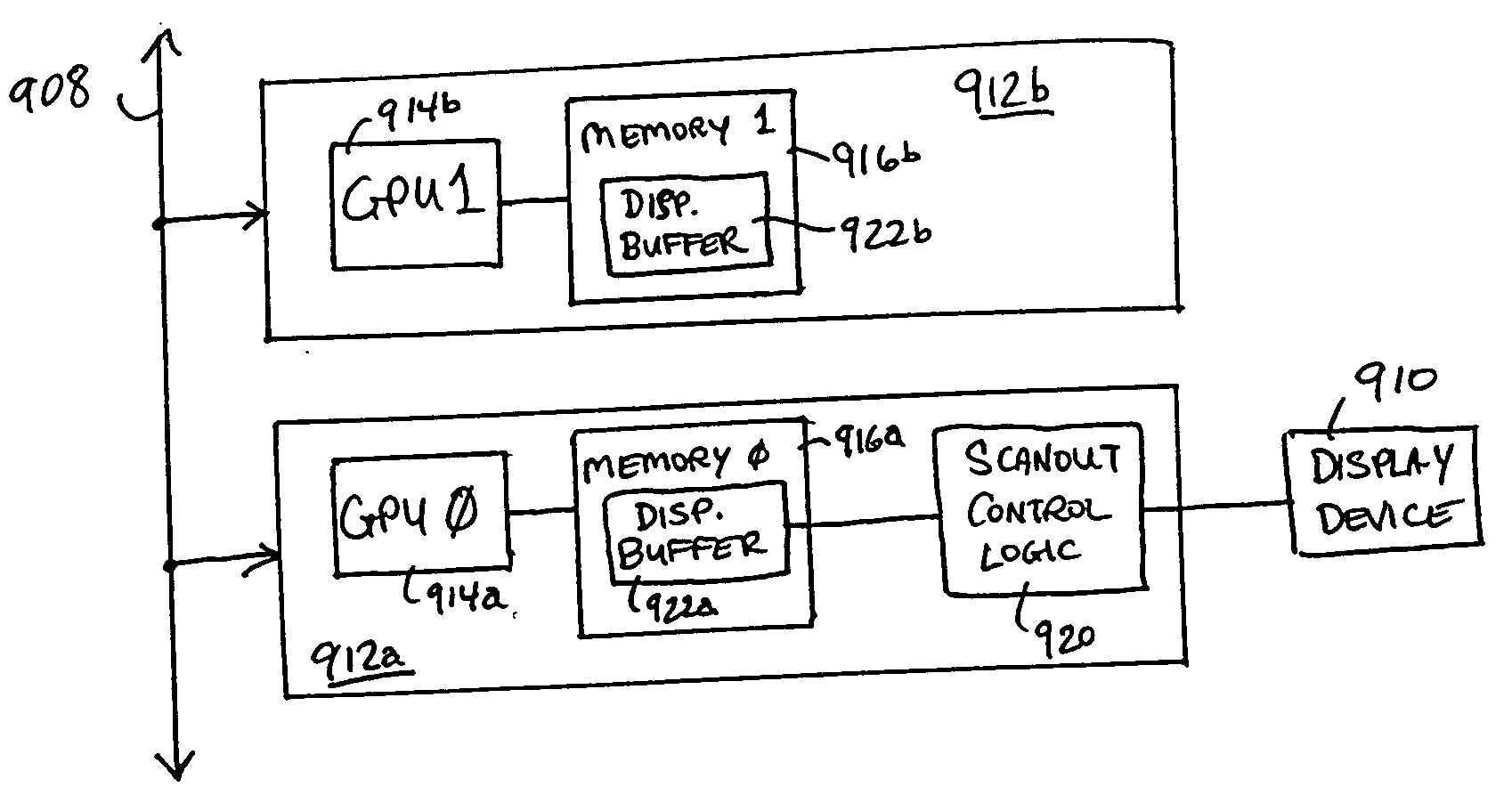

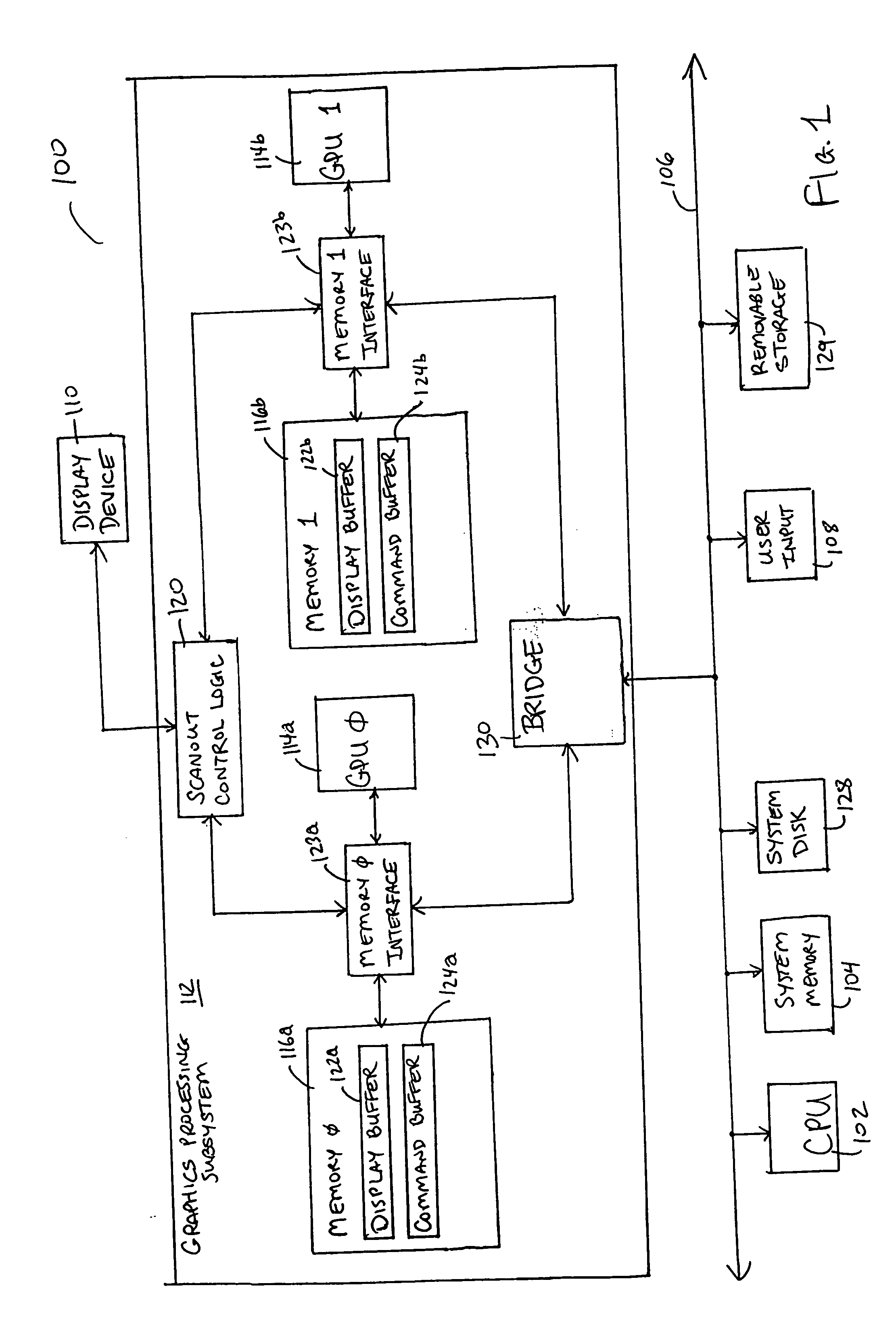

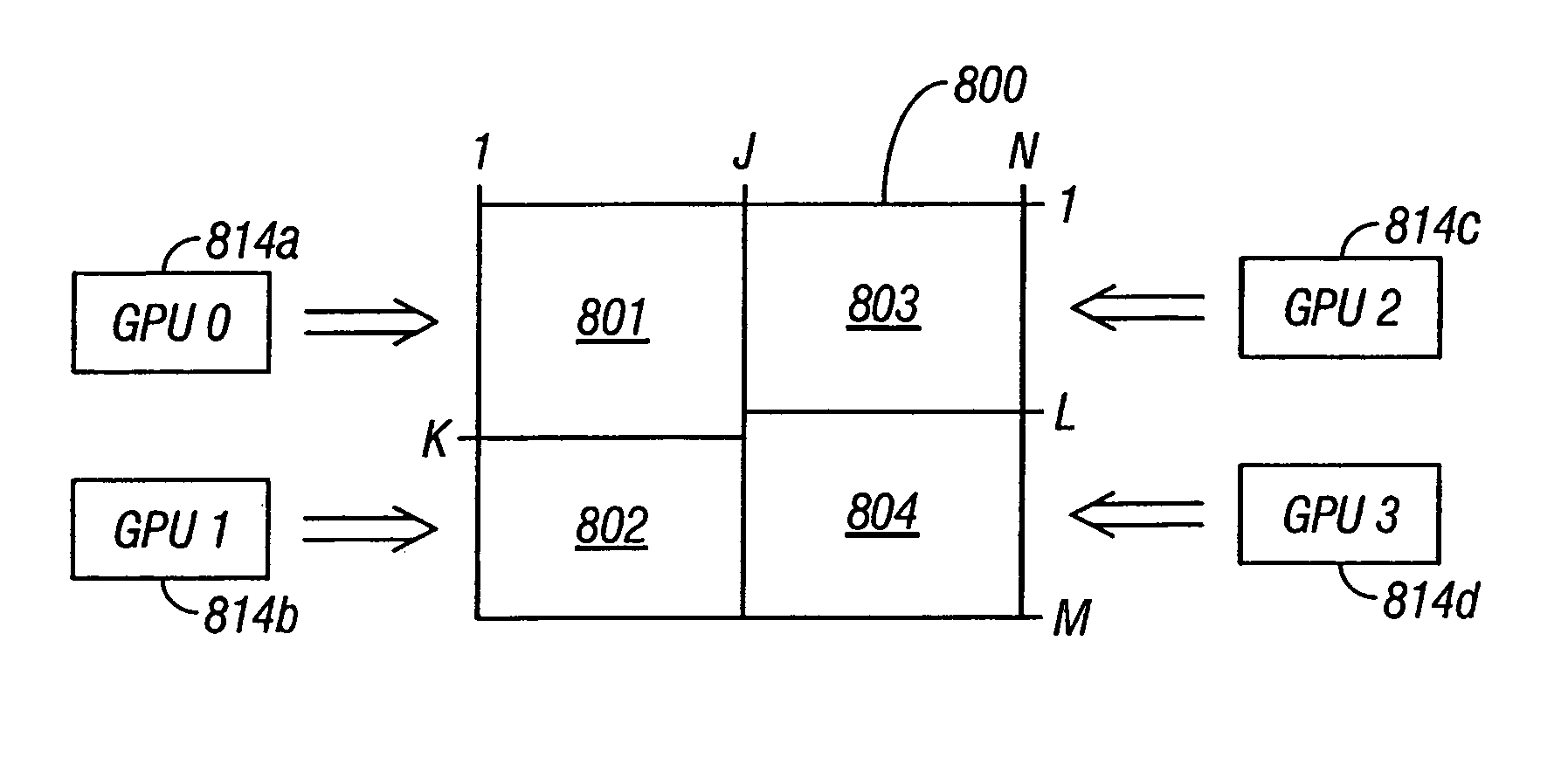

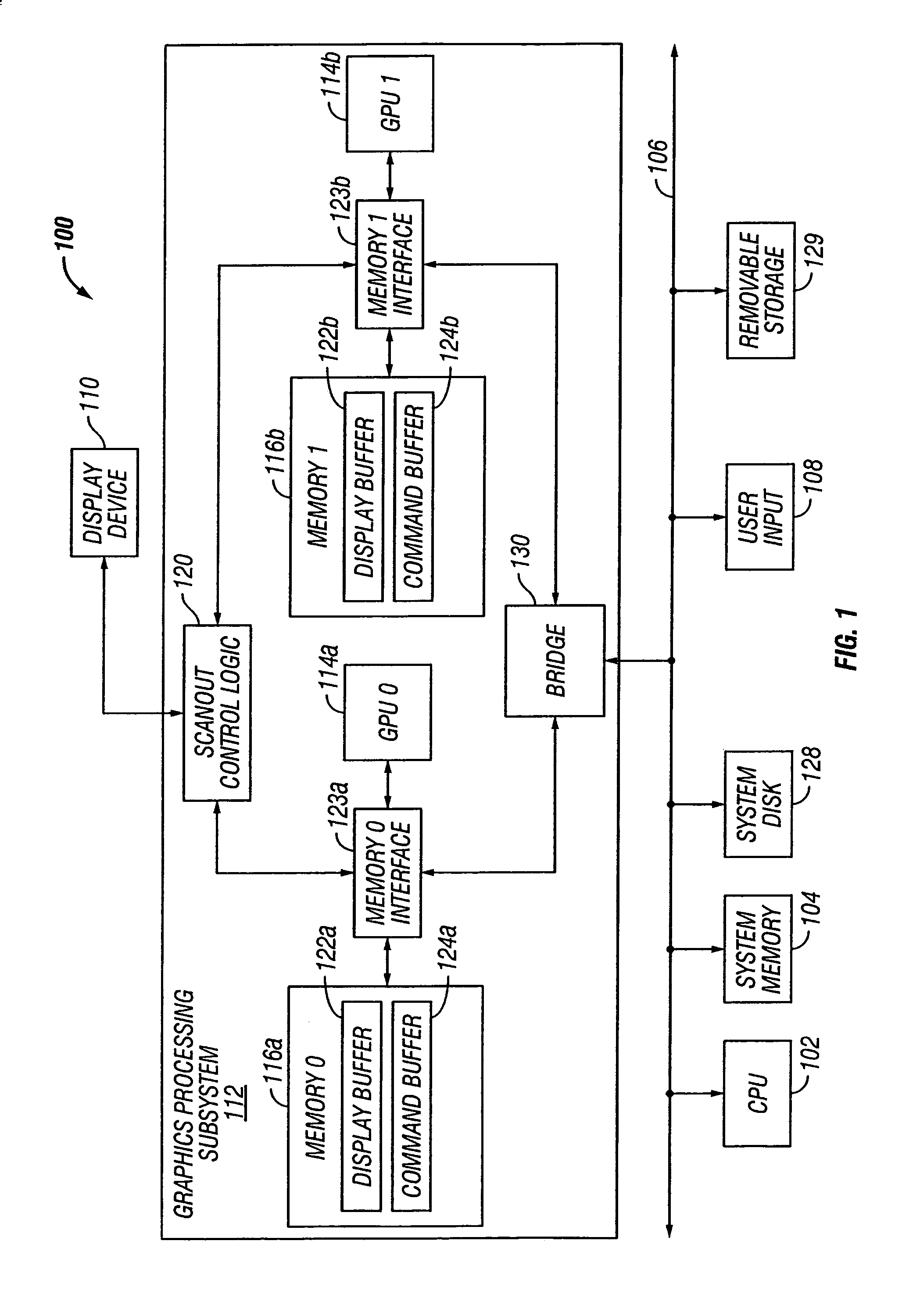

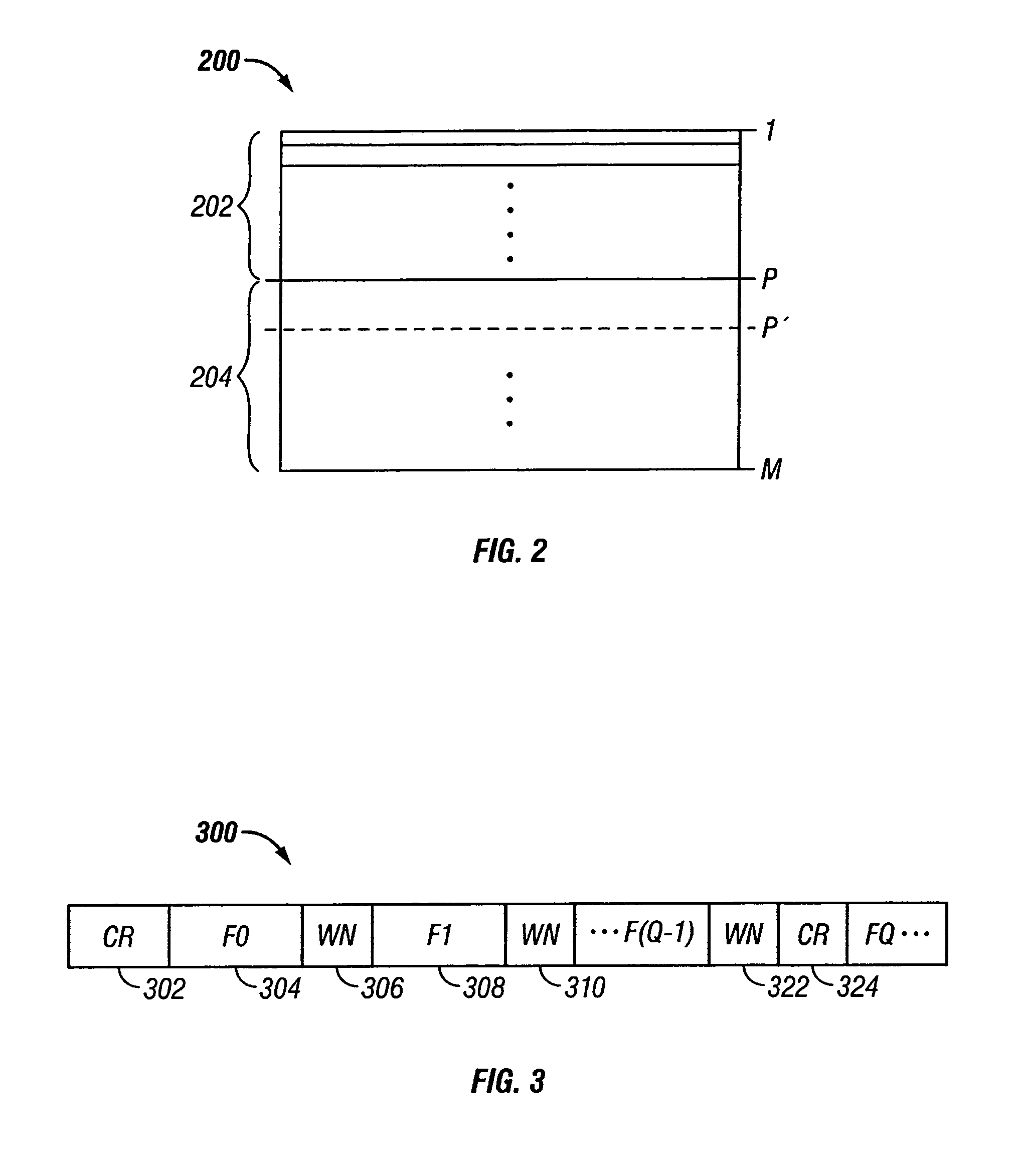

Adaptive load balancing in a multi-processor graphics processing system

ActiveUS20050041031A1Increase in sizeSmall sizeCathode-ray tube indicatorsMultiple digital computer combinationsGraphicsMulti processor

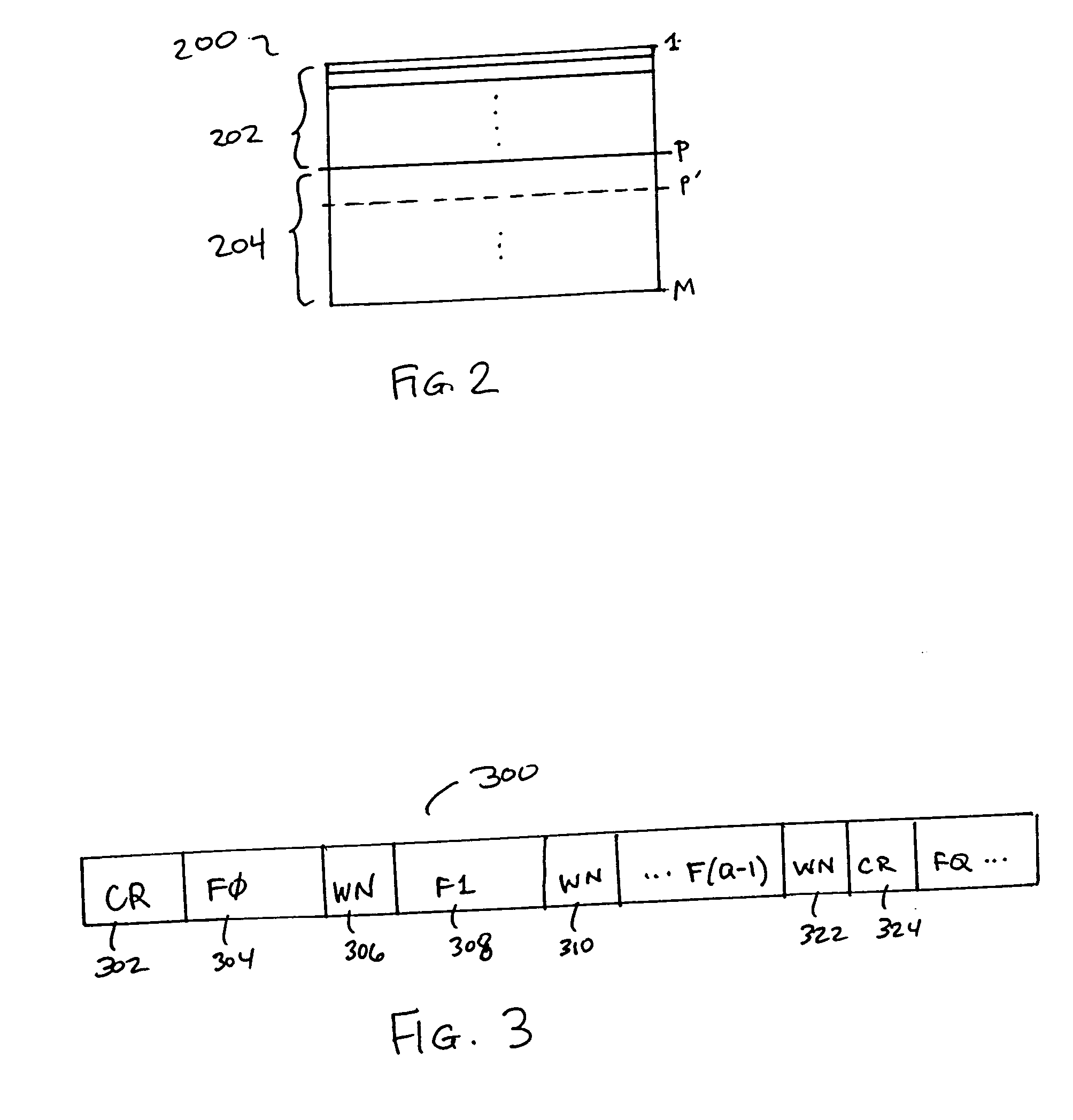

Systems and methods for balancing a load among multiple graphics processors that render different portions of a frame. A display area is partitioned into portions for each of two (or more) graphics processors. The graphics processors render their respective portions of a frame and return feedback data indicating completion of the rendering. Based on the feedback data, an imbalance can be detected between respective loads of two of the graphics processors. In the event that an imbalance exists, the display area is re-partitioned to increase a size of the portion assigned to the less heavily loaded processor and to decrease a size of the portion assigned to the more heavily loaded processor.

Owner:NVIDIA CORP

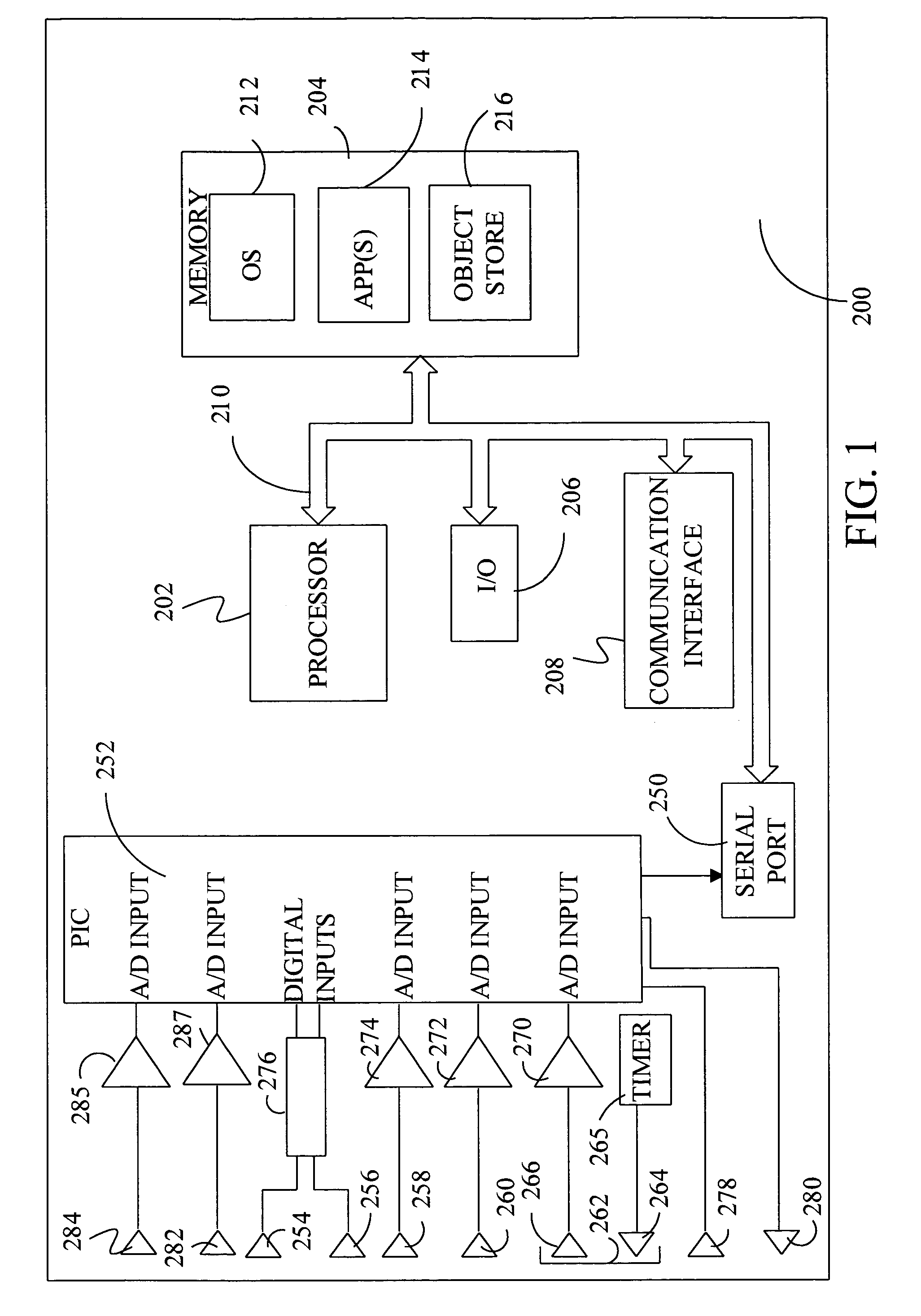

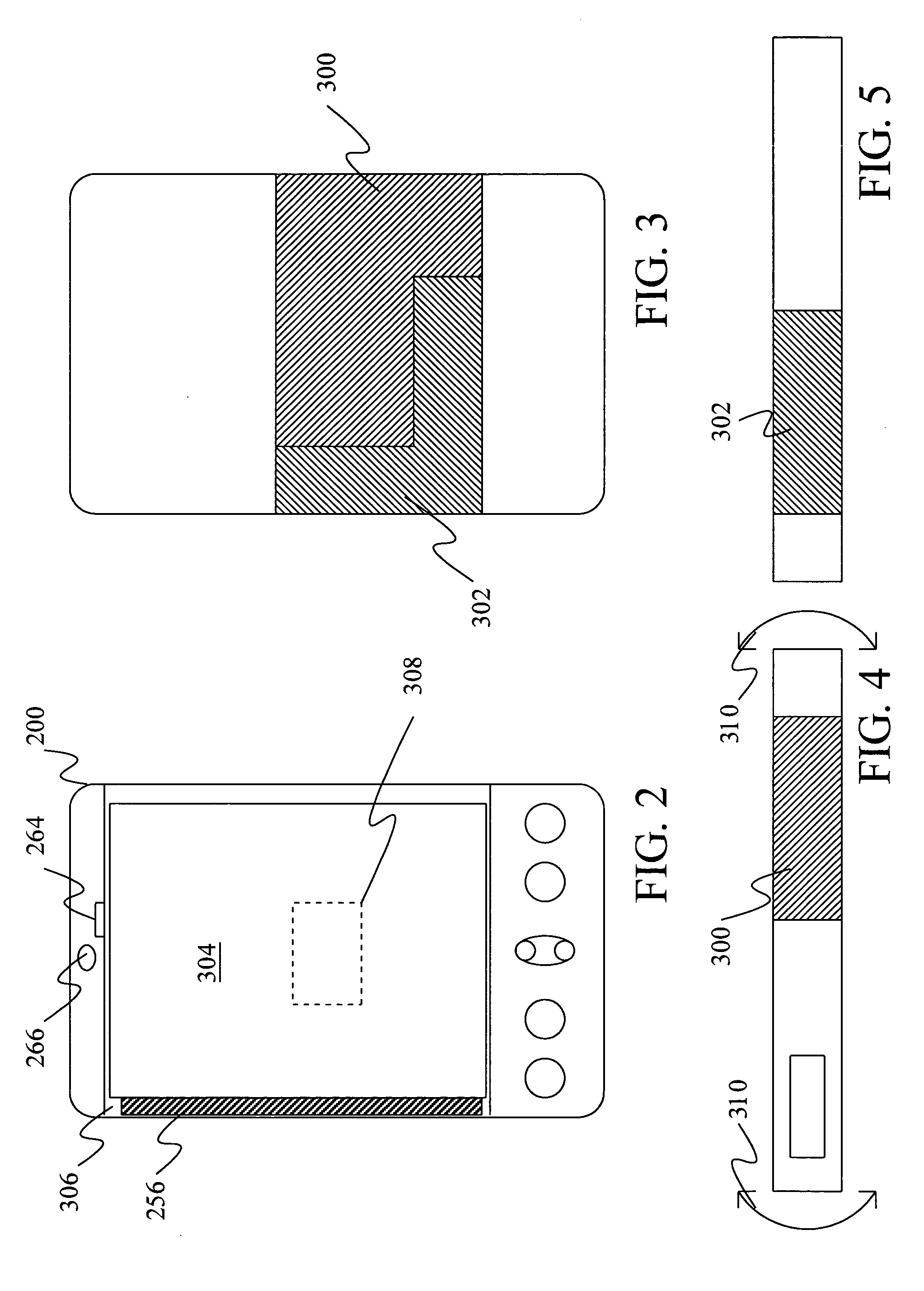

Distributed sensing techniques for mobile devices

ActiveUS7532196B2Input/output for user-computer interactionServices signallingHuman–computer interactionMobile device

Methods and apparatus of the invention allow the coordination of resources of mobile computing devices to jointly execute tasks. In the method, a first gesture input is received at a first mobile computing device. A second gesture input is received at a second mobile computing device. In response, a determination is made as to whether the first and second gesture inputs form one of a plurality of different synchronous gesture types. If it is determined that the first and second gesture inputs form the one of the plurality of different synchronous gesture types, then resources of the first and second mobile computing devices are combined to jointly execute a particular task associated with the one of the plurality of different synchronous gesture types.

Owner:MICROSOFT TECH LICENSING LLC

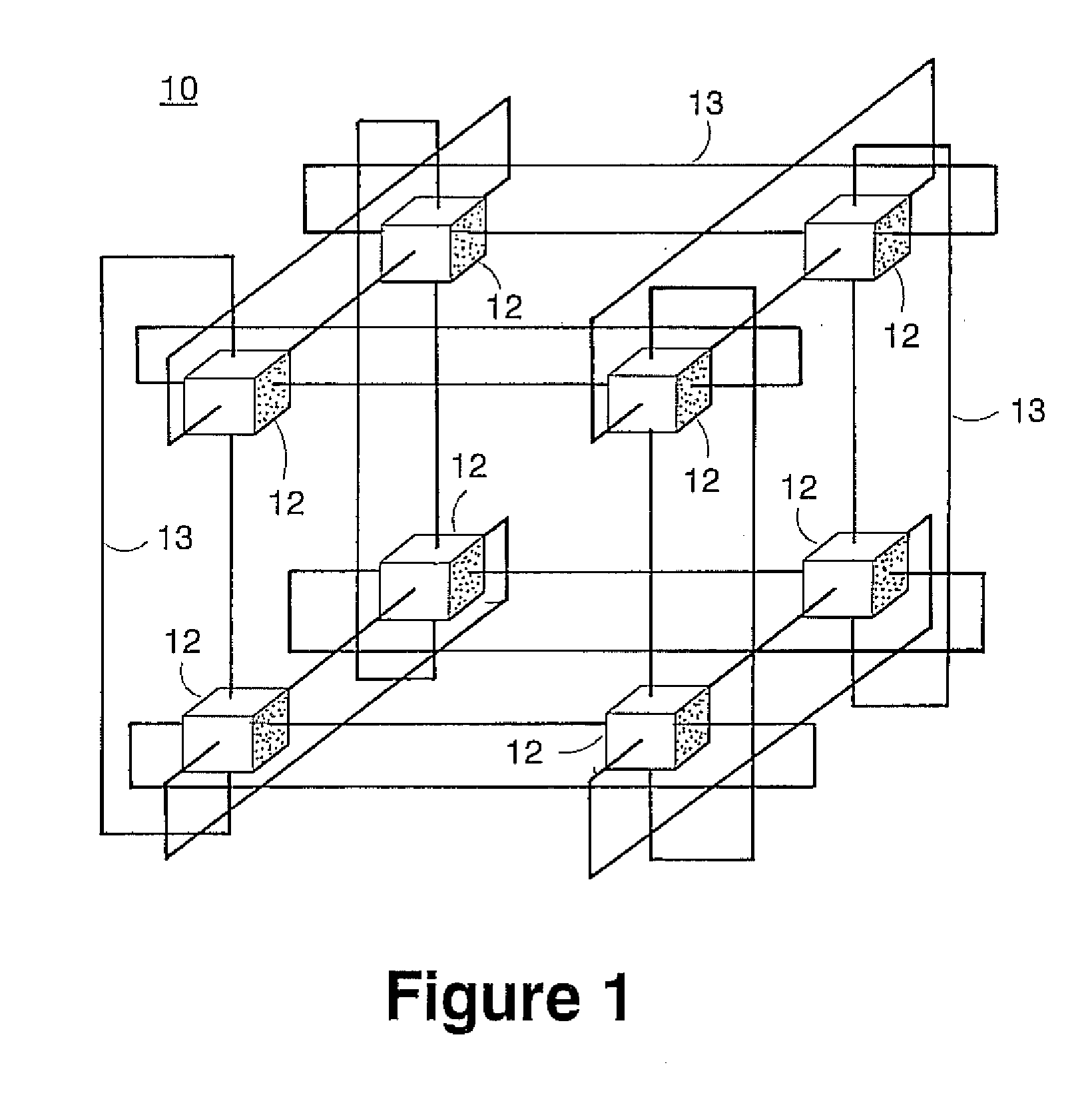

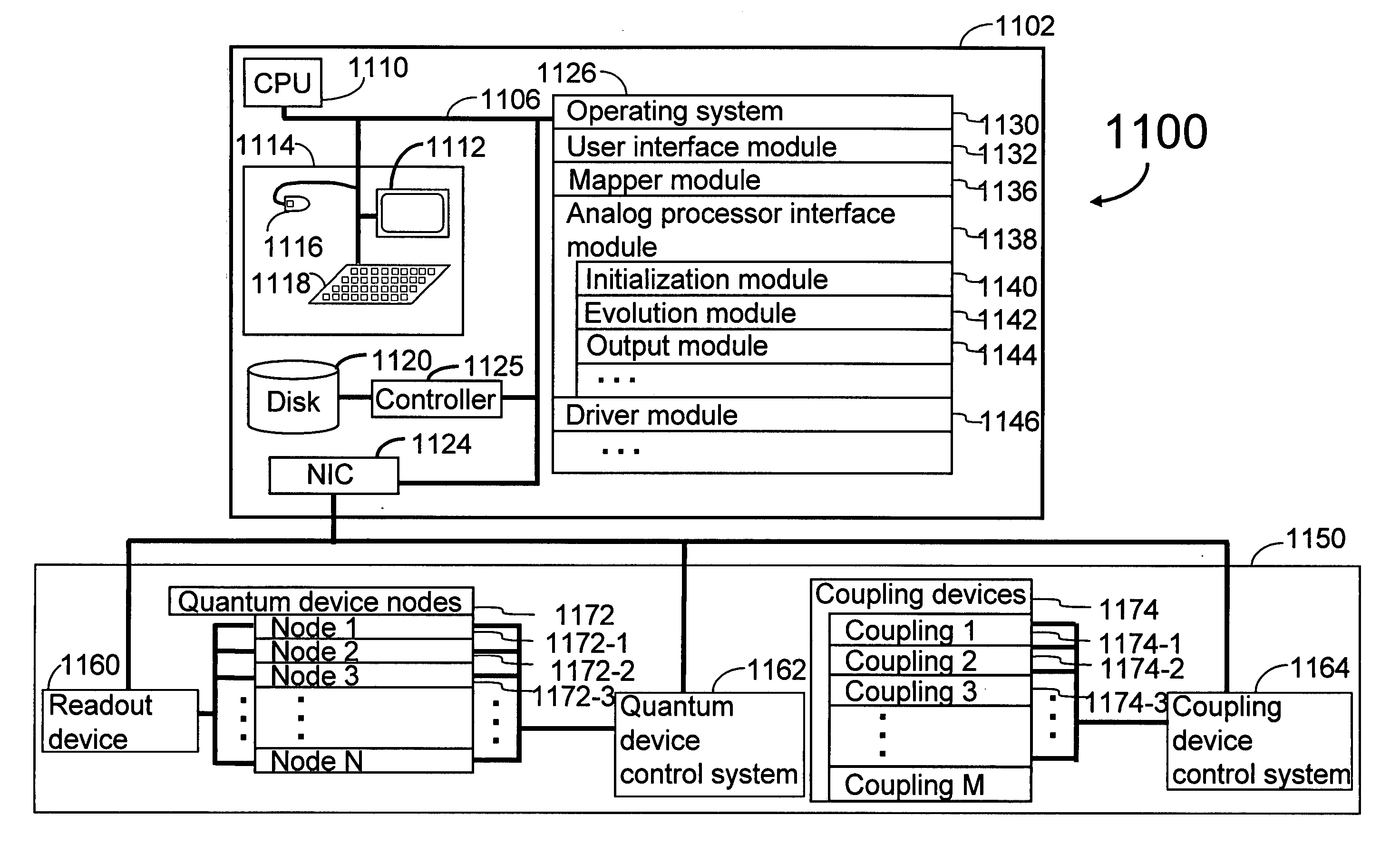

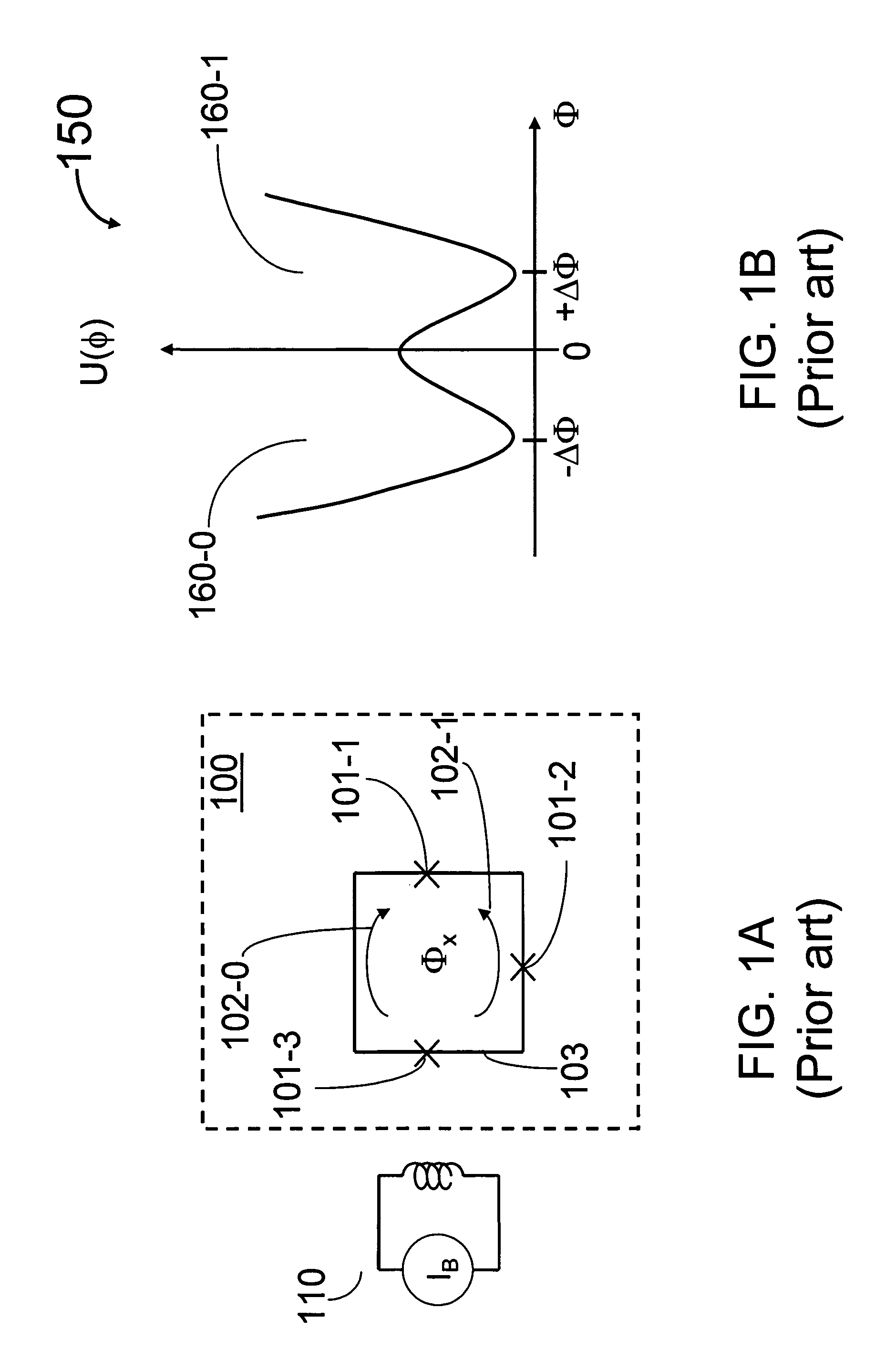

Analog processor comprising quantum devices

Owner:D WAVE SYSTEMS INC

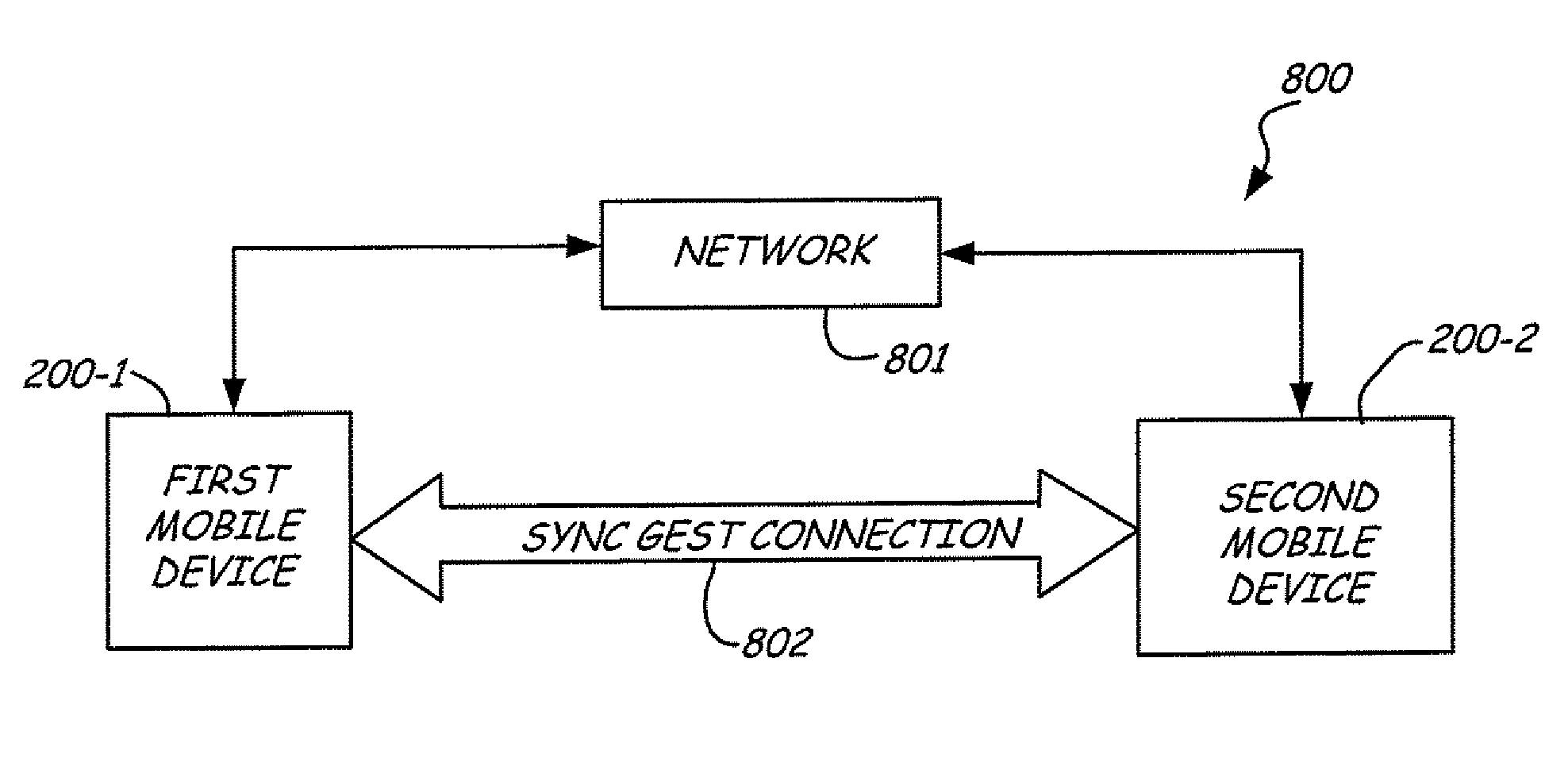

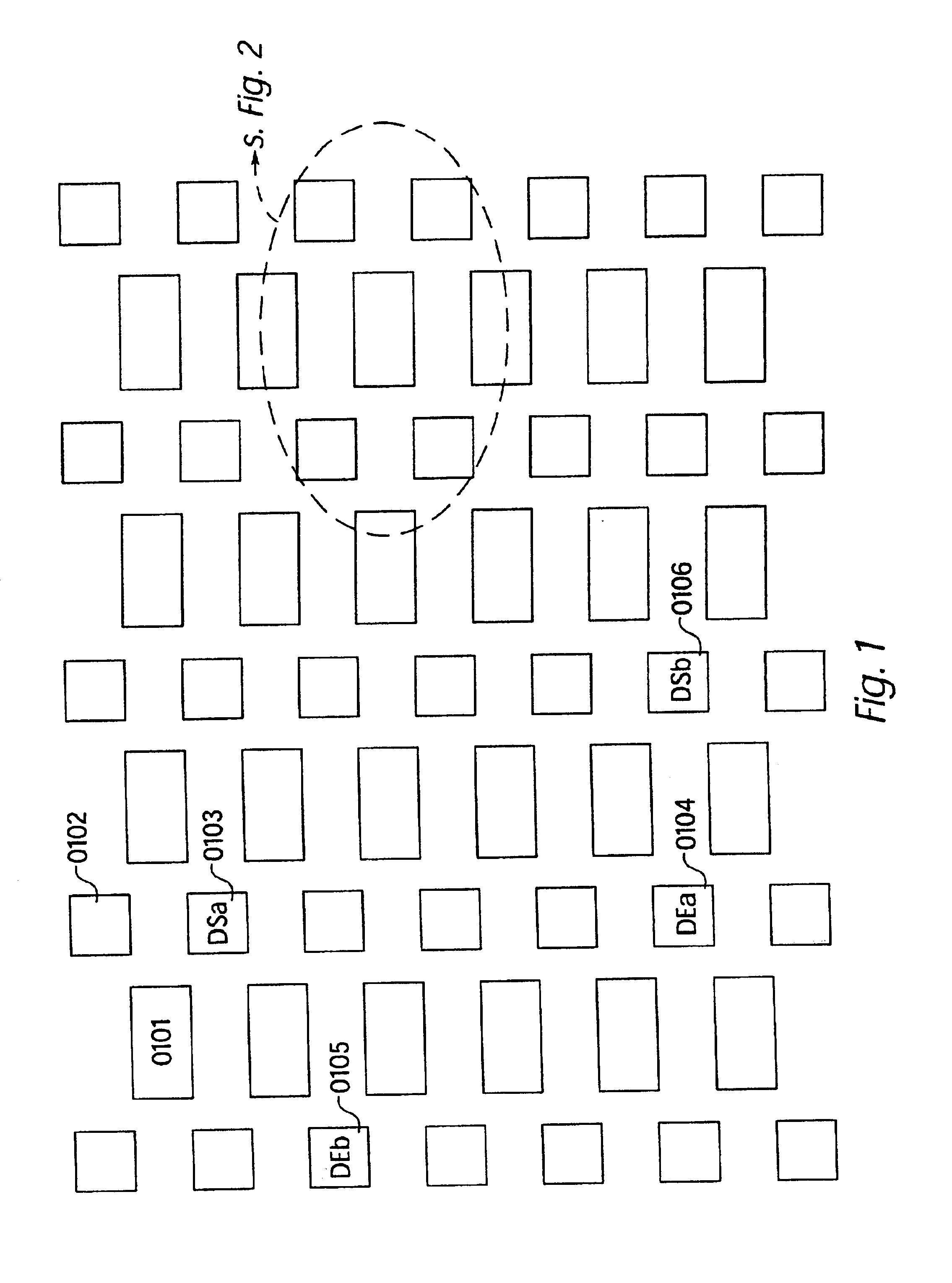

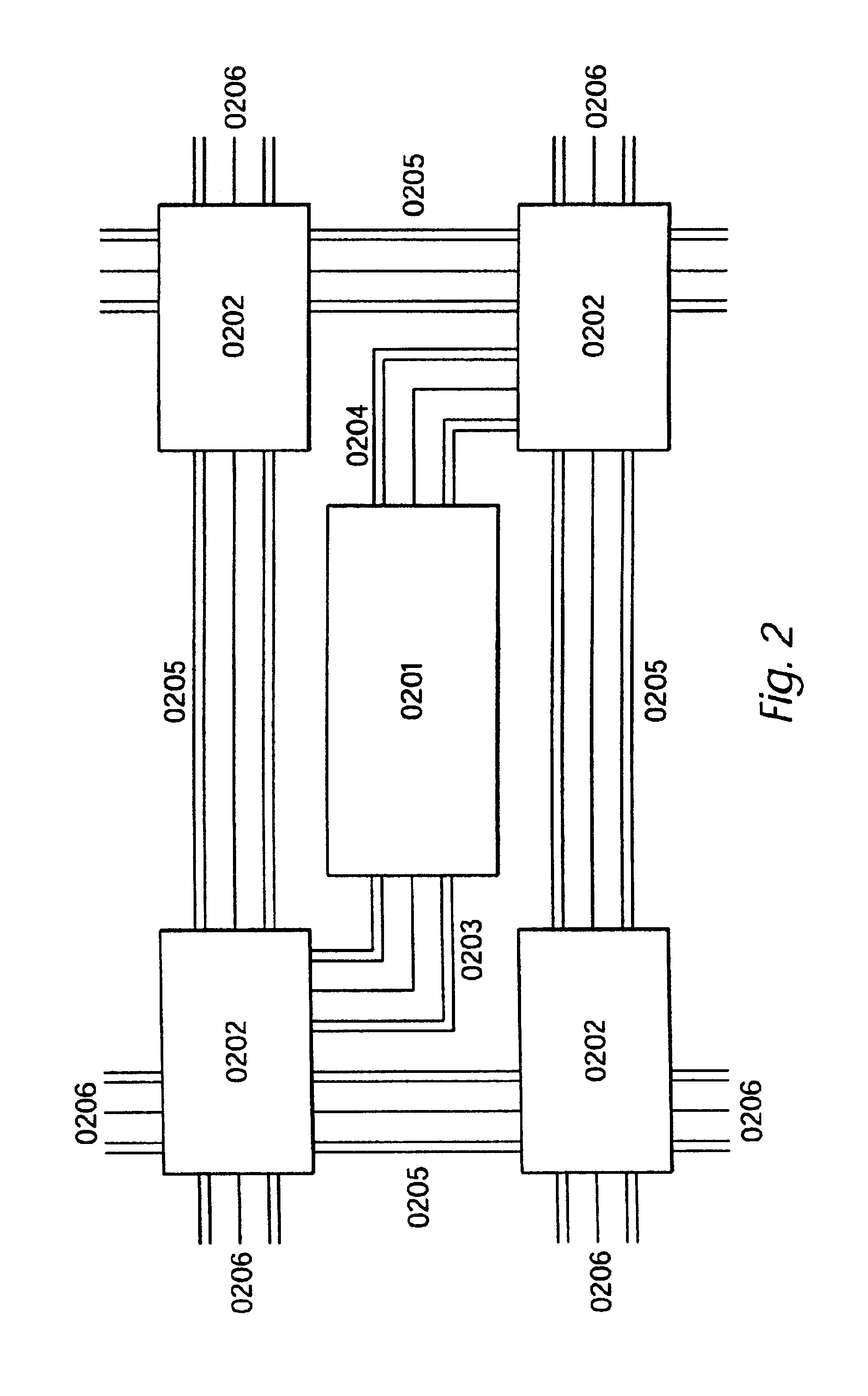

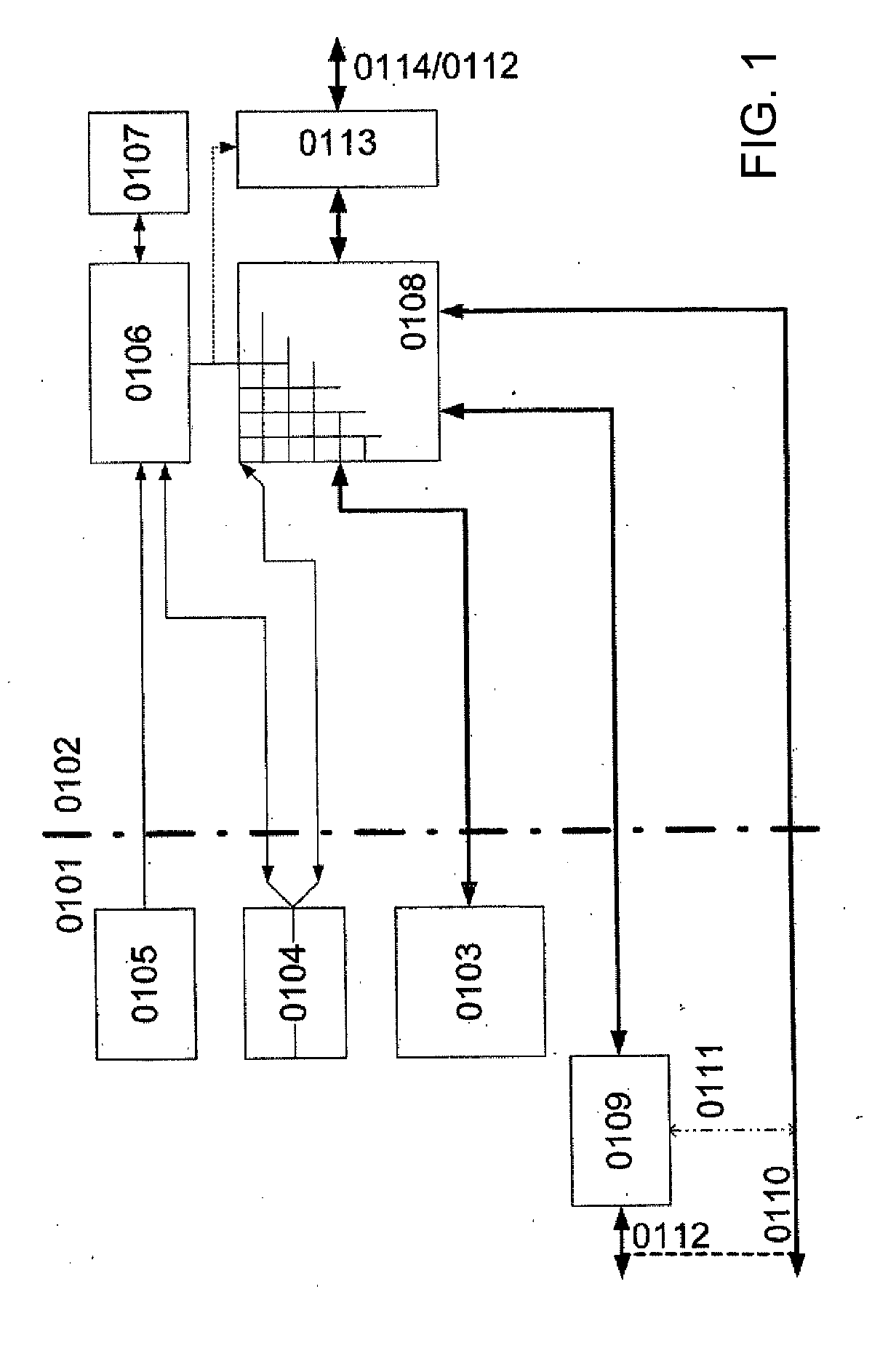

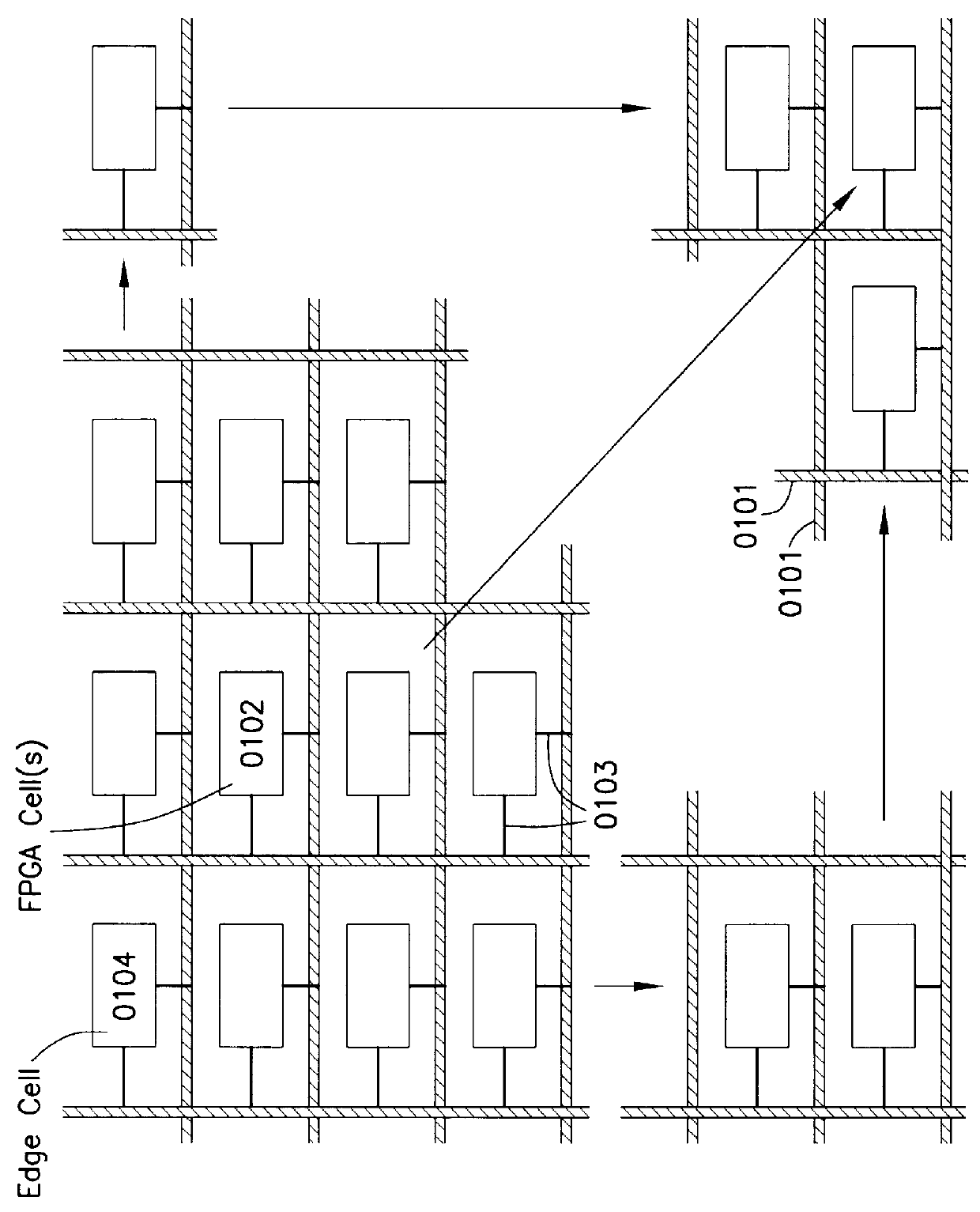

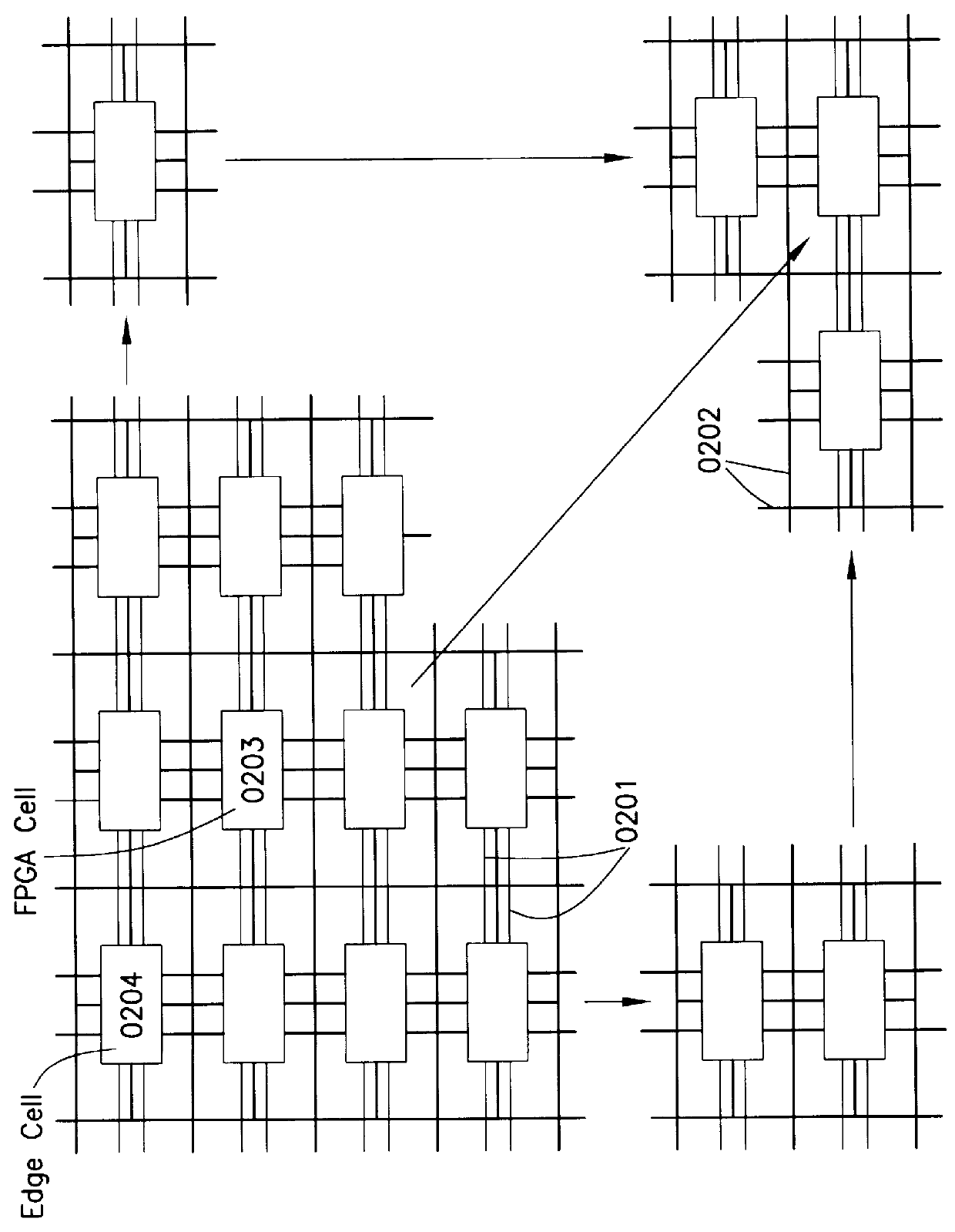

Internal bus system for DFPS and units with two- or multi-dimensional programmable cell architectures, for managing large volumes of data with a high interconnection complexity

InactiveUS7010667B2Multiple digital computer combinationsArchitecture with single central processing unitNetwork packetInterconnection

An internal bus system for DFPs and units with two- or multi-dimensional programmable cell architectures, for managing large volumes of data with a high interconnection complexity. The bus system can transmit data between a plurality of function blocks, where multiple data packets can be on the bus at the same time. The bus system automatically recognizes the correct connection for various types of data or data transmitters and sets it up.

Owner:PACT XPP TECH

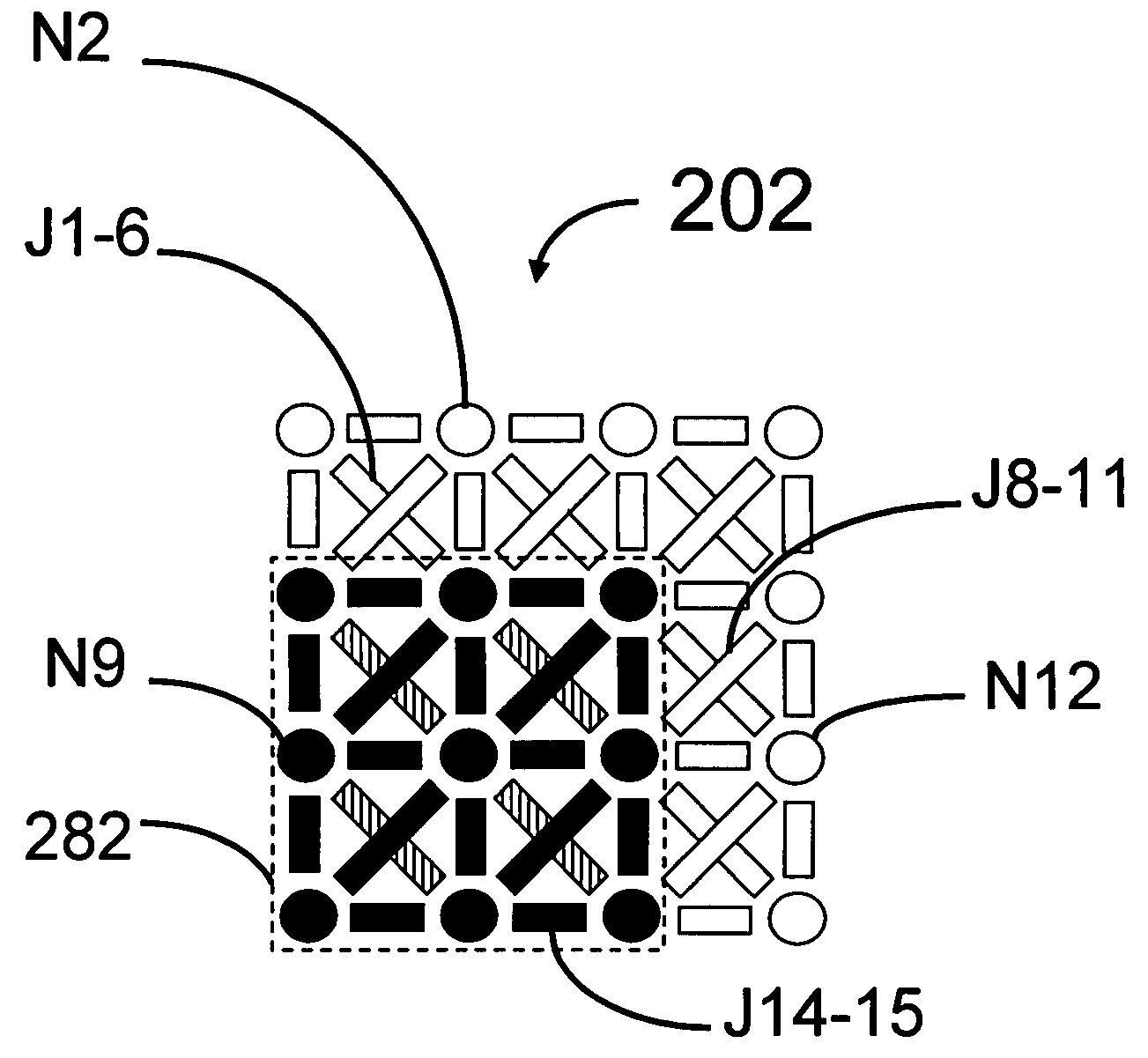

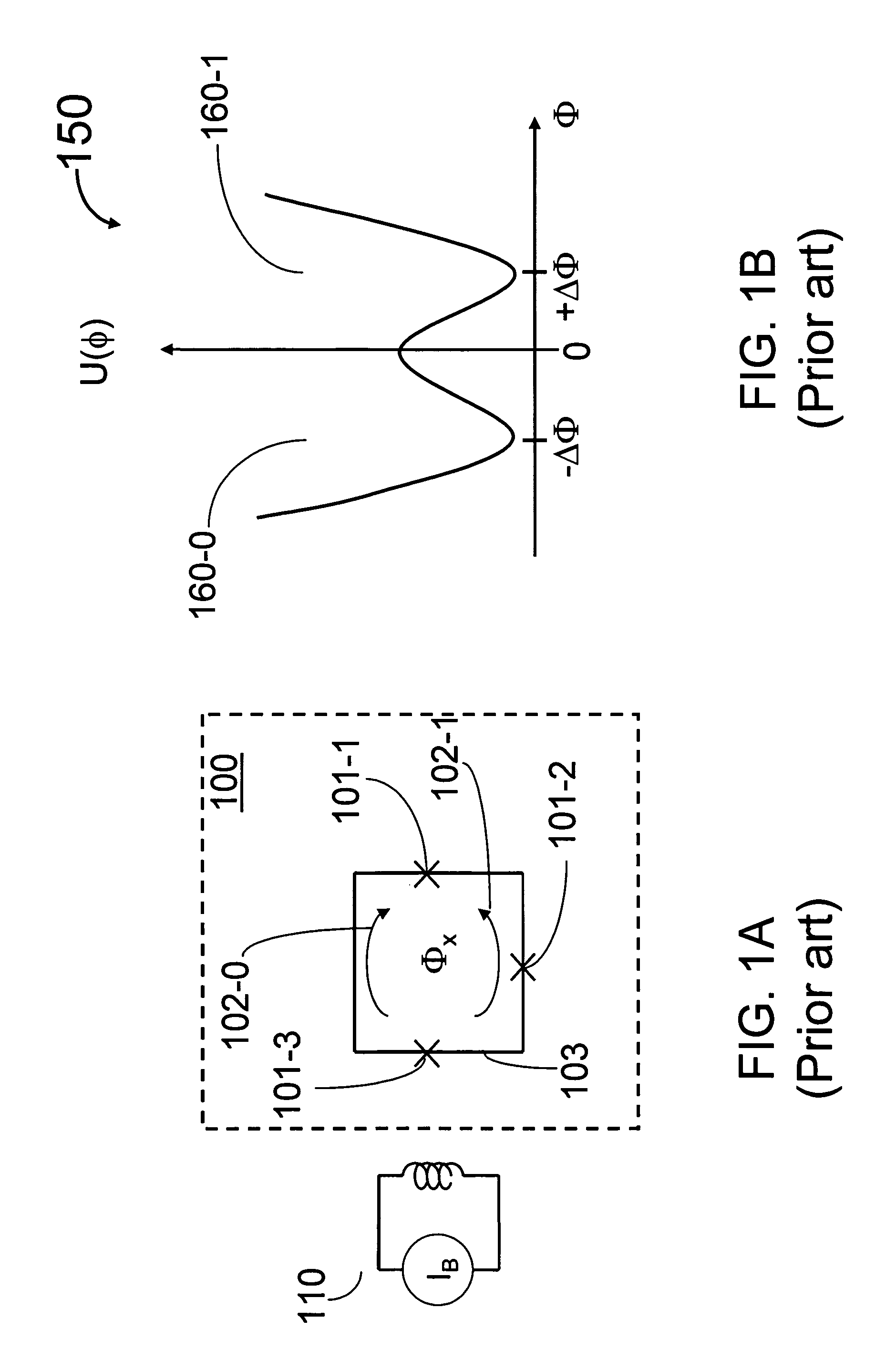

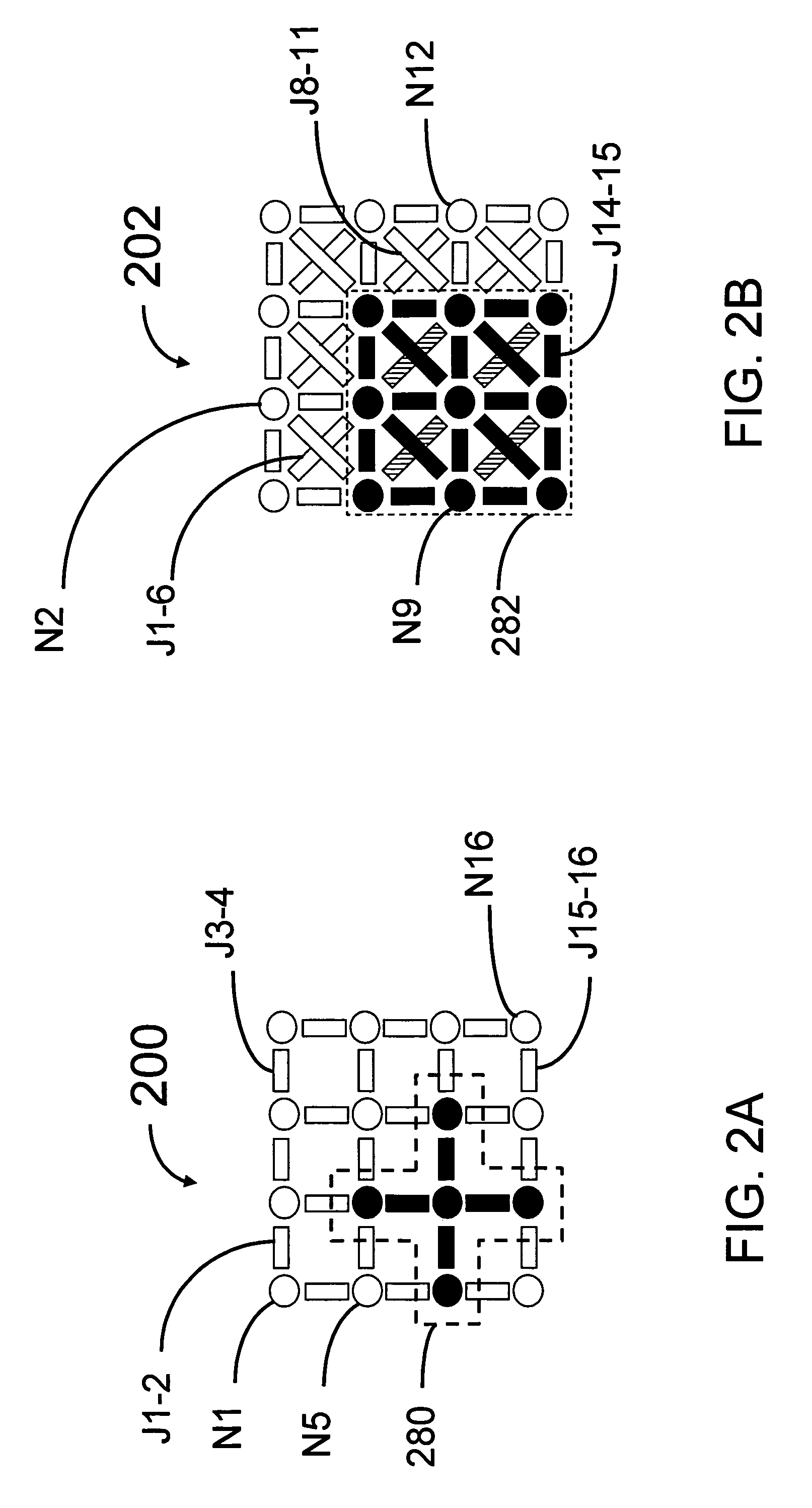

Analog processor comprising quantum devices

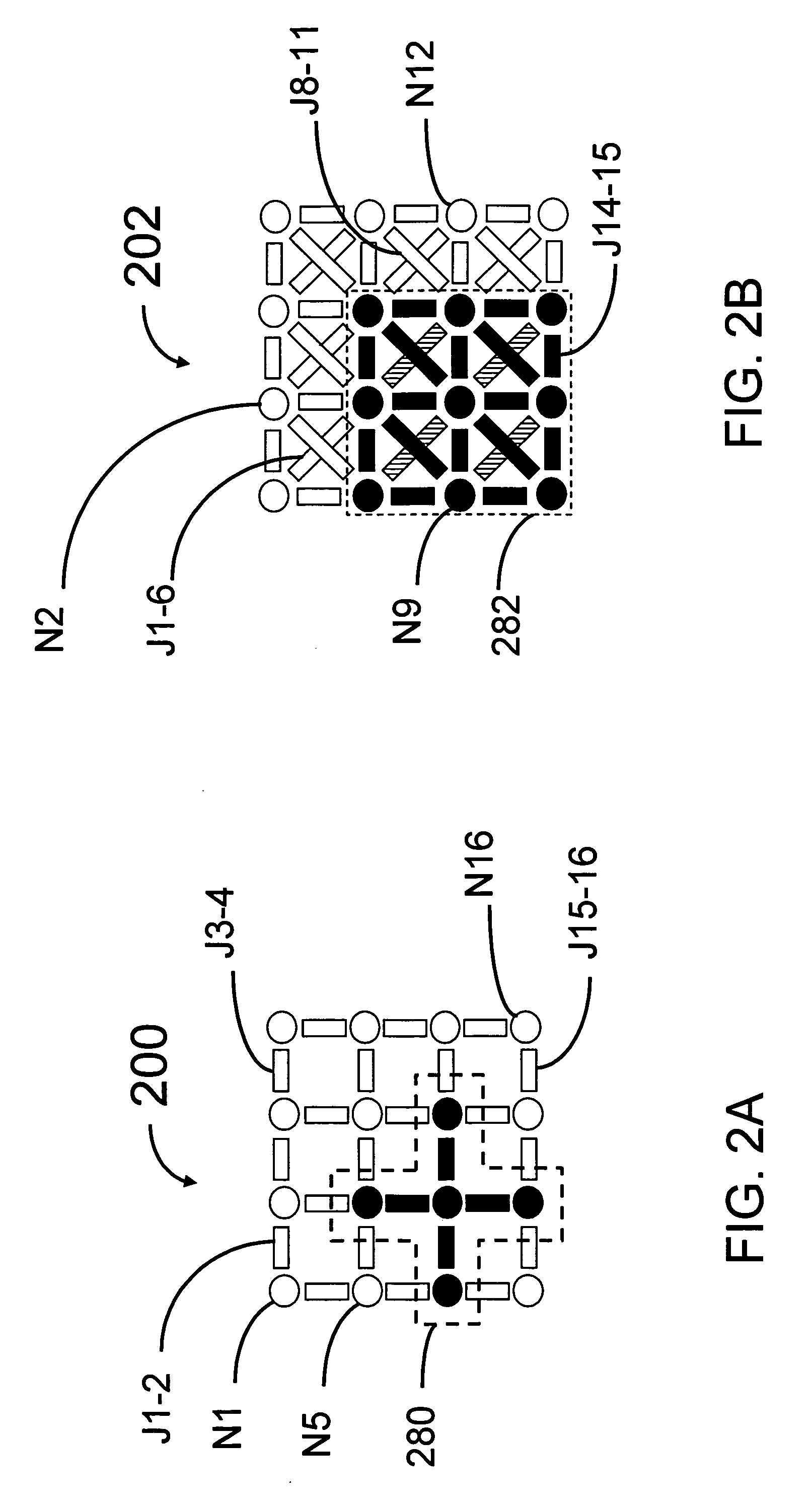

Analog processors for solving various computational problems are provided. Such analog processors comprise a plurality of quantum devices, arranged in a lattice, together with a plurality of coupling devices. The analog processors further comprise bias control systems each configured to apply a local effective bias on a corresponding quantum device. A set of coupling devices in the plurality of coupling devices is configured to couple nearest-neighbor quantum devices in the lattice. Another set of coupling devices is configured to couple next-nearest neighbor quantum devices. The analog processors further comprise a plurality of coupling control systems each configured to tune the coupling value of a corresponding coupling device in the plurality of coupling devices to a coupling. Such quantum processors further comprise a set of readout devices each configured to measure the information from a corresponding quantum device in the plurality of quantum devices.

Owner:D WAVE SYSTEMS INC

SIMD datapath coupled to scalar/vector/address/conditional data register file with selective subpath scalar processing mode

InactiveUS6839828B2Not compromise SIMD data processing performanceReduce consumptionRegister arrangementsDigital data processing detailsProcessor registerOperation mode

There is provided a processor designed to operate in a plurality of modes for processing vector and scalar instructions. Register files are each for storing scalar and vector data and address information. A parallel vector unit, coupled to the register files, includes functional units configurable to operate in a vector operation mode and a scalar operation mode. The vector unit includes an apparatus for tightly coupling the functional units to perform an operation specified by a current instruction. Under a vector operation mode, the vector unit performs, in parallel, a single vector operation on a plurality of data elements. The operations performed on the plurality of data elements are each performed by a different functional unit of the vector unit. Under a scalar operation mode, the vector unit performs a scalar operation on a data element received from the register files in a functional unit within the vector unit.

Owner:INTEL CORP

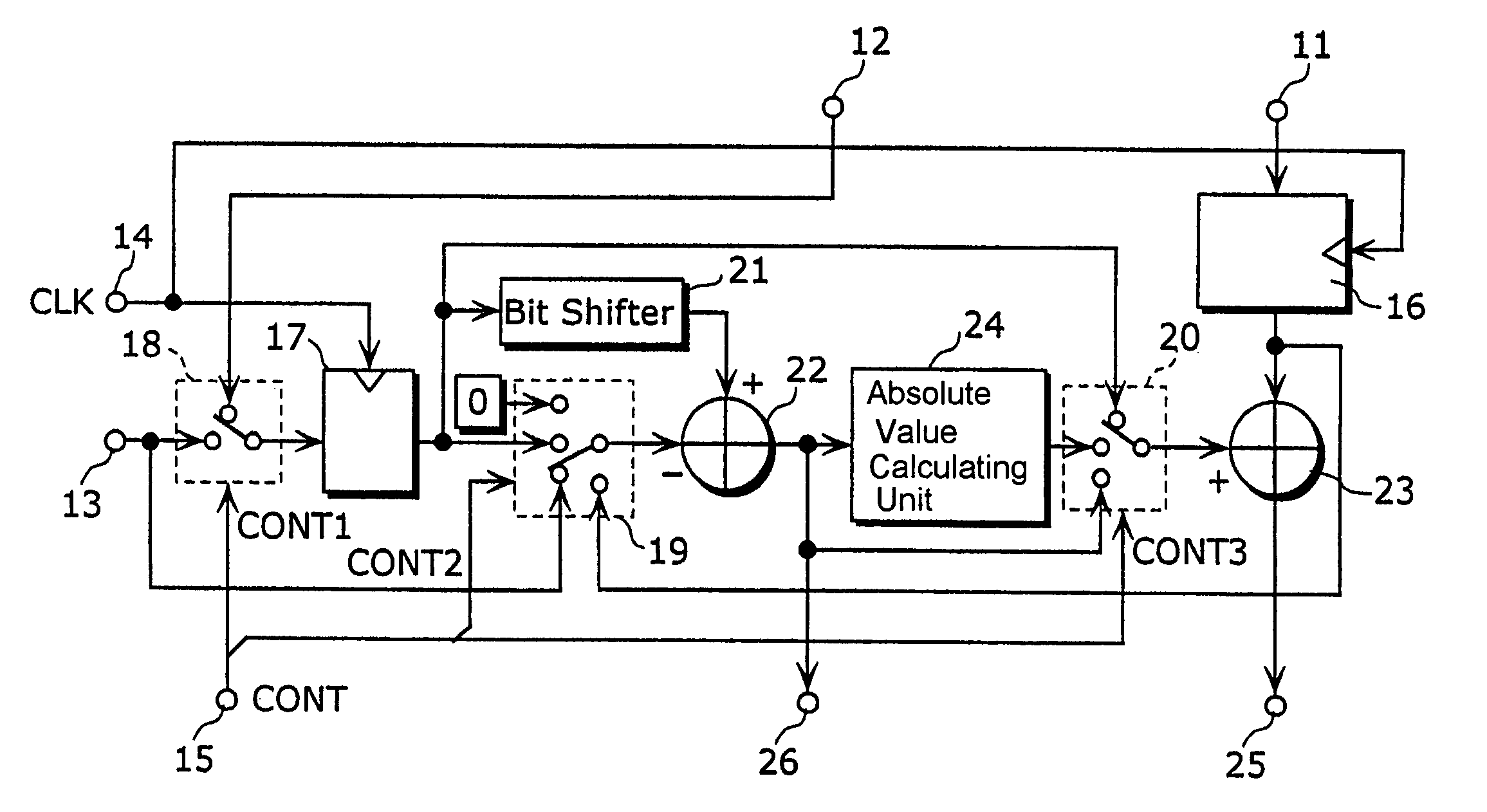

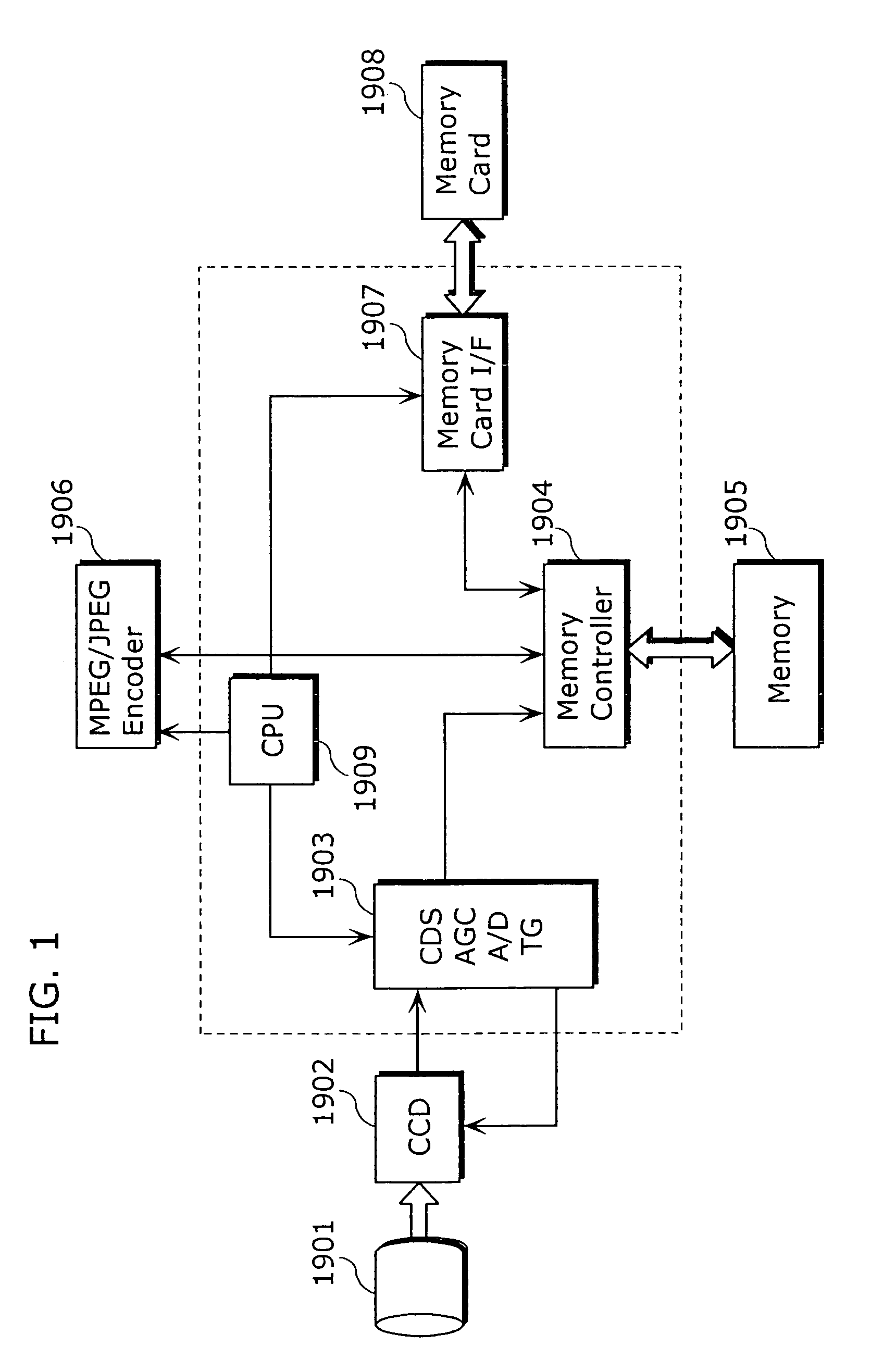

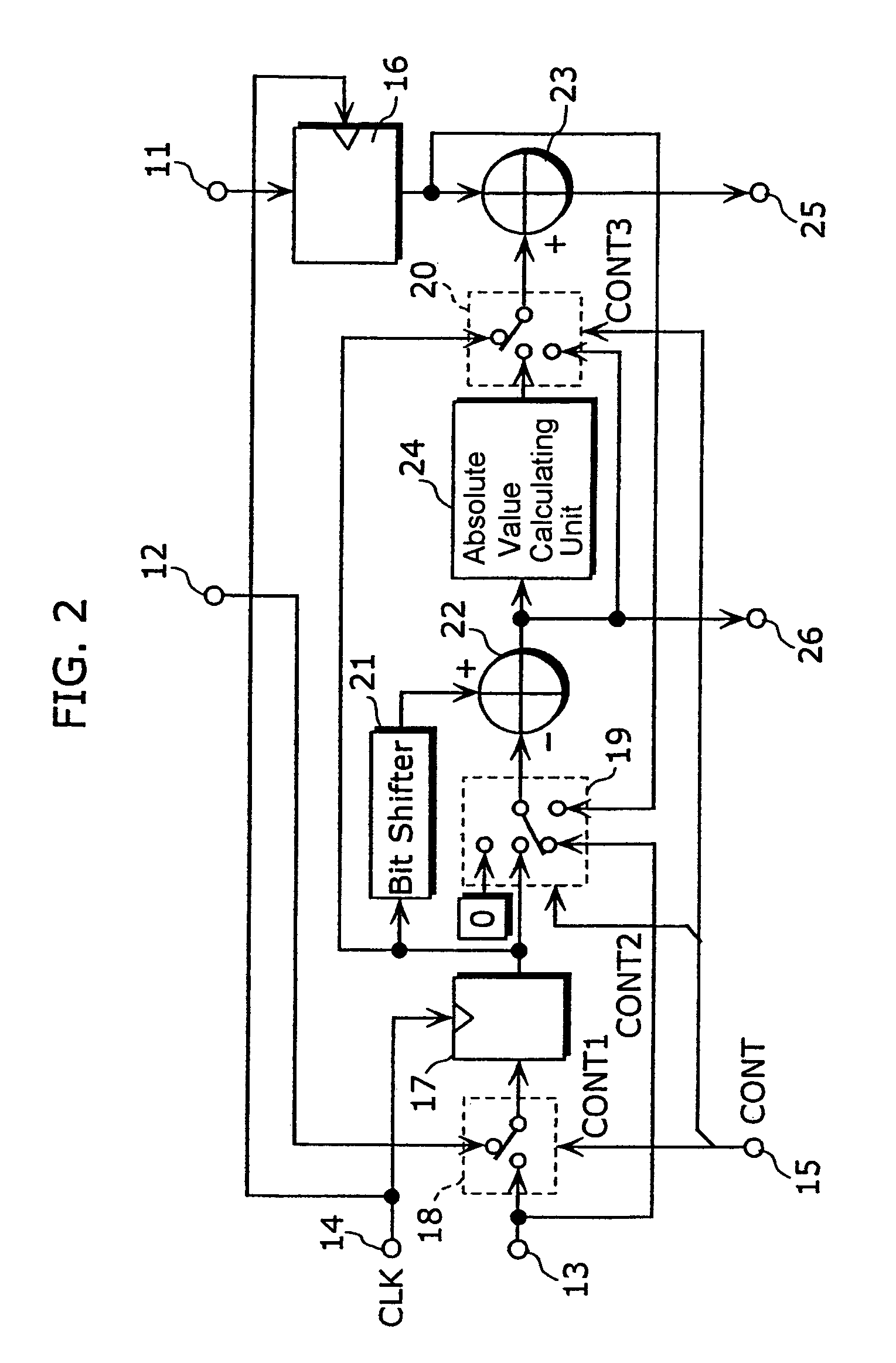

Arithmetic processing apparatus

InactiveUS7412470B2MiniaturizationReduce power consumptionDigital data processing detailsPicture reproducers using cathode ray tubesProcessor registerComputer science

The arithmetic processing apparatus of the present invention is an arithmetic processing apparatus that can be reconfigured in accordance with a processing mode and has a plurality of arranged unit arithmetic circuits. Each unit arithmetic circuit includes at least one input terminal, at least one output terminal, a first register which holds data, an adder which calculates a sum of two pieces of data, a second register which holds data, a bit shifter which shifts data left or right, a subtractor which calculates a difference between two pieces of data, an absolute value calculating unit which calculates an absolute value of data, and a path setting unit which sets a path according to the processing mode connecting among these circuit elements.

Owner:GK BRIDGE 1

Adaptive load balancing in a multi-processor graphics processing system

ActiveUS7075541B2Increase in sizeSmall sizeCathode-ray tube indicatorsMultiple digital computer combinationsGraphicsMulti processor

Systems and methods for balancing a load among multiple graphics processors that render different portions of a frame. A display area is partitioned into portions for each of two (or more) graphics processors. The graphics processors render their respective portions of a frame and return feedback data indicating completion of the rendering. Based on the feedback data, an imbalance can be detected between respective loads of two of the graphics processors. In the event that an imbalance exists, the display area is re-partitioned to increase a size of the portion assigned to the less heavily loaded processor and to decrease a size of the portion assigned to the more heavily loaded processor.

Owner:NVIDIA CORP

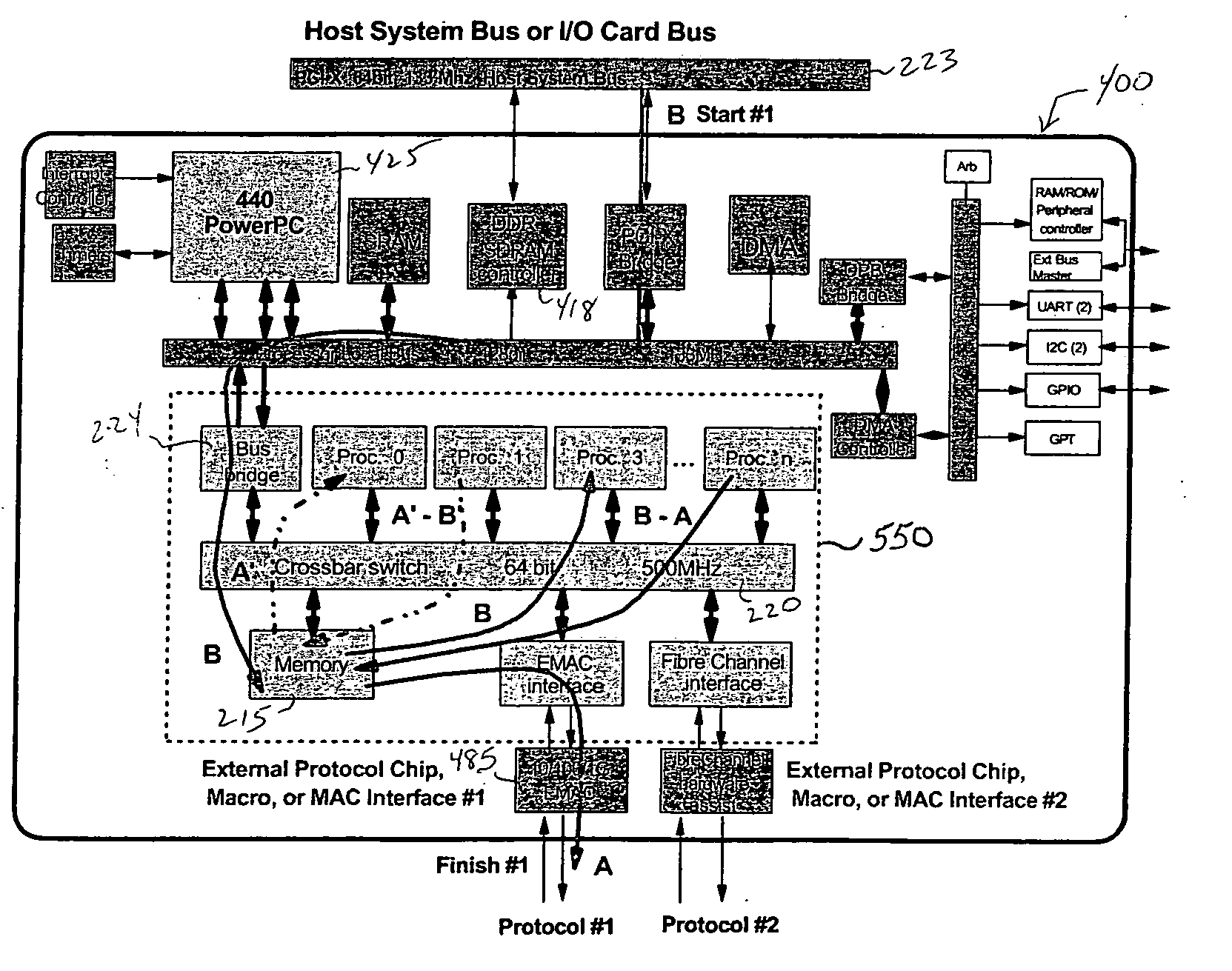

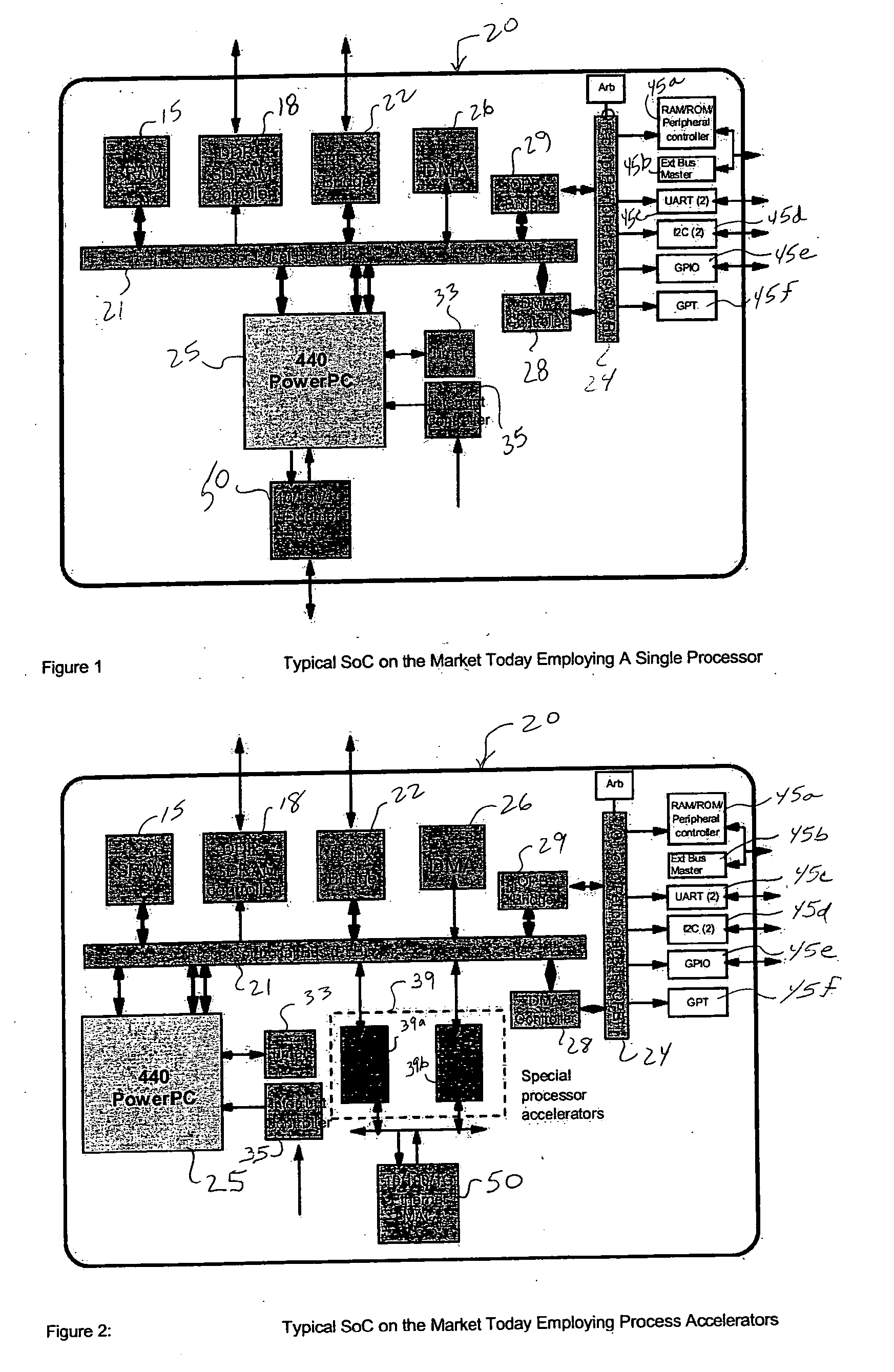

Single chip protocol converter

ActiveUS20050021874A1Increase the number ofHigh bandwidthConcurrent instruction executionMultiple digital computer combinationsSingle chipMultiprocessing

A single chip protocol converter integrated circuit (IC) capable of receiving packets generating according to a first protocol type and processing said packets to implement protocol conversion and generating converted packets of a second protocol type for output thereof, the process of protocol conversion being performed entirely within the single integrated circuit chip. The single chip protocol converter can be further implemented as a macro core in a system-on-chip (SoC) implementation, wherein the process of protocol conversion is contained within a SoC protocol conversion macro core without requiring the processing resources of a host system. Packet conversion may additionally entail converting packets generated according to a first protocol version level and processing the said packets to implement protocol conversion for generating converted packets according to a second protocol version level, but within the same protocol family type. The single chip protocol converter integrated circuit and SoC protocol conversion macro implementation include multiprocessing capability including processor devices that are configurable to adapt and modify the operating functionality of the chip.

Owner:MICROSOFT TECH LICENSING LLC

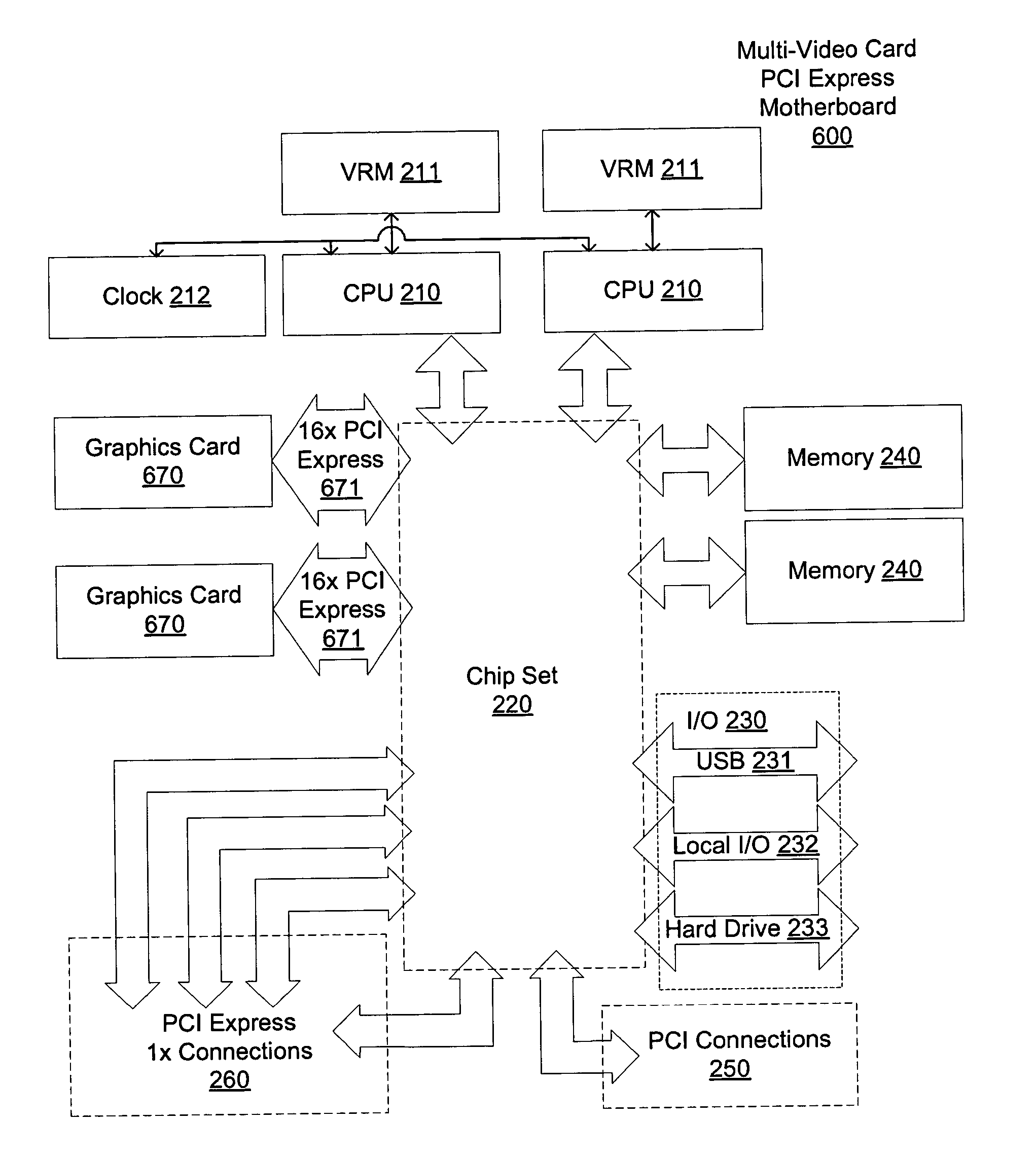

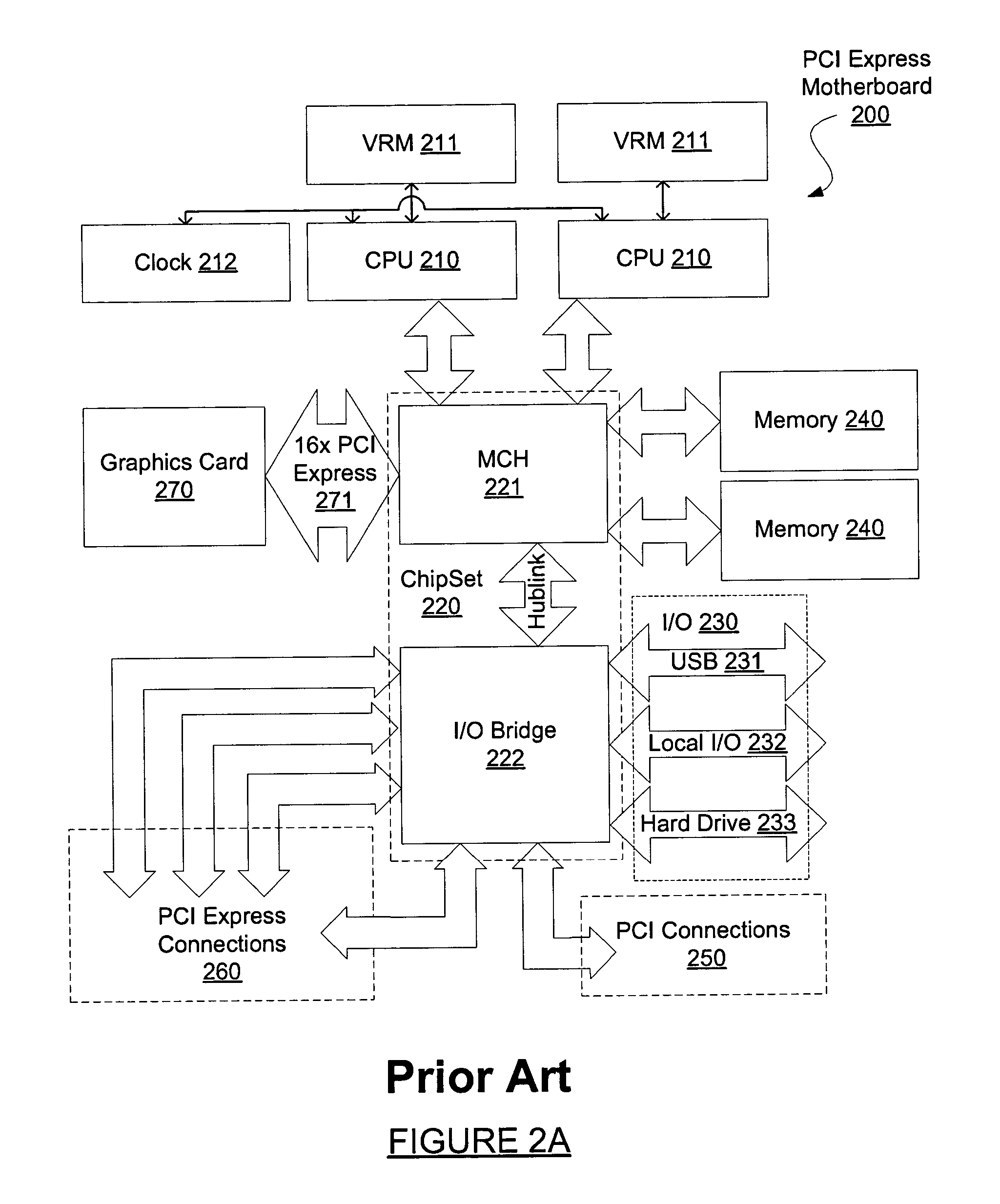

Motherboard for supporting multiple graphics cards

ActiveUS20050088445A1Cathode-ray tube indicatorsMultiple digital computer combinationsGraphicsScalable system

The present invention provides a motherboard that uses a high-speed, scalable system bus such as PCI Express® to support two or more high bandwidth graphics slots, each capable of supporting an off-the-shelf video controller. The lanes from the motherboard chipset may be directly routed to two or more graphics slots. For instance, the chipset may route (1) thirty-two lanes into two ×16 graphics slots; (2) twenty-four lanes into one ×16 graphics slot and one ×8 graphics slot (the ×8 slot using the same physical connector as a ×16 graphics slot but with only eight active lanes); or (3) sixteen lanes into two ×8 graphics slots (again, physically similar to a ×16 graphics slot but with only eight active lanes). Alternatively, a switch can convert sixteen lanes coming from the chipset root complex into two ×16 links that connect to two ×16 graphics slots. Each and every embodiment of the present invention is agnostic to a specific chipset.

Owner:DELL MARKETING

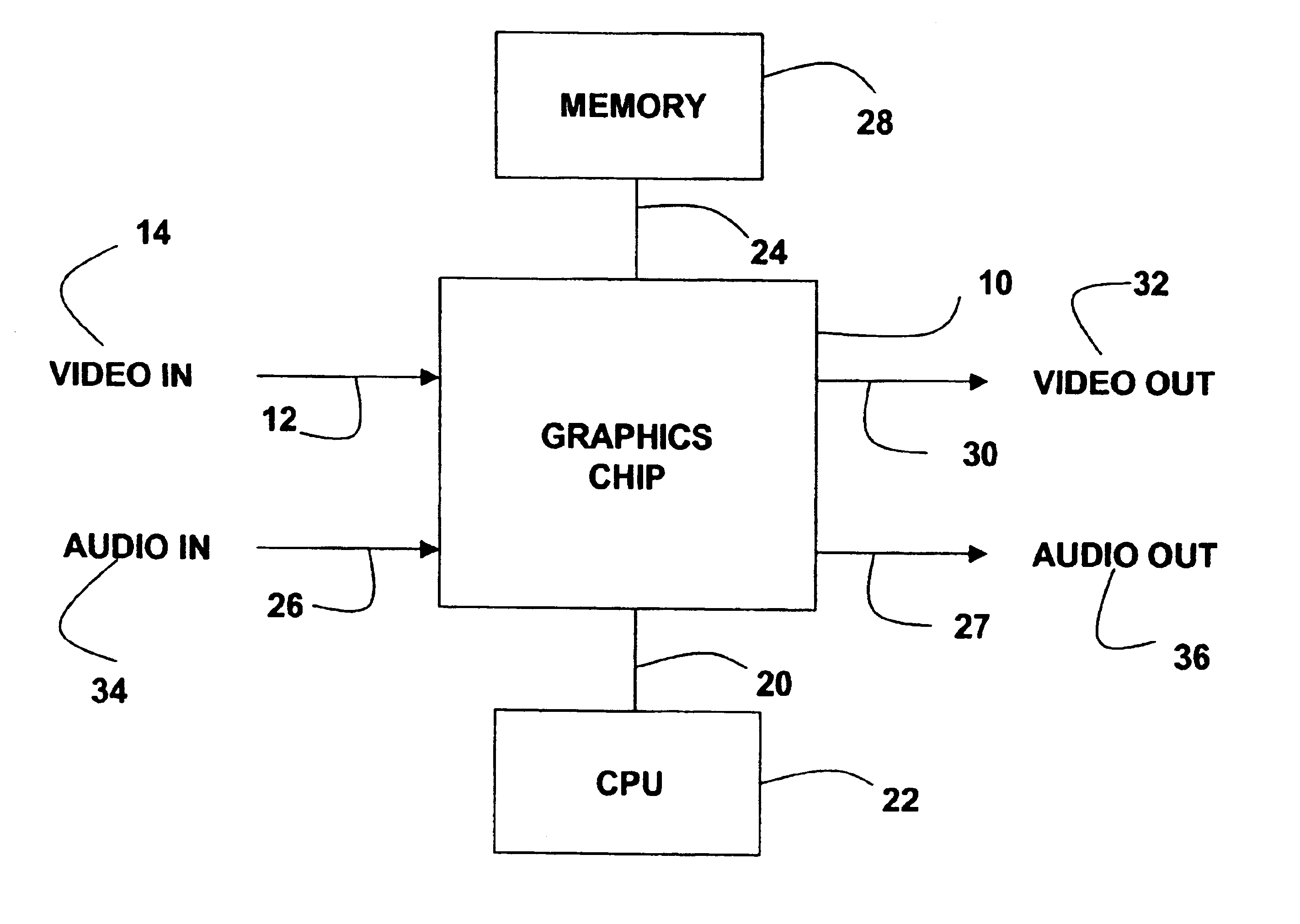

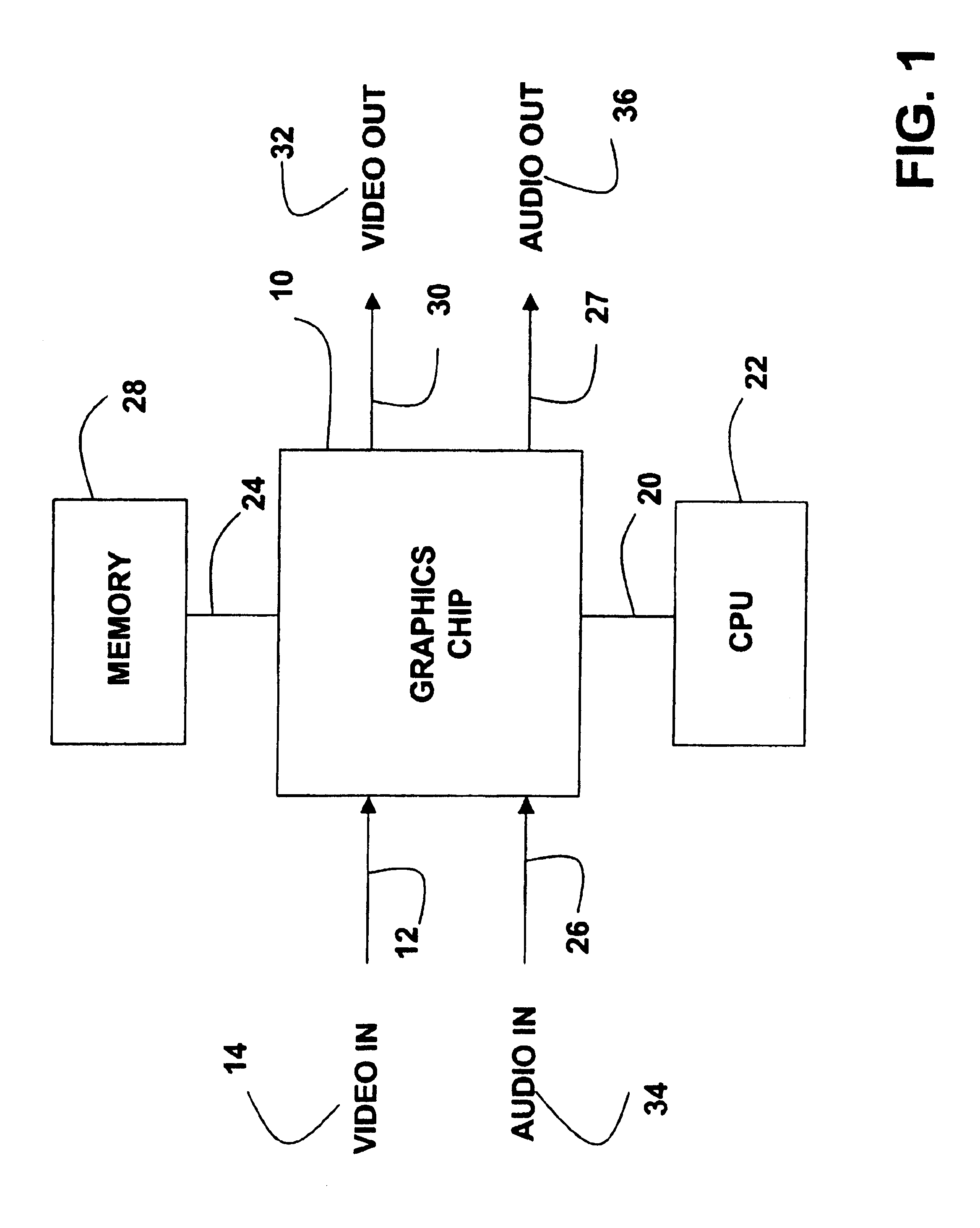

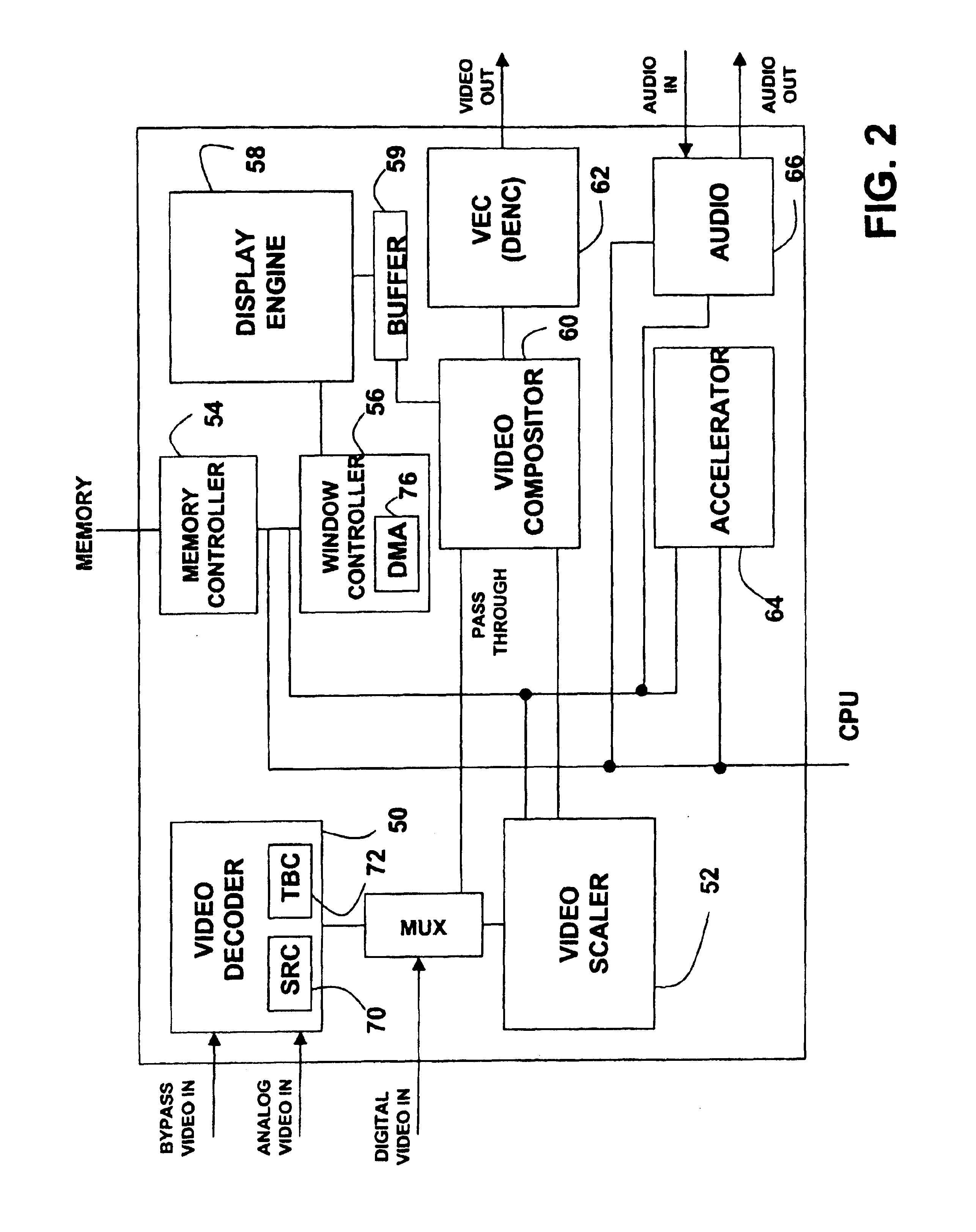

Video, audio and graphics decode, composite and display system

InactiveUS6853385B1Minimum total system costLow costTelevision system detailsTelevision system scanning detailsDigital videoDigital audio signals

A video, audio and graphics system uses multiple transport processors to receive in-band and out-of-band MPEG Transport streams, to perform PID and section filtering as well as DVB and DES decryption and to de-multiplex them. The system processes the PES into digital audio, MPEG video and message data. The system is capable of decoding multiple MPEG SLICEs concurrently. Graphics windows are blended in parallel, and blended with video using alpha blending. During graphics processing, a single-port SRAM is used equivalently as a dual-port SRAM. The video may include both analog video, e.g., NTSC / PAL / SECAM / S-video, and digital video, e.g., MPEG-2 video in SDTV or HDTV format. The system has a reduced memory mode in which video images are reduced in half in horizontal direction only during decoding. The system is capable of receiving and processing digital audio signals such as MPEG Layer 1 and Layer 2 audio and Dolby AC-3 audio, as well as PCM audio signals. The system includes a memory controller. The system includes a system bridge controller to interface a CPU with devices internal to the system as well as peripheral devices including PCI devices and I / O devices such as RAM, ROM and flash memory devices. The system is capable of displaying video and graphics in both the high definition (HD) mode and the standard definition (SD) mode. The system may output an HDTV video while converting the HDTV video and providing as another output having an SDTV format or another HDTV format.

Owner:AVAGO TECH INT SALES PTE LTD

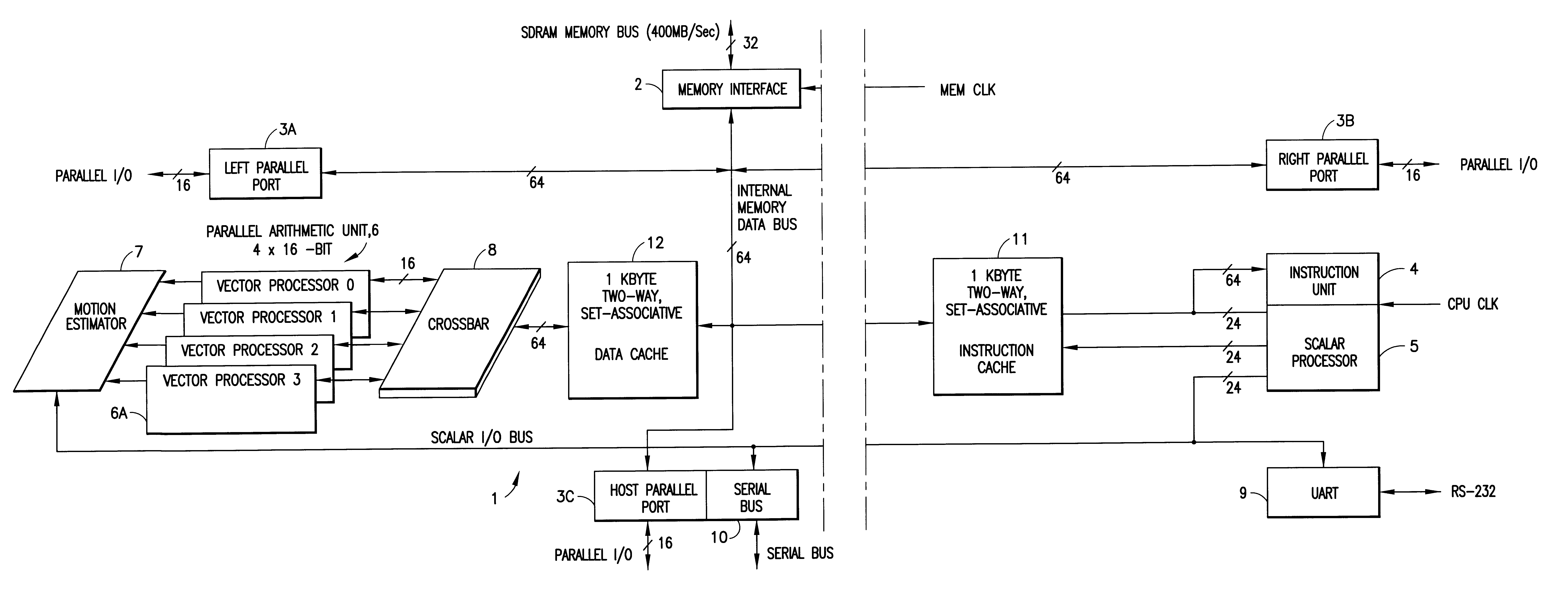

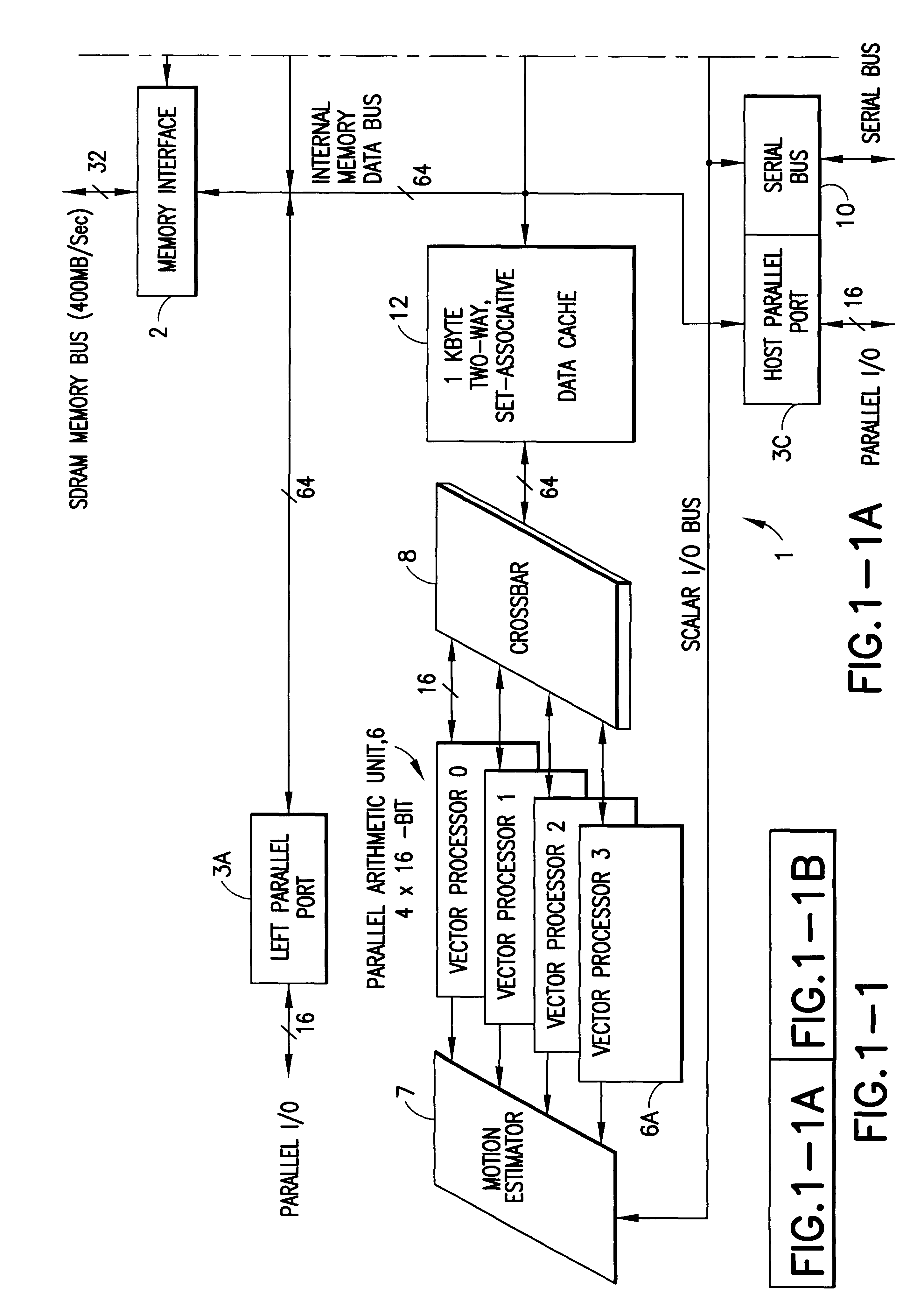

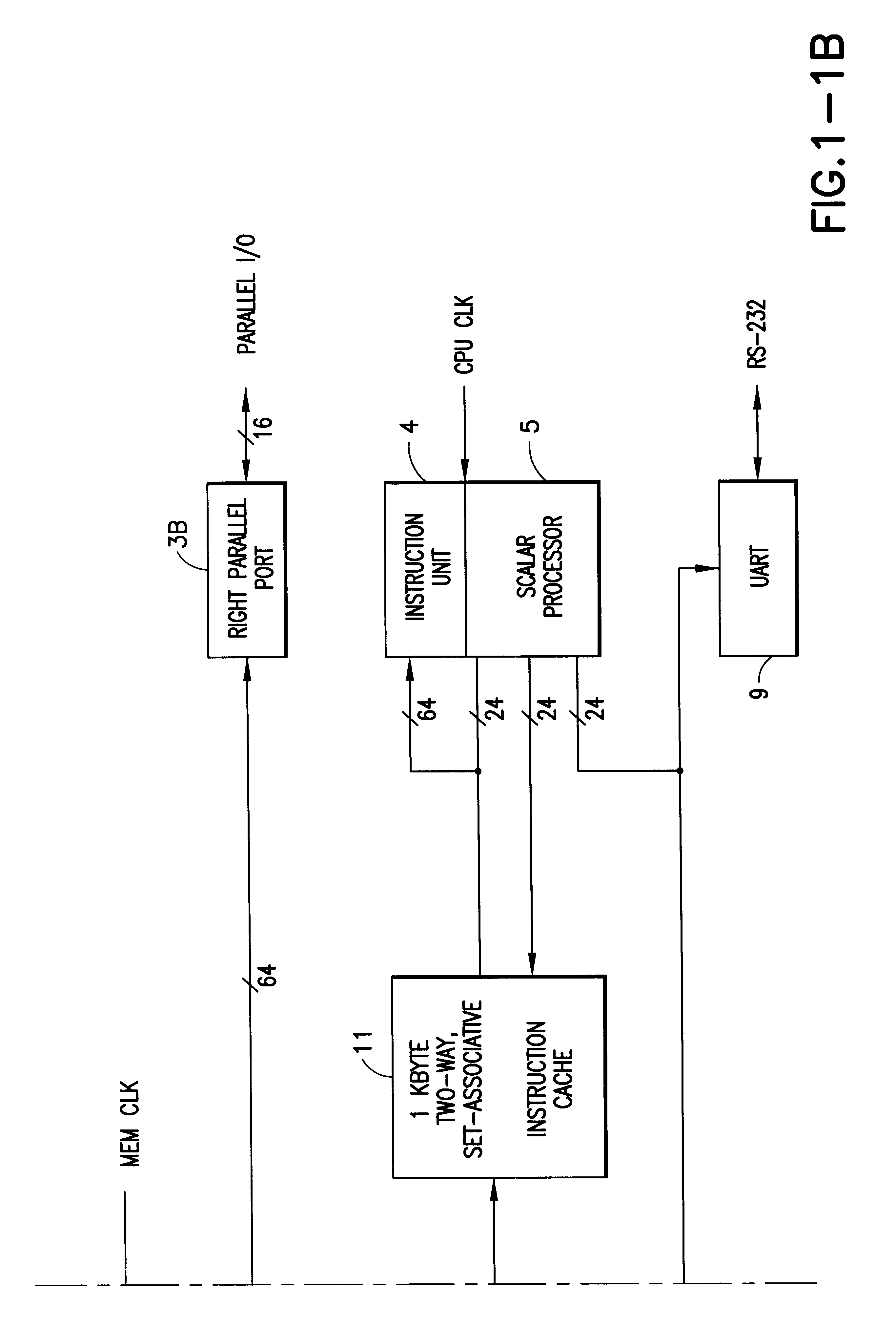

Digital signal processor containing scalar processor and a plurality of vector processors operating from a single instruction

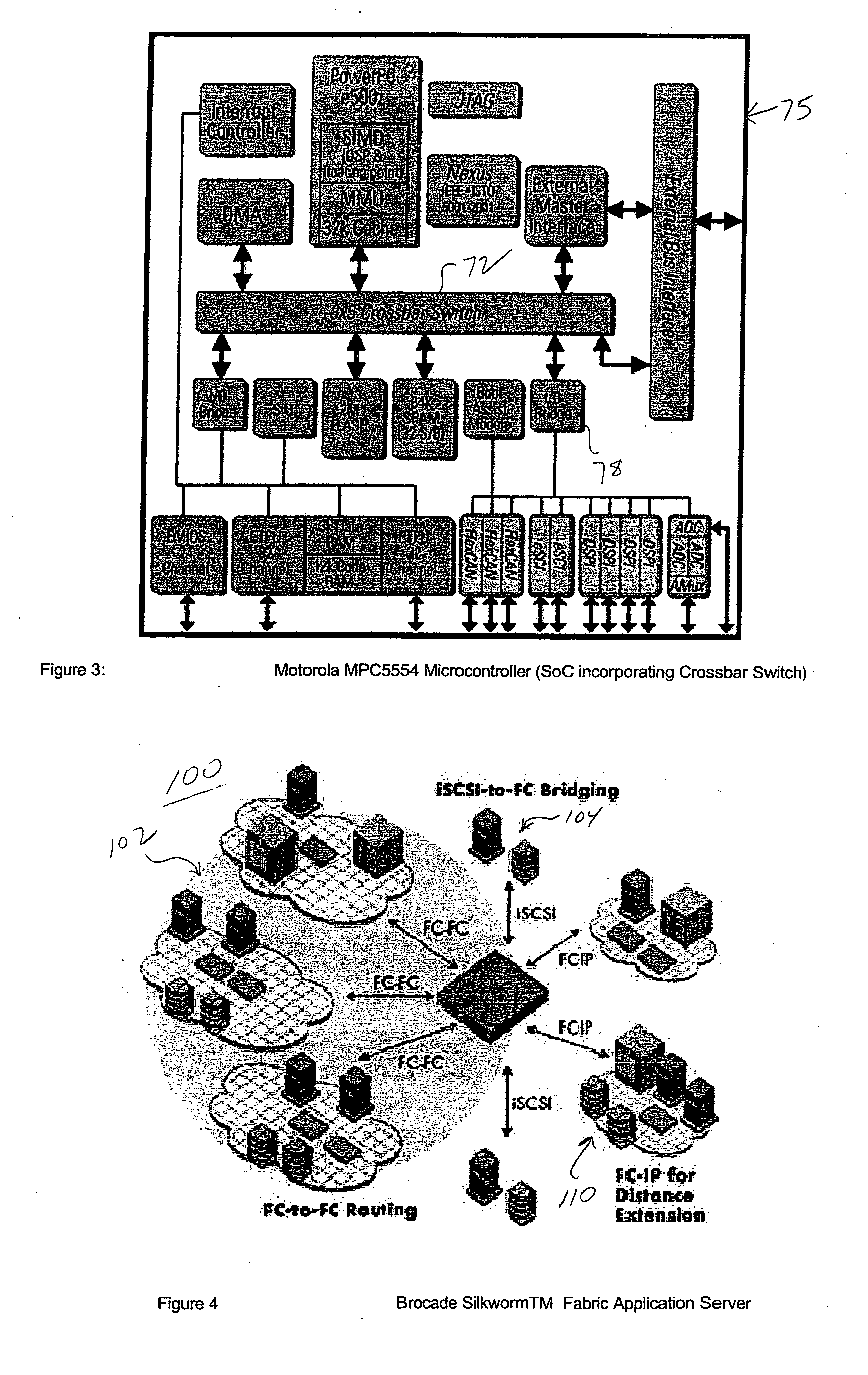

InactiveUS6317819B1Register arrangementsMemory adressing/allocation/relocationCrossbar switchDigital data

A digital data processor integrated circuit (1) includes a plurality of functionally identical first processor elements (6A) and a second processor element (5). The first processor elements are bidirectionally coupled to a first cache (12) via a crossbar switch matrix (8). The second processor element is coupled to a second cache (11). Each of the first cache and the second cache contain a two-way, set-associative cache memory that uses a least-recently-used (LRU) replacement algorithm and that operates with a use-as-fill mode to minimize a number of wait states said processor elements need experience before continuing execution after a cache-miss. An operation of each of the first processor elements and an operation of the second processor element are locked together during an execution of a single instruction read from the second cache. The instruction specifies, in a first portion that is coupled in common to each of the plurality of first processor elements, the operation of each of the plurality of first processor elements in parallel. A second portion of the instruction specifies the operation of the second processor element. Also included is a motion estimator (7) and an internal data bus coupling together a first parallel port (3A), a second parallel port (3B), a third parallel port (3C), an external memory interface (2), and a data input / output of the first cache and the second cache.

Owner:CUFER ASSET LTD LLC

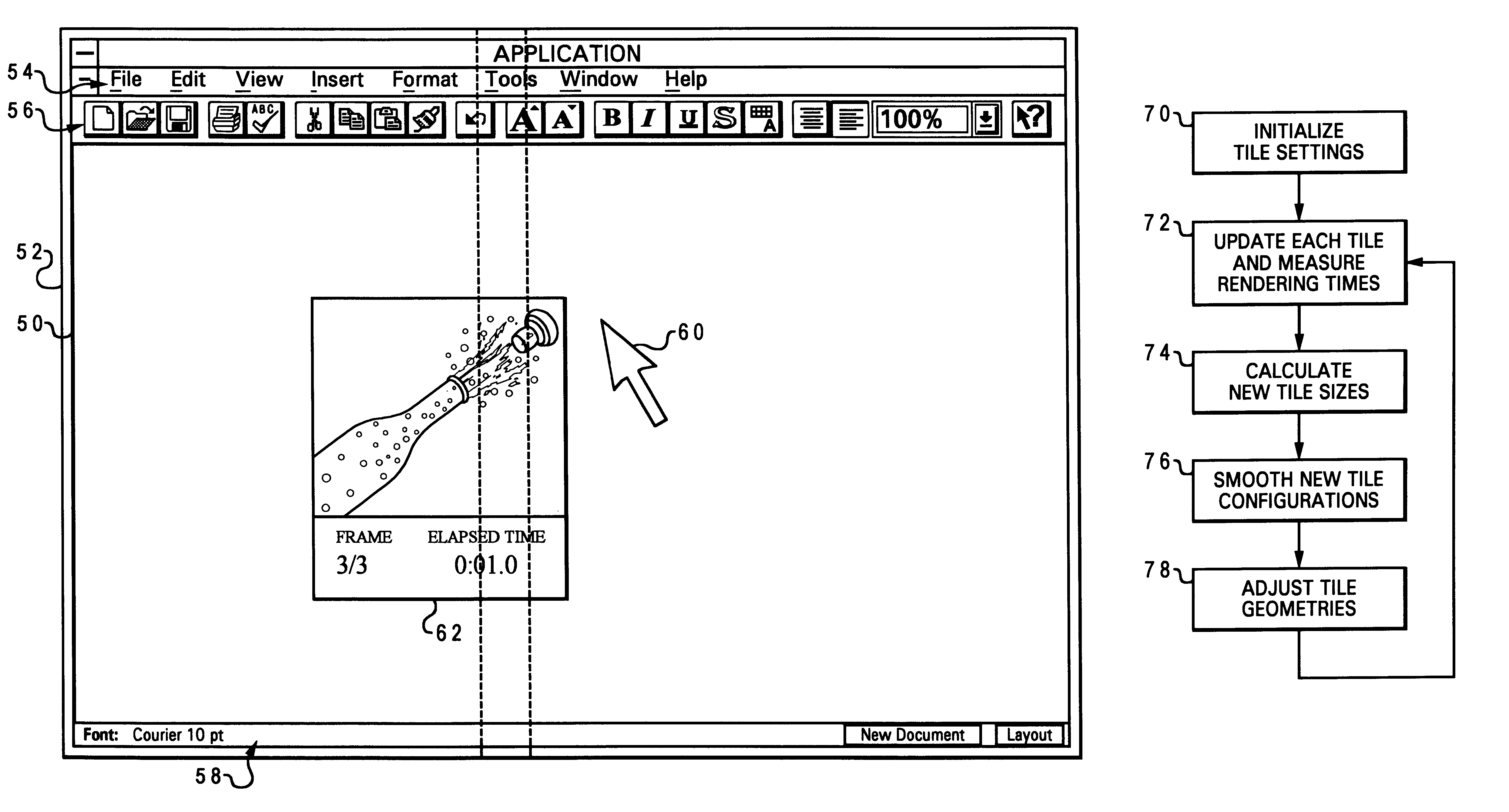

Dynamic balancing of graphics workloads using a tiling strategy

InactiveUS6191800B1Cathode-ray tube indicatorsElectric digital data processingGraphicsComputerized system

A method of generating graphic images on a display device of a computer system, by dividing the viewable area of the display device into a plurality of tiles, assigning each of the tiles to respective rendering processes, rendering a frame on the display device using the rendering processes, and adjusting the sizes of the tiles in response to the rendering step. The method then uses the adjusted tile sizes to render the next frame. The tile areas are preferably constrained from becoming too small. The adjusted tile sizes may optionally be smoothed, such as by averaging in weighted sizes from previous frames. The tile sizes are adjusted by measuring the rendering times required for each rendering process to complete a respective portion of the frame, and then resizing the tiles based on the measured rendering times.

Owner:IBM CORP

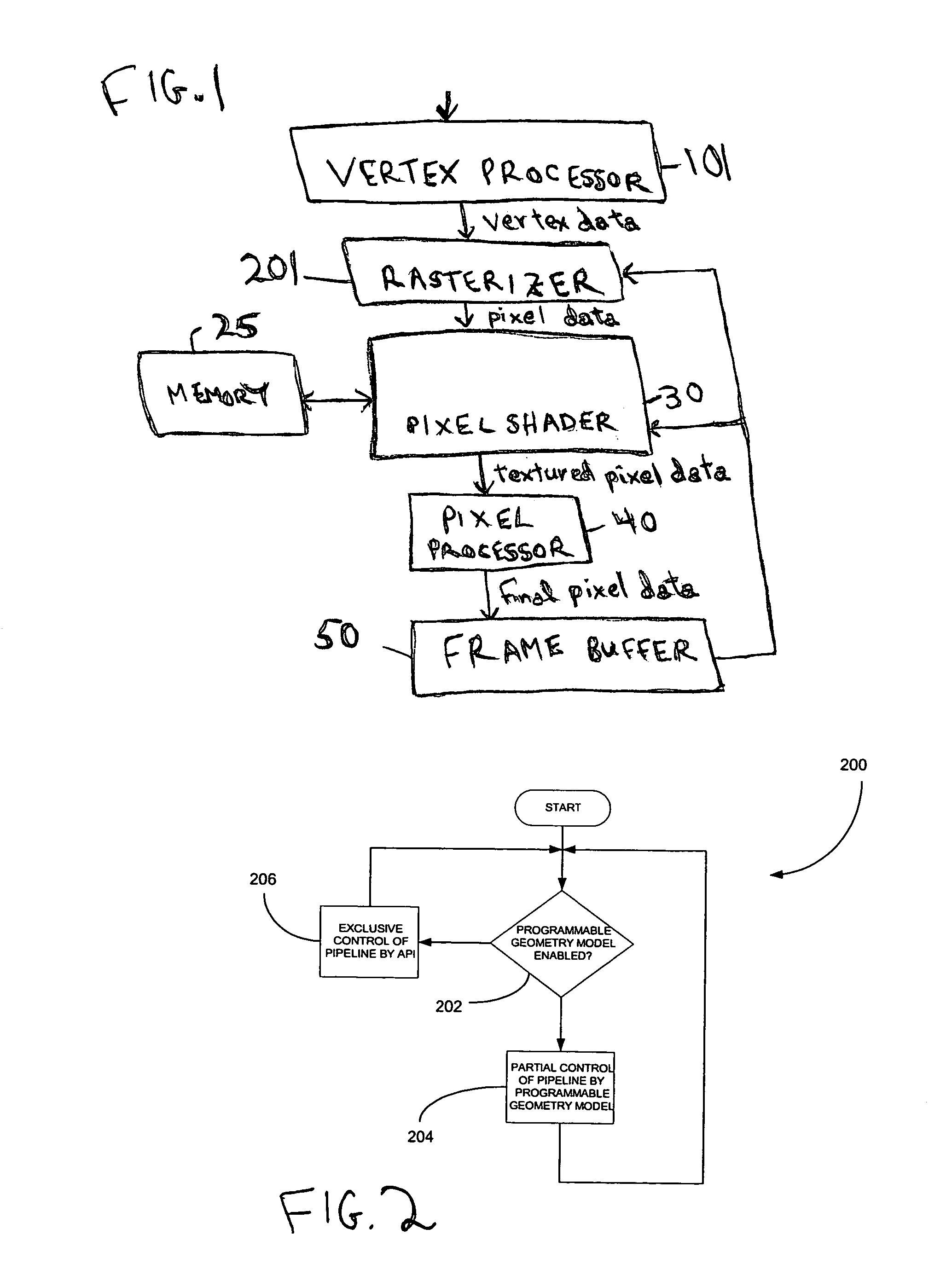

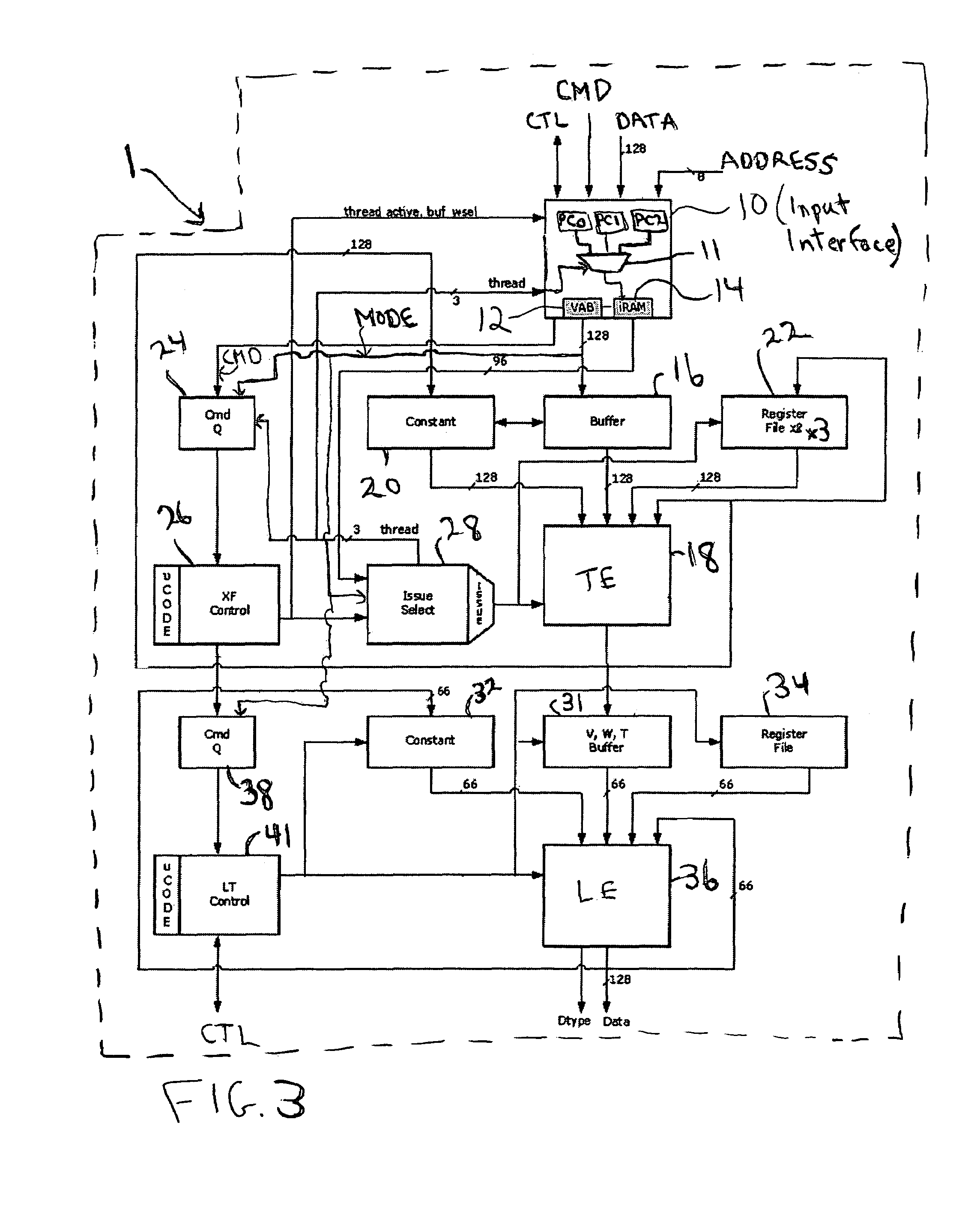

Method and system for programmable pipelined graphics processing with branching instructions

InactiveUS6947047B1Avoid confictMultiple digital computer combinationsProcessor architectures/configurationGraphicsMode control

A programmable, pipelined graphics processor (e.g., a vertex processor) having at least two processing pipelines, a graphics processing system including such a processor, and a pipelined graphics data processing method allowing parallel processing and also handling branching instructions and preventing conflicts among pipelines. Preferably, each pipeline processes data in accordance with a program including by executing branch instructions, and the processor is operable in any one of a parallel processing mode in which at least two data values to be processed in parallel in accordance with the same program are launched simultaneously into multiple pipelines, and a serialized mode in which only one pipeline at a time receives input data values to be processed in accordance with the program (and operation of each other pipeline is frozen). During parallel processing mode operation, mode control circuitry recognizes and resolves branch instructions to be executed (before processing of data in accordance with each branch instruction starts) and causes the processor to operate in the serialized mode when (and preferably only for as long as) necessary to prevent any conflict between the pipelines due to branching. In other embodiments, the processor is operable in any one of a parallel processing mode and a limited serialized mode in which operation of each of a sequence of pipelines (or pipeline sets) pauses for a limited number of clock cycles. The processor enters the limited serialized mode in response to detecting a conflict-causing instruction that could cause a conflict between resources shared by the pipelines during parallel processing mode operation.

Owner:NVIDIA CORP

Scheduling in a multicore processor

ActiveUS20070220517A1Improve application performanceIncrease speedEnergy efficient ICTConcurrent instruction executionProcessor elementMulti-core processor

A method and computer-usable medium including instructions for performing a method for scheduling executable transactions within a multicore processor comprising a plurality of processor elements. The method includes listing, using at least one distribution queue, a portion of the executable transactions in order of eligibility for execution. A plurality of executable transaction schedulers are provided, wherein each executable transaction scheduler includes a scheduling process for determining a most eligible executable transaction for execution from at least one candidate executable transaction ready for execution. The executable transaction schedulers are linked together to provide a multilevel scheduler. The most eligible executable transaction is output from the multilevel scheduler to the at least one distribution queue.

Owner:SYNOPSYS INC +1

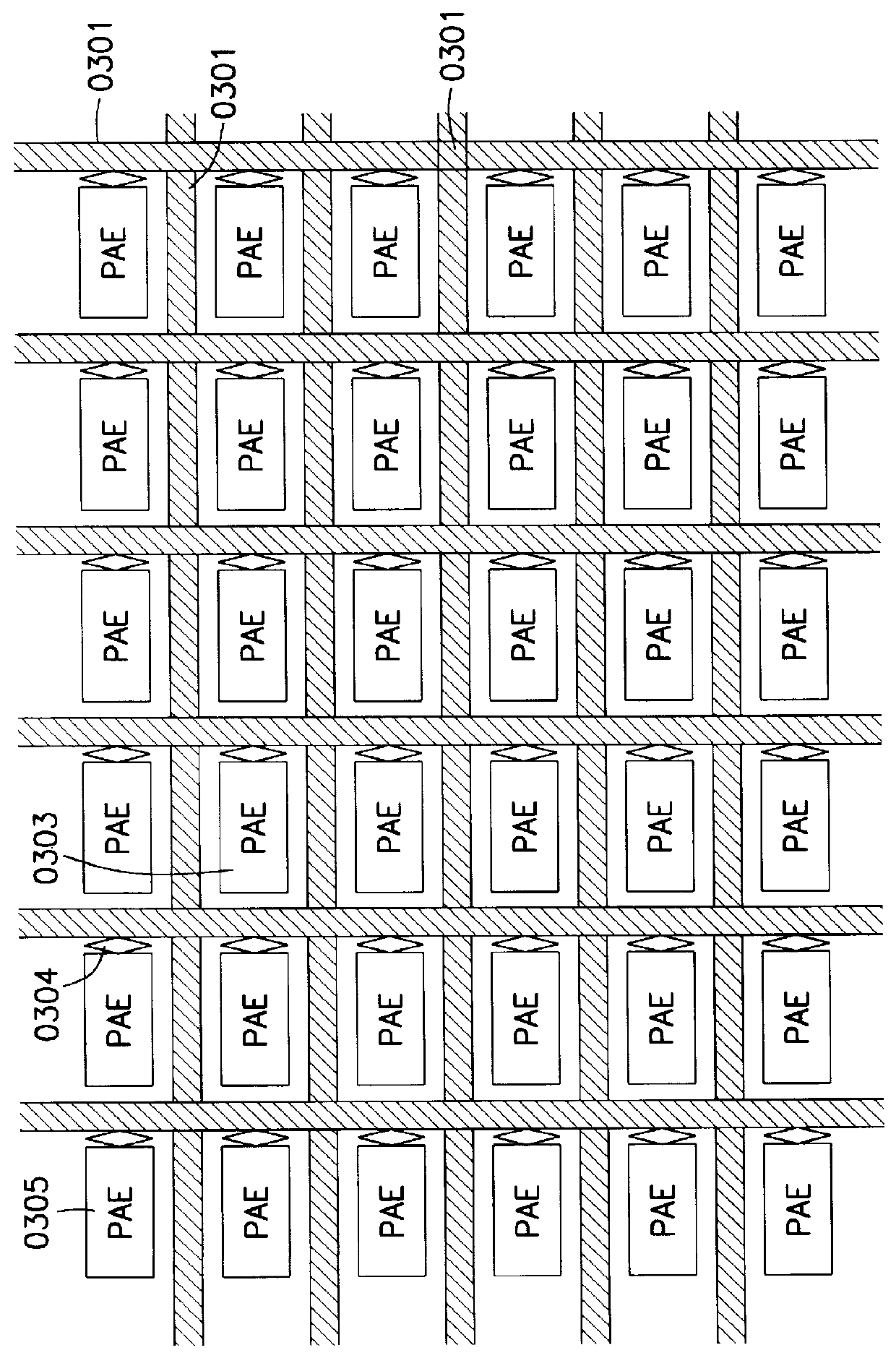

Data Processing System Having Integrated Pipelined Array Data Processor

InactiveUS20150106596A1Improve executionImprove performanceMemory architecture accessing/allocationInstruction analysisData processing systemParallel computing

A data processing system having a data processing core and integrated pipelined array data processor and a buffer for storing list of algorithms for processing by the pipelined array data processor.

Owner:SCIENTIA SOL MENTIS AG

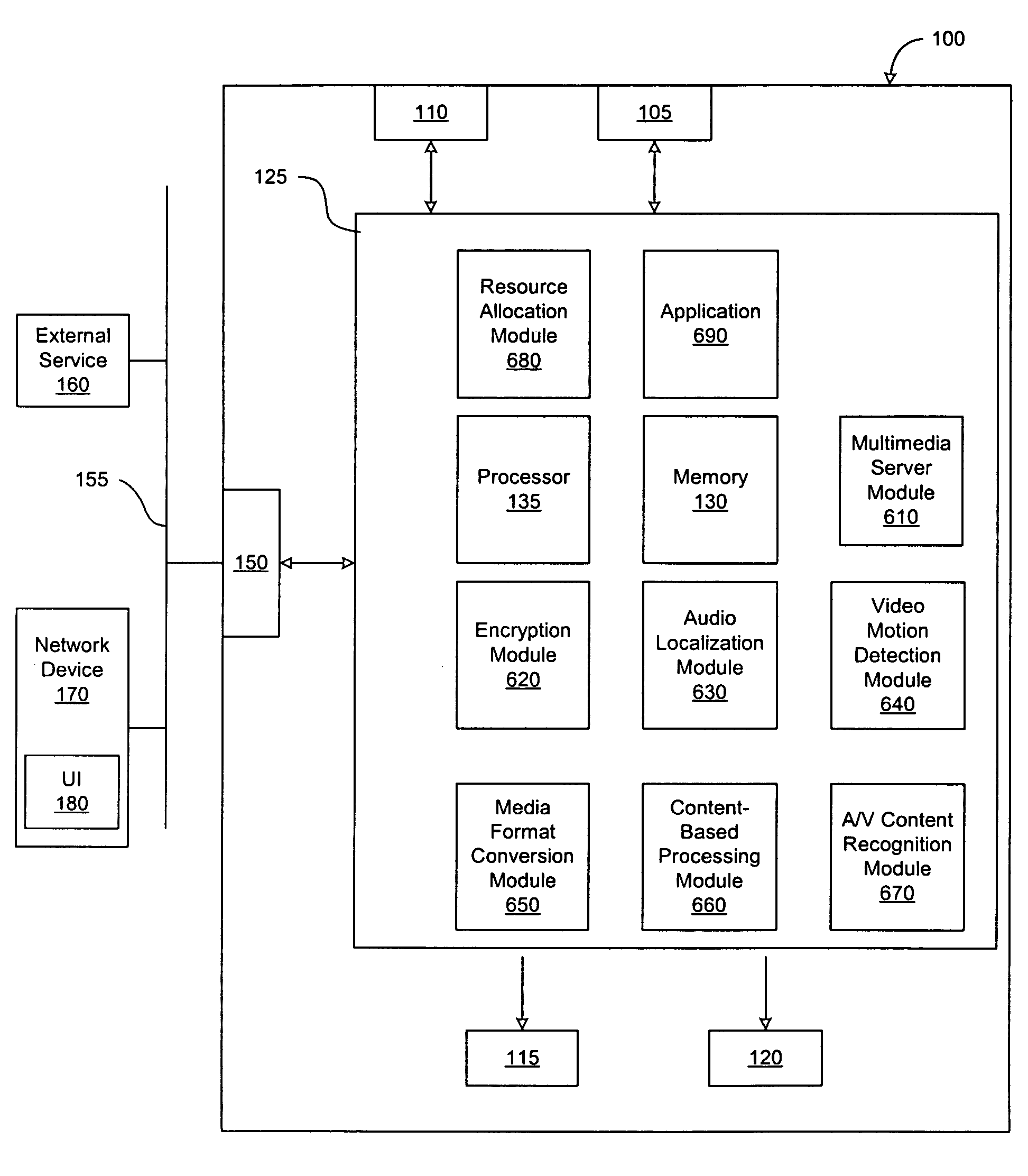

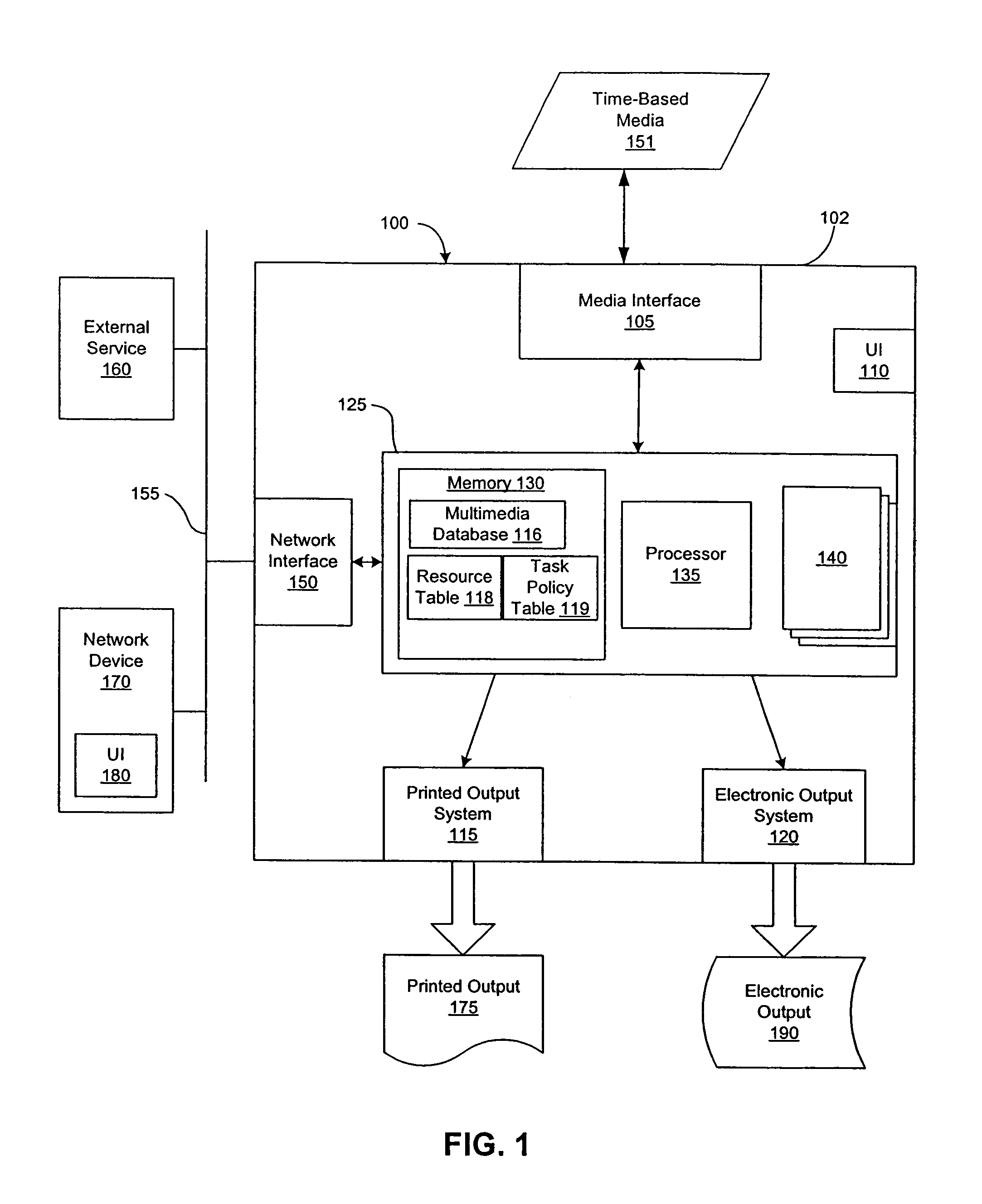

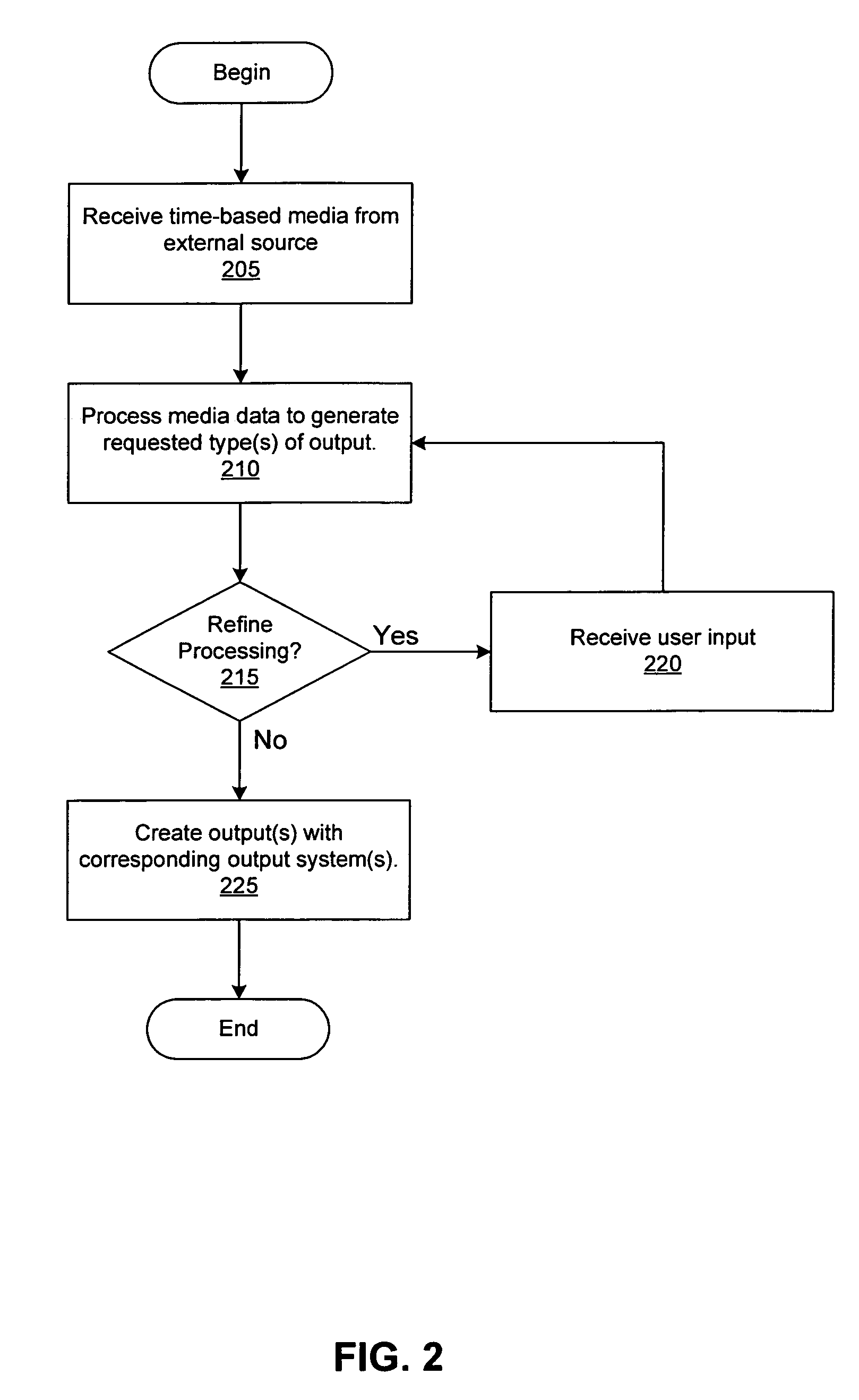

Stand alone multimedia printer with user interface for allocating processing

InactiveUS7508535B2Improve representationMeet needsVisual presentationOther printing apparatusCommunication interfaceWeb service

Owner:RICOH KK

I/O and memory bus system for DFPs and units with two- or multi-dimensional programmable cell architectures

InactiveUS6119181ADigital storageArchitecture with single central processing unitComputer architectureMemory bus

A uniform bus system is provided which operates without any special consideration by a programmer. Memories and peripheral may be connected to this bus system without any special measures. Likewise, units may be cascaded with the help of the bus system. The bus system combines a number of internal lines, and leads them as a bundle to terminals. The bus system control is predefined and does not require any influence by the programmer. Any number of memories, peripherals or other units can be connected to the bus system.

Owner:SCIENTIA SOL MENTIS AG

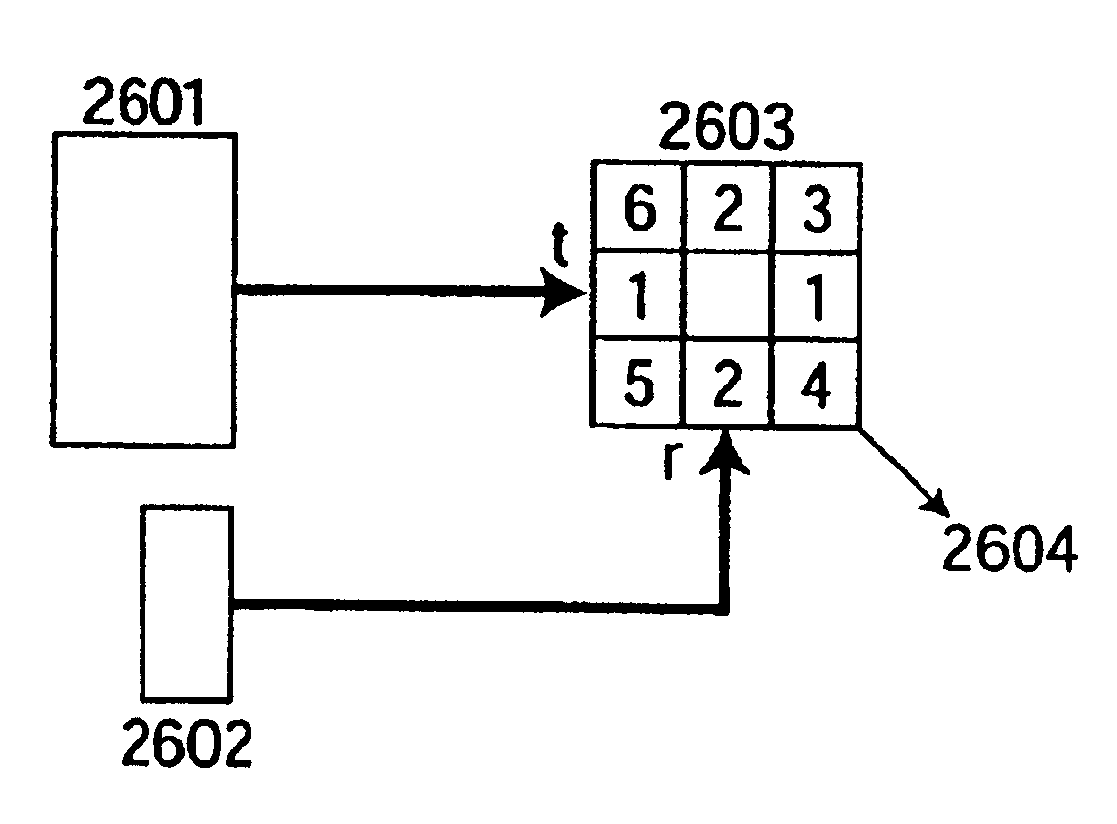

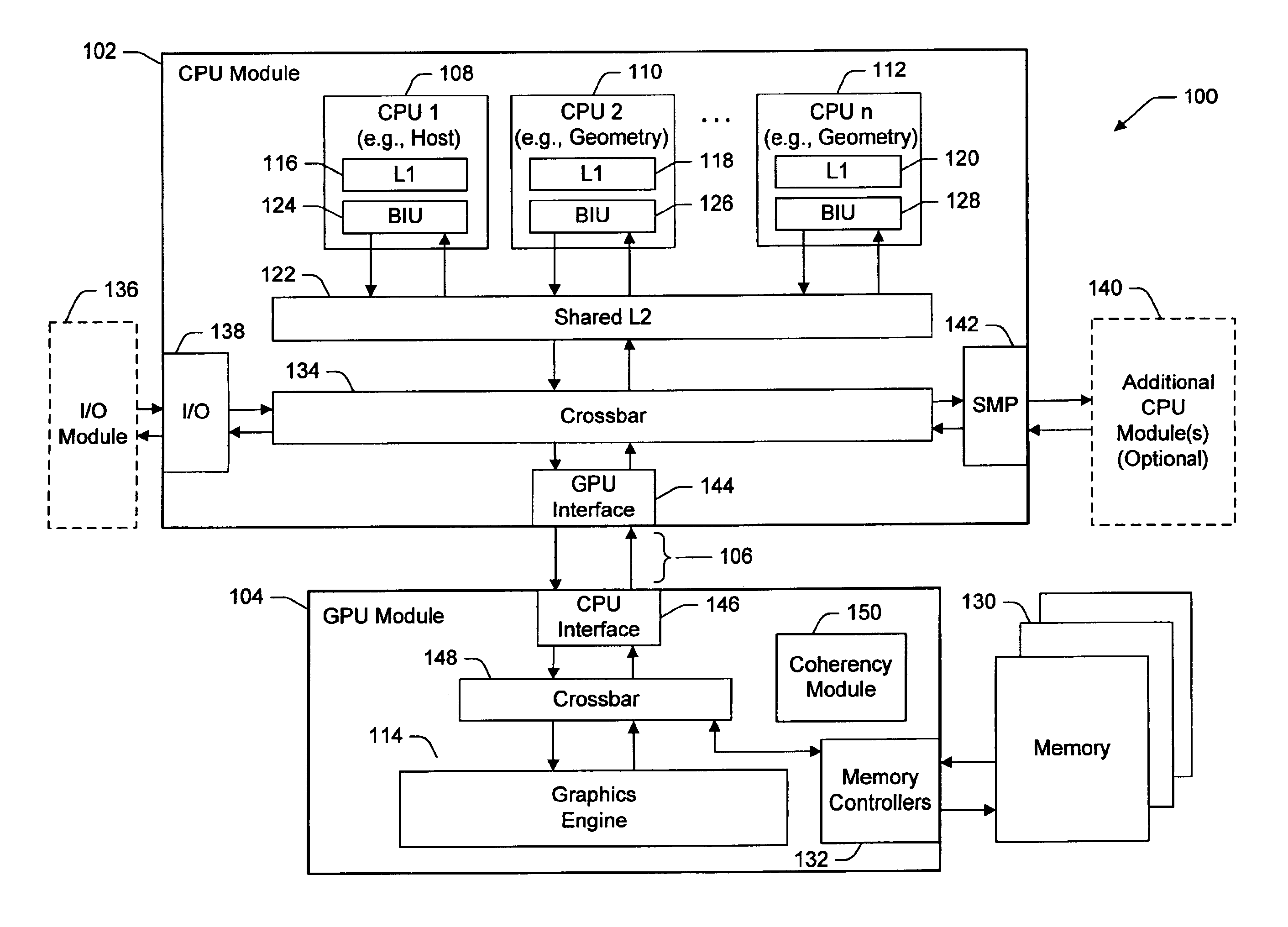

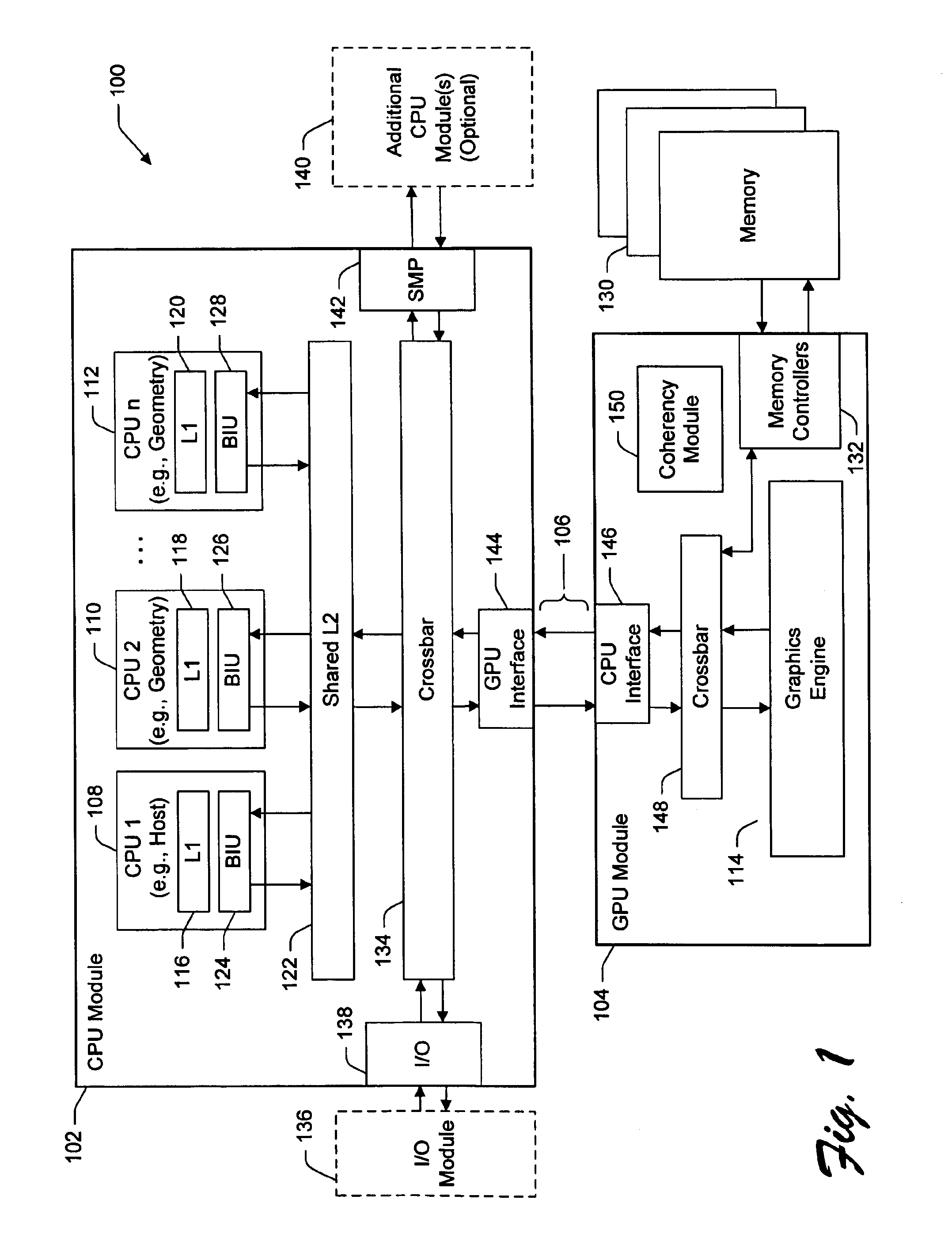

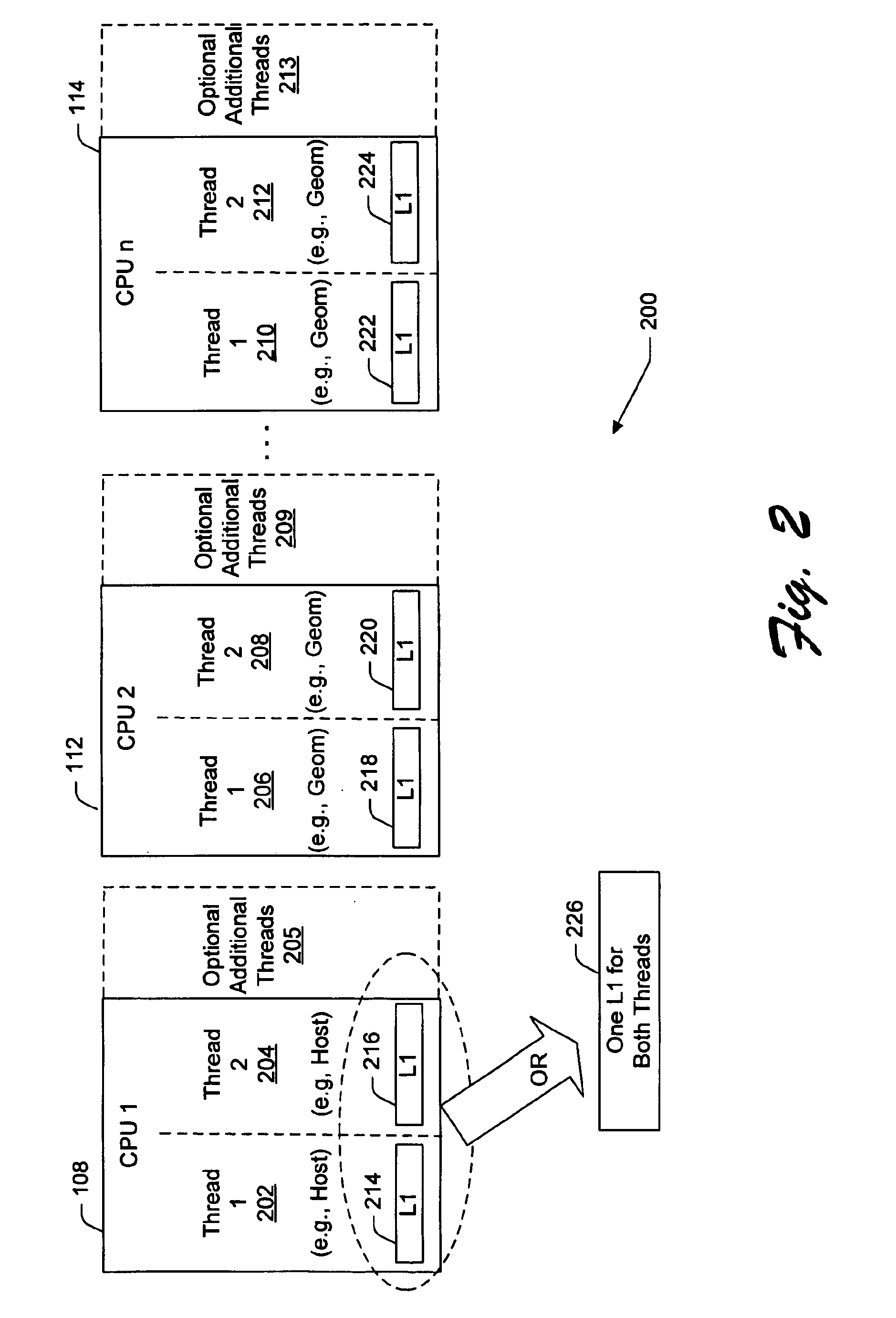

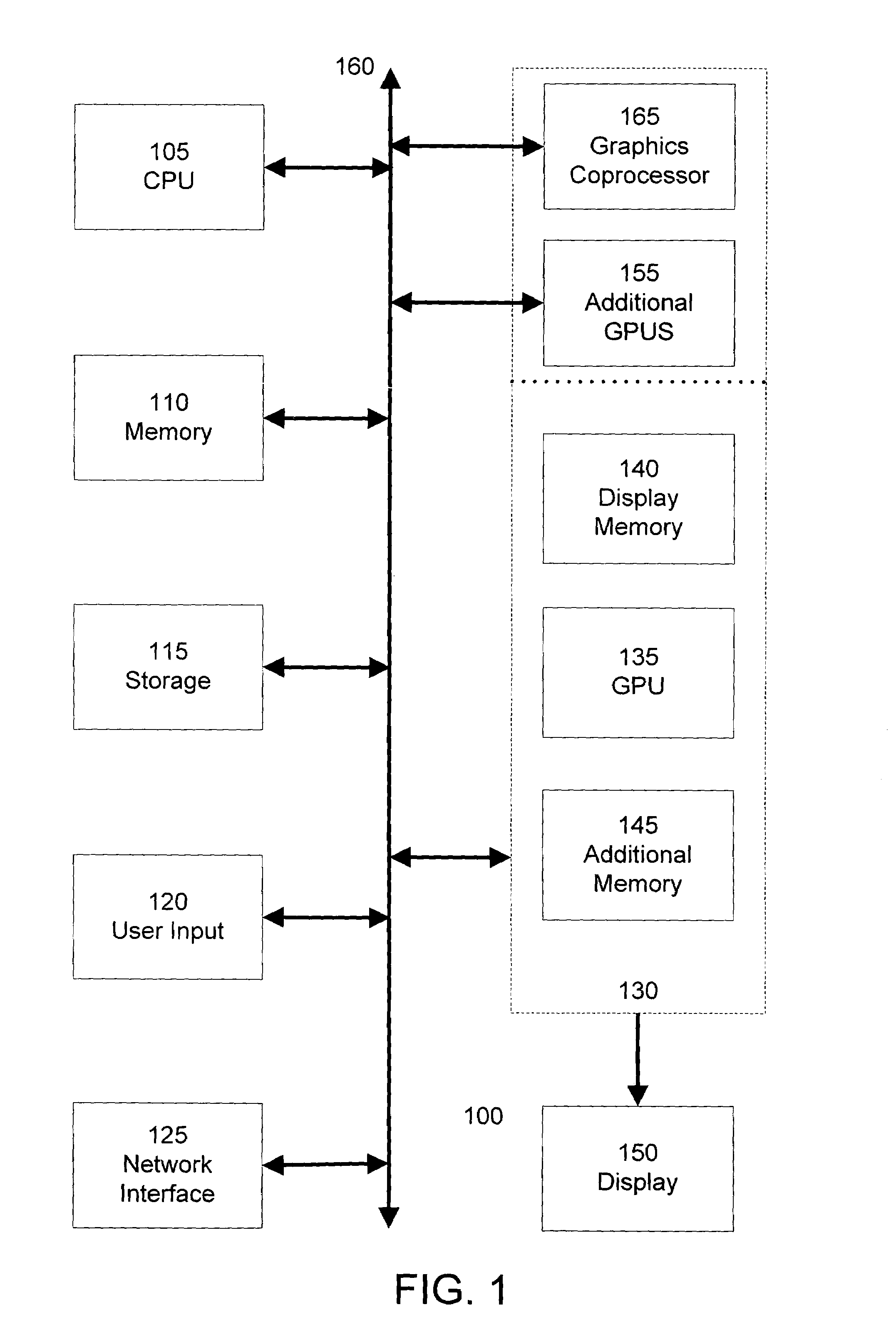

System and method for parallel execution of data generation tasks

InactiveUS6862027B2Reduce storage requirementsReduces deleterious bandwidth restrictionResource allocationMemory adressing/allocation/relocationGraphicsParallel computing

A CPU module includes a host element configured to perform a high-level host-related task, and one or more data-generating processing elements configured to perform a data-generating task associated with the high-level host-related task. Each data-generating processing element includes logic configured to receive input data, and logic configured to process the input data to produce output data. The amount of output data is greater than an amount of input data, and the ratio of the amount of input data to the amount of output data defines a decompression ratio. In one implementation, the high-level host-related task performed by the host element pertains to a high-level graphics processing task, and the data-generating task pertains to the generation of geometry data (such as triangle vertices) for use within the high-level graphics processing task. The CPU module can transfer the output data to a GPU module via at least one locked set of a cache memory. The GPU retrieves the output data from the locked set, and periodically forwards a tail pointer to a cacheable location within the data-generating elements that informs the data-generating elements of its progress in retrieving the output data.

Owner:MICROSOFT TECH LICENSING LLC

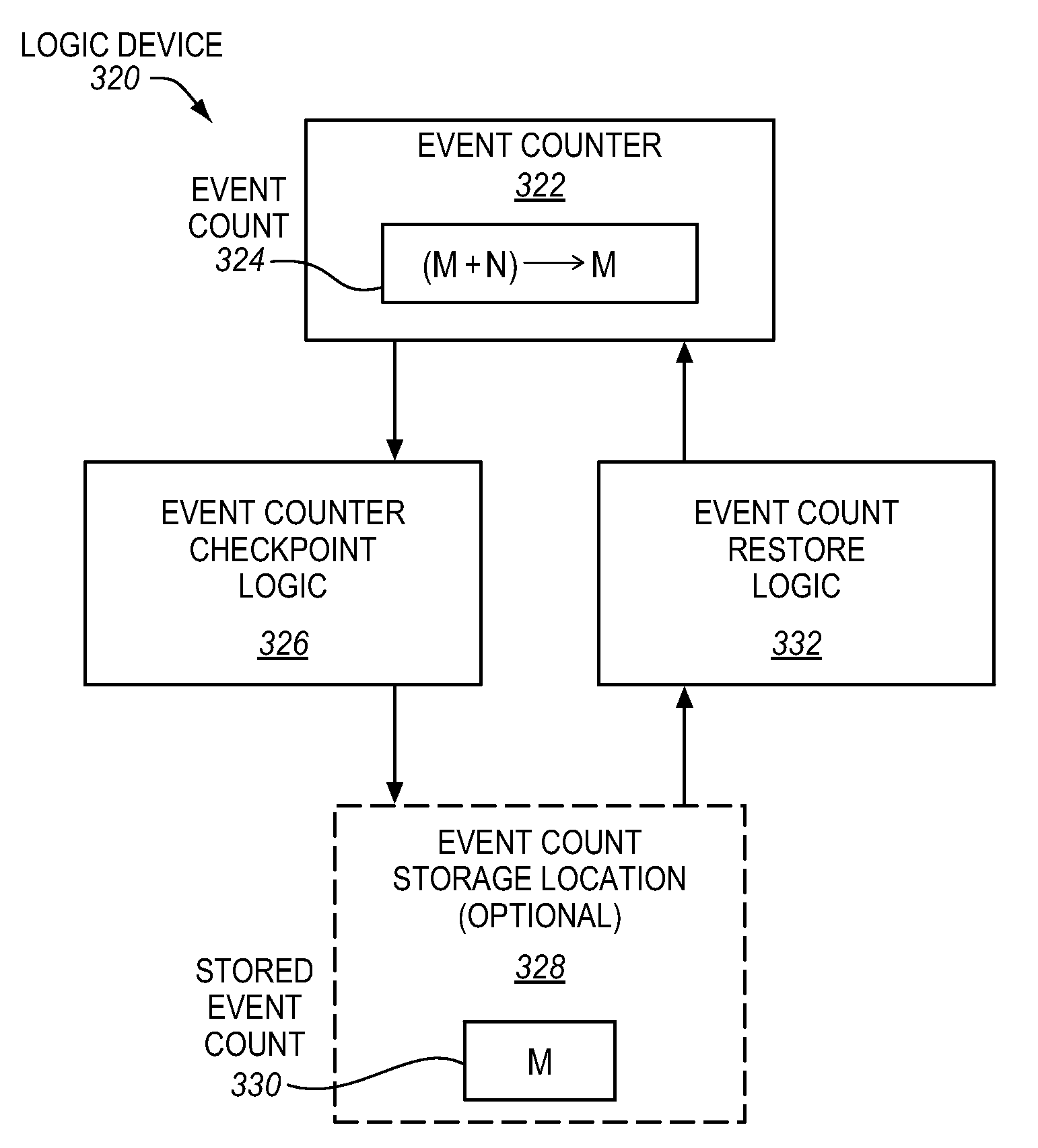

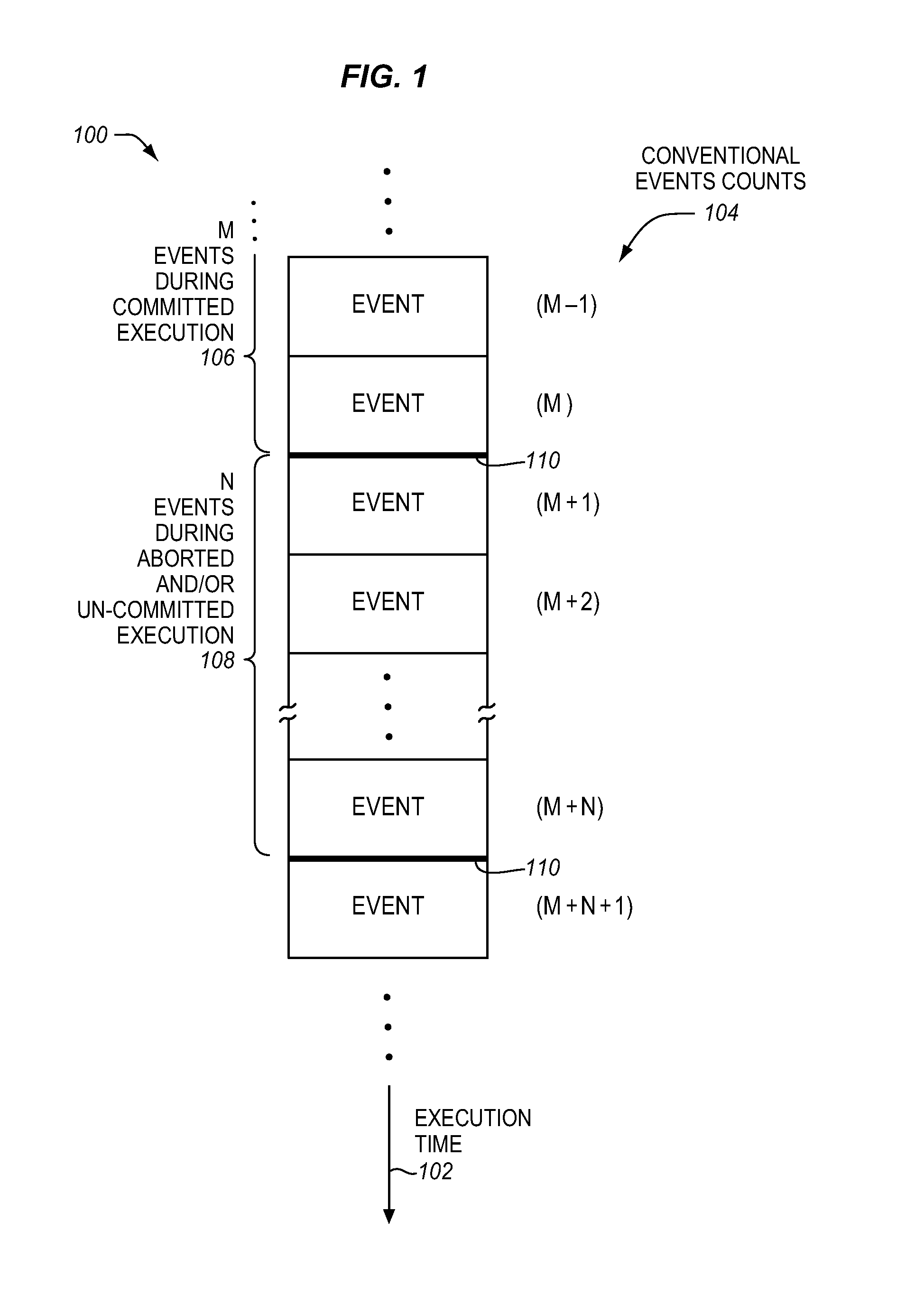

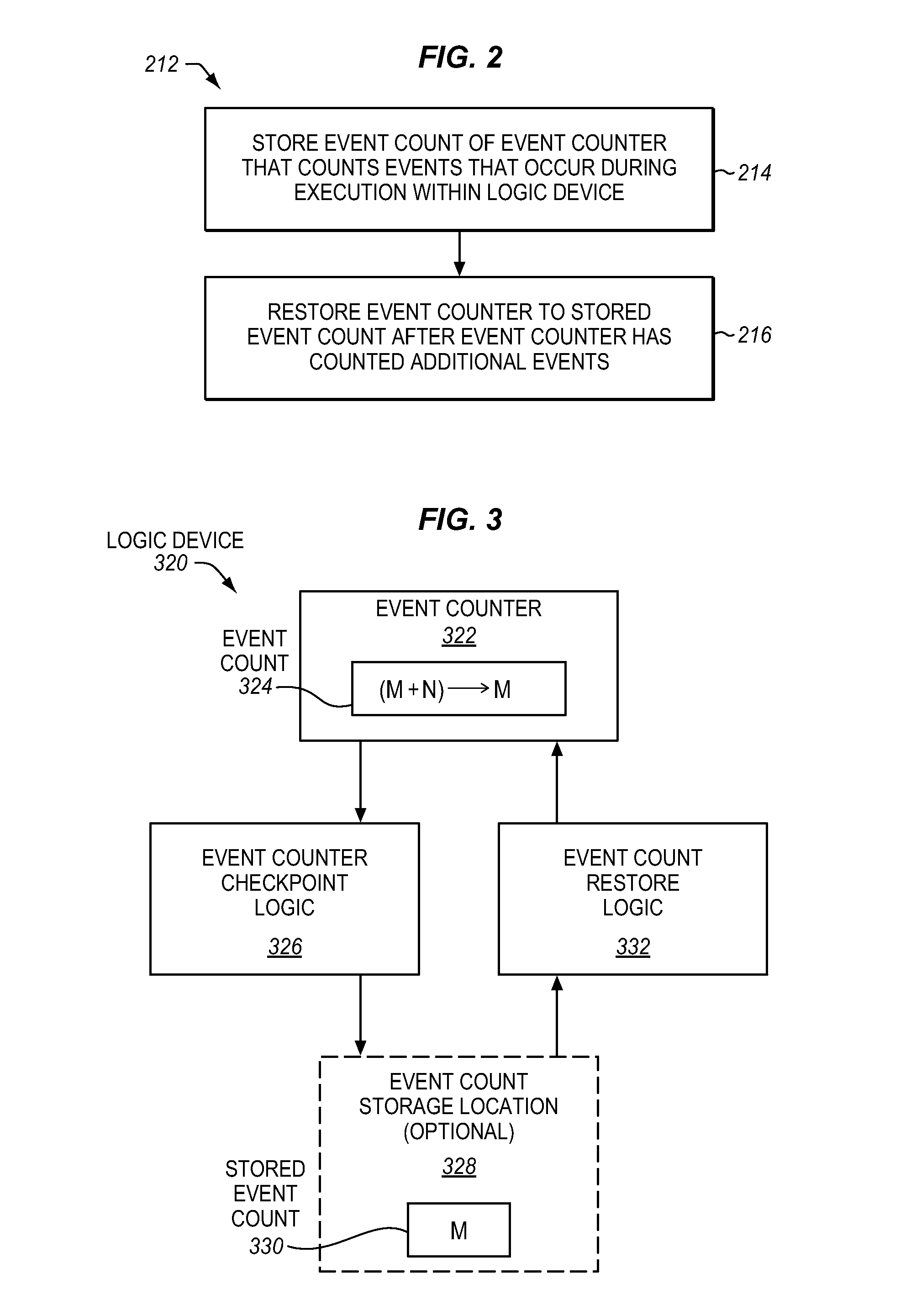

Method, apparatus, and system for speculative execution event counter checkpointing and restoring

InactiveUS20120227045A1Memory architecture accessing/allocationError detection/correctionSpeculative executionExecution cycle

An apparatus, method, and system are described herein for providing programmable control of performance / event counters. An event counter is programmable to track different events, as well as to be checkpointed when speculative code regions are encountered. So when a speculative code region is aborted, the event counter is able to be restored to it pre-speculation value. Moreover, the difference between a cumulative event count of committed and uncommitted execution and the committed execution, represents an event count / contribution for uncommitted execution. From information on the uncommitted execution, hardware / software may be tuned to enhance future execution to avoid wasted execution cycles.

Owner:INTEL CORP

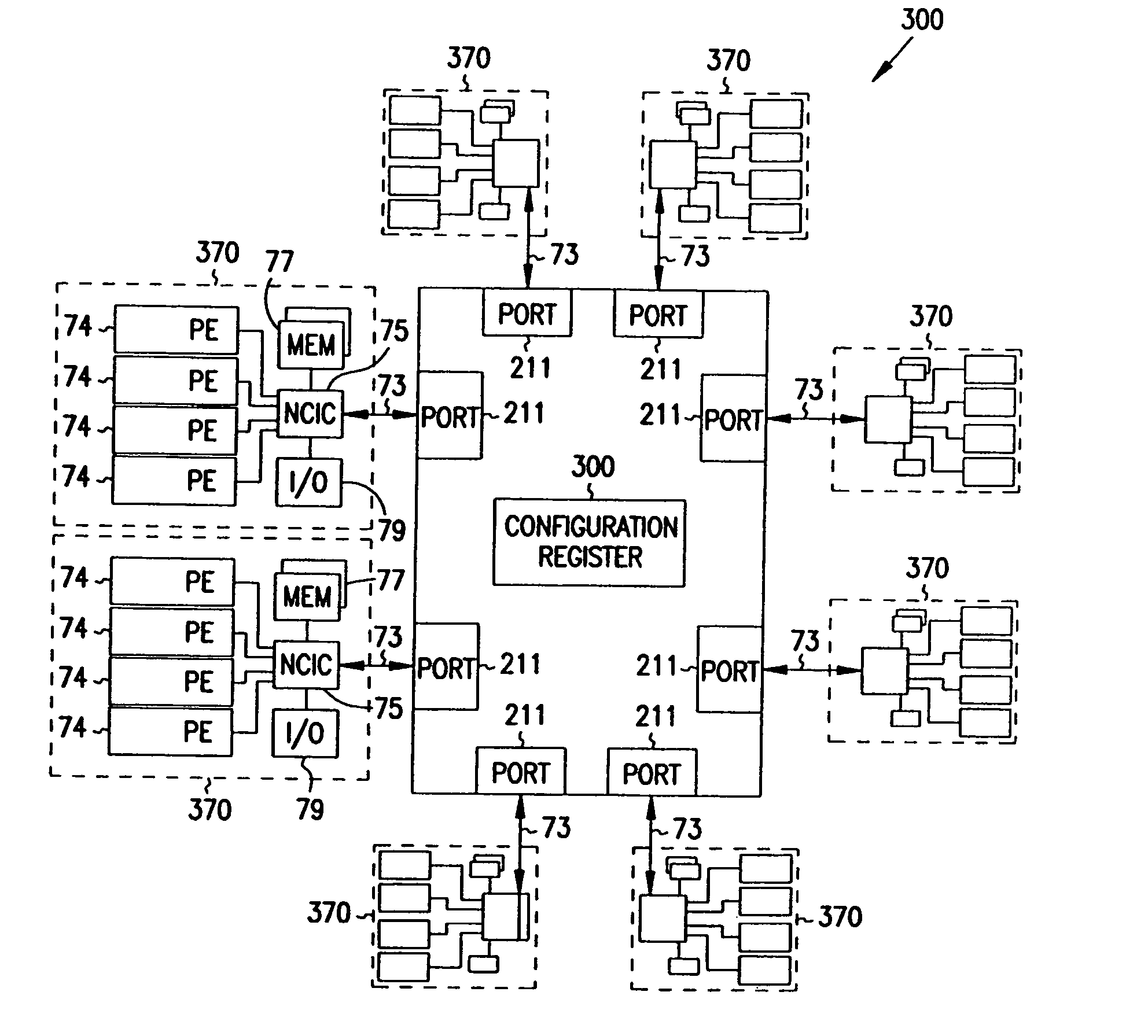

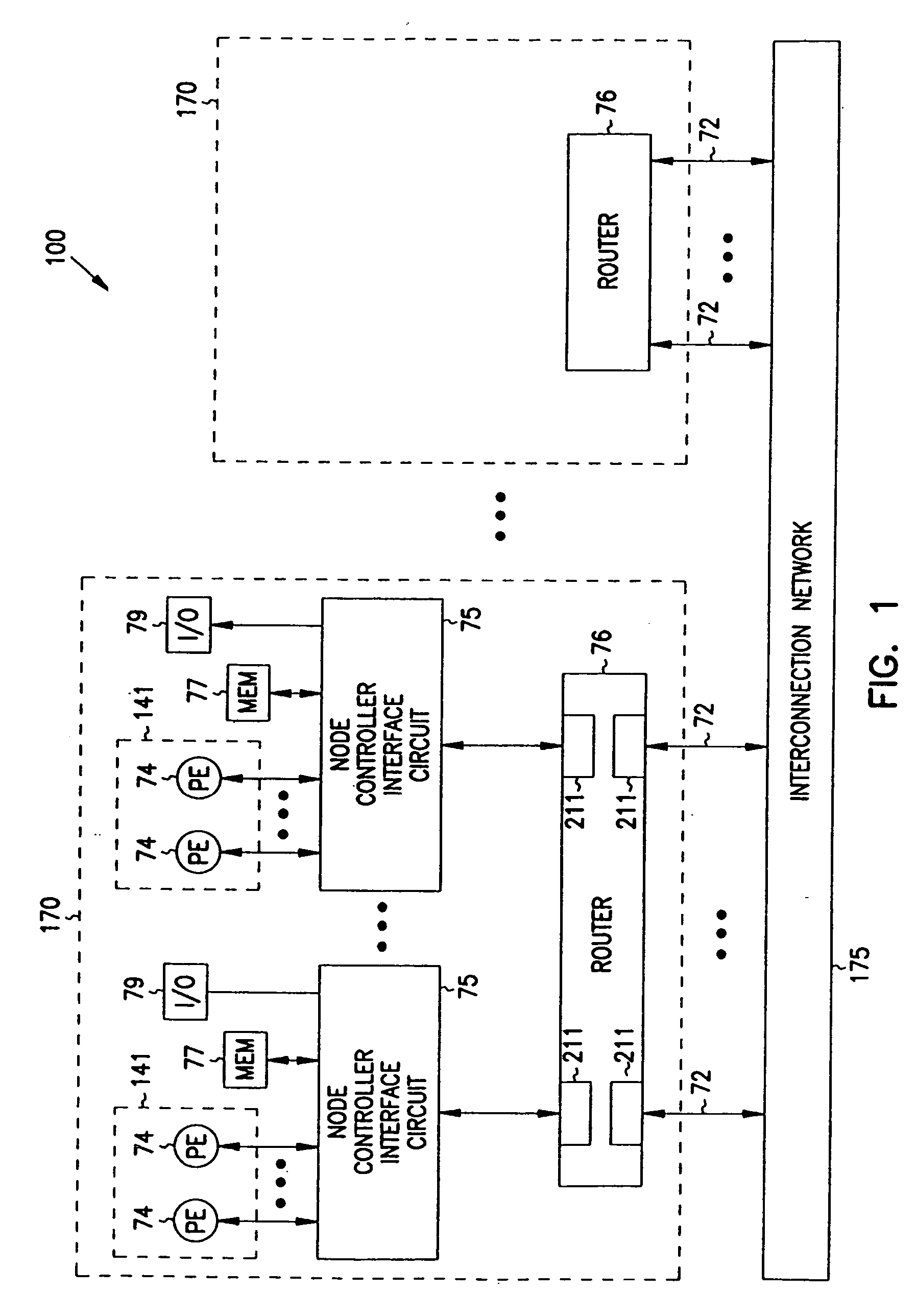

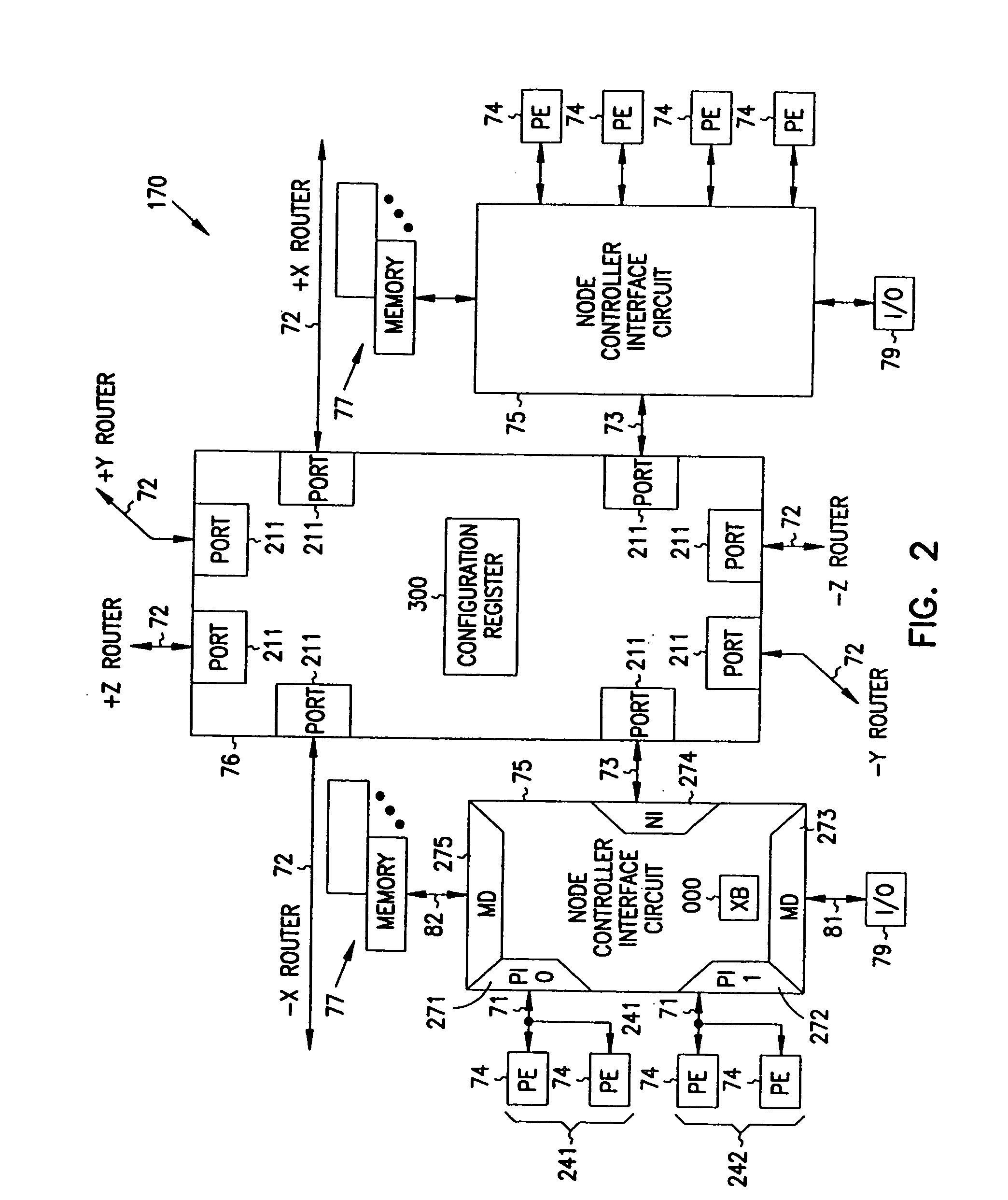

Multiprocessor node controller circuit and method

InactiveUS20050053057A1Ease of parallel processingImprove welfareMultiplex system selection arrangementsMemory adressing/allocation/relocationMemory addressCrossbar switch

Improved method and apparatus for parallel processing. One embodiment provides a multiprocessor computer system that includes a first and second node controller, a number of processors being connected to each node controller, a memory connected to each controller, a first input / output system connected to the first node controller, and a communications network connected between the node controllers. The first node controller includes: a crossbar unit to which are connected a memory port, an input / output port, a network port, and a plurality of independent processor ports. A first and a second processor port connected between the crossbar unit and a first subset and a second subset, respectively, of the processors. In some embodiments of the system, the first node controller is fabricated onto a single integrated-circuit chip. Optionally, the memory is packaged on plugable memory / directory cards wherein each card includes a plurality of memory chips including a first subset dedicated to holding memory data and a second subset dedicated to holding directory data. Further, the memory port includes a memory data port including a memory data bus and a memory address bus coupled to the first subset of memory chips, and a directory data port including a directory data bus and a directory address bus coupled to the second subset of memory chips. In some such embodiments, the ratio of (memory data space) to (directory data space) on each card is set to a value that is based on a size of the multiprocessor computer system.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

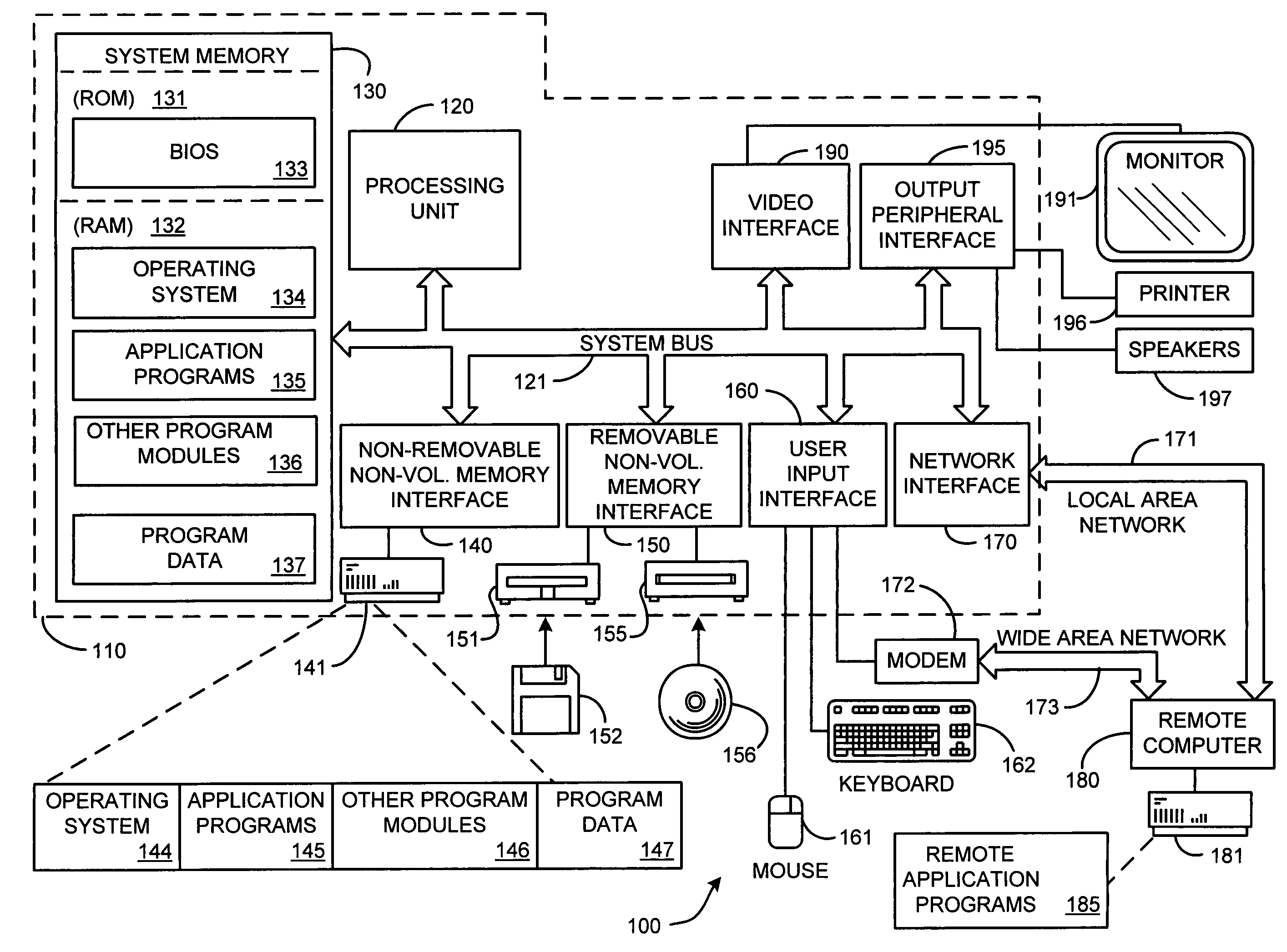

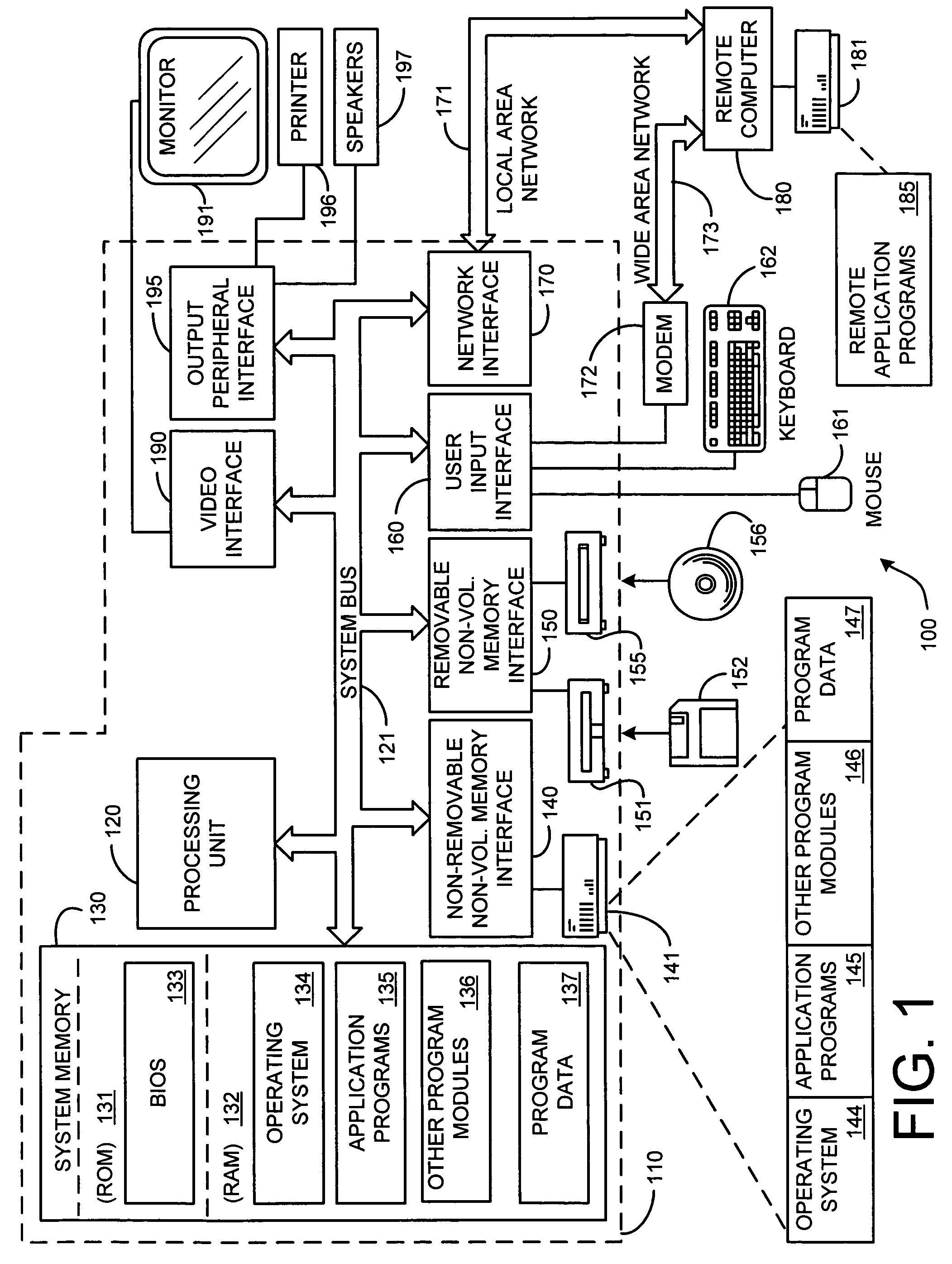

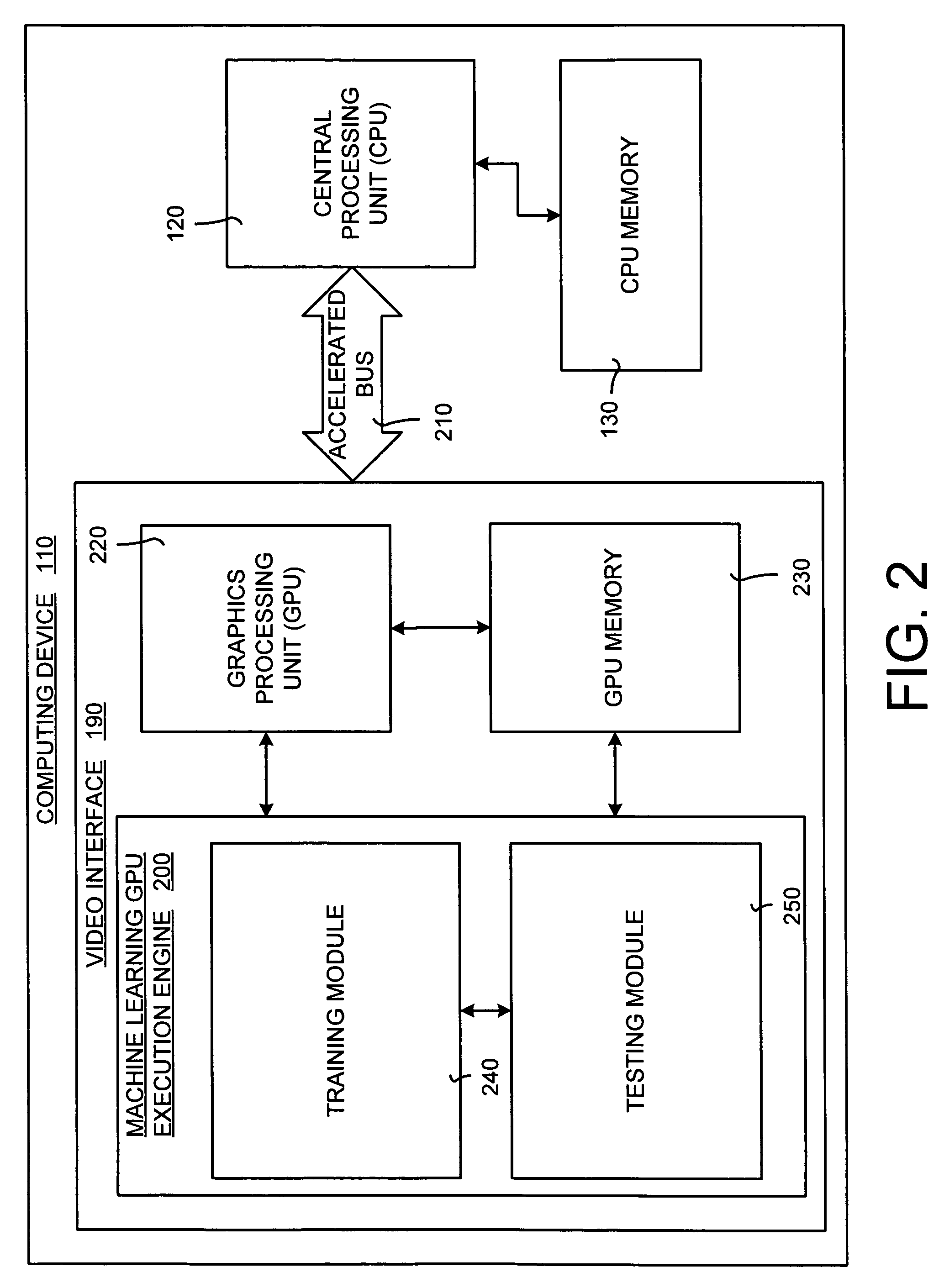

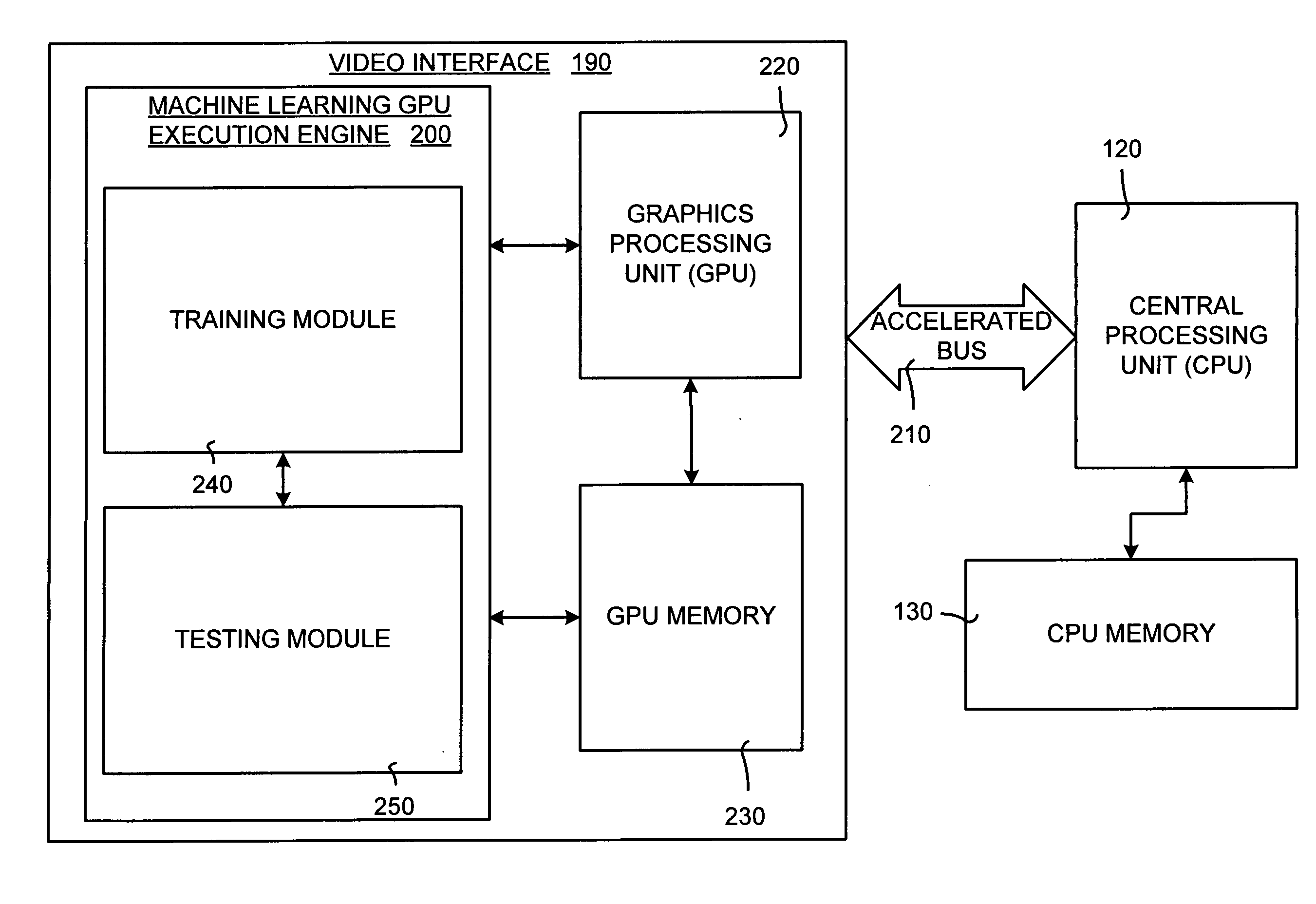

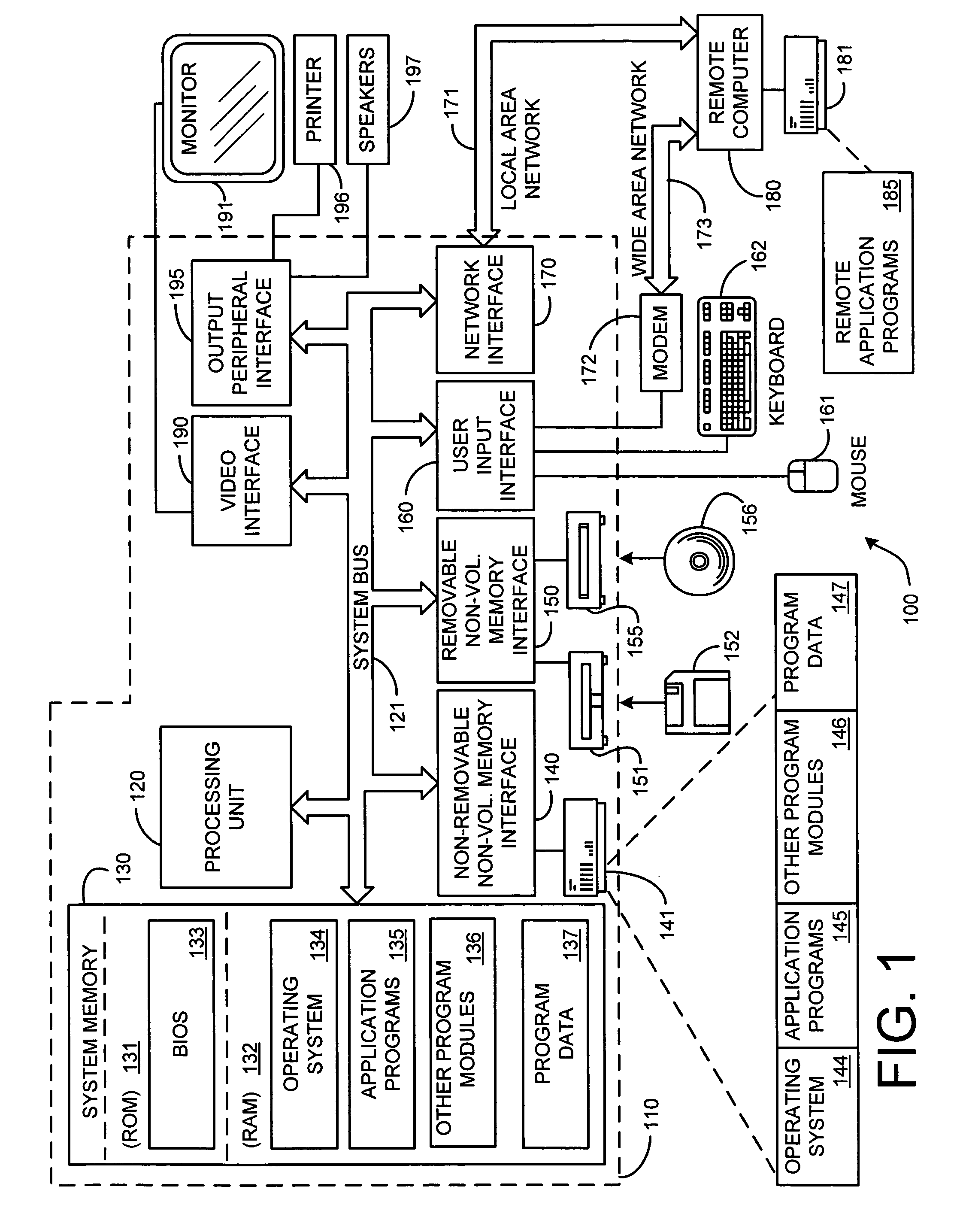

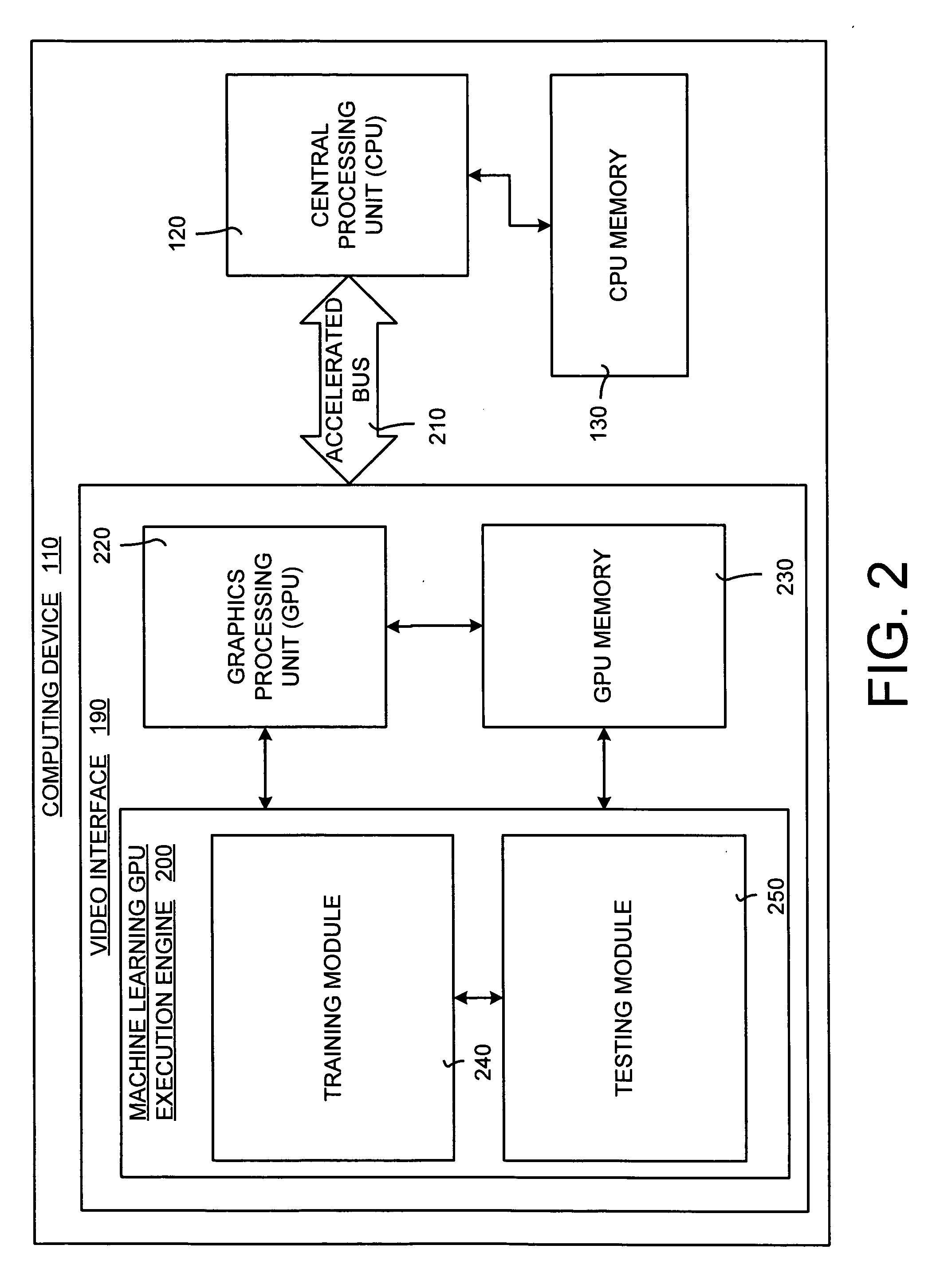

System and method for accelerating and optimizing the processing of machine learning techniques using a graphics processing unit

ActiveUS7219085B2Alleviates computational limitationMore computationCharacter and pattern recognitionKnowledge representationGraphicsTheoretical computer science

A system and method for processing machine learning techniques (such as neural networks) and other non-graphics applications using a graphics processing unit (GPU) to accelerate and optimize the processing. The system and method transfers an architecture that can be used for a wide variety of machine learning techniques from the CPU to the GPU. The transfer of processing to the GPU is accomplished using several novel techniques that overcome the limitations and work well within the framework of the GPU architecture. With these limitations overcome, machine learning techniques are particularly well suited for processing on the GPU because the GPU is typically much more powerful than the typical CPU. Moreover, similar to graphics processing, processing of machine learning techniques involves problems with solving non-trivial solutions and large amounts of data.

Owner:MICROSOFT TECH LICENSING LLC

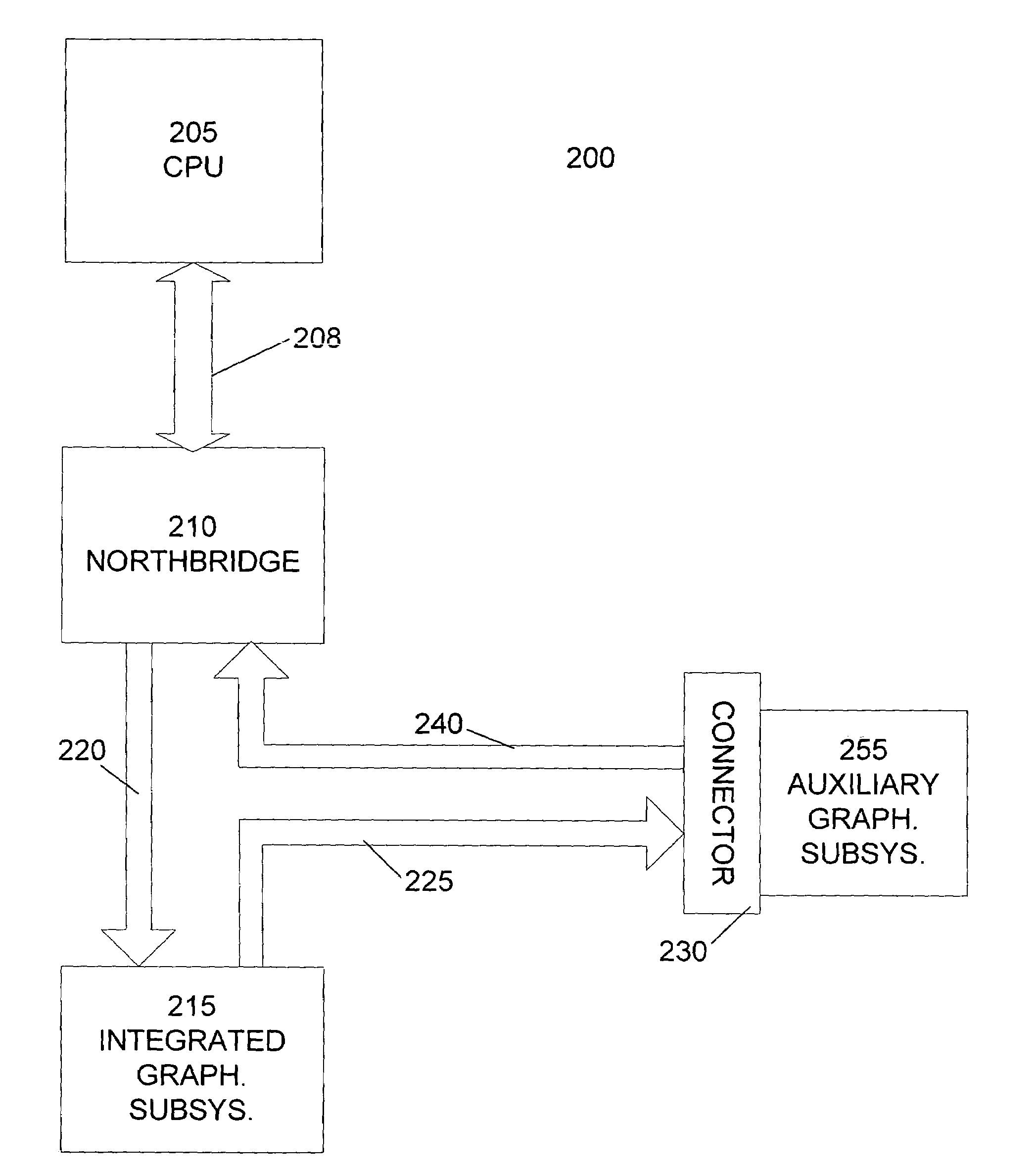

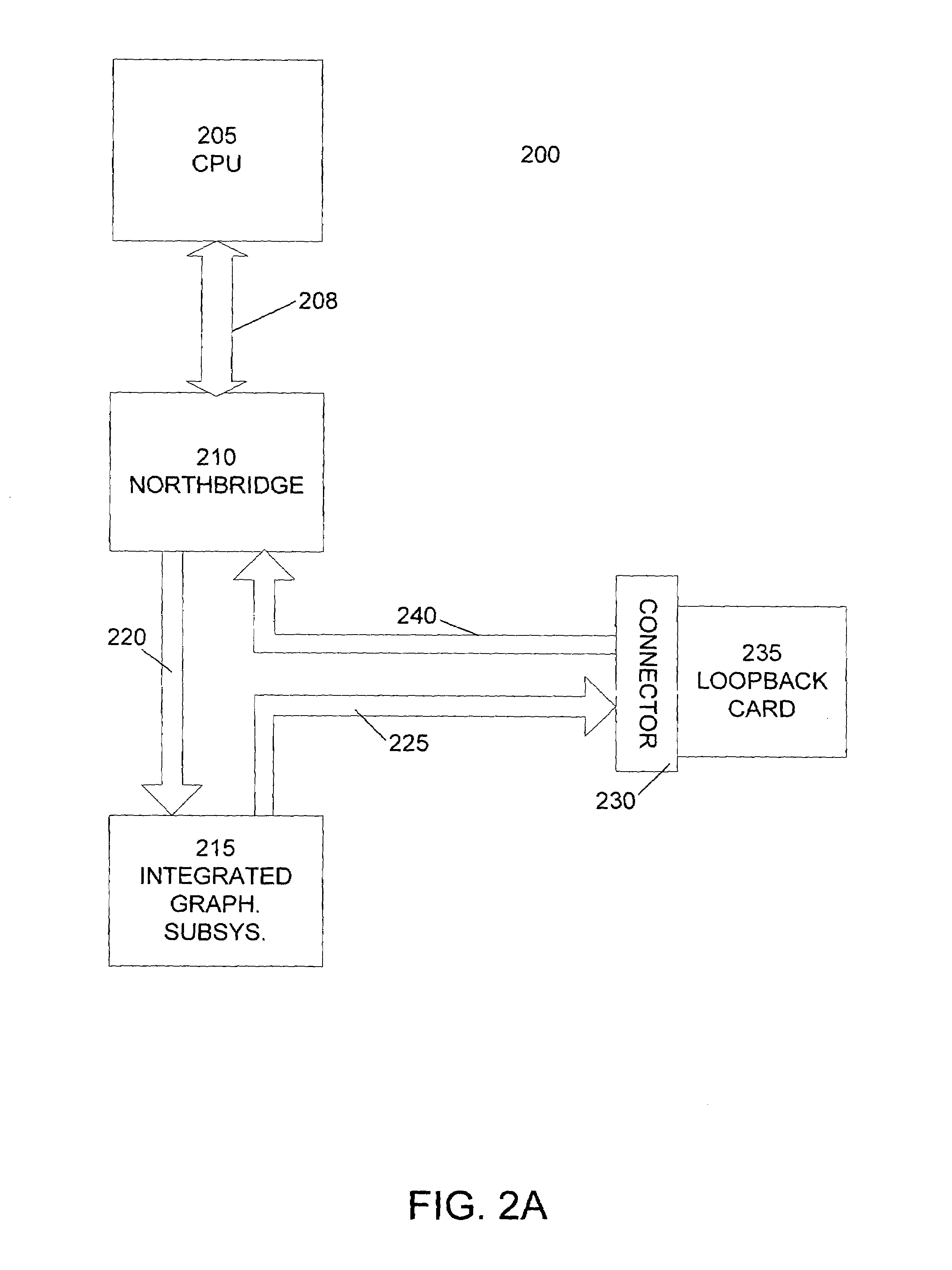

Point-to-point bus bridging without a bridge controller

ActiveUS6985152B2Multiple digital computer combinationsArchitecture with multiple processing unitsGraphicsComputerized system

A computer system includes an integrated graphics subsystem and a graphics connector for attaching either an auxiliary graphics subsystem or a loopback card. A first bus connection communicates data from the computer system to the integrated graphics subsystem. With a loopback card in place, data travels from the integrated graphics subsystem back to the computer system via a second bus connection. When the auxiliary graphics subsystem is attached, the integrated graphics subsystem operates in a data forwarding mode. Data is communicated to the integrated graphics subsystem via the first bus connection. The integrated graphics subsystem then forwards data to the auxiliary graphics subsystem. A portion of the second bus connection communicates data from the auxiliary graphics subsystem back to the computer system. The auxiliary graphics subsystem communicates display information back to the integrated graphics subsystem, where it is used to control a display device.

Owner:NVIDIA CORP

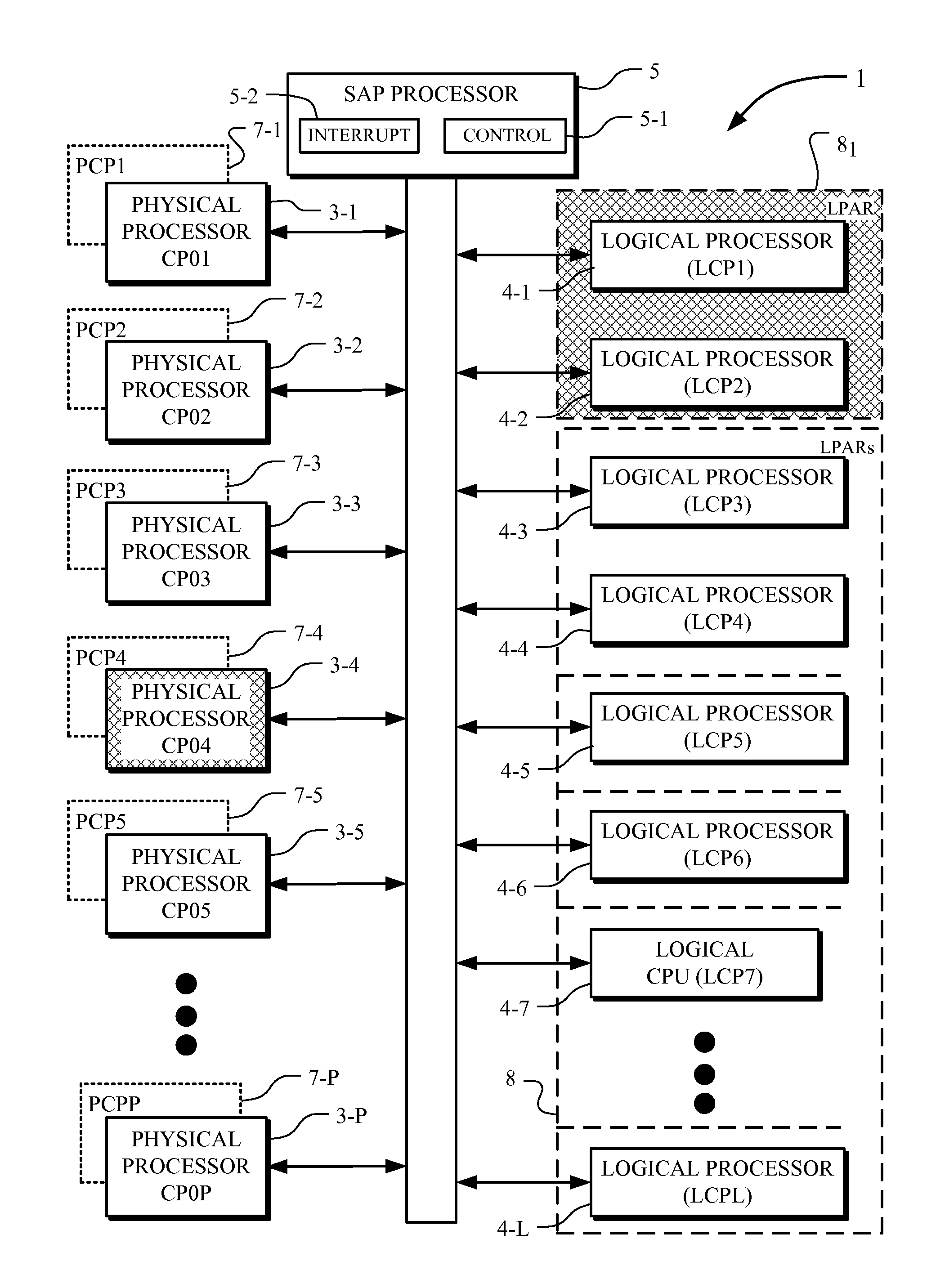

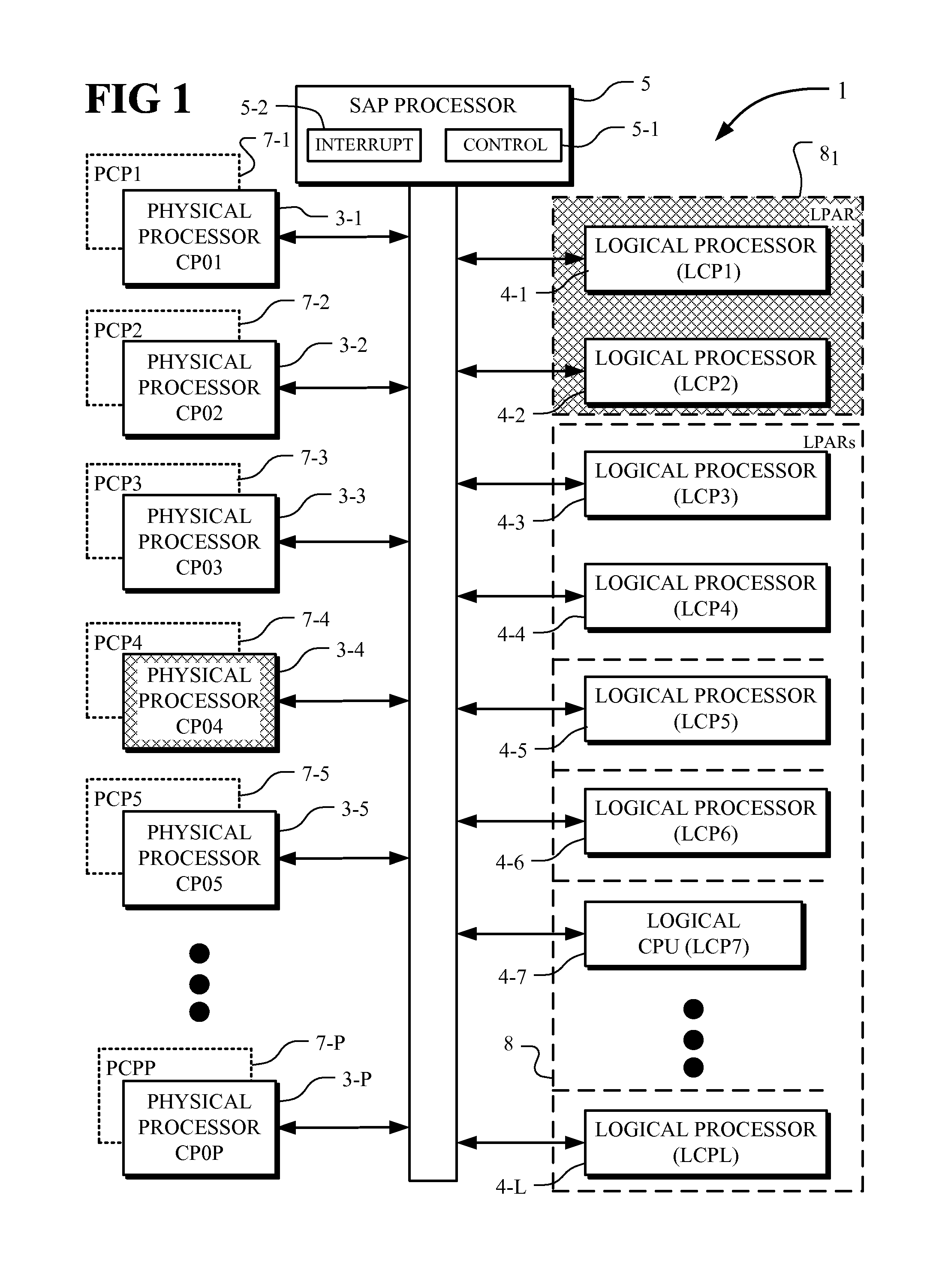

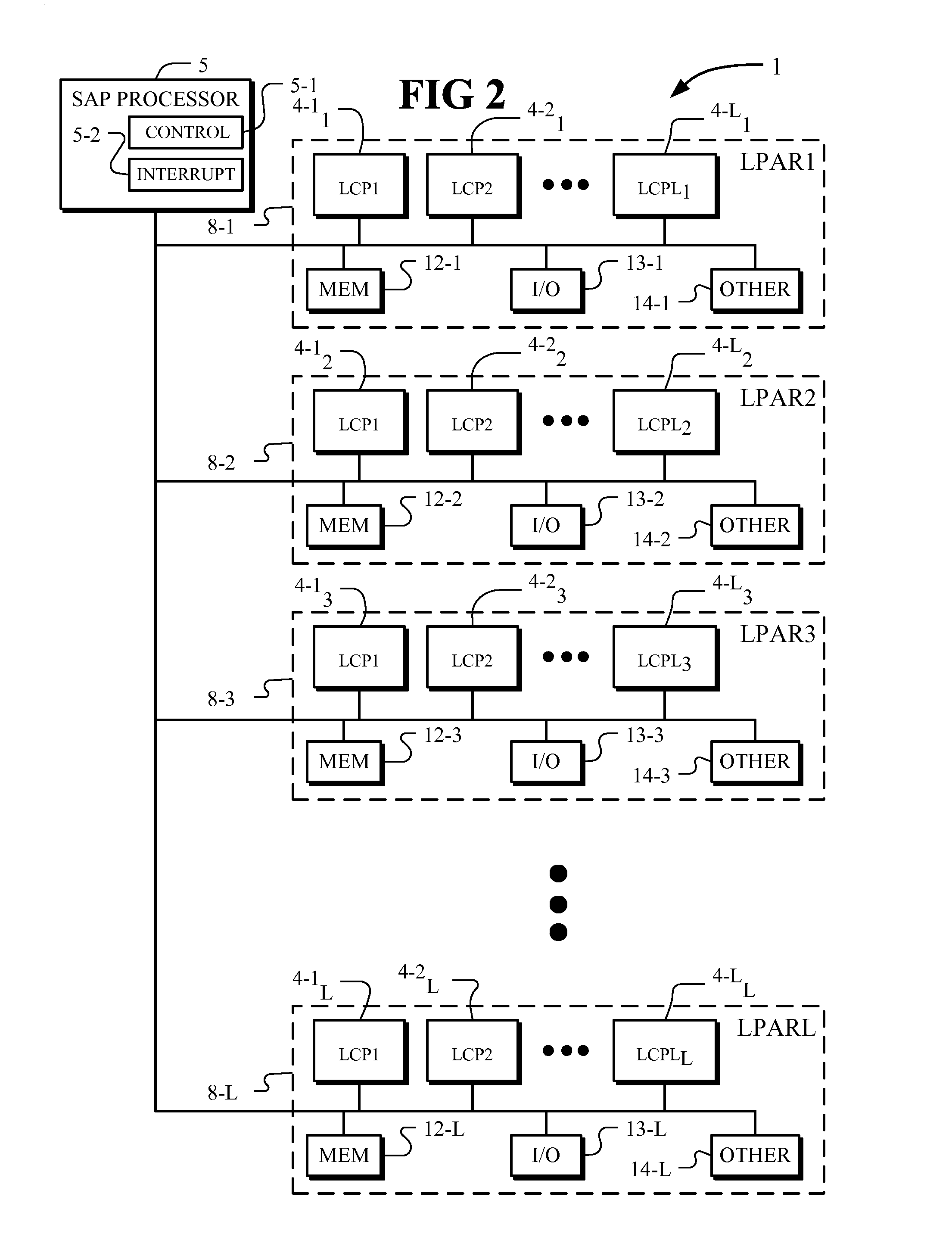

Processor exclusivity in a partitioned system

ActiveUS20090013153A1Eliminating all formMeet changing requirementsSoftware simulation/interpretation/emulationMemory systemsVirtualizationComputerized system

A computer system including a plurality of physical processors (CPs) having physical processor performances (PCPs), a plurality of logical processors (LCPs), a plurality of logical partitions (LPARs) where each partition includes one or more of the logical processors (LCPs), and a system assist processor having a control element. The control element controls the virtualization of the physical processors (CPs), the logical partitions (LPARs) and the logical processors (LCPs) and allocates the physical processor performances (PCPs) to the logical partitions (LPARs). The control element operates to exclusively bind logical processors (LCPs) to the physical processors (CPs). For a logical processor (LCP) exclusively bound to a physical processor (CP), the logical processor (LCP) has exclusive use of the underlying physical processor (CP) and no other logical processor (LCP) can be dispatched on the underlying physical processor (CP) even if the underlying physical processor (CP) is otherwise available.

Owner:IBM CORP

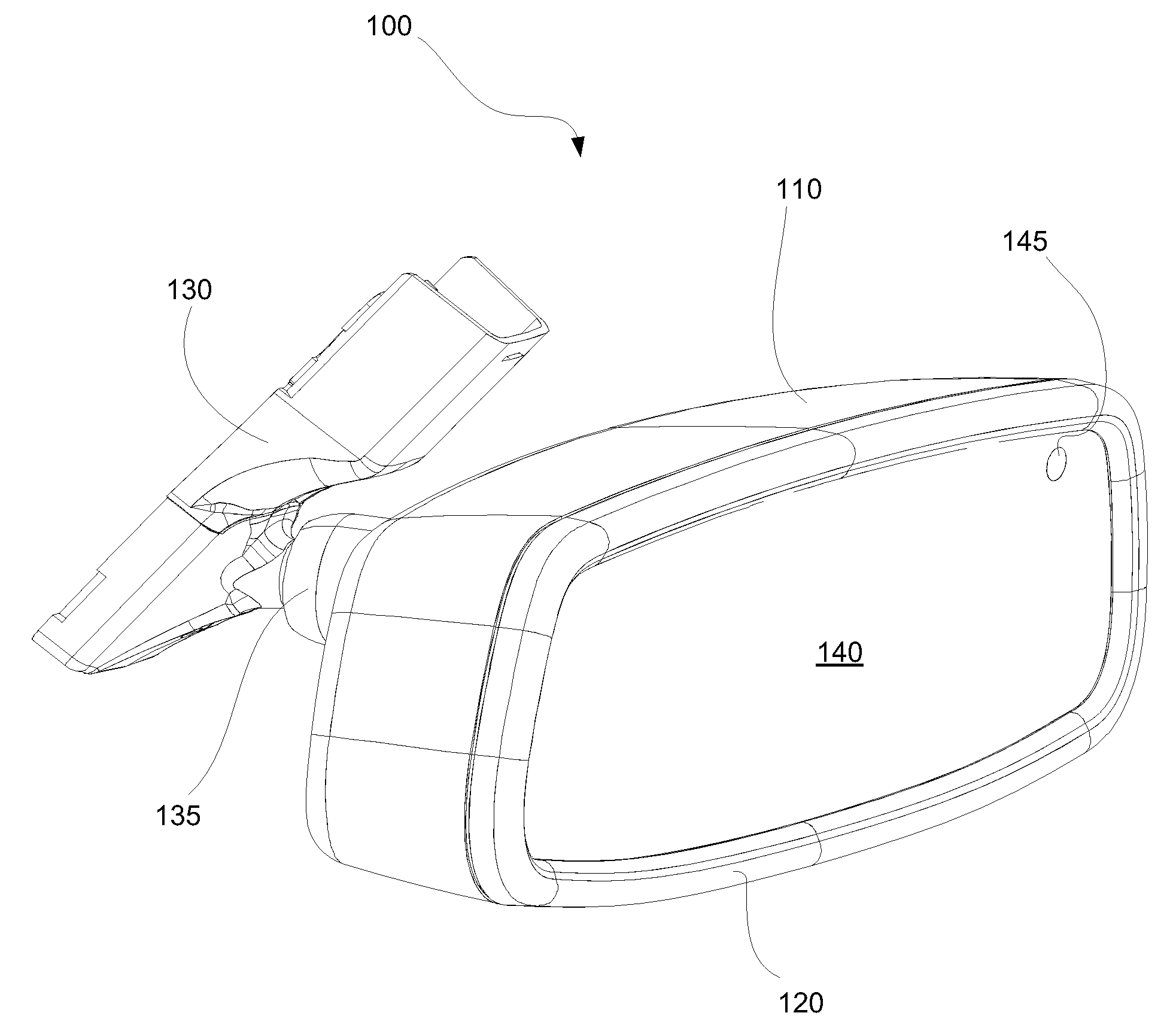

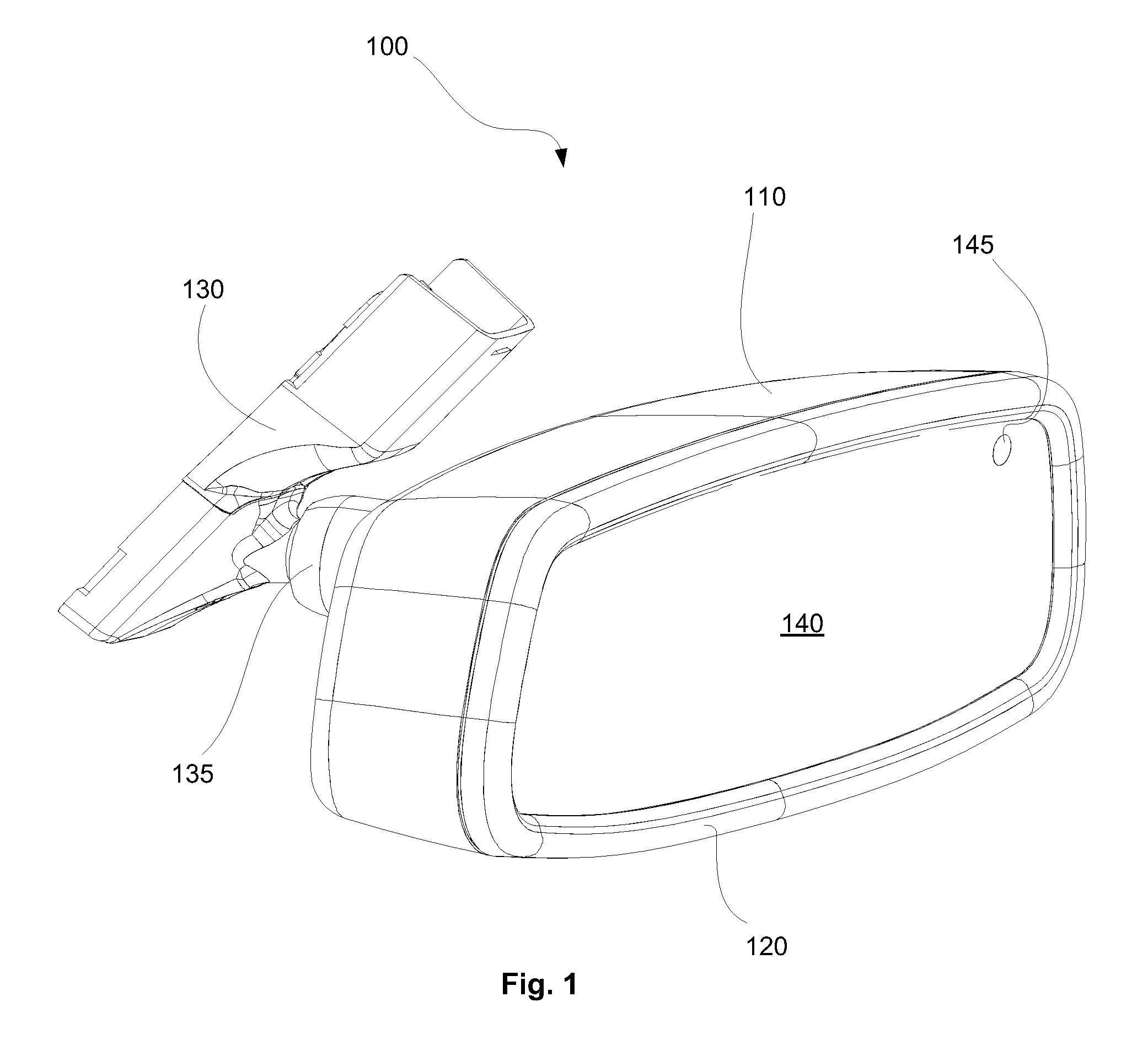

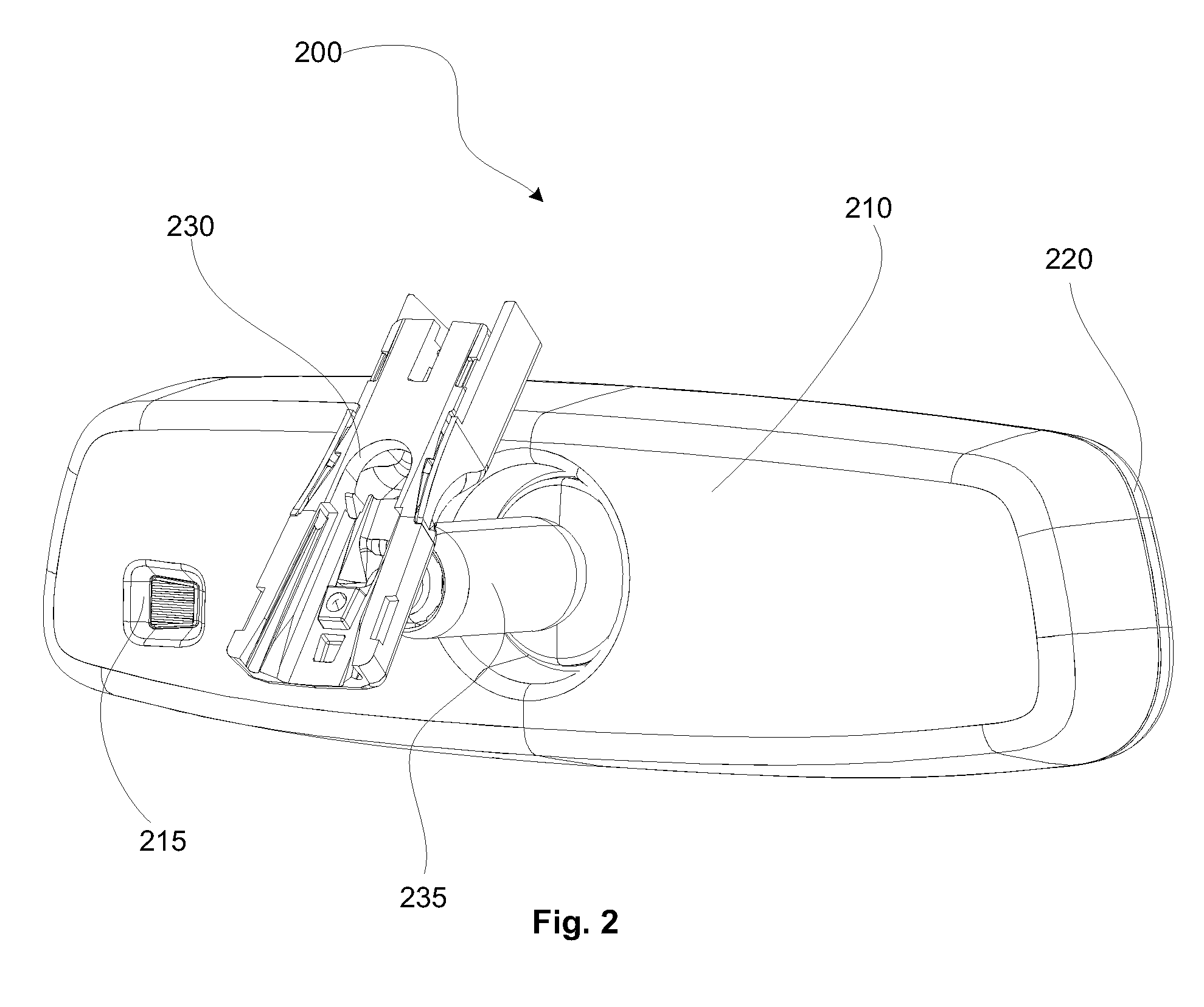

Discrete LED backlight control for a reduced power LCD display system

ActiveUS20100045899A1Optimizes system power savingMeasurement apparatus componentsStatic indicating devicesSimulationLow dynamic range

Backlit LCD displays are becoming commonplace within many vehicle applications. The unique advantage of this invention is that it optimizes system power savings for display of low dynamic range (LDR) images by dynamically controlling spatially adjustable backlighting. This is accomplishes through use of a control technique that takes into account the sequential nature of the video display process.

Owner:GENTEX CORP

System and method for accelerating and optimizing the processing of machine learning techniques using a graphics processing unit

ActiveUS20050125369A1Alleviates computational limitationImprove data accessCharacter and pattern recognitionKnowledge representationGraphicsArtificial intelligence

A system and method for processing machine learning techniques (such as neural networks) and other non-graphics applications using a graphics processing unit (GPU) to accelerate and optimize the processing. The system and method transfers an architecture that can be used for a wide variety of machine learning techniques from the CPU to the GPU. The transfer of processing to the GPU is accomplished using several novel techniques that overcome the limitations and work well within the framework of the GPU architecture. With these limitations overcome, machine learning techniques are particularly well suited for processing on the GPU because the GPU is typically much more powerful than the typical CPU. Moreover, similar to graphics processing, processing of machine learning techniques involves problems with solving non-trivial solutions and large amounts of data.

Owner:MICROSOFT TECH LICENSING LLC

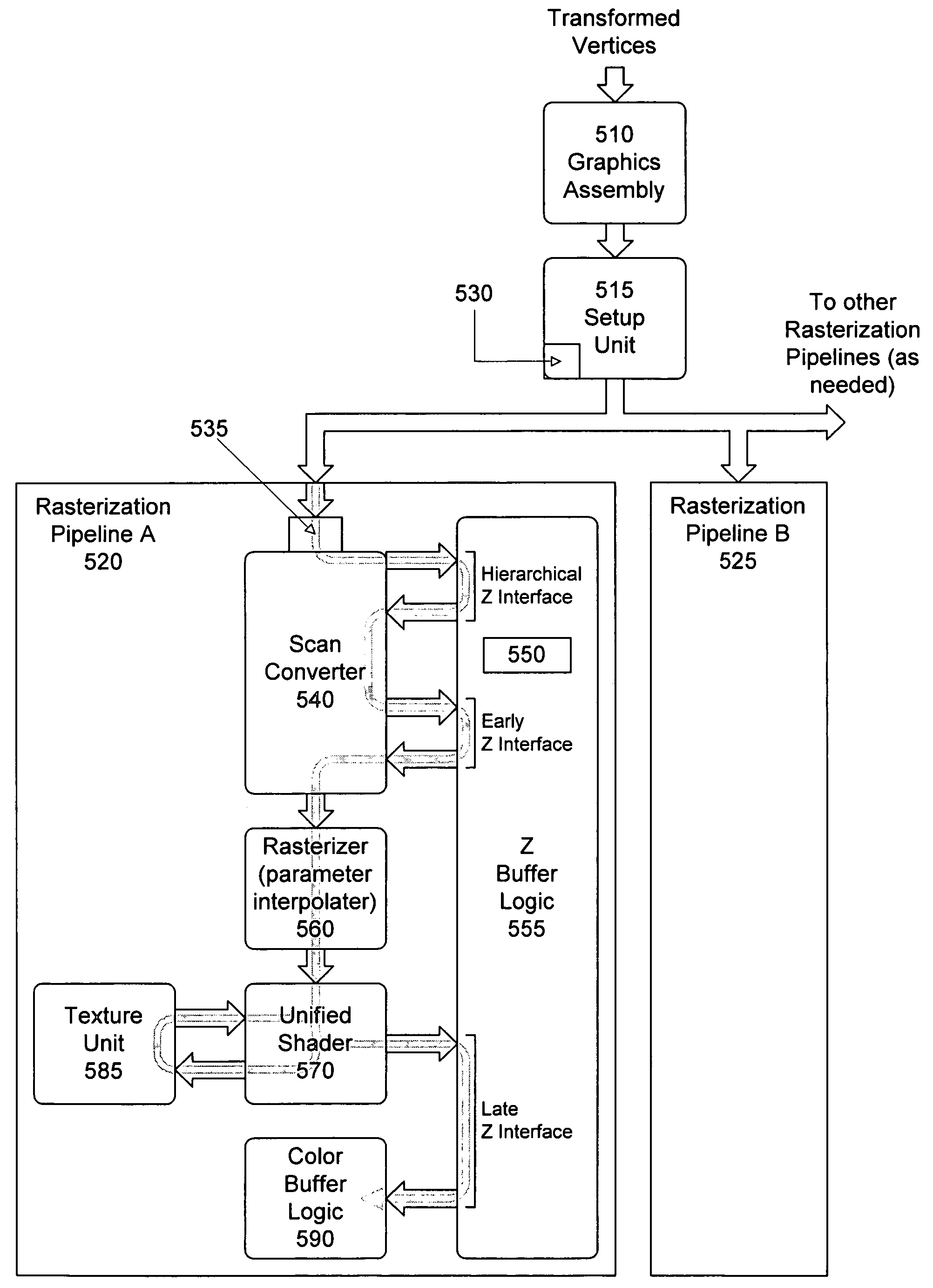

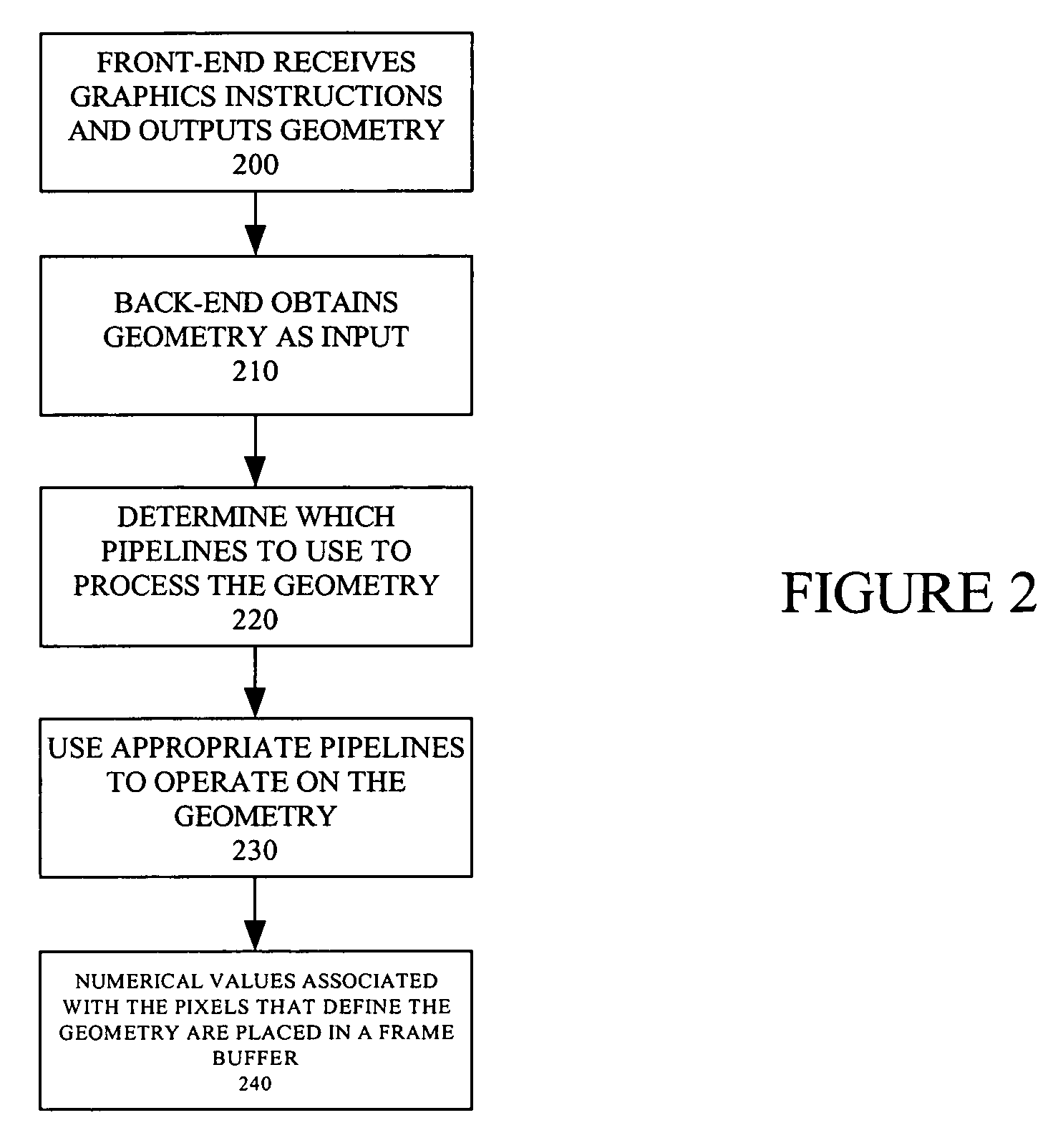

Parallel pipeline graphics system

ActiveUS7633506B1Precise definitionCathode-ray tube indicatorsProcessor architectures/configurationComputational scienceScan conversion

The present invention relates to a parallel pipeline graphics system. The parallel pipeline graphics system includes a back-end configured to receive primitives and combinations of primitives (i.e., geometry) and process the geometry to produce values to place in a frame buffer for rendering on screen. Unlike prior single pipeline implementation, some embodiments use two or four parallel pipelines, though other configurations having 2^n pipelines may be used. When geometry data is sent to the back-end, it is divided up and provided to one of the parallel pipelines. Each pipeline is a component of a raster back-end, where the display screen is divided into tiles and a defined portion of the screen is sent through a pipeline that owns that portion of the screen's tiles. In one embodiment, each pipeline comprises a scan converter, a hierarchical-Z unit, a z buffer logic, a rasterizer, a shader, and a color buffer logic.

Owner:ATI TECH INC

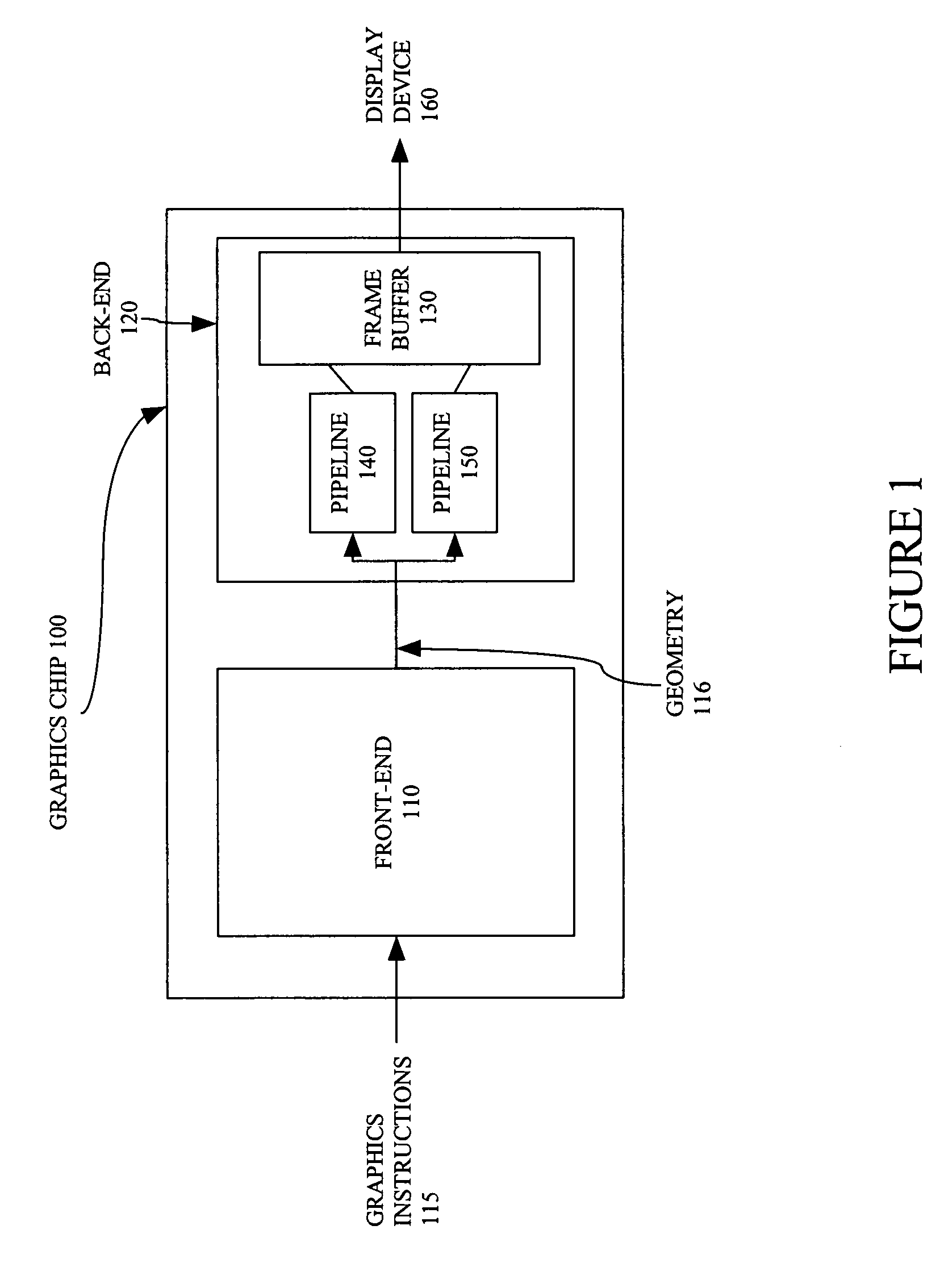

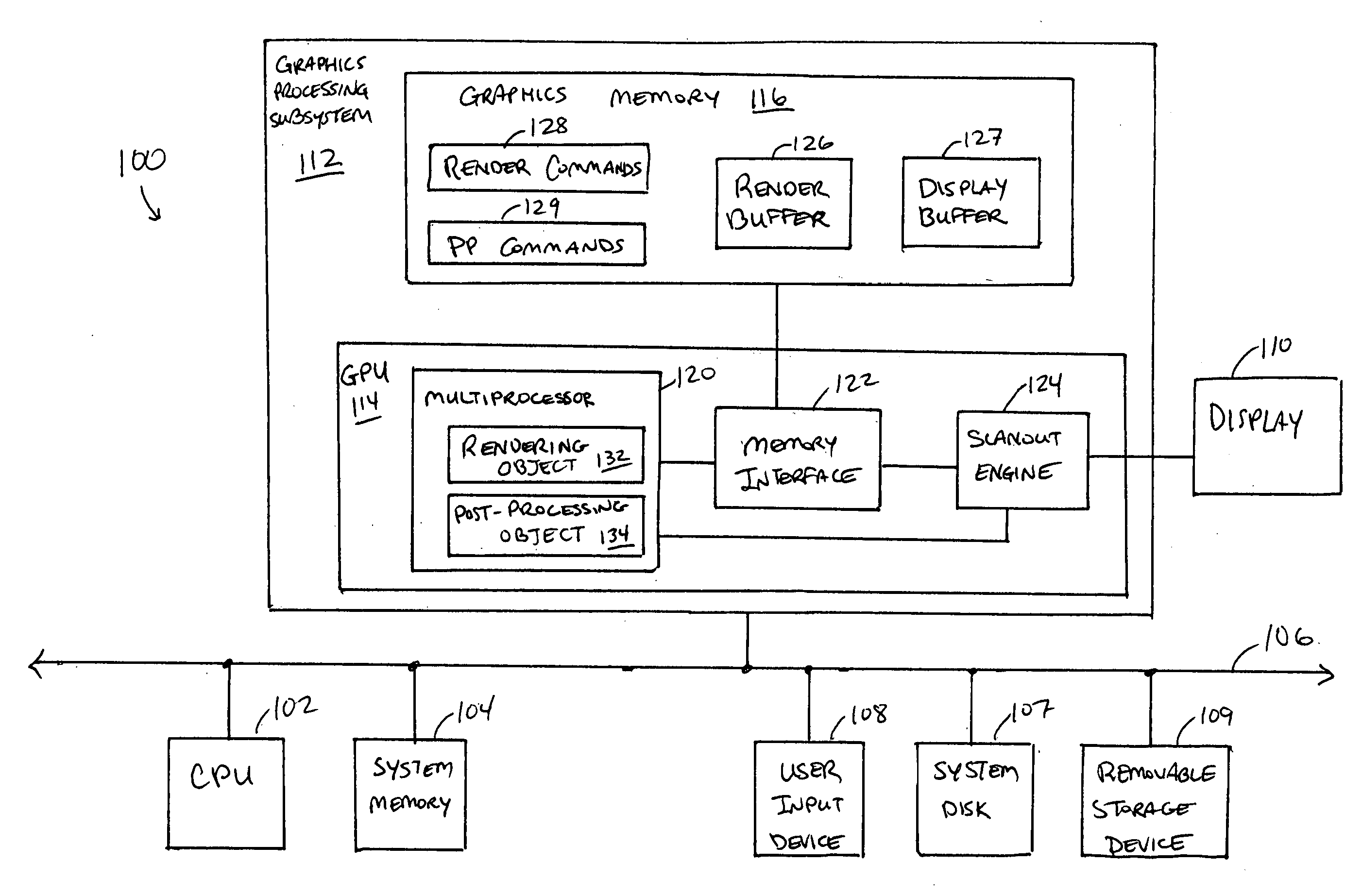

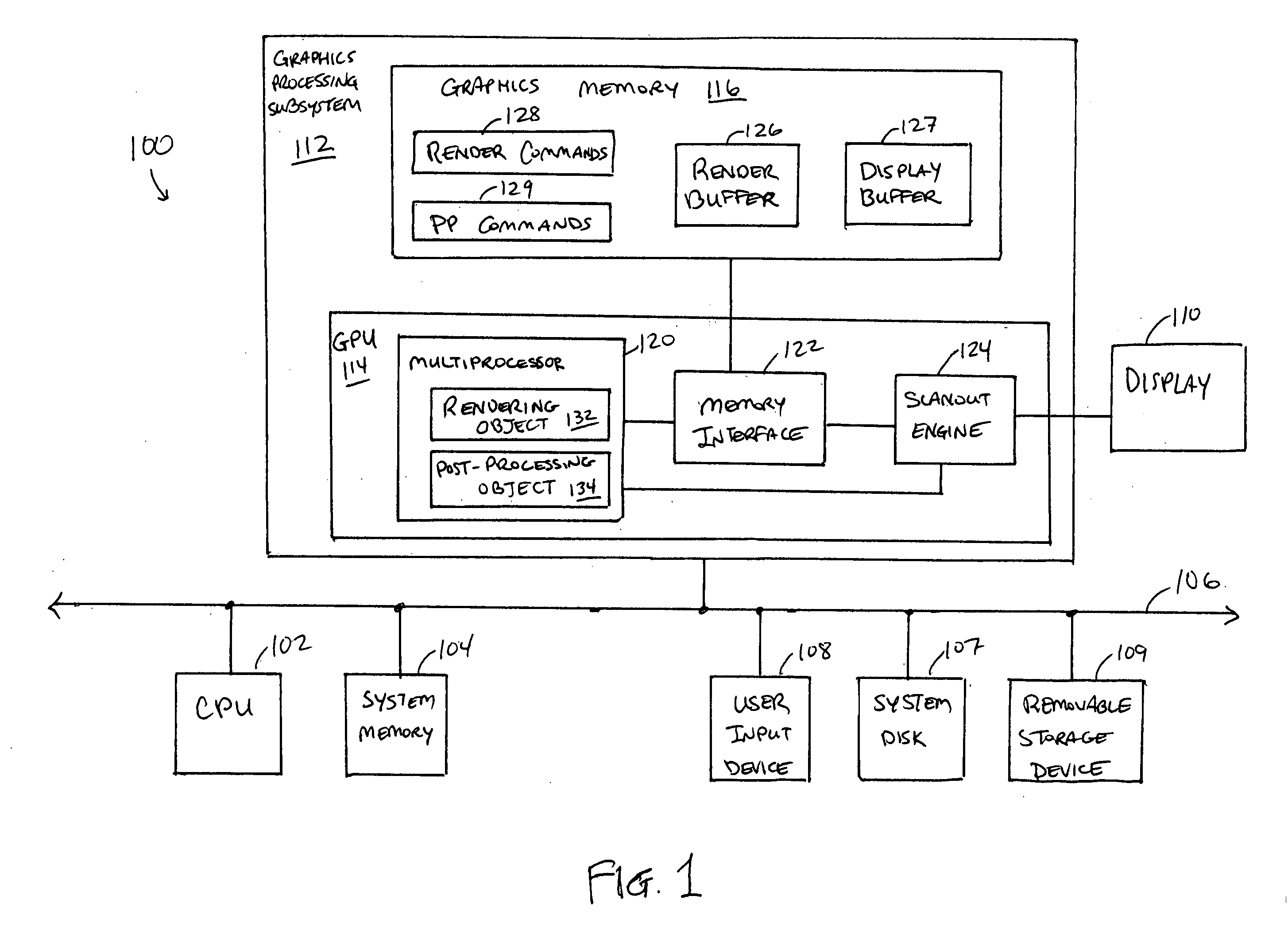

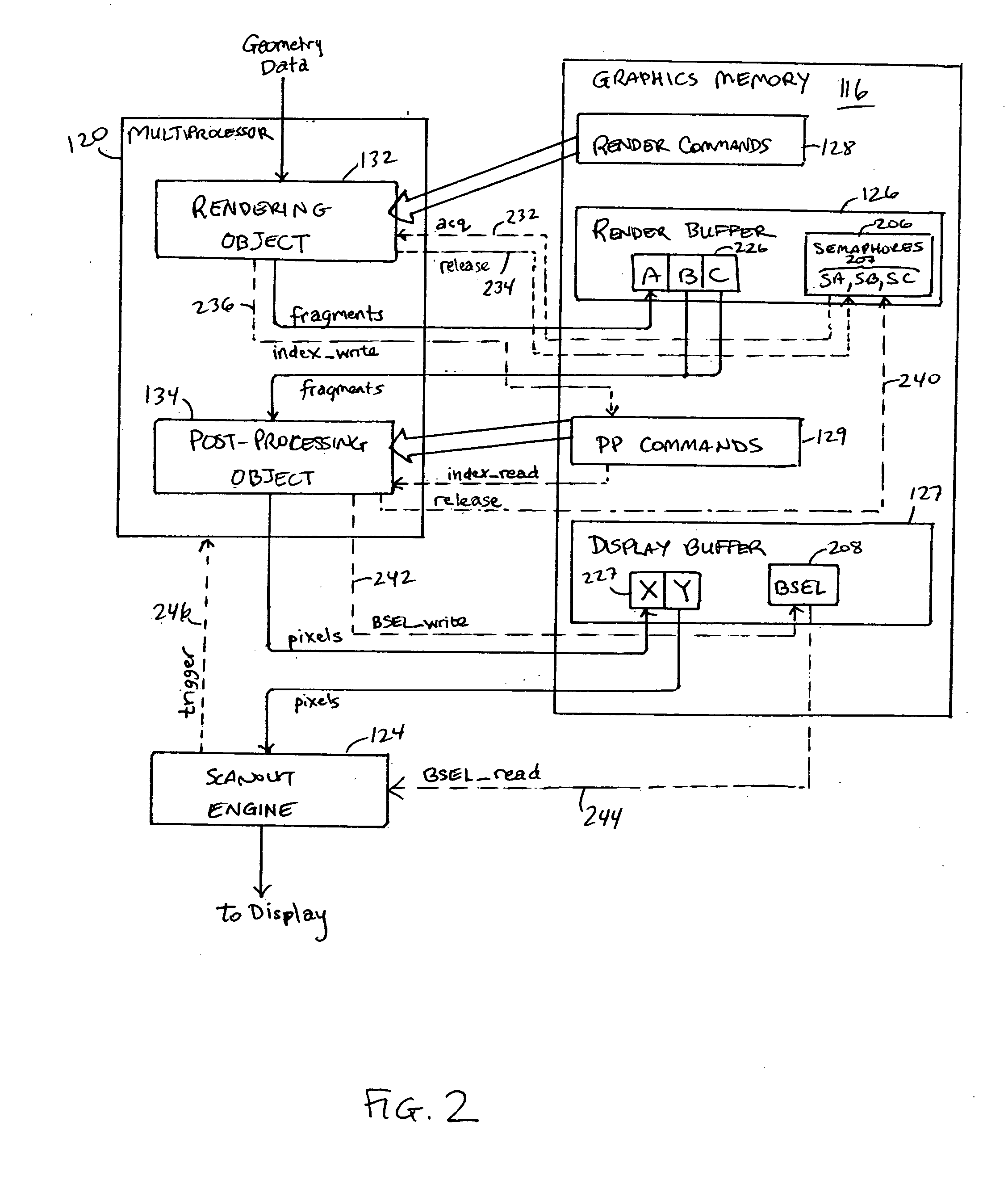

Real-time display post-processing using programmable hardware

ActiveUS20060132491A1Image memory managementMultiprogramming arrangementsDisplay deviceProgrammable hardware

In a graphics processor, a rendering object and a post-processing object share access to a host processor with a programmable execution core. The rendering object generates fragment data for an image from geometry data. The post-processing object operates to generate a frame of pixel data from the fragment data and to store the pixel data in a frame buffer. In parallel with operations of the host processor, a scanout engine reads pixel data for a previously generated frame and supplies the pixel data to a display device. The scanout engine periodically triggers the host processor to operate the post-processing object to generate the next frame. Timing between the scanout engine and the post-processing object can be controlled such that the next frame to be displayed is ready in a frame buffer when the scanout engine finishes reading a current frame.

Owner:NVIDIA CORP

Popular searches

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com